Patents

Literature

52 results about "Speech Acoustics" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The acoustic aspects of speech in terms of frequency, intensity, and time.

Speech bandwidth extension

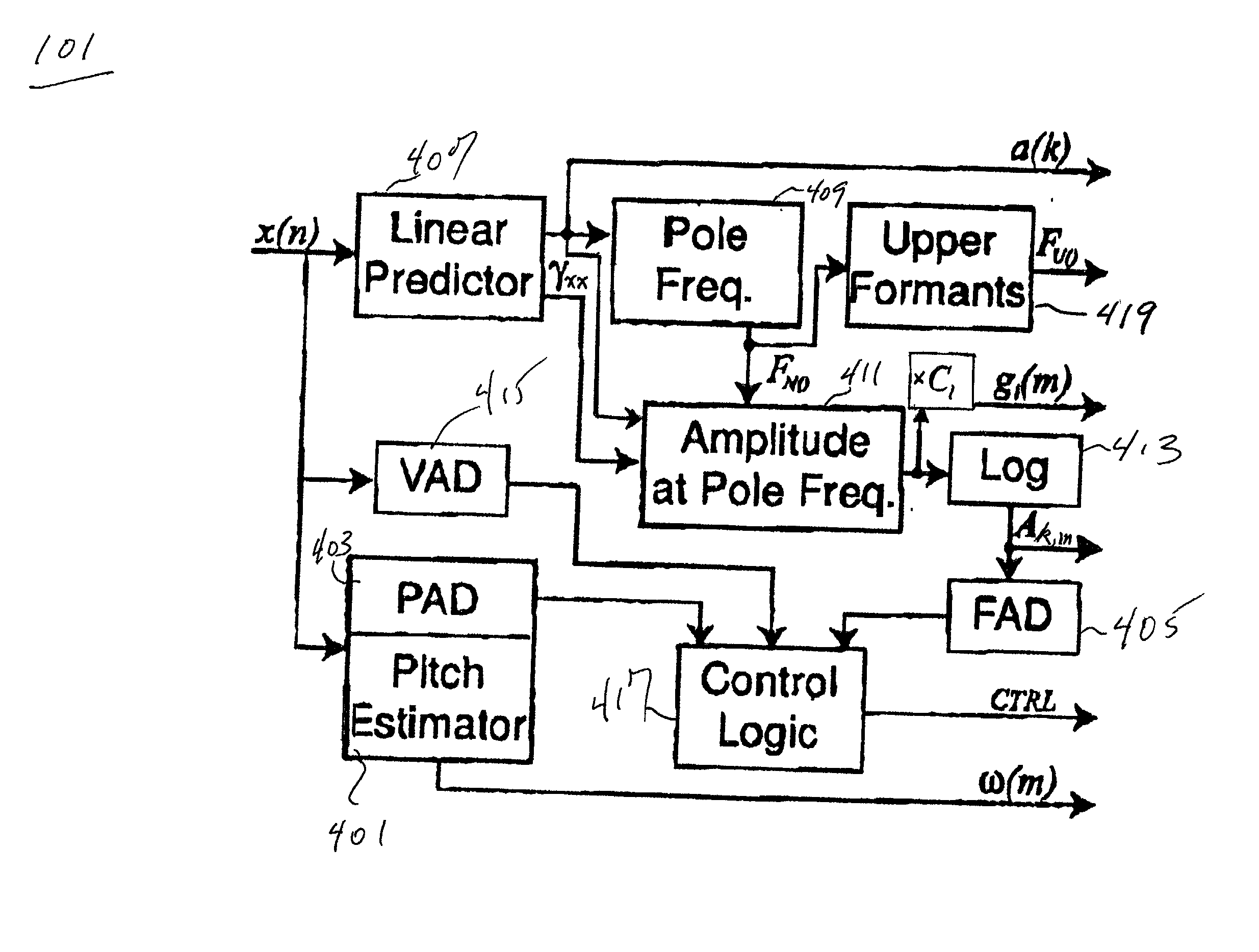

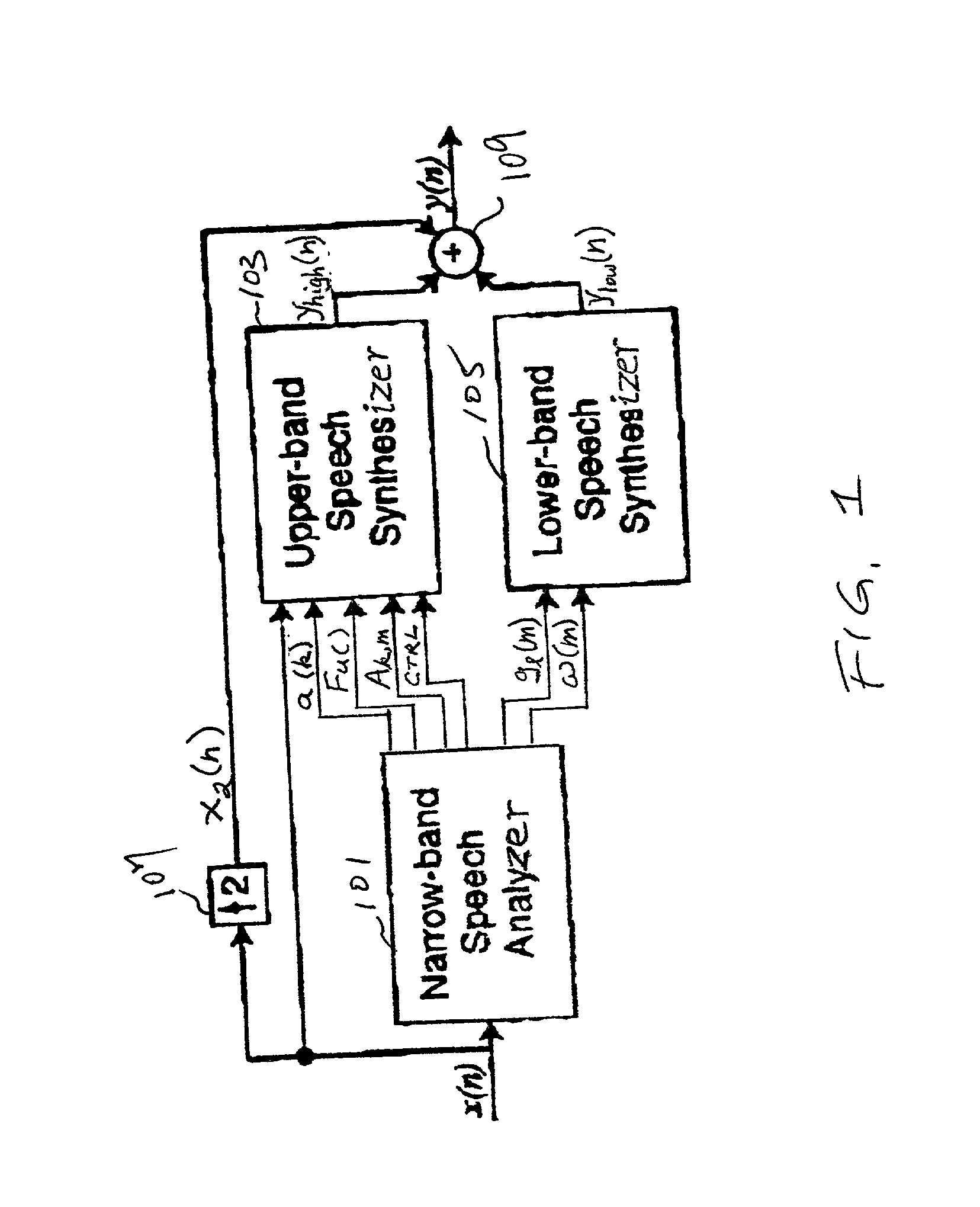

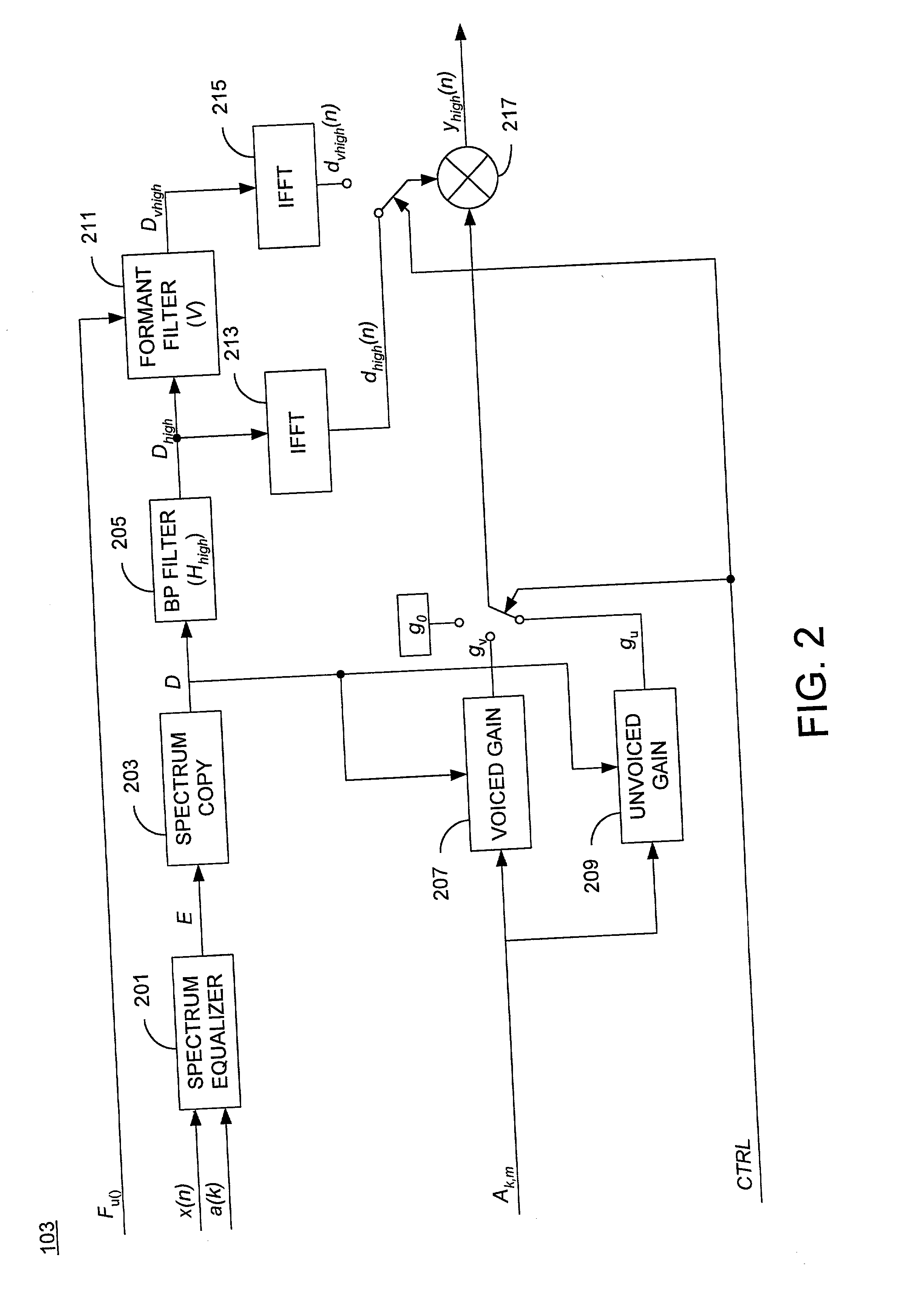

A common narrow-band speech signal is expanded into a wide-band speech signal. The expanded signal gives the impression of a wide-band speech signal regardless of what type of vocoder is used in a receiver. The robust techniques suggested herein are based on speech acoustics and fundamentals of human hearing. That is the techniques extend the harmonic structure of the speech signal during voiced speech segments and introduce a linearly estimated amount of speech energy in the wide frequency-band. During unvoiced speech segments, a fricated noise may be introduced in the upper frequency-band.

Owner:TELEFON AB LM ERICSSON (PUBL)

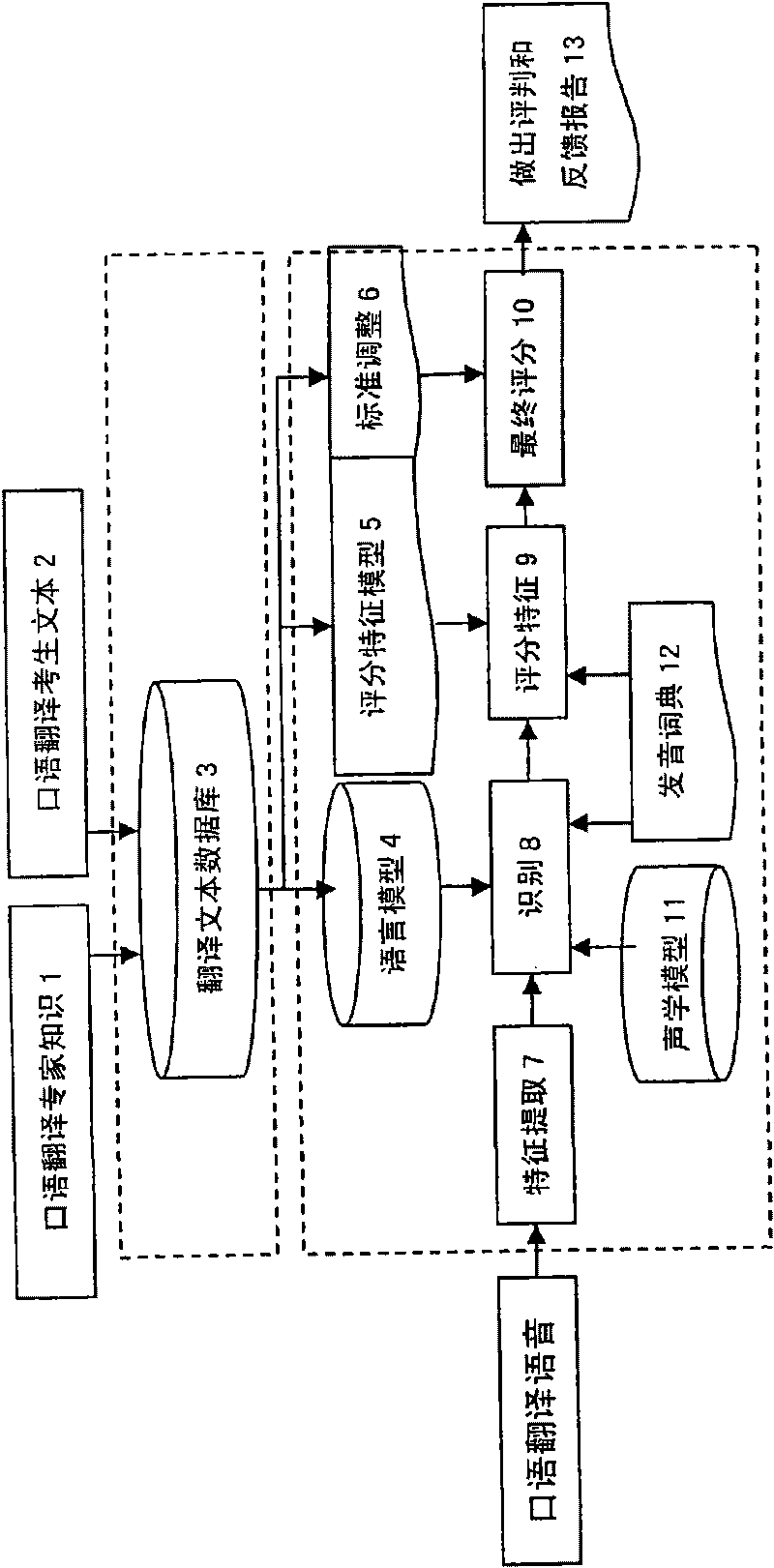

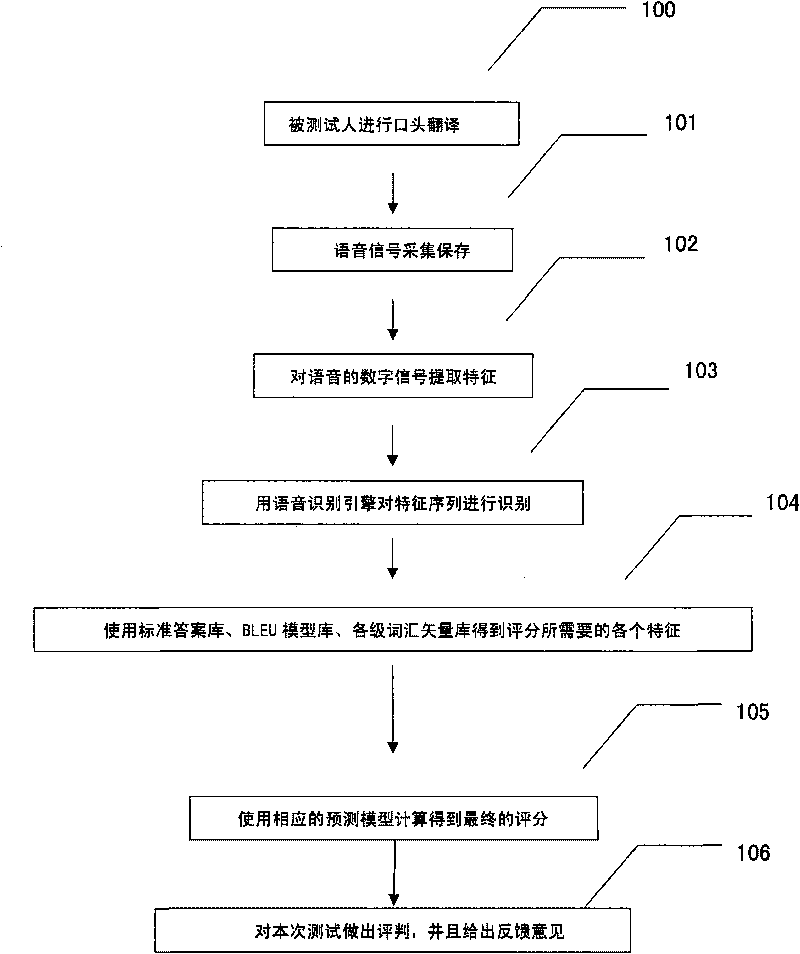

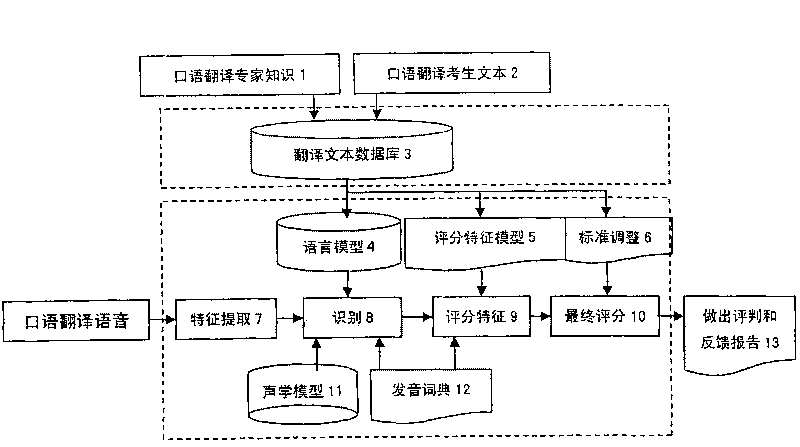

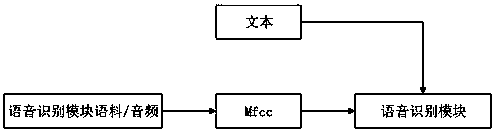

Method for scoring interpretation quality by using computer

The invention discloses a method for scoring interpretation quality by using a computer, which comprehensively uses computer voice identification, voice pronunciation estimation, text translation quality confirmation technology to obtain the interpretation quality of a testee. The method comprises the following steps: establishing a database aiming at the characteristics of the tested pronunciation crowd; training the database by using a large vocabulary continuous speech acoustic model training platform to obtain an acoustic model; collecting corresponding expert knowledge and translated text linguistic data for each translation question to form a language model, a scoring model and a standard adjustment model required for identification; and finally, integrating the output result of a speech identifier and a linguistic processing mechanism to output the score of the interpretation quality of the testee, and providing feedback suggestion. The effect of machine evaluation already basically reaches the level close to expert evaluation; and meanwhile, the method also can provide some suggestions for testee pronunciation, vocabulary use and sentence pattern use during evaluating to guide the testee to correct.

Owner:北京中自投资管理有限公司

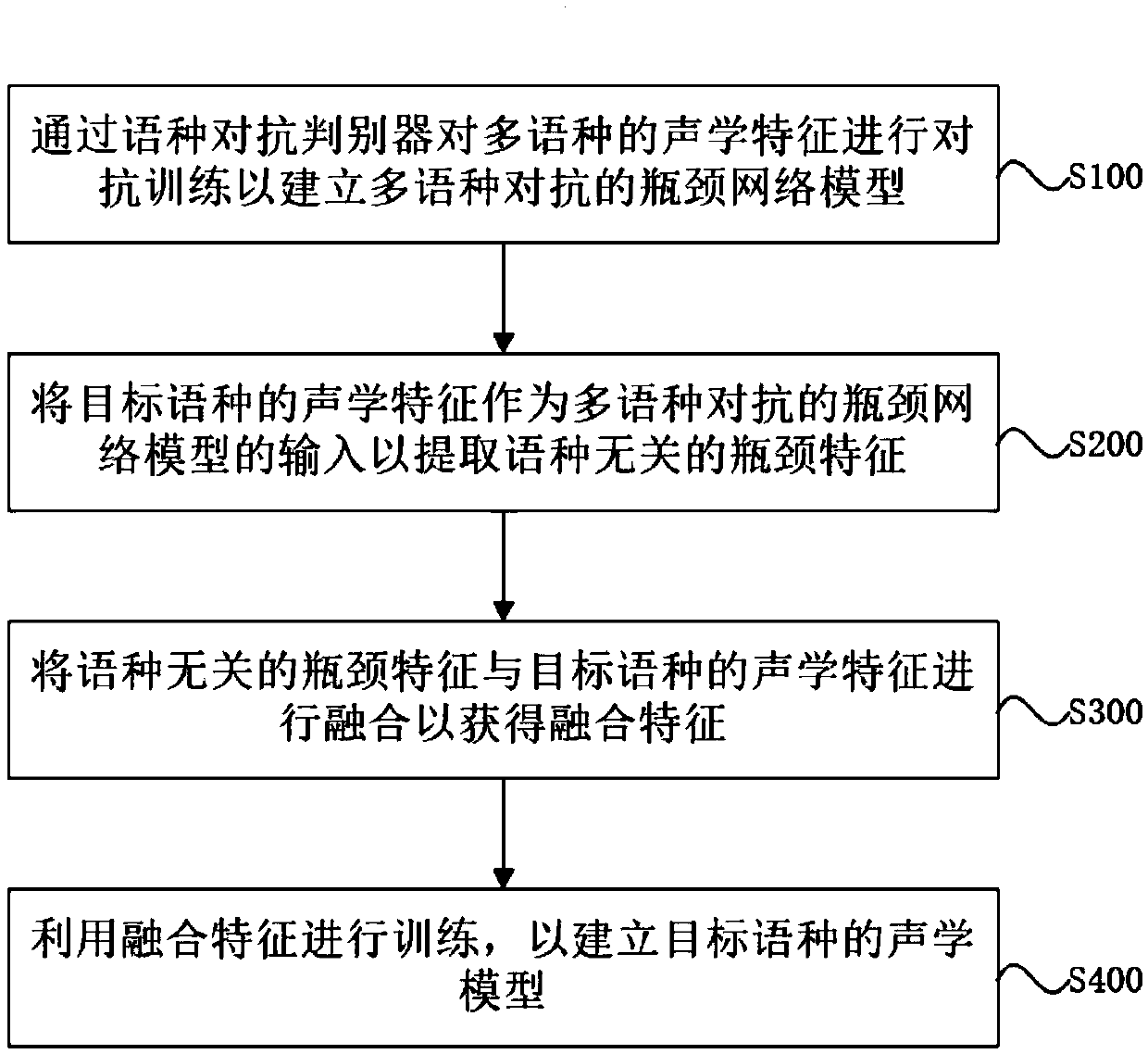

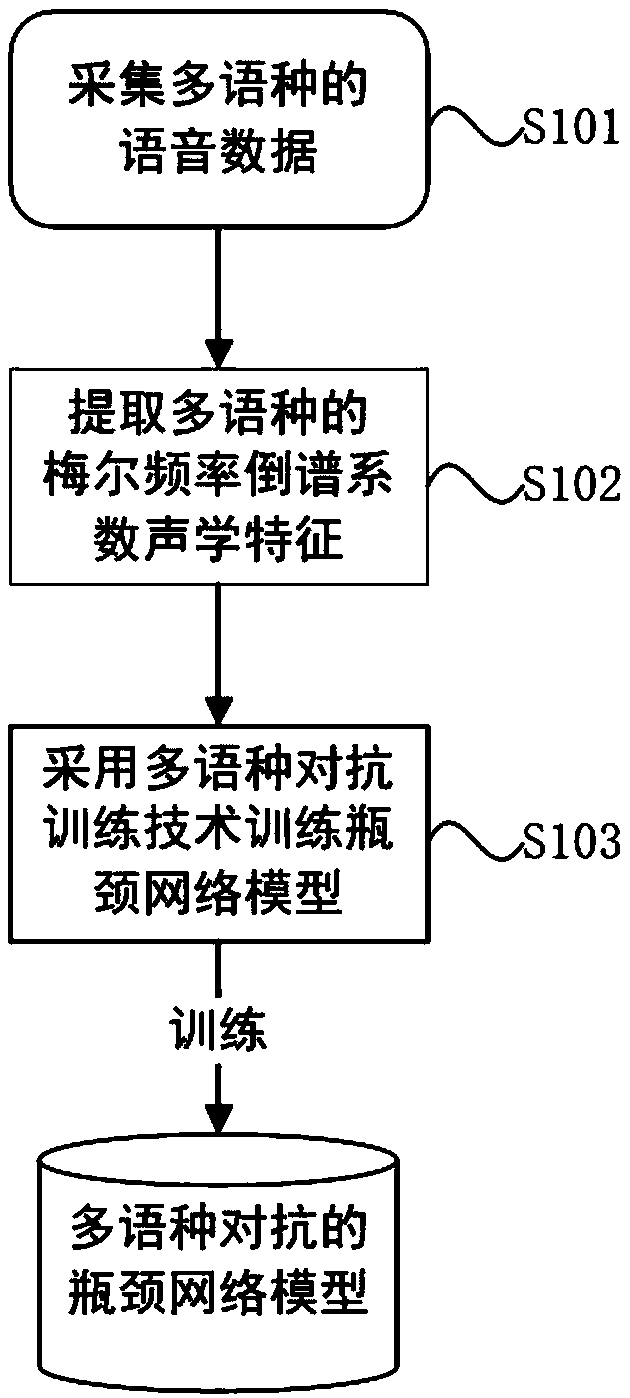

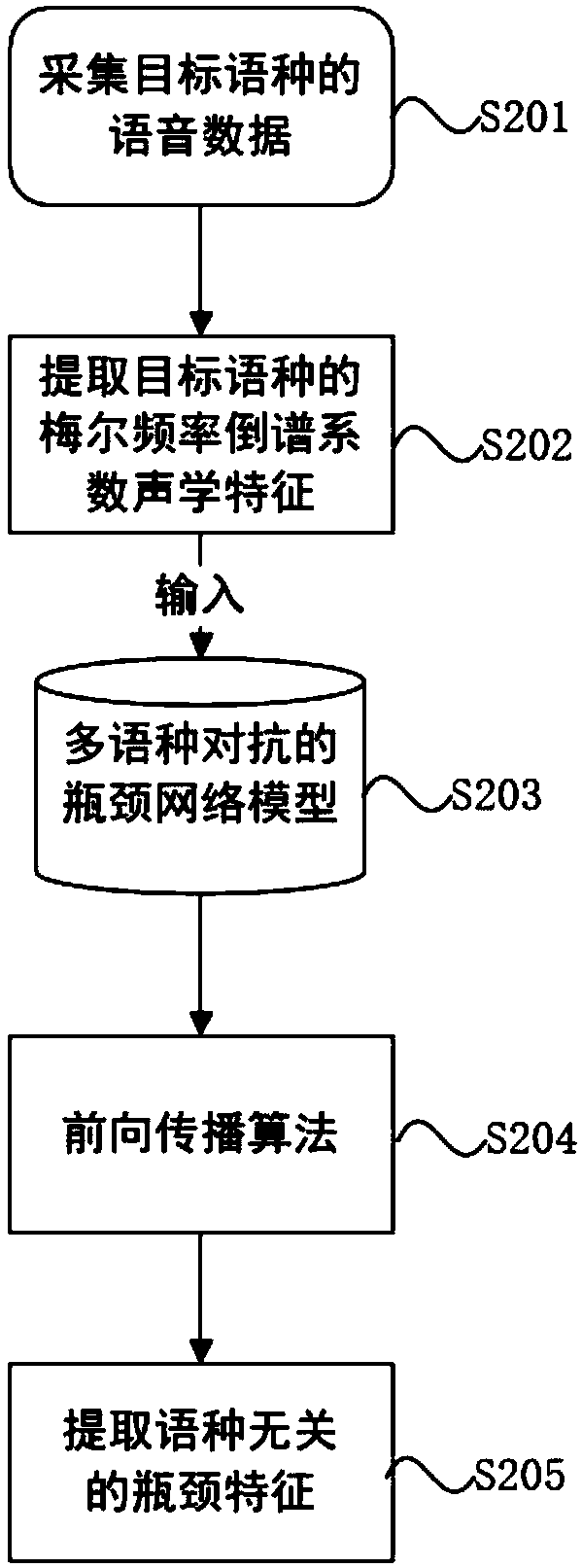

Small data speech acoustic modeling method in speech recognition

The invention belongs to the technical field of signal processing in the electronic industry, and aims at solving a problem that the discrimination performance of an acoustic model of a target language with just a little mark data is low. In order to solve the above problem, the invention provides a small data speech acoustic modeling method in speech recognition, and the method comprises the steps: carrying out the adversarial training of the acoustic features of many languages through a language adversarial discriminator, so as to build a multi-language adversarial bottleneck network model;taking the acoustic features of a target language as the input of the multi-language adversarial bottleneck network model, so as to extract the bottleneck features which is irrelevant to the language;carrying out the fusion of the bottleneck features which is irrelevant to the language with the acoustic features of the target language, so as to obtain fusion features; carrying out the training through the fusion features, so as to build an acoustic model of the target language. The method effectively irons out the defects, caused by a condition that the bottleneck information comprises the information correlated with the language, of the unremarkable improvement of the recognition performance of the target language and even the negative migration phenomenon in the prior art, thereby improving the voice recognition precision of the target language.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

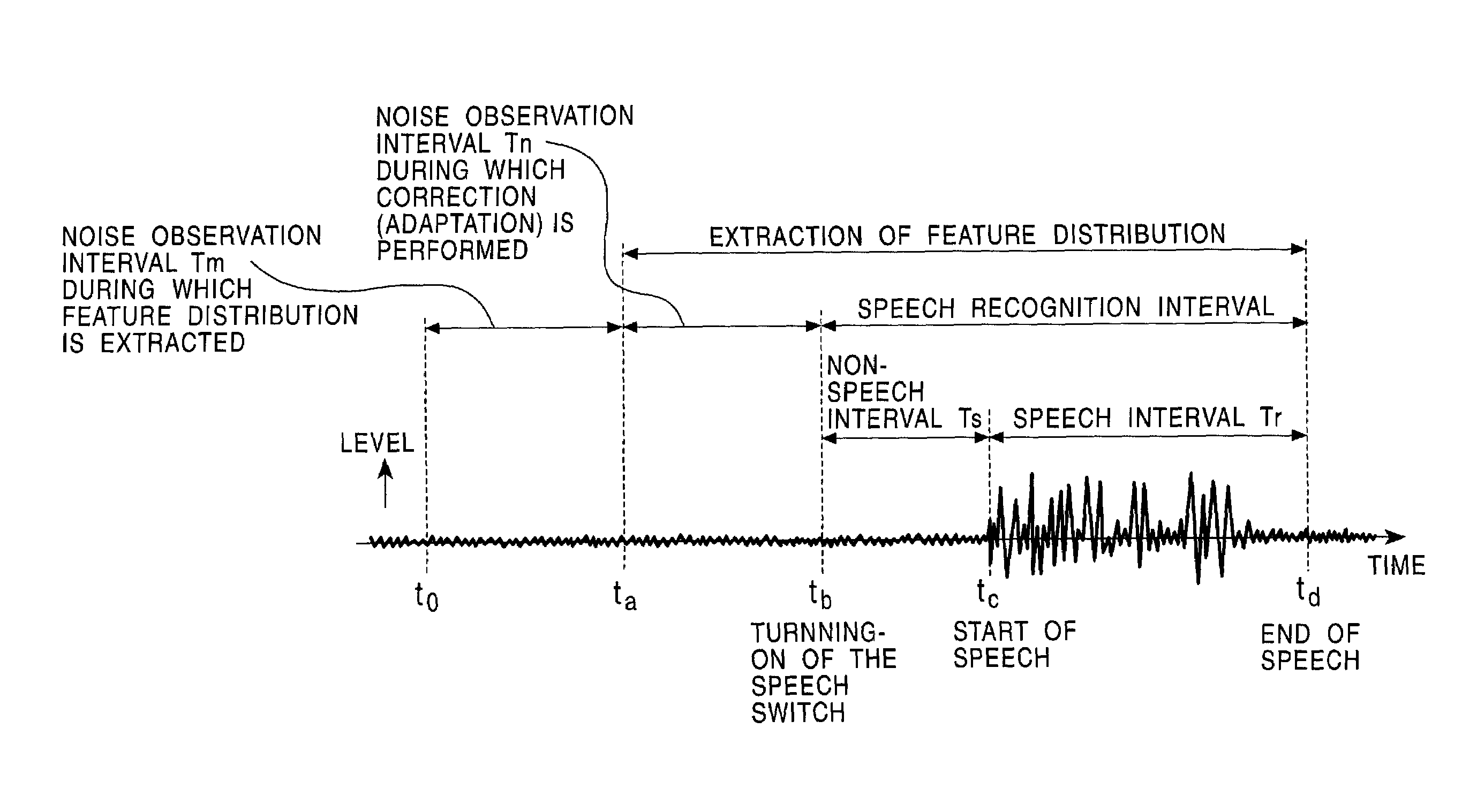

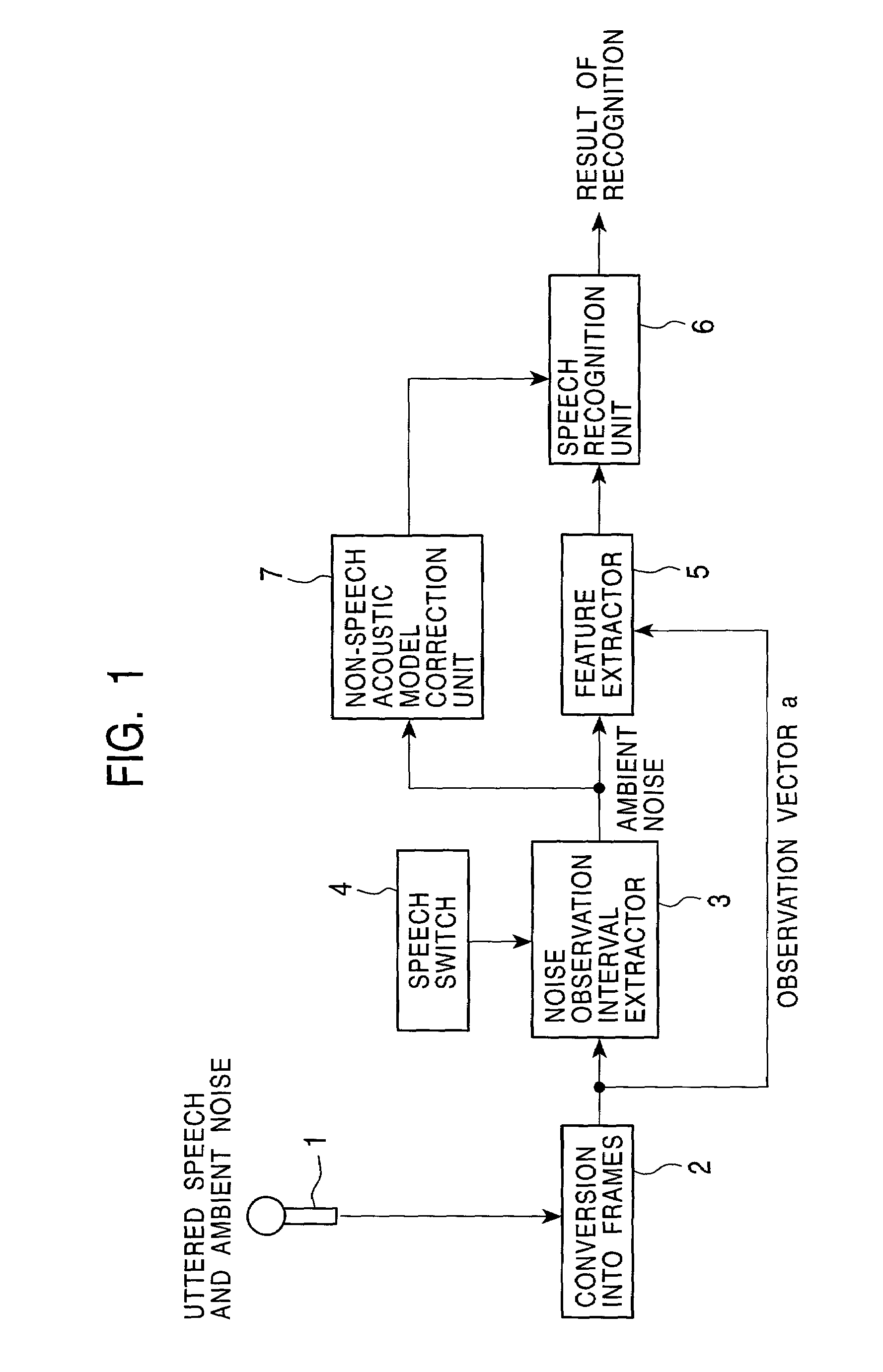

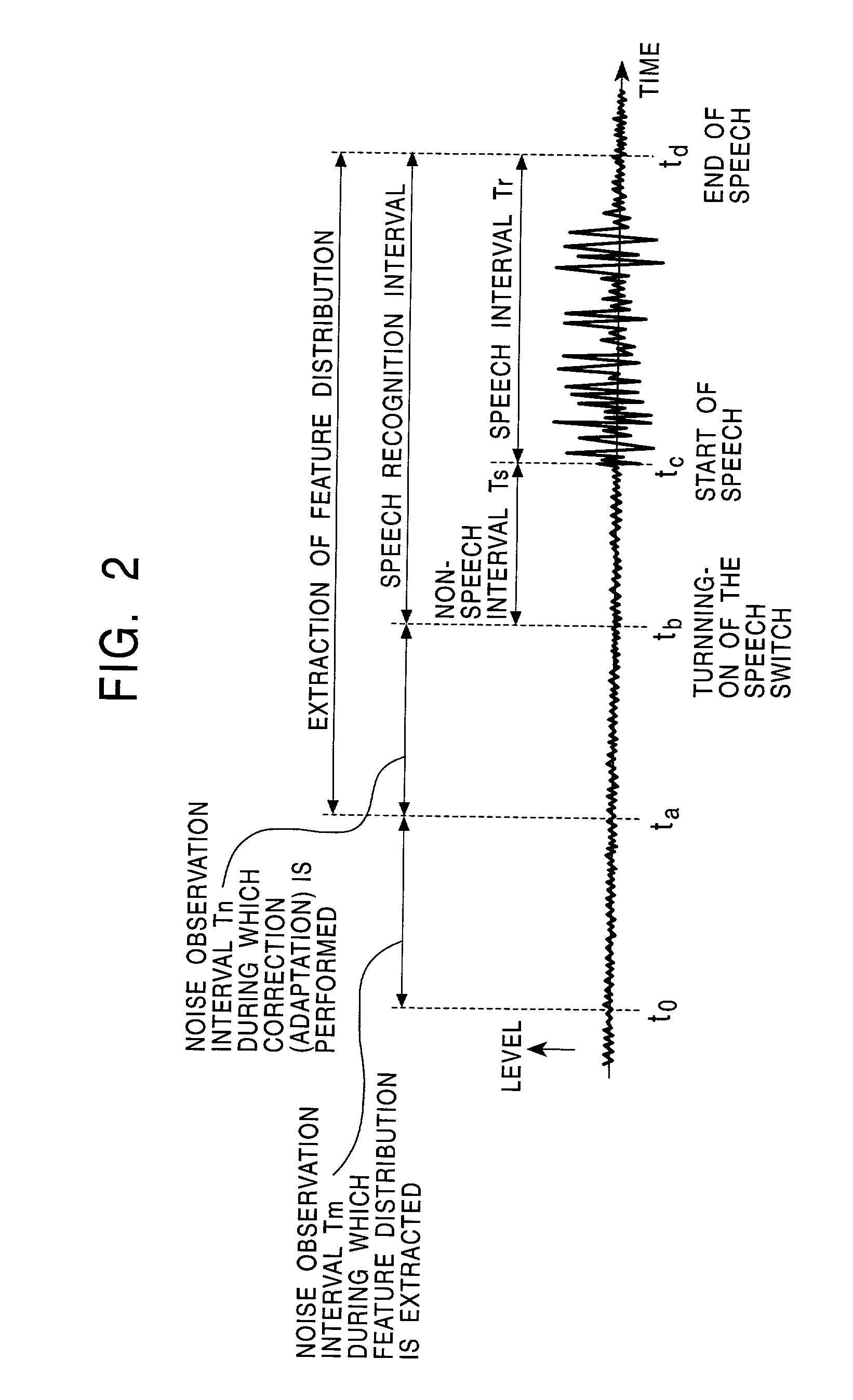

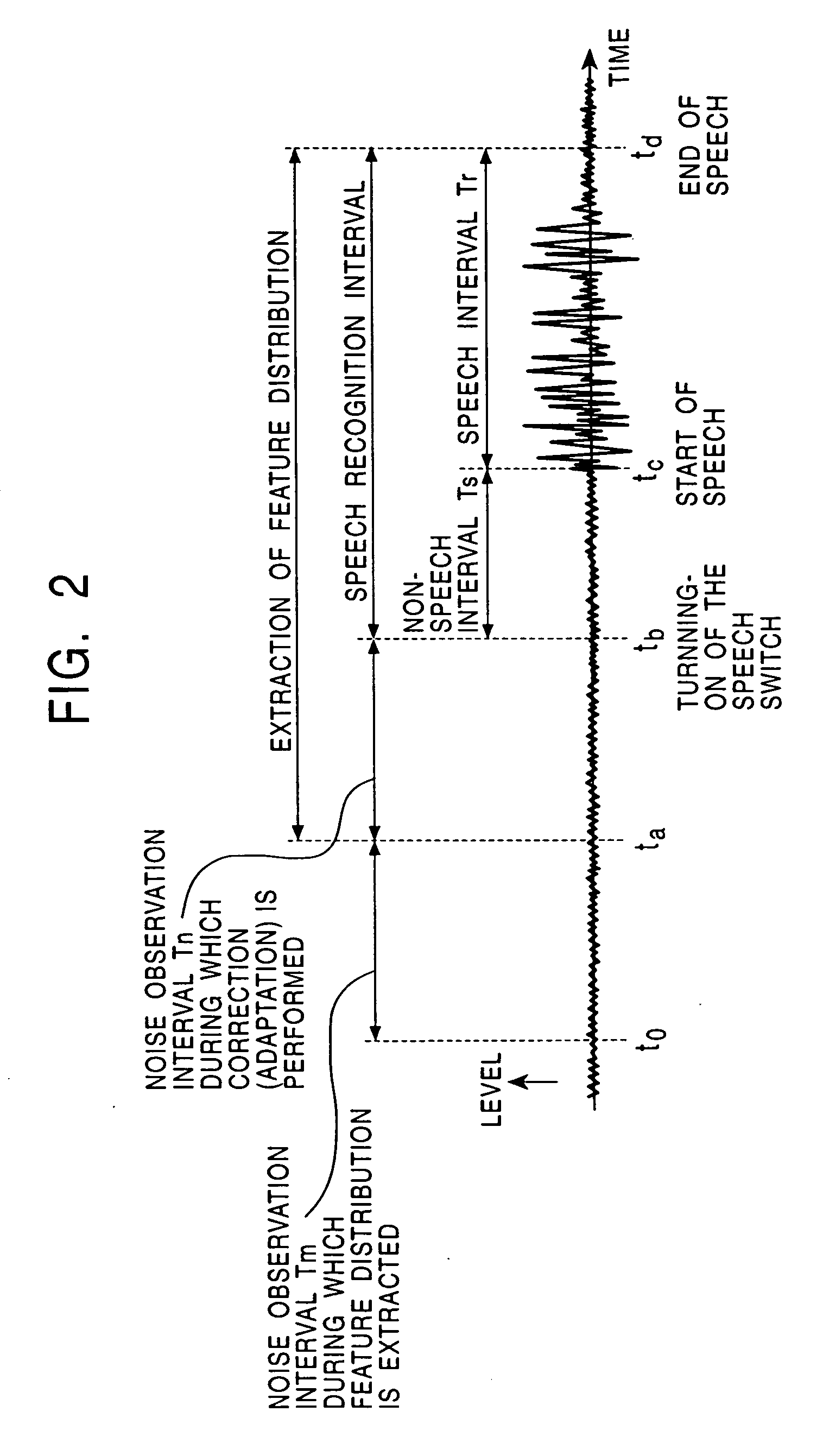

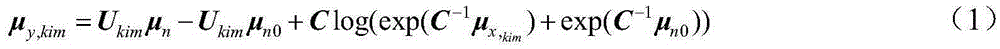

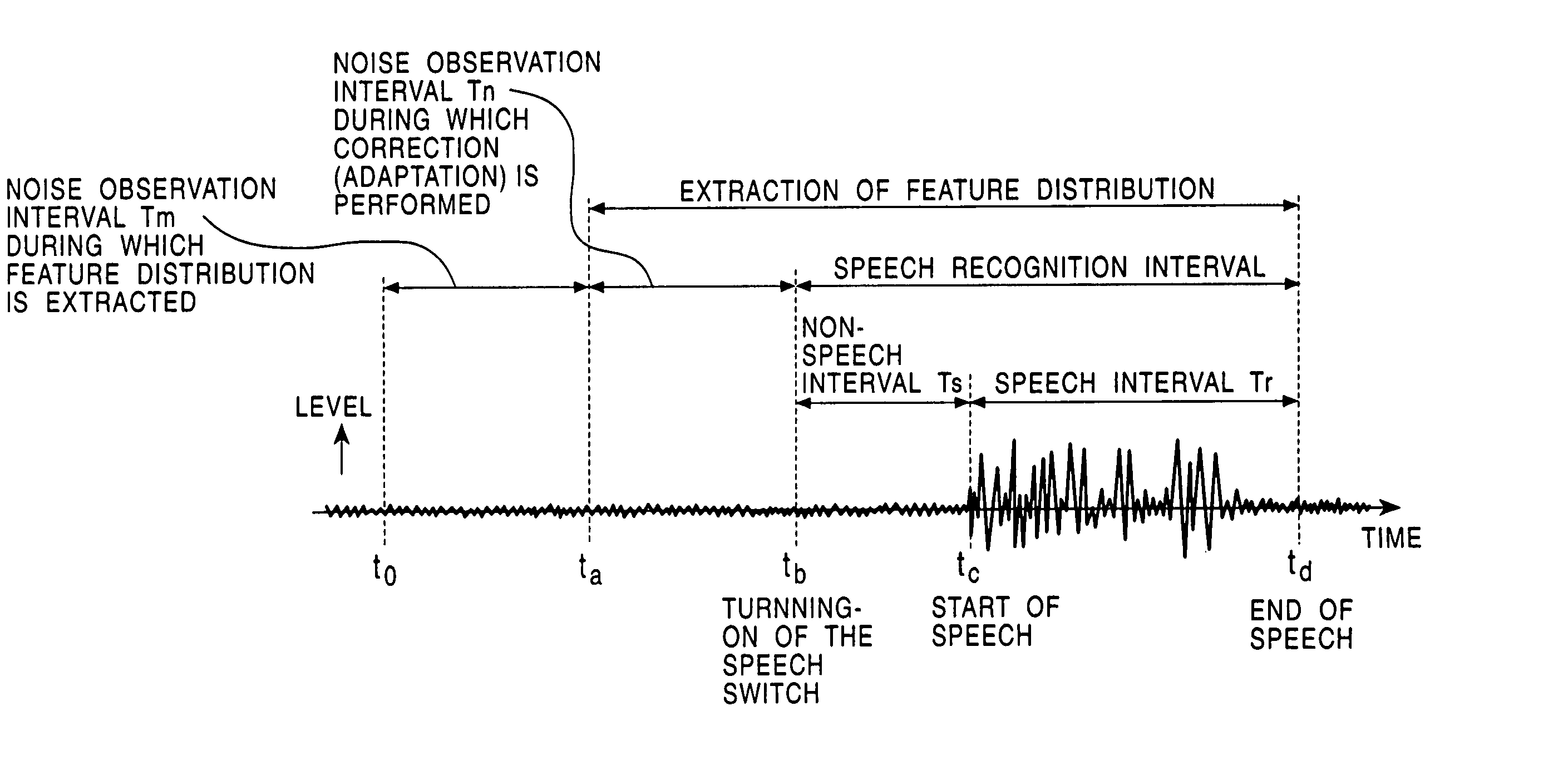

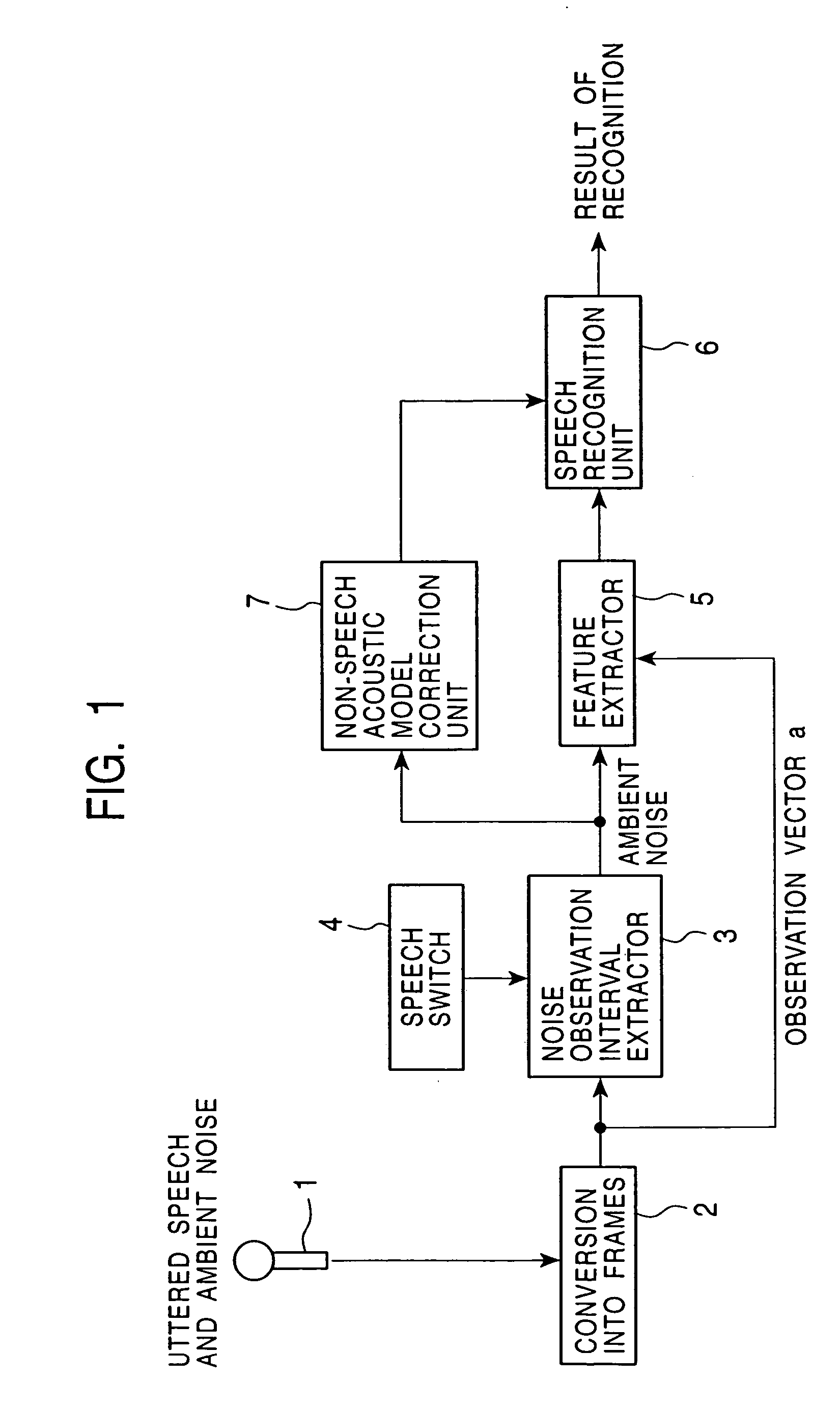

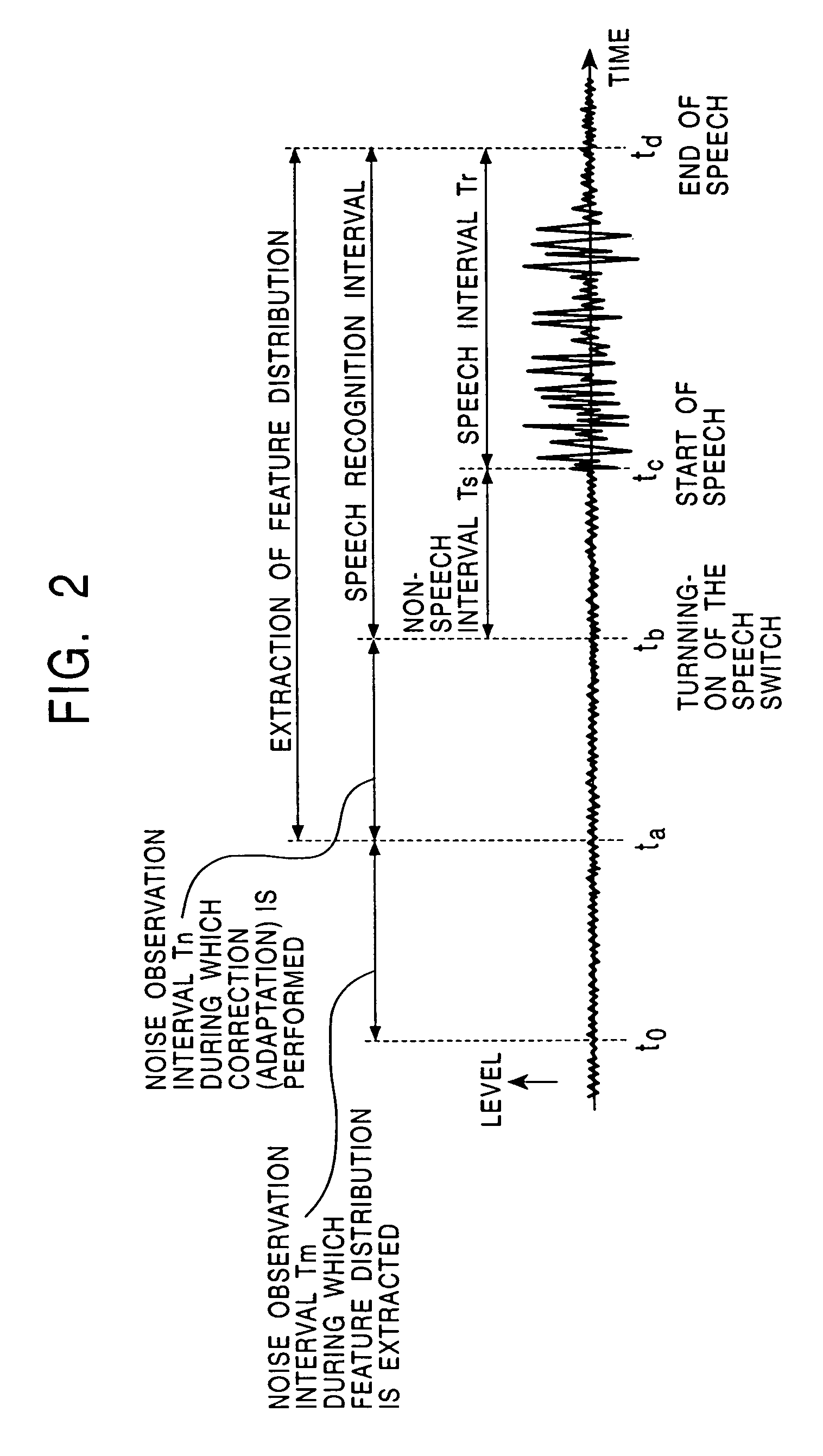

Model adaptation apparatus, model adaptation method, storage medium, and pattern recognition apparatus

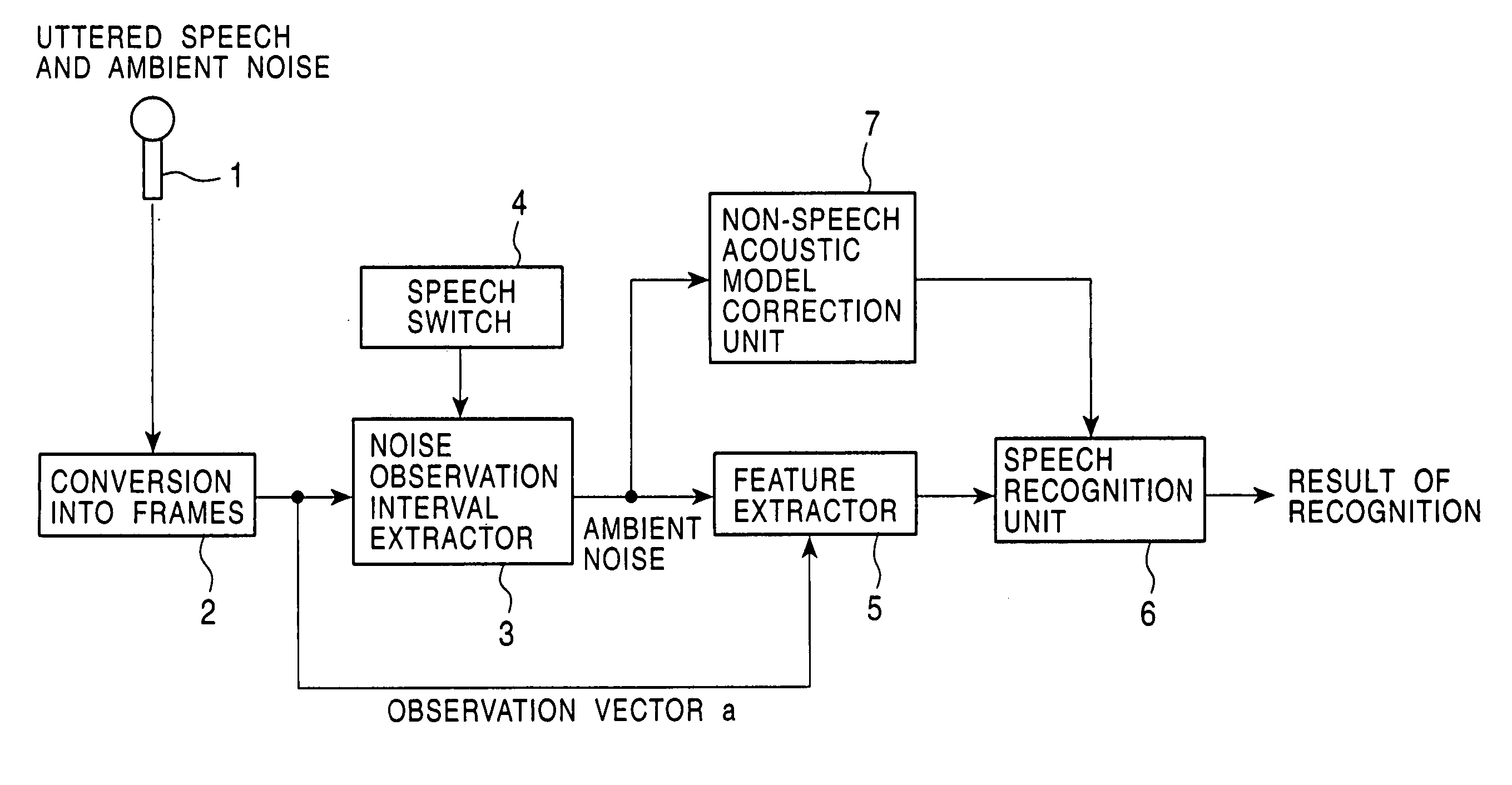

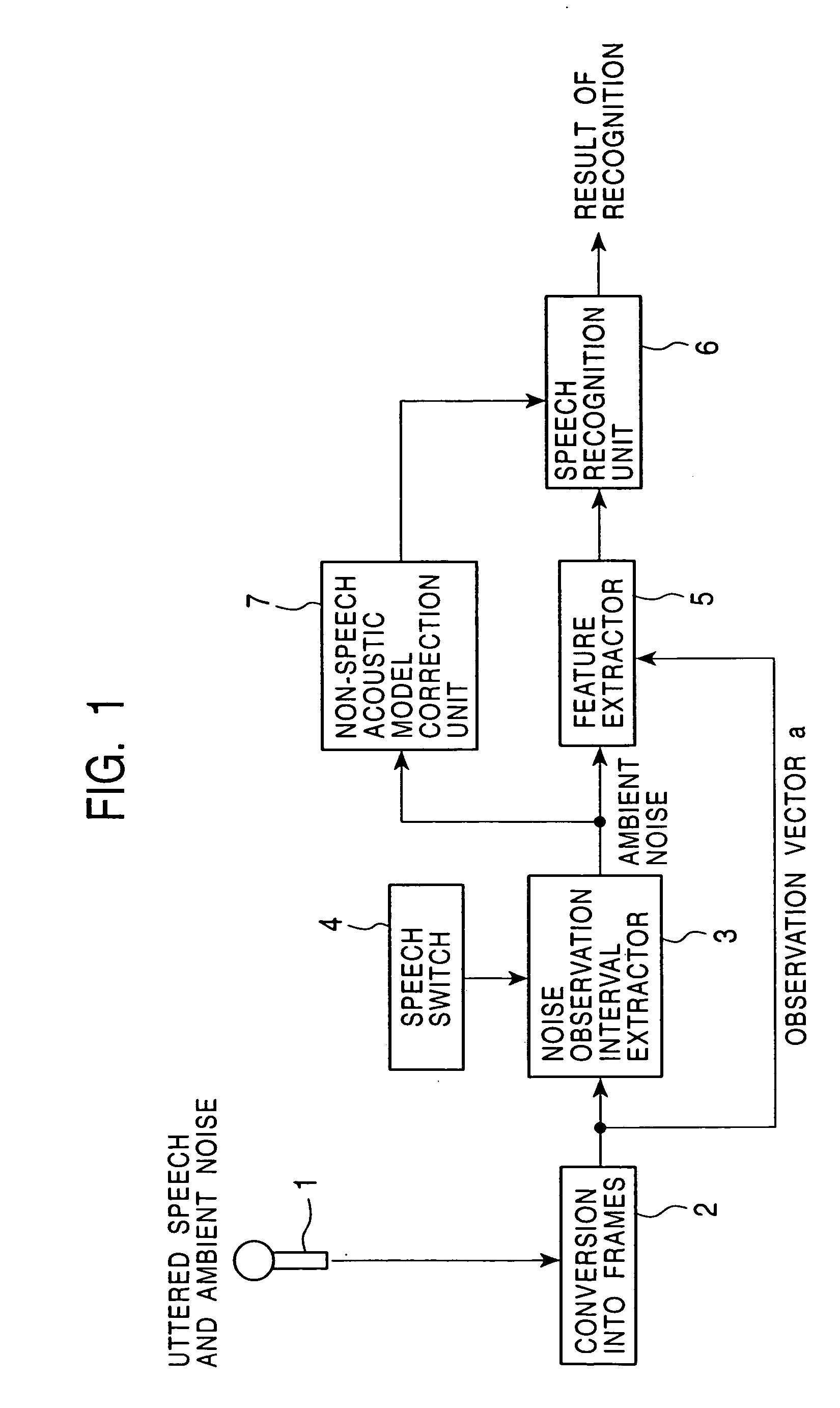

To achieve an improvement in recognition performance, a non-speech acoustic model correction unit adapts a non-speech acoustic model representing a non-speech state using input data observed during an interval immediately before a speech recognition interval during which speech recognition is performed, by means of one of the most likelihood method, the complex statistic method, and the minimum distance-maximum separation theorem.

Owner:SONY CORP

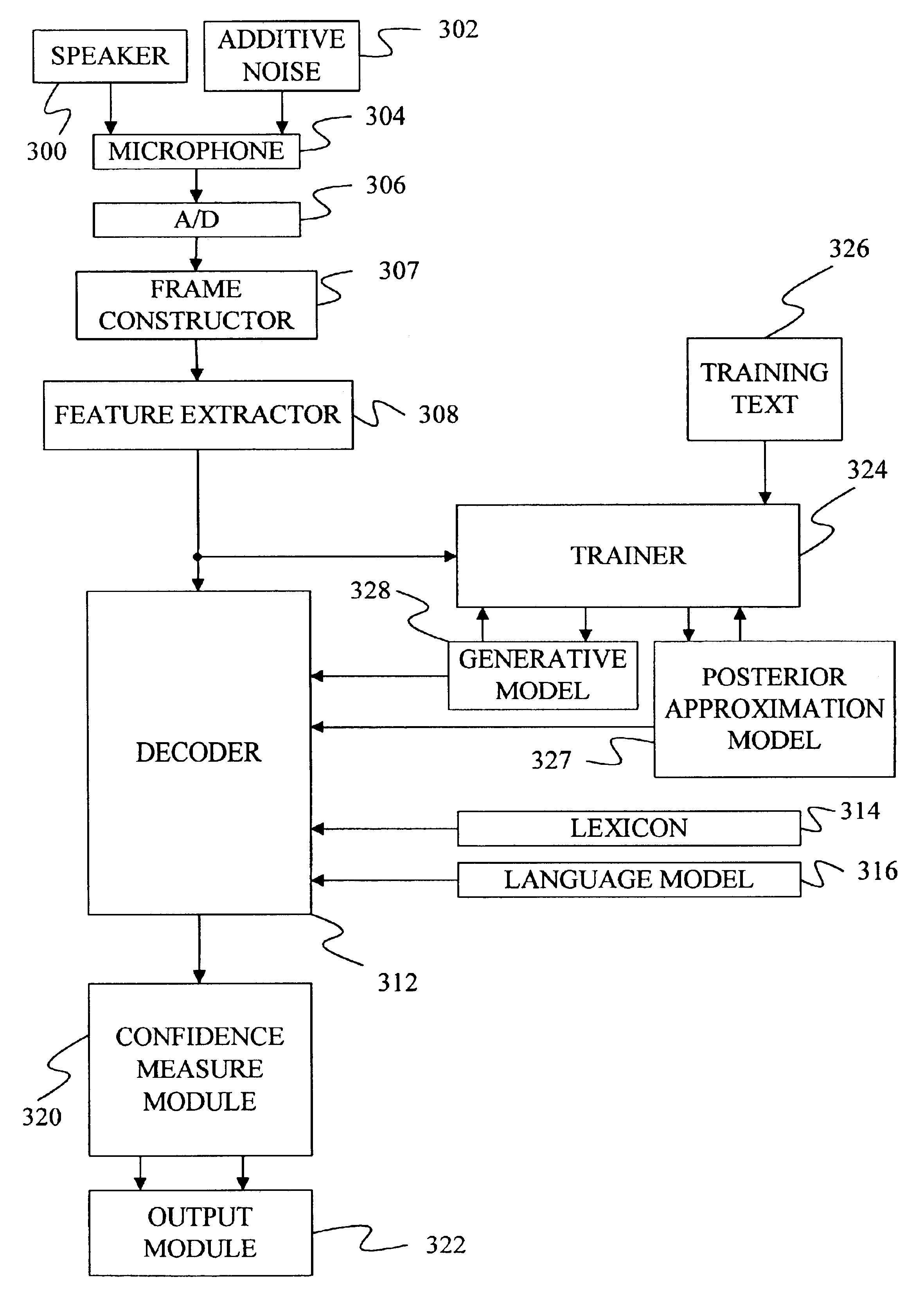

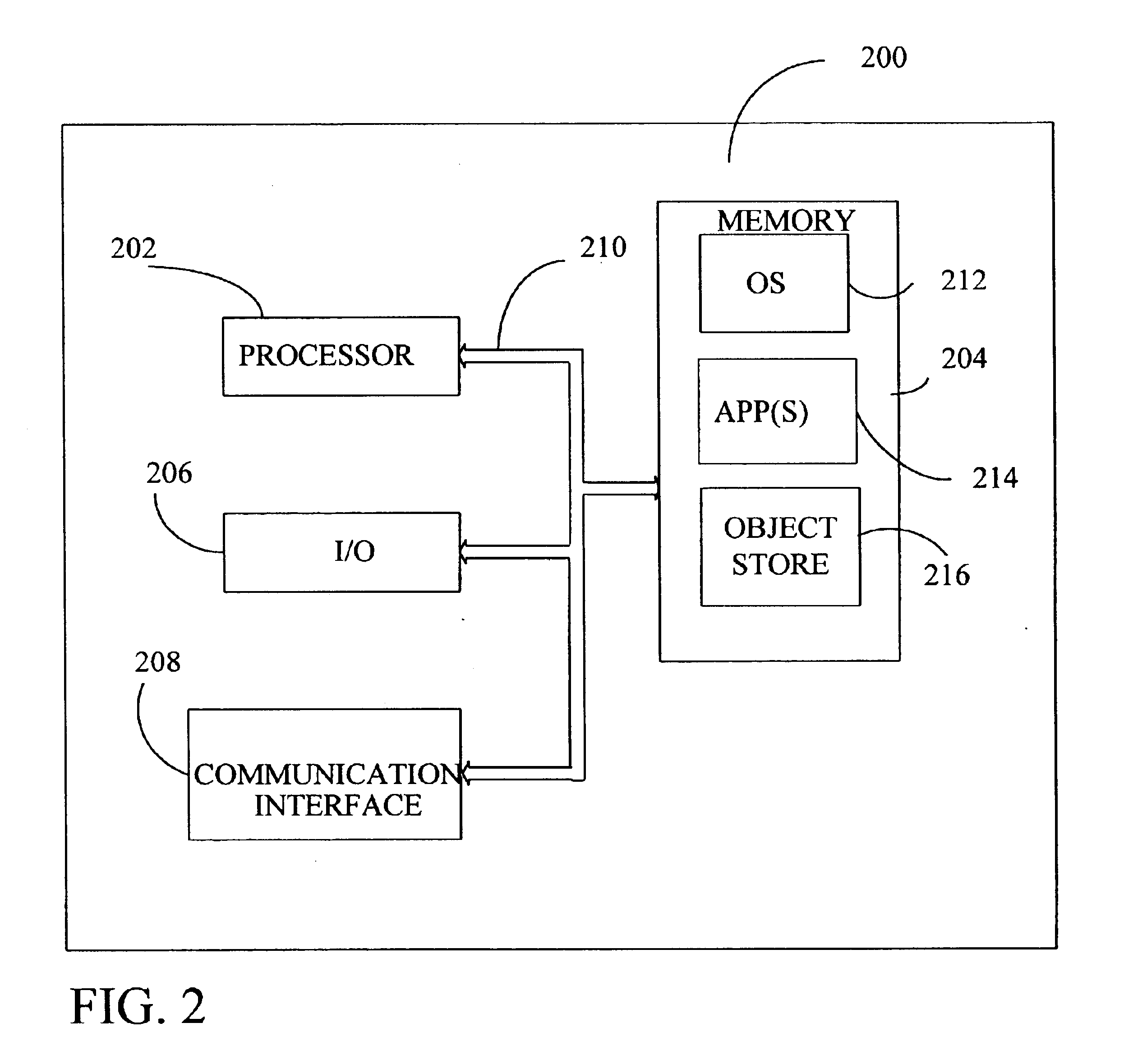

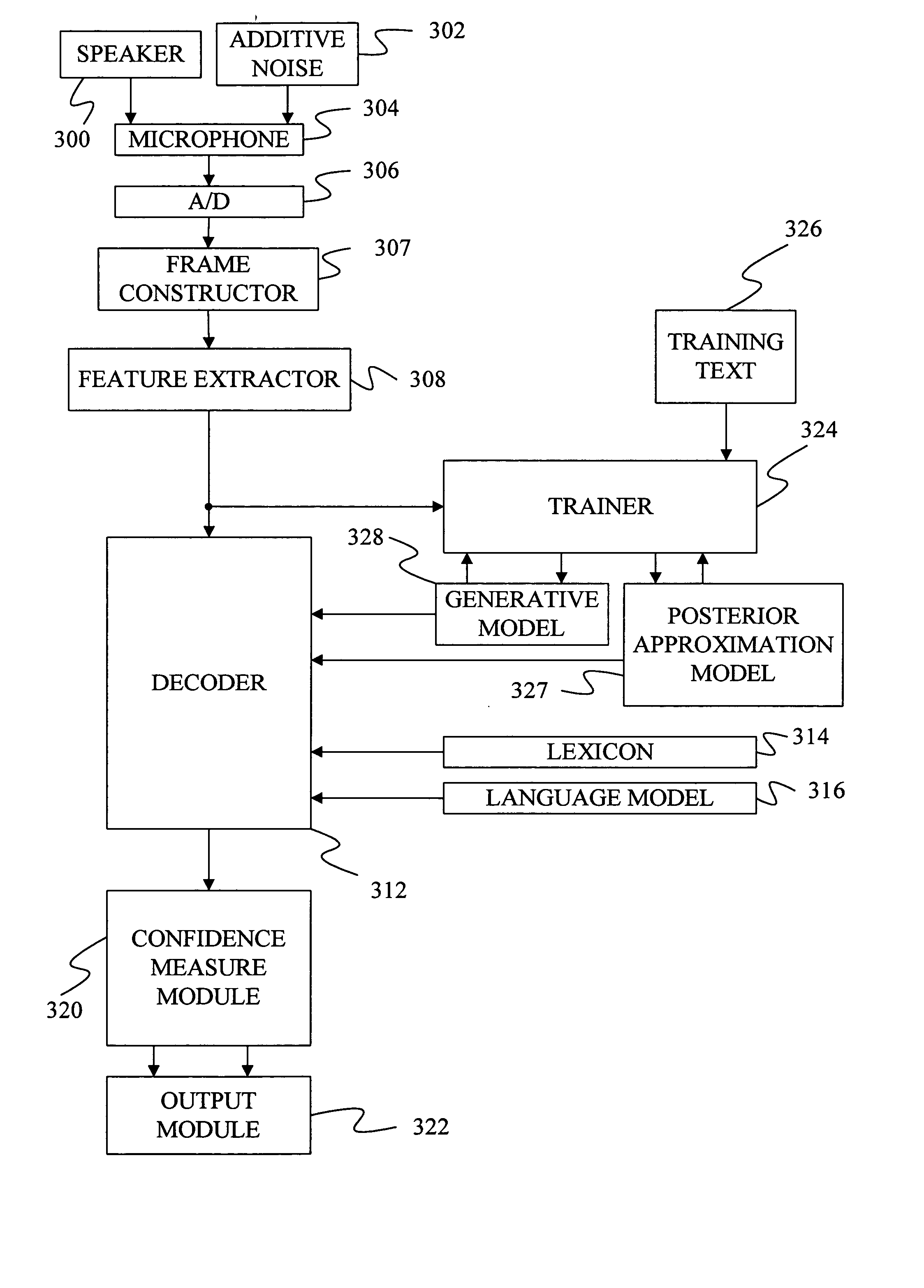

Method of speech recognition using variational inference with switching state space models

InactiveUS6931374B2Fatty/oily/floating substances removal devicesSedimentation separationState spaceSpeech identification

A method is developed which includes 1) defining a switching state space model for a continuous valued hidden production-related parameter and the observed speech acoustics, and 2) approximating a posterior probability that provides the likelihood of a sequence of the hidden production-related parameters and a sequence of speech units based on a sequence of observed input values. In approximating the posterior probability, the boundaries of the speech units are not fixed but are optimally determined. Under one embodiment, a mixture of Gaussian approximation is used. In another embodiment, an HMM posterior approximation is used.

Owner:MICROSOFT TECH LICENSING LLC

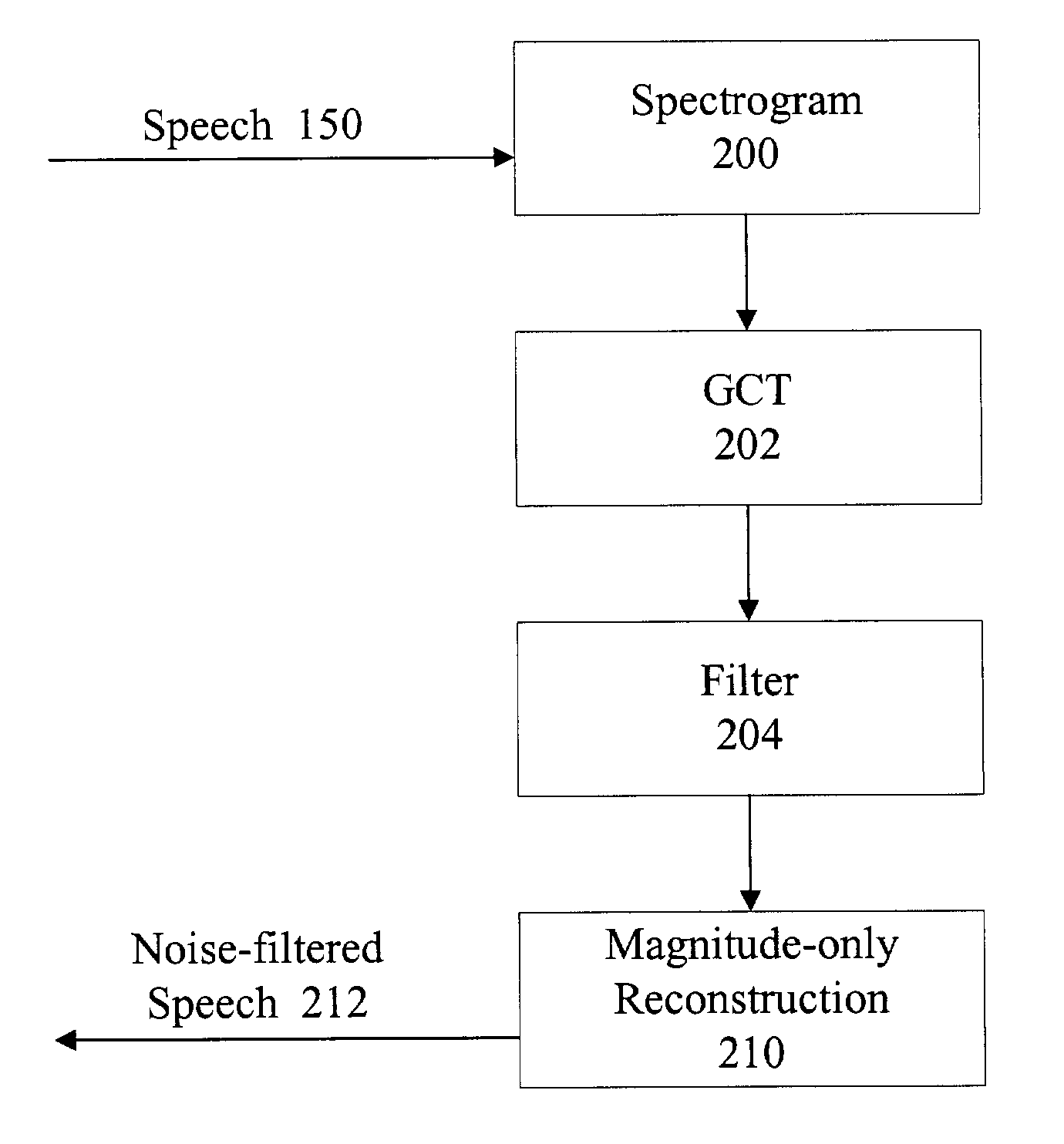

2-D processing of speech

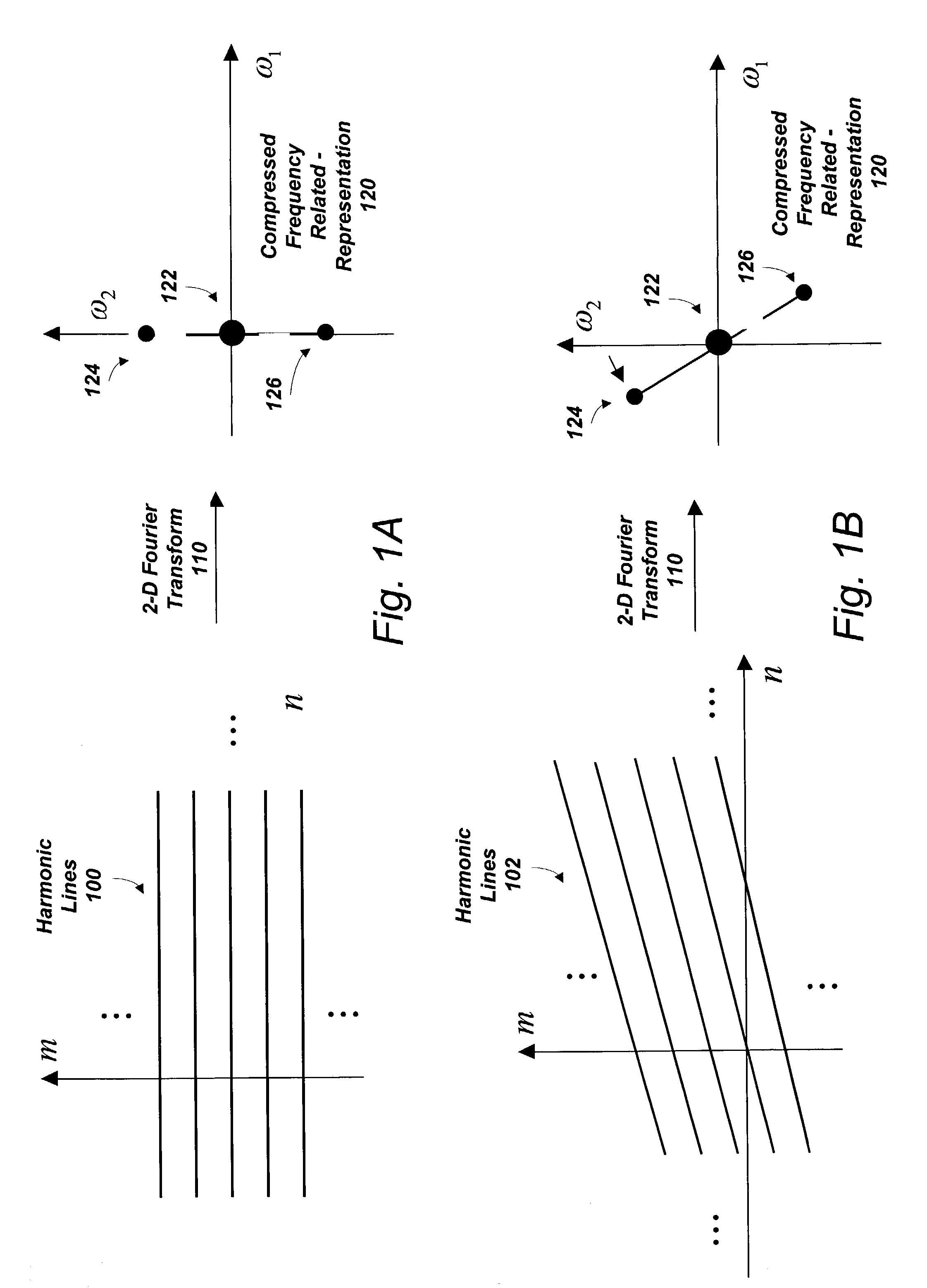

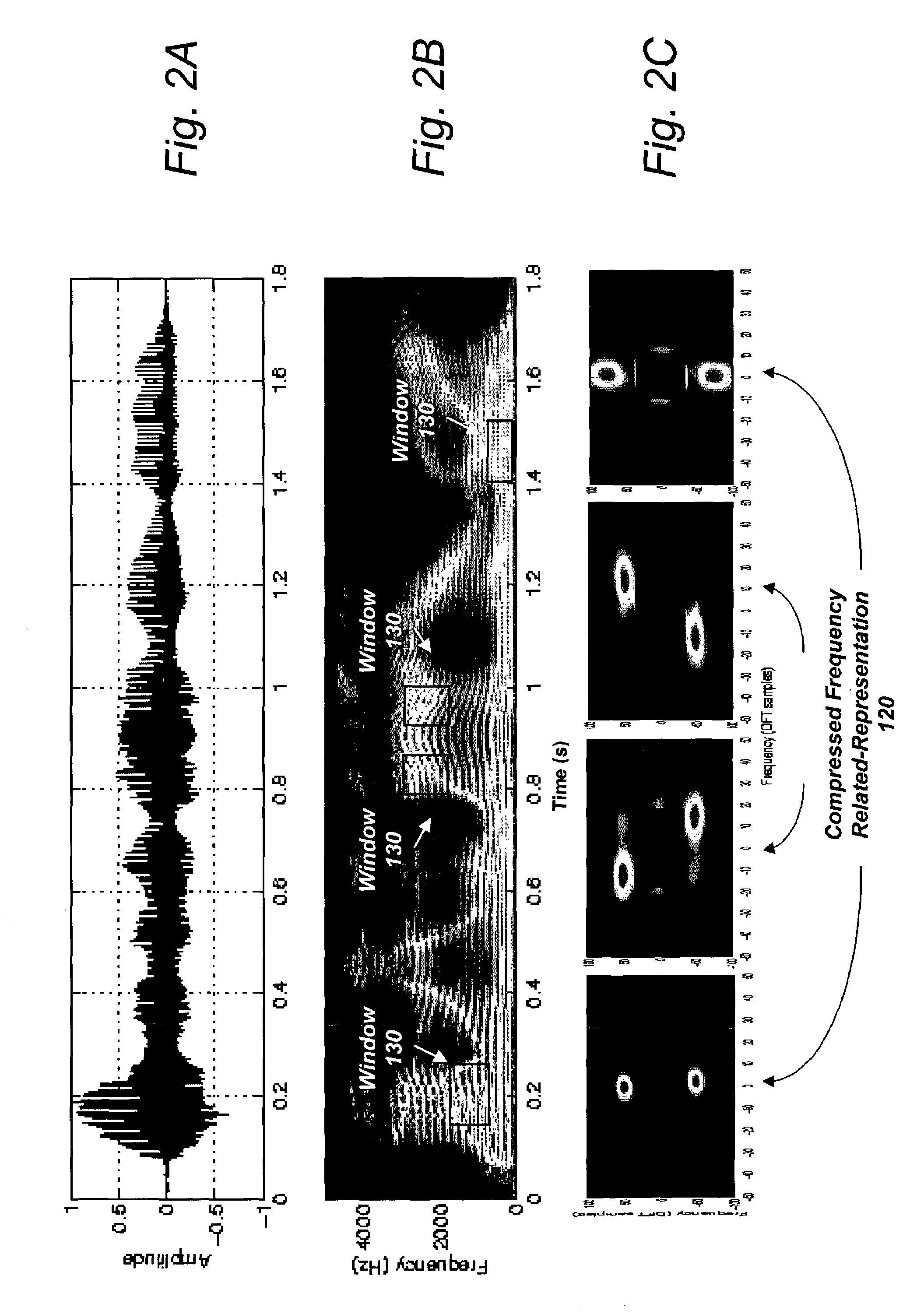

Acoustic signals are analyzed by two-dimensional (2-D) processing of the one-dimensional (1-D) speech signal in the time-frequency plane. The short-space 2-D Fourier transform of a frequency-related representation (e.g., spectrogram) of the signal is obtained. The 2-D transformation maps harmonically-related signal components to a concentrated entity in the new 2-D plane (compressed frequency-related representation). The series of operations to produce the compressed frequency-related representation is referred to as the “grating compression transform” (GCT), consistent with sine-wave grating patterns in the frequency-related representation reduced to smeared impulses. The GCT provides for speech pitch estimation. The operations may, for example, determine pitch estimates of voiced speech or provide noise filtering or speaker separation in a multiple speaker acoustic signal.

Owner:MASSACHUSETTS INST OF TECH

Model adaptation apparatus, model adaptation method, storage medium, and pattern recognition apparatus

To achieve an improvement in recognition performance, a non-speech acoustic model correction unit adapts a non-speech acoustic model representing a non-speech state using input data observed during an interval immediately before a speech recognition interval during which speech recognition is performed, by means of one of the most likelihood method, the complex statistic method, and the minimum distance-maximum separation theorem.

Owner:SONY CORP

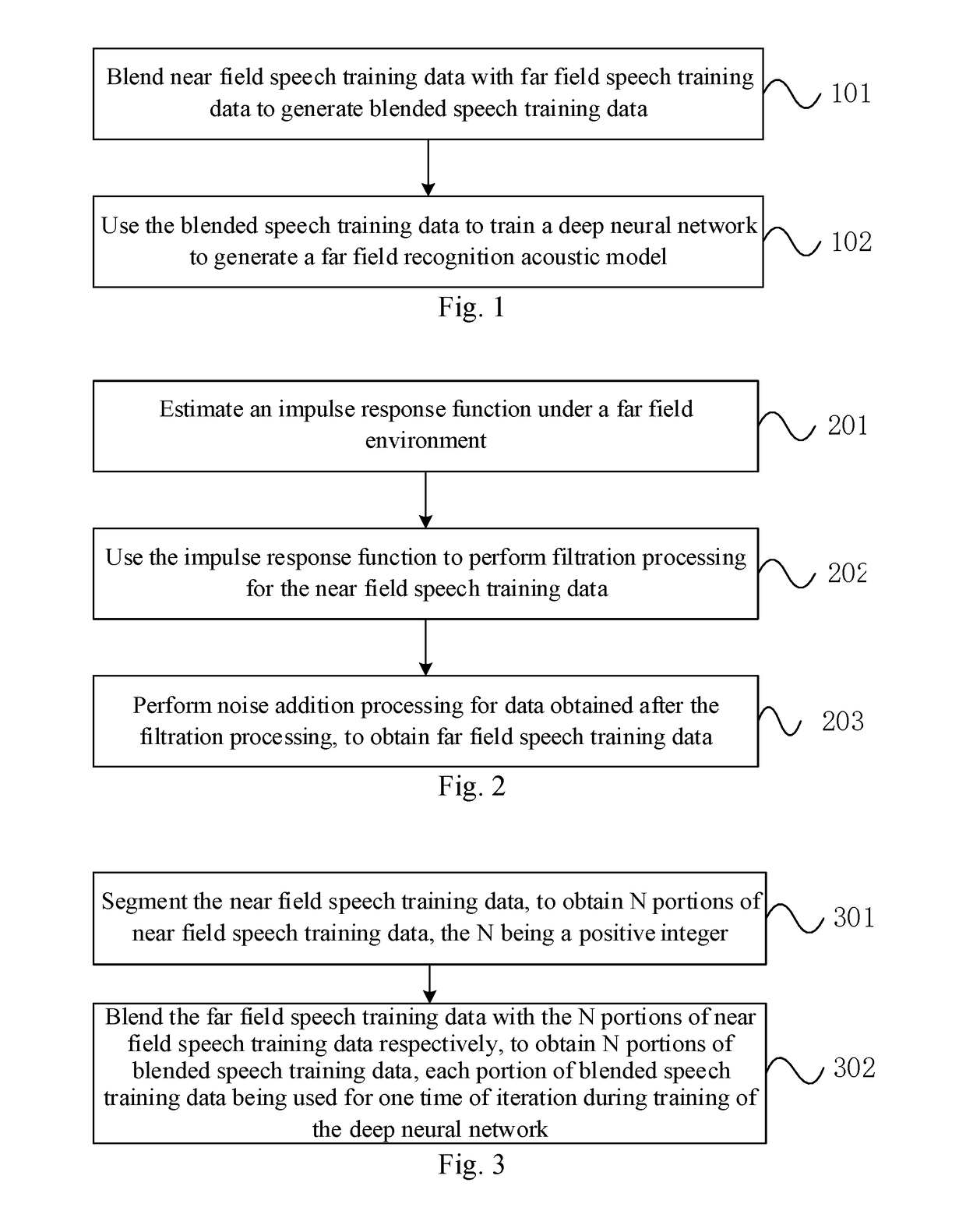

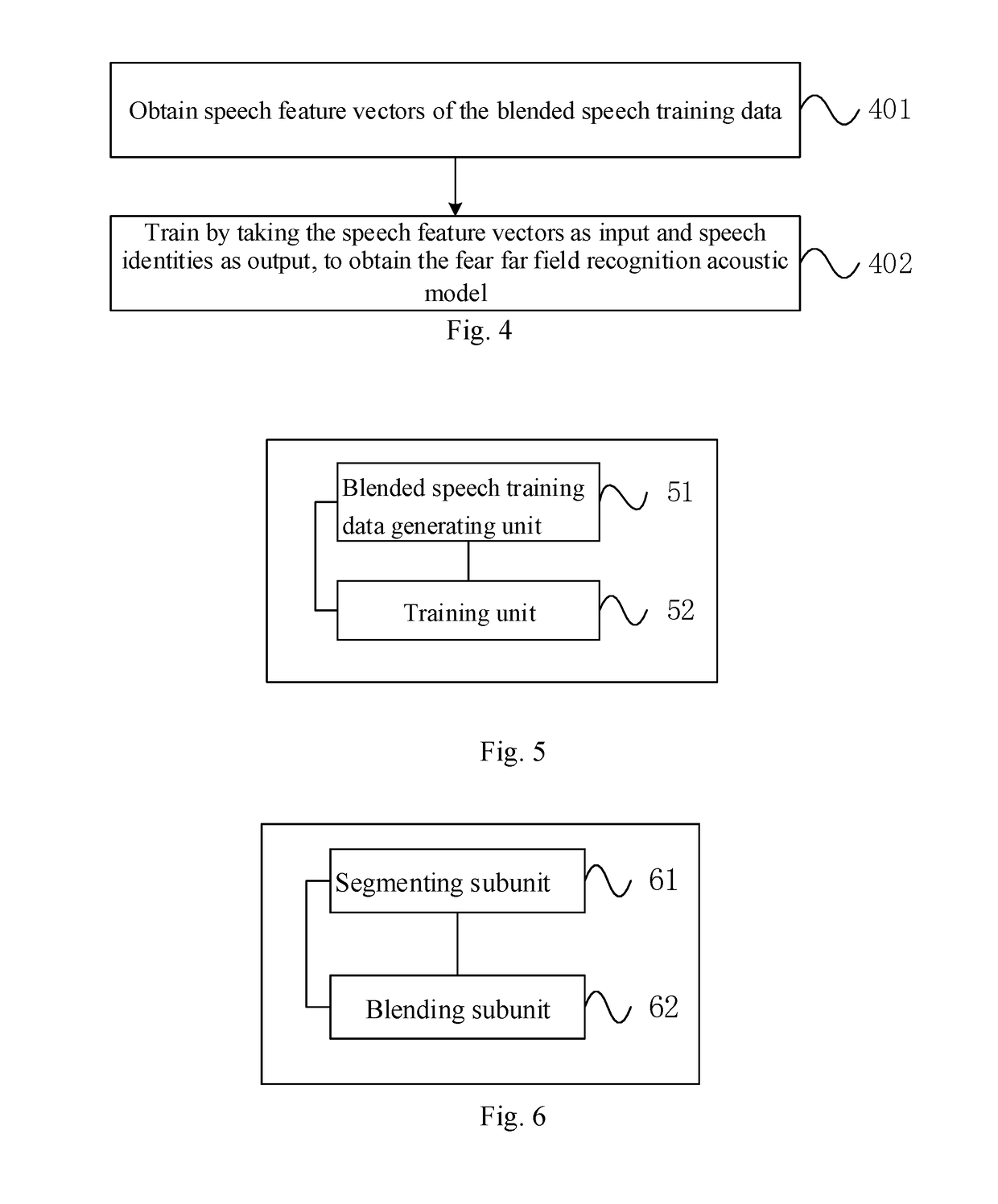

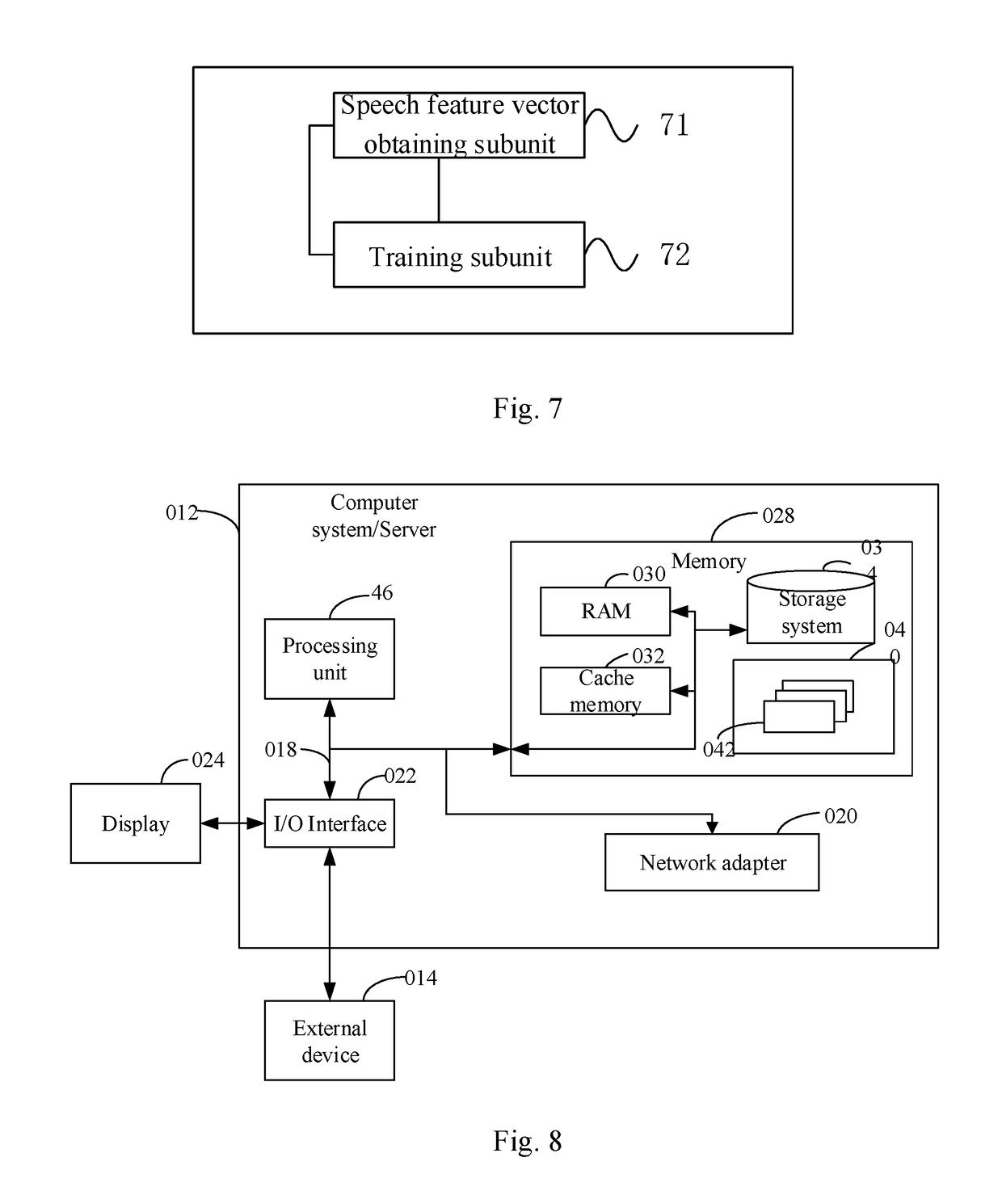

Far field speech acoustic model training method and system

InactiveUS20190043482A1Shorten the timeLow costSpeech recognitionNeural learning methodsSpeech trainingAcoustic model

The present disclosure provides a far field speech acoustic model training method and system. The method comprises: blending near field speech training data with far field speech training data to generate blended speech training data, wherein the far field speech training data is obtained by performing data augmentation processing for the near field speech training data; using the blended speech training data to train a deep neural network to generate a far field recognition acoustic model. The present disclosure can avoid the problem of spending a lot of time costs and economic costs in recording the far field speech data in the prior art; and reduce time and economic costs of obtaining the far field speech data, and improve the far field speech recognition effect.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

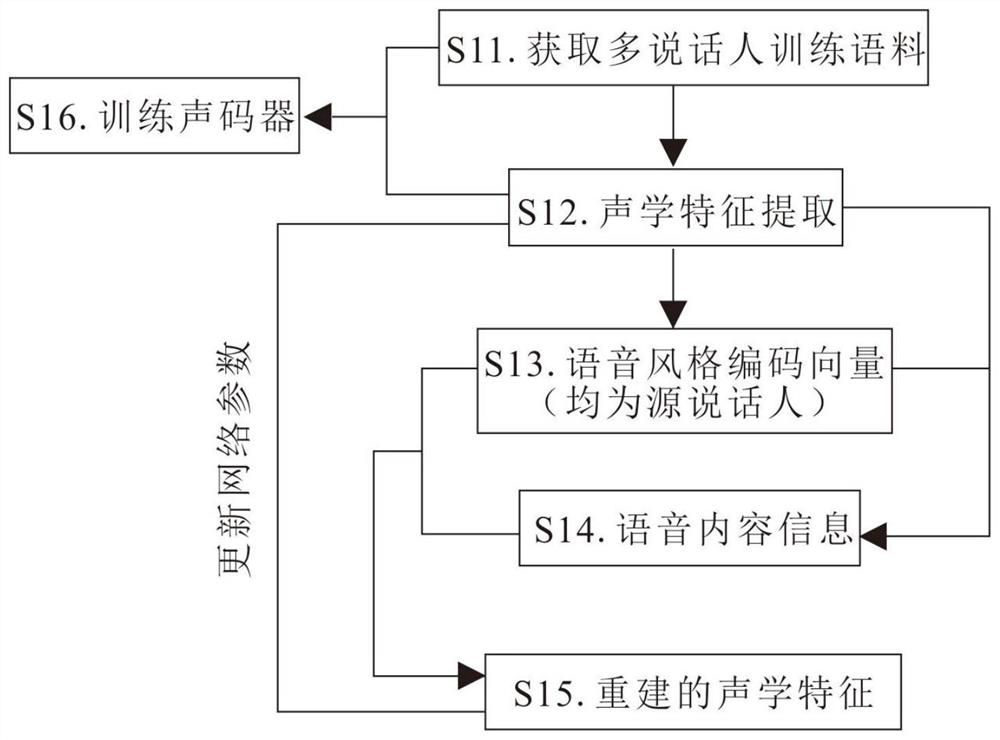

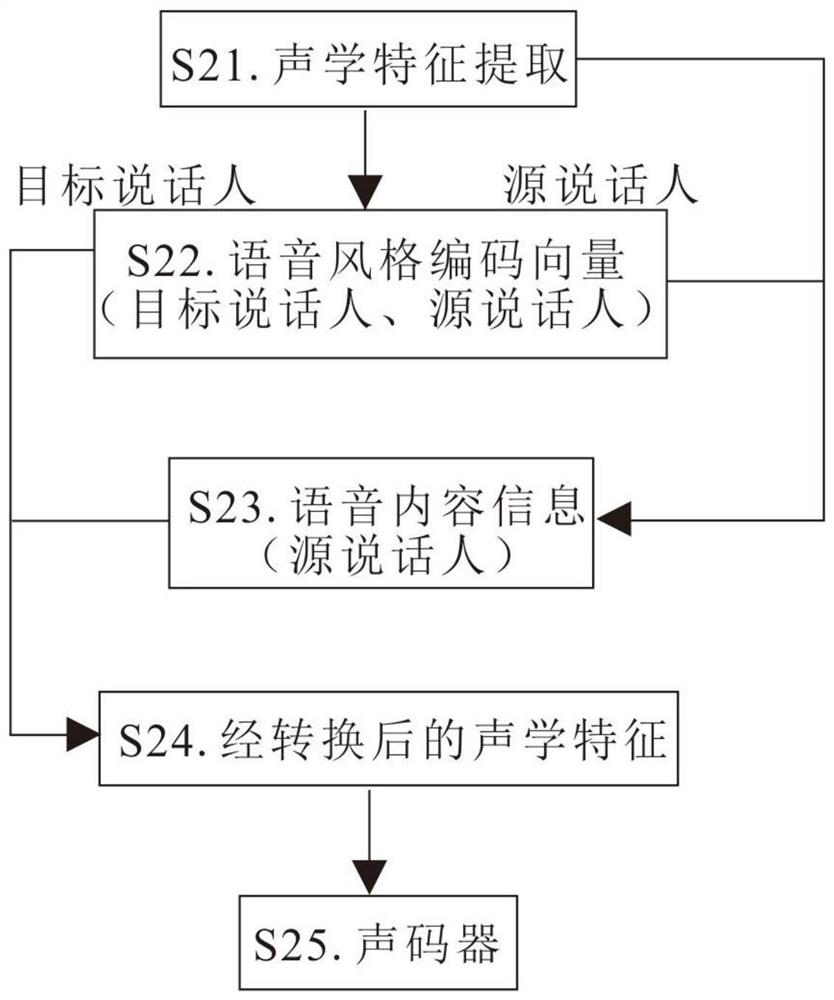

Voice conversion method and device with emotion and rhythm

ActiveCN111883149AHigh similarityImprove voice qualitySpeech analysisNeural architecturesSpeech AcousticsVoice transformation

The invention discloses a voice conversion method with emotion and rhythm, which comprises a training stage and a conversion stage, and is characterized in that a style coding layer with an attentionmechanism is used for calculating a style coding vector of a speaker; and the style coding vector and the speaker voice acoustic features are input into a self-coding network with a box link togetherfor training and conversion, and finally the acoustic features are converted into audio through a vocoder. On the basis of a traditional voice conversion method, rhythm and emotion information of a speaker is introduced, so that the converted voice has emotion and rhythm of the voice of a target speaker, and the method has high similarity and high voice quality in many-to-many speaker voice conversion tasks such as intra-set pairing, intra-set pairing, extra-set pairing, extra-set pairing and the like.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

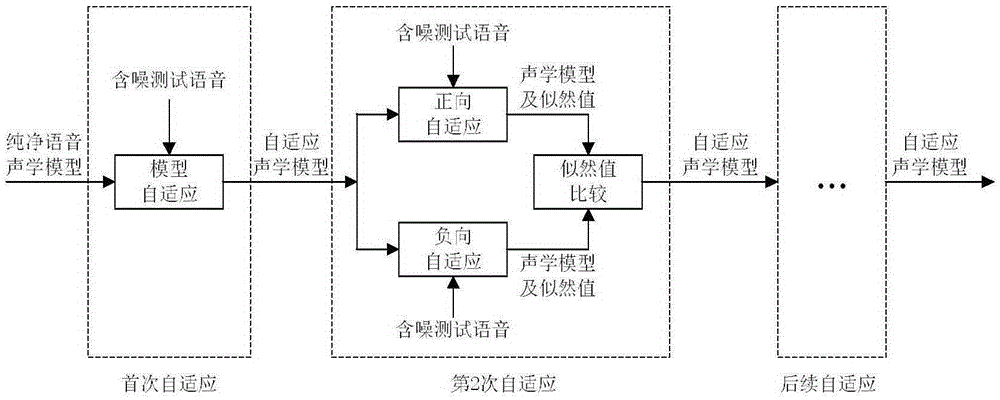

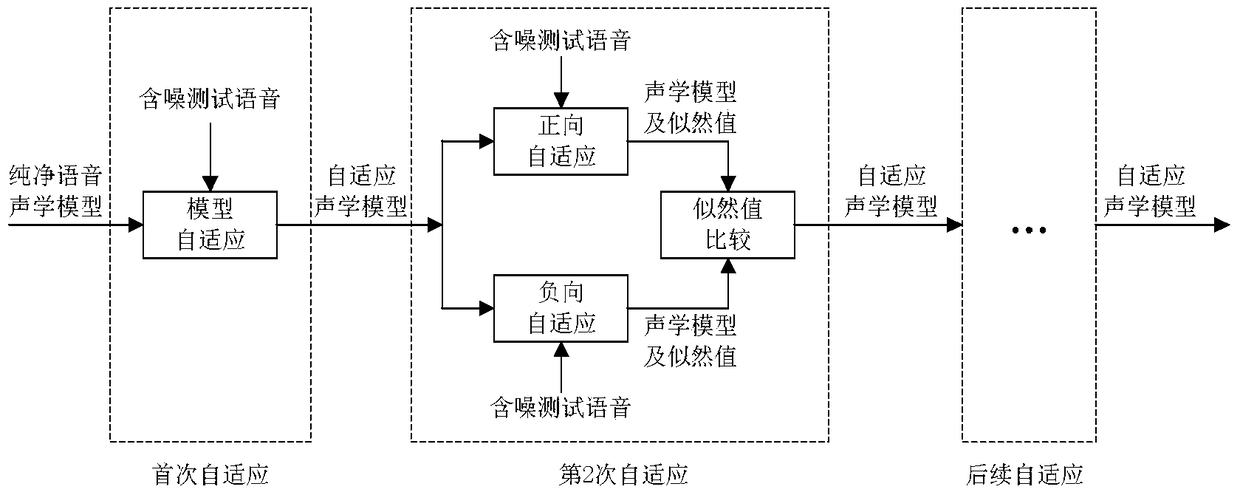

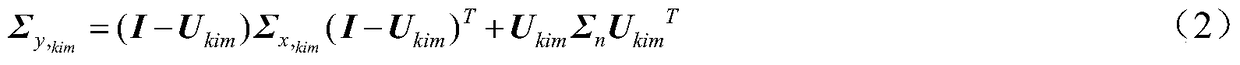

Multiple self-adaption based model compensation type speech recognition method

The invention discloses a multiple self-adaption based model compensation type speech recognition method. The method includes firstly, subjecting parameters of a clean speech acoustic model trained in a training environment in advance to transformation so as to obtain a noisy speech acoustic model matched with a practical testing environment; then, taking the noisy speech acoustic model obtained through first self-adaption as a base environment acoustic model, constructing a transformation relation between noisy speech corresponding to the base environment acoustic model and noisy testing speech of a practical environment, and subjecting the base environment acoustic model to model self-adaption again, wherein the model self-adaption includes positive self-adaption and negative self-adaption; finally, comparing output likelihood values of the positive self-adaption and the negative self-adaption, and taking the noisy speech acoustic model with the larger likelihood value as a model self-adaption result. According to the multiple self-adaption based model compensation type speech recognition method, model self-adaption precision can be further improved so as to obtain the noisy speech acoustic model better matched with the practical testing environment.

Owner:HOHAI UNIV

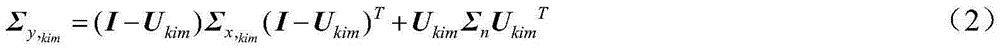

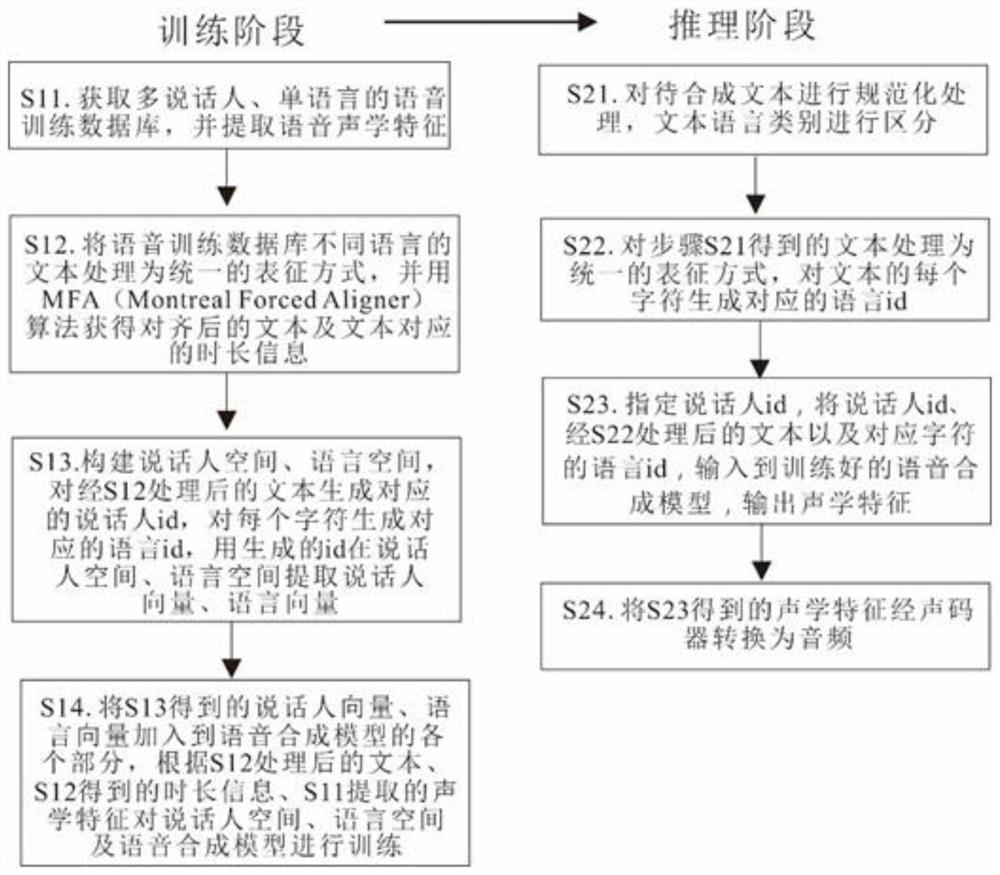

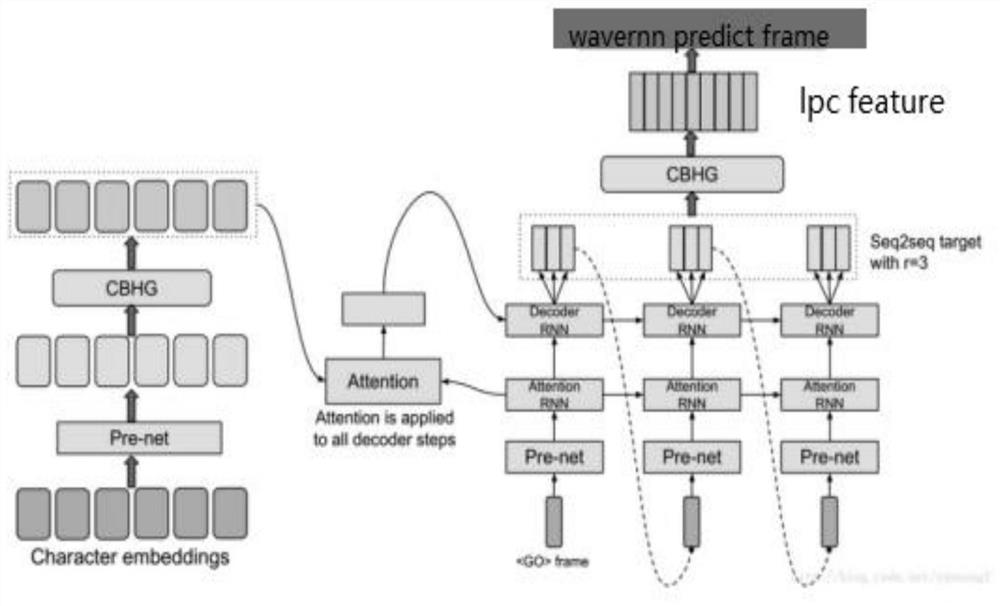

Multi-speaker and multi-language speech synthesis method and system thereof

ActiveCN112435650AKeep Tonal ConsistencyAchieve conversionSpeech synthesisSynthesis methodsSpeech Acoustics

The invention discloses a multi-speaker and multi-language speech synthesis method. The method comprises the following steps: extracting speech acoustic features; processing the texts in different languages into a unified representation mode, and aligning the audio with the texts to obtain duration information; constructing a speaker space and a language space, generating a speaker id and a language id, extracting a speaker vector and a language vector, adding the speaker vector and the language vector into the initial speech synthesis model, and training the initial speech synthesis model byusing the aligned text, duration information and speech acoustic features to obtain a speech synthesis model; processing the to-be-synthesized text to generate the speaker id and the language id; andinputting the speaker id, the text and the language id into a speech synthesis model, outputting speech acoustic features and converting the speech acoustic features into audio. A system is also disclosed. According to the method, unentanglement of the characteristics of the speaker and the language characteristics is realized, and conversion of the speaker or the language can be realized only bychanging the id.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

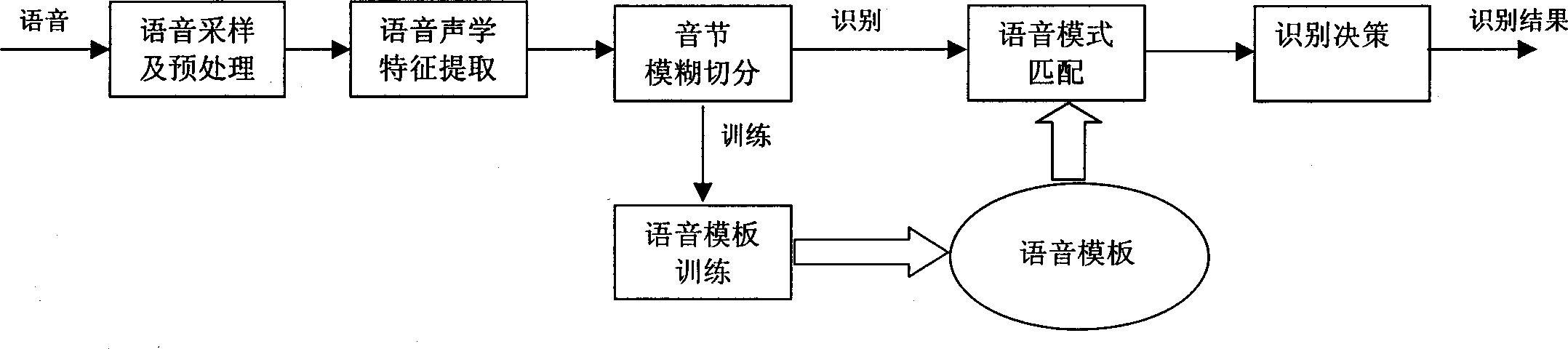

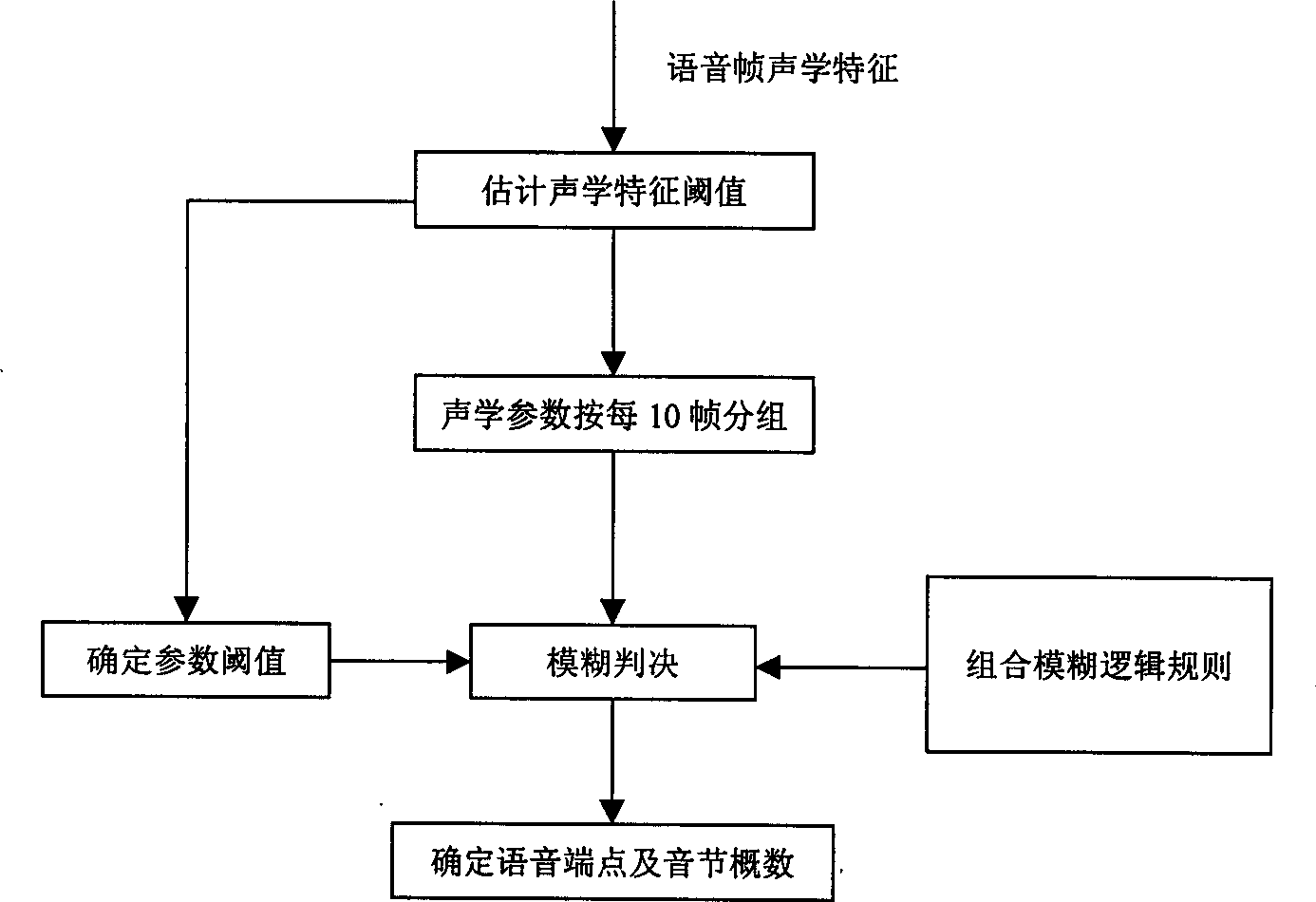

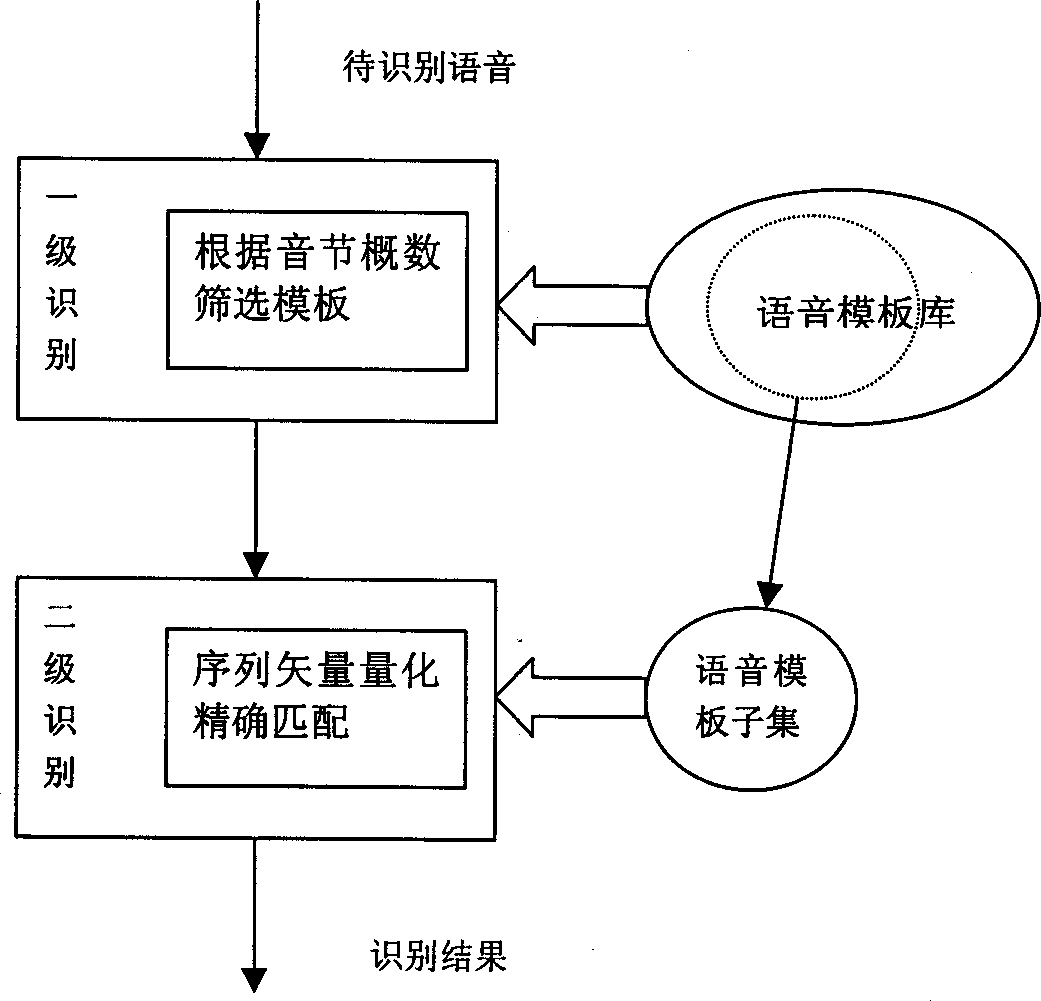

Fast voice identifying method for Chinese phrase of specific person

The present invented method comprises speech sampling and preprocessing, speech acoustic feature extraction, syllable fuzzy division, speech template training, speech pattern matching and recognitiondecision. Said method adopts uniquie quick method for processing signal to support Chinese phrase recognition, and processes the advantages of less resource consumption, quick operation speed and high recognition rate. Said method is applicable to the products of toy, personal digital processing device and communication terminal, etc.

Owner:北京安可尔通讯技术有限公司

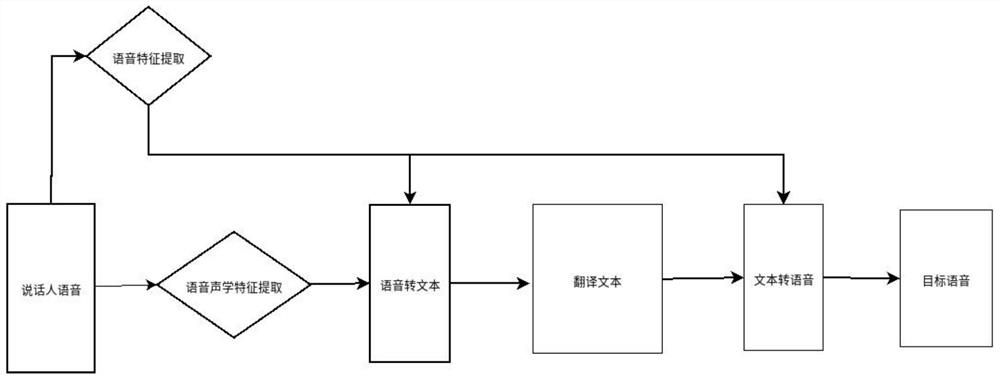

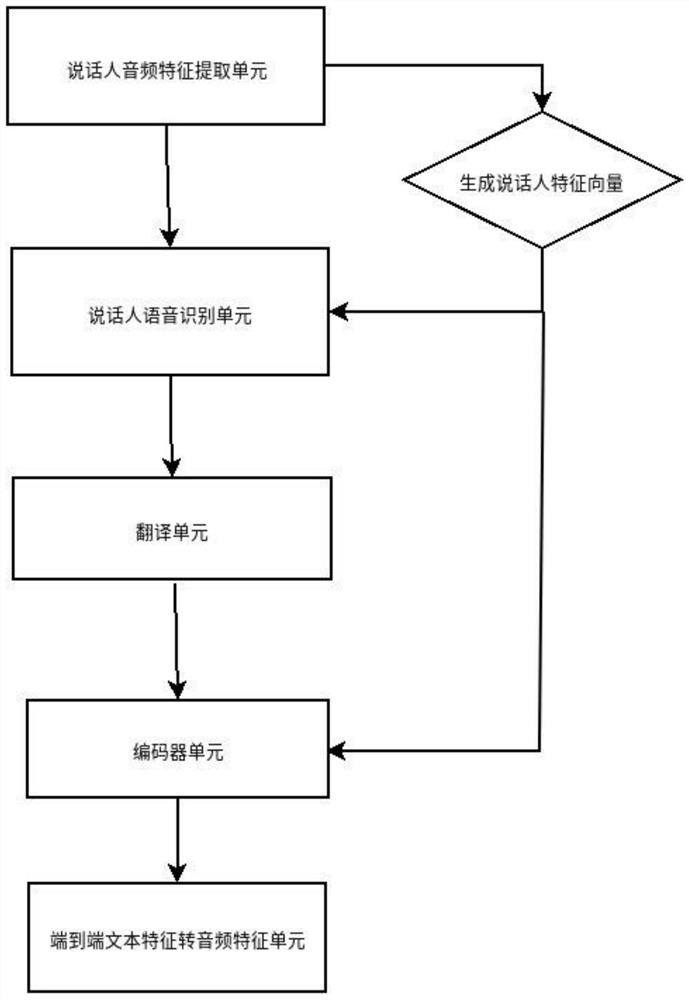

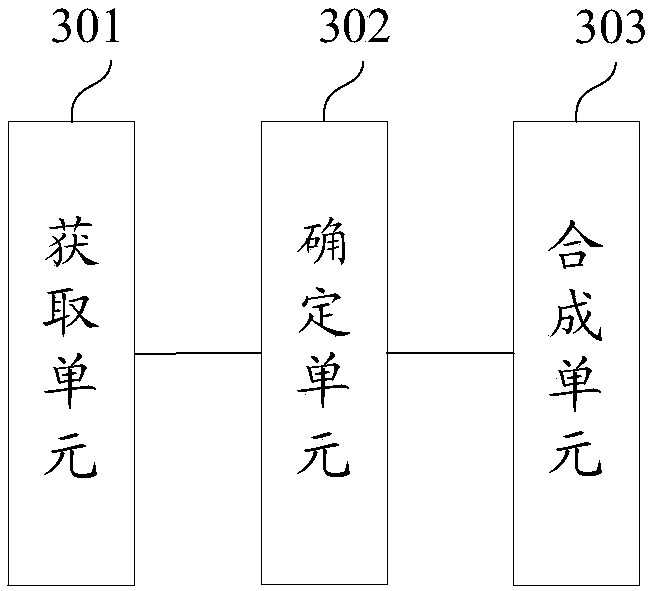

Personalized speech translation method and device based on speaker features

ActiveCN111785258AAccurate translationRealize the function of simultaneous interpretationNatural language translationSpeech recognitionText recognitionFeature extraction

The invention discloses a personalized speech translation method based on speaker features, wherein the personalized speech translation method comprises the following steps: collecting speaker speech,extracting speech acoustic features of the speaker speech, and converting the speech acoustic features into speaker feature vectors; carrying out speaker text recognition by combining the speaker feature vectors with speaker voice acoustic features; translating a text of a speaker into a text of a target language; combining a text code of the target language generated in the previous step with the speaker feature vectors generated in the first step to obtain a target text vector with speaker features; and generating a target voice from the target text vector generated in the previous step through a text-to-voice model. By adding the speaker feature extraction network, different speaker mood tones can be added into the speech recognition and text-to-speech conversion process, and the meaning of the speaker can be more accurately translated. The invention further discloses a personalized speech translation device based on the speaker features.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

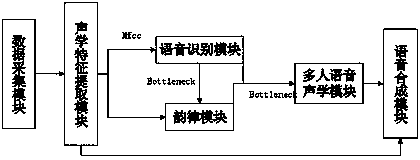

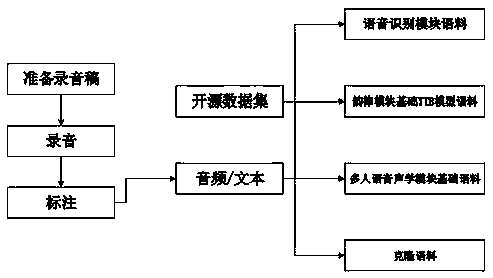

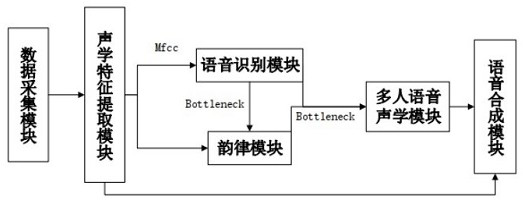

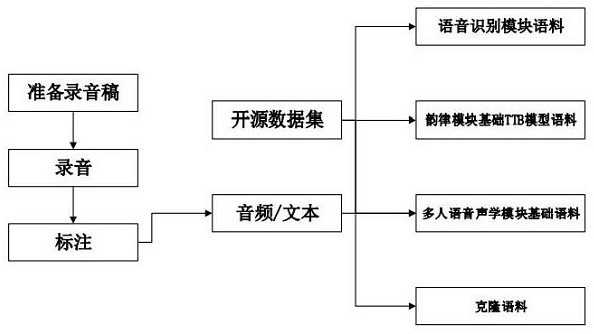

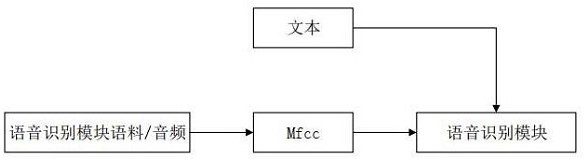

System and method for training cloned tone and rhythm based on Bottleneck features

ActiveCN111210803AQuality improvementReduce clone samplesSpeech recognitionSpeech synthesisFeature extractionData acquisition

The invention relates to the technical field of voice synthesis, voice recognition and voice cloning, and provides a voice cloning implementation scheme based on Bottleneck features (language featuresof audio) by combining a voice synthesis technology, a voice recognition technology and a transfer learning technology. A training system and a training method are included. The TTS service with highnaturalness and similarity is provided by using a small number of samples, so that the TTS service with target user characteristics is provided, and problems of large service sample size, long manufacturing period and high labor cost of a voice synthesis technology are solved. The training system comprises a data acquisition module, an acoustic feature extraction module, a voice recognition module, a rhythm module, a multi-person voice acoustic module and a voice synthesis module. The invention further provides a training method based on the system. The training method comprises the steps oftraining corpus preparation, acoustic feature extraction, training and fine adjustment of all modules and speech synthesis.

Owner:NANJING SILICON INTELLIGENCE TECH CO LTD

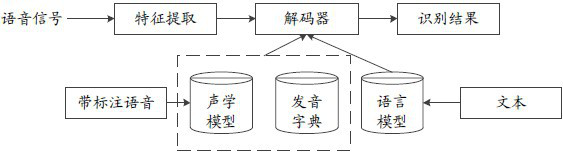

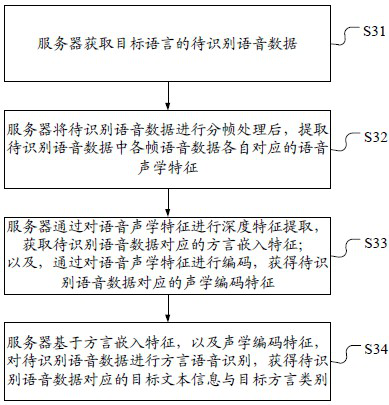

Speech recognition method and device, electronic equipment and storage medium

ActiveCN113823262AEfficient and accurate identificationRealize identificationSpeech recognitionNeural learning methodsFeature extractionSpeech Acoustics

The invention relates to the technical field of speech recognition, in particular to a speech recognition method and device, electronic equipment and a storage medium, which can be applied to various scenes such as cloud technology, artificial intelligence, intelligent traffic and auxiliary driving and are used for efficiently and accurately realizing speech recognition of multiple dialect target languages. The method comprises the following steps: acquiring to-be-recognized voice data of a target language; extracting voice acoustic features corresponding to each frame of voice data in the to-be-recognized voice data; performing deep feature extraction on the voice acoustic features to obtain corresponding dialect embedding features; encoding the voice acoustic features to obtain corresponding acoustic encoding features; and based on the dialect embedding feature and the acoustic coding feature, performing dialect speech recognition on the to-be-recognized speech data to obtain target text information and a target dialect category corresponding to the to-be-recognized speech data. According to the method, the dialect embedding feature and the acoustic coding feature are combined for comprehensive learning, so that speech recognition for recognizing various dialects can be efficiently and accurately realized.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Model adaptation apparatus, model adaptation method, storage medium, and pattern recognition apparatus

To achieve an improvement in recognition performance, a non-speech acoustic model correction unit adapts a non-speech acoustic model representing a non-speech state using input data observed during an interval immediately before a speech recognition interval during which speech recognition is performed, by means of one of the most likelihood method, the complex statistic method, and the minimum distance-maximum separation theorem.

Owner:SONY GRP CORP

Method of speech recognition using variational inference with switching state space models

InactiveUS20050119887A1Fatty/oily/floating substances removal devicesSedimentation separationState spaceSpeech Acoustics

A method is developed which includes 1) defining a switching state space model for a continuous valued hidden production-related parameter and the observed speech acoustics, and 2) approximating a posterior probability that provides the likelihood of a sequence of the hidden production-related parameters and a sequence of speech units based on a sequence of observed input values. In approximating the posterior probability, the boundaries of the speech units are not fixed but are optimally determined. Under one embodiment, a mixture of Gaussian approximation is used. In another embodiment, an HMM posterior approximation is used.

Owner:MICROSOFT TECH LICENSING LLC

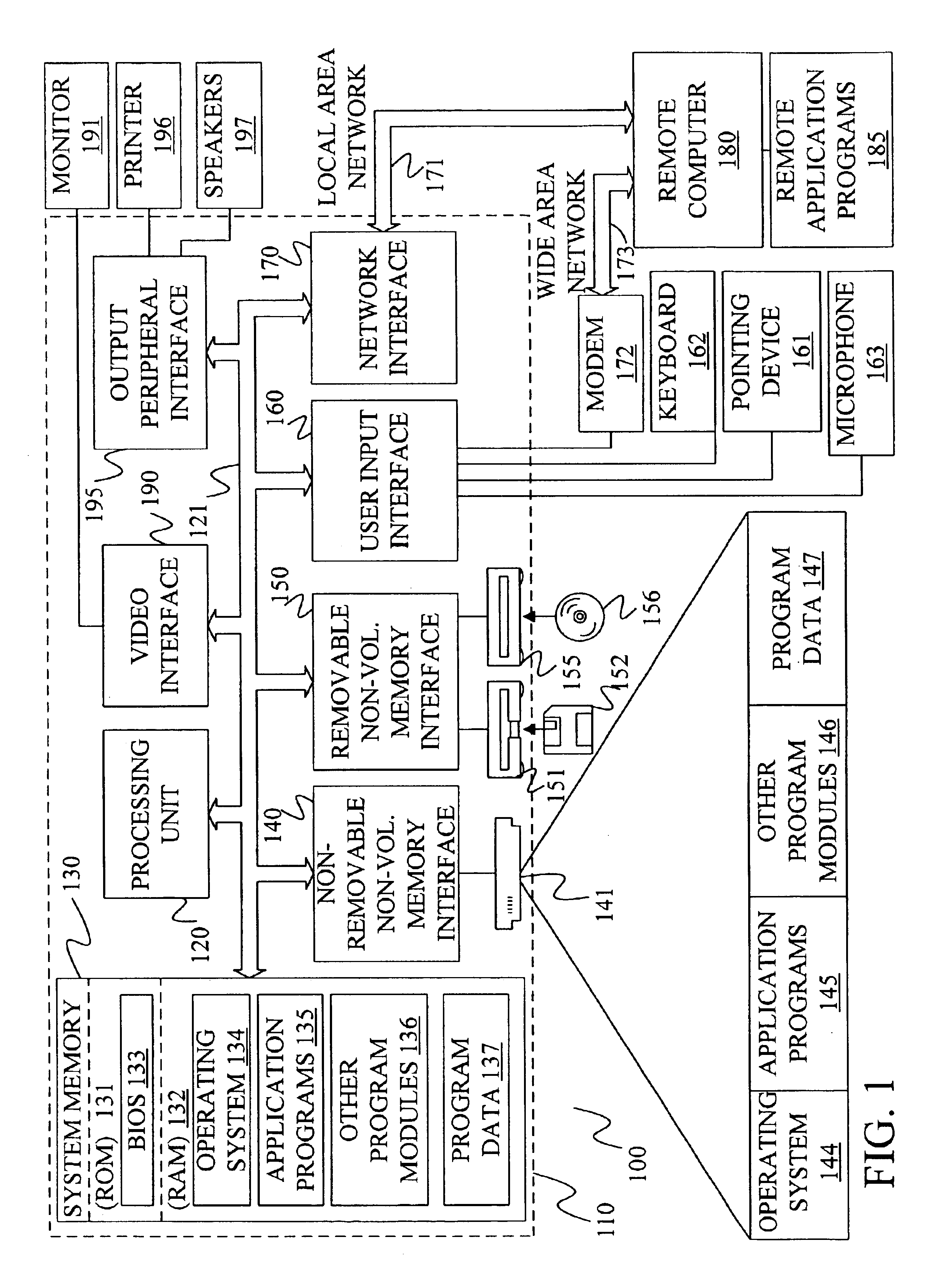

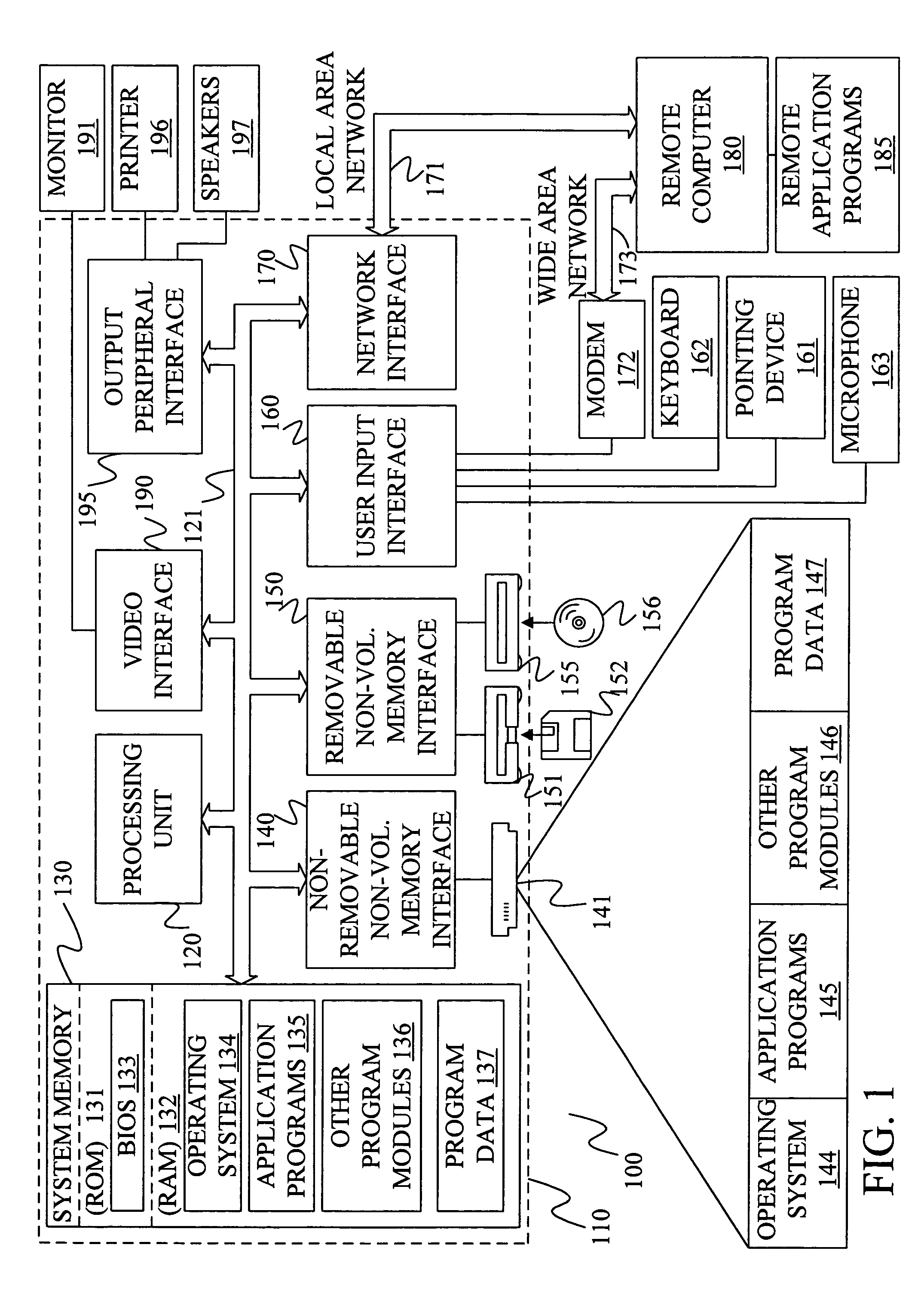

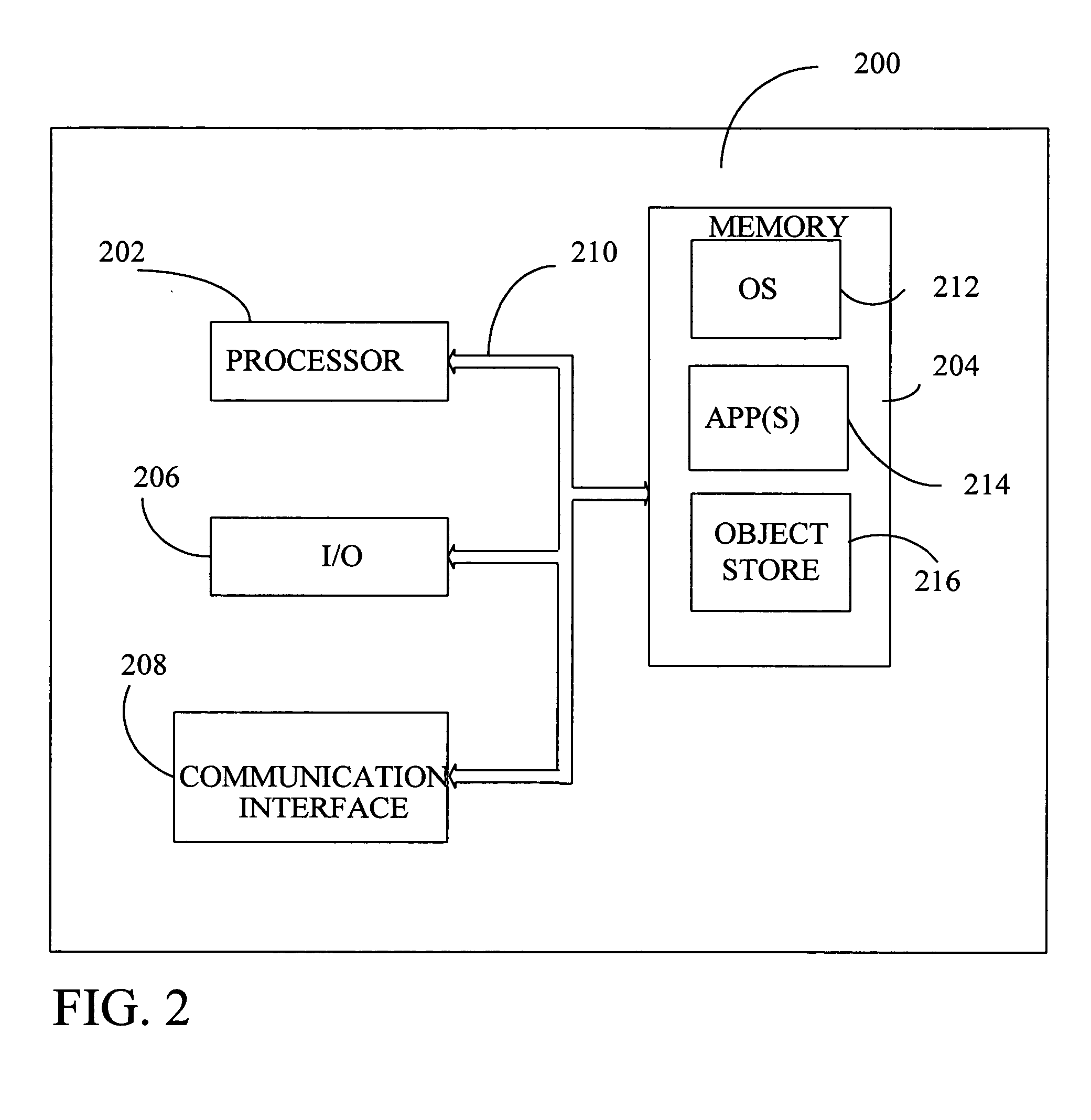

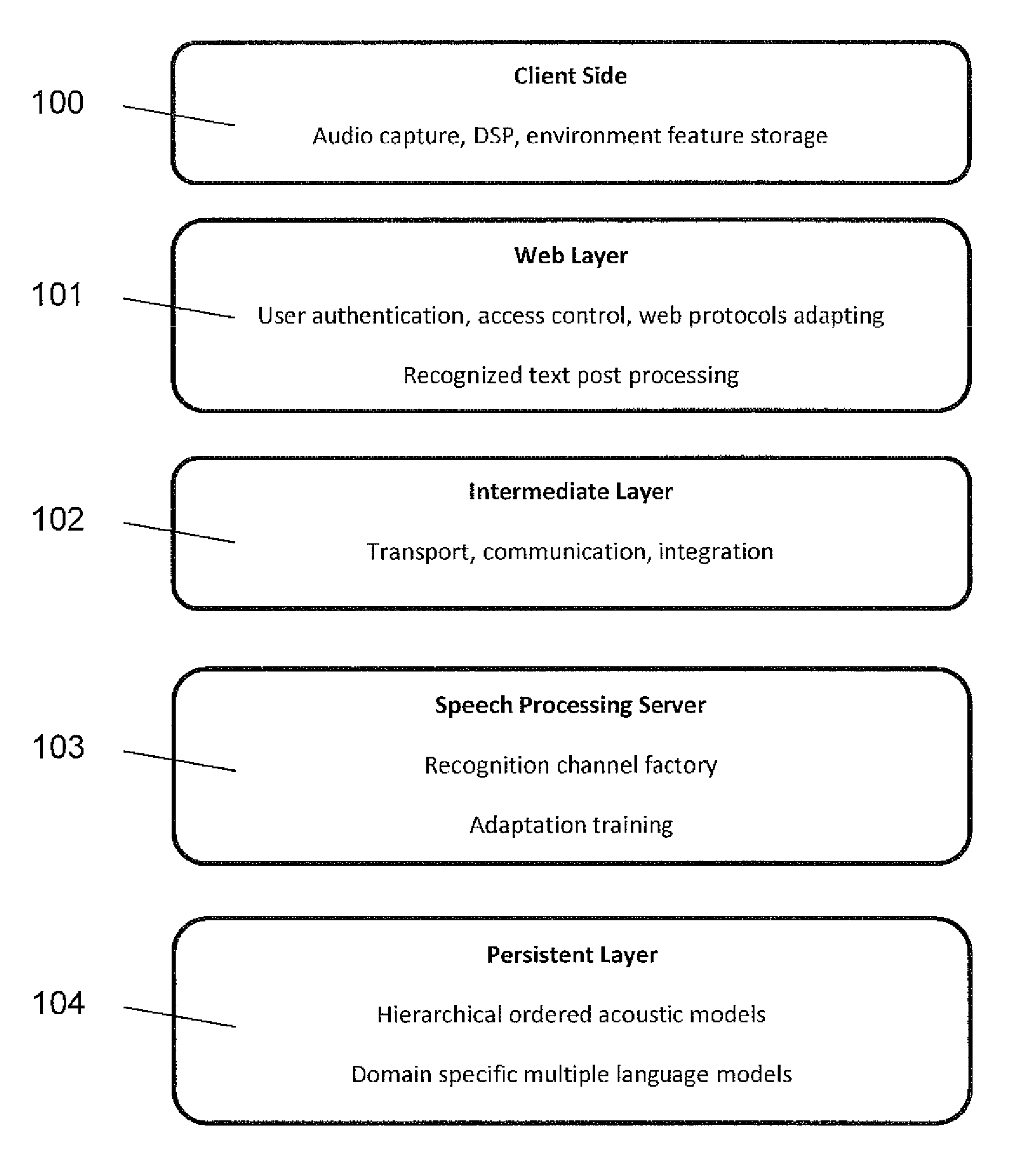

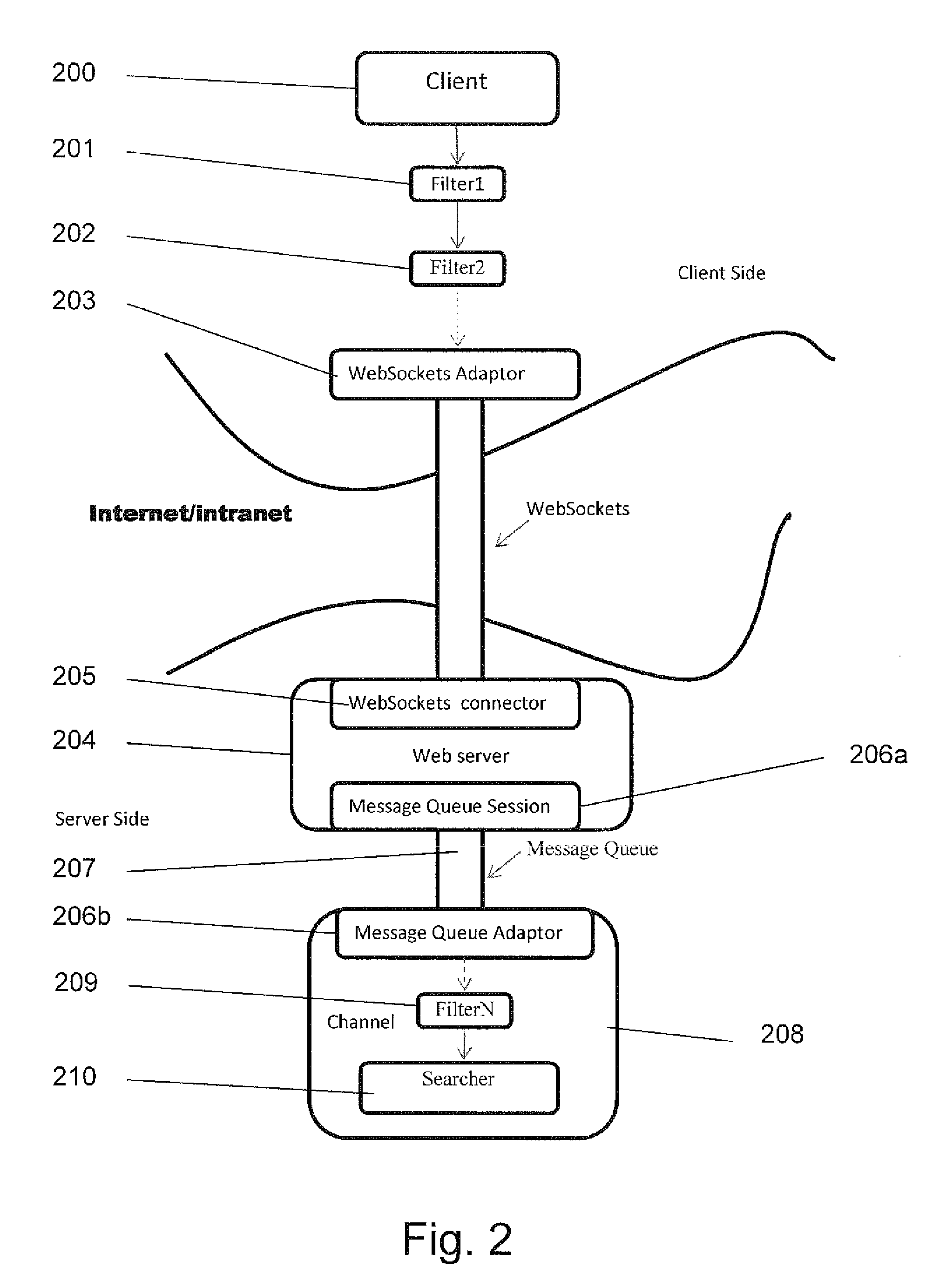

Client-server architecture for automatic speech recognition applications

A client-server architecture for Automatic Speech Recognition (ASR) applications, includes: (a) a client-side including: a client being part of distributed front end for converting acoustic waves to feature vectors; VAD for separating between speech and non-speech acoustic signals; adaptor for WebSockets; and (b) a server side including: a web layer utilizing HTTP protocols and including a Web Server having a Servlet Container; an intermediate layer for transport based on Message-Oriented Middleware being a message broker; a recognition server and an adaptation server both connected to said intermediate layer; a Speech processing server; a Recognition Server for instantiation of a recognition channel per client; an Adaptation Server for adaptation acoustic and linguistic models for each speaker; a Bidirectional communication channel between a Speech processing server and client side; and a Persistent layer for storing a Language Knowledge Base connected to said Speech processing server.

Owner:DIXILANG

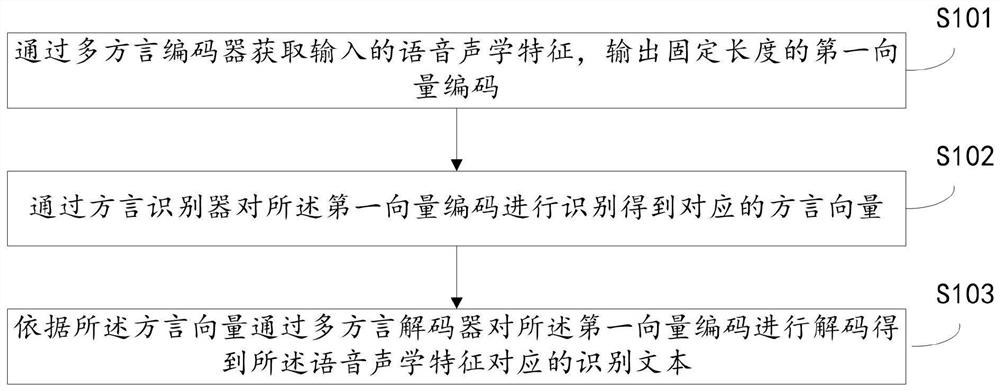

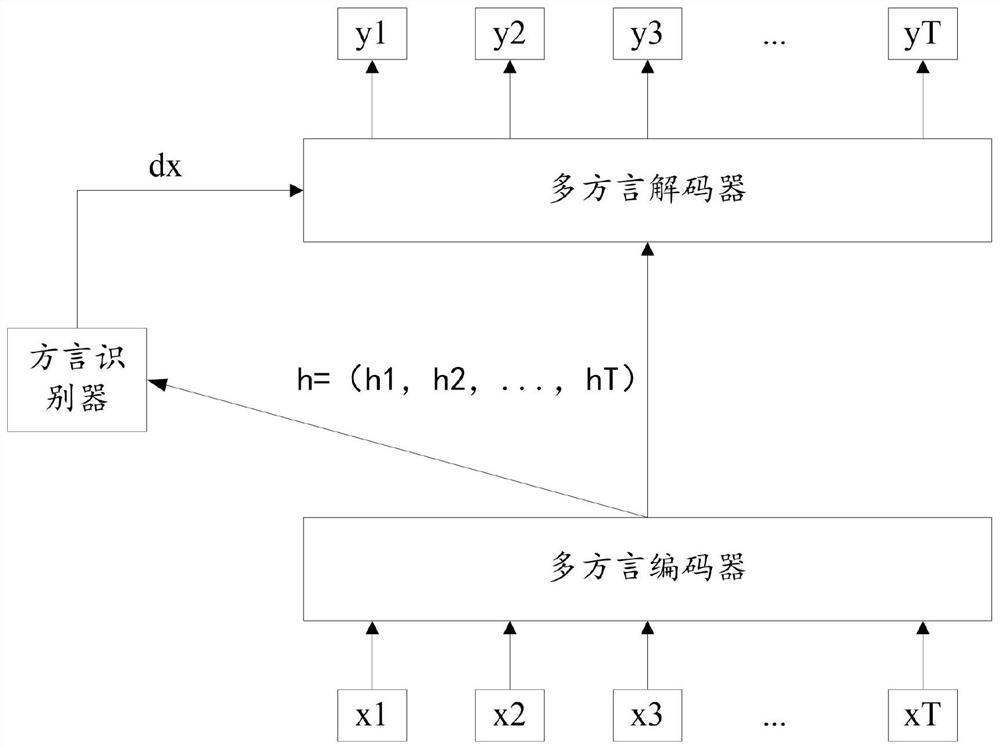

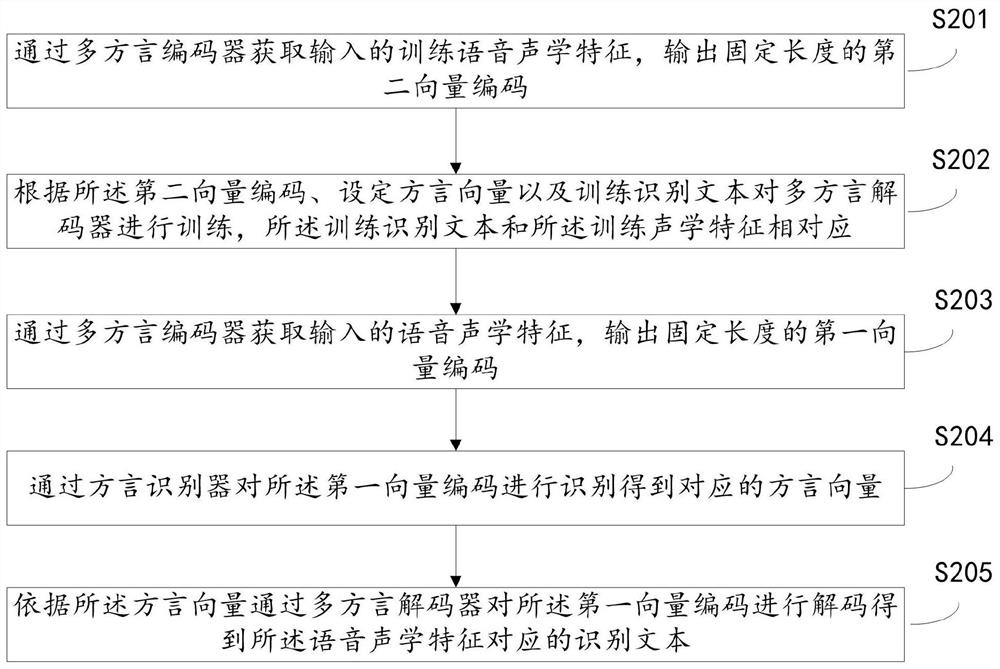

Multi-dialect speech recognition method, device and apparatus, and storage medium

PendingCN112652300AImprove recognition efficiencyImprove recognition accuracySpeech recognitionSpeech AcousticsSpeech sound

The embodiment of the invention discloses a multi-dialect speech recognition method, device and apparatus, and a storage medium. The method comprises the steps: obtaining an input speech acoustic feature through a multi-dialect encoder, and outputting a first vector code with a fixed length; identifying the first vector code through a dialect identifier to obtain a corresponding dialect vector; and decoding the first vector code through a multi-dialect decoder according to the dialect vector to obtain a recognition text corresponding to the speech acoustic feature. According to the scheme, dialect recognition efficiency is improved, a large amount of sample data is not needed, and recognition accuracy is better than that of an existing scheme.

Owner:BIGO TECH PTE LTD

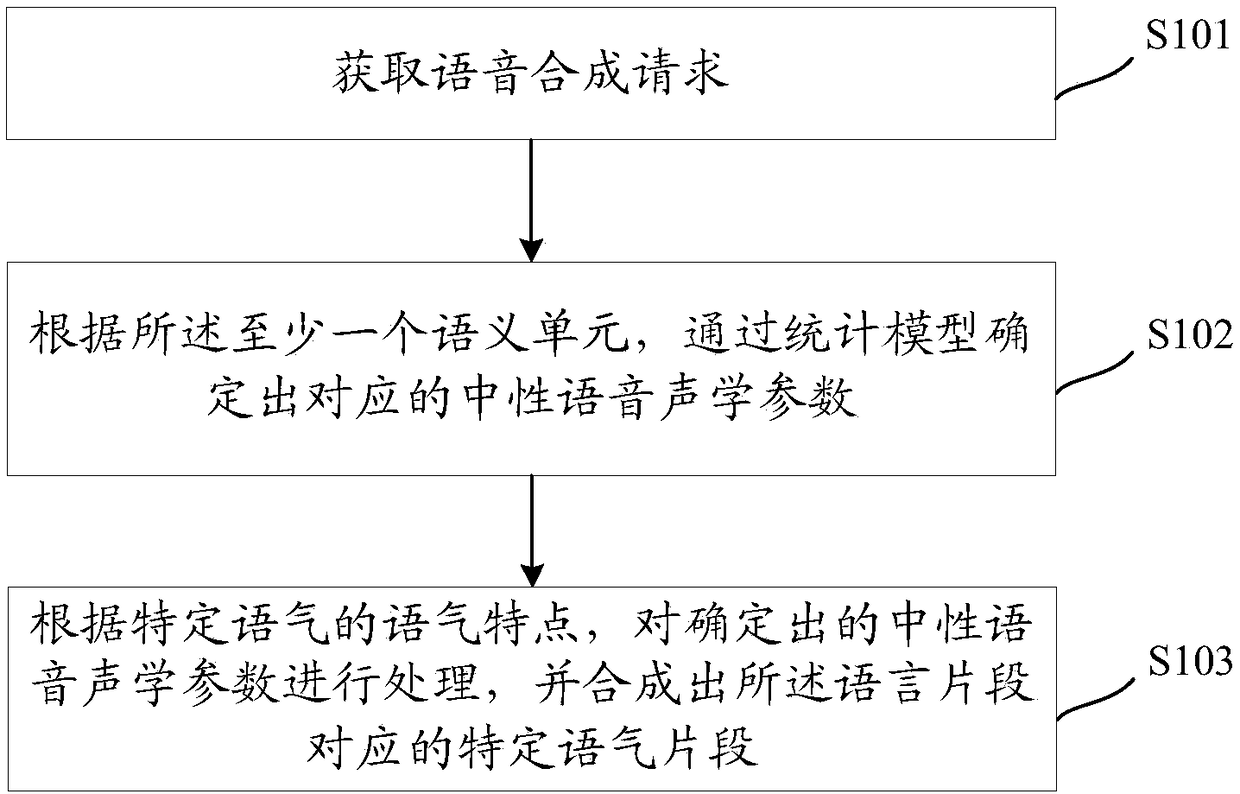

Speech synthesis method and device

An embodiment of the invention discloses a speech synthesis method and a speech synthesis device. When acquiring a speech synthesis request, corresponding neutral speech acoustic parameters can be determined according to at least one semantic unit contained in a language segment of a speech to be synthesized and by means of a statistical model established based on neutral speech acoustic parameters, and the determined neutral speech acoustic parameters are processed correspondingly according to mood characteristics of a specific mood to obtain a specific mood segment in the specific mood. Therefore, the speech synthesis method and the speech synthesis device can synthesize the specific mood segment according to the statistical model established based on the neutral speech acoustic parameters and the mood characteristics without recording speeches in a large amount of specific moods in advance, thus the cost of speech synthesis is reduced; and the speech segment in the required mood canbe synthesized for any mood by adopting the statistical model and the corresponding mood characteristics, thereby greatly enlarging the application range of the speech synthesis scheme.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

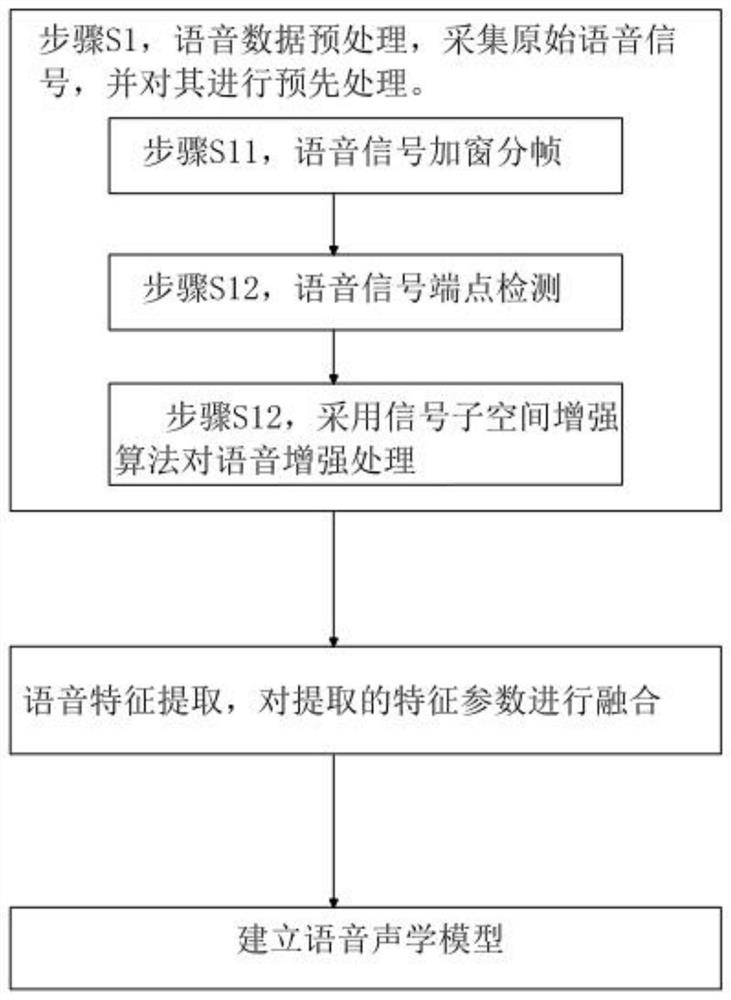

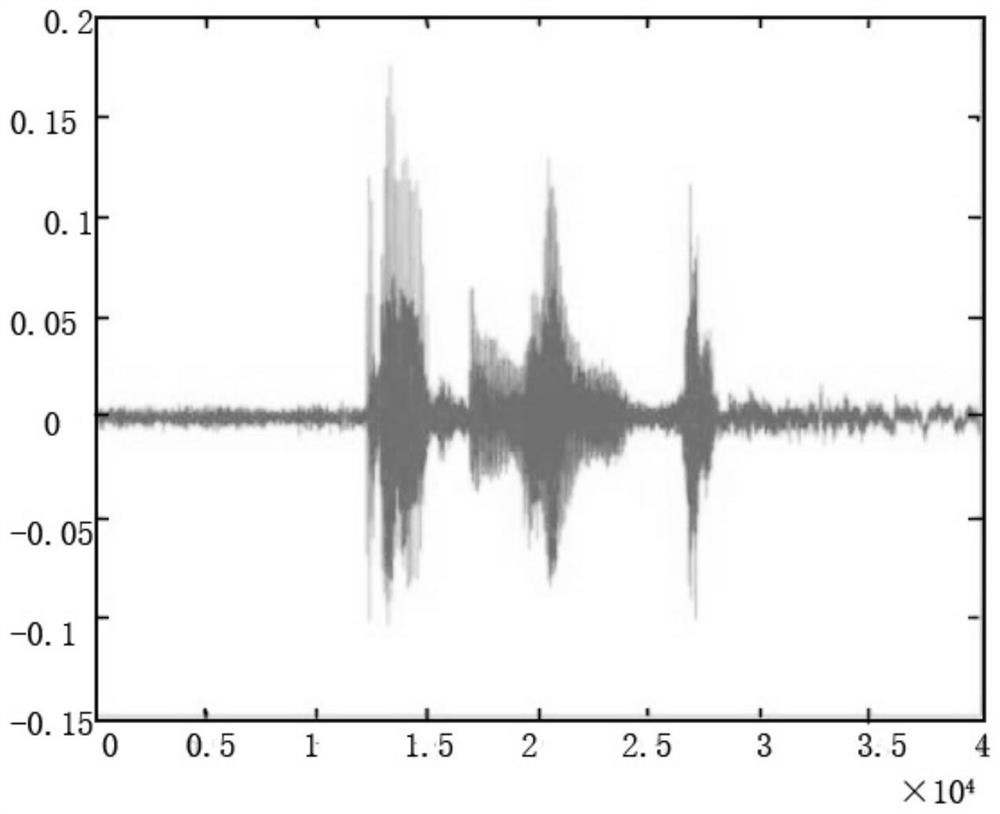

Intelligent recognition method for incomplete voice of elderly people

PendingCN112071307AEasy to identifyRapid positioningSpeech recognitionEnvironmental noiseVocal organ

The invention relates to the technical field of voice recognition, and particularly relates to an intelligent recognition method for incomplete voice of elderly people. The intelligent recognition method for incomplete voice of elderly people comprises the following steps: S1, preprocessing voice data, acquiring an original voice signal, and preprocessing the original voice signal, specifically including windowing and framing of the voice signal; detecting voice signal endpoints; performing voice enhancement processing by adopting a signal subspace enhancement algorithm; S2, extracting voice features, and fusing the extracted feature parameters; and S3, establishing a voice acoustic model. According to the intelligent recognition technology for incomplete voice of elderly people, the problems of slight sound amplitude and great influence of environmental noise caused by aging of vocal organs of the elderly people can be reduced, and the voice features fused by adopting the sound parameters can be closer to the voice features of the elderly people, so that data comprehensively representing the voice features of the elderly people can be acquired, and the recognition degree of incomplete voice and fuzzy voice of the elderly people is improved.

Owner:JIANGSU HUIMING SCI & TECH

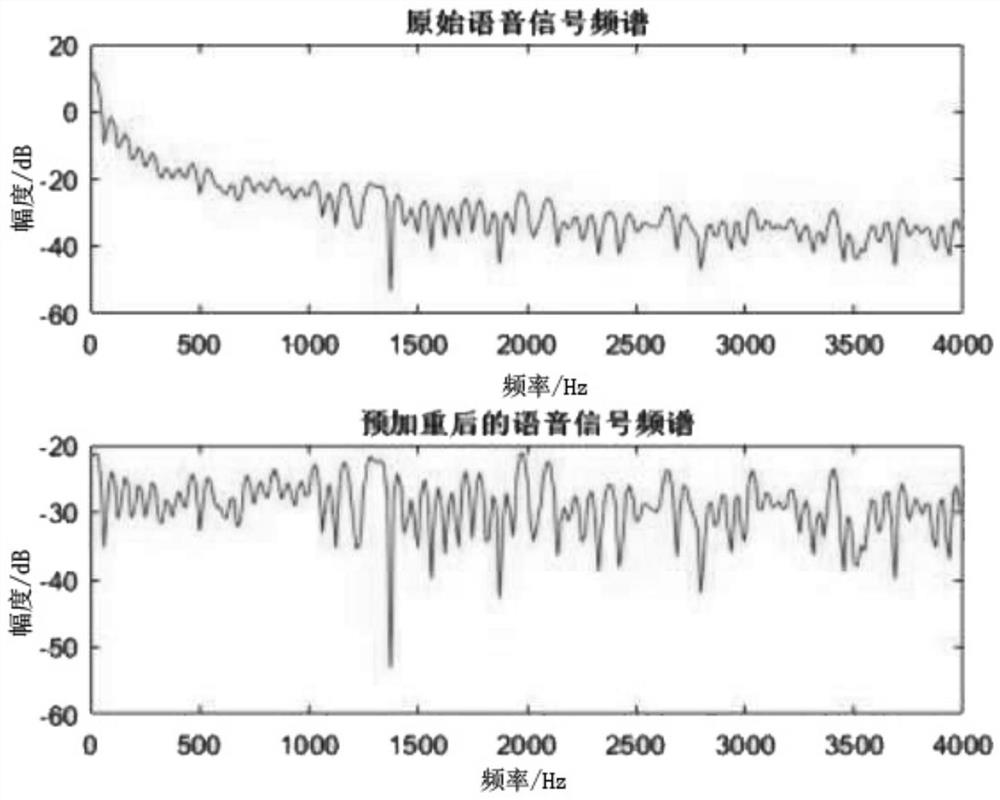

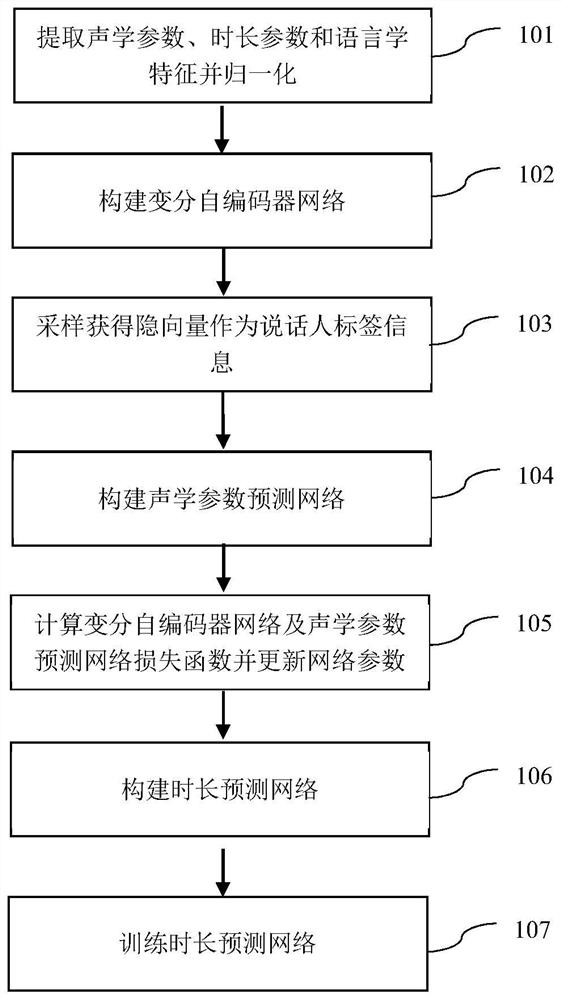

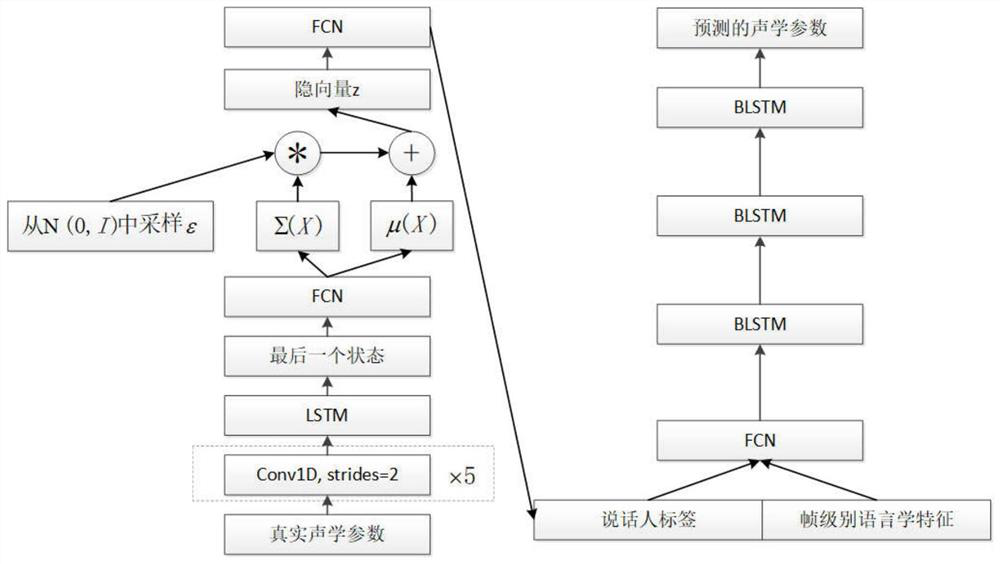

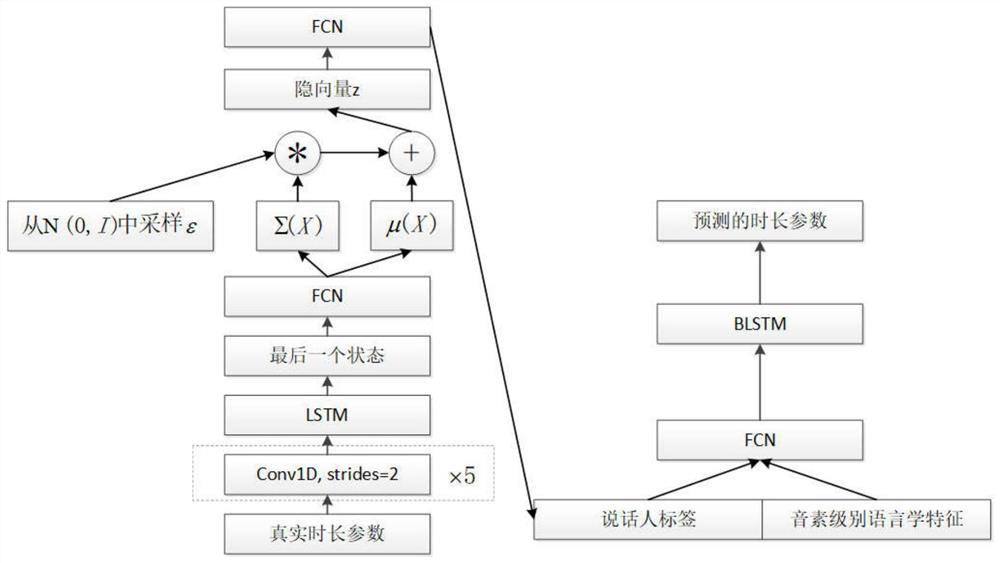

Multi-speaker voice synthesis method based on variational auto-encoder

The invention discloses a multi-speaker voice synthesis method based on a variational auto-encoder. The method comprises the following steps: extracting a phoneme-level duration parameter and a frame-level acoustic parameter of a to-be-synthesized speaker clean voice, inputting the normalized phoneme-level duration parameter into a first variational auto-encoder, and outputting a duration speakerlabel; inputting the normalized frame-level acoustic parameter into a second variational auto-encoder, and outputting an acoustic speaker label; extracting frame-level linguistic features and phoneme-level linguistic features from speech signals to be synthesized, wherein the speech signals include a plurality of speakers; inputting the duration speaker label and the normalized phoneme-level linguistic features into a duration prediction network, and outputting a current phoneme prediction duration; obtaining the frame-level linguistic characteristics of the phoneme through the current phonemeprediction duration, inputting the frame-level linguistic characteristics and the acoustic speaker label into an acoustic parameter prediction network, and outputting the normalized acoustic parameters of the prediction voice; and inputting the normalized predicted speech acoustic parameters into a vocoder, and outputting a synthesized speech signal.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

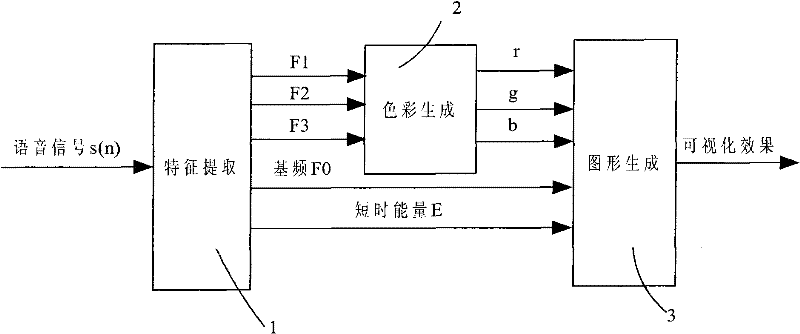

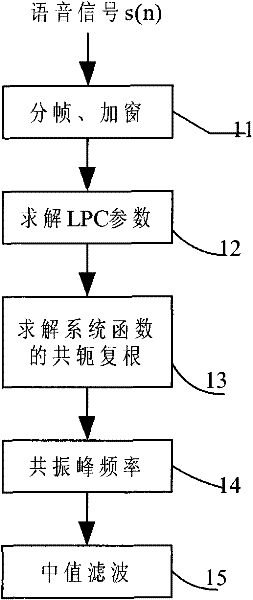

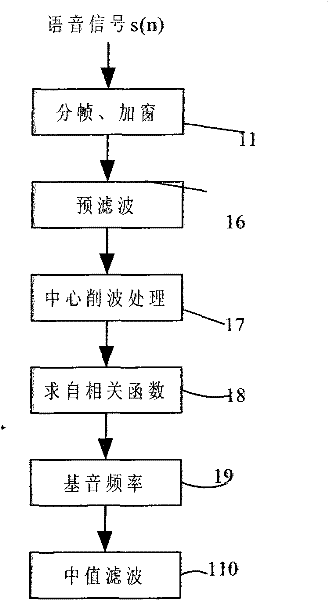

Formant-frequency-based Mandarin single final vioce visualizing method

InactiveCN102176313BObvious color differenceEasy to identifySpeech analysisSubjective perceptionSpeech Acoustics

The invention provides a formant-frequency-based Mandarin single final voice visualizing method. The method comprises the following steps: framing and windowing an original voice signal; extracting short-time energy, formant frequency and fundamental tone frequency of each frame of signal; correcting errors of specific values of the formant frequency and the fundamental tone frequency by adoptinga median smoothing method; mapping different pronunciations into different color aspects by utilizing the formant frequency, and correcting; reflecting variation tendency of pronunciation time, energy and fundamental tone frequency in an image; and differentiating different Mandarin single final pronunciations by colors. The method is easy to implement by only extracting acoustic phonetic parameters of short-time energy, formant frequency and fundamental tone frequency of a voice signal; soft decision is introduced, each pronunciation is not subject to hard decision, but represented by different colors, and visualizing effect of different speakers on the same pronunciation is based on the principle of seeking common grounds while reserving differences, so that the decision on pronunciation more accords with subjective perception of people.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

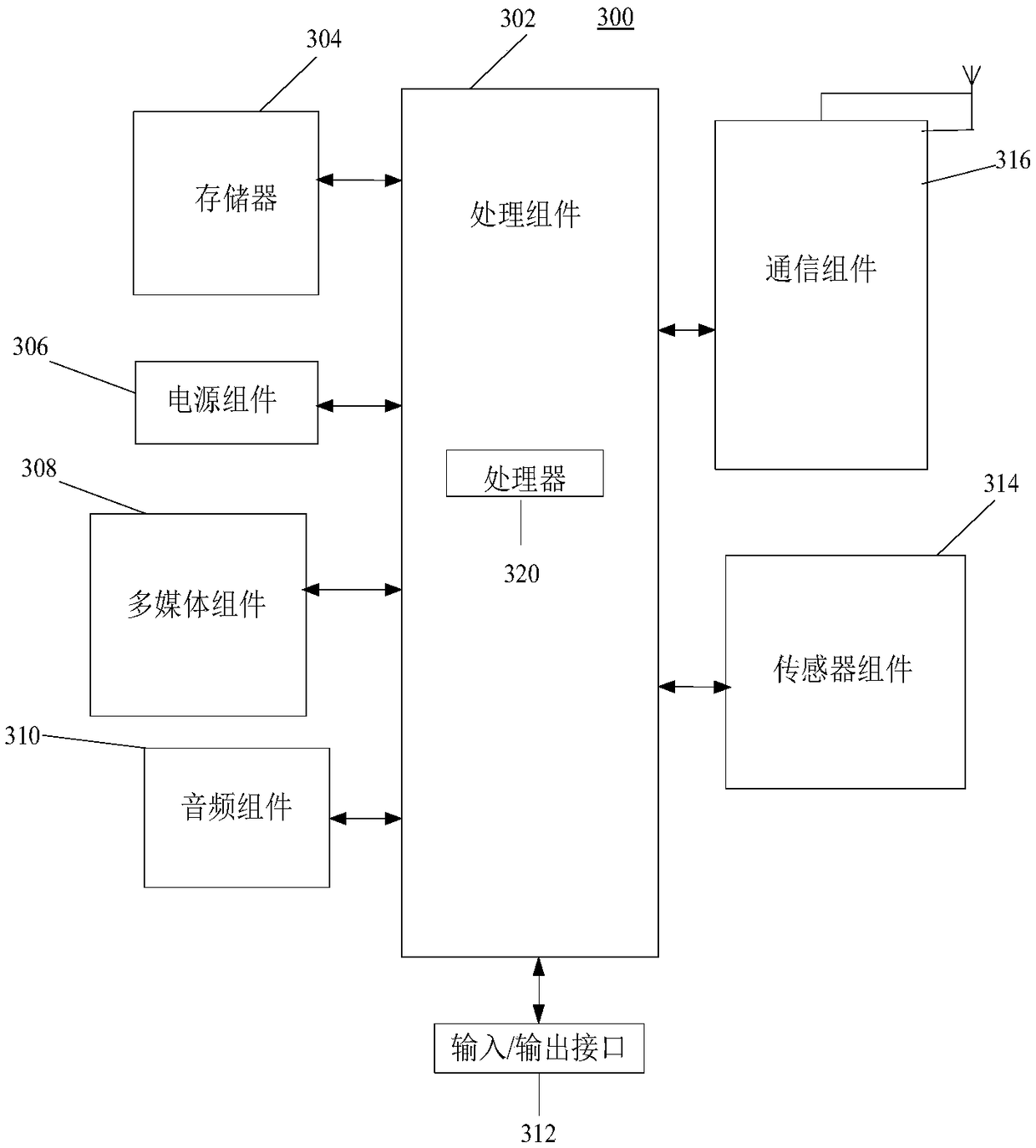

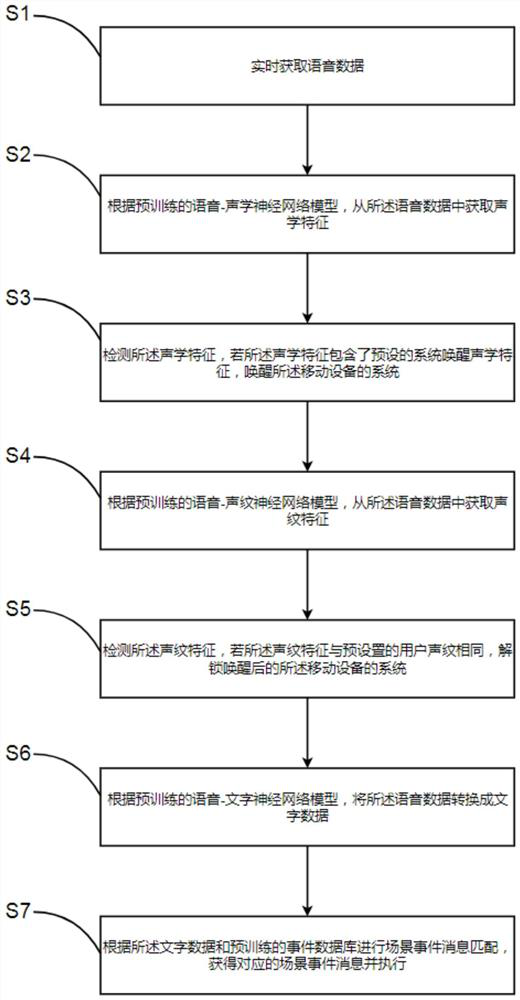

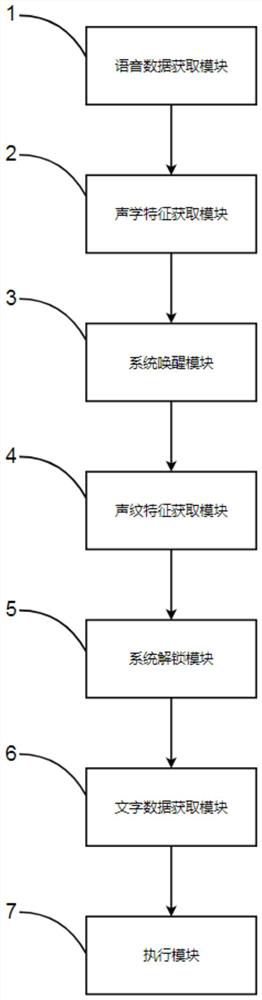

Voice control scene method and voice control scene system

PendingCN113870857AImprove experienceImprove the efficiency of the sceneSpeech recognitionNerve networkSpeech Acoustics

The invention provides a voice control scene method and a voice control scene system which are applied to a system of mobile equipment. The method comprises the following steps: obtaining voice data in real time; acquiring acoustic features from the voice data according to a pre-trained voice-acoustic neural network model; detecting the acoustic features, and if the acoustic features contain a preset system wake-up acoustic feature, waking up the system of the mobile device; acquiring voiceprint features from the voice data according to a pre-trained voice-voiceprint neural network model; detecting the voiceprint features, and if the voiceprint features are the same as those of the pre-trained user voiceprint, unlocking the system of the awakened mobile device; converting the voice data into character data according to a pre-trained voice-character neural network model; and performing scene event message matching according to the character data and a pre-trained event database to obtain a corresponding scene event message, and executing the scene event message. The efficiency of controlling the scene of the mobile device through voice can be improved, and the user experience of using the mobile device is improved.

Owner:SHENZHEN HUALONG XUNDA INFORMATION TECH

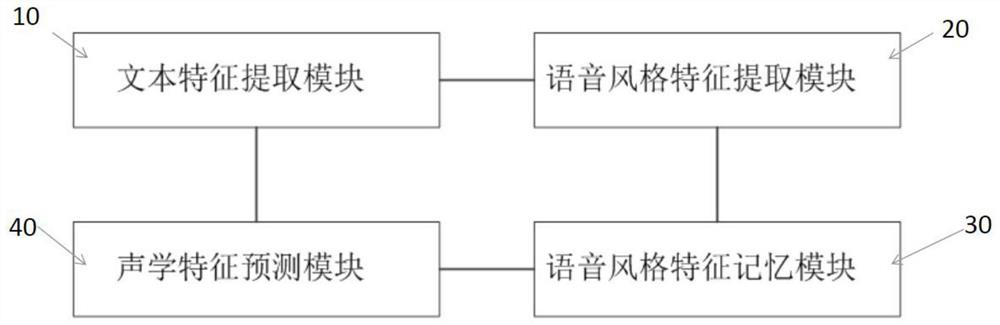

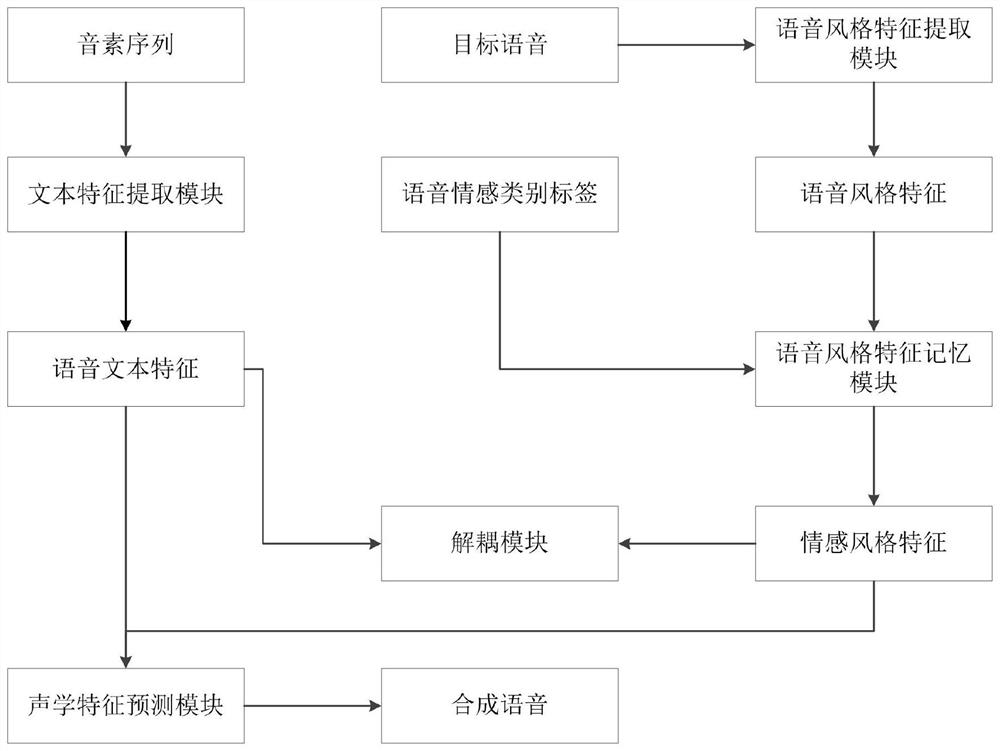

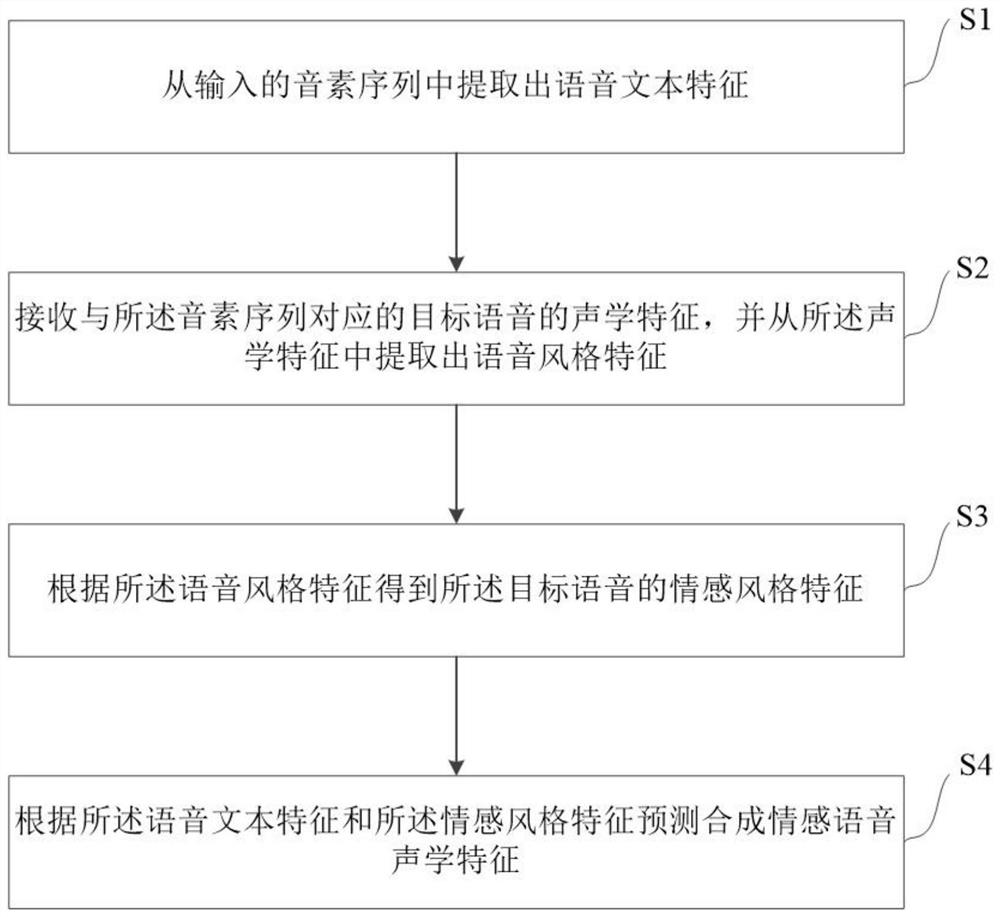

Controllable emotion speech synthesis method and system based on emotion category labels

PendingCN113327572AImprove decouplingImprove expressivenessSpeech synthesisEnergy efficient computingSynthesis methodsSpeech Acoustics

The invention discloses a controllable emotional speech synthesis system and method based on an emotional category label. The method comprises steps of text feature extraction, extracting speech text features from an input phoneme sequence; a voice style feature extraction step for receiving acoustic features of a target voice corresponding to the phoneme sequence and extracting voice style features from the acoustic features; a voice style characteristic memorizing step used for obtaining emotional style characteristics of the target voice according to the voice style characteristics; and an acoustic feature prediction step used for predicting synthetic emotional speech acoustic features according to the speech text features and the emotional style features. According to the method, the decoupling degree of the voice style characteristics and the voice text characteristics can be improved, so the style regulation and control result of the synthesized voice is not limited by the text content, controllability and flexibility of the synthesized voice are improved, and emotional labels and emotional data distribution information of the voice in the corpus can be effectively utilized; therefore, the voice style characteristics of each emotion can be extracted more efficiently.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

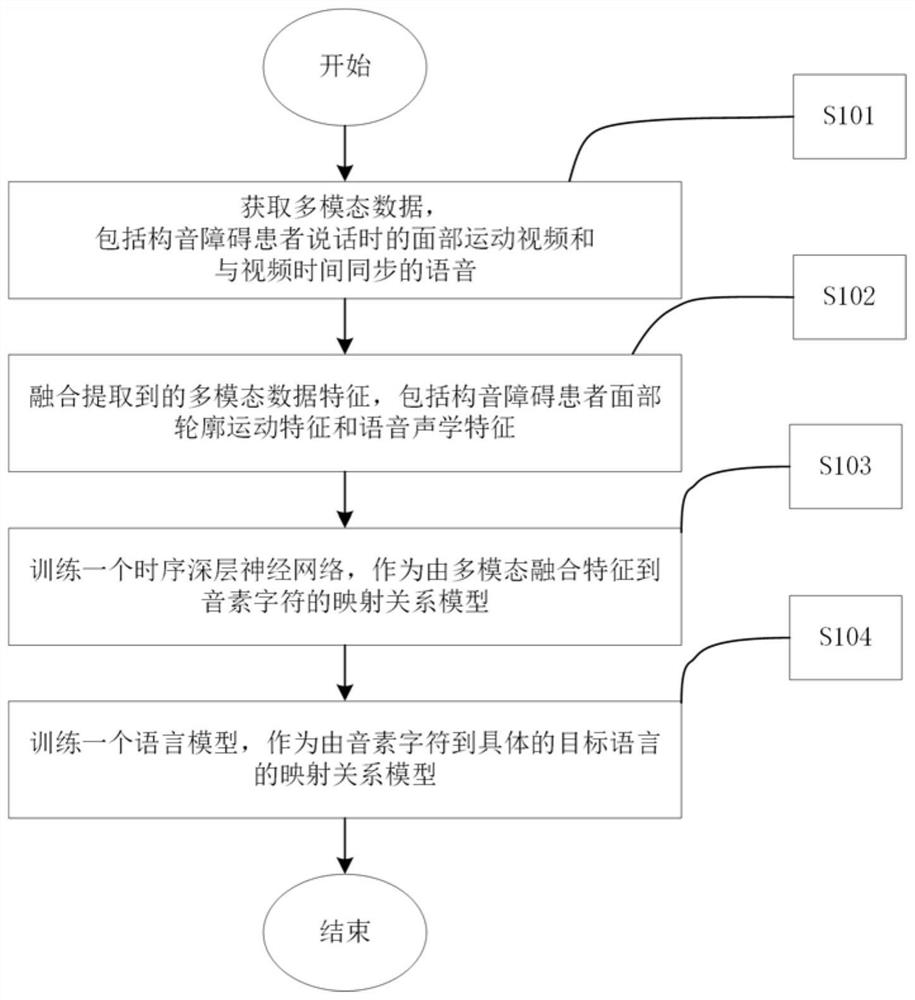

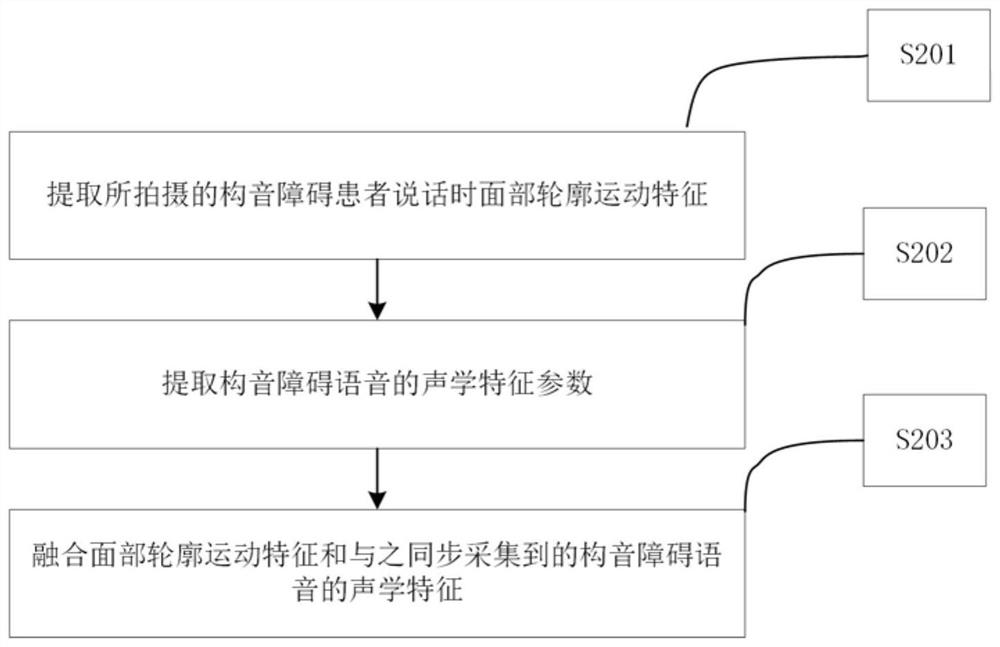

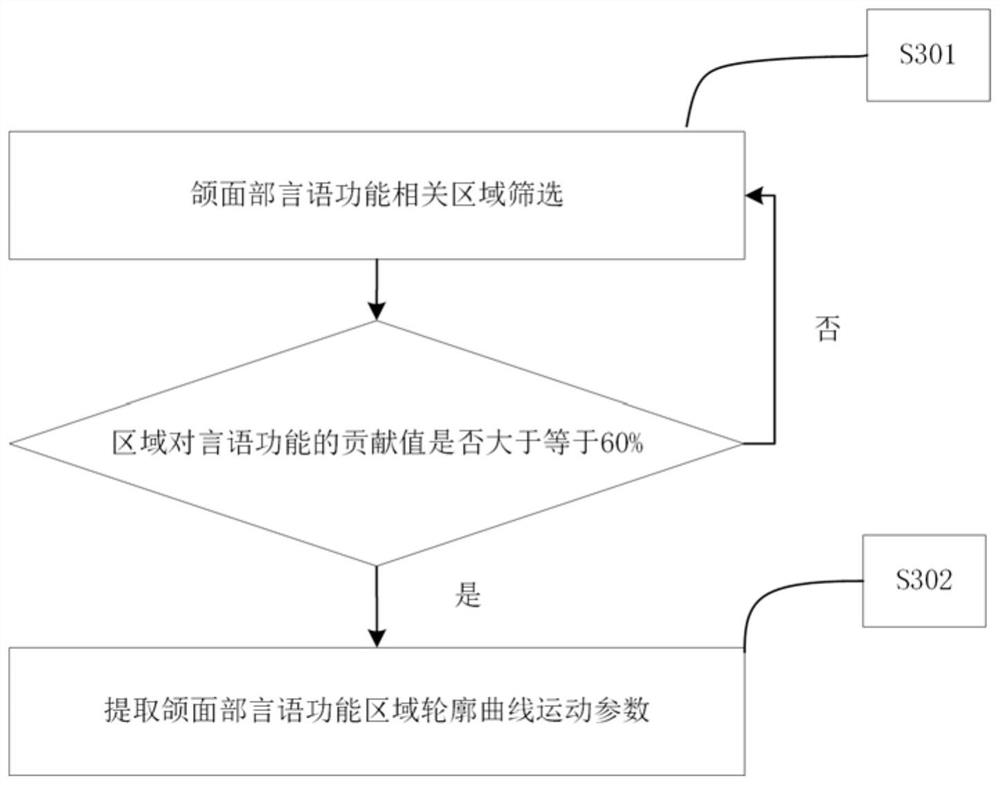

Speech recognition method and system for dysarthria based on visual facial contour movement

The invention discloses a method and system for speech recognition of dysarthria based on visual facial contour movement. The system includes multi-modal data acquisition, multi-modal fusion feature calculation, multi-modal speech recognition calculation and language model calculation modules; multi-modal The state data acquisition calculation module is used to obtain the facial contour motion video data of the dysarthria and the voice data synchronized with the video; the multi-modal fusion feature calculation module is used to fuse the facial contour motion features and speech acoustic features; multi-modal speech recognition The calculation module is used to obtain the mapping relationship from multimodal features to phoneme characters; the language model calculation module is used to obtain the mapping relationship from phoneme characters to Chinese sentences. The present invention obtains the fused multimodal features by fusing the speech acoustic feature parameters and the pronunciation actions of the dysarthria, and utilizes the fused multimodal features to perform dysarthria speech recognition, thereby effectively improving dysarthria speech recognition Accuracy.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY

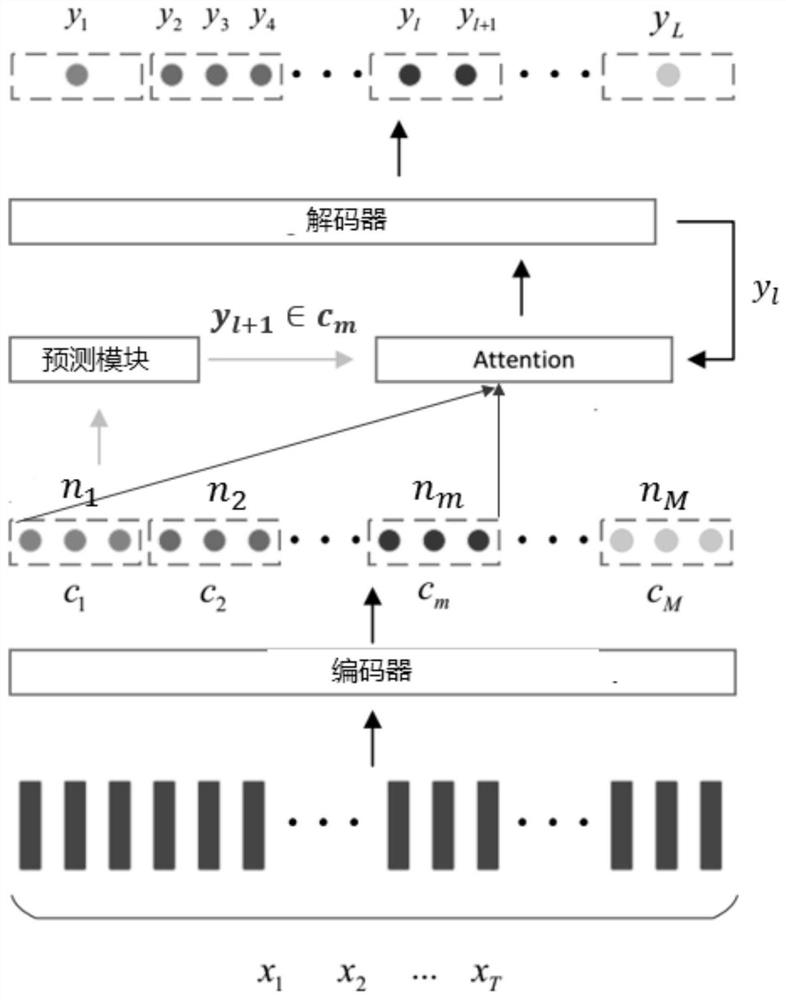

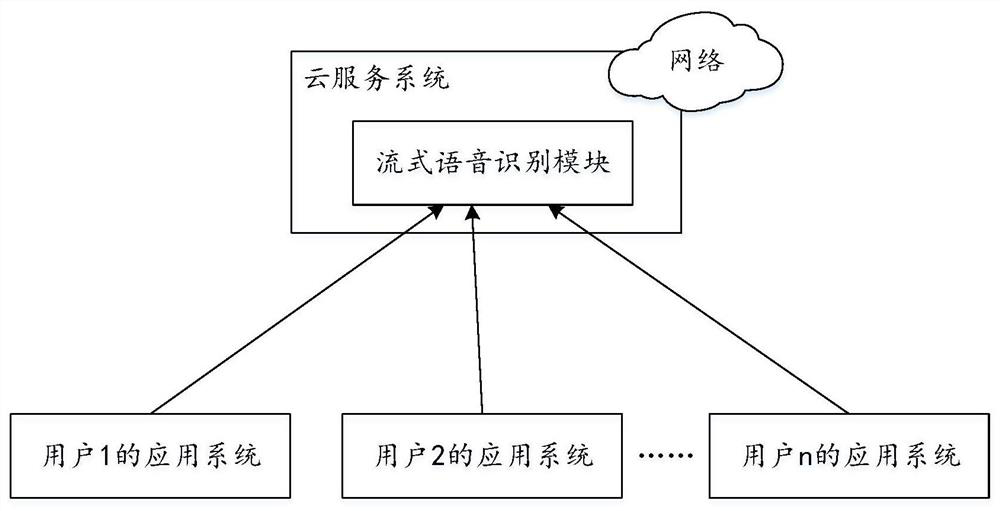

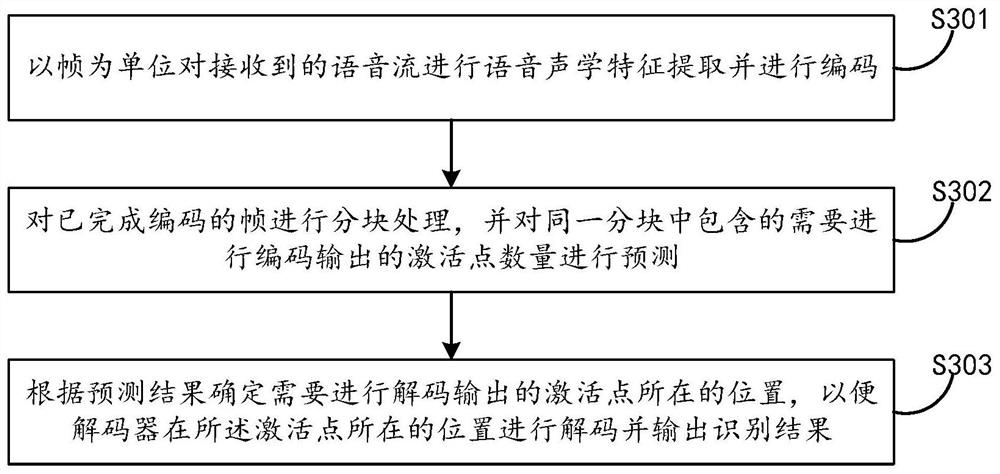

Streaming end-to-end speech recognition method and device, and electronic equipment

The embodiment of the invention discloses a streaming end-to-end speech recognition method and device, and electronic equipment. The streaming end-to-end speech recognition method comprises the following steps: performing speech acoustic feature extraction on a received speech stream by taking a frame as a unit, and encoding; performing block processing on the coded frame, and predicting the number of activation points which are included in the same block and need to be coded and output; and determining the position of the activation point needing to be decoded and output according to the prediction result so that a decoder decodes at the position of the activation point and outputs an identification result. According to the embodiment of the invention, the robustness of a streaming end-to-end speech recognition system to a noise can be improved, and the system performance and accuracy are increased.

Owner:ALIBABA GRP HLDG LTD

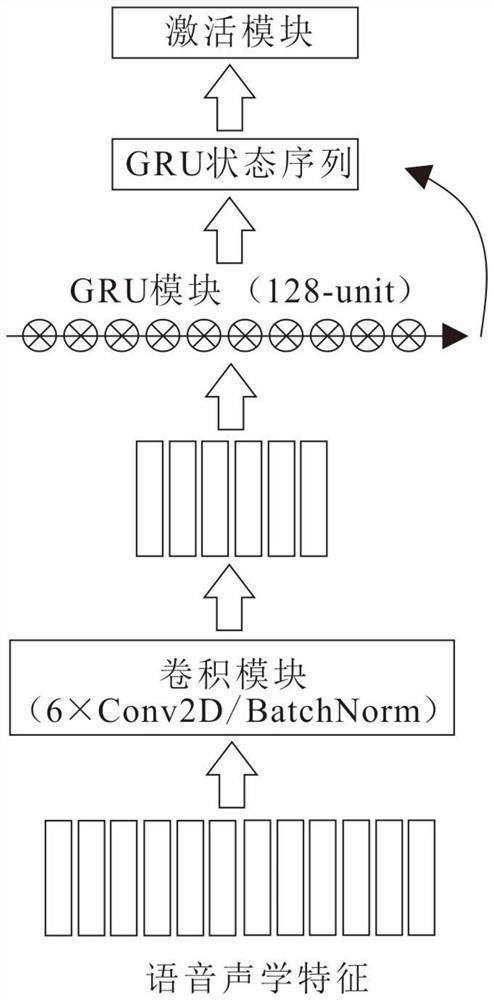

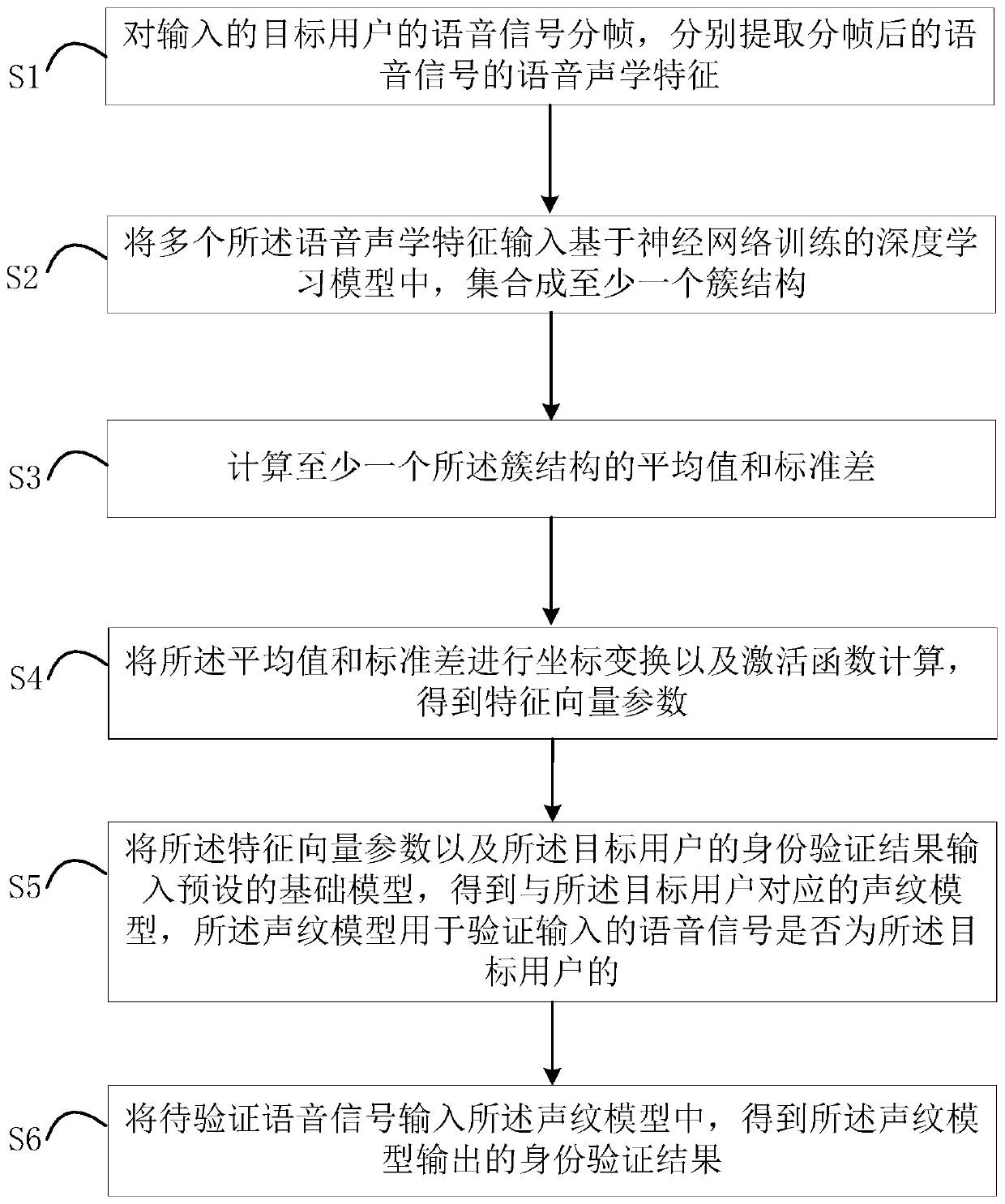

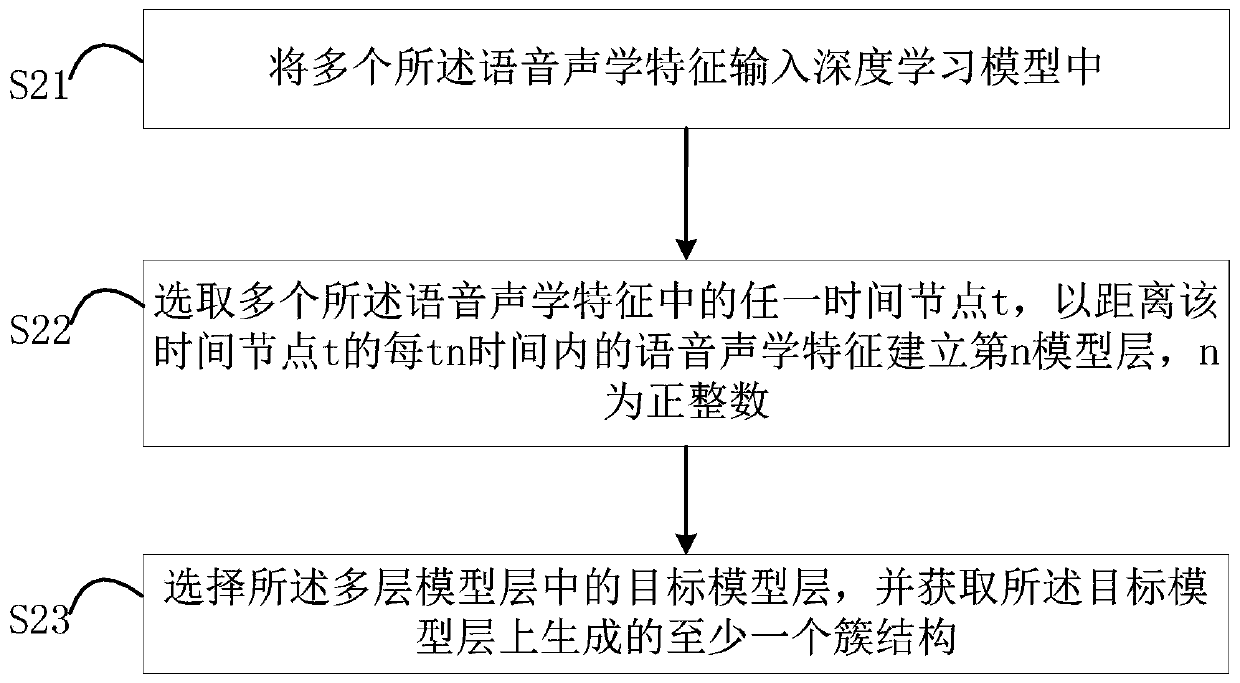

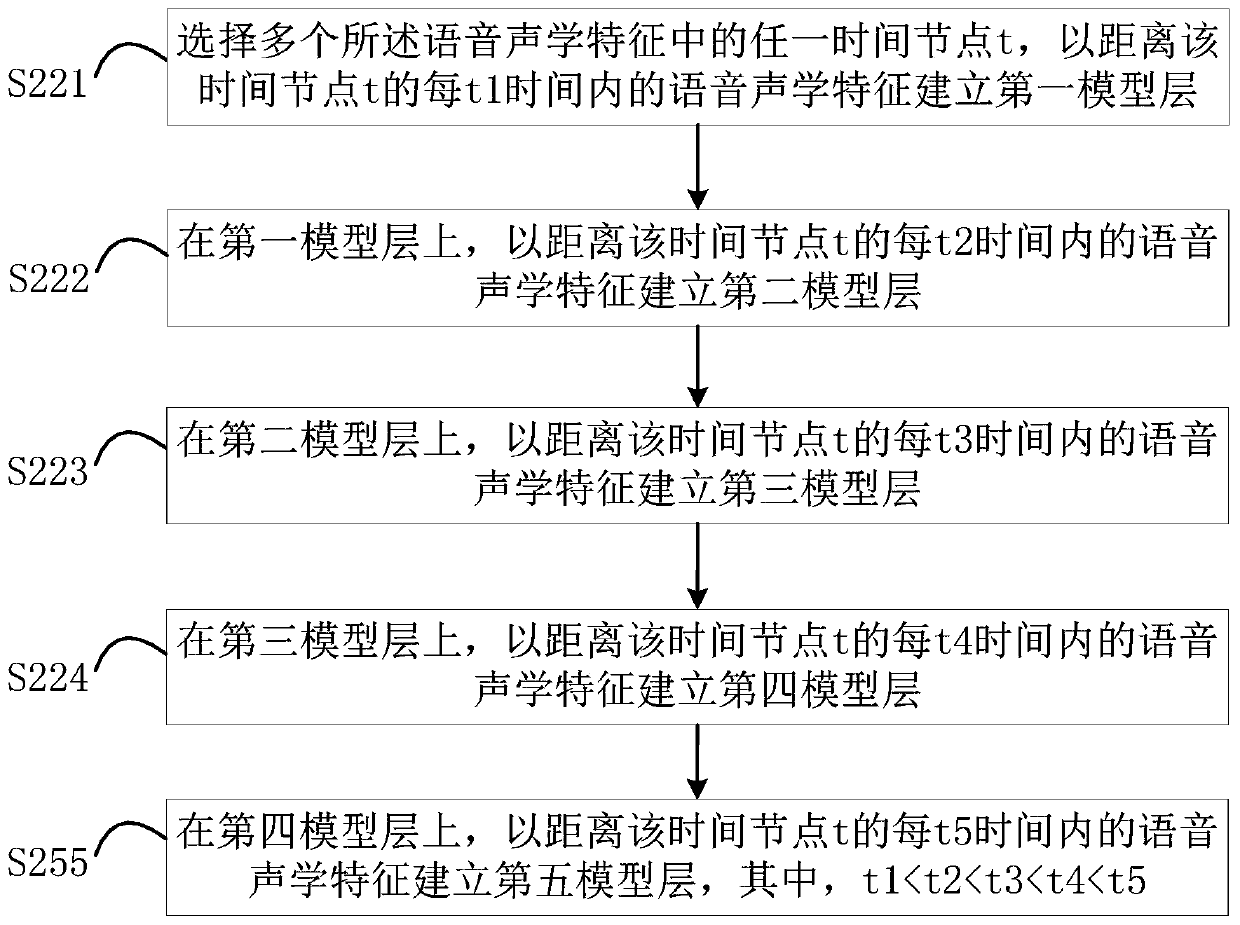

Method, device, computer equipment and storage medium for establishing voiceprint model

ActiveCN108806696BReduce the error rate of voice recognitionSpeech recognitionNeural learning methodsActivation functionSpeech Acoustics

The present application discloses a method, device, computer equipment and storage medium for establishing a voiceprint model, wherein the method includes: dividing the input target user's voice signal into frames, and extracting the voice acoustic features of the framed voice signals respectively; A plurality of said speech acoustic features are input into a deep learning model based on neural network training, and are assembled into at least one cluster structure; calculating the mean value and standard deviation of at least one said cluster structure; performing coordinate transformation on said mean value and standard deviation and calculating the activation function to obtain feature vector parameters; inputting the feature vector parameters and the identity verification result of the target user into a preset basic model to obtain a voiceprint model corresponding to the target user. The speech acoustic features extracted in this application are based on the cluster structure obtained in the deep neural network training, and then the cluster structure is subjected to coordinate mapping and activation function calculation to obtain a voiceprint model, which can reduce the voice recognition error rate of the voiceprint model.

Owner:PING AN TECH (SHENZHEN) CO LTD

A system and method for training clone timbre and rhythm based on bottle neck features

ActiveCN111210803BPreserve prosodic informationPreserve the sense of rhythmSpeech recognitionSpeech synthesisFeature extractionData acquisition

The present invention relates to the technical fields of speech synthesis, speech recognition, and sound cloning. The present invention combines speech synthesis technology, speech recognition technology, and transfer learning technology to provide a sound cloning implementation scheme based on Bottleneck features (language features of audio), including a training system and training methods; use a small number of samples to provide TTS services with high naturalness and similarity, so as to provide TTS services with target user characteristics, and solve the problems of large sample size, long production cycle, and high labor cost of speech synthesis technology services. The training system includes: a data acquisition module, an acoustic feature extraction module, a speech recognition module, a prosody module, a multi-person speech acoustic module, and a speech synthesis module; the present invention also provides a training method based on the above-mentioned system, including preparing training corpus, acoustic feature extraction , training and fine-tuning of each module, and speech synthesis.

Owner:NANJING SILICON INTELLIGENCE TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com