Patents

Literature

38 results about "Vocal organ" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

N any of the organs involved in speech production. a movable speech organ. the vocal apparatus of the larynx; the true vocal folds and the space between them where the voice tone is generated.

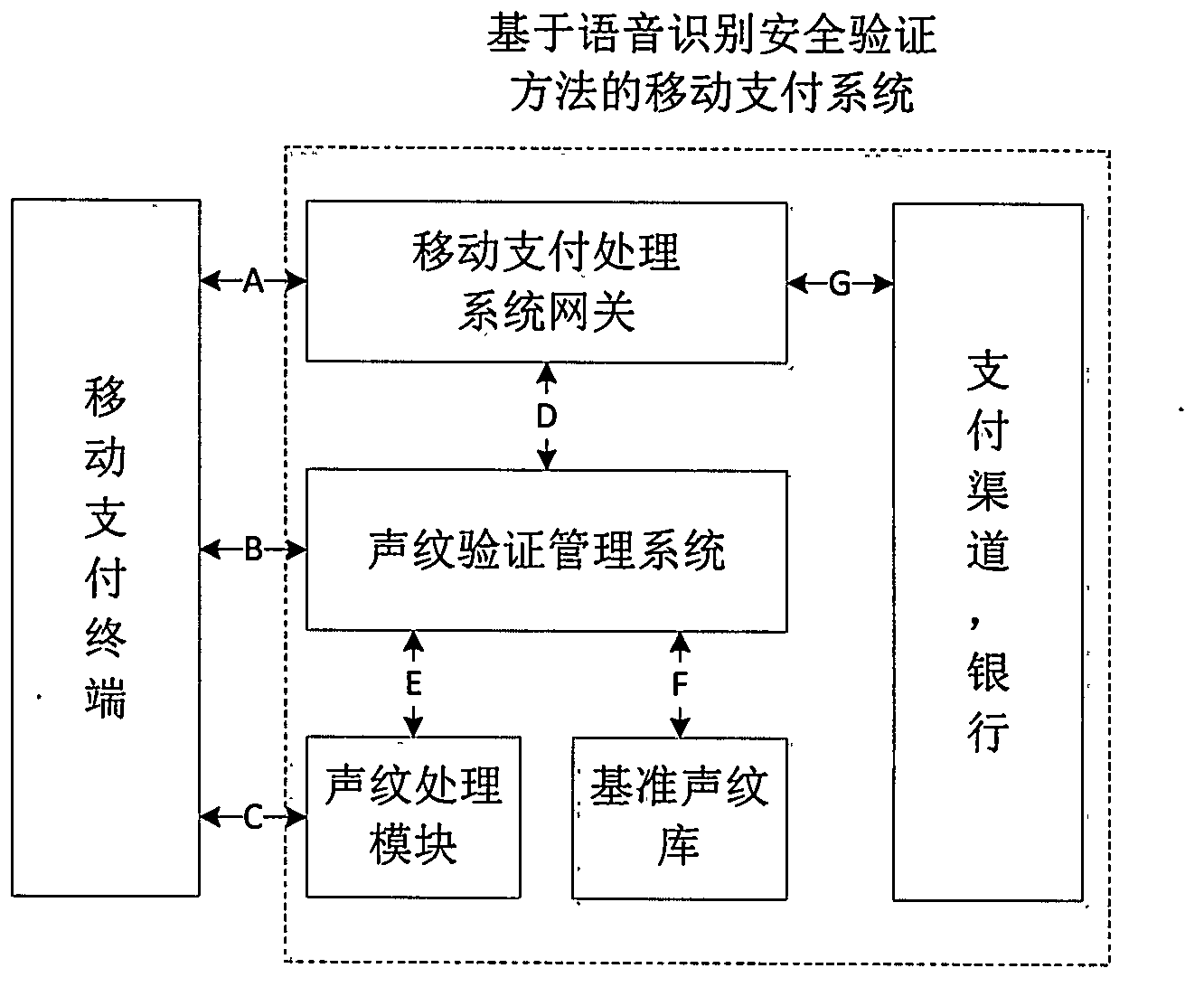

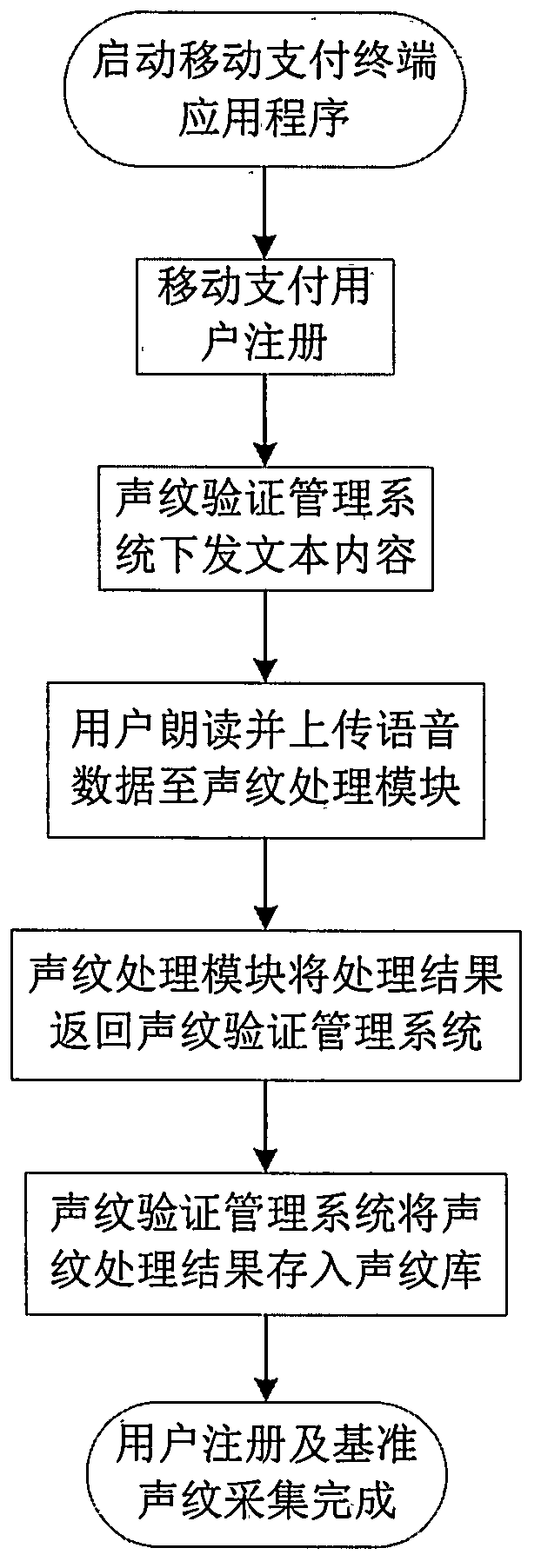

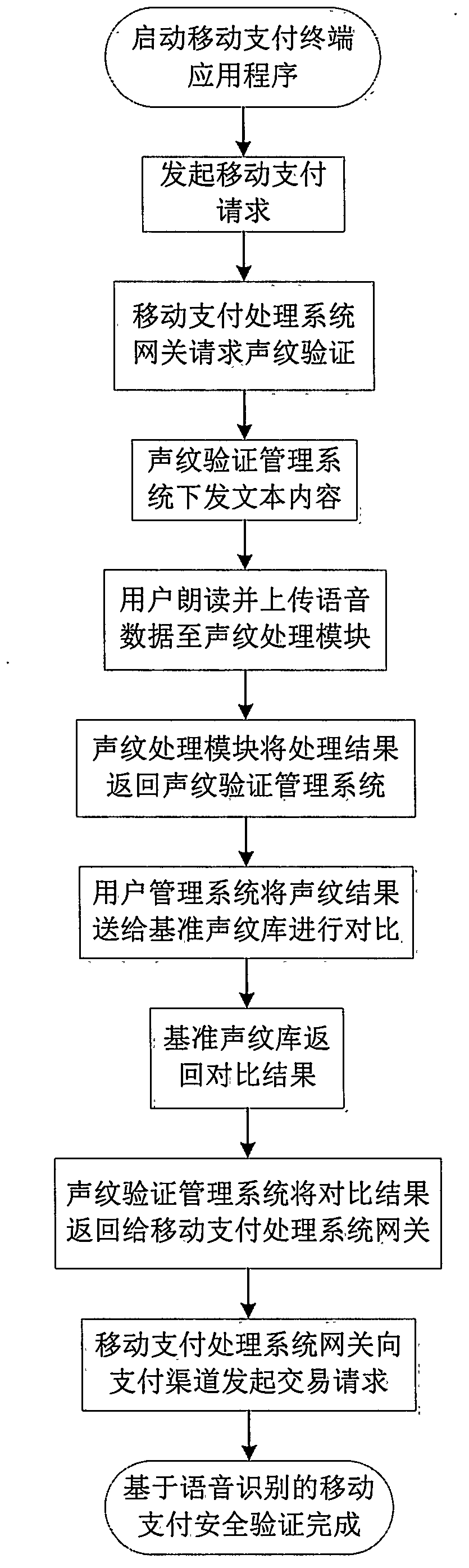

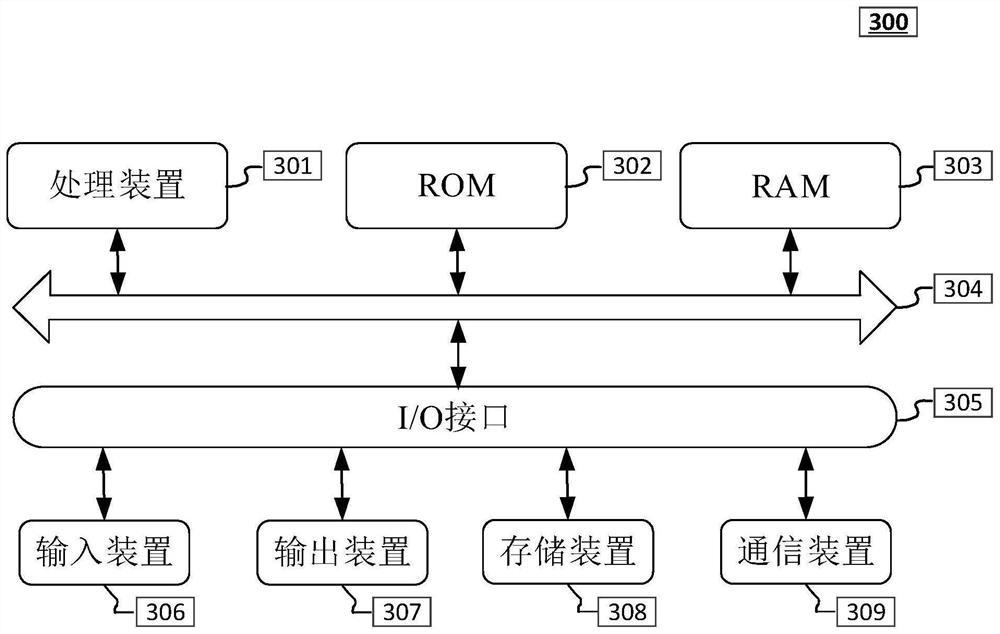

Mobile payment safety verification method based on voice recognition

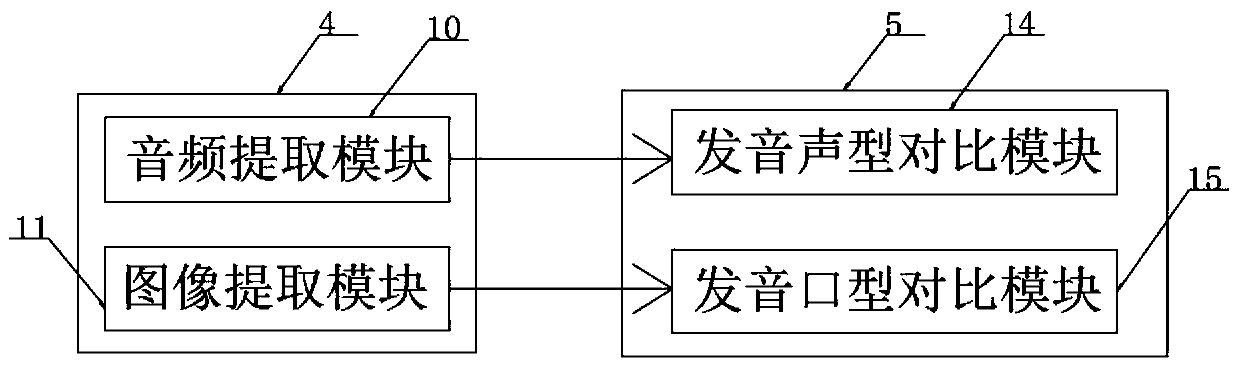

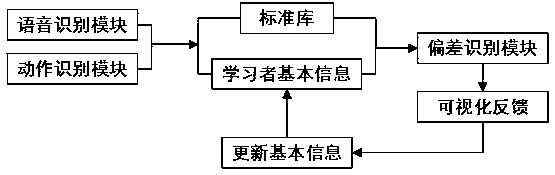

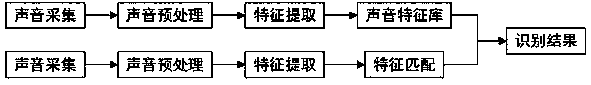

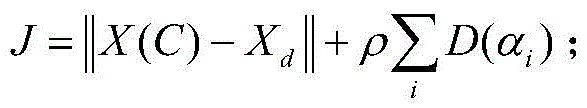

InactiveCN103325037AImprove security levelEffective combinationPayment protocolsSpeech recognitionVocal organSystem structure

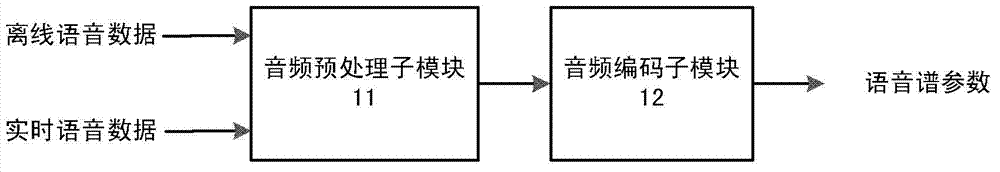

The invention relates to an application in the technical field of mobile payment safety verification, wherein the application is characterized in that the safety verification of the mobile payment process is achieved by the utilization of the voice recognition technology. A brand-new mobile payment safety verification method based on voice recognition is provided according to voiceprint uniqueness resulting from difference among physical structures of human vocal organs. The method supports voiceprint verification relevant to text and voiceprint verification irrelevant to text at the same time and can be effectively combined with the existing mobile payment products. While the payment process is simplified, the safety level of the payment is promoted. The whole system structure is shown in the figure 1.

Owner:SHANGHAI CARDINFOLINK DATA SERVICE

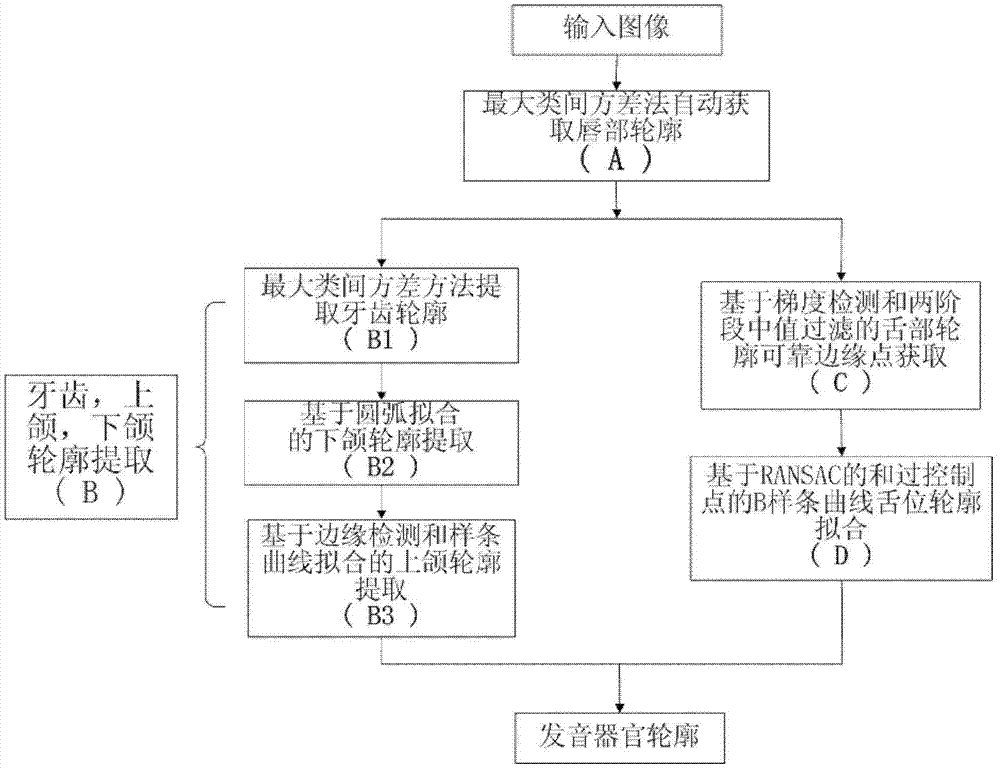

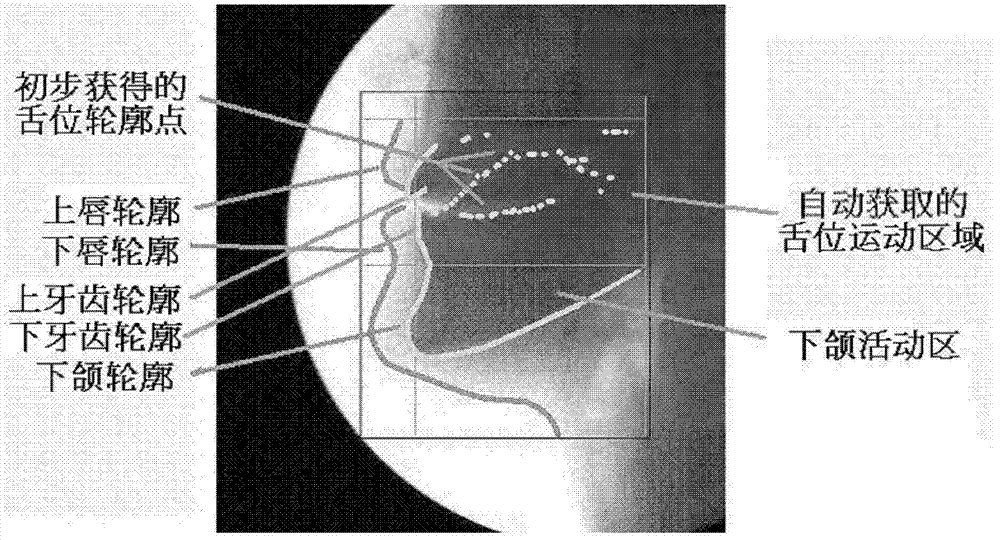

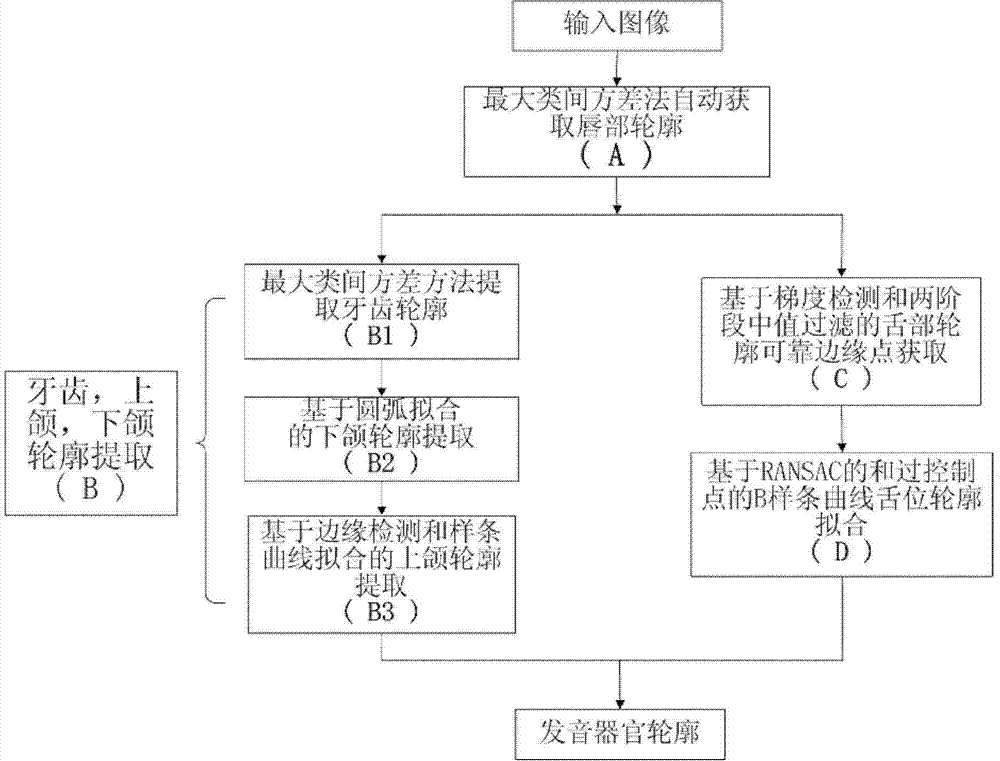

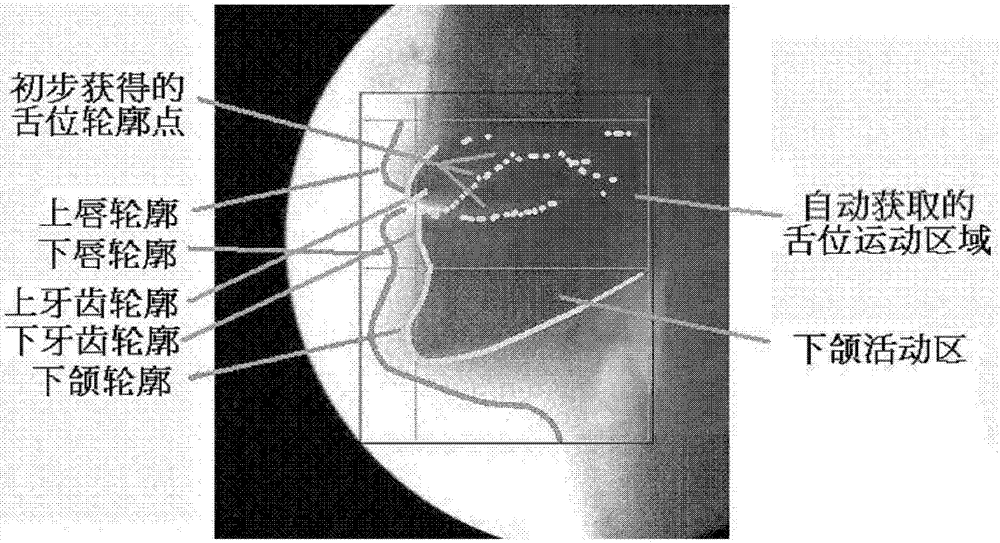

Method for acquiring vocal organ profile in medical image

ActiveCN102831606ANo human interaction requiredGet Tongue ProfileImage analysisVocal organAutomatic segmentation

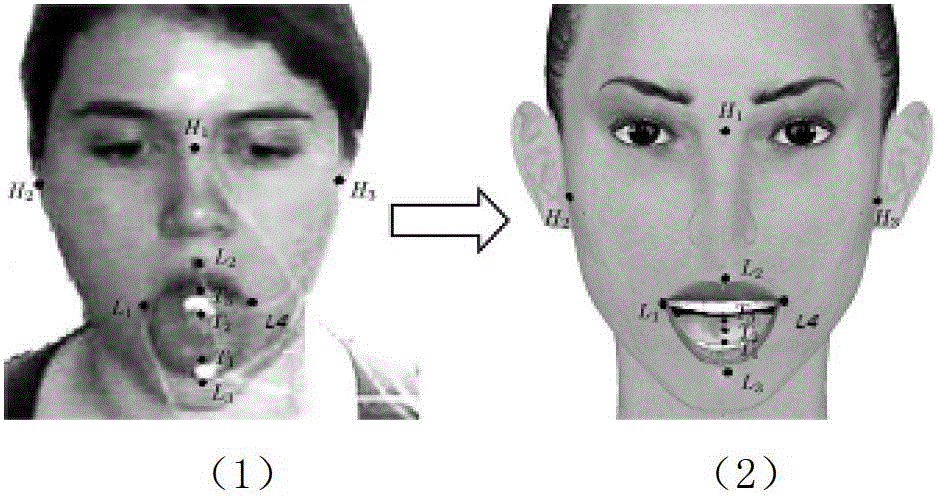

The invention provides a method for acquiring a vocal organ profile in a medical image. The method comprises the following steps of: for the medical image, performing binaryzation on the lip and a background area in the medical image by using an automatic segmentation threshold of the lip and the background so as to acquire a lip profile; extracting an upper tooth profile, a lower tooth profile, an upper jaw profile and a lower jaw profile in a face range included in the lip profile; for an image area between the upper jaw profile and the lower jaw profile, acquiring a reliable edge point of a tongue profile; and fitting a tongue edge profile by the reliable edge point of the tongue profile. By the method, the vocal head part and the organ area can be automatically segmented from the image background, and the whole process is automatically completed without manual interaction.

Owner:中科极限元(杭州)智能科技股份有限公司

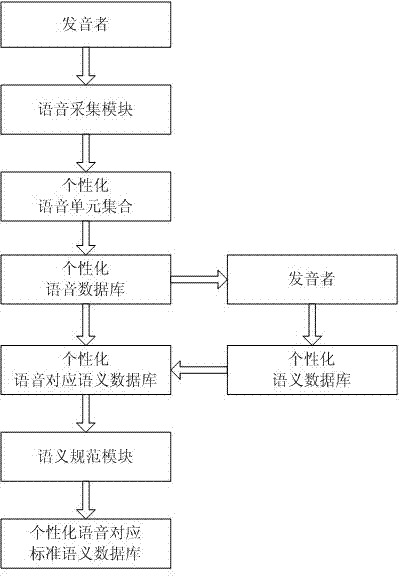

Individualized voice collection and semantics determination system and method

ActiveCN102831195AHigh standardLow accuracySound input/outputSpecial data processing applicationsPersonalizationVocal organ

The invention discloses individualized voice collection and semantics determination system and method. The individualized voice acquisition and semantics determination system comprises a voice collection module, a voice unit module, a voice database, a semantics database, a voice correspondence semantics database, a semantics normalization module and a voice correspondence standard semantics database, wherein the voice collection module collects voice sound from the vocal organ of a sounder; the voice unit module classifies the voices collected by the voice collection module and establishes voice units by using monosyllables, bisyllables and multisyllables; the voice database is established by using the voice units as basic units; and the voice correspondence semantics database is established by corresponding the voice units of the voice database to the semantics database in one-to-one way. In the invention, the sounder directly determines the correspondence between voices and semantics text words, no requirement is asked for the sounder, the sounder does not need to be trained, the system does not need to be trained neither, and the accuracy is 99.9%.

Owner:HENAN BAITENG ELECTRONICS TECH

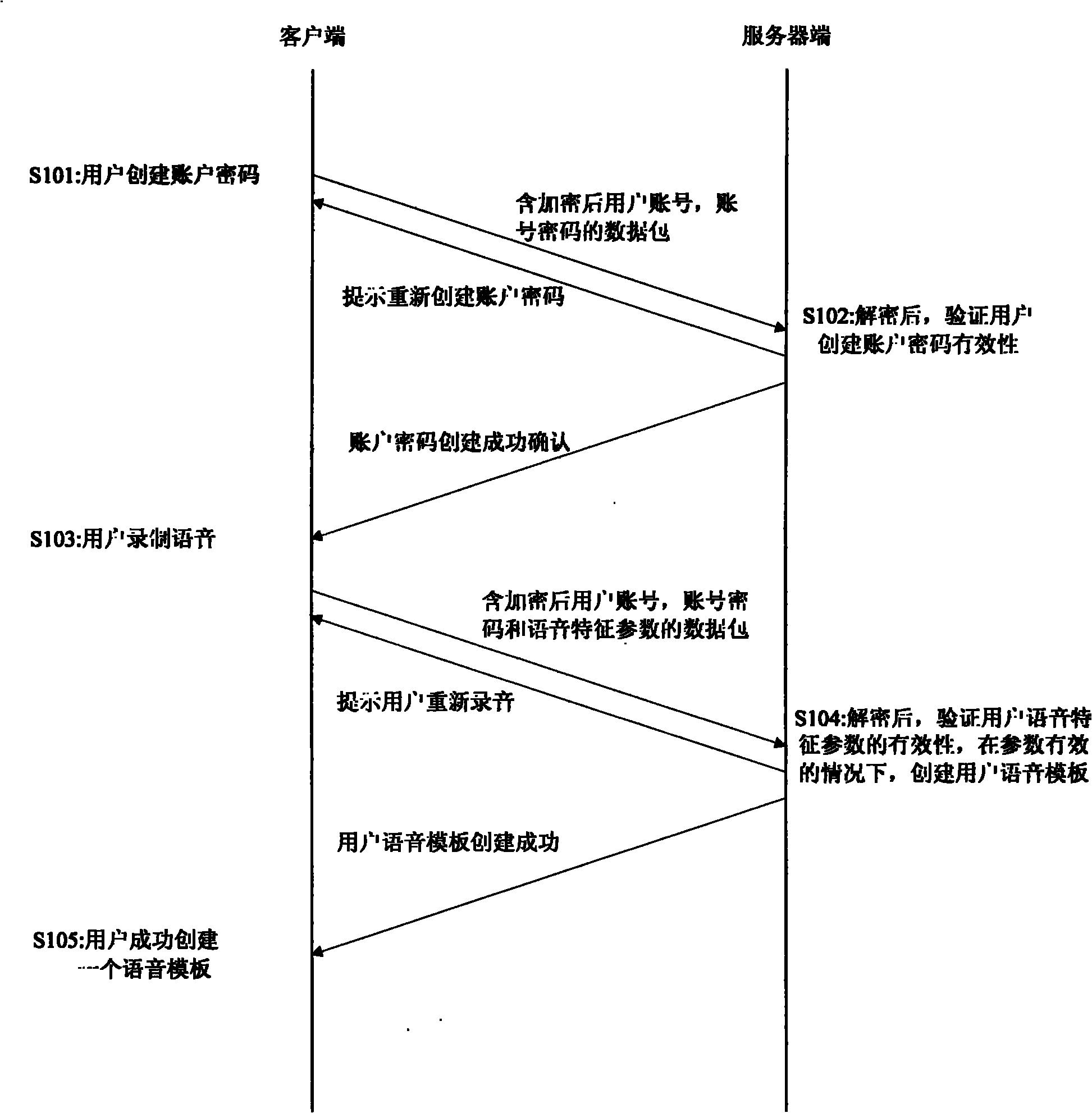

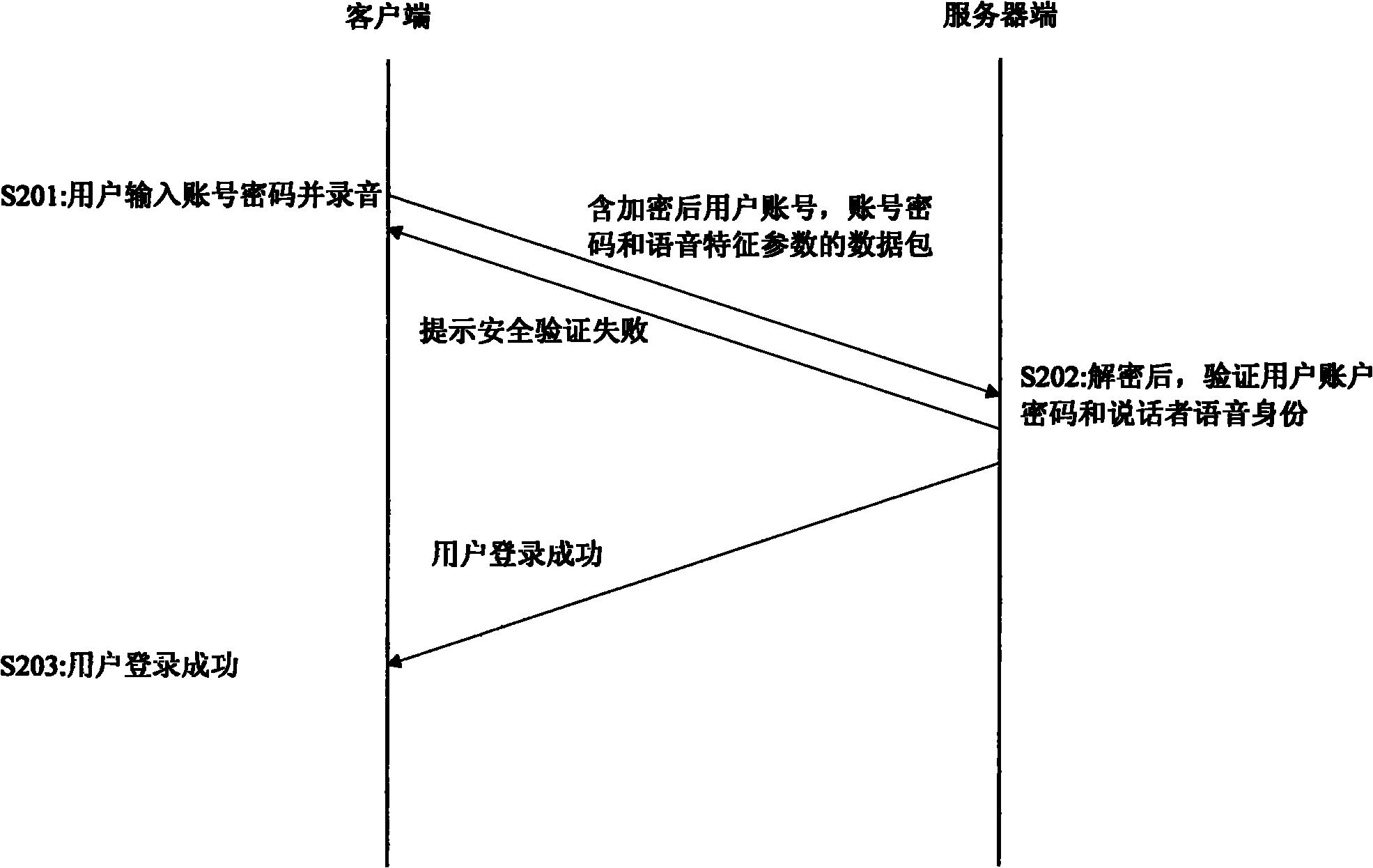

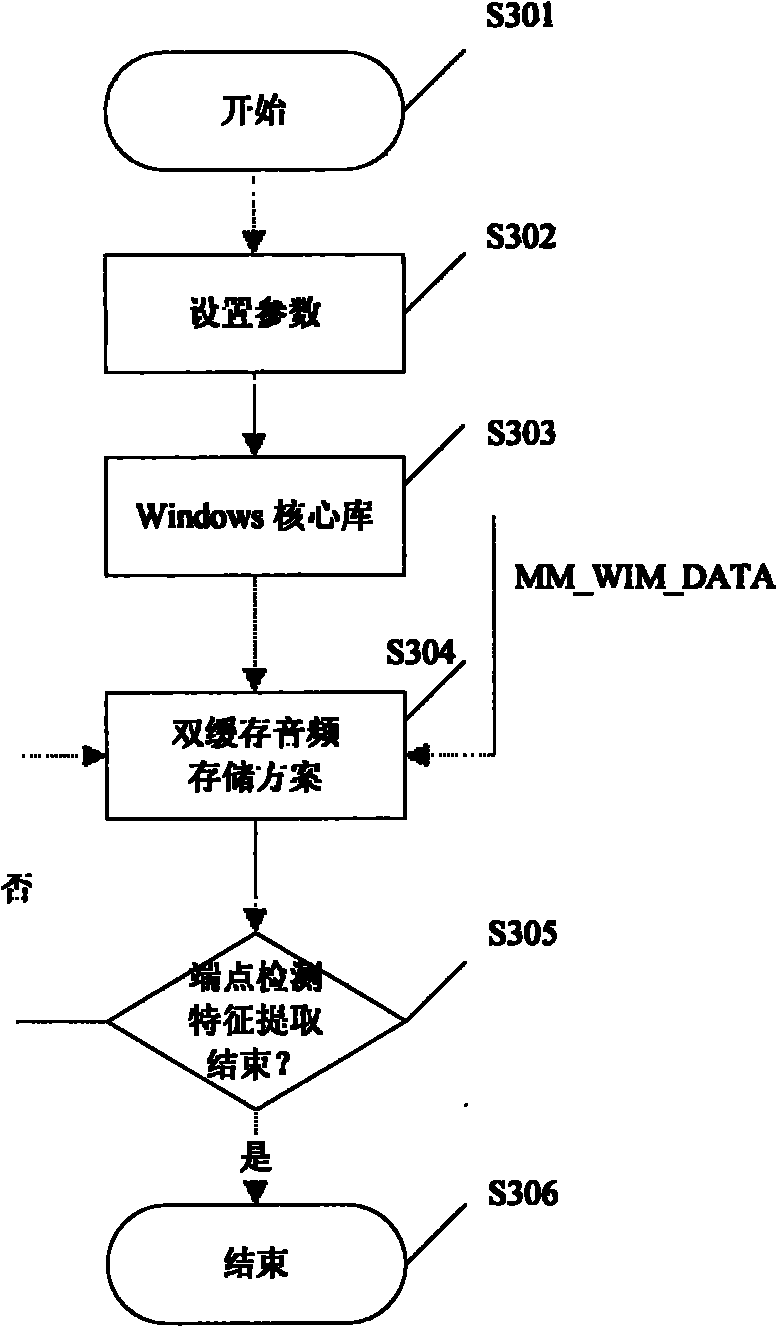

User registration and logon method by combining speaker speech identity authentication and account code protection in network games

The invention discloses a user registration and logon method by combining speaker speech identity authentication and account code protection in network games. A client receives and processes a speech signal, and a server carries out speaker speech identity authentication through a speech template. The method adopts speaker text related speech identity authentication related and account code protection, and has an anti-theft function. At the client, the method comprises: dual-cache sound storage scheme: acquiring and storing a sound signal; and end-point detection and feature extraction: detecting end points in the sampled sound signal to determine start / end frames of an effective speech signal, and extracting feature parameter (linear prediction cepstrum factor) of each frame. At the server, the method adopts dynamic programming to compute matching degree of speaker speed parameter and speech template. If the account code is thieved, since the vocal process and vocal organ of an illegal user are different from a registered user, the illegal user can not easily pass through the speech identity authentication. Even if the illegal user logs on by copying the account code and speech parameter, the server can compare with the prestored speech parameter and detect parameter conformity, causing speech identity authentication failure. After having successfully registered the account code, the user has to register a speech code by speaking and repeating the same text content until enough quantity of speech templates are successfully generated. The user needs to speak the speech code to log on. After the speaker speech identity authentication is successful, the server can confirm a user logon success immediately or after the user has input the correct account code; and after the speaker speech identity authentication fails, the server can determine a user logon failure immediately or confirm a user logon success after requiring the user to input the correct account code.

Owner:朱建政

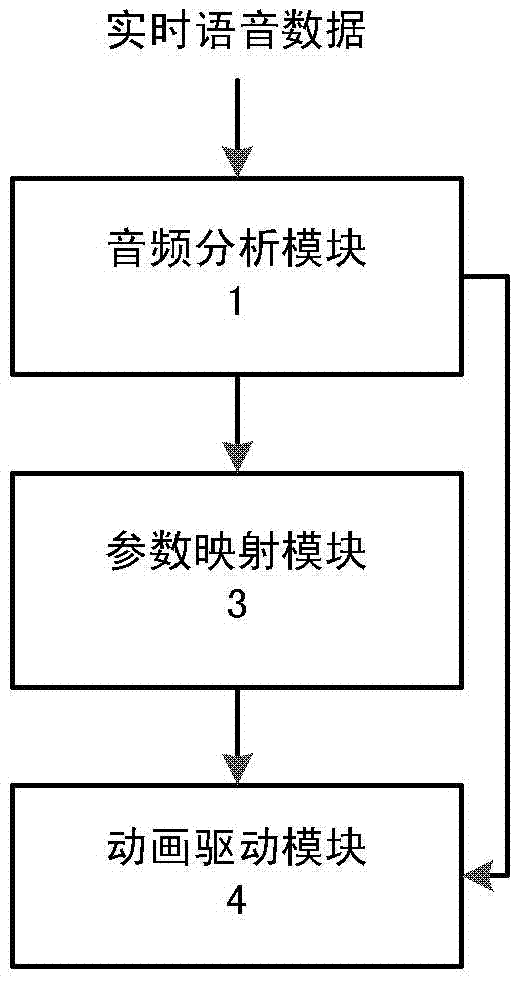

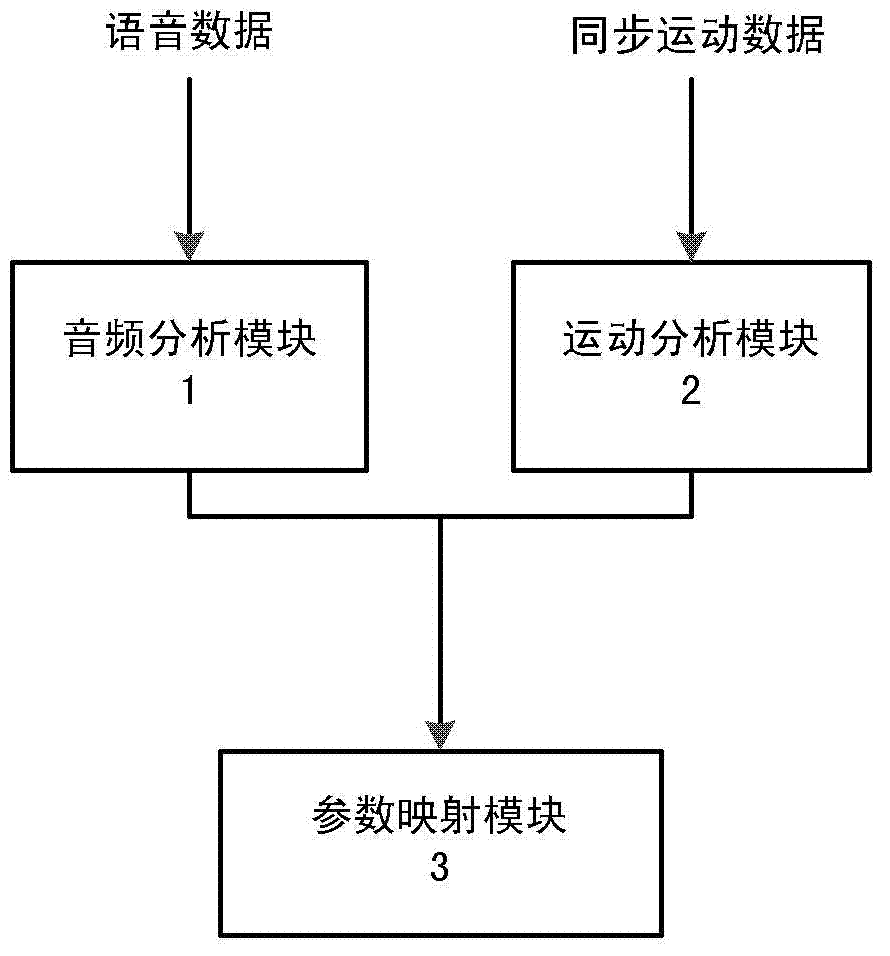

Vocal organ visible speech synthesis system

ActiveCN102820030AHigh conversion sensitivitySmall amount of calculationSpeech synthesisVisible SpeechVoice frequency

The invention provides a vocal organ visible speech synthesis system which comprises a voice frequency analysis module, a parameter mapping module, an animation drive module and a motion analysis module; wherein, the voice frequency analysis module is used for receiving the input speech signal of a speaker, judging a mute section according to energy information, coding non-mute section of speech and outputting a speech line spectrum pair parameter; the parameter mapping module is used for receiving the speech line spectrum pair parameter transmitted in real time from the voice frequency analysis module, converting the speech line spectrum pair parameter into a model motion parameter by using the trained Gaussian mixture model; the animation drive module is used for receiving the model motion parameter generated in real time by the parameter mapping module, driving the motion of key points of a virtual vocal organ model so as to drive the motion of the whole virtual vocal organ model. According to the vocal organ visible speech synthesis system, the motion of the model is driven by the corresponding motion parameter generated directly by a frequency domain parameter of the input speech, and therefore, the vocal organ visible speech synthesis system has the advantage of being free from limitations of an online database and a physiological model.

Owner:中科极限元(杭州)智能科技股份有限公司

Automatic tongue contour extraction method based on nuclear magnetic resonance images

InactiveCN102750549ASmall research error effectsShorten the timeCharacter and pattern recognitionData setData extraction

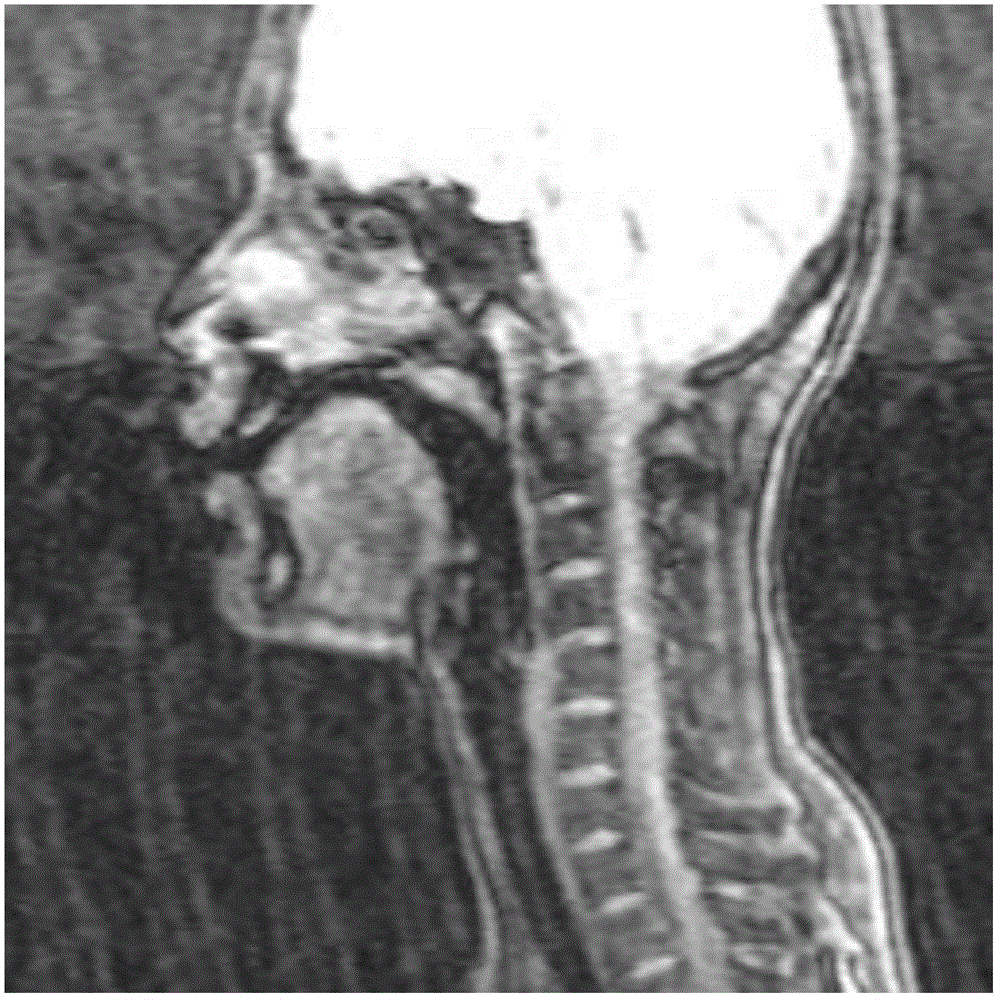

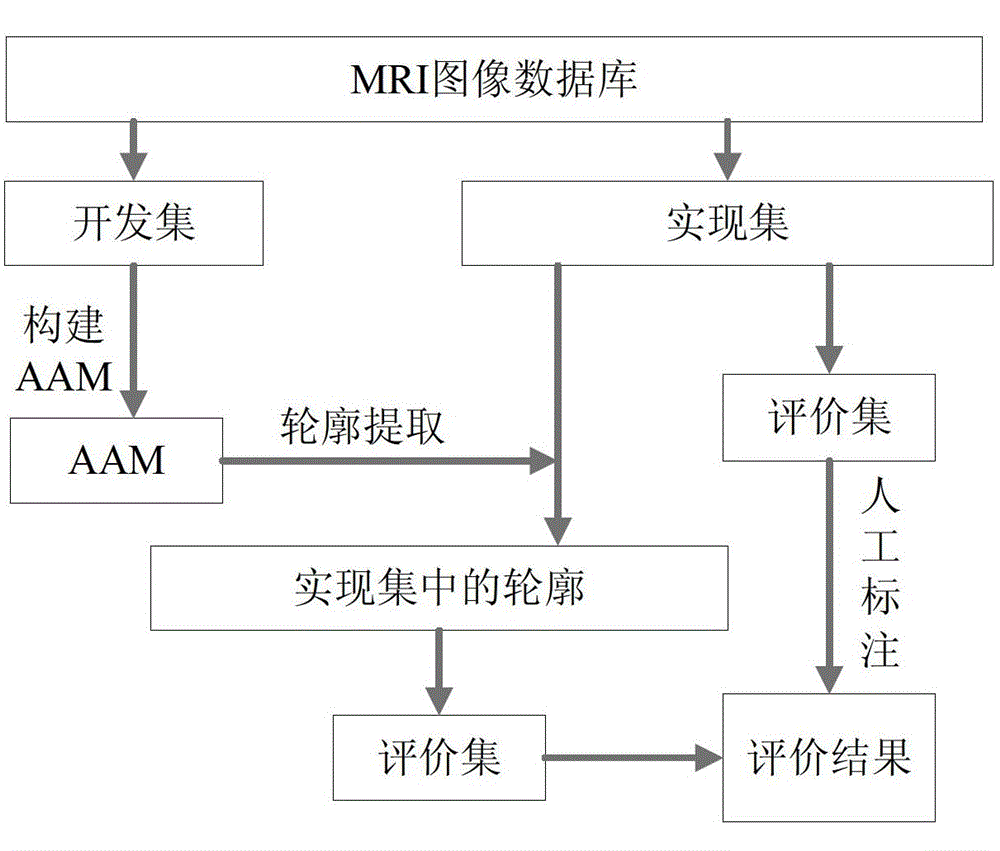

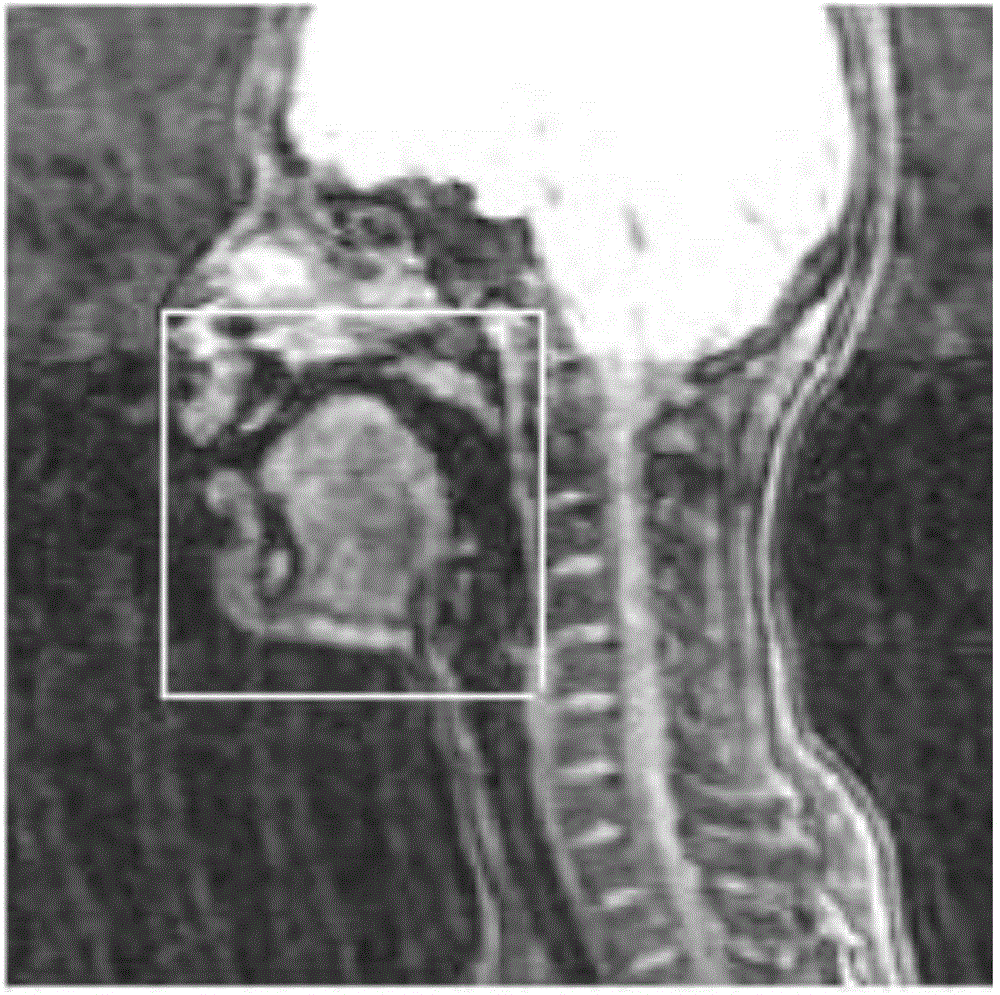

The invention discloses an automatic tongue contour extraction method based on nuclear magnetic resonance images. The method is based on an active appearance model (AAM) algorithm, and a magnetic resonance image (MRI) of a vocal organ serves as a data extraction source. The method is characterized by comprising the steps of firstly performing image annotation and data set division of a development set and an evaluation set according to a MRI, and secondly constructing an AAM and performing automatic annotation of a tongue model according to the AAM. Compared with the prior art, the method has the advantages that the tongue contour extraction is achieved with small errors, the effect on survey errors in the later period is small and can be ignored, MRIs to be processed are large in quantity, and the method for extracting tongue contours through automatic annotation based on the AAM can save a large amount of time and human resources. The method has great significance to a series of speech studies.

Owner:TIANJIN UNIV

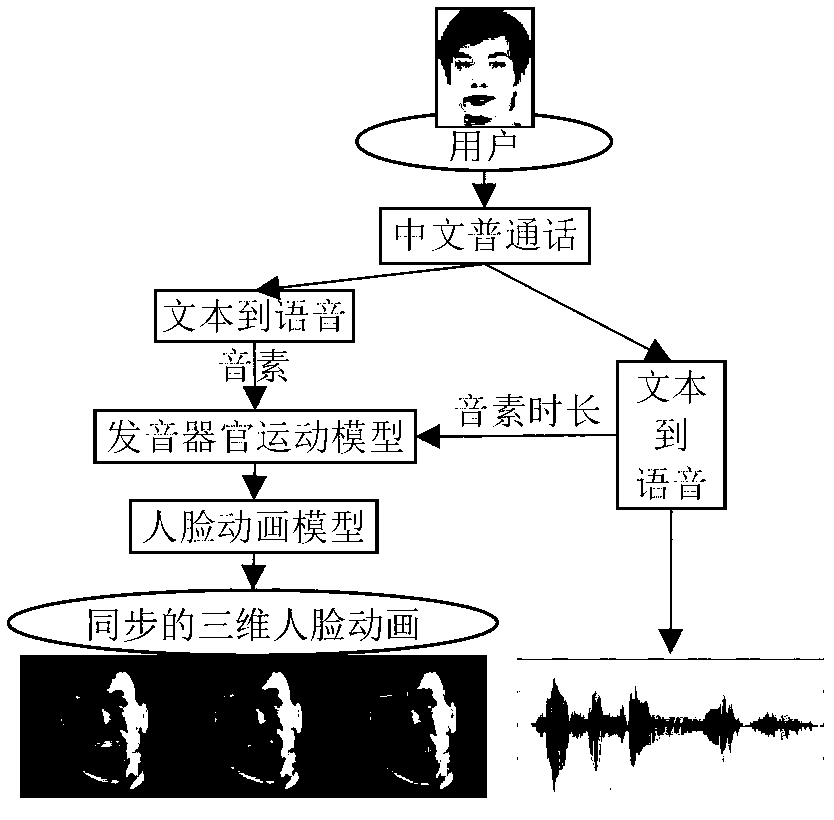

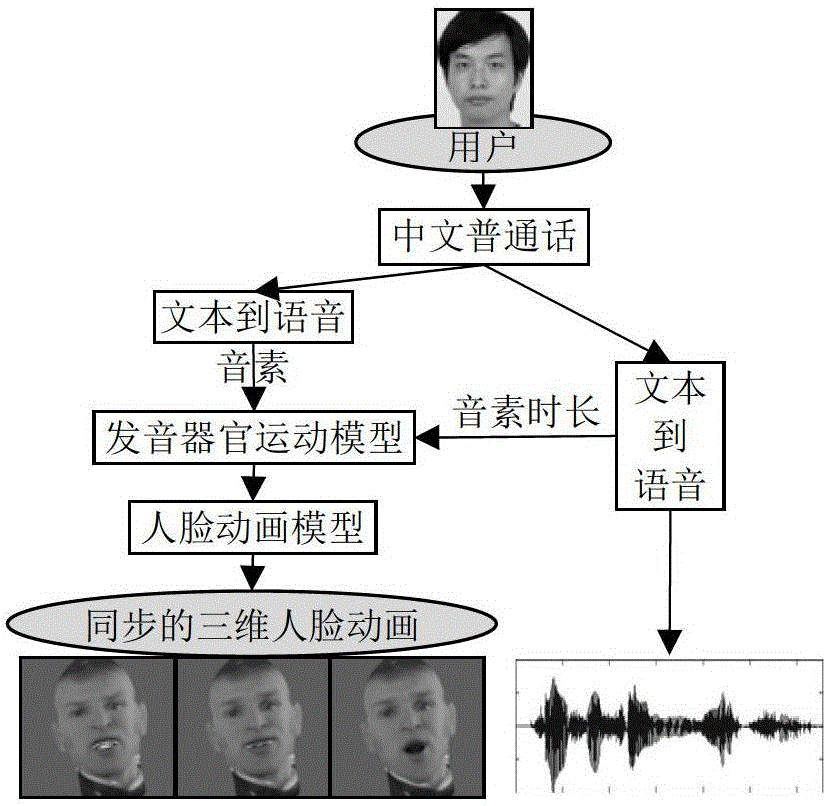

Pronunciation method of three-dimensional visual Chinese mandarin pronunciation dictionary with pronunciation being rich in emotion expression ability

The invention provides a pronunciation method of a three-dimensional visual Chinese mandarin pronunciation dictionary with pronunciation being rich in emotion expression ability and relates to the technical field of voice visualization, language teaching, vocal organ animation and facial animation. The method produces the vocal organ animation and produces the facial animation with vivid expressions at the same time. The method has the advantages that based on truly-captured motion data, a physiology motion mechanism of vocal organs and a harden markov model, the built vocal organ animation has coordination and consistency related to the facial animation, and coarticulation phenomena in continuous voice animation can be completely described; data driving models are embedded into physiology models by the utilization of advantages of the physiology models and advantages of the data driving models on describing face partial detail features and reality sense aspects, and the facial animation with high reality sense is generated. An objective performance test and a subjective performance test to a system verify the effectiveness of the pronunciation method in the aspect of intelligent auxiliary language teaching.

Owner:UNIV OF SCI & TECH OF CHINA

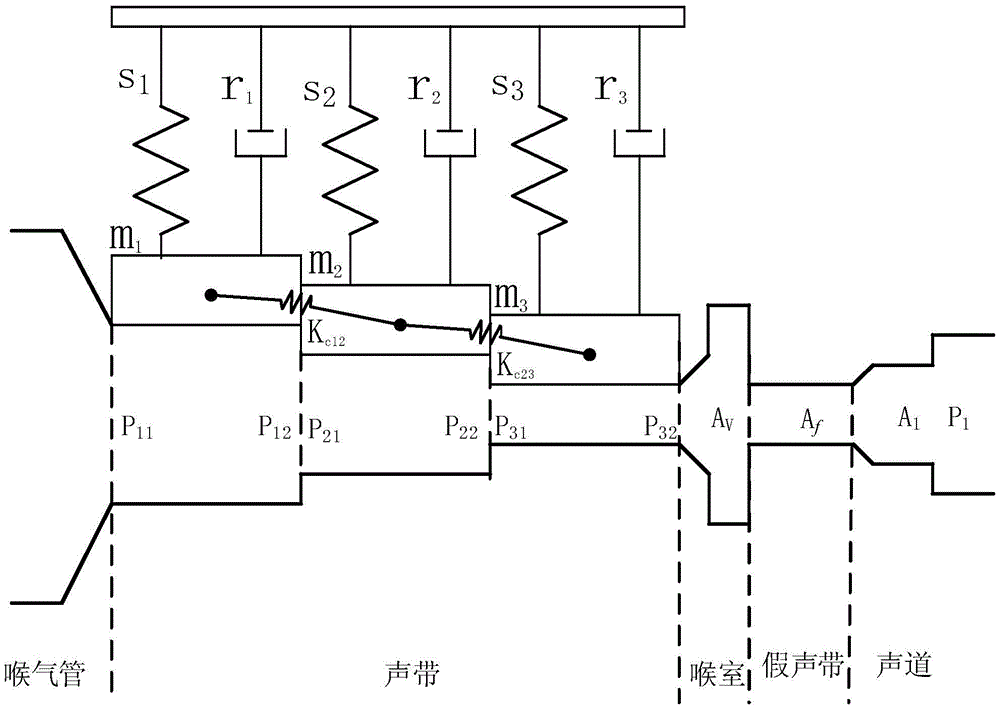

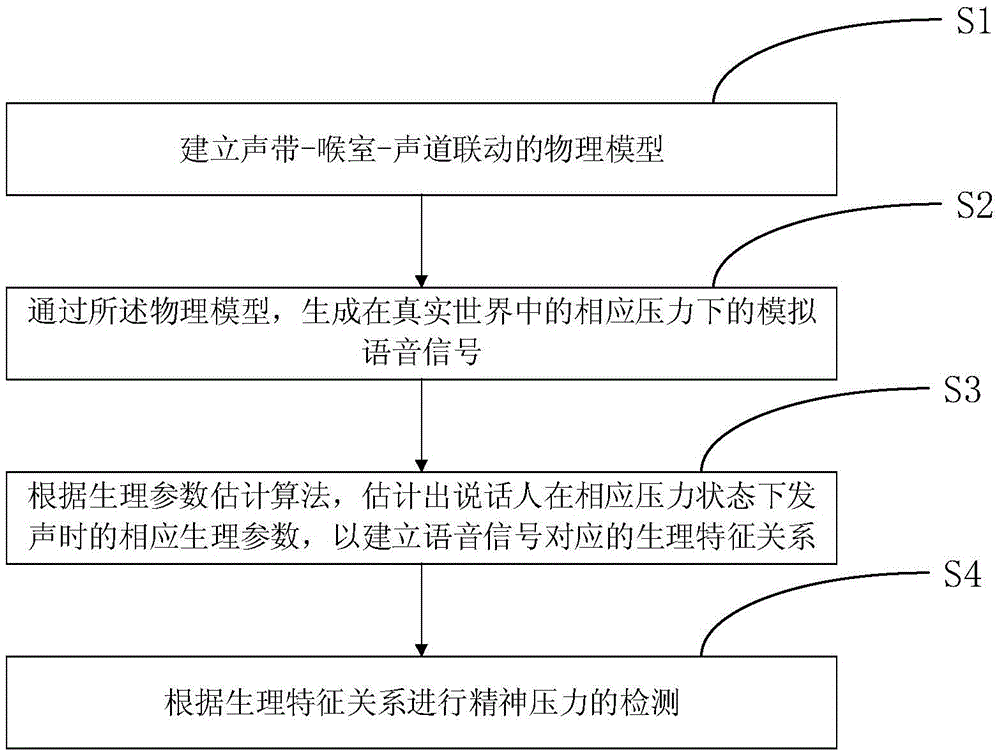

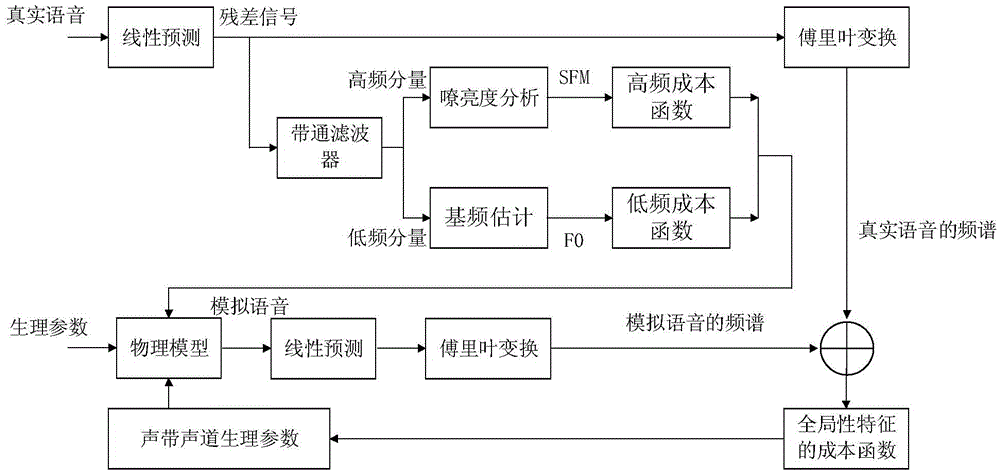

Vocal cord-larynx ventricle-vocal track linked physical model and mental pressure detection method

The invention relates to a vocal cord-larynx ventricle-vocal track linked physical model and a mental pressure detection method. The physical model includes a mechanical equation set for describing a vocal cord motion model, and an aerodynamics equation set for describing pressure drop distribution in a glottis depth direction and a larynx ventricle-false vocal cord-vocal track direction. A physiological parameter estimation algorithm is designed through the established vocal cord-larynx ventricle-vocal track linked physical model, so that a physiological variation mechanism of phonation in a pressure state is researched. Physiological feature parameters of the vocal cords and the larynx ventricle when a speaker phonates in the pressure state are extracted, and a relation from real voice signals to physiological features is established. According to the estimated physiological parameters, variation features of various vocal organs and the flow state of airflow in the vocal organs under the influence of pressure variation factors are obtained, and the variation features are used for detection of the mental pressure. The detection recognition precision and reliability are improved.

Owner:HOHAI UNIV CHANGZHOU

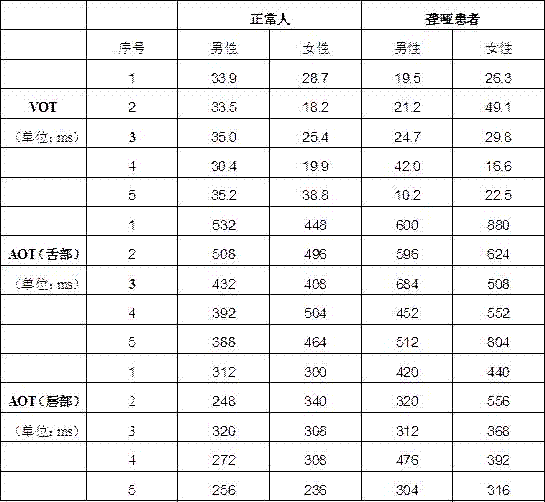

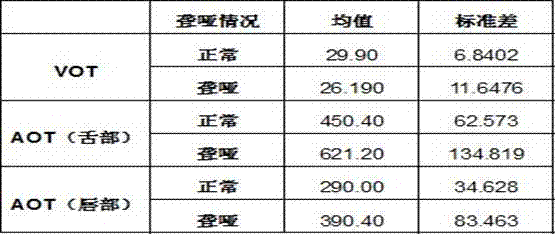

Method for evaluating hearing damage degree of deaf-mute

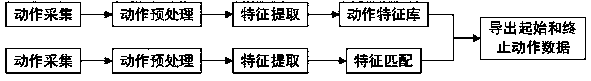

InactiveCN106875956AValid for degree of hearing impairmentImprove language skillsHealth-index calculationSpeech analysisVocal organStart time

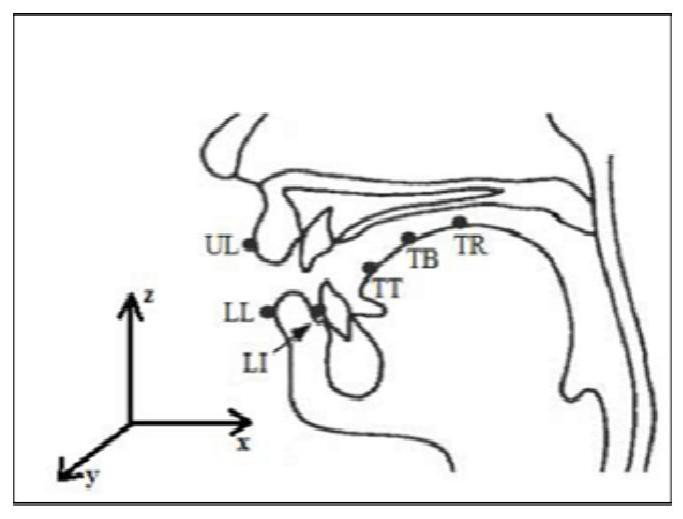

The invention relates to the fields of medical treatment and education for disabled people, in particular to a method for judging the degree of hearing impairment of deaf-mute patients. A method for judging the degree of hearing impairment of deaf-mute patients, using a three-dimensional electromagnetic pronunciation instrument to obtain the pronunciation start time of the pronunciation organs and the start time of the pronunciation organs of multiple normal people when they read the test corpus, and the deaf-mute patients to be judged read through the three-dimensional The electromagnetic pronunciation instrument obtains the pronunciation start time of the corresponding pronunciation organ and the pronunciation start time of the pronunciation organ when the deaf-mute patient to be judged reads the test corpus, and compares the two to judge the degree of hearing impairment of the deaf-mute patient.

Owner:TAIYUAN UNIV OF TECH

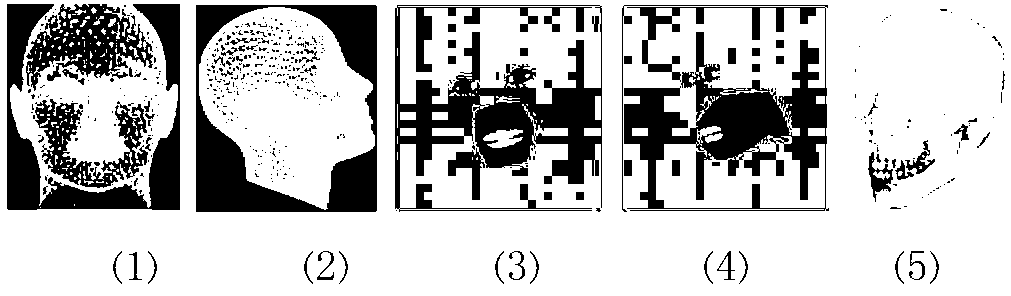

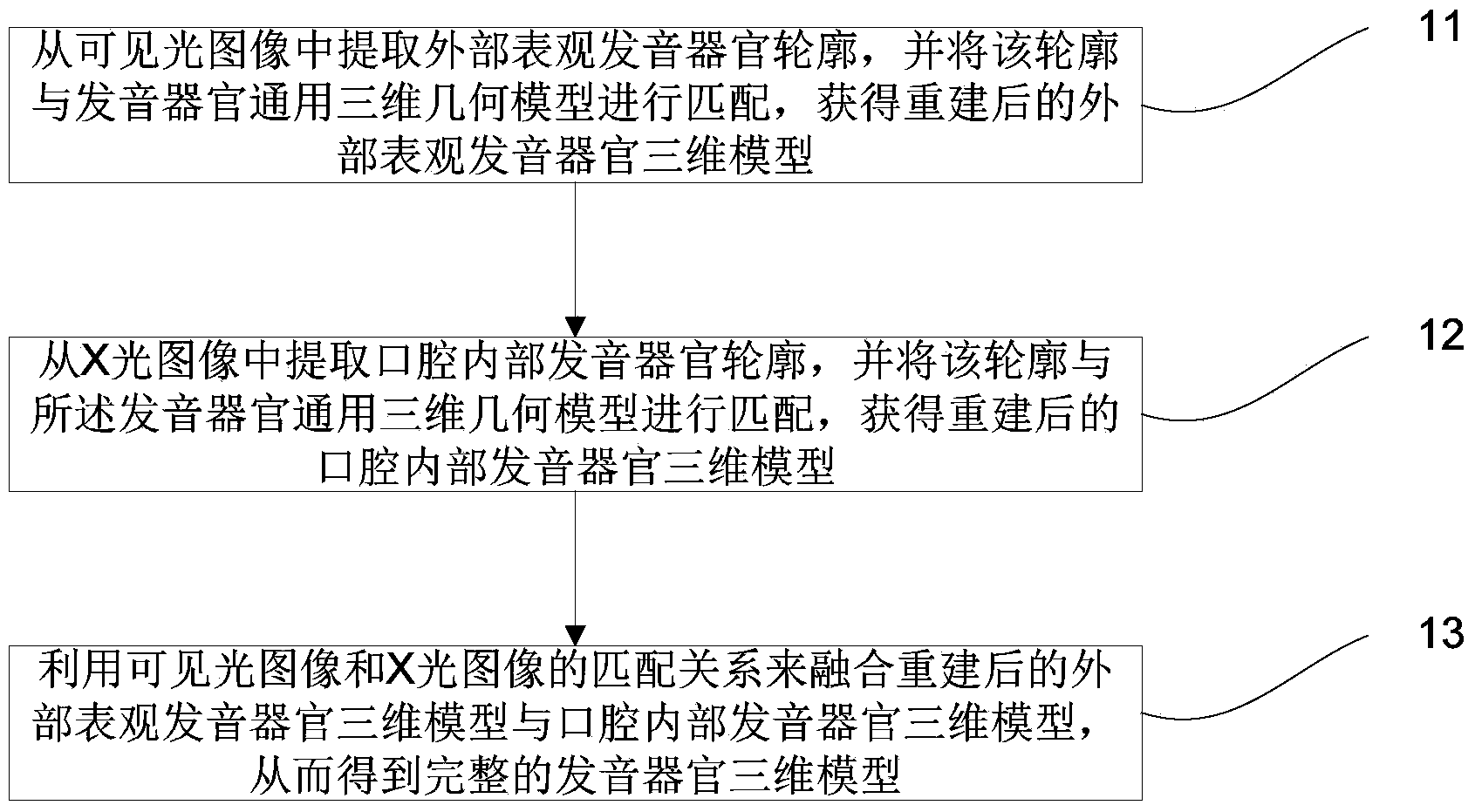

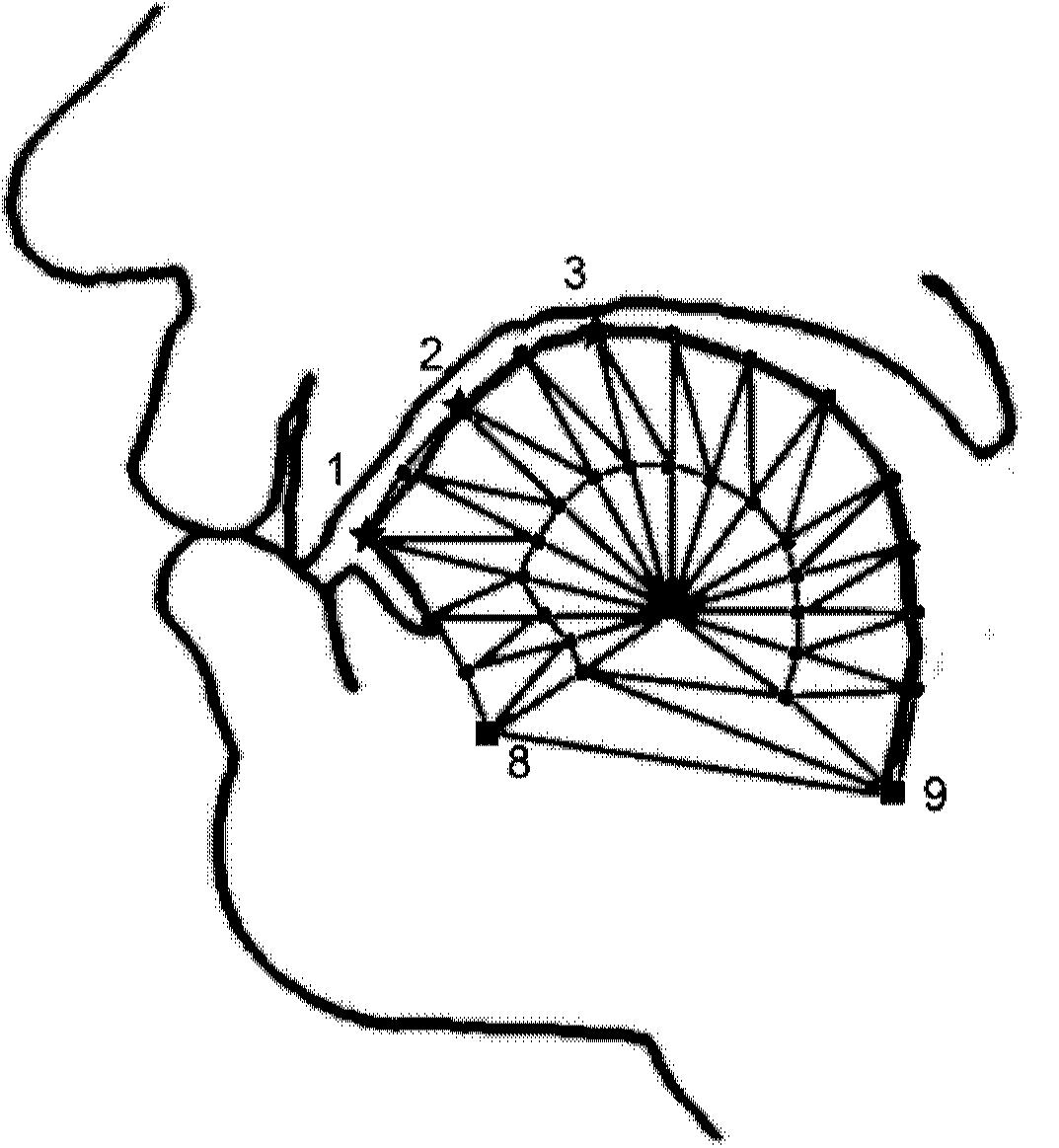

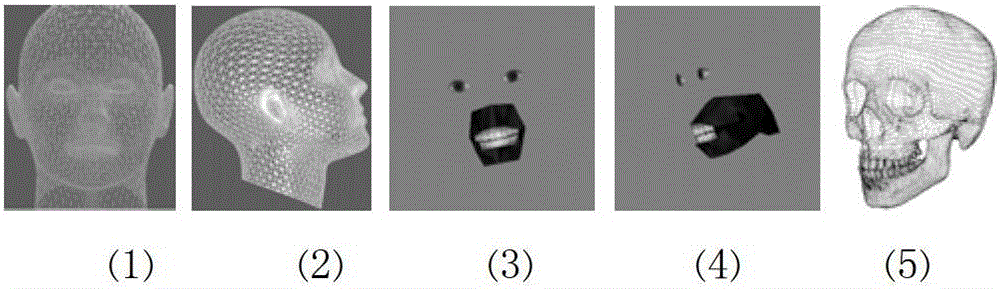

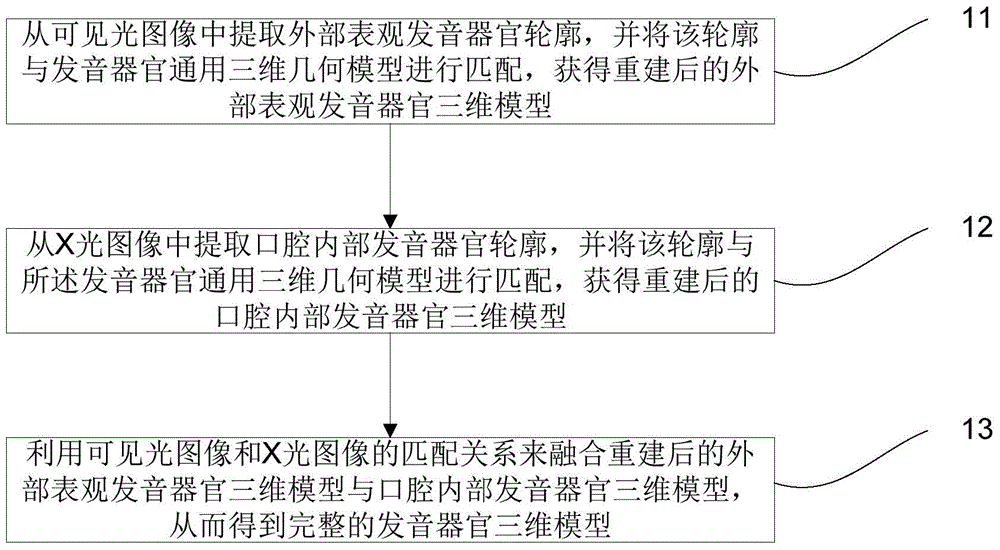

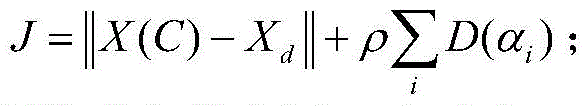

Vocal organ three-dimensional modeling method

ActiveCN104318615AHigh precision requirementsImage generation3D modellingVocal organDimensional modeling

The invention discloses a vocal organ three-dimensional modeling method which comprises the steps that an outer apparent vocal organ contour is extracted from a visible image, the contour is matched with a vocal organ universal three-dimensional geometrical model, and a reestablished outer apparent vocal organ three-dimensional model is obtained; an oral cavity inner vocal organ contour is extracted from an X-ray image, the contour is matched with the vocal organ universal three-dimensional geometrical model, and a reestablished oral cavity inner vocal organ three-dimensional model is obtained; and through the matching relation of the visible image and the X-ray image, the reestablished outer apparent vocal organ three-dimensional model and the reestablished oral cavity inner vocal organ three-dimensional model are fused, and accordingly an complete vocal organ three-dimensional model is obtained. According to the method, the vocal organ model which can reflect the real shape of a vocal organ from inside to outside during pronunciation by the vocal organ can be obtained accurately and quickly.

Owner:UNIV OF SCI & TECH OF CHINA

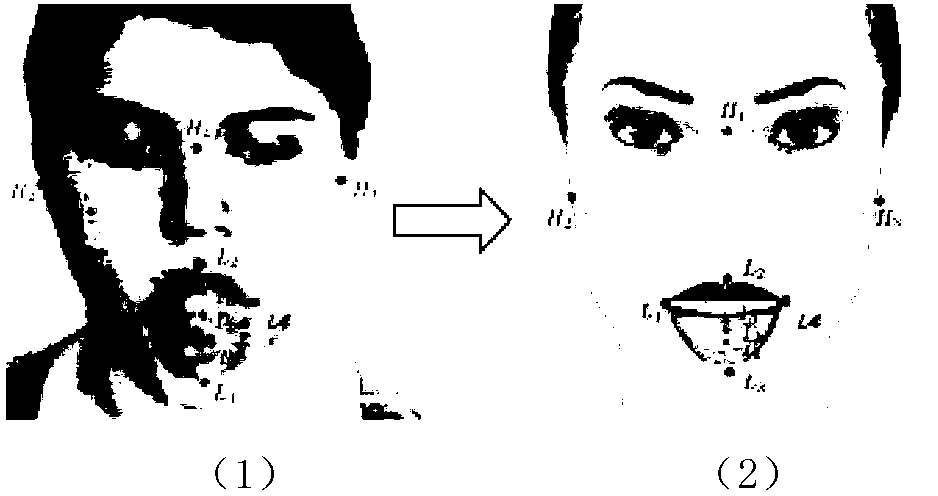

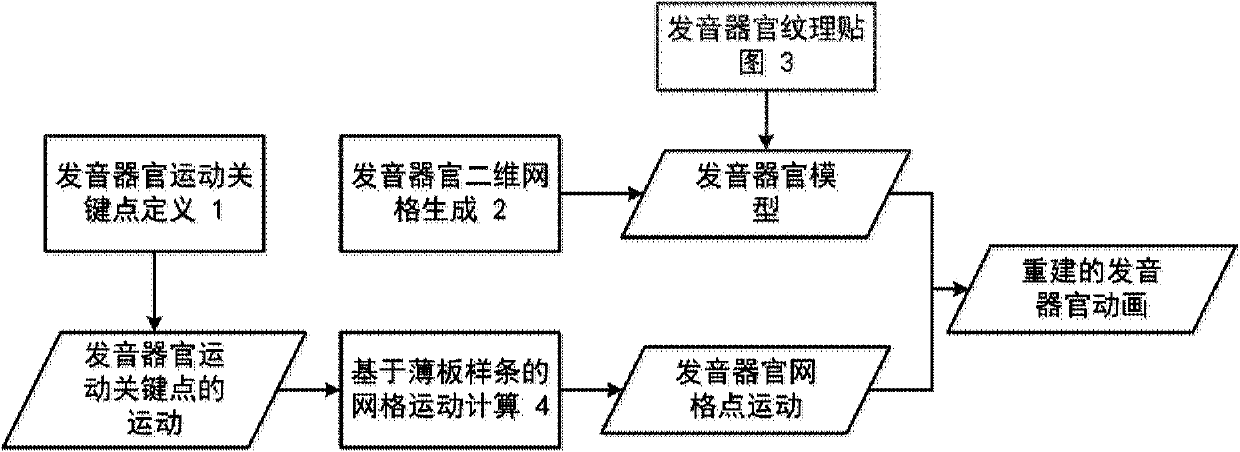

Method for generating lattice animation of vocal organs

The invention provides a method for generating lattice animation of vocal organs. The method comprises the following steps of defining vocal organ movement key points; generating a two-dimensional lattice of a midsagittal plane of each vocal organ; obtaining texture maps of the vocal organs; performing thin plate spline-based lattice movement calculation. The shape of the midsagittal plane of each vocal organ is calculated according to the positions of the vocal organ movement key points, and the movement condition of the whole vocal organs on the midsagittal planes is directly obtained according to the movement of the vocal organ movement key points, and the method has the advantages of simplifying description parameters for vocal organ movement and reducing the difficulty in making the animation of the vocal organs.

Owner:中科极限元(杭州)智能科技股份有限公司

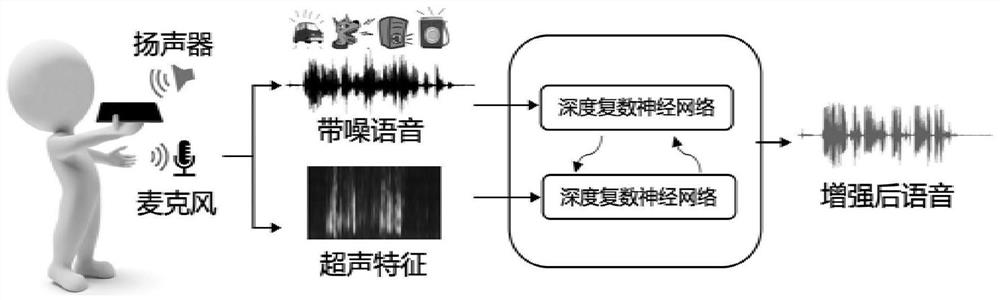

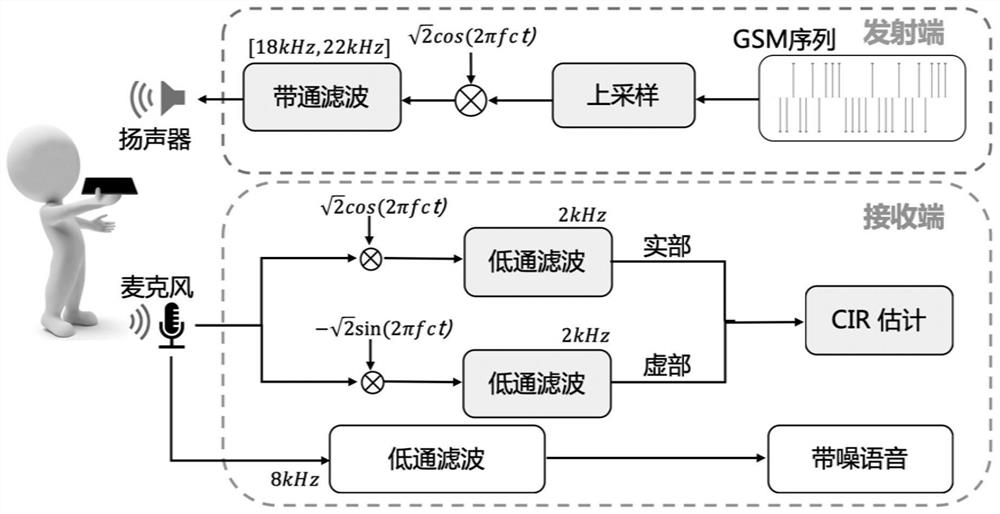

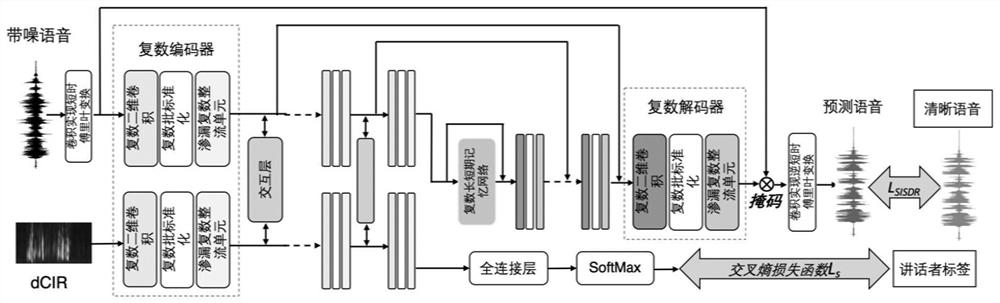

Speech enhancement method and system fusing ultrasonic signal features

PendingCN114067824ALossless reconstructionPrediction is accurateBaseband system detailsSpeech analysisPhonic TicVocal organ

The invention discloses a speech enhancement method and system fusing ultrasonic signal features. The method comprises the steps: firstly predefining an ultrasonic signal, and then actively transmitting and receiving the ultrasonic signal through a loudspeaker and a microphone of a device; and performing channel estimation to obtain a channel impact response, and inputting the channel impact response to a neural network to realize speech enhancement, wherein the channel impact response reflects motion features of facial vocal organs when a user speaks, and the motion features serve as supplementary modal information of speech. According to the invention, the speech enhancement task is assisted by making full use of the user voice action characteristics, the speech enhancement effect is improved, and the method has wide application prospects.

Owner:XI AN JIAOTONG UNIV

Pronunciation detection method and apparatus, and phonetic-category learning method and system

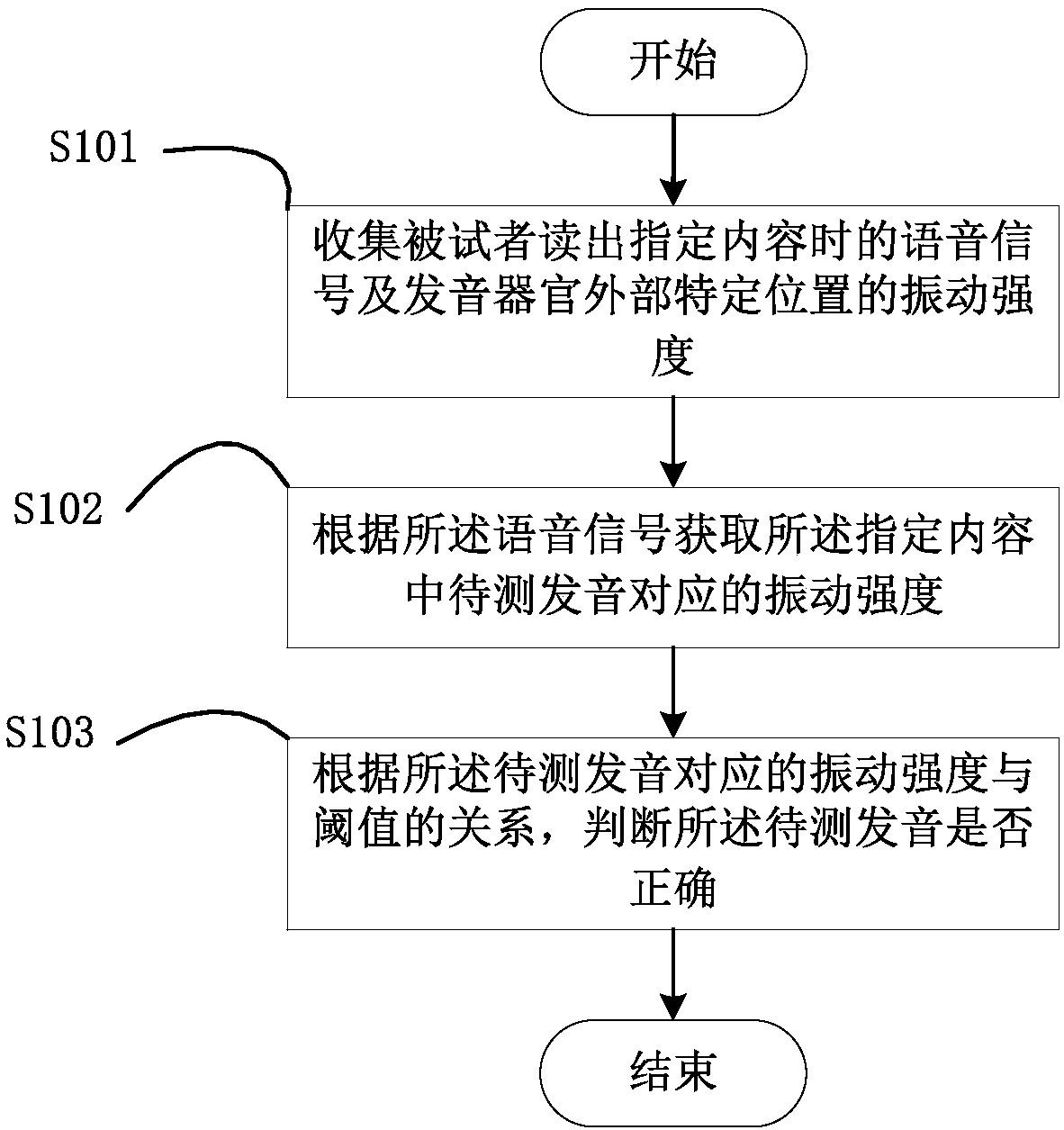

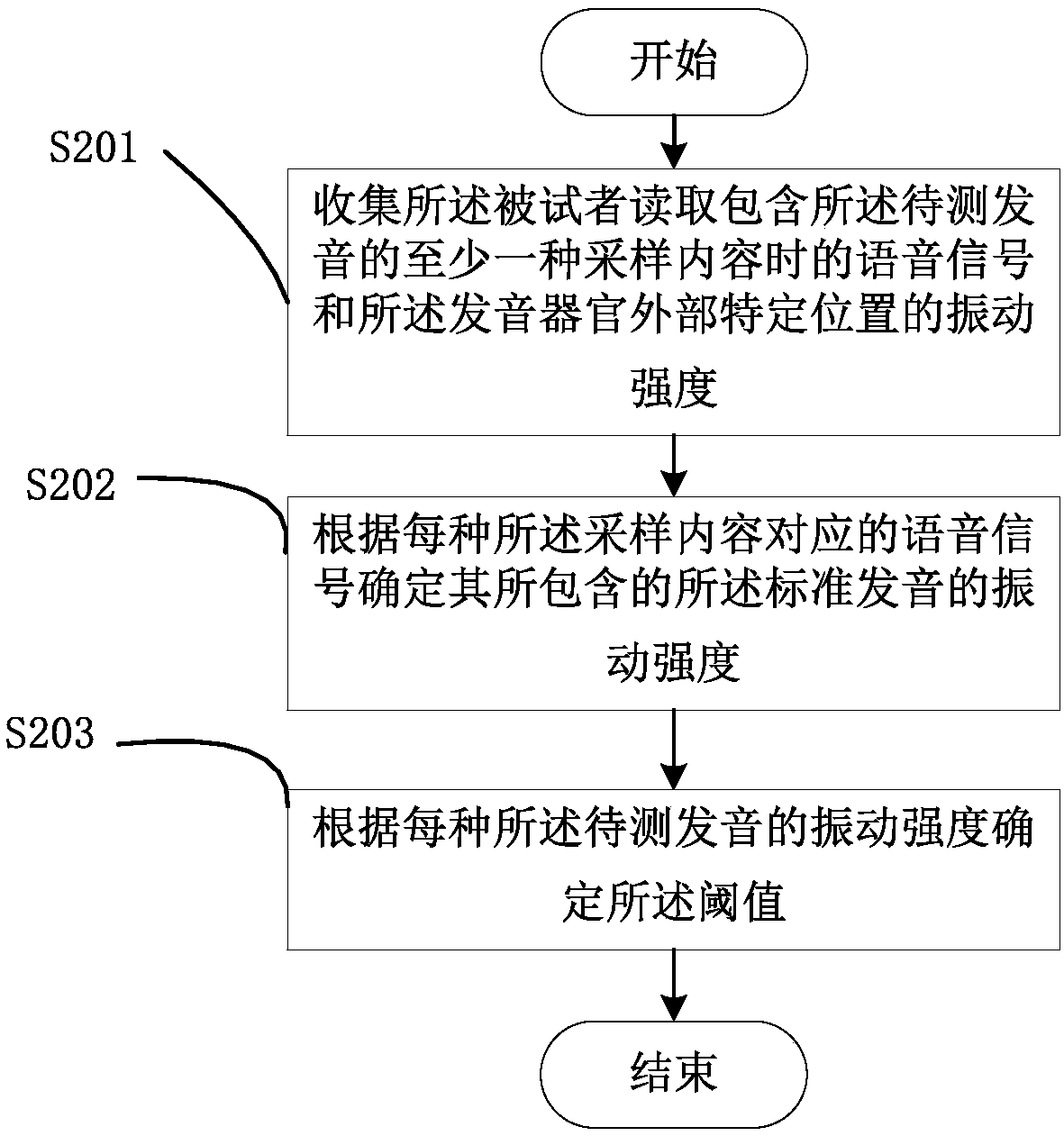

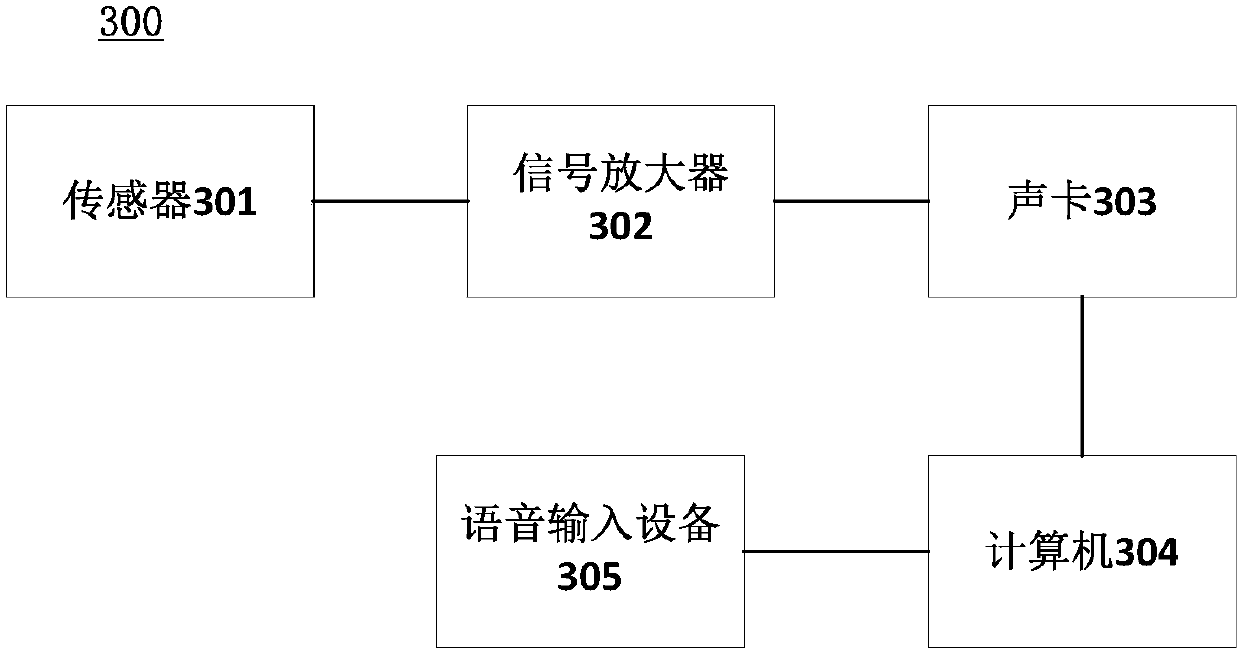

ActiveCN107591163AThe result is accurateLow costSpeech recognitionTeaching apparatusVocal organStudy methods

The invention, which relates to the field of the computer technology, provides a pronunciation detection method. The method comprises: collecting a speech signal that is generated when a tested personreads a designated content and a vibration intensity at a specific position outside a vocal organ; according to the speech signal, acquiring a vibration intensity corresponding to a to-be-tested pronunciation in the designated content; and on the basis of a relationship between the vibration intensity corresponding to the to-be-tested pronunciation and a threshold, determining whether the to-be-tested pronunciation is correct. According to the invention, on the basis of non-invasive pronunciation detection, the operation becomes simple and the cost is lowered; and the tested person feels safeand comfortable. Besides, the invention also provides a pronunciation detection apparatus and a phonetic-category learning method and system based on the pronunciation detection method.

Owner:XIAMEN KUAISHANGTONG TECH CORP LTD

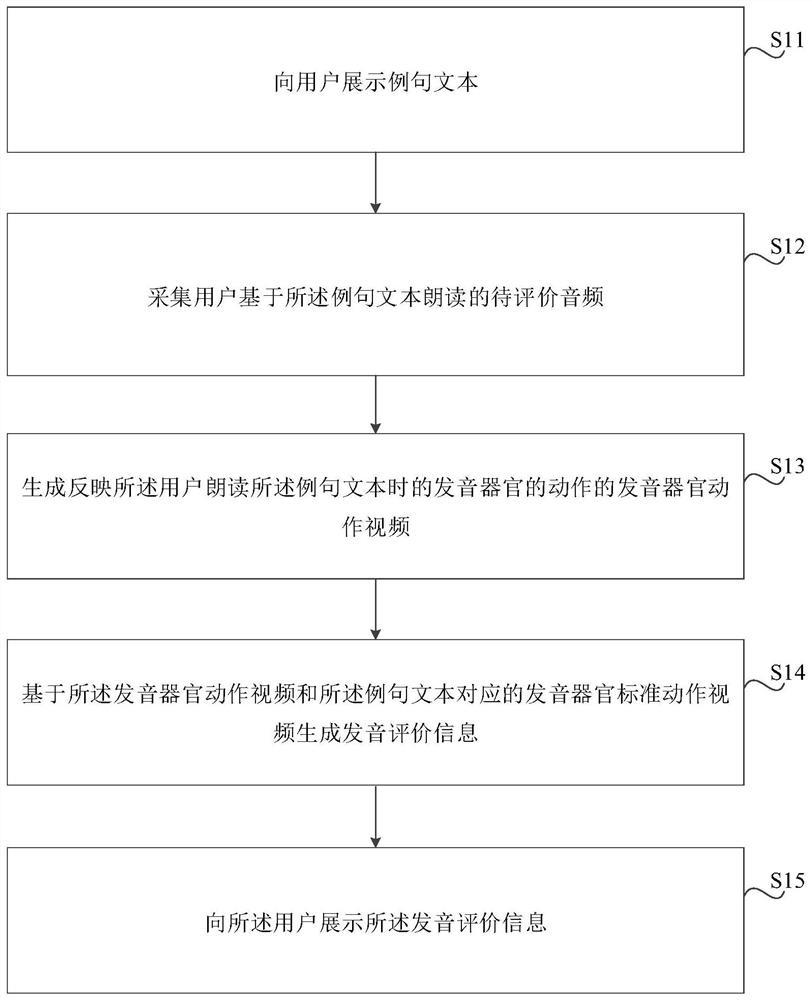

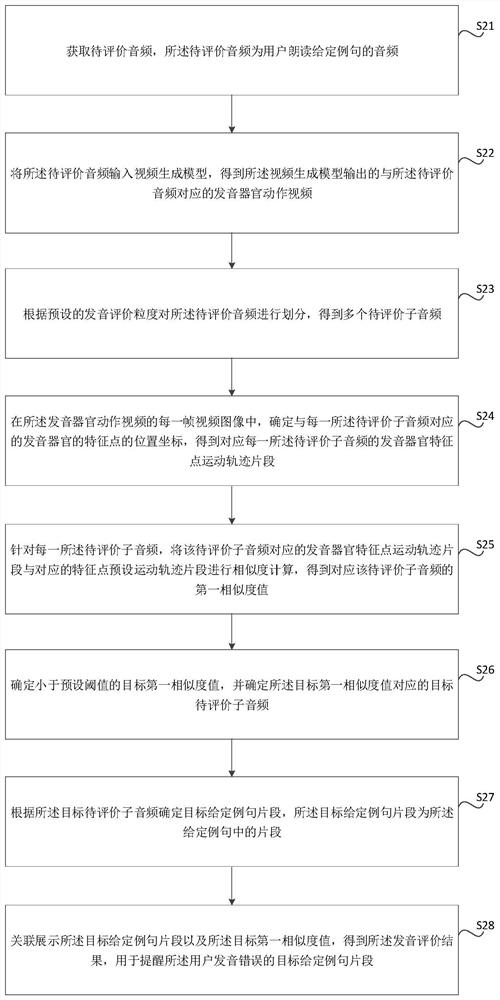

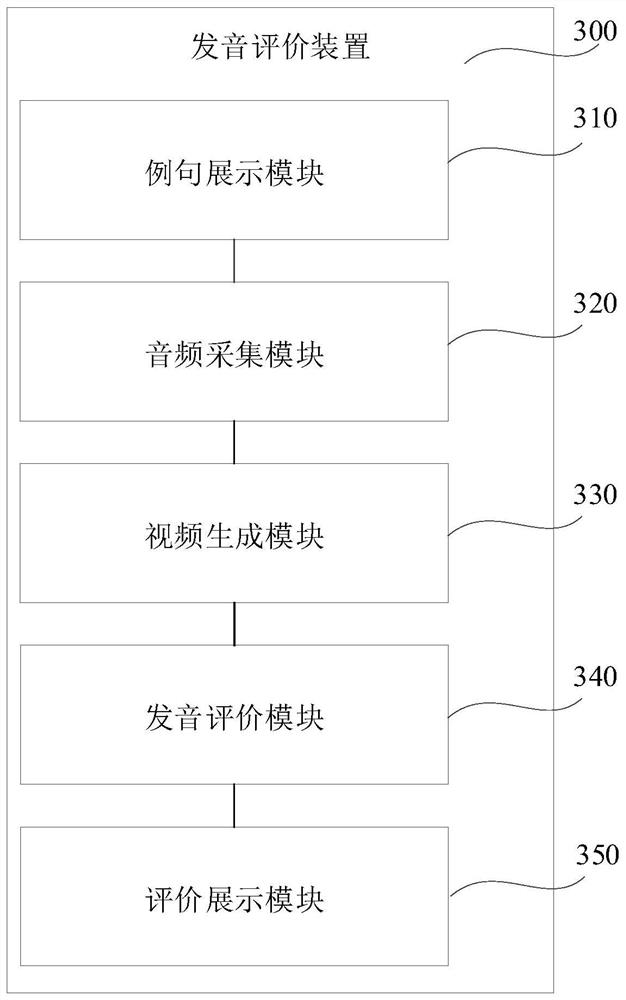

Pronunciation evaluation method and device, storage medium and electronic equipment

The invention relates to a pronunciation evaluation method and device, a storage medium and electronic equipment. The method comprises the steps of displaying an example sentence text to a user; collecting a to-be-evaluated audio read by the user based on the example sentence text; generating a vocal organ action video reflecting the action of a vocal organ when the user reads the example sentence text; generating pronunciation evaluation information based on the vocal organ action video and a vocal organ standard action video corresponding to the example sentence text; and displaying the pronunciation evaluation information to the user. The pronunciation of the user can be accurately evaluated, and whether the pronunciation of the user is accurate or not can be visually reflected.

Owner:BEIJING YOUZHUJU NETWORK TECH CO LTD

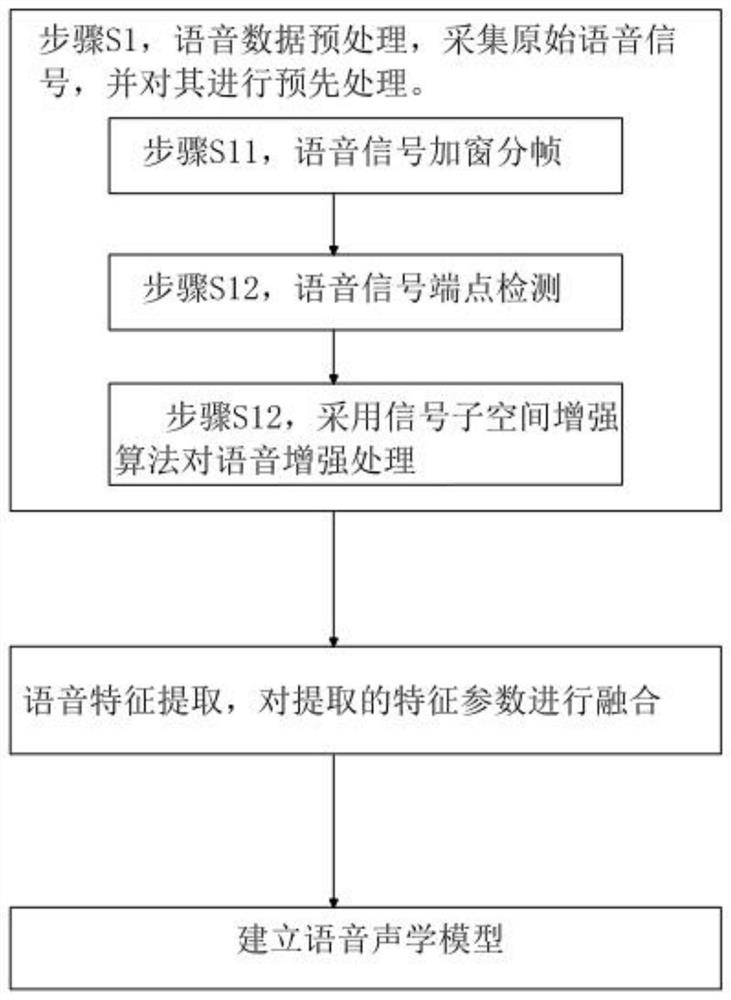

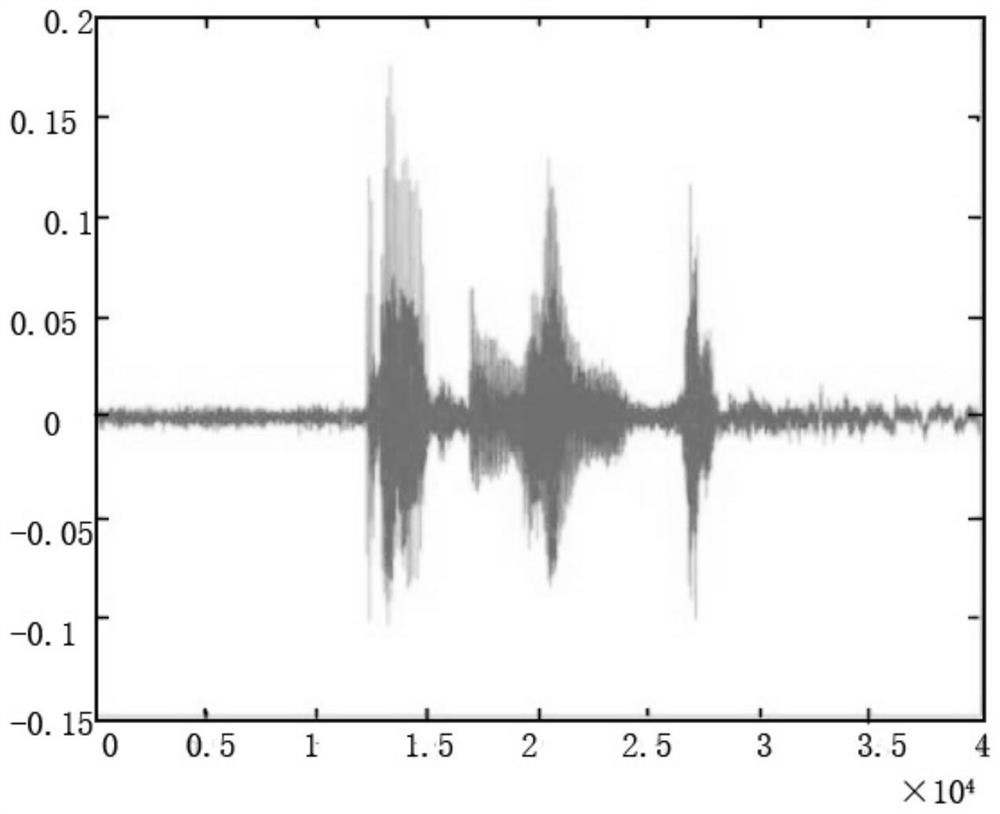

Intelligent recognition method for incomplete voice of elderly people

PendingCN112071307AEasy to identifyRapid positioningSpeech recognitionEnvironmental noiseVocal organ

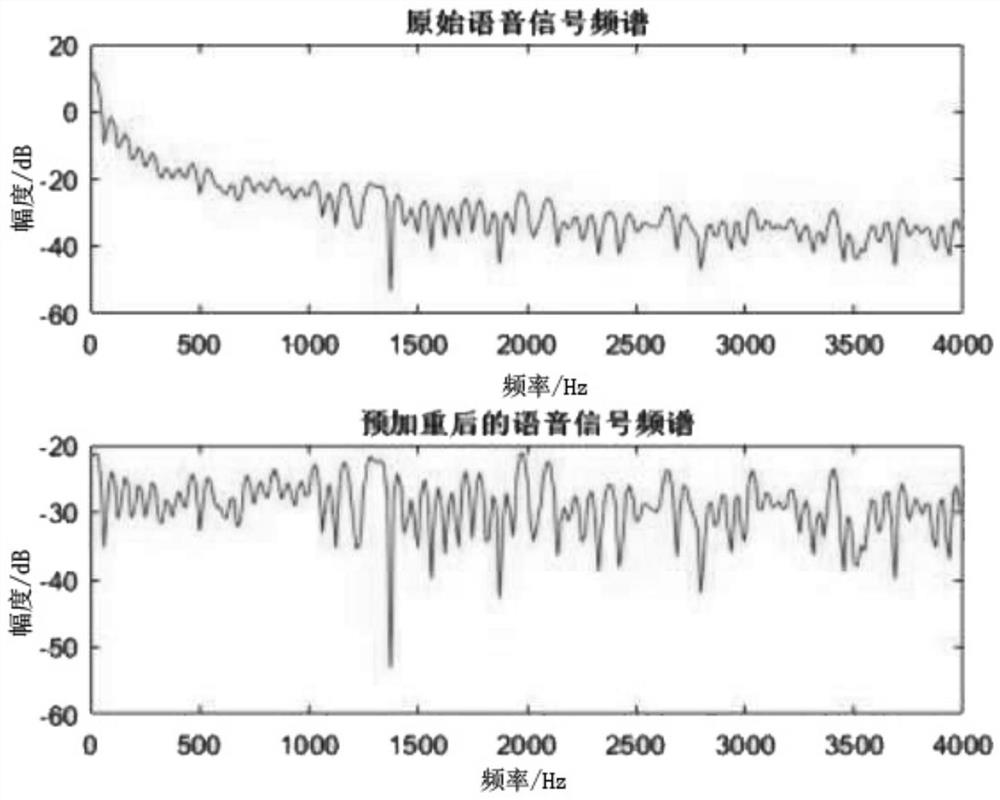

The invention relates to the technical field of voice recognition, and particularly relates to an intelligent recognition method for incomplete voice of elderly people. The intelligent recognition method for incomplete voice of elderly people comprises the following steps: S1, preprocessing voice data, acquiring an original voice signal, and preprocessing the original voice signal, specifically including windowing and framing of the voice signal; detecting voice signal endpoints; performing voice enhancement processing by adopting a signal subspace enhancement algorithm; S2, extracting voice features, and fusing the extracted feature parameters; and S3, establishing a voice acoustic model. According to the intelligent recognition technology for incomplete voice of elderly people, the problems of slight sound amplitude and great influence of environmental noise caused by aging of vocal organs of the elderly people can be reduced, and the voice features fused by adopting the sound parameters can be closer to the voice features of the elderly people, so that data comprehensively representing the voice features of the elderly people can be acquired, and the recognition degree of incomplete voice and fuzzy voice of the elderly people is improved.

Owner:JIANGSU HUIMING SCI & TECH

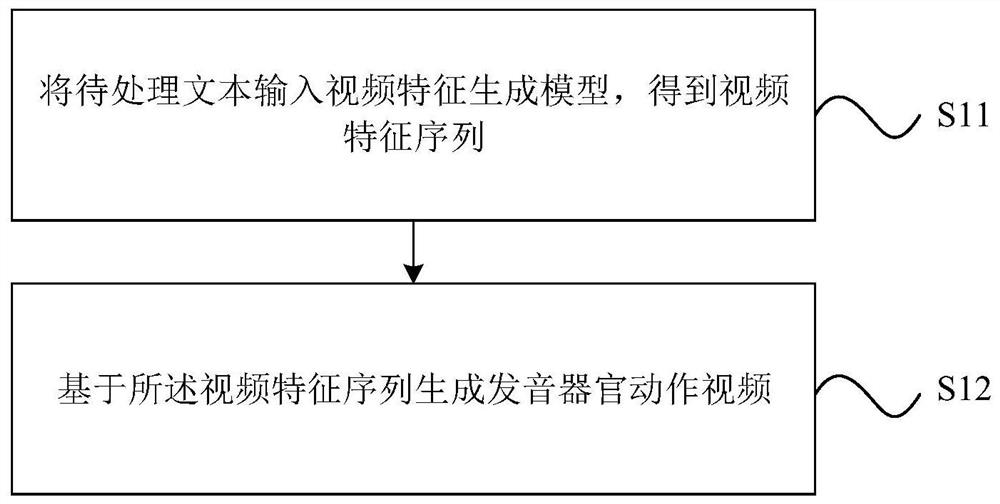

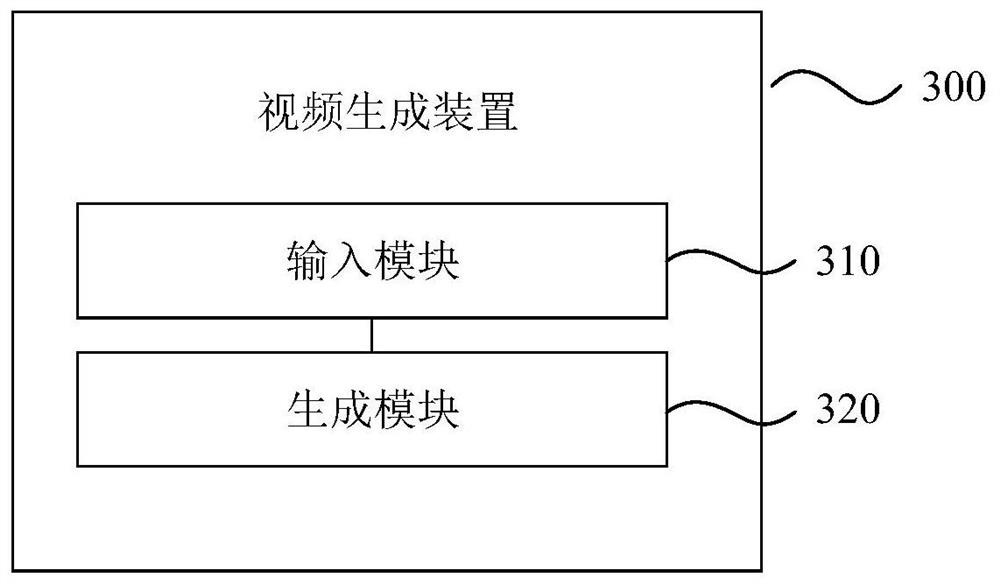

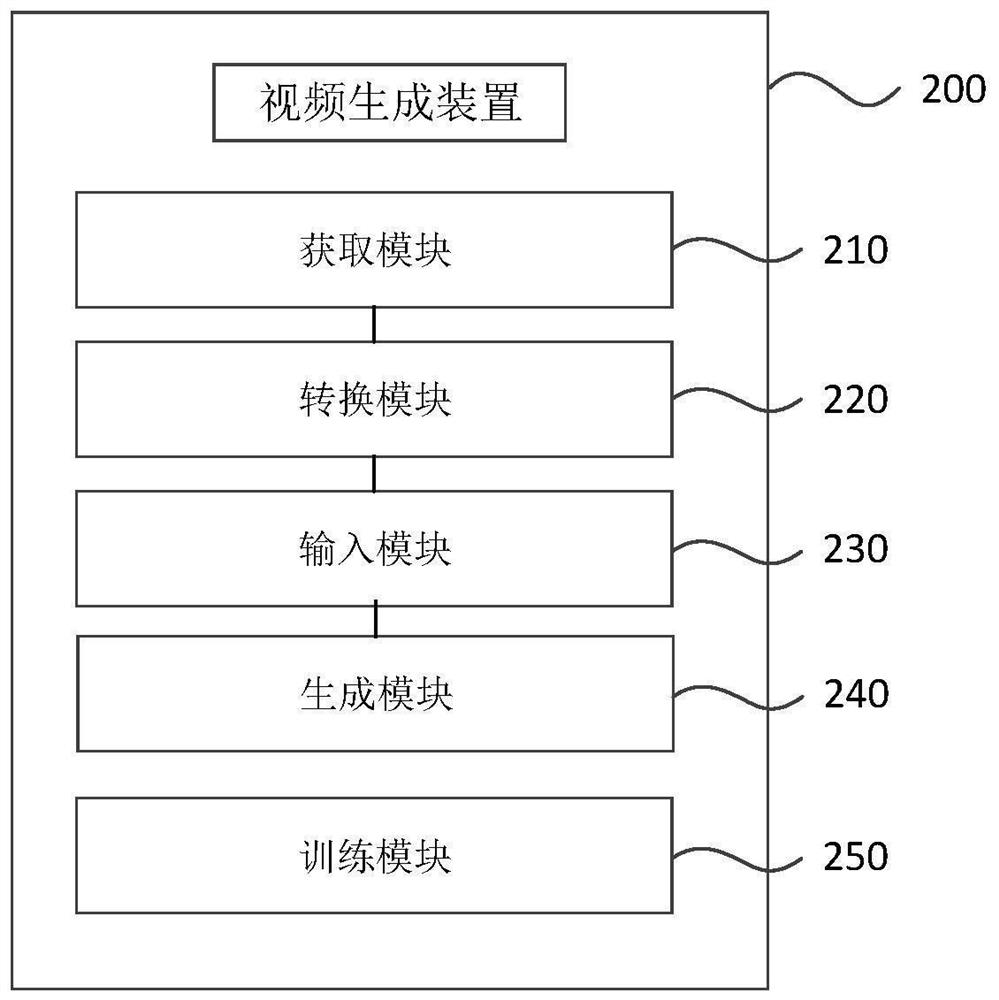

Video generation method and device, storage medium and electronic equipment

ActiveCN113079328AImprove production efficiencyTelevision system detailsColor television detailsVocal organComputer graphics (images)

The invention relates to a video generation method and device, a storage medium and electronic equipment, and the method comprises the steps: inputting a to-be-processed text into a video feature generation model to obtain a video feature sequence; and generating a vocal organ action video based on the video feature sequence. The vocal organ action video generation efficiency can be improved.

Owner:BEIJING YOUZHUJU NETWORK TECH CO LTD

3D face animation synthesis method and system

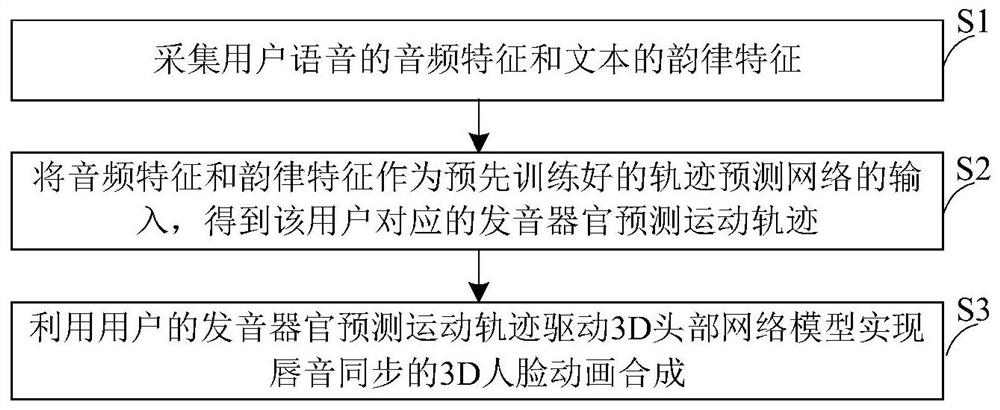

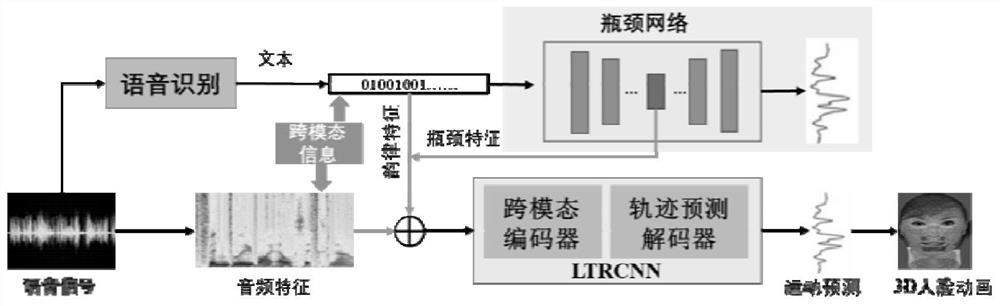

PendingCN113160366ASmall amount of calculationImprove practicalityInput/output for user-computer interactionAnimationVocal organSynthesis methods

The invention discloses a 3D face animation synthesis method and system, and belongs to the technical field of artificial intelligence, and the method comprises the steps: collecting the audio features of the voice of a user and the rhythm features of a text; taking the audio features and the rhythm features as input of a pre-trained trajectory prediction network to obtain a vocal organ motion trajectory corresponding to the user; and driving the 3D head network model by using the vocal organ movement track of the user to achieve lip sound synchronous 3D face animation synthesis. According to the method and system, the motion trail of the vocal organ is used as the animation parameter of the 3D face model, the 3D face model is driven through the motion trail to achieve the face animation with synchronous lip sound, and the calculation amount is greatly reduced.

Owner:合肥综合性国家科学中心人工智能研究院

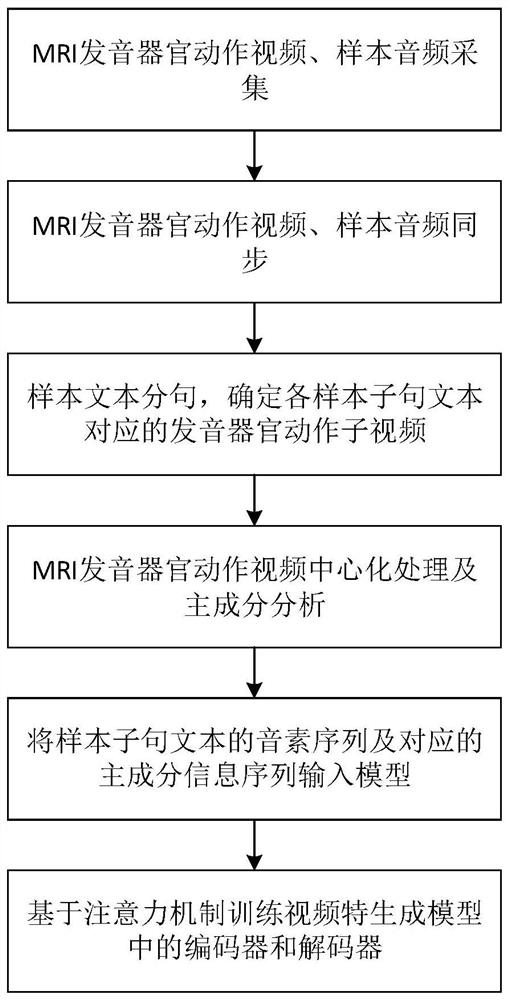

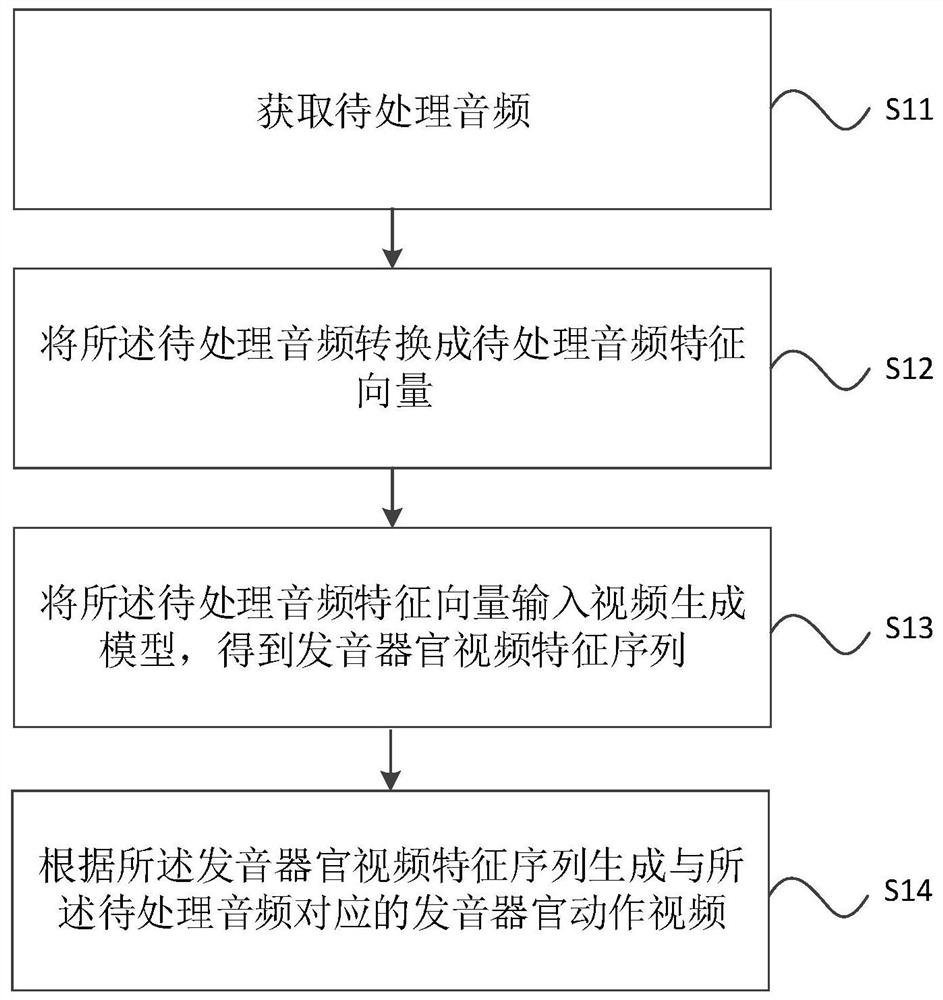

Video generation method and device, storage medium and electronic equipment

PendingCN113079327AImprove production efficiencyVisually display the real pronunciation action processTelevision system detailsColor television detailsVocal organVideo restoration

The invention relates to a video generation method and device, a storage medium and electronic equipment. The method comprises the steps of obtaining a to-be-processed audio; converting the to-be-processed audio into a to-be-processed audio feature vector; inputting the to-be-processed audio feature vector into a video generation model to obtain a vocal organ video feature sequence; generating a vocal organ action video corresponding to the to-be-processed audio according to the vocal organ video feature sequence, wherein the video generation model is trained by: constructing model training data according to a sample audio feature vector converted from a sample audio and a sample vocal organ video feature sequence of the sample vocal organ action video corresponding to the sample audio, and training the video generation model according to the model training data. According to the invention, the generation efficiency of the vocal organ action video is improved, and the real vocal action process of the vocal organ is restored and visually displayed by using the vocal organ action video.

Owner:BEIJING YOUZHUJU NETWORK TECH CO LTD

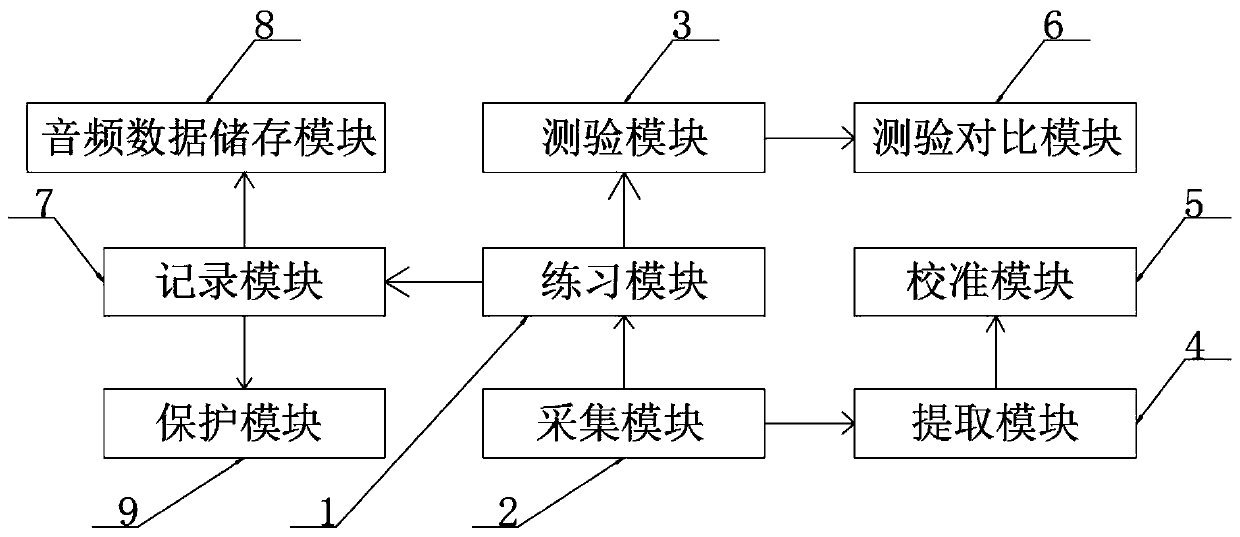

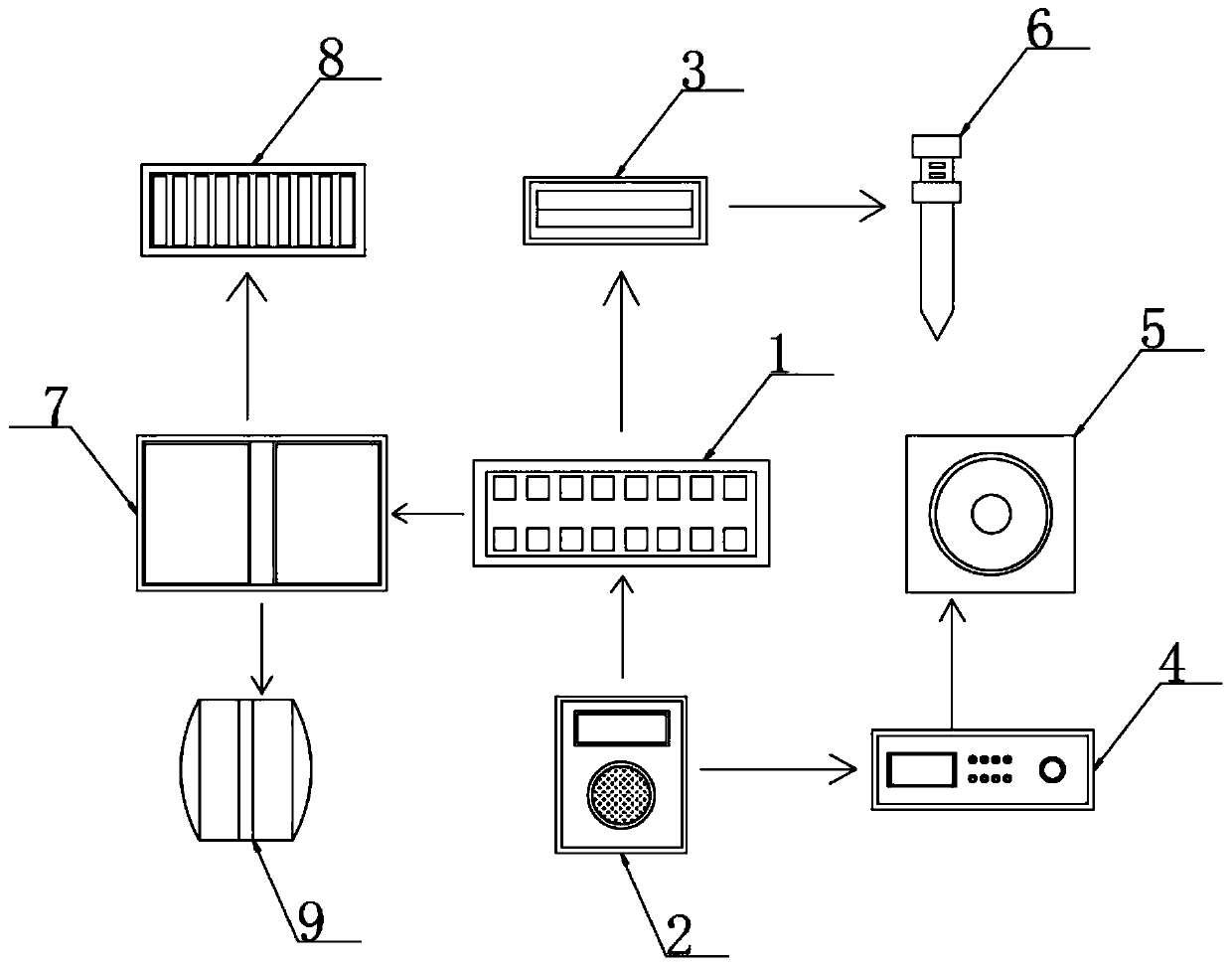

Electronic auxiliary pronunciation system for vocal music learning

The invention discloses an electronic auxiliary pronunciation system for vocal music learning, and particularly relates to the field of vocal music learning, which comprises a practice module, the input end of the practice module is provided with an acquisition module, and the output end of the practice module is provided with a test module. By arranging a protection module, the acquisition module, an extraction module and a recording module, in the process of sound production practice, the recording module records the practice process, the classification module can identify and classify the original sound during practice, the original sound is divided into bass, alto and high pitch, and the bass, the alto and the high pitch respectively use different sounding organs. The timing module records the practice time of the bass, the alto and the high pitch, the practice time is counted through the audio data storage module, and when the practice time of the bass, the alto and the high pitchis too long, the alarm in the protection module gives an alarm to remind people of paying attention to the practice time and paying attention to protection of own sounding organs.

Owner:PINGDINGSHAN UNIVERSITY

Vehicle rearview mirror control method and device, vehicle and storage medium

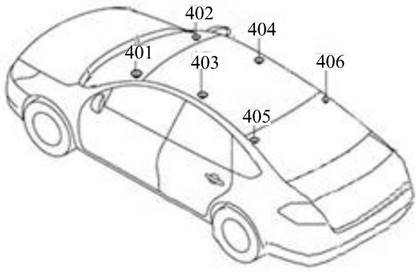

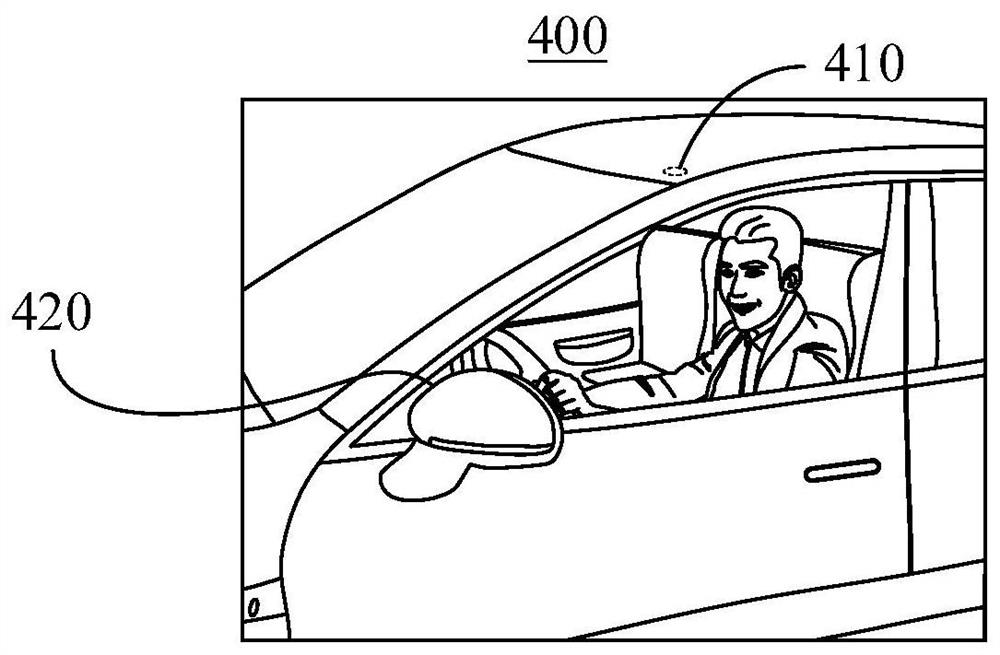

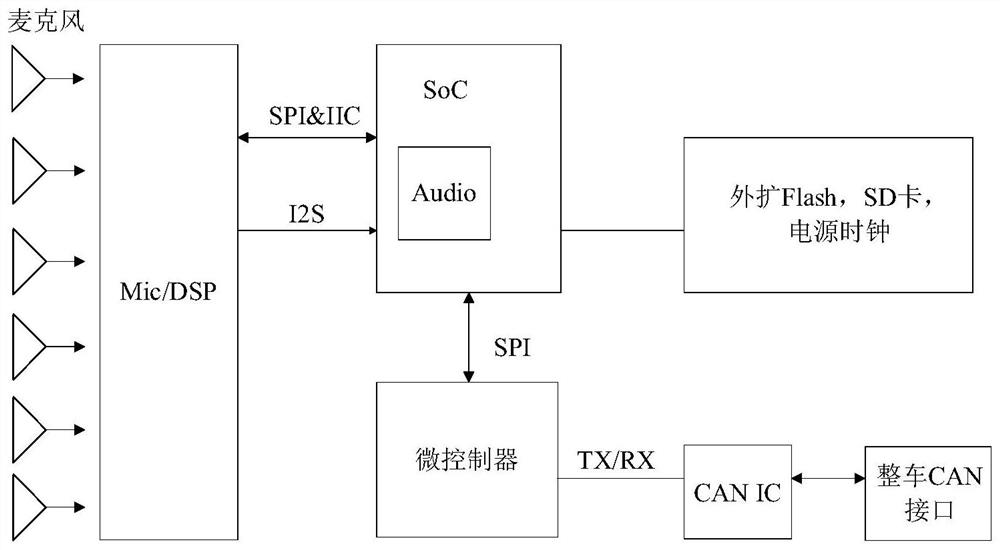

PendingCN113291247AImprove regulation efficiencyEasy to driveElectric/fluid circuitSound source locationVocal organ

The invention discloses a vehicle rearview mirror control method and device, a vehicle and a storage medium, and the method comprises the steps that of obtaining sound source position of a target object, and the sound source position is used for representing the spatial position of a sound production organ making a sound by the target object; determining a binocular position of the target object according to the sound source position; according to the binocular position, adjusting the vehicle rearview mirror to the target position, and therefore the vehicle rearview mirror is adjusted through sound source positioning, and great convenience is brought to vehicle driving of a user.

Owner:GUANGZHOU XIAOPENG MOTORS TECH CO LTD

Pronunciation correction method based on vocal organ form and behavior deviation visualization

InactiveCN108877319AForeign language learning is convenient and smartIncrease interest in learningSpeech recognitionElectrical appliancesVocal organCorrection method

The invention discloses a pronunciation correction method based on vocal organ form and behavior deviation visualization. The method comprises the steps: performing the foreign language learning modeling for a learner, acquiring the voice content of the learner and the organ motion corresponding to the pronunciation, and then calling a corresponding pronunciation module in a standard library according to the target foreign language selected by the learner; searching the deviations of the lip position, tongue position, tooth position, expiratory volume and jaw height of the learner and the corresponding data in the standard library by comparing the same pronunciation contents and organ movement patterns of the learner and the standard library, and visually feeding back the difference of the organ forms between the learner and the organ of the standard sound and the deviation between the two to the learner, and then updating the basic information of the learner. The method achieves therecording of the learning ability of the learner at any time to provide the learner with a coherent executable way of learning foreign languages.

Owner:HAINAN UNIVERSITY

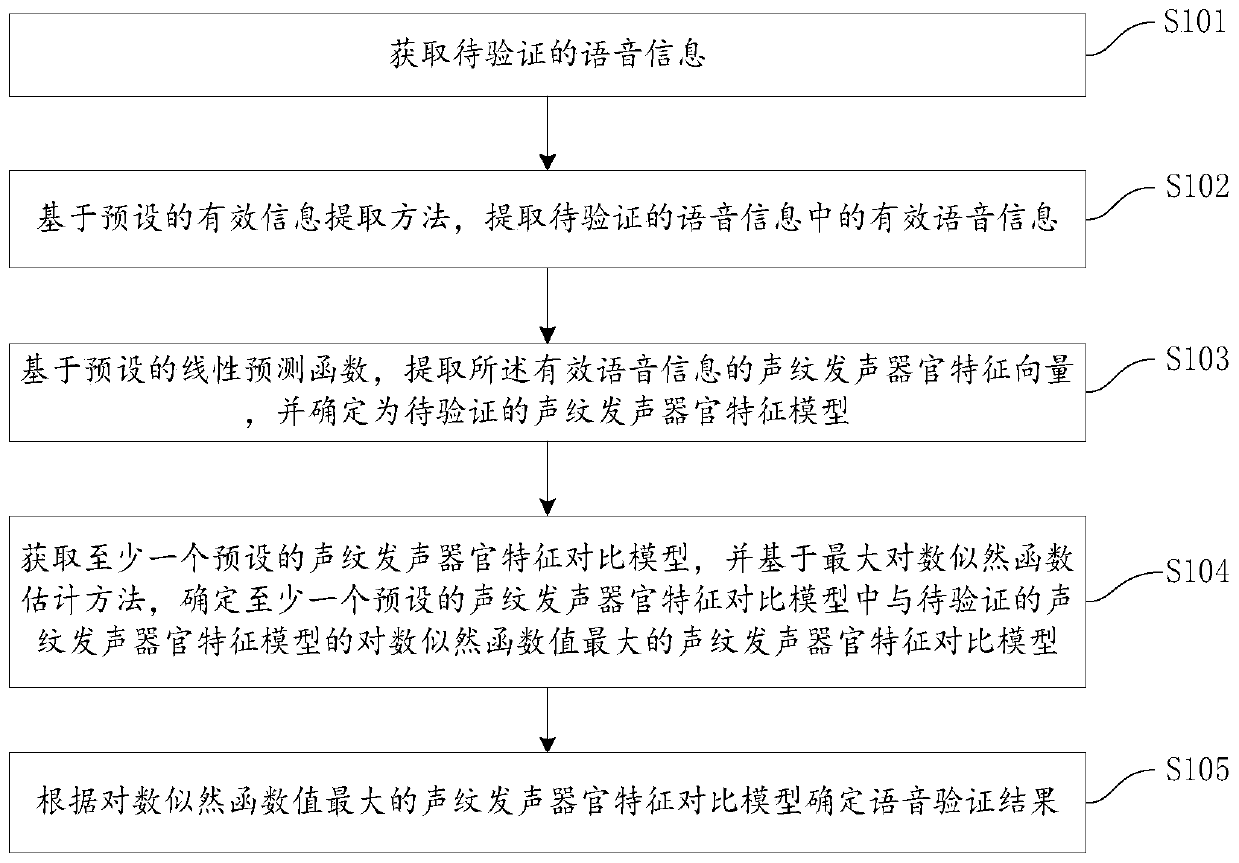

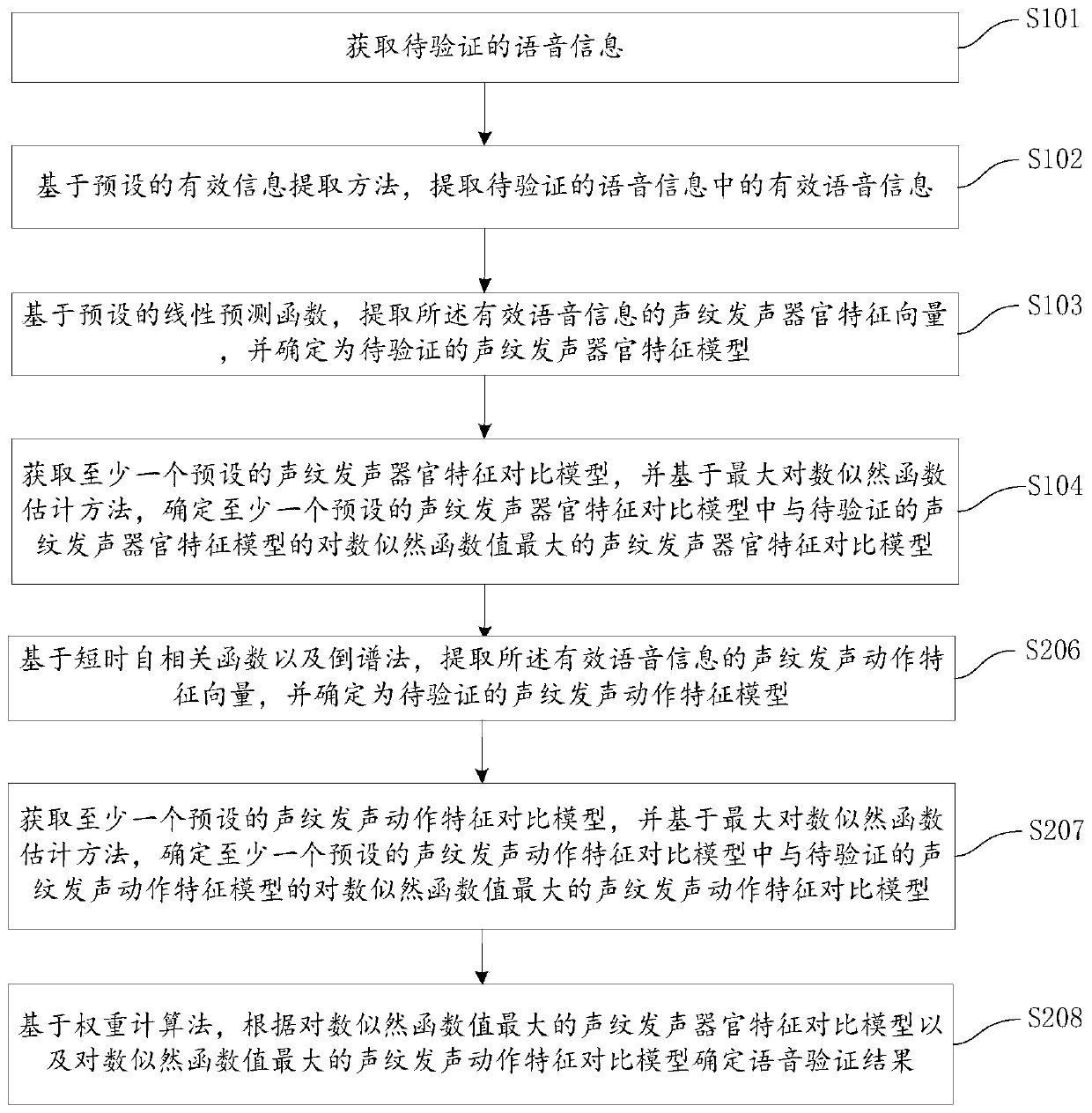

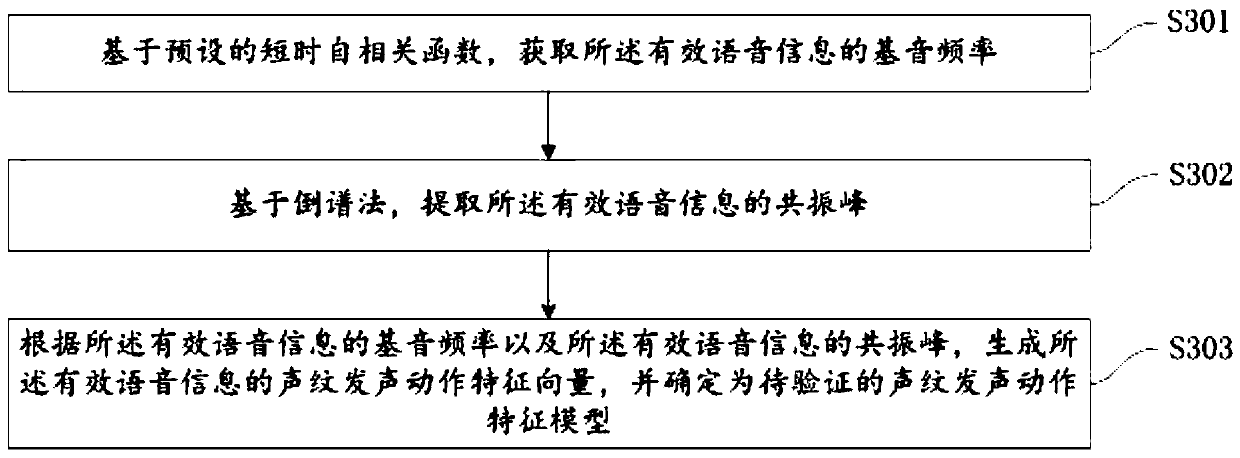

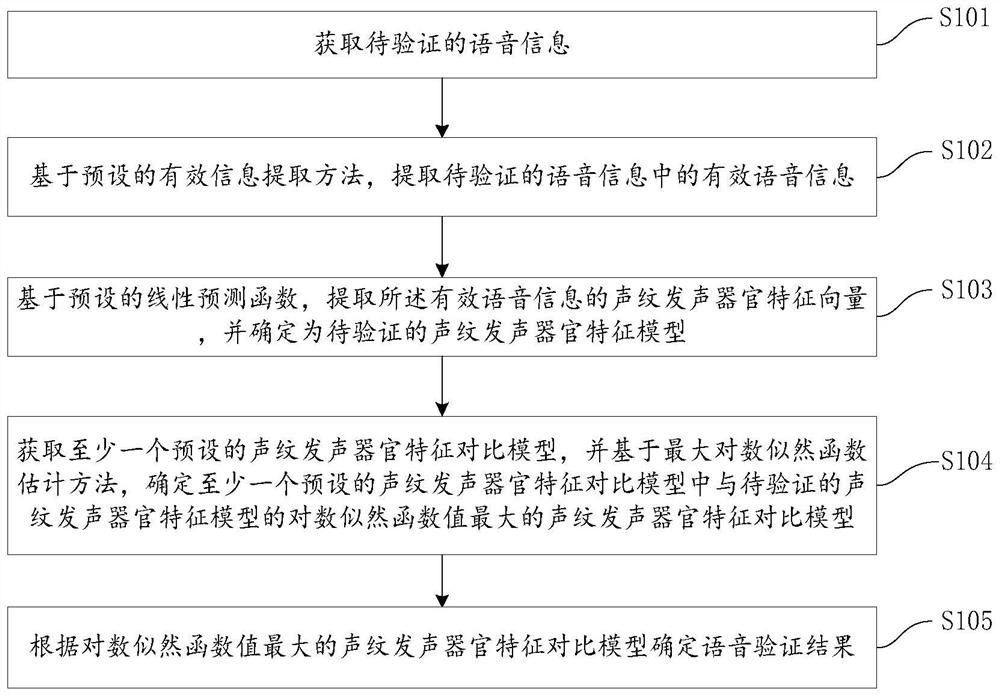

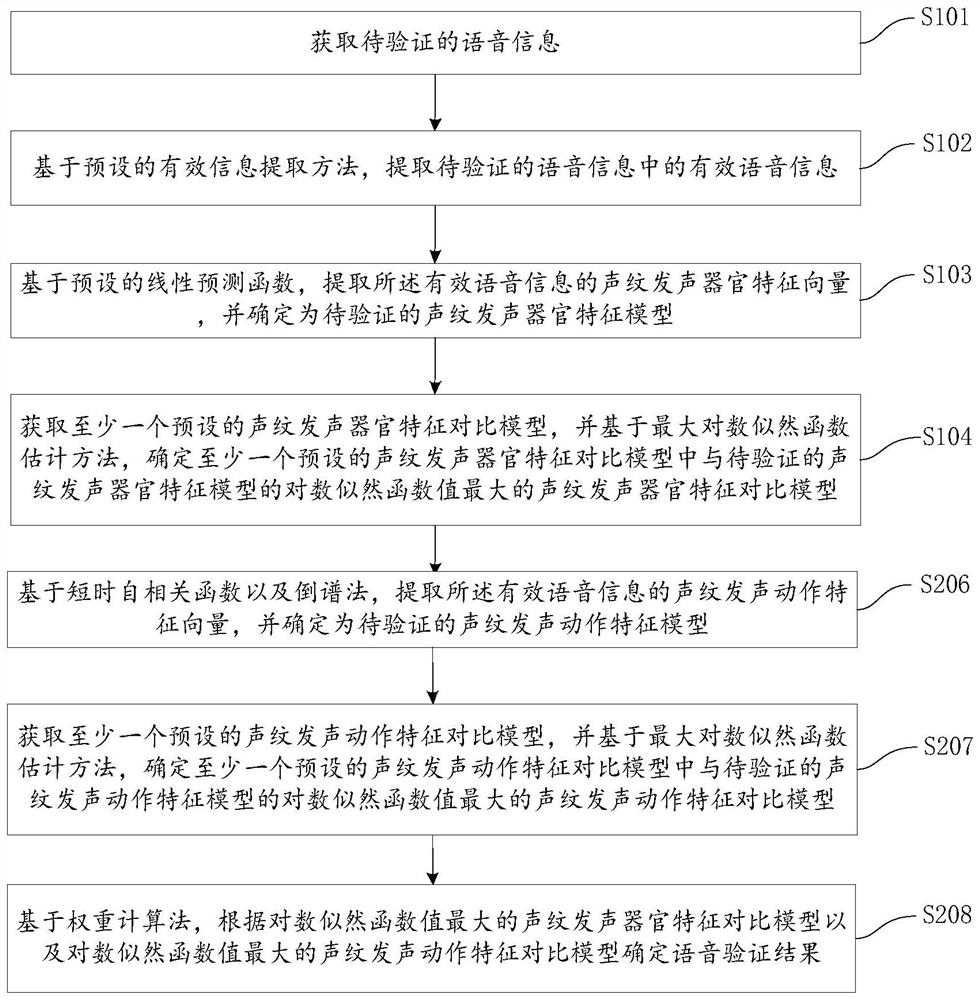

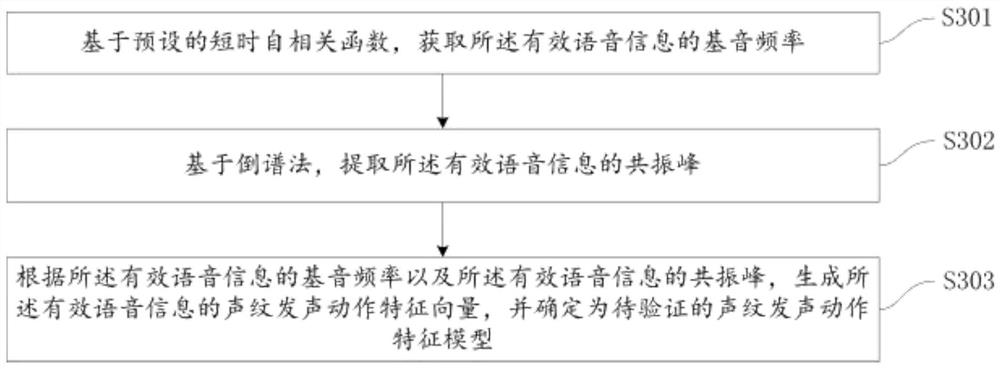

Voice verification method and device, computer equipment and storage medium

The invention is applicable to the technical field of computers, and provides a voice verification method and device, computer equipment and a storage medium. The voice verification method comprises the steps that voice information to be verified is acquired; based on a preset effective information extraction method, effective voice information in the voice information to be verified is extracted;based on a preset linear prediction function, a voiceprint sounding organ feature vector of the effective voice information is extracted to be determined as a voiceprint sounding organ feature modelto be verified; at least one preset voiceprint sounding organ feature comparison model is obtained, and a voiceprint sounding organ feature comparison model with the maximum log likelihood function value is determined from the voiceprint sounding organ feature model to be verified; and a voice verification result is determined according to the voiceprint sounding organ feature comparison model with the maximum log likelihood function value. The voice verification method can improve the accuracy of voice verification, and can be applied to text-independent voiceprint recognition.

Owner:效生软件科技(上海)有限公司

Pronunciation method of a three-dimensional visualized Mandarin Chinese pronunciation dictionary with rich emotional expression ability

InactiveCN103258340BCoherentFully describe the coarticulation phenomenonAnimationVocal organAnimation

Owner:UNIV OF SCI & TECH OF CHINA

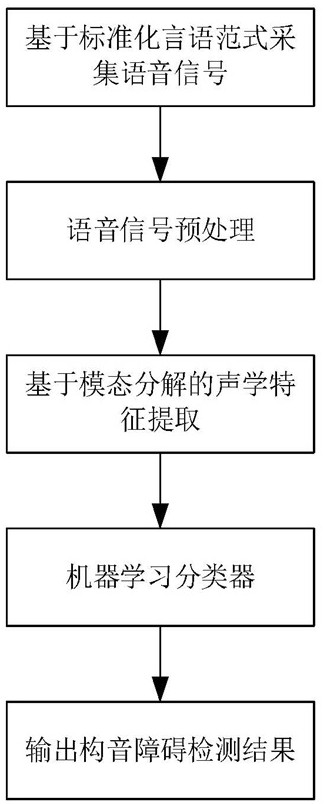

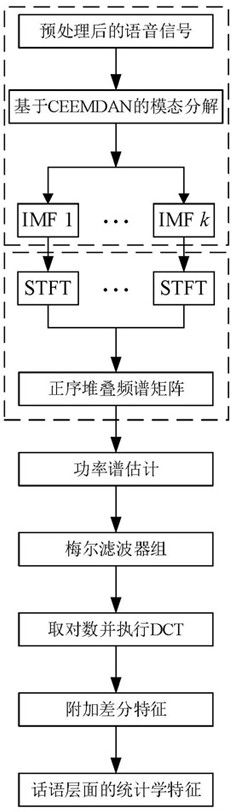

Rapid dysarthria detection method based on modal decomposition

The invention relates to a rapid dysarthria detection method based on modal decomposition. The method comprises the following steps: collecting an original voice signal; preprocessing the original voice signal; performing acoustic feature extraction on the framed and windowed signal S based on modal decomposition to obtain statistical features; the statistical features are input into a machine learning classifier, dysarthria detection is achieved, and the machine learning classifier is a support vector machine (SVM) model. According to the method, the limitation of traditional acoustic features in a nonlinear time-varying system is overcome, the IMF obtained through decomposition contains time-frequency information of original audio signals on different levels, voice physiological information of dysarthria patients can be well captured, pathological changes of vocal organs are reflected, and the accuracy of the voice physiological information is improved. The accuracy and robustness of dysarthria detection are improved; the method can adapt to non-linear and non-stable voice signals, and further improves the detection effect of dysarthria.

Owner:HEFEI INSTITUTES OF PHYSICAL SCIENCE - CHINESE ACAD OF SCI

Voice verification method, device, computer equipment and storage medium

Owner:效生软件科技(上海)有限公司

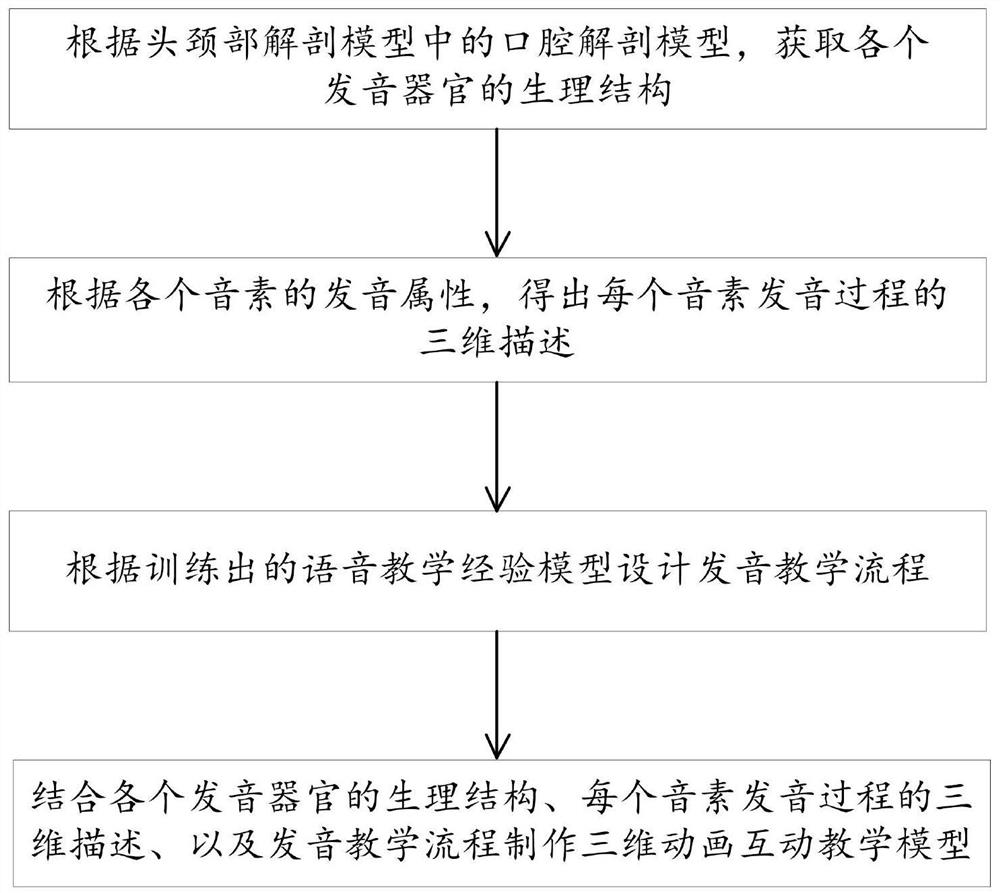

Construction method of dynamic pronunciation teaching model based on 3D modeling and oral anatomy

ActiveCN112381913BPrecise changeAccurate captureAnimationElectrical appliancesVocal organPhysical medicine and rehabilitation

The invention discloses a method for constructing a dynamic pronunciation teaching model based on 3D modeling and oral anatomy, including: obtaining the physiological structure of each pronunciation organ according to the oral anatomy model in the head and neck anatomy model; Obtain a three-dimensional description of the pronunciation process of each phoneme; design the pronunciation teaching process according to the trained phonetic teaching experience model; combine the physiological structure of each pronunciation organ, the three-dimensional description of the pronunciation process of each phoneme, and the pronunciation teaching process to create a three-dimensional animation interactive teaching Model. The present invention is based on a three-dimensional oral anatomy model, combines the pronunciation attributes of each phoneme, and uses the experience of phonetic teaching, so that the sounding method of each phoneme can be visually represented by a three-dimensional animation model, so as to achieve 3D graphic and intuitive in online pronunciation teaching Teaching feedback allows learners to more accurately capture the changes in key pronunciation parts, improving the teaching effect.

Owner:BEIJING LANGUAGE AND CULTURE UNIVERSITY

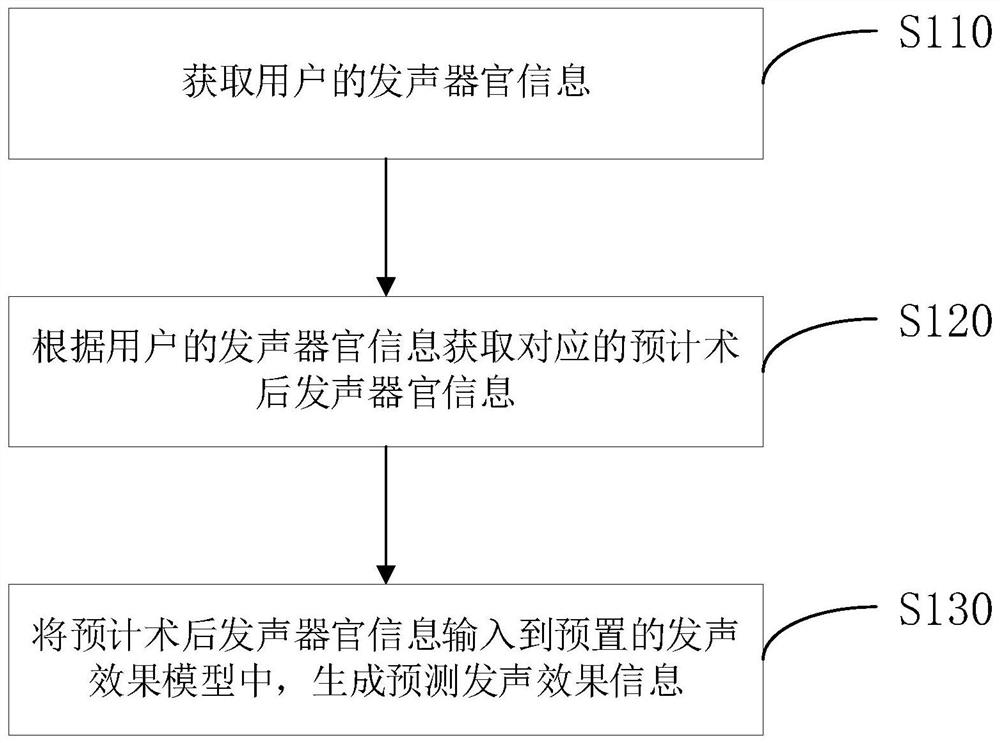

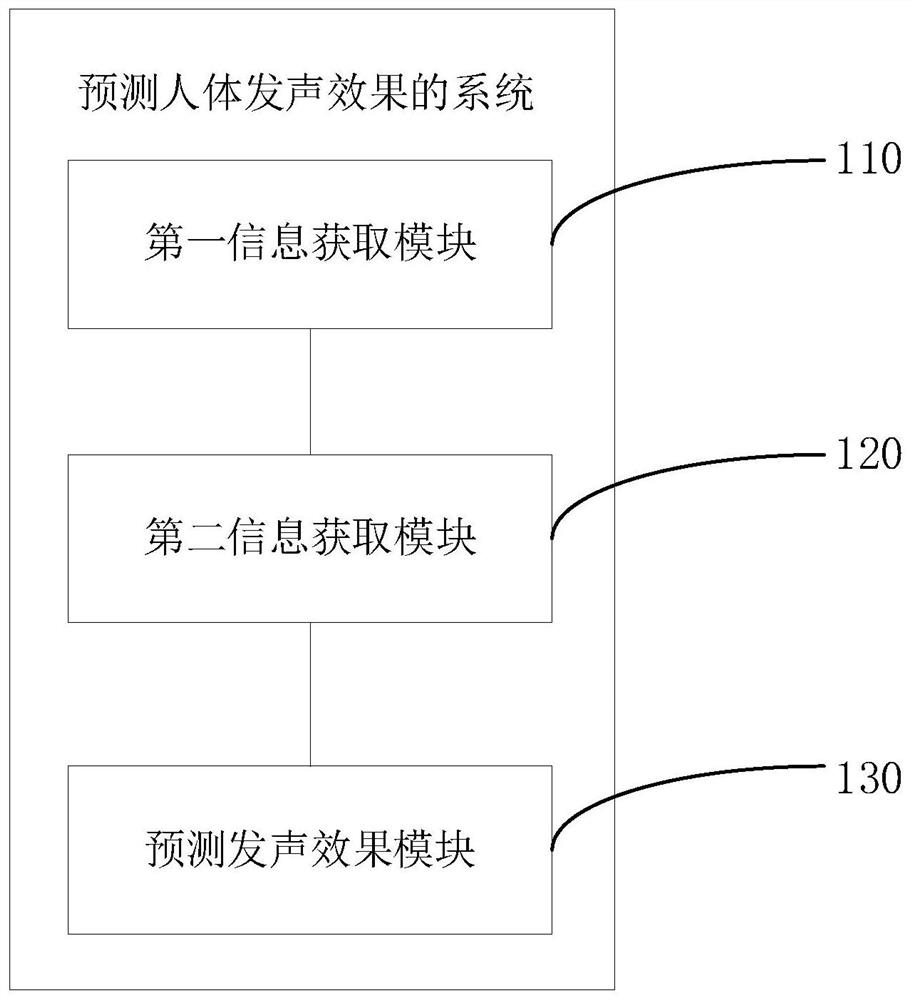

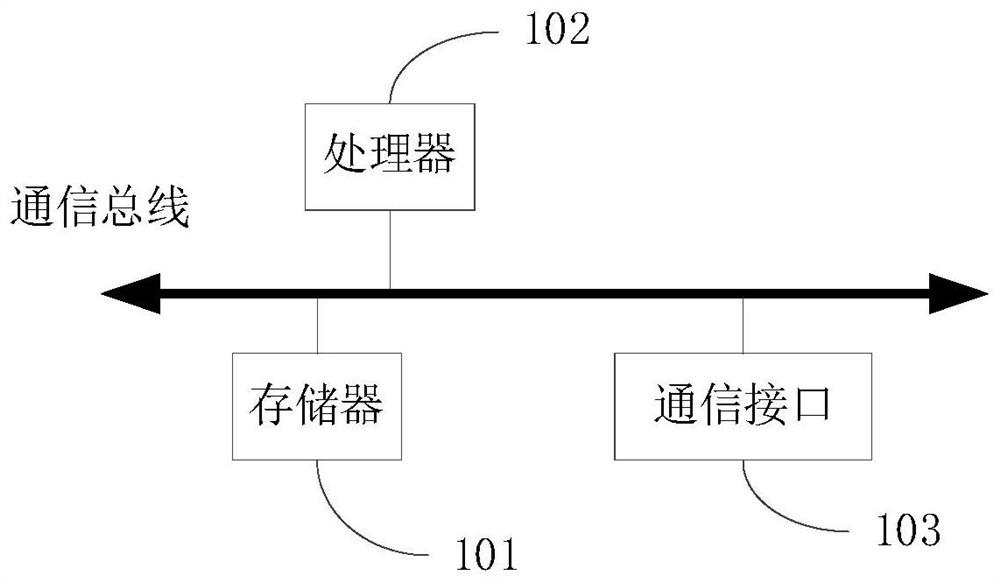

Method and system for predicting human body sound production effect

PendingCN113143217AConducive to decisionEasy to understandMedical simulationMechanical/radiation/invasive therapiesVocal organHuman body

The invention provides a method and system for predicting a human body sound production effect, and relates to the technical field of voice improvement. The method for predicting the human body sound production effect comprises the following steps of acquiring sound production organ information of a user; acquiring corresponding predicted postoperative sound production organ information according to the sound production organ information of the user; and inputting the predicted postoperative sound production organ information into a preset sound production effect model to generate predicted sound production effect information, wherein the preset sound production effect model contains sound production organ information and sound production effects of a plurality of samples, and the predicted sound production effect information can be obtained by comparing the input predicted postoperative sound production organ information with the sound production organ information in the preset sound production effect model; and by predicting the sound production effect information, the user can know the postoperative sound production effect, so that the postoperative effect can be known in advance before the operation, the data support of the operation effect is provided for the user, and the user can conveniently know the operation scheme and effect.

Owner:张育青

A Method for Obtaining the Contours of Speech Organs in Medical Images

ActiveCN102831606BNo human interaction requiredGood for athletic featuresImage analysisVocal organAutomatic segmentation

The invention provides a method for acquiring a vocal organ profile in a medical image. The method comprises the following steps of: for the medical image, performing binaryzation on the lip and a background area in the medical image by using an automatic segmentation threshold of the lip and the background so as to acquire a lip profile; extracting an upper tooth profile, a lower tooth profile, an upper jaw profile and a lower jaw profile in a face range included in the lip profile; for an image area between the upper jaw profile and the lower jaw profile, acquiring a reliable edge point of a tongue profile; and fitting a tongue edge profile by the reliable edge point of the tongue profile. By the method, the vocal head part and the organ area can be automatically segmented from the image background, and the whole process is automatically completed without manual interaction.

Owner:中科极限元(杭州)智能科技股份有限公司

A three-dimensional modeling method for vocal organs

ActiveCN104318615BHigh precision requirementsImage generation3D modellingVocal organDimensional modeling

The invention discloses a vocal organ three-dimensional modeling method which comprises the steps that an outer apparent vocal organ contour is extracted from a visible image, the contour is matched with a vocal organ universal three-dimensional geometrical model, and a reestablished outer apparent vocal organ three-dimensional model is obtained; an oral cavity inner vocal organ contour is extracted from an X-ray image, the contour is matched with the vocal organ universal three-dimensional geometrical model, and a reestablished oral cavity inner vocal organ three-dimensional model is obtained; and through the matching relation of the visible image and the X-ray image, the reestablished outer apparent vocal organ three-dimensional model and the reestablished oral cavity inner vocal organ three-dimensional model are fused, and accordingly an complete vocal organ three-dimensional model is obtained. According to the method, the vocal organ model which can reflect the real shape of a vocal organ from inside to outside during pronunciation by the vocal organ can be obtained accurately and quickly.

Owner:UNIV OF SCI & TECH OF CHINA

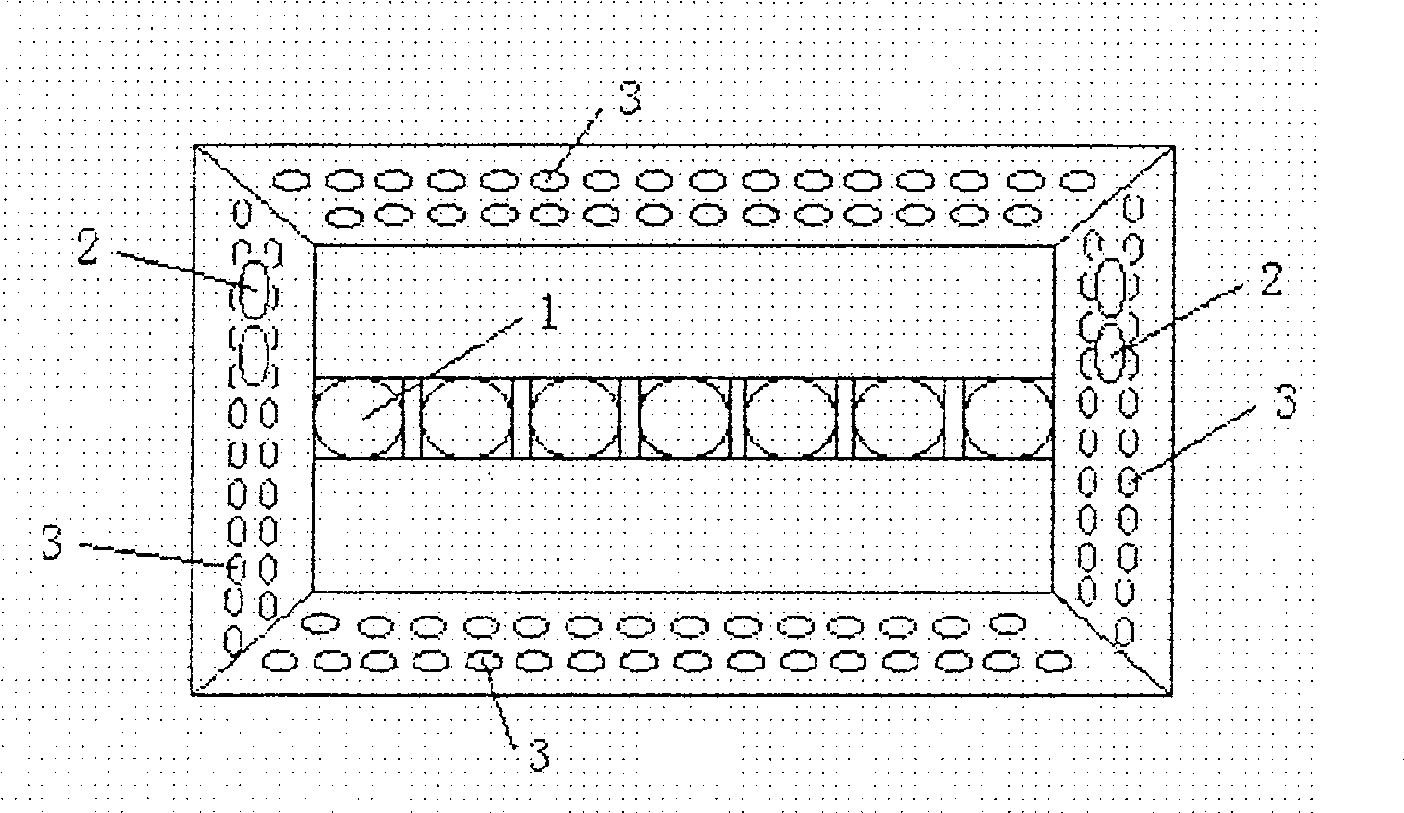

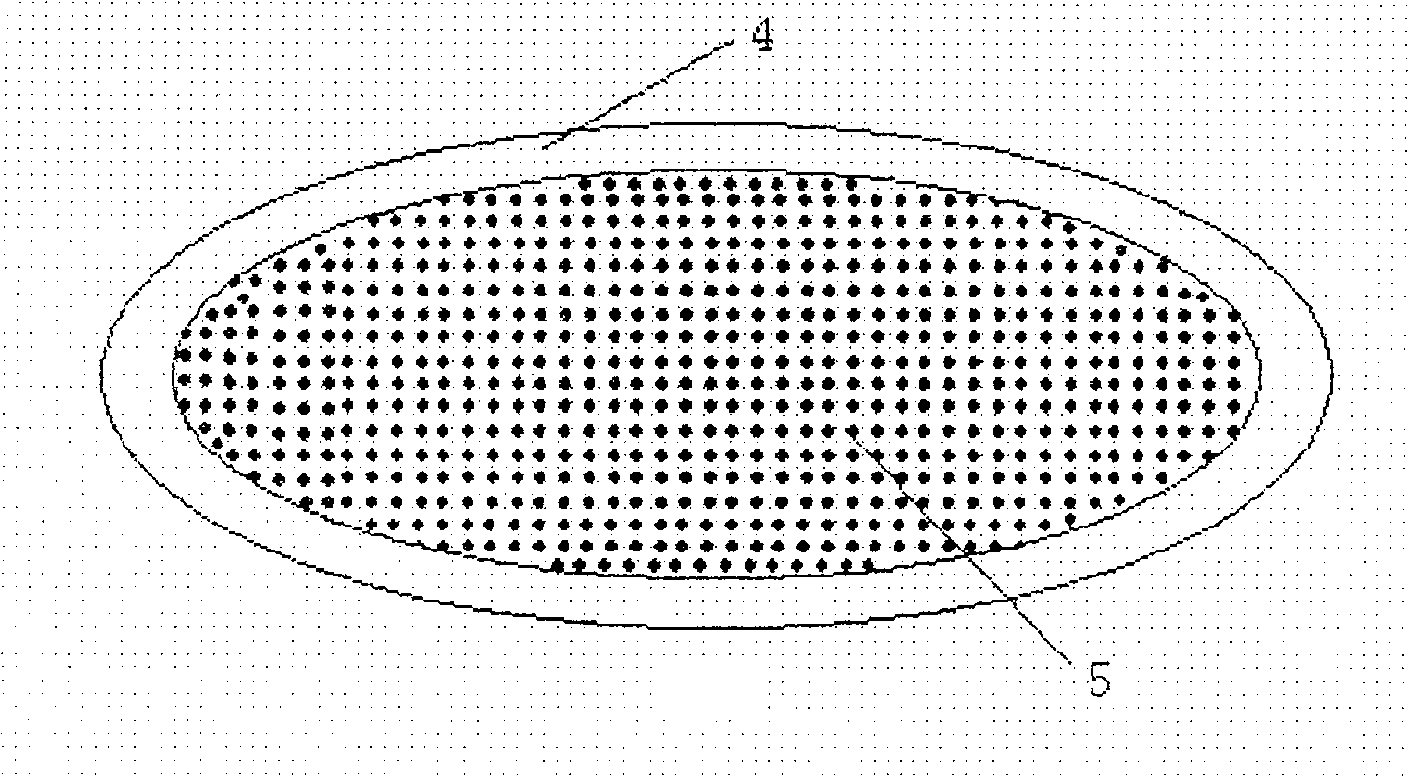

Organ protective cover

InactiveCN100508020CAvoid destructionAvoid getting stuckWind musical instrumentsVocal organEngineering

In order to overcome the lack of effective protective devices for the existing pedal organ bellows, a protective cover for the organ is provided, which can effectively protect the sounding system and other mechanical devices of the organ, ensure the normal operation of the organ, and save maintenance costs. Extend use time.

Owner:肖萍

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com