Patents

Literature

81 results about "Video eeg" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method and system for recommending video resource

InactiveCN103686236ARealize groupingImprove recommendation effectSelective content distributionSpecific timeVideo eeg

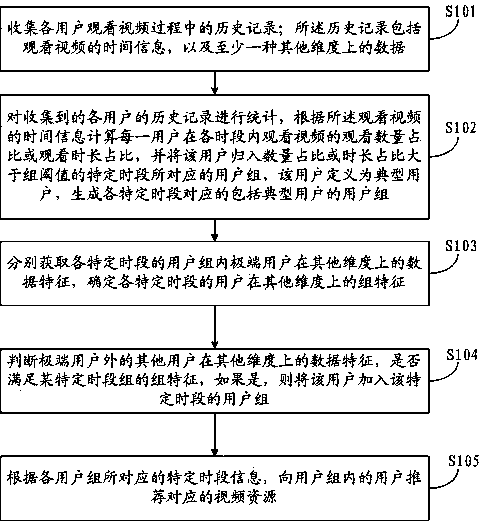

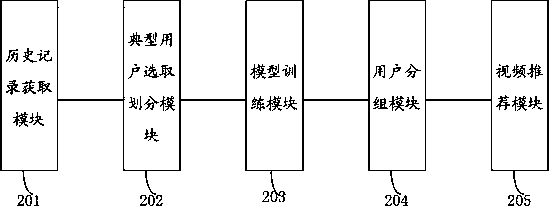

The invention provides a method and a system for recommending a video resource. The method comprises that: historical records of all users in the process of watching videos are collected; statistics is performed on the collected historical records of all the users, number ratio or duration ratio of video wartching in each time period of each user is calculated according to time information of the watched videos, and the users are classified into the corresponding user groups of specific time periods whose number ratio or duration ratio is greater than a group threshold so that user groups which include typical users and are corresponding to each specific time period are generated; data characteristics of the typical users in the user groups of all the specific time periods on other dimensions are respectively acquired, and group characteristics of the users of all the specific time periods on other dimensions are confirmed; if the result is yes, the users are added into the user groups of the specific time periods; and according to specific time period information corresponding to all the user groups, the corresponding video resource is recommended to the users in the user groups. With application of the method and the system for recommending the video resource, content recommendation can be specifically performed when the users are watching videos so that recommendation effect is enhanced.

Owner:LE SHI ZHI ZIN ELECTRONIC TECHNOLOGY (TIANJIN) LTD

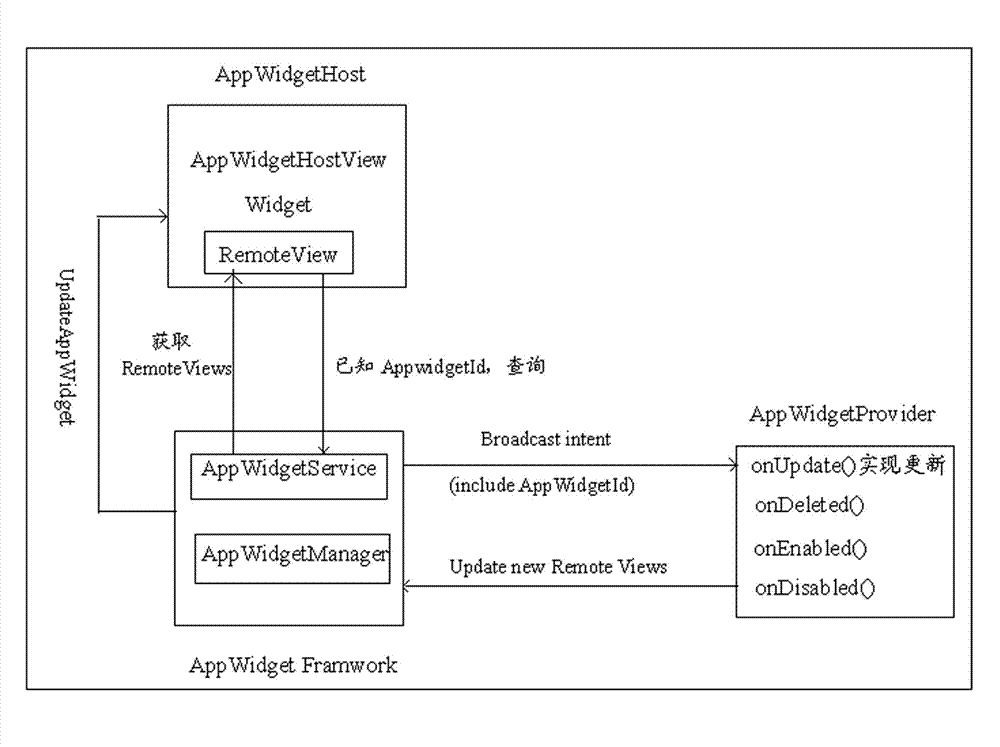

Method and system for controlling video playing based on android operating system

InactiveCN103049258AEasy to operateSpecific program execution arrangementsInput/output processes for data processingEngineeringWorld Wide Web

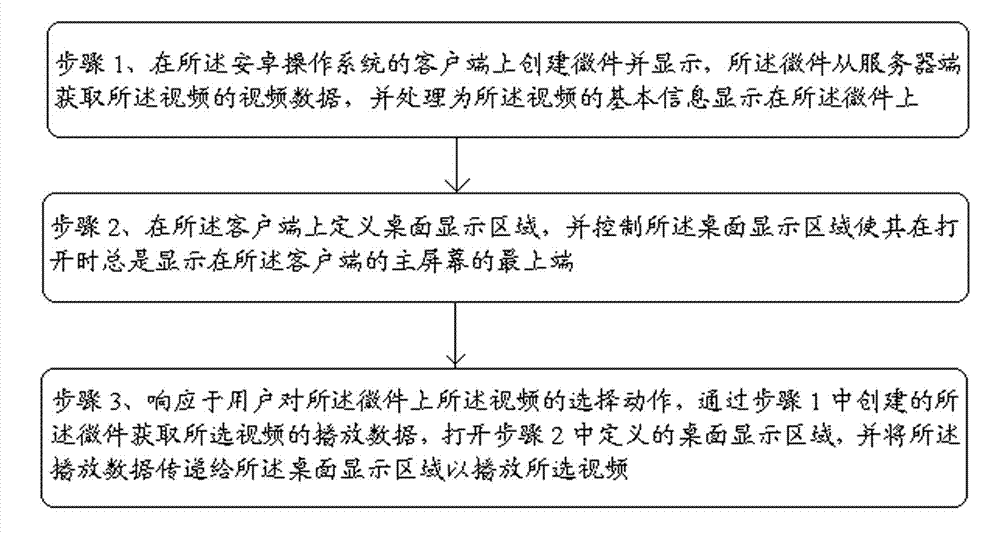

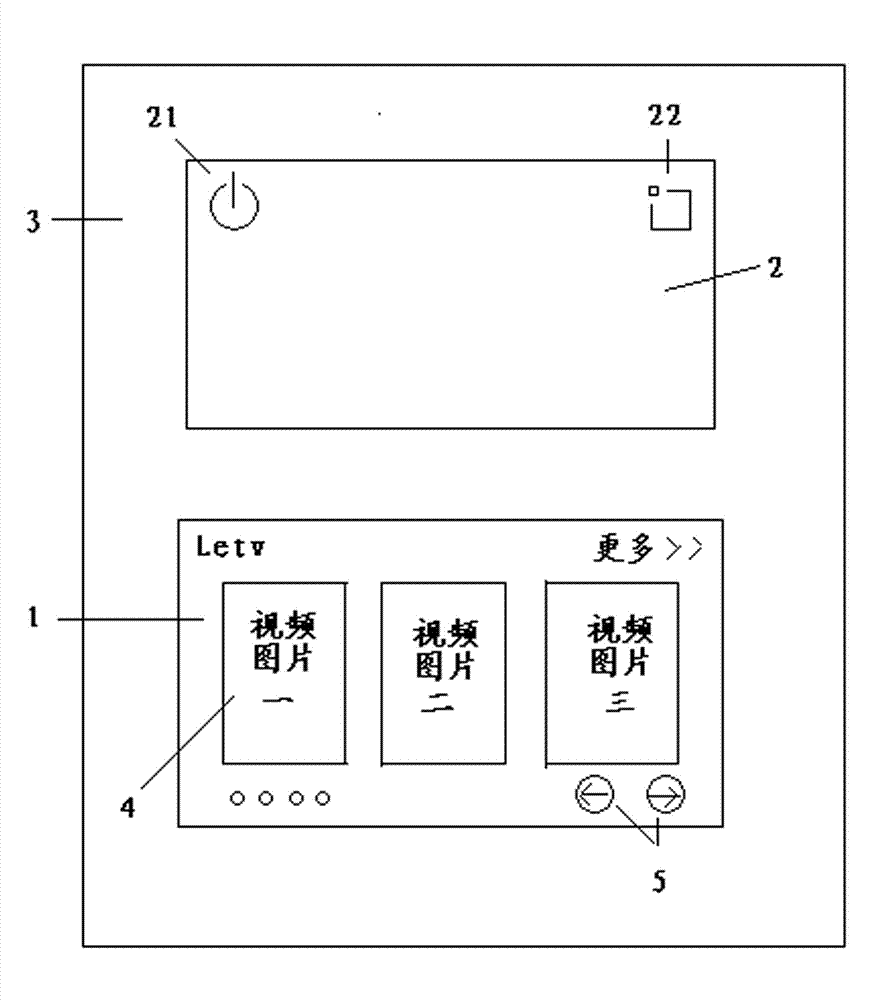

The invention discloses a method and system for controlling video playing based on an android operating system. The method includes: creating and displaying a web widget on the client side of the android operating system; defining a desk top displaying area on the client side; responding to selection actions of users on the video on the web widget, obtaining playing data of the video through the created web widget, opening the defined desk top displaying area, and transmitting the playing data to the desk top displaying area to play the video. The system comprises a web widget creating module, a display control module and a video interactive module. By creating the web widget and defining the desk top displaying area, the web widget technology and desk top displaying technology are combined, and during the process of controlling video playing, basic information of the video can be looked up through the web widget, the video can be controlled to play through the defined desk top displaying area when users need to watch the video, accordingly efficiency in video looking up process can be high, and operation of users is facilitated.

Owner:LETV INFORMATION TECH BEIJING

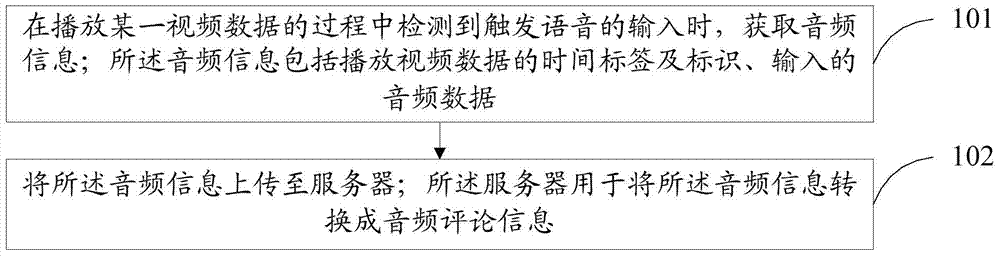

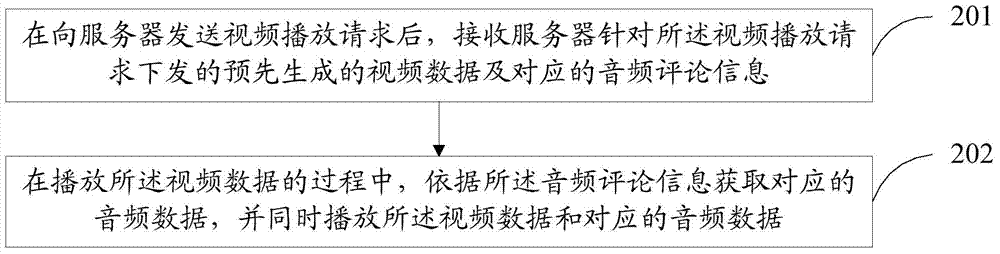

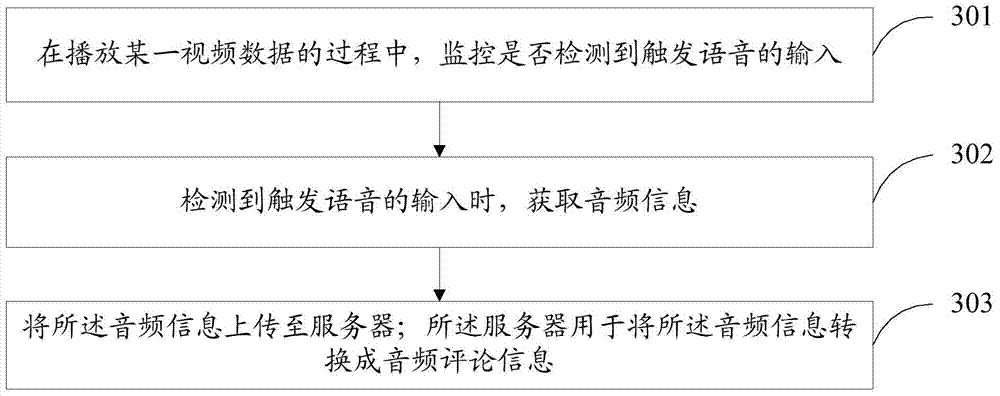

Audio comment information generating method and device and audio comment playing method and device

InactiveCN104125491ASimplify the build processImprove general performanceSelective content distributionSpeech soundMultimedia

The invention provides an audio comment information generating method and device and an audio comment playing method and device. The audio comment information generating method and device and the audio comment playing method and device are used for solving the problems of difficulty in text comment generation and influence of text comment browsing on user experience during a video playing process. The video comment information generating method comprises obtaining audio information when input triggering audio is detected during the playing process of certain video data; uploading the audio information to a server; converting the audio information into audio comment information through the server. The audio comment playing method comprises, after a video playing request is sent to the server, receiving pre-generated video data issued by the server and corresponding audio data. According to the audio comment information generating method and device and the audio comment playing method and device, the audio comment generating process is simple and high in universality; during a video playing process, a user can play the audio data simultaneously, and the user experience can be enhanced by calling the voice and the sound feeling of the user.

Owner:LETV INFORMATION TECH BEIJING

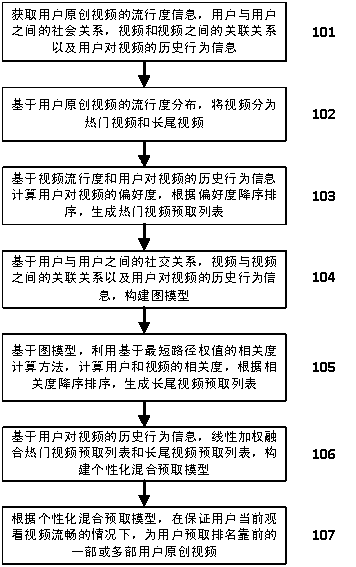

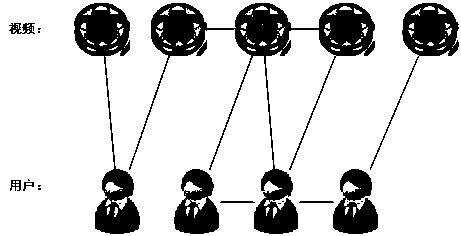

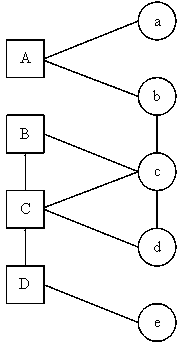

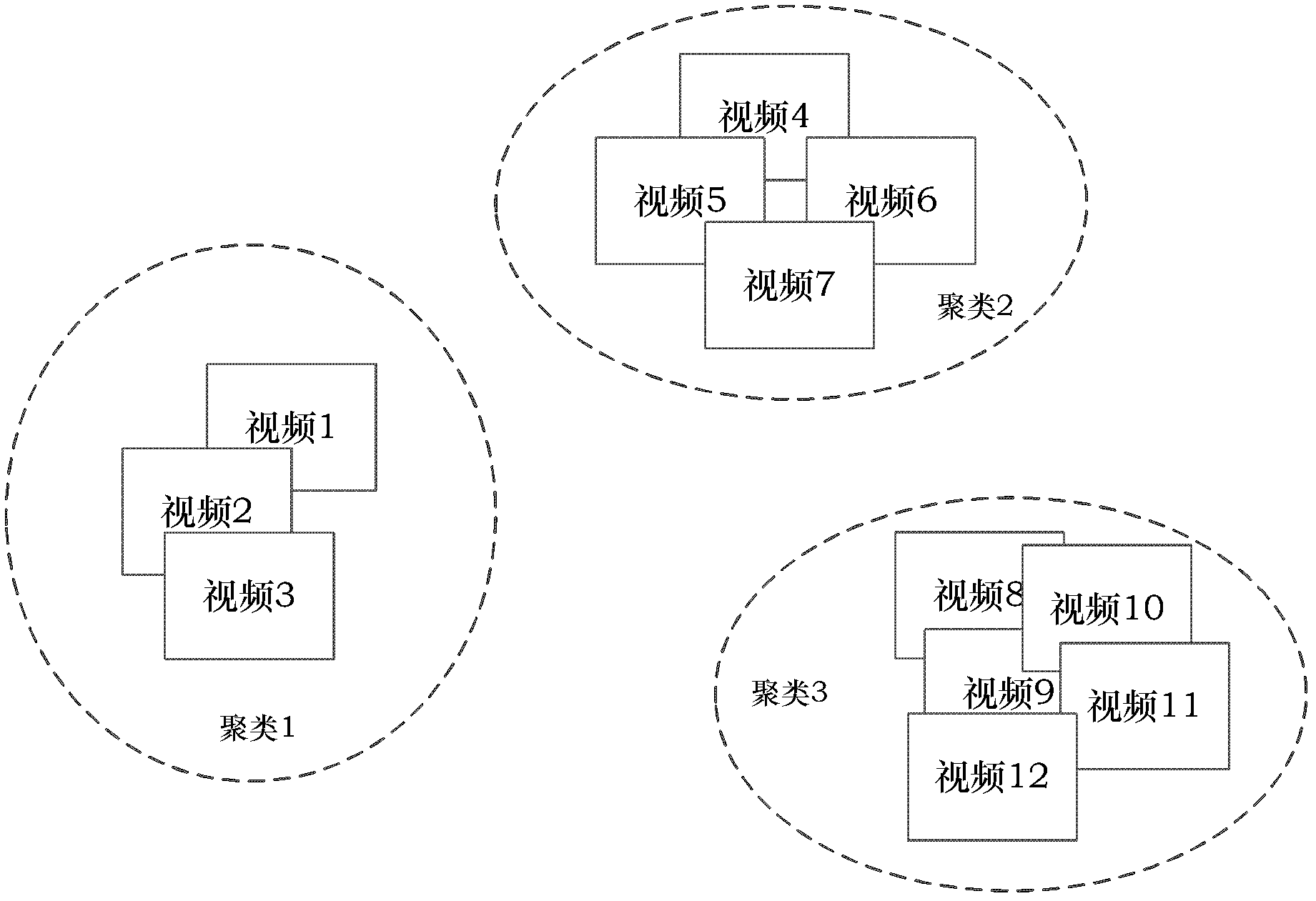

Personalized user-generated video prefetching method and system based on popularity and social networks

ActiveCN103974097AImprove hit rate and accuracyQuality improvementSelective content distributionPersonalizationService quality

The invention discloses a personalized user-generated video prefetching method and system based on popularity and social networks. The method comprises the steps that video popularity information, social relations between users, incidence relations between videos and user historical behavior information are collected at first; on the basis of the popularity information, user-generated videos are divided into popular videos and long tail videos; the preference degrees of the users for the popular videos are calculated and ranked, and a popular video prefetching list is generated; a graph model is built, relevance measurement for the users and the videos is achieved, and a long tail video prefetching list is generated for the users; weighted linear fusion is conducted on the popular video prefetching list and the long tail prefetching list, and a personalized mixed prefetching model is built; on the condition that the video watched by the users at present is smooth, one or more videos with the high rank are fetched for the users based on the personalized mixed prefetching model. According to the personalized user-generated video prefetching method and system, the user-generated video prefetching hit rate and accuracy degree are improved, user-generated video service quality is improved, and user watching experience is improved.

Owner:南京大学镇江高新技术研究院

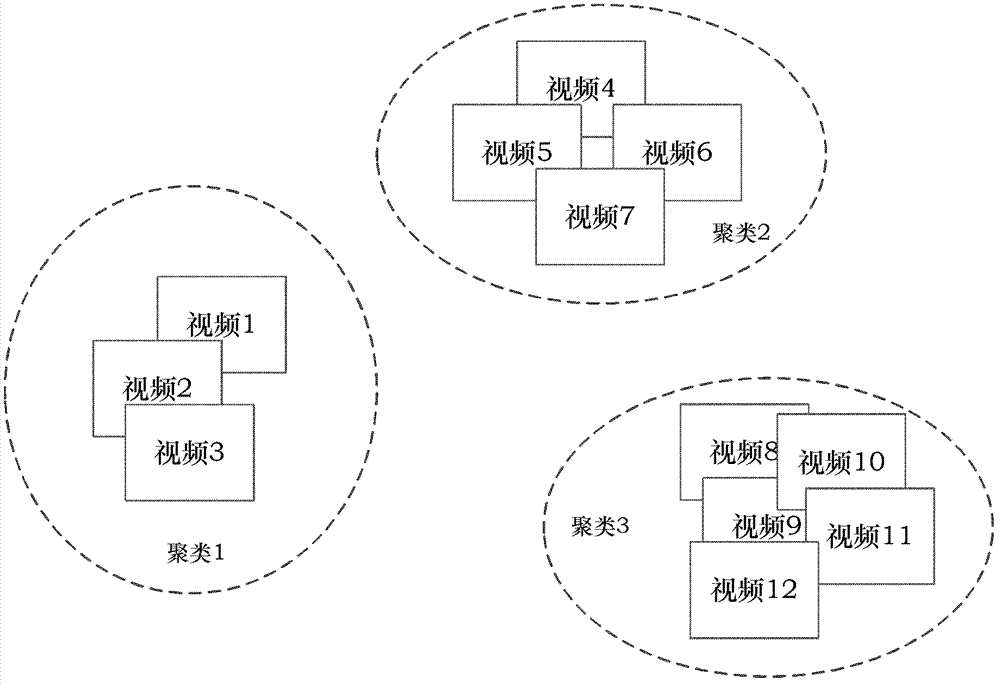

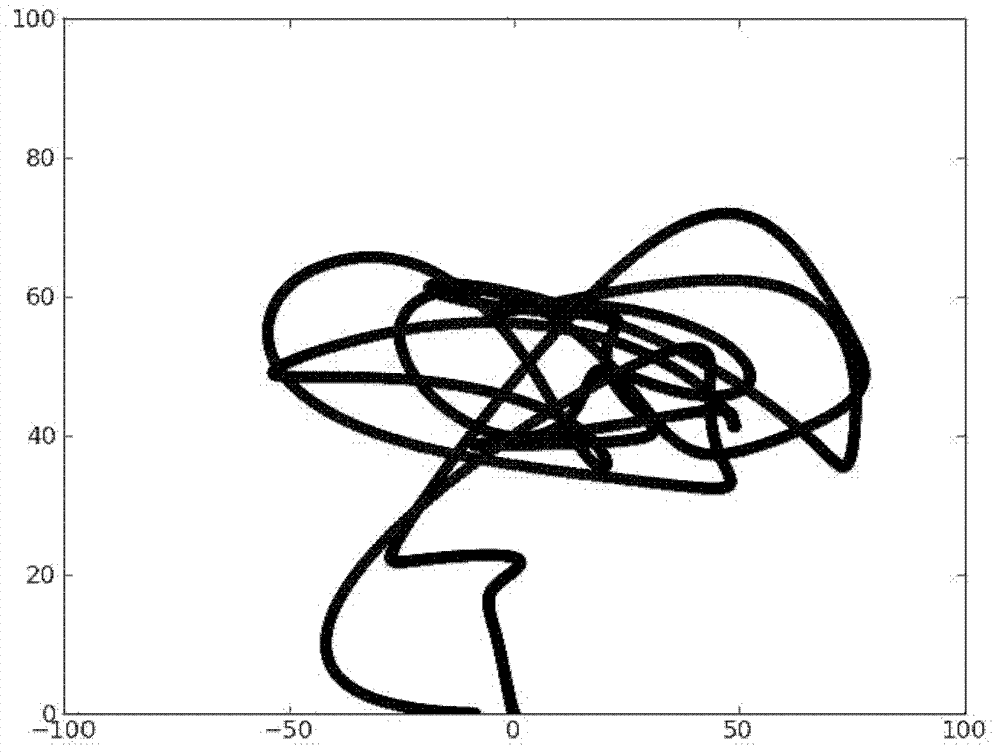

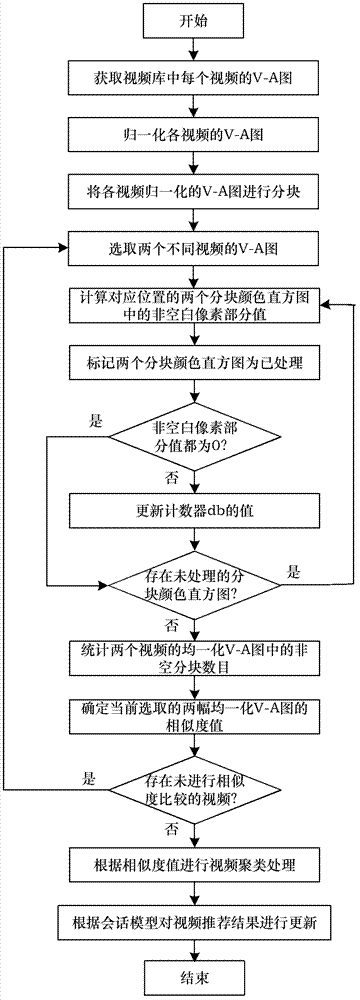

Video recommending method based on video affective characteristics and conversation models

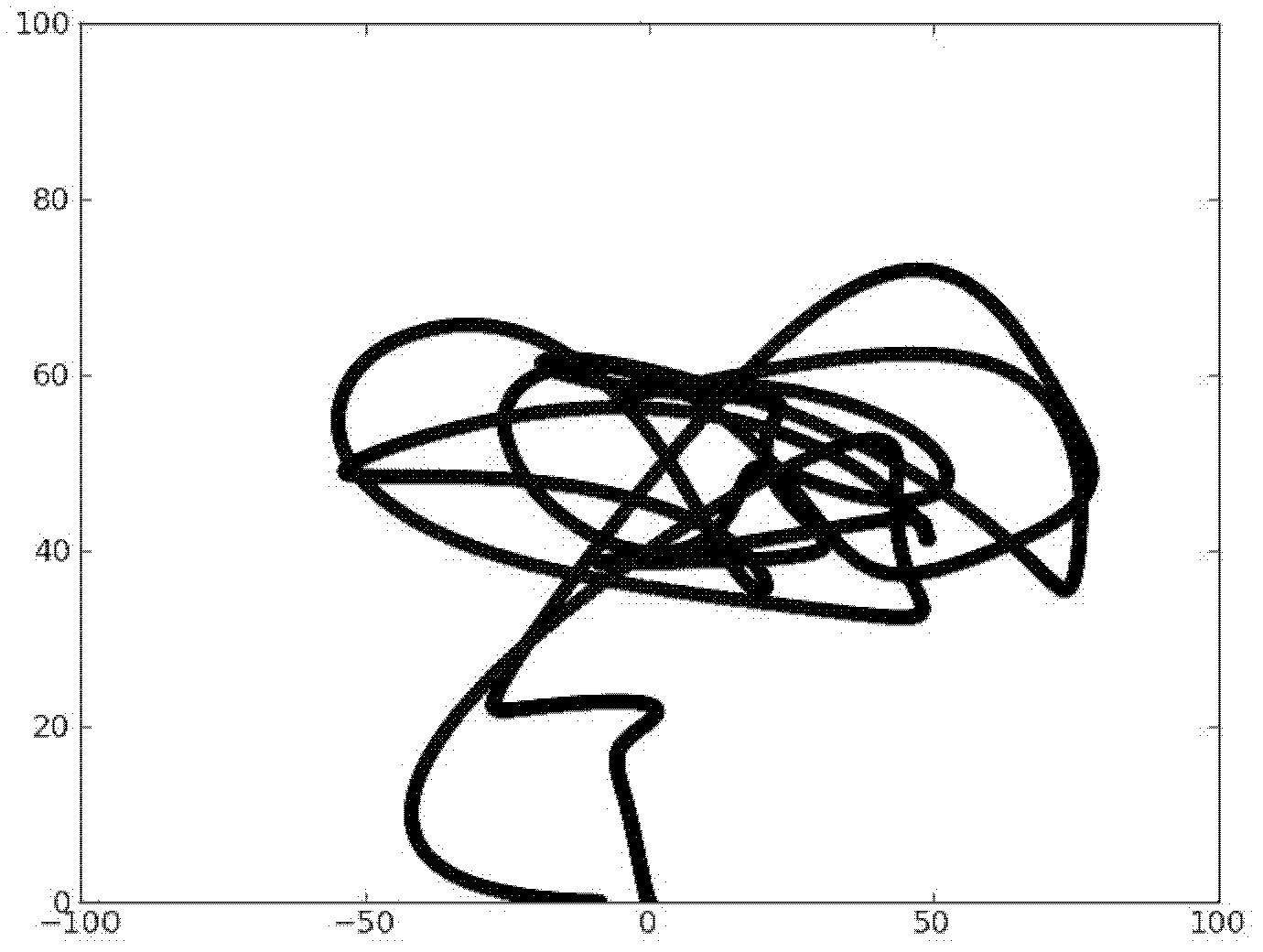

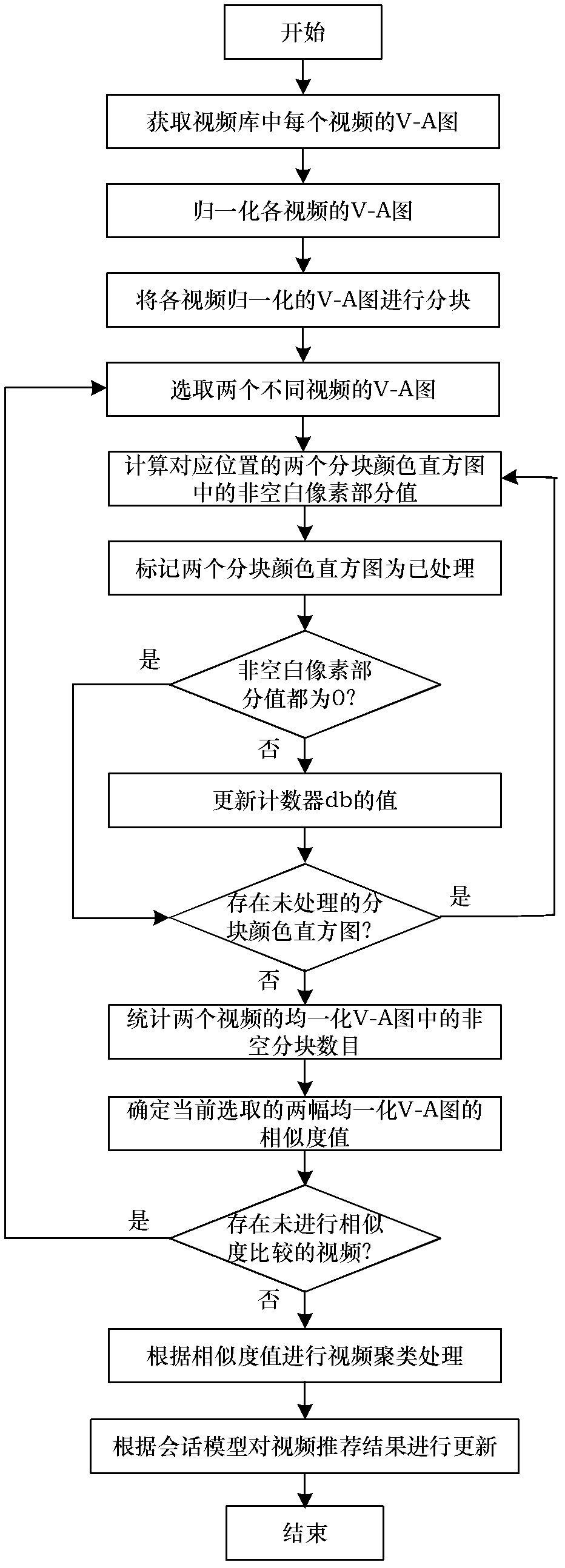

InactiveCN102495873AProvide accuratelyImprove viewing experienceSpecial data processing applicationsPattern recognitionArousal

The invention provides a video recommending method based on video affective characteristics and conversation models, which is characterized in that: affective characteristics of a video are adopted as the comparison foundation, multiple affective characteristics are extracted from the video and an affiliated sound track to synthesize an attraction-arousal curve diagram (V-A diagram), then the V-Adiagram is homogenized, the homogenized V-A diagram is classified into different identical blocks with a fixed quantity, a color block diagram of each block is determined, a difference of the two color block diagrams on corresponding positions of two pictures is compared to a threshold value to obtain a block difference and a coverage difference, finally the similarity value of the two videos canbe obtained, and a processed result for clustering the similarity value is used as a video recommend result. The method also adopts a conversation model to update the video recommend result during the continuous watching process of a user. Due to the adoption of the method, the video recommend result can more satisfy the current affective status of the user, the clicking rate of the user on the recommended video and the number of the continuously-watched video can be improved.

Owner:BEIHANG UNIV

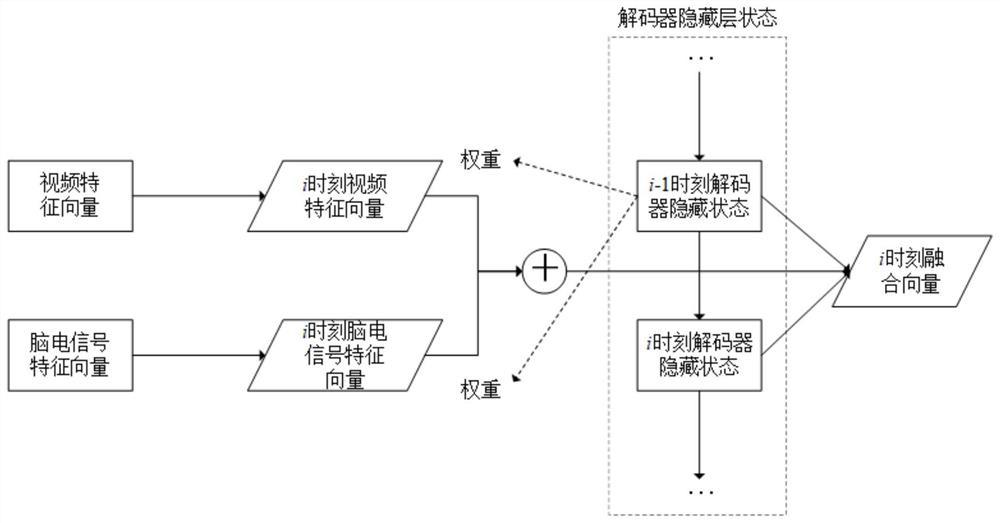

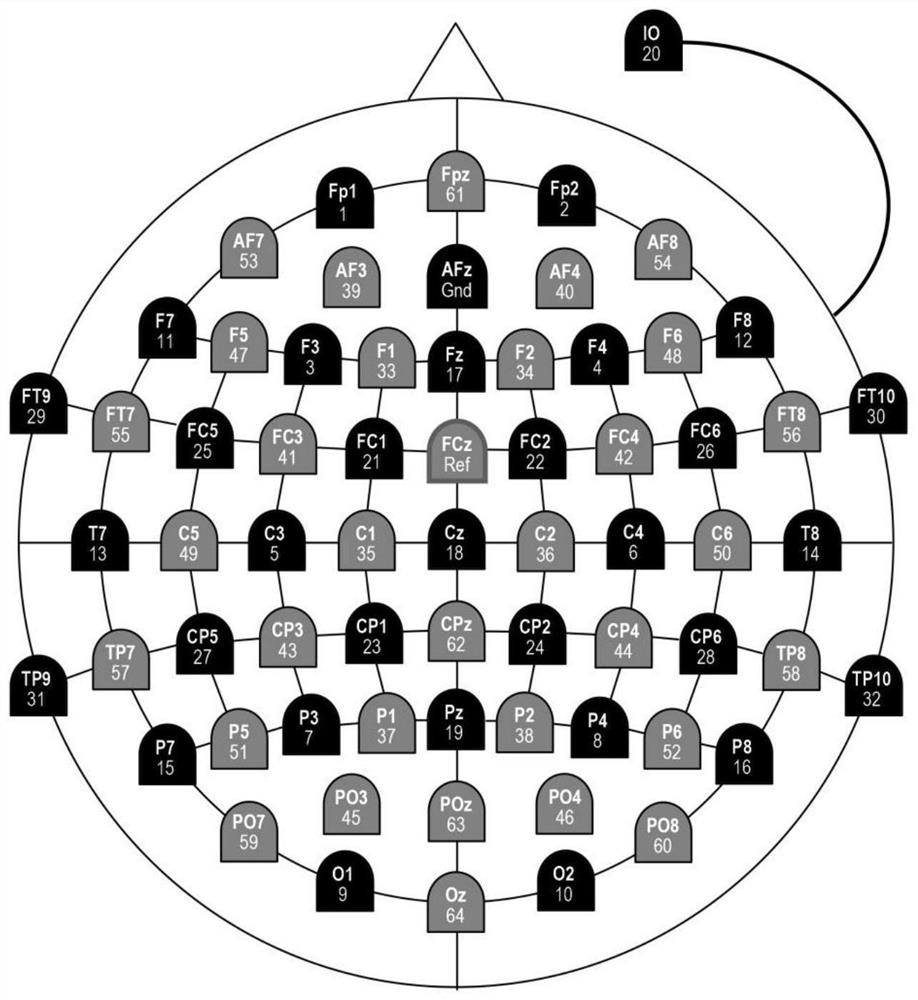

Video emotion classification method and system fusing electroencephalogram and stimulation source information

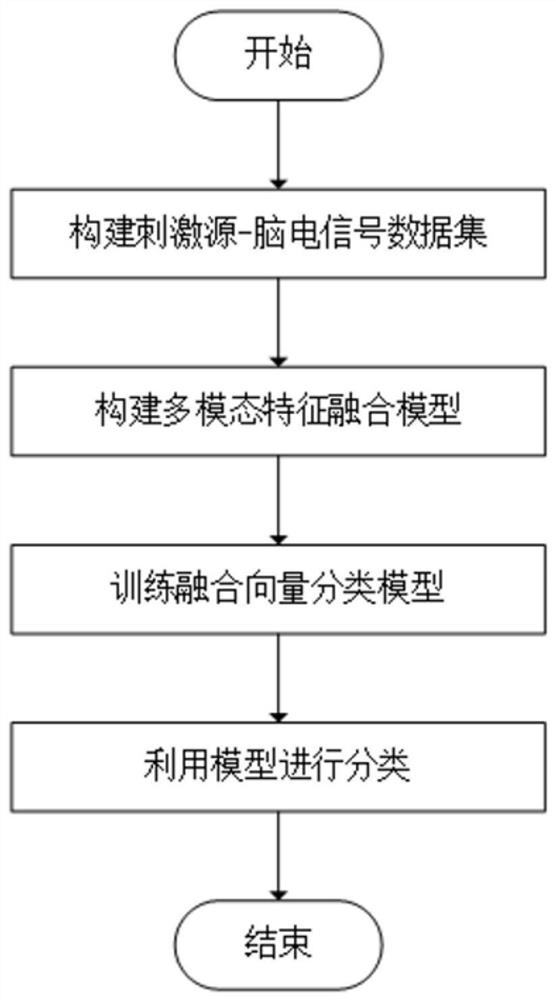

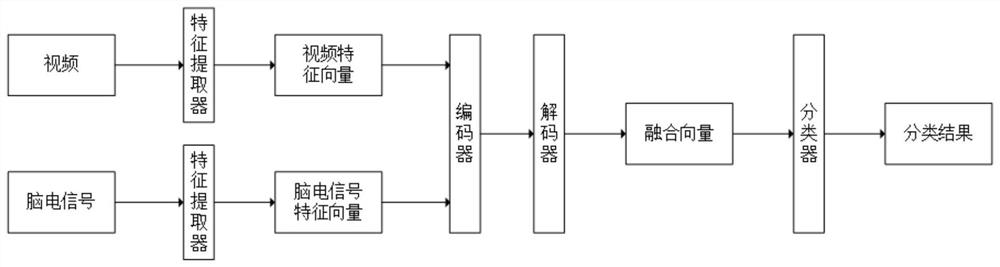

ActiveCN113095428AImprove generalization abilityImprove accuracyCharacter and pattern recognitionSensorsData setMedicine

The invention discloses a video emotion classification method and system fusing electroencephalogram and stimulation source information, and the method comprises the steps: constructing a stimulation source-electroencephalogram signal data set: enabling a subject to watch a video clip, collecting the electroencephalogram signals of the subject when the subject watches a video through an electroencephalogram scanner, and constructing the stimulation source-electroencephalogram signal data set; and constructing a multi-modal feature fusion model: for the training data set, respectively extracting video features and electroencephalogram signal features, and generating a fusion vector by adopting a multi-modal information fusion method based on an attention mechanism. Training a fusion vector classification model: taking the fusion vector as the input of a neural network full connection layer for prediction; updating the weight of the neural network according to the difference between the prediction result and the real label, and training the neural network. Classifying by using the model; collecting an electroencephalogram signal when a subject watches a to-be-classified video; extracting and fusing video features and electroencephalogram signal features; and inputting the fusion vector into the trained neural network to obtain a classification result.

Owner:XI AN JIAOTONG UNIV

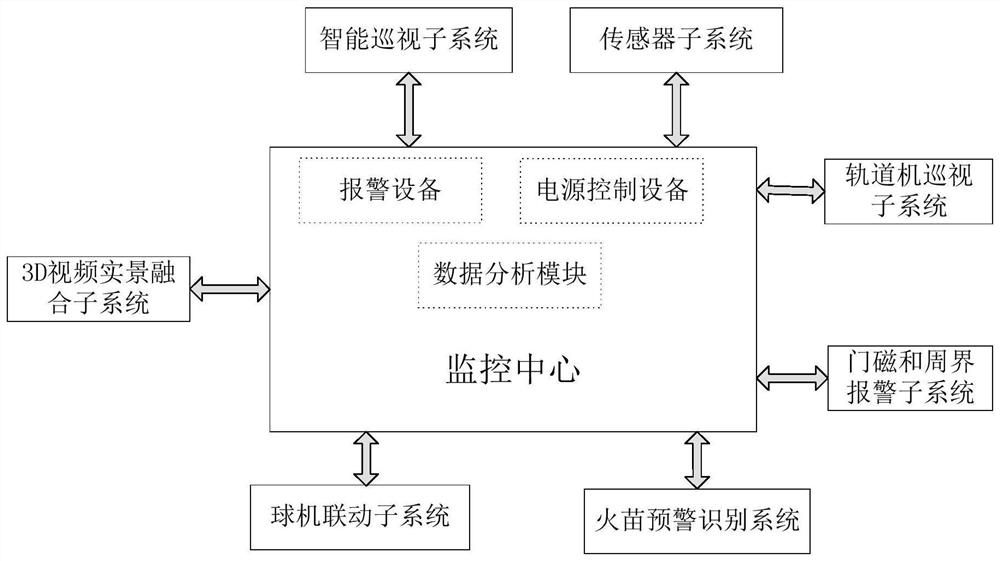

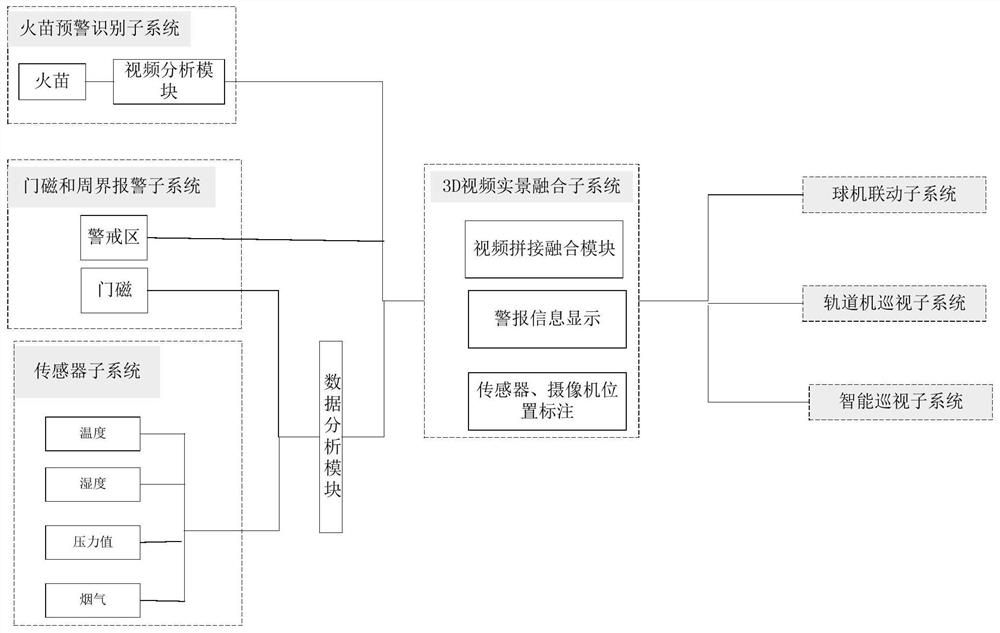

Three-dimensional visual unattended transformer substation intelligent linkage system based on video fusion

PendingCN112288984ARealize intelligent monitoringReal-timeClosed circuit television systemsFire alarm electric actuationTransformerSmart surveillance

The invention relates to a three-dimensional visual unattended substation intelligent linkage system based on video fusion. The system comprises a 3D video live-action fusion subsystem, an intelligentinspection subsystem, a sensor subsystem, a rail machine inspection subsystem, a door magnet and perimeter alarm subsystem, a dome camera linkage subsystem, a flame recognition and early warning subsystem and a monitoring center, and all the subsystems are interconnected and intercommunicated with the monitoring center. The three-dimensional visual unattended substation intelligent linkage systemis realized by fusing three-dimensional videos, the all-weather, omnibearing and 24-hour uninterrupted intelligent monitoring of a substation is realized, and the arbitrary browsing and viewing of athree-dimensional real scene of the substation and alarm prompting of faults in the substation are realized; a worker can watch the videos in the monitoring room, the real-time monitoring and dispatching command of a plurality of substations under administration are achieved, the worker does not need to inspect power transformation facilities and equipment every day, and the monitoring capacity and the working efficiency are improved.

Owner:刘禹岐

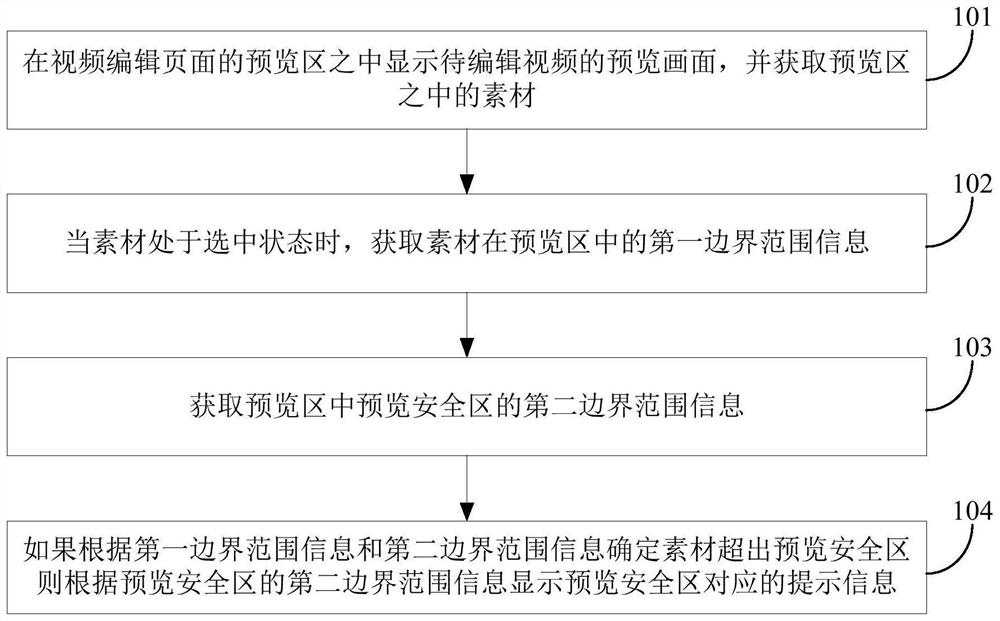

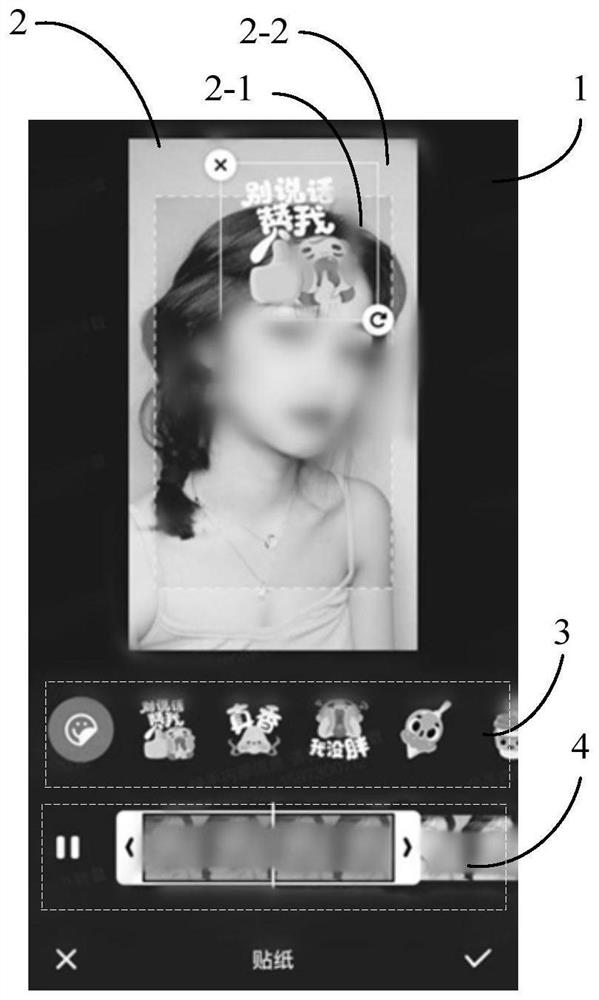

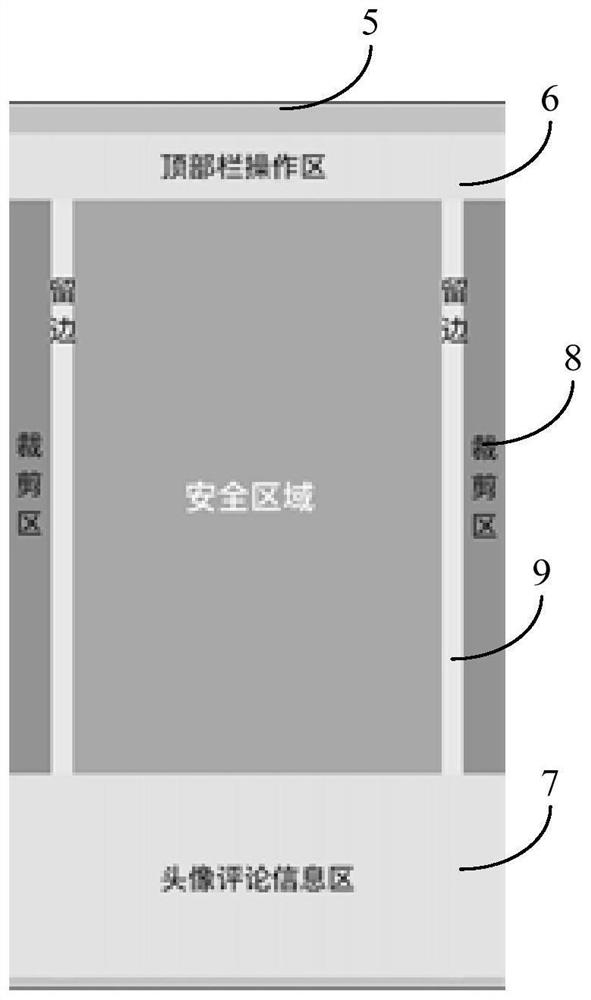

Video editing prompting method and device and electronic equipment

ActiveCN111770381ASatisfy the viewing effectGuaranteed production requirementsElectronic editing digitised analogue information signalsCarrier indicating arrangementsSafety zoneVideo production

The invention relates to a video editing prompting method and device, electronic equipment and a storage medium, and relates to the technical field of software application, and the method comprises the steps: displaying a preview image of a to-be-edited video in a preview region of a video editing page, and obtaining materials in the preview region; when the material is in the selected state, obtaining first boundary range information of the material in the preview area; acquiring second boundary range information of a preview safety area in the preview area; and if it is determined that the material exceeds the preview security area according to the first boundary range information and the second boundary range information, if so, displaying prompt information corresponding to the previewsafety area according to the second boundary range information of the preview safety area, by prompting when the added material exceeds the preview safety area, the made video can meet the watching effects of more users, and the watching experience of video watching users is improved while the making requirements of the video making users are ensured.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

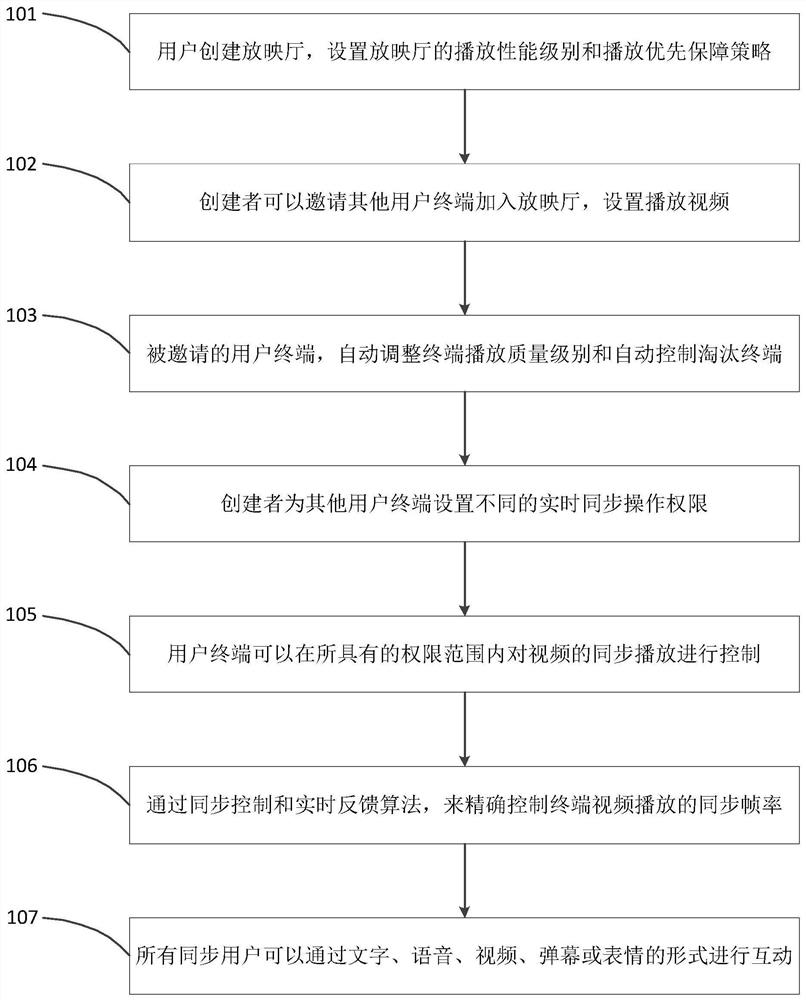

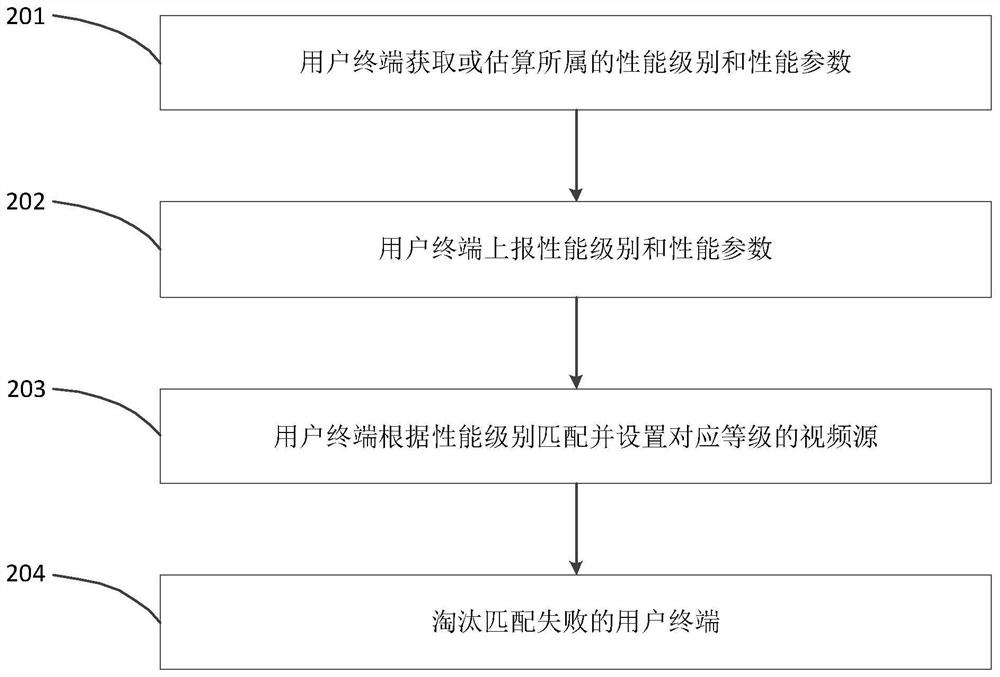

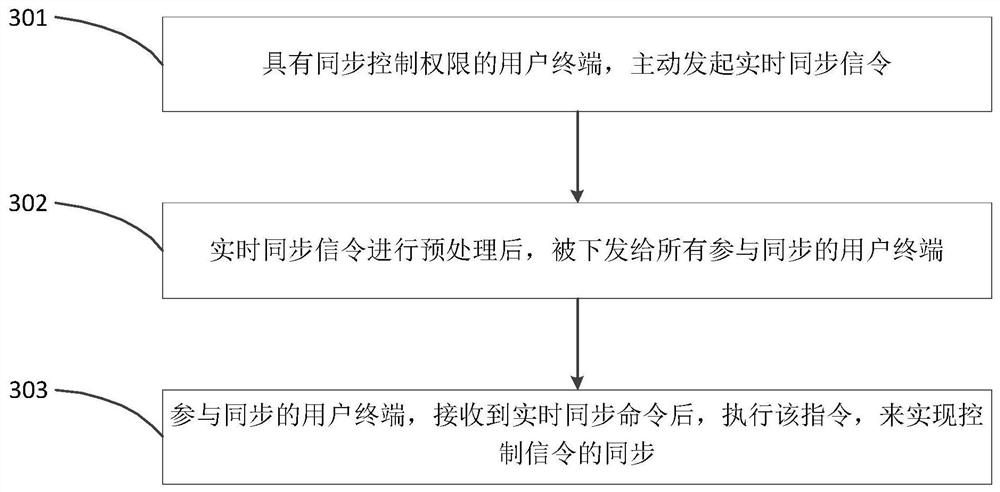

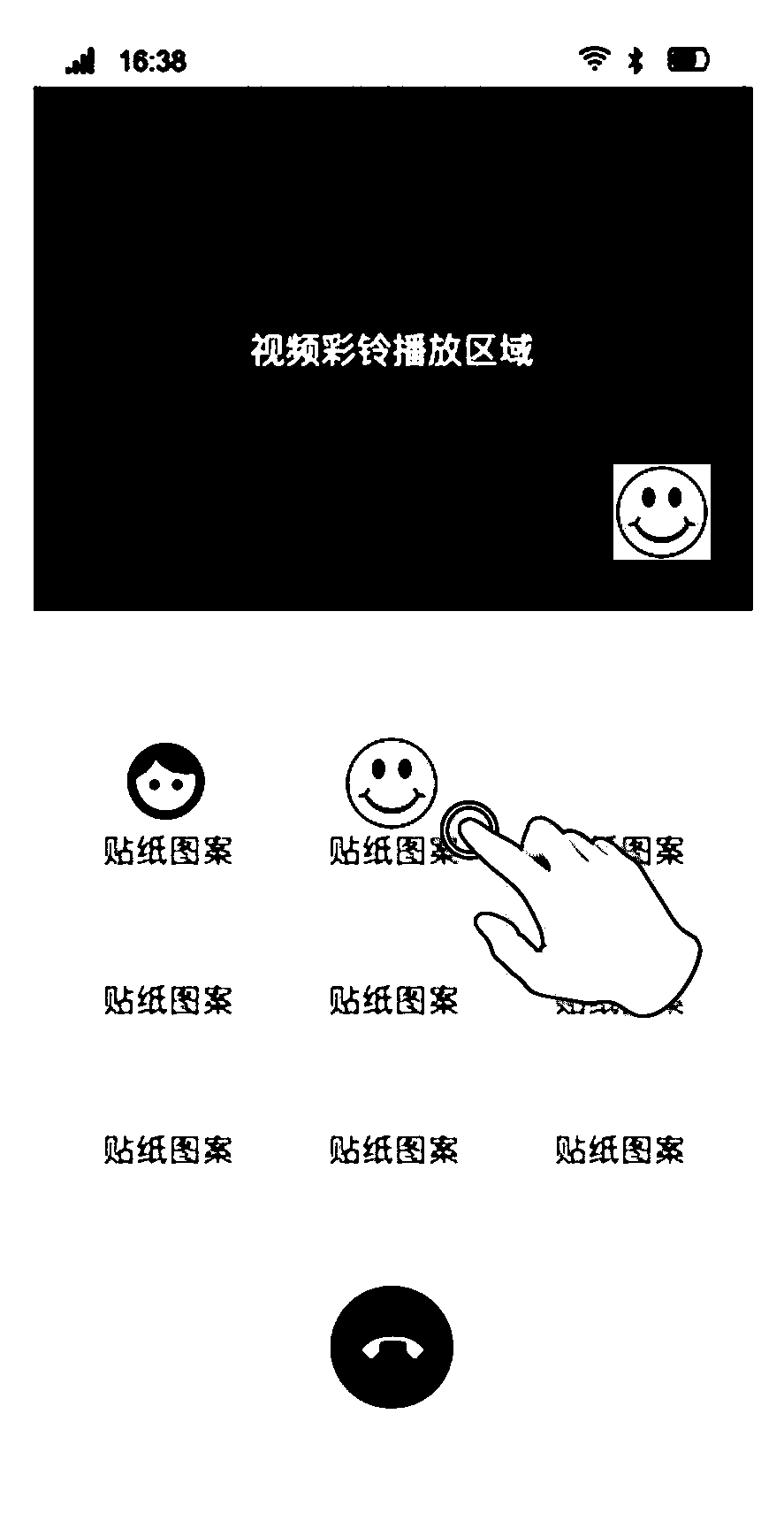

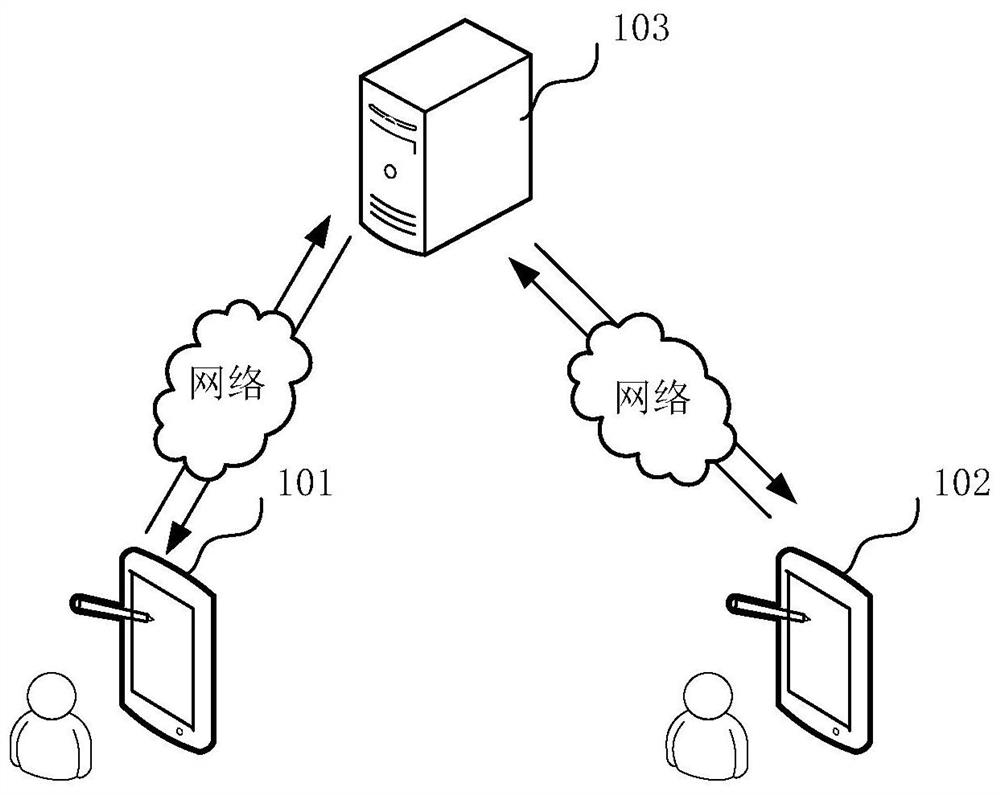

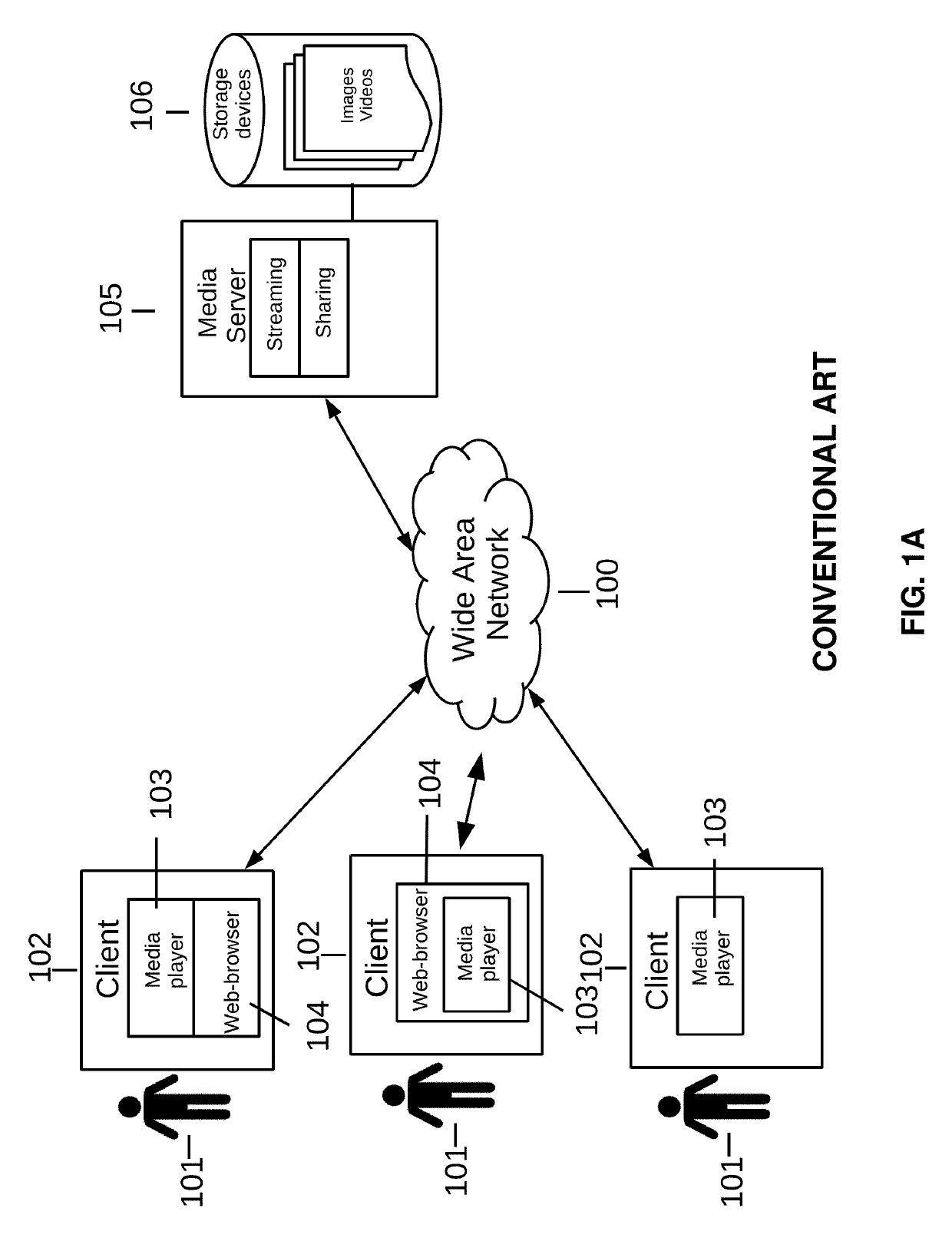

Method and system for synchronously watching videos and interacting in real time by multiple users

PendingCN112104904APrecisely control sync frame rateTroubleshoot out-of-sync issuesSelective content distributionVideo eegLive feedback

The invention discloses a method and a system for synchronously watching videos and interacting , which are used for solving the problems that pictures of the same video watched by multiple users in real time are difficult to synchronize and the real-time interaction of the multiple users is difficult when the multiple users watch the video, and the method for synchronously watching videos and interacting in real time by the multiple users comprises the following steps that: the user creates a studio and sets a playing performance level and a playing priority guarantee strategy of the studio;a creator can invite other user terminals to join in the studio and set a playing video; an invited user terminal automatically adjusts the playing quality level of the terminal and automatically controls the elimination terminal; the creator sets different real-time synchronization instruction operation authorities for other user terminals; the user can control the synchronous playing of the video within the authority range; synchronous playing of terminal videos is accurately controlled through synchronous control and a real-time feedback algorithm; all synchronous users can perform real-time interaction in the form of characters, voices, videos, barrages or expressions.

Owner:北京我声我视科技有限公司

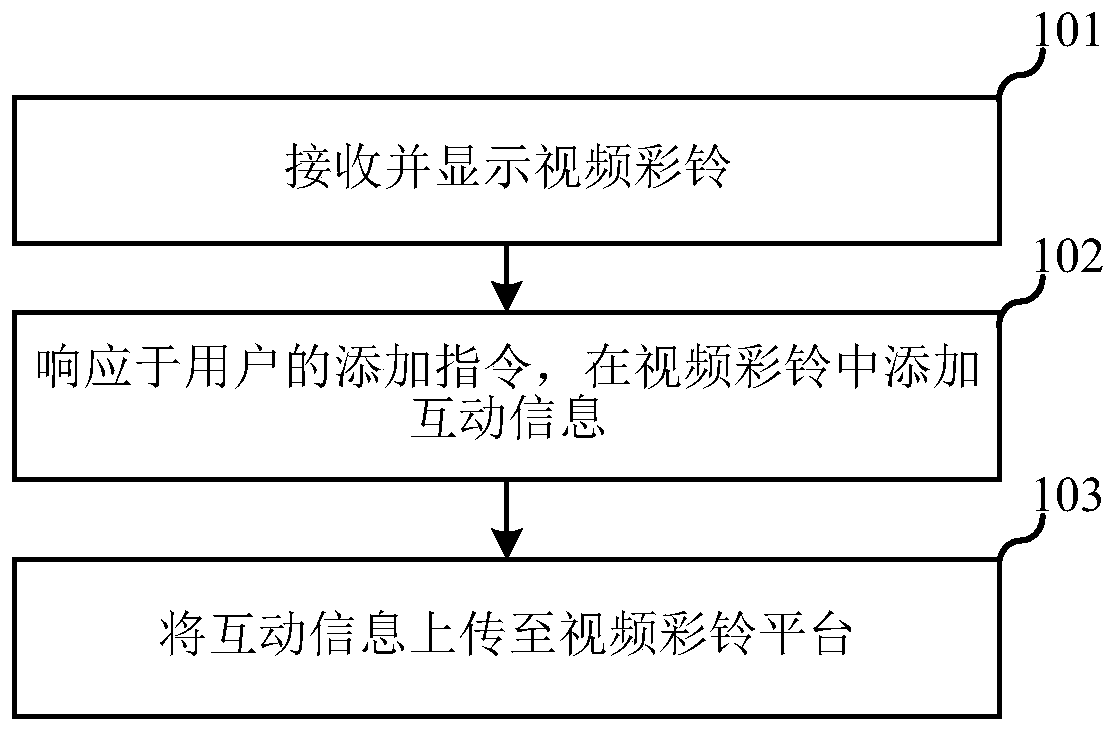

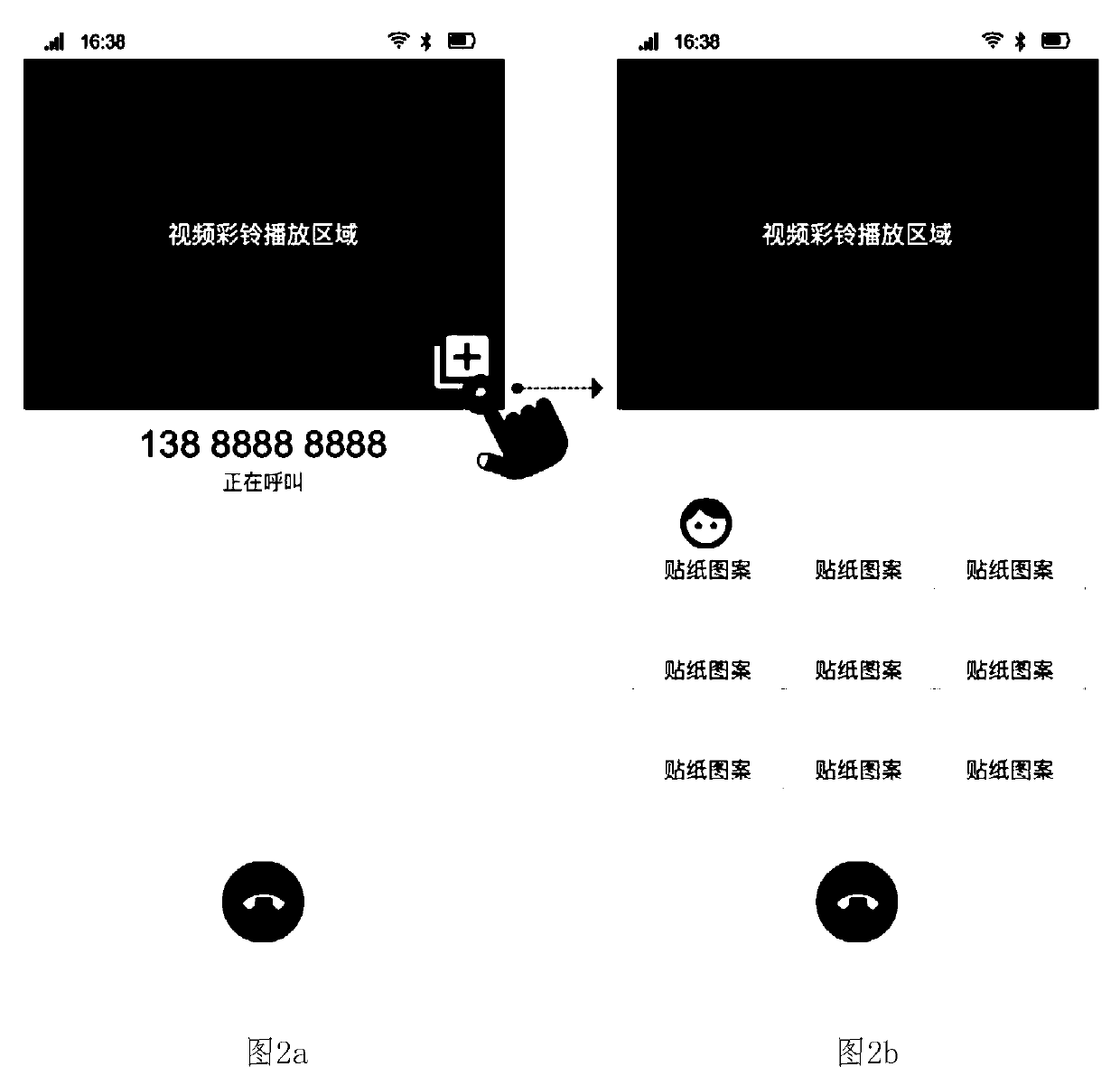

Operation method and system for video polyphonic ringtone, electronic device and storage medium

ActiveCN111246022AImprove information interactivityImprove experienceSpecial service for subscribersSubstation equipmentEngineeringElectric devices

The embodiment of the invention relates to the technical field of mobile terminals, and discloses an operation method and system for a video polyphonic ringtone, an electronic device and a storage medium. The method comprises the following steps: receiving and displaying a video polyphonic ringtone; in response to an adding instruction of a user, adding interactive information into the video polyphonic ringtone; and uploading the interaction information to a video polyphonic ringtone platform, so that the video polyphonic ringtone platform sends the interaction information and the video polyphonic ringtone together when receiving a request instruction of the video polyphonic ringtone. According to the invention, the user can add the interaction information into the video polyphonic ringtone while watching the video polyphonic ringtone, and the added interaction information can be viewed when other users or the user watches the video polyphonic ringtone again, so that a certain interaction behavior can be added in the process of watching the video polyphonic ringtone, and the watching experience of the user is improved.

Owner:MIGU MUSIC CO LTD +2

Video interface setting method and device, electronic equipment and storage medium

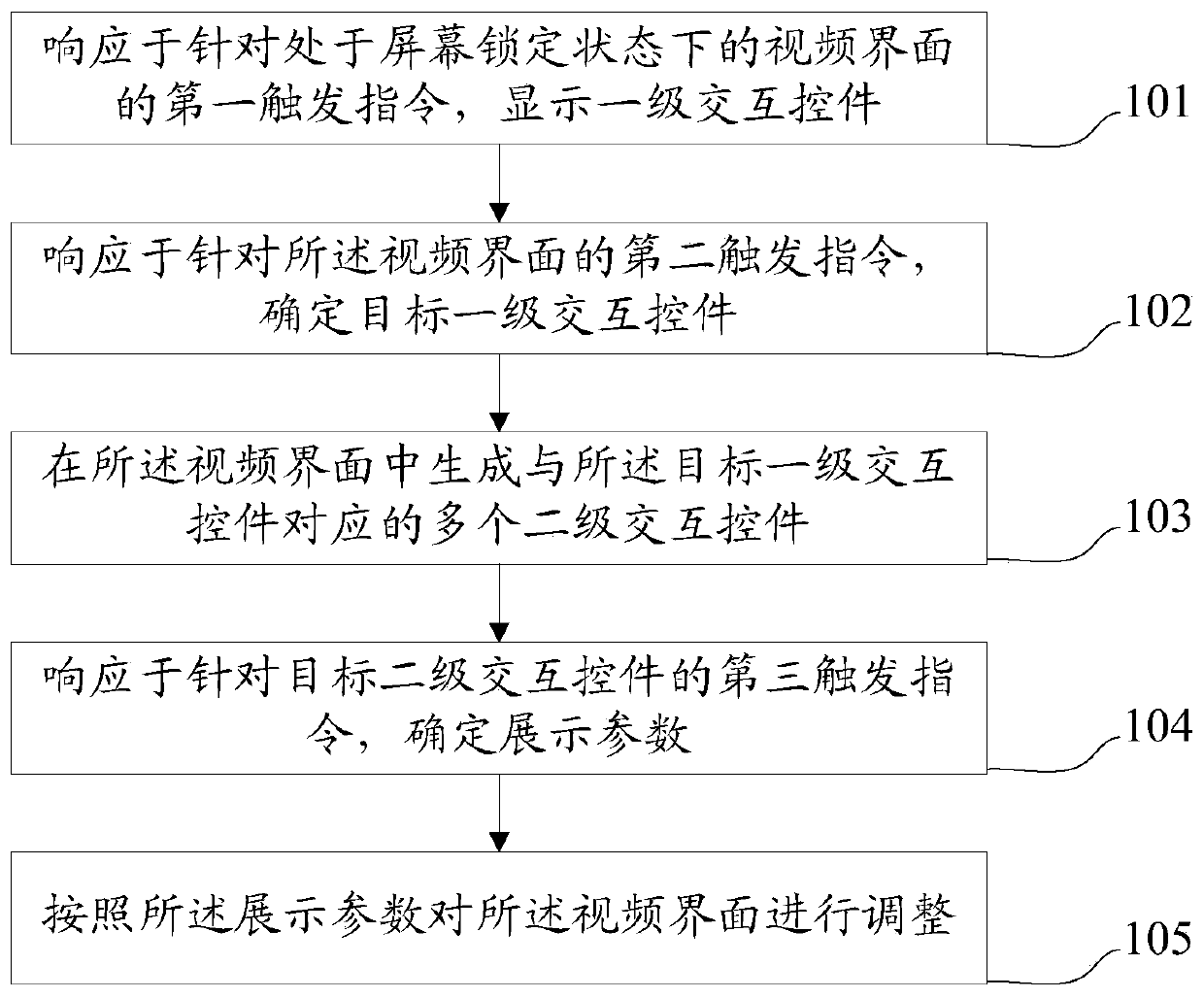

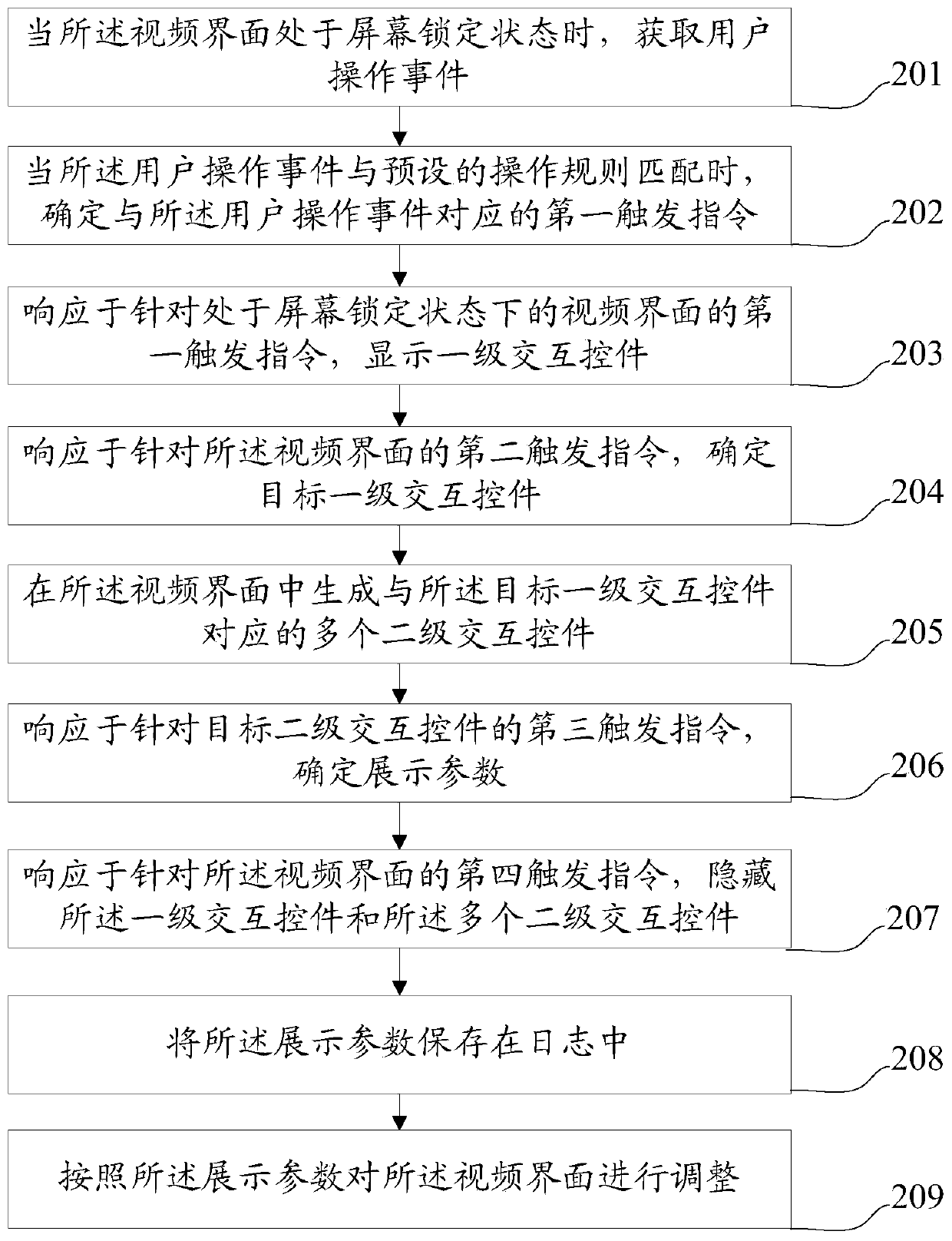

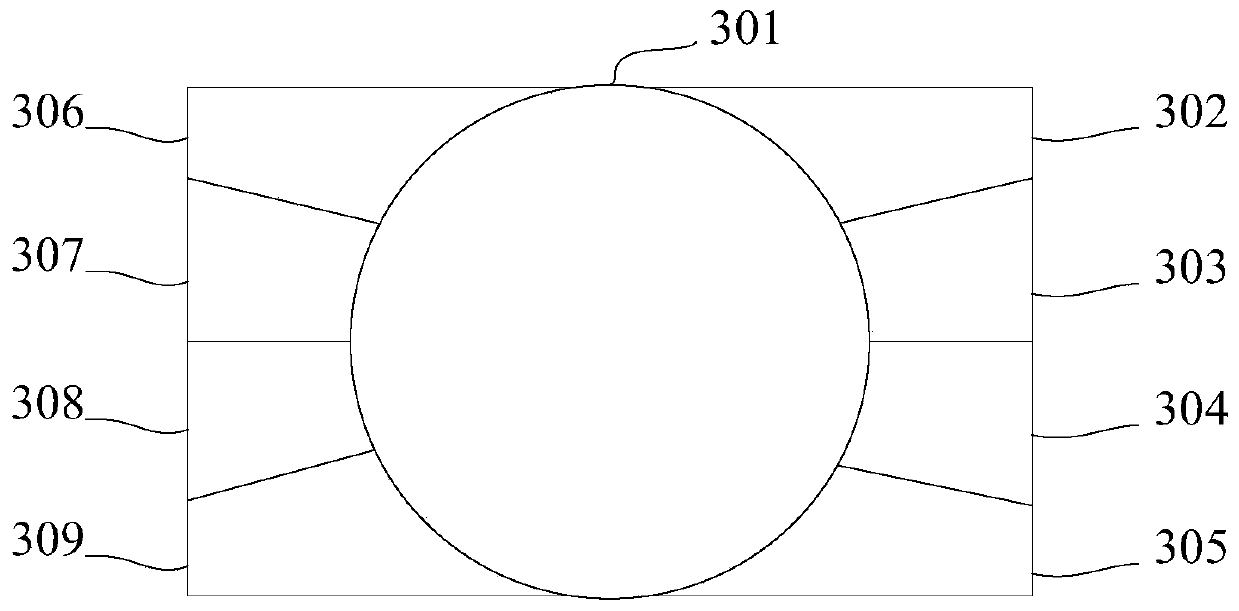

PendingCN111309214AEasy to adjustImprove the experience of watching videosInput/output processes for data processingComputer hardwareInteraction control

The embodiment of the invention provides a video interface setting method. The method is applied to a terminal, a software application is executed on a processor of the terminal, a video interface isobtained through rendering on a touch displayer of the terminal, and the method comprises the steps that a first trigger instruction for the video interface in a screen locking state is responded to,and a first-level interaction control is displayed; in response to a second trigger instruction for the video interface, determining a target first-level interaction control; generating a plurality ofsecondary interaction controls corresponding to the target primary interaction control in the video interface; determining display parameters in response to a third trigger instruction for the targetsecondary interaction control; and adjusting the live broadcast room video interface according to the display parameters. Therefore, the user can quickly and conveniently complete the adjustment of the video interface setting without unlocking the video interface, the operation is simple, and the video watching experience of the user is effectively improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

Method and system for categorizing video

InactiveCN102143327AReduce labor intensityTimely processingTelevision system detailsColor television detailsComputer graphics (images)Engineering

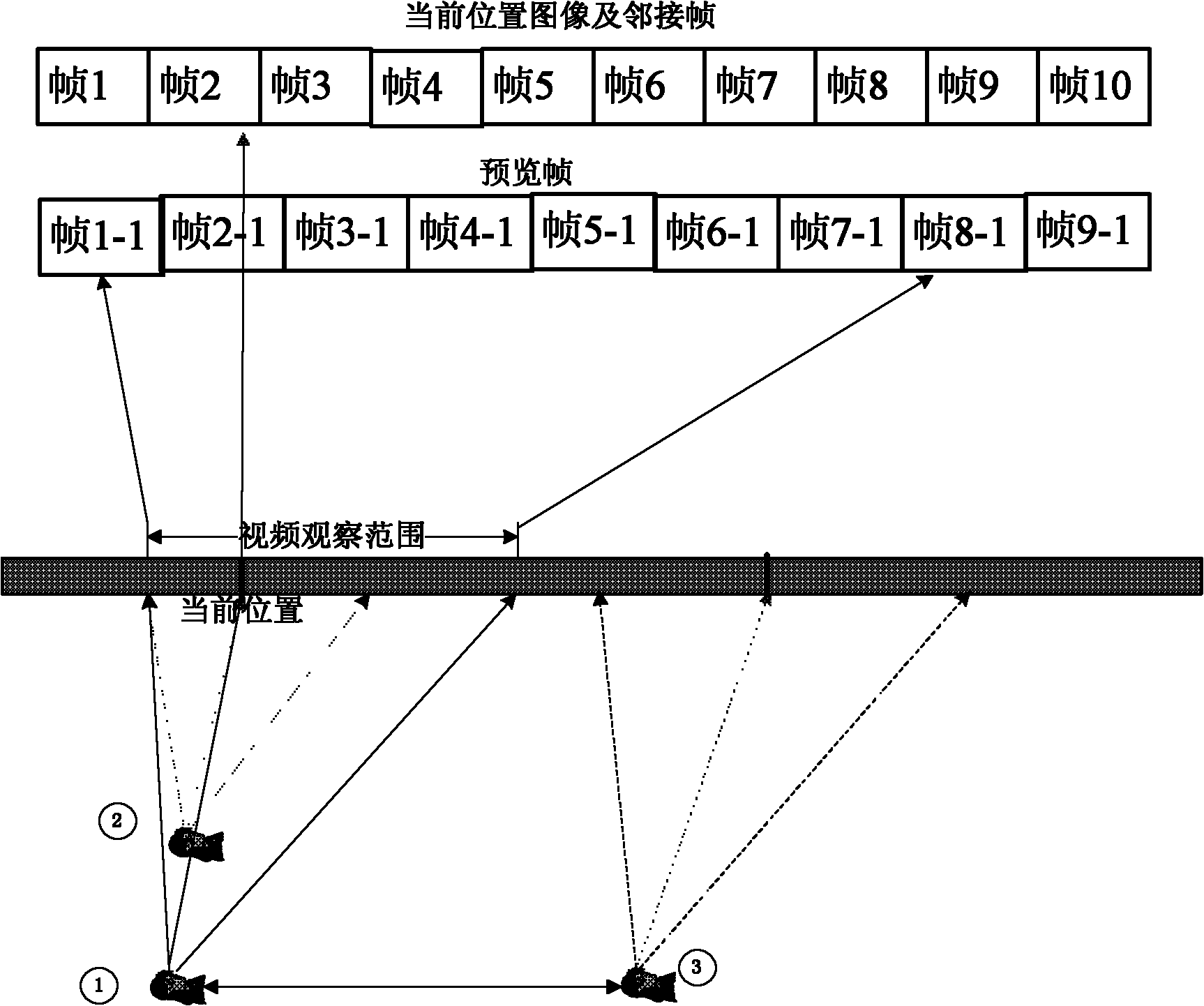

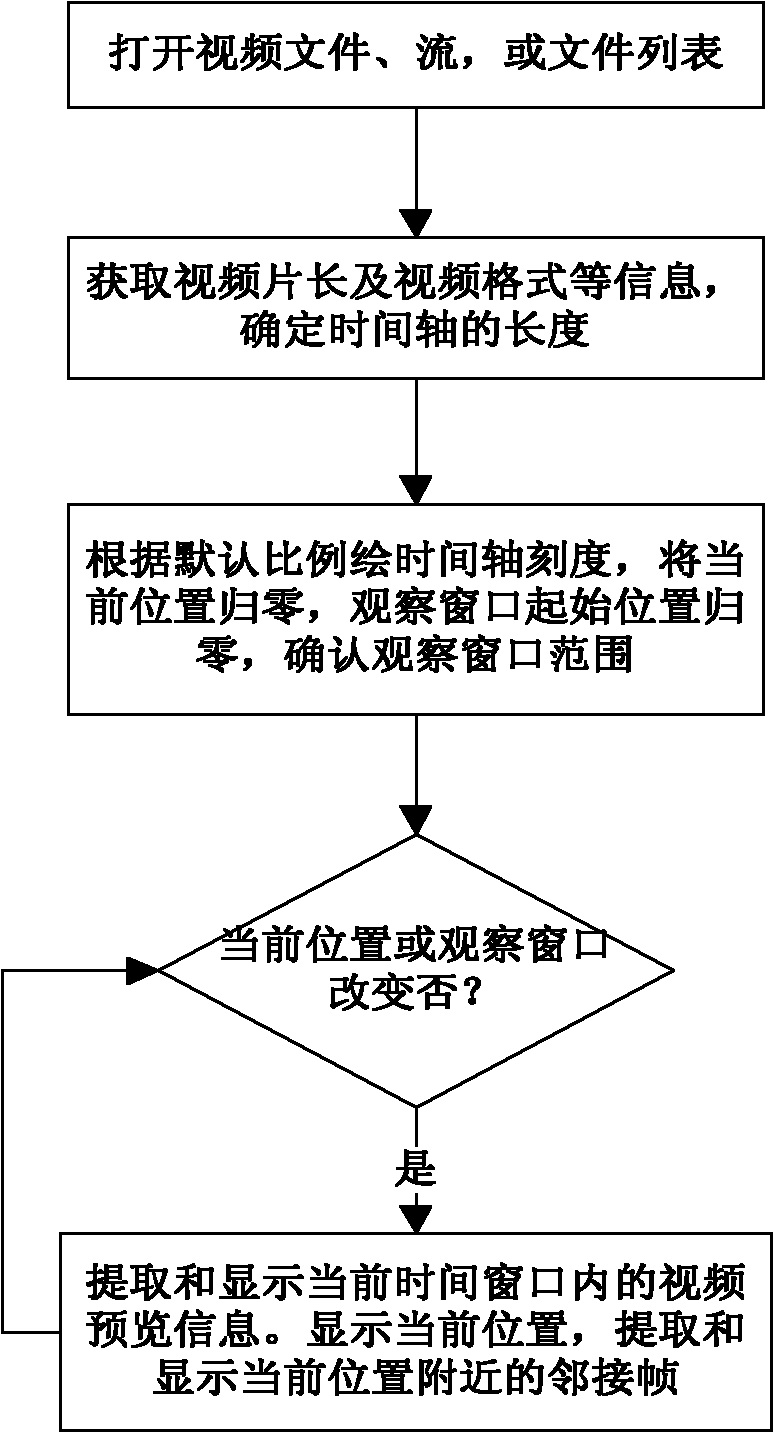

The invention provides a method and system for categorizing a video aiming at the problems that the efficiency is low, the cost is high, and the preview observation accuracy can not be adjusted in the existing method for manually categorizing the video. The method comprises the following steps: 1, loading the video, acquiring the running time and the format information of the video and determining the length of a timer shaft; 2, determining an existing observation position of the video, decoding and displaying an adjacent frame neighboring the existing observation position, and updating the adjacent frame when the existing observation position of the video is changed; and 3, determining an initial preview display scale, decoding and displaying a preview frame, and updating the preview frame when the preview display scale or the existing observation position is changed. A categorization operator uses the method and system provided by the invention to browse the preview frame of the video in advance without watching the video completely; and then the adjacent frame is observed after preliminary positioning is carried out, thus the categorization positioning is more accurate, the categorization efficiency is improved, the labor intensity of categorization work is reduced, and a user can be preferably helped to process and use video resources in time.

Owner:北京新岸线网络技术有限公司

Video music matching method and device, electronic equipment and storage medium

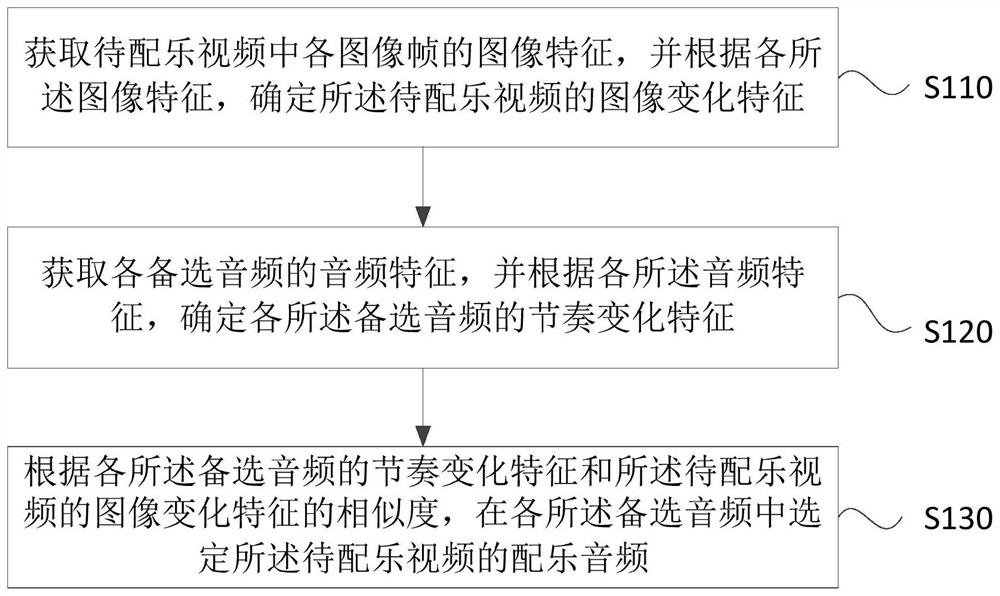

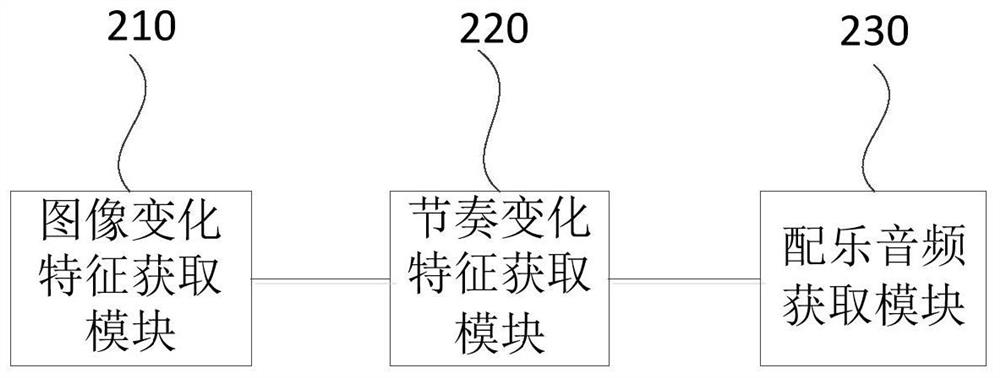

ActiveCN112153460AEasy to resonateImprove viewing experienceTelevision system detailsSpeech analysisComputer graphics (images)Music festival

The embodiment of the invention discloses a video music matching method and device, electronic equipment and a storage medium, and the method comprises the steps: obtaining the image features of all image frames in a to-be-matched video, and determining the image change features of the to-be-matched video according to all image features; acquiring audio features of each alternative audio, and determining rhythm change features of each alternative audio according to each audio feature; and according to the similarity between the rhythm change characteristics of the alternative audios and the image change characteristics of the to-be-matched video, selecting the matched audio of the to-be-matched video from the alternative audios. According to the technical scheme provided by the embodimentof the invention, the change of the video content is matched with the change of the music rhythm, and the content expression of the video is accurately highlighted, so that when a user watches the video, the resonance of the user is more easily caused, and the watching experience of the user is improved.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

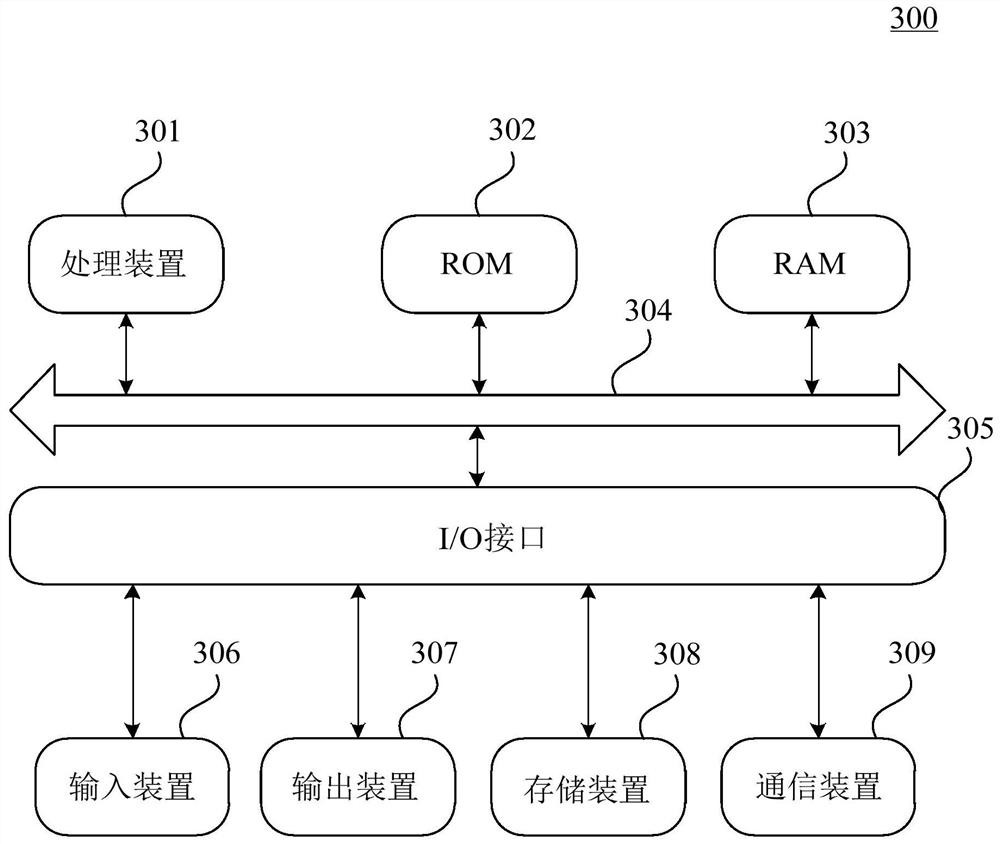

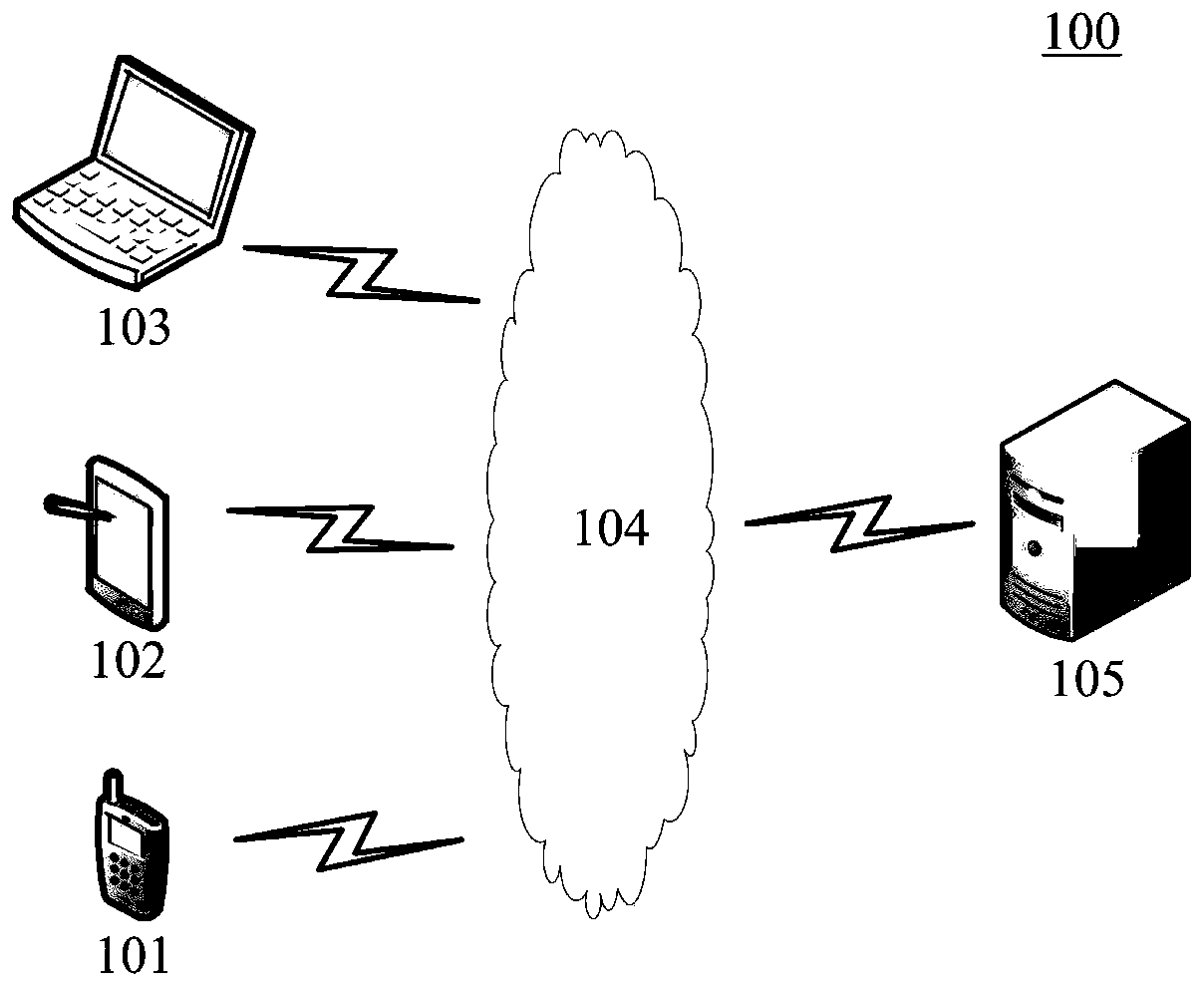

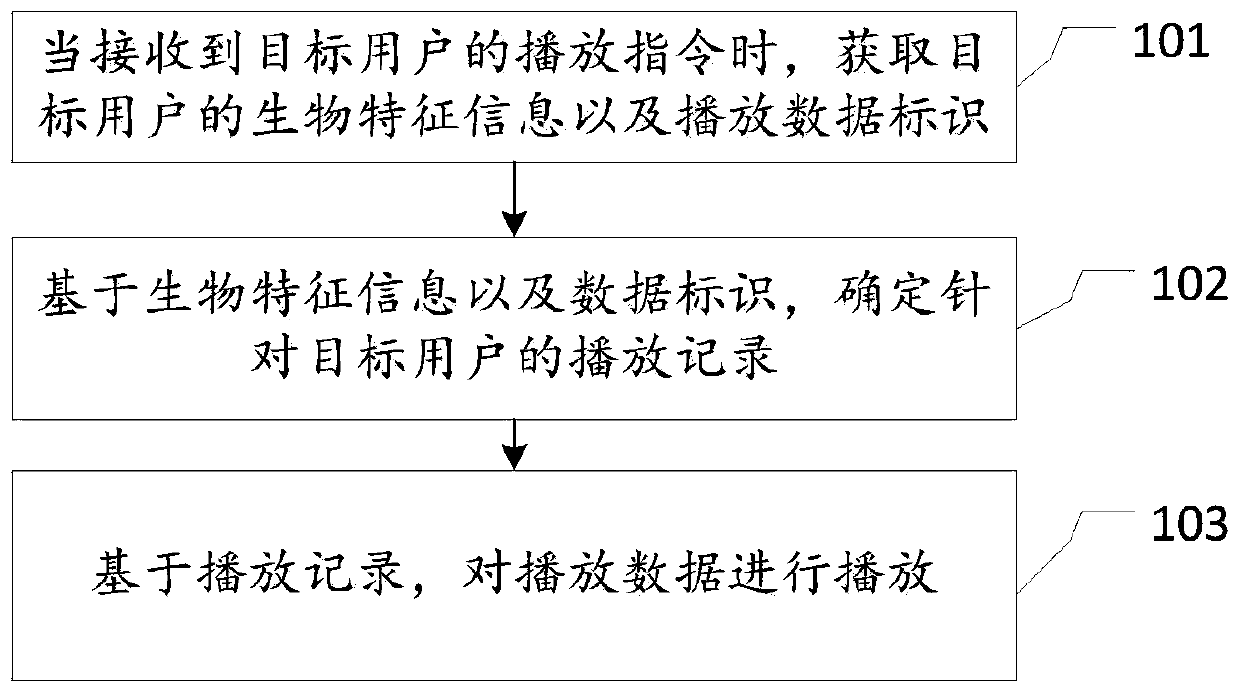

Playing method and device, electronic equipment and medium

InactiveCN110933468AIssues affecting the viewing experienceSelective content distributionVideo eegEngineering

The invention discloses a playing method and device, electronic equipment and a medium. According to the invention, when the playing instruction of the target user is received, after the biological characteristic information and the playing data identifier of the target user are obtained, the playing record for the target user can be determined based on the biological characteristic information and the data identifier, and the playing data is played based on the playing record. Through application of the technical scheme of the invention, when the instruction of the user for playing the videodata is received, whether the user performs historical playing on the video data or not is searched from the database based on the biological characteristic information of the user and the video dataidentifier, and the video is played according to the historical playing progress. Therefore, the problem that when multiple users use the same mobile terminal to watch the video information, the watching experience of the users is influenced due to different playing progresses in the prior art can be avoided.

Owner:YULONG COMPUTER TELECOMM SCI (SHENZHEN) CO LTD

Video key frame self-adaptive extraction method under emotion encourage

InactiveCN104008175ARepresentativeEffectiveImage analysisSpecial data processing applicationsPattern recognitionSelf adaptive

The invention relates to a video key frame self-adaptive extraction method under emotion encourage. The video key frame self-adaptive extraction method comprises the steps of: thinking in terms of emotional fluctuation of a video looker, computing exercise intensity of video frames to serve as visual emotion incentive degrees of the video looker when looking a video, computing short-time average energy and tone as audition emotion incentive degrees, and linearly fusing the visual emotion incentive degree and the audition emotion incentive degree to obtain the video emotion incentive degree of each video frame and then generate a video emotion incentive degree curve of the scene; obtaining video key frame number KN shall be distributed to the scene according to the video emotion incentive change of the scene; at last taking the video frames corresponding to KN crests before the highest emotion incentive degree of the video emotion incentive degree curve as the scene key frames. The video key frame self-adaptive extraction method is simple and performed from the perspective of the emotional fluctuation of the video looker, and utilizes the video emotion incentive degree to semantically direct the extraction of the key frames; and the extracted video key frames are more representative and effective.

Owner:FUZHOU UNIV

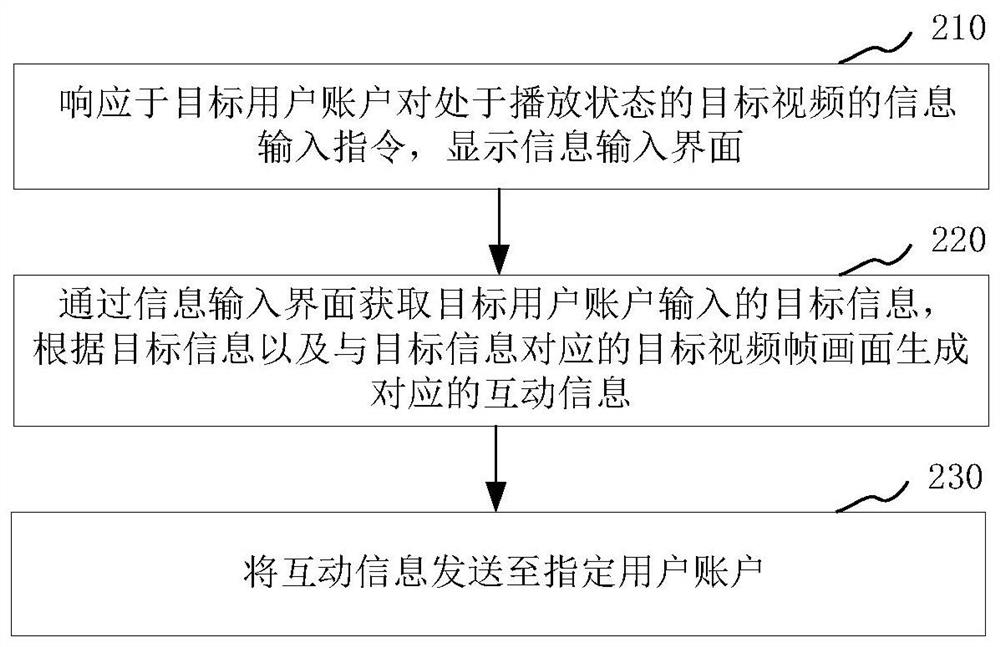

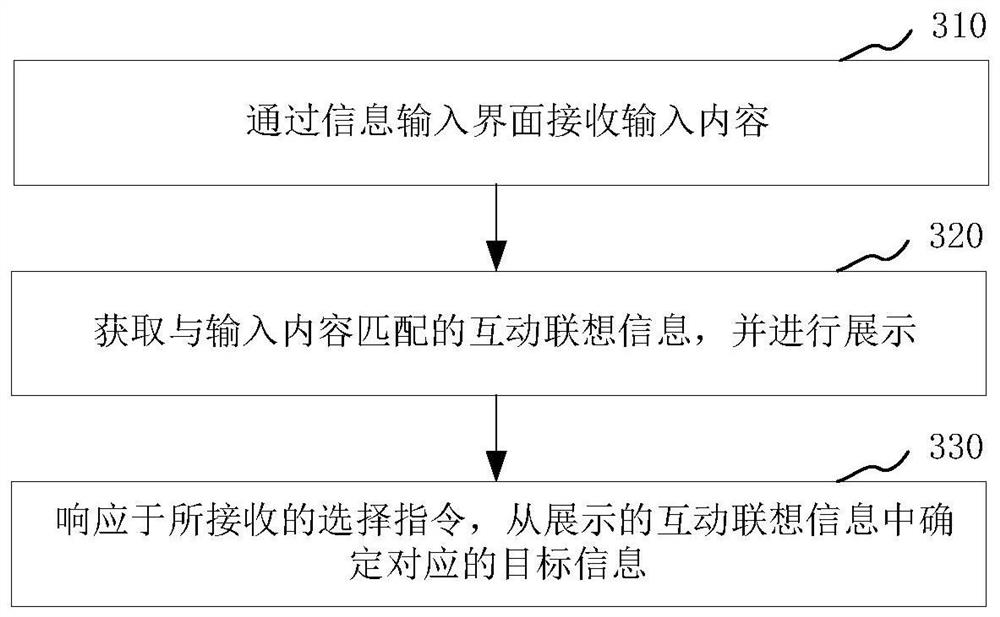

Video interaction method and device, electronic equipment and storage medium

InactiveCN111836114AImprove interaction efficiencyAccurate interactionSelective content distributionText database queryingHuman–computer interactionComputer engineering

The invention relates to a video interaction method and device, electronic equipment and a storage medium. The method comprises the steps of in response to an information input instruction of a targetuser account for a target video in a playing state, displaying an information input interface, and obtaining target information input by the target user account, so that the target user account can ask a question in real time when watching the video, generate corresponding interaction information according to the asked target information and a target video frame picture corresponding to the target information, and send the interaction information to a specified user account. Accurate interaction between the user and other users based on the video content in the video watching process is realized, and the video interaction efficiency is improved.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

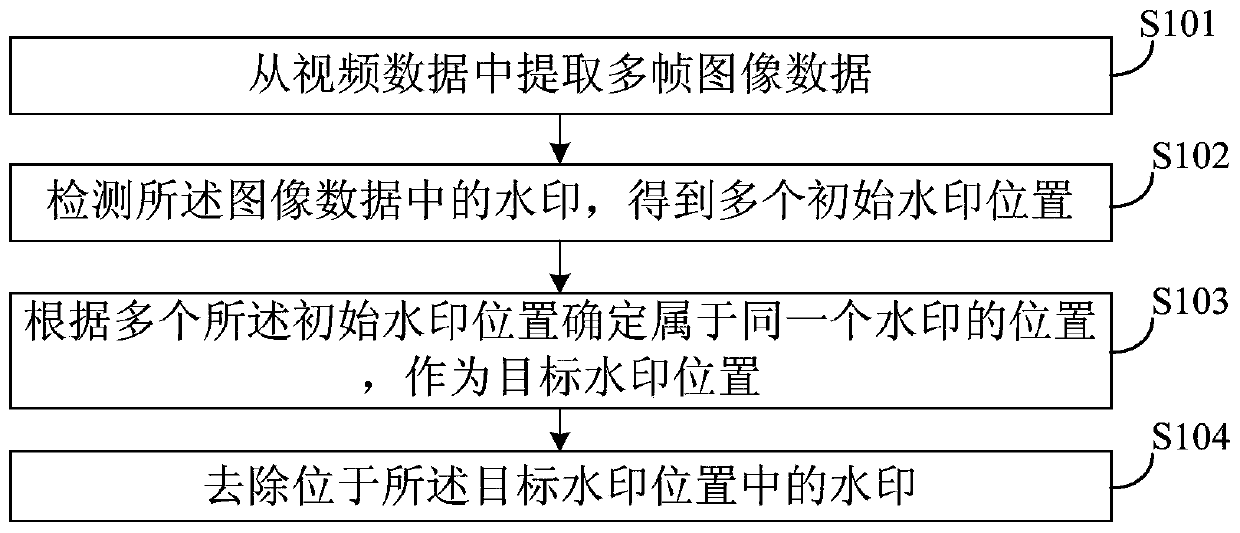

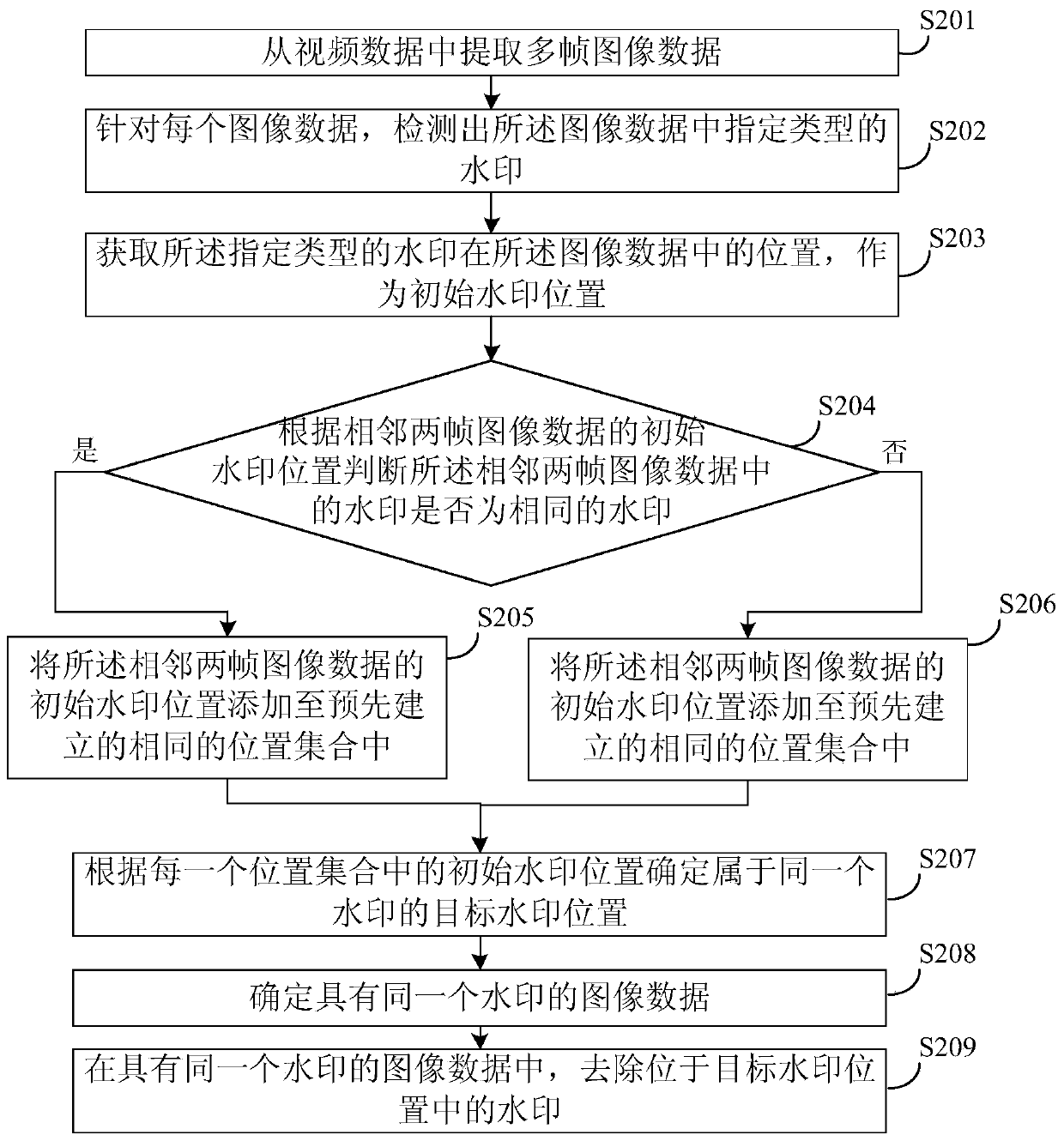

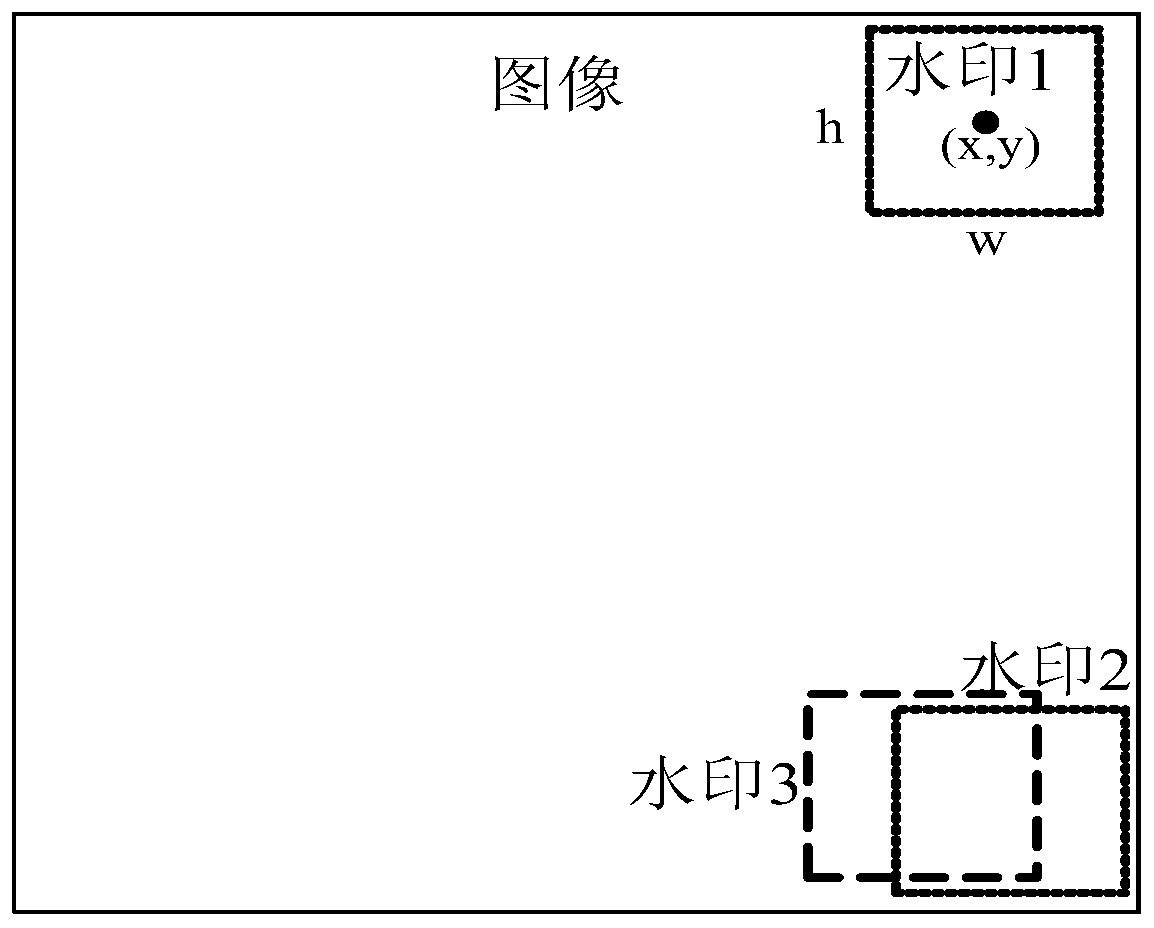

Video watermark removing method, video data publishing method and related devices

ActiveCN110798750AImprove experienceAvoid jitterSelective content distributionPattern recognitionRadiology

The embodiment of the invention discloses a video watermark removing method, a video data publishing method and related devices. The video watermark removing method comprises the following steps: extracting multiple frames of image data from video data; detecting watermarks in the image data to obtain a plurality of initial watermark positions; determining the position belonging to the same watermark according to the plurality of initial watermark positions as a target watermark position; and removing the watermark in the target watermark position. According to the embodiment of the invention,the target watermark position belonging to the same watermark is determined according to the initial watermark position; wherein the target watermark position can include the initial watermark position of the watermark in each frame of image data; jittering of the detected initial watermark position is avoided. The problem that the watermarks in the video data cannot be completely removed due tothe fact that the detected initial watermark position deviates from the real position is solved, the watermarks in the clean video data can be removed after the watermarks of each frame of image dataare removed based on the target watermark position, and the video data watching experience of a user is improved.

Owner:BIGO TECH PTE LTD

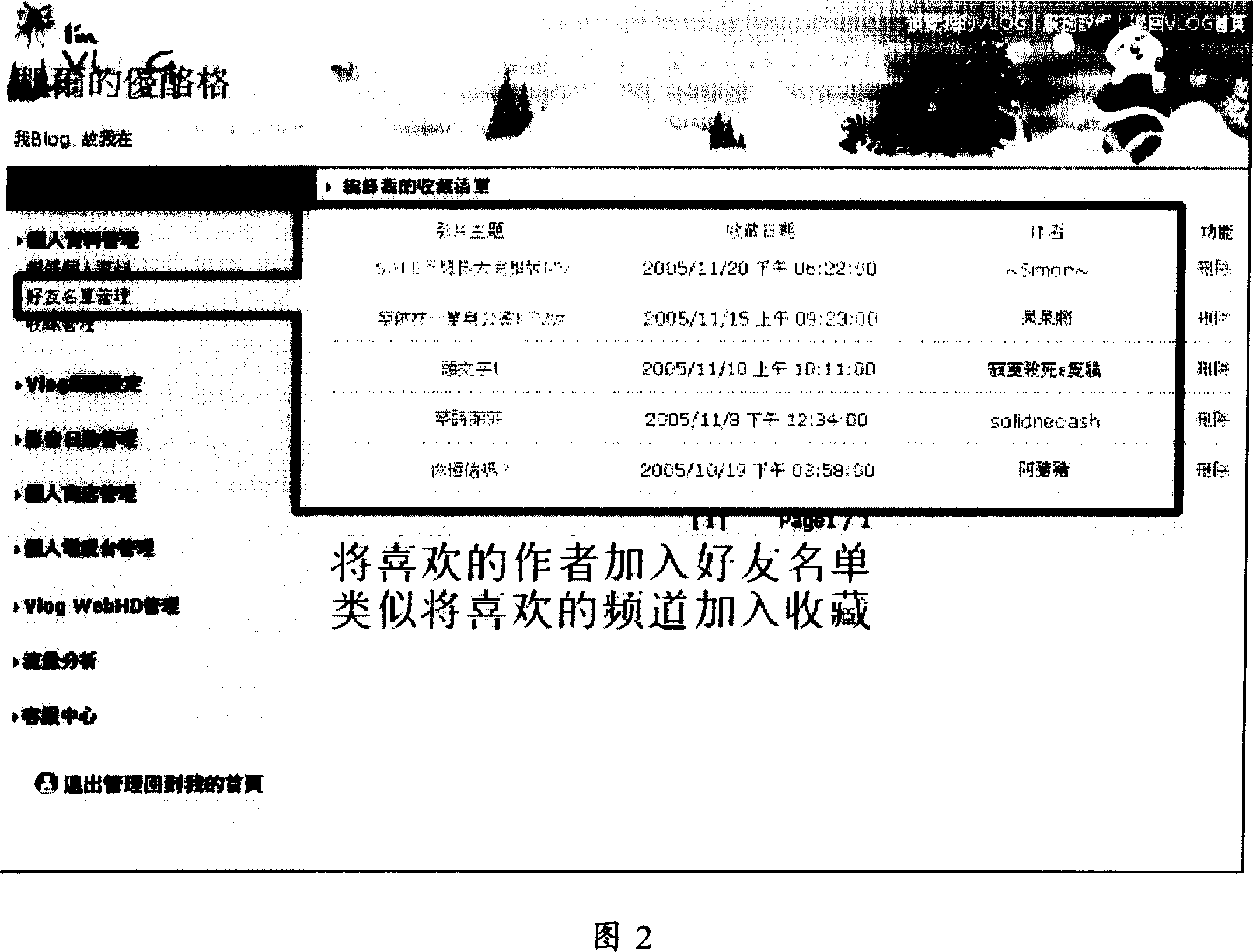

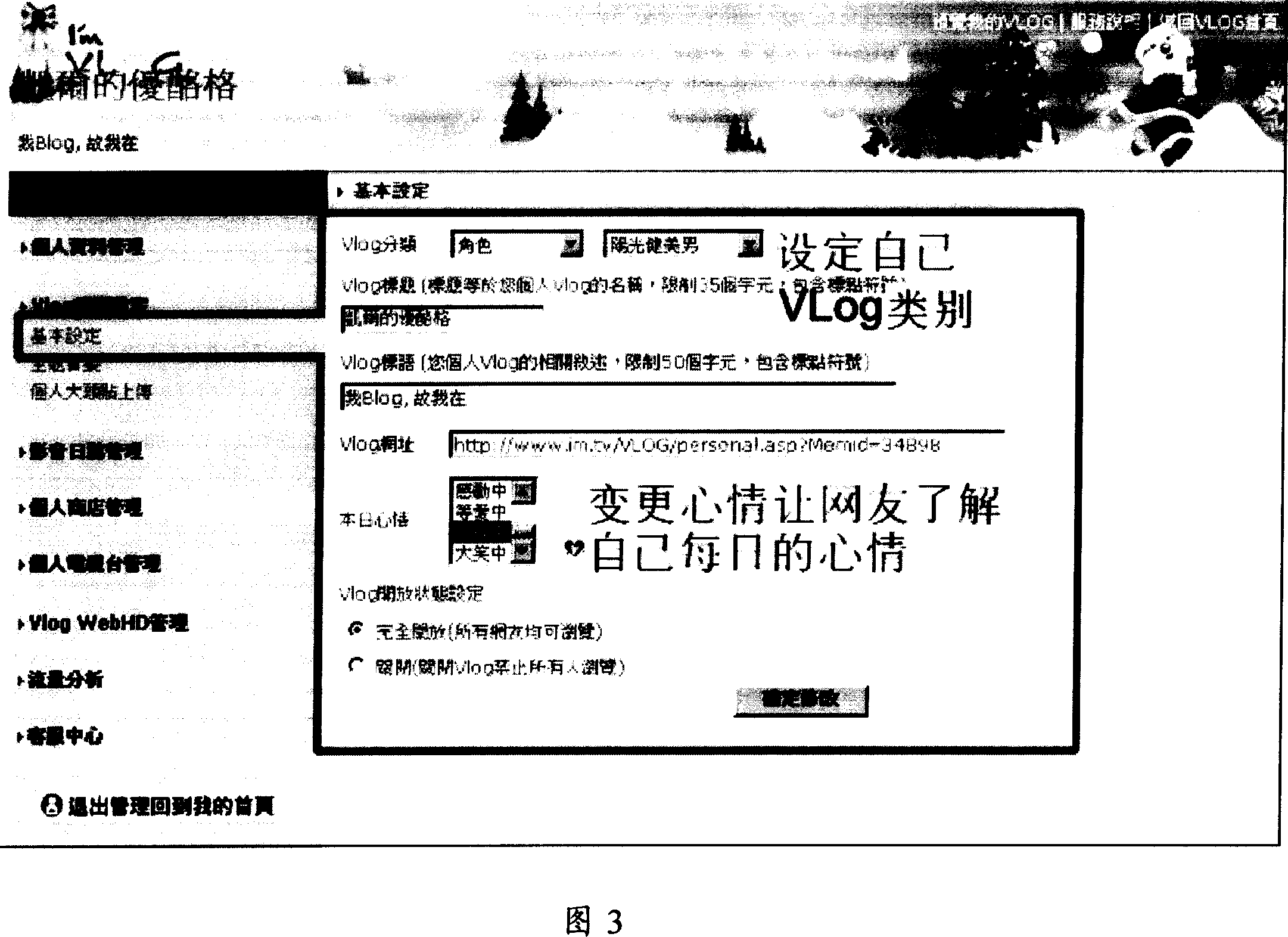

Video weblog system

InactiveCN101030868ASimple codingImprove personalizationData processing applicationsMultimedia data retrievalPersonalizationVideo storage

A web-based video log that allows users to present video segments on-line in a chronological format is disclosed. A user friendly interface and system is provided for allowing users to place video or other multimedia on a personal website space. Users can record video directly to a central server without having to record the video locally on their computer and then upload the file via file transfer protocol. Alternatively, users can upload video files in any format and codecs located on the server automatically convert the original format into a common format so that all users will be able to view the video. Video effects can be automatically added to the video. The amount of effect and various parameters for the effect can also be selected. Meta-tags are added so videos can be searched for and located. Users can personalize their VLOG but customizing the background.

Owner:ERA DIGITAL MEDIA

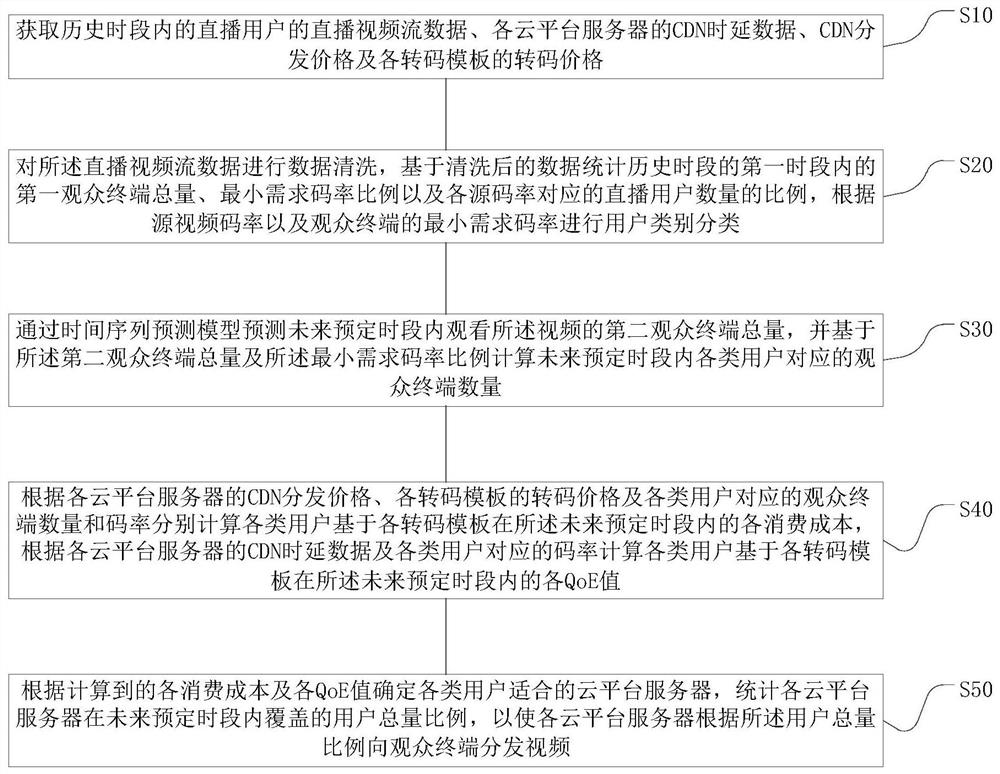

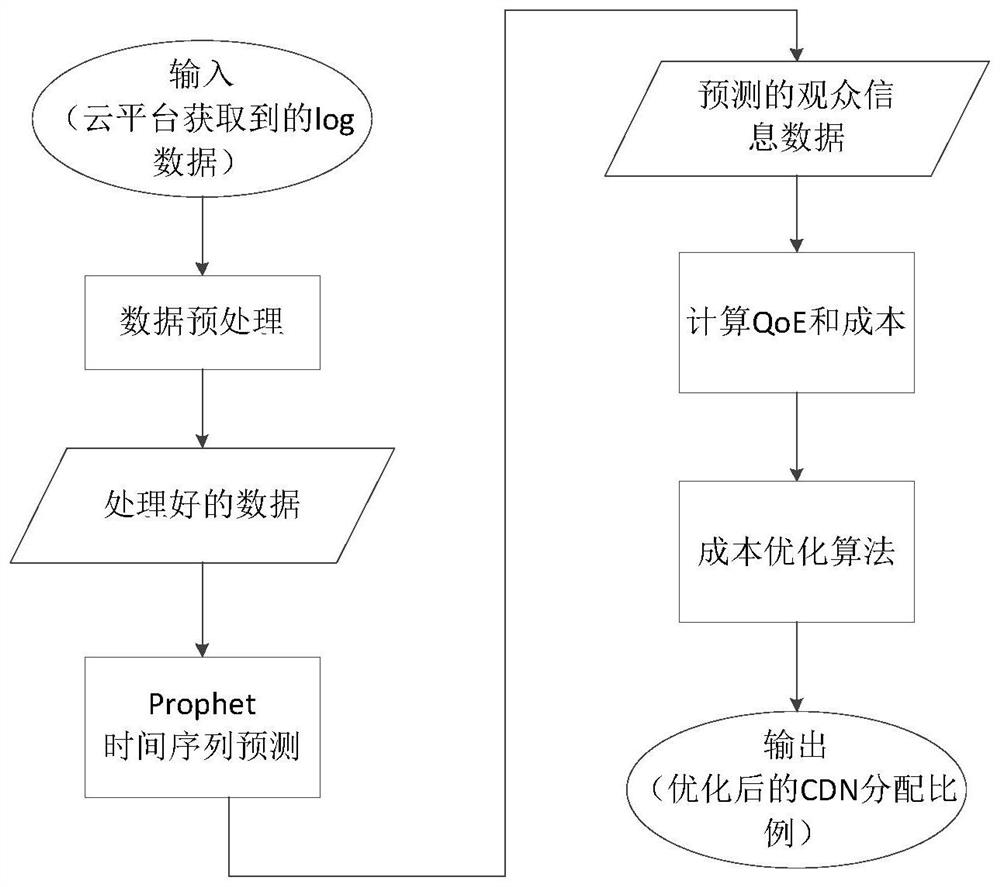

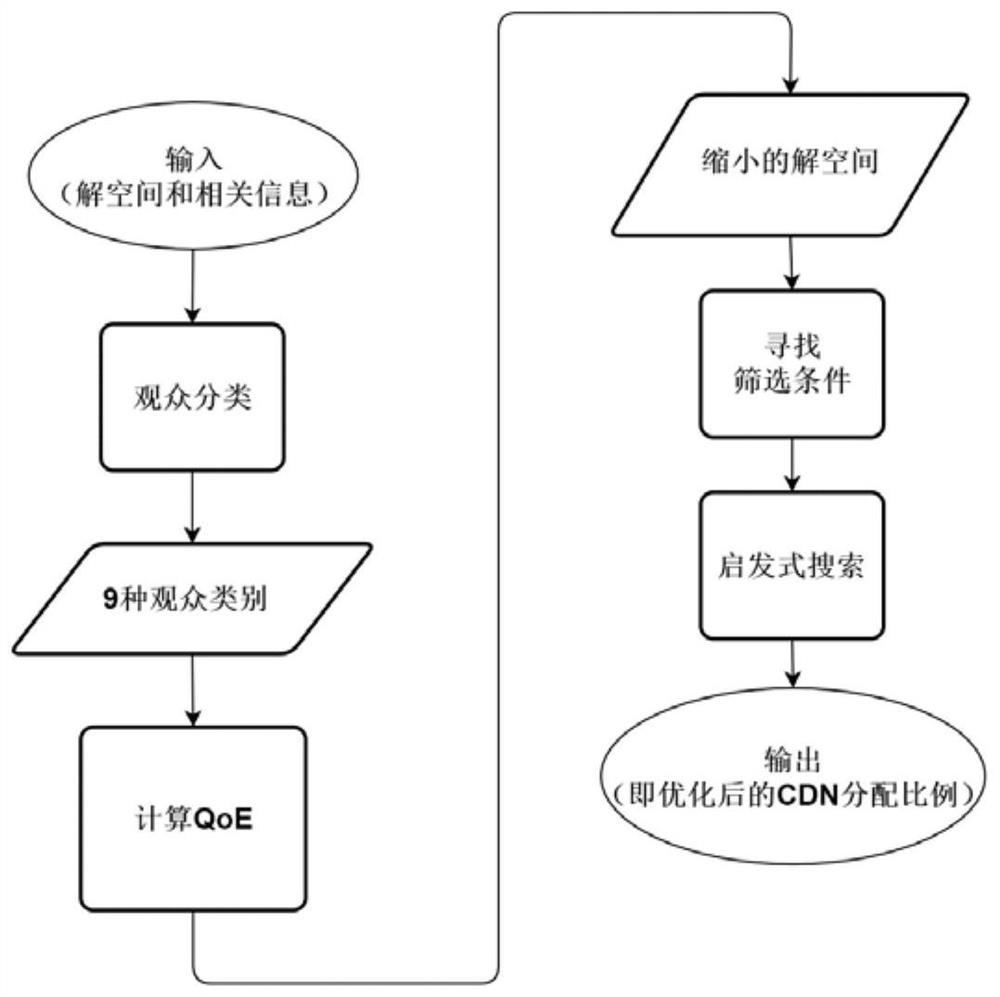

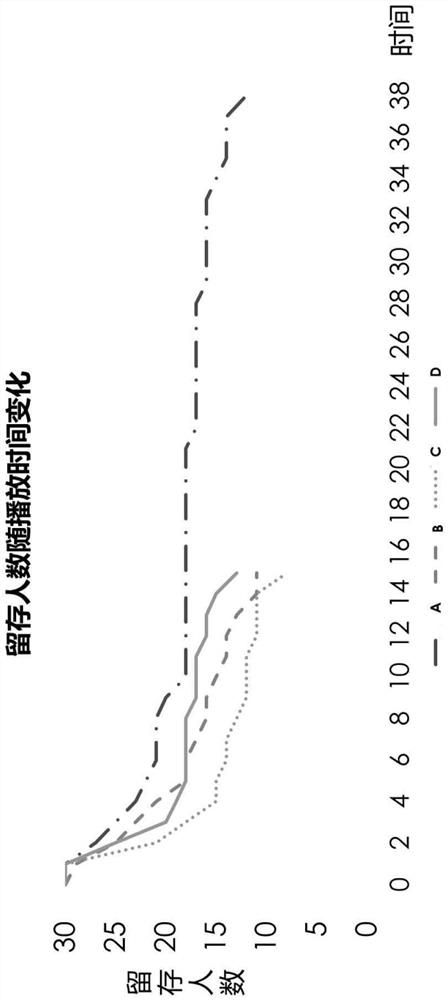

Multi-cloud video distribution strategy optimization method and system

ActiveCN113645471ALower service costsGuaranteed viewing experienceSelective content distributionTime delaysStream data

The invention provides a multi-cloud video distribution strategy optimization method and system. The method comprises the following steps: acquiring live video stream data, CDN time delay data of each cloud platform server, CDN distribution price and transcoding price of each transcoding template in a historical time period; cleaning the live broadcast video stream data, counting the total amount of first audience terminals in a first time period of a historical time period, the minimum demand code rate proportion and the proportion of the number of live broadcast users corresponding to each source code rate, and performing user category classification according to the source video code rate and the minimum demand code rate of the audience terminals; predicting the total number of second audience terminals watching videos in a future predetermined time period, calculating the number of audience terminals corresponding to various users in the future predetermined time period, and respectively calculating each consumption cost and each QoE value of each user in the future predetermined time period based on each transcoding template; and determining a cloud platform server suitable for each type of users according to each consumption cost and each QoE value, and performing statistics on the proportion of the total number of users covered by each cloud platform server in a future predetermined time period.

Owner:BEIJING UNIV OF POSTS & TELECOMM

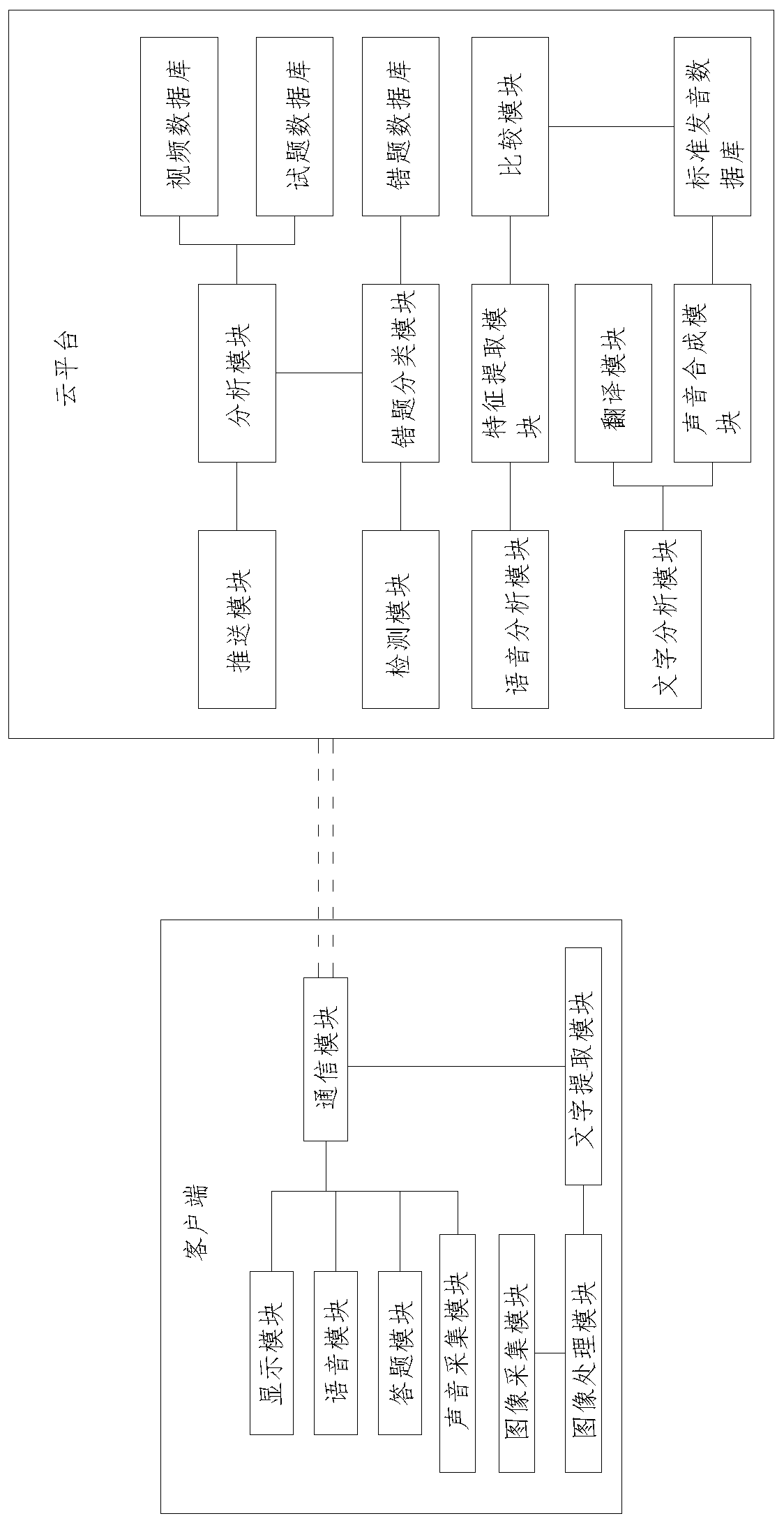

Intelligent English teaching system for English teaching

InactiveCN111489597AJudging the learning effectImprove learning effectElectrical appliancesPersonalizationPersonalized learning

The invention discloses an intelligent English teaching system for English teaching. The intelligent English teaching system comprises a client and a cloud platform, wherein the client is connected with the cloud platform through a communication module; a display module, a voice module, an answering module, a sound acquisition module and an image acquisition module are arranged in the client; a pushing module, an analysis module and a video database are arranged in the cloud platform; teaching videos are stored in the video database; and the teaching videos are pushed to the client through thepushing module. According to the invention, the interactive answering module is added on the basis of traditional teaching video playing, so after watching a video, a user can timely perform self-detection, judge the current learning effect of knowledge points, strengthen the learning effect and perform statistics on wrong questions; a personalized learning route is pushed to the user; audio andimages are acquired to correct wrong pronunciation; and the system has a translation function and better facilitates the learning of the user.

Owner:HUNAN INST OF TECH

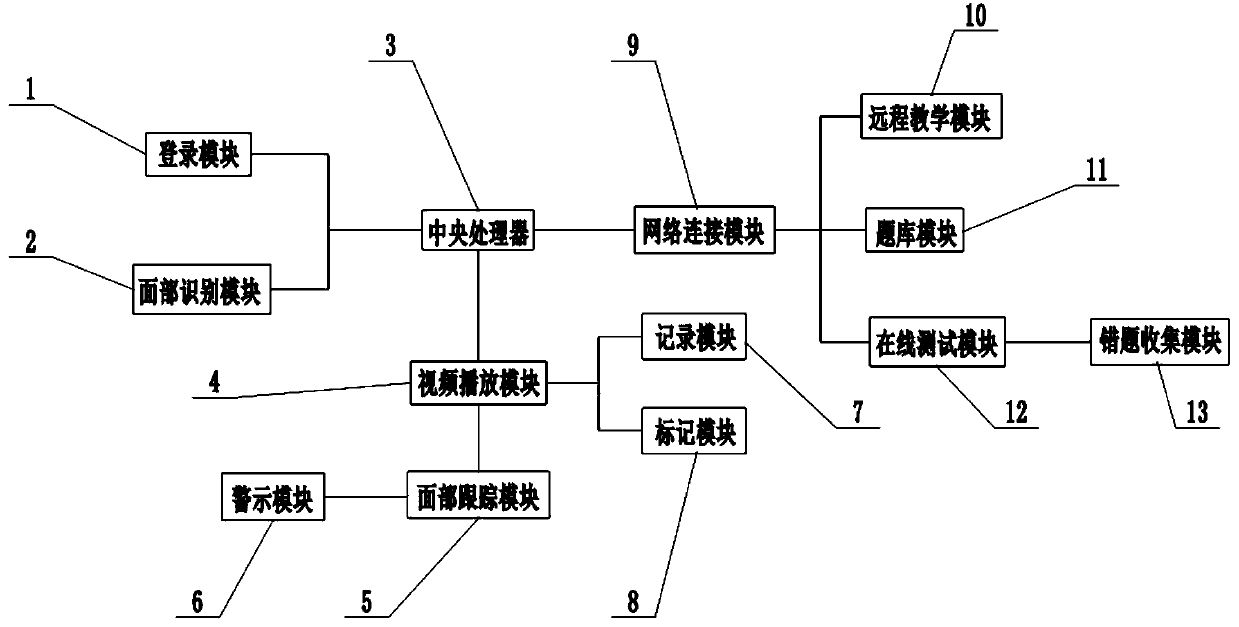

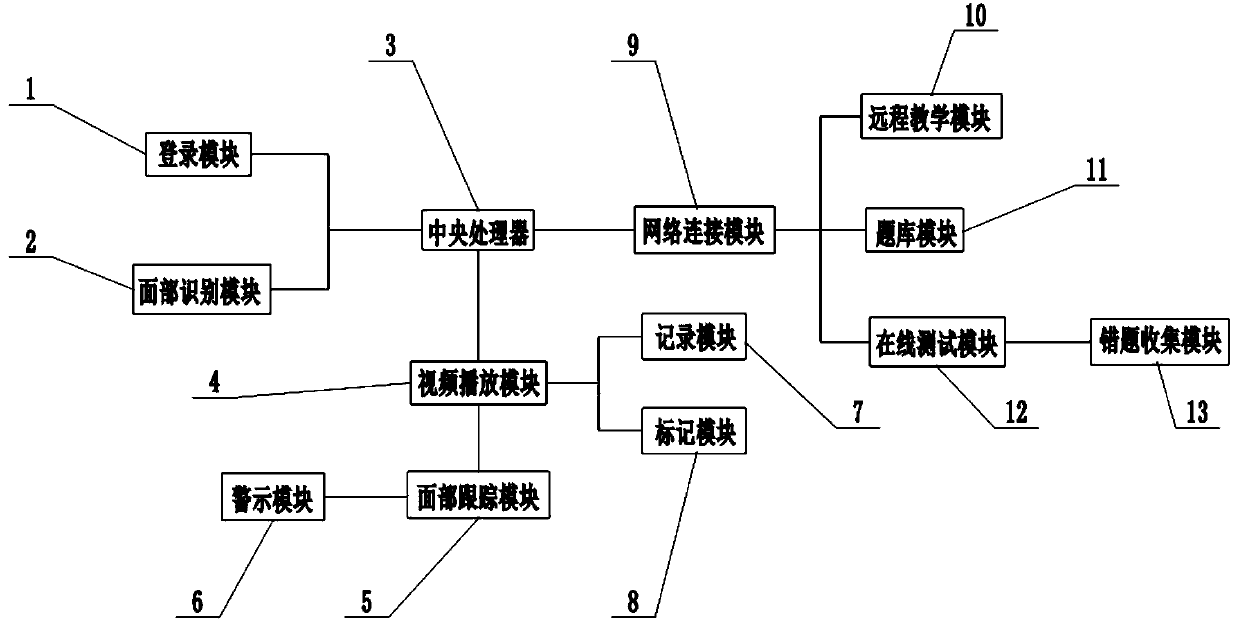

Network teaching system

PendingCN111145055ASolve the problem of lack of supervision of users and poor teaching effectPlay a supervisory roleData processing applicationsElectrical appliancesEngineeringModule network

The invention relates to the field of education, more particularly to a network teaching system. The system comprises a login module, a face recognition module, a central processing unit, a video playing module, a face tracking module, a warning module, a recording module, a marking module, a network connection module, a remote teaching module and a question bank module. The login module and the face recognition module are respectively connected with the central processing unit; the login module and the face recognition module are used for logging in the system; the face tracking module is connected with the video playing module; the recording module and the marking module are respectively connected with the video playing module; a user can select an account password input mode or a face recognition mode to log in the system according to personal habits, the user can monitor the face orientation of the user in real time by controlling the video playing module, the teaching video playing module and the face tracking module, the user is reminded to focus attention when watching the video, and the teaching effect is improved.

Owner:HUANGHE S & T COLLEGE

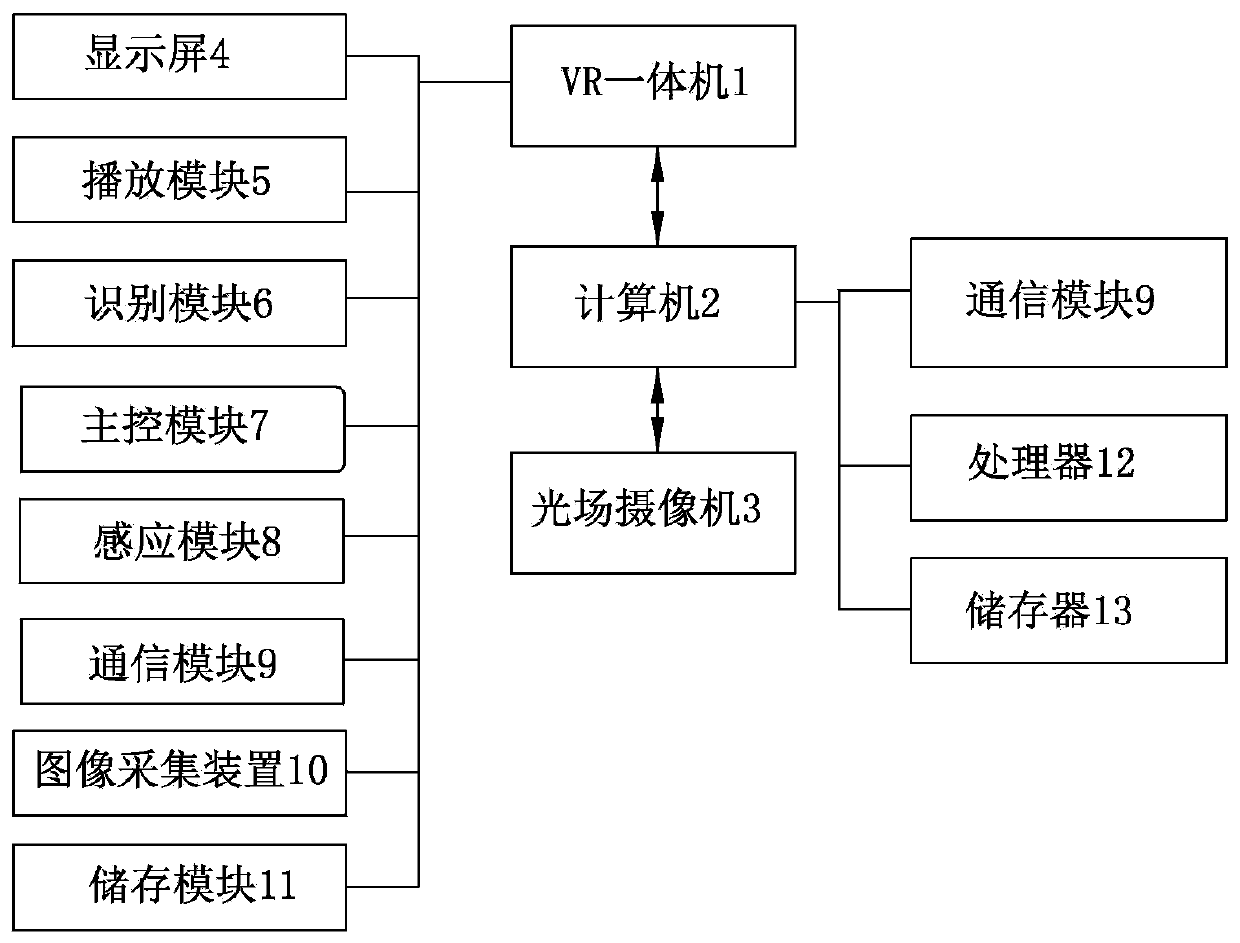

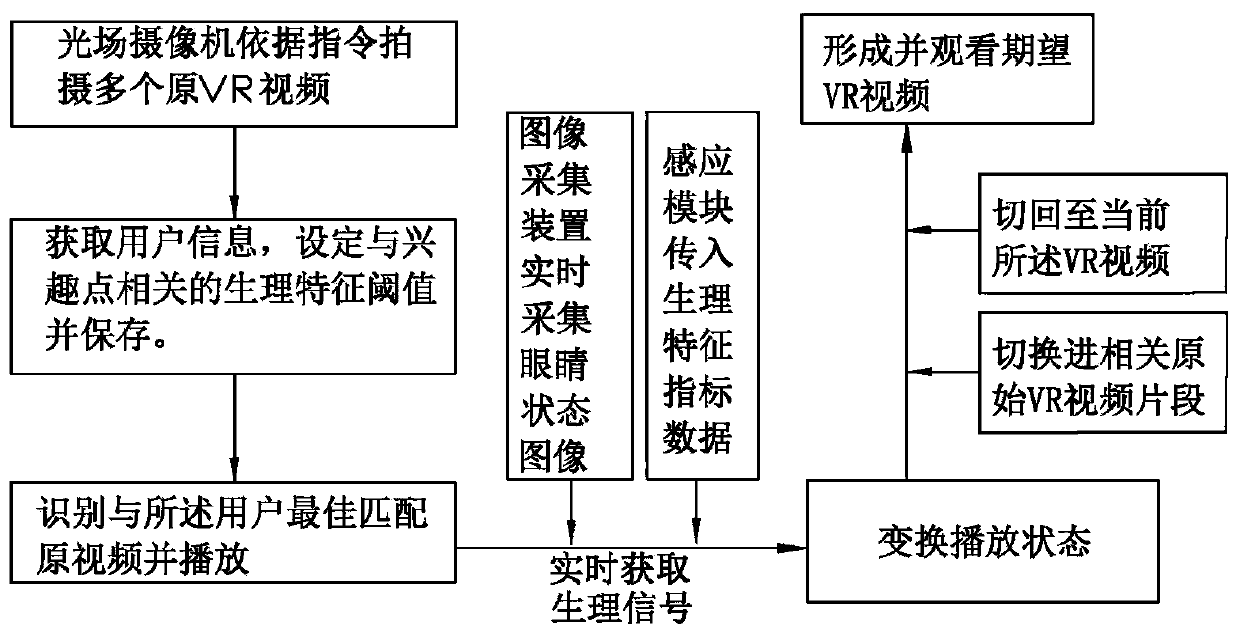

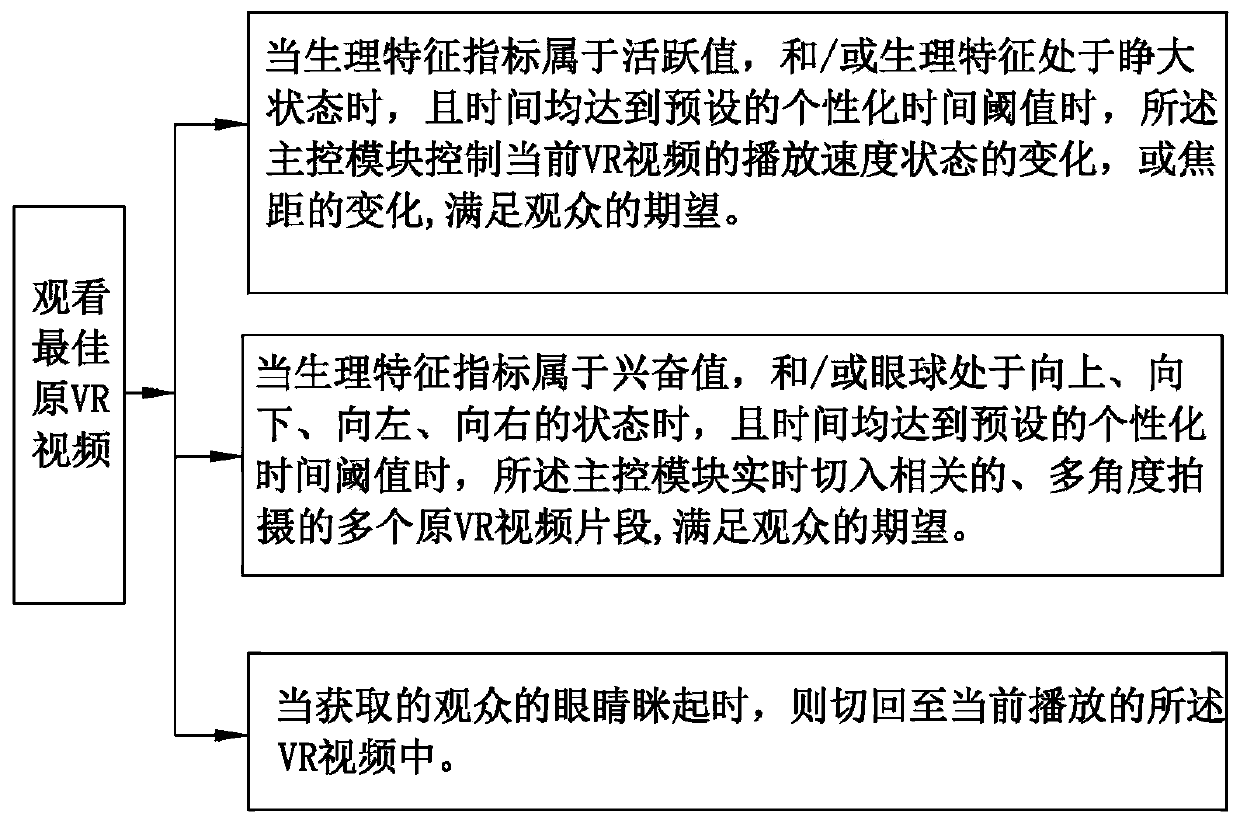

Method and device for controlling video content in real time according to physiological signals

InactiveCN111193964AKeep healthyMeet the shocking experienceSelective content distributionPhysical healthComputer graphics (images)

The invention discloses a method and device for controlling video content in real time according to physiological signals, and the method and device are used for VR equipment. The method comprises thesteps of enabling a photographing device to photograph a plurality of original videos according to an instruction; acquiring user information, and setting a physiological feature threshold related tothe interest point and storing the physiological feature threshold; identifying and playing an original video which optimally matches the user; and acquiring the physiological signal of the user in real time, comparing the physiological signal with the physiological feature threshold, and changing the playing state of the original video in real time according to a result. Through the method and the device, according to personal preferences and physical conditions, a set of optimal original VR video can be firstly selected; in the watching process, the playing state of watching the VR video can be adjusted at any time according to own physiological needs, including real-time cut-in or cut-back of the original VR video clip; the user can enjoy shocking experience of watching the VR video ina multi-direction, multi-view and multi-season mode; interestingness and satisfaction are greatly improved, and meanwhile, the body health of the user is guaranteed; and the method and the device areuser-friendly.

Owner:未来新视界科技(北京)有限公司

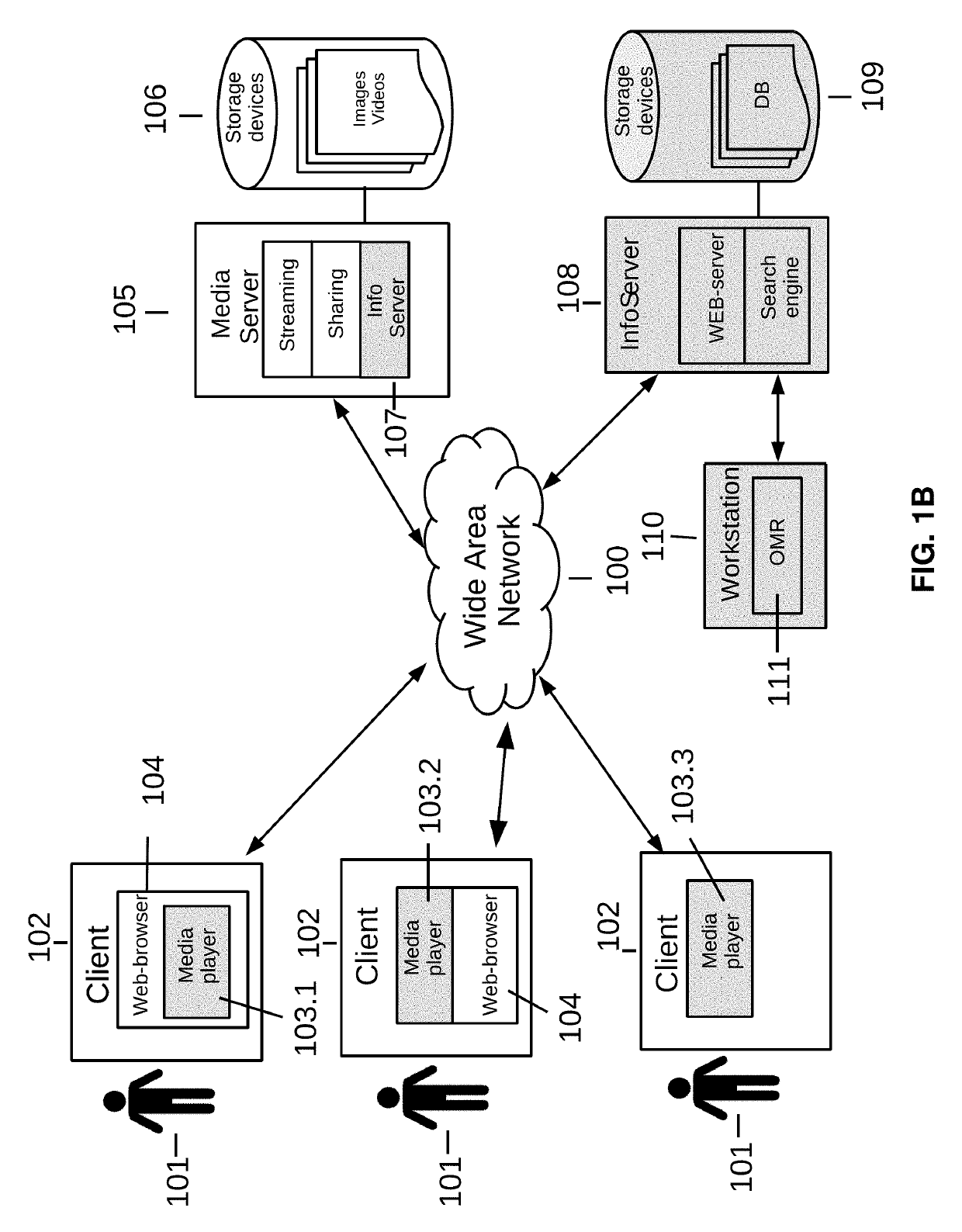

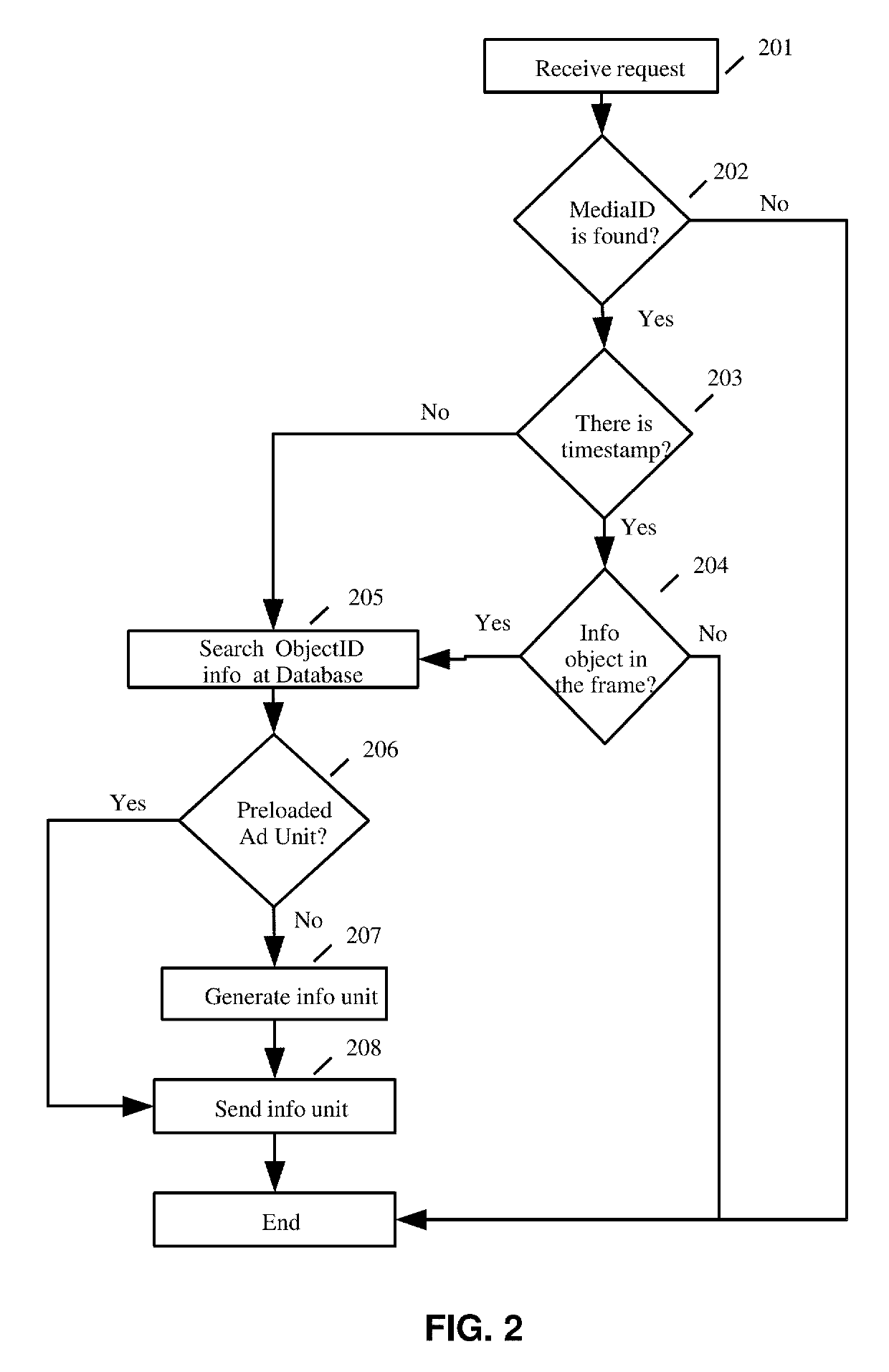

Method of displaying advertising during a video pause

ActiveUS10299010B2AdvertisementsSelective content distributionComputer graphics (images)Information networks

A system and method for displaying information while watching videos and viewing still images. The user is provided additional information about objects in still images and videos; improving the information value of implicit advertising for the end user, increasing advertising efficiency; increasing monetization of videos and still images. The embodiment of the invention provides a system and method to equip videos and still images transmitted through an information network with additional information about the depicted objects. When the user activates a pause function while watching videos or is idle while viewing still images, a query is generated for the presence of objects in the frame with an information unit (advertisement). The query is analyzed at the information server. The result of the query is presented as an information (advertising) unit visually related to the information item.

Owner:KACHKOVA VALERIA +2

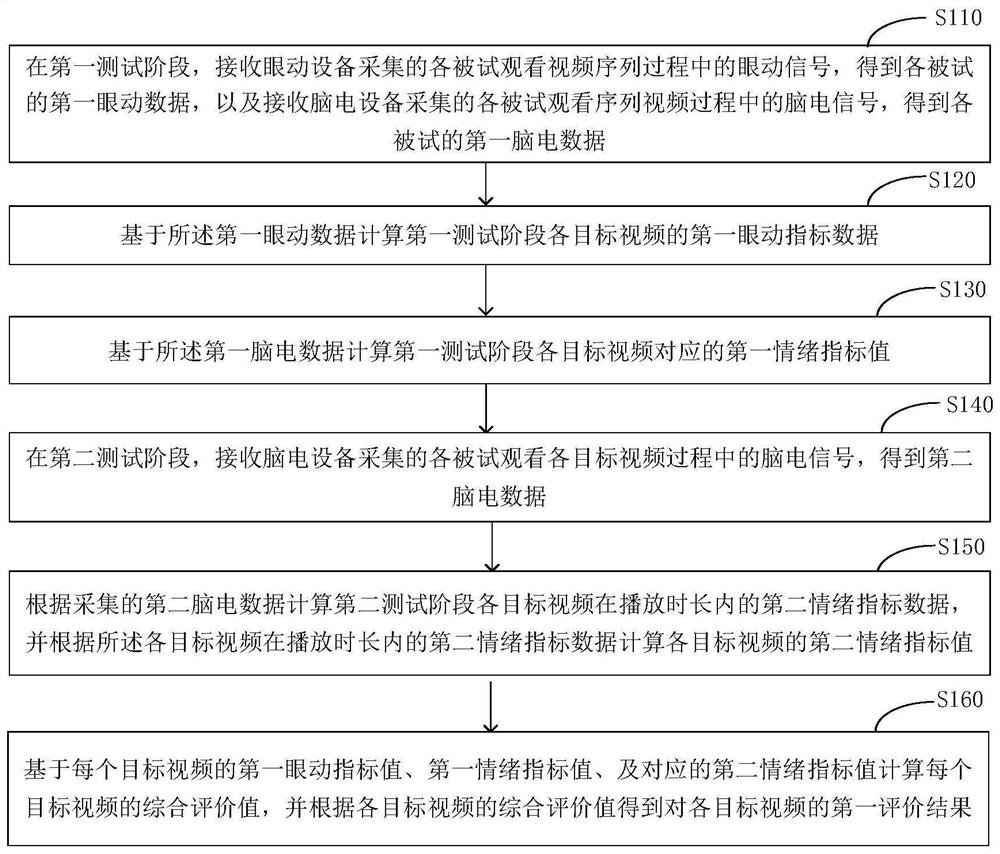

Video content evaluation method and video content evaluation system

ActiveCN112637688AThe result is objectiveWith real-time monitoringTelevision systemsSelective content distributionEvaluation resultVideo sequence

The invention provides a video content evaluation method and a video content evaluation system, and the method comprises the steps: in a first test stage, receiving eye movement signals in a process that eye movement equipment collects each tested watching video sequence, obtaining first eye movement data, and receiving an electroencephalogram signal collected by electroencephalogram equipment, and obtaining first electroencephalogram data; calculating first eye movement index data based on the first eye movement data; calculating a first emotion index value corresponding to each target video in the first test stage based on the first electroencephalogram data; in a second test stage, receiving an electroencephalogram signal acquired by the electroencephalogram equipment to obtain second electroencephalogram data; calculating second emotion index data according to the second electroencephalogram data, and calculating a second emotion index value according to the second emotion index data; and calculating a comprehensive evaluation value of the target video based on the first eye movement index value, the first emotion index value and the second emotion index value, and obtaining a first evaluation result according to the comprehensive evaluation value. Through the steps, the purpose that the obtained evaluation result is objective, direct and accurate is achieved.

Owner:北京意图科技有限公司

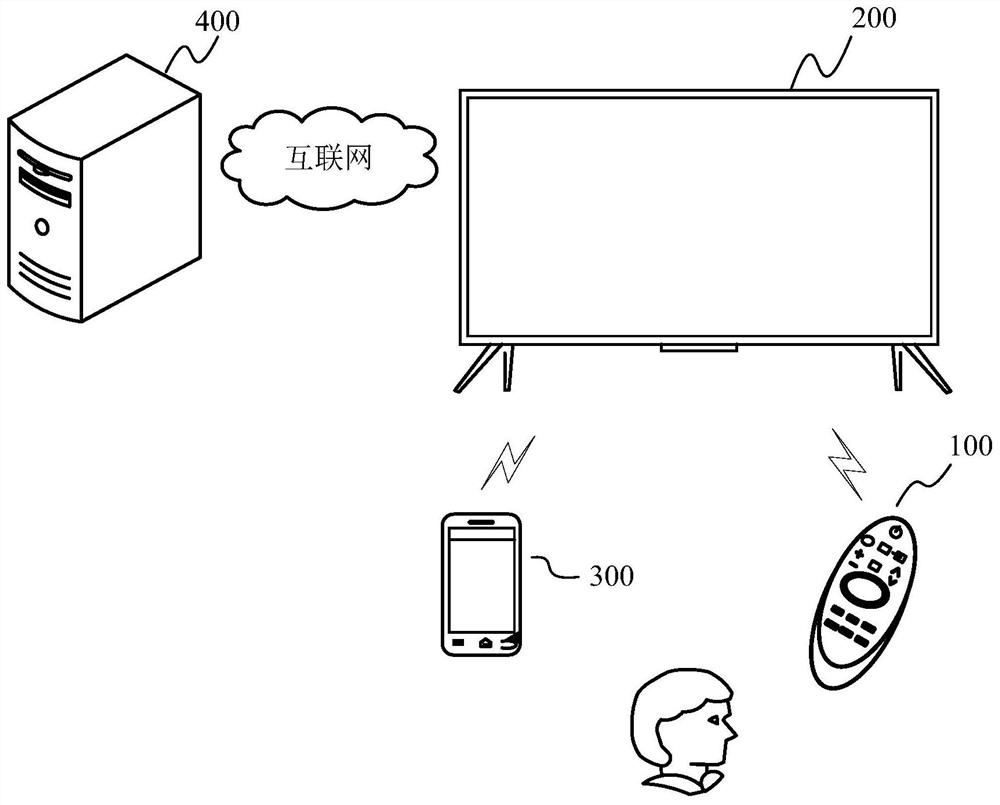

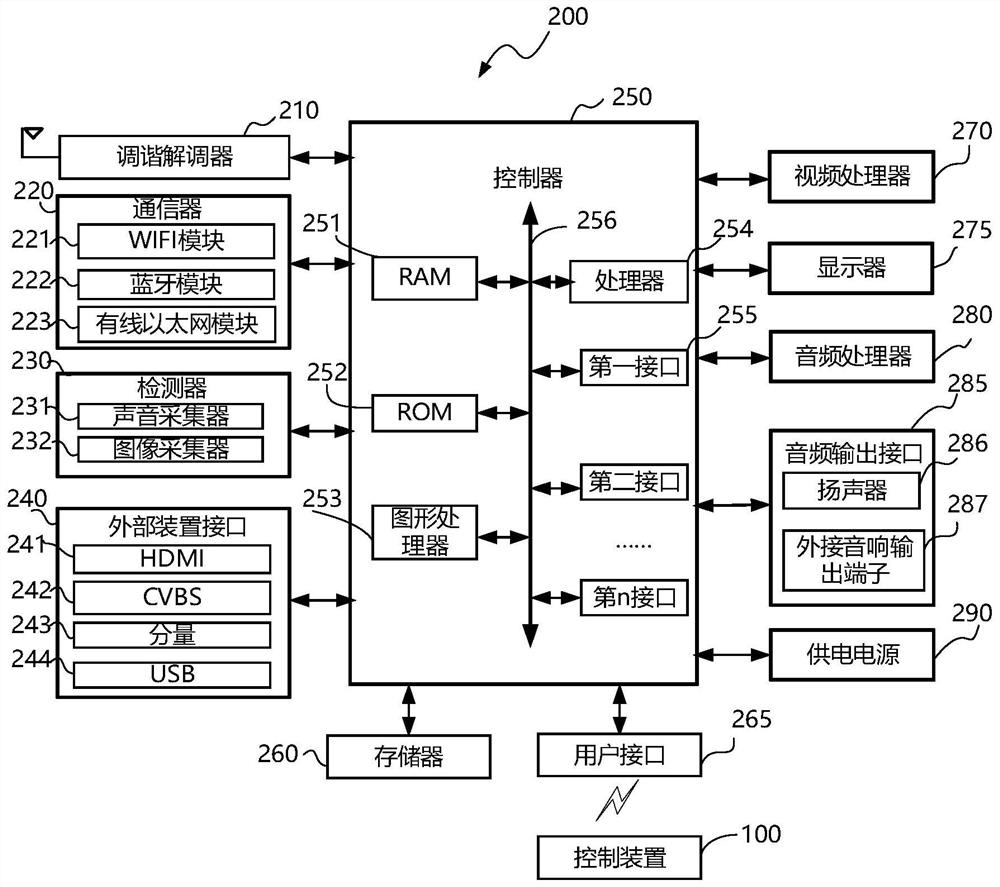

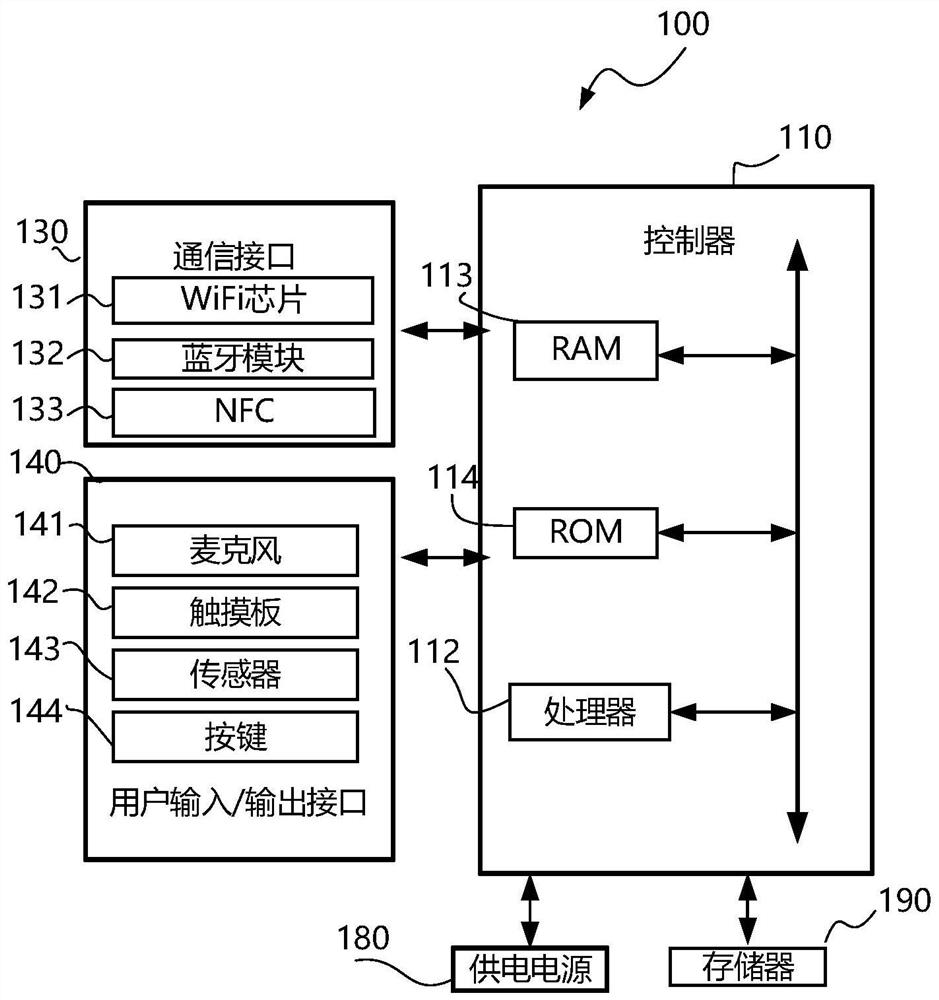

Double-browser application loading method and display equipment

ActiveCN111935510AImprove loading speedImprove interactivitySelective content distributionVideo eegEngineering

The invention provides a double-browser application loading method and display equipment. The method comprises the following steps: under the condition of the video playing operation selected by a user, enabling a first browser to notify a downloading agent to pre-download video resources related to the film source information of the video; and after the session ID fed back by the downloading agent is obtained, triggering the second browser to start and load the video playing application, and continuously obtaining pre-downloaded video resources from the downloading agent through the session ID and the film source information so as to play the pre-downloaded video resources in the video playing application. According to the method, a single browser is not used any more, but double browsersare adopted, and when a user browses information, the first browser with low resource occupation is adopted; and when the user watches the video, a second browser is adopted for watching the video. Therefore, by applying the technical scheme provided by the invention, the time of the screen projection process can be shortened, and the screen projection efficiency can be further improved.

Owner:VIDAA(荷兰)国际控股有限公司

Online open course video quality quantitative evaluation method

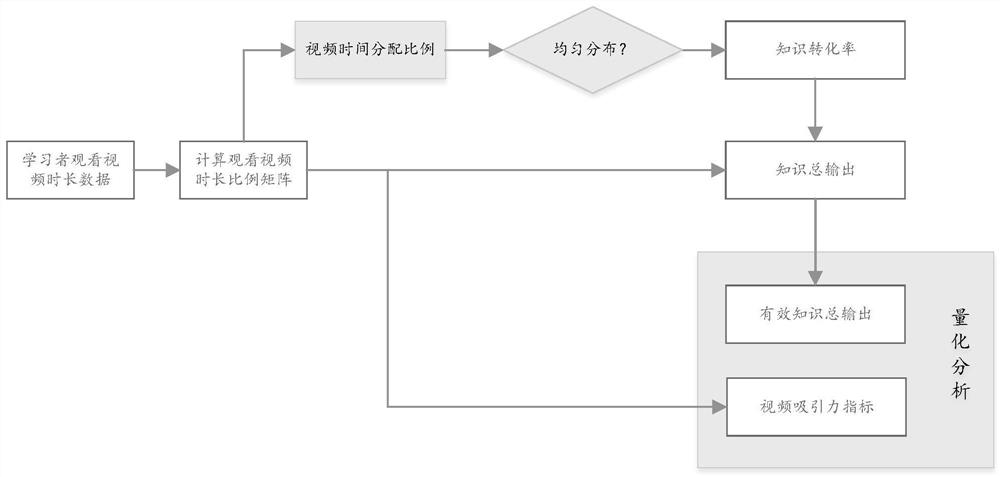

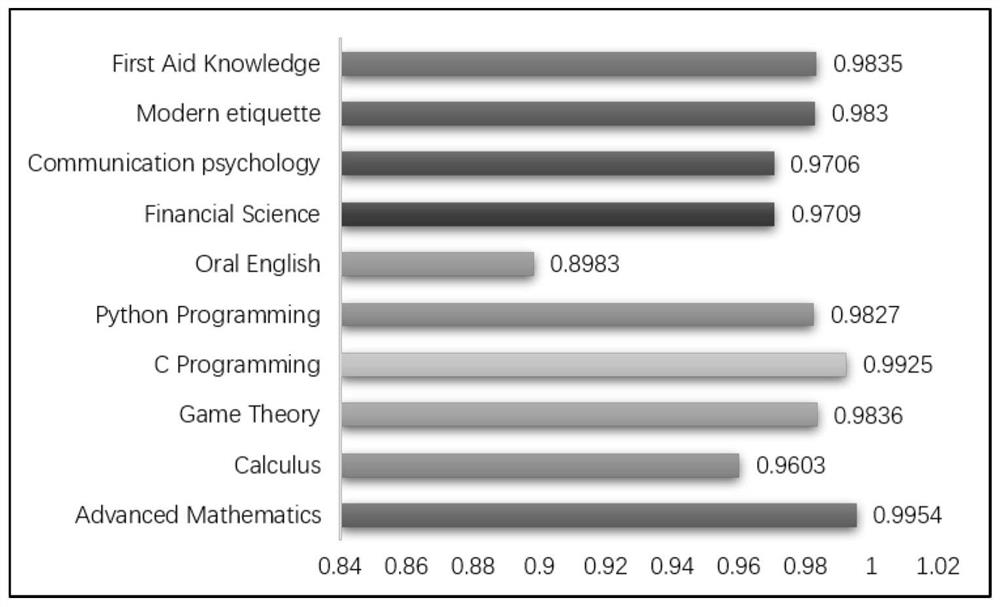

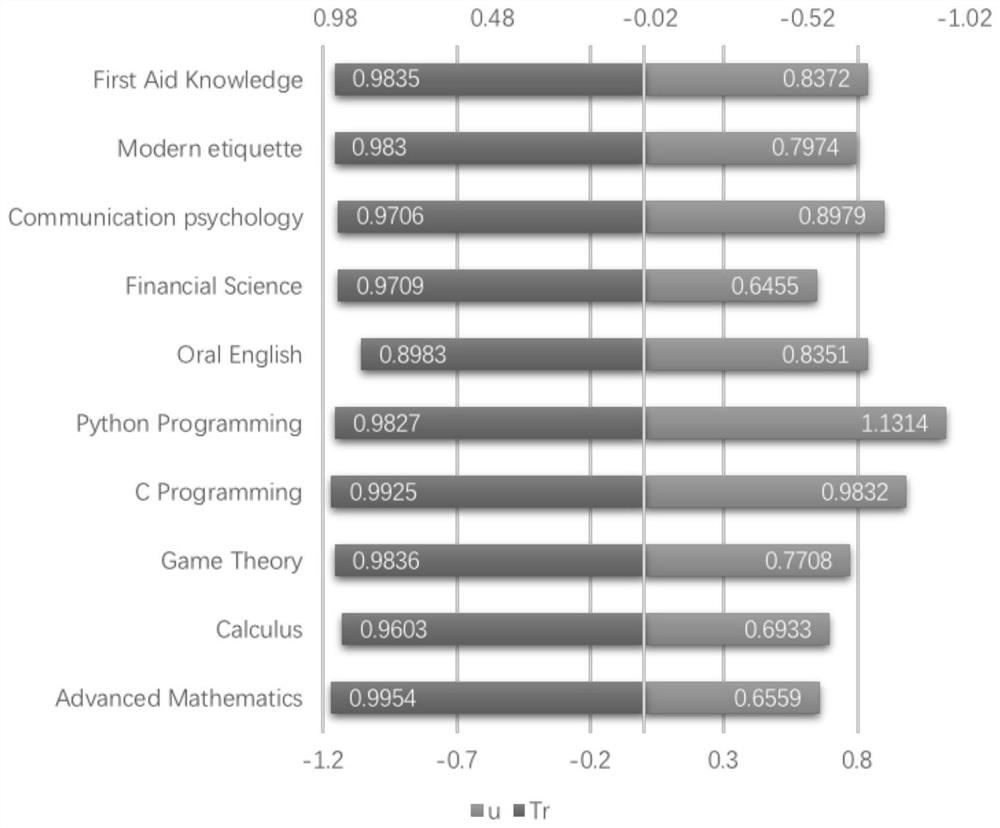

PendingCN112163778ARealize quantitative and objective evaluationBreak through subjectivityResourcesInformatizationKnowledge conversion

The invention belongs to the field of education informatization, and particularly relates to an online open course video quality quantitative evaluation method. The method comprises the following steps: S1, calculating a matrix of a video watching duration proportion; S2, calculating a total knowledge output quantity and a video attraction index of the course video; S3, calculating a knowledge conversion rate; S4, calculating the effective total output of the video knowledge. Compared with the prior art, the invention has the following beneficial effects: (1) based on an education big data analysis and statistics method, quantitative and objective evaluation of video quality is realized, and subjectivity of traditional evaluation is broken through; (2) the method has universality and can be used for evaluating the quality of online video resources of any platform, and the problems caused by traditional subjective scoring and different evaluation standards are solved; (3) the video quality evaluation method established by the invention solves the problems of how to quantize knowledge and convert knowledge into mathematical mechanisms, and has a certain scientific theoretical value.

Owner:NAT UNIV OF DEFENSE TECH

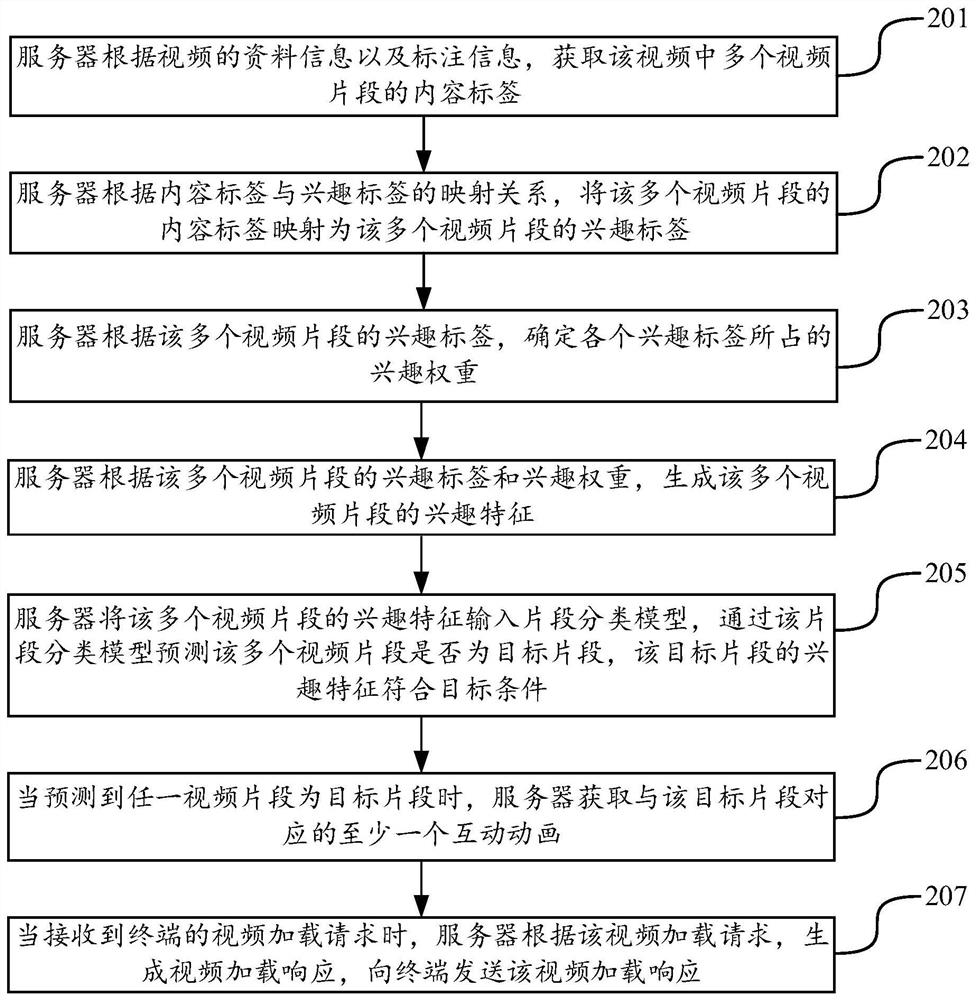

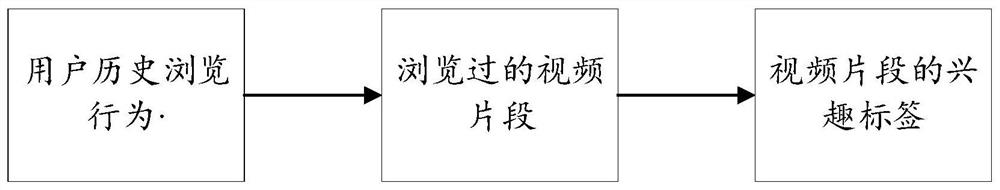

Animation display method and device, electronic equipment and storage medium

ActiveCN112235635AImprove experienceRich interactionSelective content distributionAnimationEngineering

The invention discloses an animation display method and device, electronic equipment and a storage medium, and belongs to the technical field of multimedia. According to the invention, when the targetsegment in the video is played, the face image of the user watching the video is obtained; because the interest characteristic of the target segment accords with the target condition, the user watching the video usually makes some unquiet expressions, the expression category is determined based on the face image, and the corresponding interactive animation is displayed in the video playing interface, so the interactive mode provided for the user by the terminal is enriched, the interestingness of the terminal during video playing is improved, and the user experience when the user watches thevideo is optimized.

Owner:TENCENT TECH (BEIJING) CO LTD

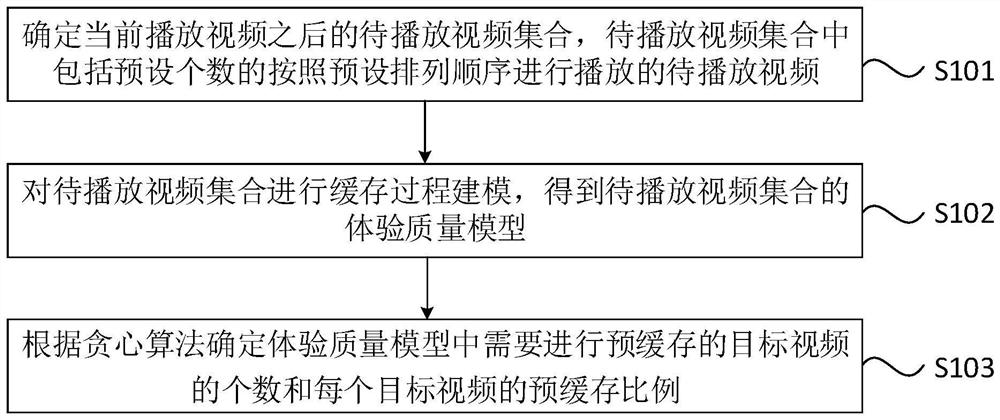

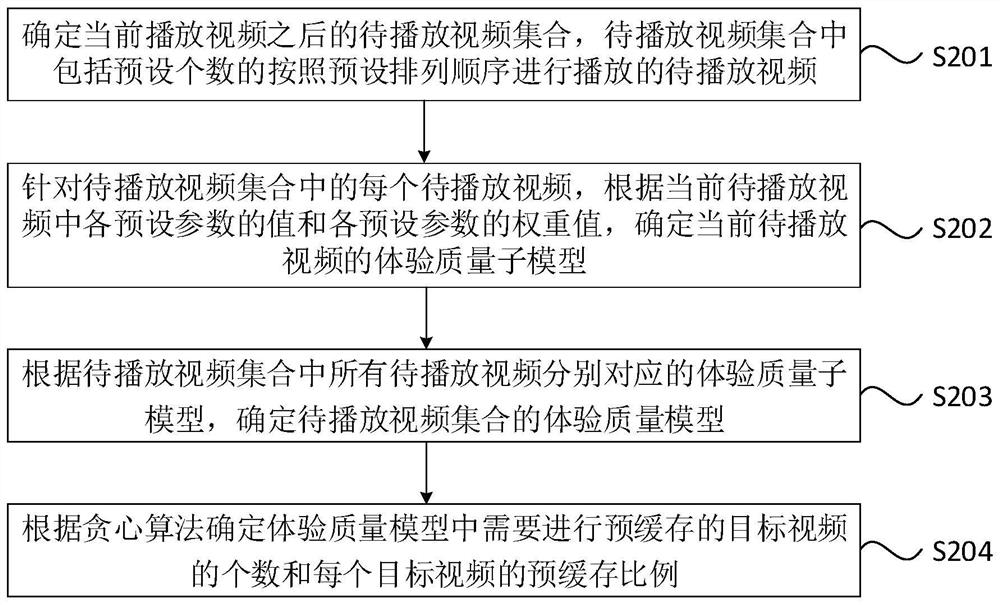

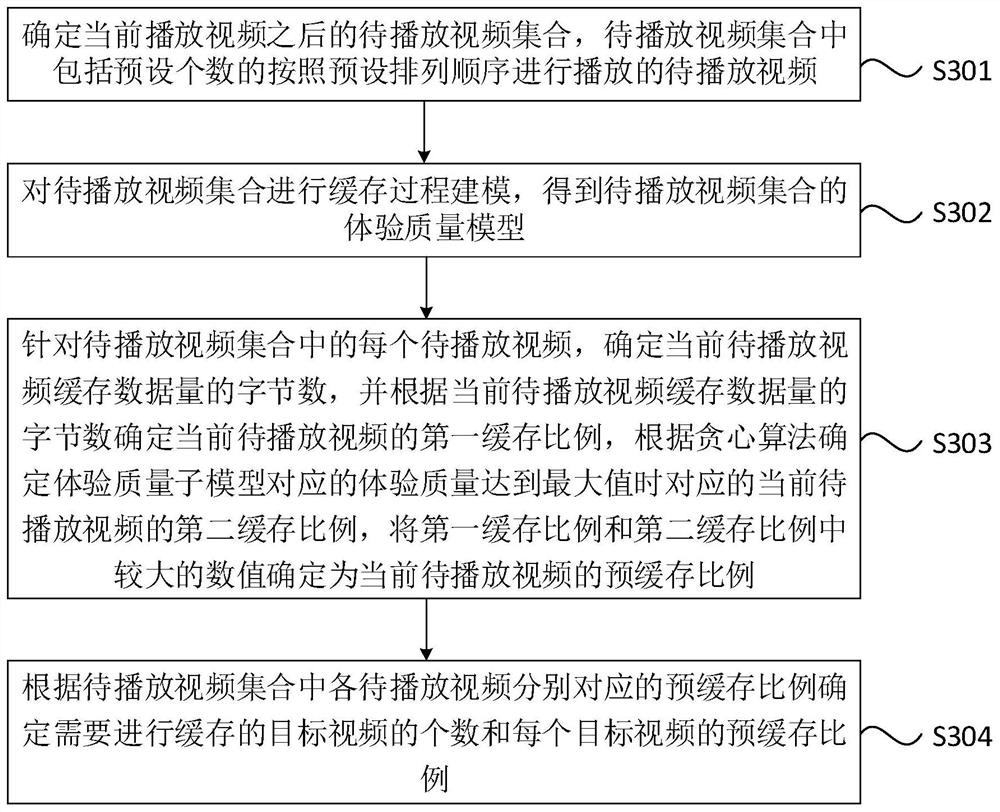

Video caching method and device, equipment and storage medium

ActiveCN112752117AAvoid CatonReduce the probability of stutteringSelective content distributionGreedy algorithmMediaFLO

The embodiment of the invention discloses a video caching method and device, equipment and a storage medium. The method comprises the steps: determining a to-be-played video set after a currently played video, wherein the to-be-played video set comprises a preset number of to-be-played videos played according to a preset arrangement sequence; caching process modeling is conducted on the to-be-played video set, and obtaining an experience quality model of the to-be-played video set; and determining the number of target videos needing to be pre-cached in the experience quality model and the pre-caching proportion of each target video according to a greedy algorithm. According to the technical scheme provided by the embodiment of the invention, the caching process modeling is carried out on the to-be-played video set, and the constructed experience quality model is solved by utilizing the greedy algorithm, so that the number of videos needing to be cached and the pre-caching proportion corresponding to each video can be reasonably determined, and the problem of lagging in the video playing process is solved; in the continuous playing process of the video streaming media, the lagging rate is lower, and the video watching proportion is larger.

Owner:BIGO TECH PTE LTD

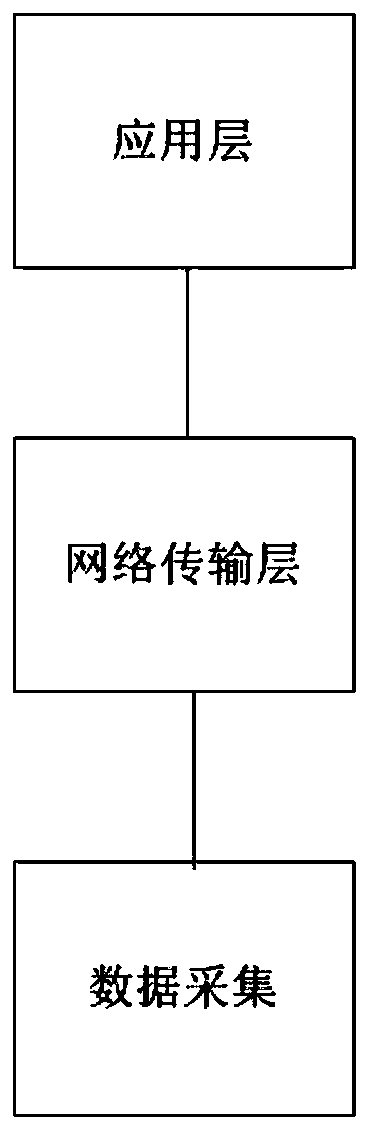

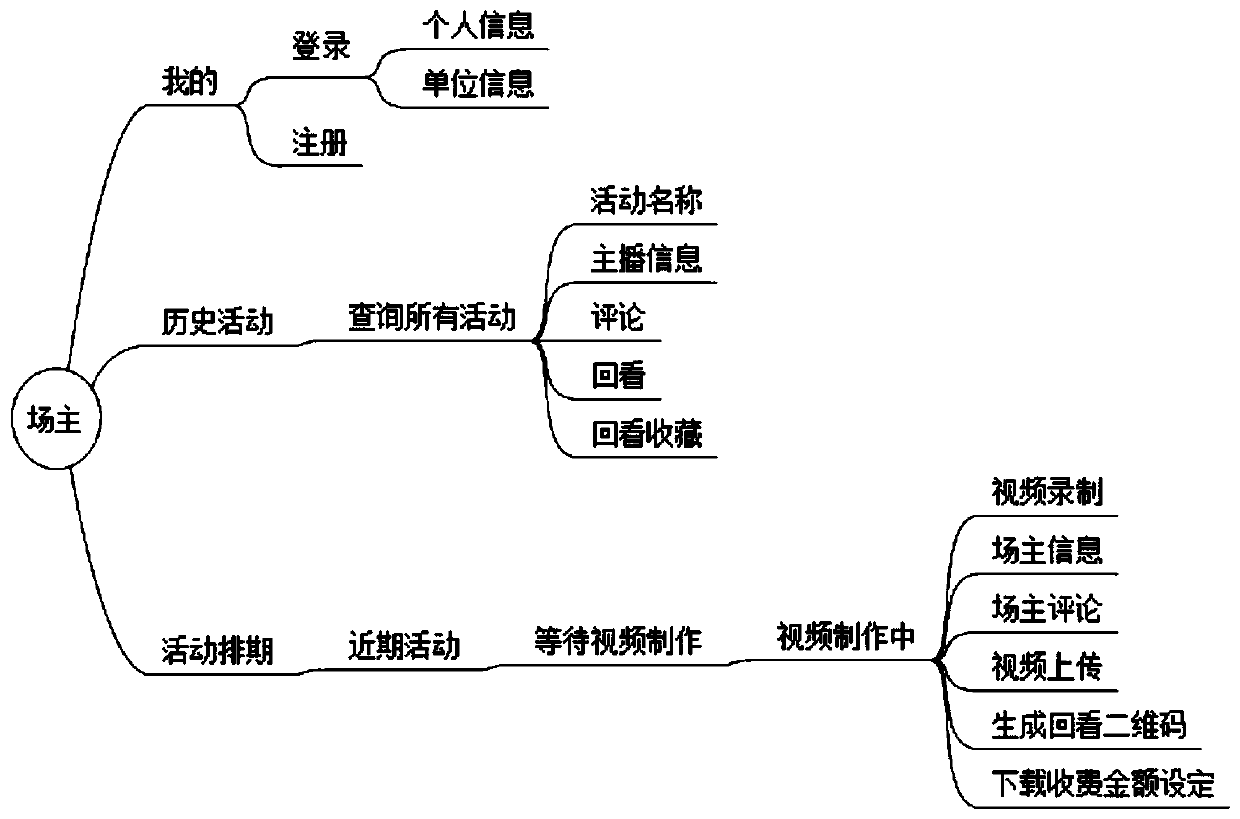

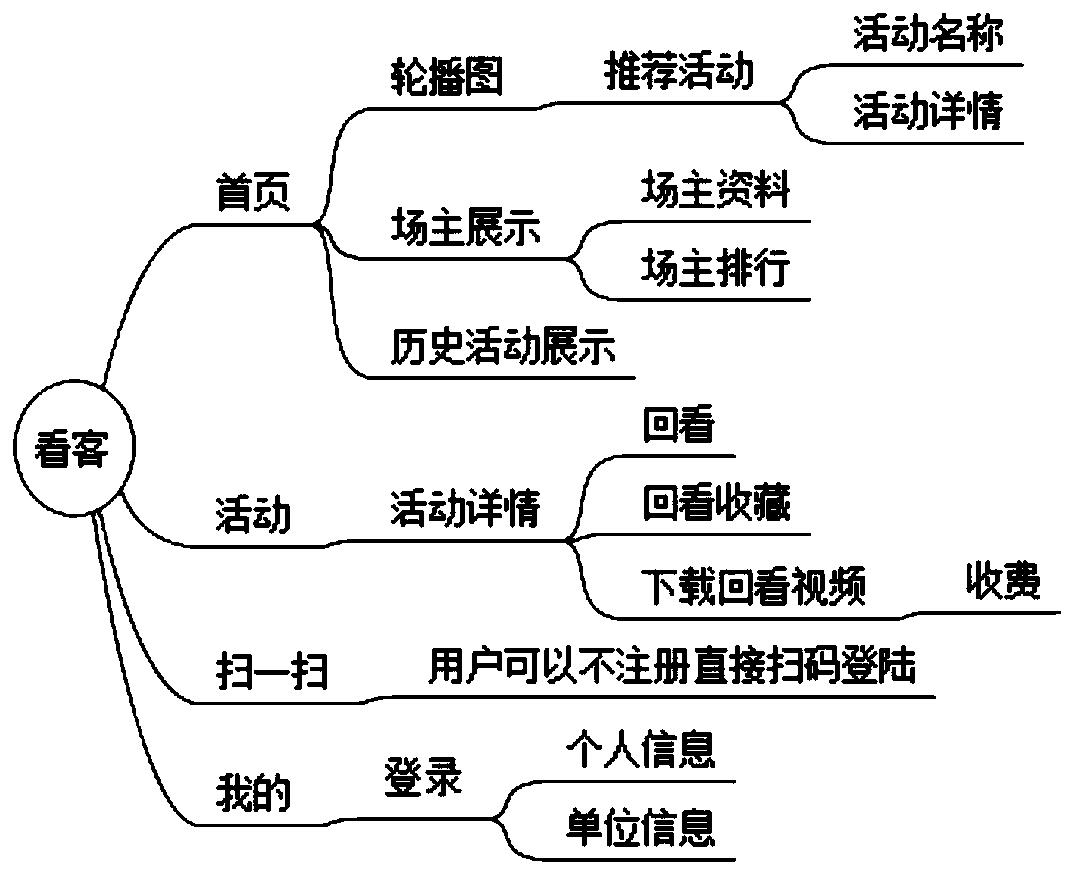

Field broadcasting system

InactiveCN110691266AAddressing Selfie BehaviorSolve the poor picture effectSelective content distributionVideo recordLarge screen

The invention relates to a field broadcasting system. The field broadcasting system comprises a mobile phone APP module, wherein a user logs in a main page of the mobile phone APP module through scanning, and different playback information is displayed according to activity types through playback classification of the main page; a user PC end module having the same interface and function as the mobile phone APP module, wherein a user can synchronously watch videos on the user PC end module and the mobile phone APP module; and an operation background module providing an information interface for the mobile phone APP module and the user PC terminal module, wherein the operation background module generates a look-back two-dimensional code, sets whether a user can download the two-dimensionalcode, sets video content time limit, prints the generated corresponding two-dimensional code on an admission ticket or projects the generated corresponding two-dimensional code on a large screen released on site. According to the invention, the problems of selfie behavior, poor video picture effect and poor tone quality effect existing in live broadcast of visitors are solved, and professional audio and video records at the optimal shooting position are provided.

Owner:季国存

Video recommending method based on video affective characteristics and conversation models

InactiveCN102495873BProvide accuratelyImprove viewing experienceSpecial data processing applicationsPattern recognitionDegree of similarity

The invention provides a video recommending method based on video affective characteristics and conversation models, which is characterized in that: affective characteristics of a video are adopted as the comparison foundation, multiple affective characteristics are extracted from the video and an affiliated sound track to synthesize an attraction-arousal curve diagram (V-A diagram), then the V-Adiagram is homogenized, the homogenized V-A diagram is classified into different identical blocks with a fixed quantity, a color block diagram of each block is determined, a difference of the two color block diagrams on corresponding positions of two pictures is compared to a threshold value to obtain a block difference and a coverage difference, finally the similarity value of the two videos canbe obtained, and a processed result for clustering the similarity value is used as a video recommend result. The method also adopts a conversation model to update the video recommend result during the continuous watching process of a user. Due to the adoption of the method, the video recommend result can more satisfy the current affective status of the user, the clicking rate of the user on the recommended video and the number of the continuously-watched video can be improved.

Owner:BEIHANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com