Patents

Literature

31results about How to "Increase winning percentage" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

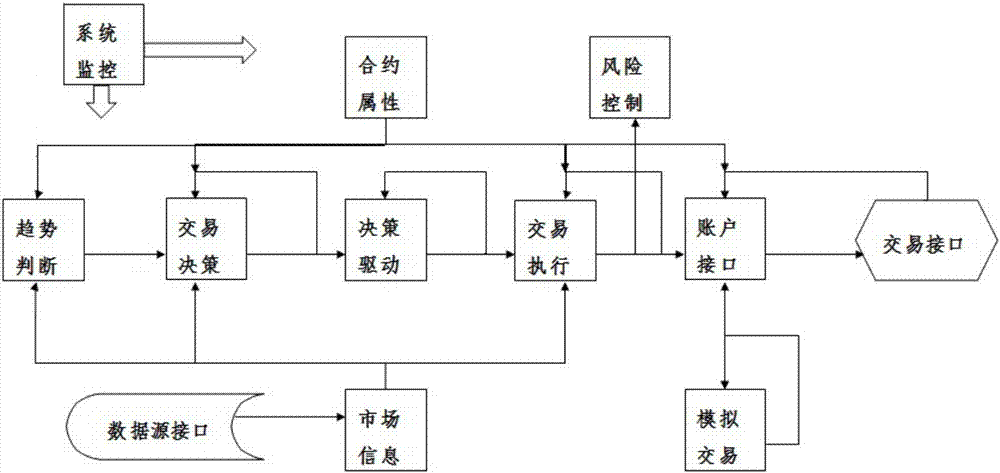

Multi-module automatic trading system based on network distributed computing

InactiveCN106934716AReduce human errorSimplify Quantitative Modeling ProceduresFinanceRisk ControlTransaction management

The invention discloses a multi-module automatic trading system based on network distributed computing. The system comprises a historical and real-time market data processing module, a real-time market probability distribution prediction module, a trading strategy design and development module, a trading strategy history backtracking and evaluating module, a trading strategy market access and transaction management module, a system monitoring and risk control module, and a transaction report and quantitative analysis module. The automatic trading system can face various types of quantitative traders, a quantitative modeling program is simplified, a quantitative trading threshold is reduced, the quantitative strategy test and evaluation efficiency is improved, and the transaction security is guaranteed. The automatic trading system can automatically and intelligently analyze market and trading trends, provide real-time price probability distribution prediction for market main transaction products, provide a transaction signal with low delay and a high success rate for a transaction strategy and thus generate a timely and accurate trading signal, an efficient tool and comprehensive and accurate data are provided to construct the strategy of a user, and back testing and optimization are carried out.

Owner:燧石科技(武汉)有限公司

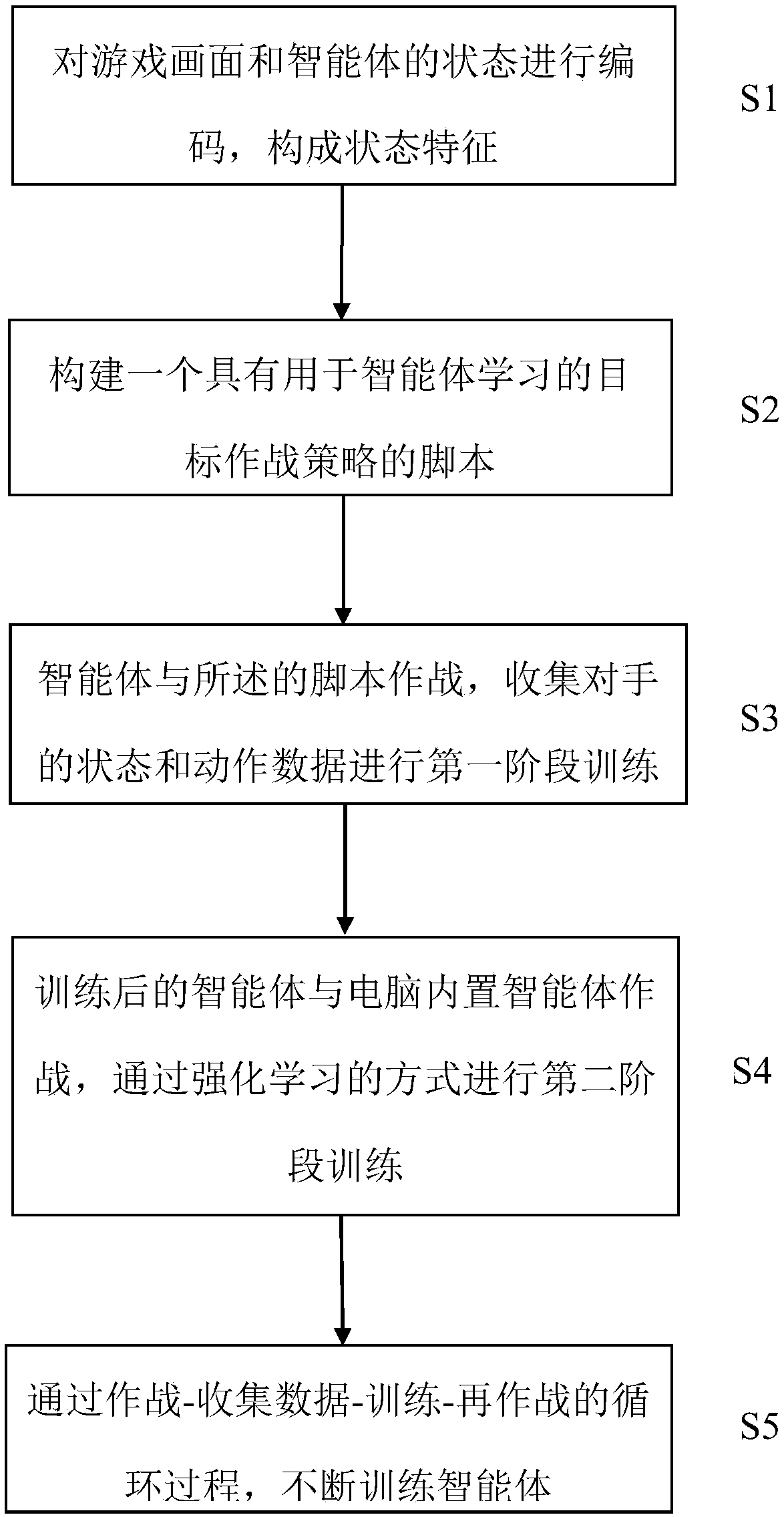

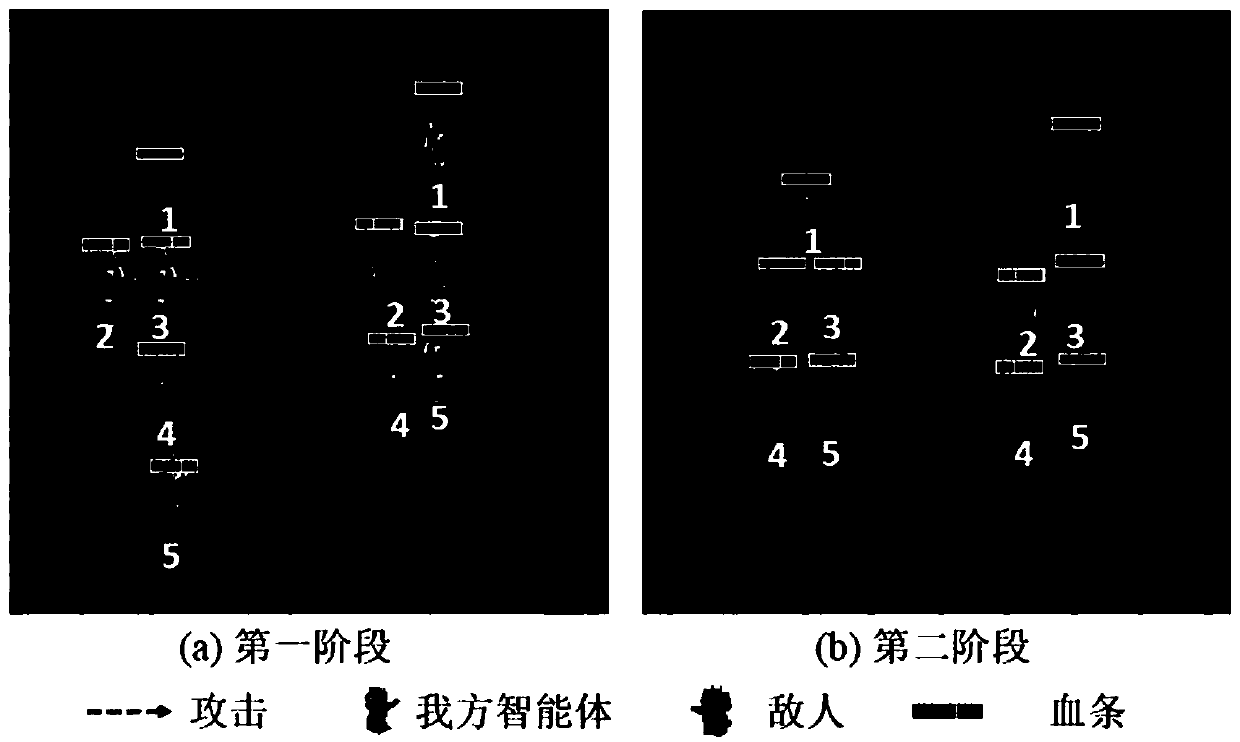

Agent learning method based on knowledge guidance-tactical perception

ActiveCN108629422AReduce training timeGood initial modelComputing modelsVideo gamesMicro-operationPhacus

The invention discloses an agent learning method based on knowledge guidance-tactical perception. In the environment of a two-party fight game, human knowledge is used to train an agent which fights with a specific tactical strategy through two stages of training. The method comprises the steps that 1) a game screen and the state of the agent are encoded to form a state feature; 2) a script with aspecific fight strategy is constructed by manually writing a script; 3) the agent fights with the script to collect the state and motion data of an opponent to carry out training in the first stage;4) the trained agent fights with a computer built-in AI, and training in the second stage is carried out through reinforcement learning; and 5) the learning framework is used to train the agent to participate in fight with specific tactics. The method provided by the invention is applicable to agent training in a two-party fight mode in a micro-operation environment, and acquires a good winning rate in the face of various micro-operation fight scenes.

Owner:ZHEJIANG UNIV

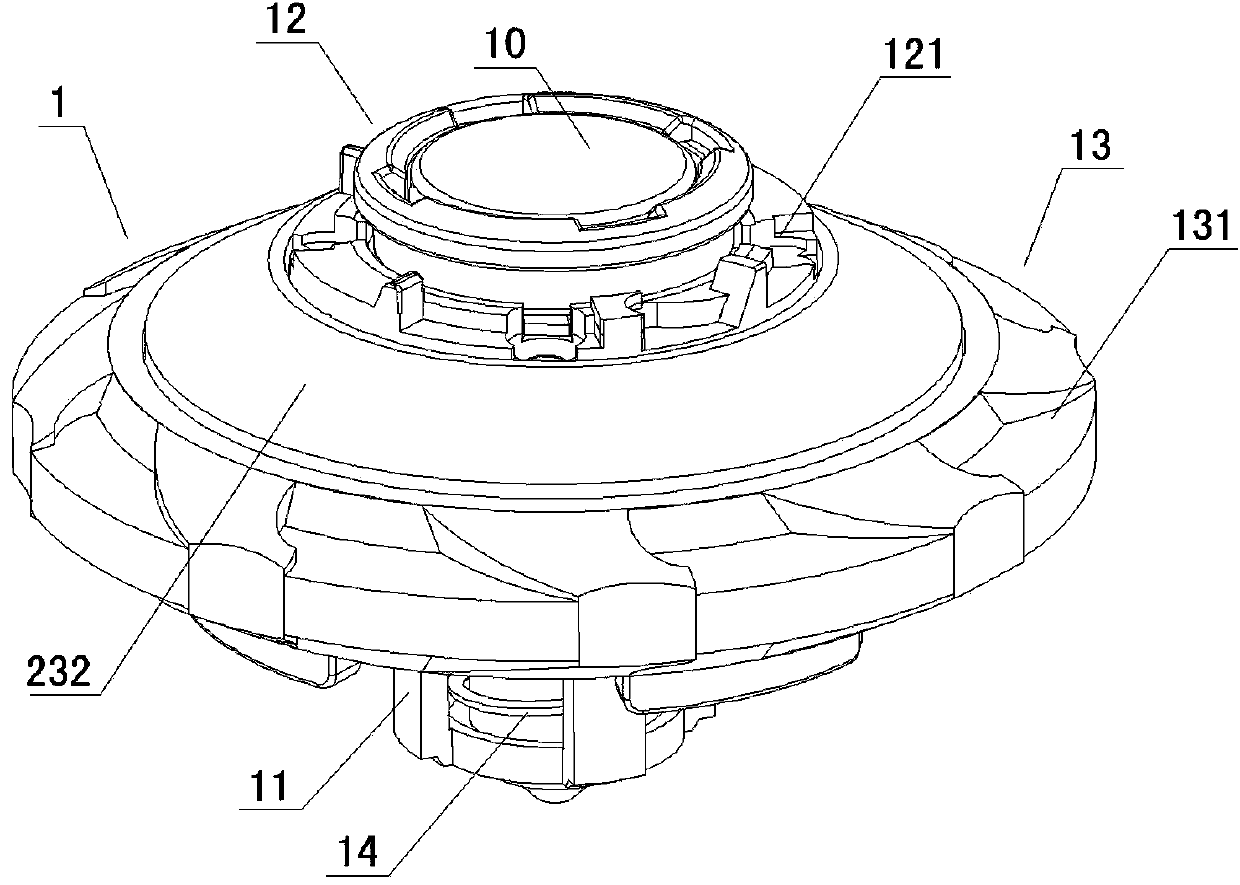

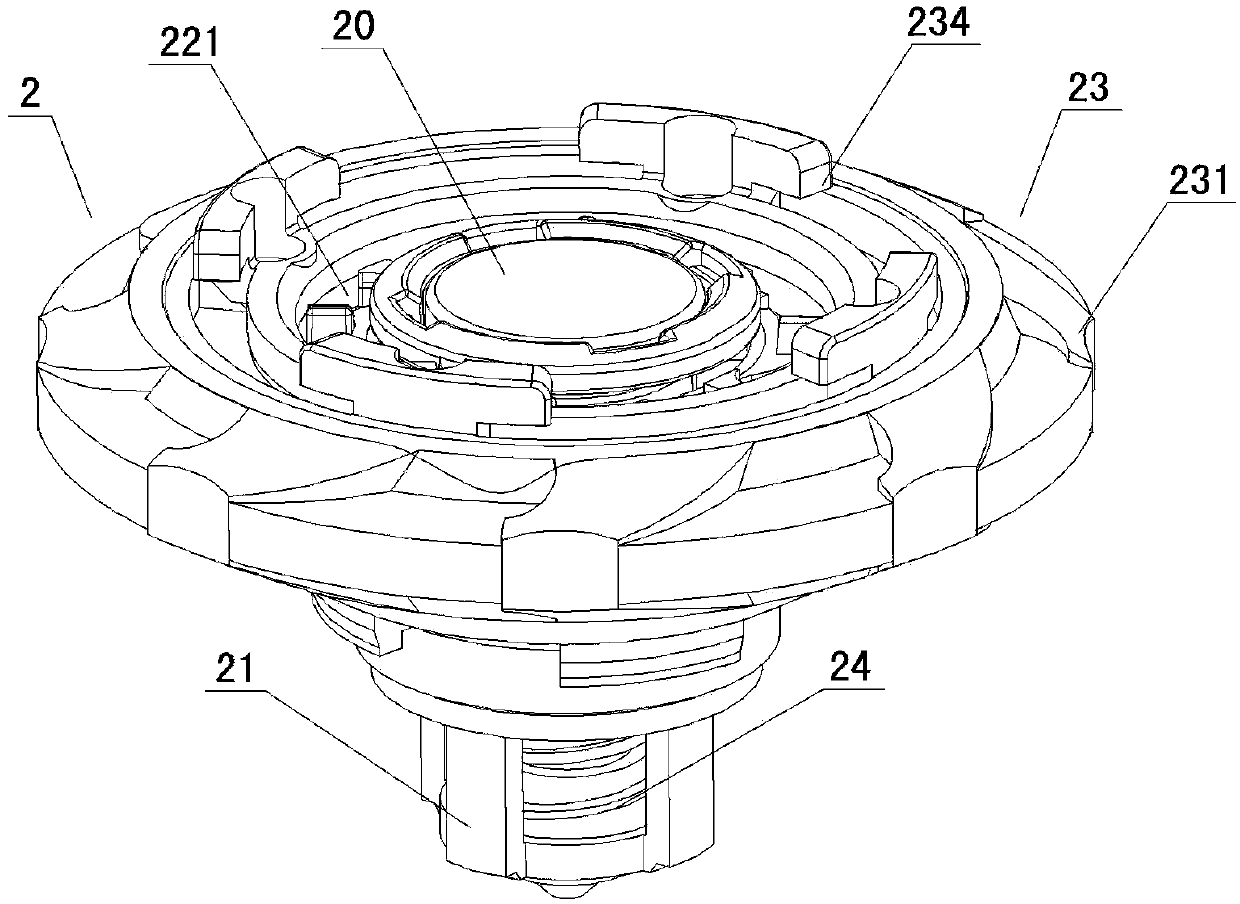

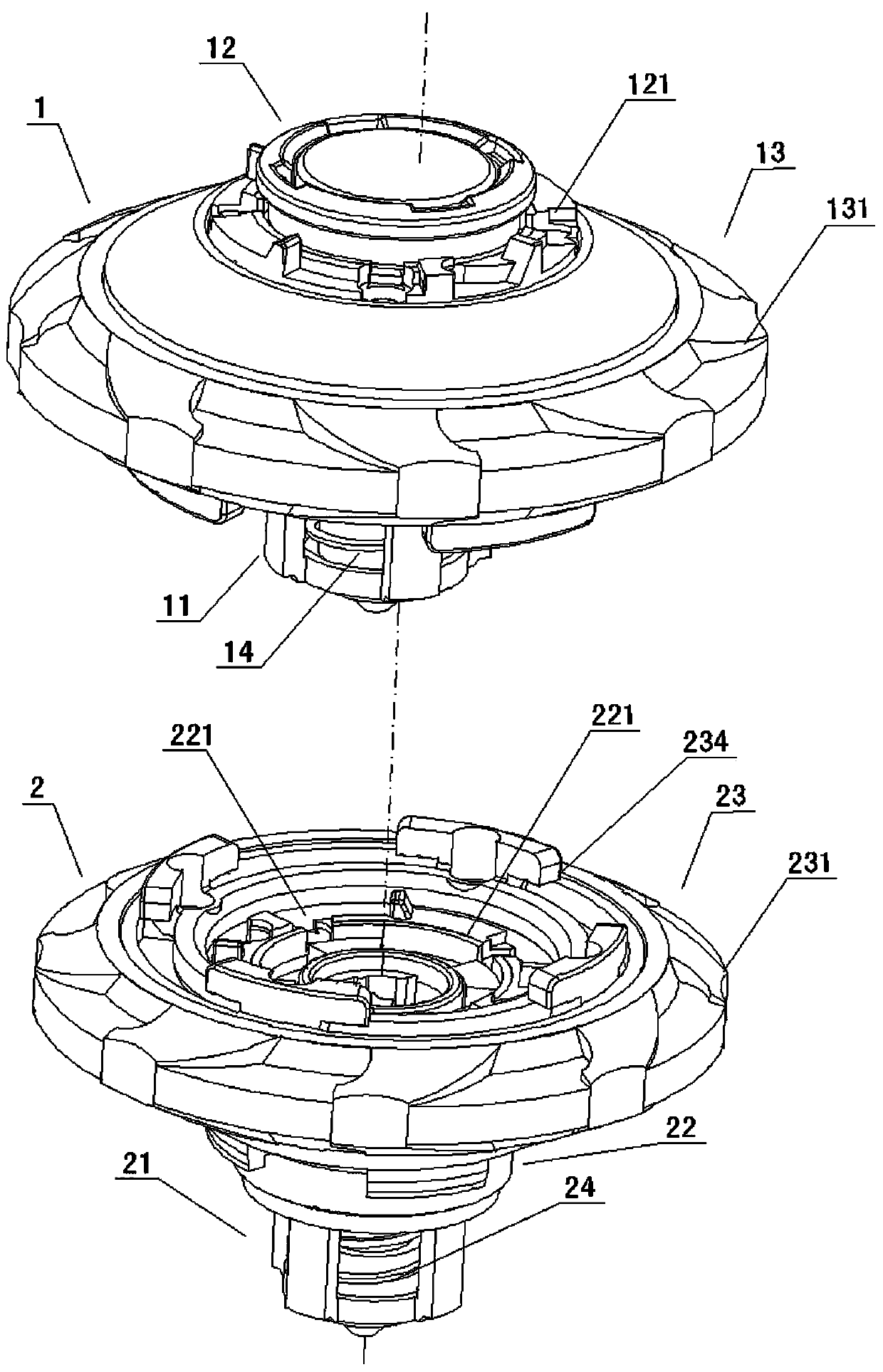

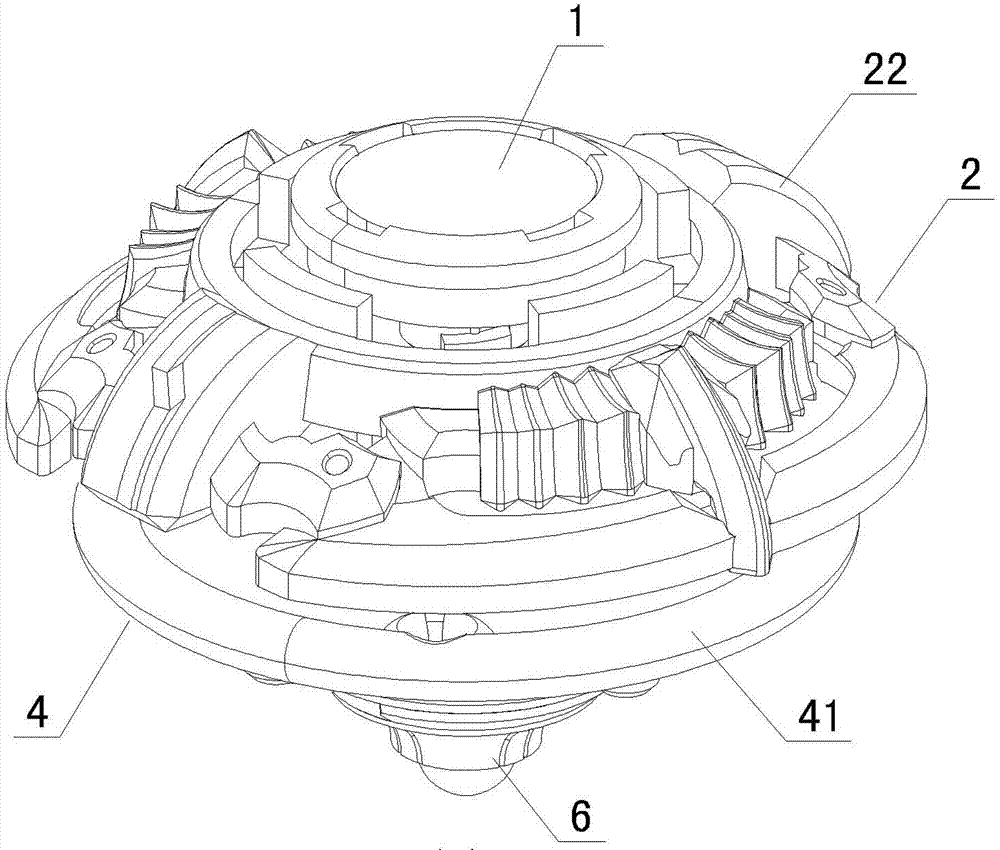

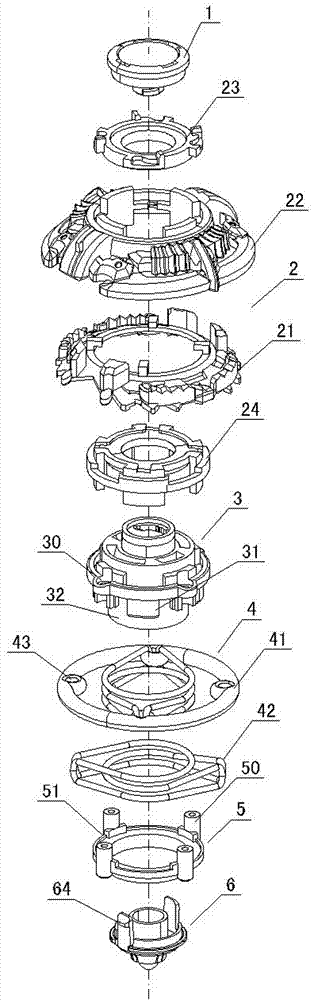

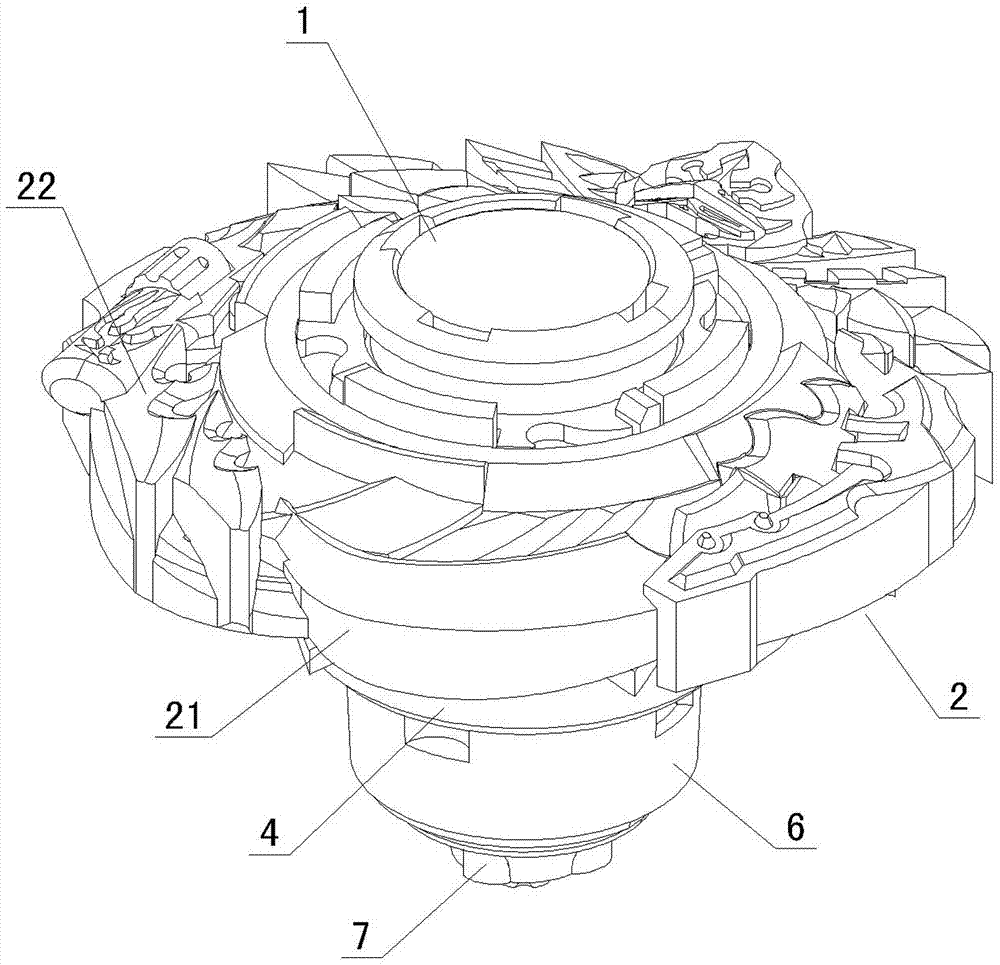

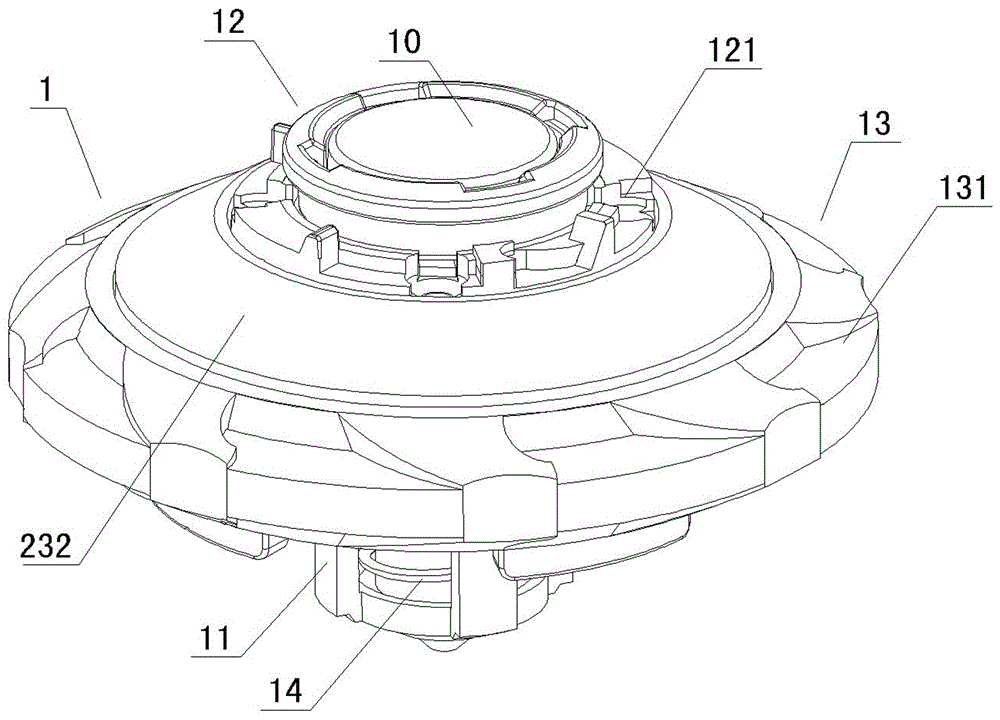

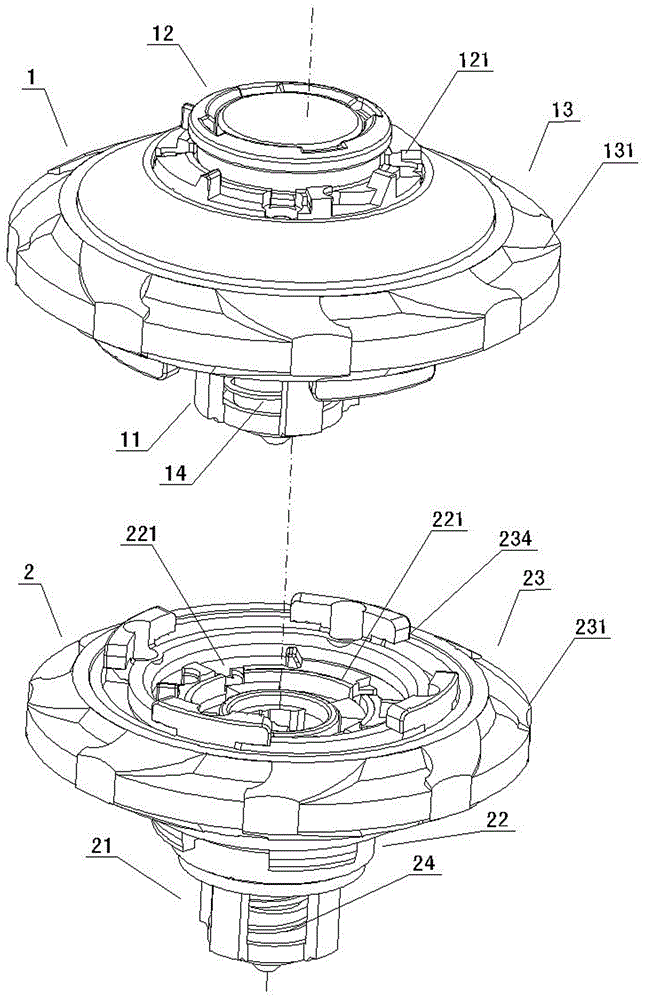

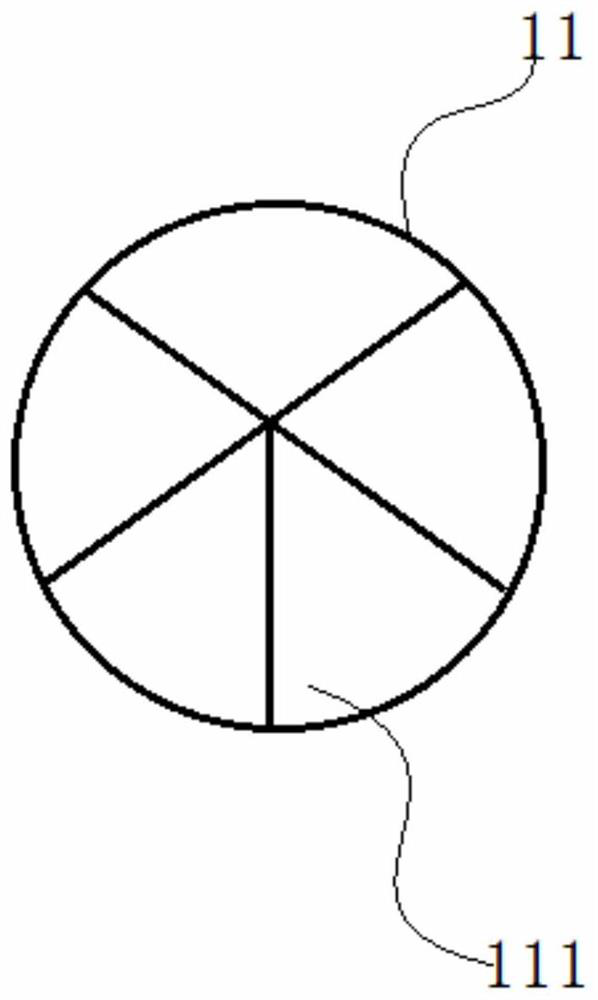

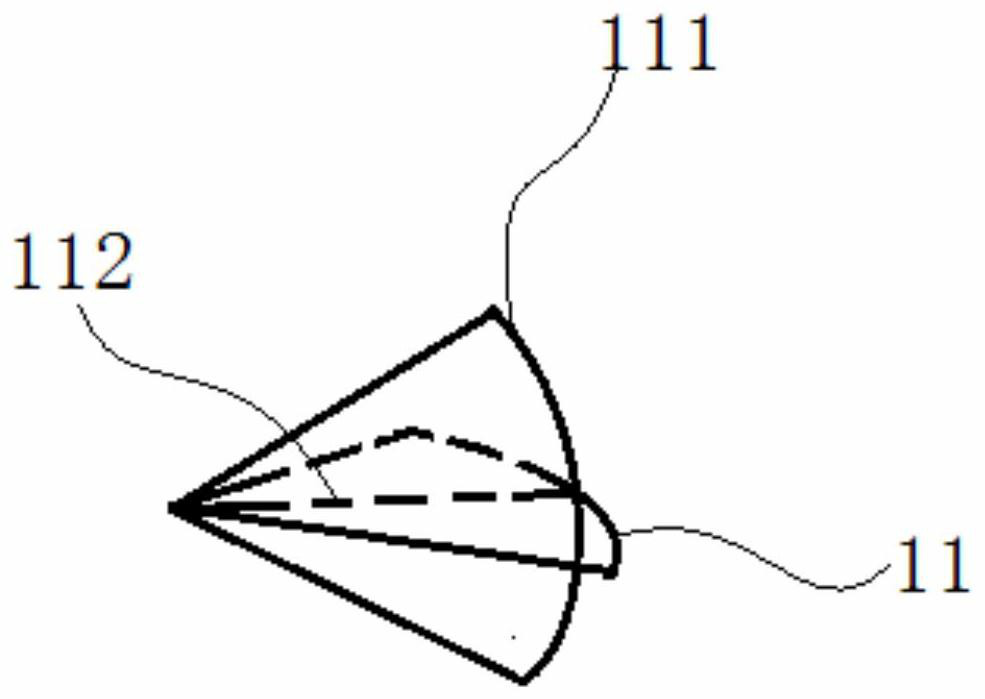

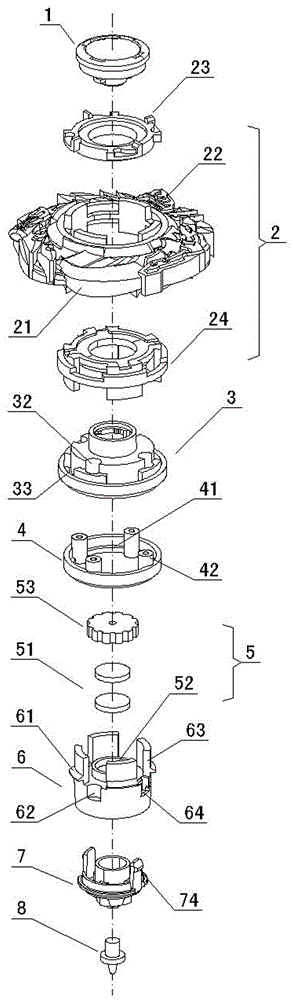

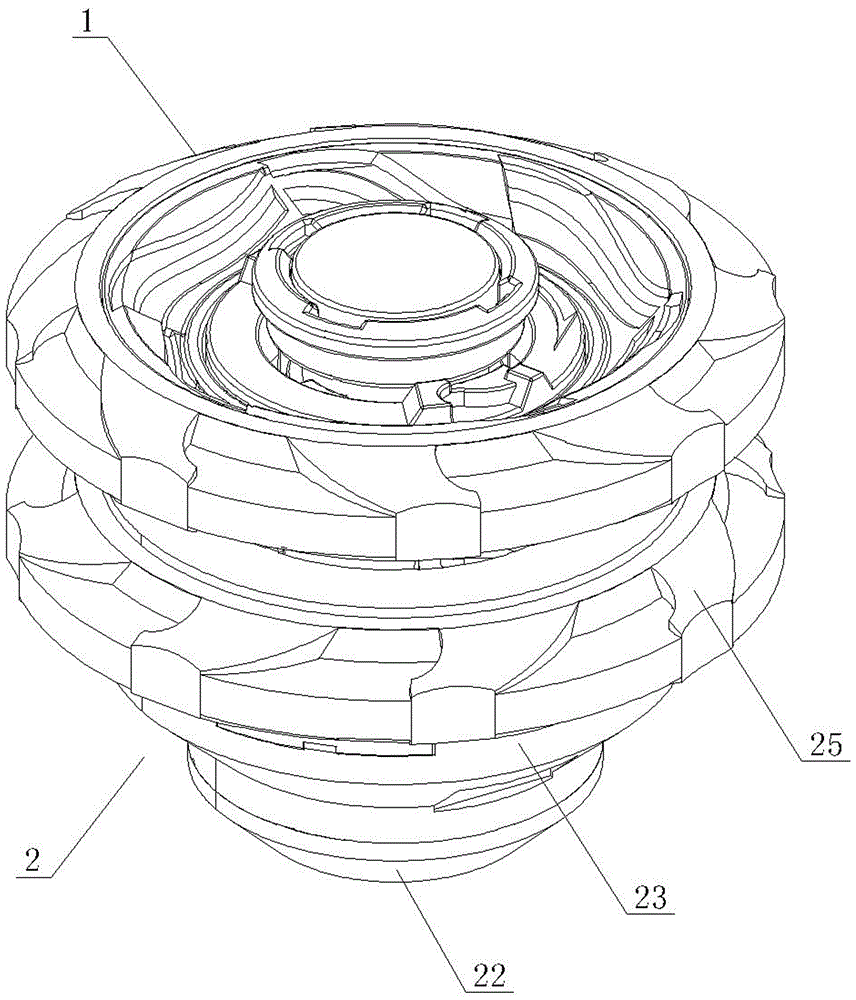

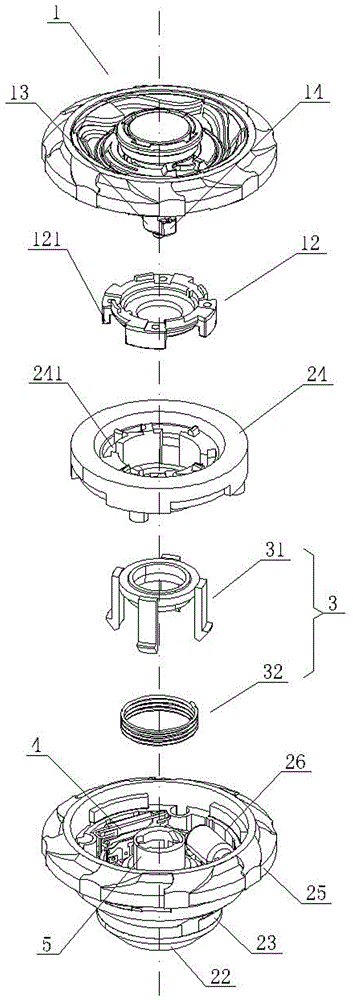

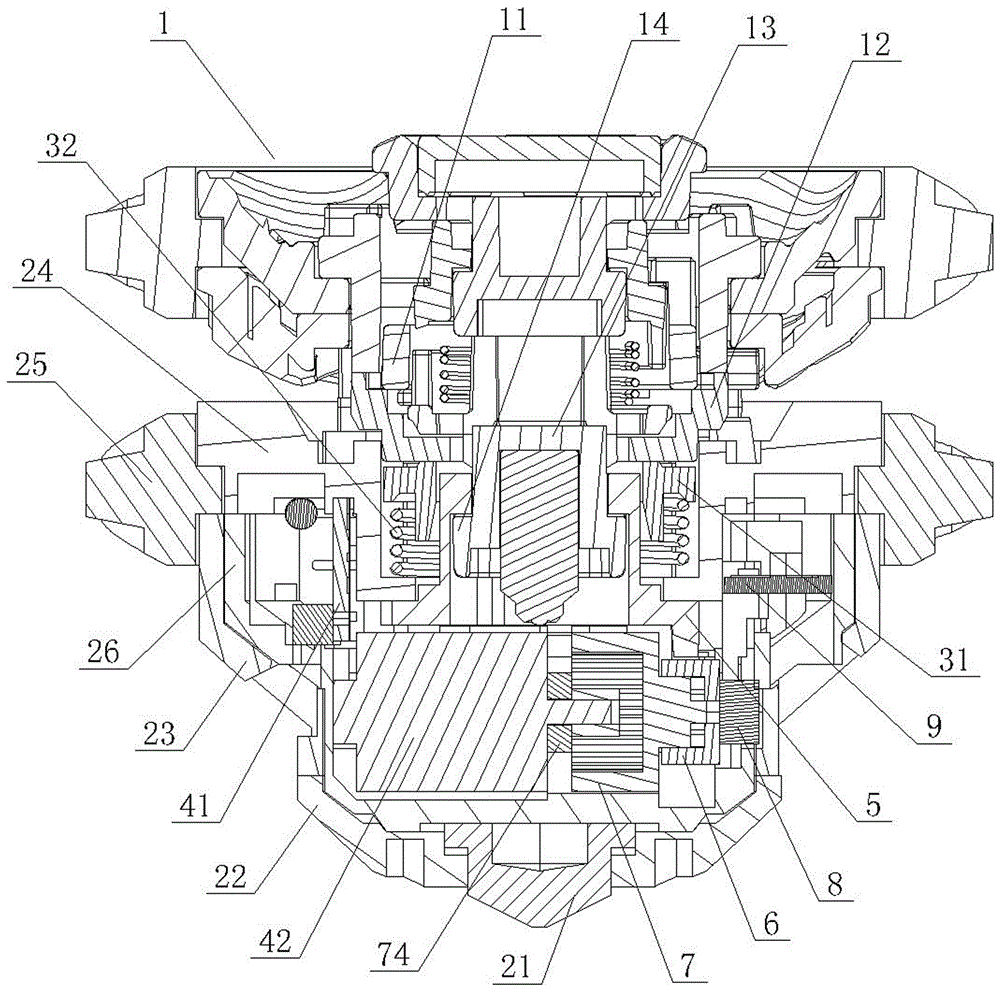

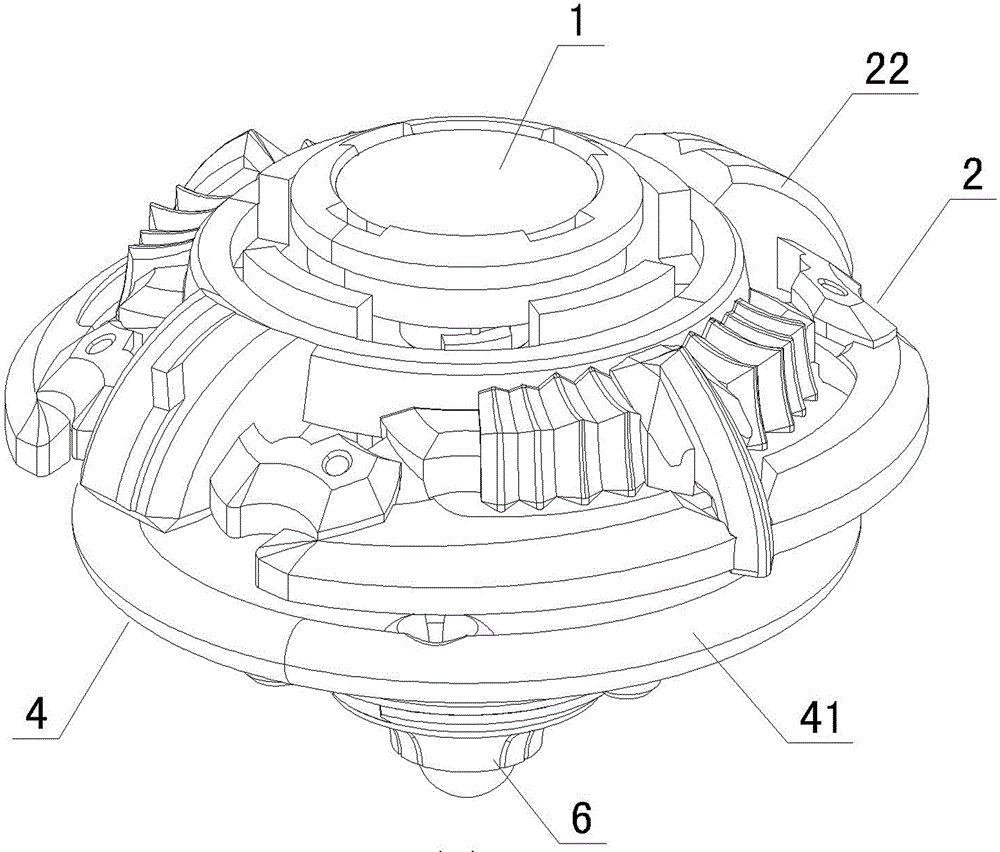

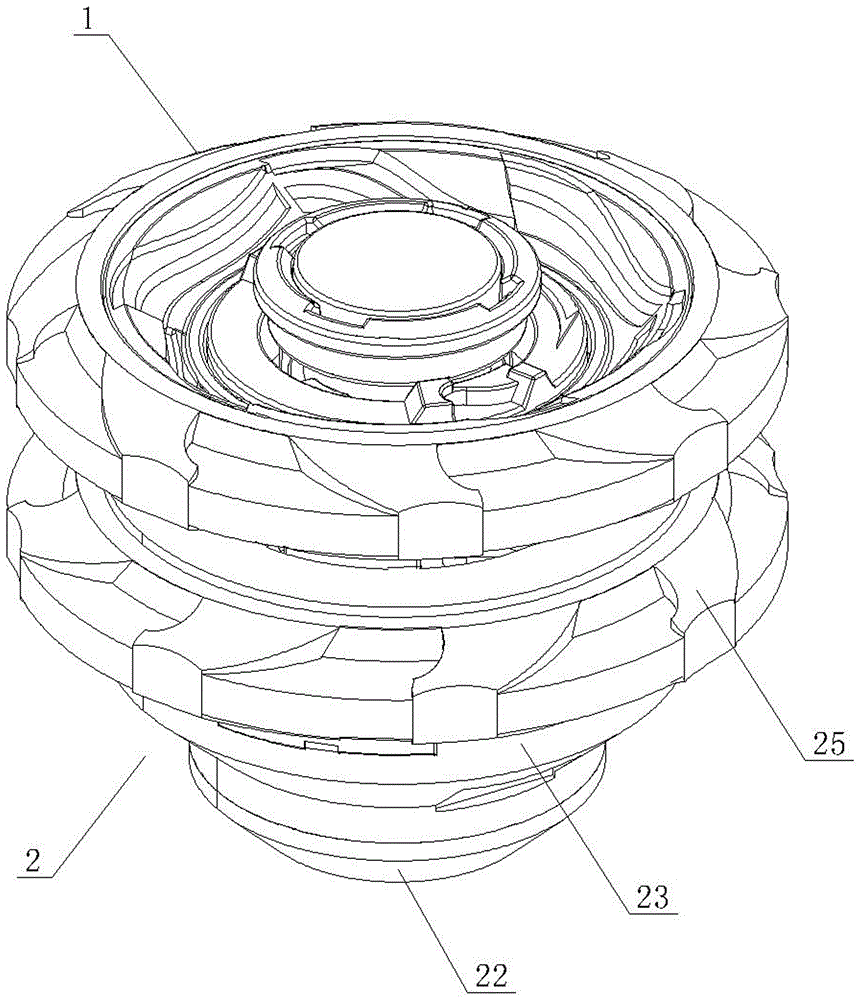

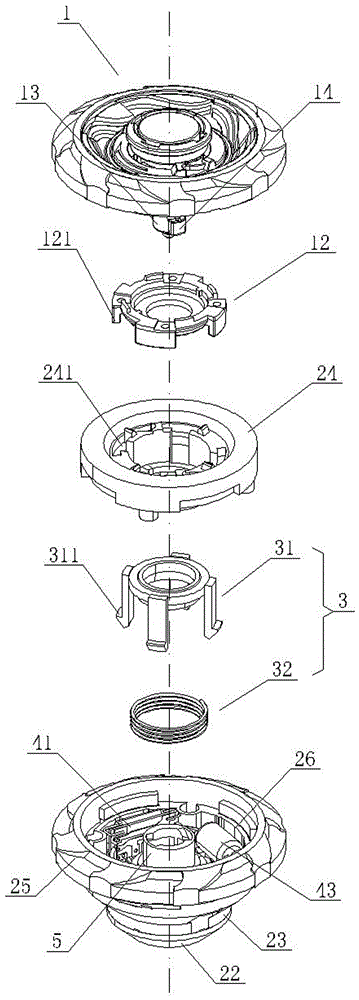

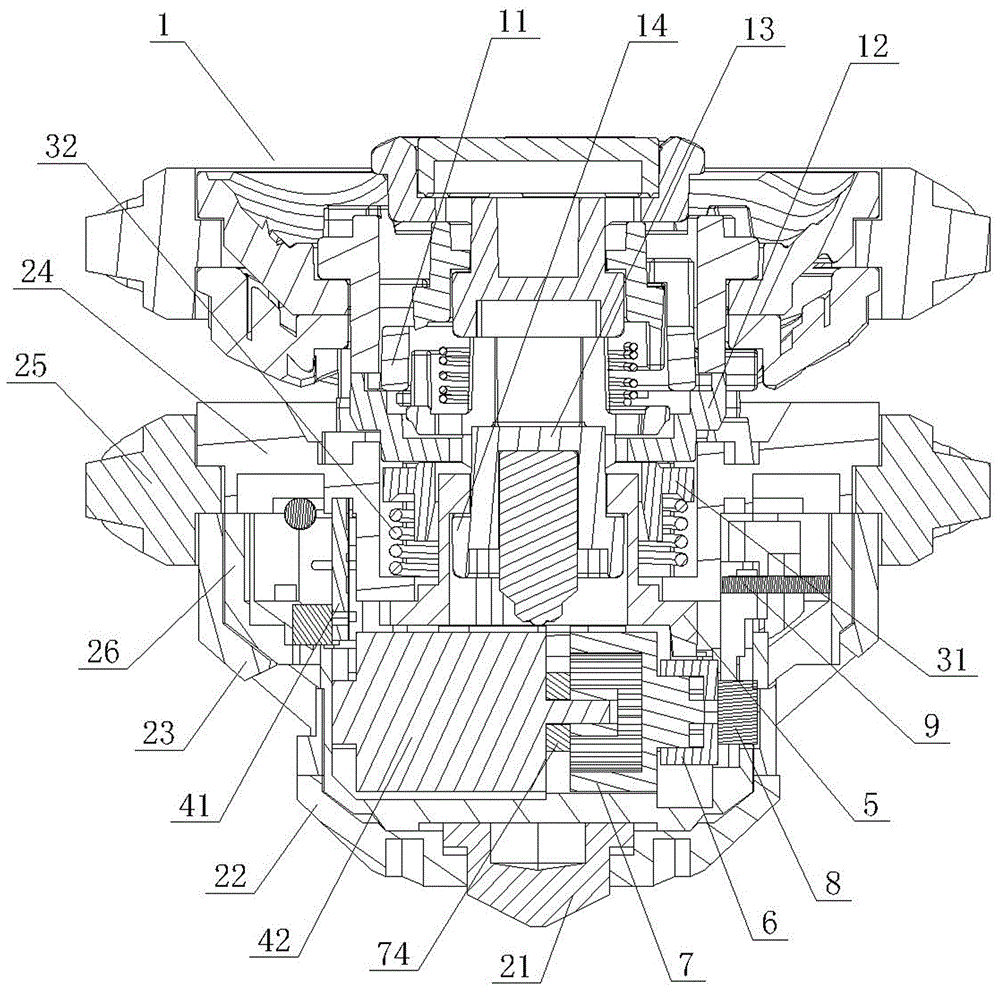

Combined type toy spinning top capable of automatically splitting

The invention discloses a combined type toy spinning top capable of automatically splitting. The combined type toy spinning top is characterized by comprising at least two spinning top bodies which are butted vertically, wherein the spinning top tip of each spinning top body is an elastic spinning top tip; the upper spinning top body in the spinning top can automatically separate from the lower spinning top body when being impacted or blocked during the rotation, and ejects out under the action of the elastic spinning top tip of the upper spinning top body, so that the two spinning top bodies rotating independently respectively are formed. According to the invention, when a user plays the spinning top, the fact that the spinning top can be split into two parts or three parts during a competition can be realized, so that the attack force of the spinning top is greatly improved, and further the win rate is higher; besides, the spinning top tips of the spinning top bodies are elastic spinning top tips, and when the spinning top is split during a game, the upper spinning top body ejects out mainly under the action of the elasticity of the spinning top tip of the upper spinning top body, so that the impact to the lower spinning top body is not large; besides, the soft landing can be realized through the utilization of the buffer action of the elasticity during landing, so that the spinning top tips can be effectively protected, and the operation stability can be kept.

Owner:ALPHA GRP CO LTD +2

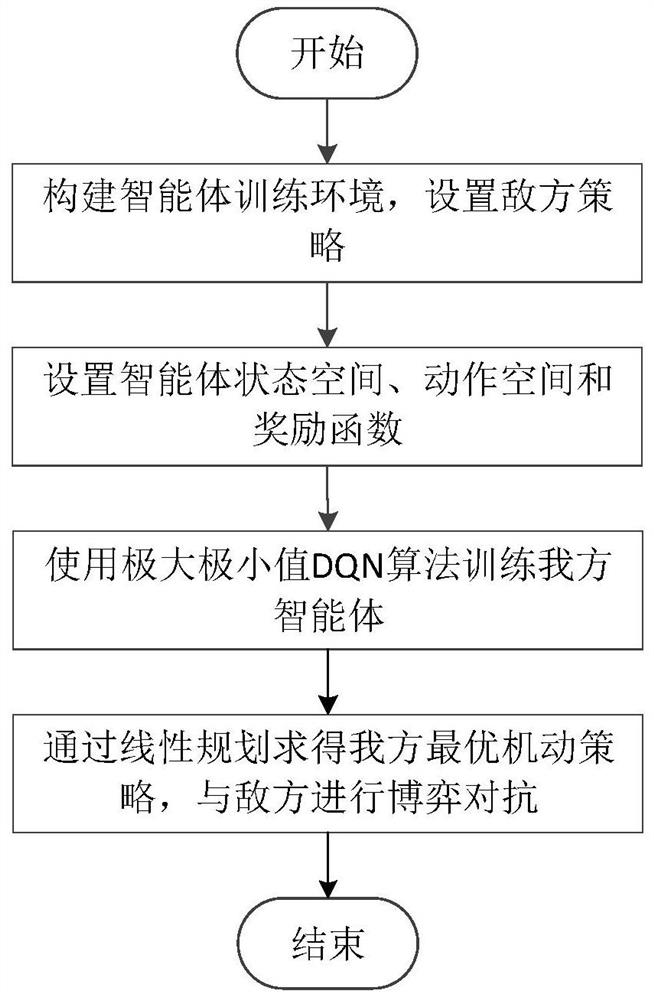

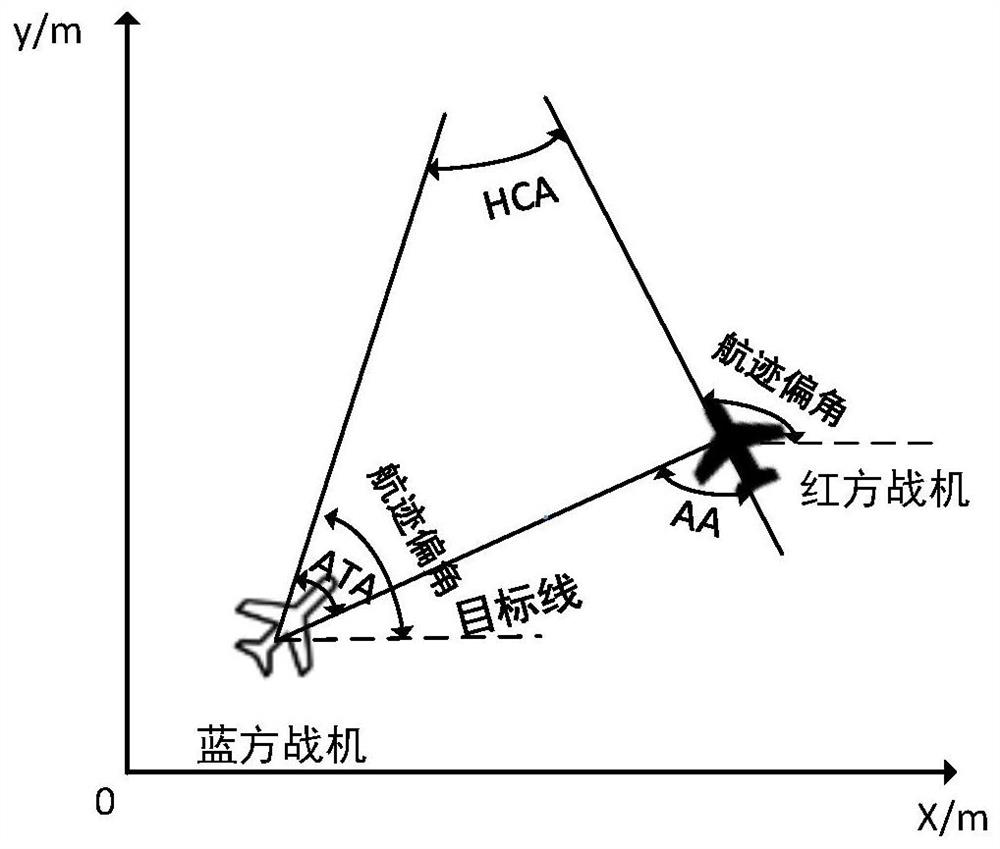

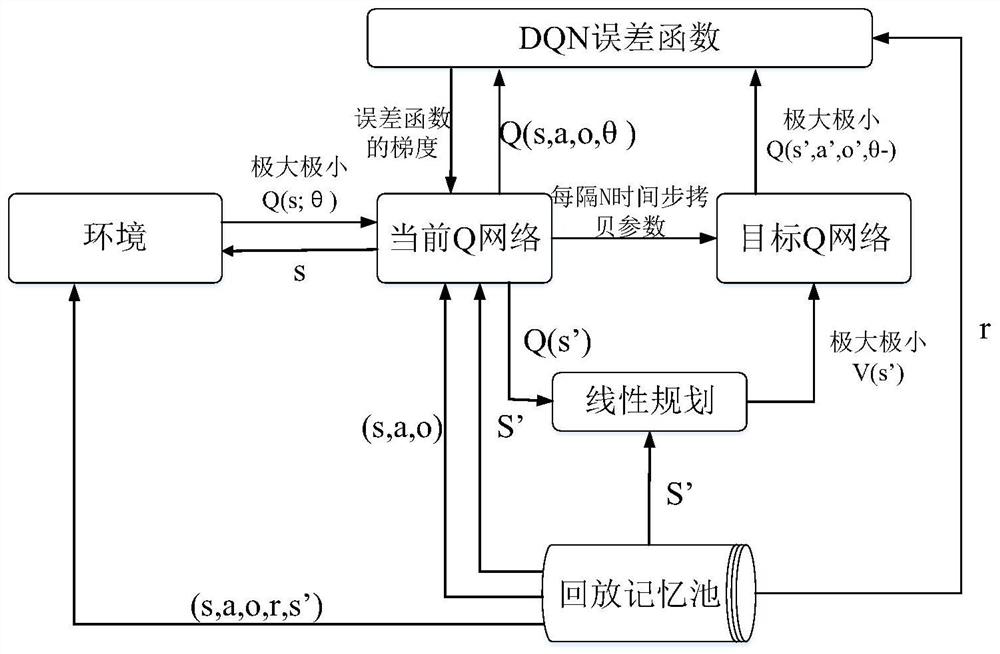

Air combat maneuvering strategy generation technology based on deep random game

InactiveCN112052511AGuaranteed real-timeImprove computing efficiencyGeometric CADDesign optimisation/simulationSimulationIntelligent agent

The invention discloses a short-distance air combat maneuvering strategy generation technology based on a deep random game. The technology comprises the following steps: firstly constructing a training environment for combat aircraft game confrontation according to a 1V1 short-distance air combat flow, and setting an enemy maneuvering strategy; secondly, by taking a random game as a standard, constructing agents of both sides of air combat confrontation, and determining a state space, an action space and a reward function of each agent; thirdly, constructing a neural network by using a maximumand minimum value DQN algorithm combining random game and deep reinforcement learning, and training our intelligent agent; and finally, according to the trained neural network, obtaining an optimal maneuvering strategy under an air combat situation through a linear programming method, and performing game confrontation with enemies. The thought of random game and deep reinforcement learning is combined, the maximum and minimum value DQN algorithm is provided to obtain the optimal air combat maneuver strategy, the optimal air combat maneuver strategy can be applied to an existing air combat maneuver guiding system, and effective decisions can be accurately made in real time to guide a fighter plane to occupy a favorable situation position.

Owner:CHENGDU RONGAO TECH

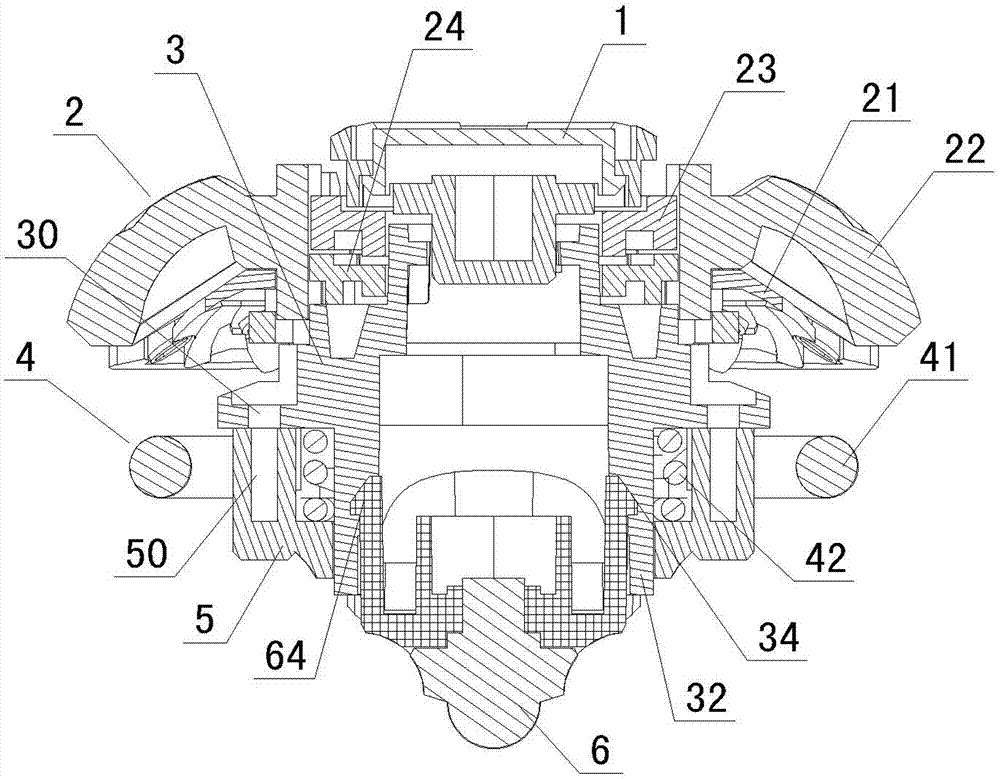

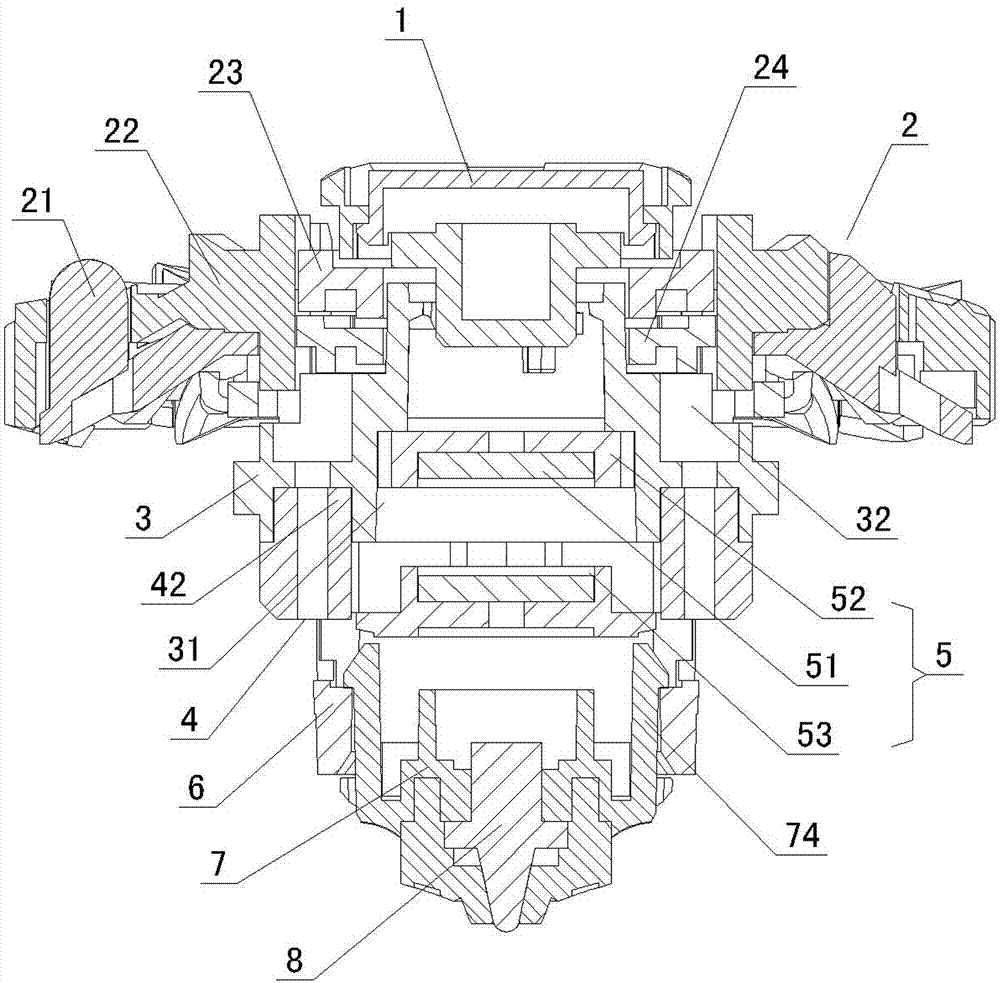

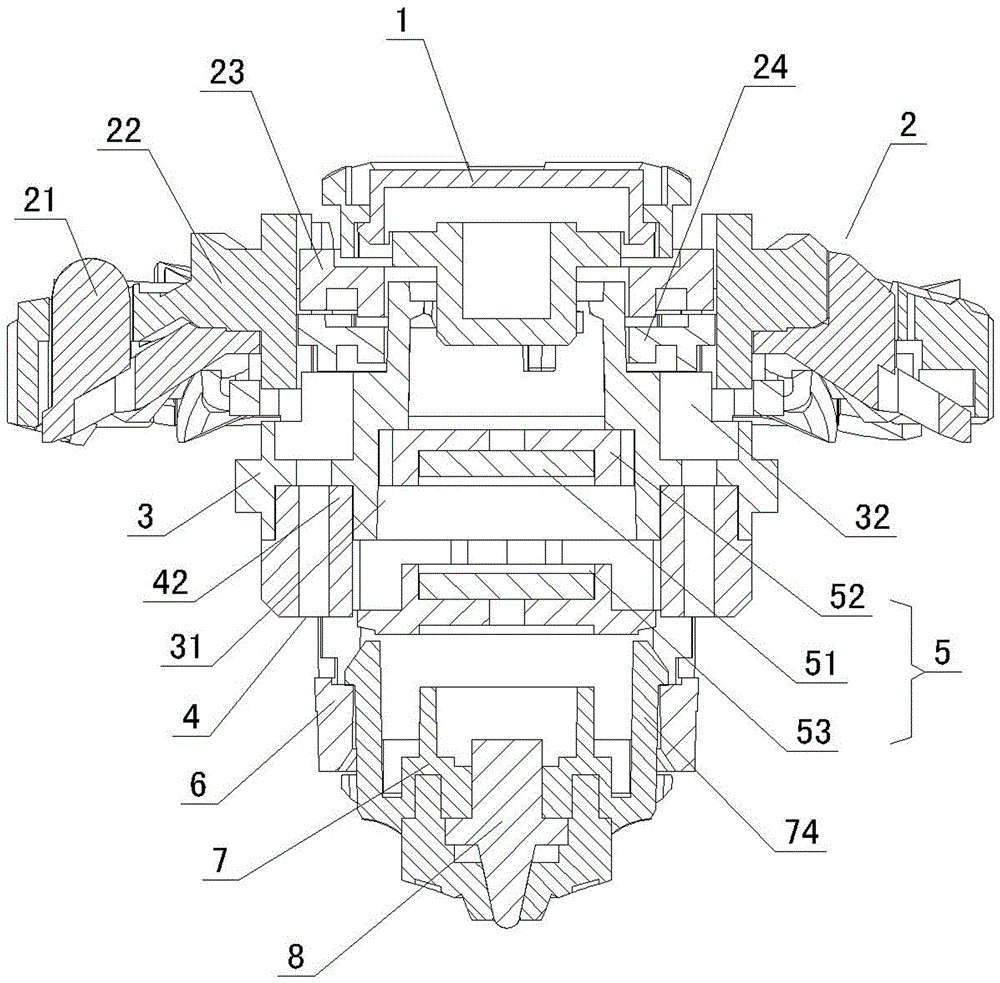

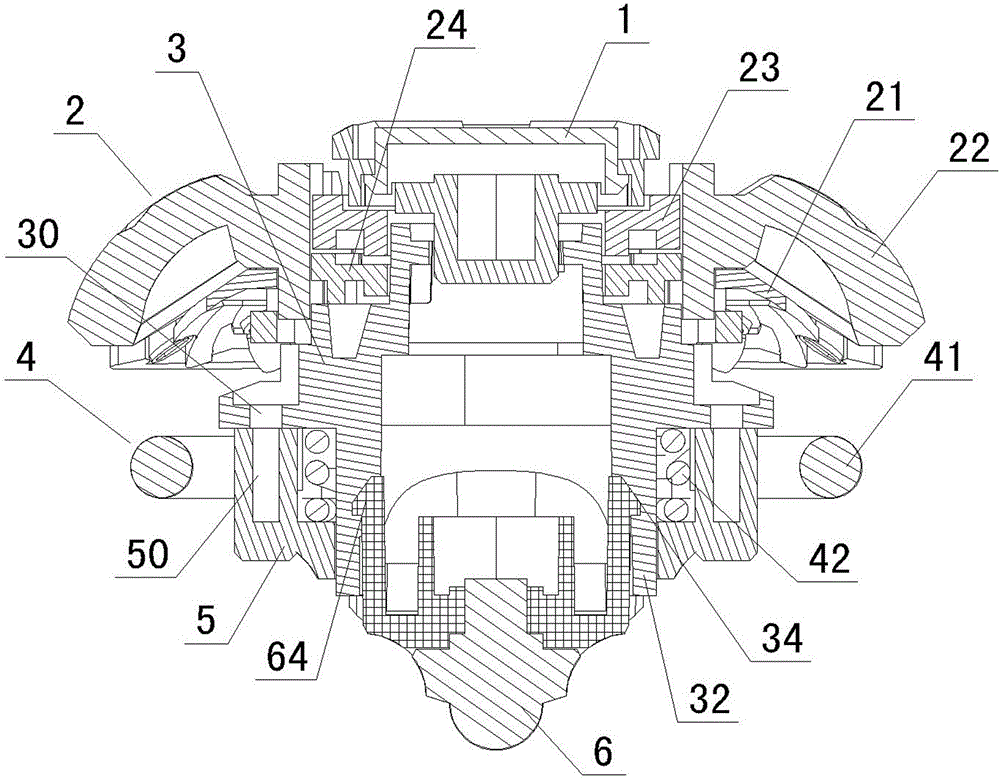

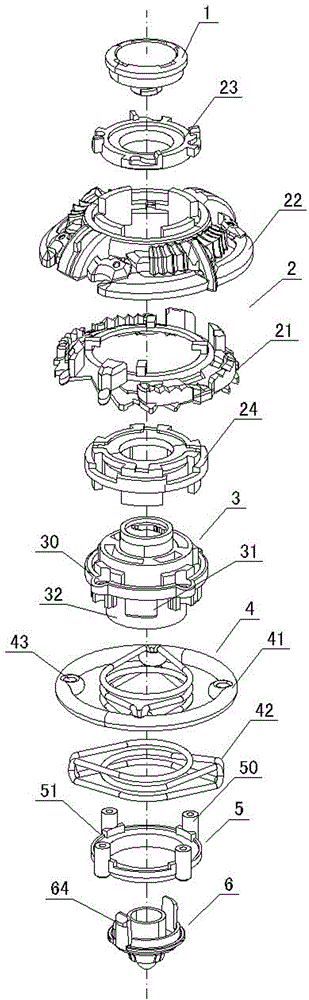

Toy gyro good in defensiveness

The invention provides a toy gyro good in defensiveness. The toy gyro comprises a spiral cover, a spiral sheet, a spiral base and a hillock point, and is characterized by further comprising an elastic shock absorption piece for buffering impact force when the gyro is impacted. The shock absorption piece is provided with an elastic part used for resisting impact and a connection part fixedly connected with the spiral base. The elastic part is located on the periphery of the spiral base through fixed connection of the connection part and the spiral base, and therefore when the gyro competes with other gyros and the other party impacts on the elastic part, impact force will be reduced due to elastic deformation of the elastic part; thus, the shock absorption effect is achieved to make the gyro rotate stably, anti-impact force is produced and returned to the other party at the same time to interfere the other party, and accordingly the win rate of the gyro is improved. The toy gyro is strong in interestingness, the elastic property of the elastic shock absorption piece can be changed by a player according to judgments so as to achieve a better anti-impact effect, the method to play the toy gyro is novel, the toy gyro can attract more players, and manipulative ability and competitive ability of children can be trained at the same time.

Owner:ALPHA GRP CO LTD +2

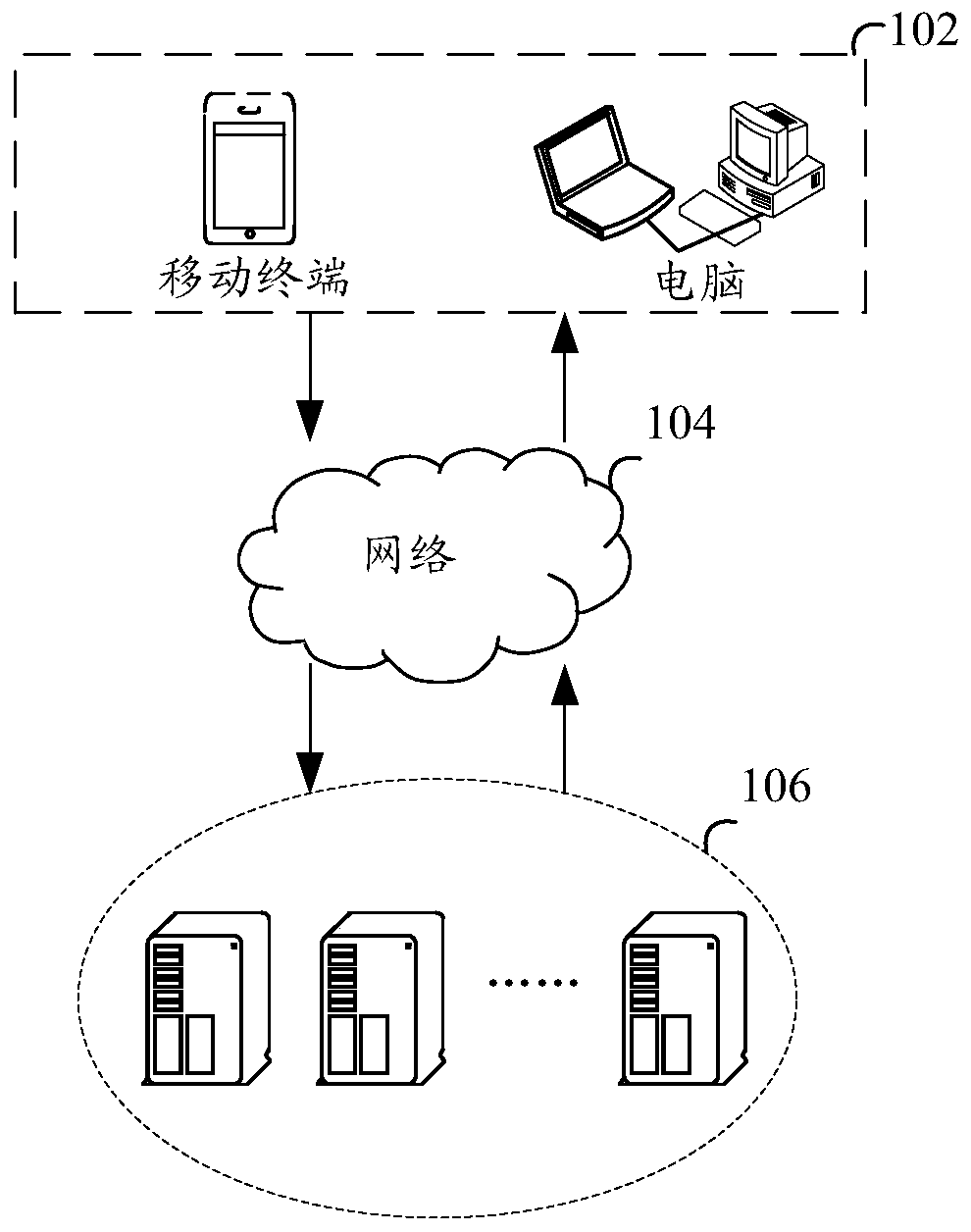

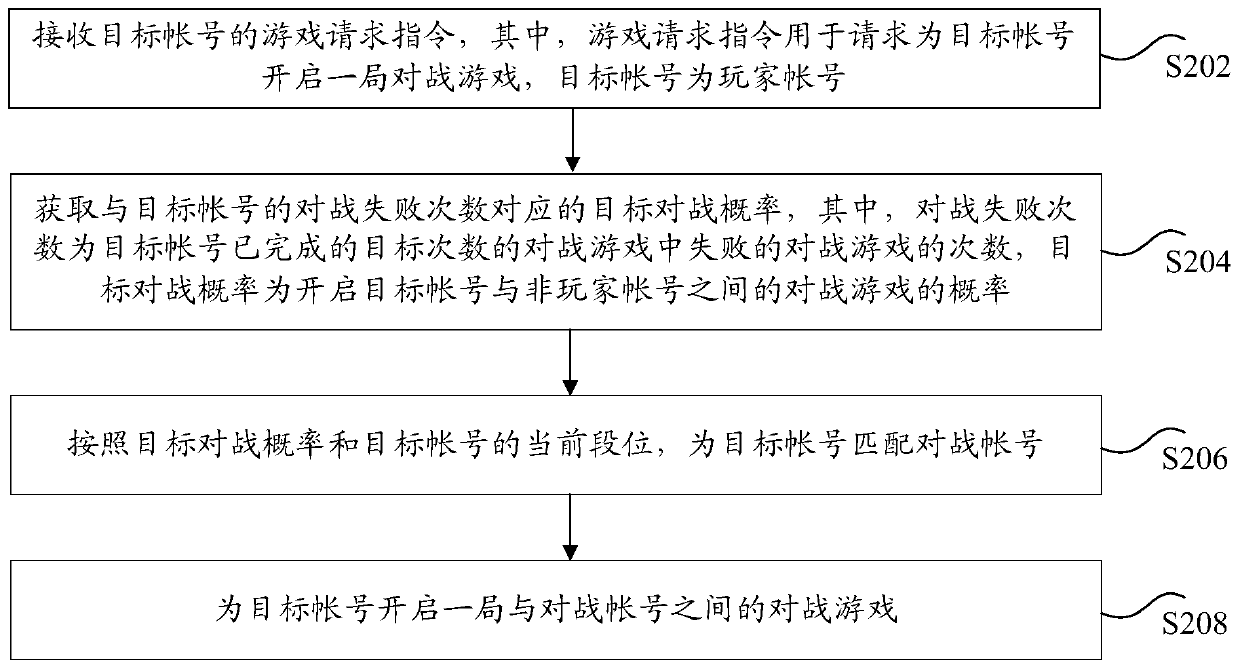

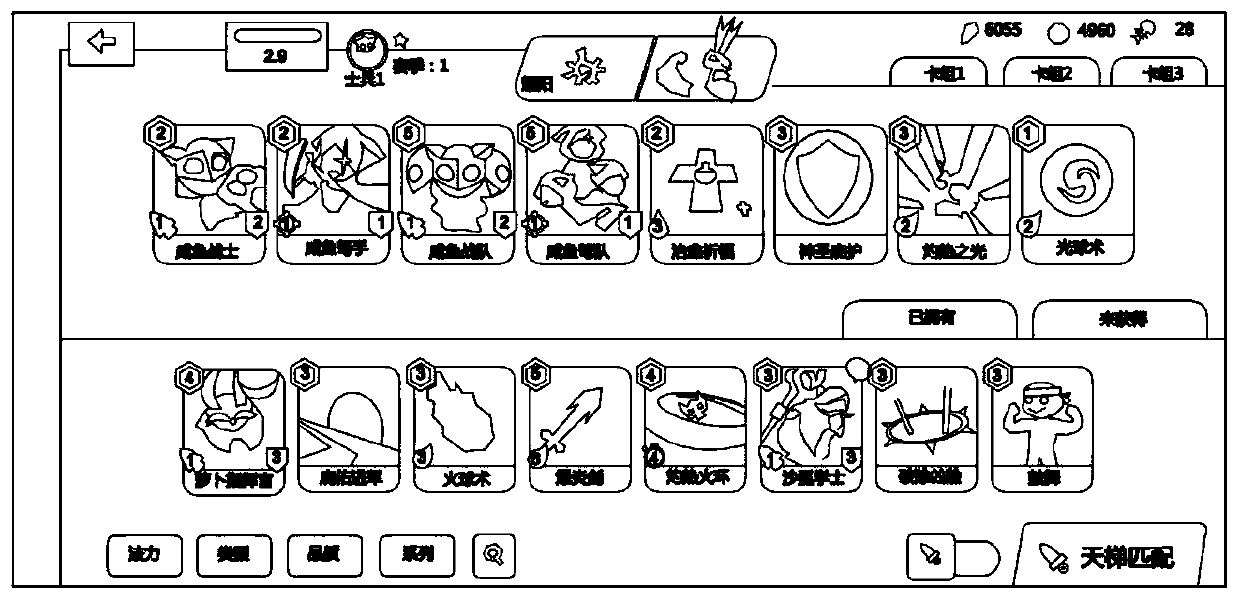

Game account matching method and device, storage medium and electronic device

InactiveCN110585729AReduce the probability of consecutive failuresImprove gaming experienceVideo gamesSimulation

Owner:TENCENT TECH (SHENZHEN) CO LTD

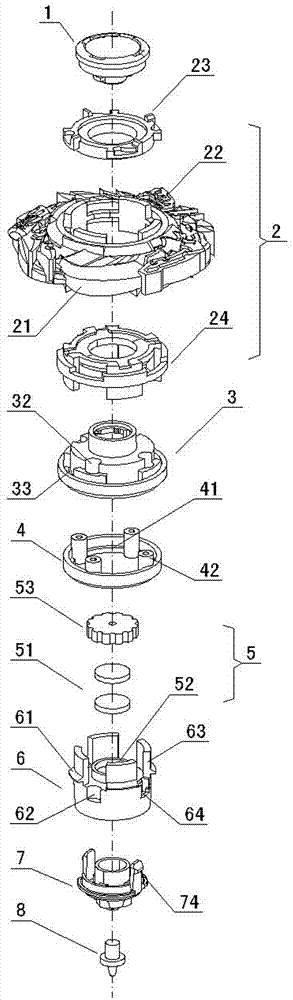

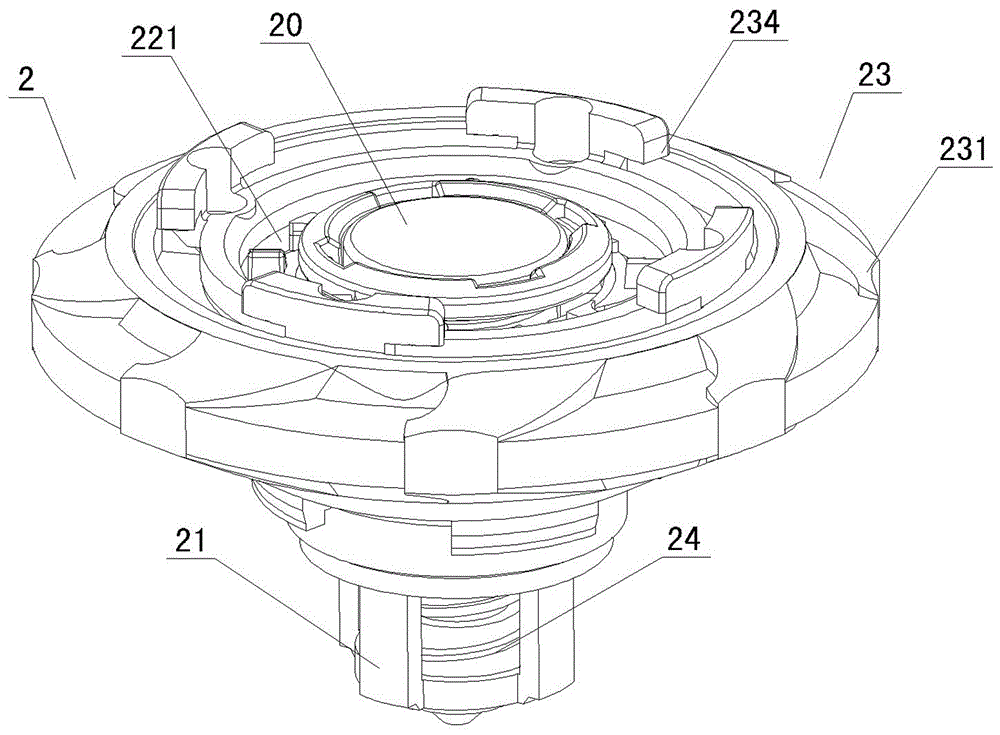

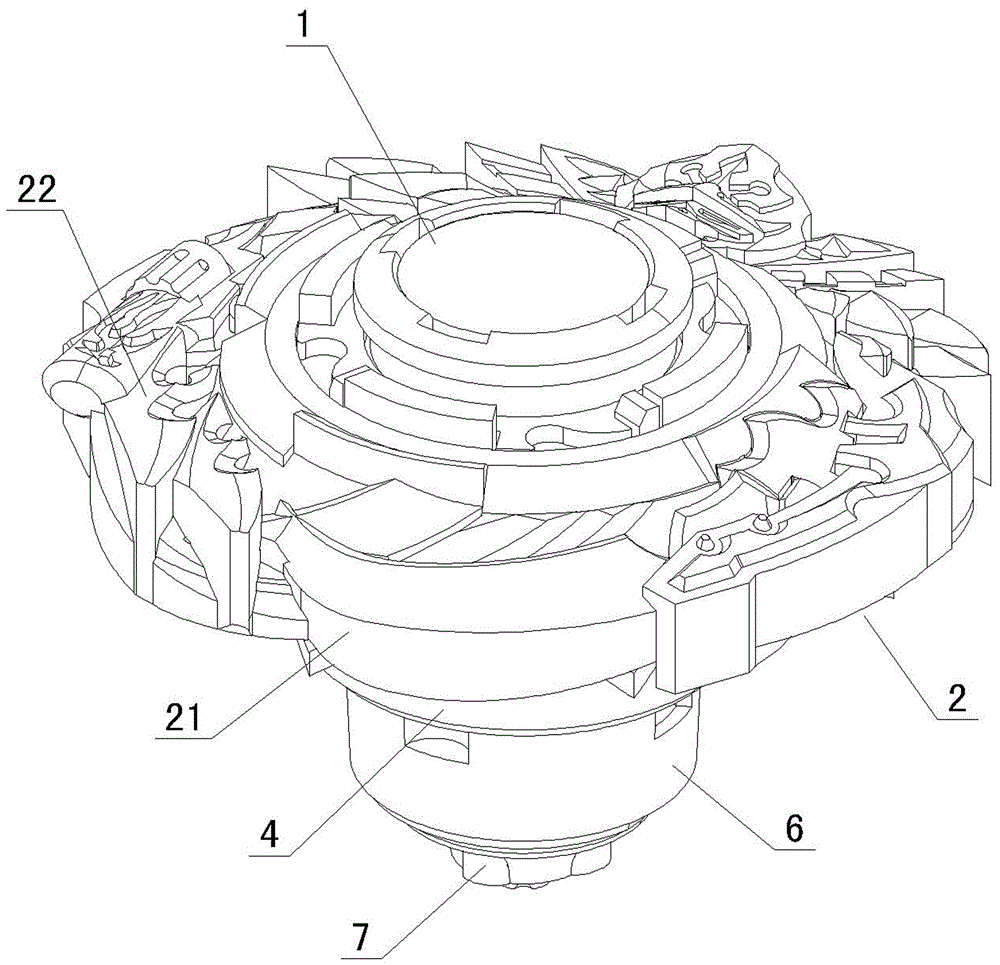

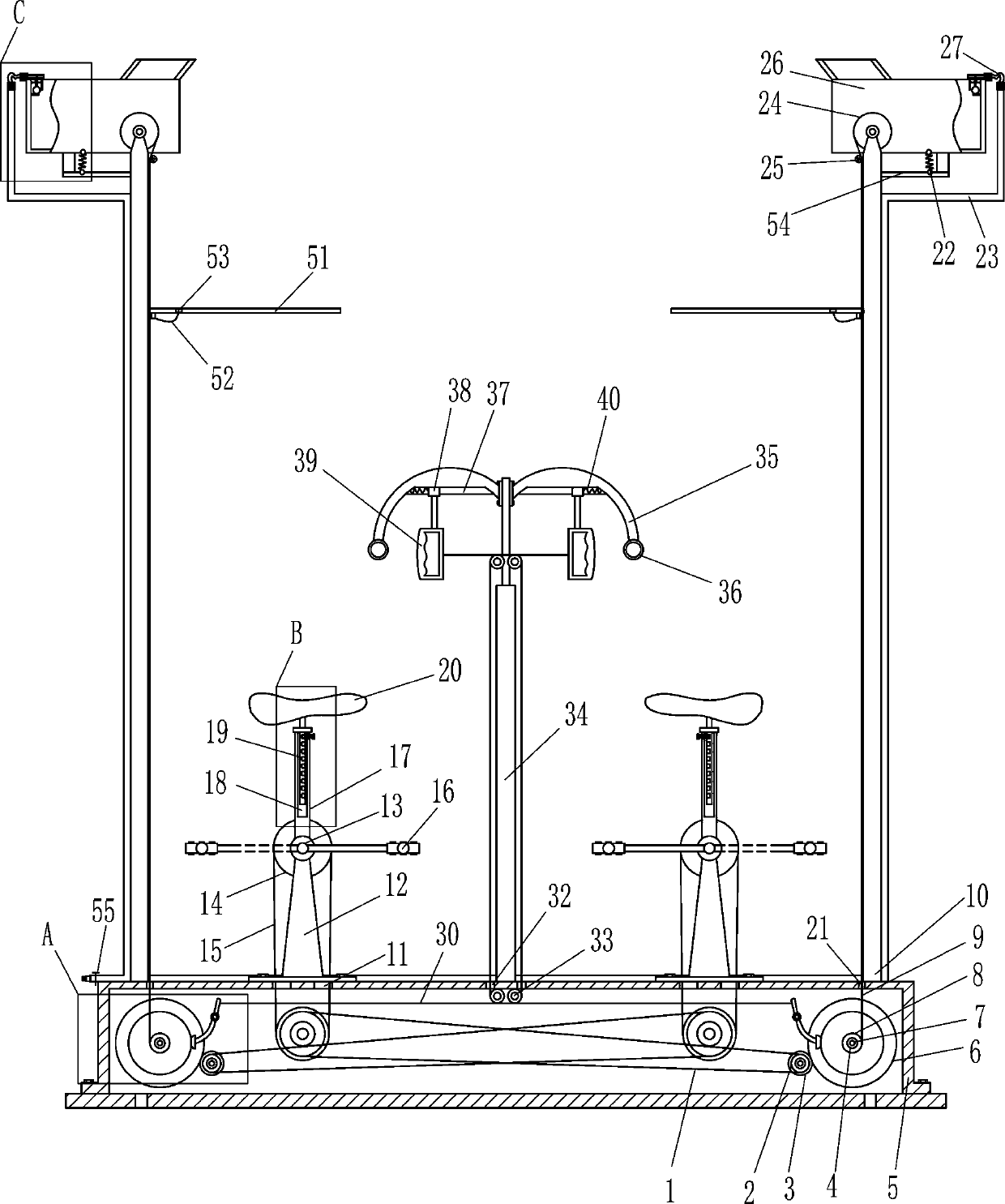

Bounceable toy gyro

The invention provides a bounceable toy gyro. The toy gyro comprises a gyro cover, a gyro piece, a gyro base and a gyro tip, wherein the gyro cover is located at the top; the gyro piece is located below the gyro cover; the gyro base is used for fixing the gyro piece; and the gyro tip is located at the bottom. The toy gyro is characterized in that a bouncing moving part is arranged between the gyro base and the gyro tip, fixedly connected with the gyro tip and connected with the gyro base in an up-down bouncing manner, so that when the gyro falls to the ground from a launcher, the bouncing moving part and the gyro tip move upwards under the effect of the reactive force of the gravity, then recover elastically, conduct downward returning movement and then move upwards again under the effect of inertia force, the process is repeated, bouncing actions are formed, and the gyro is quite interesting; in addition, in competitions, the gyro can bounce to hit a gyro of an opponent downwards, so that the gyro of the opponent is easier to damage, the aggressiveness of the gyro is greatly increased, and the winning rate is higher; besides, a playing method of the gyro is novel, so that more players like the gyro; and meanwhile, the operational ability and the competitive ability of children can be trained.

Owner:ALPHA GRP CO LTD +2

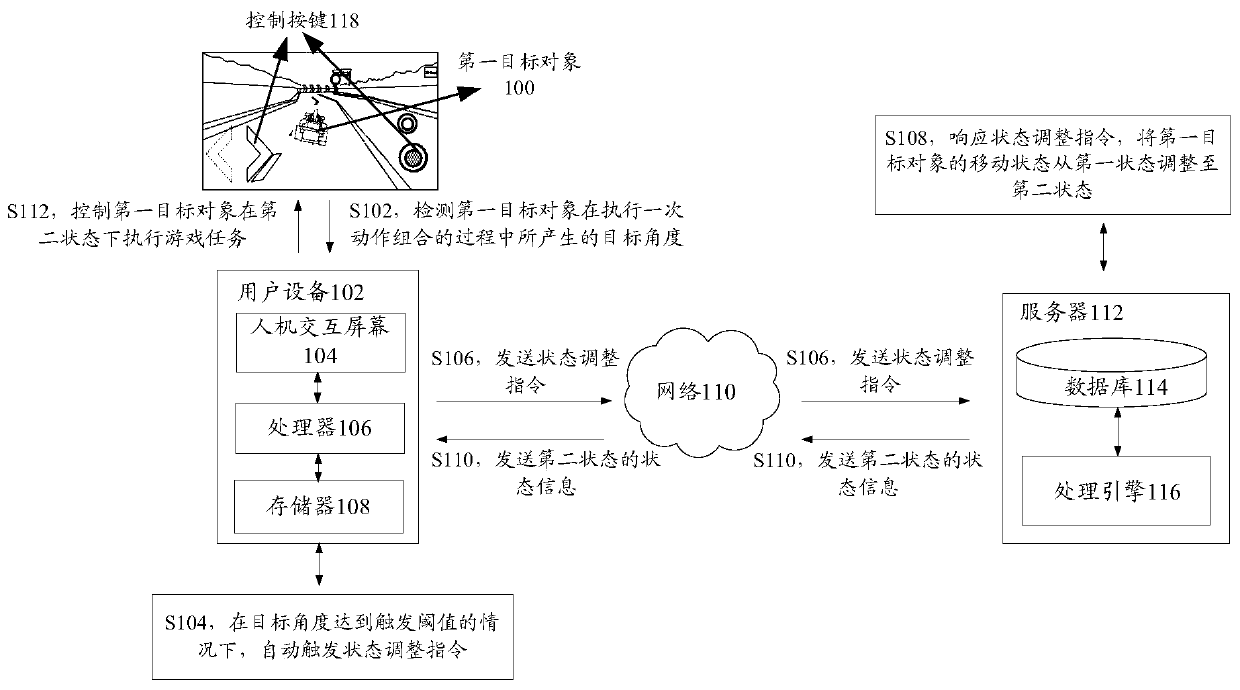

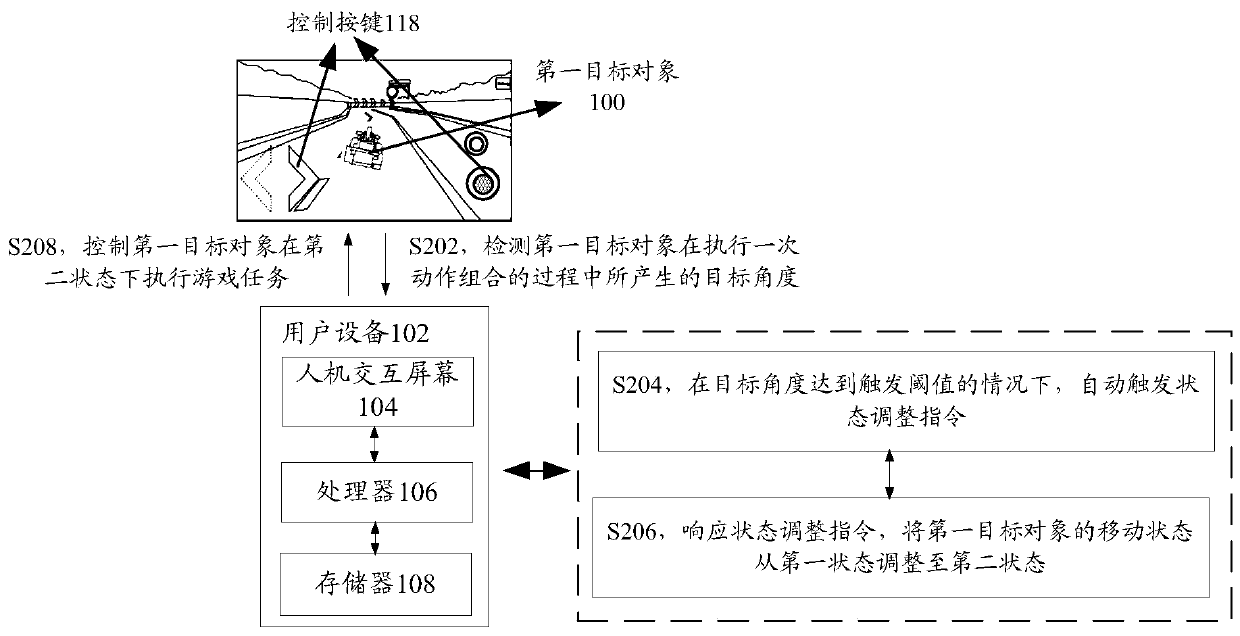

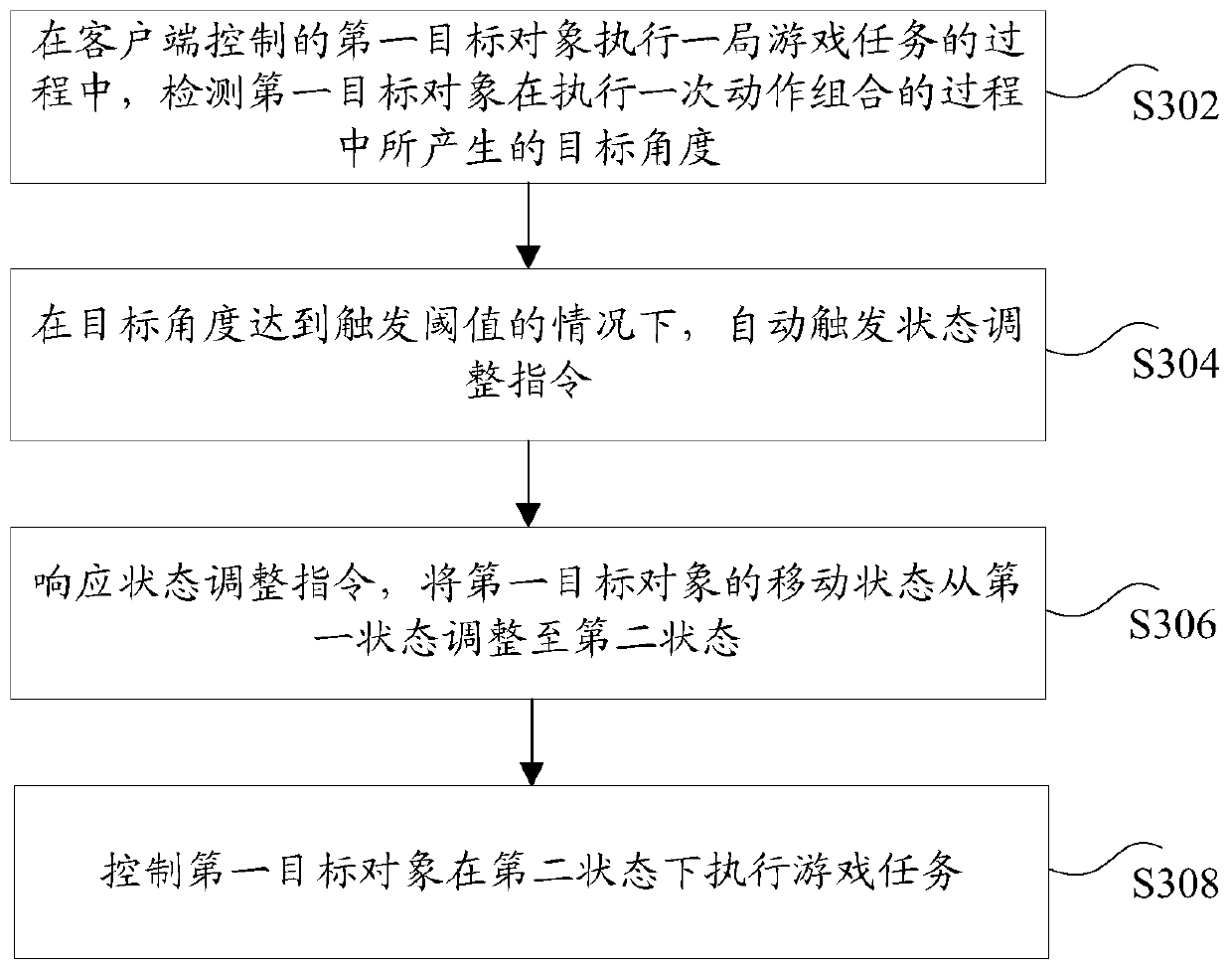

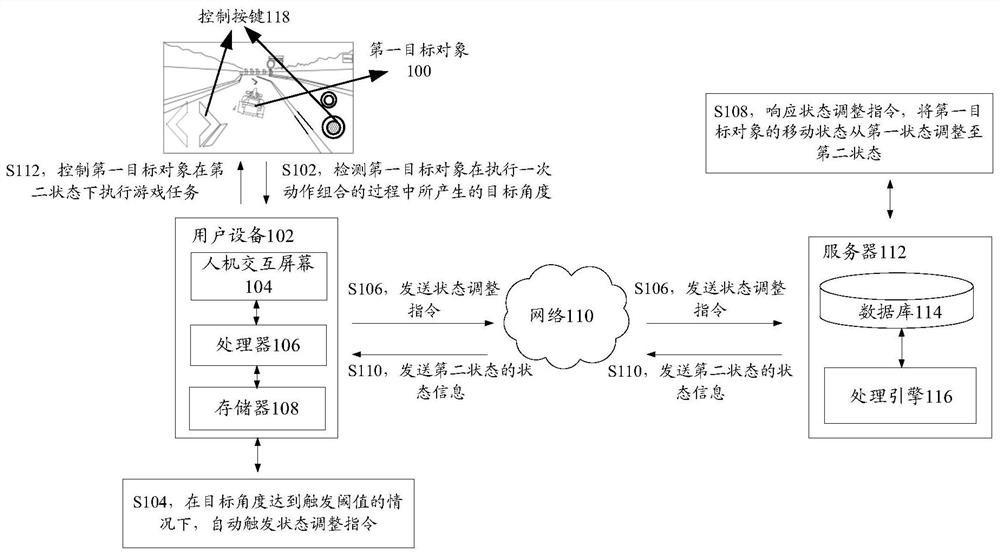

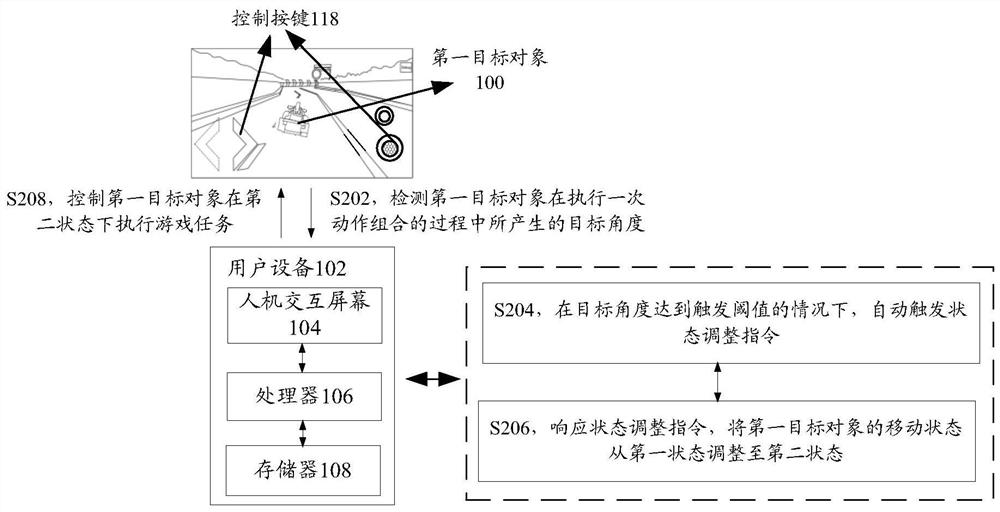

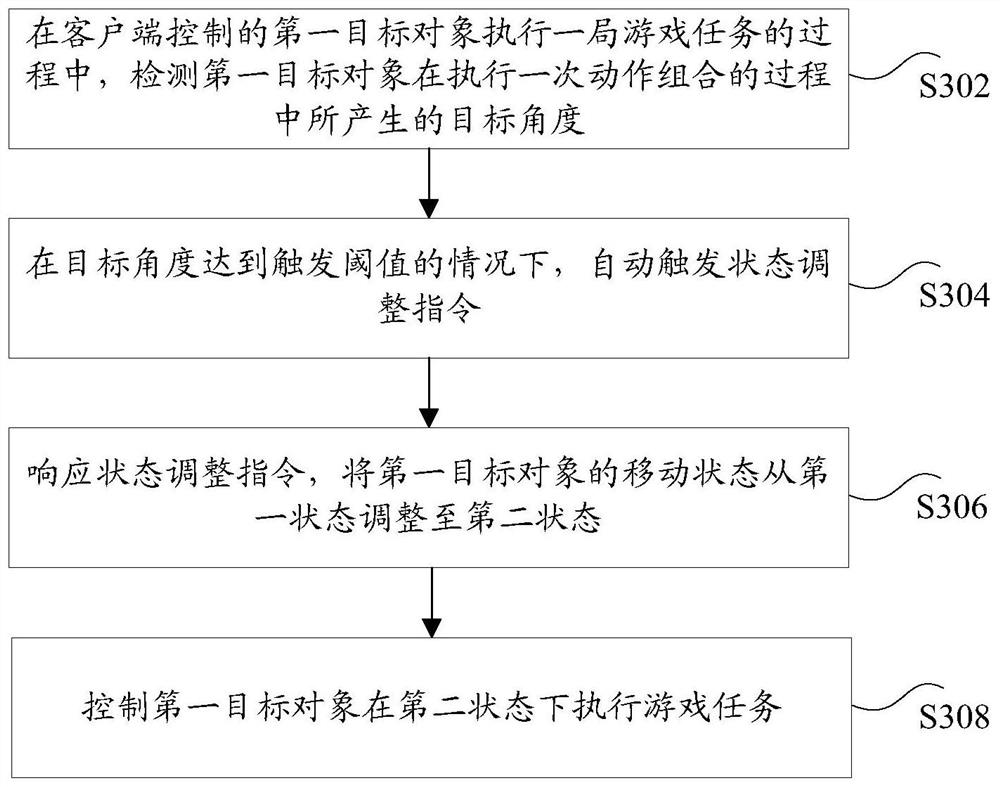

Object control method and device, storage media and electronic device

ActiveCN110201387AShorten control timeIncrease winning percentageVideo gamesTarget–actionHuman–computer interaction

The invention discloses an object control method and device, a storage medium and an electronic device. The object control method includes the steps that during execution of a game task by a first target object controlled by a client, a target angle generated by the first target object during an action combination is detected, and the action combination includes two or more target actions executedin the same direction; under the condition that the target angle reaches a trigger threshold, a status adjustment instruction is automatically triggered; in response to the status adjustment instruction, the movement state of the first target object is adjusted from the first state to the second state, and the first time taken by the first target object to complete the game task in the first state is longer than the second time taken by the first target object to complete the game task in the second state; and the first target object is controlled to execute the game task in the second state.The object control method and device, the storage medium and the electronic device solve the technical problem of increasing control time due to high operation complexity.

Owner:TENCENT TECH (SHENZHEN) CO LTD

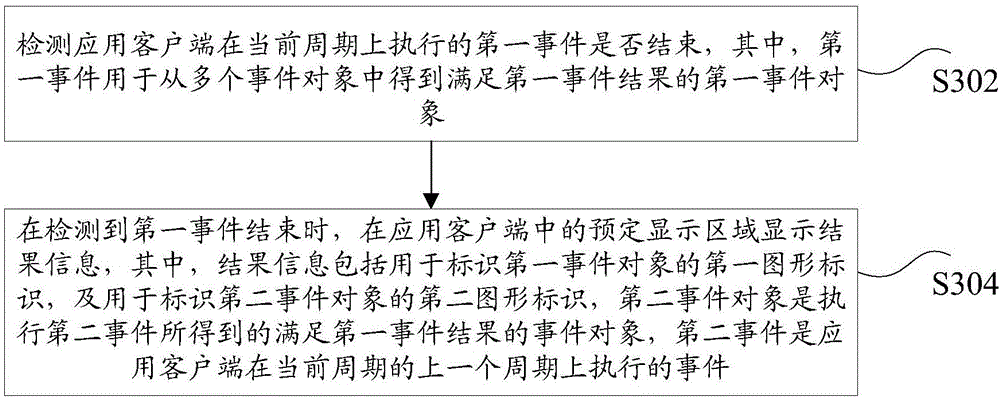

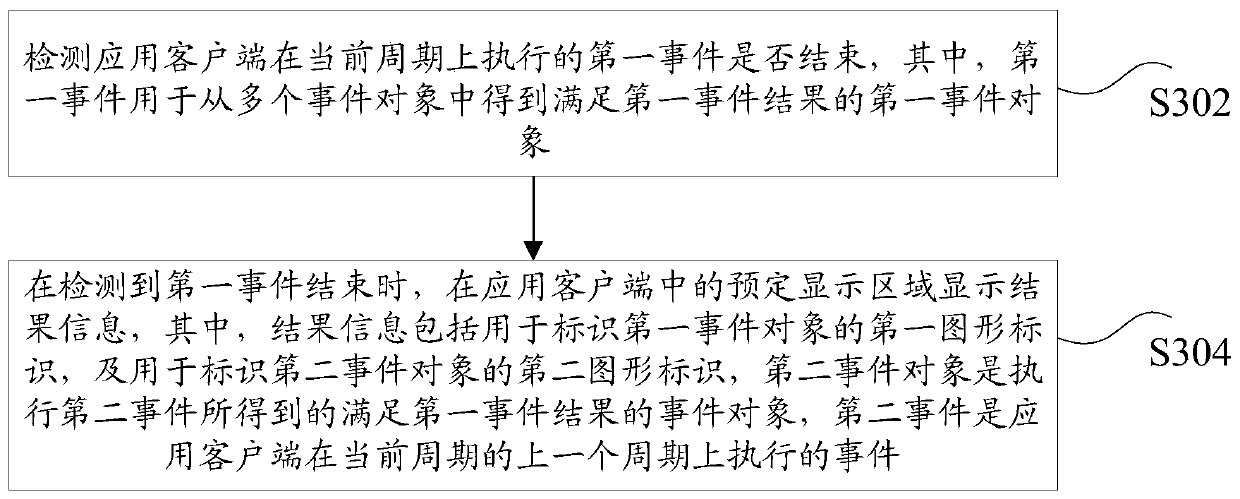

Event display method and apparatus

ActiveCN106484395ASteps to Simplify DisplayOvercome the problem of low display efficiencyExecution for user interfacesInput/output processes for data processingGraphicsClient-side

The present invention discloses an event display method and apparatus. The method comprises the steps of detecting whether a first event executed by an application client in a current cycle is finished, wherein the first event is used for obtaining a first event object meeting a first event result among multiple event objects; and when it is detected that the first event is finished, displaying result information in a predetermined display area of the application client, wherein the result information comprises a first figure identifier for identifying the first event object, and a second figure identifier for identifying a second event object, the second event object is an event object that meets the first event result and is obtained in execution of a second event, and the second event is an event executed by the application client in a previous cycle of the current cycle. The existing display method is low in display efficiency. The event display method solves the problem in the existing display method.

Owner:TENCENT TECH (SHENZHEN) CO LTD

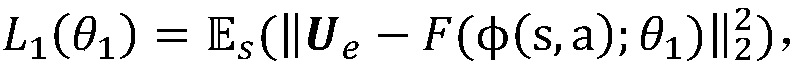

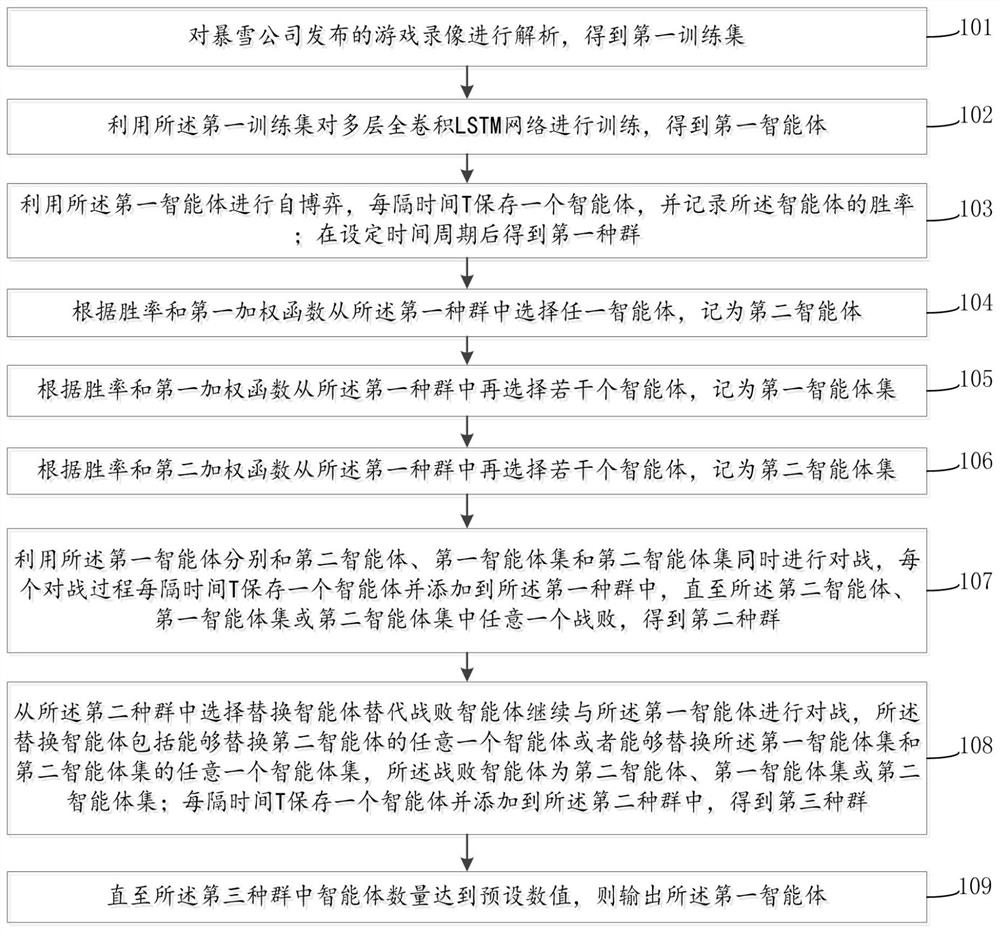

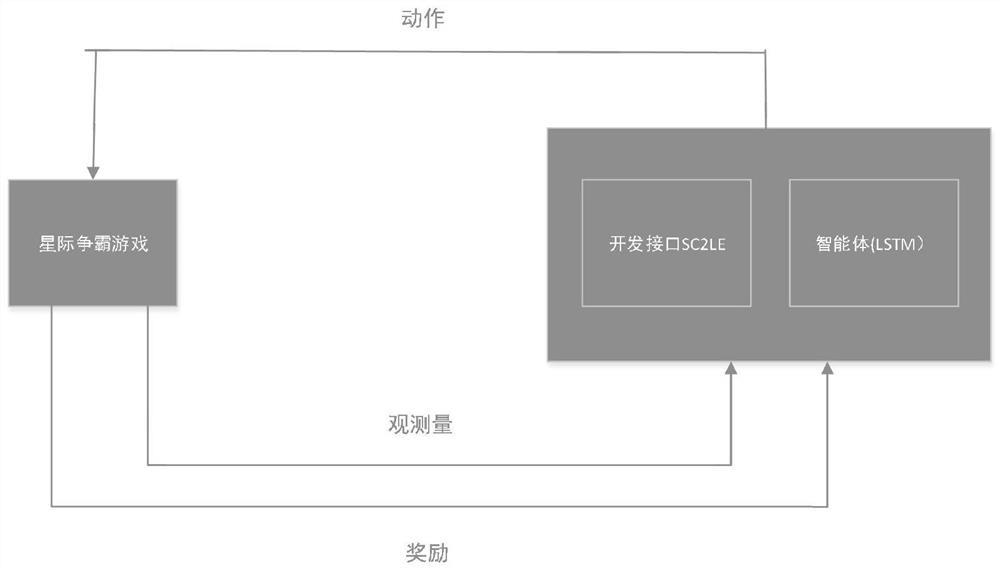

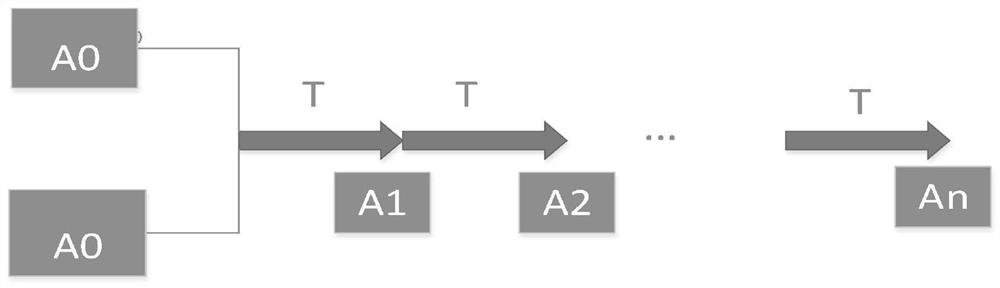

Multi-agent reinforcement learning method and system based on population training

ActiveCN112561032ASolve the small amount of training dataSolve the problem of small amount of training dataArtificial lifeNeural architecturesSpecific populationEngineering

The invention relates to a multi-agent reinforcement learning method and system based on population training. The method comprises the steps of obtaining a first training set according to game videos;training the multi-layer full convolution LSTM network by using the first training set to obtain a first intelligent agent; utilizing the first intelligent agent to perform self-gaming, and obtaininga first population after a set time period; selecting a second intelligent agent, a first intelligent agent set and a second intelligent agent set from the first population; utilizing the first intelligent agent to fight with the selected three groups of intelligent agents at the same time, and storing and updating the first population until any one of the selected three groups of intelligent agents goes wrong, so as to obtain a second population; selecting a replacement agent from the second population to replace the battle agent to continue to fight with the first agent, storing and updating the second population, and obtaining a third population; and until the number of the agents in the third population reaches a preset value, outputting the first agent. According to the invention, the intelligent agent capable of simulating unmanned system combat command and control can be trained.

Owner:NO 15 INST OF CHINA ELECTRONICS TECH GRP

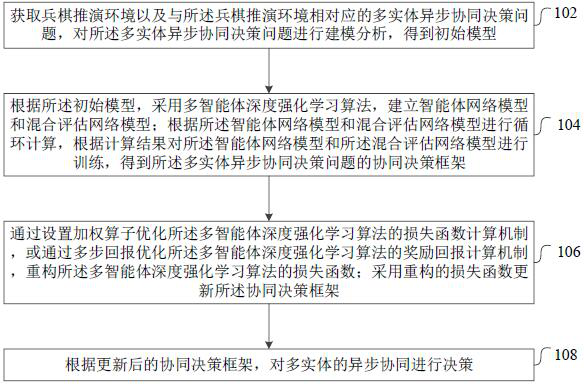

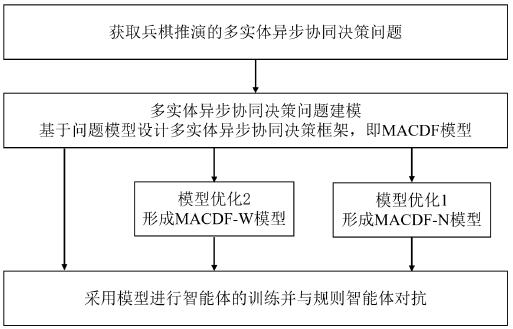

Wargame multi-entity asynchronous collaborative decision-making method and device based on reinforcement learning

ActiveCN114880955AFast learningHigh winning rateArtificial lifeDesign optimisation/simulationReinforcement learning algorithmEngineering

The invention belongs to the technical field of intelligent decision making, and relates to a war game multi-entity asynchronous collaborative decision making method and device based on reinforcement learning, and the method comprises the steps: obtaining a war game deduction environment and a multi-entity asynchronous collaborative decision making problem, and carrying out the modeling analysis of the multi-entity asynchronous collaborative decision making problem, and obtaining an initial model; according to the initial model, a multi-agent deep reinforcement learning algorithm is adopted to establish an agent network model and a hybrid evaluation network model; training the agent network model and the hybrid evaluation network model to obtain a collaborative decision framework; a loss function of the multi-agent deep reinforcement learning algorithm is reconstructed by setting a weighting operator or optimizing the multi-agent deep reinforcement learning algorithm through multi-step return; using the reconstructed loss function to update the collaborative decision framework; and according to the updated collaborative decision framework, performing decision making on asynchronous collaboration of multiple entities. According to the method, multi-entity asynchronous collaborative decision-making in war game deduction can be realized.

Owner:NAT UNIV OF DEFENSE TECH

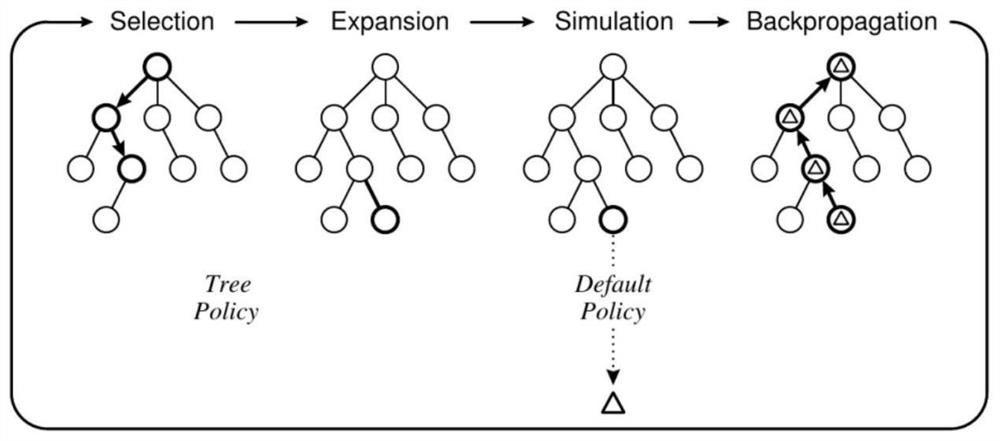

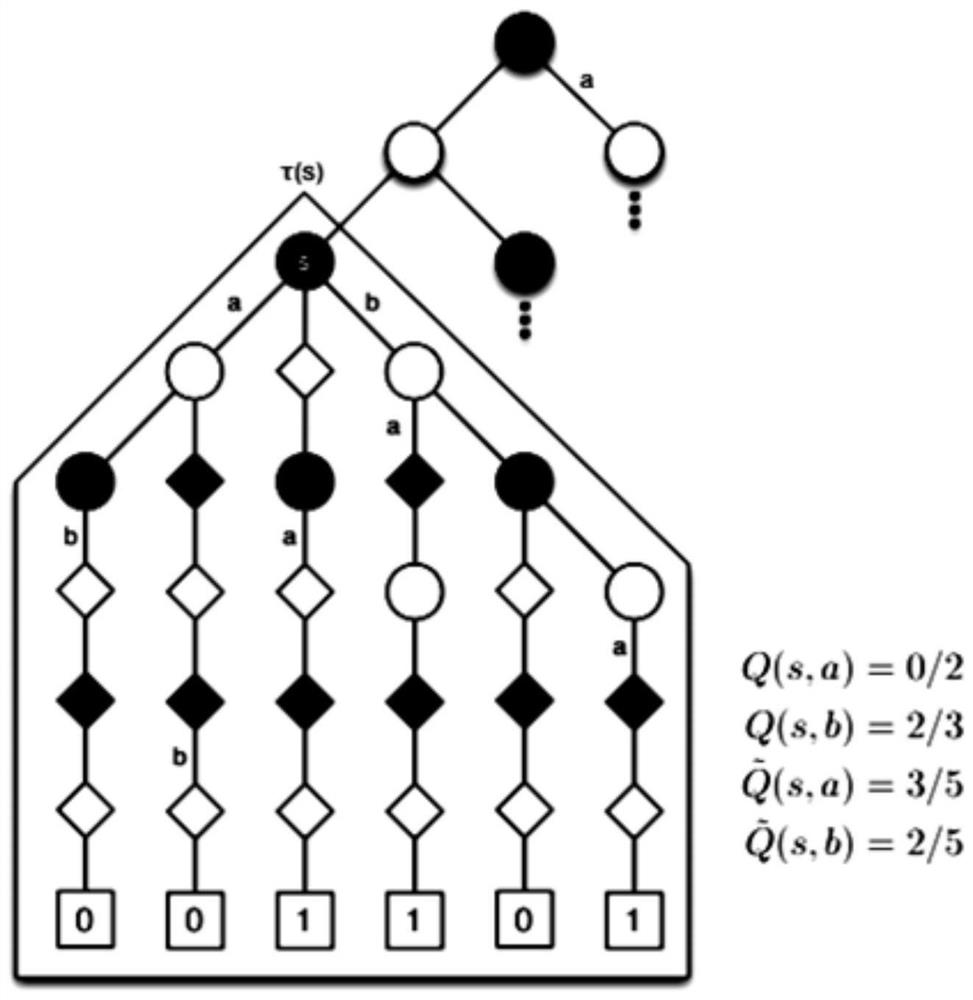

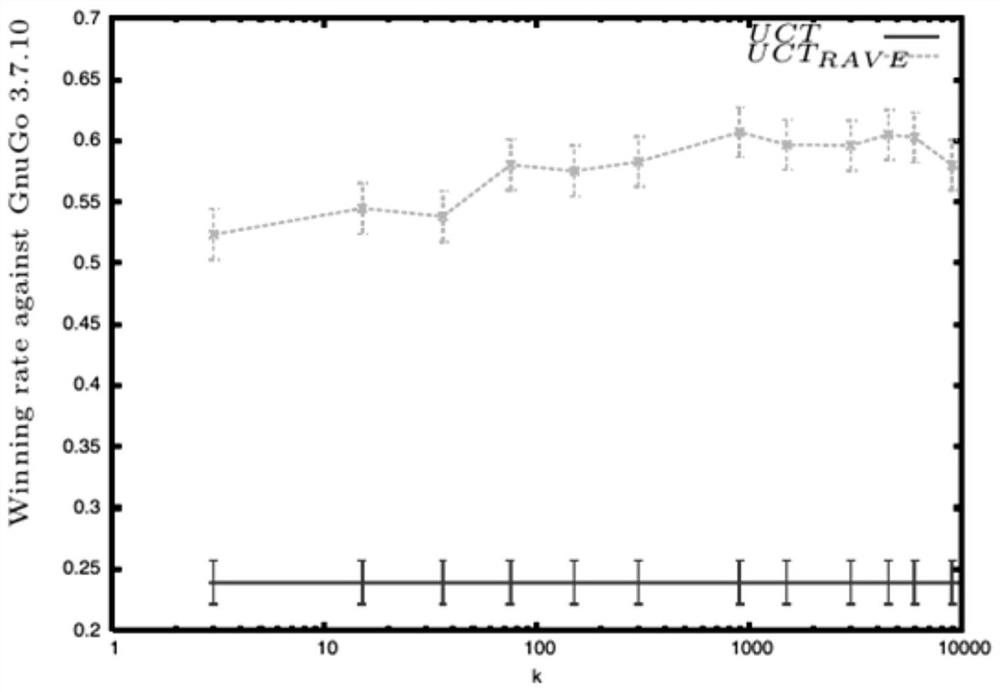

Strategy improvement method of search game tree on Go

PendingCN113377779AIncrease winning percentageFast convergenceSpecial data processing applicationsDatabase indexingTheoretical computer scienceImproved algorithm

The invention relates to a strategy improvement method for a search game tree on Go. The method comprises the following steps: establishing a search tree by taking a current state as a root node; selecting a child node of the root node for simulation, and if a child node is not simulated, randomly selecting a child from the child node of the root node for simulation; if all the child nodes are simulated for at least one time, selecting the child node with the highest UCB sub-tree; starting to simulate from the selected child node to the leaf node; combining the simulation strategy with uniform sampling and a minimum and maximum strategy; propagating the final simulation result reversely to the root node, and updating the Q value and the N value of the action value function of all leaves on the path; and repeating the steps for many times, and finally selecting the node with the highest utilization item score in the UCB. According to the invention, the improved algorithm is applied to the search strategy of the Go, the evaluation of GNUGo and CGOS is passed, and the final experimental result shows that the algorithm can improve the accuracy of game search in the Go.

Owner:沈阳雅译网络技术有限公司

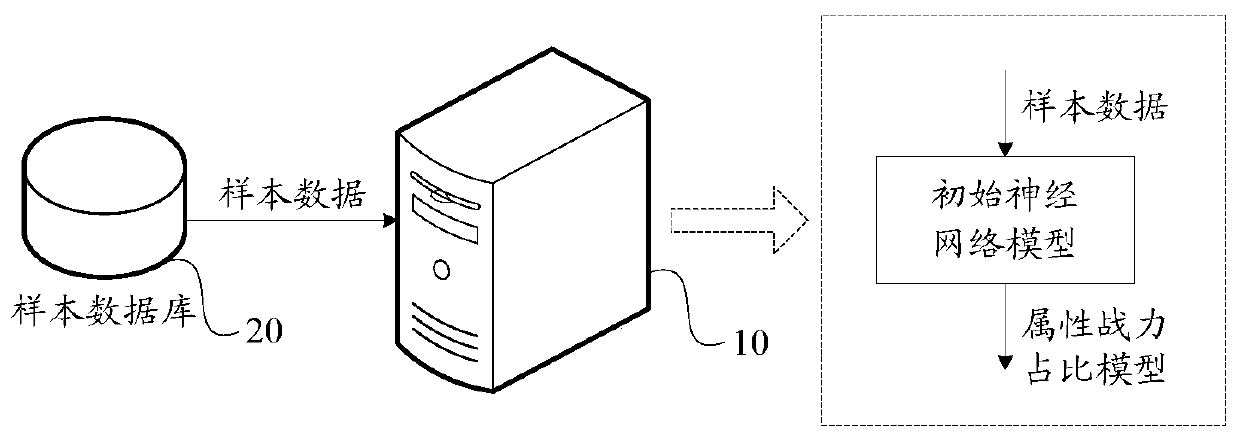

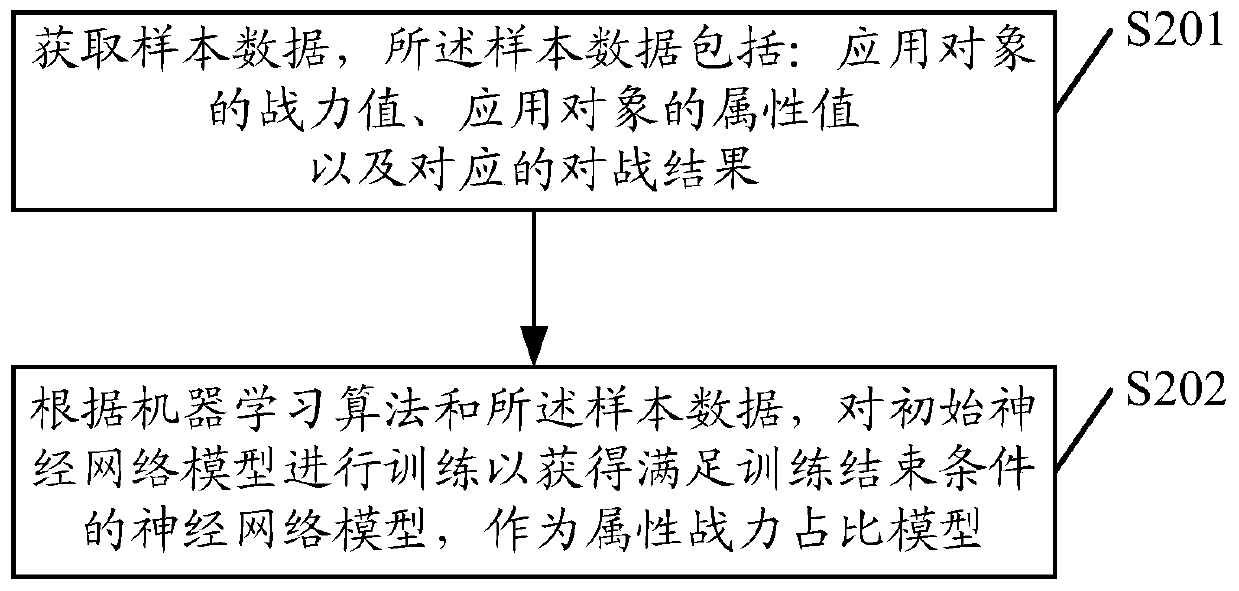

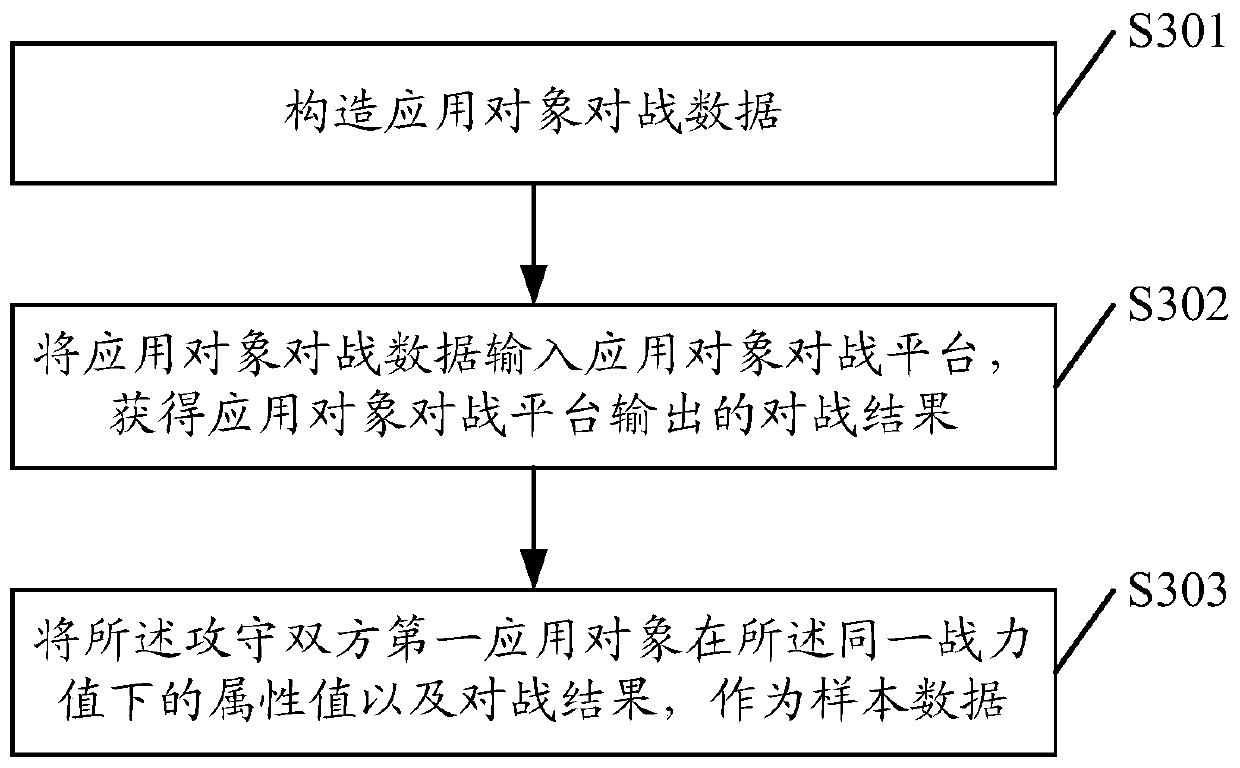

A configuration method, device, equipment and medium for application object attributes

ActiveCN109107159BIncrease winning percentageImprove performanceVideo gamesInteraction timeResource utilization

Owner:SHENZHEN TENCENT NETWORK INFORMATION TECH CO LTD

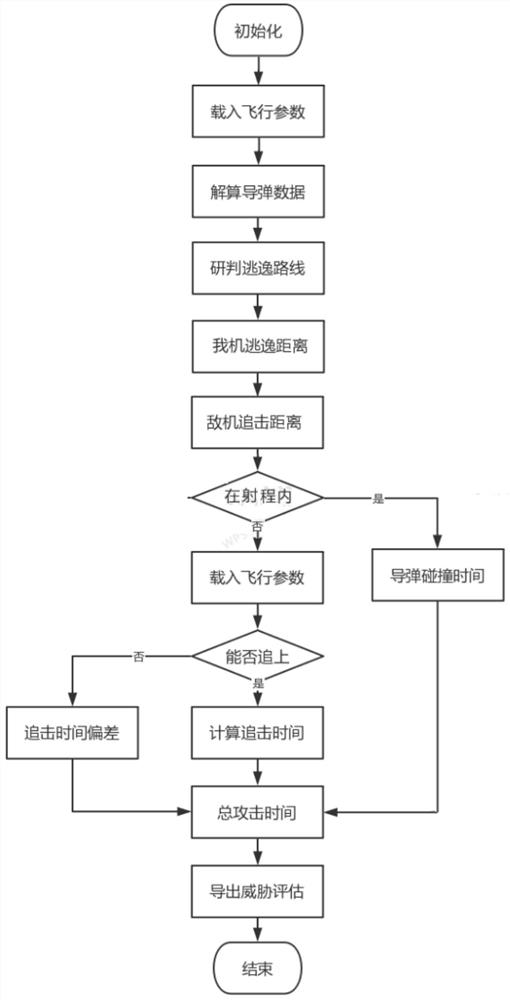

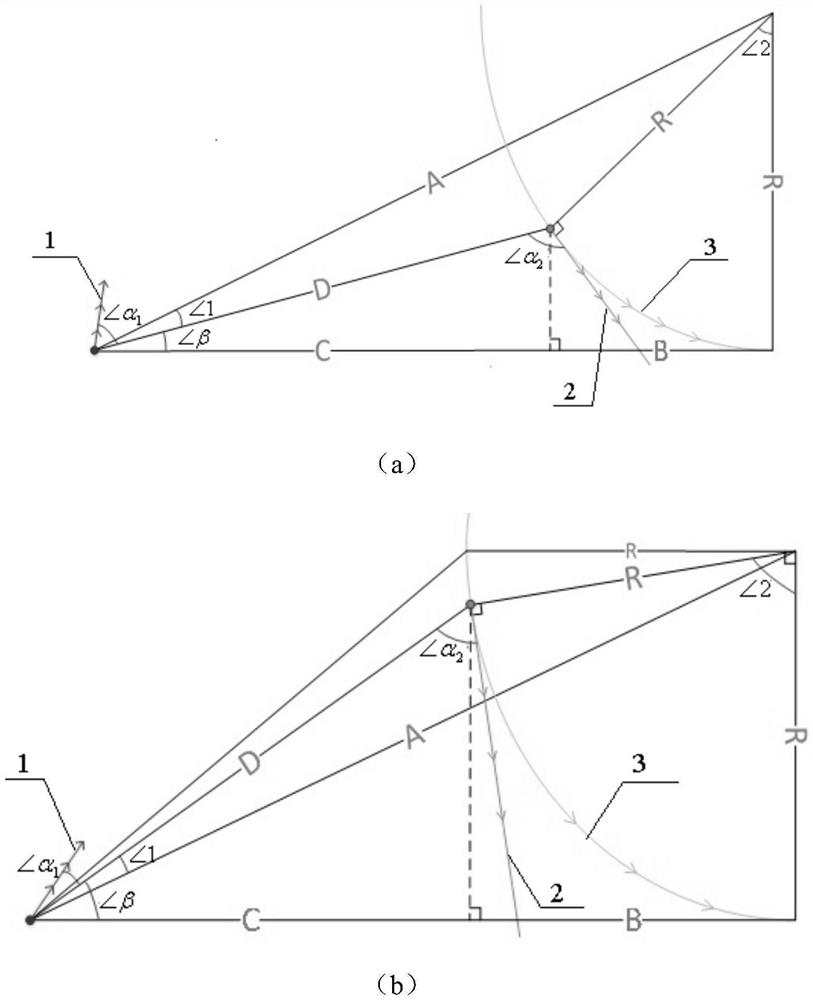

Threat evaluation method for aerial target in beyond-visual-range air combat

ActiveCN111832193AImprove judgment accuracyImprove accuracyDesign optimisation/simulationSpecial data processing applicationsClassical mechanicsControl theory

The invention provides a threat evaluation method for an air target in an over-the-horizon air combat, and relates to the technical field of threat evaluation in the over-the-horizon air combat. The method comprises the steps of firstly establishing a missile model, and calculating flight data of a missile carried by a target enemy plane; determining a turning angle when the aircraft escapes according to the azimuth angle of the enemy aircraft, and further determining an escape route and a folded escape distance of the aircraft; determining the turning angle and the turning time of the enemy plane according to the azimuth angle of the enemy plane and the speed vector direction of the enemy plane; judging whether the aircraft is within the range of the enemy missile or not, and judging theattack result of the enemy aircraft to the aircraft and the total attack time by combining the flight speeds of the enemy aircraft and the aircraft; and finally, determining a threat evaluation valueof the enemy plane to the aircraft according to an attack result of the enemy plane to the aircraft. According to the method, energy is used as a basis, time is used as a unified dimension, and the judgment precision of absolute threat evaluation is effectively improved; and the output quantity units are unified, so that the judgment precision of relative threat evaluation is effectively improved.

Owner:SHENYANG AEROSPACE UNIVERSITY

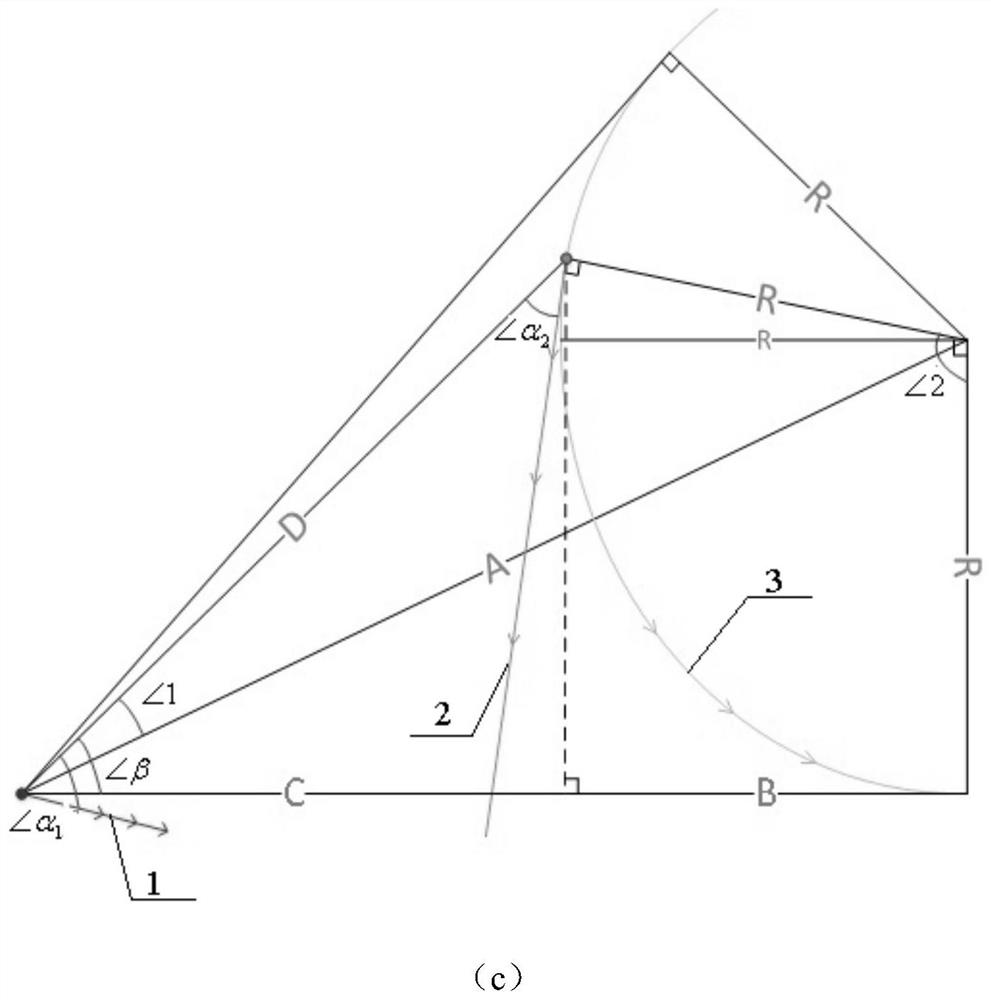

Soccer robot, and control system and control method of soccer robot

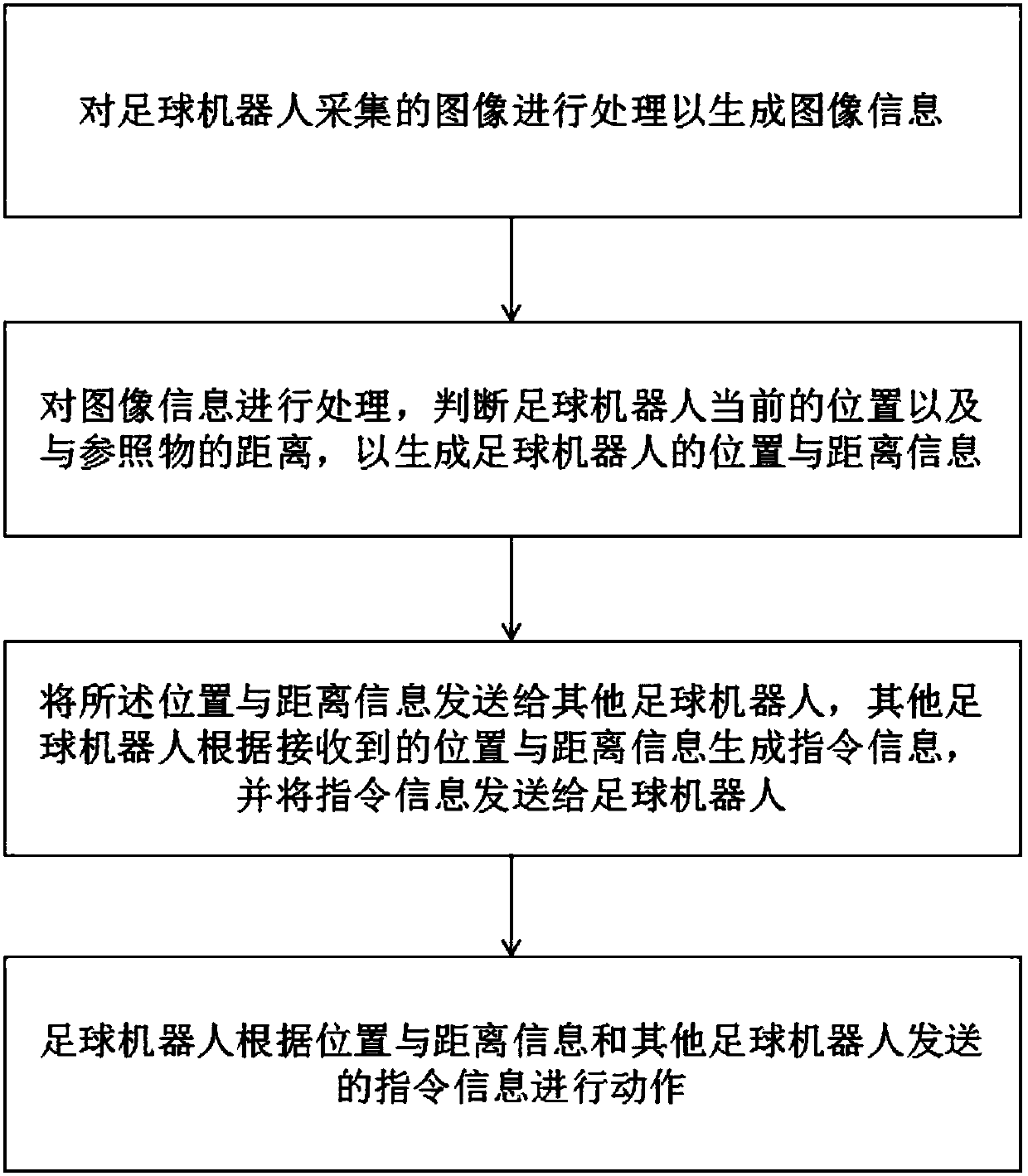

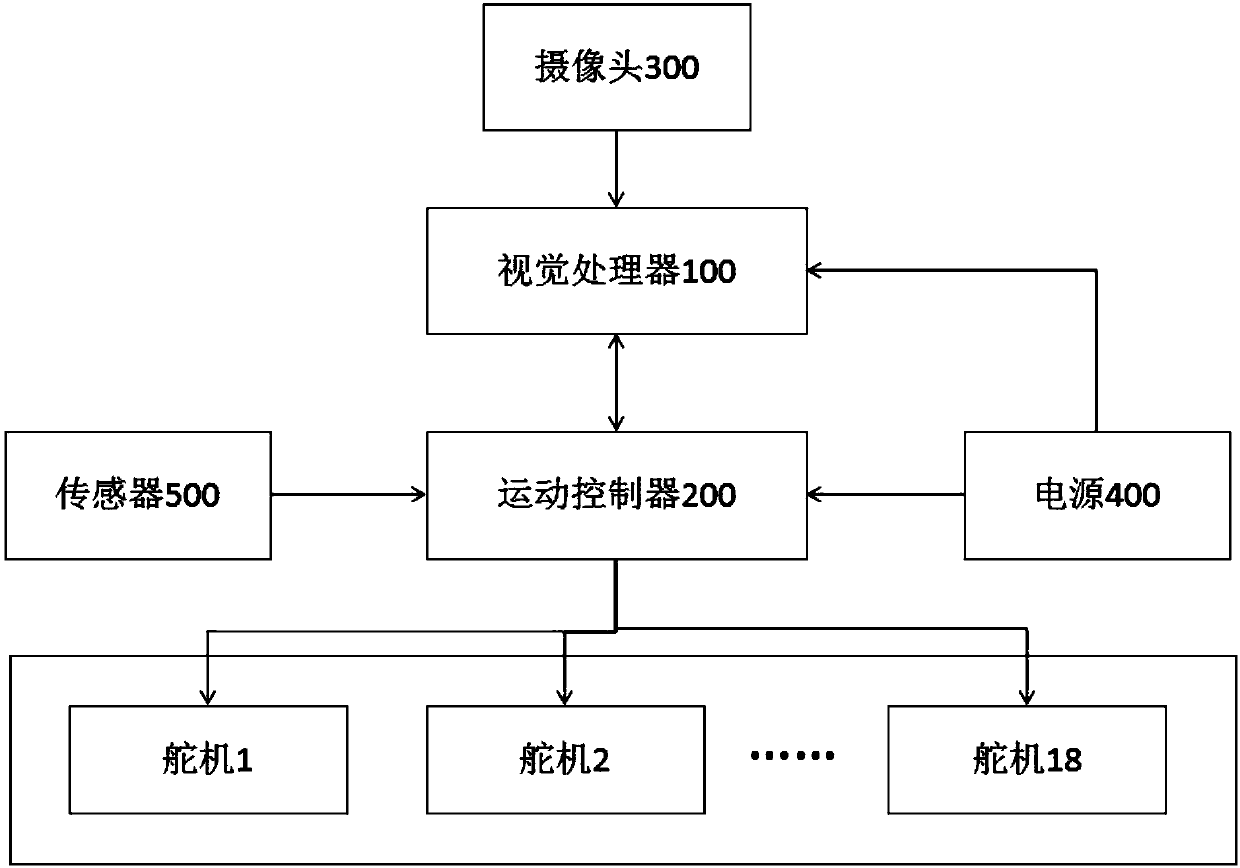

InactiveCN109976330AIncrease winning percentageImprove accuracyPosition/course control in two dimensionsComputer visionSoccer robot

The invention discloses a control system of a soccer robot. The control system comprises a visual processor and a motion controller, wherein the visual processor comprises an image processing module,a positioning module and a communication module, wherein the image processing module is used for processing an image collected by the soccer robot so as to generate image information, the positioningmodule is connected with the image processing module and is used for processing the image information so as to generate the position and distance information of the soccer robot, and the communicationmodule is connected with the positioning module and is used for sending the position and distance information to other soccer robots and receiving instruction information sent by the other soccer robots; and the motion controller includes a motion state management and decision making module, wherein the motion state management and decision making module is connected with the visual processor andis used for receiving the position and distance information and instruction information, and controlling the movement of the soccer robot according to the position and distance information and instruction information. With the control system of the soccer robot of the present invention adopted, the teamwork of a plurality of soccer robots can be realized.

Owner:SHENYANG SIASUN ROBOT & AUTOMATION

A combined toy top that can be automatically separated

The combined toy top that can be automatically separated in the present invention is characterized in that at least two tops and bottoms are docked, and the tops of the tops are all elastic tops, and the top top of the top is rotating When it is bumped or blocked during rotation, it will automatically release the connection with the lower top, and will be ejected under the action of its own elastic top to form two tops that rotate independently. In the process of playing, the present invention can realize that the spinning top can be divided into two or three when fighting against the competition, which greatly increases the attack power of the spinning top and makes the winning rate higher, and because the top of the spinning top is elastic The gyro tip, when the fission splits during the game, the upper gyro is mainly produced through its own gyro elastic fission, so the impact on the lower gyro is not great, and it can also use the elastic buffering effect to achieve a soft landing when landing, so as to Effectively protect the top tip and maintain the stability of operation.

Owner:ALPHA GRP CO LTD +2

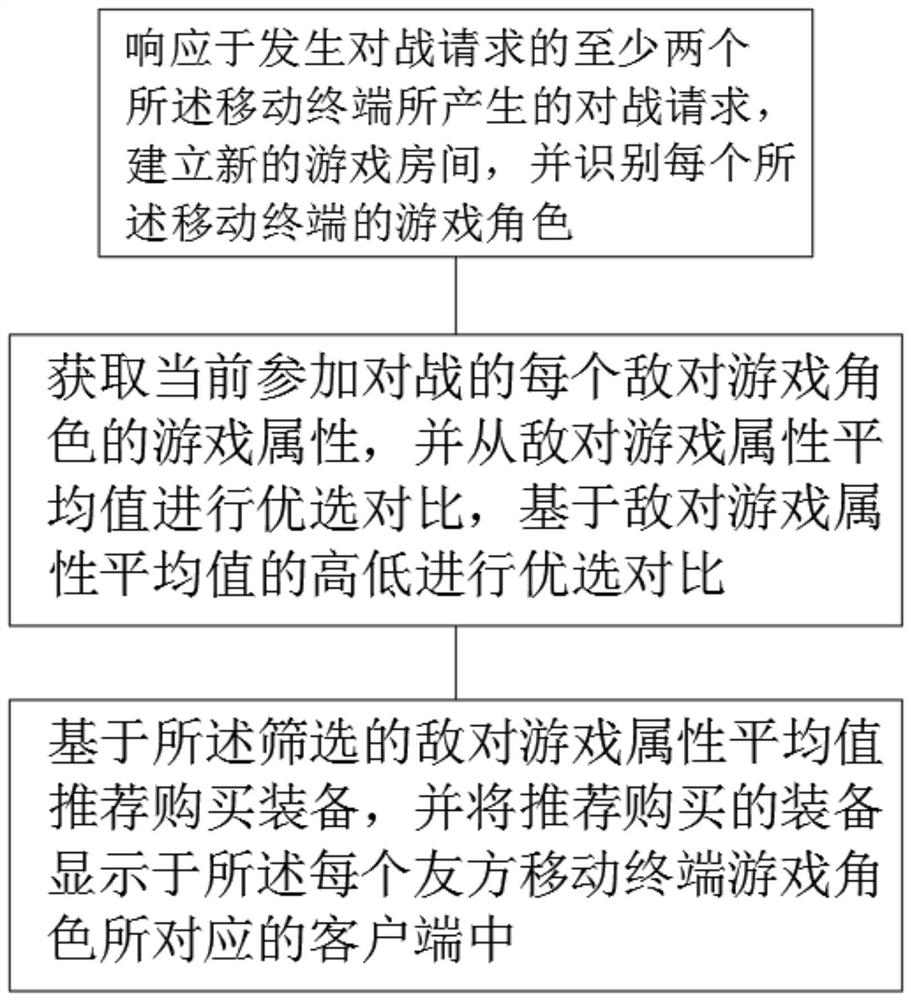

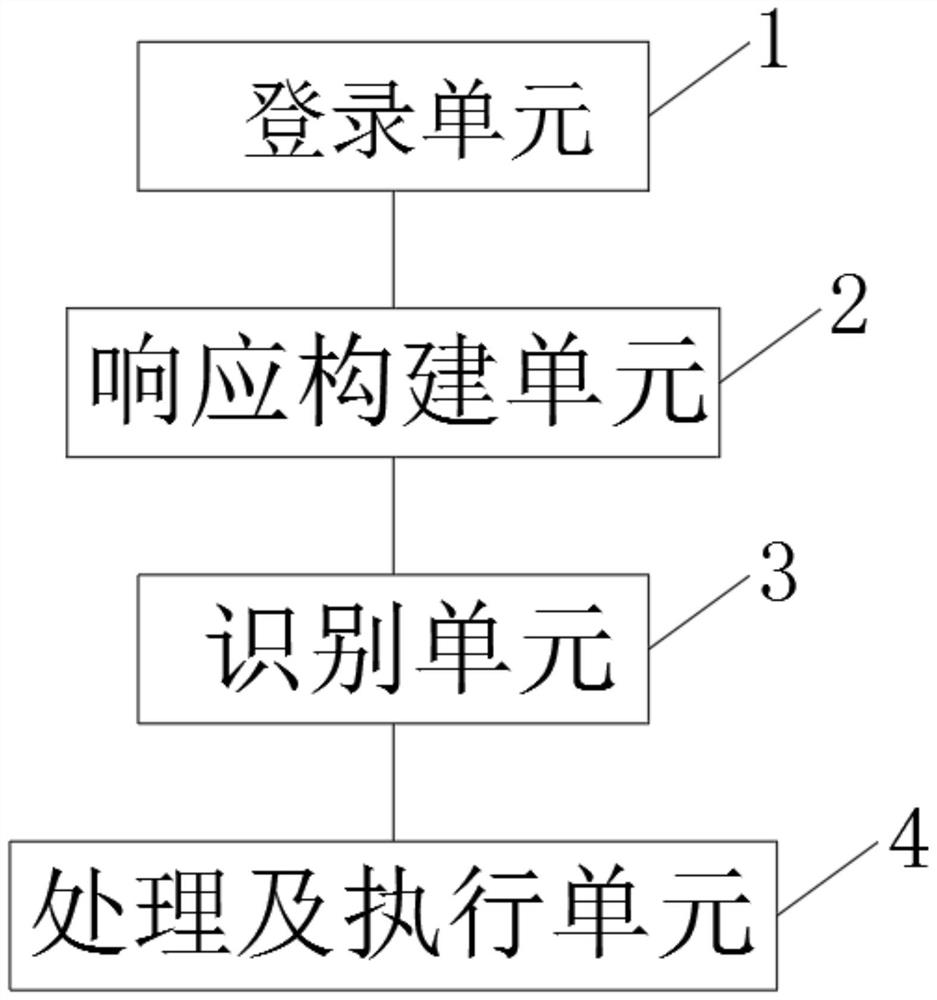

Method, system and device for recommending equipment in real time based on MOBA games

PendingCN112138395AIncrease winning percentageImprove gaming experienceVideo gamesComputer securityOperations research

Owner:BEIJING ELEX TECH

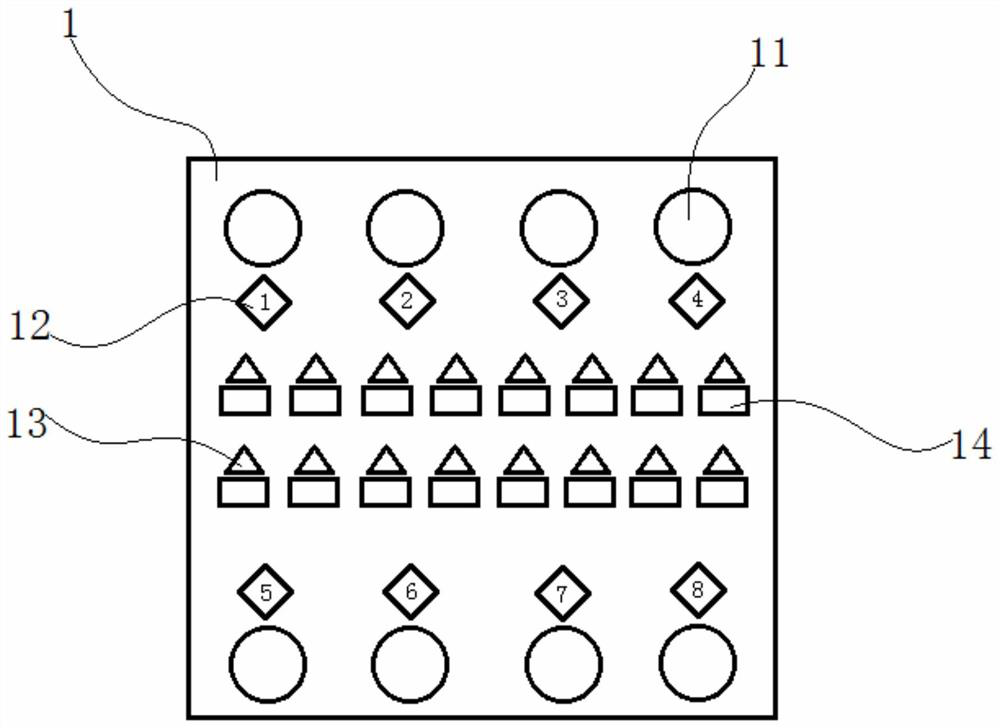

Game auxiliary tool for Killers of the Three Kingdoms

InactiveCN112023381AEfficient recordingIncrease winning percentageCard gamesGame designComputer engineering

The invention relates to a game auxiliary tool for Killers of the Three Kingdoms, which comprises a panel, an identity plate, number marks and a counting assembly, through holes are formed in the upper end and the lower end of the panel, the identity plate is arranged on the through holes, the number marks are matched with the identity plate, and the counting assembly comprises name marks and a counter. The tool is designed for Killers of the Three Kingdoms, can efficiently record information in the games, especially the number information of cards, effectively helps players to better analyzethe disability information of the games, improves the winning rate of the players, and improves the game experience of the players.

Owner:淮南市九方皋科技咨询有限公司

Polypropylene short-term Delta sequence query method

The invention discloses a polypropylene short-term Delta sequence query method. The method quickly queries the short-term Delta sequence by searching the period and serial number. The Delta sequence is an objectively existing sequence that does not depend on human will. The mean value and variance can be used for better trading. When investors know the mean value of the short-term Delta sequence, they can conduct corresponding transactions according to different trading strategies. However, when trading, they should not blindly trade according to time. Wait for the verification signal of the trend change within the variance time, so that the winning rate of the transaction can be improved. Compared with traditional technical analysis, DeIta is more trustworthy and reassuring, because it is the law that exists in the market and guides the direction of the market, while traditional technical analysis is to process the emerging data to make a big difference. A guess that the probability is expected to go the same way is essentially a prediction based on the further continuation of the trend rather than an objective law.

Owner:SHAANXI SCI TECH UNIV

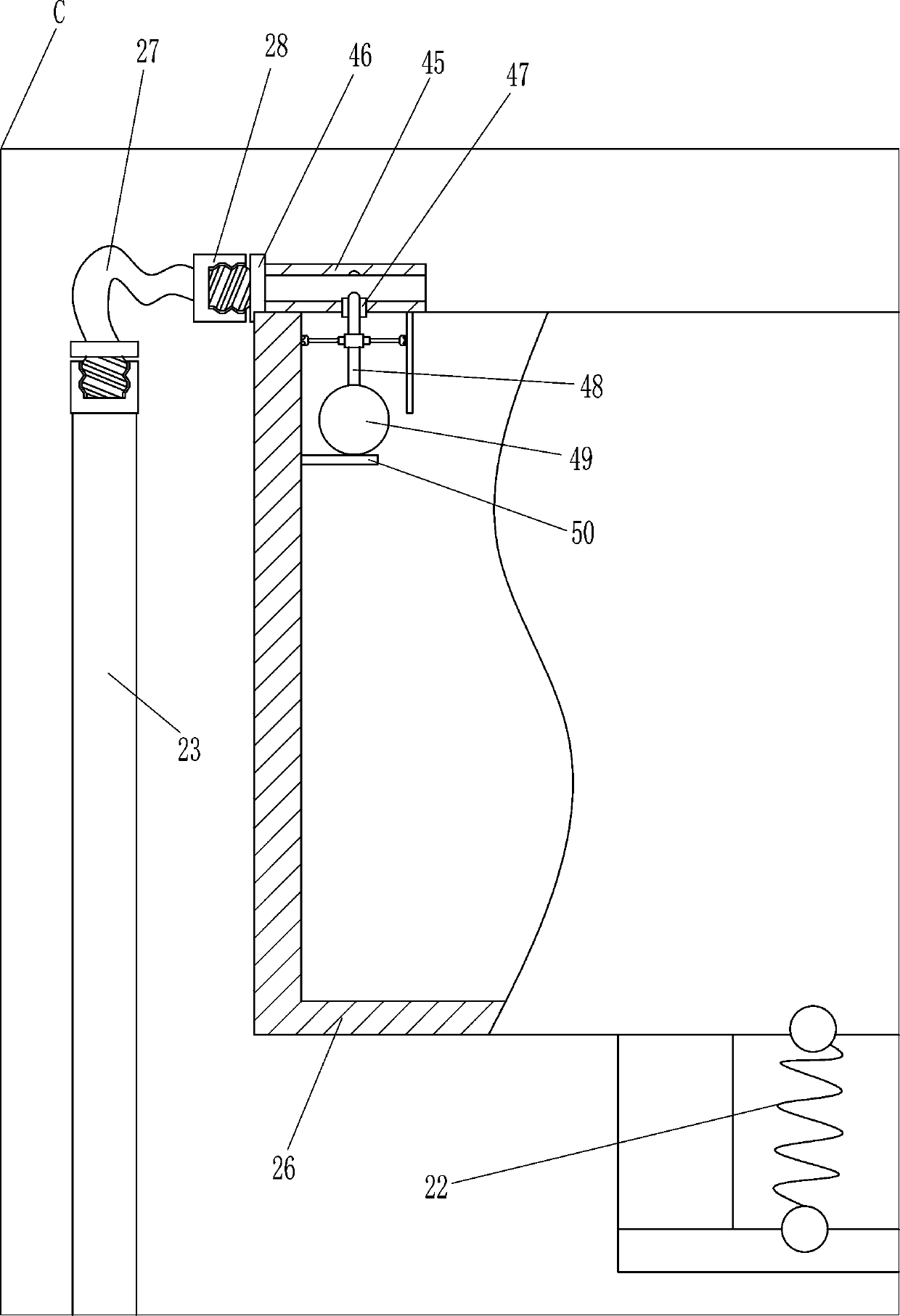

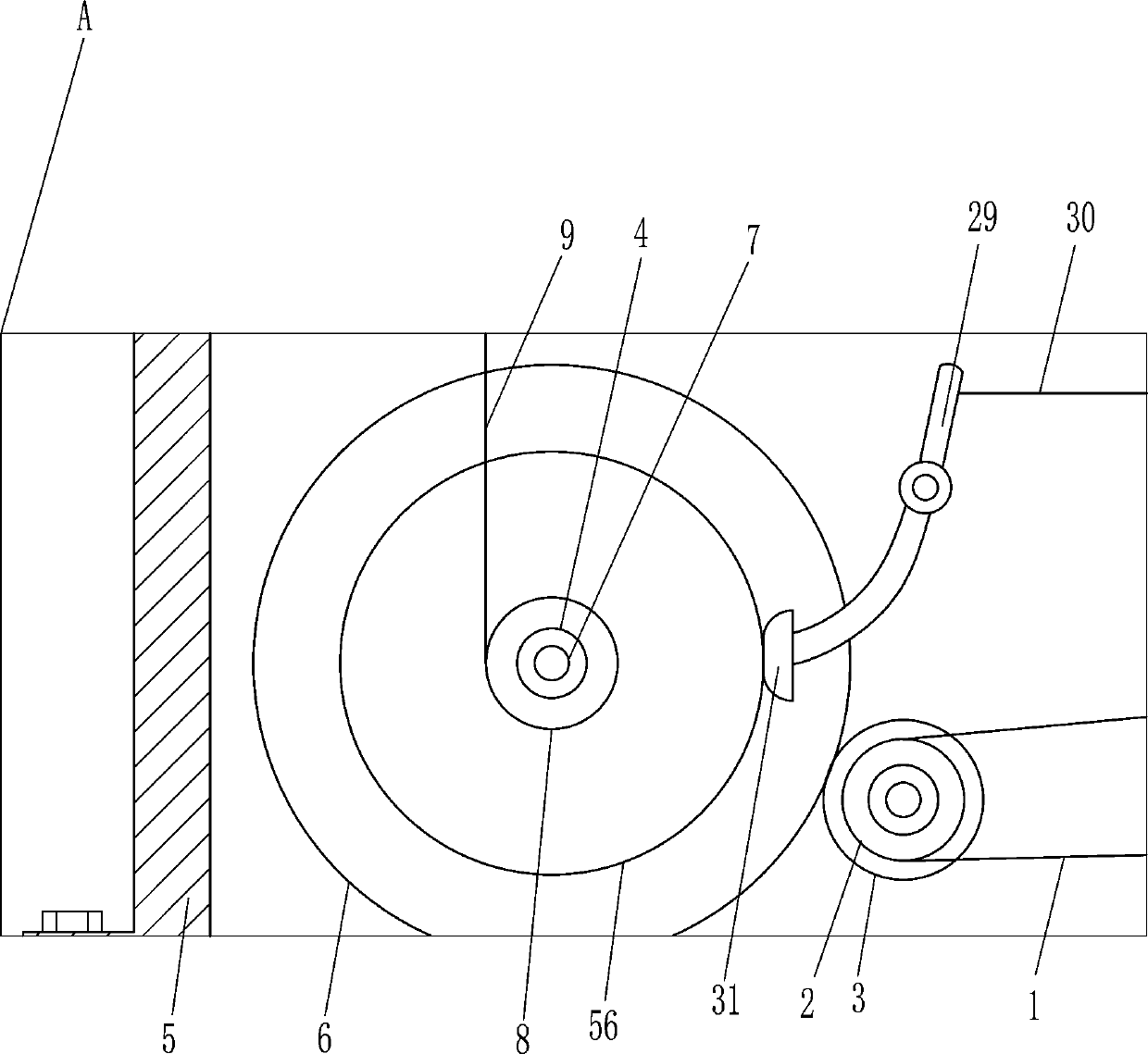

A bouncing toy top

A bouncing toy spin-top, comprising a gyro cap (1) located above, a gyro piece (2) located below the gyro cap (1), a gyro base (3) used for fixing the gyro piece (2), a top tip (8) located at the bottom, and a resilient movable member located between the gyro base (3) and the top tip (8), the resilient movable member being fixedly connected to the top tip (8) and connected to the gyro base (3) in such a way as to allow upward and downward resilient motion. When the spin-top lands on the ground from the launcher, the resilient movable member and the top tip (8) move upward under the reaction force of gravity, then resiliently recover and move back to their original positions, then move upward under the inertial force, repeating in this manner and thus forming a bouncing action. In a competitive confrontation, the toy spin-top can strike an opposing spin-top from above by means of jumping up, thus more easily damaging the opposing spin-top; the aggressiveness of the spin-top is significantly increased, making the odds of winning higher.

Owner:ALPHA GRP CO LTD +2

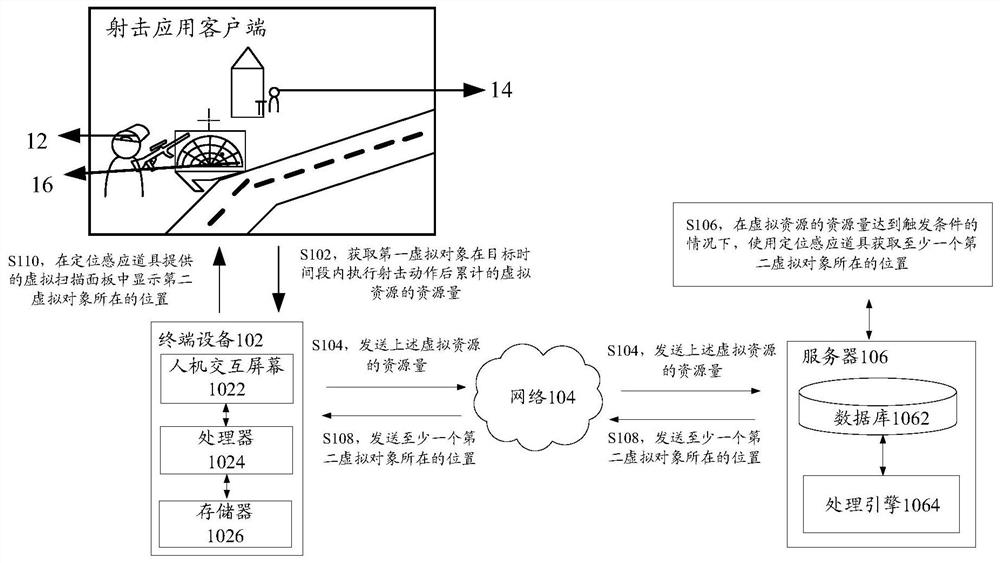

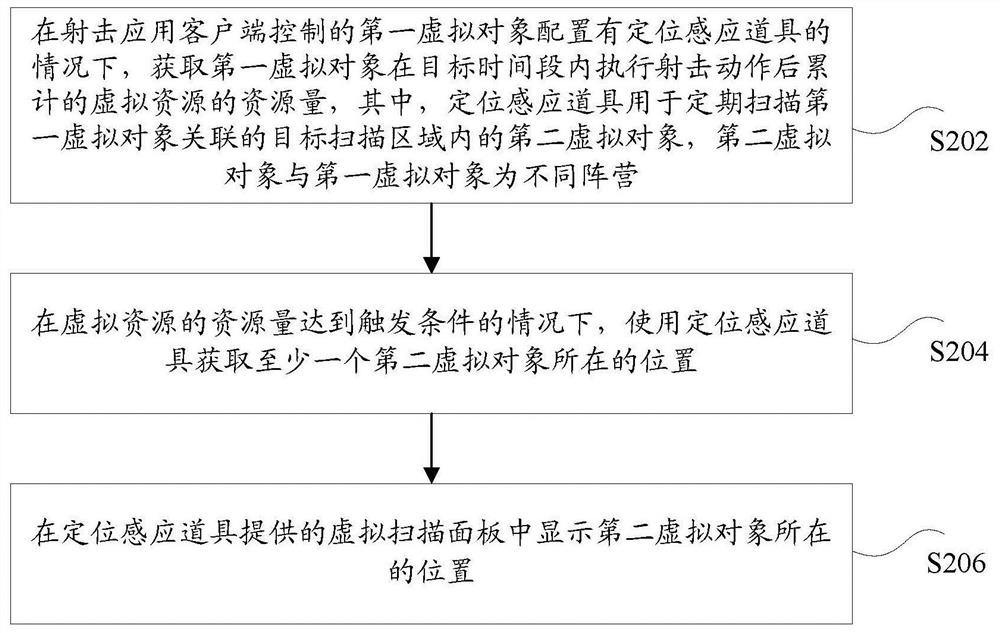

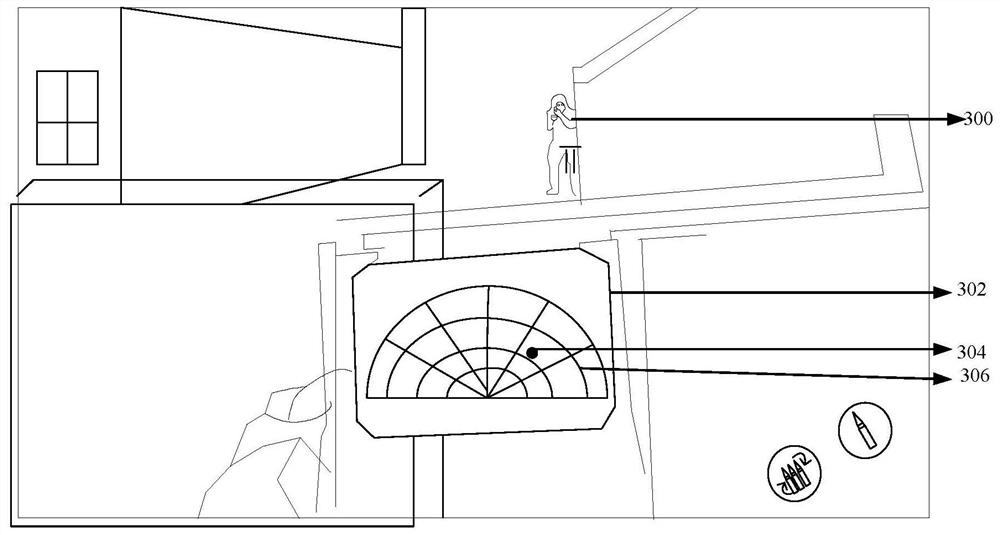

Object positioning method and device, storage medium and electronic equipment

ActiveCN111888764AImprove positioning efficiencyIncrease winning percentageVideo gamesComputer graphics (images)Engineering

The invention discloses an object positioning method and device, a storage medium and electronic equipment. The method comprises the following steps that: 1, under the condition that a first virtual object controlled by a shooting application client is provided with a positioning sensing prop, the resource quantity of virtual resources accumulated after the first virtual object executes a shootingaction in a target time period is obtained, wherein the positioning sensing prop is used for scanning second virtual objects in a target scanning area associated with the first virtual object at regular intervals, and the second virtual object and the first virtual object are in different camps; under the condition that the resource quantity of the virtual resources reaches a triggering condition, the position of at least one second virtual object is obtained by using the positioning sensing prop; and the positions of the second virtual objects are displayed in a virtual scanning panel provided by the positioning sensing prop. According to the object positioning method and device, the storage medium and the electronic equipment of the invention, the technical problem of low positioning efficiency of an object positioning method provided by the prior art is solved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

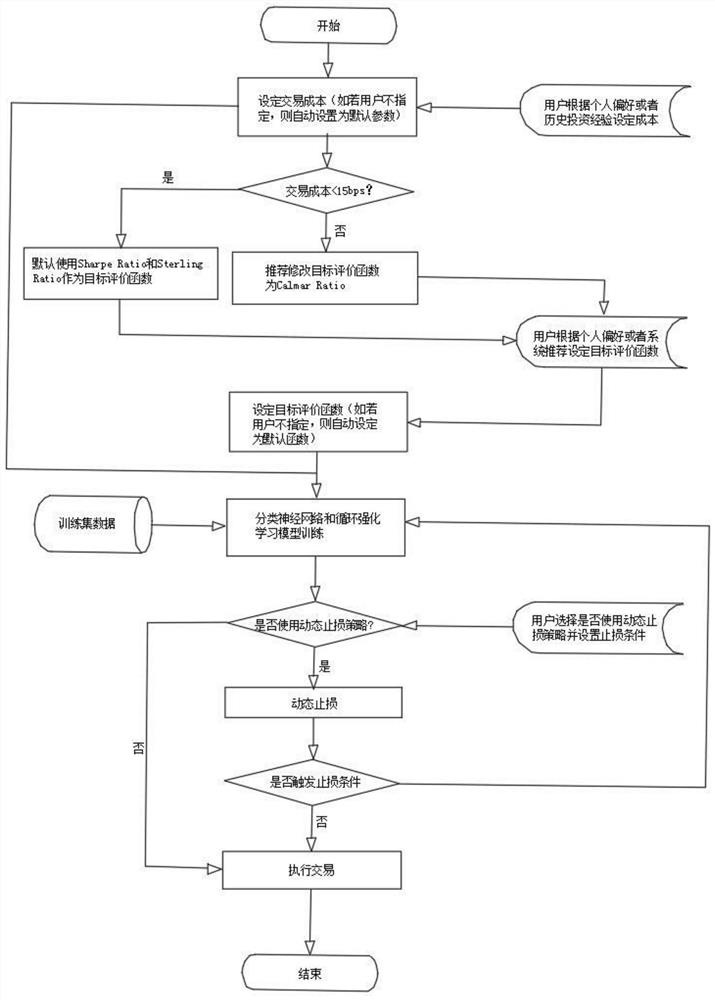

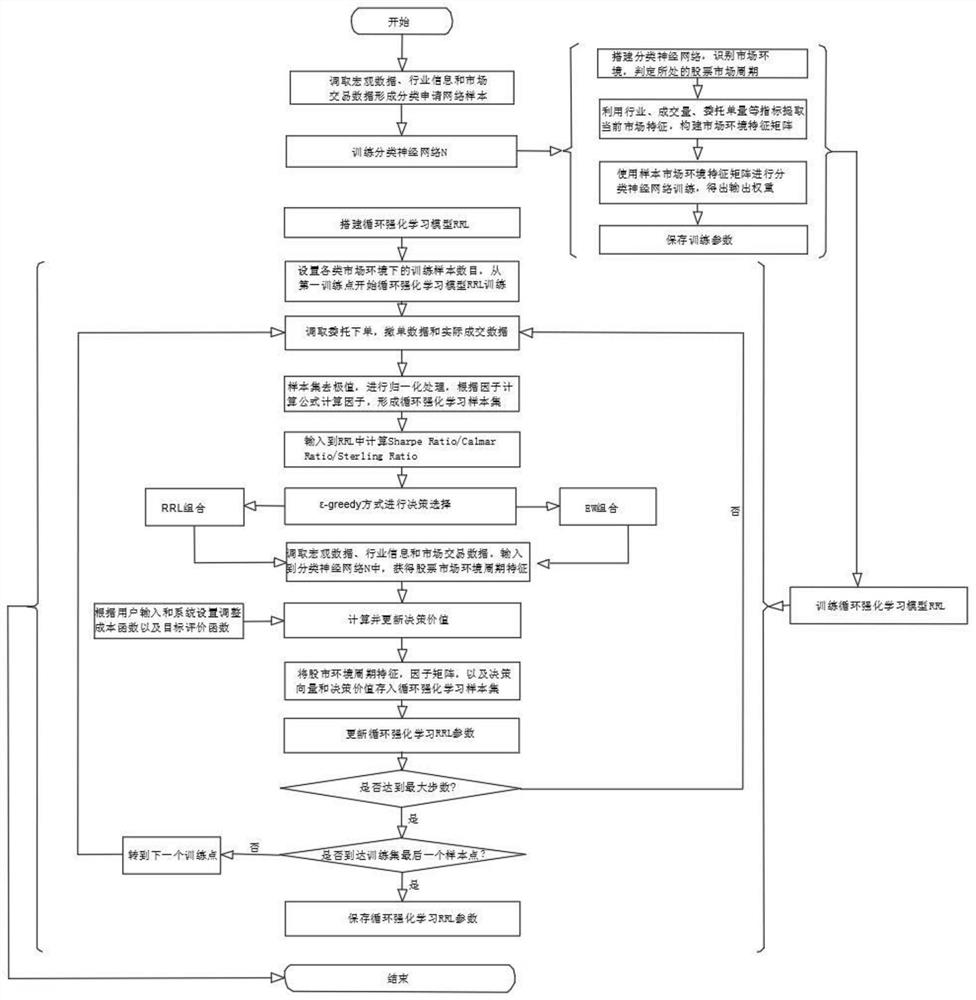

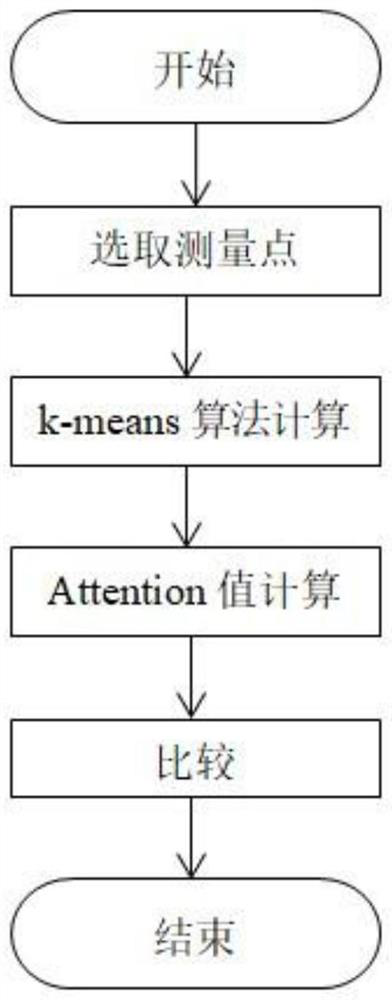

Stock trading method based on reinforcement learning

PendingCN112884576AMeet the needs of transaction decision-makingHigh speedFinanceNeural learning methodsReinforcement learning algorithmEngineering

The invention discloses a stock trading method based on reinforcement learning, and relates to the field of machine learning and quantitative trading. According to the invention, stock transaction is carried out based on a cyclic reinforcement learning algorithm with adaptive capability; the method comprises the steps that a user interaction interface and a classification neural network N train a classification neural network; through a circular reinforcement learning RRL training stage, three types of operations of buying, holding and selling are respectively executed in recognized different stock market cycle scenes, after RRL training is completed, an automatic transaction execution and loss stopping stage is entered, dynamic loss stopping is performed according to a loss stopping strategy set by a user, and automatic transaction execution is performed. According to the invention, the specific preference of the user for risk earnings can be satisfied, the risk of manual transaction errors is reduced, and the cost of manual decision making is reduced; compared with a traditional linear model and a Q learning method, the price self-adaptability is higher, the made investment decision is timely and effective, and the transaction winning rate can be greatly improved.

Owner:上海卡方信息科技有限公司

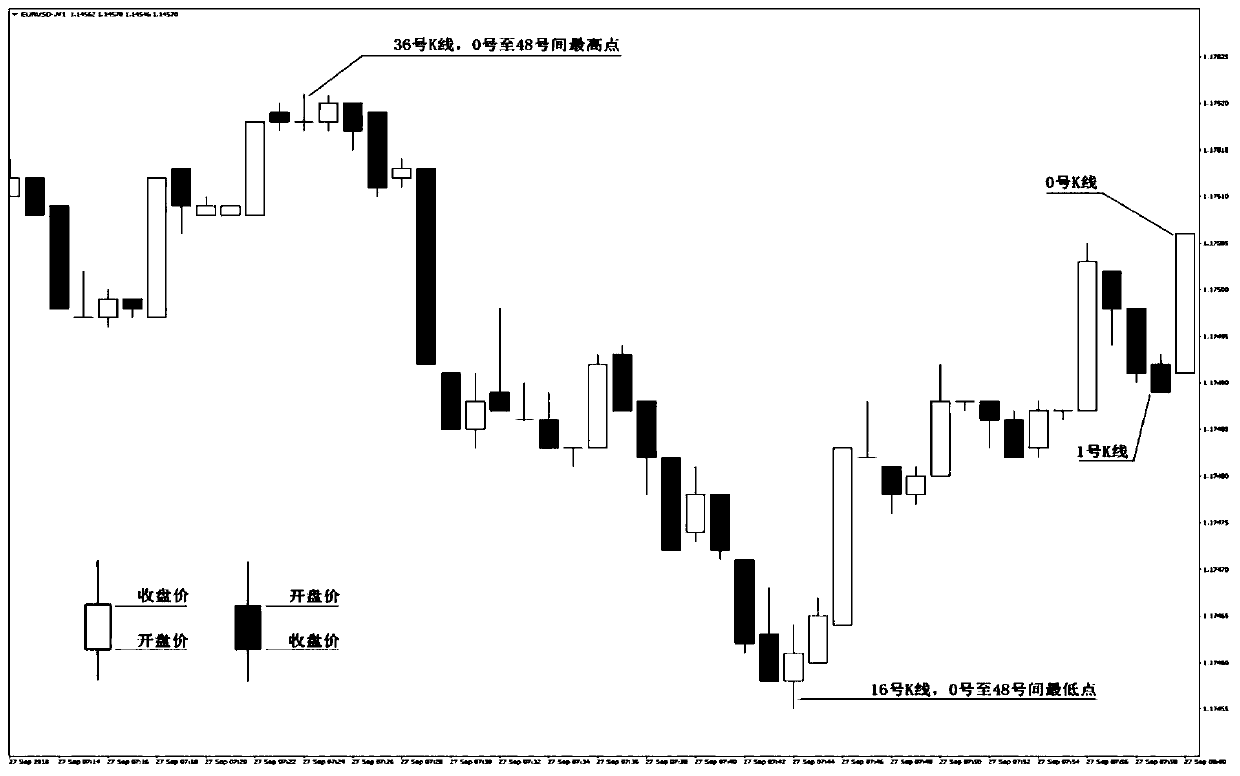

Algorithm for predicting price rise and fall by combining energy values with big data and application

PendingCN109741085AGood entry positionSmall stop loss possibilityMarketingData validationMarket prediction

The invention discloses an algorithm for predicting price rise and fall by combining energy values with big data and application. On the basis of a K line graph analyzing the price trend and on the basis of minute chart, subtracting the opening price of each K line from the closing price of each K line to obtain the energy value of each K line; if the energy value is positive, the K line is a positive line, and if the energy value is negative, the K line is a negative line, and the energy values of the K lines at different stages are accumulated to obtain the energy values with different durations, such as extremely short term, short term, middle term, middle and long term, long term and ultra-long term. The method is accurate and timely, can quantify, can quantify rising energy or fallingenergy of different stages, and can also timely enter or reject entry. According to the method, the winning rate of market prediction is relatively high, and after historical big data verification and parameter optimization, an automatic transaction system or index based on an energy value is based on an objective energy value and probability when an automatic transaction (or transaction signal sending) is executed, so that relatively high winning rate guarantee can be achieved.

Owner:上海句石智能科技有限公司

Smith poem level wild strange killing time confirmation method based on MOBA type game lineup

InactiveCN114768257AIncrease winning percentageLess distracting factorsCharacter and pattern recognitionNeural architecturesEnglish charactersOperations research

The invention discloses a historical poem level wild strange killing time confirmation method based on MOBA type game lineup, and the method comprises a male and English character sample set module, a male and English character strength analysis module, and a killing advantage coefficient module. According to the method, the attack advantage coefficient of the teams of the two battle parties is taken as the output, the attack time point of the historical poem-level wild strange in the MOBA game is detected, interference factors in the electronic competition decision making process are reduced, and the method has a promoting effect on the development of electronic competition.

Owner:NANJING COLLEGE OF INFORMATION TECH

A combined toy top with induction control and separation

Owner:ALPHA GRP CO LTD +2

A defensive toy top

A toy gyroscope with strong defensiveness comprises a gyroscope cap (1), a gyroscope disc (2), a gyroscope base (3) and a gyroscope point (6), and also comprises an elastic absorber for reducing impact force when the gyroscope is collided with, wherein the elastic absorber comprises an elastic part (4) for confronting collision and a connecting part (5) that is fixedly connected to the gyroscope base. Through a fixed connection between the connecting part (5) and the gyroscope base (3), the elastic part (4) is located in a position of a periphery of the gyroscope base (3); therefore, when the gyroscope competes with other gyroscopes and a counterpart gyroscope collides with the elastic absorber, the elastic absorber deforms elastically to reduce the impact force, thus providing a cushioning effect and making the gyroscope rotate stably. Meanwhile, the elastic absorber generates and returns counterpart force to the counterpart gyroscope, causing interference to the counterpart gyroscope, thus improving a winning rate of the own gyroscope and providing great fun. In addition, a player can change elastic performance of the elastic absorber based on judgment to achieve a better anti-collision effect. The gyroscope provides a novel playing method, can obtain favor of more players, and can culture a manipulative ability and a competitive ability of children.

Owner:ALPHA GRP CO LTD +2

Event display method and device

ActiveCN106484395BSteps to Simplify DisplayOvercome the problem of low display efficiencyExecution for user interfacesInput/output processes for data processingGraphicsData mining

The present invention discloses an event display method and apparatus. The method comprises the steps of detecting whether a first event executed by an application client in a current cycle is finished, wherein the first event is used for obtaining a first event object meeting a first event result among multiple event objects; and when it is detected that the first event is finished, displaying result information in a predetermined display area of the application client, wherein the result information comprises a first figure identifier for identifying the first event object, and a second figure identifier for identifying a second event object, the second event object is an event object that meets the first event result and is obtained in execution of a second event, and the second event is an event executed by the application client in a previous cycle of the current cycle. The existing display method is low in display efficiency. The event display method solves the problem in the existing display method.

Owner:TENCENT TECH (SHENZHEN) CO LTD

A combined toy top with magnetic control separation

ActiveCN104623901BSolved the problem that the gyro could not be separated normallyReduce power consumptionTopsEngineeringOperability

Owner:ALPHA GRP CO LTD +2

Object control method and device, storage medium and electronic device

ActiveCN110201387BShorten control timeIncrease winning percentageVideo gamesSimulationComputer engineering

The invention discloses an object control method and device, a storage medium and an electronic device. The method includes: in the process of executing a game task by the first target object controlled by the client, detecting the target angle generated by the first target object in the process of executing an action combination, the action combination includes actions performed in the same direction At least two target actions; when the target angle reaches the trigger threshold, the state adjustment command is automatically triggered; in response to the state adjustment command, the movement state of the first target object is adjusted from the first state to the second state, and its first target The first duration used by the object to complete the game task in the first state is greater than the second duration used by the first target object to complete the game task in the second state; the first target object is controlled to perform the game task in the second state. The invention solves the technical problem that the control duration is increased due to high operation complexity.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com