Patents

Literature

84 results about "Floating point multiplication" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

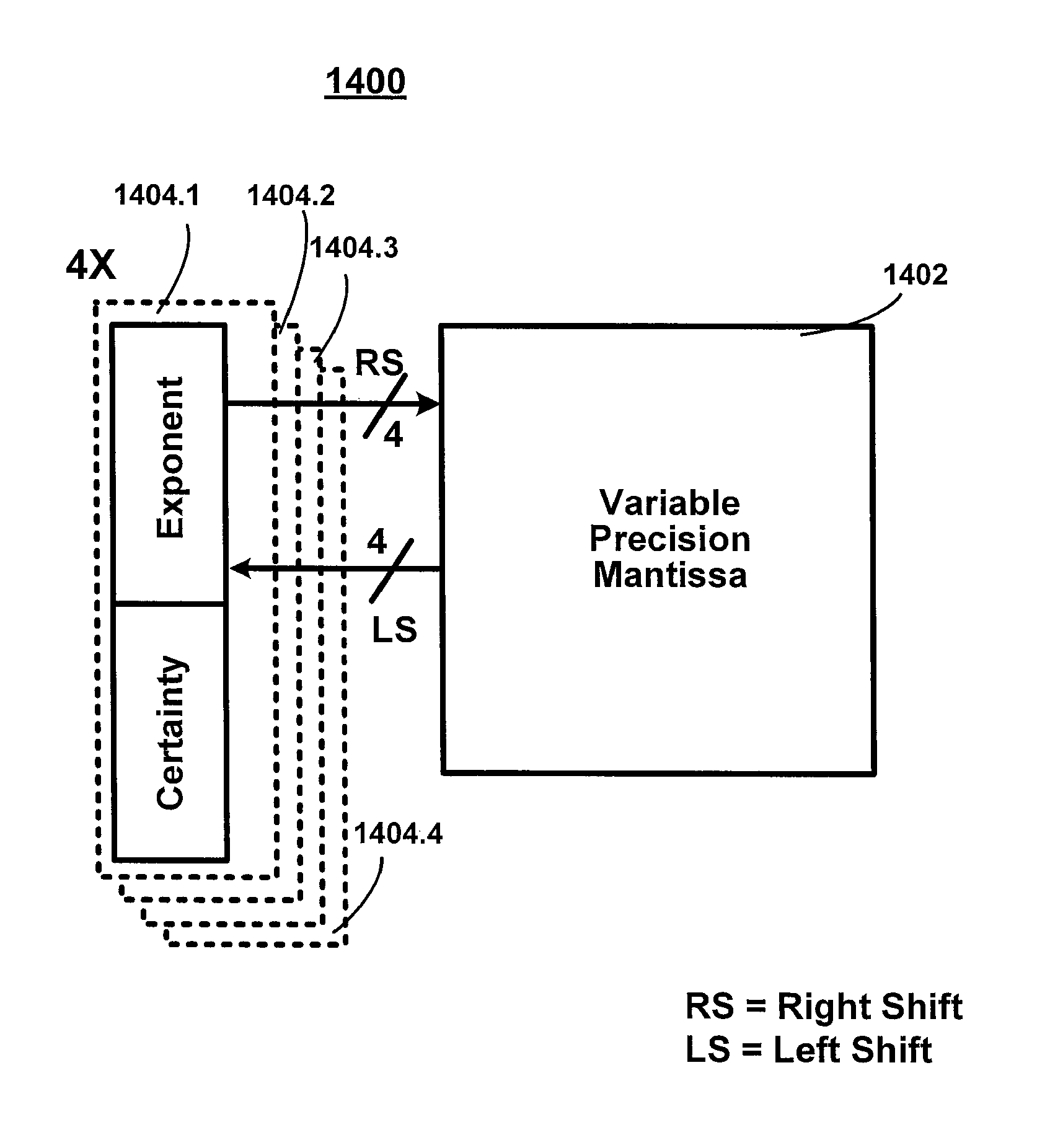

Variable precision floating point multiply-add circuit

Owner:INTEL CORP

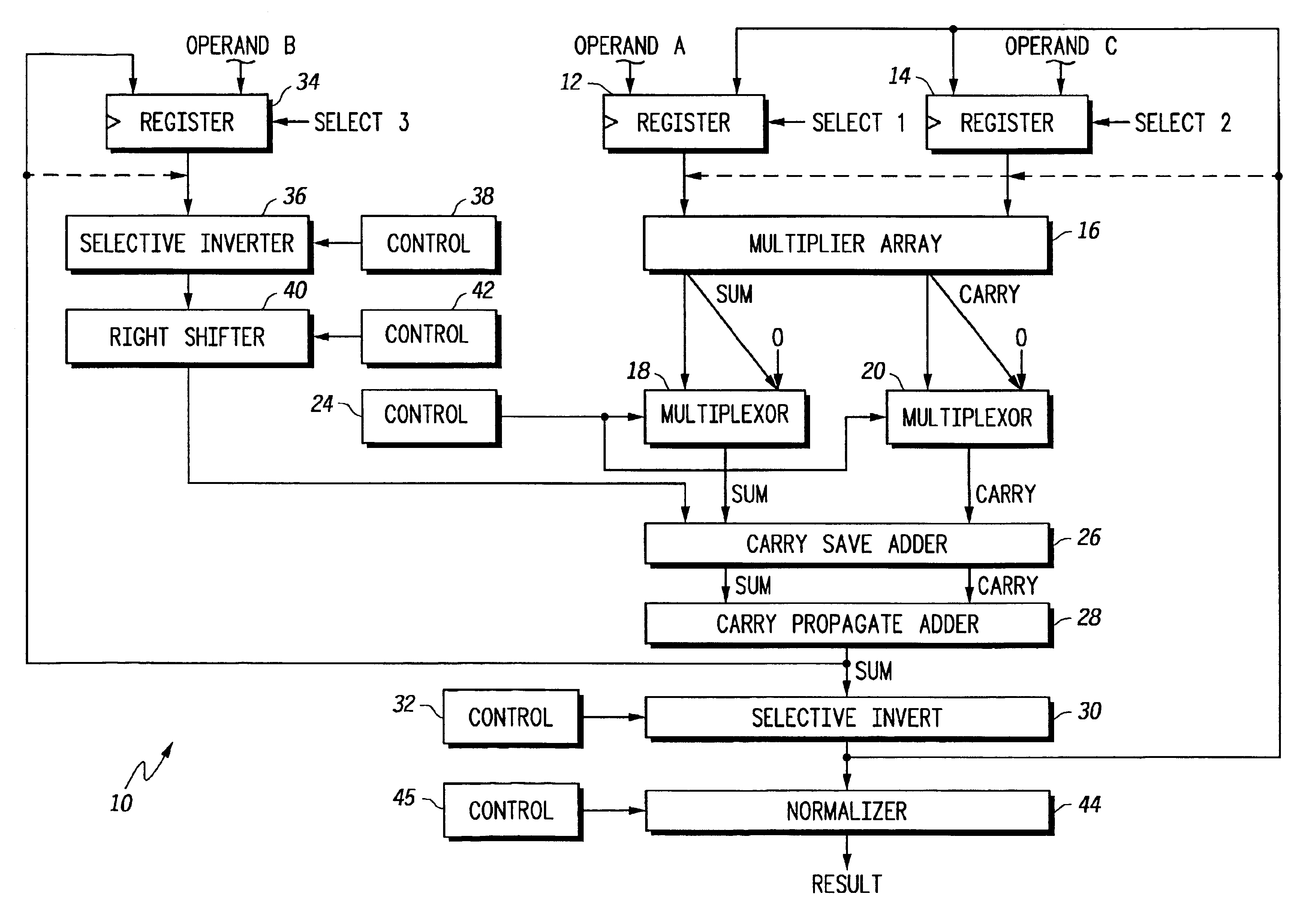

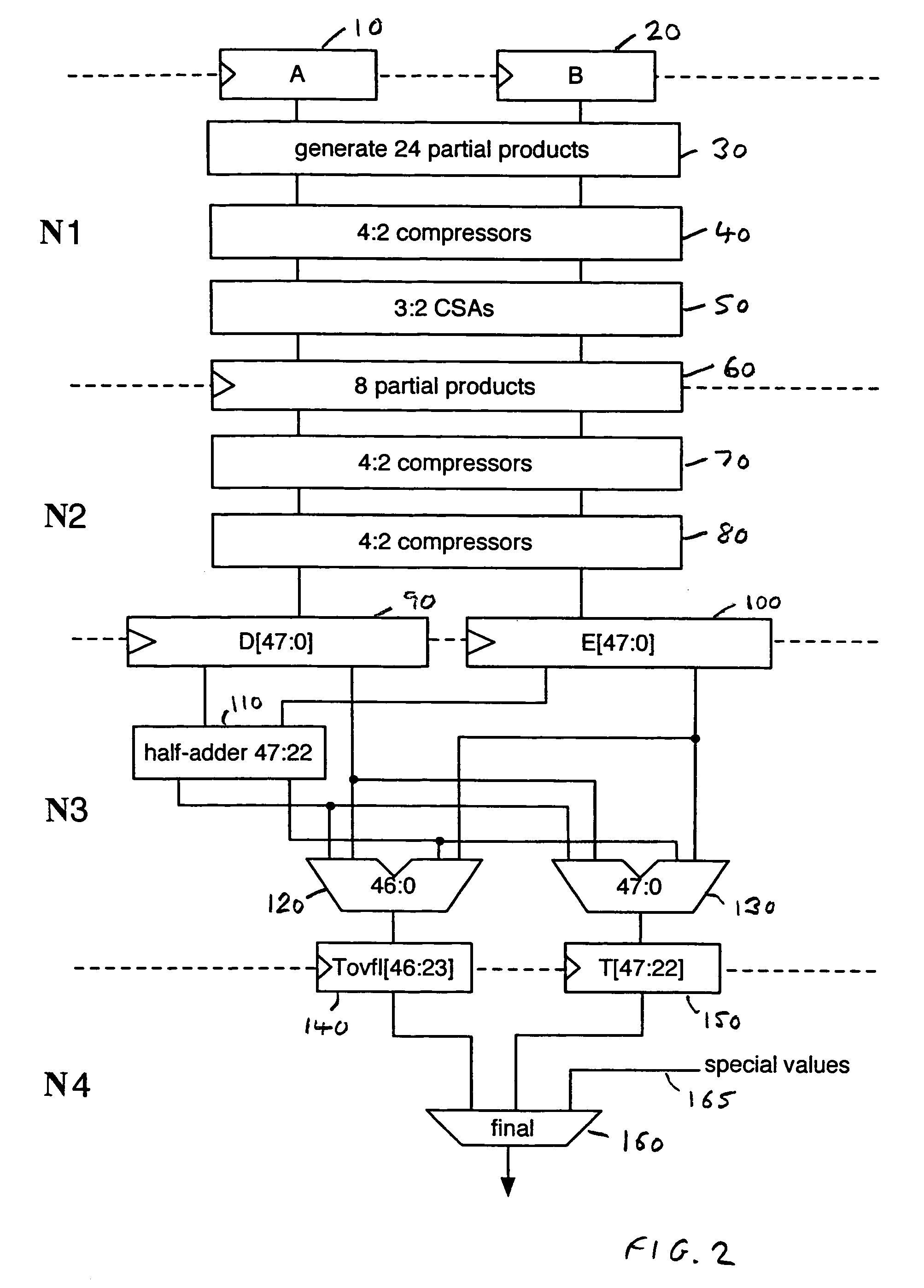

Floating point multiplier/accumulator with reduced latency and method thereof

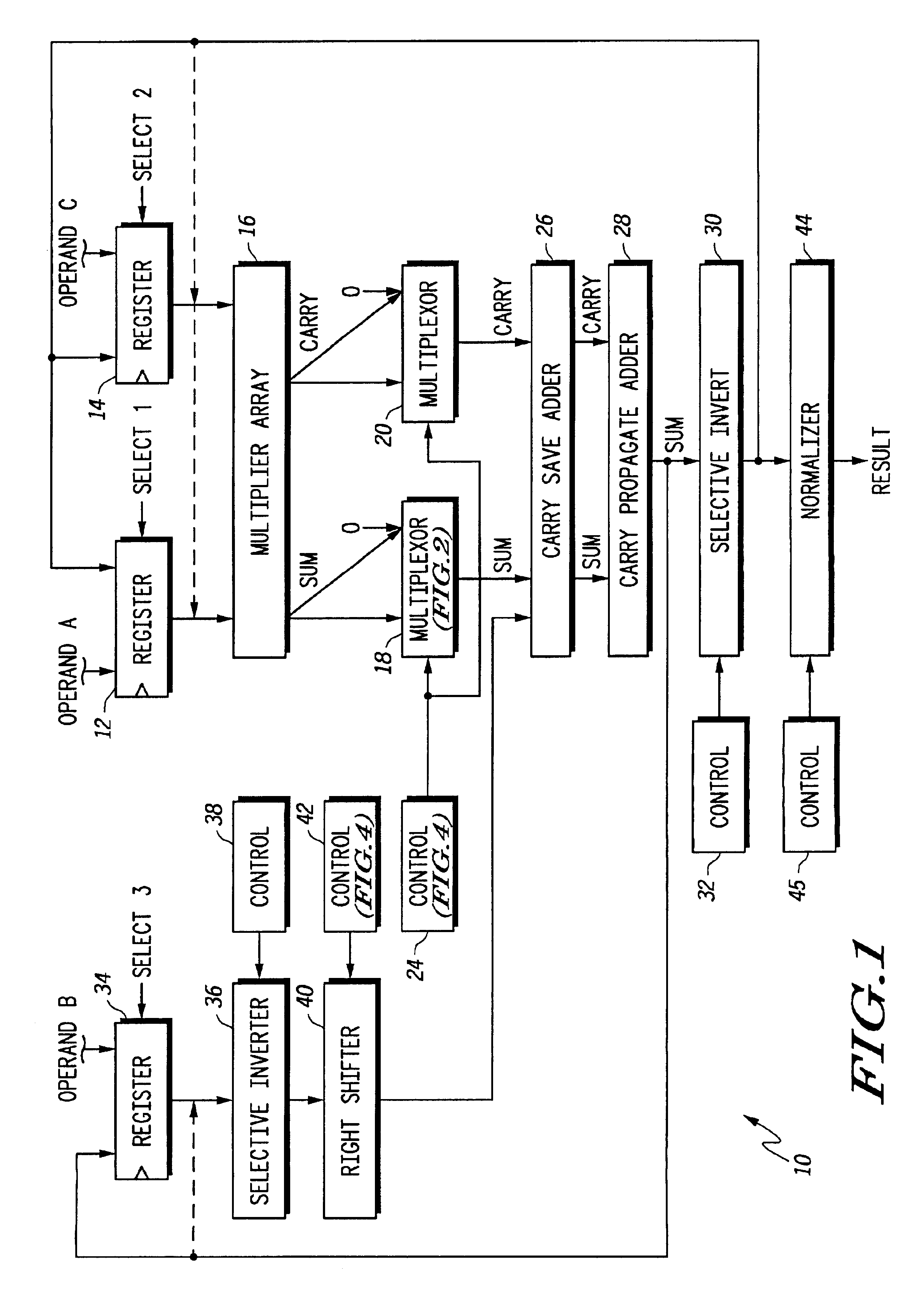

A circuit (10) for multiplying two floating point operands (A and C) while adding or subtracting a third floating point operand (B) removes latency associated with normalization and rounding from a critical speed path for dependent calculations. An intermediate representation of a product and a third operand are selectively shifted to facilitate use of prior unnormalized dependent resultants. Logic circuitry (24, 42) implements a truth table for determining when and how much shifting should be made to intermediate values based upon a resultant of a previous calculation, upon exponents of current operands and an exponent of a previous resultant operand. Normalization and rounding may be subsequently implemented, but at a time when a new cycle operation is not dependent on such operations even if data dependencies exist.

Owner:NORTH STAR INNOVATIONS

Data processing apparatus and method for performing floating point multiplication

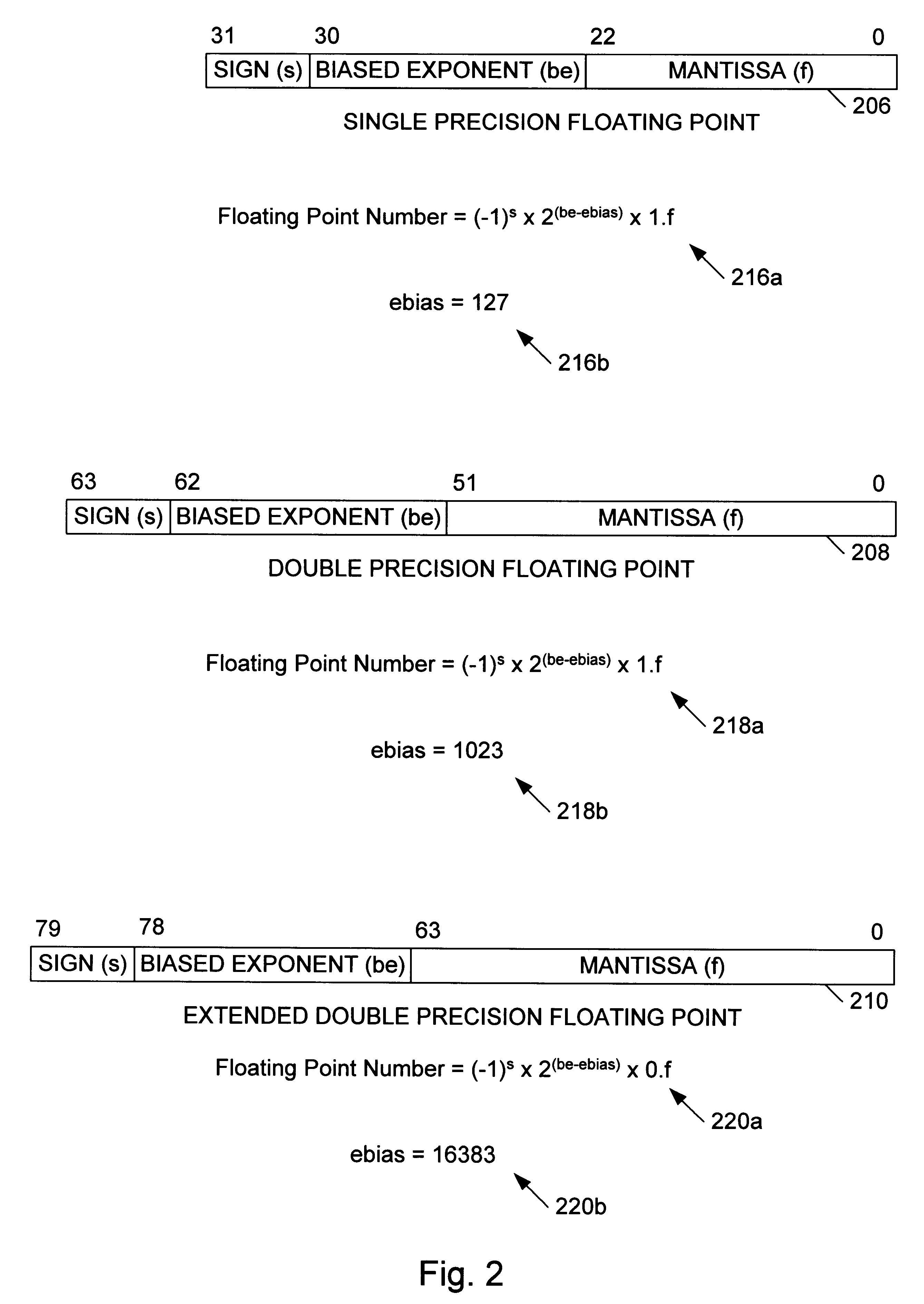

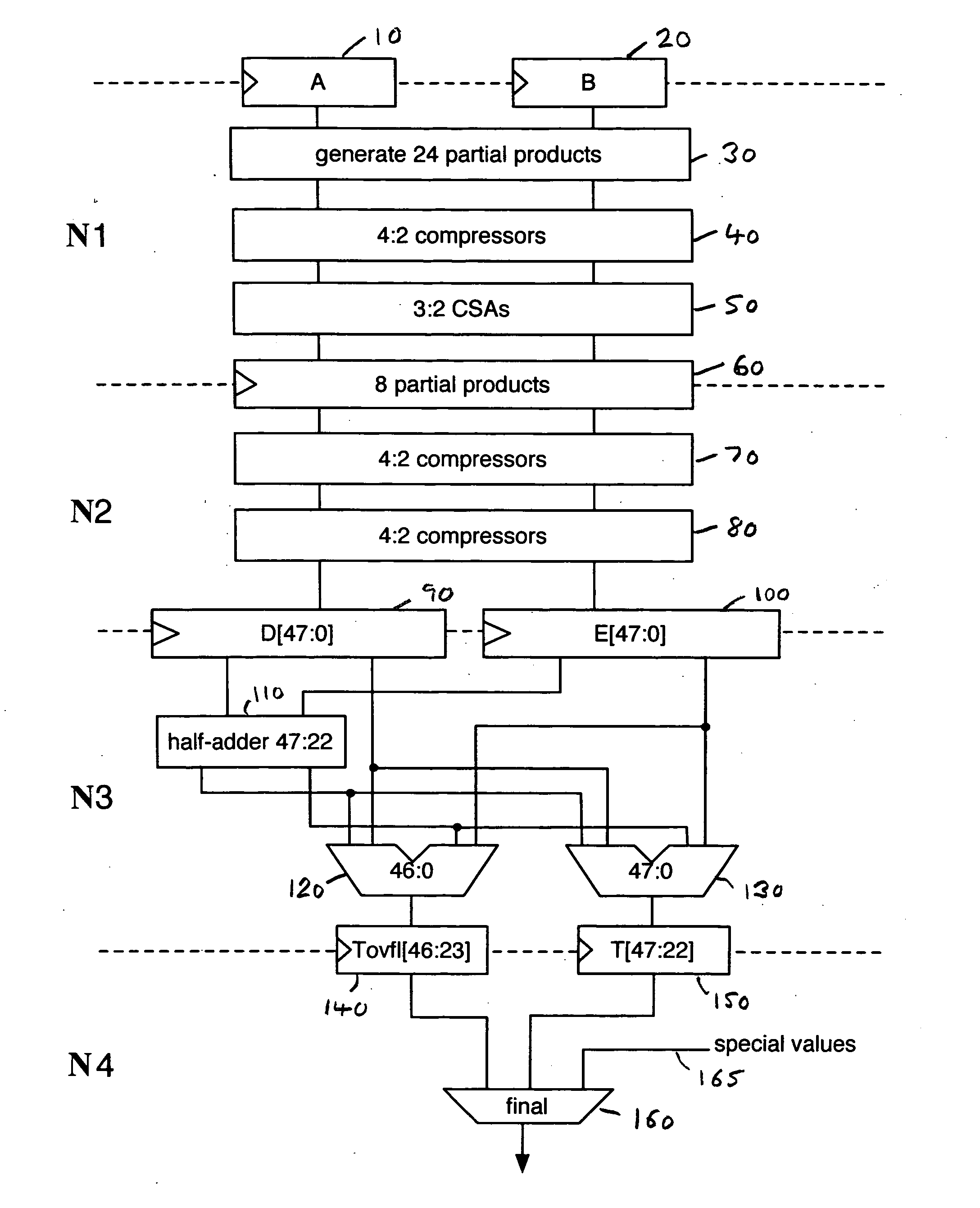

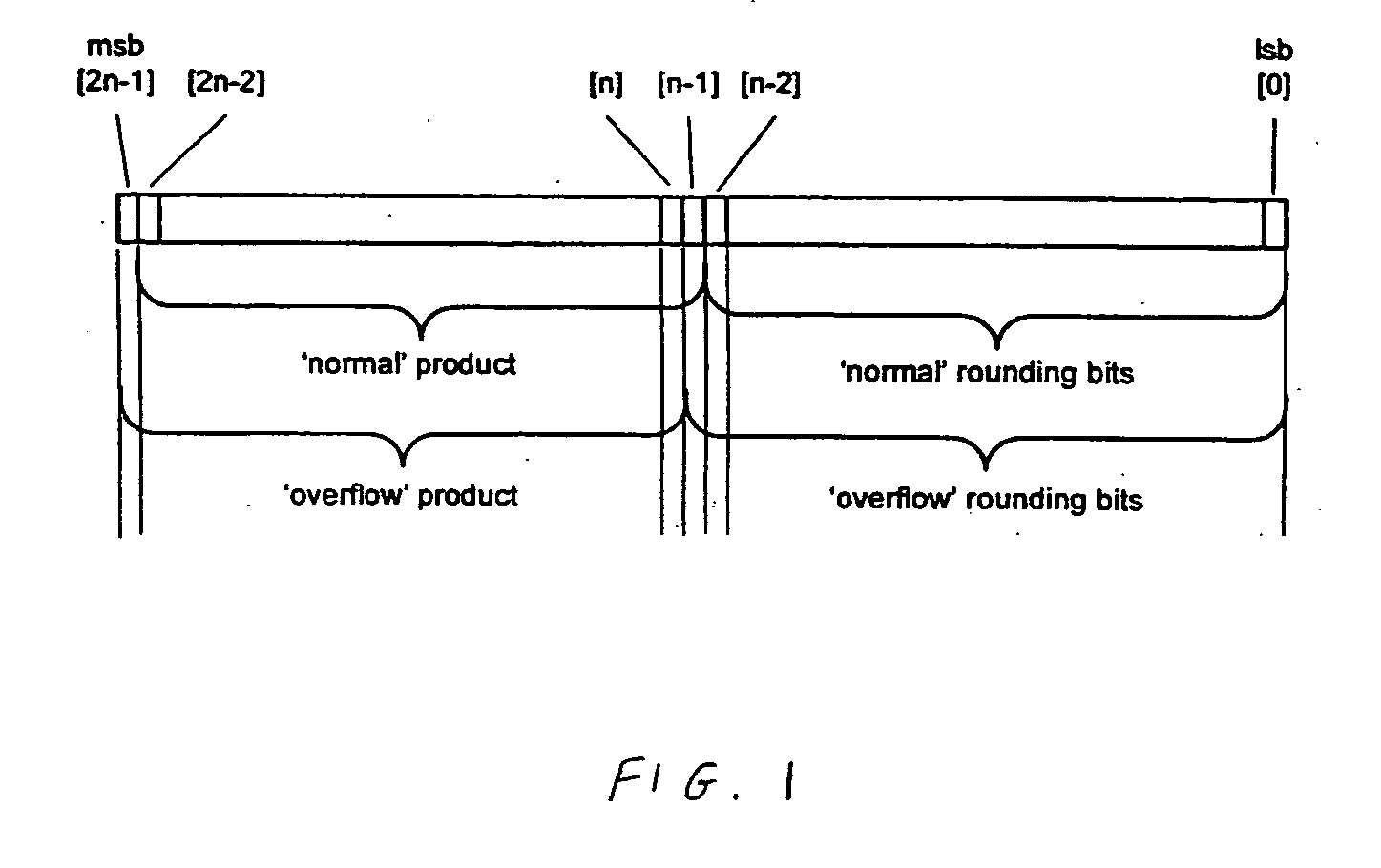

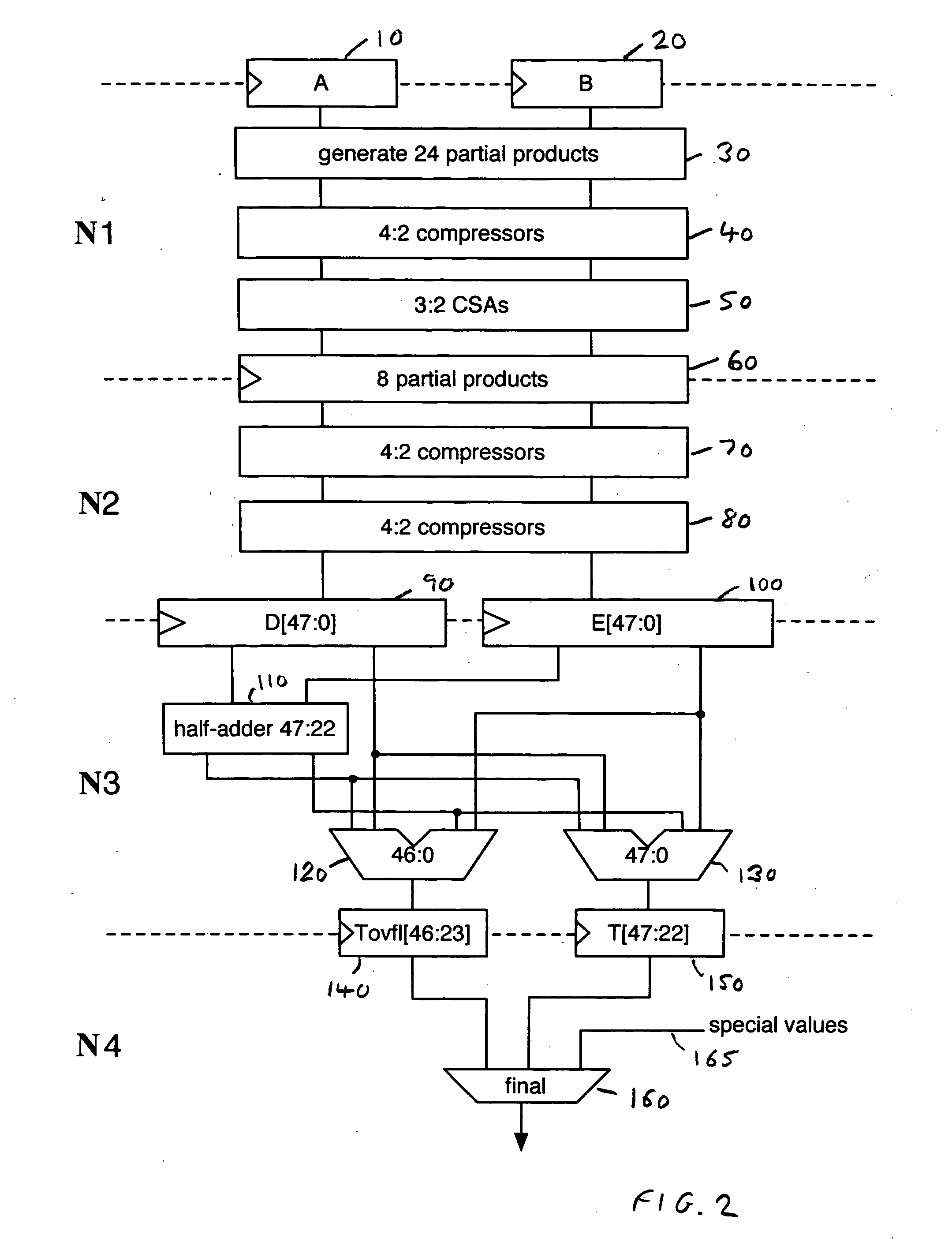

ActiveUS7668896B2Efficient detectionAvoid the needComputations using contact-making devicesComputation using non-contact making devicesLeast significant bitFloating point multiplication

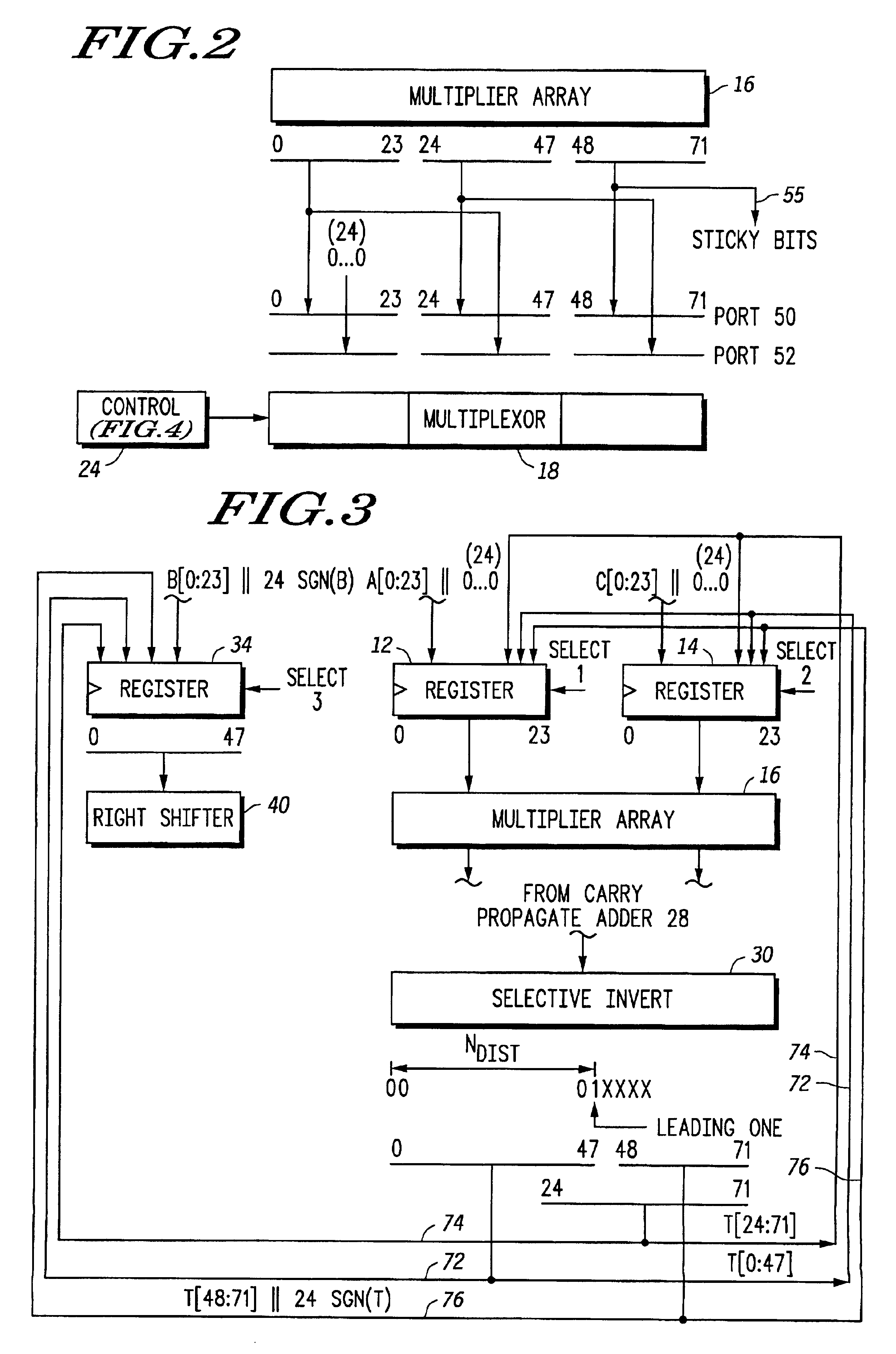

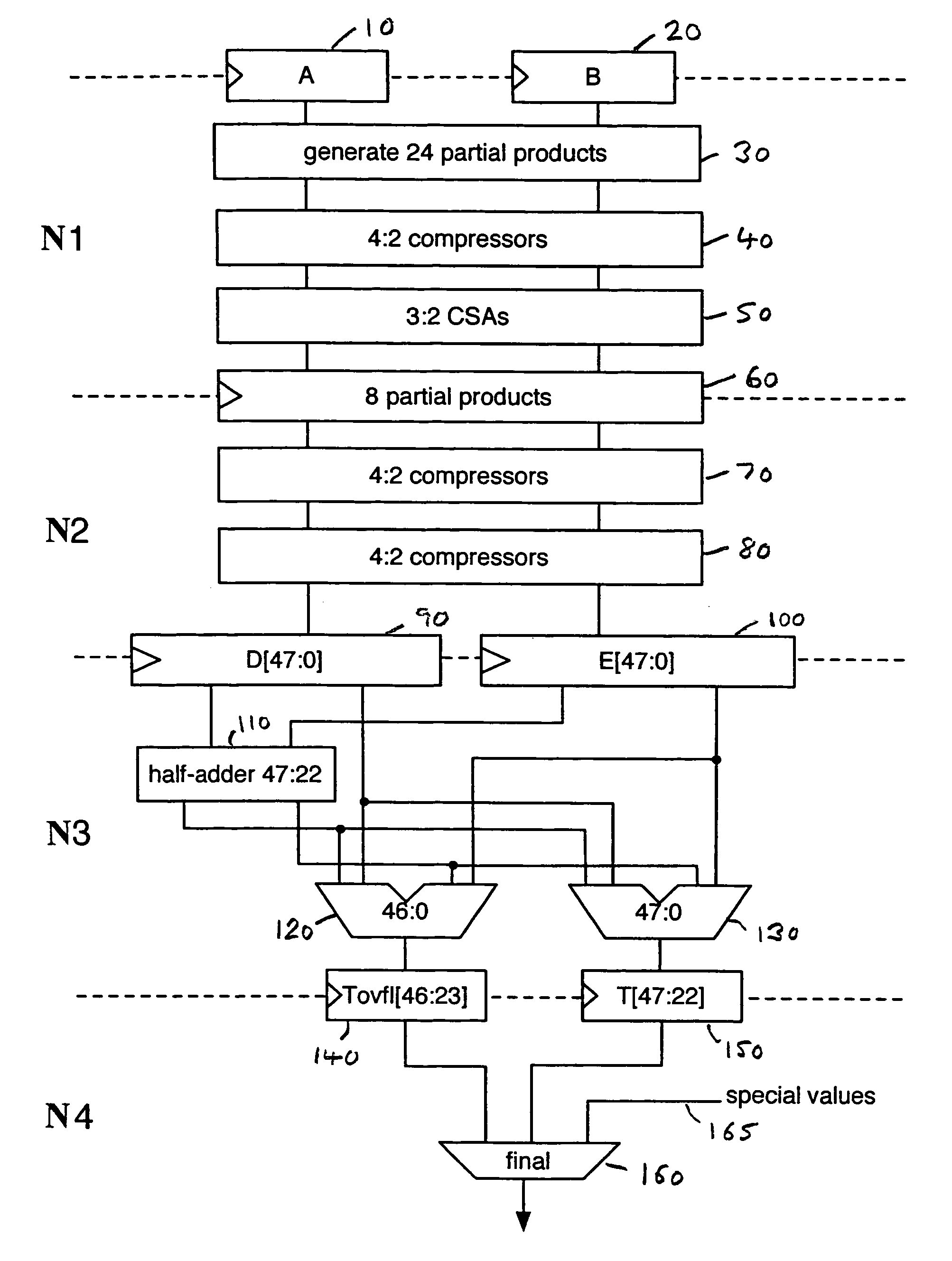

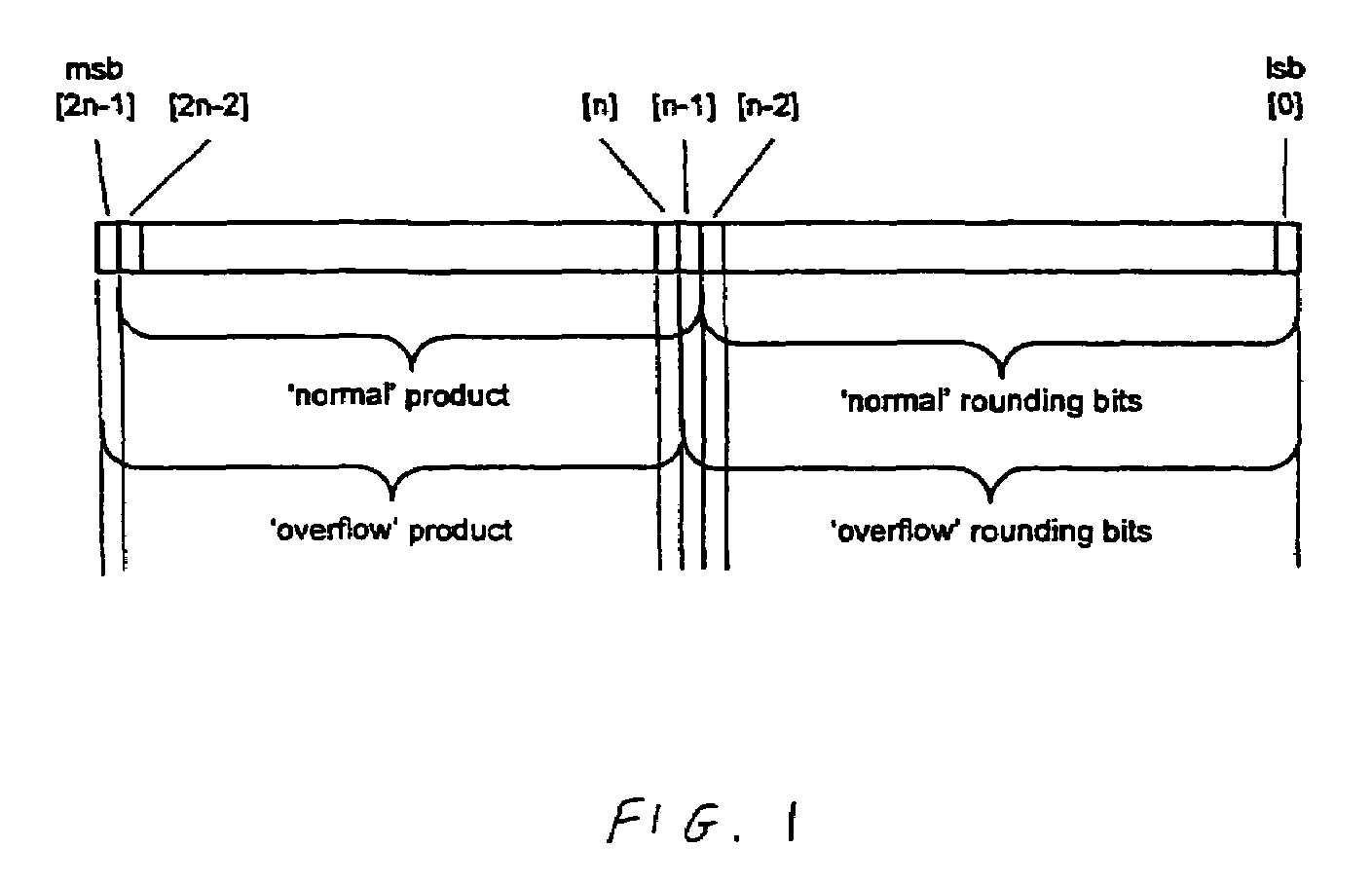

The first and second n-bit significands are multiplied producing a pair of 2n-bit vectors, and half adder logic produces a corresponding plurality of carry and sum bits. A product exponent is checked for correspondence with a predetermined exponent value. A sum operation generates a first result equivalent to the addition of the pair of 2n-bit vectors. First adder logic uses corresponding m carry and sum bits, the least significant of them carry bits being replaced with the increment value prior to the first adder logic performing the first sum operation. Second adder logic performs a second sum operation and uses the corresponding m−1 carry and sum bits replacing the least significant m−1 carry bits with the rounding increment value prior to the second adder logic second sum operation. The n-bit result is derived from either the first rounded result, the second rounded result or a predetermined result value.

Owner:ARM LTD

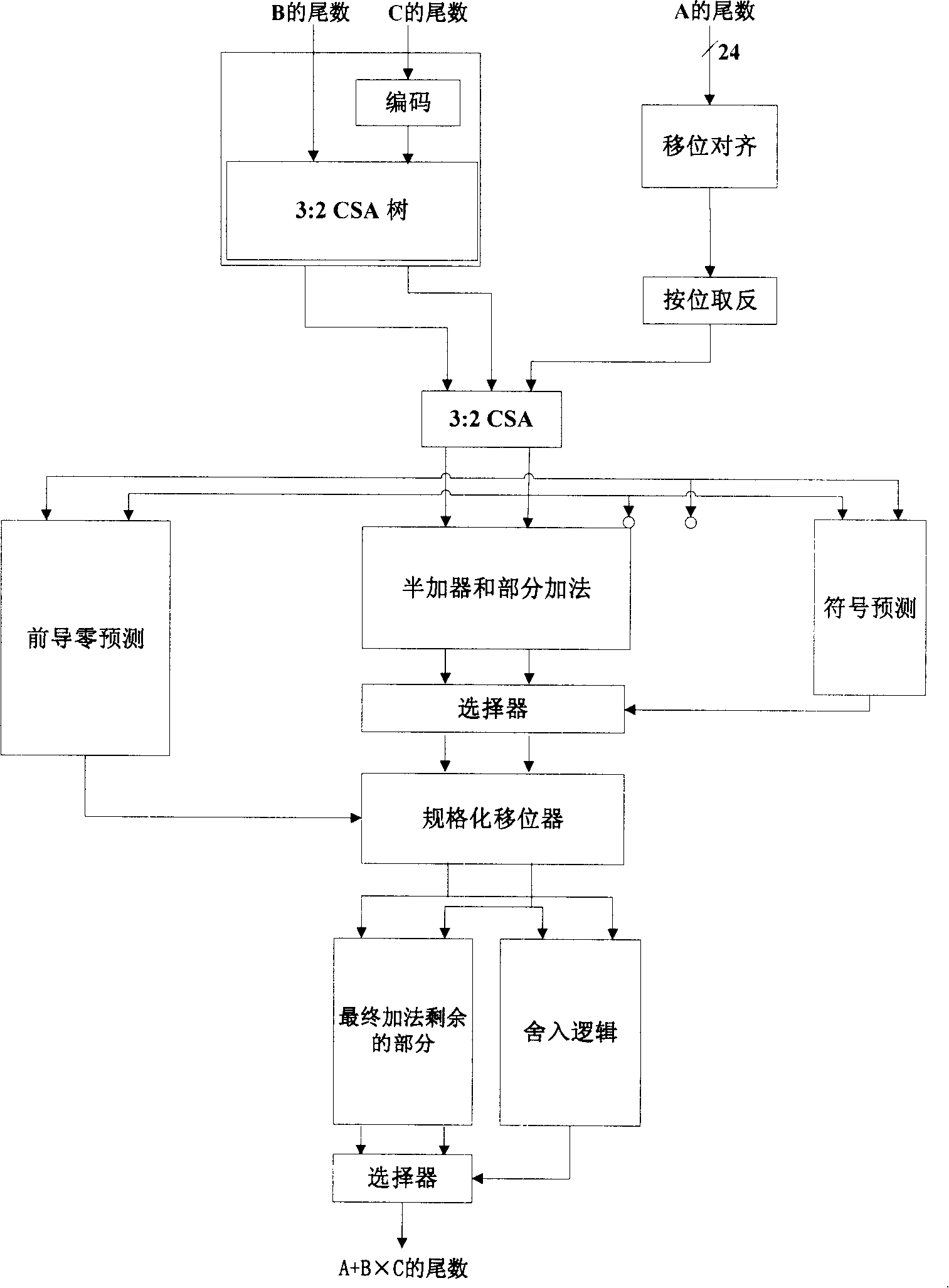

5-grade stream line structure of floating point multiplier adder integrated unit

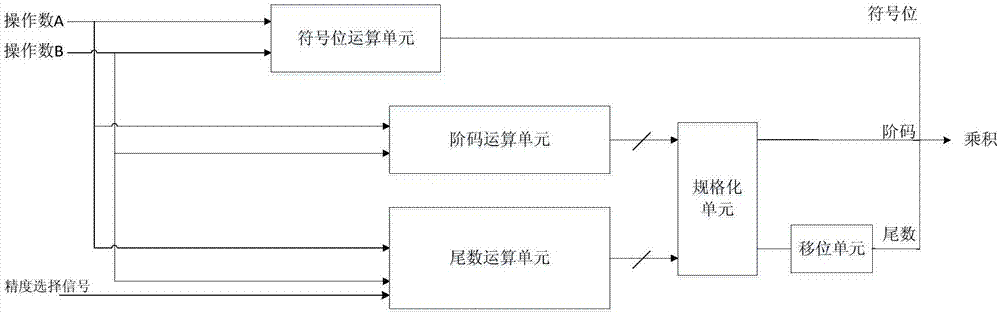

ActiveCN101174200AHigh precisionDigital data processing detailsSymbol of a differential operatorLeading zero

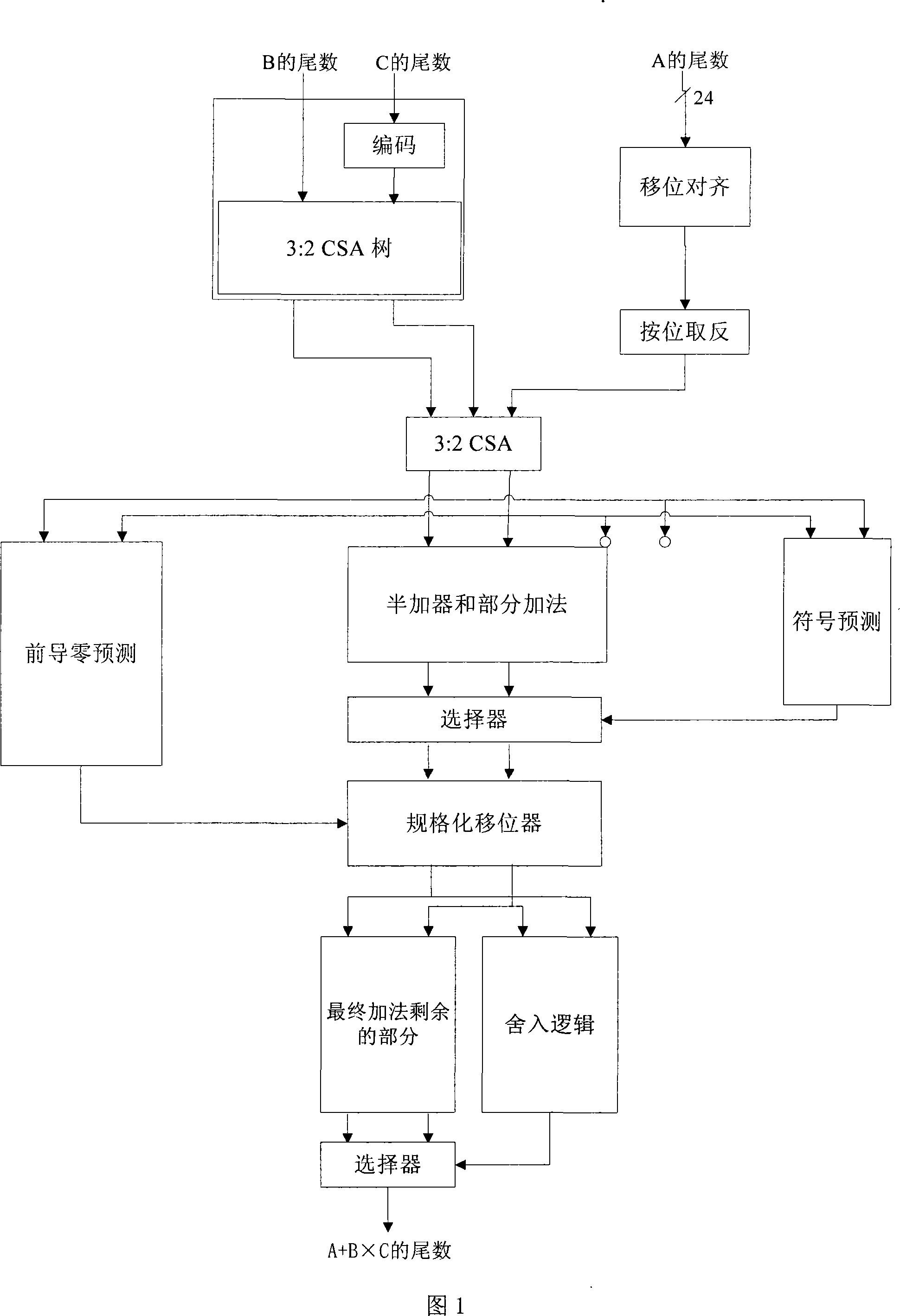

The invention discloses a design of a full pipeline of a single precision floating point multiplication-add fused unit, which realizes multiplication-add operation in the form of A+B x C. the multiplication-add operation is realized in the following five pipelines: in the first stage pipeline, exponential difference is calculated and a part of the multiplication is completed; in the second stage pipeline, A and B x C are aligned according to the exponential difference, effective subtraction and complement are performed, the rest multiplication is completed, simultaneously, the exponent is divided into six states, and the calculation method of normalized shift amount in different states are different; in the third stage pipeline, the number of leading zero is pre-estimated, simultaneously the sign of the final result is synchronously pre-estimated, and finally first stage normalized shift is performed; in the fourth stage pipeline, second normalized shift is performed first, and then addition and a part of half adjust are performed; in the last stage pipeline, addition and half adjust are completed, exponential terms are amended, and third stage normalized shift is completed in the spacing of the half adjust. The invention has the advantages that high performance and high precision are realized in the condition of low hardware cost.

Owner:TSINGHUA UNIV

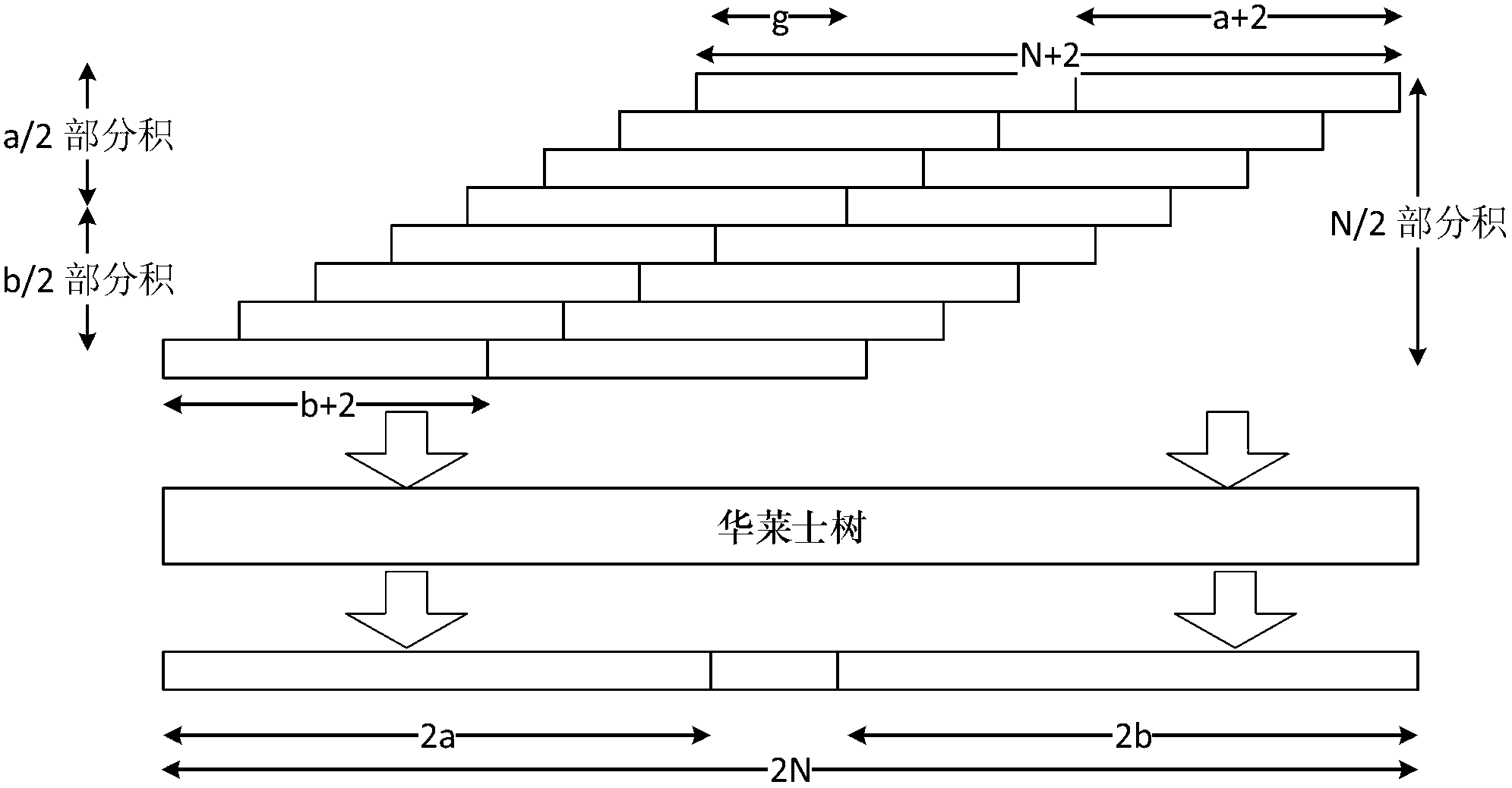

Single-accuracy matrix multiplication optimization method based on loongson chip 3A

InactiveCN102214160AImprove computing efficiencyOvercoming the problem of invalid prefetchingComplex mathematical operationsData setParallel computing

The invention discloses a single-accuracy matrix multiplication optimization method based on a loongson chip 3A. The method is characterized by comprising the following steps of: dividing two single-accuracy source matrixes of the loongson chip 3A into two sub matrixes according to a principle that the two single-accuracy source matrixes are less than or equal to a half of a one-level cache and less than or equal to a half of a second-level cache; and pre-fetching data by using a 128-bit access instruction and a concurrent single-accuracy floating point instruction of the loongson chip 3A in a matrix multiplication core computation code of a 32-bit access instruction, a single-accuracy floating point multiplication-addition instruction and a pre-fetching instruction of the loongson chip 3A and using a pre-fetching address calculation mode of subtracting the size of an operation data CDS from the first address CACAS of an operation data set, so that a floating point operation part can basically operate at full load. By the method, the problem of invalid pre-fetching of address-non-aligned data is solved, and the executive efficiency of an address-non-aligned single-accuracy matrix multiplication is approximate to that of an address-aligned single-accuracy matrix multiplication. Compared with a basic linear algebra subprogram library (GotoBLAS) version 2-1.07, the single-accuracy matrix multiplication which is optimized by the method provided by the invention has the advantage that: an operation speed is averagely improved by above 90 percent.

Owner:UNIV OF SCI & TECH OF CHINA

Paralleling floating point multiplication addition unit

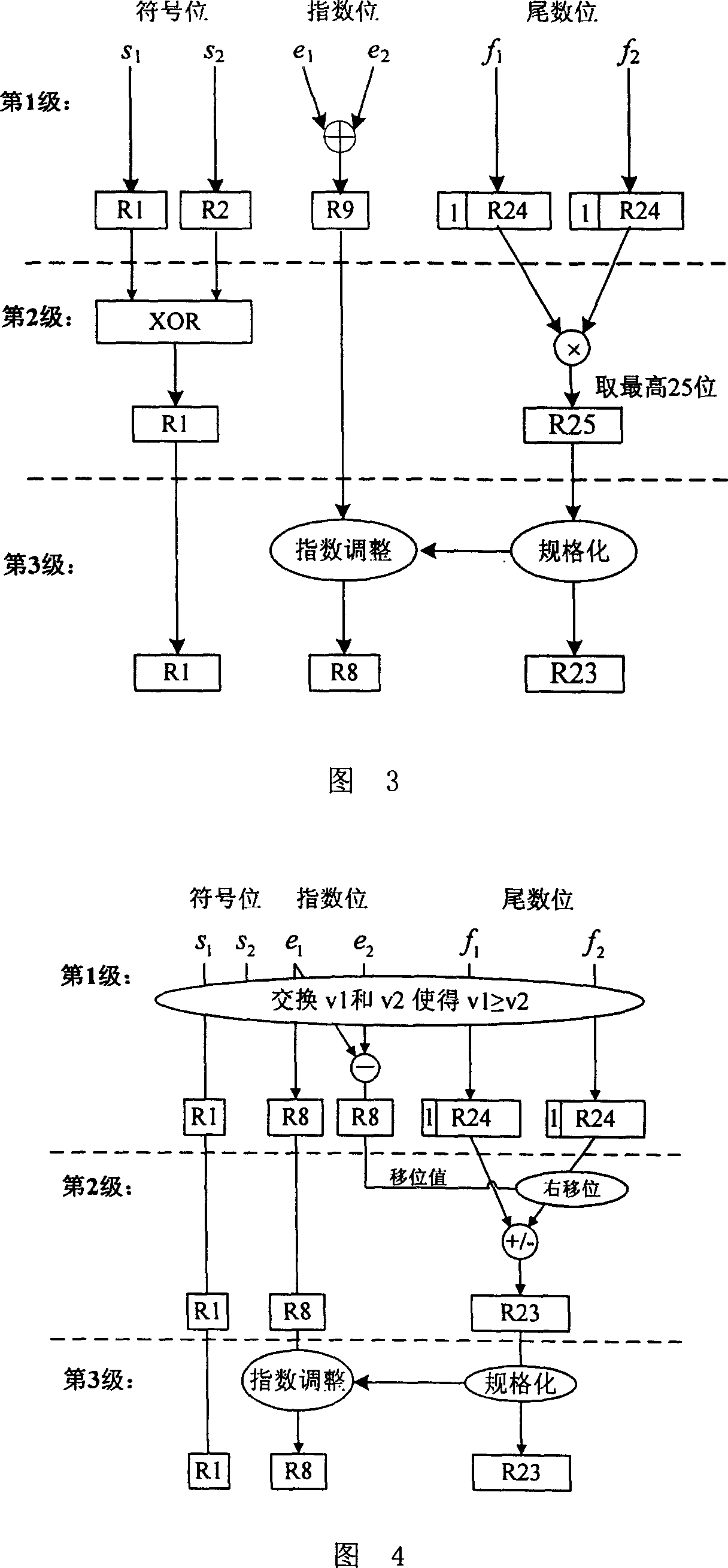

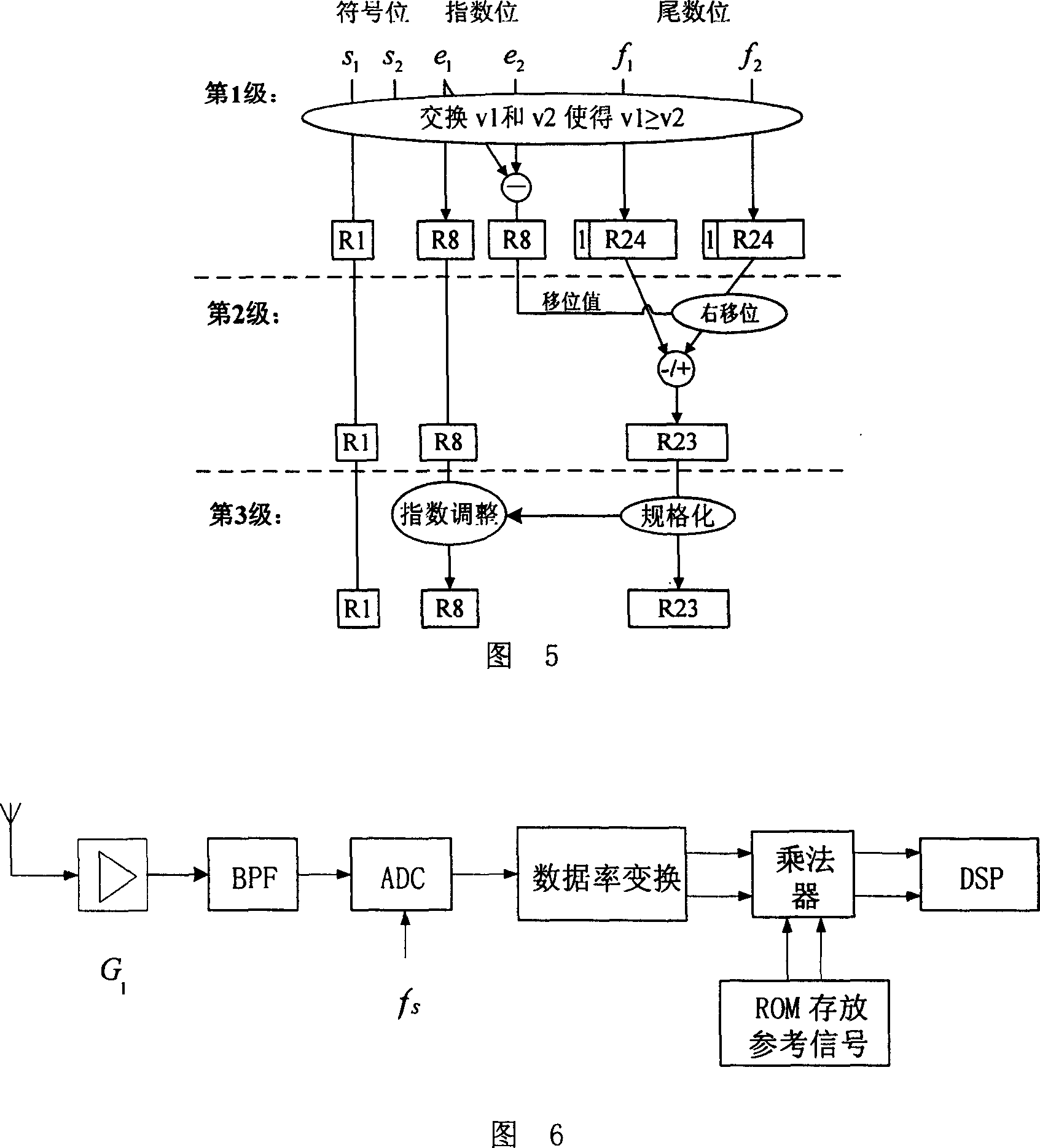

ActiveCN101178645AAchieving Instruction Level ParallelismDigital data processing detailsConcurrent instruction executionProduction lineRounding

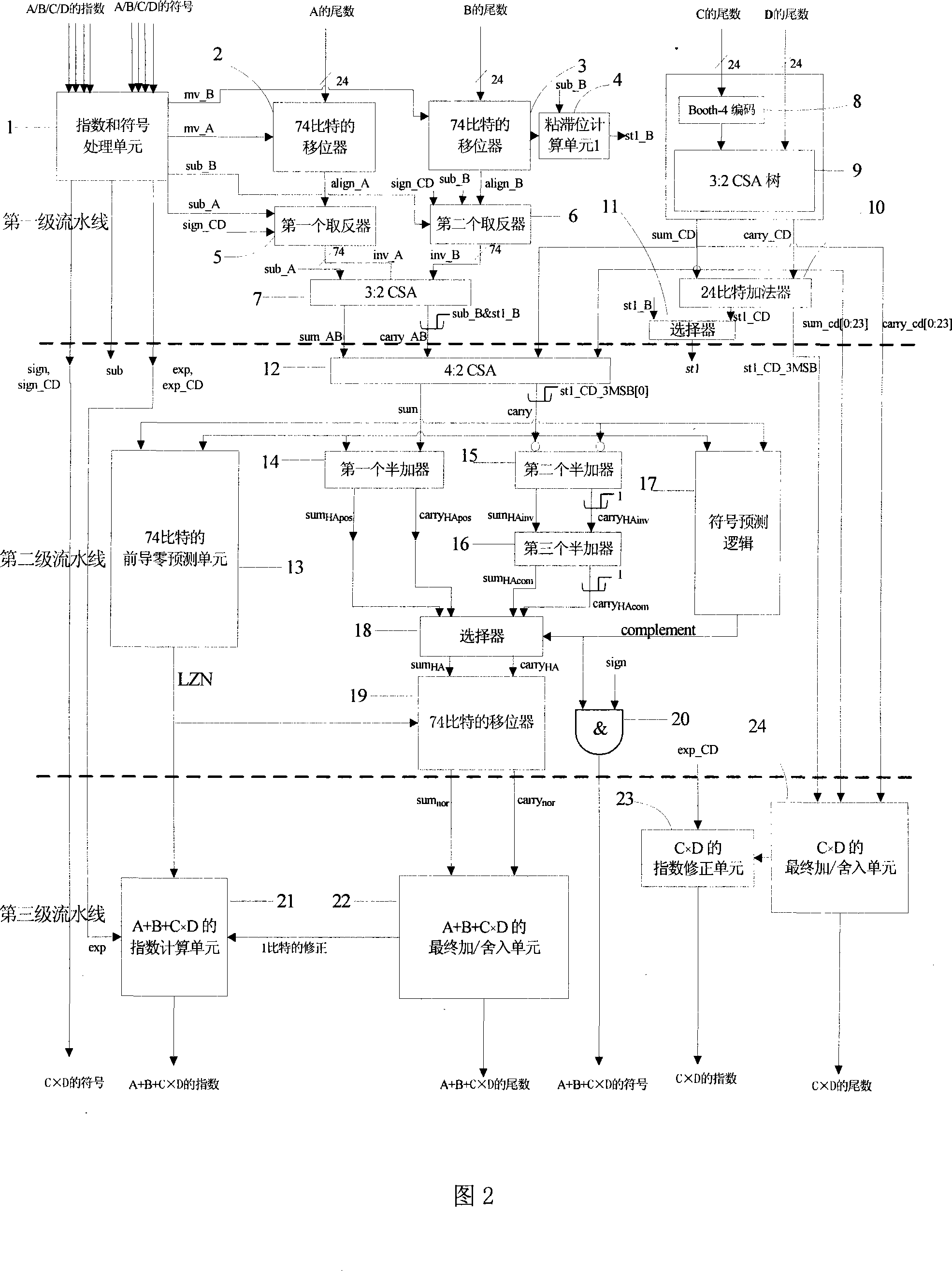

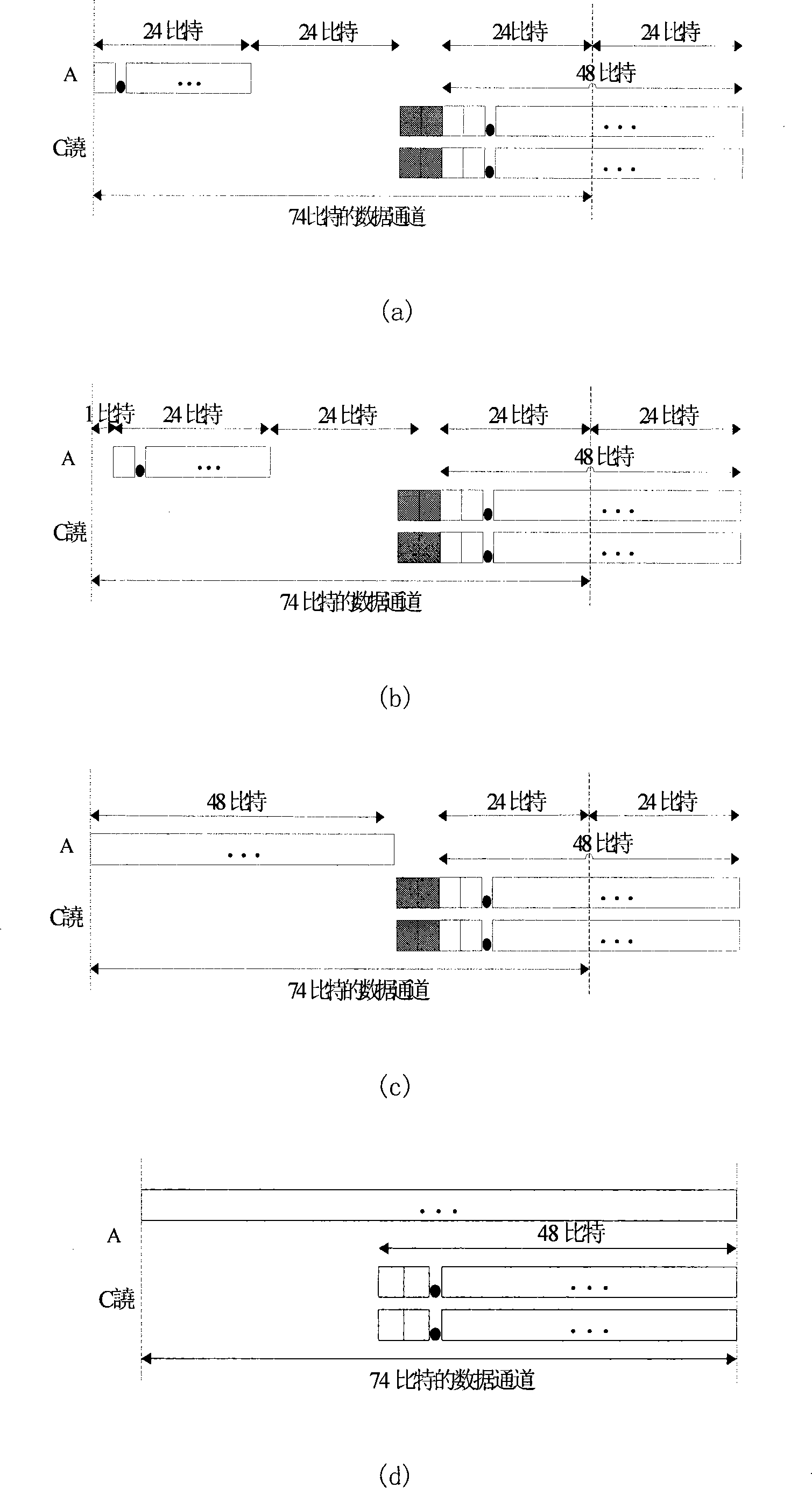

The invention relates to a parallel floating-point fused multiply-add unit which simplifies similar technique and achieves the multiply-add operation of A+B+C*D (A is equal to or greater than B) and acquires the result of C*D, so as to achieve three classes production line: in the first production line, A and B are displaced and snapped, and the C*D is coded and part of C*D is compressed; in the second production line, the displacement and snapped result of A and B and the result of partial compressed C*D are compressed in 4:2CSA, and then front zero guide prediction, character prediction, half-add operation and normalized displacement are accomplished; in the third production line, the final add operation and rounding of A+B+C*D are accomplished and the index is counted, and the mantissa and the index of C*D are counted according to the output of the first production line. The invention has the advantages of achieving the parallel of instruction grade; accomplishes an add instruction and a multiply instruction at the same time; and also can accelerate two continuous instructions with correlative data.

Owner:TSINGHUA UNIV

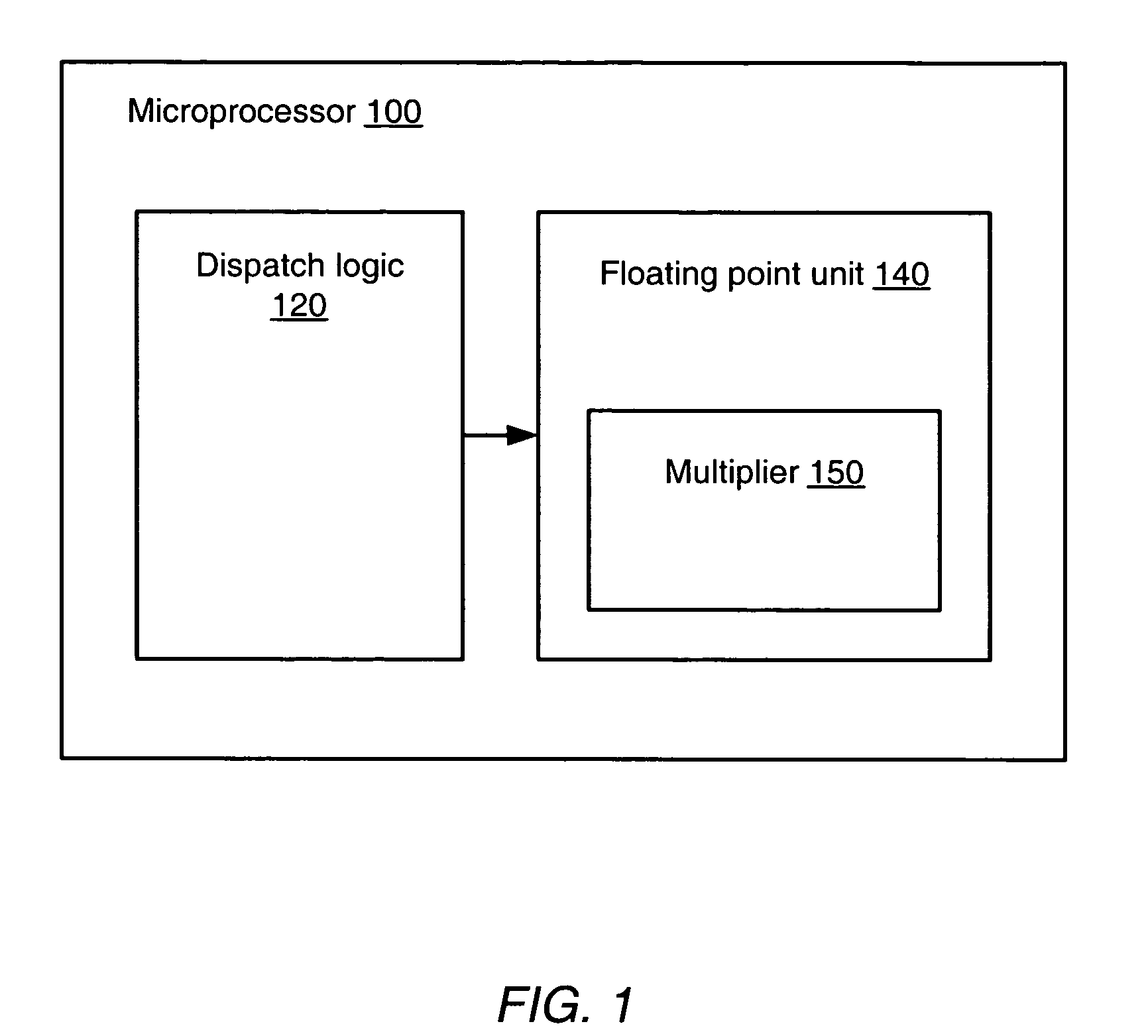

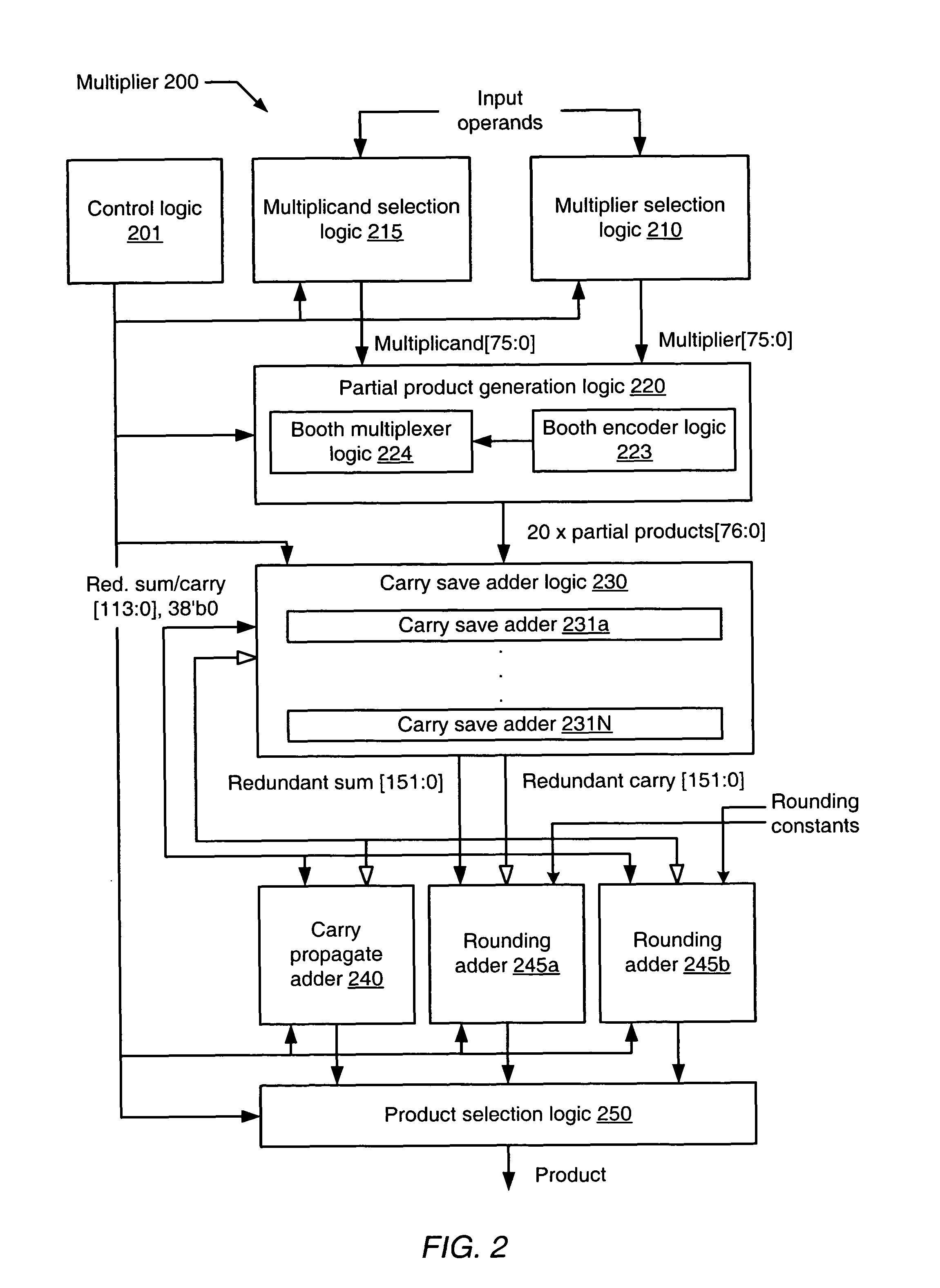

Apparatus and method for multiple pass extended precision floating point multiplication

InactiveUS8019805B1Computations using contact-making devicesComputation using non-contact making devicesComputational scienceCarry-save adder

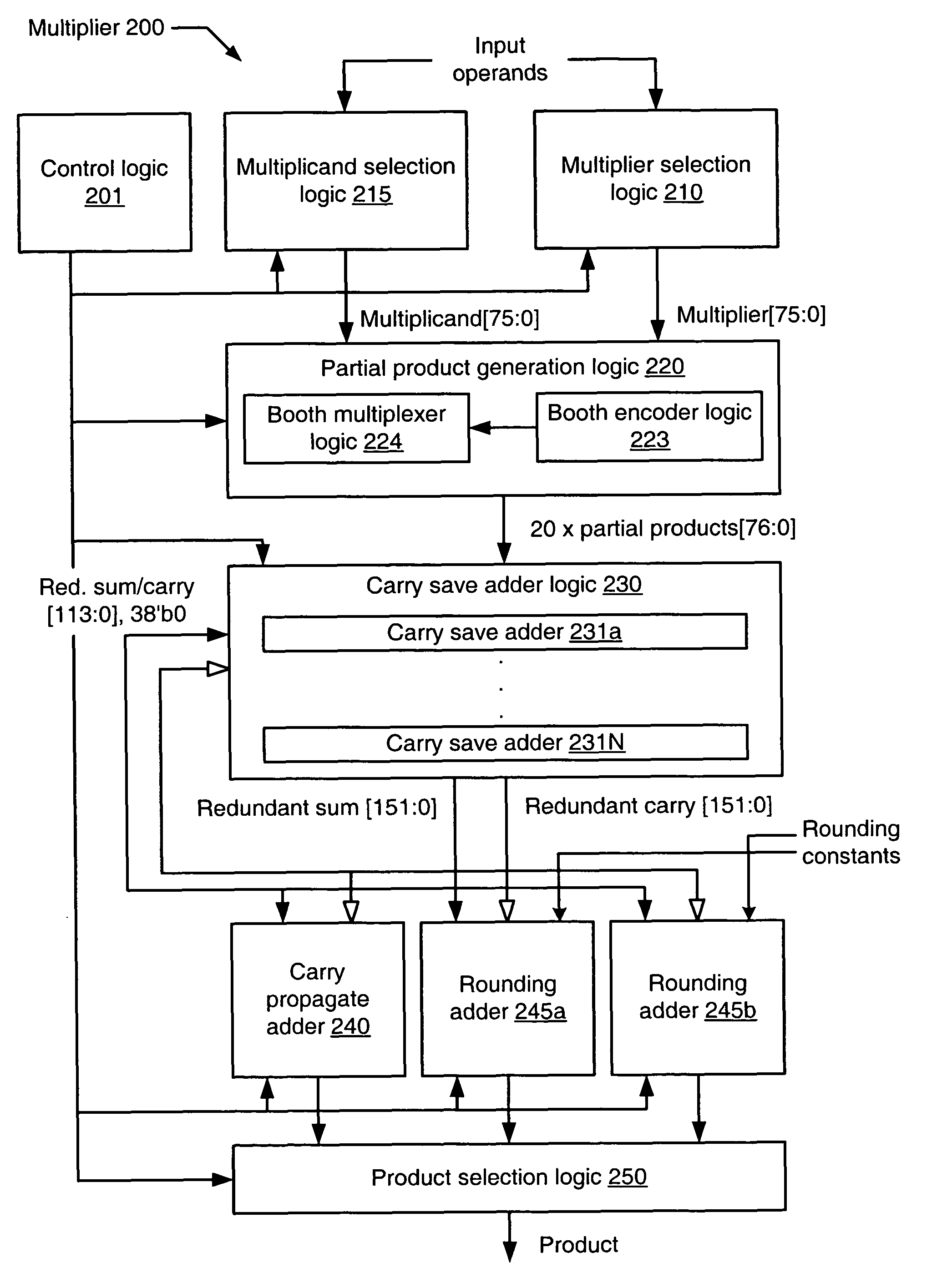

A floating point multiplier circuit includes partial product generation logic configured to generate a plurality of partial products from multiplicand and multiplier values. The plurality of partial products corresponds to a first and second portion of the multiplier value during respective first and second partial product execution phases. The multiplier also includes a plurality of carry save adders configured to accumulate the plurality of partial products generated during the first and second partial product execution phases into a redundant product during respective first and second carry save adder execution phases. The multiplier further includes a first carry propagate adder coupled to the plurality of carry save adders and configured to reduce a first and second portion of the redundant product to a multiplicative product during respective first and second carry propagate adder phases. The first carry propagate adder phase begins after the second carry save adder execution phase completes.

Owner:GLOBALFOUNDRIES INC

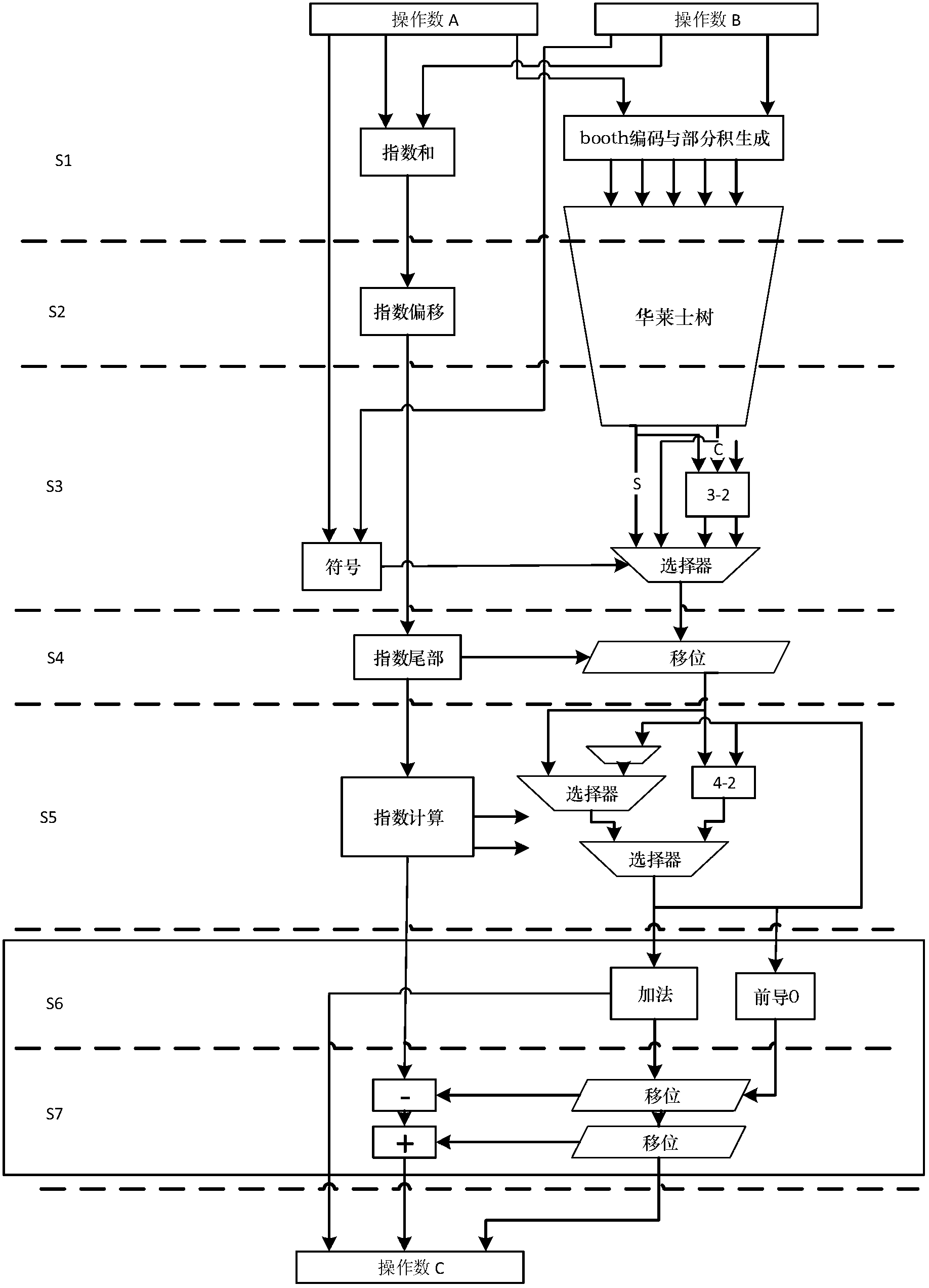

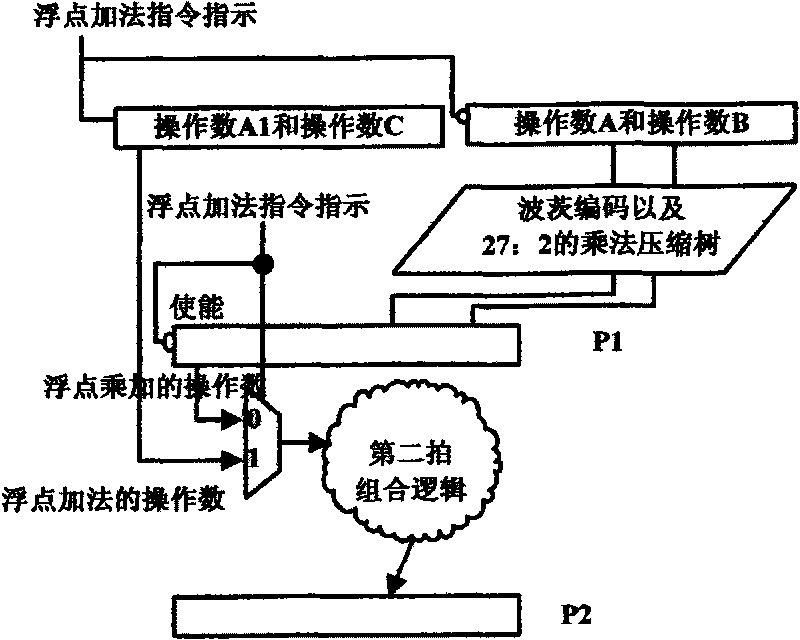

Implementation method of floating point multiply-accumulate unit low in power consumption and high in huff and puff

InactiveCN103176767AAvoid frequent access operationsReduce power consumptionComputation using non-contact making devicesProduction lineProcessor register

The invention discloses an implementation method of floating point multiply-accumulate unit low in power consumption and high in huff and puff. The implementation method of the floating point multiply-accumulate unit low in power consumption and high in huff and puff comprises the following steps: 1, when a vector point multiplication operation is calculated, a pair of operating number A and operating number B are input in each period from N periods, and a floating-point multiplication operation of the operating number A and the operating number B is operated by a first level production line, a second level production line and a third level production line; 2, product is transformed in a weight mode in a fourth level production line, mantissa bit width is increased, and index number bit width is reduced; 3, accumulation operation is conducted on the transformed product in a fifth level production line, the product is accumulated for one time in each period; 4, recovering of the product is conducted by a sixth level production line and a seventh level production line, and an eventual accumulated result is output at a N plus 6 period. The implementation method of the floating point multiply-accumulate unit low in power consumption and high in huff and puff can complete the vector point multiplication operation of random length N, multiple accumulation is calculated for one time in each period, thereby avoiding frequent access operation of a register of a processor. The operation can be completed with N plus 6 periods, single precision and double precision floating point number are compatible, and power consumption of floating point operation is effectively reduced.

Owner:ZHEJIANG UNIV

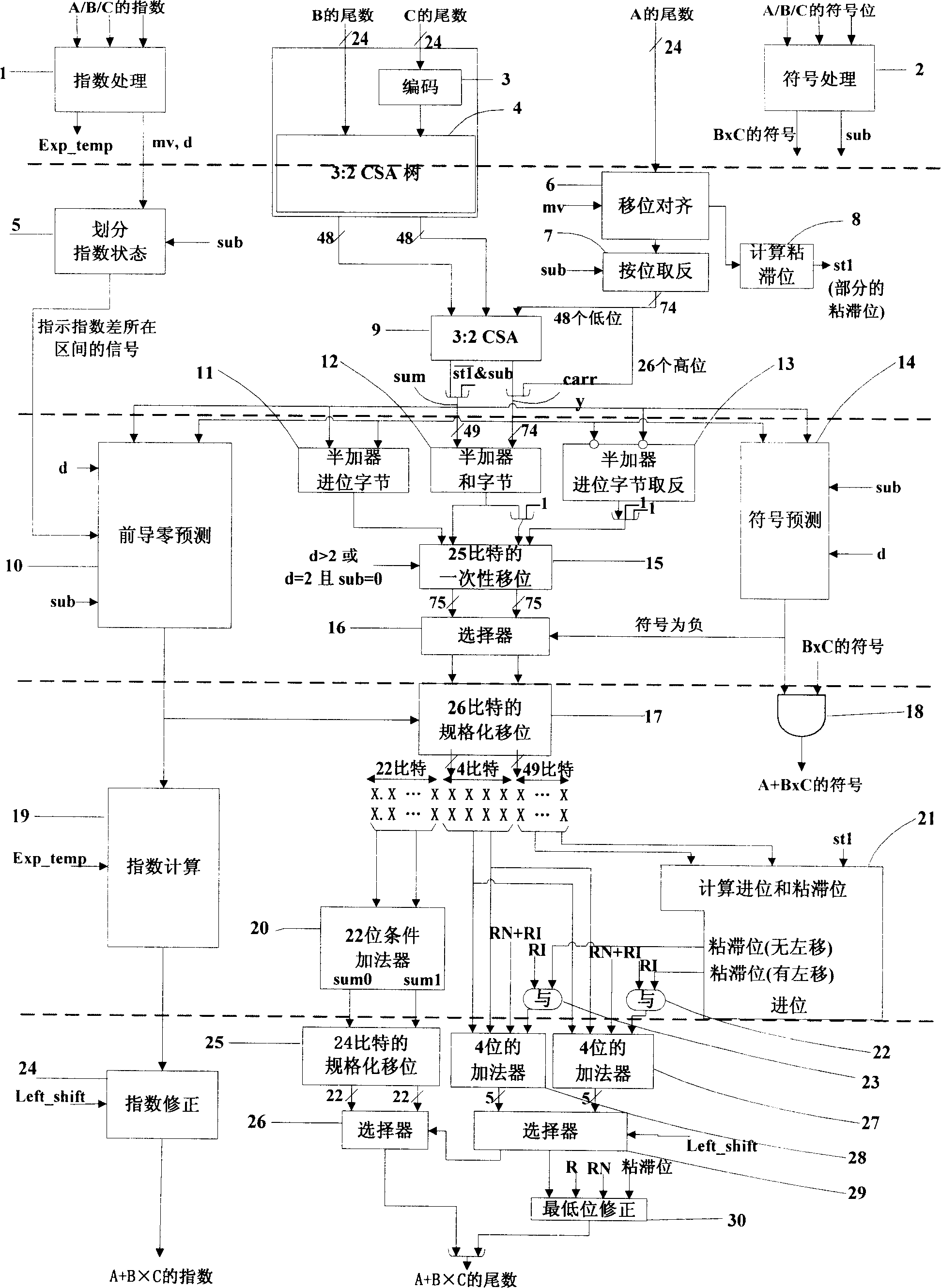

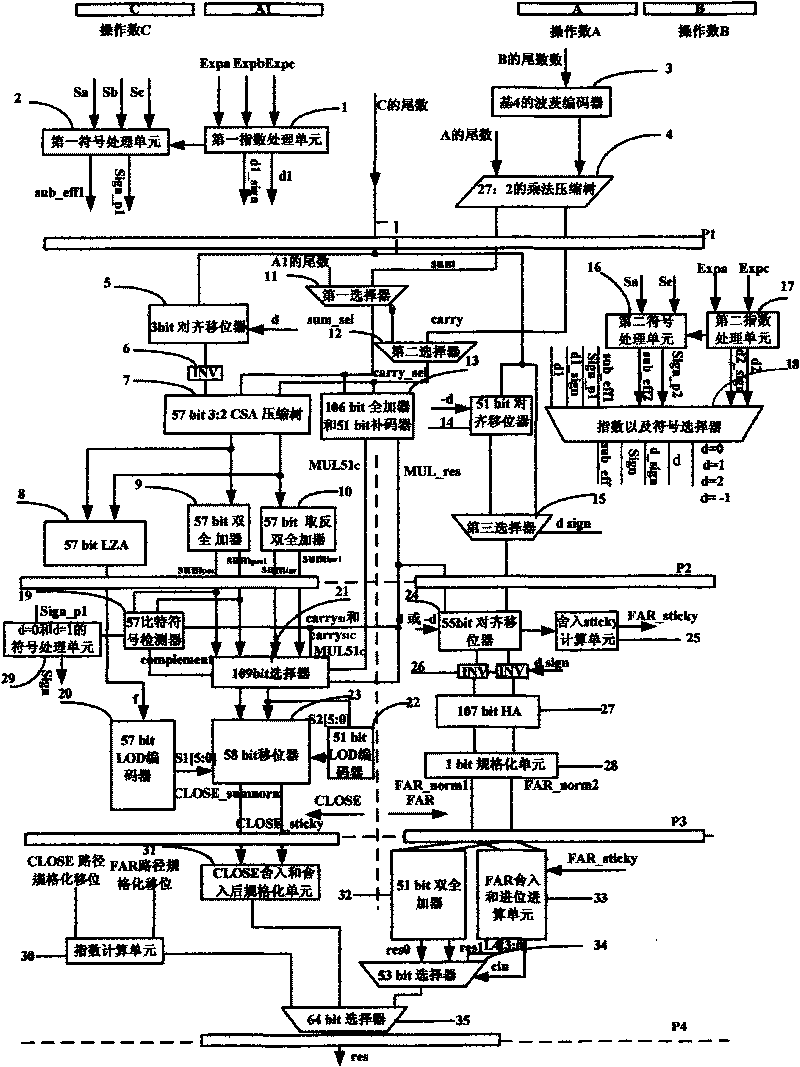

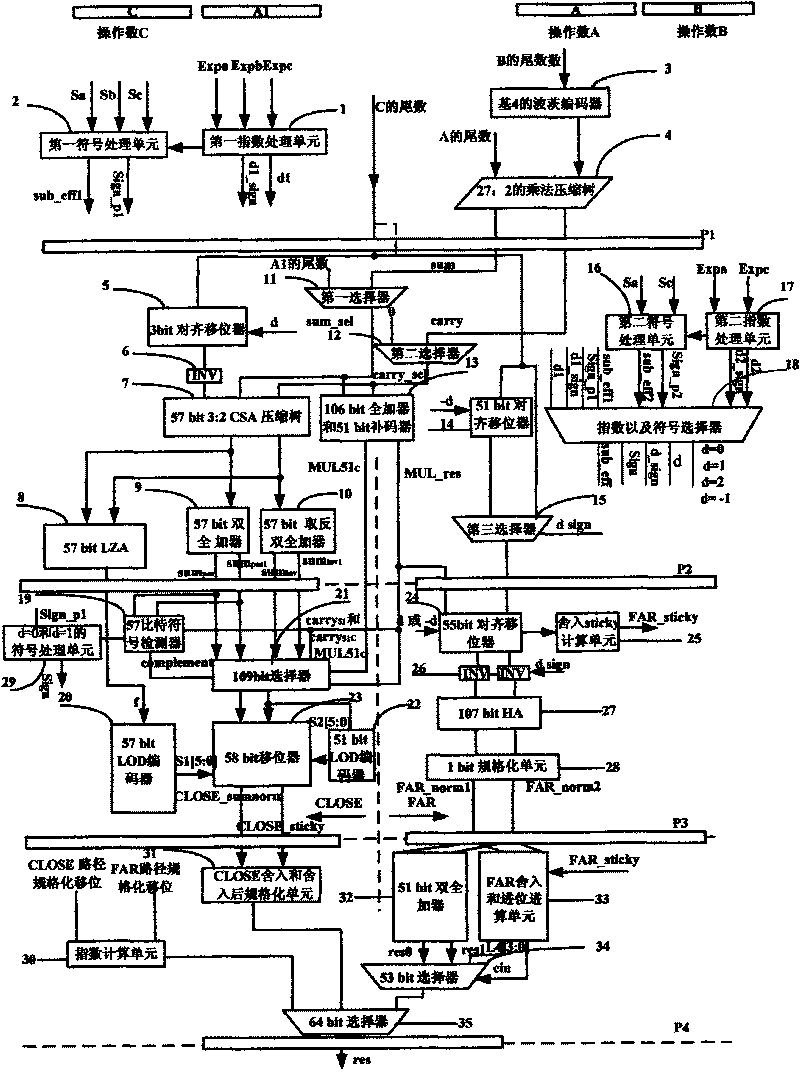

64-bit floating-point multiply accumulator and method for processing flowing meter of floating-point operation thereof

The invention discloses a 64-bit floating-point multiply accumulator and a method for processing the flowing meter of floating-point operation thereof. A first index processing unit of the multiply accumulator is used for calculating the index difference in floating-point multiplication-addition and floating-point multiplication operations; a first symbol processing unit is used for judging the symbol of results of the floating-point multiplication-addition and floating-point multiplication operations and judging whether to conduct effective subtraction; a second index processing unit thereof is used for processing the index of operands when only the addition operations are conducted; a second symbol processing unit is used for processing the symbol of operands when only the addition operations are conducted; and an index and symbol selector thereof is used for selecting the results of the first index processing unit and the first symbol processing unit or selecting the results of the second index processing unit and the second symbol processing unit, and judging the index difference d, wherein if d is equal to 0,1, 2, or minus 1 and the valid subtraction is conducted, the operations are conducted through a CLOSE path, and if not, the operations are conducted through an FAR path, so as to reduce the time delay of the multiply accumulator.

Owner:LOONGSON TECH CORP

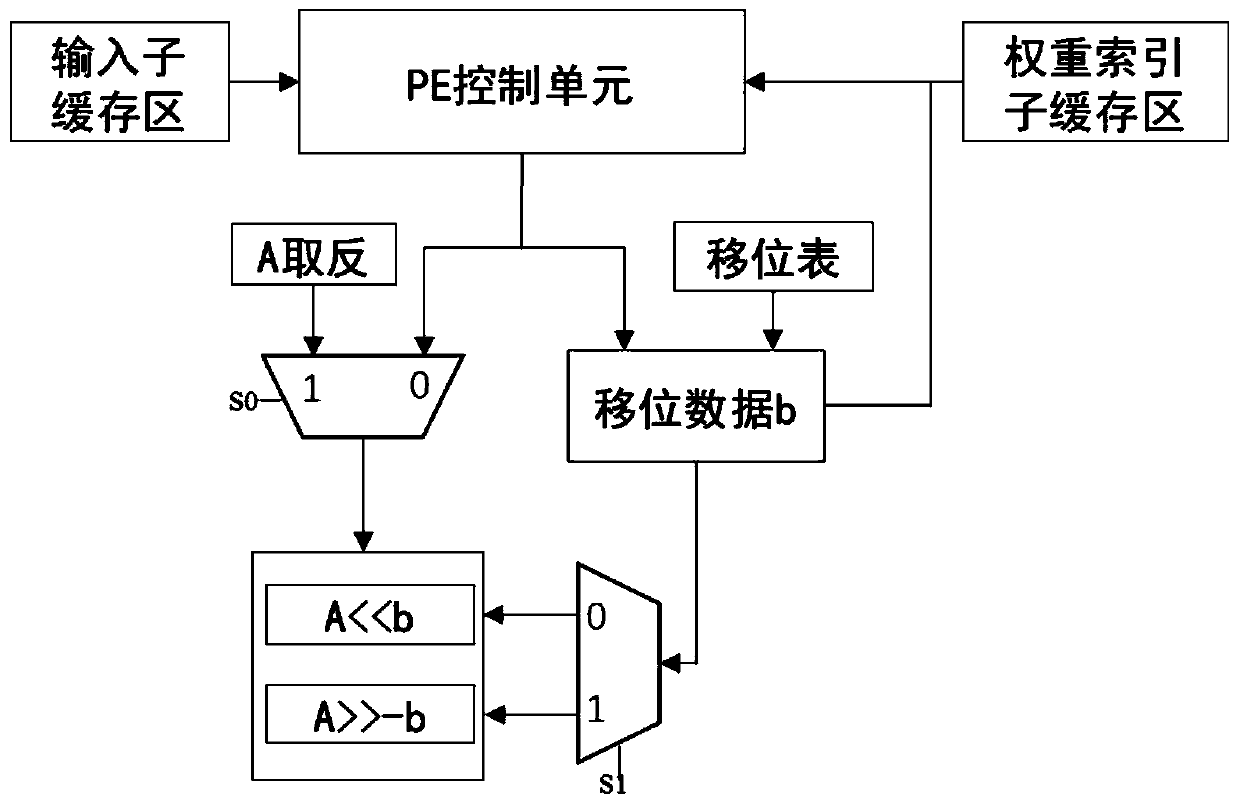

Approximate floating-point multiplier for neural network processor and floating-point multiplication

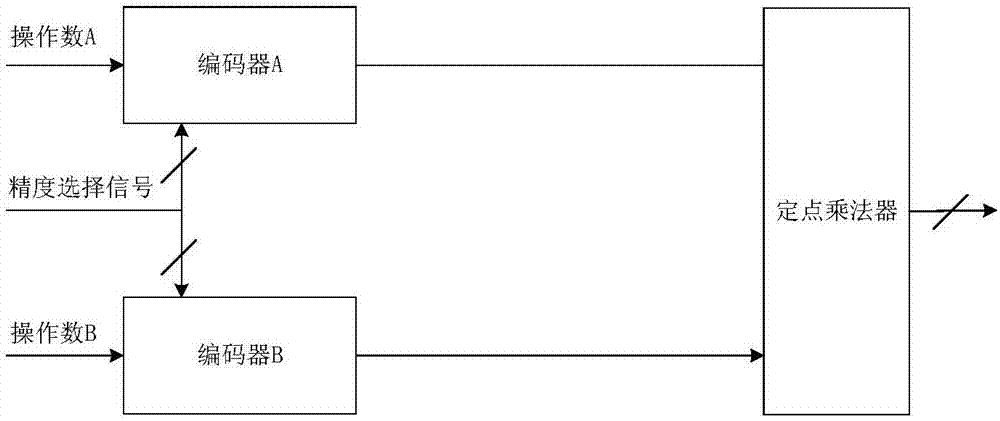

ActiveCN107273090AImprove work efficiencyImprove performanceDigital data processing detailsPhysical realisationOperandApproximate computing

The invention discloses an approximate floating-point multiplier for a neural network processor and a floating-point multiplication. When the approximate floating-point multiplier executes fractional part multiplying operation on an operand, part bits are intercepted from all high bits of a fractional part of the operand according to designated precision, and 1 is supplemented to the front and the back of the intercepted part bits to obtain two new fractional parts; multiplying operation is performed on the two new fractional parts to obtain an approximate fractional part of a product; and zero is supplemented to a low bit of the normalized approximate fractional part so that the bits of the approximate fractional part are consistent with the bits of the fractional part of the operand, and therefore the fractional part of the product is obtained. According to the approximate floating-point multiplier, an approximate calculation mode is adopted, different bits of the fractional part are intercepted according to a precision demand for corresponding multiplying operation, energy loss of multiplying operation is lowered, multiplying operation speed is increased, and therefore the performance of a neural network processing system is more efficient.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

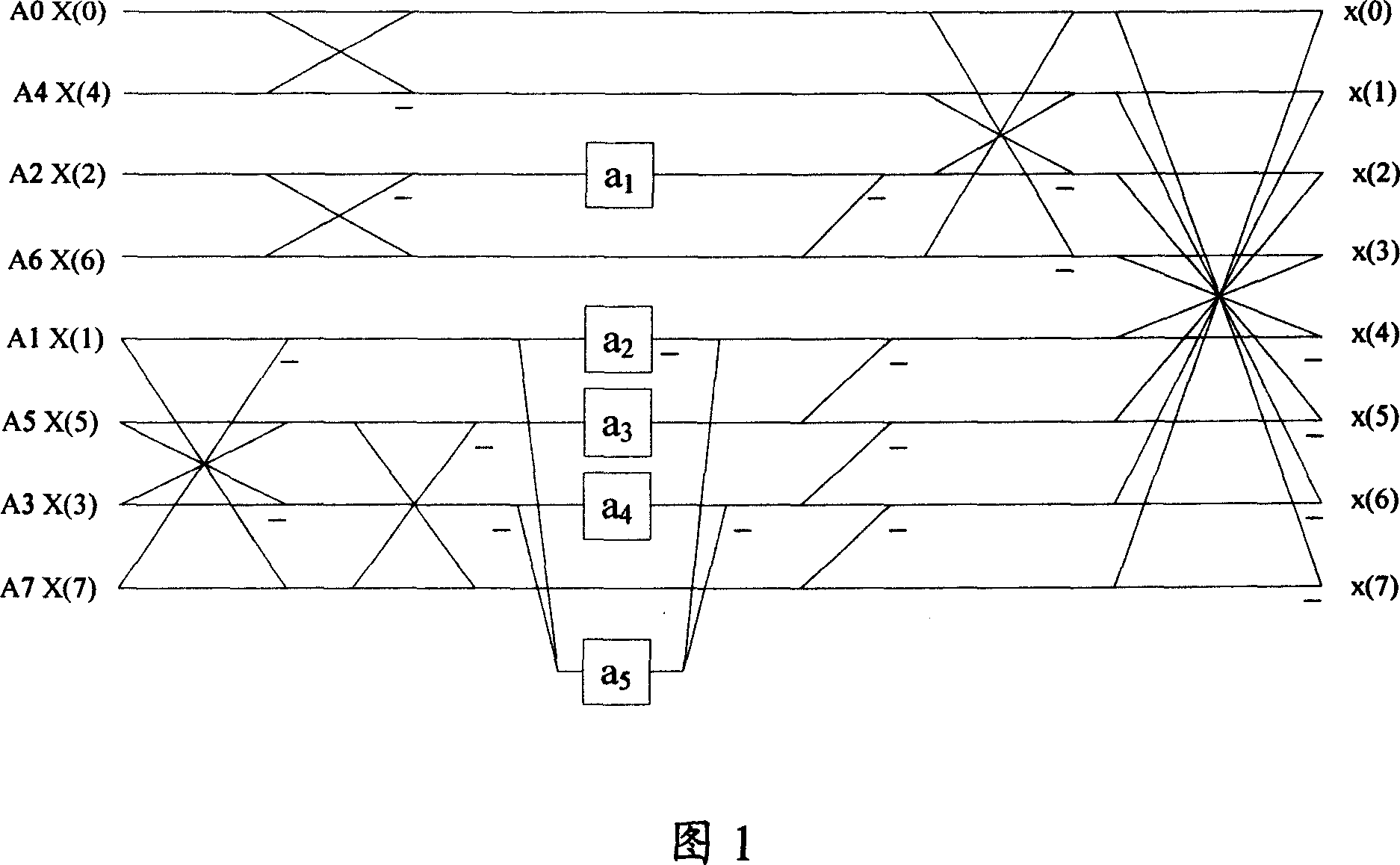

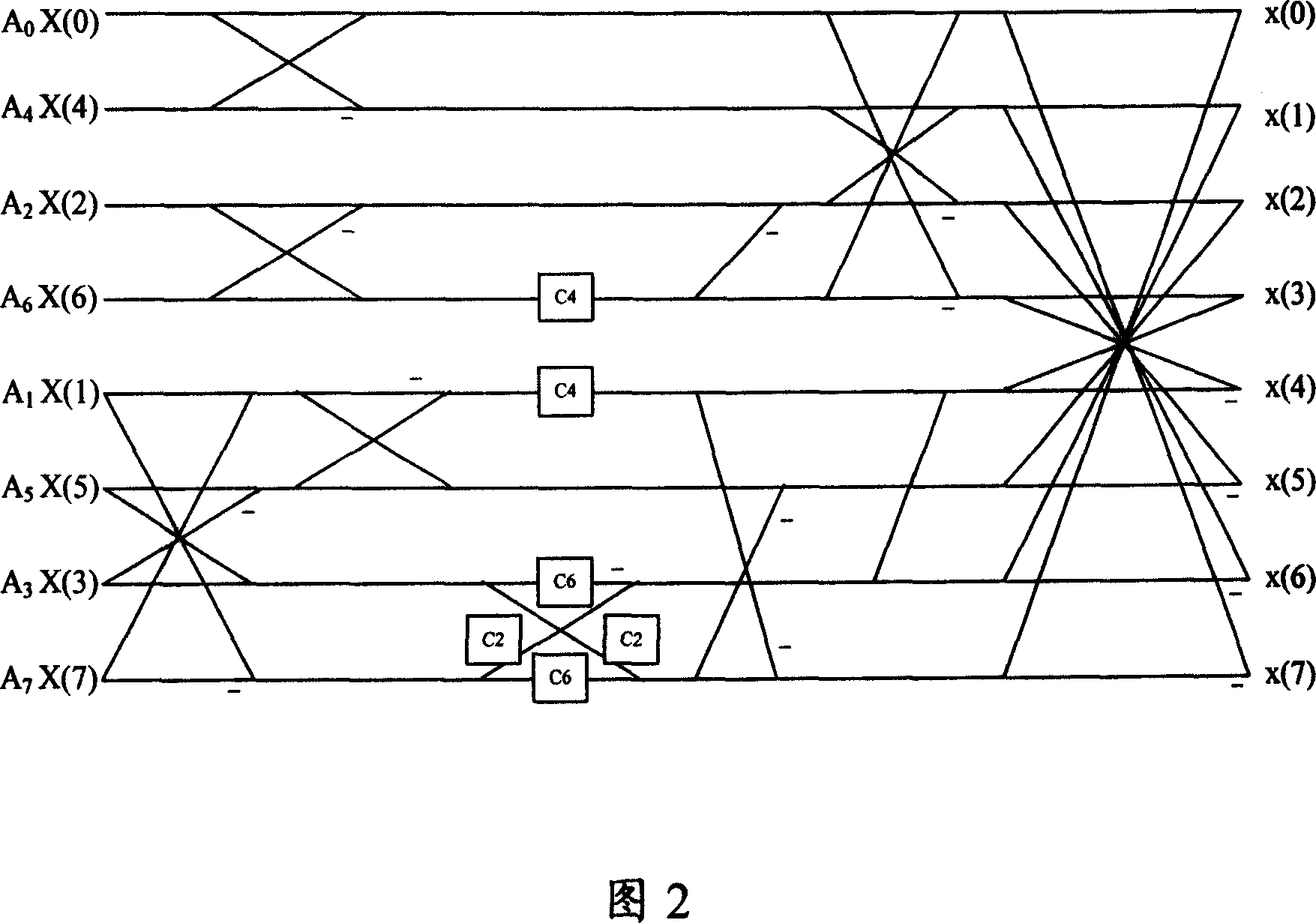

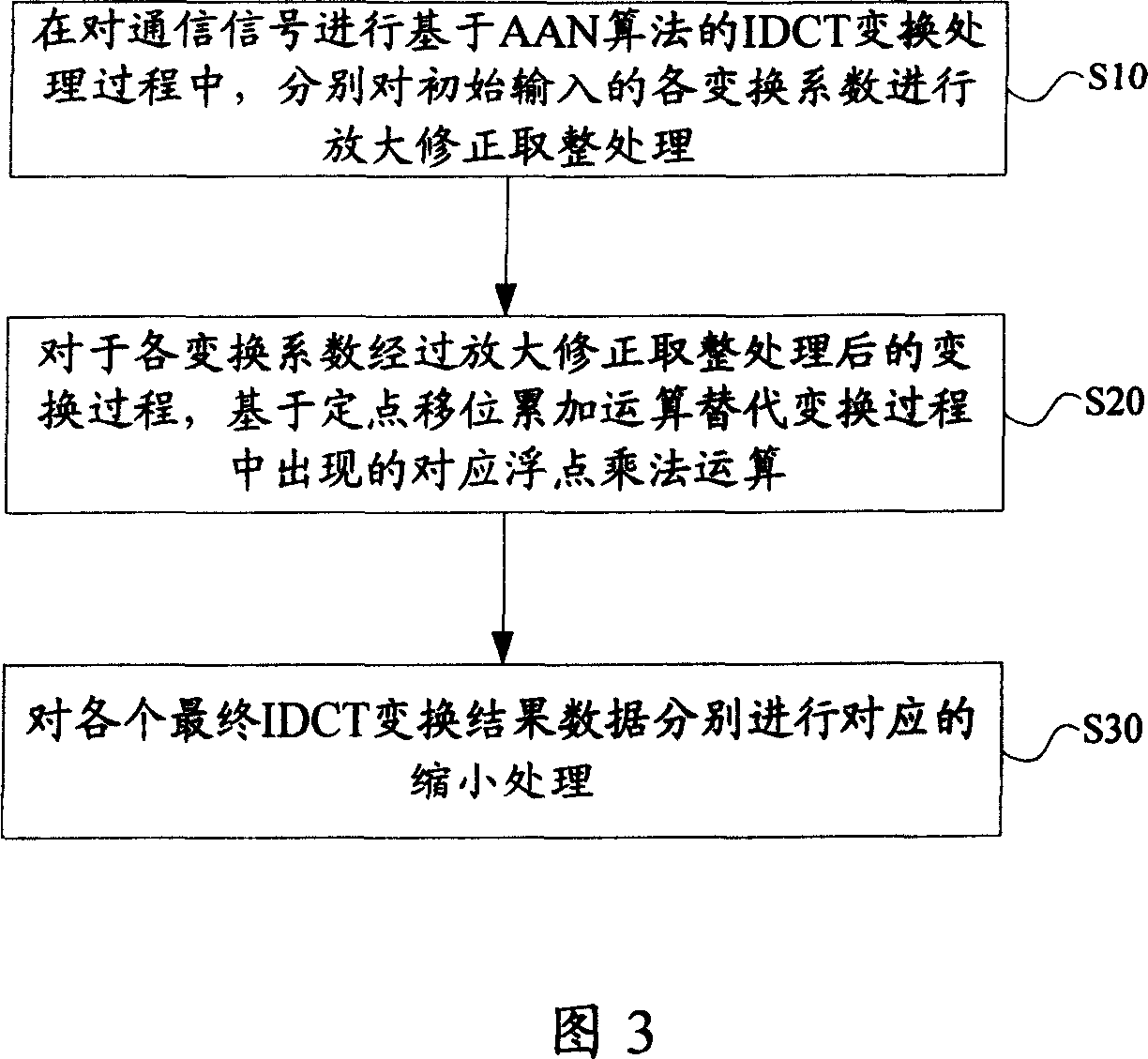

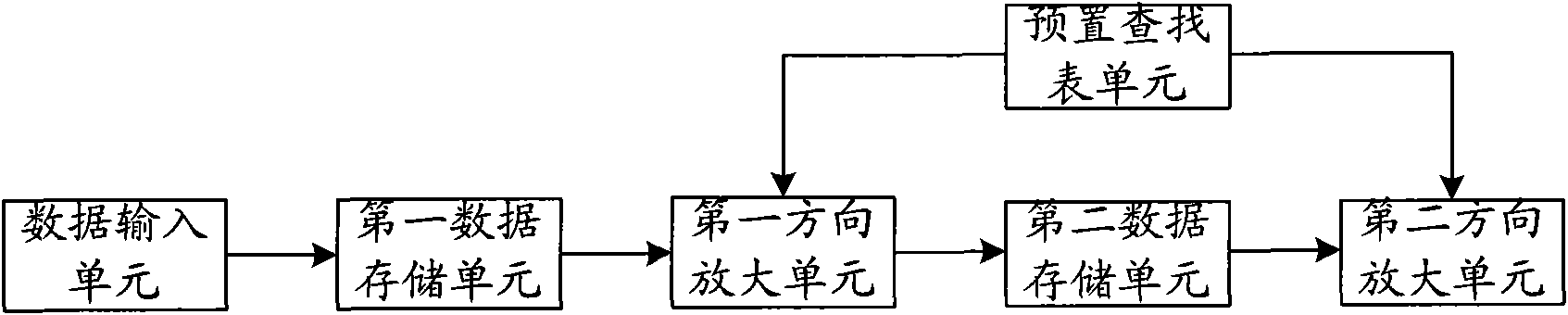

Discrete cosine inverse transformation method and its device

InactiveCN101047849AGuarantee the accuracy of the transformation resultSpeed up transform processingPulse modulation television signal transmissionDigital video signal modificationFloating point multiplicationComputer science

This invention discloses a scattered cosine inverse transformation method including: carrying out amplifying, correcting and rounding process to the transformed coefficients input initially in the process of scattered cosine inverse transformation to digital signals based on AAN algorithm to substitute corresponded floating-point multiplication operation appearing in the transforming process based on a fixed-point shift accumulation operation and carrying out corresponding reduction process to the final inverse transformation results. This invention also discloses a device.

Owner:HUAWEI TECH CO LTD

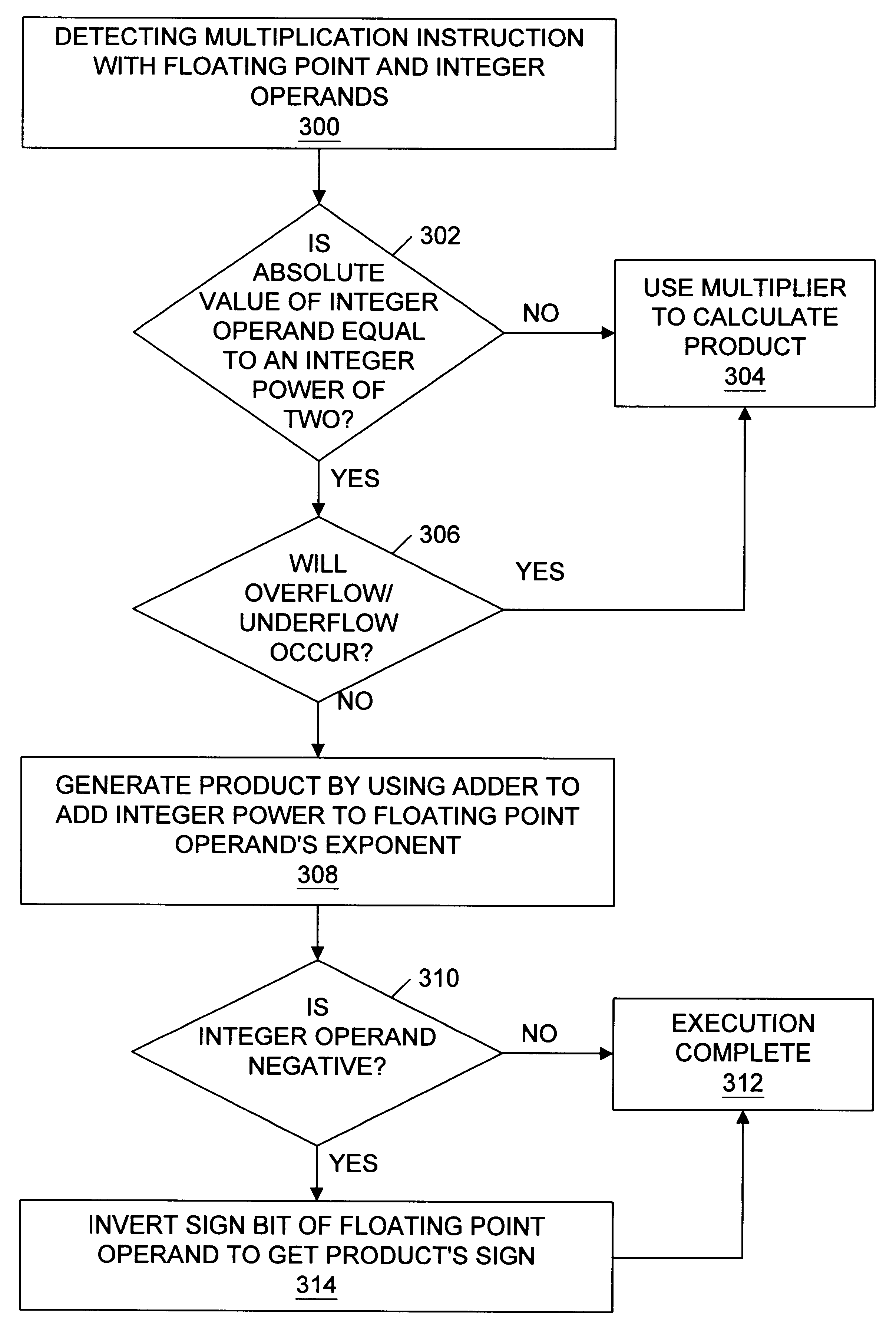

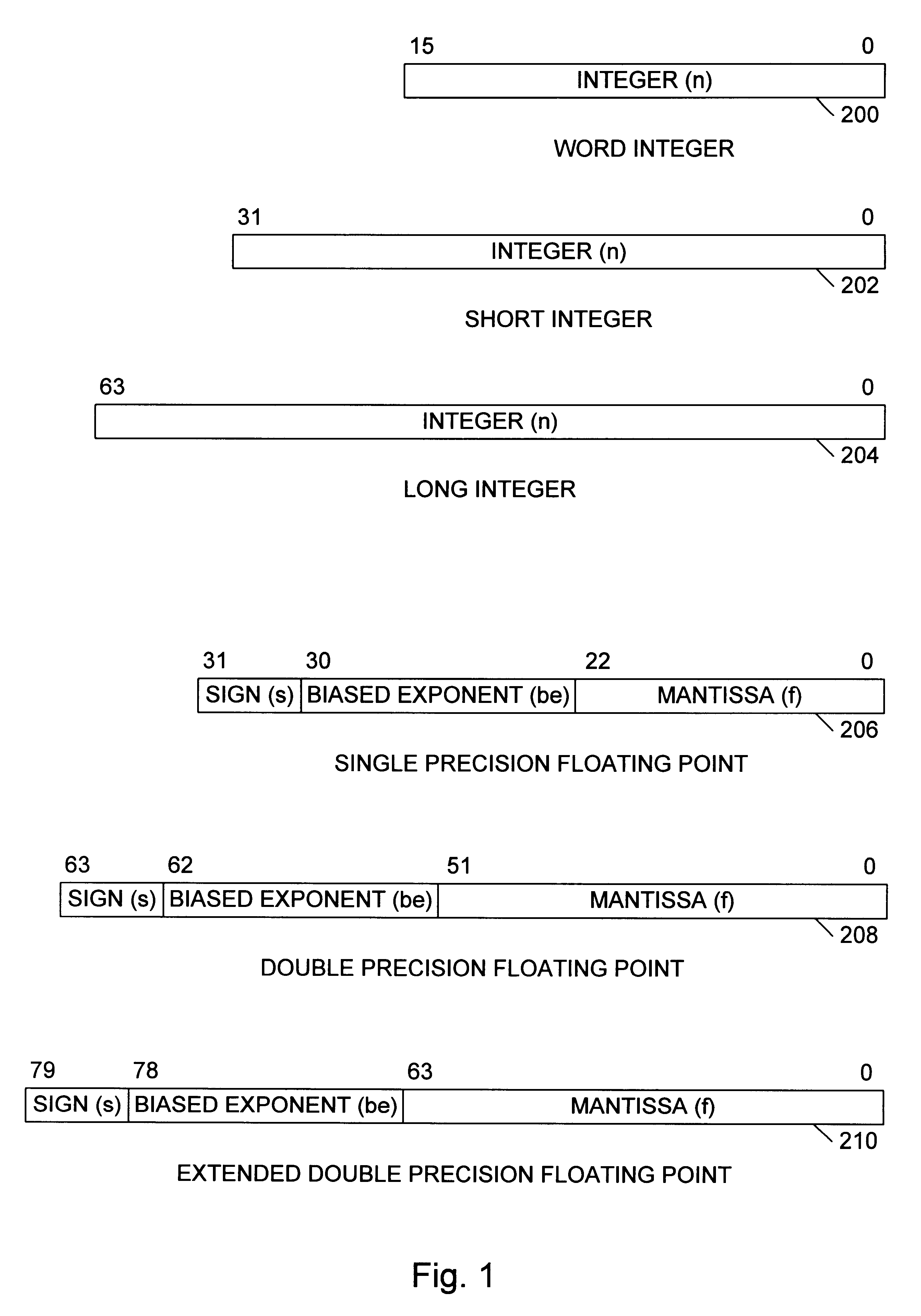

Fast multiplication of floating point values and integer powers of two

InactiveUS6233595B1Computations using contact-making devicesComputation using non-contact making devicesSign bitOperand

A method for performing fast multiplication in a microprocessor is disclosed. The method comprises detecting multiplication operations that have a floating point operand and an integer operand, wherein the integer operand is an integer power of two. Once detected, a multiplication operation meeting these criteria may be executed by using an integer adder to sum the integer power and the floating point operand's exponent to from a product exponent. The bias of the integer operand's exponent may also be subtracted. A product mantissa is simply copied from the floating point operand's mantissa. The floating point operand's sign bit may be inverted to form the product's sign bit if the integer operand is negative. Advantageously, the product is generated using integer addition which is faster than floating point multiplication. The method may be implemented in hardware or software.

Owner:ADVANCED MICRO DEVICES INC

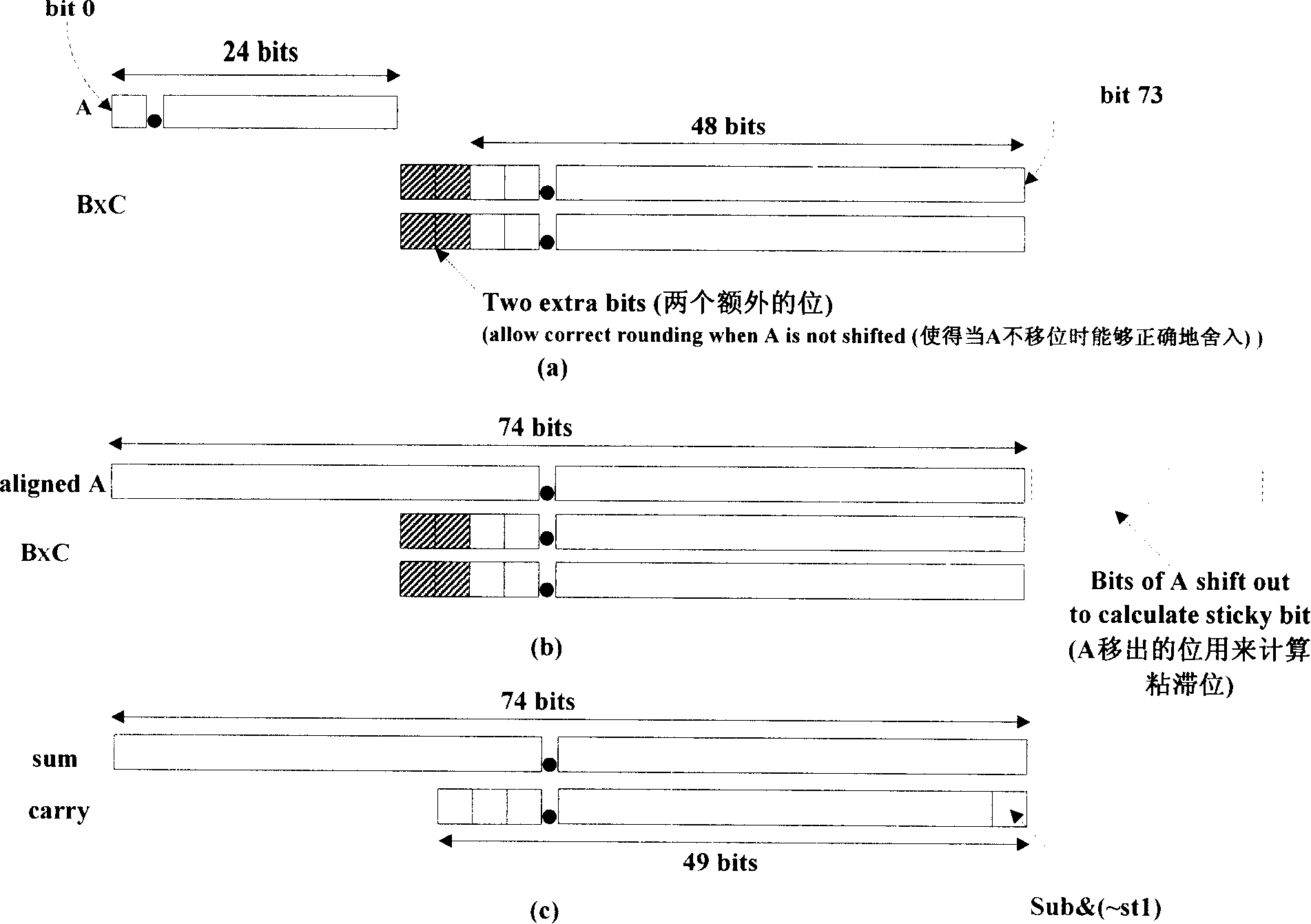

Data processing apparatus and method for performing floating point multiplication

ActiveUS20060117080A1Avoid the needComputation using non-contact making devicesBinary multiplierLeast significant bit

A data processing apparatus and method are provided for multiplying first and second n-bit significands of first and second floating point operands to produce an n-bit result. The data processing apparatus comprises multiplier logic operable to multiply the first and second n-bit significands to produce a pair of 2n-bit vectors. Half adder logic is then arranged to produce a plurality of carry and sum bits representing a corresponding plurality of most significant bits of the pair of 2n-bit vectors. The first adder logic then performs a first sum operation in order to generate a first rounded result equivalent to the addition of the pair of 2n-bit vectors with a rounding increment injected at a first predetermined rounding position appropriate for a non-overflow condition. To achieve this, the first adder logic uses as the m most significant bits of the pair of 2n-bit vectors the corresponding m carry and sum bits, the least significant of the m carry bits being replaced with a rounding increment value prior to the first adder logic performing the first sum operation. Second adder logic is arranged to perform a second sum operation in order to generate a second rounded result equivalent to the addition of the pair of 2n-bit vectors with a rounding increment injected at a second predetermined rounding position appropriate for an overflow condition. To achieve this, the second adder logic uses as the m-1 most significant bits of the pair of 2n-bit vectors the corresponding m−1 carry and sum bits, with the least significant of the m−1 carry bits being replaced with the rounding increment value prior to the second adder logic performing the second sum operation. The required n-bit result is then derived from either the first rounded result or the second rounded result. The data processing apparatus takes advantage of a property of the half adder form to enable a rounding increment value to be injected prior to performance of the first and second sum operations without requiring full adders to be used to inject the rounding increment value.

Owner:ARM LTD

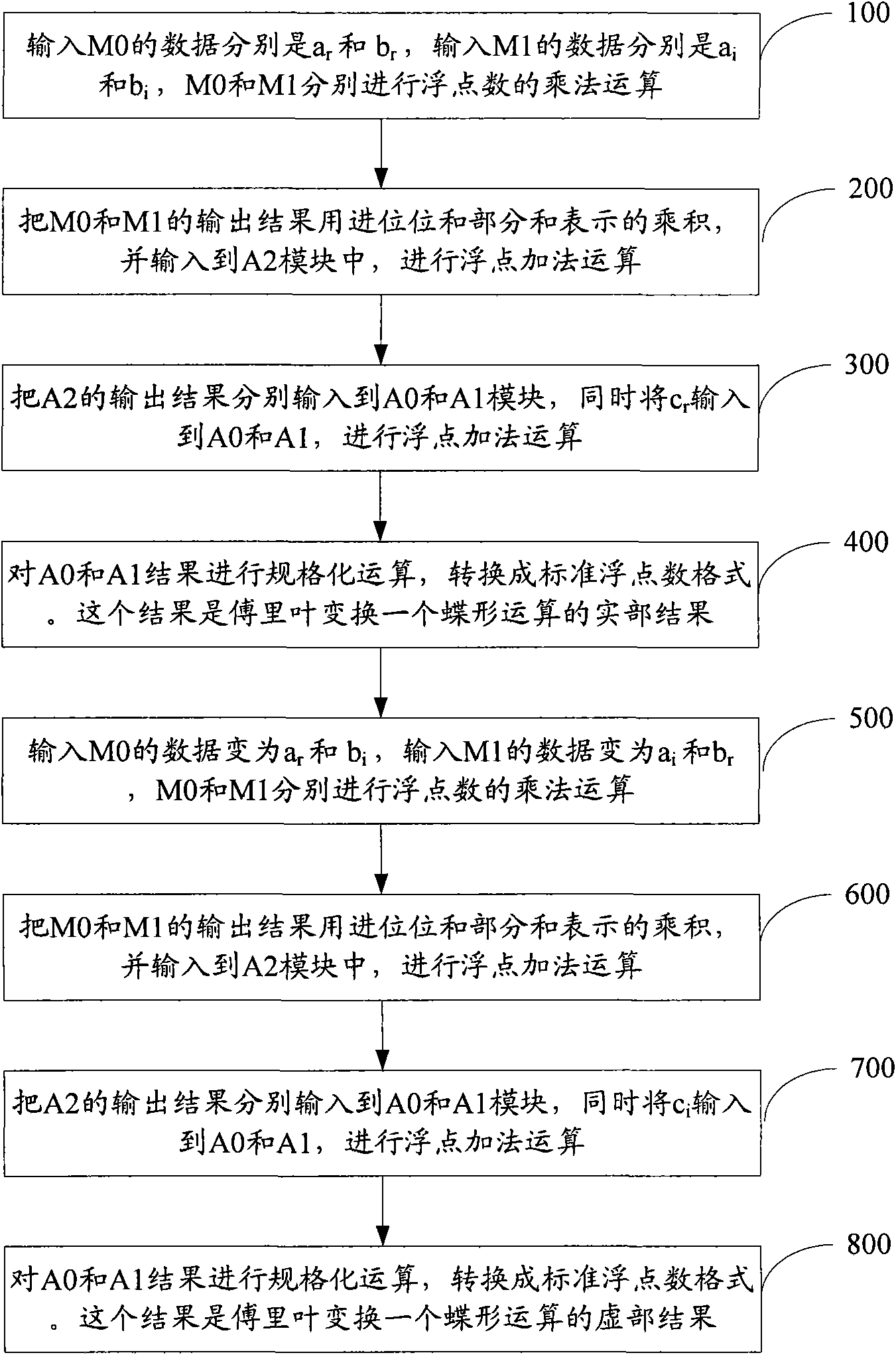

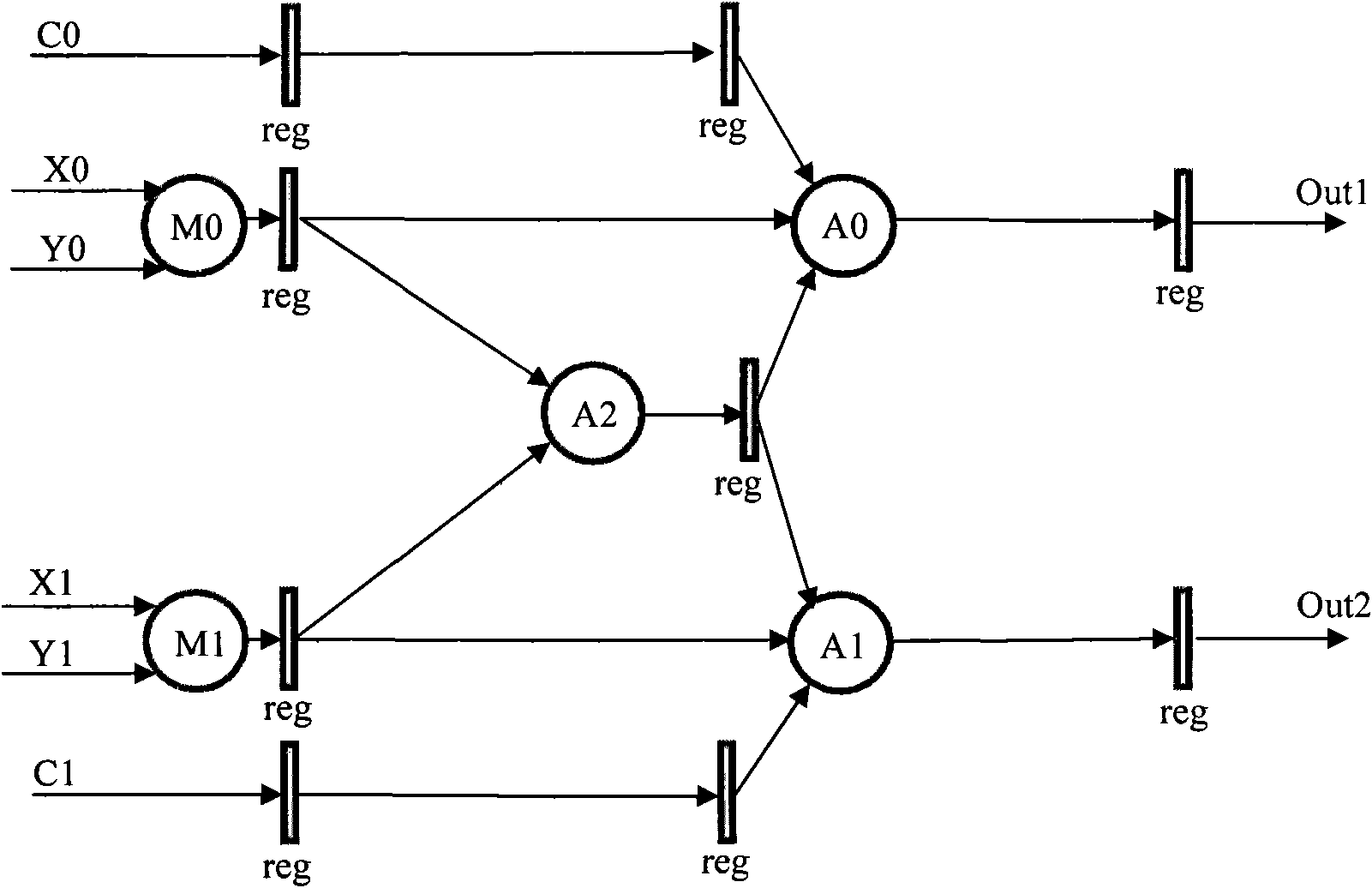

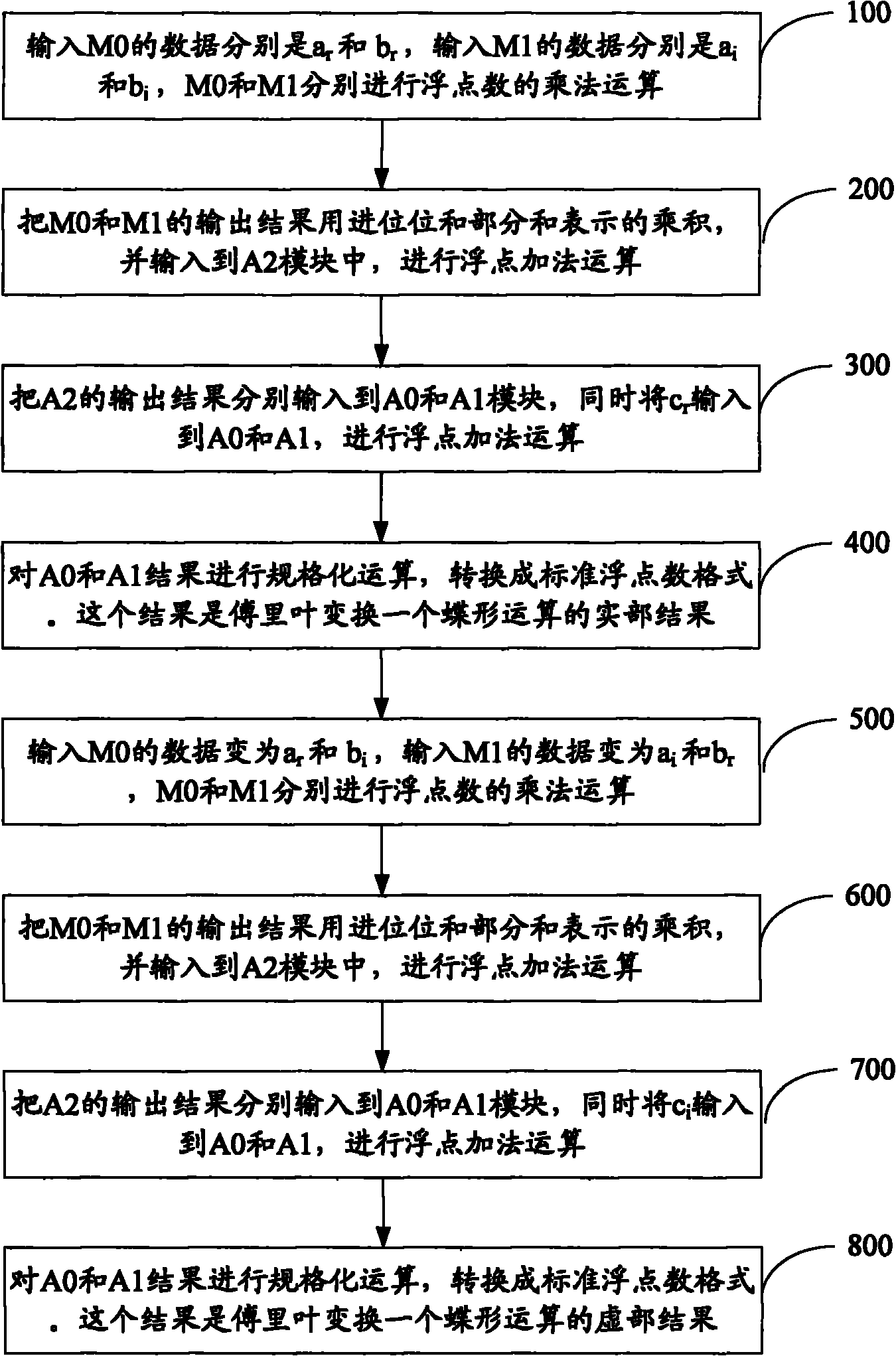

Fusion processing device and method for floating-point number multiplication-addition device

ActiveCN102339217ASimplify operation stepsSave resourcesDigital data processing detailsComputer moduleParallel computing

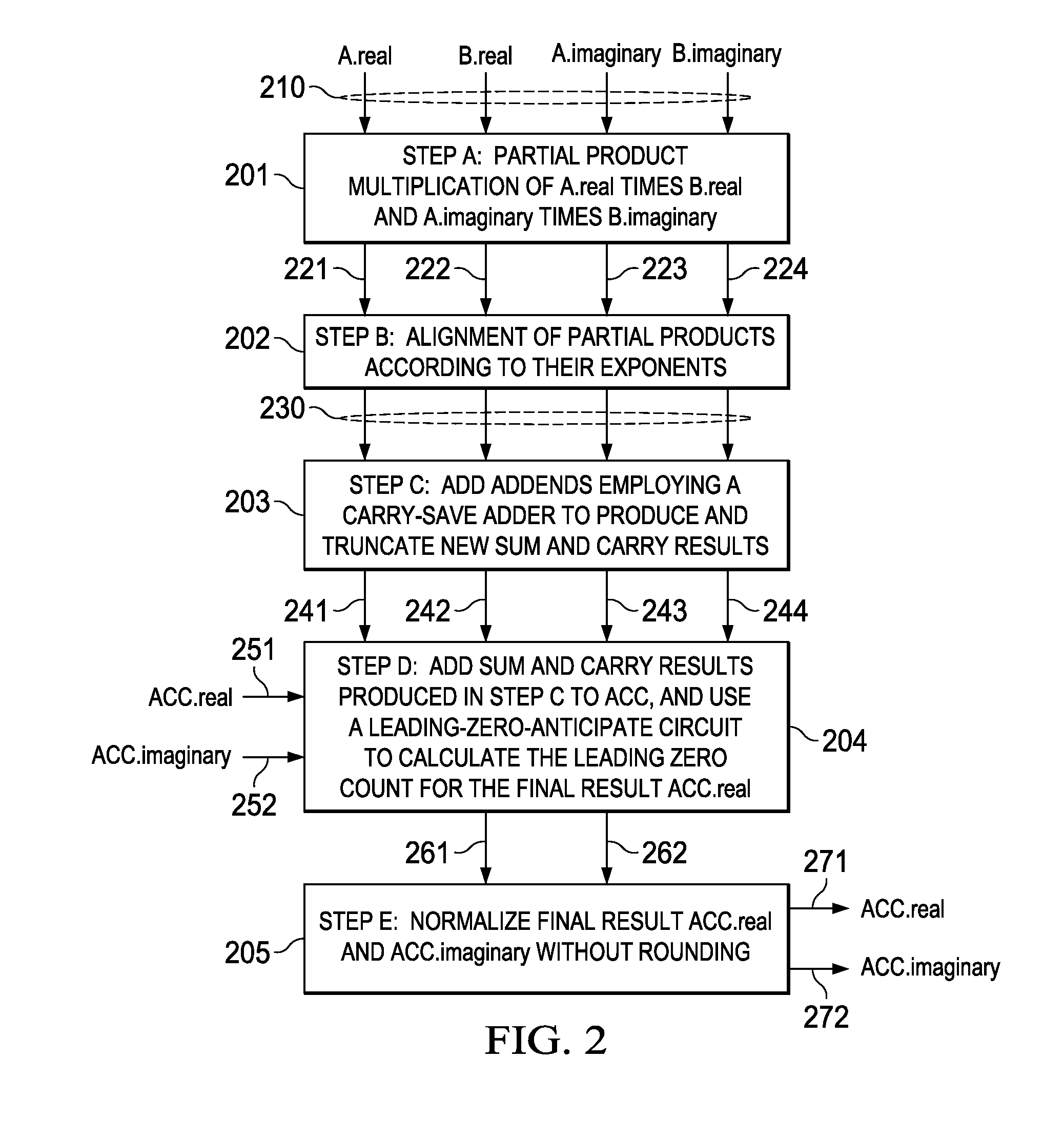

The invention provides a fusion processing device and a fusion processing method for a floating-point number multiplication-addition device. The method comprises the following steps of: inputting real parts and imaginary parts of a multiplier and a multiplicand of a floating point complex number into floating-point multiplication modules M0 and M1, and performing floating-point multiplication operation, wherein output results present products by using a carry bit and a partial sum; inputting the products into a floating-point addition module A2, and performing floating-point addition operation, wherein the output results present addition operation by using the carry bit and the partial sum; inputting the output results which present the addition operation into floating-point addition modules A0 and A1 simultaneously; inputting addends input from the outside into the floating-point addition modules A0 and A1, and performing floating-point addition operation; and outputting operation results. The device and the method can be better applied to butterfly computation of Fourier transform; and by the device and the method, operation steps can be simplified, hardware resources are easy to save, and the multiplication-addition operation of the floating point complex number is realized by less resources.

Owner:SANECHIPS TECH CO LTD

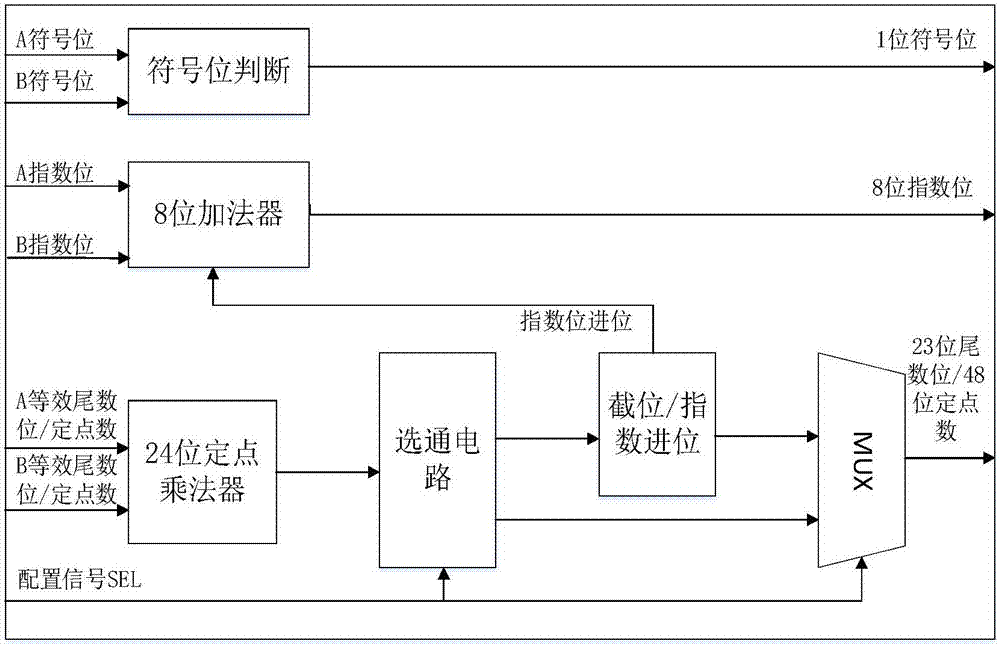

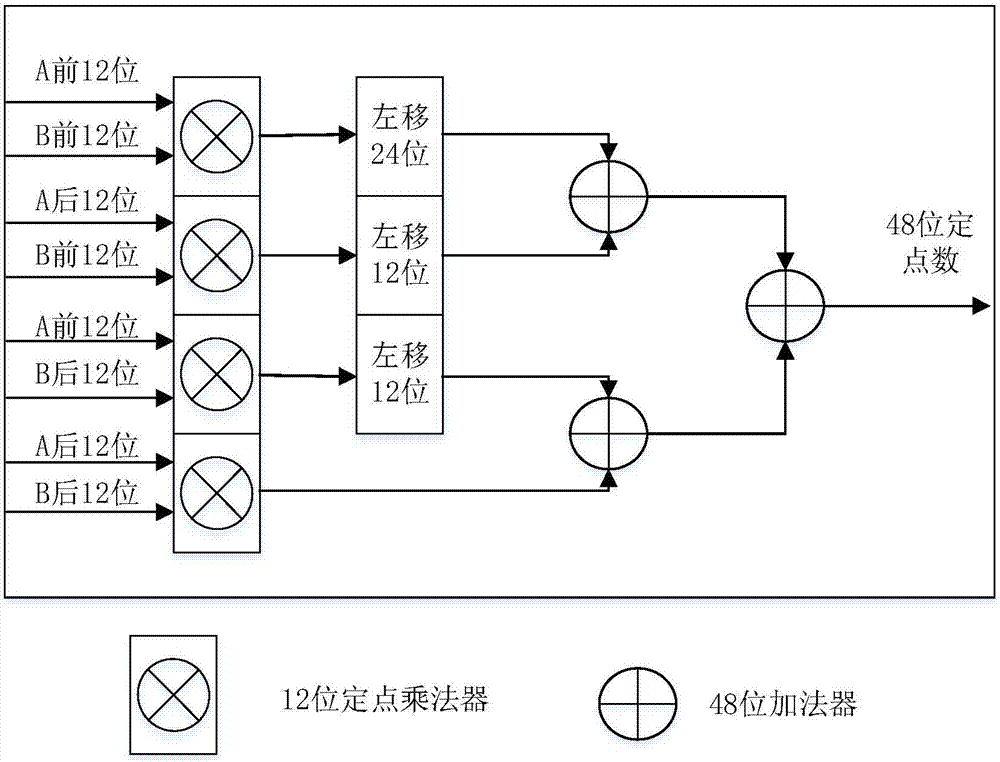

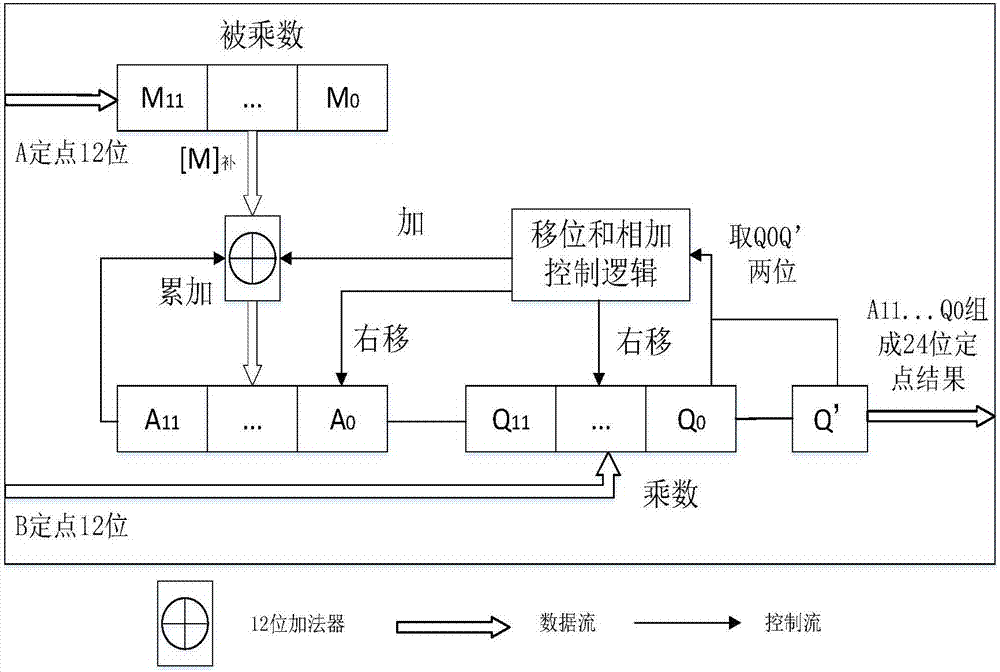

Reconfigurable universal fixed floating-point multiplier

ActiveCN106951211AImprove versatilityImprove computing efficiencyDigital data processing detailsBinary multiplierFloating point multiplication

The invention provides a universal fixed floating-point multiplier, which can realize not only 24-bit fixed-point multiplication operation but also 32-bit single-precision floating-point multiplication operation. According to the multiplier, a fixed-point multiplier is separated from a main body structure, and the 24-bit fixed-point multiplier is reconfigured as a single-precision floating-point multiplier. The 24-bit fixed-point multiplier consists of four 12-bit multipliers, wherein each 12-bit multiplier adopts a BOOTH algorithm, and operation is finished through a contracted structure of multiplication accumulation, so that the multiplication operation efficiency is effectively improved and the operation resource overhead is reduced. The multiplier except the 24-bit fixed-point multiplier does not additionally occupy excessive resources, so that the universality of the multiplier is effectively improved under the condition of ensuring operation precision and data throughput.

Owner:NANJING UNIV

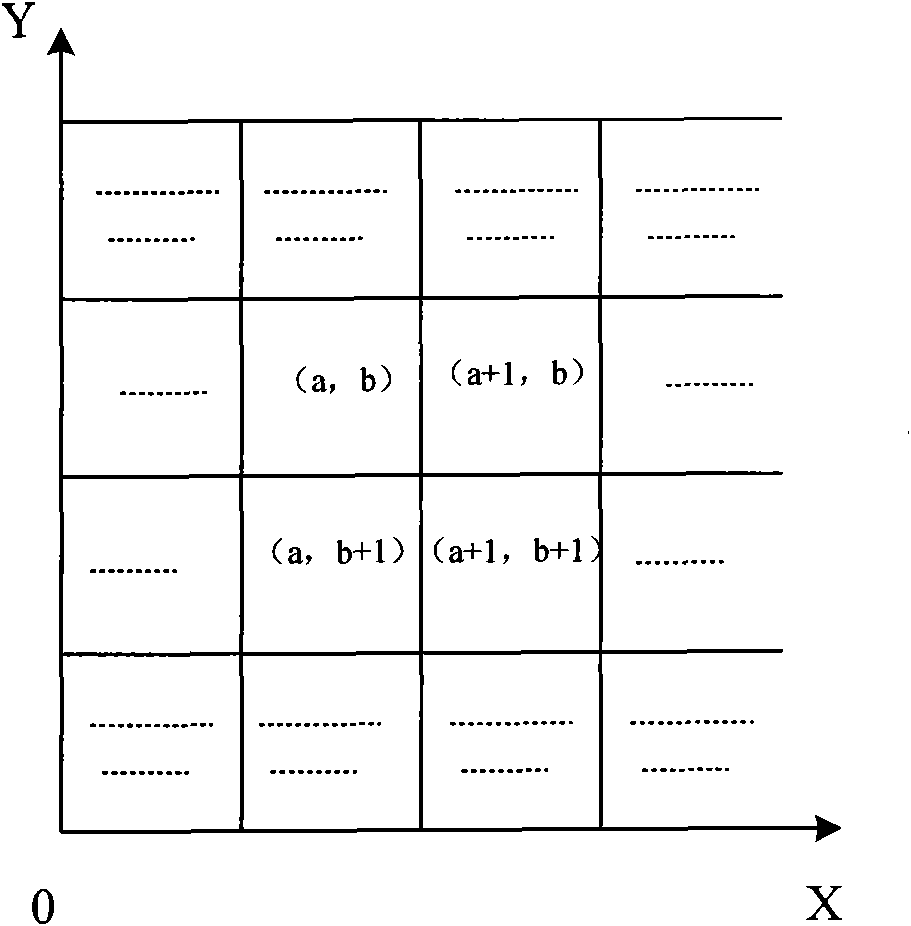

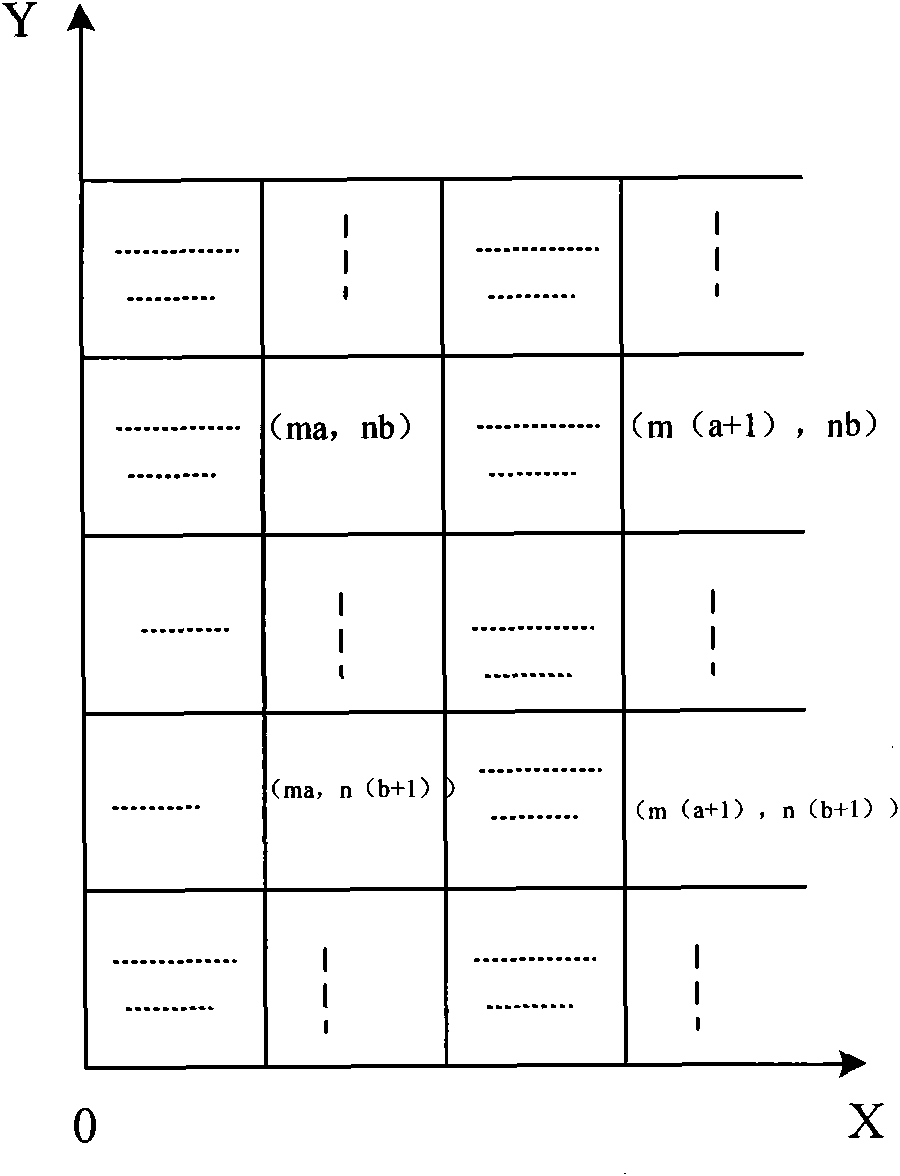

Image amplification method and device

ActiveCN101853488ASave resourcesGeometric image transformationFloating point multiplicationComputer science

The invention discloses an image amplification method and an image amplification device. Based on a bilinear interpolation method, the calculation of the gray value of each pixel point to be inserted into the amplified target image is converted into add operation and second power shift operation of gray values of two adjacent pixel points in the to-be-inserted direction, and the gray values of two adjacent pixel points are added and shifted according to the calculation to obtain the gray value of each pixel point to be inserted. Because the gray values of pixel points to be inserted into the target image are calculated without complex floating point multiplication, the processing speed of image amplification is improved.

Owner:KUSN INFOVISION OPTOELECTRONICS

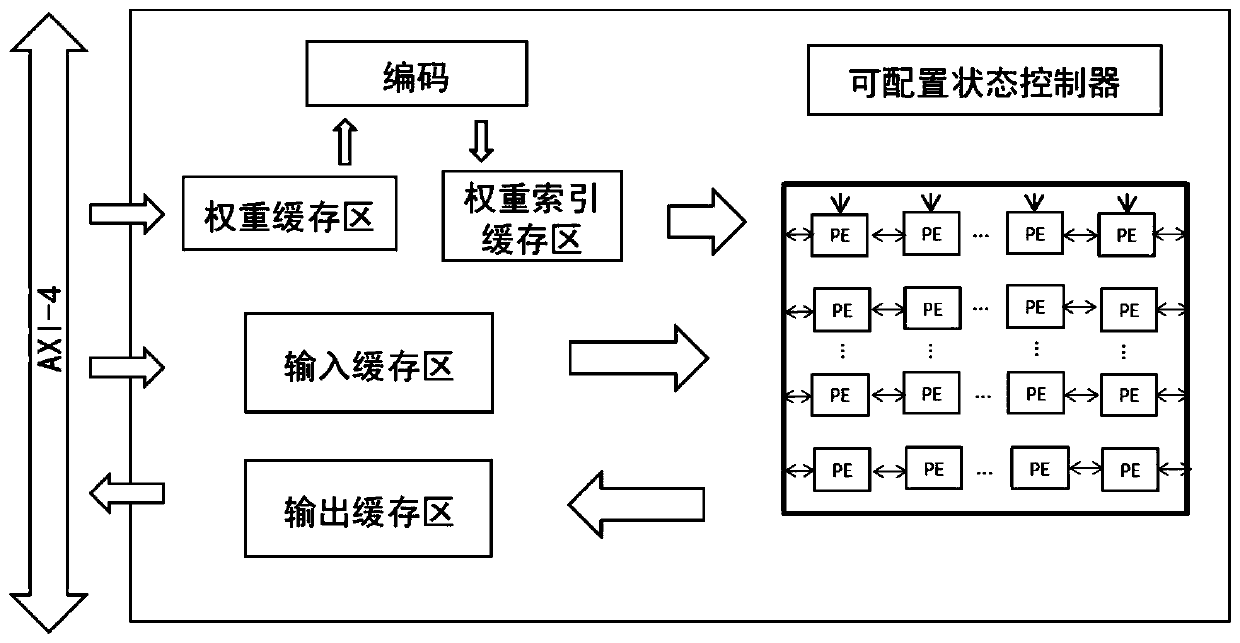

Deep neural network hardware accelerator based on power exponent quantization

ActiveCN110390383AImprove computing efficiencyReduce usageDigital data processing detailsProgram controlNetworking hardwareParallel computing

Owner:SOUTHEAST UNIV

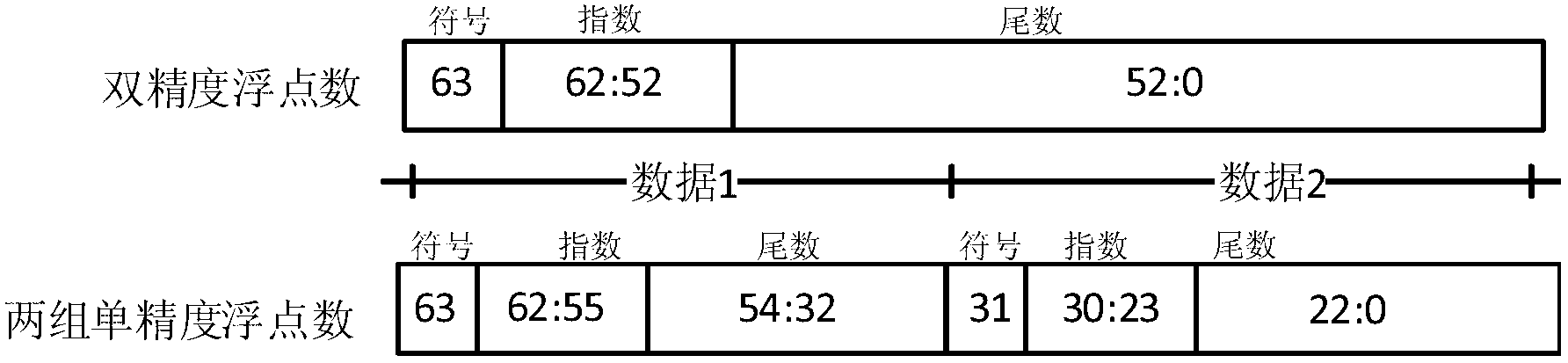

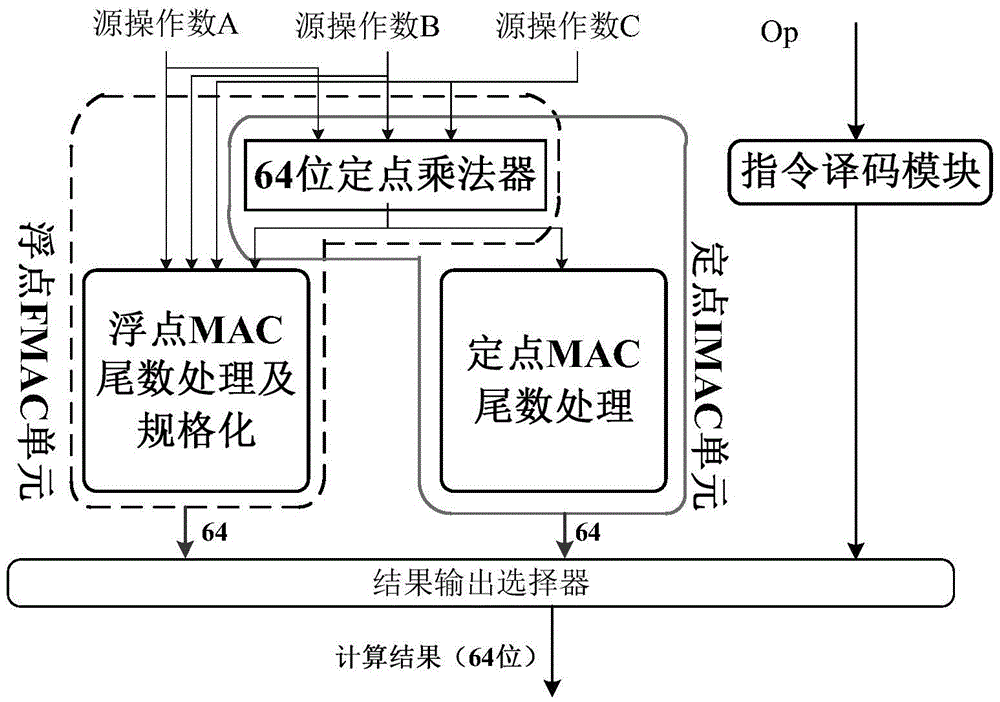

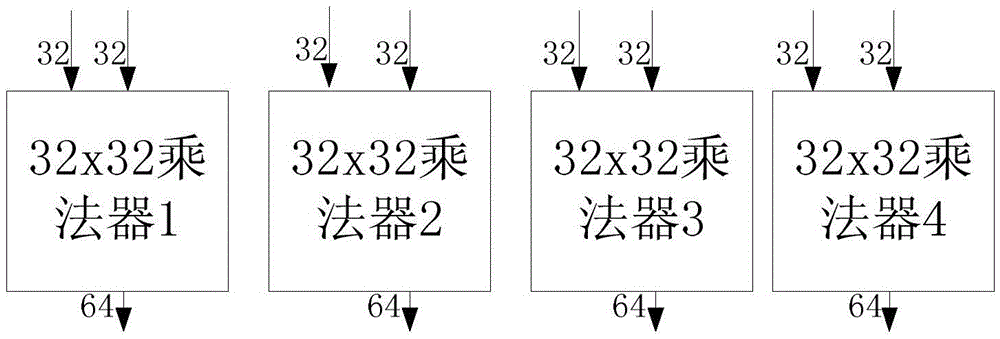

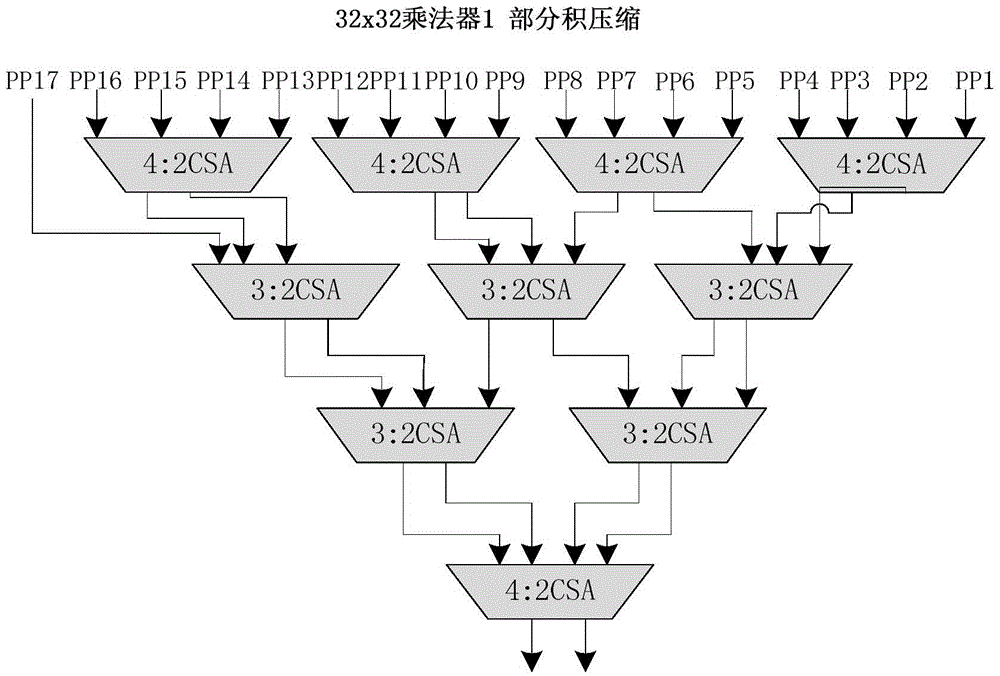

Fixed-point and floating-point operation part with shared multiplier structure in GPDSP

InactiveCN105607889AReduce areaImprove resource utilizationComputation using non-contact making devicesGeneral purposeBinary multiplier

The invention discloses a fixed-point and floating-point operation part with a shared multiplier structure in a GPDSP (General-Purpose Digital Signal Processor). The fixed-point and floating-point operation part comprises a floating-point multiplier-adder unit, a fixed-point multiplier-adder unit and a 64-bit fixed-point multiplier, wherein the floating-point multiplier-adder unit is used for supporting double-precision floating-point operation and double / single-precision floating point multiplication, multiplication-addition, multiplication-subtraction and complex multiplication operations of an SIMD structure; the fixed-point multiplier-adder unit is used for supporting 64-bit signed or unsigned fixed-point multiplication operation and double 32-bit signed or unsigned fixed-point multiplication operation of the SIMD structure; and the 64-bit fixed-point multiplier performs operation by regarding floating-point mantissa multiplication as unsigned fixed-point multiplication by multiplexing the structure of the same multiplier. The fixed-point and floating-point operation part has the advantages of capabilities of increasing the hardware utilization rate and reducing the chip area, and the like.

Owner:NAT UNIV OF DEFENSE TECH

High Efficiency Computer Floating Point Multiplier Unit

ActiveUS20150370537A1Reduce the required powerSignificant power savingComputations using contact-making devicesOperandFloating point multiplication

Owner:WISCONSIN ALUMNI RES FOUND

Deep neural network reasoning method and computing device

The present application relates to the field of neural networks, in particular to a deep neural network reasoning method and a computing device. The method includes receiving input features inputted to an operation layer in a first depth neural network model; determining an index value corresponding to the operation layer; determining a codebook of the operation layer according to the index valueof the operation layer; quantifying the input features according to a preset first quantization rule; In the operation layer, the operation layer performs the operation on the input features accordingto the codewords corresponding to the quantized input features in the quantized input features query codebook. In accordance with an embodiment of that present application, each quantization model parameter and each quantization input characteristic are multiplied to obtain a codebook, as long as the actual input feature is quantized into the quantized input feature, the floating-point multiplication result of the quantized input feature can be obtained by directly consulting the codebook, so that the operation can be completed quickly, and the reasoning speed of the deep neural network can be greatly accelerated without the substantial floating-point multiplication operation.

Owner:HUAWEI TECH CO LTD

Floating point number multiplication rounding method and device

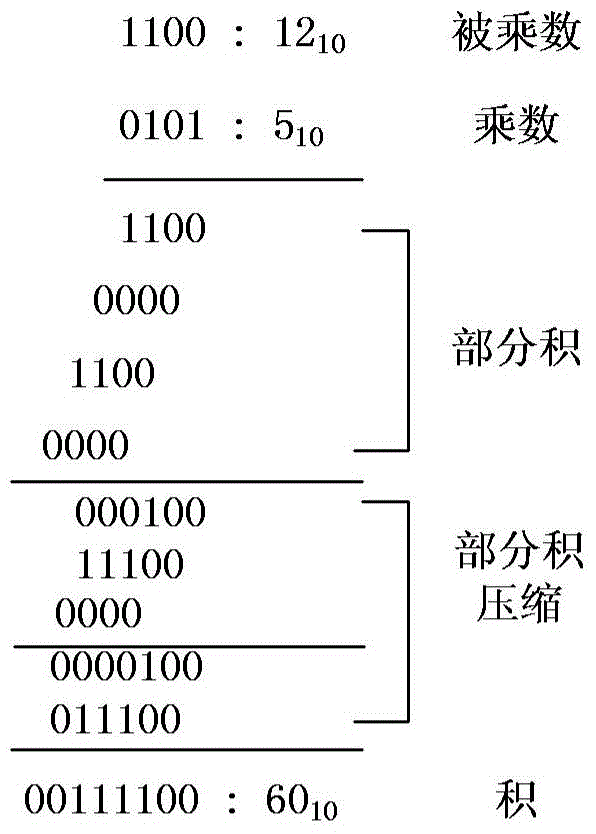

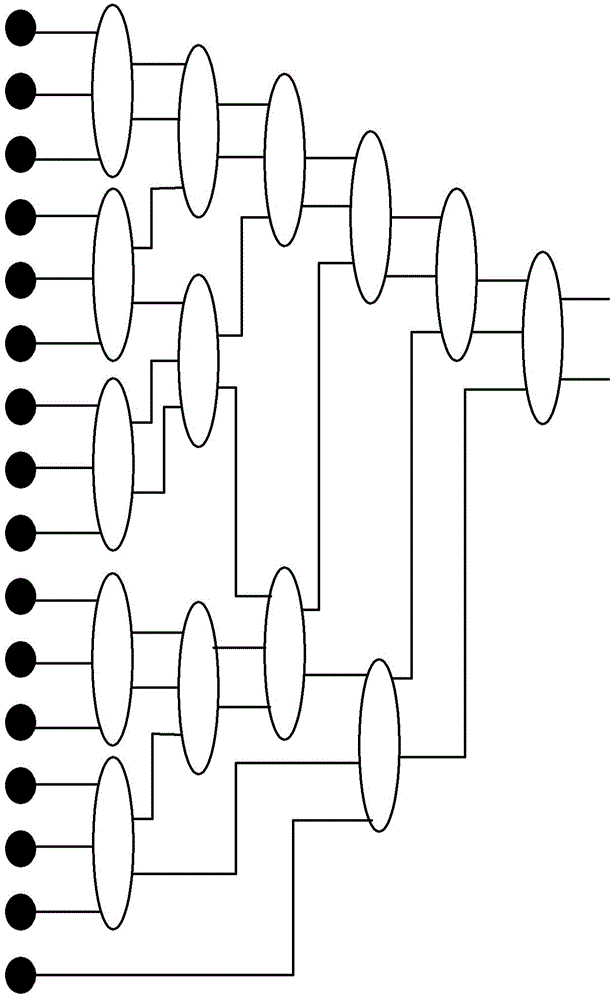

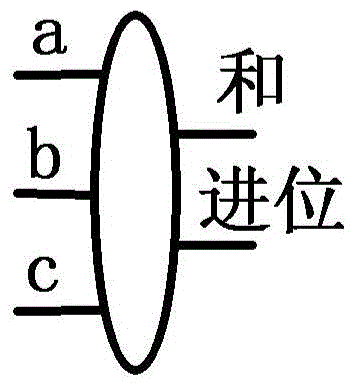

The invention discloses a floating point number multiplication rounding method and device. According to the method, when partial product compression is performed, predetermined data are introduced to serve as partial products to take part in the partial product compression. The predetermined data are different according to the different rounding methods of floating point multiplication results. The value is as follows specifically: when the rounding rounds to zero, a special number is 0; when the rounding rounds to the nearest even, a special number is 2N-2; when the rounding rounds to the positive infinity, if the sign bit of the result is positive, the value is 2N-1-1, otherwise the value is 0; when the rounding rounds to the negative infinity, if the sign bit of the result is negative, the value is 2N-1-1, otherwise, the value is 0. N means the length of the floating point number mantissa. A special datum is introduced in advance in the partial product compression stage, the simplification of the required work for the rounding of the subsequent mantissa is achieved, and the floating point multiplication processing performance is improved.

Owner:BEIJING SMART LOGIC TECH CO LTD

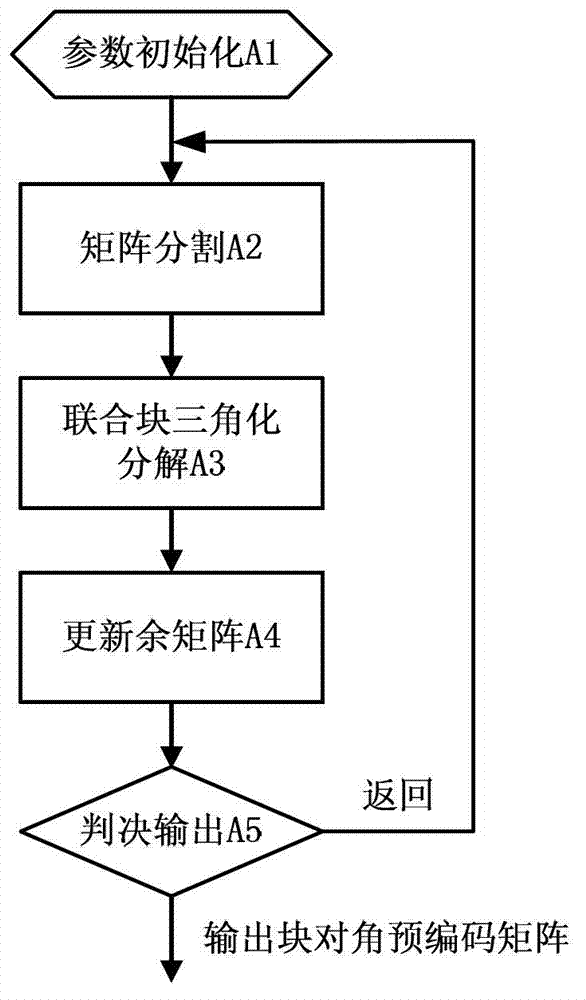

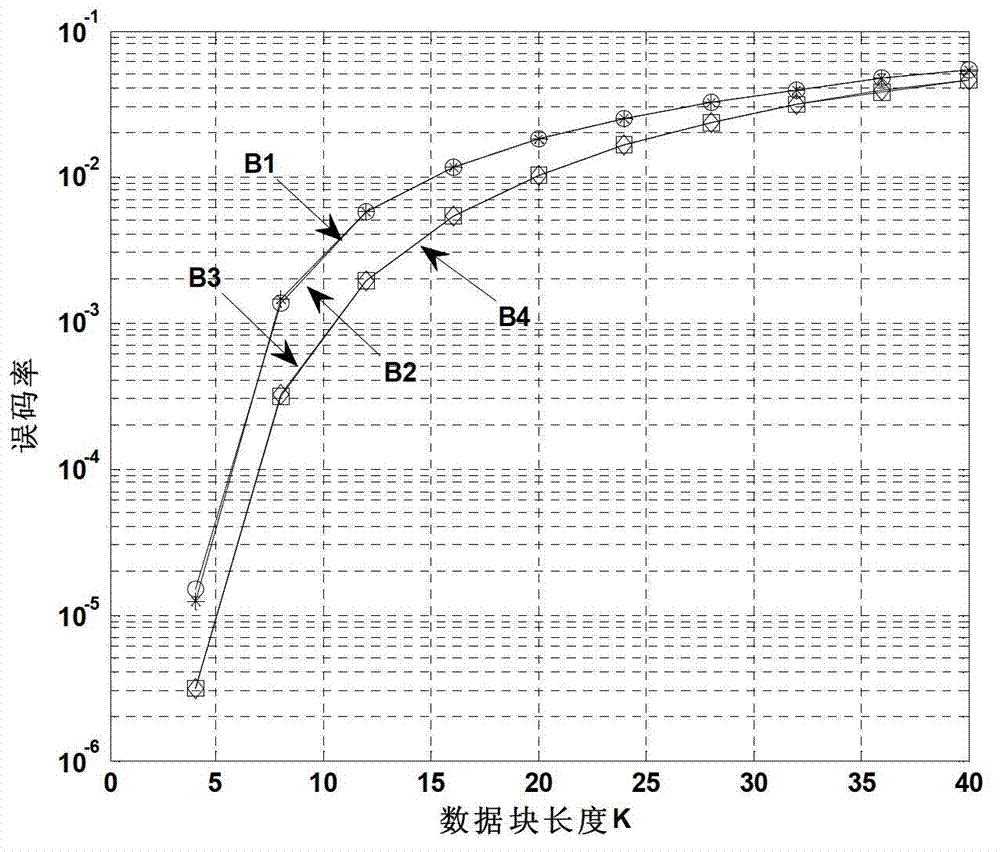

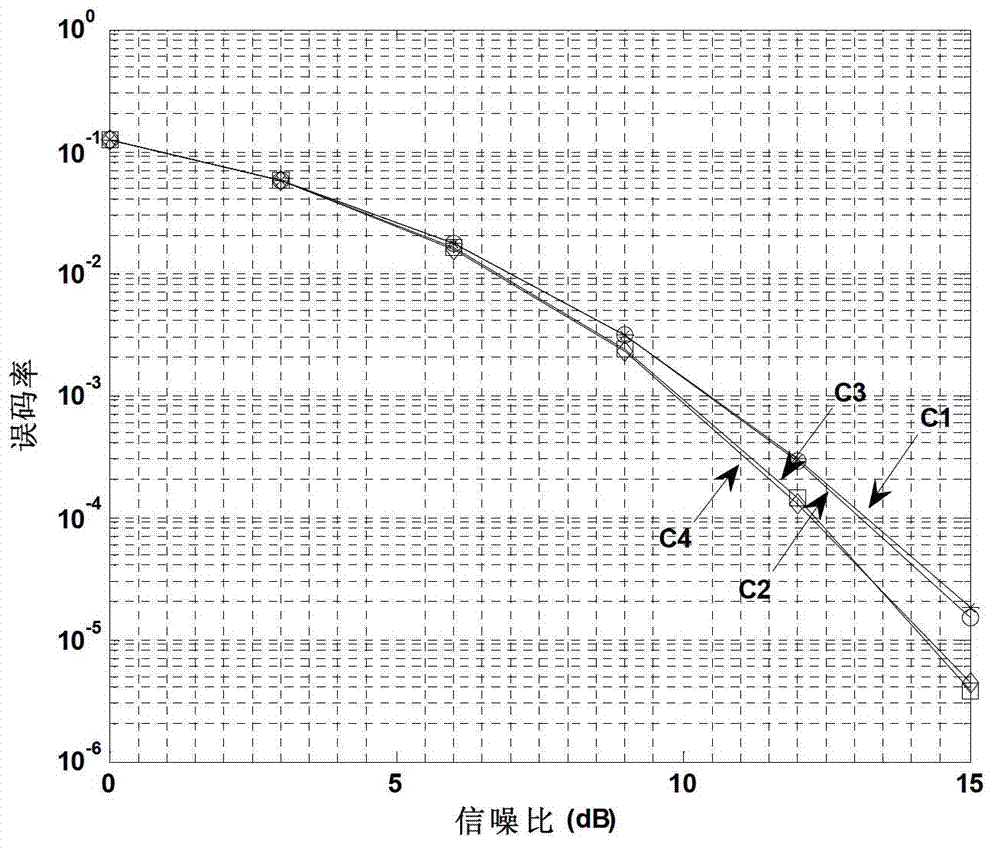

Physical layer multicast and multithread transmission method based on combined block triangularization

InactiveCN103199963AImprove bit error performanceGood bit error performanceError prevention/detection by diversity receptionDecompositionPhysical layer

The invention discloses a physical layer multicast and multithread transmission method based on combined block triangularization. The physical layer multicast and multithread transmission method is characterized in that a block diagonal precoding matrix of a physical layer multicast wireless scene suitable for a single launching base station and two receiving users is iterated and constructed through a combined triangularization decomposition algorithm by using of a matrix block computational thinking. Compared with an existing combined triangularization decomposition algorithm, the physical layer multicast and multithread transmission method based on the combined block triangularization can not only reduce reduce floating-point multiplication times participating in computing when the block diagonal precoding matrix is used for data transmission, but also obtain better system error code performance, is suitable for is suitable for an environment of receive-transmit antenna of a large scale and a frequency selectivity fading wireless communication channel in a system, and can be conveniently implemented in a new-generation broadband wireless and mobile communication system using a multiple-input-multiple-output technique.

Owner:UNIV OF SCI & TECH OF CHINA

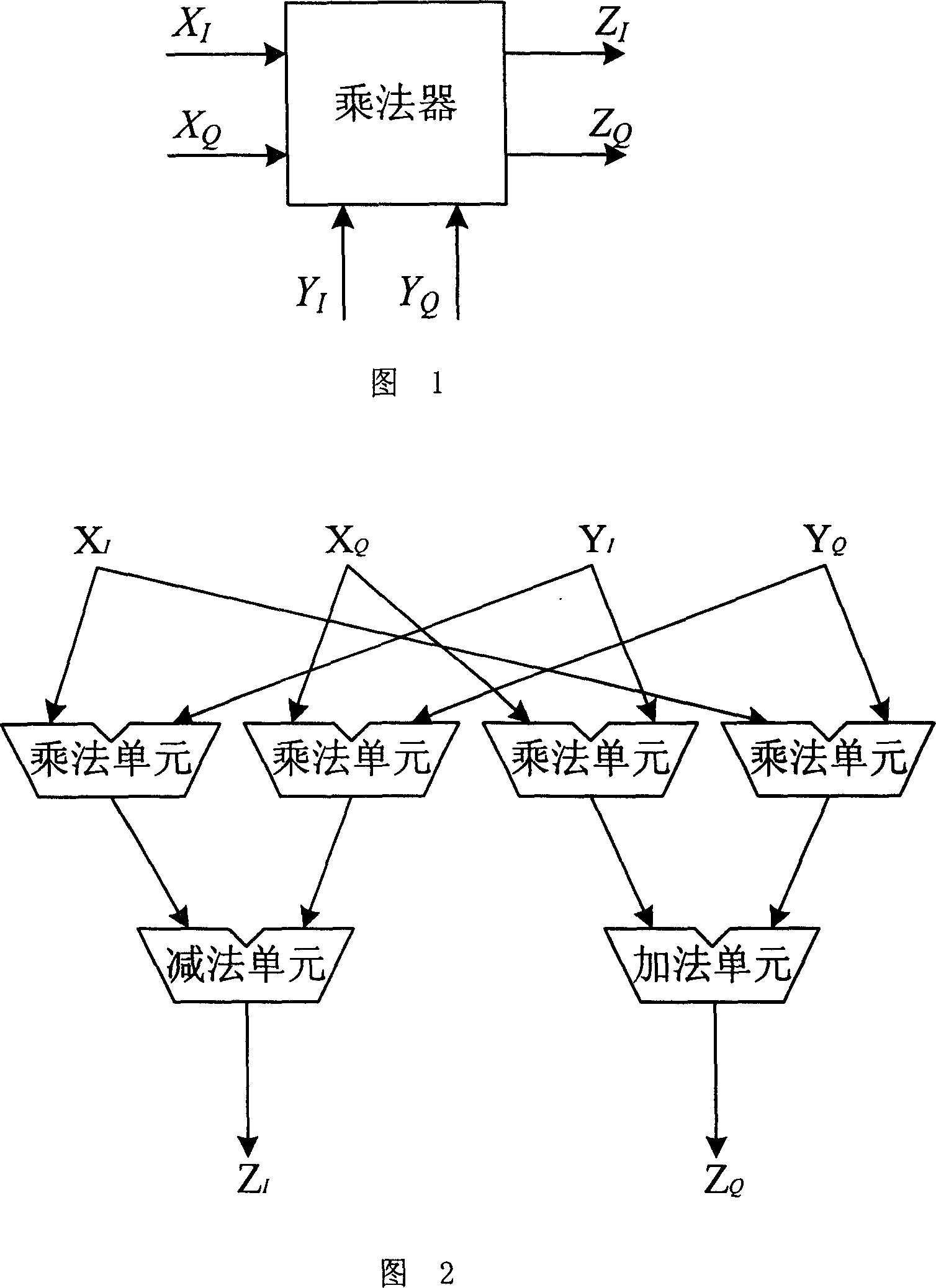

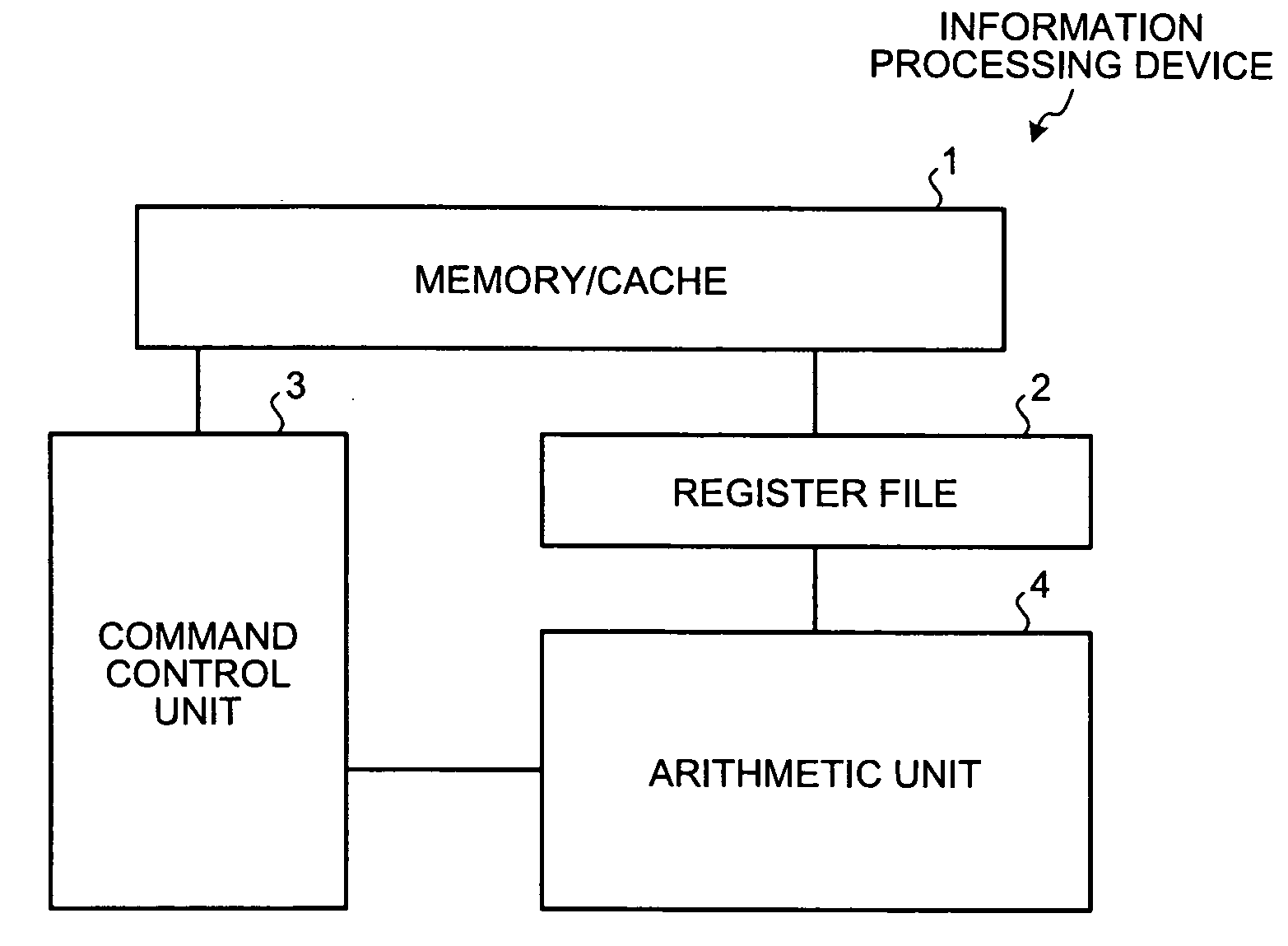

Floating-point complex multiplier

InactiveCN1996235AImprove reliabilityImprove versatilityWave based measurement systemsDigital data processing detailsRadarEngineering

The floating point complex multiplier comprises a data interface, a floating addition unit, a floating deduction unit and four floating point multiplication unit. The invented floating point complex multiplier integrates high frequency land wave radar overall digital radar receiver, realizing the floating point complex multiplication of the timely digital pulse compression, improving the data processing speed and precision. It is high in reliability, good universality, small in size and low in cost.

Owner:WUHAN UNIV

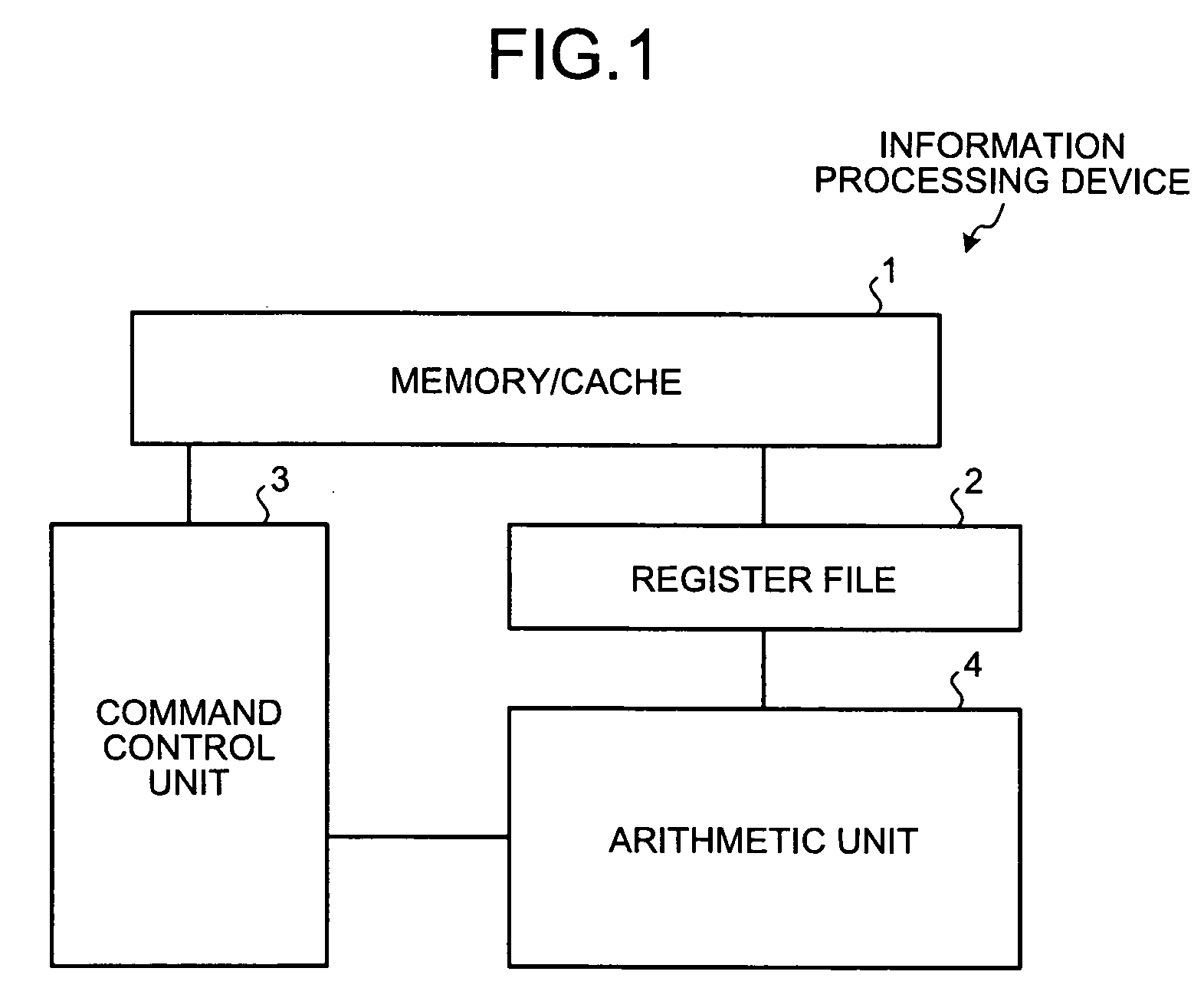

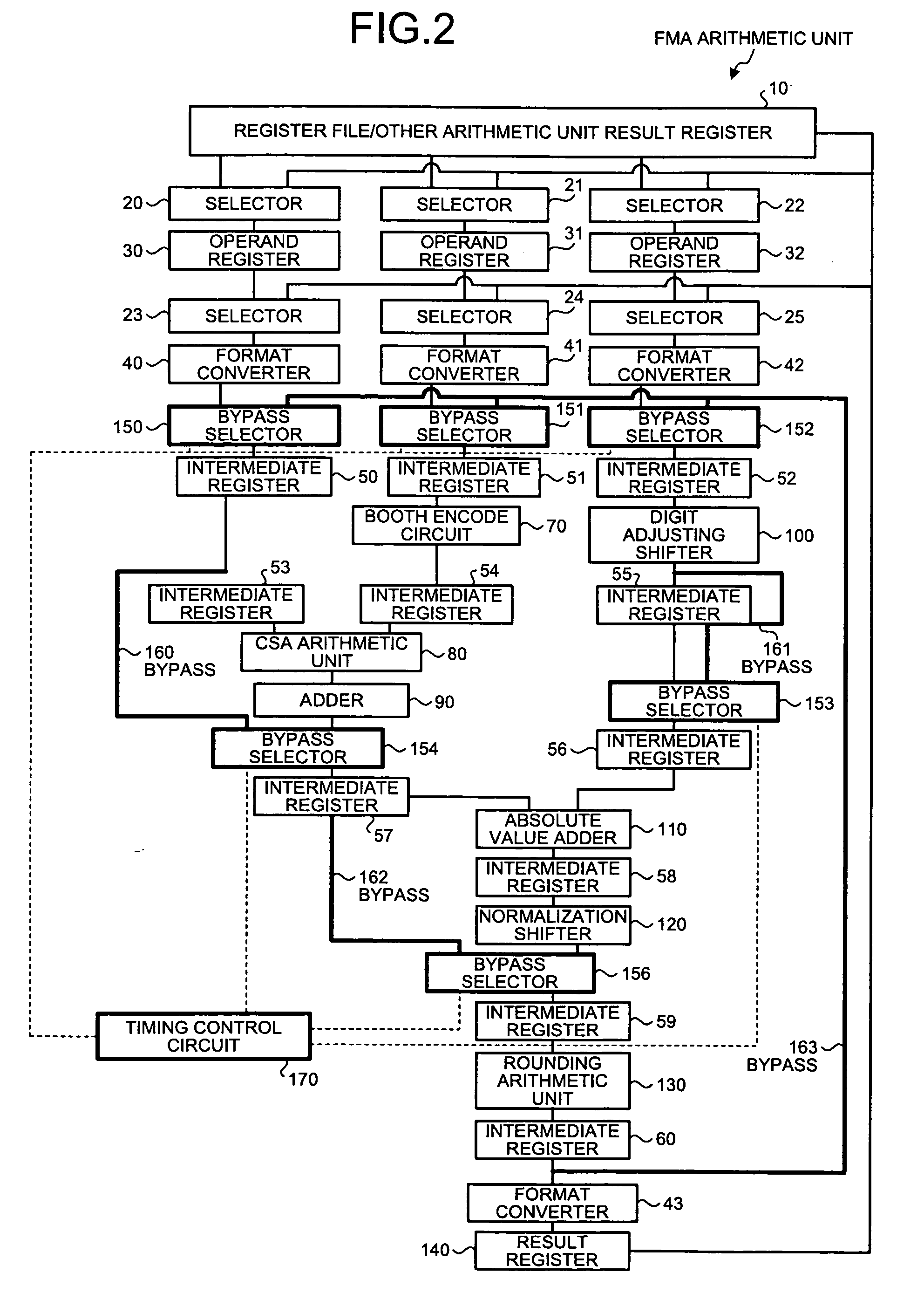

Arithmetic device and arithmetic method

InactiveUS20080307029A1Solve problemsComputations using contact-making devicesProcessor registerFloating point

An FMA arithmetic unit has a timing control circuit. The timing control circuit controls bypass selectors to bypass intermediate resisters on performing floating point addition / subtraction, controls another bypass selector to bypass another intermediate register on performing floating point multiplication, and controls still another bypass selectors to bypass a register file / other arithmetic unit result register and operand registers on performing successive FMA arithmetic operations.

Owner:FUJITSU LTD

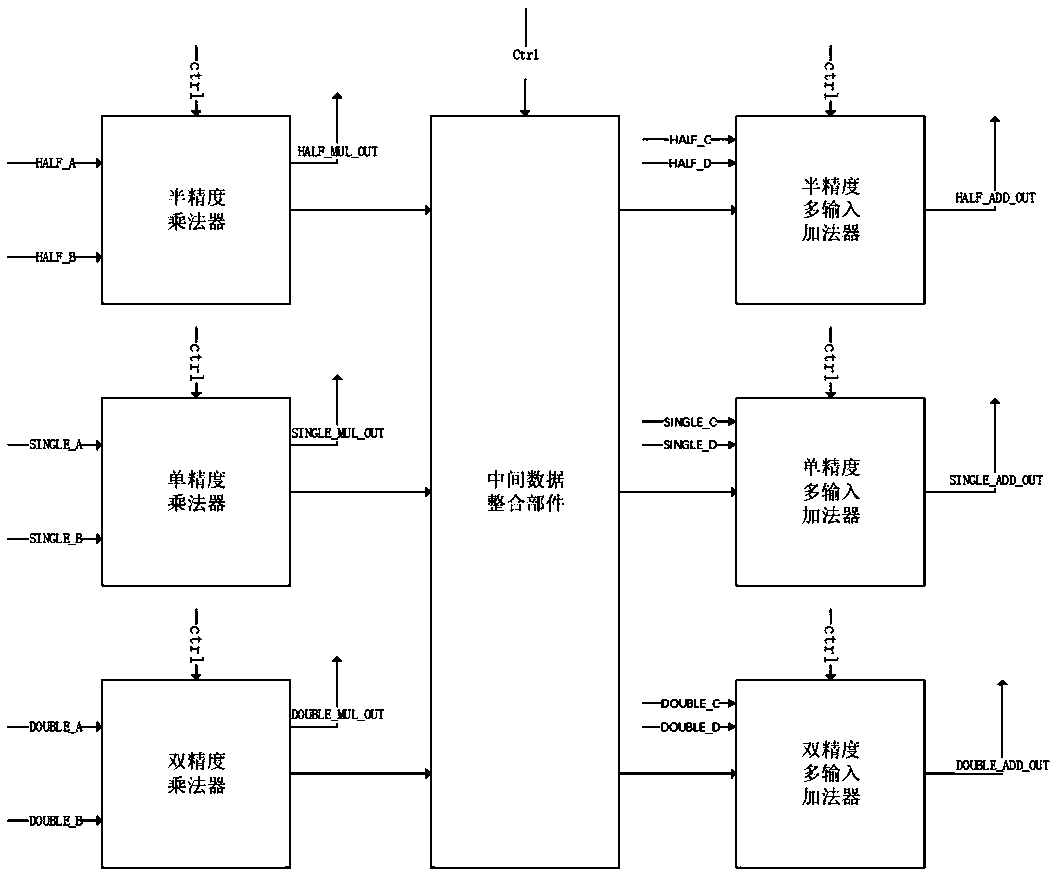

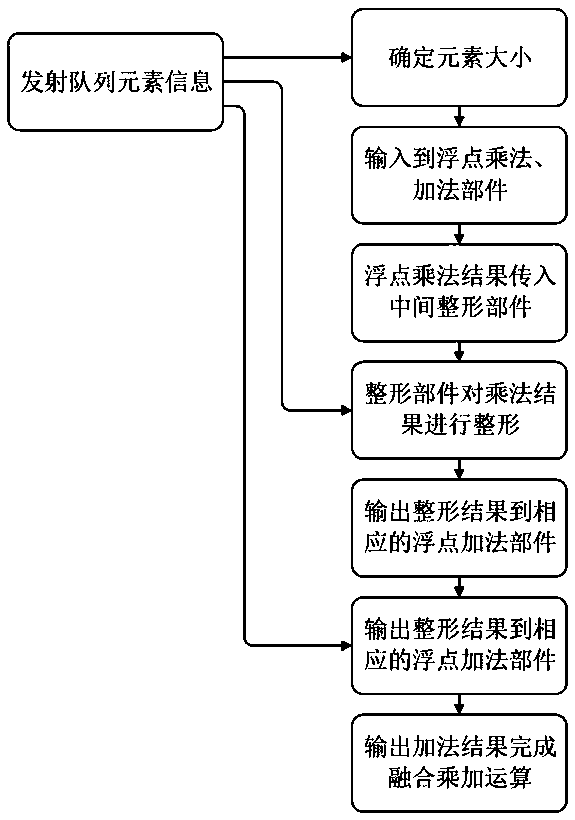

A floating-point fusion multiplier and adder supporting mixed data types and an application method thereof

The invention discloses a floating-point fusion multiplier and adder supporting mixed data types and an application method thereof. The floating-point fusion multiplier and adder supporting mixed datatypes includes three floating-point multipliers, an intermediate data integration part and three multi-input adders. The application method comprises classifying mixed data, inputting the mixed datato a corresponding floating-point multiplication unit, outputting an unrounded intermediate multiplication result to an intermediate data integration section, and the intermediate integration sectionshaping the multiplication result and outputting the multiplication result to a floating-point addition unit of a corresponding data type. The invention is designed for processing floating-point multiplication and addition operation of mixed data types, can complete fusion multiplication and addition calculation between different data types, and can keep precision of multiplication result of intermediate process when low-precision multiplication and high-precision addition fusion are carried out.

Owner:PHYTIUM TECH CO LTD

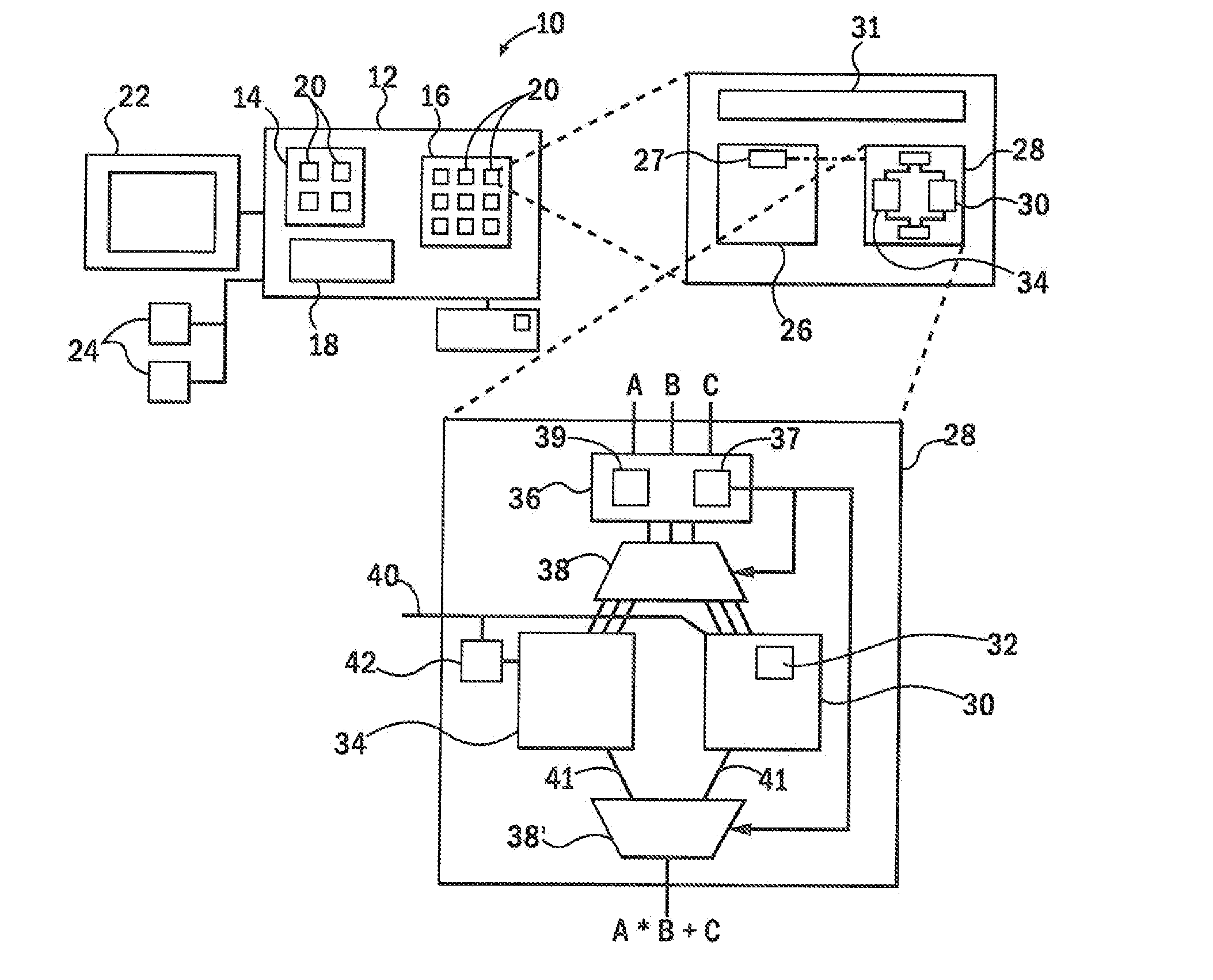

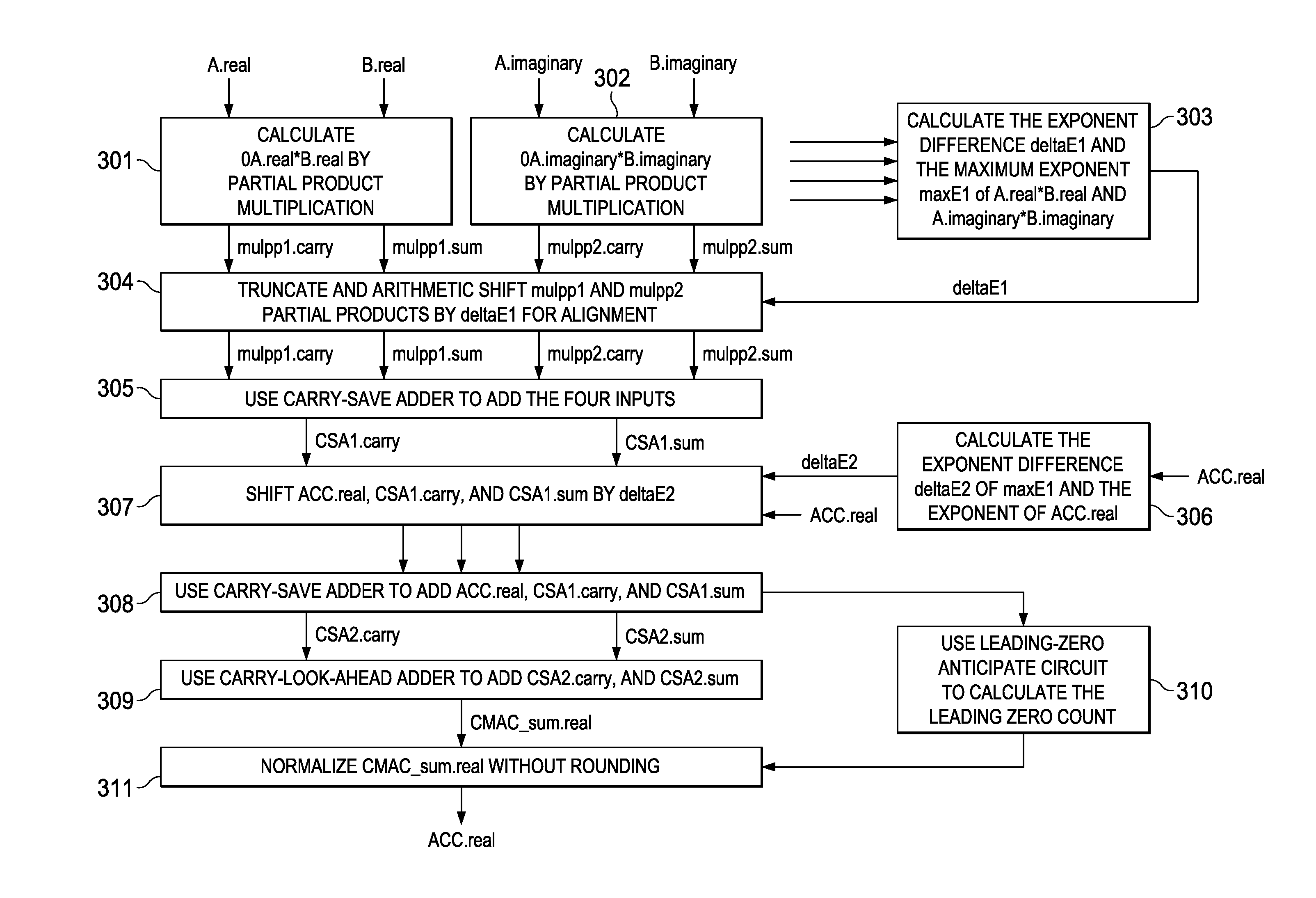

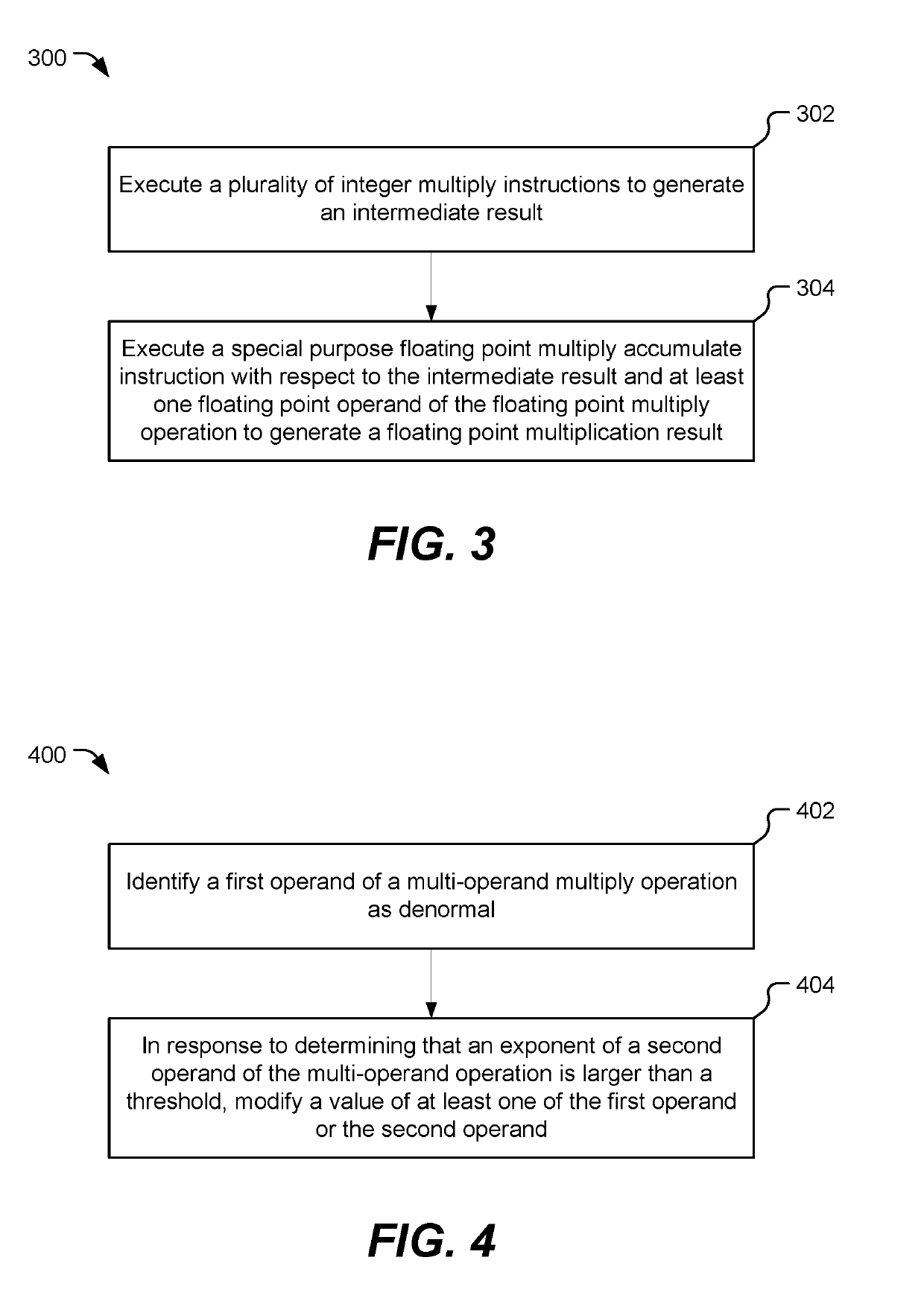

Systems and Methods for a Floating-Point Multiplication and Accumulation Unit Using a Partial-Product Multiplier in Digital Signal Processors

InactiveUS20130282783A1Computation using denominational number representationFloating pointFloating point multiplication

Owner:FUTUREWEI TECH INC

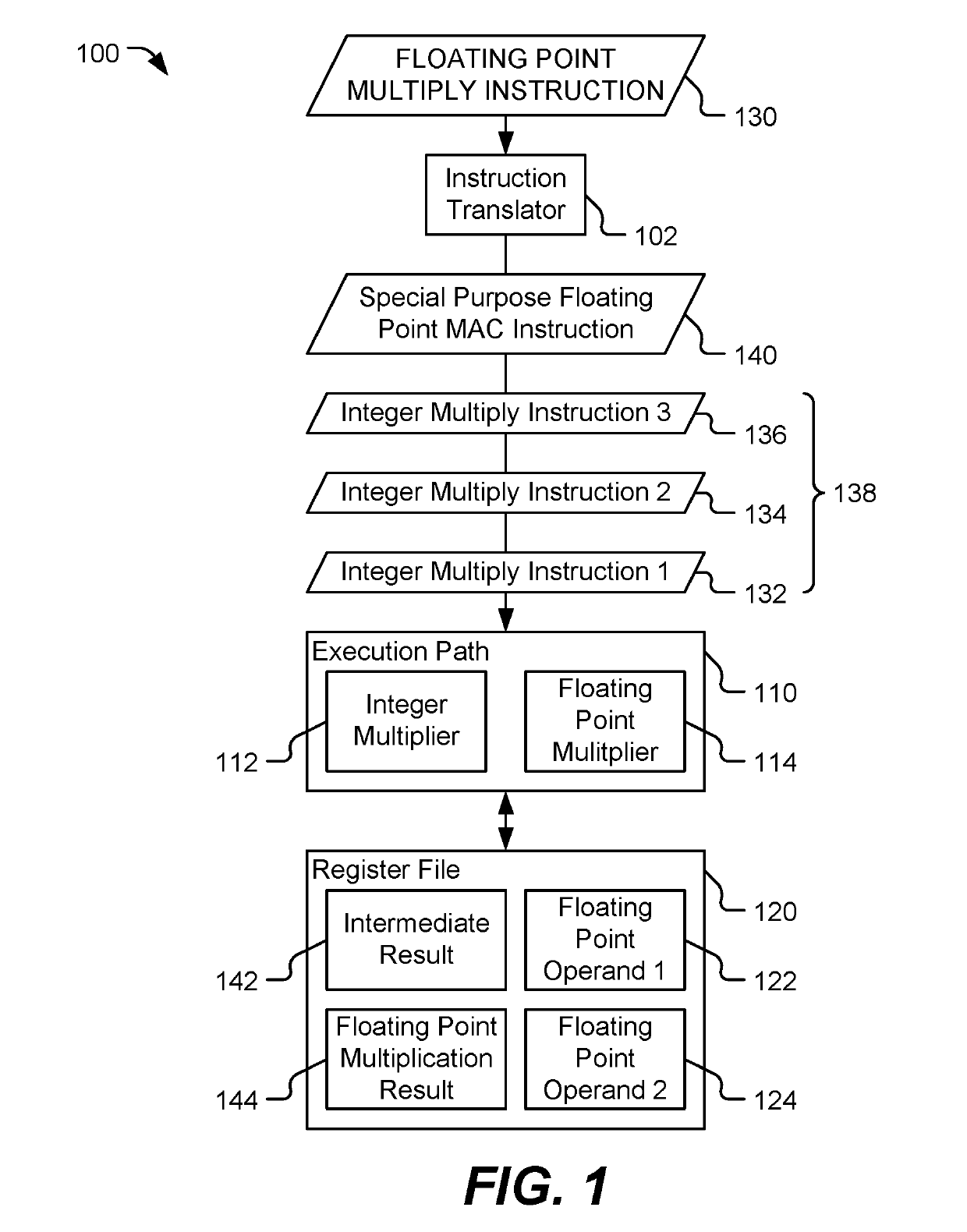

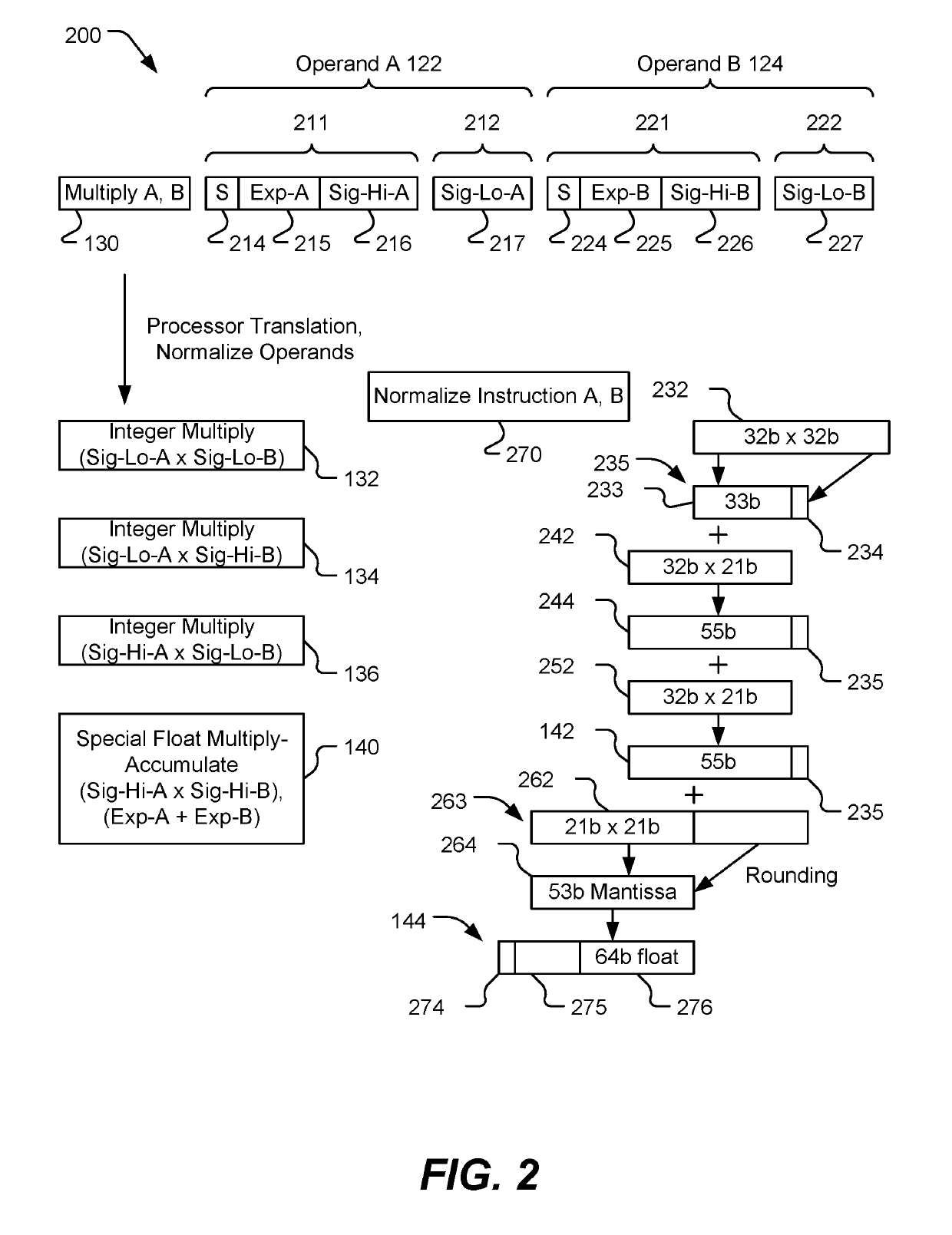

System and method of floating point multiply operation processing

ActiveUS20190196785A1Sufficient bit widthIncrease widthDigital data processing detailsOperandFloating point multiplication

A processor includes an integer multiplier configured to execute an integer multiply instruction to multiply significand bits of at least one floating point operand of a floating point multiply operation. The processor also includes a floating point multiplier configured to execute a special purpose floating point multiply accumulate instruction with respect to an intermediate result of the floating point multiply operation and the at least one floating point operand to generate a final floating point multiplication result.

Owner:QUALCOMM INC

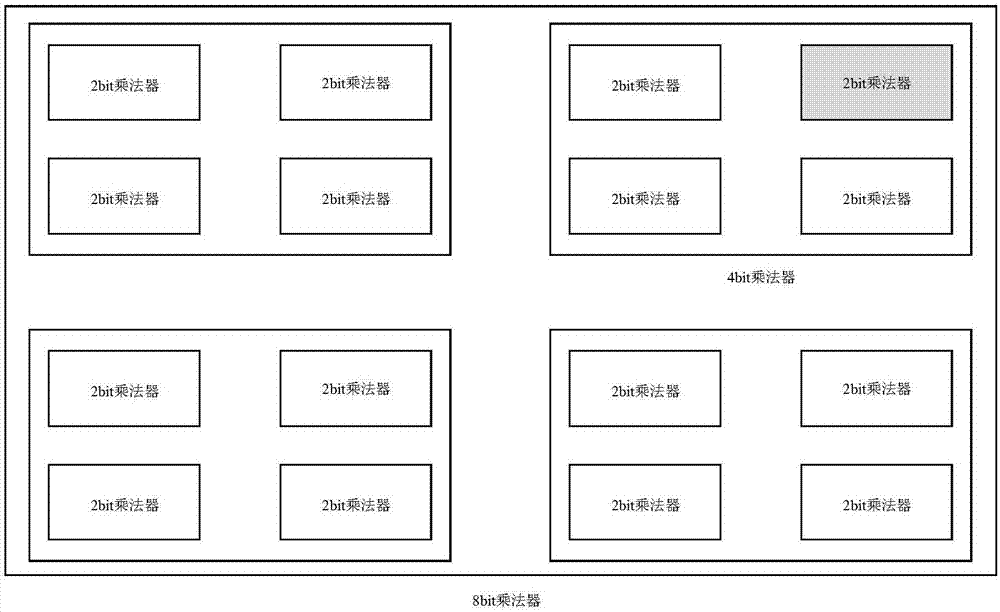

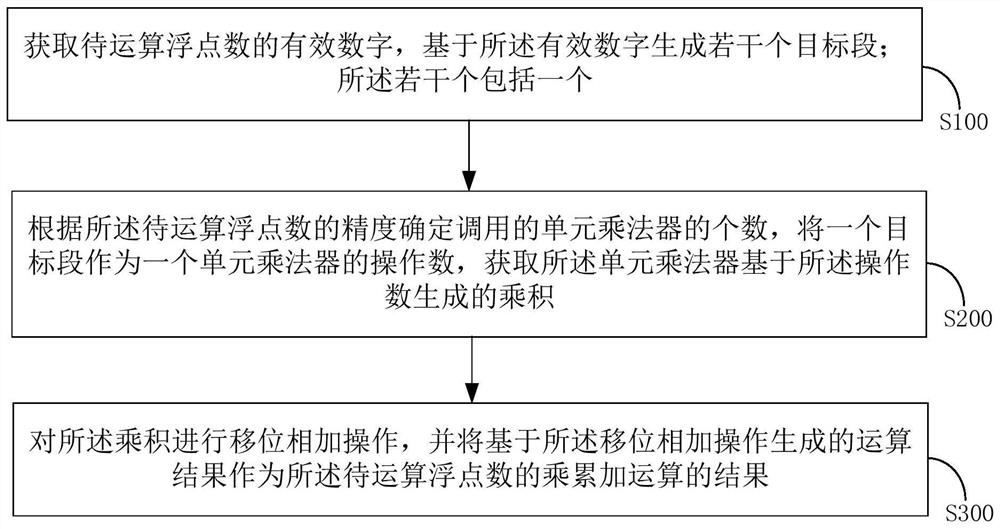

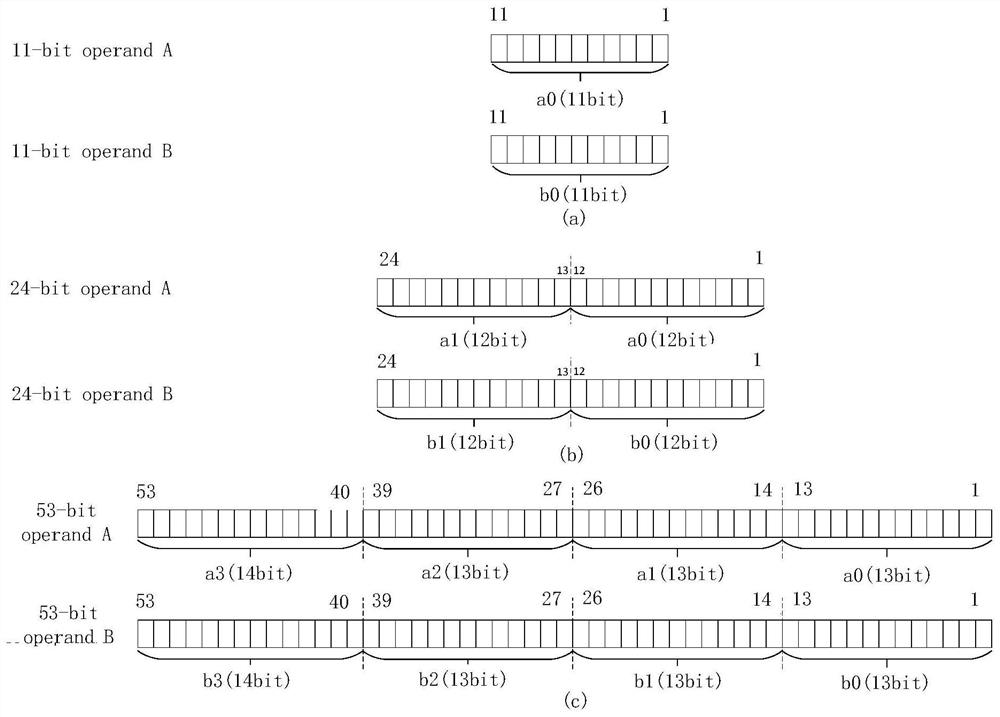

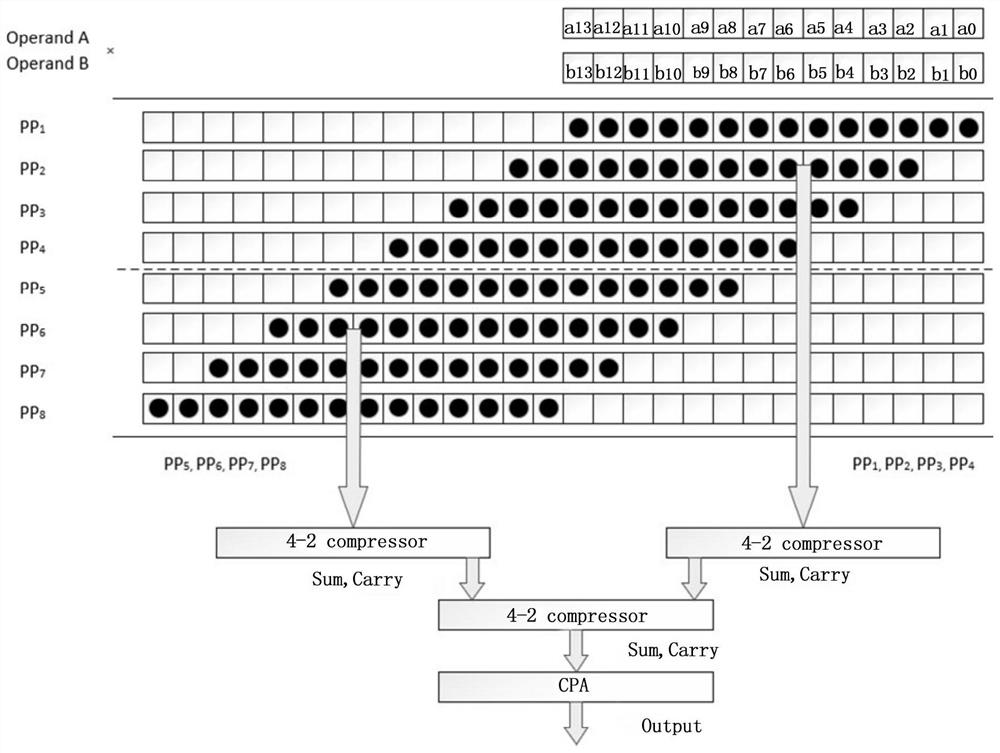

Reconfigurable floating point multiply-add operation unit and method suitable for multi-precision calculation

ActiveCN112860220AAvoid the problem of bit redundancySolve the characteristicsDigital data processing detailsEnergy efficient computingComputer hardwareBinary multiplier

The invention discloses a reconfigurable floating point multiply-add operation unit and method suitable for multi-precision calculation, and the method comprises the steps: dividing mantissas of floating points with different precision through employing a unified method, and obtaining a plurality of bit segments; and calling different numbers of same-type unit multipliers to achieve multiplication of a plurality of bit segments in one period and output corresponding products, and then performing shift addition operation on the products to obtain a multiply-accumulate operation result of the floating-point number. The problem of bit redundancy is avoided by adopting a unified mantissa division scheme, the hardware utilization rate is improved by adopting a unified unit multiplier, and the multiply-accumulate operation of half-precision floating-point numbers, the multiply-accumulate operation of single-precision dot product floating-point numbers and the multiply-accumulate operation of double-precision floating-point numbers can be achieved. The problems of bit redundancy, low hardware utilization rate and the like of an operation method supporting multi-precision floating point multiplication in the prior art are solved.

Owner:SOUTH UNIVERSITY OF SCIENCE AND TECHNOLOGY OF CHINA

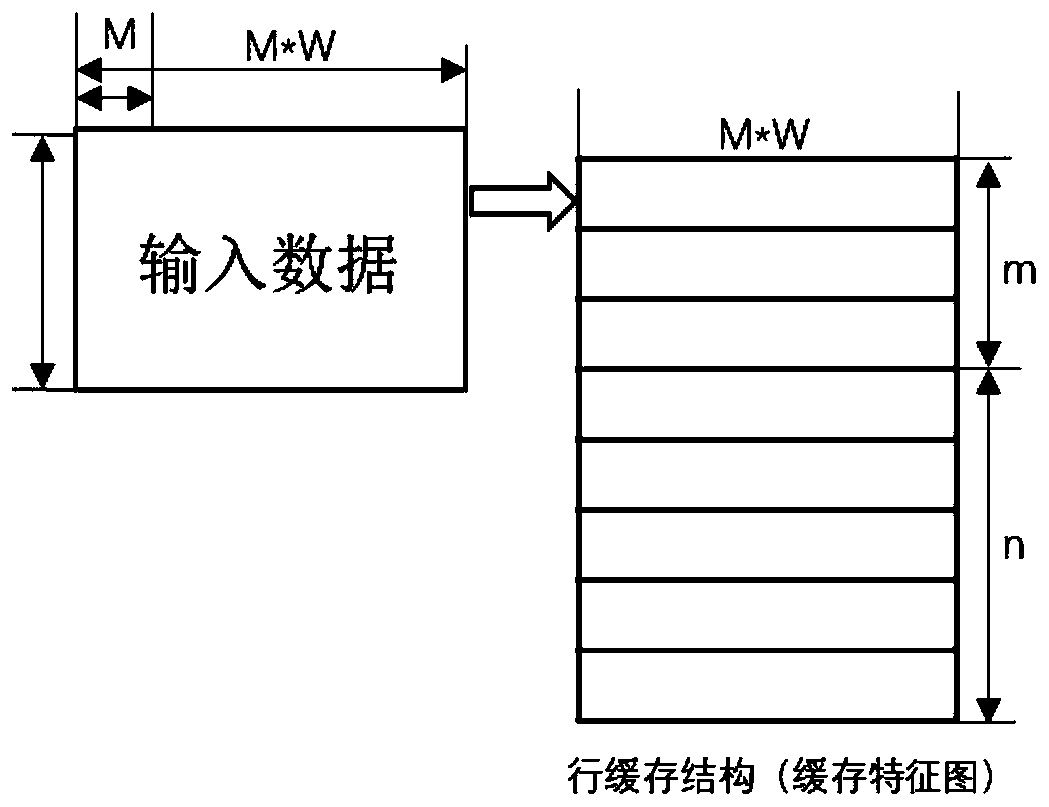

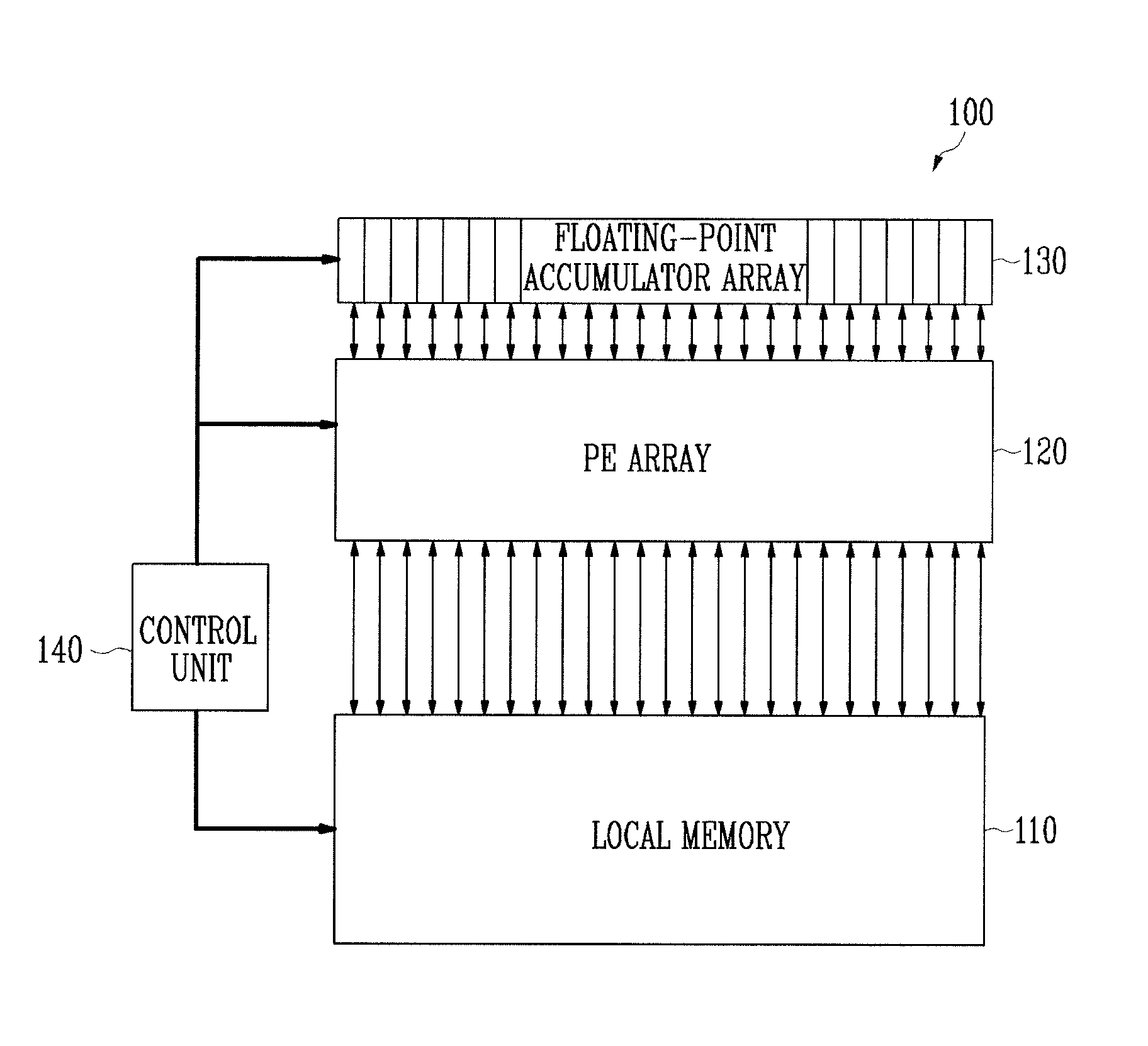

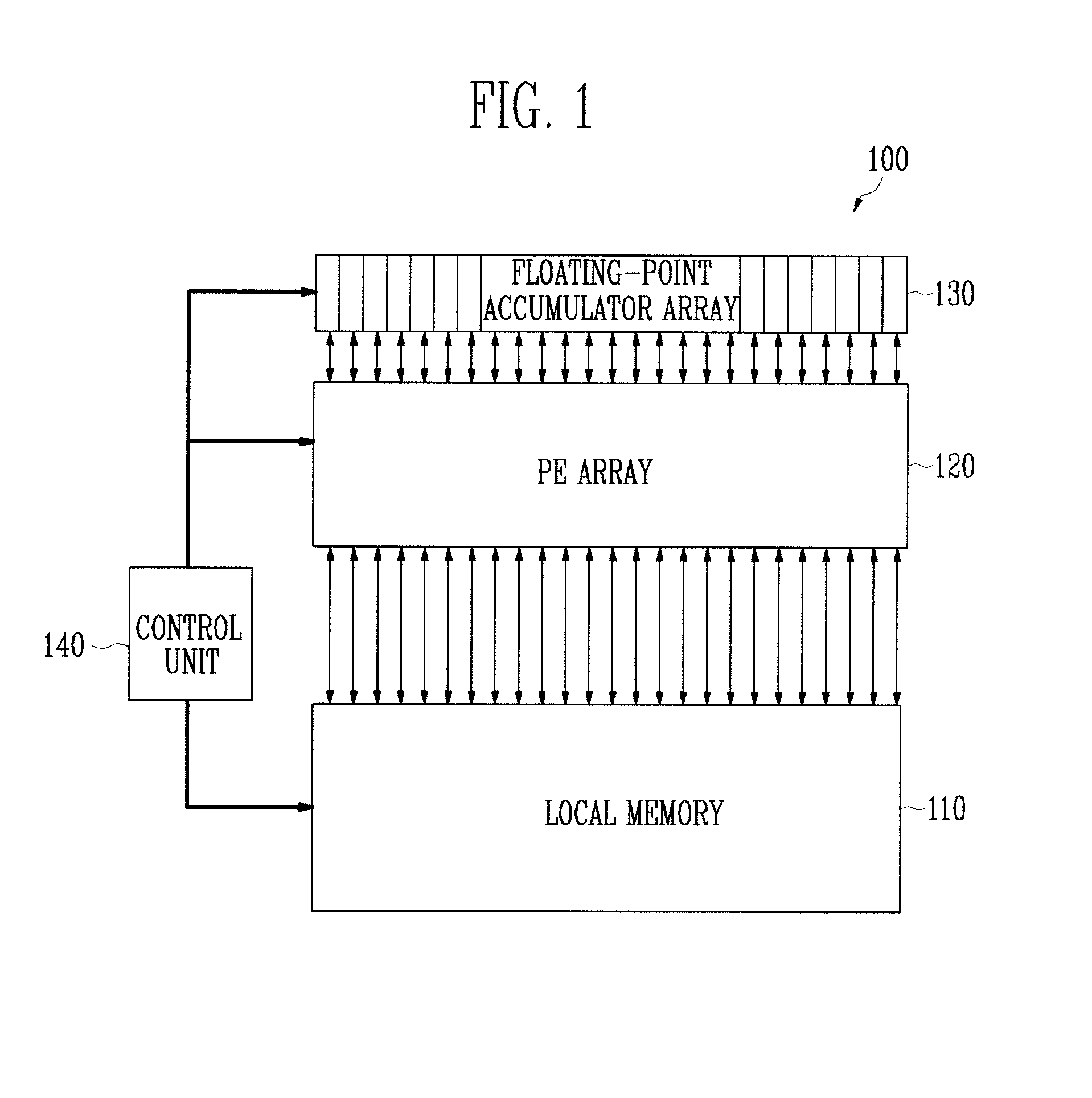

Parallel processor for efficient processing of mobile multimedia

InactiveUS20080294875A1Efficient processingLow hardware costSingle instruction multiple data multiprocessorsProgram control using wired connectionsGraphicsParallel algorithm

Provided is a parallel processor for supporting a floating-point operation. The parallel processor has a flexible structure for easy development of a parallel algorithm involving multimedia computing, requires low hardware cost, and consumes low power. To support floating-point operations, the parallel processor uses floating-point accumulators and a flag for floating-point multiplication. Using the parallel processor, it is possible to process a geometric transformation operation in a 3-dimensional (3D) graphics process at low cost. Also, the cost of a bus width for instructions can be minimized by a partitioned Single-Instruction Multiple-Data (SIMD) method and a method of conditionally executing instructions.

Owner:ELECTRONICS & TELECOMM RES INST

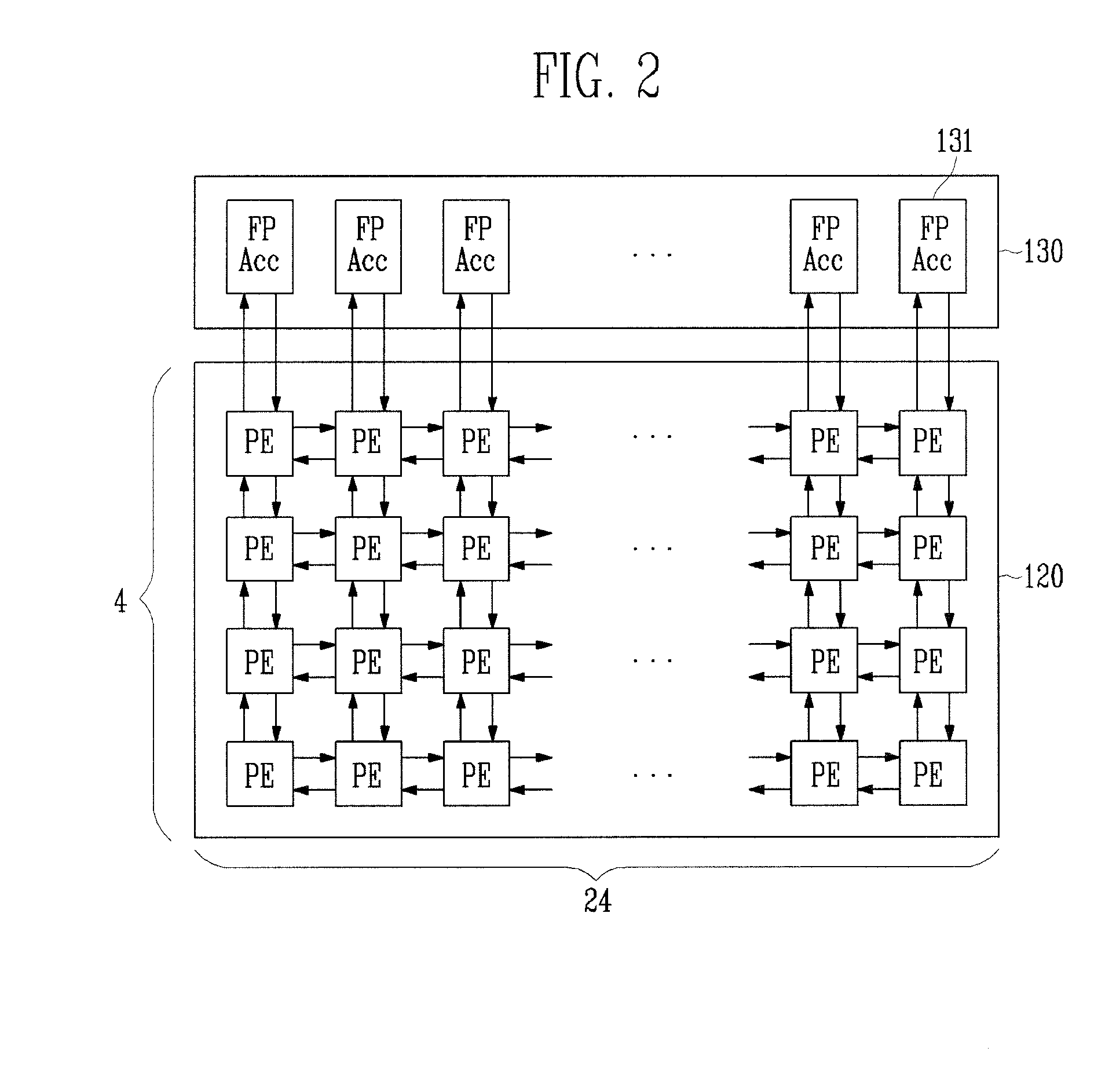

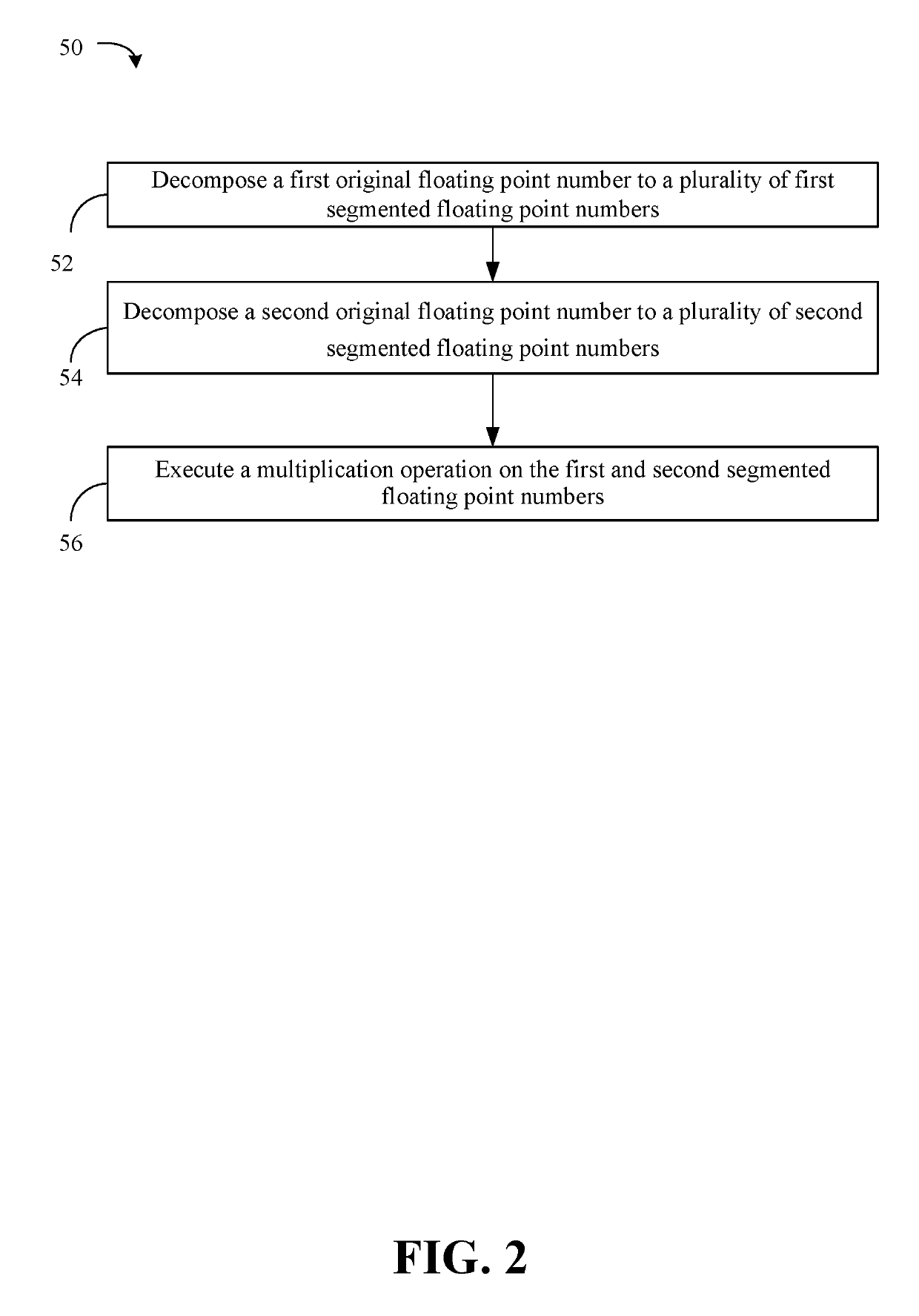

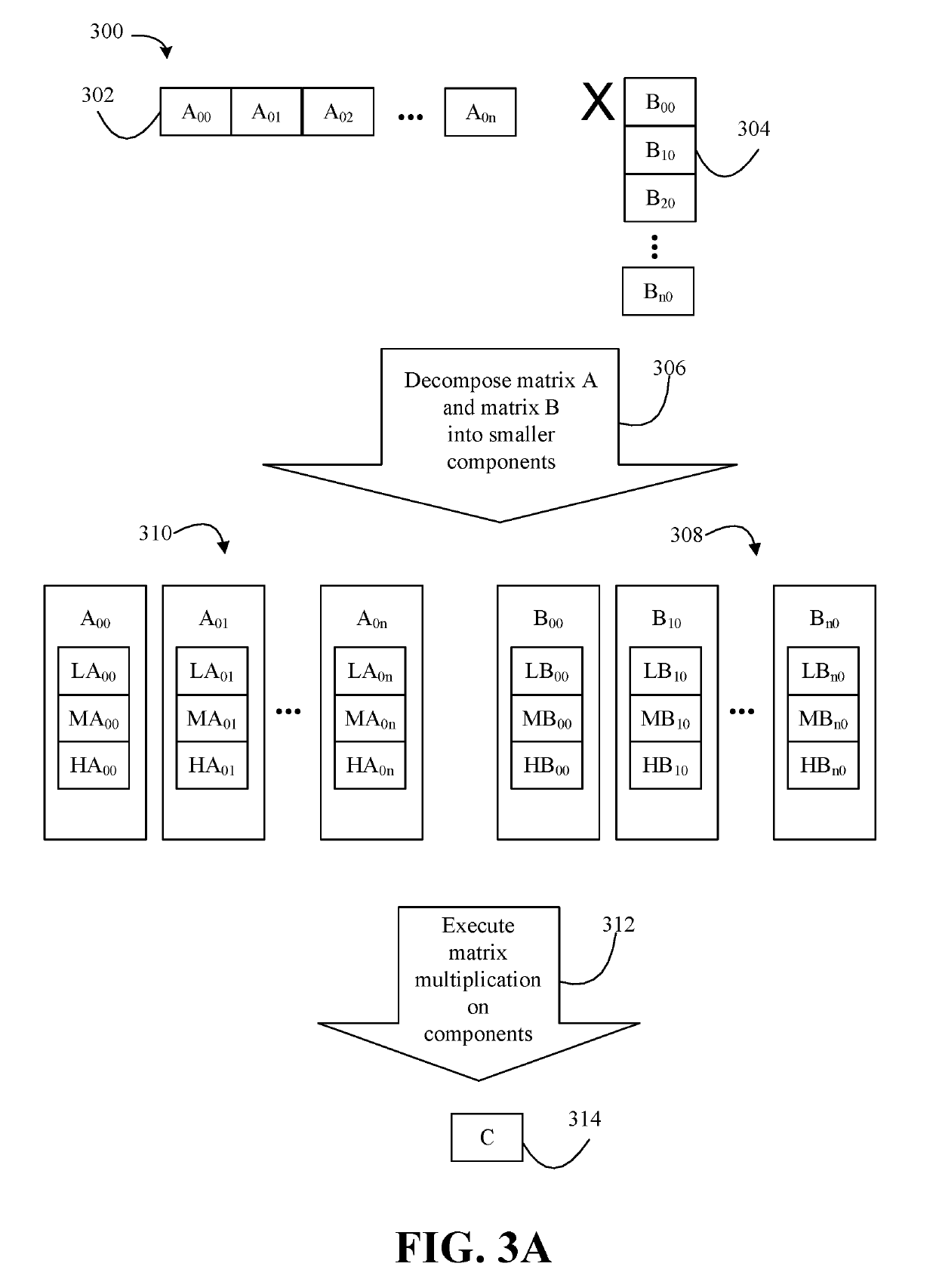

Decomposed floating point multiplication

ActiveUS20190324723A1Digital data processing detailsComplex mathematical operationsParallel computingFloating point

Systems, apparatuses and methods may provide for technology that in response to an identification that one or more hardware units are to execute on a first type of data format, decomposes a first original floating point number to a plurality of first segmented floating point numbers that are to be equivalent to the first original floating point number. The technology may further in response to the identification, decompose a second original floating point number to a plurality of second segmented floating point numbers that are to be equivalent to the second original floating point number. The technology may further execute a multiplication operation on the first and second segmented floating point numbers to multiply the first segmented floating point numbers with the second segmented floating point numbers.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com