Patents

Literature

38results about How to "Reduce write latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

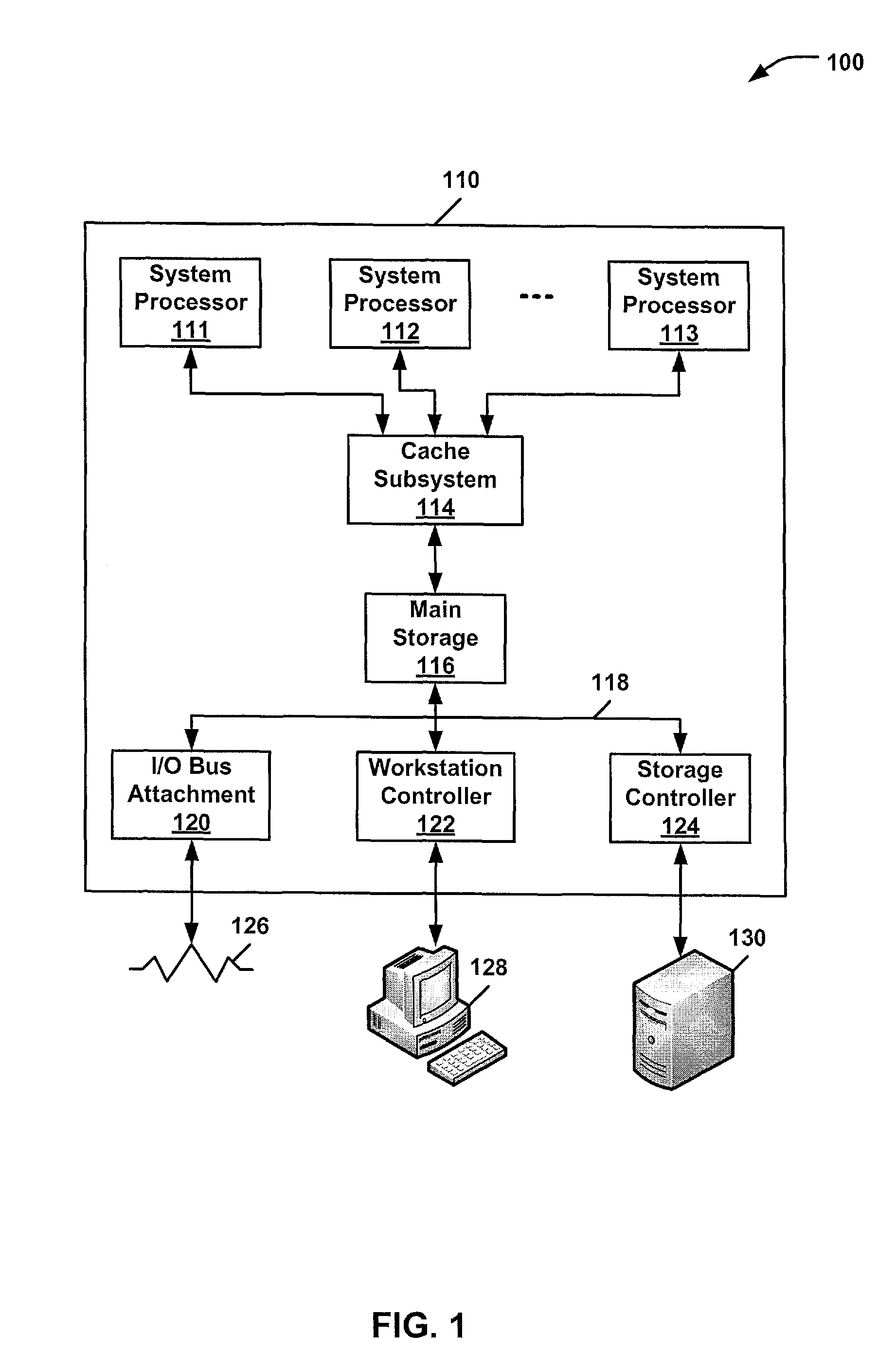

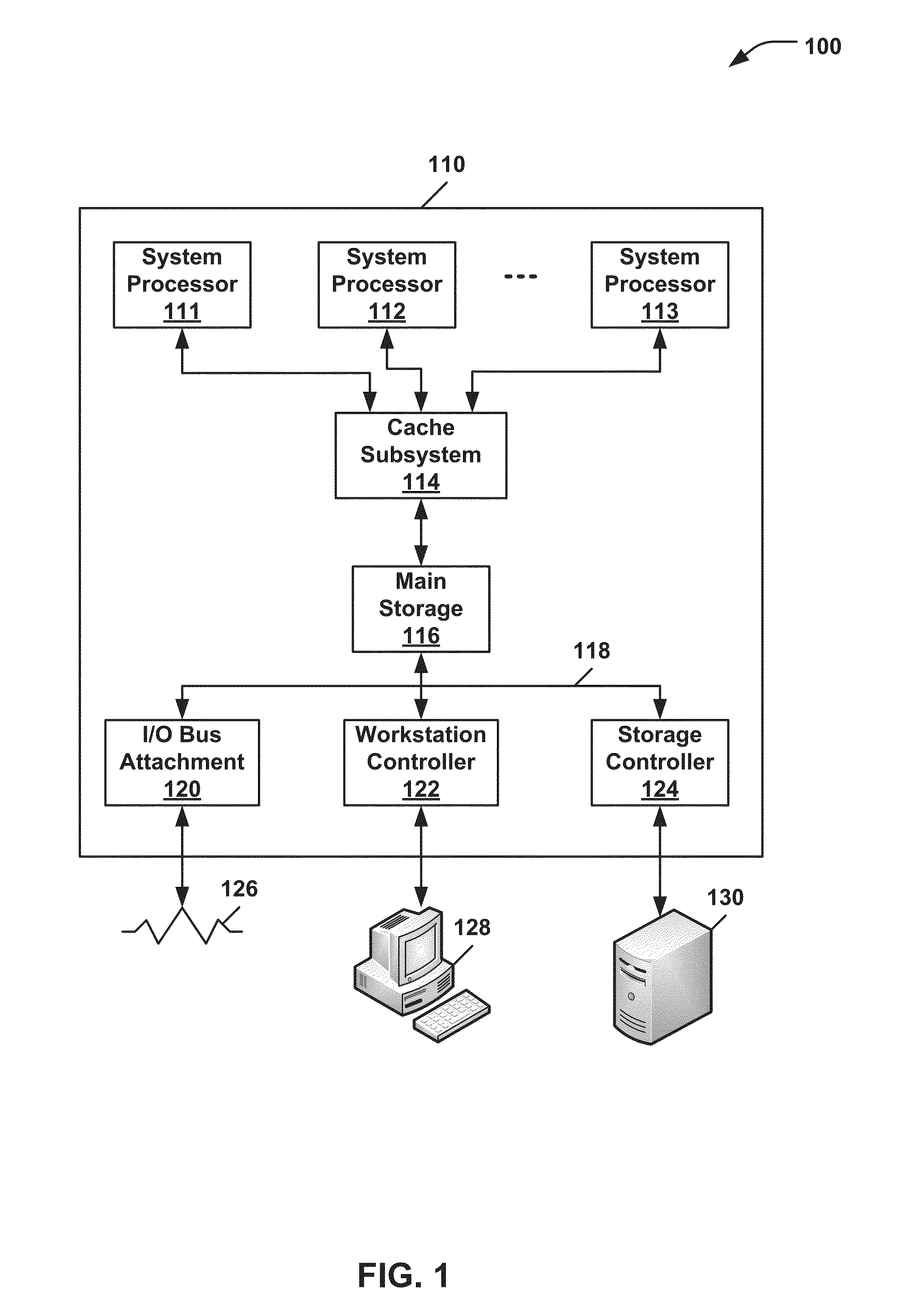

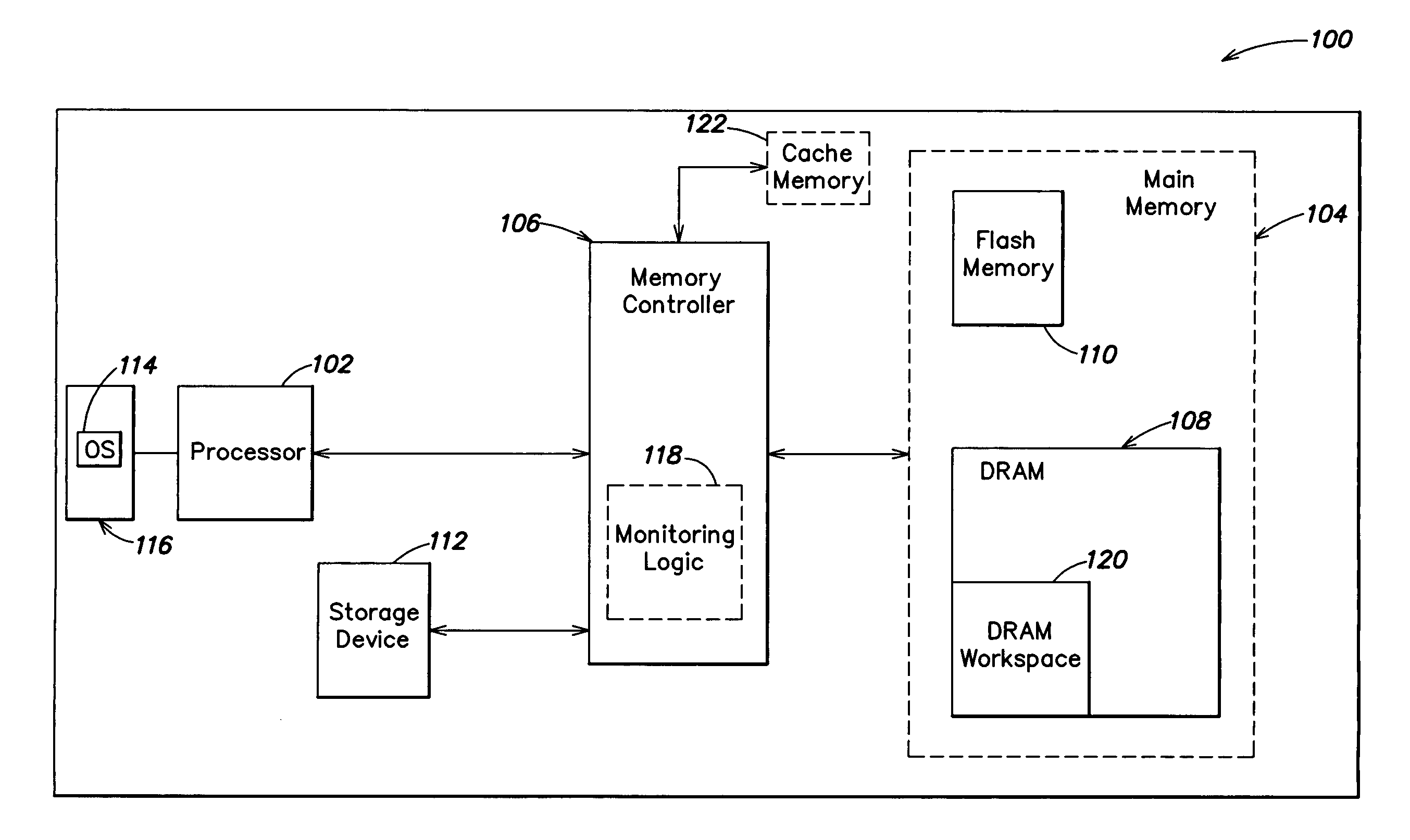

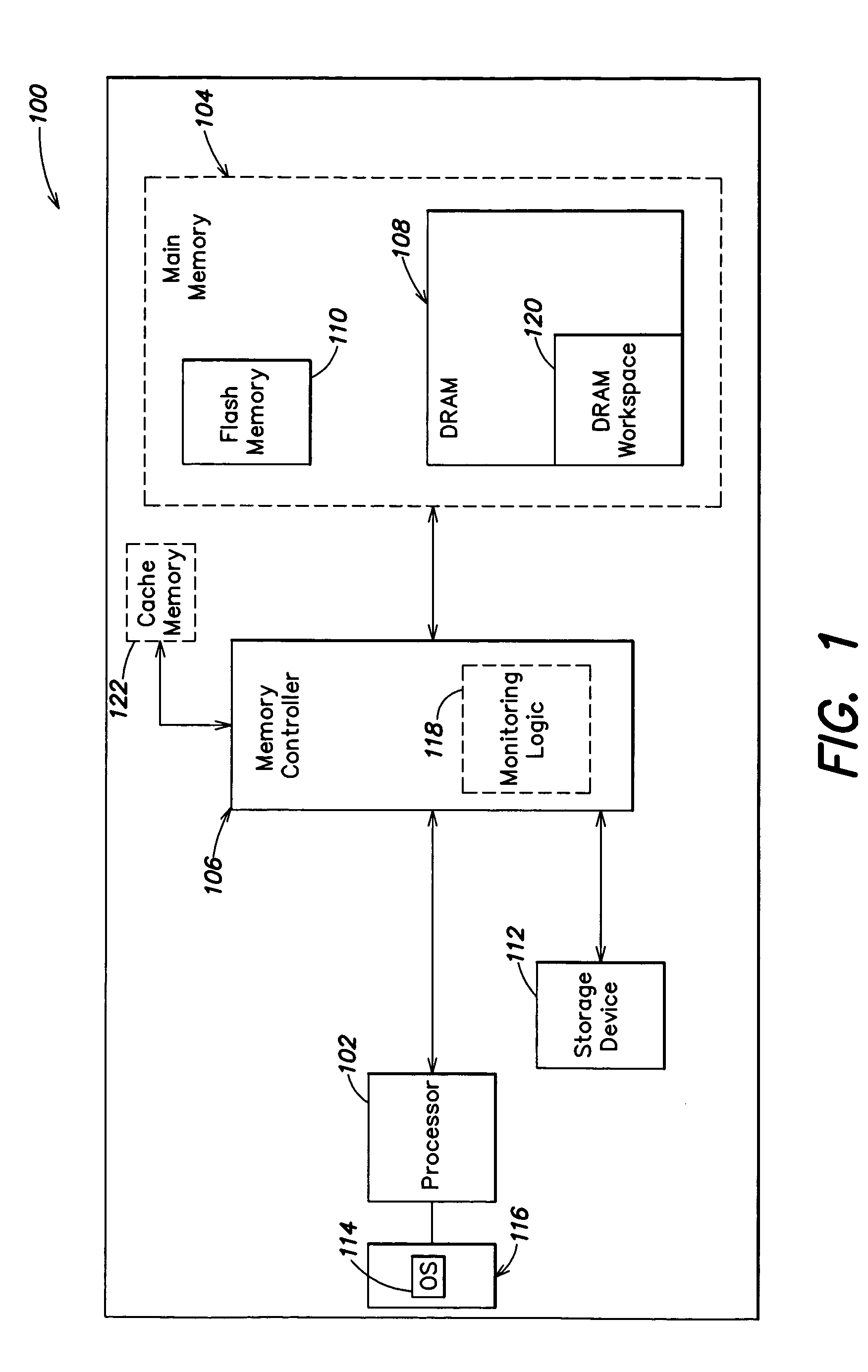

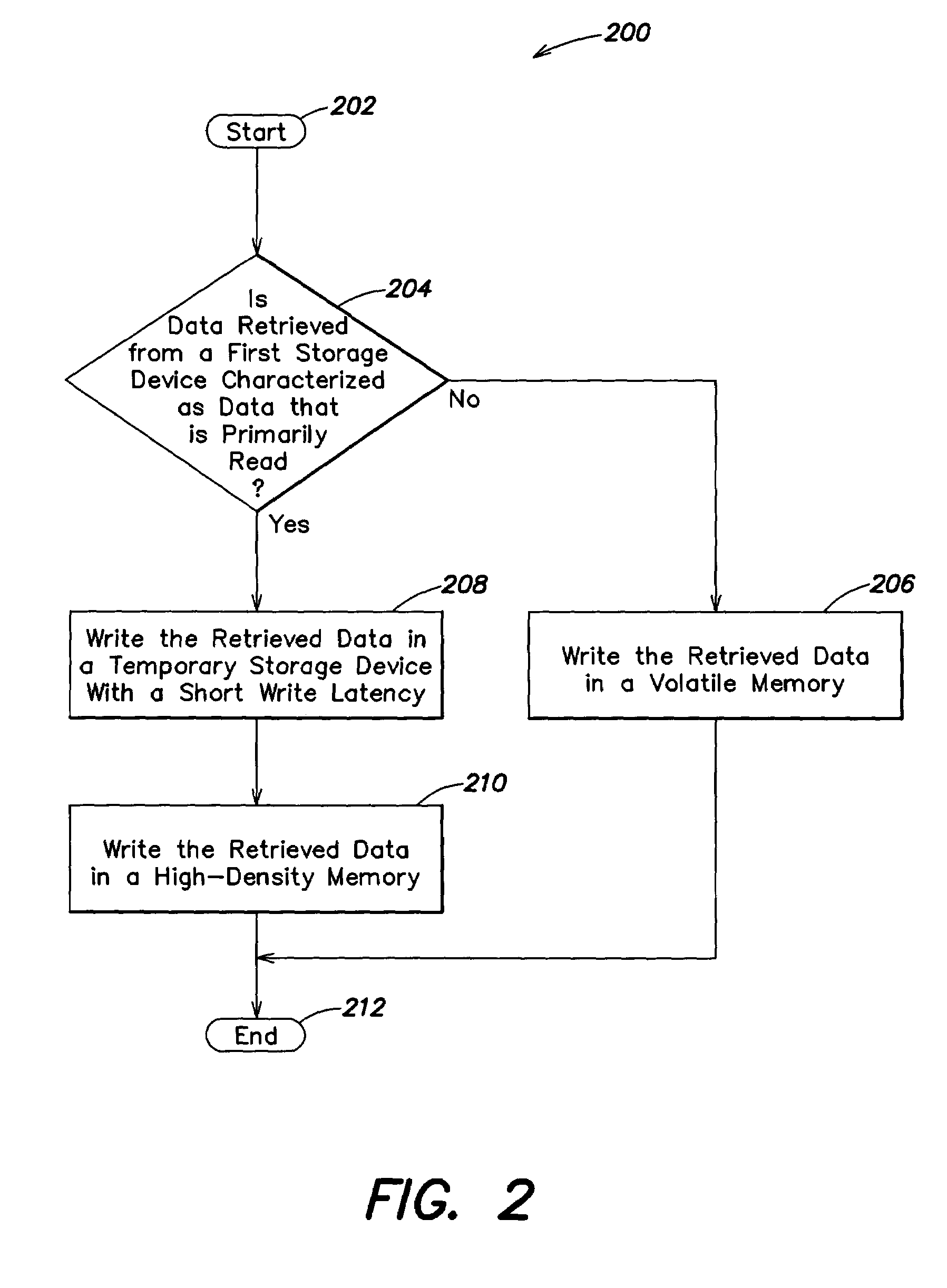

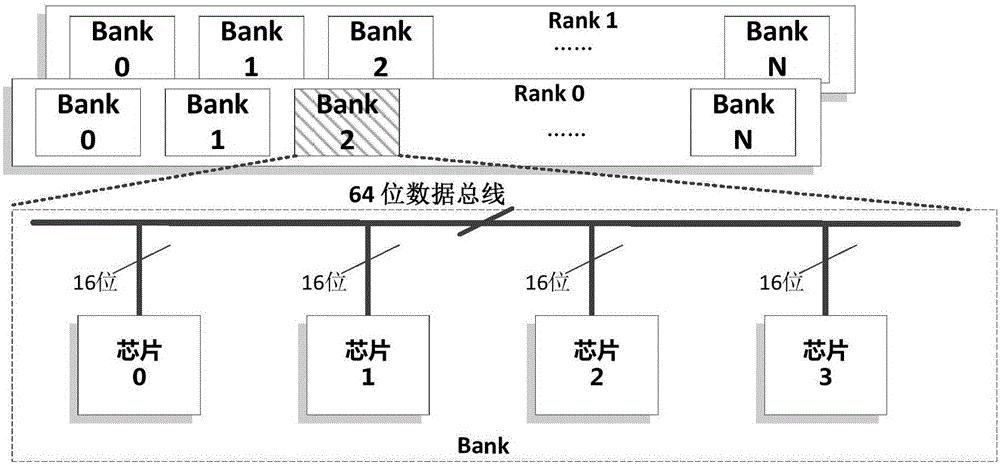

Methods and apparatus for efficient memory usage

ActiveUS20060106984A1Efficient memory usageReduce write latencyMemory architecture accessing/allocationEnergy efficient ICTHigh densityParallel computing

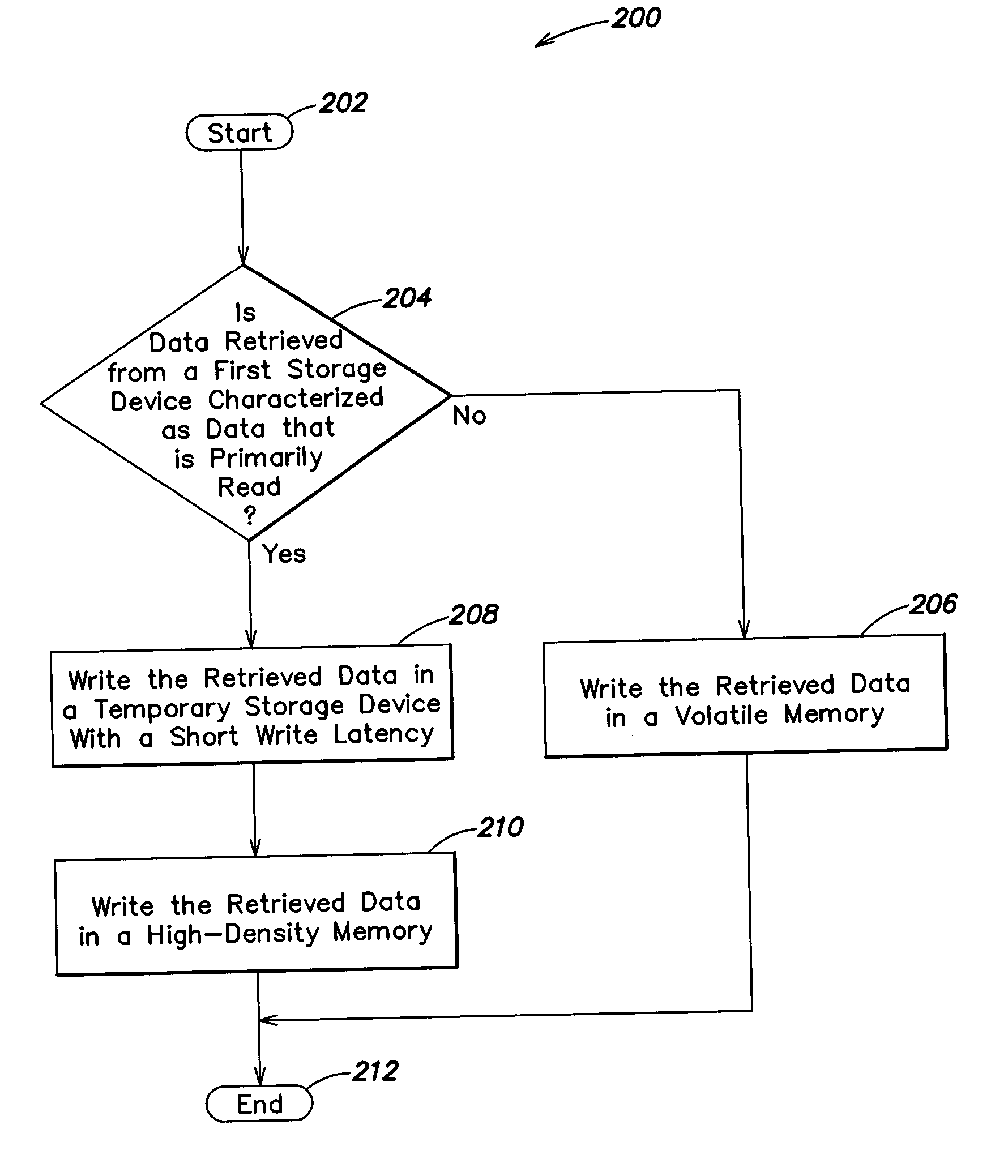

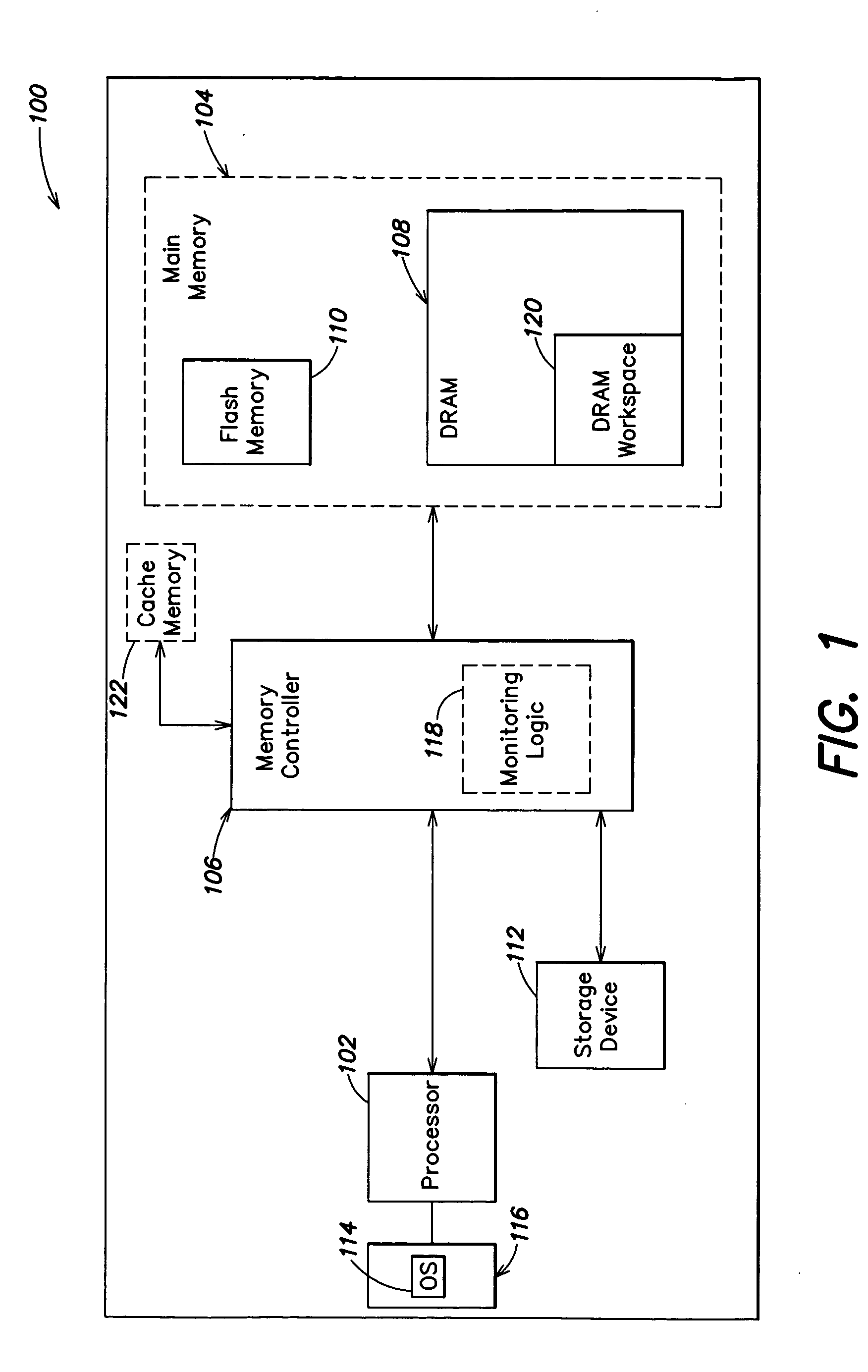

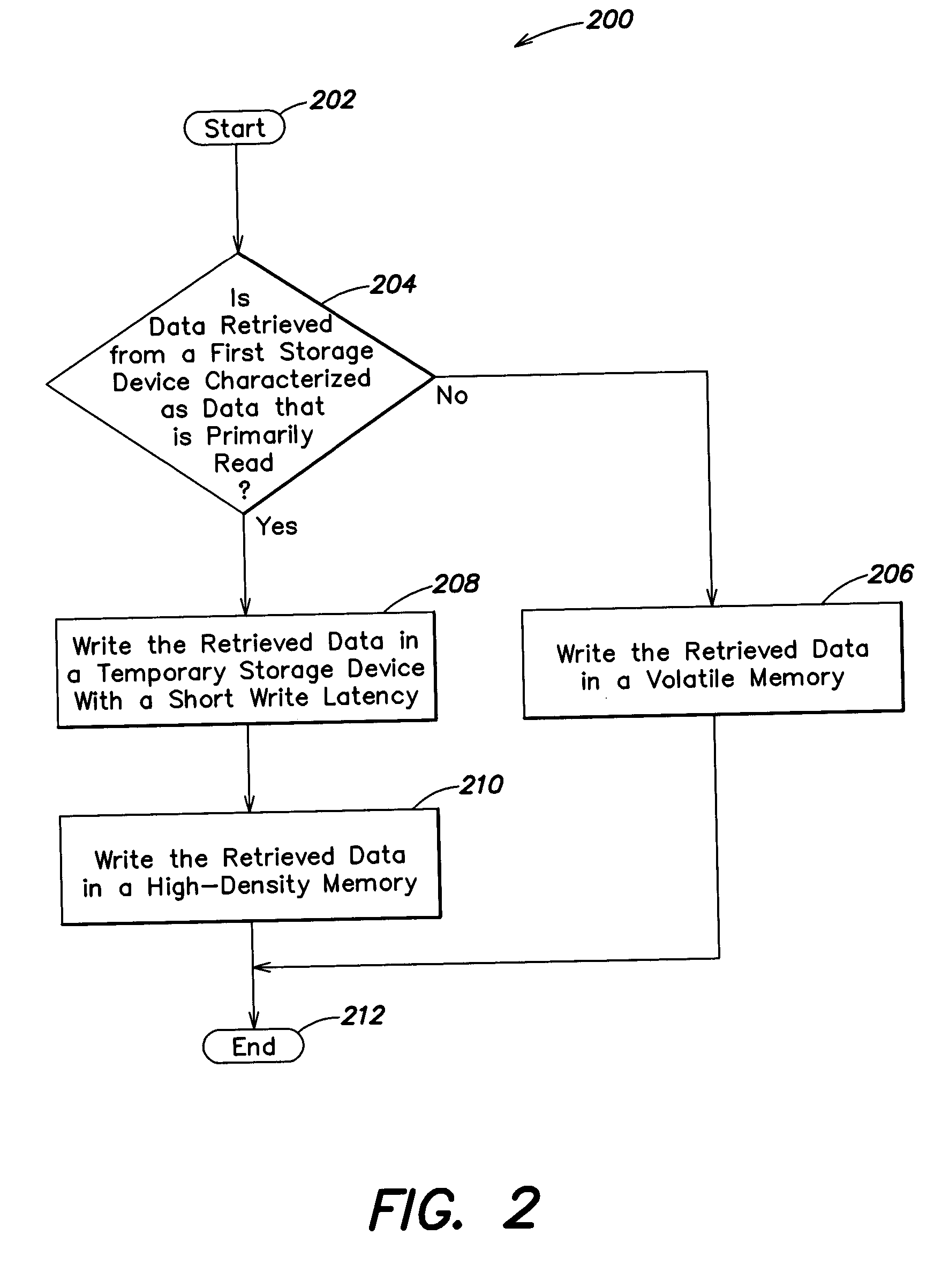

In a first aspect, a first method is provided for efficient memory usage. The first method includes the steps of (1) determining whether data retrieved from a first storage device is characterized as data that is primarily read; and (2) if data retrieved from the first storage device is characterized as data that is primarily read (a) writing the retrieved data in a temporary storage device with short write latency; and (b) writing the retrieved data in a high-density memory. Numerous other aspects are provided.

Owner:LENOVO GLOBAL TECH INT LTD

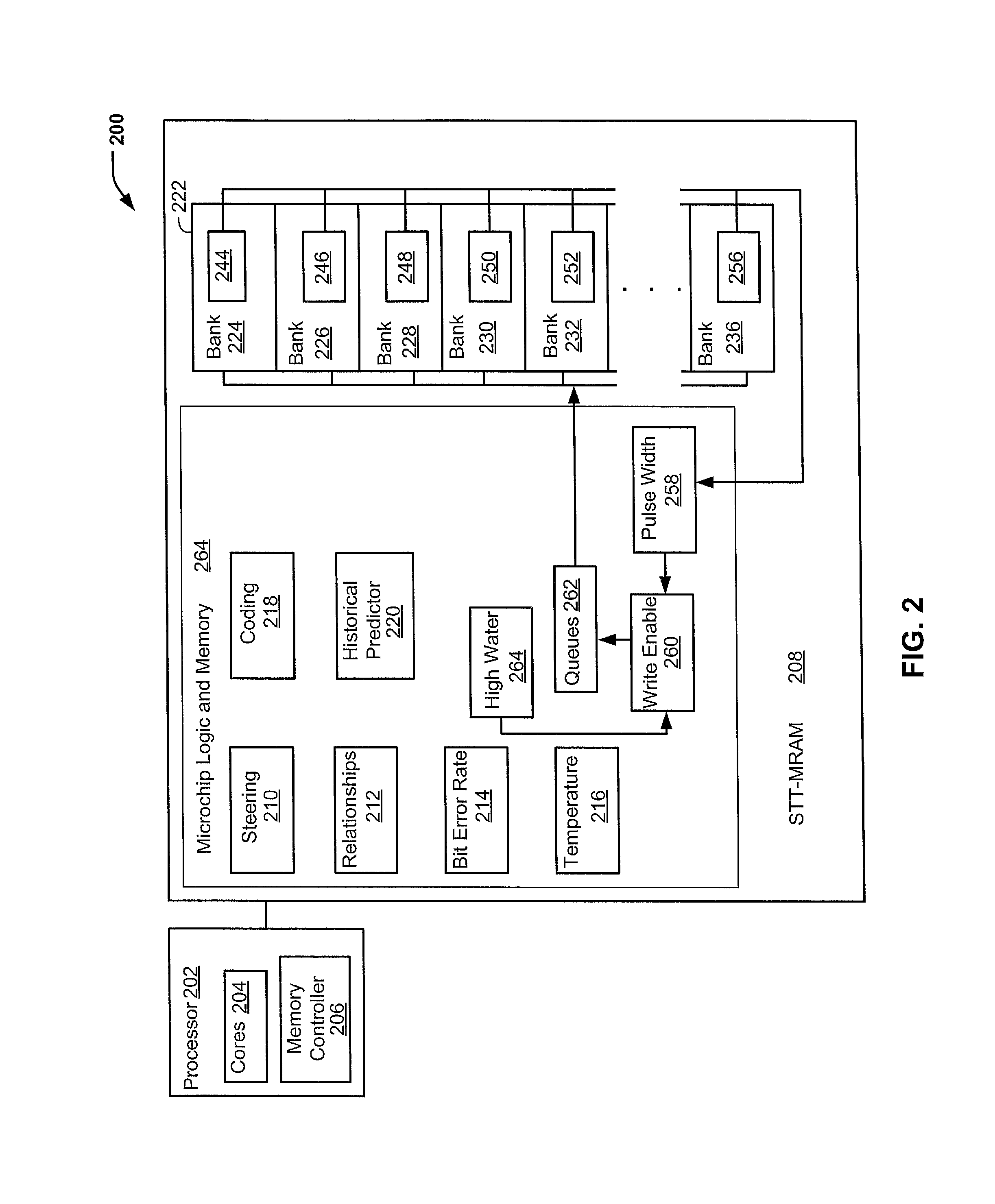

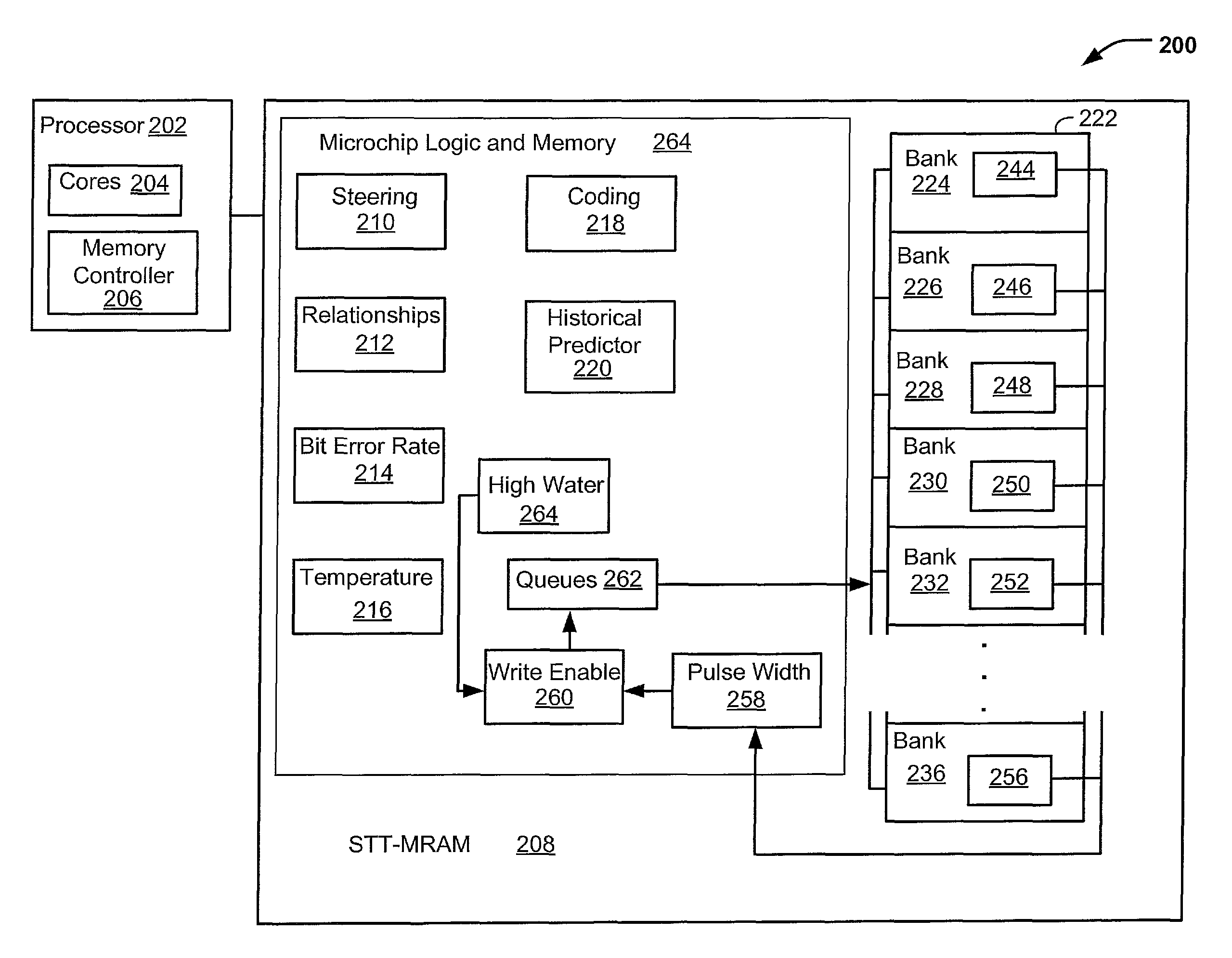

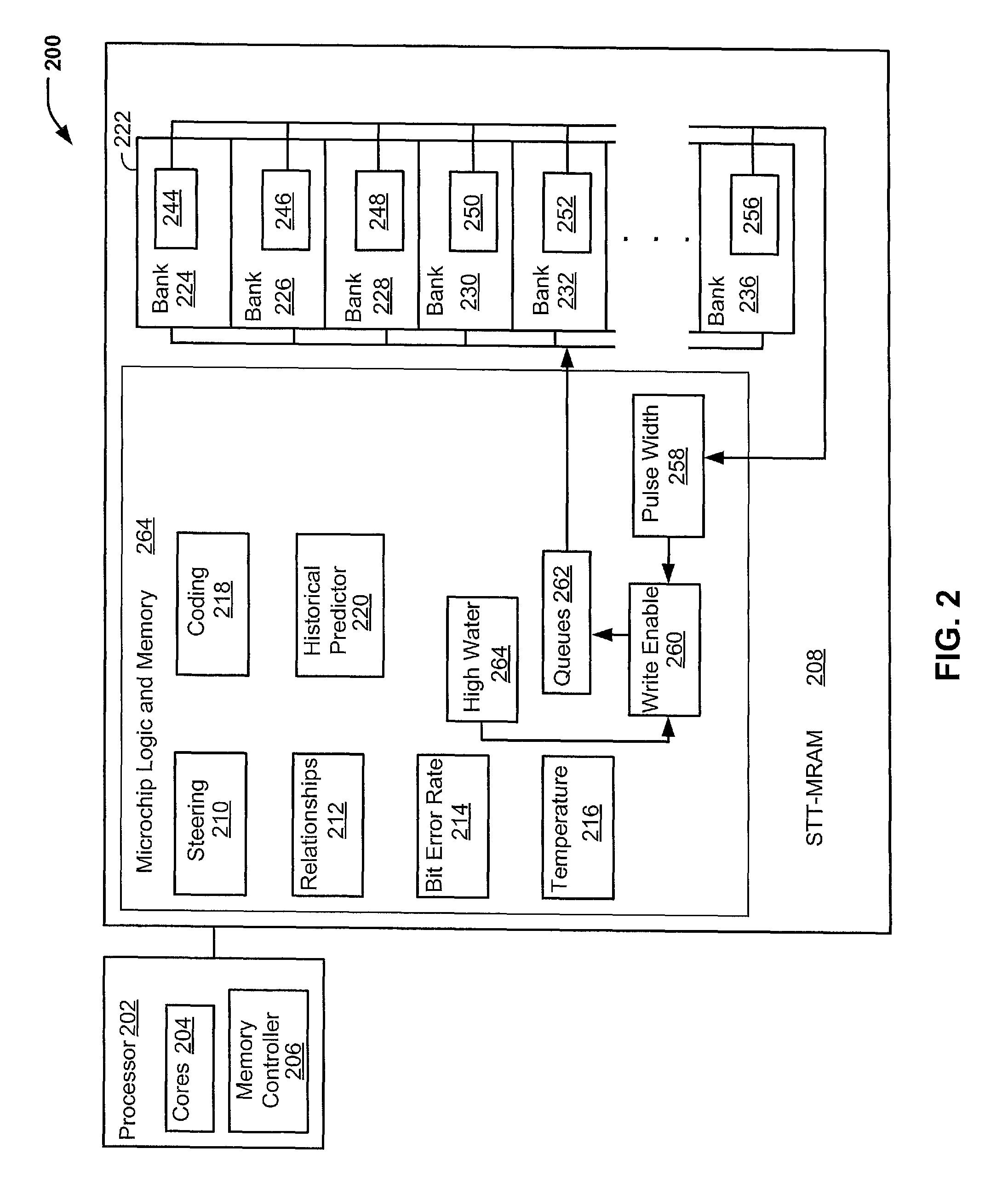

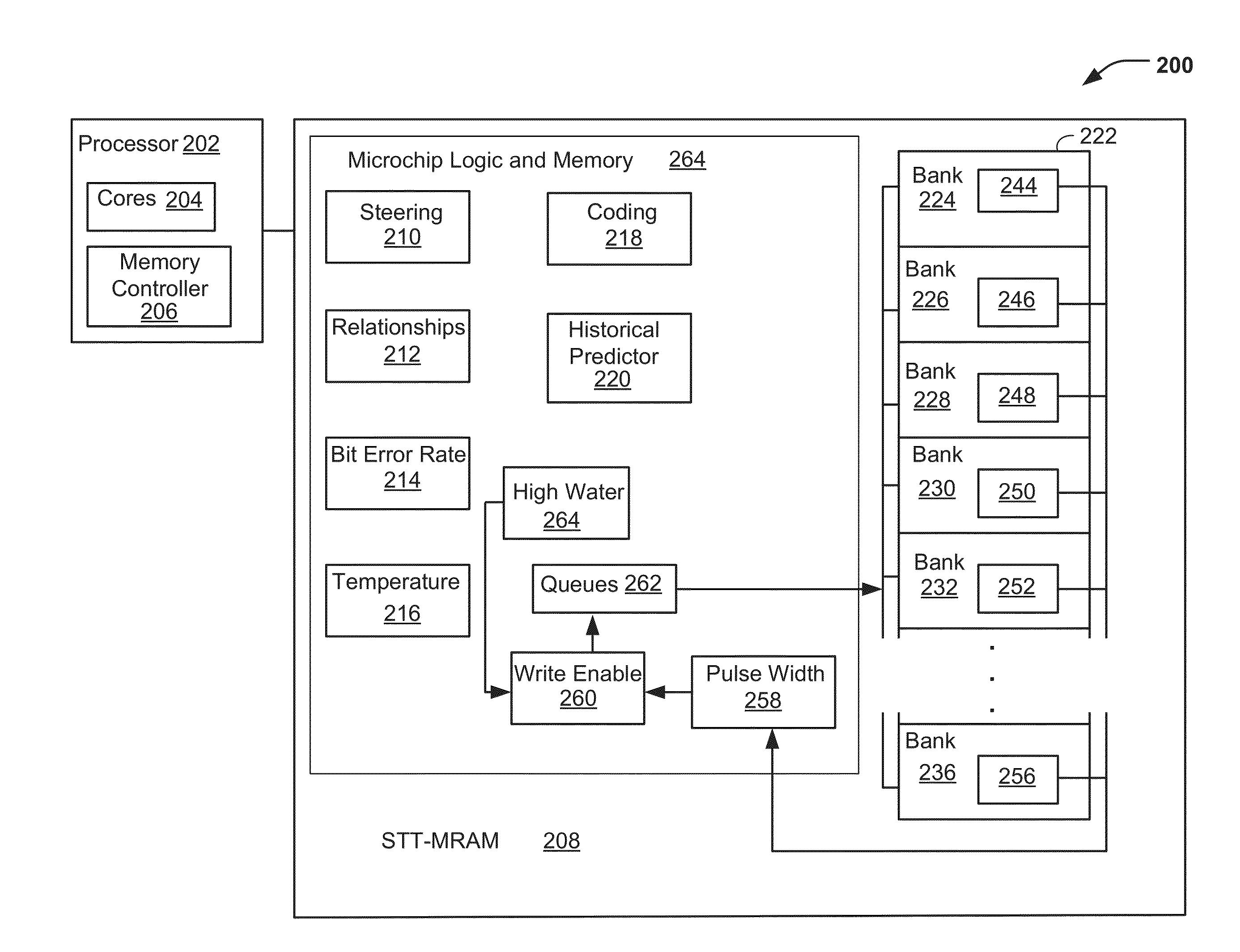

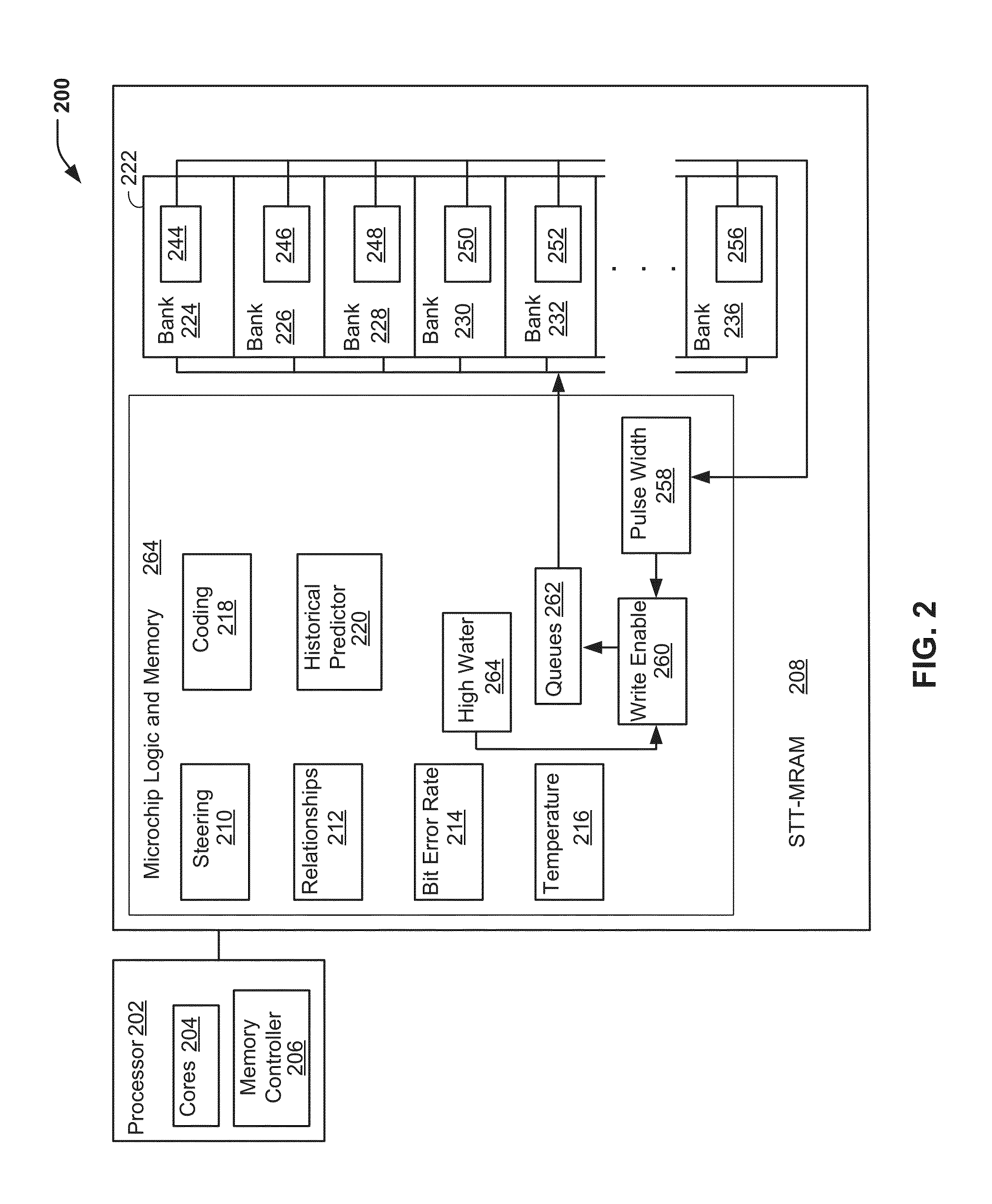

Dynamic temperature adjustments in spin transfer torque magnetoresistive random-access memory (stt-mram)

ActiveUS20150206569A1Decrease write latencyReduce power consumptionWalking aidsWheelchairs/patient conveyanceSpin-transfer torqueParallel computing

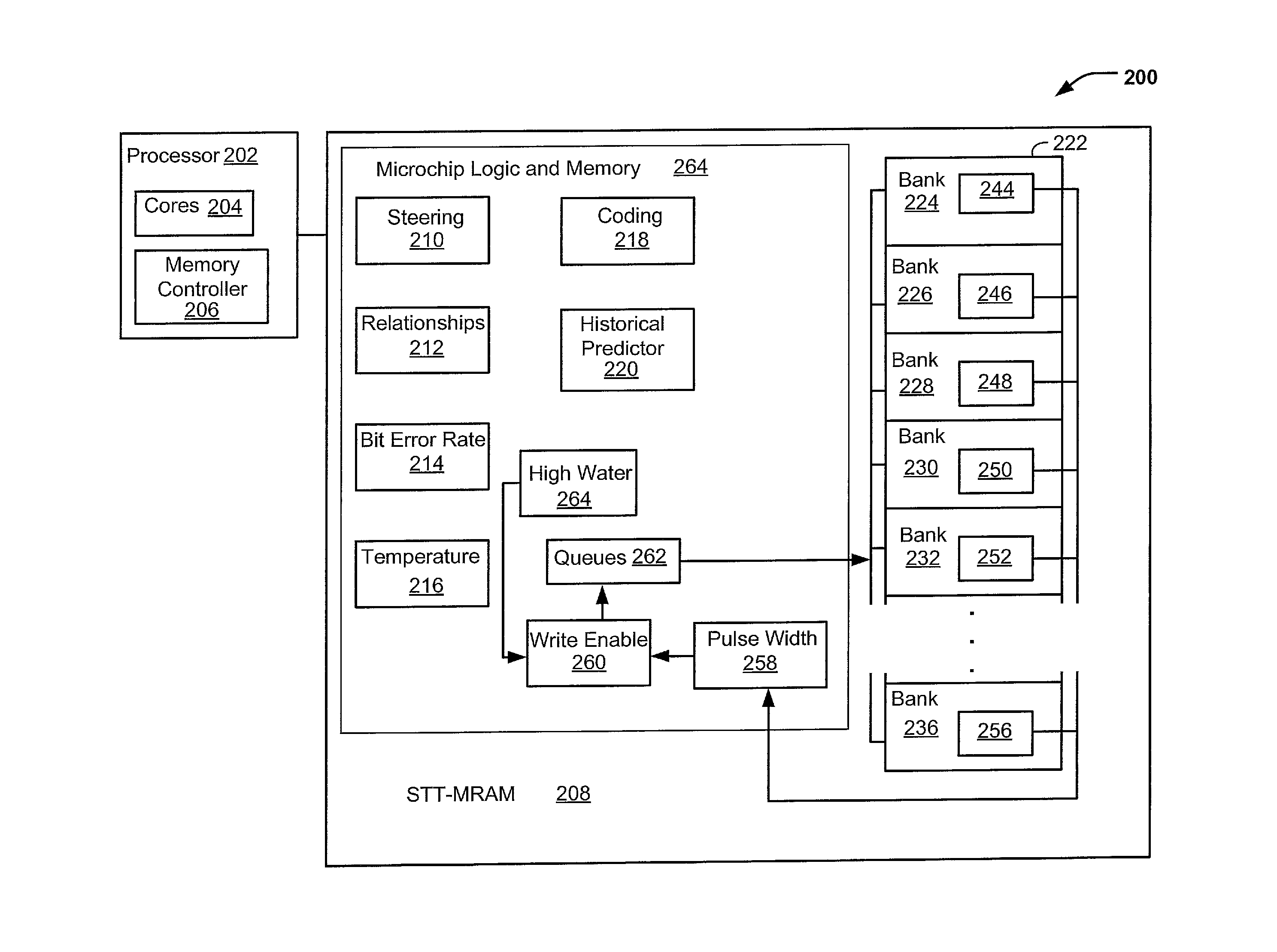

Systems and methods to manage memory on a spin transfer torque magnetoresistive random-access memory (STT-MRAM) are provided. A particular method of managing memory includes determining a temperature associated with the memory and determining a level of write queue utilization associated with the memory. A write operation may be performed based on the level of write queue utilization and the temperature.

Owner:IBM CORP

Dynamic temperature adjustments in spin transfer torque magnetoresistive random-access memory (STT-MRAM)

ActiveUS9351899B2Increase temperatureReduce consumptionWheelchairs/patient conveyanceWalking aidsSpin-transfer torqueParallel computing

Systems and methods to manage memory on a spin transfer torque magnetoresistive random-access memory (STT-MRAM) are provided. A particular method of managing memory includes determining a temperature associated with the memory and determining a level of write queue utilization associated with the memory. A write operation may be performed based on the level of write queue utilization and the temperature.

Owner:IBM CORP

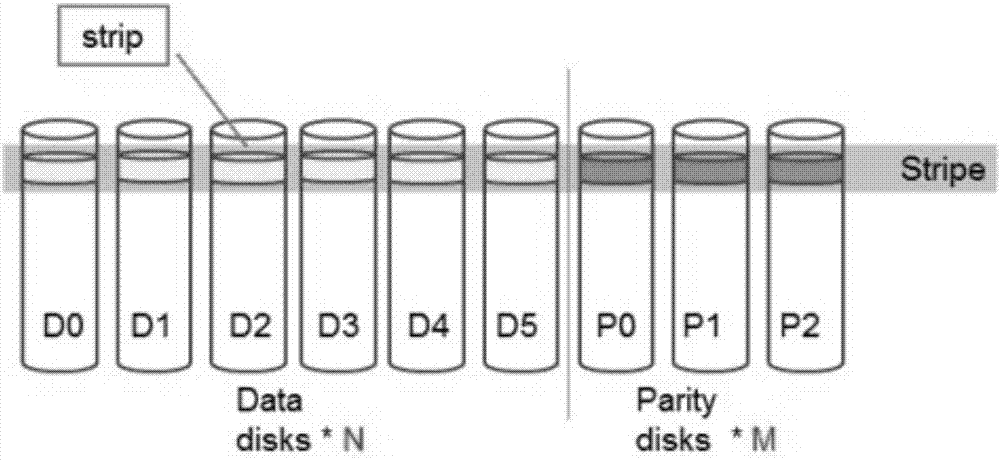

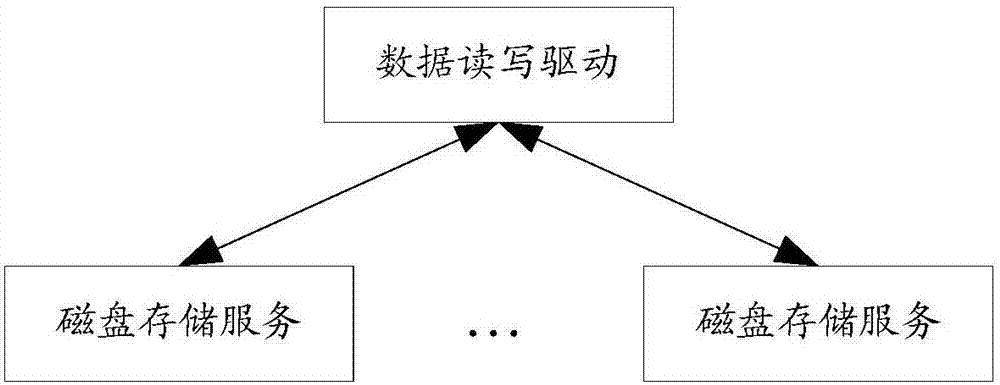

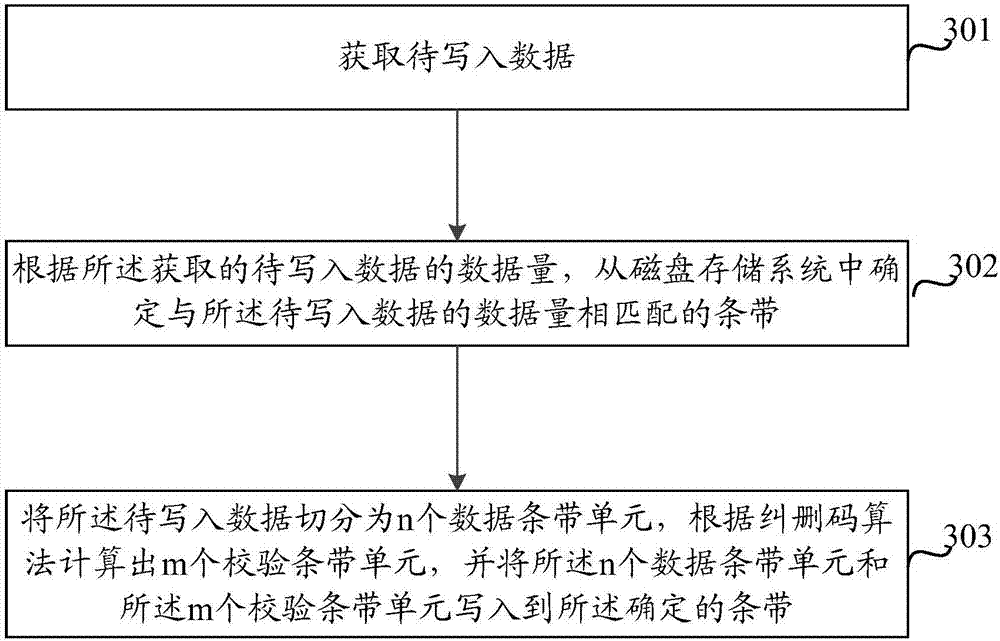

Method and device for writing data into

ActiveCN107273048ALow data write latencyReduce riskInput/output to record carriersRedundant data error correctionComputer architectureData set

The invention discloses a method and device for writing data into. The method includes obtaining of the data to be written into, a strip matched with the volume of the obtained data to be written into is determined from a disk storage system according to the volume of the obtained data to be written into, wherein the disk storage system includes multiple strips different in depth, each strip includes n data strip units and m verification strip units, the data to be written into is divided into n data strip units, the m verification strip units are calculated out through an erasure code algorithm, and the n data strip units and the m verification strip units are written into the determined strip. According to the volume of the data to be written into, the strip of the suitable depth is selected, the data to be written into is written into the selected strip through the erasure code algorithm, and the effects that the throughput is improved by selecting the strip with the large depth when the volume of the data is large, and when the volume of the data is small, the strip with small depth is selected, so that the risks of delayed writing and data losing are reduced.

Owner:ZHEJIANG DAHUA TECH CO LTD

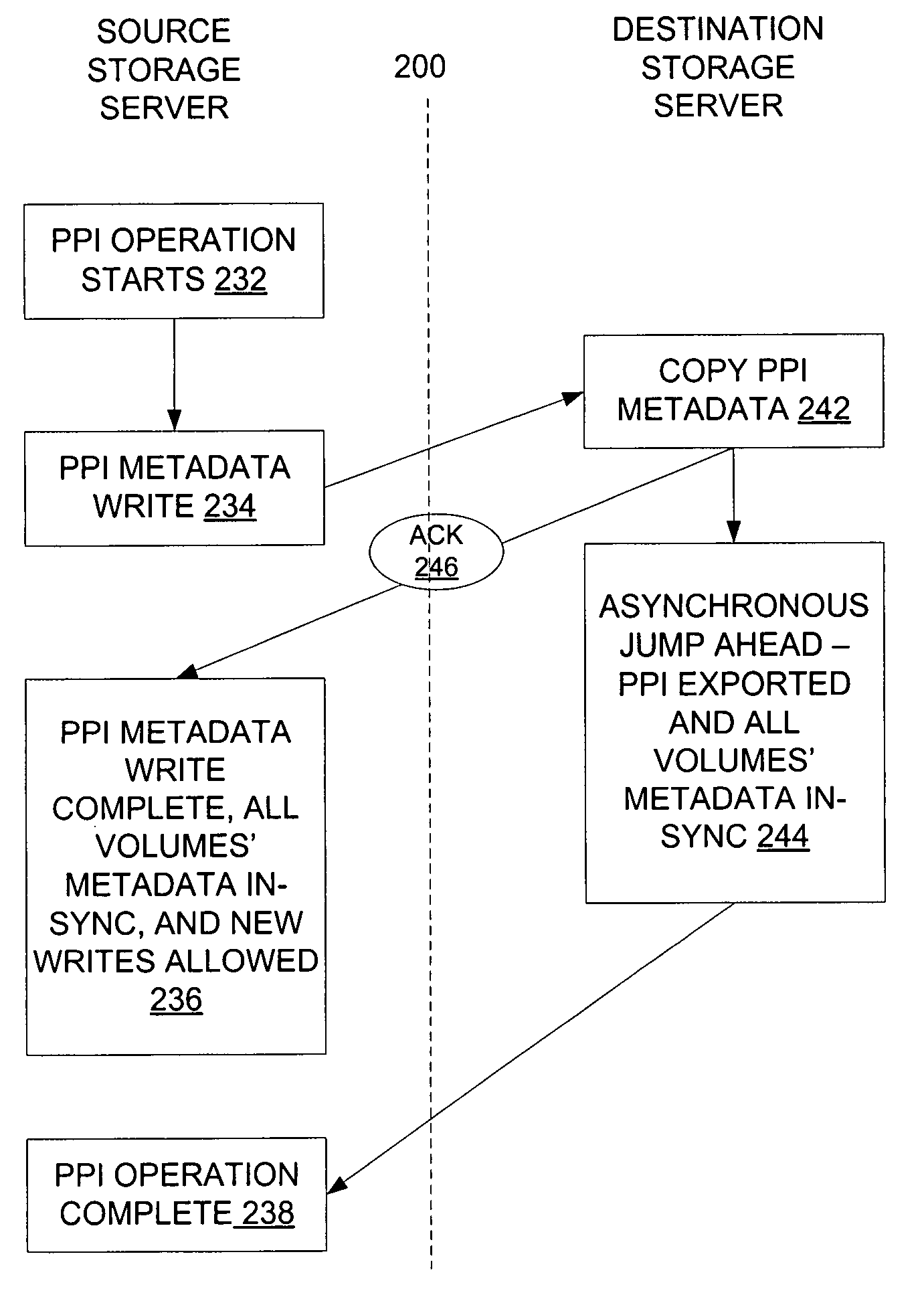

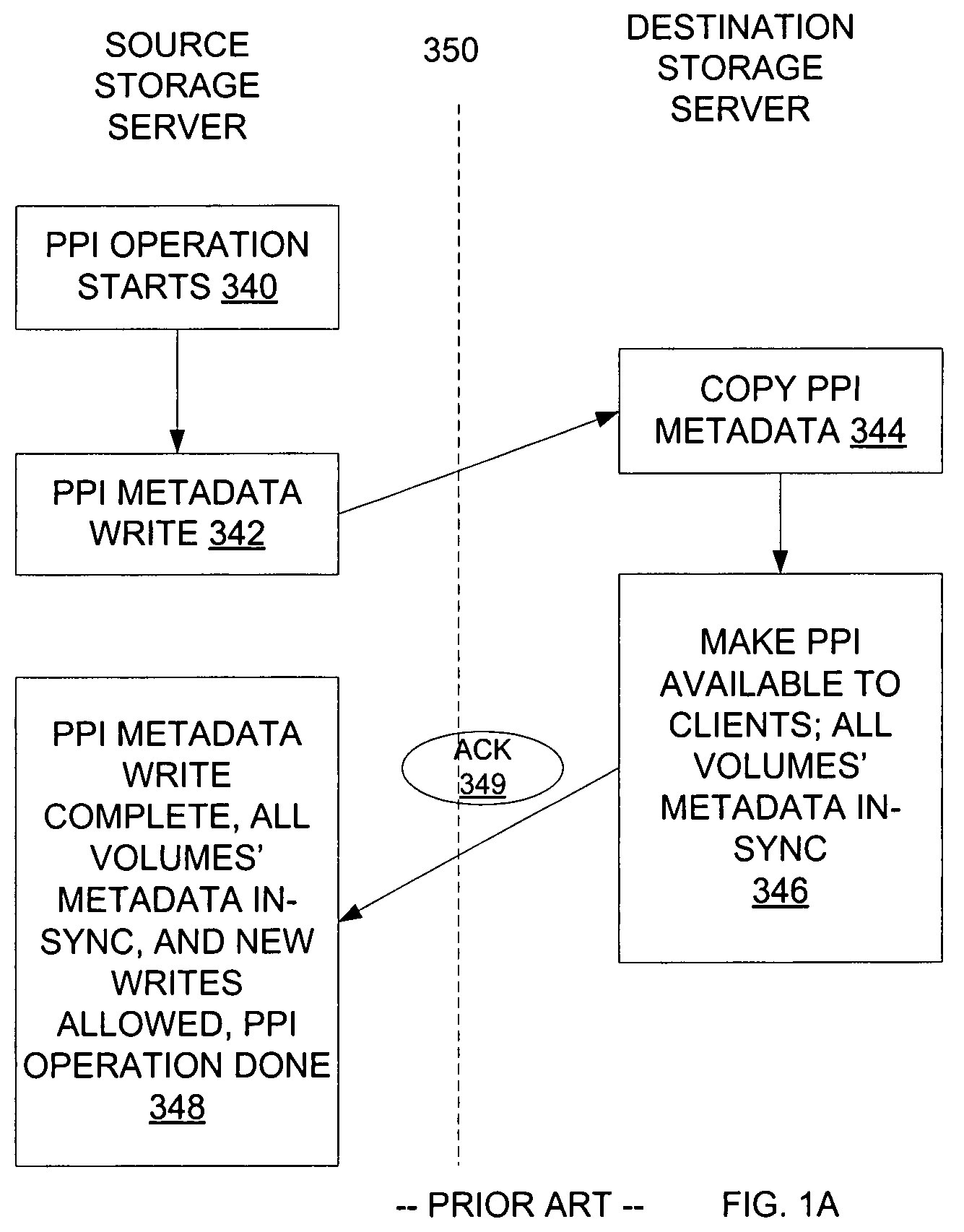

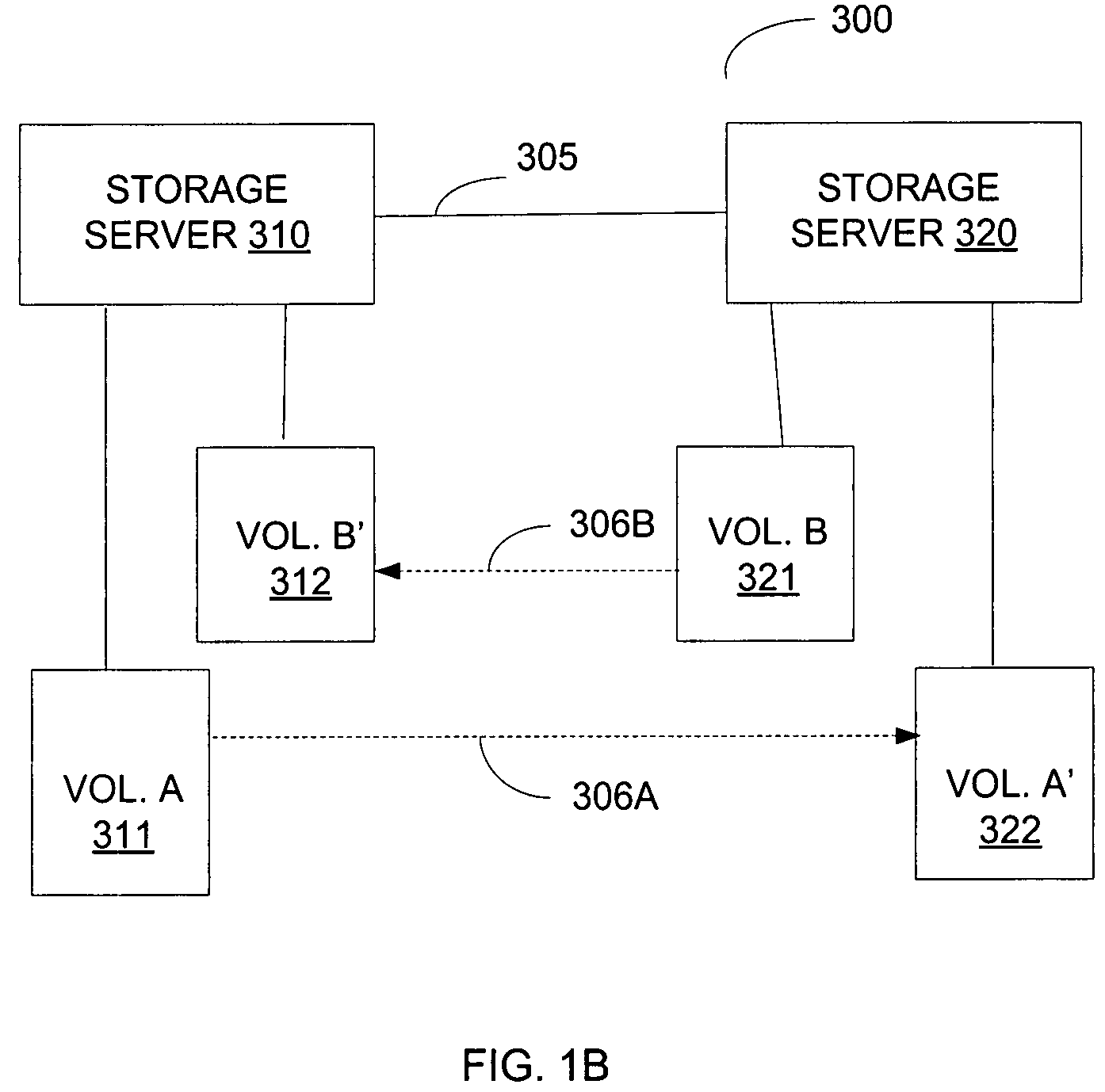

Apparatus and a method to eliminate deadlock in a bi-directionally mirrored data storage system

ActiveUS8001307B1Eliminate deadlockAvoid deadlockDigital data processing detailsMultiple digital computer combinationsData storeDeadlock

An apparatus and a method to eliminate deadlock in a bi-directionally mirrored data storage system are presented. In some embodiments, a first and a second storage servers have established a mirroring relationship. To prevent deadlock between the storage servers and to reduce write latency, the second storage server may hold data received from the first storage server in a replication queue and send an early confirmation to the first storage server before writing the data to a destination volume if the first storage server is held up due to a lack of confirmation. In another embodiment, when the first storage server writes metadata of a persistent point-in-time image (PPI) to the second storage server, the second storage server may send a confirmation to the first storage server after copying the metadata, but before exporting the PPI at the second storage server.

Owner:NETWORK APPLIANCE INC

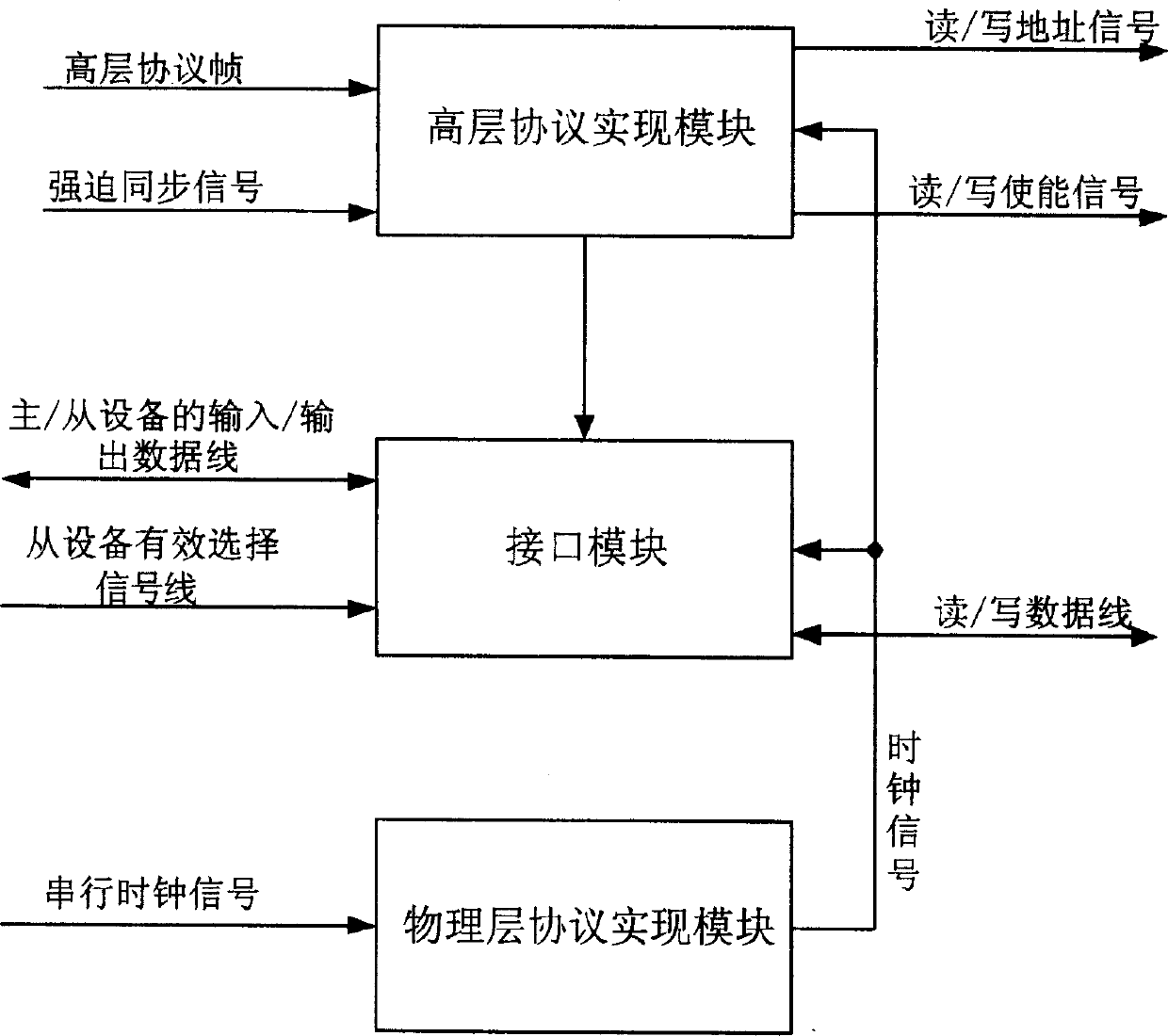

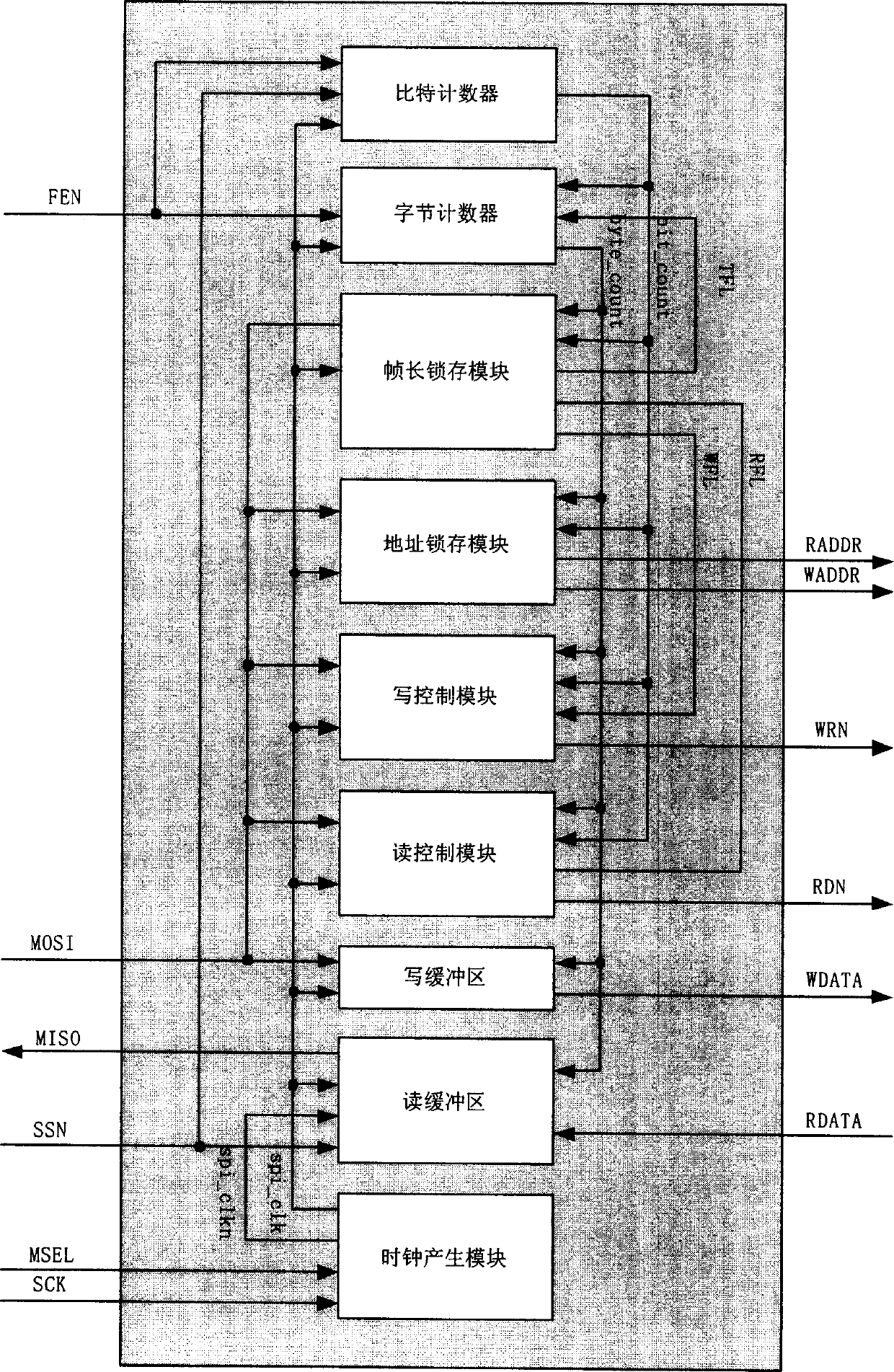

Serial communiction bus external equipment interface

InactiveCN1588337AImprove transmission efficiencyImprove reliabilityElectric digital data processingPhysical layerMaster/slave

The serial communication bus peripheral interface consists of physical layer protocol realizing module, higher layer protocol realizing module and interface module. The physical layer protocol realizing module receives serial clock signal and generates clock signal output to the higher layer protocol realizing module and the interface module in SPI mode; the interface module connects the effective selection signal line of the slave equipment, I / O data line of the master / slave equipment and read / write data line to form the read / write data channel of the serial communication bus peripheral interface; and the higher layer protocol realizing module receives the higher layer protocol frame for data communication, receives forced sync signal from the master equipment for united sync of master and slave equipment and outputs read / write address signal and read / write enable signal. The present invention has the features of high, universality, high transmission efficiency, etc.

Owner:VIMICRO CORP

Dynamic temperature adjustments in spin transfer torque magnetoresistive random-access memory (stt-mram)

ActiveUS20150206567A1Reduces power (or energy) consumptionReduces processing latencyWheelchairs/patient conveyanceWalking aidsSpin-transfer torqueParallel computing

Systems and methods to manage memory on a spin transfer torque magnetoresistive random-access memory (STT-MRAM) are provided. A particular method of managing memory includes determining a temperature associated with the memory and determining a level of write queue utilization associated with the memory. A write operation may be performed based on the level of write queue utilization and the temperature.

Owner:IBM CORP

Efficient memory usage in systems including volatile and high-density memories

ActiveUS7613870B2Efficient use ofReduce write latencyMemory architecture accessing/allocationEnergy efficient ICTHigh densityOperating system

A first method for efficient memory usage includes (1) determining whether data retrieved from a first storage device is characterized as data that is primarily read; and (2) if data retrieved from the first storage device is characterized as data that is primarily read (a) writing the retrieved data in a temporary storage device with short write latency; and (b) writing the retrieved data in a high-density memory. Numerous other aspects are provided.

Owner:LENOVO GLOBAL TECH INT LTD

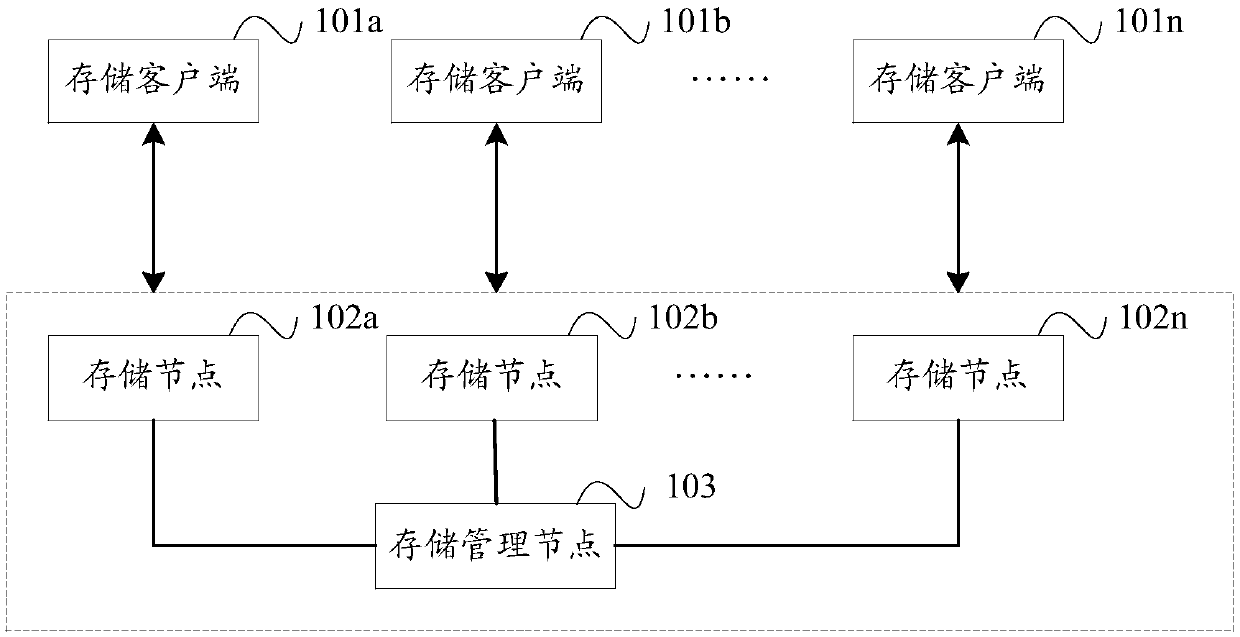

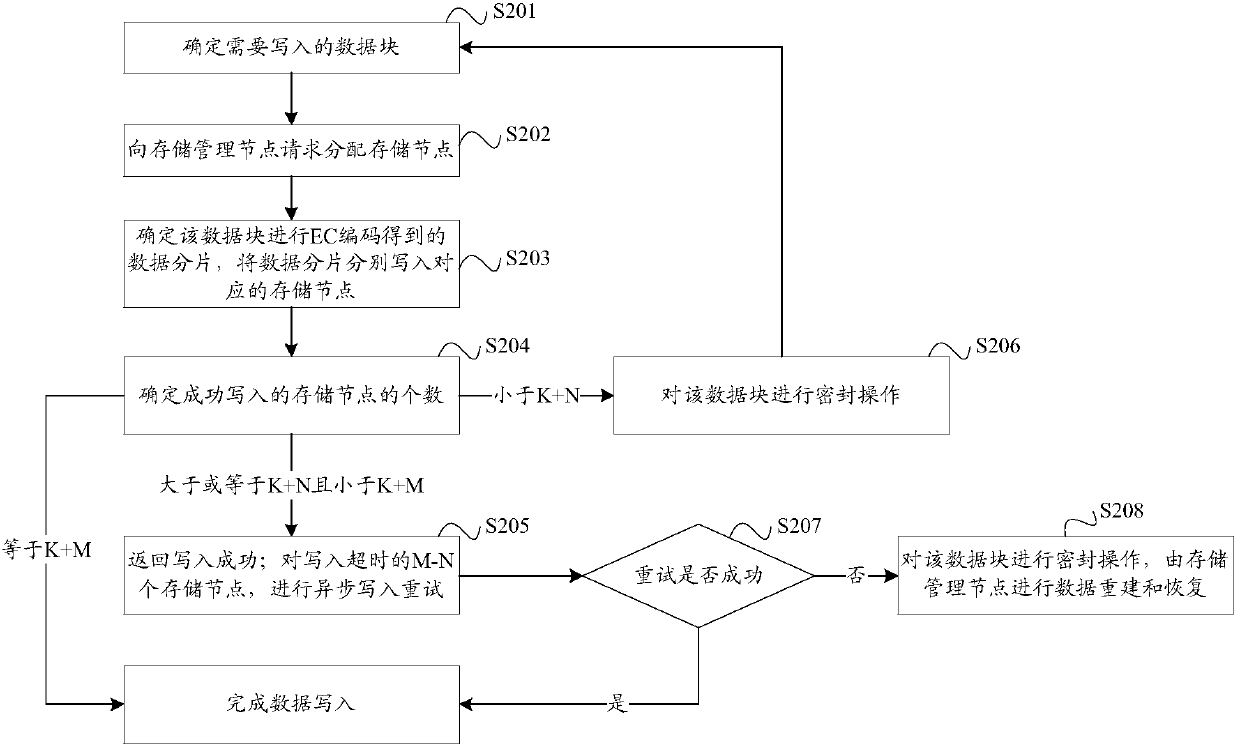

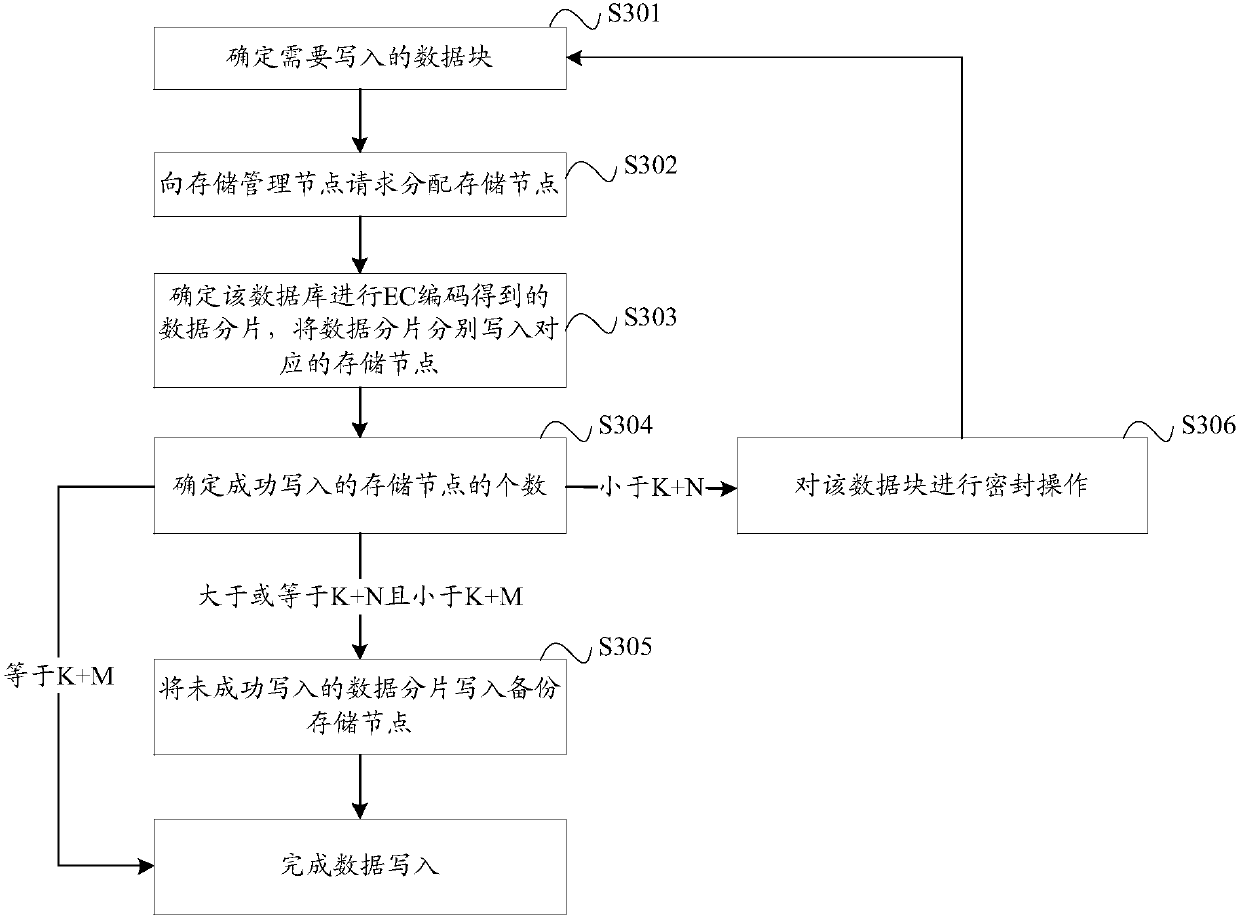

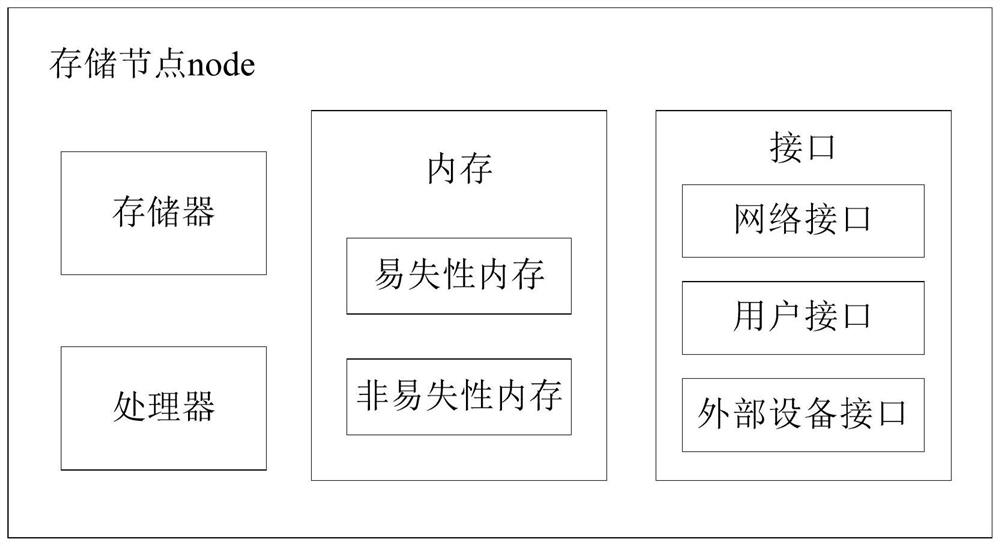

Data storage method, device and system

ActiveCN110018783AImprove performance and stabilityReduce write latencyInput/output to record carriersError detection/correctionErasure codeData store

The invention discloses a data storage method, device and system. The data storage method comprises the following steps of carrying out erasure code coding on a data block to obtain a plurality of corresponding data fragments; respectively writing the plurality of data fragments into the correspondingly distributed storage nodes; if the number of the storage nodes successfully writing the data fragments in the first set duration is greater than or equal to a set value, determining that the data block is successfully written, wherein the set value is greater than or equal to the number of the data block fragments in the data fragments and less than or equal to the number of the data fragments. Therefore, the data writing delay can be reduced, and the performance stability when the storage node has a fault can be improved.

Owner:ALIBABA GRP HLDG LTD

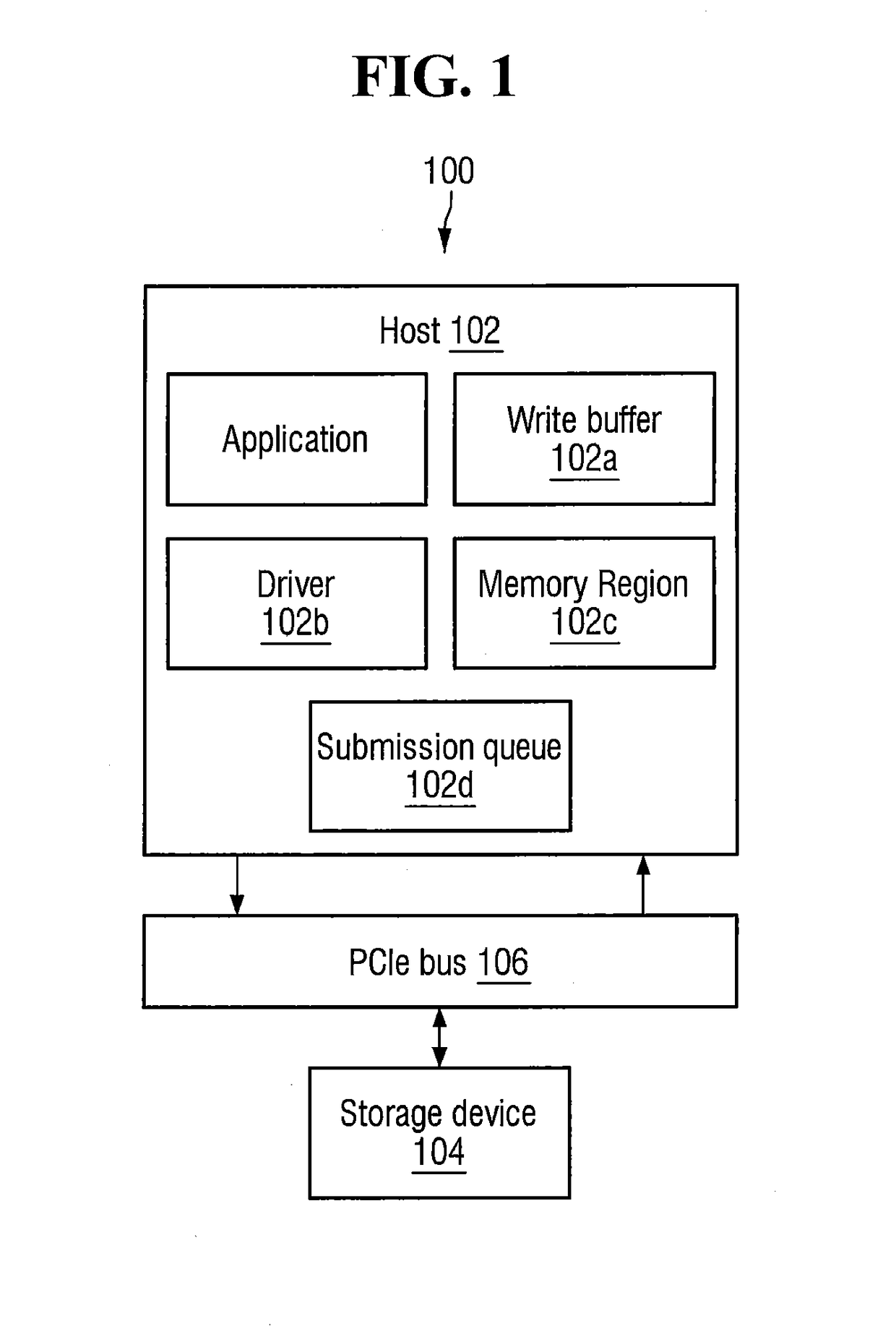

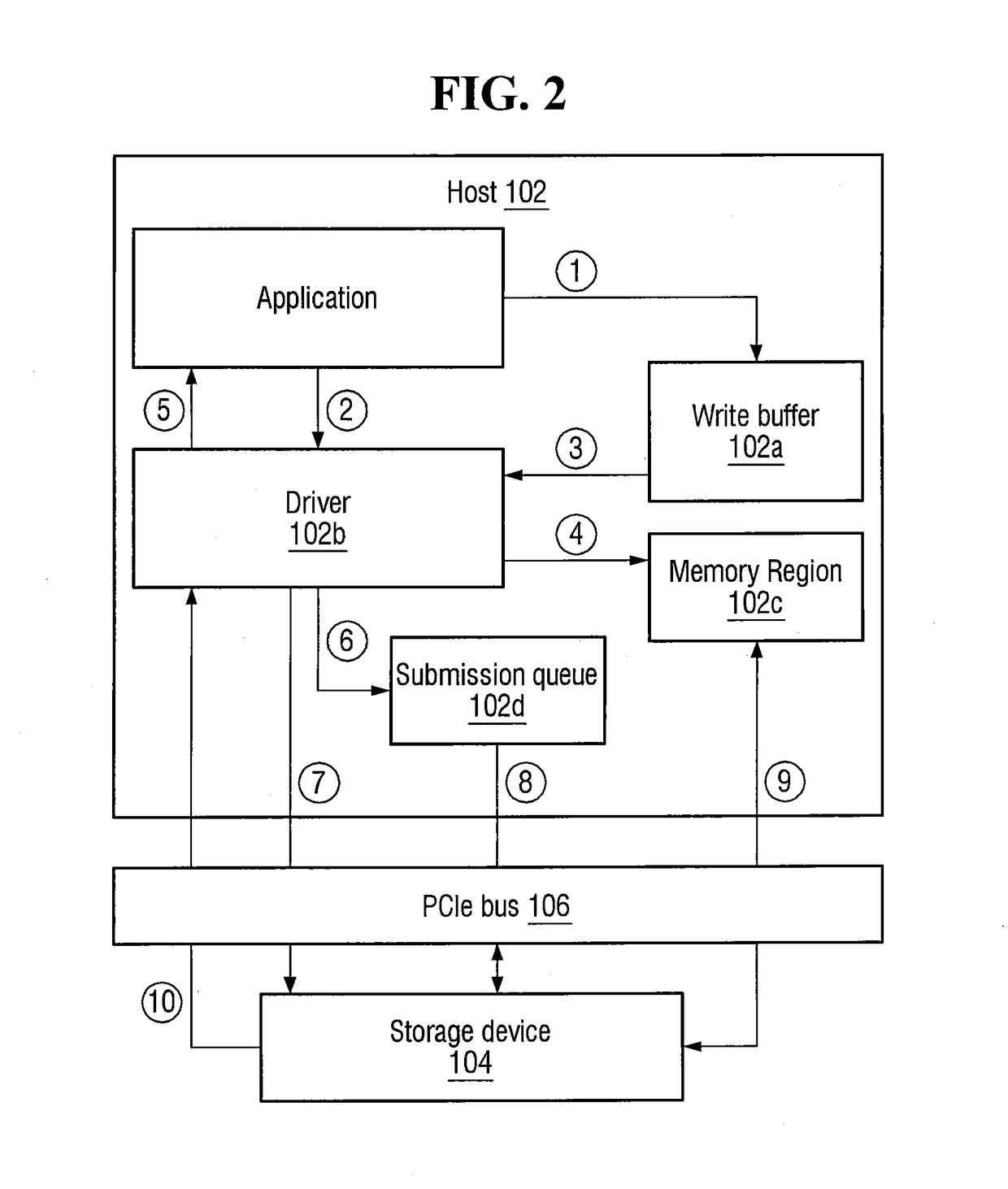

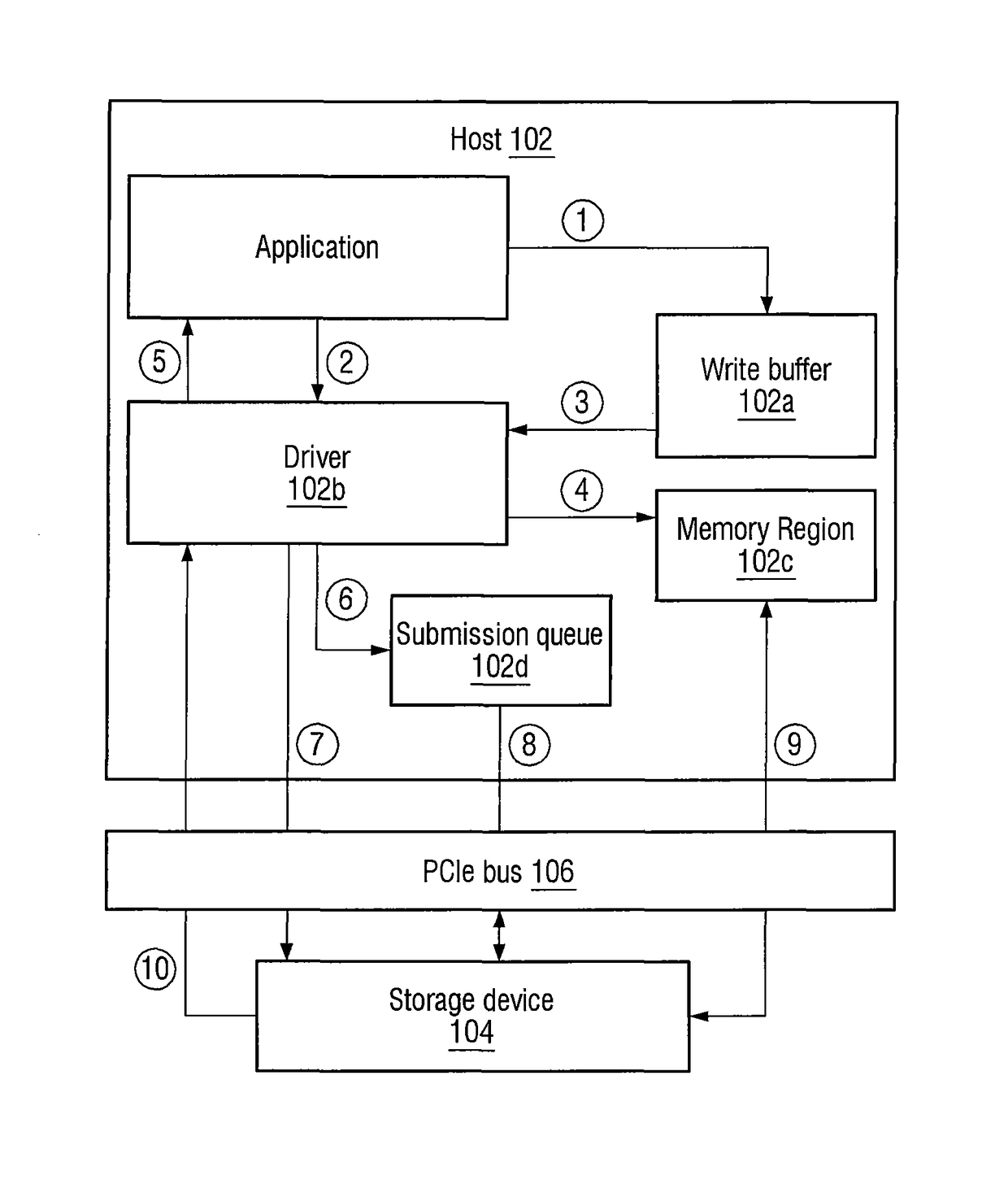

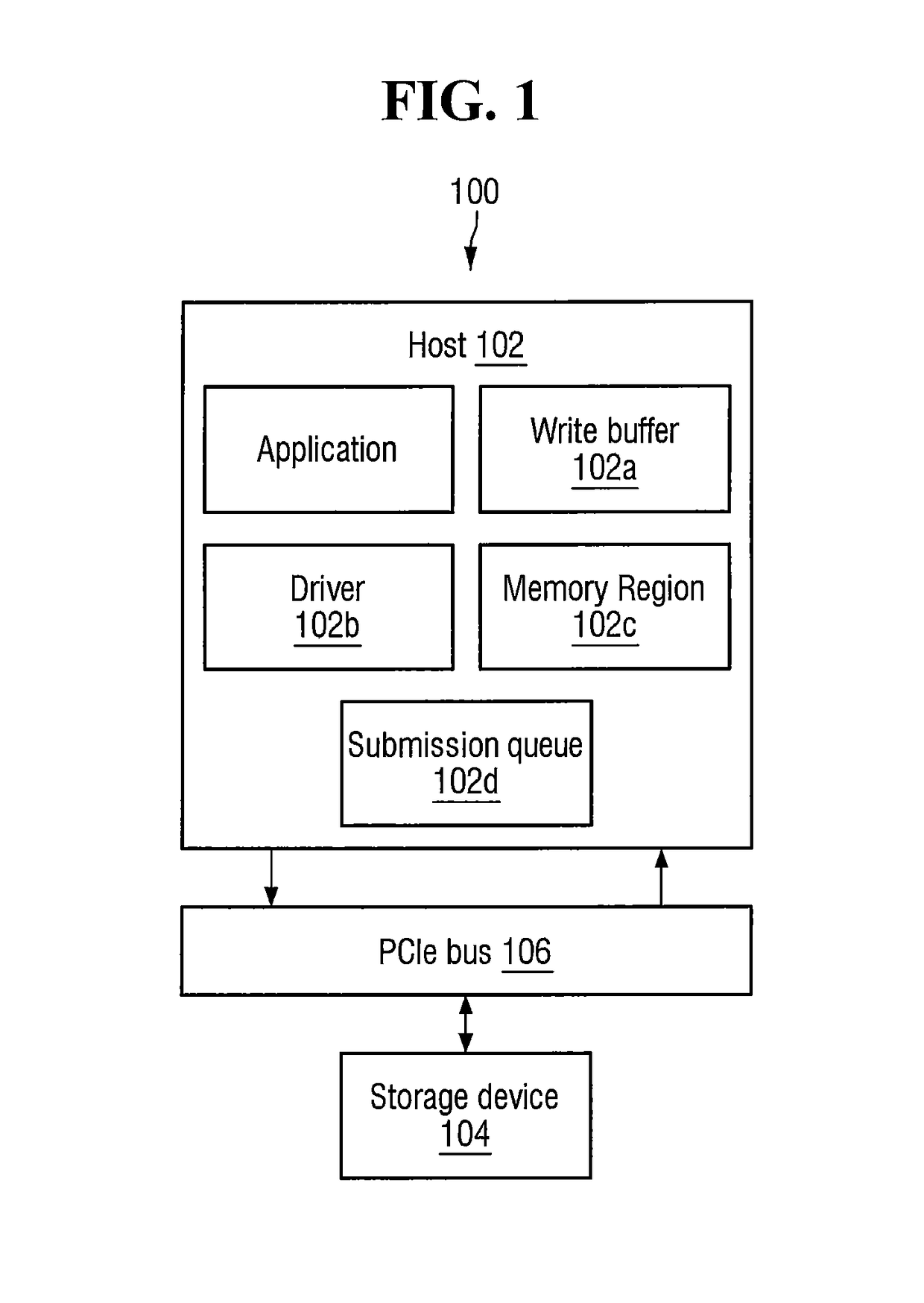

Method of achieving low write latency in a data storage system

ActiveUS20180024949A1Reduce write latencyInput/output to record carriersComputer hardwareWrite buffer

A data storage system includes a host having a write buffer, a memory region, a submission queue and a driver therein. The driver is configured to: (i) transfer data from the write buffer to the memory region in response to a write command, (ii) generate a write command completion notice; and (iii) send at least an address of the data in the memory region to the submission queue. The host may also be configured to transfer the address to a storage device external to the host, and the storage device may use the address during an operation to transfer the data in the memory region to the storage device.

Owner:SAMSUNG ELECTRONICS CO LTD

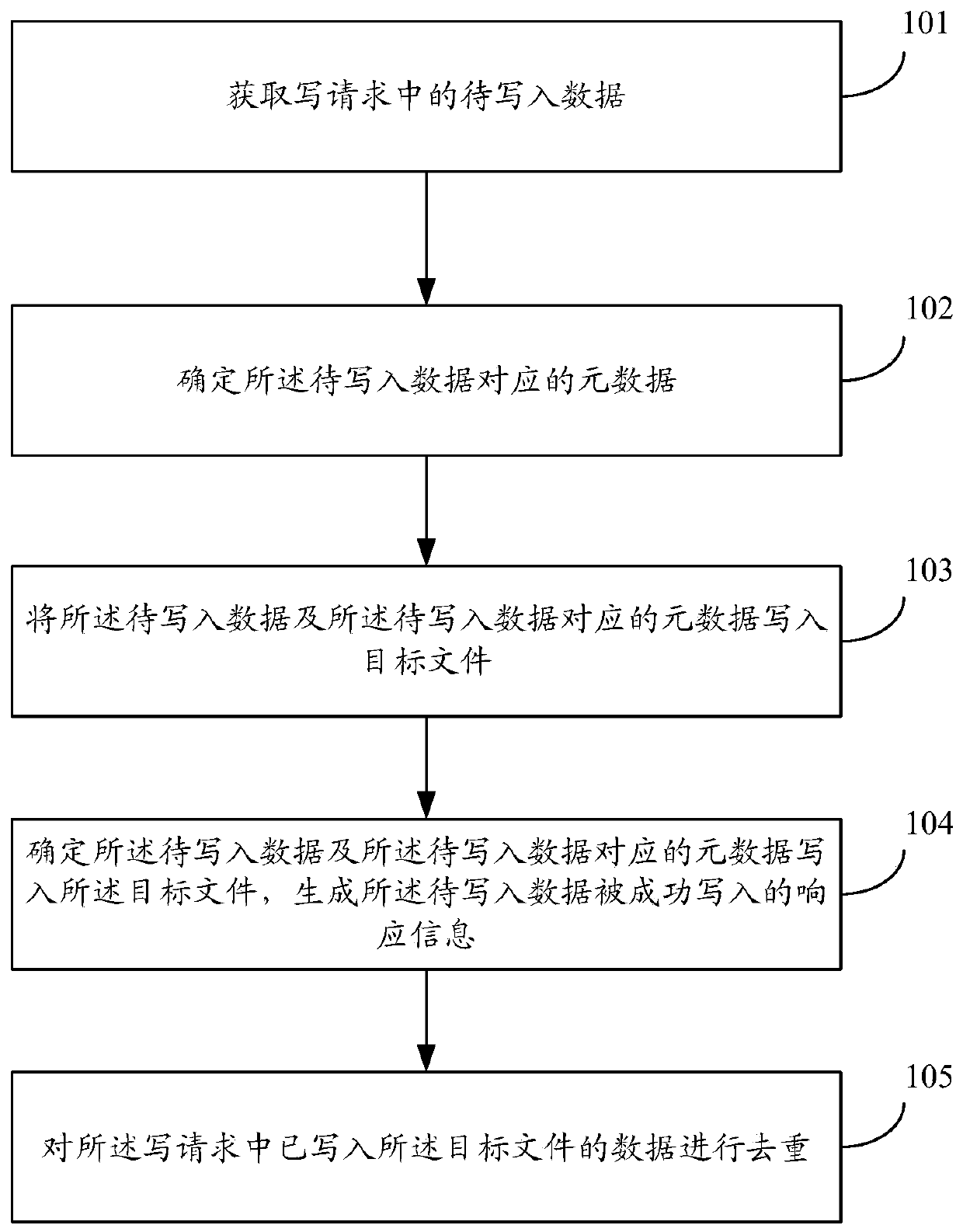

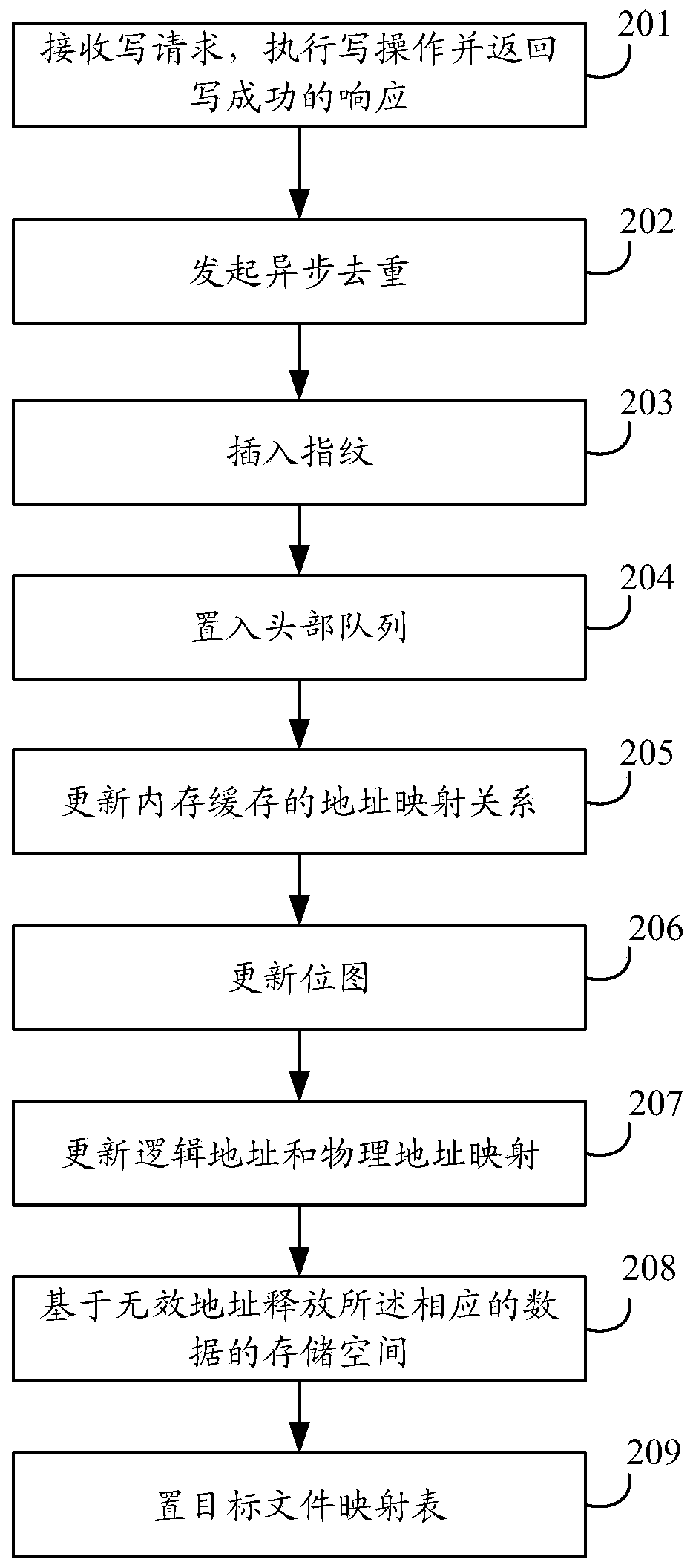

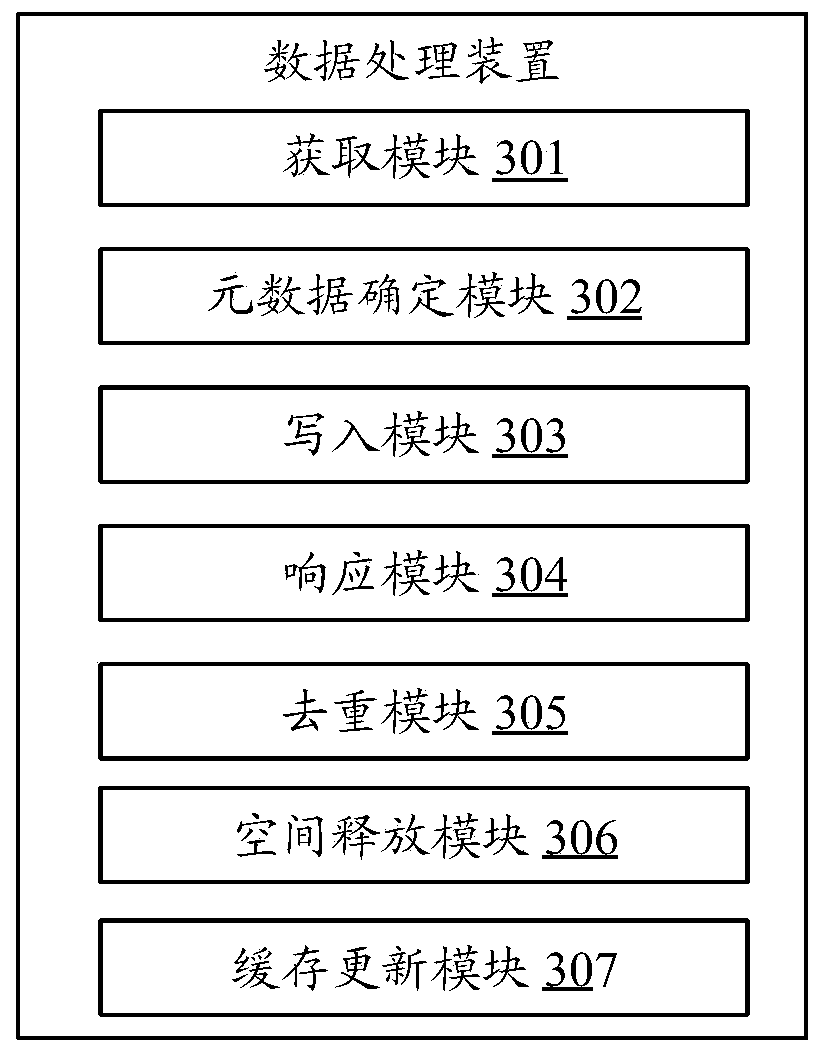

Data processing method, device, equipment and storage medium

PendingCN111381779ADeduplication implementationReduce write latencyInput/output to record carriersEngineeringMetadata

The invention discloses a data processing method, a device, equipment and a storage medium. The method comprises the steps of obtaining to-be-written data in a writing request; determining metadata corresponding to the to-be-written data; writing the to-be-written data and metadata corresponding to the to-be-written data into a target file; determining that the to-be-written data and metadata corresponding to the to-be-written data are written into the target file, and generating response information that the to-be-written data are successfully written; and carrying out duplicate removal on the data written into the target file in the write request. According to the method of the invention, the write delay is reduced, the write request can be quickly responded, and the data written into the target file in the write request is subjected to duplicate removal, so that the write delay of an upper-layer service during data writing can be reduced, the data duplicate removal can be effectively realized, and the utilization rate of a storage space is improved.

Owner:SANGFOR TECH INC

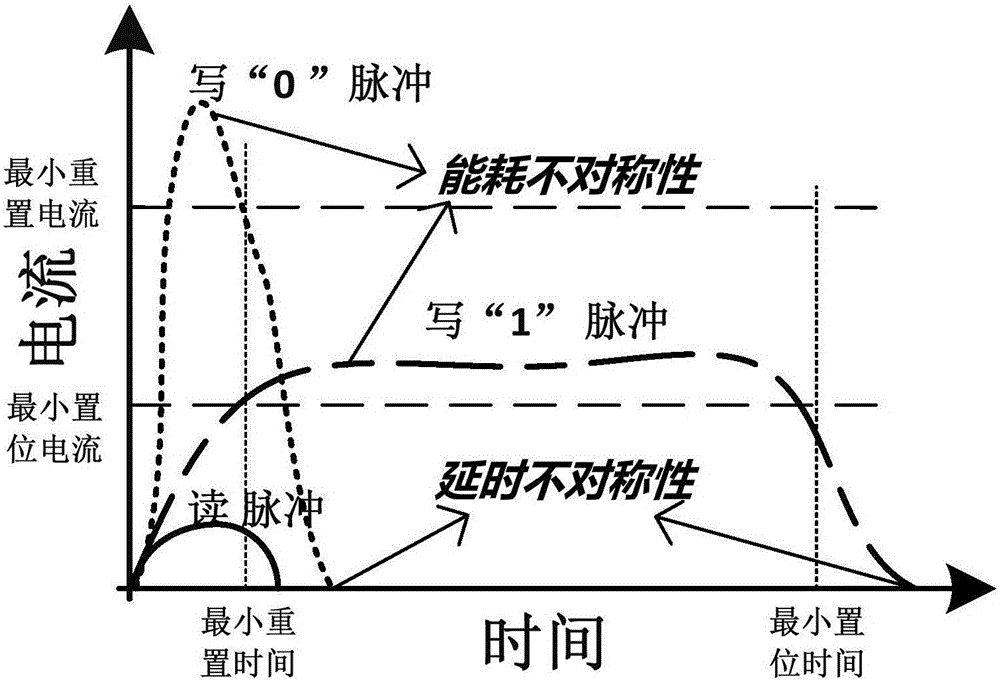

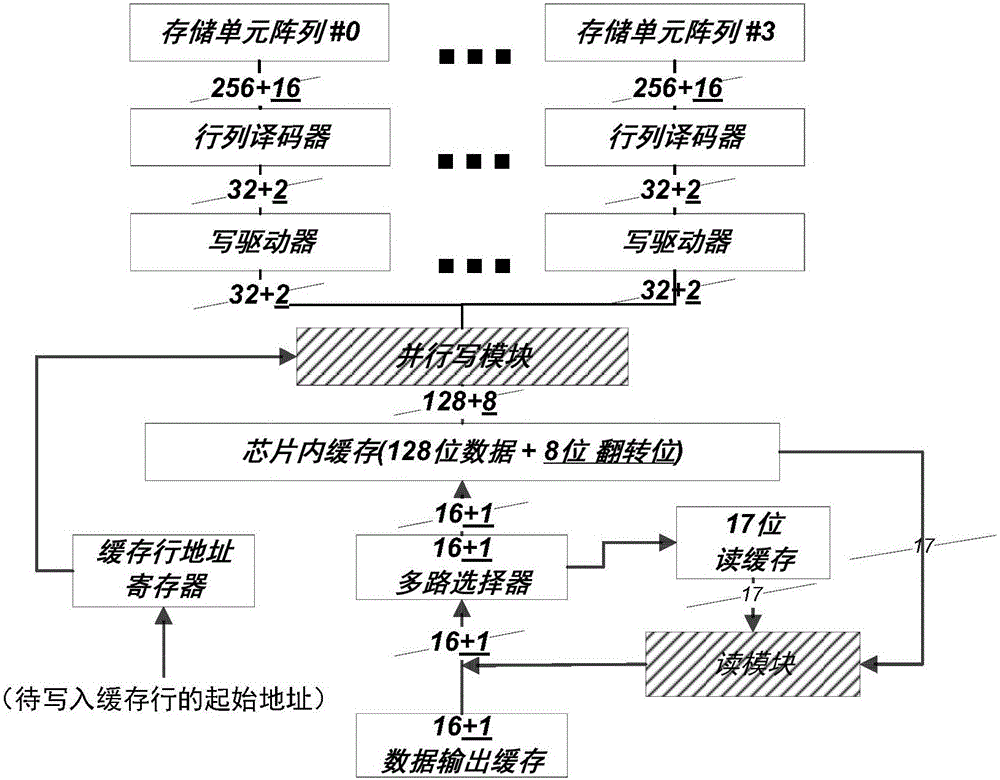

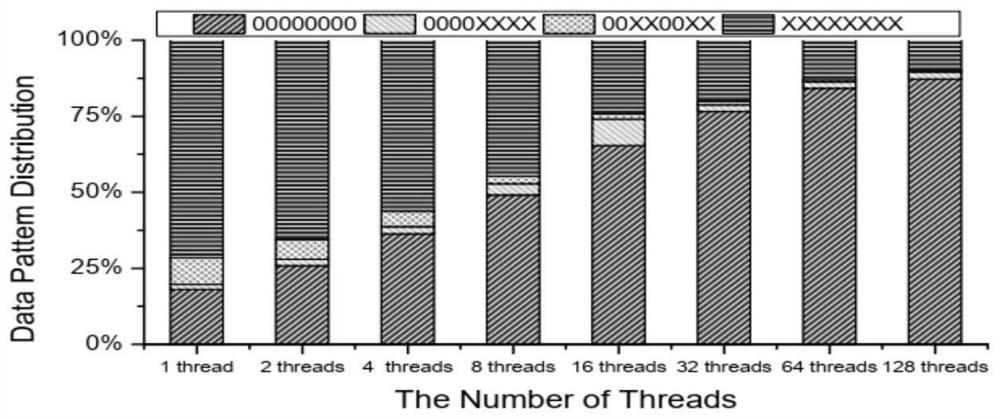

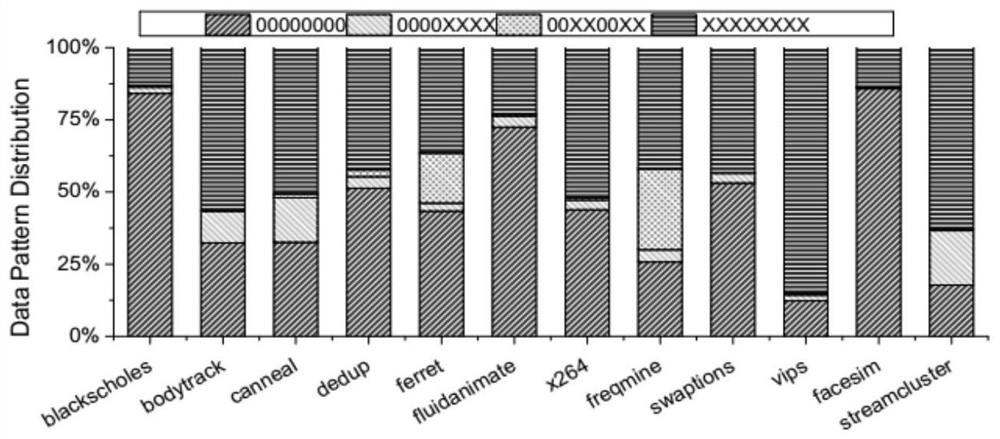

Phase change memory data writing method

ActiveCN106057236AExtend your lifeReduce the total number of digitsDigital storageComputer architectureAnalysis data

A phase change memory data writing method belongs to a data storage method for a memory and solves the problem that an existing phase change memory data writing method fails to make maximum use of power budget, improving writing performance of a phase change memory (PCM) and prolonging its life. The method of the invention includes the steps of reading data, analyzing the data and writing the data. For bisymmetry in '0' writing and '1' writing delay and energy consumption of the PCM, power budge of the PCM is made full use, quantities of '0' and '1' to be modified for each data unit are recorded respectively, a writing sequence of each data unit is re-adjusted, power budget is maximally utilized, write delay of the PCM is further shortened, and thus writing performance is improved and the life is prolonged.

Owner:HUAZHONG UNIV OF SCI & TECH

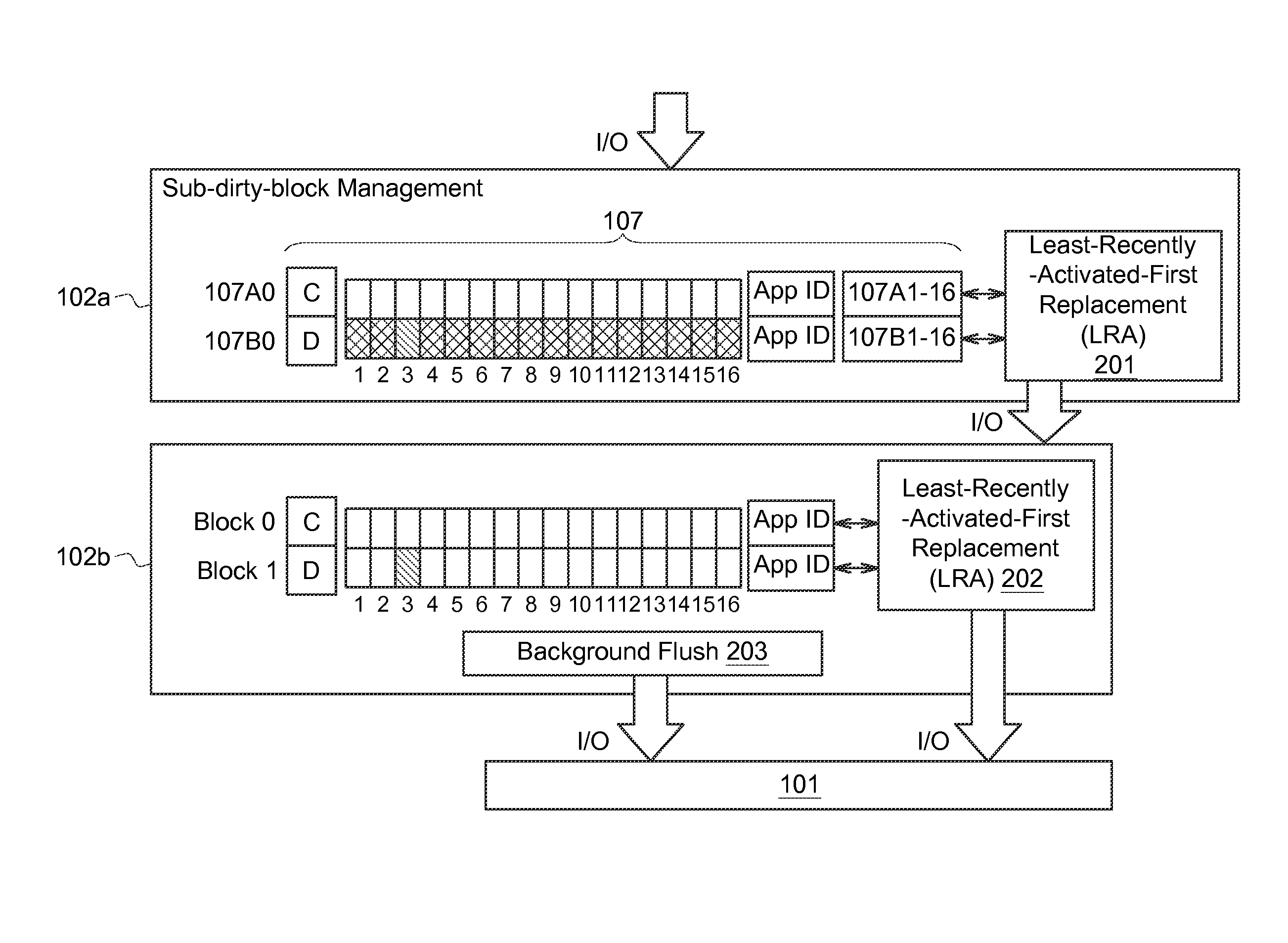

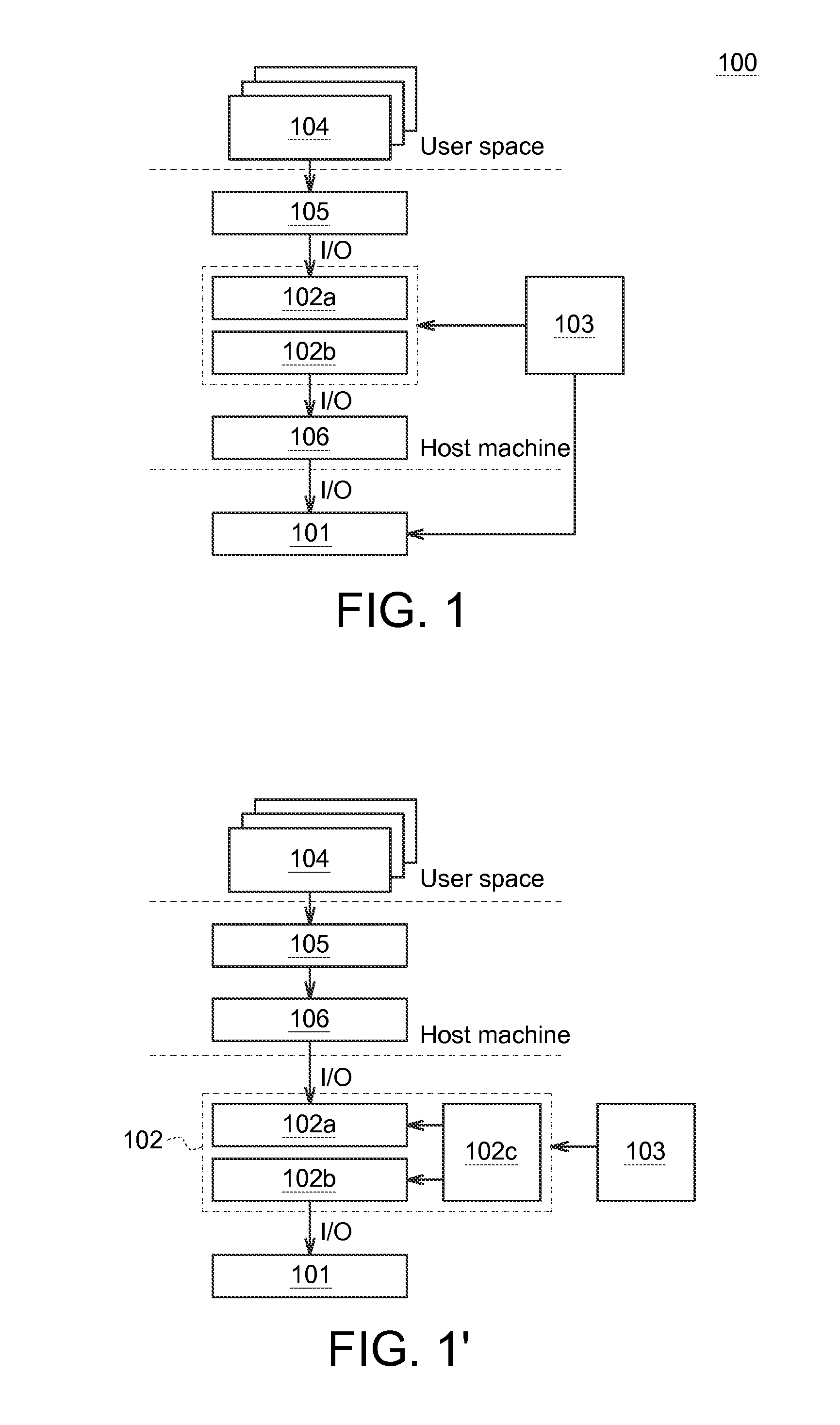

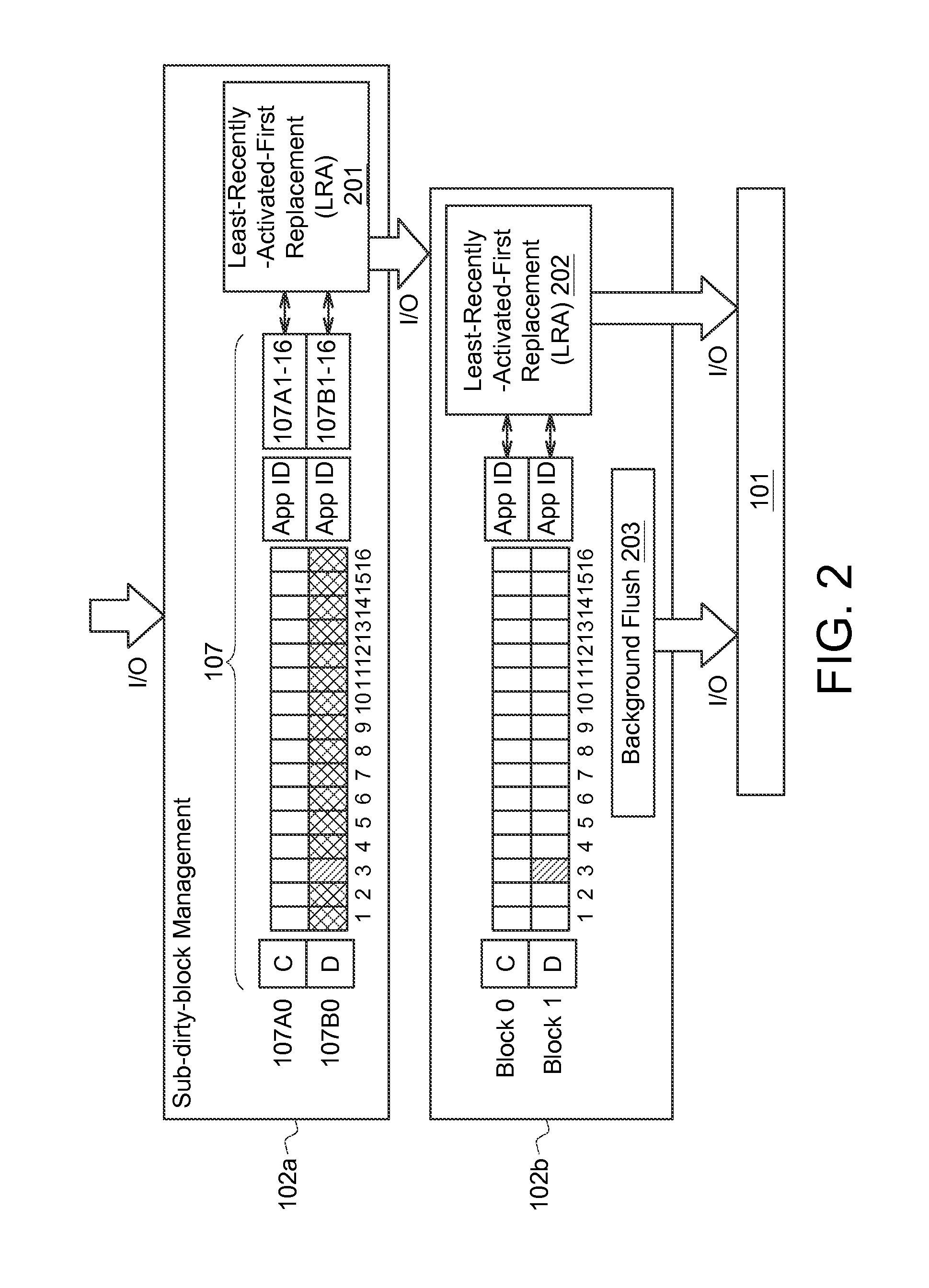

Buffer cache device method for managing the same and applying system thereof

InactiveUS20170052899A1Inconsistency problemEnhance write accessMemory architecture accessing/allocationInput/output to record carriersParallel computingData storing

A buffer cache device used to get at least one data from at least one application is provided, wherein the buffer cache device includes a first-level cache memory, a second-level cache memory and a controller. The first-level cache memory is used to receive and store the data. The second-level cache memory has a memory cell architecture different from that of the first-level cache memory. The controller is used to write the data stored in the first-level cache memory into the second-level cache memory.

Owner:MACRONIX INT CO LTD

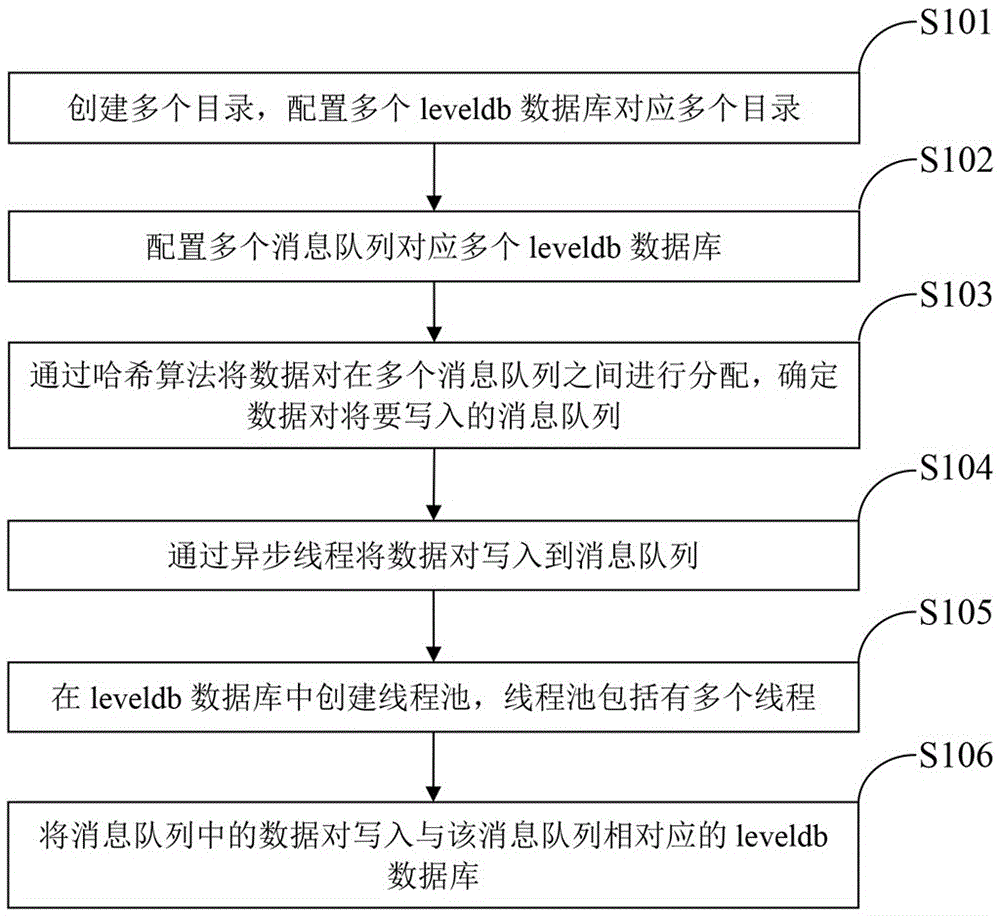

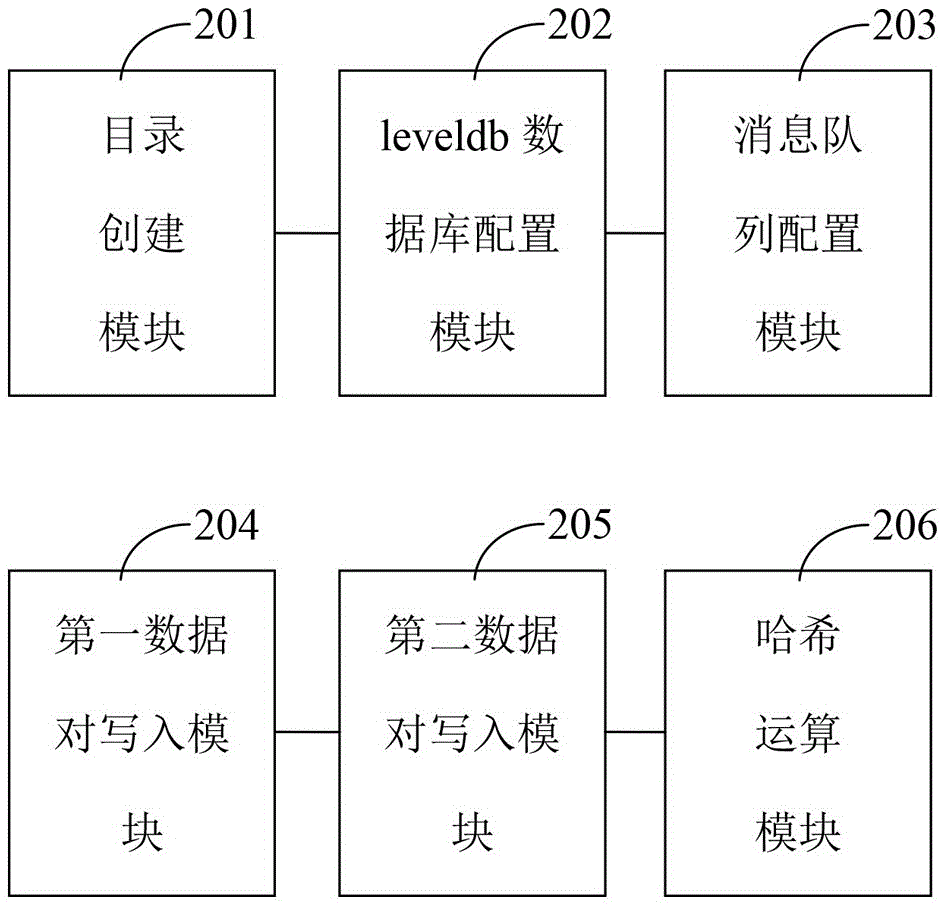

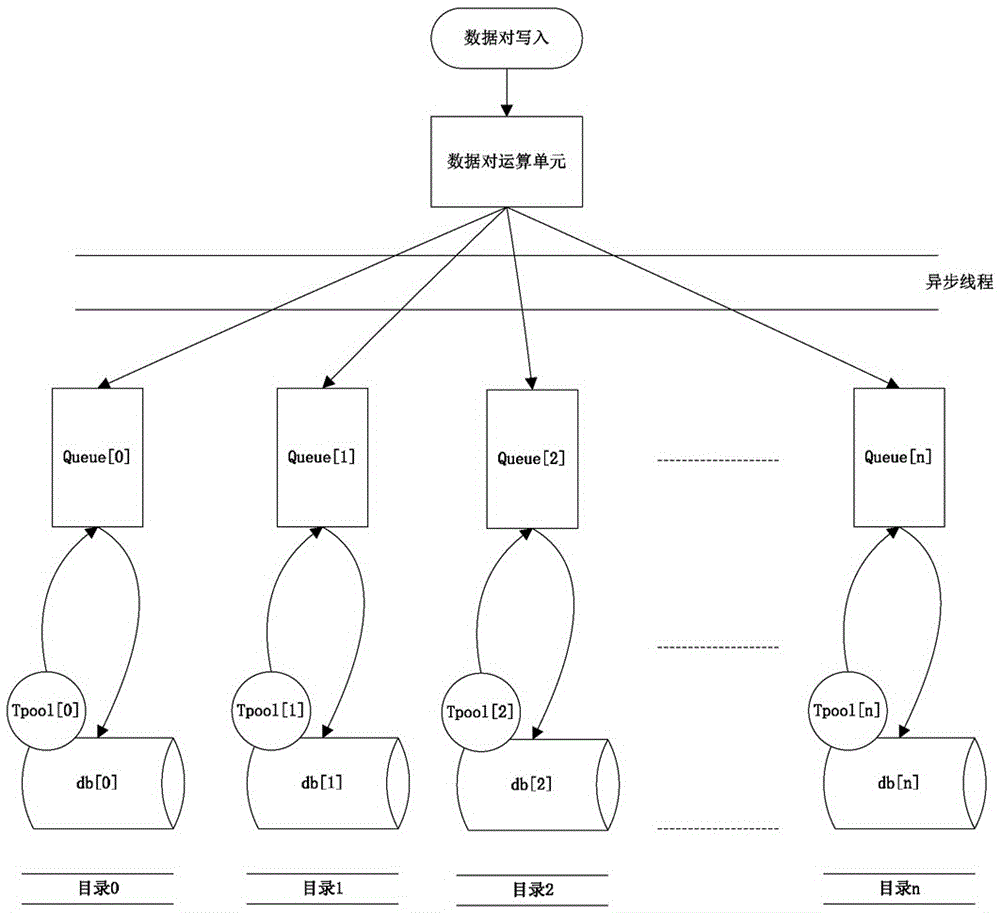

Method, device and system for writing data pairs in LevelDB databases

InactiveCN106682134AImprove writing efficiencyImprove write performanceSpecial data processing applicationsMessage queueComputer data storage

The invention relates to the technical field of computer data storage, in particular to a method, device and system for writing data pairs in LevelDB databases. The method provided herein comprises: configuring multiple LevelDB databases corresponding to multiple message queues; writing data pairs into one message queue; writing the data pairs of the message queue into the LevelDB database corresponding to the message queue, wherein before configuring the multiple databases corresponding to the multiple message queues, steps also are included which include: creating multiple catalogues, and configuring multiple catalogues corresponding to the multiple LevelDB databases, and wherein writing the data pairs into one message queue includes: allocating the data pairs among the multiple message queues through Hash algorithm, and determining the message queue which the data pairs are to be written in. The problem that writing performance of LevelDB databases under high stress is low is solved, and the writing performance of LevelDB databases is improved.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

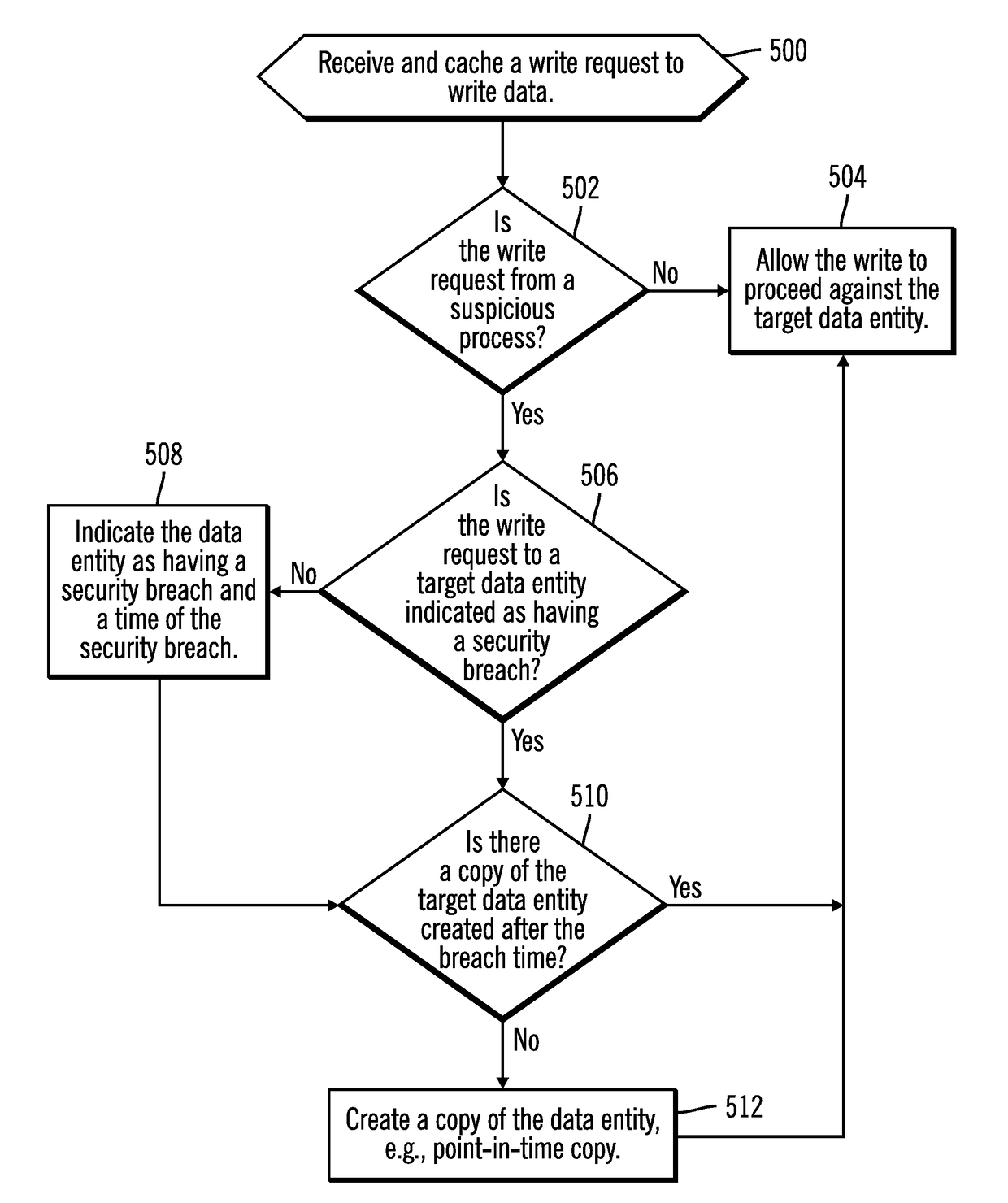

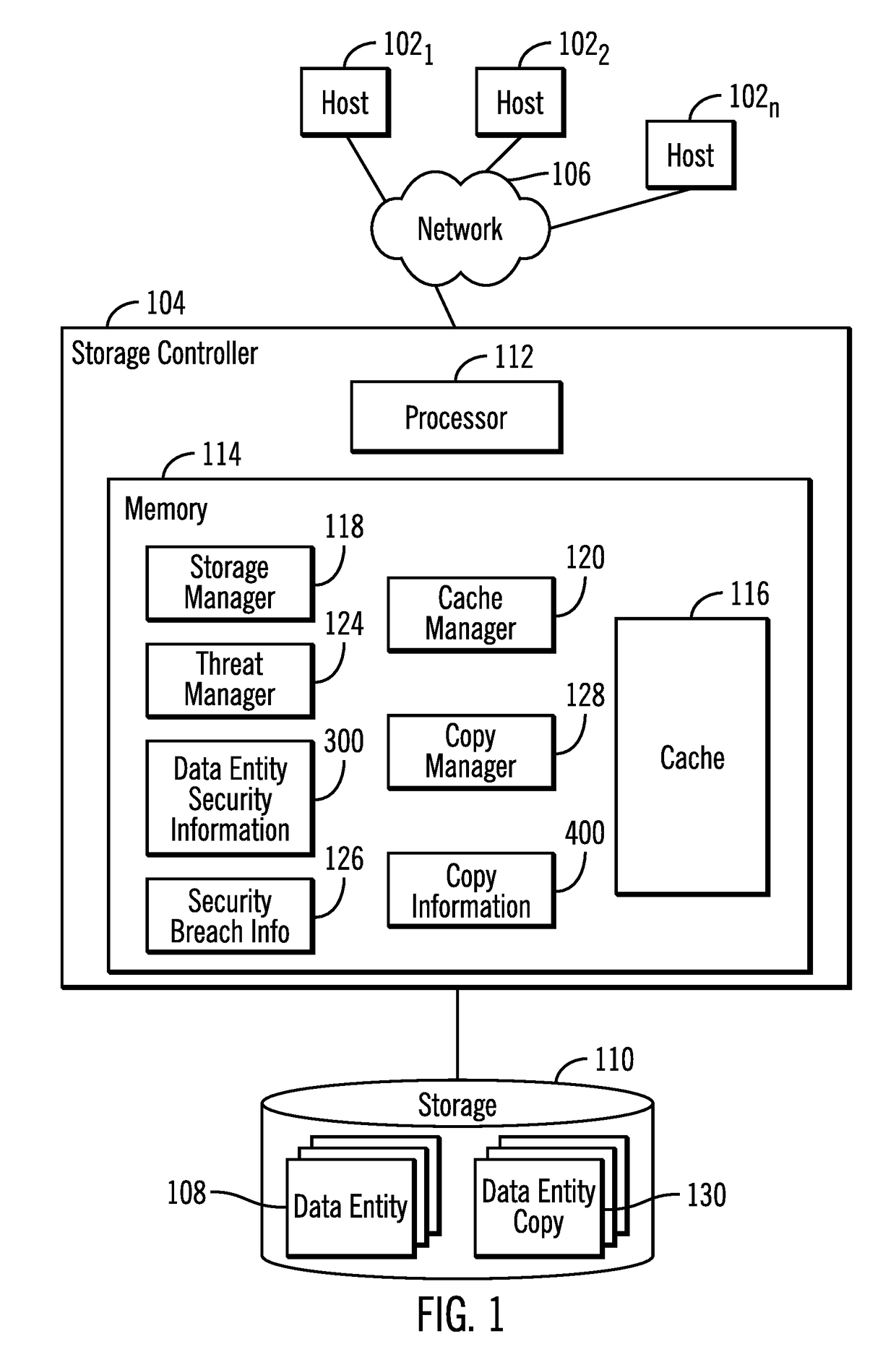

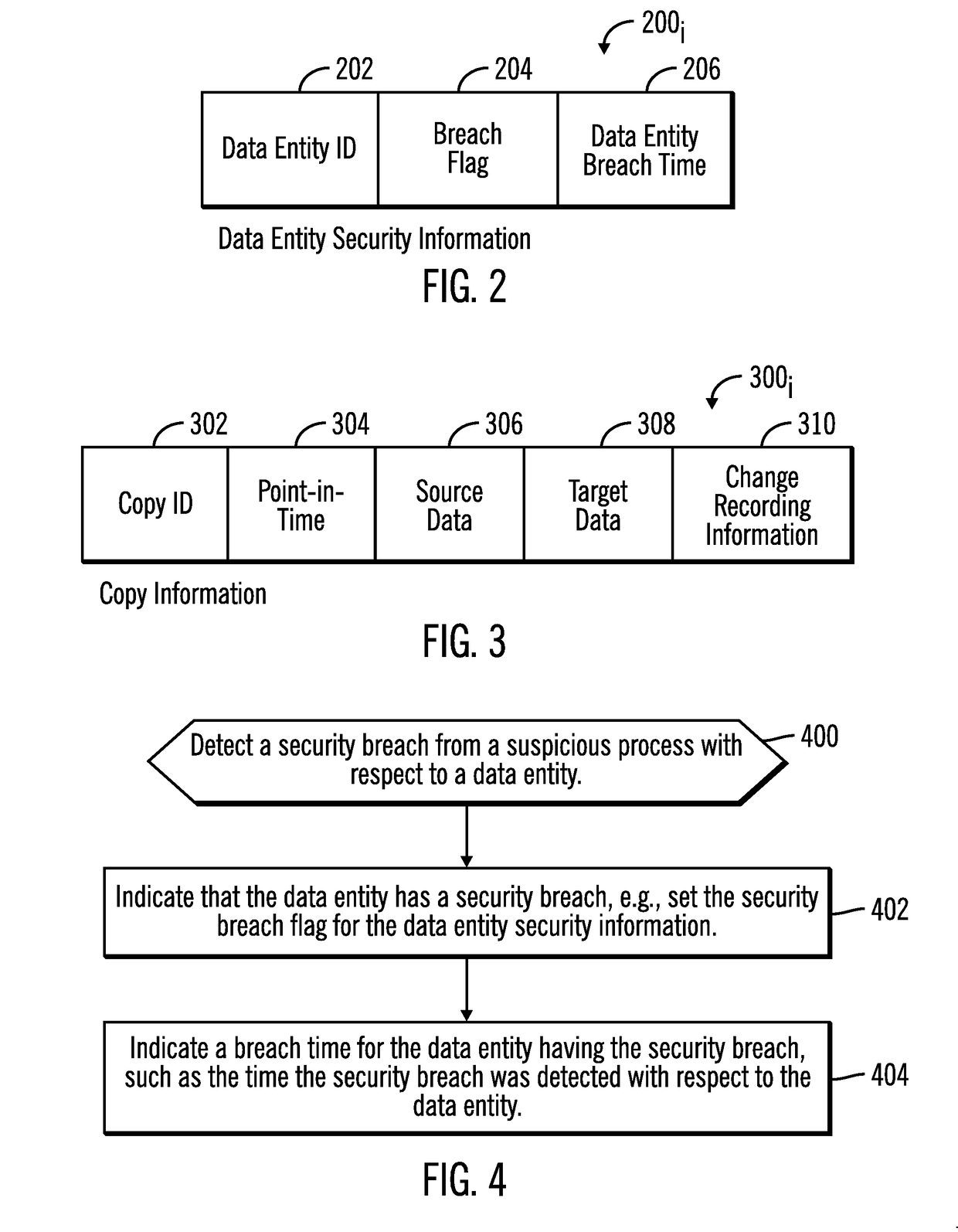

Managing reads and writes to data entities experiencing a security breach from a suspicious process

ActiveUS20180285566A1Minimize read latencyReduce write latencyDigital data protectionPlatform integrity maintainanceData entityVulnerability

Provided are a computer program product, system, and method for managing reads and writes to data entities experiencing a security breach from a suspicious process. A suspicious process is detected that is determined to have malicious code. A breach time for a data entity is indicated in response to detecting the suspicious process performing an operation with respect to the data entity. A determination is made whether there is a copy of the data entity created after the breach time for the data entity. A copy of the data entity is created when there is no copy of the data entity created after the breach time.

Owner:IBM CORP

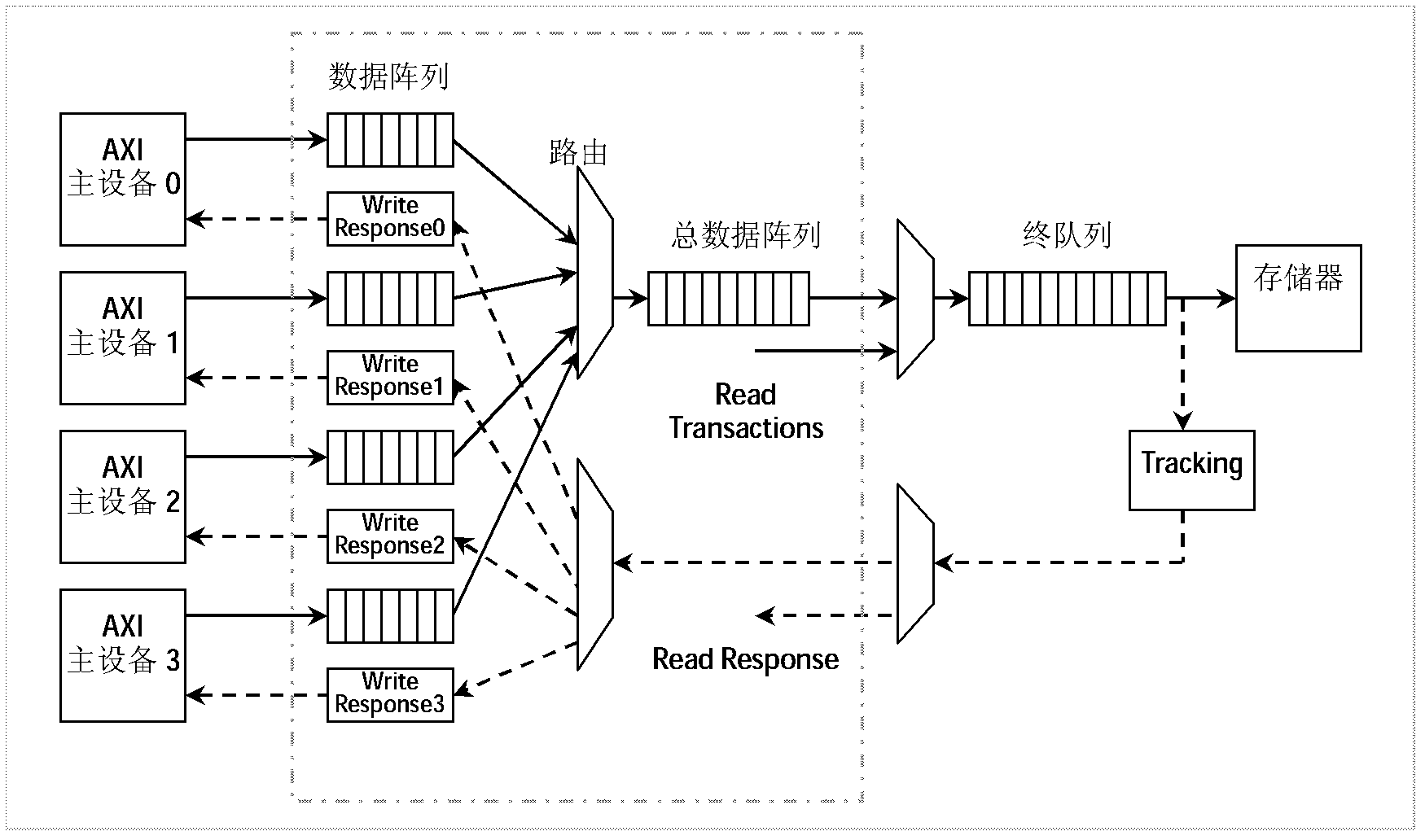

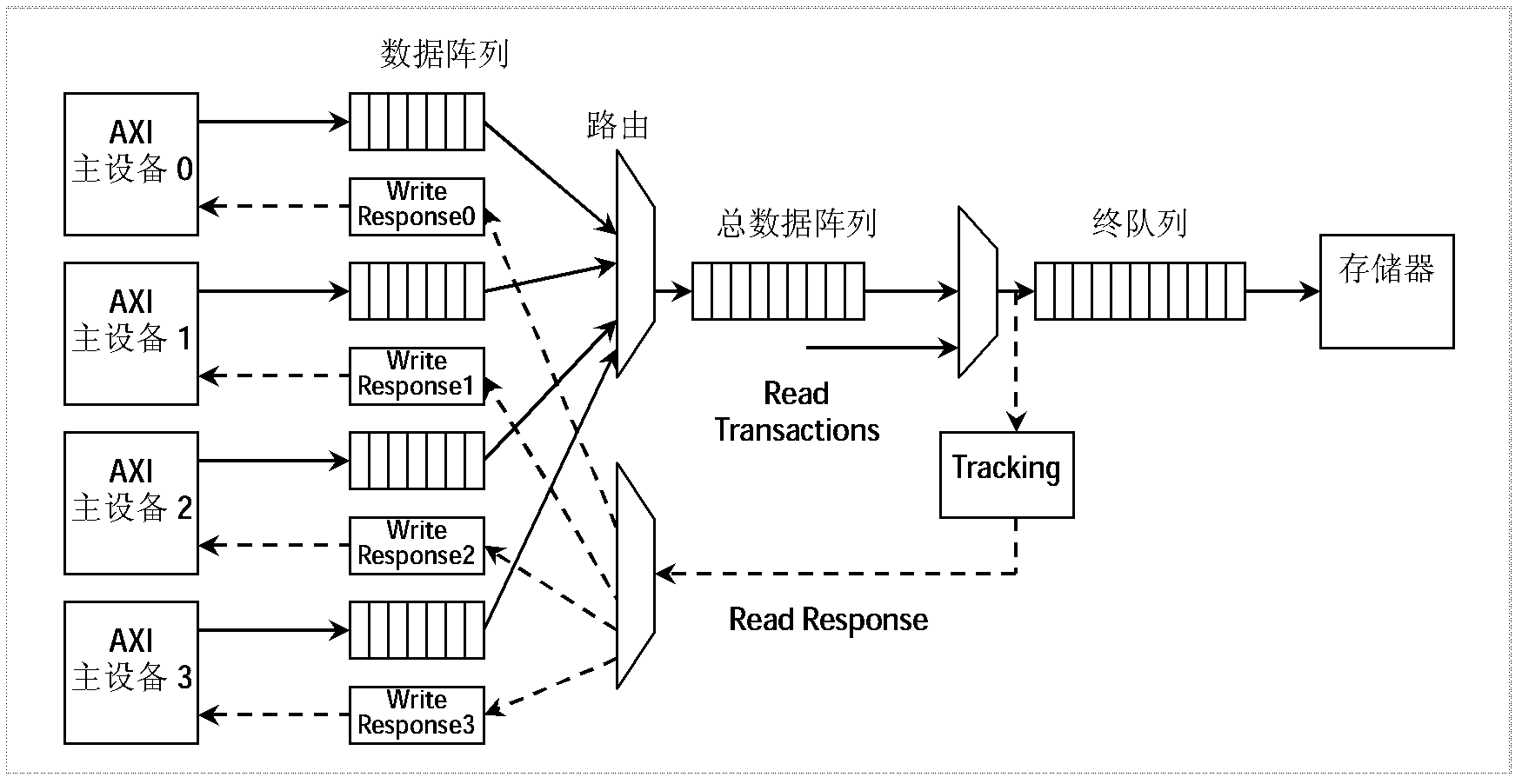

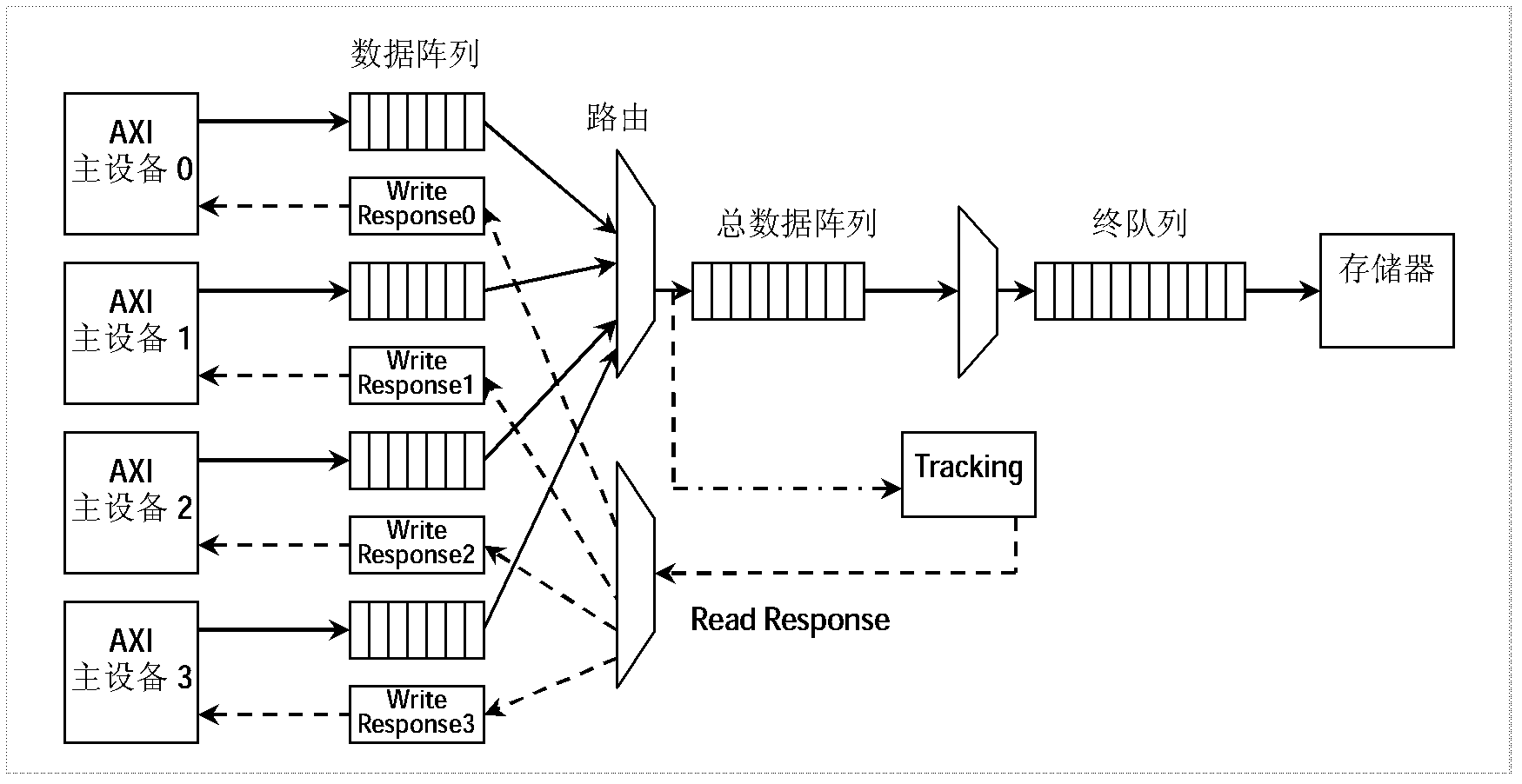

Method and equipment for writing data in AXI (Advanced Extensible Interface) bus

ActiveCN103077136AImprove performanceReduce write latencyElectric digital data processingEmbedded system

The invention provides a method and equipment for writing data in an AXI (Advanced Extensible Interface) bus. The method comprises the following steps that data are written and stored in a buffering array; a response message which indicates that the data are written is returned; the buffering array is released, and the data are written in a storage; and returning of the response message is performed before the data are written in the storage. In the AXI bus equipment, an EWR (early write response) function is properly introduced, so that writing delay can be reduced, thereby improving the performance of the bus.

Owner:GUANGDONG NUFRONT COMP SYST CHIP

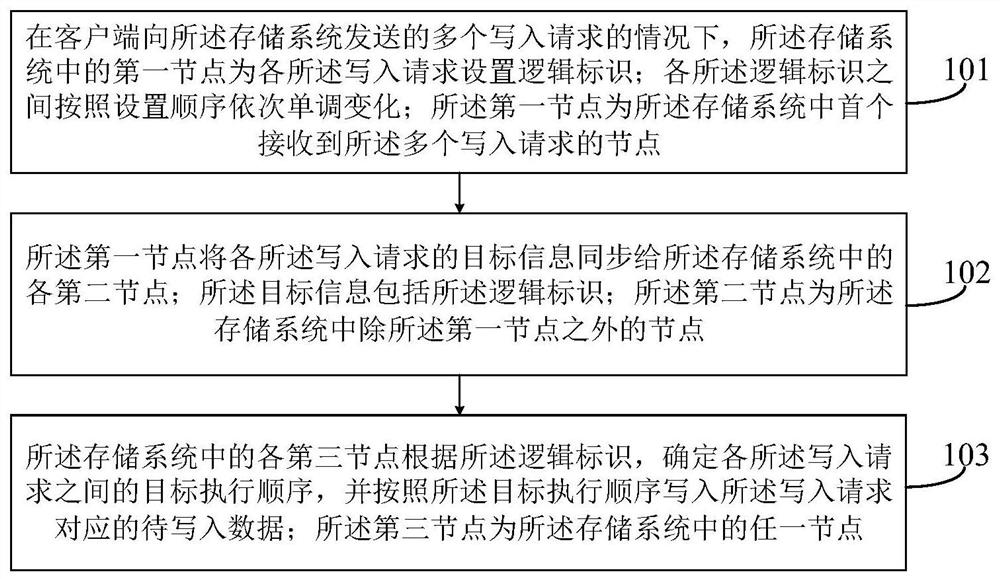

Data processing method and device, electronic device and computer readable storage medium

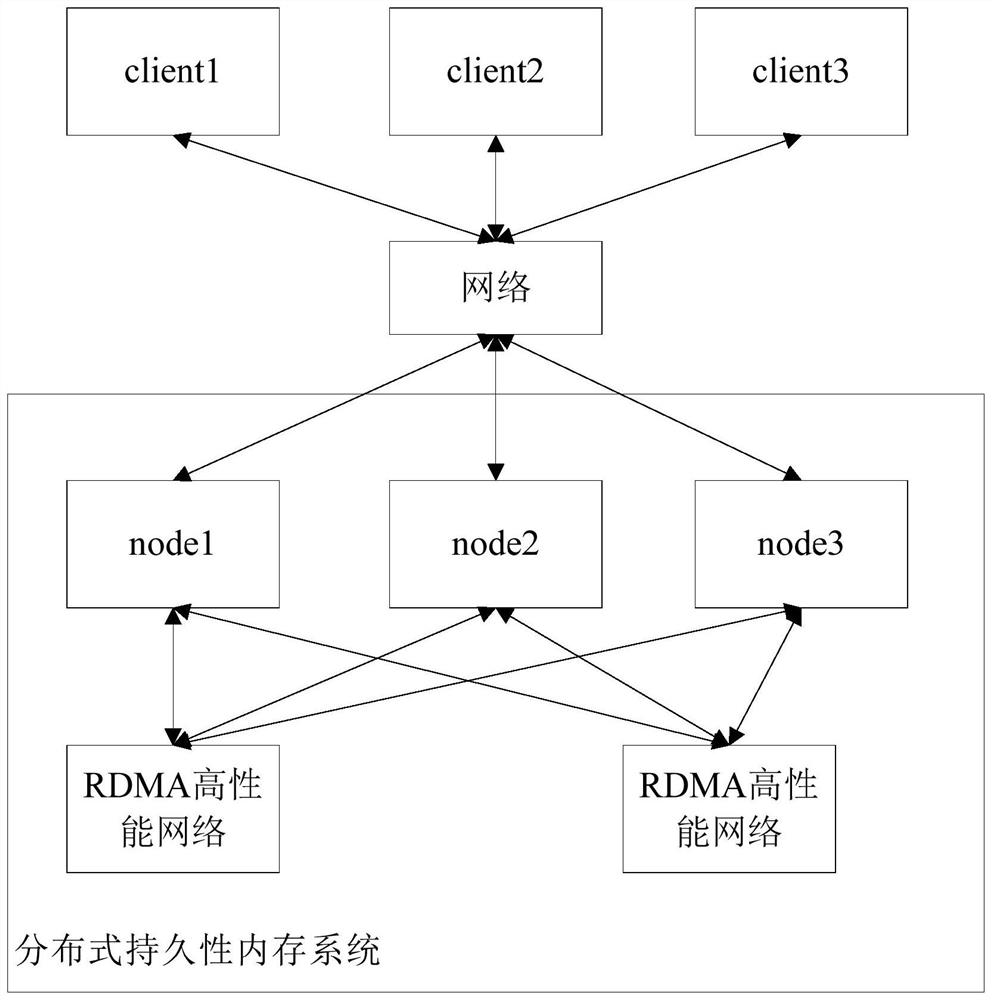

PendingCN113253924AEasy to operateReduce write latencyInput/output to record carriersComputer hardwareTime delays

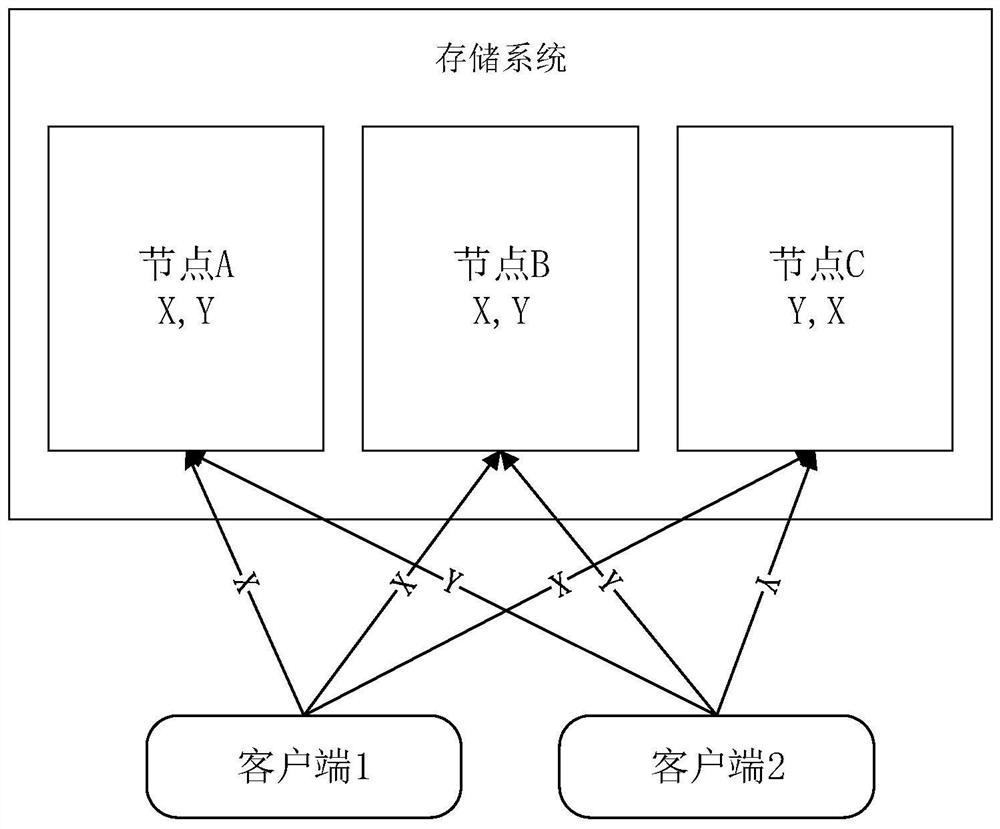

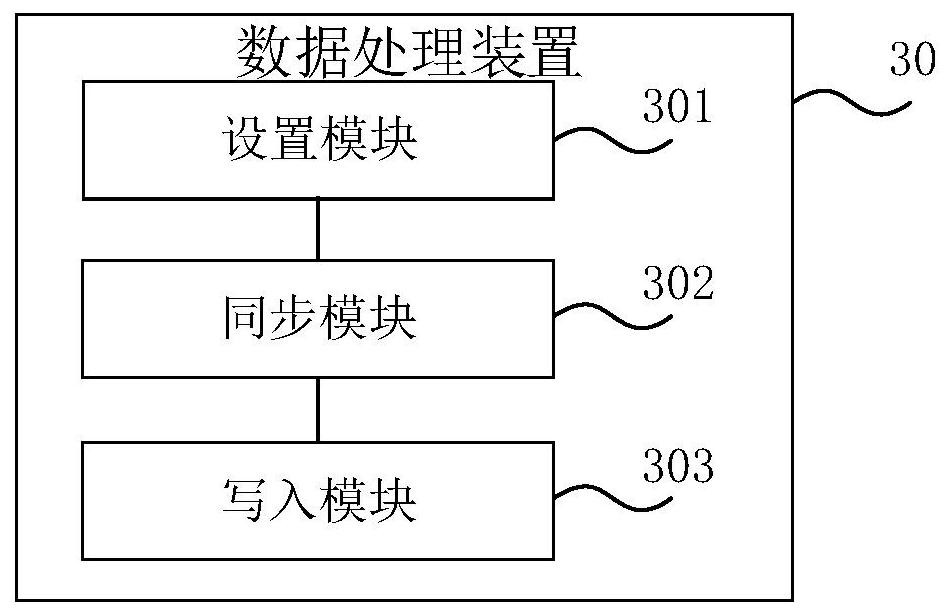

The invention provides a data processing method and device, an electronic device and a computer readable storage medium, and belongs to the technical field of electronic devices. In the method, under the condition that a client sends a plurality of write-in requests to a storage system, a first node in the storage system sets logic identifiers for the write-in requests, the logic identifiers are monotonically changed in sequence according to a setting sequence, then the first node synchronizes the target information of the write-in requests to the second nodes in the storage system, and finally, each third node in the storage system determines a target execution sequence among the write requests according to the logic identifiers, and writes the to-be-written data corresponding to the write requests according to the target execution sequence. According to the invention, the nodes do not need to select a master node and do not need to negotiate the write-in sequence, and the execution sequence of each write-in request is directly stipulated by the first node which receives the request firstly, so that the write-in consistency can be ensured, the operation steps can be simplified, and the write-in time delay can be reduced.

Owner:BIGO TECH PTE LTD

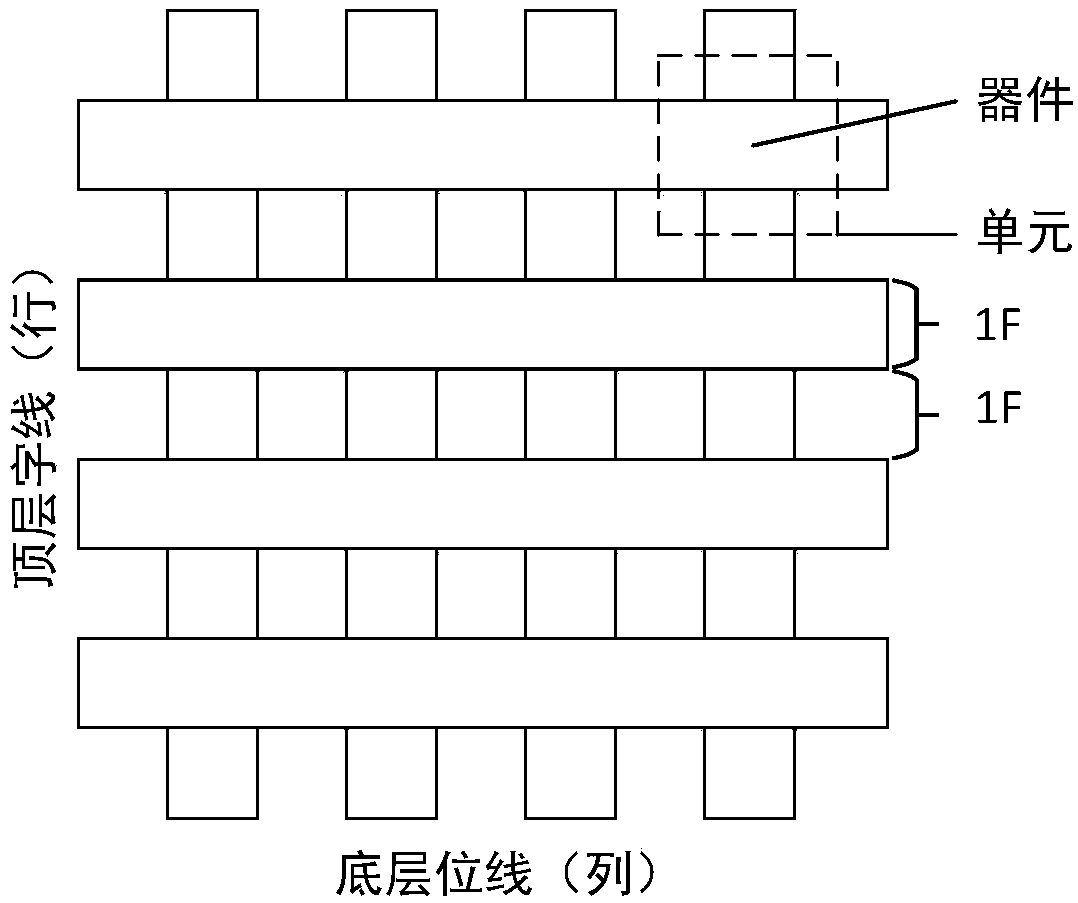

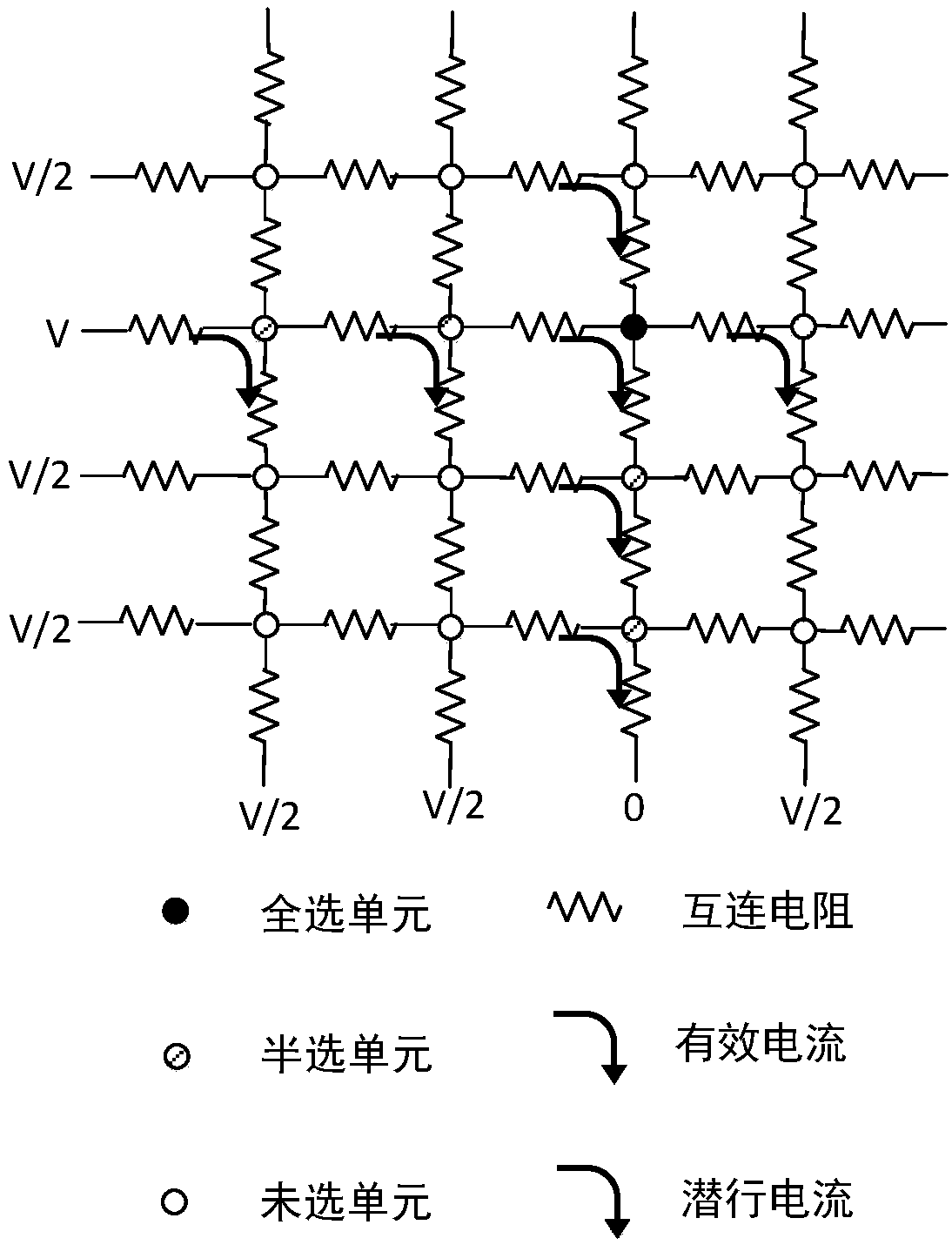

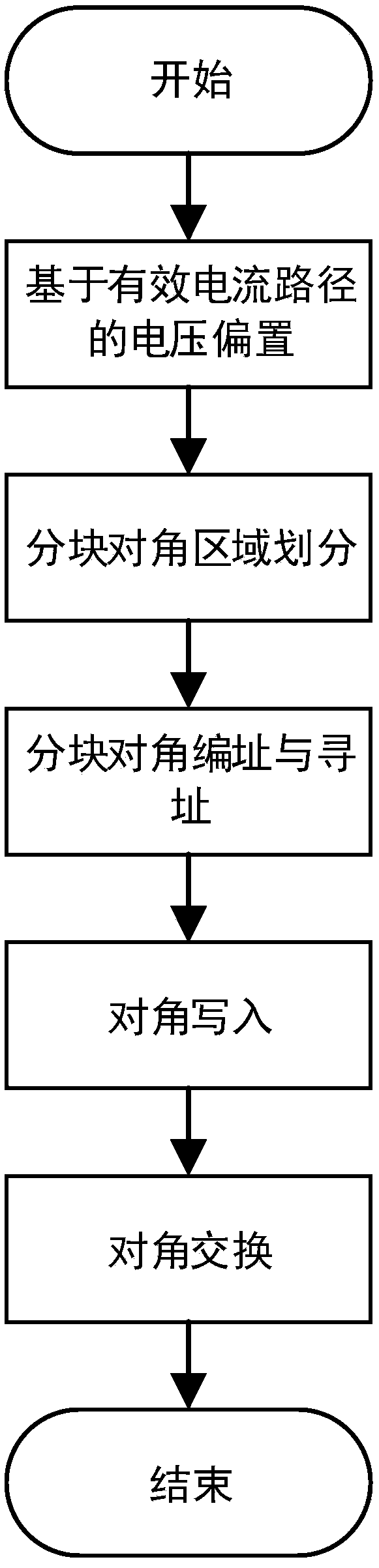

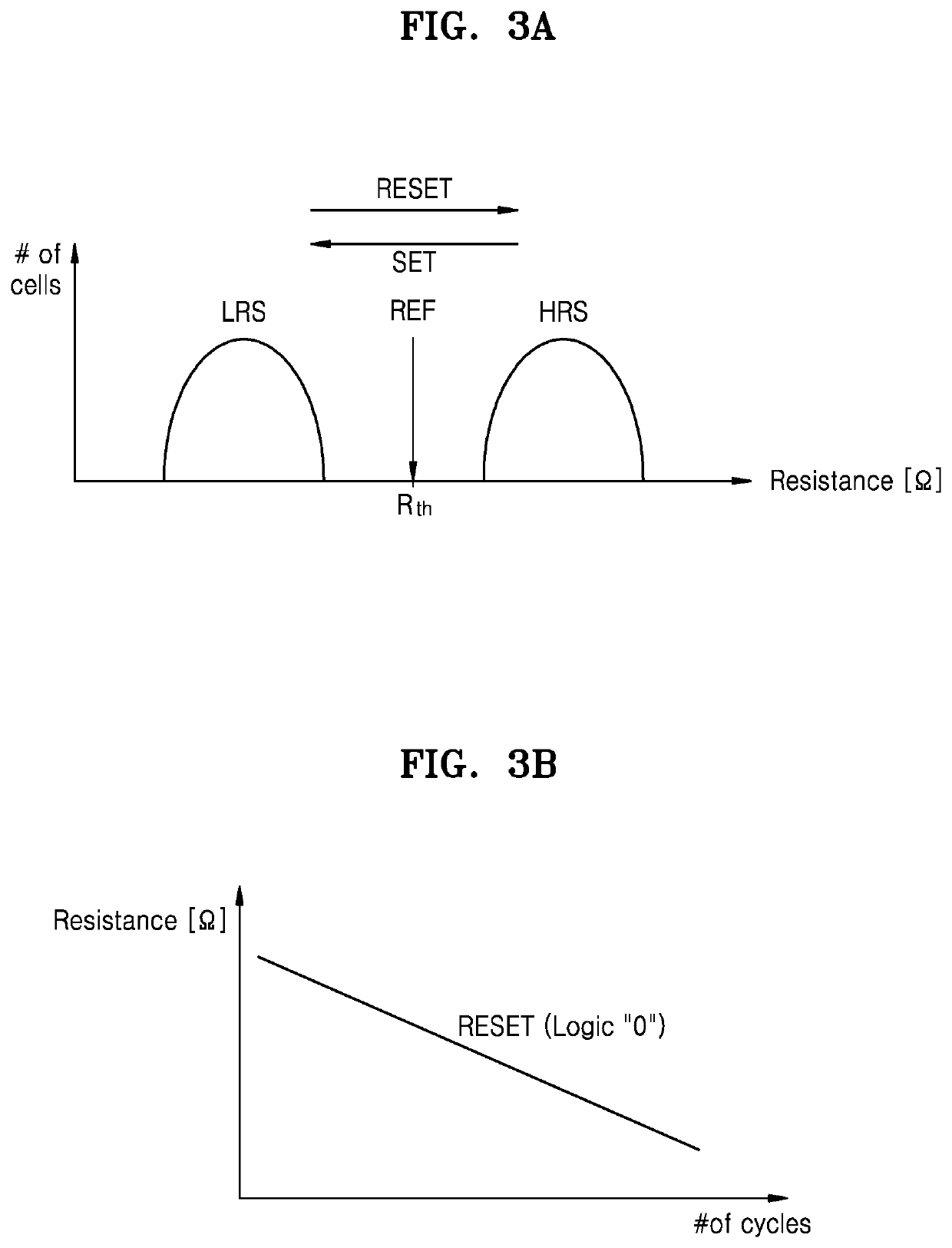

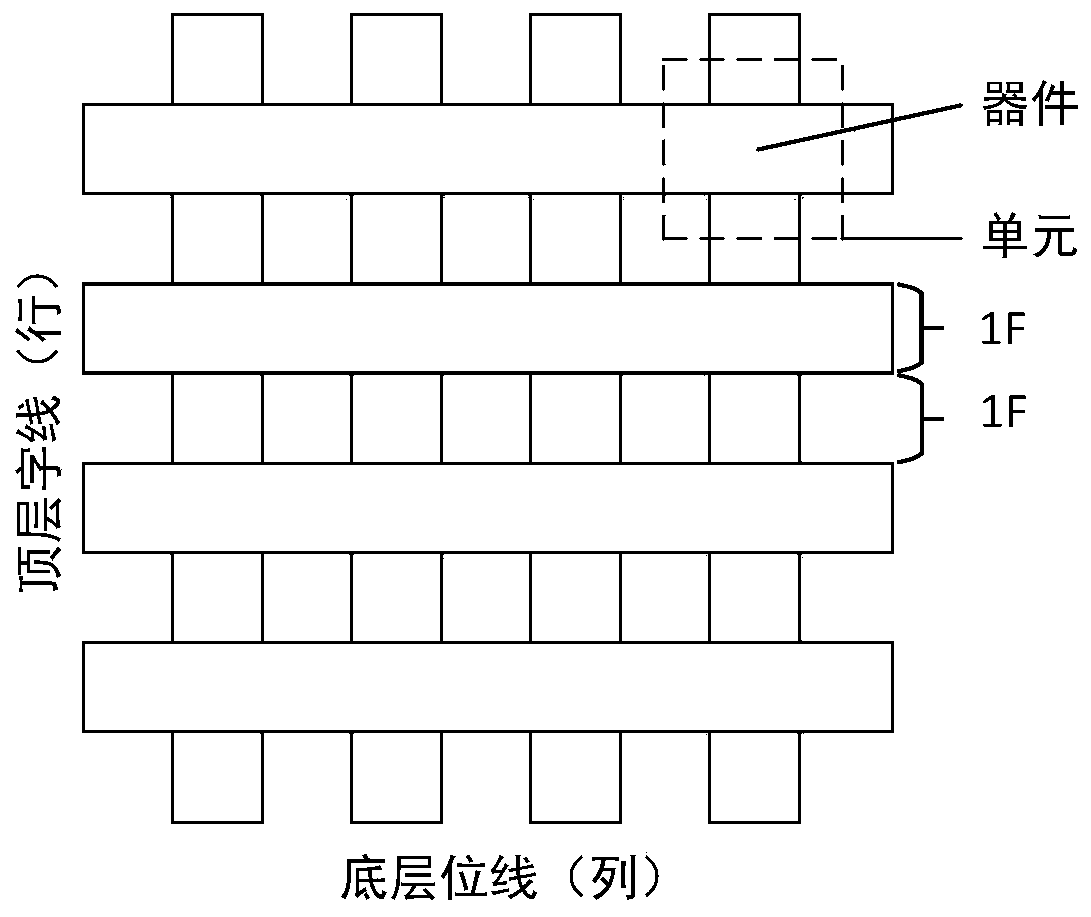

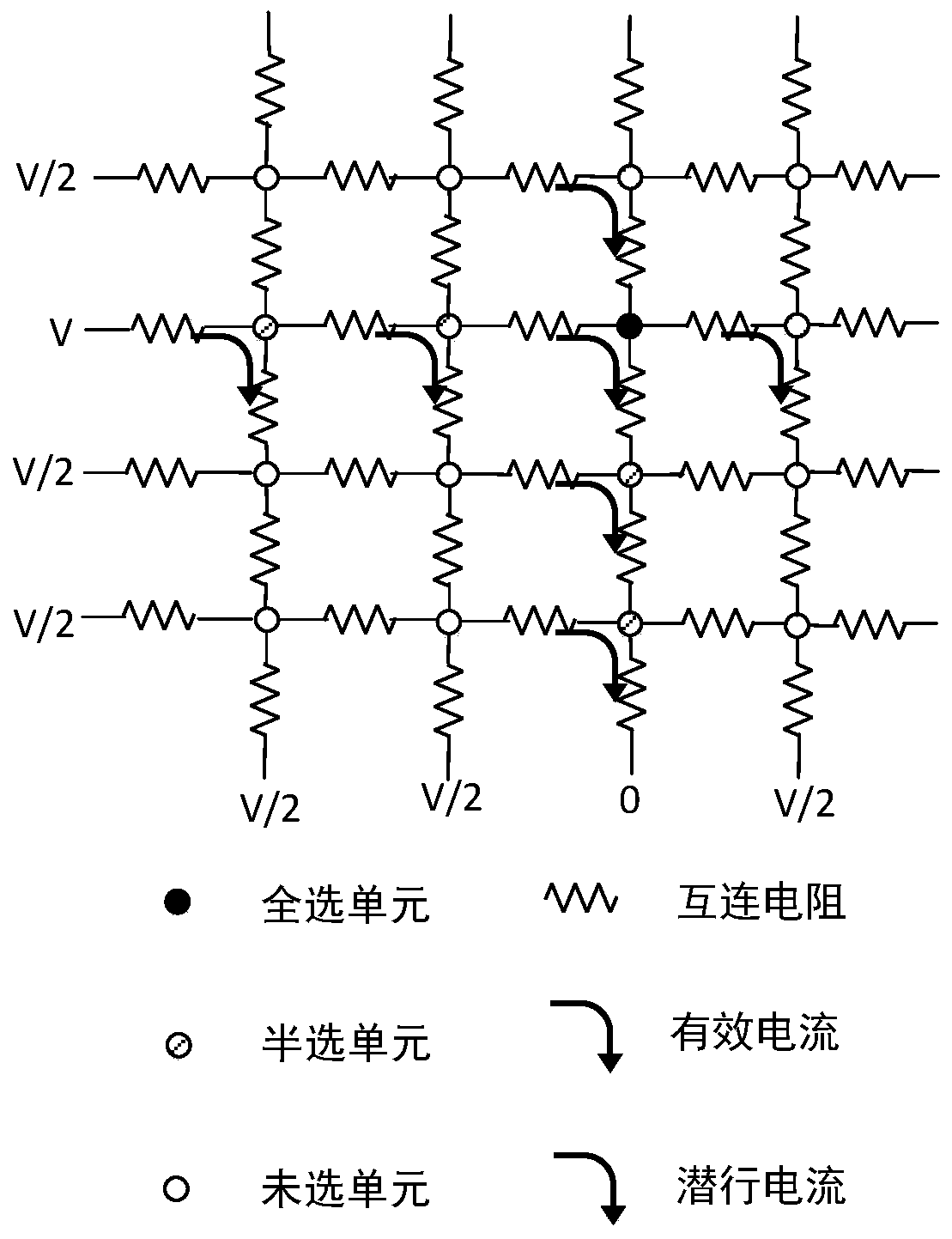

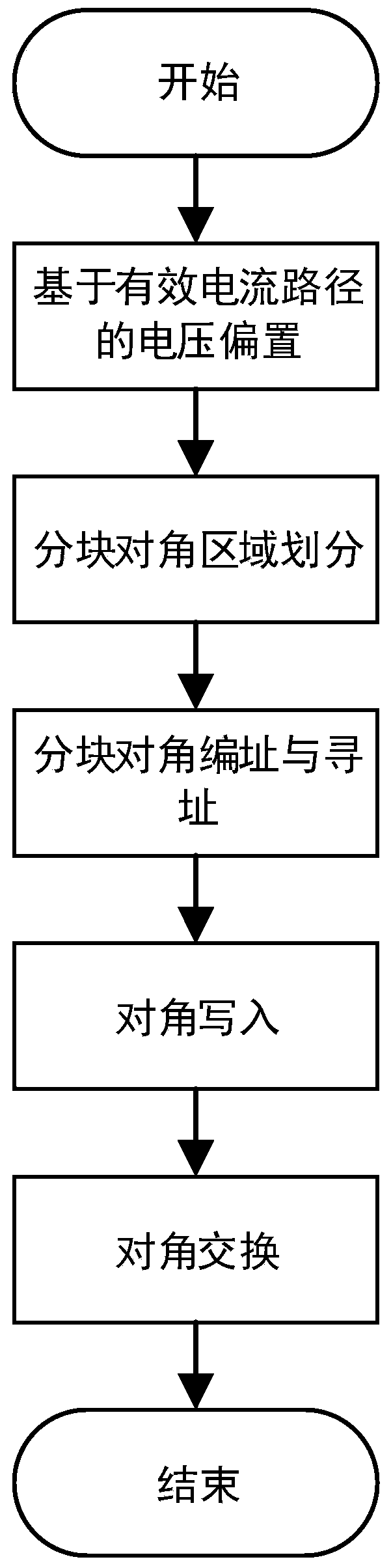

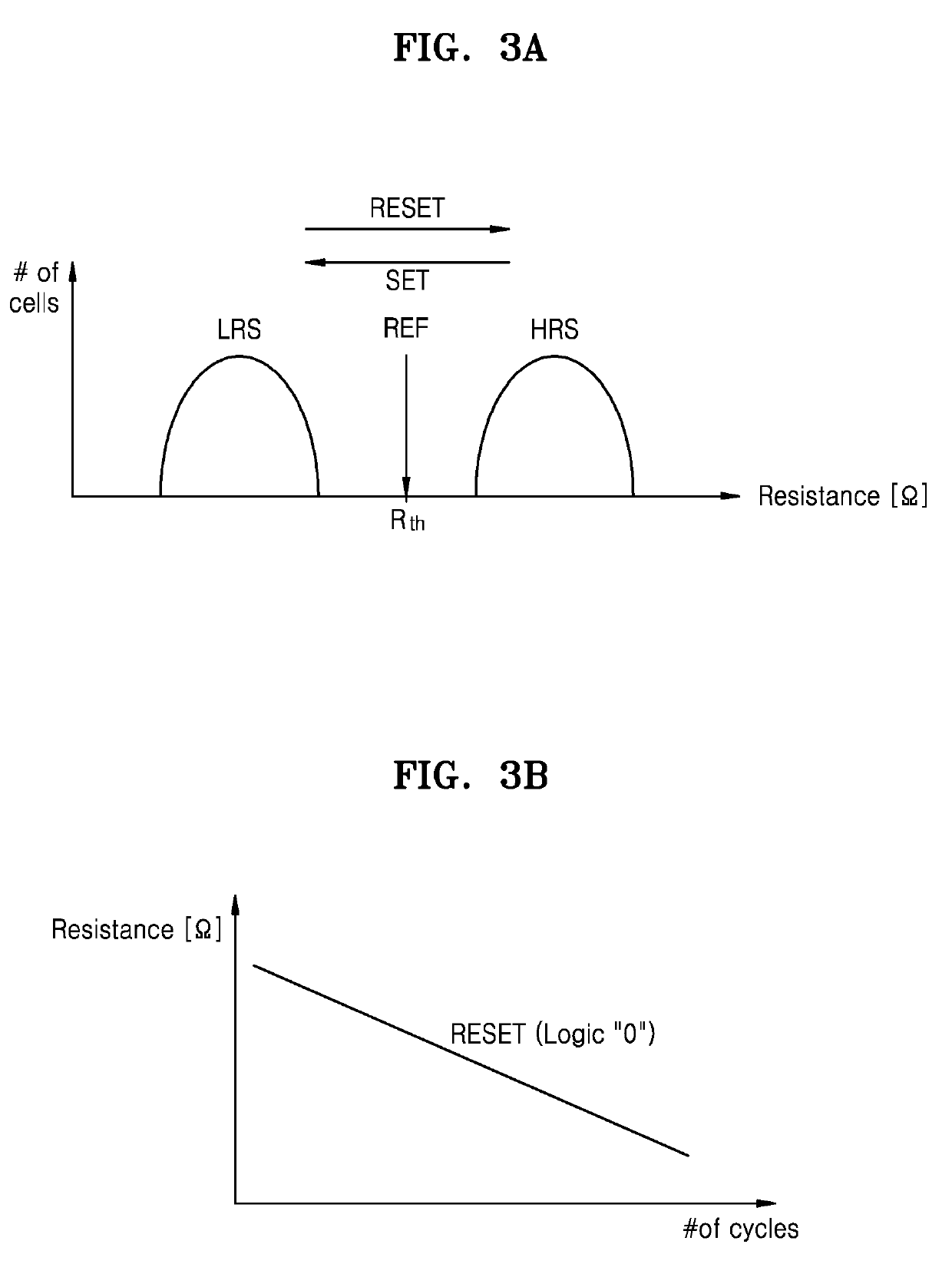

Writing method for resistive random access memory based on crosspoint array

ActiveCN108053852ANarrowing down the write latency varianceGuaranteed parallelismRead-only memoriesDigital storageRandom access memoryVoltage drop

The invention discloses a writing method for a resistive random access memory based on a crosspoint array, belonging to the field of information storage. The method of the invention increases effective voltage and reduces write delays by dynamically selecting the shortest voltage drop path; one regional division manner is employed to reduce differences among write delays in each area, so write delays in each area are reduced, and unit-level parallelism is guaranteed at the same time; the mapping between physical addresses and unit locations is established by employing an addressing mode, so write delays increase with the physical addresses, which facilitates optimization of address mapping, memory allocation and compilation; a parallel addressing circuit system is provided to speed up theaddressing process; and SET and RESET processes are overlapped for units in different rows and columns in virtue of a specific voltage bias mode, and row-level parallelism in the crosspoint array is developed. The method of the invention can reduce the write delays of the resistive random access memory, increase write bandwidth, and decrease the power consumption of writing.

Owner:HUAZHONG UNIV OF SCI & TECH

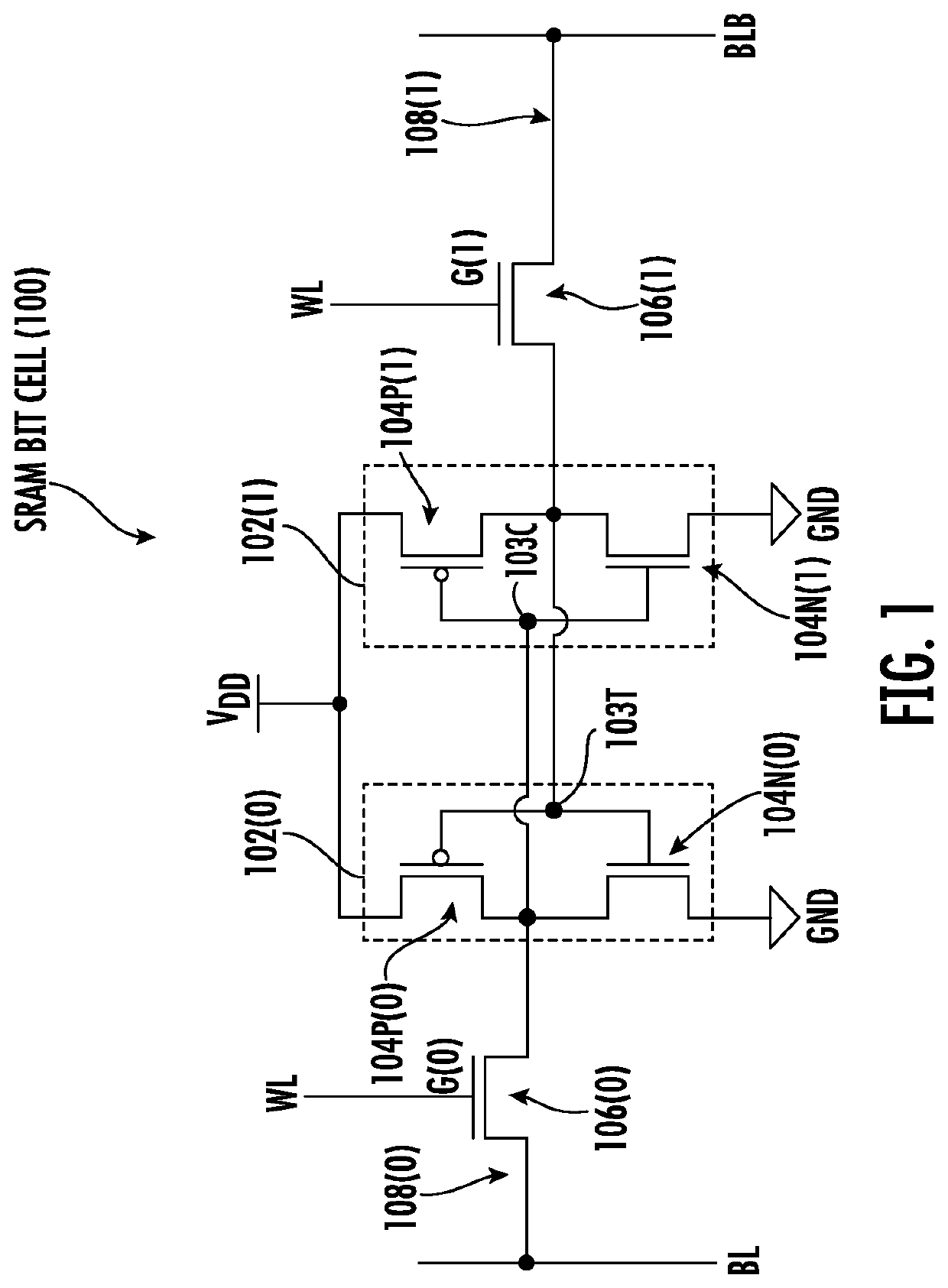

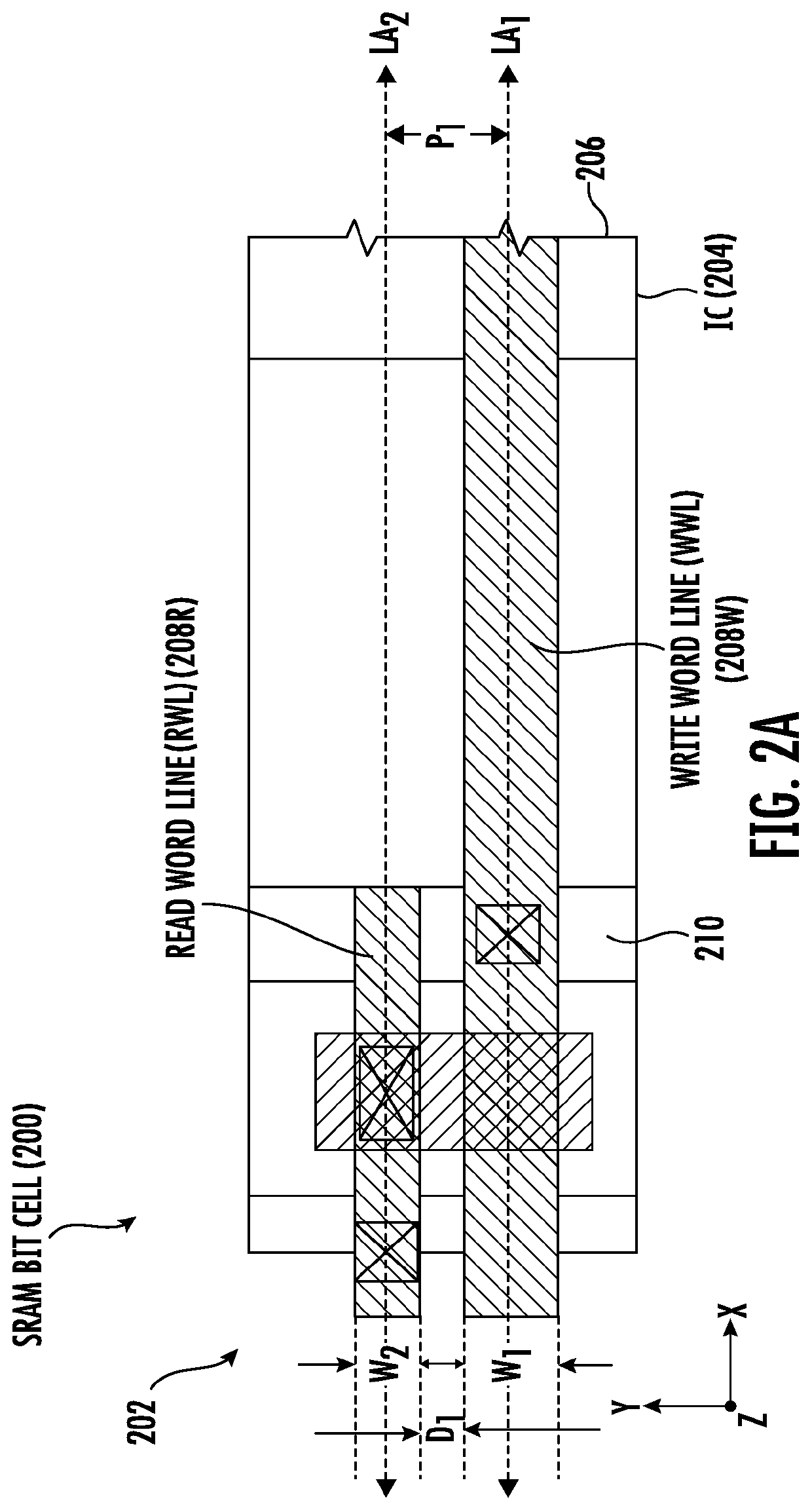

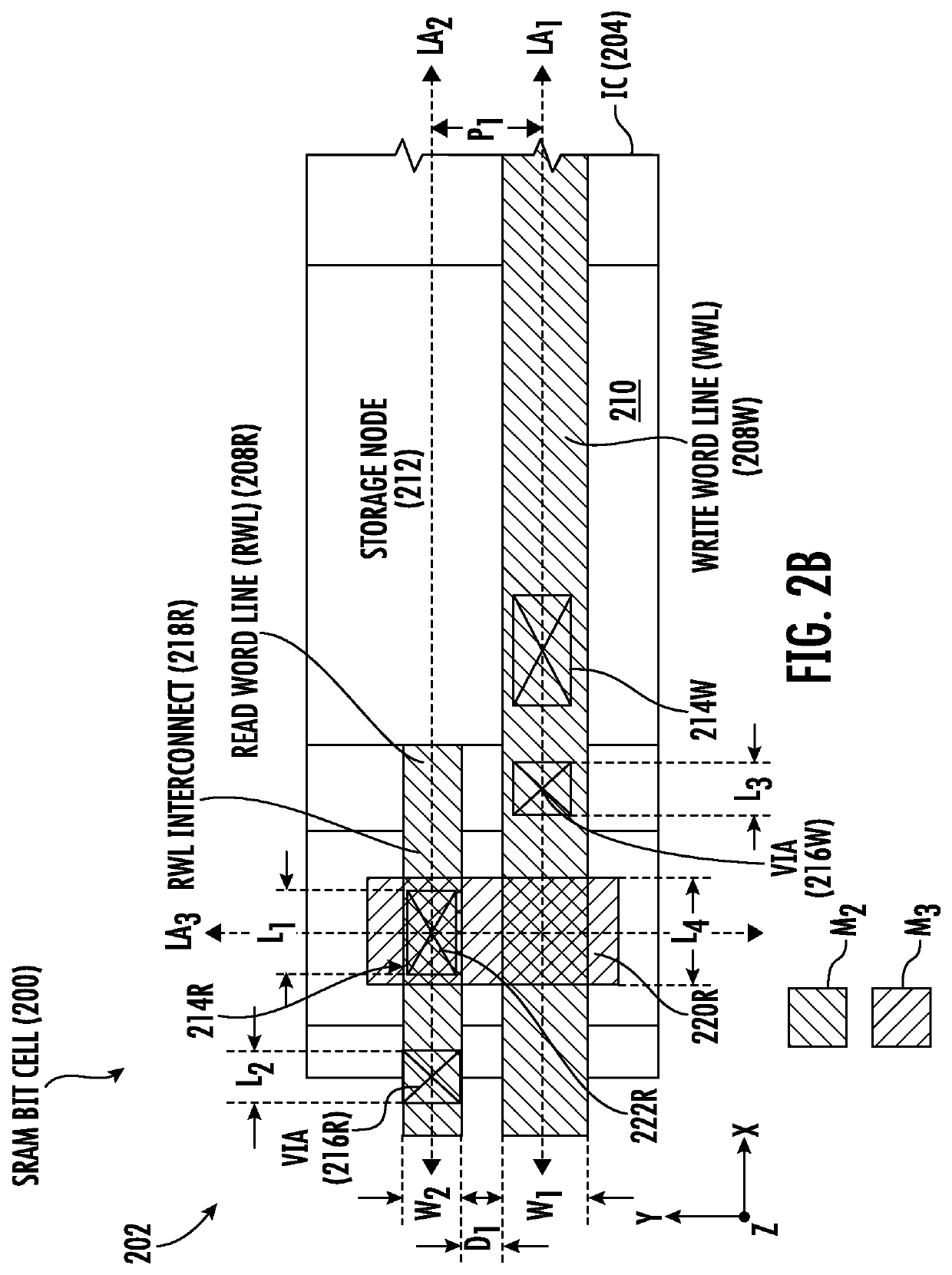

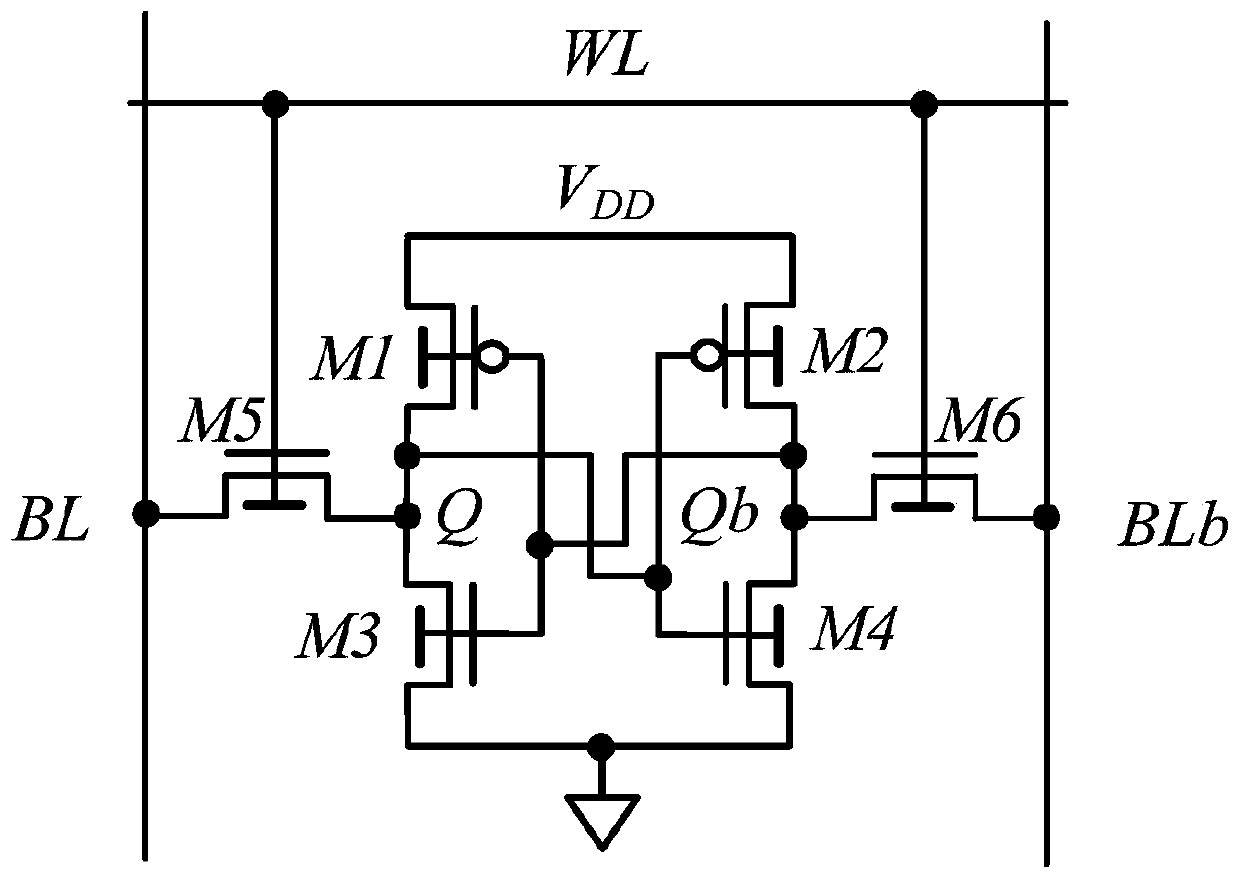

Static random access memory (SRAM) bit cells employing asymmetric width read and write word lines, and related methods

ActiveUS11222846B1Increased width write word lineReduce resistanceTransistorSemiconductor/solid-state device detailsComputer architectureStatic random-access memory

Owner:QUALCOMM INC

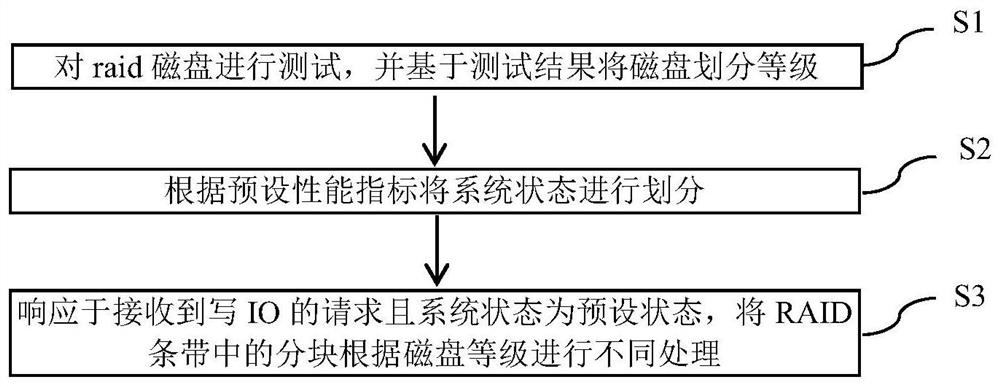

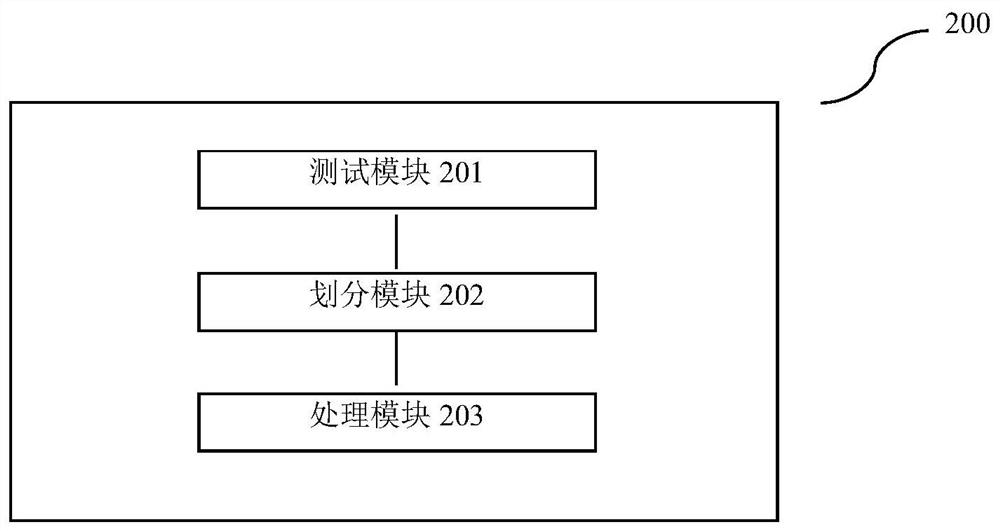

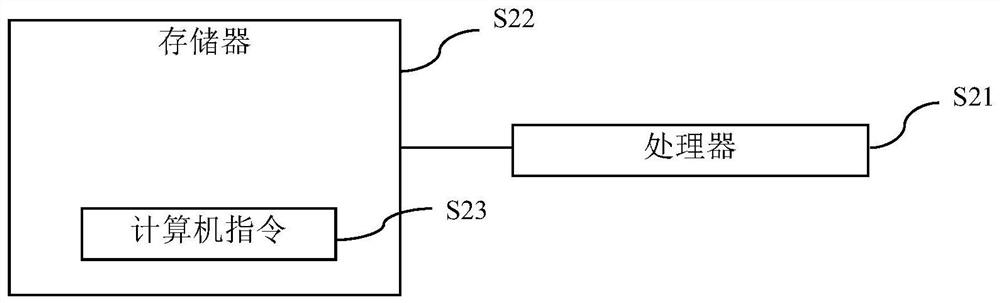

Method, device and equipment for writing IO based on RAID strip and readable medium

ActiveCN113805800AReduce write latencyImprove performanceInput/output to record carriersFaulty hardware testing methodsRAIDTime delays

The invention provides a method, a device and equipment for writing IO based on an RAID stripe and a readable medium. The method comprises the following steps: testing RAID disks, and grading the disks based on a test result; dividing the system state according to a preset performance index; and in response to the received writing IO request and the condition that the system state is a preset state, performing different processing on the blocks in the RAID strip according to the disk level. By using the scheme of the invention, the write-in time delay of the RAID stripe IO can be reduced, the bandwidth of the data is improved, and the performance of the storage system is improved.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

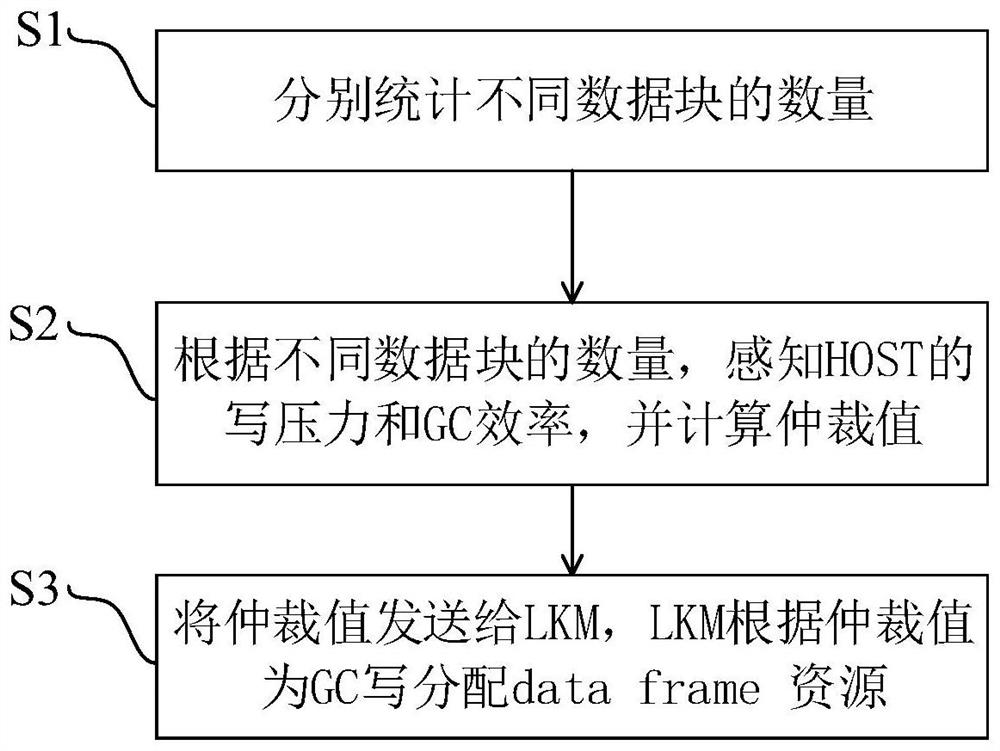

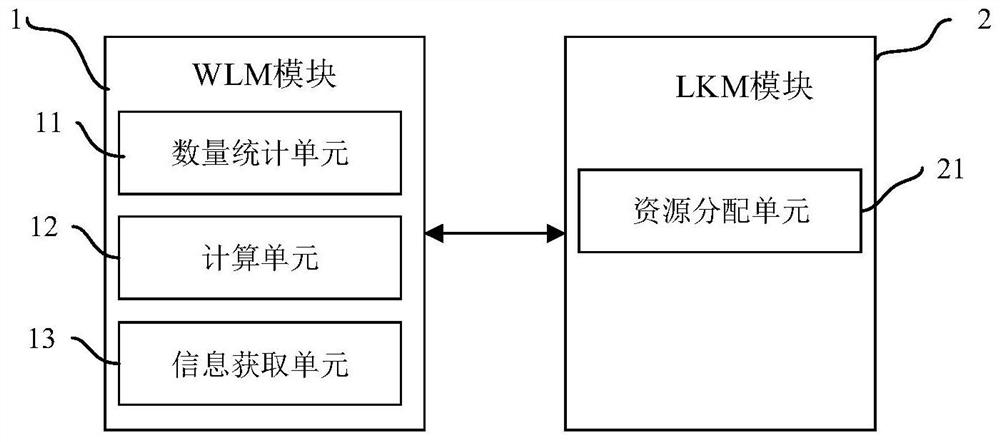

A method and device for reducing write latency under gc of solid-state hard disk

ActiveCN110569201BImprove energy utilizationImprove resource utilizationMemory architecture accessing/allocationInput/output to record carriersComputer hardwareEmbedded system

Owner:SUZHOU METABRAIN INTELLIGENT TECH CO LTD

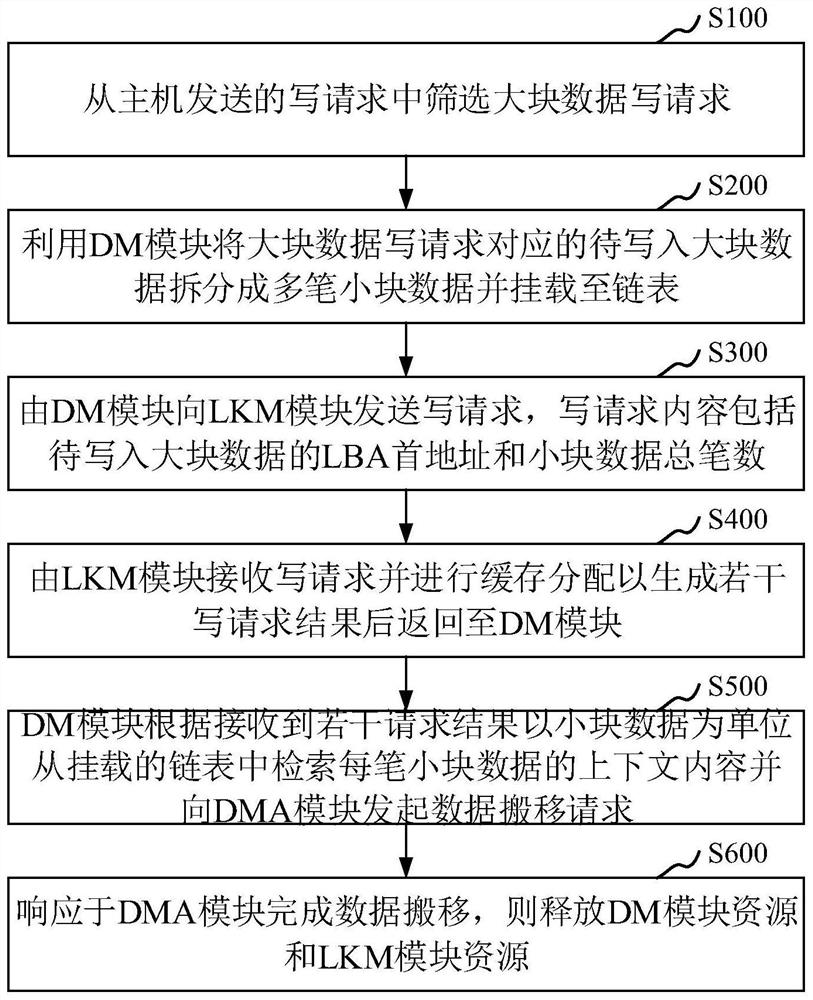

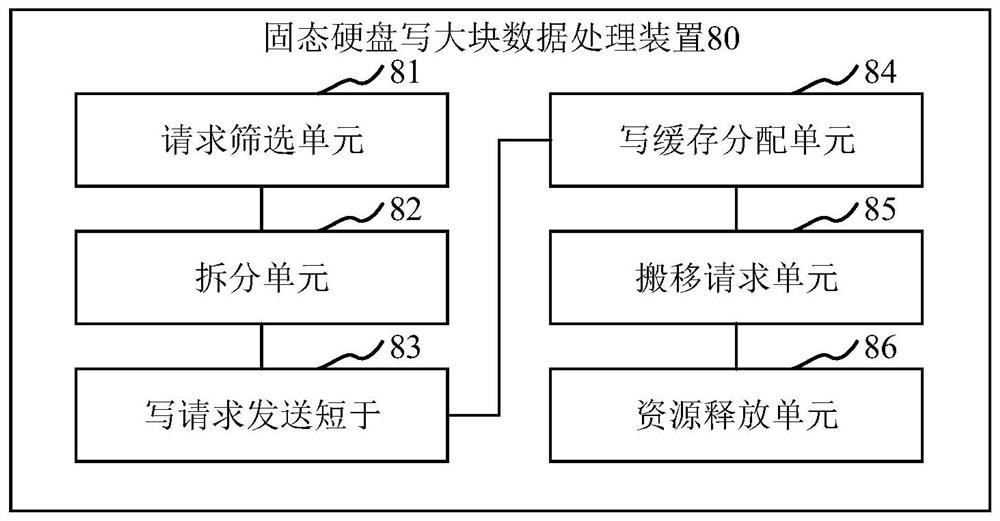

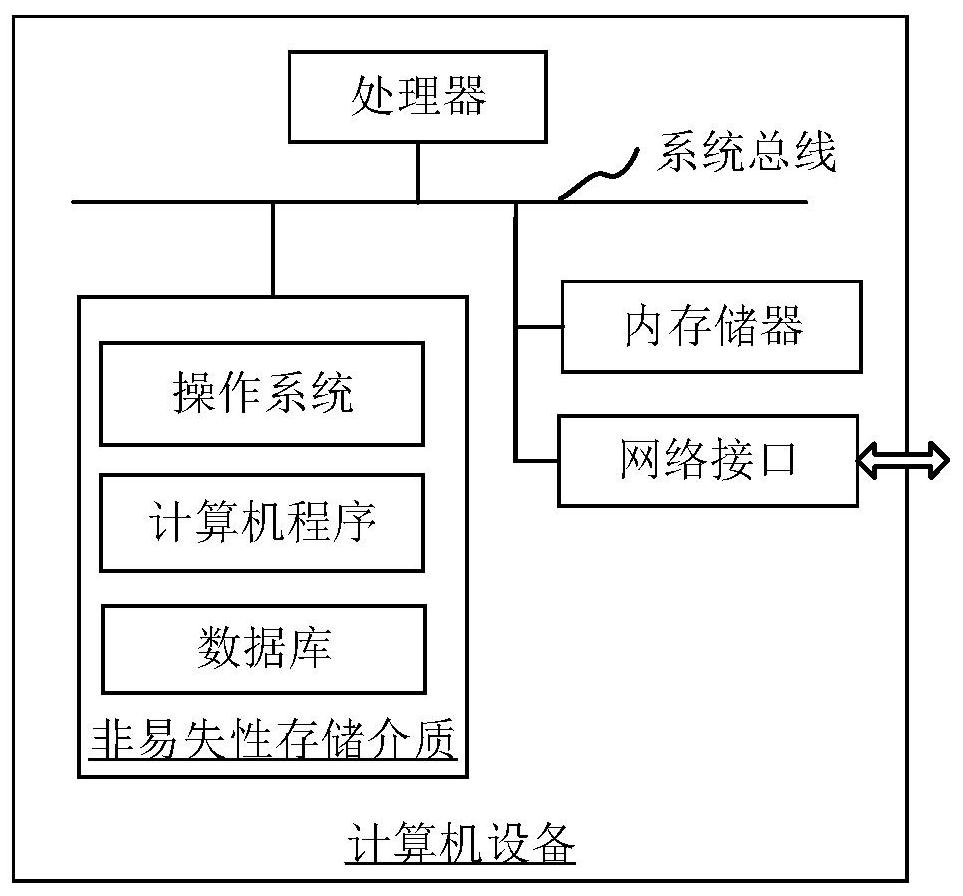

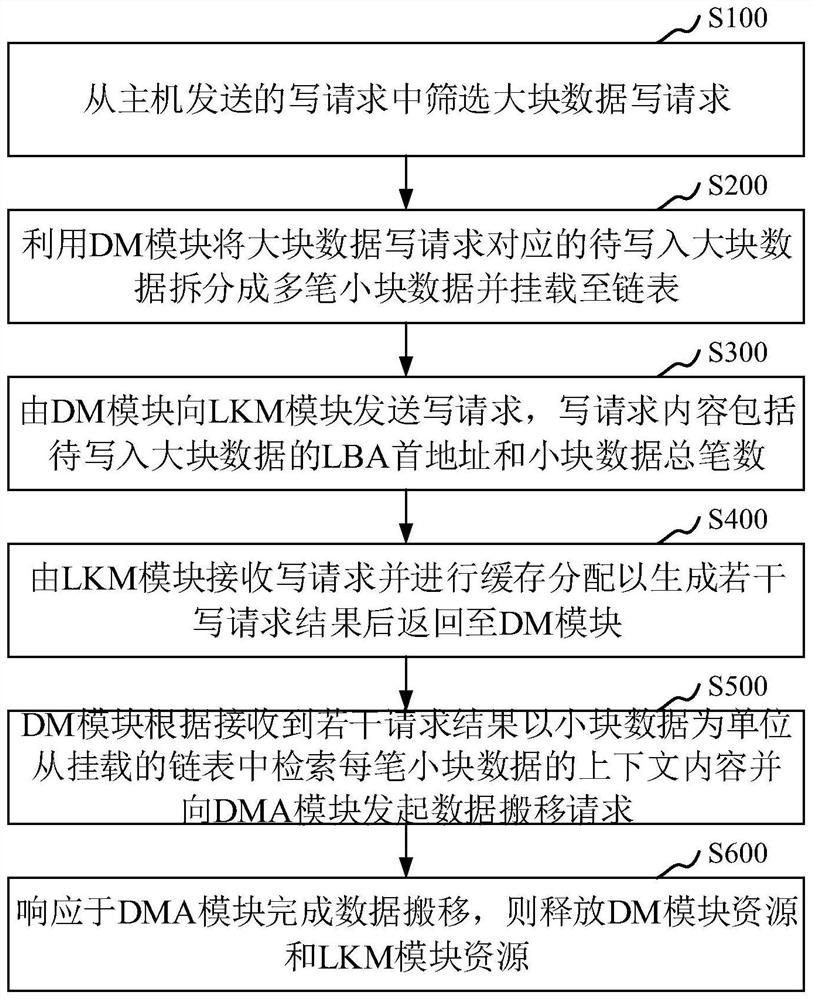

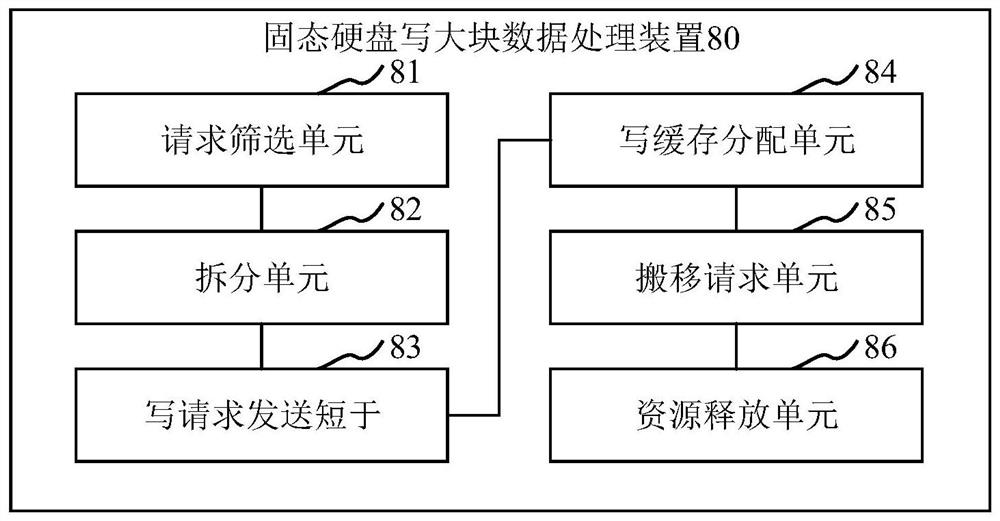

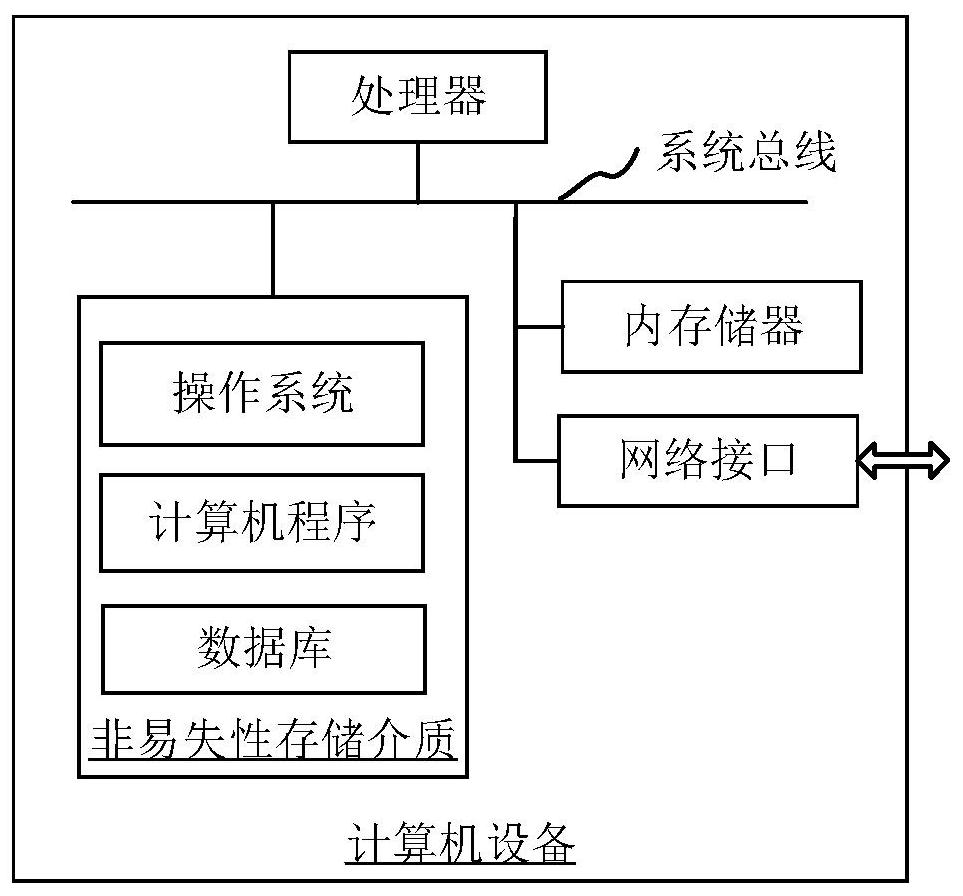

A method, device, device and medium for processing large block data of solid state hard disk

ActiveCN113448517BImprove writing efficiencyReduce in quantityInput/output to record carriersComputer hardwareLinked list

The invention discloses a method, device, equipment and medium for writing large block data of a solid-state hard disk. The method includes: screening large-block data write requests from write requests sent by the host; using a DM module to split the large-block data to be written corresponding to the large-block data write requests into multiple small-block data and mount them on a linked list; The DM module sends a write request to the LKM module, and the content of the write request includes the LBA first address of the large block of data to be written and the total number of small blocks of data; the LKM module receives the write request and performs cache allocation to generate several write request results Then return to the DM module; the DM module retrieves the context content of each small piece of data from the mounted linked list in units of small pieces of data according to the received several request results and initiates a data transfer request to the DMA module; in response to the DMA module completing the data If it is moved, the DM module resources and the LKM module resources are released. The scheme of the invention reduces the number of communication between modules, optimizes the efficiency of large block writing, reduces the writing delay, and increases the writing bandwidth.

Owner:SHANDONG YINGXIN COMP TECH CO LTD

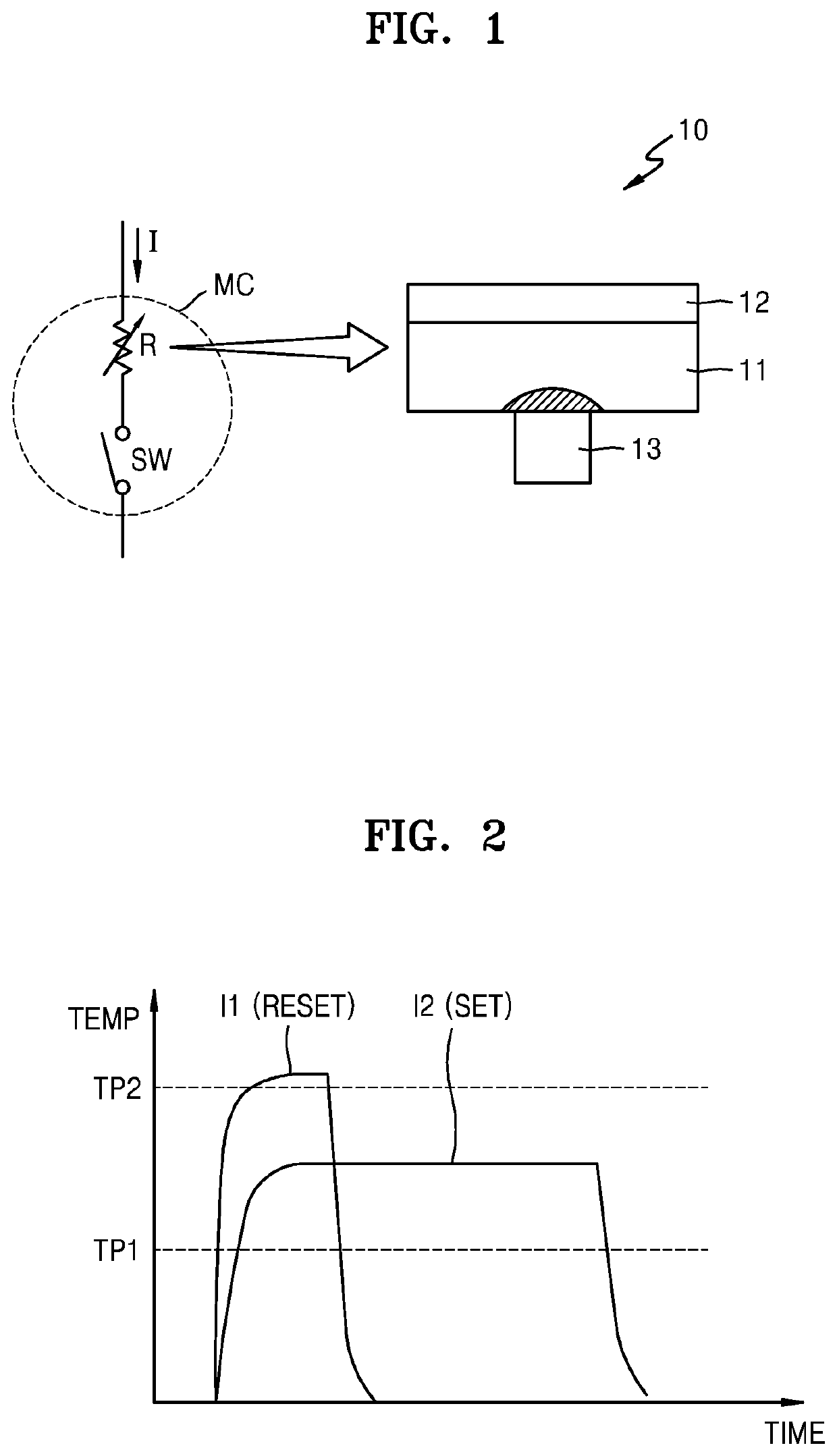

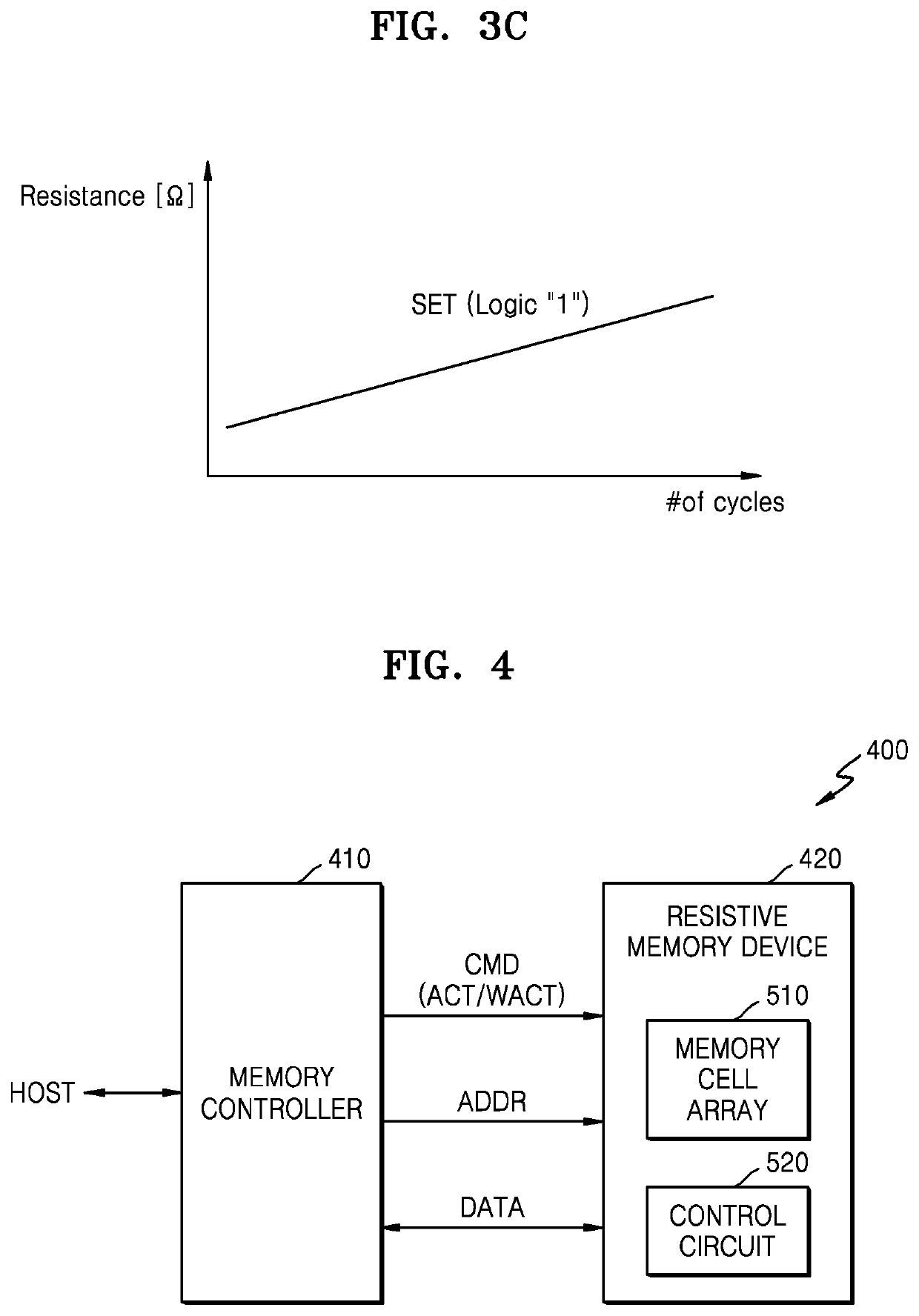

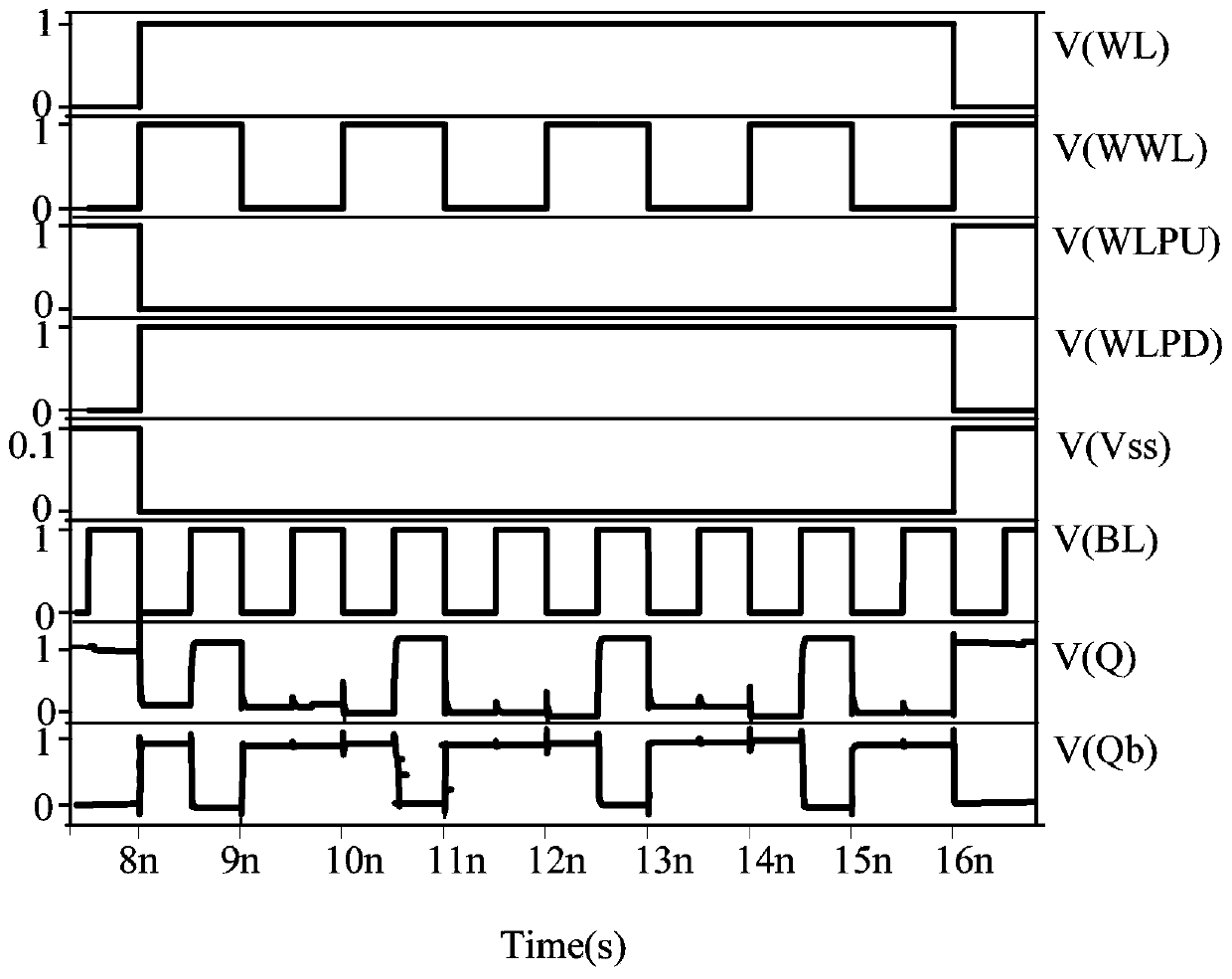

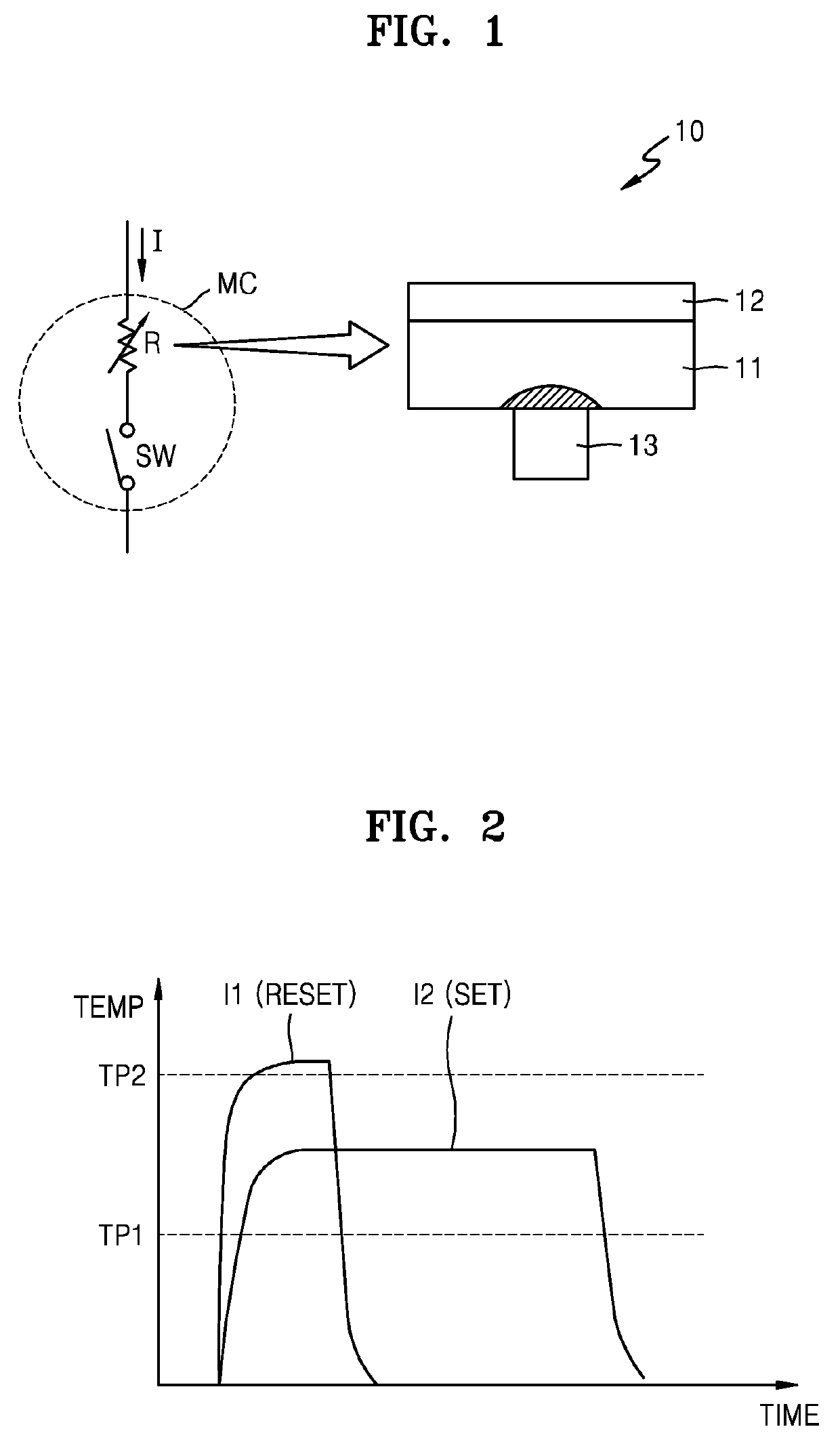

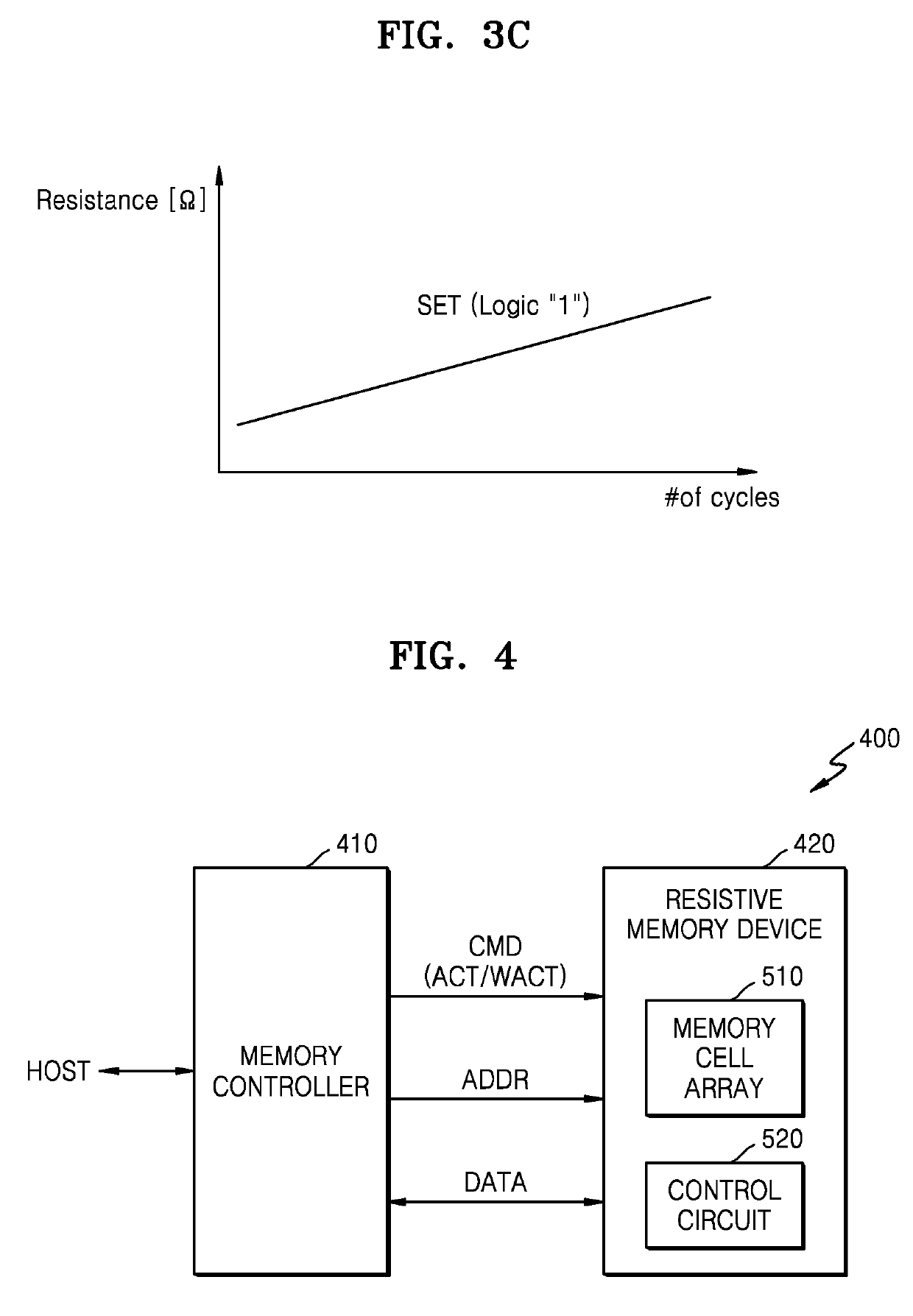

Method of operating resistive memory device capable of reducing write latency

Provided is a method of operating a resistive memory device including a memory cell array. The method includes the resistive memory device performing a first write operation in response to an active command and a write command and performing a second write operation in response to a write active command and the write command. The first write operation includes a read data evaluation operation for latching data read from the memory cell array in response to the active command. The second write operation excludes the read data evaluation operation.

Owner:SAMSUNG ELECTRONICS CO LTD

A writing method of resistive memory based on cross-point array

ActiveCN108053852BNarrowing down the write latency varianceGuaranteed parallelismRead-only memoriesDigital storageComputer architectureParallel computing

The invention discloses a writing method for a resistive random access memory based on a crosspoint array, belonging to the field of information storage. The method of the invention increases effective voltage and reduces write delays by dynamically selecting the shortest voltage drop path; one regional division manner is employed to reduce differences among write delays in each area, so write delays in each area are reduced, and unit-level parallelism is guaranteed at the same time; the mapping between physical addresses and unit locations is established by employing an addressing mode, so write delays increase with the physical addresses, which facilitates optimization of address mapping, memory allocation and compilation; a parallel addressing circuit system is provided to speed up theaddressing process; and SET and RESET processes are overlapped for units in different rows and columns in virtue of a specific voltage bias mode, and row-level parallelism in the crosspoint array is developed. The method of the invention can reduce the write delays of the resistive random access memory, increase write bandwidth, and decrease the power consumption of writing.

Owner:HUAZHONG UNIV OF SCI & TECH

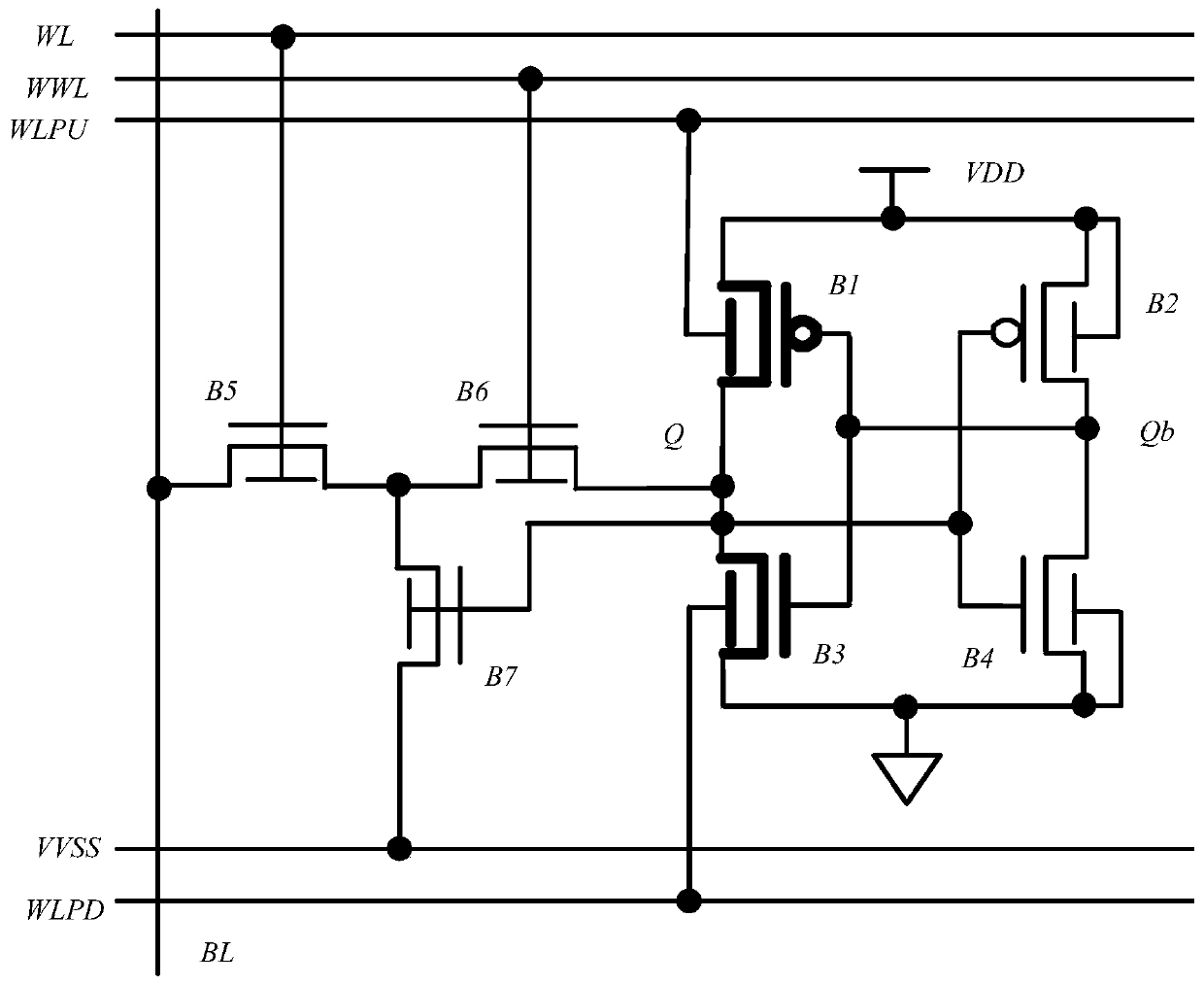

A finfet-based single-ended read-write storage unit

ActiveCN108461104BWith power control functionStable functionDigital storageControl theoryStorage cell

The invention discloses a single side reading and writingstorage unit based on FinFET. The single side writing and reading storage unit comprises a first FinFET pipe, a second FinFET pipe, a third FinFET pipe, a fourth FinFET pipe, a fifth FinFET pipe, a sixth FinFET pipe, a seventh FinFET pipe, a position line, a character line, a character writing line, an upper-character writing line, a lower-character writing line and a dotted line, the first FinFET pipe and the second FinFET pipe are both P-type FinFET pipes, and the third FinFET pipe, the fourth FinFET pipe, the fifth FinFET pipe, the sixth FinFET pipe and the seventh FinFET pipe are all N-type FinFET pipes. The single side writing and reading storage unit has the advantages that on the basis of guaranteeing the reading operation stability, a high writing noise limit can be obtained, the storage value result is stable, the circuit function is stable, the leaked power consumption is small, the delay is also small, and data is quickly and stably accessed.

Owner:NINGBO UNIV

Method of achieving low write latency in a data storage system

A data storage system includes a host having a write buffer, a memory region, a submission queue and a driver therein. The driver is configured to: (i) transfer data from the write buffer to the memory region in response to a write command, (ii) generate a write command completion notice; and (iii) send at least an address of the data in the memory region to the submission queue. The host may also be configured to transfer the address to a storage device external to the host, and the storage device may use the address during an operation to transfer the data in the memory region to the storage device.

Owner:SAMSUNG ELECTRONICS CO LTD

Method of operating resistive memory device capable of reducing write latency

Provided is a method of operating a resistive memory device including a memory cell array. The method includes the resistive memory device performing a first write operation in response to an active command and a write command and performing a second write operation in response to a write active command and the write command. The first write operation includes a read data evaluation operation for latching data read from the memory cell array in response to the active command. The second write operation excludes the read data evaluation operation.

Owner:SAMSUNG ELECTRONICS CO LTD

Optimization method for improving PCM writing performance

InactiveCN112068775AReduce the total number of writesReduce write latencyInput/output to record carriersMemory adressing/allocation/relocationProgramming languageComputer engineering

Owner:NANCHANG HANGKONG UNIVERSITY

IO scheduling method and device

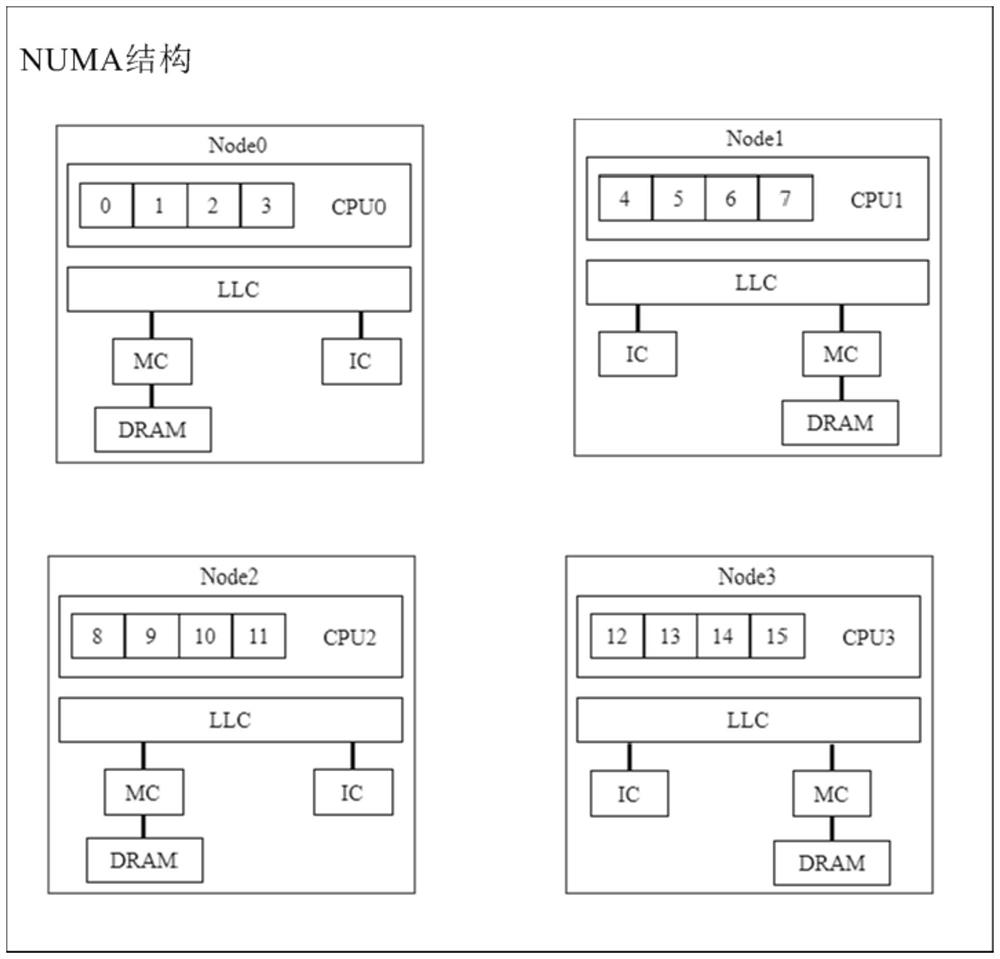

PendingCN114595043AReduce round trip overheadReduce write latencyProgram initiation/switchingResource allocationThread schedulingParallel computing

The invention discloses an IO scheduling method and device, and relates to the field of computer system structures. A specific embodiment of the method comprises the following steps: receiving a transmitted input / output (IO) request; wherein the IO request is a data reading request or a data writing request; when the IO request is a data reading request, determining a target CPU corresponding to the data reading request so as to schedule an IO process or thread to a core of the target CPU to perform data reading operation, and returning read data; and when the IO request is a data writing request, determining a target node corresponding to the data writing request so as to schedule an IO process or thread to write data into a CPU memory of the target node. According to the implementation mode, the read-write mode, the IO size and the performance characteristics and use conditions of all the nodes / CPUs are comprehensively considered, the IO process / thread scheduling mode is determined, scheduling errors are avoided, the expenditure of back-and-forth scheduling of the IO process / thread on a CPU core is reduced, and therefore the IO access performance of a system is improved.

Owner:CCB FINTECH CO LTD

Processing method, device and equipment for writing bulk data in solid state disk and medium

ActiveCN113448517AImprove writing efficiencyReduce in quantityInput/output to record carriersSoftware engineeringSmall data

The invention discloses a processing method, device and equipment for writing bulk data in a solid state disk and a medium. The method comprises the following steps: large data write requests are screened from write requests sent by a host; the DM module is used for splitting to-be-written large data corresponding to the large data writing request into multiple pieces of small data and mounting the small data to a linked list; the DM module sends a write request to the LKM module, wherein the content of the write request comprises an LBA initial address of large data to be written and the total number of small data; the LKM module receives the write request, performs cache allocation to generate a plurality of write request results and returns the write request results to the DM module; the DM module retrieves the context content of each piece of small data from the mounted linked list by taking the small data as a unit according to a plurality of received request results and initiates a data migration request to the DMA module; and the DM module resources and the LKM module resources are released in response to completion of data migration of the DMA module. According to the scheme, the number of communication times between modules is reduced, the efficiency of large block writing is optimized, the writing delay is reduced, and the writing bandwidth is improved.

Owner:SHANDONG YINGXIN COMP TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com