Patents

Literature

84 results about "Context values" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

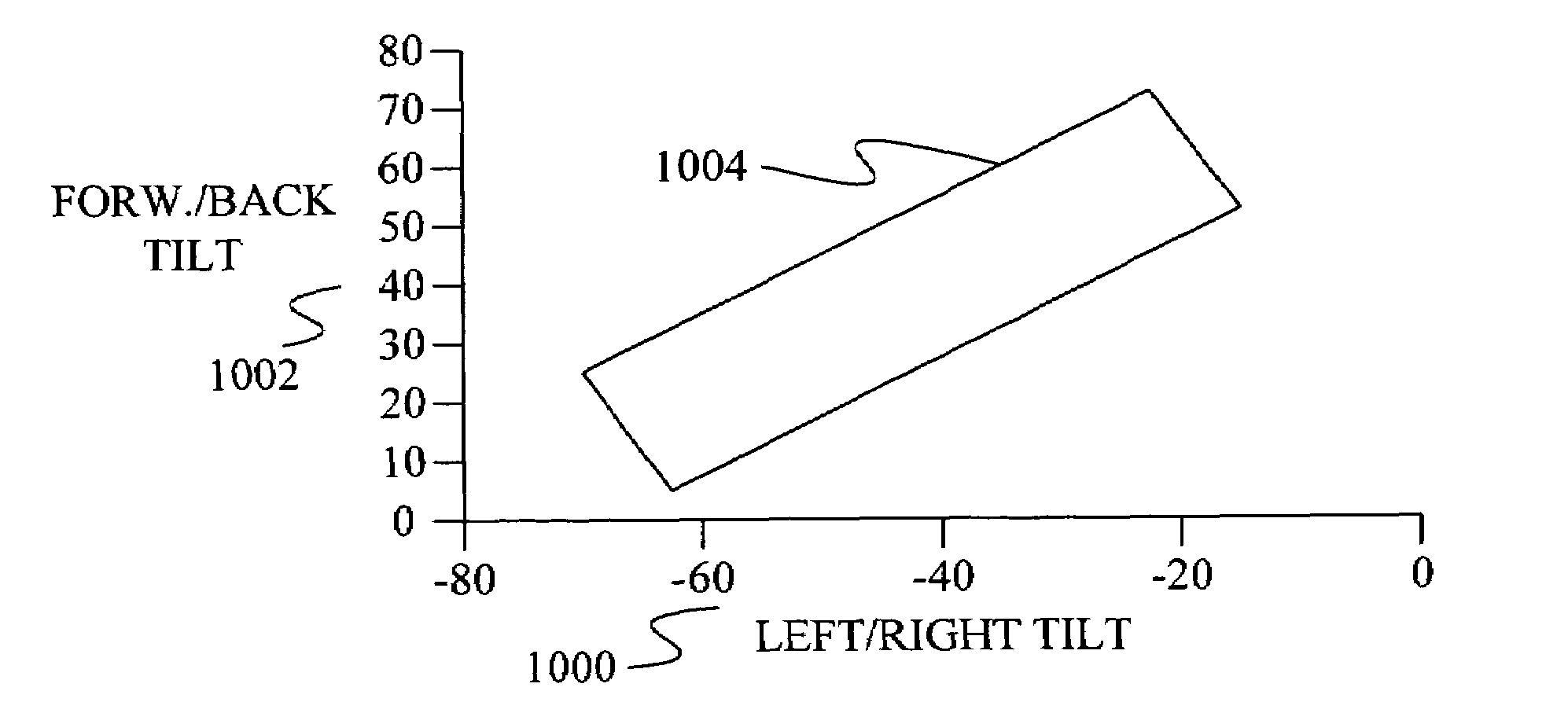

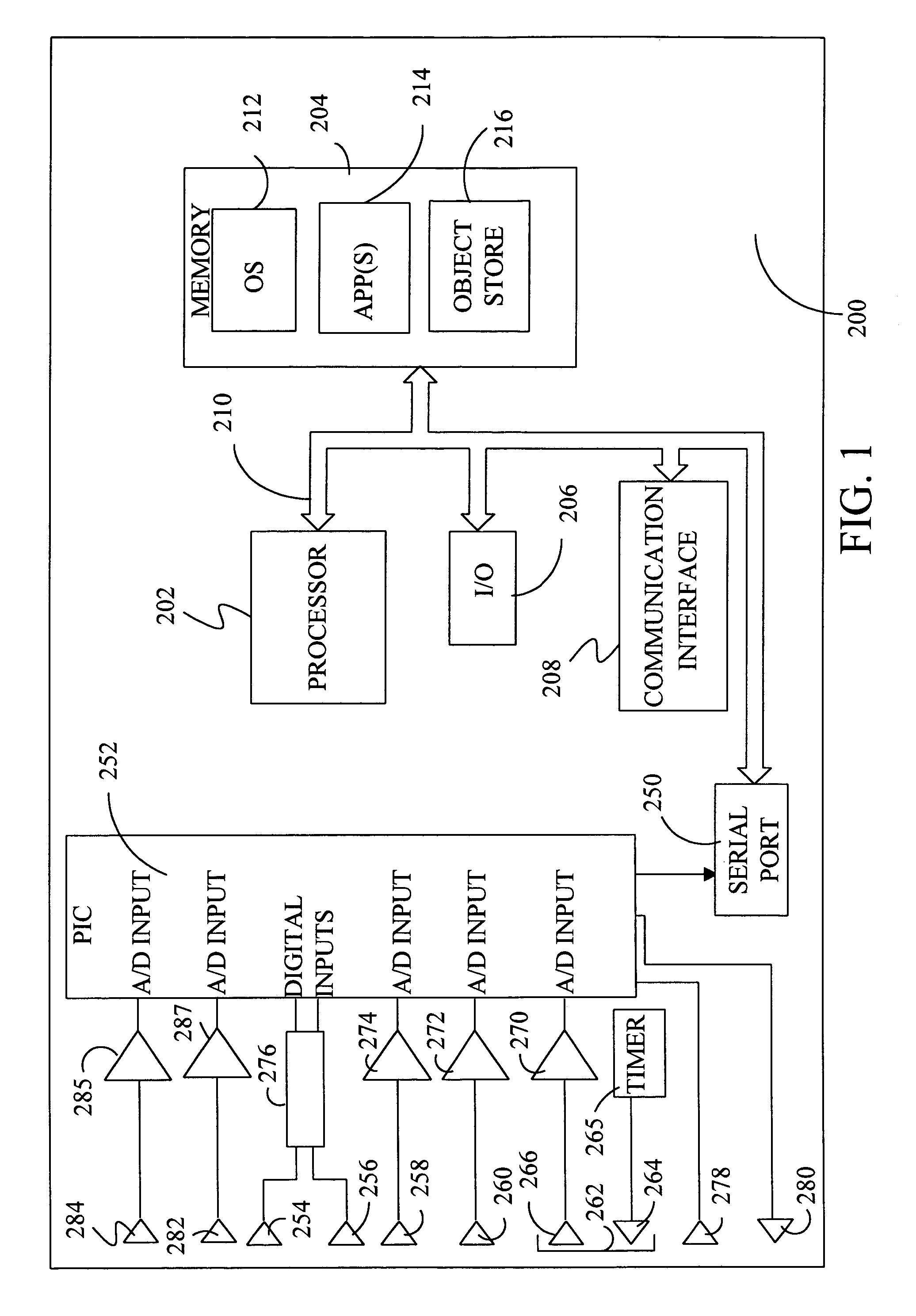

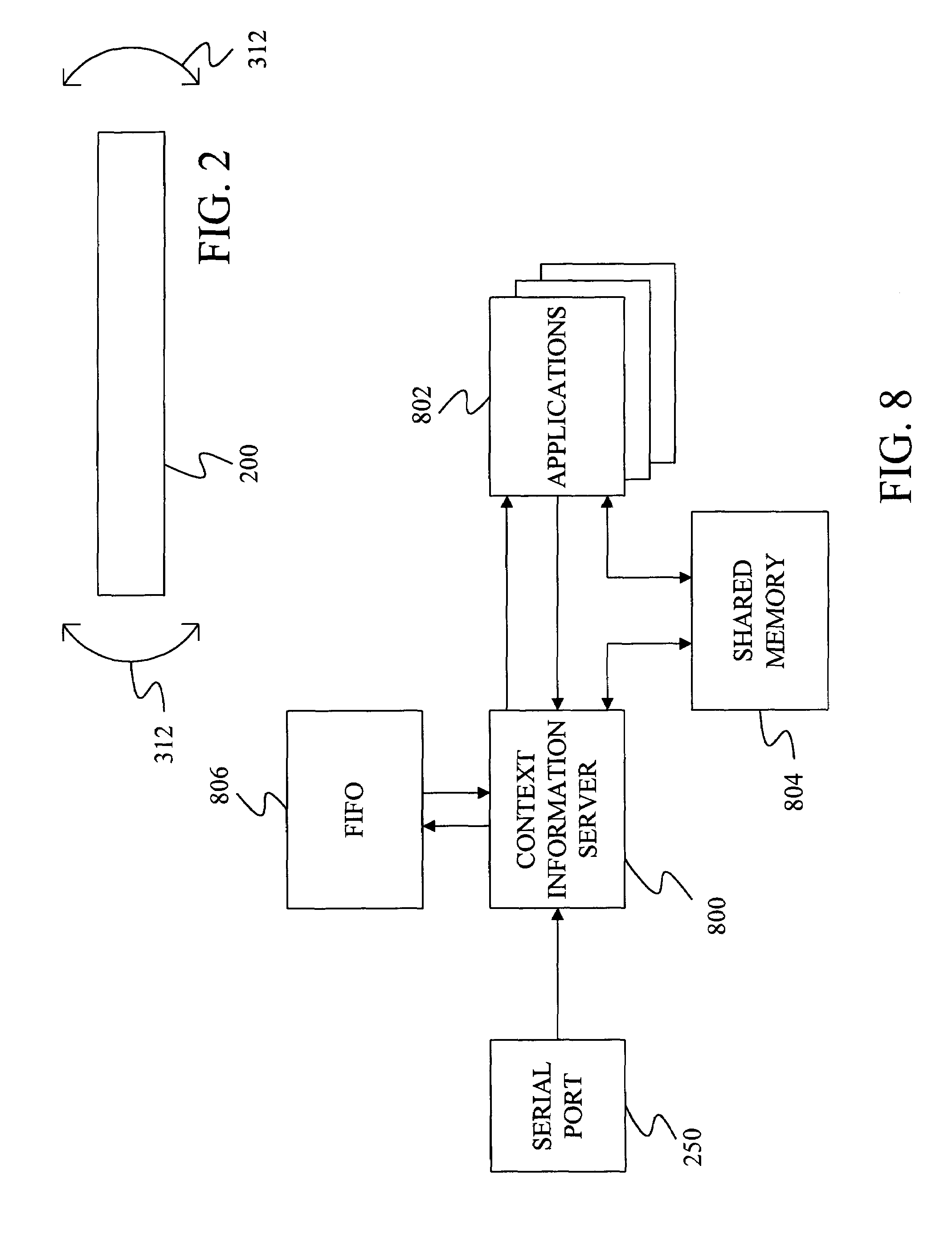

Method and apparatus using multiple sensors in a device with a display

In a device having a display, at least one sensor signal is generated from a sensor in the device. One or more context values are then generated from the sensor signal. The context values indicate how the device is situated relative to one or more objects. At least one of the context values is then used to control the operation of one or more aspects of the device.

Owner:MICROSOFT TECH LICENSING LLC

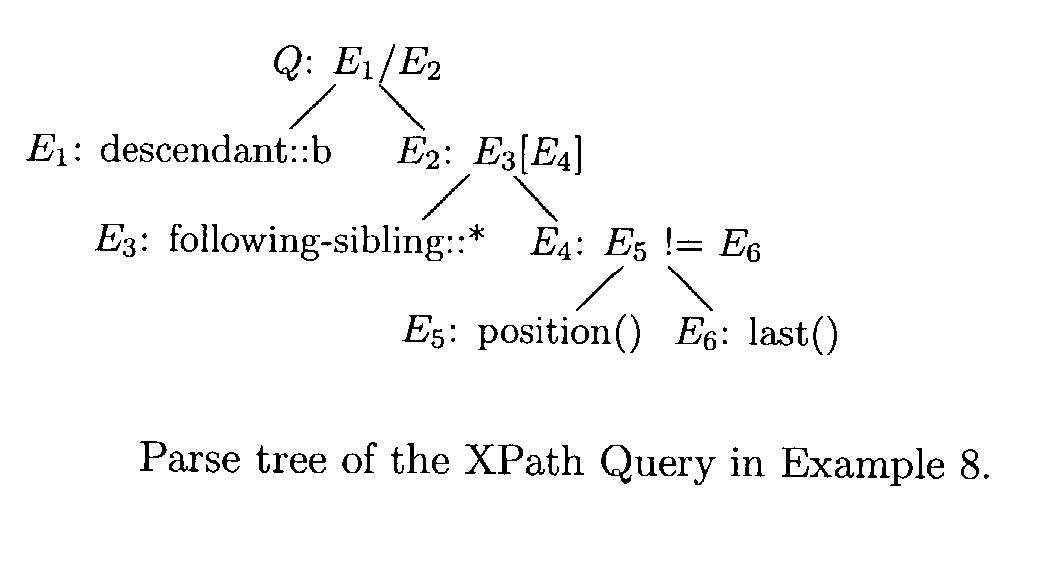

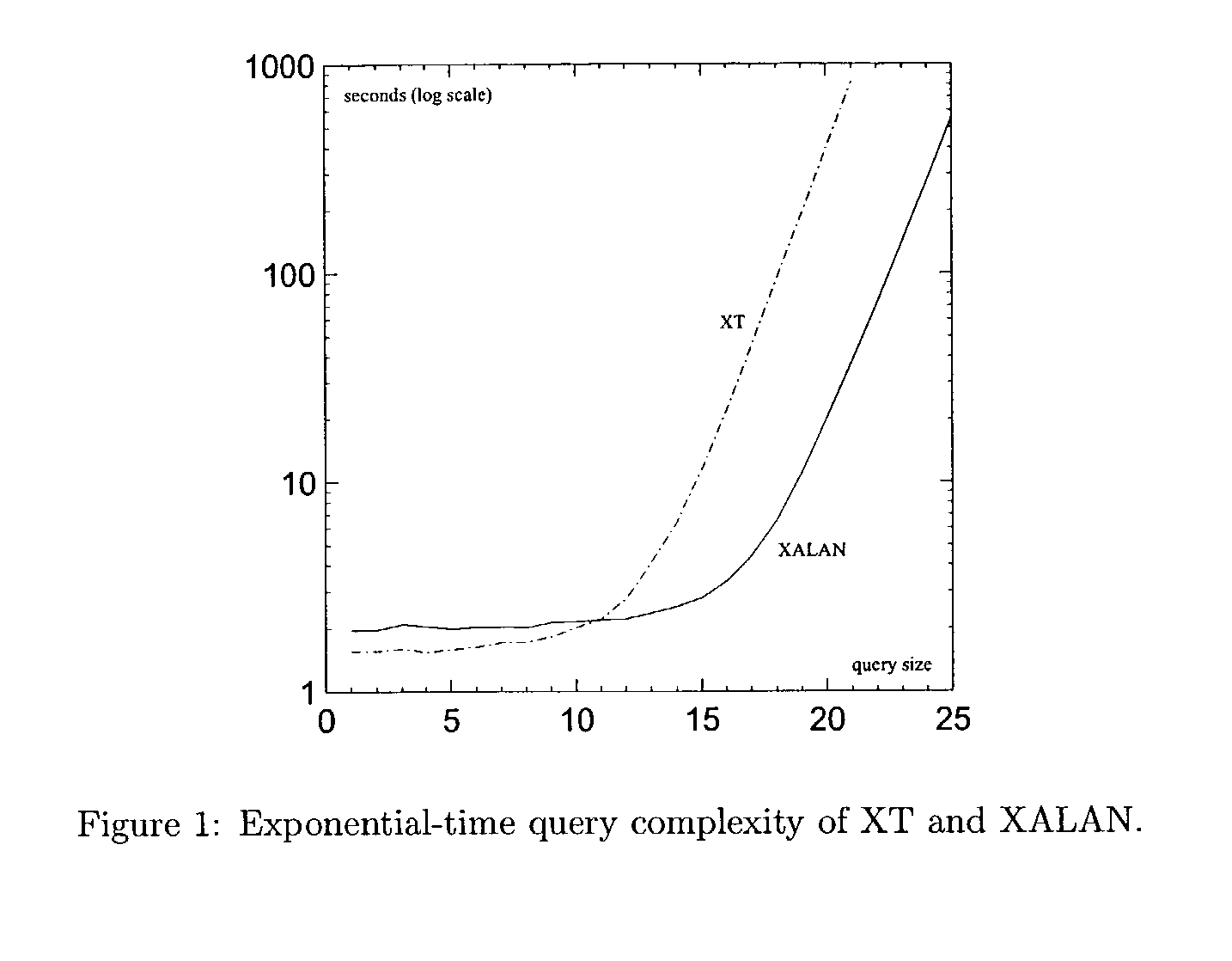

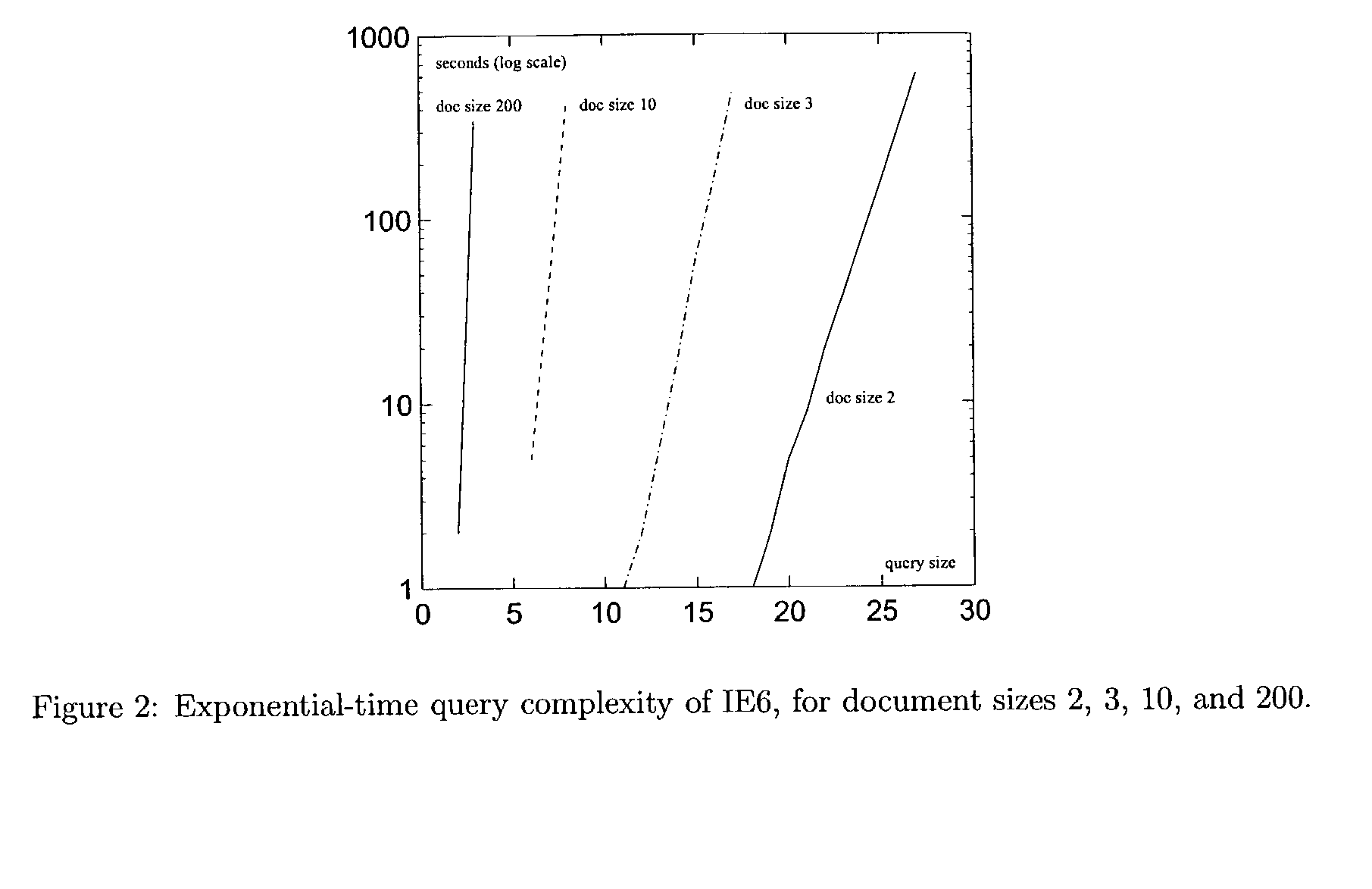

Efficient processing of XPath queries

InactiveUS7162485B2Digital data information retrievalData processing applicationsRound complexityXSLT

The disclosed teachings provide methods and systems for efficient evaluation of XPath queries. In particular, the disclosed evaluation methods require only polynomial time with respect to the total size of an input XPath query and an input XML document. Crucial for the new methods is the notion of “context-value tables”. This idea can be further refined for queries in Core XPath and XSLT Patterns so as to yield even a linear time evaluation method. Moreover, the disclosed methods can be used for improving existing methods and systems for processing XPath expressions so to guarantee polynomial worst-case complexity.

Owner:GOTTLOB GEORG +2

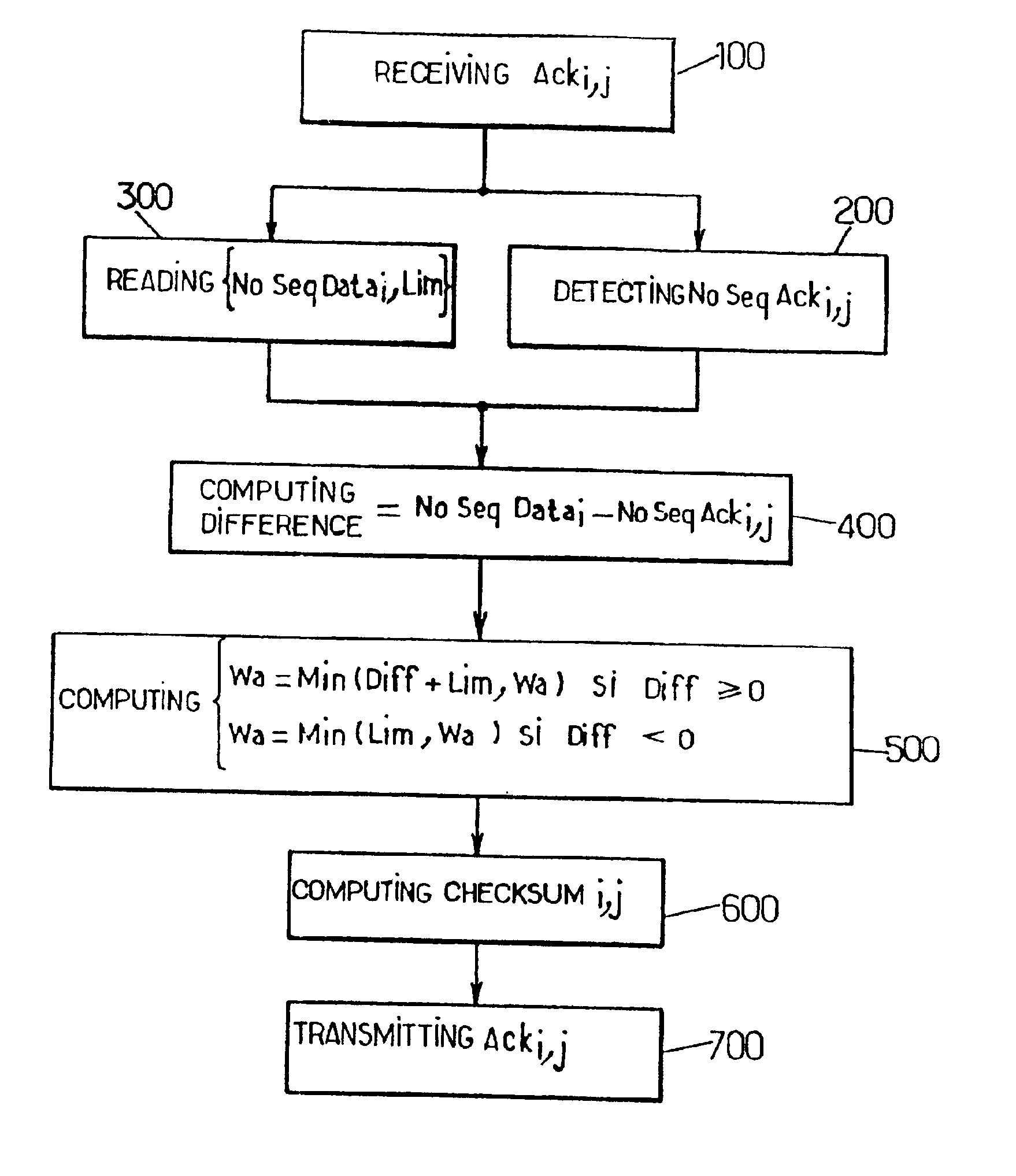

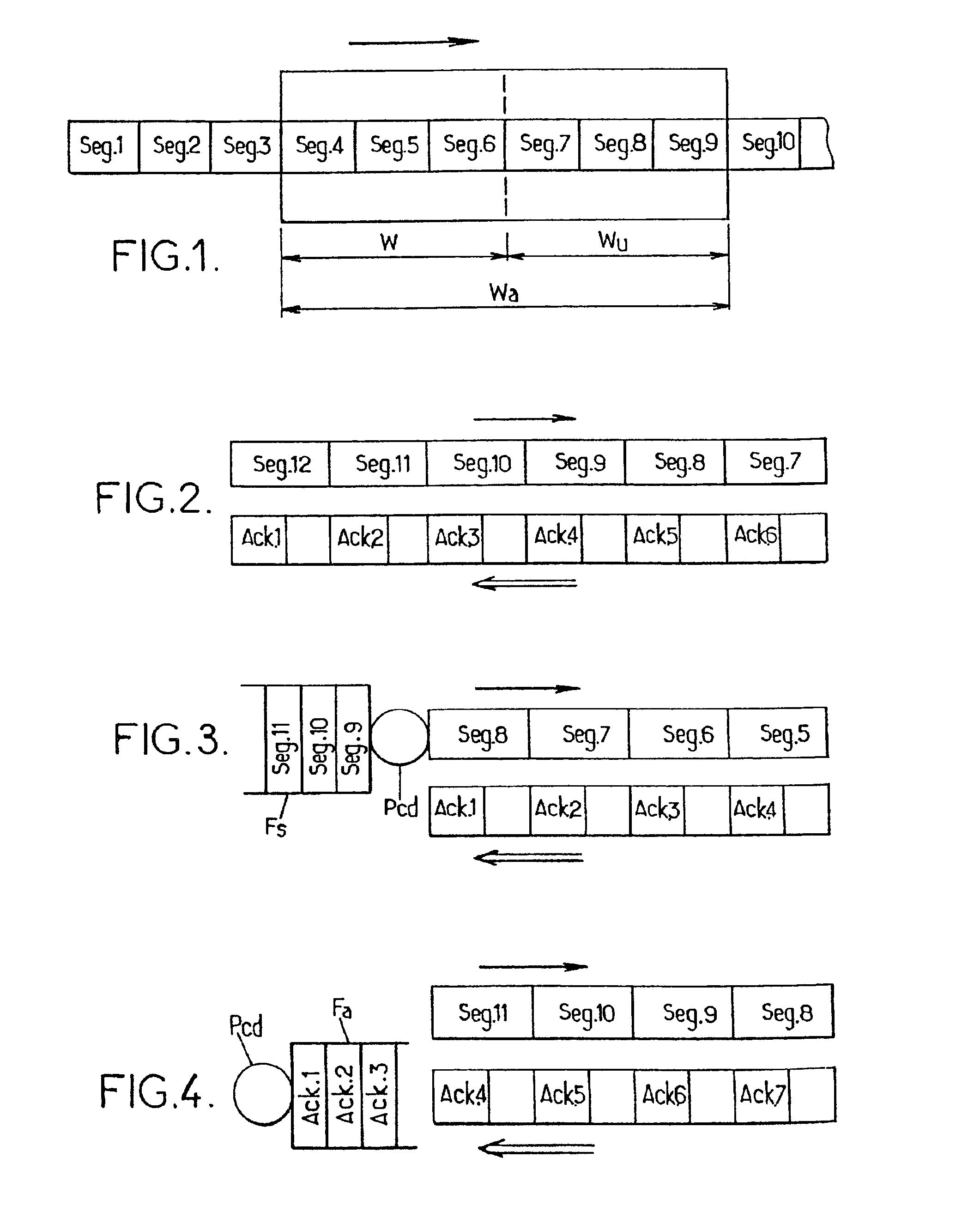

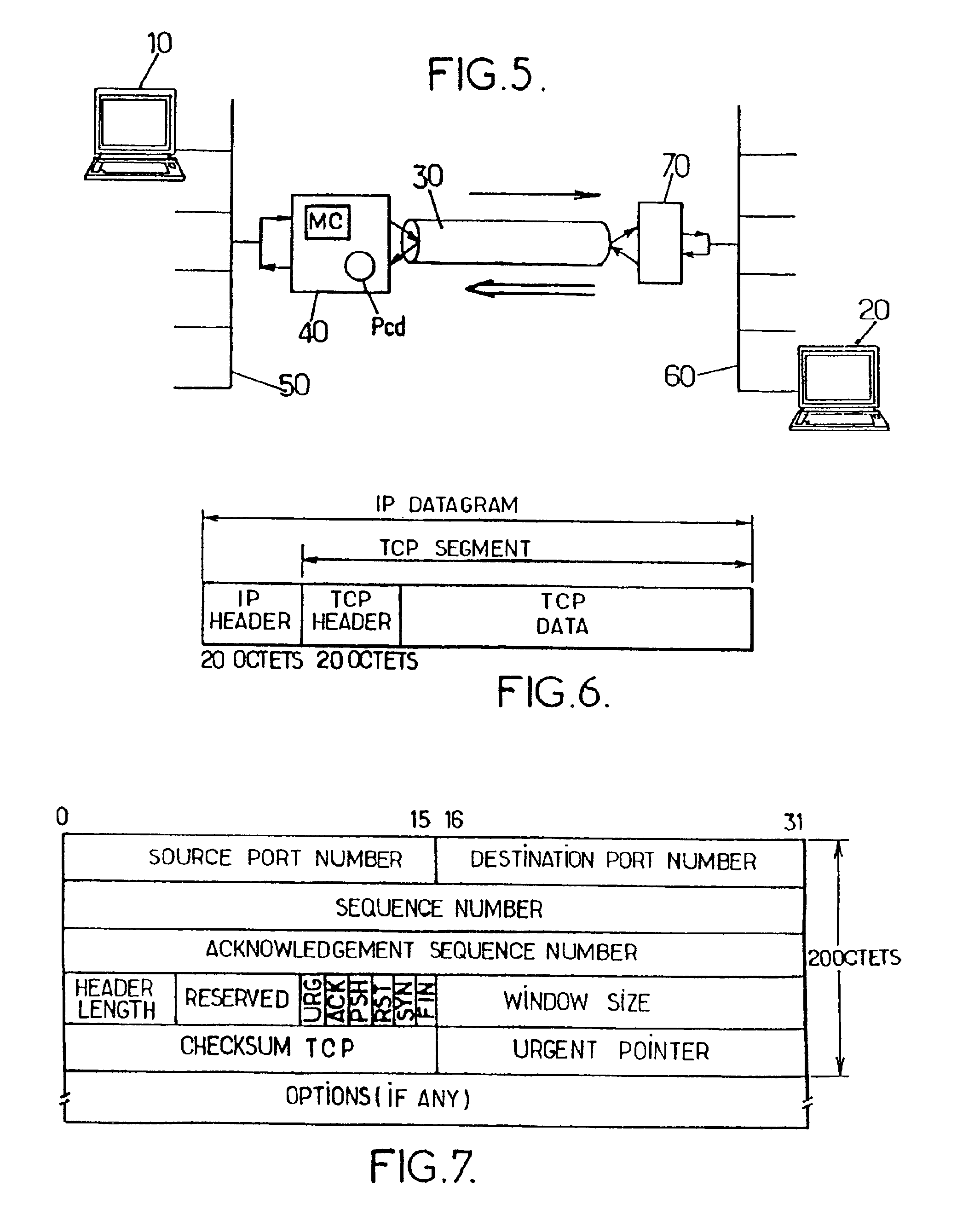

Method and unit for controlling the flow of a TCP connection on a flow controlled network

InactiveUS6925060B2Easy to implementAvoid congestionError prevention/detection by using return channelTransmission systemsMultiplexingComputer science

The invention proposes a method and a unit for controlling the flow of at least one TCP connection between a sender and a receiver. The method is of the type which consists in controlling, at the level of a given multiplexing node through which TCP segments relevant to the connection pass, a window size parameter contained in acknowledgement segments sent back by the receiver. The method comprises the steps of:a) receiving an acknowledgement from the receiver on the up link (receiver to sender) of the connection at the level of said given multiplexing node;b) controlling a window size parameter contained in, said acknowledgement segment on the basis of the difference between, firstly, a first context value associated with the TCP connection, defined as being the sequence number of the last segment that was transmitted from said given multiplexing node on the down link (sender to receiver) of the connection, to which the length of said segment is added, and, secondly, the sequence number indicated in said acknowledgement segment;c) transmitting the acknowledgement segment to the sender the up link of the connection from said multiplexing node with the window size parameter thus controlled.

Owner:MITSUBISHI ELECTRIC CORP

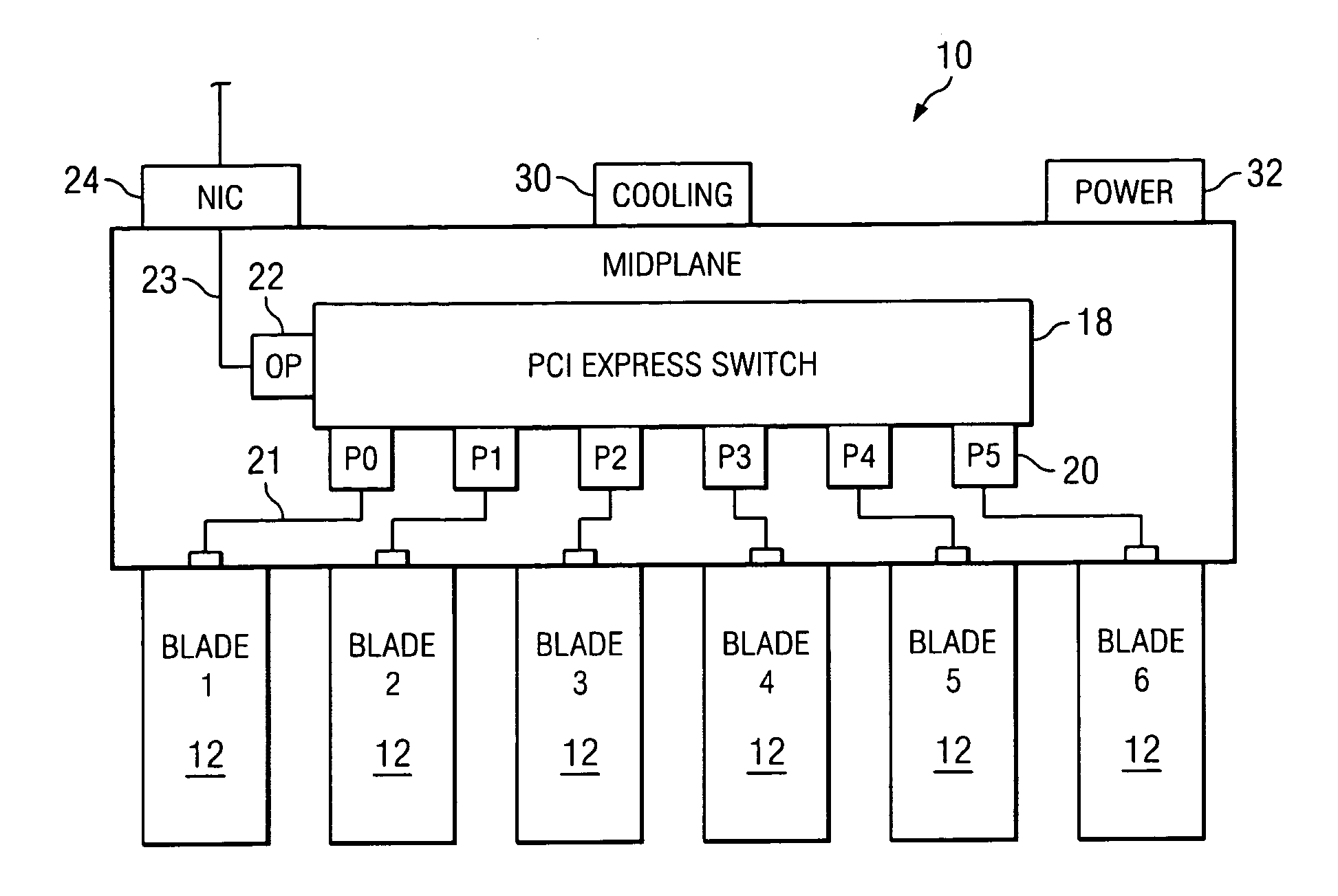

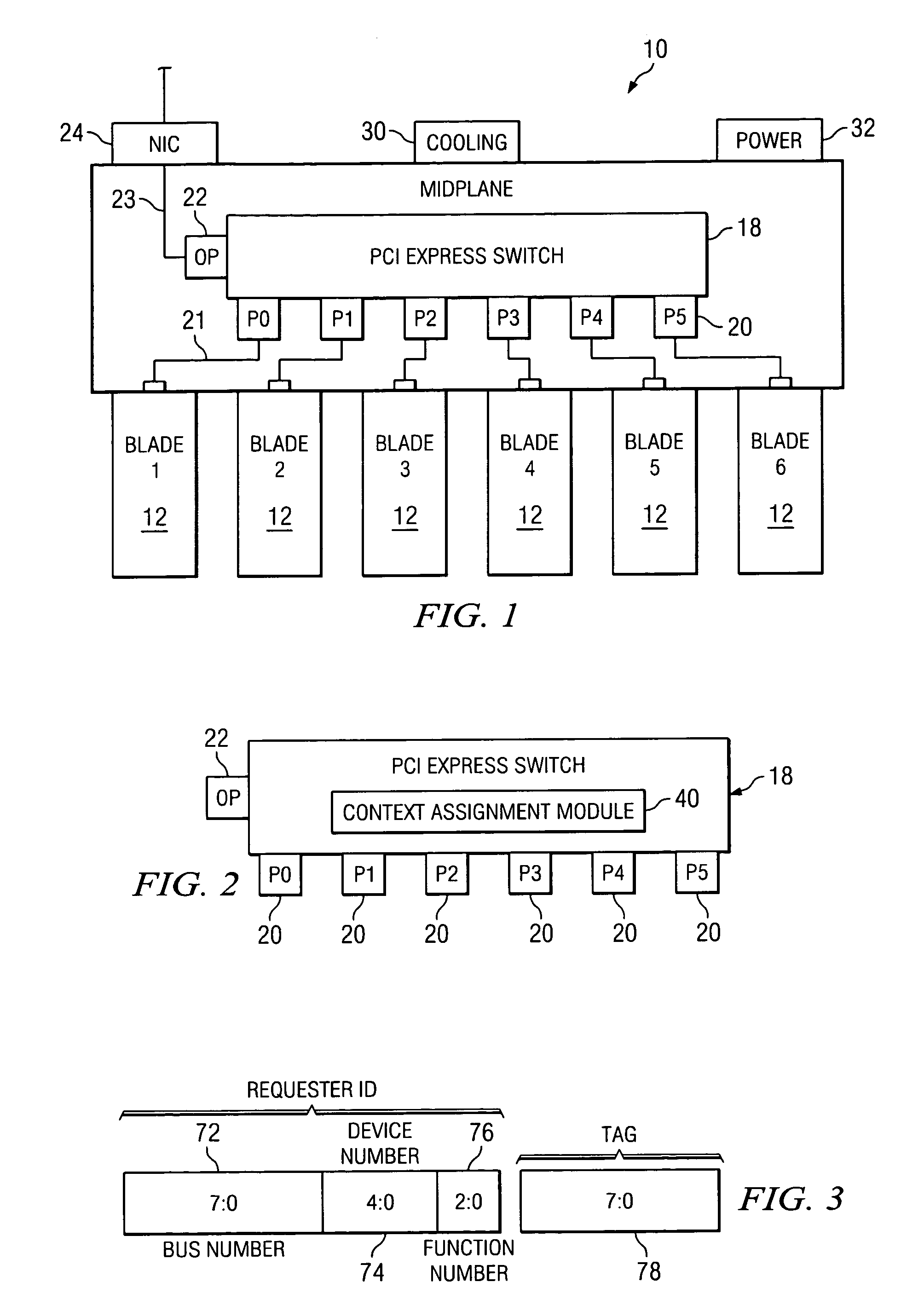

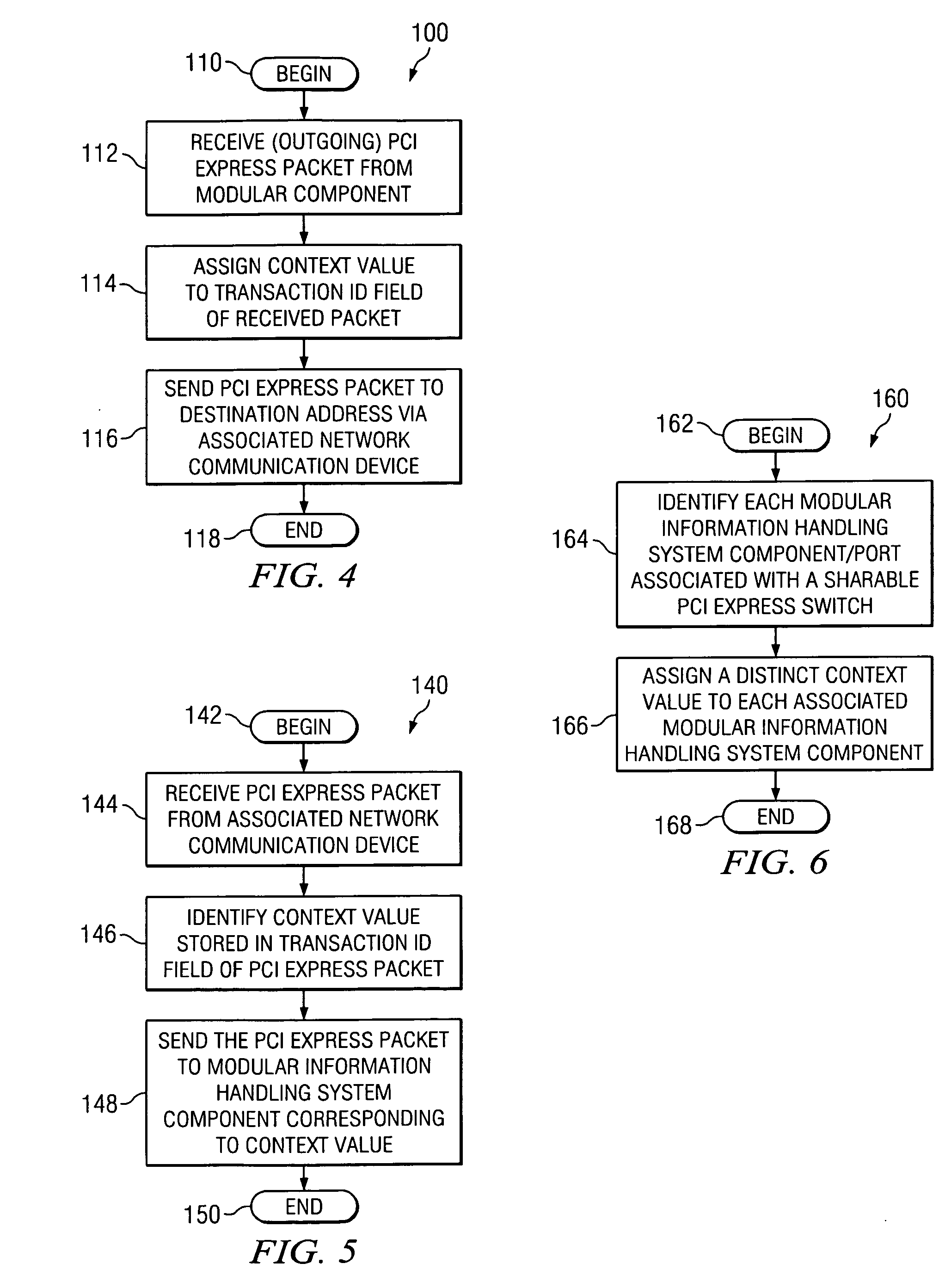

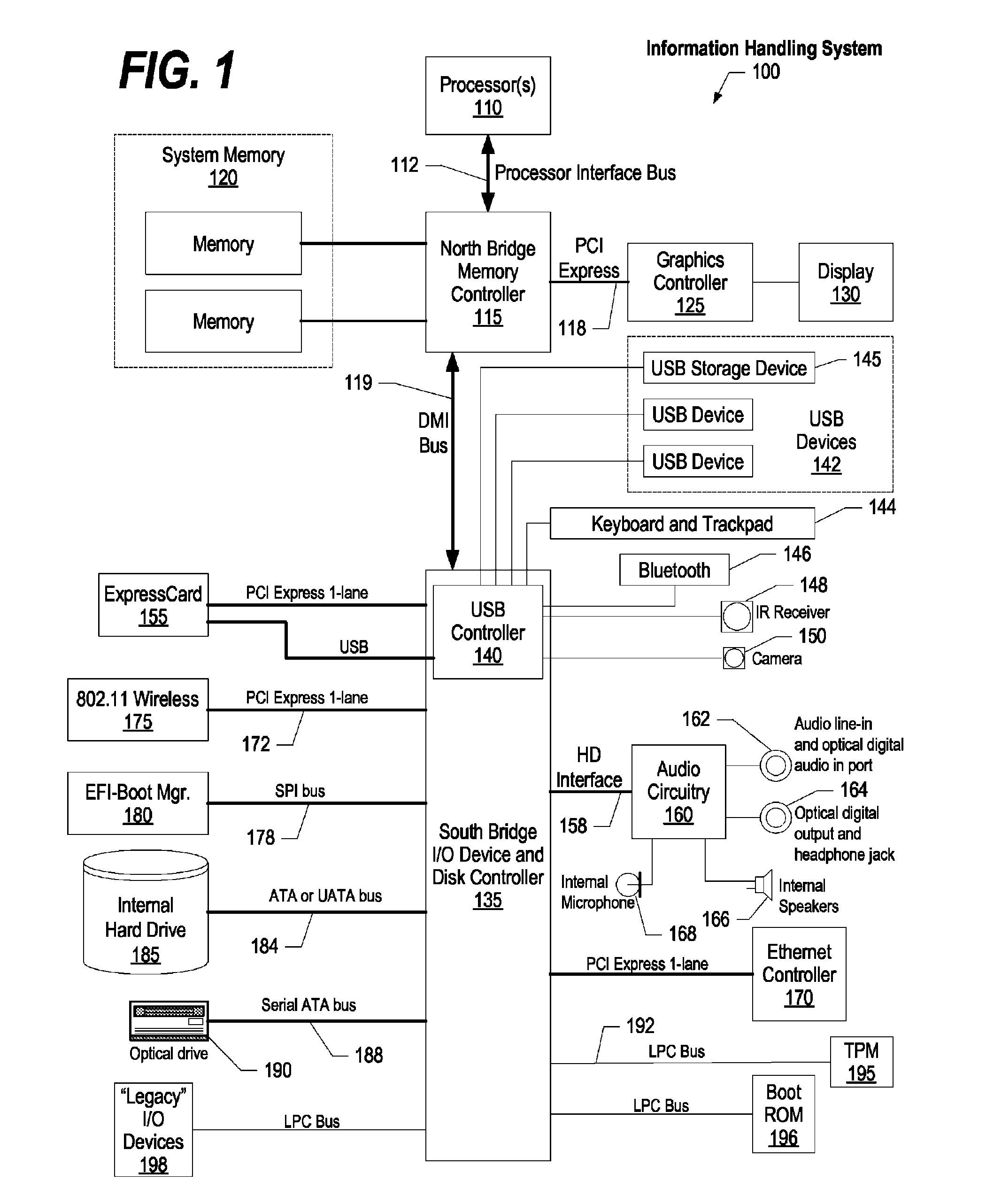

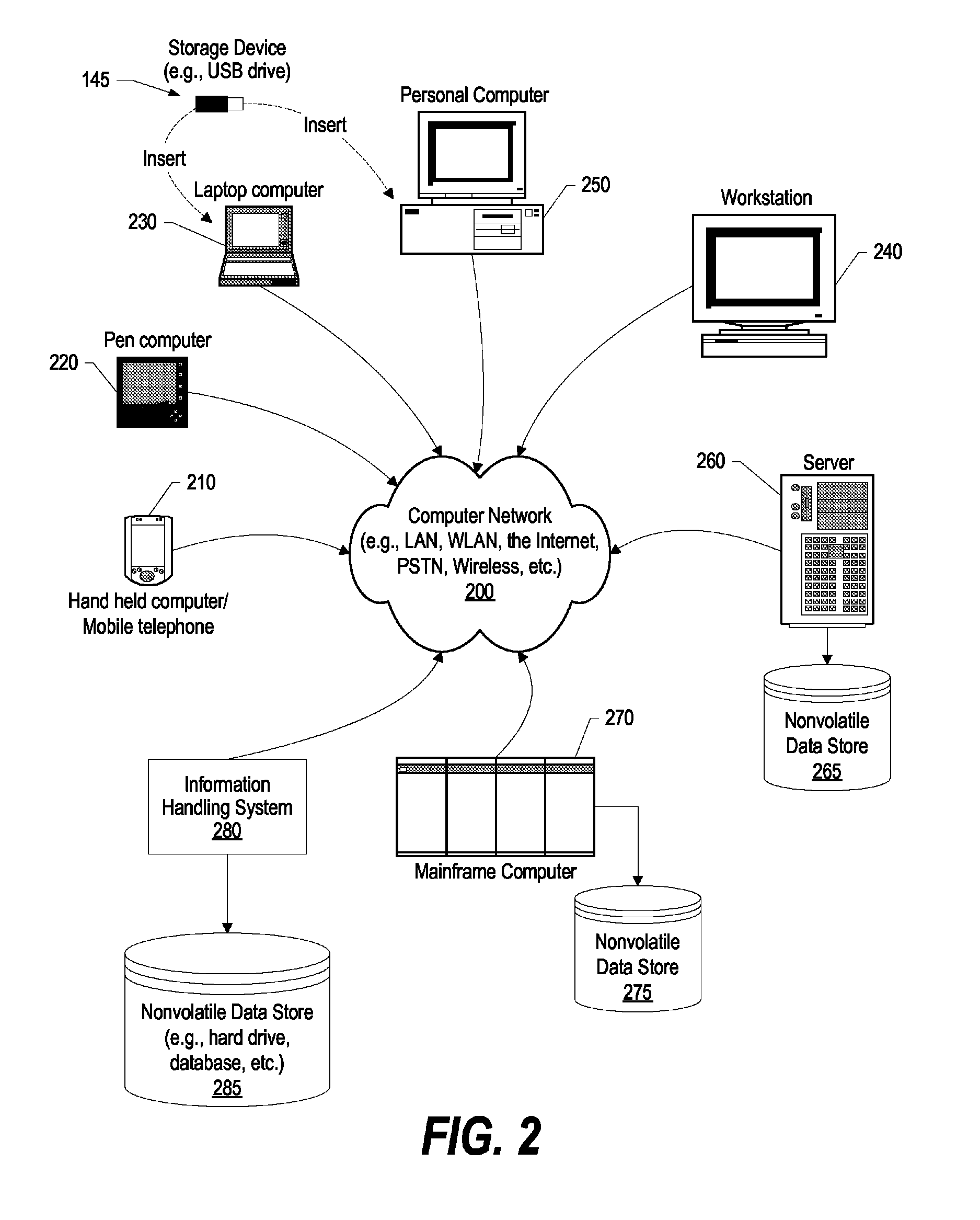

System and method for providing a shareable input/output device in a PCI express environment

The midplane of a modular information handling system includes a sharable PCI Express switch that is serially connect with each associated modular information handling system component and a network communication device. The shareable PCI Express switch acts to receive PCI Express packets from associated information handling system components, with each packet including a Transaction ID field. The shareable PCI Express switch assigns a context value that identifies a particular modular information handling system component within the Transaction ID field of each PCI Express packet received from the connected modular information handling system component.

Owner:DELL PROD LP

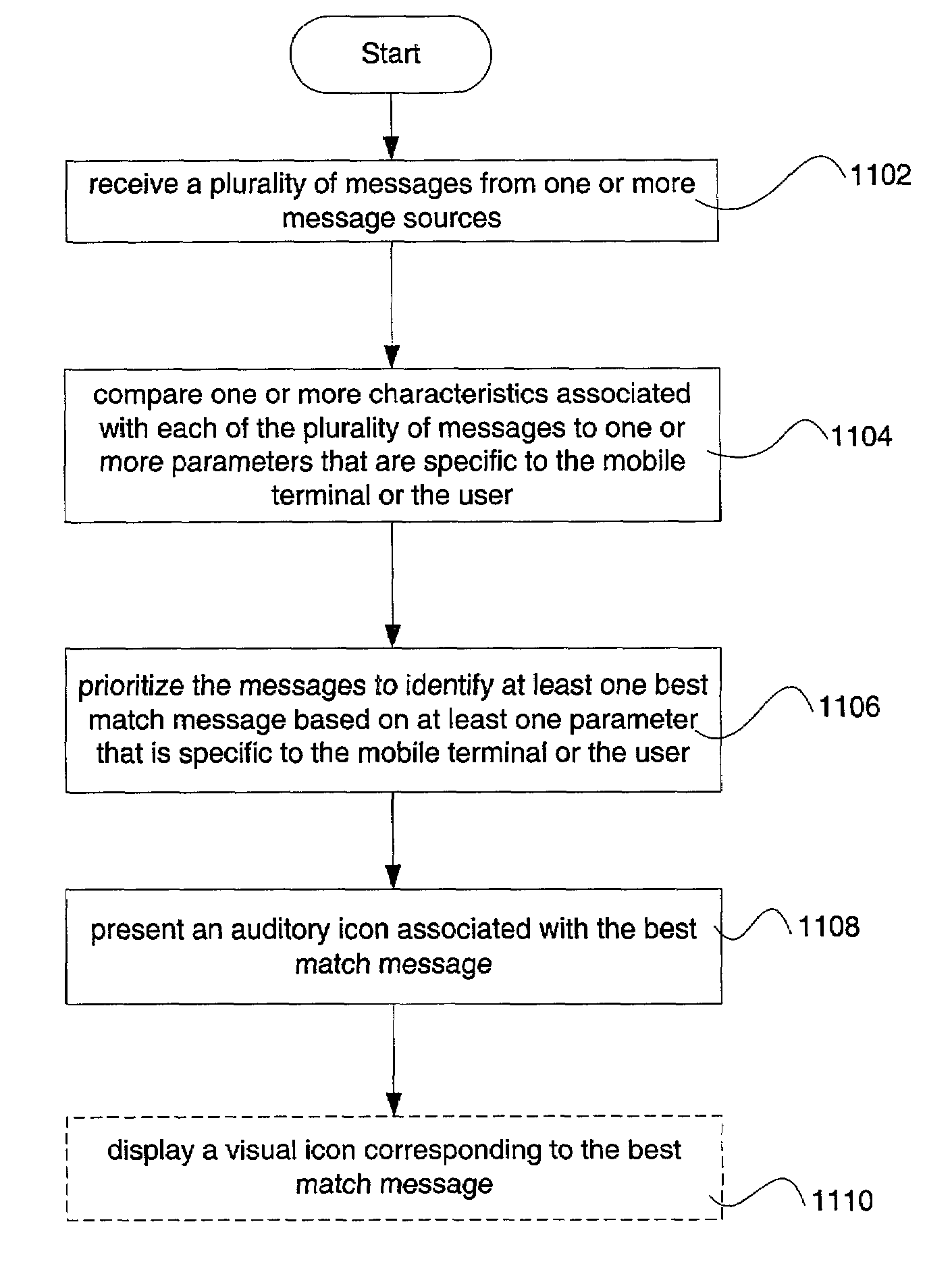

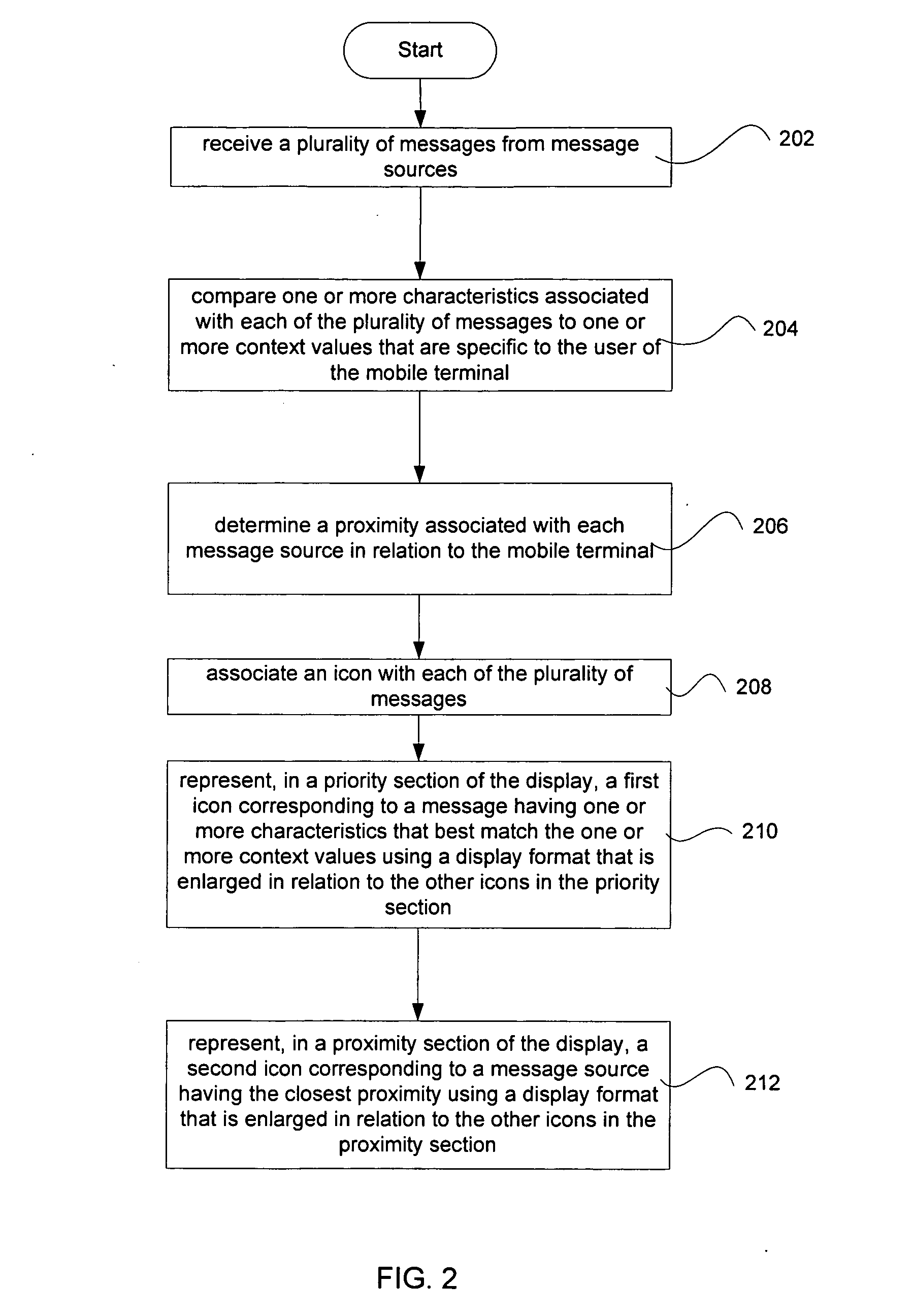

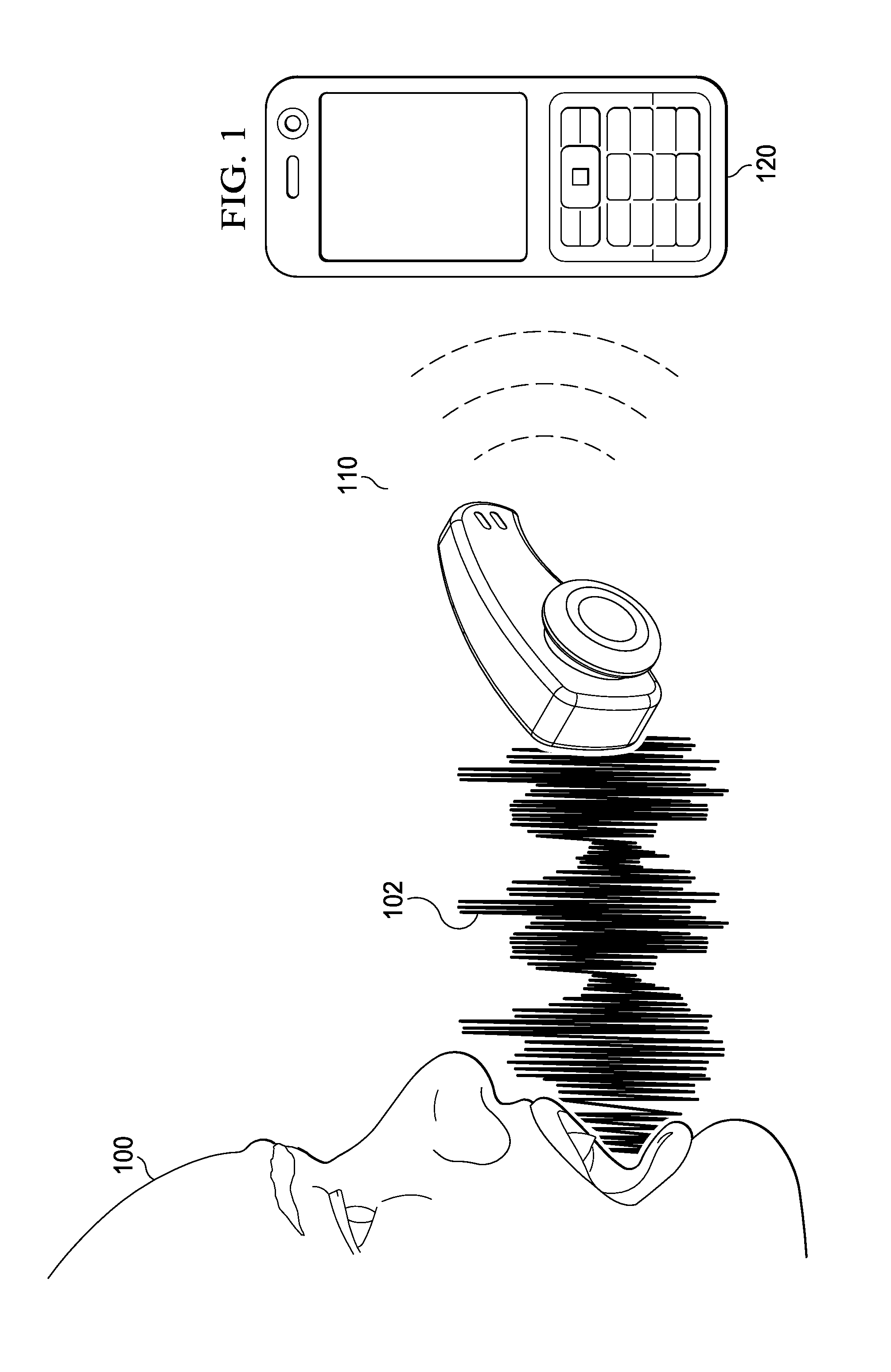

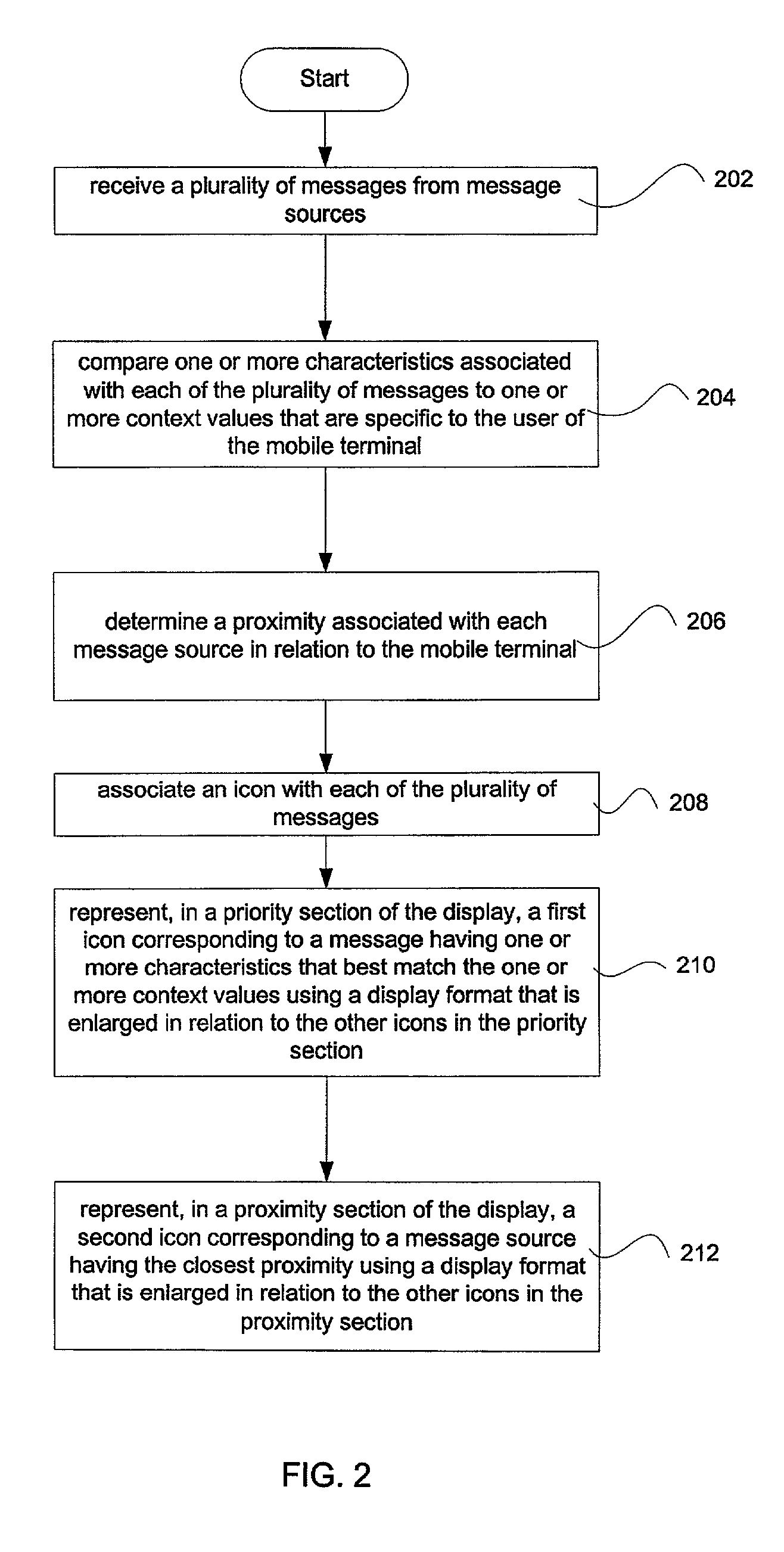

Method and apparatus for presenting auditory icons in a mobile terminal

InactiveUS6996777B2Quick identificationSubstation equipmentSound input/outputMultiple contextDisplay device

An apparatus and method for presenting one or more auditory icons are provided to deliver contextual information in a timely manner to the user without requiring the user to view the mobile terminal's display. The auditory icons may correspond to visual icons that are provided on a display of the mobile terminal, for example, a navigational bar. The mobile terminal receives a plurality of messages from one or more message sources, prioritizes them according to one or more context values, and presents to the user auditory icons associated with the messages in accordance with any number of pre-set preferences set by the user.

Owner:NOKIA TECHNOLOGLES OY

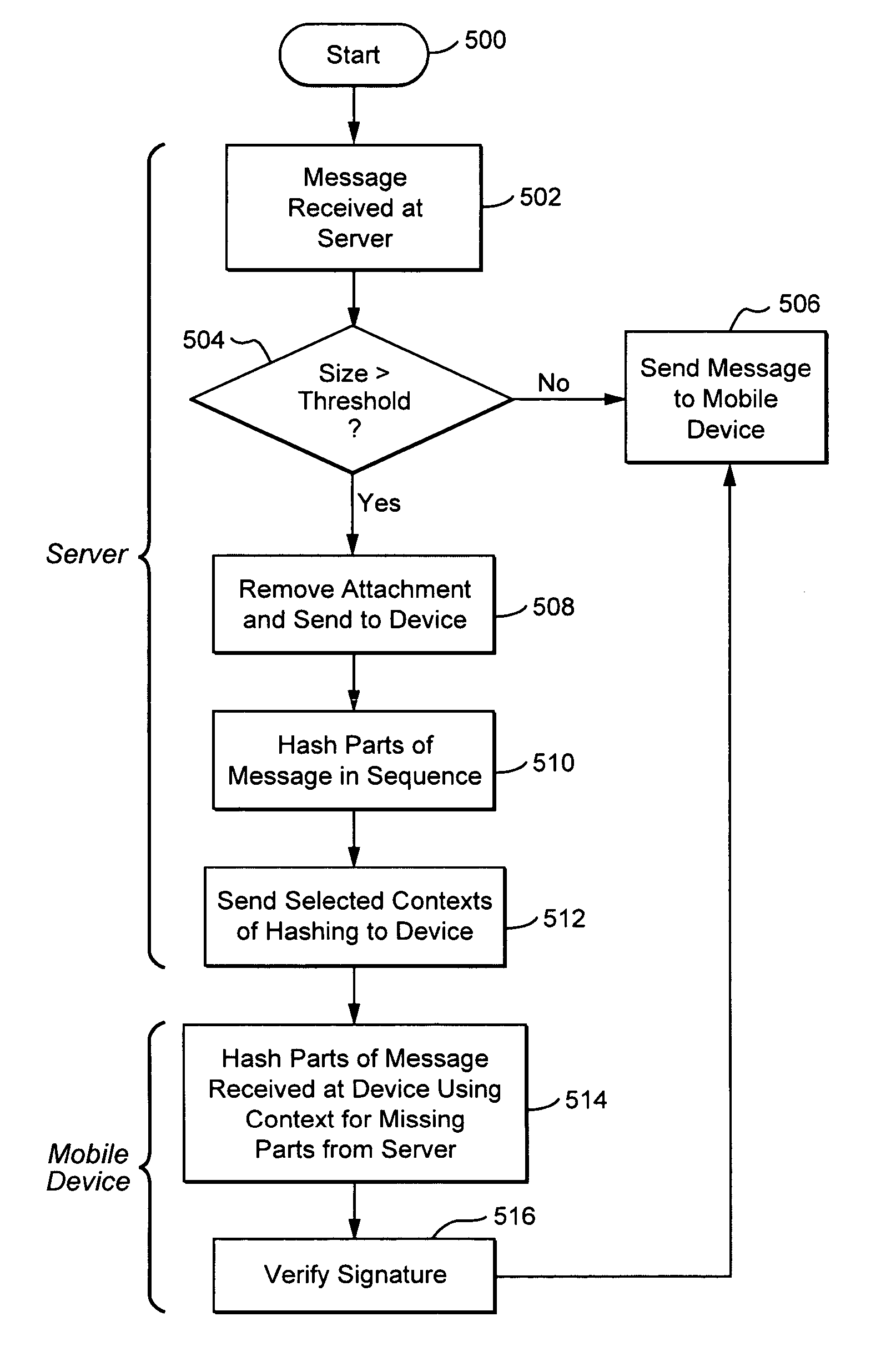

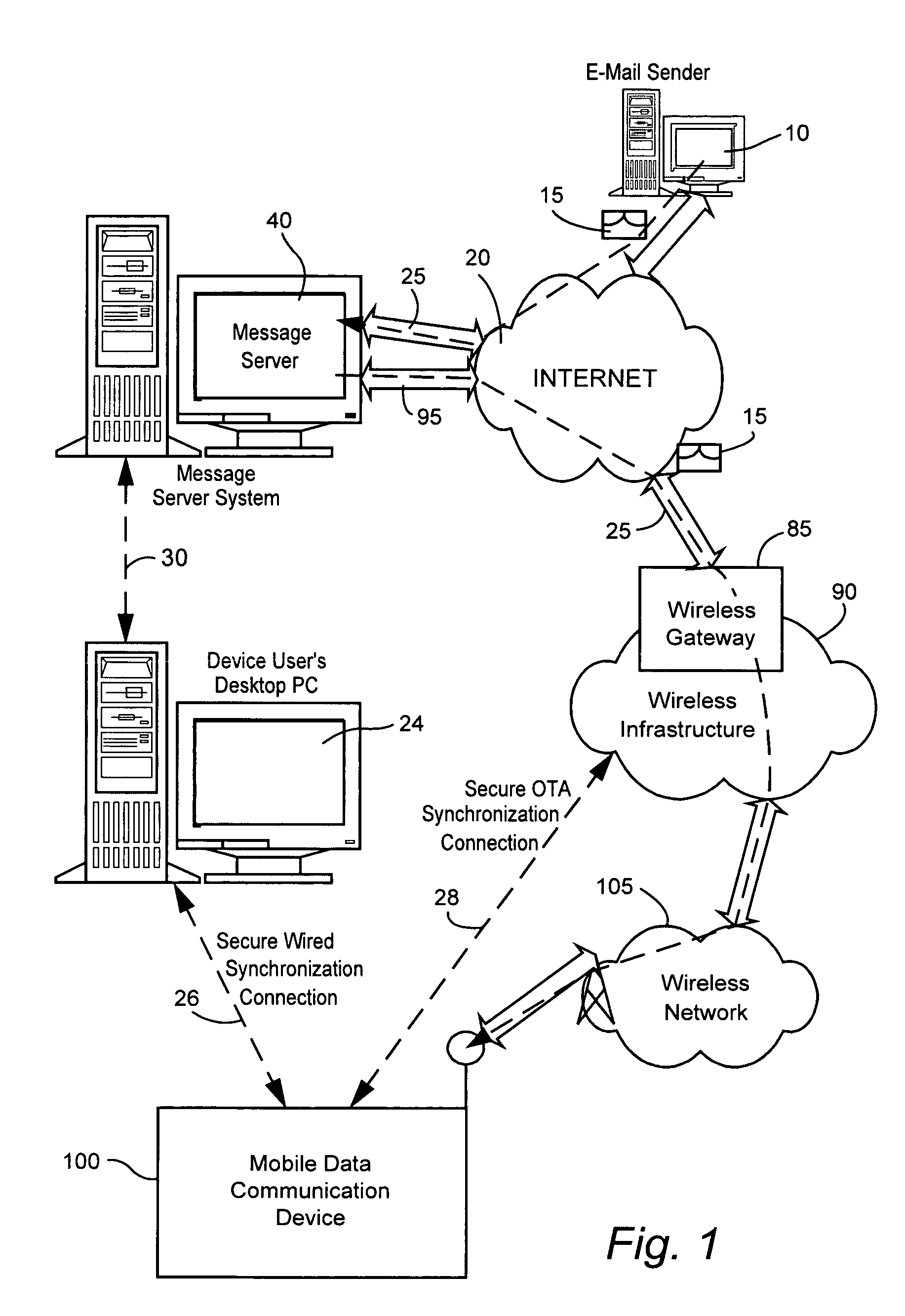

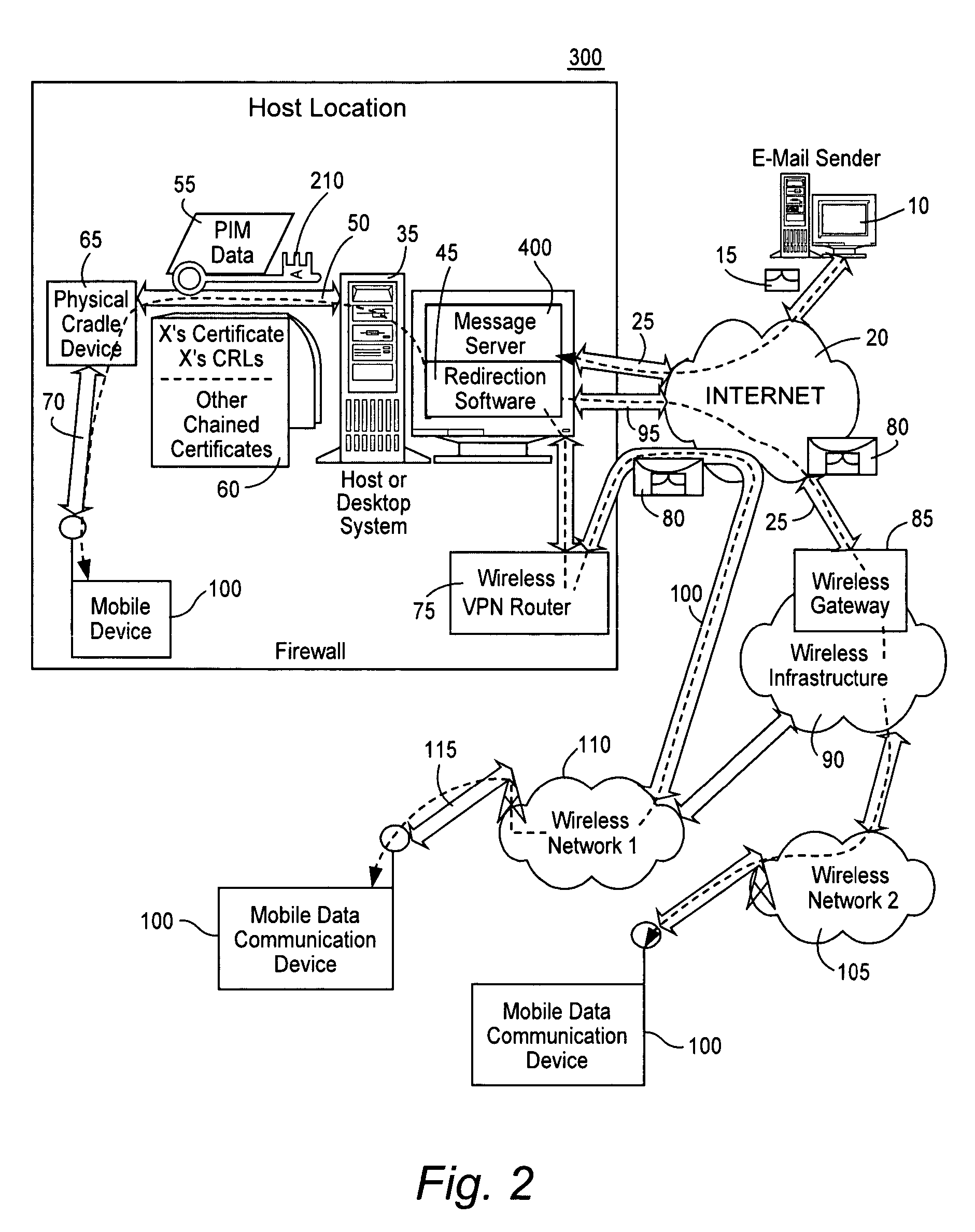

Server verification of secure electronic messages

ActiveUS20060036865A1Easy to implementReduce data volumeSynchronising transmission/receiving encryption devicesUser identity/authority verificationComputer hardwareCommunications system

Systems and methods for processing encoded messages within a wireless communications system are disclosed. A server within the wireless communications system determines whether the size of an encoded message is too large for a wireless communications device. If the message is too large, the server removes part of the message and sends an abbreviated message to the wireless device, together with additional information relating to processing of the encoded message, such as, for example, hash context values, that assist the wireless communications device in verifying the abbreviated message.

Owner:MALIKIE INNOVATIONS LTD

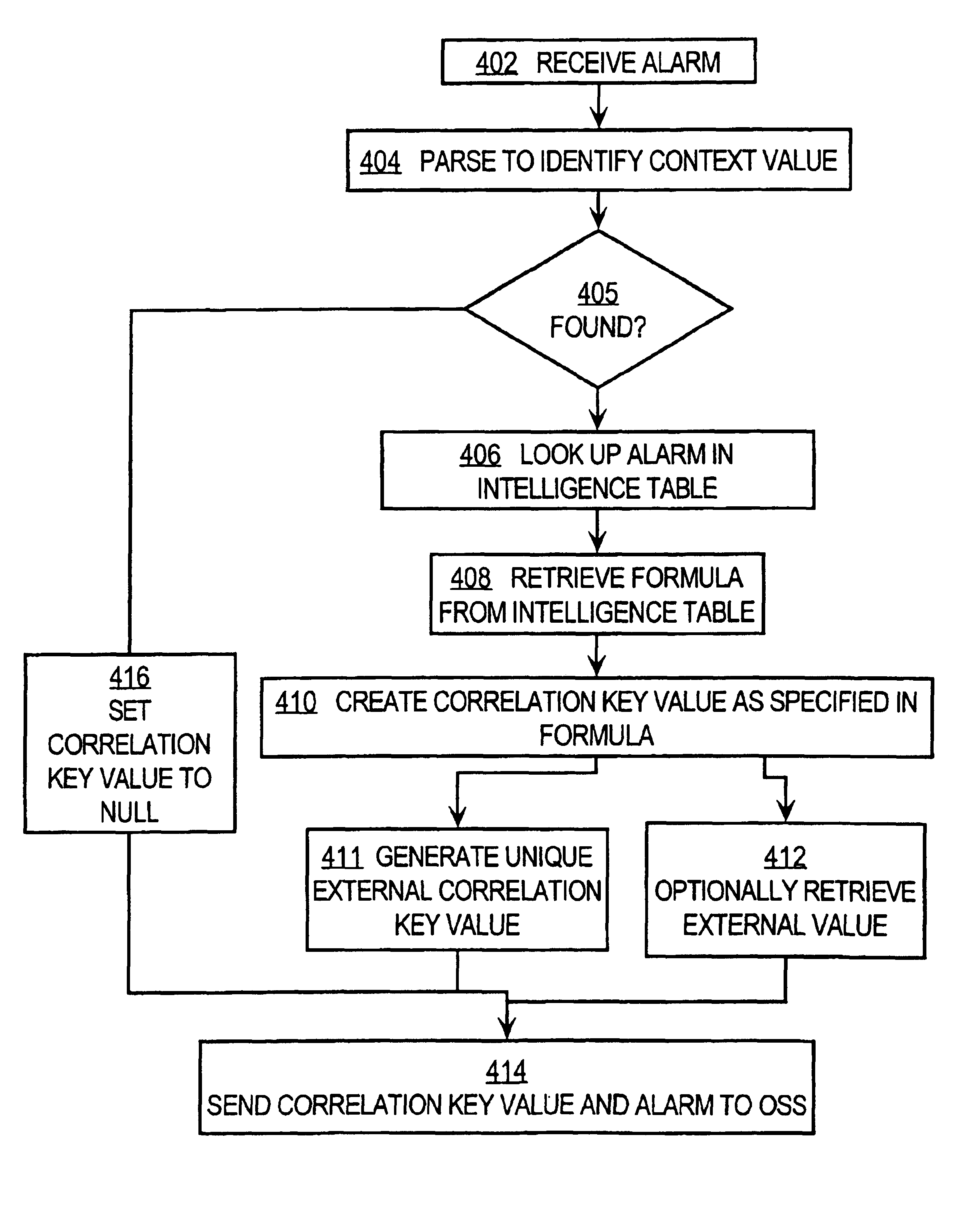

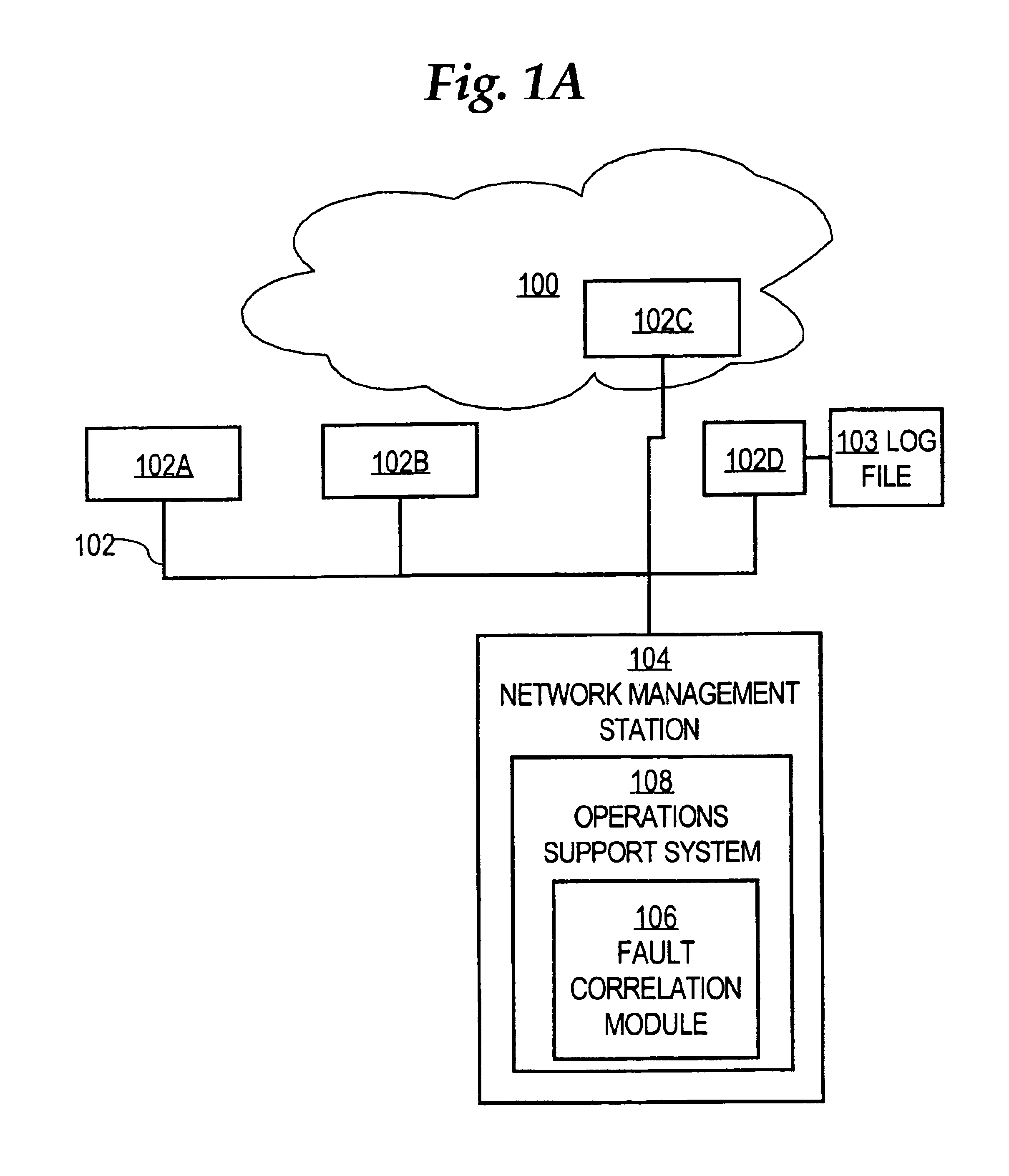

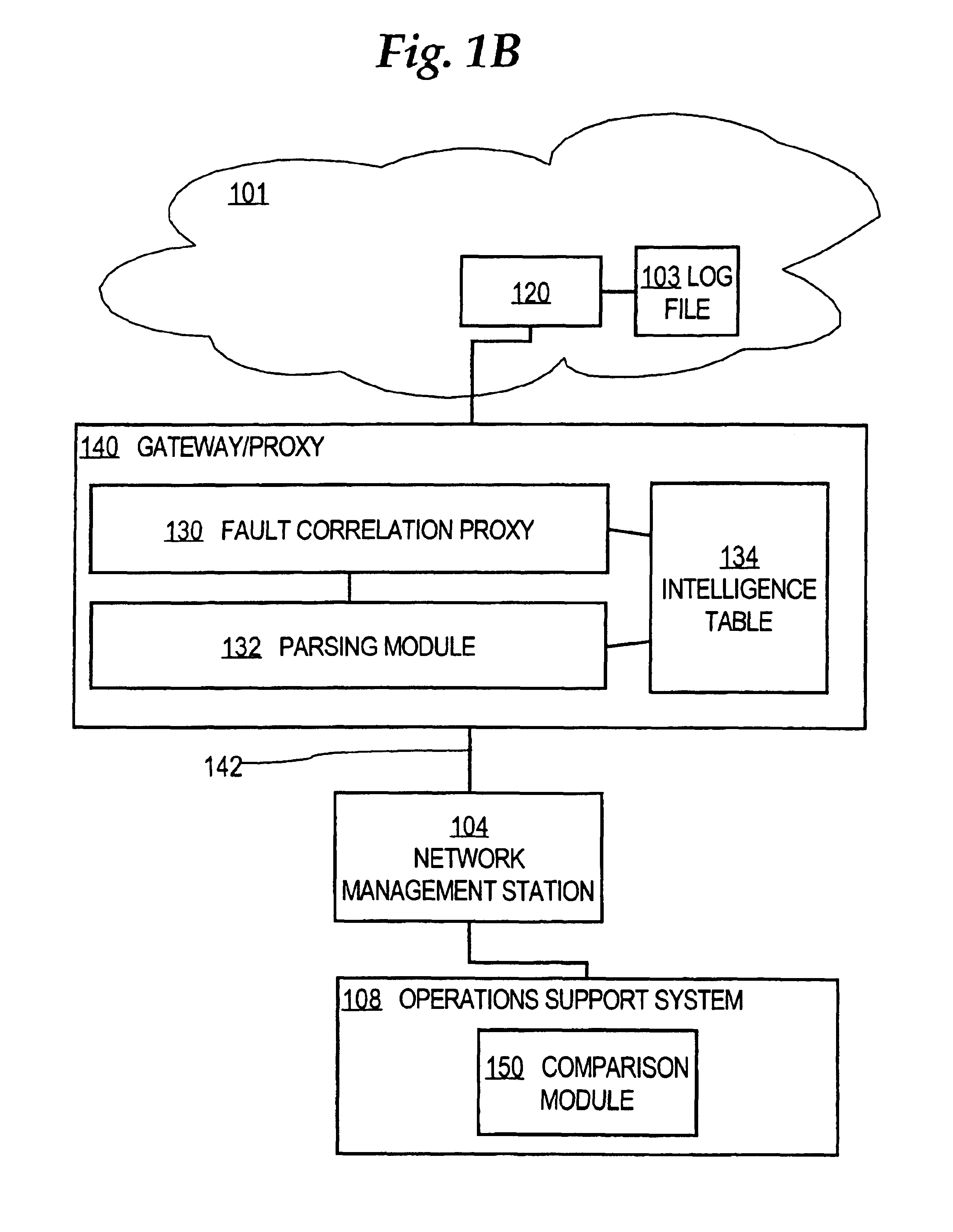

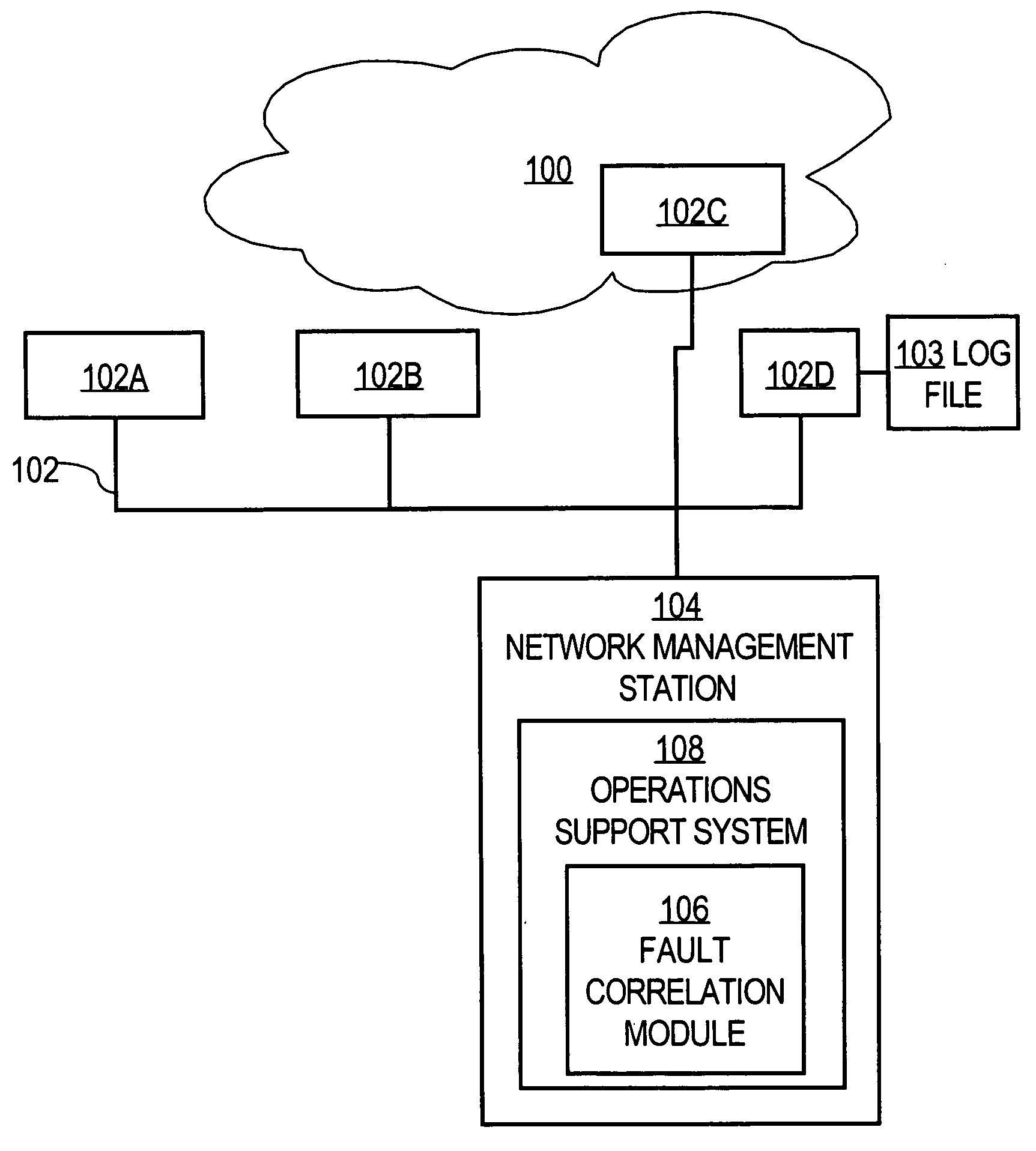

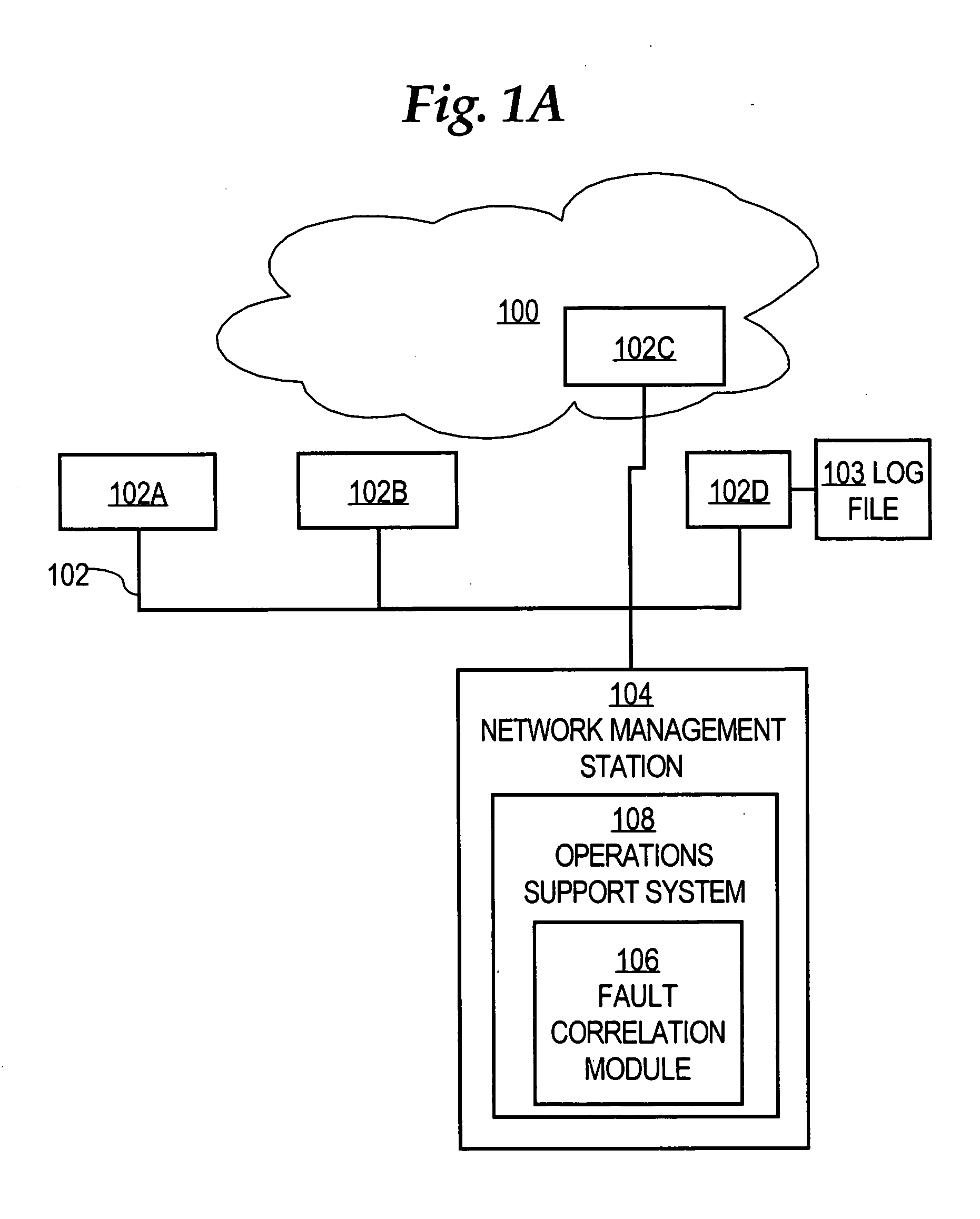

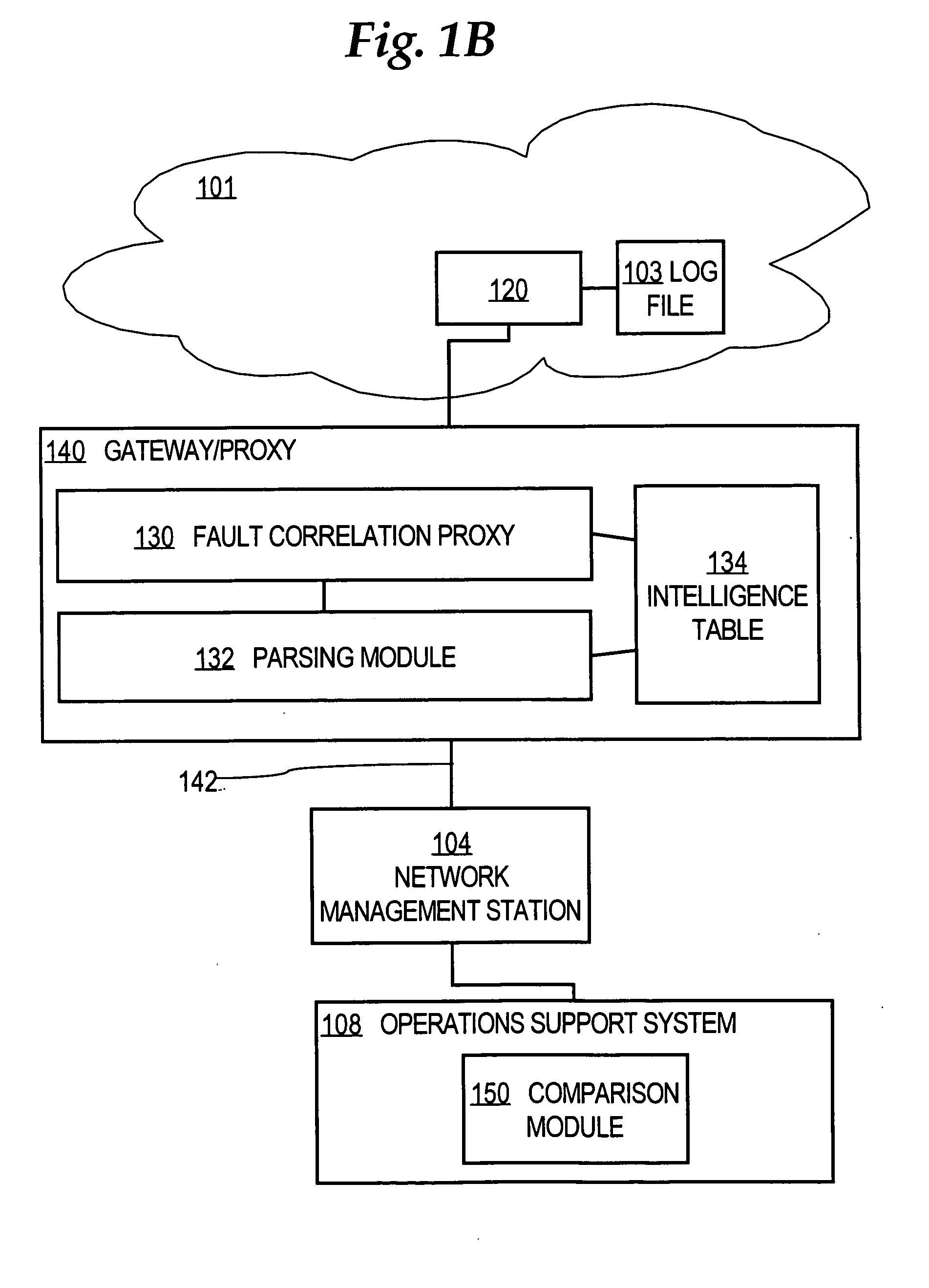

Method of labeling alarms to facilitate correlating alarms in a telecommunications network

InactiveUS6862698B1Low costDegrades interpretationDigital computer detailsNon-redundant fault processingAlarm messageTelecommunications network

A method for generating compressed correlation key values for use in correlating alarms generated by network elements in a telecommunications network is disclosed. An alarm message generated by a network element is received. A context value in the alarm message is identified. A table that associates context values to correlation key value formulas is maintained. A formula specifying how to generate the correlation key value is retrieved from the table. Each formula may specify, for an associated context value, one or more ordinal positions of fields in the alarm message, a concatenation of which yields the correlation key value. The correlation key value is created based on the formula. A unique ordinal number is generated to represent the correlation key value, which acts as a context key. The alarm message and correlation key value are sent to an external system for use in correlating alarms.

Owner:CISCO TECH INC

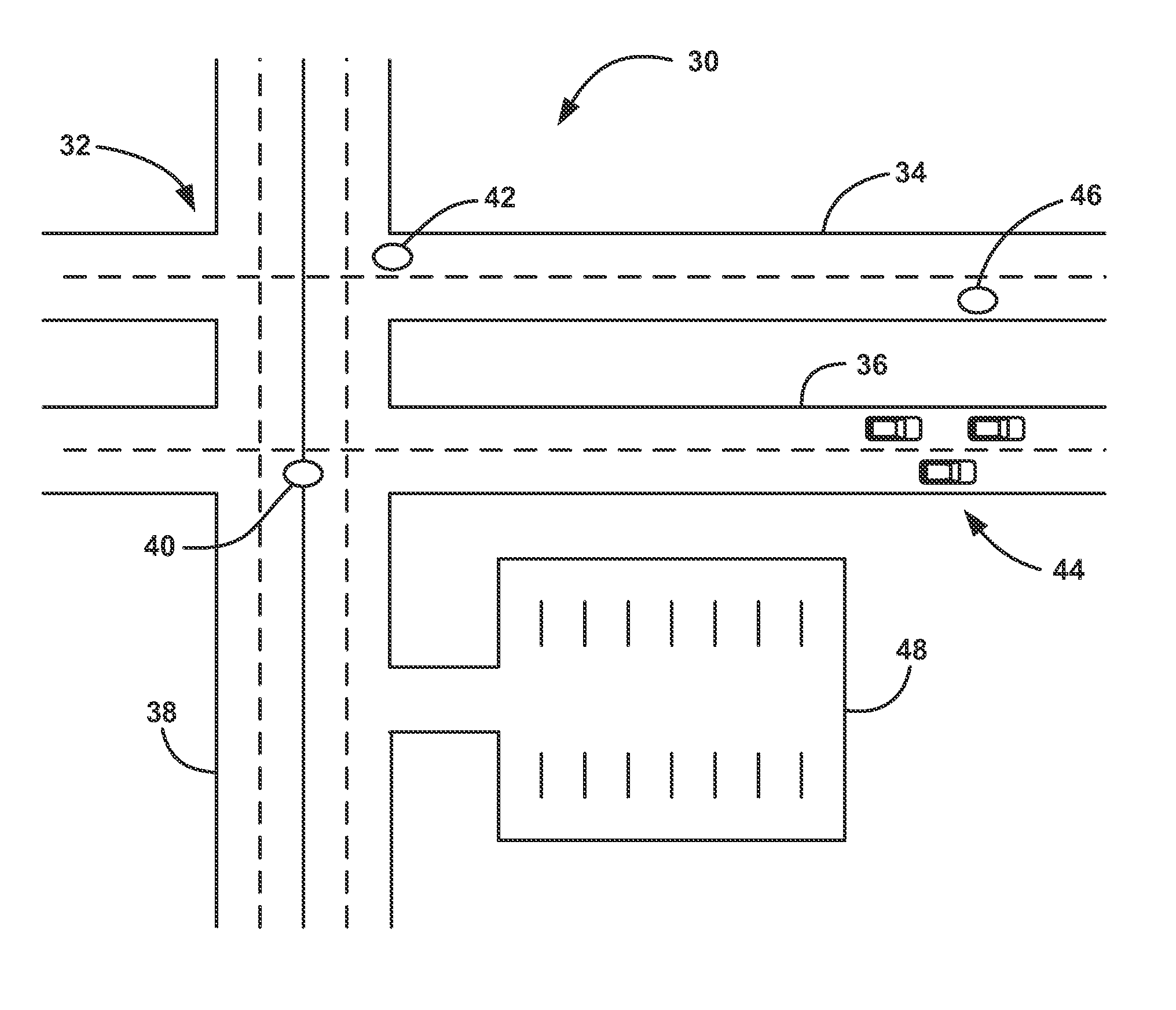

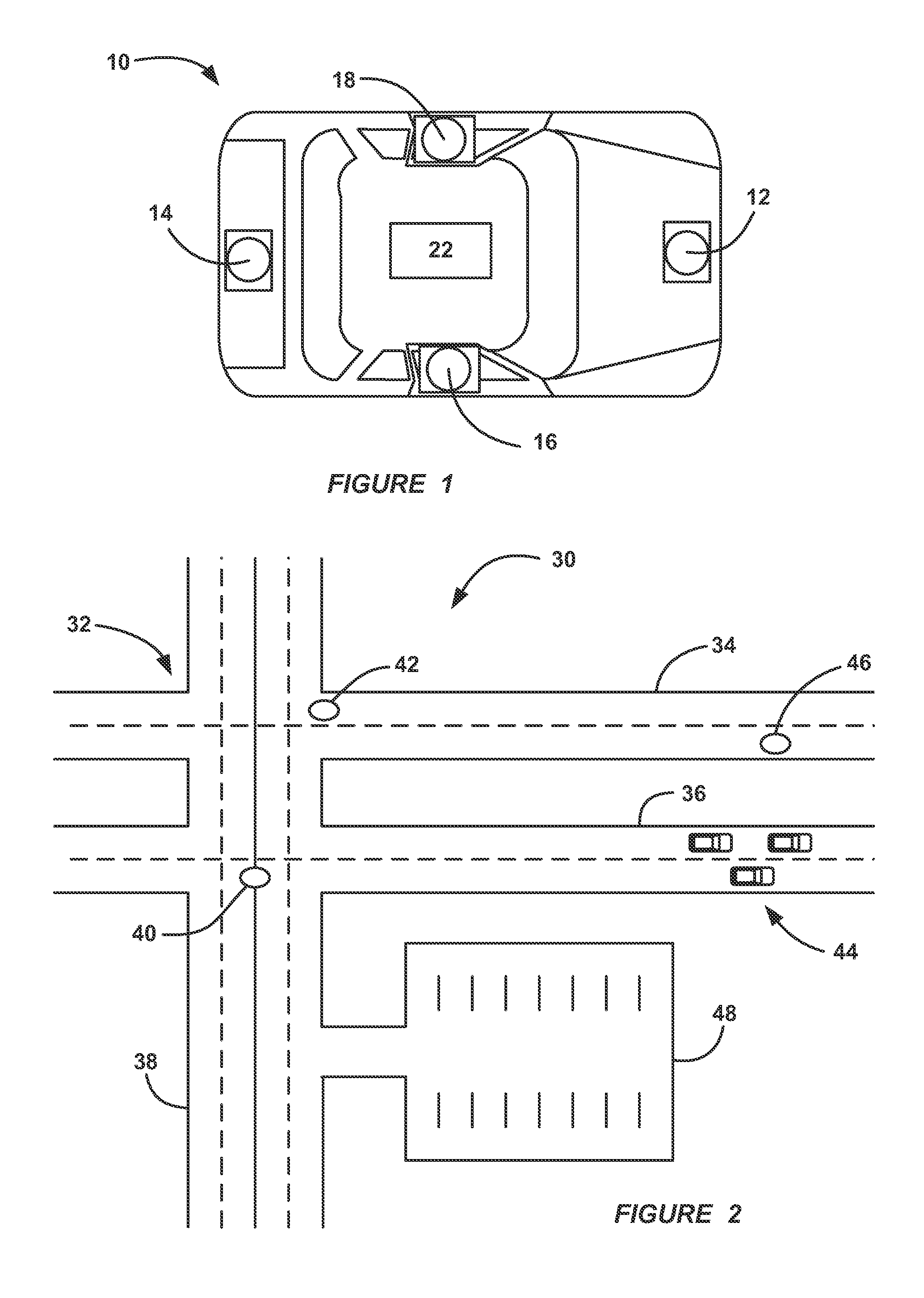

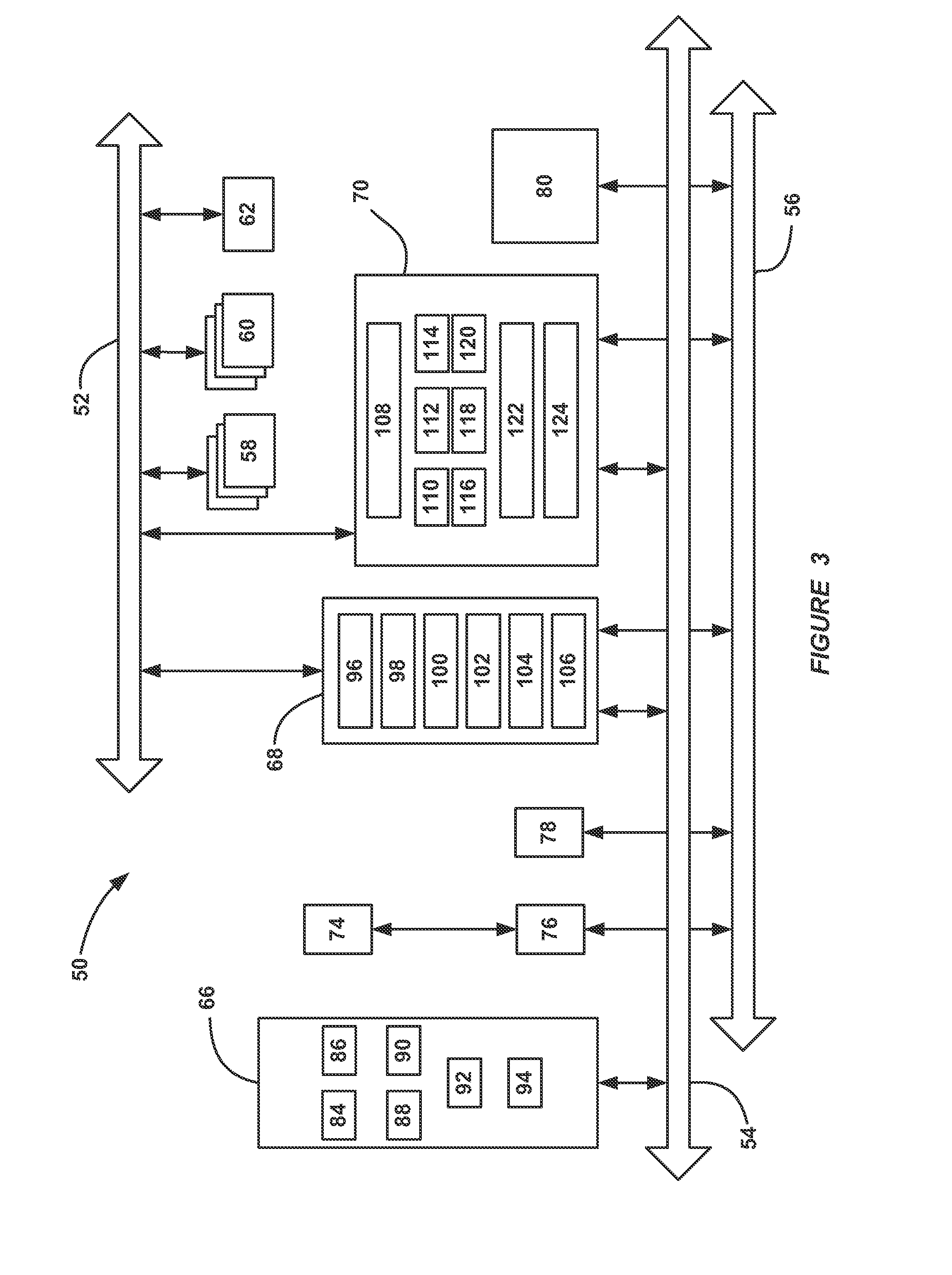

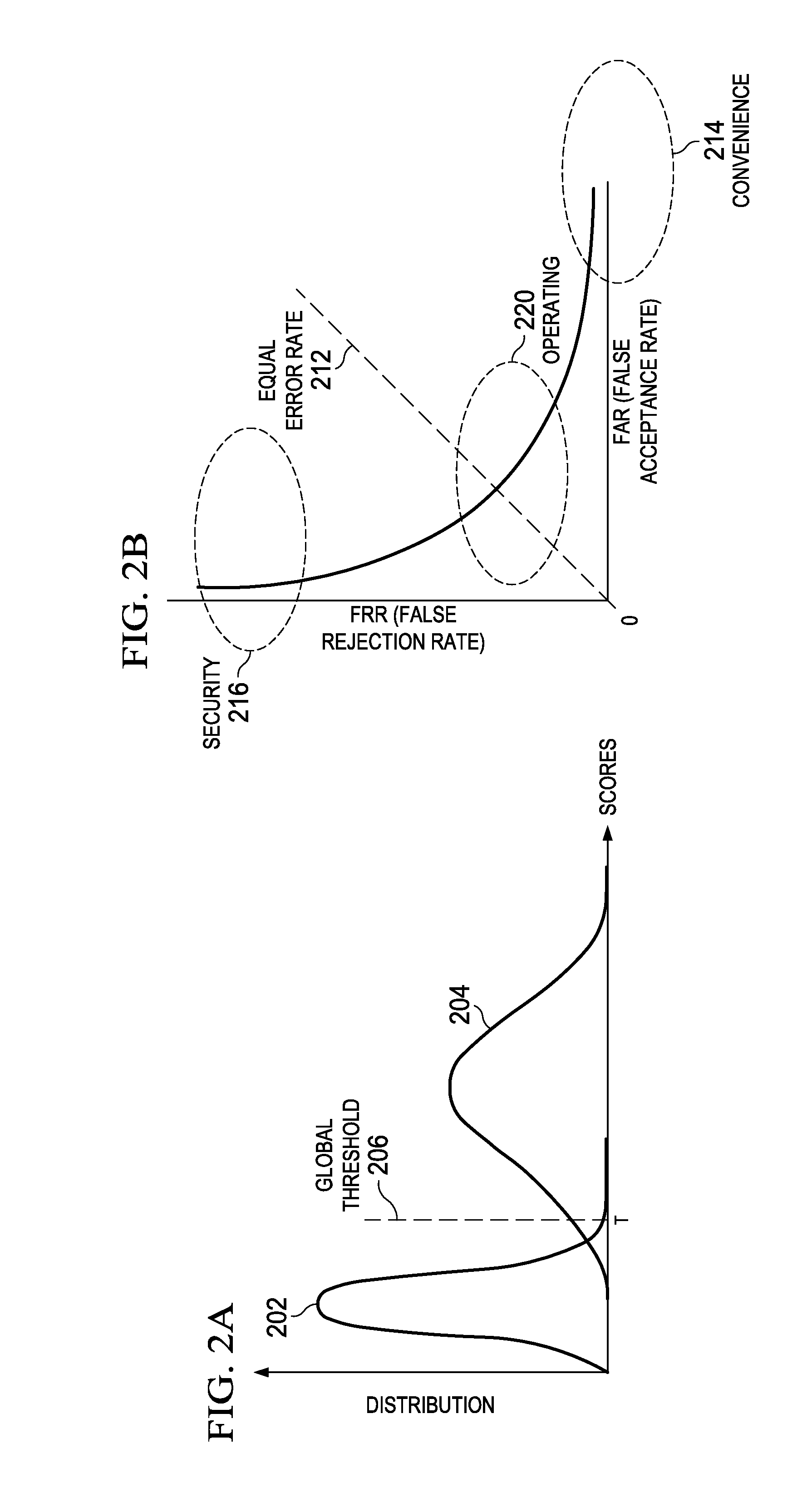

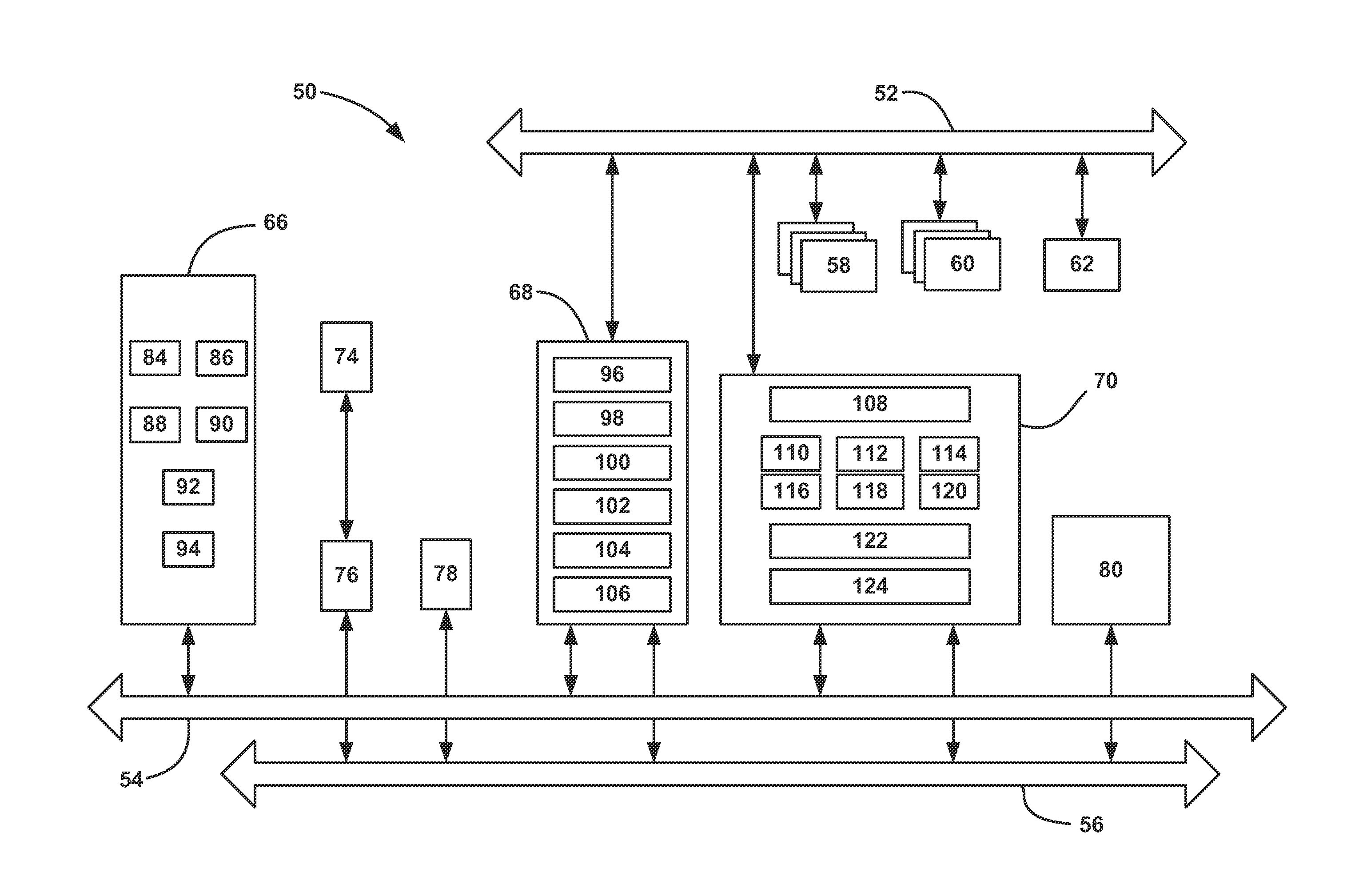

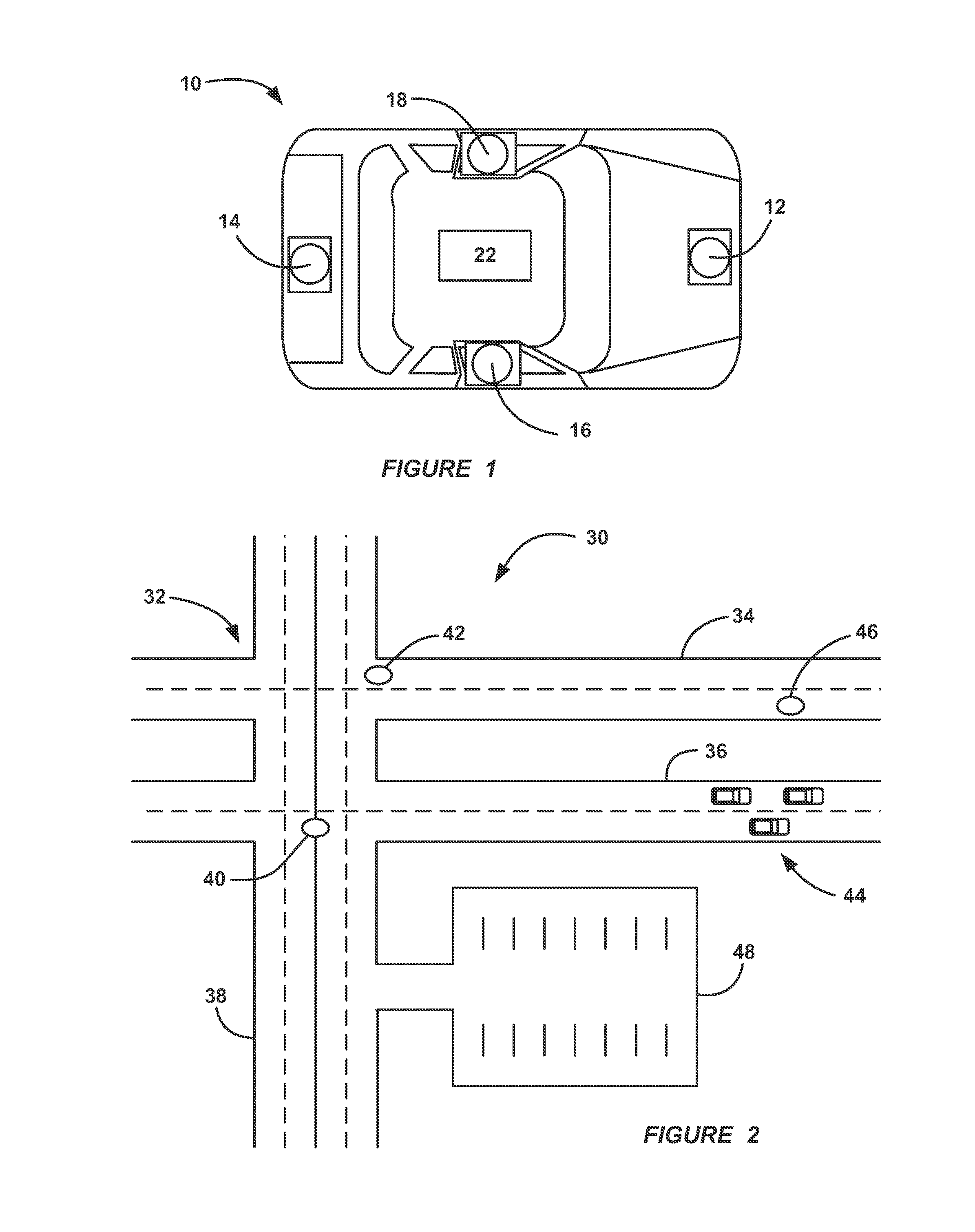

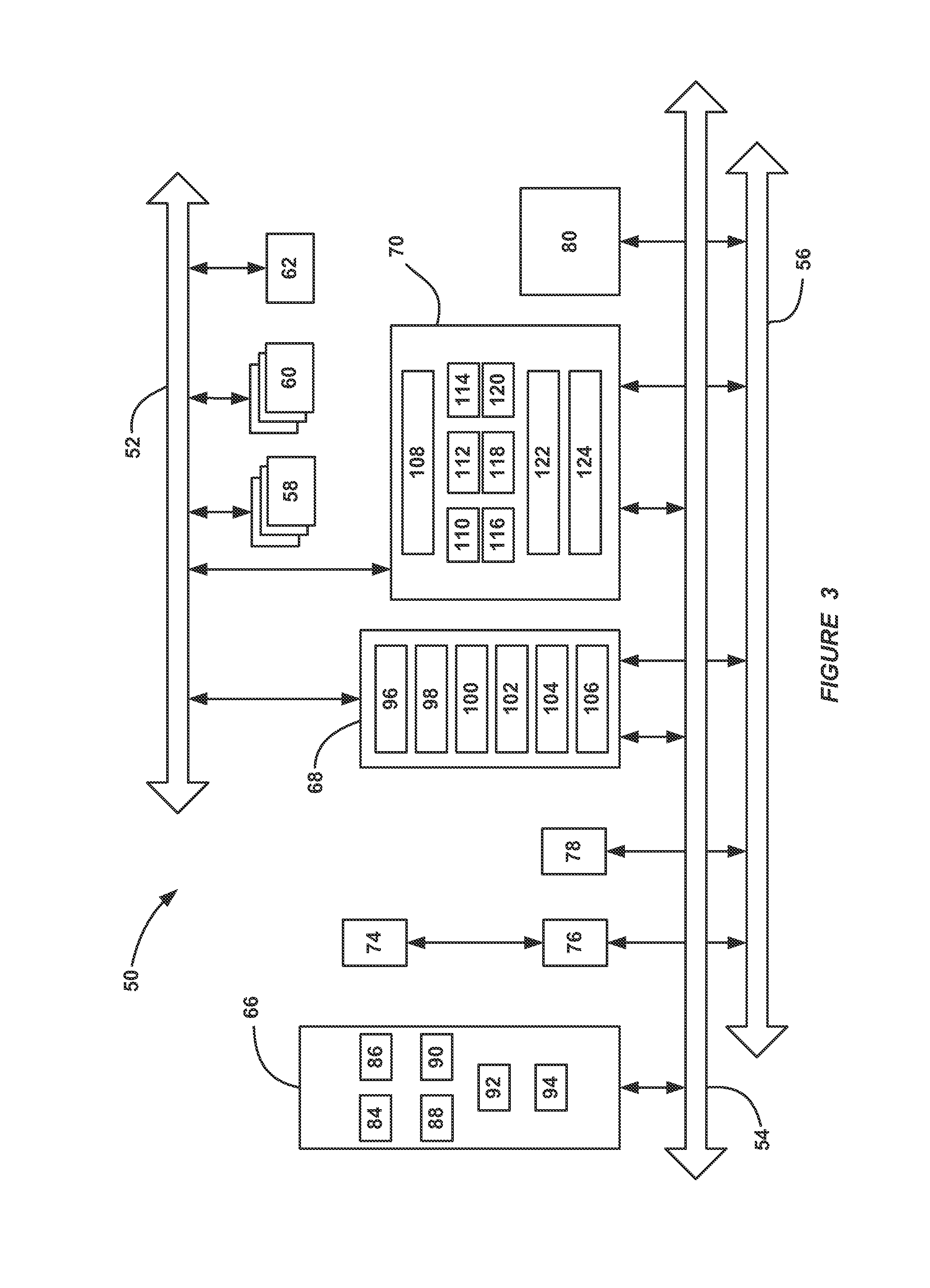

Context-aware threat response arbitration

ActiveUS20150057891A1Reduce probabilityImprove convenienceAnalogue computers for trafficSteering initiationsThreat levelThrottle

A method for prioritizing potential threats identified by vehicle active safety systems. The method includes providing context information including map information, vehicle position information, traffic assessment information, road condition information, weather condition information, and vehicle state information. The method calculates a system context value for each active safety system using the context information. Each active safety system provides a system threat level value, a system braking value, a system steering value, and a system throttle value. The method calculates an overall threat level value using all of the system context values and all of the system threat level values. The method then provides a braking request value to vehicle brakes based on all of the system braking values, a throttle request value to a vehicle throttle based on all of the system throttle values, and a steering request value to vehicle steering based on all of the system steering values.

Owner:GM GLOBAL TECH OPERATIONS LLC

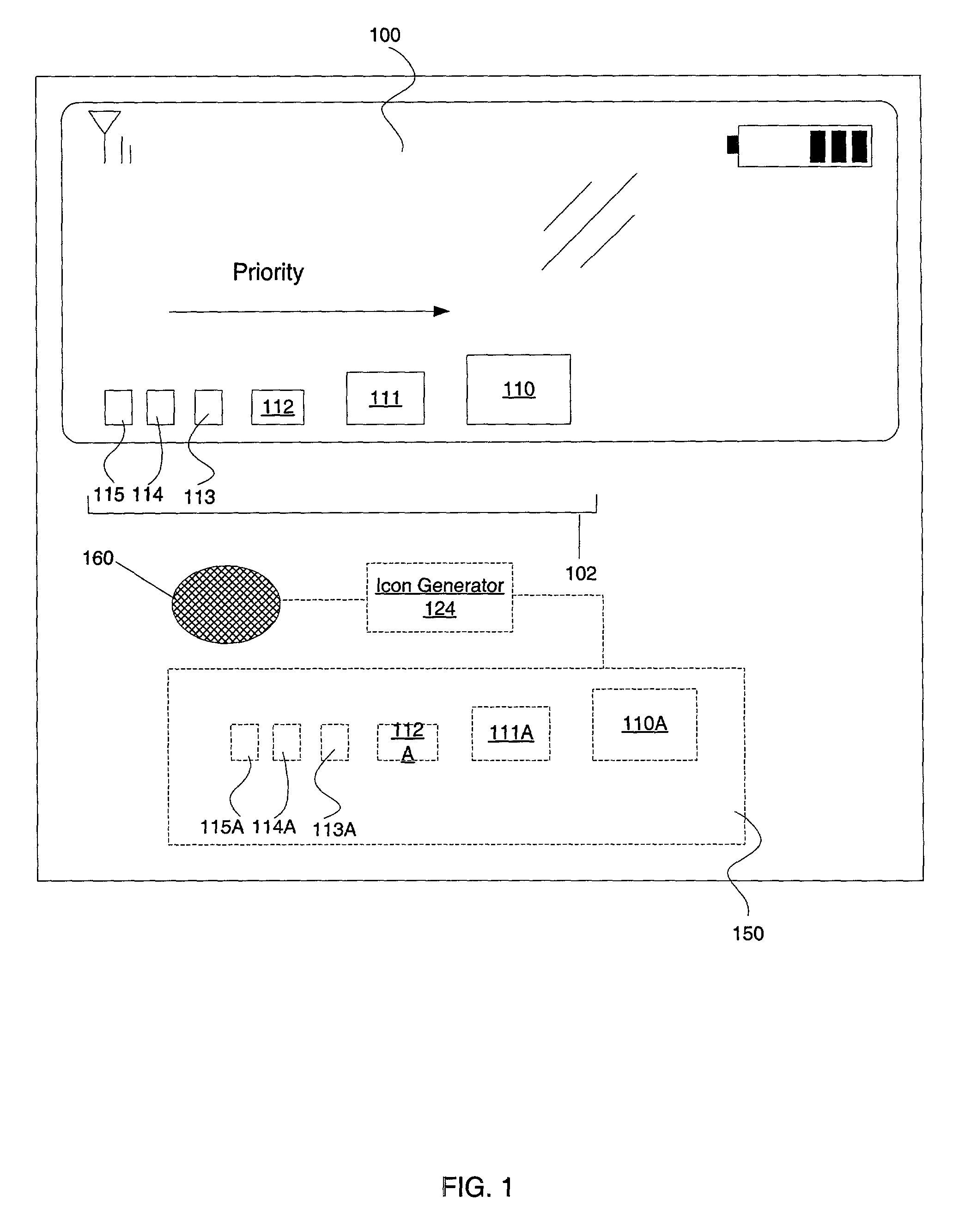

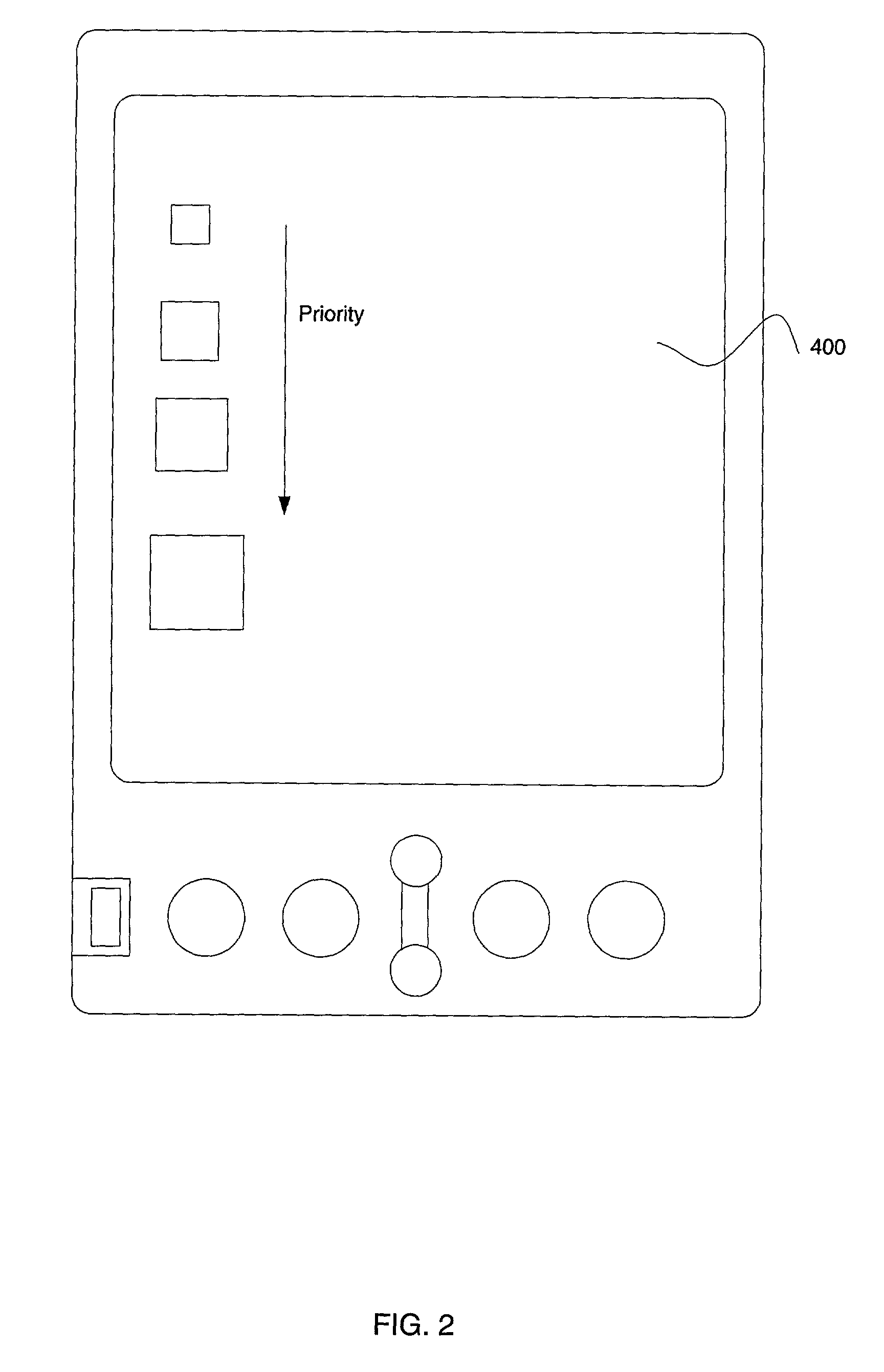

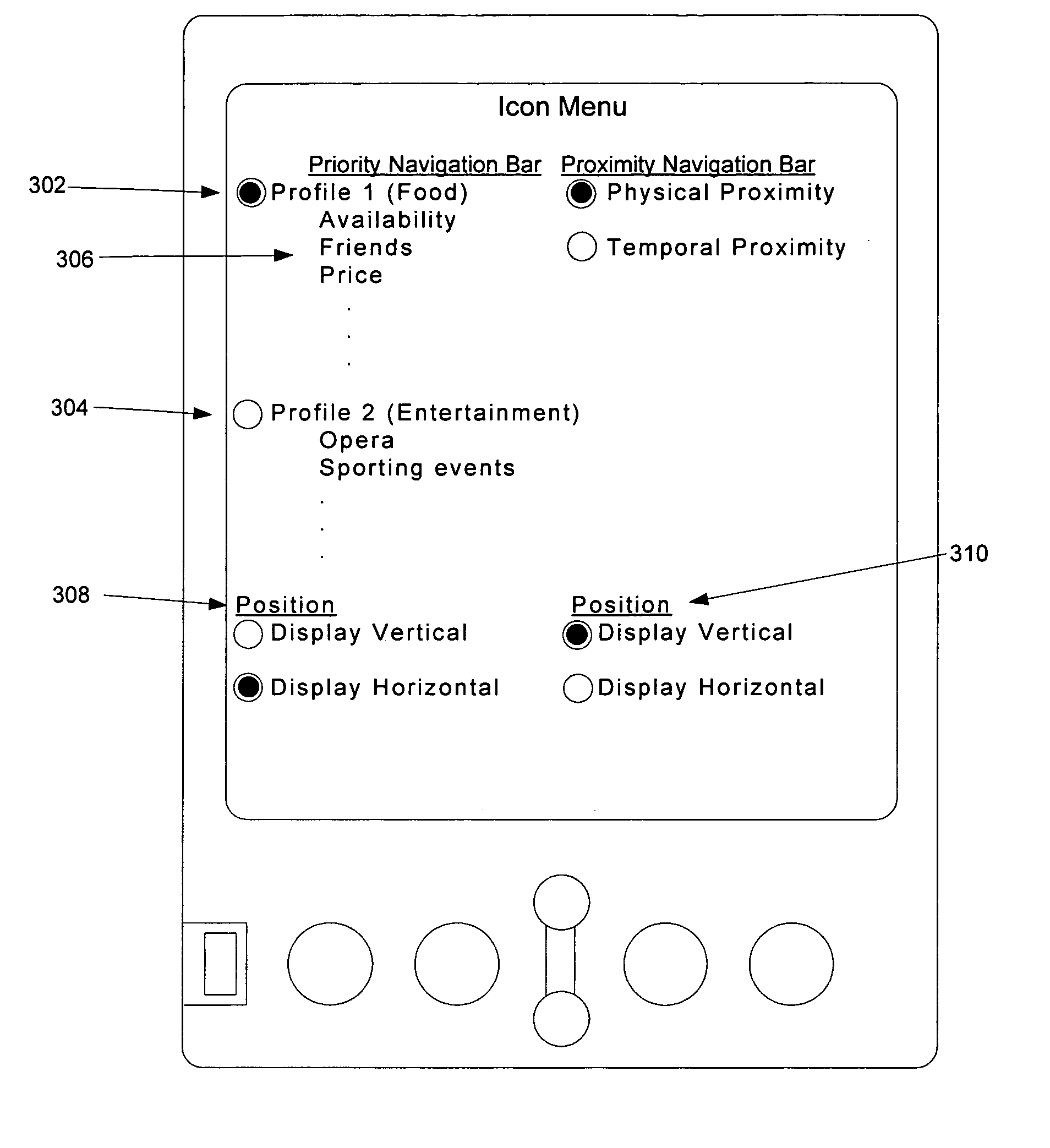

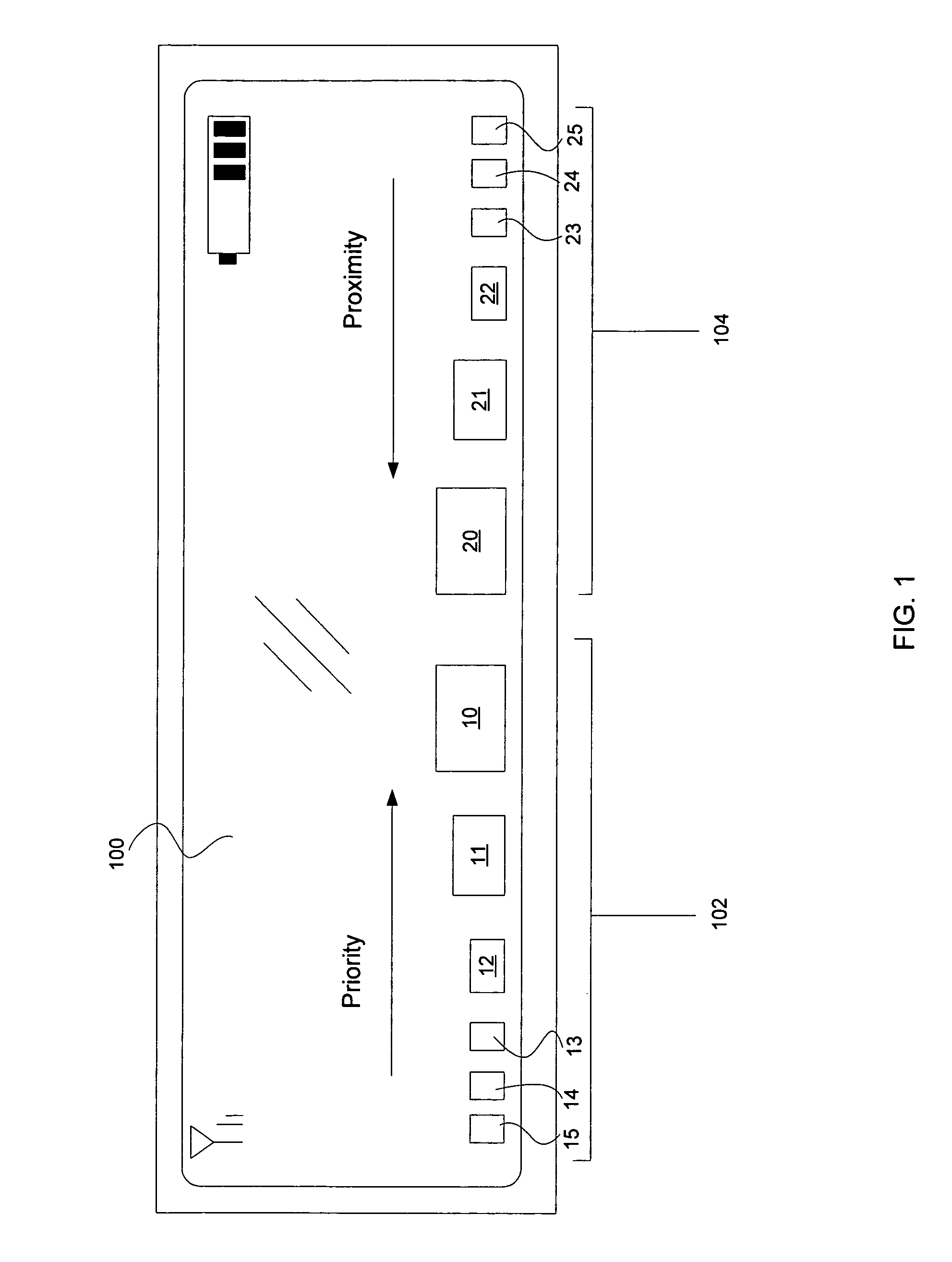

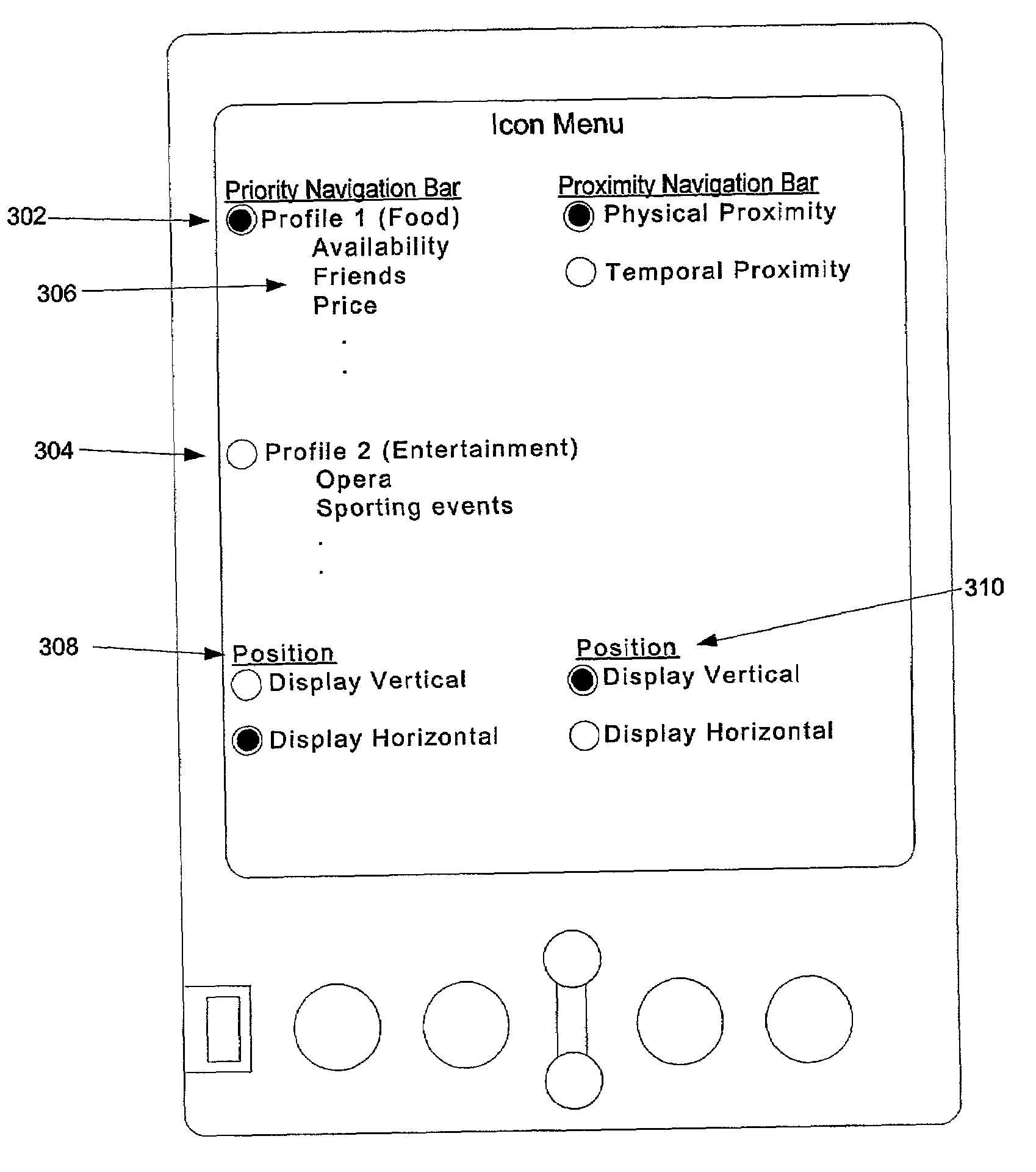

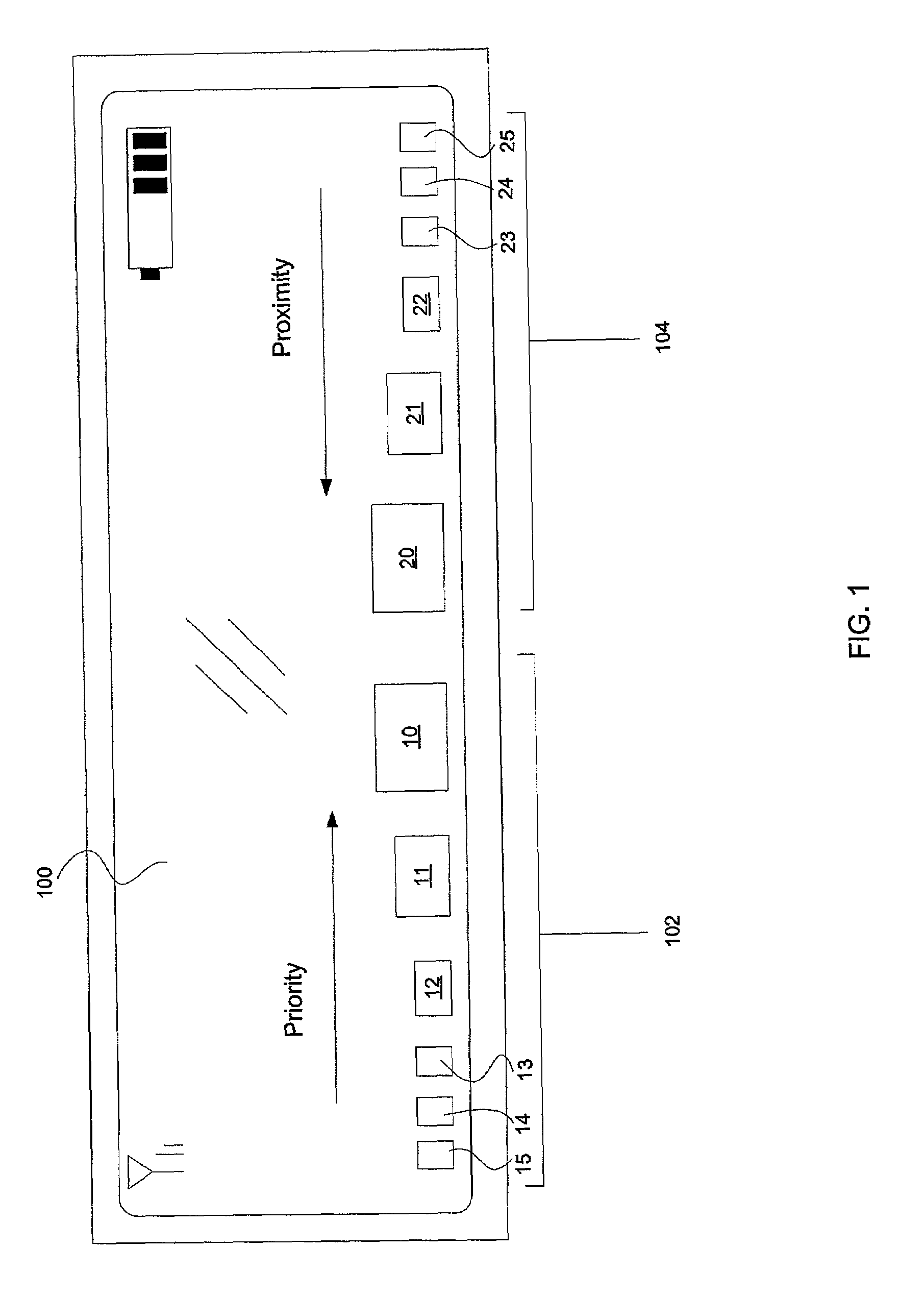

Multilevel sorting and displaying of contextual objects

InactiveUS20060053392A1Quick identificationSubstation equipmentDigital output to display deviceMultiple contextDisplay device

An apparatus and method for displaying a plurality of icons on the display of a mobile terminal are provided. Icons are displayed in at least two different sections. The first section includes icons having sizes determined by comparing characteristics of associated messages to one or more context values, such as time of day, geographic area, or user profile characteristics. The second section includes icons having sizes determined by the proximities of the message sources to the mobile terminal.

Owner:NOKIA CORP

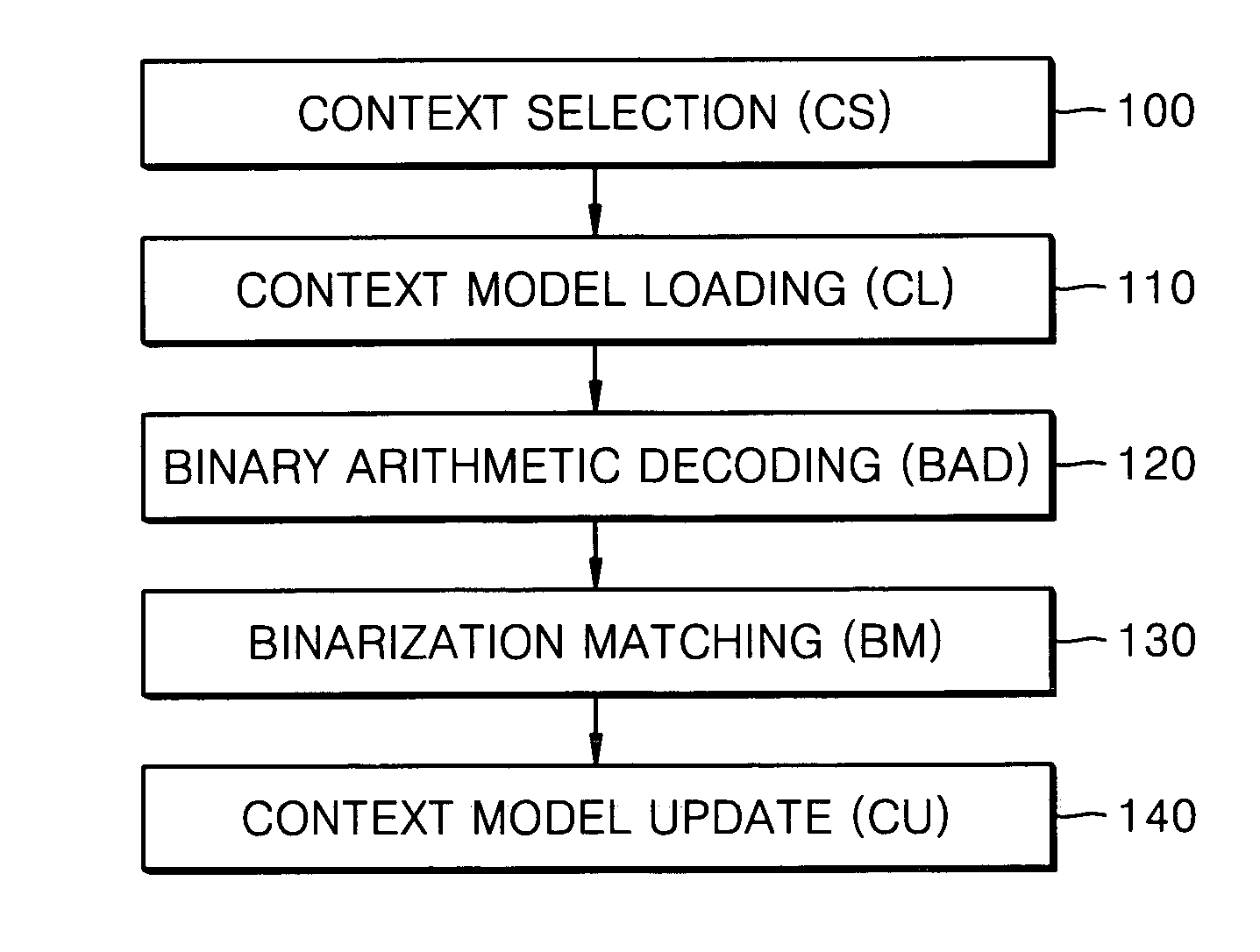

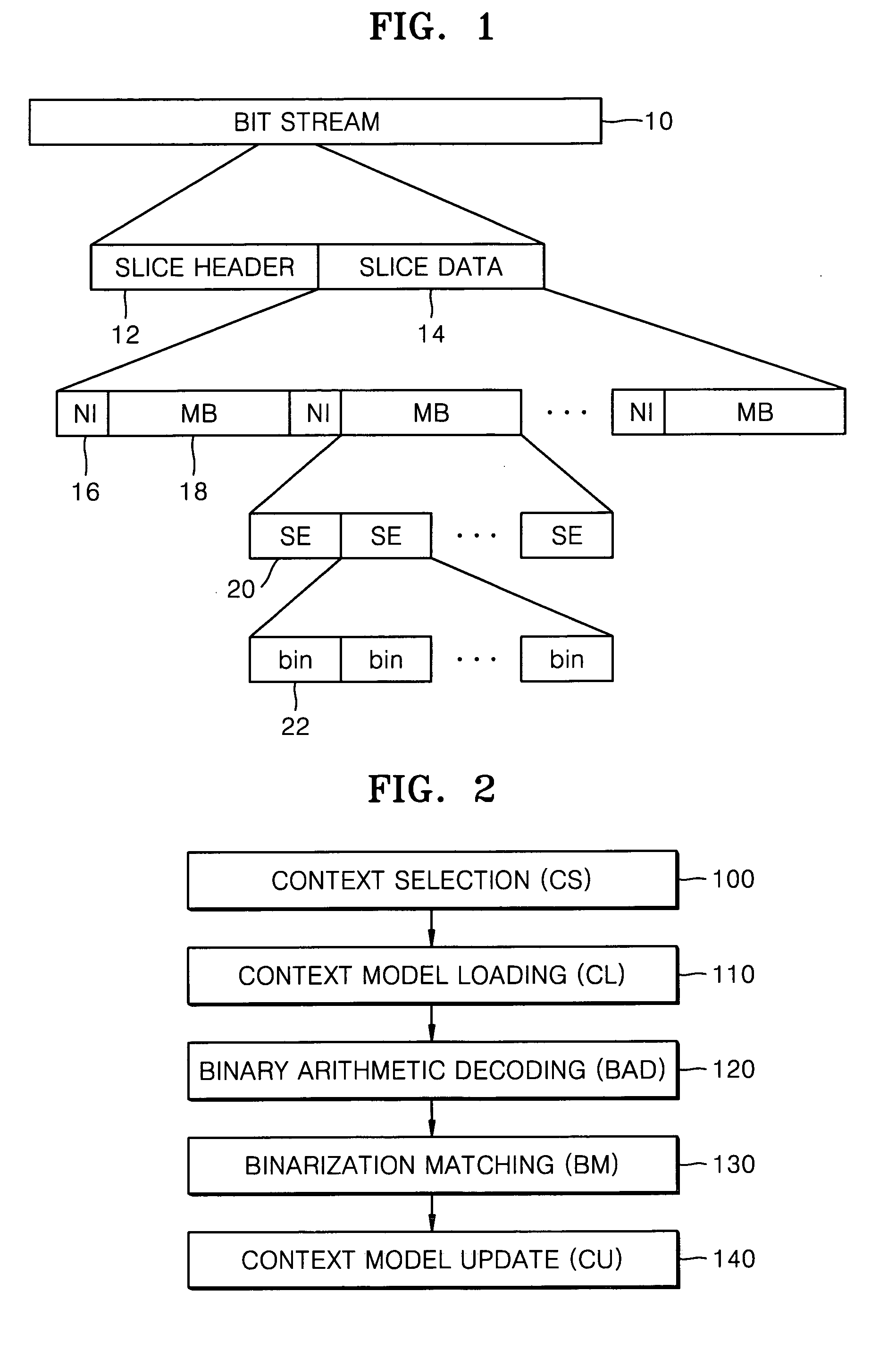

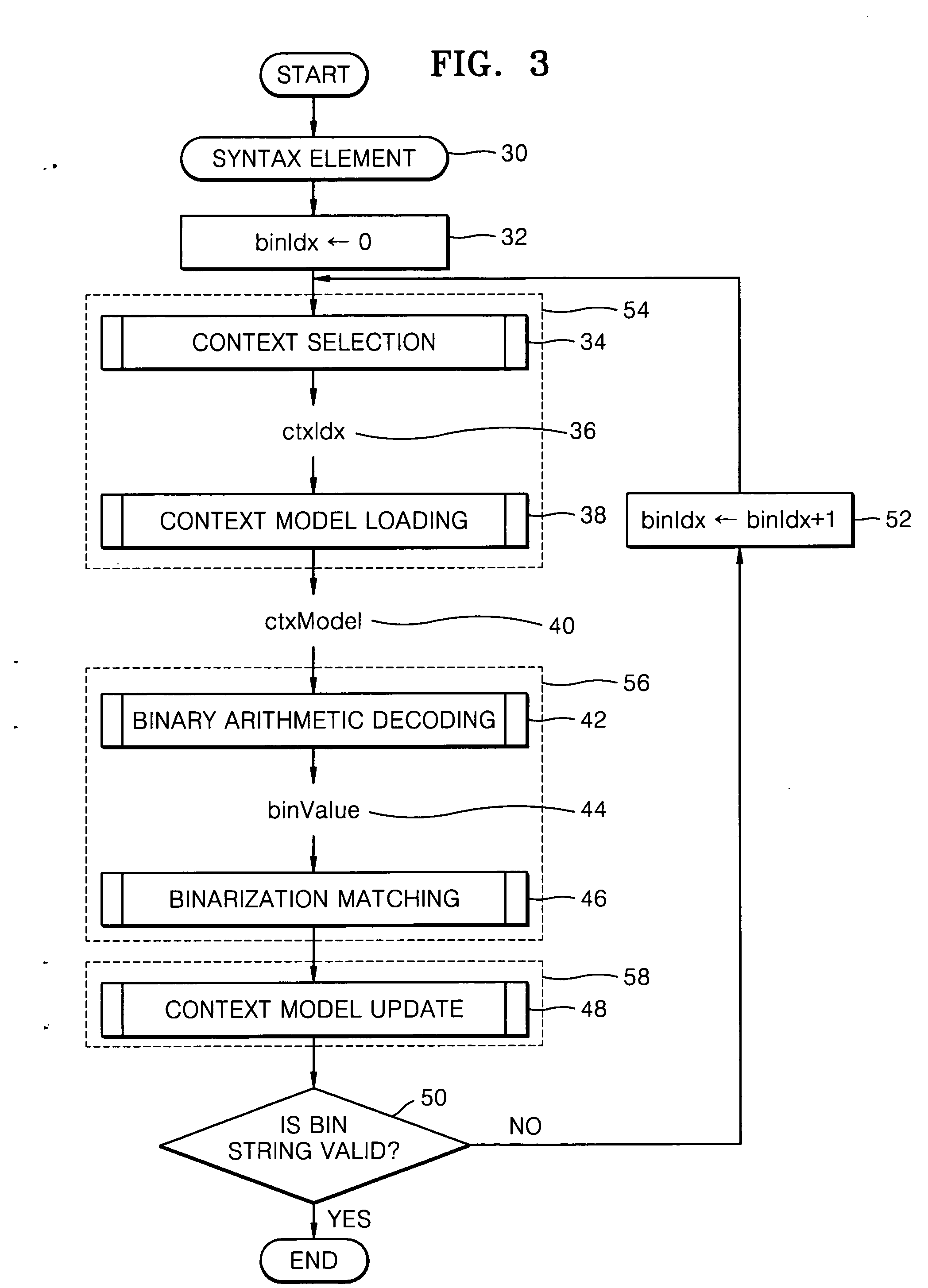

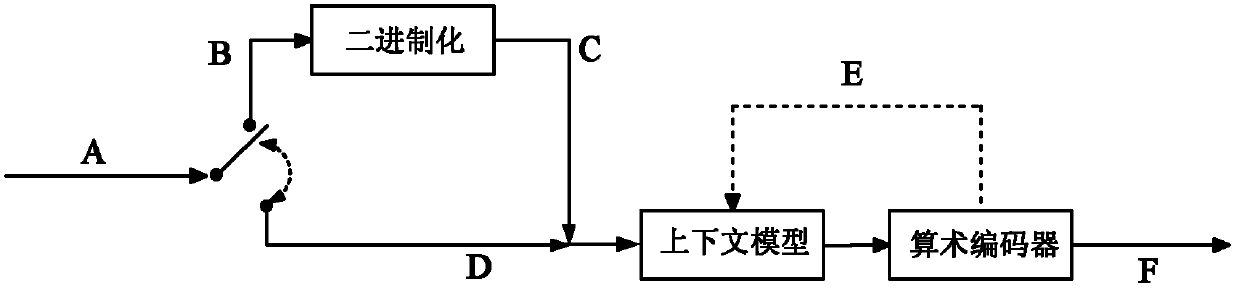

Method of decoding bin values using pipeline architecture and decoding device therefor

InactiveUS20070115154A1Pulse modulation television signal transmissionCode conversionContext modelTheoretical computer science

A method and device for decoding bin values using a pipeline architecture in a CABAC decoder are provided. The method includes reading a first context model required to decode a first bin value, from a memory; determining whether a second context model required to decode a second bin value is the same as the first context model, while decoding the first bin value using the first context model; determining whether a third context model required to decode a third bin value is the same as the second context model, while decoding the second bin value using the second context model, if it is determined that the second context model is the same as the first context model; and reading the second context model from the memory, if it is determined that the second context model is not the same as the first context model.

Owner:SAMSUNG ELECTRONICS CO LTD

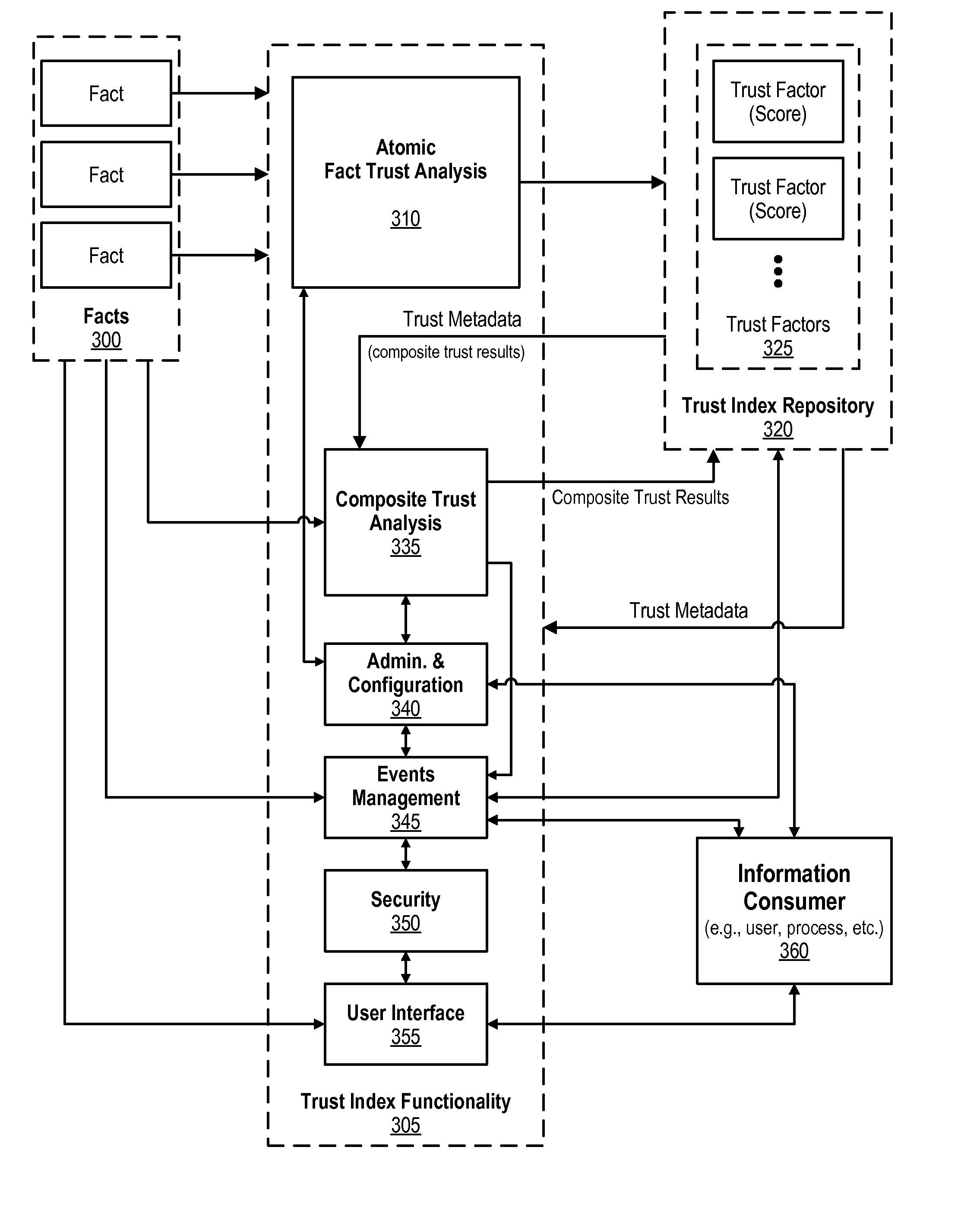

Configurable trust context assignable to facts and associated trust metadata

An approach is provided for selecting a trust factor from trust factors that are included in a trust index repository. A trust metaphor is associated with the selected trust factor. The trust metaphor includes various context values. Range values are received and the trust metaphor, context values, and range values are associated with the selected trust factor. A request is received from a data consumer, the request corresponding to a trust factor metadata score that is associated with the selected trust factor. The trust factor metadata score is retrieved and matched with the range values. The matching results in one of the context values being selected based on the retrieved trust factor metadata score. The selected context value is then provided to the data consumer.

Owner:IBM CORP

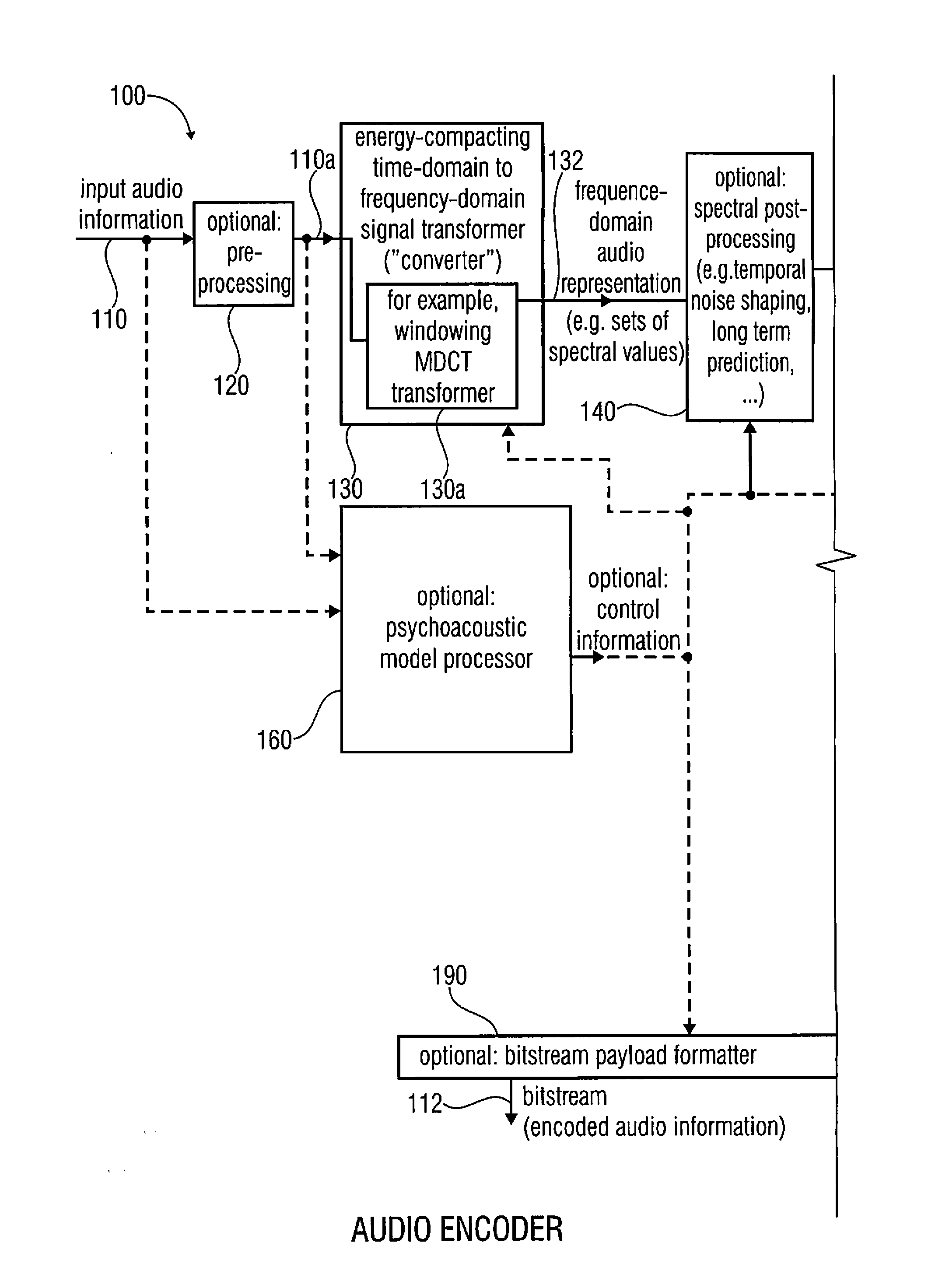

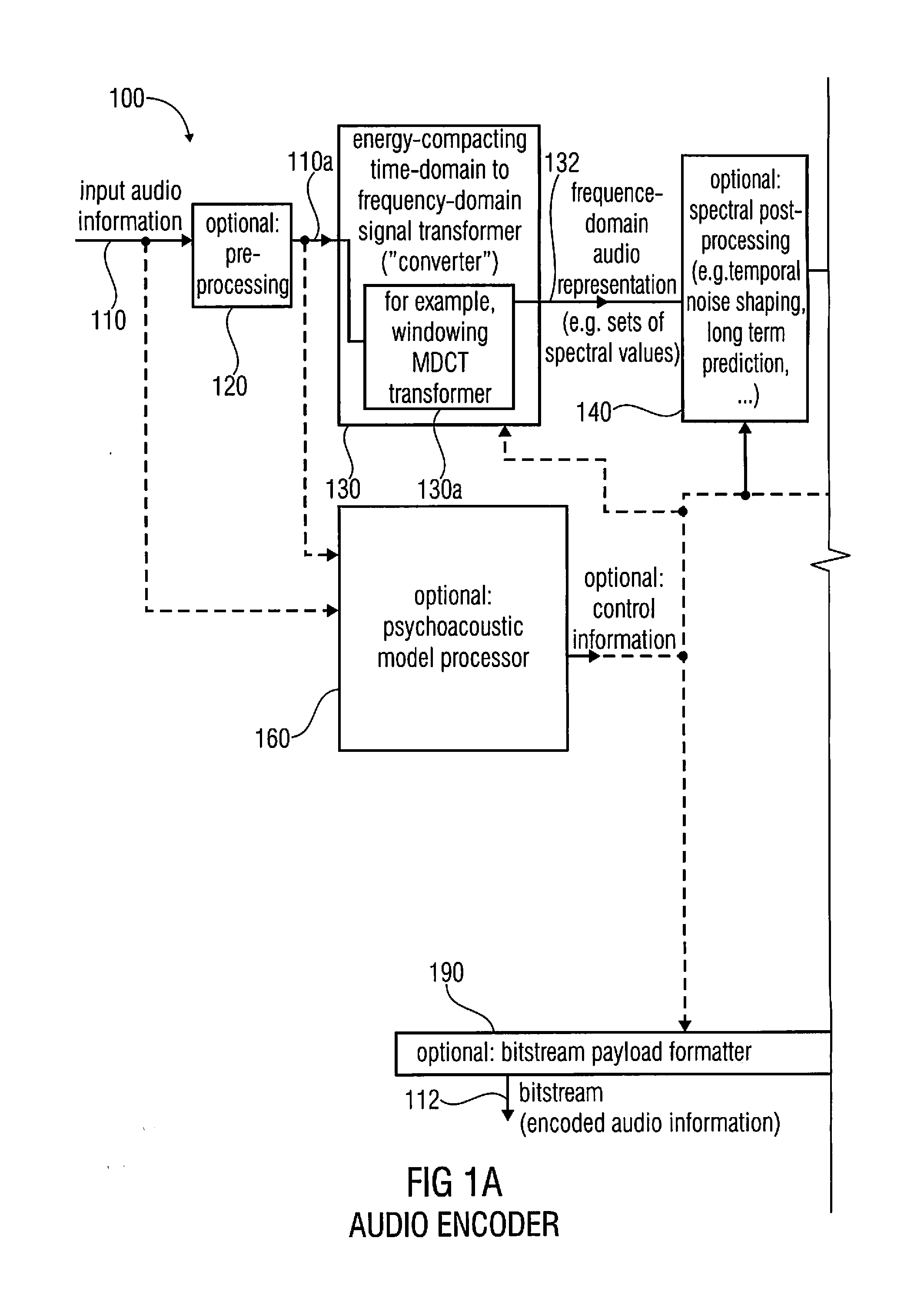

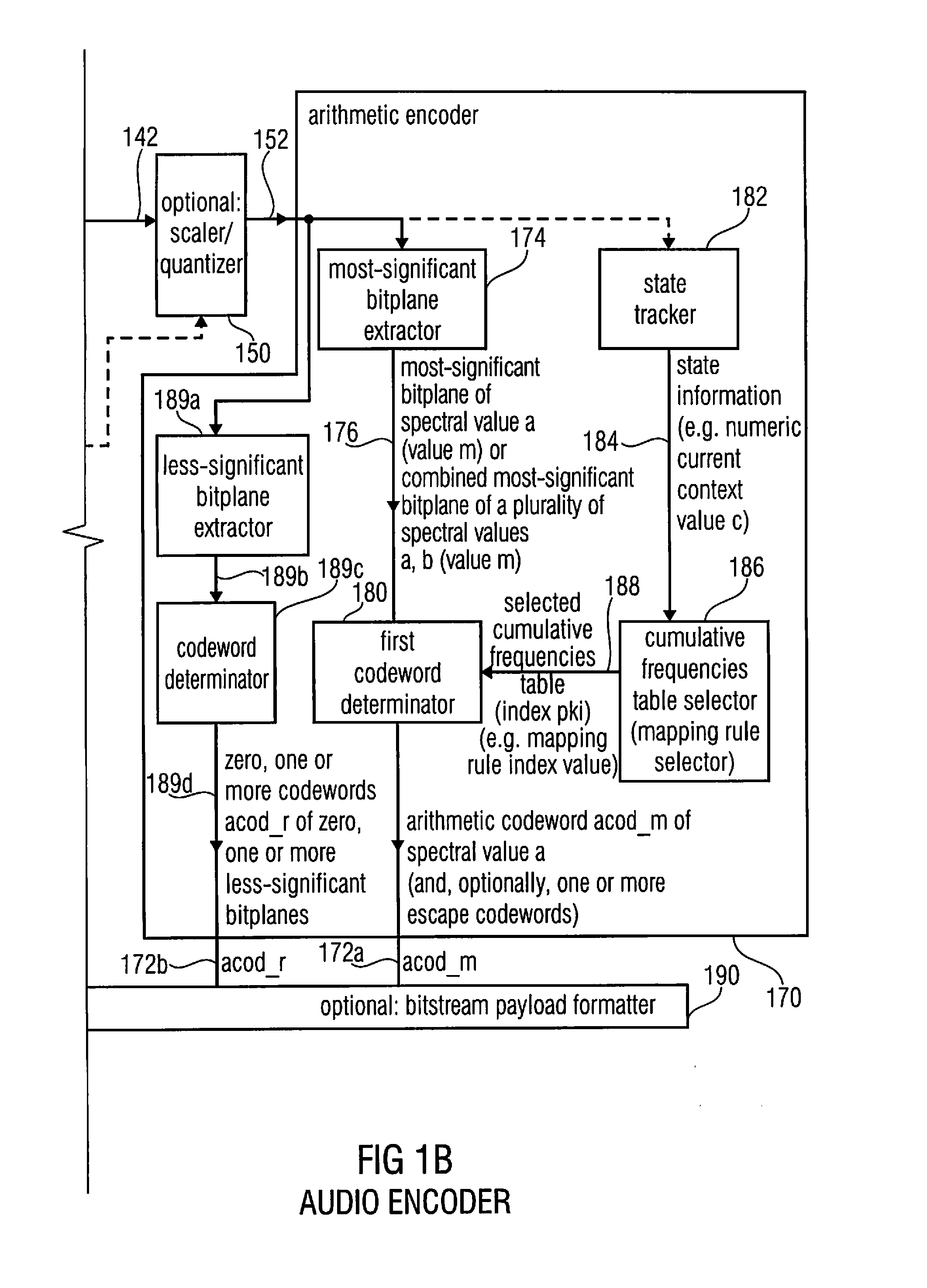

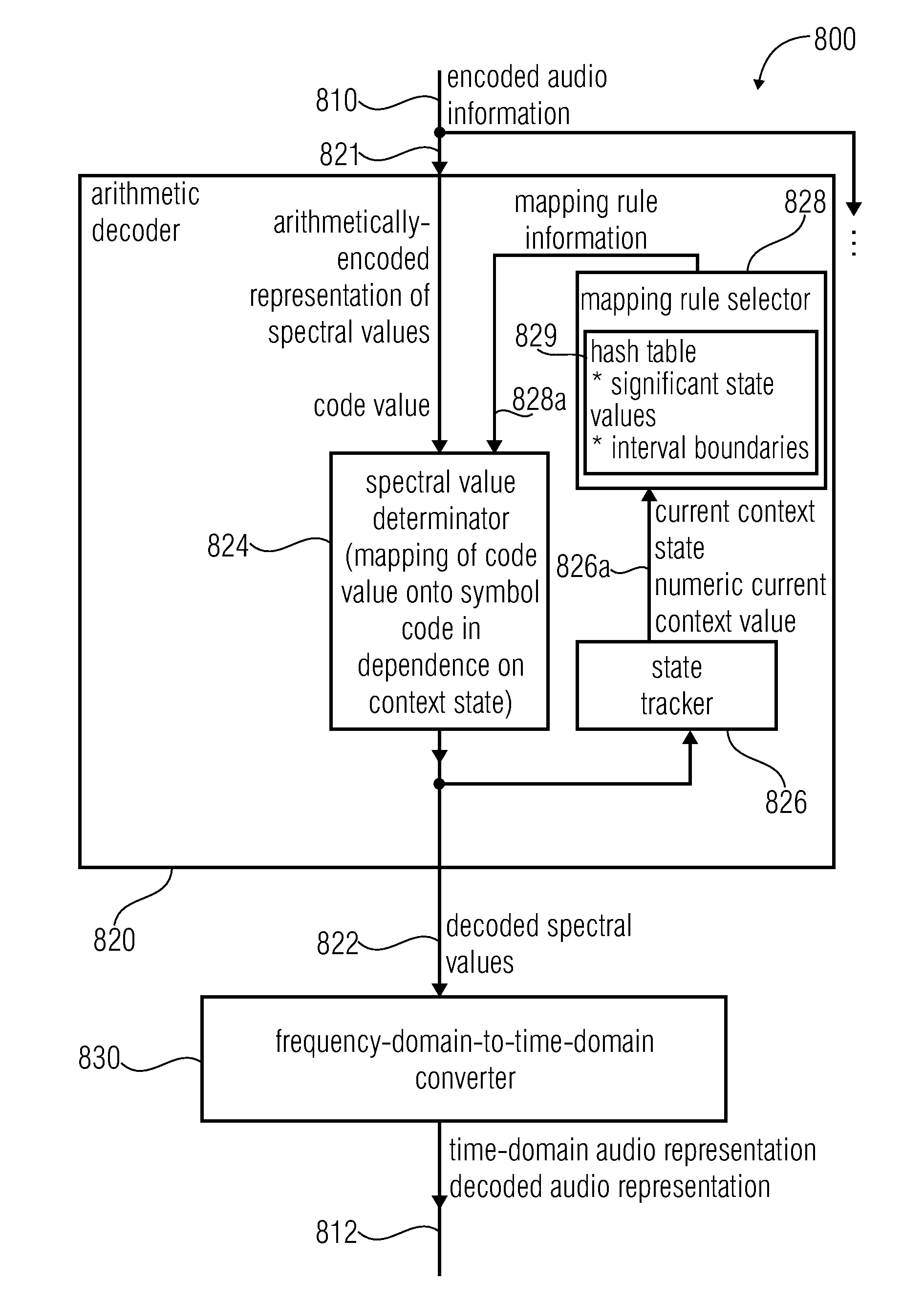

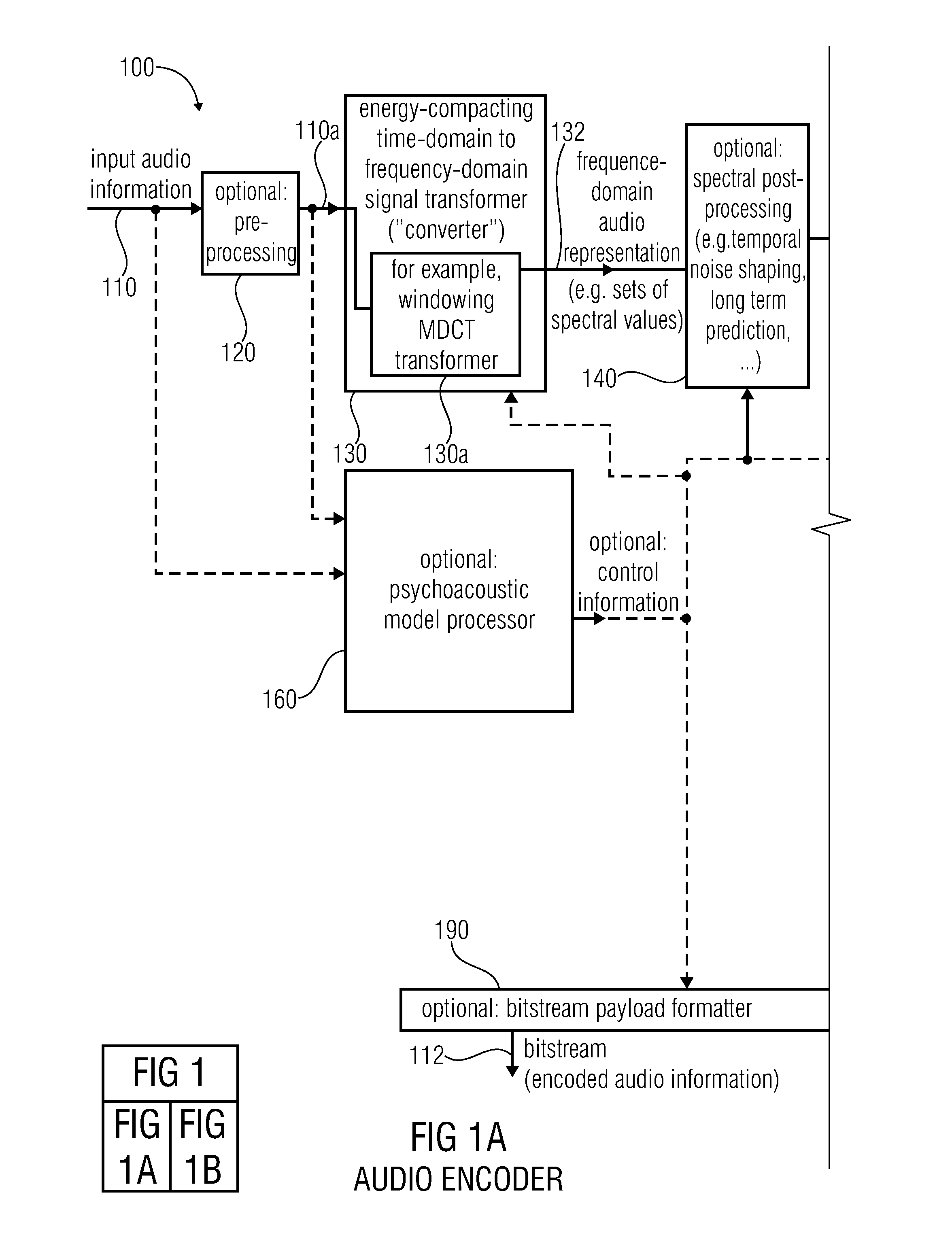

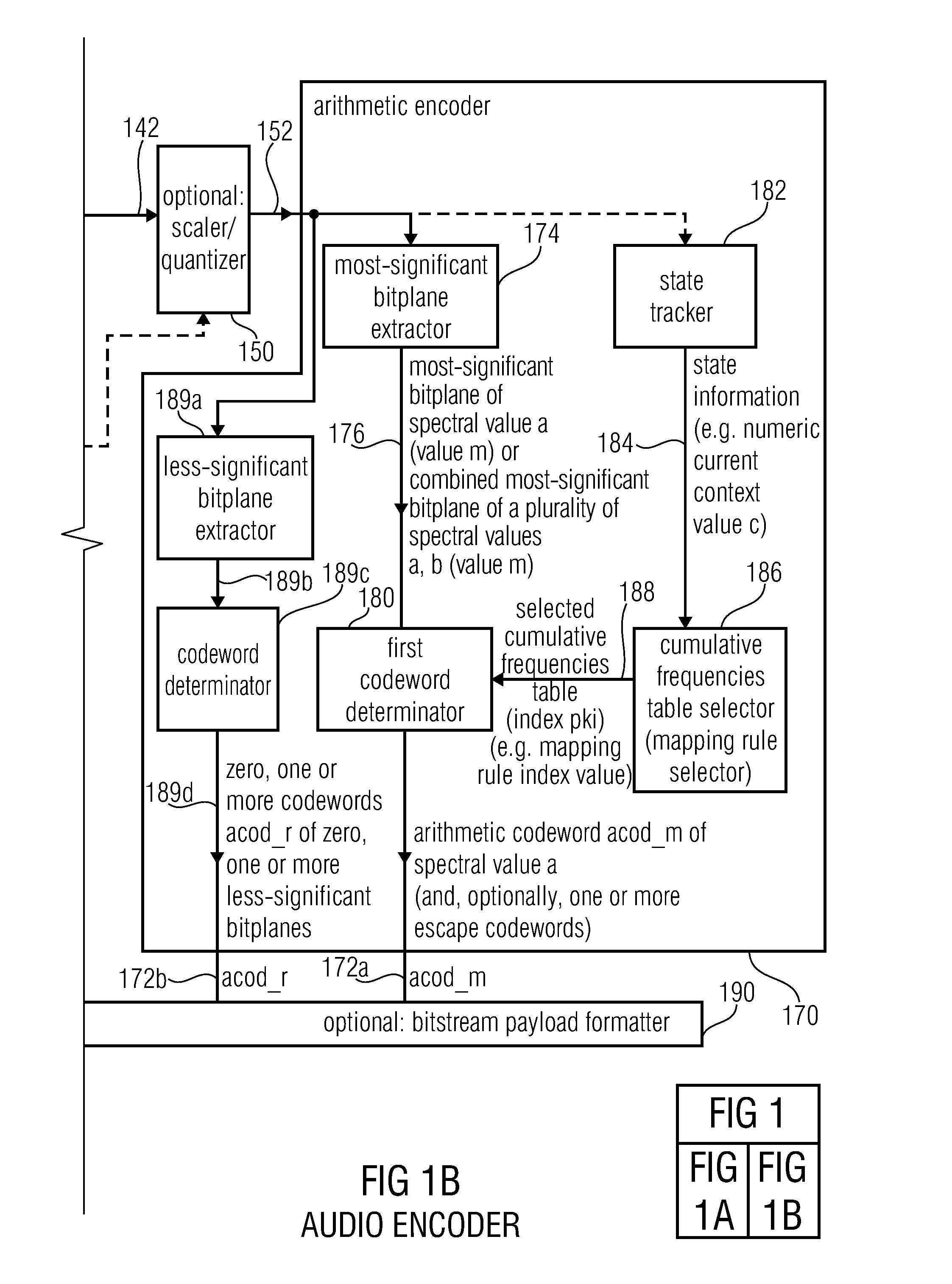

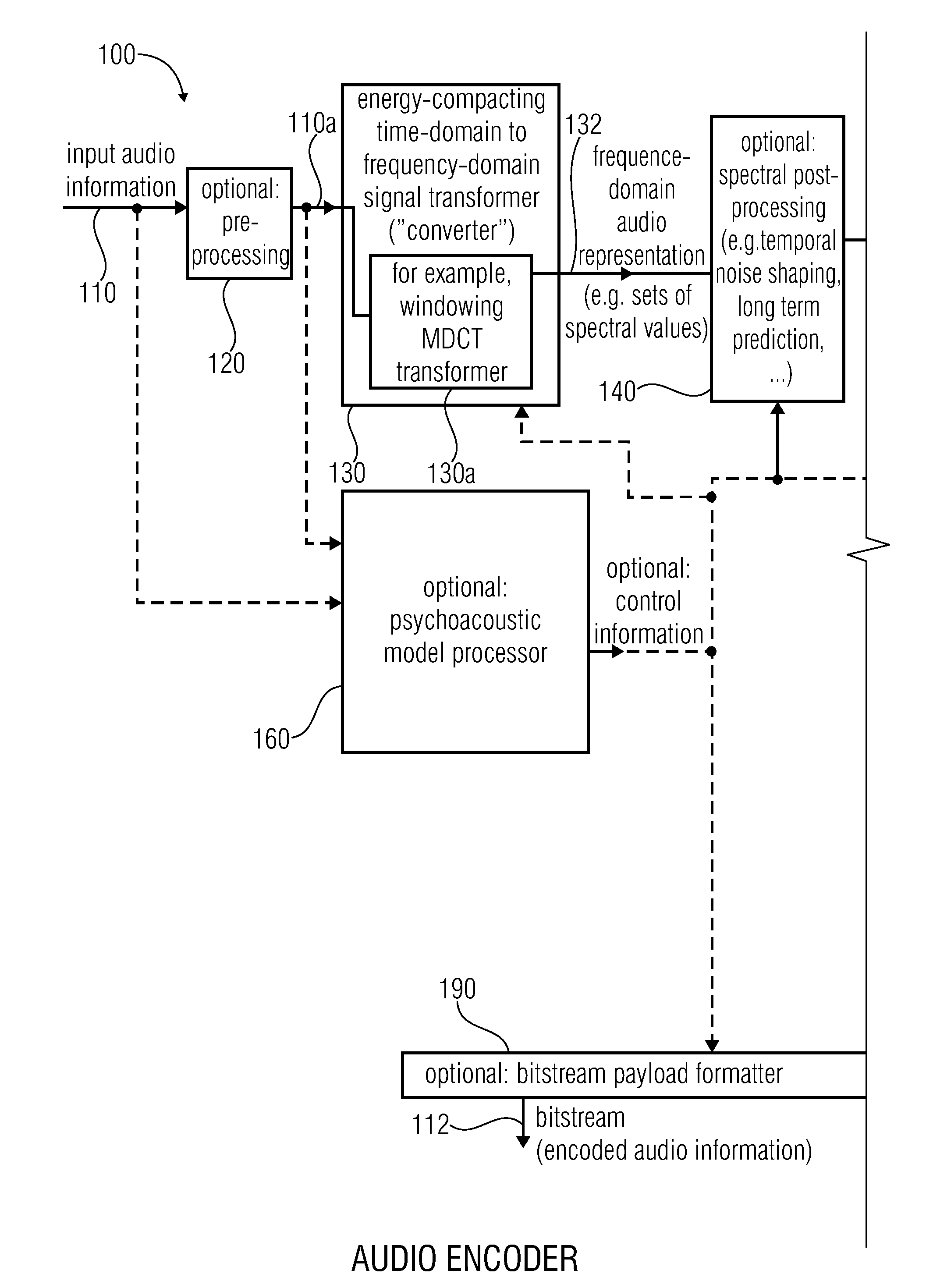

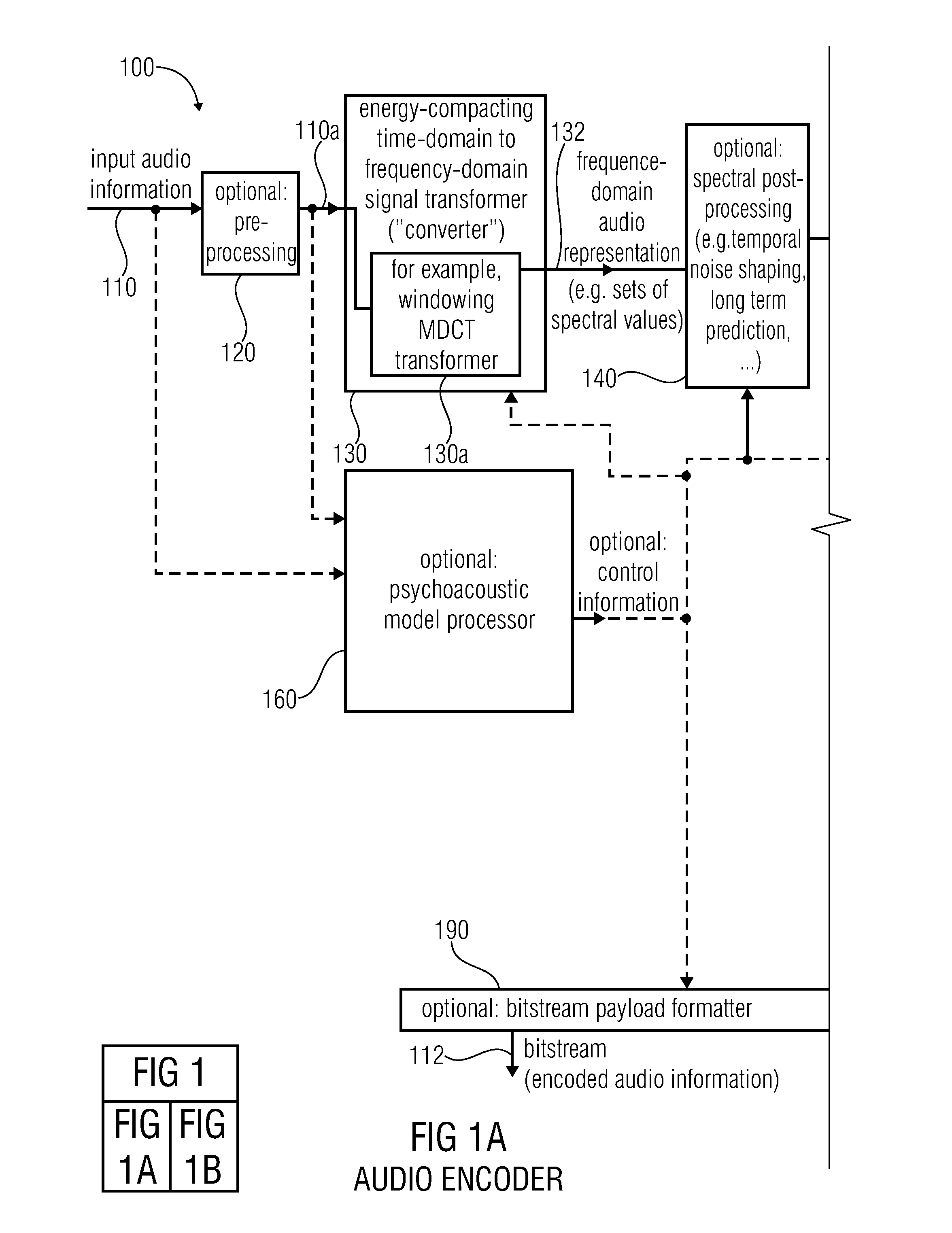

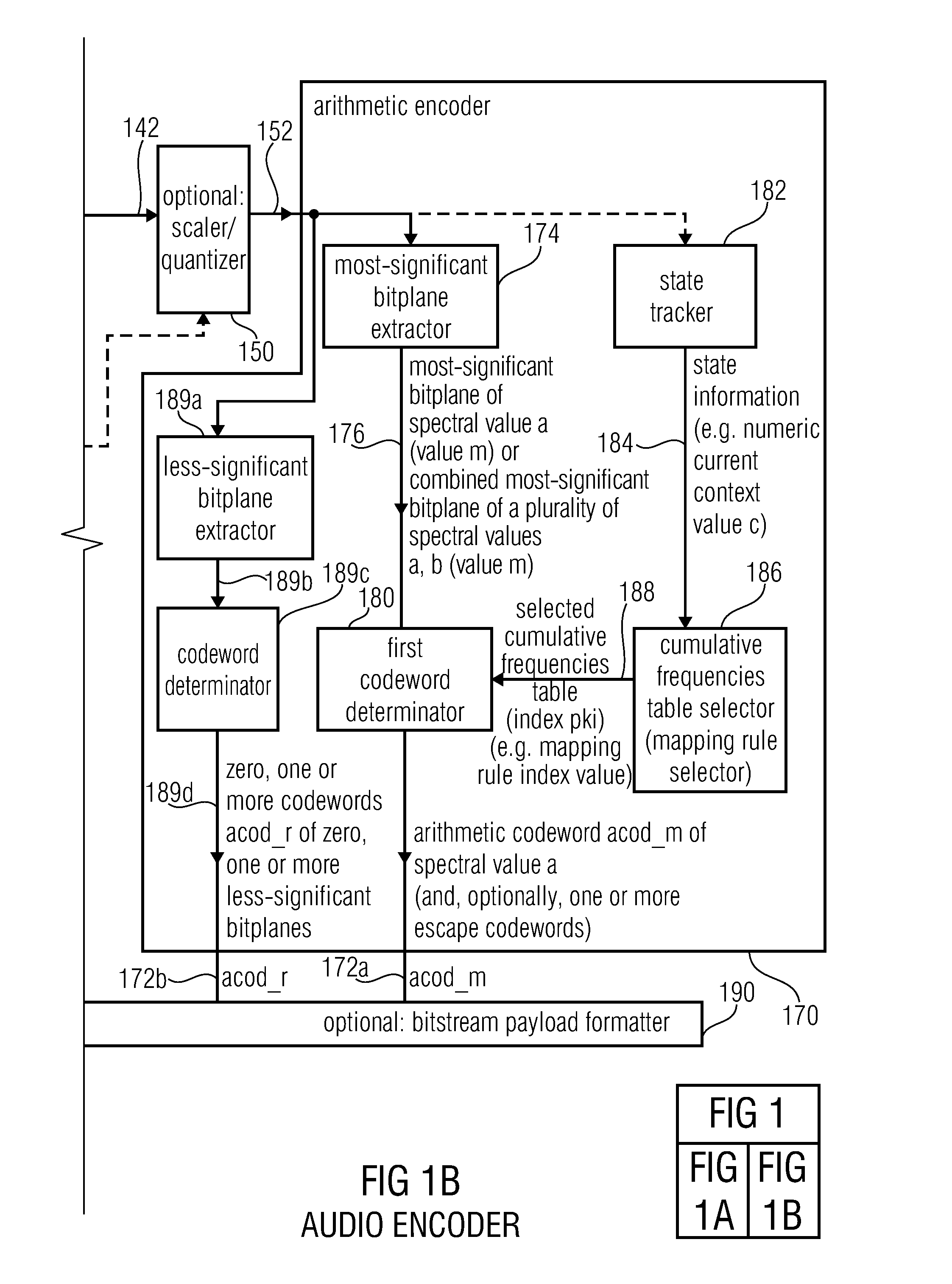

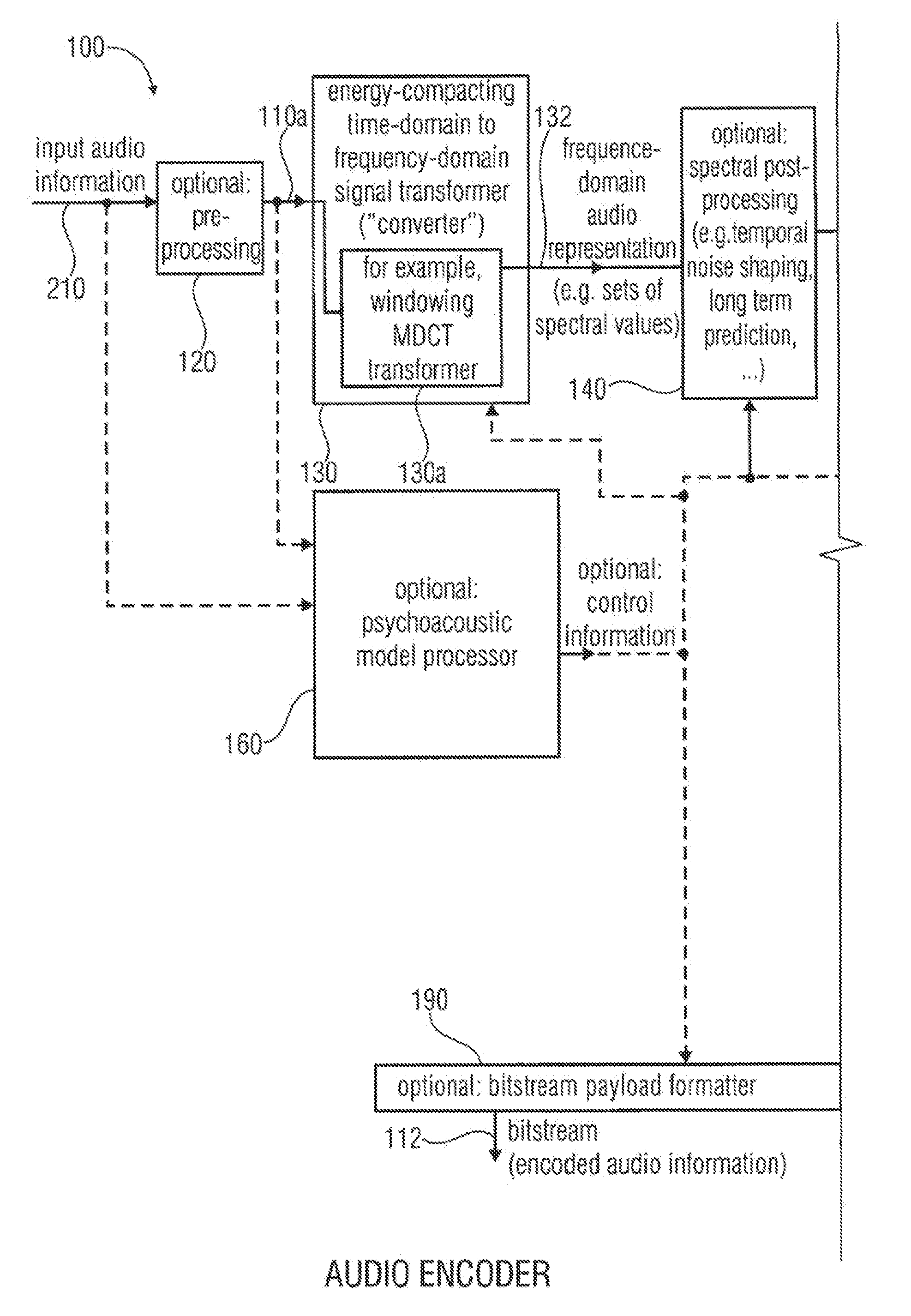

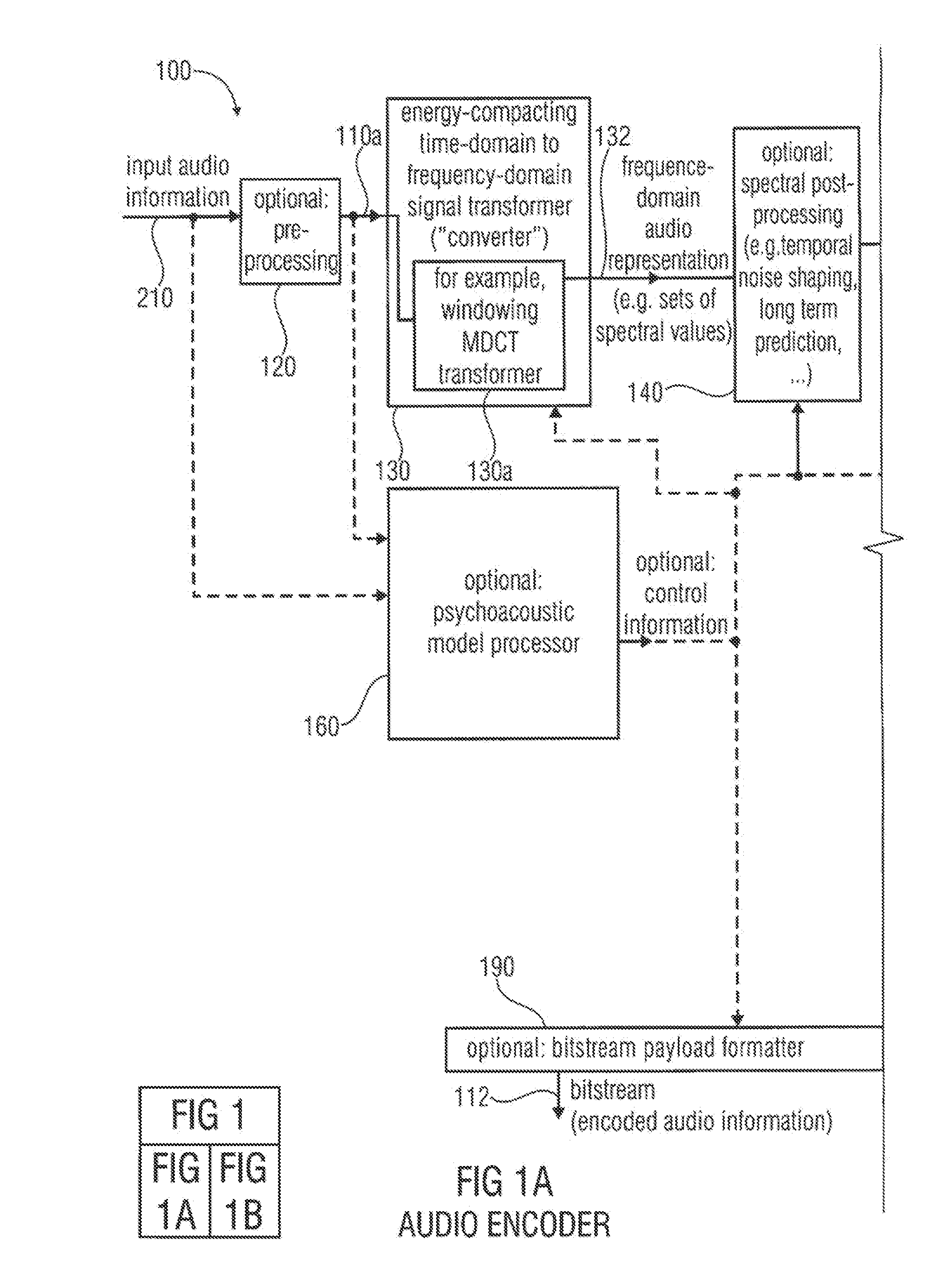

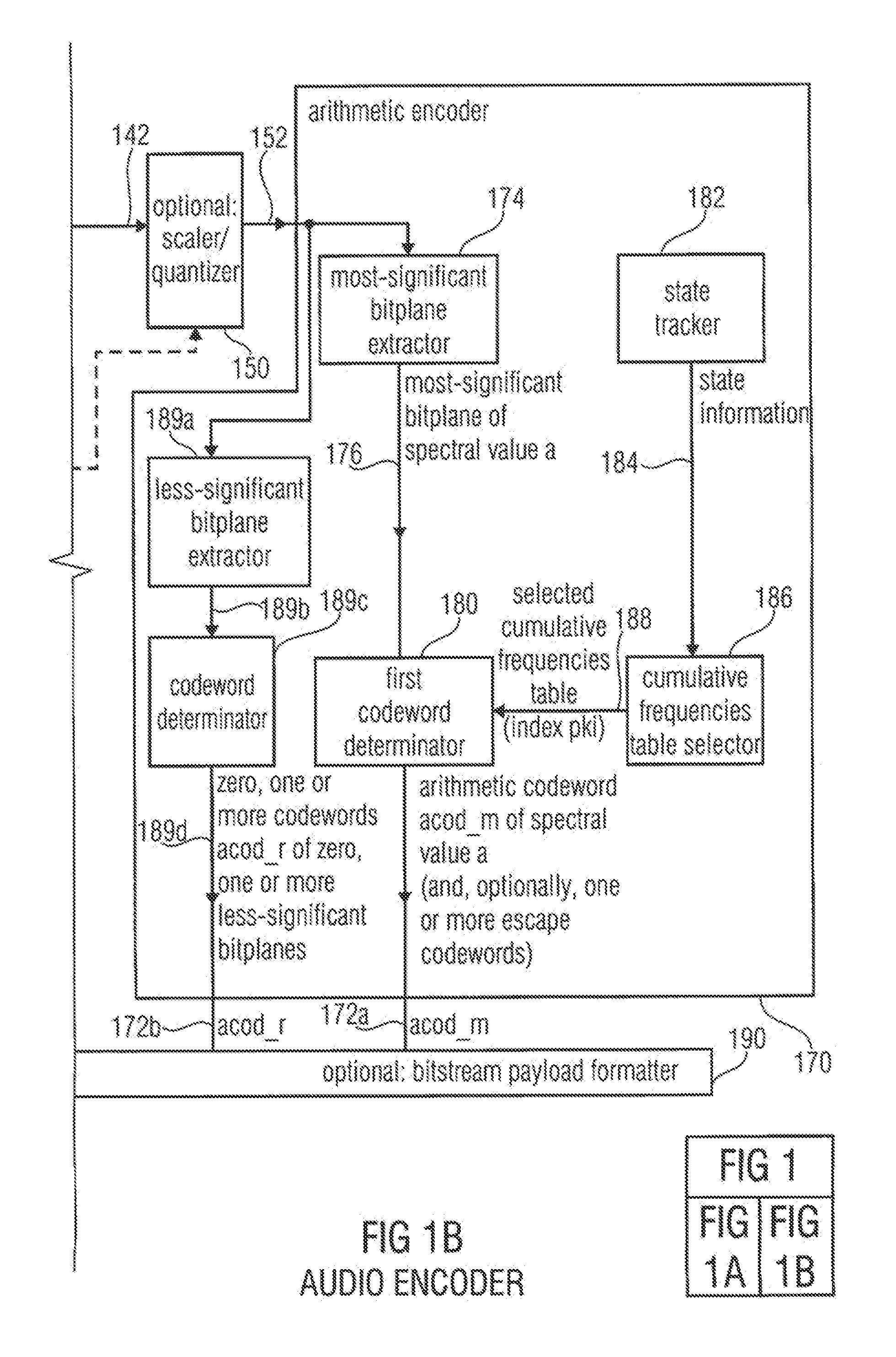

Audio encoder, audio decoder, method for encoding and audio information, method for decoding an audio information and computer program using an optimized hash table

An audio decoder includes an arithmetic decoder for providing decoded spectral values on the basis of an arithmetically encoded representation thereof, and a frequency-domain-to-time-domain converter for providing a time-domain audio representation. The arithmetic decoder selects a mapping rule describing a mapping of a code value onto a symbol code representing a spectral value, or a most significant bit-plane thereof, in a decoded form, in dependence on a context state described by a numeric current context value. The arithmetic decoder determines the numeric current context value in dependence on a plurality of previously decoded spectral values. It evaluates a hash table, entries of which define both significant state values amongst the numeric context values and boundaries of intervals of numeric context values, in order to select the mapping rule, wherein the hash table ari_hash_m is defined as given in FIGS. 22(1), 22(2), 22(3) and 22(4).

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

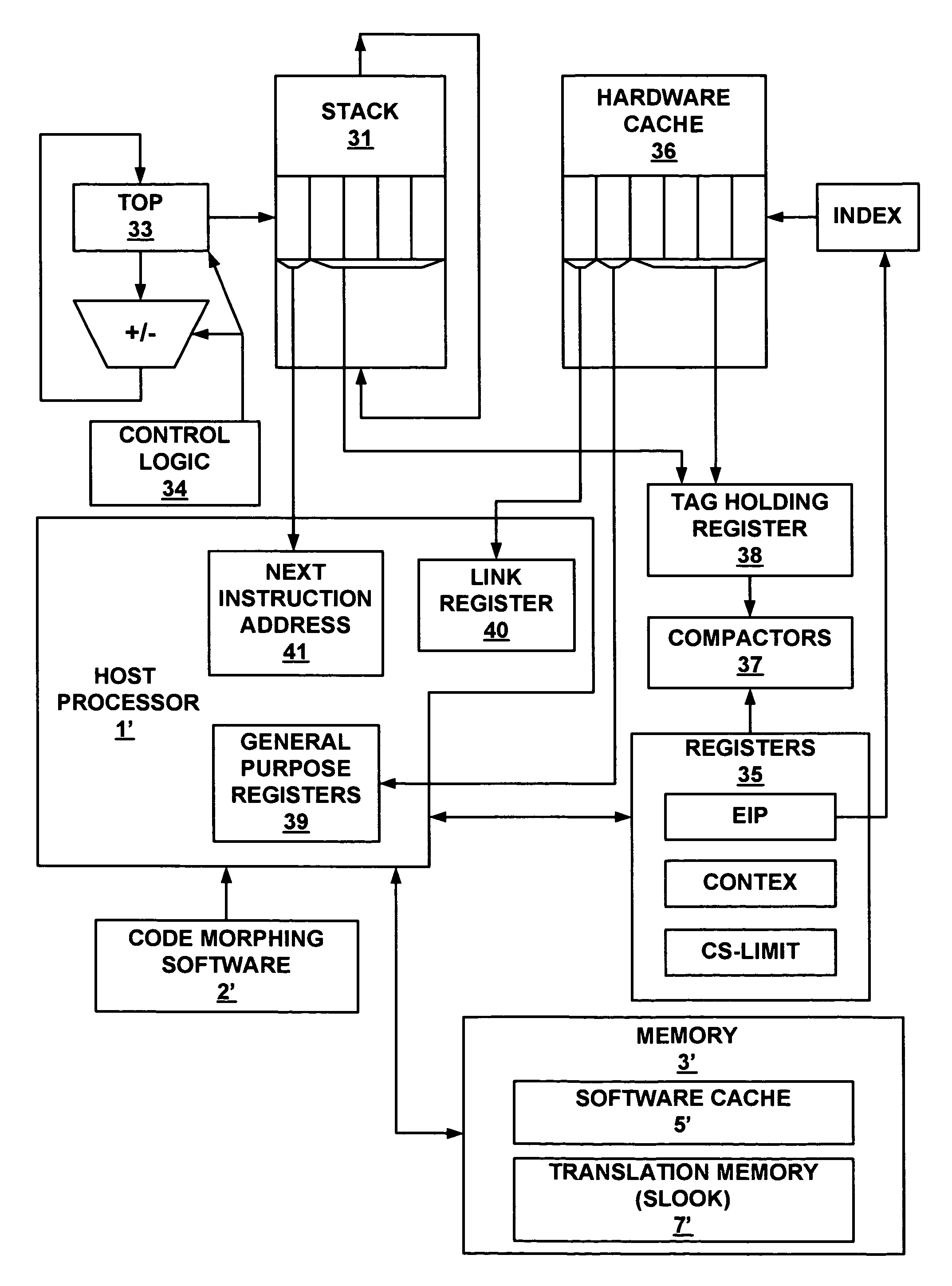

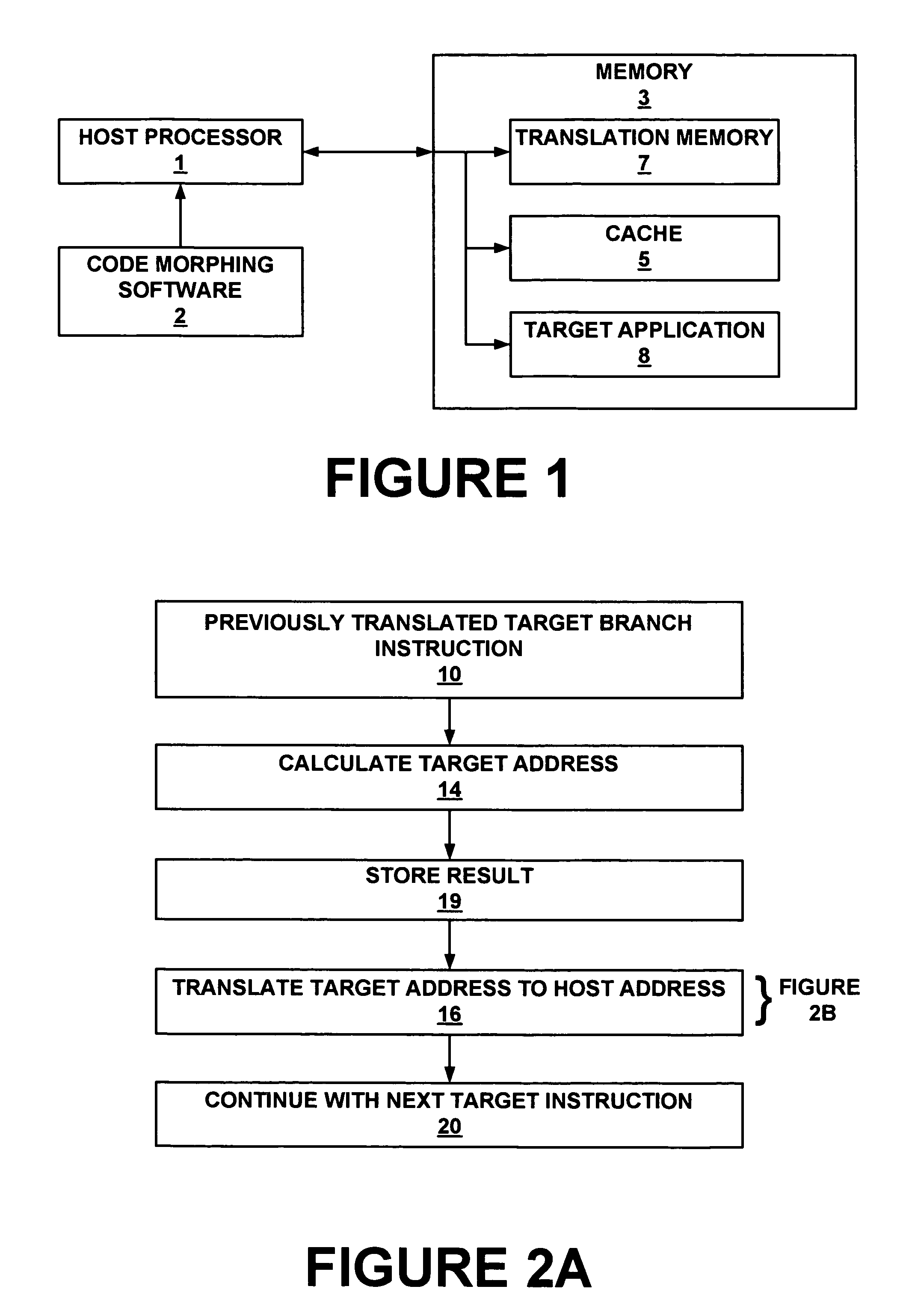

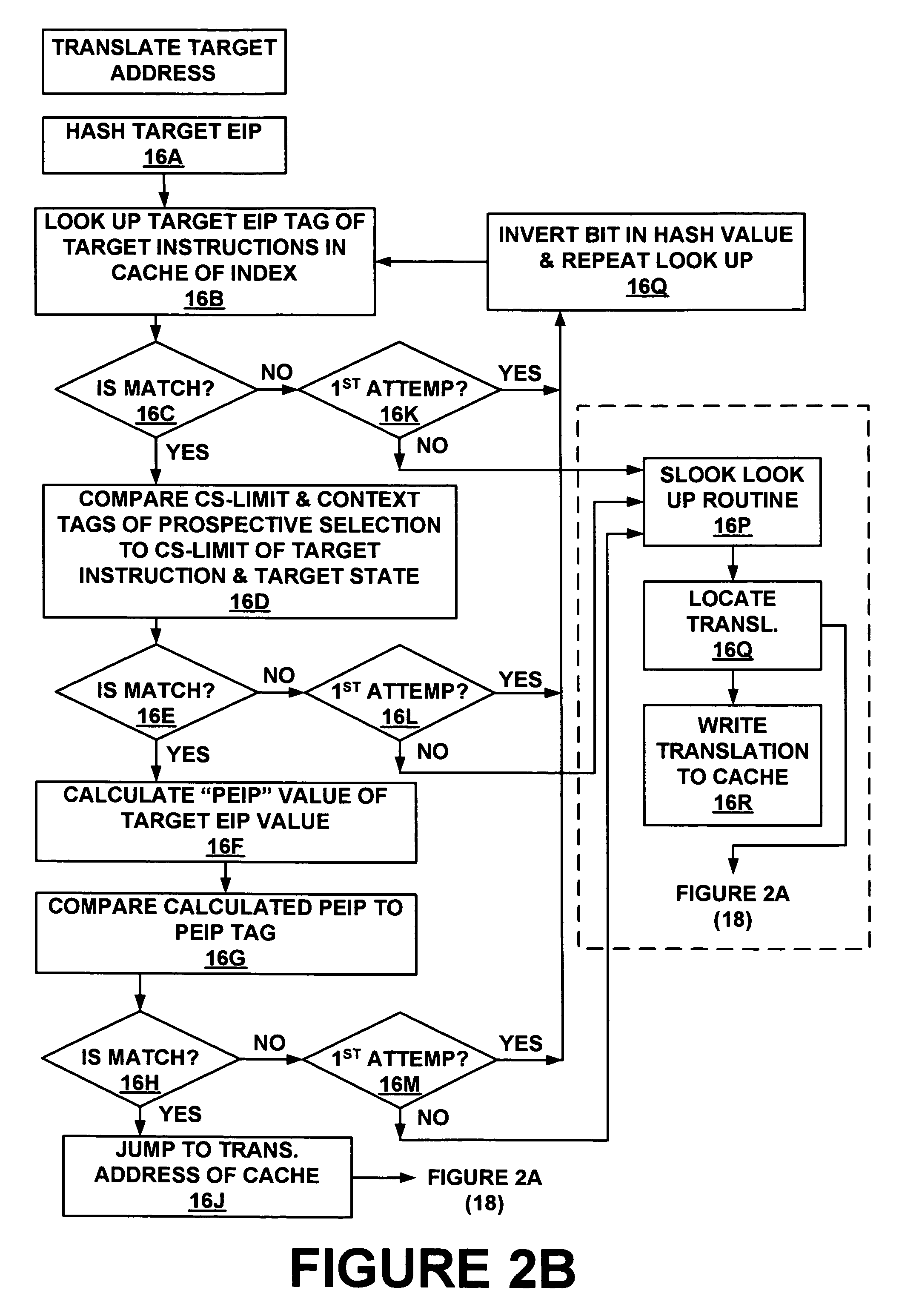

Method and system for storing and retrieving a translation of target program instruction from a host processor using fast look-up of indirect branch destination in a dynamic translation system

InactiveUS7644210B1Fast executionProgram control using stored programsDigital computer detailsProgram instructionParallel computing

Dynamic translation of indirect branch instructions of a target application by a host processor is enhanced by including a cache to provide access to the addresses of the most frequently used translations of a host computer, minimizing the need to access the translation buffer. Entries in the cache have a host instruction address and tags that may include a logical address of the instruction of the target application, the physical address of that instruction, the code segment limit to the instruction, and the context value of the host processor associated with that instruction. The cache may be a software cache apportioned by software from the main processor memory or a hardware cache separate from main memory.

Owner:INTELLECTUAL VENTURES HOLDING 81 LLC

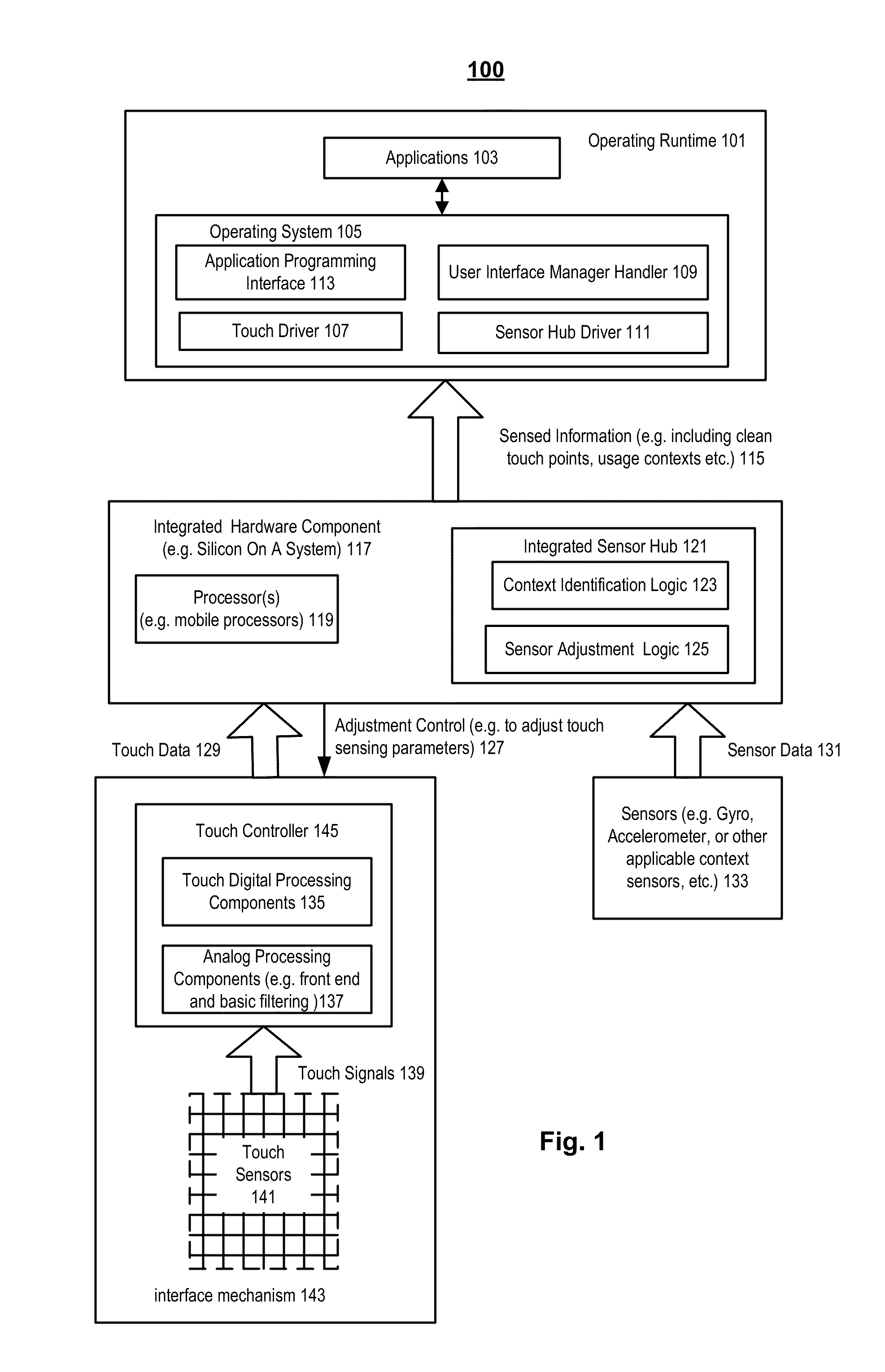

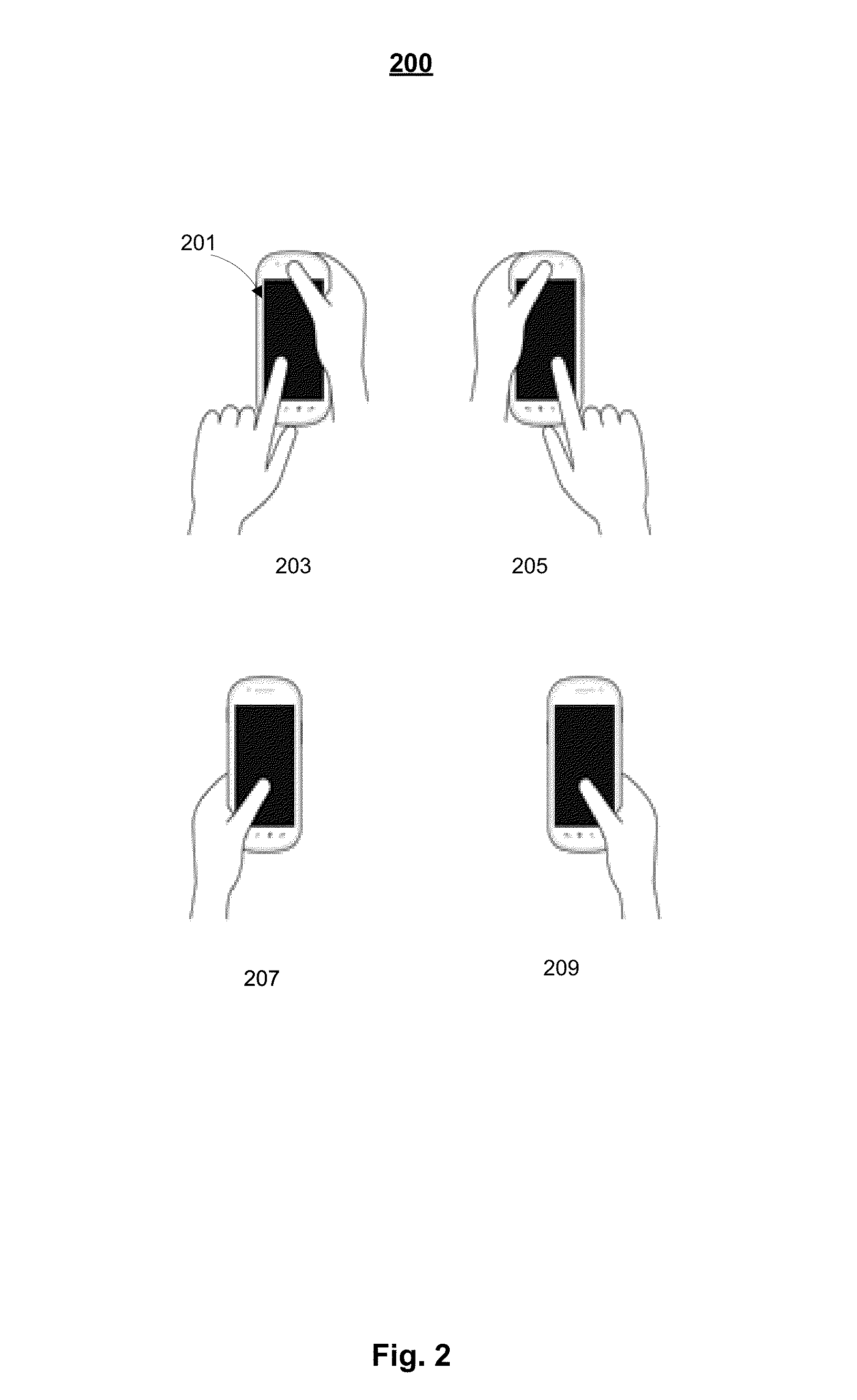

Adapting interface based on usage context

Methods and apparatuses that present a user interface via a touch panel of a device are described. The touch panel can have touch sensors to generate touch events to receive user inputs from a user using the device. Sensor data may be provided via one or more context sensors. The sensor data can be related to a usage context of the device by the user. Context values may be determined based on the sensor data of the context sensors to represent the usage context. The user interface may be updated when the context values indicate a change of the usage context to adapt the device for the usage context.

Owner:INTEL CORP

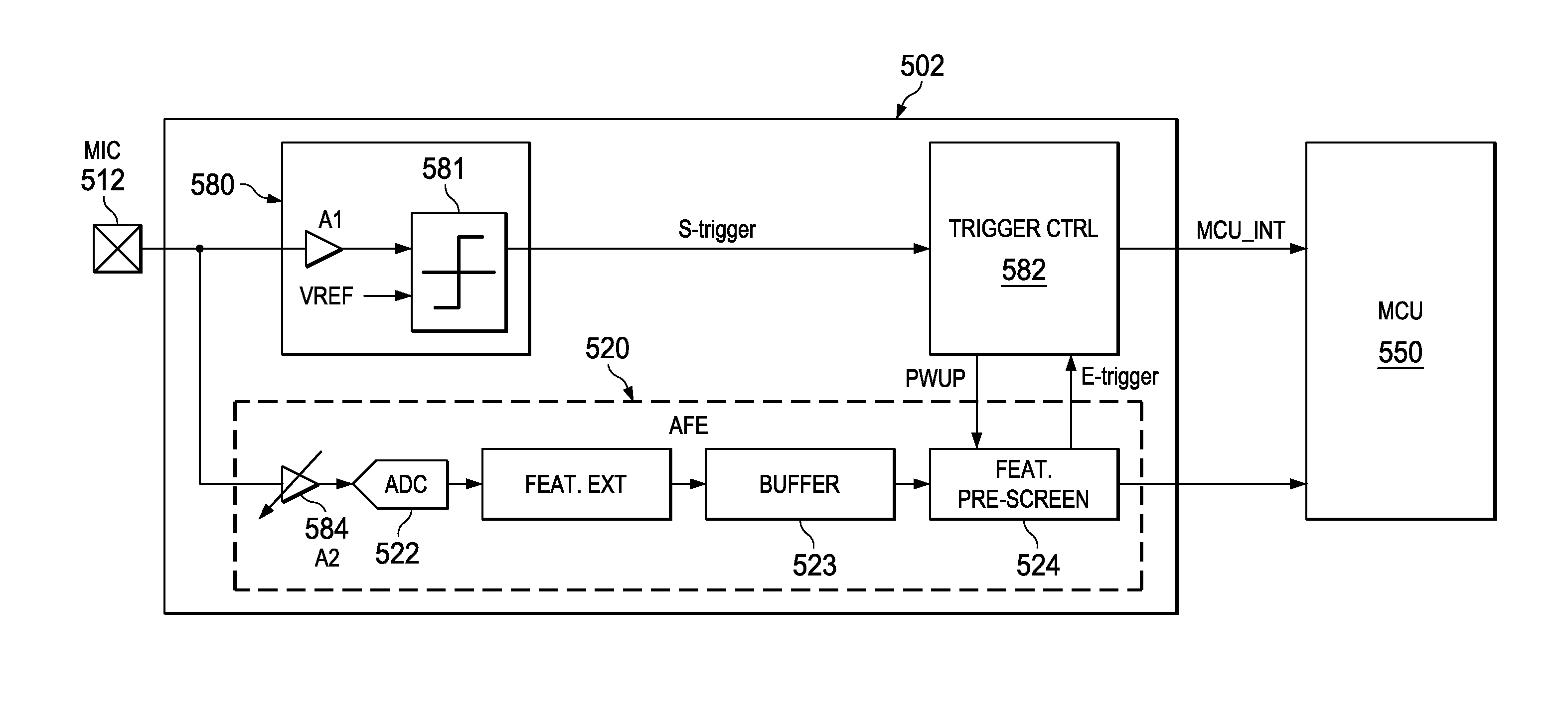

Context Aware Sound Signature Detection

A low power sound recognition sensor is configured to receive an analog signal that may contain a signature sound. Sparse sound parameter information is extracted from the analog signal. The extracted sound parameter information is sampled in a periodic manner and a context value is updated to indicate a current environmental condition. The sparse sound parameter information is compared to both the context value and a signature sound parameter database stored locally with the sound recognition sensor to identify sounds or speech contained in the analog signal, such that identification of sound or speech is adaptive to the current environmental condition.

Owner:TEXAS INSTR INC

Audio encoder, audio decoder, method for encoding and audio information, method for decoding an audio information and computer program using a hash table describing both significant state values and interval boundaries

ActiveUS8645145B2Comprehensive performance is smallSimple processSpeech analysisCode conversionTime domainFrequency spectrum

An audio decoder includes an arithmetic decoder for providing a plurality of decoded spectral values on the basis of an arithmetically encoded representation of the spectral values, and a frequency-domain-to-time-domain converter for providing a time-domain audio representation using the decoded spectral values. The arithmetic decoder selects a mapping rule describing a mapping of a code value onto a symbol code in dependence on a context state described by a numeric current context value. The arithmetic decoder determines the numeric current context value in dependence on a plurality of previously decoded spectral values. The arithmetic decoder evaluates a hash table, entries of which define both significant state values and boundaries of intervals of numeric context values, in order to select the mapping rule. A mapping rule index value is individually associated to a numeric context value being a significant state value.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

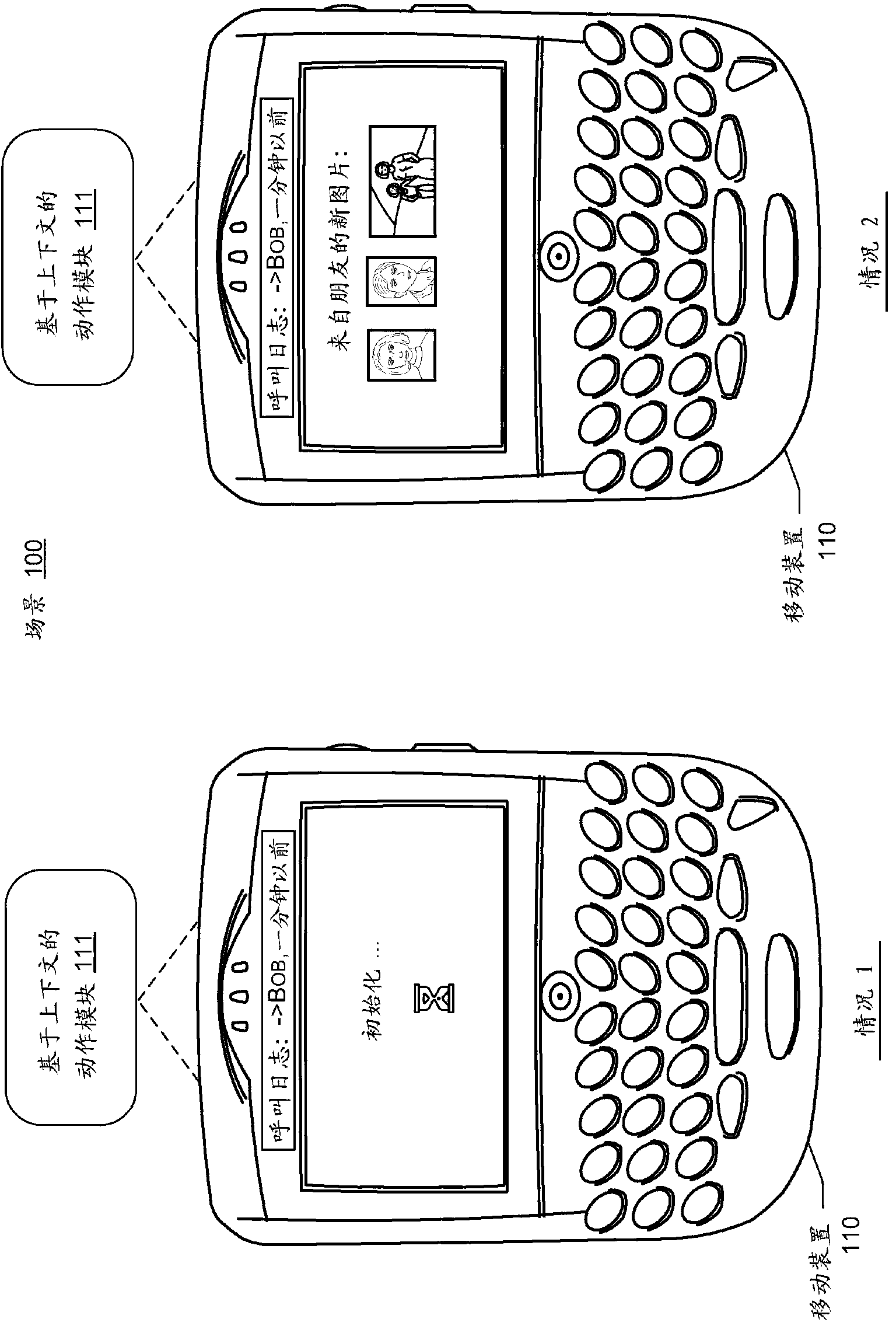

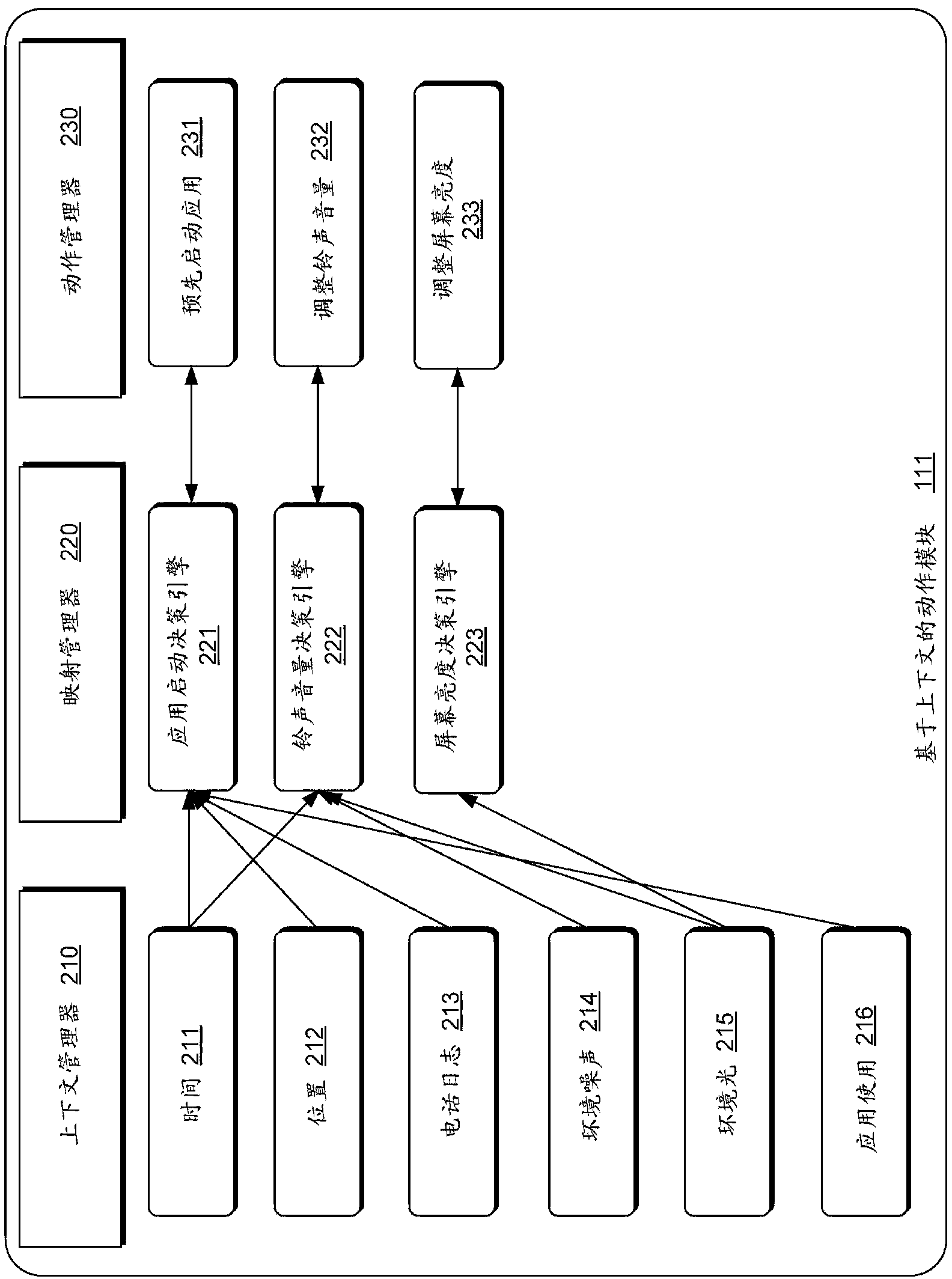

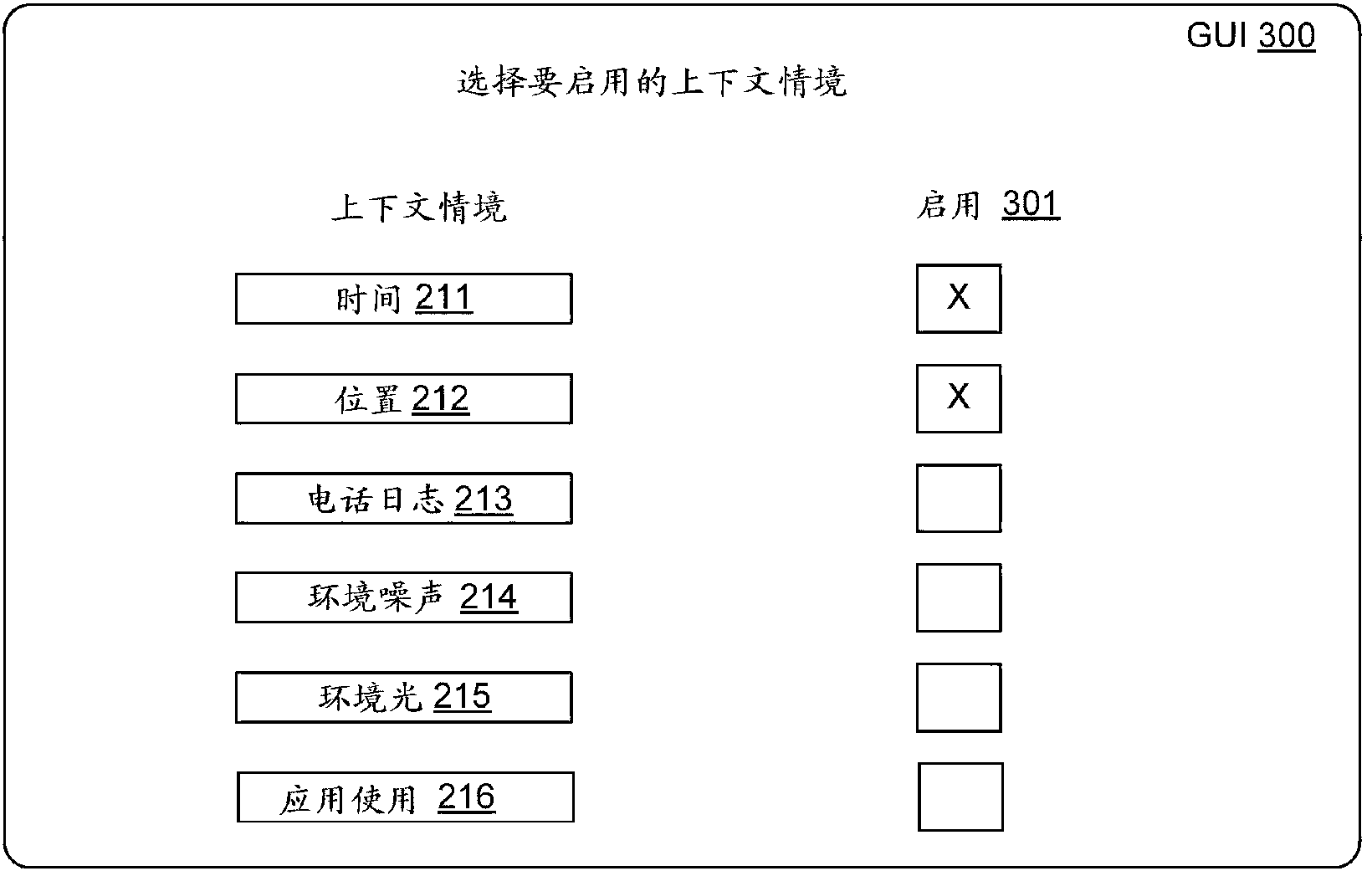

Method and system for forecasting motion of device based on context

ActiveCN103077224ANatural language data processingProgram loading/initiatingDecision takingContext based

The present invention discloses a method and a system for forecasting motion of a device based on context. A described embodiment relates to performing device action automatically. One embodiment can acquire the context value of situational context. According to the embodiment, a determination engine can be used for determining whether action is performed on a computing device based on the context value. In a condition that the determination engine determines to perform the action, the embodiment can be used for performing action on the computing device. According to the embodiment, feedback which is related with the action can be used for upgrading the determination engine. As a specific embodiment, the action can activate the application before a user requests execution of the application. Pre-activation of the application can reduce application delay which is related with waiting for the user request for execution of the application before application activation.

Owner:MICROSOFT TECH LICENSING LLC

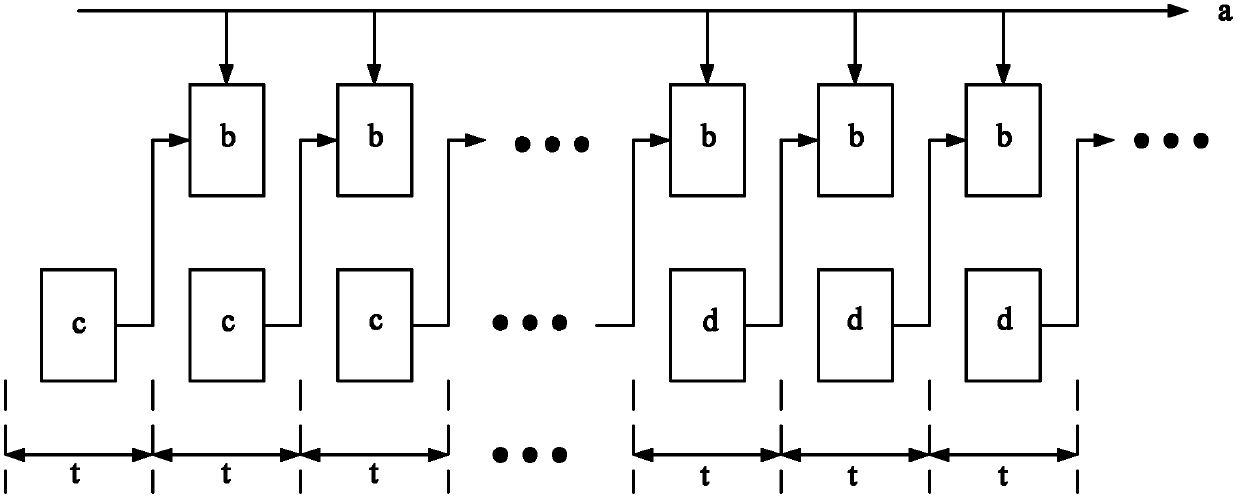

Parallel non-zero coefficient context modeling method for binary arithmetic coding

InactiveCN102186087AResolve dependenciesTelevision systemsDigital video signal modificationMultiple contextNonzero coefficients

The invention discloses a parallel non-zero coefficient context modeling method for binary arithmetic coding, and relates to a context modeling technology for video coding. The method is proposed to solve the problem that the data throughput rate of a coding system is reduced because the conventional binary arithmetic coding generates a data dependency relationship on the context in the context modeling process of non-zero coefficients. The method comprises the following steps of: 1, defining the number of coefficients and non-zero coefficients in a transform quantification block; 2, performing binarization on the non-zero coefficients to obtain a bin sequence; 3, performing context modeling on a first context according to the position information of the non-zero coefficients and the number of the non-zero coefficients in the transform quantification block; 4, calculating the probability distribution of the non-zero coefficients, the absolute value of which is abs (Li) in the first context value; 5, subtracting 1 from the absolute value of Li, and performing binarization; and 6, performing context modeling by using the equal probability distribution. By using the method, the context modeling processes of different non-zero coefficients can be simultaneously performed, and parallel execution of multiple context modeling processes in the coding process is realized.

Owner:HARBIN INST OF TECH

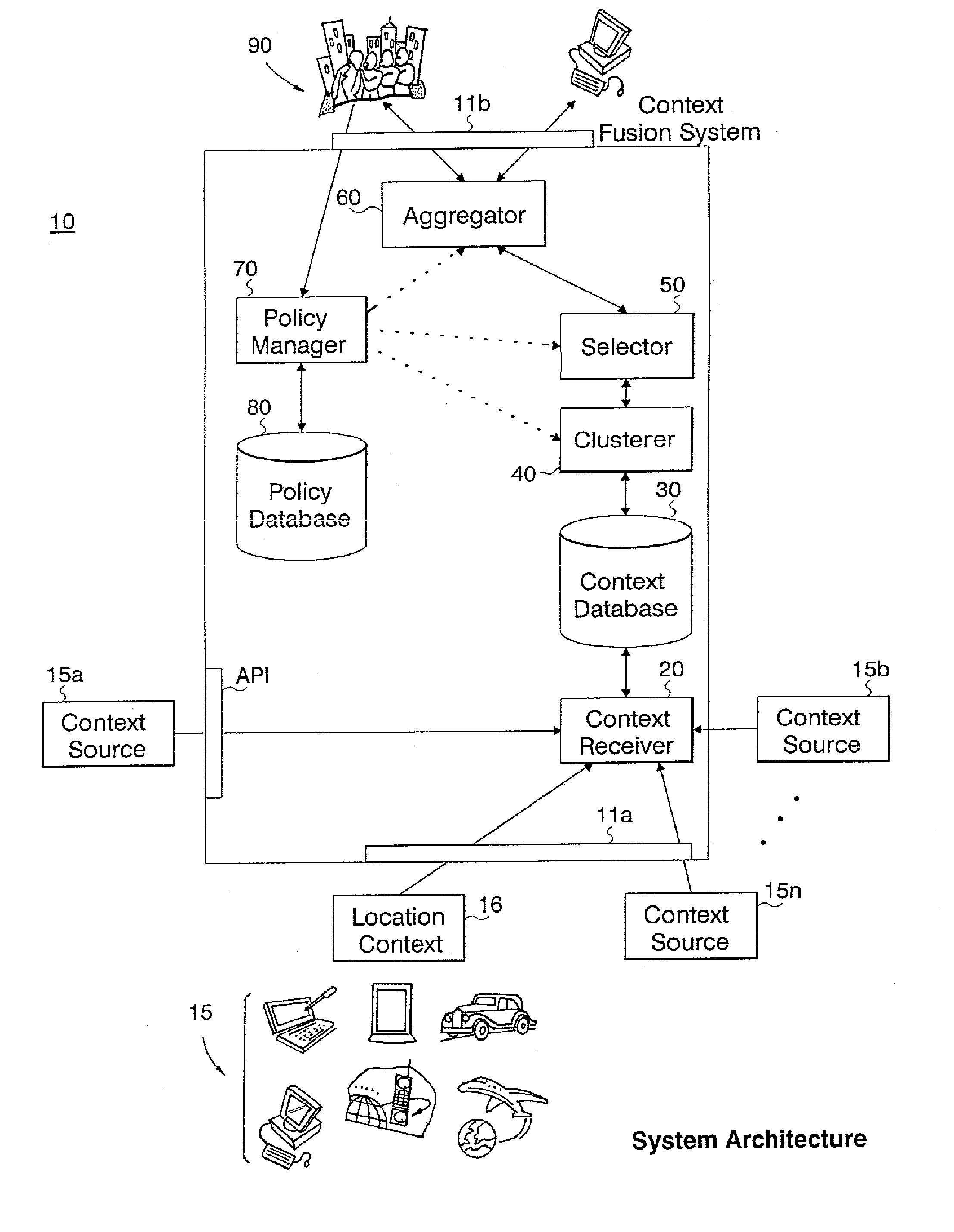

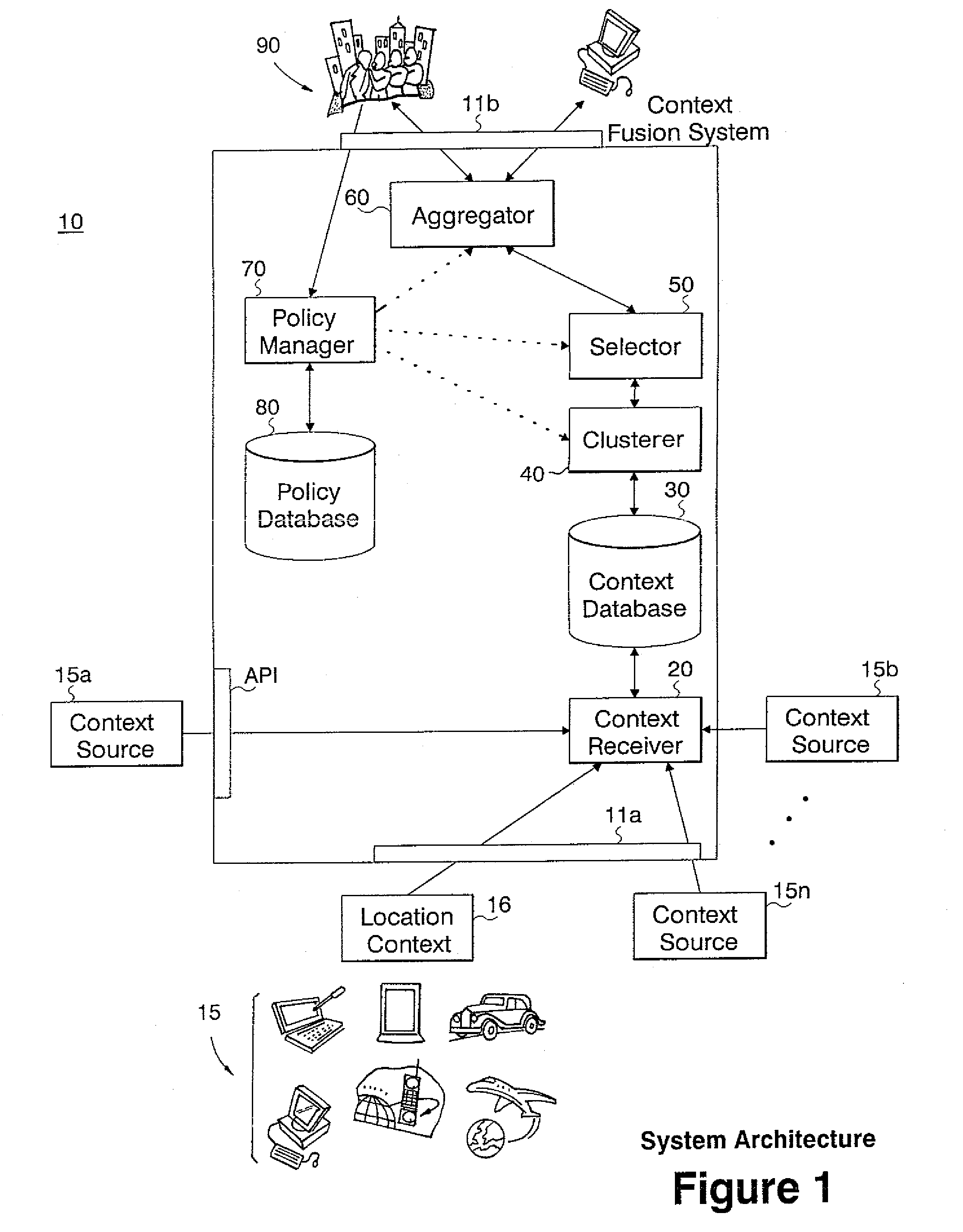

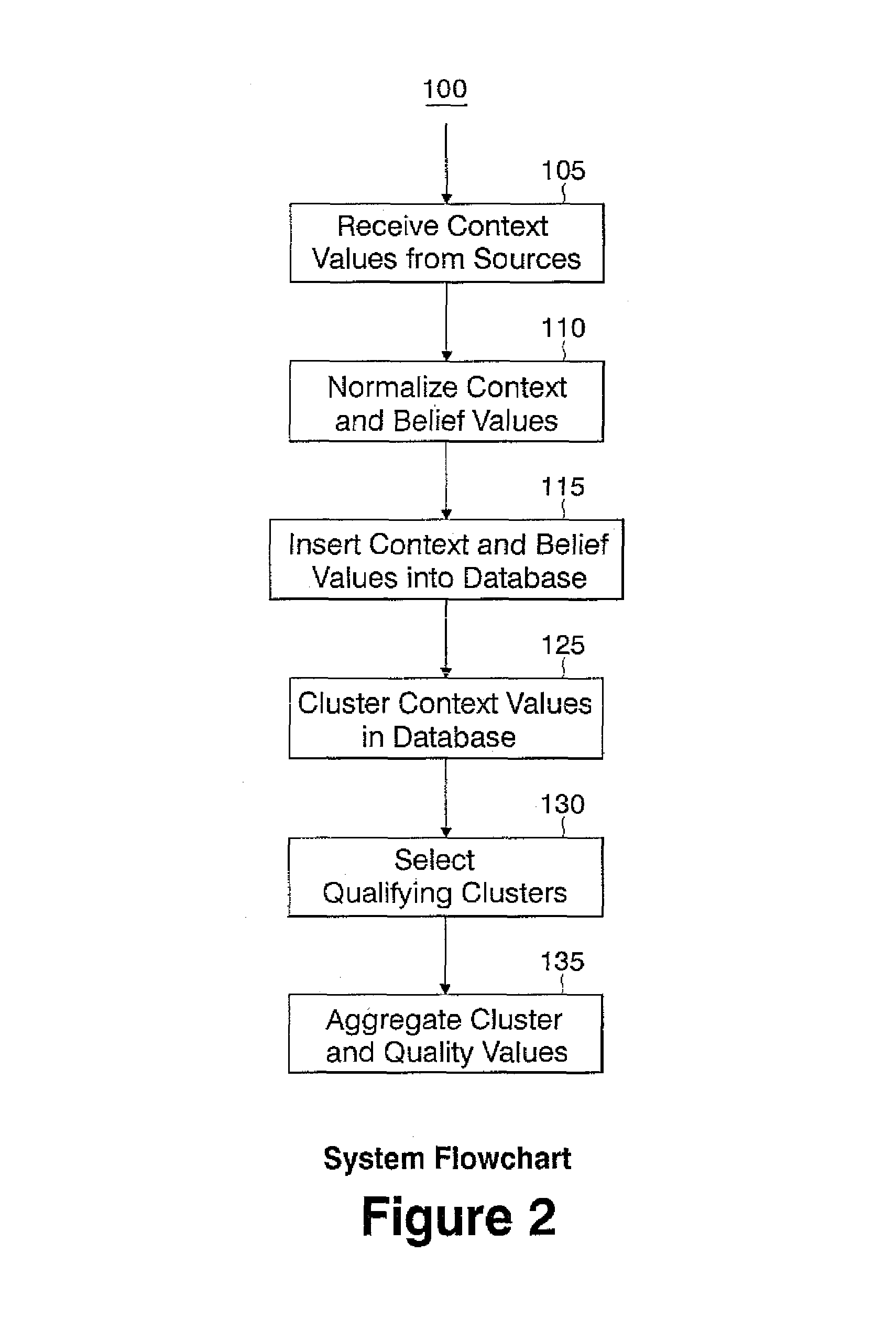

Method and apparatus for fusing context data

InactiveUS7392247B2Reduce the possibilityImprove satisfactionData processing applicationsDigital data processing detailsAlgorithmAmbiguity

A context fusing system and methodology includes the steps of receiving context data from a plurality of information sources; computing a quality measure for each input context value; organizing context values into one or more clusters, and assigning a single context value and a single quality measure to each cluster; and, selecting one or more clusters according to one or more criteria and aggregating the context values and quality measures of selected clusters to generate a single context value and single quality measure. The single context value and single quality measure are usable by a context aware application to avoid conflict and ambiguity among different information sources providing the context data.

Owner:GOOGLE LLC

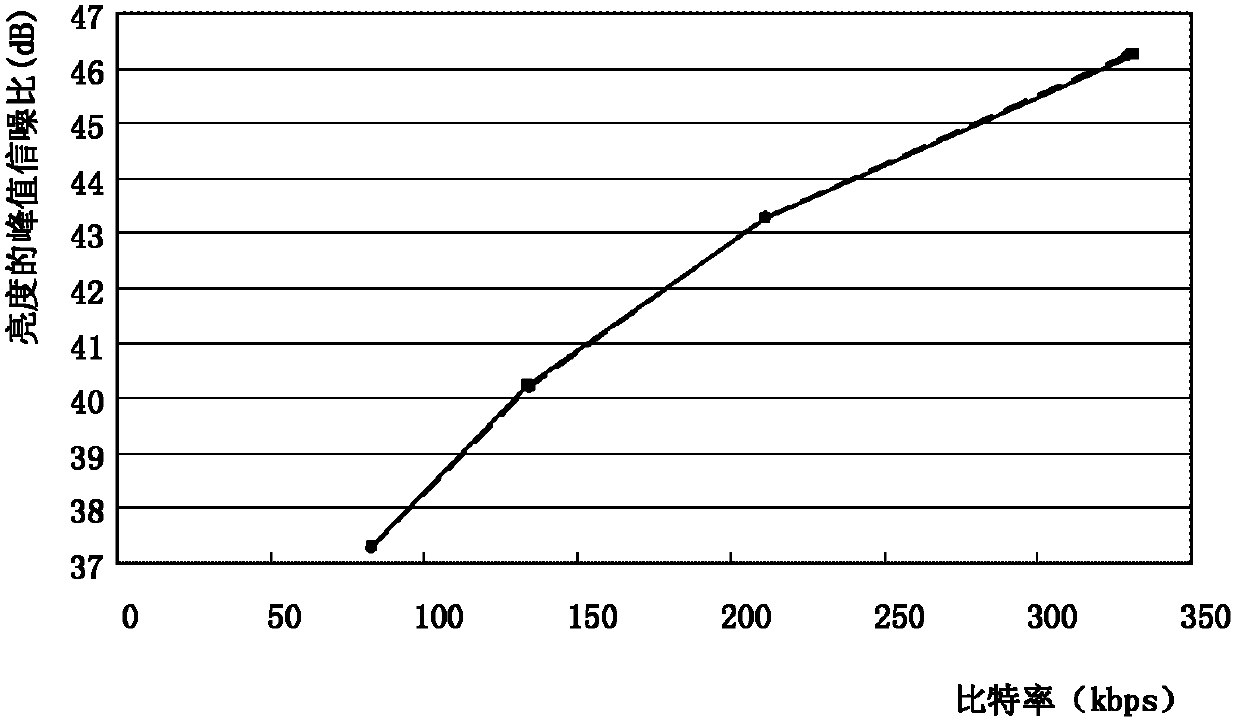

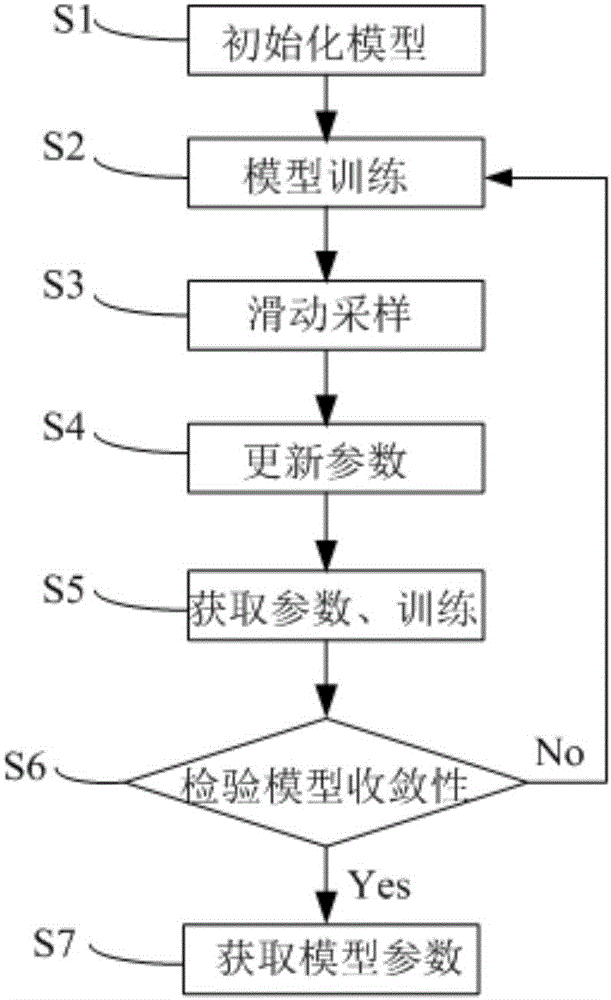

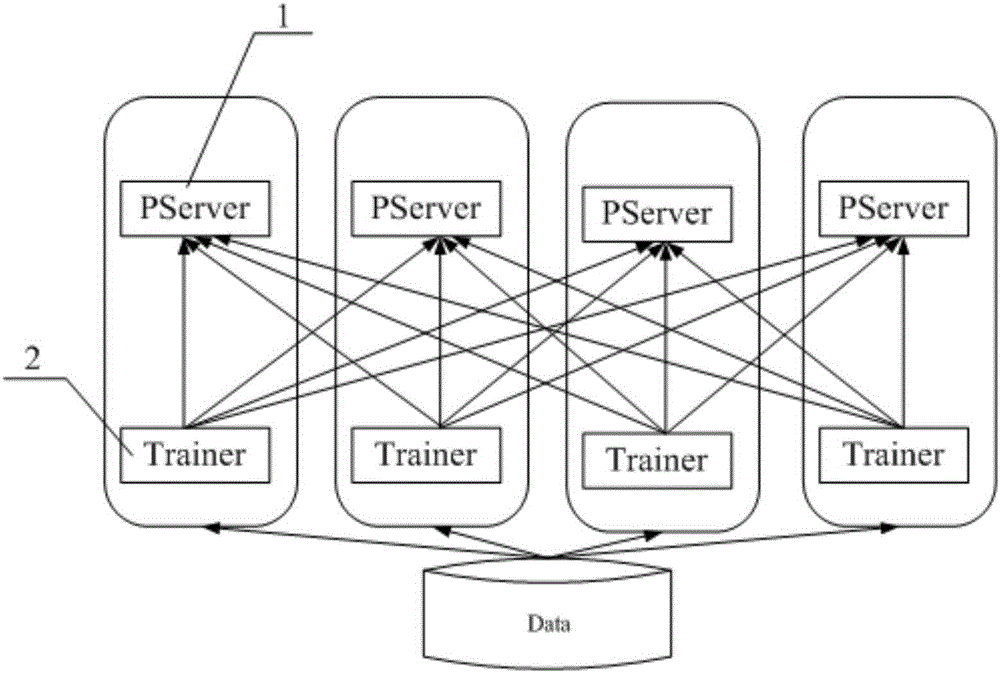

Sliding window sampling-based distributed machine learning training method and system thereof

InactiveCN106779093AImprove perceptionAlleviate the problem of poor training convergenceMachine learningSlide windowModel parameters

The invention provides a sliding window sampling-based distributed machine learning training method and system thereof. The method comprises the steps of initializing parameters of a machine learning model; obtaining a data fragment of all data and independently carrying out model training; collecting multiple rounds of historical gradient expiration degree samples, sampling the samples through sliding, calculating a gradient expiration degree context value, adjusting the learning rate and then initiating a gradient update request; asynchronously collecting the multiple gradient expiration degree samples, updating global model parameters by using the adjusted learning rates and pushing updated parameters; asynchronously obtaining pushed global parameters for updating, and further carrying out next training; checking the model convergence, if the model is not convergent, carrying out model training cycle; and if the model is convergent, obtaining model parameters. The learning rate of a learning device is controlled by using the expiration gradient, the stability and the convergence effect of distributed training are improved, the training fluctuation caused by the distributed system is reduced and the robustness of distributed training is improved.

Owner:SHANGHAI ADVANCED RES INST CHINESE ACADEMY OF SCI

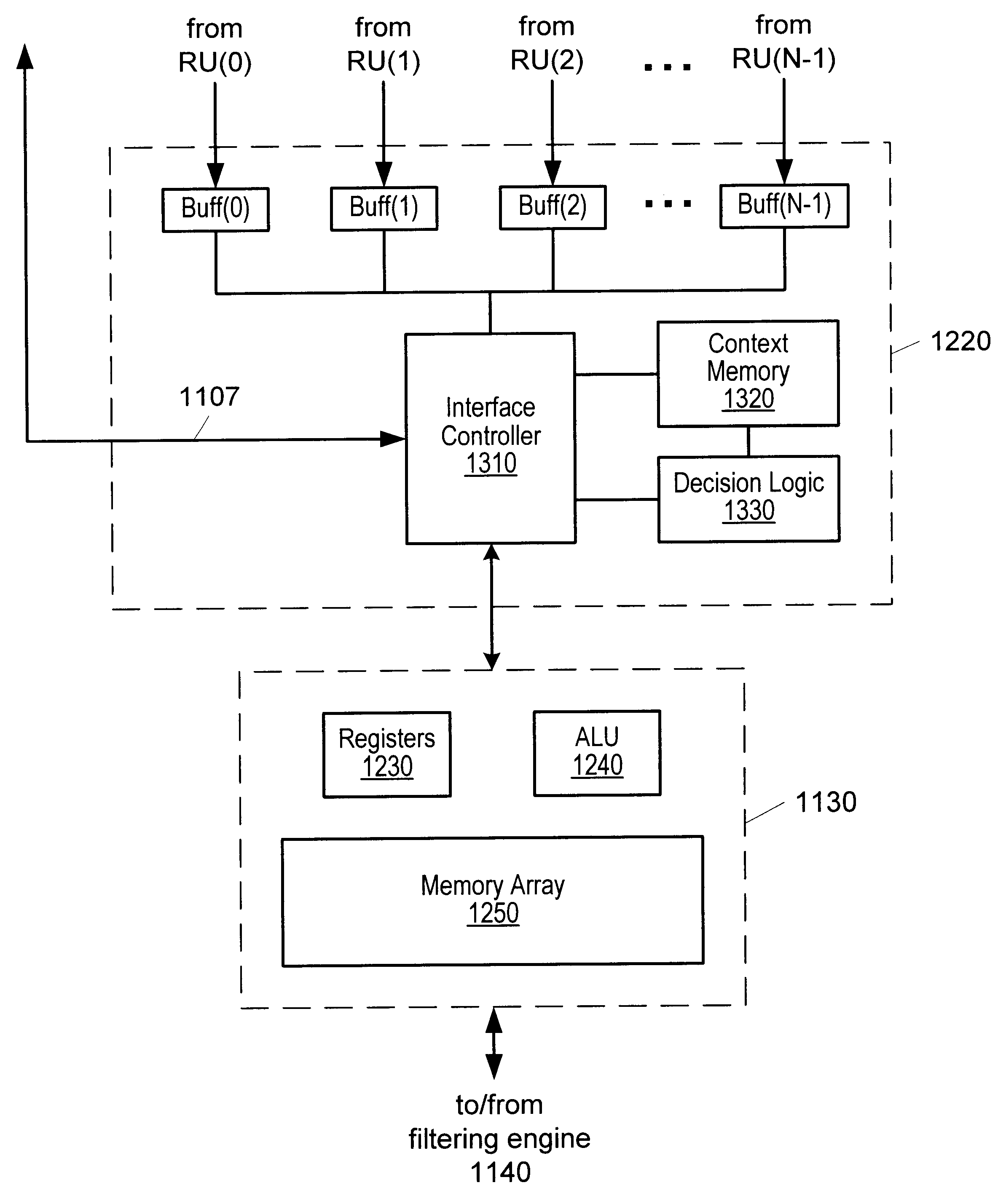

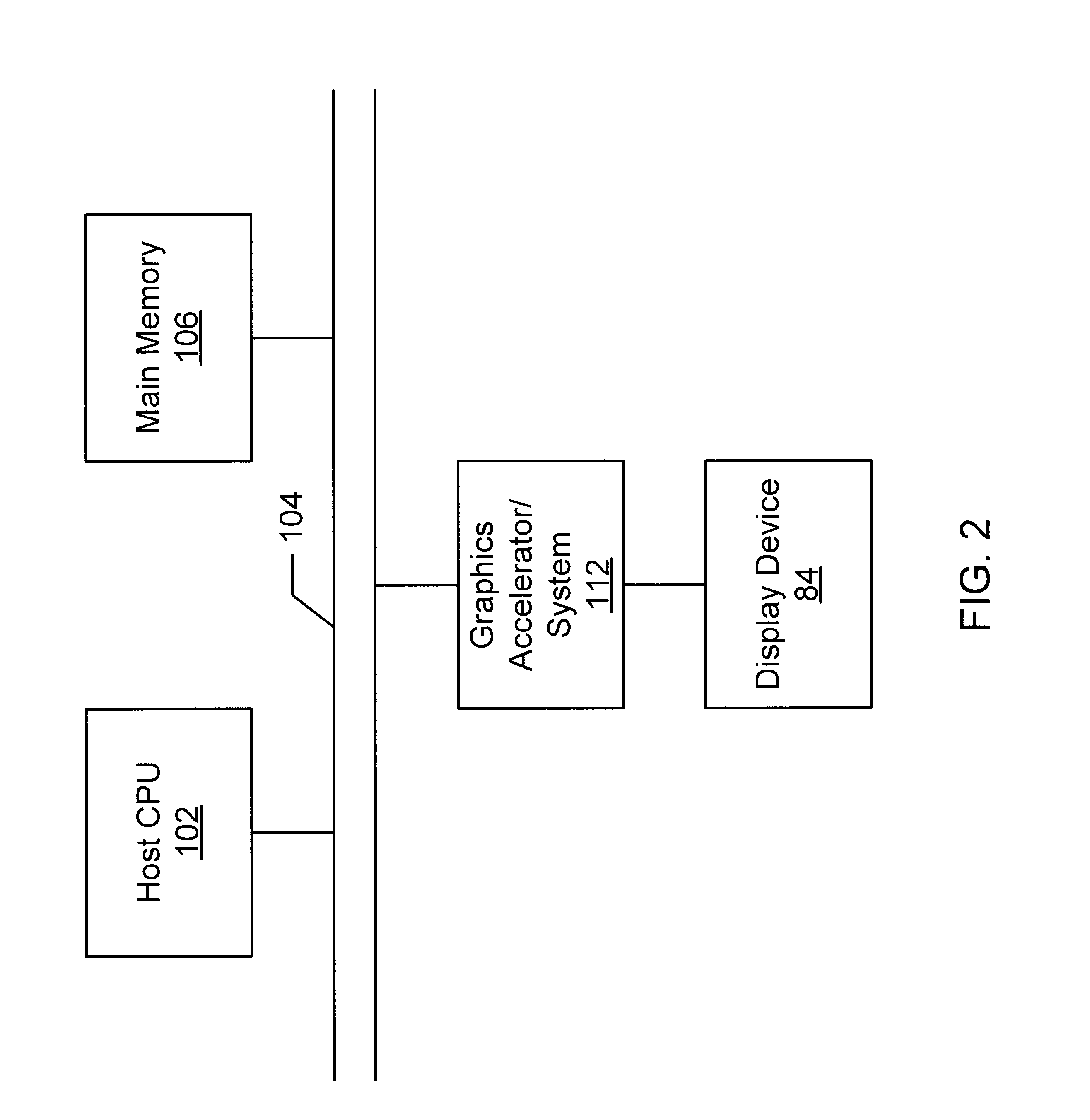

Graphics system configured to switch between multiple sample buffer contexts

InactiveUS6753870B2Cathode-ray tube indicatorsElectric digital data processingGraphicsArithmetic logic unit

A graphics system comprising a programmable sample buffer and a sample buffer interface. The sample buffer interface is configured to (a) buffer N streams of samples in N corresponding input buffers, wherein N is greater than or equal to two, (b) store N sets of context values corresponding to the N input buffers respectively, (c) terminate transfer of samples from a first of the input buffers to the programmable sample buffer, (d) selectively update a subset of state registers in the programmable sample buffer with context values corresponding to a next input buffer of the input buffers, (e) initiate transfer of samples from the next input buffer to the programmable sample buffer. The context values stored in the state registers of the programmable sample buffer determine the operation of an arithmetic logic unit internal to the programmable sample buffer on samples data.

Owner:ORACLE INT CORP

Audio encoder, audio decoder, method for encoding and audio information, method for decoding an audio information and computer program using a hash table describing both significant state values and interval boundaries

ActiveUS20130013301A1Reduce computational complexityReduce complexitySpeech analysisTime domainFrequency spectrum

An audio decoder includes an arithmetic decoder for providing a plurality of decoded spectral values on the basis of an arithmetically encoded representation of the spectral values, and a frequency-domain-to-time-domain converter for providing a time-domain audio representation using the decoded spectral values. The arithmetic decoder selects a mapping rule describing a mapping of a code value onto a symbol code in dependence on a context state described by a numeric current context value. The arithmetic decoder determines the numeric current context value in dependence on a plurality of previously decoded spectral values. The arithmetic decoder evaluates a hash table, entries of which define both significant state values and boundaries of intervals of numeric context values, in order to select the mapping rule. A mapping rule index value is individually associated to a numeric context value being a significant state value.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

Method of labeling alarms to facilitate correlating alarms in a telecommunications network

InactiveUS20050166099A1Save bandwidthLarge and expensive to transmitDigital computer detailsNon-redundant fault processingAlarm messageTelecommunications network

A method for generating compressed correlation key values for use in correlating alarms generated by network elements in a telecommunications network is disclosed. An alarm message generated by a network element is received. A context value in the alarm message is identified. A table that associates context values to correlation key value formulas is maintained. A formula specifying how to generate the correlation key value is retrieved from the table. Each formula may specify, for an associated context value, one or more ordinal positions of fields in the alarm message, a concatenation of which yields the correlation key value. The correlation key value is created based on the formula. A unique ordinal number is generated to represent the correlation key value, which acts as a context key. The alarm message and correlation key value are sent to an external system for use in correlating alarms.

Owner:CISCO TECH INC

Audio encoder, audio decoder, method for encoding an audio information, method for decoding an audio information and computer program using a region-dependent arithmetic coding mapping rule

ActiveUS20120278086A1Quality improvementImprovement effortsSpeech analysisTime domainFrequency spectrum

An audio decoder for providing a decoded audio information includes an arithmetic decoder for providing a plurality of decoded spectral values on the basis of an arithmetically-encoded representation of the spectral values and a frequency-domain-to-time-domain converter for providing a time-domain audio representation using decoded spectral values. The arithmetic decoder is configured to select a mapping rule describing a mapping of a code value onto a symbol code in dependence on a context state. The arithmetic decoder is configured to determine a numeric current context value describing the current context state in dependence on a plurality of previously decoded spectral values and also in dependence on whether a spectral value to be decoded is in a first predetermined frequency region or in a second predetermined frequency region.An audio encoder provides an encoded audio information on the basis of an input audio information.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

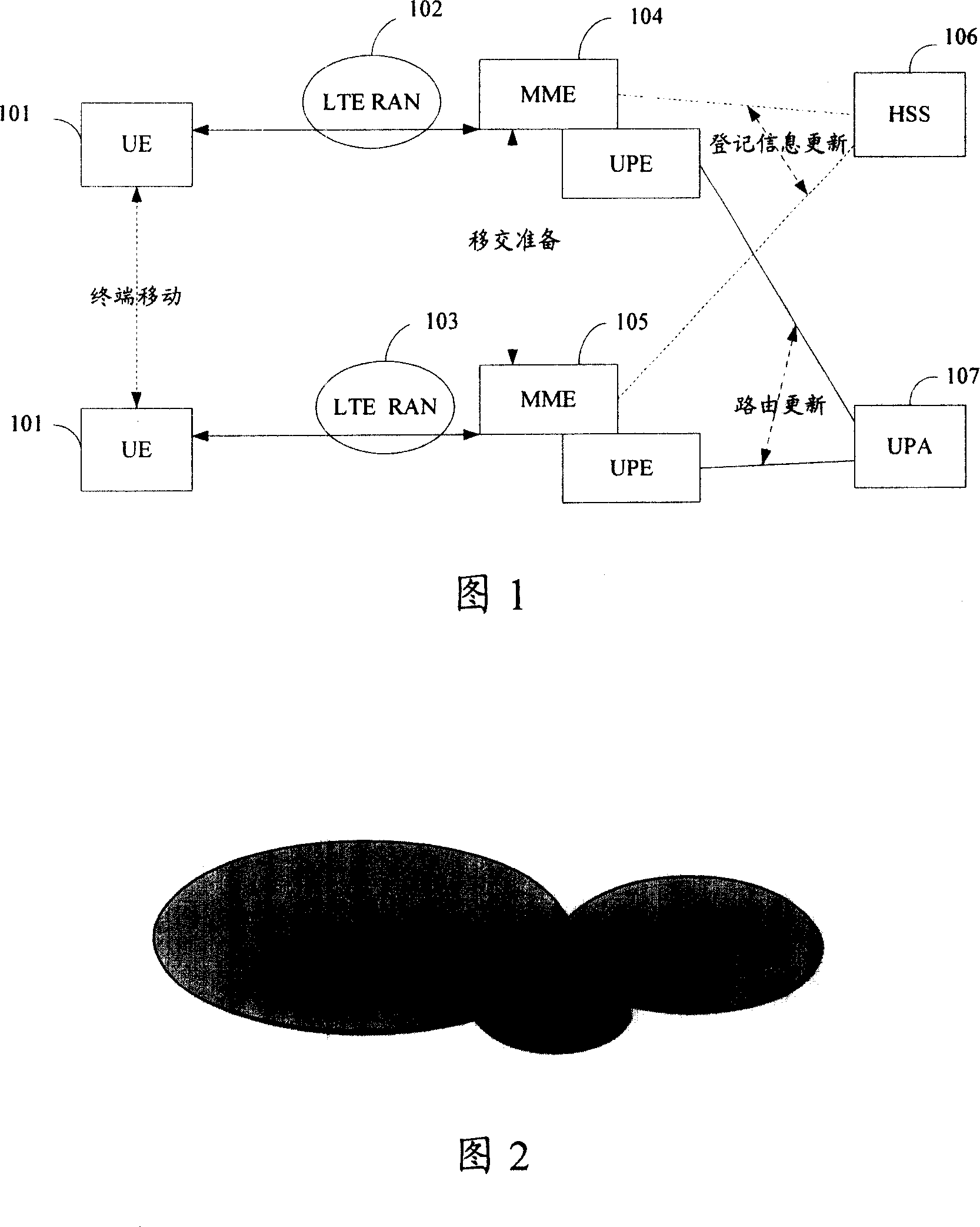

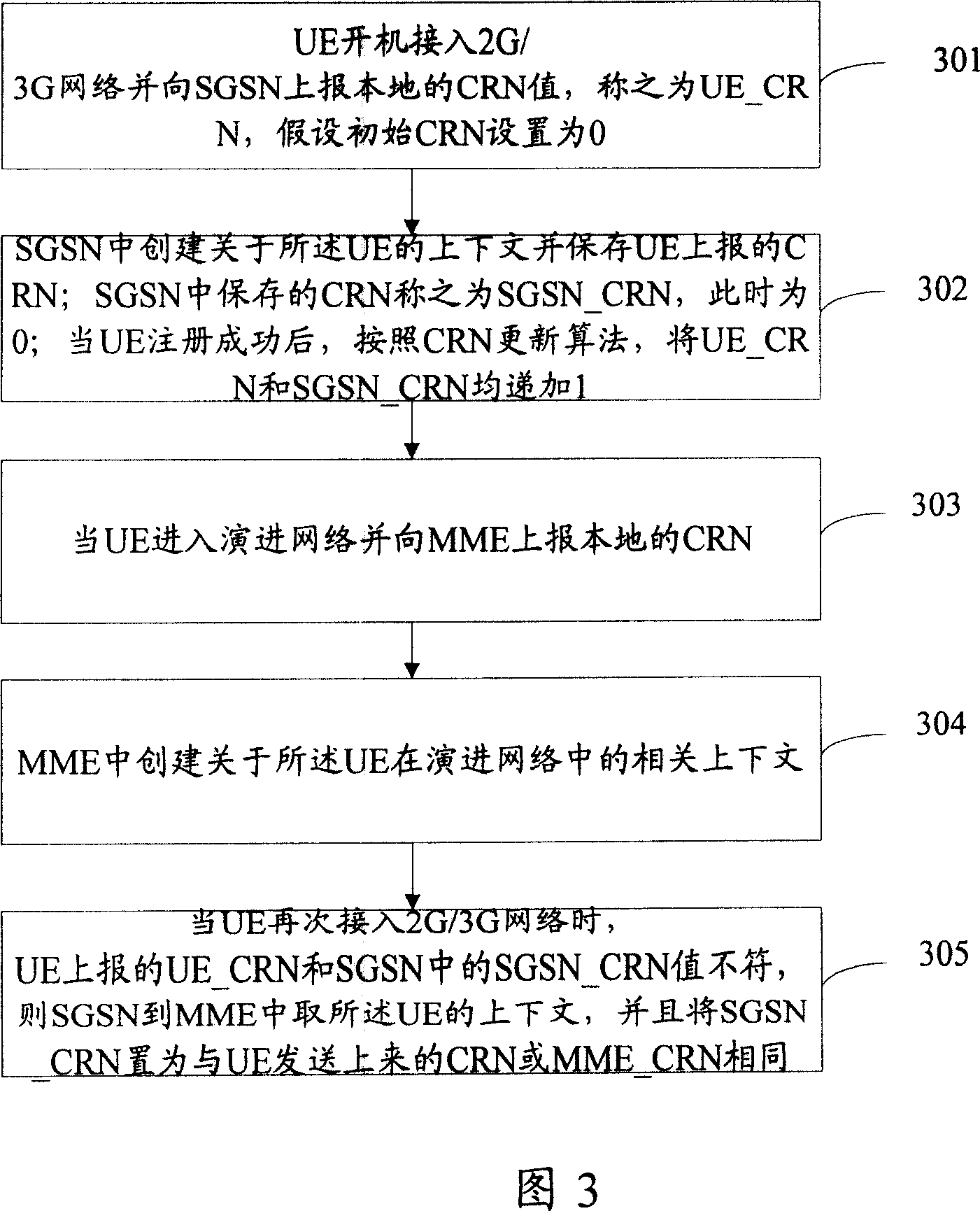

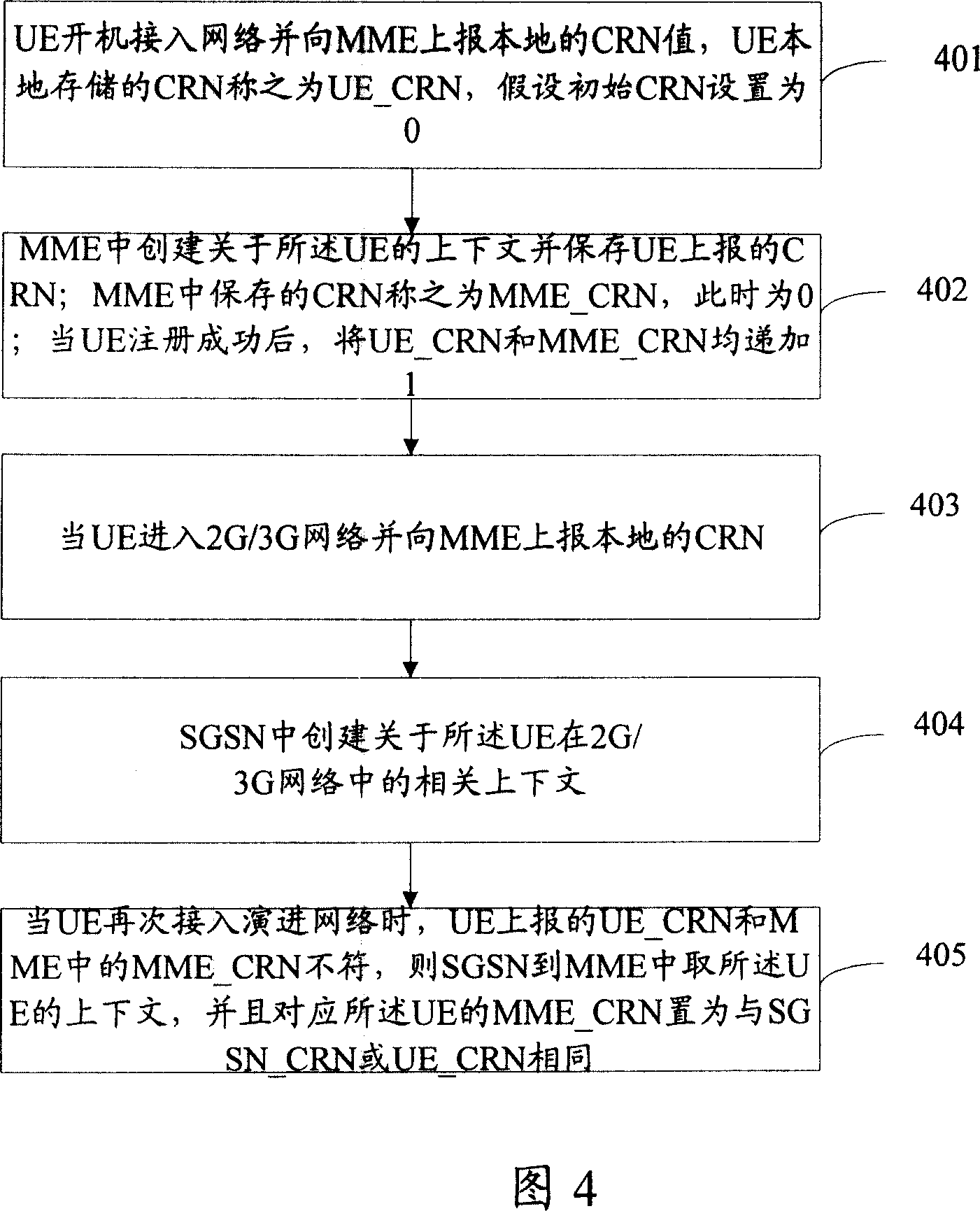

Context negotiation method

InactiveCN101128030ASave air interface resourcesConnection managementRadio/inductive link selection arrangementsTelecommunicationsContext values

The utility model relates to a mobile communication access technical field, disclosing a context negotiating method, a subscriber equipment UE and a wireless access body are used to store the context value CRN of the UE. The utility model comprises following steps: A. The subscriber equipment sends access request with CRN to a first RAT body; B. The first RAT body judge whether the received CRN is matched with the CRN corresponding to the UE stored in the RAT body; if not, C process is executed, or the flow is exited; C. The first RAT body gets the context of UE from a second RAT body, and the first body RAT and UE update the UE and CRN stored locally. The utility model also discloses another context negotiating method. The utility model avoids unnecessary context synchronous process.

Owner:HUAWEI TECH CO LTD

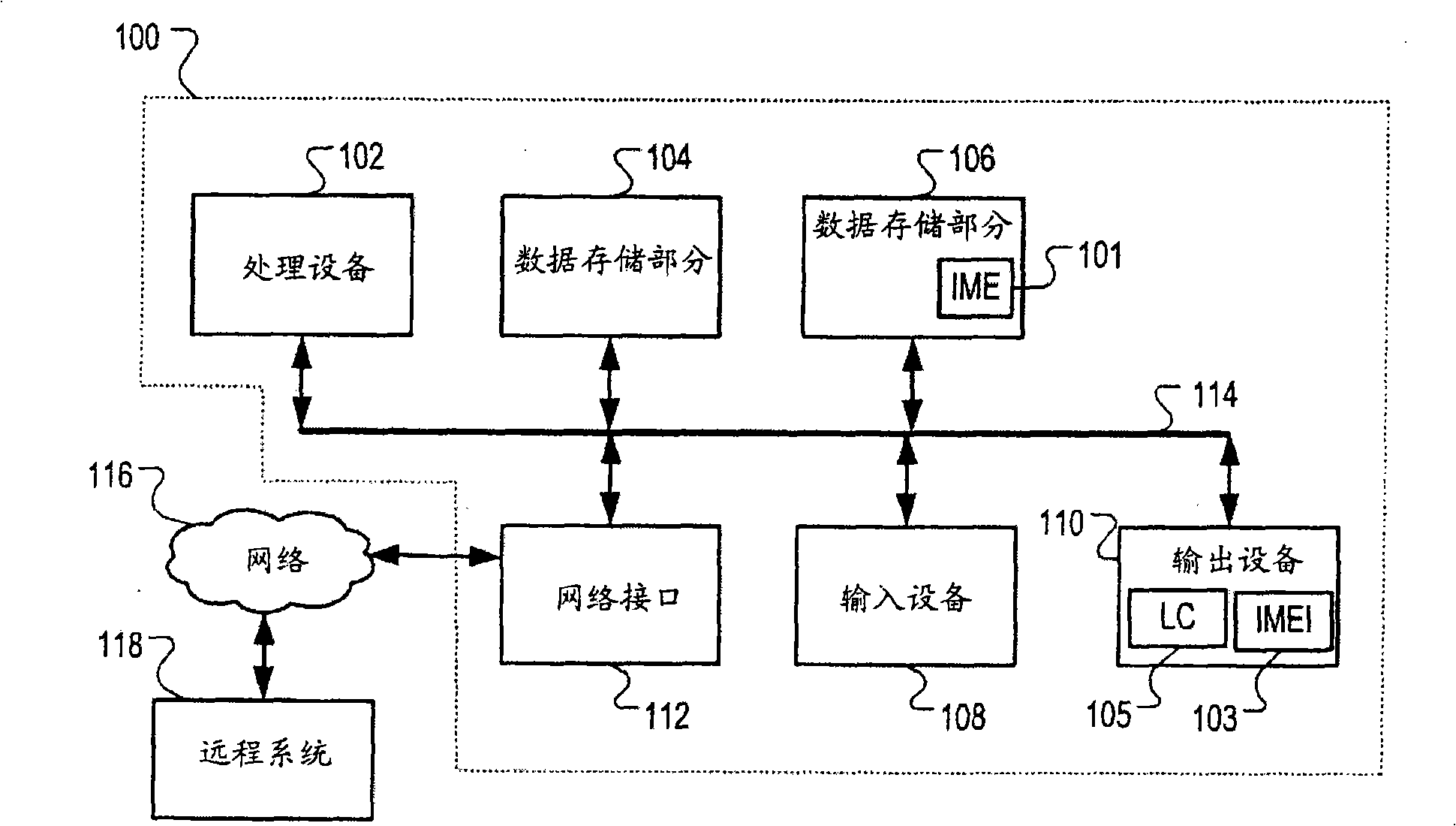

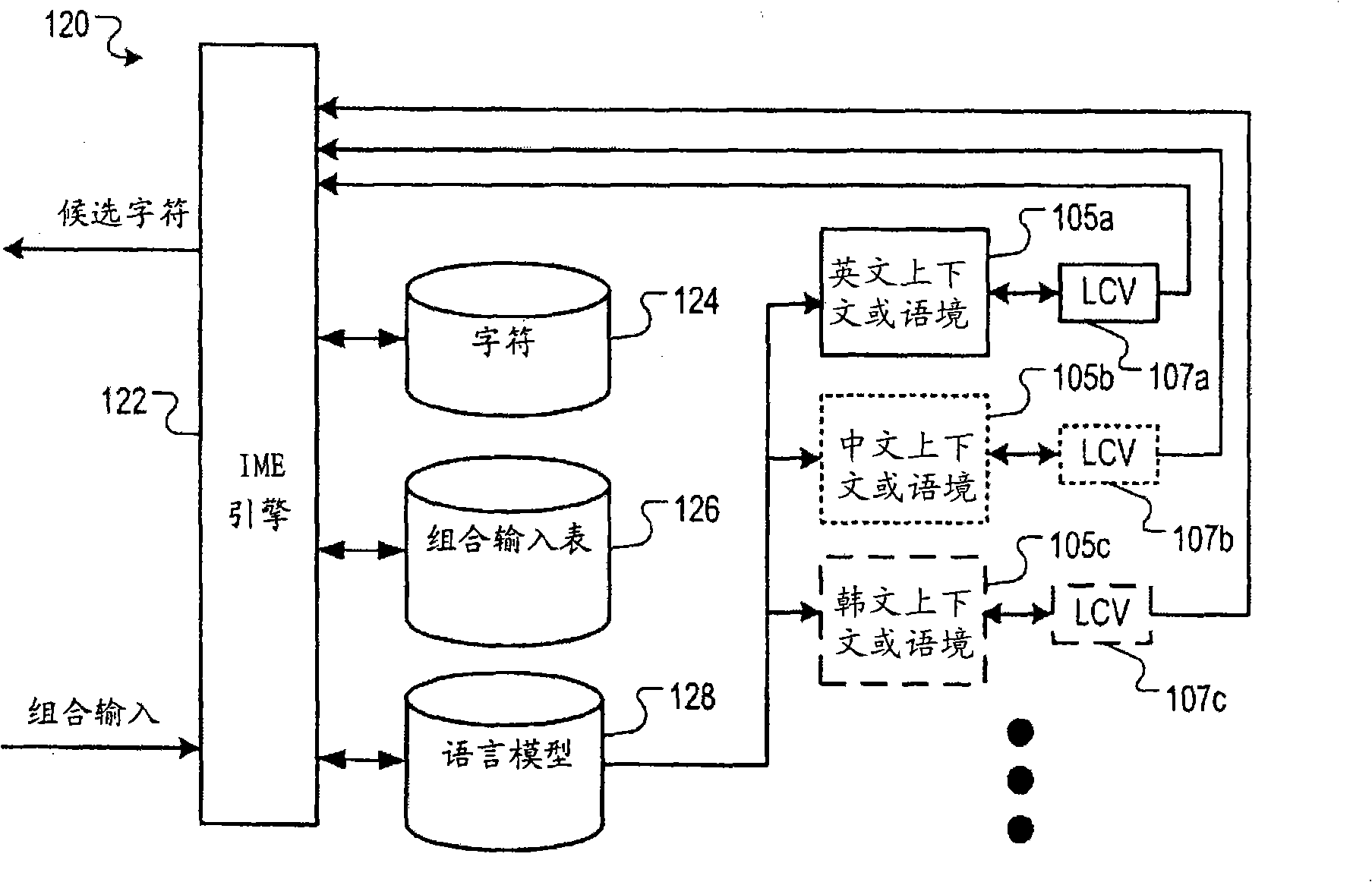

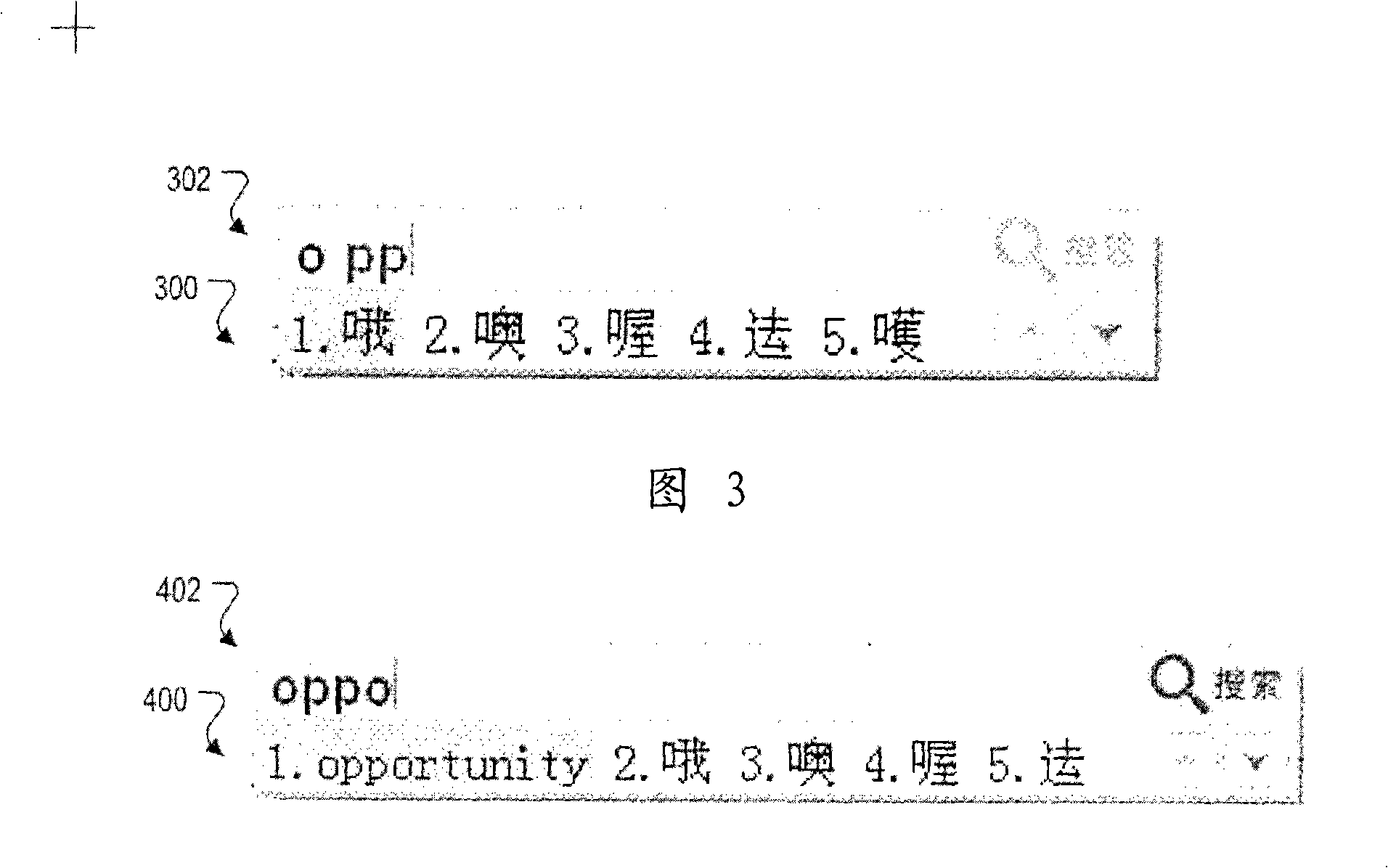

Multi-mode input method editor

InactiveCN101286094ANatural language translationSpecial data processing applicationsSoftware engineeringData mining

Methods, systems, and apparatus, including computer program products, in which an input method editor receives composition inputs and determines language context values based on the composition inputs. Candidate selections based on the language context values and the composition inputs are identified.

Owner:GOOGLE LLC

Context-aware threat response arbitration

A method for prioritizing potential threats identified by vehicle active safety systems. The method includes providing context information including map information, vehicle position information, traffic assessment information, road condition information, weather condition information, and vehicle state information. The method calculates a system context value for each active safety system using the context information. Each active safety system provides a system threat level value, a system braking value, a system steering value, and a system throttle value. The method calculates an overall threat level value using all of the system context values and all of the system threat level values. The method then provides a braking request value to vehicle brakes based on all of the system braking values, a throttle request value to a vehicle throttle based on all of the system throttle values, and a steering request value to vehicle steering based on all of the system steering values.

Owner:GM GLOBAL TECH OPERATIONS LLC

Multilevel sorting and displaying of contextual objects

InactiveUS7032188B2Quick identificationSubstation equipmentVisual presentationMultiple contextDisplay device

An apparatus and method for displaying a plurality of icons on the display of a mobile terminal are provided. Icons are displayed in at least two different sections. The first section includes icons having sizes determined by comparing characteristics of associated messages to one or more context values, such as time of day, geographic area, or user profile characteristics. The second section includes icons having sizes determined by the proximities of the message sources to the mobile terminal.

Owner:NOKIA CORP

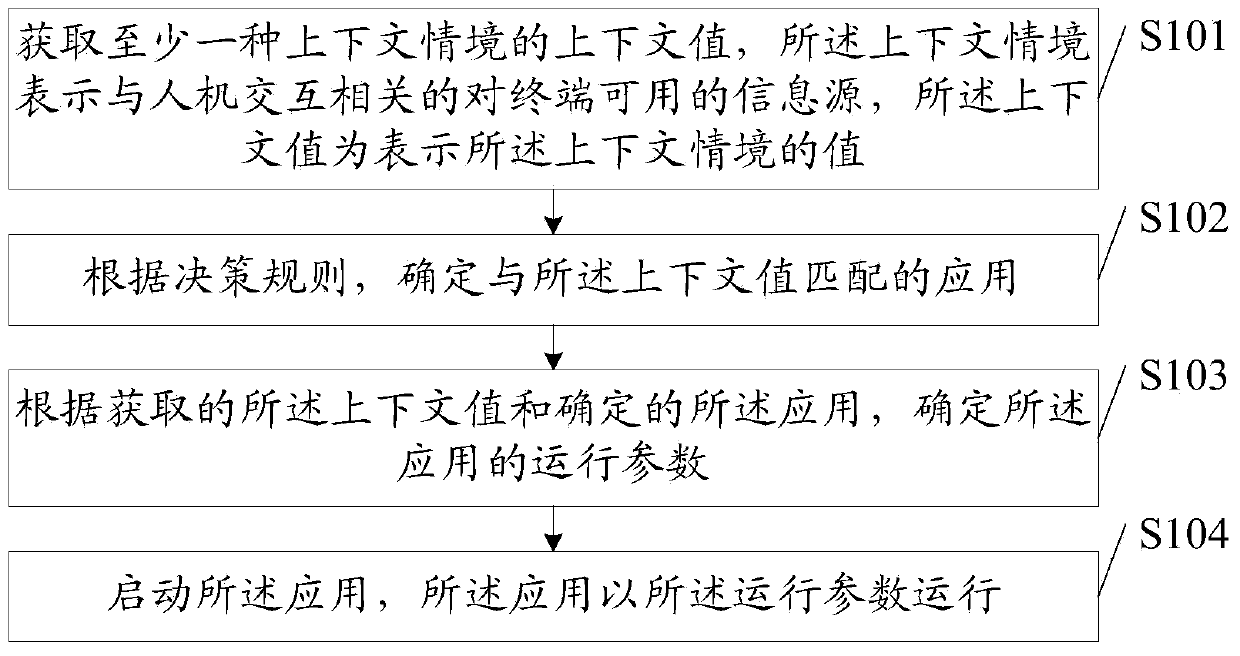

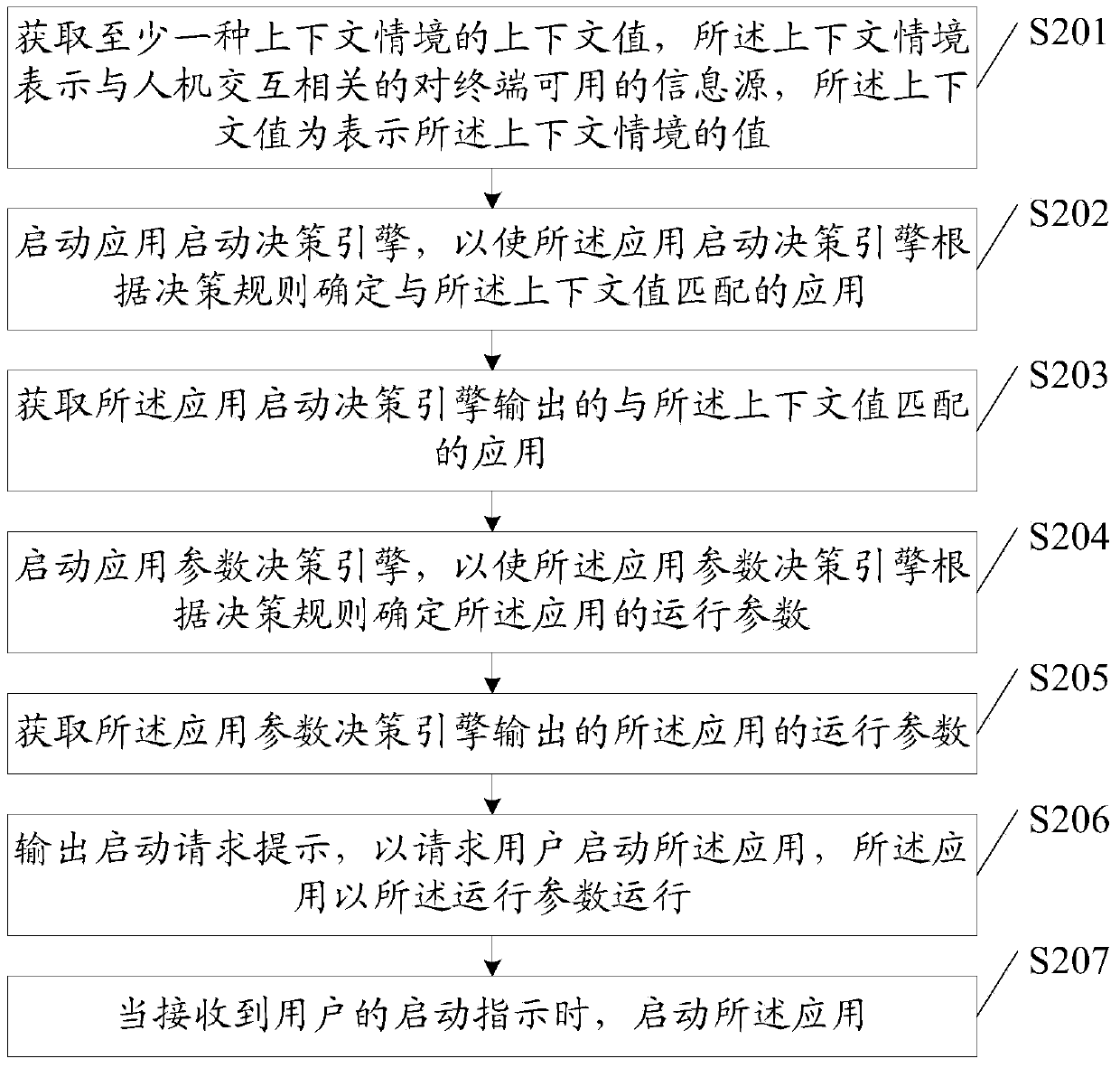

Terminal application starting method and terminal

ActiveCN103995716AImprove experienceImplement autostartProgram loading/initiatingMachine learningData miningHuman–computer interaction

The invention discloses a terminal application starting method and a terminal. The method comprises the steps that the context value of at least one context situation is obtained, the context situation represents an information source available for the terminal and related to man-machine interaction, and the context value represents the value of the context situation; an application corresponding to the context value is determined according to the decision rule; operating parameters of the application are determined according to the context value and the determined application; the application is started, and the application is operated according to the operating parameters. The invention further discloses the corresponding terminal and another corresponding method and terminal. According to the method and terminal, the application of the terminal can be automatically started and operated or the application can be automatically selected and started according to the context value of the context situation, and the user experience is enhanced.

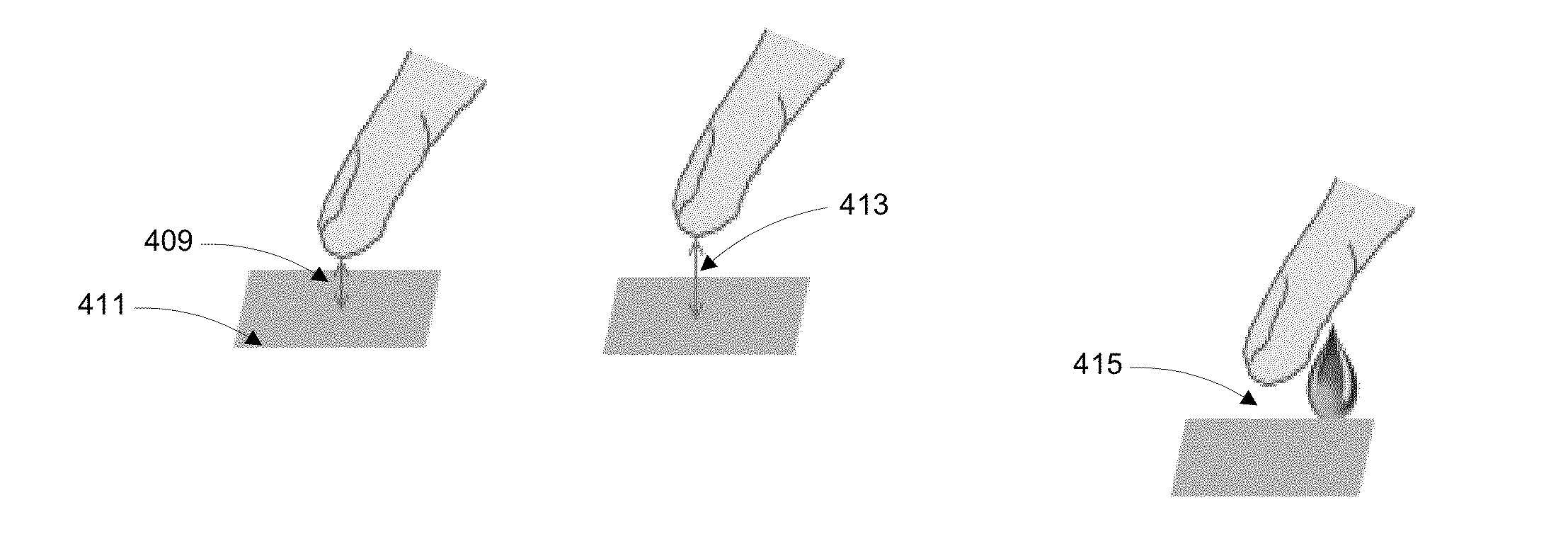

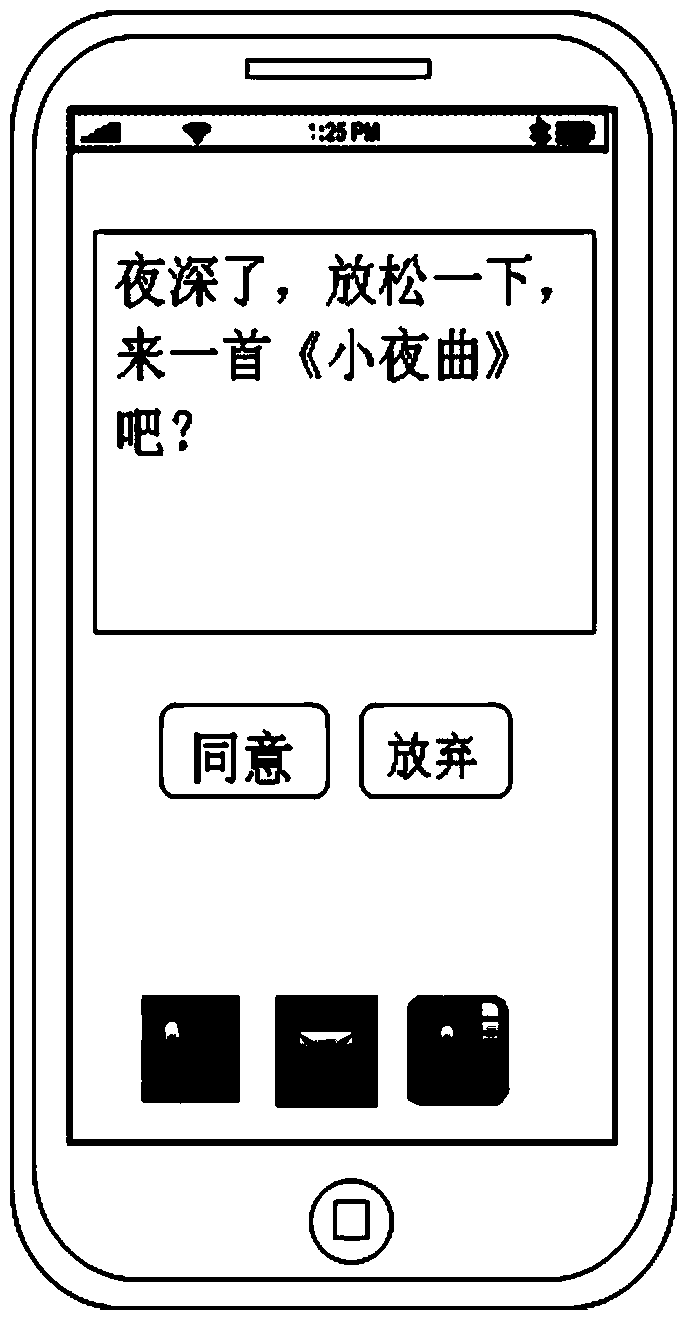

Method and apparatus for displaying prioritized icons in a mobile terminal

An apparatus and method for displaying a plurality of icons on the display (412) of a mobile terminal (401) are provided. One or more characteristics associated with each icon are compared to one or more context values (408), such as time of day, geographic area, or user profile characteristics. Icons that best match one or more context values are represented in a display format that is enlarged in relation to other icons on the display device (412). The context values (408) may include dynamically changing information, such as a current location of the user, so that as the user moves to a different geographic area, different icons are enlarged on the display device (412). The icons can correspond to application programs; logos (such as a corporate logo); documents; Web sites; or other objects. The icons can be grouped into a context bar that is displayed along an edge of the display device (412), and can be arranged in a horizontal, vertical, or mixed fashion. A magnifying glass metaphor can be moved over icons to highlight and select an icon.

Owner:NOKIA TECHNOLOGLES OY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com