Patents

Literature

80 results about "Producer consumer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

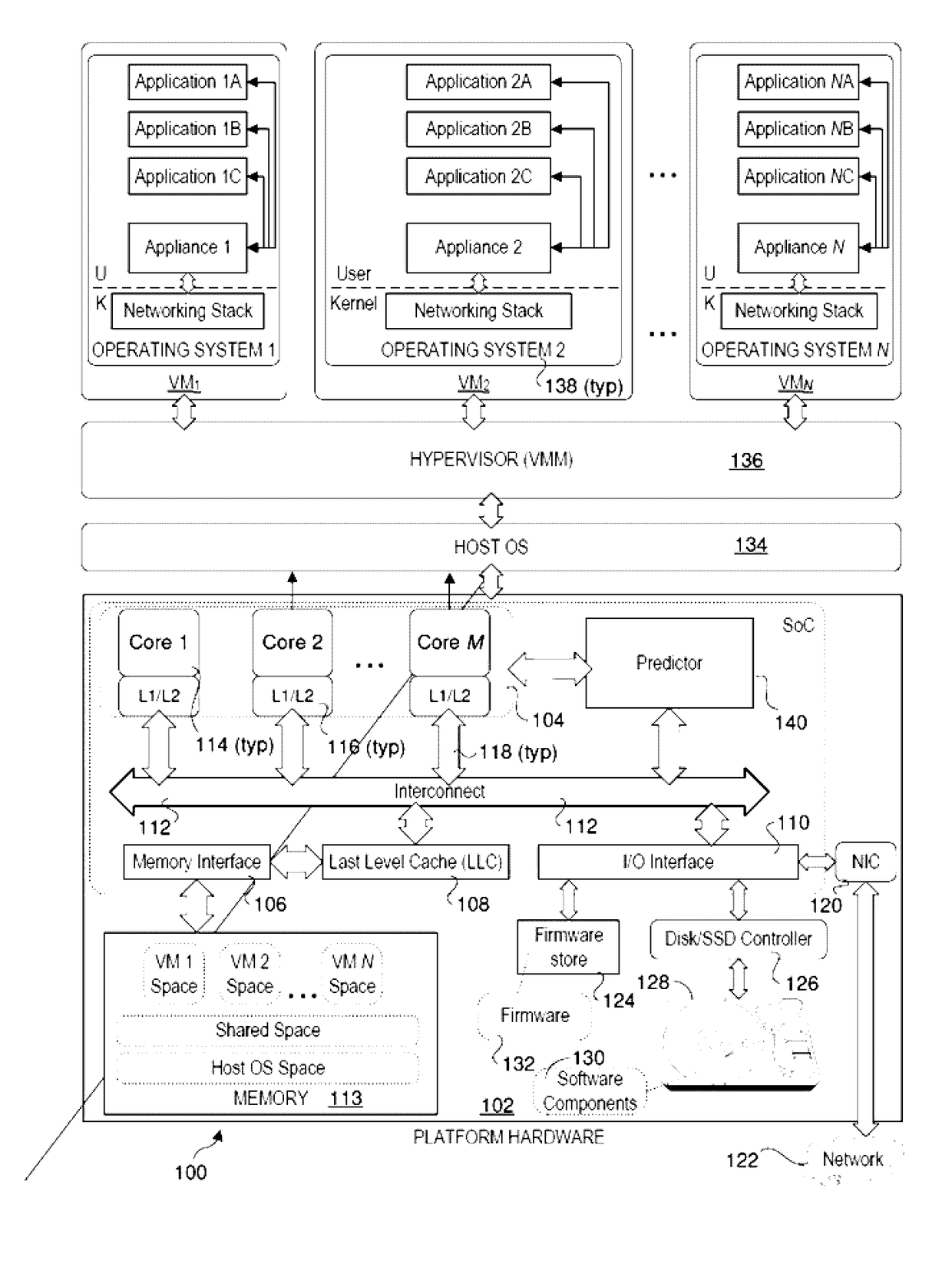

Hardware/software co-optimization to improve performance and energy for inter-vm communication for nfvs and other producer-consumer workloads

ActiveUS20160188474A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyStructure of Management Information

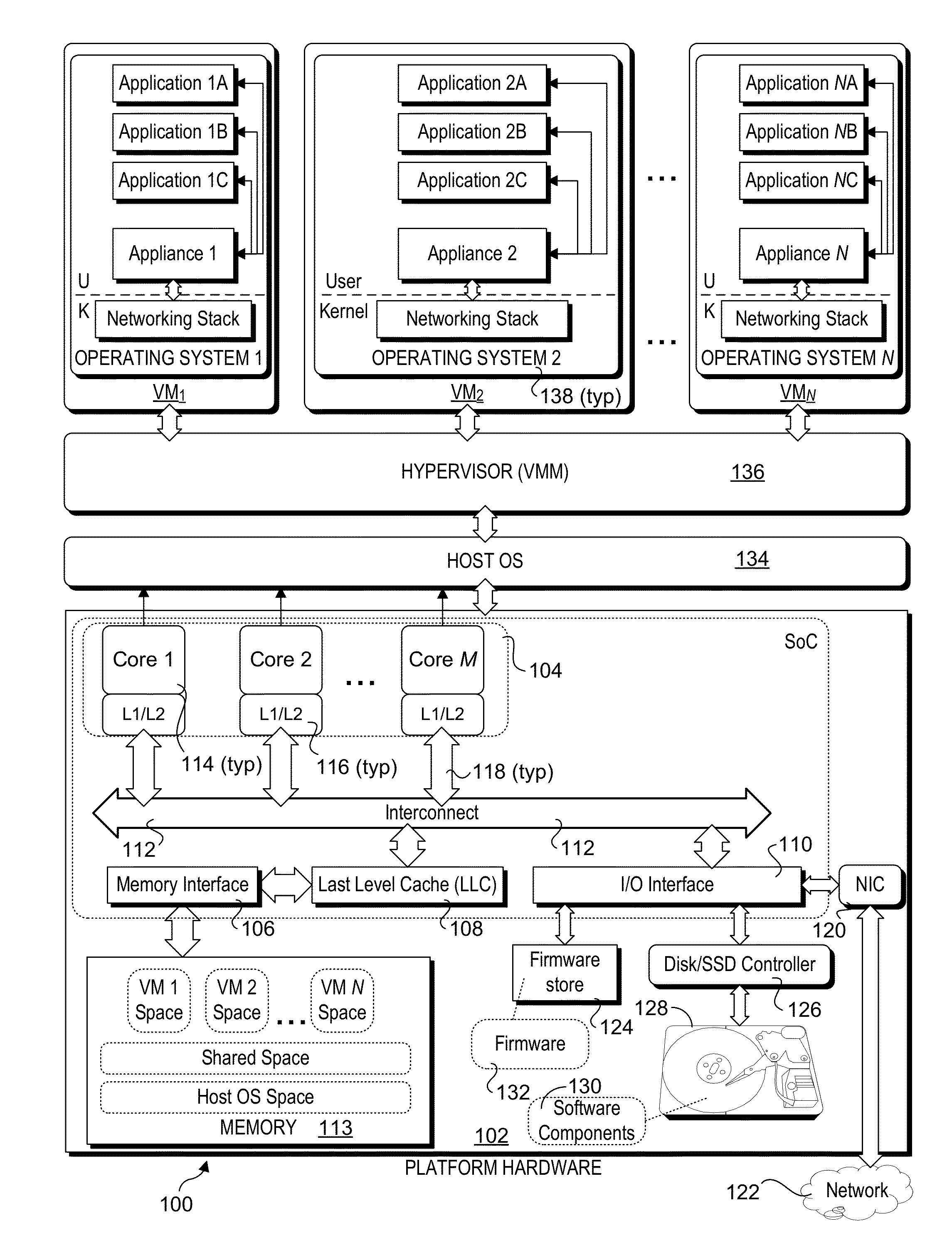

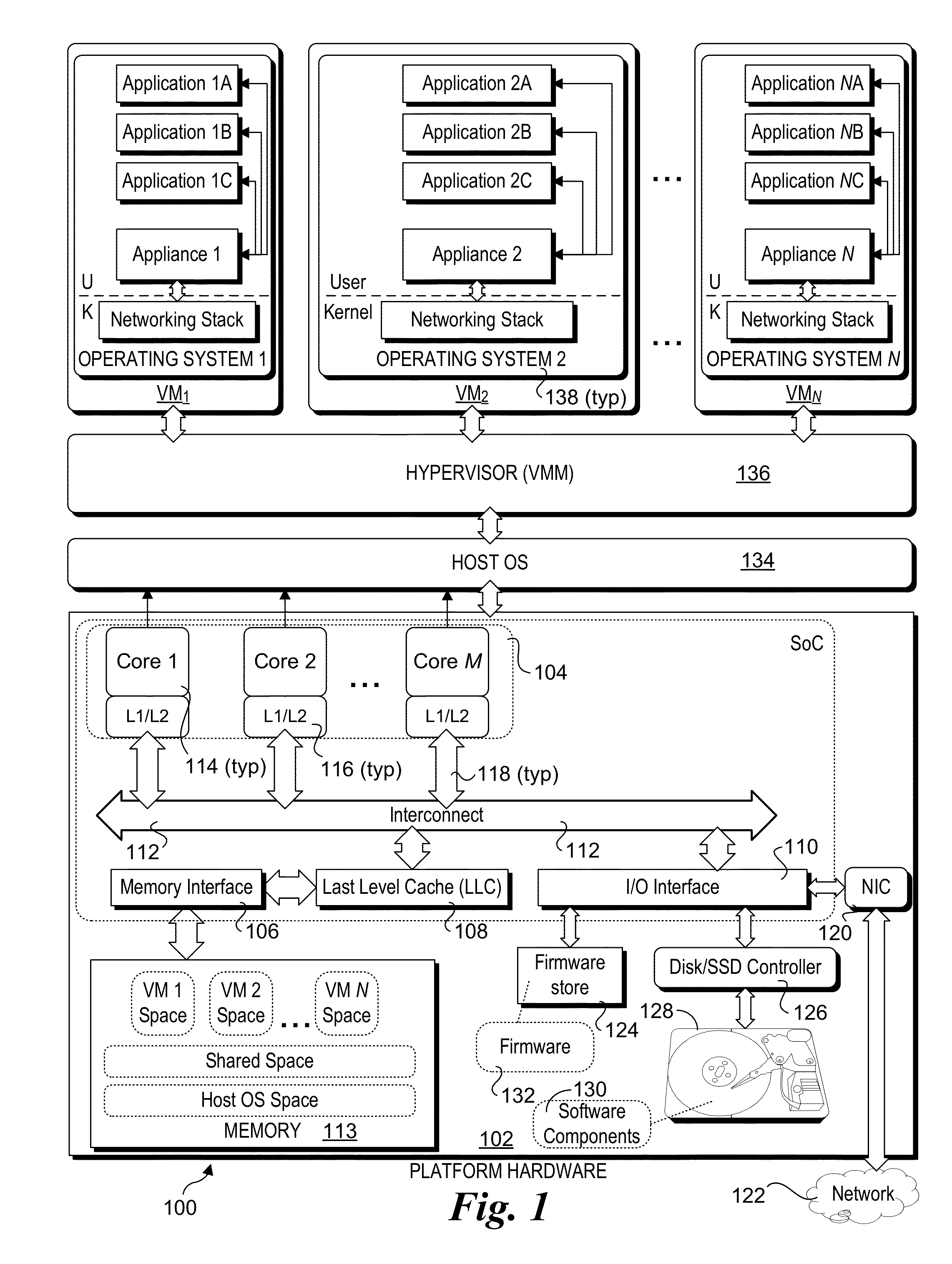

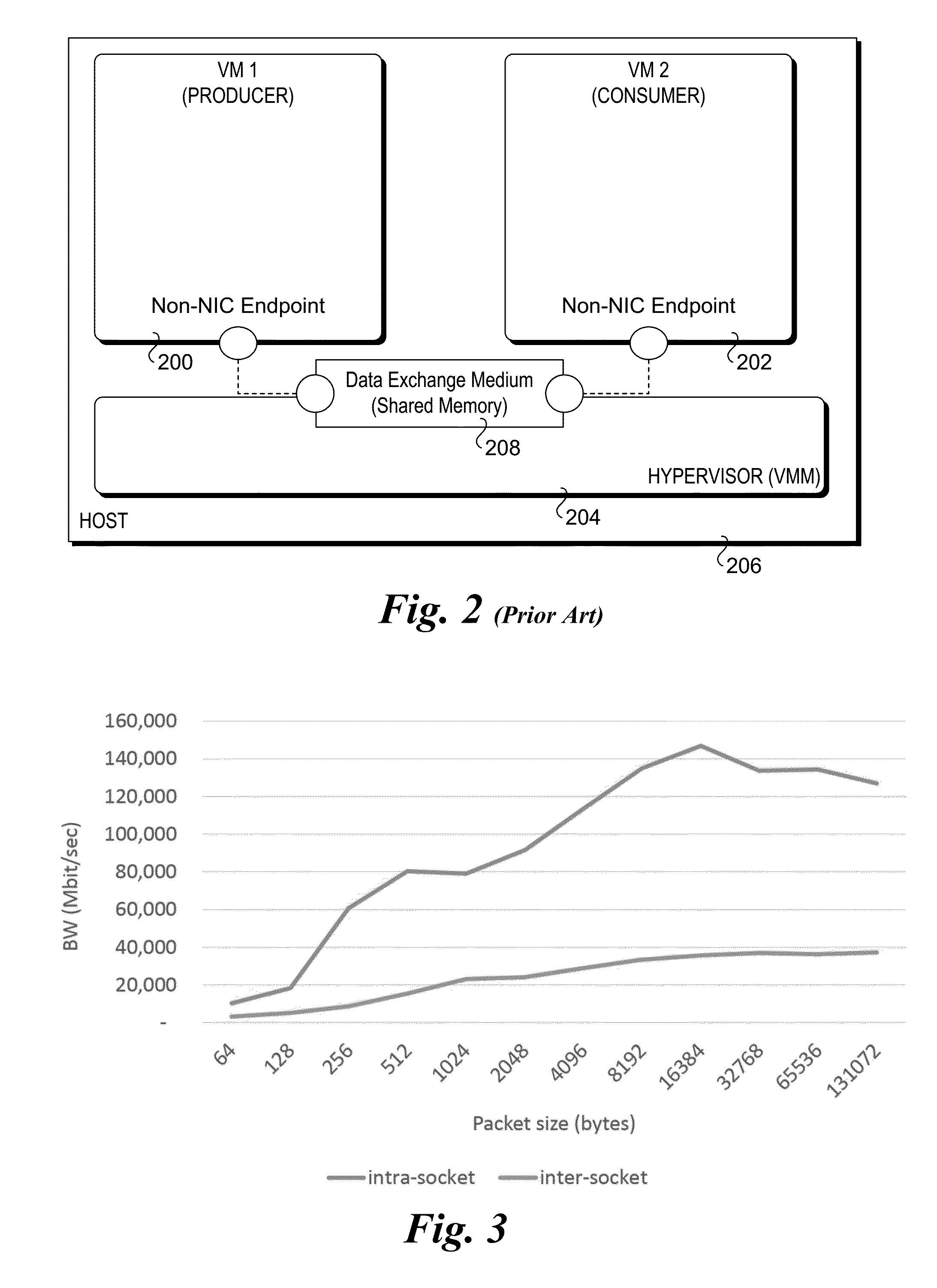

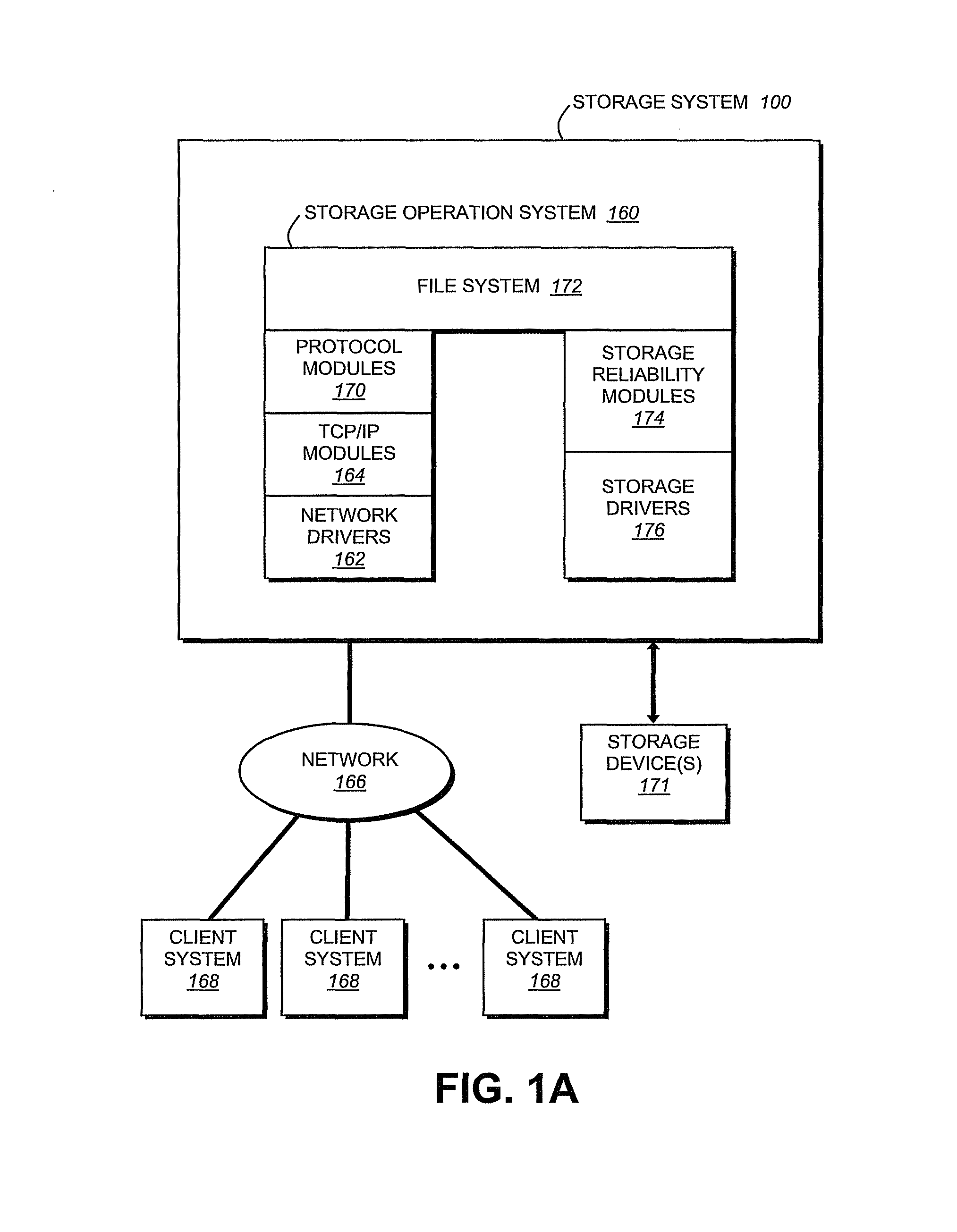

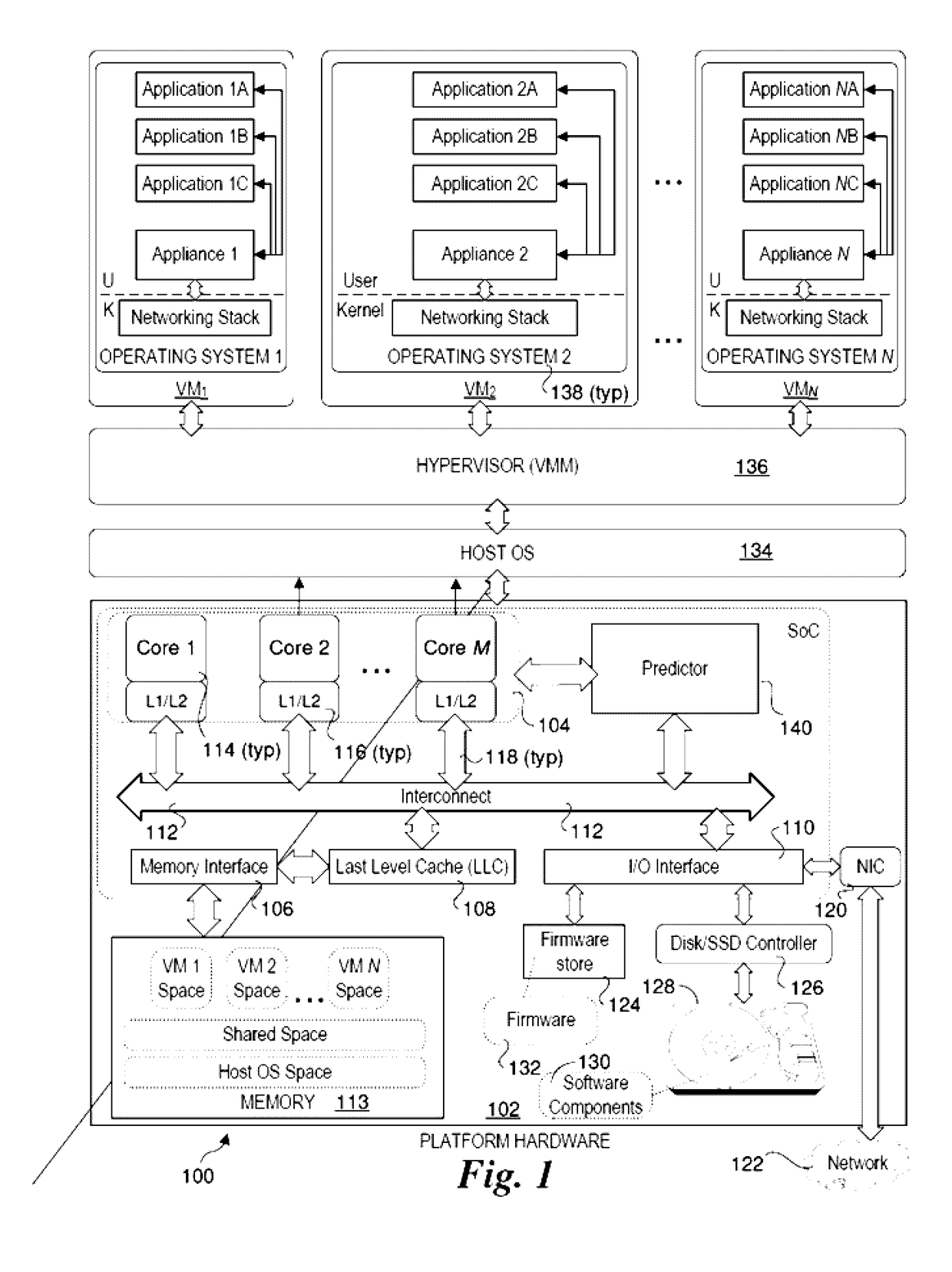

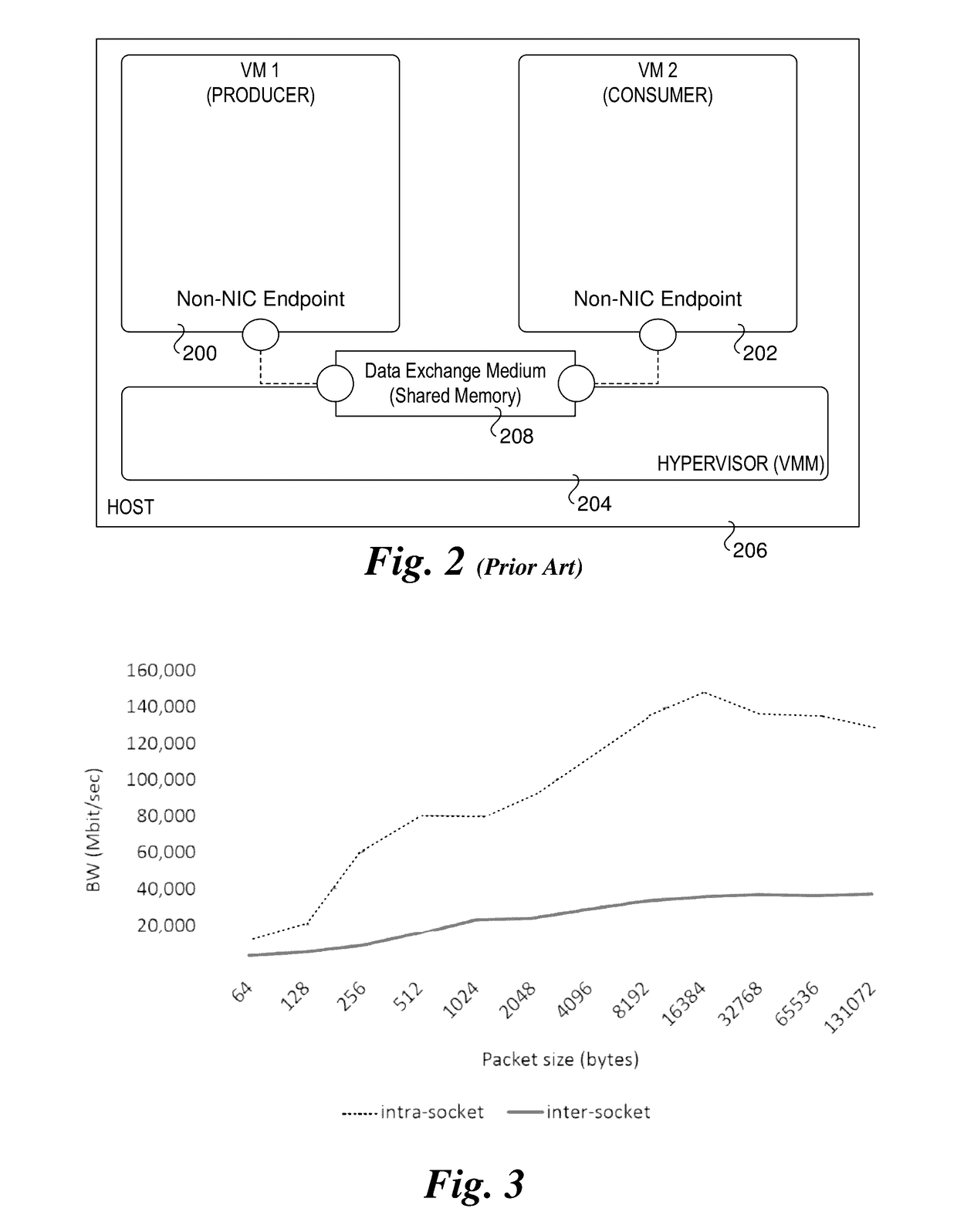

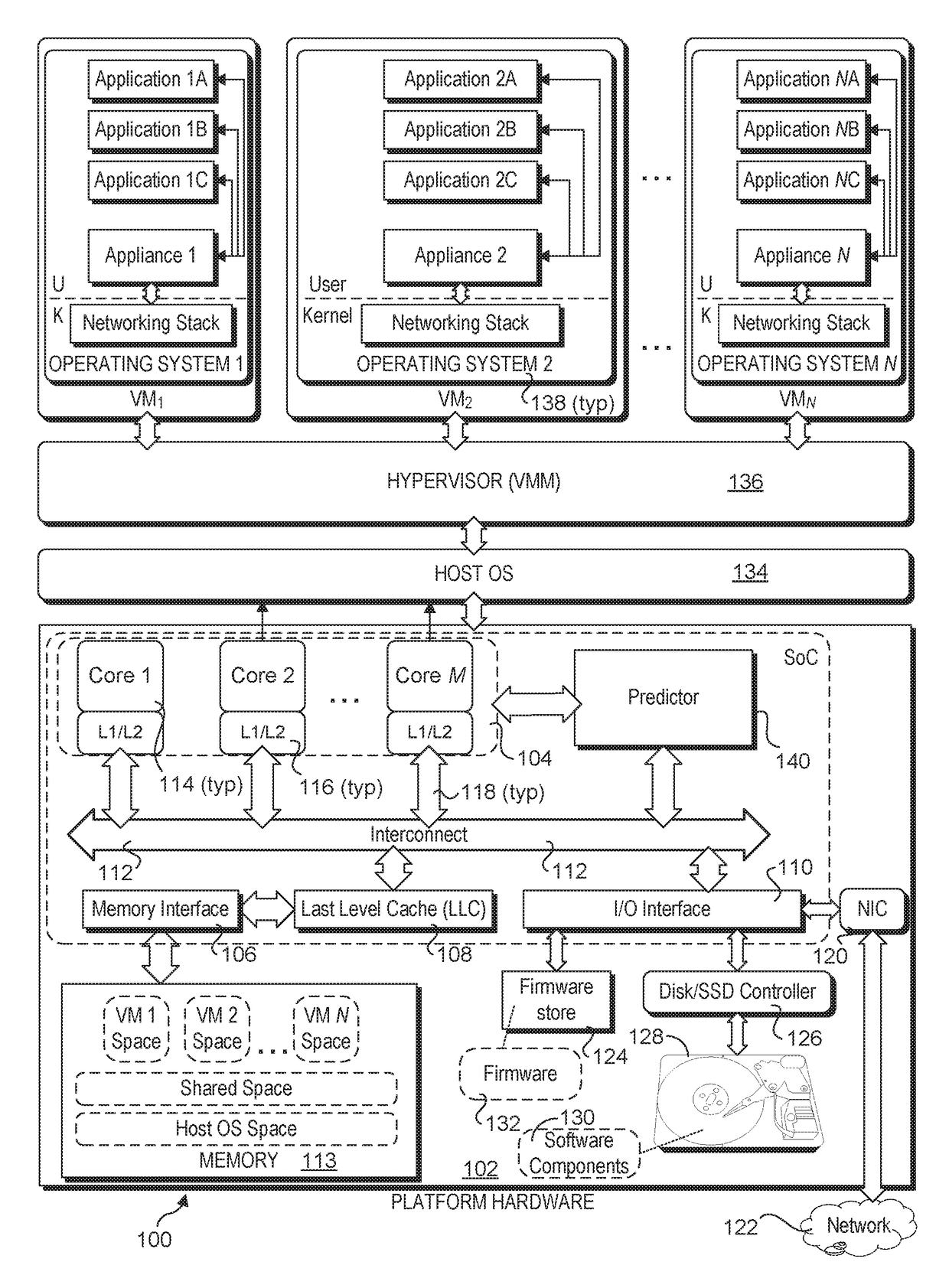

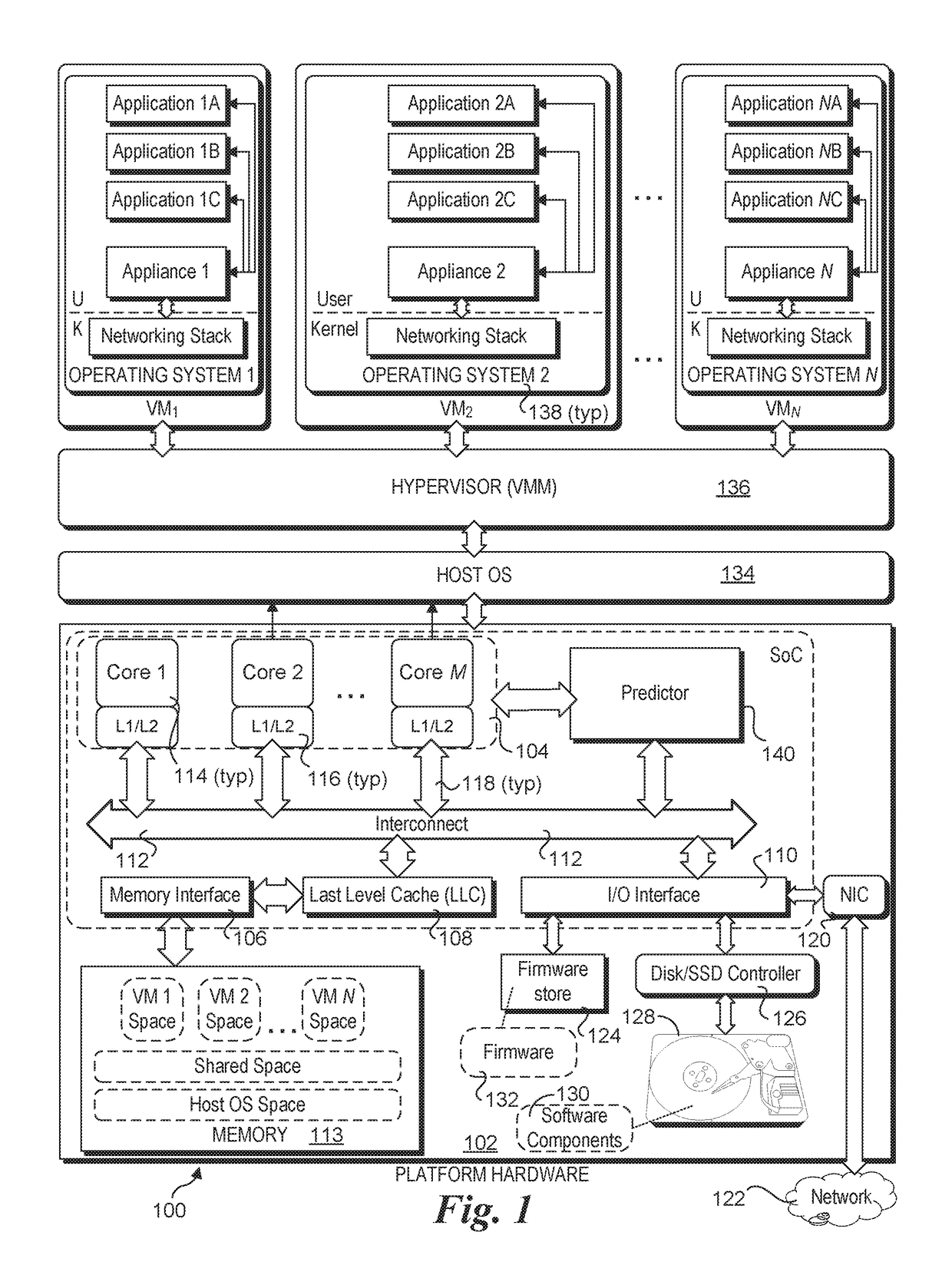

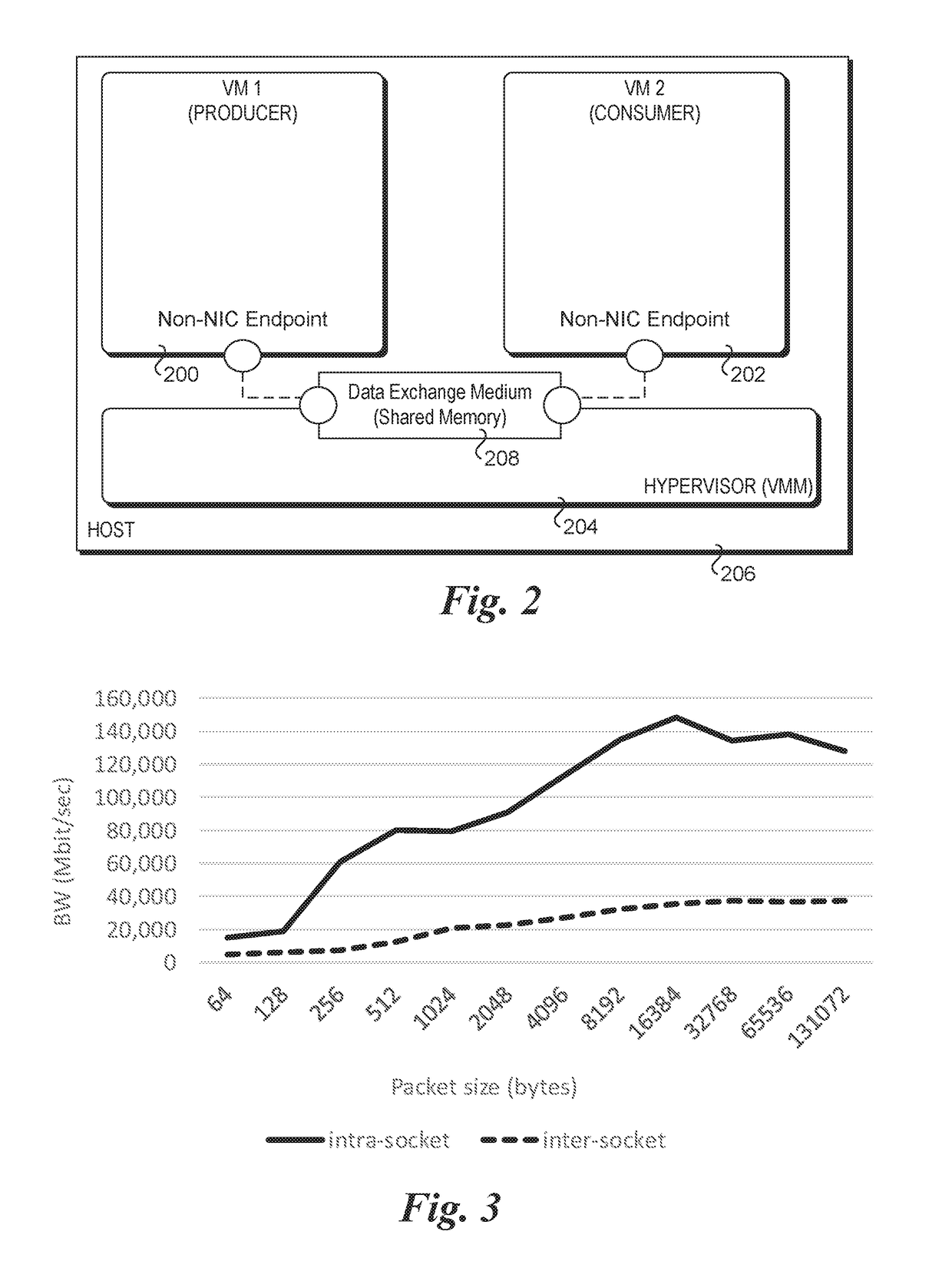

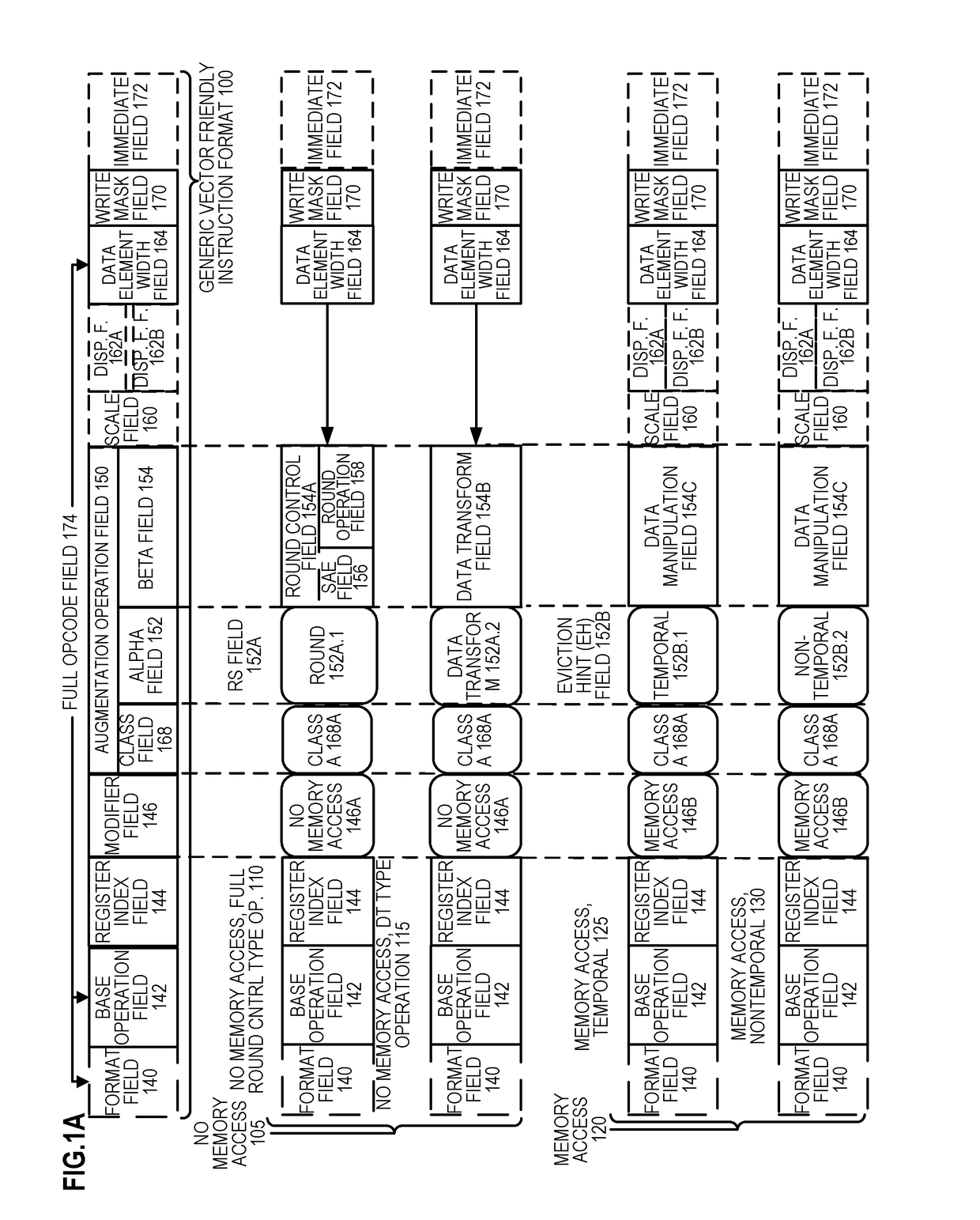

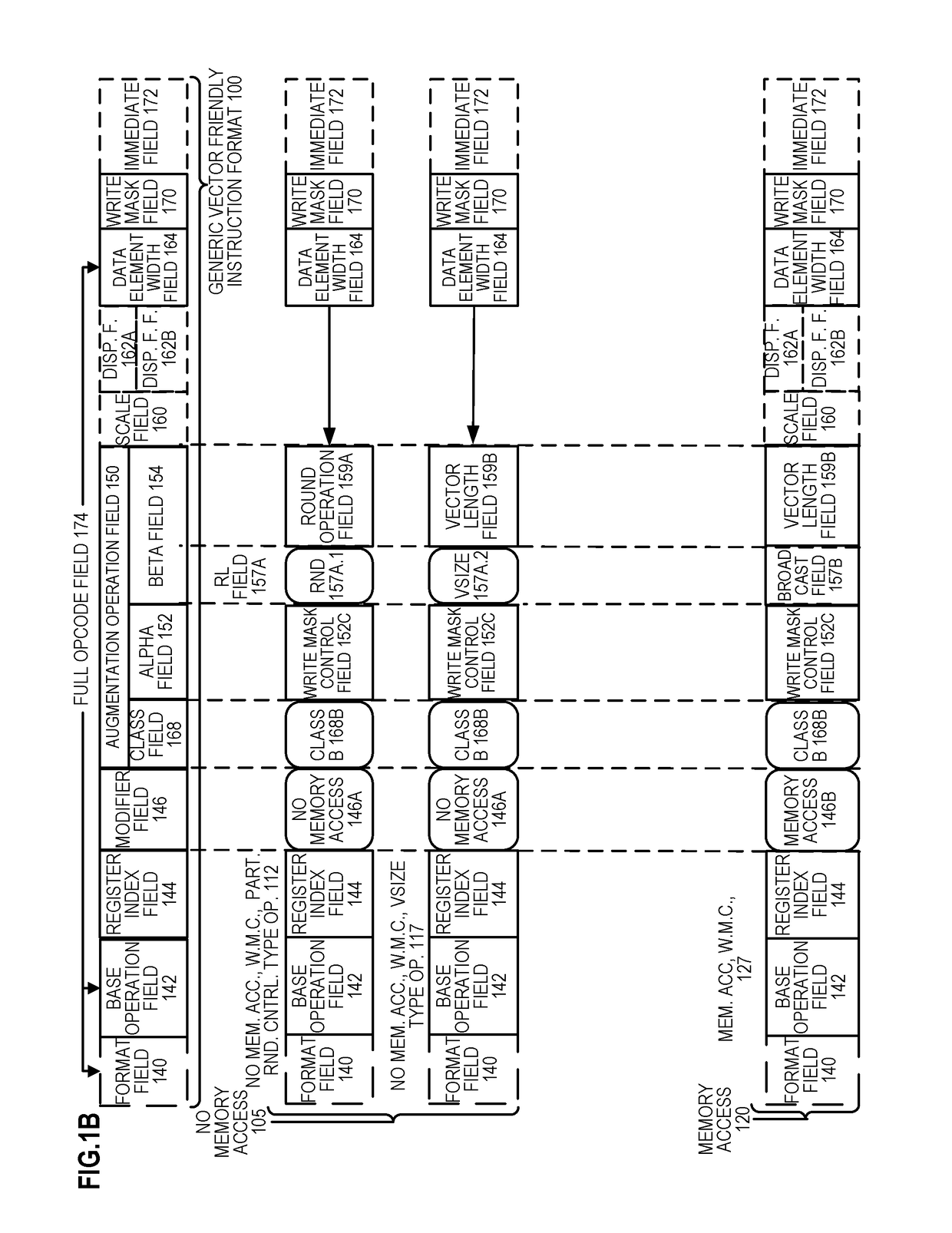

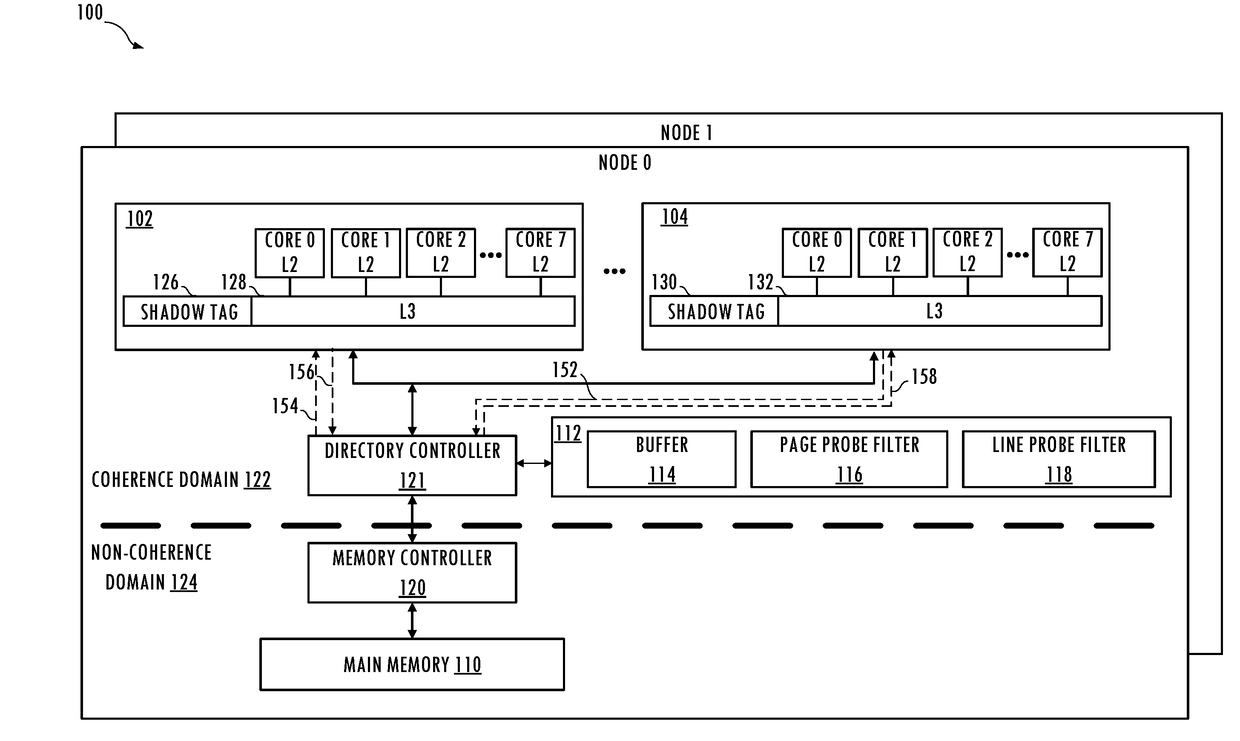

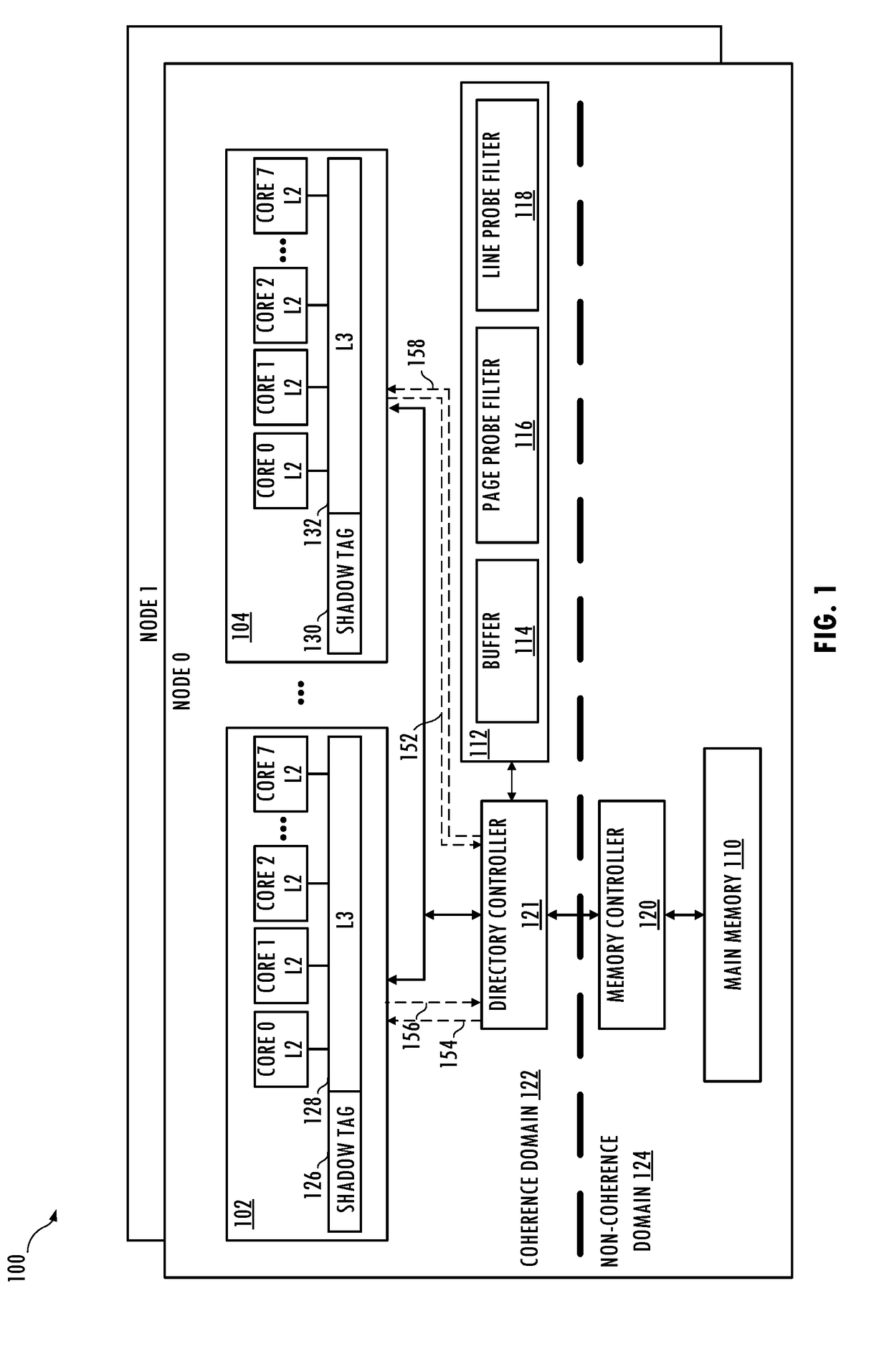

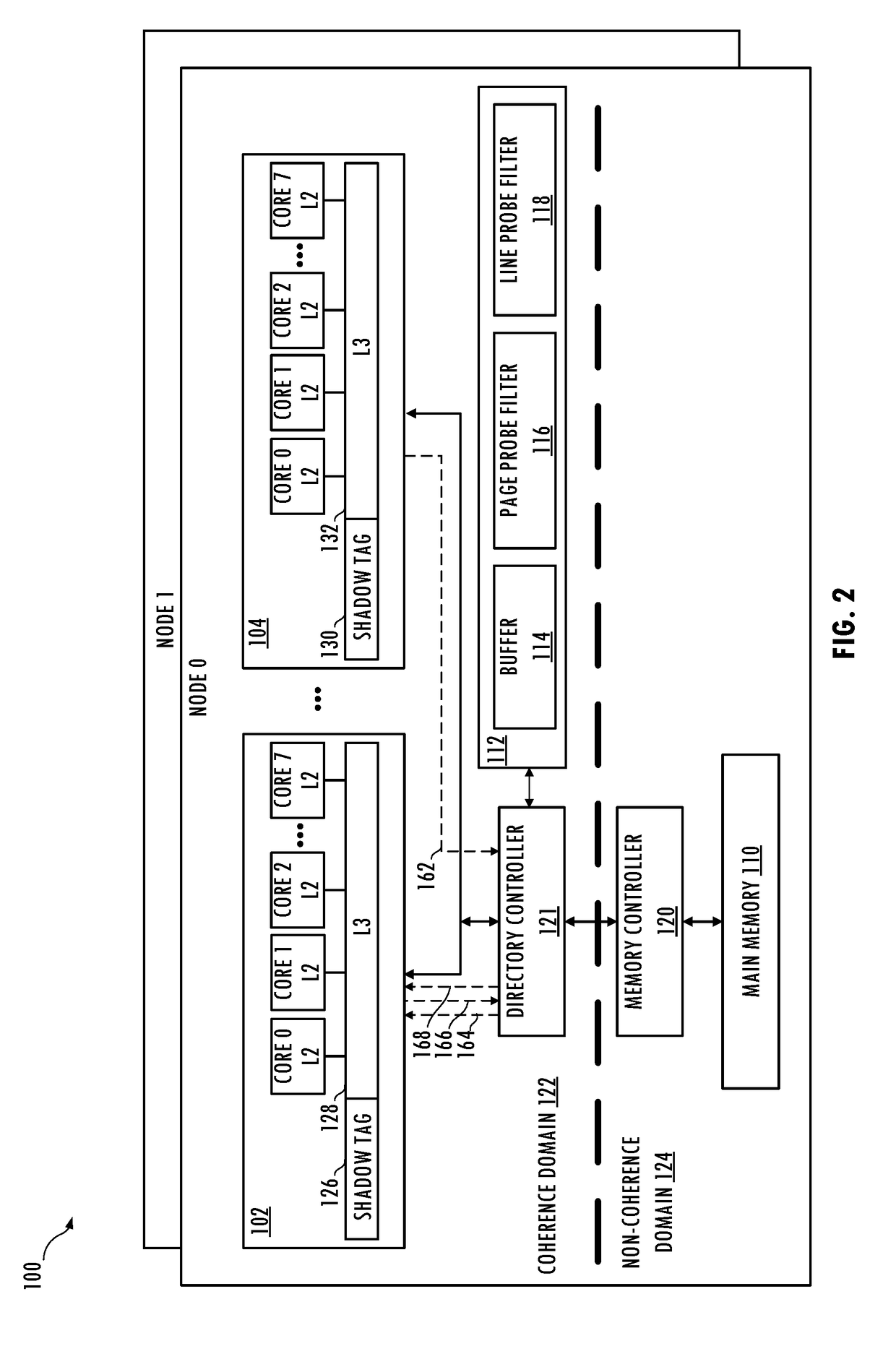

Methods and apparatus implementing Hardware / Software co-optimization to improve performance and energy for inter-VM communication for NFVs and other producer-consumer workloads. The apparatus include multi-core processors with multi-level cache hierarchies including and L1 and L2 cache for each core and a shared last-level cache (LLC). One or more machine-level instructions are provided for proactively demoting cachelines from lower cache levels to higher cache levels, including demoting cachelines from L1 / L2 caches to an LLC. Techniques are also provided for implementing hardware / software co-optimization in multi-socket NUMA architecture system, wherein cachelines may be selectively demoted and pushed to an LLC in a remote socket. In addition, techniques are disclosure for implementing early snooping in multi-socket systems to reduce latency when accessing cachelines on remote sockets.

Owner:INTEL CORP

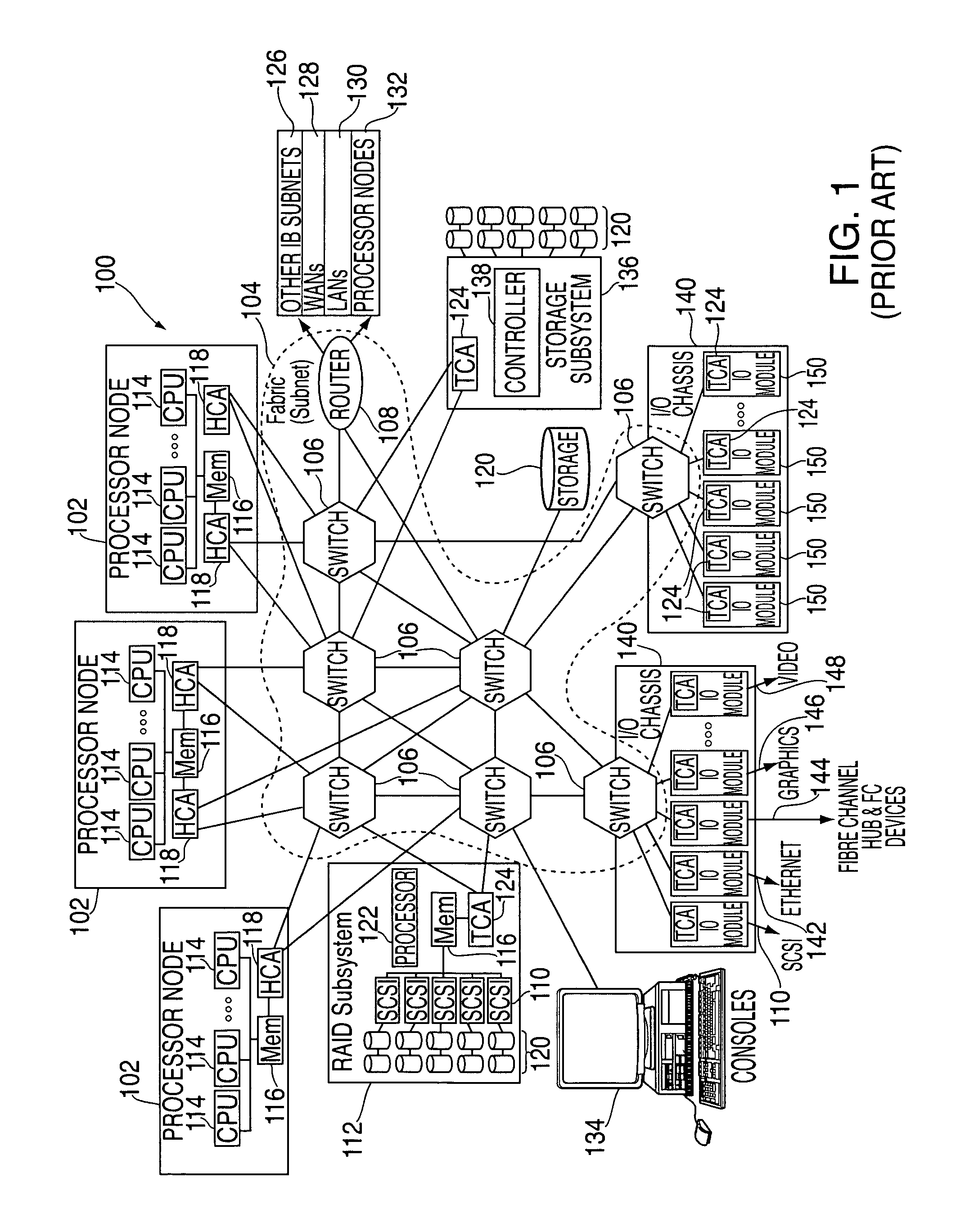

Apparatus and method for efficient communication of producer/consumer buffer status

InactiveUS20070174411A1Response latencyMinimize power consumptionDigital computer detailsElectric digital data processingData processing systemReal-time computing

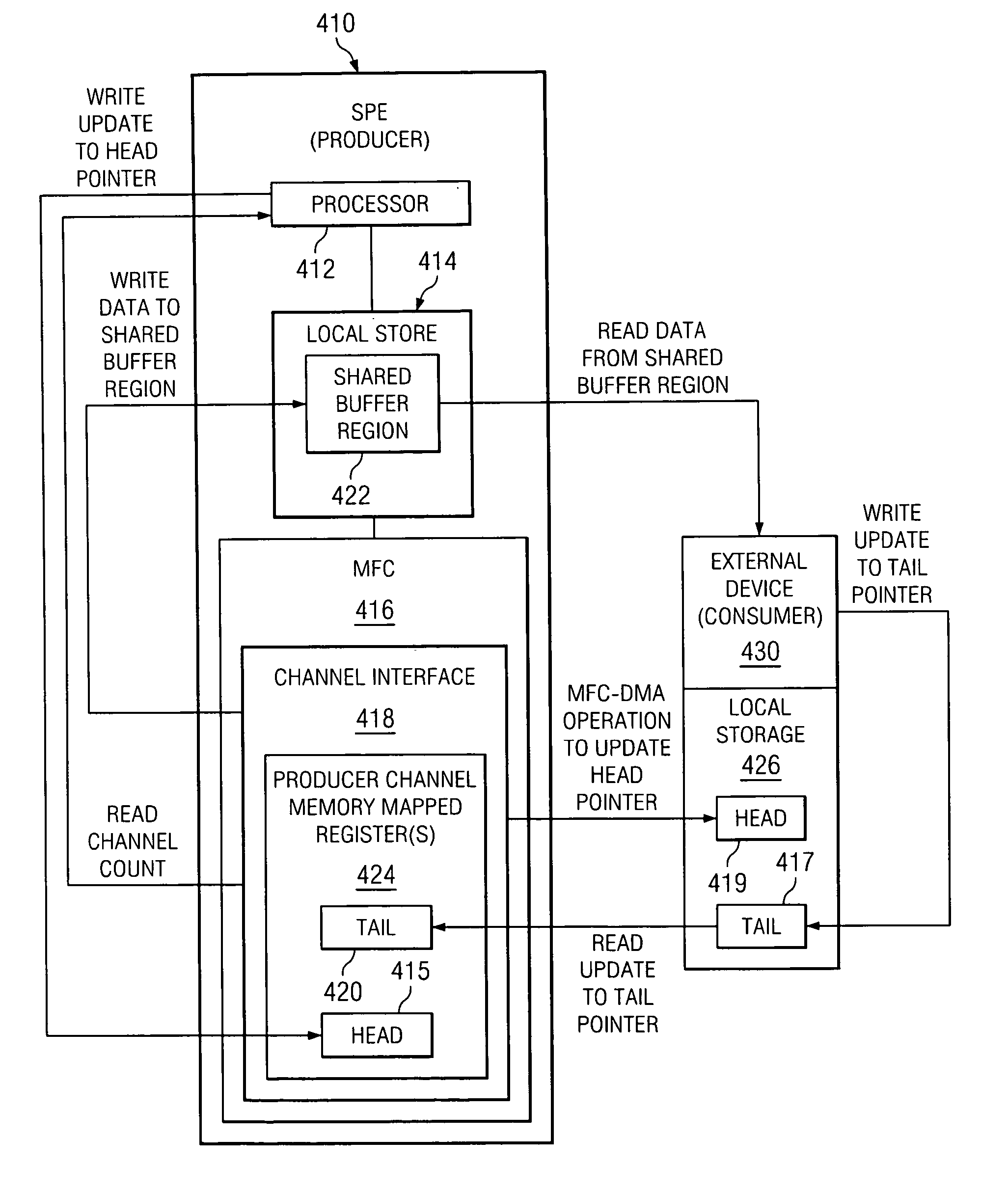

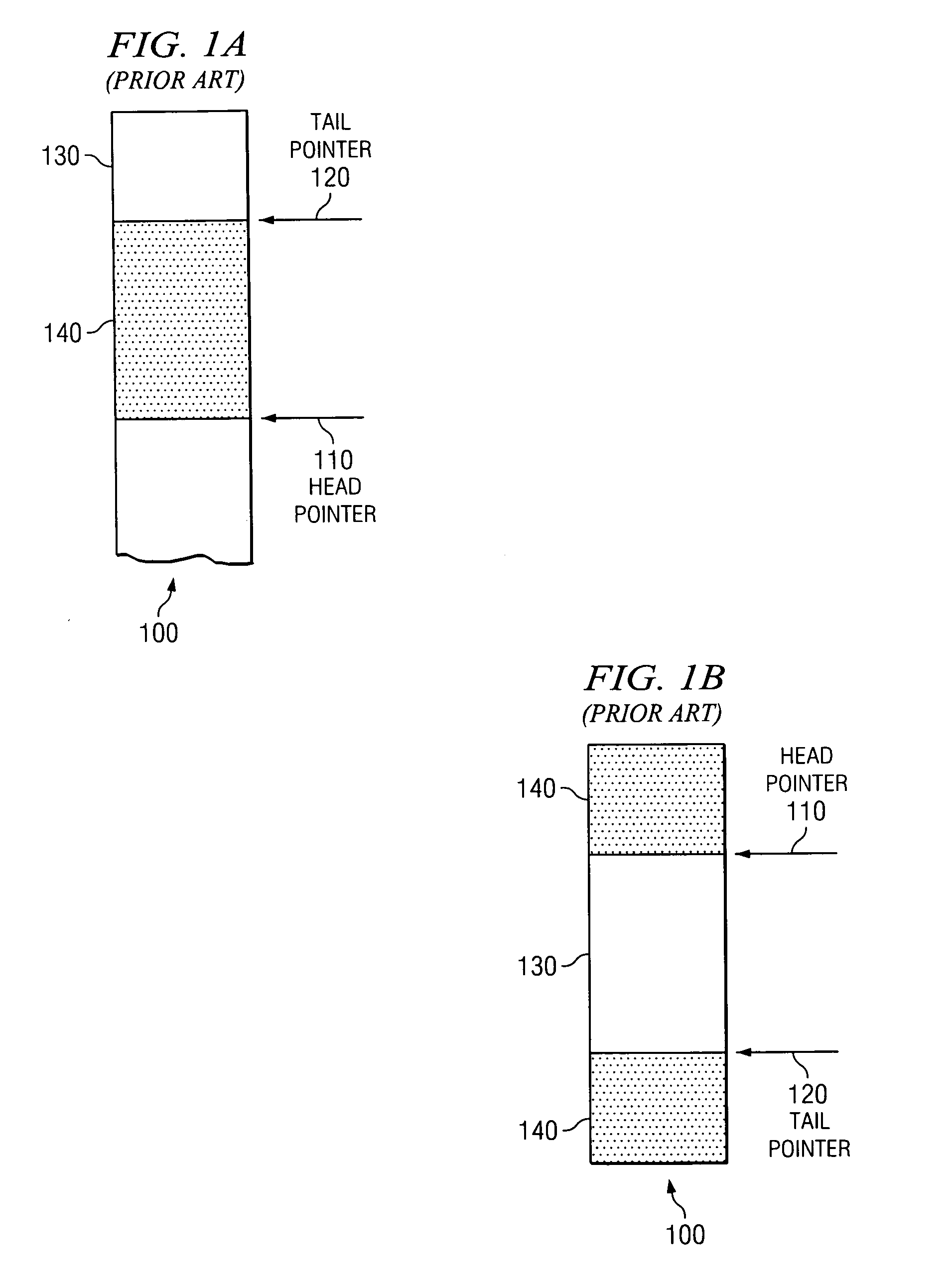

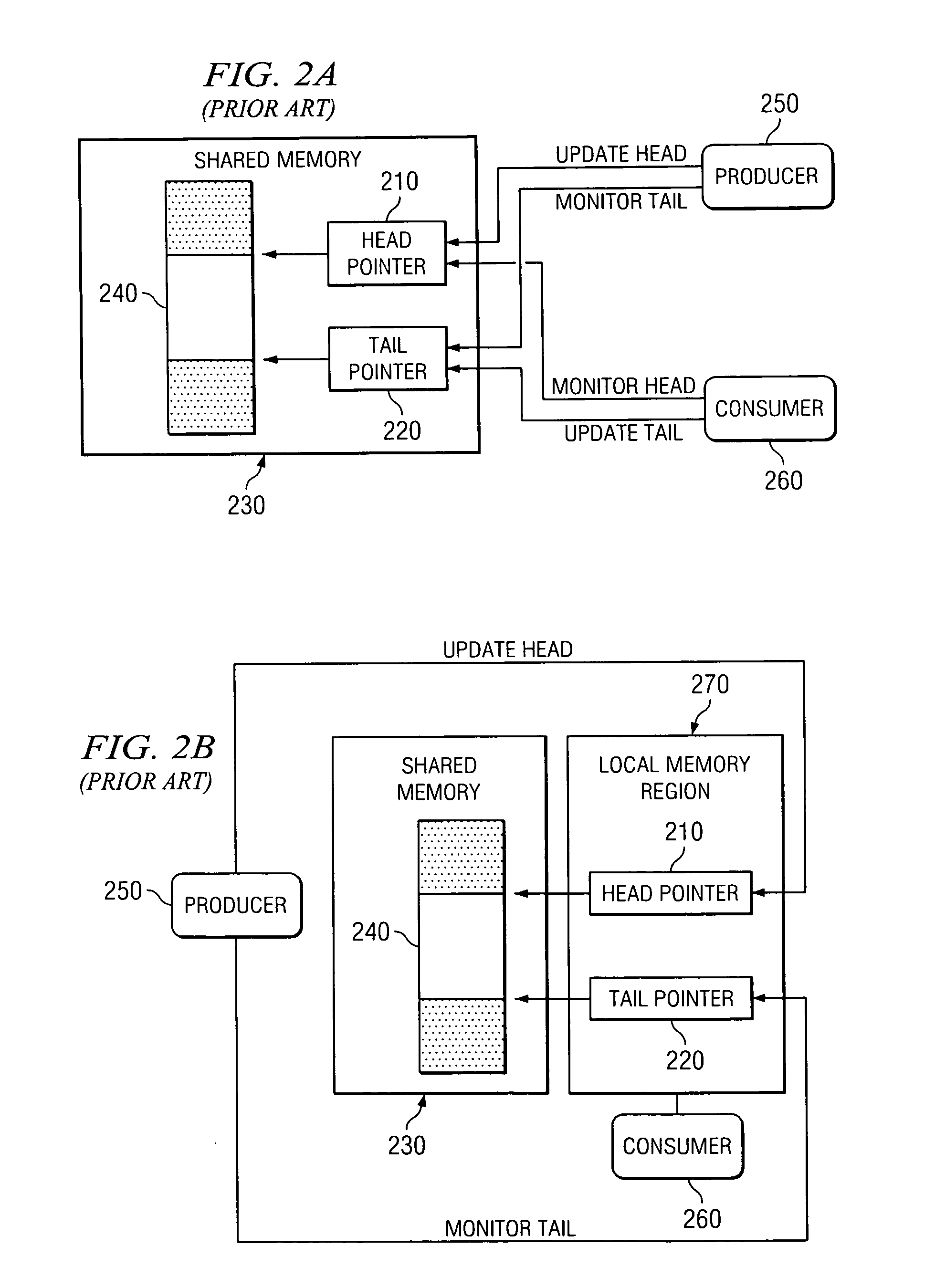

An apparatus and method for efficient communication of producer / consumer buffer status are provided. With the apparatus and method, devices in a data processing system notify each other of updates to head and tail pointers of a shared buffer region when the devices perform operations on the shared buffer region using signal notification channels of the devices. Thus, when a producer device that produces data to the shared buffer region writes data to the shared buffer region, an update to the head pointer is written to a signal notification channel of a consumer device. When a consumer device reads data from the shared buffer region, the consumer device writes a tail pointer update to a signal notification channel of the producer device. In addition, channels may operate in a blocking mode so that the corresponding device is kept in a low power state until an update is received over the channel.

Owner:MACHINES CORP INT BUSINESS +1

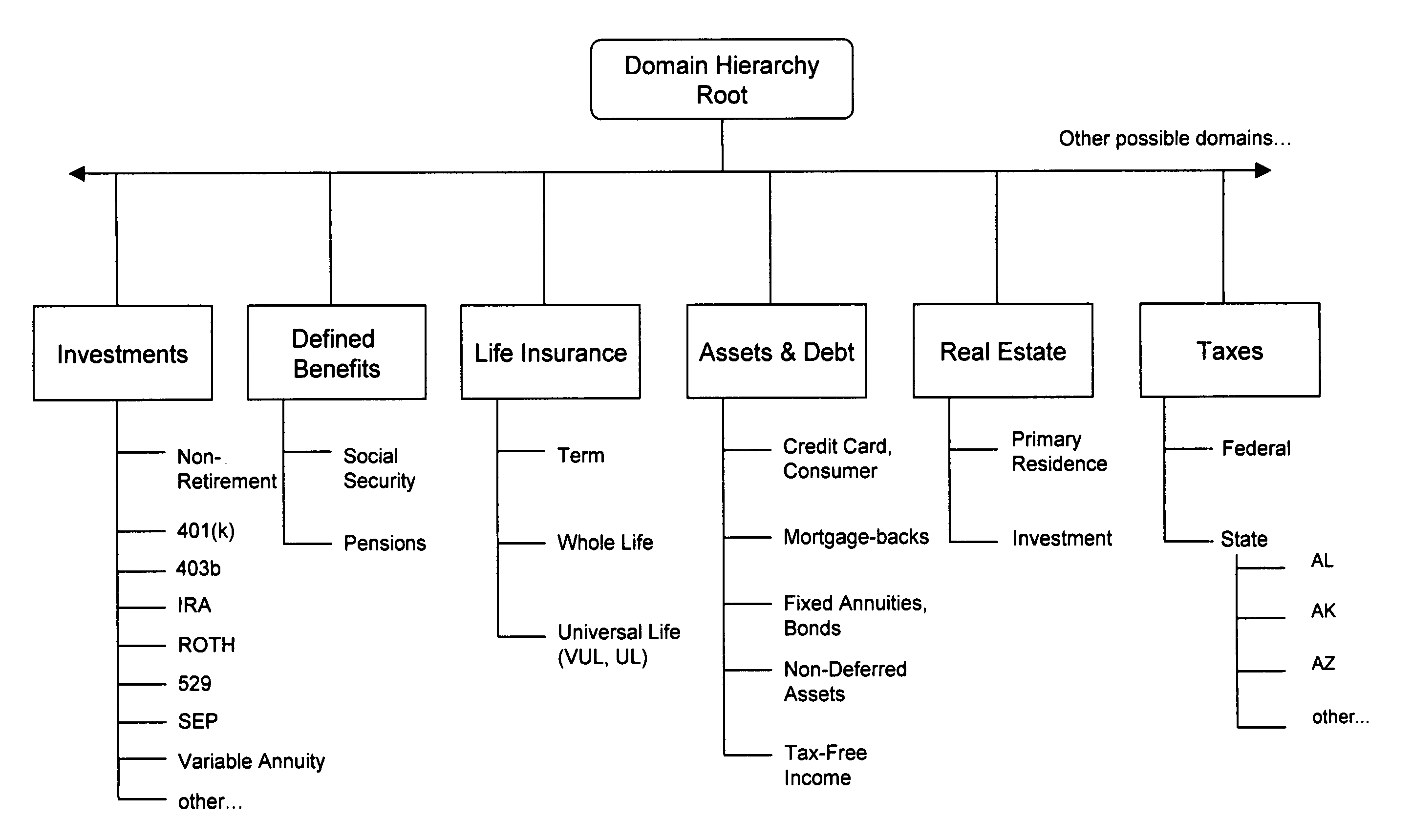

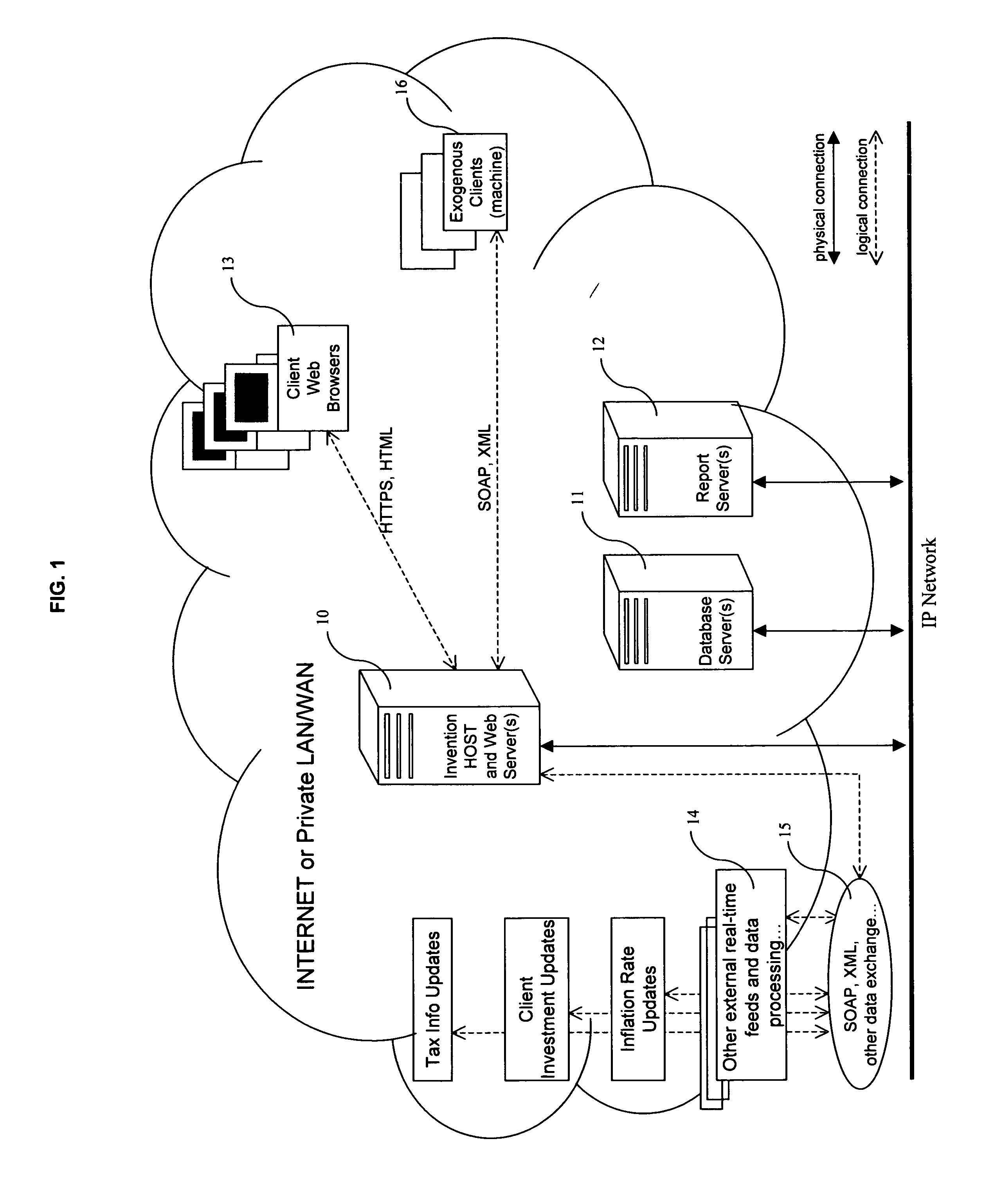

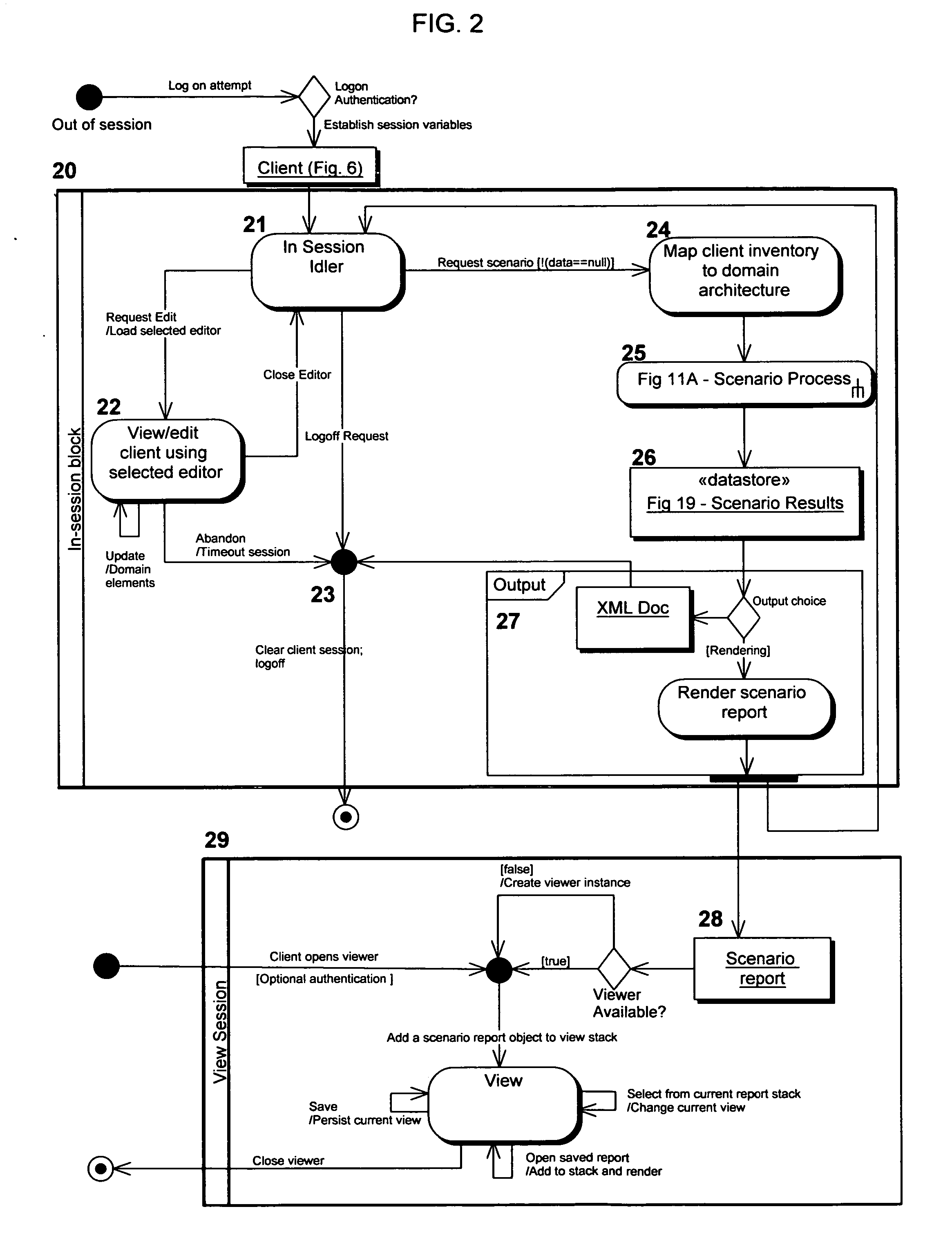

Systems and methods for strategic financial independence planning

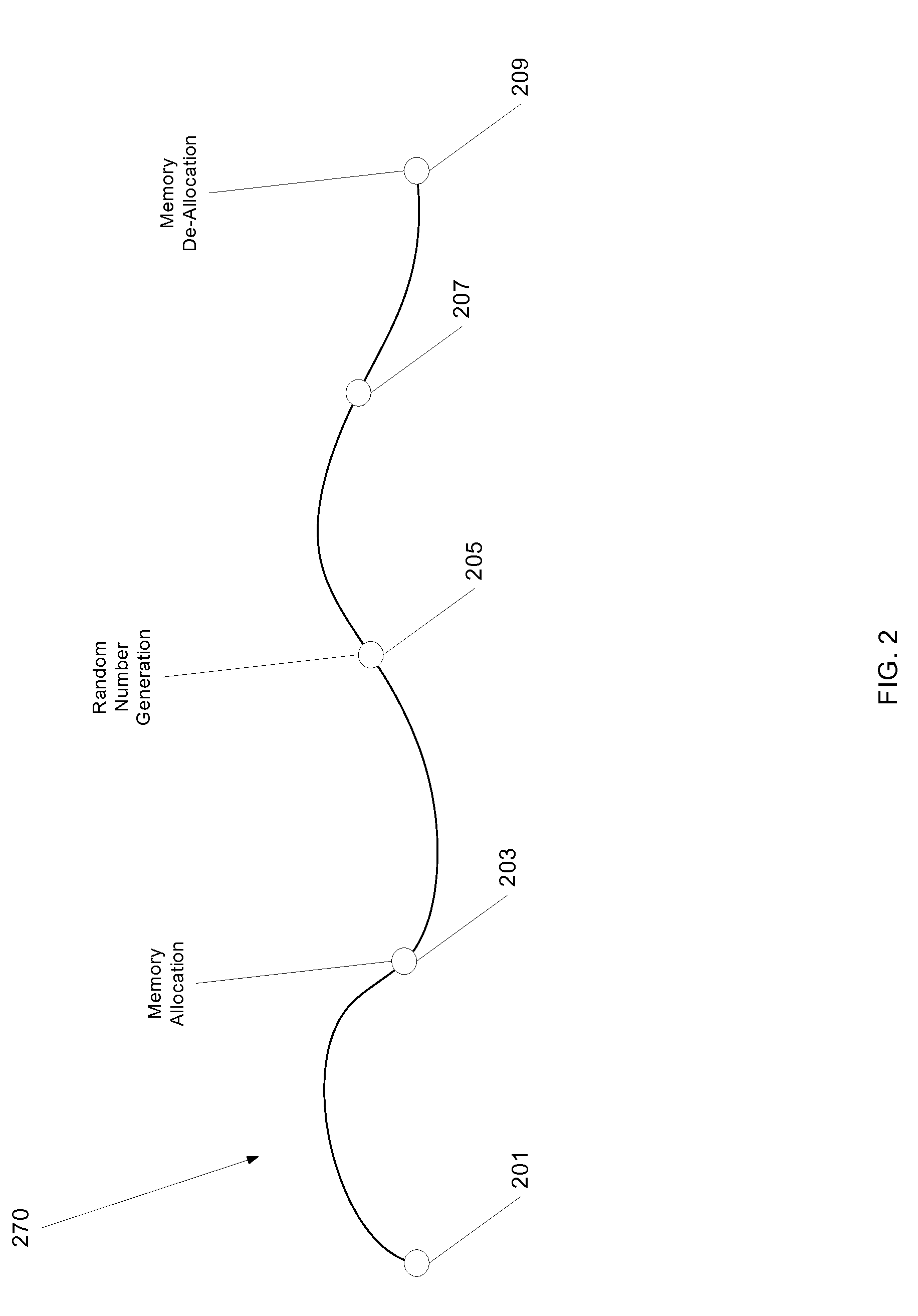

The present invention relates to financial planning and retirement planning systems, particularly to systems and methods for deriving and measuring the feasibility of a client's retirement across a diverse set of financial domains. Output is made available in a variety of formats suiting different client needs including views, formatted documents and document exports accessible on demand or schedule by external computing systems. The intention is to help clients take primary ownership with respect to issues related to their retirement readiness and improve strategic decision making under an experimental framework capable of deriving, comparing and optimizing a multitude of cases that simulate future uncertainty. The invention specifies a client-server and producer-consumer distributed architecture, decomposed and integrated into various data and functional layers. Modularity is one result of the architecture, enabling the invention to be deployed in various configurations.

Owner:CUSCOVITCH SAMUEL THOMAS +2

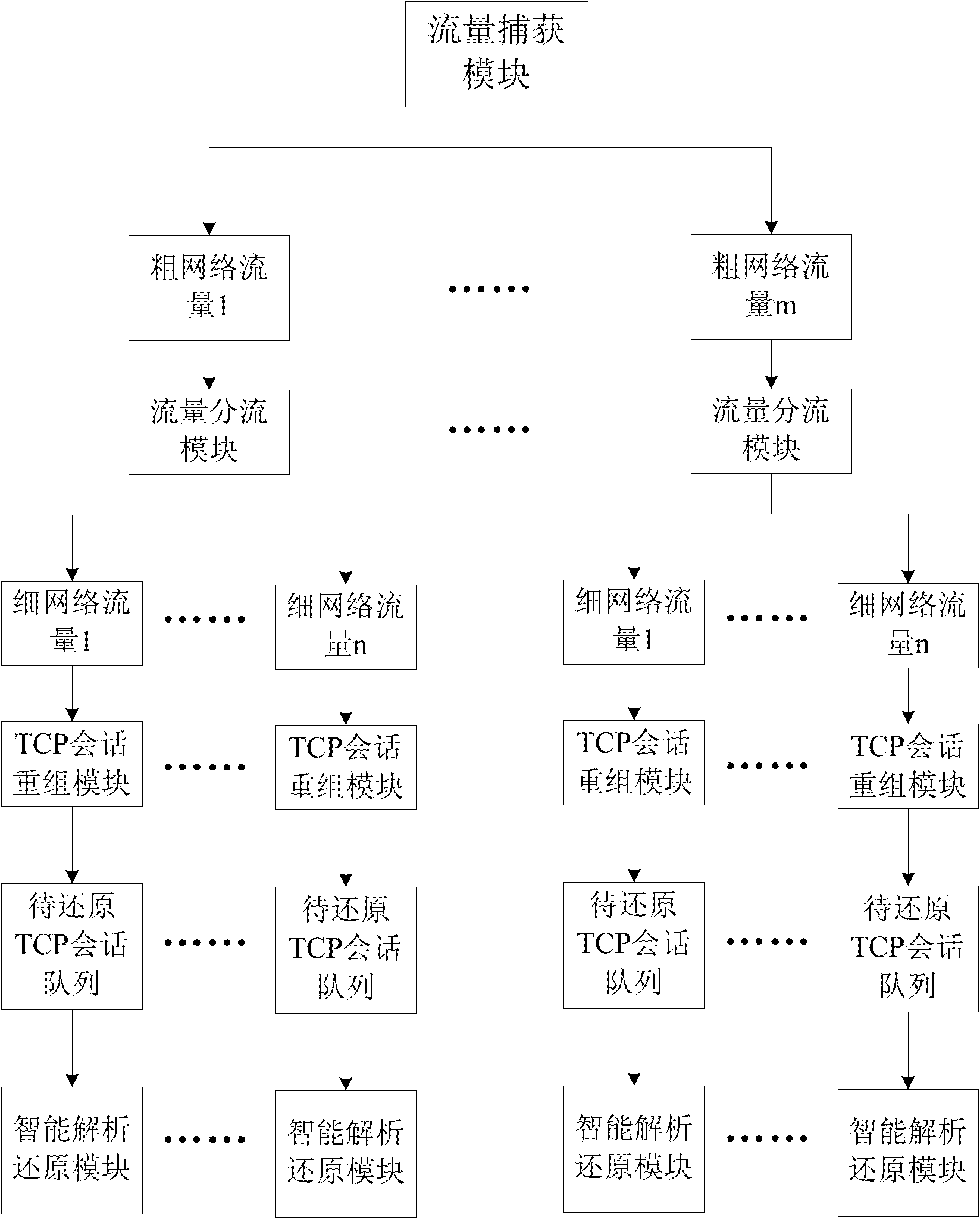

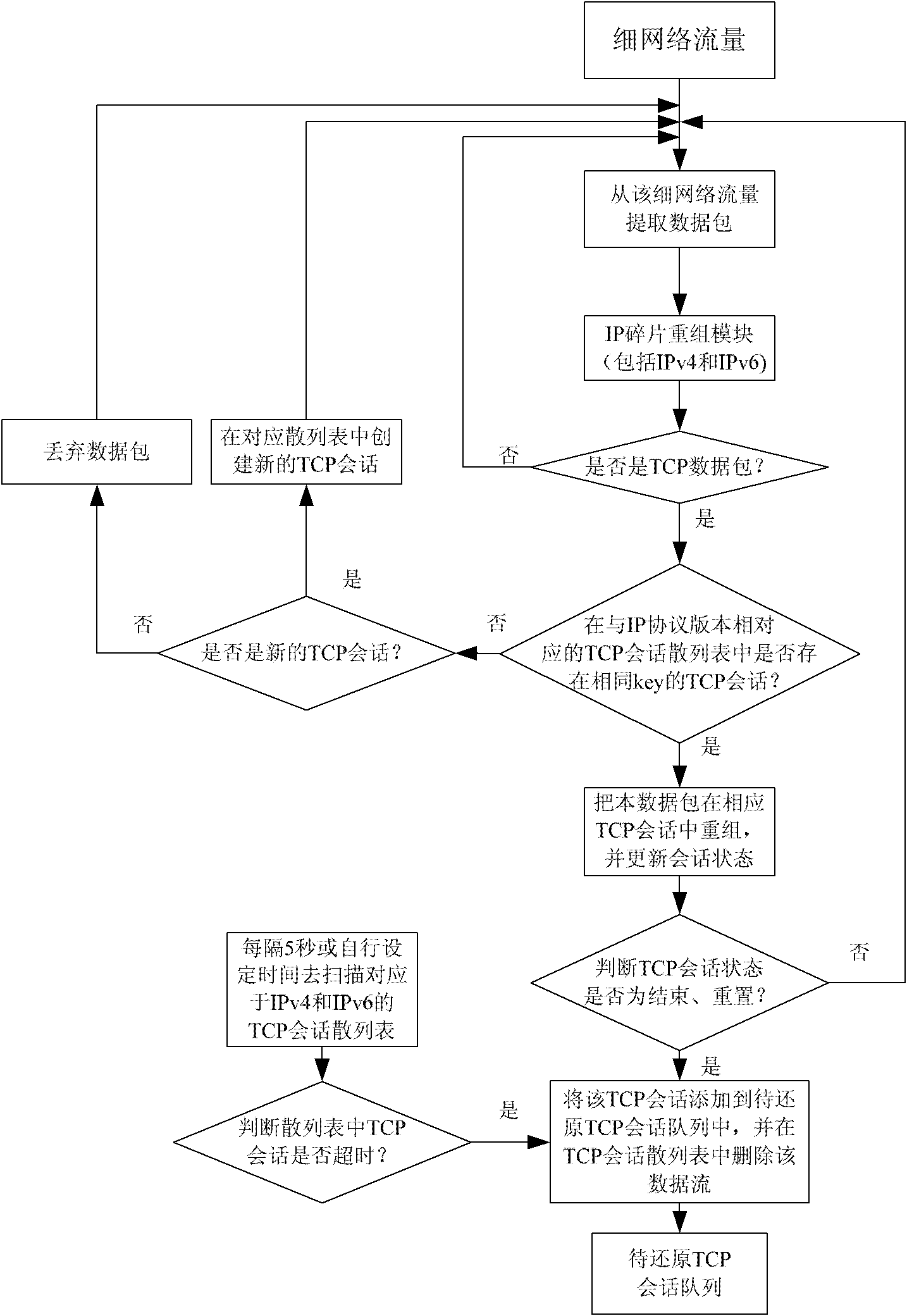

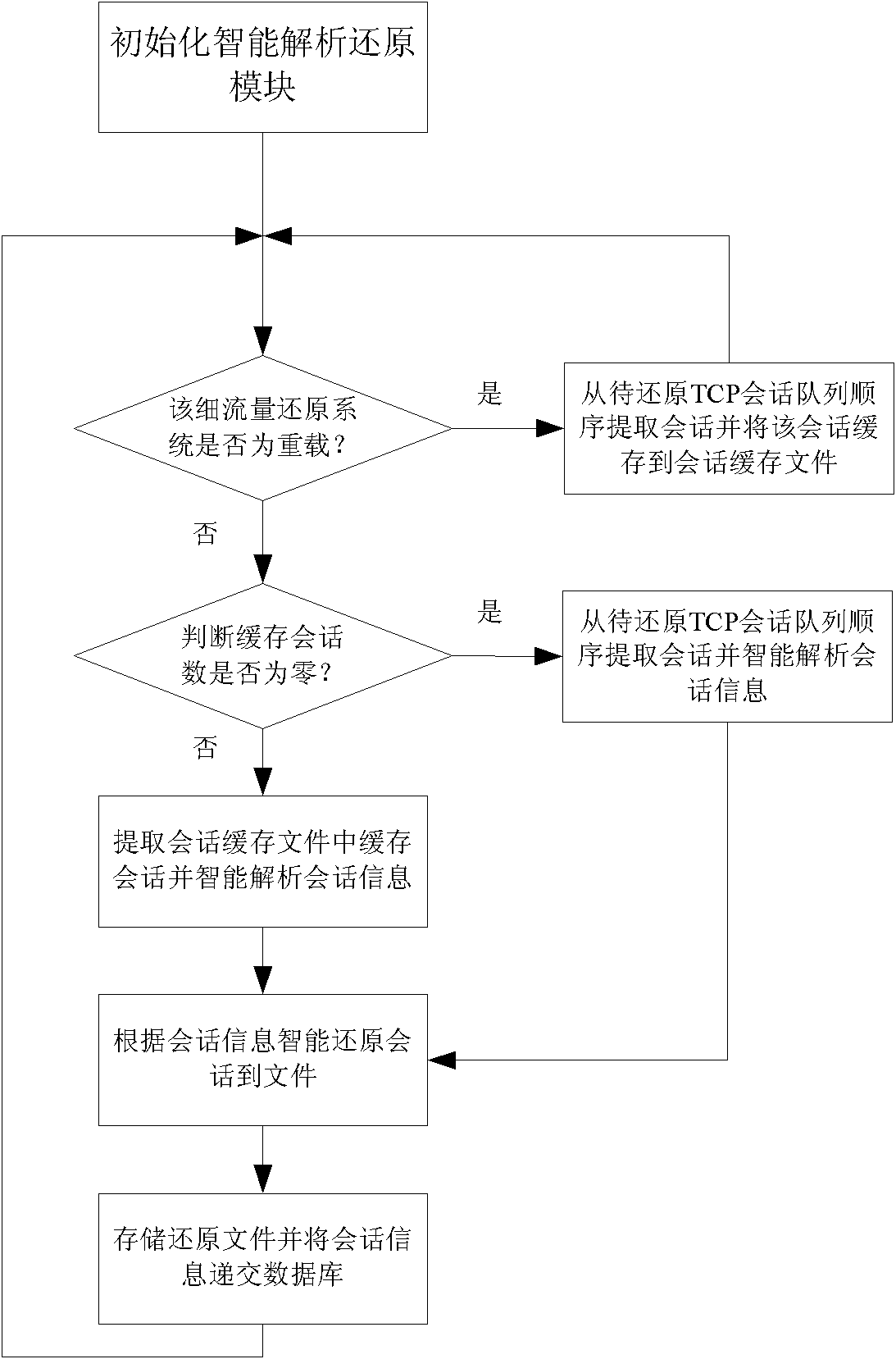

Network flow recovery method

The invention relates to a network flow recovery method, which belongs to the technical field of Internet and discloses a method for recovering network flow to a file. The method adopts a two-stage parallel strategy method to fully make the best of the processing efficiency of a multi-core computer, which comprises the following steps: firstly acquiring high-speed flow, adopting a Mac address xor and IP address xor method to split flow twice, and resolving the acquired initial flow into a plurality of thin flows to realize the two-stage parallel resolution of the flow; then adopting a 'producer-consumer model' loose coupling multithreading framework between working modules with data transfer in a thin flow recovery flow path to realize parallel on a threading level. In addition, the method realizes load balancing on each thin flow, and supports IPv4 and IPv6 protocols simultaneously. The invention aims to solve the problem of converting 'invisible' network flow into information which can be directly processed by a computer under high-speed network bandwidth, and provides technical support for identifying and blocking illegal network information transmission.

Owner:XI AN JIAOTONG UNIV

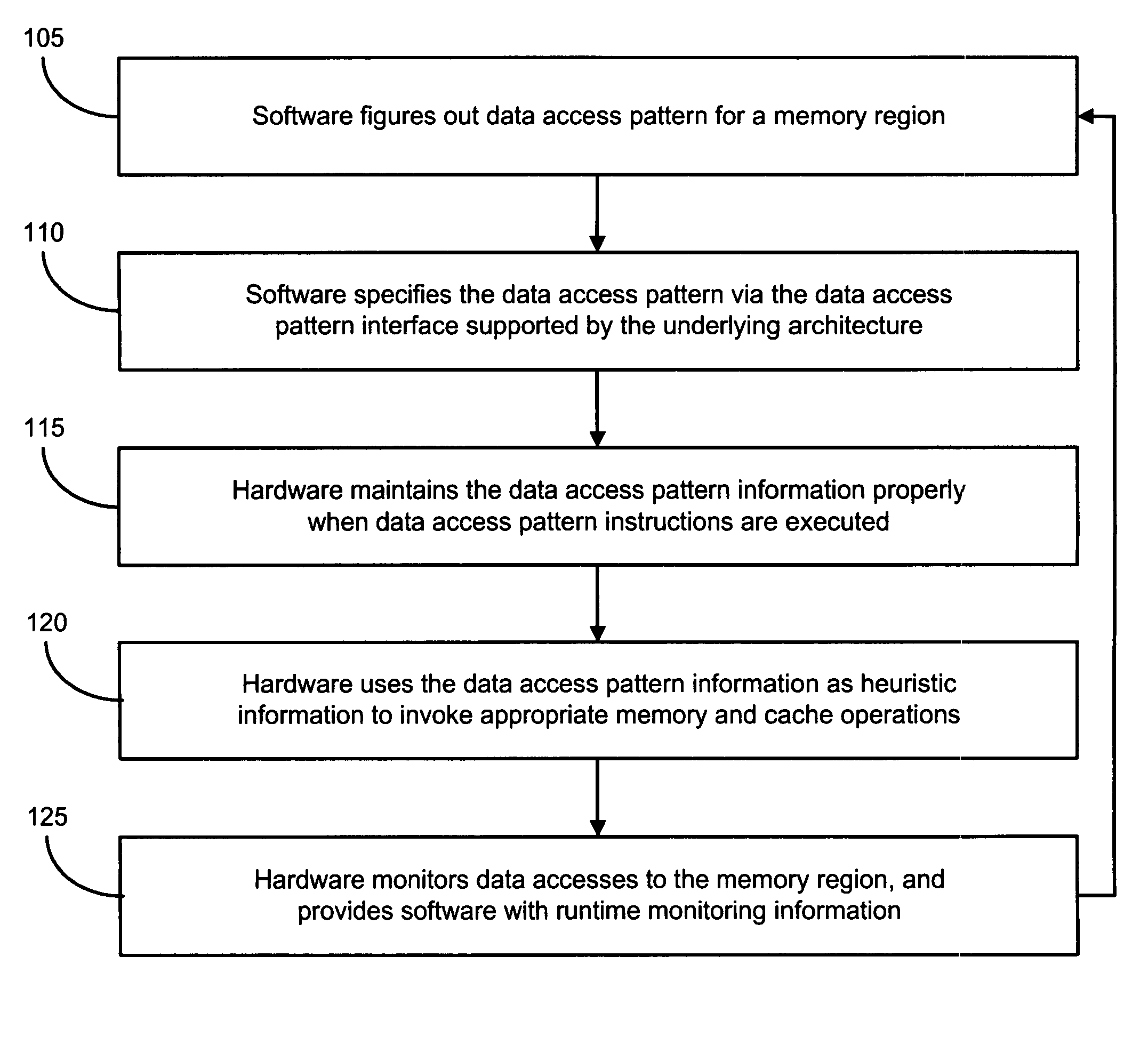

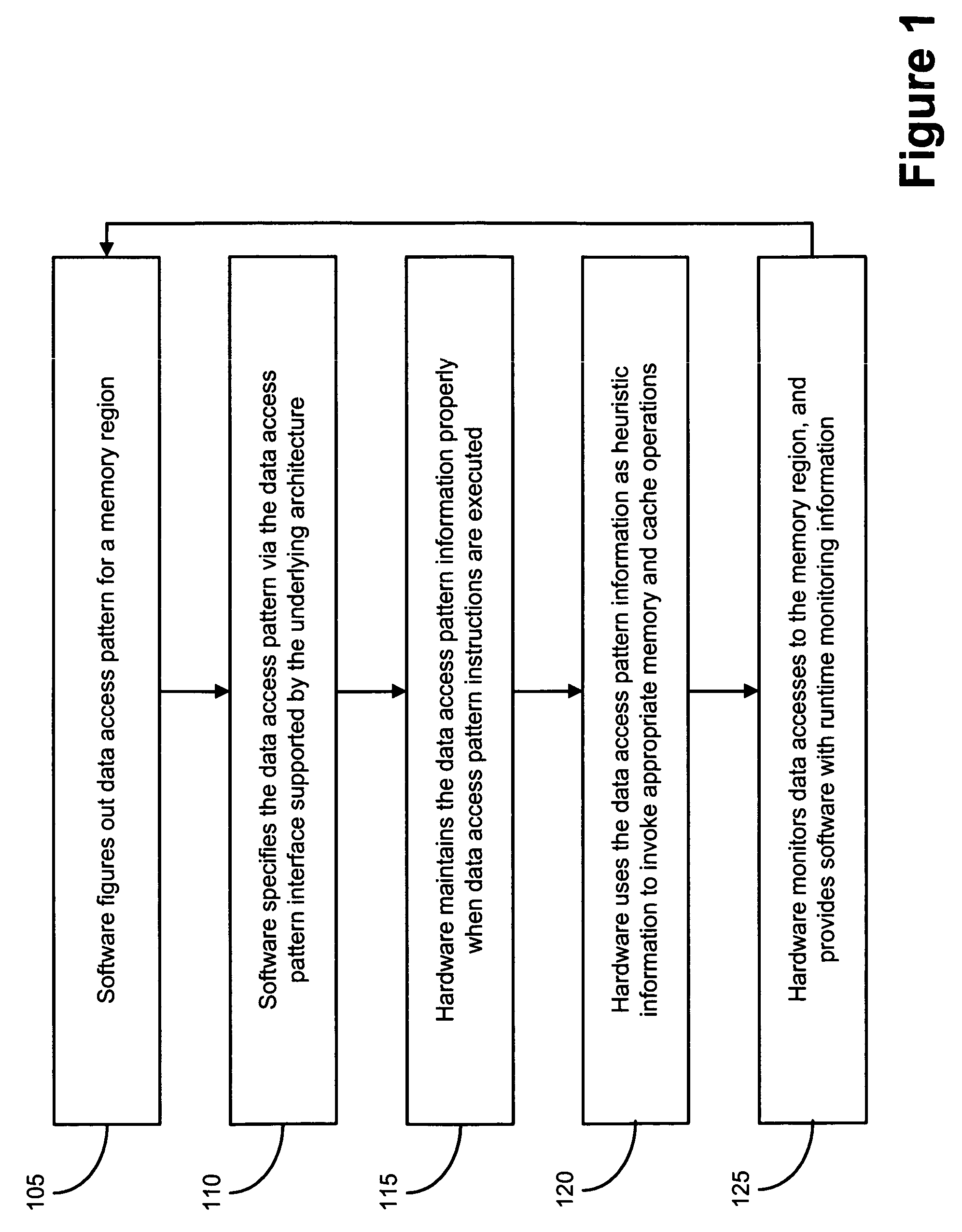

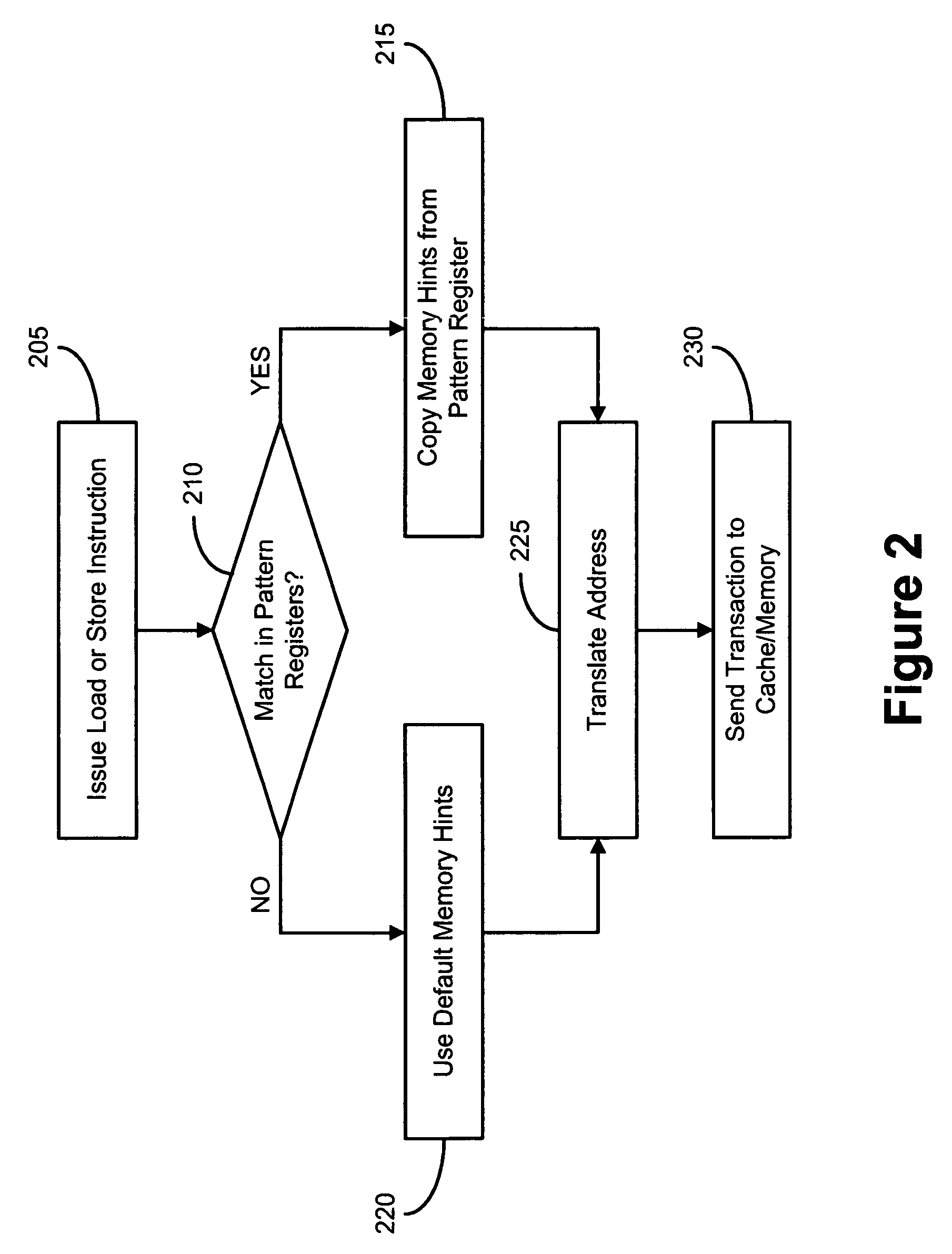

Mechanisms and methods for using data access patterns

ActiveUS20070088919A1Memory architecture accessing/allocationMemory systemsParallel computingData access

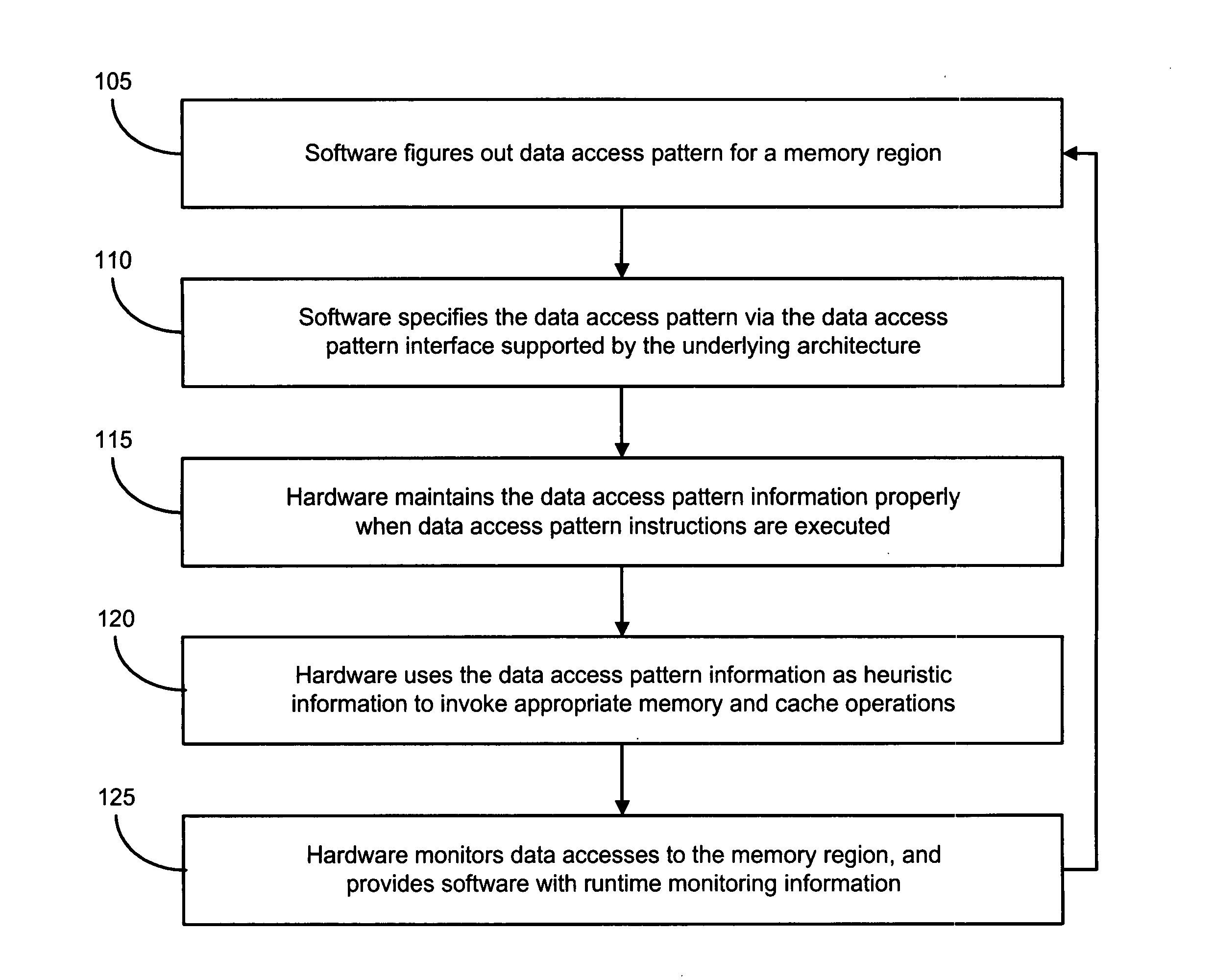

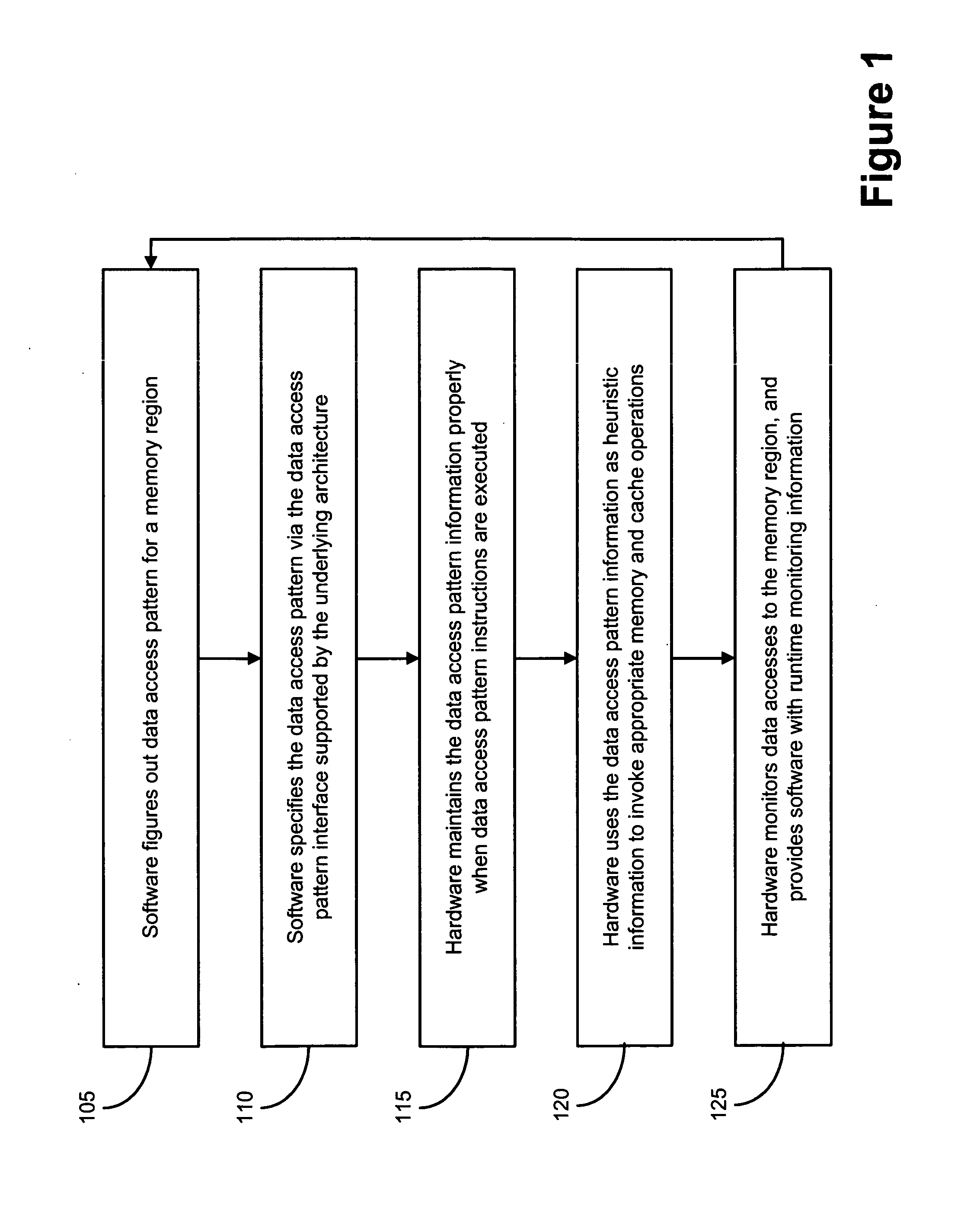

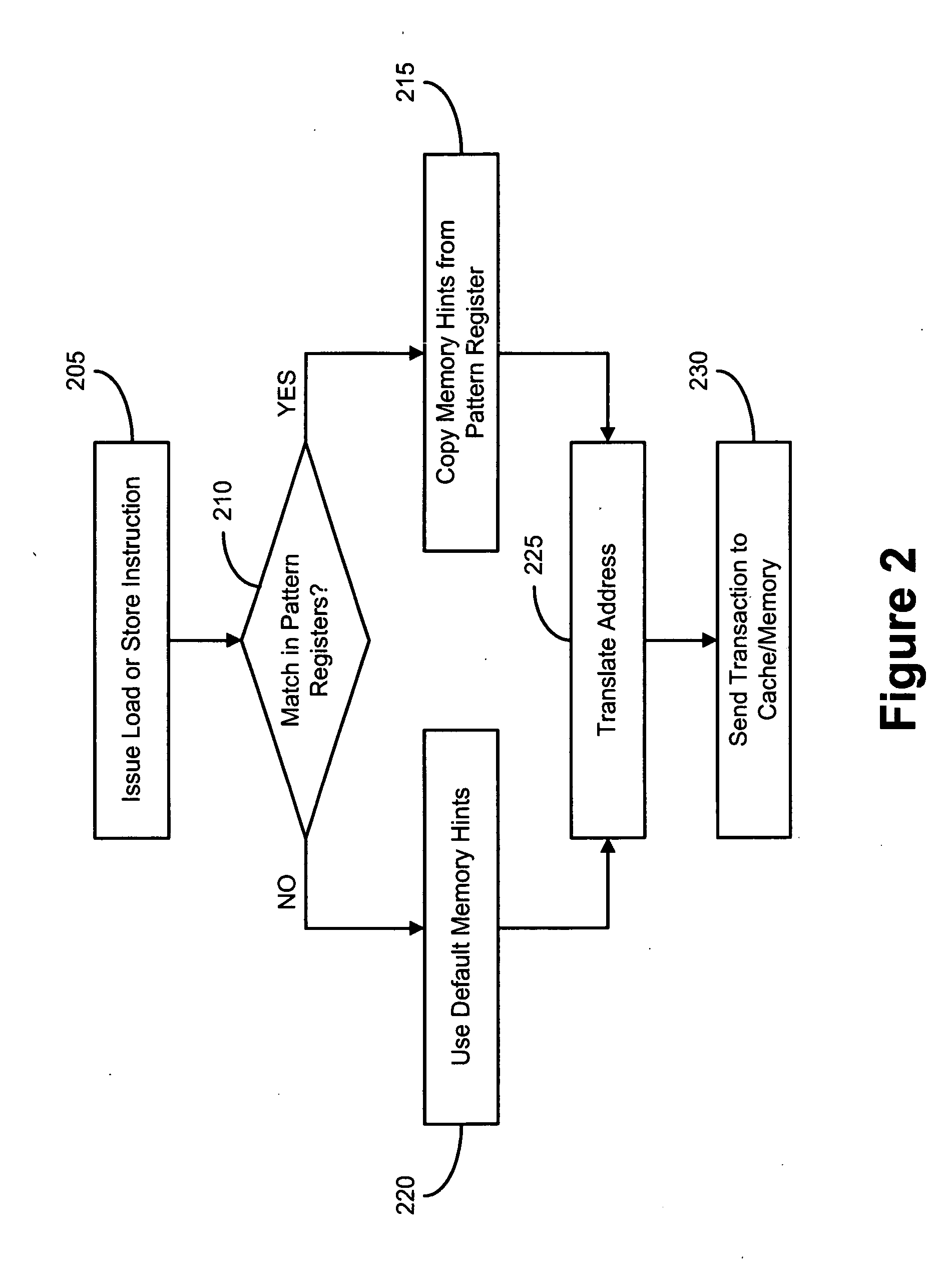

The present invention comprises a data access pattern interface that allows software to specify one or more data access patterns such as stream access patterns, pointer-chasing patterns and producer-consumer patterns. Software detects a data access pattern for a memory region and passes the data access pattern information to hardware via proper data access pattern instructions defined in the data access pattern interface. Hardware maintains the data access pattern information properly when the data access pattern instructions are executed. Hardware can then use the data access pattern information to dynamically detect data access patterns for a memory region throughout the program execution, and voluntarily invoke appropriate memory and cache operations such as pre-fetch, pre-send, acquire-ownership and release-ownership. Further, hardware can provide runtime monitoring information for memory accesses to the memory region, wherein the runtime monitoring information indicates whether the software-provided data access pattern information is accurate.

Owner:IBM CORP

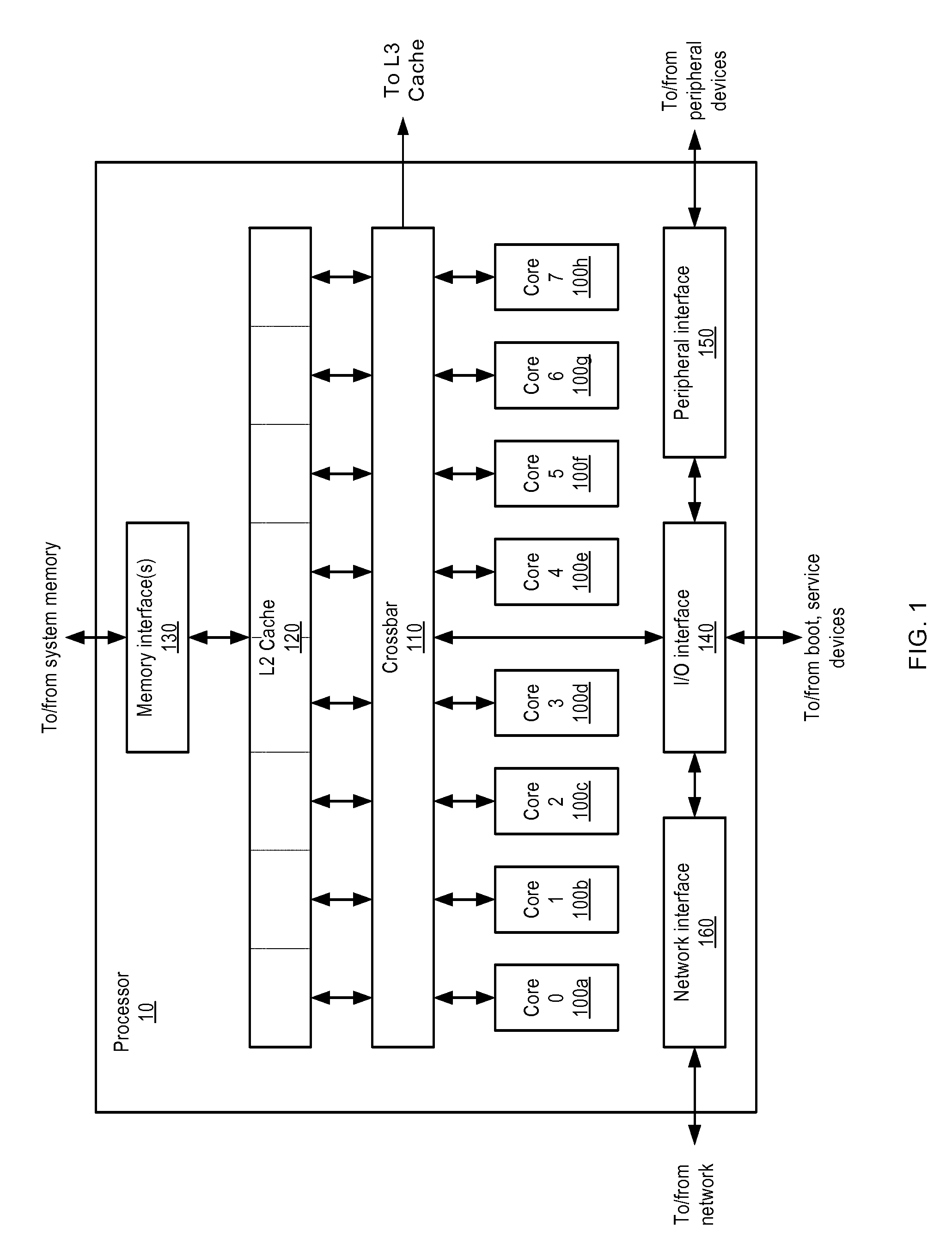

Method and apparatus for efficient helper thread state initialization using inter-thread register copy

InactiveUS20110296431A1Digital computer detailsMultiprogramming arrangementsMemory hierarchyAccess time

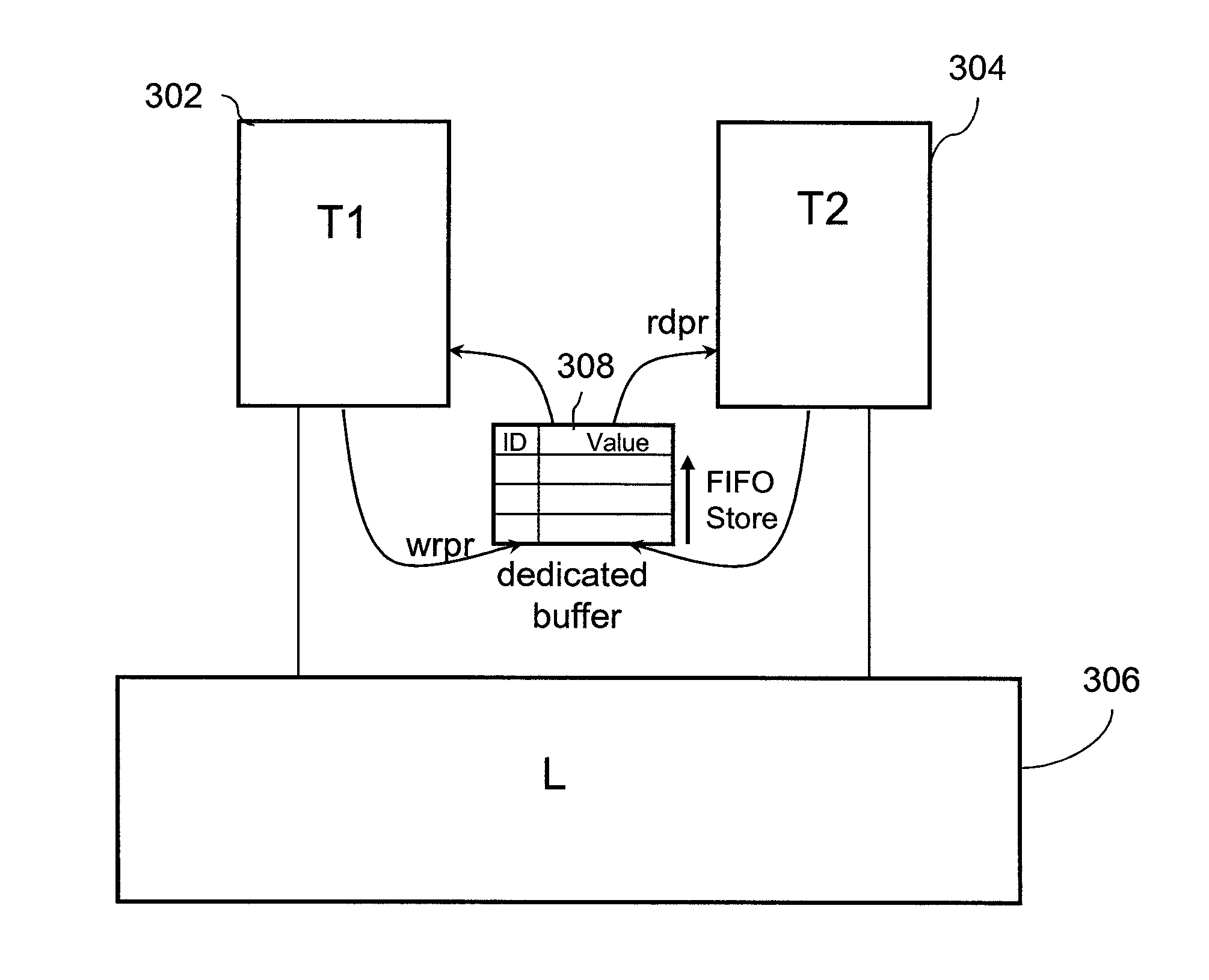

This disclosure describes a method and system that may enable fast, hardware-assisted, producer-consumer style communication of values between threads. The method, in one aspect, uses a dedicated hardware buffer as an intermediary storage for transferring values from registers in one thread to registers in another thread. The method may provide a generic, programmable solution that can transfer any subset of register values between threads in any given order, where the source and target registers may or may not be correlated. The method also may allow for determinate access times, since it completely bypasses the memory hierarchy. Also, the method is designed to be lightweight, focusing on communication, and keeping synchronization facilities orthogonal to the communication mechanism. It may be used by a helper thread that performs data prefetching for an application thread, for example, to initialize the upward-exposed reads in the address computation slice of the helper thread code.

Owner:IBM CORP

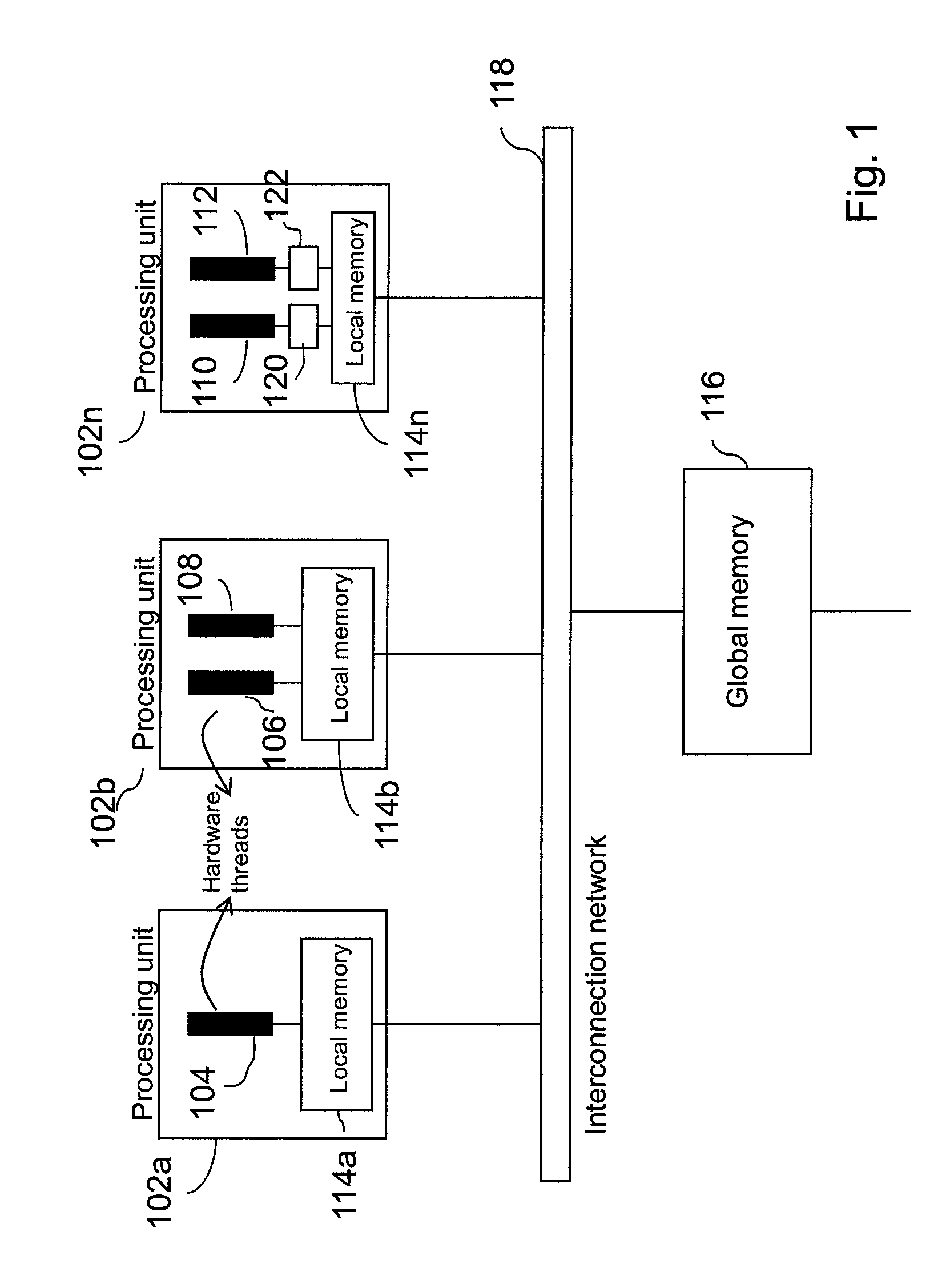

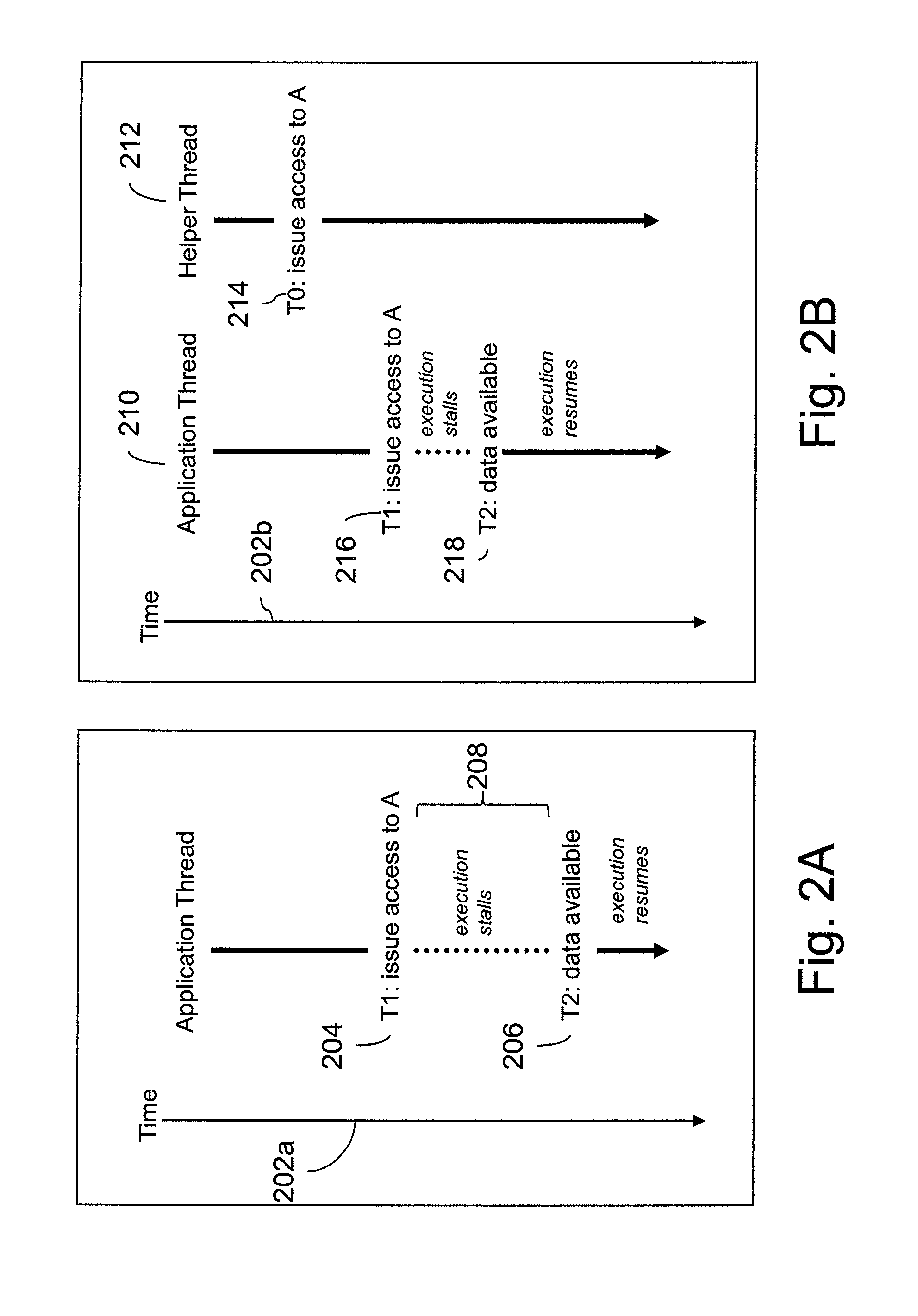

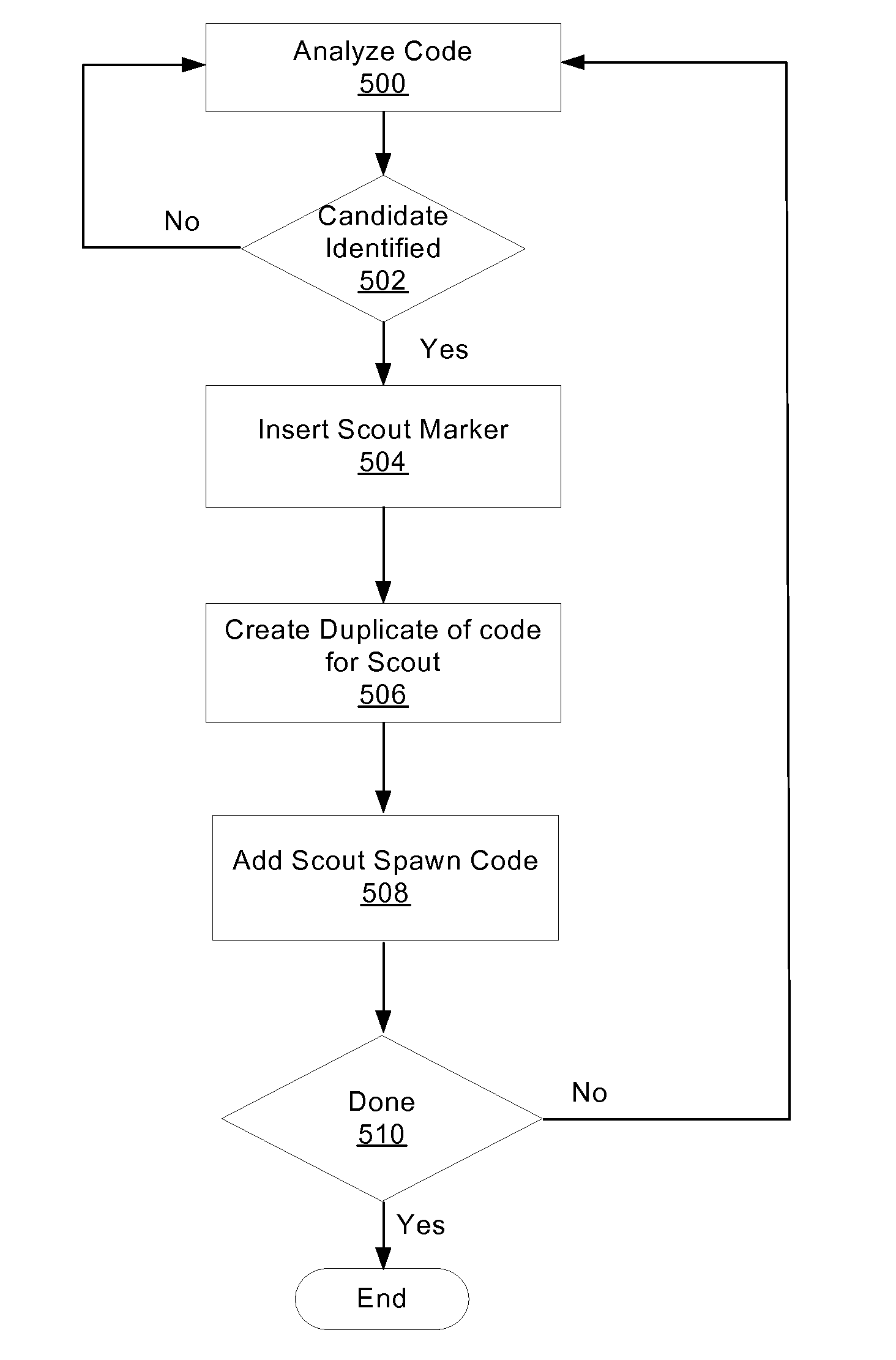

Utility function execution using scout threads

InactiveUS20080141268A1Improve performanceMultiprogramming arrangementsMemory systemsParallel computingProgram code

A method and mechanism for using threads in a computing system. A multithreaded computing system is configured to execute a first thread and a second thread. The first and second threads are configured to operate in a producer-consumer relationship. The second thread is configured to execute utility type functions in advance of the first thread reaching the functions in the program code. The second thread executes in parallel with the first thread and produces results from the execution which are made available for consumption by the first thread. Analysis of the program code is performed to identify such utility functions and modify the program code to support execution of the functions by the second thread.

Owner:SUN MICROSYSTEMS INC

Bridges performing remote reads and writes as uncacheable coherent

InactiveUS20050080948A1High bandwidthMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemTerm memory

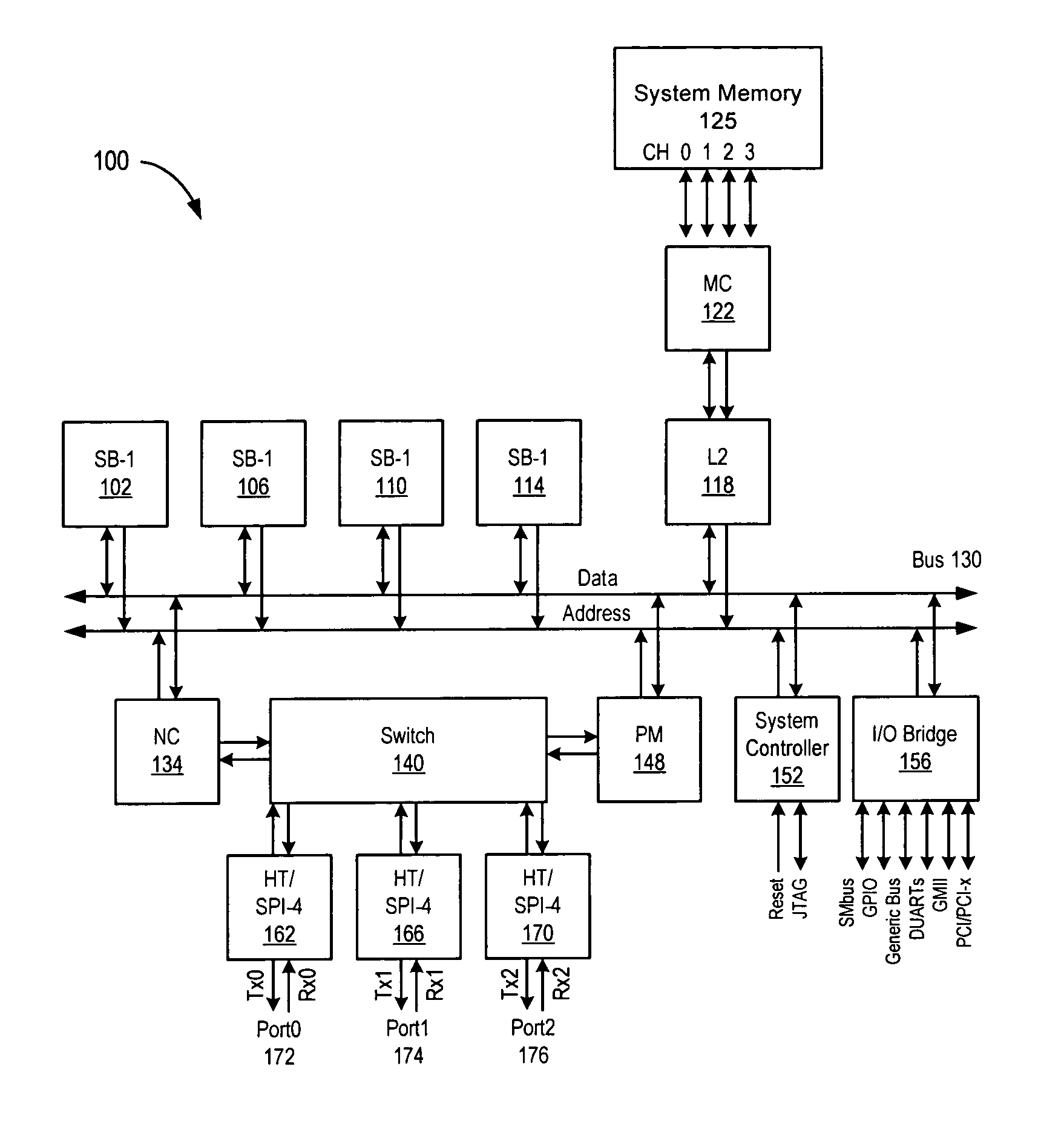

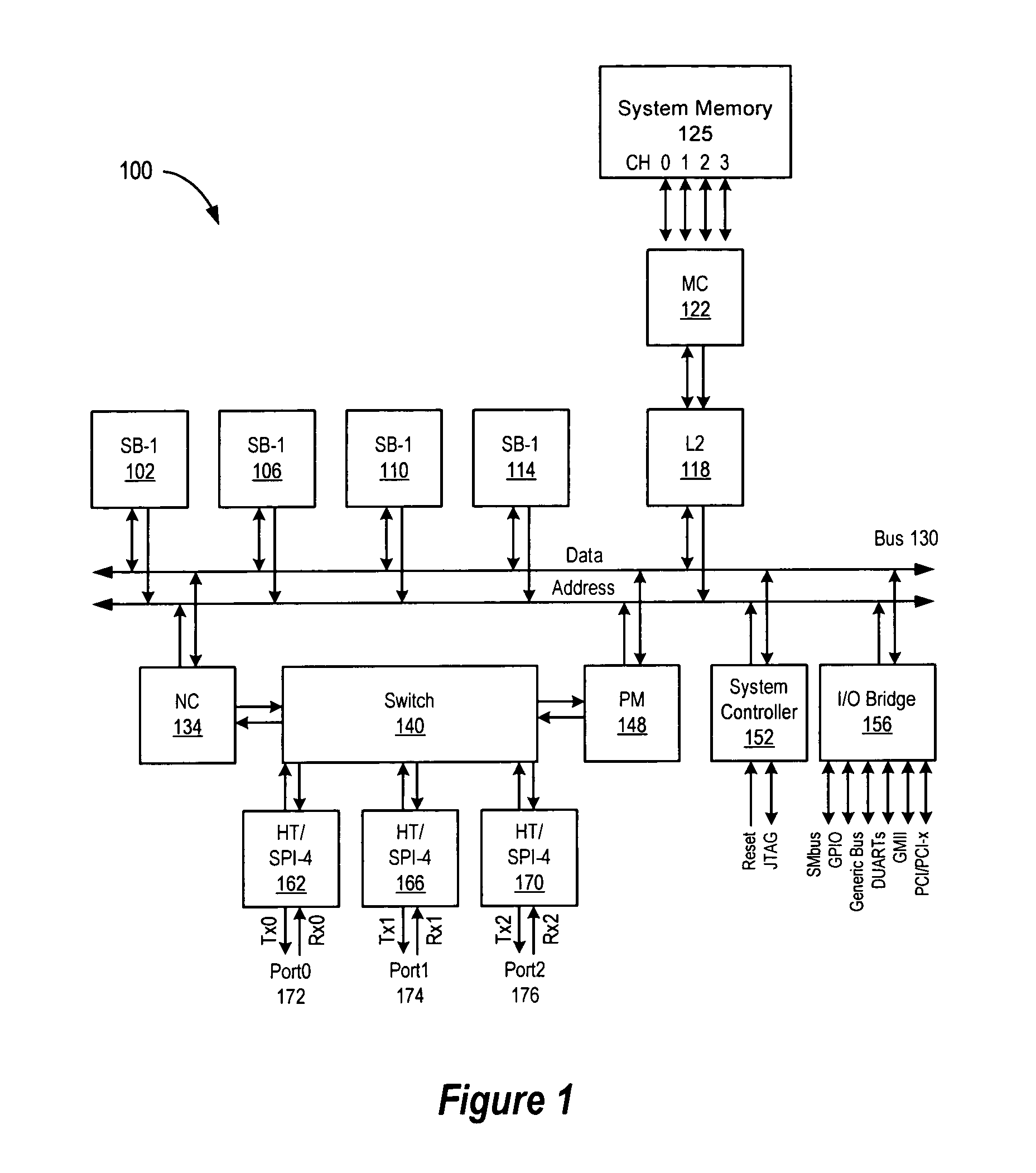

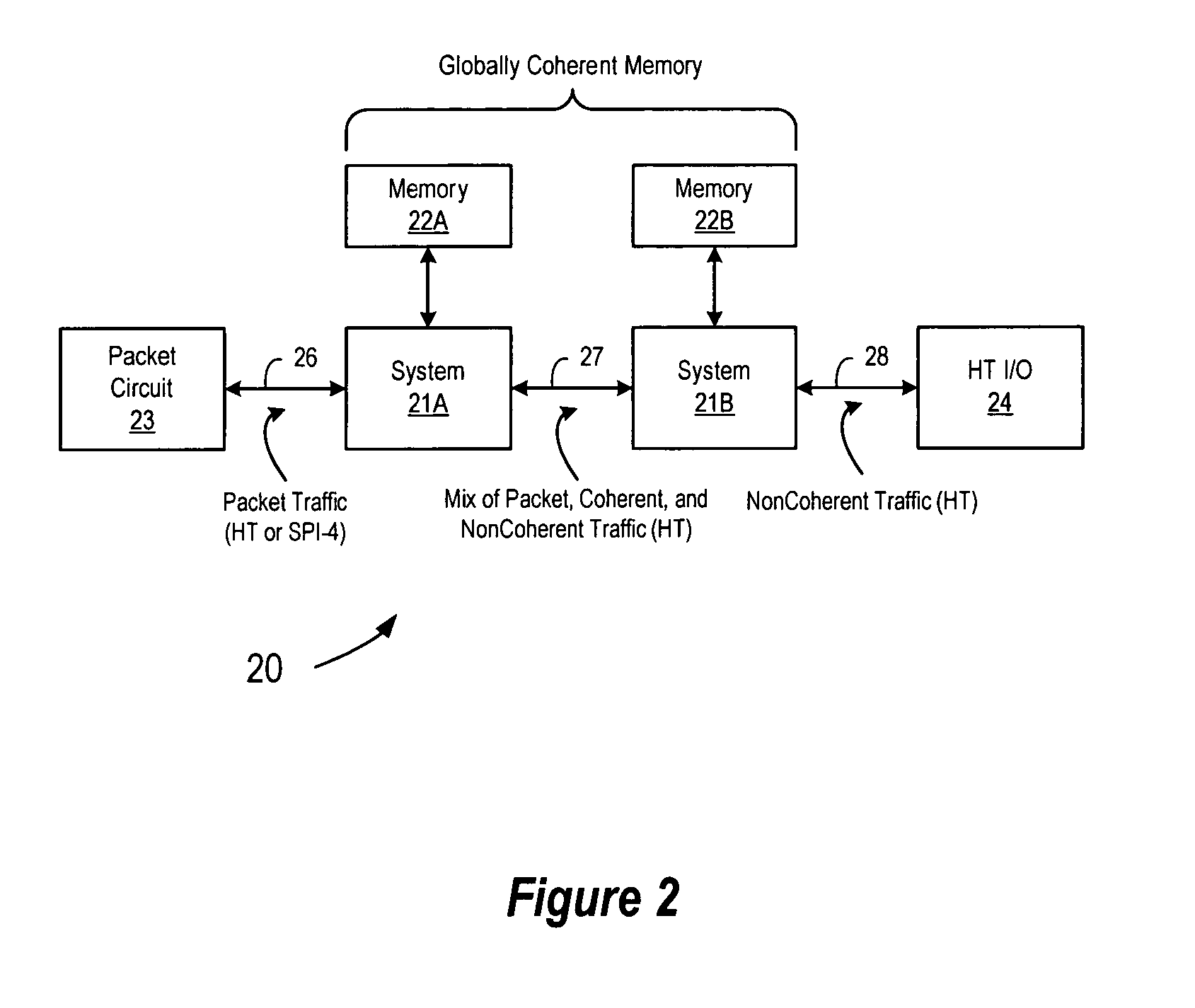

A system and method for improving the bandwidth for data read and write operations in a multi-node system by using uncacheable read and write commands to a home node in the multi-node system so that the home node can determine whether the commands needs to enter the coherent memory space. In one embodiment where nodes are connected via HT interfaces, posted commands are used to transmit uncacheable write commands over the HT fabric to a remote home node so that no response is required from the home node. When both cacheable and uncacheable memory operations are mixed in a multi-node system, a producer-consumer software model may be used to require that the data and flag must be co-located in the home node's memory and that the producer write both the data and flag using regular HT I / O commands. In one embodiment, a system for managing data in multiple data processing devices using common data paths comprises a first data processing system comprising a memory, wherein the memory comprises a cacheable coherent memory space; and a second data processing system communicatively coupled to the first data processing system with the second data processing system comprising at least one bridge, wherein the bridge is operable to perform an uncacheable remote access to the cacheable coherent memory space of the first data processing system. In some embodiments, the access performed by the bridge comprises a data write to the memory of the first data processing system for incorporation into the cacheable coherent memory space of the first data system. In other embodiments, the access performed by the bridge comprises a data read from the cacheable coherent memory space of the first data system.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

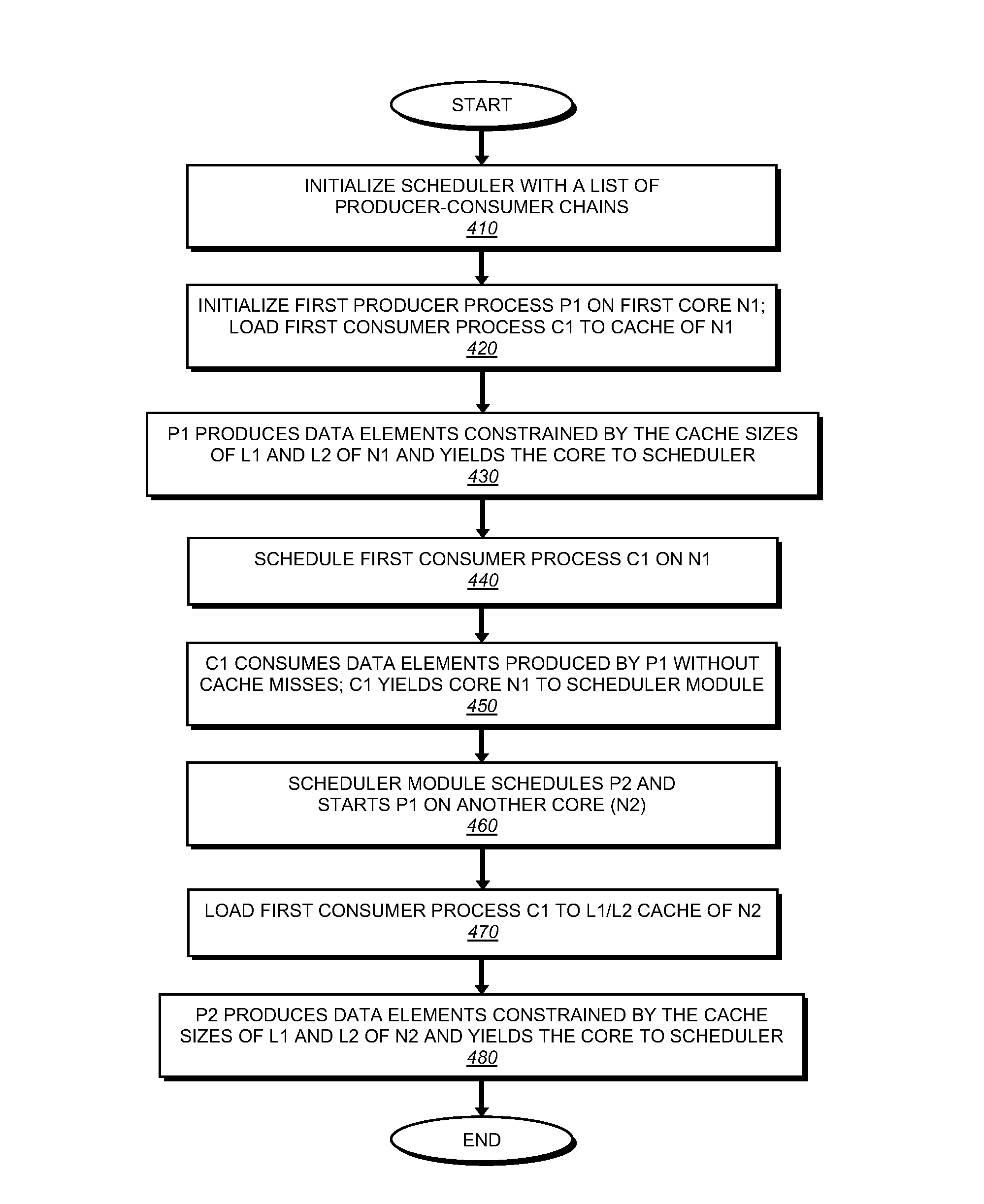

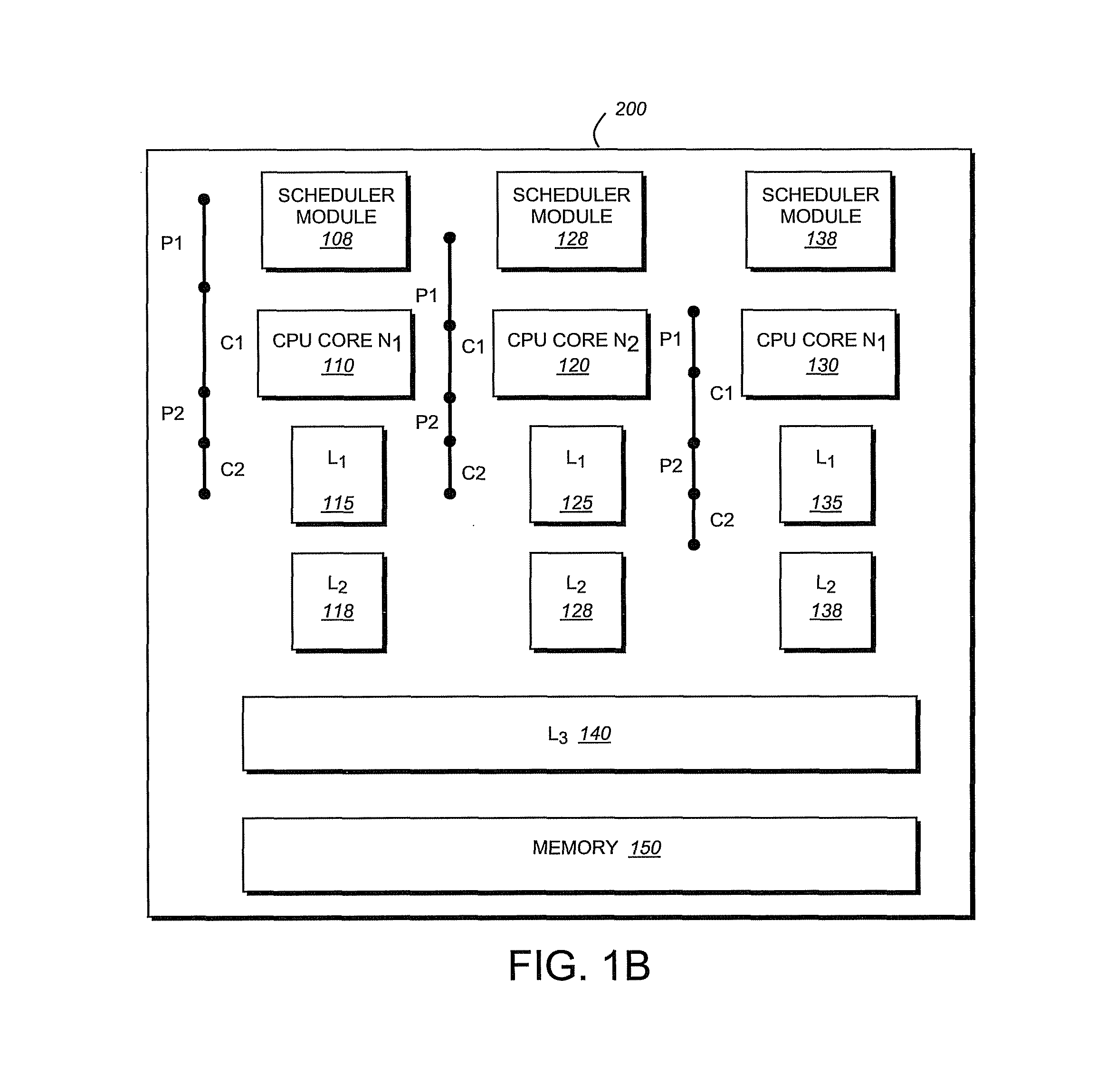

Effective scheduling of producer-consumer processes in a multi-processor system

ActiveUS8621184B1Improve throughputLatency can be optimizedDigital computer detailsSpecific program execution arrangementsMulti processorParallel computing

A novel technique for improving throughput in a multi-core system in which data is processed according to a producer-consumer relationship by eliminating latencies caused by compulsory cache misses. The producer and consumer entities run as multiple slices of execution. Each such slice has an associated execution context that comprises of the code and data that particular slice would access. The execution contexts of the producer and consumer slices are small enough to fit in the processor caches simultaneously. When a producer entity scheduled on a first core completed production of data elements as constrained by the size of cache memories, a consumer entity is scheduled on that same core to consume the produced data elements. Meanwhile, a second slice of the producer entity is moved to another core and a second slice of a consumer entity is scheduled to consume elements produced by the second slice of the producer.

Owner:NETWORK APPLIANCE INC

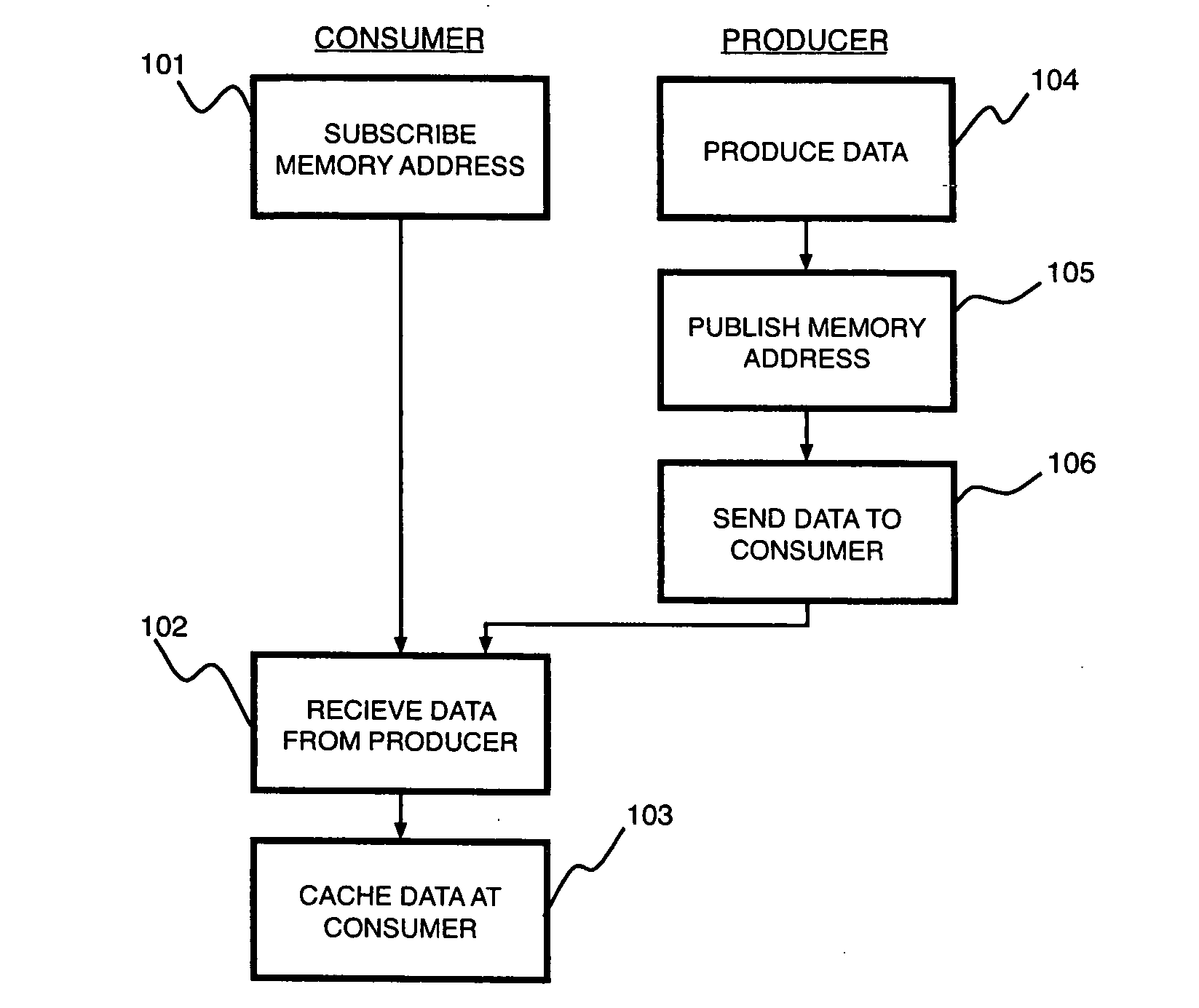

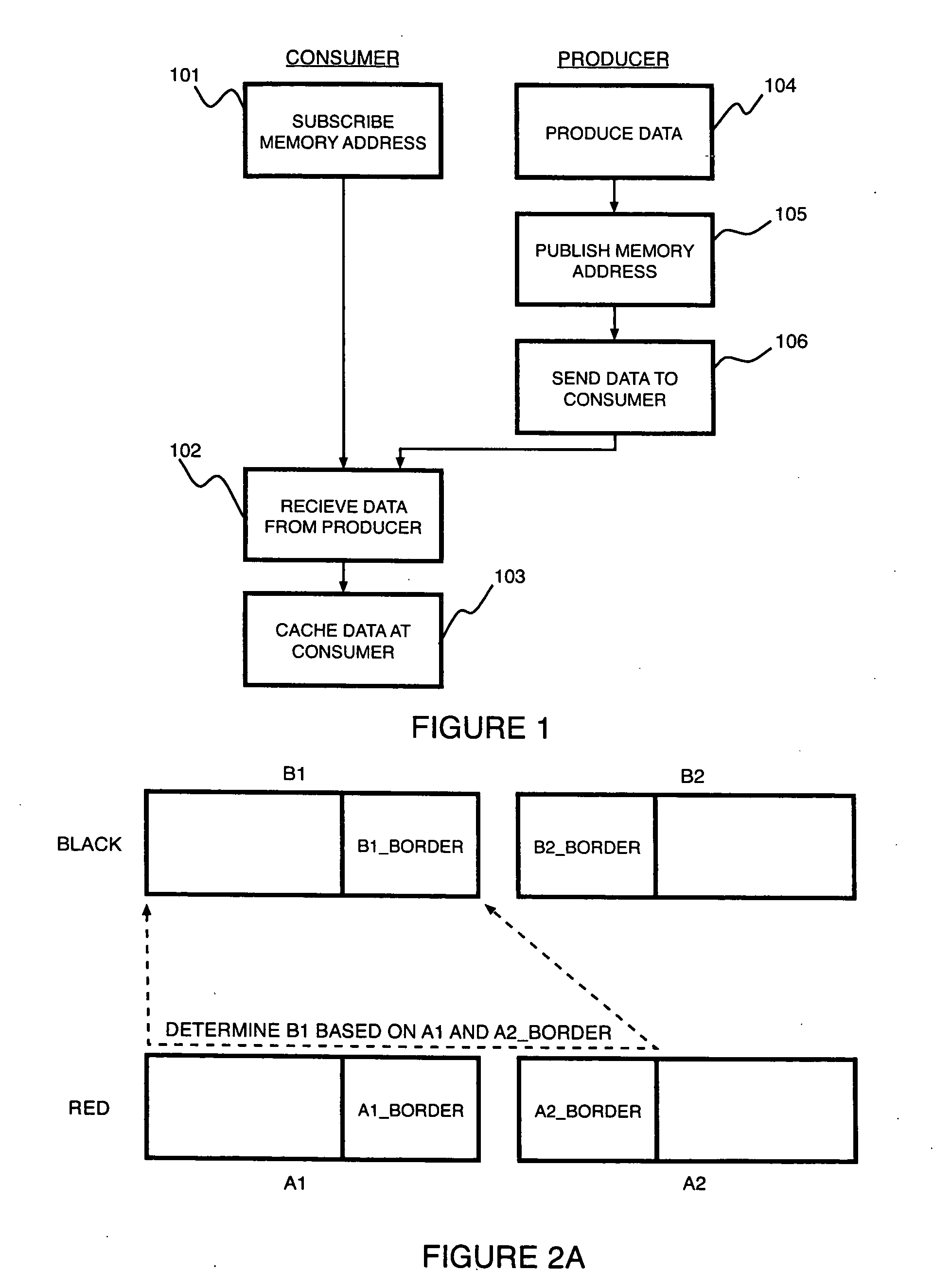

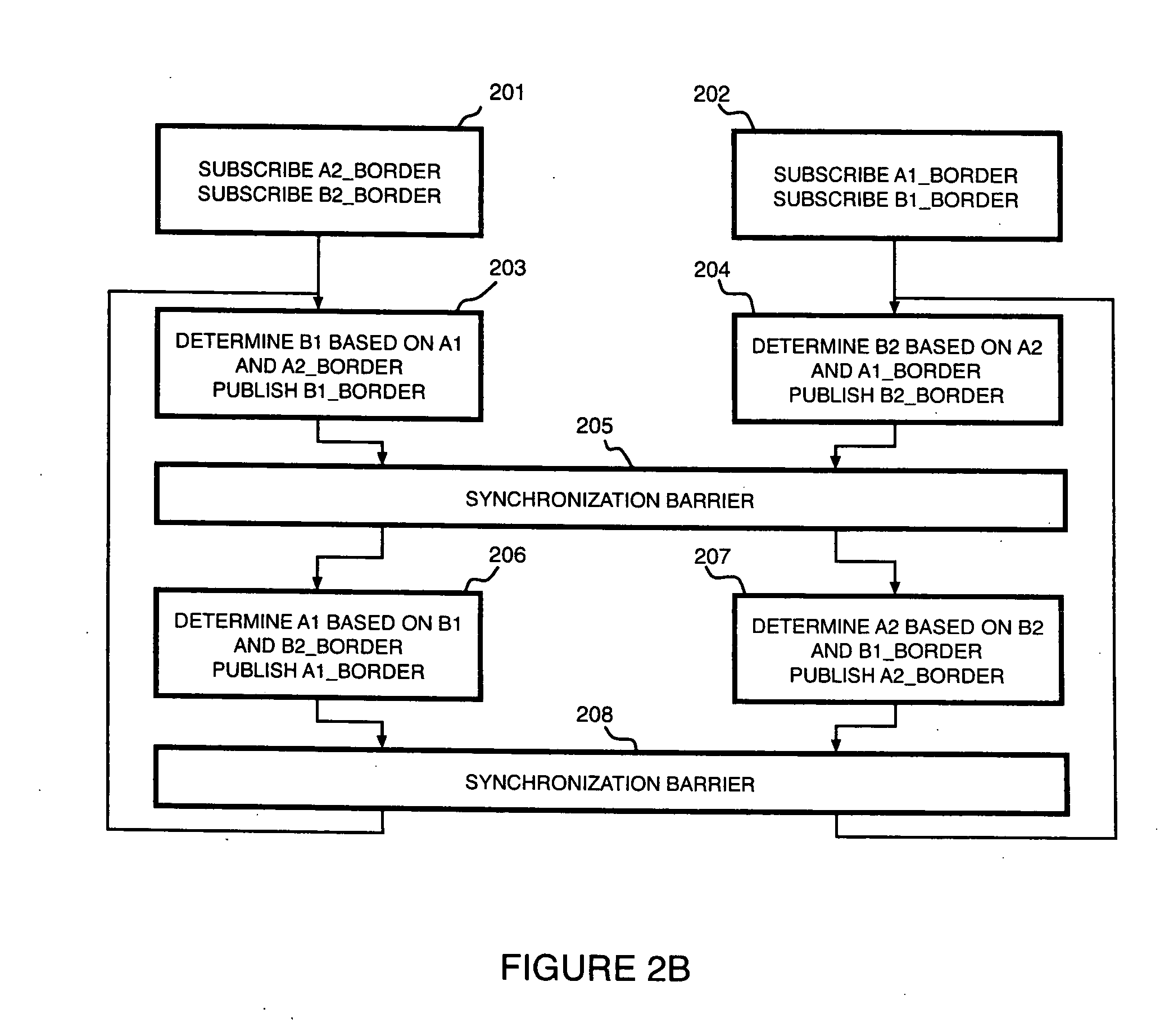

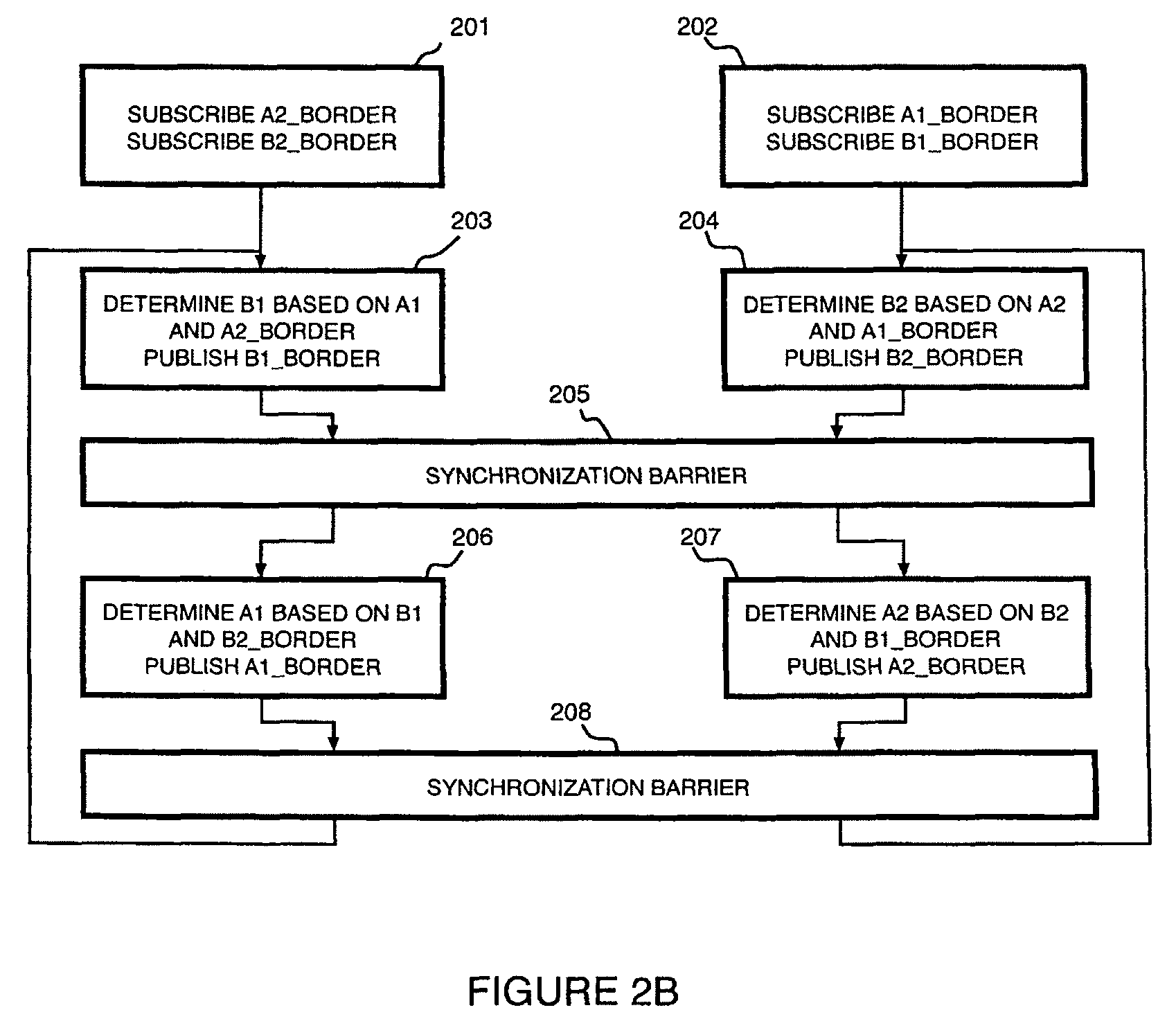

Data subscribe-and-publish mechanisms and methods for producer-consumer pre-fetch communications

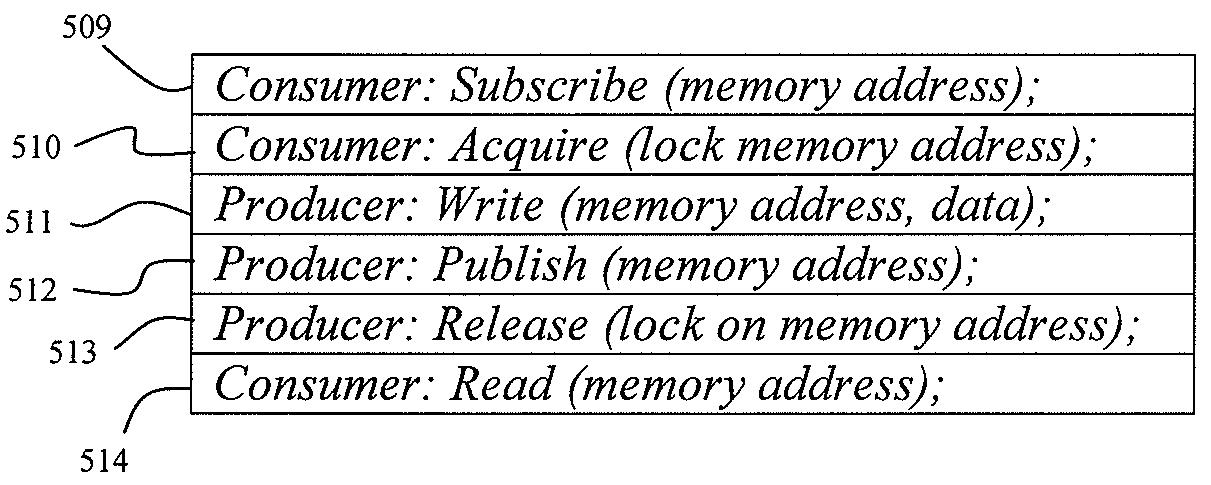

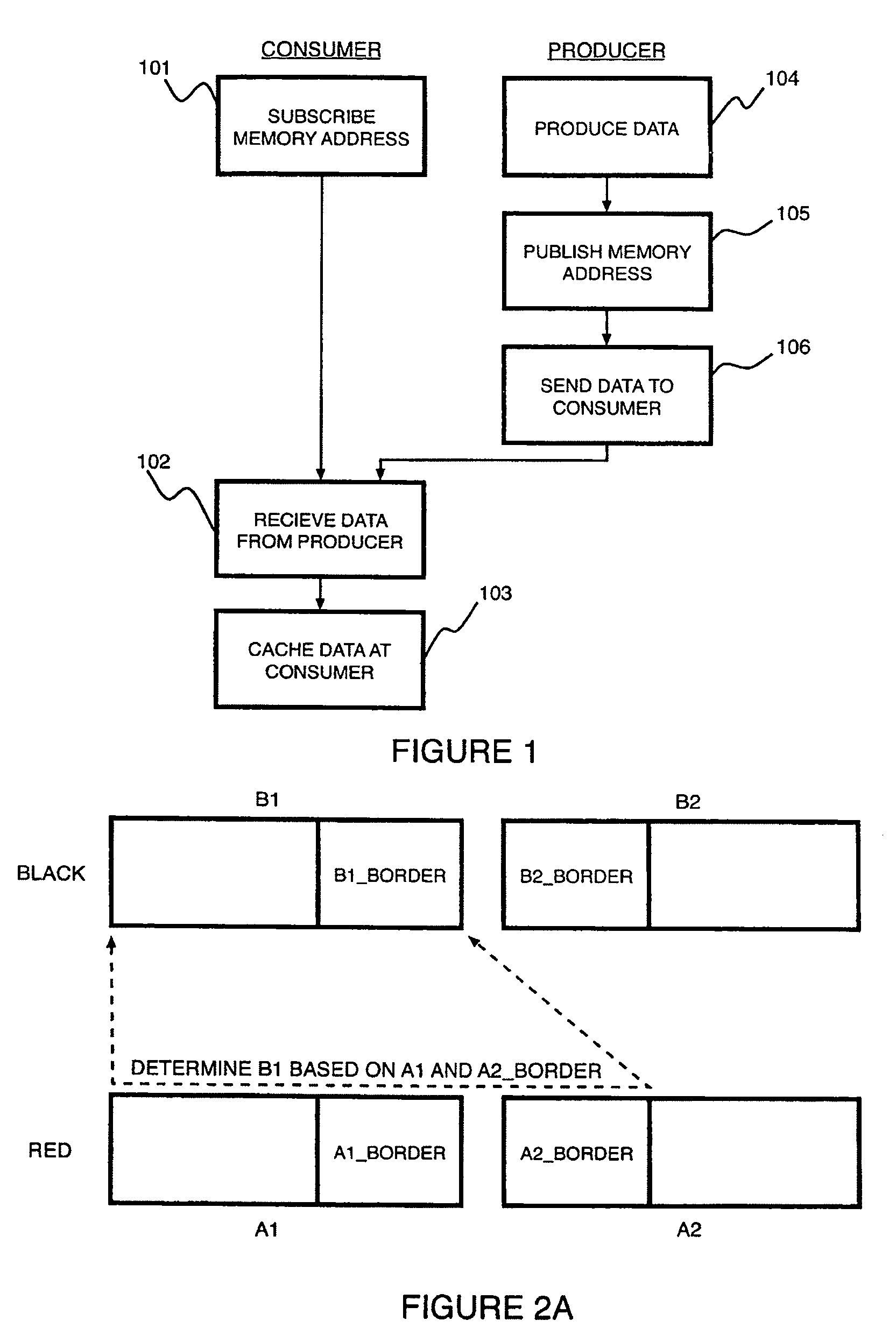

InactiveUS20100241813A1Memory architecture accessing/allocationProgram synchronisationTechnical communicationMemory address

A system supporting producer-consumer pre-fetch communications includes a first processor, wherein the first processor is a producer node, and a second processor, wherein the second processor is a consumer node. The system further includes a data subscribe mechanism for performing a data subscribe operation at the consumer node, wherein the data subscribe operation records that a memory address is subscribed at the consumer node, a data publish mechanism for performing a data publish operation at the producer nod; wherein the data publish operation sends data of the memory address from the producer node to the consumer node if the memory address is subscribed at the consumer node, and a communication network coupled to the producer node and the consumer node for enabling communicating between the producer node and the consumer node.

Owner:IBM CORP

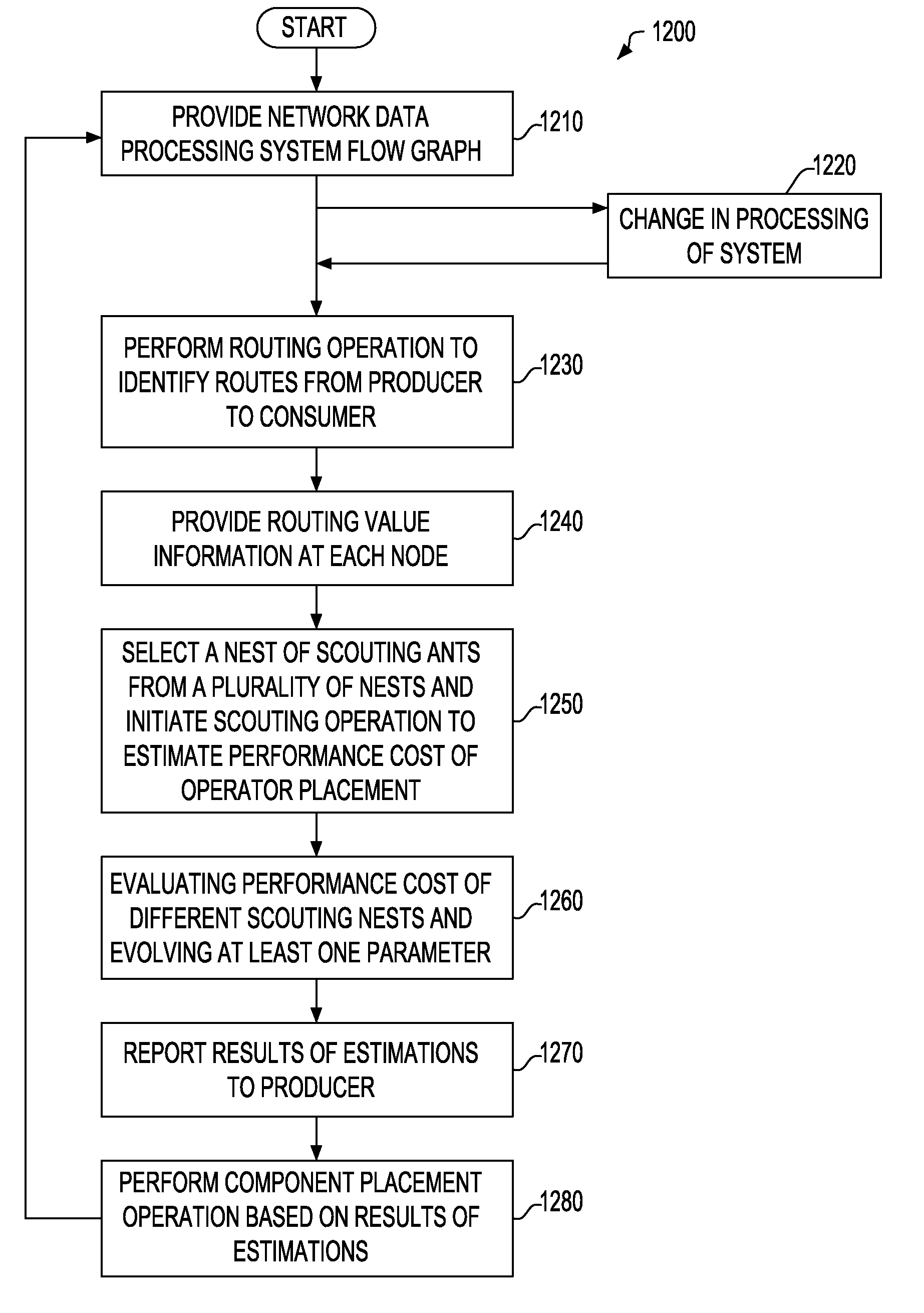

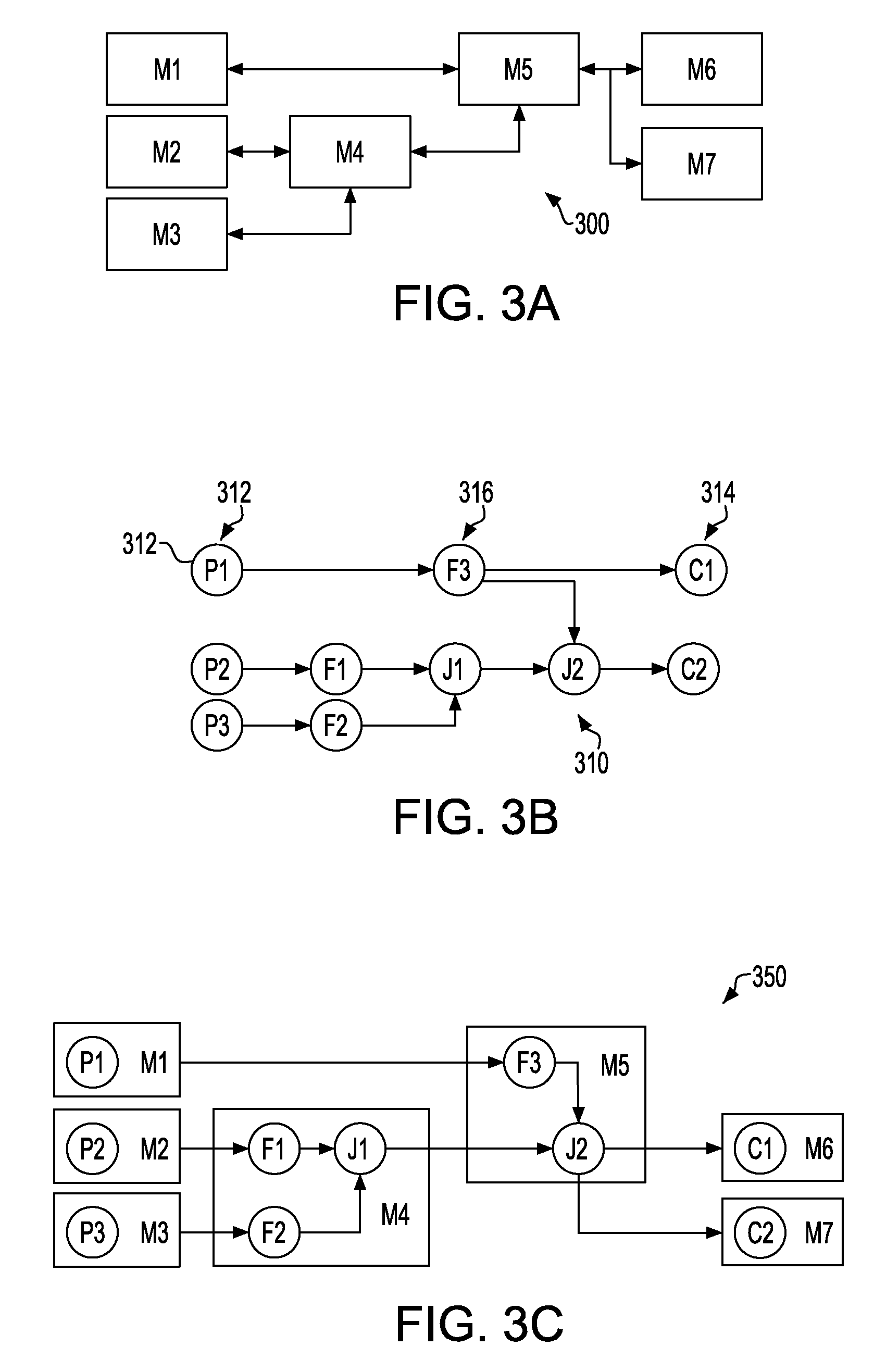

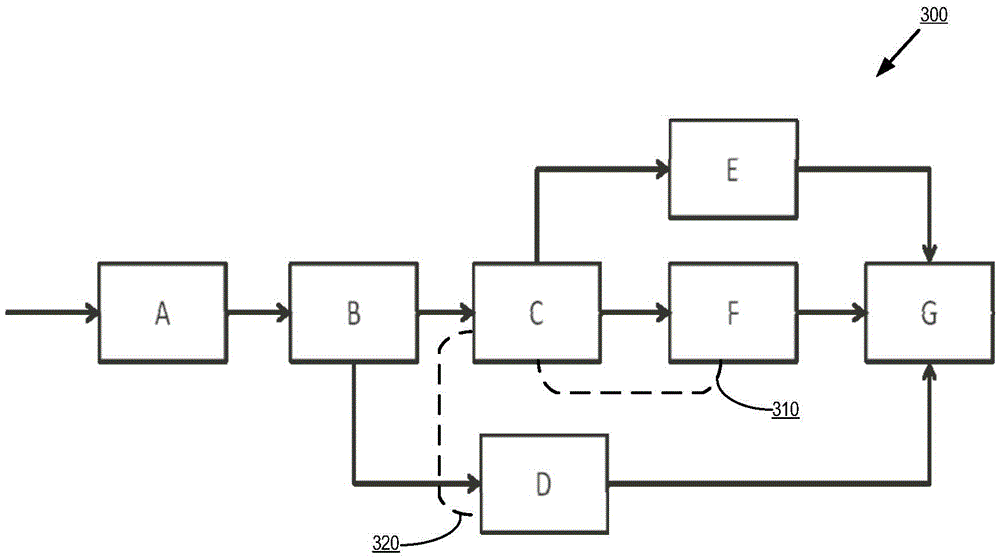

Dynamic and evolutionary placement in an event-driven component-oriented network data processing system

InactiveUS20110055426A1Avoid performance costLow costMultiple digital computer combinationsProgram controlData processing systemNODAL

Method, system and computer readable program code for dynamic and evolutionary component placement in an event processing system having producers, consumers, a plurality of nodes between the producers and the consumers, and a flow graph representing operator components to be executed between the producers and the consumers. A description of a change to the system is received. At each node, next-hop neighbor nodes for each consumer are identified. A routing value is assigned to each next-hop neighbor node for each consumer and the routing values are updated according to an update rule that represents a chromosome in a routing probe. The update rule in a routing probe is selectively updated from a plurality of update rules at the consumer. The probability of selecting a particular update rule is reinforced or decayed based on the success of an update rule in allowing routing probes to create many different efficient routes. At each producer, nests of scouting probes are adaptively selected from an available set of nests and dispatched to execute hypothetical placement of a query by an independent agent called a “leader”. A placement of the operator components that minimizes performance cost of the system relative to the hypothetical placement is selected. Each scouting probe contains chromosomes that guide placement. Scouting probes in two different nests have different chromosomes. The performance cost of the hypothetical changed placement is evaluated and the performance evaluation is used to evolve at least one chromosome of a scouting ant in each nest.

Owner:IBM CORP

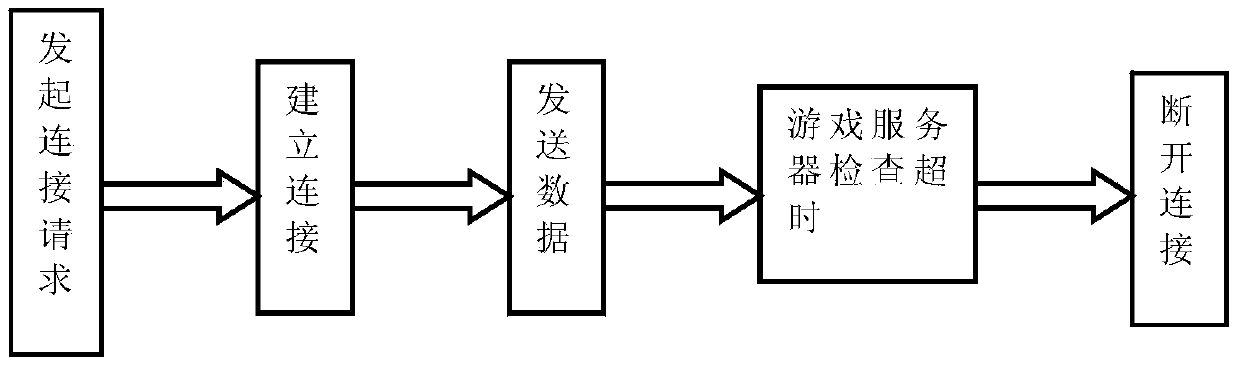

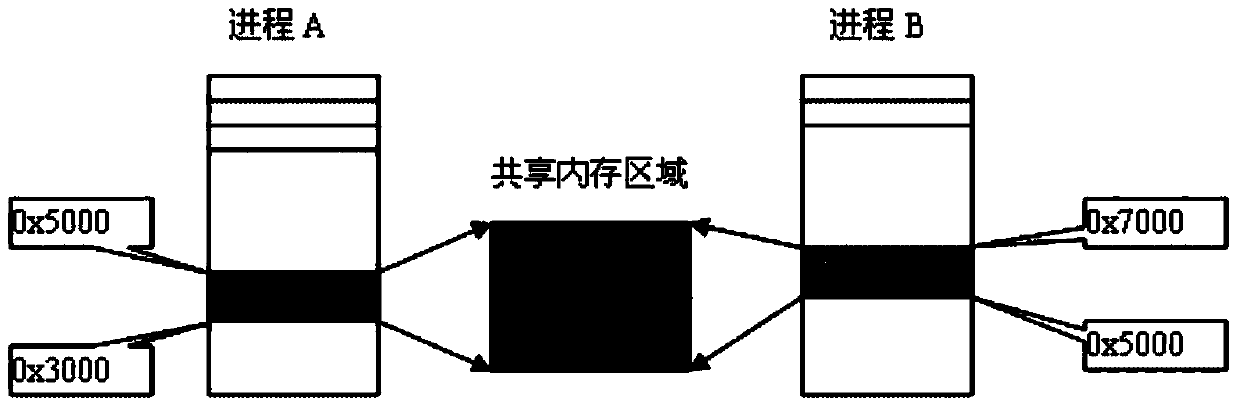

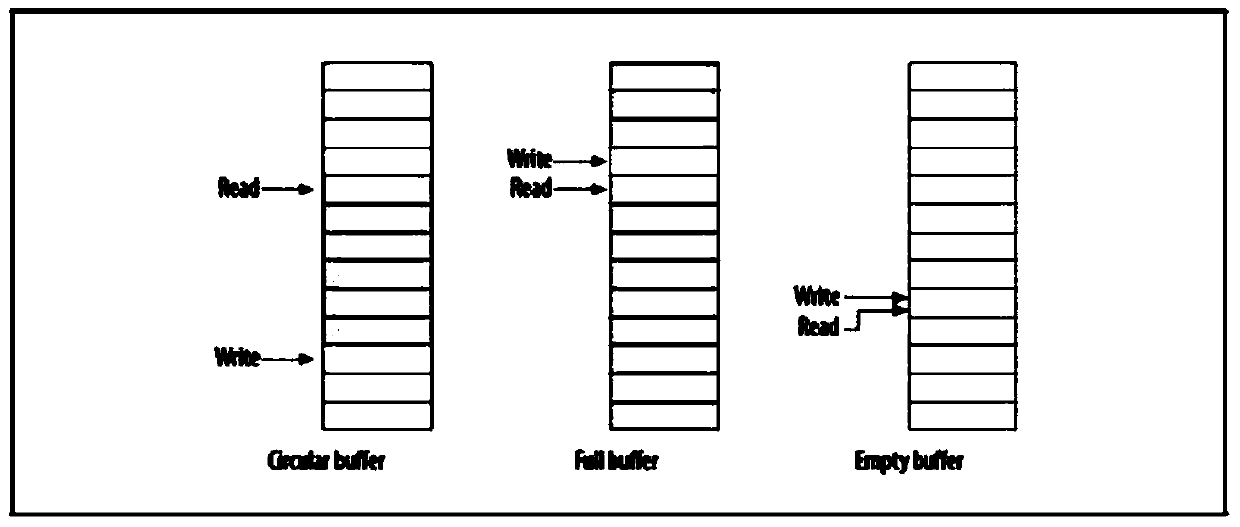

Server optimizing method based on mobile terminal and server system

InactiveCN103995755AReduce overheadLow costFault responseHardware monitoringNetwork connectionStructure of Management Information

The invention provides a server optimizing method based on a mobile terminal and a server system. According to the server optimizing method, a new technical architecture is designed based on TCP long connection, long connection is replaced by TCP short connection, a shared memory is explored in a game server, and reading and writing are separated on a data access layer by arranging a database cache server. In addition, data retreat caused by shutdown of the server is avoided, and user experience of mobile phone games is promoted. An annular buffering area based on a producer and consumer model is arranged in the game server, a monitoring module used for monitoring the connection state of the mobile terminal is arranged in the game server, in this way, multiple aspects including the network connection mode, data caching, the network buffering area structure and the like are improved, and the user experience of the mobile phone games is further promoted.

Owner:北京乐动卓越信息技术有限公司

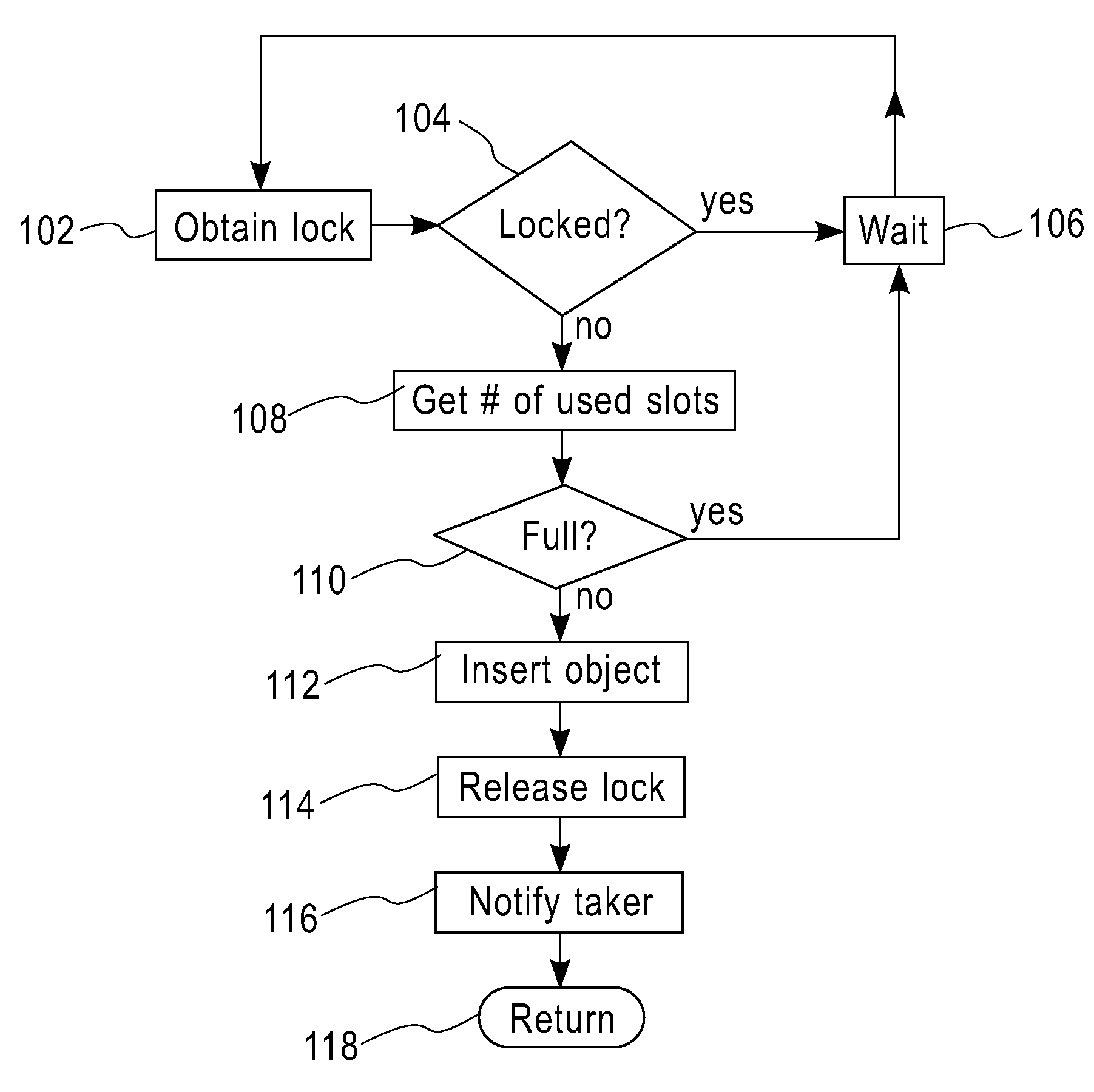

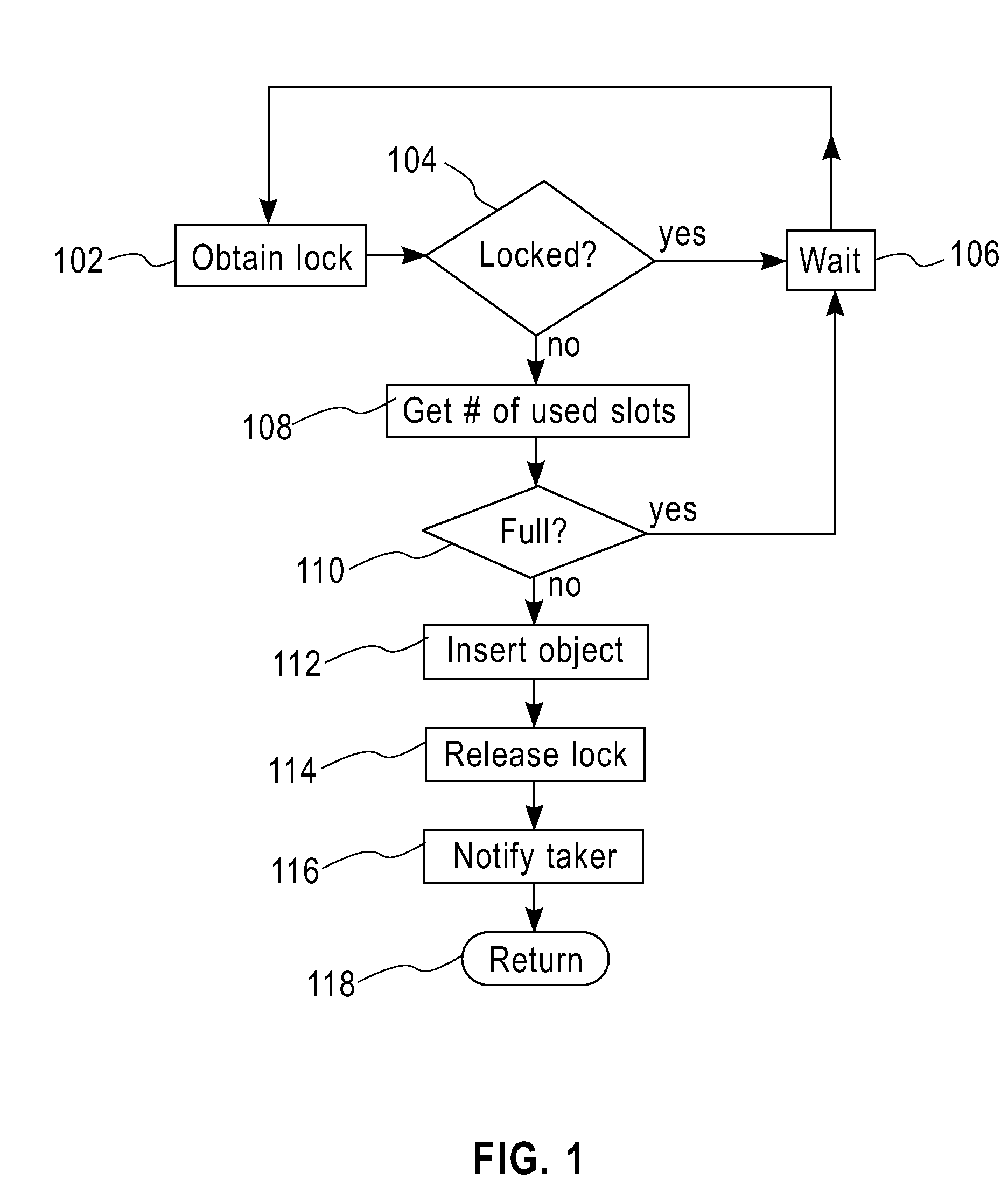

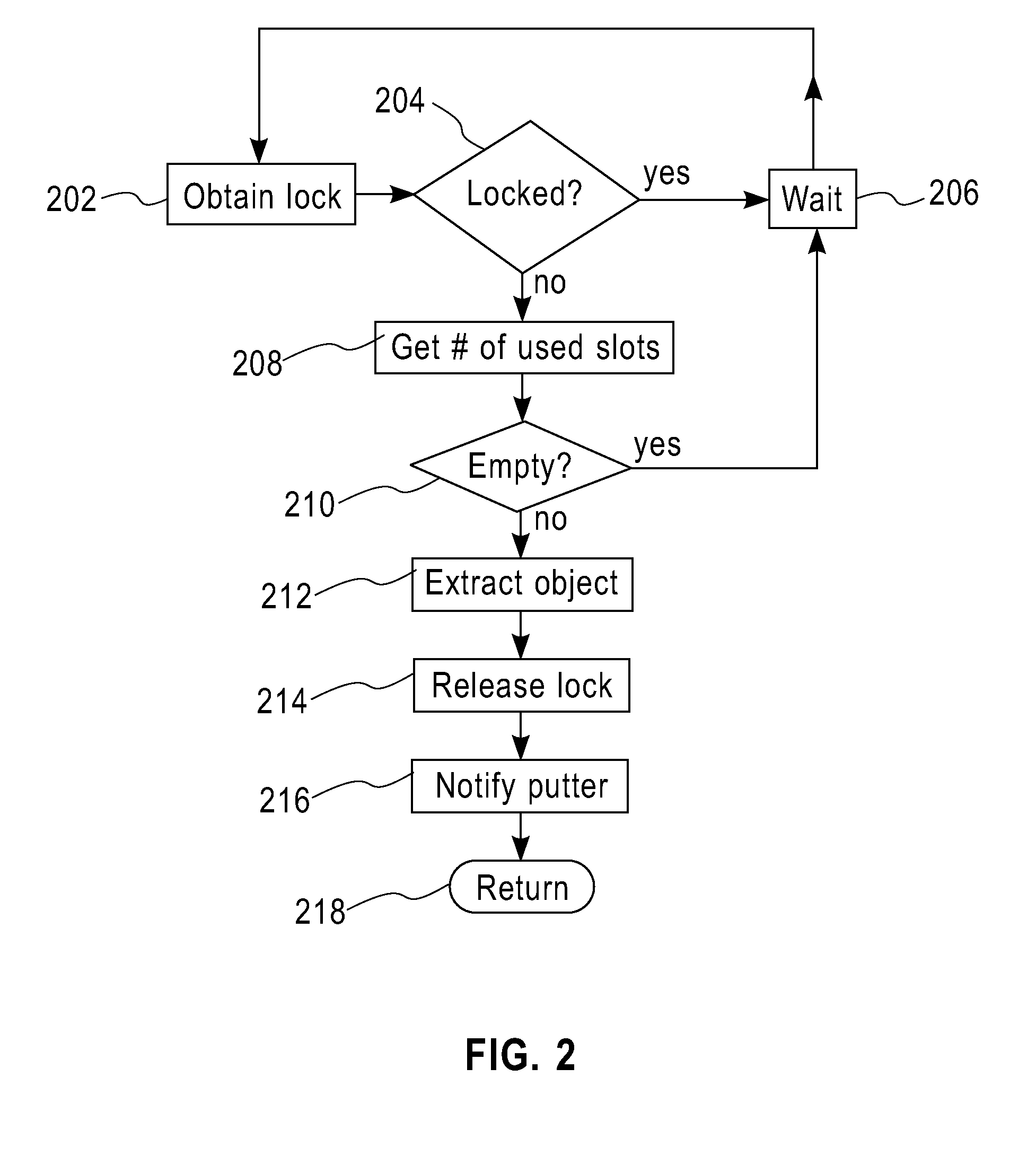

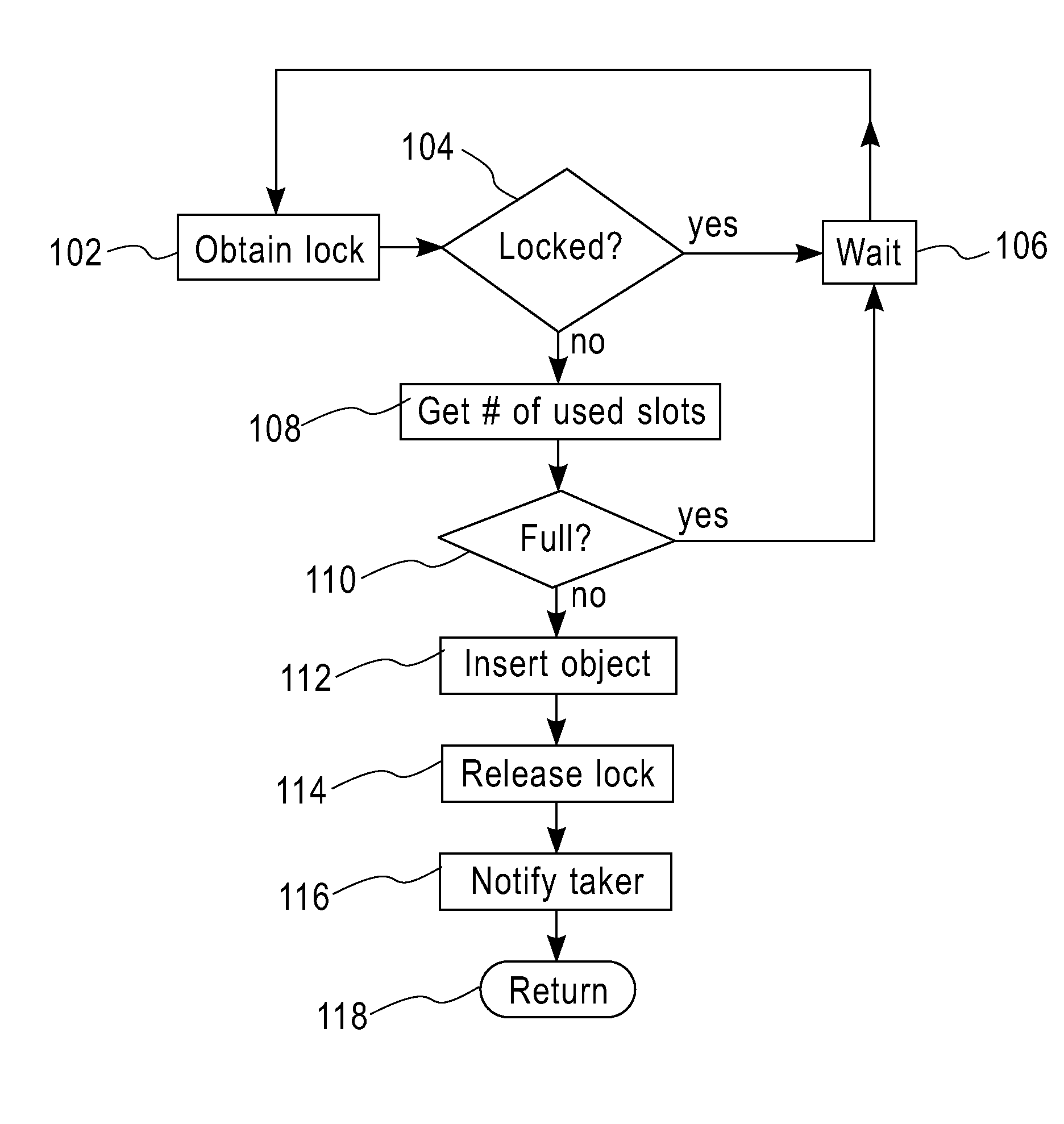

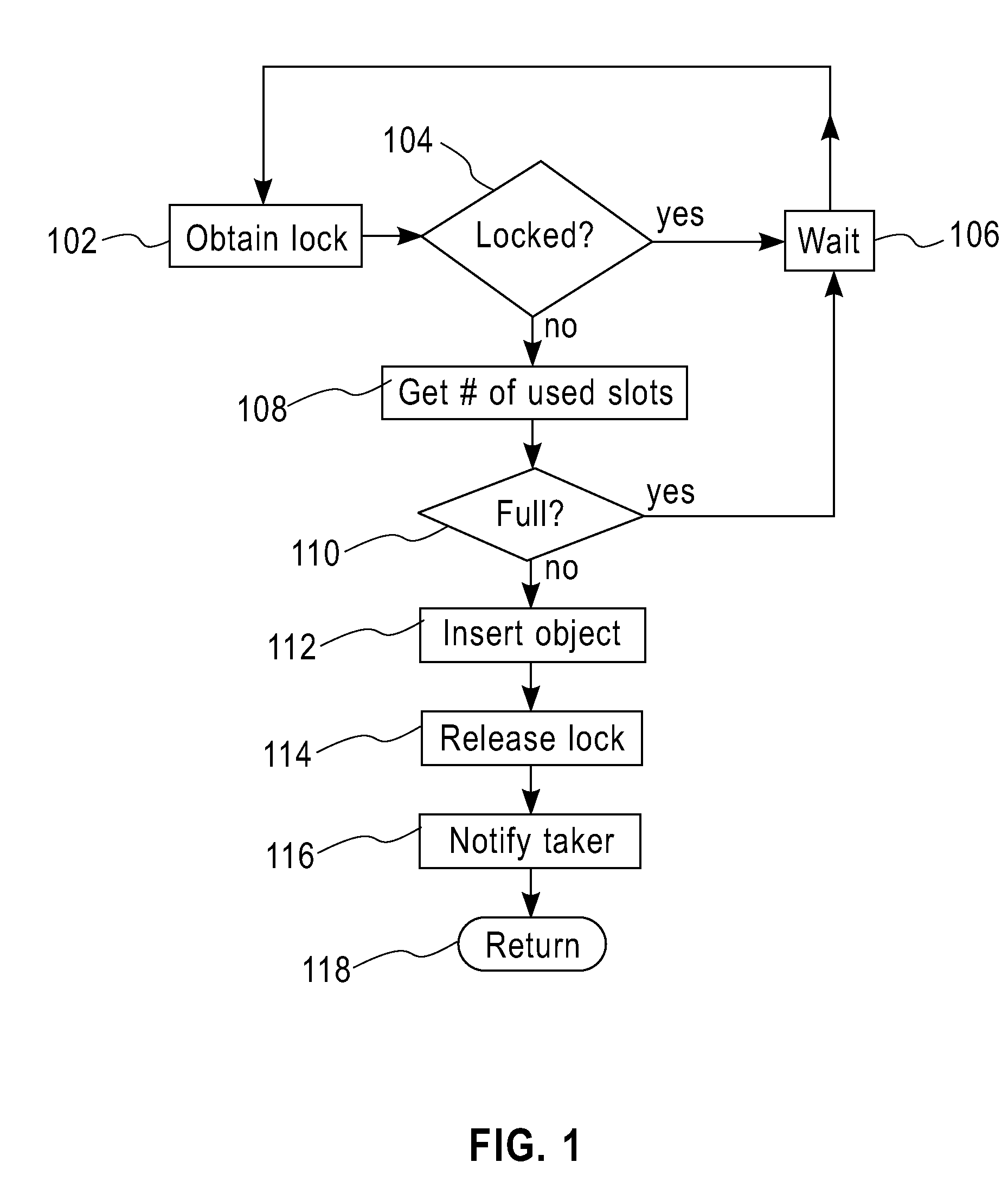

Method for implementing concurrent producer-consumer buffers

InactiveUS20080288496A1Program controlSpecial data processing applicationsComputer architectureProducer consumer

A method and a system for implementing concurrent producer-consumer buffers are provided. The method and system in one aspect uses separate locks, one for putter and another for taker threads operating on a concurrent producer-consumer buffer. The locks operate independently of a notify-wait process.

Owner:IBM CORP

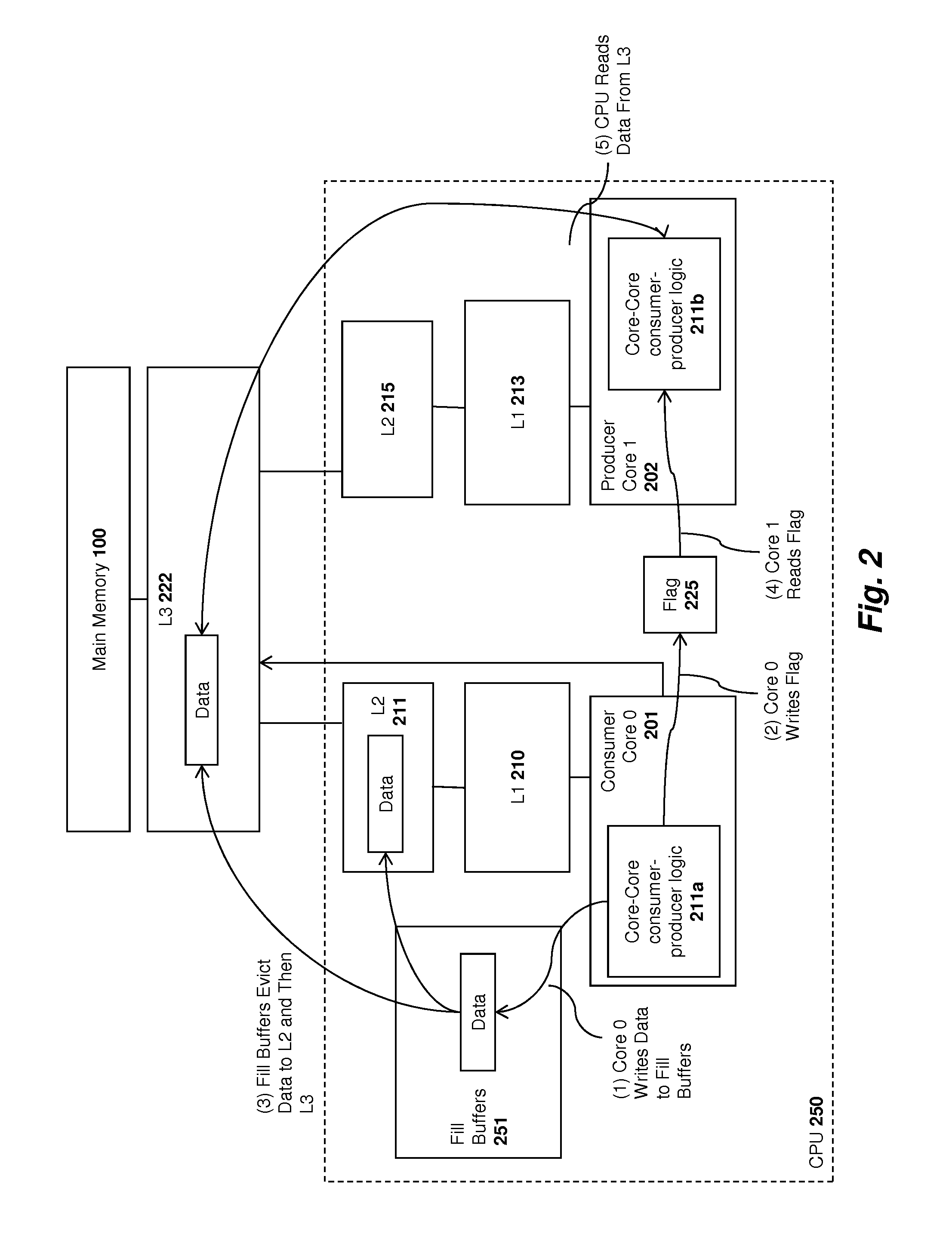

Low-overhead hardware predictor to reduce performance inversion for core-to-core data transfer optimization instructions

ActiveUS20170091090A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyWorkload

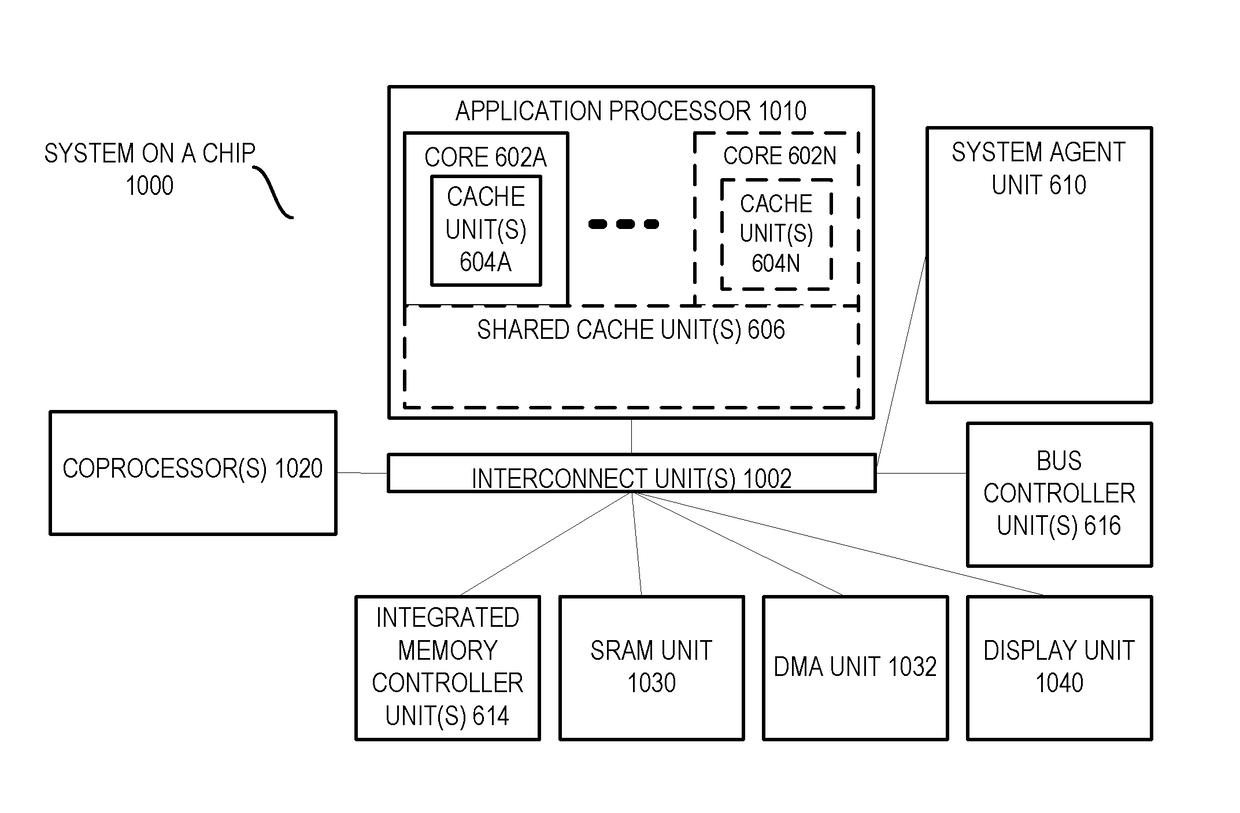

Apparatus and methods implementing a hardware predictor for reducing performance inversions caused by intra-core data transfer during inter-core data transfer optimization for NFVs and other producer-consumer workloads. The apparatus include multi-core processors with multi-level cache hierarchies including and L1 and L2 cache or mid-level cache (MLC) for each core and a shared L3 or last-level cache (LLC). A hardware predictor to monitor accesses to sample cache lines and, based on these accesses, adaptively control the enablement of cache line demotion instructions for proactively demoting cache lines from lower cache levels to higher cache levels, including demoting cache lines from L1 or L2 caches (MLC) to L3 cache (LLC).

Owner:INTEL CORP

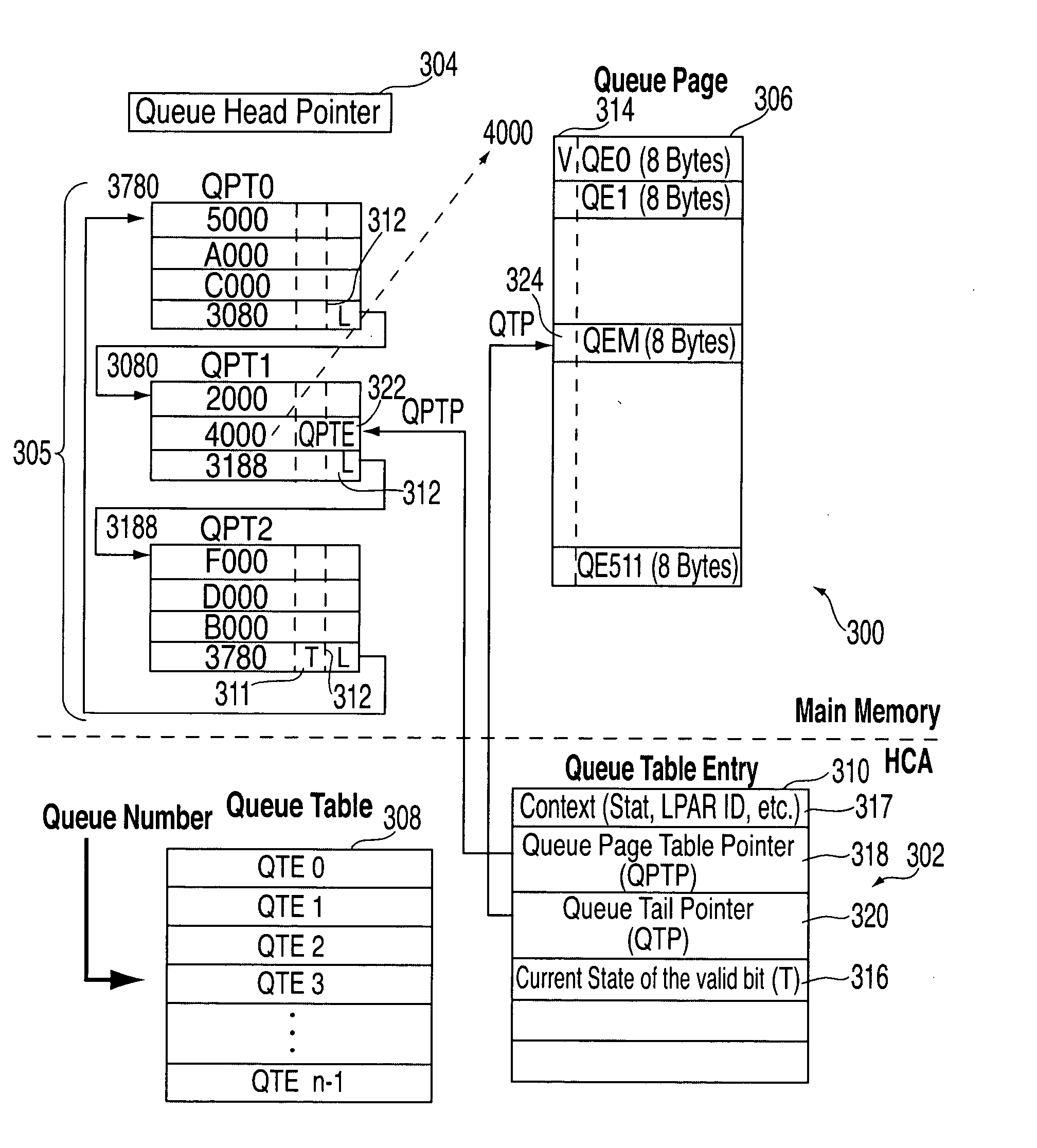

Moving, resizing, and memory management for producer-consumer queues

InactiveUS20060095610A1Multiplex system selection arrangementsData switching by path configurationTerm memorySystems approaches

Systems, methods, and software products for moving and / or resizing a producer-consumer queue in memory without stopping all activity is provided so that no data is lost or accidentally duplicated during the move. There is a software consumer and a hardware producer, such as a host channel adapter.

Owner:IBM CORP

Mechanisms and methods for using data access patterns

ActiveUS7395407B2Memory architecture accessing/allocationMemory systemsData accessParallel computing

Owner:INT BUSINESS MASCH CORP

Hardware predictor using a cache line demotion instruction to reduce performance inversion in core-to-core data transfers

ActiveUS10019360B2Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingWorkload

Apparatus and methods implementing a hardware predictor for reducing performance inversions caused by intra-core data transfer during inter-core data transfer optimization for network function virtualizations (NFVs) and other producer-consumer workloads. An apparatus embodiment includes a plurality of hardware processor cores each including a first cache, a second cache shared by the plurality of hardware processor cores, and a predictor circuit to track the number of inter-core versus intra-core accesses to a plurality of monitored cache lines in the first cache and control enablement of a cache line demotion instruction, such as a cache line LLC allocation (CLLA) instruction, based upon the tracked accesses. An execution of the cache line demotion instruction by one of the plurality of hardware processor cores causes a plurality of unmonitored cache lines in the first cache to be moved to the second cache, such as from L1 or L2 caches to a shared L3 or last level cache (LLC).

Owner:INTEL CORP

Apparatus and method for triggered prefetching to improve I/O and producer-consumer workload efficiency

ActiveUS20170286295A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingWorkload

An apparatus and method are described for a triggered prefetch operation. For example, one embodiment of a processor comprises: a first core comprising a first cache to store a first set of cache lines; a second core comprising a second cache to store a second set of cache lines; a cache management circuit to maintain coherency between one or more cache lines in the first cache and the second cache, the cache management circuit to allocate a lock on a first cache line to the first cache; a prefetch circuit comprising a prefetch request buffer to store a plurality of prefetch request entries including a first prefetch request entry associated with the first cache line, the prefetch circuit to cause the first cache line to be prefetched to the second cache in response to an invalidate command detected for the first cache line.

Owner:INTEL CORP

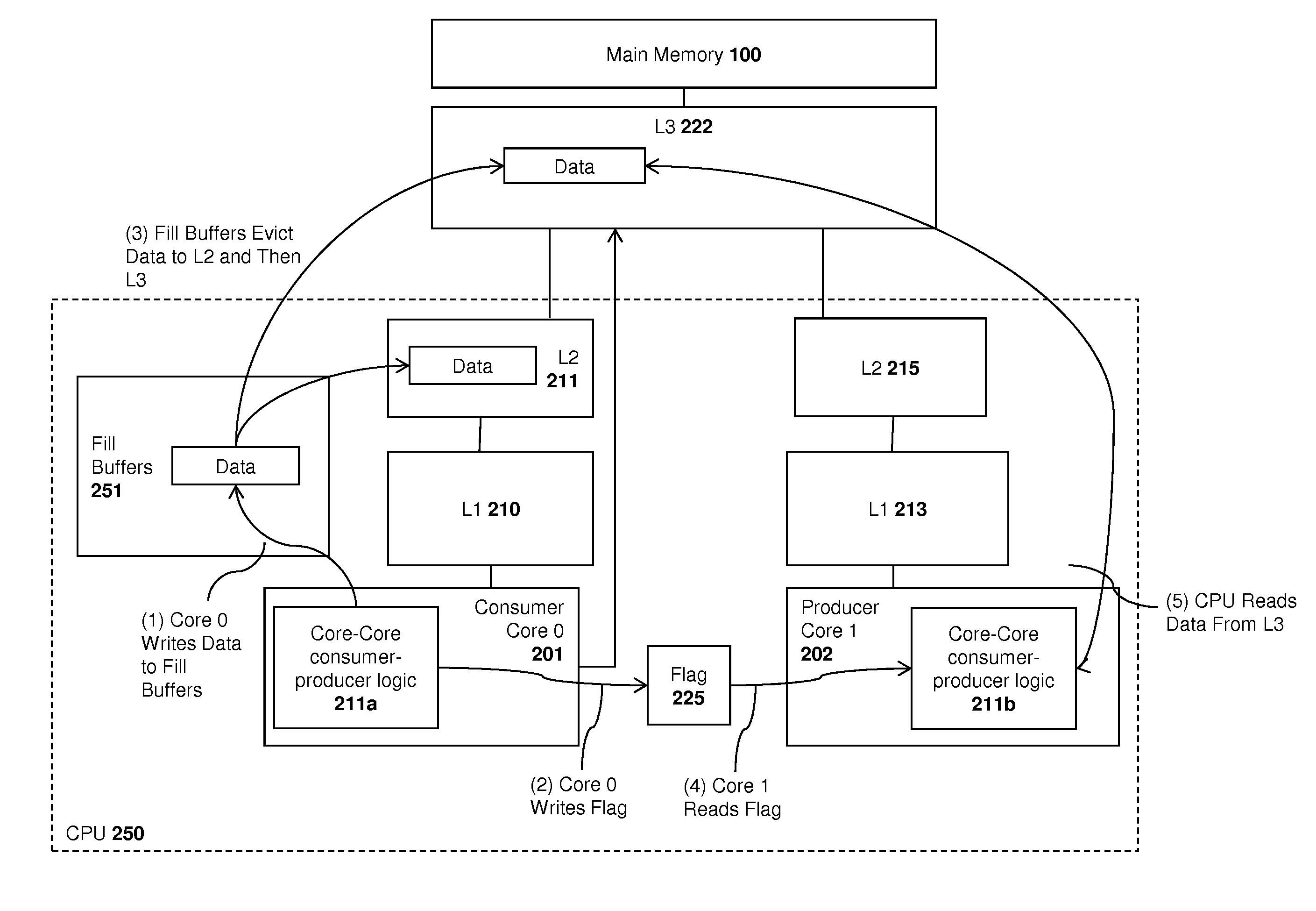

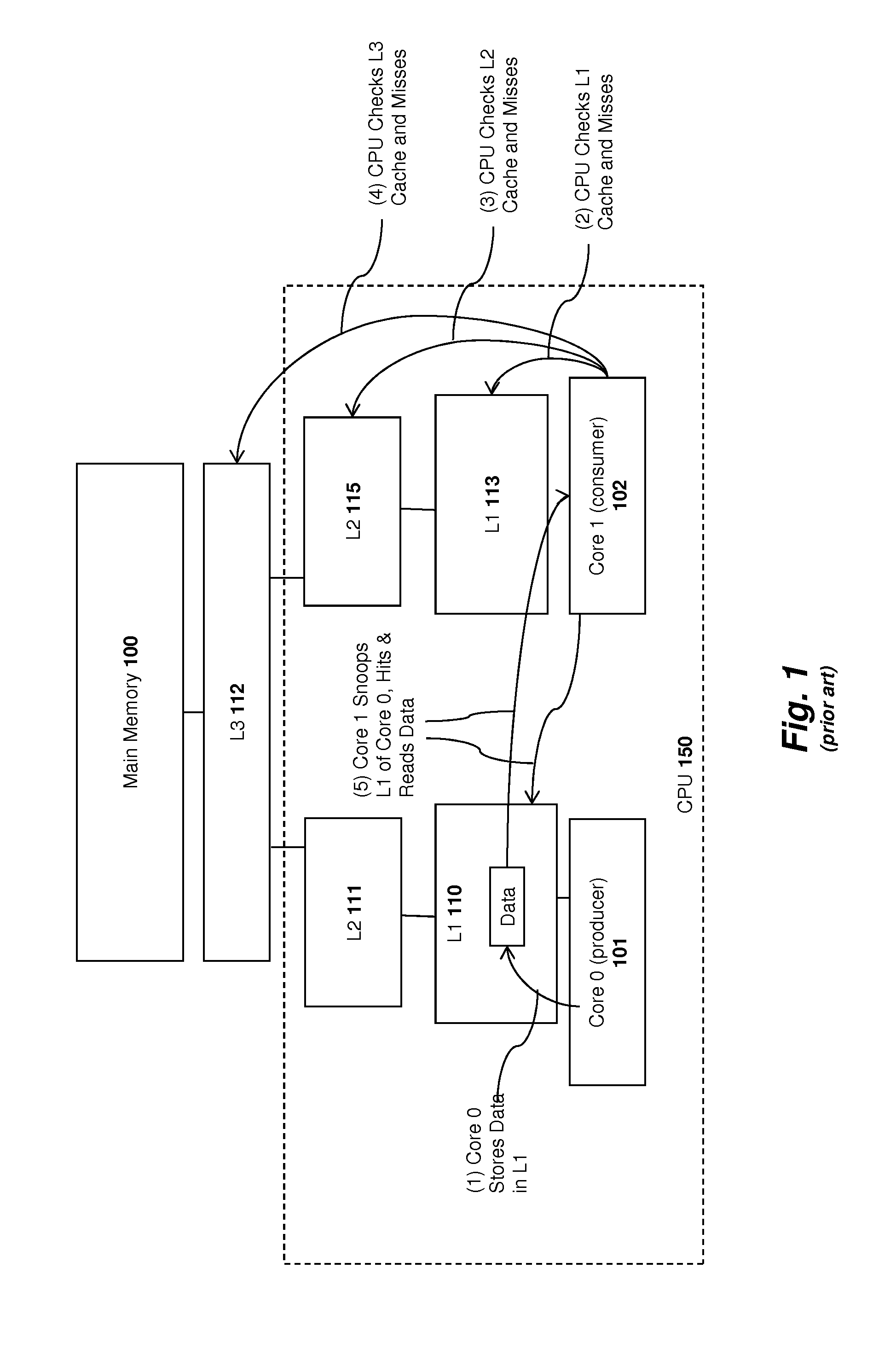

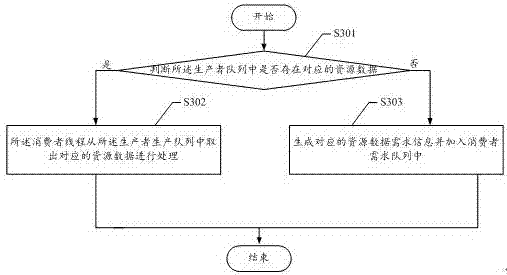

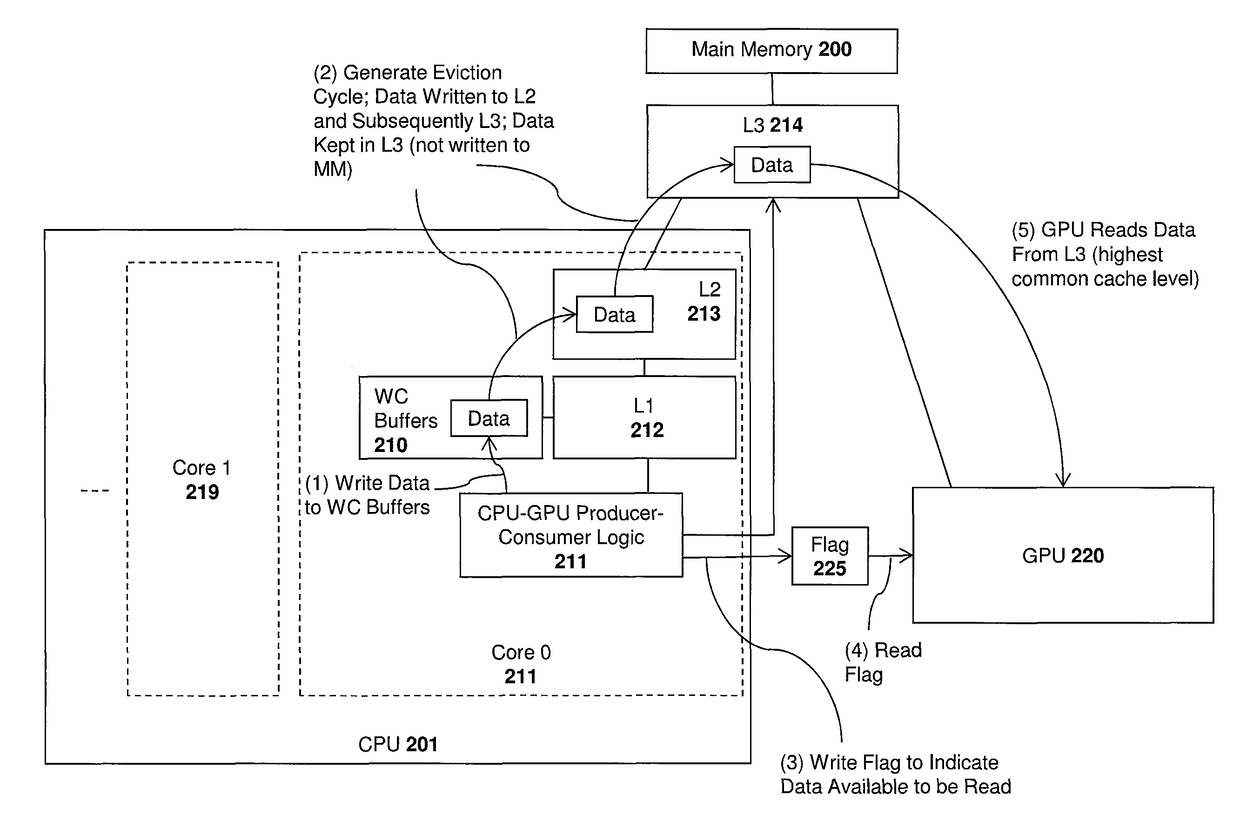

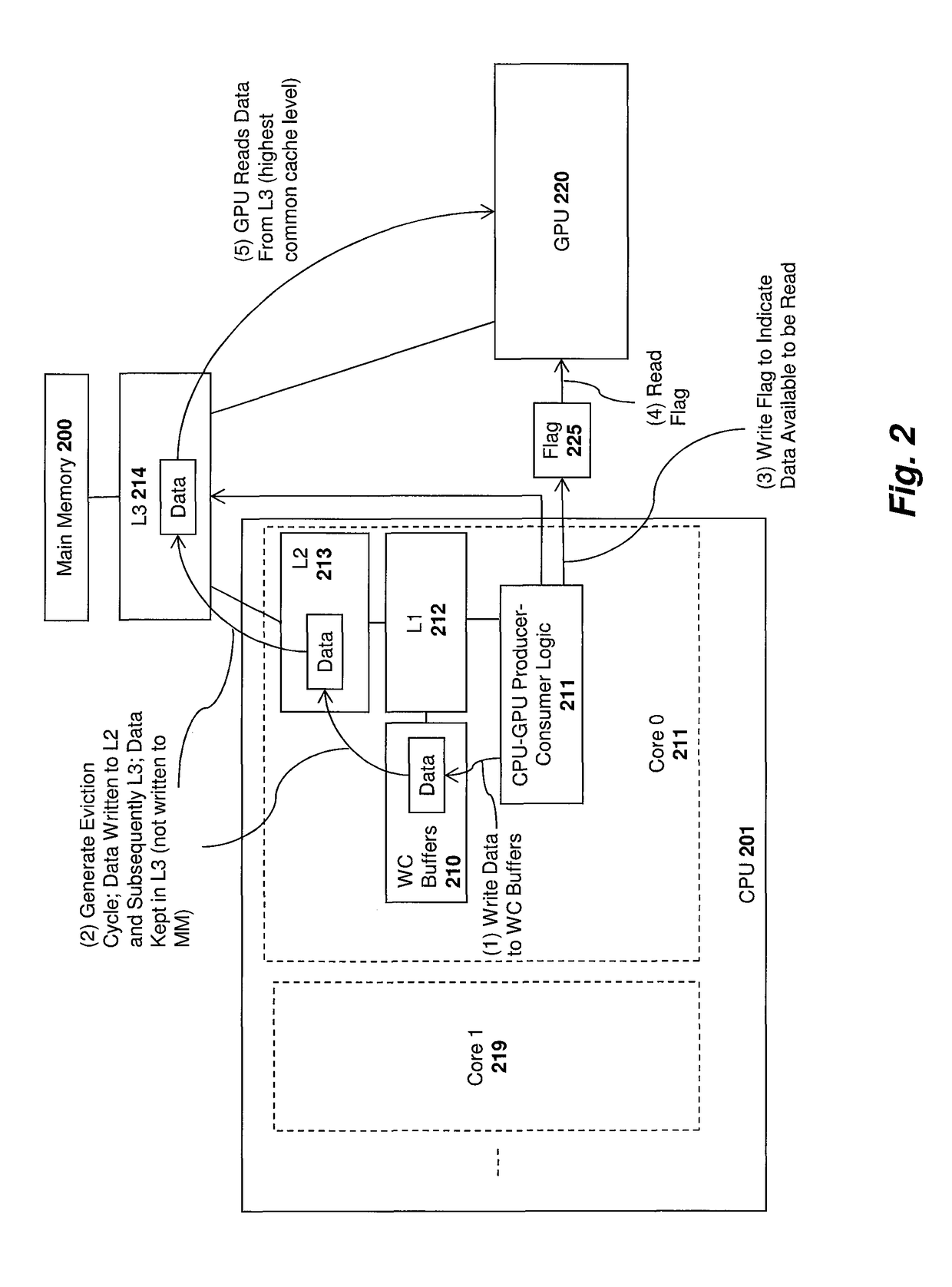

Apparatus and method for memory-hierarchy aware producer-consumer instructions

InactiveUS20140208031A1Memory adressing/allocation/relocationDigital computer detailsMemory hierarchyReceipt

An apparatus and method are described for efficiently transferring data from a producer core to a consumer core within a central processing unit (CPU). For example, one embodiment of a method comprises: A method for transferring a chunk of data from a producer core of a central processing unit (CPU) to consumer core of the CPU, comprising: writing data to a buffer within the producer core of the CPU until a designated amount of data has been written; upon detecting that the designated amount of data has been written, responsively generating an eviction cycle, the eviction cycle causing the data to be transferred from the fill buffer to a cache accessible by both the producer core and the consumer core; and upon the consumer core detecting that data is available in the cache, providing the data to the consumer core from the cache upon receipt of a read signal from the consumer core.

Owner:INTEL CORP

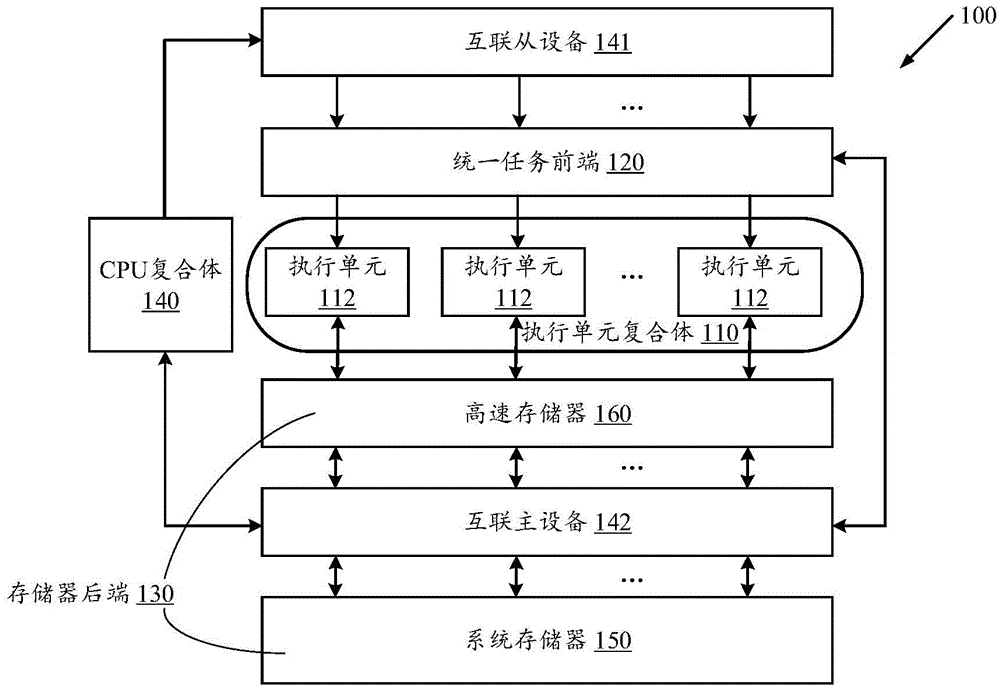

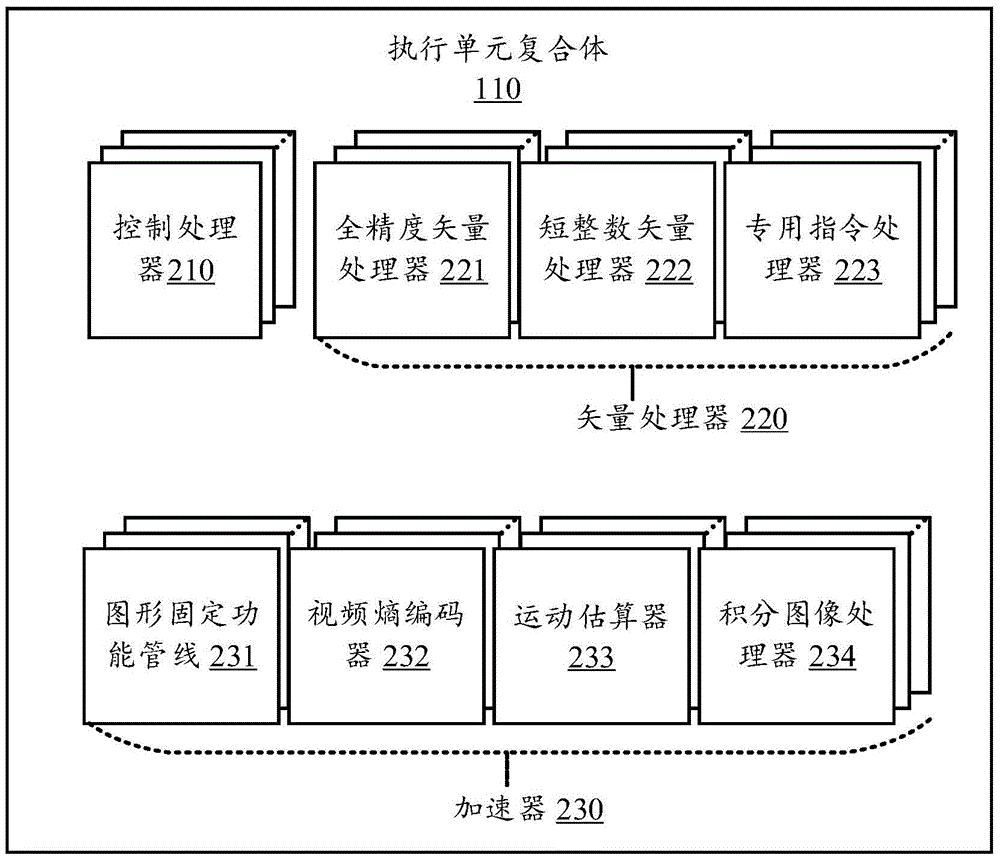

Heterogeneous Computing system and method thereof

InactiveCN106502782AImprove energy efficiencyImprove computing efficiencyResource allocationEnergy efficient computingComputerized systemMultimedia signal processing

A heterogeneous computing system described herein has an energy-efficient architecture that exploits producer-consumer locality, task parallelism and data parallelism. The heterogeneous computing system includes a task frontend that dispatches tasks and updated tasks from queues for execution based on properties associated with the queues, and execution units that include a first subset acting as producers to execute the tasks and generate the updated tasks, and a second subset acting as consumers to execute the updated tasks. The execution units includes one or more control processors to perform control operations, vector processors to perform vector operations, and accelerators to perform multimedia signal processing operations. The heterogeneous computing system also includes a memory backend containing the queues to store the tasks and the updated tasks for execution by the execution units.

Owner:MEDIATEK INC

Real-time monitoring and early warning system and real-time monitoring and early warning method based on internet data

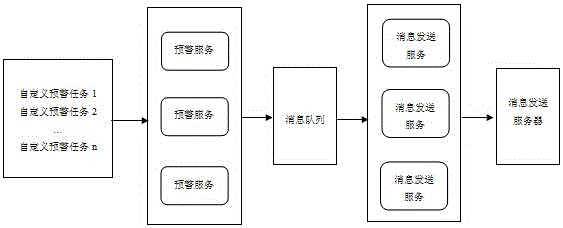

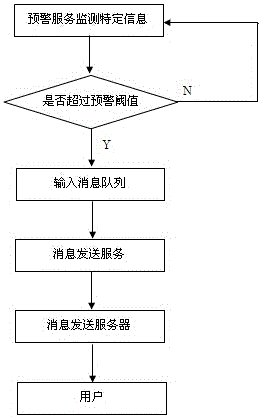

InactiveCN105939379AWill not blockImprove stabilityMessaging/mailboxes/announcementsAlarmsMessage queueEarly warning system

The invention provides a real-time monitoring and early warning system based on internet data. The system comprises early warning services, a message queue, message sending services and message sending servers, wherein a producer-consumer mode is adopted, the early warning services serve as producers to output early warning information to the message queue, and the message sending services push messages in the message queue through the message sending servers; and there are a plurality of early warning services and a plurality of message sending services respectively. In a real-time monitoring and early warning method based on internet data, the early warning services and the message sending services are decoupled, the early warning services server as the producers, the message sending services server as the consumers, the two services independently turn without generating mutual interference, so no message congestion is generated by rapid data expansion, meanwhile, the messages are pushed by a plurality of different message sending servers through different communication channels, so that the stability in a message sensing service stage after early warning is further improved.

Owner:HYLANDA INFORMATION TECH

Data subscribe-and-publish mechanisms and methods for producer-consumer pre-fetch communications

InactiveUS7913048B2Memory architecture accessing/allocationProgram synchronisationMemory addressTechnical communication

A system supporting producer-consumer pre-fetch communications includes a first processor, wherein the first processor is a producer node, and a second processor, wherein the second processor is a consumer node. The system further includes a data subscribe mechanism for performing a data subscribe operation at the consumer node, wherein the data subscribe operation records that a memory address is subscribed at the consumer node, a data publish mechanism for performing a data publish operation at the producer node, wherein the data publish operation sends data of the memory address from the producer node to the consumer node if the memory address is subscribed at the consumer node, and a communication network coupled to the producer node and the consumer node for enabling communicating between the producer node and the consumer node.

Owner:INT BUSINESS MASCH CORP

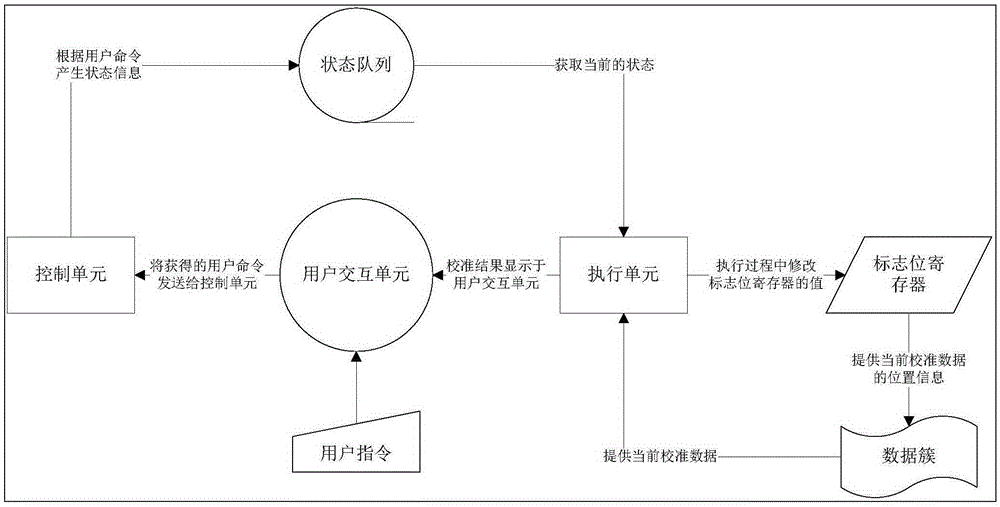

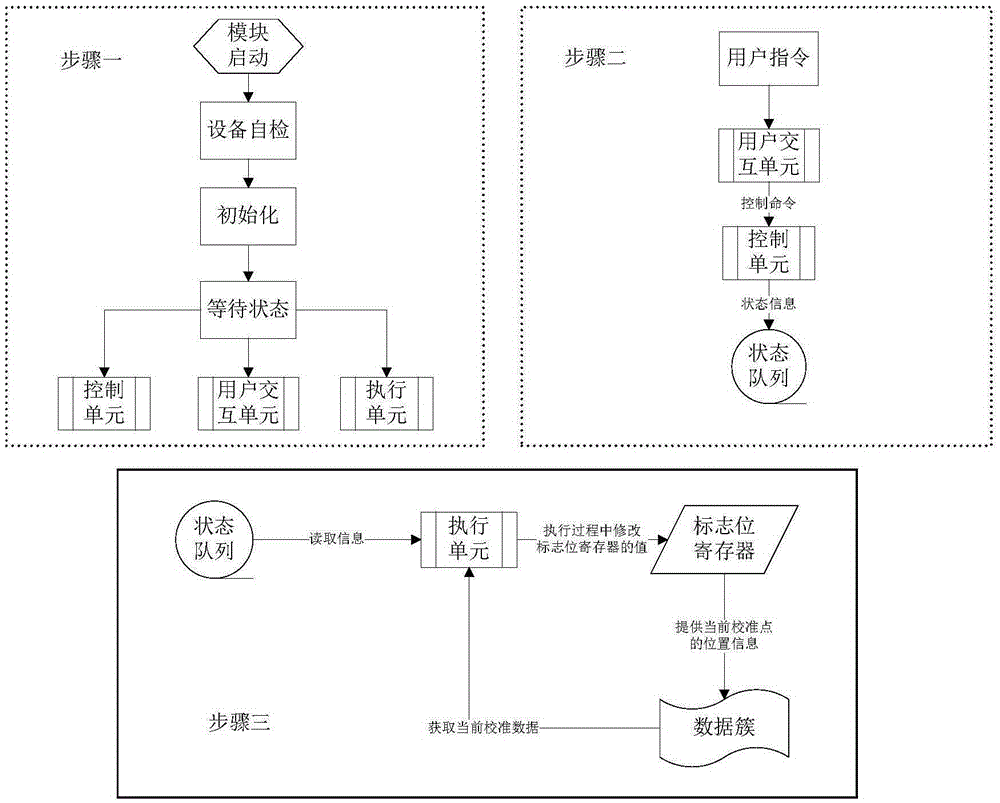

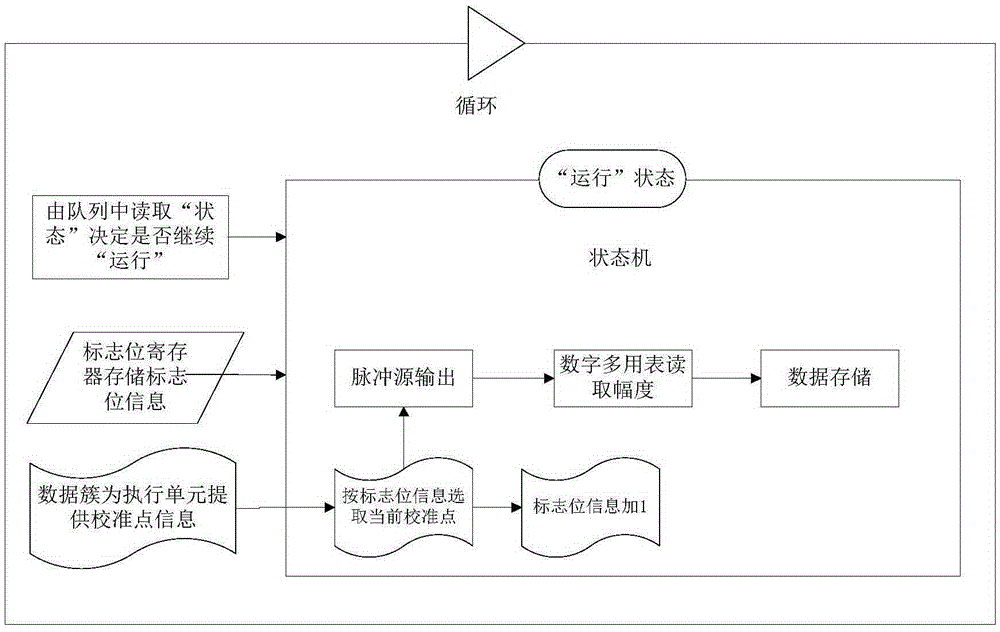

Device and method for controlling auto-calibration process of pulse signal generator

ActiveCN105302006ASimple structureImprove the level of full controlProgramme control in sequence/logic controllersExtensibilityElectricity

The invention relates to a device and a method for controlling an auto-calibration process of a pulse signal generator and belongs to the technical field of electricity measurement. According to the invention, a control module is created on the basis of a LabVIEW state machine, a producer and consumer loop structure and queue design. Real-time control of the auto-calibration process of the pulse amplitude, period, pulse width and time delay of a pulse signal is achieved by means of the module. The control module comprises three major parts: a user interaction unit, a control unit and an execution unit. The user interaction unit is a user main interface for responding to a user command and displaying calibration data. The control unit generates state information according to the user command received by the user interaction unit and controls the execution of the calibration process. The execution unit is used to run the calibration process according to a control command and generates a calibration result. The module and the method achieve full control of the whole calibration process of a pulse interference module. The process can be paused, terminated or exited anytime, and can be resumed after being paused according to the progress, and thus the versatility and scalability are high.

Owner:BEIJING CHANGCHENG INST OF METROLOGY & MEASUREMENT AVIATION IND CORP OF CHINA

Acceleration of cache-to-cache data transfers for producer-consumer communication

ActiveUS20180239708A1Memory architecture accessing/allocationMemory systemsTraffic capacityParallel computing

Owner:ADVANCED MICRO DEVICES INC

Method for implementing concurrent producer-consumer buffers

InactiveUS8122168B2Program controlInput/output processes for data processingComputer architectureProducer consumer

A method and a system for implementing concurrent producer-consumer buffers are provided. The method and system in one aspect uses separate locks, one for putter and another for taker threads operating on a concurrent producer-consumer buffer. The locks operate independently of a notify-wait process.

Owner:IBM CORP

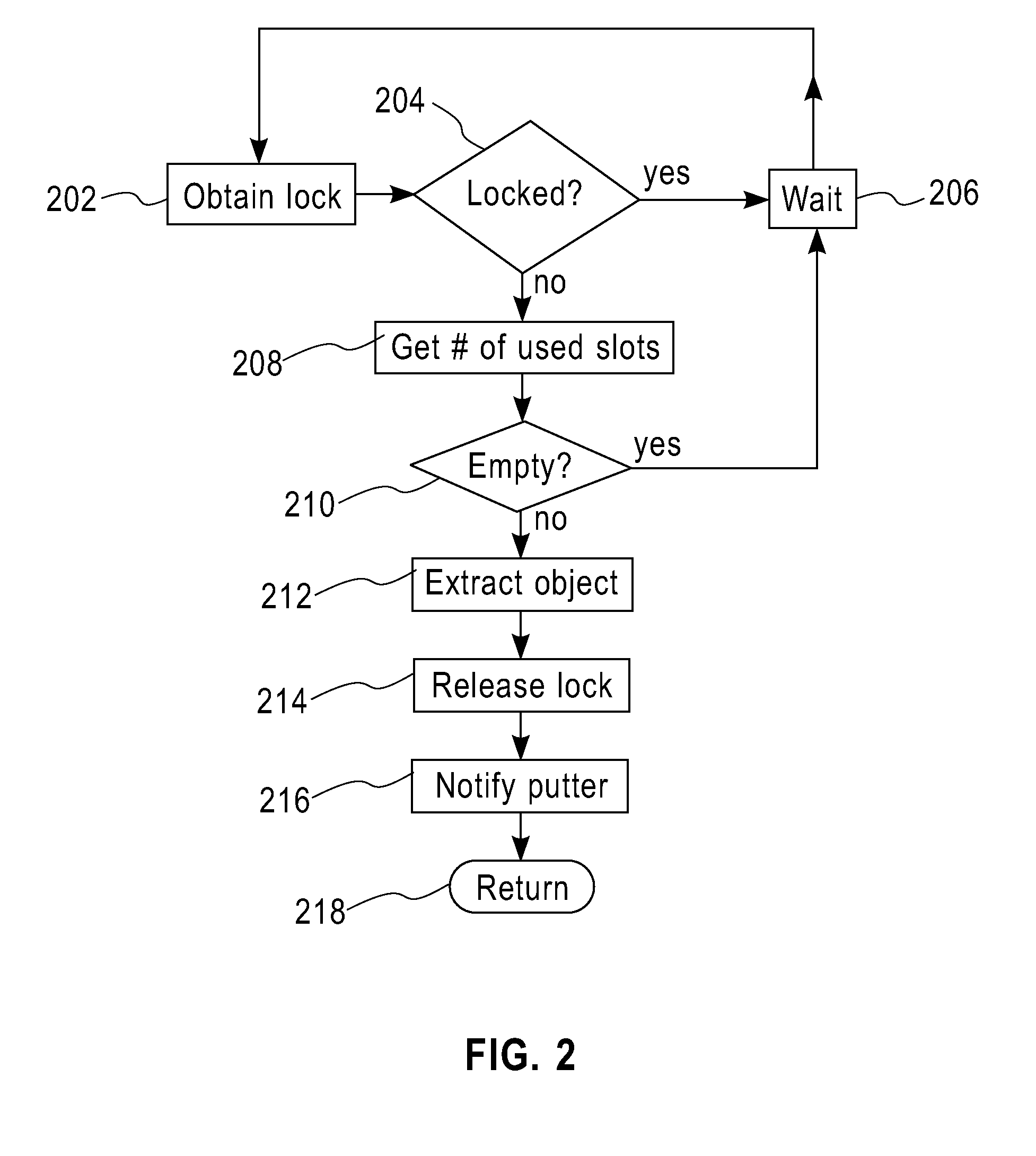

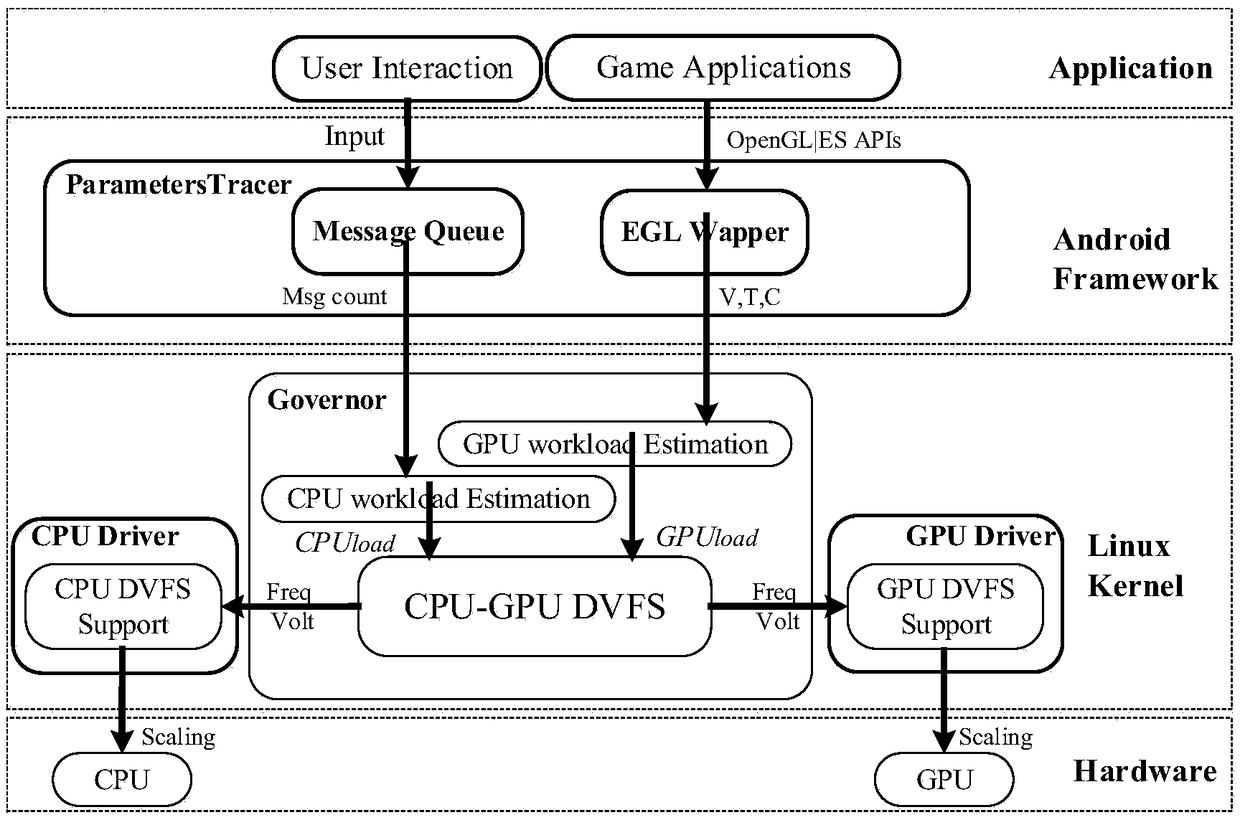

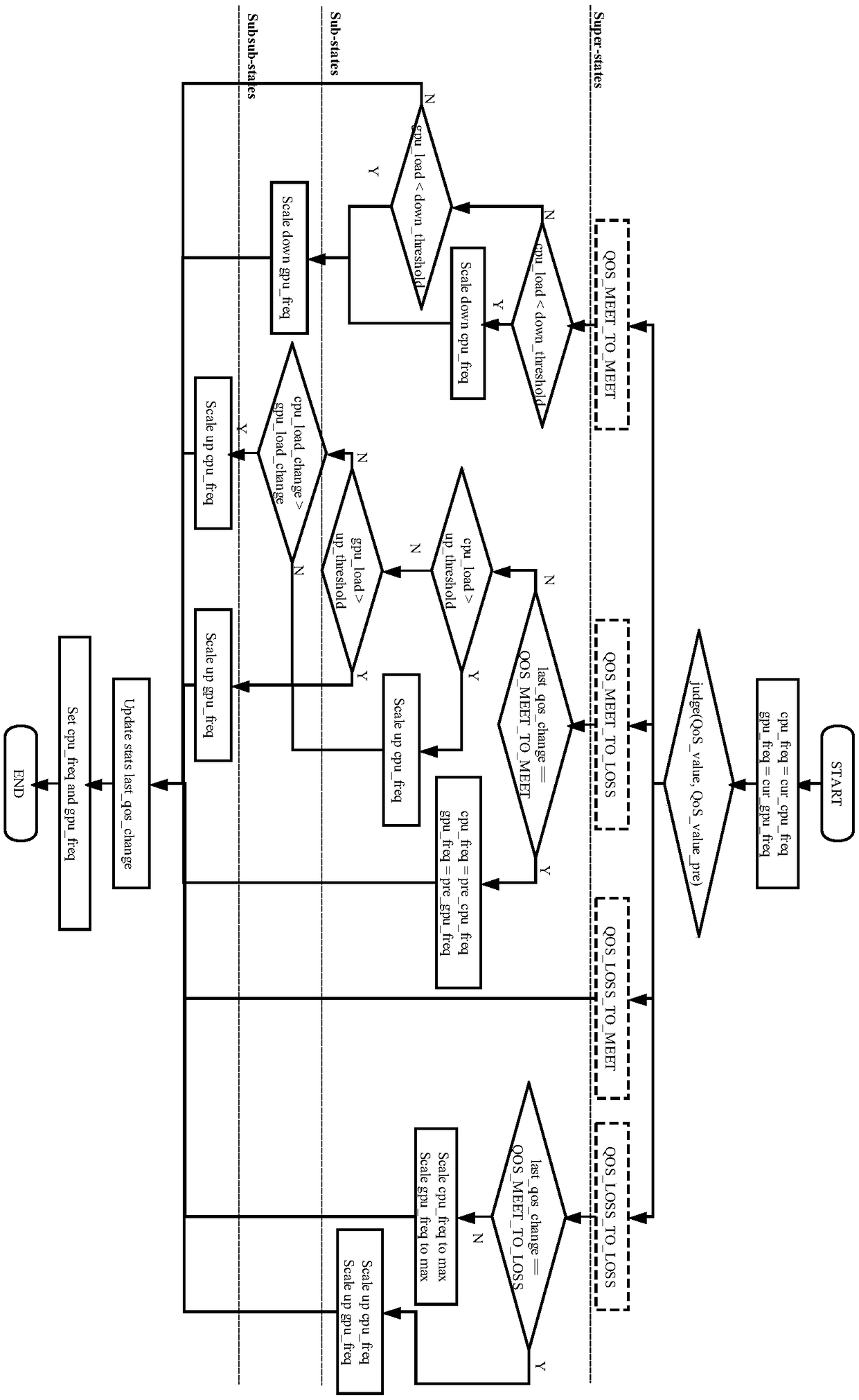

Processor power consumption optimization method suitable for Android games

InactiveCN108919938ALower latencyImprove accuracyPower supply for data processingParallel computingControl logic

The invention discloses a processor power consumption optimization method suitable for Android games. A power consumption management framework for Android platform game applications is constructed andincludes a game characteristic data collection module and a CPU-GPU coordinated voltage frequency control module. The characteristic data collection module completes the collection of user interaction data and GPU rendering data during Android game running, provides support for CPU and GPU load evaluation based on Android game characteristics, and improves timeliness and accuracy; and the coordinated voltage frequency control module uses a hierarchical state machine to comprehensively describe game running state changes and processor running state changes and indicates the timing and direction for voltage frequency control logic. The control logic achieves CPU-GPU coordinated power consumption management based on a producer-consumer collaborative relationship between a CPU and a GPU in anAndroid game. The CPU-GPU energy efficiency is improved on the premise of ensuring user experience.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

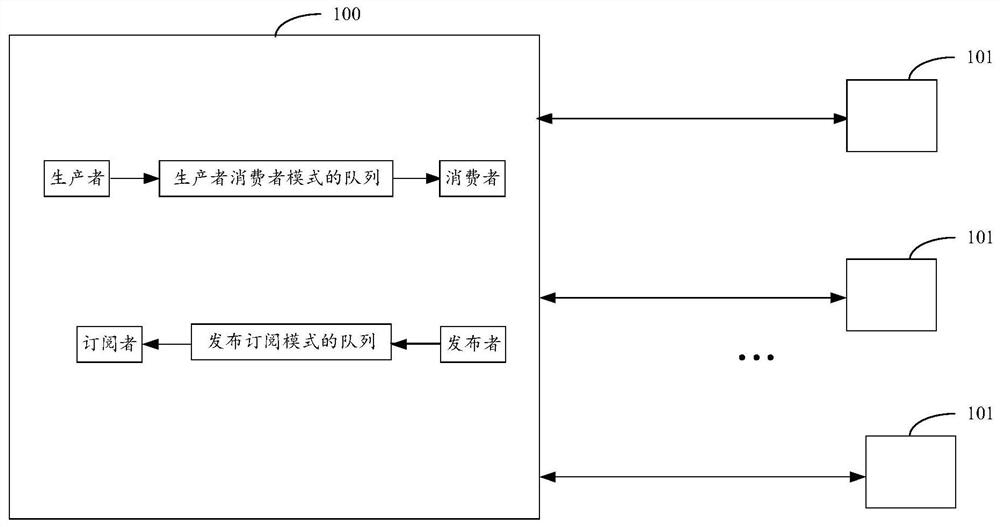

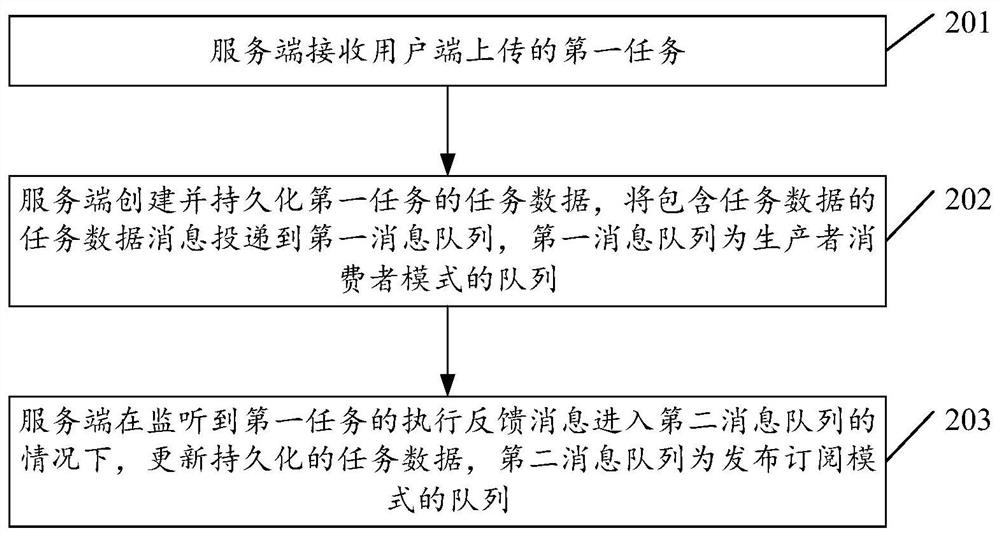

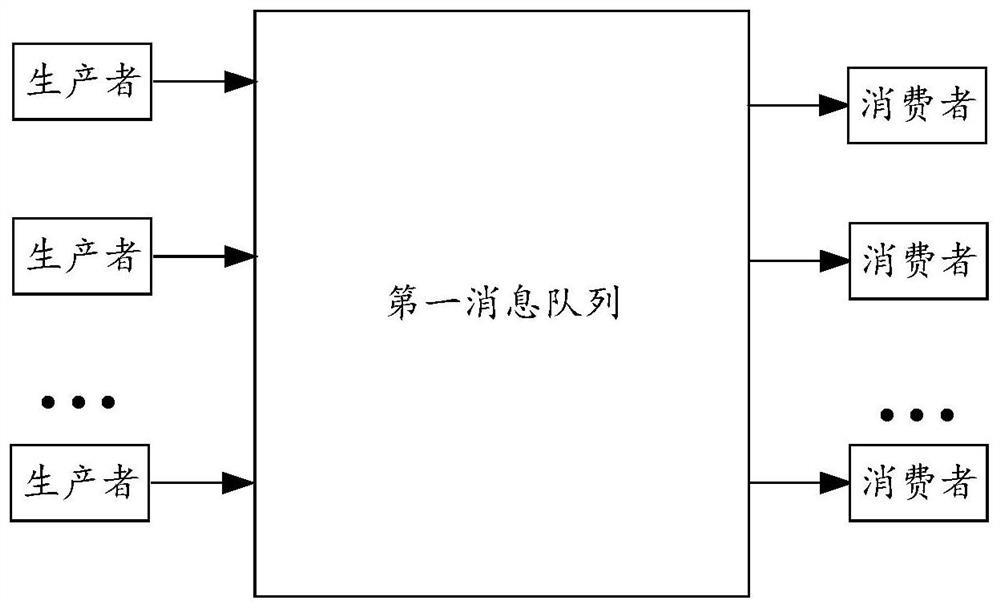

Task processing method and related product

PendingCN113220435AEnsure consistencyTraceable statusProgram initiation/switchingInterprogram communicationMessage queueProcessing

The embodiment of the invention provides a task processing method and a related product. The task processing method comprises the steps that a server side receives a first task uploaded by a user side; task data of the first task are created and persisted, a task data message containing the task data is delivered to a first message queue, and the first message queue is a queue in a producer-consumer mode; under the condition that it is monitored that an execution feedback message of the first task enters a second message queue, the persistent task data are updated, and the second message queue is a queue in a publish-subscribe mode. According to the method of the invention, the consistency of task states can be ensured.

Owner:SHENZHEN SENSETIME TECH CO LTD

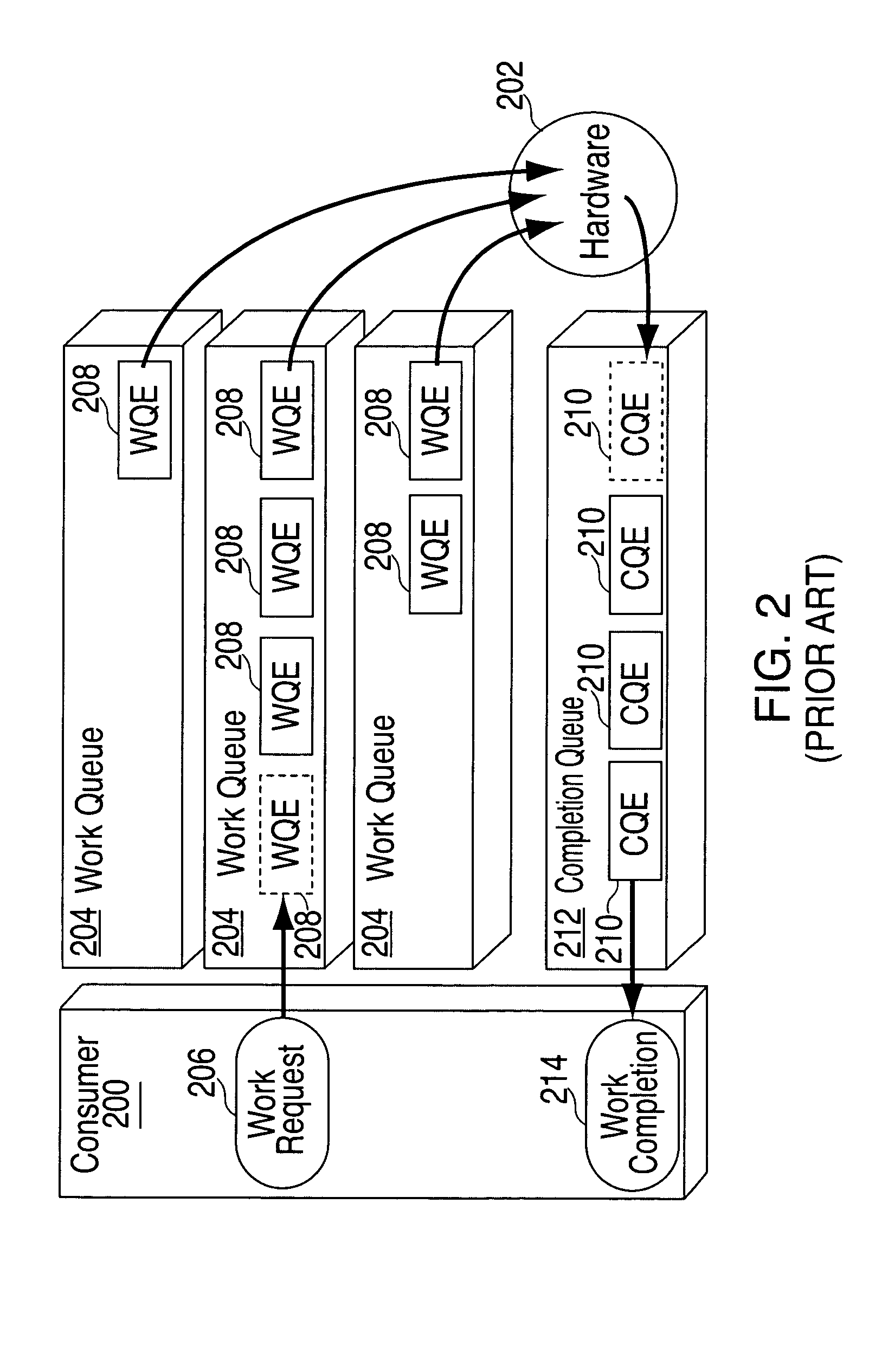

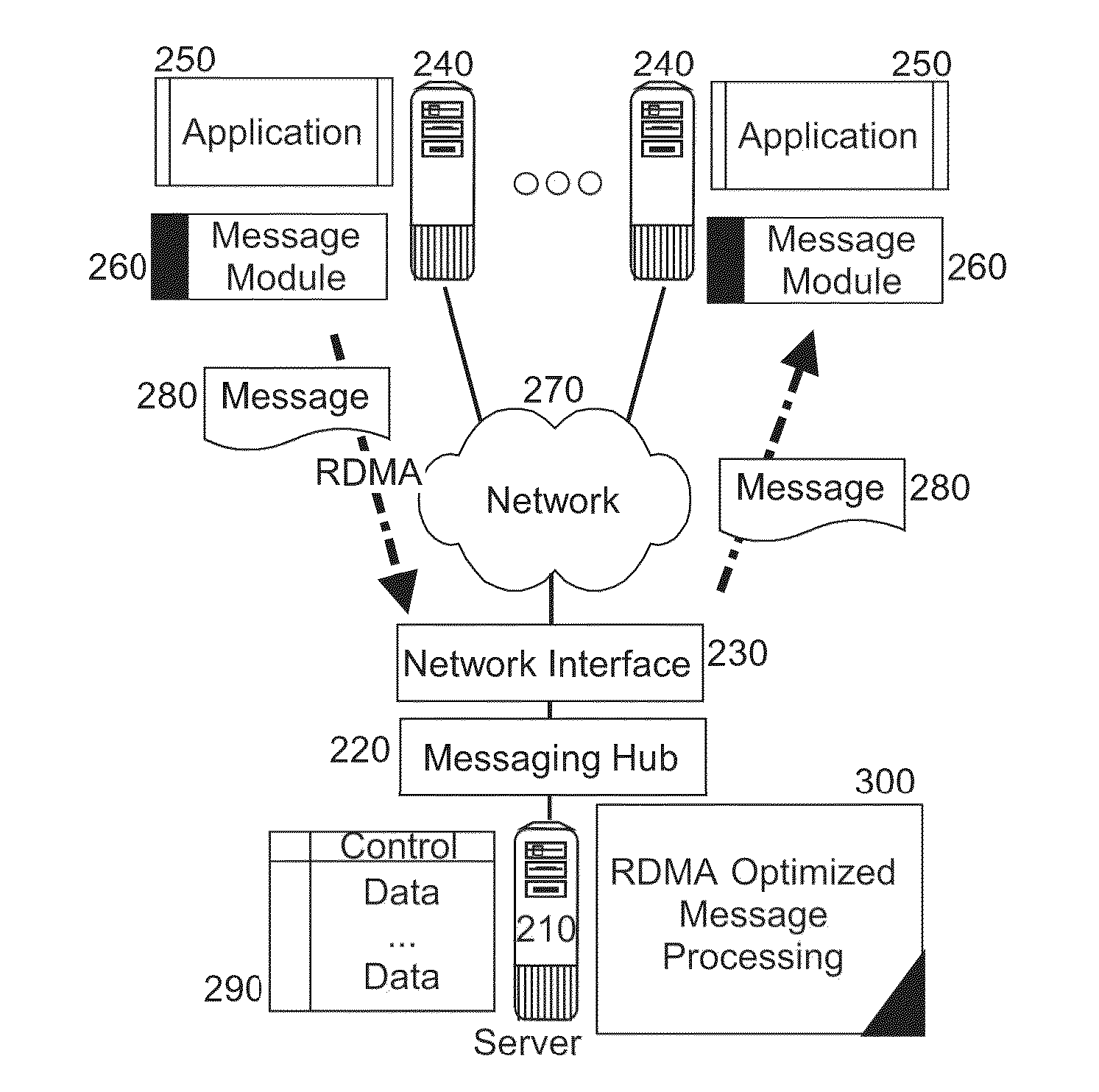

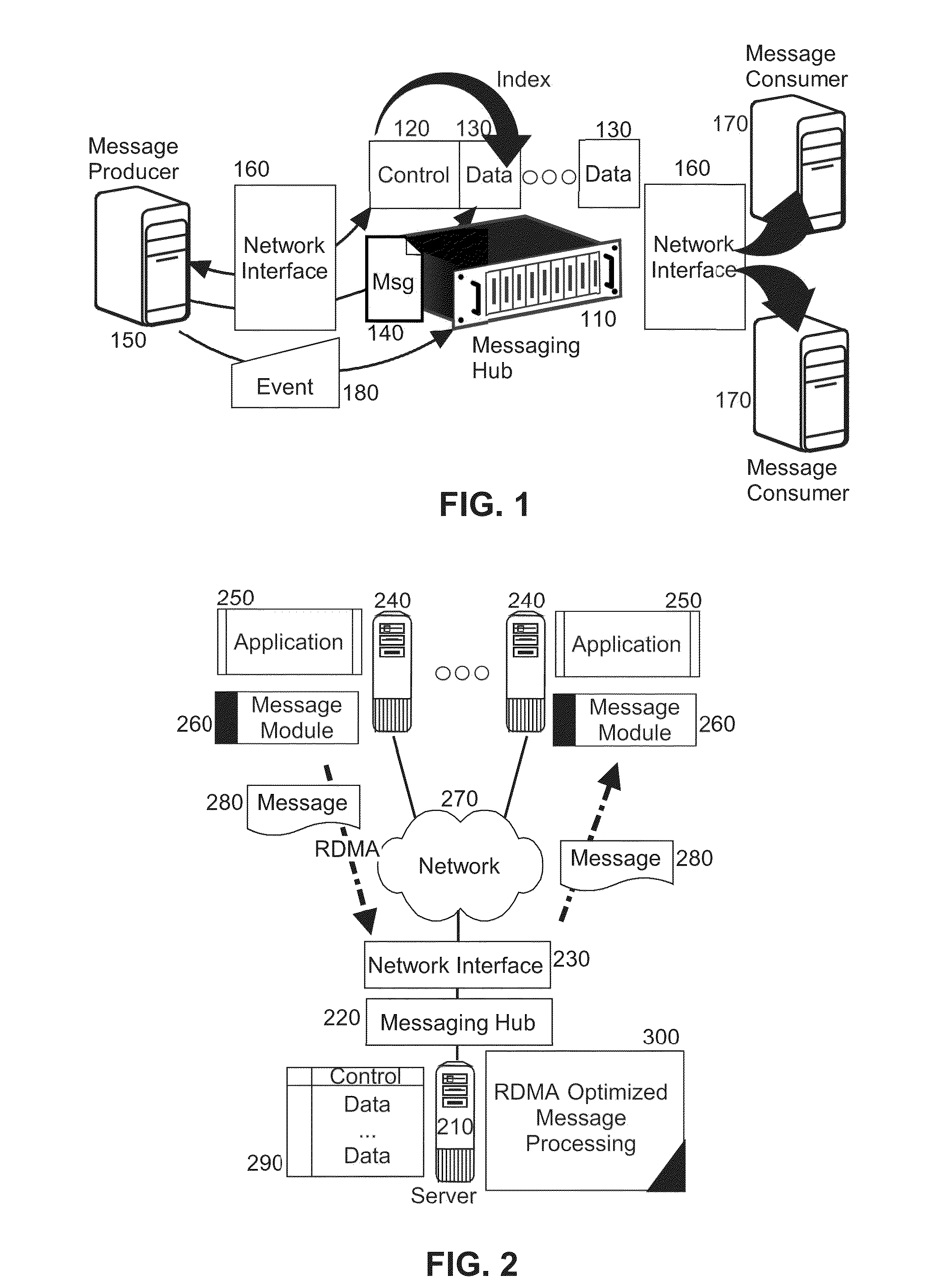

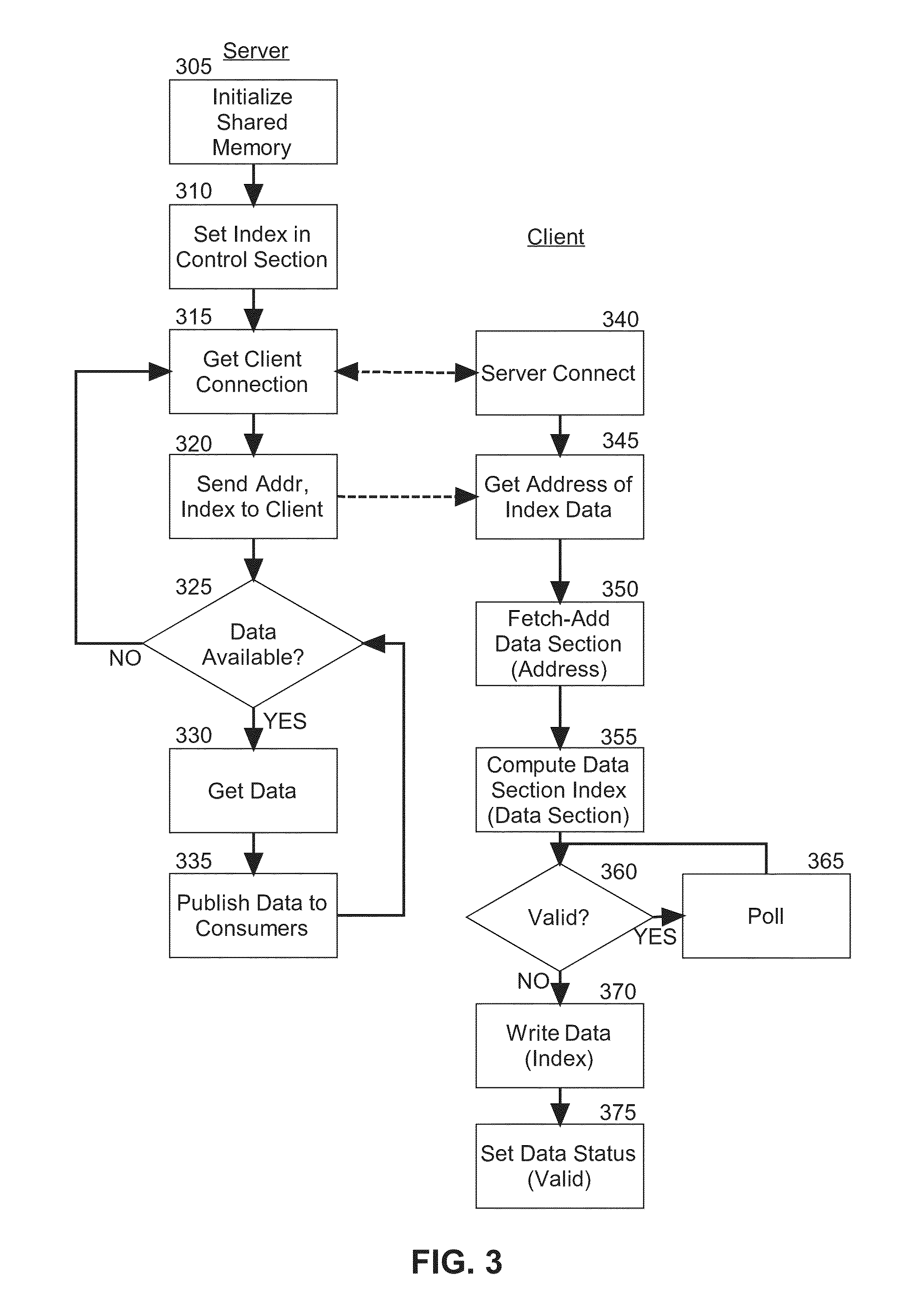

Remote direct memory access (RDMA) high performance producer-consumer message processing

ActiveUS20150186331A1Digital computer detailsTransmissionDirect memory accessRemote direct memory access

A method, system and computer program product for remote direct memory access (RDMA) optimized producer-consumer message processing in a messaging hub is provided. The method includes initializing a shared memory region in memory of a host server hosting operation of a messaging hub. The initialization provides for a control portion and one or more data portions, the control portion storing an index to an available one of the data portions. The method also includes transmitting to a message producer an address of the shared memory region and receiving a message in one of the data portions of the shared memory region from the message producer by way of an RDMA write operation on a network interface of the host server. Finally, the method includes retrieving the message from the one of the data portions and processing the message in the messaging hub in response to the receipt of the message.

Owner:IBM CORP

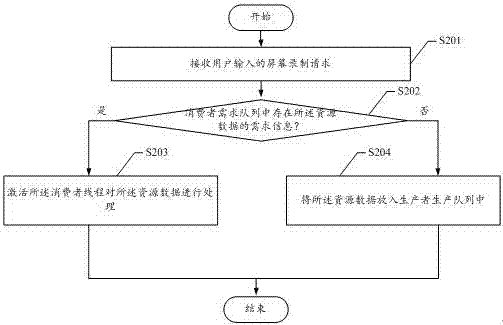

Data processing method and device

ActiveCN107239343AImprove real-time performanceShorten the timeResource allocationInterprogram communicationSource dataReal-time computing

The invention provides a data processing method and device. The method comprises the steps that when a producer thread produces source data, whether a consumer thread needs the source data or not is judged; when it is determined that the consumer thread needs the source data, the consumer thread is activated so as to process the source data. By means of the data processing method and device, when the source data is processed by using a producer-consumer model, the real-time performance of the source data processing can be improved.

Owner:INSPUR FINANCIAL INFORMATION TECH CO LTD

Apparatus and method for memory-hierarchy aware producer-consumer instruction

An apparatus and method are described for efficiently transferring data from a core of a central processing unit (CPU) to a graphics processing unit (GPU). For example, one embodiment of a method comprises: writing data to a buffer within the core of the CPU until a designated amount of data has been written; upon detecting that the designated amount of data has been written, responsively generating an eviction cycle, the eviction cycle causing the data to be transferred from the buffer to a cache accessible by both the core and the GPU; setting an indication to indicate to the GPU that data is available in the cache; and upon the GPU detecting the indication, providing the data to the GPU from the cache upon receipt of a read signal from the GPU.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com