Patents

Literature

38 results about "Performance per watt" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, performance per watt is a measure of the energy efficiency of a particular computer architecture or computer hardware. Literally, it measures the rate of computation that can be delivered by a computer for every watt of power consumed. This rate is typically measured by performance on the LINPACK benchmark when trying to compare between computing systems.

Apparatus and method for realizing accelerator of sparse convolutional neural network

InactiveCN107239824AImprove computing powerReduce response latencyDigital data processing detailsNeural architecturesAlgorithmBroadband

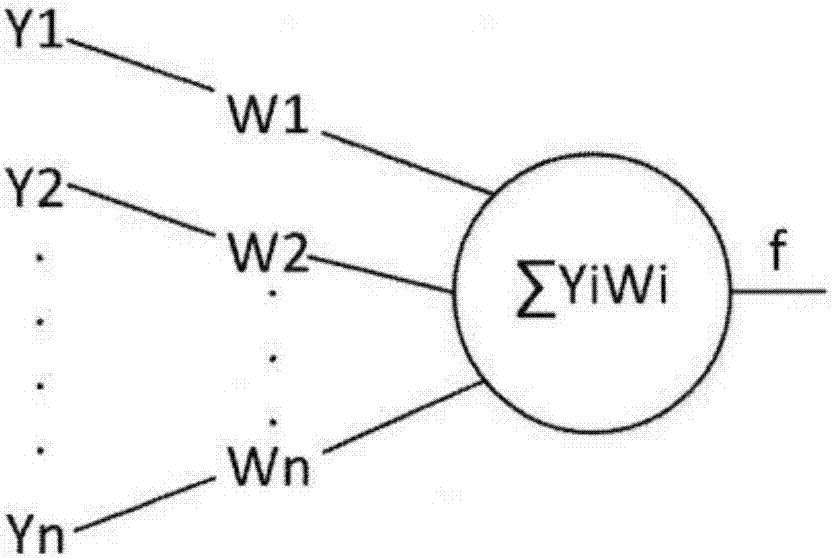

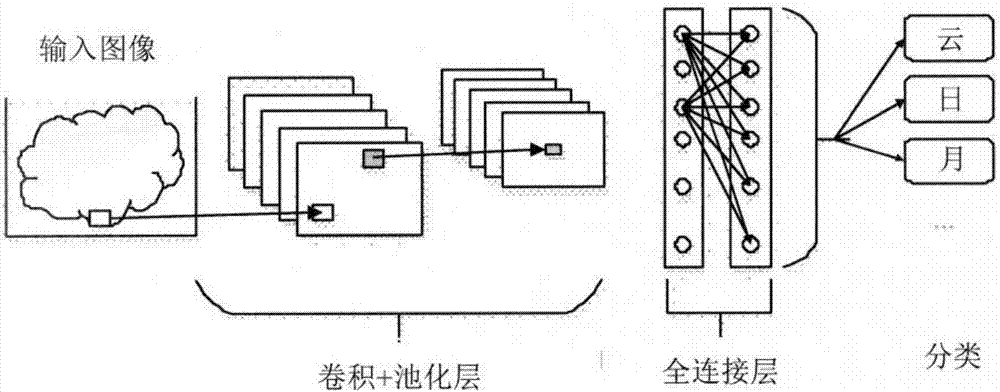

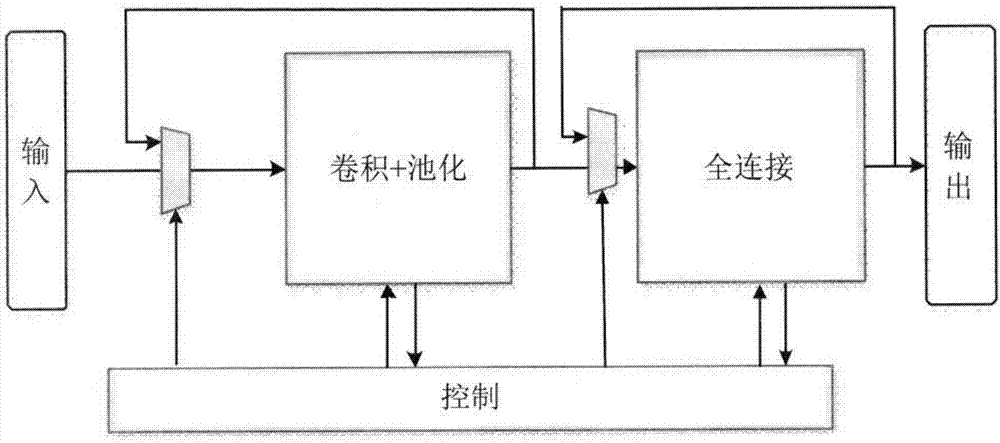

The invention provides an apparatus and method for realizing an accelerator of a sparse convolutional neural network. According to the invention, the apparatus herein includes a convolutional and pooling unit, a full connection unit and a control unit. The method includes the following steps: on the basis of control information, reading convolutional parameter information, and input data and intermediate computing data, and reading full connected layer weight matrix position information, in accordance with the convolutional parameter information, conducting convolution and pooling on the input data with first iteration times, then on the basis of the full connected layer weight matrix position information, conducting full connection computing with second iteration times. Each input data is divided into a plurality of sub-blocks, and the convolutional and pooling unit and the full connection unit separately operate on the plurality of sub-blocks in parallel. According to the invention, the apparatus herein uses a specific circuit, supports a full connected layer sparse convolutional neural network, uses parallel ping-pang buffer design and assembly line design, effectively balances I / O broadband and computing efficiency, and acquires better performance power consumption ratio.

Owner:XILINX INC

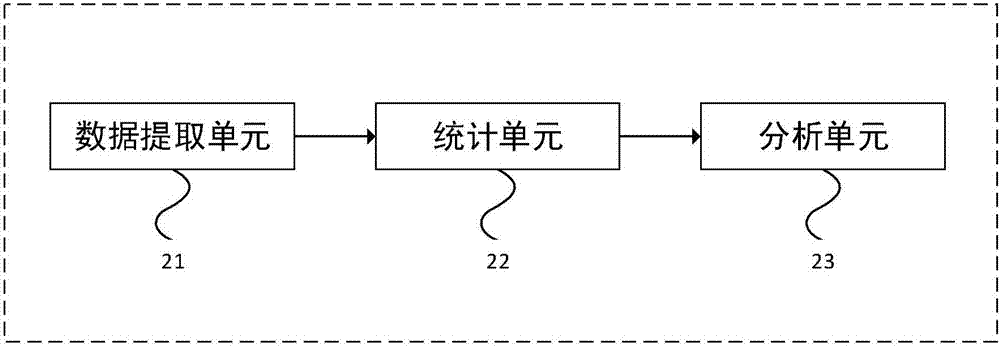

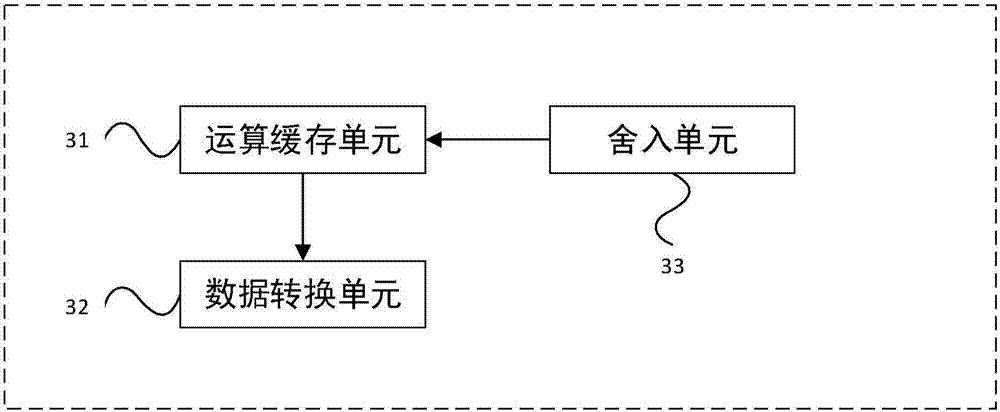

Device and method for executing forward operation of artificial neural network

PendingCN107330515ASmall area overheadReduce area overhead and optimize hardware area power consumptionDigital data processing detailsCode conversionData operationsComputer module

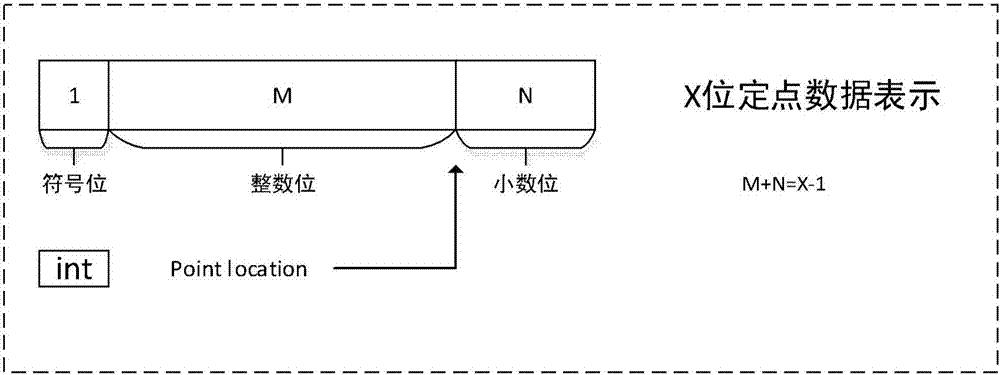

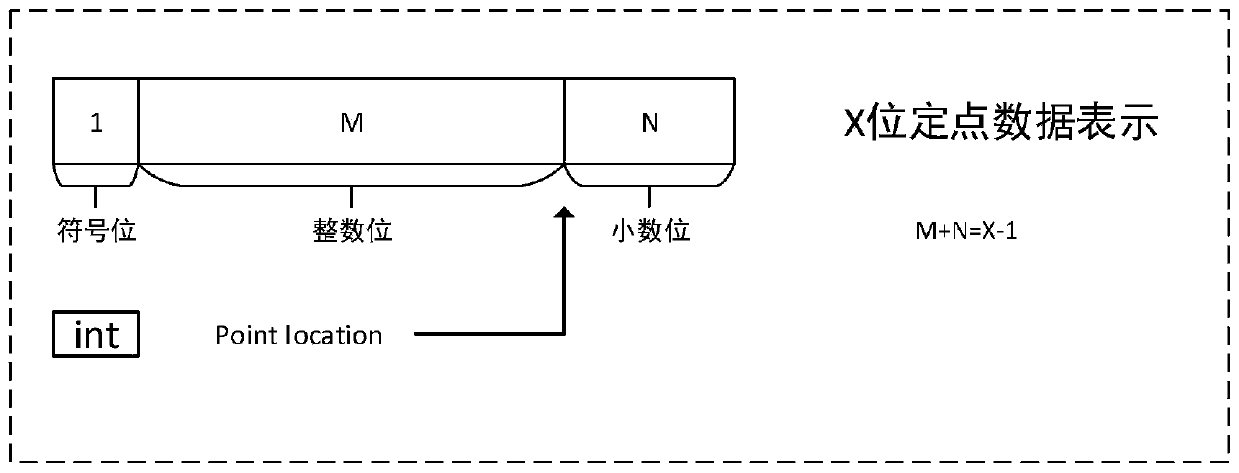

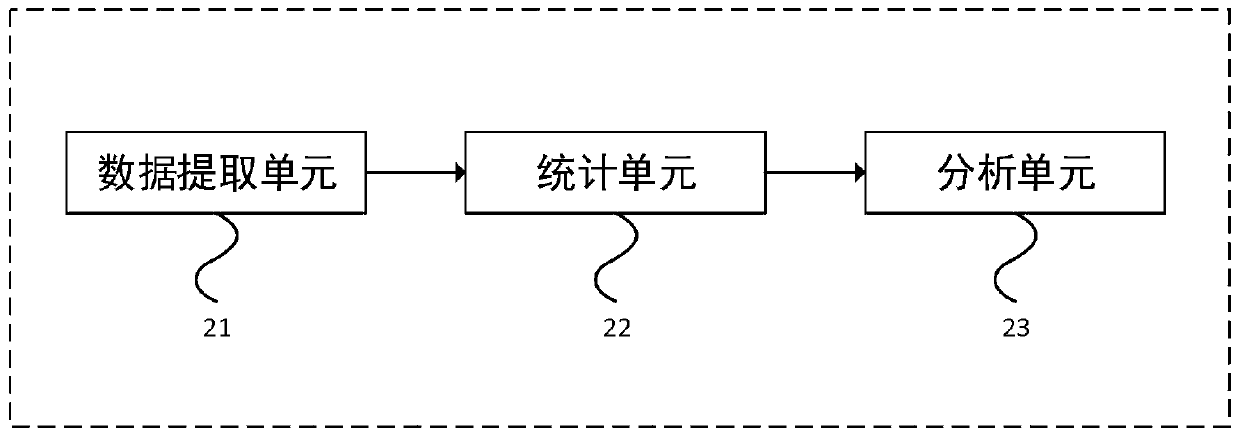

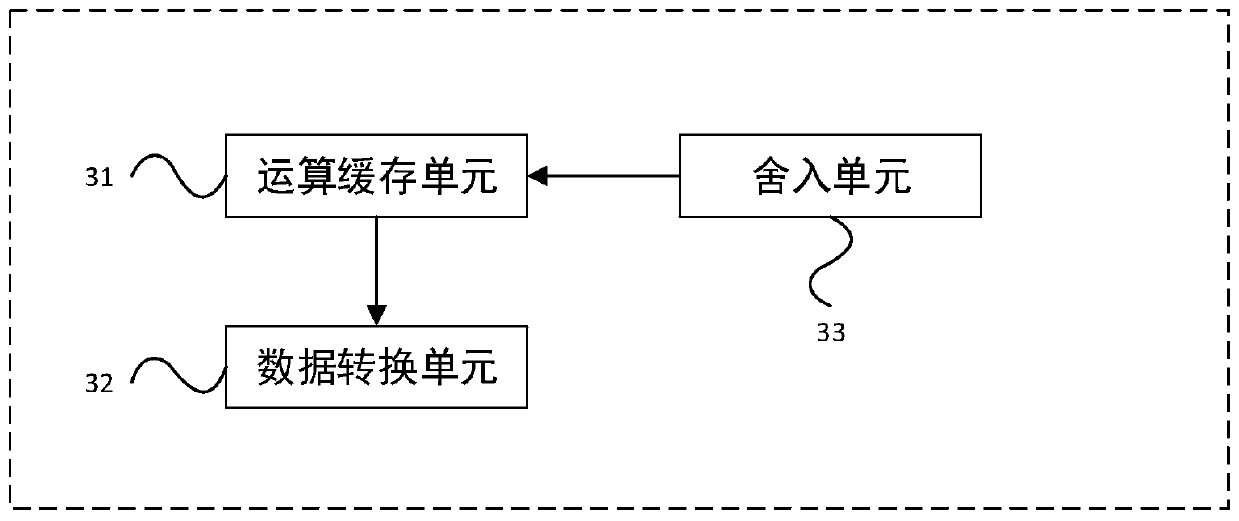

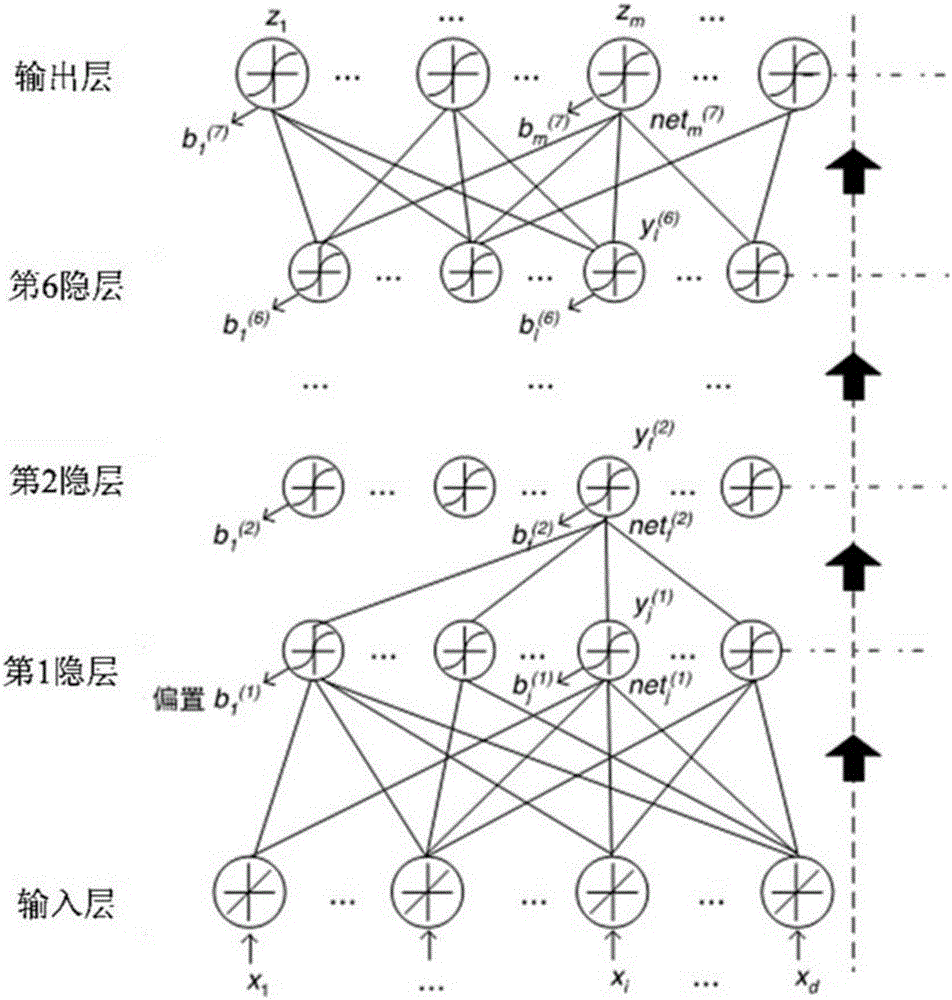

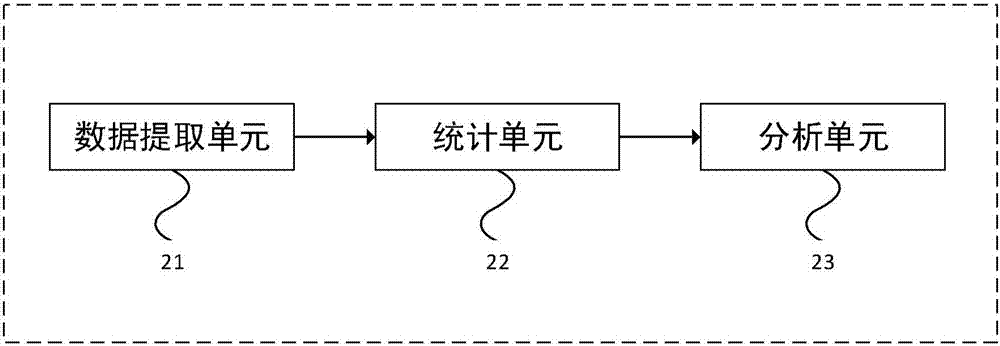

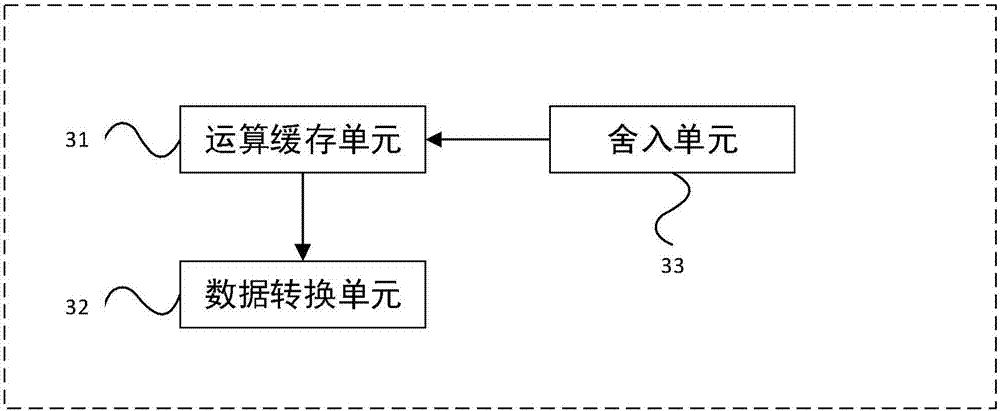

The invention provides a device and a method for executing a forward operation of an artificial neural network. The device comprises the components of a floating point data statistics module which is used for performing statistics analysis on varies types of required data and obtains the point location of fixed point data; a data conversion unit which is used for realizing conversion from a long-bit floating point data type to a short-bit floating point data type according to the point location of the fixed point data; and a fixed point data operation module which is used for performing artificial neural network forward operation on the short-bit floating point data. According to the device provided by the invention, through representing the data in the forward operation of the multilayer artificial neural network by short-bit fixed points, and utilizing the corresponding fixed point data operation module, forward operation for the short-bit fixed points in the artificial neural network is realized, thereby greatly improving performance-to-power ratio of hardware.

Owner:CAMBRICON TECH CO LTD

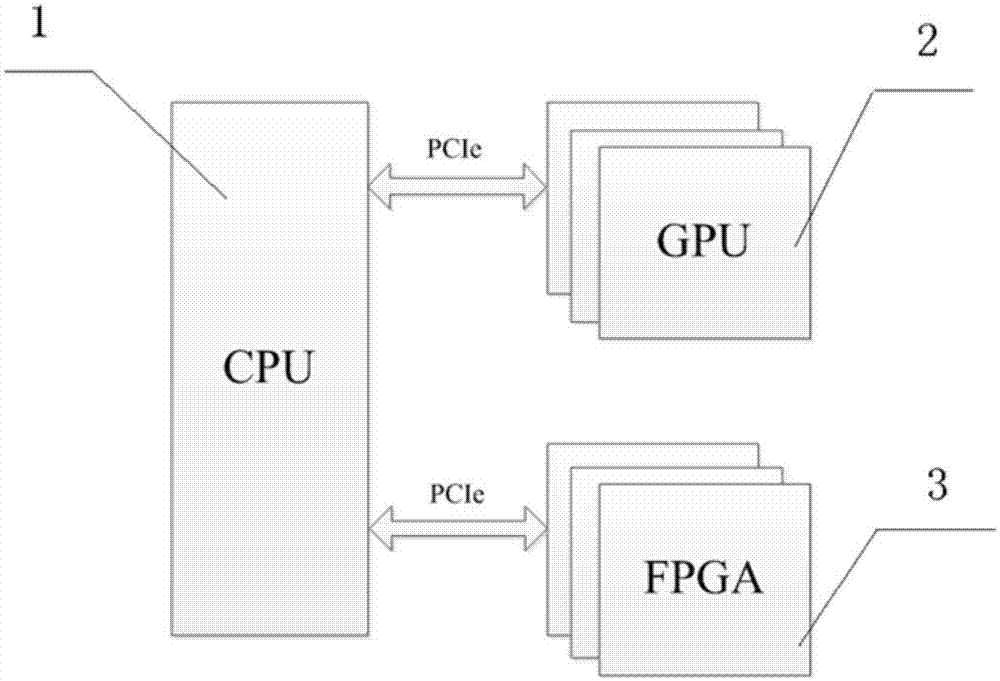

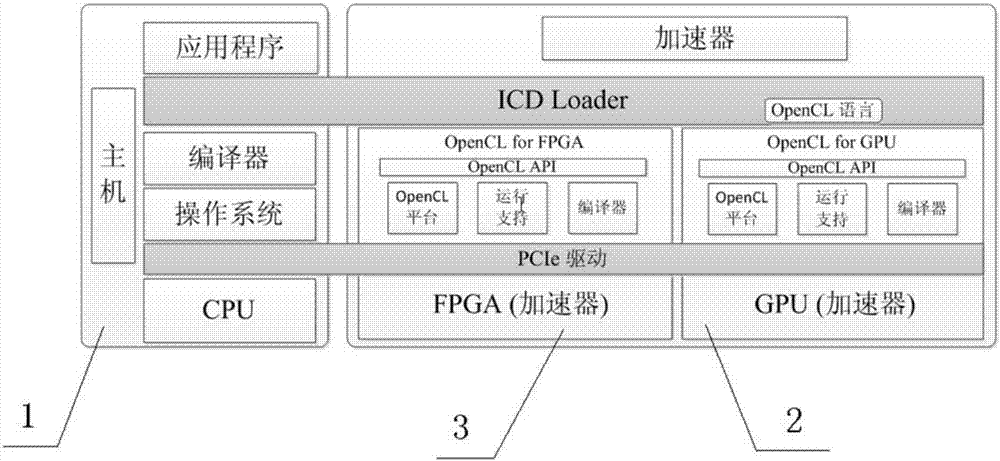

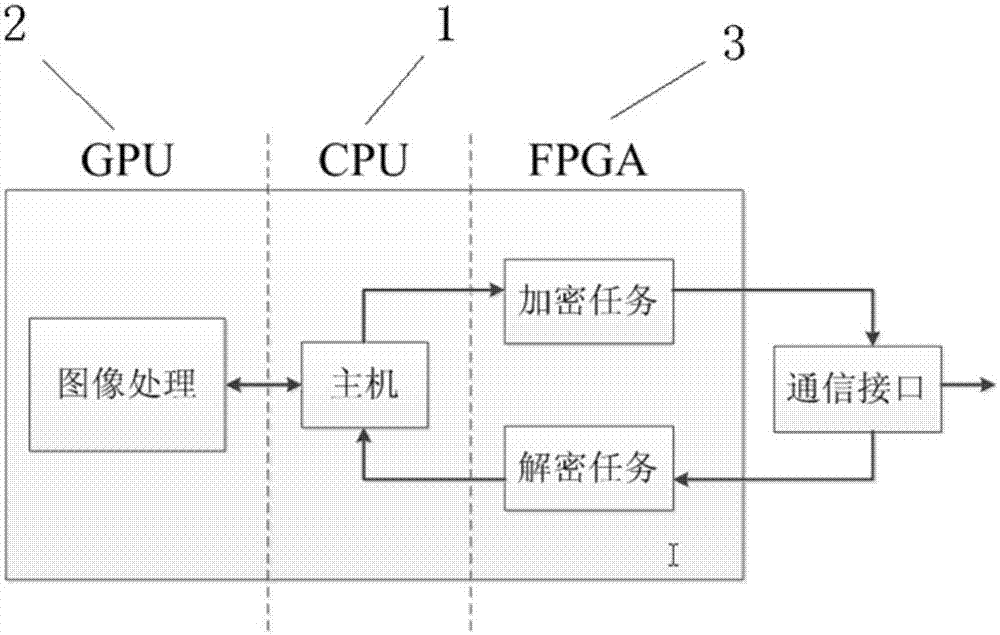

Heterogeneous computing system and method based on CPU+GPU+FPGA architecture

InactiveCN107273331AGive full play to the advantages of management and controlTake full advantage of parallel processingArchitecture with single central processing unitEnergy efficient computingFpga architectureResource management

The invention provides a heterogeneous computing system based on CPU+GPU+FPGA architecture. The system comprises a CPU host unit, one or more GPU heterogeneous acceleration units and one or more FPGA heterogeneous acceleration units. The CPU host unit is in communication connection with the GPU heterogeneous acceleration units and the FPGA heterogeneous acceleration units. The CPU host unit is used for managing resources and allocating processing tasks to the GPU heterogeneous acceleration units and / or the FPGA heterogeneous acceleration units. The GPU heterogeneous acceleration units are used for carrying out parallel processing on tasks from the CPU host unit. The FPGA heterogeneous acceleration units are used for carrying out serial or parallel processing on the tasks from the CPU host unit. According to the heterogeneous computing system provided by the invention, the control advantages of the CPU, the parallel processing advantages of the GPU, the performance and power consumption ratio and flexible configuration advantages of the FPGA can be exerted fully, the heterogeneous computing system can adapt to different application scenes and can satisfy different kinds of task demands. The invention also provides a heterogeneous computing method based on the CPU+GPU+FPGA architecture.

Owner:SHANDONG CHAOYUE DATA CONTROL ELECTRONICS CO LTD

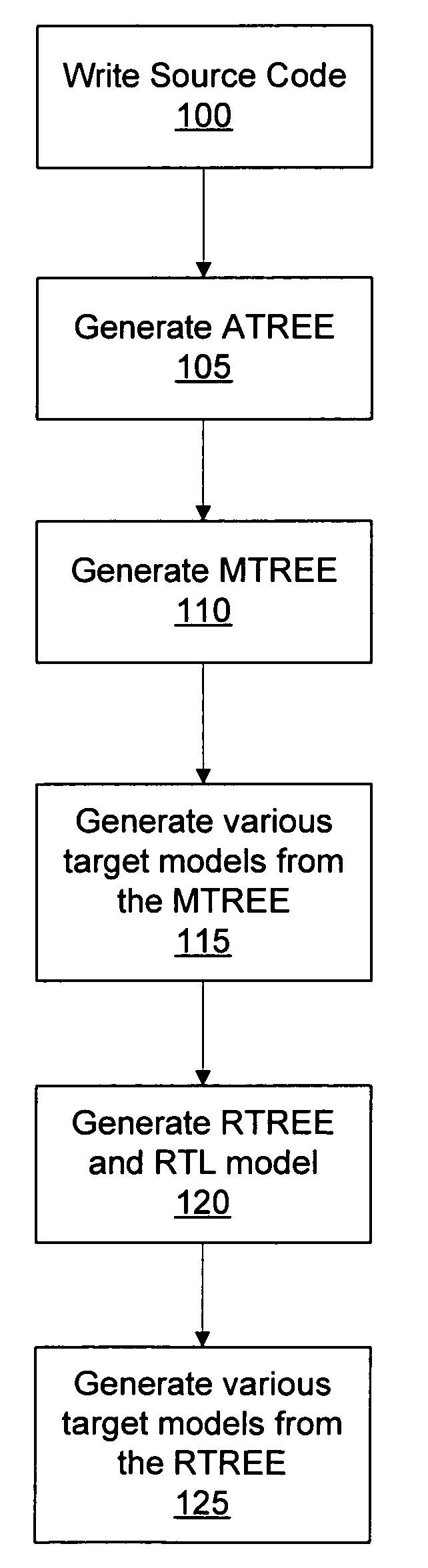

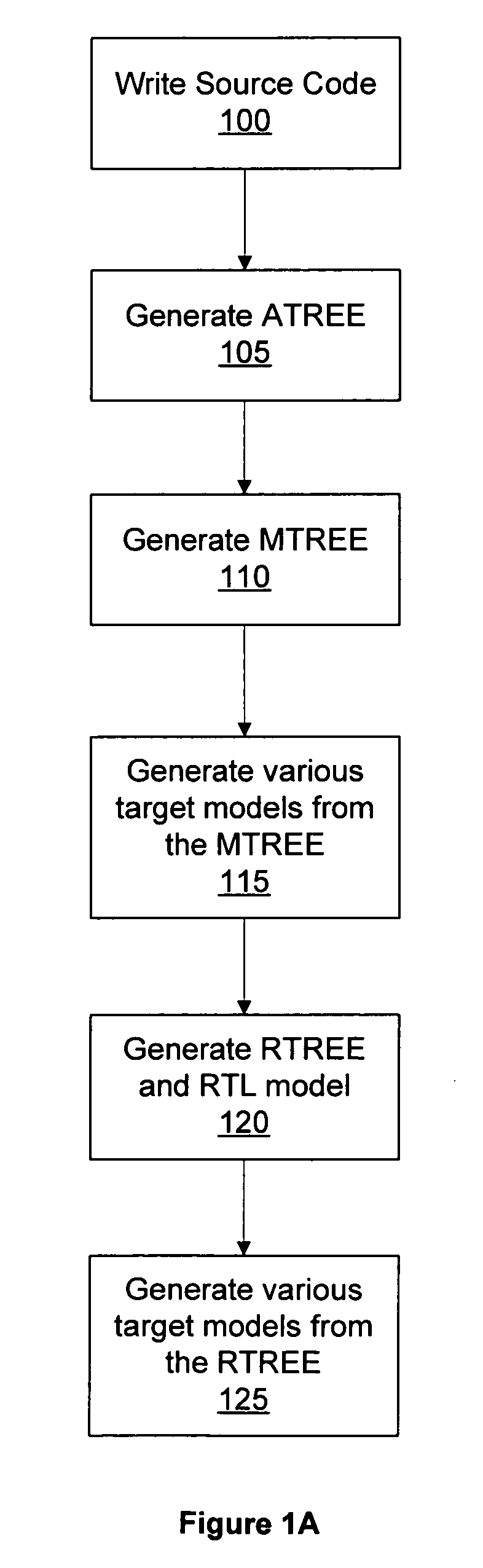

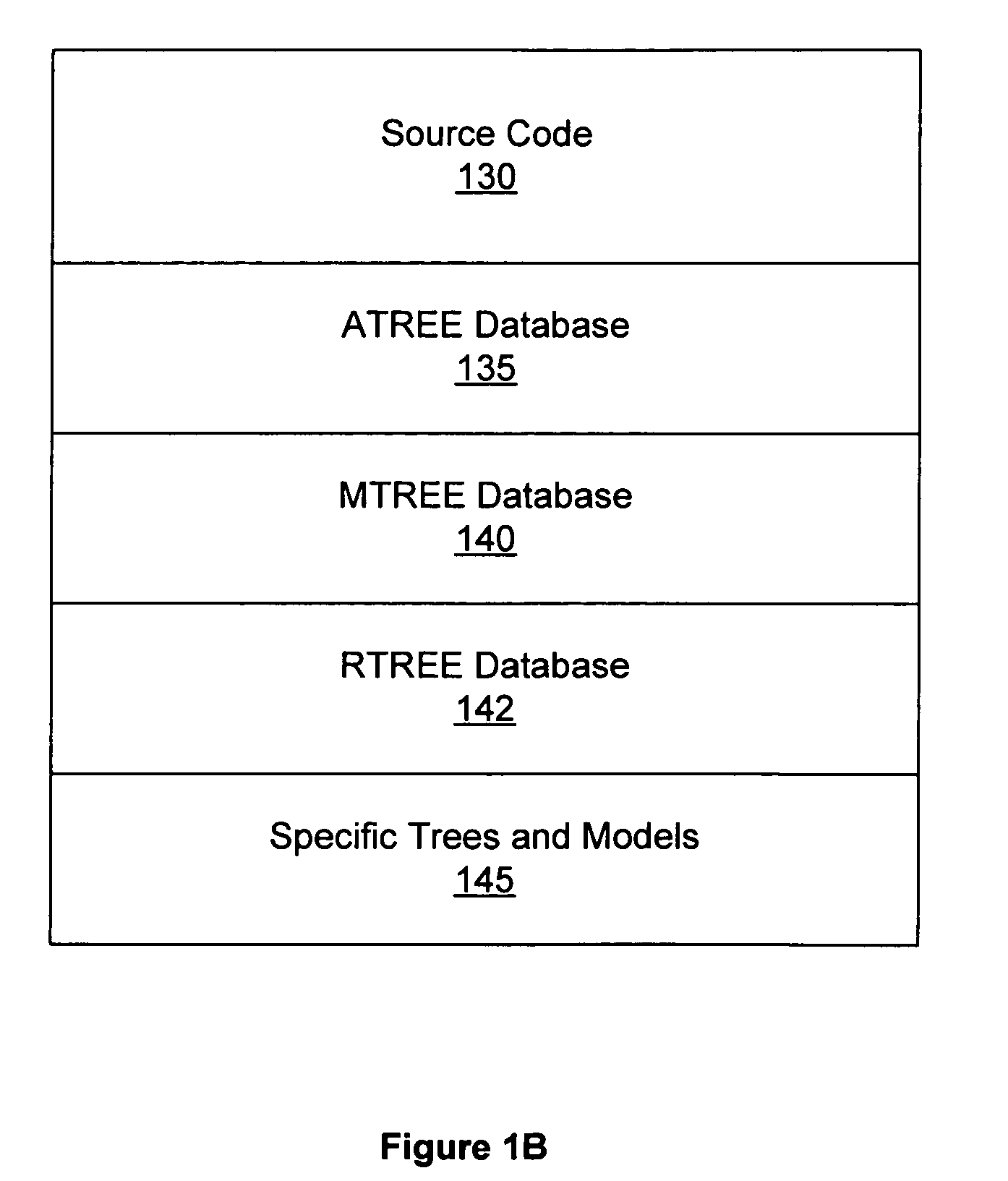

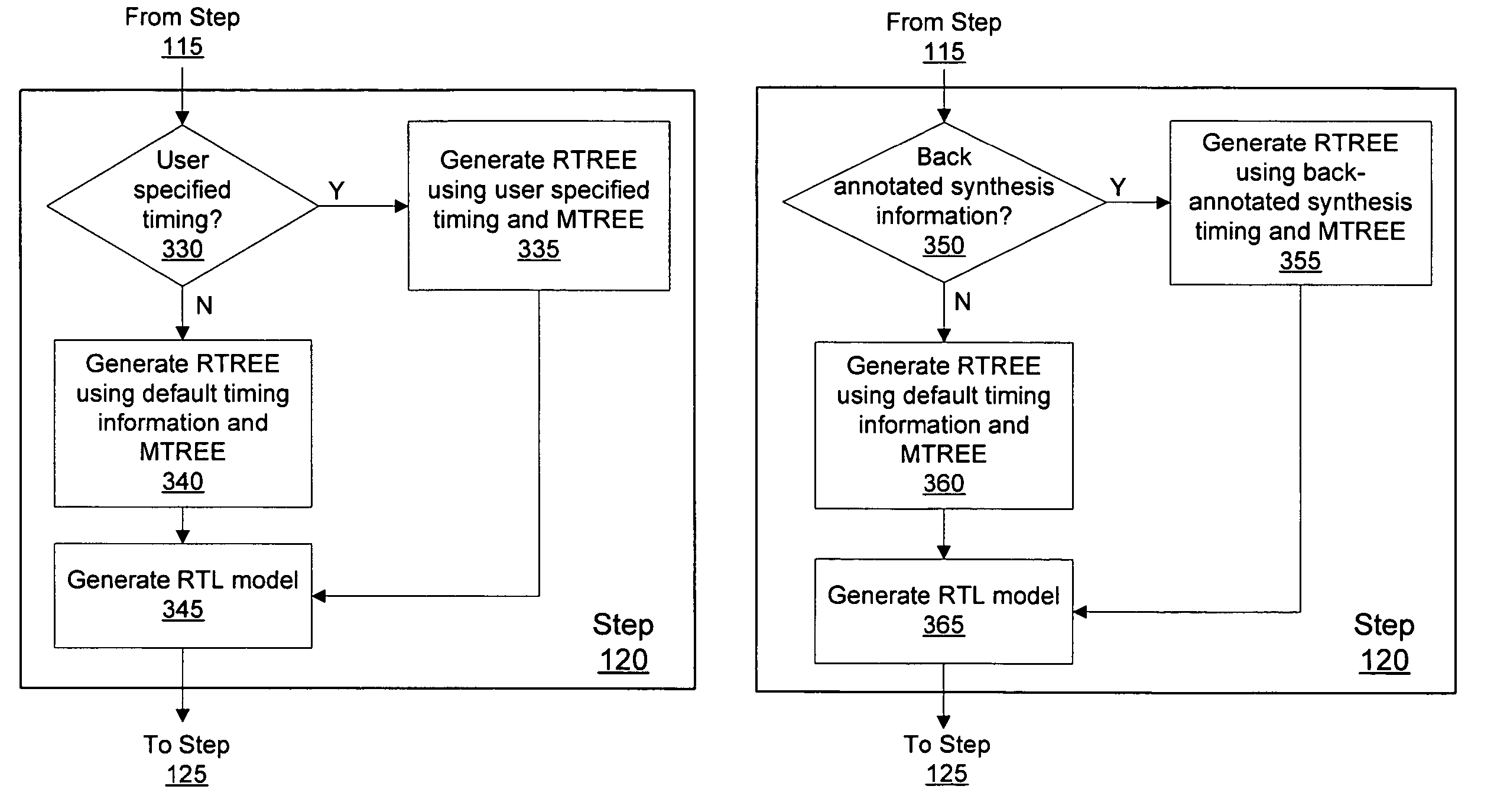

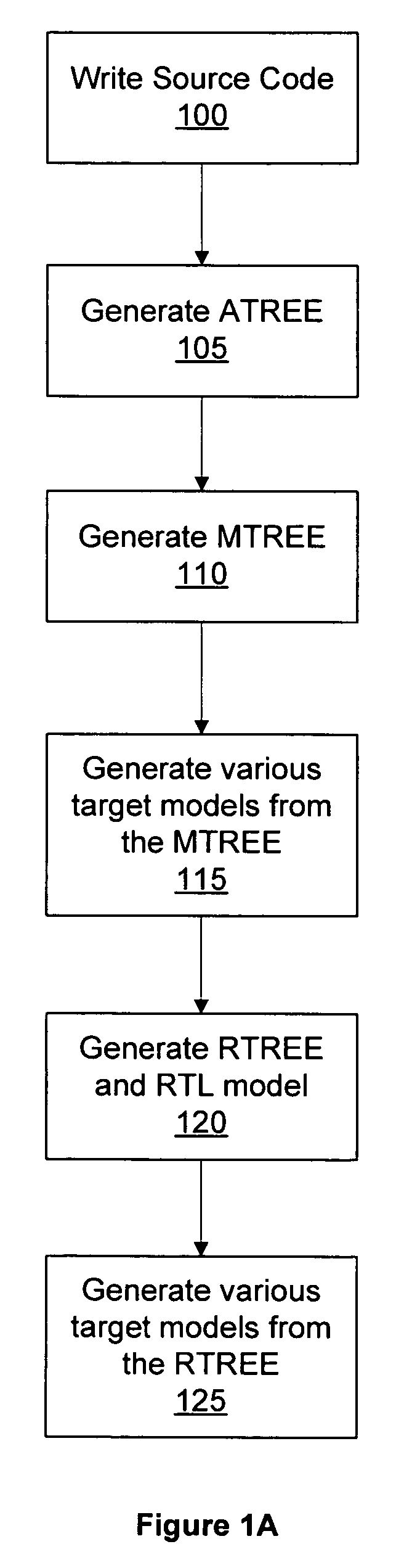

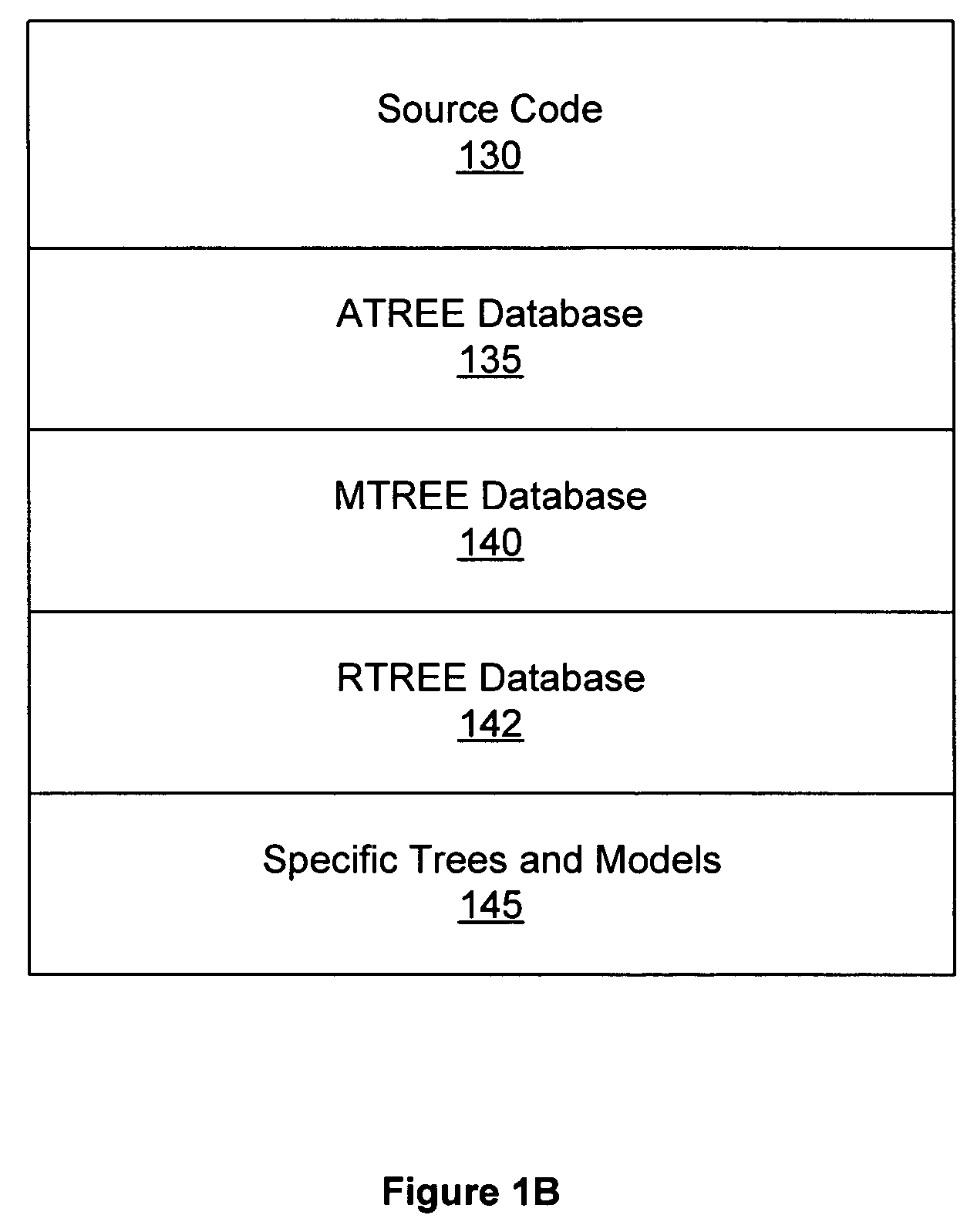

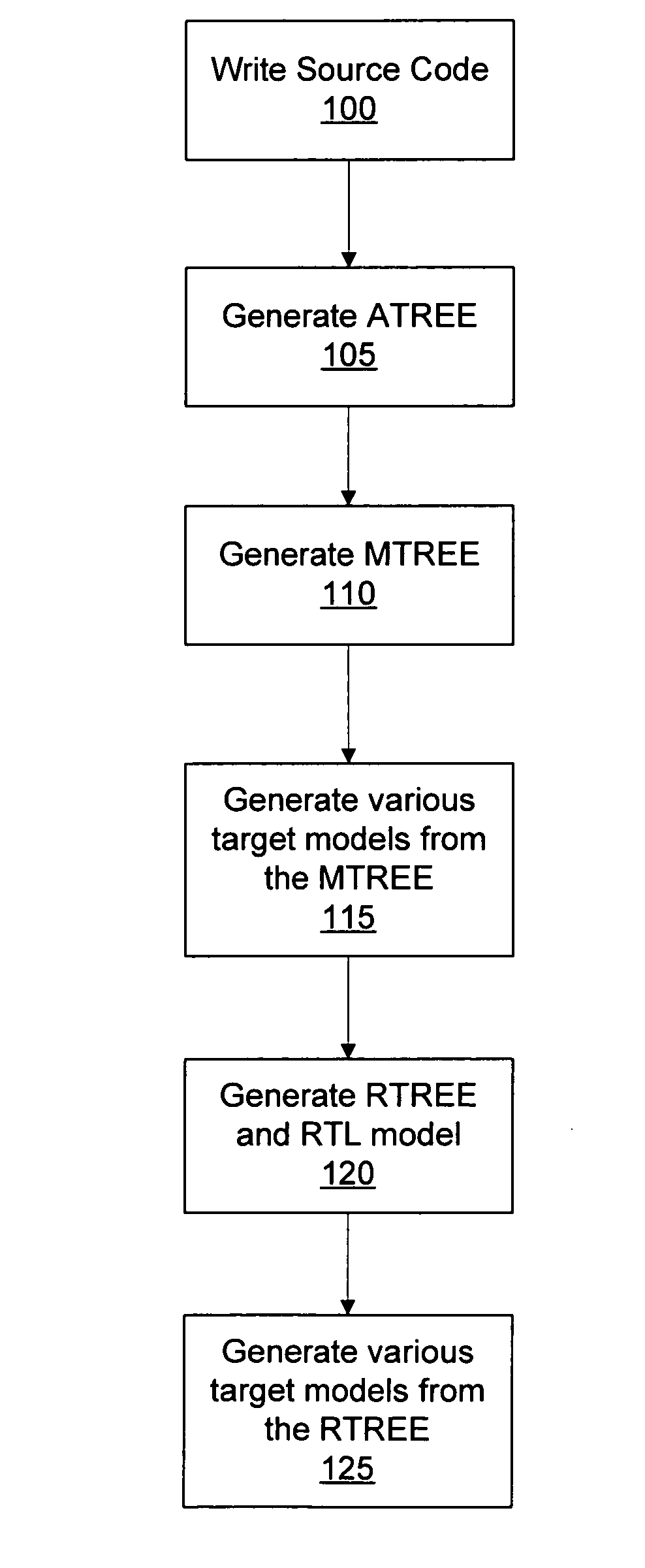

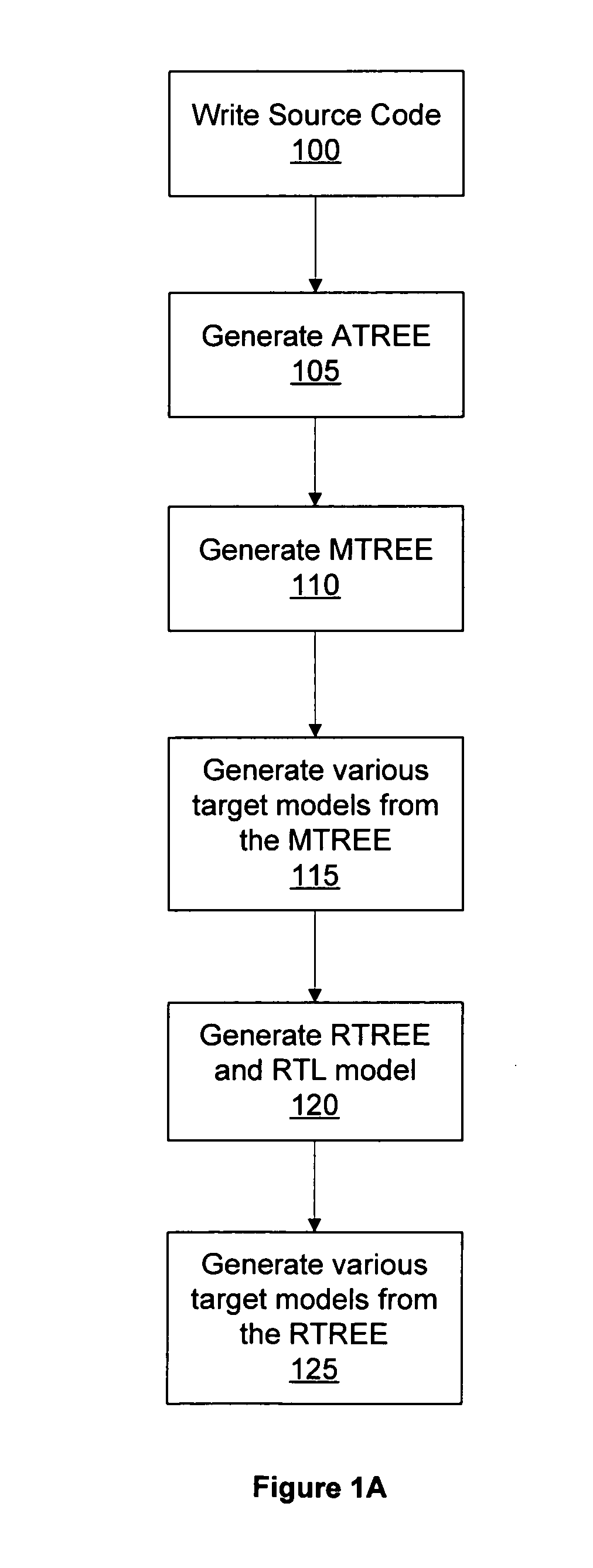

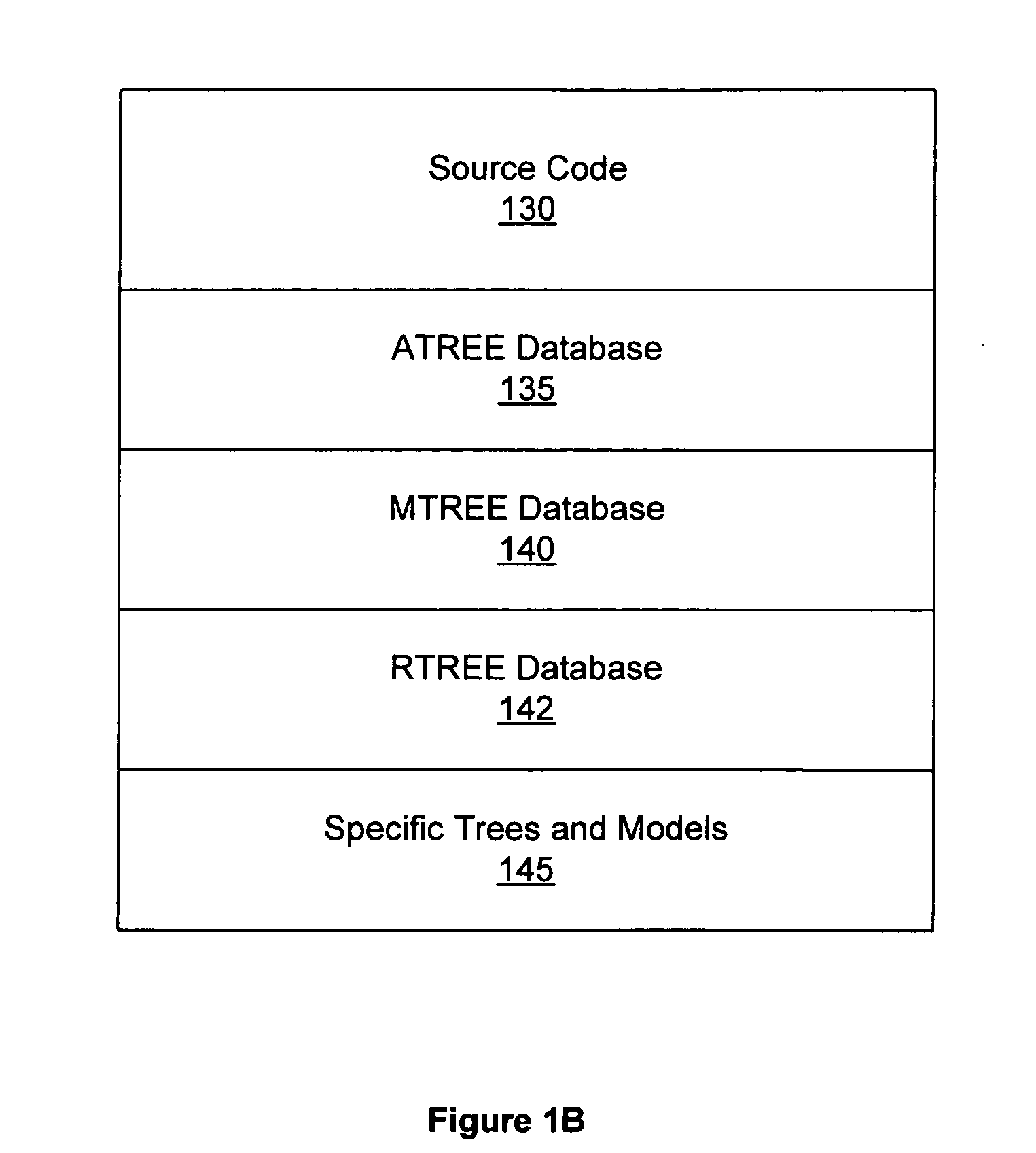

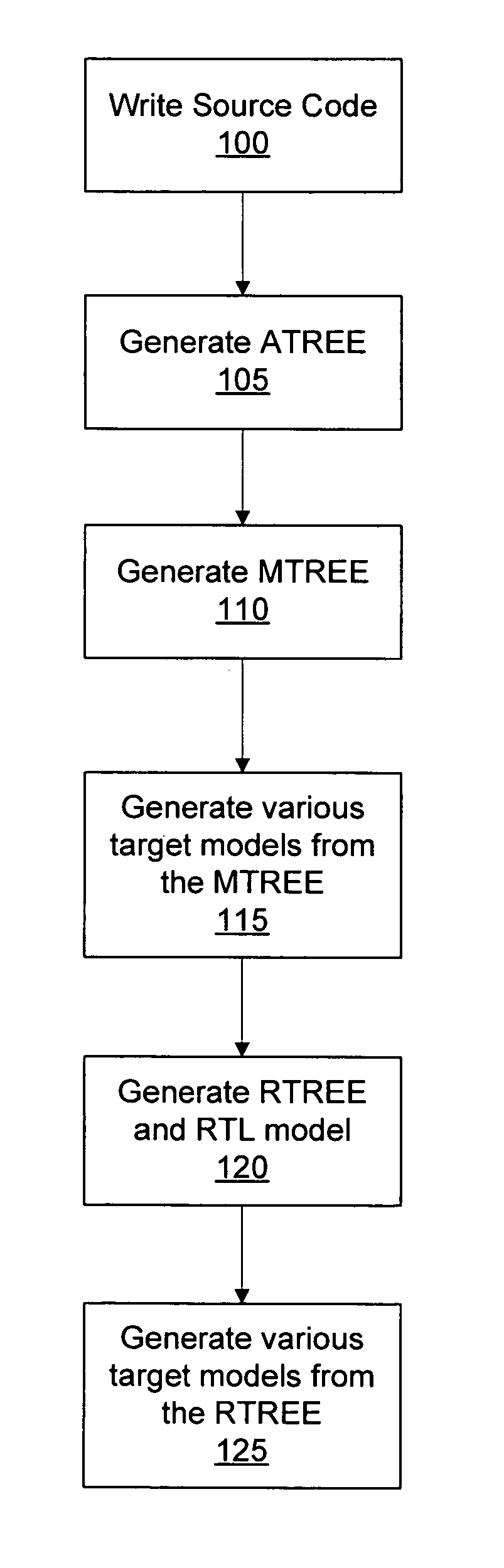

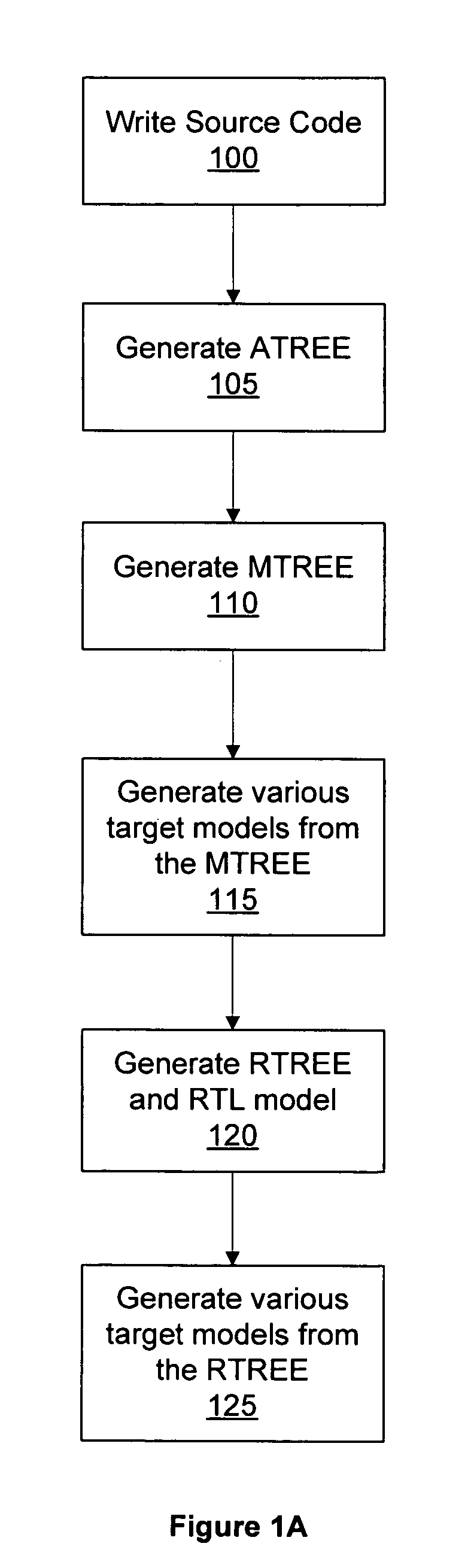

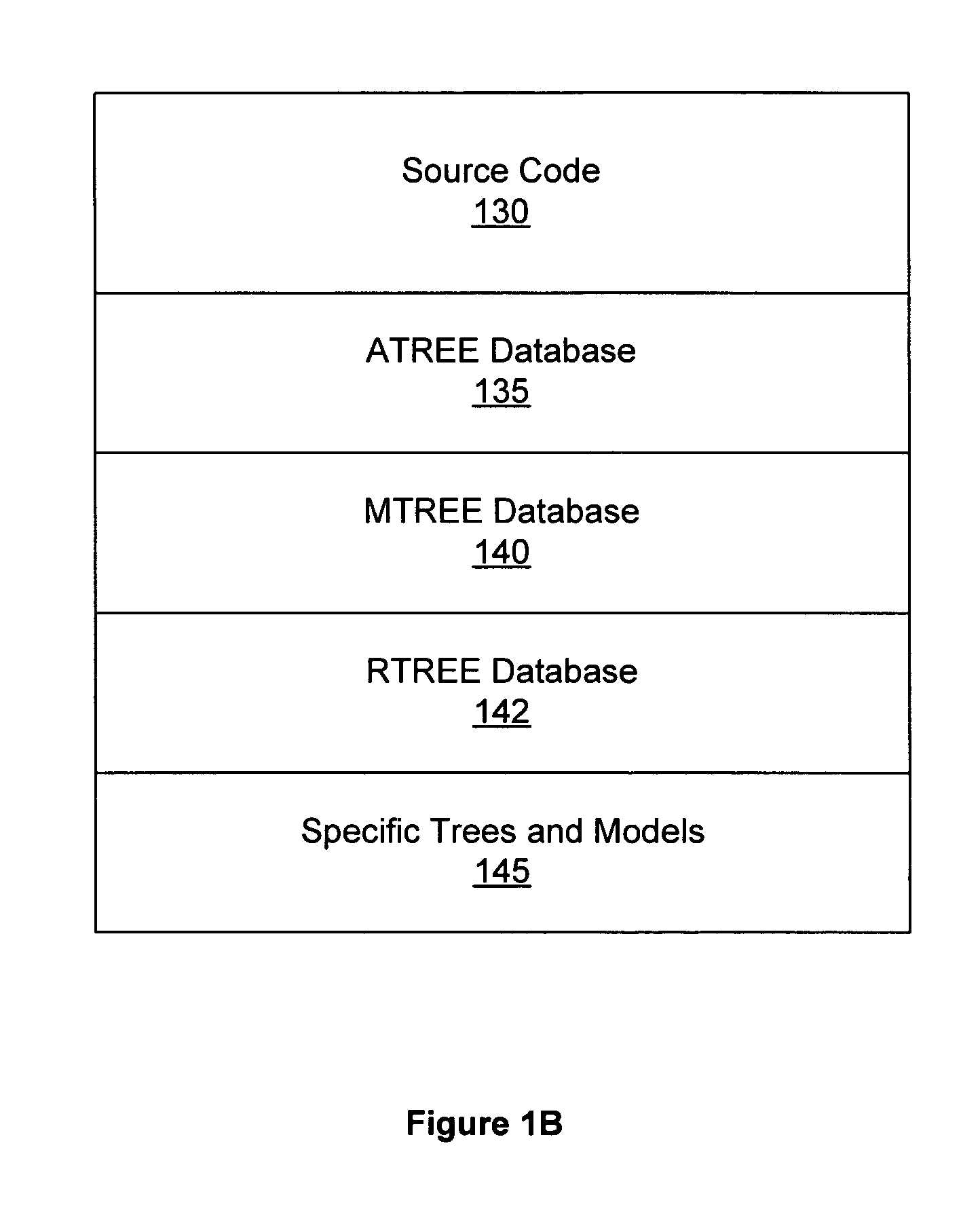

Building integrated circuits using a common database

InactiveUS7363610B2Improved performance per watt of power consumptionCAD circuit designSoftware simulation/interpretation/emulationComputer architectureHigh-level programming language

Systems and methods for designing and generating integrated circuits using a high-level language are described. The high-level language is used to generate performance models, functional models, synthesizable register transfer level code defining the integrated circuit, and verification environments. The high-level language may be used to generate templates for custom computation logical units for specific user-determined functionality. The high-level language and compiler permit optimizations for power savings and custom circuit layout, resulting in integrated circuits with improved performance per watt of power consumption.

Owner:NVIDIA CORP

An apparatus and method for performing an artificial neural network forward operation

ActiveCN109934331ARealize forward operationSmall area overheadDigital data processing detailsCode conversionAlgorithmData operations

The invention discloses a device and a method for executing forward operation of an artificial neural network, and the device comprises a floating point data statistics module which is used for carrying out statistics analysis on various types of required data, and obtaining the decimal point position Point locality of fixed point data; a data conversion unit which is used for realizing conversionfrom the long-bit floating point data type to the short-bit fixed point data type according to the decimal point position of the fixed point data; And a fixed-point data operation module which is used for carrying out artificial neural network forward operation on the short-digit fixed-point data. According to the device disclosed by the invention, the data in the forward operation of the multilayer artificial neural network is represented by using the short-digit fixed points, and the corresponding fixed-point operation module is used, so that the short-digit fixed-point forward operation ofthe artificial neural network is realized, and the performance power consumption ratio of hardware is greatly improved.

Owner:CAMBRICON TECH CO LTD

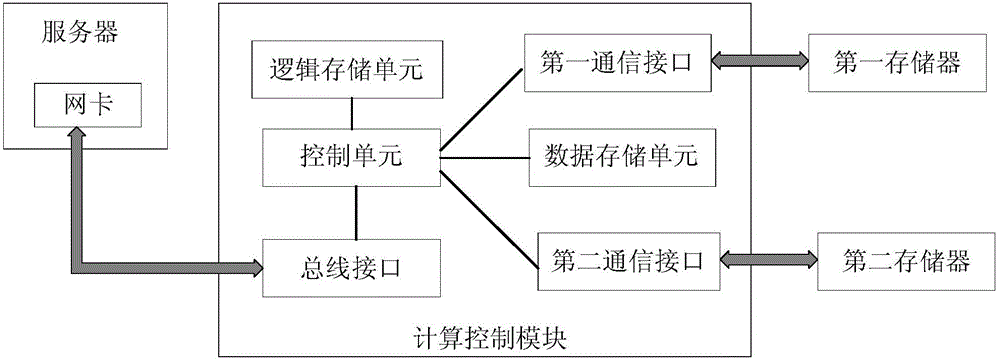

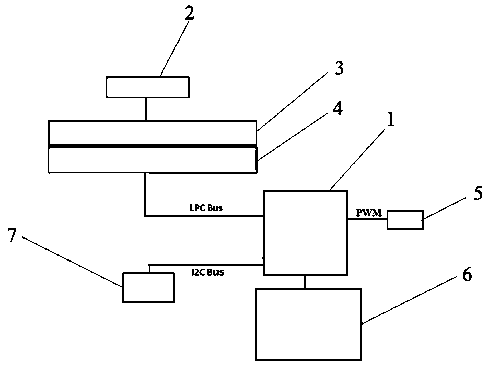

Acceleration device and method for deep learning service

ActiveCN106156851AExcellent performance per wattNeural learning methodsCommunication interfaceProgrammable logic device

The invention discloses an acceleration device for the deep learning service, wherein the device is used for the deep learning calculation on to-be-processed data in a server. The device comprises a network card arranged at a server side, a calculation control module connected with the server through a bus, a first memory and a second memory. The calculation control module is a programmable logic device and comprises a control unit, a data storage unit, a logic storage unit, a bus interface, a first communication interface and a second communication interface, wherein the bus interface, the first communication interface and the second communication interface are respectively communicated with the network card, the first memory and the second memory. The logic storage unit is used for storing the depth learning control logic. The first memory is used for storing the weight data and the offset data of each layer of the network. Based on the device, the calculation efficiency can be effectively improved, and the performance power consumption ratio can also be improved.

Owner:IFLYTEK CO LTD

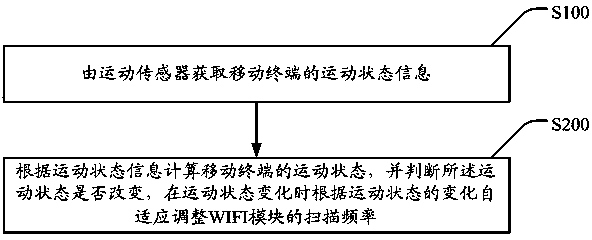

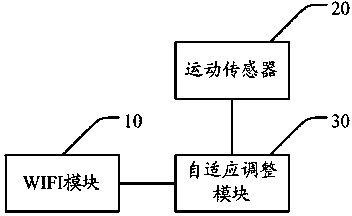

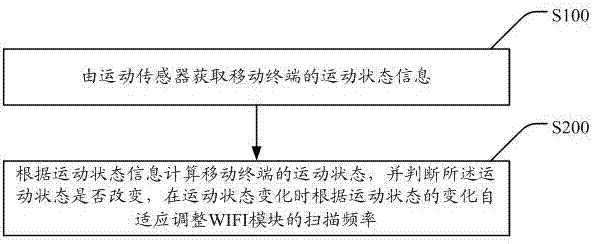

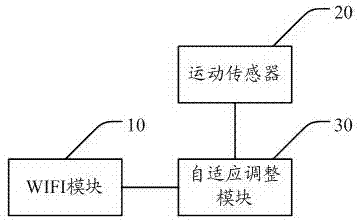

Method and mobile terminal for adjusting WIFI scanning frequency based on motion state

ActiveCN103458440AGuaranteed to workImprove performance per power ratioPower managementNetwork topologiesComputer moduleComputer terminal

The invention discloses a method and mobile terminal for adjusting WIFI scanning frequency based on a motion state. The method comprises the steps that A, a motion sensor acquires the motion state information of the mobile terminal; B, the motion state of the mobile terminal is calculated according to the motion state information, whether the motion state is changed or not is judged, and the scanning frequency of a WIFI module is adaptively adjusted according to the change of the motion state when the motion state is changed. According to the method and mobile terminal for adjusting the WIFI scanning frequency based on the motion state, the scanning frequency of the WIFI module can be adaptively adjusted according to the change of the motion state, therefore, a WIFI can work more efficiently, the performance per watt can be improved, the battery time of the mobile terminal under a WIFI work mode can be prolonged, and the user experience is improved.

Owner:威海神舟信息技术研究院有限公司

Building integrated circuits using logical units

ActiveUS7483823B2Improved performance per watt of power consumptionCAD circuit designSpecial data processing applicationsComputer architectureUnit system

Systems and methods for designing and generating integrated circuits using a high-level language are described. The high-level language is used to generate performance models, functional models, synthesizable register transfer level code defining the integrated circuit, and verification environments. The high-level language may be used to generate templates for custom computation logical units for specific user-determined functionality. The high-level language and compiler permit optimizations for power savings and custom circuit layout, resulting in integrated circuits with improved performance per watt of power consumption.

Owner:NVIDIA CORP

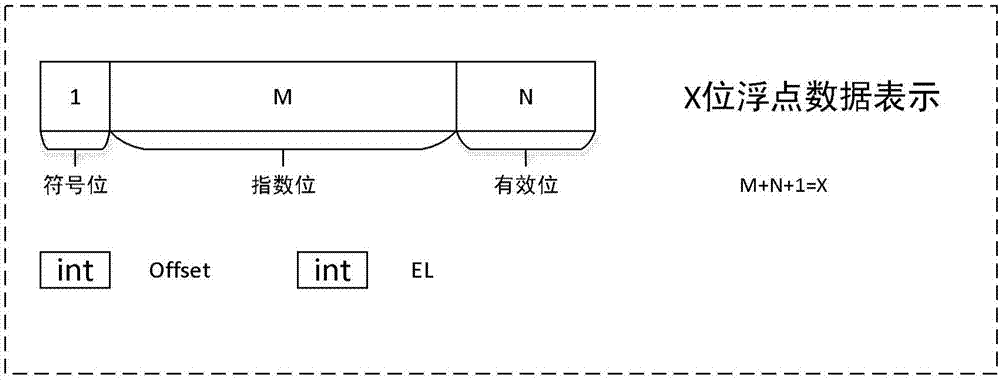

Device and method for neural network operation supporting floating point number with few digits

ActiveCN107340993ASmall area overheadReduce area overhead and optimize hardware area power consumptionDigital data processing detailsPhysical realisationData operationsComputer module

The invention provides a device and a method for executing artificial neural network forward operation. The device comprises a floating point data statistical module, a floating point data conversion unit and a floating point data operation module, wherein the floating point data statistical module is used for performing statistical analysis on all types of needed data to obtain index digit offset and length EL of index digits; the floating point data conversion unit is used for realizing conversion from a long-digit floating point data type to a short-digit floating point data type according to the index digit offset and the length EL of the index digits; and the floating point data operation module is used for performing artificial neural network forward operation on short-digit floating point data. According to the device, the data in multi-level artificial neural network forward operation is expressed with short-digit floating points, and the corresponding floating point operation module is used, so that forward operation of the short-digit floating points of an artificial neural network is realized, and accordingly the performance-to-consumption ratio of hardware is greatly improved.

Owner:CAMBRICON TECH CO LTD

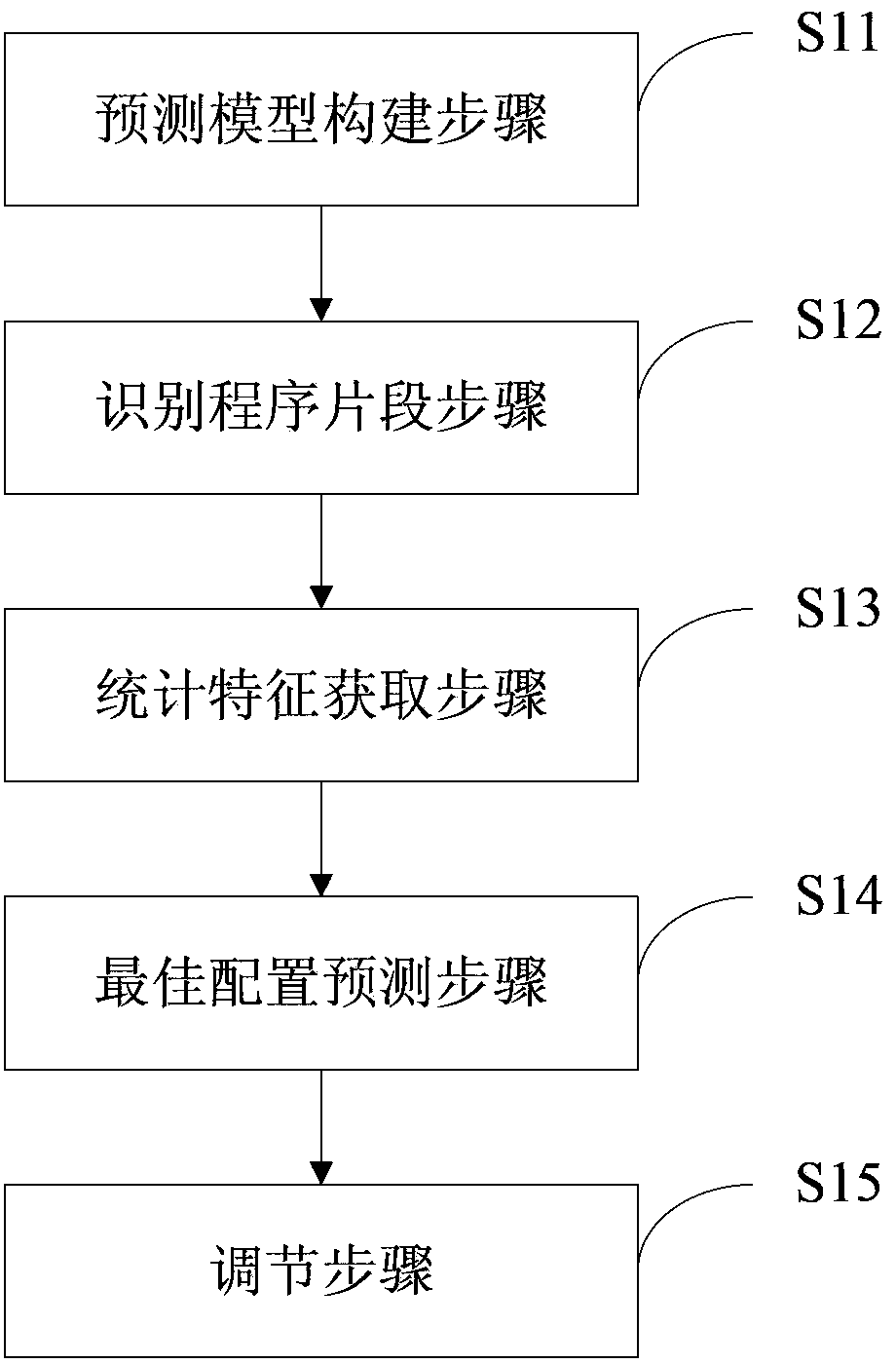

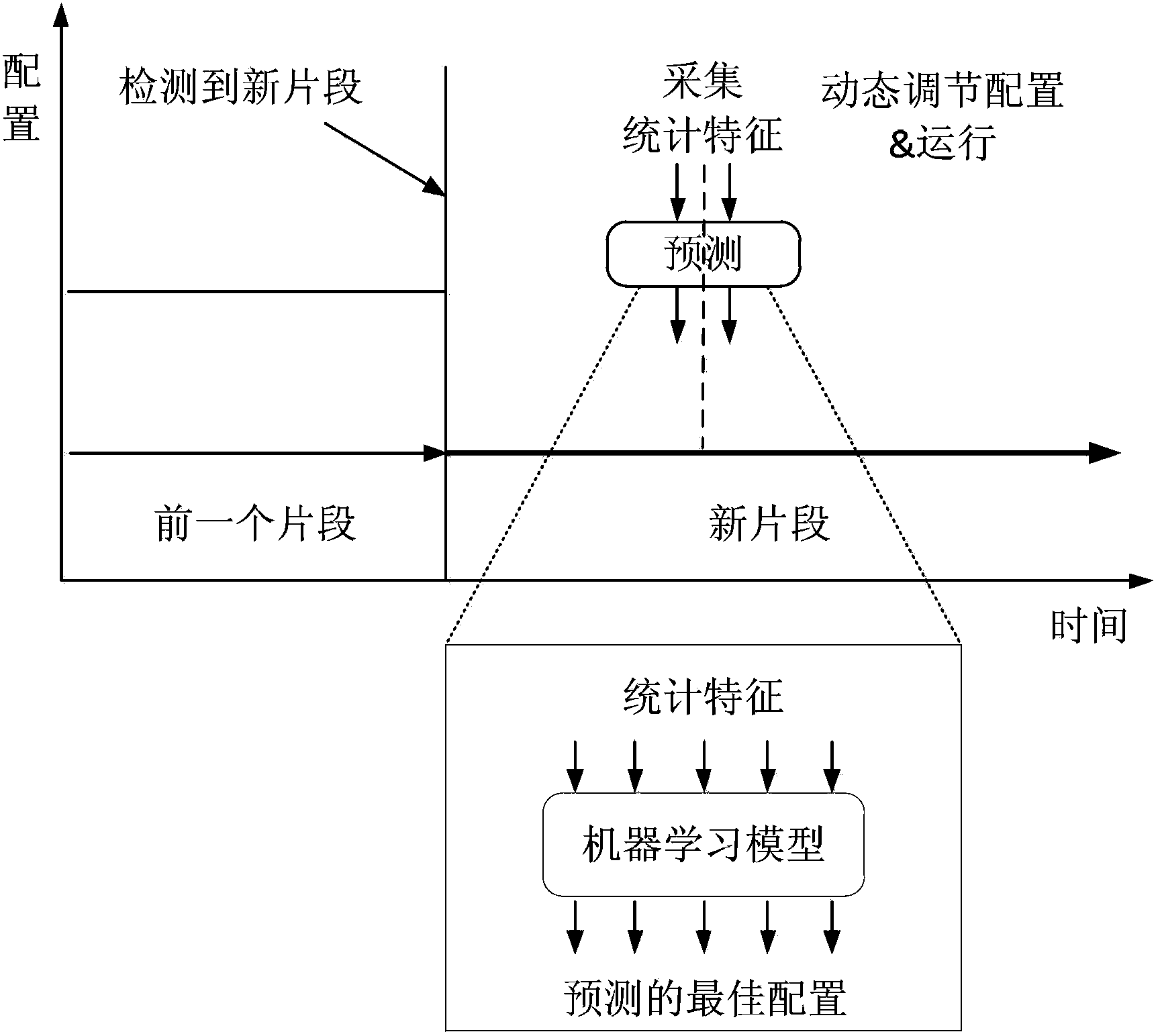

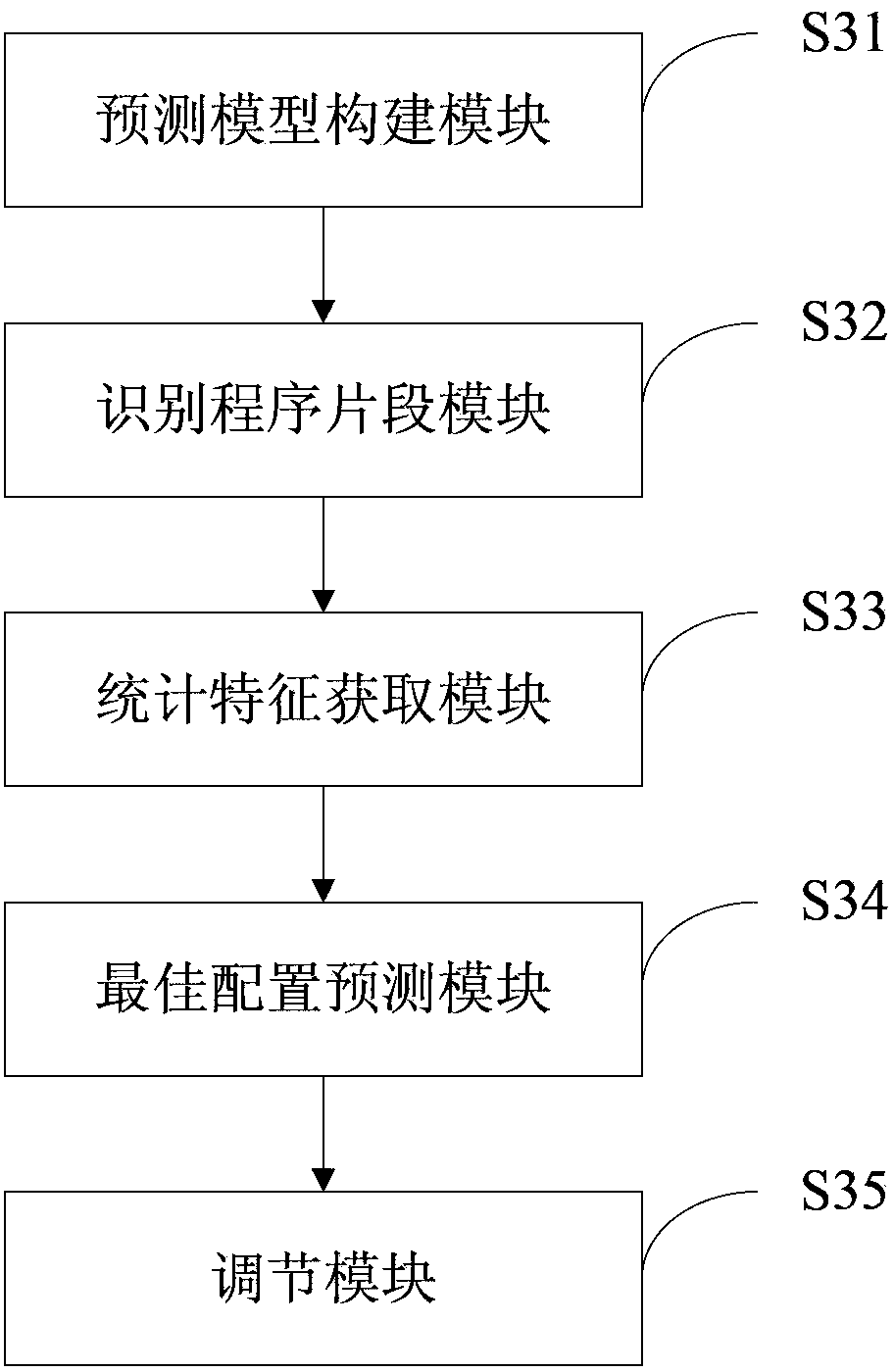

Method and system for reducing soft error rate of processor

ActiveCN103365731AReduce soft error rateMaintain or improve performance per wattError detection/correctionProgram segmentLearning methods

The invention discloses a method and system for reducing soft error rate of a processor. The method comprises the following steps: constructing a prediction model: adopting a machine learning method to construct the prediction model, so as to predict processor optimum configuration capable of reducing soft error rate of the processor with low cost; recognizing program segments: dividing a program into a plurality of continuous program segments during program running; obtaining statistical characteristics: obtaining the statistical characteristics of the program segments within a short period of time when the program segments are run initially; predicting optimum configuration: inputting the obtained statistical characteristics into the prediction model, so as to predict the processor optimum configuration corresponding to the program segments as predicting results; adjusting: according to the predicting results, adjusting processor components configuration, so as to reduce the soft error rate of the processor under the condition that performance power consumption ratio is maintained or improved. According to the method and the system for reducing soft error rate of the processor, the purpose that the reduction of soft error rate of the processor with low cost is achieved through dynamically adjusting the processor components configuration is achieved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

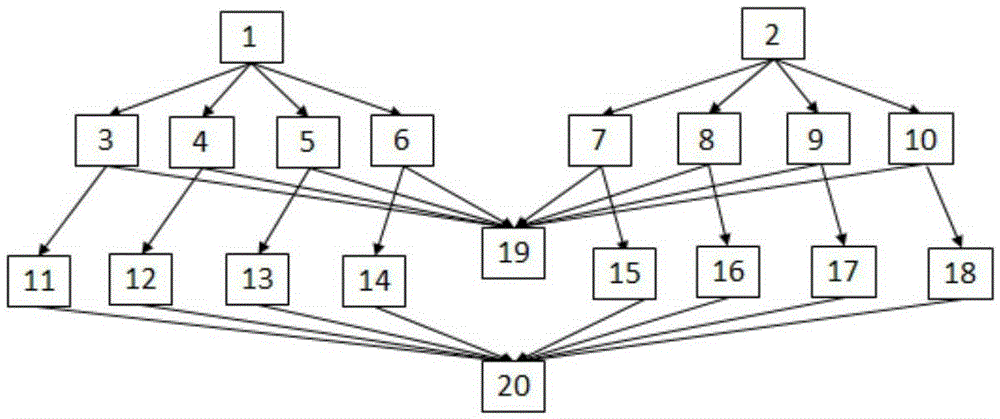

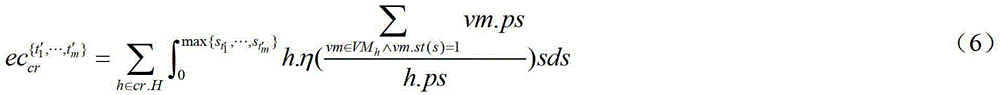

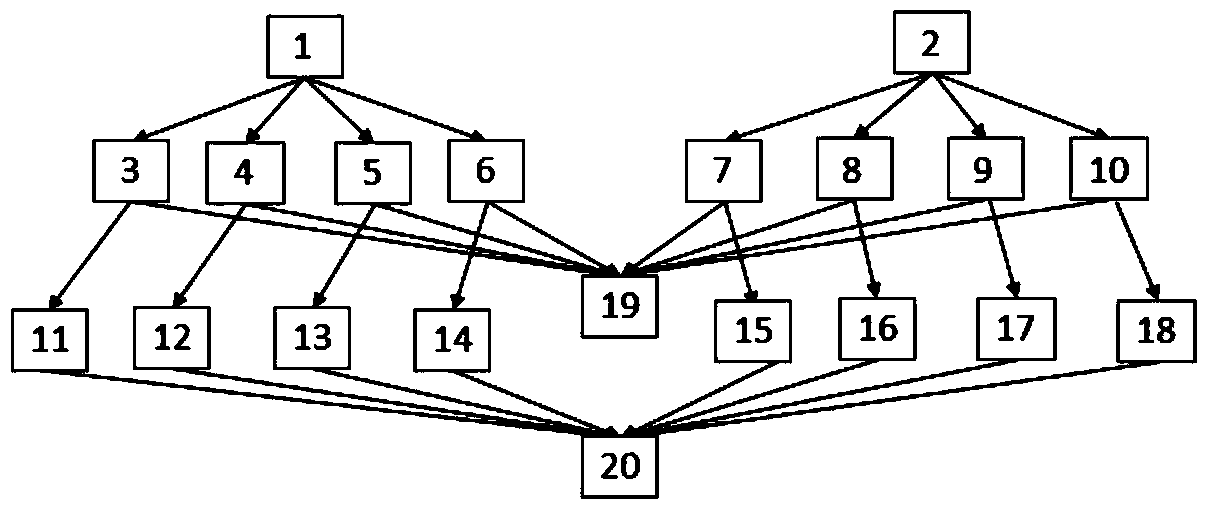

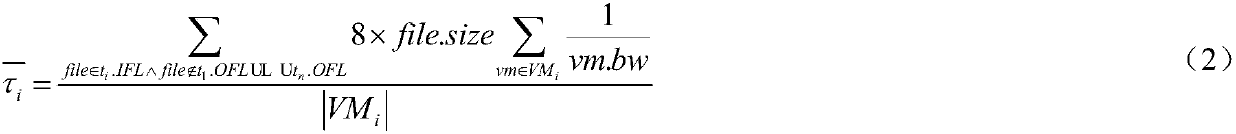

Energy consumption-oriented cloud workflow scheduling optimization method

InactiveCN105260005AImplement energy consumption calculation methodKeep Execution Time EfficientResource allocationPower supply for data processingCloud workflowWorkflow scheduling

The invention discloses an energy consumption-oriented cloud workflow scheduling optimization method. The method comprises the following steps: (1) establishing energy consumption-oriented cloud workflow process model and resource model; (2) calculating a task priority; (3) taking out a task t with highest priority from a task set T, finding out a virtual machine set VMt capable of executing the task t, and calculating energy consumption for distributing the task t to each virtual machine in the VMt and completing all distributed tasks; (4) finding out a vm with minimal energy consumption, if only one vm has the minimal energy consumption, distributing the task t to the vm, and if a plurality of vms have the minimal energy consumption, distributing the task t to the vm with characteristics that the vm has the minimal energy consumption and a host in which the vm is located has highest performance per watt; deleting the task t from the task set T, and if the task set T is not null, going to the step (3), or otherwise, going to the step (5); and (5) outputting a workflow scheduling scheme. According to the scheduling optimization method provided by the invention, an energy consumption factor is considered, so that the energy consumption for task processing by the host is effectively reduced while workflow execution time efficiency is kept.

Owner:南京喜筵网络信息技术有限公司

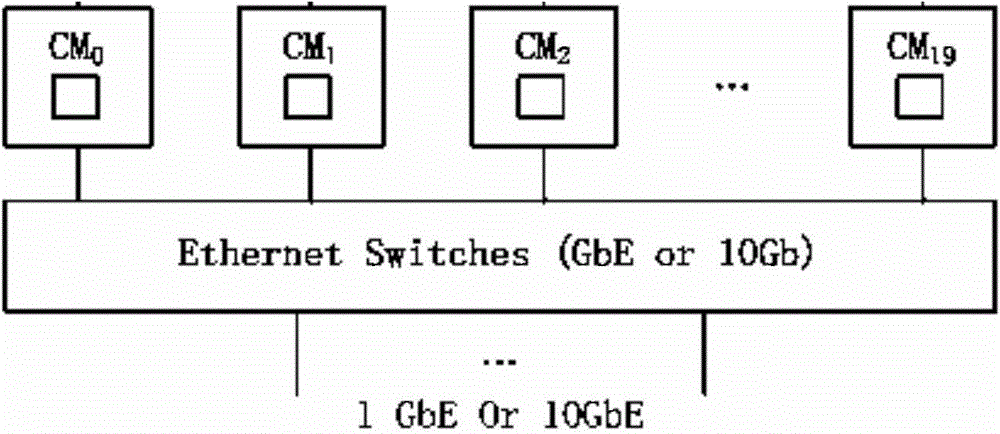

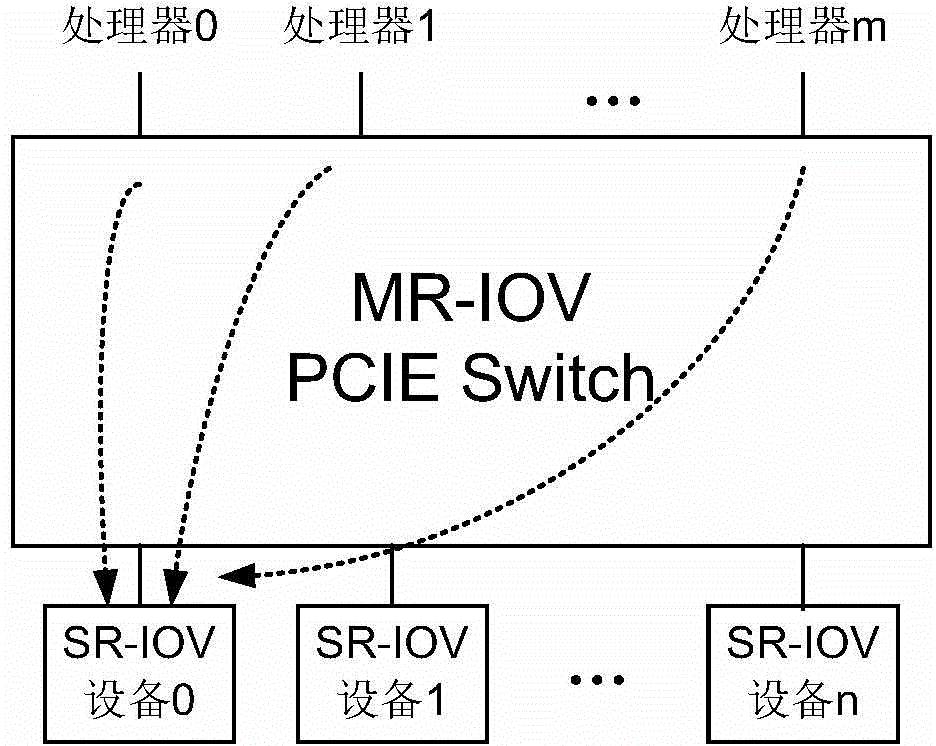

Cloud server system

InactiveCN104601684AMeet design needsGuaranteed performanceTransmissionEnergy efficient computingVirtualizationPerformance per watt

The invention discloses a cloud server system. The cloud server system comprises a plurality of MR-IOV PCIE (multiple root-input and output virtualization peripheral component interconnect express)switches, wherein the plurality of the MR-IOV PCIE switches are connected with one another. The cloud server system based on the MR-IOV PCIE switches can structurally meet design demands of a cloud server well, and in other words, the cloud server system is strong in performance consumption rate and overall service ability, low in cost, low in power consumption and high in efficiency. The cloud server system can structurally achieve I / O virtualization, and guarantees performance of the server to the utmost.

Owner:DAWNING CLOUD COMPUTING TECH CO LTD +1

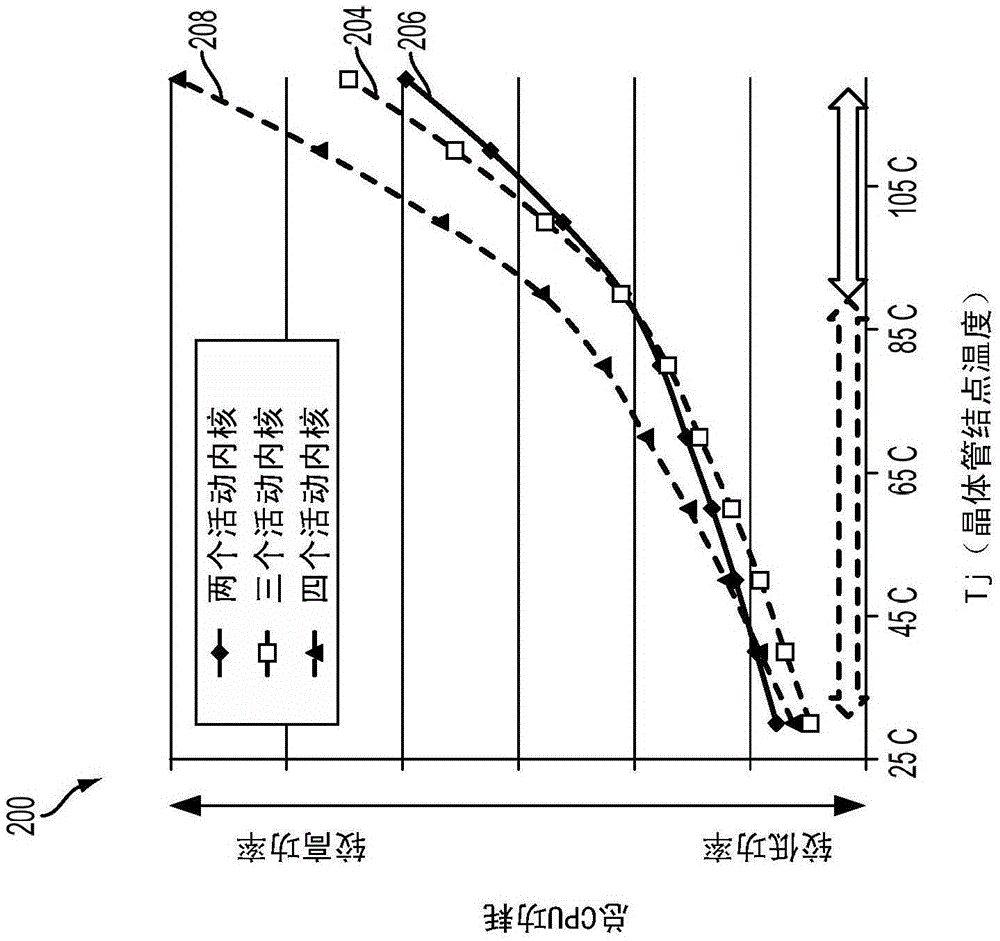

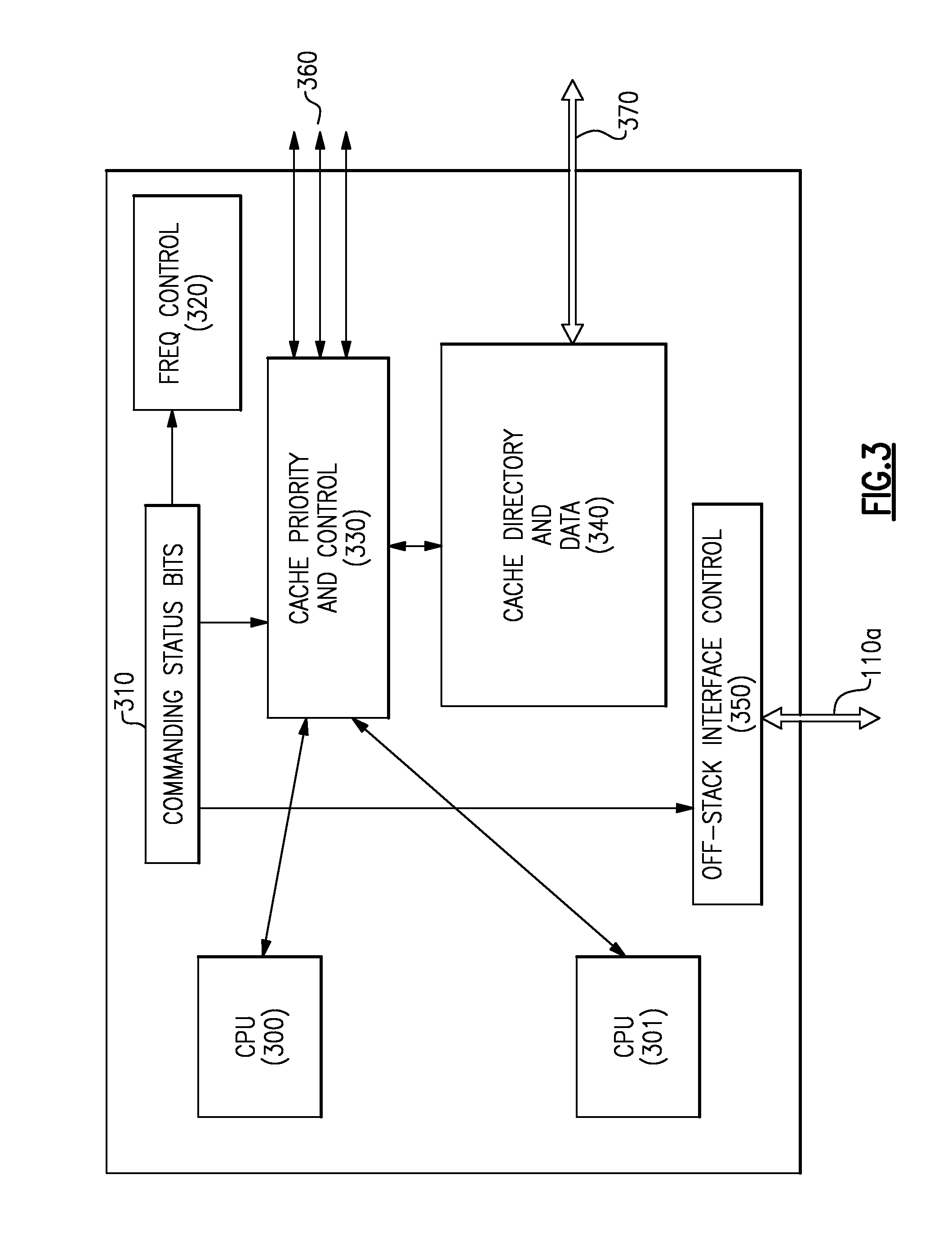

Intelligent multicore control for optimal performance per watt

The various aspects provide for a device and methods for intelligent multicore control of a plurality of processor cores of a multicore integrated circuit. The aspects may identify and activate an optimal set of processor cores to achieve the lowest level power consumption for a given workload or the highest performance for a given power budget. The optimal set of processor cores may be the number of active processor cores or a designation of specific active processor cores. When a temperature reading of the processor cores is below a threshold, a set of processor cores may be selected to provide the lowest power consumption for the given workload. When the temperature reading of the processor cores is above the threshold, a set processor cores may be selected to provide the best performance for a given power budget.

Owner:QUALCOMM INC

Building integrated circuits using a common database

InactiveUS20070005329A1Improved performance per watt of power consumptionCAD circuit designSoftware simulation/interpretation/emulationComputer architecturePerformance per watt

Systems and methods for designing and generating integrated circuits using a high-level language are described. The high-level language is used to generate performance models, functional models, synthesizable register transfer level code defining the integrated circuit, and verification environments. The high-level language may be used to generate templates for custom computation logical units for specific user-determined functionality. The high-level language and compiler permit optimizations for power savings and custom circuit layout, resulting in integrated circuits with improved performance per watt of power consumption.

Owner:NVIDIA CORP

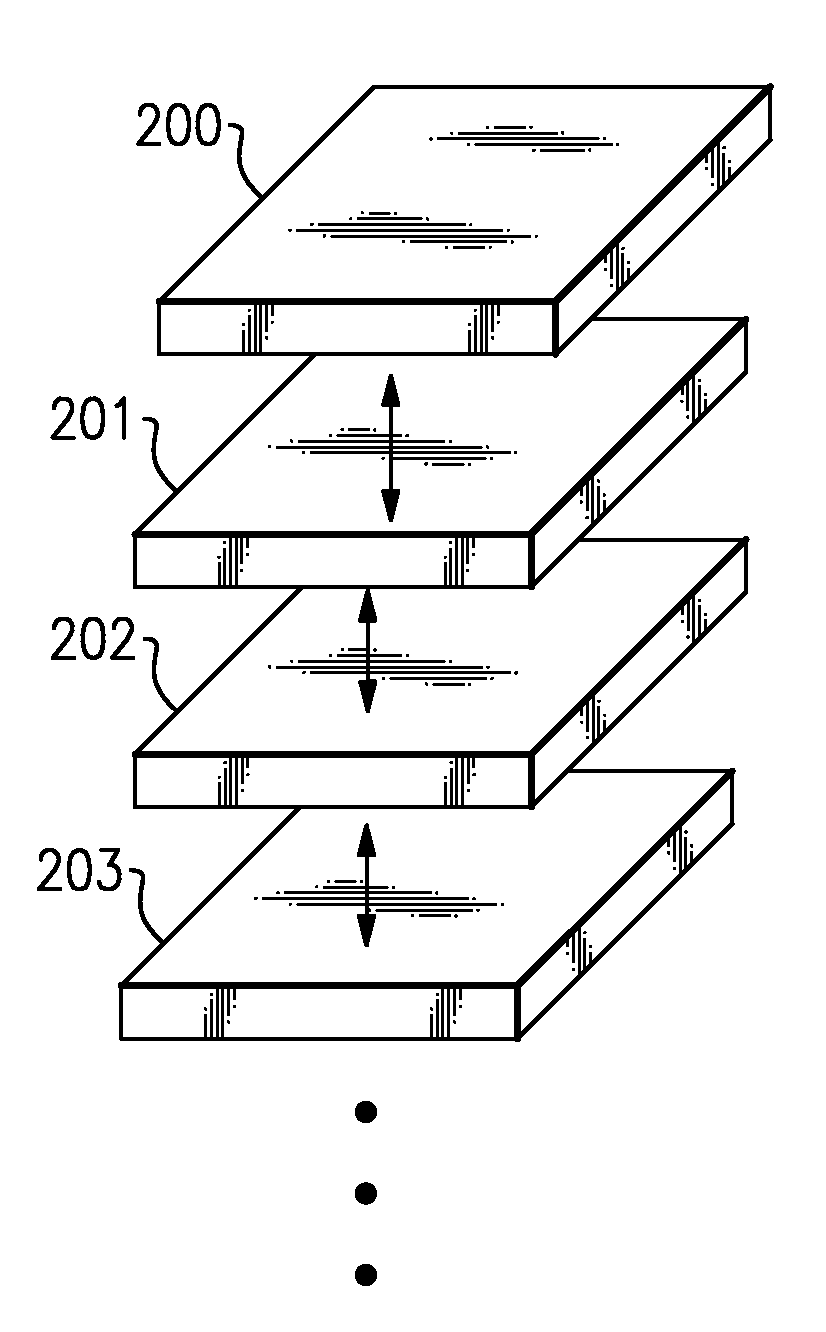

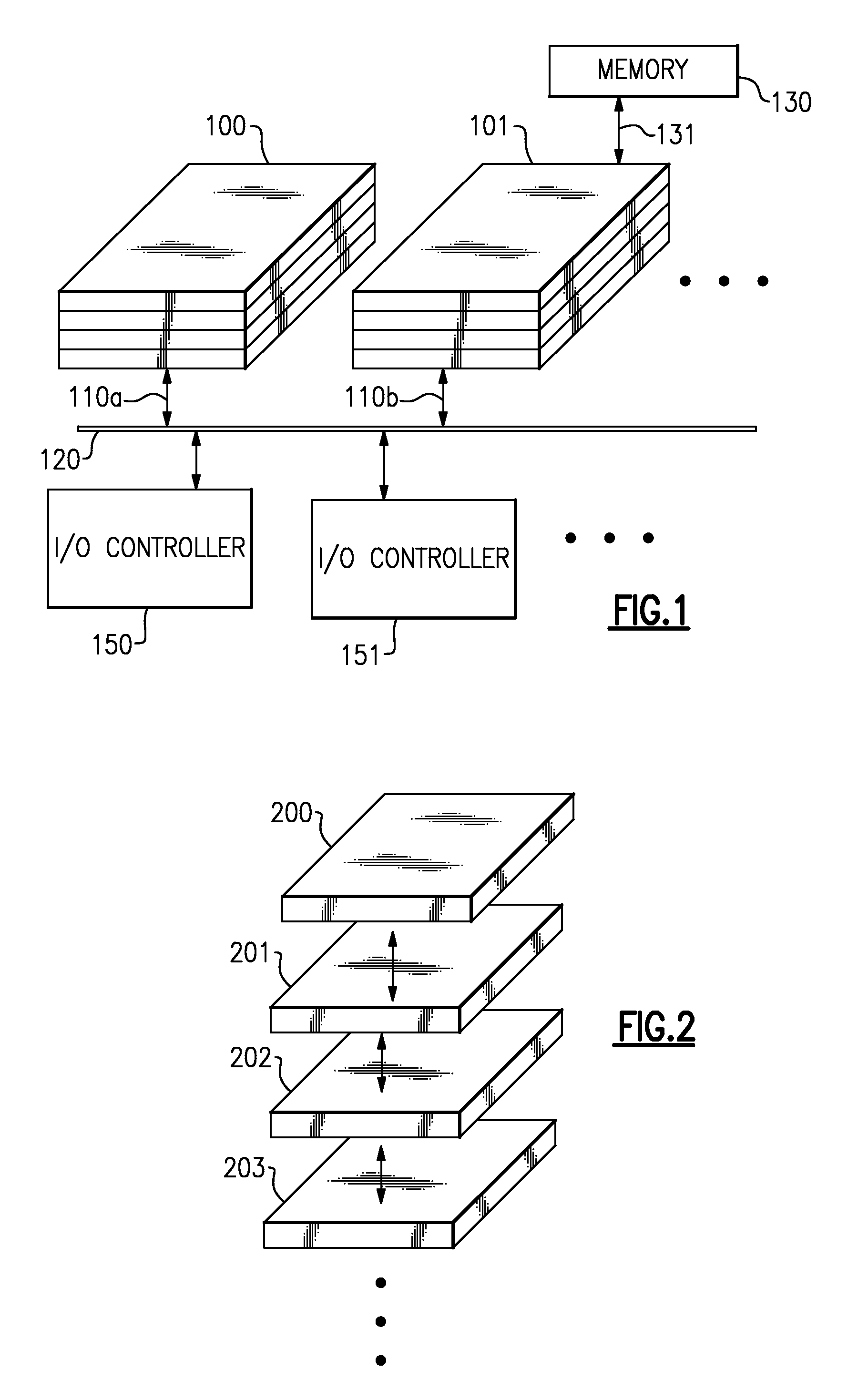

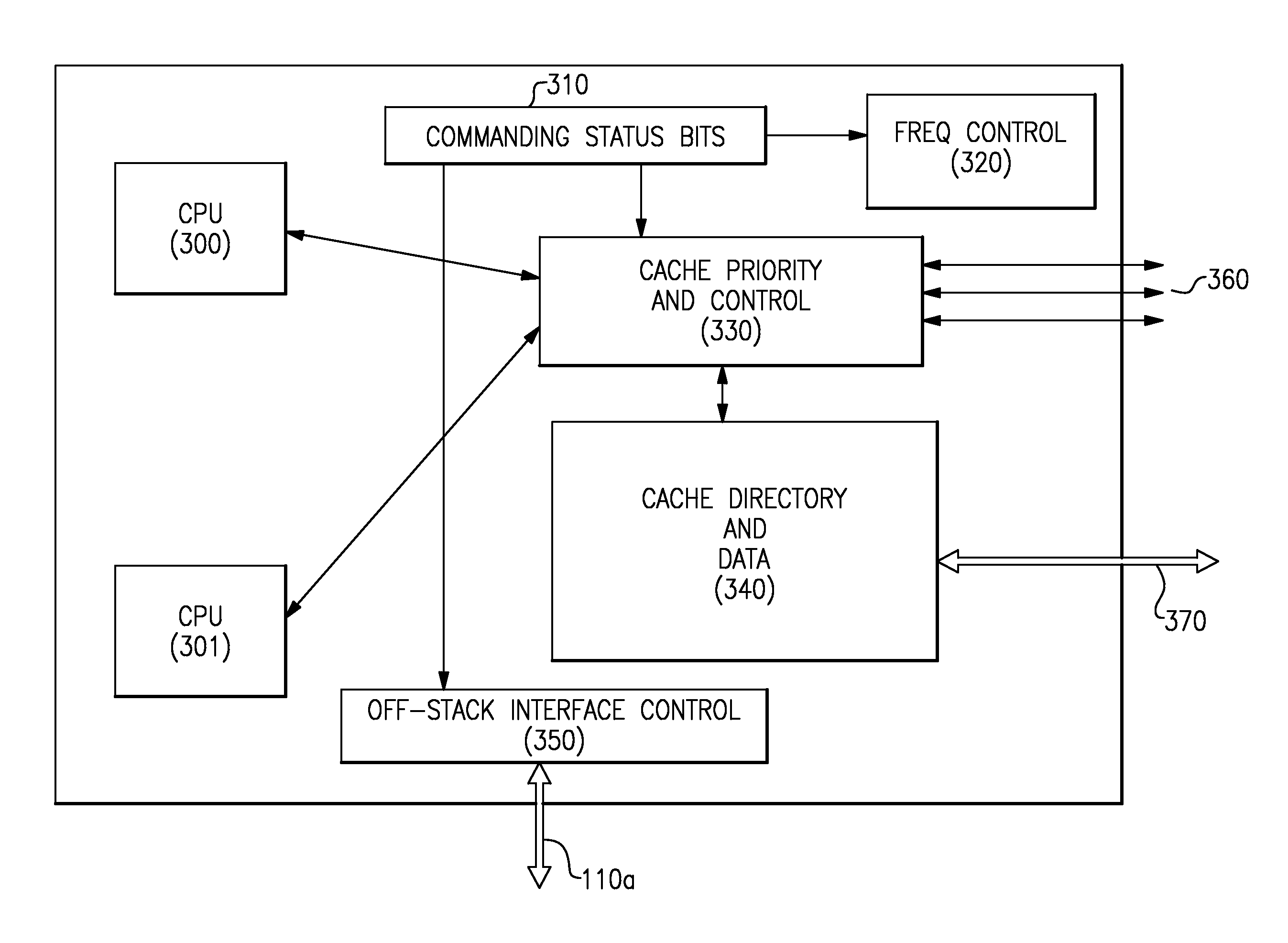

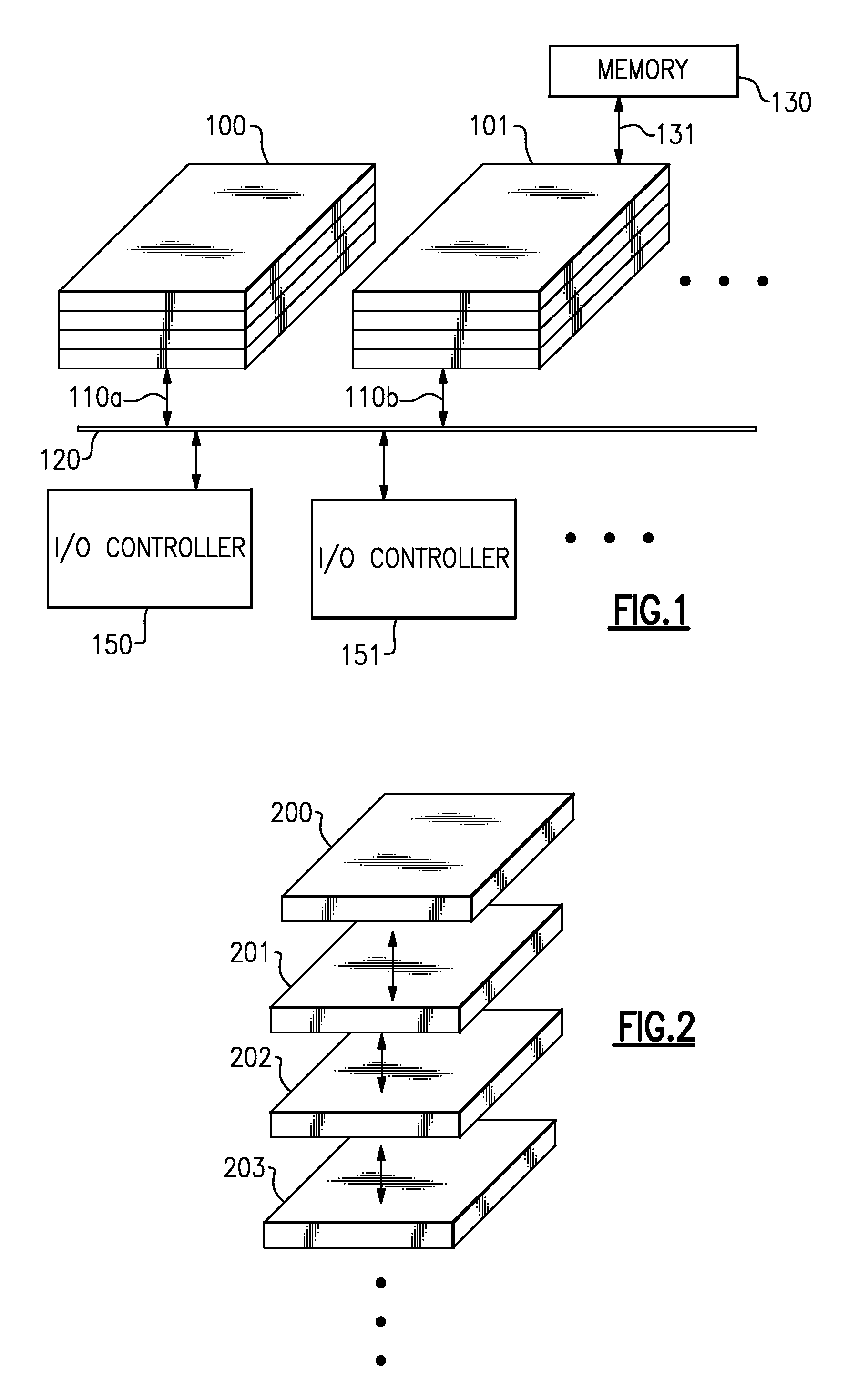

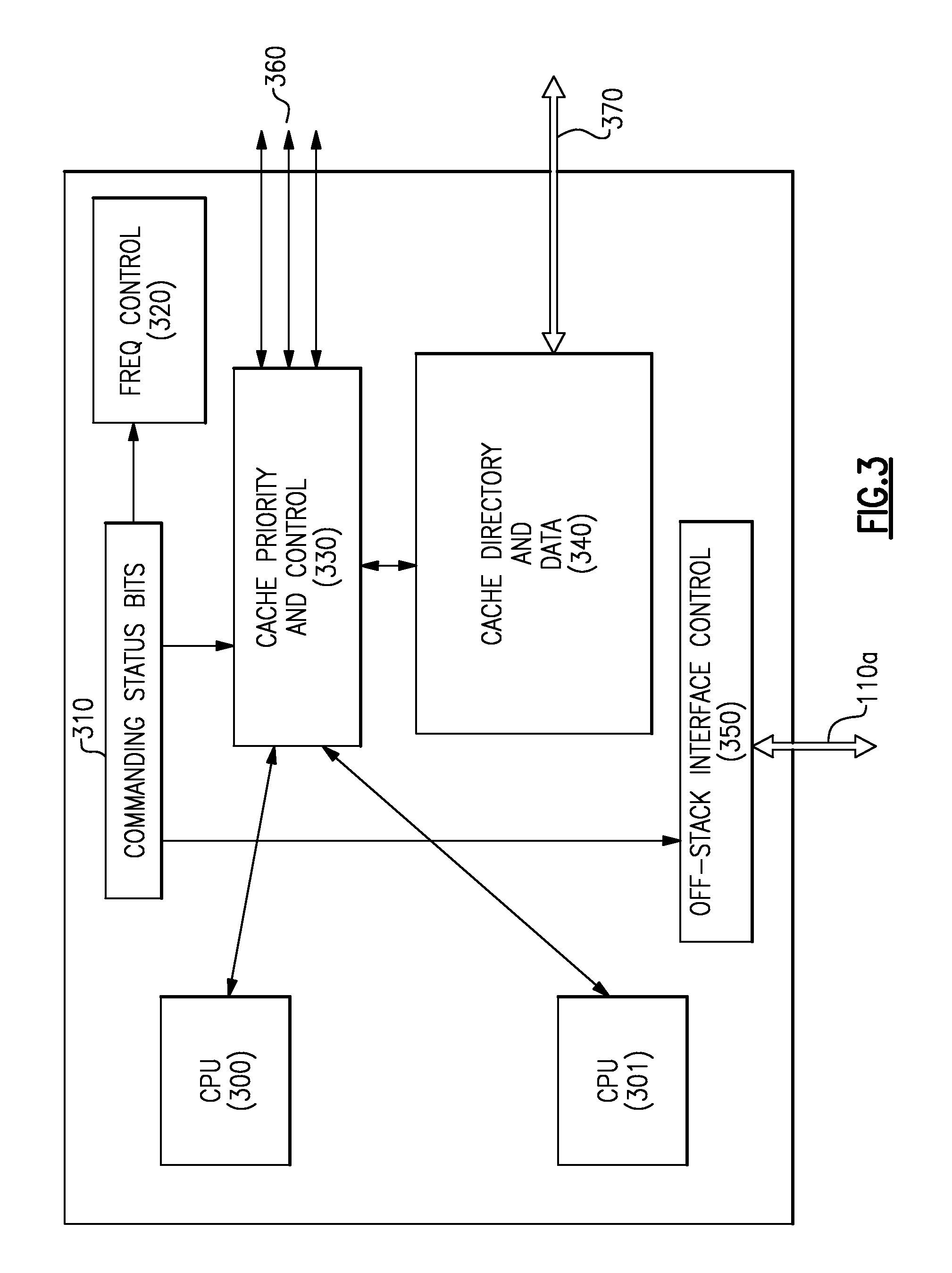

Power Efficient Stack of Multicore Microprocessors

InactiveUS20110119508A1Increased performance per watt of power consumedExisting designEnergy efficient ICTProgram control using stored programsOperational systemPower efficient

A computing system has a stack of microprocessor chips that are designed to work together in a multiprocessor system. The chips are interconnected with 3D through vias, or alternatively by compatible package carriers having the interconnections, while logically the chips in the stack are interconnected via specialized cache coherent interconnections. All of the chips in the stack use the same logical chip design, even though they can be easily personalized by setting specialized latches on the chips. One or more of the individual microprocessor chips utilized in the stack are implemented in a silicon process that is optimized for high performance while others are implemented in a silicon process that is optimized for power consumption i.e. for the best performance per Watt of electrical power consumed. The hypervisor or operating system controls the utilization of individual chips of a stack.

Owner:IBM CORP

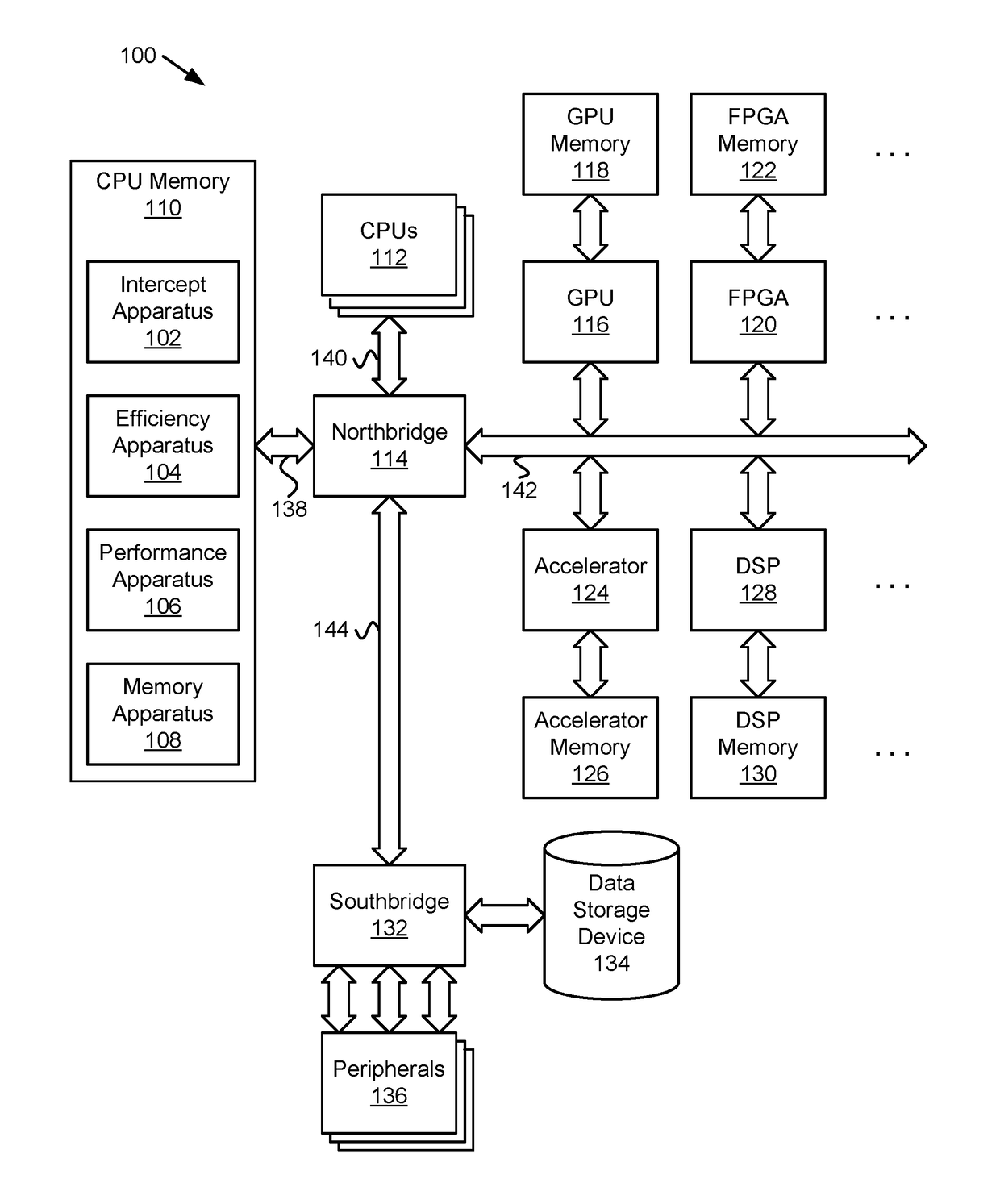

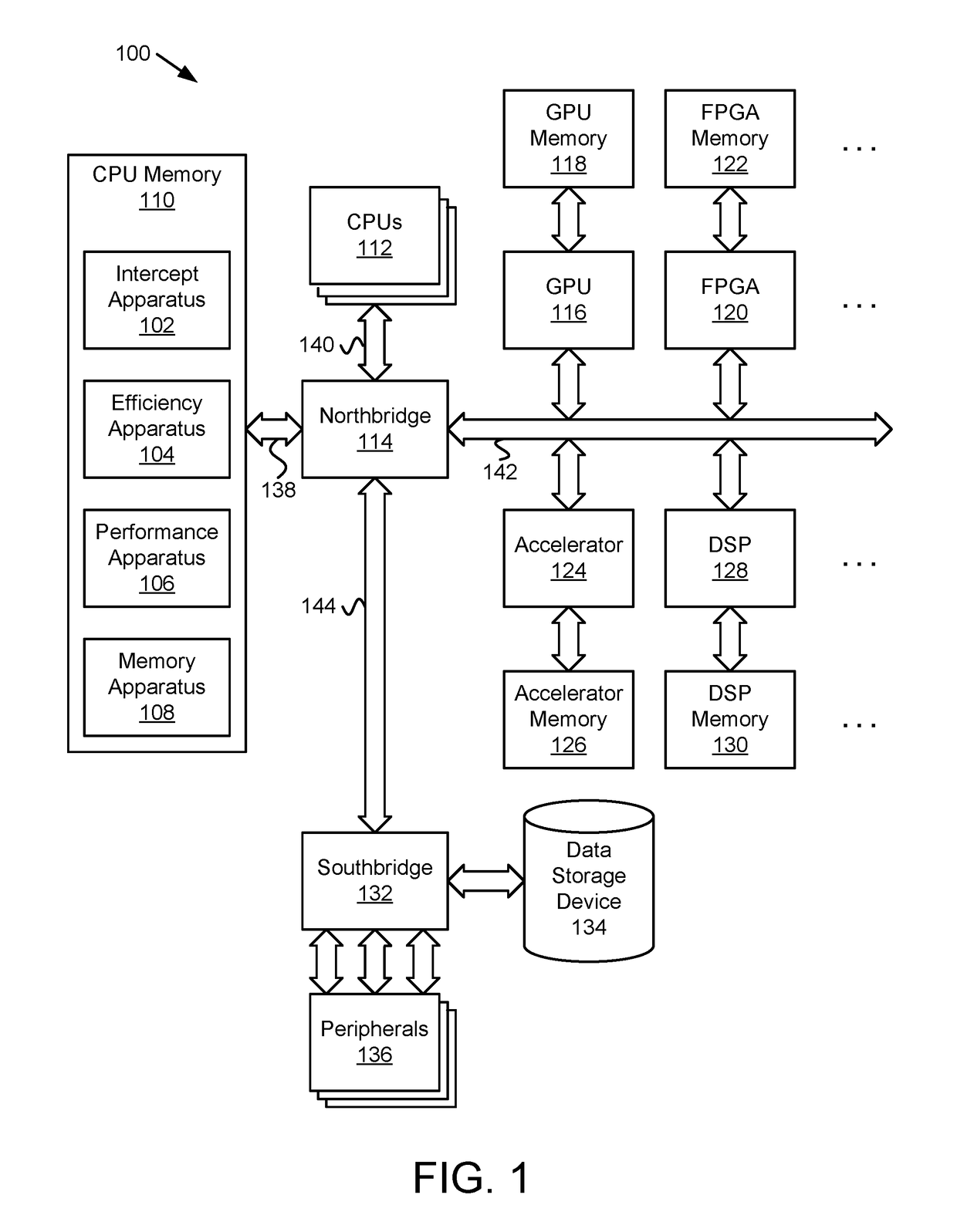

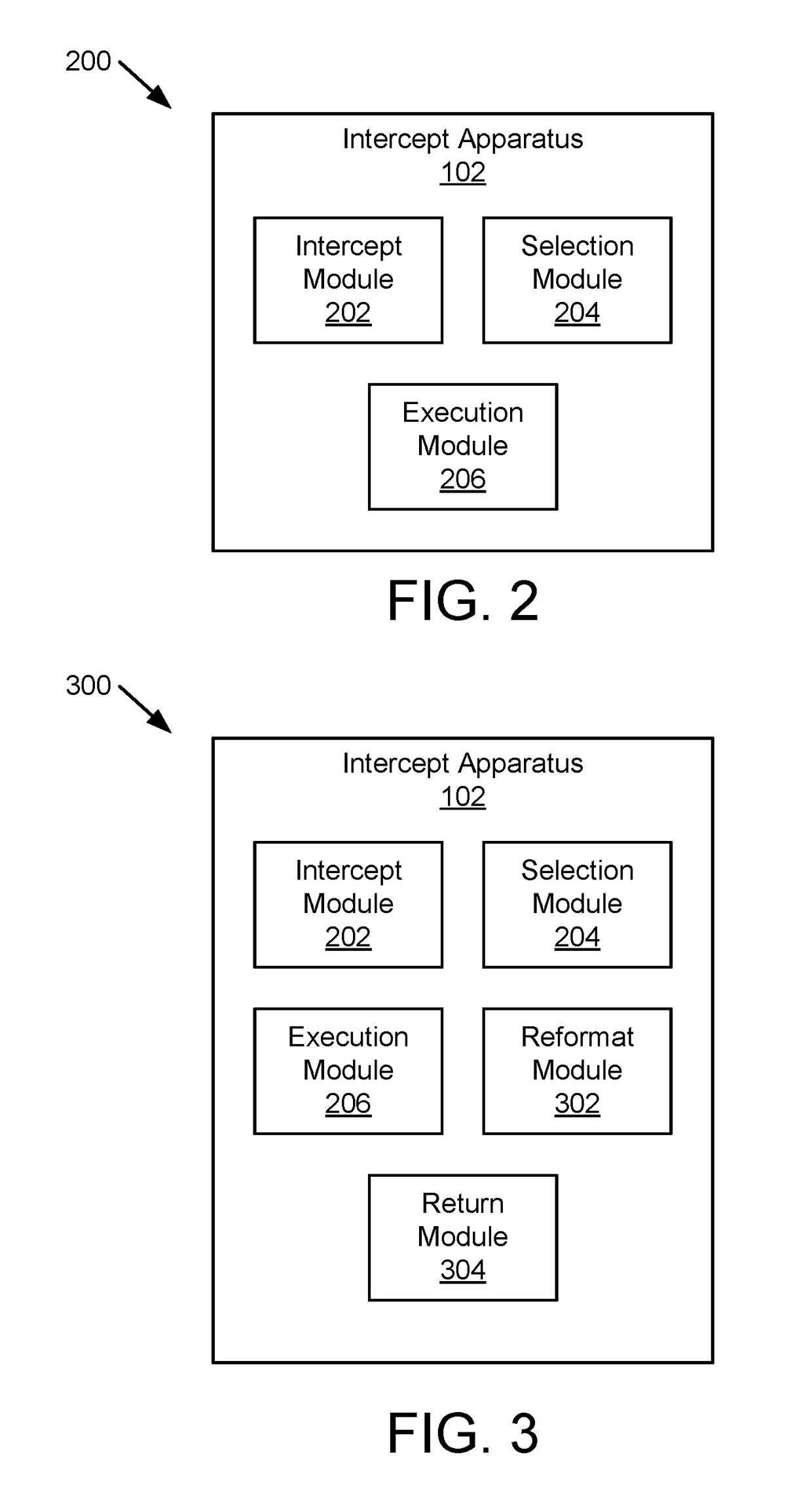

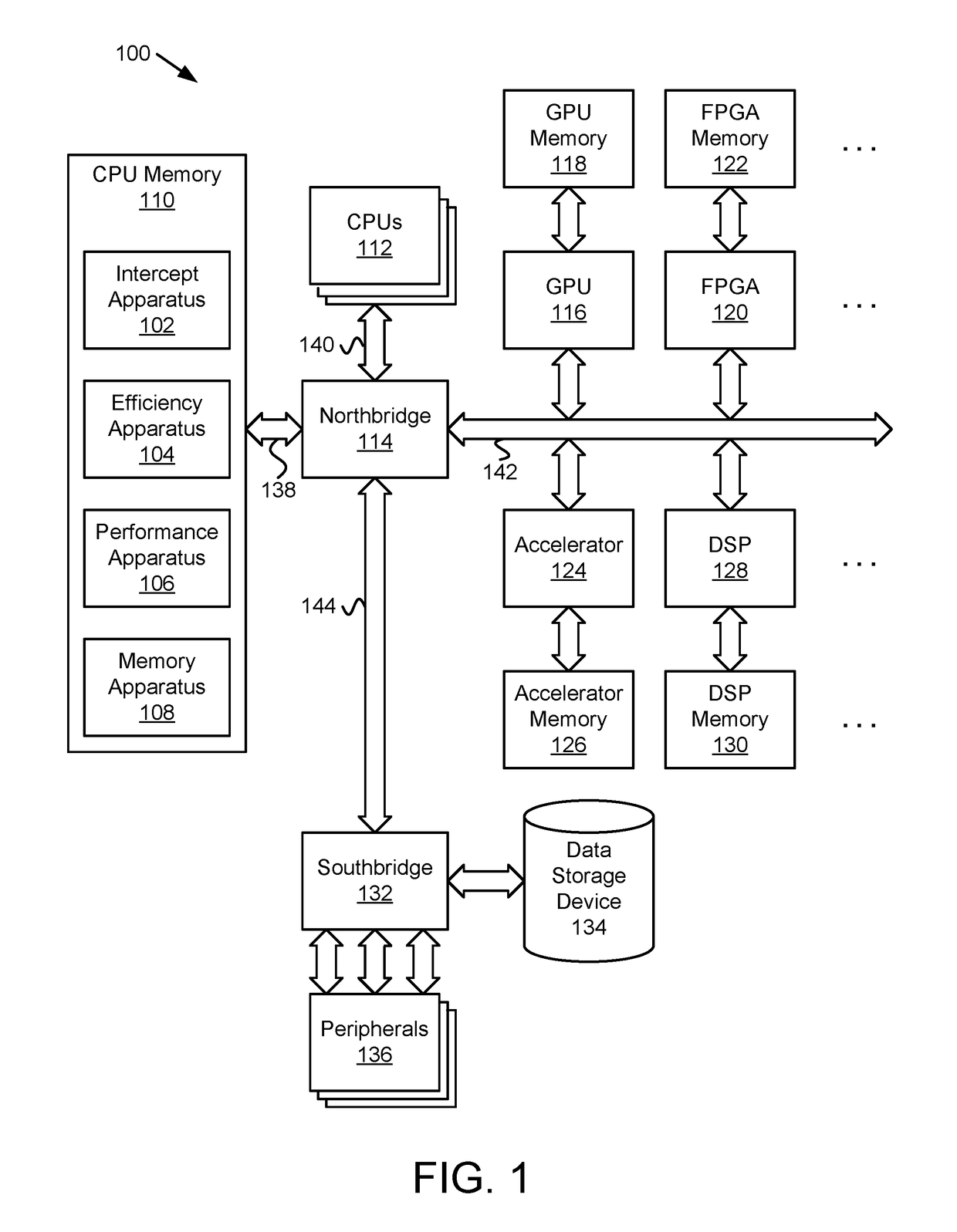

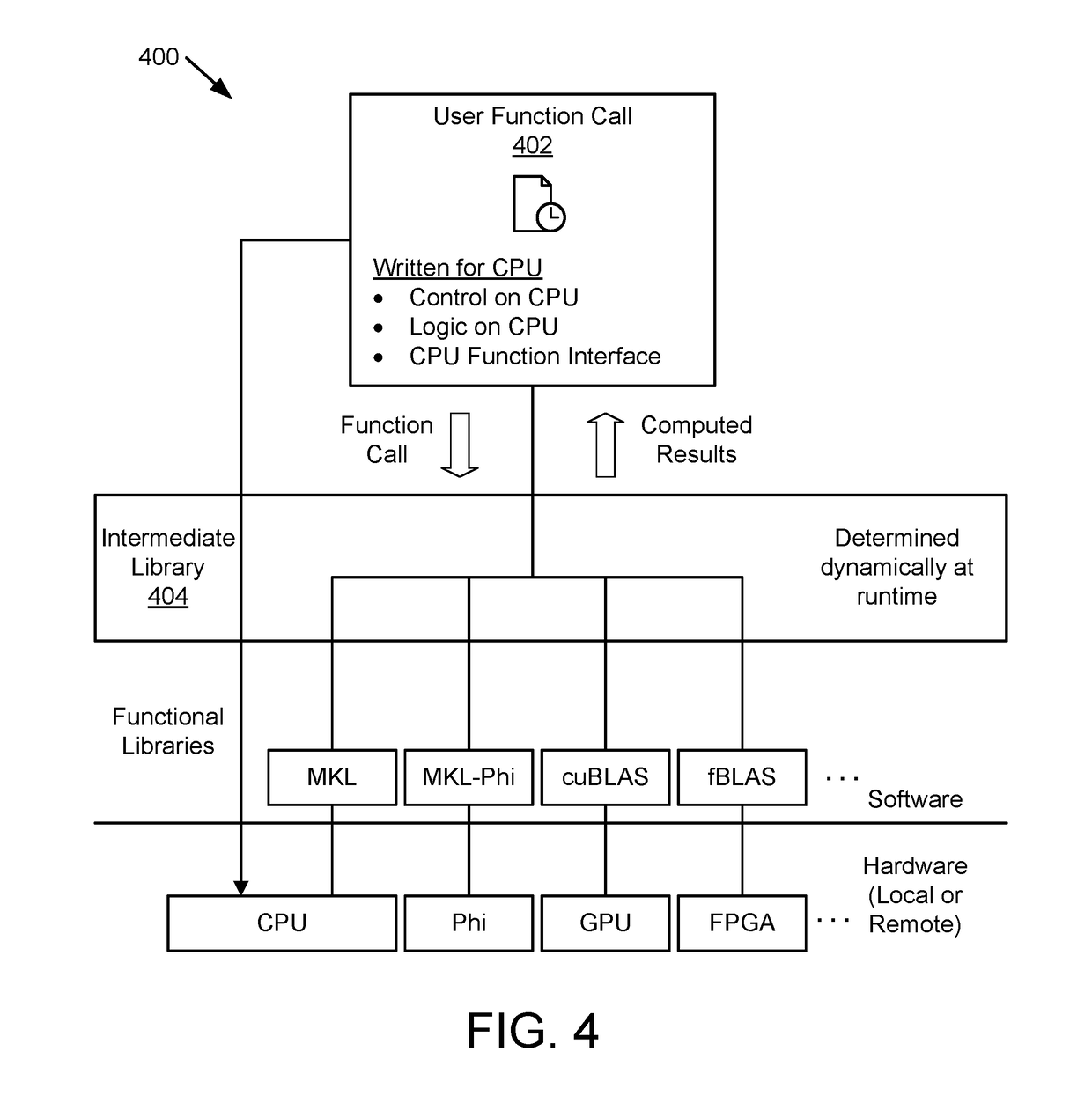

Workload placement based on heterogeneous compute performance per watt

ActiveUS20170277246A1Power supply for data processingEnergy efficient computingComputer moduleParallel computing

An apparatus for selecting a function includes a comparison module that compares energy consumption characteristics of a plurality of processors available for execution of a function, where each energy consumption characteristic varies as a function of function size. The apparatus includes a selection module that selects, based on the size of the function, a processor from the plurality of processors with a lowest energy consumption for execution of the function. The apparatus includes an execution module that executes the function on the selected processor.

Owner:LENOVO GLOBAL TECH INT LTD

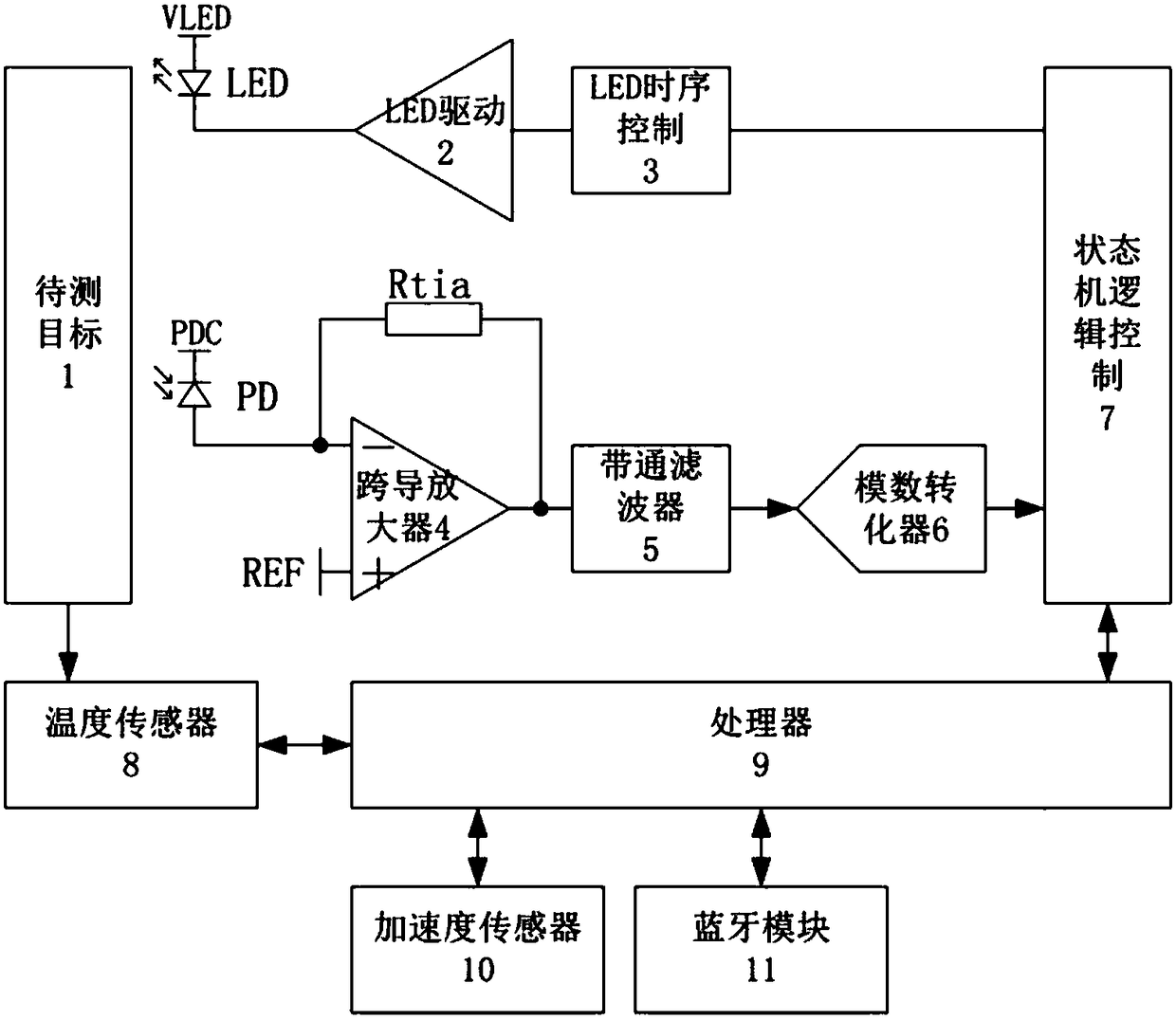

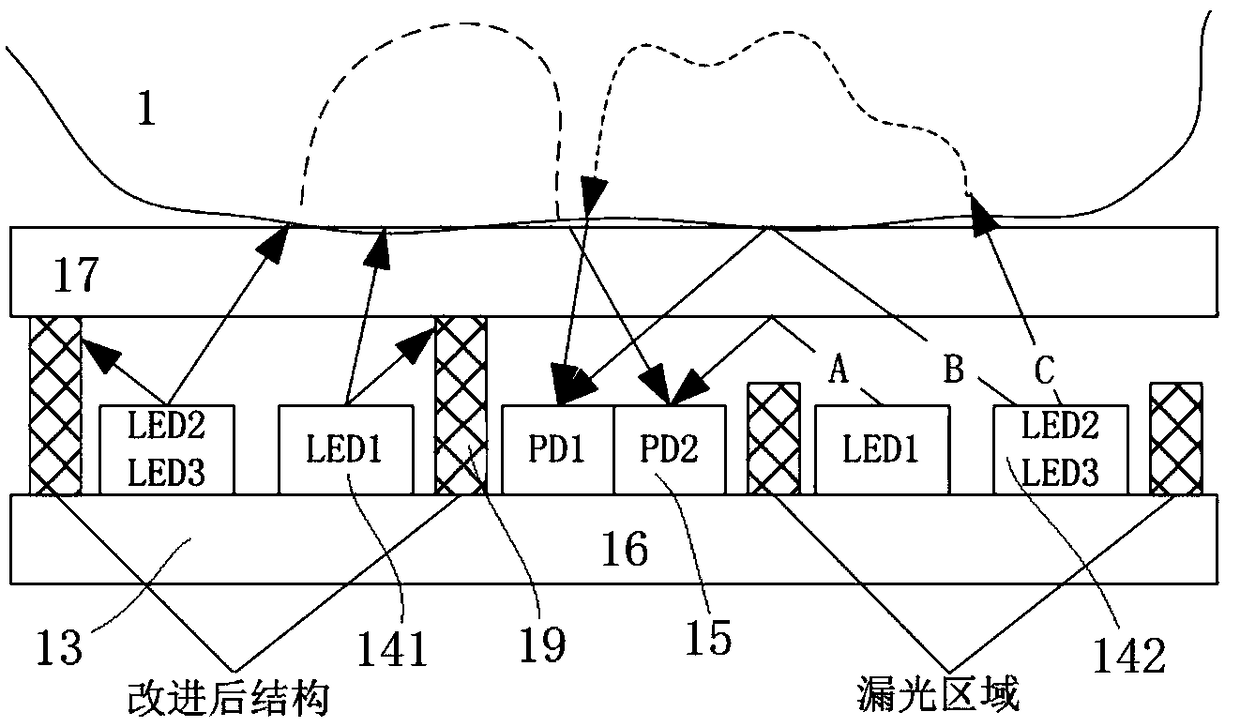

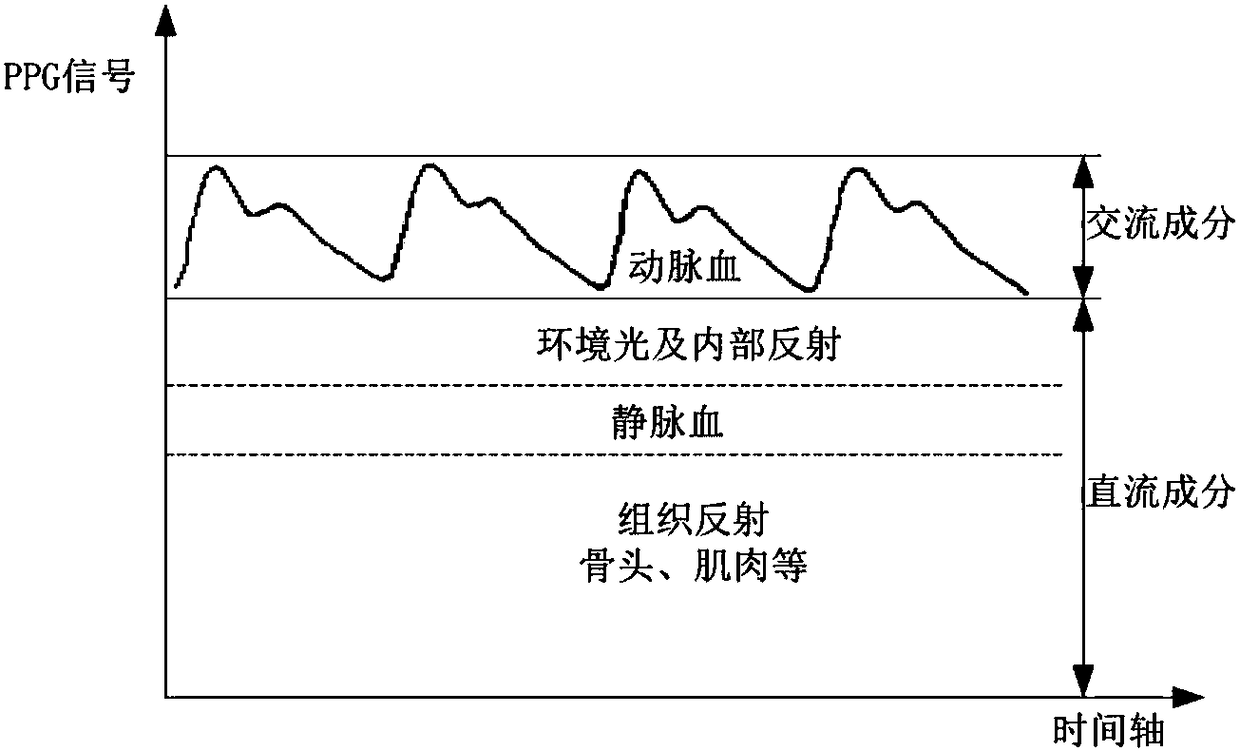

Multi-spectral skin color calibration and power consumption optimization device and working method of PPG technology

ActiveCN108606801AImprove battery lifeOvercoming the problem of not being able to collect smoothlyDiagnostic recording/measuringSensorsBand-pass filterSkin color

The invention discloses a multi-spectral skin color calibration and power consumption optimization device based on a PPG technology. The device comprises a detection device body, the detection devicebody comprises an LED, a PD and a processor, the LED and the PD are disposed on the same side, facing a target to be detected, of the detection device body, the processor is sequentially in controlling connection with the LED through an LED timing controller and an LED driver, and the PD is sequentially in communication connection with the processor through a transconductance amplifier, a band pass filter and an analog-to-digital converter; compared with a traditional method, a working method of the device achieves two major purposes that one, two-color light is used for calibrating skin colors, light with a longer wavelength such as infrared light is used for overcoming the problem that dark skin cannot be collected smoothly, so that the device adapts to more user groups, and user feelings are significantly improved; two, a method of dynamically adjusting PPG detection parameters is proposed, so that a working response state is entered at the fastest speed, the data is collected by using the best performance-to-power ratio, and the capability of prolonging runtime of a detection device is significantly improved.

Owner:福建守众安智能科技有限公司

Building integrated circuits using logical units

ActiveUS20070005321A1Improved performance per watt of power consumptionCAD circuit designSpecial data processing applicationsComputer architectureUnit system

Systems and methods for designing and generating integrated circuits using a high-level language are described. The high-level language is used to generate performance models, functional models, synthesizable register transfer level code defining the integrated circuit, and verification environments. The high-level language may be used to generate templates for custom computation logical units for specific user-determined functionality. The high-level language and compiler permit optimizations for power savings and custom circuit layout, resulting in integrated circuits with improved performance per watt of power consumption.

Owner:NVIDIA CORP

Method for testing performance per watt of server cluster system through SPECPOWER

InactiveCN104184631AReasonable designEasy to useData switching networksTest performanceCluster systems

The invention provides a method for testing performance per watt of a server cluster system through SPECPOWER. Tested server systems are configured to be accessible without passwords through ssh or rsh; a test environment of the tested server systems is built according to description documents; the first value and the last value are removed from power values in each stage, and the remaining values are averaged to obtain the average consumed power in each stage; the ssj_ops value is read from a test control end; the performance per watt is calculated according to the formula Sigma ssj_ops / Sigma power. Compared with the prior art, the mode that the performance per watt is calculated by acquiring consumed power and performance data of the cluster system is adopted and different from the method for acquiring the performance per watt through the SPECPower itself, and thus the application range of the tool can extend to cover the server cluster system.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

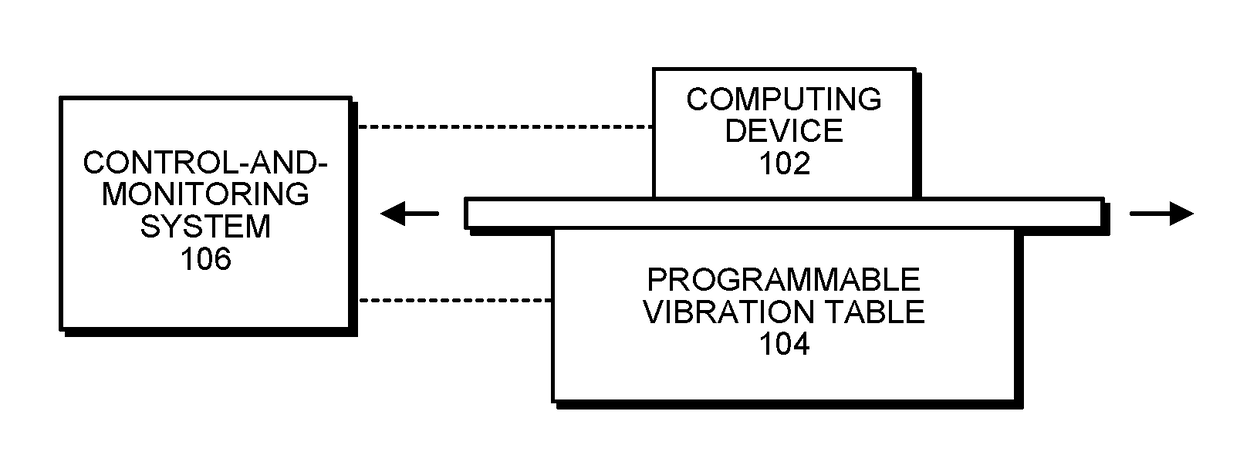

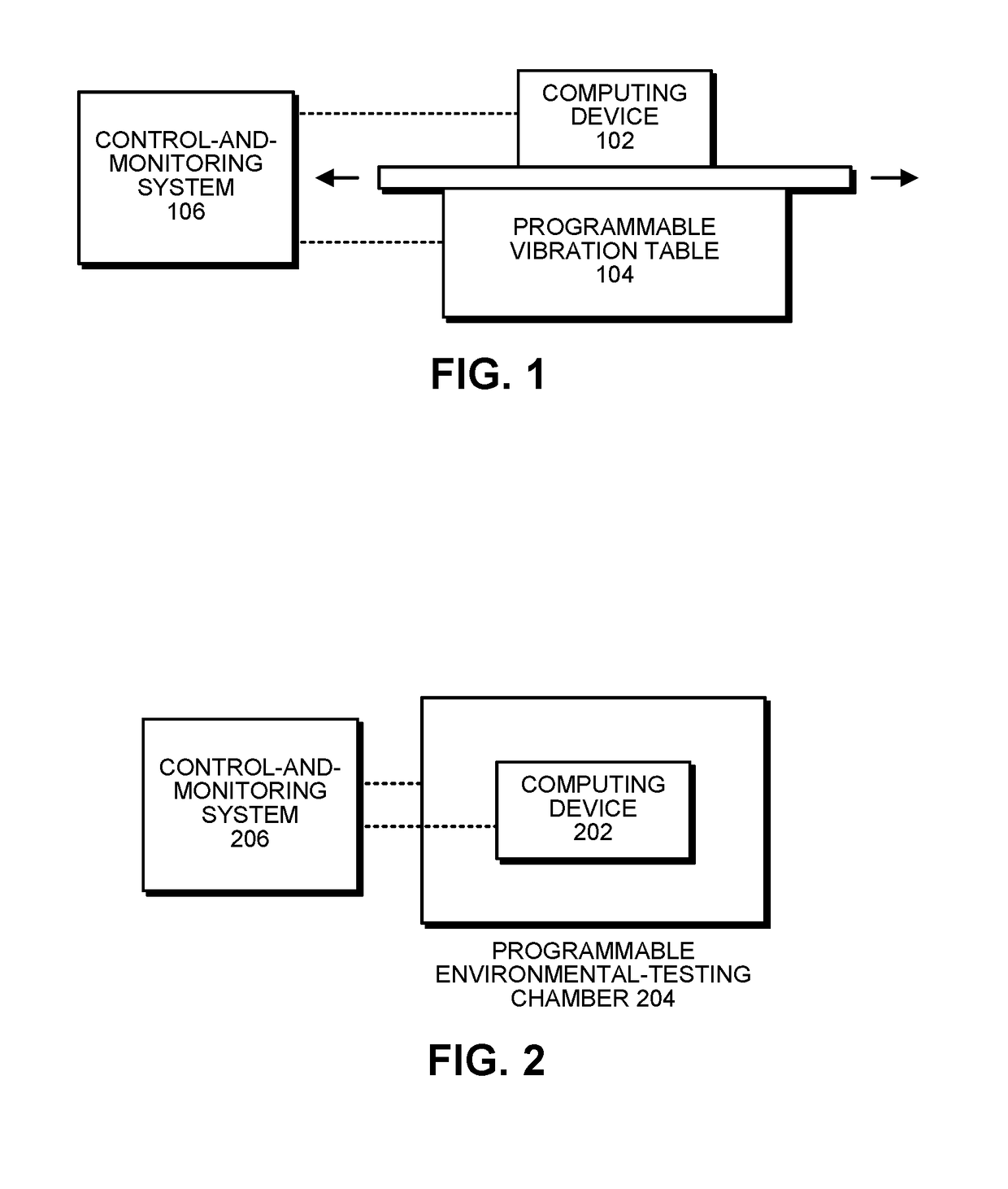

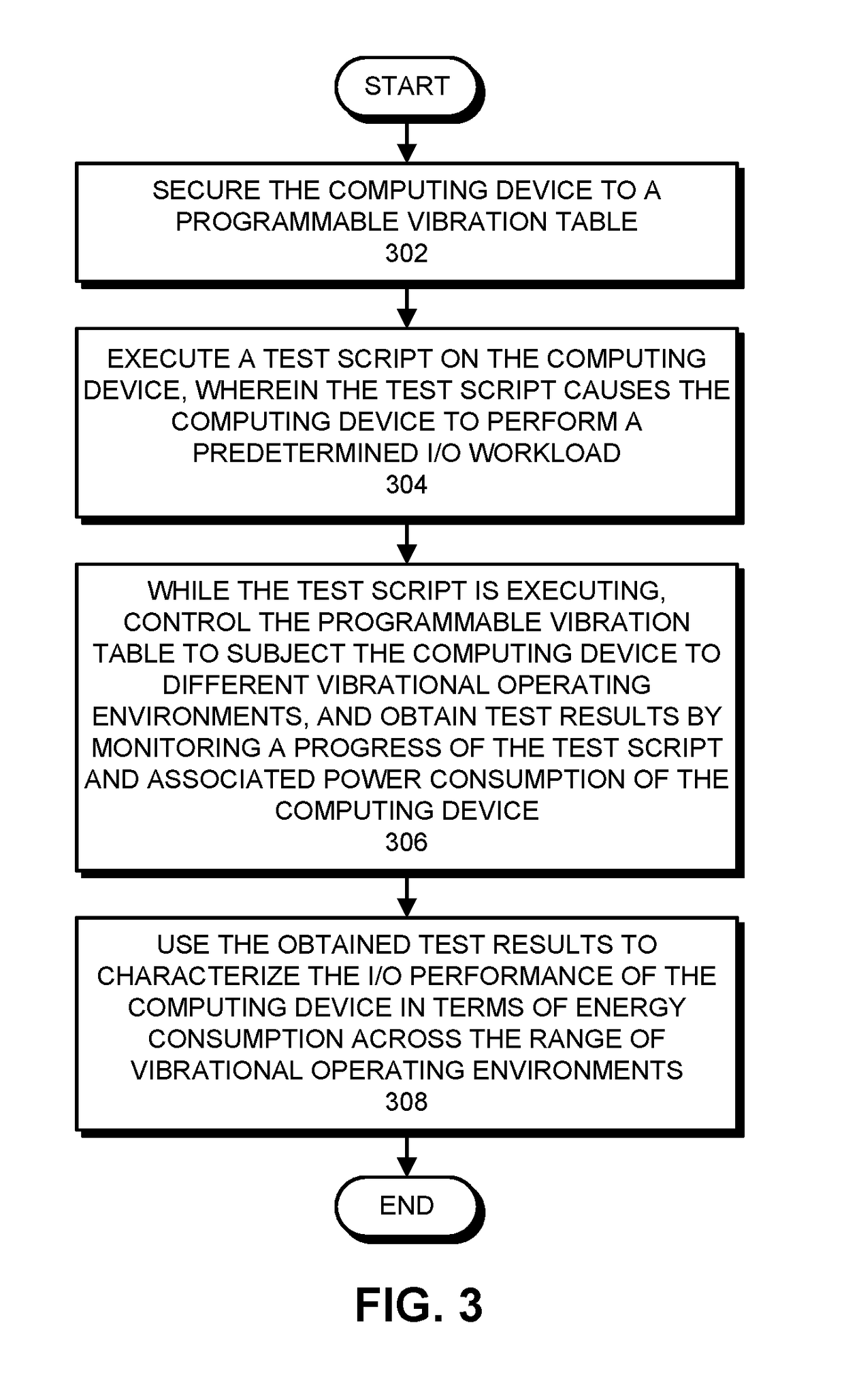

Characterizing the i/o-performance-per-watt of a computing device across a range of vibrational operating environments

ActiveUS20180058976A1Record information storagePower measurement by digital techniqueComputer hardwareTest script

The disclosed embodiments relate to a system that characterizes I / O performance of a computing device in terms of energy consumption across a range of vibrational operating environments. During operation, the system executes a test script on a computing device that is affixed to a programmable vibration table, wherein the test script causes the computing device to perform a predetermined I / O workload. While the test script is executing, the system controls the programmable vibration table to subject the computing device to different vibrational operating environments. At the same time, the system obtains test results by monitoring a progress of the test script and an associated power consumption of the computing device. Finally, the system uses the obtained test results to characterize the I / O performance of the computing device in terms of energy consumption across the range of vibrational operating environments.

Owner:ORACLE INT CORP

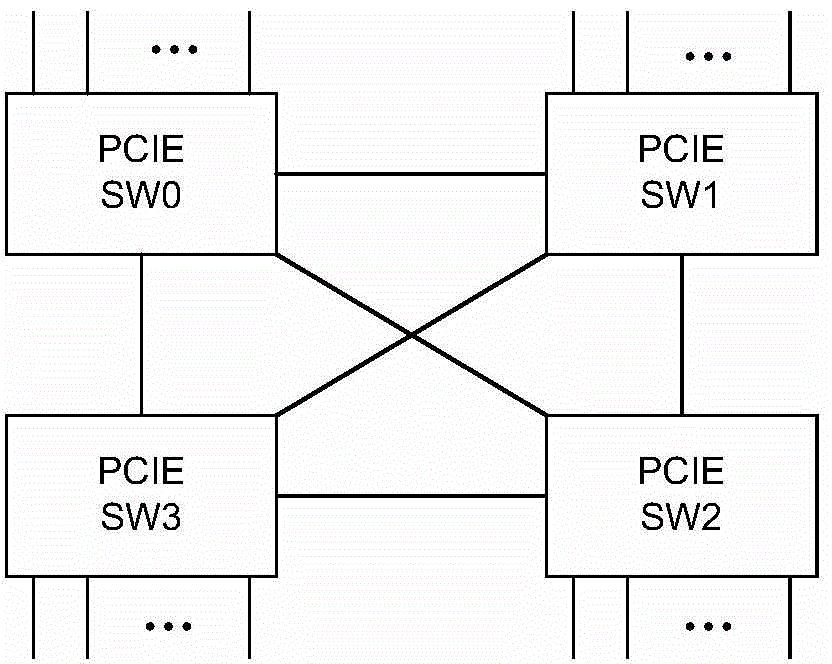

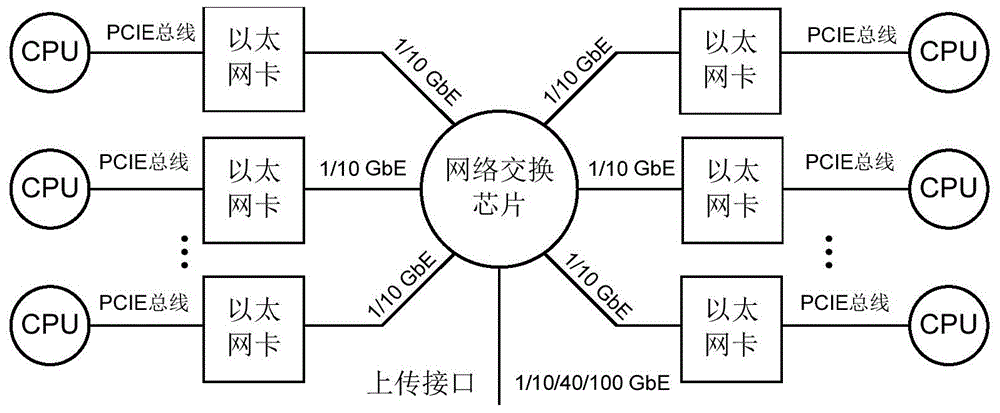

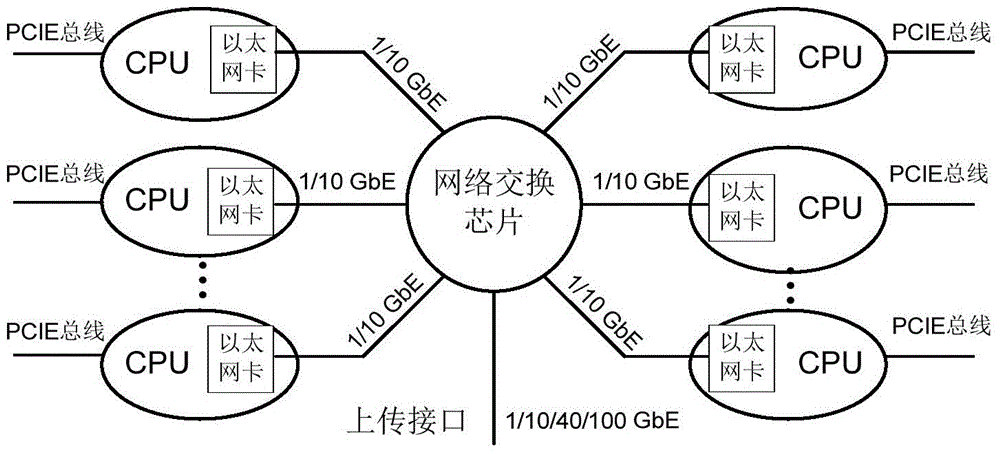

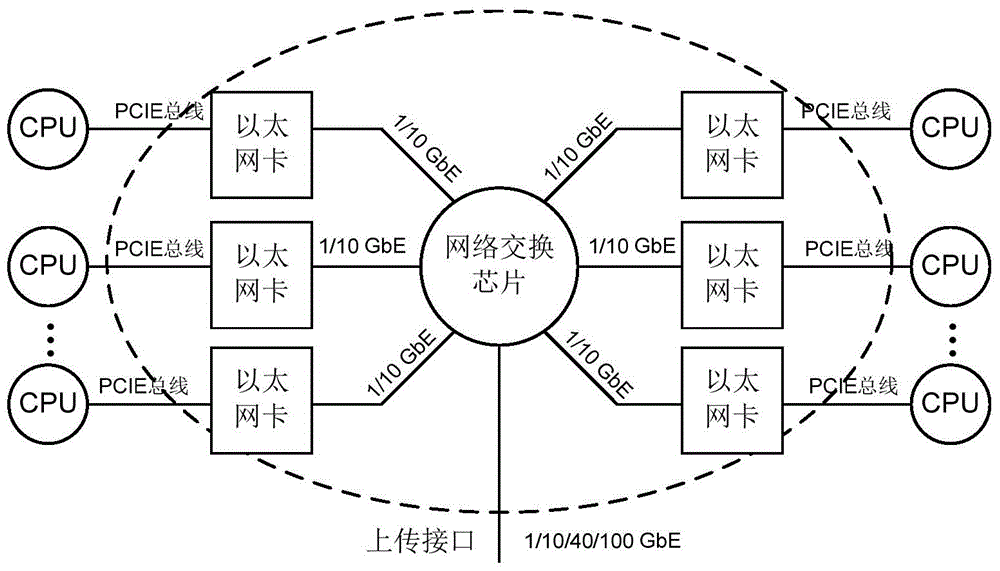

Network chip and cloud server system

InactiveCN104699655AMeet design needsGuaranteed performanceTransmissionEnergy efficient computingNetwork virtualization platformEthernet

The invention discloses a network chip and a cloud server system. The network chip comprises a plurality of Ethernet NICs (network interface cards) and an Ethernet switch, wherein the plurality of NICs are connected with the Ethernet switch. The NICs and the Ethernet switch are integrated into a single network chip, and the cloud server system is built based on the chip. The structure of the cloud server system can meet the design requirements of a cloud server very well, that is, the performance per watt and the integrated service capacity are high, the cost and power consumption are low, and high performance is realized. Network virtualization is realized on the framework, and the performance of the server can be guaranteed to the largest extent.

Owner:DAWNING CLOUD COMPUTING TECH CO LTD +1

Power efficient stack of multicore microprocessors

InactiveUS8417974B2Improve performanceEnergy efficient ICTSemiconductor/solid-state device detailsPower efficientInterconnection

A computing system has a stack of microprocessor chips that are designed to work together in a multiprocessor system. The chips are interconnected with 3D through vias, or alternatively by compatible package carriers having the interconnections, while logically the chips in the stack are interconnected via specialized cache coherent interconnections. All of the chips in the stack use the same logical chip design, even though they can be easily personalized by setting specialized latches on the chips. One or more of the individual microprocessor chips utilized in the stack are implemented in a silicon process that is optimized for high performance while others are implemented in a silicon process that is optimized for power consumption i.e. for the best performance per Watt of electrical power consumed. The hypervisor or operating system controls the utilization of individual chips of a stack.

Owner:INT BUSINESS MASCH CORP

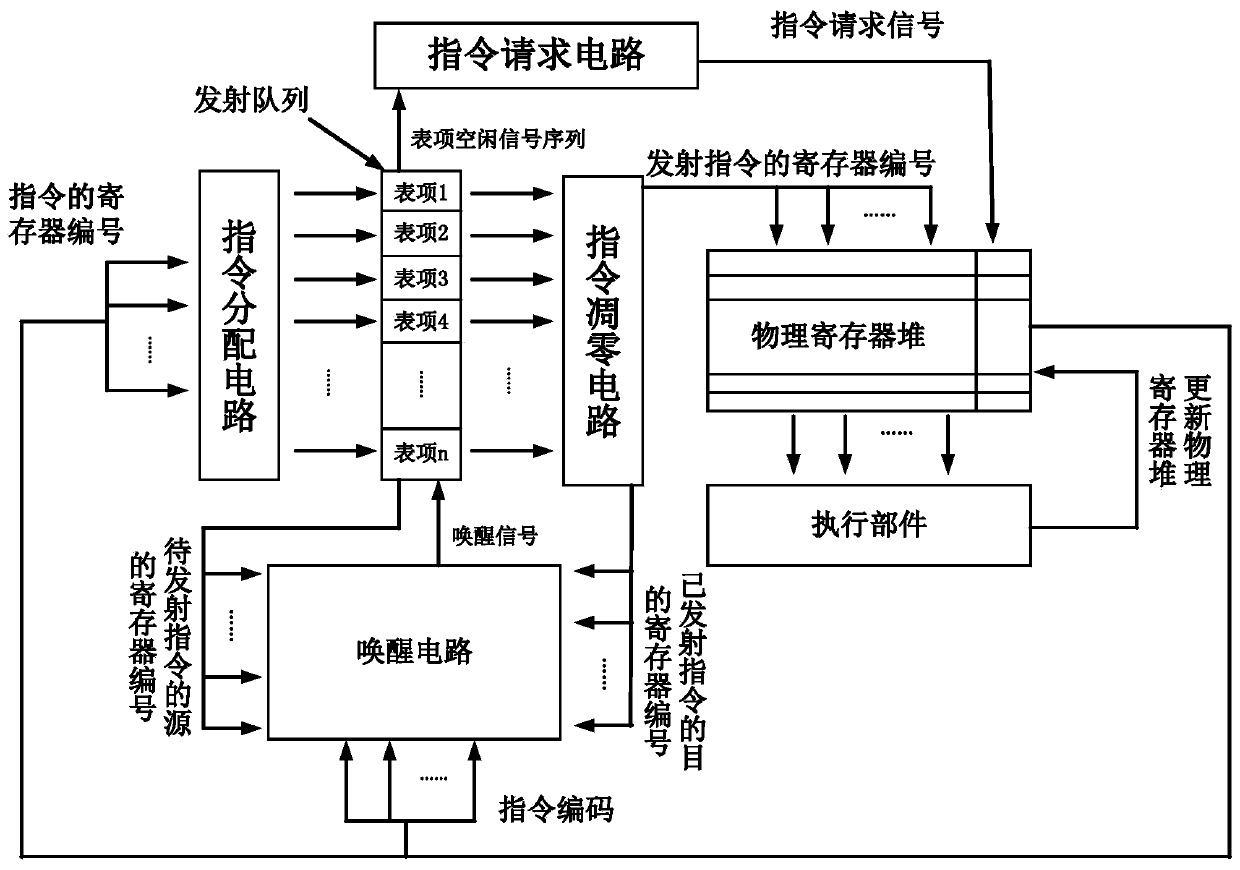

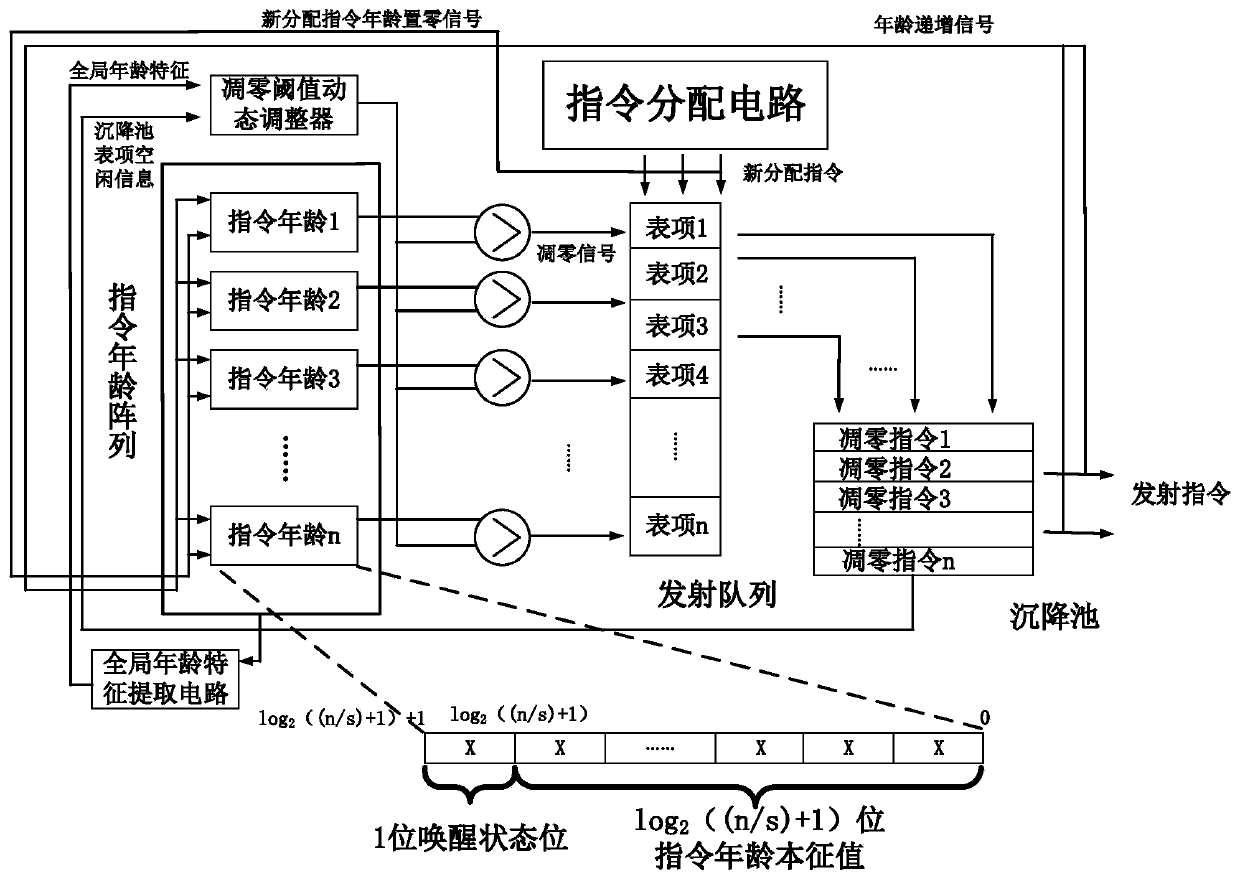

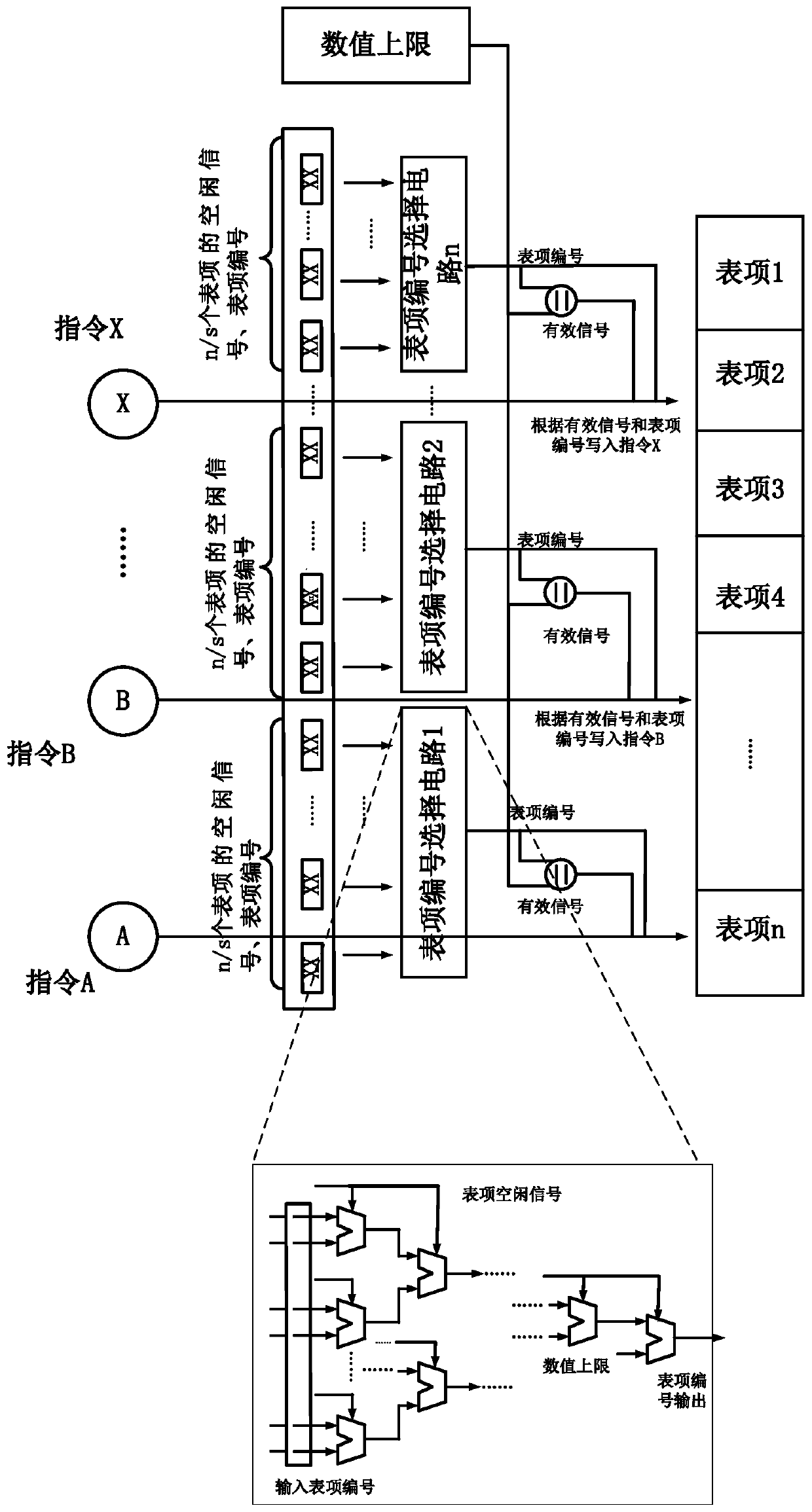

Multi-instruction out-of-order transmitting method based on instruction withering and processor

ActiveCN111538534ASolve the problem of not being able to increase the number of entries in the launch queueSolve the problem of increasing latencyConcurrent instruction executionEnergy efficient computingEngineeringLow delay

The invention discloses a multi-instruction out-of-order transmitting method based on instruction withering and a processor, and belongs to the field of processor design. According to the invention, aredundant arbitration structure in a traditional transmitting architecture is abandoned, an instruction withering circuit is added, and an instruction age array is adopted to represent the storage time of instructions in a CPU. In addition, an awakening state bit is added, the instructions exceeding the withering threshold value are stored in a settling pond so that a CPU can directly transmit the instructions, circuit structures such as an instruction request circuit, an instruction distribution circuit and an awakening circuit are improved, and the time sequence of a key path in the processor for multi-instruction transmission is effectively improved; and when an instruction is awakened, delayed awakening is performed on an instruction with a short execution period, the instruction witha long execution period is awakened in advance so as to ensure that the instruction can be executed back to back, the requirements of high power consumption ratio, low delay and high IPC in a modernsuperscalar out-of-order processor are met, and the problems that in the prior art, the number of items of a launch queue table of a processor cannot be increased day by day, and delay is also increased day by day are solved.

Owner:JIANGNAN UNIV

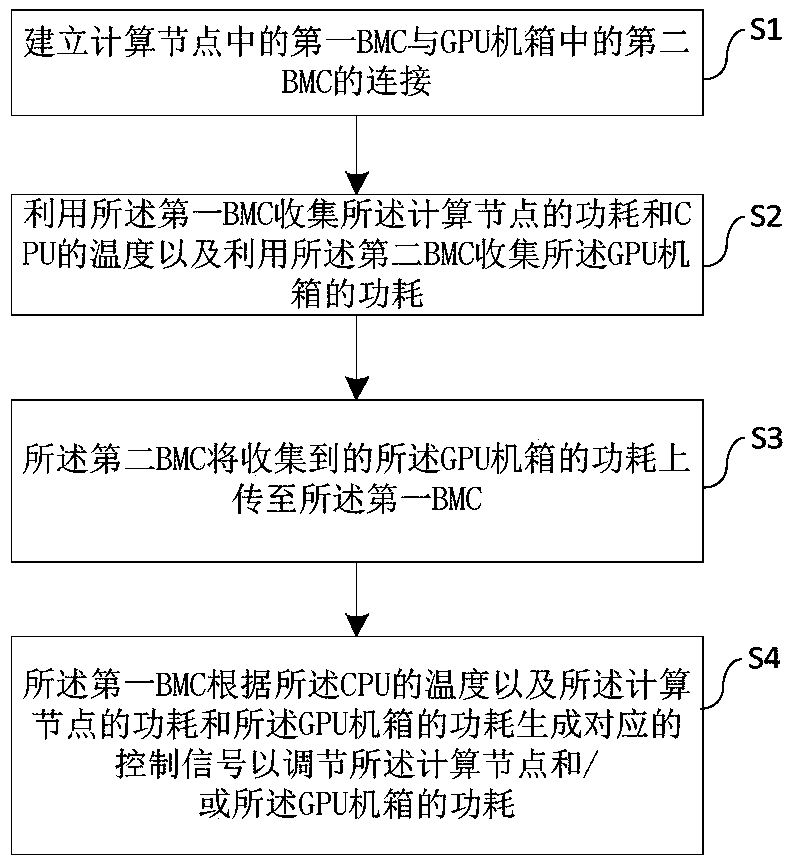

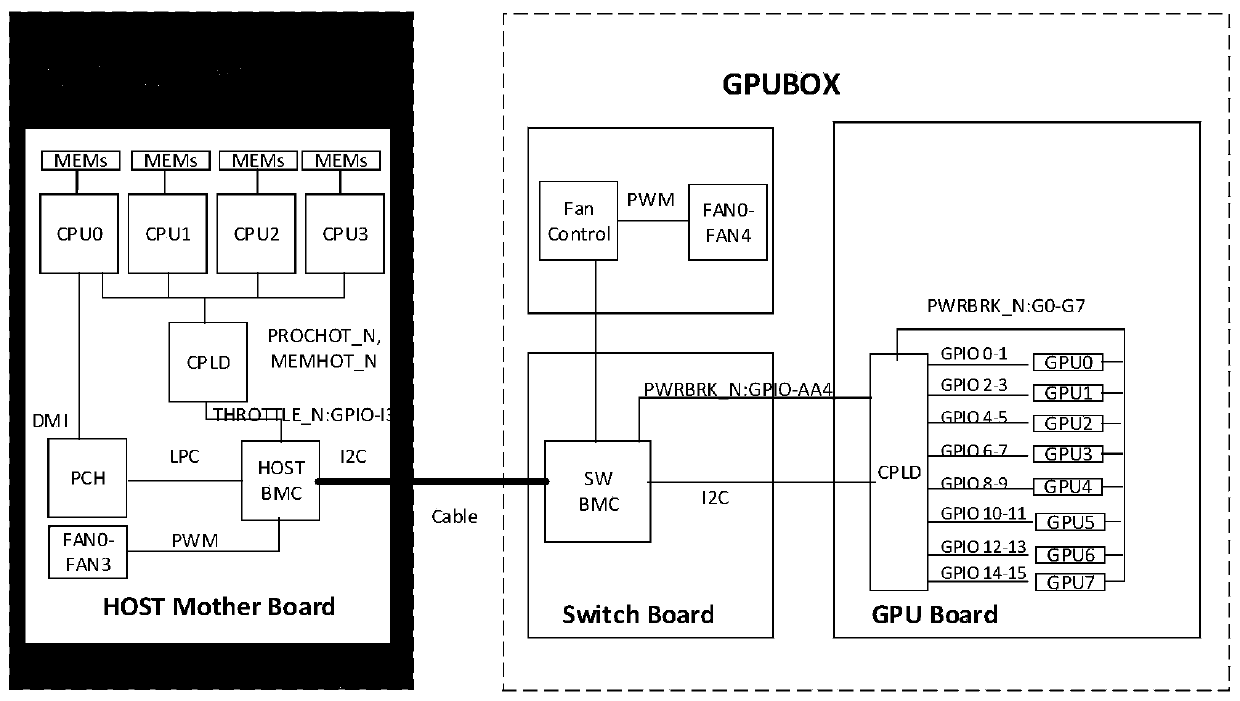

Server power consumption management method and equipment, and medium

InactiveCN111427744ABest Performance per WattDigital data processing detailsHardware monitoringComputer hardwareControl signal

The invention discloses a server power consumption management method. The method comprises the following steps: establishing connection between a first BMC in a computing node and a second BMC in a GPU case; collecting the power consumption of the computing node and the temperature of the CPU by using the first BMC, and collecting the power consumption of the GPU chassis by using the second BMC; enabling the second BMC to upload the collected power consumption of the GPU case to the first BMC; and enabling the first BMC to generate a corresponding control signal according to the temperature ofthe CPU, the power consumption of the computing node and the power consumption of the GPU case so as to adjust the power consumption of the computing node and / or the GPU case. The invention further discloses computer equipment and a readable storage medium. According to the scheme provided by the invention, integrated dynamic power consumption management between the computing node and the GPUBOXcan be realized, and meanwhile, the optimal performance power consumption ratio between the computing node and the GPUBOX can be realized.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

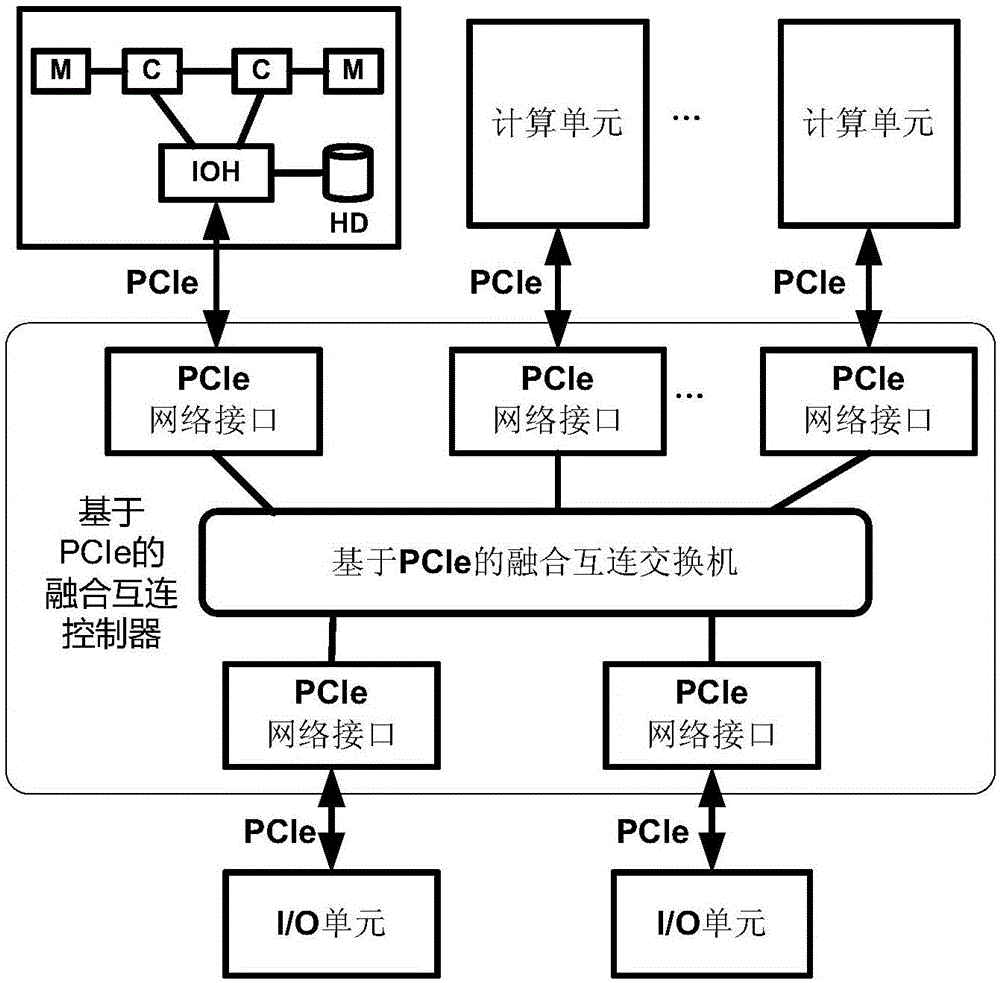

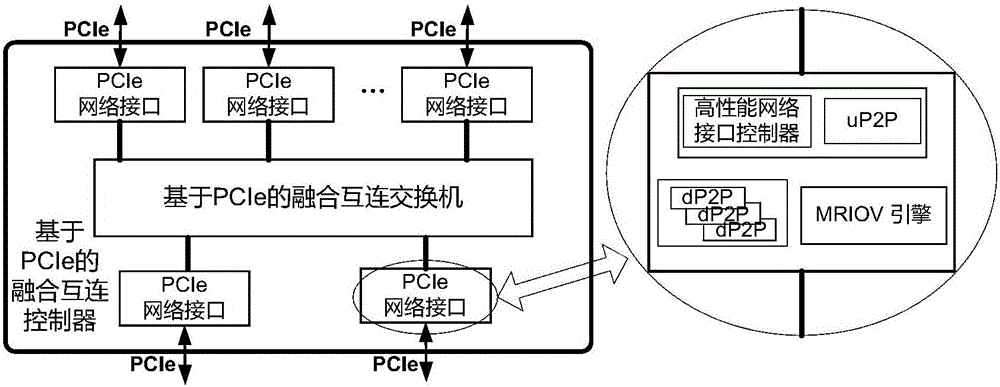

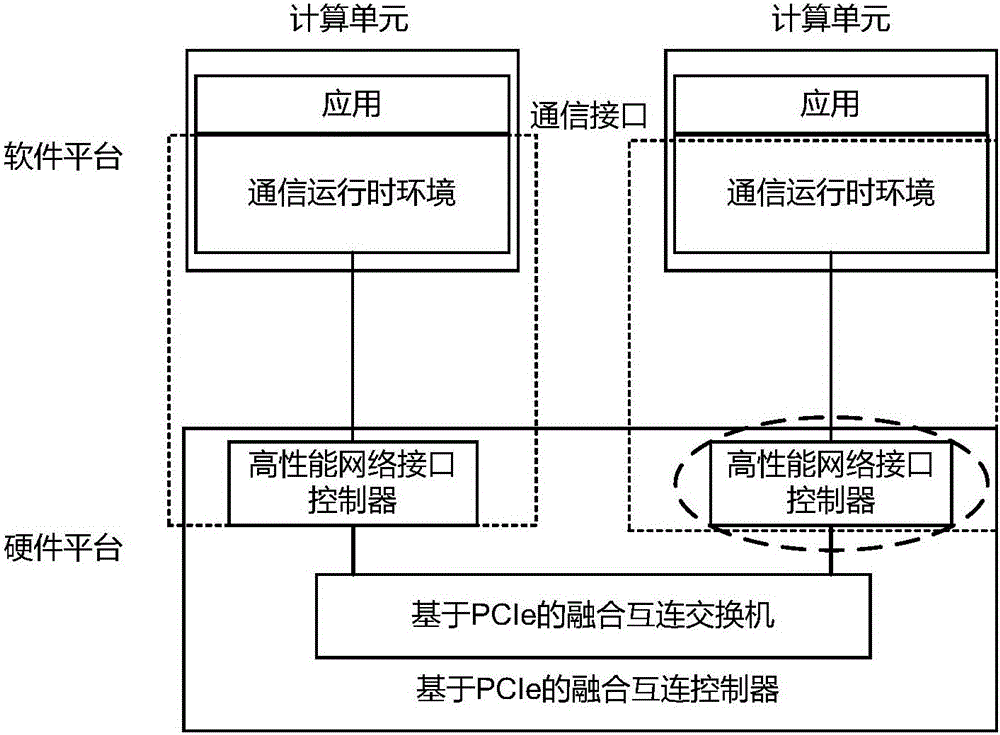

Configurable multiprocessor computer system and implementation method

ActiveCN106844263AReduce power consumptionLow costEnergy efficient computingElectric digital data processingCommunication interfaceHigh speed memory

The invention provides a configurable multiprocessor computer system and an implementation method, and relates to the technical field of computer system structures. The system comprises general purpose computation units, a high performance network communication interface, a PCIe-based fusion interconnection controller and an I / O unit, wherein the general purpose computation units are connected to the PCIe-based fusion interconnection controller through the high performance network communication interface, the I / O unit is connected to the PCIe-based fusion interconnection controller through a standard PCIe interface, and the I / O unit is shared by the multiple general purpose computation units through the PCIe-based fusion interconnection controller. According to the efficient interconnection configurable multiprocessor computer system architecture, the numbers and working modes of the general purpose computation units, acceleration computation units, network devices, high-speed memories and the like are configured according to application needs, then, the optimal system can be constructed, and the best performance-to-power consumption ratio and the best cost performance are achieved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

Workload placement based on heterogeneous compute performance per watt

ActiveUS10203747B2Power supply for data processingEnergy efficient computingParallel computingEnergy expenditure

An apparatus for selecting a function includes a comparison module that compares energy consumption characteristics of a plurality of processors available for execution of a function, where each energy consumption characteristic varies as a function of function size. The apparatus includes a selection module that selects, based on the size of the function, a processor from the plurality of processors with a lowest energy consumption for execution of the function. The apparatus includes an execution module that executes the function on the selected processor.

Owner:LENOVO GLOBAL TECH INT LTD

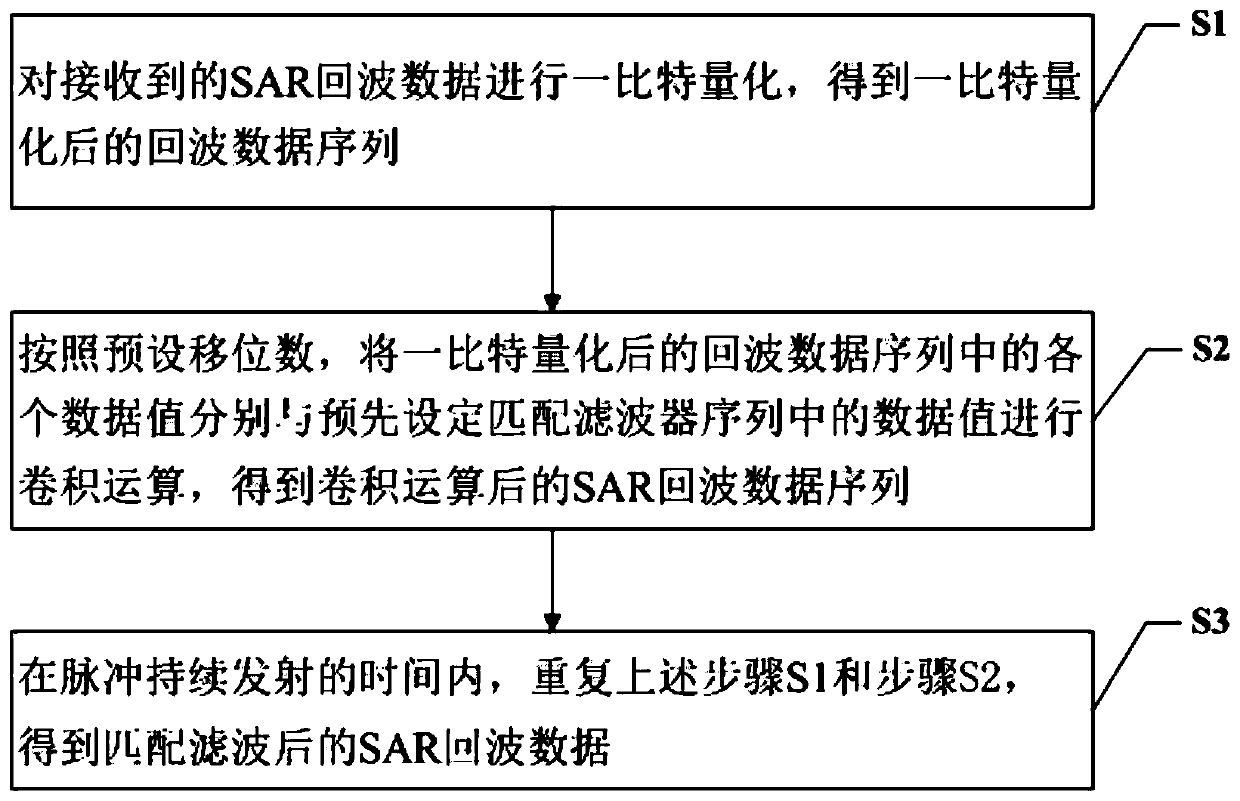

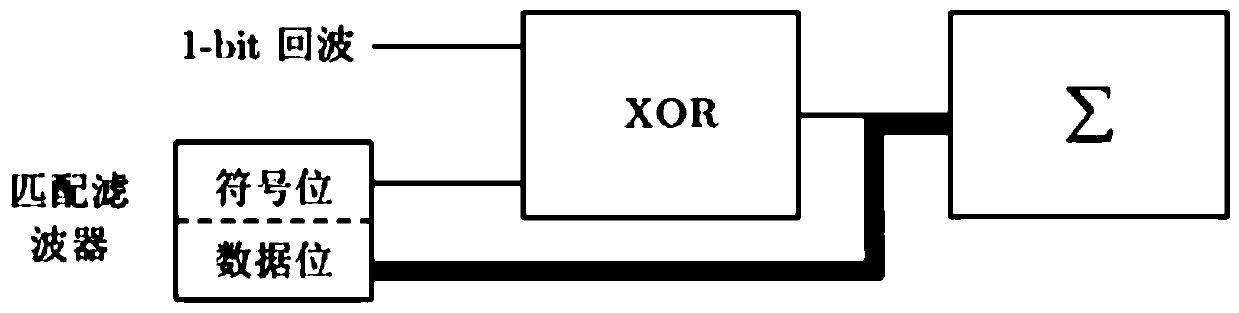

Method and system for processing one-bit SAR echo data

ActiveCN110045375AReduce the amount of calculationReduce complexityRadio wave reradiation/reflectionImaging qualityMatched filter

The invention provides a method and a system for processing one-bit SAR echo data. The method comprises the following steps: obtaining one-bit quantization on received SAR echo data to obtain an echosequence subject to one-bit quantization; and respectively performing convolution operation on data values in the echo sequence subject to one-bit quantization and the data values in a matched filtersequence to obtain an SAR echo data sequence subject to convolution operation. The calculated amount and complexity of the convolution operation are reduced, the cost of an SAR system is reduced, theoperation speed and efficiency of the system are improved, and meanwhile the imaging quality of an SAR image subject to matched filtering is ensured, therefore having better instantaneity and higherperformance per watt on data processing.

Owner:SHENZHEN UNIV

An Energy-Consumption-Oriented Cloud Workflow Scheduling Optimization Method

InactiveCN105260005BImplement energy consumption calculation methodKeep Execution Time EfficientResource allocationPower supply for data processingParallel computingCloud workflow

The invention discloses an energy consumption-oriented cloud workflow scheduling optimization method. The method comprises the following steps: (1) establishing energy consumption-oriented cloud workflow process model and resource model; (2) calculating a task priority; (3) taking out a task t with highest priority from a task set T, finding out a virtual machine set VMt capable of executing the task t, and calculating energy consumption for distributing the task t to each virtual machine in the VMt and completing all distributed tasks; (4) finding out a vm with minimal energy consumption, if only one vm has the minimal energy consumption, distributing the task t to the vm, and if a plurality of vms have the minimal energy consumption, distributing the task t to the vm with characteristics that the vm has the minimal energy consumption and a host in which the vm is located has highest performance per watt; deleting the task t from the task set T, and if the task set T is not null, going to the step (3), or otherwise, going to the step (5); and (5) outputting a workflow scheduling scheme. According to the scheduling optimization method provided by the invention, an energy consumption factor is considered, so that the energy consumption for task processing by the host is effectively reduced while workflow execution time efficiency is kept.

Owner:南京喜筵网络信息技术有限公司

Openpower system performance and power consumption ratio optimization display system

PendingCN109871112AOptimization and display performanceOptimize and display power consumptionDigital data processing detailsHardware monitoringControl powerPerformance computing

The invention discloses an Openpower system performance and power consumption ratio optimization display system. Including BMC module, OS operating system, Performance service module, Performance calculation algorithm module, the BMC controls a power consumption module and a BMC Web display module; wherein the OS operating system is connected with the performance service module and the performancecalculation algorithm module, the performance service module and the performance calculation algorithm module are connected with the BMC module, and the BMC module is further connected with the BMC control power consumption module and the BMC Web display module. Via the above-mentioned technical scheme, the above, According to the invention, a reference system for the performance-power consumption ratio is provided for the user, the user can set the target of the performance-power consumption ratio, then the real-time performance-power consumption ratio can be dynamically displayed, the server can achieve the optimization and display of the balance of the performance and the power consumption, and the user can freely select the target to meet the actual requirement of the data center.

Owner:SUZHOU POWERCORE TECH CO LTD

Method and mobile terminal for adjusting wifi scanning frequency based on motion state

ActiveCN103458440BGuaranteed to workImprove performance per power ratioPower managementNetwork topologiesComputer moduleComputer terminal

Owner:威海神舟信息技术研究院有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com