Patents

Literature

45 results about "Aggregate level" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Aggregate level cost method refers to an actuarial accounting method that tries to match and allocate the cost and benefit of a pension plan over the span of the plan's life. The Aggregate Level Cost Method typically takes the present value of benefits minus asset value and spreads the excess amount over the future payroll of the participants.

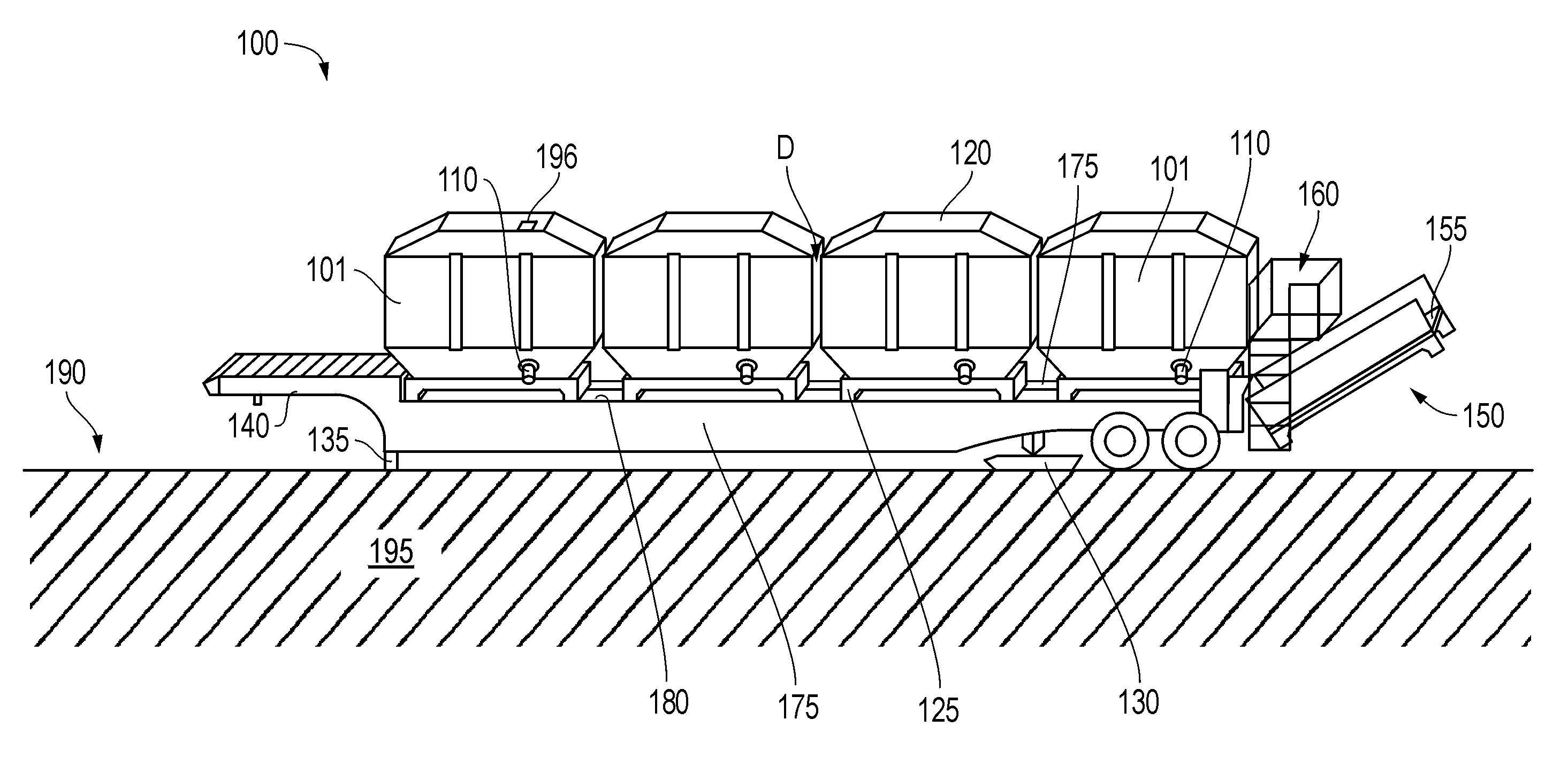

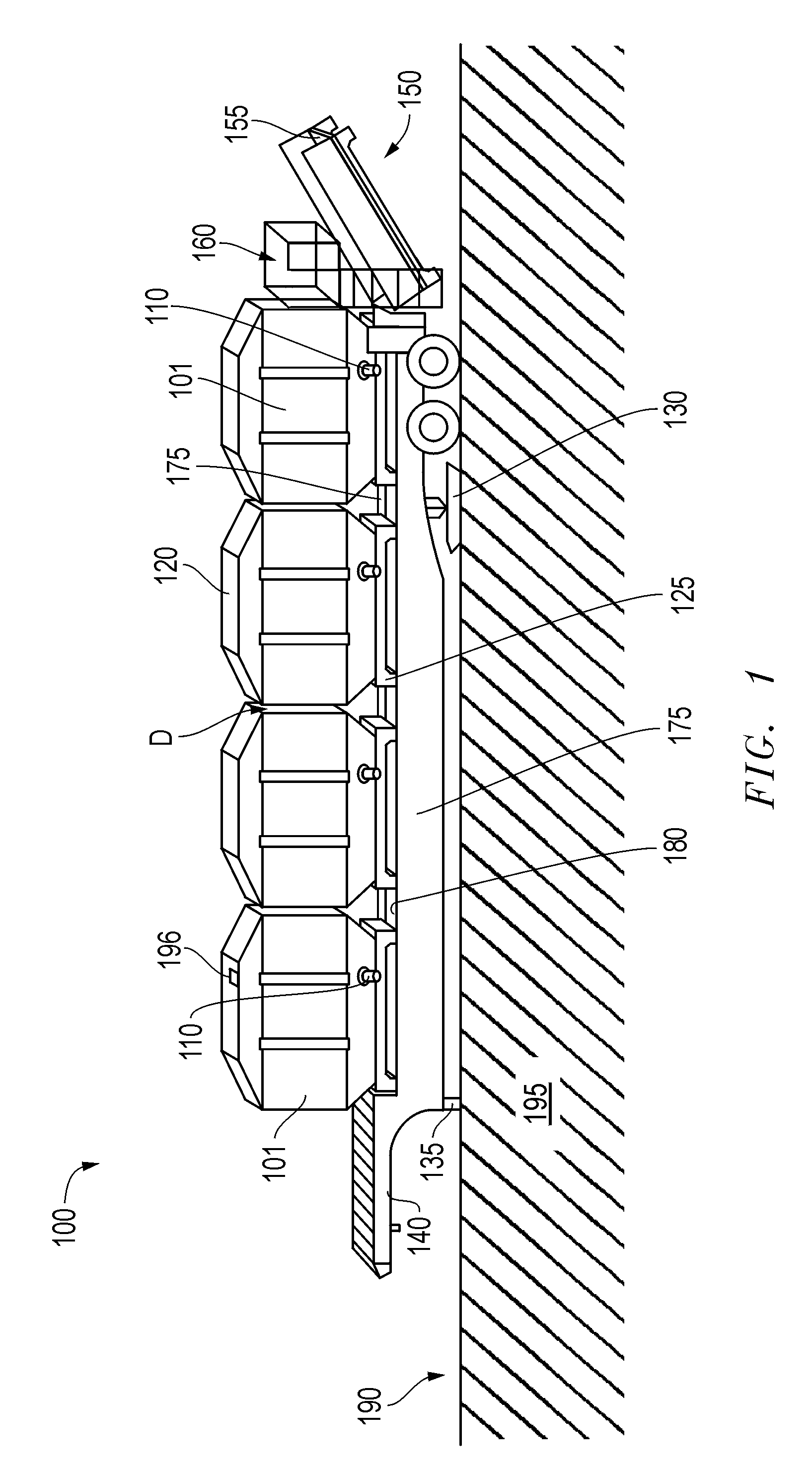

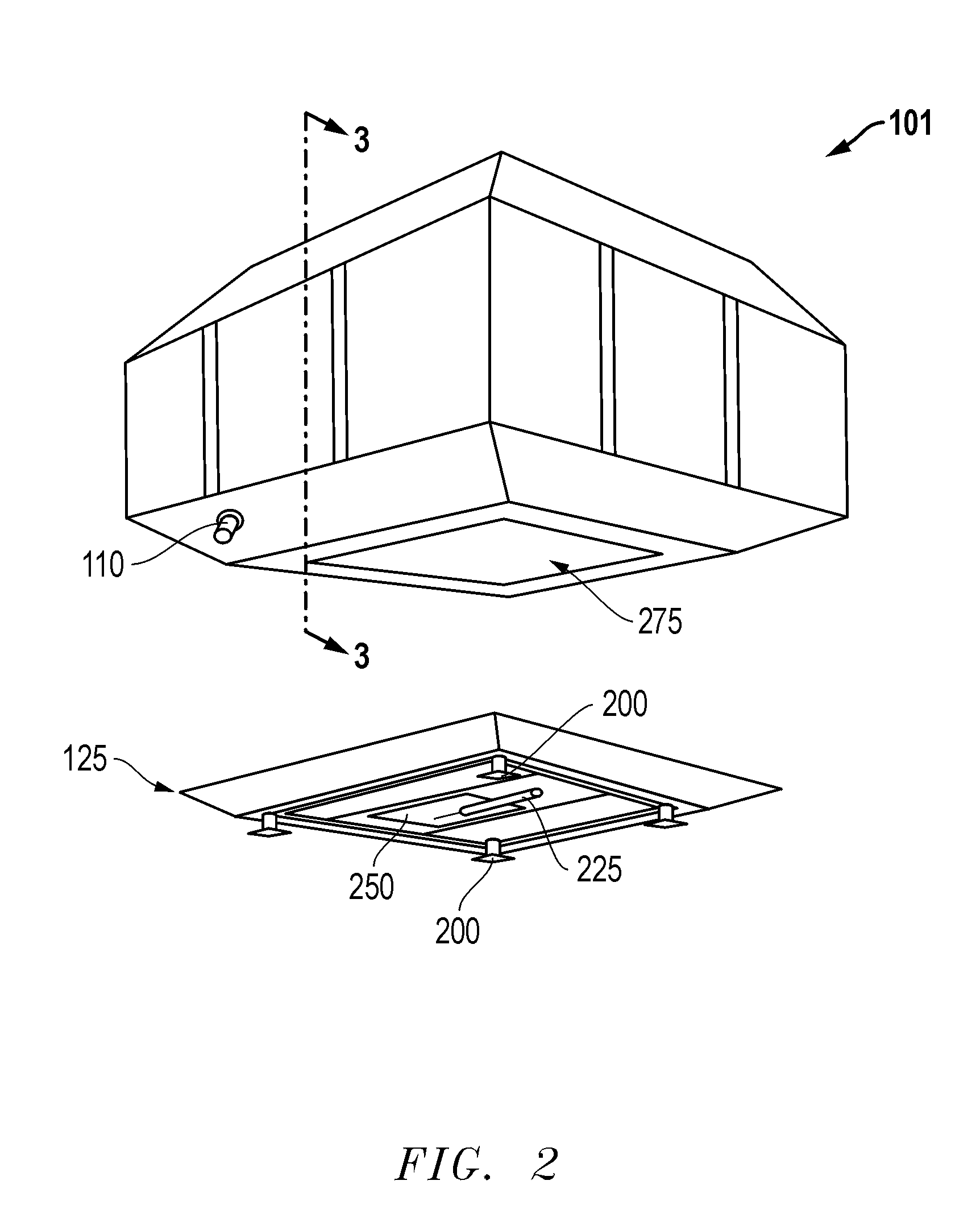

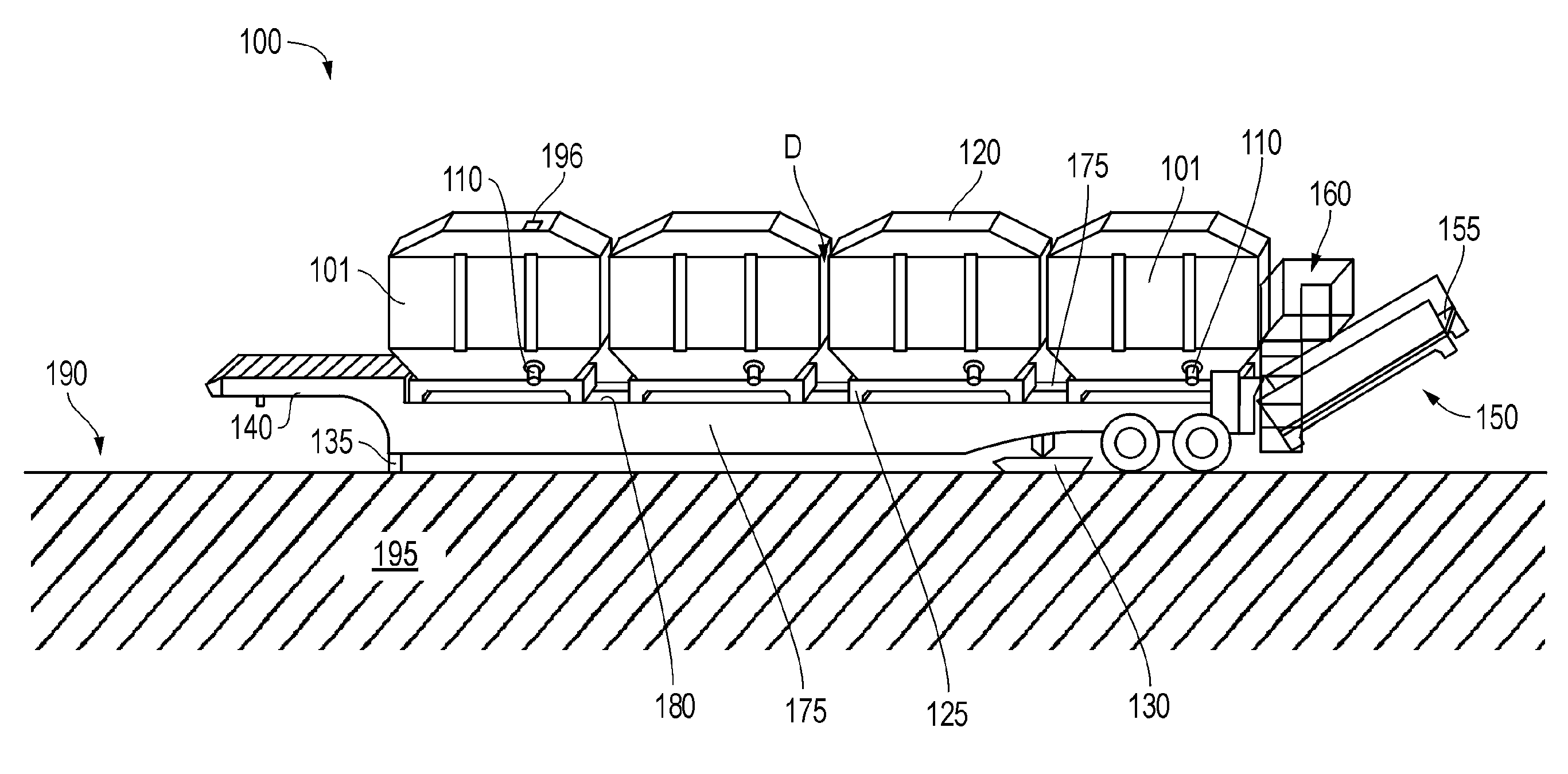

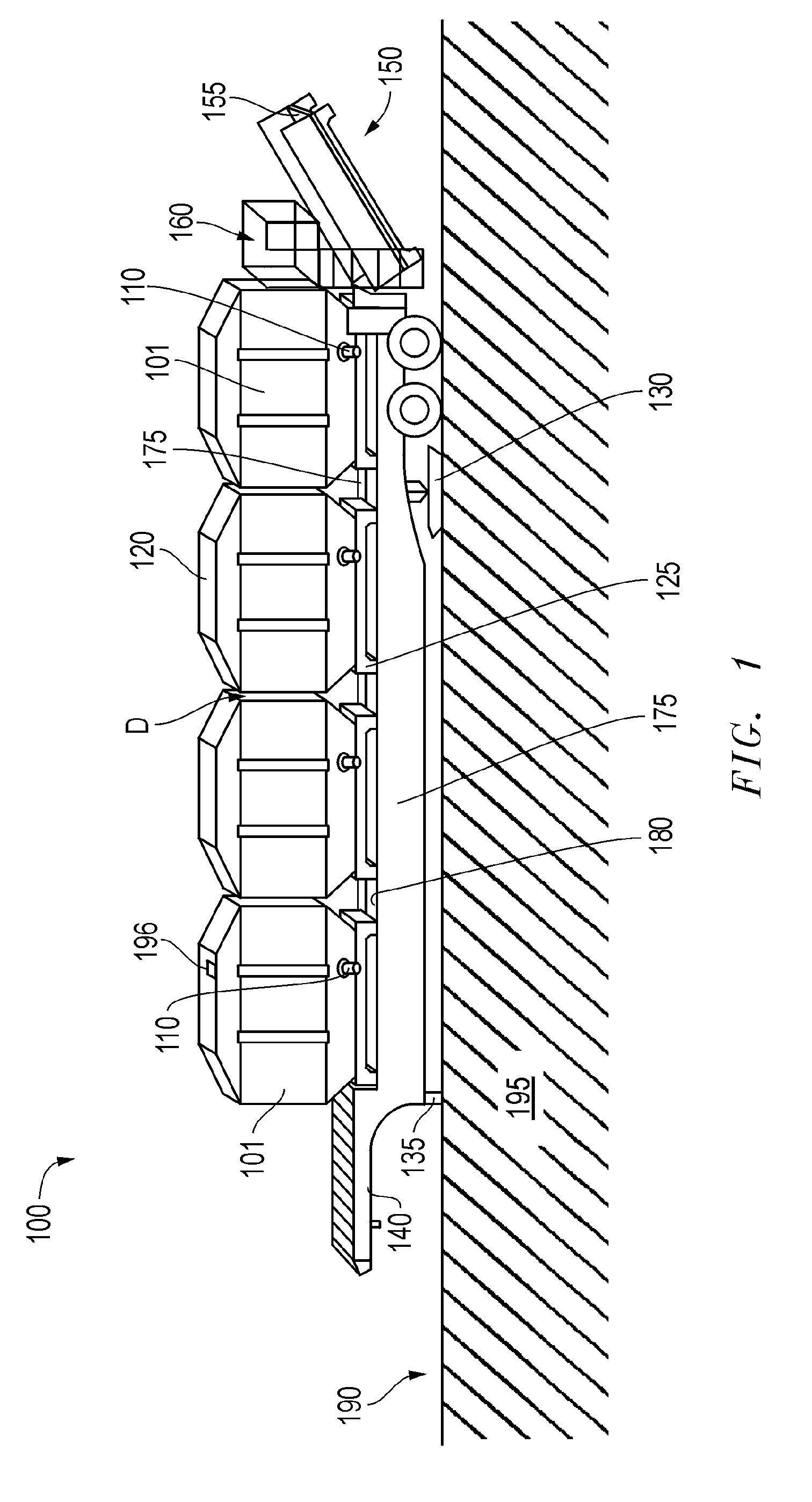

Aggregate Delivery Unit

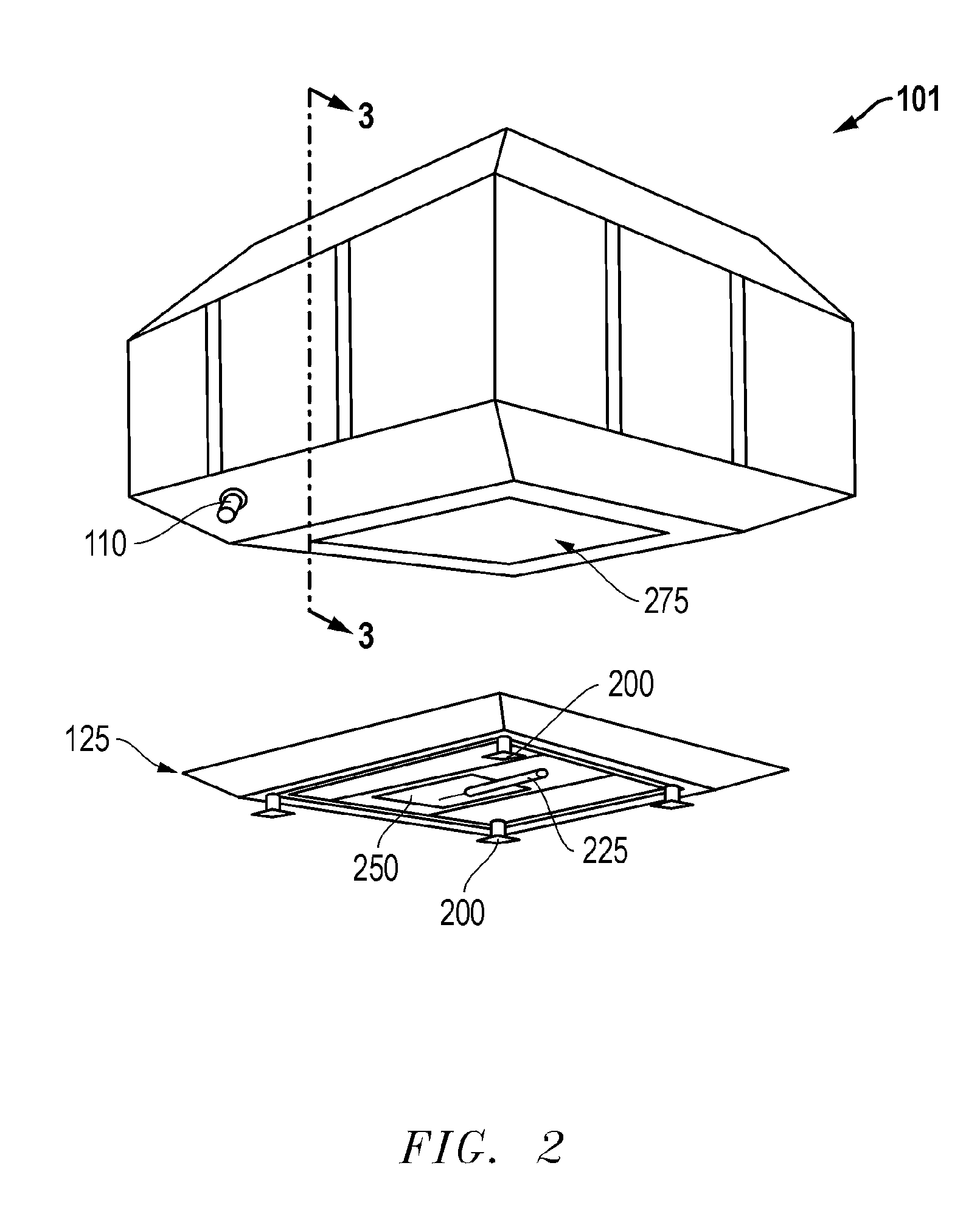

A delivery unit for providing aggregate to a worksite, such as a wellsite location. The unit may include a mobile chassis for accommodating a plurality of modular containers which in turn house the aggregate. As such, a weight measurement device may be located between each container and the chassis so as to monitor aggregate levels within each container over time. The units may be particularly well suited for monitoring and controlling aggregate delivery during a fracturing operation at an oilfield. The modular containers may be of an interchangeable nature. Furthermore, a preferably wireless control device may be provided for monitoring and directing aggregate delivery from a relatively remote location.

Owner:SCHLUMBERGER TECH CORP

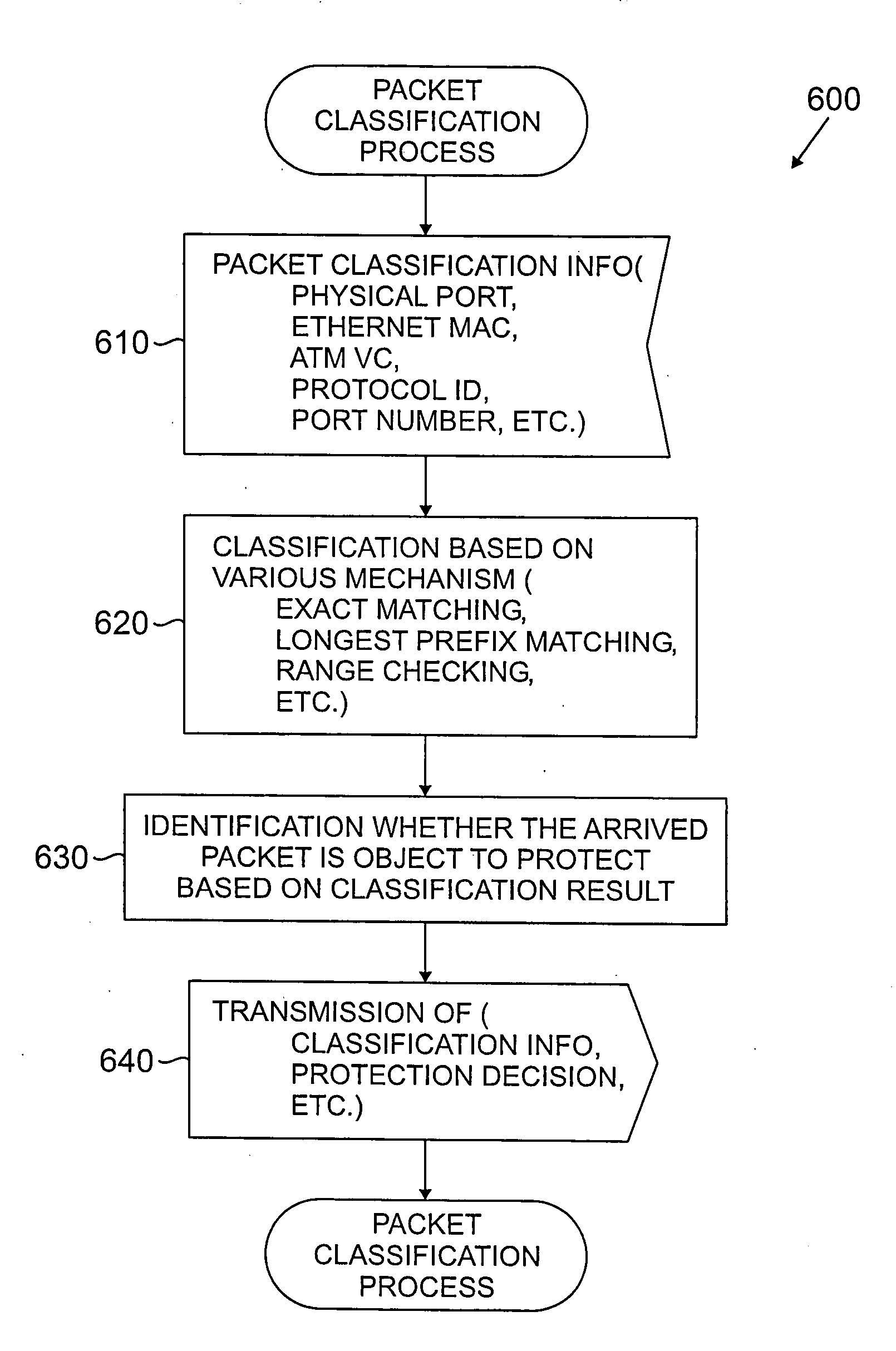

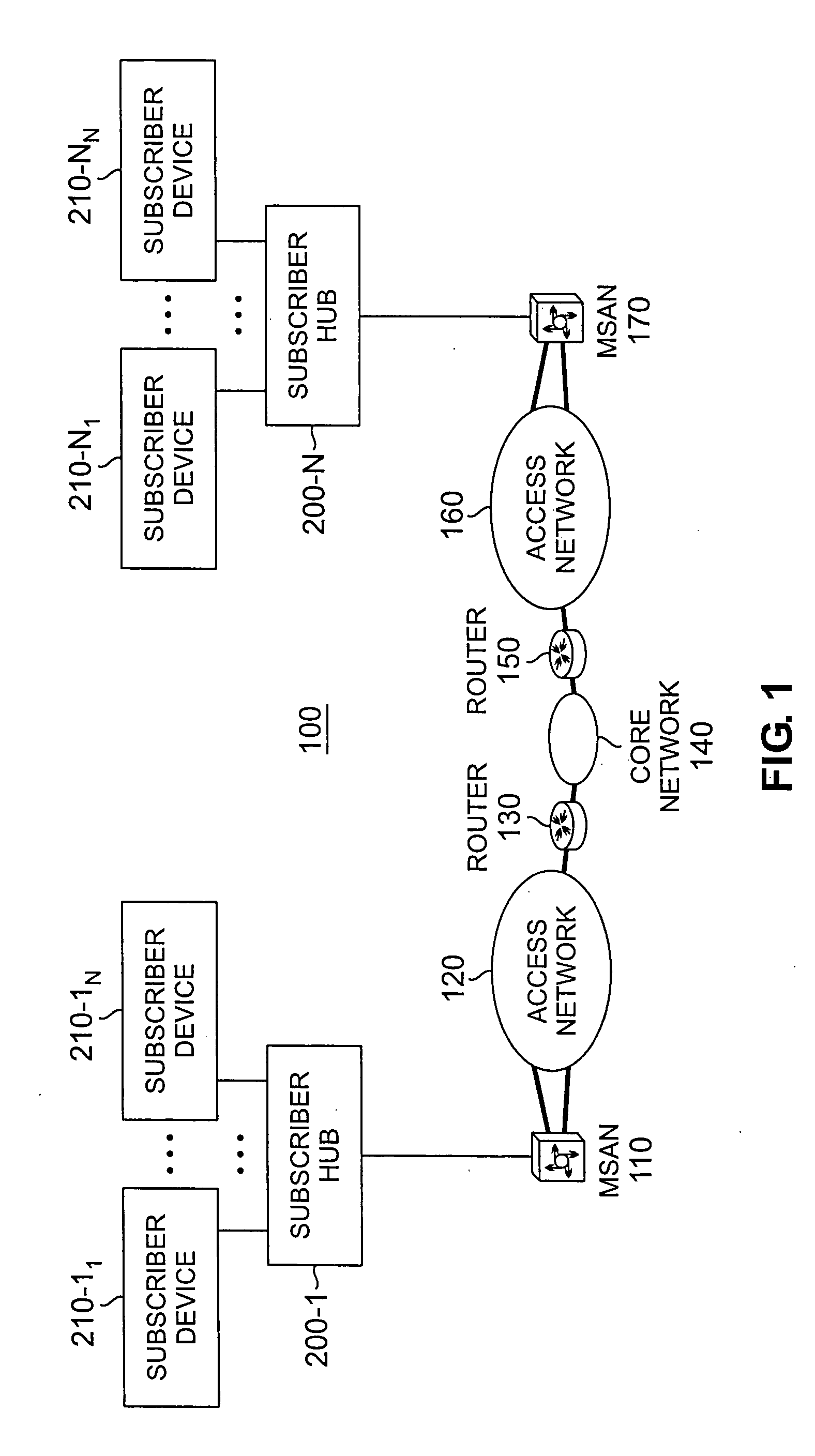

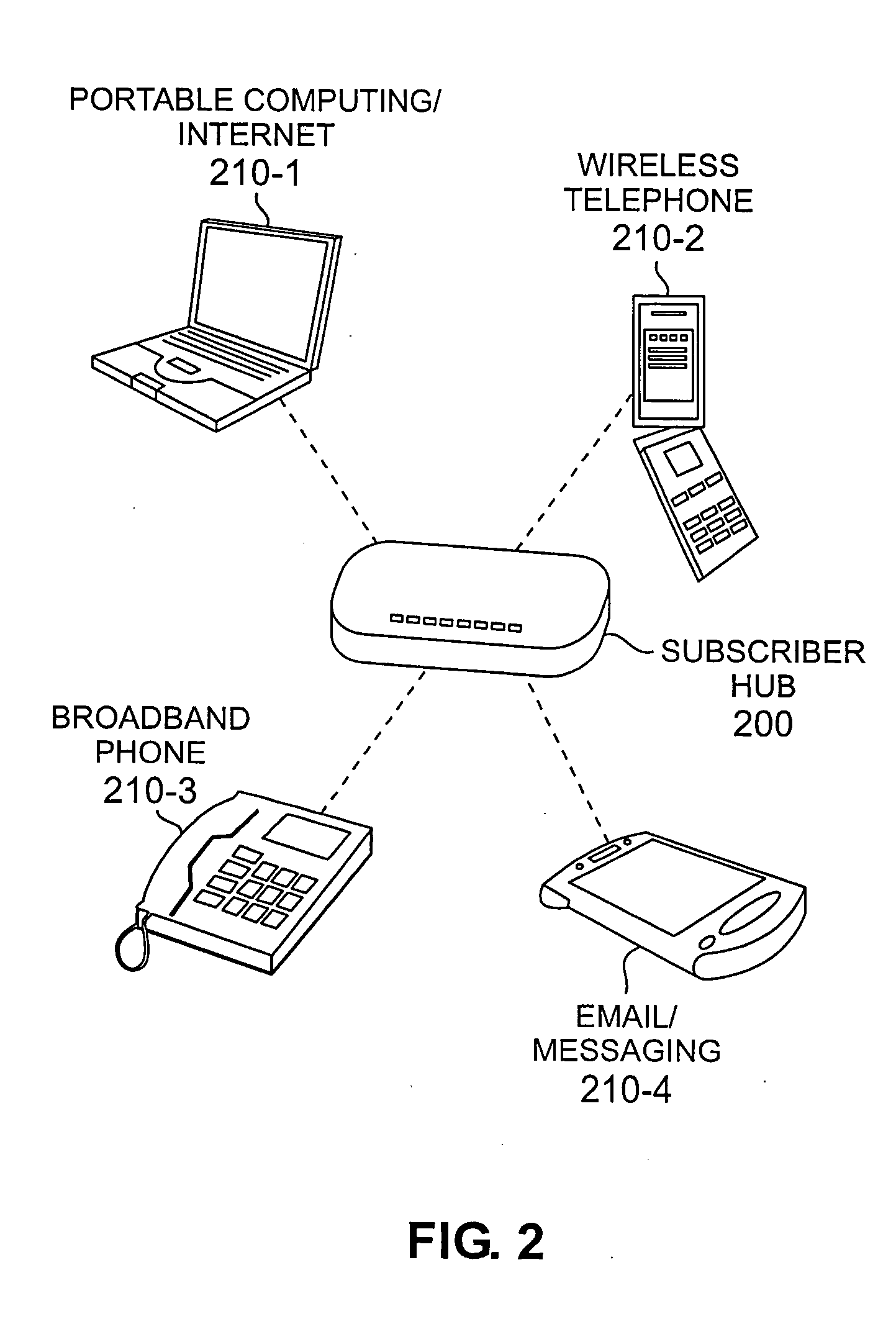

Method and apparatus for per-service fault protection and restoration in a packet network

InactiveUS20060013210A1Quickly and efficiently restoredLower Level RequirementsError preventionTransmission systemsService flowTraffic capacity

A method and apparatus are disclosed for per-service flow protection and restoration of data in one or more packet networks. The disclosed protection and restoration techniques allow traffic to be prioritized and protected from the aggregate level down to a micro-flow level. Thus, protection can be limited to those services that are fault sensitive. Protected data is duplicated over a primary path and one or more backup data paths. Following a link failure, protected data can be quickly and efficiently restored without significant service interruption. A received packet is classified at each end point based on information in a header portion of the packet, using one or more rules that determine whether the received packet should be protected. At an ingress node, if the packet classification determines that the received packet should be protected, then the received packet is transmitted on at least two paths. At an egress node, if the packet classification determines that the received packet is protected, then multiple versions of the received packet are expected and only one version of the received packet is transmitted.

Owner:AGERE SYST INC

Method and apparatus for simplified and flexible selection of aggregate and cross product levels for a data warehouse

InactiveUS6163774ASimpler and powerful selectionSpecification is simpleFinanceResourcesData warehouseAggregate level

A method of defining aggregate levels to be used in aggregation in a data store having one or more dimensions. Levels are defined corresponding to attributes in the dimension, so that data can be aggregated into aggregates corresponding to values of those attributes. The invention provides for the definition of sub-levels which act as levels but define which detail entries in the associated dimension will contribute to the sub-level. The invention also provides for the definition of level groups. A level group can replace a level in a level cross-product and such a cross product is then expanded before aggregation into a set of cross products, each containing one of the level group entries.

Owner:COMP ASSOC THINK INC

Aggregate delivery unity

ActiveUS20140321950A1Large containersVehicle with removable loadingWireless controlMeasurement device

A delivery unit for providing aggregate to a worksite, such as a wellsite location. The unit may include a mobile chassis for accommodating a plurality of modular containers which in turn house the aggregate. As such, a weight measurement device may be located between each container and the chassis so as to monitor aggregate levels within each container over time. The units may be particularly well suited for monitoring and controlling aggregate delivery during a fracturing operation at an oilfield. The modular containers may be of an interchangeable nature. Furthermore, a preferably wireless control device may be provided for monitoring and directing aggregate delivery from a relatively remote location.

Owner:LIBERTY OILFIELD SERVICES LLC

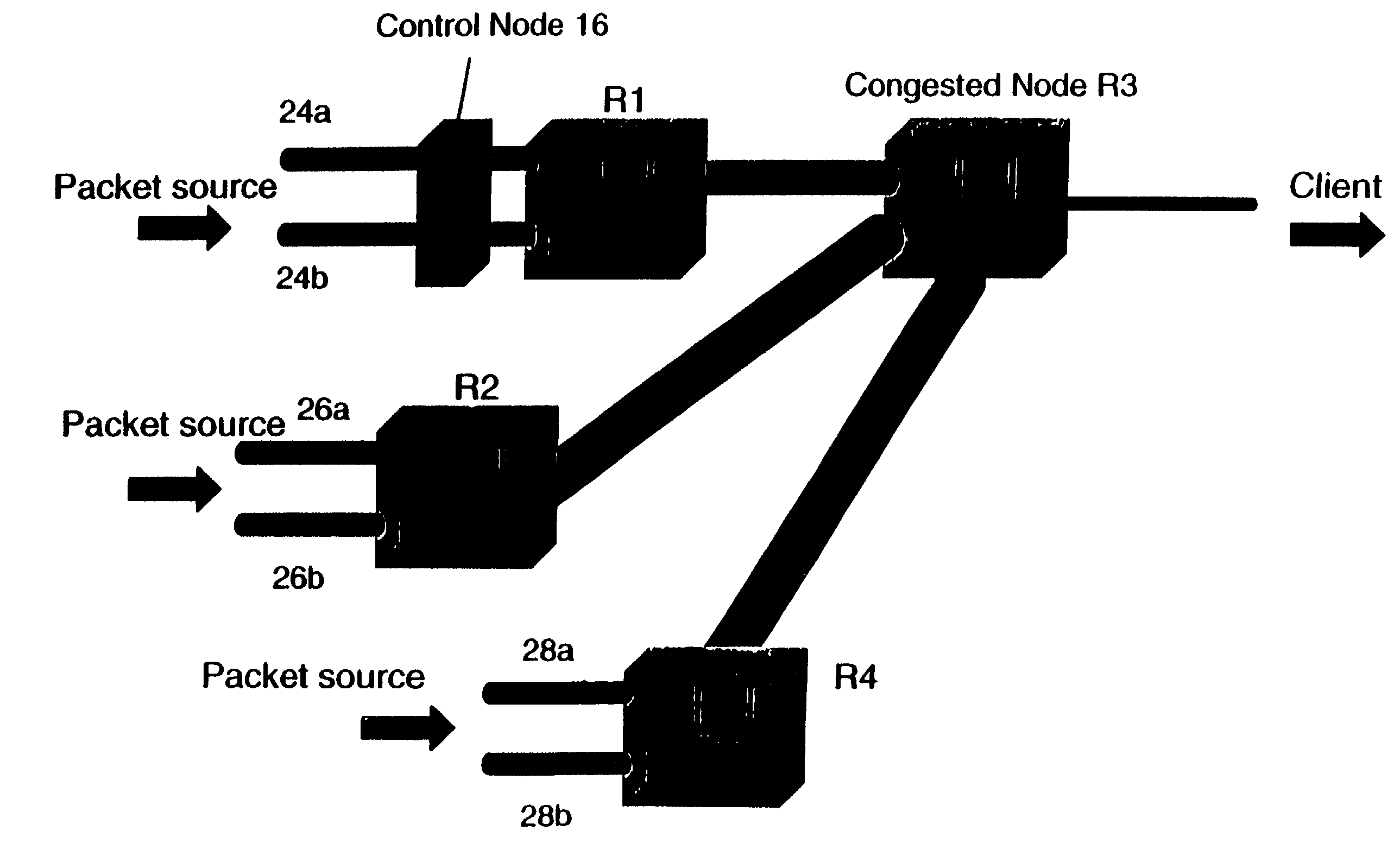

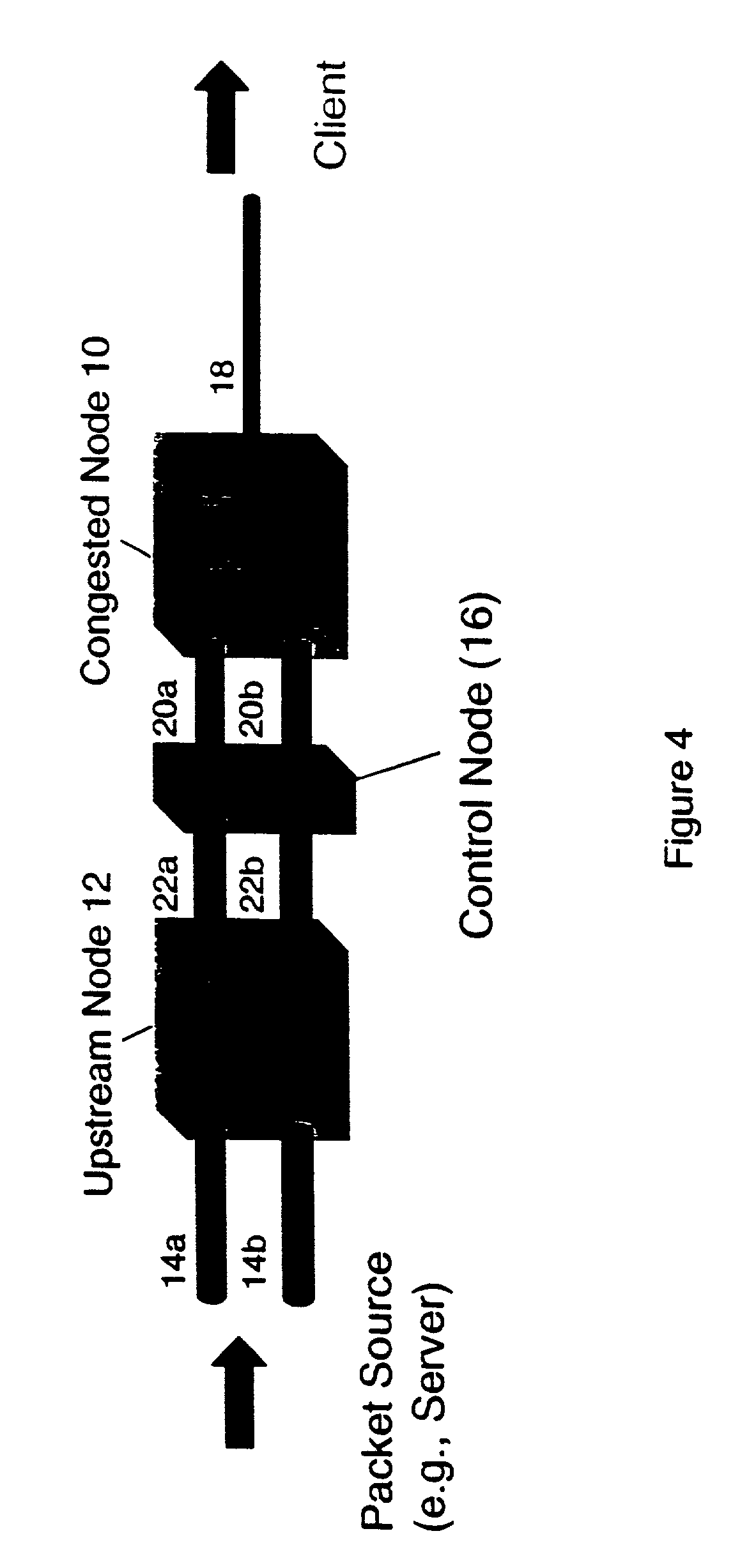

Method for reducing fetch time in a congested communication network

Congestion within a communication is controlled by rate limiting packet transmissions over selected communication links within the network and modulating the rate limiting according to buffer occupancies at control nodes within the network. Preferably, though not necessarily, the rate limiting of the packet transmissions is performed at an aggregate level for all traffic streams utilizing the selected communication links. The rate limiting may also be performed dynamically in response to measured network performance metrics; such as the throughput of the selected communication links input to the control points and / or the buffer occupancy level at the control points. The network performance metrics may be measured according to at least one of: a moving average of the measured quantity, a standard average of the measured quantity, or another filtered average of the measured quantity. The rate limiting may be achieved by varying an inter-packet delay time over the selected communication links at the control points. The control points themselves may be located upstream or even downstream (or both) of congested nodes within the network and need only be located on only a few of a number of communication links that are coupled to a congested node within the network. More generally, the control points need only be associated with a fraction of the total number of traffic streams applied to a congested node within the network.

Owner:RIVERBED TECH LLC

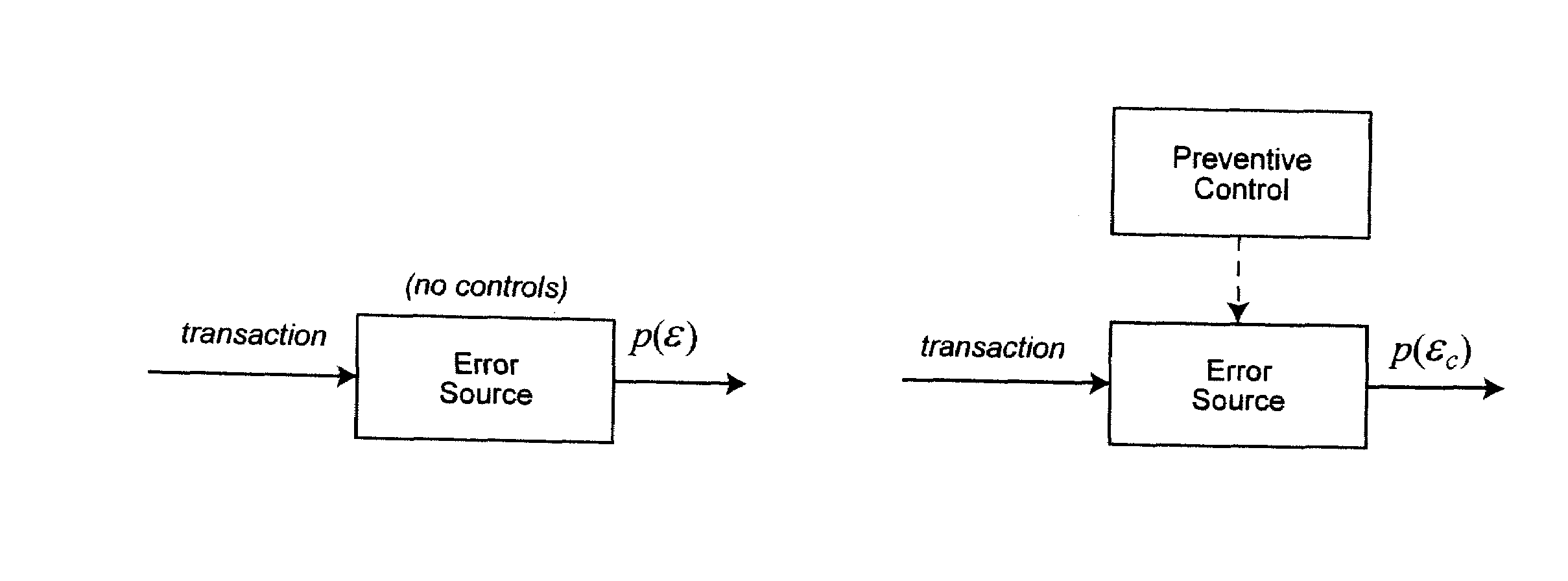

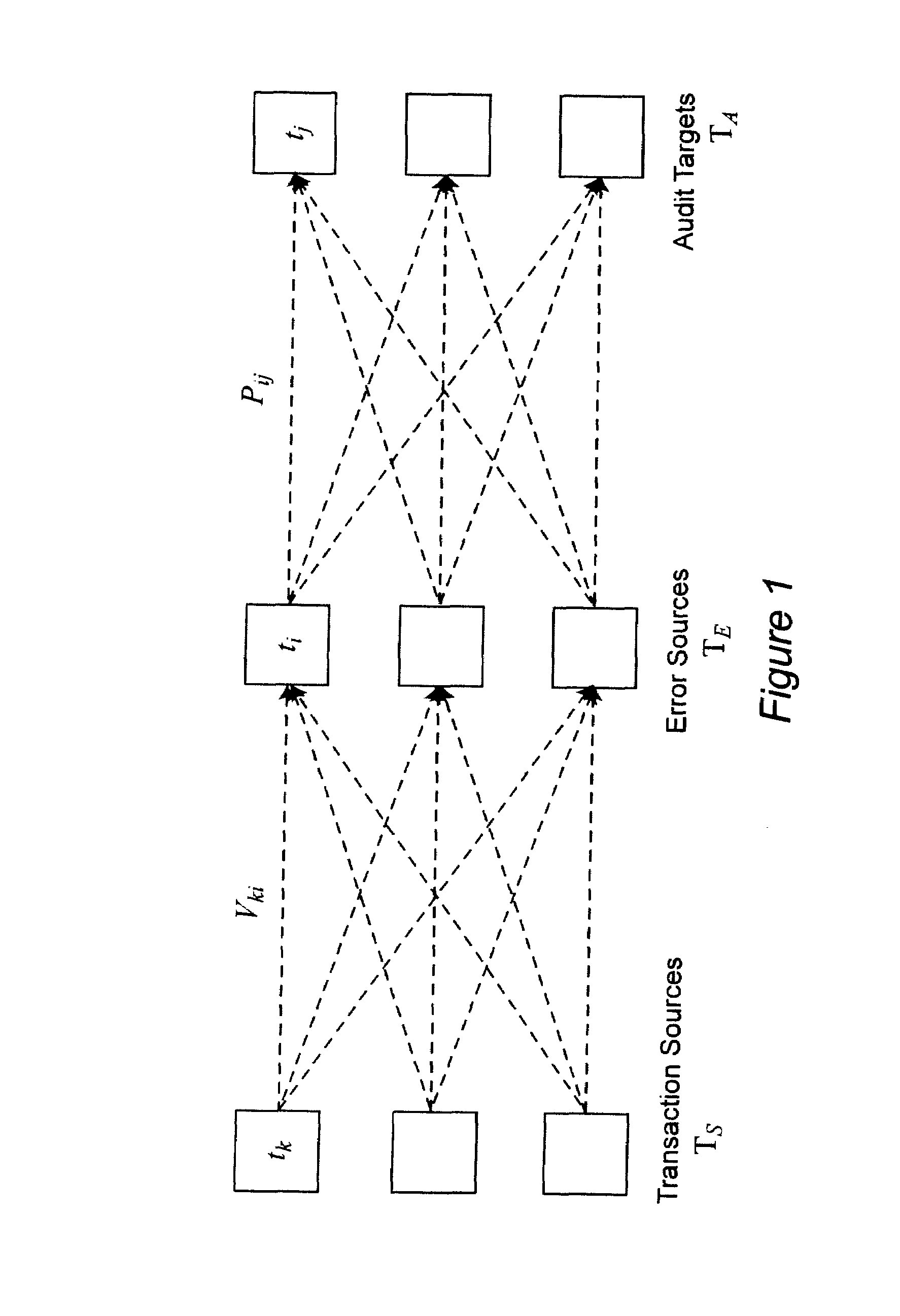

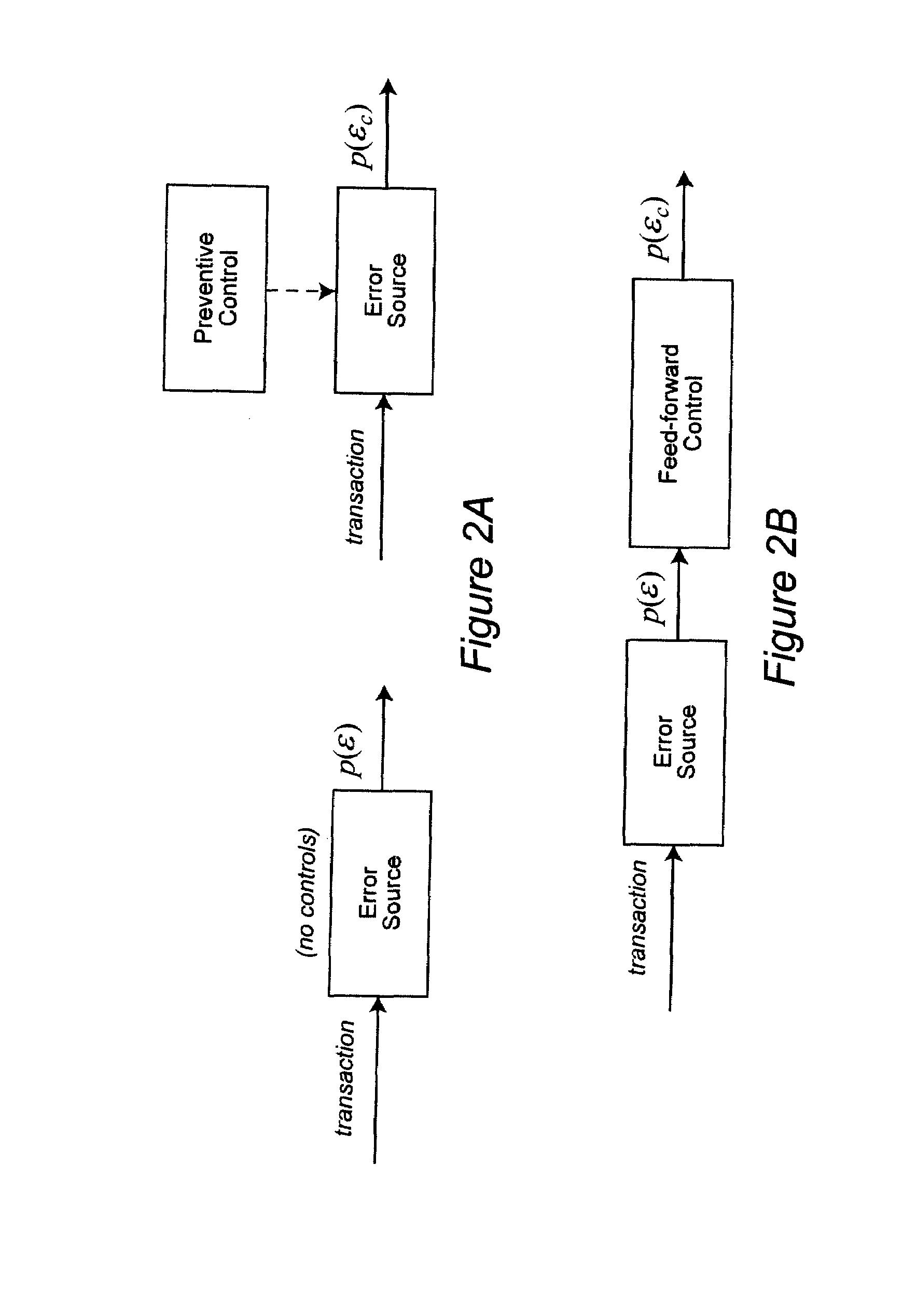

Data quality management using business process modeling

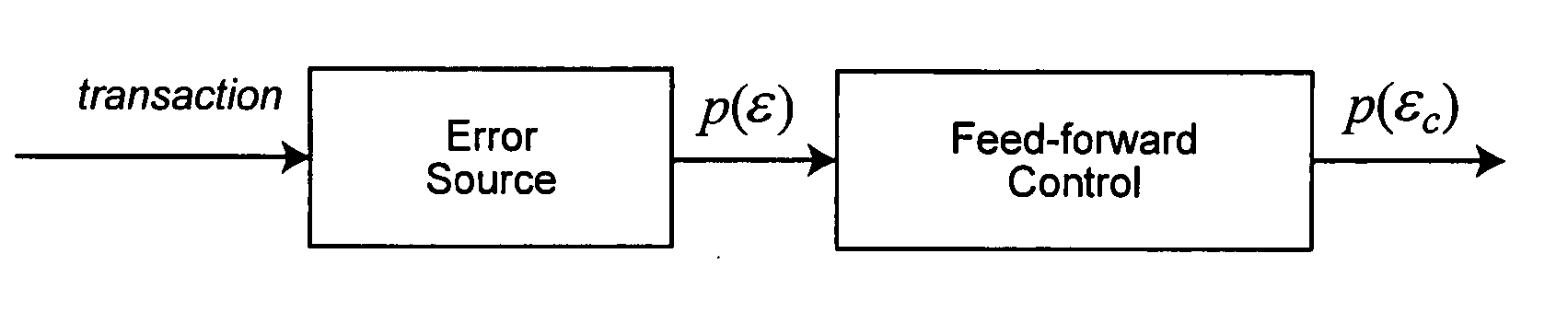

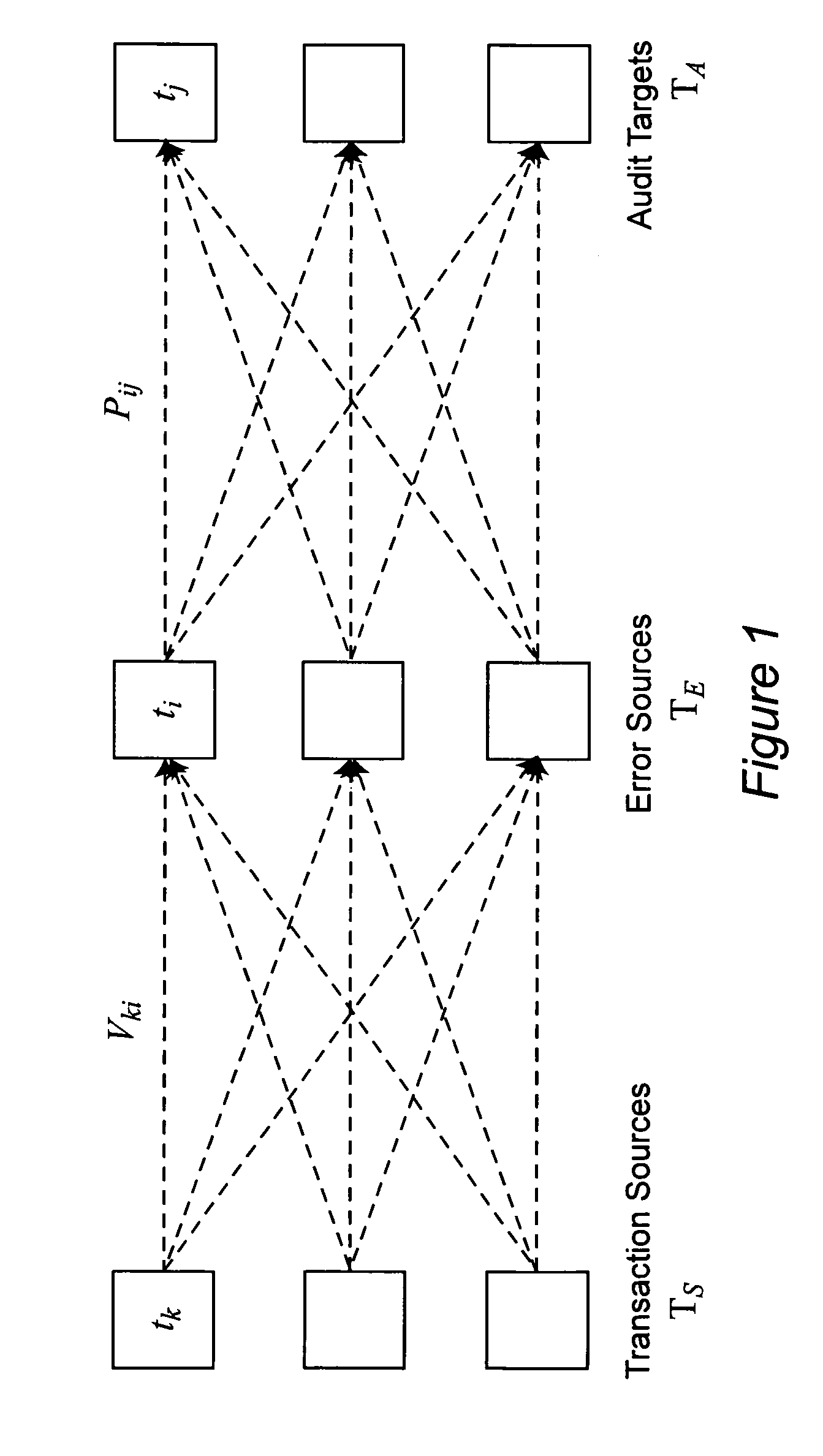

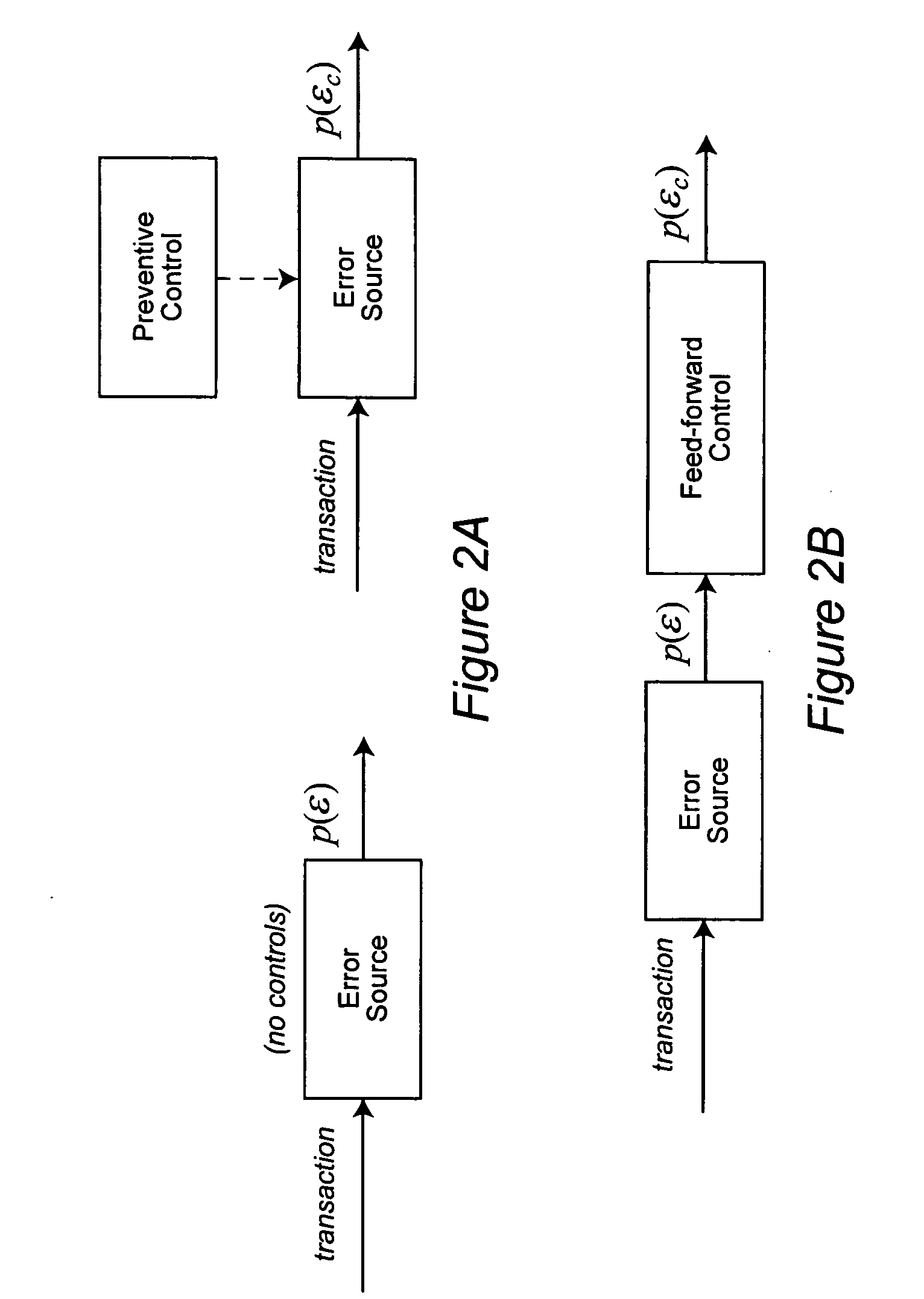

InactiveUS20070198312A1Impacts the overall quality of sales dataLow costResourcesComplex mathematical operationsInformation processingDashboard

A business process modeling framework is used for data quality analysis. The modeling framework represents the sources of transactions entering the information processing system, the various tasks within the process that manipulate or transform these transactions, and the data repositories in which the transactions are stored or aggregated. A subset of these tasks is associated as the potential error introduction sources, and the rate and magnitude of various error classes at each such task are probabilistically modeled. This model can be used to predict how changes in transactions volumes and business processes impact data quality at the aggregate level in the data repositories. The model can also account for the presence of error correcting controls and assess how the placement and effectiveness of these controls alter the propagation and aggregation of errors. Optimization techniques are used for the placement of error correcting controls that meet target quality requirements while minimizing the cost of operating these controls. This analysis also contributes to the development of business “dashboards” that allow decision-makers to monitor and react to key performance indicators (KPIs) based on aggregation of the transactions being processed. Data quality estimation in real time provides the accuracy of these KPIs (in terms of the probability that a KPI is above or below a given value), which may condition the action undertaken by the decision-maker.

Owner:DOORDASH INC

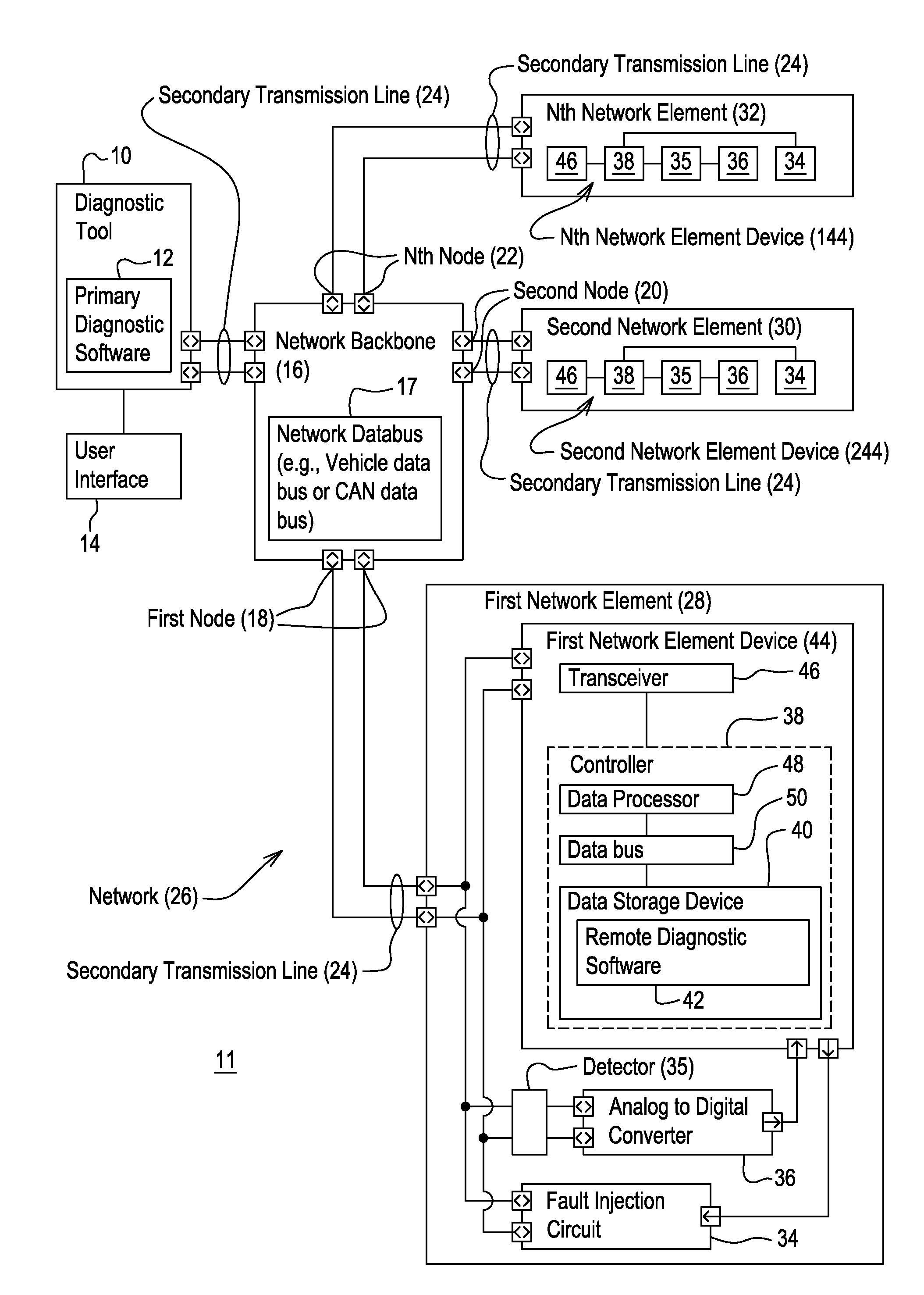

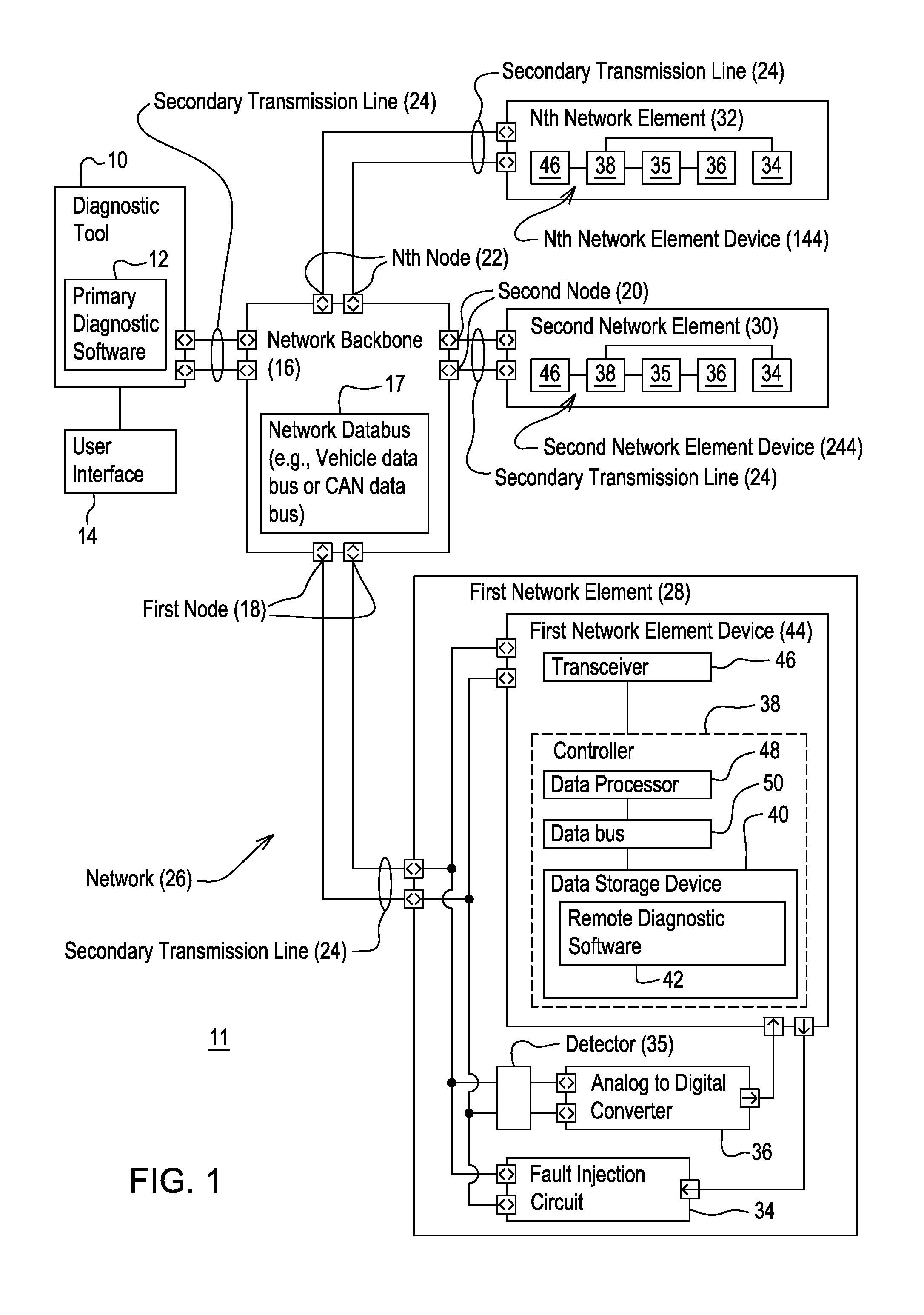

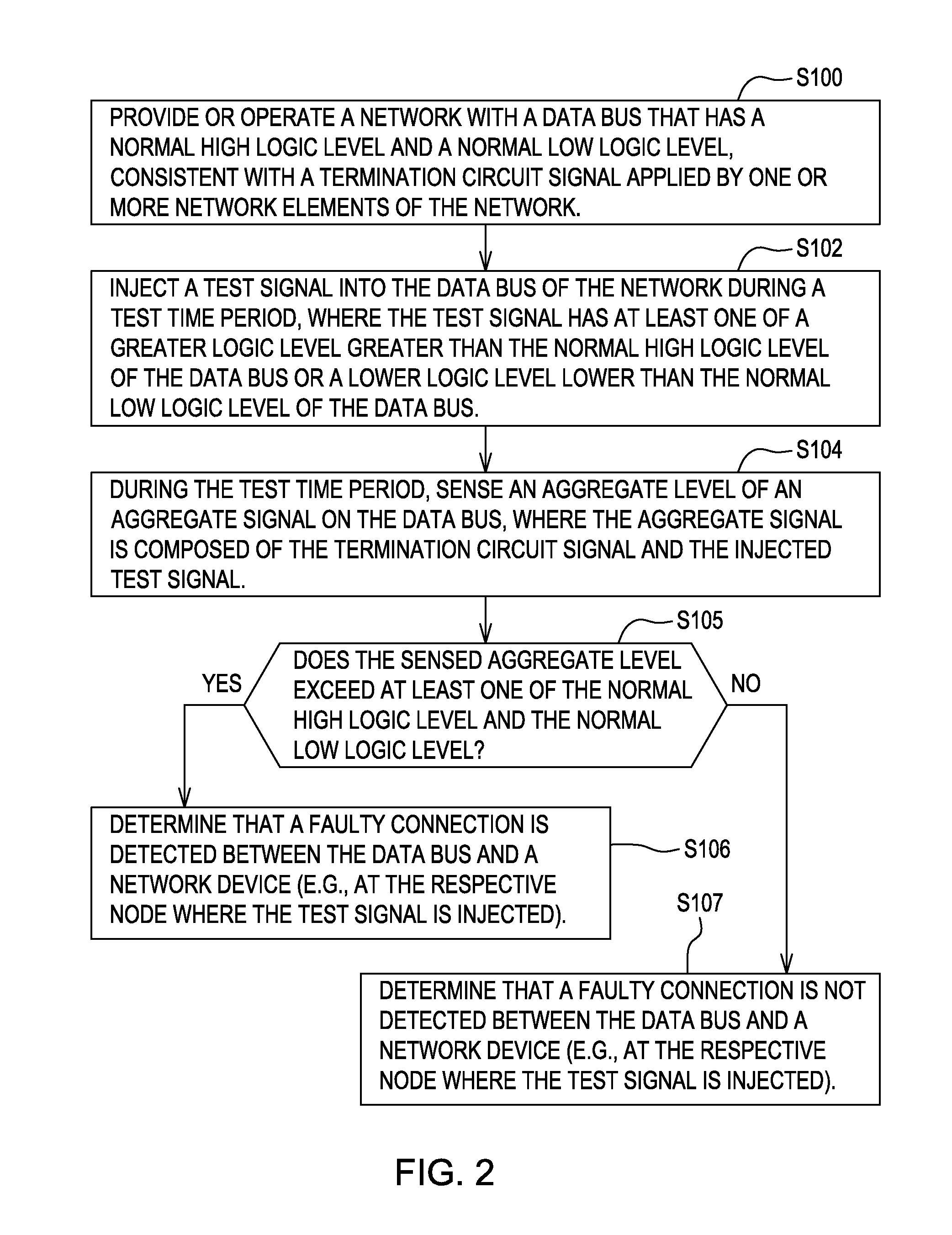

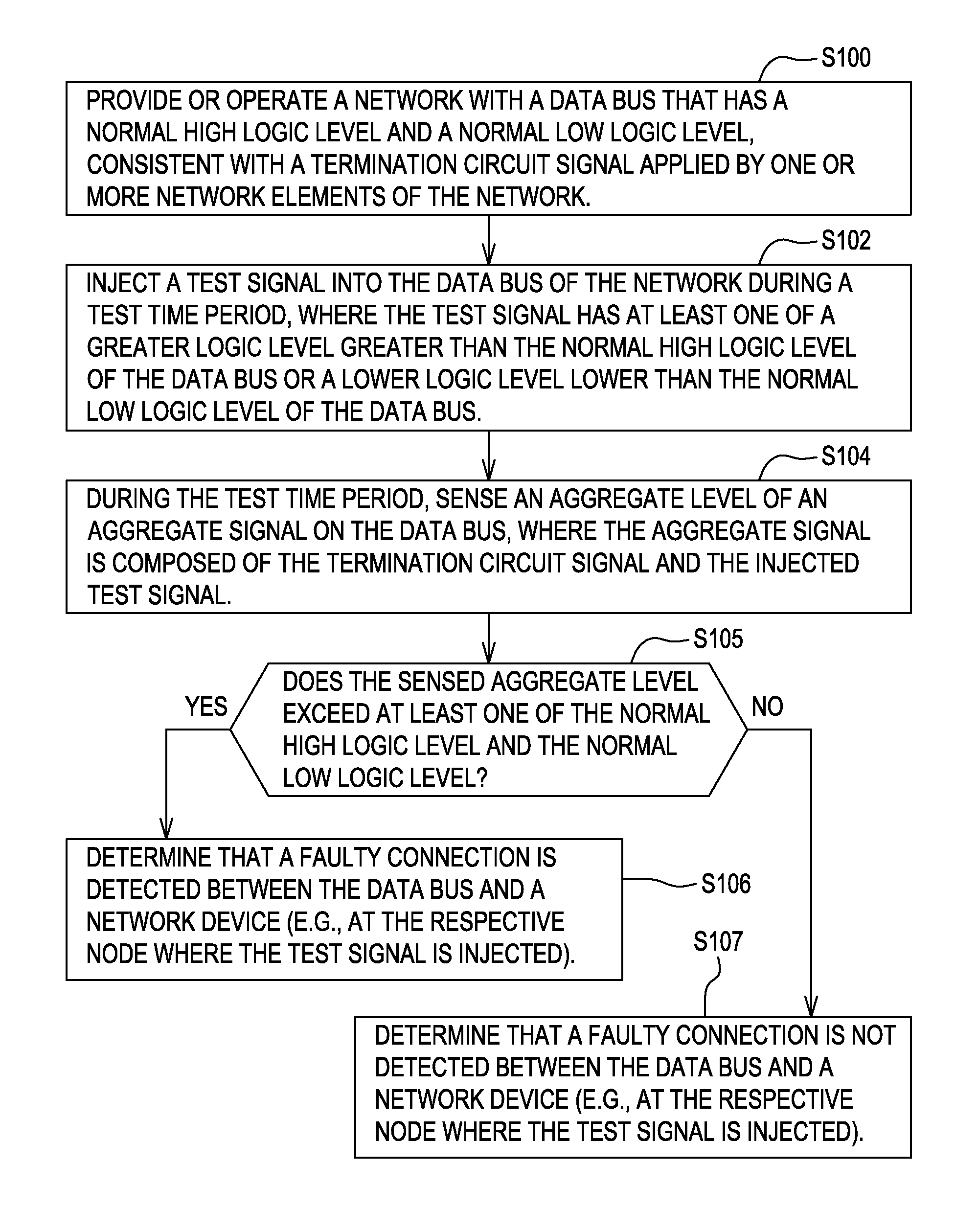

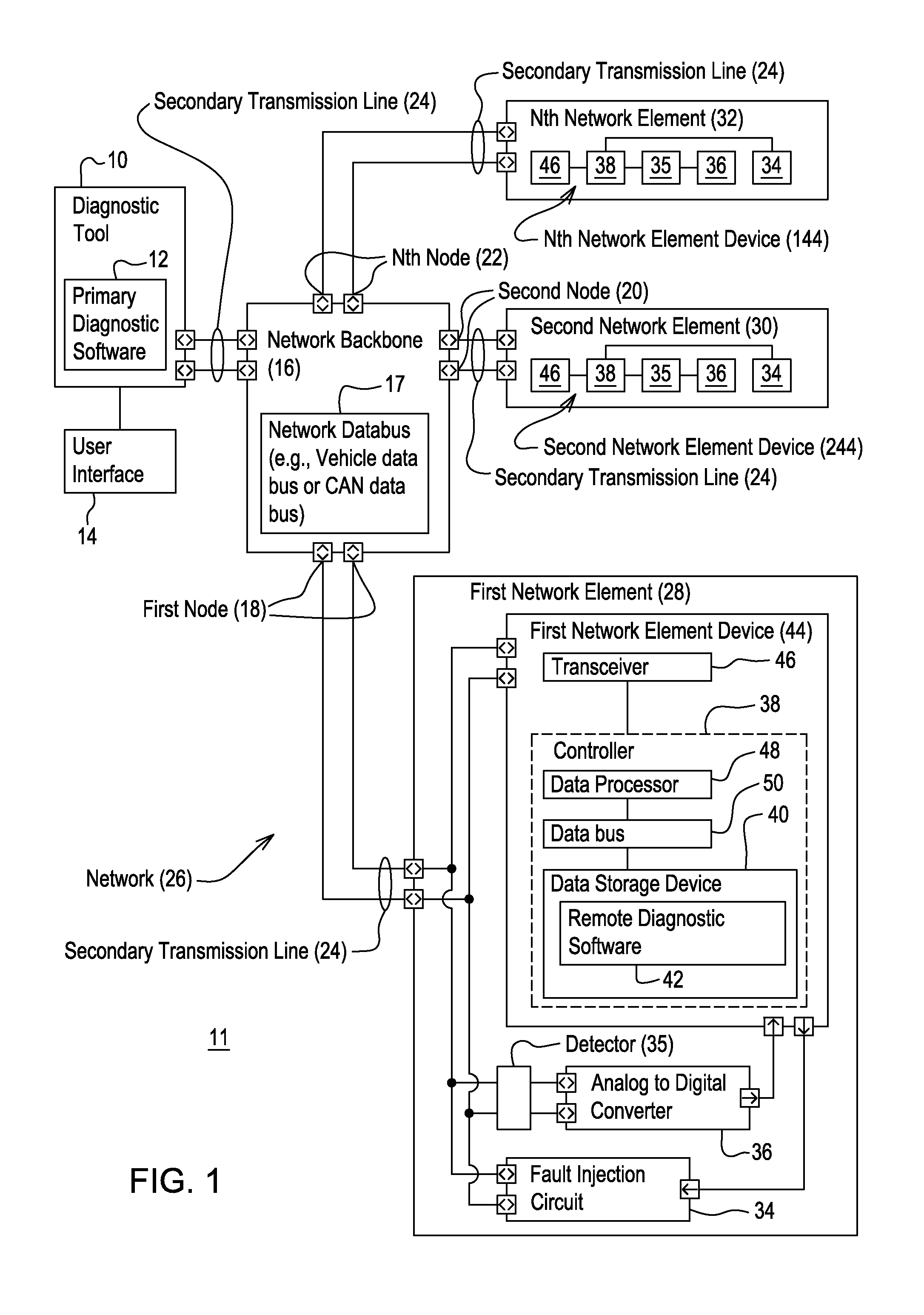

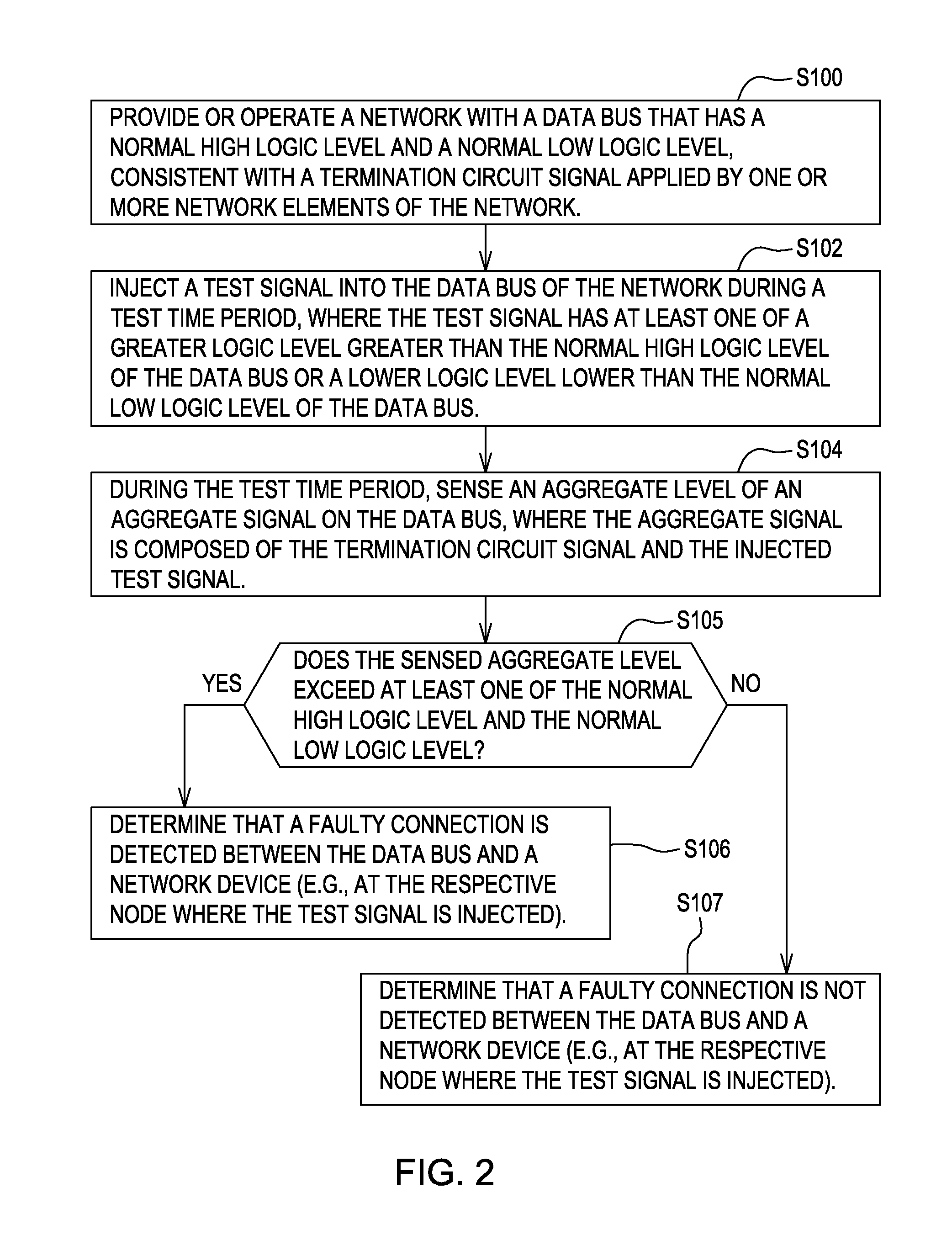

Method and system for diagnosing a fault or open circuit in a network

A fault injection circuit injects a test signal into a data bus with a normal high logic level and a normal low logic level. The test signal has a greater logic level greater than the normal high logic level of the data bus or a lower logic level lower than the normal low logic level of the data bus. An analog-to-digital converter is coupled to a voltage level detector for sensing an aggregate level of an aggregate signal on the data bus. The aggregate signal is composed of the termination circuit signal and the test signal. A diagnostic tool determines whether a faulty connection between the data bus and a network device exists, where the sensed aggregate level exceeds at least one of the normal high logic level and the normal low logic level.

Owner:DEERE & CO

Data quality management using business process modeling

InactiveUS20080195440A1Impacts the overall quality of sales data.Low costResourcesComplex mathematical operationsDashboardInformation processing

A business process modeling framework is used for data quality analysis. The modeling framework represents the sources of transactions entering the information processing system, the various tasks within the process that manipulate or transform these transactions, and the data repositories in which the transactions are stored or aggregated. A subset of these tasks is associated as the potential error introduction sources, and the rate and magnitude of various error classes at each such task are probabilistically modeled. This model can be used to predict how changes in transactions volumes and business processes impact data quality at the aggregate level in the data repositories. The model can also account for the presence of error correcting controls and assess how the placement and effectiveness of these controls alter the propagation and aggregation of errors. Optimization techniques are used for the placement of error correcting controls that meet target quality requirements while minimizing the cost of operating these controls. This analysis also contributes to the development of business “dashboards” that allow decision-makers to monitor and react to key performance indicators (KPIs) based on aggregation of the transactions being processed. Data quality estimation in real time provides the accuracy of these KPIs (in terms of the probability that a KPI is above or below a given value), which may condition the action undertaken by the decision-maker.

Owner:DOORDASH INC

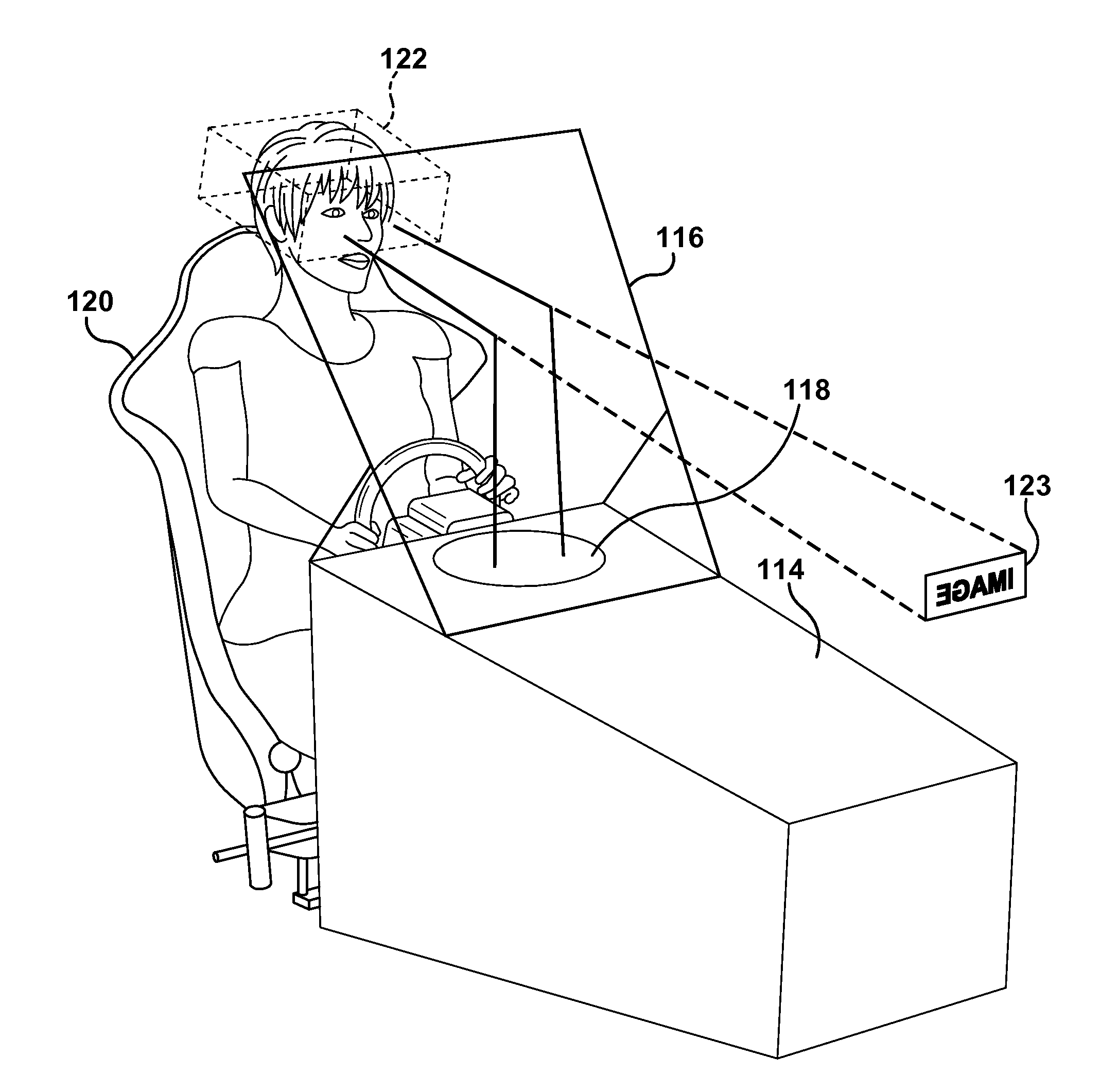

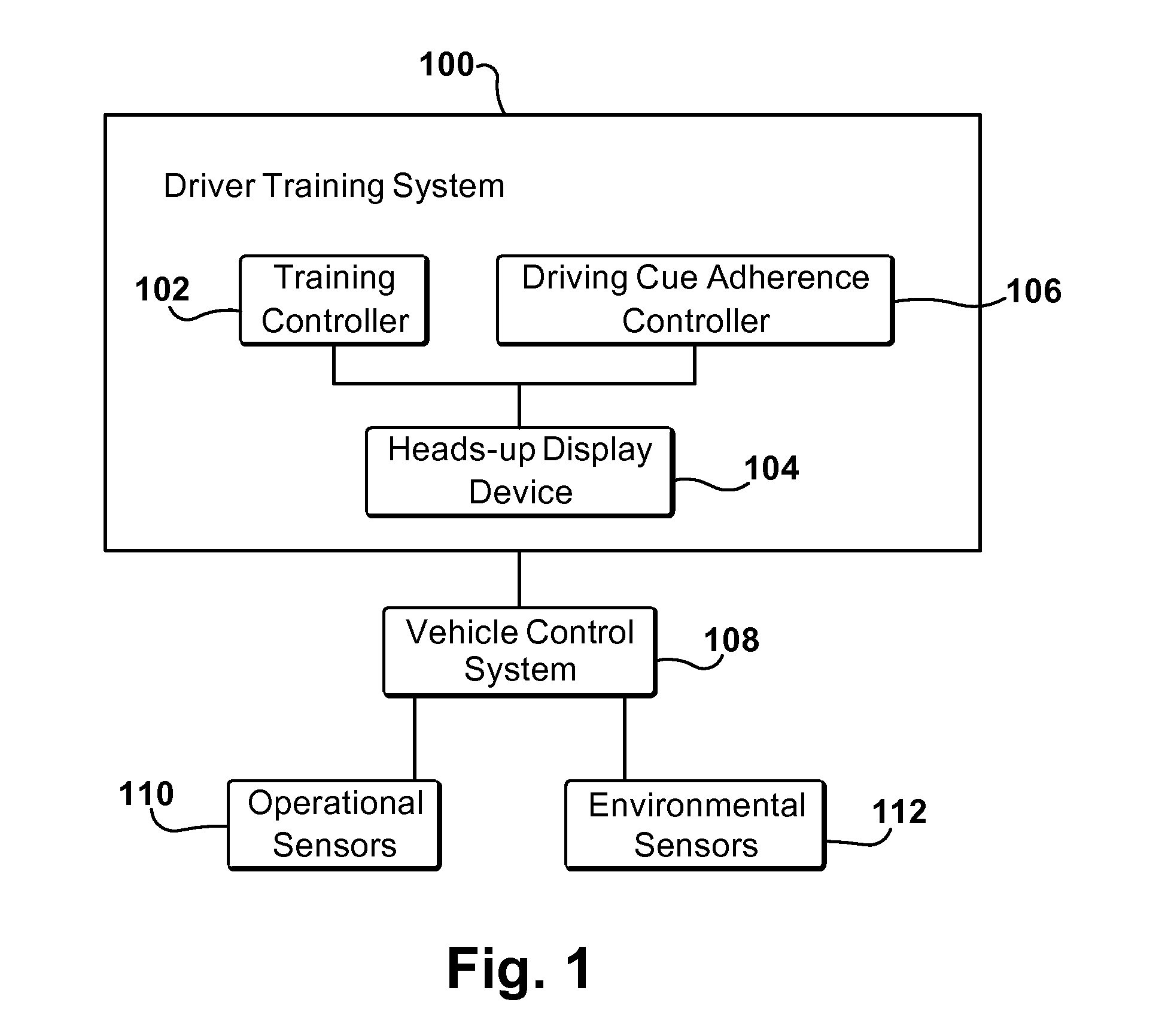

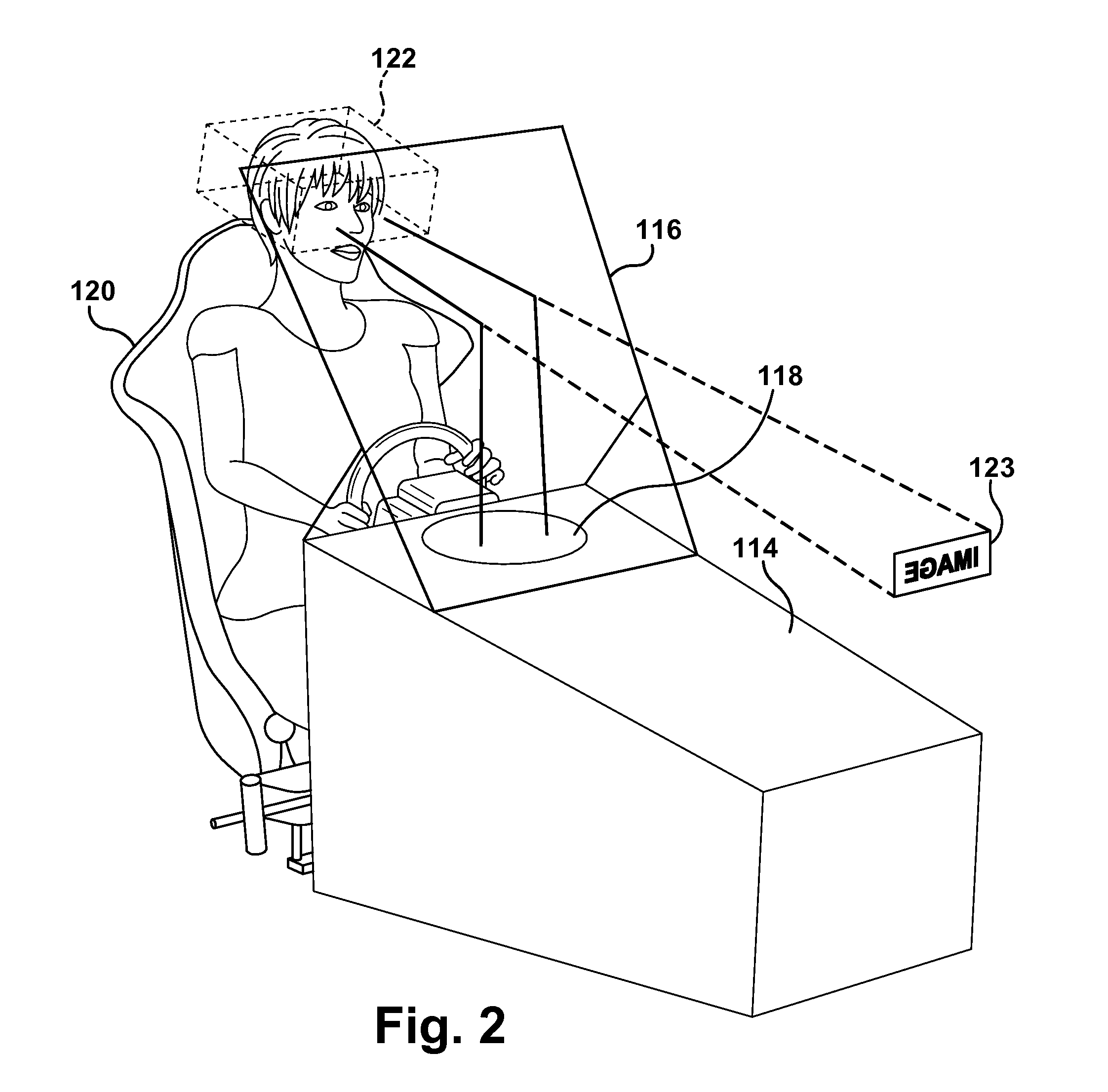

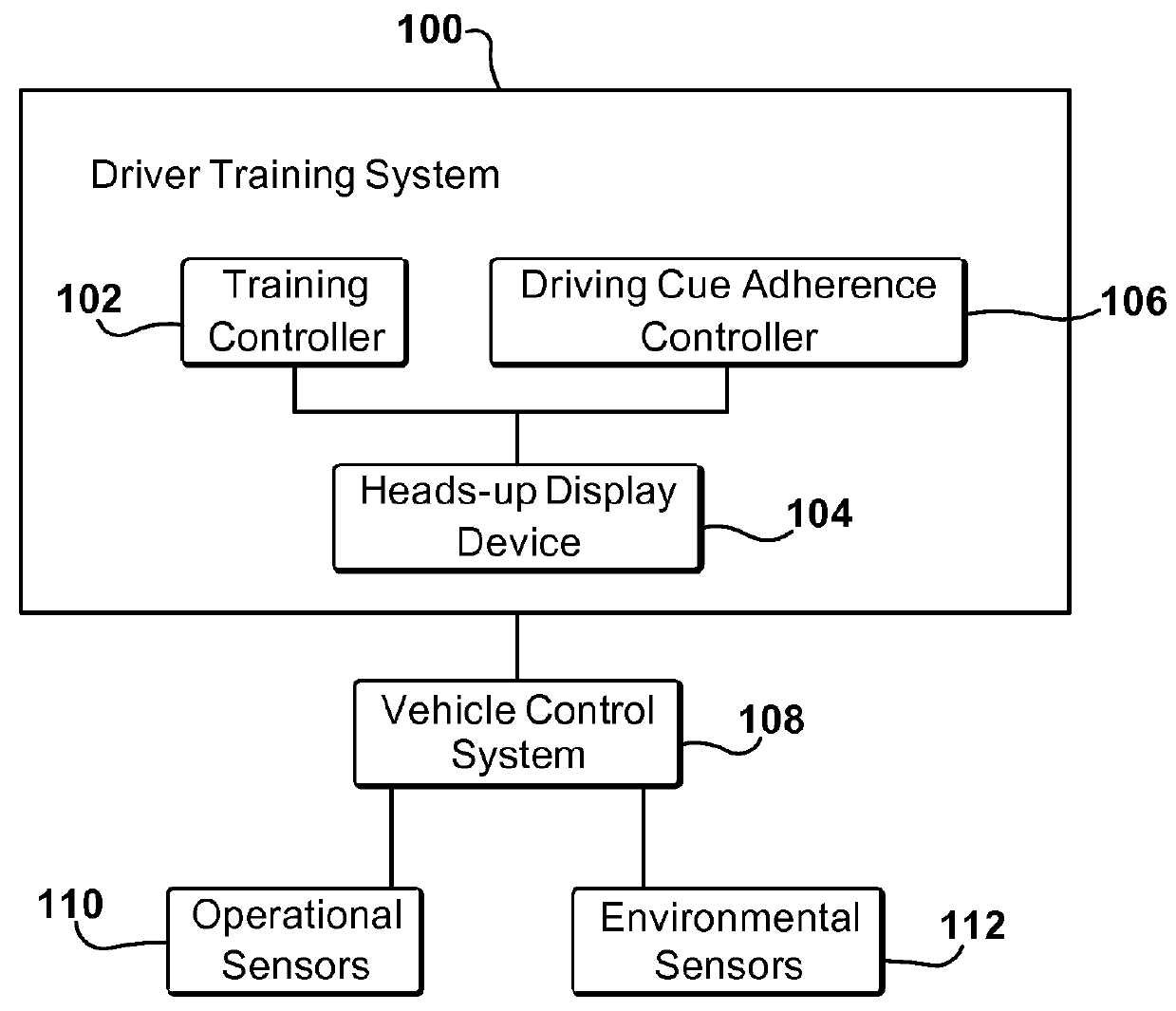

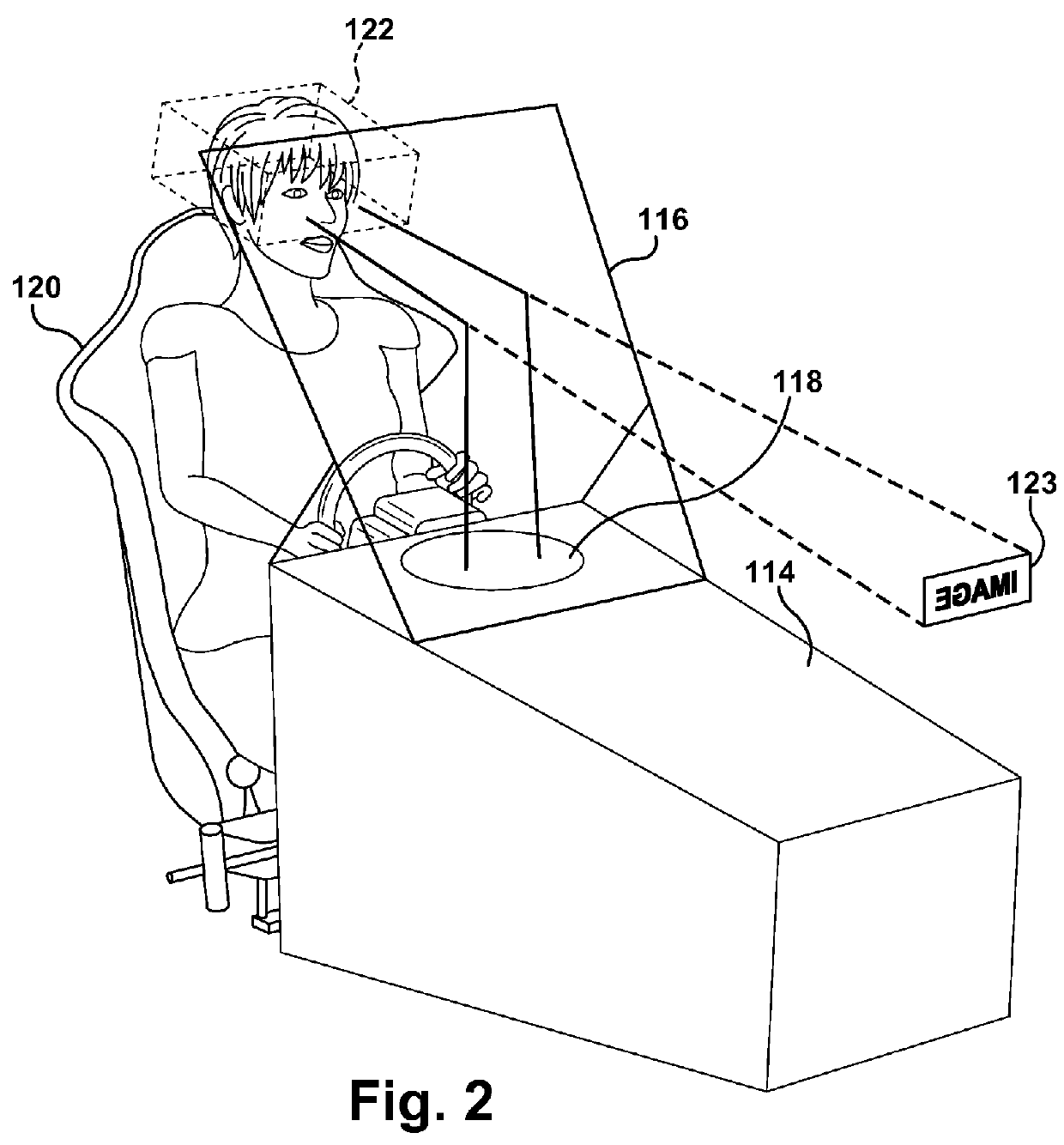

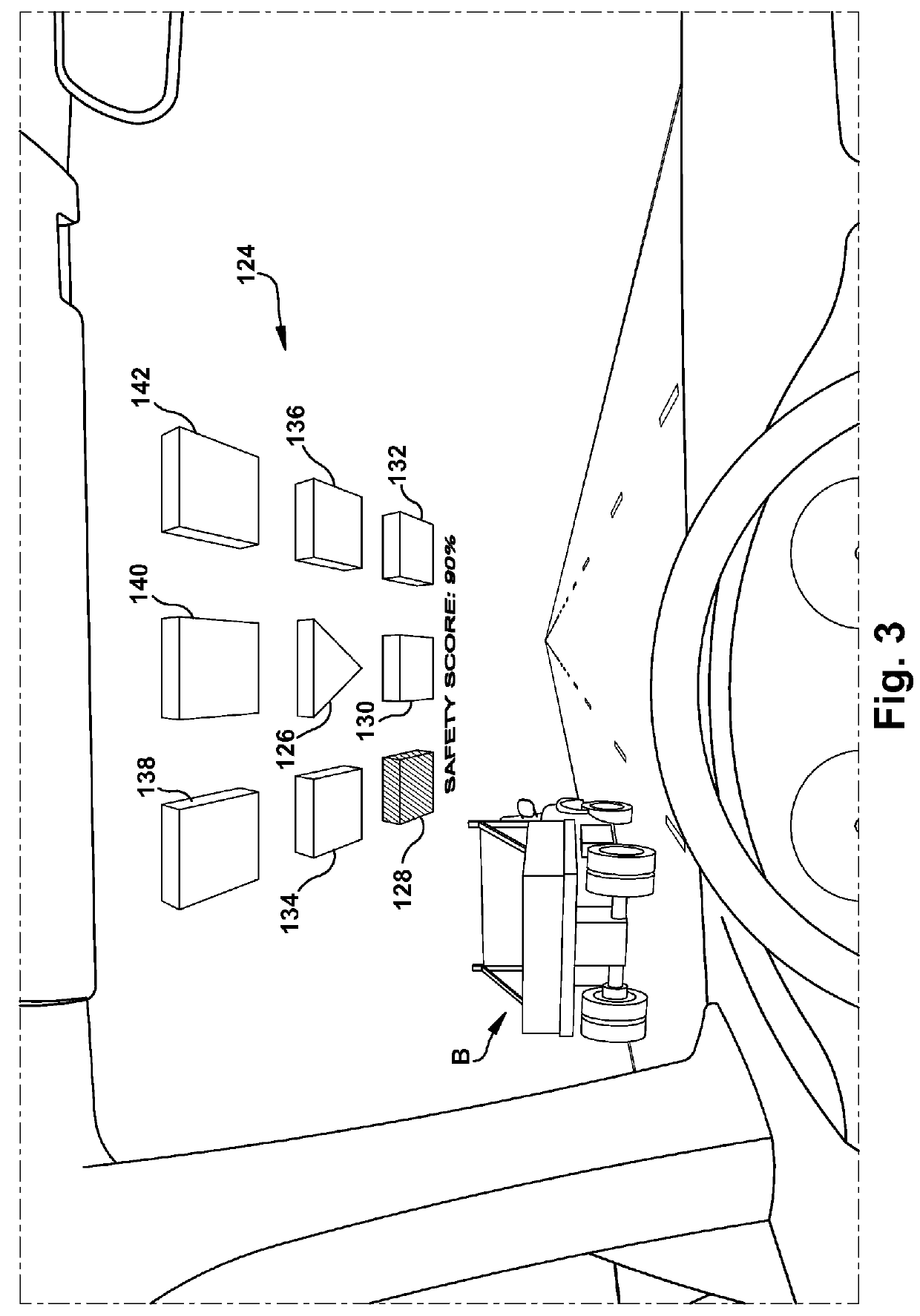

Driver training system using heads-up display augmented reality graphics elements

A driver training system includes a training controller, a heads-up display device, and a driving cue adherence controller. The training controller is configured to receive inputs related to an operational state of a vehicle and an environment surrounding the vehicle, and to determine a driving cue based on the received inputs. The heads-up display device is configured to present the driving cue as an augmented reality graphic element in view of a driver by projecting graphic elements on a windshield of the vehicle. The driving cue adherence controller is configured to continuously determine a current level of adherence to the driving cue, and an aggregate level of adherence to the driving cue based on the continuously determined current level of adherence to the driving cue over a predetermined time period. The heads-up display device is configured to present the continuously determined aggregate level of adherence in view of the driver.

Owner:HONDA MOTOR CO LTD

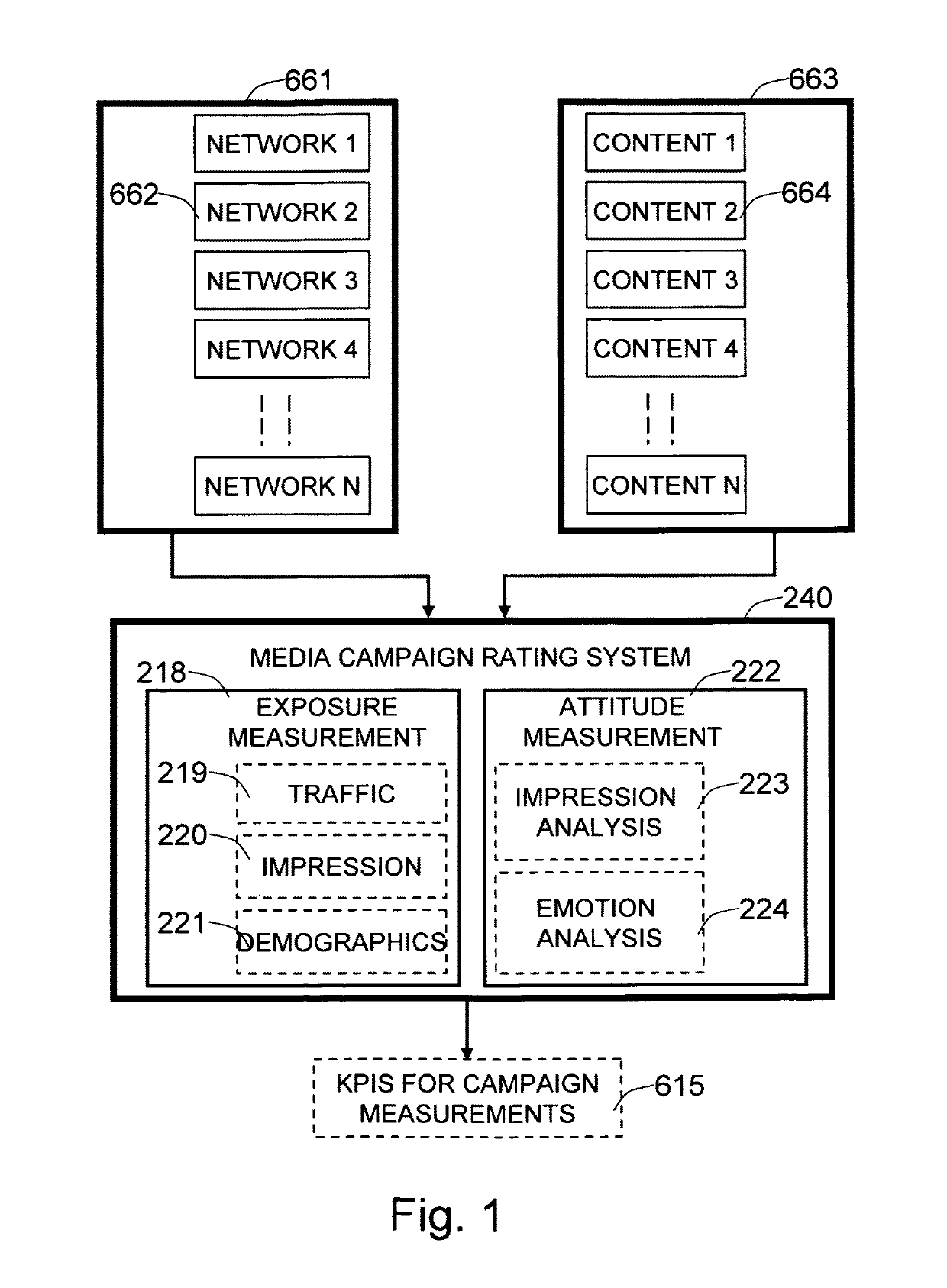

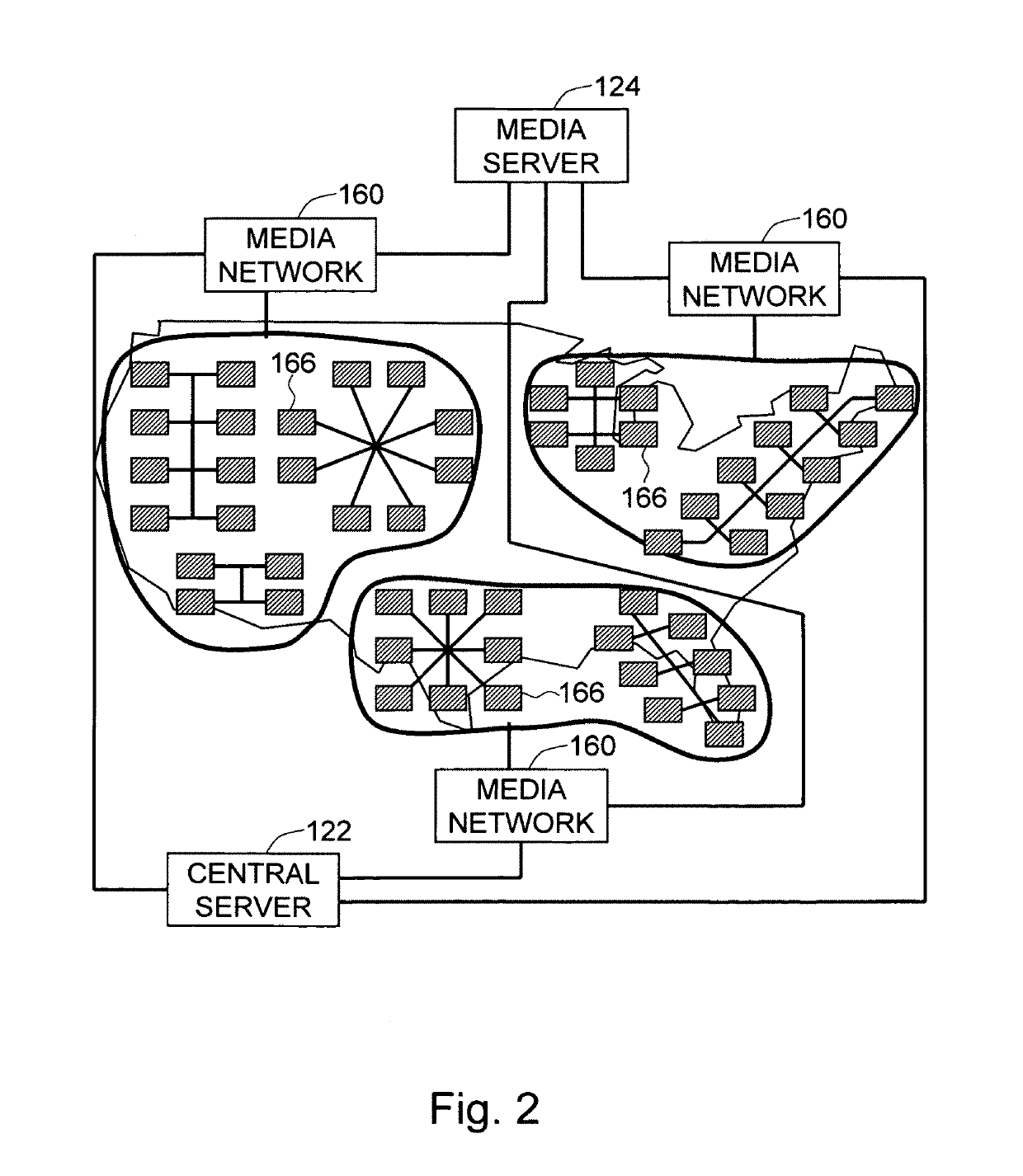

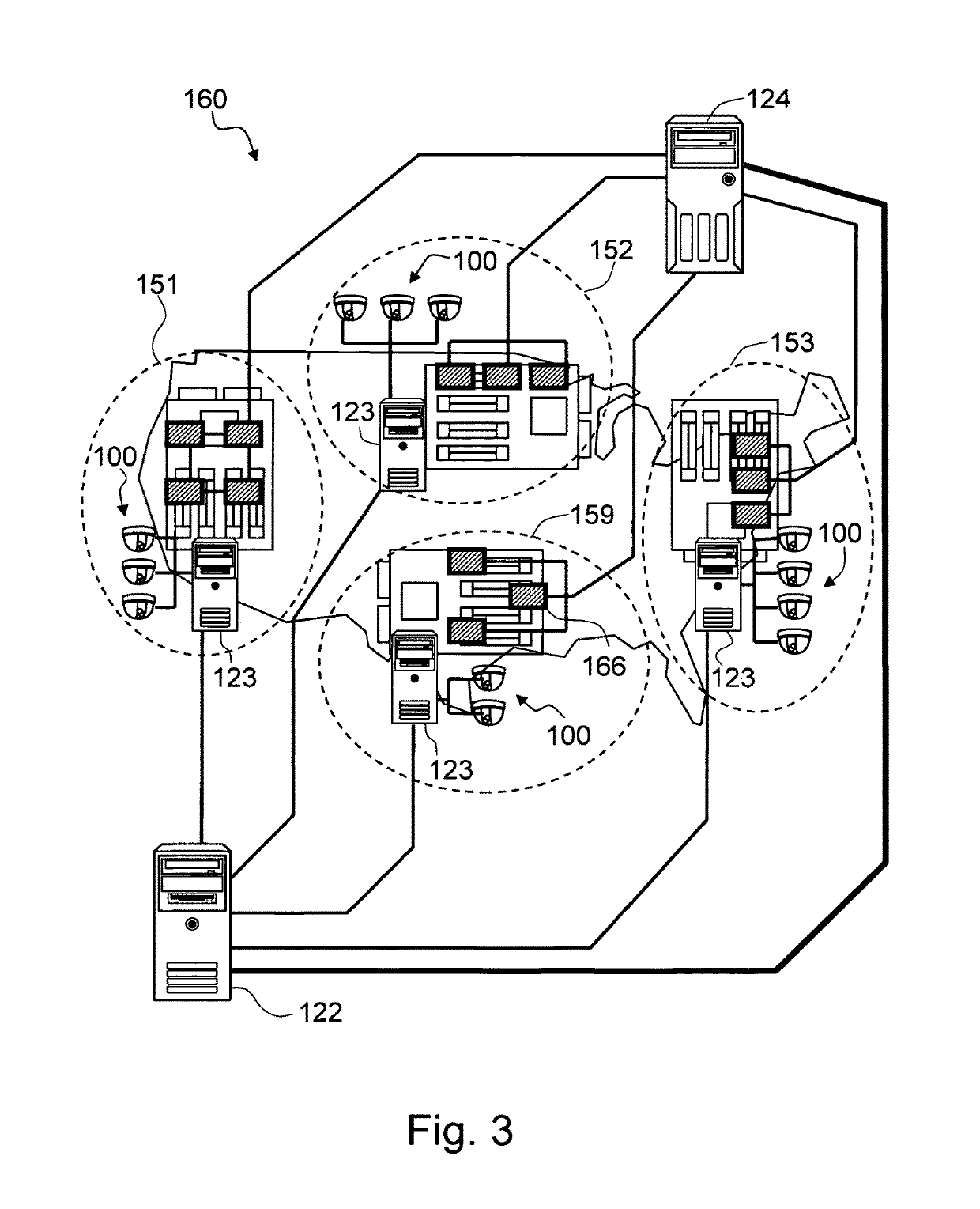

Method and system for measuring effectiveness of a marketing campaign on digital signage

The present invention is a system and method for measuring effectiveness of a marketing campaign on digital signage on many different signage networks, by measuring the efficiency of the campaign at reaching targeted audience and the effectiveness of conveying the message. This invention provides a solution to the challenges created by wide variety of measurements and lack of accuracy. By using automated audience measurement, the current invention is able to collect, large, statistically significant data for analysis. Non-intrusive, computer based measurement also ensure that the data is free from any biases. The media content rating system will provide a quantitative measure of how many people did the campaign reach and what effect did it have. The data will be available at the aggregate level, at network level and down to the screen level.

Owner:VIDEOMINING CORP

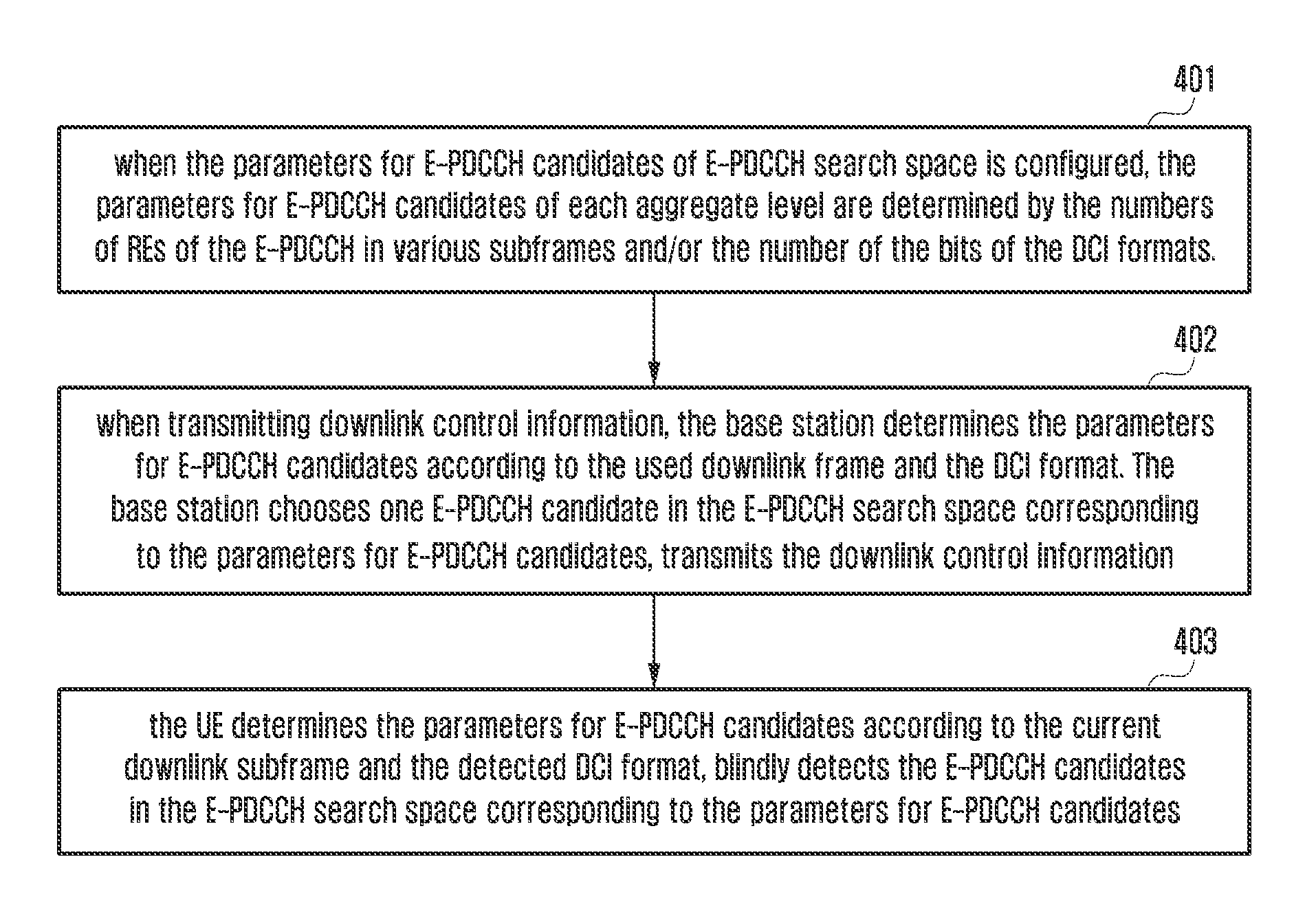

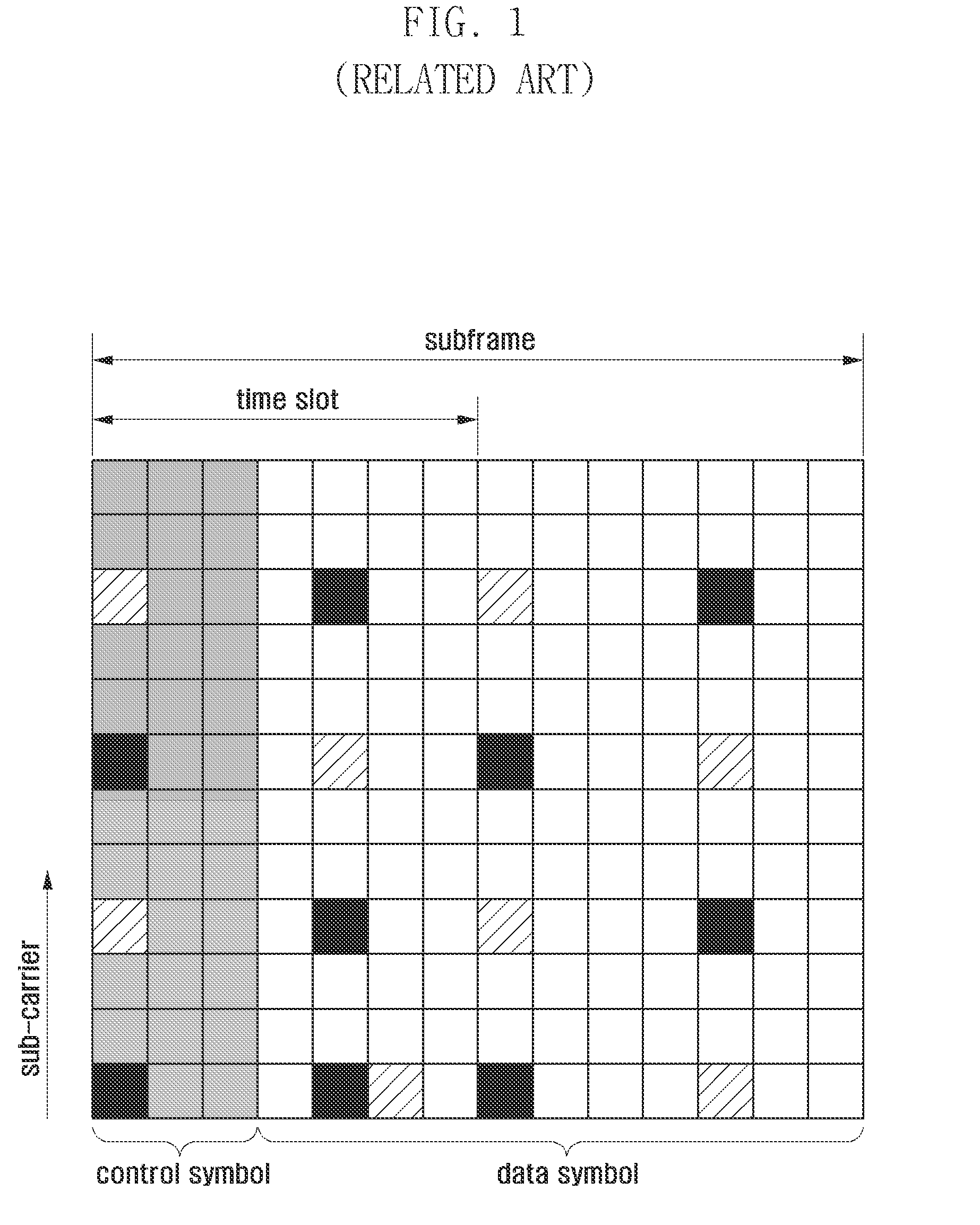

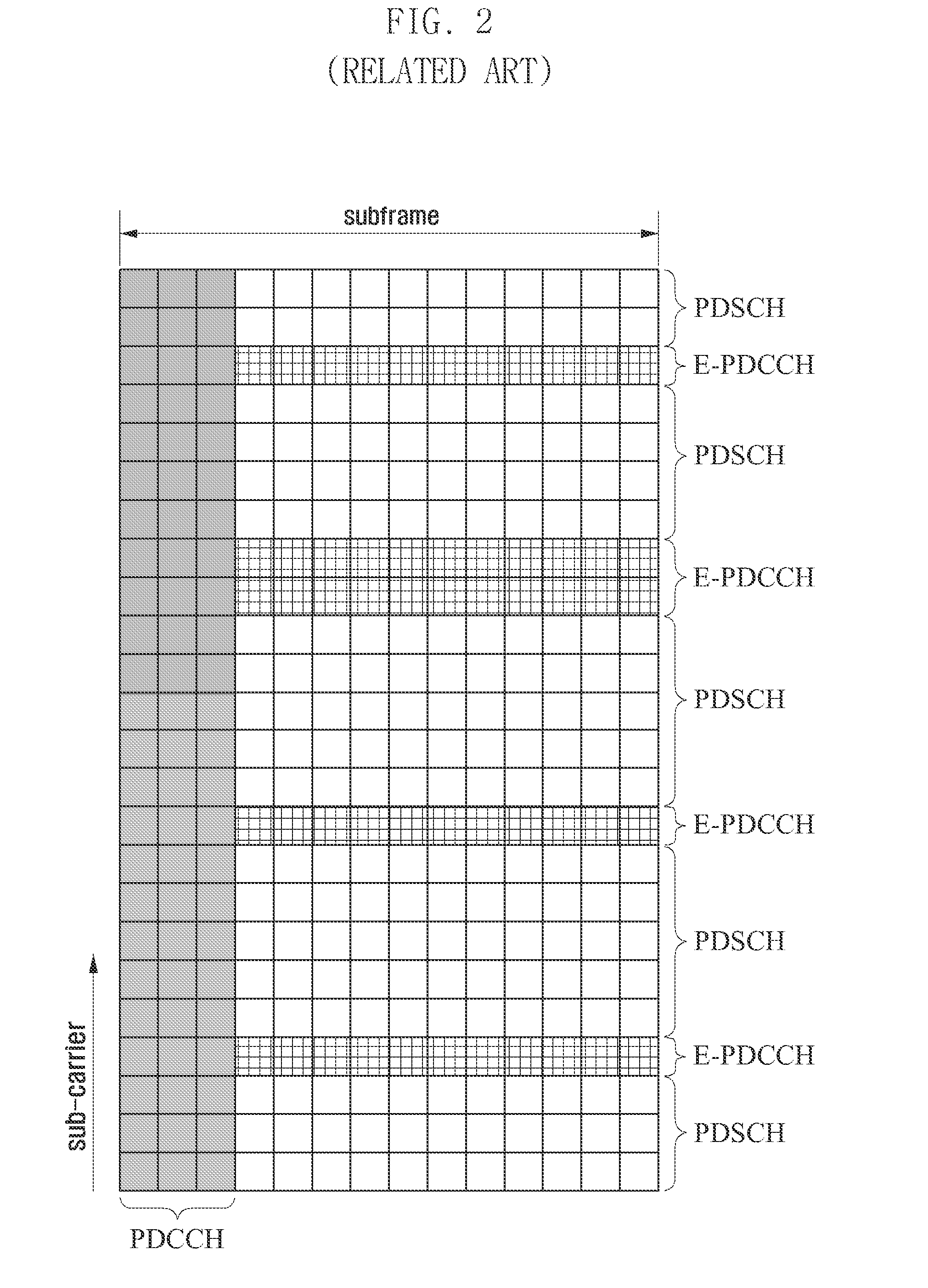

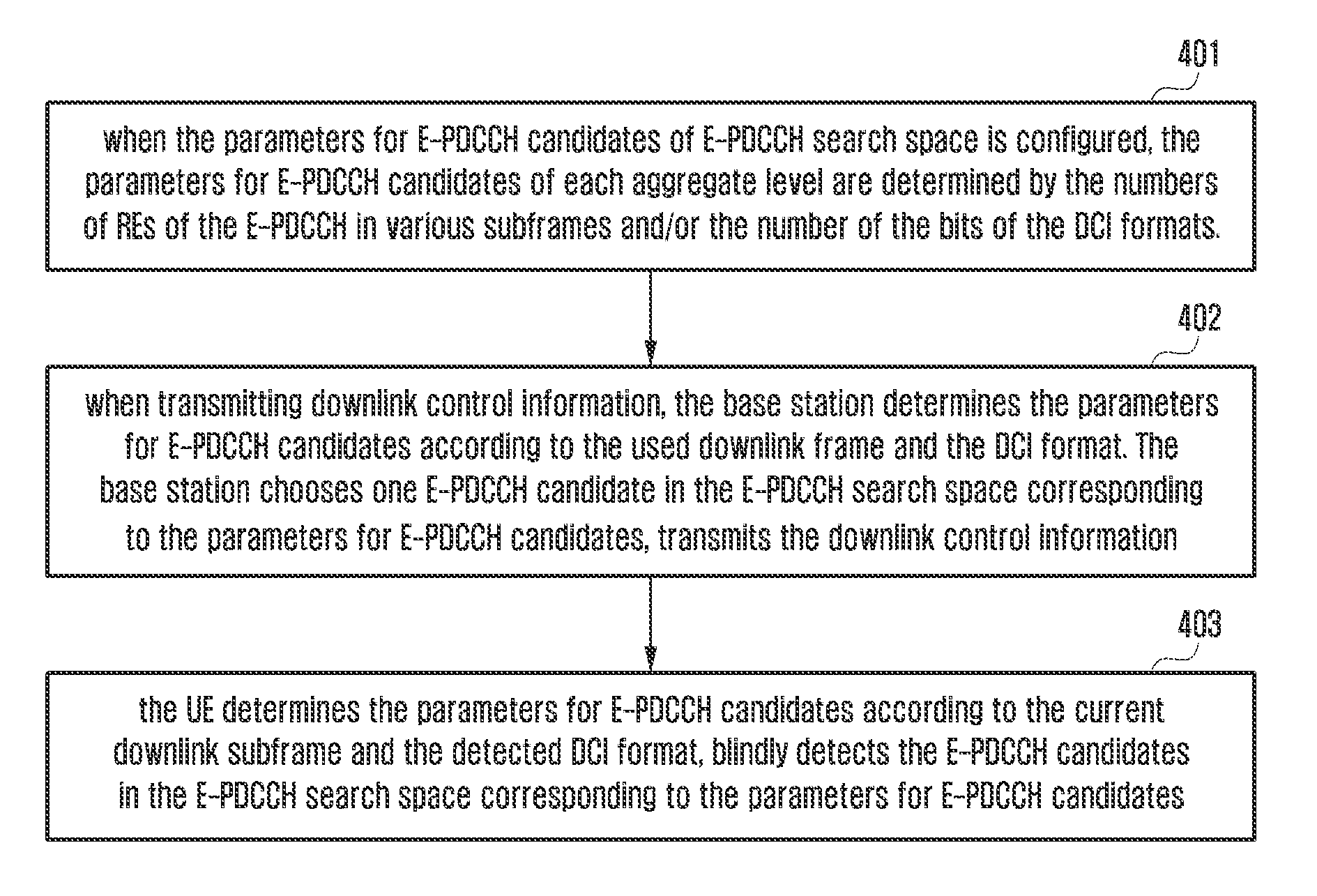

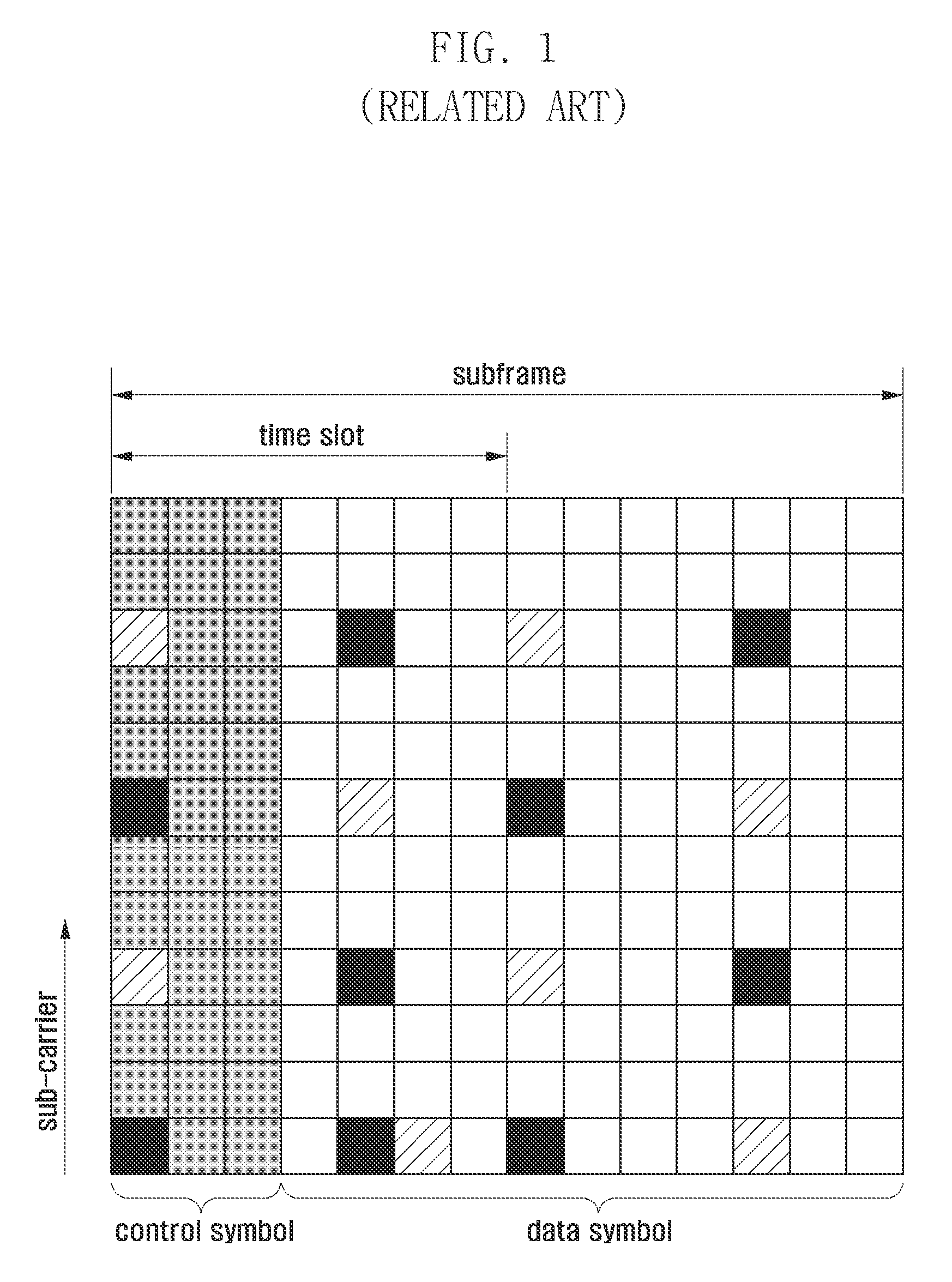

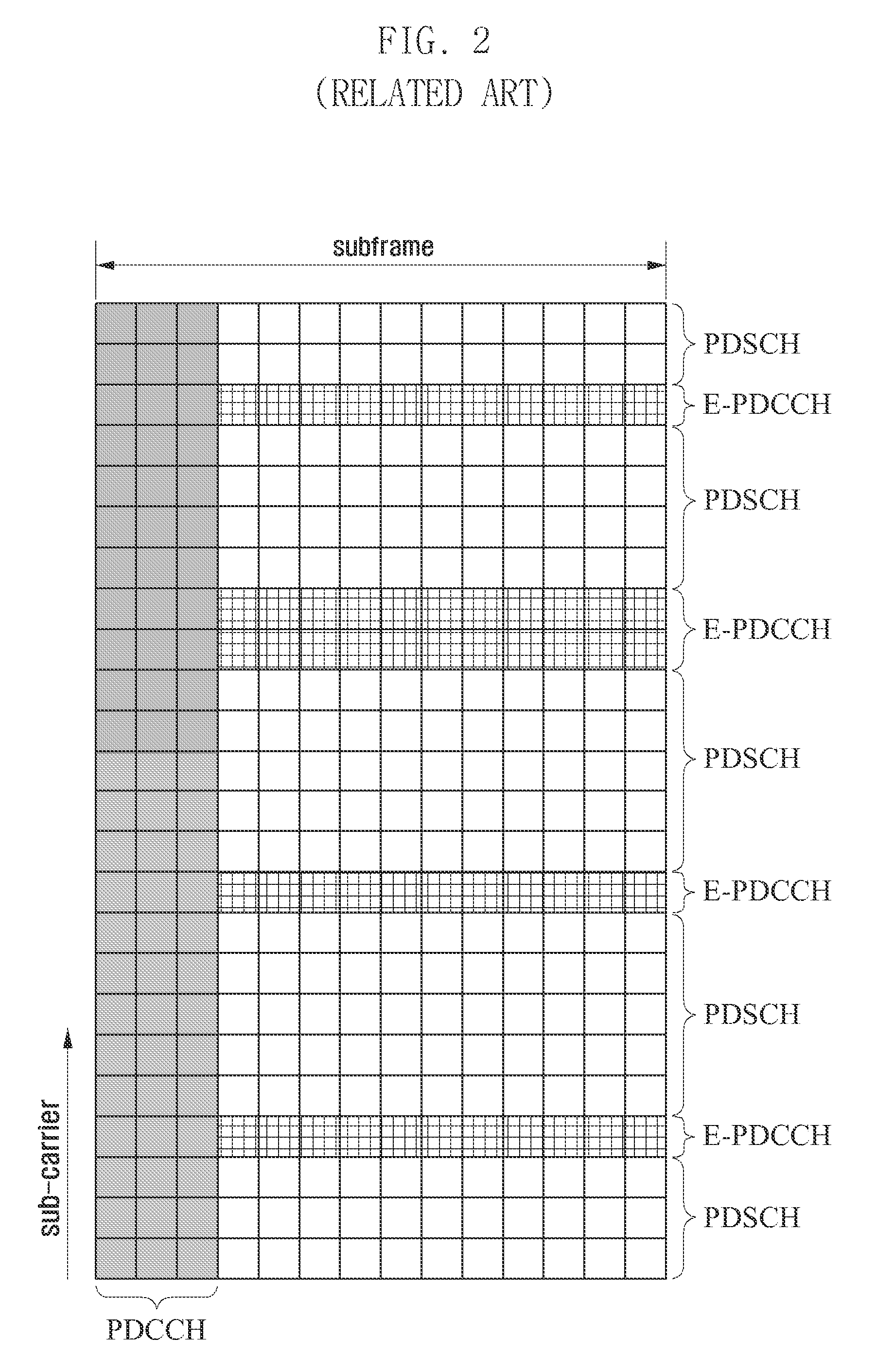

Method and apparatus for configuring search space of a downlink control channel

ActiveUS20130242906A1Increase flexibilityIncrease the stationError preventionSignal allocationResource elementAggregate level

A method for configuring a search space of a downlink control channel is provided. The method includes determining the parameters for Enhanced Physical Downlink Control Channel (E-PDCCH) candidates of each aggregate level according to the number of Resource Elements (RE) in a subframe and / or the number of bits of Downlink Control Information (DCI) formats, when the parameters for E-PDCCH candidates of E-PDCCH search space is configured, determining, by a User Equipment (UE), the parameters for E-PDCCH candidates according to a current downlink subframe and a detected DCI format, and detecting blindly, by the UE, the E-PDCCH candidates in the E-PDCCH search space corresponding to the parameters for E-PDCCH candidates. The present invention also provides a UE and a base station. Application of the present invention can improve the flexibility of the base station scheduling, and reduce the possibility that the E-PDCCHs of different UEs block each other.

Owner:SAMSUNG ELECTRONICS CO LTD

Driver training system using heads-up display augmented reality graphics elements

A driver training system includes a training controller, a heads-up display device, and a driving cue adherence controller. The training controller is configured to receive inputs related to an operational state of a vehicle and an environment surrounding the vehicle, and to determine a driving cue based on the received inputs. The heads-up display device is configured to present the driving cue as an augmented reality graphic element in view of a driver by projecting graphic elements on a windshield of the vehicle. The driving cue adherence controller is configured to continuously determine a current level of adherence to the driving cue, and an aggregate level of adherence to the driving cue based on the continuously determined current level of adherence to the driving cue over a predetermined time period. The heads-up display device is configured to present the continuously determined aggregate level of adherence in view of the driver.

Owner:HONDA MOTOR CO LTD

Method and system for diagnosing a fault or open circuit in a network

A fault injection circuit injects a test signal into a data bus with a normal high logic level and a normal low logic level. The test signal has a greater logic level greater than the normal high logic level of the data bus or a lower logic level lower than the normal low logic level of the data bus. An analog-to-digital converter is coupled to a voltage level detector for sensing an aggregate level of an aggregate signal on the data bus. The aggregate signal is composed of the termination circuit signal and the test signal. A diagnostic tool determines whether a faulty connection between the data bus and a network device exists, where the sensed aggregate level exceeds at least one of the normal high logic level and the normal low logic level.

Owner:DEERE & CO

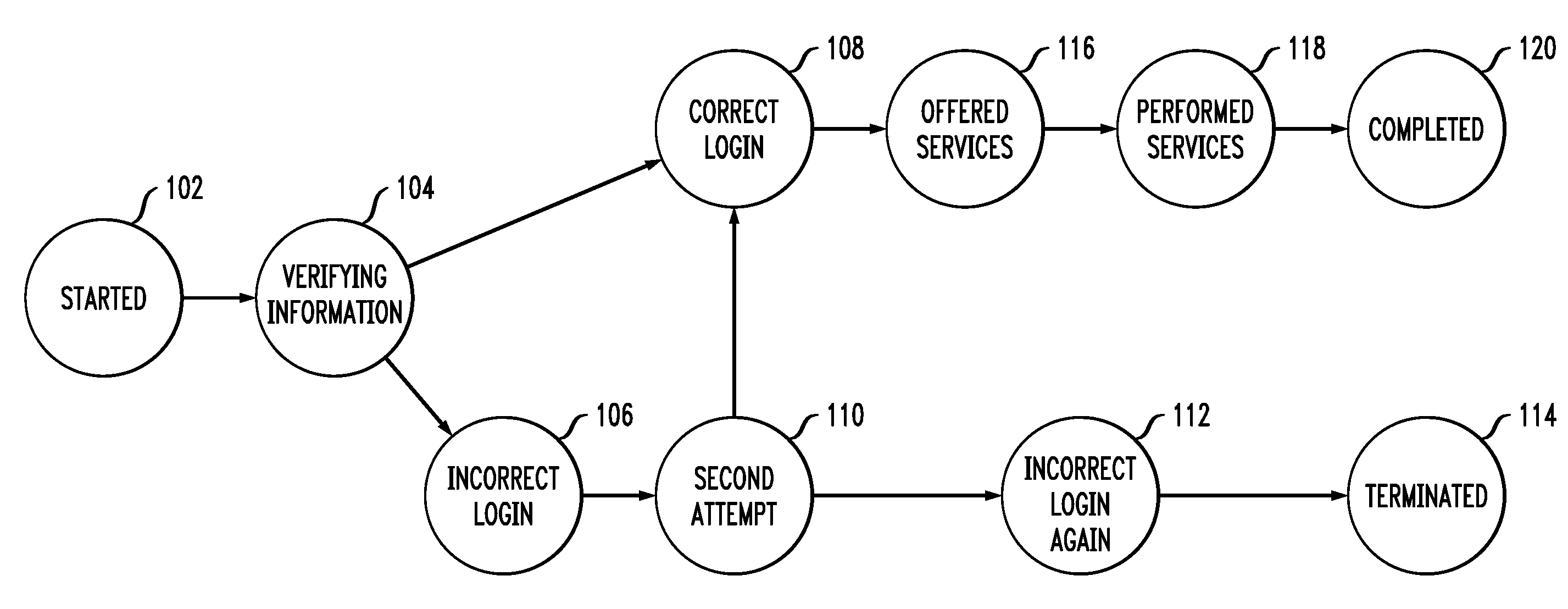

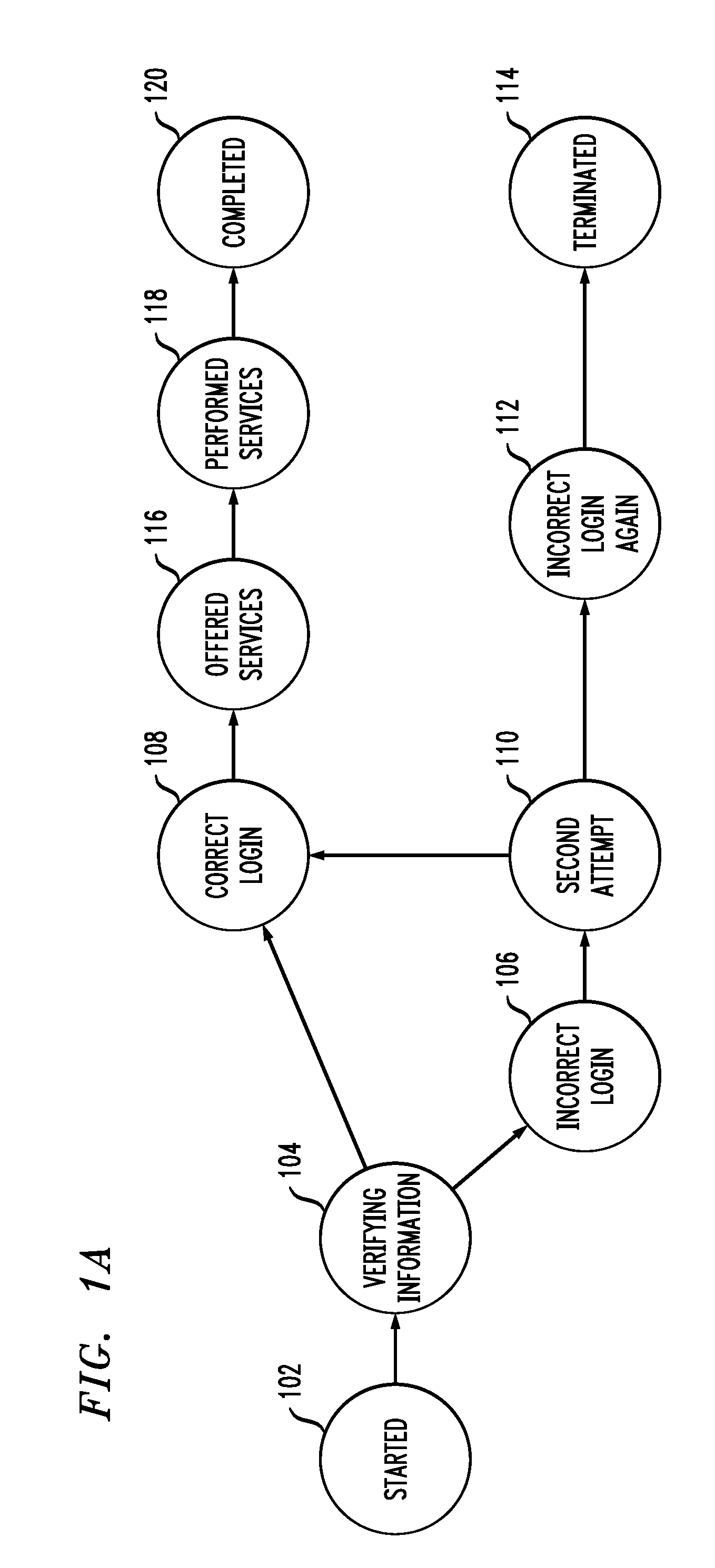

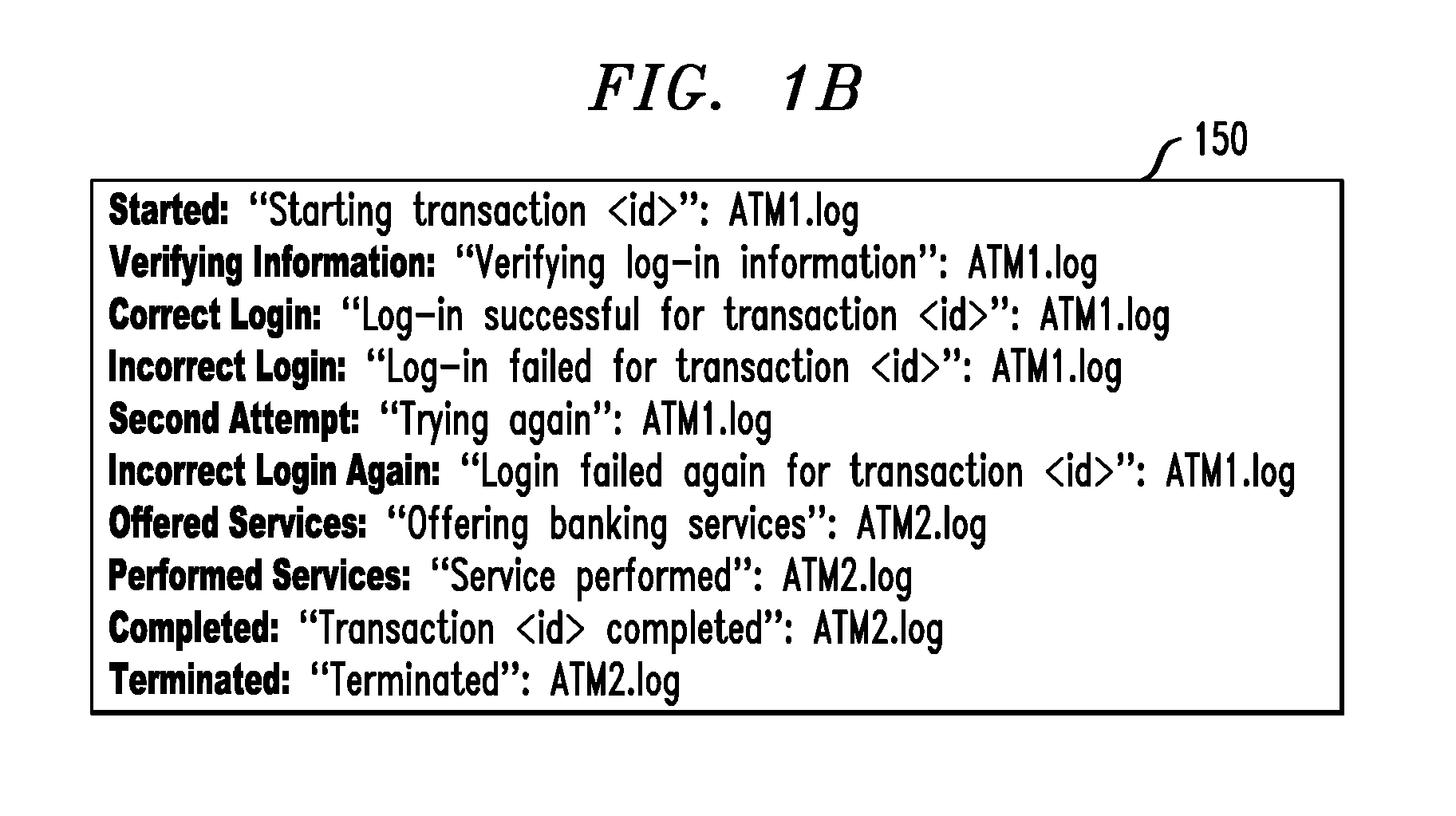

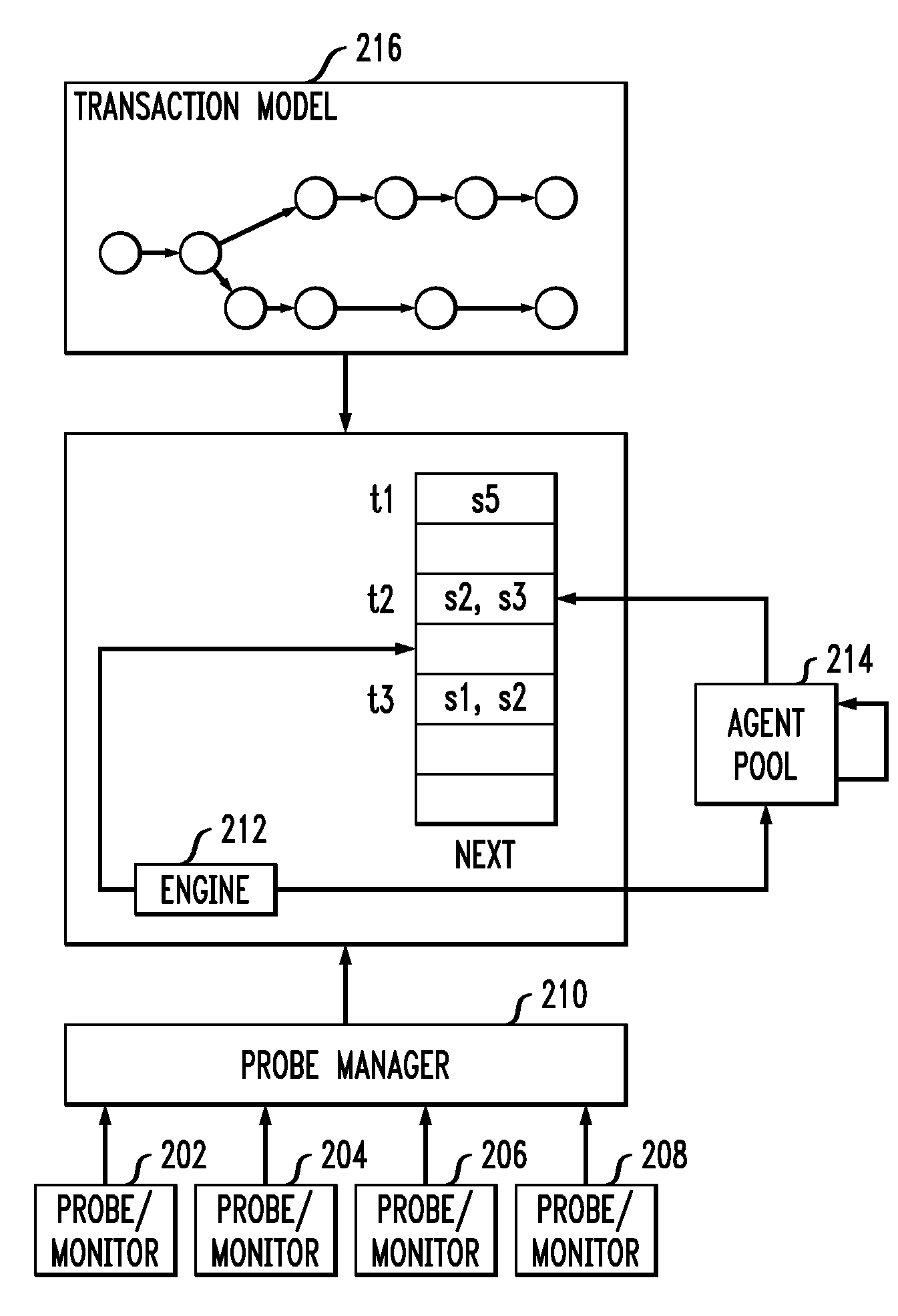

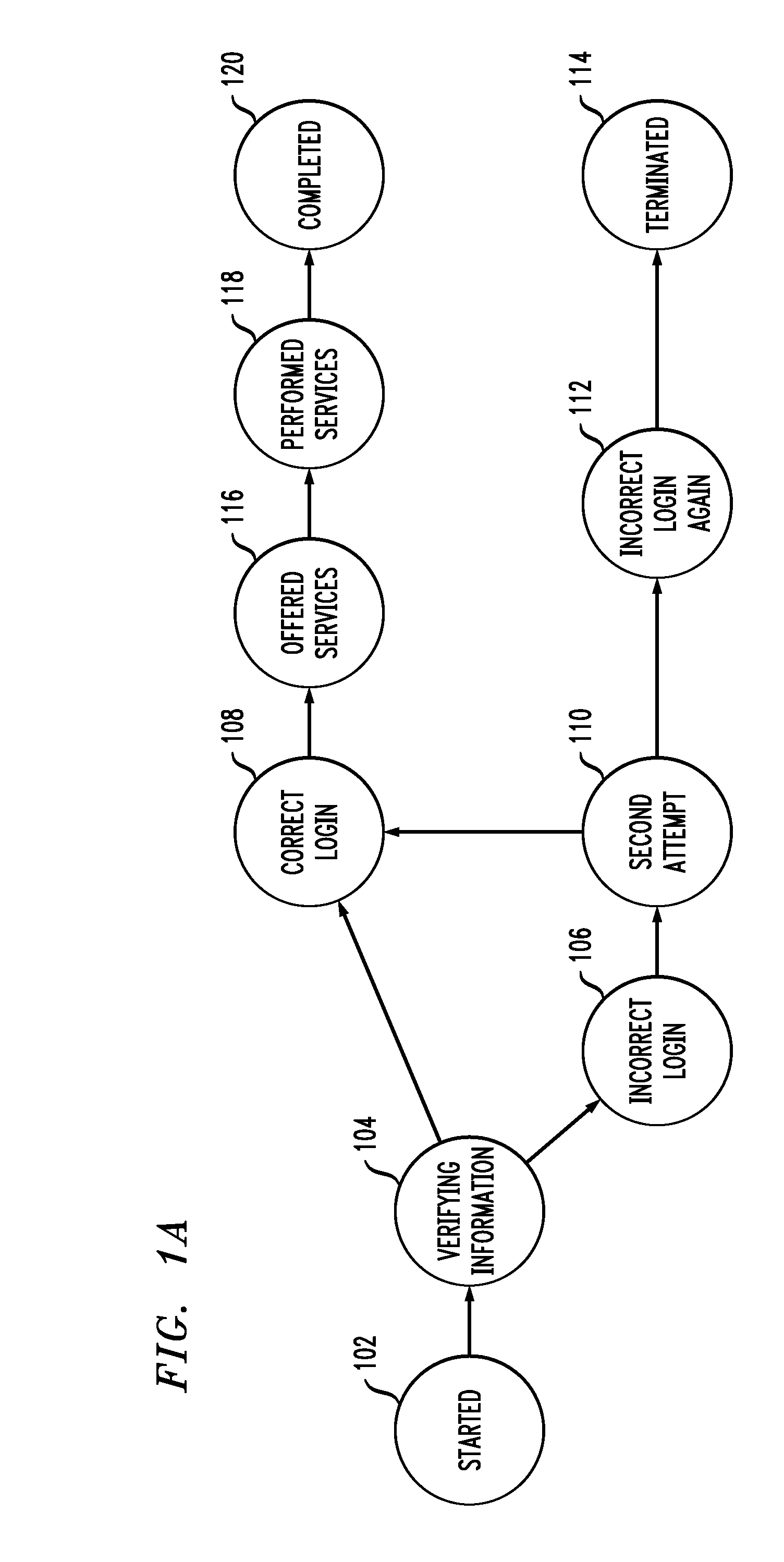

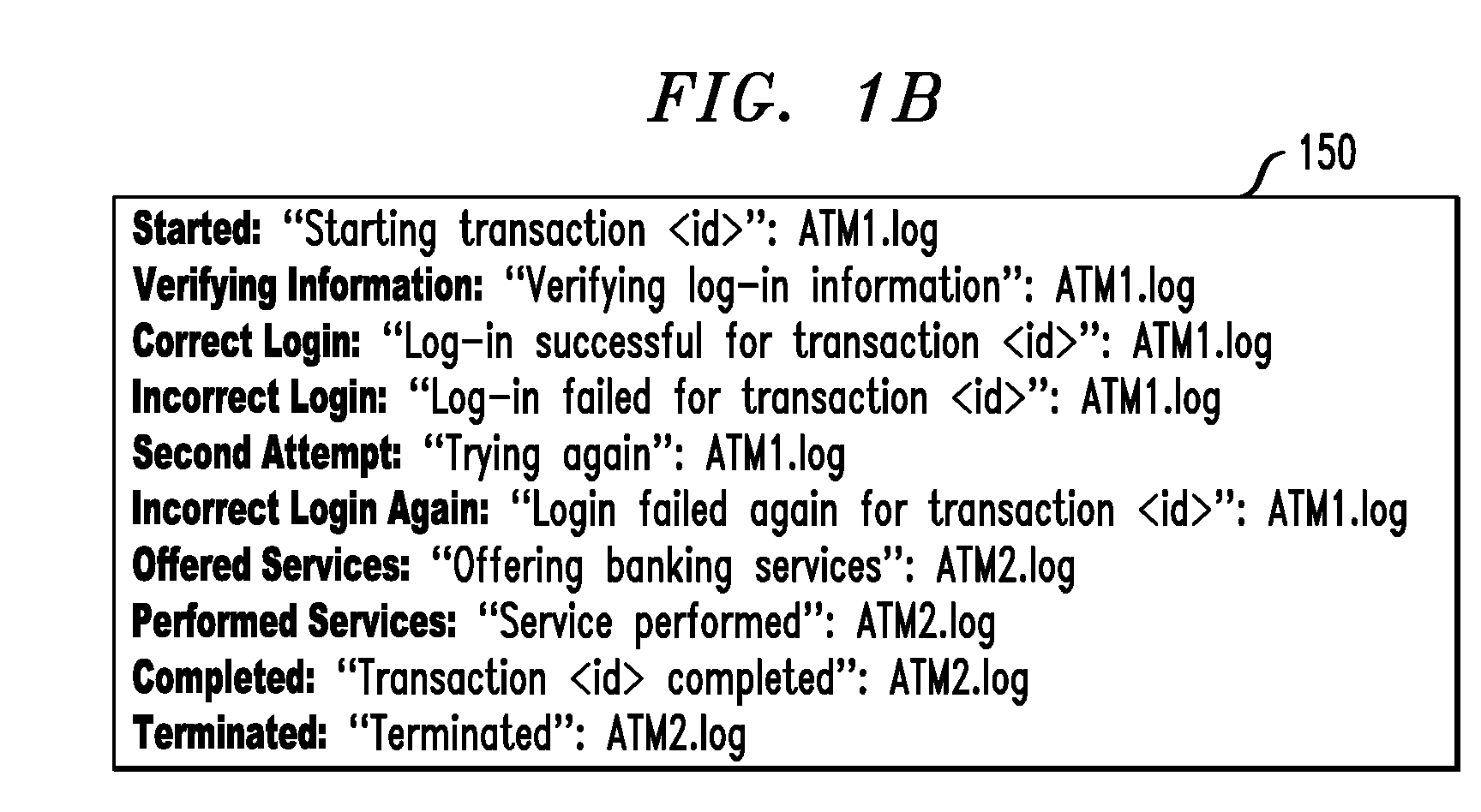

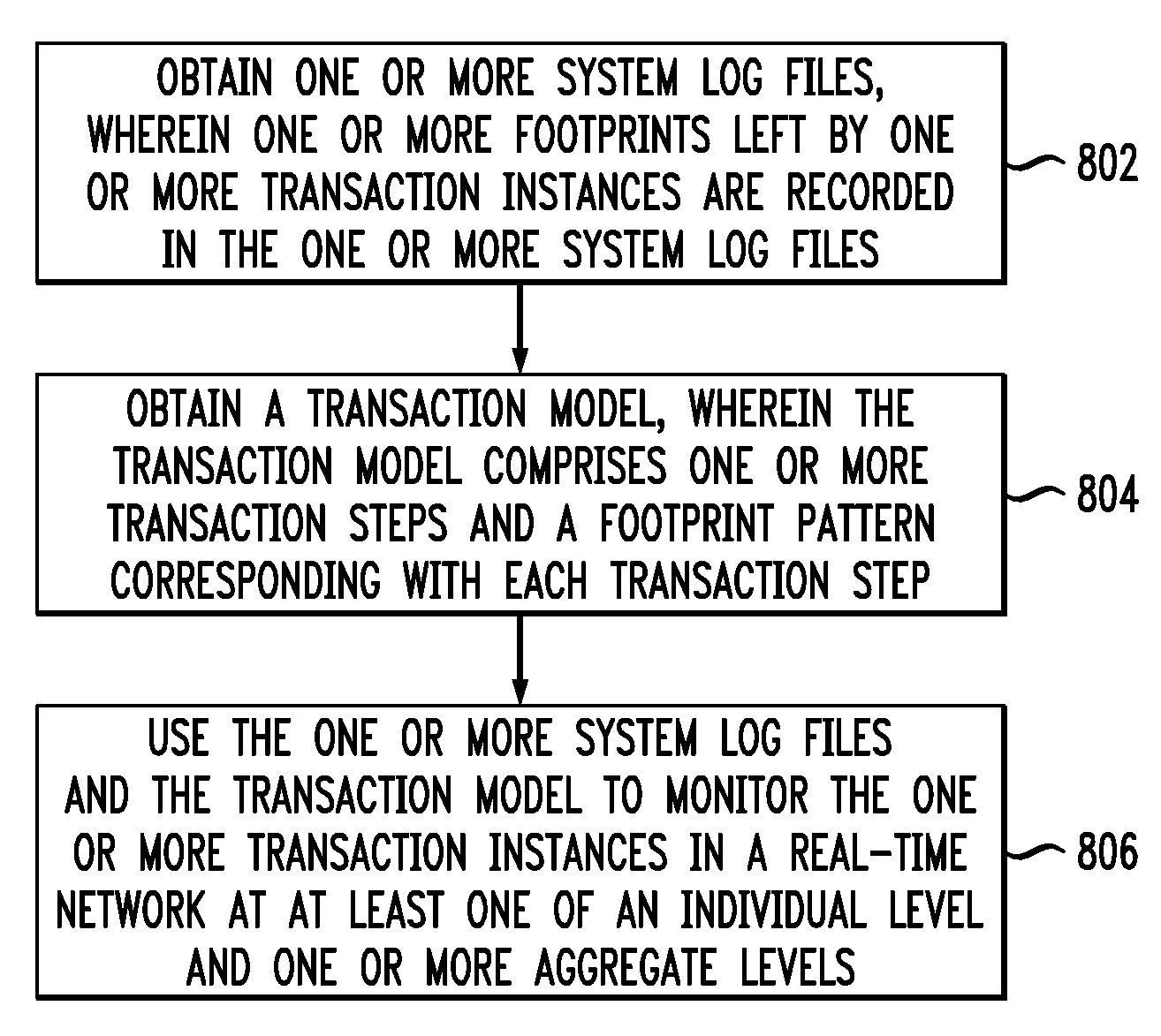

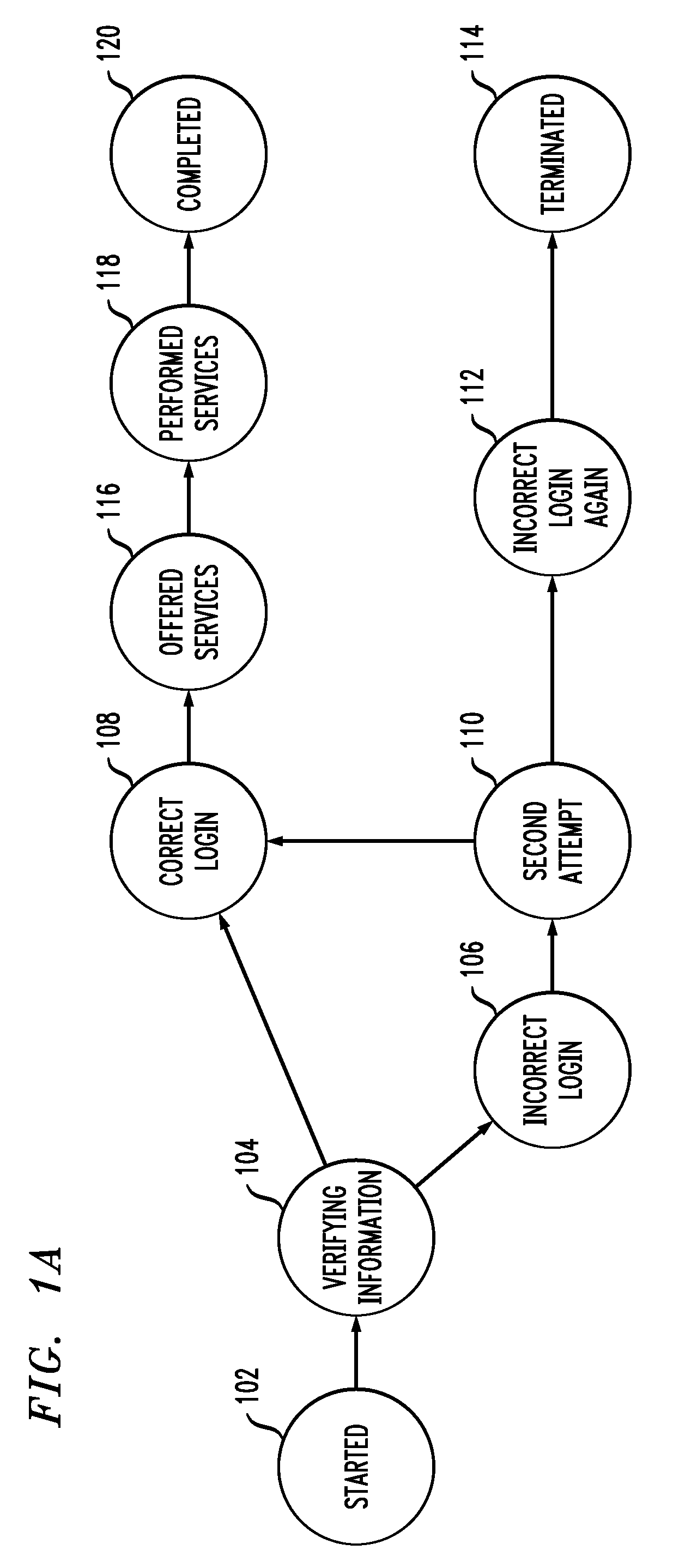

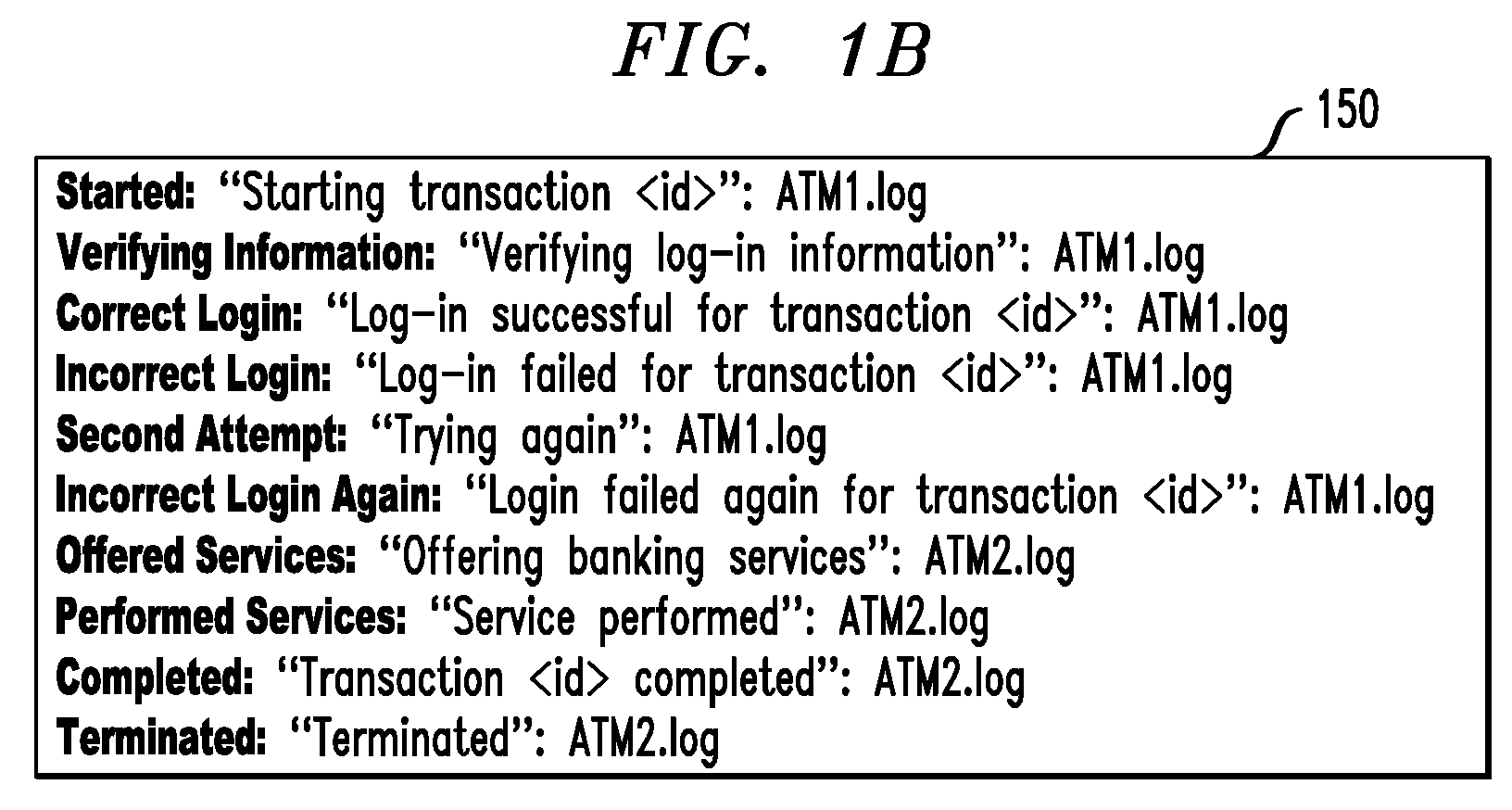

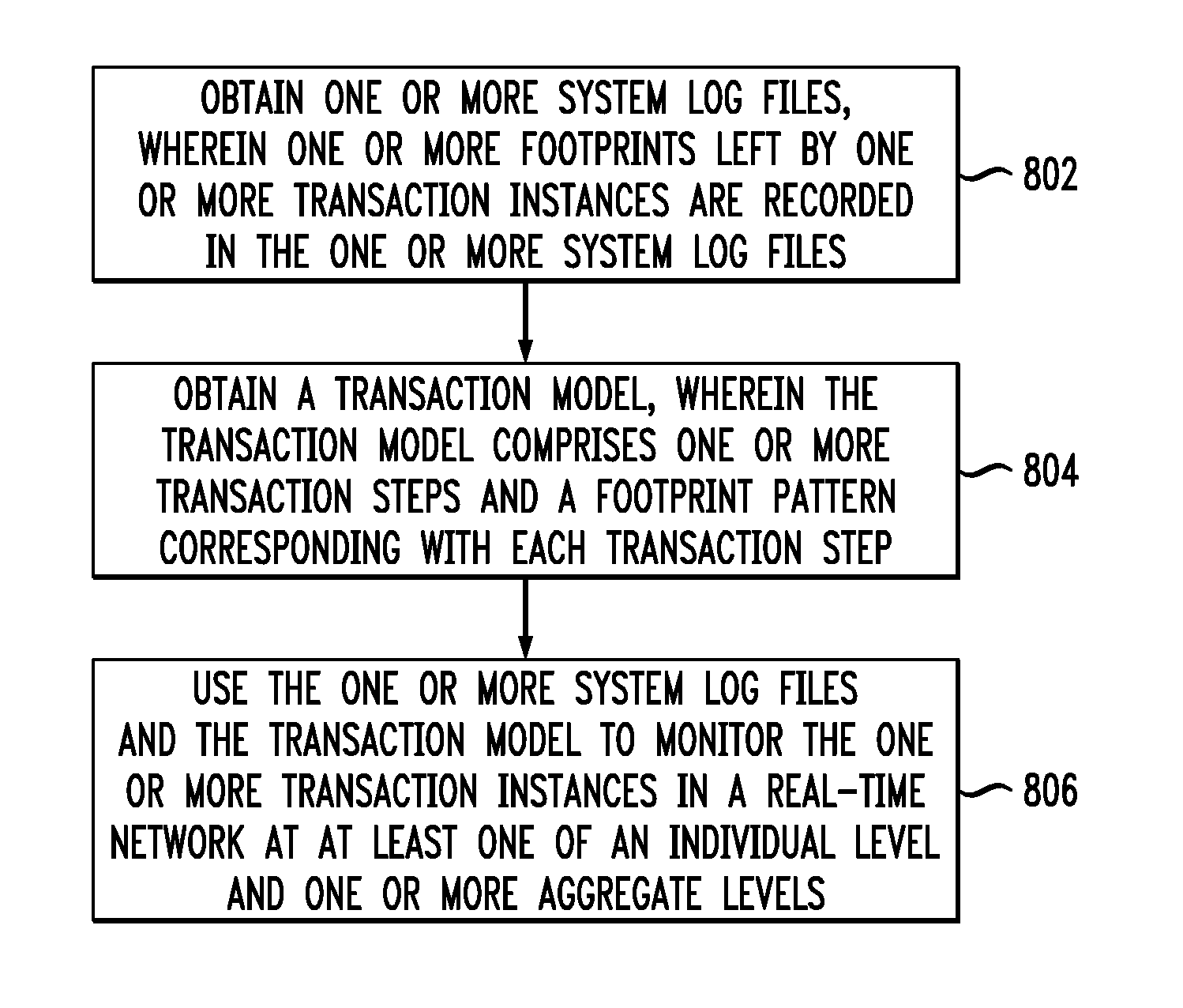

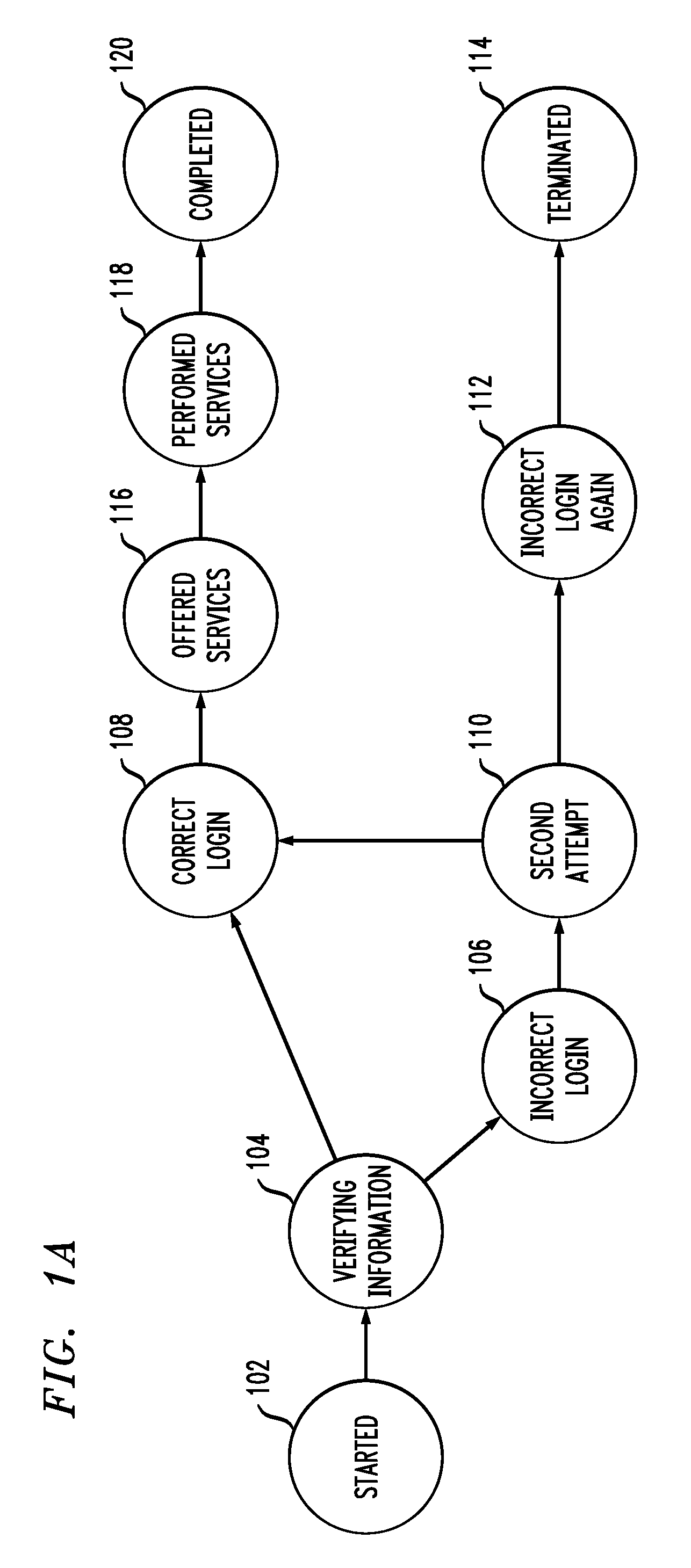

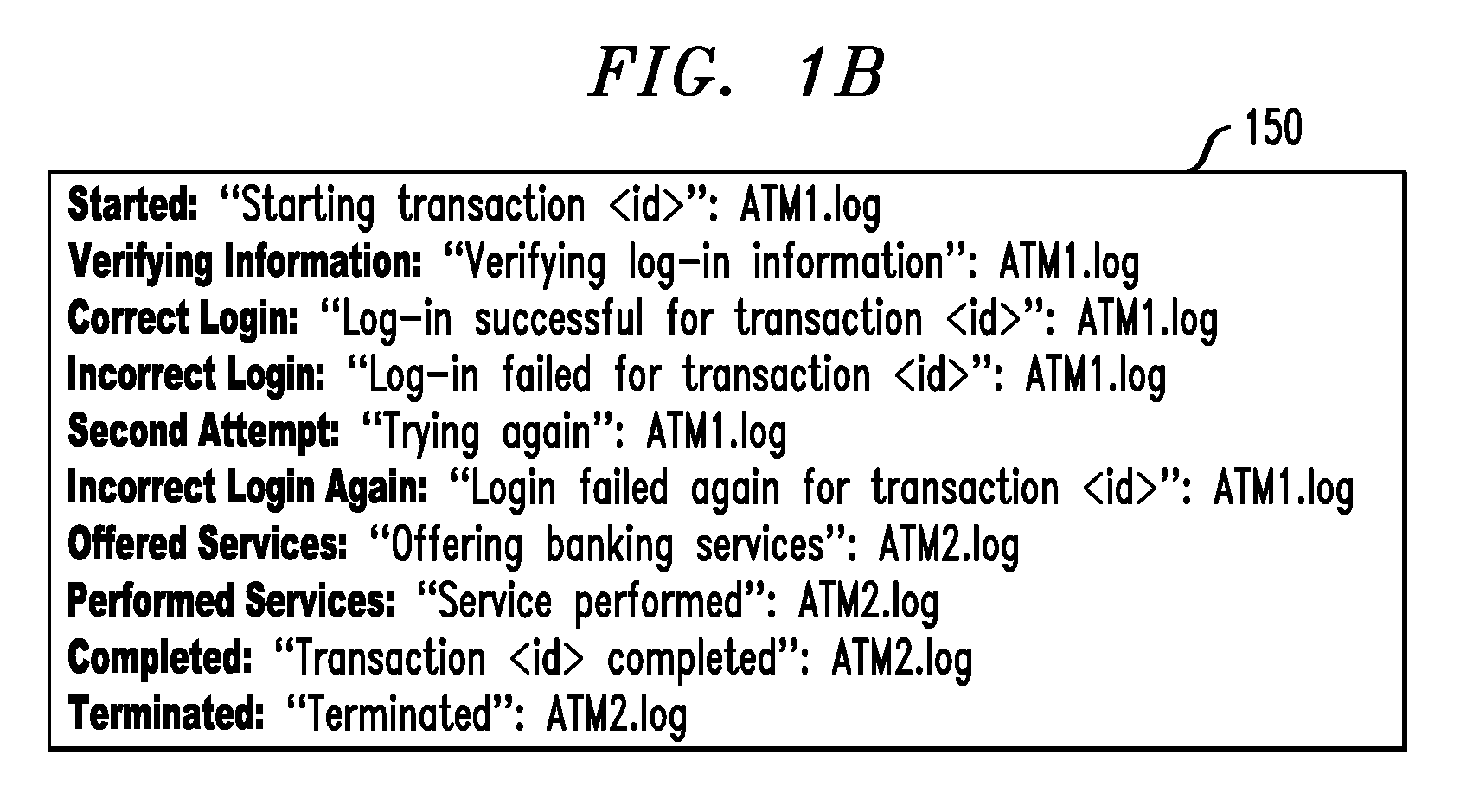

System and computer program product for monitoring transaction instances

InactiveUS20090193112A1Error detection/correctionDigital computer detailsTransaction modelAggregate level

Techniques for monitoring one or more transaction instances in a real-time network are provided. The techniques include obtaining one or more system log files, wherein one or more footprints left by one or more transaction instances are recorded in the one or more system log files, obtaining a transaction model, wherein the transaction model comprises one or more transaction steps and a footprint pattern corresponding with each transaction step, and using the one or more system log files and the transaction model to monitor the one or more transaction instances in a real-time network at least one of an individual level and one or more aggregate levels.

Owner:IBM CORP

Method for monitoring transaction instances

Techniques for monitoring one or more transaction instances in a real-time network are provided. The techniques include obtaining one or more system log files, wherein one or more footprints left by one or more transaction instances are recorded in the one or more system log files, obtaining a transaction model, wherein the transaction model comprises one or more transaction steps and a footprint pattern corresponding with each transaction step, and using the one or more system log files and the transaction model to monitor the one or more transaction instances in a real-time network at least one of an individual level and one or more aggregate levels.

Owner:IBM CORP

Method using footprints in system log files for monitoring transaction instances in real-time network

Owner:INT BUSINESS MASCH CORP

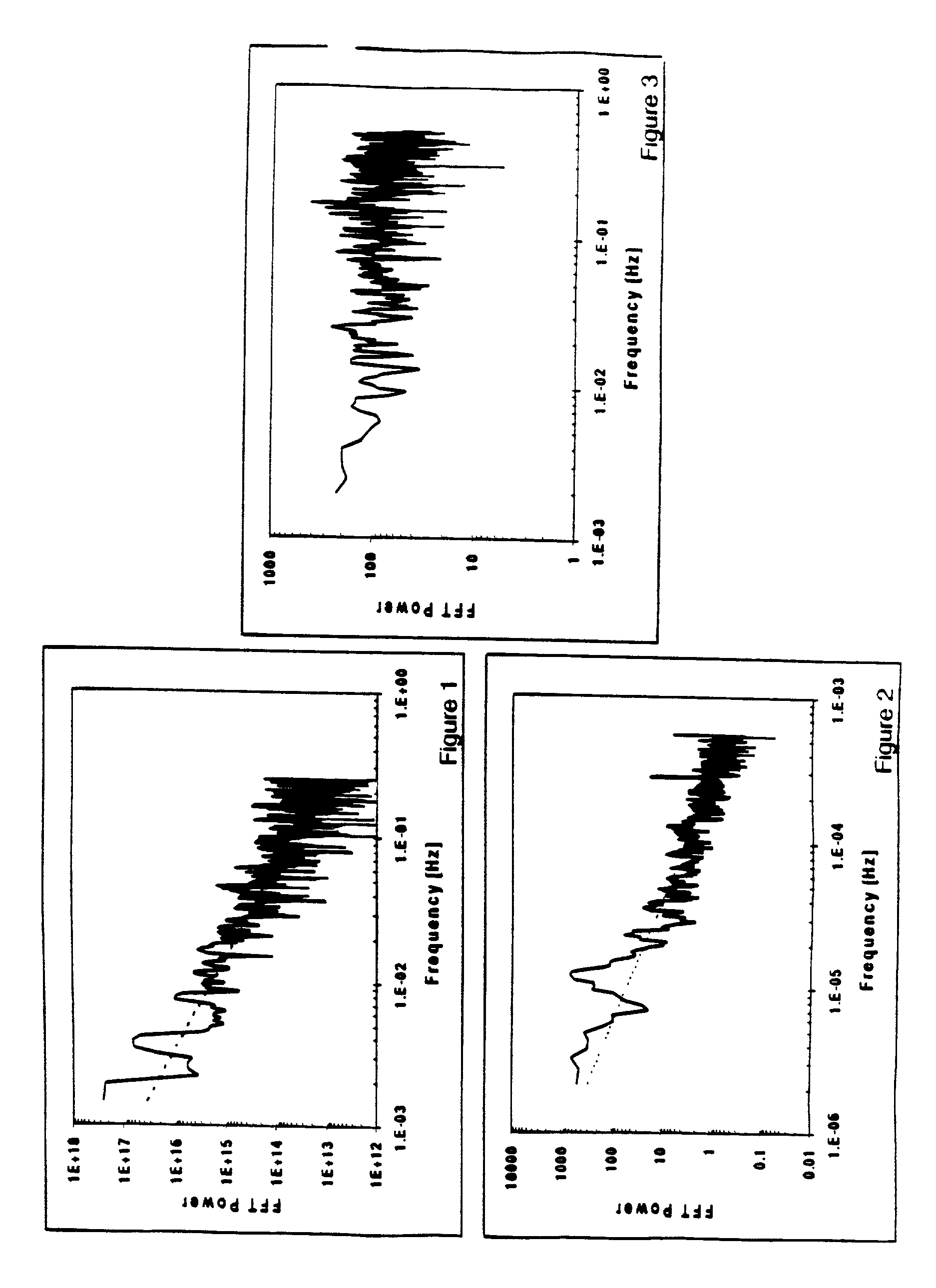

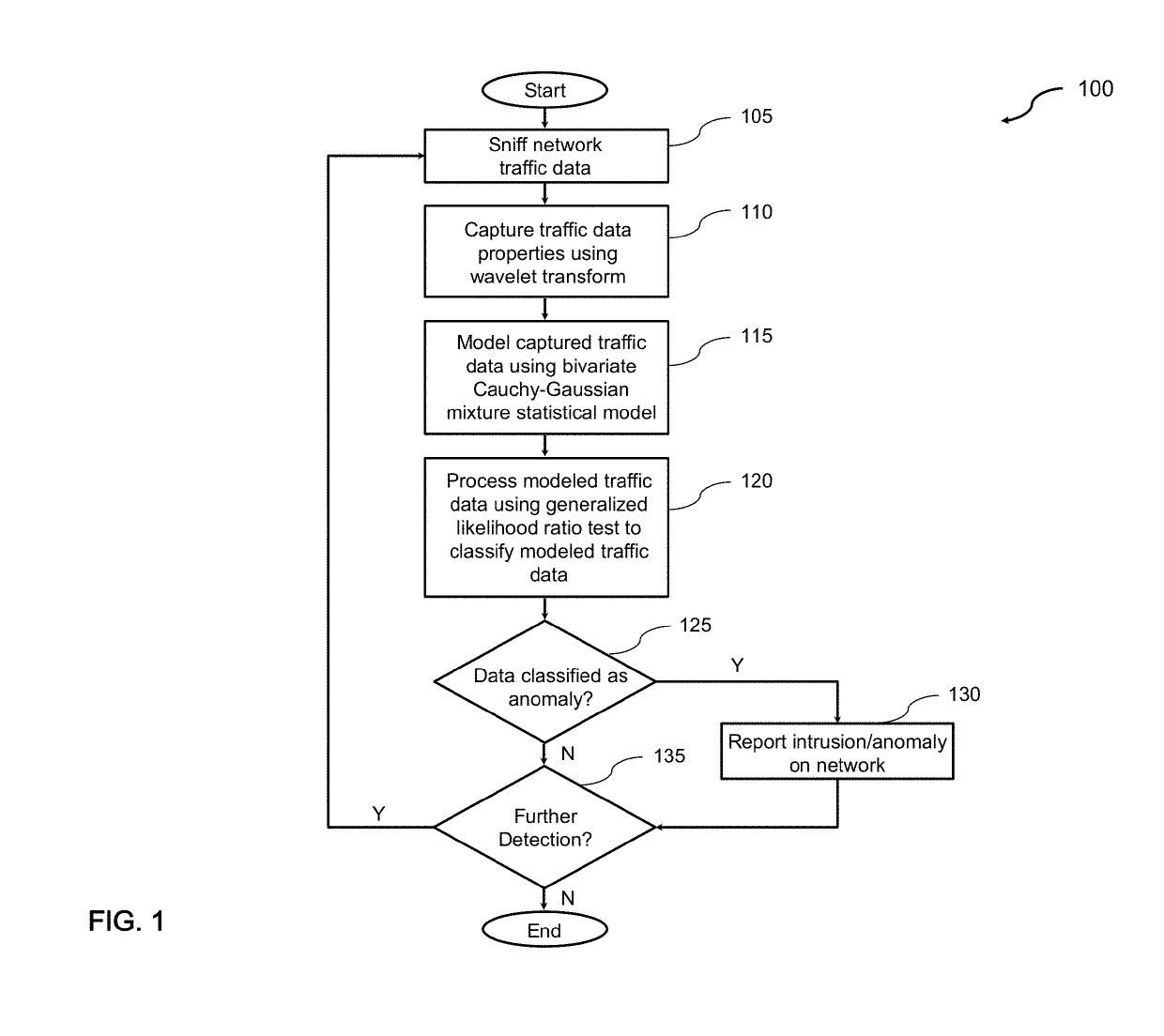

Generalized likelihood ratio test (GLRT) based network intrusion detection system in wavelet domain

ActiveUS20190158522A1Improve accuracyIncrease analytical tractabilityMathematical modelsComputer security arrangementsFast algorithmLikelihood-ratio test

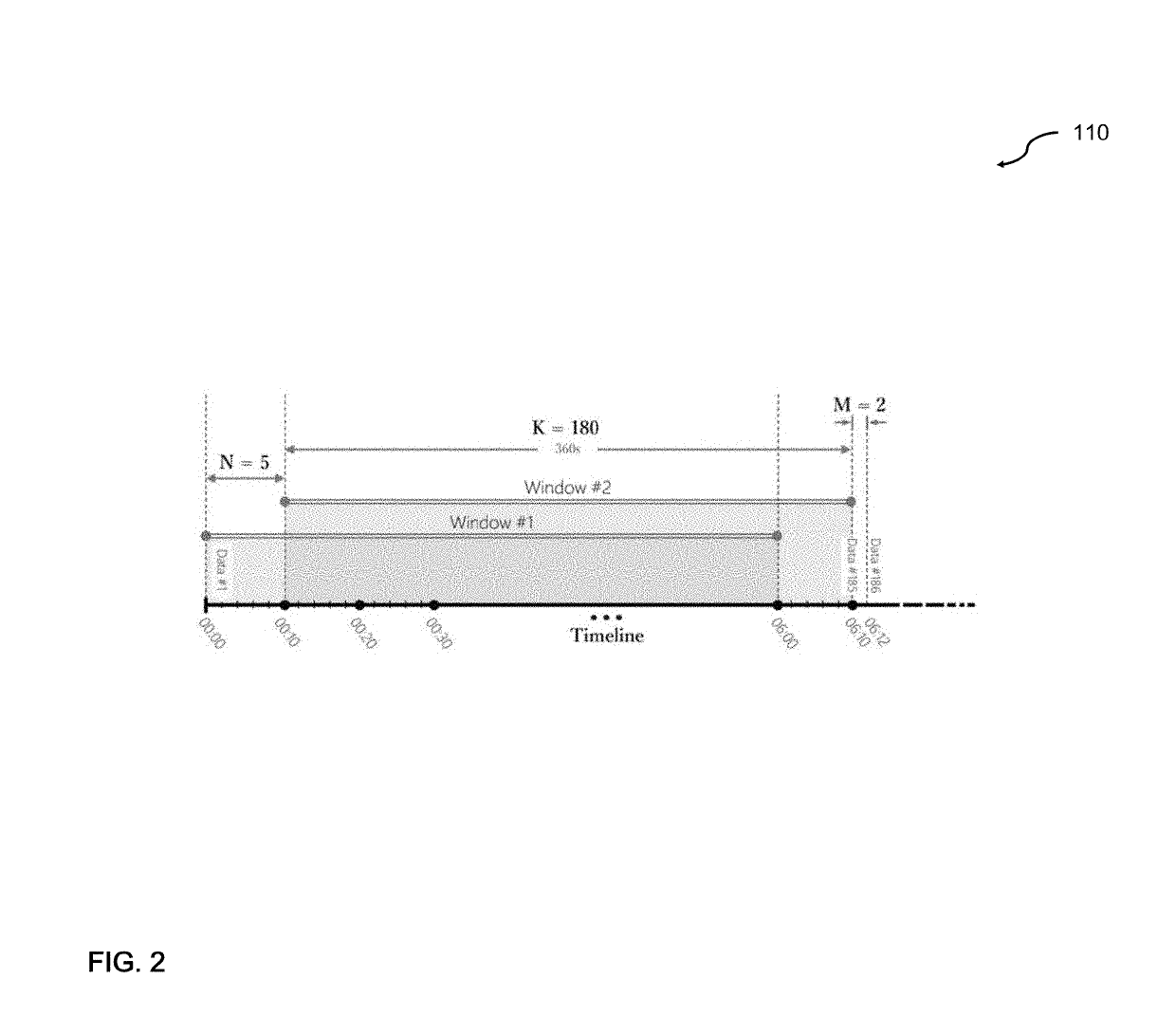

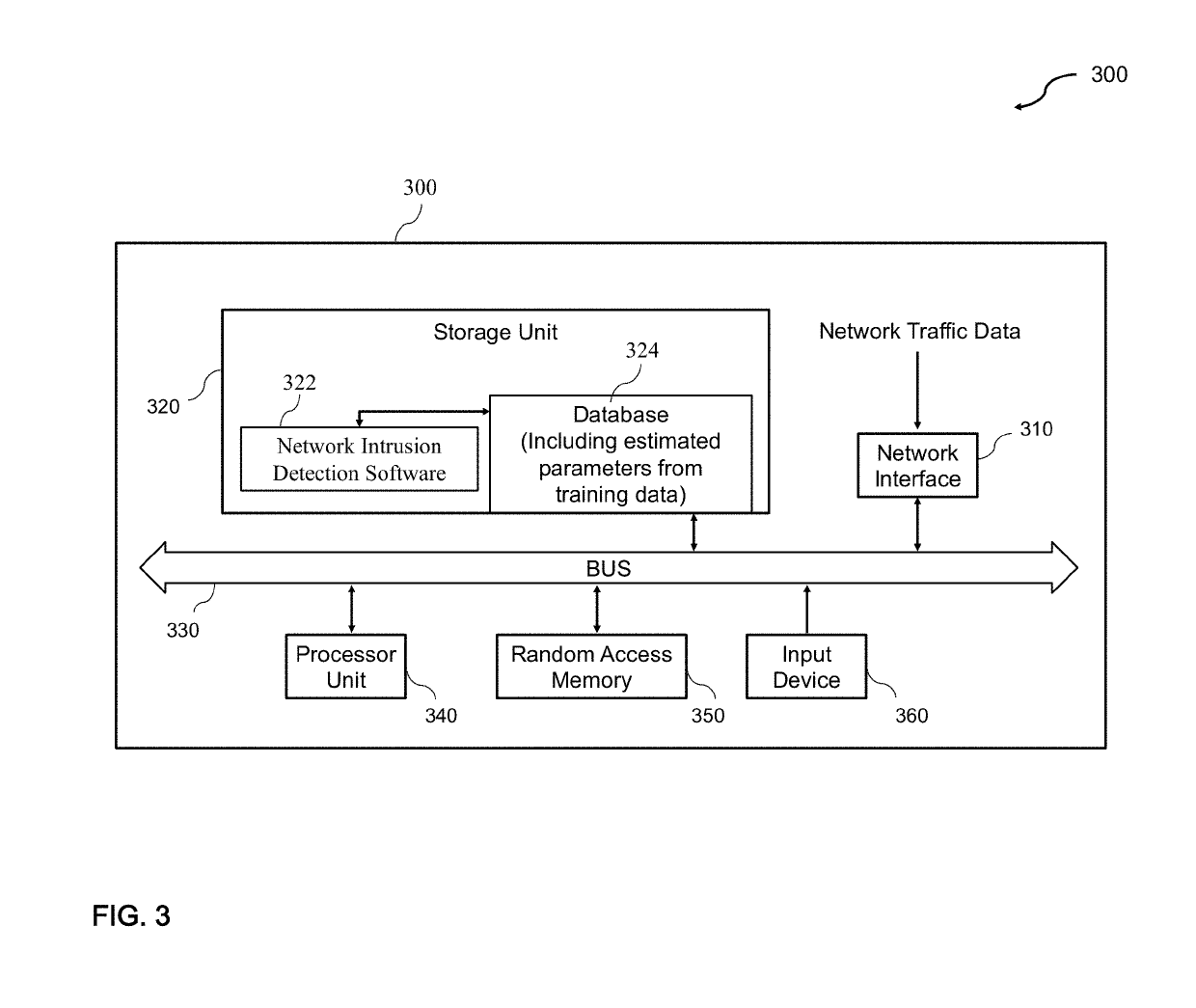

An improved system and method for detecting network anomalies comprises, in one implementation, a computer device and a network anomaly detector module executed by the computer device arranged to electronically sniff network traffic data in an aggregate level using a windowing approach. The windowing approach is configured to view the network traffic data through a plurality of time windows each of which represents a sequence of a feature including packet per second or flow per second. The network anomaly detector module is configured to execute a wavelet transform for capturing properties of the network traffic data, such as long-range dependence and self-similarity. The wavelet transform is a multiresolution transform, and can be configured to decompose and simplify statistics of the network traffic data into a simplified and fast algorithm. The network anomaly detector module is also configured to execute a bivariate Cauchy-Gaussian mixture (BCGM) statistical model for processing and modeling the network traffic data in the wavelet domain. The BCGM statistical model is an approximation of α-stable model, and offers a closed-form expression for probability density function to increase accuracy and analytical tractability, and to facilitate parameter estimations when compared to the α-stable model. Finally, the network anomaly detector module is further configured to execute a generalized likelihood ratio test for detecting the network anomalies.

Owner:AMIRMAZLAGHANI MARYAM +2

Method and apparatus for configuring search space of a downlink control channel

ActiveUS9155091B2Increase flexibilityIncrease the stationError preventionSignal allocationResource elementAggregate level

A method for configuring a search space of a downlink control channel is provided. The method includes determining the parameters for Enhanced Physical Downlink Control Channel (E-PDCCH) candidates of each aggregate level according to the number of Resource Elements (RE) in a subframe and / or the number of bits of Downlink Control Information (DCI) formats, when the parameters for E-PDCCH candidates of E-PDCCH search space is configured, determining, by a User Equipment (UE), the parameters for E-PDCCH candidates according to a current downlink subframe and a detected DCI format, and detecting blindly, by the UE, the E-PDCCH candidates in the E-PDCCH search space corresponding to the parameters for E-PDCCH candidates. The present invention also provides a UE and a base station. Application of the present invention can improve the flexibility of the base station scheduling, and reduce the possibility that the E-PDCCHs of different UEs block each other.

Owner:SAMSUNG ELECTRONICS CO LTD

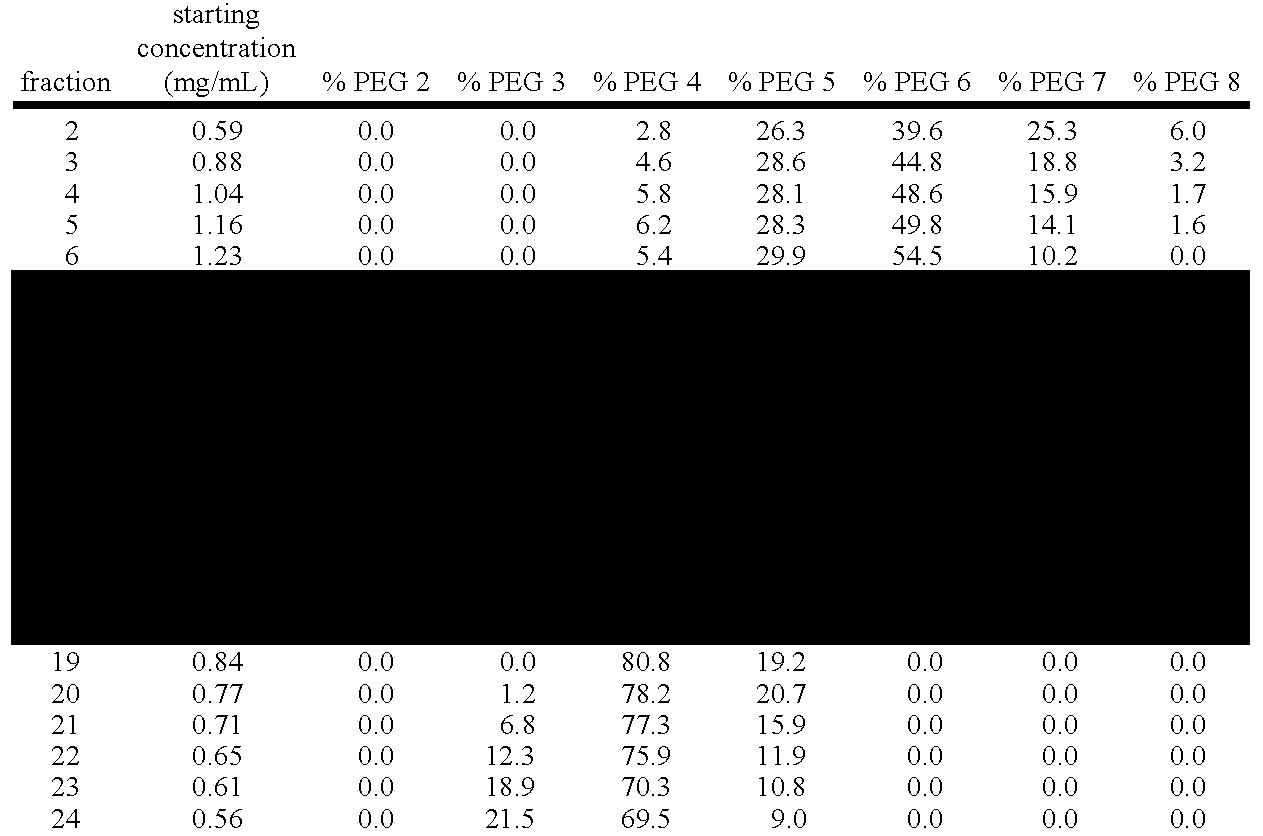

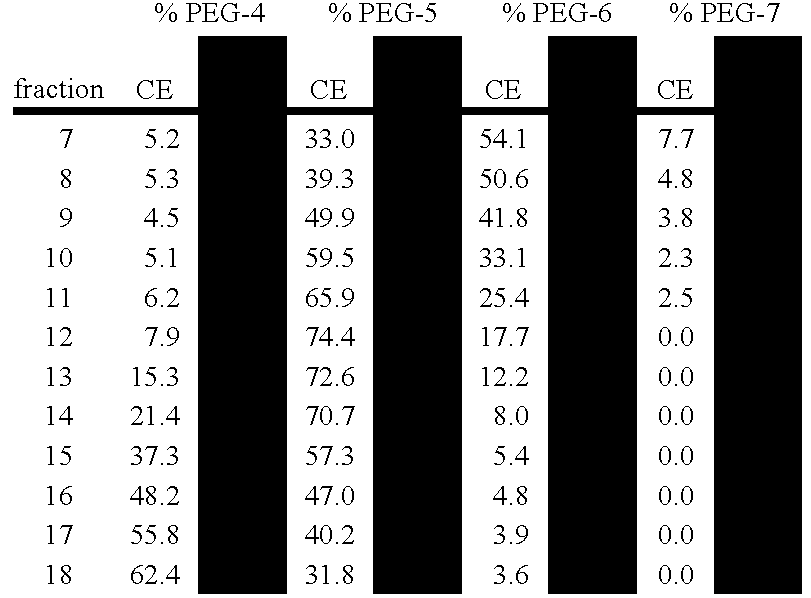

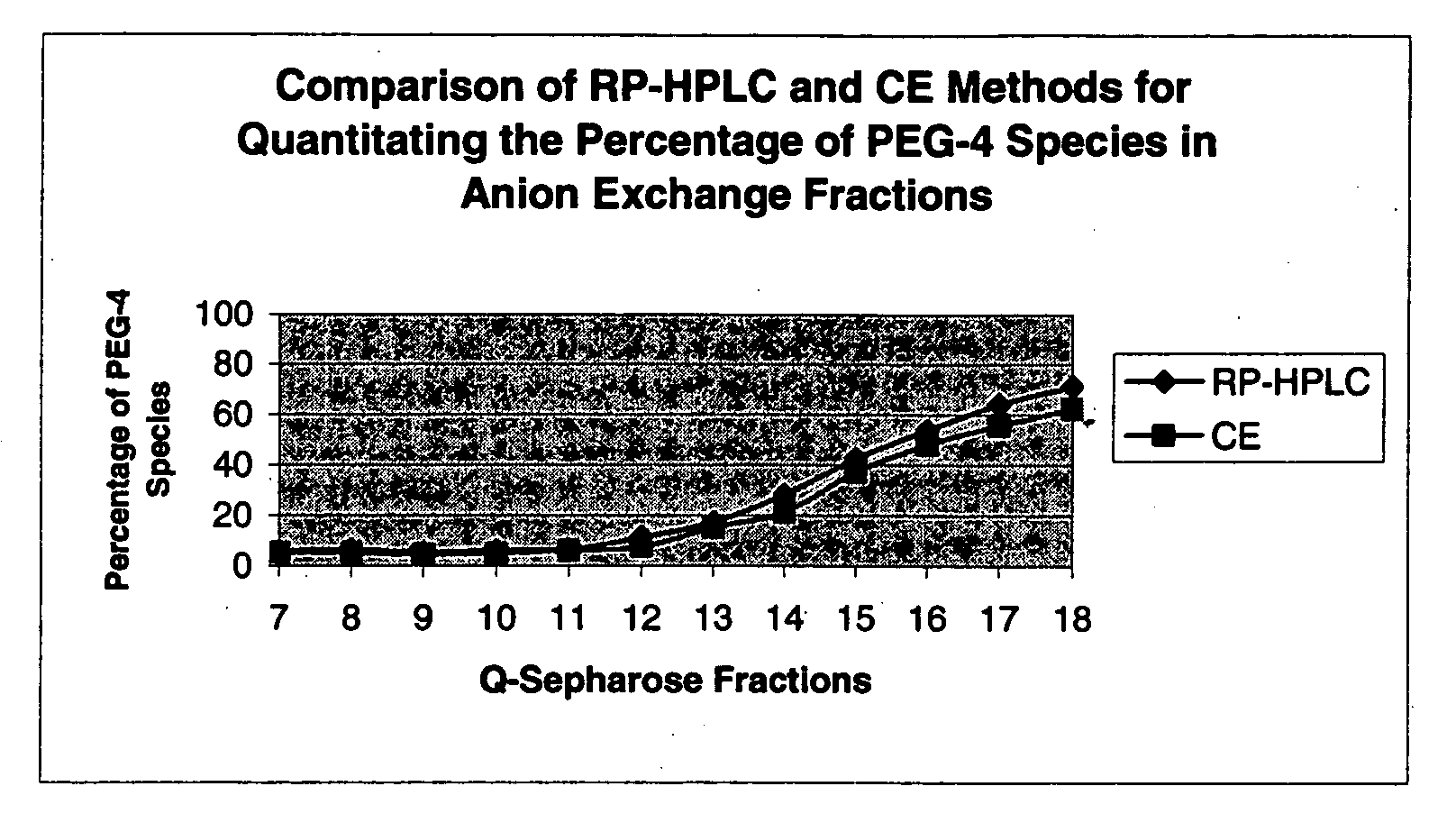

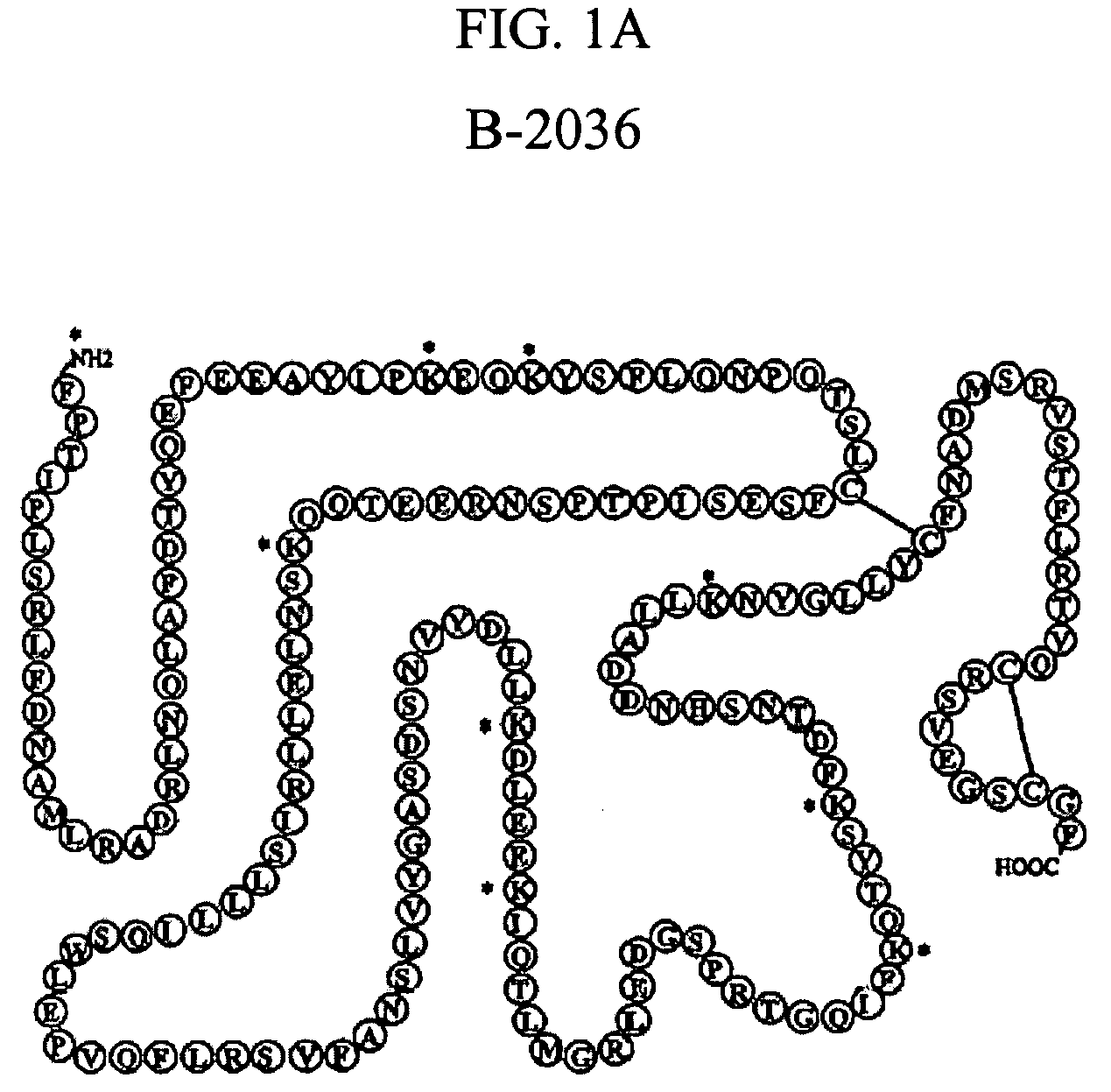

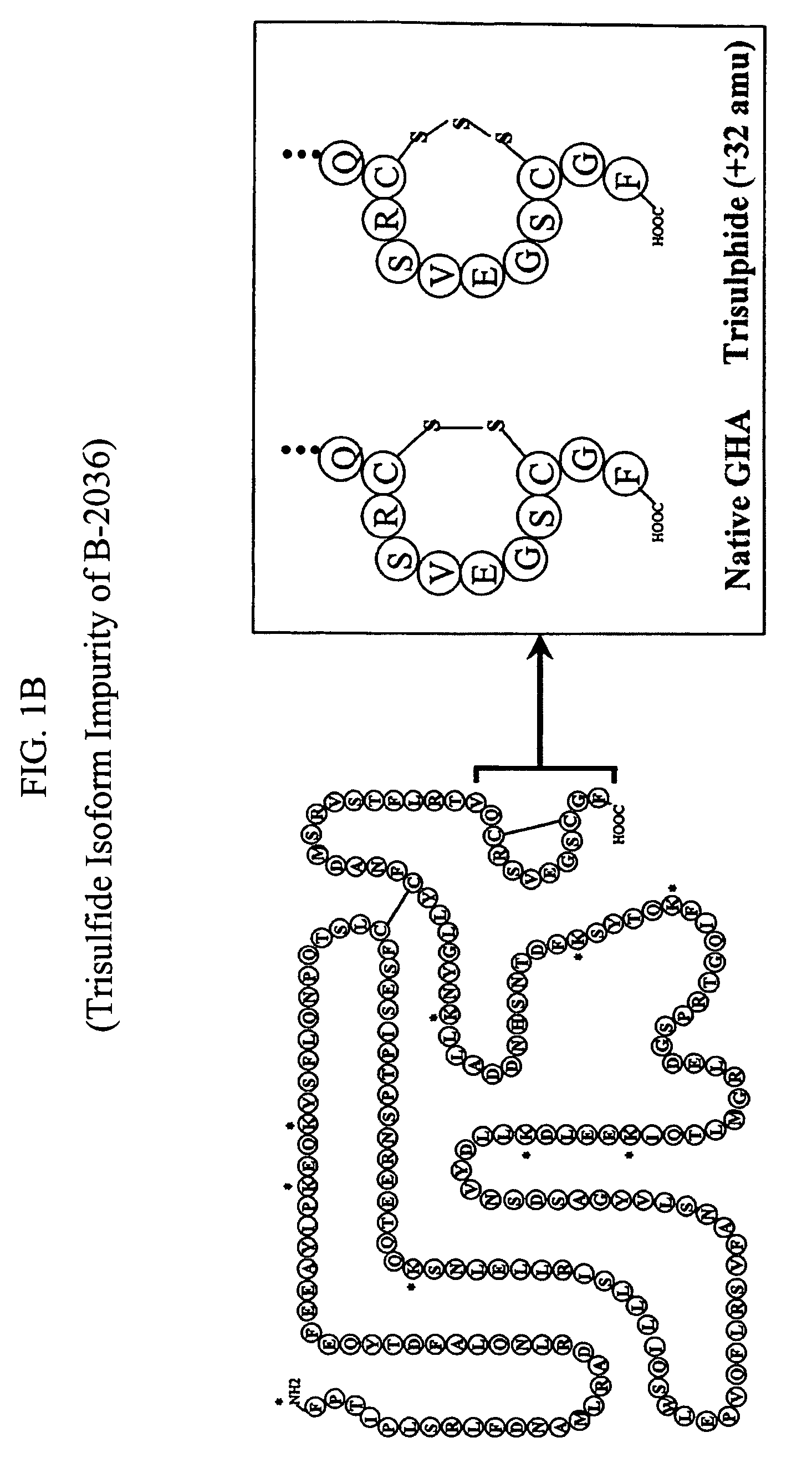

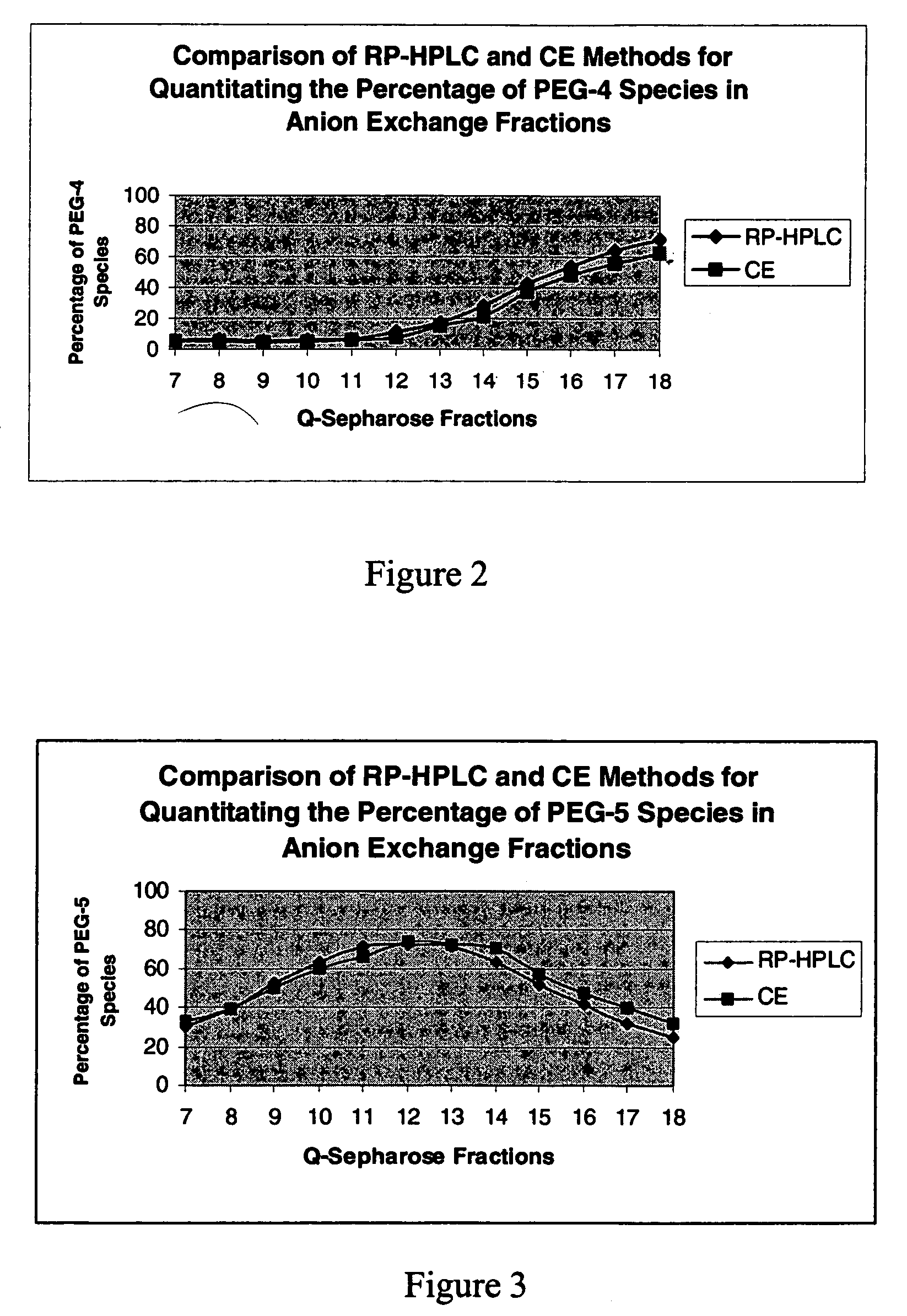

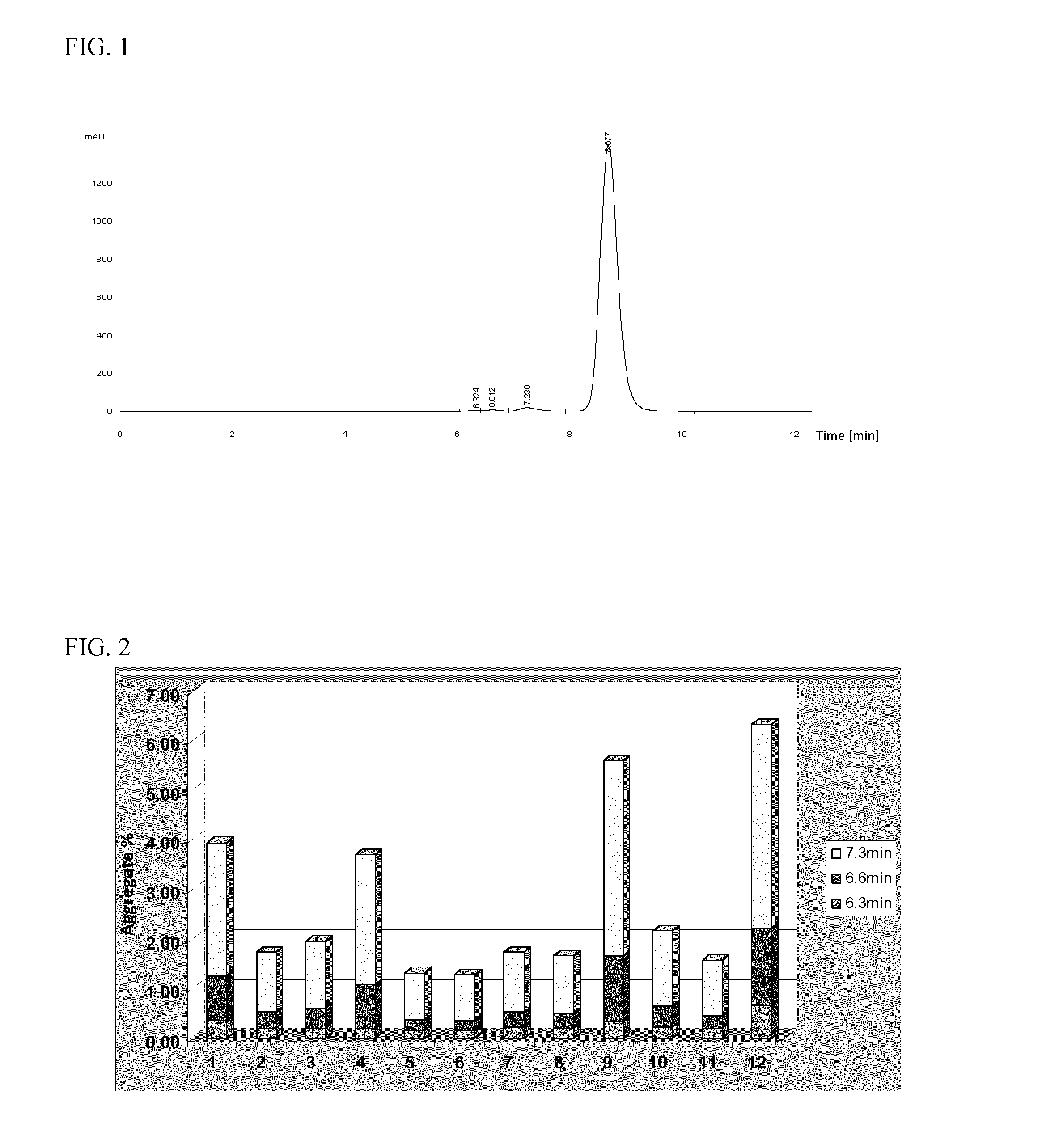

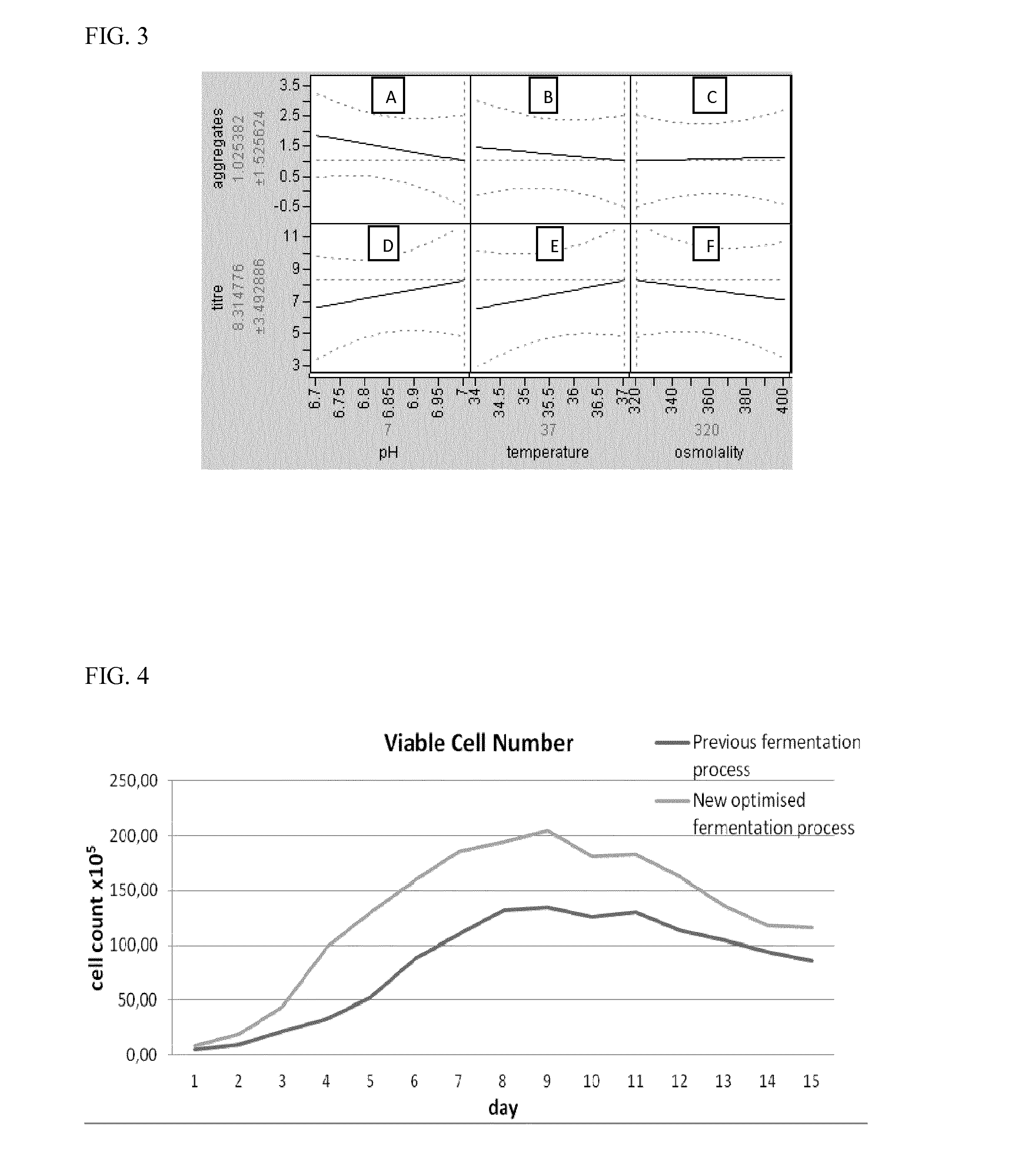

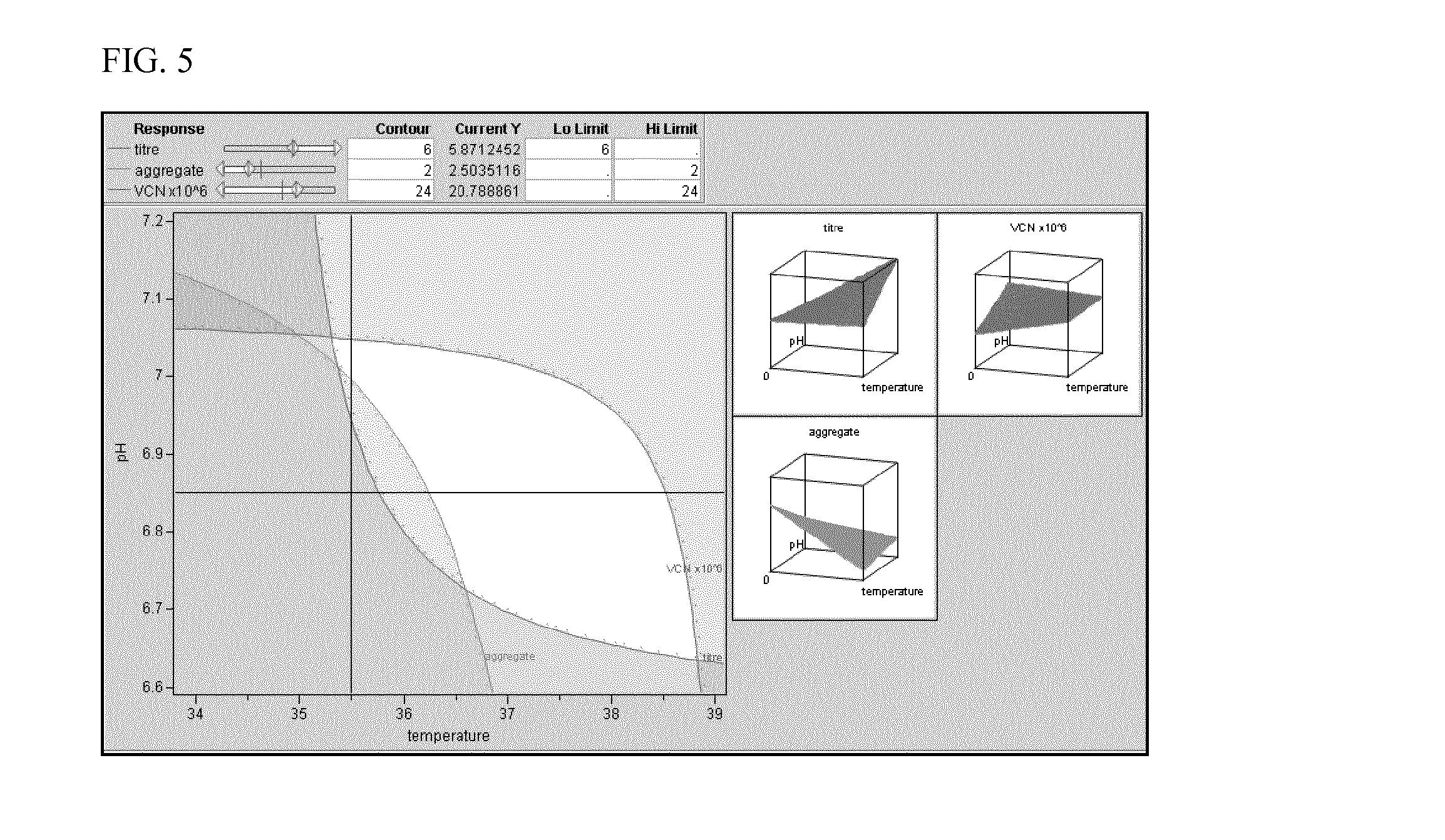

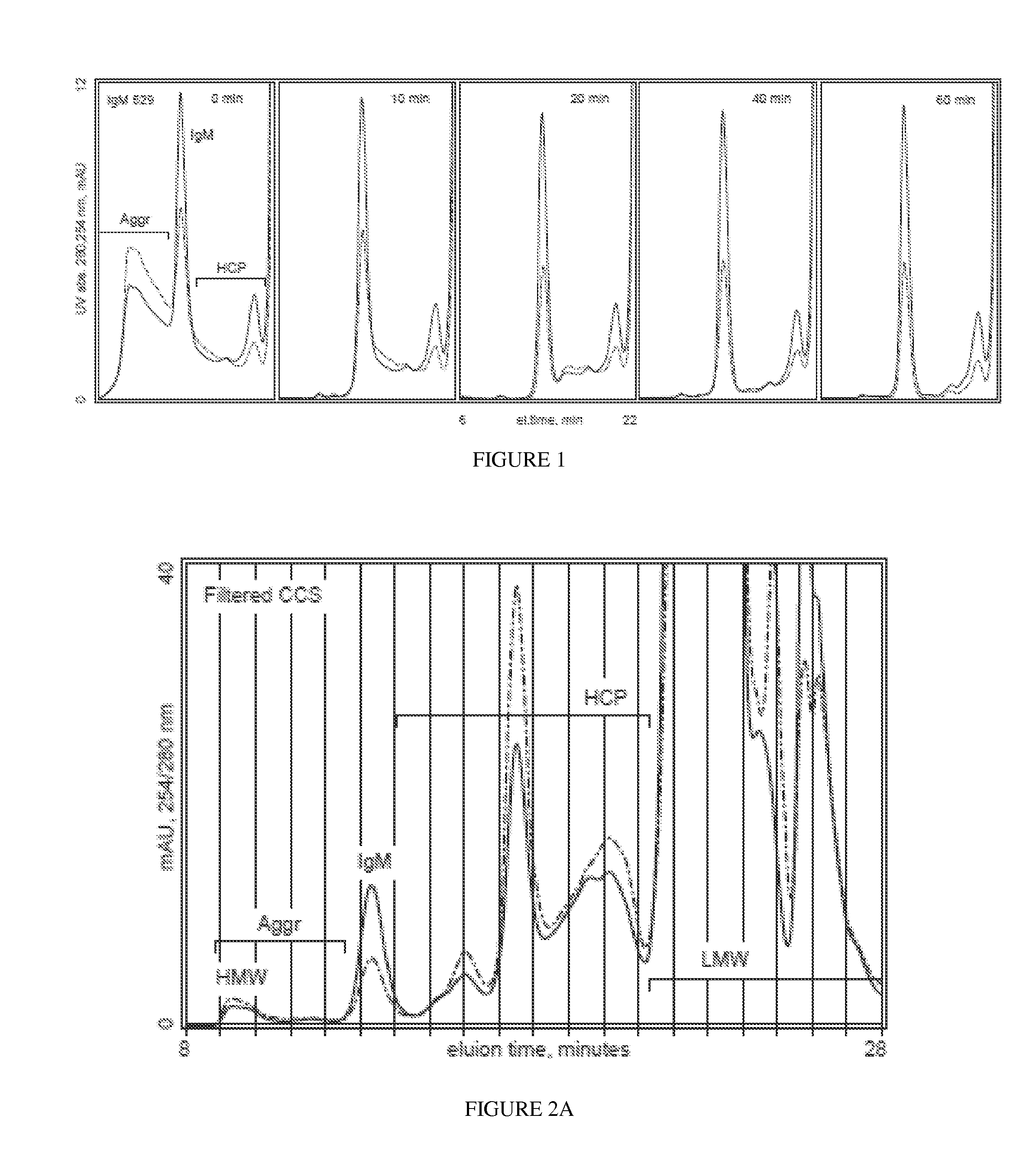

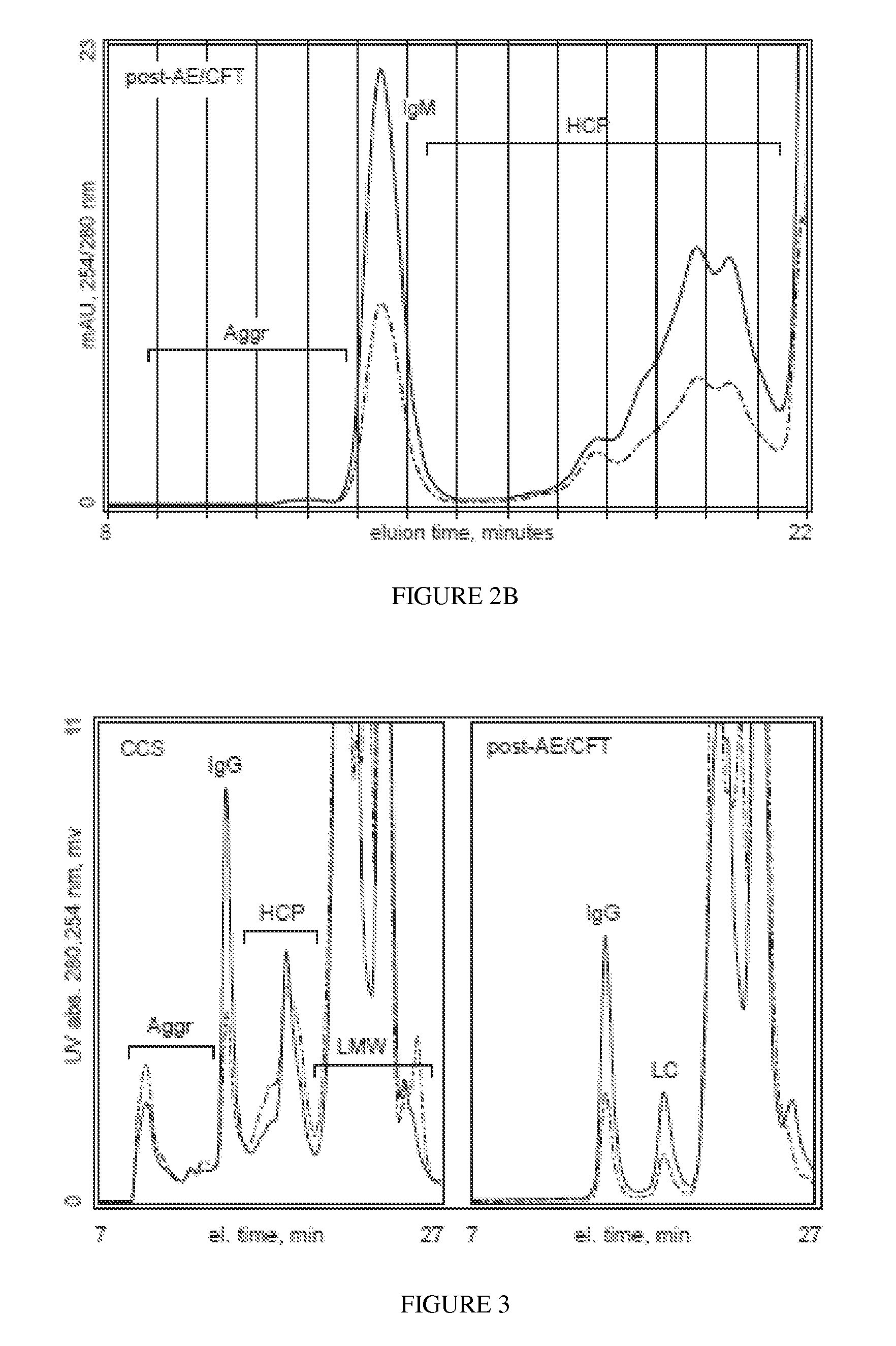

Process for reducing antibody aggregate levels and antibodies produced thereby

InactiveUS20150005475A1Reduce aggregationImmunoglobulins against growth factorsFermentationMonoclonal antibodyAggregate level

The disclosure provides a method of reducing aggregates in a preparation of monoclonal antibody by modifying at least three parameters in the bioreactor culture process.

Owner:MEDIMMUNE LTD

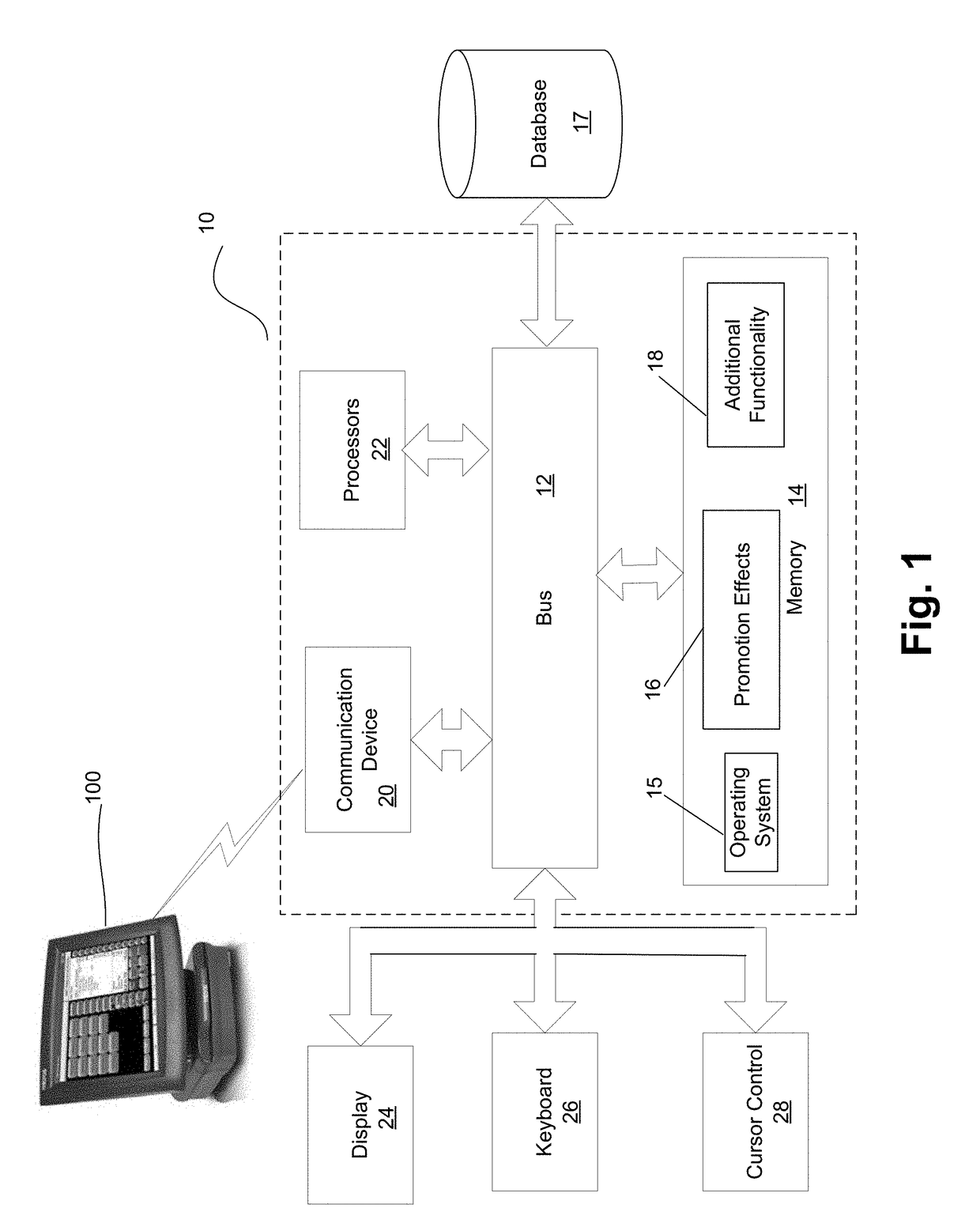

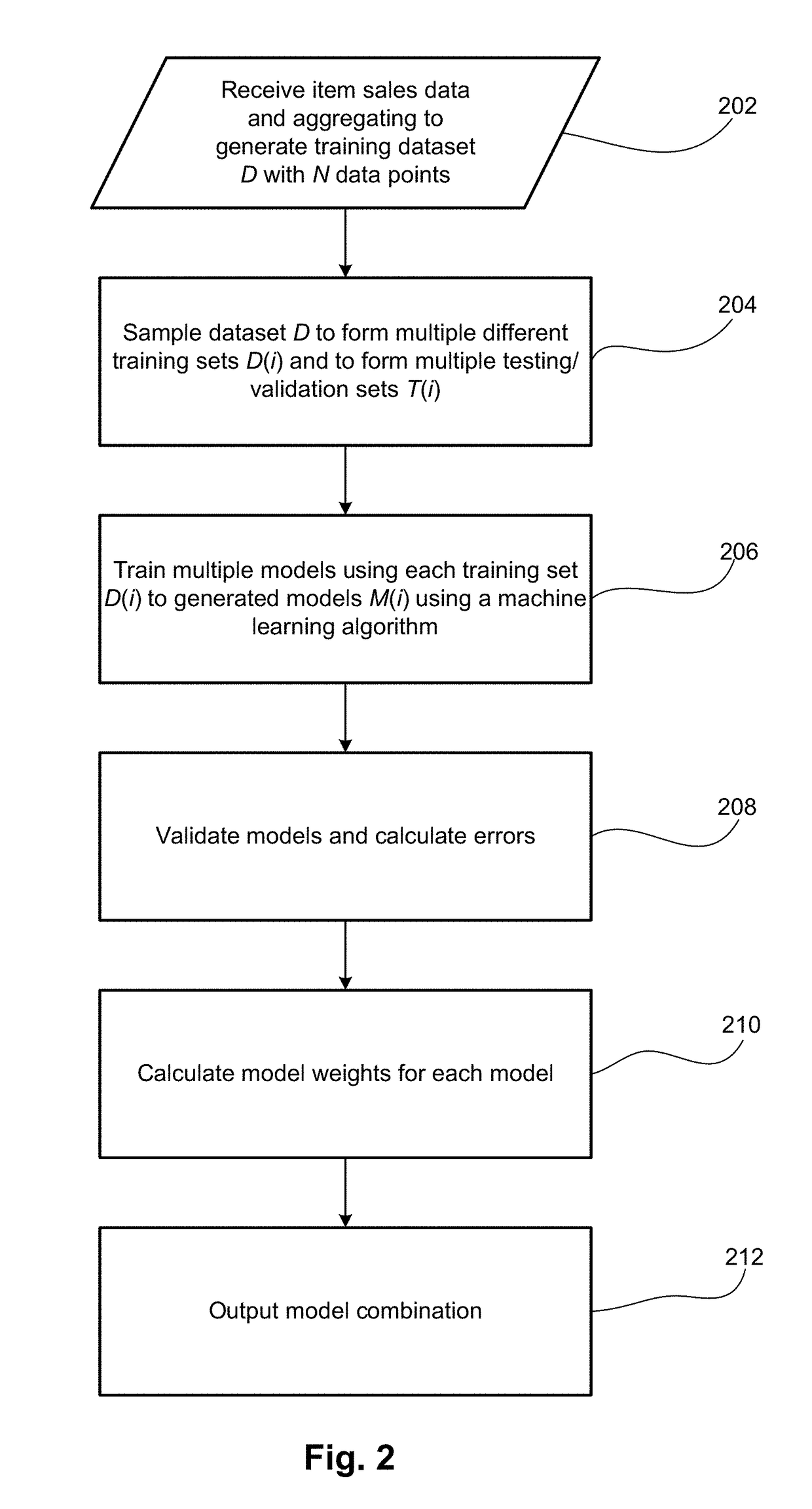

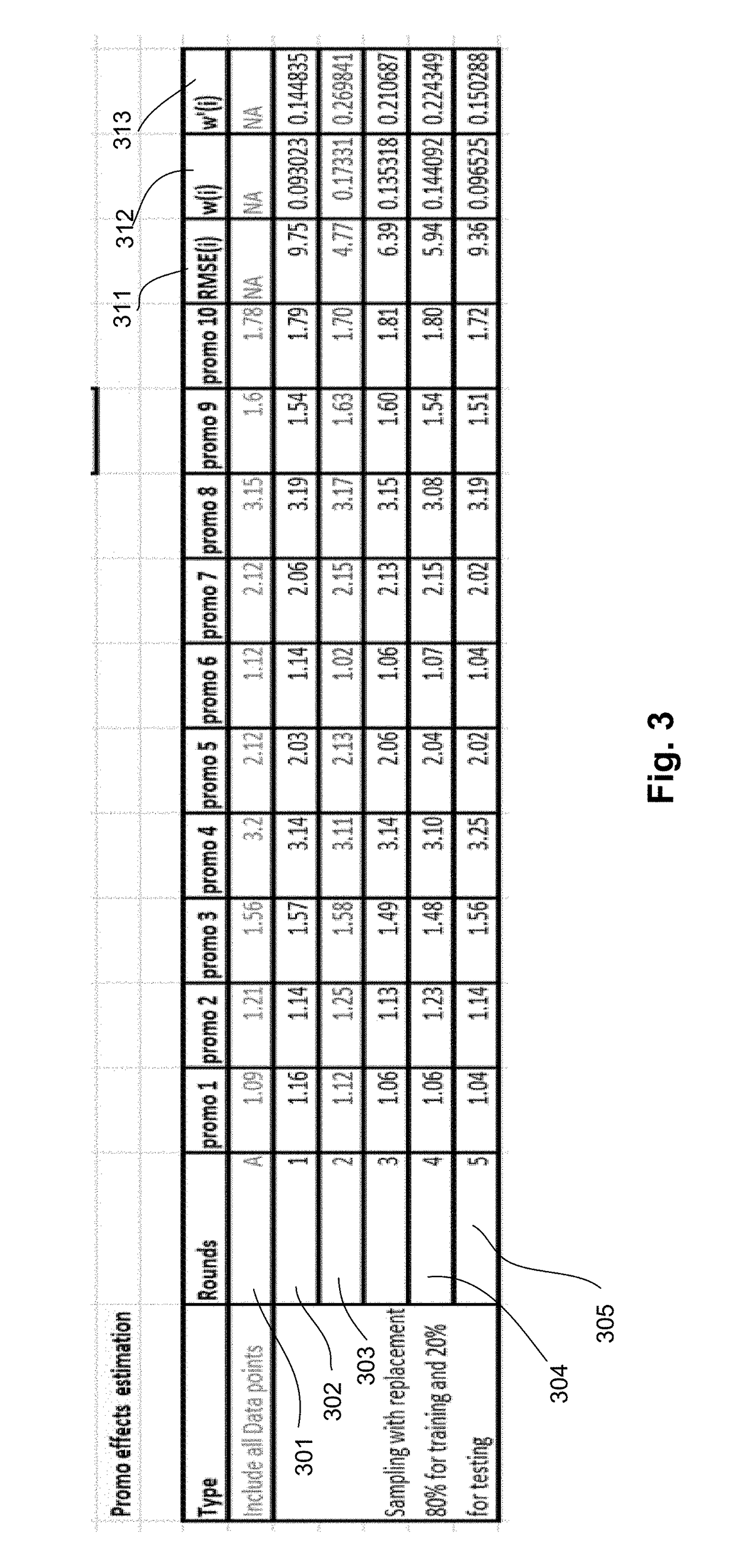

Promotion effects determination at an aggregate level

A system for forecasting sales of a retail item receives historical sales data of a class of a retail item, the historical sales data including past sales and promotions of the retail item across a plurality of past time periods. The system aggregates the historical sales to form a training dataset having a plurality of data points. The system randomly samples the training dataset to form a plurality of different training sets and a plurality of validation sets that correspond to the training sets, where each combination of a training set and a validation set forms all of the plurality of data points. The system trains multiple models using each training set, and using each corresponding validation set to validate each trained model and calculate an error. The system then calculates model weights for each model, outputs a model combination including for each model a forecast and a weight, and generates a forecast of future sales based on the model combination.

Owner:ORACLE INT CORP

System using footprints in system log files for monitoring transaction instances in real-time network

InactiveUS7908365B2Error detection/correctionDigital computer detailsTransaction modelAggregate level

Owner:INT BUSINESS MASCH CORP

Methods for reducing levels of protein-contaminant complexes and aggregates in protein preparations by treatment with electropositive organic additives

InactiveUS20150148526A1Reduce hydrophobic interactionsImprove abilitiesFactor VIIPeptide preparation methodsPurification methodsOrganic solvent

Methods for reduction of aggregate levels in antibody and other protein preparations through treatment with low concentrations of electropositive organic additives (e.g., ethacridine, chlorhexidine, or polyethylenimine) in combination with ureides (e.g., urea, uric acid, or allantoin) or organic modulators (e.g., nonionic organic polymers, surfactants, organic solvent or ureides). Some aspects of the invention relate to methods for reducing the level of aggregates in conjunction with clarification of cell culture harvest. It further relates to the integration of these capabilities with other purification methods to achieve the desired level of final purification.

Owner:AGENCY FOR SCI TECH & RES

Semi-aromatic polyamide and preparation method thereof

The invention discloses semi-aromatic polyamide and a preparation method thereof. The preparation method comprises the following steps that (a), dicarboxylic acid and diamine are subjected to a neutralization reaction with water as the solvent, and a semi-aromatic polyamide salt solution is formed; (b), the semi-aromatic polyamide salt solution obtained in the step (a) is further subjected to warming, polycondensation of the solution is promoted by continuous drainage, and after prepolymerization of the solution, semi-aromatic polyamide prepolymer is obtained; (c), the semi-aromatic polyamide prepolymer obtained in the step (b) is subjected to a viscosity-enhancing reaction, and the semi-aromatic polyamide is obtained. According to the preparation method, the aggregate-level dicarboxylic acid and diamine are adopted, so that the operational process is simplified; a diamine online separation recovery device is used for conducting separation and recycling on the diamine in a prepolymerization process, so that the volatilization loss of the diamine along with water vapor is effectively reduced, and pollution generation is avoided; the molar ratio of the diamine to the dicarboxylic acid in the prepolymerization process is kept, so that the yield coefficient is increased, and the product with stable quality is obtained.

Owner:ZHEJIANG NHU SPECIAL MATERIALS

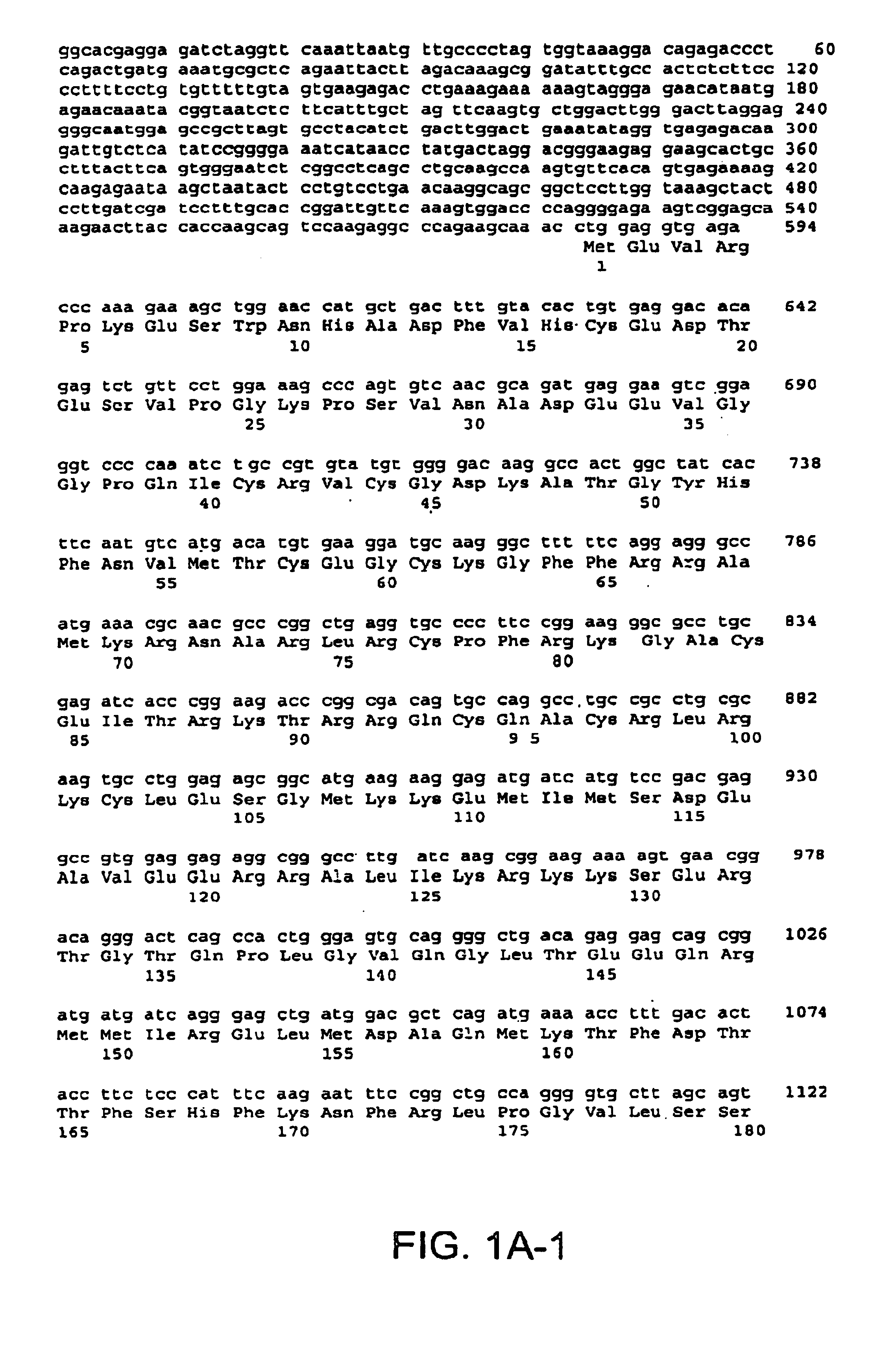

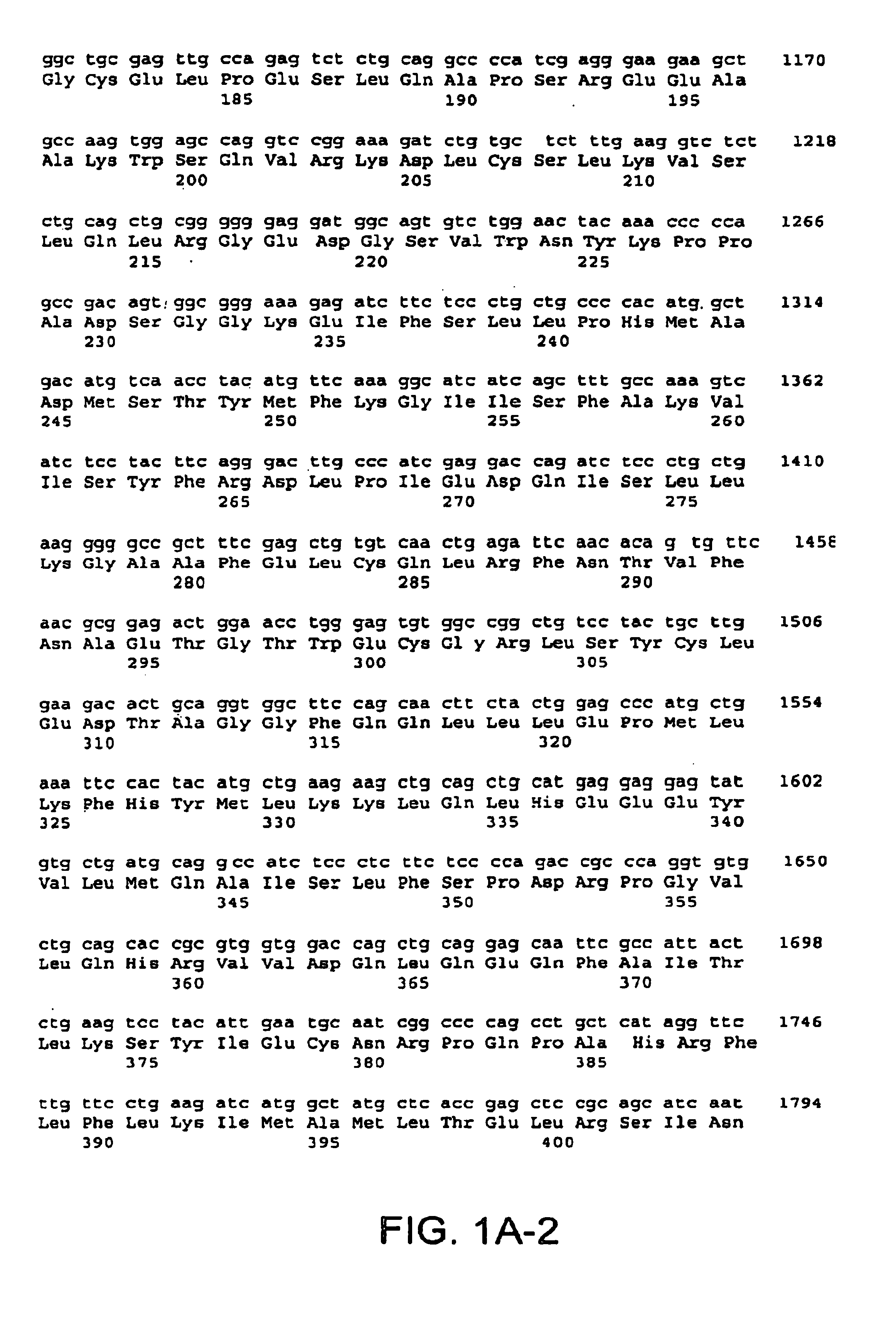

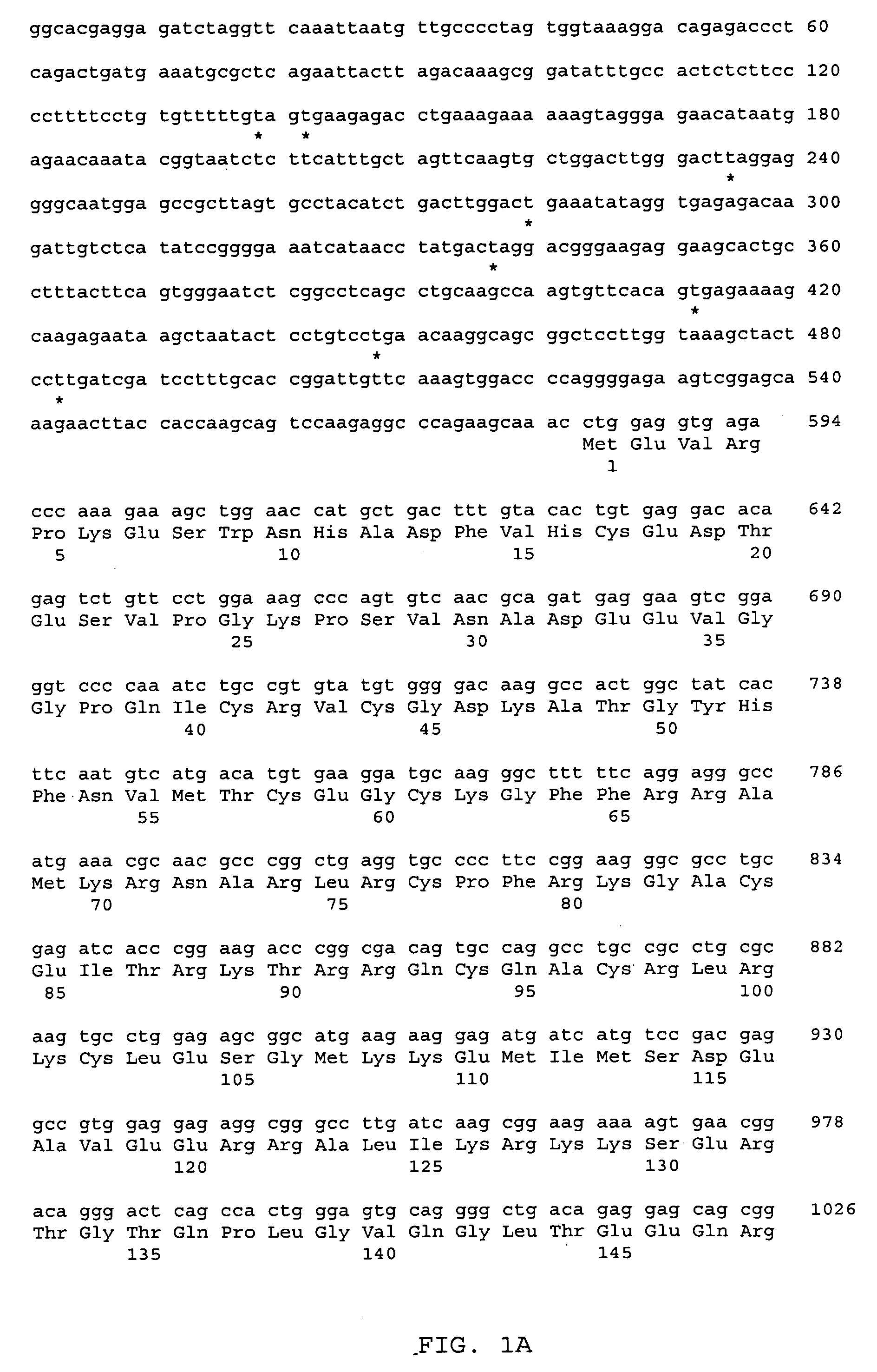

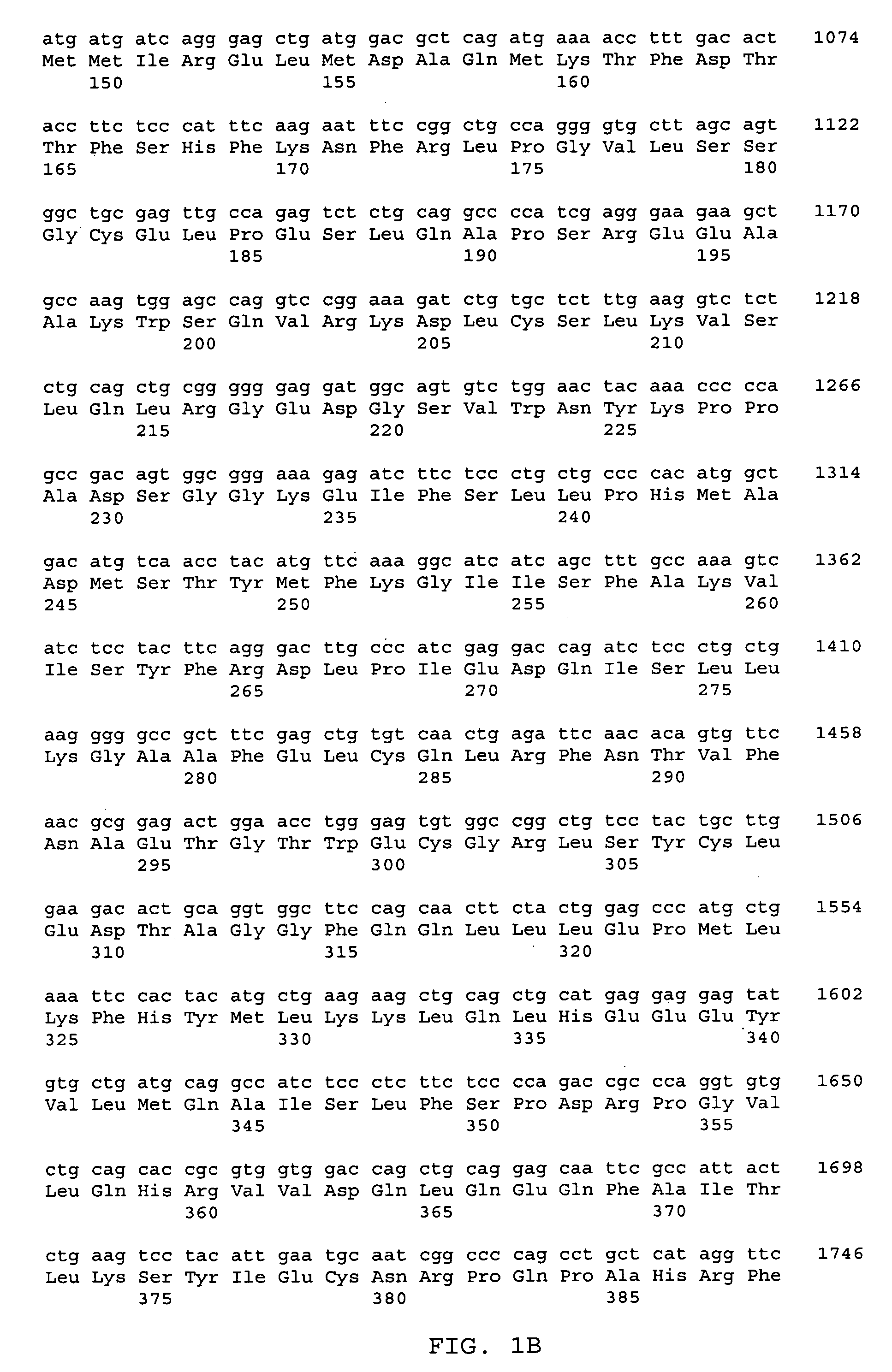

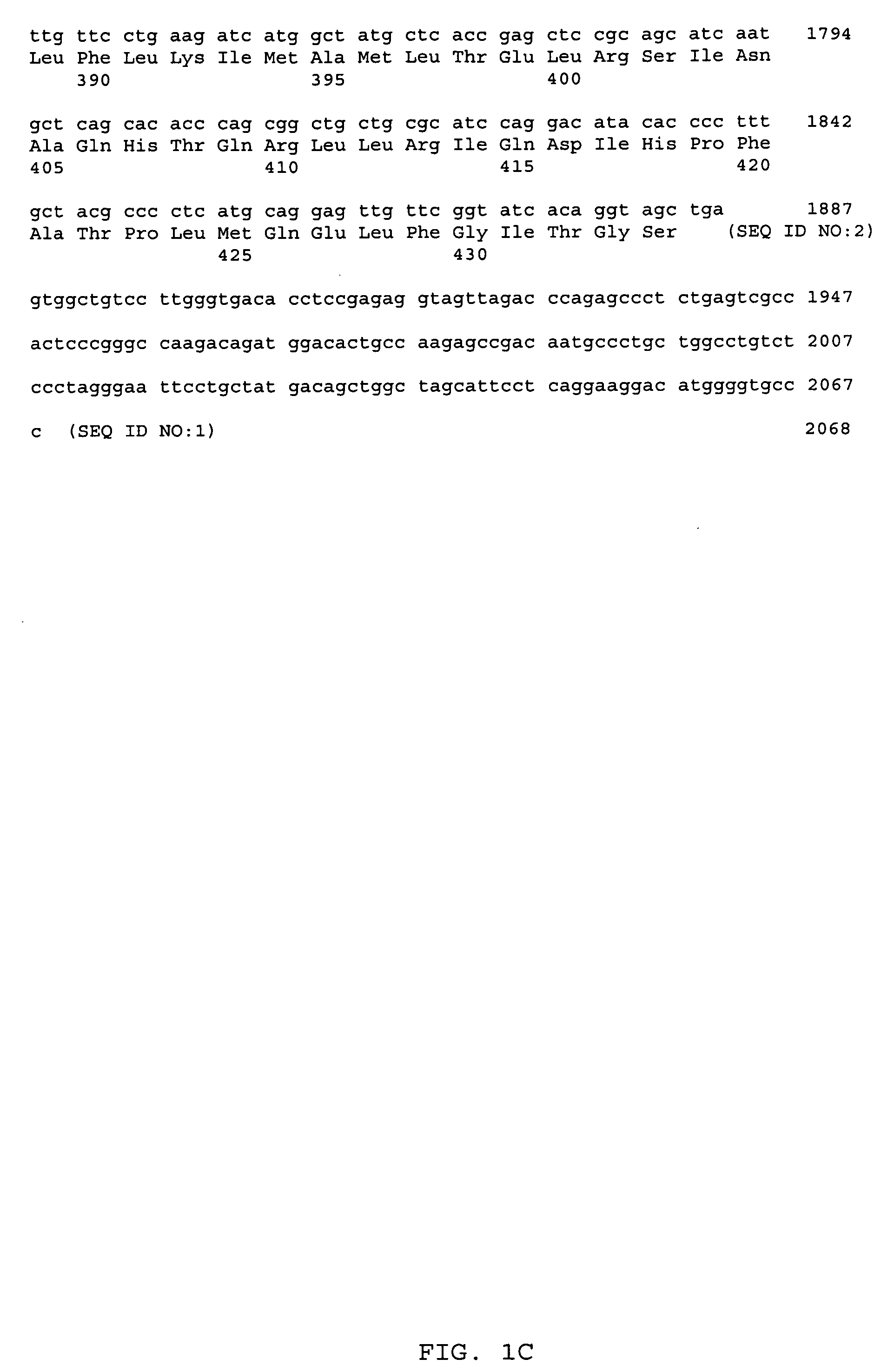

Transgenic mice expressing a human SXR receptor polypeptide

A novel nuclear receptor, termed the steroid and xenobiotic receptor (SXR), a broad-specificity sensing receptor that is a novel branch of the nuclear receptor superfamily, has been discovered. SXR forms a heterodimer with RXR that can bind to and induce transcription from response elements present in steroid-inducible cytochrome P450 genes in response to hundreds of natural and synthetic compounds with biological activity, including therapeutic steroids as well as dietary steroids and lipids. Instead of hundreds of receptors, one for each inducing compound, the invention SXR receptors monitor aggregate levels of inducers to trigger production of metabolizing enzymes in a coordinated metabolic pathway. Agonists and antagonists of SXR are administered to subjects to achieve a variety of therapeutic goals dependent upon modulating metabolism of one or more endogenous steroids or xenobiotics to establish homeostasis. An assay is provided for identifying steroid drugs that are likely to cause drug interaction if administered to a subject in therapeutic amounts. Transgenic animals are also provided which express human SXR, thereby serving as useful models for human response to various agents which potentially impact P450-dependent metabolic processes.

Owner:SALK INST FOR BIOLOGICAL STUDIES

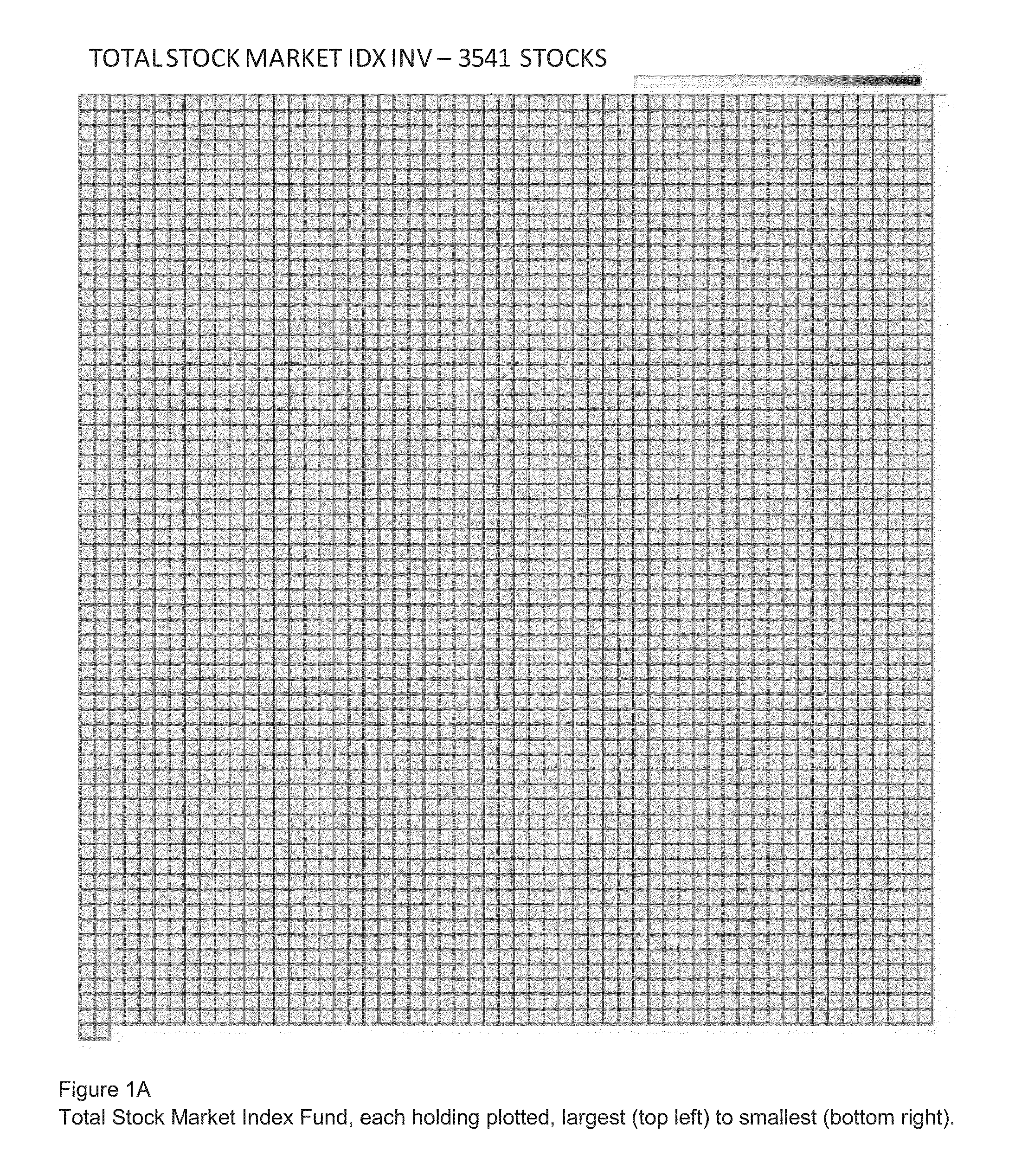

2-D and 3-D Graphical Visualization of Diversification of Portfolio Holdings

Data visualization processes are provided for expressing diversification of an investment based on each underlying holding weight in the investment relative to each holding's market capitalization weight in the investment and each holding's market capitalization weight in the broad market or by its absolute holding percentage. Visualizations can be depicted in both 2-D and 3-D formats, with area fill, volume fill, color, and / or opacity depicting percentage of coverage. Visualization can show diversification down to the individual holding level and in aggregate levels, such as mega-cap, mid-cap and small-cap.

Owner:THE VANGUARD GROUP

Methods for use of mixed multifunctional surfaces for reducing aggregate content in protein preparations

InactiveUS20150183879A1Reduce contentFactor VIIImmunoglobulins against cell receptors/antigens/surface-determinantsChemical MoietyChlorhexidine

Methods for reduction of aggregate levels in antibody and other protein preparations comprising the steps of treatment with an organic multivalent cation (e.g., ethacridine, chlorhexidine, or polyethylenimine) and treatment with a composition having a combination of surfaces bearing electronegative chemical moieties and surfaces bearing electropositive chemical moieties.

Owner:AGENCY FOR SCI TECH & RES

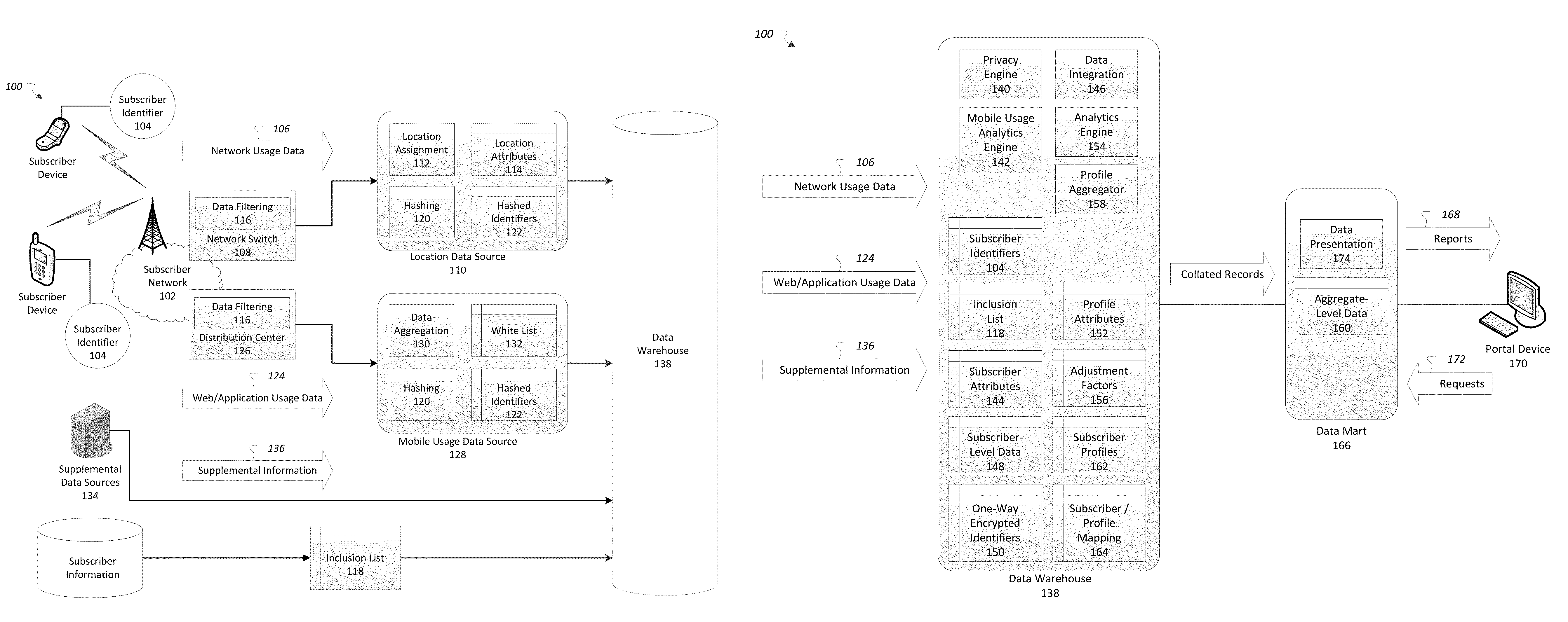

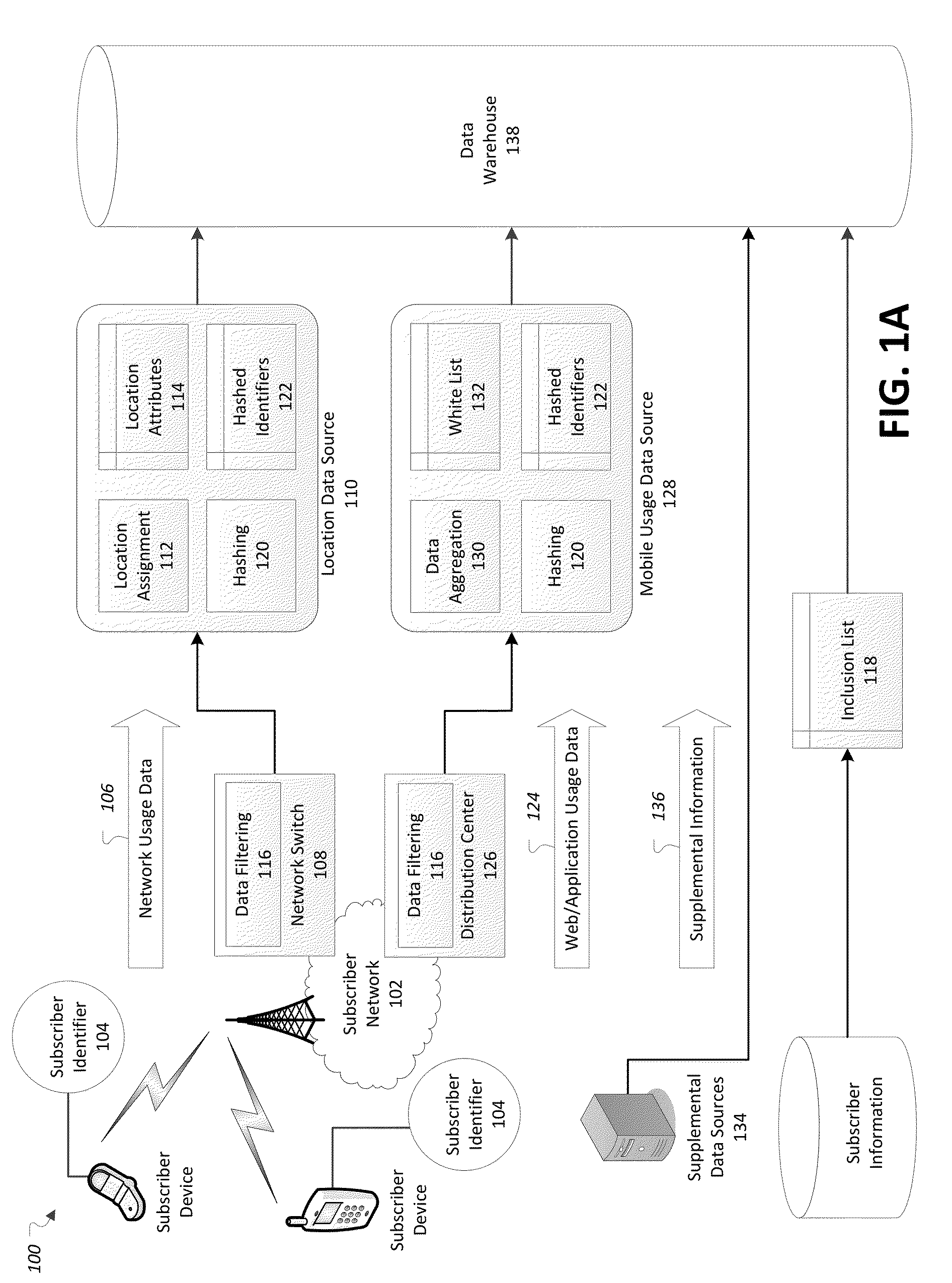

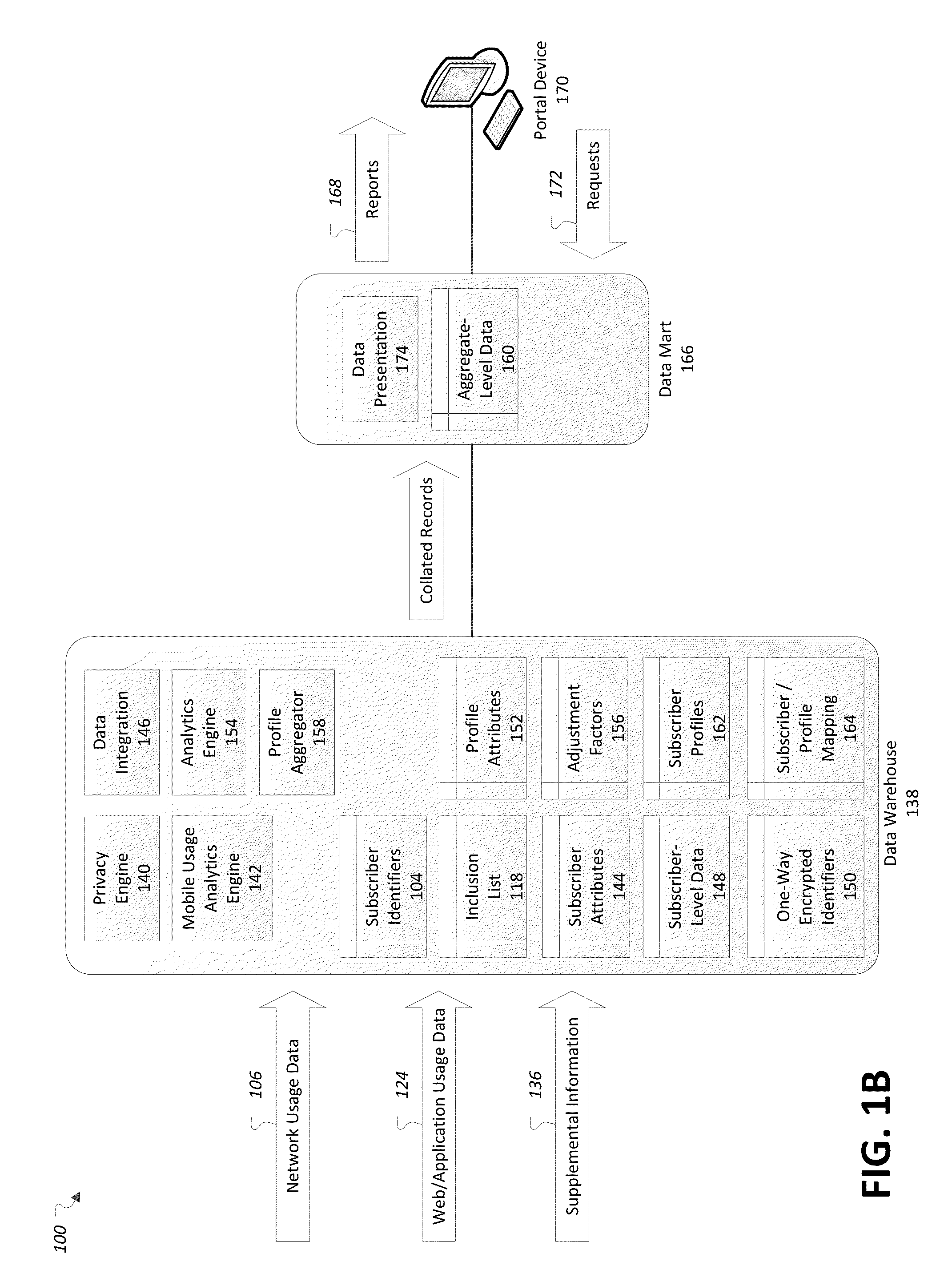

Out of home media measurement

A system may include at least one data source configured to provide network usage data indicative of the existence of communications with subscriber devices, and web and application usage data indicative of data usage of the subscriber network by the subscriber devices. The system may also include a data warehouse server configured to perform operations including correlating the network usage data and web and application usage data into subscriber-level data; associating, with the subscriber-level data, subscriber attributes indicative of a preference of the subscriber for content in a particular category of content, and profile attributes indicative of demographic characteristics of the subscriber; matching the subscriber-level data with a set of subscriber profiles, each of the set of subscriber profiles including a set of subscriber attributes and profile attributes associated with the respective subscriber profile; and aggregating the subscriber-level data into aggregate-level data according to the matching subscriber profiles.

Owner:CELLCO PARTNERSHIP INC

Novel steroid-activated nuclear receptors and uses therefor

Owner:SALK INST FOR BIOLOGICAL STUDIES

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com