Patents

Literature

44 results about "Simd architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

SIMD Defined. The SIMD architecture performs a single, identical action simultaneously on multiple data pieces, including retrieving, calculating or storing information. One example is retrieving multiple files at the same time.

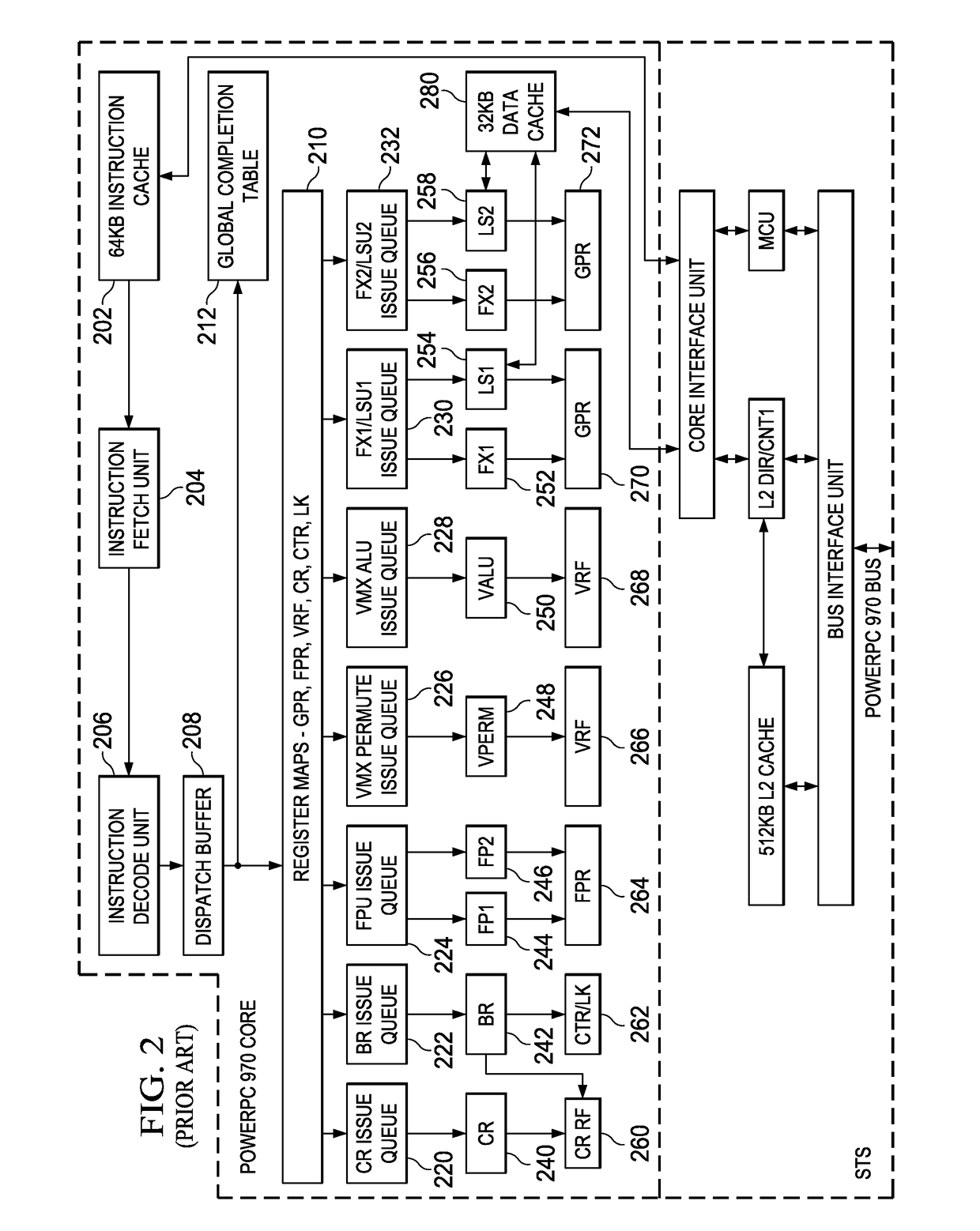

Translation of SIMD instructions in a data processing system

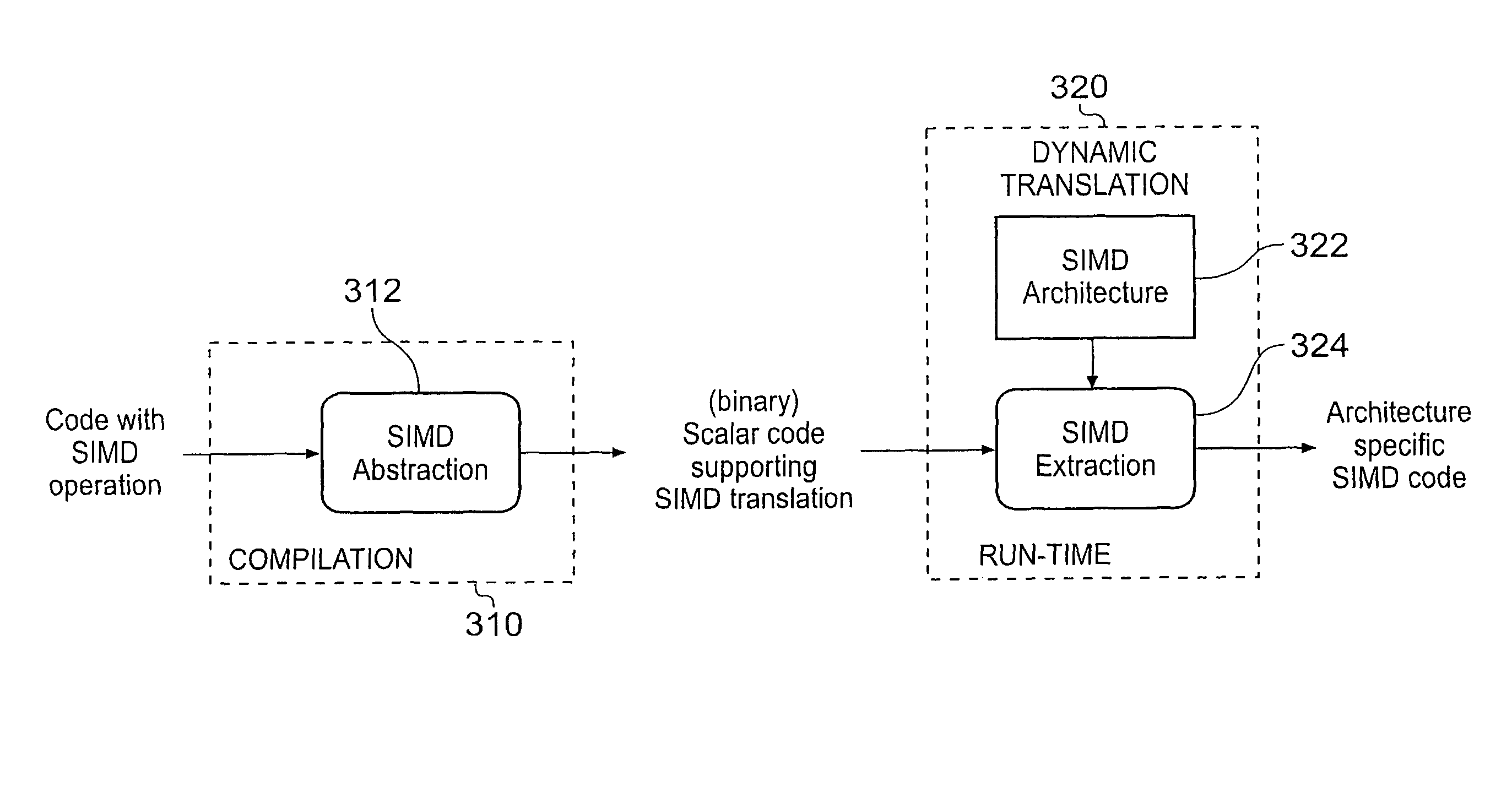

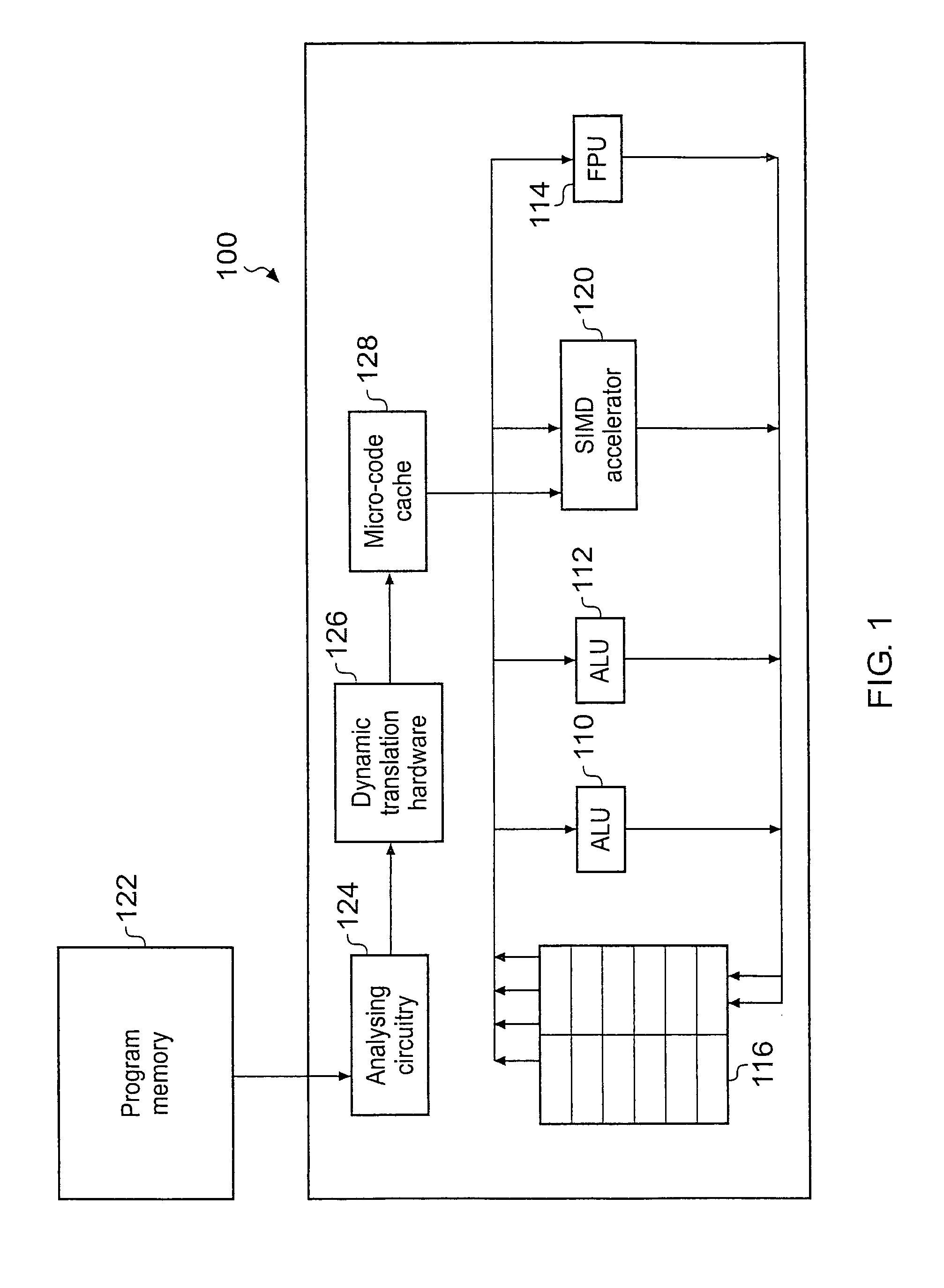

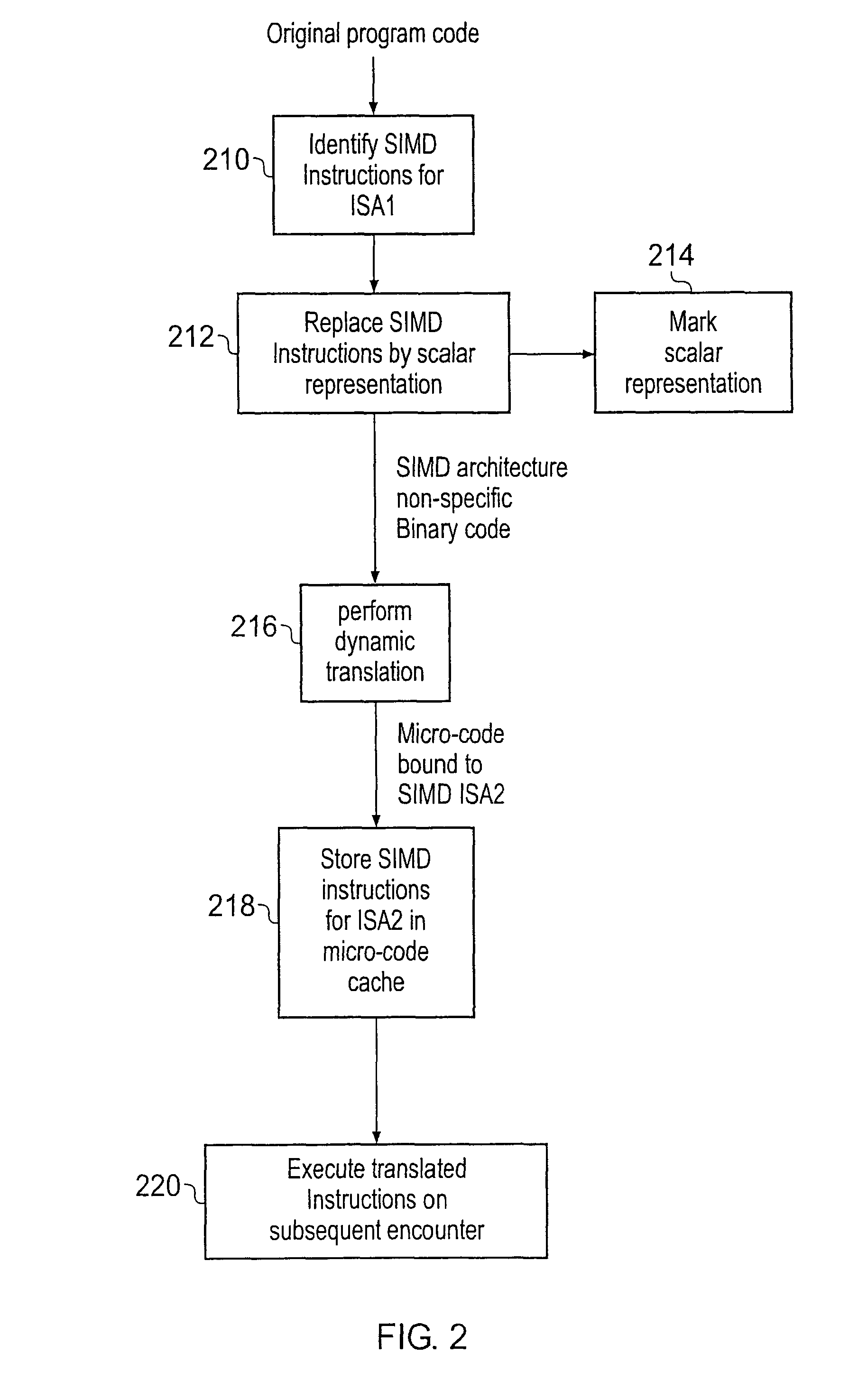

ActiveUS20080141012A1Little overheadEfficiently translatedDigital computer detailsSpecific program execution arrangementsData processing systemInstruction set

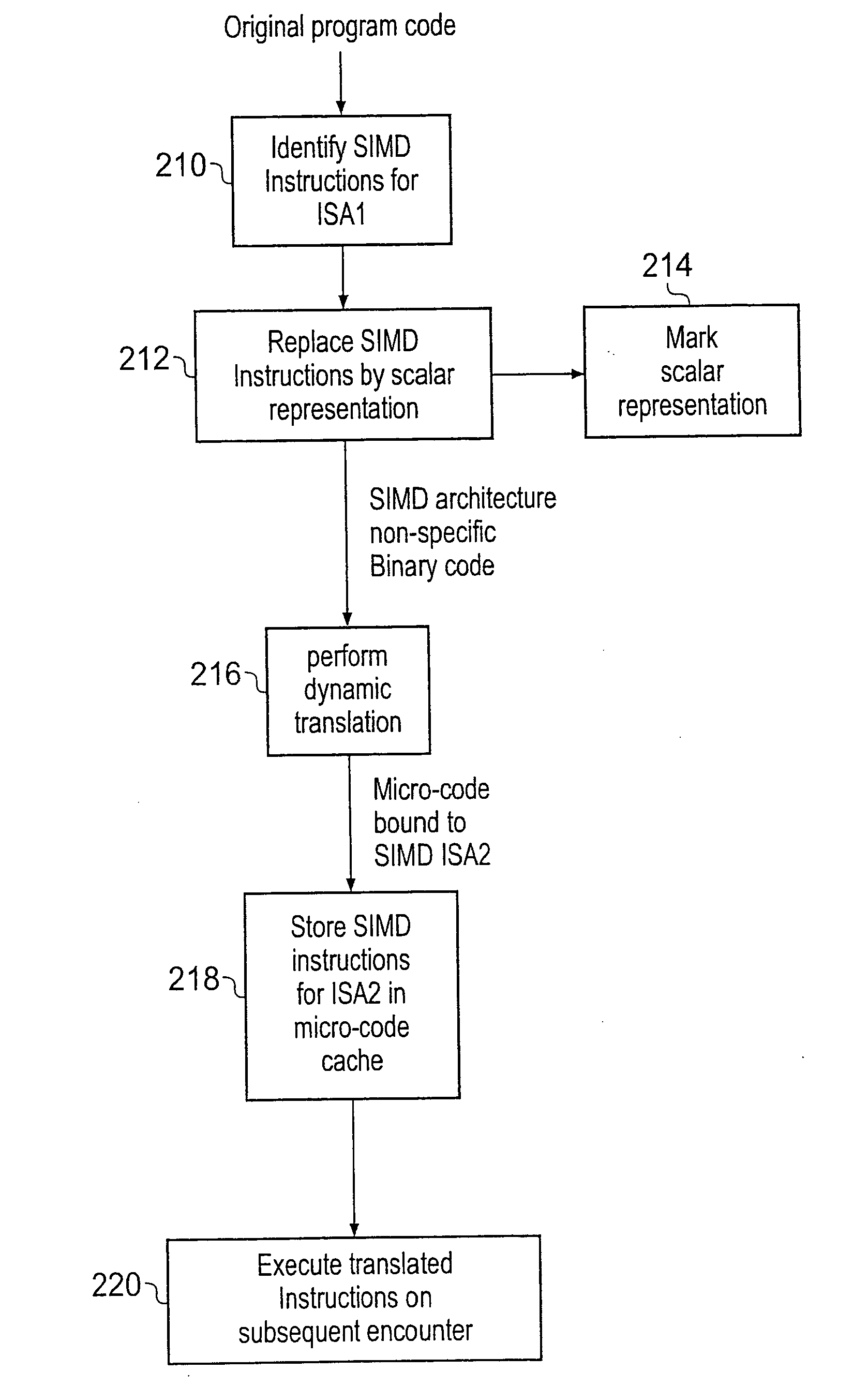

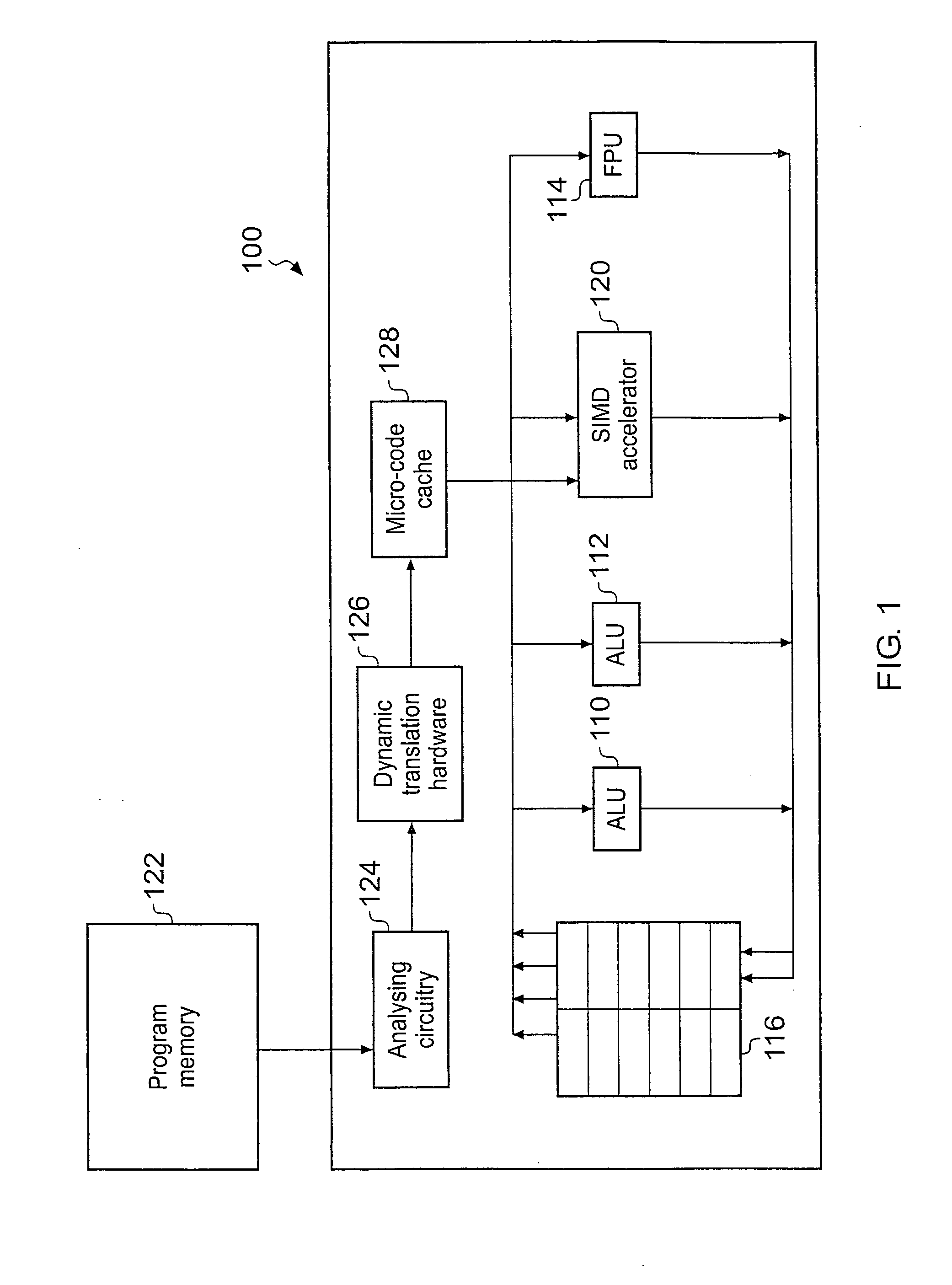

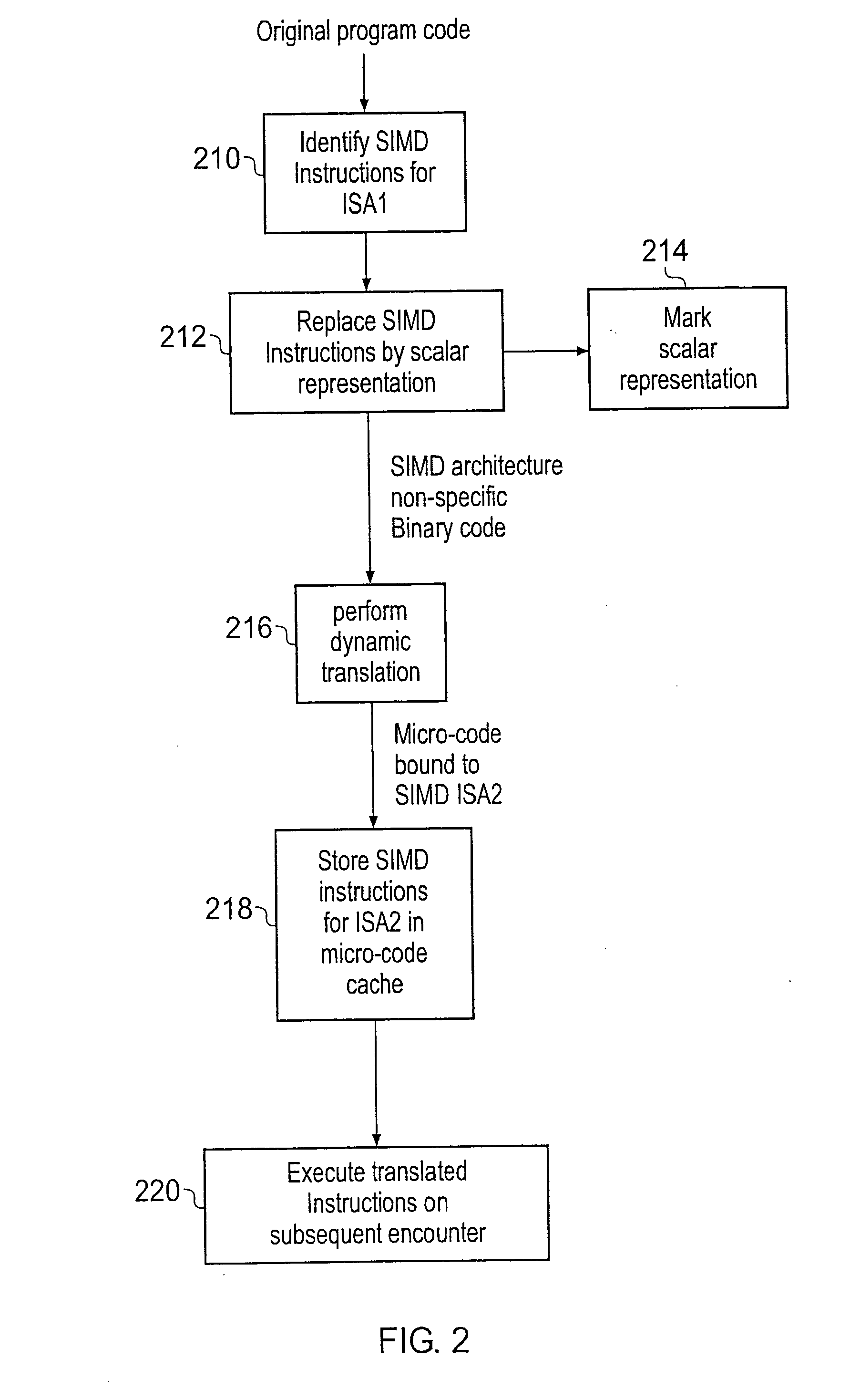

A data processing system is provided having a processor and analysing circuitry for identifying a SIMD instruction associated with a first SIMD instruction set and replacing it by a functionally-equivalent scalar representation and marking that functionally-equivalent scalar representation. The marked functionally-equivalent scalar representation is dynamically translated using translation circuitry upon execution of the program to generate one or more corresponding translated instructions corresponding to a instruction set architecture different from the first SIMD architecture corresponding to the identified SIMD instruction.

Owner:RGT UNIV OF MICHIGAN +1

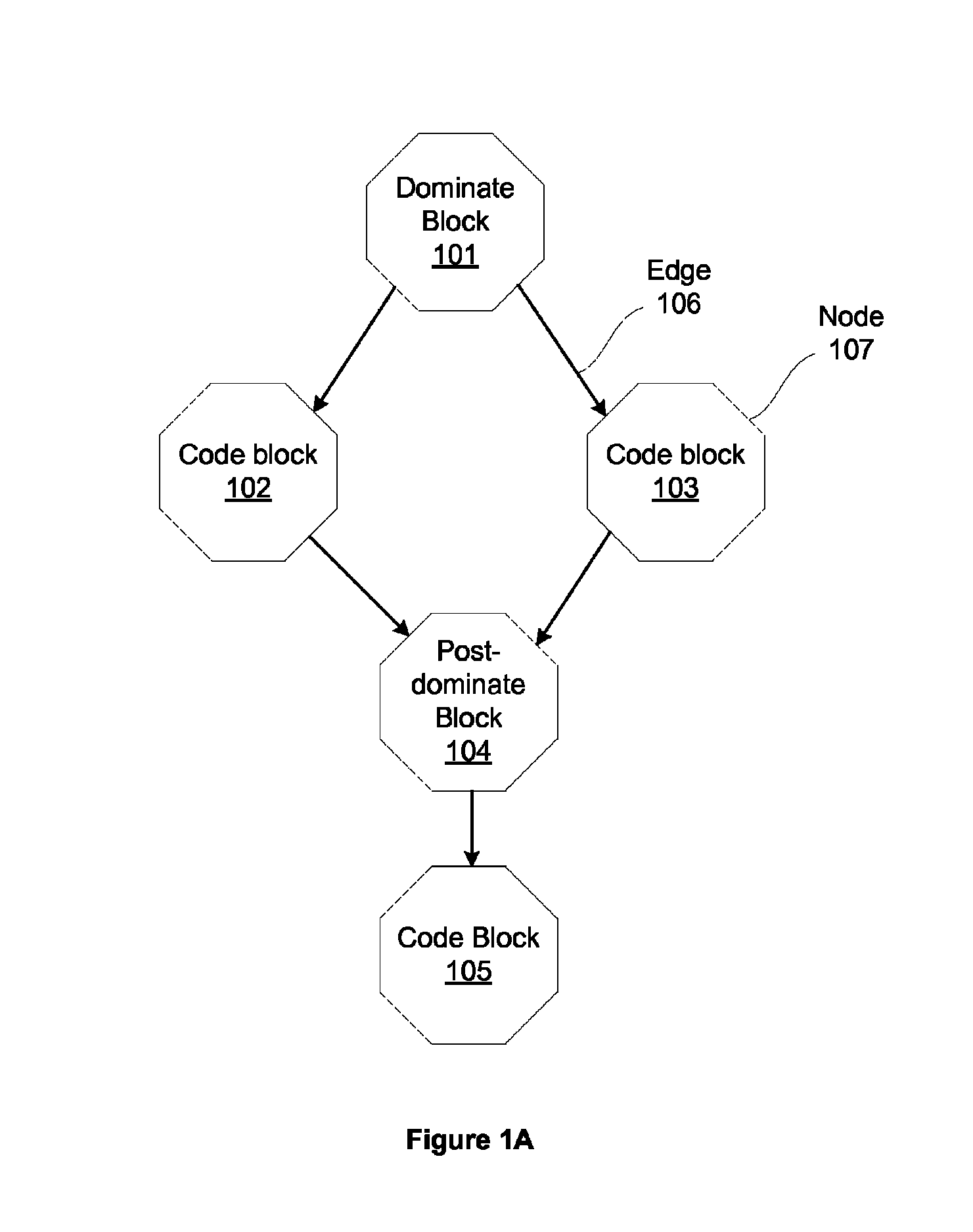

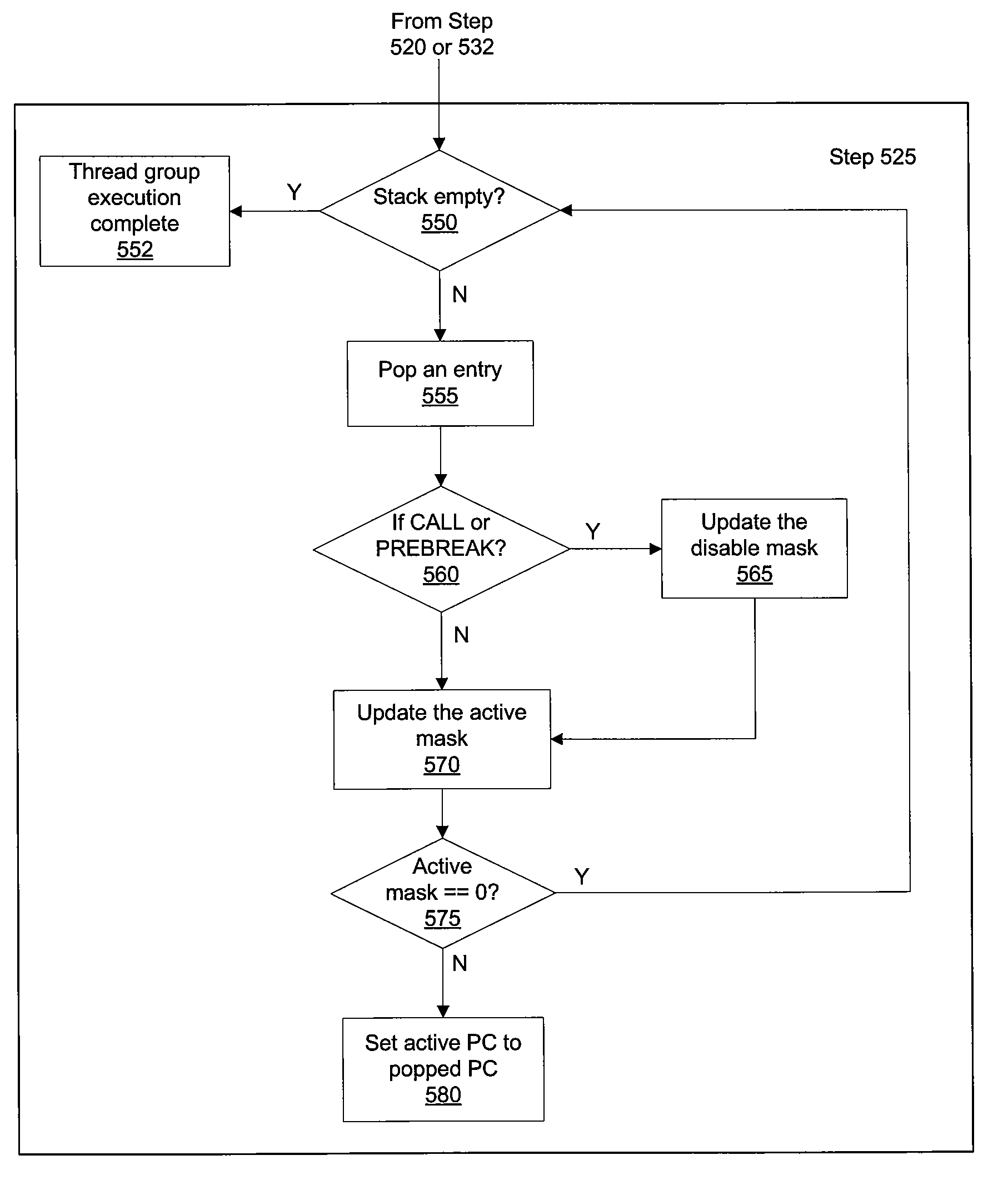

Structured programming control flow using a disable mask in a SIMD architecture

ActiveUS7617384B1Efficient mechanismSpecific program execution arrangementsMemory systemsControl flowParallel processing

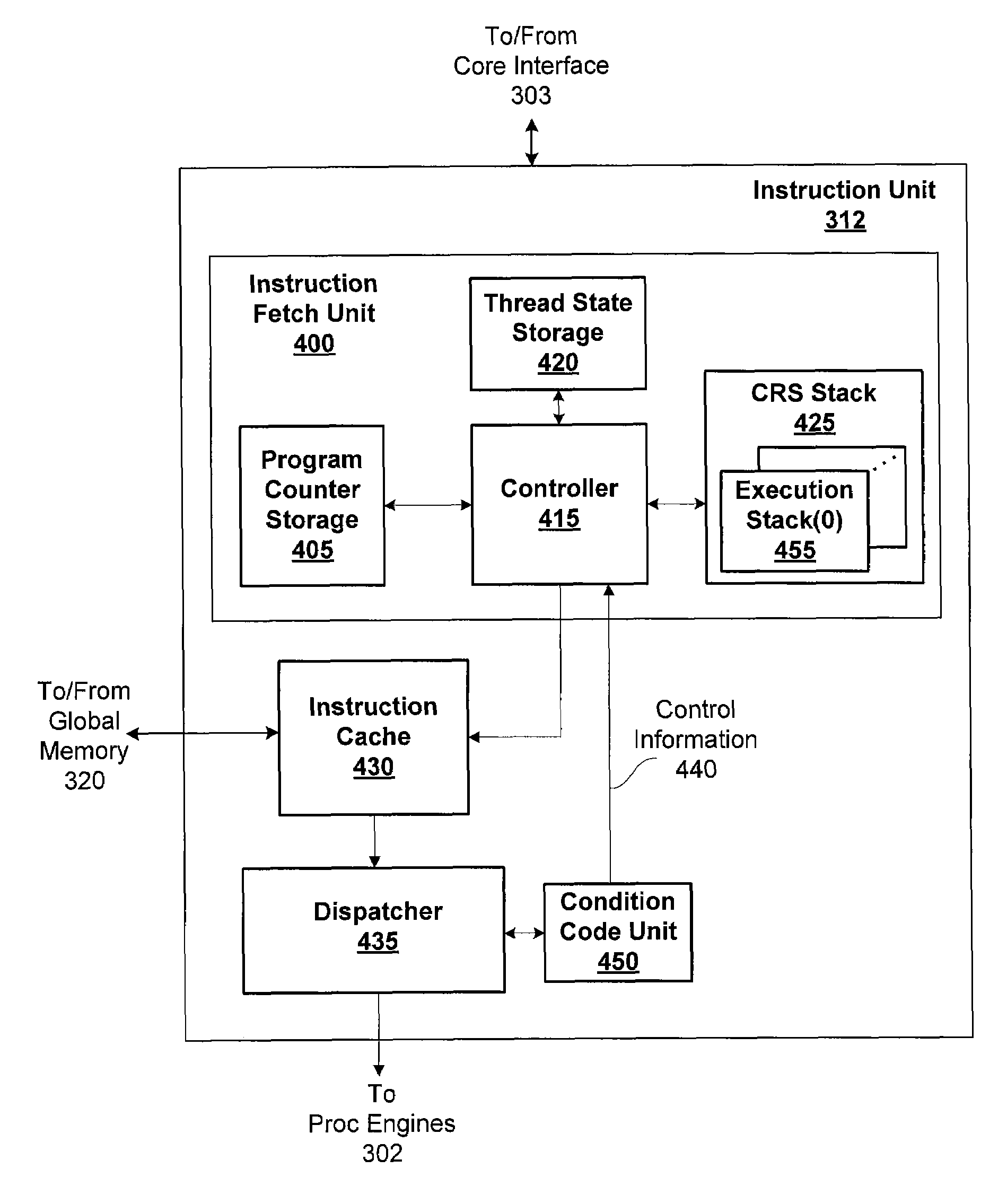

One embodiment of a computing system configured to manage divergent threads in a SIMD thread group includes a stack configured to store state information for processing control instructions. A parallel processing unit is configured to perform the steps of determining if one or more threads diverge during execution of a conditional control instruction. Threads that exit a program are identified as idle by a disable mask. Other threads that are disabled may be enabled once the divergent threads reach an instruction that enables the disabled threads. Use of the disable mask allows for the use of conditional return and break instructions in a multithreaded SIMD architecture.

Owner:NVIDIA CORP

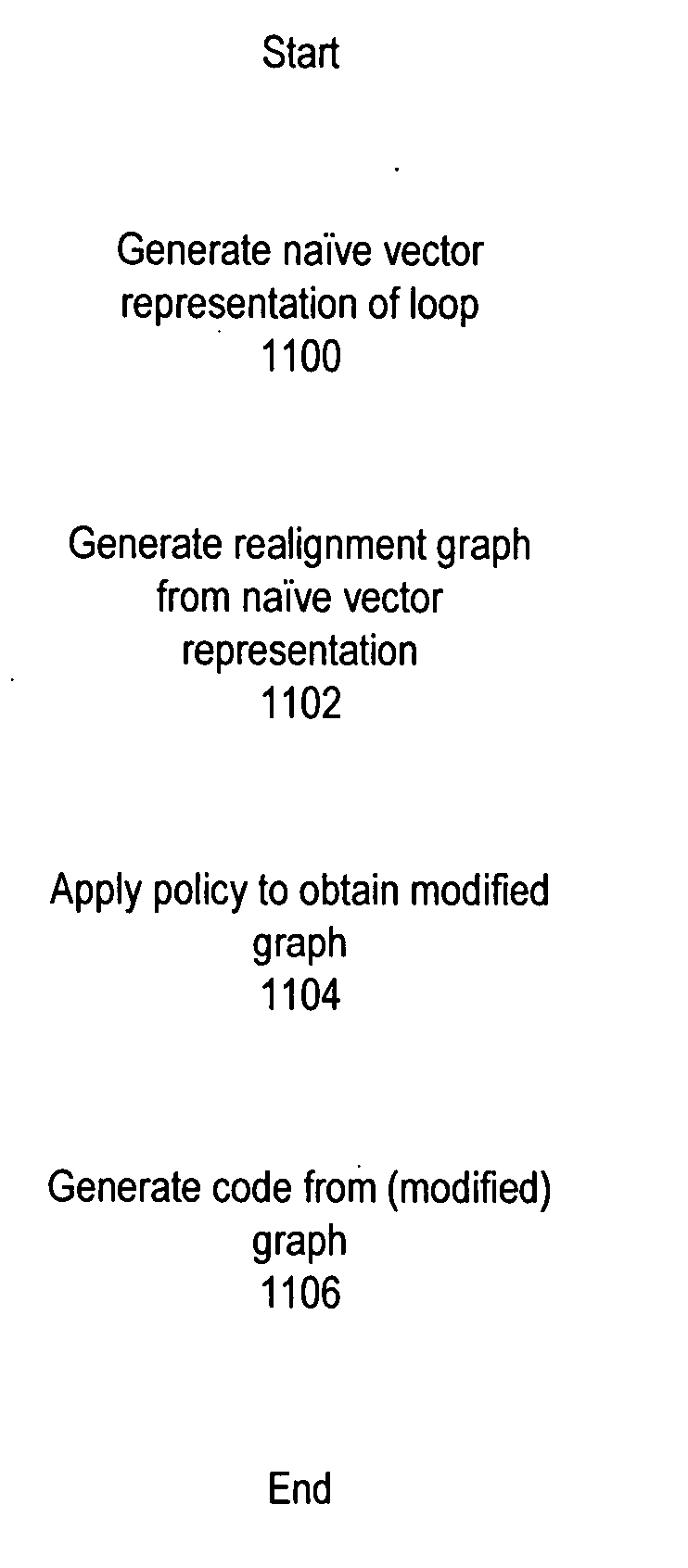

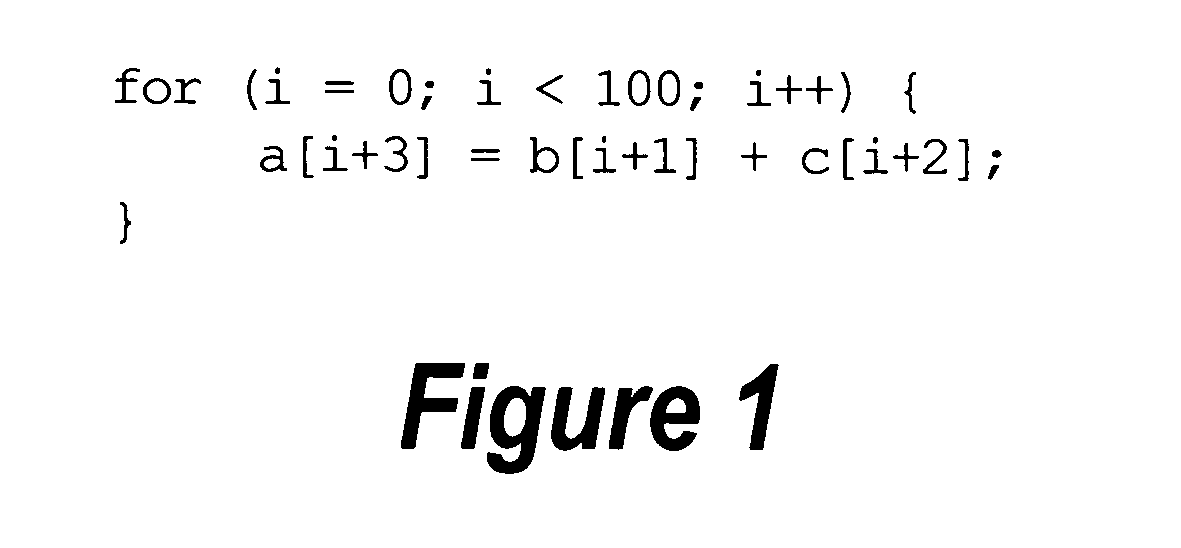

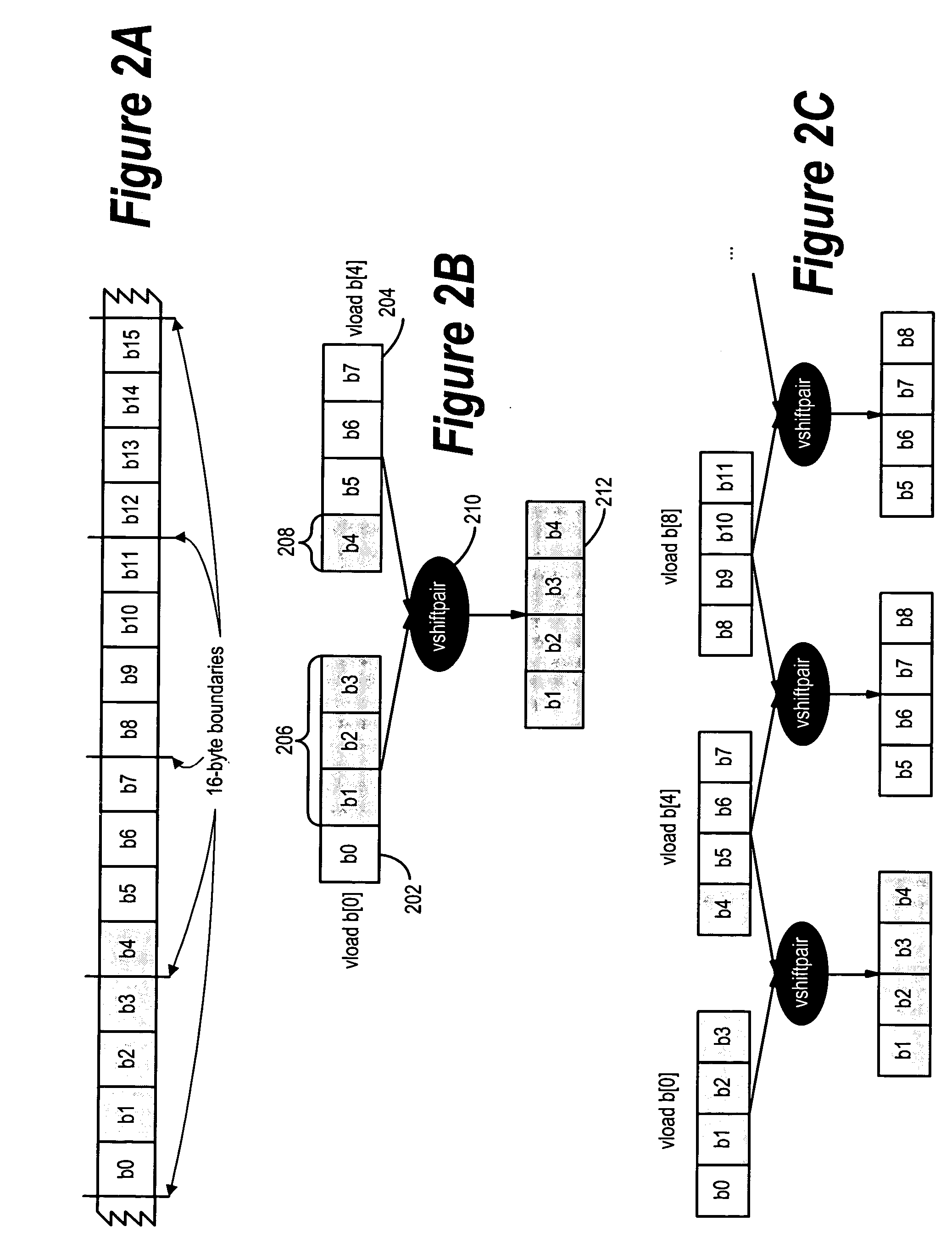

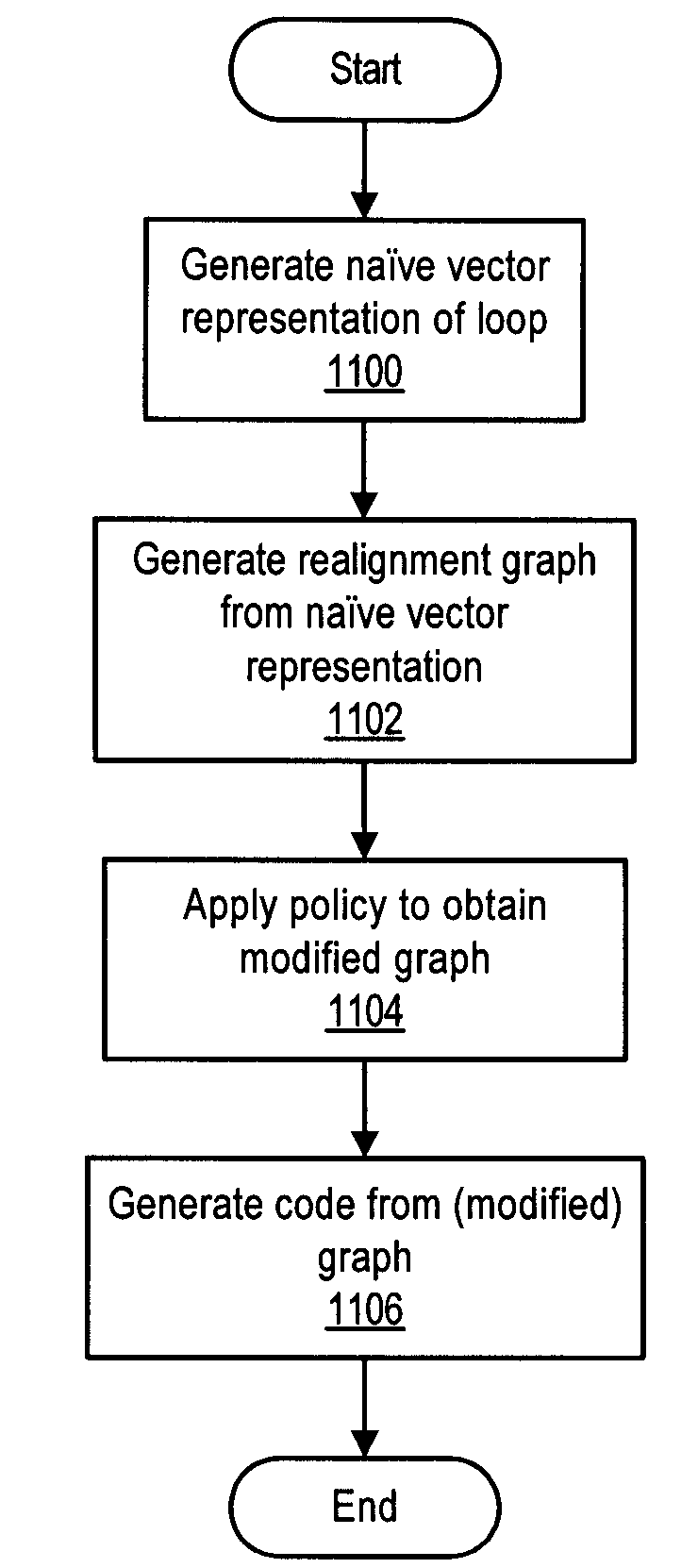

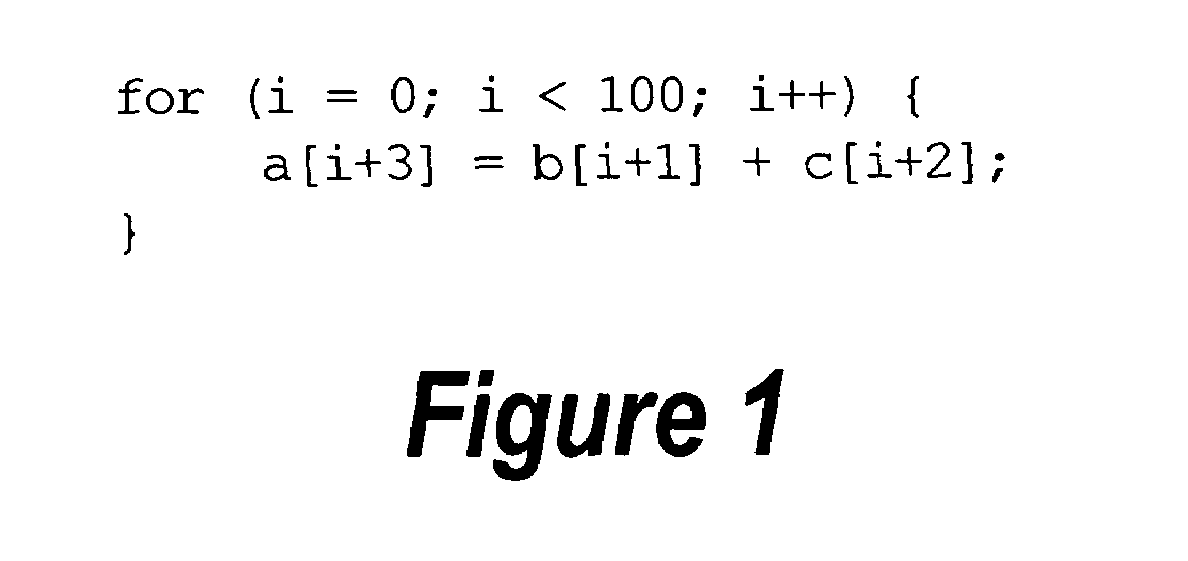

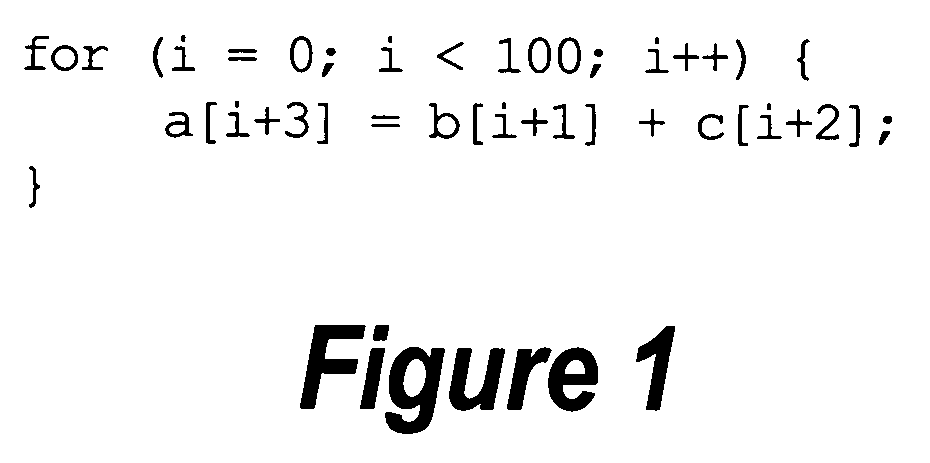

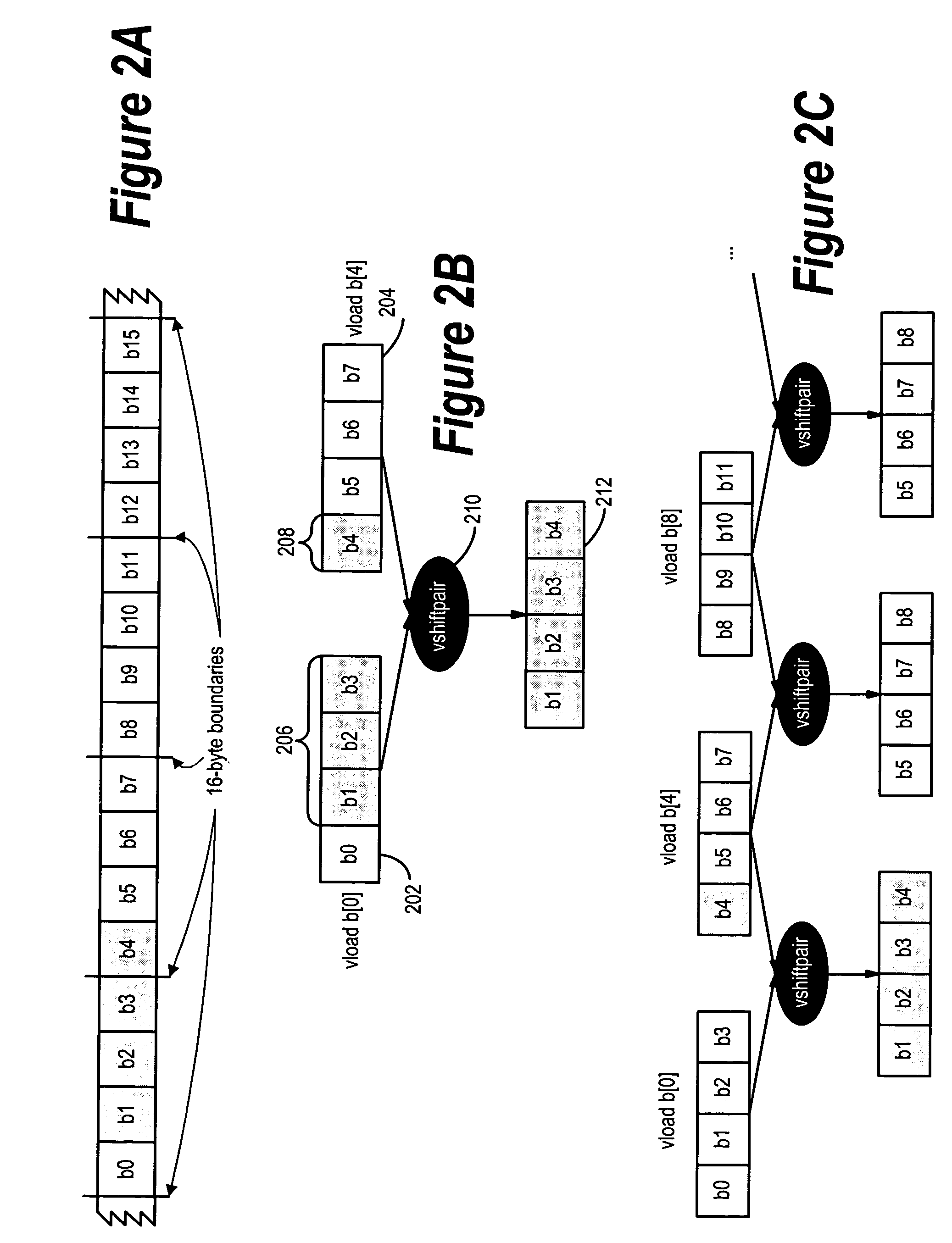

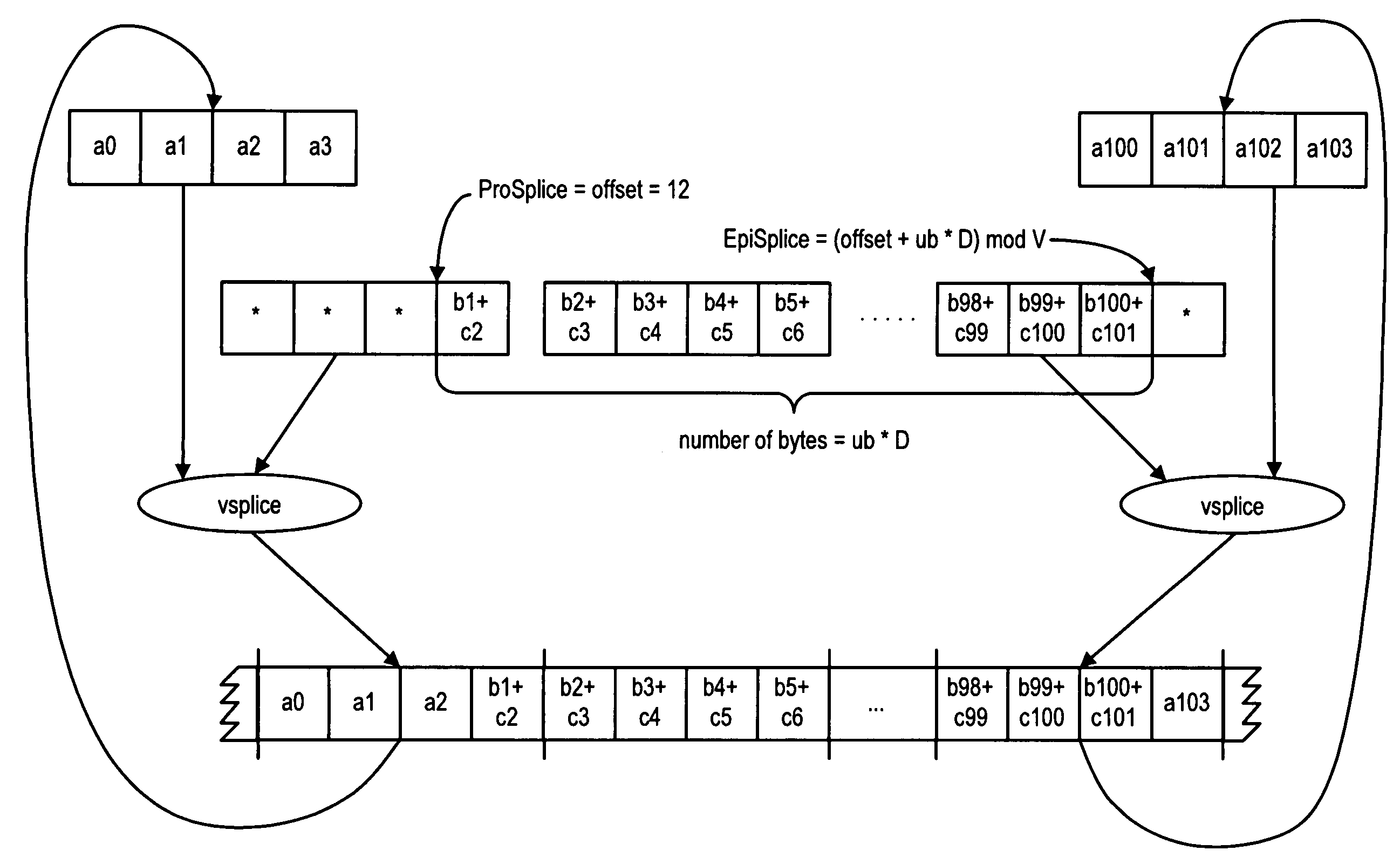

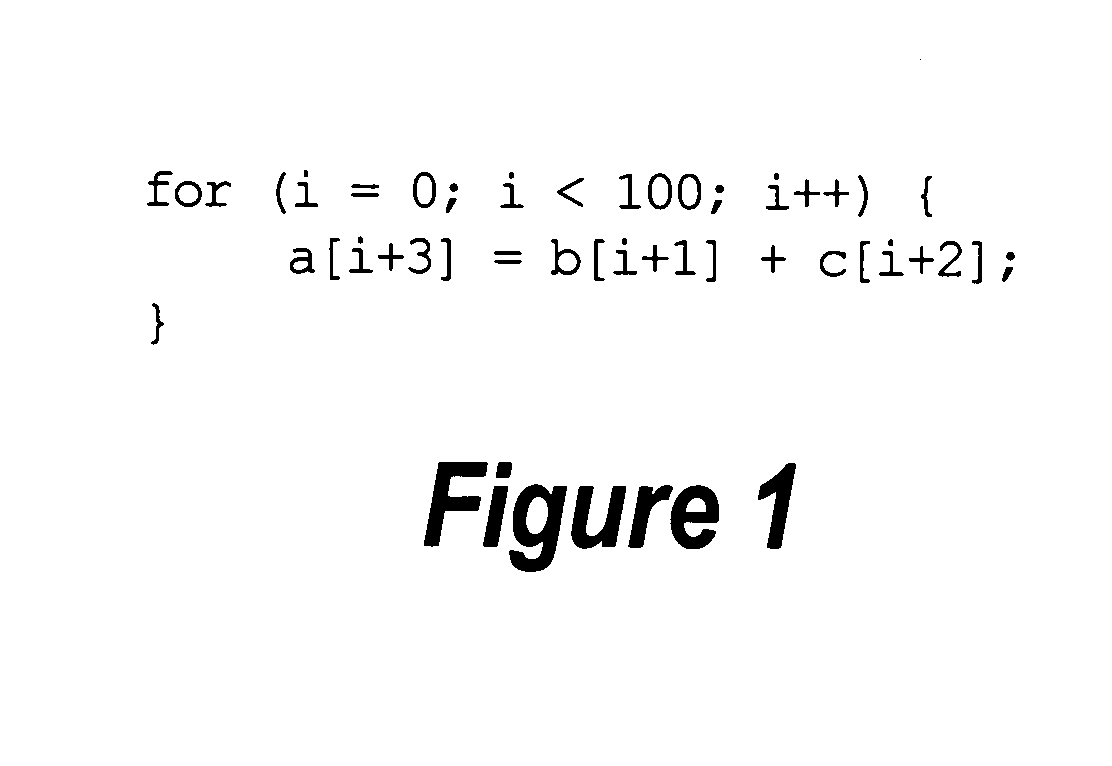

Framework for efficient code generation using loop peeling for SIMD loop code with multiple misaligned statements

InactiveUS20050283773A1Reduce computational overheadIncrease computational overheadSoftware engineeringGeneral purpose stored program computerData reorganizationSteady state

A system and method is provided for vectorizing misaligned references in compiled code for SIMD architectures that support only aligned loads and stores. In this framework, a loop is first simdized as if the memory unit imposes no alignment constraints. The compiler then inserts data reorganization operations to satisfy the actual alignment requirements of the hardware. Finally, the code generation algorithm generates SIMD codes based on the data reorganization graph, addressing realistic issues such as runtime alignments, unknown loop bounds, residual iteration counts, and multiple statements with arbitrary alignment combinations. Loop peeling is used to reduce the computational overhead associated with misaligned data. A loop prologue and epilogue are peeled from individual iterations in the simdized loop, and vector-splicing instructions are applied to the peeled iterations, while the steady-state loop body incurs no additional computational overhead.

Owner:IBM CORP

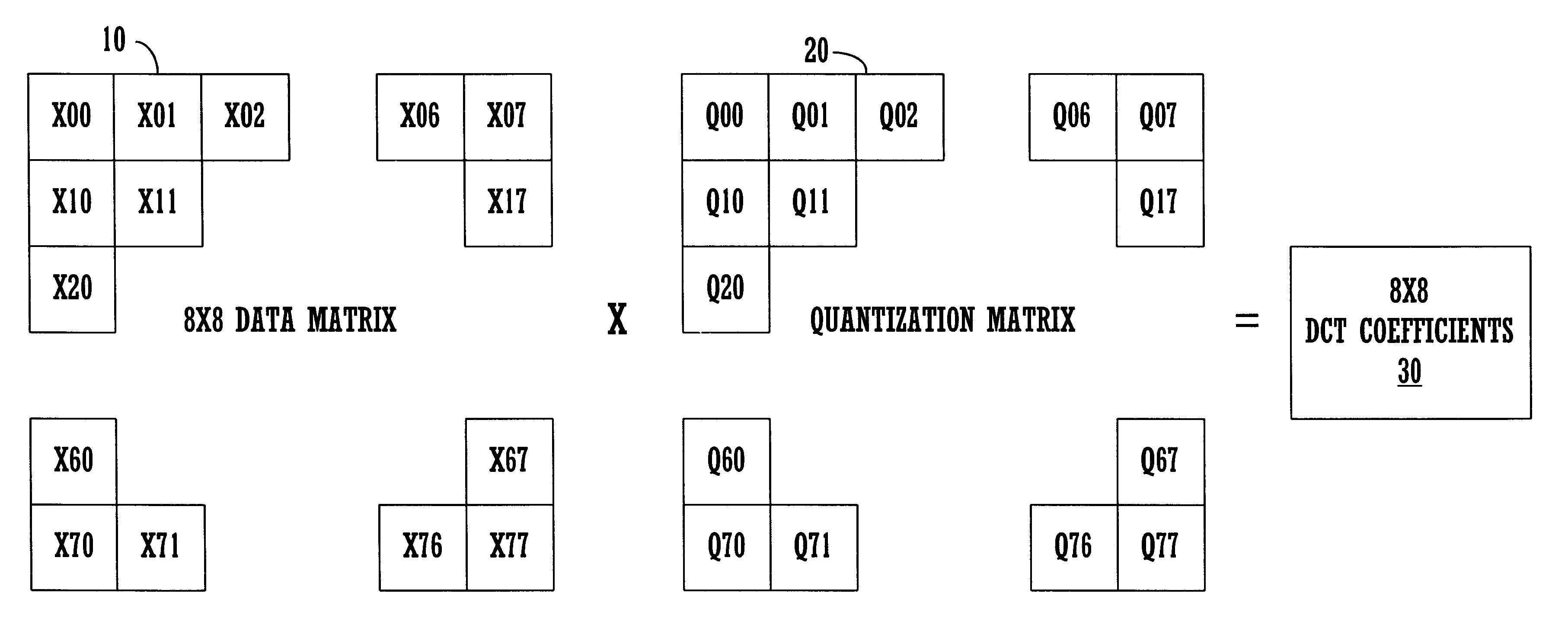

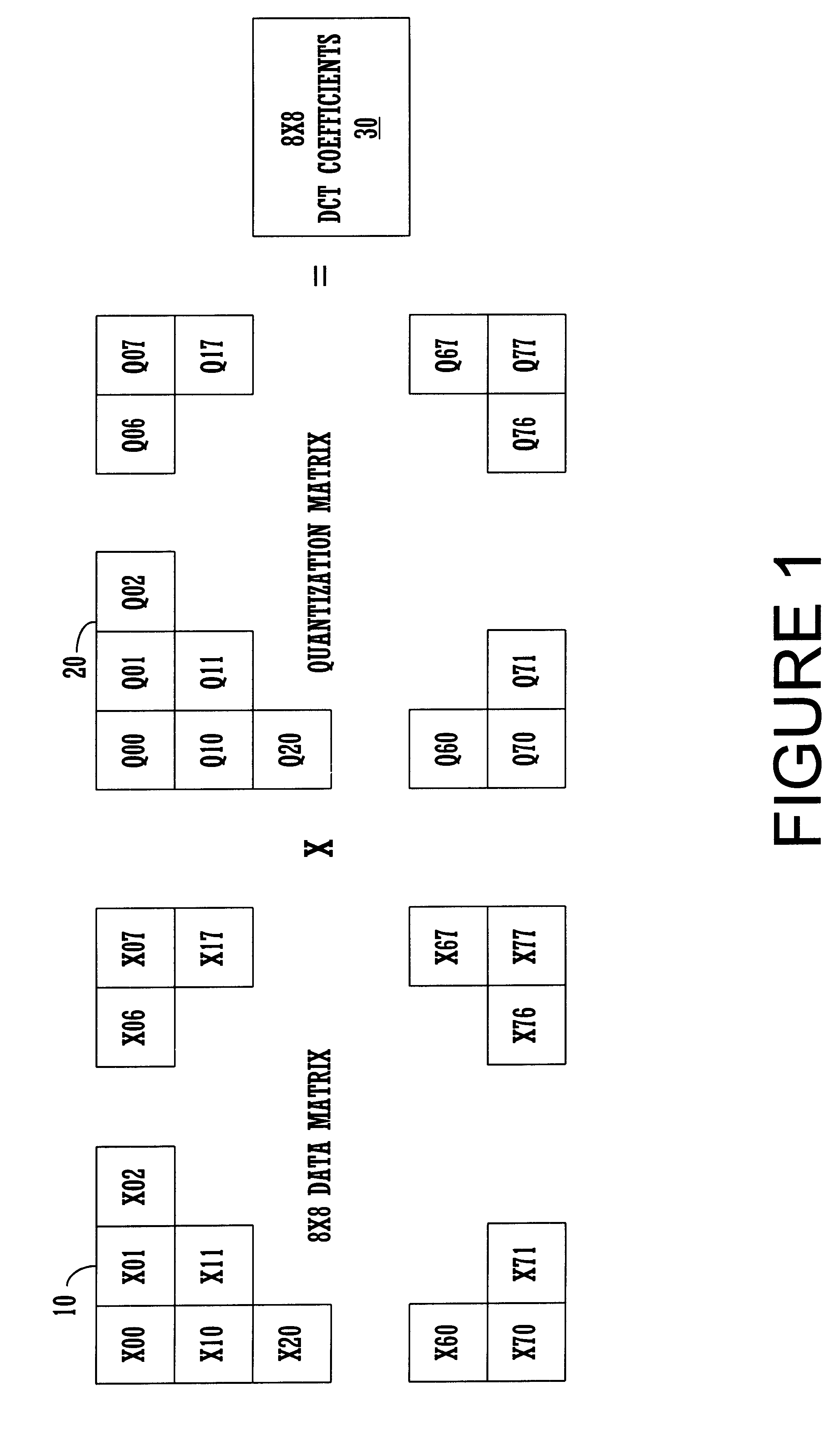

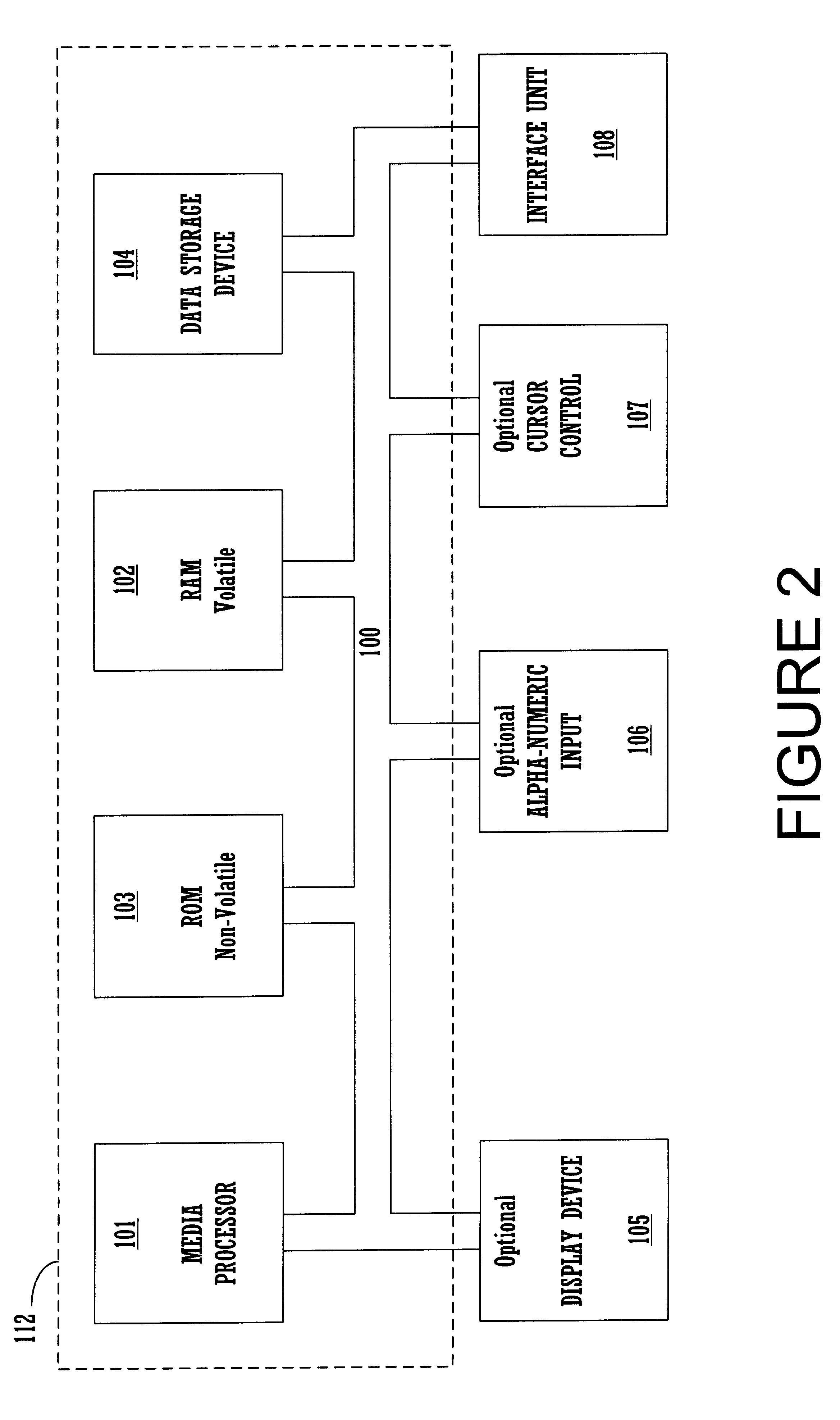

Efficient de-quantization in a digital video decoding process using a dynamic quantization matrix for parallel computations

InactiveUS6507614B1Efficient productionPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningDigital videoArray data structure

An efficient digital video (DV) decoder process that utilizes a specially constructed quantization matrix allowing an inverse quantization subprocess to perform parallel computations, e.g., using SIMD processing, to efficiently produce a matrix of DCT coefficients. The present invention utilizes a first look-up table (for 8x8 DCT) which produces a 15-valued quantization scale based on class number information and a QNO number for an 8x8 data block ("data matrix") from an input encoded digital bit stream to be decoded. The 8x8 data block is produced from a deframing and variable length decoding subprocess. An individual 8-valued segment of the 15-value output array is multiplied by an individual 8-valued segment, e.g., "a row," of the 8x8 data matrix to produce an individual row of the 8x8 matrix of DCT coefficients ("DCT matrix"). The above eight multiplications can be performed in parallel using a SIMD architecture to simultaneously generate a row of eight DCT coefficients. In this way, eight passes through the 8x8 block are used to produce the entire 8x8 DCT matrix, in one embodiment consuming only 33 instructions per 8x8 block. After each pass, the 15-valued output array is shifted by one value position for proper alignment with its associated row of the data matrix. The DCT matrix is then processed by an inverse discrete cosine transform subprocess that generates decoded display data. A second lookup table can be used for 2x4x8 DCT processing.

Owner:SONY ELECTRONICS INC +1

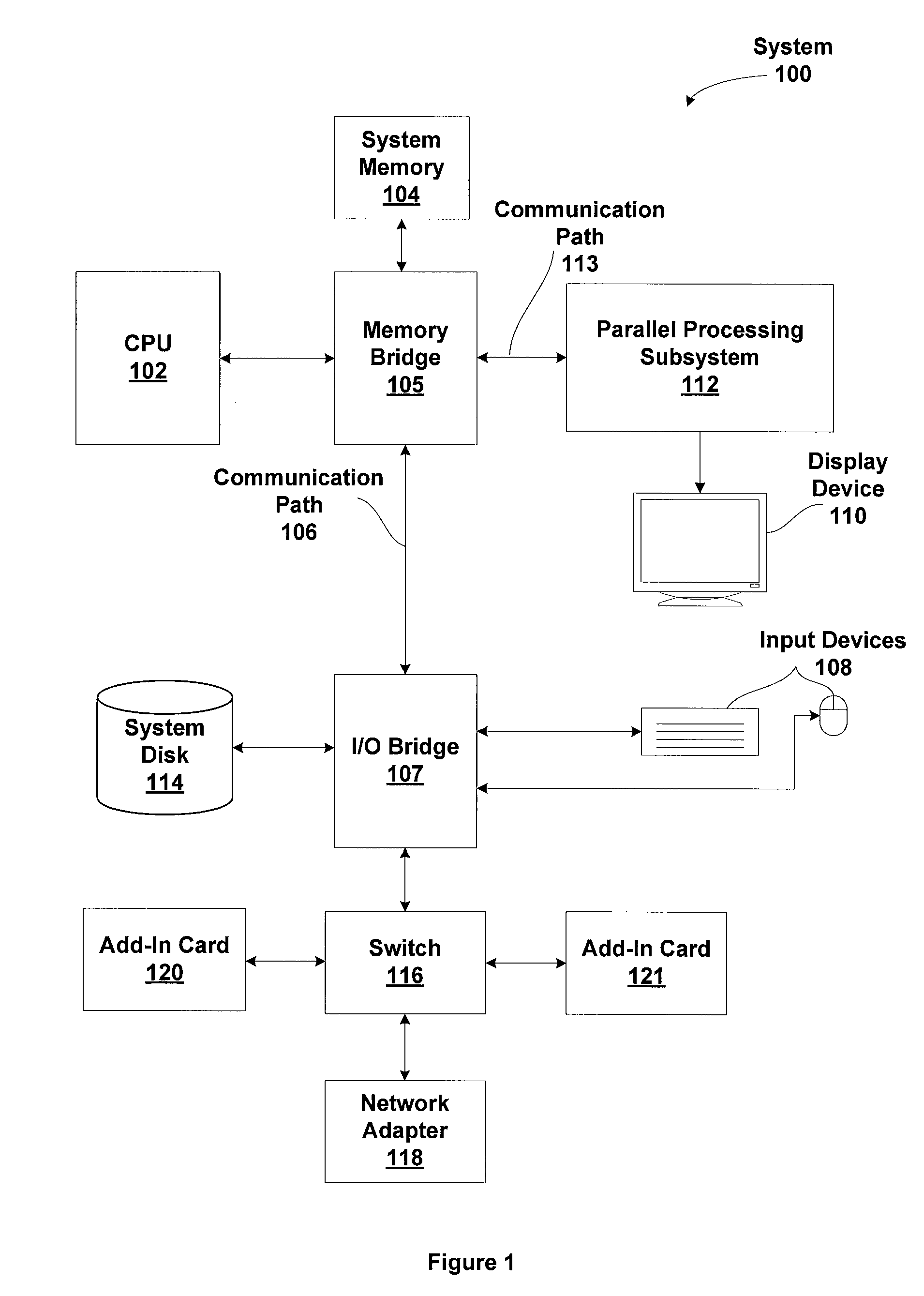

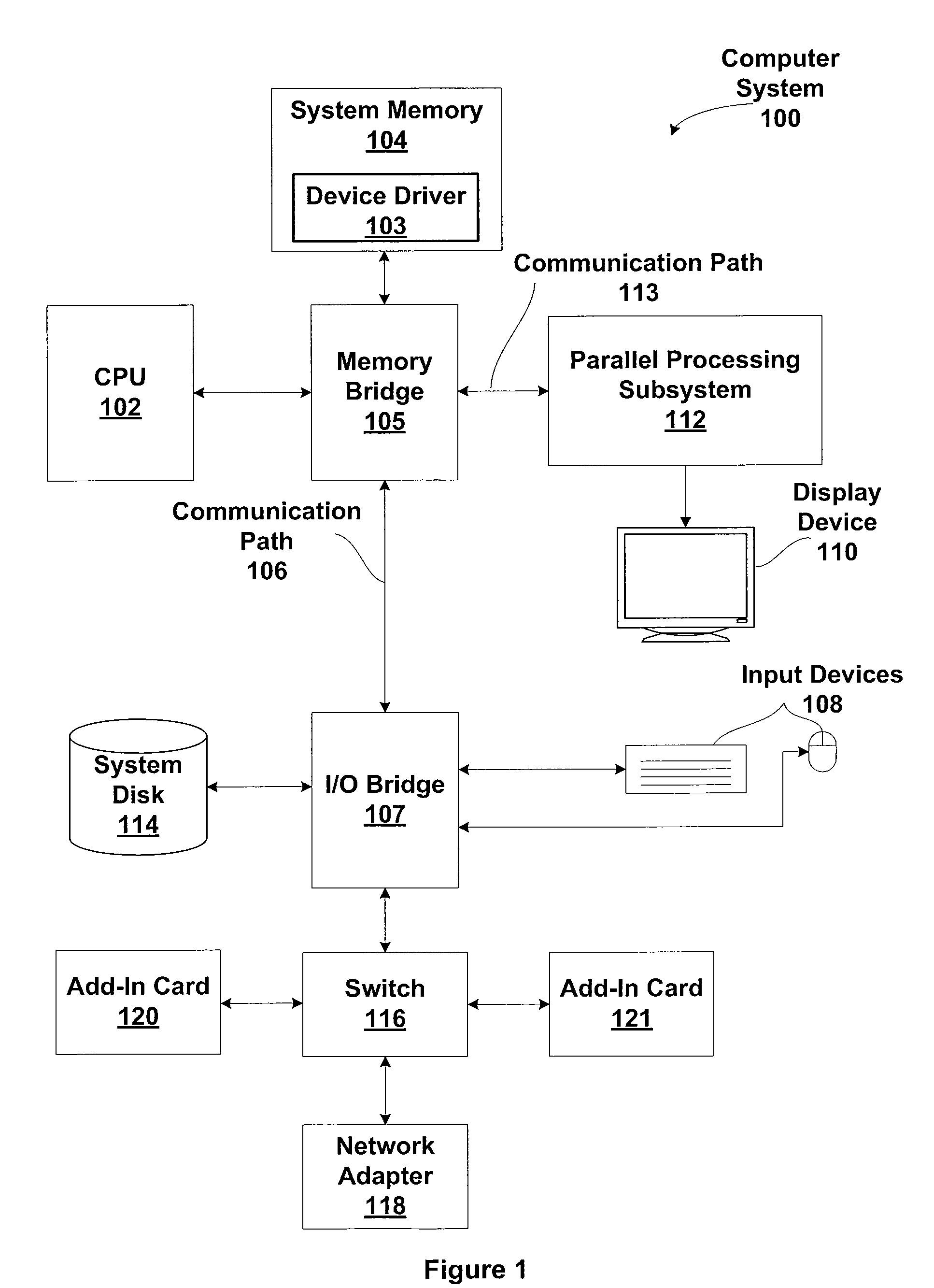

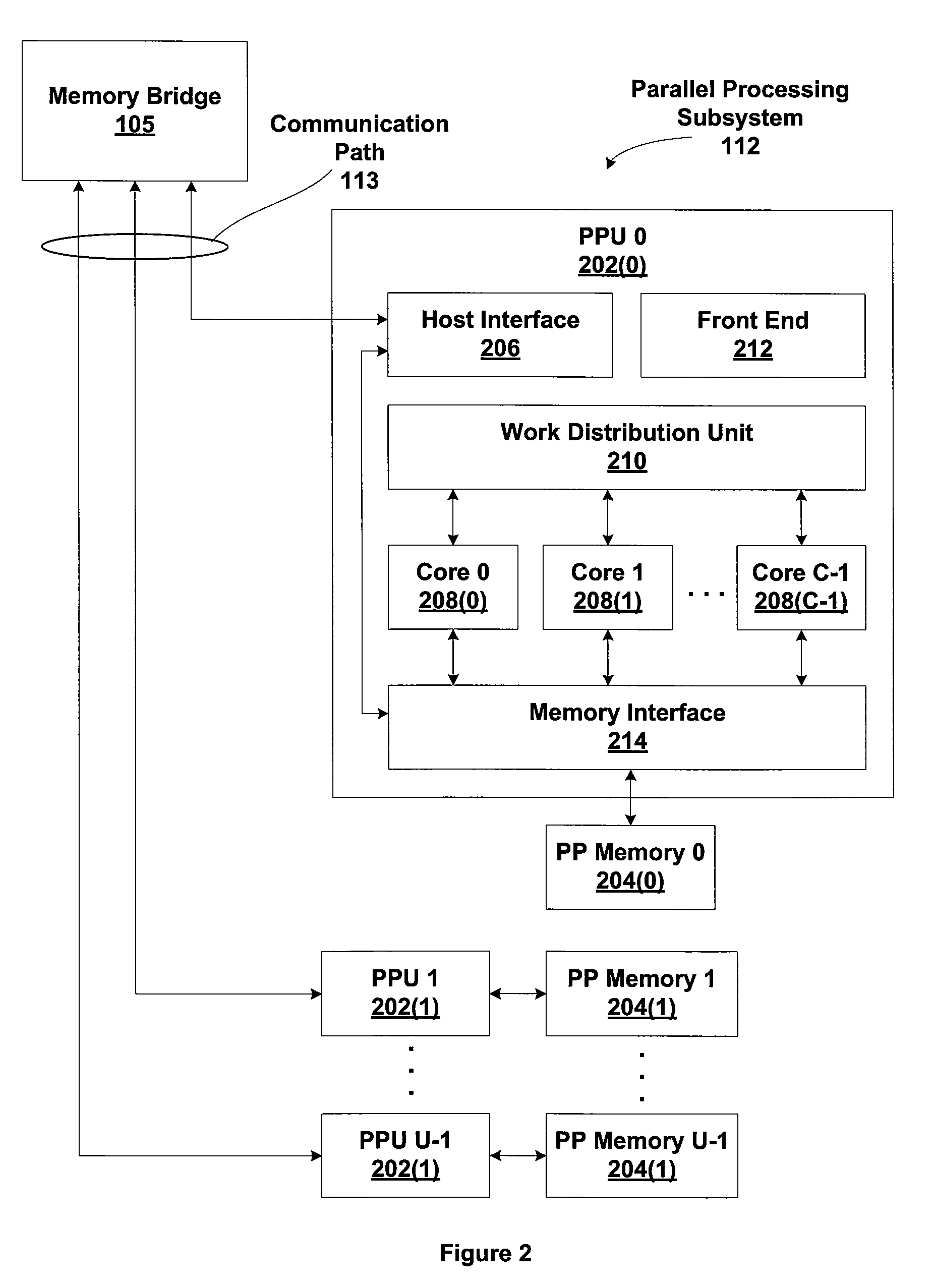

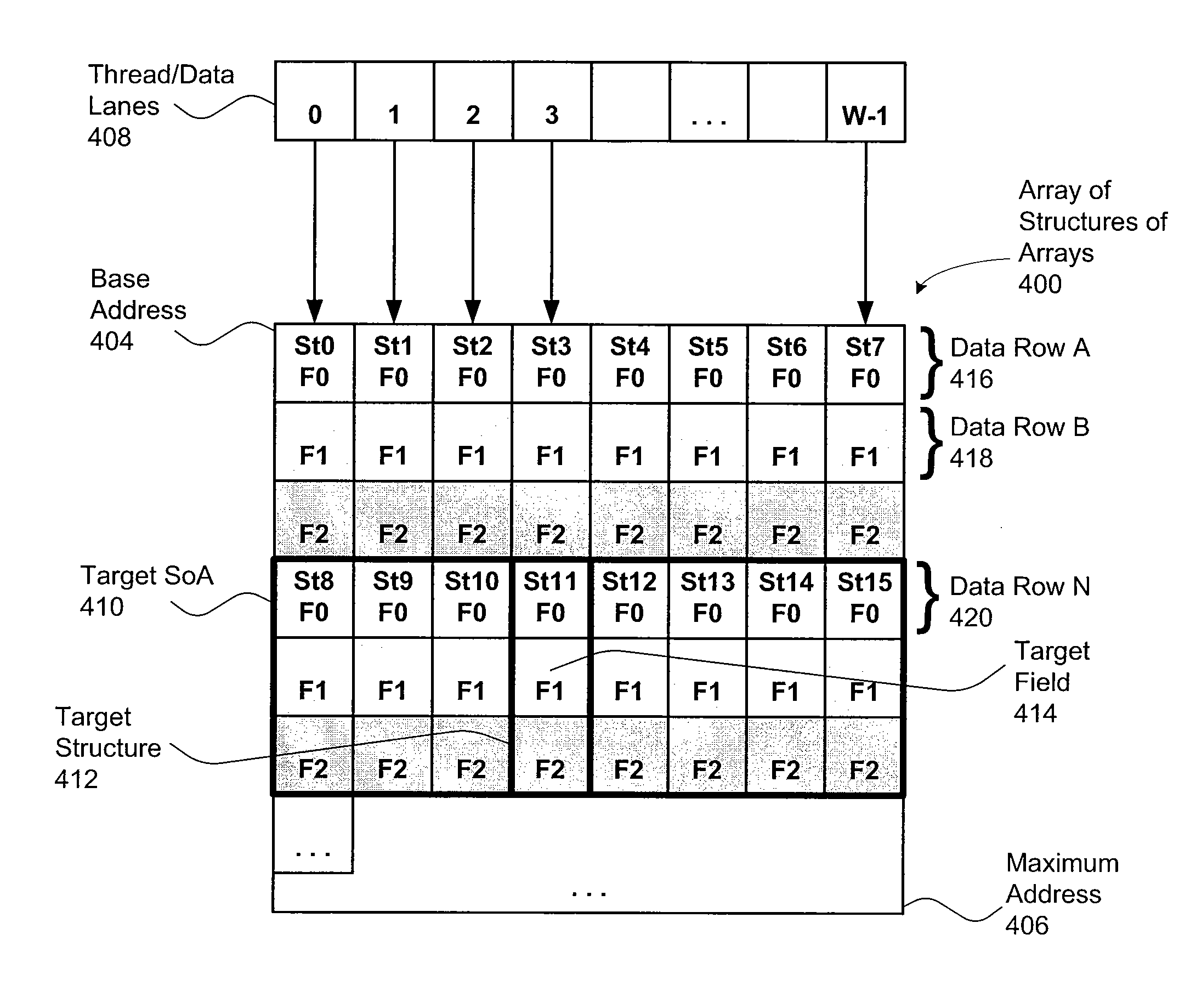

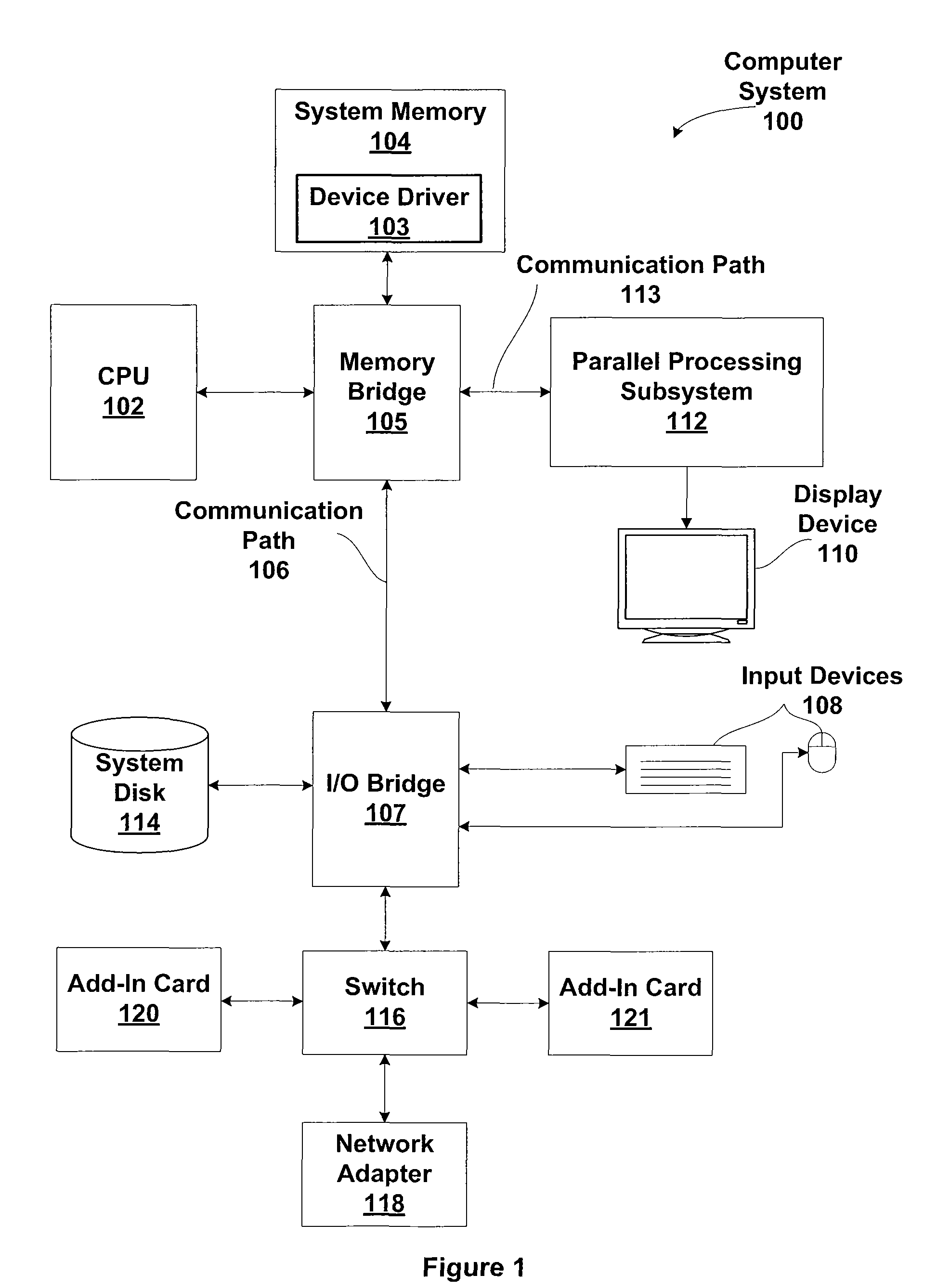

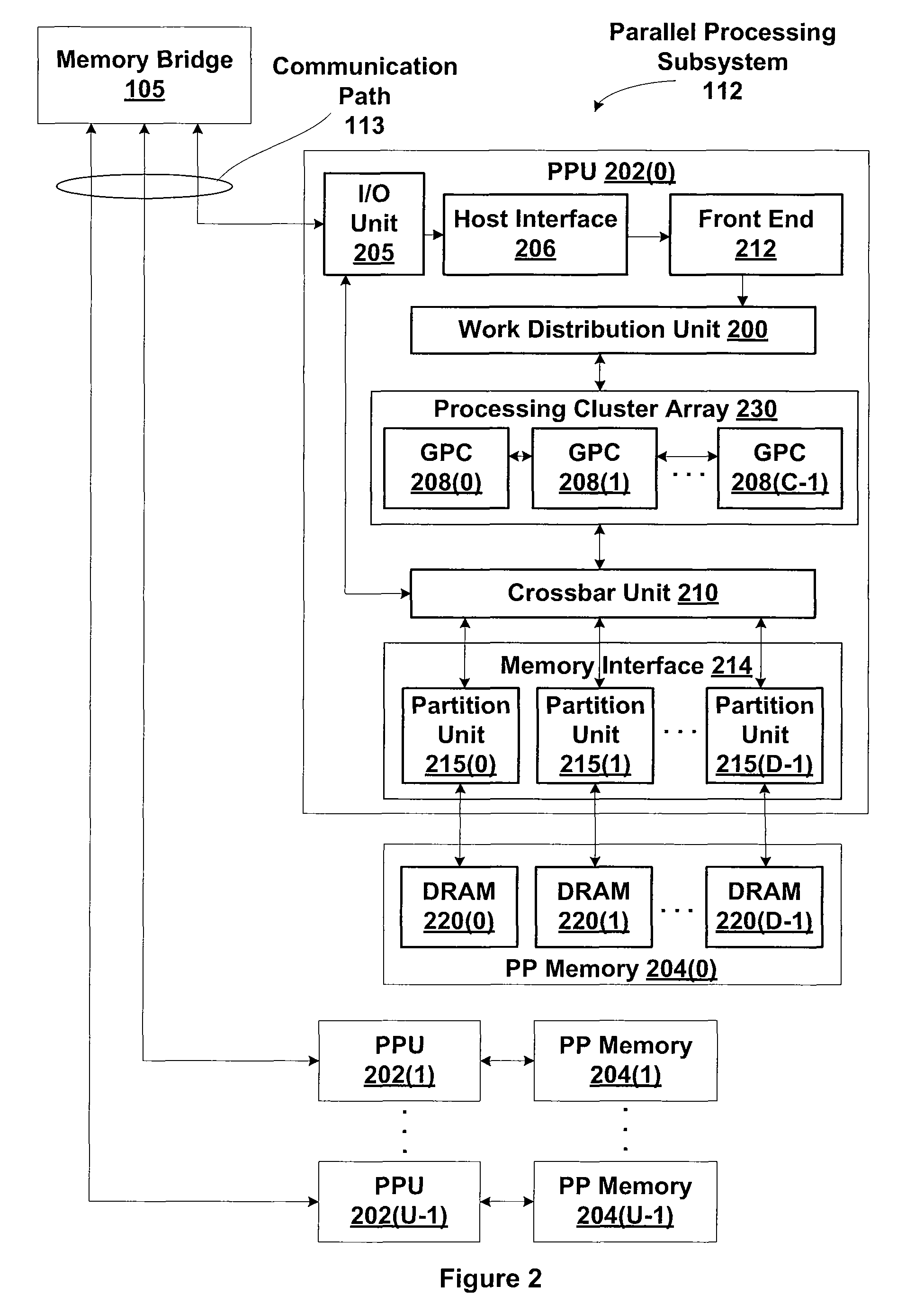

Efficient implementation of arrays of structures on simt and simd architectures

ActiveUS20120089792A1Improve processing efficiencyProcessor architectures/configurationProgram controlAccess methodJoint Implementation

One embodiment of the present invention sets forth a technique providing an optimized way to allocate and access memory across a plurality of thread / data lanes. Specifically, the device driver receives an instruction targeted to a memory set up as an array of structures of arrays. The device driver computes an address within the memory using information about the number of thread / data lanes and parameters from the instruction itself. The result is a memory allocation and access approach where the device driver properly computes the target address in the memory. Advantageously, processing efficiency is improved where memory in a parallel processing subsystem is internally stored and accessed as an array of structures of arrays, proportional to the SIMT / SIMD group width (the number of threads or lanes per execution group).

Owner:NVIDIA CORP

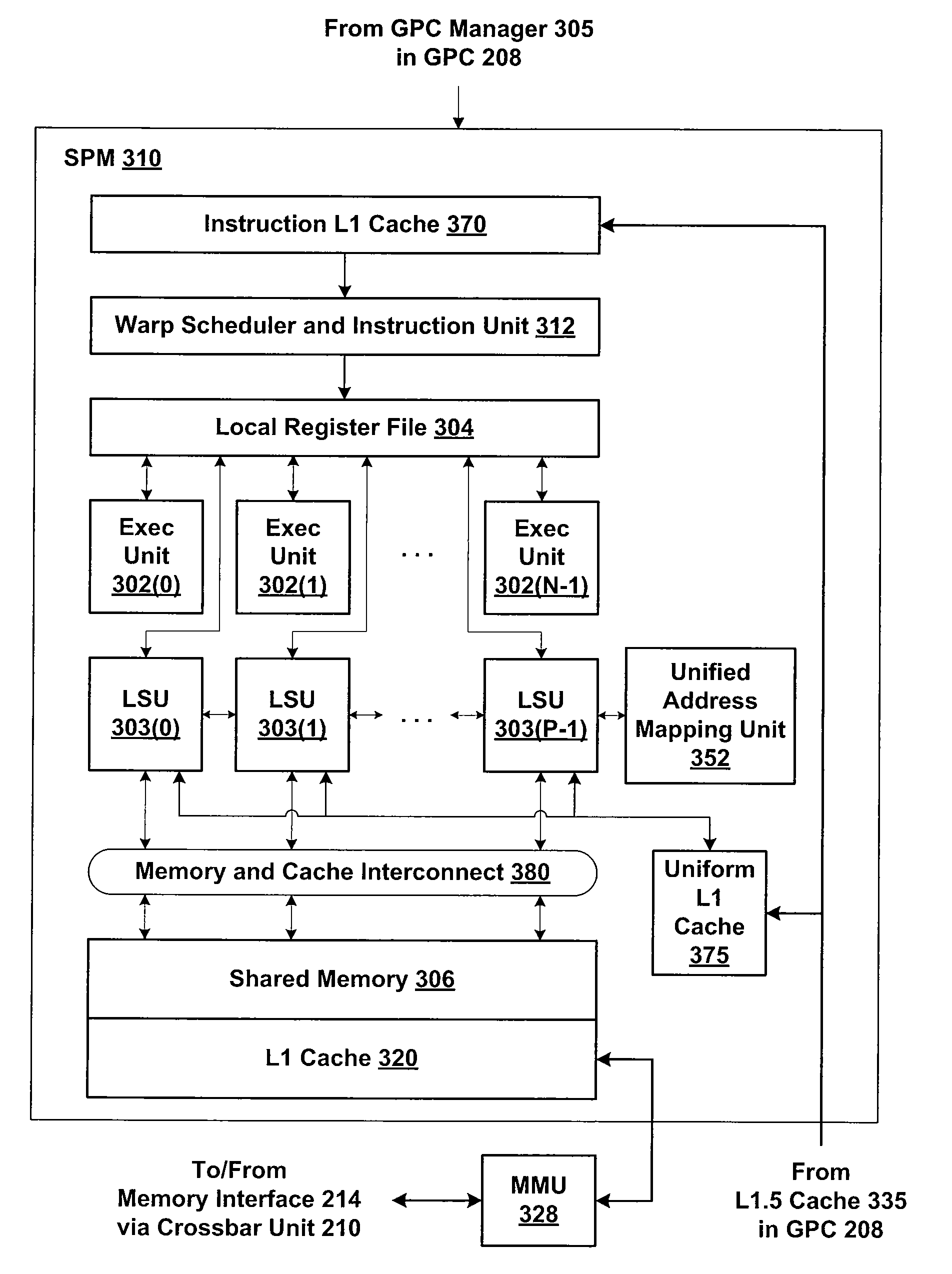

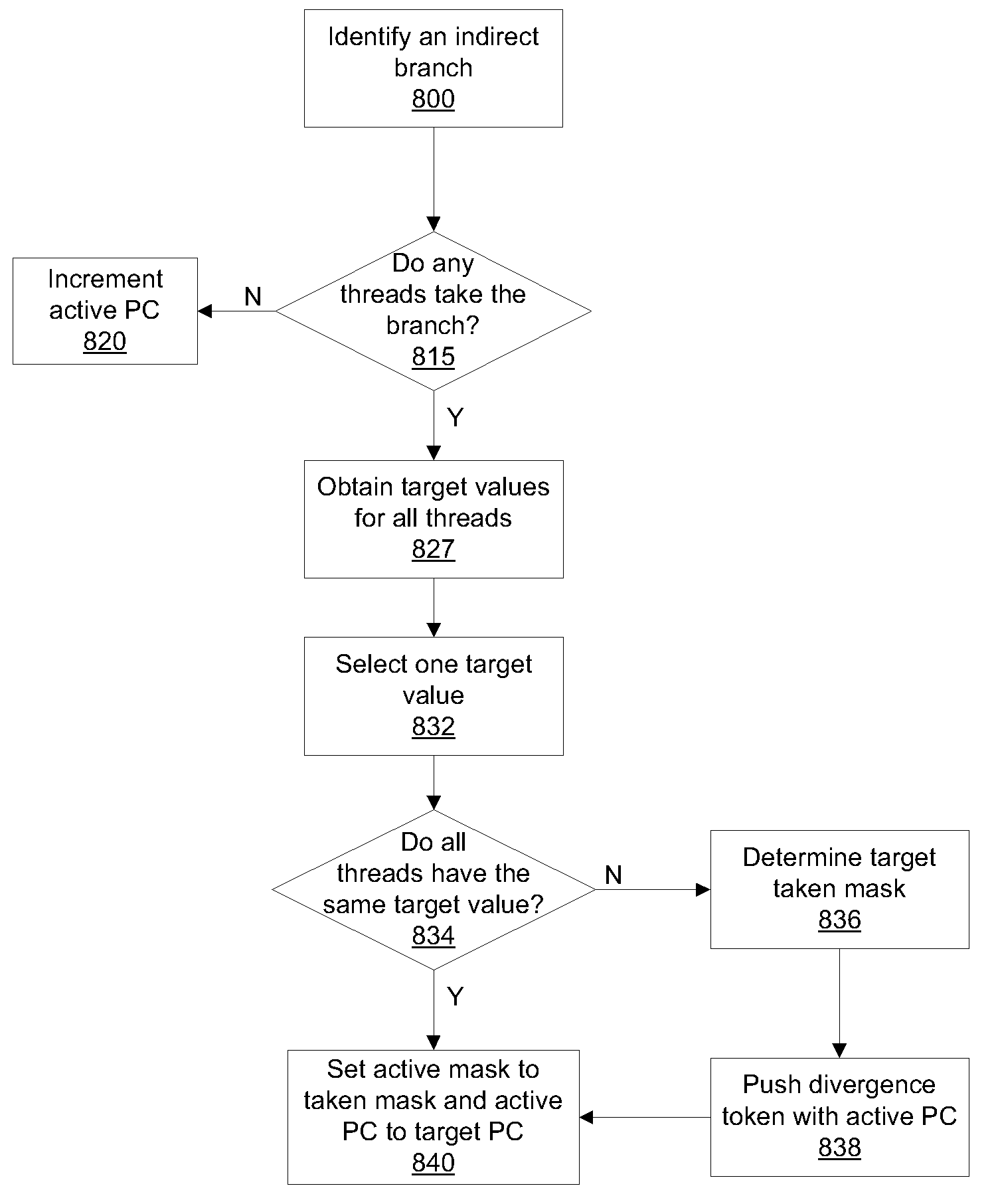

Processing an indirect branch instruction in a SIMD architecture

ActiveUS7761697B1Minimal performance degradationEfficient mechanismDigital computer detailsSpecific program execution arrangementsProgram instructionParallel computing

One embodiment of a computing system configured to manage divergent threads in a thread group includes a stack configured to store at least one token and a multithreaded processing unit. The multithreaded processing unit is configured to perform the steps of fetching a program instruction, determining that the program instruction is an indirect branch instruction, and processing the indirect branch instruction as a sequence of two-way branches to execute an indirect branch instruction with multiple branch addresses. Indirect branch instructions may be used to allow greater flexibility since the branch address or multiple branch addresses do not need to be determined at compile time.

Owner:NVIDIA CORP

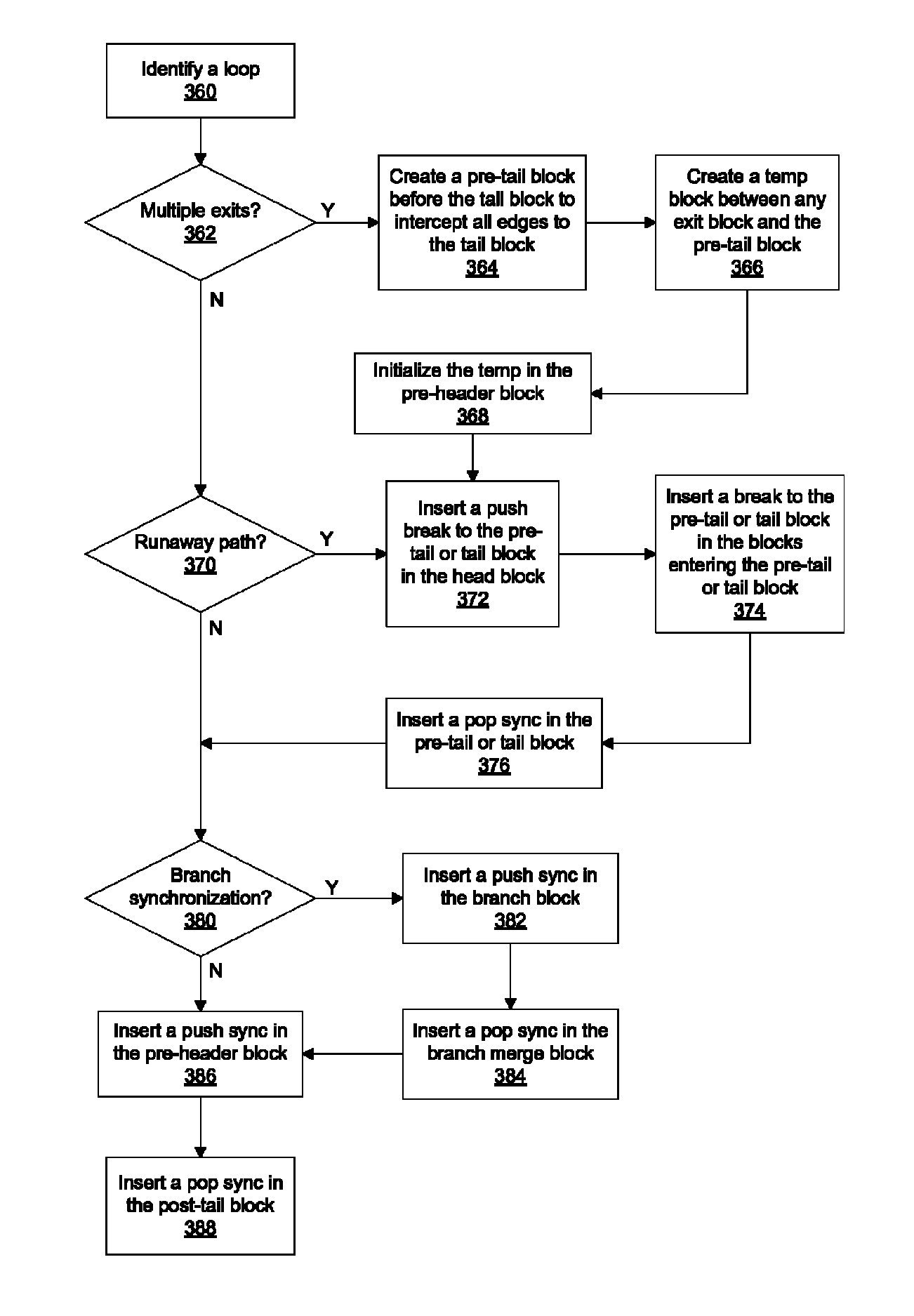

Insertion of multithreaded execution synchronization points in a software program

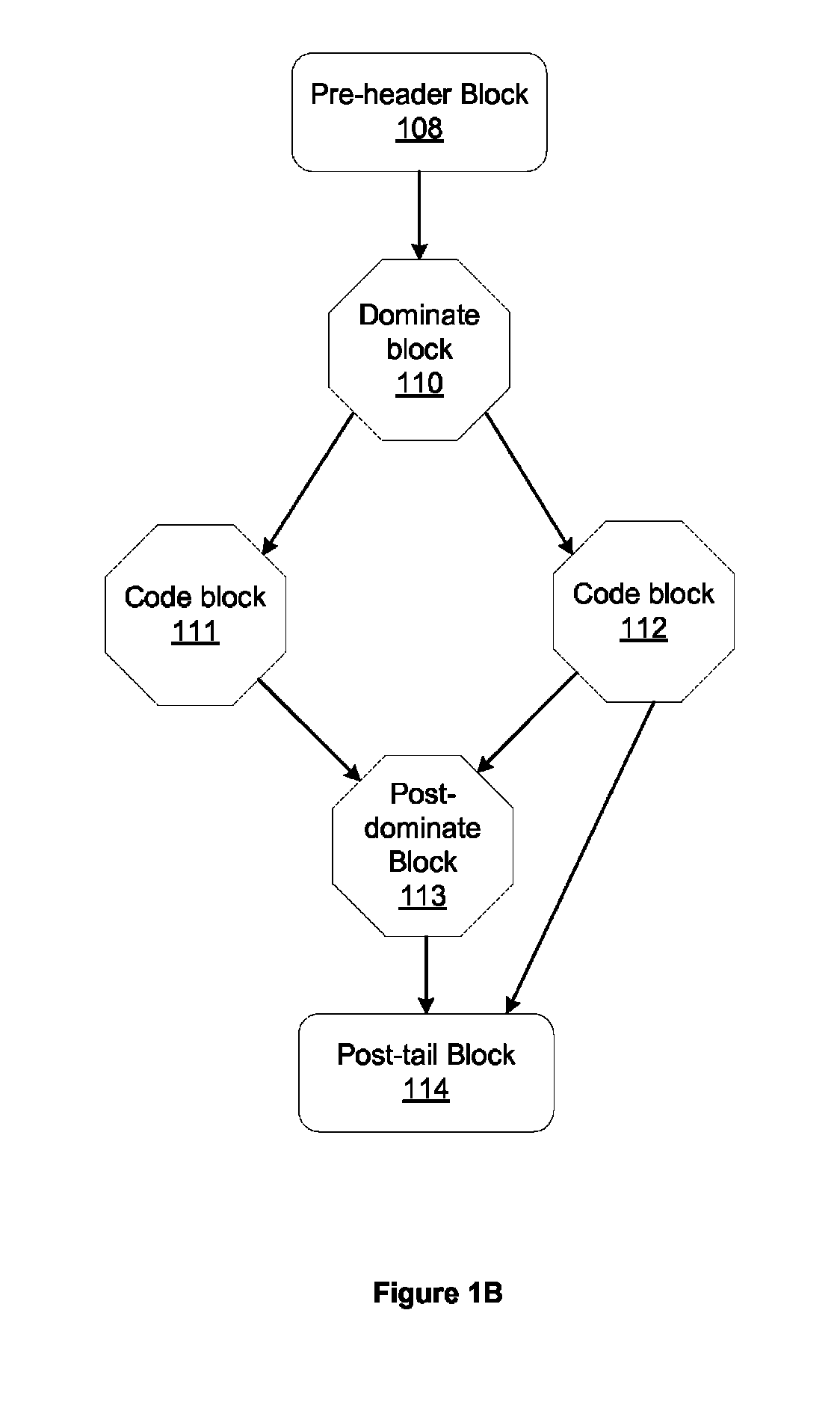

ActiveUS8381203B1Good benefitImprove performanceSoftware engineeringMultiprogramming arrangementsGraphicsMIMD

A compiler is configured to determine a set of points in a flow graph for a software program where multithreaded execution synchronization points are inserted to synchronize divergent threads for SIMD processing. MIMD execution of divergent threads is allowed and execution of the divergent threads proceeds until a synchronization point is reached. When all of the threads reach the synchronization point, synchronous execution resumes. The synchronization points are needed to ensure proper execution of the certain instructions that require synchronous execution as defined in some graphics APIs and when synchronous execution improves performance based on a SIMD architecture.

Owner:NVIDIA CORP

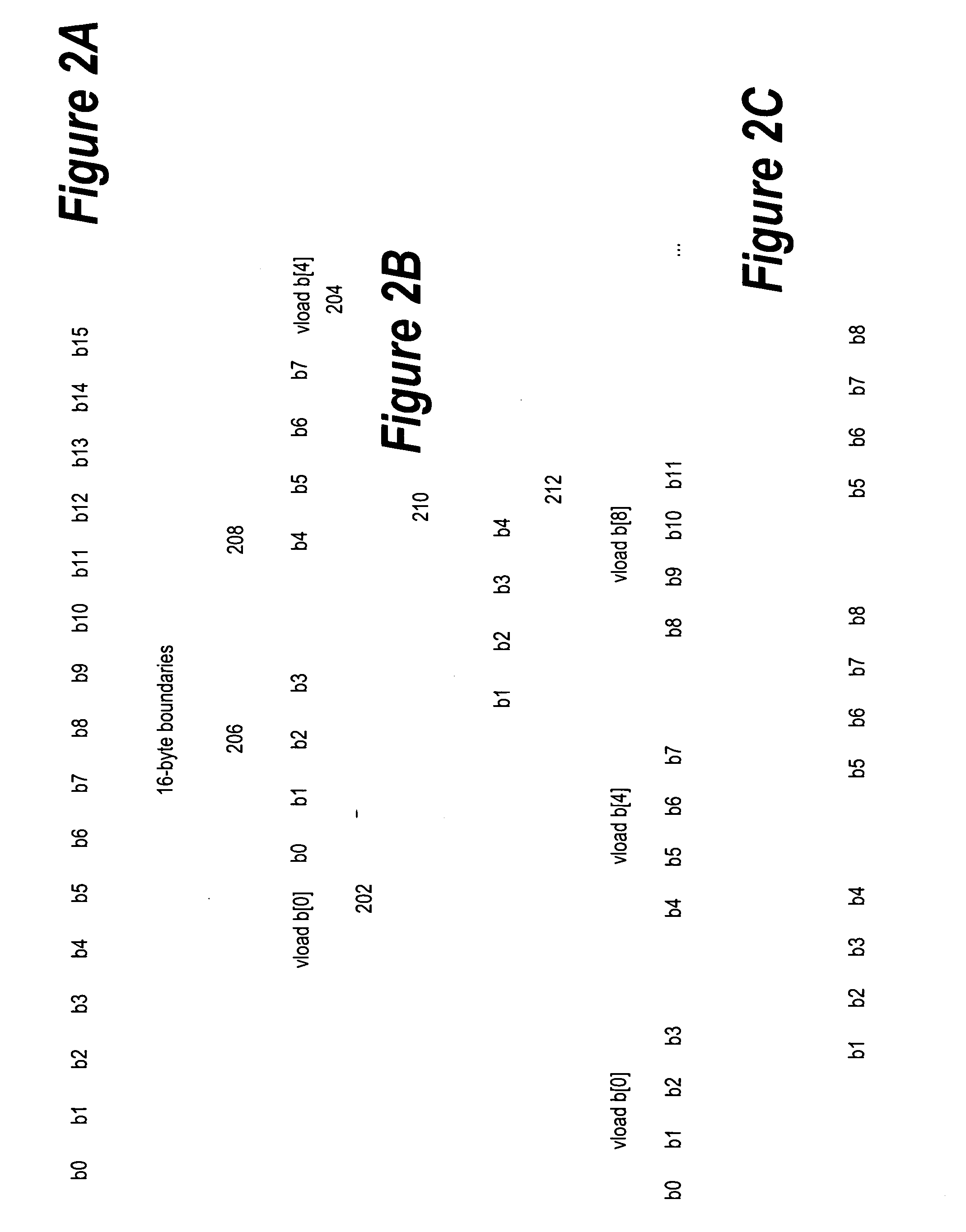

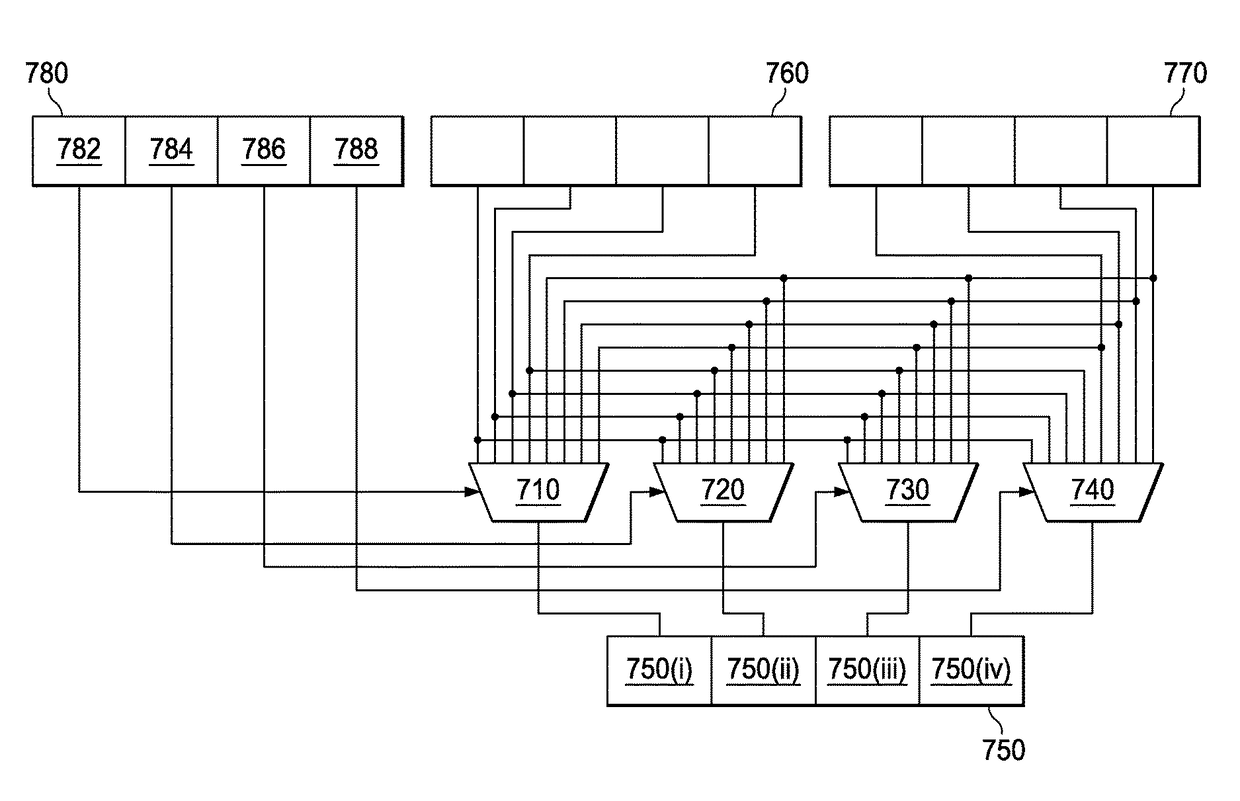

Dynamic Data Driven Alignment and Data Formatting in a Floating-Point SIMD Architecture

InactiveUS20100095087A1Minimizing amount of dataQuantity minimizationProgram control using stored programsHandling data according to predetermined rulesControl vectorProcessor register

Mechanisms are provided for dynamic data driven alignment and data formatting in a floating point SIMD architecture. At least two operand inputs are input to a permute unit of a processor. Each operand input contains at least one floating point value upon which a permute operation is to be performed by the permute unit. A control vector input, having a plurality of floating point values that together constitute the control vector input, is input to the permute unit of the processor for controlling the permute operation of the permute unit. The permute unit performs a permute operation on the at least two operand inputs according to a permutation pattern specified by the plurality of floating point values that constitute the control vector input. Moreover, a result output of the permute operation is output from the permute unit to a result vector register of the processor.

Owner:IBM CORP

Structured programming control flow in a SIMD architecture

ActiveUS7877585B1Efficient mechanismGeneral purpose stored program computerSpecific program execution arrangementsControl flowParallel processing

One embodiment of a computing system configured to manage divergent threads in a SIMD thread group includes a stack configured to store state information for processing control instructions. A parallel processing unit is configured to perform the steps of determining if one or more threads diverge during execution of a conditional control instruction. A disable mask allows for the use of conditional return and break instructions in a multithreaded SIMD architecture. Additional control instructions are used to set up thread processing target addresses for synchronization, breaks, and returns.

Owner:NVIDIA CORP

Efficient hardware instructions for single instruction multiple data processors

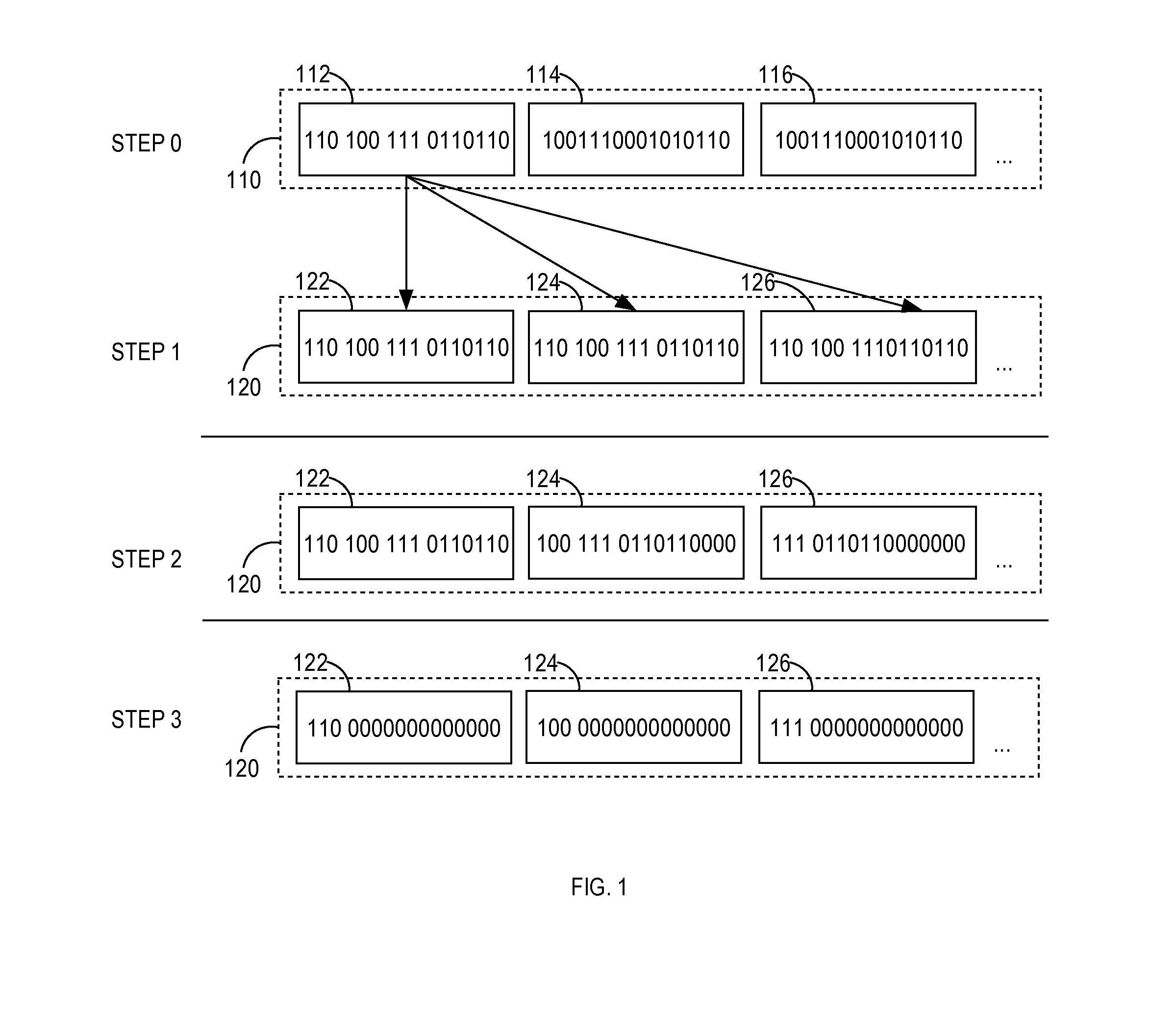

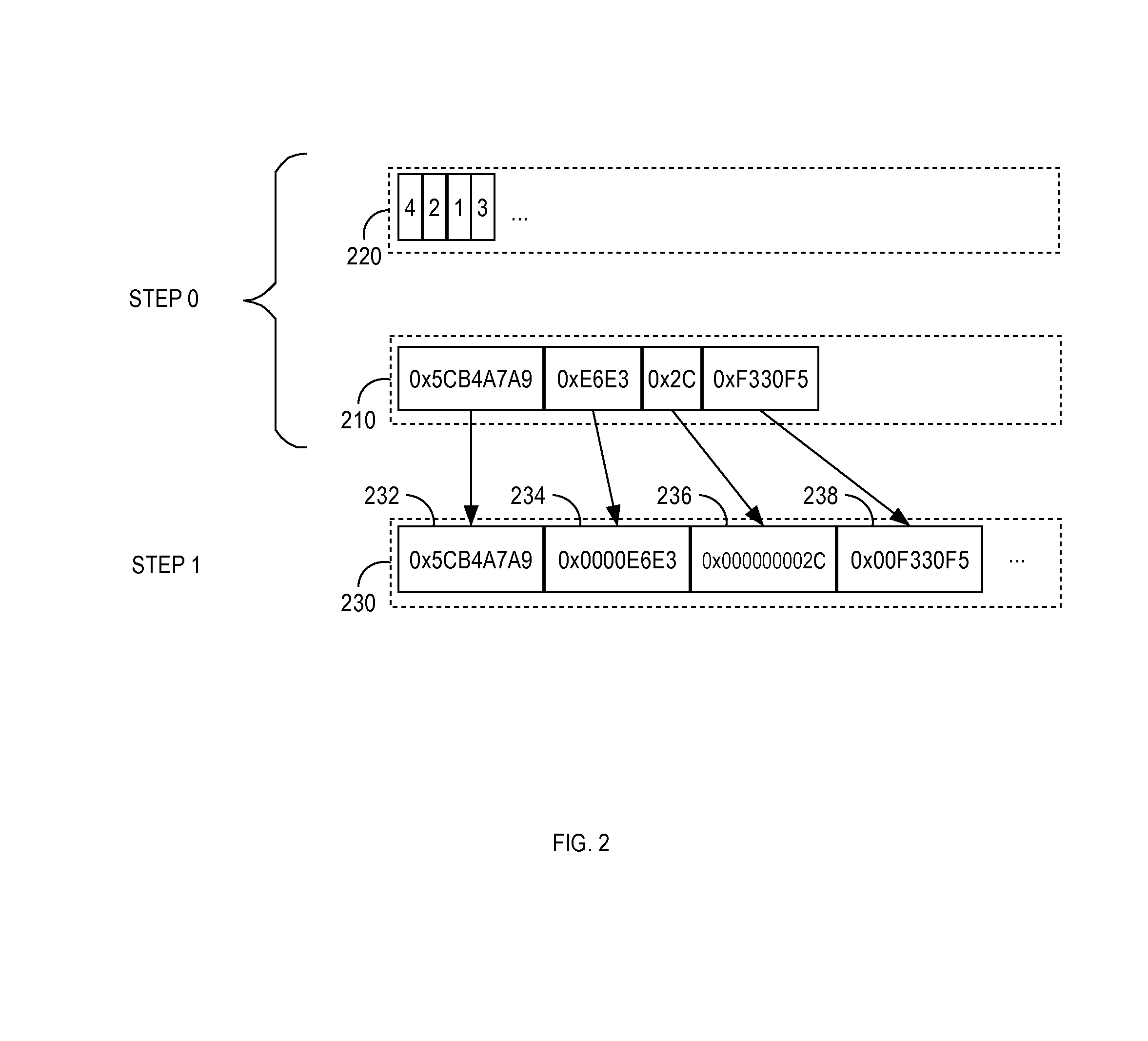

ActiveUS20140013078A1General purpose stored program computerConcurrent instruction executionVariable lengthByte

A method and apparatus for efficiently processing data in various formats in a single instruction multiple data (“SIMD”) architecture is presented. Specifically, a method to unpack a fixed-width bit values in a bit stream to a fixed width byte stream in a SIMD architecture is presented. A method to unpack variable-length byte packed values in a byte stream in a SIMD architecture is presented. A method to decompress a run length encoded compressed bit-vector in a SIMD architecture is presented. A method to return the offset of each bit set to one in a bit-vector in a SIMD architecture is presented. A method to fetch bits from a bit-vector at specified offsets relative to a base in a SIMD architecture is presented. A method to compare values stored in two SIMD registers is presented.

Owner:ORACLE INT CORP

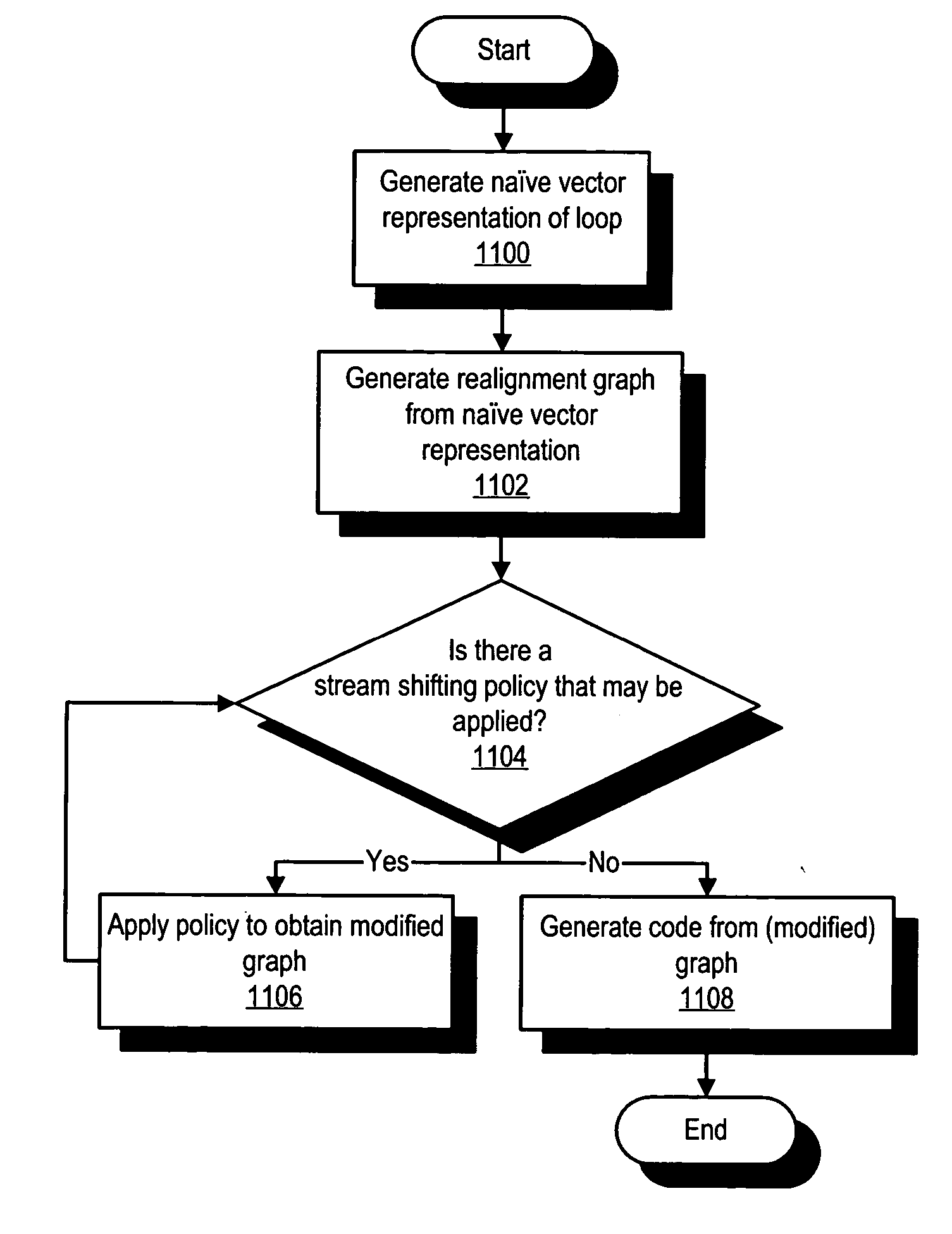

Determining Median Value of an Array on Vector SIMD Architectures

A method for determining a median value of an array of pixels in a vision system may be performed in an efficient manner using the parallel computing capabilities of a SIMD processing engine. Each column of an array may be sorted in ascending (descending) order to form a first sorted array. Each row of the first sorted array may be sorted in ascending (descending) order to form a second sorted array. A pixel may be selected as the median value from a diagonal portion of the second sorted array, wherein the diagonal portion bisects a lower value region and a higher value region of the second sorted array.

Owner:TEXAS INSTR INC

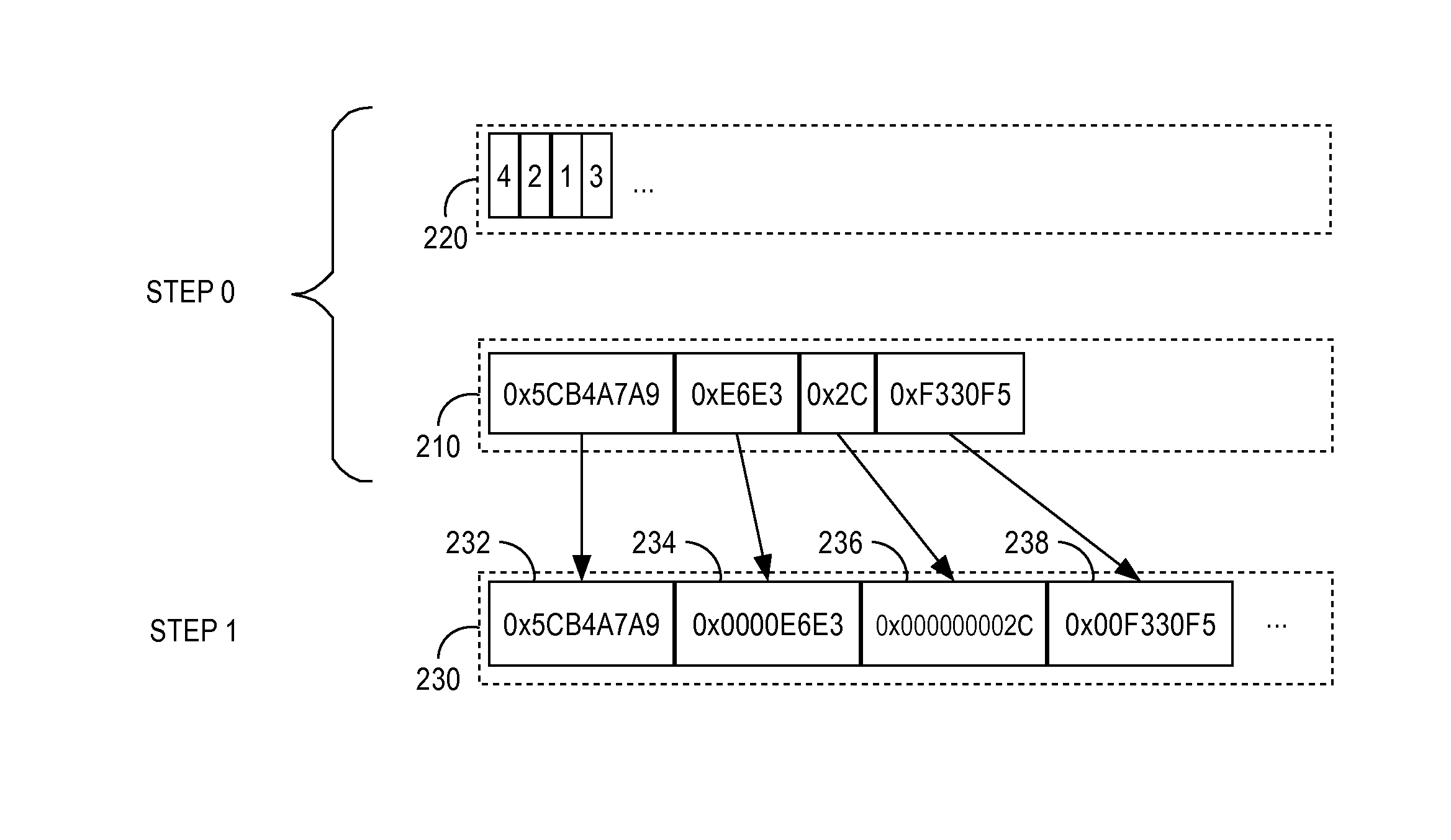

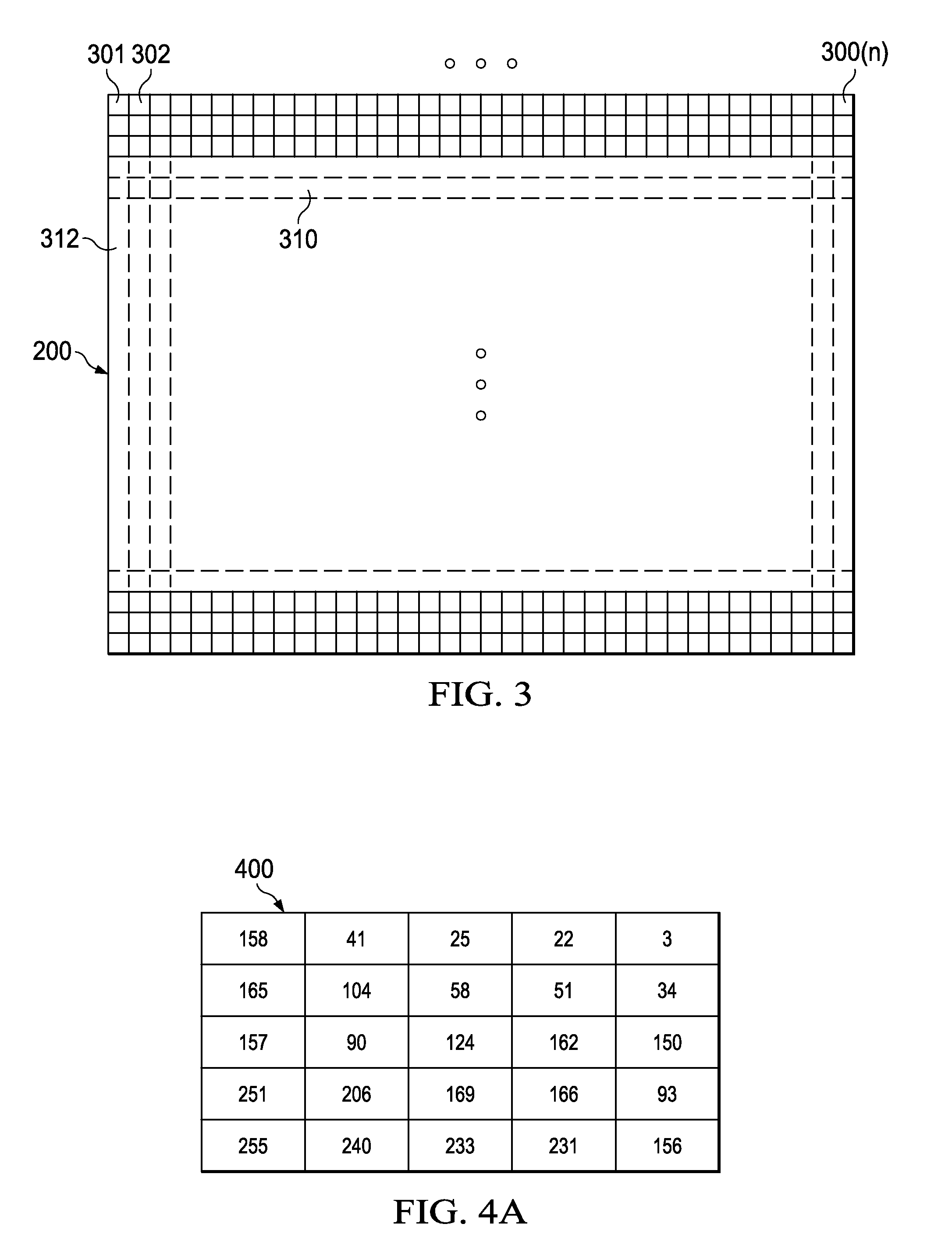

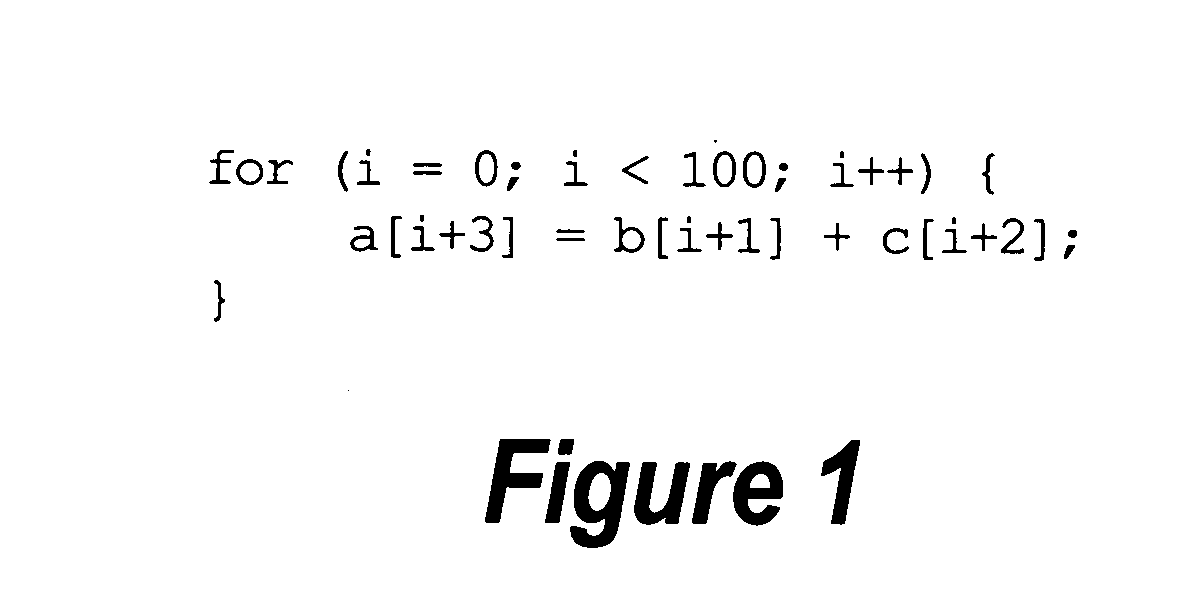

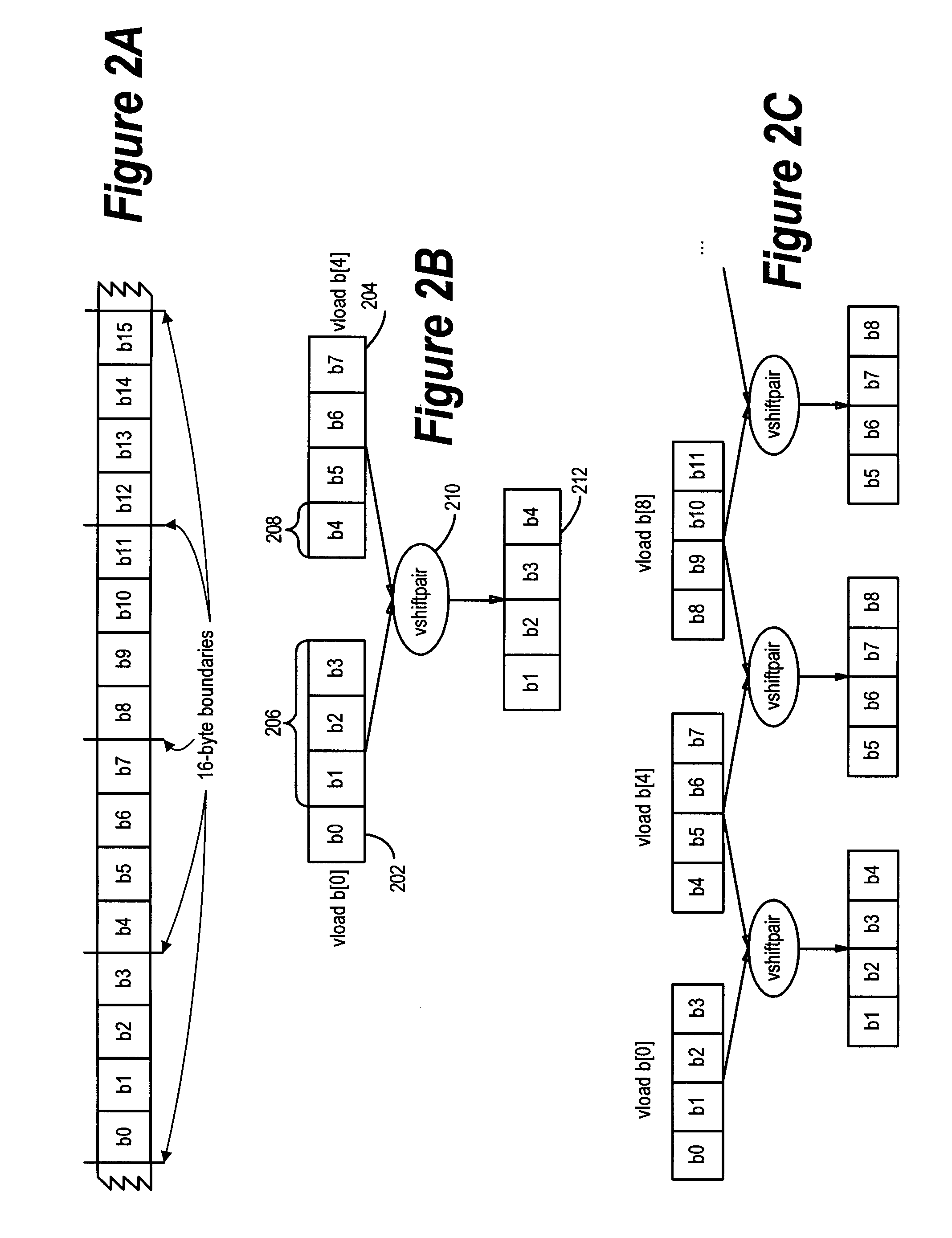

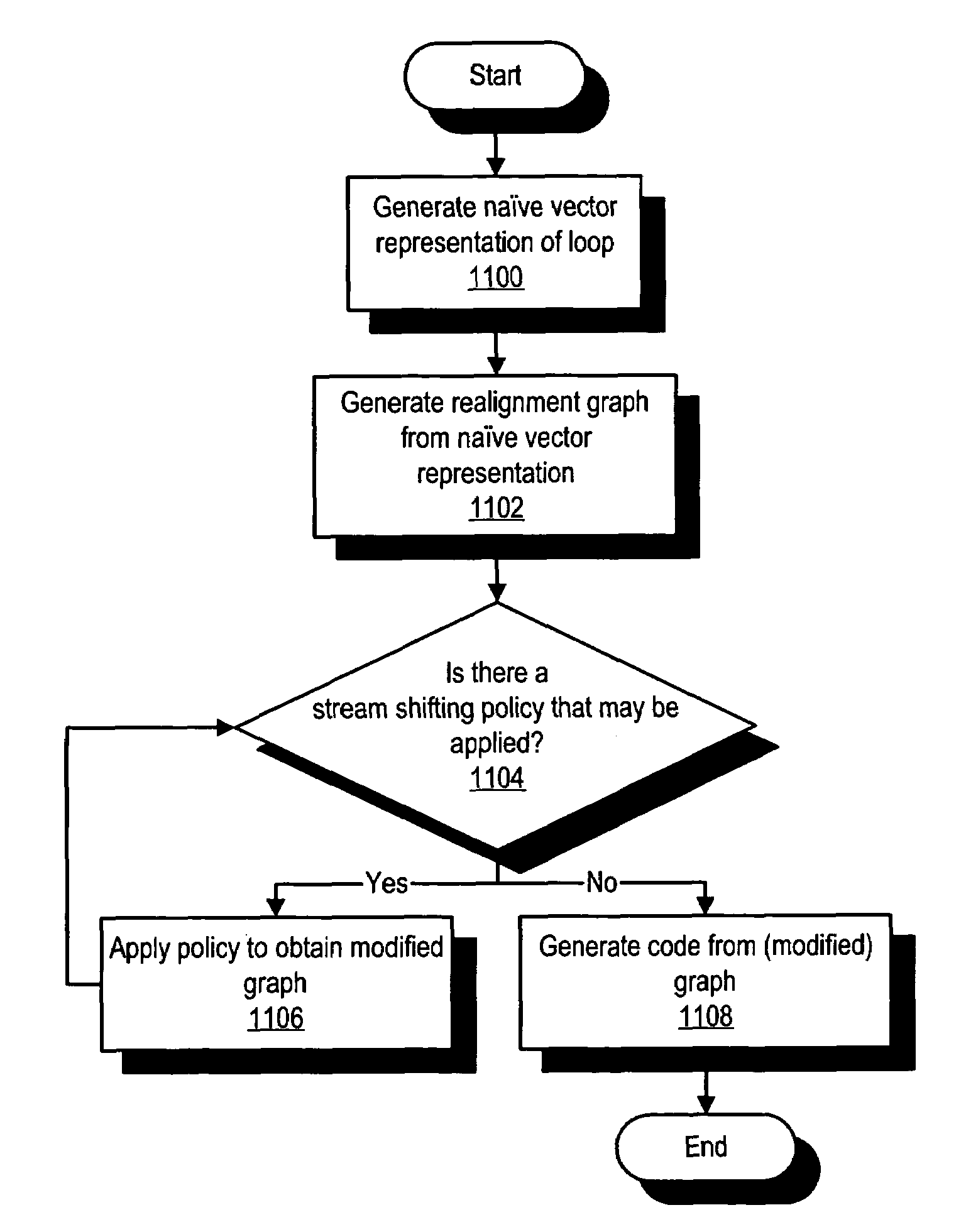

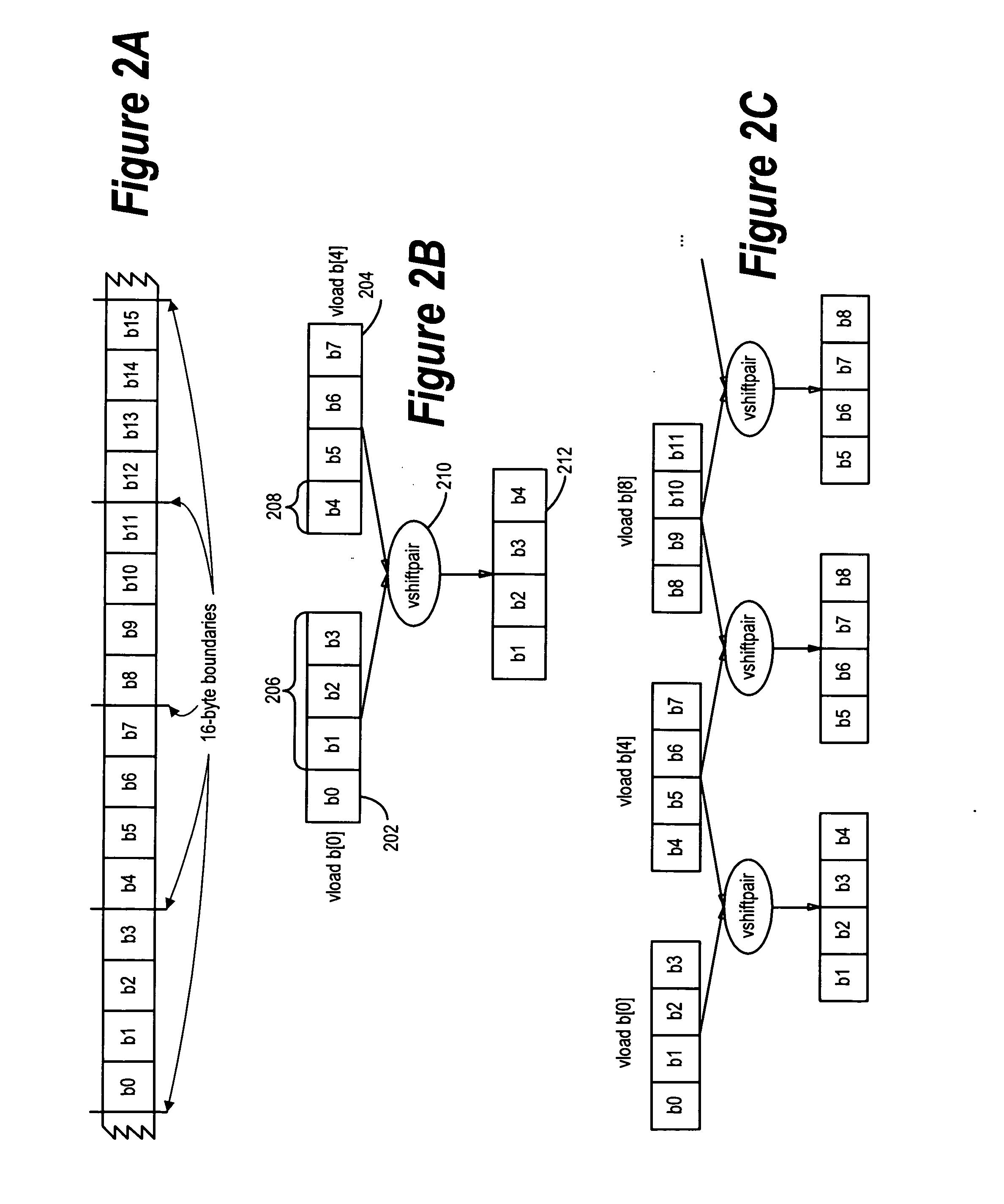

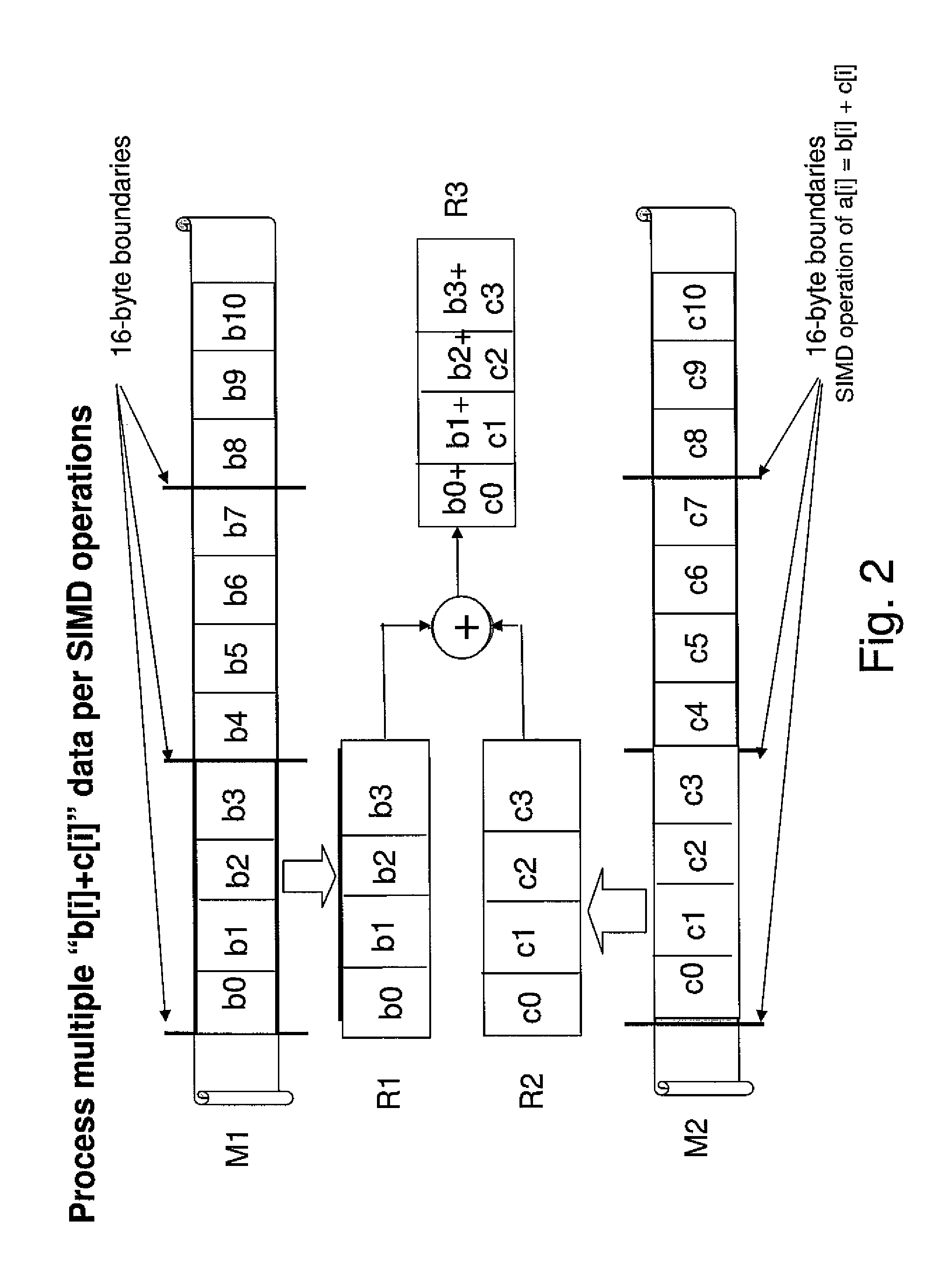

System and method for efficient data reorganization to satisfy data alignment constraints

InactiveUS20050283769A1Quality improvementMinimize the numberSoftware engineeringProgram controlData reorganizationMajorization minimization

A system and method is provided for vectorizing misaligned references in compiled code for SIMD architectures that support only aligned loads and stores. In the framework presented herein, a loop is first simdized as if the memory unit imposes no alignment constraints. The compiler then inserts data reorganization operations to satisfy the actual alignment requirement of the hardware. Finally, the code generation algorithm generates SIMD codes based on the data reorganization graph, addressing realistic issues such as runtime alignments, unknown loop bounds, residue iteration counts, and multiple statements with arbitrary alignment combinations. Beyond generating a valid simdization, a preferred embodiment further improves the quality of the generated codes. Four stream-shift placement policies are disclosed, which minimize the number of data reorganization generated by the alignment handling.

Owner:IBM CORP

Framework for efficient code generation using loop peeling for SIMD loop code with multiple misaligned statements

InactiveUS7395531B2No additional computational overheadReduce computational overheadSoftware engineeringGeneral purpose stored program computerData reorganizationSteady state

A system and method is provided for vectorizing misaligned references in compiled code for SIMD architectures that support only aligned loads and stores. In this framework, a loop is first simdized as if the memory unit imposes no alignment constraints. The compiler then inserts data reorganization operations to satisfy the actual alignment requirements of the hardware. Finally, the code generation algorithm generates SIMD codes based on the data reorganization graph, addressing realistic issues such as runtime alignments, unknown loop bounds, residual iteration counts, and multiple statements with arbitrary alignment combinations. Loop peeling is used to reduce the computational overhead associated with misaligned data. A loop prologue and epilogue are peeled from individual iterations in the simdized loop, and vector-splicing instructions are applied to the peeled iterations, while the steady-state loop body incurs no additional computational overhead.

Owner:INT BUSINESS MASCH CORP

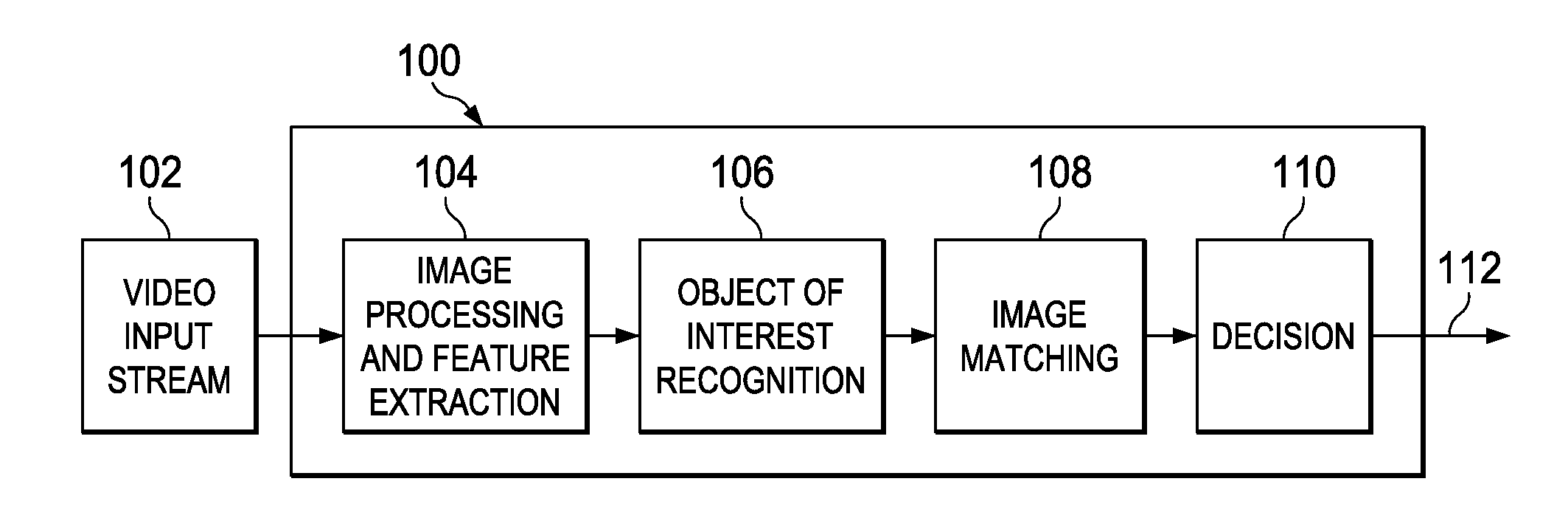

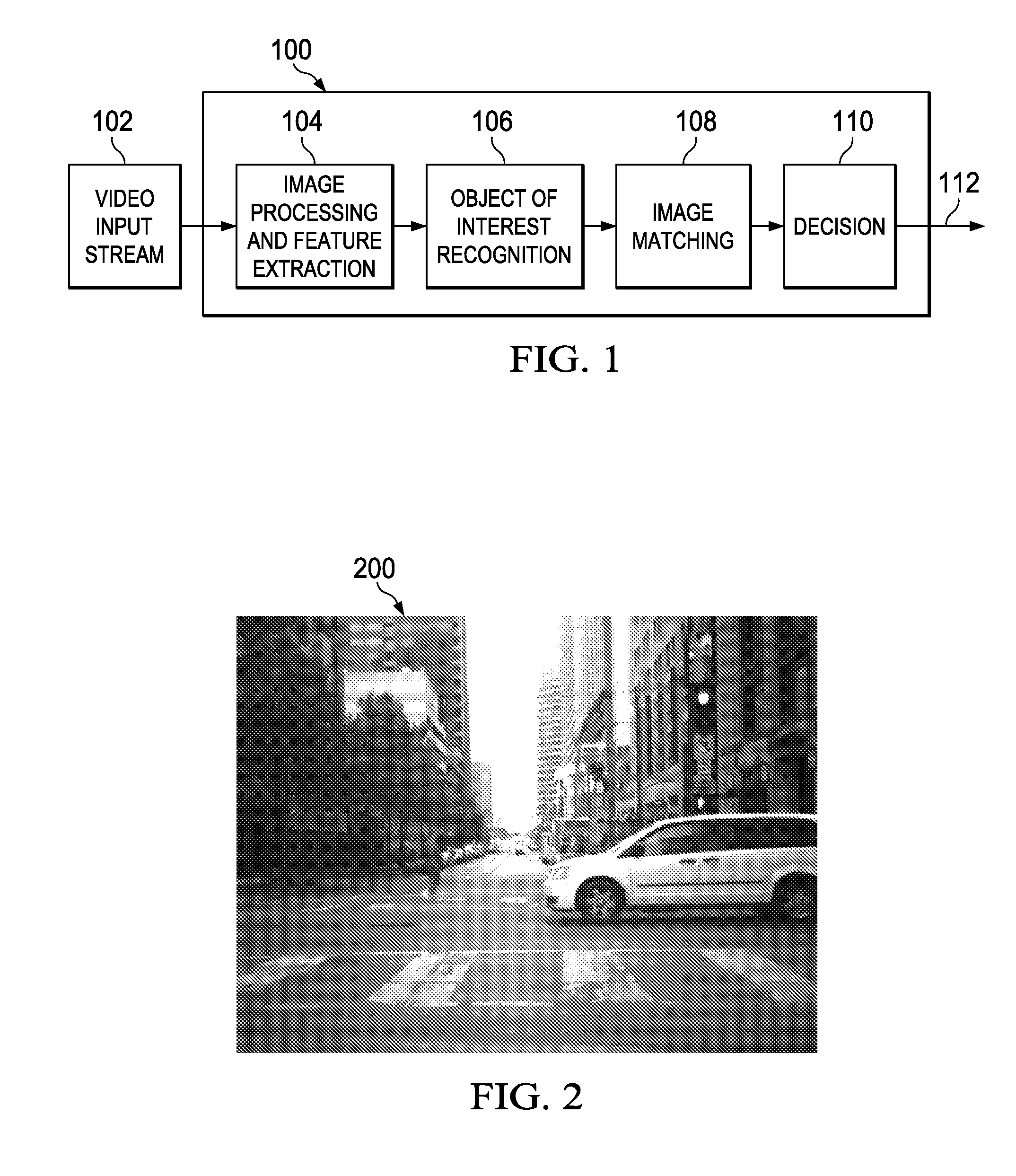

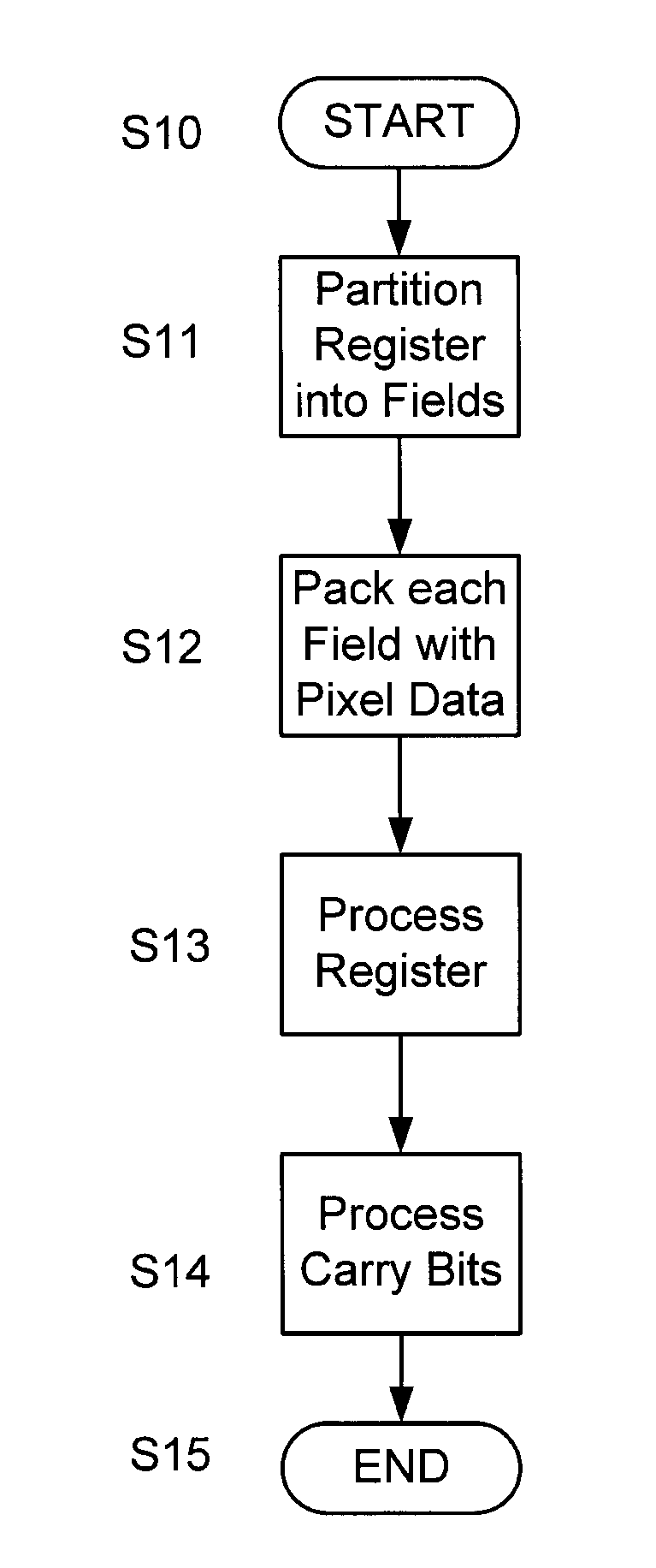

Apparatus, computer program product and associated methodology for video analytics

ActiveUS8401327B2Efficient implementationDigital computer detailsCharacter and pattern recognitionThroughputInstruction set

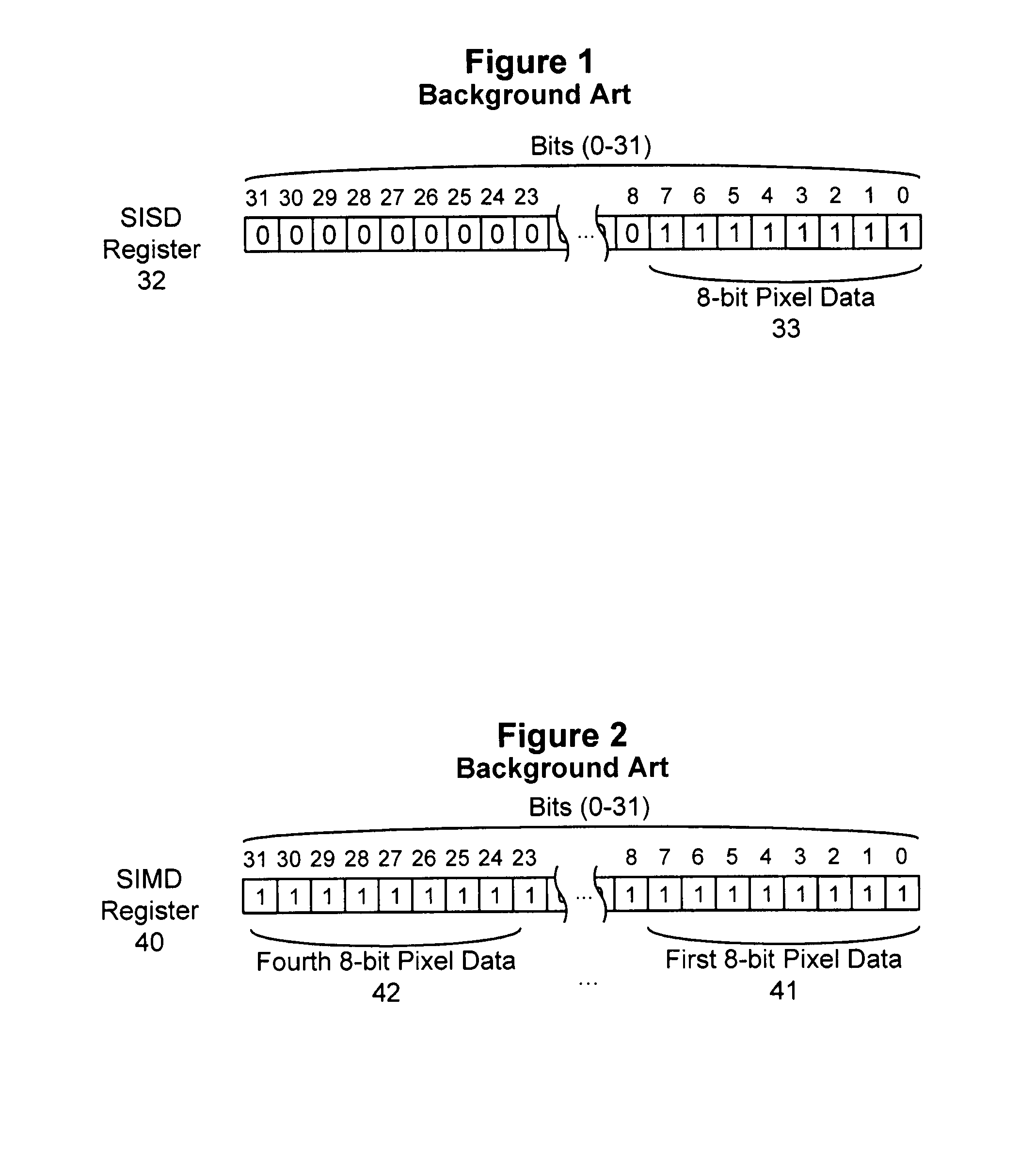

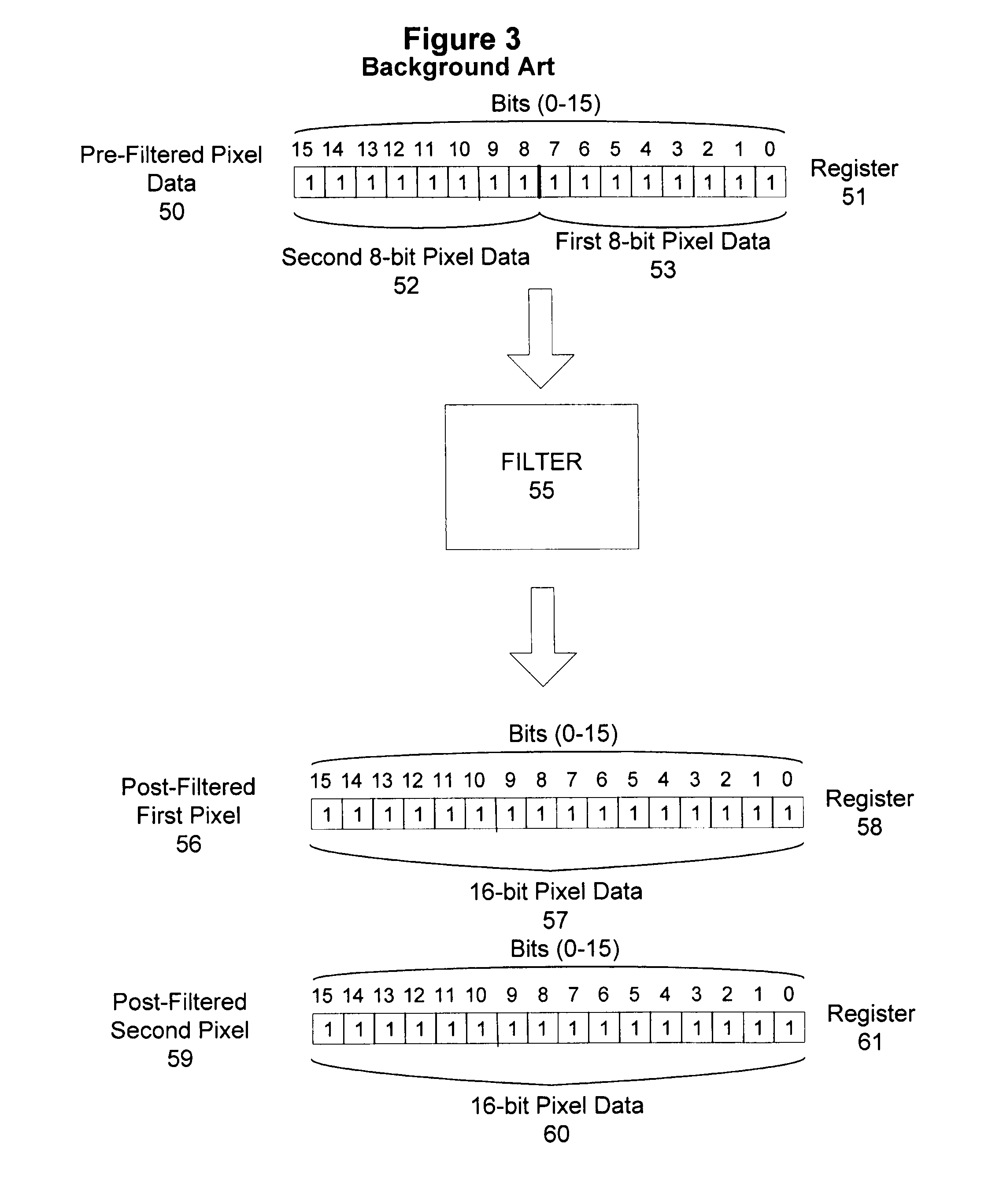

A processor and associated methodology employ a SIMD architecture and instruction set to efficiently perform video analytics operation on images. The processor contains a group of SIMD instructions used by the method to implement video analytic filters that avoid bit expansion of the pixels to be filtered. The filters hold the number of bits representing a pixel constant throughout the entire operation, conserving processor capacity and throughput when performing video analytics.

Owner:AXIS

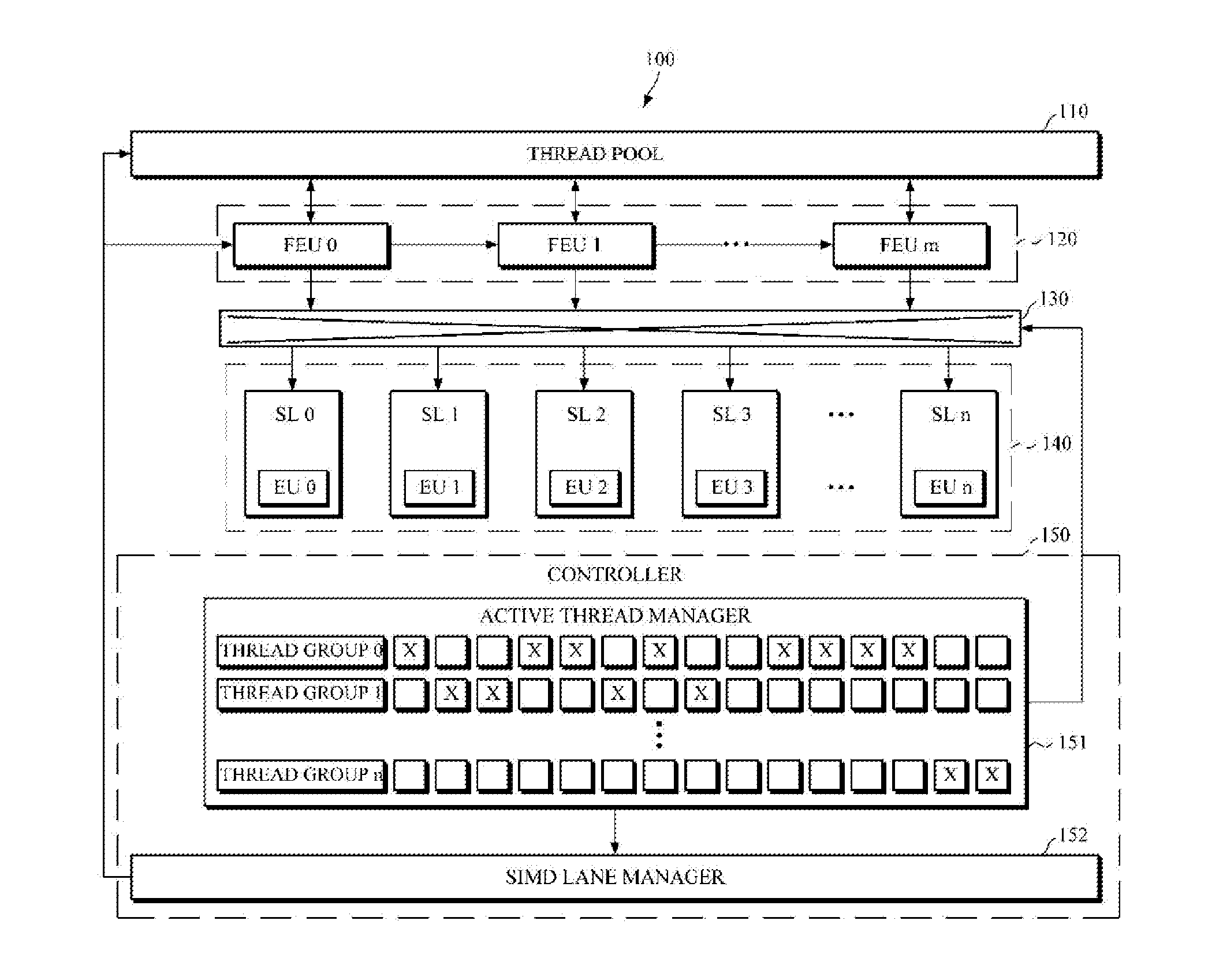

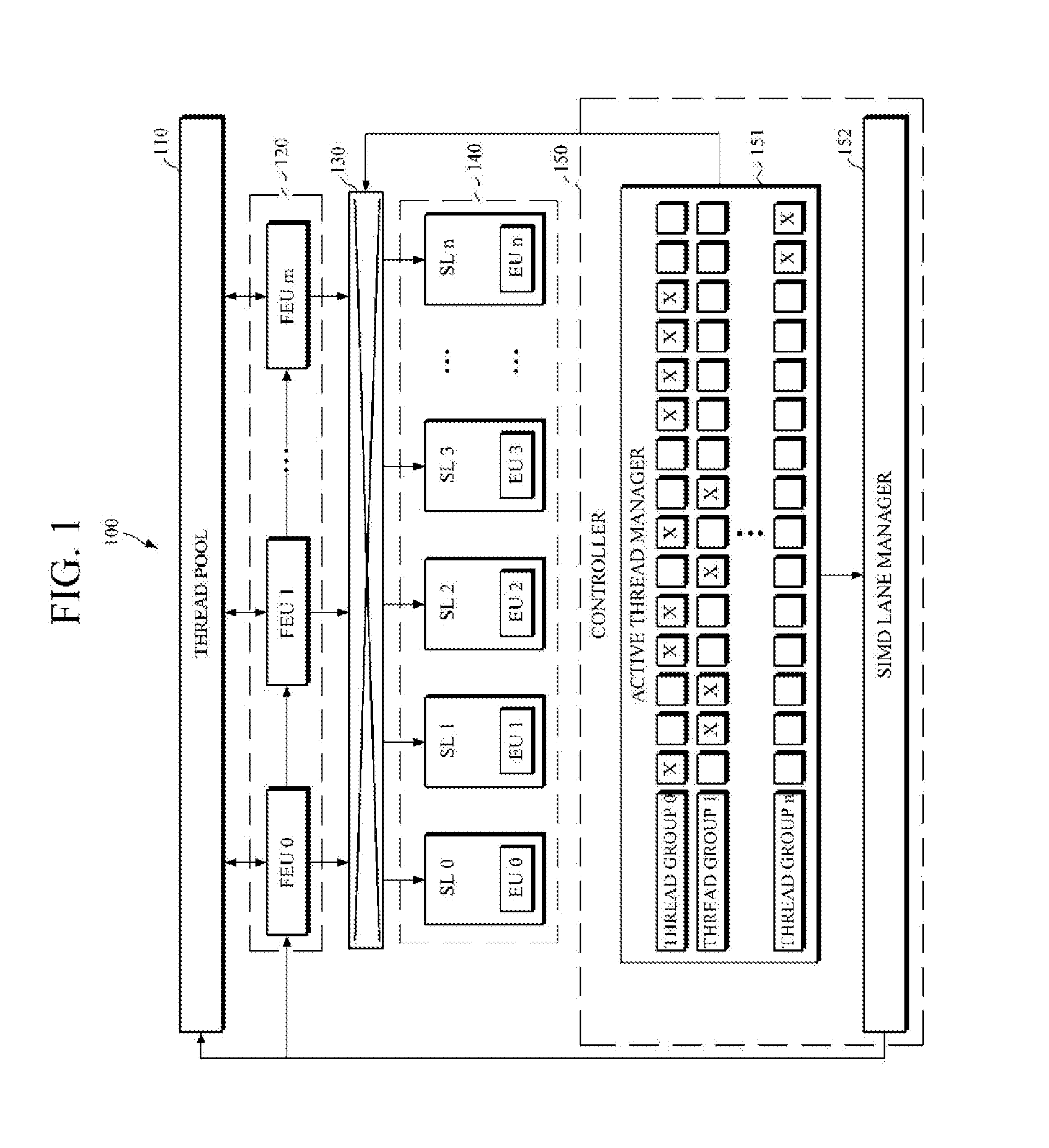

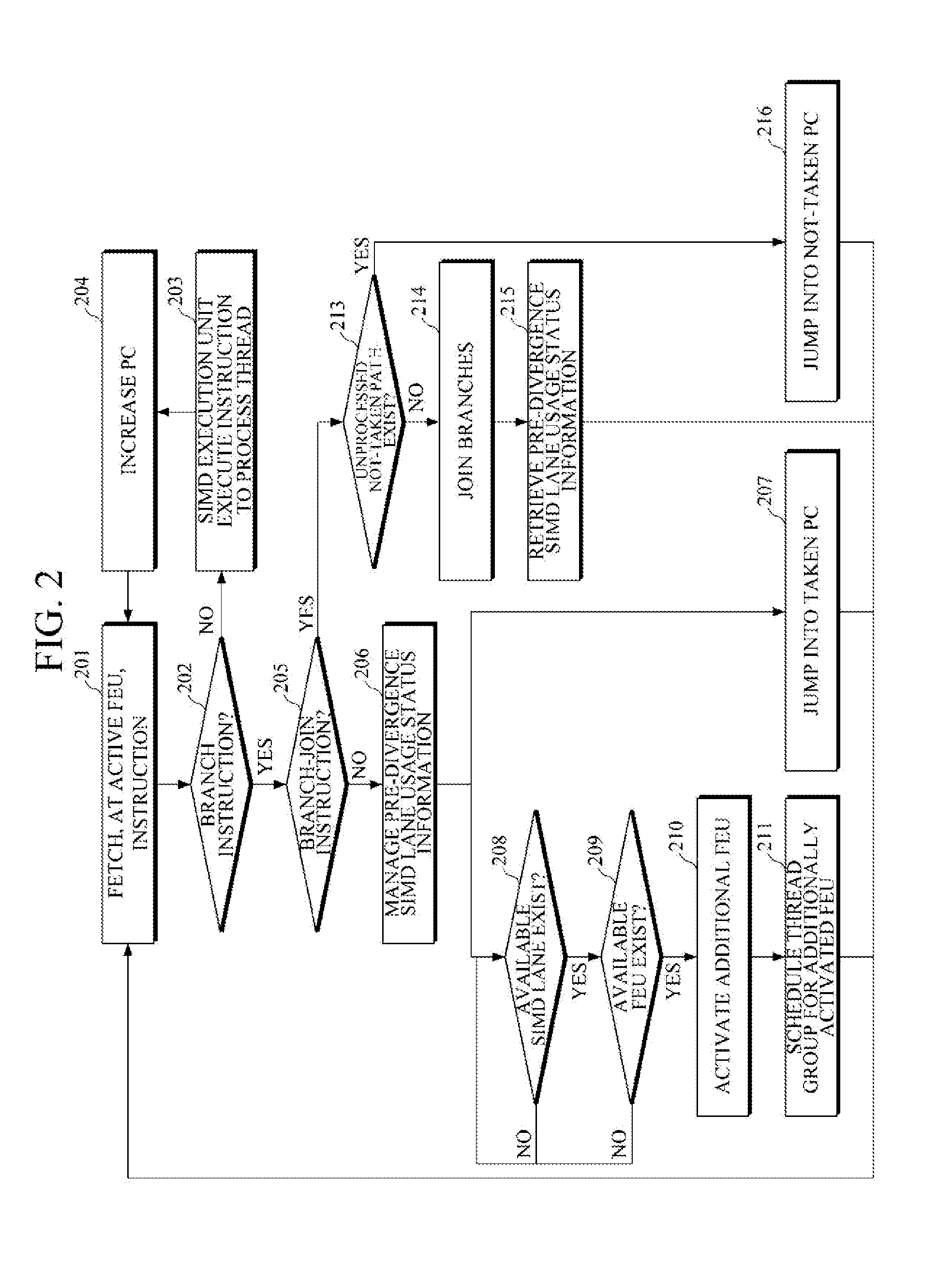

Device and method for managing simd architecture based thread divergence

ActiveUS20160132338A1Minimize idle SIMD laneImprove performanceInstruction analysisDigital computer detailsExecution controlConditional branch

Provided are an apparatus and a method for effectively managing threads diverged by a conditional branch based on Single Instruction Multiple-based Data (SIMD). The apparatus includes: a plurality of Front End Units (FEUs) configured to fetch, for execution by SIMD lanes, instructions of thread groups of a program flow; and a controller configured to schedule a thread group based on SIMD lane availability information, activate an FEU of the plurality of FEUs, and control the activated FEU to fetch an instruction for processing the scheduled thread group.

Owner:SAMSUNG ELECTRONICS CO LTD

Efficient data reorganization to satisfy data alignment constraints

InactiveUS7386842B2Quality improvementMinimize the numberSoftware engineeringProgram controlTheoretical computer scienceData reorganization

An approach is provided for vectorizing misaligned references in compiled code for SIMD architectures that support only aligned loads and stores. In the framework presented herein, a loop is first simdized as if the memory unit imposes no alignment constraints. The compiler then inserts data reorganization operations to satisfy the actual alignment requirement of the hardware. Finally, the code generation algorithm generates SIMD codes based on the data reorganization graph, addressing realistic issues such as runtime alignments, unknown loop bounds, residue iteration counts, and multiple statements with arbitrary alignment combinations. Beyond generating a valid simdization, a preferred embodiment further improves the quality of the generated codes. Four stream-shift placement policies are disclosed, which minimize the number of data reorganization generated by the alignment handling.

Owner:INT BUSINESS MASCH CORP

Translation of SIMD instructions in a data processing system

ActiveUS8505002B2Avoid problemsEases non-recurring engineering costsDigital computer detailsSpecific program execution arrangementsData processing systemData treatment

A data processing system is provided having a processor and analysing circuitry for identifying a SIMD instruction associated with a first SIMD instruction set and replacing it by a functionally-equivalent scalar representation and marking that functionally-equivalent scalar representation. The marked functionally-equivalent scalar representation is dynamically translated using translation circuitry upon execution of the program to generate one or more corresponding translated instructions corresponding to a instruction set architecture different from the first SIMD architecture corresponding to the identified SIMD instruction.

Owner:RGT UNIV OF MICHIGAN +1

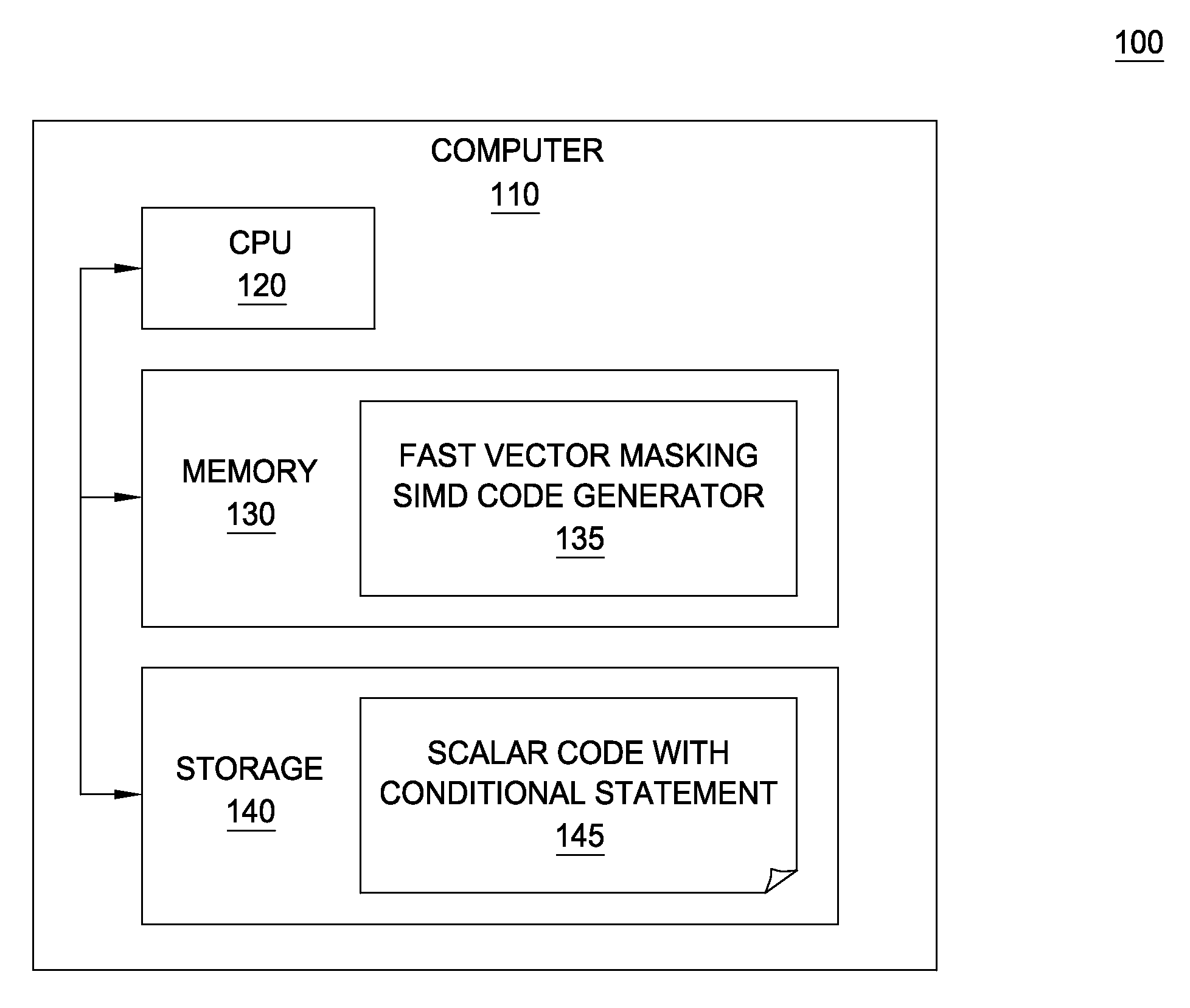

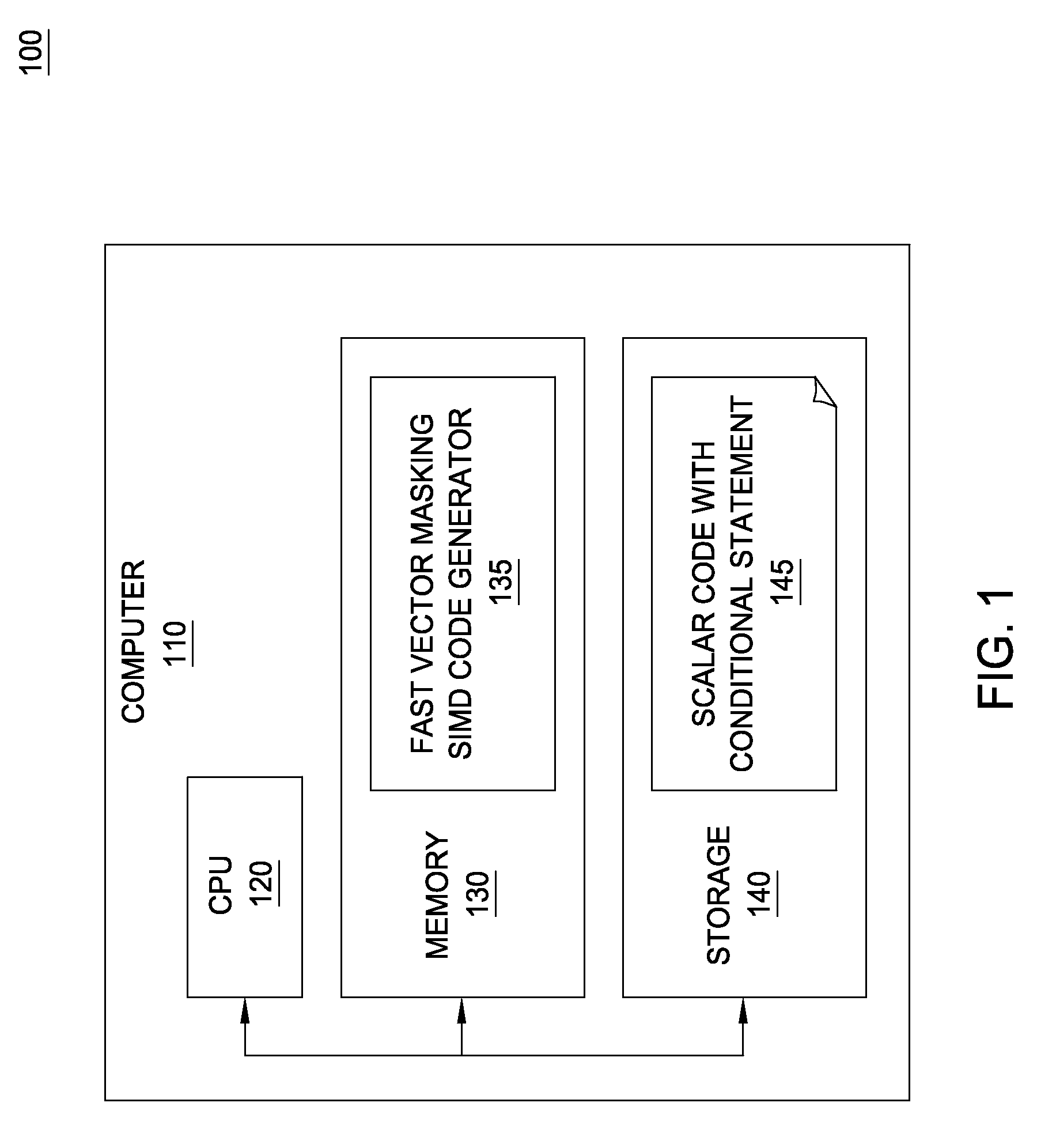

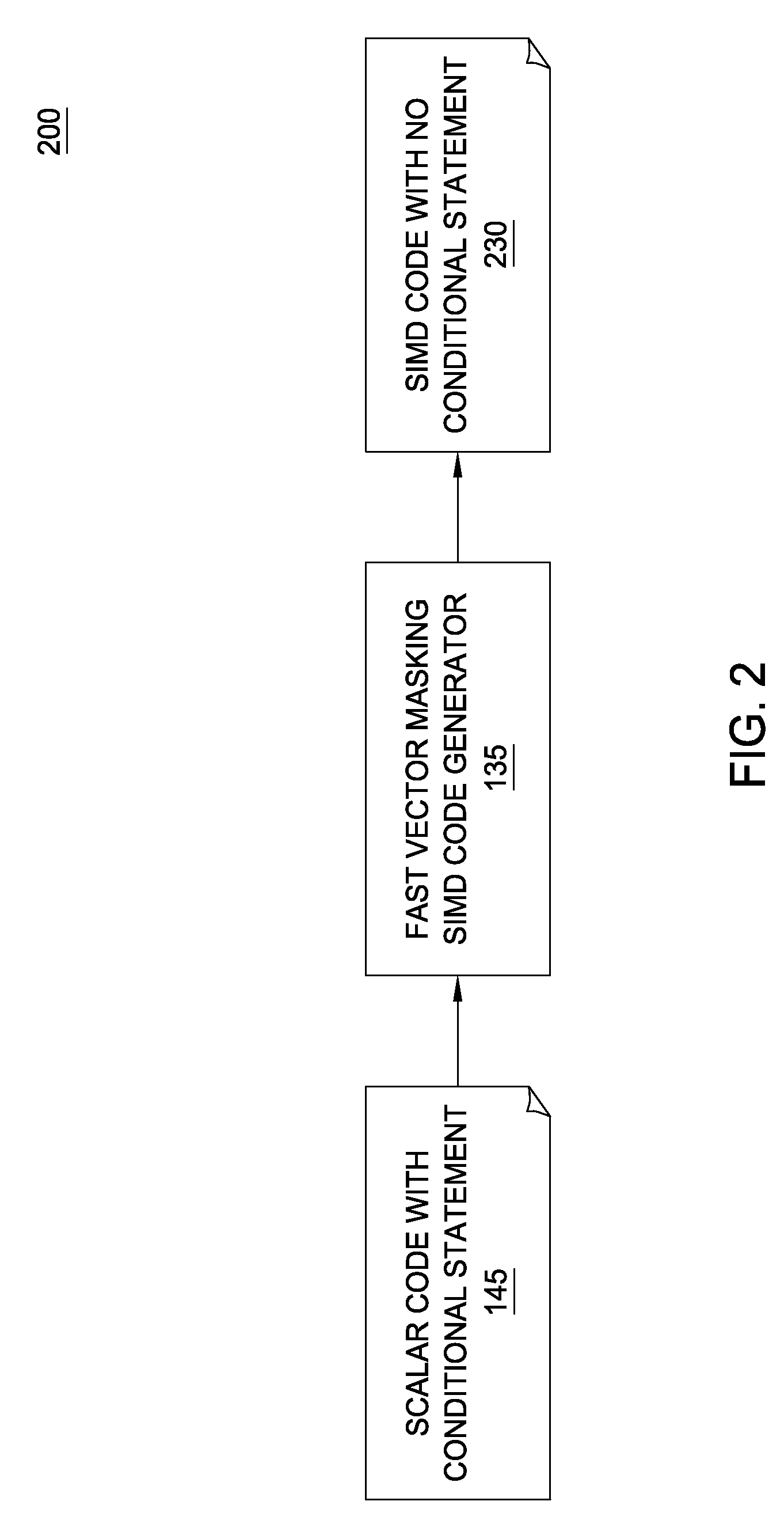

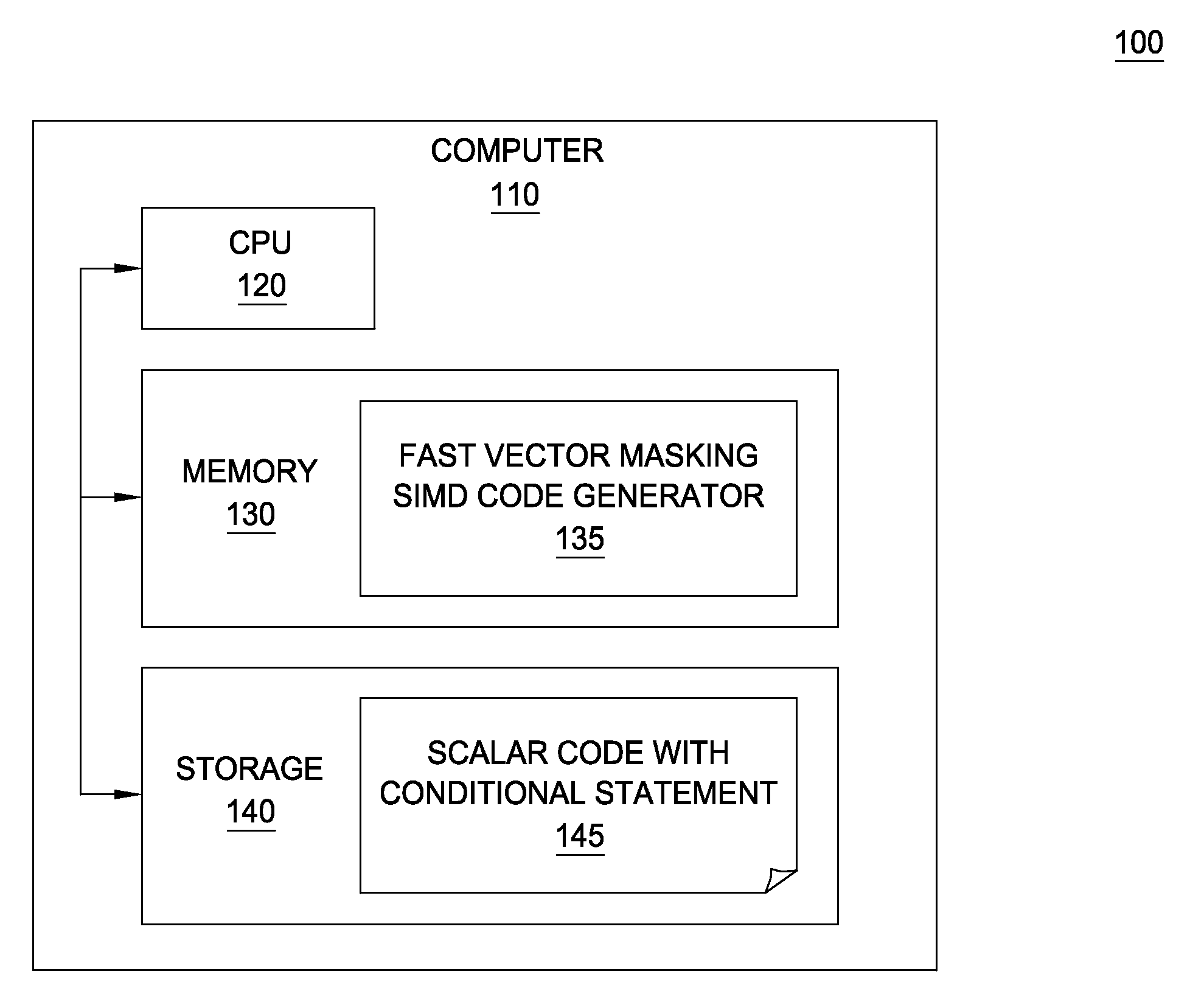

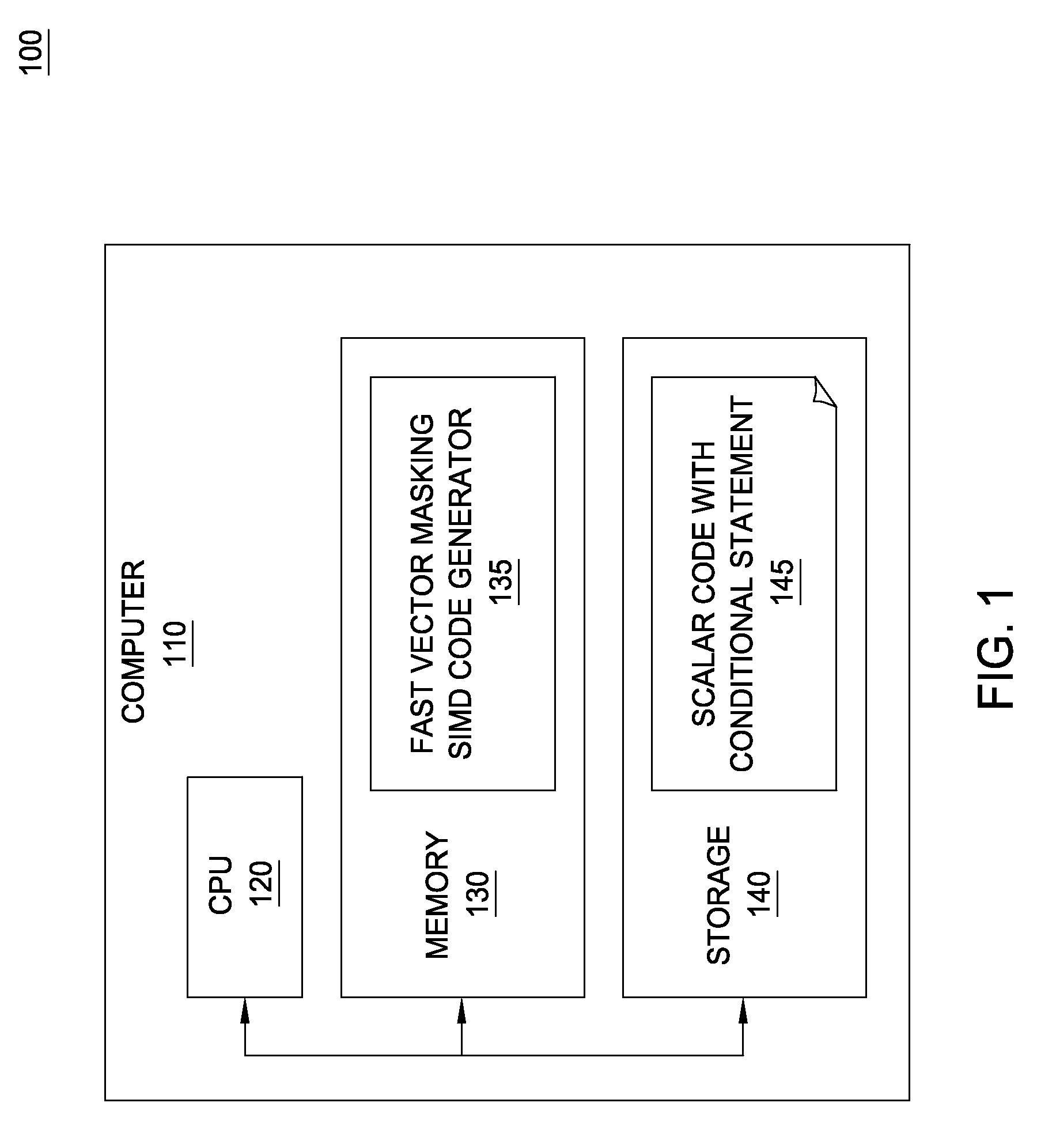

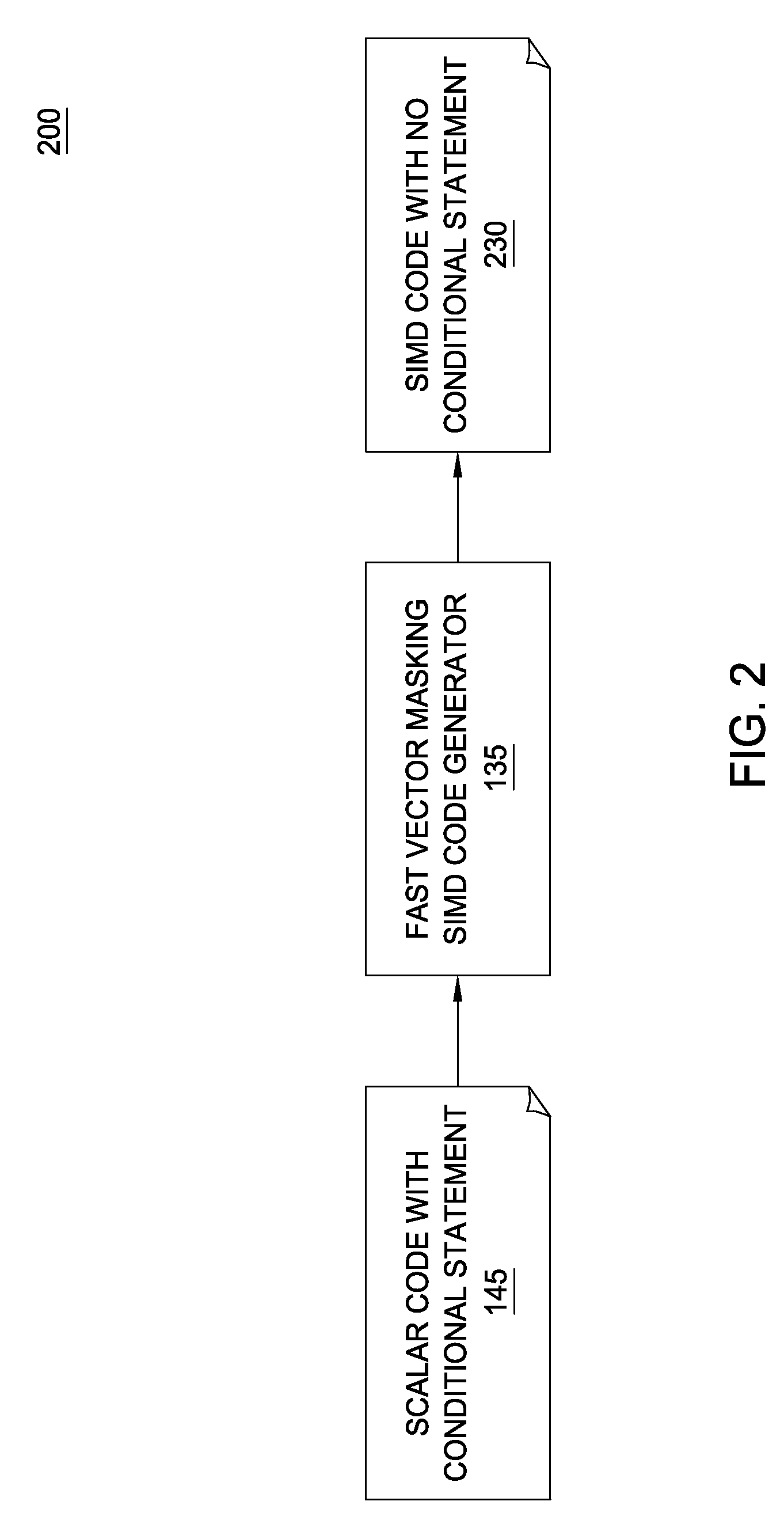

Fast vector masking algorithm for conditional data selection in simd architectures

InactiveUS20100205585A1Transformation of program codeConcurrent instruction executionData selectionLogical operations

Techniques are disclosed for generating fast vector masking SIMD code corresponding to source code having a conditional statement, where the SIMD code replaces the conditional statements with vector SIMD operations. One technique includes performing conditional masking using vector operations, bit masking operations, and bitwise logical operations. The need for conditional statements in SIMD code is thereby removed, allowing SIMD hardware to avoid having to use branch prediction. This reduces the number of pipeline stalls and results in increased utilization of the SIMD computational units.

Owner:IBM CORP

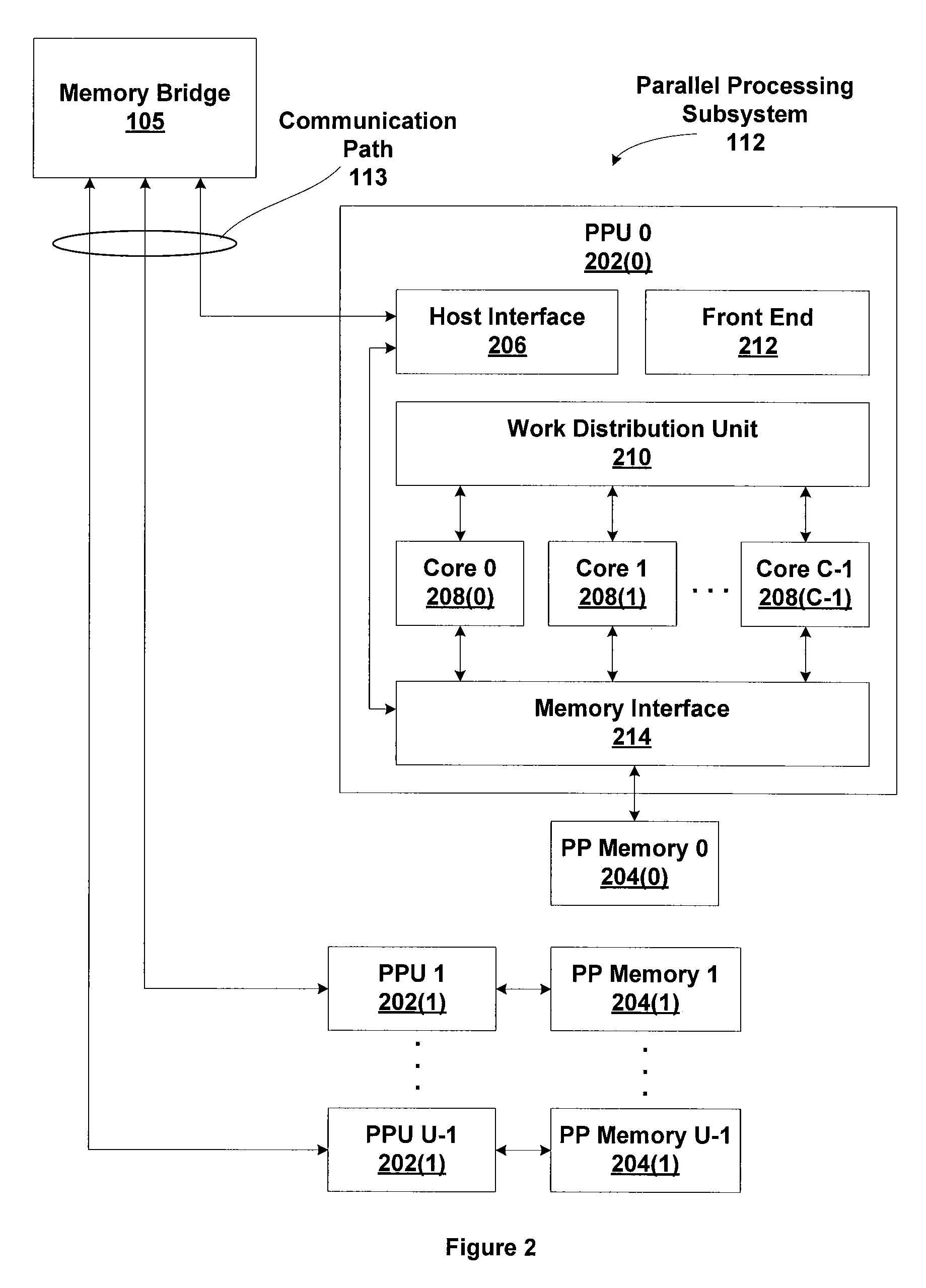

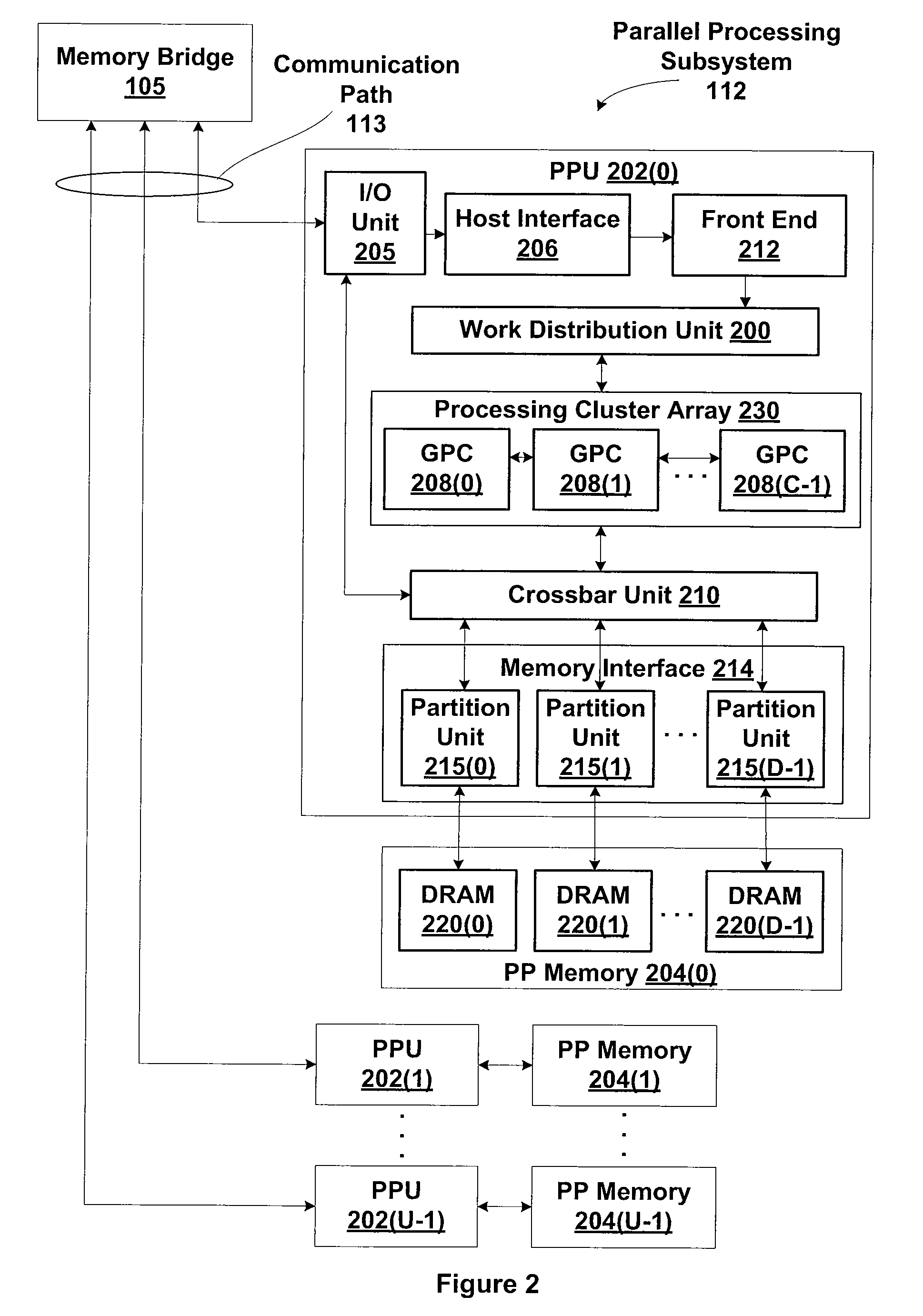

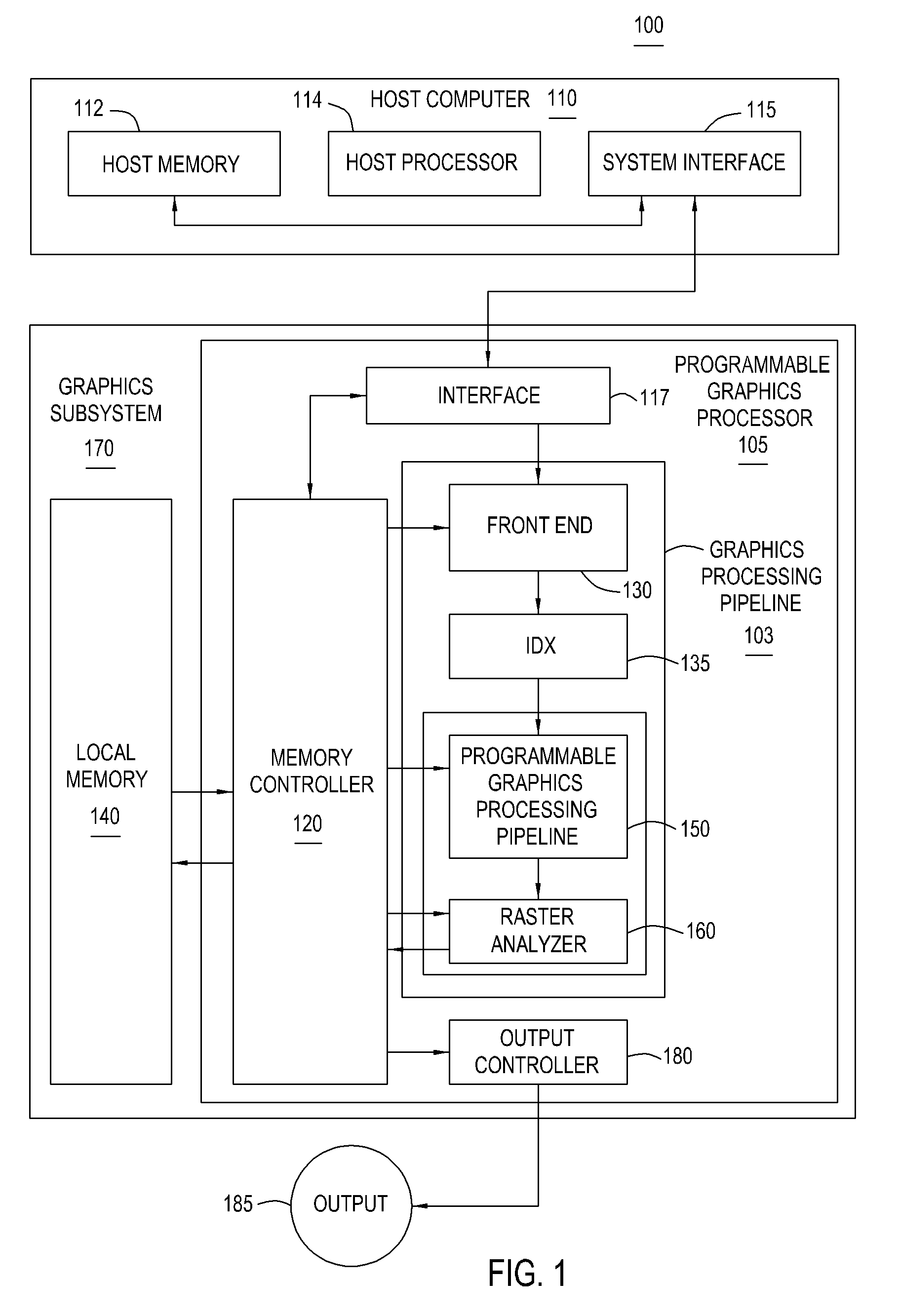

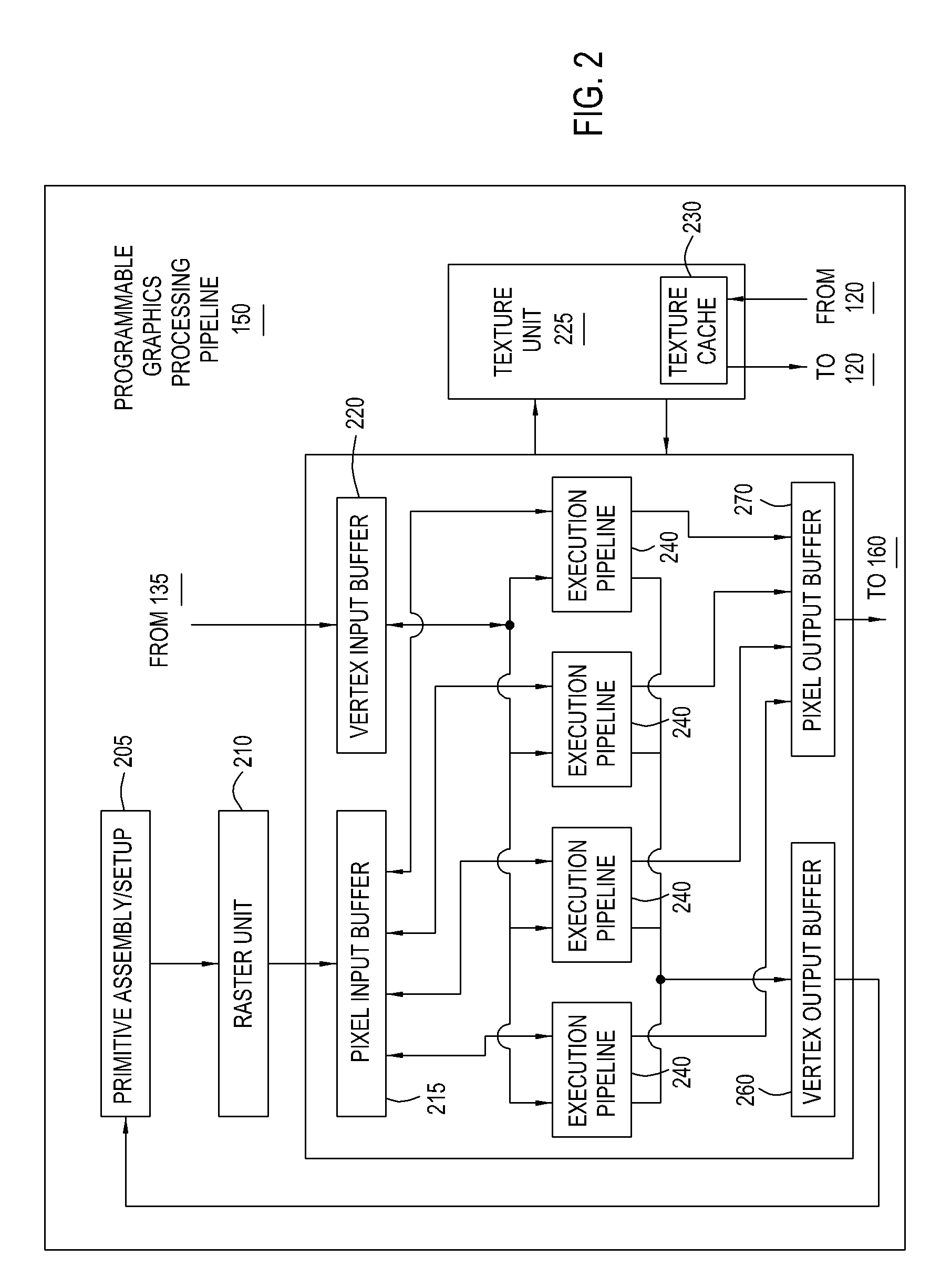

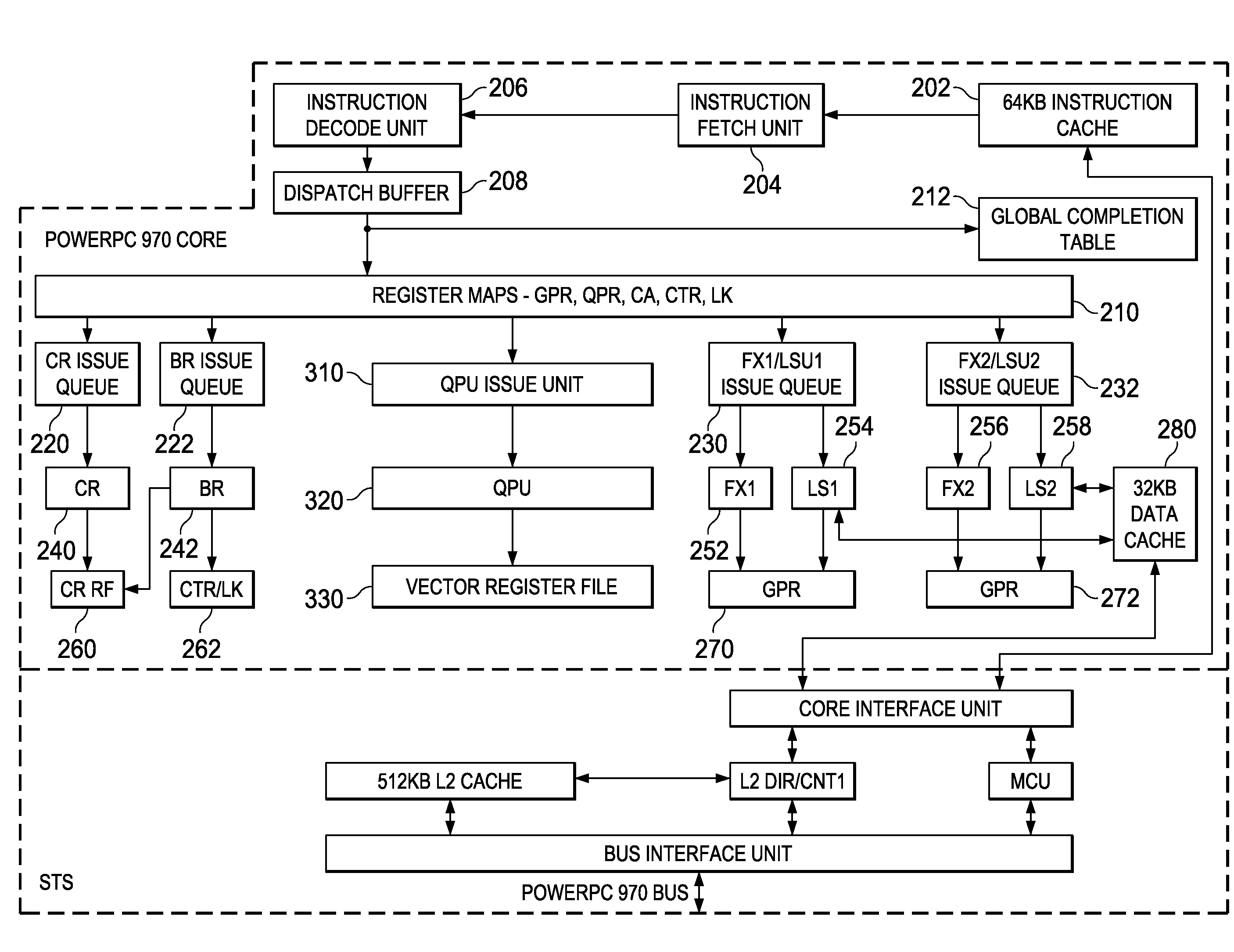

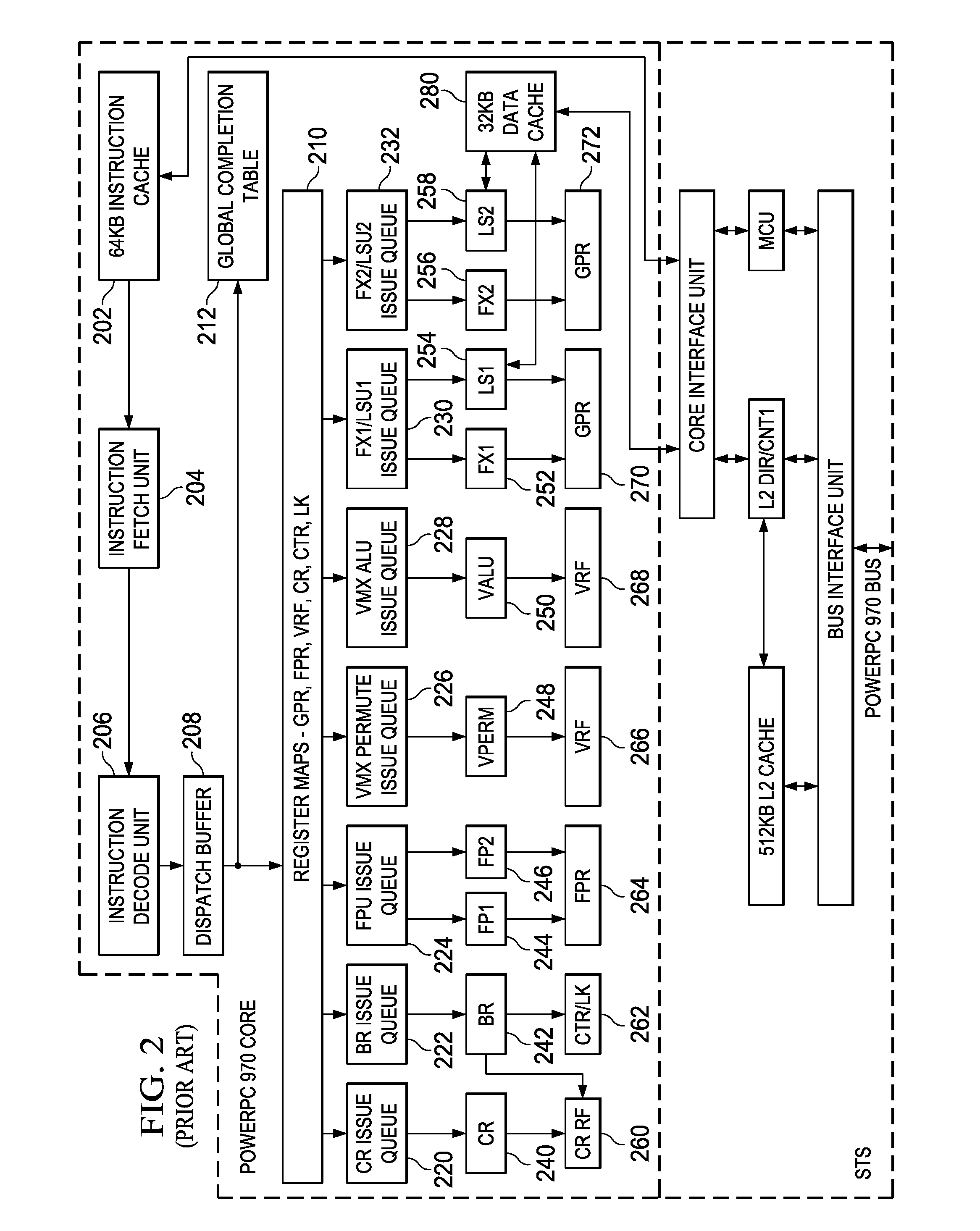

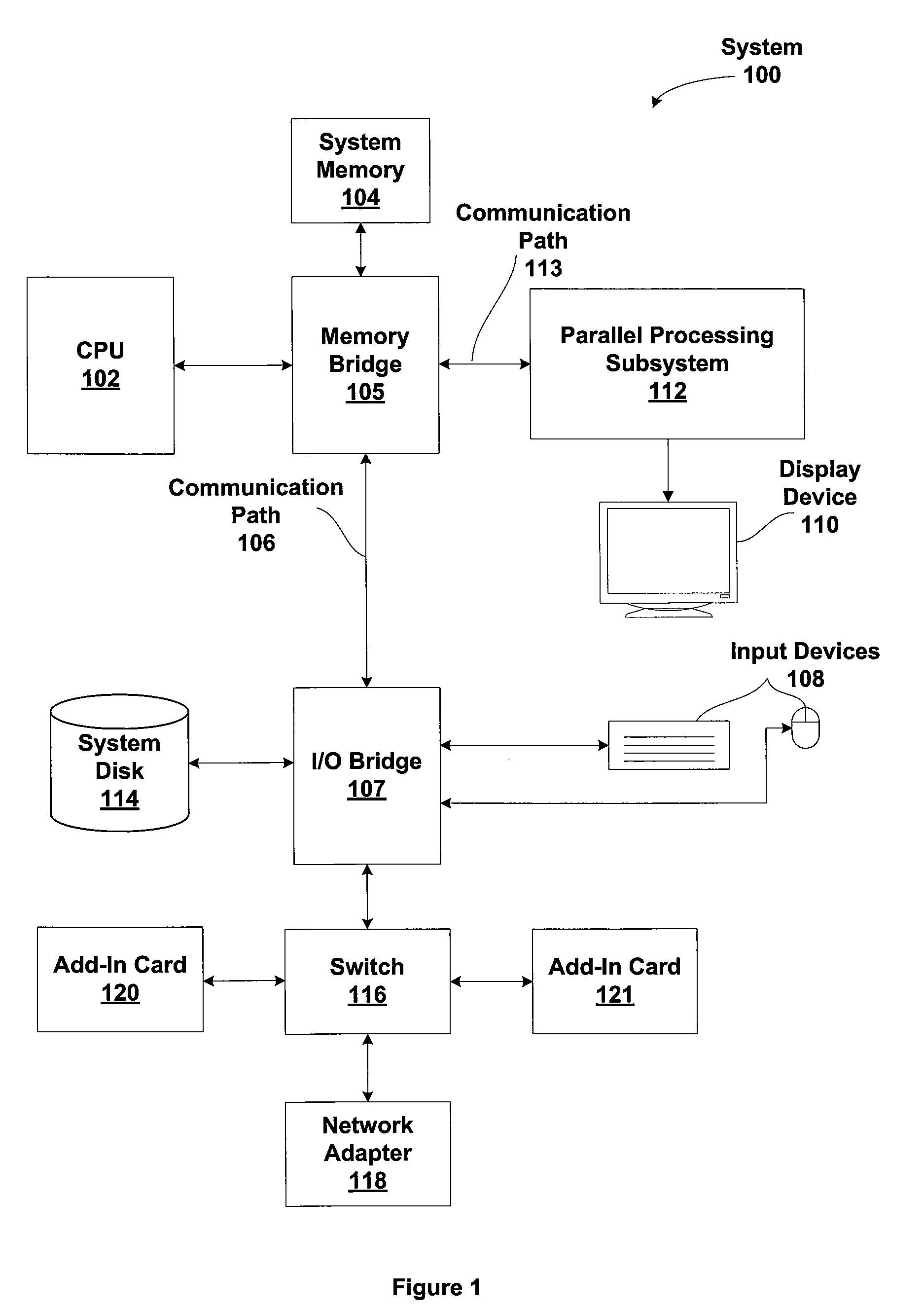

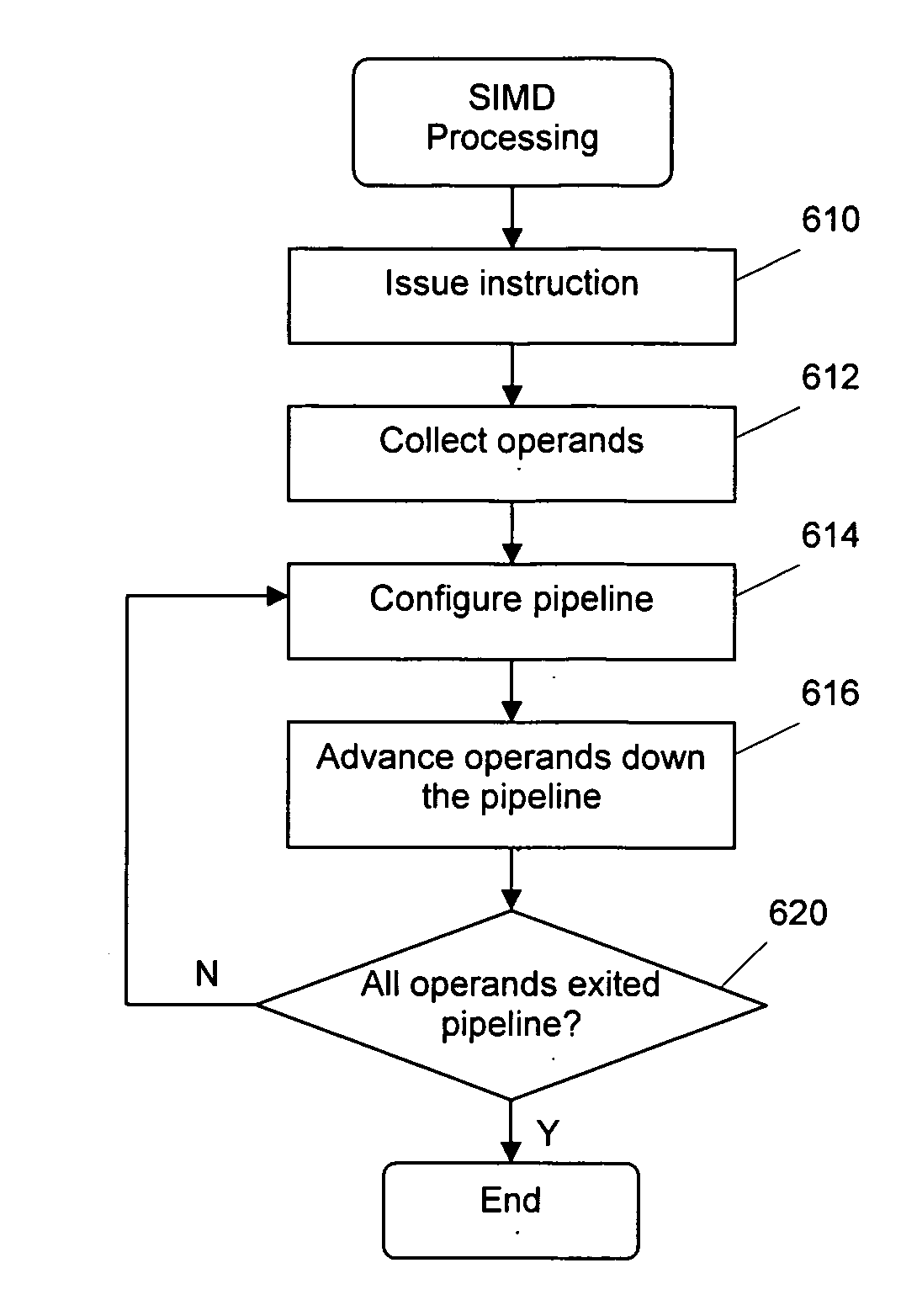

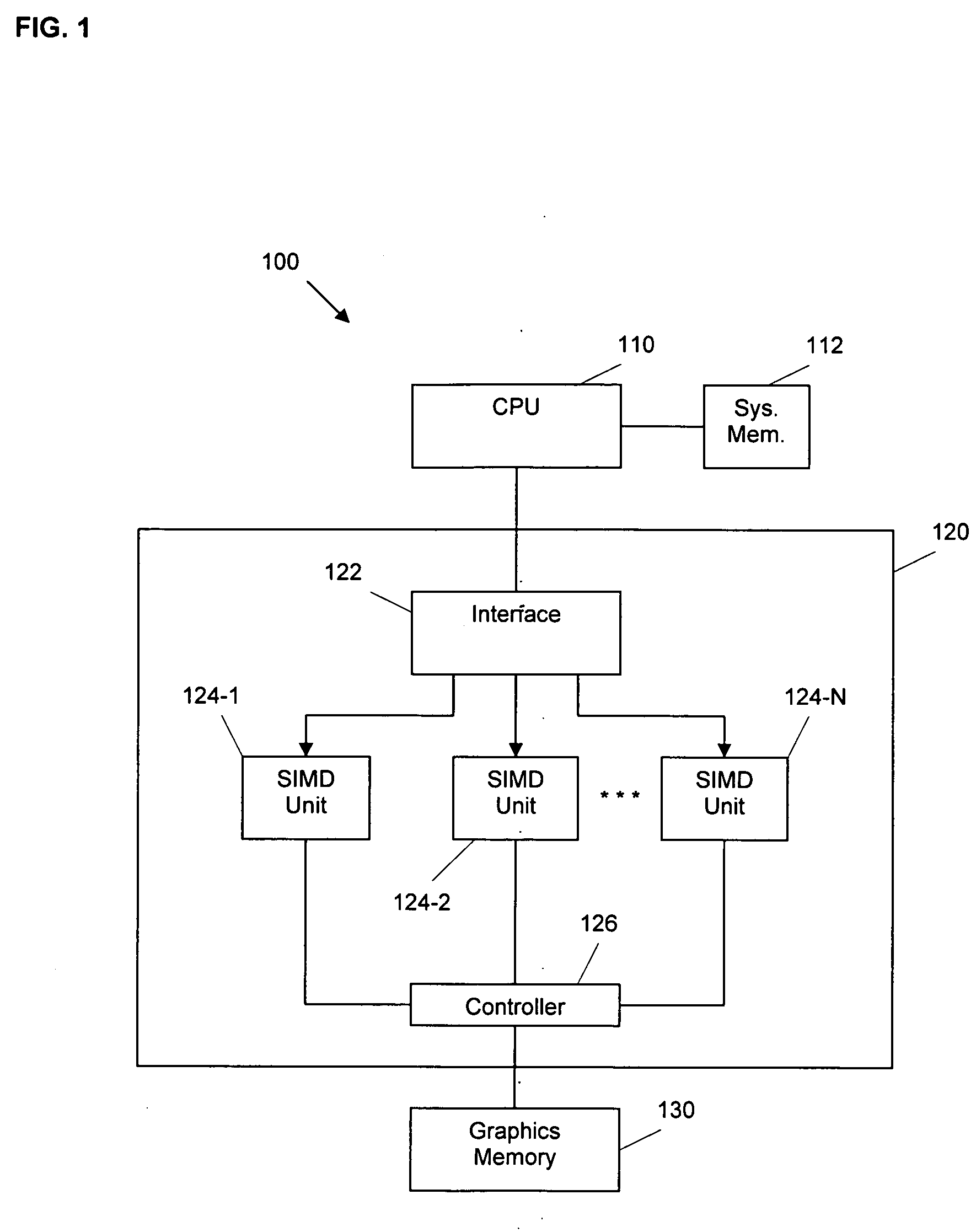

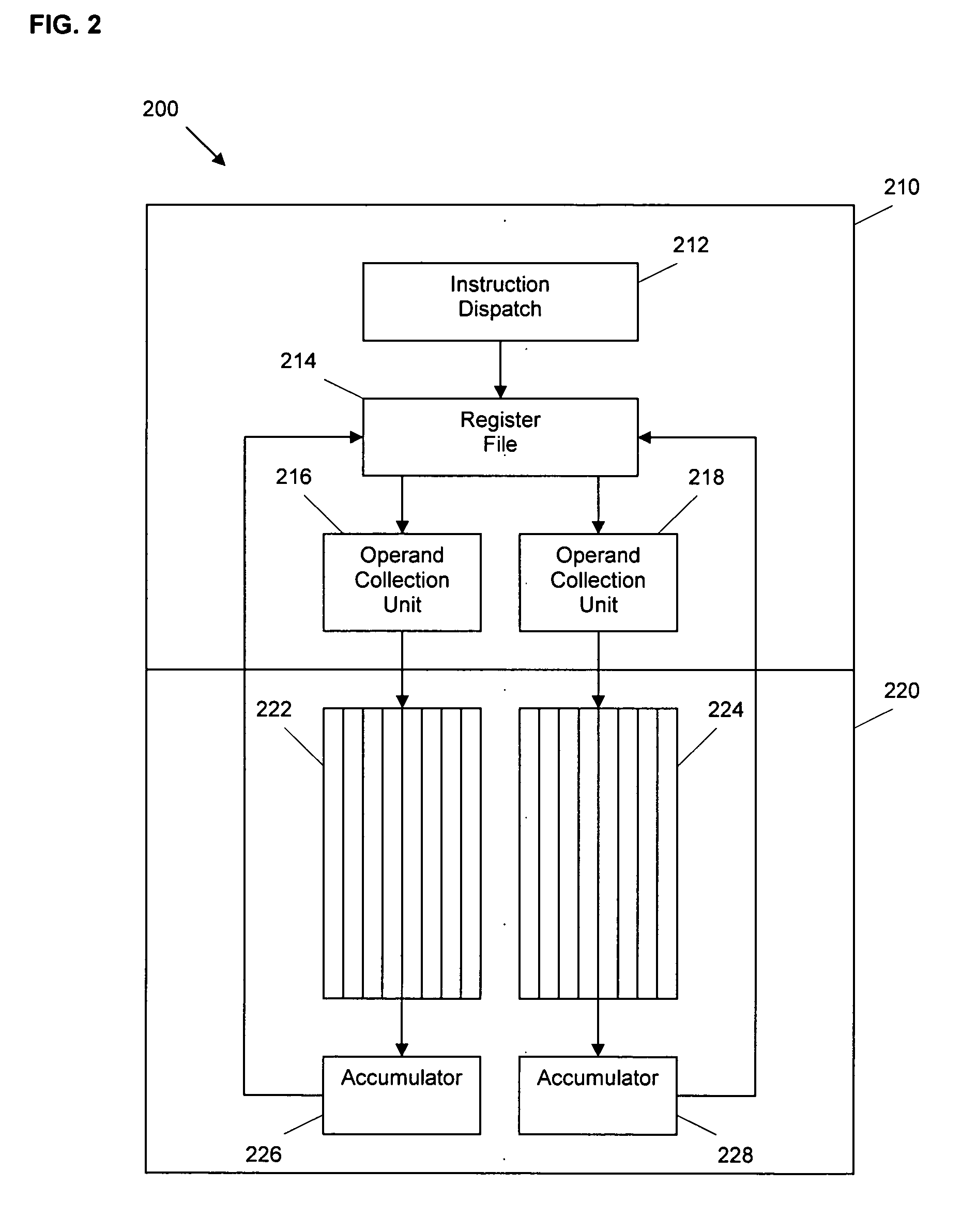

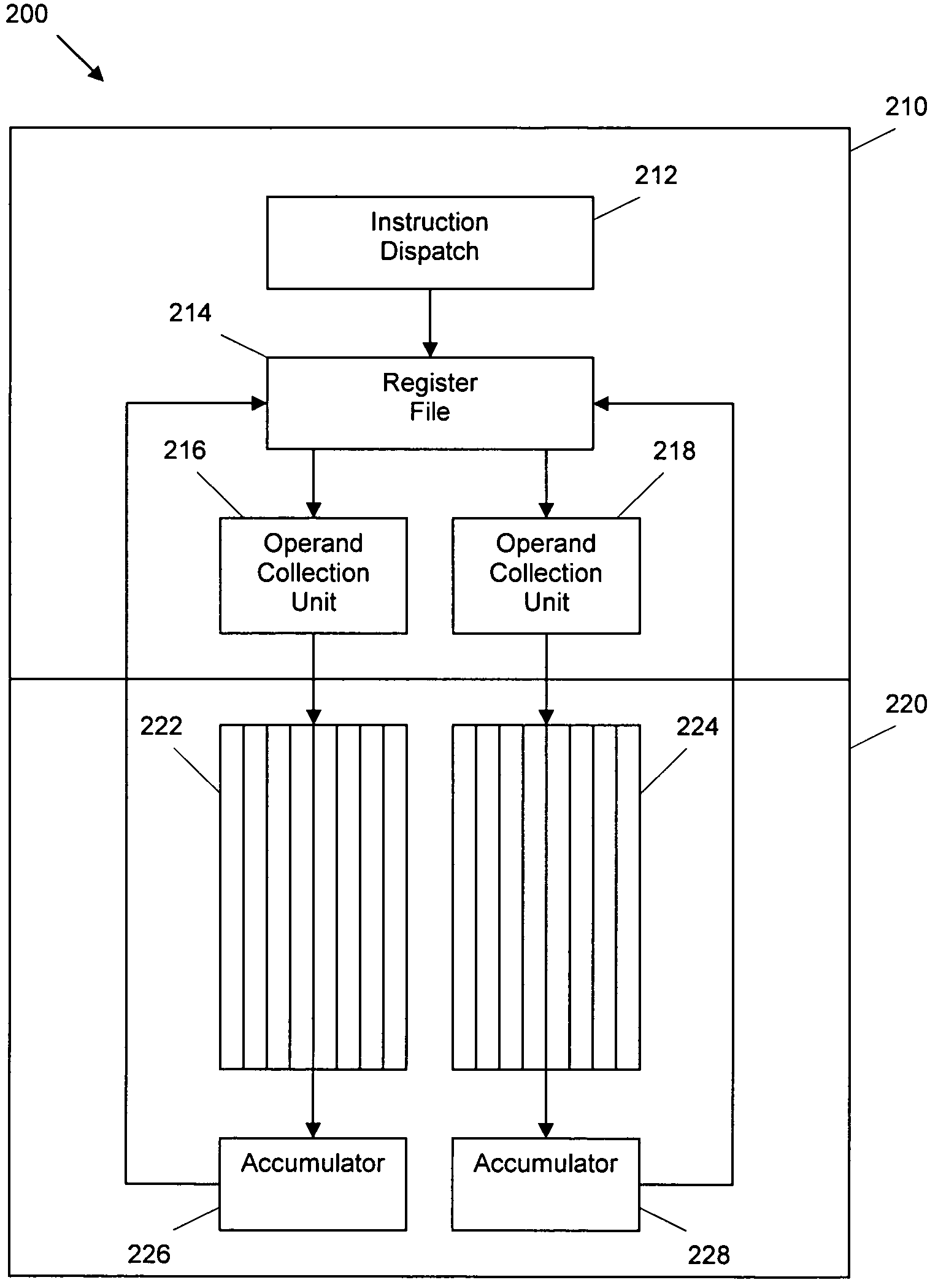

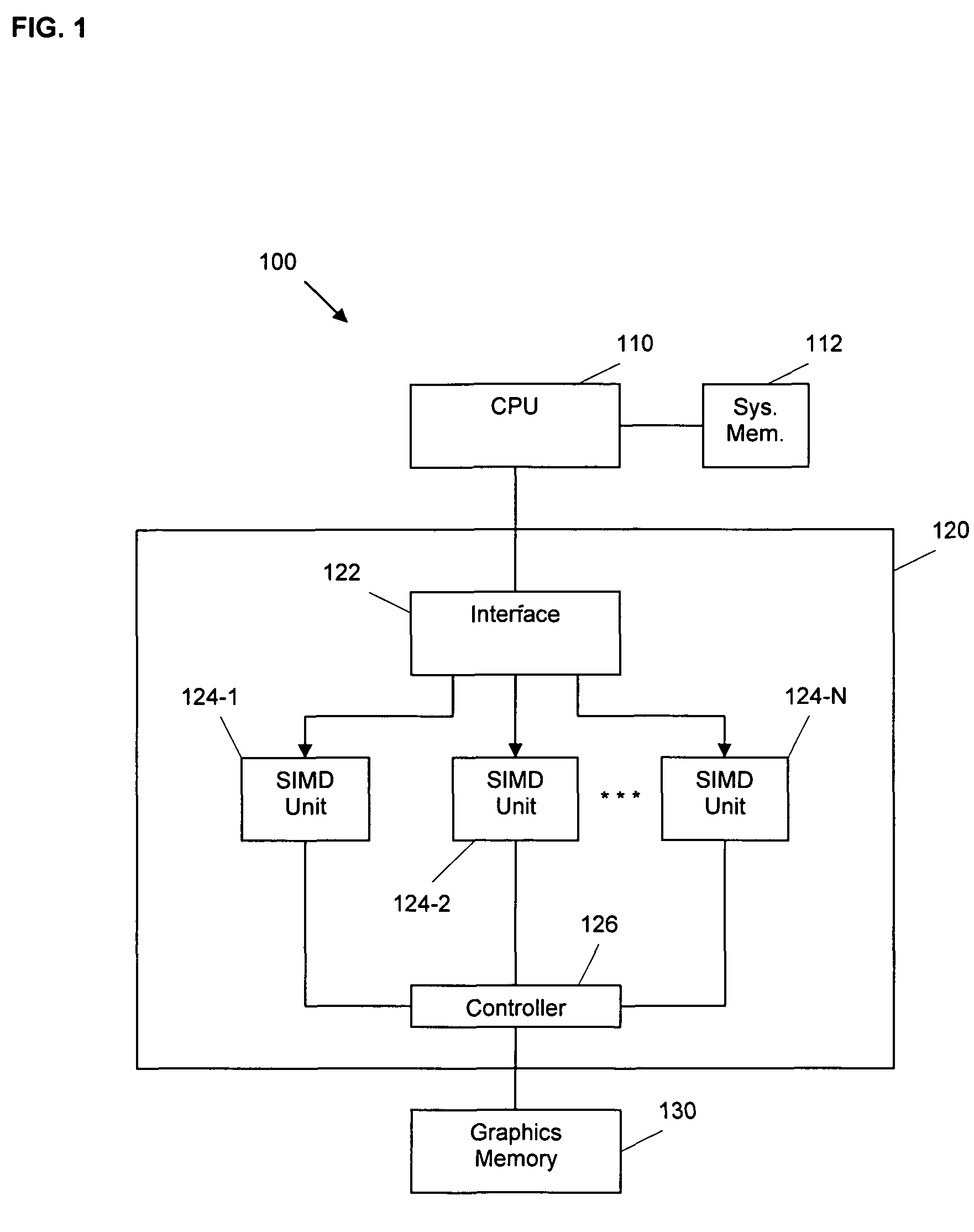

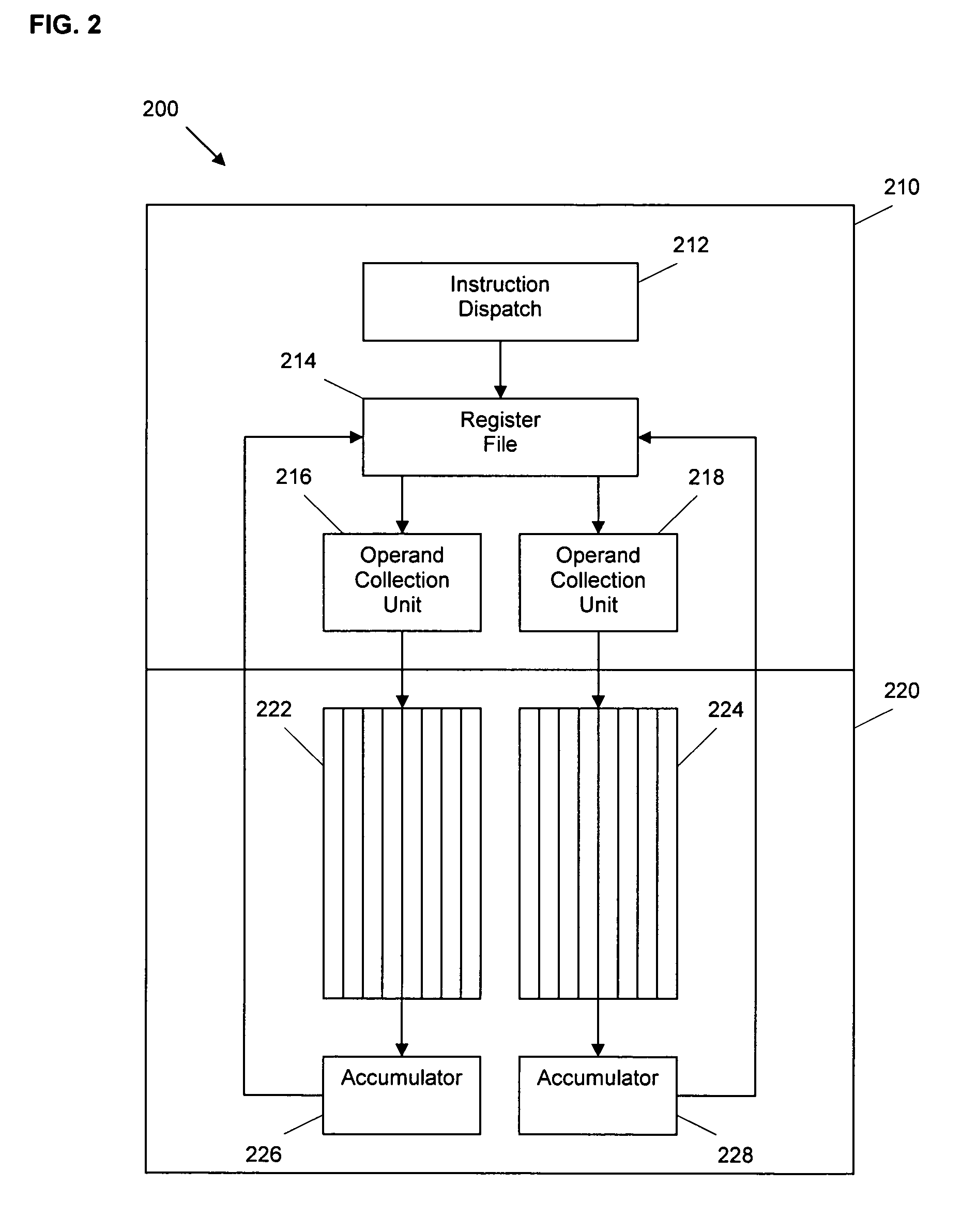

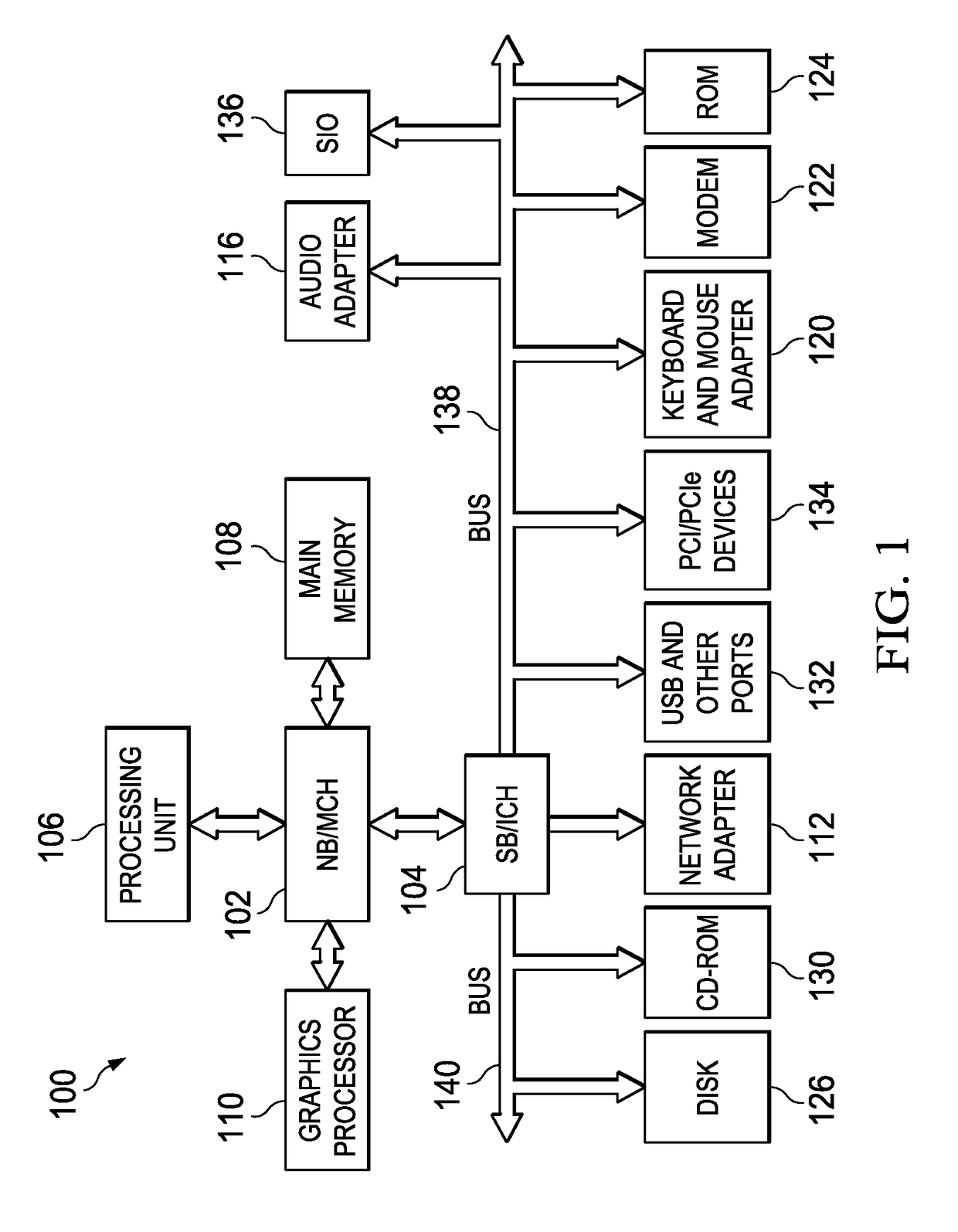

System and method for processing thread groups in a SIMD architecture

ActiveUS20070130447A1Reduce clock frequencyLow hardware requirementsGeneral purpose stored program computerMemory systemsGraphicsDatapath

A SIMD processor efficiently utilizes its hardware resources to achieve higher data processing throughput. The effective width of a SIMD processor is extended by clocking the instruction processing side of the SIMD processor at a fraction of the rate of the data processing side and by providing multiple execution pipelines, each with multiple data paths. As a result, higher data processing throughput is achieved while an instruction is fetched and issued once per clock. This configuration also allows a large group of threads to be clustered and executed together through the SIMD processor so that greater memory efficiency can be achieved for certain types of operations like texture memory accesses performed in connection with graphics processing.

Owner:NVIDIA CORP

Efficient Code Generation Using Loop Peeling for SIMD Loop Code with Multiple Misaligned Statements

InactiveUS20080222623A1No additional computational overheadReduce computational overheadSoftware engineeringGeneral purpose stored program computerData reorganizationSteady state

An approach is provided for vectorizing misaligned references in compiled code for SIMD architectures that support only aligned loads and stores. In this framework, a loop is first simdized as if the memory unit imposes no alignment constraints. The compiler then inserts data reorganization operations to satisfy the actual alignment requirements of the hardware. Finally, the code generation algorithm generates SIMD codes based on the data reorganization graph, addressing realistic issues such as runtime alignments, unknown loop bounds, residual iteration counts, and multiple statements with arbitrary alignment combinations. Loop peeling is used to reduce the computational overhead associated with misaligned data. A loop prologue and epilogue are peeled from individual iterations in the simdized loop, and vector-splicing instructions are applied to the peeled iterations, while the steady-state loop body incurs no additional computational overhead.

Owner:INT BUSINESS MASCH CORP

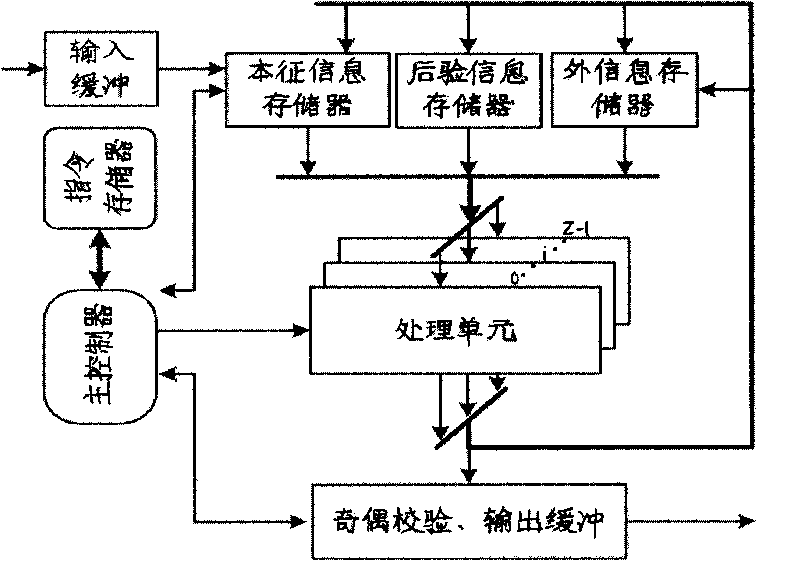

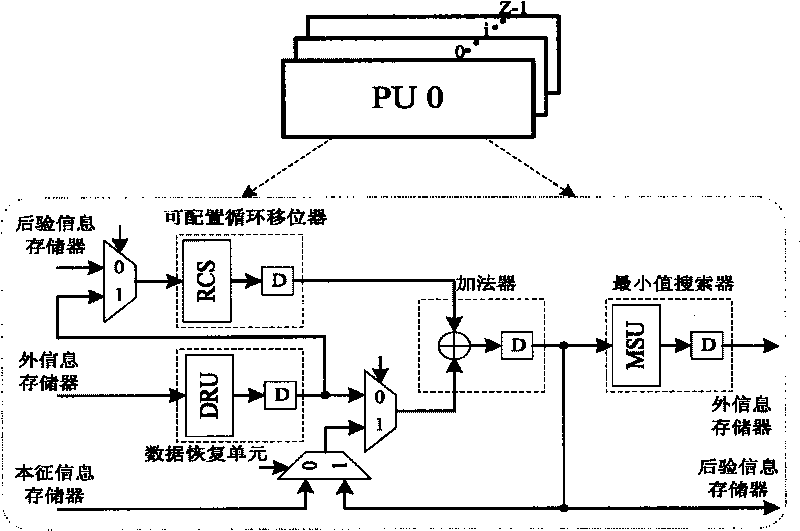

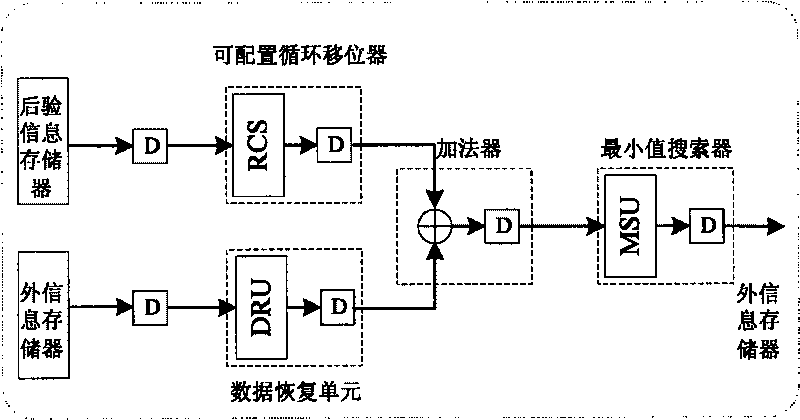

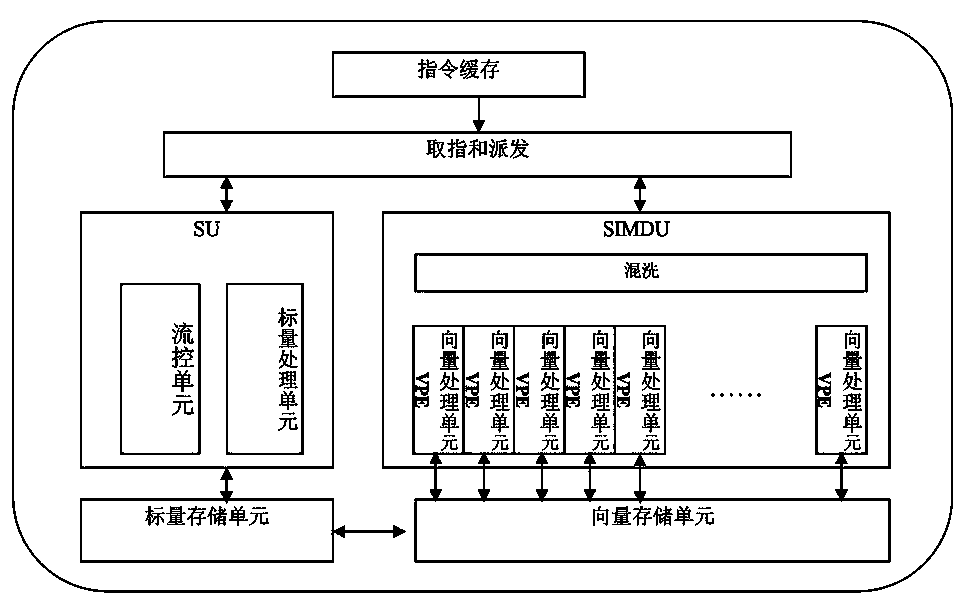

Multi-standard LDPC encoder circuit base on SIMD architecture

InactiveCN101692611AMeet the needs of multi-standard communicationReduce designError correction/detection using multiple parity bitsInstruction memoryVery large scale integrated circuits

The invention provides a multi-standard low density parity check (LDPC) encoder circuit base on a single instruction multiple data (SIMD) architecture. The LDPC encoder circuit comprises an input buffer unit, a master controller, an instruction memory, an intrinsic information memory, a posterior information memory, an external information memory, a parity check and output buffer unit and a processing unit array, wherein the processing unit array is composed of a plurality of concurrent processing units, and the processing unit adopts very large scale integrated circuits (VLSI) hardware architecture. The encoder adopts a novel two-phase message passing (TPMP) decoding algorithm, ensures that the hardware architecture is not limited by a special architecture of a block matrix, and realizes the separation of the hardware architecture and the block LDPC code check matrix architecture. The invention provides a flexible and configurable design circuit of the processing unit, effectively improves the use ratio of the hardware, reduces design area of chips, provides a dedicated and simplified SIMD instruction set which is suitable for various block LDPC codes, realizes the separation of the hardware architecture and the block LDPC code check matrix architecture, and meets the demands of multi-standard communication.

Owner:FUDAN UNIV

Fast vector masking algorithm for conditional data selection in SIMD architectures

InactiveUS8418154B2Transformation of program codeConcurrent instruction executionComputational scienceData selection

Owner:IBM CORP

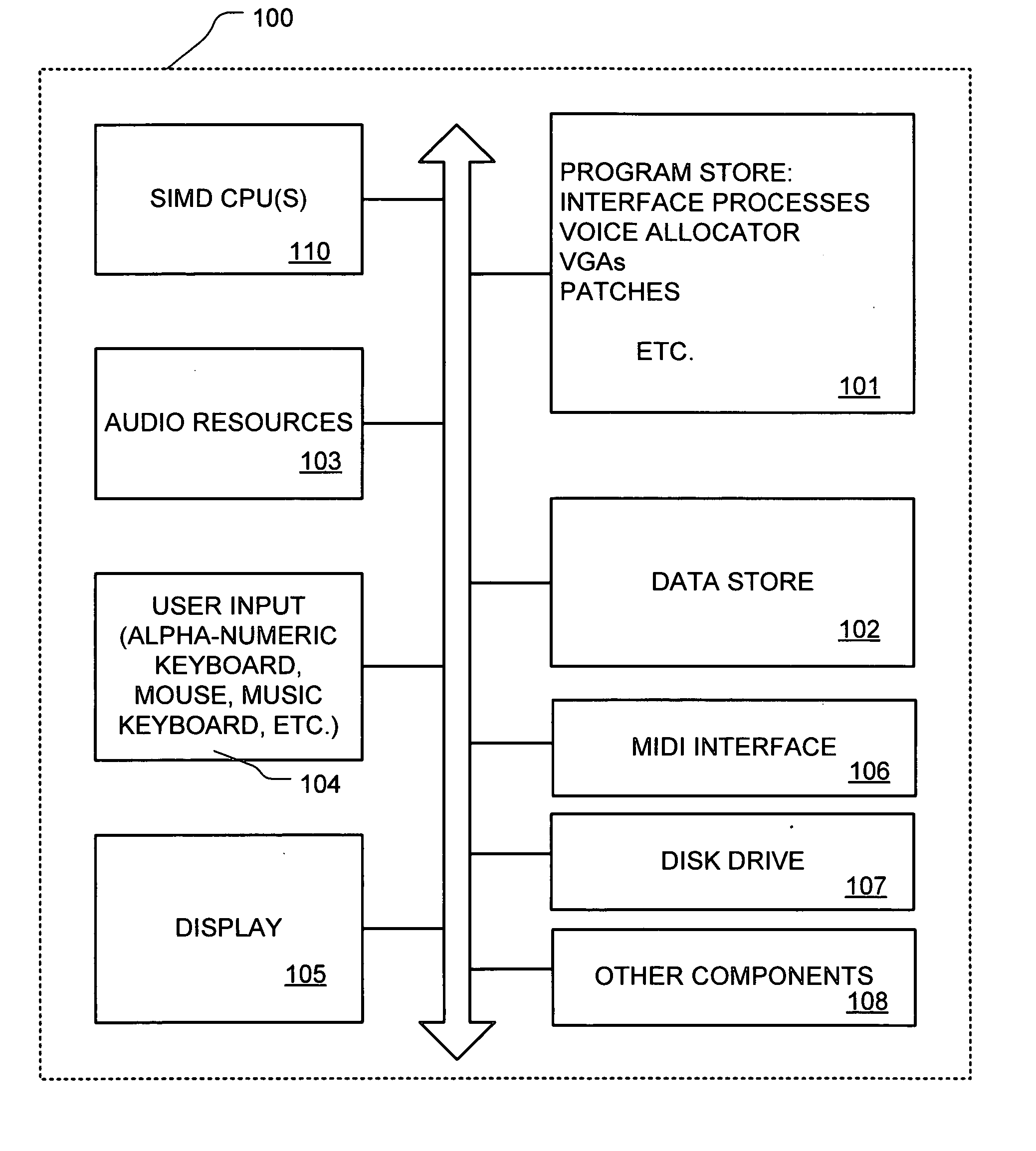

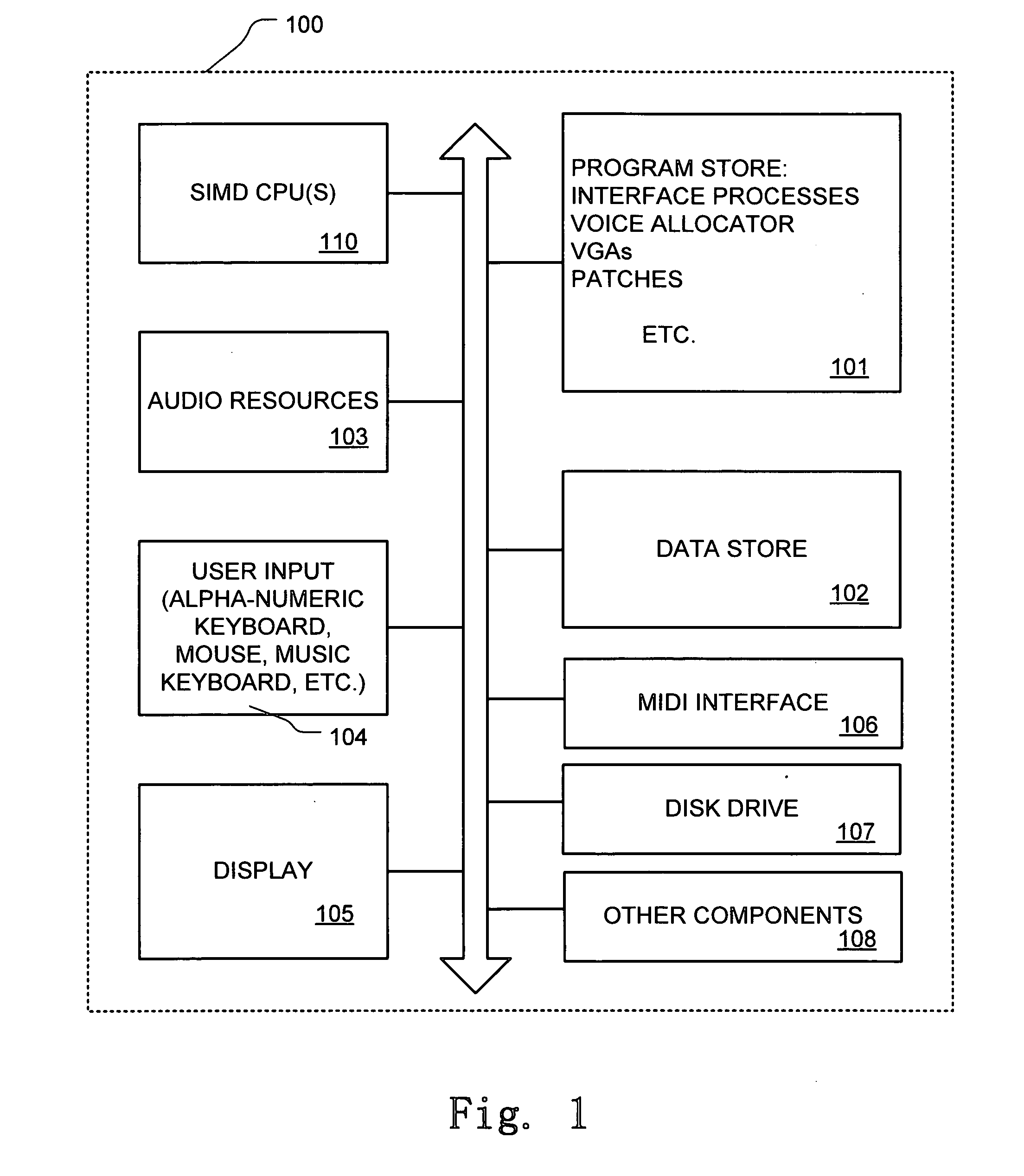

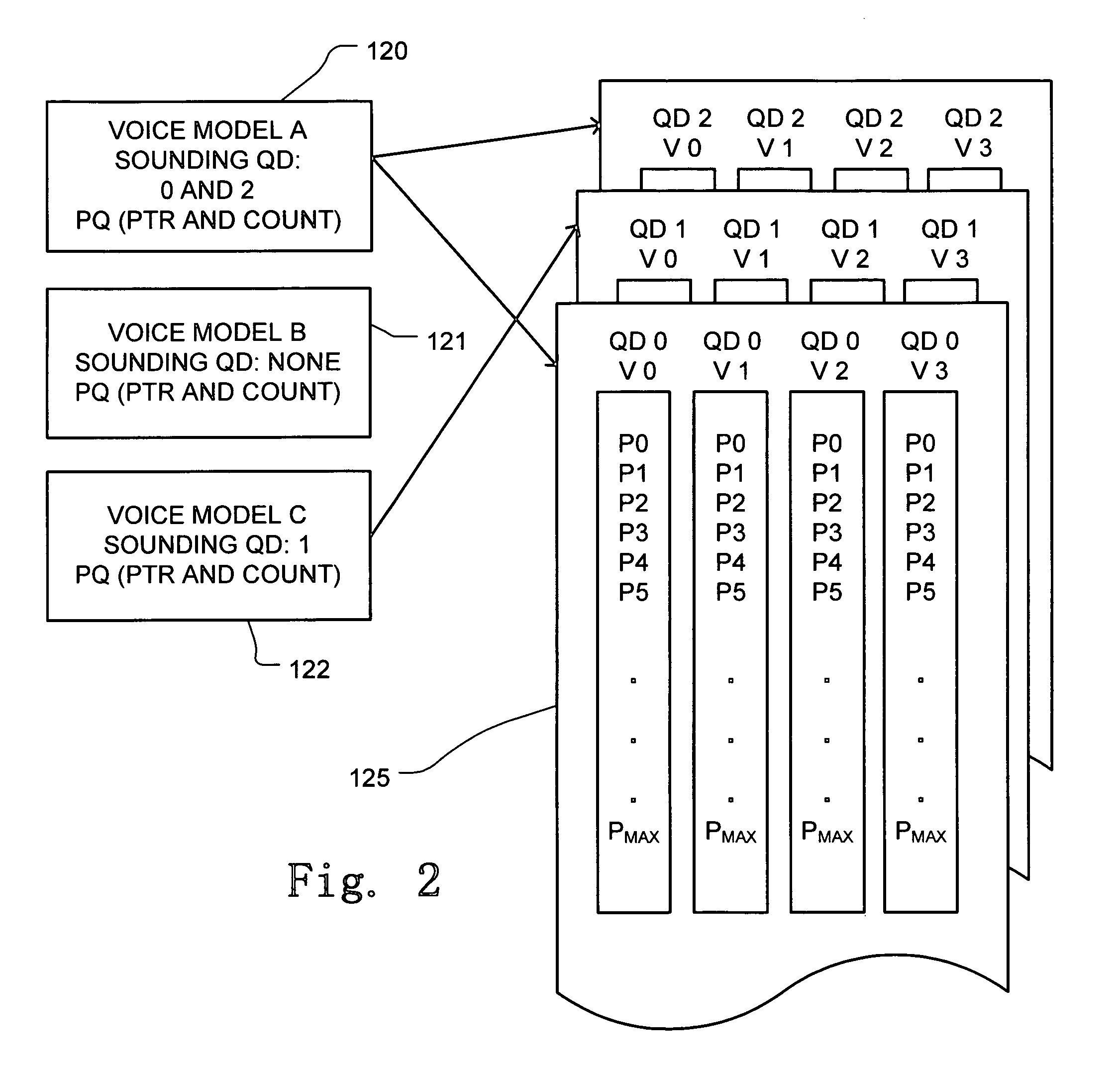

Dynamic voice allocation in a vector processor based audio processor

InactiveUS20060155543A1Reduced processor resourceAvoid system overagesSpeech synthesisMusic synthesisSpeech sound

A method dynamically allocating voices to processor resources in a music synthesizer or other audio processor includes utilizing processor resources to execute vector-based voice generation algorithm for sounding voices, such as executed using SIMD architecture processors or other vector processor architectures. The dynamic voice allocation process identifies a new voice to be executed in response to an event. The combined processor resources needed to be allocated for the new voice and for the currently sounding voices are determined. If the processor resources are available to meet the combined need, then processor resources are allocated to a voice generation algorithm for the new voice, and if the processor resources are not available, then voices are stolen. To steal voices, processor resources are de-allocated from at least one sounding voice or sounding voice cluster.

Owner:KORG

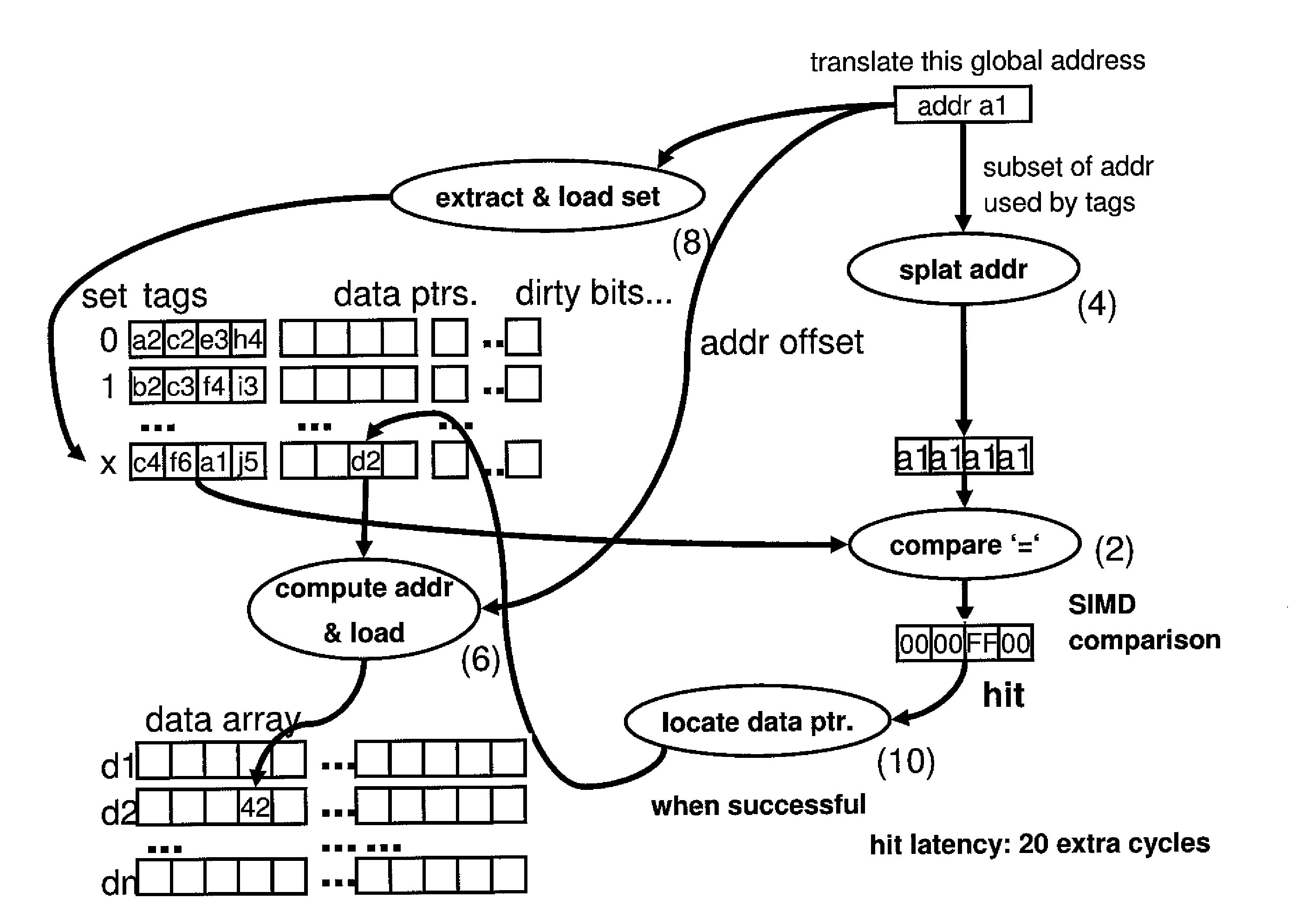

Optimized software cache lookup for SIMD architectures

InactiveUS8370575B2Improve performanceBig amount of dataMemory adressing/allocation/relocationMicro-instruction address formationPosition dependentSoftware cache

Process, cache memory, computer product and system for loading data associated with a requested address in a software cache. The process includes loading address tags associated with a set in a cache directory using a Single Instruction Multiple Data (SIMD) operation, determining a position of the requested address in the set using a SIMD comparison, and determining an actual data value associated with the position of the requested address in the set.

Owner:IBM CORP

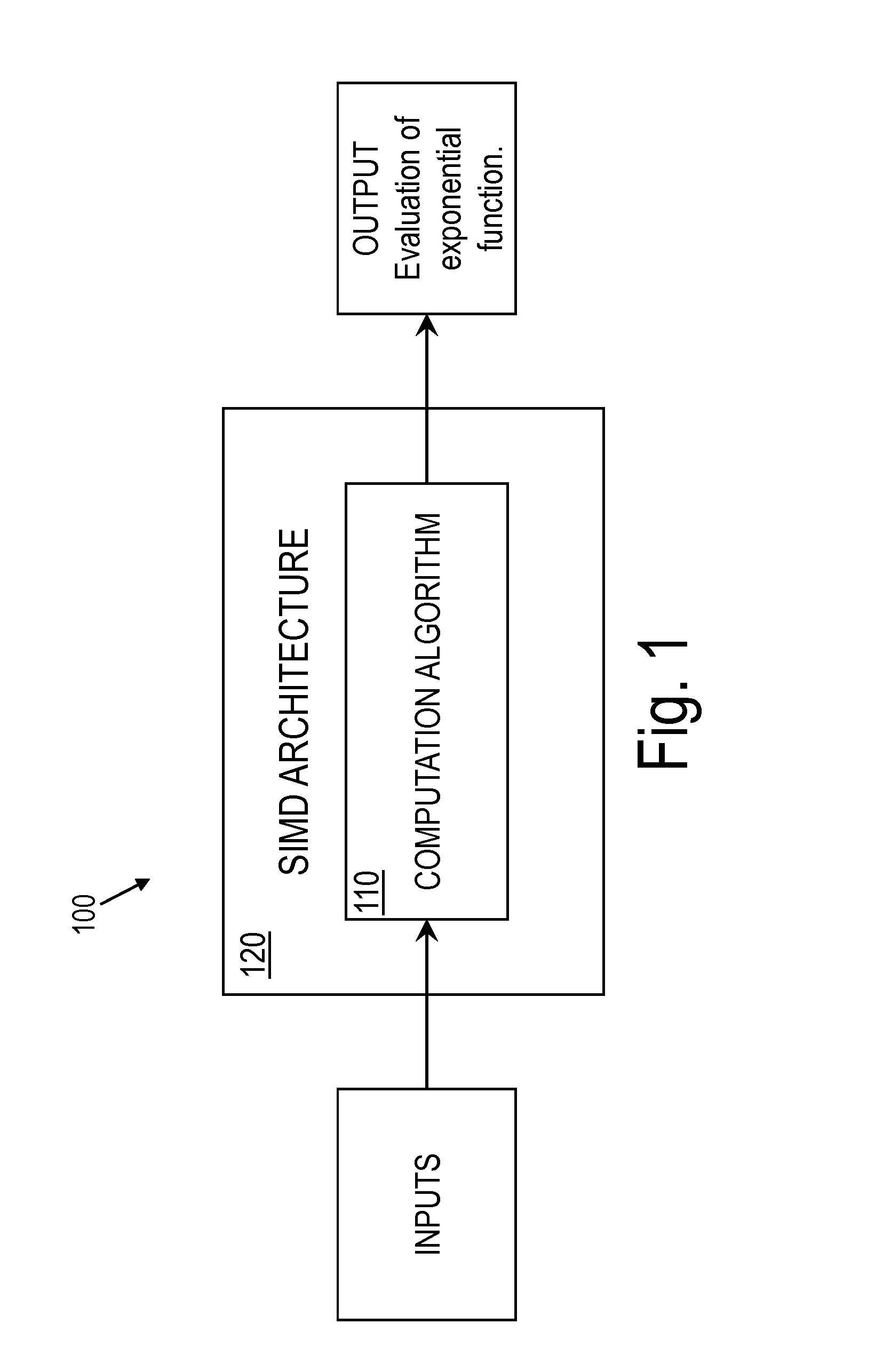

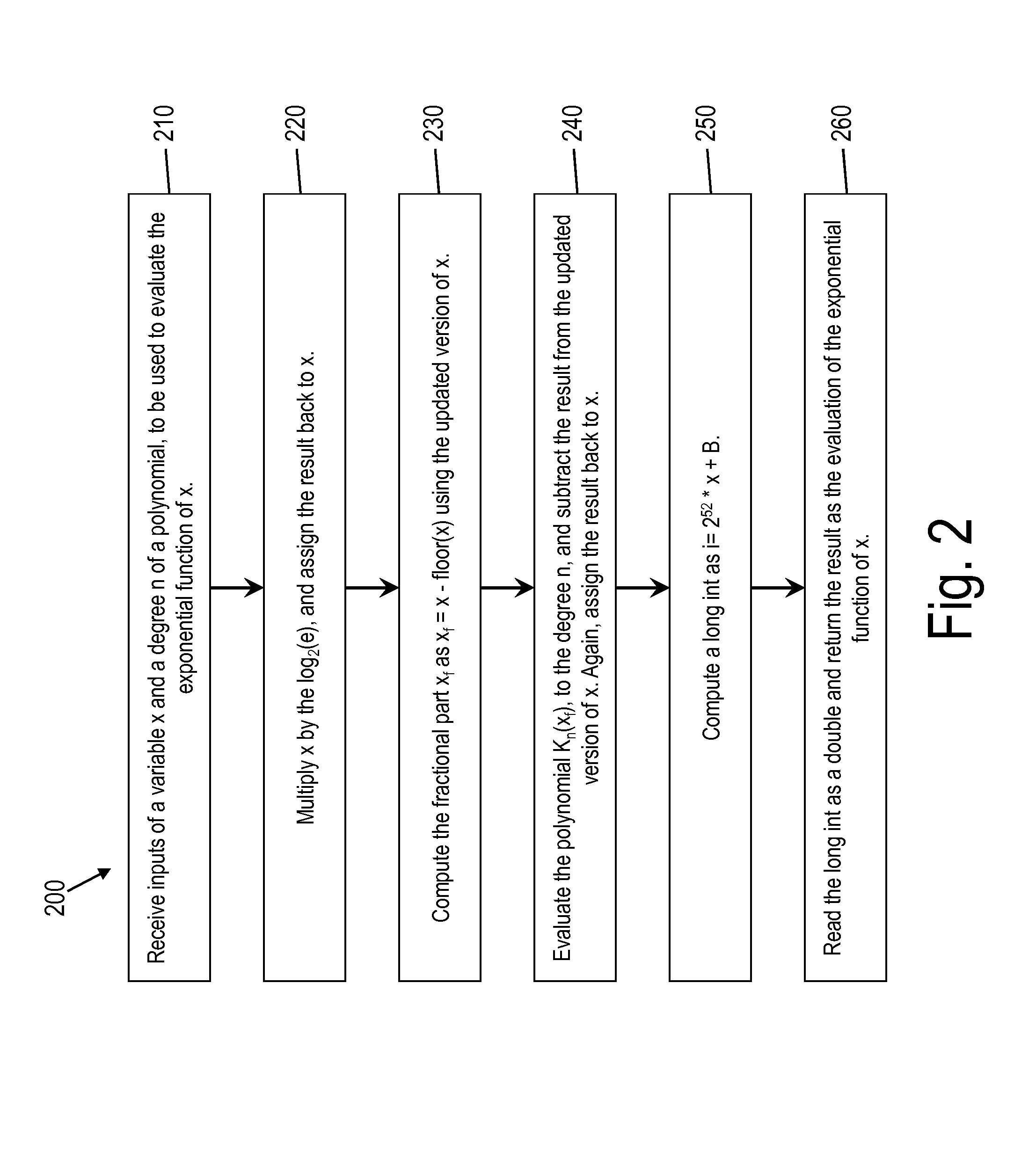

Fast, energy-efficient exponential computations in simd architectures

InactiveUS20160124713A1Digital computer detailsSpecific program execution arrangementsTheoretical computer scienceAssessment data

In one embodiment, a computer-implemented method includes receiving as input a value of a variable x and receiving as input a degree n of a polynomial function being used to evaluate an exponential function ex. A first expression A*(x−ln(2)*Kn(xf))+B is evaluated, by one or more computer processors in a single instruction multiple data (SIMD) architecture, as an integer and is read as a double. In the first expression, Kn(xf) is a polynomial function of the degree n, xf is a fractional part of x / ln(2), A=252 / ln(2), and B=1023*252. The result of reading the first expression as a double is returned as the value of the exponential function with respect to the variable x.

Owner:IBM CORP

System and method for processing thread groups in a SIMD architecture

ActiveUS7836276B2Efficient use ofImprove throughputGeneral purpose stored program computerConcurrent instruction executionGraphicsSimd processor

A SIMD processor efficiently utilizes its hardware resources to achieve higher data processing throughput. The effective width of a SIMD processor is extended by clocking the instruction processing side of the SIMD processor at a fraction of the rate of the data processing side and by providing multiple execution pipelines, each with multiple data paths. As a result, higher data processing throughput is achieved while an instruction is fetched and issued once per clock. This configuration also allows a large group of threads to be clustered and executed together through the SIMD processor so that greater memory efficiency can be achieved for certain types of operations like texture memory accesses performed in connection with graphics processing.

Owner:NVIDIA CORP

All-to-all permutation of vector elements based on a permutation pattern encoded in mantissa and exponent bits in a floating-point SIMD architecture

InactiveUS9652231B2Handling data according to predetermined rulesGeneral purpose stored program computerControl vectorProcessor register

Mechanisms are provided for dynamic data driven alignment and data formatting in a floating point SIMD architecture. At least two operand inputs are input to a permute unit of a processor. Each operand input contains at least one floating point value upon which a permute operation is to be performed by the permute unit. A control vector input, having a plurality of floating point values that together constitute the control vector input, is input to the permute unit of the processor for controlling the permute operation of the permute unit. The permute unit performs a permute operation on the at least two operand inputs according to a permutation pattern specified by the plurality of floating point values that constitute the control vector input. Moreover, a result output of the permute operation is output from the permute unit to a result vector register of the processor.

Owner:INT BUSINESS MASCH CORP

Efficient implementation of arrays of structures on SIMT and SIMD architectures

ActiveUS8751771B2Improve processing efficiencyResource allocationImage memory managementAccess methodJoint Implementation

One embodiment of the present invention sets forth a technique providing an optimized way to allocate and access memory across a plurality of thread / data lanes. Specifically, the device driver receives an instruction targeted to a memory set up as an array of structures of arrays. The device driver computes an address within the memory using information about the number of thread / data lanes and parameters from the instruction itself. The result is a memory allocation and access approach where the device driver properly computes the target address in the memory. Advantageously, processing efficiency is improved where memory in a parallel processing subsystem is internally stored and accessed as an array of structures of arrays, proportional to the SIMT / SIMD group width (the number of threads or lanes per execution group).

Owner:NVIDIA CORP

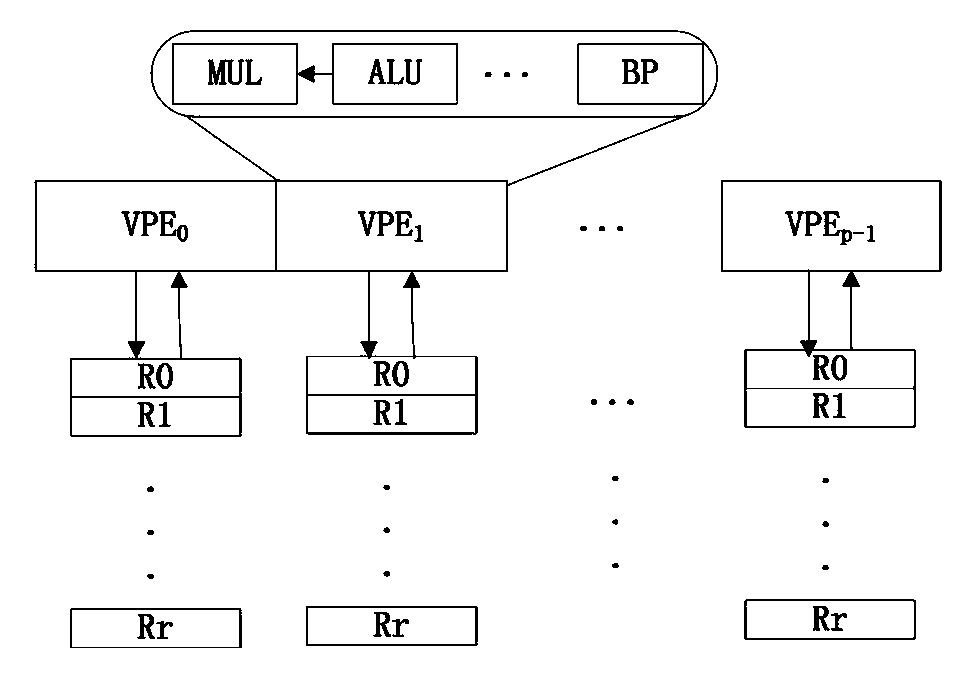

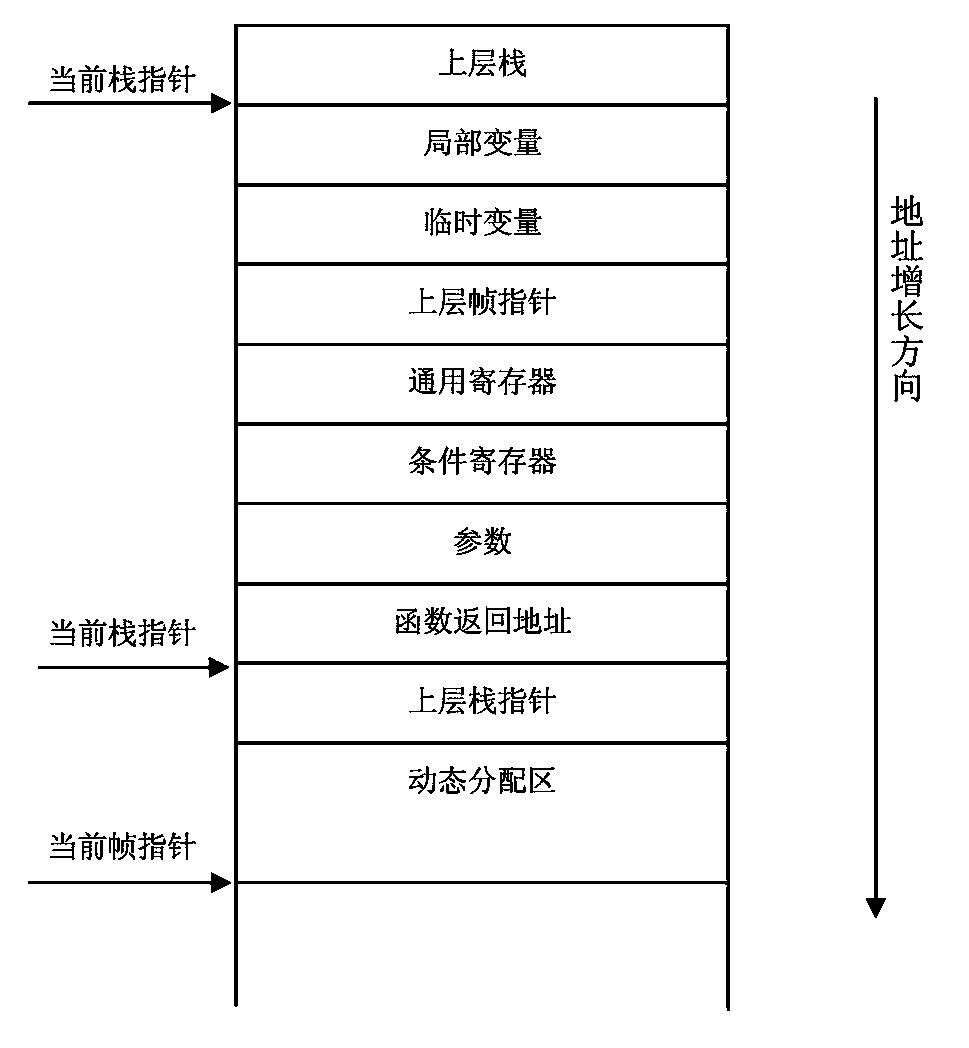

Distributed stacking data storage method supporting SIMD system structure

ActiveCN103942152AImprove access performanceReduce transmission bandwidth requirementsMemory adressing/allocation/relocationEnergy efficient computingLocal variableBandwidth requirement

The invention discloses a distributed stacking data storage method supporting an SIMD system structure. Stacking spaces are allocated in an internal storage in a distribution mode, scalar stacks storing scalar information are allocated in a scalar storage, and vector stacks storing vector information are allocated in a vector storage; when a program is compiled, local variables needing to be accessed by scalar units are allocated in the scalar stacks, and local variables needing to be accessed by vector units are allocated in the vector stacks; when the program is operated, the scalar information, needing to be stored, in a program switching site, is stored in the scalar stacks, and vector information, needing to be stored, in a program switching site, is stored in the scalar stacks, and when the program returns on site, the scalar information is directly read from the scalar stacks to the scalar units, and the vector information is directly read from the vector stacks to the vector units. The distributed stacking data storage method supporting the SIMD system structure has the advantages of being high in storing and accessing speed of stacking data, small in bandwidth requirement, high in system performance and low in power consumption.

Owner:NAT UNIV OF DEFENSE TECH

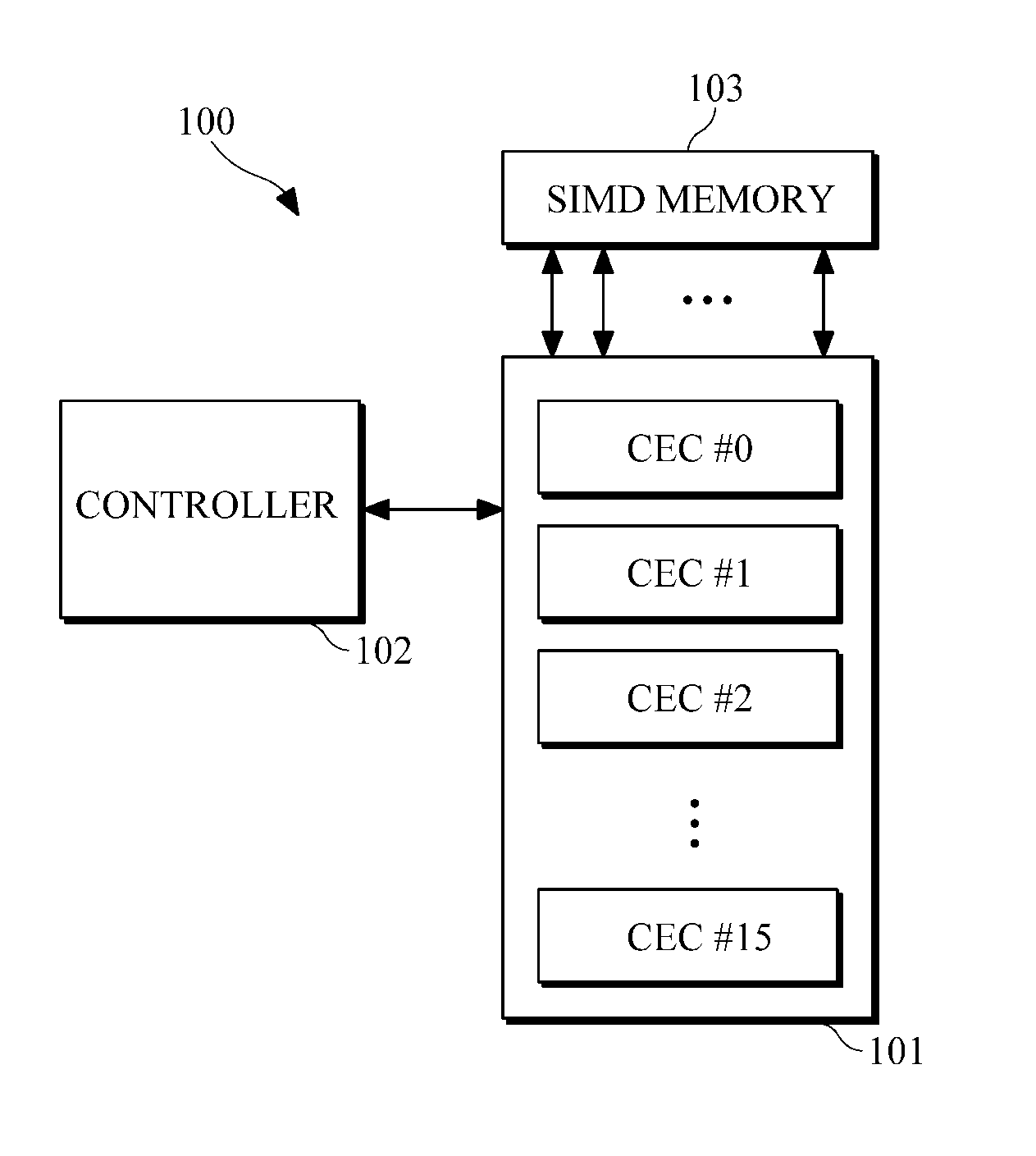

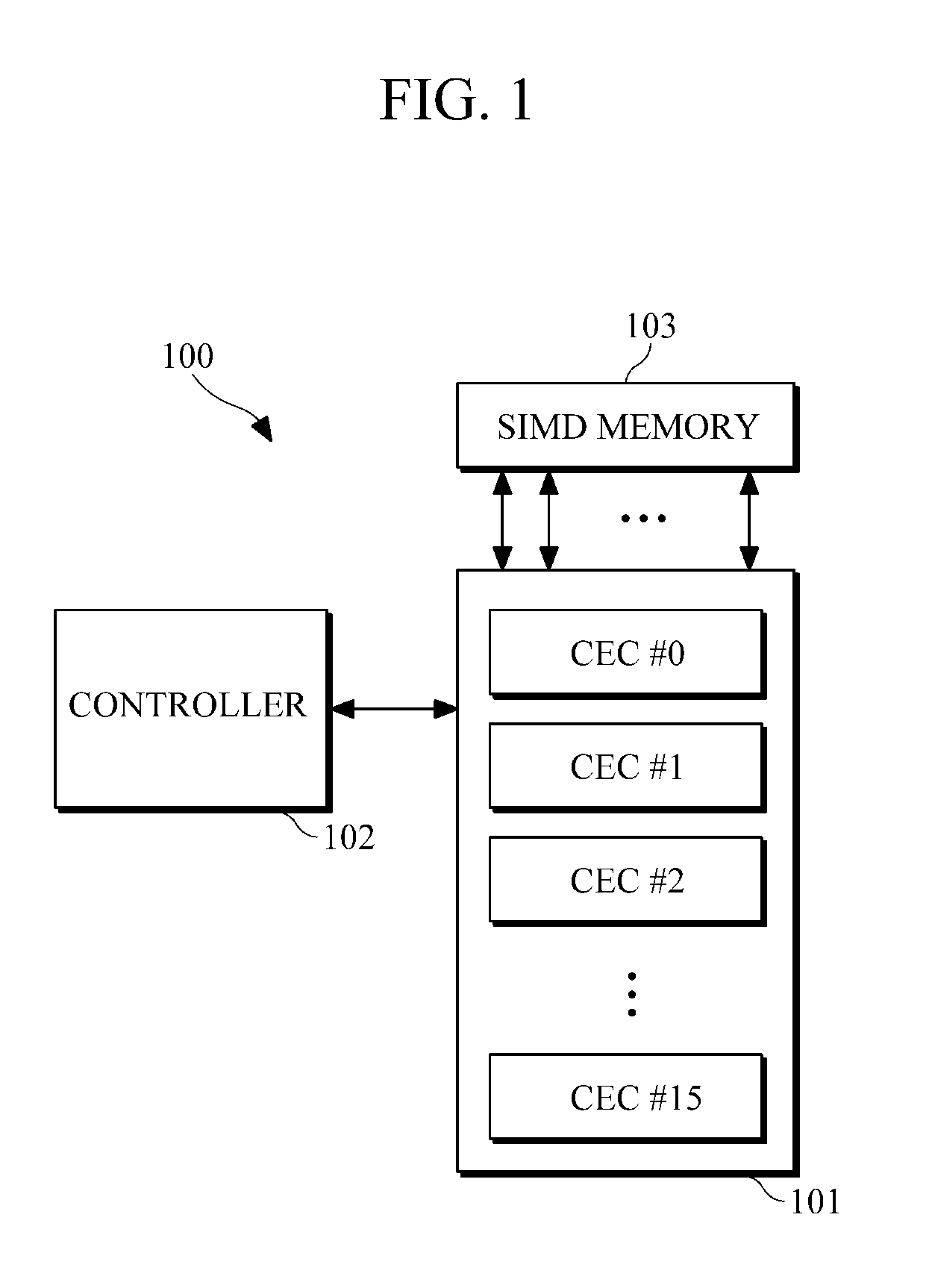

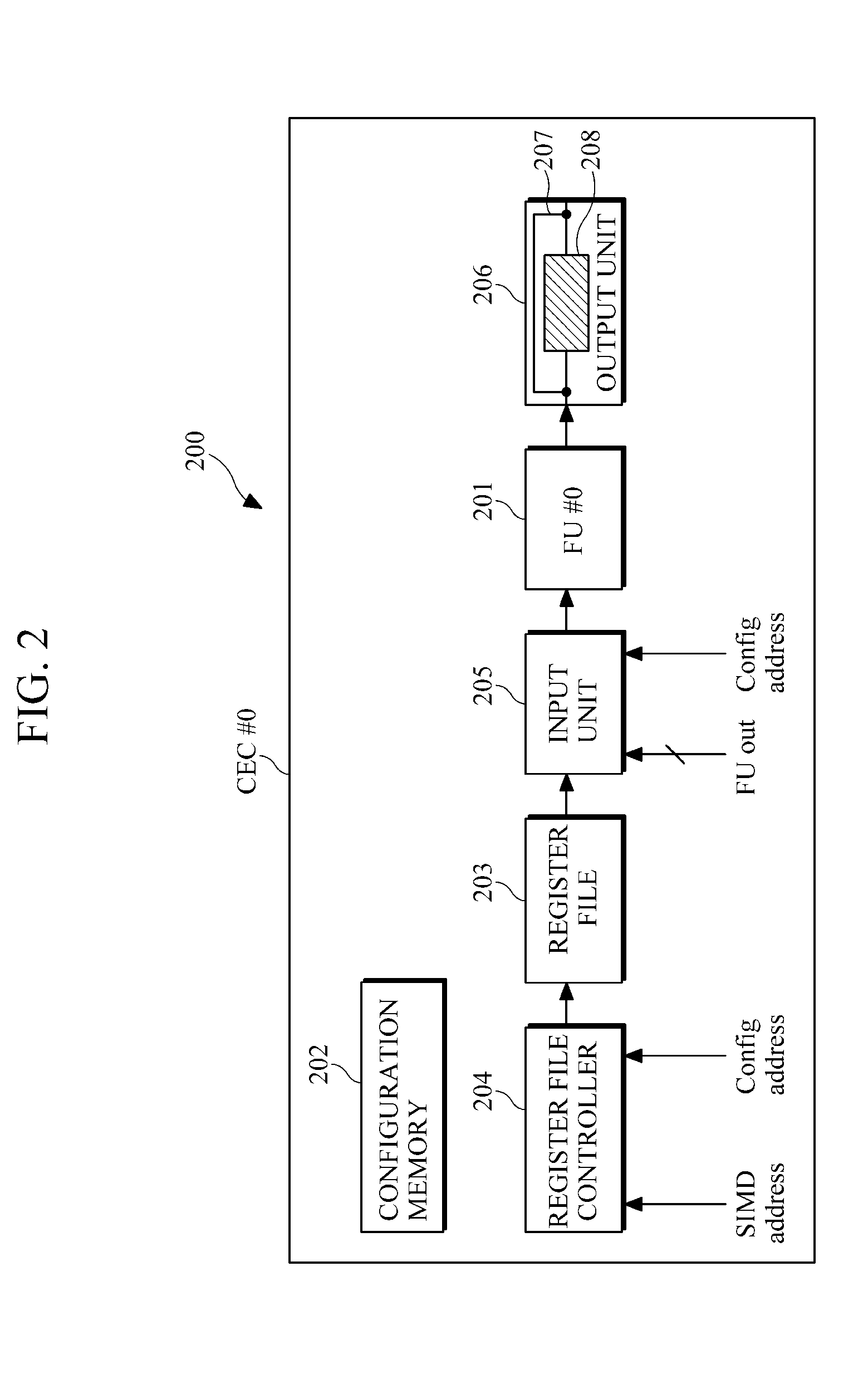

Computing apparatus and method based on a reconfigurable single instruction multiple data (SIMD) architecture

InactiveUS20120166762A1Achieve processing efficiencySingle instruction multiple data multiprocessorsProgram control using wired connectionsSimd architecture

Provided are a computing apparatus and method based on SIMD architecture capable of supporting various SIMD widths without wasting resources. The computing apparatus includes a plurality of configurable execution cores (CECs) that have a plurality of execution modes, and a controller for detecting a loop region from a program, determining a Single Instruction Multiple Data (SIMD) width for the detected loop region, and determining an execution mode of the processor according to the determined SIMD width.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com