Patents

Literature

86 results about "High dimensional data sets" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Pattern change discovery between high dimensional data sets

ActiveUS20140122039A1Cancel noiseFacilitate underlying processComputation using non-denominational number representationDesign optimisation/simulationDirect computationMatrix decomposition

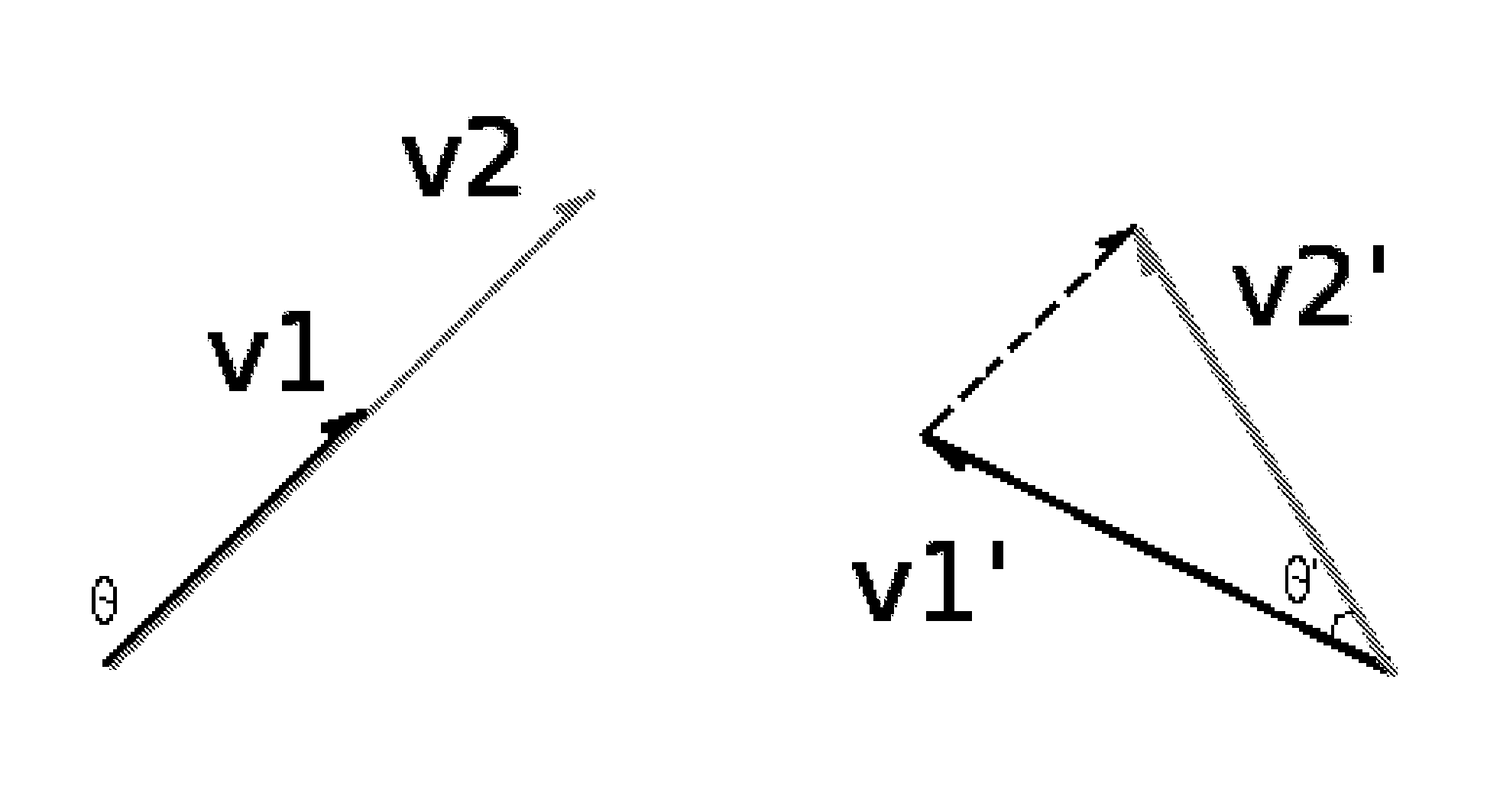

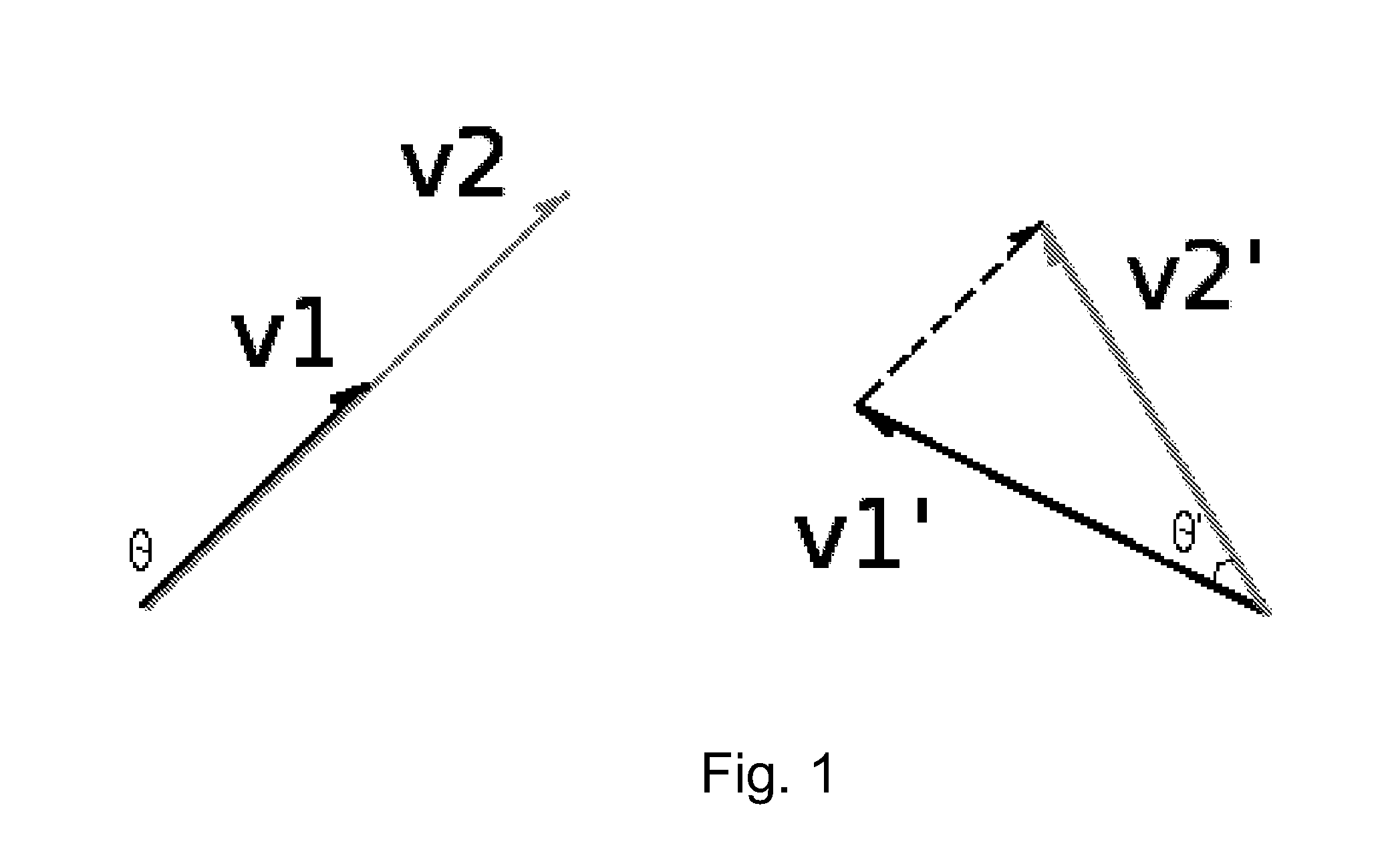

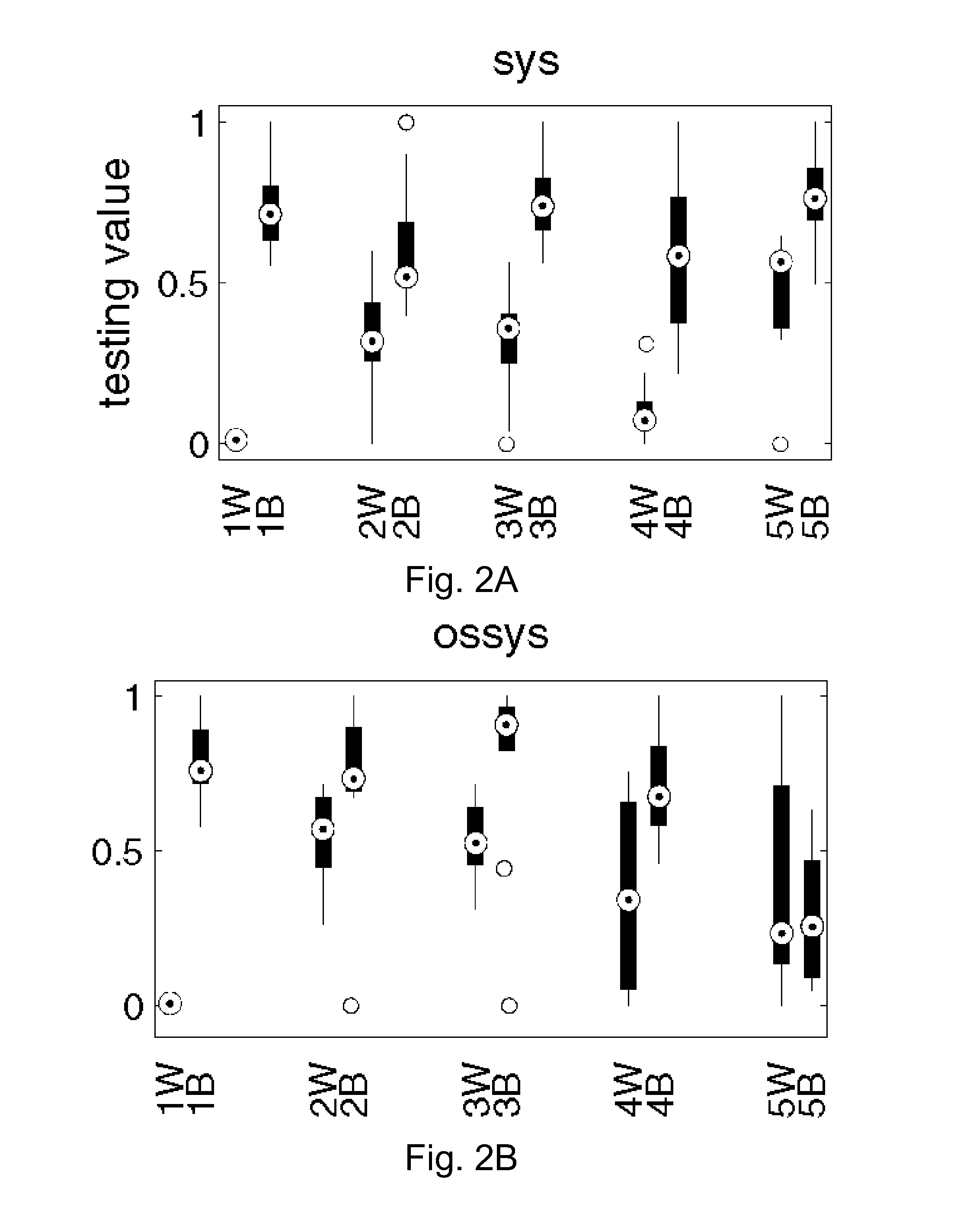

The general problem of pattern change discovery between high-dimensional data sets is addressed by considering the notion of the principal angles between the subspaces is introduced to measure the subspace difference between two high-dimensional data sets. Current methods either mainly focus on magnitude change detection of low-dimensional data sets or are under supervised frameworks. Principal angles bear a property to isolate subspace change from the magnitude change. To address the challenge of directly computing the principal angles, matrix factorization is used to serve as a statistical framework and develop the principle of the dominant subspace mapping to transfer the principal angle based detection to a matrix factorization problem. Matrix factorization can be naturally embedded into the likelihood ratio test based on the linear models. The method may be unsupervised and addresses the statistical significance of the pattern changes between high-dimensional data sets.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

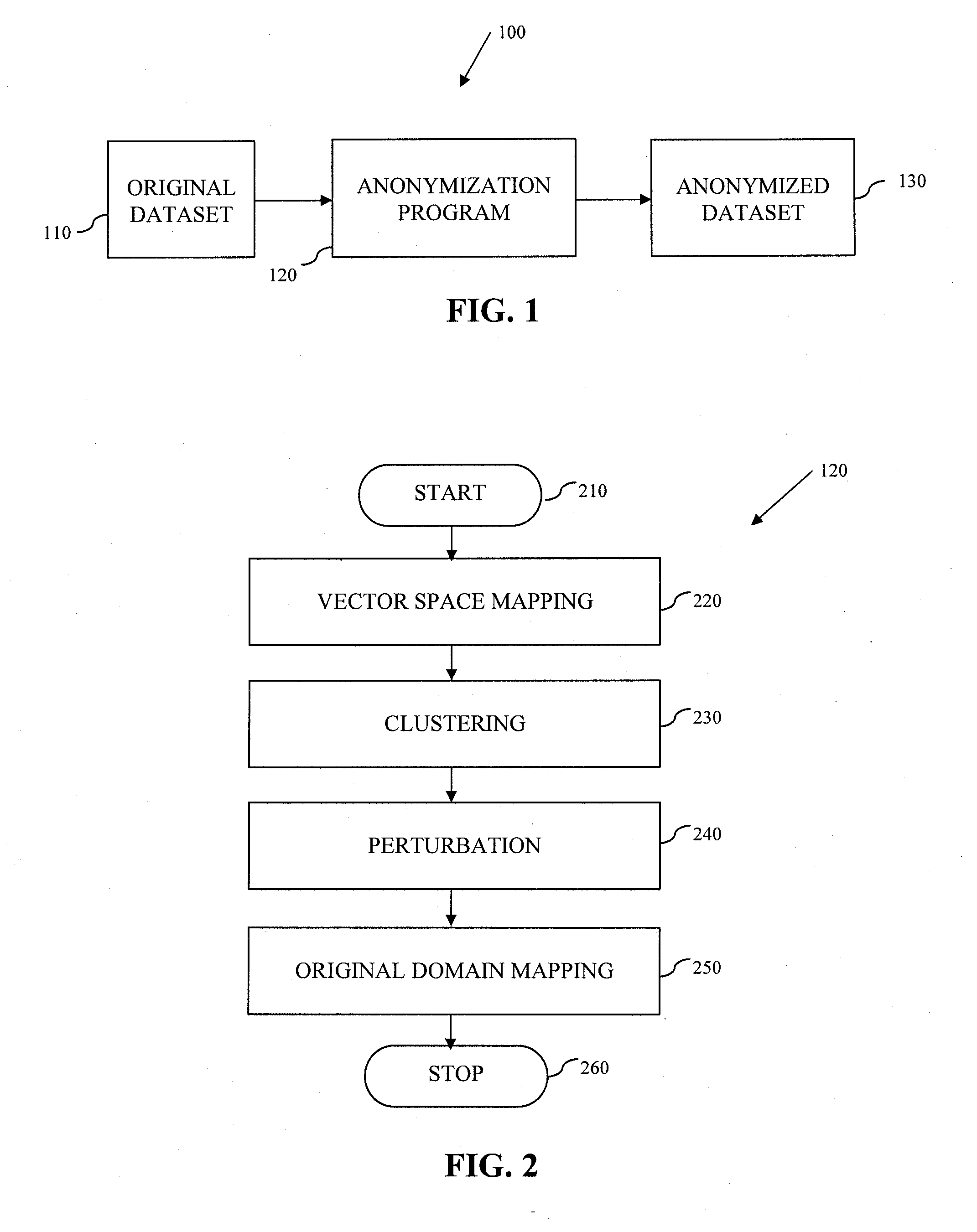

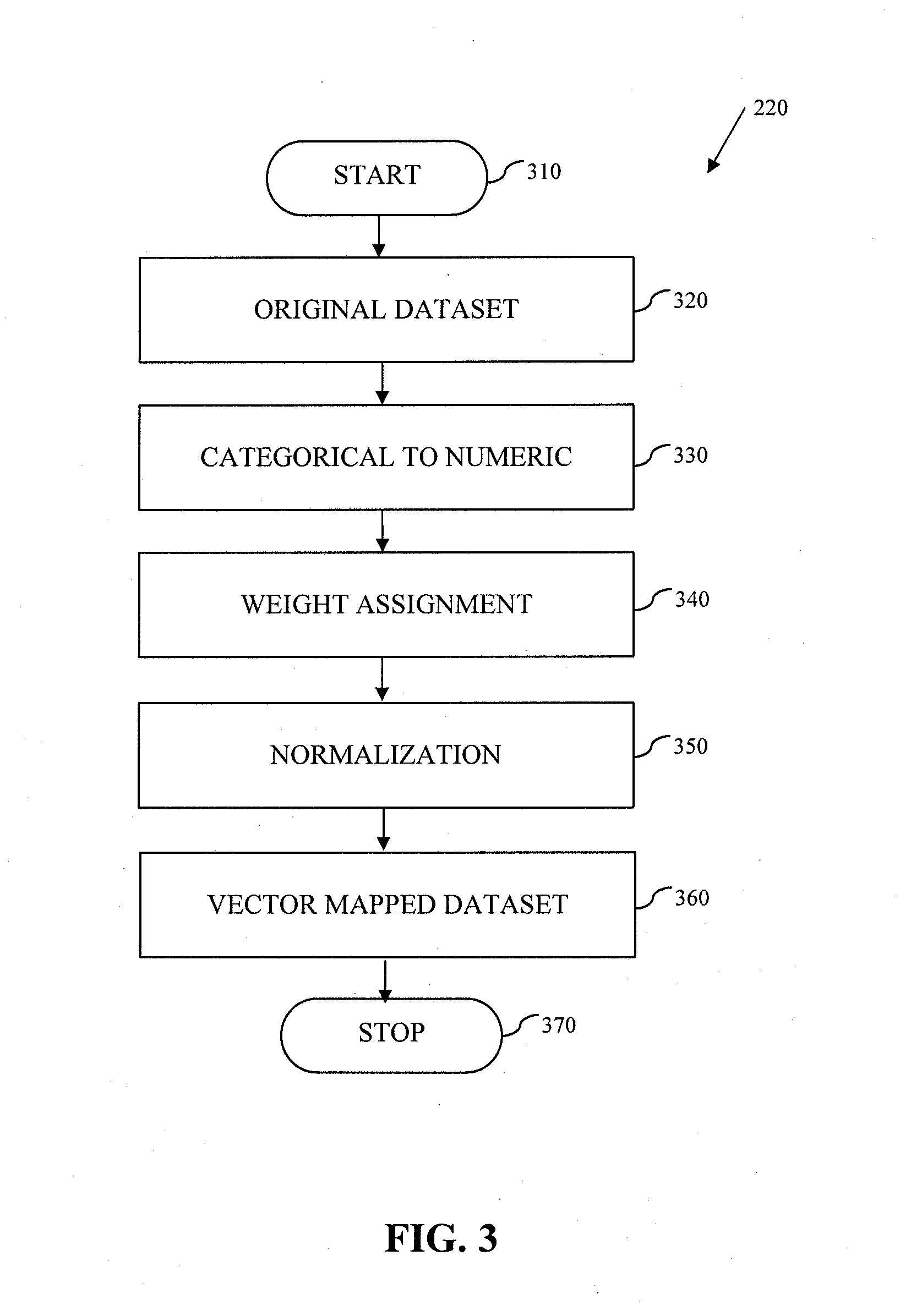

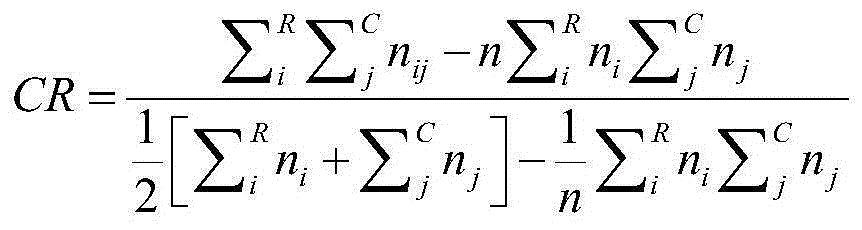

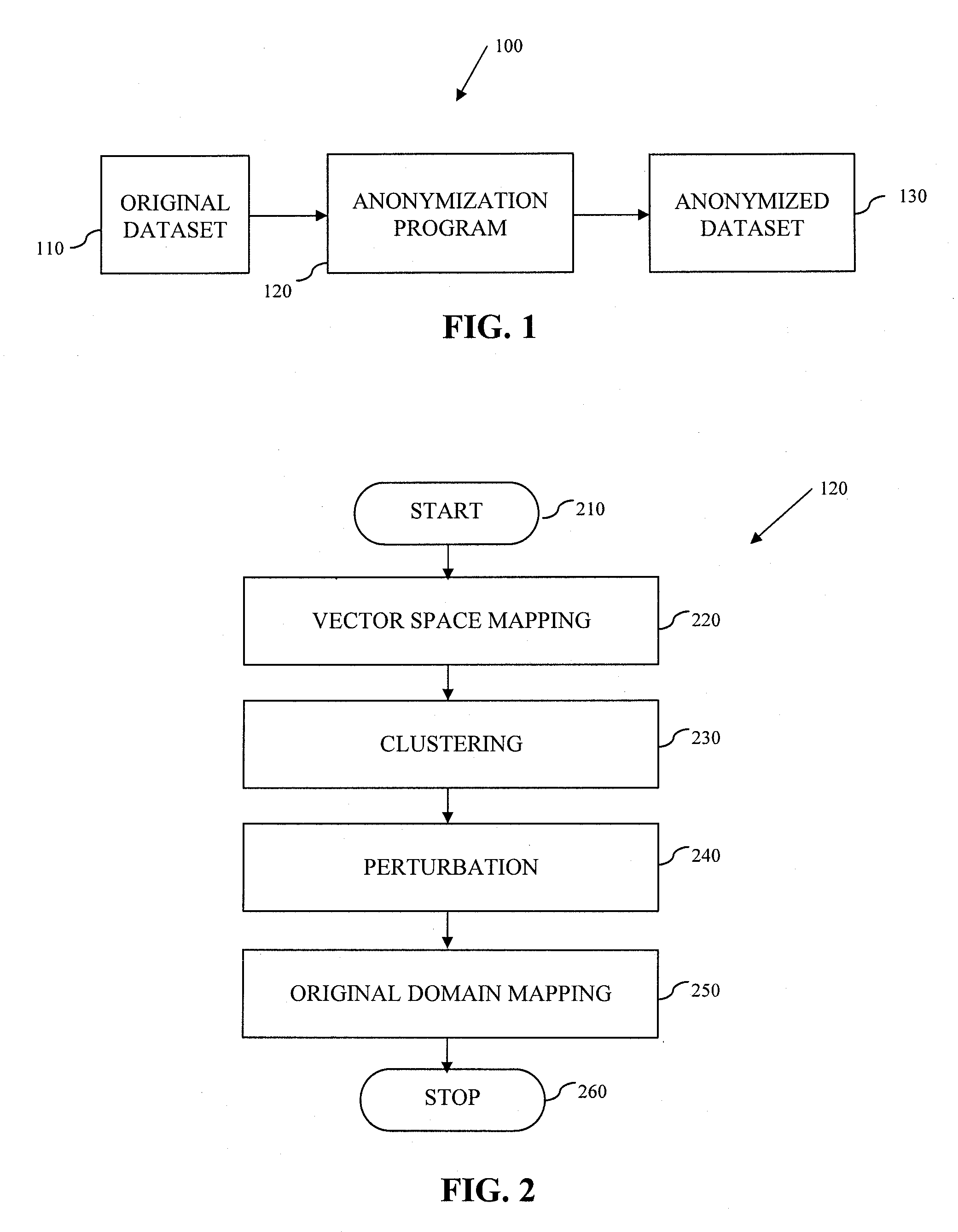

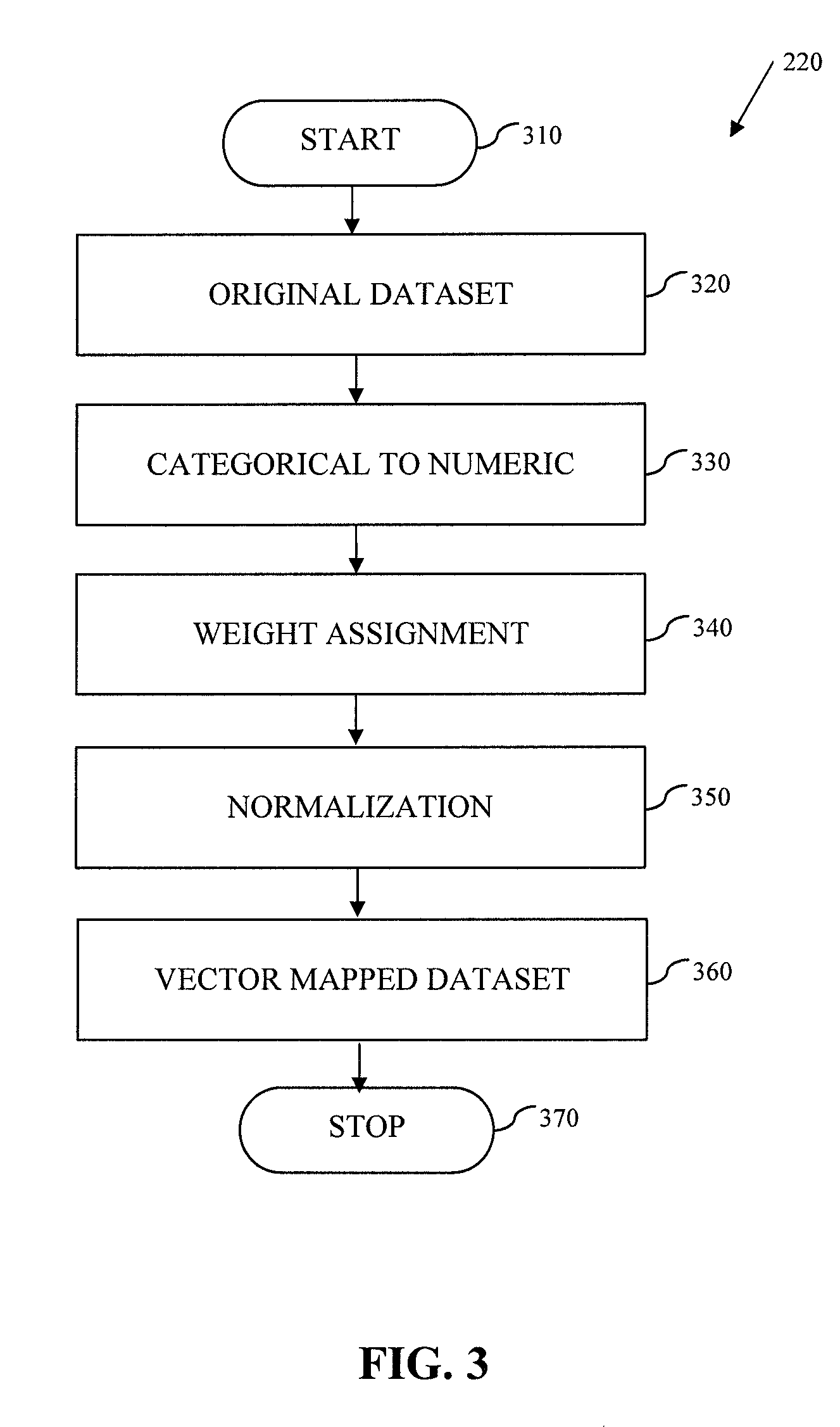

System and Method for Data Anonymization Using Hierarchical Data Clustering and Perturbation

ActiveUS20130339359A1Digital data information retrievalDigital data processing detailsData transformationComputerized system

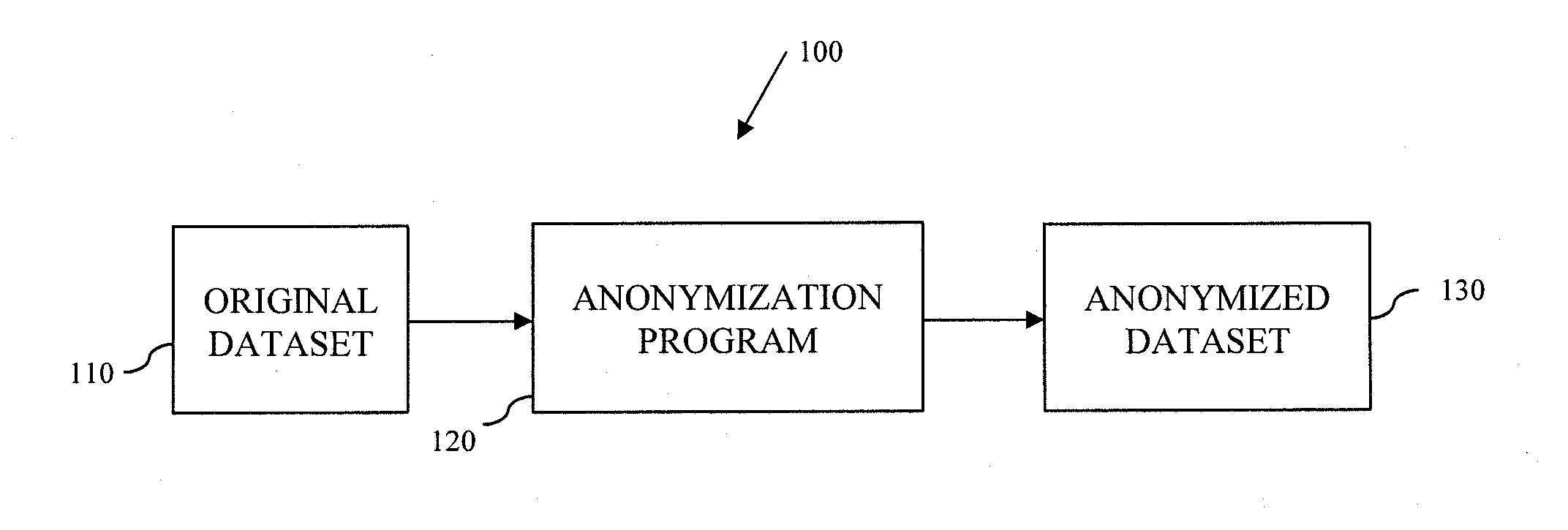

A system and method for data anonymization using hierarchical data clustering and perturbation is provided. The system includes a computer system and an anonymization program executed by the computer system. The system converts the data of a high-dimensional dataset to a normalized vector space and applies clustering and perturbation techniques to anonymize the data. The conversion results in each record of the dataset being converted into a normalized vector that can be compared to other vectors. The vectors are divided into disjointed, small-sized clusters using hierarchical clustering processes. Multi-level clustering can be performed using suitable algorithms at different clustering levels. The records within each cluster are then perturbed such that the statistical properties of the clusters remain unchanged.

Owner:ELECTRIFAI LLC

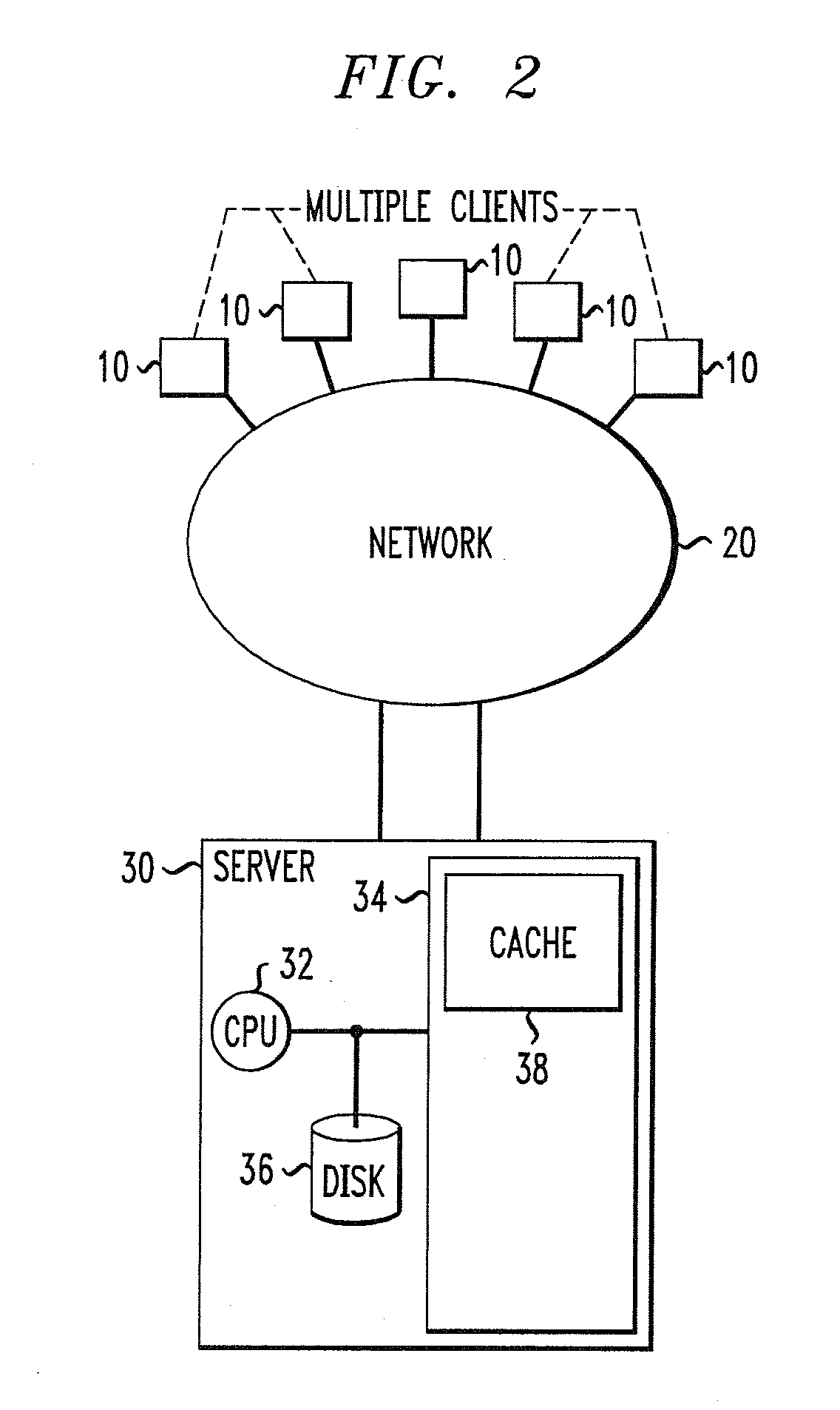

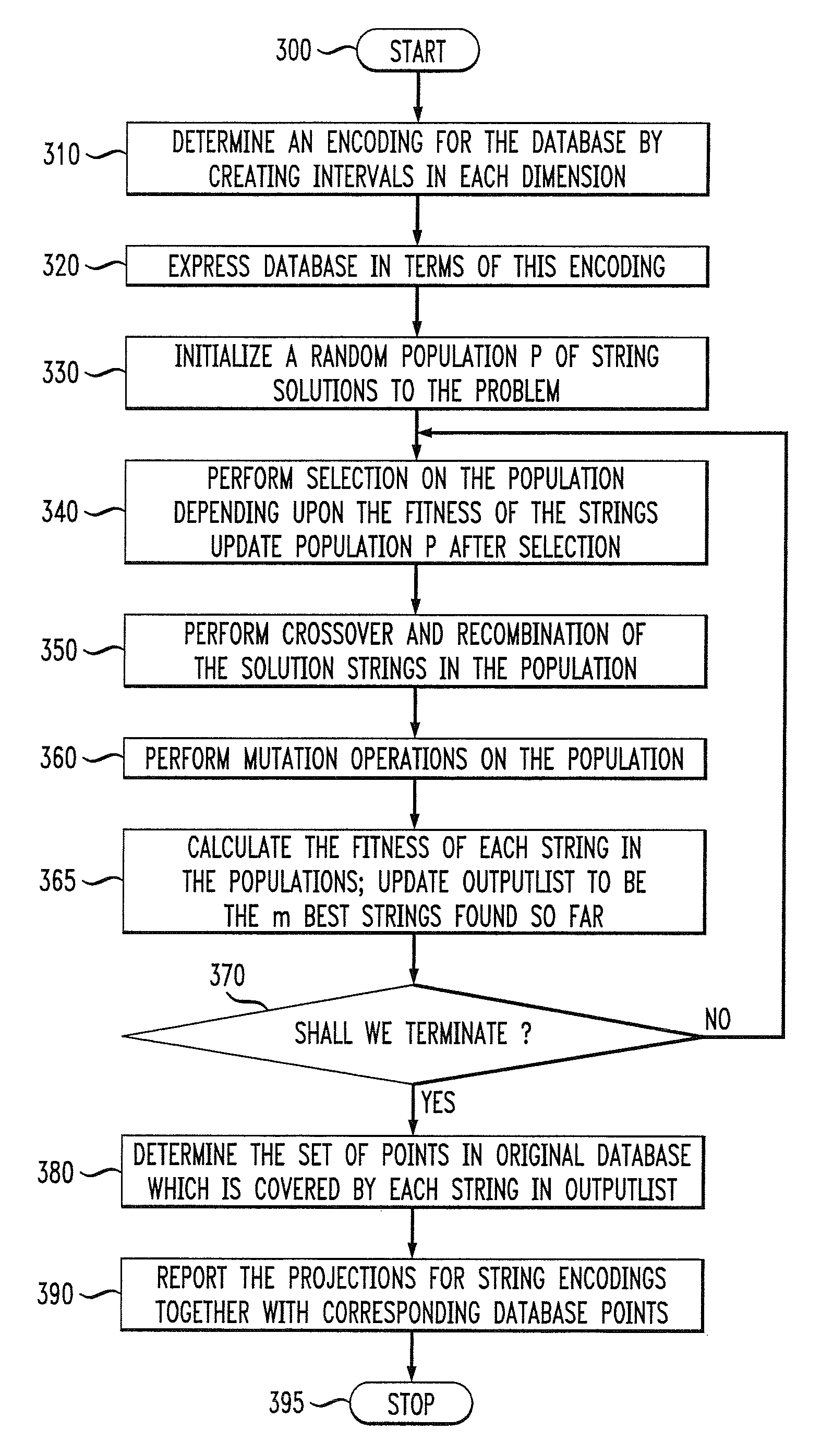

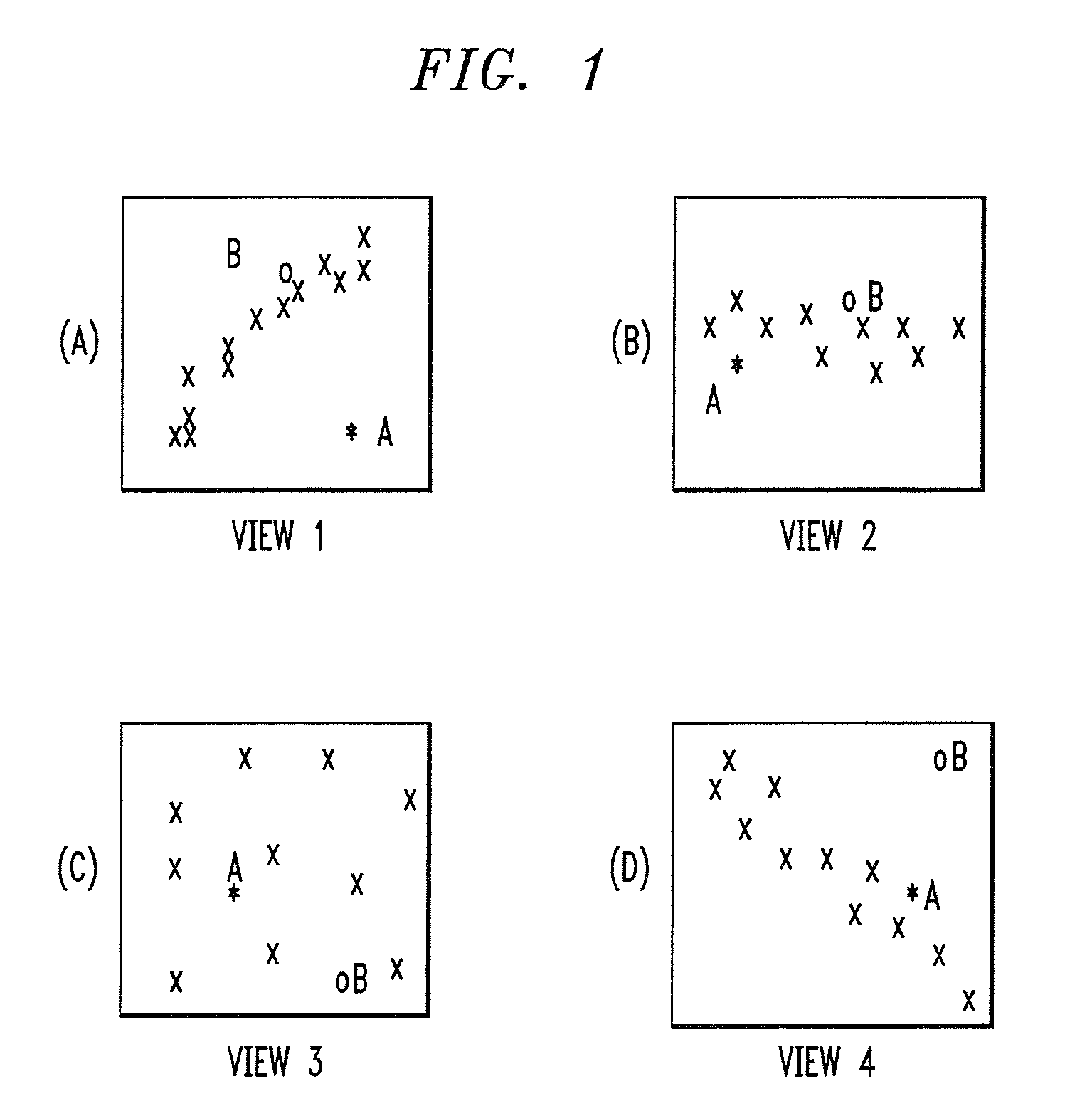

Methods and Apparatus for Outlier Detection for High Dimensional Data Sets

Methods and apparatus are provided for outlier detection in databases by determining sparse low dimensional projections. These sparse projections are used for the purpose of determining which points are outliers. The methodologies of the invention are very relevant in providing a novel definition of exceptions or outliers for the high dimensional domain of data.

Owner:TREND MICRO INC

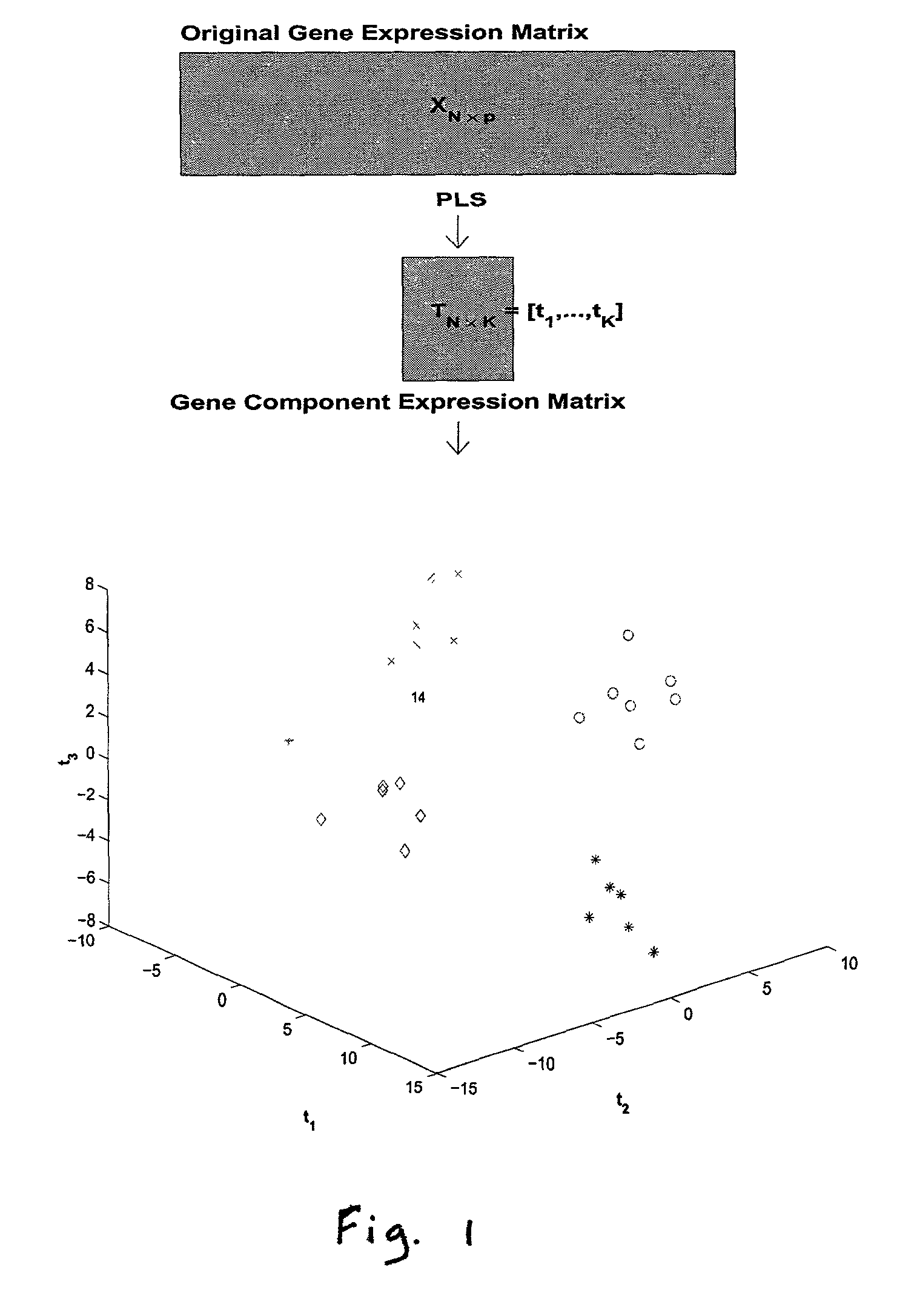

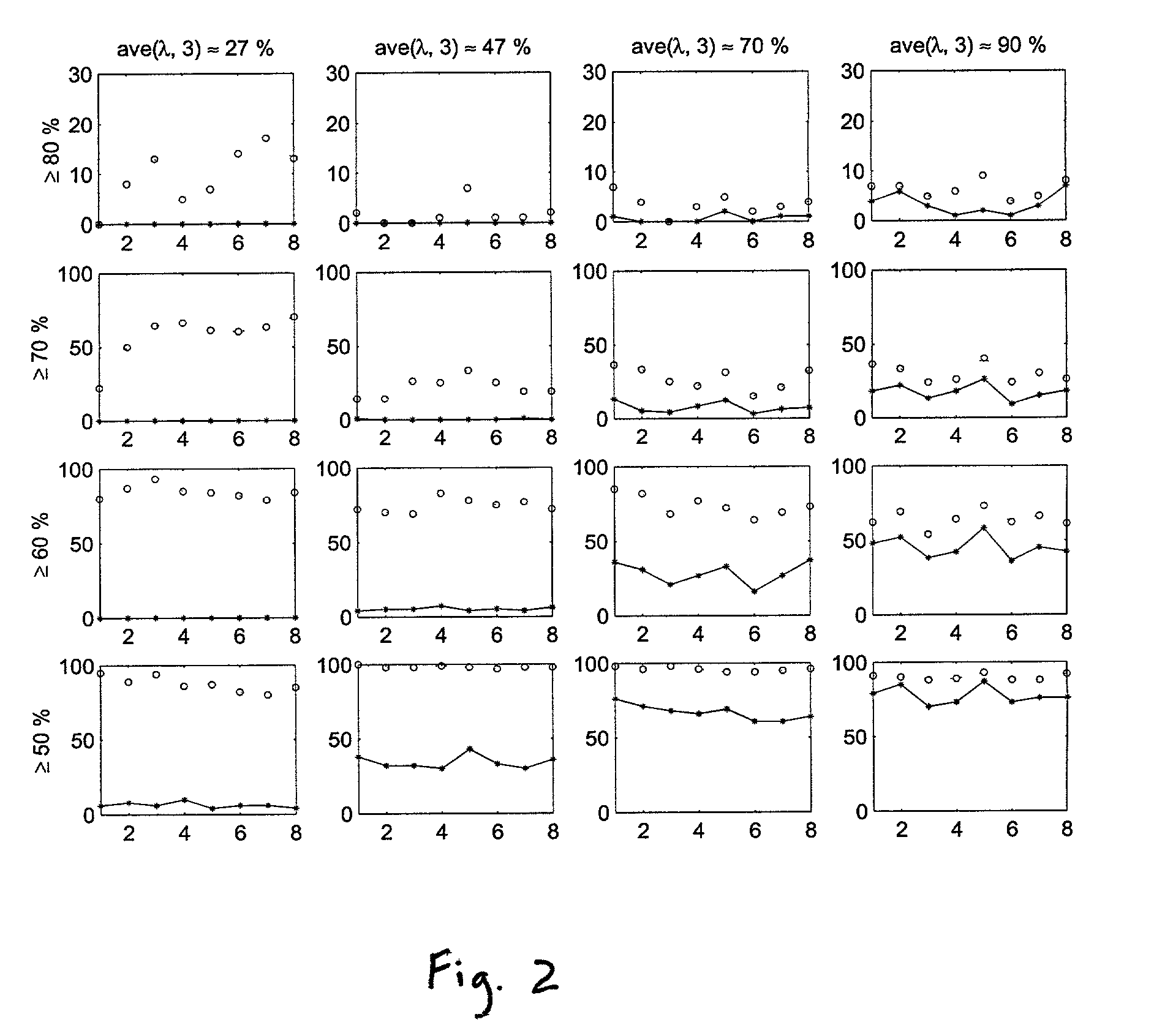

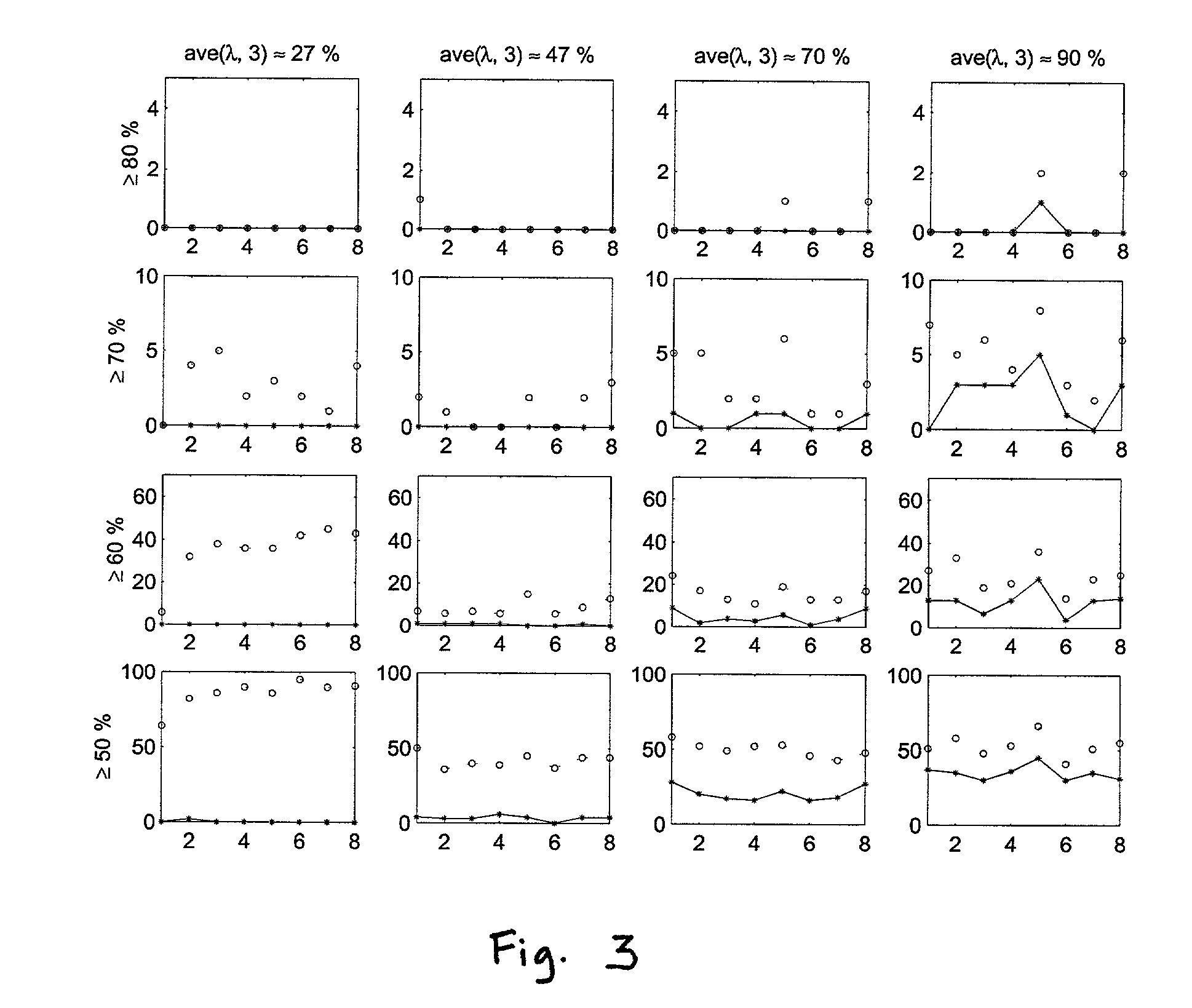

Methods for classifying high-dimensional biological data

InactiveUS7062384B2Useful predictionBiostatisticsCharacter and pattern recognitionData selectionHigh dimensional

Provided are methods of classifying biological samples based on high dimensional data obtained from the samples. The methods are especially useful for prediction of a class to which the sample belongs under circumstances in which the data are statistically under-determined. The advent of microarray technologies which provide the ability to measure en masse many different variables (such as gene expression) at once has resulted in the generation of high dimensional data sets, the analysis of which benefits from the methods of the present invention. High dimensional data is data in which the number of variables, p, exceeds the number of independent observations (e.g. samples), N, made. The invention relies on a dimension reduction step followed by a logistic determination step. The methods of the invention are applicable for binary (i.e. univariate) classification and multi-class (i.e. multivariate) classifications. Also provided are data selection techniques that can be used in accordance with the methods of the invention.

Owner:RGT UNIV OF CALIFORNIA

Efficient near neighbor search (ENN-search) method for high dimensional data sets with noise

InactiveUS6947869B2Quality improvementReduce probabilityAmplifier modifications to reduce noise influenceDigital computer detailsData compressionHigh dimensionality

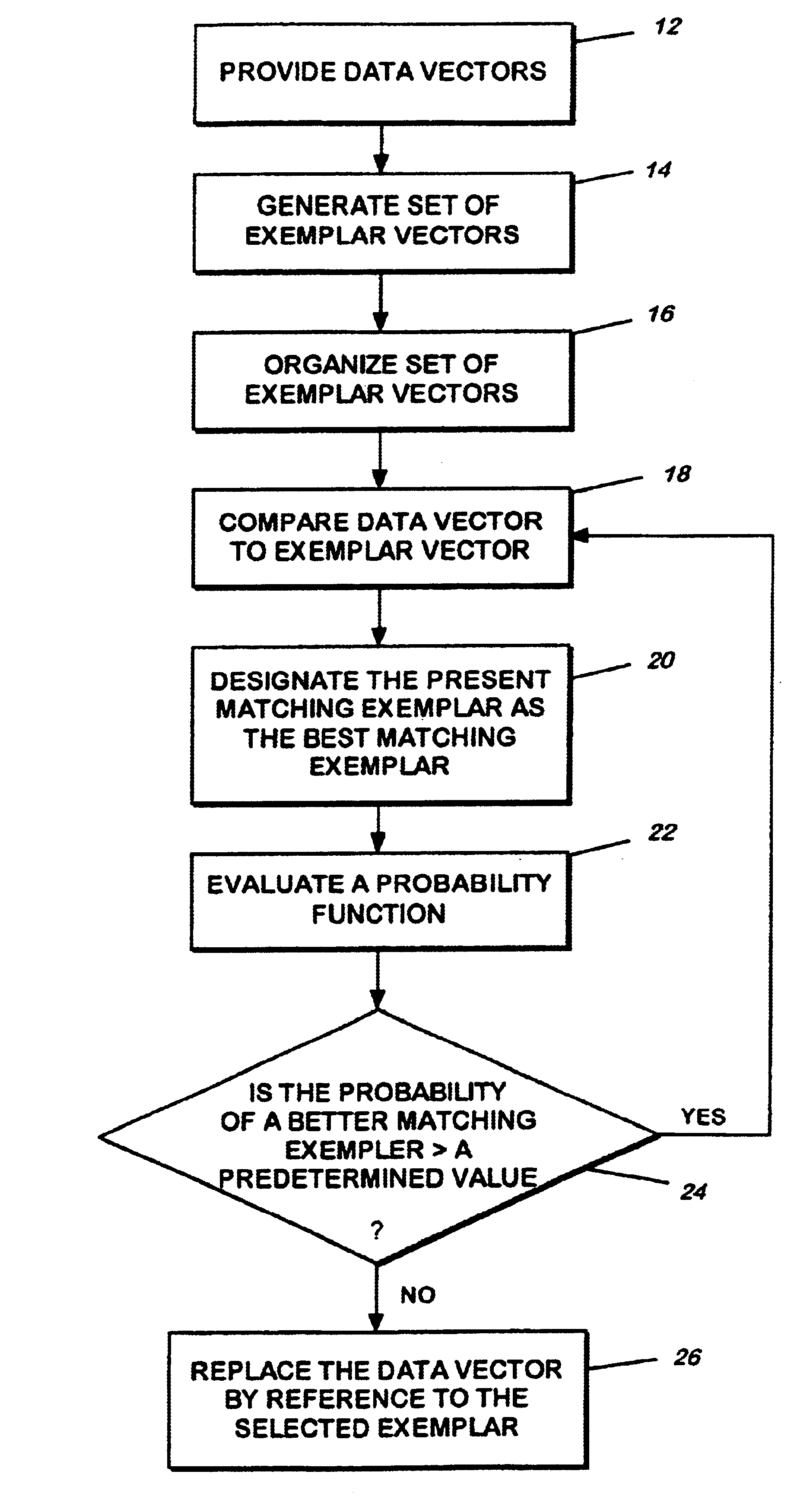

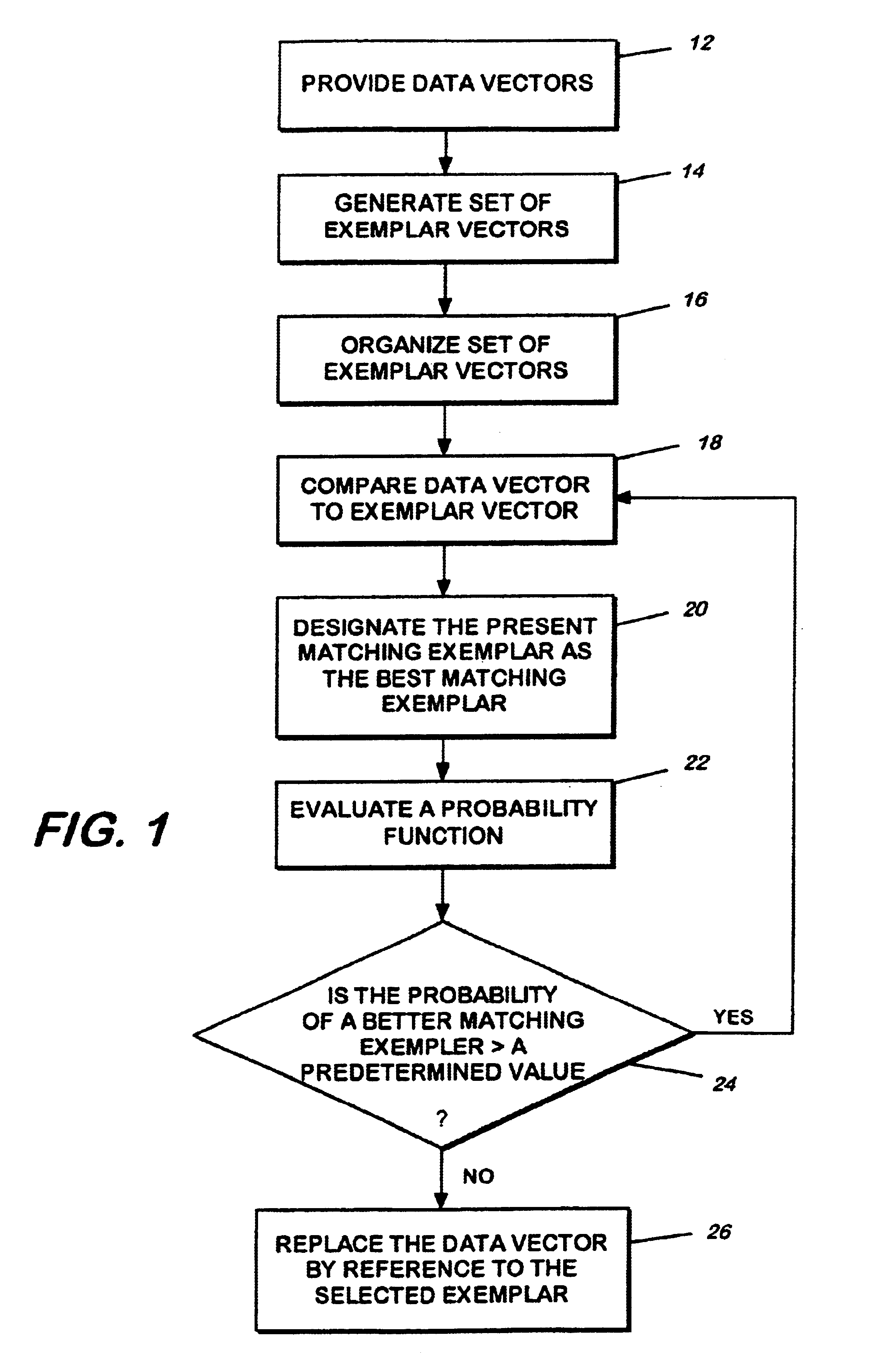

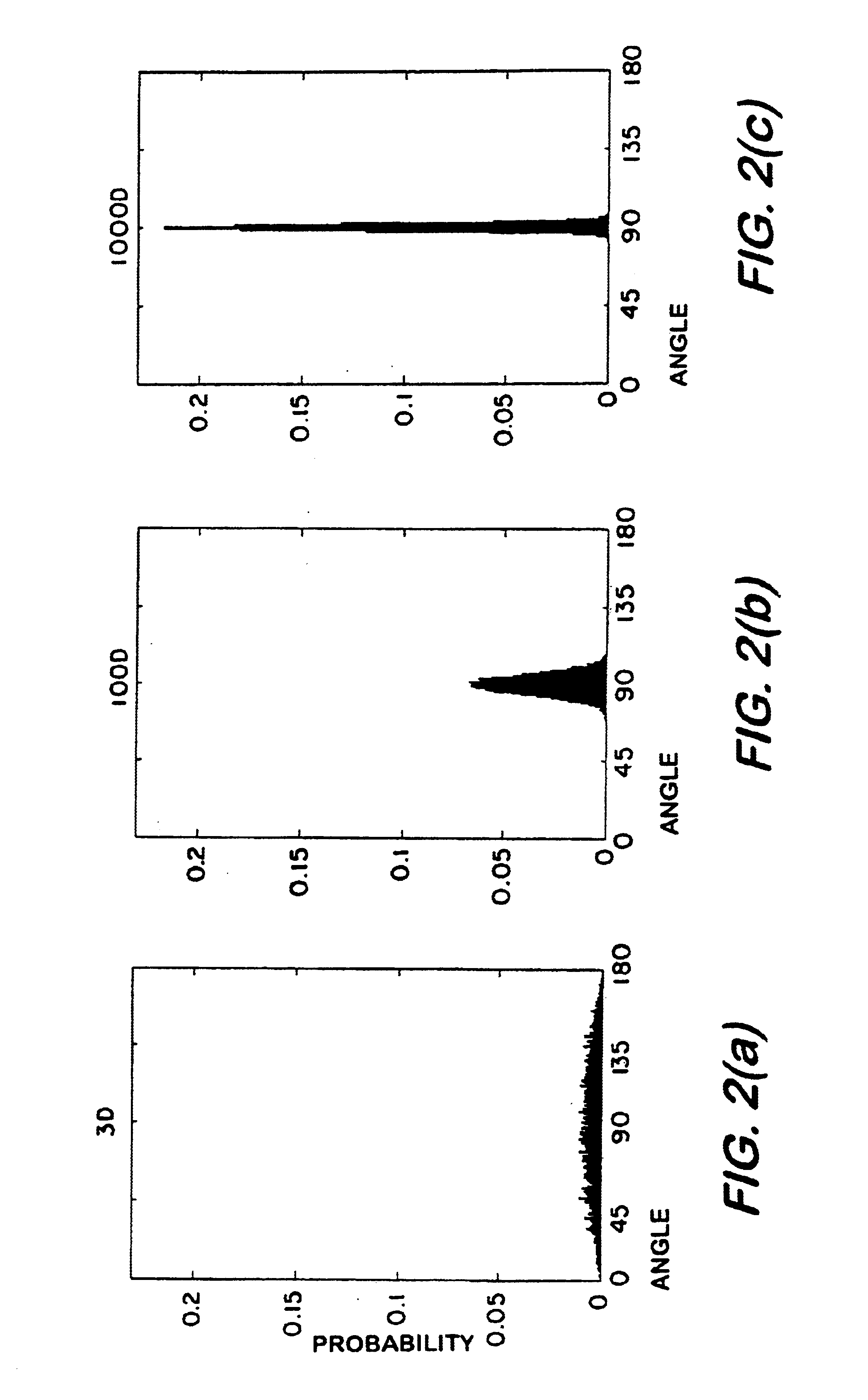

A nearer neighbor matching and compression method and apparatus provide matching of data vectors to exemplar vectors. A data vector is compared to exemplar vectors contained within a subset of exemplar vectors, i.e., a set of possible exemplar vectors, to find a match. After a match is found, a probability function assigns a probability value based on the probability that a better matching exemplar vector exists. If the probability that a better match exists is greater than a predetermined probability value, the data vector is compared to an additional exemplar vector. If a match is not found, the data vector is added to the set of exemplar vectors. Data compression may be achieved in a hyperspectral image data vector set by replacing each observed data vector representing a respective spatial pixel by reference to a member of the exemplar set that “matches' the data vector. As such, each spatial pixel will be assigned to one of the exemplar vectors.

Owner:THE UNITED STATES OF AMERICA AS REPRESENTED BY THE SECRETARY OF THE NAVY

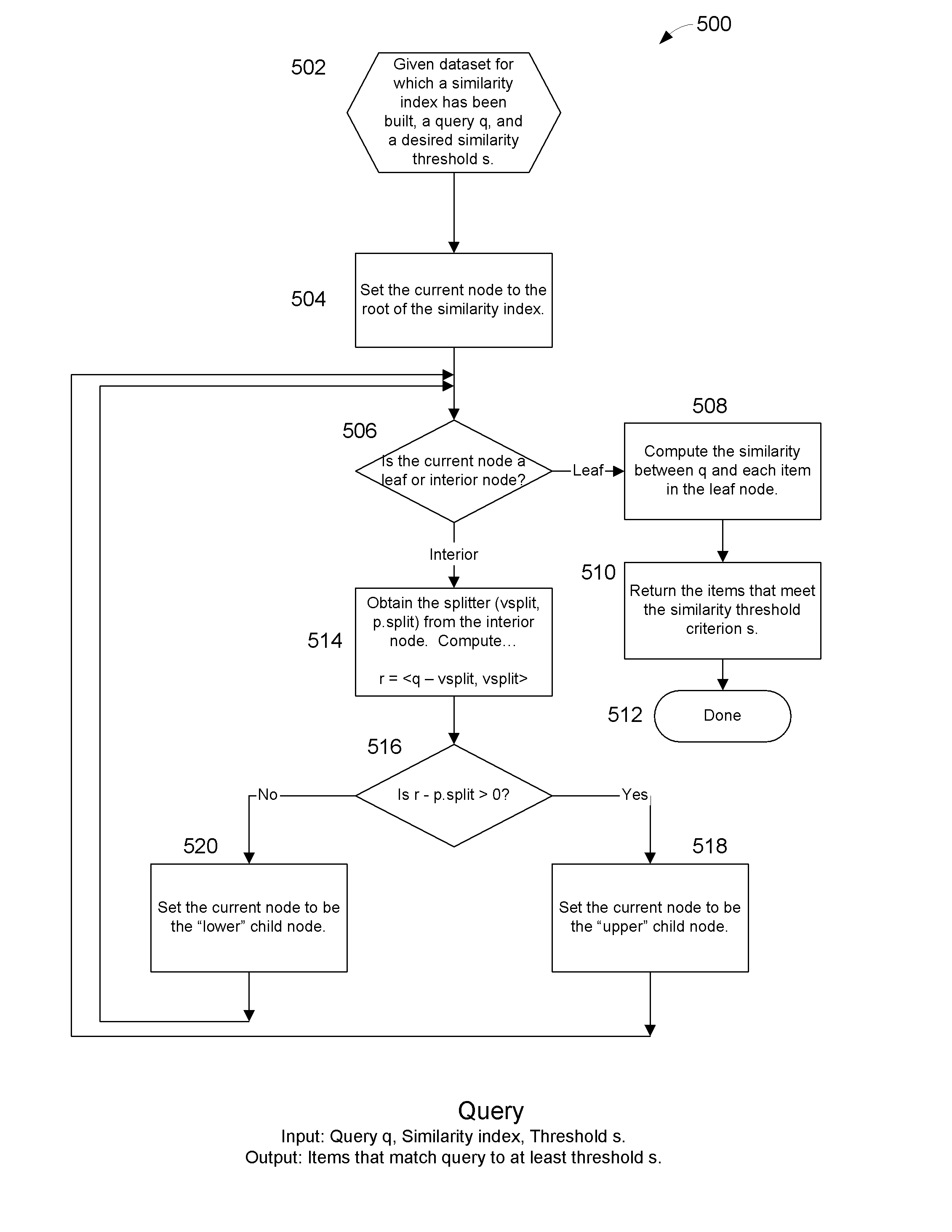

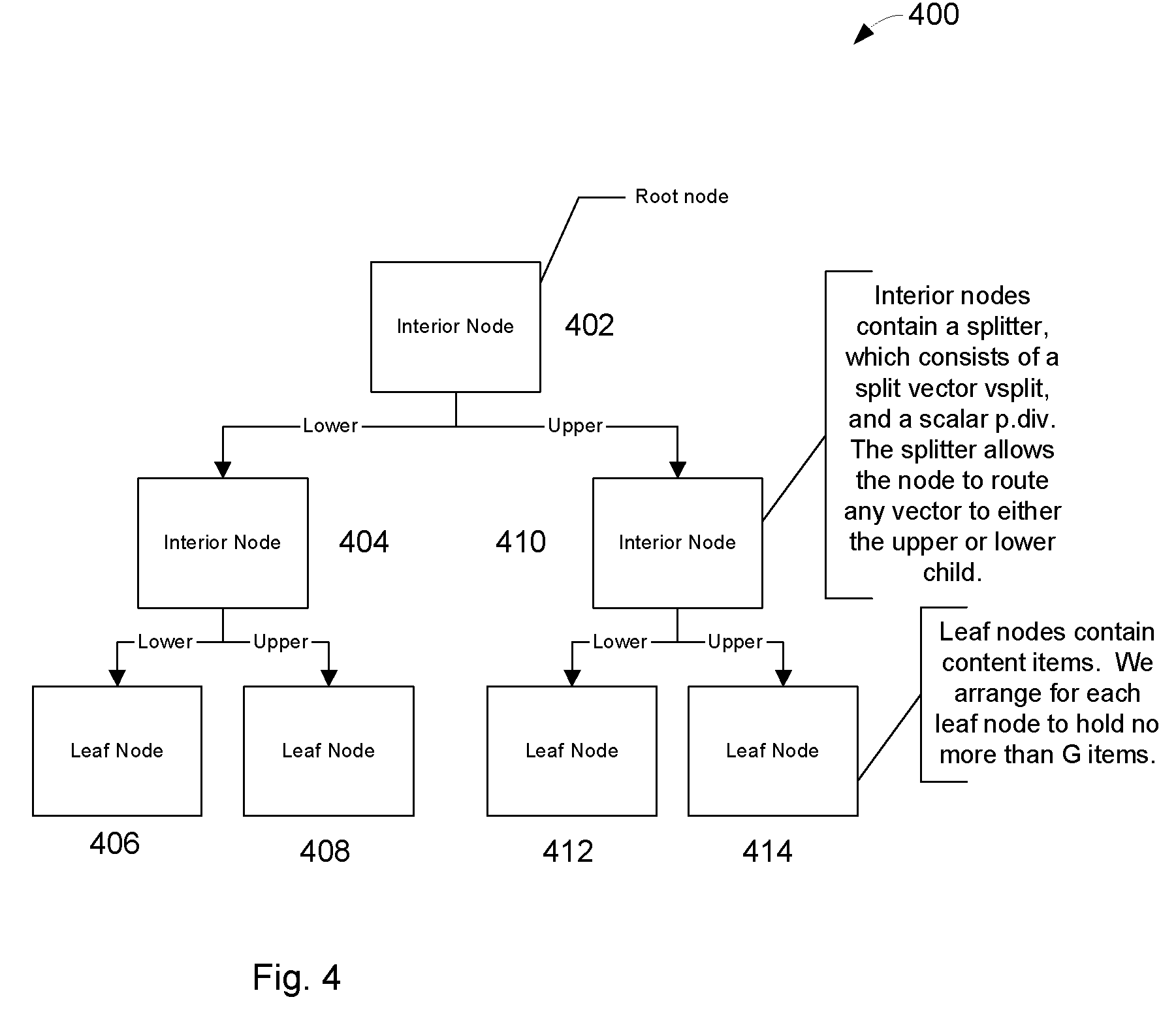

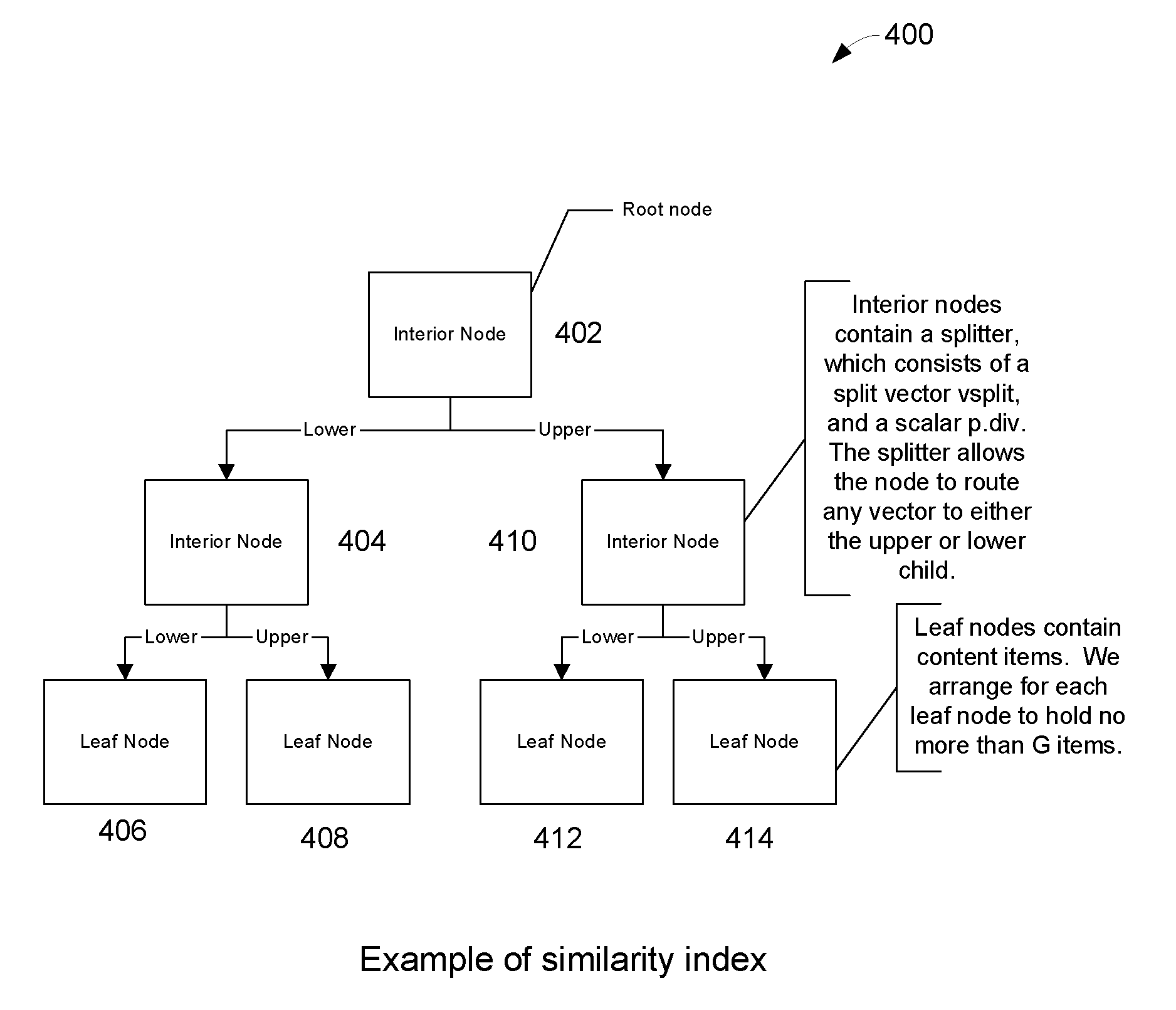

Method and apparatus for fast similarity-based query, self-join, and join for massive, high-dimension datasets

ActiveUS8117213B1Digital data information retrievalDigital data processing detailsHigh dimensionalityDegree of similarity

Owner:NAHAVA

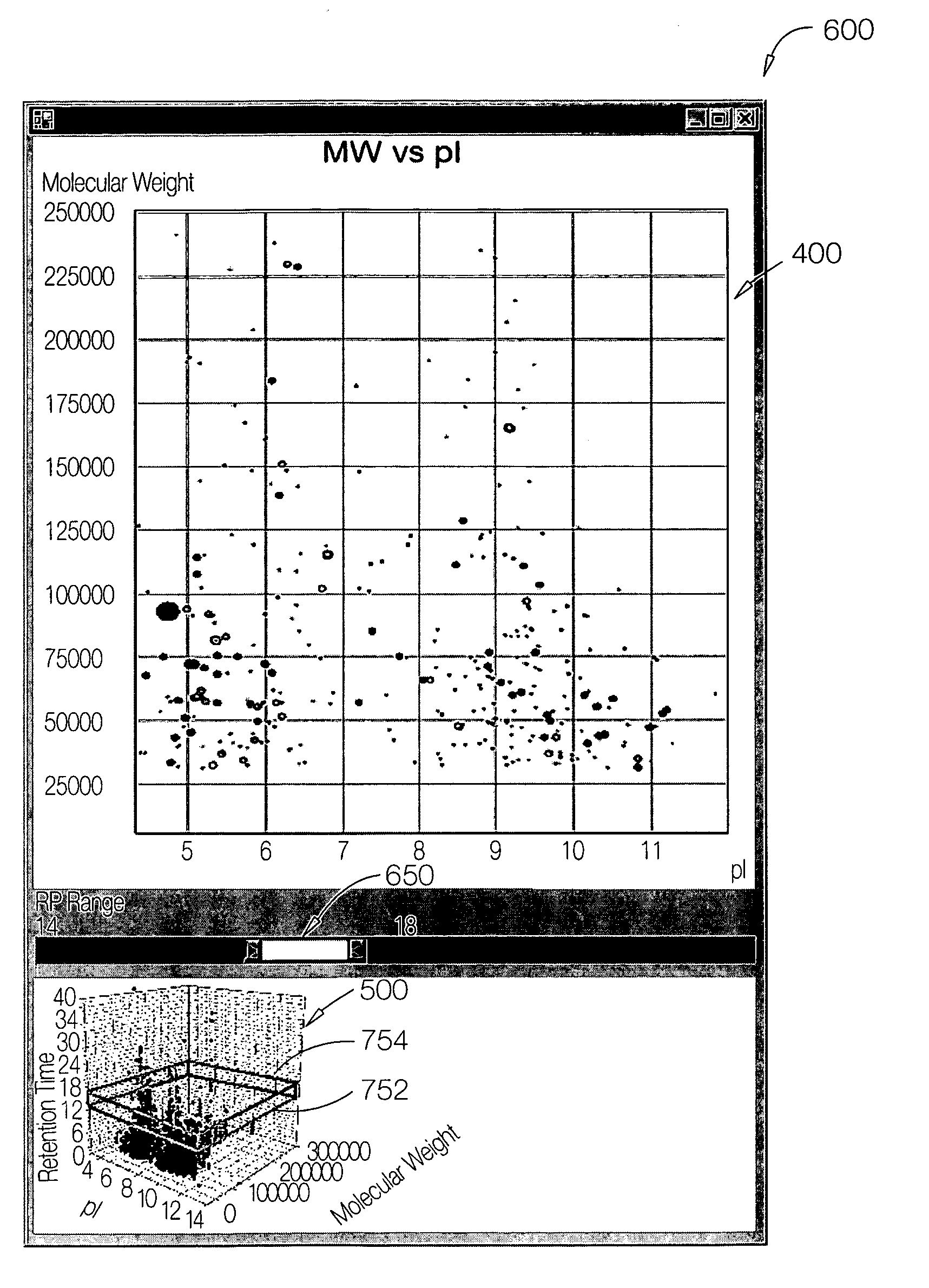

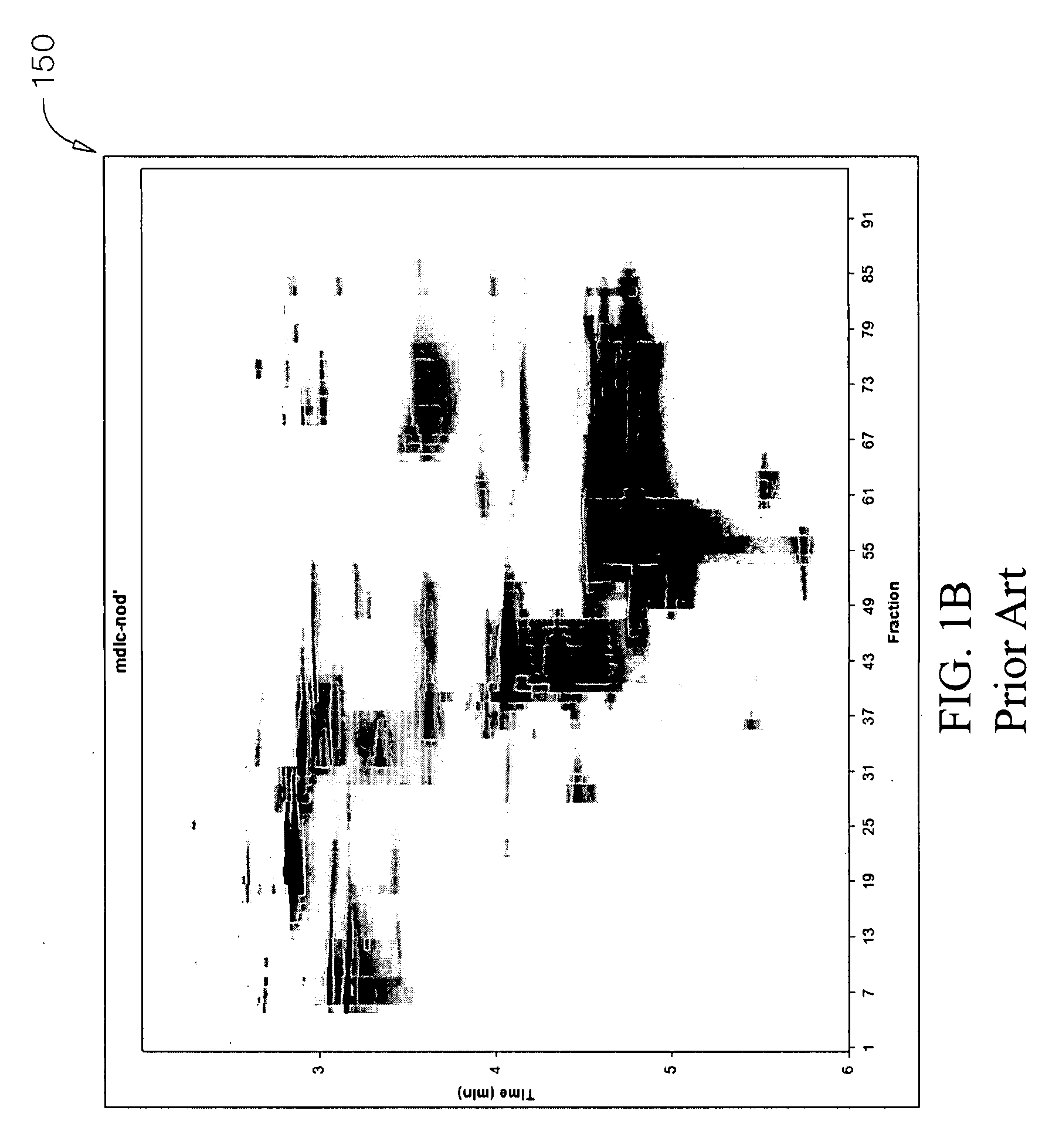

Methods, systems and computer readable media facilitating visualization of higher dimensional datasets in a two-dimensional format

Methods, systems and computer readable media for representing multidimensional data derived from molecular separation processing to resemble a two-dimensional display created from a molecular separation process producing data values for two different properties. Multidimensional data having values for at least three different properties of molecules separated by the molecular separation processing is received. Data values for a first of the at least three different properties are plotted relative to data values for a second of the at least three different properties in a two-dimensional plot. The first and second properties are the same properties as those plotted in the two-dimensional display created from the molecular separation process producing values for two different properties. Data values for a third of the properties are represented by varying the graphic representation of the data values plotted for the first and second of the properties.

Owner:AGILENT TECH INC

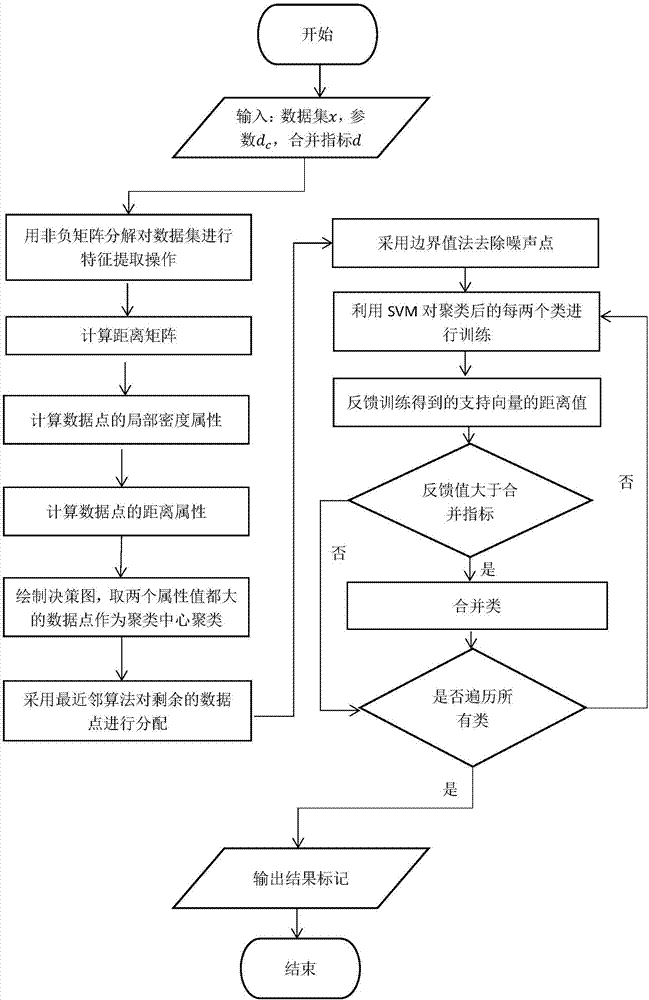

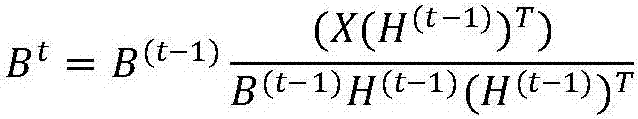

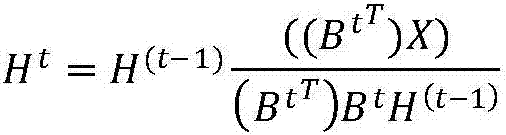

Feedback density peak value clustering method and system thereof

InactiveCN107016407AAccurate clusteringSolve the disadvantages of inaccurate clusteringCharacter and pattern recognitionCluster algorithmFeature extraction

The invention provides a feedback density peak value clustering method and a system thereof. A problem that one type is divided into a plurality of types when a plurality of density peak values are generated in one type in an original density peak value algorithm is solved. Simultaneously, accuracy of an original algorithm in a high dimension data set is increased. The method comprises the following steps of 1, using non-negative matrix factorization to carry out characteristic extraction on a data set; 2, according to an original density peak value clustering algorithm, drawing a decision graph and selecting a plurality of clustering centers; 3, using a ''nearest neighbor'' algorithm to distribute the rest of points and removing a noise point; 4, using a SVM to feed back a clustering result between two types; and 5, according to a feedback result, merging the types which can be merged. By using the method, robustness of a density peak value algorithm can be effectively increased, clusters with any shapes can be well discovered, high dimension data can be effectively processed, and a good clustering effect is possessed.

Owner:CHINA UNIV OF MINING & TECH

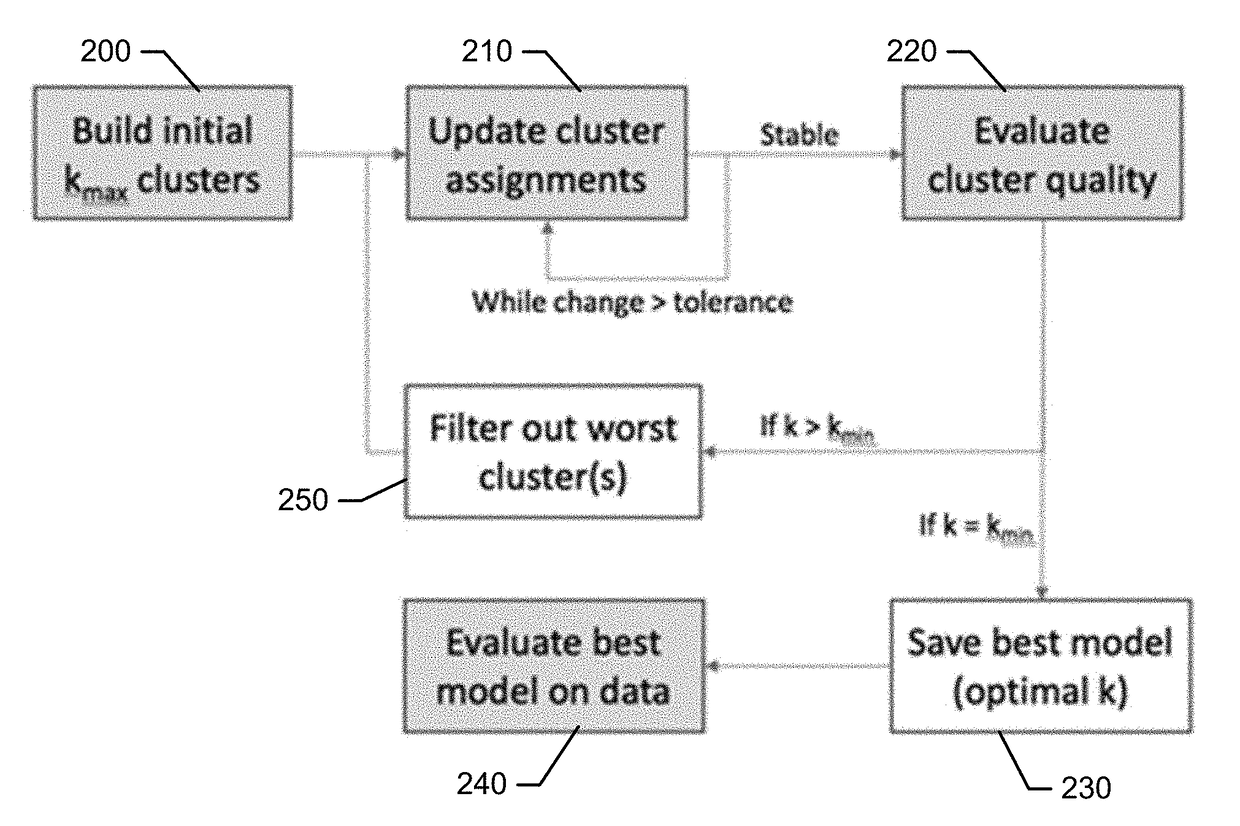

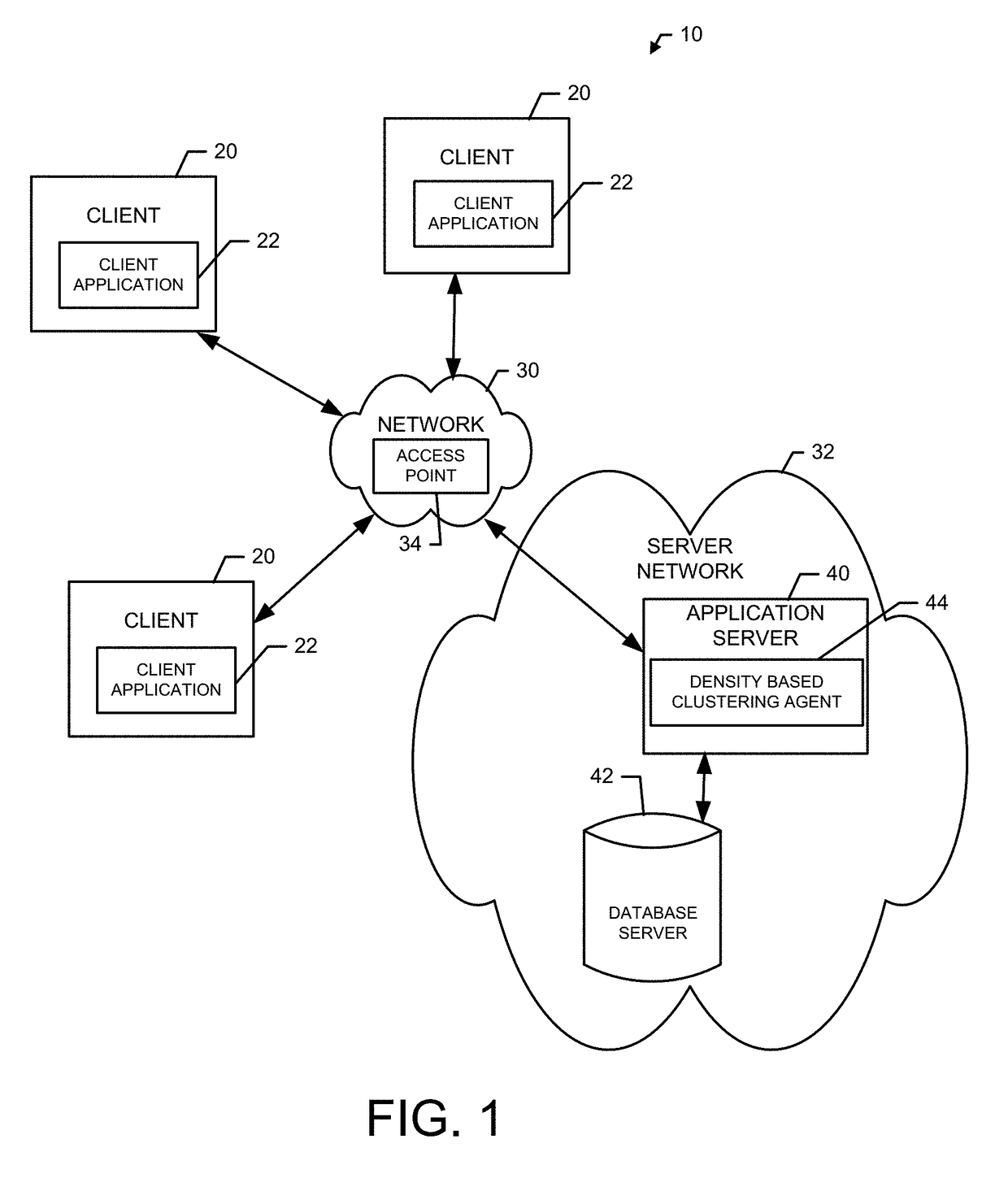

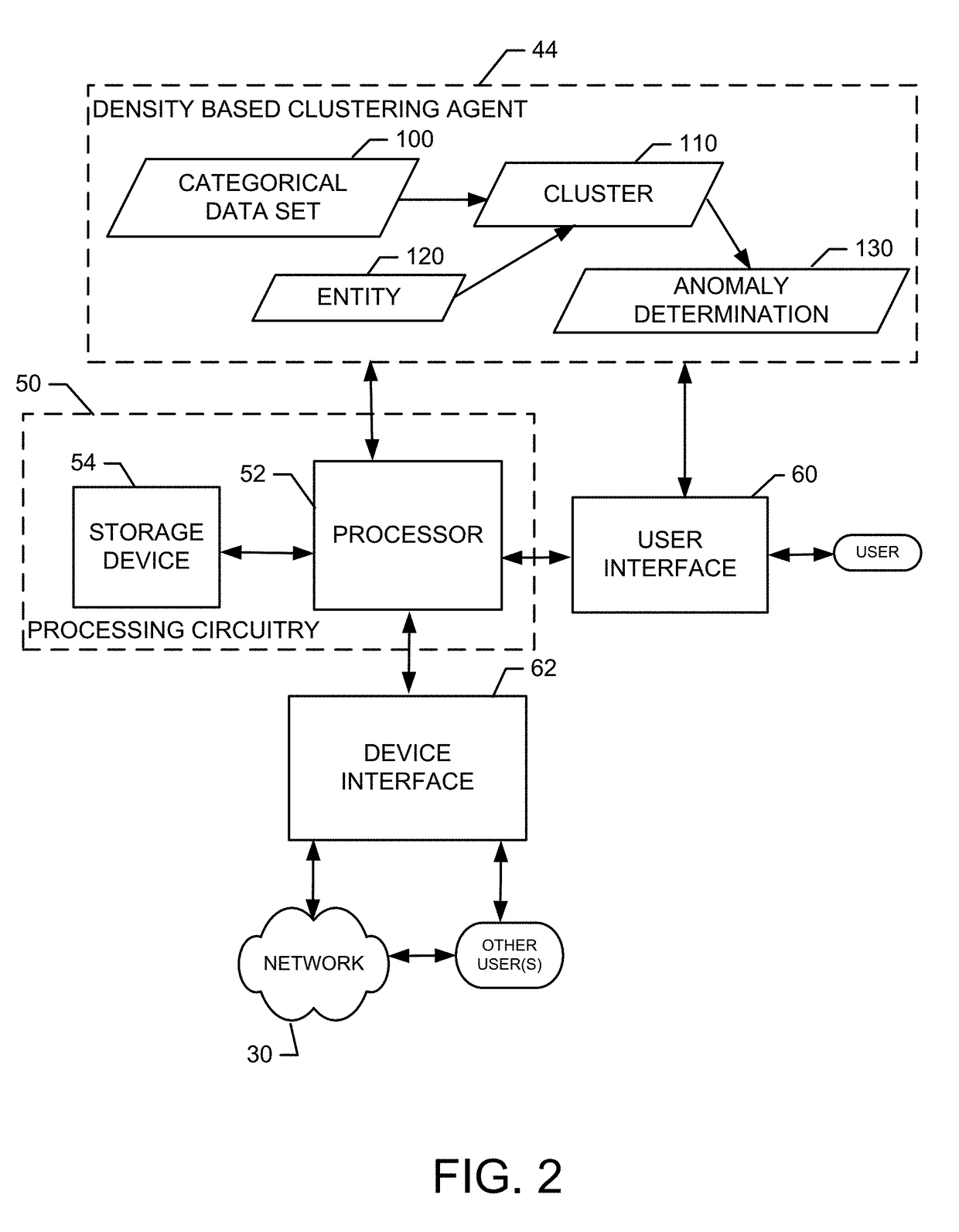

Method and apparatus for clustering, analysis and classification of high dimensional data sets

An apparatus includes processing circuitry configured to execute instructions that, when executed, cause the apparatus to initialize a mixture model having a number of clusters including categorical data, iteratively update cluster assignments, evaluate cluster quality based on categorical density of the clusters, and prune clusters that have low categorical density, and determine an optimal mixture model based on the pruned clusters.

Owner:THE JOHN HOPKINS UNIV SCHOOL OF MEDICINE

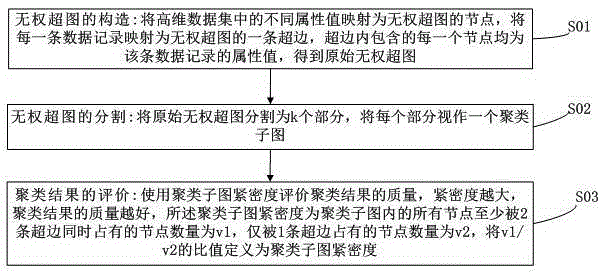

High-dimensional data clustering method based on unweighted hypergraph segmentation

InactiveCN105279524AComprehensive cluster analysisImprove computing efficiencyCharacter and pattern recognitionCluster algorithmAlgorithm

The invention discloses a high-dimensional data clustering method based on unweighted hypergraph segmentation. The high-dimensional data clustering method comprises the steps of: mapping different attribute values in a high-dimensional data set as nodes of an unweighted hypergraph, and mappting each data record as a hyperedge of the unweighted hypergraph to obtain an original unweighted hypergraph, wherein each node contained in the hyperedge is an attribute value of the data record; segmenting the original unweighted hypergraph into k parts, and regarding each part as a clustering subgraph; and evaluating quality of a clustering result by using compactness of the clustering subgraphs, wherein the greater the compactness, the better the quality of the clustering result, the compactness of the clustering subgraphs is defined by a ratio of v1 to v2, the v1 is the number of nodes simultaneously occupied by at least two hyperedges among all the nodes in the clustering subgraphs, and the v2 is the number of nodes occupied by only one hyperedge. The high-dimensional data clustering method can be used for carrying out clustering analysis on the high-dimensional data set comprehensively, and can further improve the calculating efficiency of a high-dimensional data clustering algorithm.

Owner:YANCHENG INST OF TECH

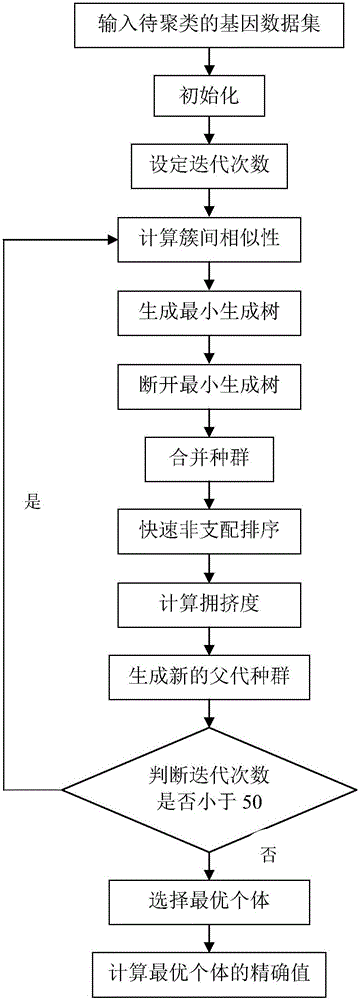

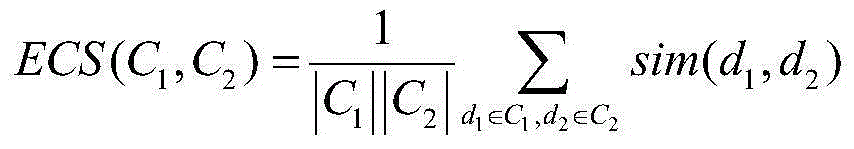

Integrated multi-objective evolutionary automatic clustering method based on minimum spinning tree

ActiveCN105139037AImprove search capabilitiesOvercome the problem of prone to illegal solutionsCharacter and pattern recognitionAlgorithmHigh dimensional

Owner:XIDIAN UNIV

System and method for data anonymization using hierarchical data clustering and perturbation

ActiveUS9135320B2Digital data information retrievalDigital data processing detailsComputerized systemEuclidean vector

Owner:ELECTRIFAI LLC

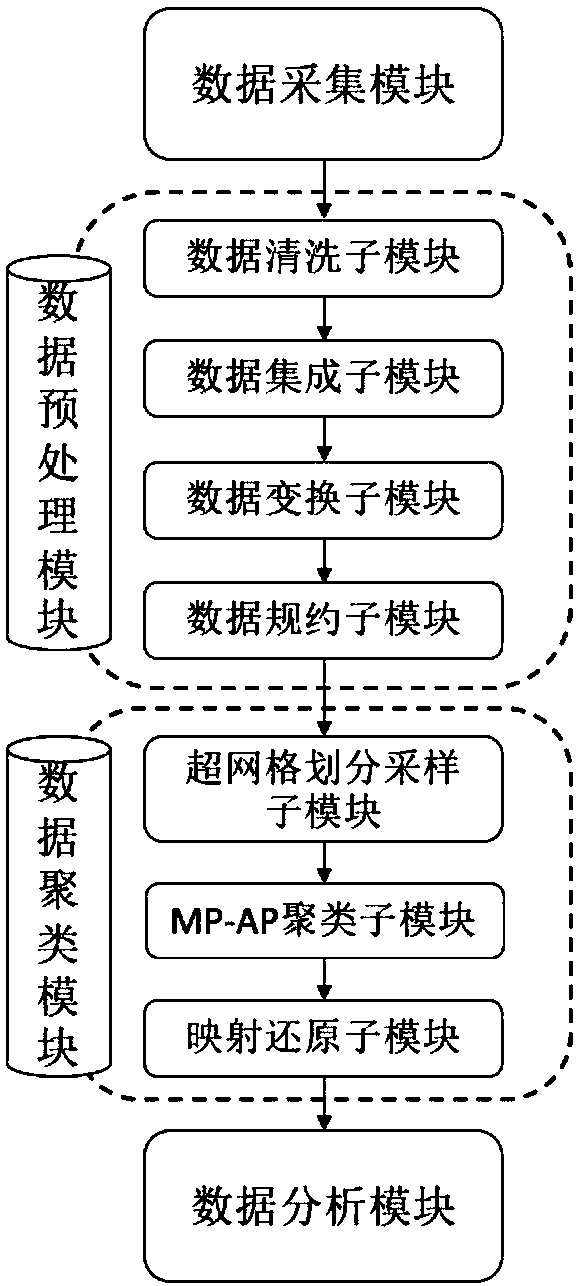

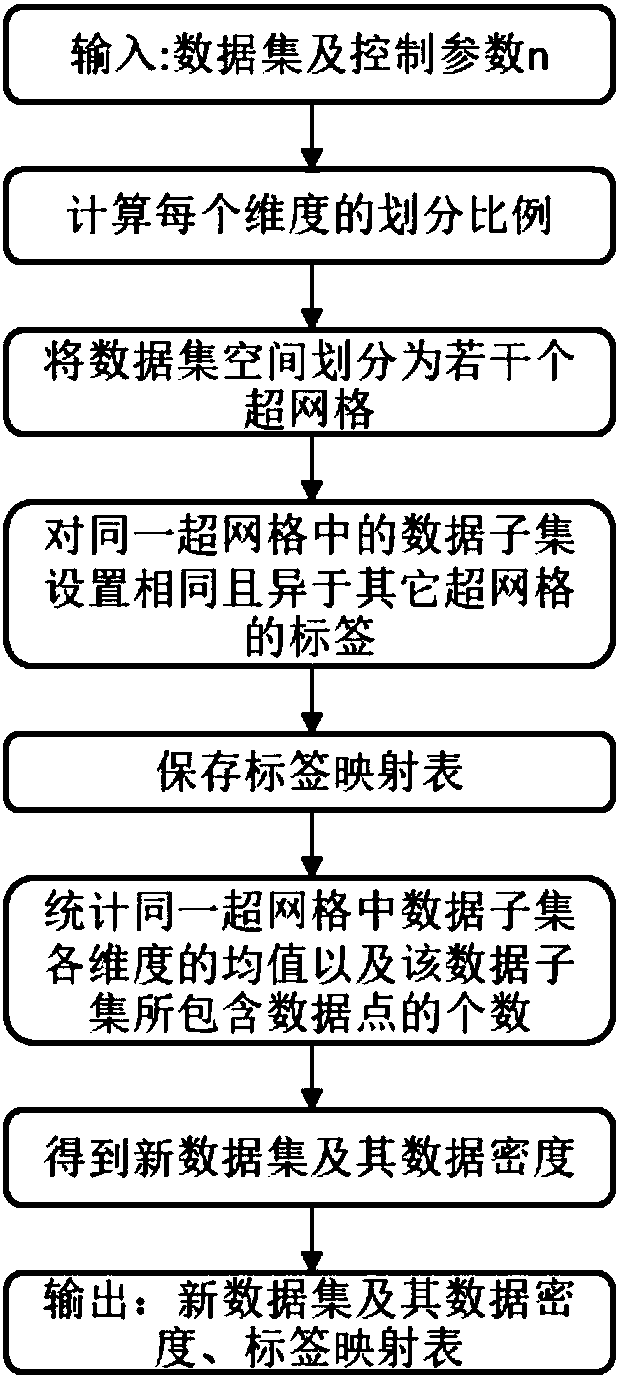

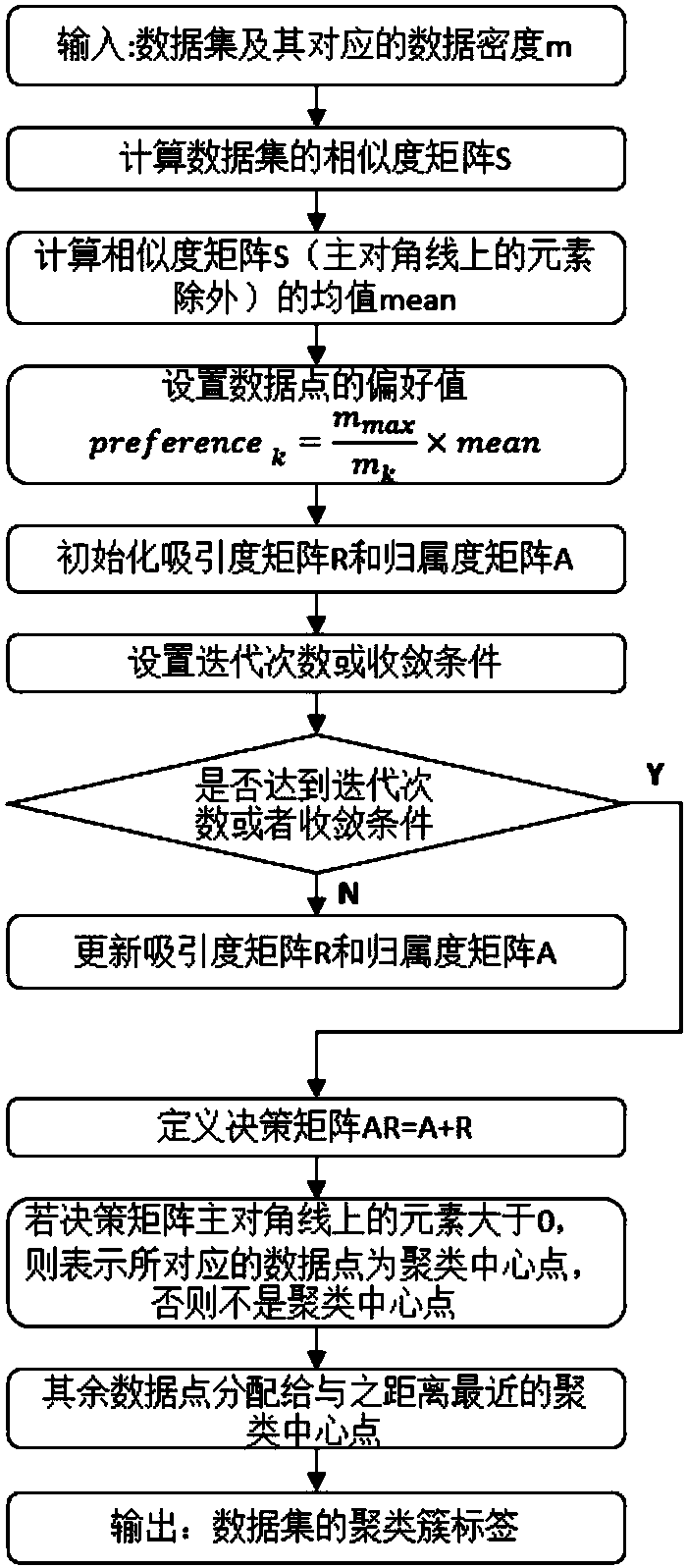

Unsupervised rapid clustering method and system suitable for big data

InactiveCN107944465AReduce space complexityReduce memory overheadCharacter and pattern recognitionSpecial data processing applicationsOriginal dataAnalysis working

The invention discloses an unsupervised rapid clustering method and system suitable for big data, and the method comprises the steps: carrying out the hyper-grid dividing and sampling of a preprocessed large-scale data set, and obtaining a new data set; carrying out the clustering of the new data set through an improved neighbor propagation method, and obtaining a preliminary clustering result; finally remapping and restoring the initial clustering result to an original data set, and finally obtaining a final clustering result, thereby creating conditions for the further analysis. The method is higher in robustness, is suitable for a low-dimensional set, and is also suitable for a high-dimensional data set.

Owner:SOUTH CHINA UNIV OF TECH

Method and apparatus for fast similarity-based query, self-join, and join for massive, high-dimension datasets

ActiveUS7644090B2Digital data information retrievalSpecial data processing applicationsHigh dimensionalityHigh dimensional data sets

Owner:NAHAVA

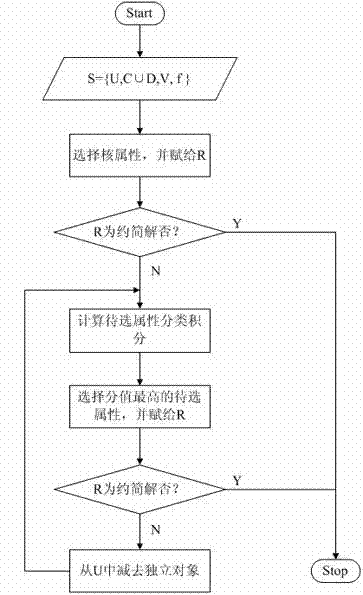

Fast Attribute Reduction Method Based on Rough Classification Knowledge Discovery

InactiveCN102262682AOvercome the problem of large amount of calculationThe principle of reduction is clearSpecial data processing applicationsData miningKnowledge level

A fast attribute reduction method based on rough classification knowledge discovery relates to the technical field of data processing and solves the technical problem of simplifying the reduction principle and compressing redundant data the fastest. The specific steps of this method are as follows: 1) Find out the core attributes in the conditional attribute set to form the core attribute set, and the remaining conditional attributes form the candidate attribute set; 2) Determine whether the core attribute set is the reduced solution of the data set, and if so, then Attribute reduction is completed; 3) Use the classification knowledge of the decision attribute set as the standard to evaluate the classification ability of each candidate attribute, and find the candidate with the highest consistency between the classification knowledge combined with the core attribute set and the classification knowledge of the decision attribute set. The selected attributes are moved to the core attribute set; 4) Determine whether the selected attribute set is the reduction solution of the data set, if yes, the attribute reduction is completed, if not, go to step 3. The method provided by the invention is especially suitable for high-dimensional data sets.

Owner:SHANGHAI INST OF TECH

Method and Apparatus for fast similarity-based query, self-join, and join for massive, high-dimension datasets

ActiveUS20070299865A1Digital data information retrievalDigital data processing detailsHigh dimensionalityHigh dimensional data sets

Owner:NAHAVA

Methods and apparatus for outlier detection for high dimensional data sets

Methods and apparatus are provided for outlier detection in databases by determining sparse low dimensional projections. These sparse projections are used for the purpose of determining which points are outliers. The methodologies of the invention are very relevant in providing a novel definition of exceptions or outliers for the high dimensional domain of data.

Owner:TREND MICRO INC

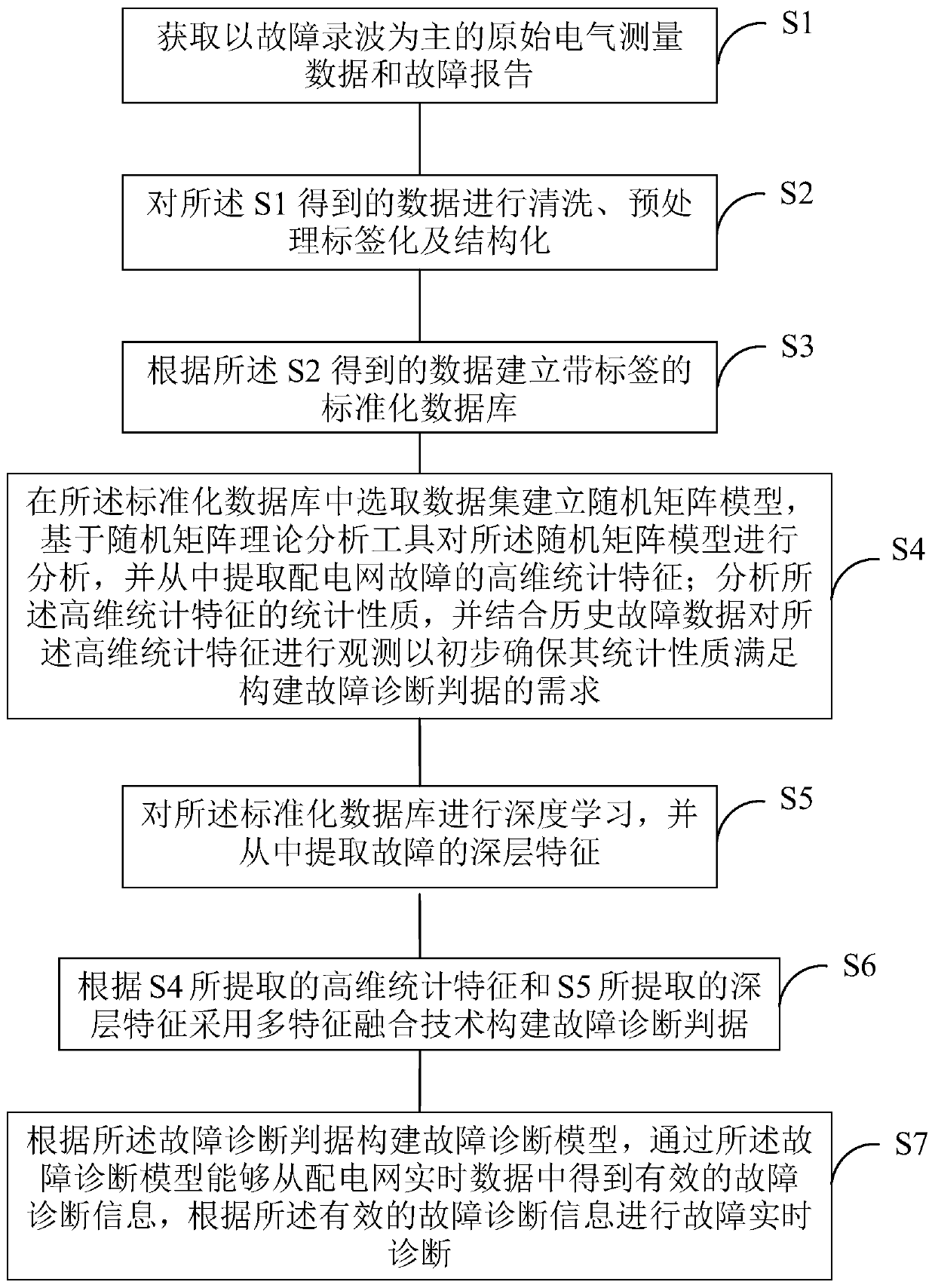

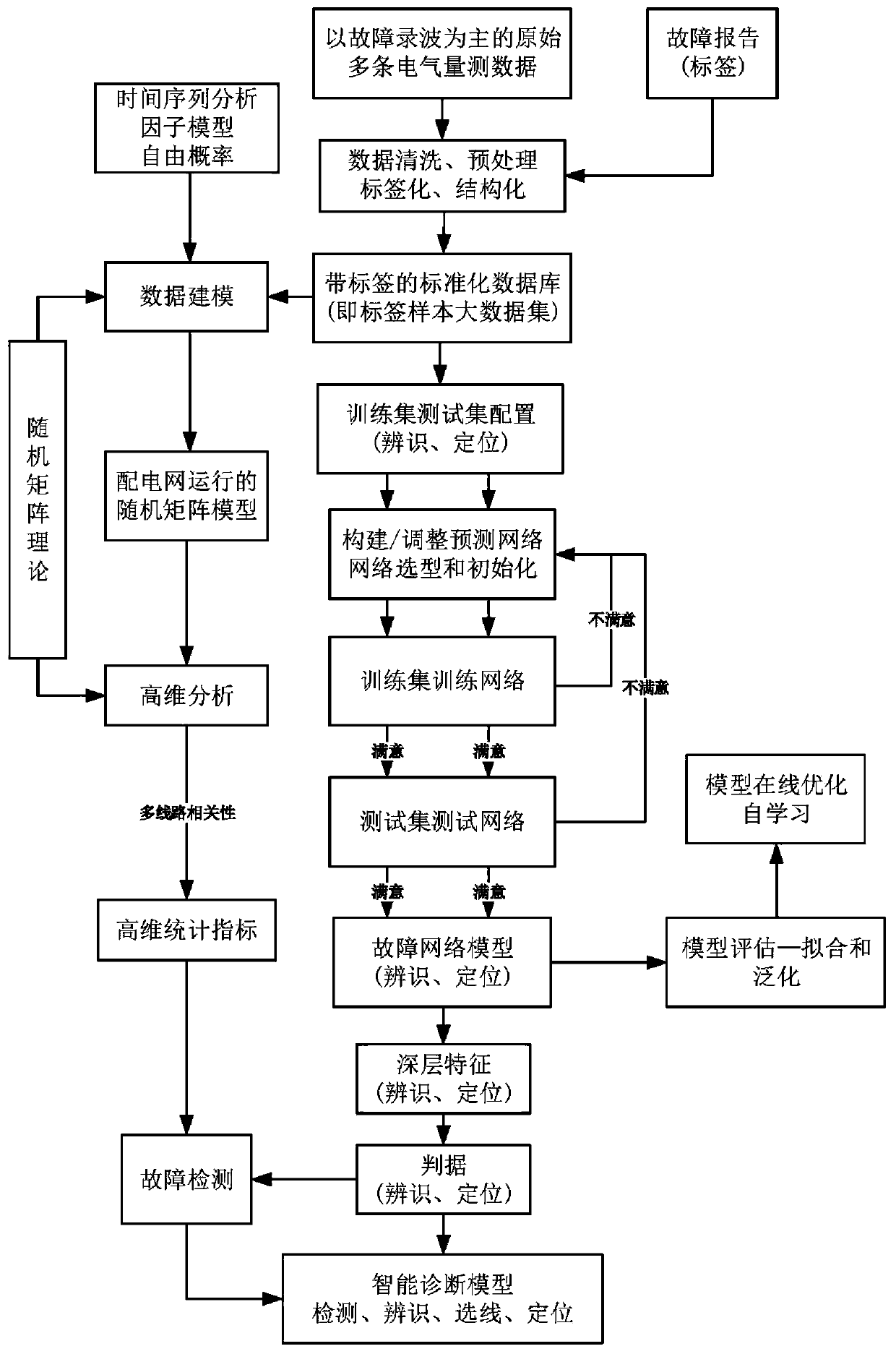

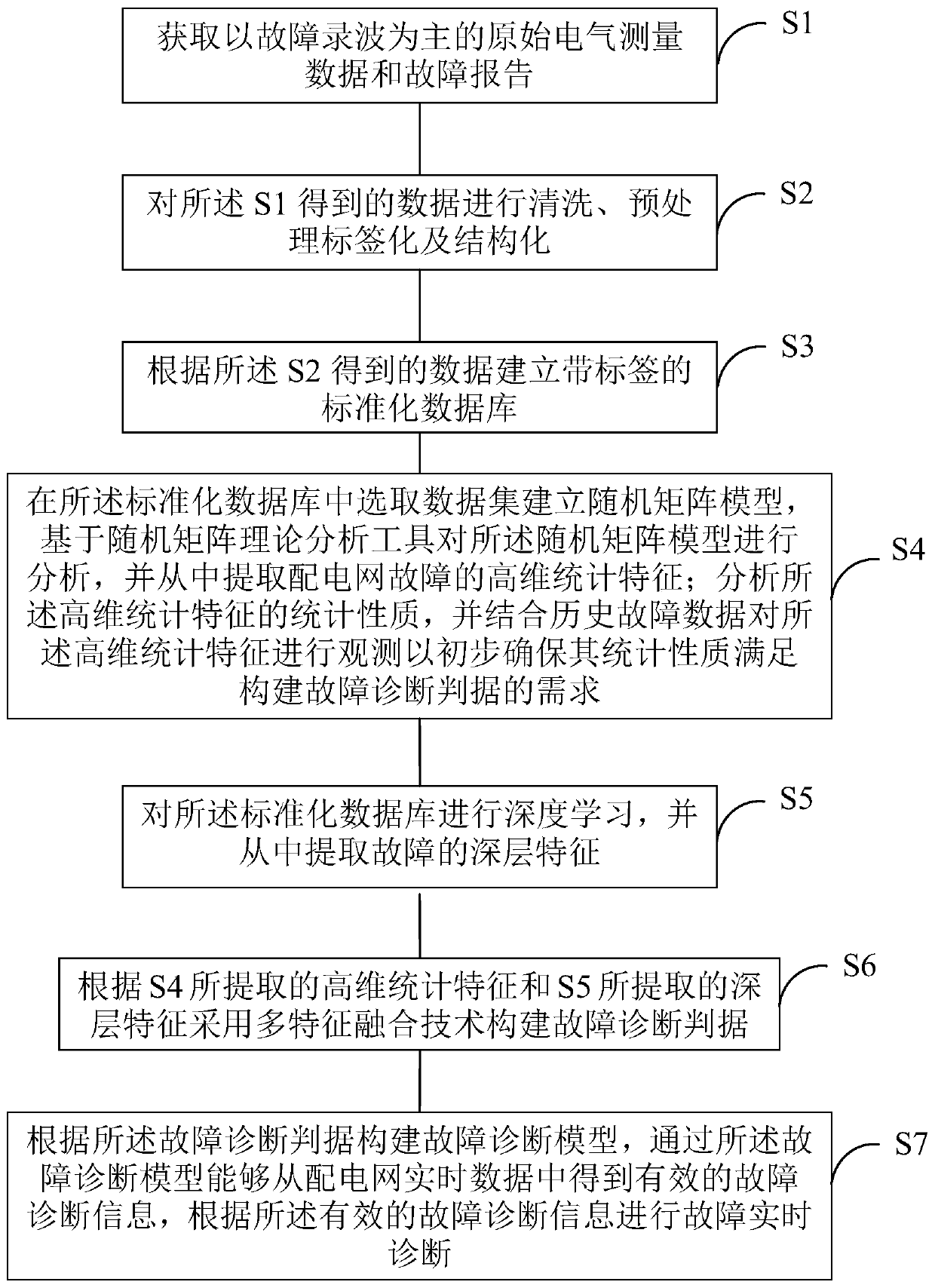

Power distribution network fault diagnosis method based on random matrix and deep learning

ActiveCN110045227AImprove accuracyImprove completenessFault location by conductor typesNeural learning methodsReal-time dataData modeling

The invention discloses a power distribution network fault diagnosis method based on a random matrix and deep learning, and relates to the technical field of power distribution network fault diagnosis. According to the method, two basic tools of a random matrix theory and a deep learning technology are introduced to process a fault high-dimensional data set, wherein the random matrix theory has strict and flexible mathematical analysis capability in a high-dimensional space, and the deep learning technology has excellent high-dimensional data modeling capability; the high-dimensional characteristics of a fault are extracted by the random matrix theory and the deep learning technology; fault criterion is formed by adopting multi-feature fusion technology according to the extracted fault high-dimensional characteristics according to the process; the fault diagnosis model is constructed, effective fault diagnosis information can be obtained from the real-time data of the power distribution network through the fault diagnosis model, and fault real-time diagnosis is carried out according to the effective fault diagnosis information, so that the accuracy and the intelligent degree of thefault diagnosis of the power distribution network are improved.

Owner:ELECTRIC POWER RES INST OF GUANGXI POWER GRID CO LTD

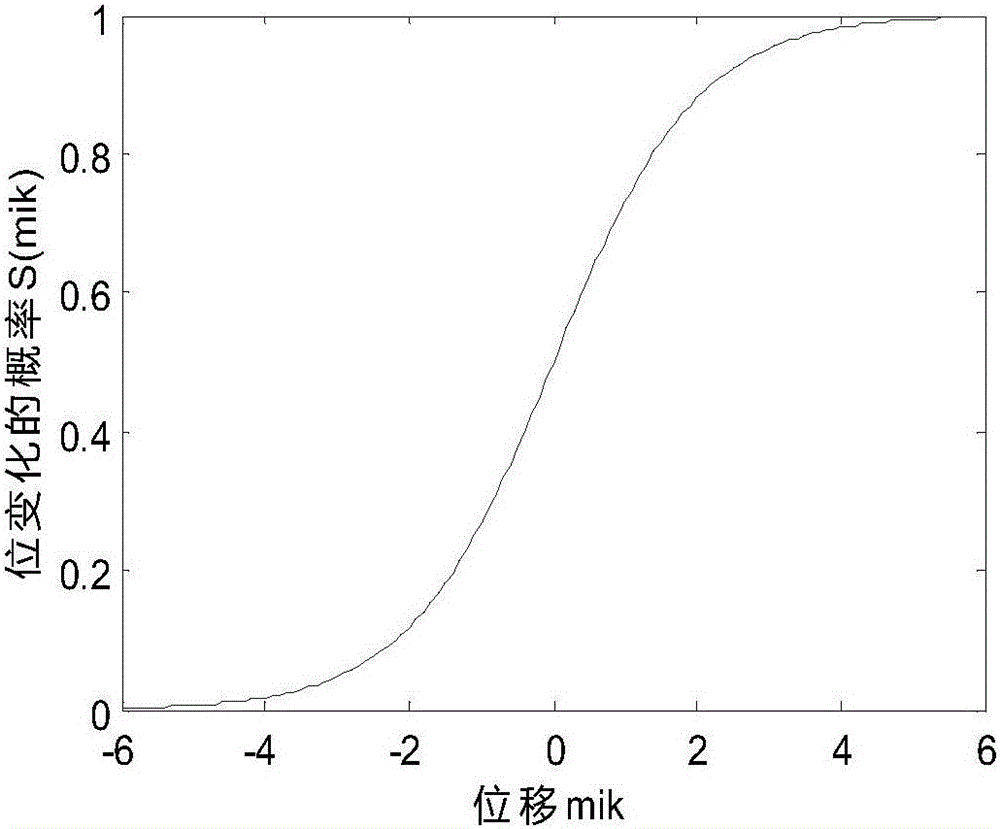

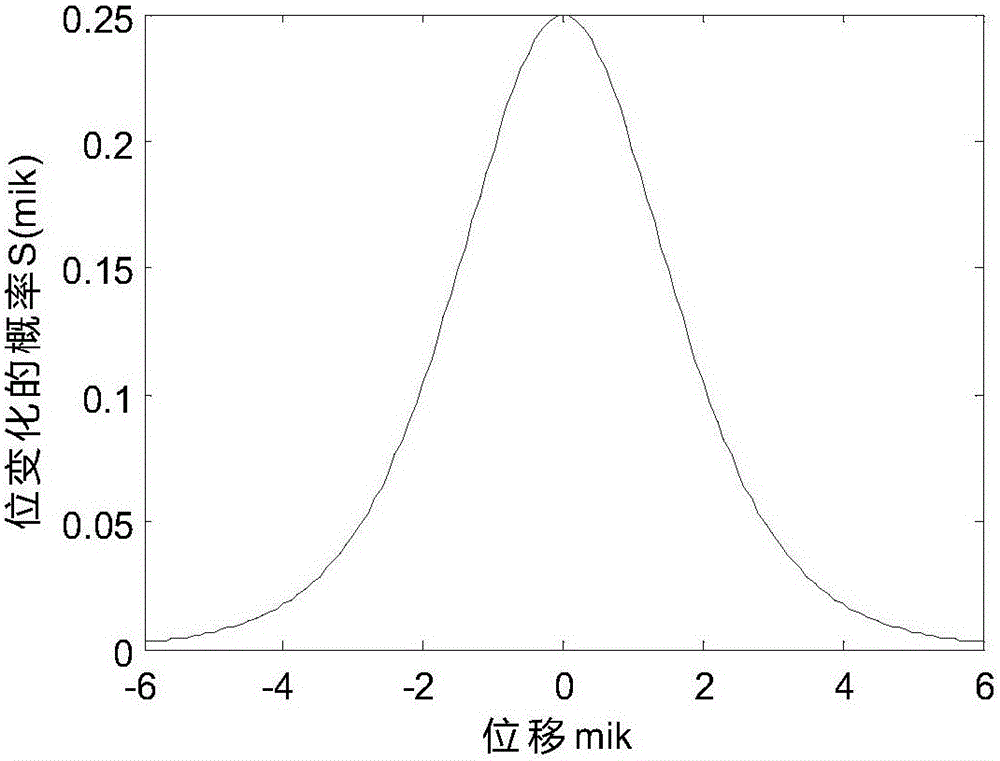

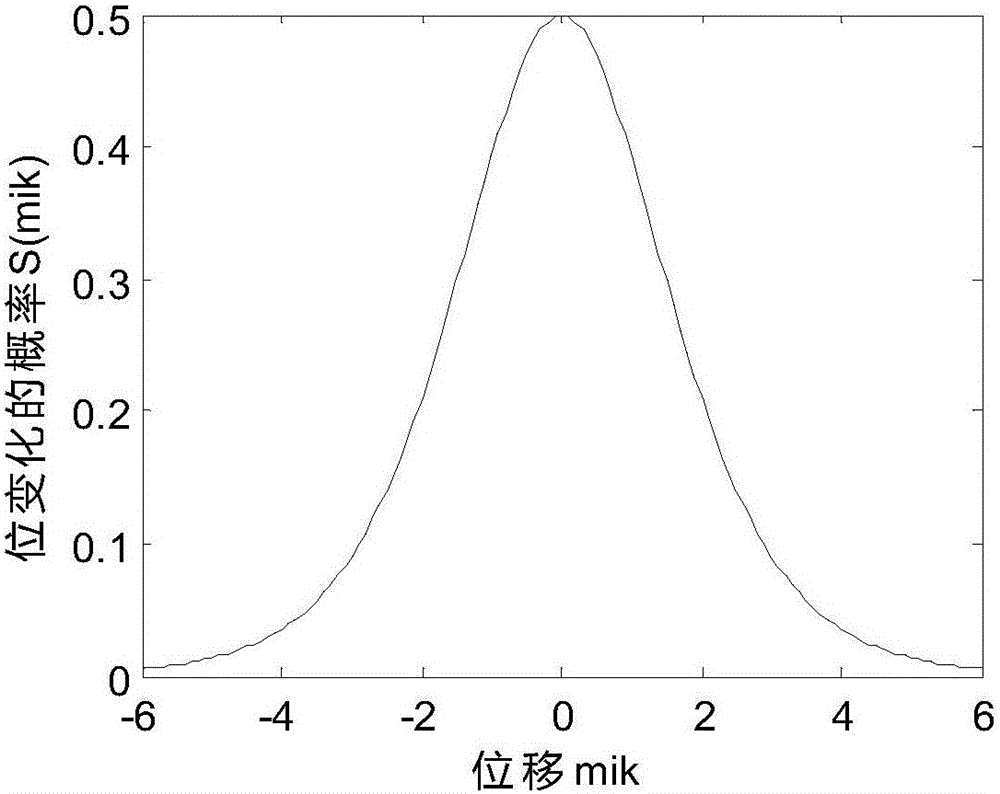

Position difference-based high-precision nearest neighbor search algorithm

InactiveCN106557780AHigh precisionGuaranteed efficiency advantageCharacter and pattern recognitionSorting algorithmPartition of unity

The present invention relates to a position difference-based high-precision nearest neighbor search algorithm. The algorithm comprises the steps of setting the value of an i-th component of an i-th reference point in the high dimension distance position difference factors as -1, and setting the values of other components as 1; setting all unit vectors of which the lengths are 1 as the reference points; calculating the distances Disi between the i-th reference point to all data points; ranking the distances Disi, and generating an ordered sequence; calculating the accurate Euclidean distances between a sample point A to a subsequence of which the length is 2k*epsilon, wherein the epsilon is a length adjusting factor of the subsequence; applying a partial ranking algorithm on the obtained distance values to obtain k minimum Euclidean distances; if the nearest neighboring points of all data points to which the reference points are applied are calculated, calculating the high dimension distance position difference factors of all data points and a terminal point, otherwise, enabling i=i+1, and returning to the first step. Under the premise of not increasing the time complexity, the position difference-based high-precision nearest neighbor search algorithm of the present invention enables the precision to be improved, and retains the advantages of being independent of the indexes in a high dimension data set, being efficient and online, etc.

Owner:四川外国语大学重庆南方翻译学院

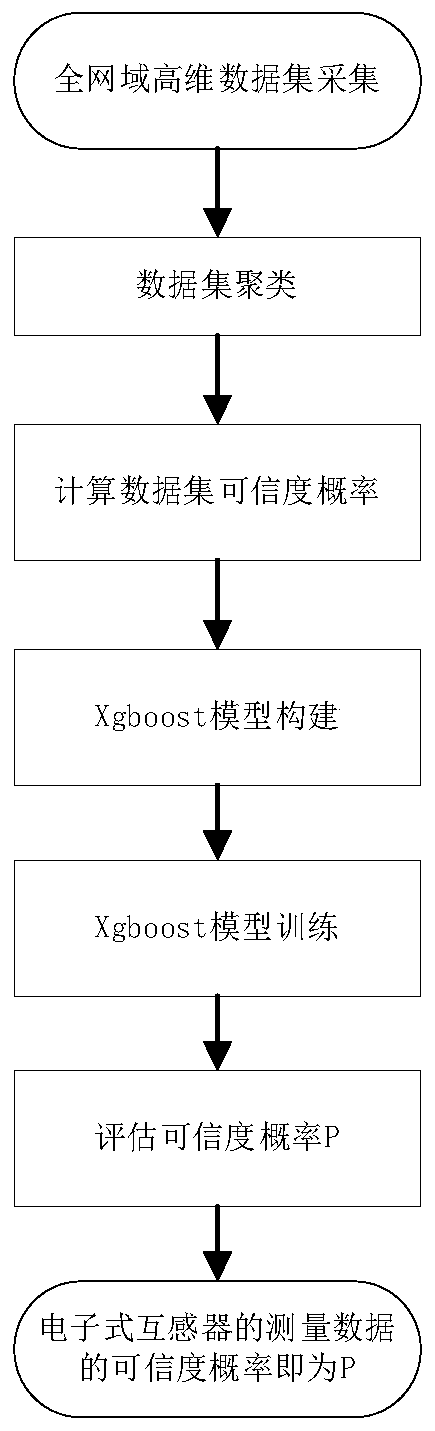

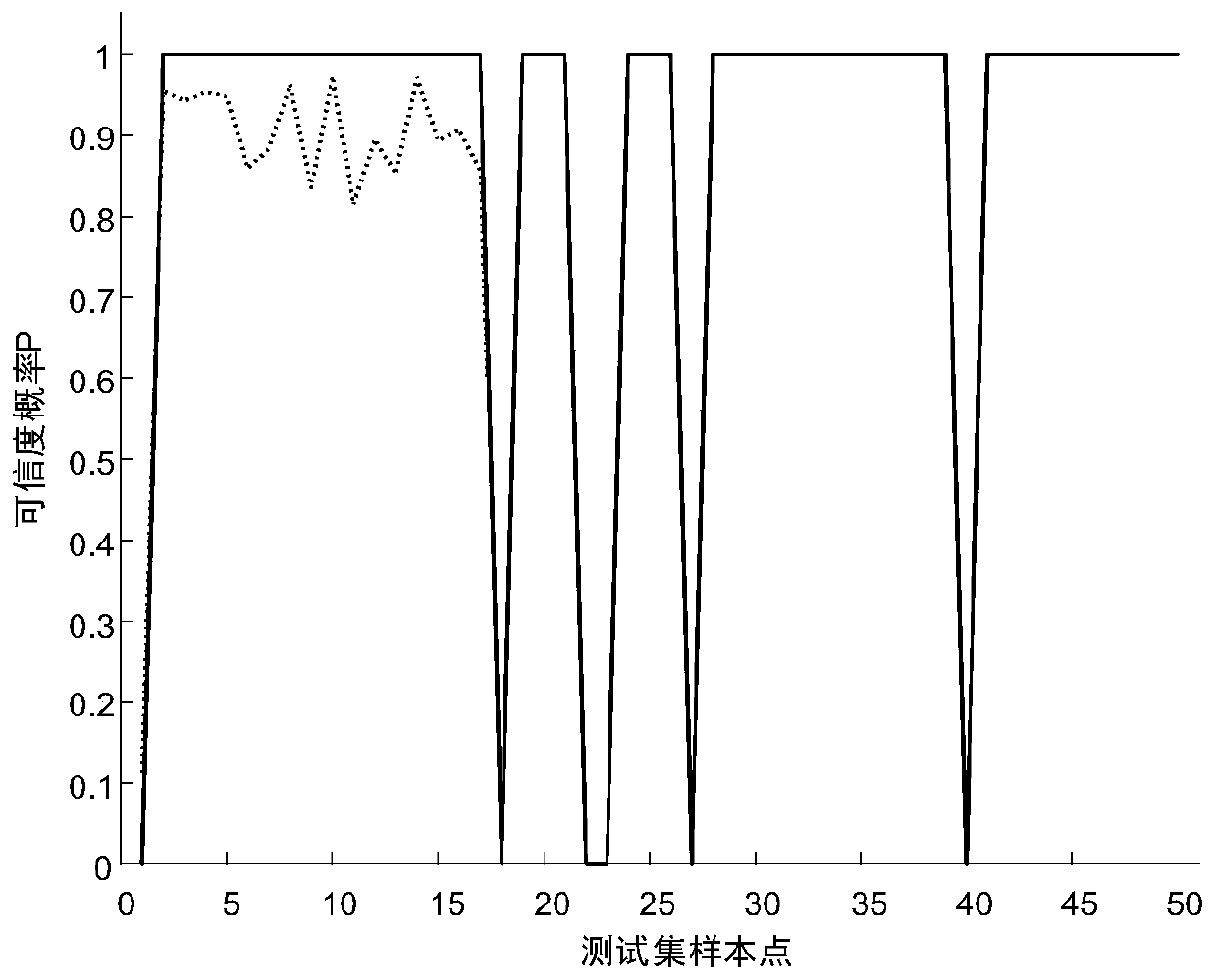

Electronic transformer credibility evaluation method and device based on whole-network domain evidence set

ActiveCN110632546APrediction Confidence ProbabilitySolve the problem that the error state cannot be evaluatedCharacter and pattern recognitionElectrical measurementsTransformerHigh dimensional

The invention discloses an electronic transformer credibility probability evaluation method and device based on a whole-network domain evidence set. The method comprises the steps of clustering high-dimensional data sets of an electronic transformer to obtain different clusters of the clustered high-dimensional data sets of the electronic transformer, and removing noise point data; outputting a predicted credibility probability according to the credibility probabilities of the high-dimensional data sets of the electronic transformer and the different clusters of the high-dimensional data setsof the electronic transformer, and training a pre-established XGBoost model to obtain a trained XGBoost model; and inputting the high-dimensional data set of the to-be-evaluated electronic transformerinto the trained XGBoost model to obtain a predicted credibility probability, so that whether the electronic transformer has a metering error or not is judged, and the credibility of the measured data of the electronic transformer is evaluated under the condition of not depending on a standard transformer.

Owner:STATE GRID JIANGSU ELECTRIC POWER CO ELECTRIC POWER RES INST +4

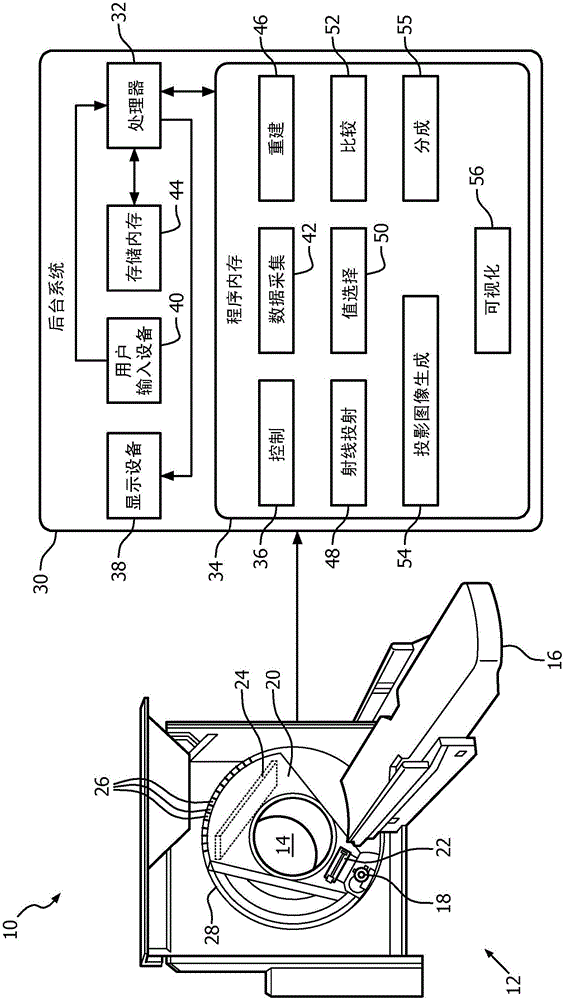

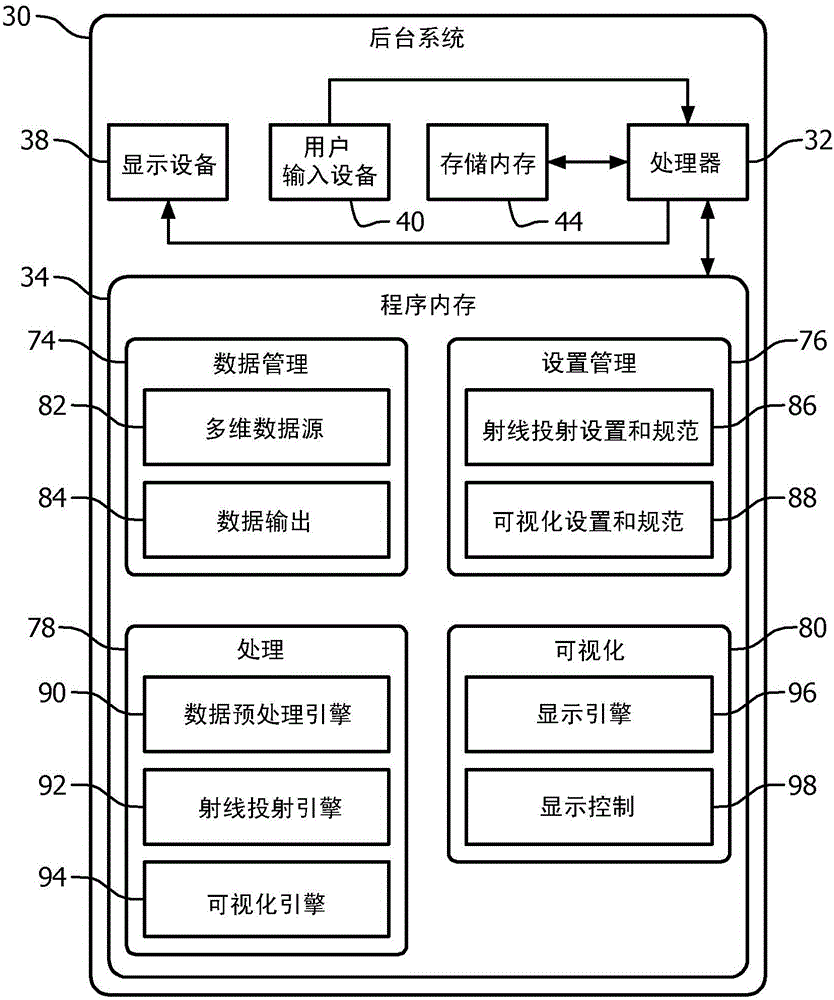

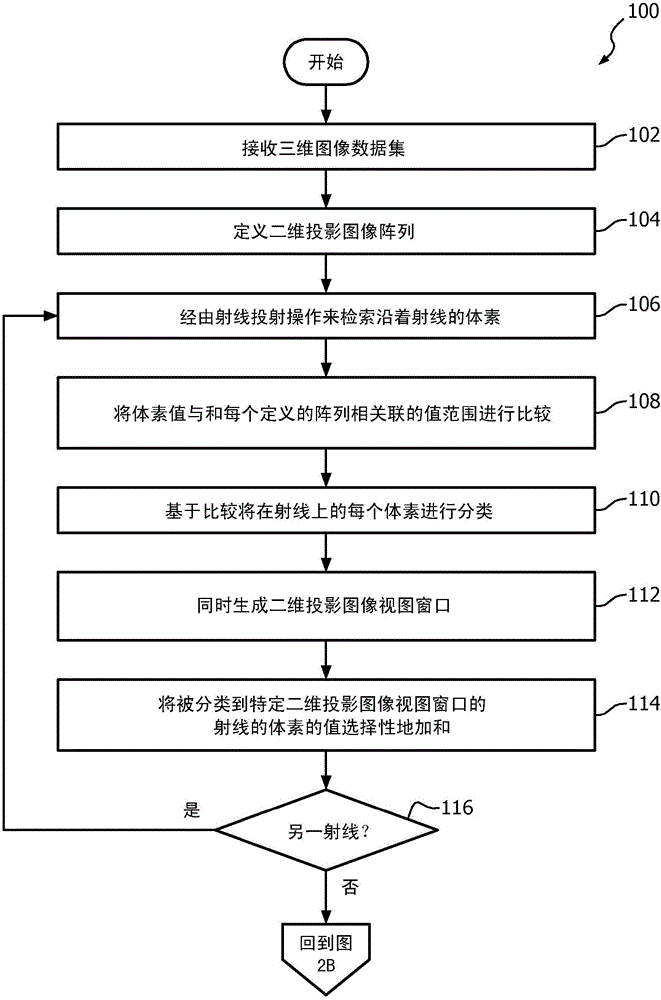

Layered two-dimensional projection generation and display

ActiveCN105103193AIncrease spaceHigh resolutionImage enhancementReconstruction from projectionColor imageVoxel

An imaging system (10) generates a layered reconstructed radiograph (LRR) (66) of a subject. The system (10) takes as input a three-dimensional (3D) or higher dimensional data set (68) of the object, e.g. data produced by an image scanner (12). At least one processor (32) is programmed to define a set of two-dimensional (2D) projection images and associated view windows (60, 62, 64) corresponding to a set of voxel value (tissue) types with corresponding voxel value specification (50); determine the contribution of each processed voxel along each of a plurality of rays (72) through the 3D data set (68) to one of the predetermined voxel value (tissue) types in accordance with each voxel value with respect to the voxel value selection specification (50); and concurrently generate a 2D projection image corresponding to each of the voxel value specifications and related image view windows (60, 62, 64) based on the processed voxel values satisfying the corresponding voxel value specification (50). Each image is colorized differently and corresponding pixels in the images are aligned. An LRR (66) is generated by layering the aligned, colorized images and displaying as a multi-channel color image, e.g. an RGB image.

Owner:KONINKLJIJKE PHILIPS NV

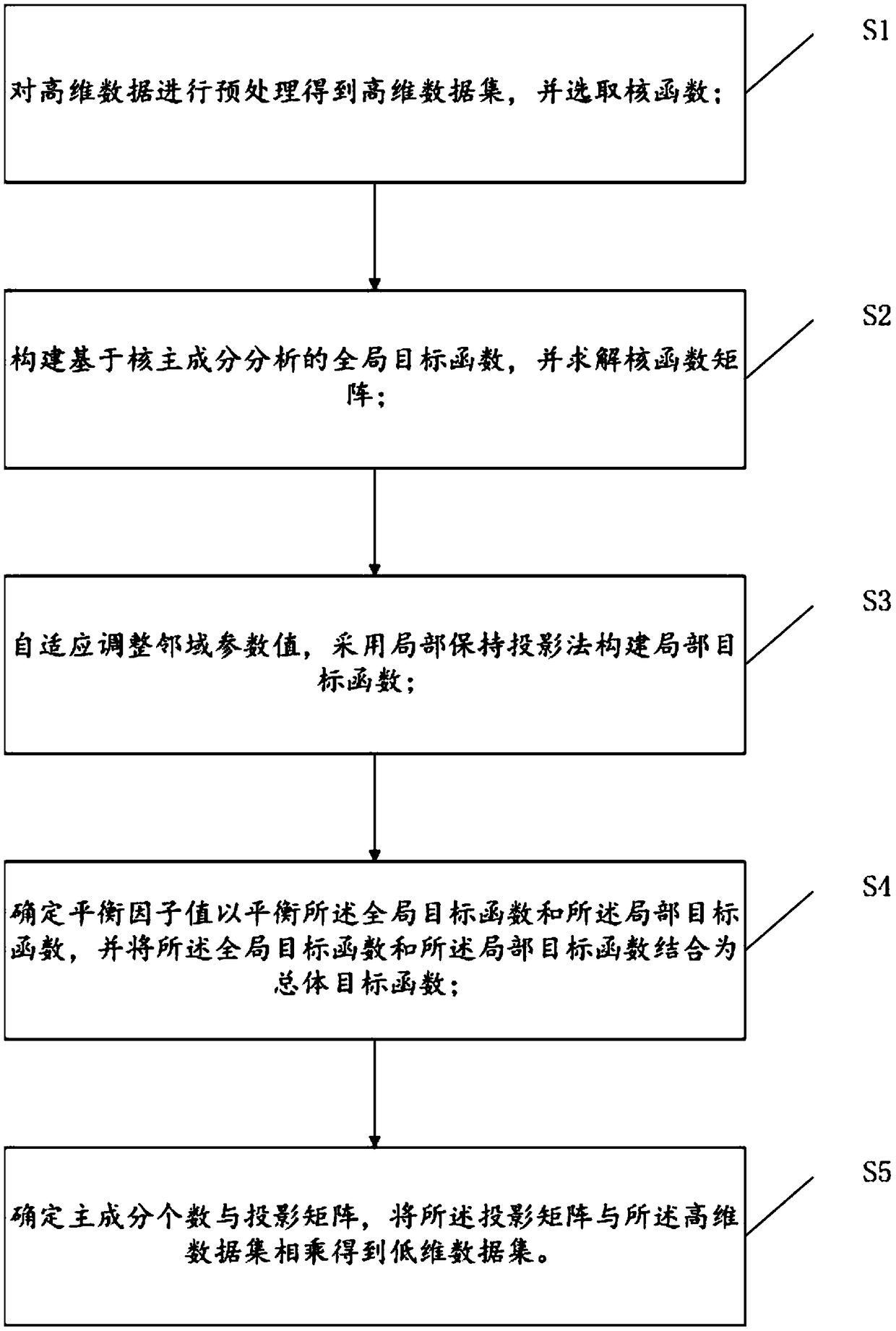

A data dimensionality reduction method

InactiveCN109189776AImprove operational efficiencyReduce operationDigital data information retrievalSpecial data processing applicationsKernel principal component analysisHat matrix

The invention discloses a data dimensionality reduction method, which comprises the following steps: preprocessing the high-dimensional data to obtain a high-dimensional data set, and selecting a kernel function; constructing a global objective function based on kernel principal component analysis and solving the kernel matrix; adaptively adjusting the neighborhood parameter value and constructingthe local objective function by local preserving projection method; determining a balance factor value to balance the global objective function and the local objective function, and combining the global objective function and the local objective function into an overall objective function; determining the number of principal components and the projection matrix, and multiplying the projection matrix and the high-dimensional data set to obtain a low-dimensional data set. The data dimensionality reduction method provided by the embodiment of the invention, based on the kernel principal component analysis and the local preservation projection method, can remove noise and redundant information in a high-dimensional data set, reduce unnecessary operation process in data mining, and improve theoperation efficiency of the algorithm.

Owner:GUANGDONG POWER GRID CO LTD +1

Attribute selection method based on binary system firefly algorithm

InactiveCN105824937AReduce complexityImprove processing efficiencyArtificial lifeSpecial data processing applicationsHigh dimensionalityAlgorithm

The invention discloses an attribute selection method based on a binary system firefly algorithm. The attribute selection method is characterized in comprising the following steps: 1: utilizing a fractal dimension box-counting method to calculate a fractal dimension of a high-dimension data set, and obtaining the number of attributes which need to be selected; 2: initializing a firefly population; 3: utilizing the binary system firefly algorithm to select a plurality of attributes of the high-dimension data set to obtain an optimal attribute subset; 4: outputting an optimal solution. The attribute selection method uses the binary system firefly algorithm as a search strategy of attribute selection, takes the fractal dimension as an attribute selection evaluation measurement criterion, and selects the optimal attribute subset from the plurality of index attributes of the high-dimension data set, so that the data processing complexity can be lowered, and the data processing efficiency is improved so as to meet the requirements of solving practical problems.

Owner:HEFEI UNIV OF TECH

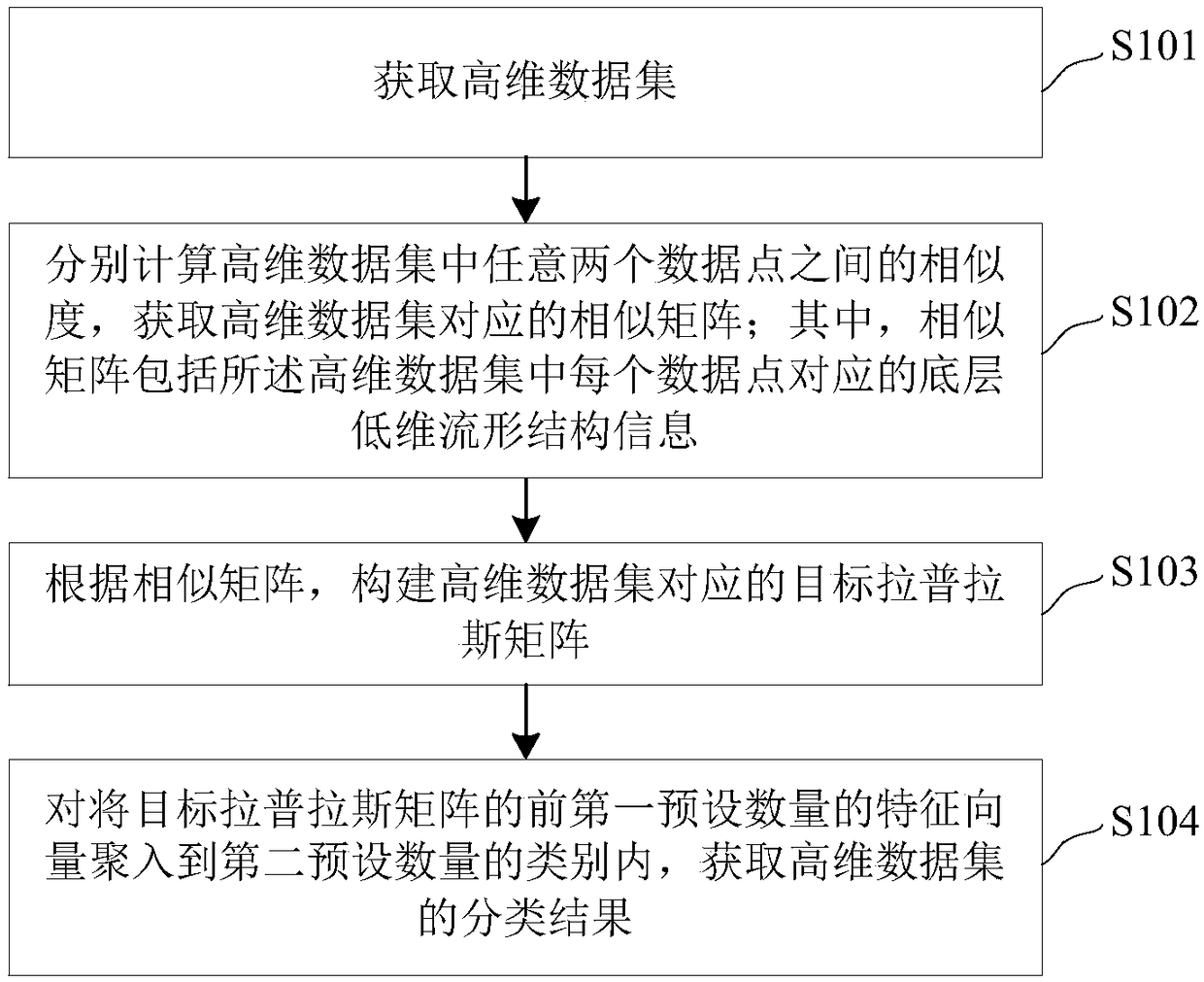

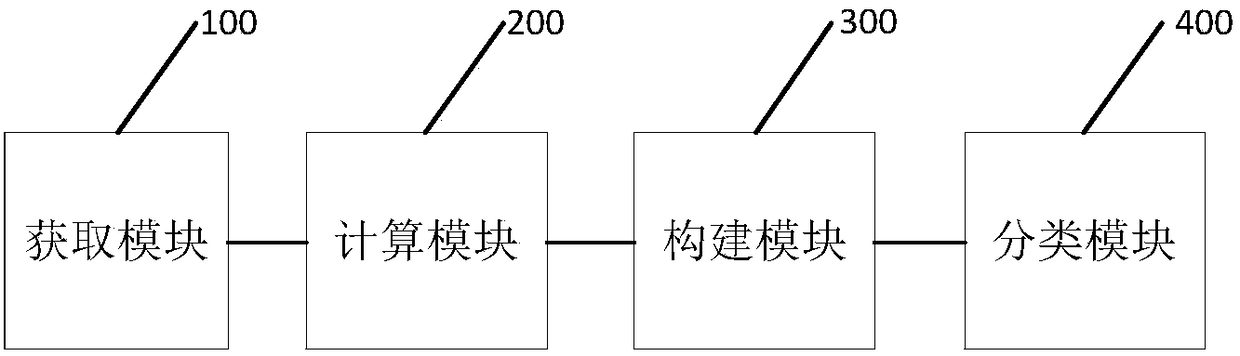

Multi-manifold based handwritten data classification method and system

ActiveCN108388869APreserve the original structureImprove recognition accuracyDigital ink recognitionFeature vectorHigh dimensionality

The invention discloses a multi-manifold based handwritten data classification method and system. The method comprises that a high-dimension data set is obtained; the similarity between any two data points in the high-dimension data set is calculated, and a similarity matrix corresponding to the high-dimension data set is obtained; according to the similarity matrix, a target Laplacian matrix corresponding to the high-dimension dataset is constructed; and a first preset number of former characteristic vectors in the target Laplacian matrix are gathered into a second preset number of classes, and a classification result of the high-dimension data set is obtained. The similarity matrix corresponding to the high-dimension data set is obtained, a bottom low-dimension mapping manifold structureof the high-dimension data is obtained, the Laplacian matrix taking both the high-dimension structure of high-dimension data and a bottom low-dimension mapping structure into consider can be constructed, the target Laplacian matrix is used to decompose characteristic values of the Laplacian matrix, the obtained characteristic values are clustered, a clustering result of the high-dimension data set is obtained, and an original structure of the high-dimension data is reserves as much as possible.

Owner:SUZHOU UNIV

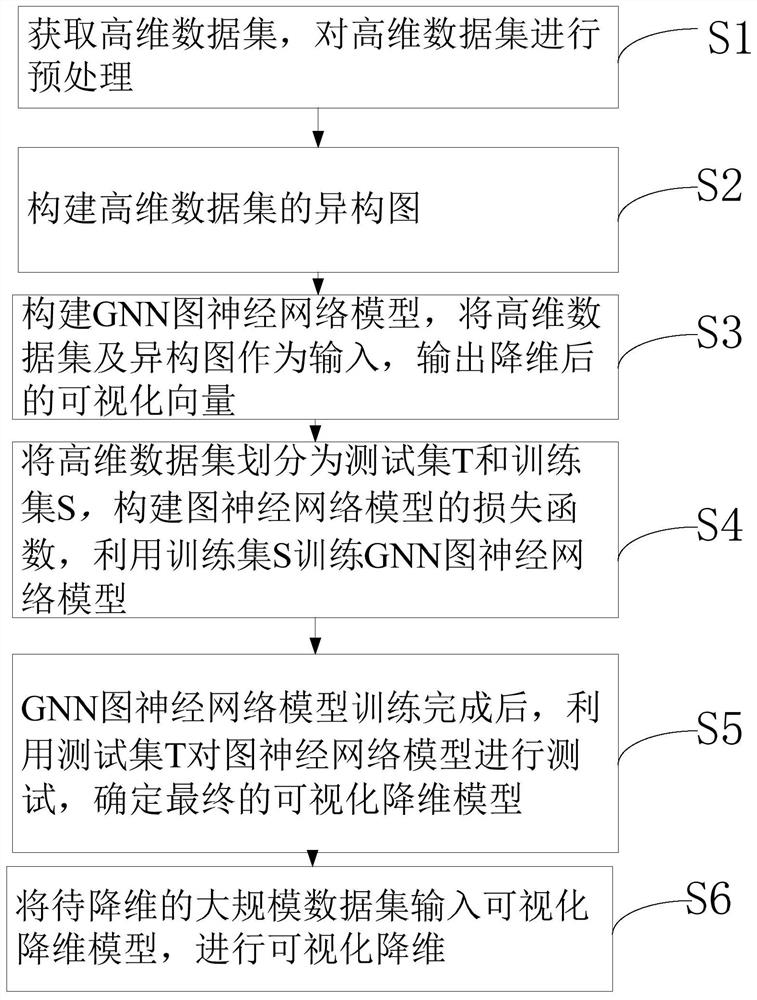

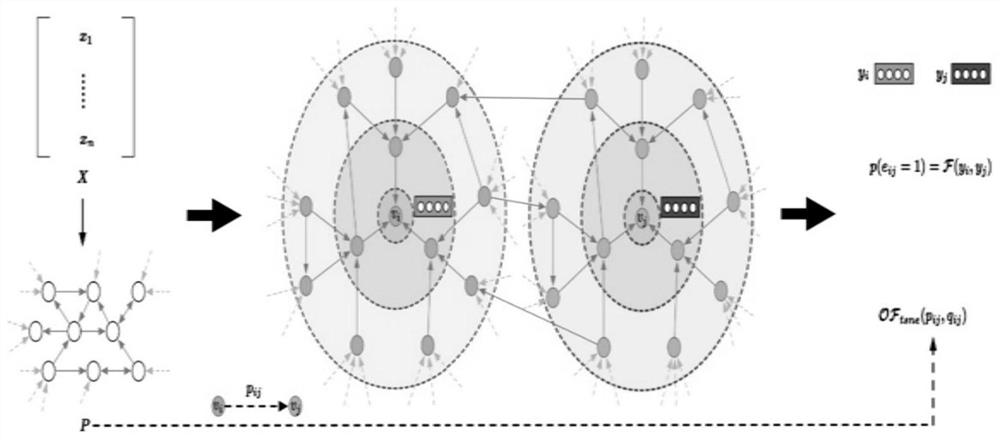

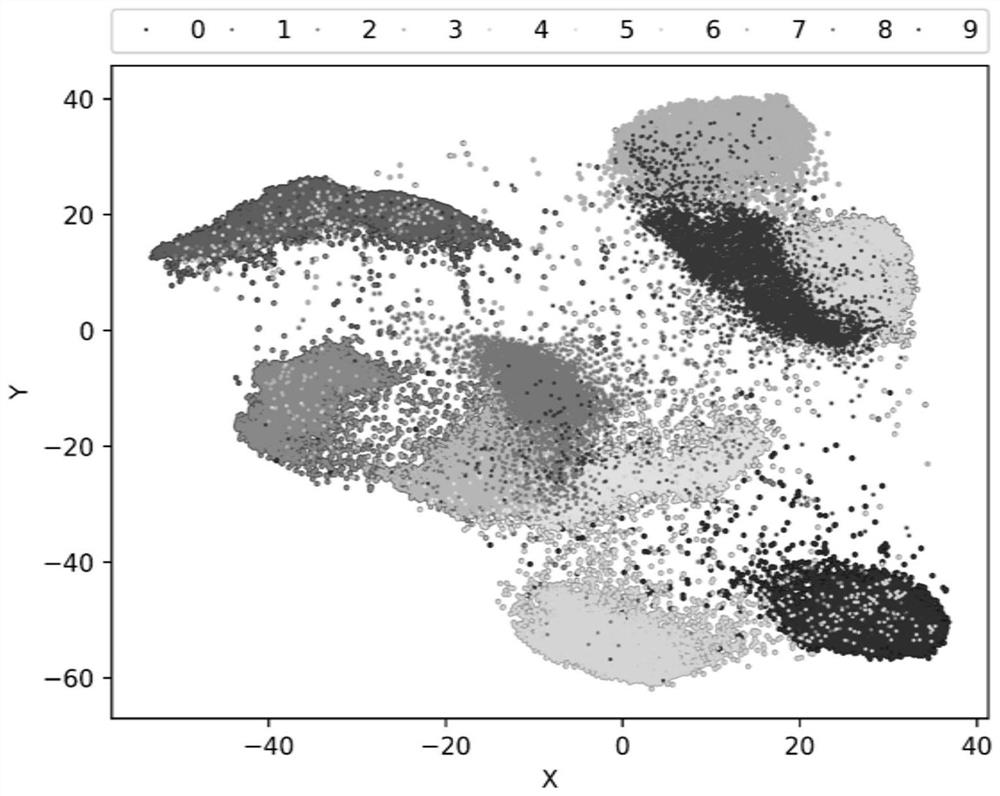

Large-scale data visualization dimension reduction method based on graph neural network

ActiveCN112241478AReduce dimensionalityReduce the cost of trainingNeural architecturesOther databases browsing/visualisationAlgorithmData information

The invention provides a visualized dimension reduction method based on a graph neural network, and relates to the technical field of deep learning and large-scale data processing. The problems that amodel cannot be subjected to large-scale data training, a non-parametric visualization dimensionality reduction model cannot process visualization of unknown data points and a visualization result ofthe parametric visualization dimensionality reduction model is poor in the prior art are solved. After an obtained high-dimensional data set is divided and preprocessed, a heterogeneous graph is constructed, a GNN graph neural network model is established, a loss function is confirmed, then training is carried out, testing is carried out after training is completed, a loss function carries out visual dimensionality reduction on high-dimensional large-scale data, innovative training is carried out by adopting the thought of subgraph negative sampling, the training cost of the model is reduced,the dimensionality of the data can be reduced, but a considerable part of high-dimensional data information is kept; therefore, subsequent data analysis and processing become more meaningful and easier.

Owner:GUANGDONG UNIV OF TECH

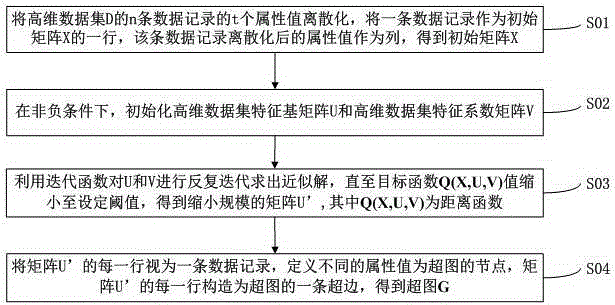

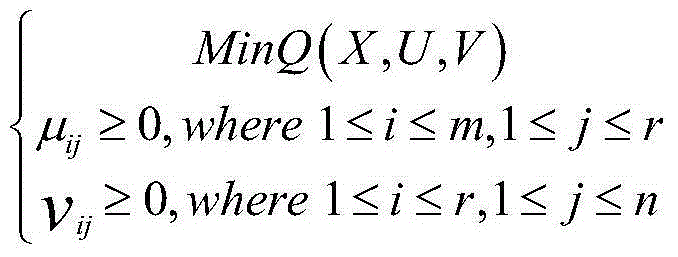

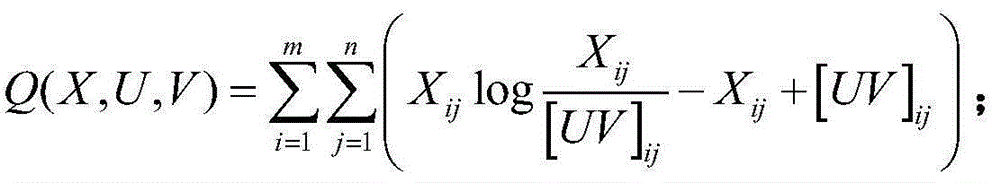

High-dimensional data hypergraph model construction method based on feature induction

InactiveCN105224508ASmall scaleReduce problem sizeCharacter and pattern recognitionComplex mathematical operationsAlgorithmHypergraph

The present invention discloses a high-dimensional data hypergraph model construction method based on feature induction. The method comprises the following steps: discretizing t attribute values of n data records of a high-dimensional data set D, using one data record as one row of an initial matrix X, and using the discretized attribute values of the data record as a column, thereby obtaining the initial matrix X; under the non-negative condition, initializing a high-dimensional data set feature-based matrix U and a high-dimensional data set feature coefficient matrix V; performing repeated iteration on the U and V by using an iteration function to obtain an approximate solution until a value of an objective function Q(X, U, V) is reduced to a set threshold, thereby obtaining a matrix U' with a reduced scale; and considering each row of the matrix U' as a data record, defining different attribute values as nodes of a hypergraph, and constructing one hyperedge of the hypergraph with each row of the matrix U', thereby obtaining the hypergraph G. The high-dimensional data hypergraph model construction method based on feature induction is capable of performing complete cluster analysis on a high-dimensional data set, and can further improve the operation efficiency of a high-dimensional data clustering algorithm.

Owner:YANCHENG INST OF TECH

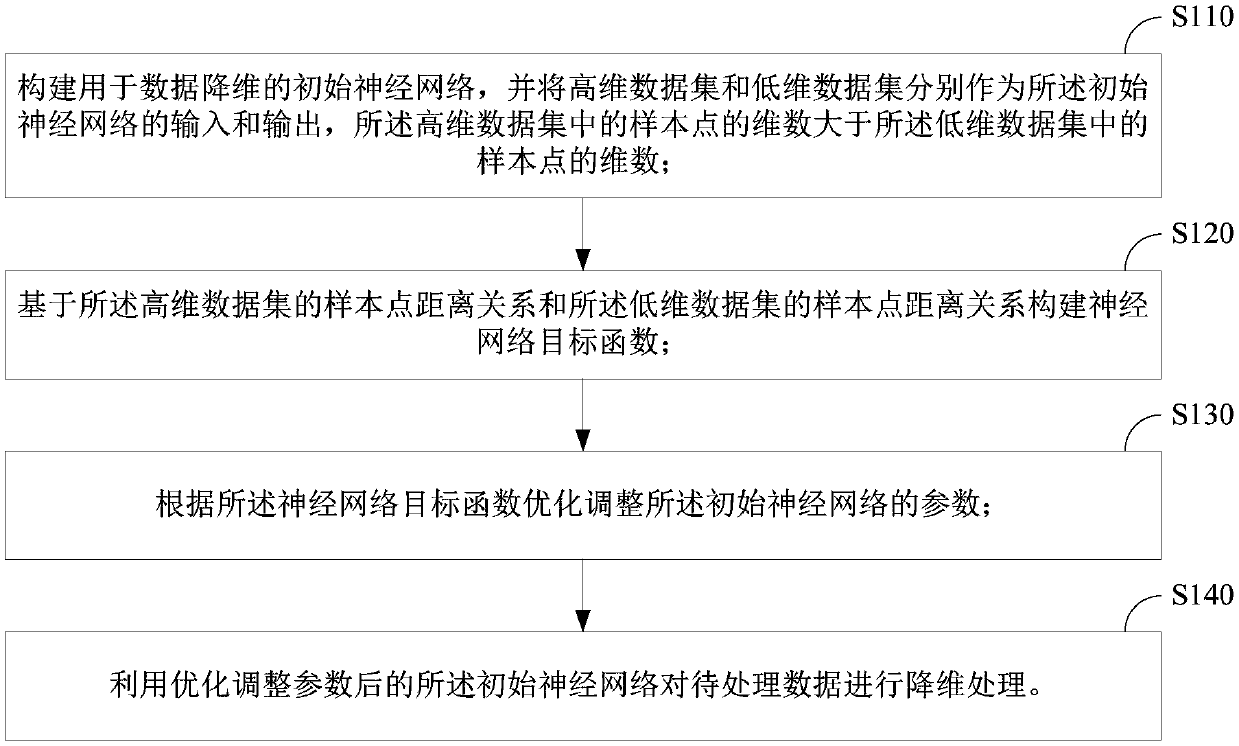

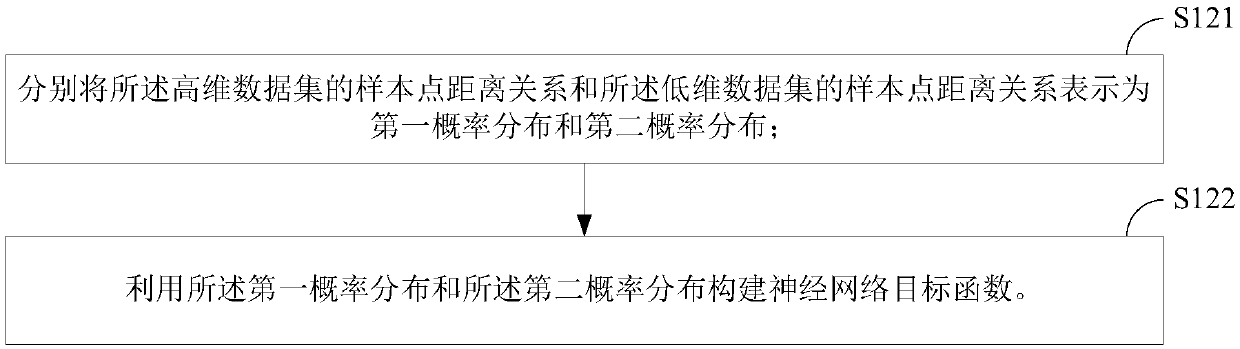

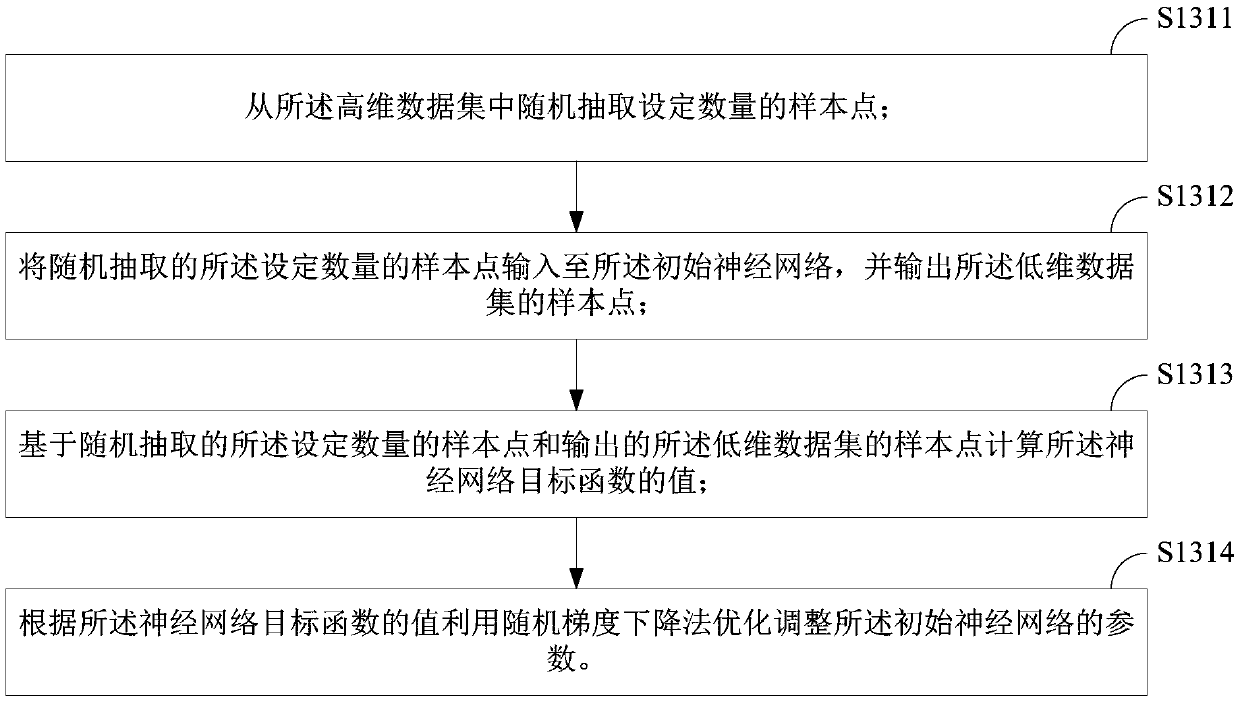

Data dimension reduction method and device

InactiveCN109558899AMaintain the global sample point distance relationshipEasy to rememberCharacter and pattern recognitionNeural architecturesDimensionality reductionHigh dimensional

The invention provides a data dimension reduction method and device. The method comprises the steps of constructing an initial neural network used for data dimension reduction, using a high-dimensional data set and a low-dimensional data set as input and output of the initial neural network respectively, wherein the dimension of sample points in the high-dimensional data set is larger than that ofsample points in the low-dimensional data set; constructing a neural network objective function based on the sample point distance relationship of the high-dimensional data set and the sample point distance relationship of the low-dimensional data set; optimizing and adjusting parameters of the initial neural network according to the neural network objective function; and carrying out dimension reduction processing on to-be-processed data by using the initial neural network after parameter optimization and adjustment. The low-dimensional data set obtained through the scheme can keep the global characteristics of the high-dimensional data set.

Owner:PETROCHINA CO LTD

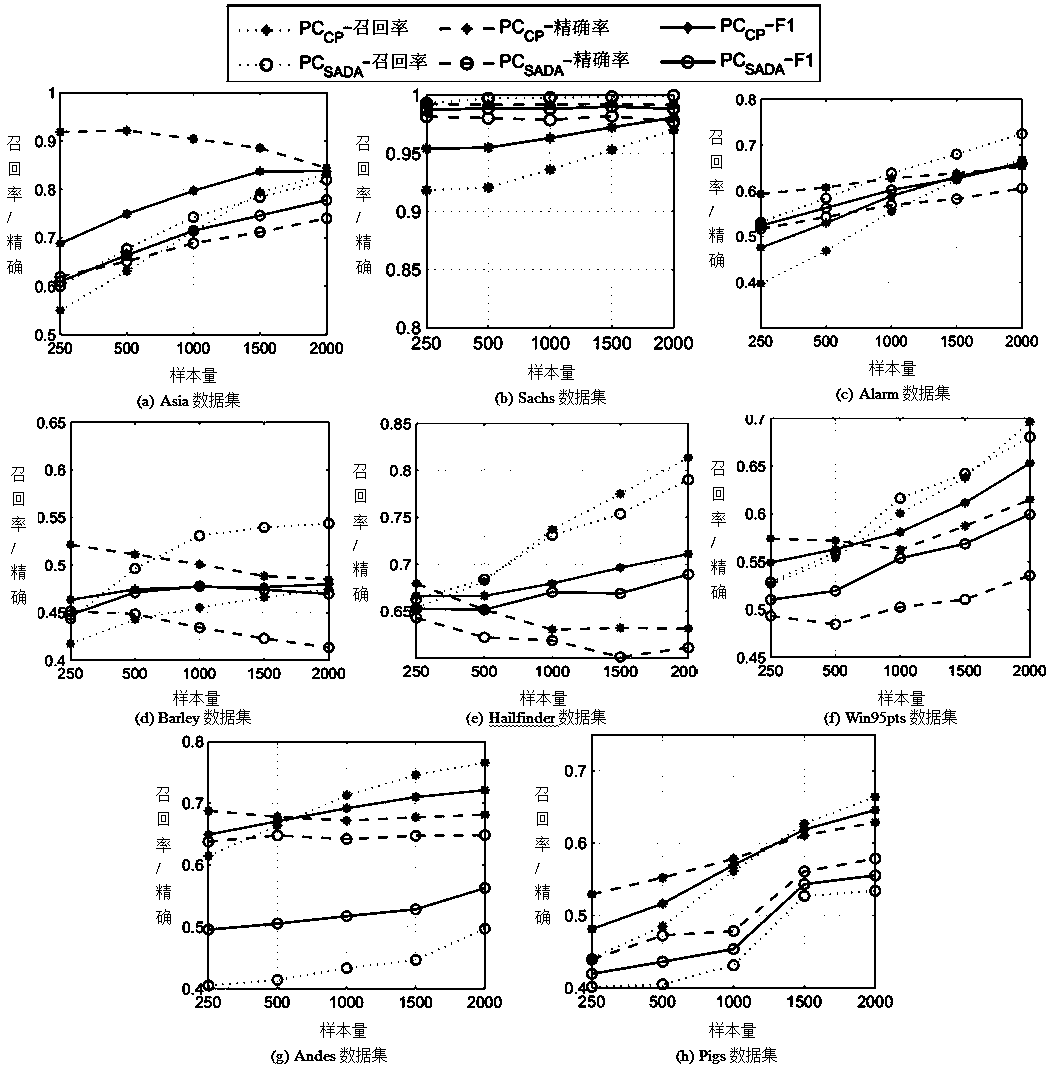

A recursive causal inference method based on causal segmentation

InactiveCN109542936AImprove accuracyHigh speedDigital data information retrievalSpecial data processing applicationsData dredgingThe Internet

The invention belongs to the technical field of data mining, in particular to a recursive causal inference method based on causal segmentation. The method of the invention adopts the divide-and-conquer strategy, recursively utilizes the low-order conditional independence test to divide the data set into layers of causality, then reconstructs the causality of each sub-data set, and finally merges to obtain the whole causality information of the data set. This method can be used in high-dimensional data set for causal inference and causal relationship mining. Under the background of big data era, causality inference algorithm has been widely used in the fields of economics, Internet social network, medical big data and so on, but high-dimensional data problem is a common problem in the industry information intelligence, so it is urgent to solve the related problems in this field. The present invention is helpful to solve the problem of how to deal with the ever-increasing mass data causal information mining, and plays an important role in extracting the precious causal information in the mass data.

Owner:FUDAN UNIV

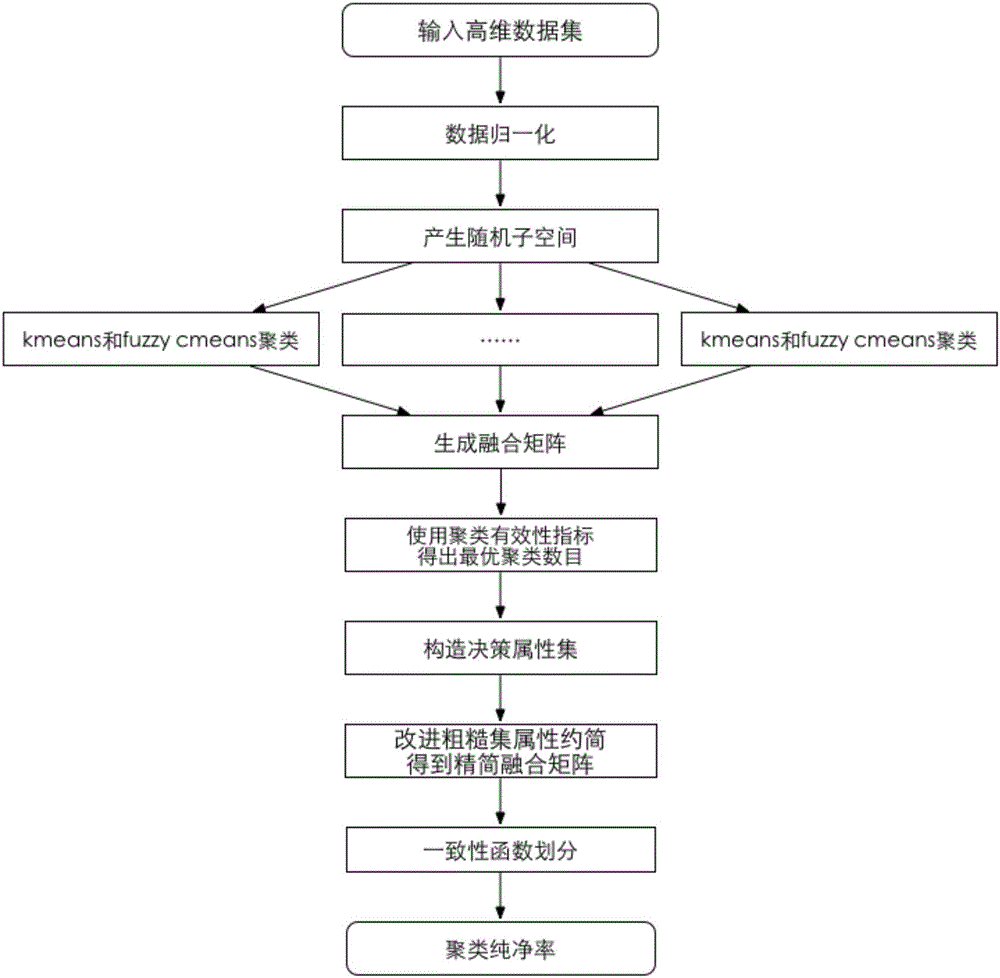

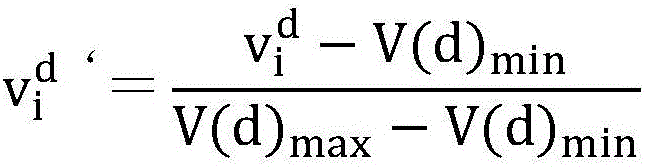

High-dimension data soft and hard clustering integration method based on random subspace

InactiveCN106446947AClustering is fastGood varietyCharacter and pattern recognitionState of artHigh dimensionality

The invention discloses a high-dimension data soft and hard clustering integration method based on a random subspace. The method comprises the following steps of (1) inputting a high-dimension data set; (2) performing data normalization; (3) generating the random subspace; (4) performing kmeans and fuzzy cmeans clustering; (5) generating a fusion matrix; (6) using a clustering validity index to obtain an optimum clustering number; (7) constructing a decision attribute set; (8) improving rough set attribute reduction to obtain a simplified fusion matrix; (9) performing consistency function division; (10) obtaining a clustering purification rate. By using the method provided by the invention, the random subspace is used for solving the problem of processing difficulty of high-dimension data; the combination of soft clustering and hard clustering is used; original data and intermediate result information are sufficiently utilized for performing the intermediate result redundant attribute reduction; the clustering accuracy is improved; meanwhile, the clustering speed is also accelerated; the problems of incapability of sufficiently utilizing clustering information and removing redundant information in the prior art are solved.

Owner:SOUTH CHINA UNIV OF TECH

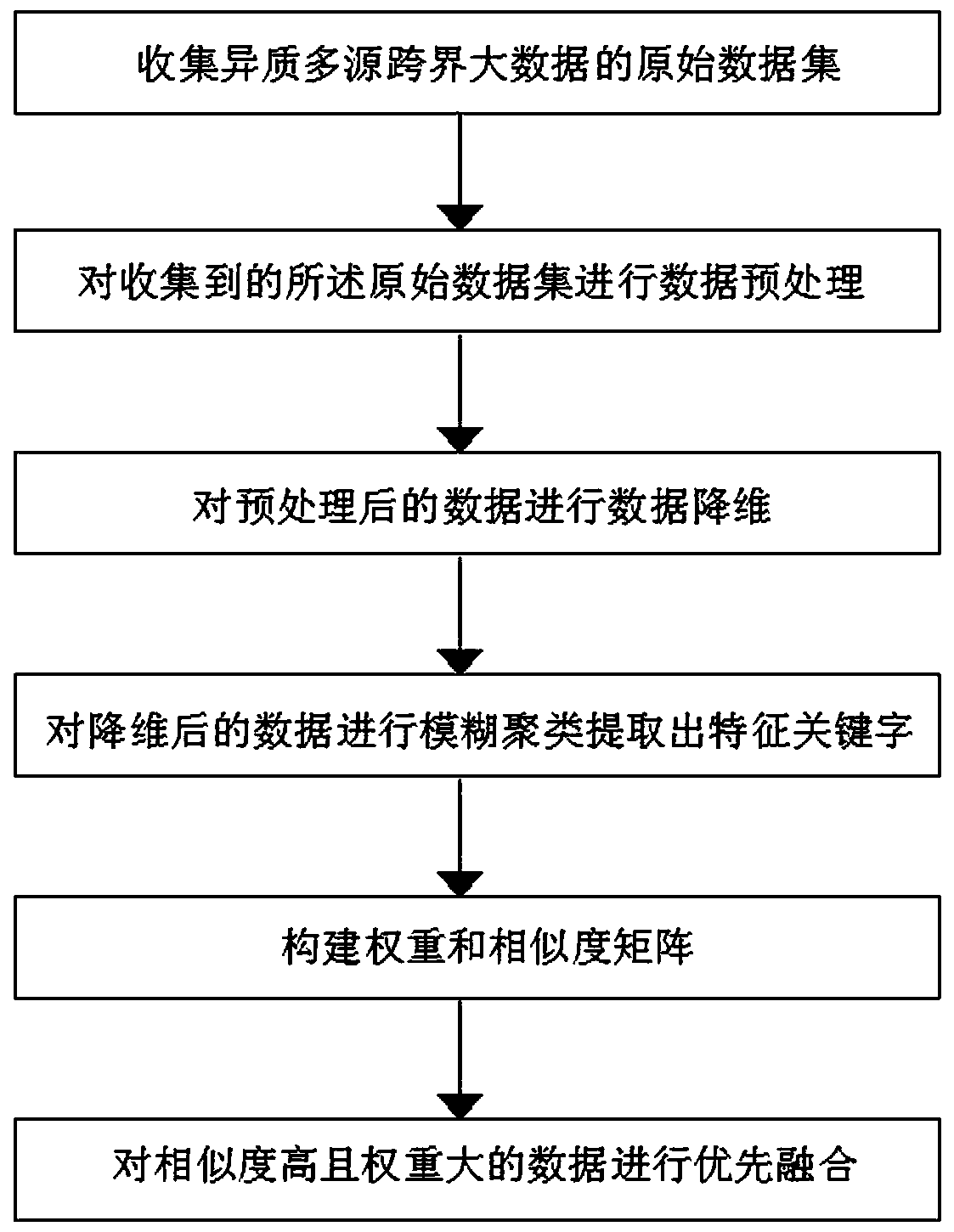

Data fusion method based on FCM algorithm

InactiveCN110990498AImprove effective utilizationEfficient integrationRelational databasesMachine learningAlgorithmOriginal data

The invention discloses a data fusion method based on FCM algorithm. The method comprises the following steps: S1, collecting an original data set of heterogeneous multi-source cross-boundary big data; S2, performing data preprocessing on the collected original data set; S3, performing data dimension reduction on the preprocessed data; S4, performing fuzzy clustering on the data subjected to dimension reduction by utilizing FCM algorithm to extract feature keywords; A5, calculating the weights of the feature keywords and the similarity between the different feature keywords through TF-IDF technology, and constructing a weight and similarity matrix; and S6, preferentially fusing the data with high similarity and large weight. According to the method, effective fusion of multi-source heterogeneous high-dimensional data is realized, the problems of low fusion efficiency and insufficient user feature acquisition accuracy caused by a high-dimensional data set with complex data types and numerous data features are solved, and the effective utilization rate of data and the user satisfaction of enterprises are improved.

Owner:HANGZHOU SUNYARD DIGITAL SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com