Patents

Literature

156 results about "Network reduction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Network reduction. The network reduction module is used to extract a sub part of a network, replacing the border lines, transformers, HVDC lines by injections. It is required to run a load-flow computation before trying to reduce it. The network reduction is relying on a NetworkPredicate instance, to define an area of interest (i.e.

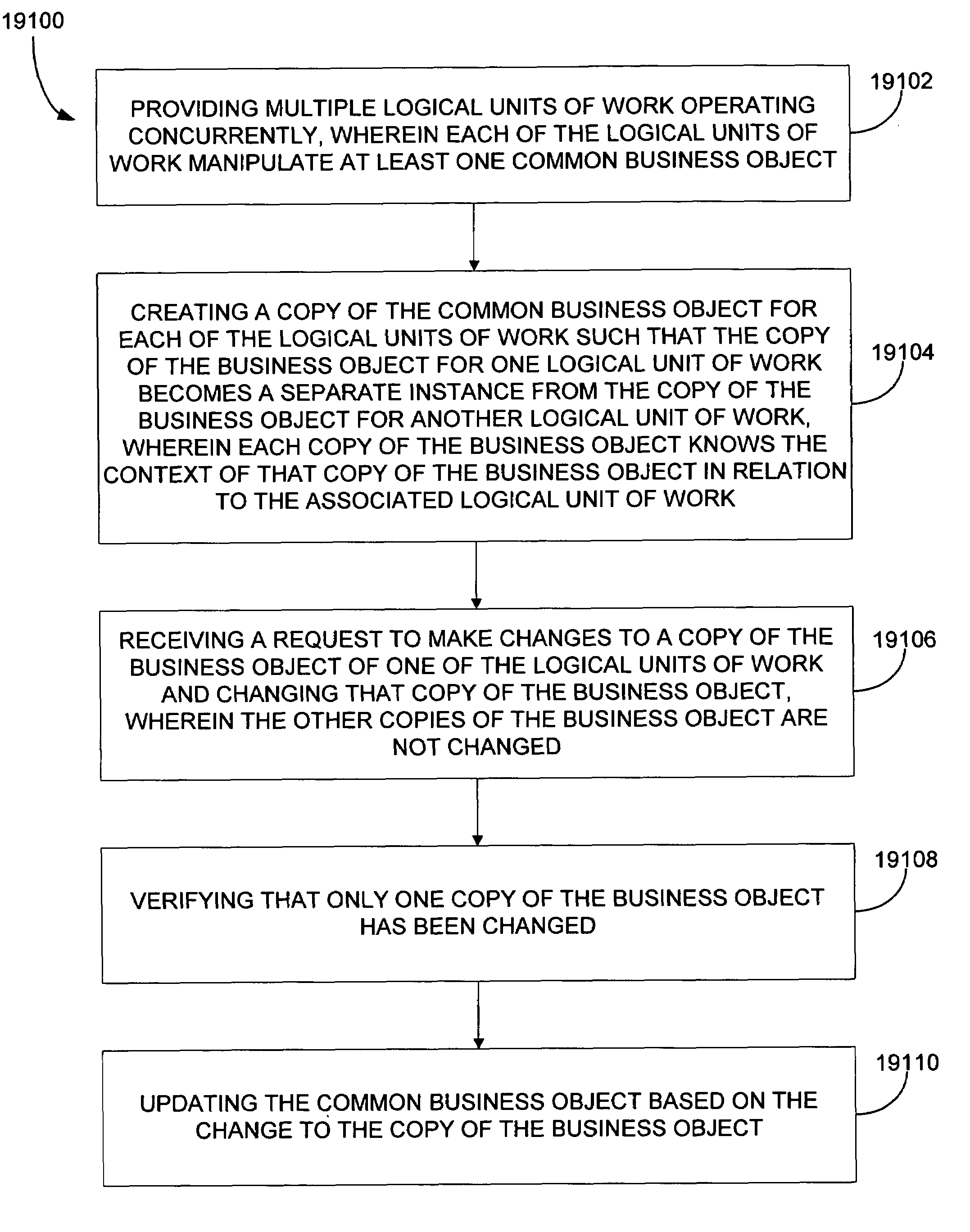

System and method for transaction services patterns in a netcentric environment

InactiveUS7289964B1Reduce network trafficOffice automationProgram controlTraffic capacityTransaction service

The present disclosure provides for implementing transaction services patterns. Logical requests are batched for reducing network traffic. A batched request is allowed to indicate that it depends on the response to another request. A single message is sent to all objects in a logical unit of work. Requests that are being unbatched from a batched message are sorted. Independent copies of business data are assigned to concurrent logical units of work for helping prevent the logical units of work from interfering with each other.

Owner:ACCENTURE GLOBAL SERVICES LTD

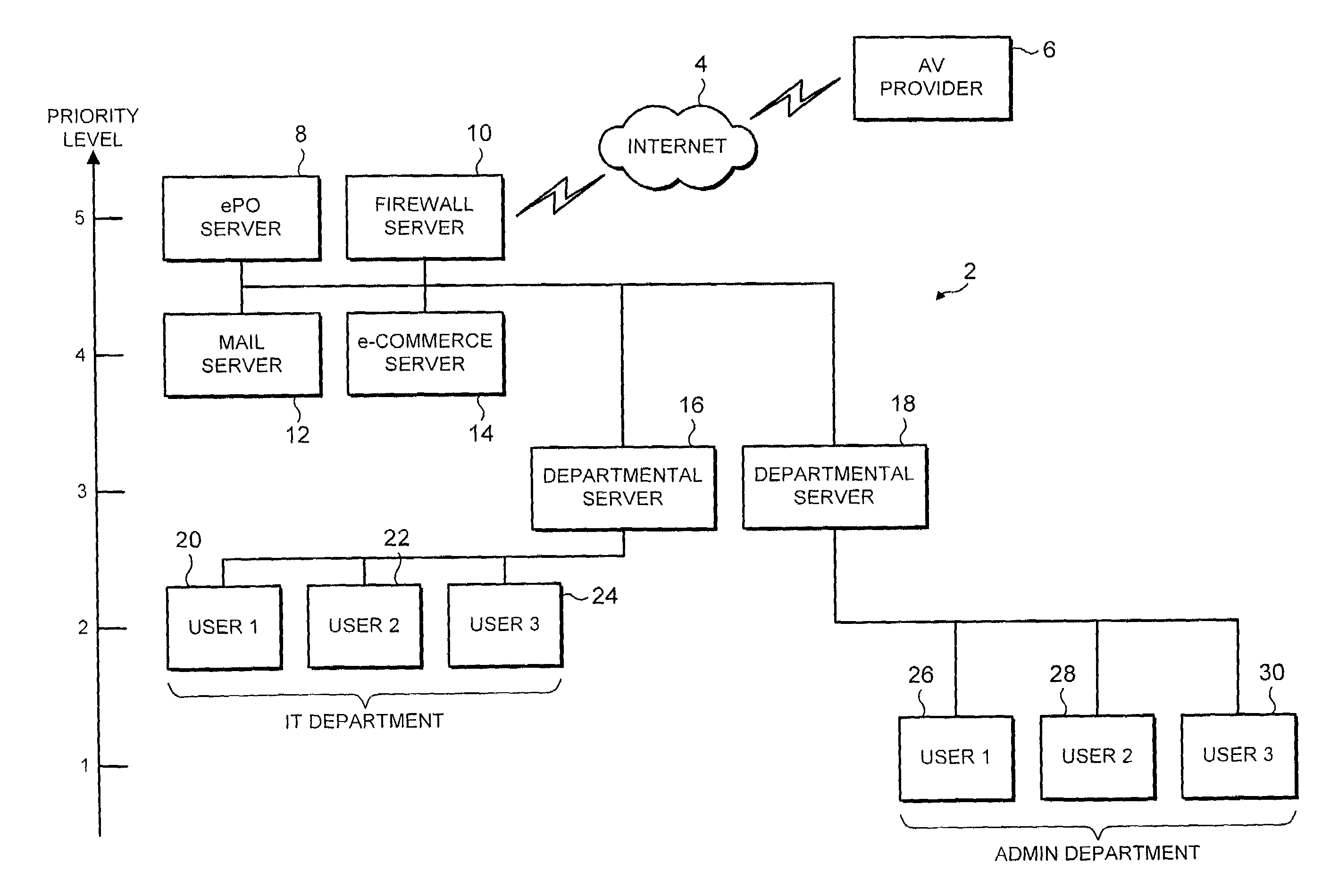

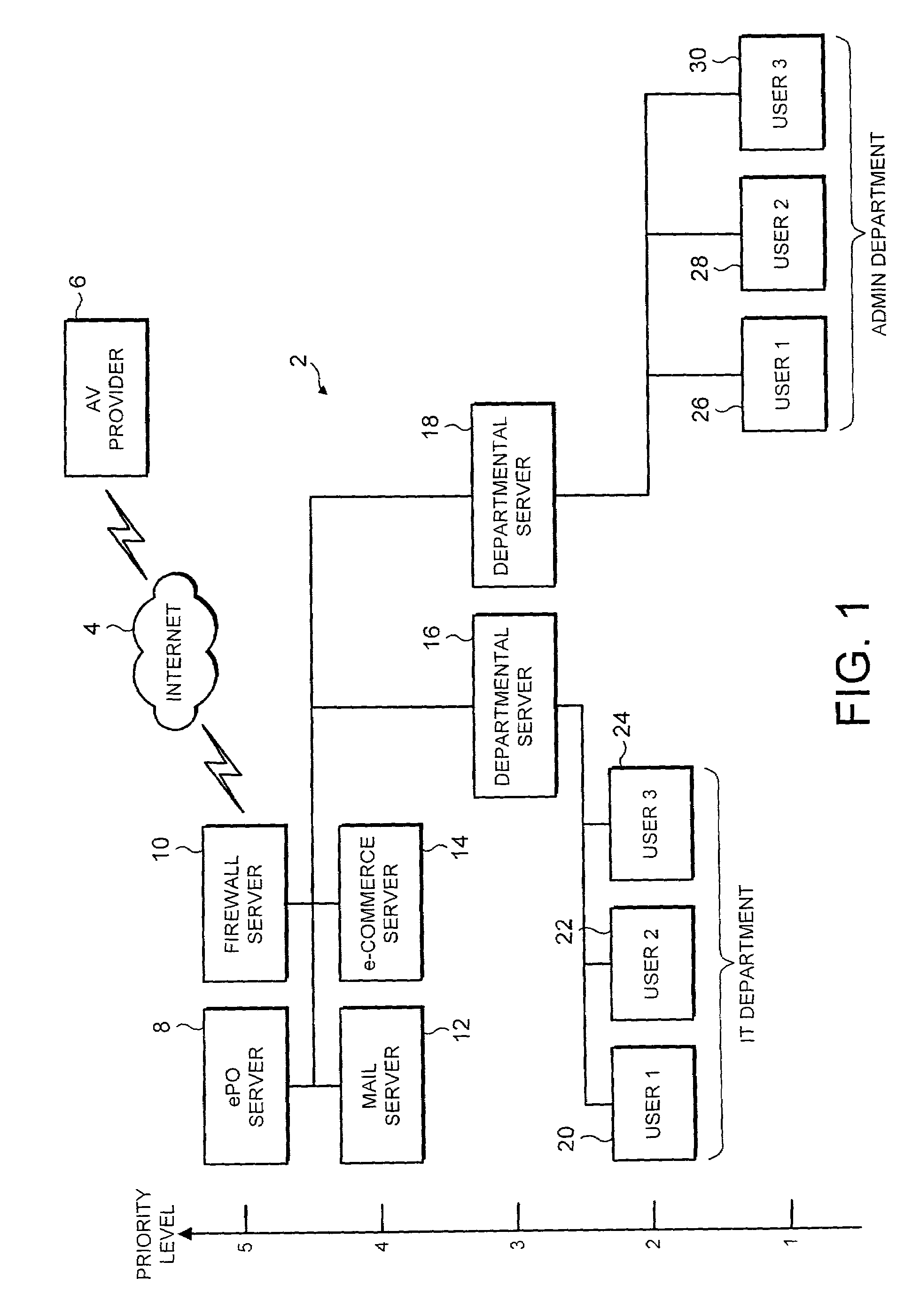

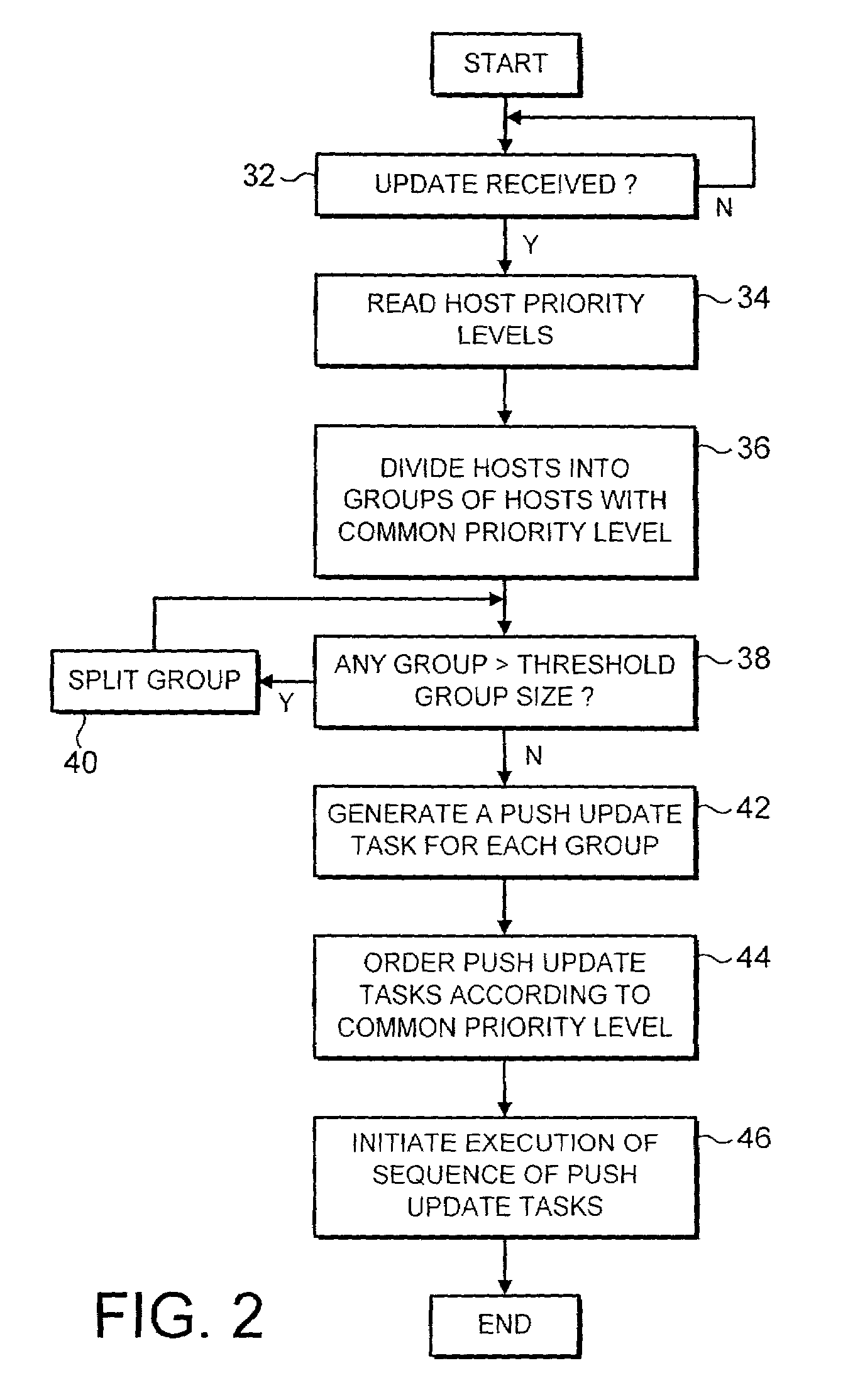

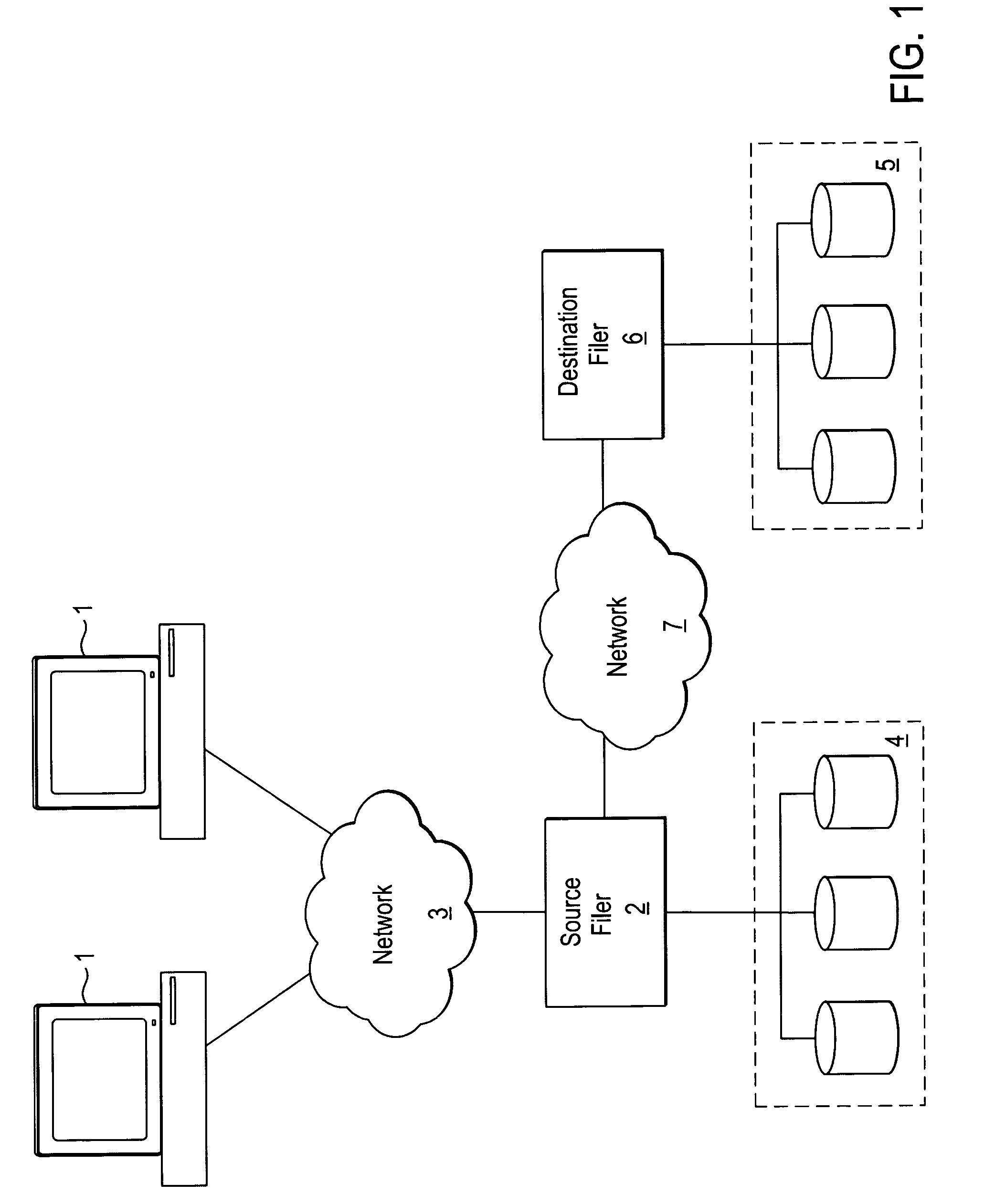

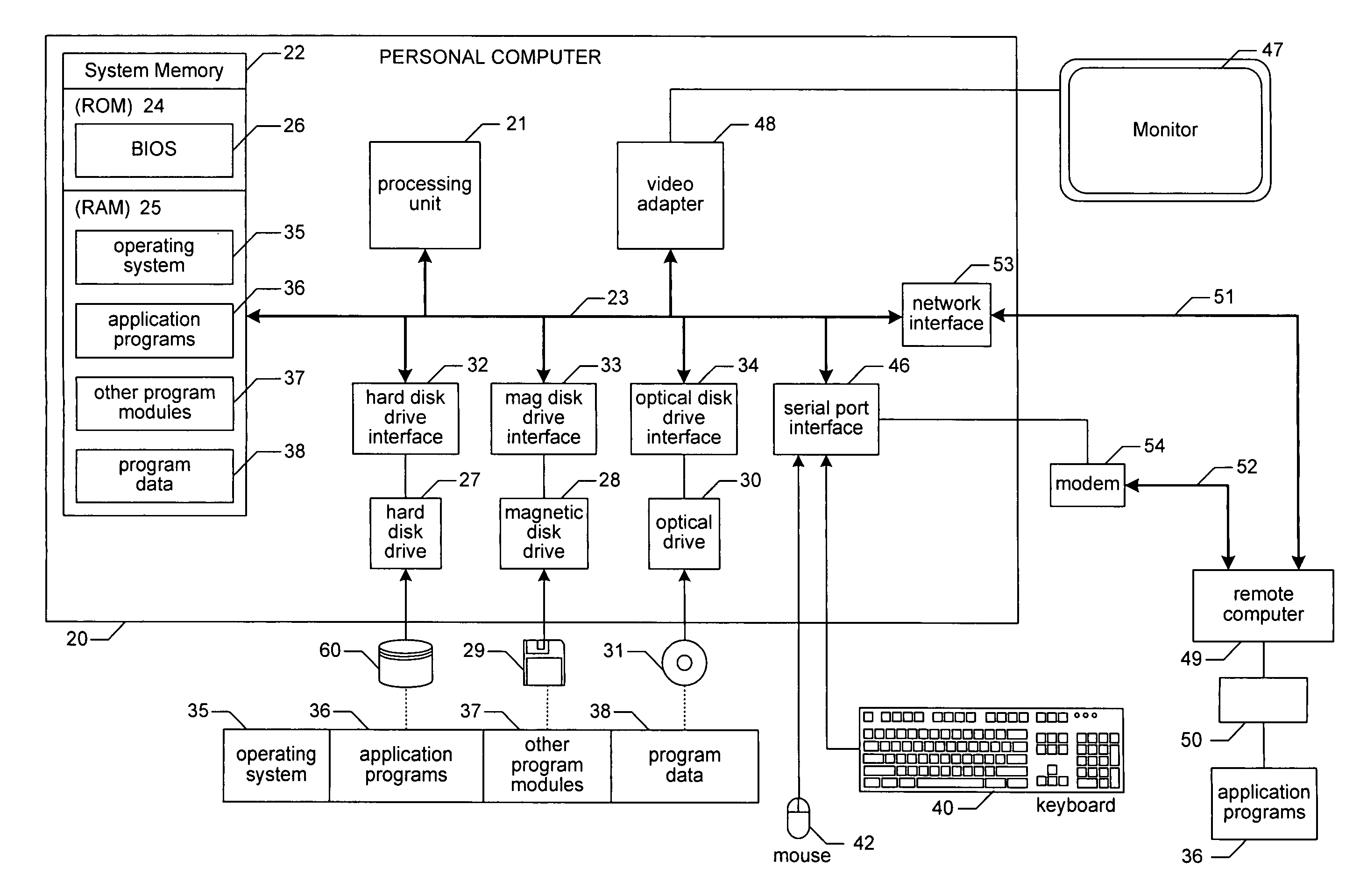

Updating data from a source computer to groups of destination computers

ActiveUS7159036B2Improve effectivenessImprove efficiencySpecial service provision for substationMultiple digital computer combinationsNetwork reductionComputer science

Owner:MCAFEE LLC

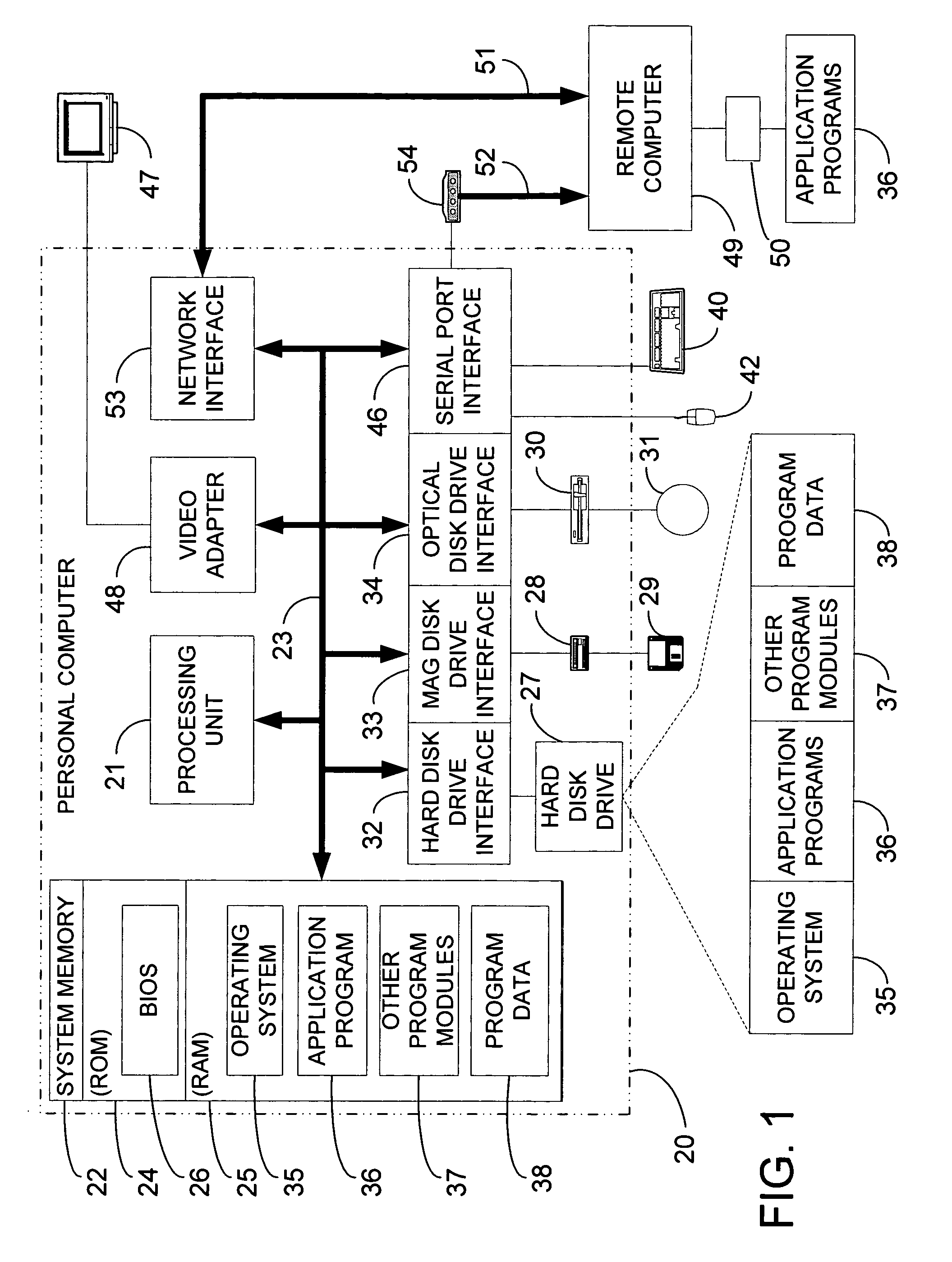

Method and apparatus for conducting crypto-ignition processes between thin client devices and server devices over data networks

InactiveUS6263437B1Key distribution for secure communicationMultiple keys/algorithms usageTraffic capacityKey-agreement protocol

A crypto-ignition process is needed to establish an encrypted communication protocol between two devices connected by an insecure communication link. The present invention introduces a method of creating an identical secret key to two communicating parties is conducted between a thin device and a server computer over an insecure data network. The thin device generally has limited computing power and working memory and the server computer may communicate with a plurality of such thin devices. To ensure the security of the secret key on both sides and reduce traffic in the network, only a pair of public values is exchanged between the thin device and the server computer over the data network. Each side generates its own secret key from a self-generated private value along with the received counterpart's public value according to a commonly used key agreement protocol, such as the Diffie-Hellman key agreement protocol. To ensure that the generated secret keys are identical on both sides, a verification process is followed by exchanging a message encrypted by one of two generated secret keys. The secret keys are proved to be identical and secret when the encrypted message is successfully decrypted by the other secret key. To reduce network traffic, the verification process is piggybacked with a session request from the thin device to establish a secure and authentic communication session with the server computer. The present invention enables the automatic delivery of the secret keys, without requiring significant computing power and working memory, between each of the thin clients respectively with the server computer.

Owner:UNWIRED PLANET

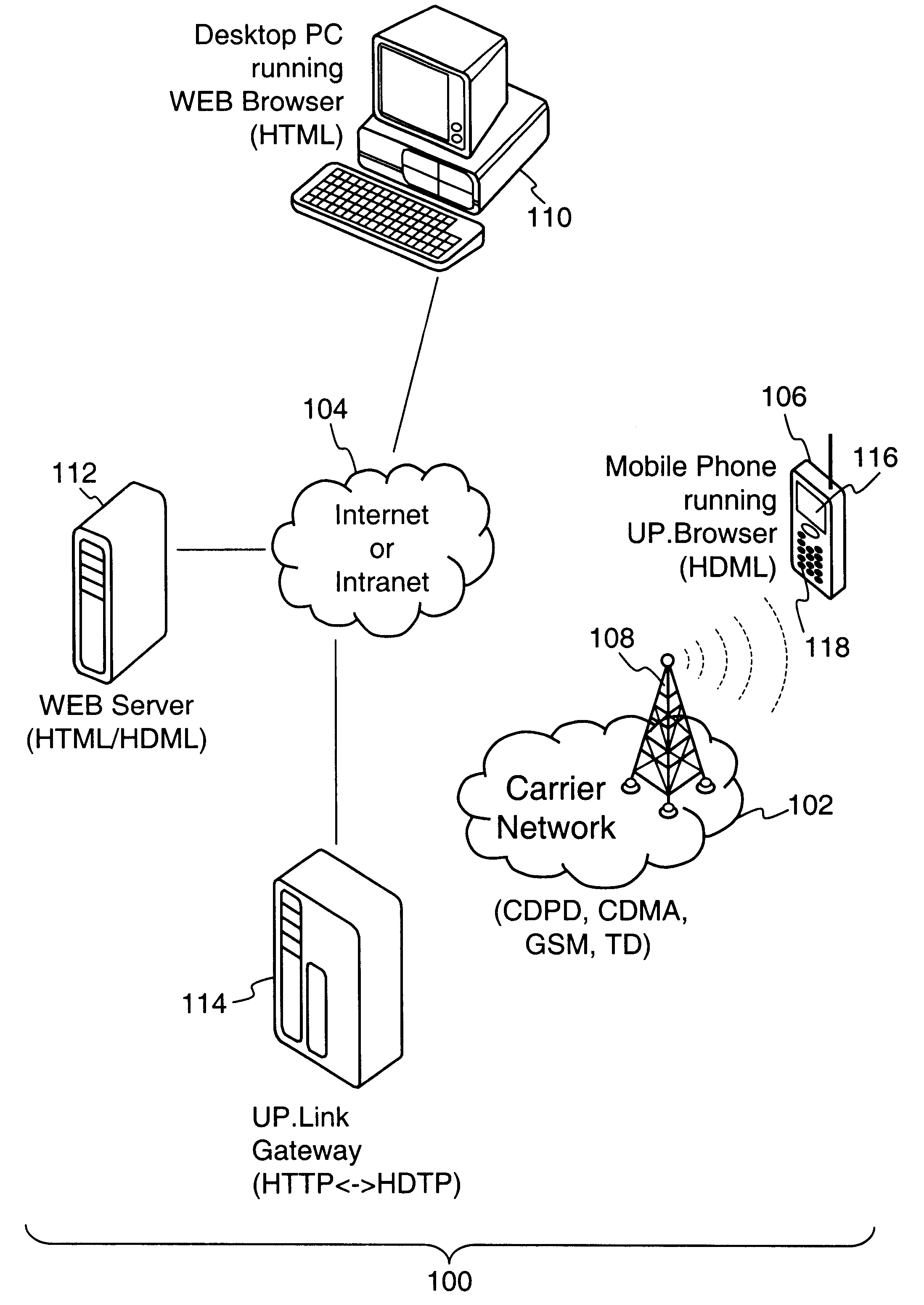

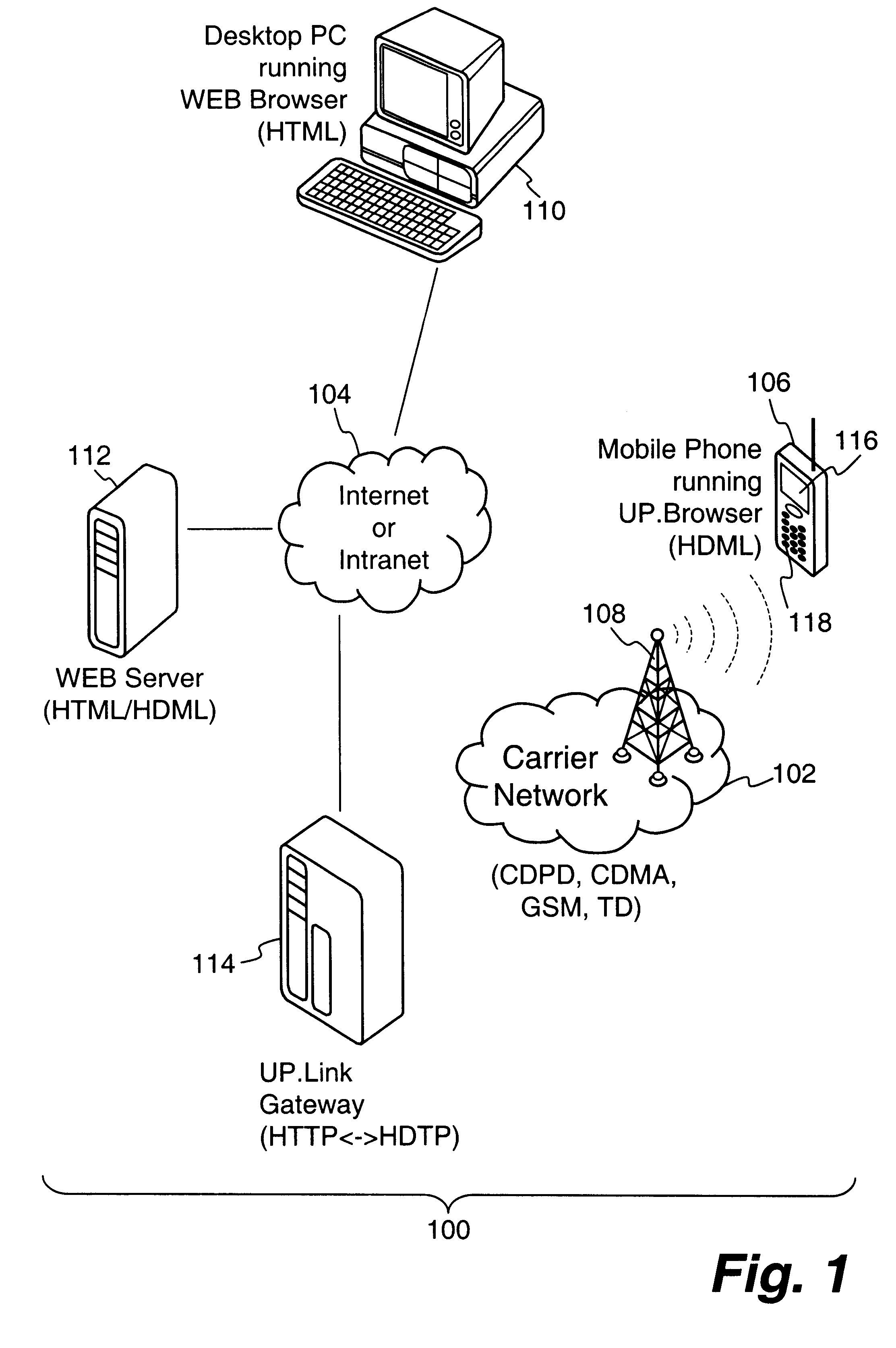

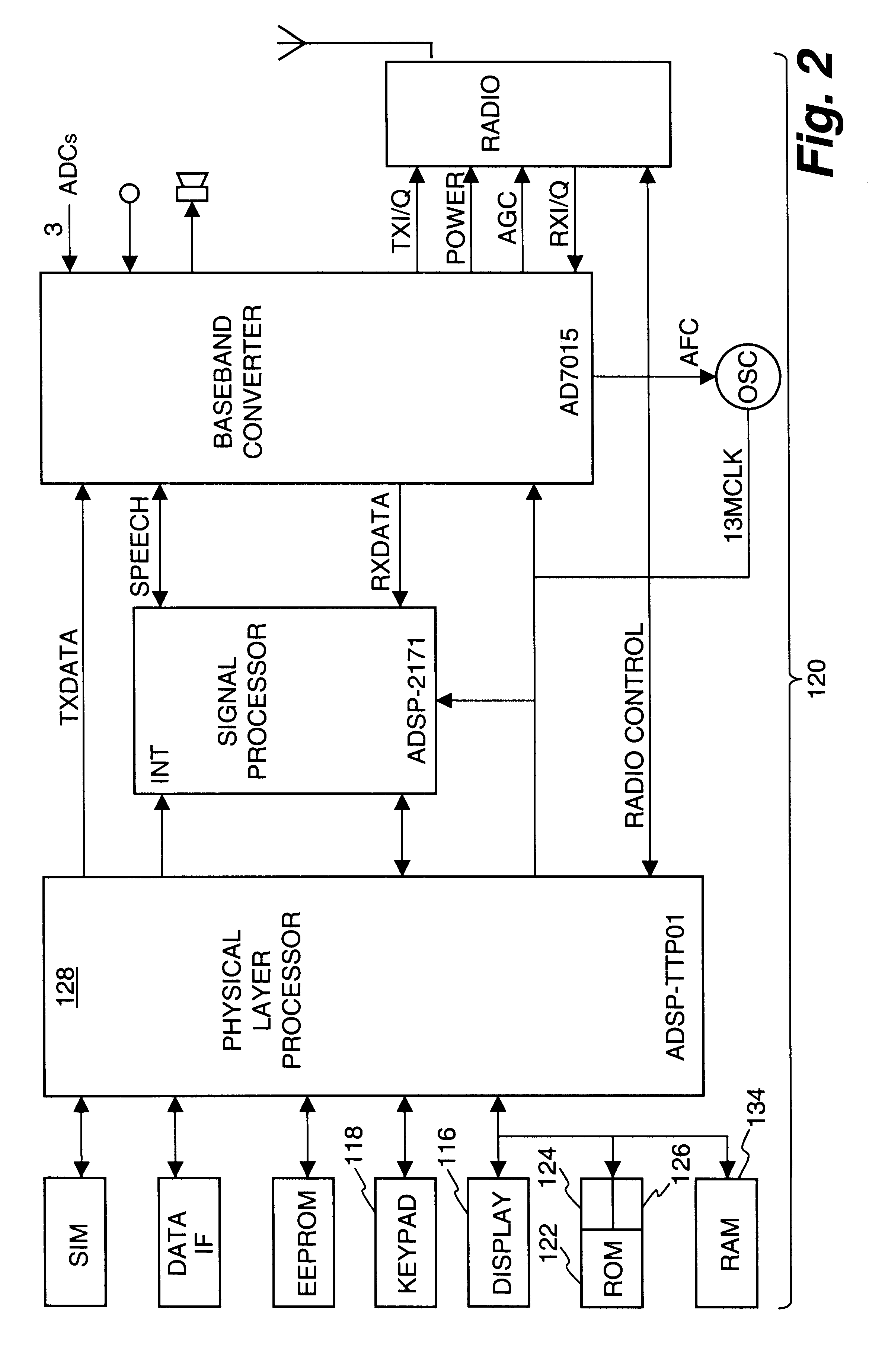

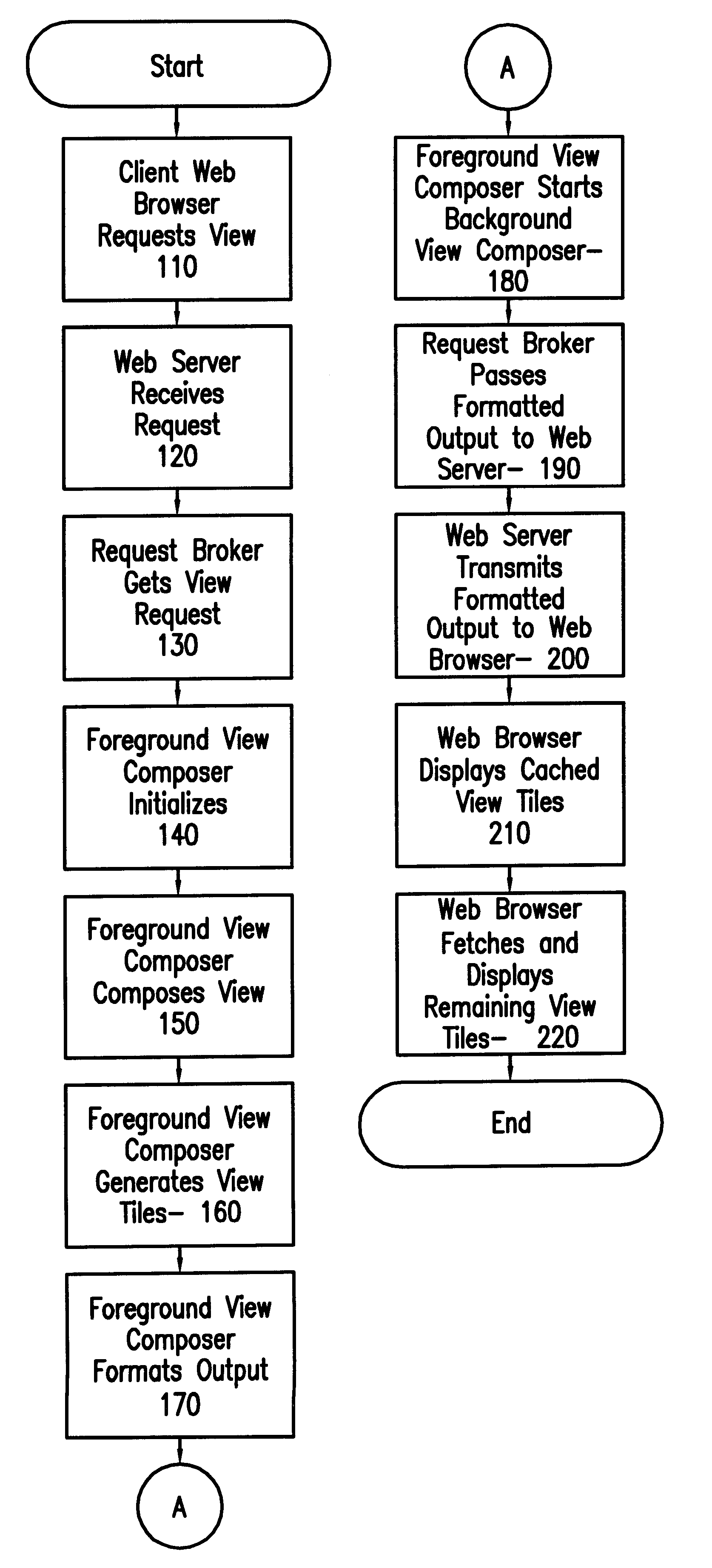

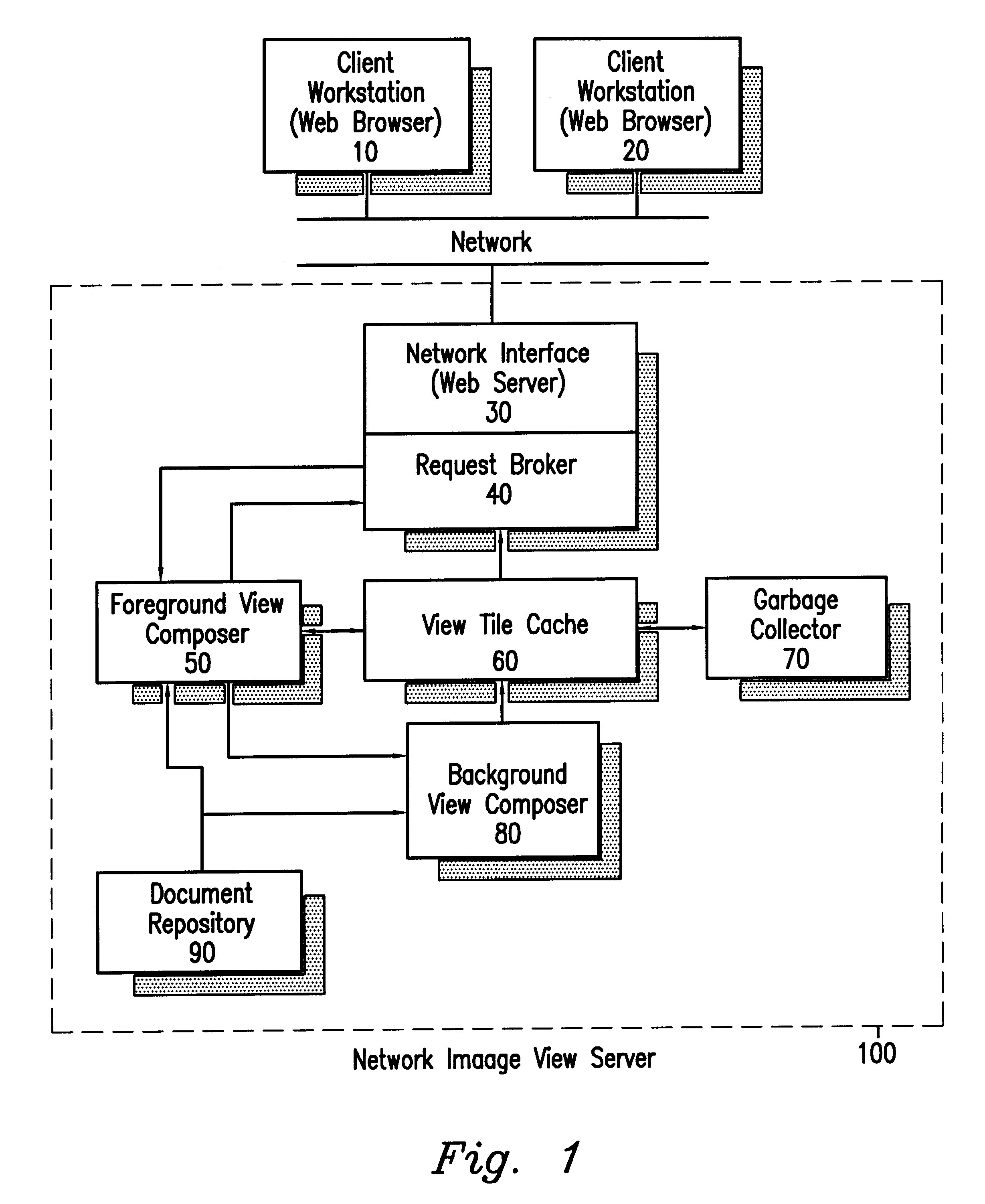

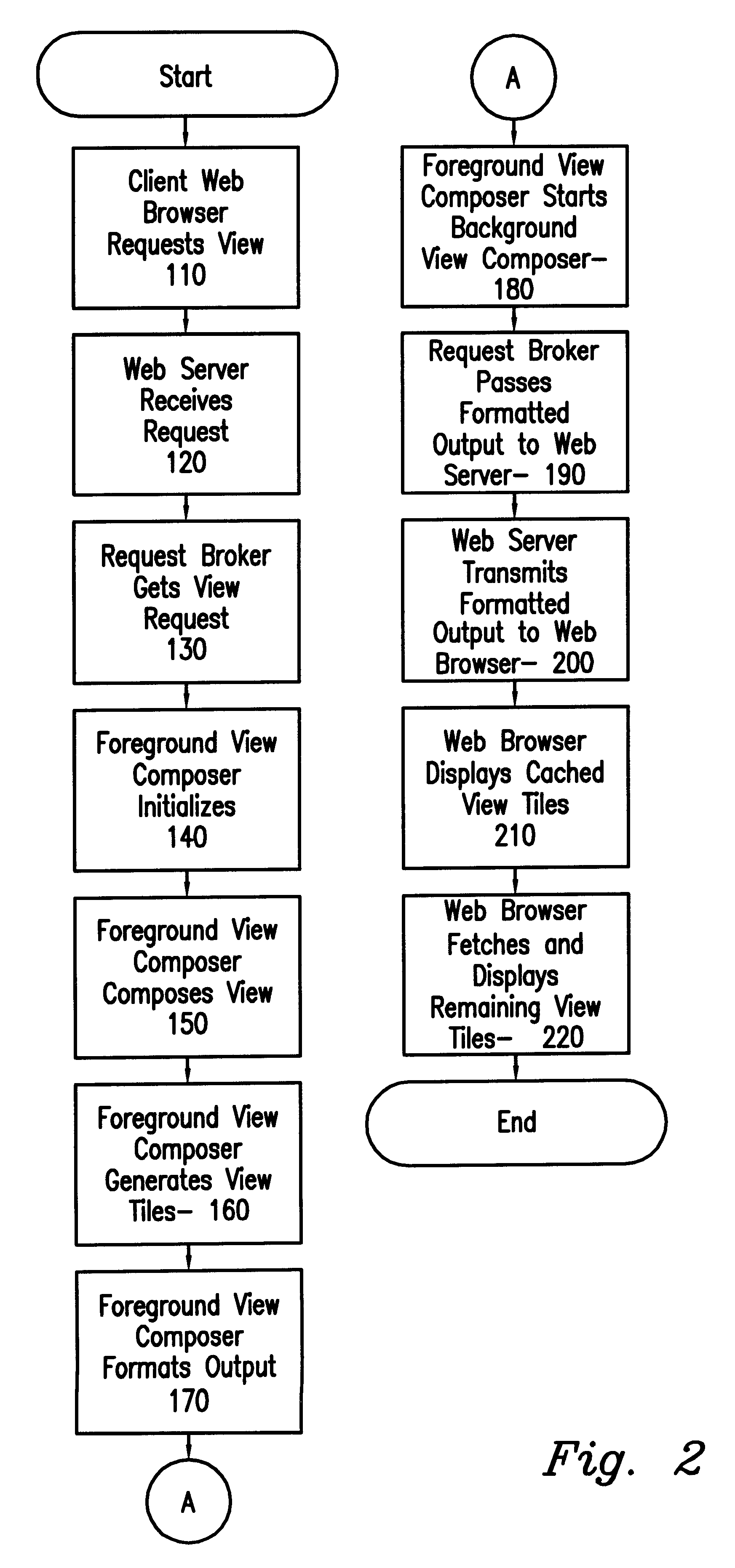

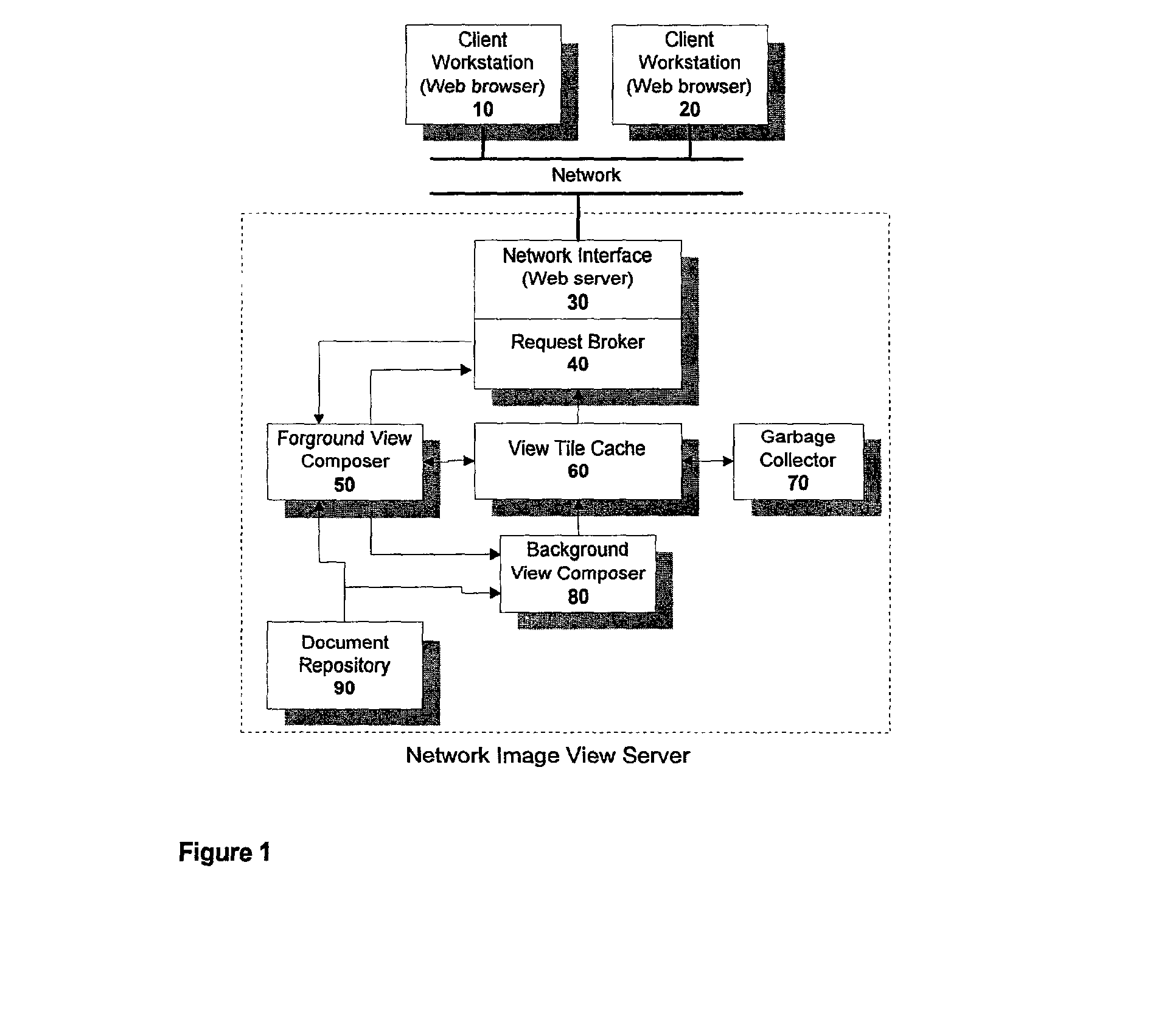

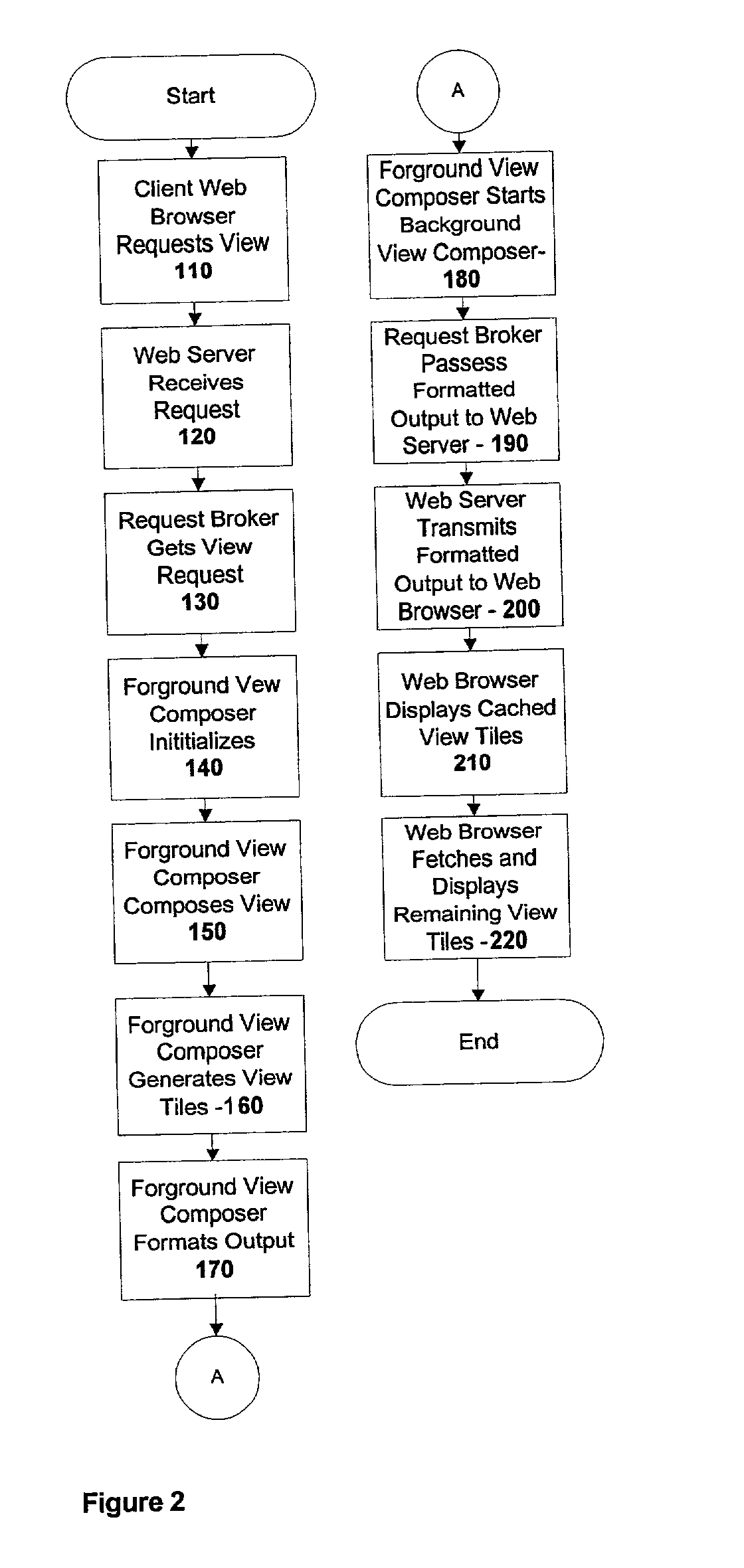

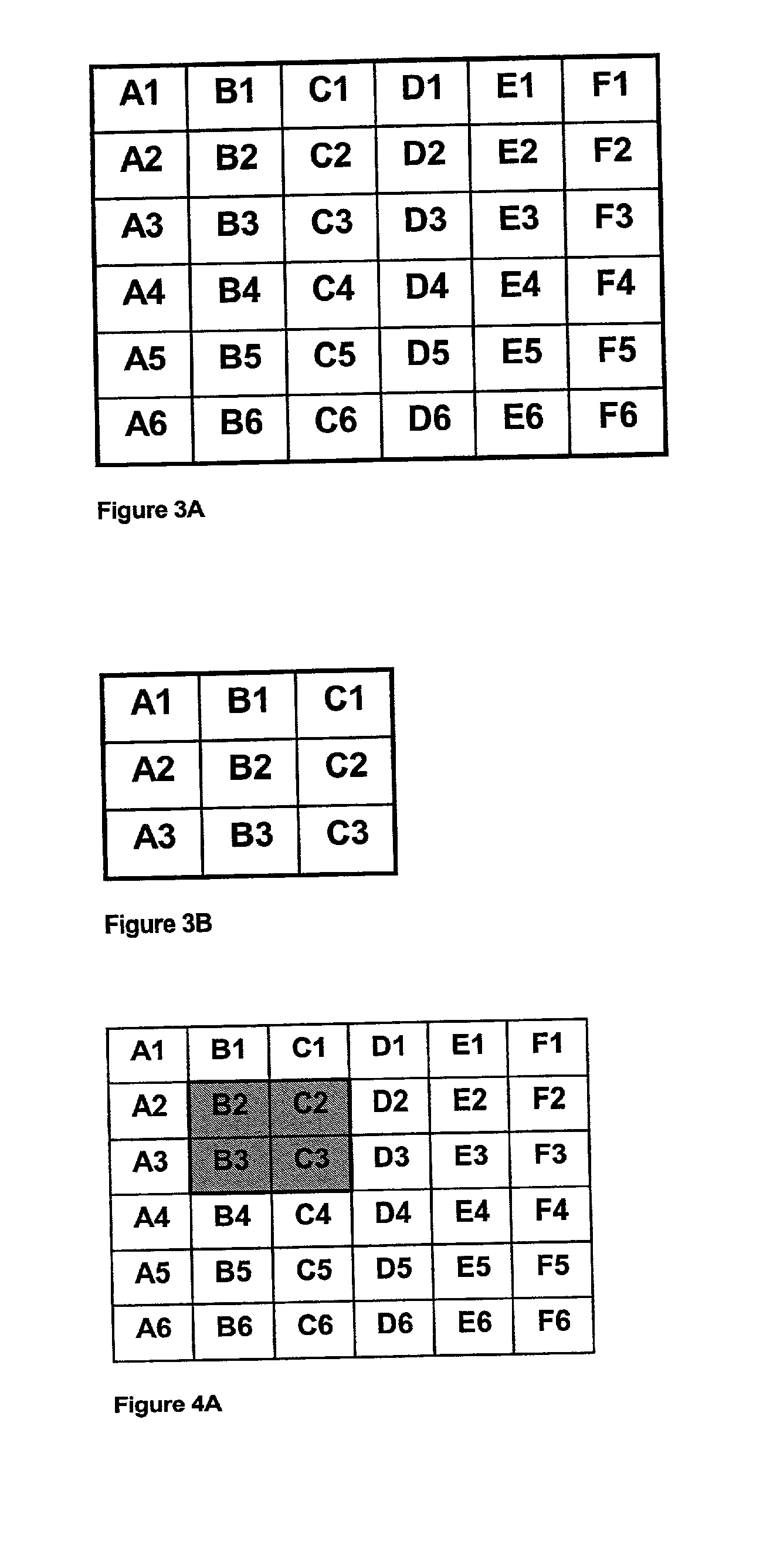

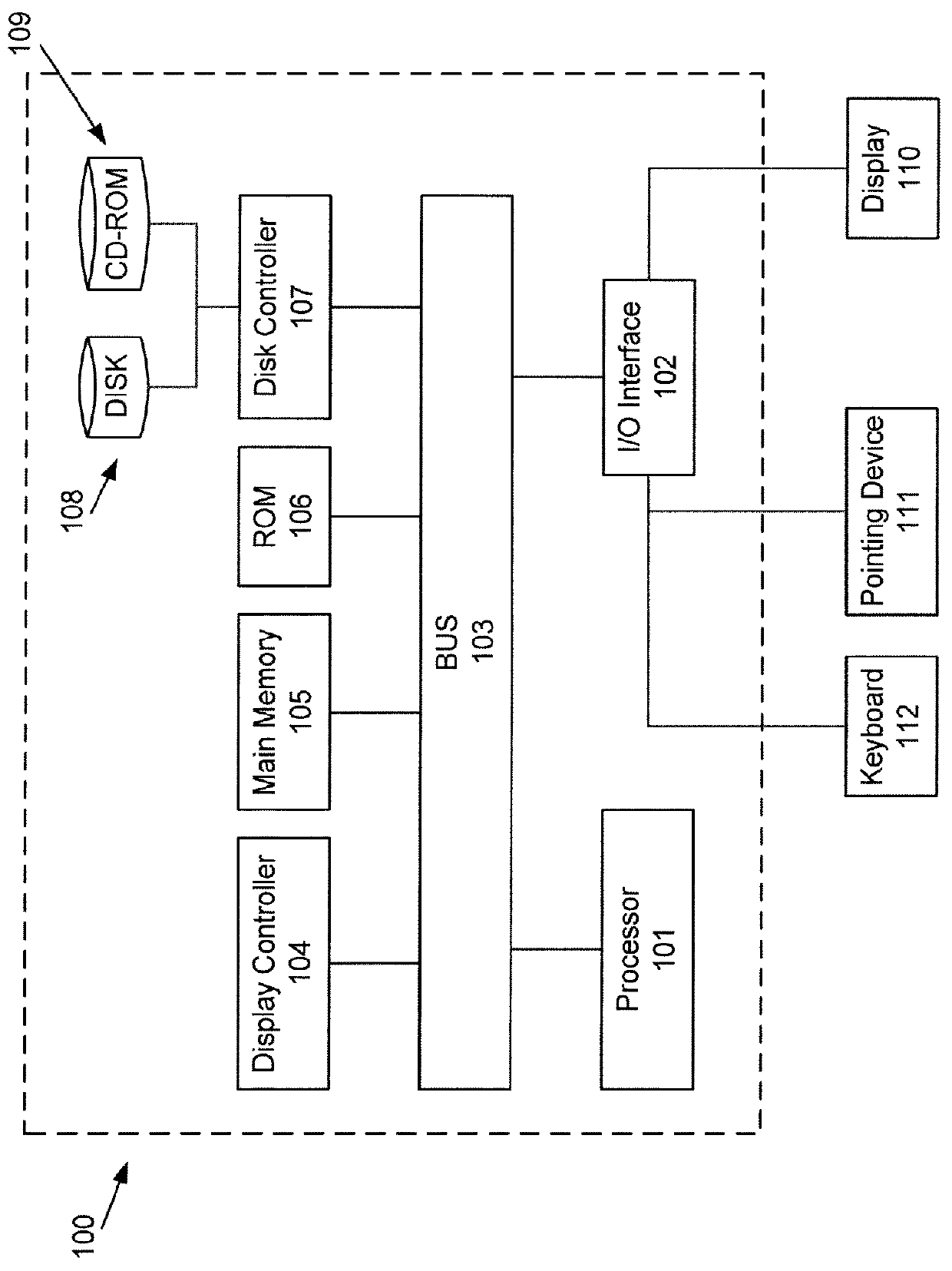

Network image view server using efficient client-server tiling and caching architecture

InactiveUS6182127B1Easy to useIncrease speedData processing applicationsDigital data information retrievalData displayComputer network server

A computer network server using HTTP (Web) server software combined with foreground view composer software, background view composer software, a view tile cache, view tile cache garbage collector software, and image files provides image view data to client workstations using graphical Web browsers to display the view of an image from the server. Problems with specialized client workstation image view software are eliminated by using the Internet and industry standards-based graphical Web browsers for the client software. Network and system performance problems that previously existed when accessing large image files from a network file server are eliminated by tiling the image view so that computation and transmission of the view data can be done in an incremental fashion. The view tiles are cached on the client workstation to further reduce network traffic. View tiles are cached on the server to reduce the amount of view tile computation and to increase responsiveness of the image view server.

Owner:EPLUS CAPITAL

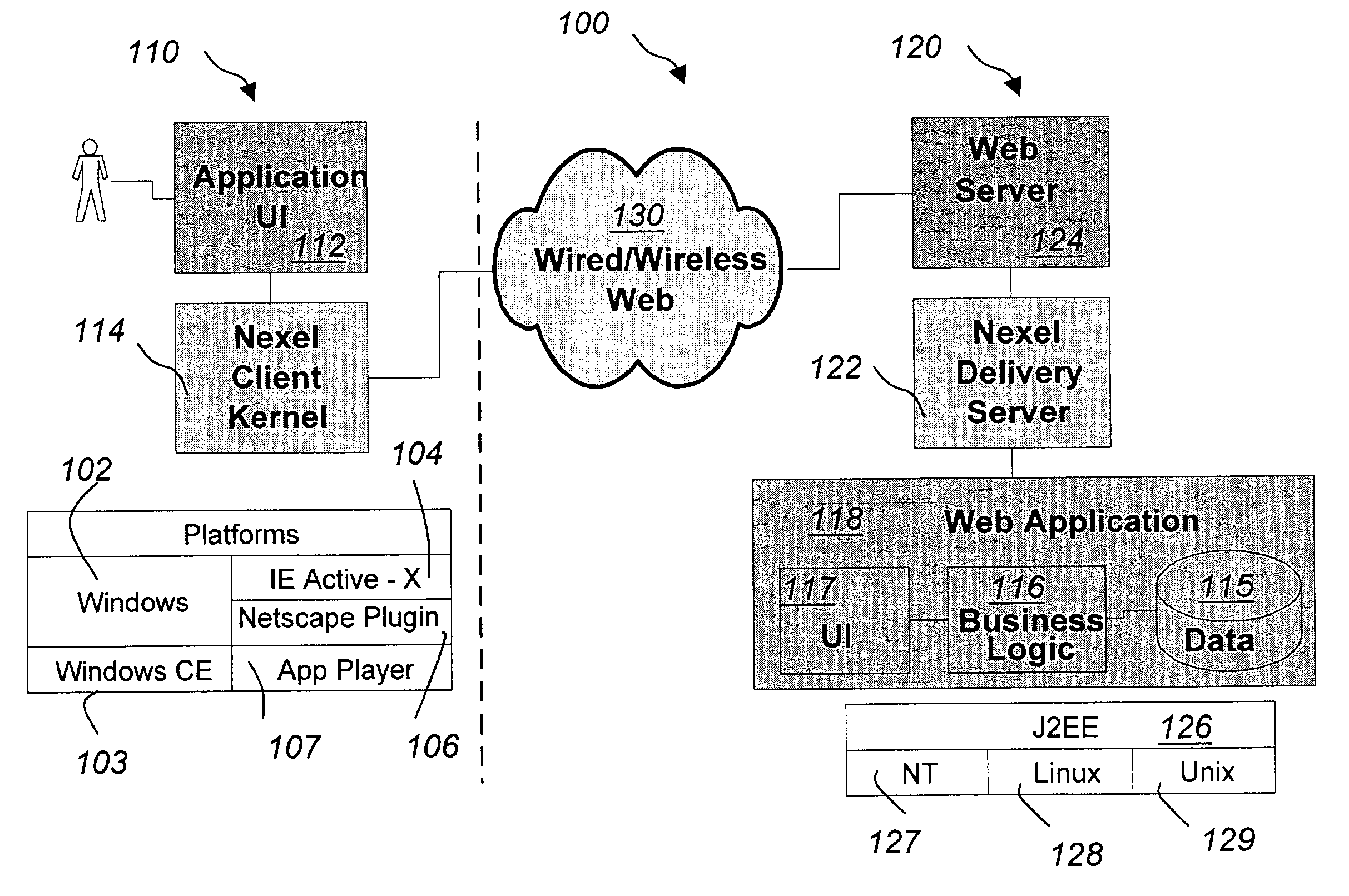

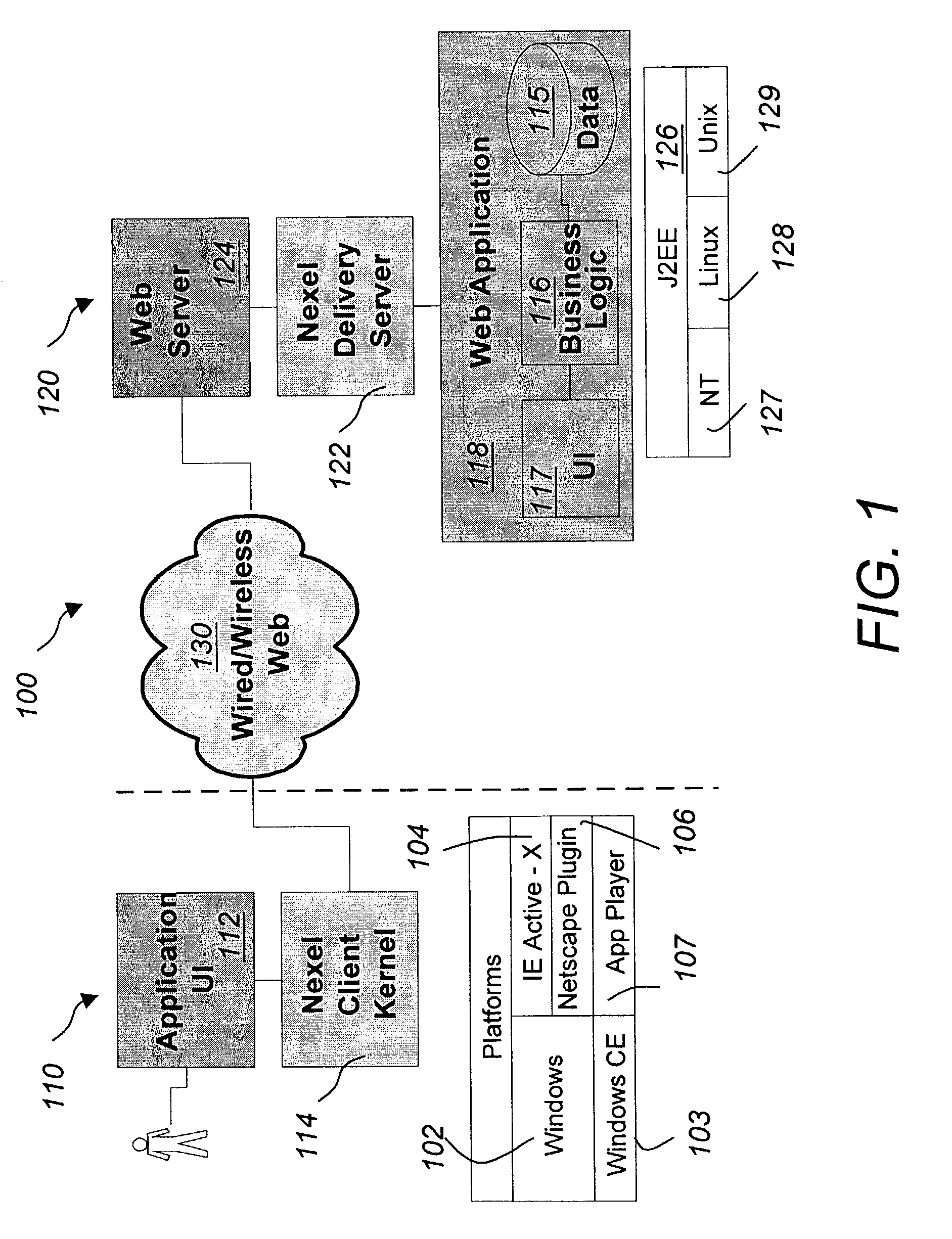

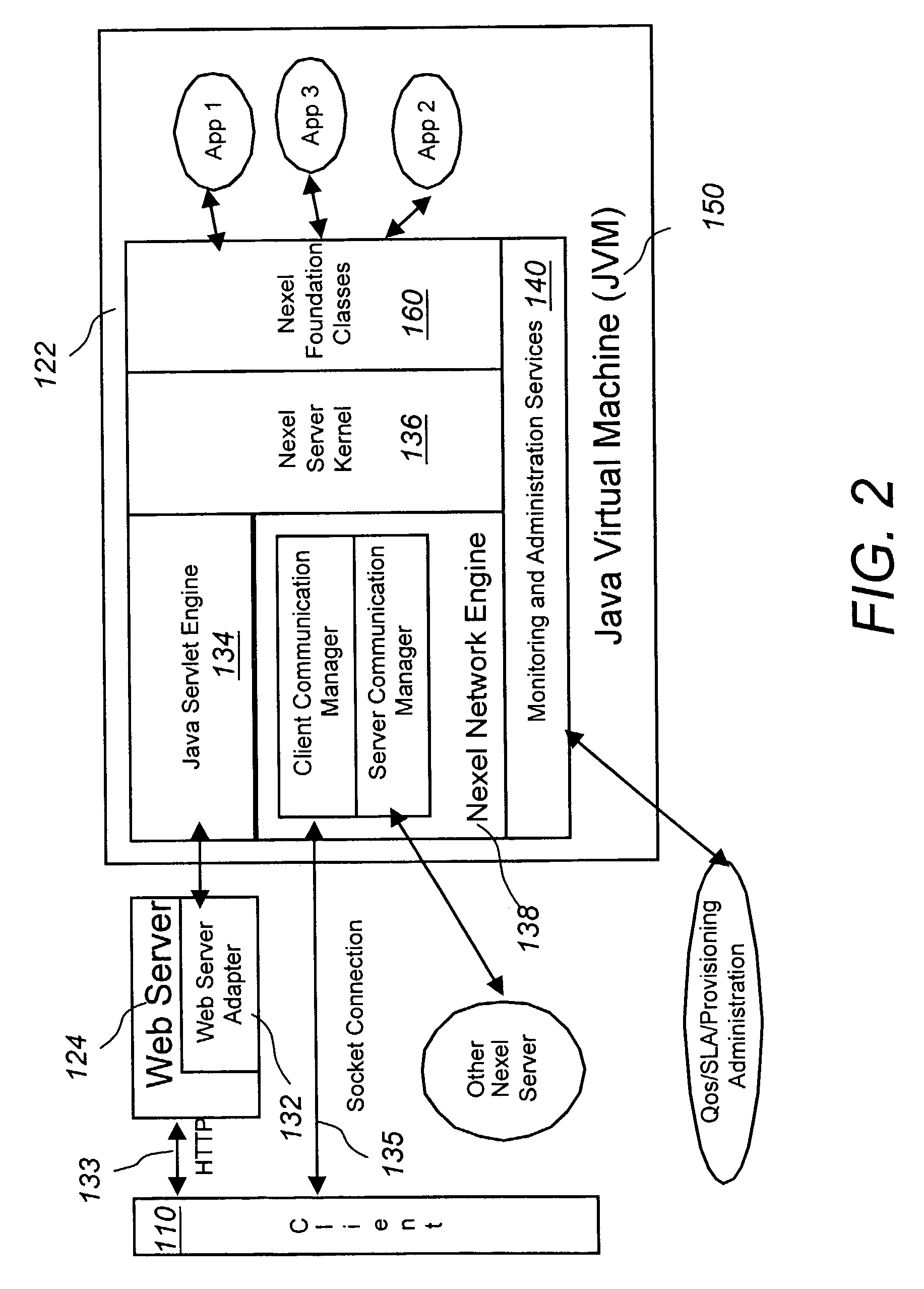

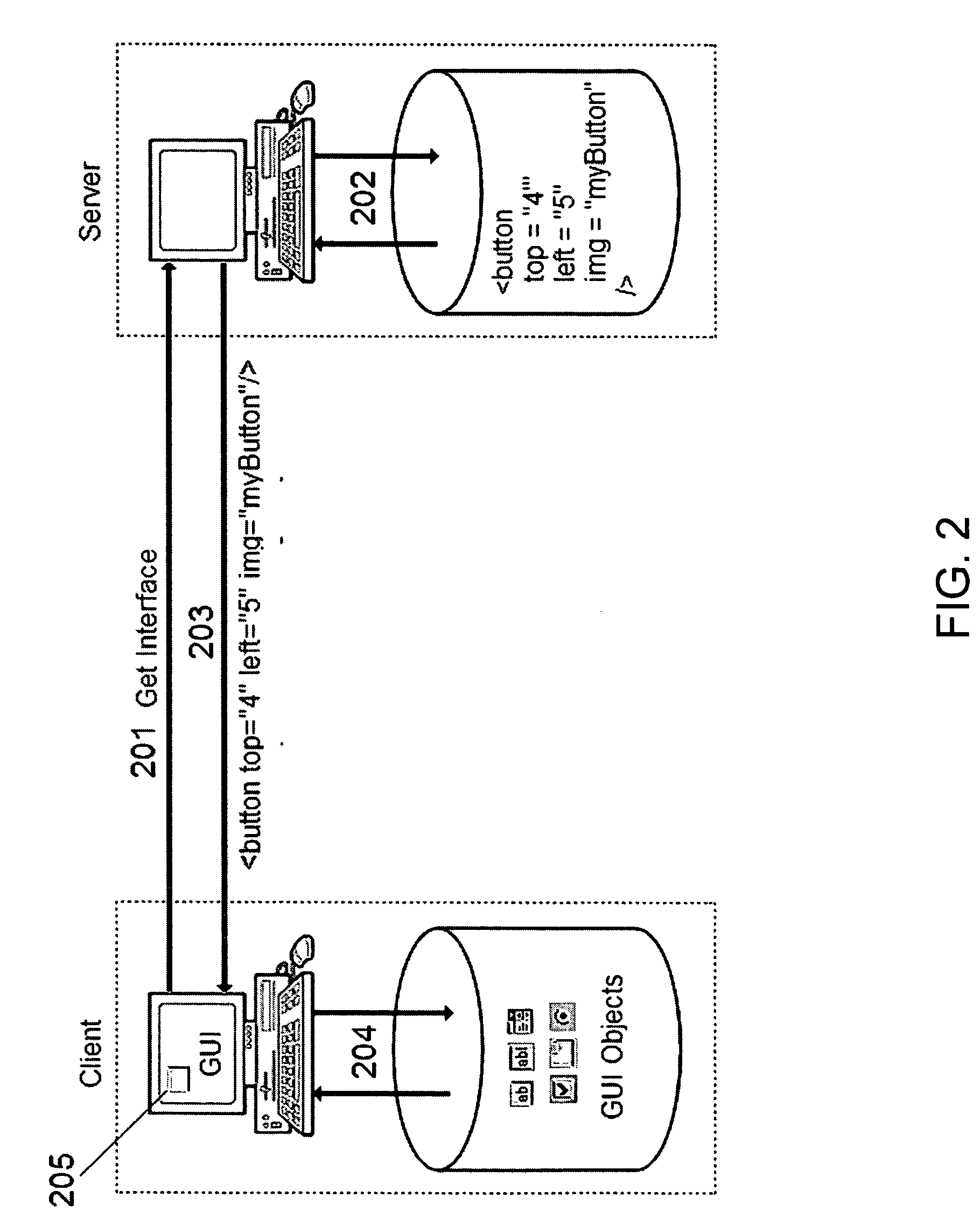

Methods and techniques for delivering rich Java applications over thin-wire connections with high performance and scalability

ActiveUS7596791B2Interprogram communicationMultiple digital computer combinationsNetwork connectionApplication software

A method for delivering applications over a network where the application's logic runs on the backend server and the application's user interface is rendered on a client-device, according to its display capabilities, thought a network connection with the backend server. The application's GUI API and event processing API are implemented to be network-aware, transmitting application's presentation layer information, event processing registries, and other related information a between client and server. The transmission is a high, object level format, which minimizes network traffic. Client-side events are transmitted to the server for processing via a predetermined protocol, the server treating such events and inputs as if locally generated. The appropriate response to the input is generated and transmitted to the client device using the format to refresh the GUI on the client.

Owner:EMC IP HLDG CO LLC

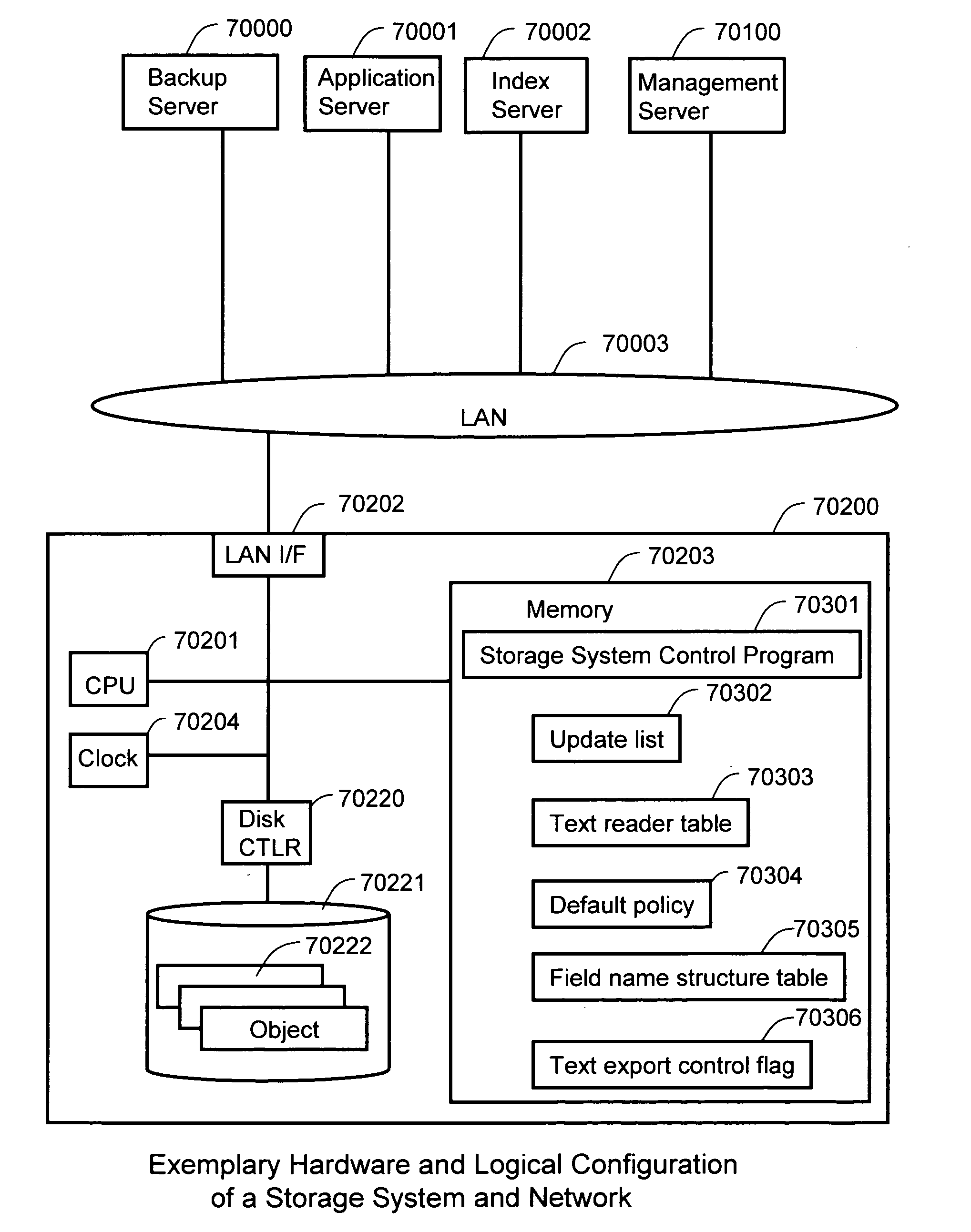

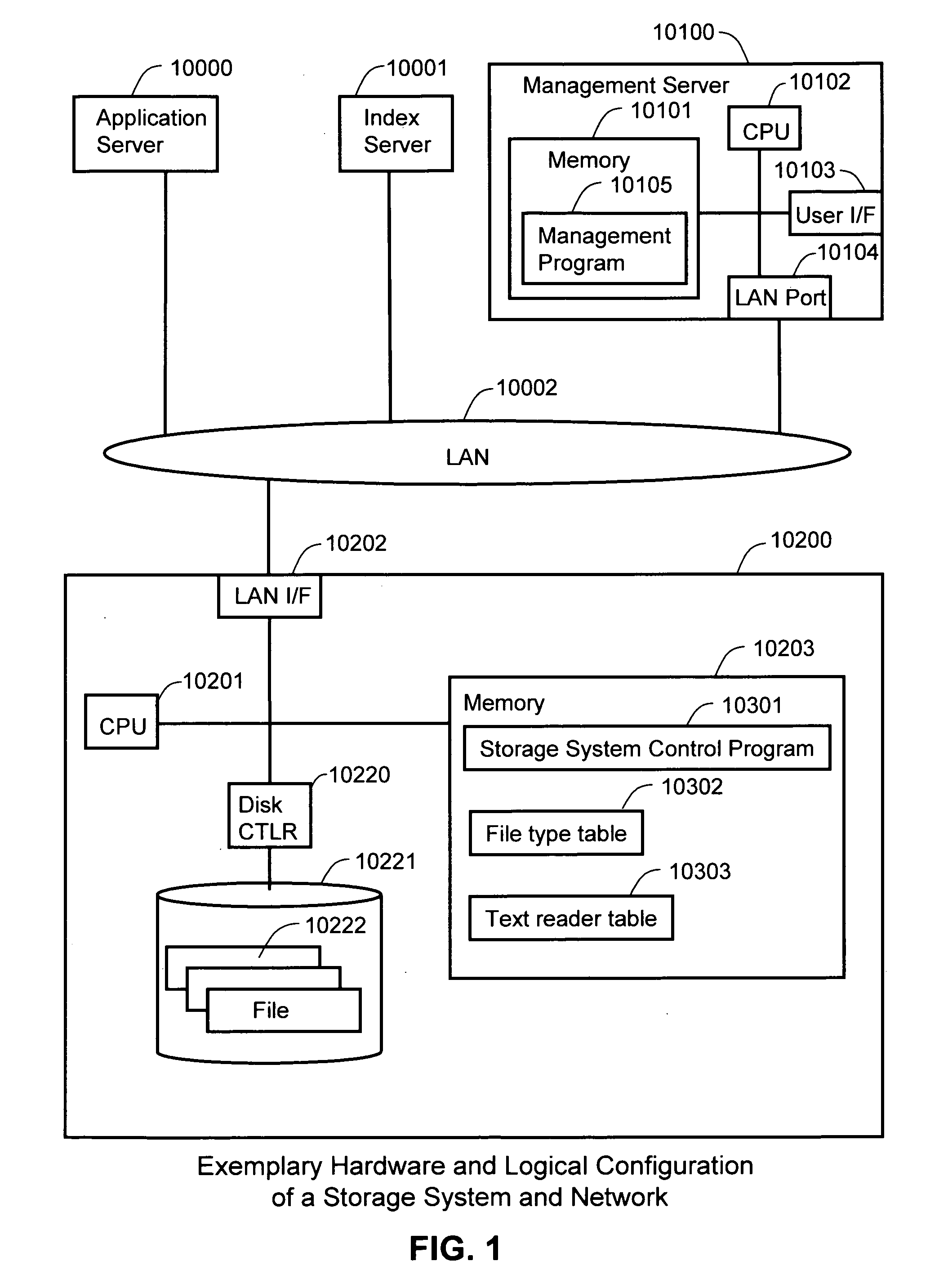

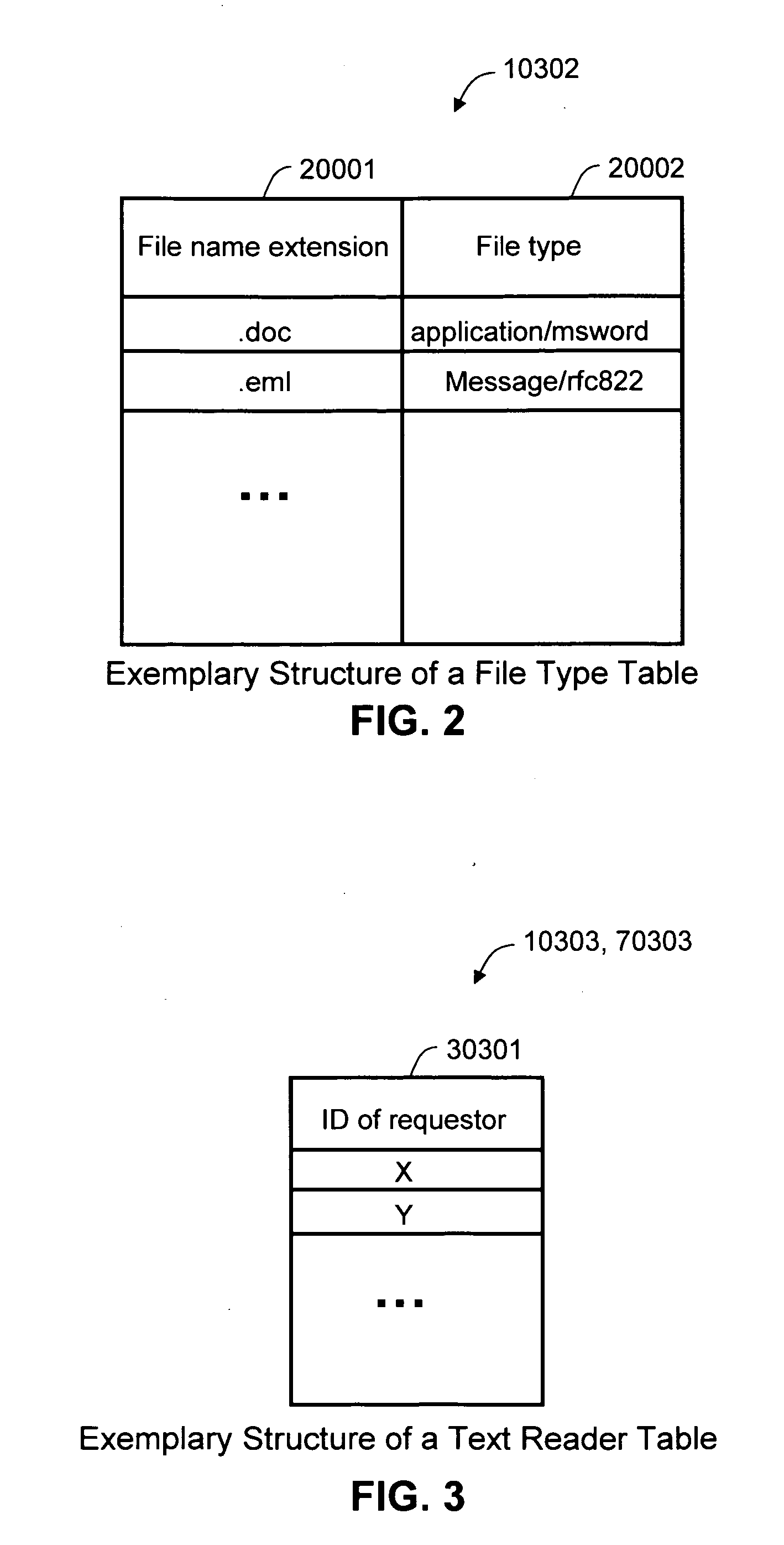

Methods and systems for assisting information processing by using storage system

InactiveUS20090313260A1Digital data processing detailsText database indexingTraffic capacityInformation processing

In a networked information system, a portion of the information processing is offloaded from servers to a storage system to reduce network traffic and conserve server resources. The information system includes a storage system storing files or objects and having a function which automatically extracts portions of text from the files and transmits the extracted text to the servers. The text extraction is responsive to file requests from the servers. The extracted text and files are stored on the storage system, decreasing the need to send entire files across the network. Thus, by transmitting smaller extracted text data instead of entire files over the network, network performance can be increased through the reduction of traffic. Additionally, the processing strain on physical resources of the servers can be reduced by extracting the text at the storage system rather than at the servers.

Owner:HITACHI LTD

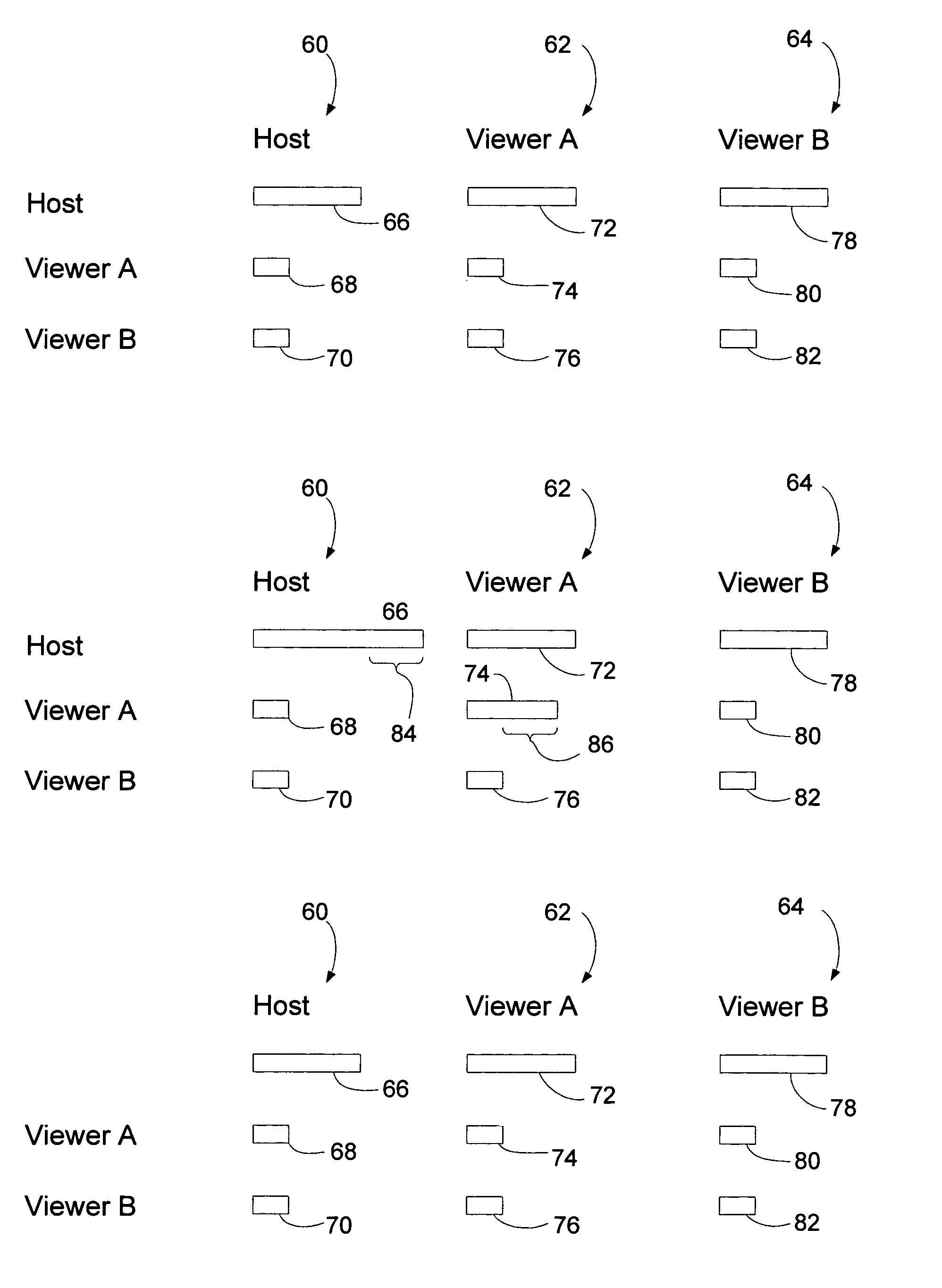

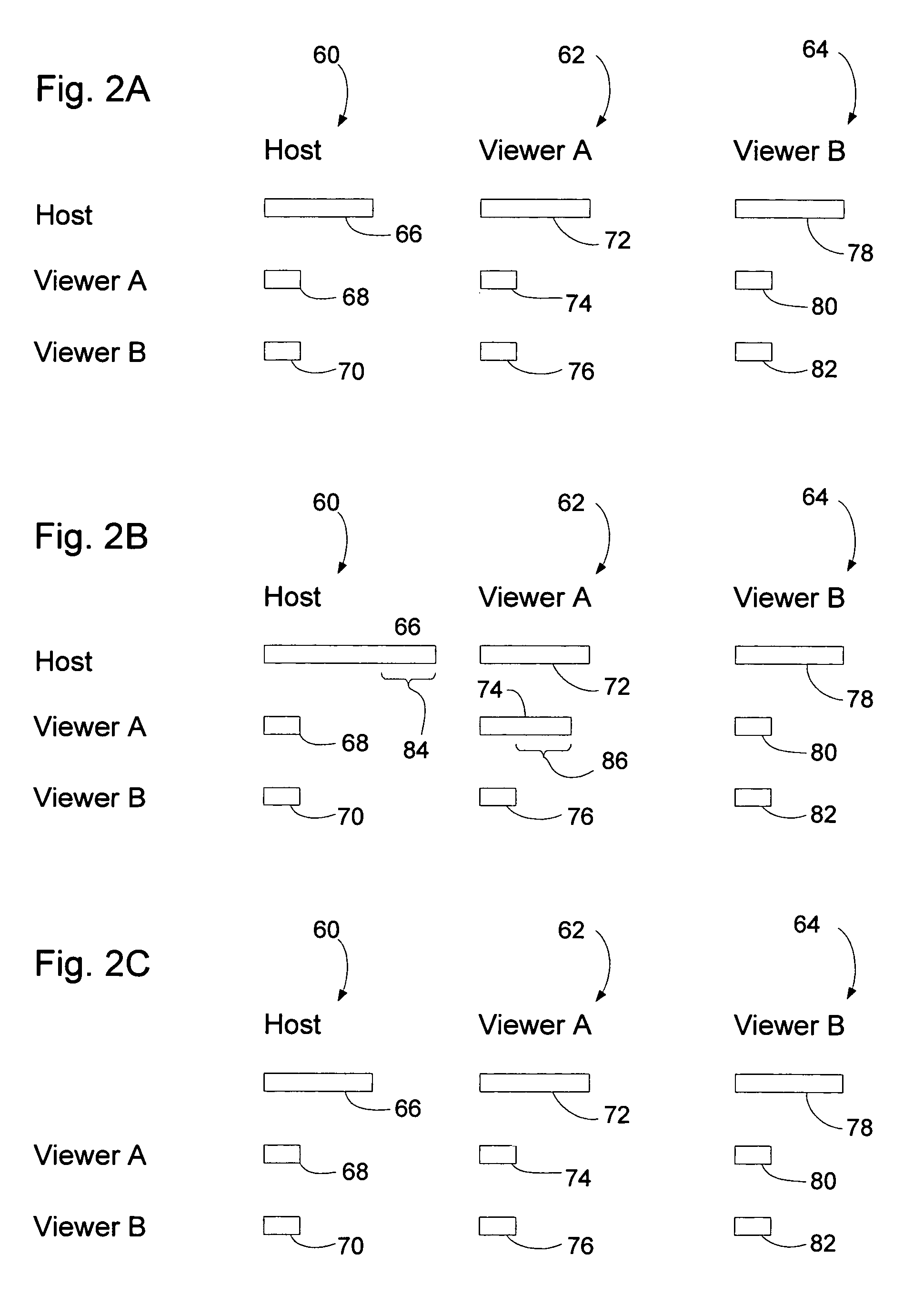

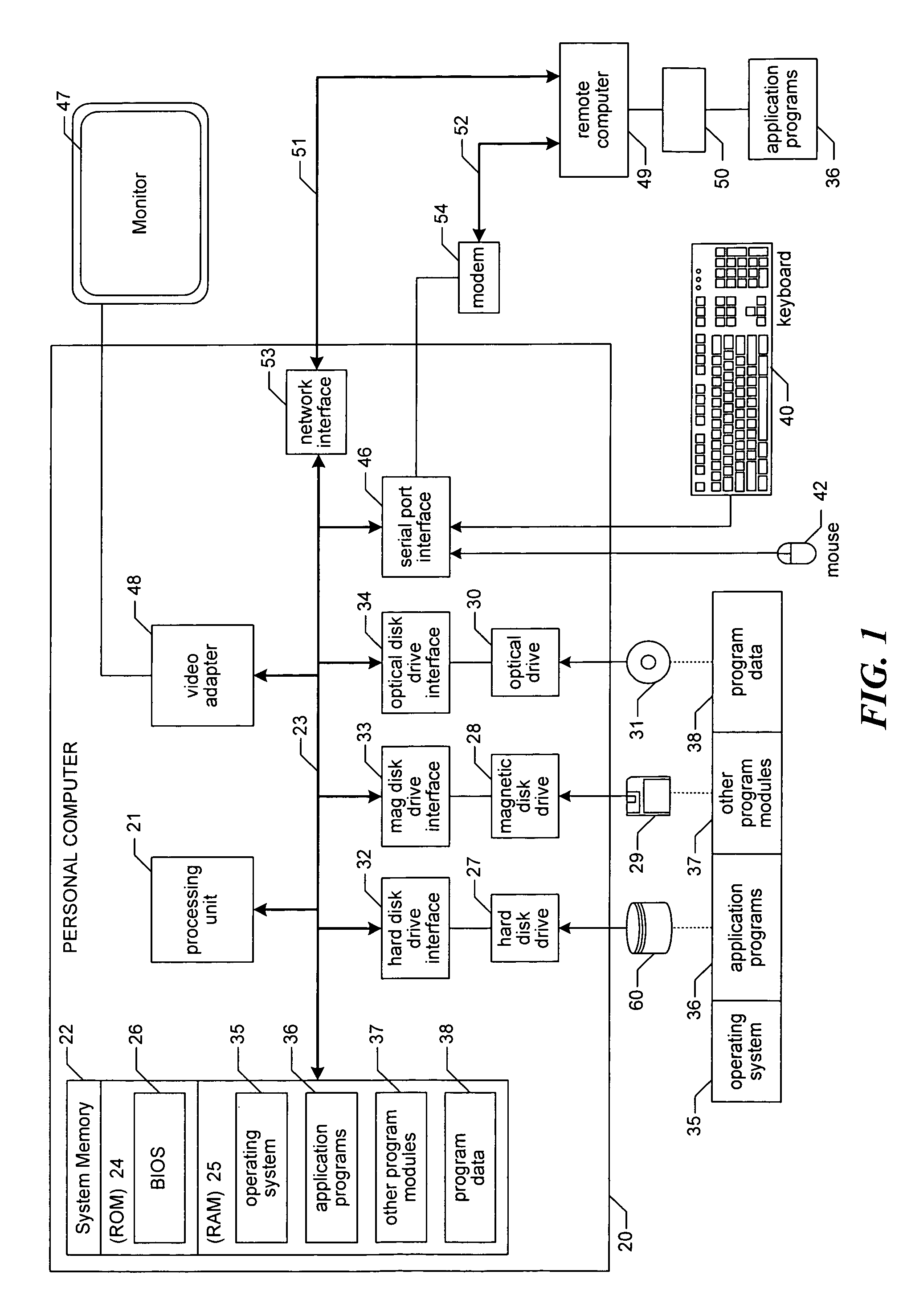

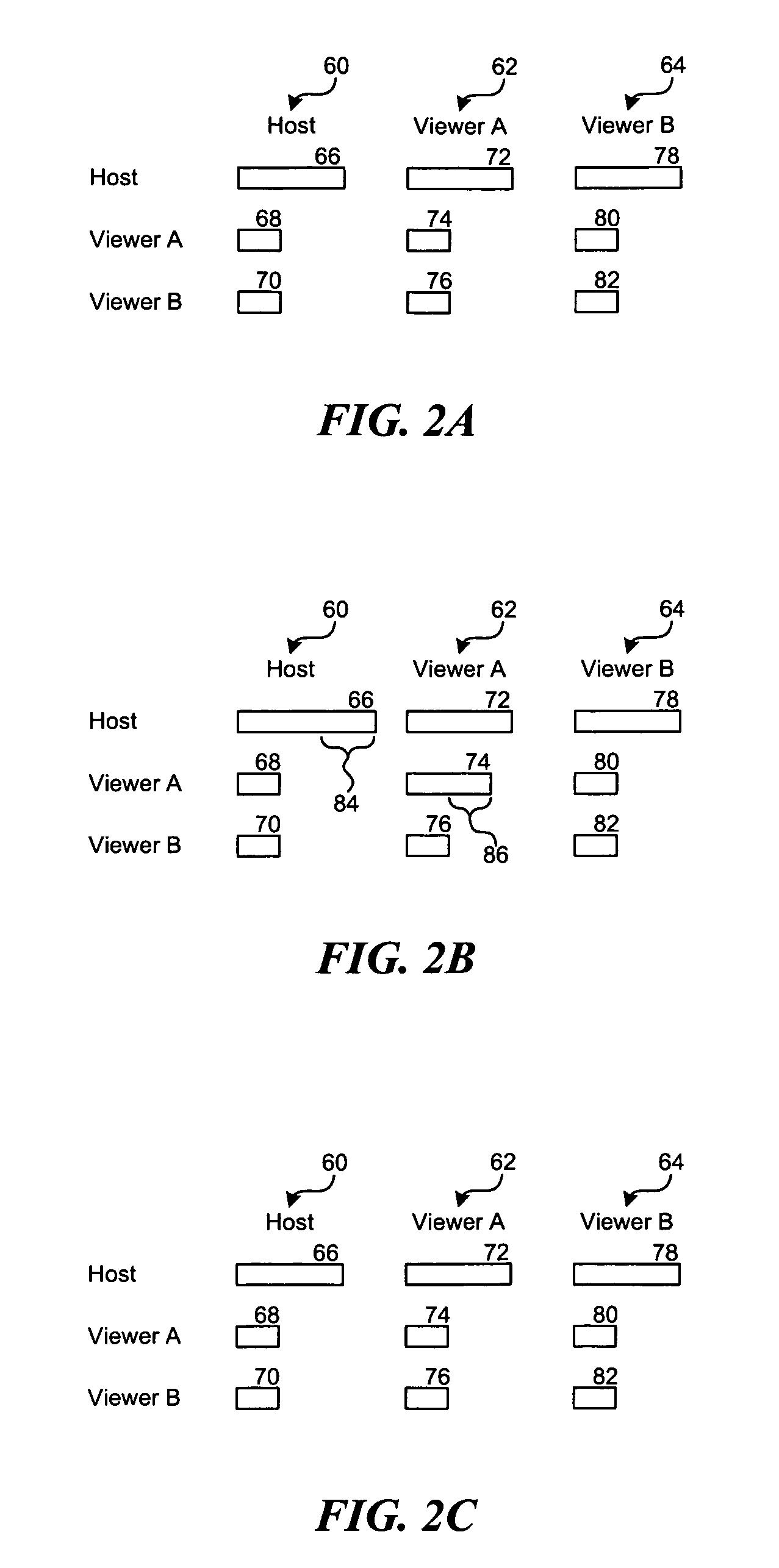

Scalable multiparty conferencing and collaboration system and method of dynamically allocating system resources in same

InactiveUS7167182B2Improve ease of useImprove functionalityMultiplex system selection arrangementsSpecial service provision for substationPrivate communicationCollaboration tool

A networking conferencing and collaboration tool utilizing an enhanced T.128 application sharing protocol. This enhanced protocol is based on a per-host model command, control, and communication structure. This per-host model reduces network traffic, allows greater scalability through dynamic system resource allocation, allows a single host to establish and maintain a share session with no other members present. The per-host model allows private communication between the host and a remote with periodic broadcasts of updates by the host to the entire share group. This per-host model also allows the host to allow, revoke, pause, and invite control of the shared applications. Subsequent passing of control is provided, also with the hosts acceptance. The model contains no fixed limit on the number of participants, and dynamically allocates resources when needed to share or control a shared application. These resources are then freed when no longer needed.

Owner:MICROSOFT TECH LICENSING LLC

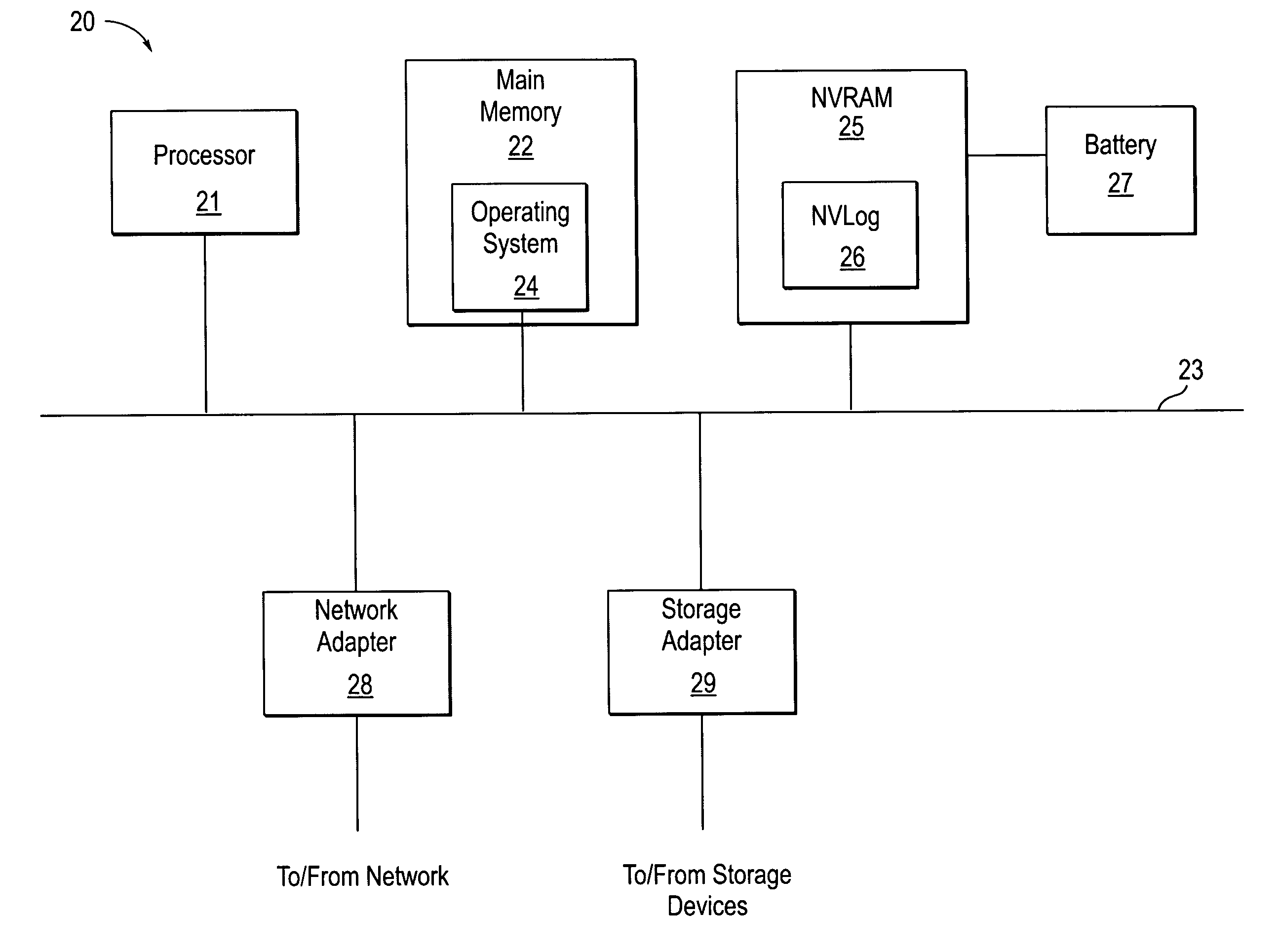

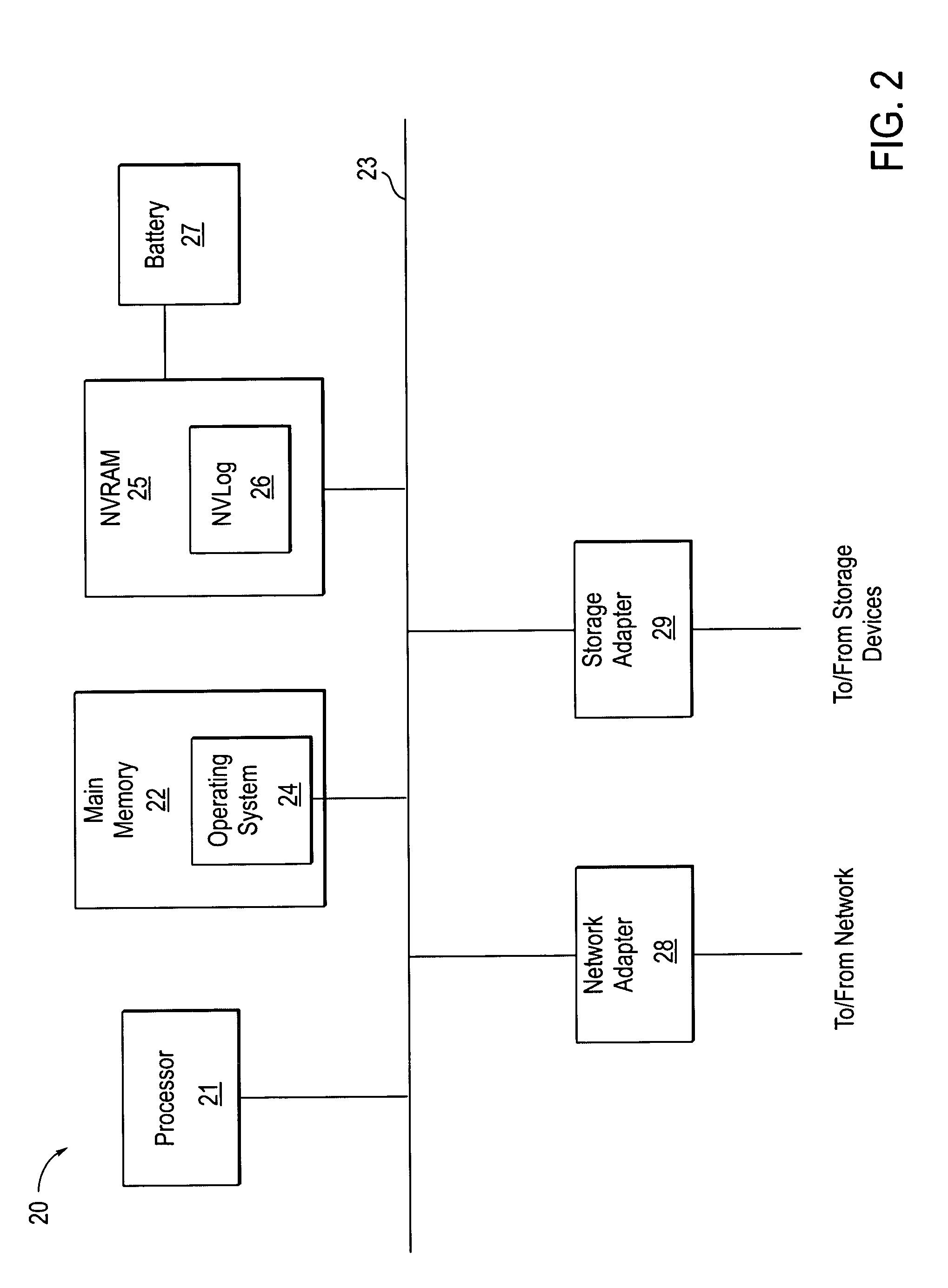

Method and apparatus for reducing network traffic during mass storage synchronization phase of synchronous data mirroring

A source storage server receives various write requests from a set of clients, logs each request in local memory, and forwards each log entry to a destination storage server at a secondary site. At a consistency point, the source storage server saves data, modified per the requests, to a first set of mass storage devices, and also initiates a synchronization phase during which process the modified data is mirrored at the secondary site. The destination storage server uses data in the received log entries to mirror at least a portion of the modified data in a second set of mass storage devices located at the secondary site, such that said portion of the modified data does not have to be sent from the source storage server to the destination storage server at the consistency point.

Owner:NETWORK APPLIANCE INC

Scalable multiparty conferencing and collaboration system and method of dynamically allocating system resources and providing true color support in same

InactiveUS7136062B1Improve functionalityIncrease usageCathode-ray tube indicatorsOffice automationPrivate communicationCollaboration tool

A networking conferencing and collaboration tool utilizing an enhanced T.128 application sharing protocol. This enhanced protocol is based on a per-host model command, control, and communication structure. This per-host model reduces network traffic, allows greater scalability through dynamic system resource allocation, allows a single host to establish and maintain a share session with no other members present, and supports true color graphics. The per-host model allows private communication between the host and a remote with periodic broadcasts of updates by the host to the entire share group. This per-host model also allows the host to allow, revoke, pause, and invite control of the shared applications. Subsequent passing of control is provided, also with the hosts acceptance. The model contains no fixed limit on the number of participants, and dynamically allocates resources when needed to share or control a shared application. These resources are then freed when no longer needed. Calculation of minimum capabilities is conducted by the host as the membership of the share changes. The host then transmits these requirements to the share group.

Owner:MICROSOFT TECH LICENSING LLC

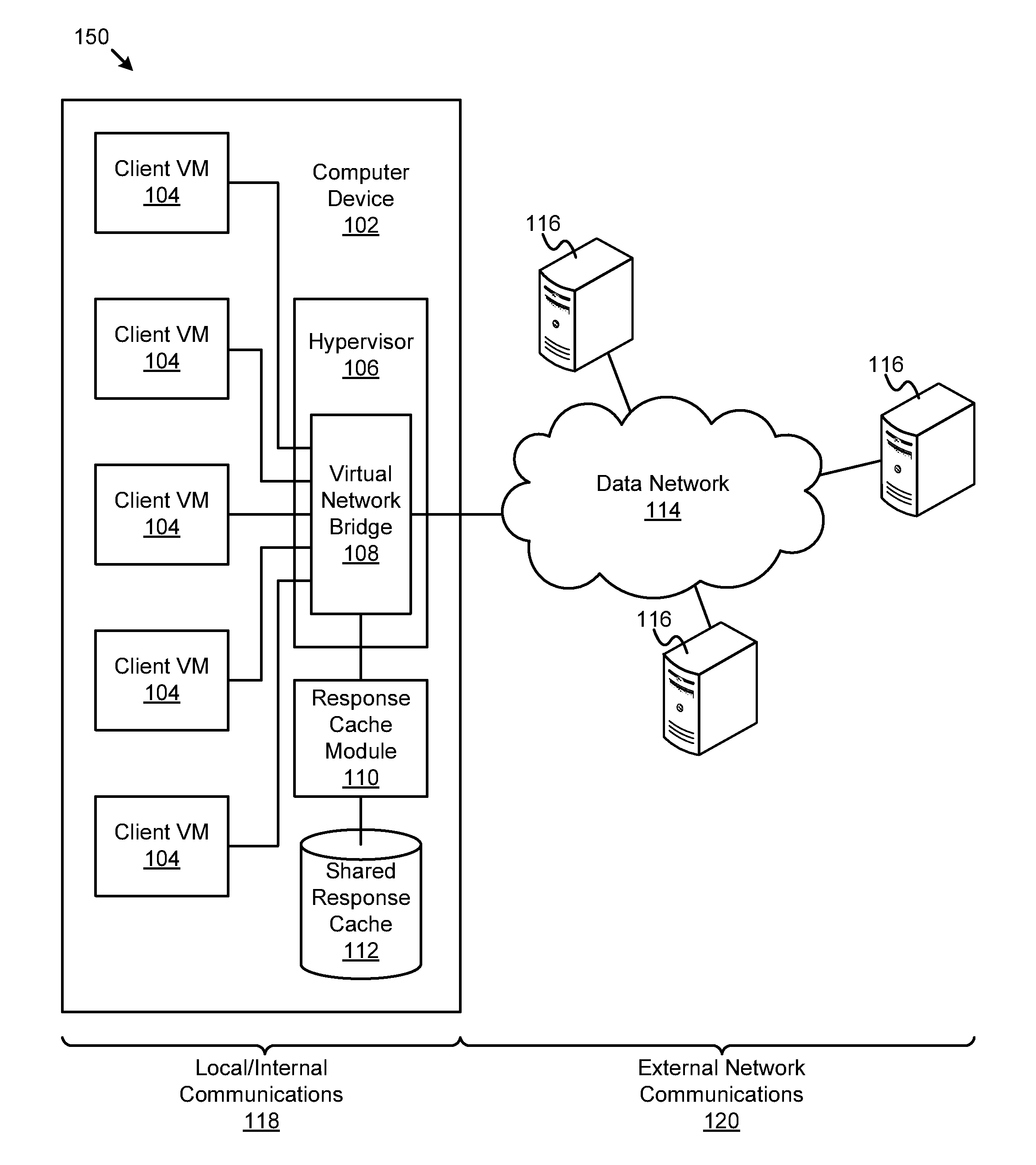

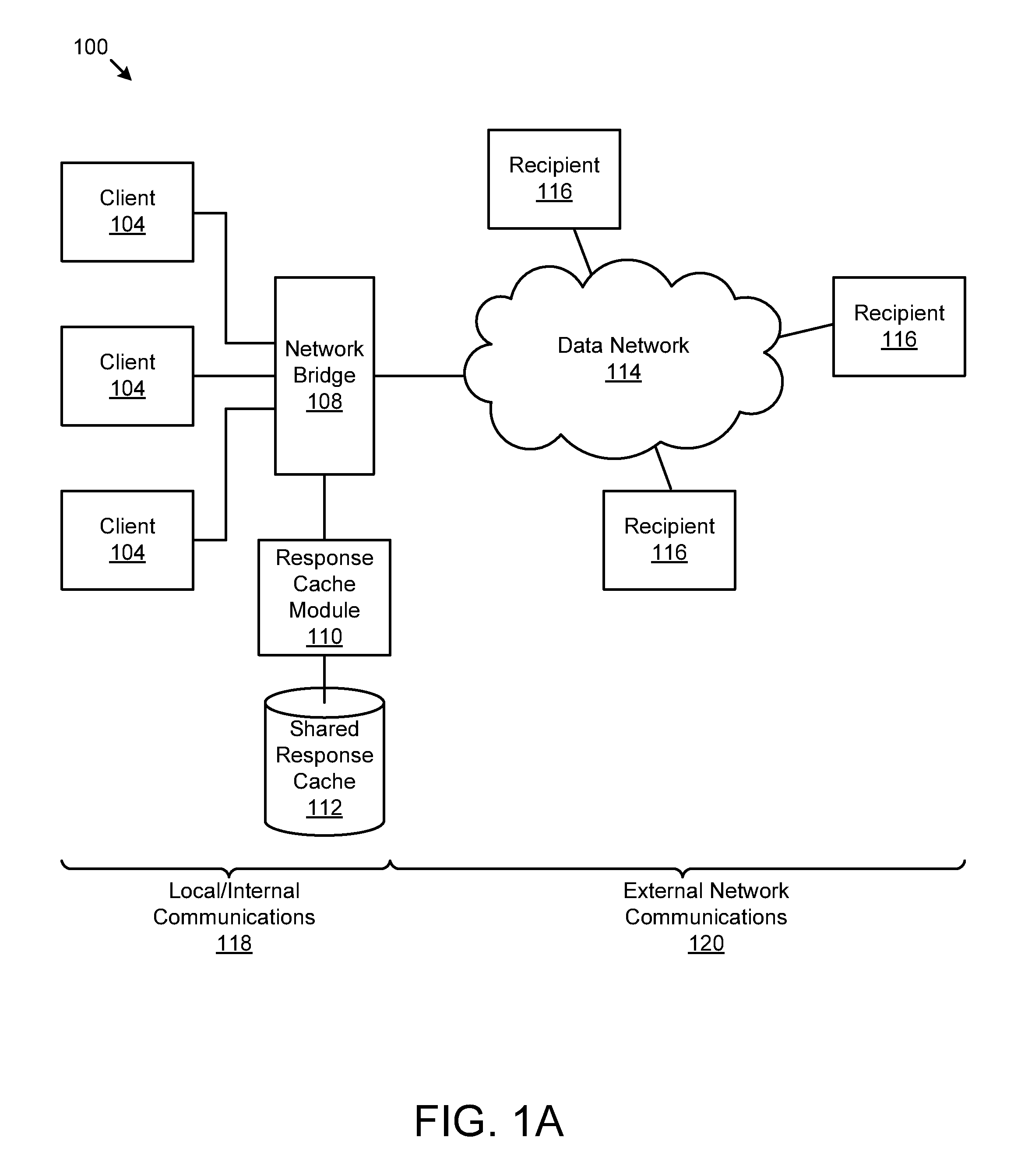

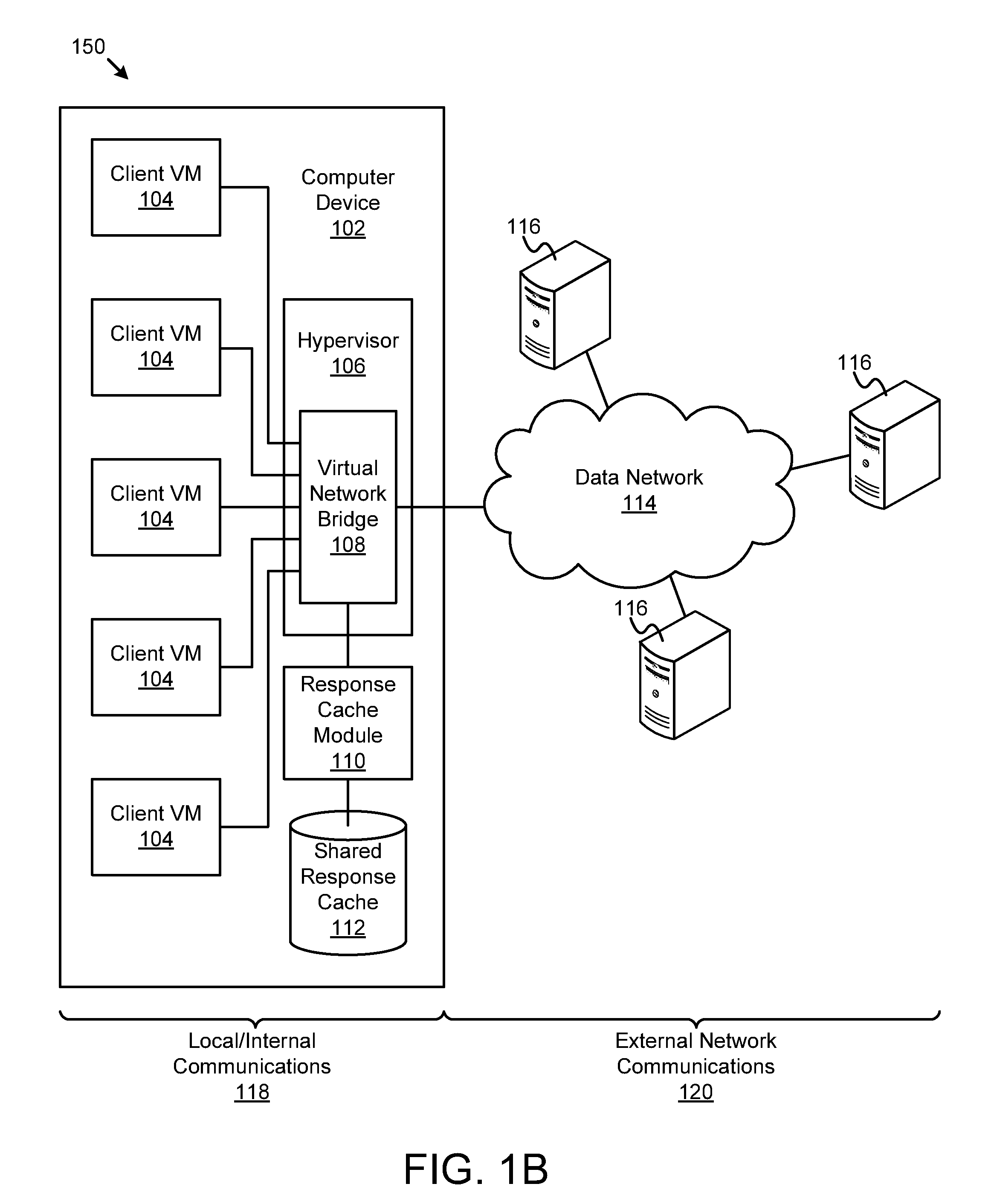

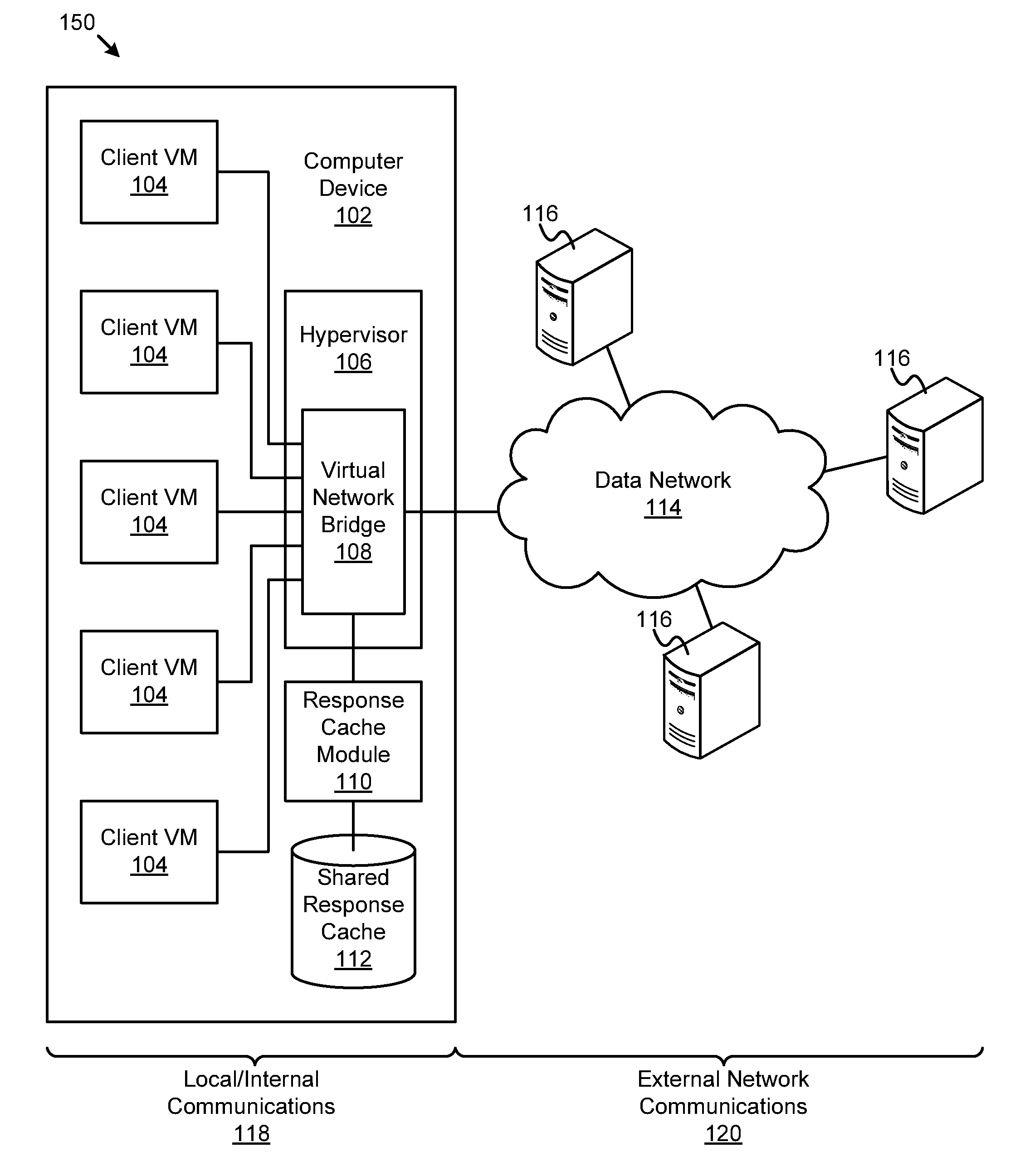

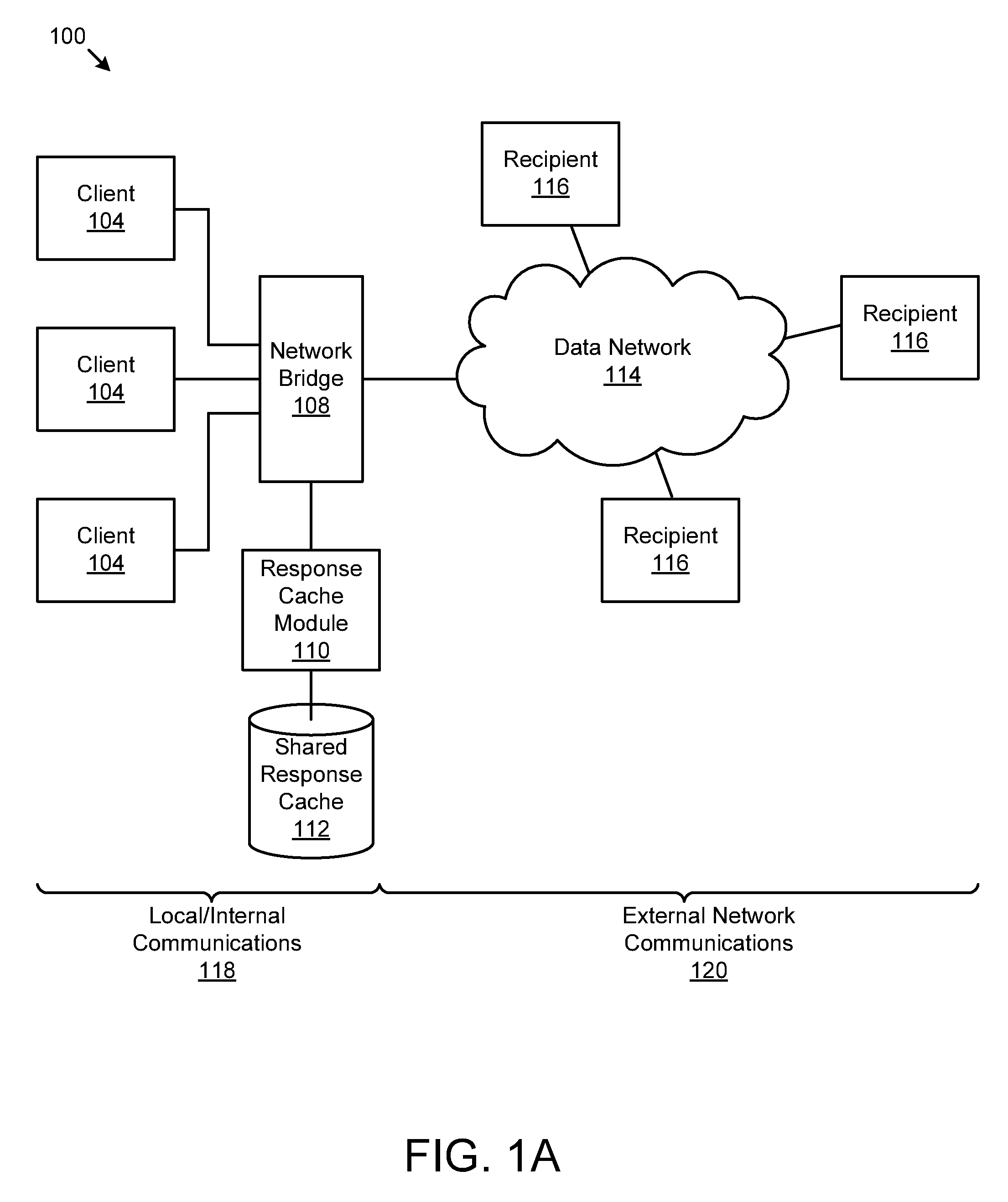

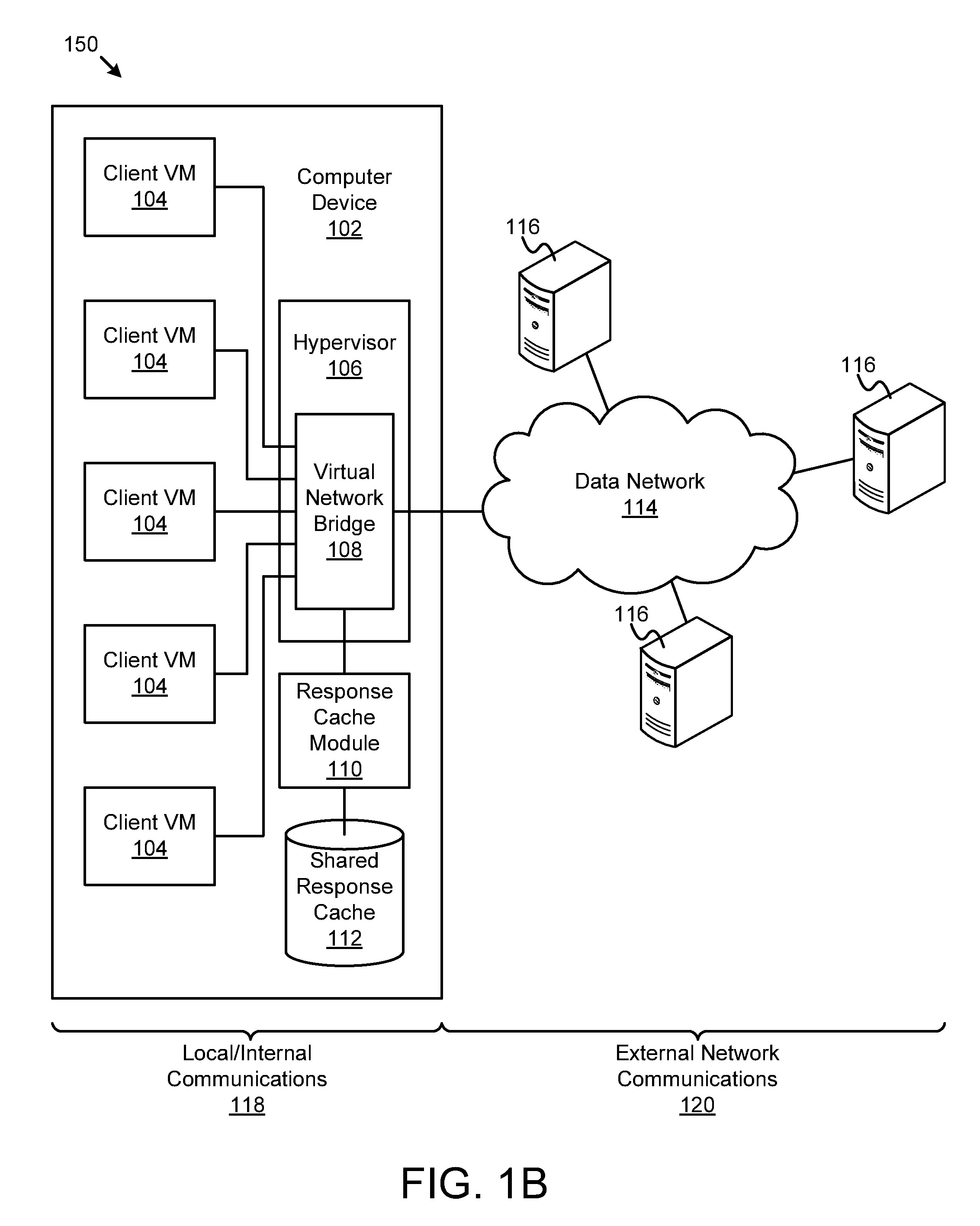

Shared network response cache

An apparatus and system are disclosed for reducing network traffic using a shared network response cache. A request filter module intercepts a network request to prevent the network request from entering a data network. The network request is sent by a client and is intended for one or more recipients on the data network. A cache check module checks a shared response cache for an entry matching the network request. A local response module sends a local response to the client in response to an entry in the shared response cache matching the network request. The local response satisfies the network request based on information from the matching entry in the shared response cache.

Owner:IBM CORP

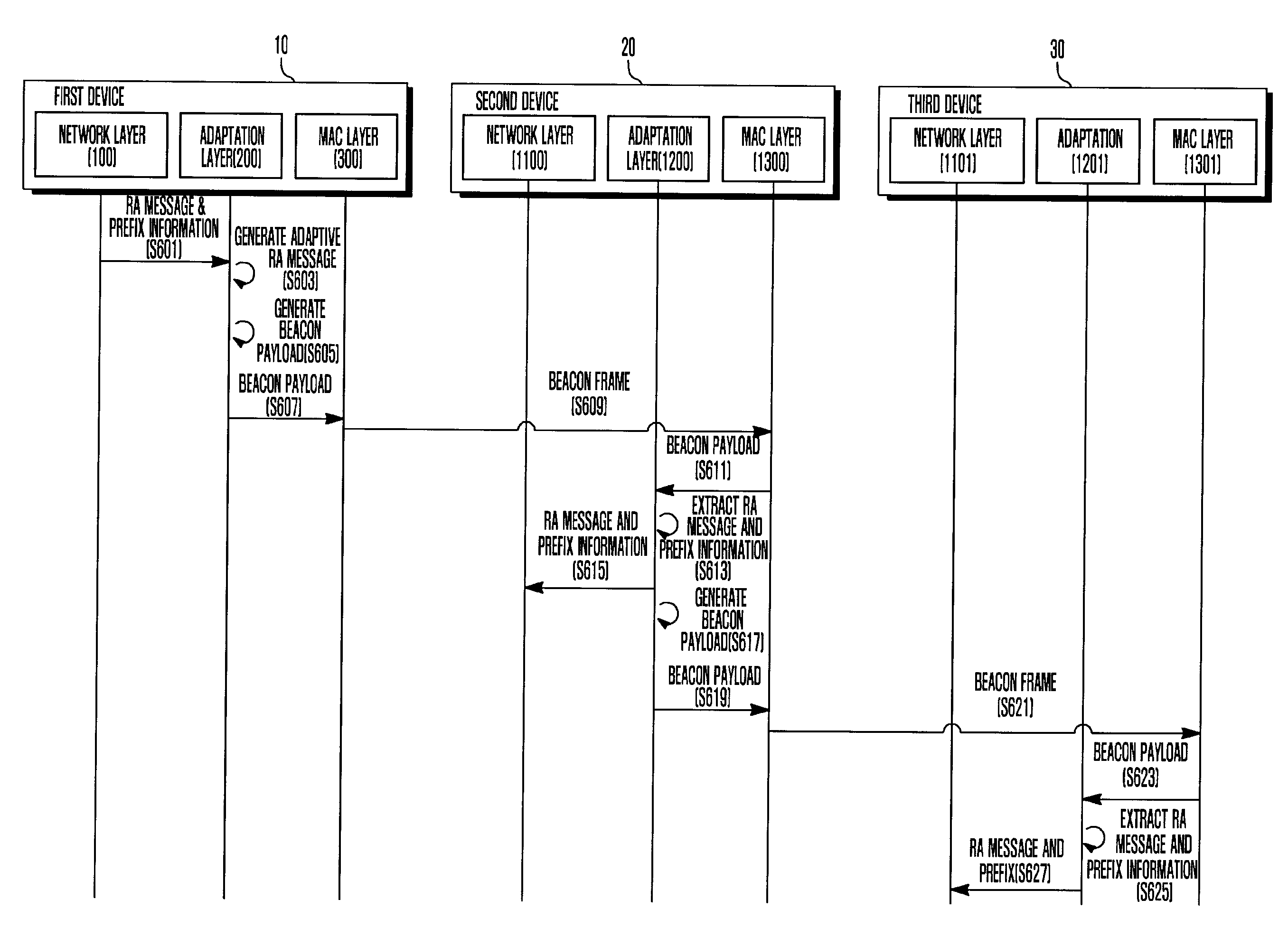

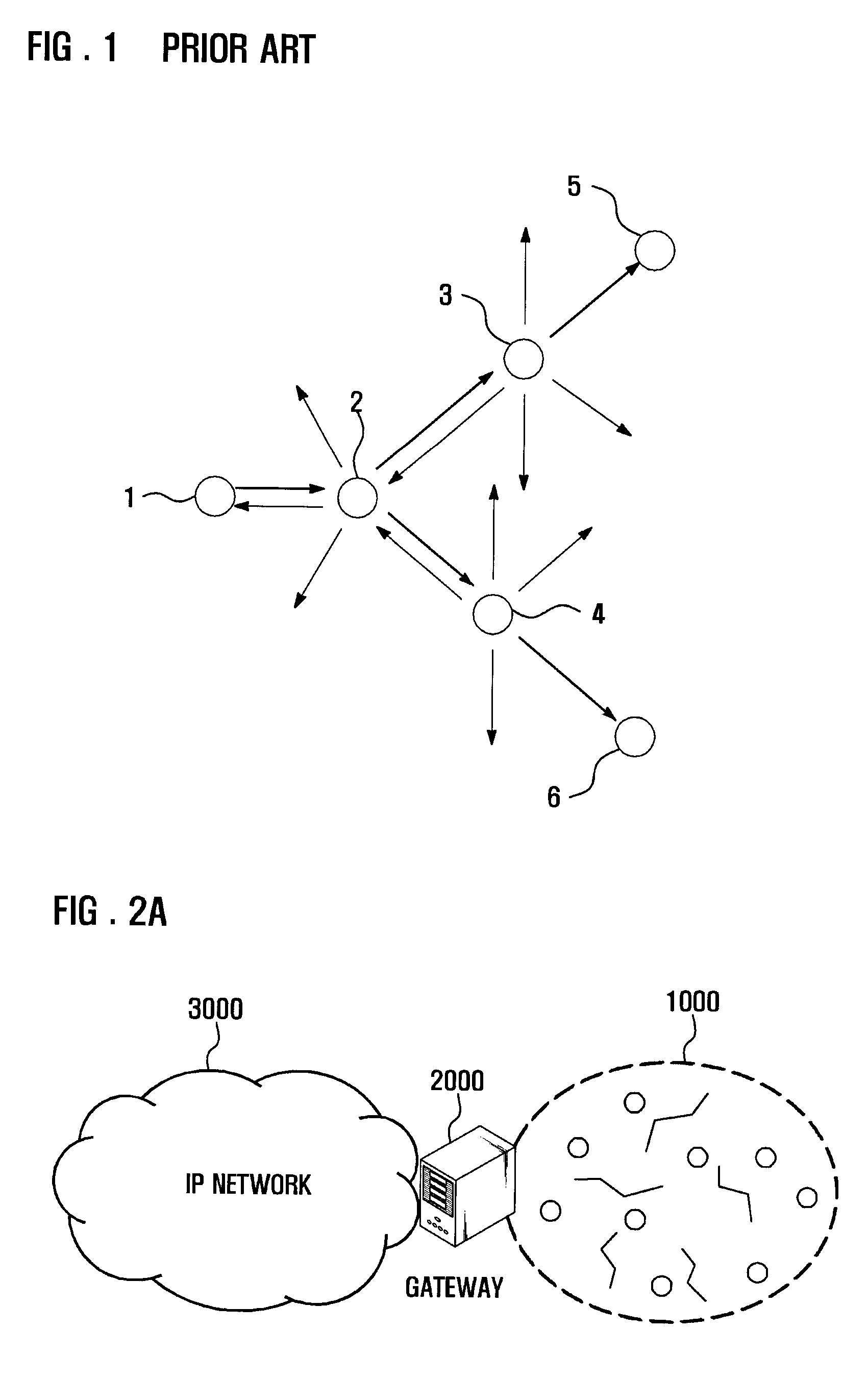

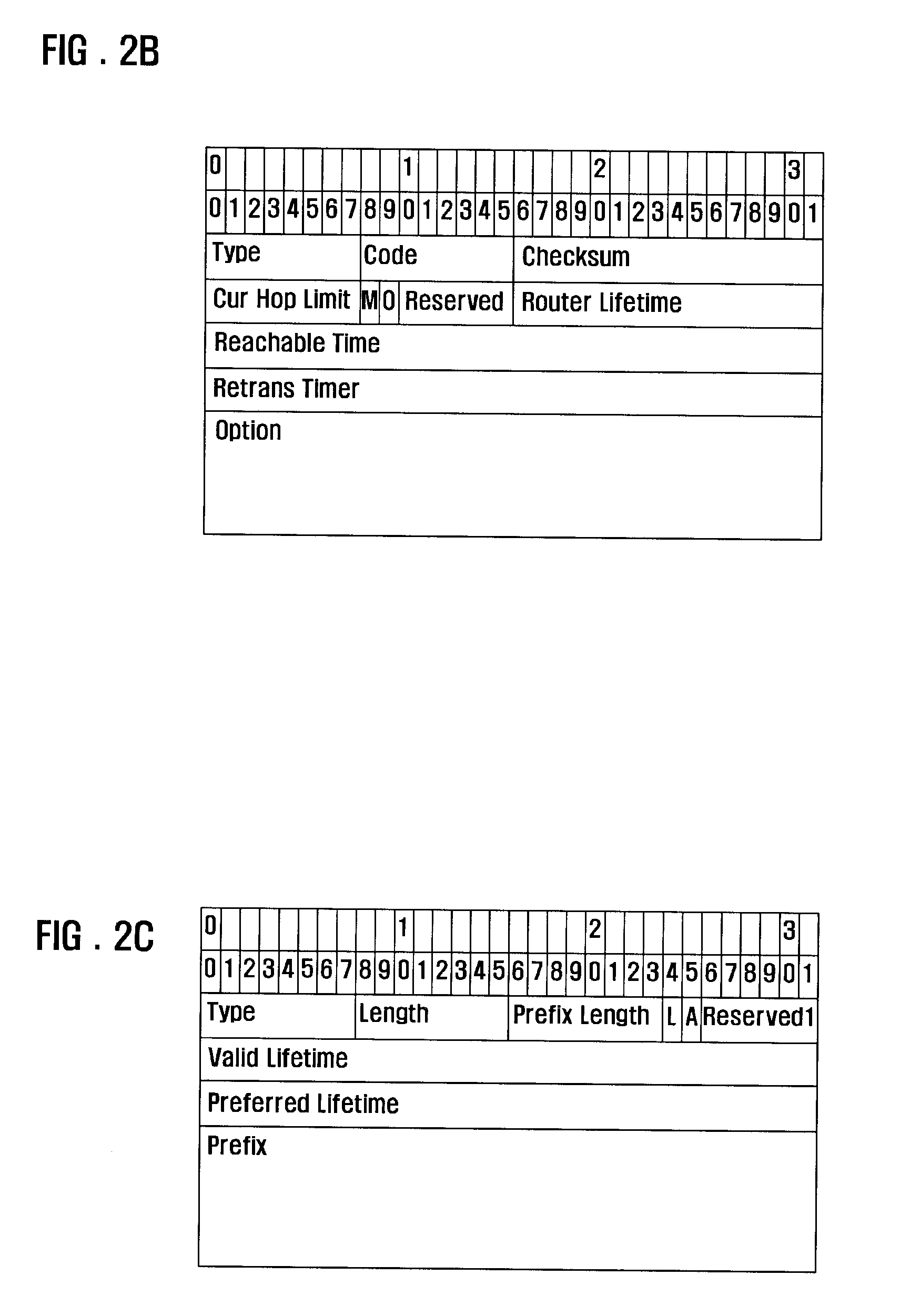

ADDRESS AUTOCONFIGURATION METHOD AND SYSTEM FOR IPv6-BASED LOW-POWER WIRELESS PERSONAL AREA NETWORK

InactiveUS20090161581A1Avoid trafficReduce network trafficPower managementAssess restrictionAuto-configurationIp address

An IP address autoconfiguration method and system of an IPv6-based Low Power WPAN for reducing network traffics is applicable for an Internet Protocol (IP) based network including a plurality of devices. The address autoconfiguration method generates and broadcasts, at a first device, a beacon frame containing an adaptive router advertisement (RA) message having prefix information, and configures, at a second device received the beacon frame, an IP address using the prefix information extracted from the adaptive RA message carried by the beacon frame and a physical address of the second device. The system includes a first type device which broadcasts a beacon frame carrying a prefix; at least one second type device which relays the prefix using a beacon frame; and at least one terminal device which configures an IP address using the prefix in the beacon frame and a physical address of the terminal device.

Owner:SAMSUNG ELECTRONICS CO LTD

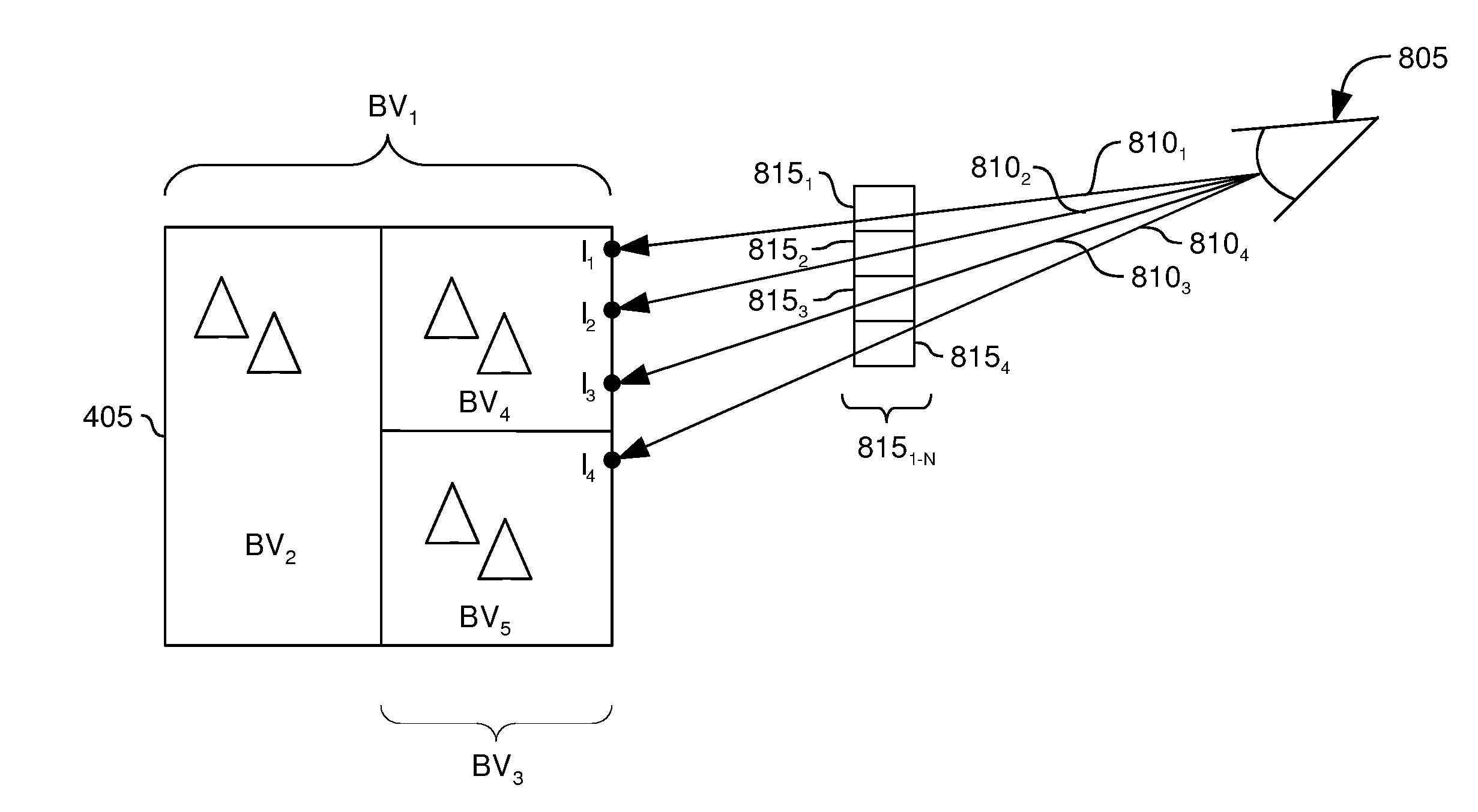

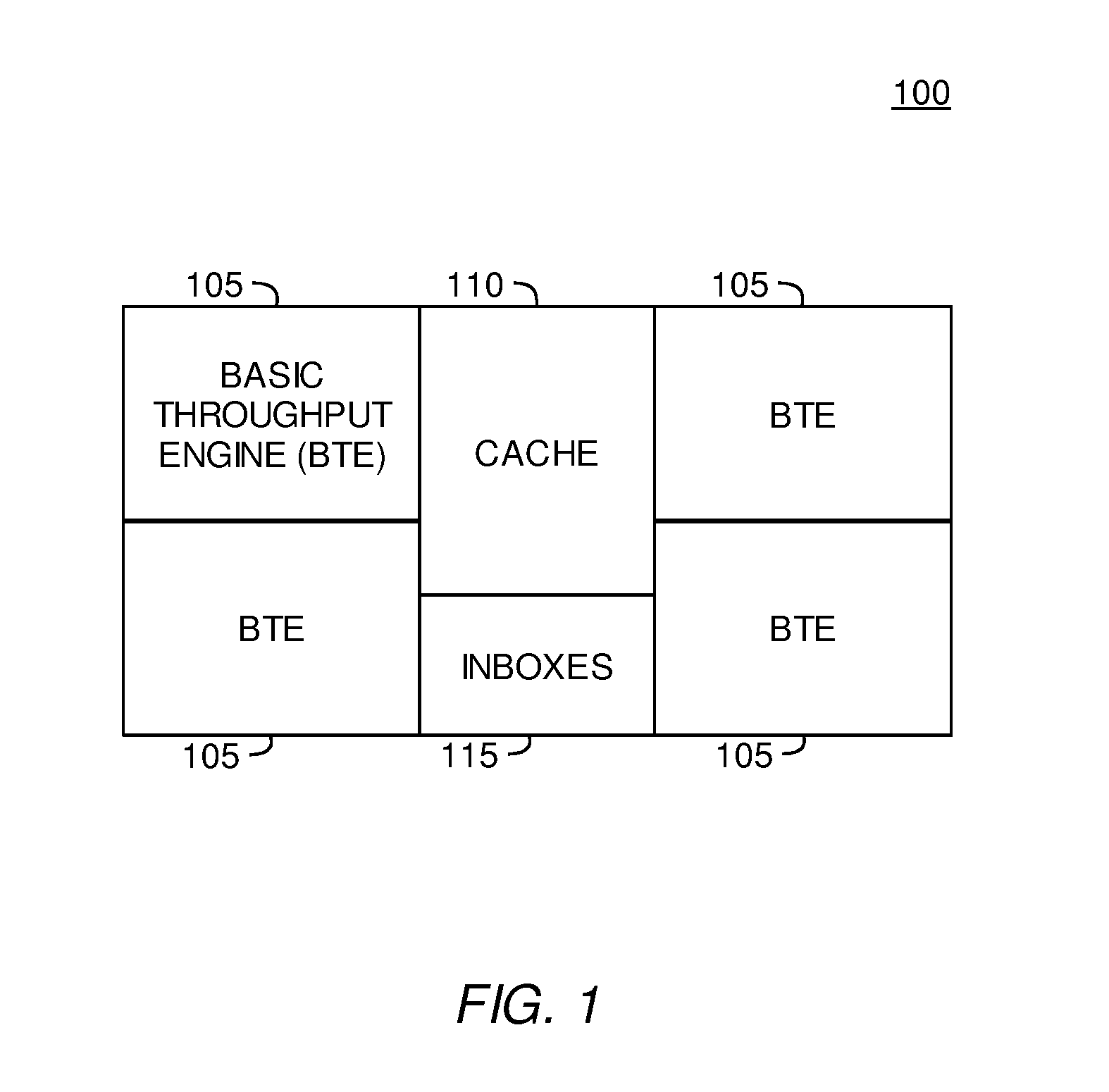

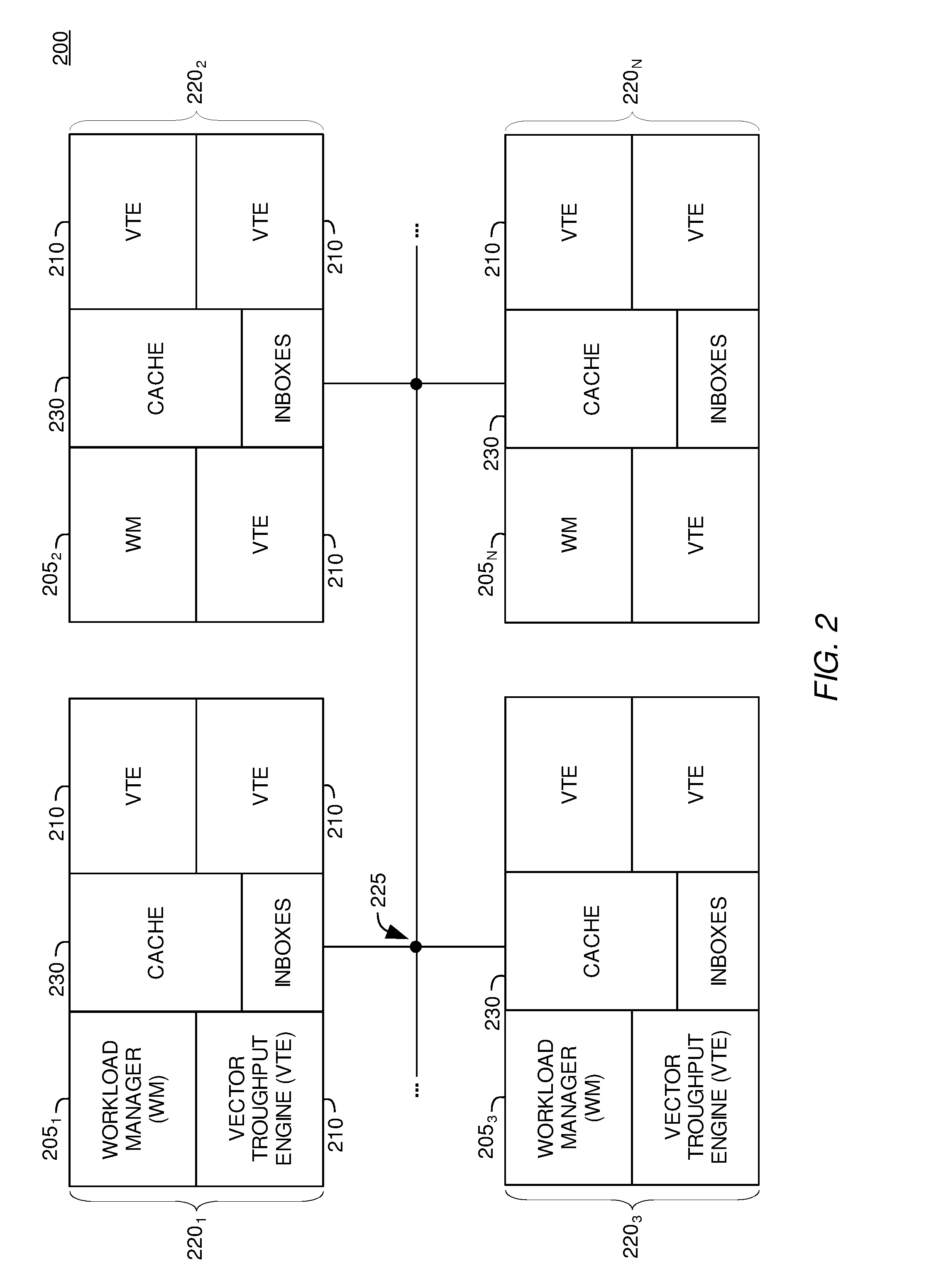

Methods and Systems for Reducing the Number of Rays Passed Between Processing Elements in a Distributed Ray Tracing System

InactiveUS20080049017A1Reduce traffic problemsMultiple digital computer combinationsElectric digital data processingNetwork reductionProcessing element

Embodiments of the invention provide techniques and systems for reducing network traffic in relation to ray-tracing a three dimensional scene. According to one embodiment of the invention, as a ray is traversed through a spatial index, a leaf node may be reached. Subsequent rays that traverse through the spatial index may reach the same leaf node. In contrast to sending information defining a ray issued by the workload manager to a vector throughput engine each time a ray reaches a leaf node, the workload manager may determine if a series of rays reach the same leaf node and send information defining the series of rays to the vector throughput engine. Thus, network traffic may be reduced by sending information which defines a series of rays which are traversed to a common (i.e., the same) leaf node in contrast to sending information each time a ray is traversed to a leaf node.

Owner:IBM CORP

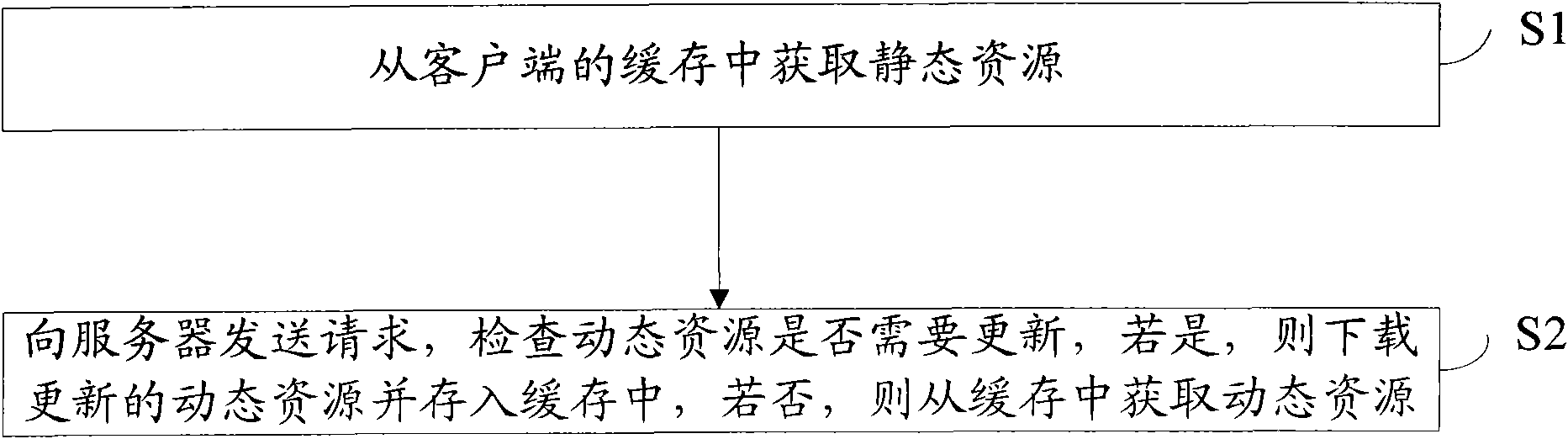

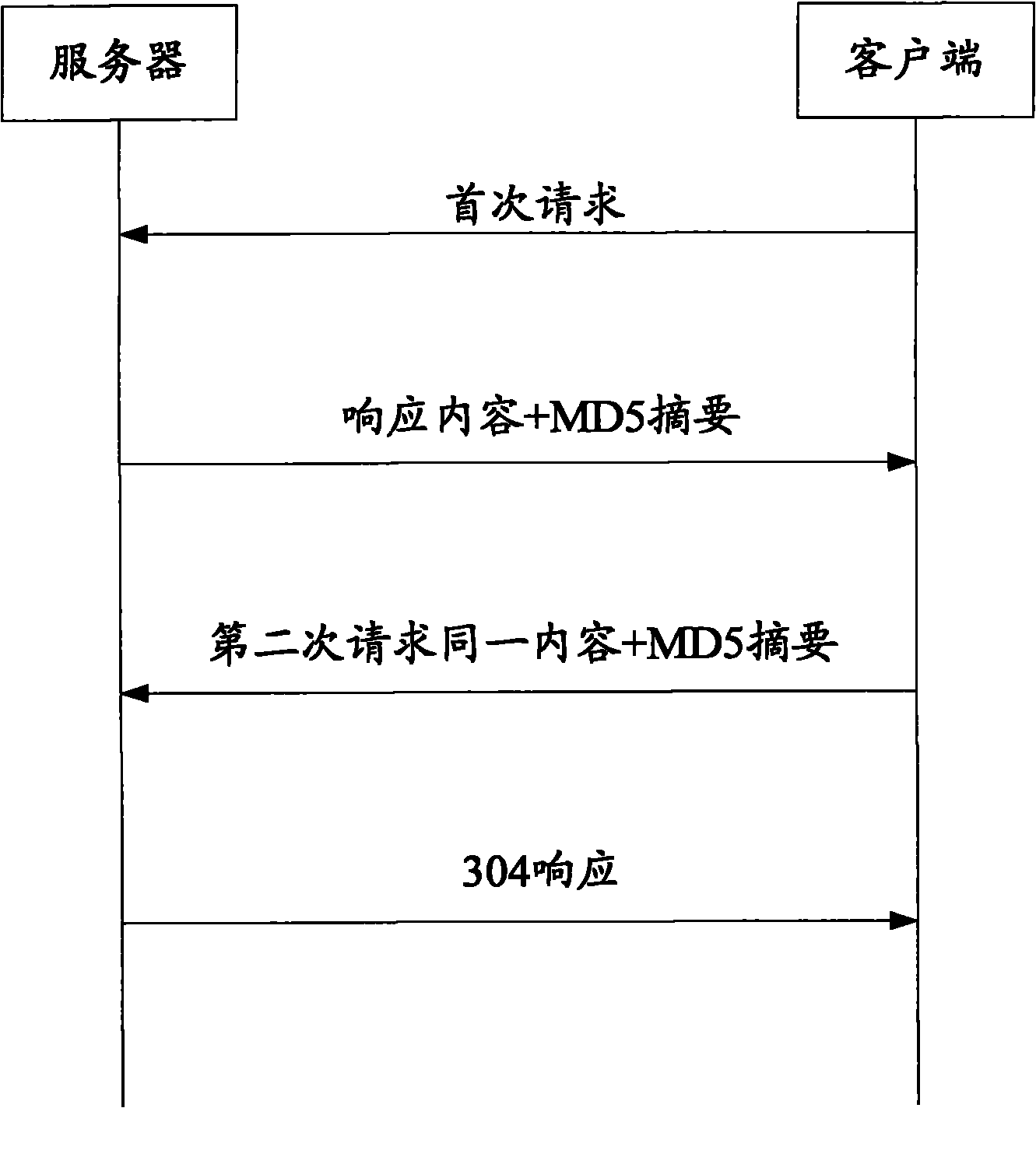

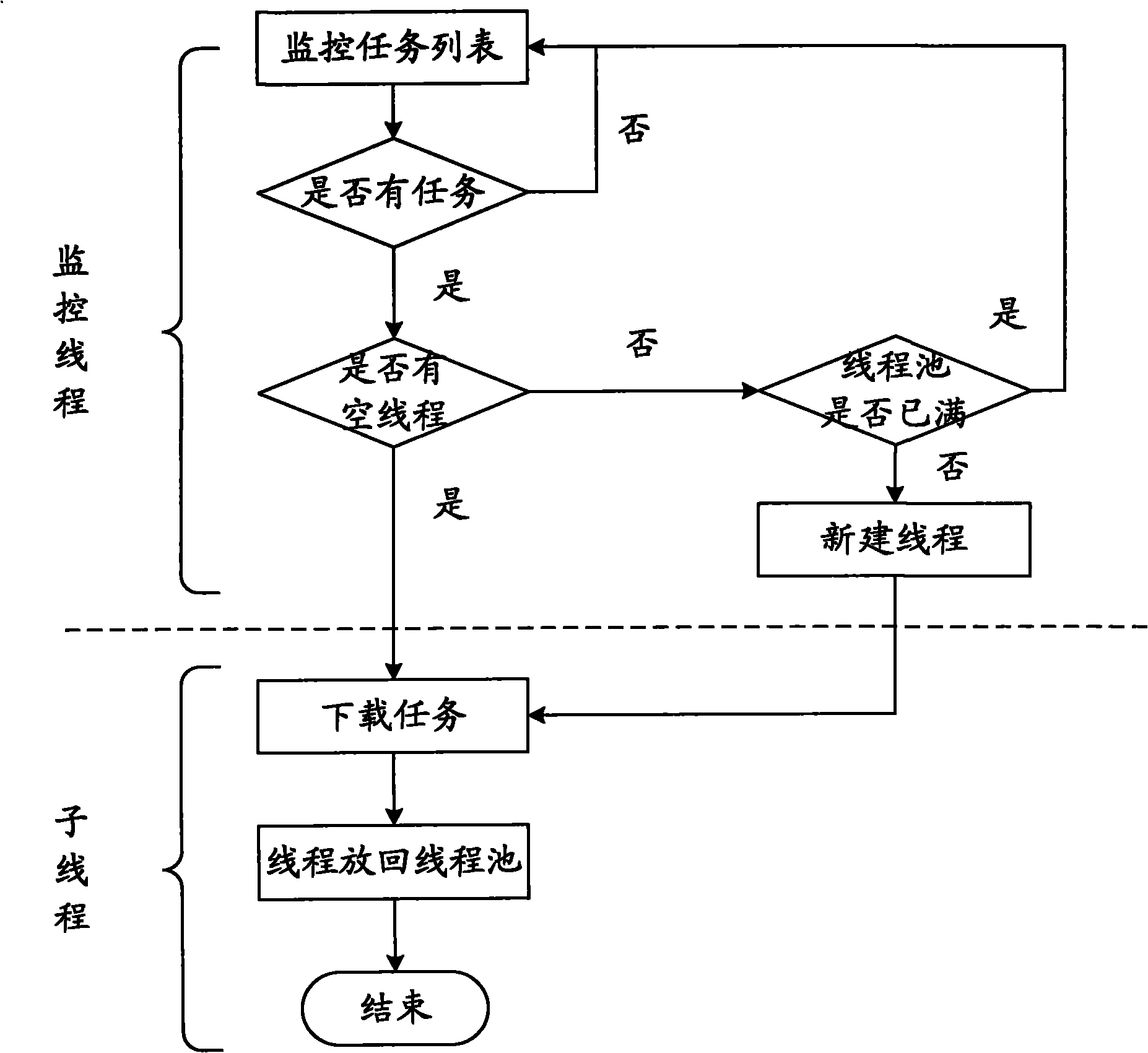

Method for rapidly displaying user interface of embedded type platform

InactiveCN102081650AOpen fastReduce the number of requestsSpecial data processing applicationsTraffic capacityDynamic resource

The invention discloses a method for rapidly displaying a user interface of an embedded type platform, which divides the resource of the user interface into a static resource and a dynamic resource. The method provided by the invention comprises the following steps: acquiring the static resource from the cache of a client; sending a request to a server; and checking whether the dynamic resource needs to be updated or not, if the dynamic resource needs to be updated, downloading the updated dynamic resource and storing into the cache, and if the dynamic resource needs not to be updated, acquiring the dynamic resource from the cache. The method provided by the invention reduces the times of network requests to the greatest extent and shortens the time taken for opening pages to the greatest extent through an effective strategy, solves the problems of insufficient cache resources caused by the limit of hardware resources in the existing embedded type environment, enhances the display speed of the interface, reduces the times of the network requests and reduces the network flow, thereby slowing the accumulation of cache resources.

Owner:上海网达软件股份有限公司

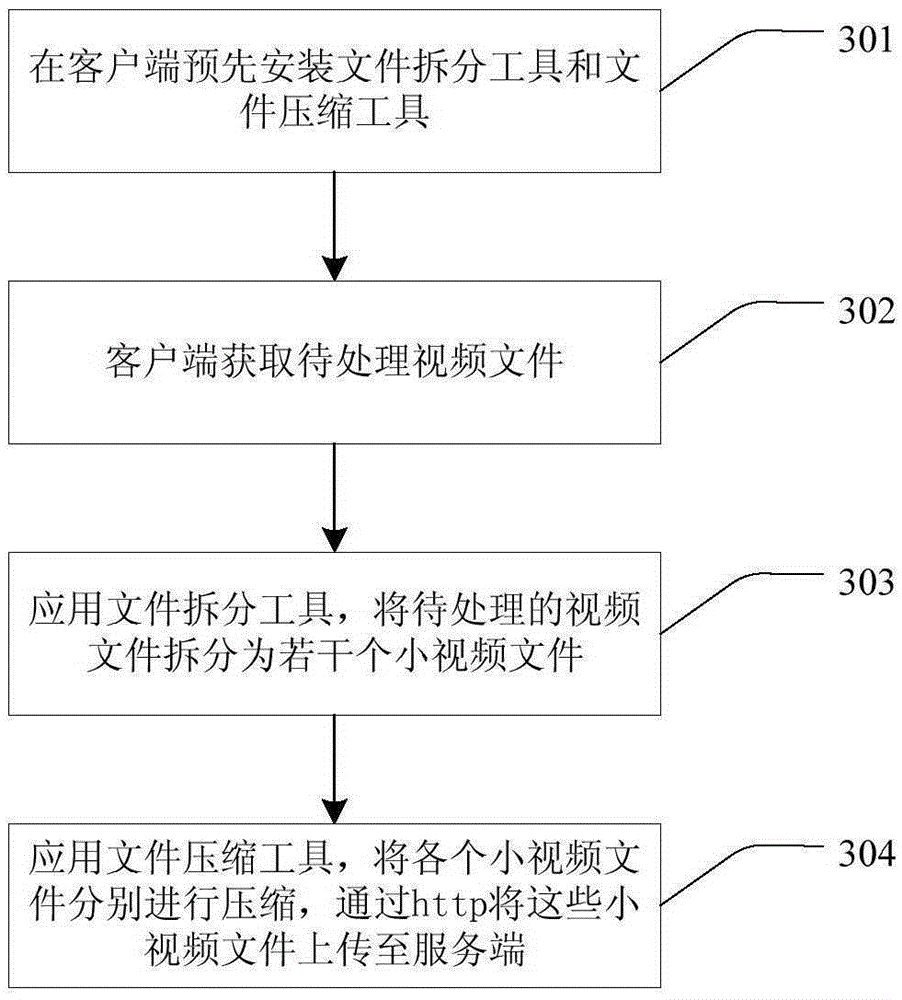

Video processing method and system

ActiveCN105338424AReduce consumptionImprove transmission efficiencySelective content distributionTraffic capacityDistributed File System

The invention discloses a video processing method and system. The method includes the following steps that: a video file to be processed is obtained at a client end, and the video file to be processed is split into a plurality of segment files, and the plurality of segment files are compressed and are uploaded to a server; at the server end, after being received, all the segment files are de-compressed and stored in a distributed file system; the segment files of the video file to be processed are download and are compressed and decoded, and new segment files are stored in the distributed file system; and the segment files are merged to form a complete video file. According to the method of the invention, at the client end, the video file is split into the small files from a large file, and so that the video file can be segmented to form the segment files, and the segment files are uploaded, and therefore, transmission efficiency can be improved, and network traffic consumption can be decreased; at the server end, the plurality of small files are compressed and decoded under a cluster, so that processing time can be decreased. With the video processing method and system adopted, the video file can be played independently and can be played in a blocked and segmented manner, and therefore, and user experience can be greatly enhanced. The video processing method and system are applicable to the video playing field in complex environments.

Owner:NUBIA TECHNOLOGY CO LTD

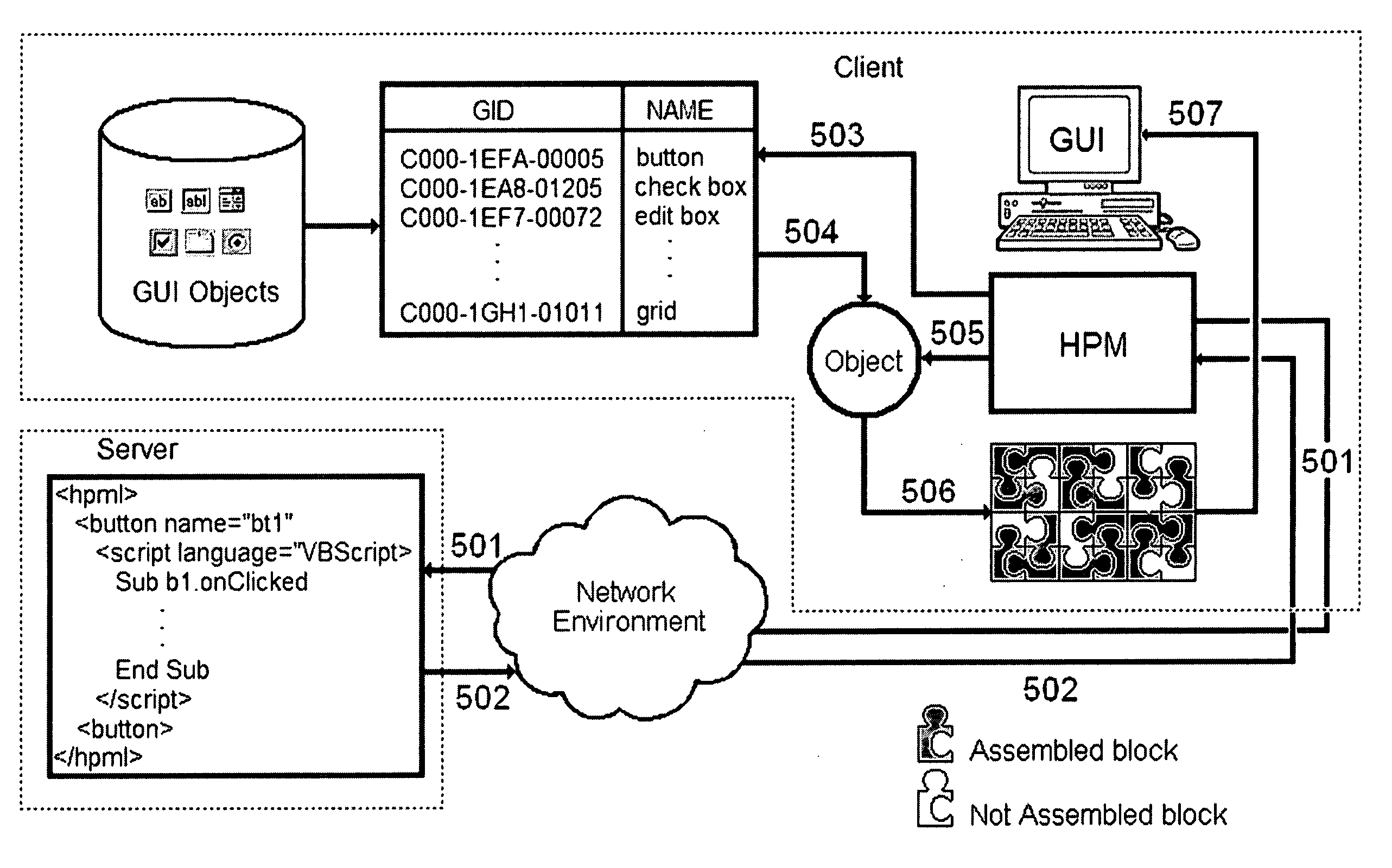

Systems and methods for developing and running applications in a web-based computing environment

InactiveUS20070288644A1Improve application performanceSoftware engineeringMultiple digital computer combinationsGraphicsGraphical user interface

Systems and methods for developing computer applications in a computer network environment by describing graphic user interface components and other application's components with a new XML markup language, by coding the component's behavior with an scripting programming language, and by deploying said applications to a client workstation running in a browser that contains a new virtual machine that replaces the HTML interpreter with an interpreter of the new markup language. The new virtual machine receives the application split in small modules, parses the XML descriptions and the scripting code and creates instances of the components to build the application on the client workstation. All the components are held by the new virtual machine, so that no new request to the server are made when the components are reused, minimizing the network traffic.

Owner:ROJAS CESAR AUGUSTO +1

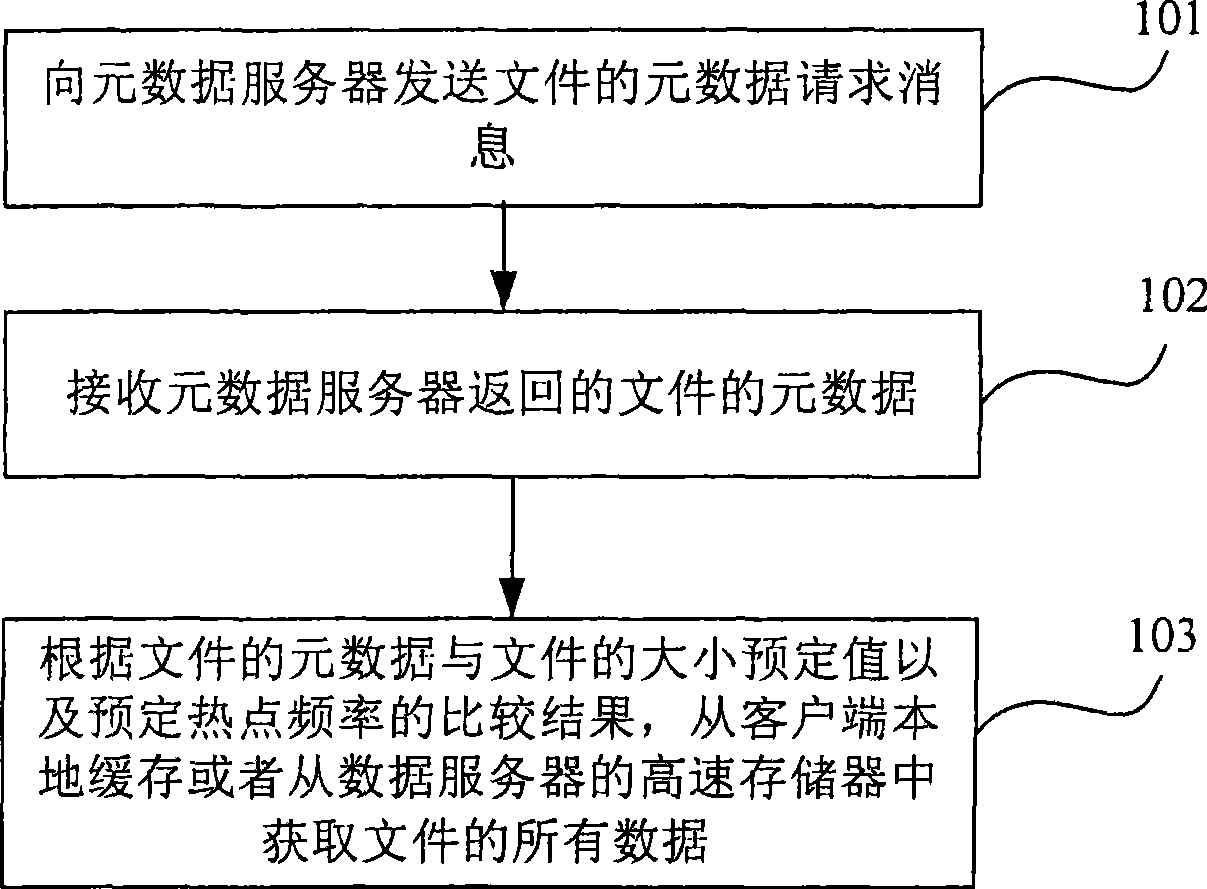

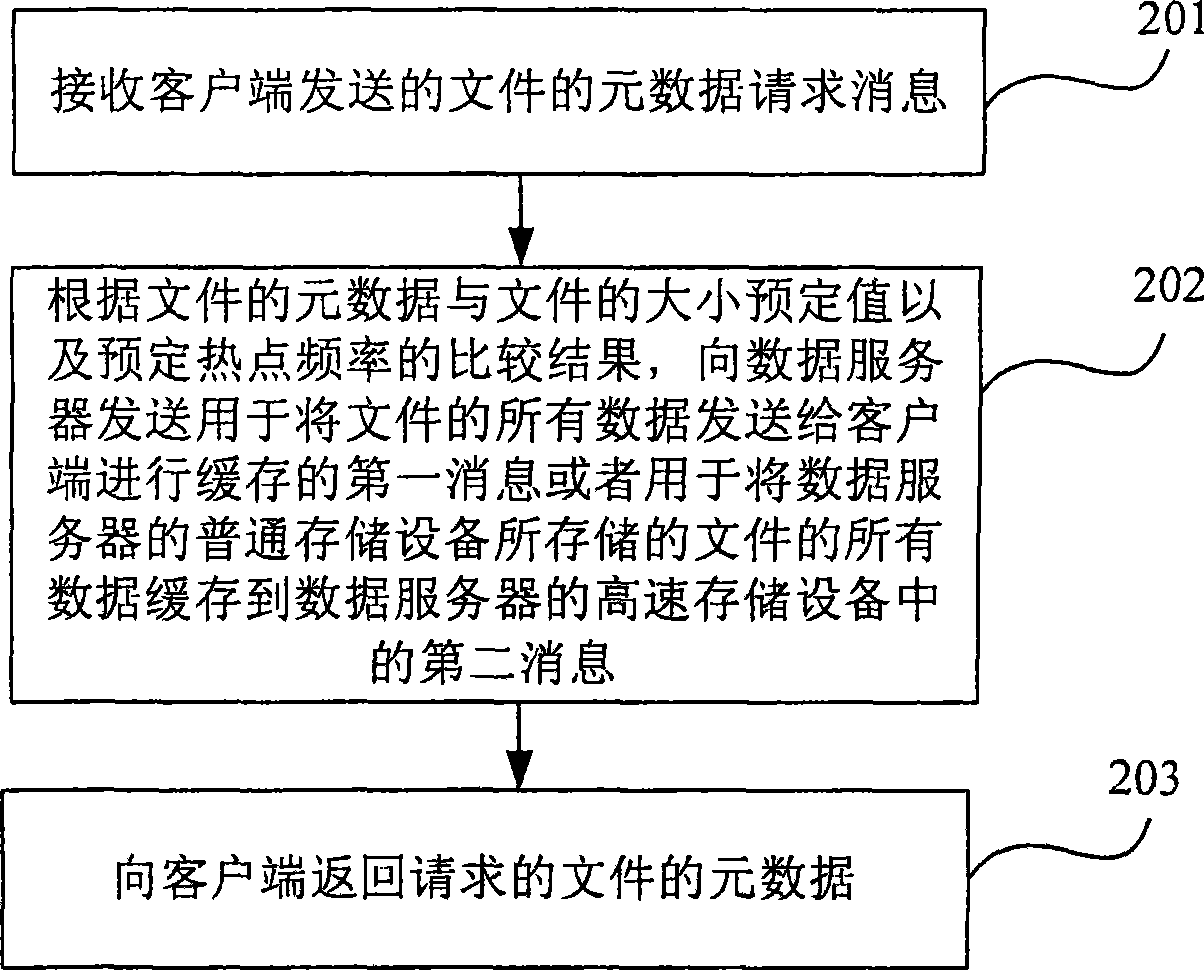

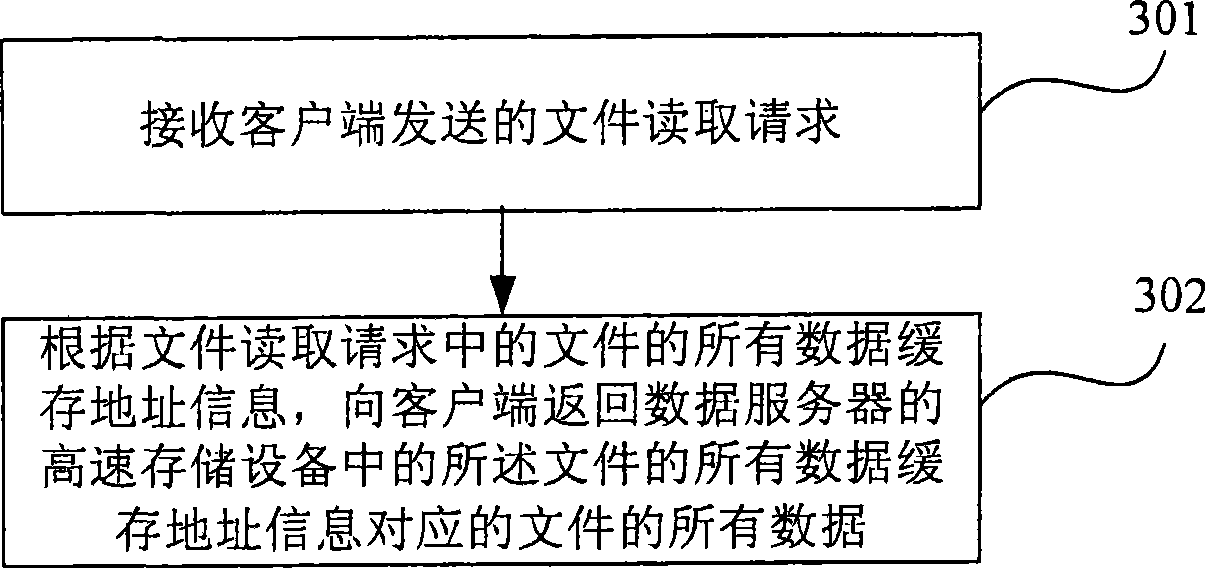

File data accessing method, apparatus and system

ActiveCN101510219AImplement multi-level cachingImprove reading efficiencyTransmissionSpecial data processing applicationsTraffic capacityHigh speed memory

The invention provides a method, a device and a system for file data access, and the file data accessing method comprises the following steps: a file metadata request message is sent to a metadata server; file metadata returned by the metadata server is received; and according to the comparison result of the file metadata, a preset file size value and preset hotspot frequency, all the file data is obtained from a local cache of the client terminal or a high-speed memory of a data server. The file data accessing method, the device and the system realize multilevel caching of files with small hotspot, and can obtain all the file data from the local cache or the high-speed cache of the data server especially for the files with small hotspot, thus improving the reading efficiency of the files with small hotspot in network memory, and further raising the access speed of the files with small hotspot and reducing network flow.

Owner:CHENGDU HUAWEI TECH

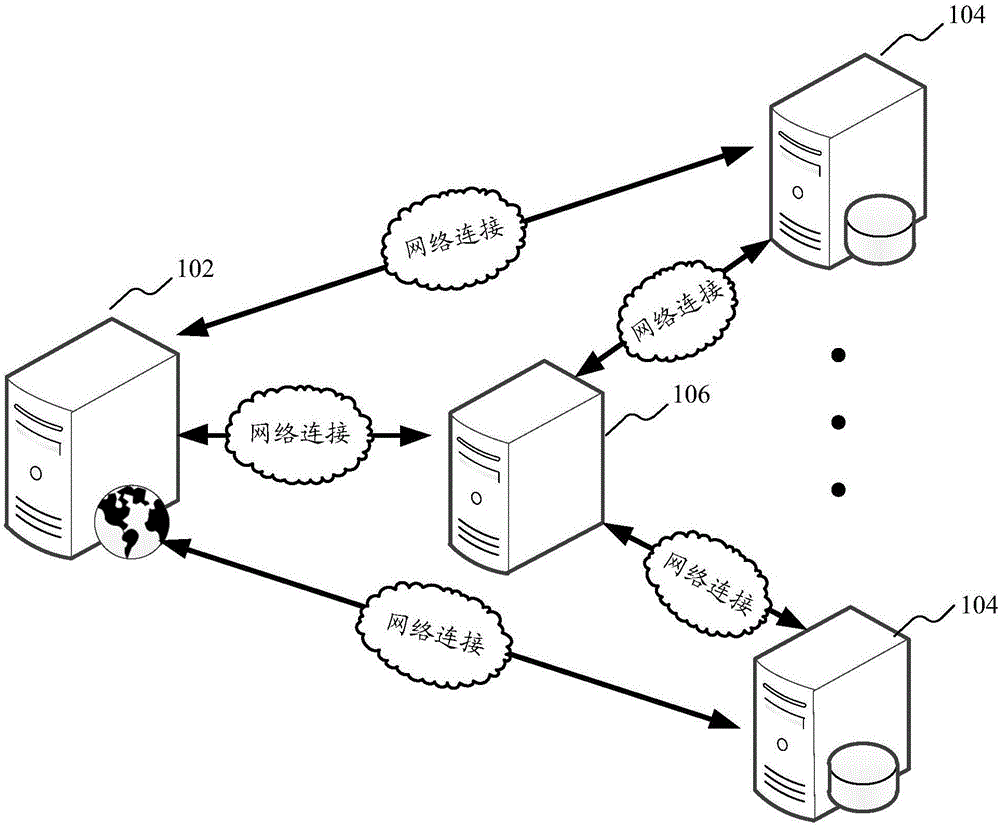

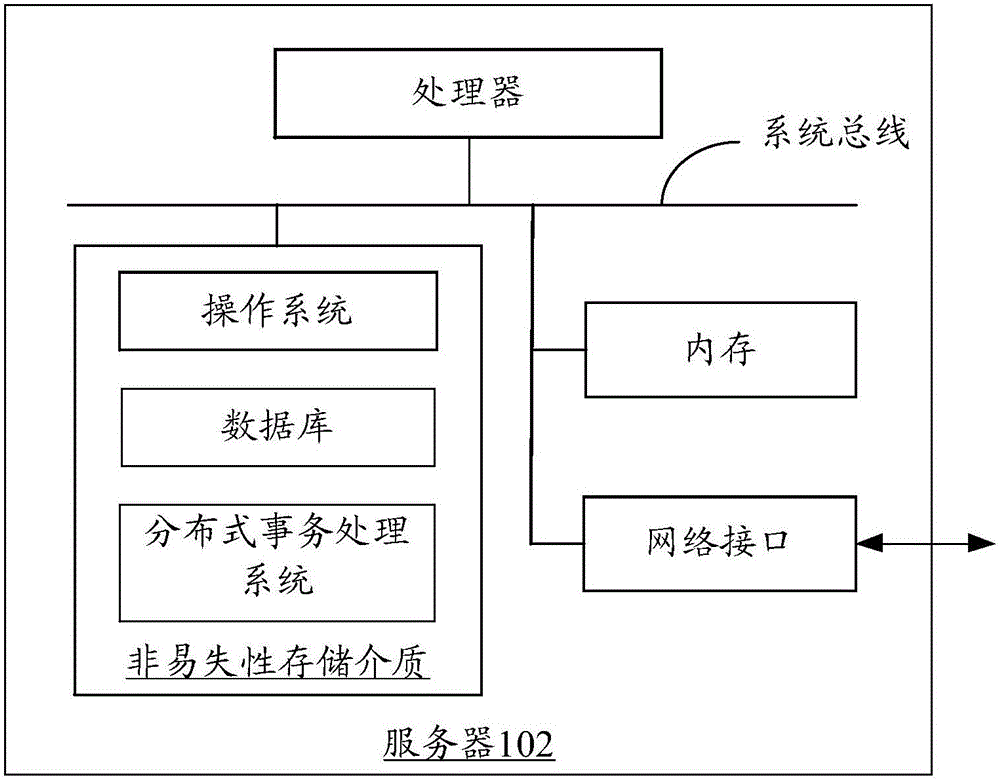

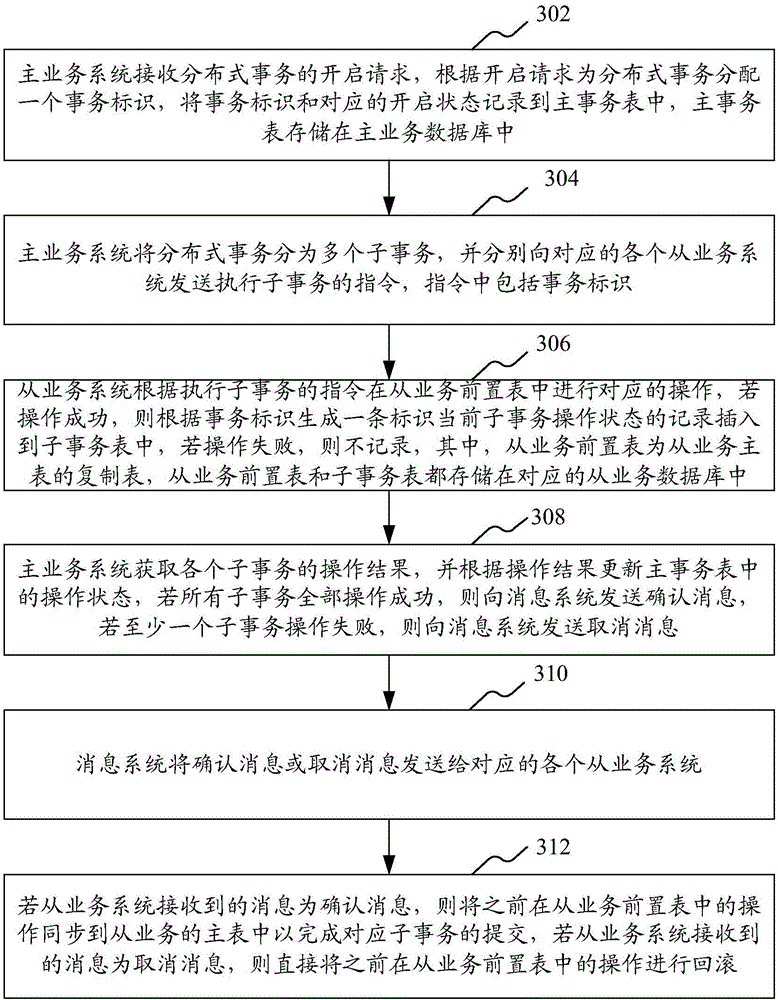

Distributed transaction processing method and system

ActiveCN106775959AGuaranteed ACIDReduce consumptionSpecial data processing applicationsDistributed object oriented systemsFault toleranceTraffic capacity

The invention provides a distributed transaction processing method. The method comprises the following steps: distributing a distributed transaction into a plurality of sub-transactions by a main business system; then respectively transmitting commands for executing the sub-transactions to the various corresponding secondary business systems; carrying out corresponding operations by the secondary business systems from secondary business front tables according to the commands for executing sub-transactions; after all the sub-transactions are executed successfully, simultaneously synchronizing operations in the secondary business front tables to secondary business main tables, so that consistency of the sub-transactions is realized; and when execution of at least one sub-transaction fails, rolling back all the sub-transactions in the secondary business front tables. A transaction table is added in a database of each business system, consumption of network flow can be reduced, transaction processing efficiency can be improved, single point of failure is avoided, and certain fault tolerance is achieved. In addition, the invention provides a distributed transaction processing system.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

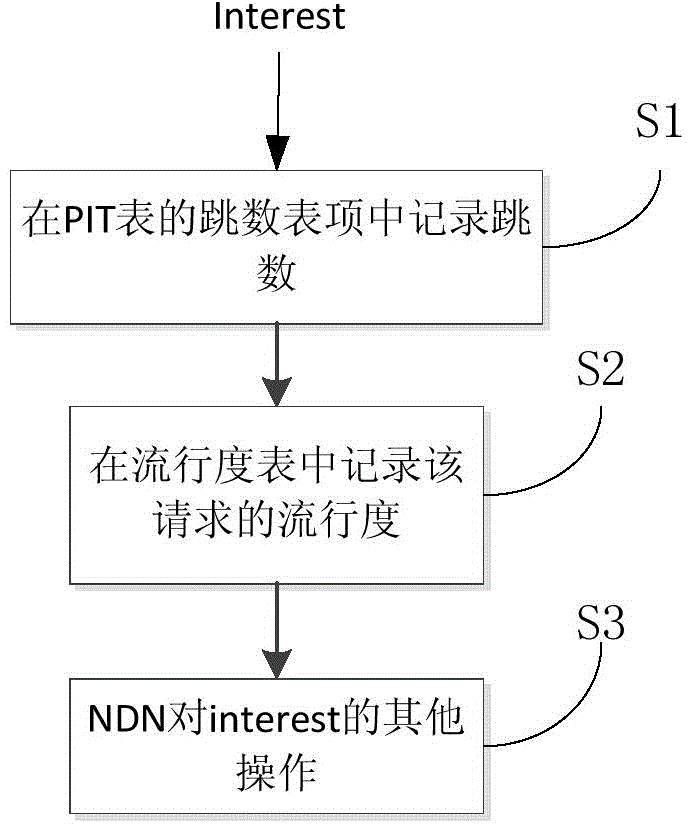

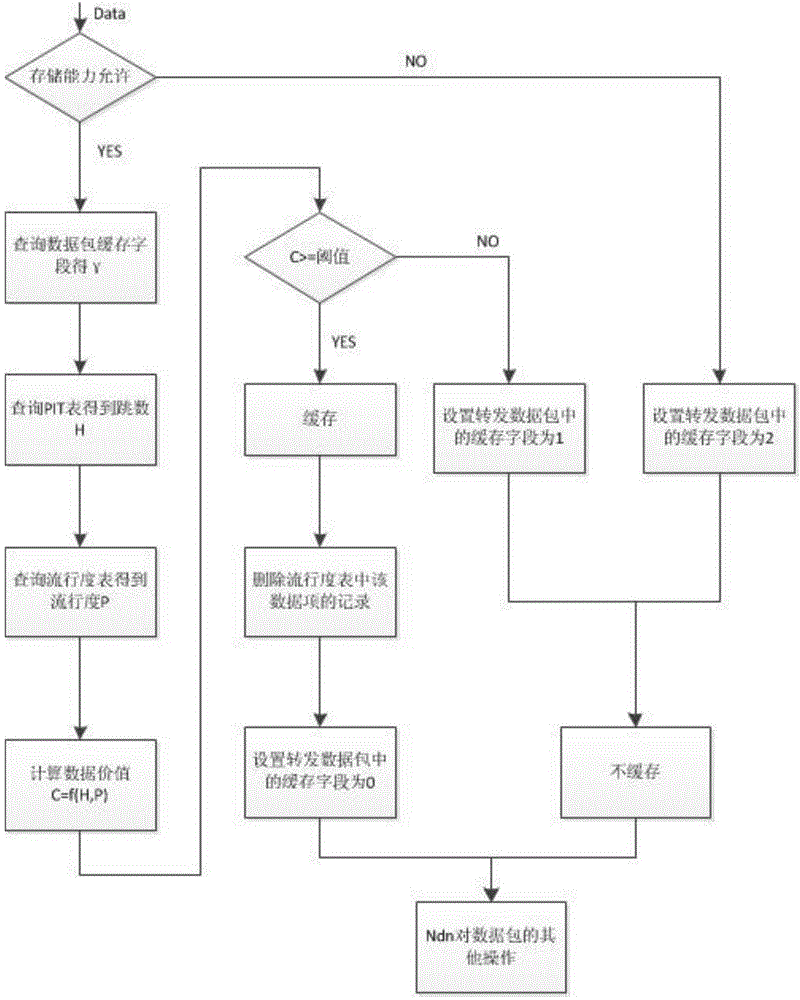

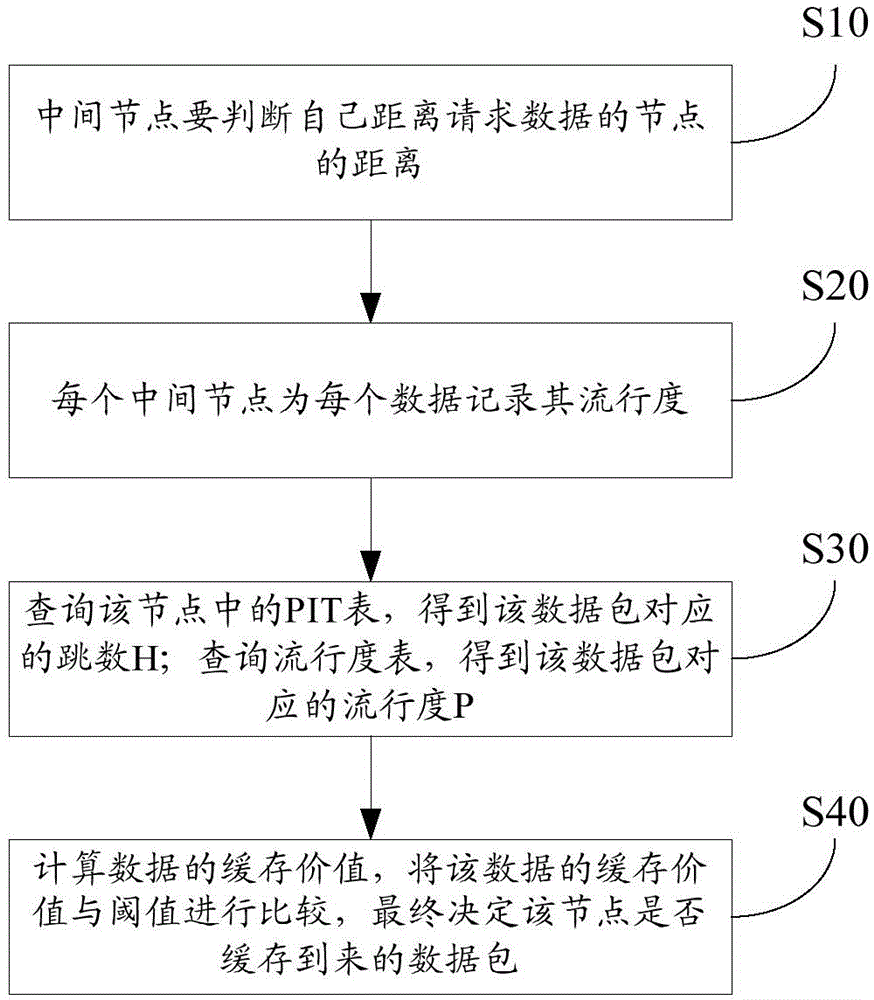

Popularity-based equilibrium distribution caching method for named data networking

InactiveCN104901980AReduce redundancyQuick responseData switching networksTraffic capacityNetwork packet

The invention provides a popularity-based equilibrium distribution caching method for named data networking and relates to the technical field of caching in named data networking. The method comprises the steps as follows: an intermediate node judging the distance between the intermediate node itself and a node of requested data; recording popularity for each data at each intermediate node; querying a PIT table in the node to obtain a hop count H corresponding to a data package; querying a popularity table to obtain popularity P corresponding to the data package; calculating the caching value of the data, comparing the caching value of the data with a threshold value, and finally determining whether to cache the arrived data package. The popularity-based equilibrium distribution caching method of the invention improves response speed and reduces network flow, simultaneously gives consideration to cache capacity of mobile nodes and reduces the redundancy degree of data in the network.

Owner:BEIJING UNIV OF TECH

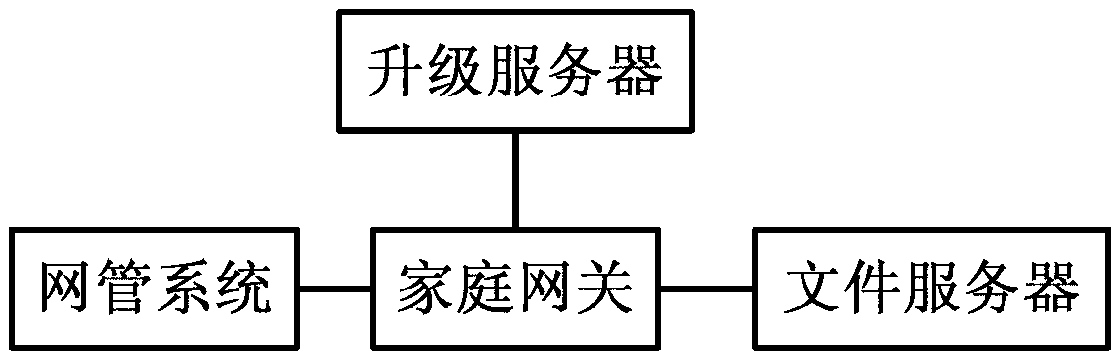

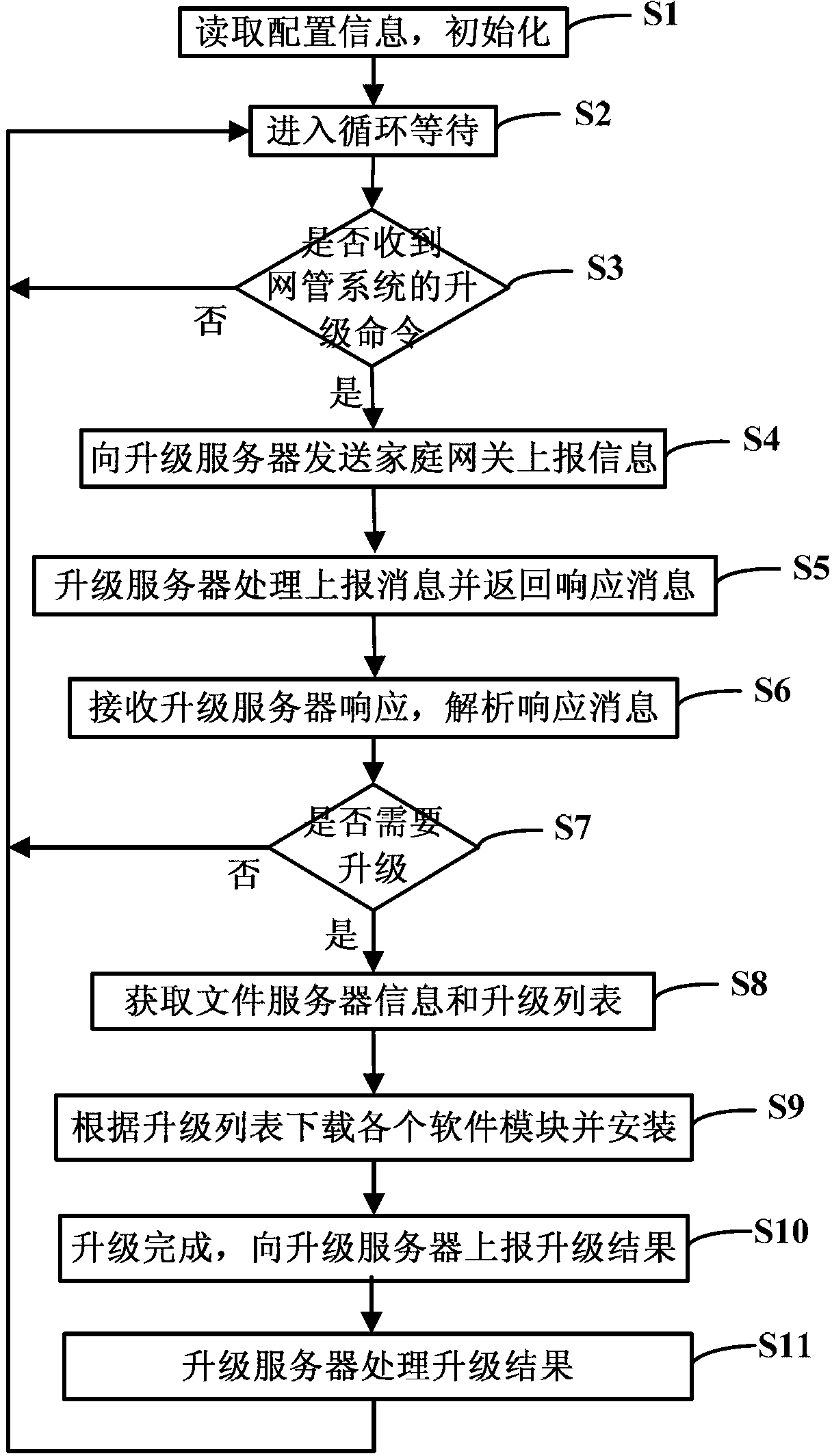

Home gateway intelligent upgrading device and upgrading method

ActiveCN103281373ASolve the problem of low efficiency in the upgradeAvoid congestionData switching networksNetwork reductionFile server

The invention discloses a home gateway intelligent upgrading device and upgrading method, and relates to the technical field of home gateways. The home gateway intelligent upgrading device comprises a home gateway, a webmaster system, an upgrading server and a file server, wherein a server-side program of home gateway upgrading programs is deployed in the upgrading server and comprises various upgrading strategy files of the home gateway and relevant upgrading version information, the server-side program processes the information coming from the home gateway, upgrading strategies are recorded in an appointed configuration file, and the configuration file comprises comparison elements, comparison operational characters, comparison conditions and operation directions after the conditions are met. According to the home gateway intelligent upgrading device and upgrading method, upgrading commands are issued in batch through the webmaster system, and the situation that a large amount of upgrading operation is executed in a short period to cause network blocking can be avoided; by the adoption of the modularization upgrading mode, the network flow can be reduced, the upgrading efficiency is improved, user experience is strengthened, and maintenance cost of operators is reduced effectively.

Owner:FENGHUO COMM SCI & TECH CO LTD

Shared network response cache

InactiveUS20120323987A1Memory adressing/allocation/relocationMultiple digital computer combinationsTraffic capacityNetwork reduction

A method is disclosed for reducing network traffic using a shared network response cache. The method intercepts a network request to prevent the network request from entering a data network. The network request is sent by a client and is intended for one or more recipients on the data network. The method checks a shared response cache for an entry matching the network request. The method sends a local response to the client in response to an entry in the shared response cache matching the network request. The local response satisfies the network request based on information from the matching entry in the shared response cache.

Owner:IBM CORP

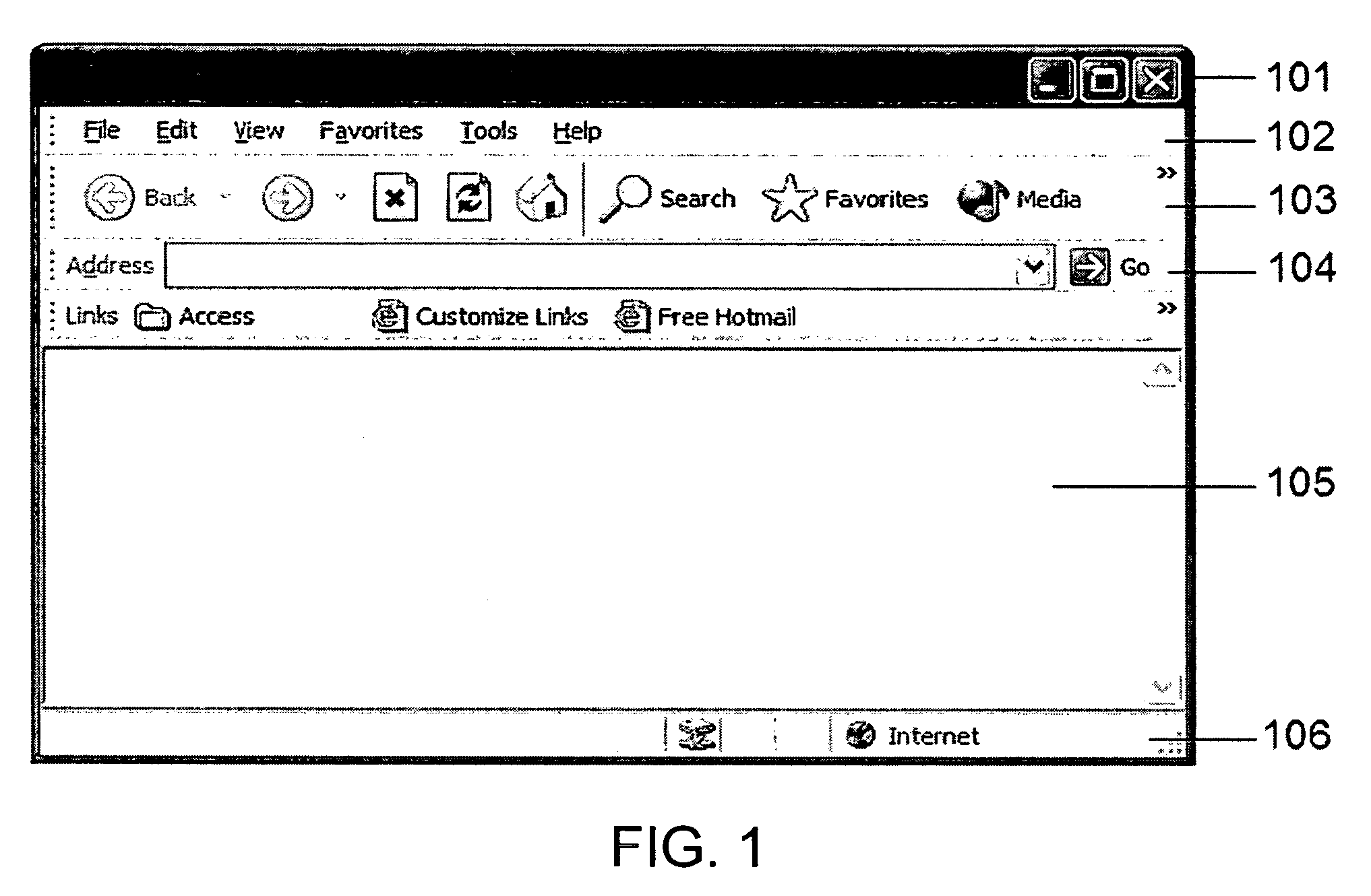

Network image view server using efficient client-server, tiling and caching archtecture

InactiveUS20010032238A1Easy to useIncrease speedDigital data information retrievalData processing applicationsData displayGraphics

A computer network server using HTTP (Web) server software combined with foreground view composer software, background view composer software, a view tile cache, view tile cache garbage collector software, and image files provides image view data to client workstations using graphical Web browsers to display the view of an image from the server. Problems with specialized client workstation image view software are eliminated by using the Internet and industry standards-based graphical Web browsers for the client software. Network and system performance problems that previously existed when accessing large image files from a network file server are eliminated by tiling the image view so that computation and transmission of the view data can be done in an incremental fashion. The view tiles are cached on the client workstation to further reduce network traffic. View tiles are cached on the server to reduce the amount of view tile computation and to increase responsiveness of the image view server.

Owner:EPLUS CAPITAL

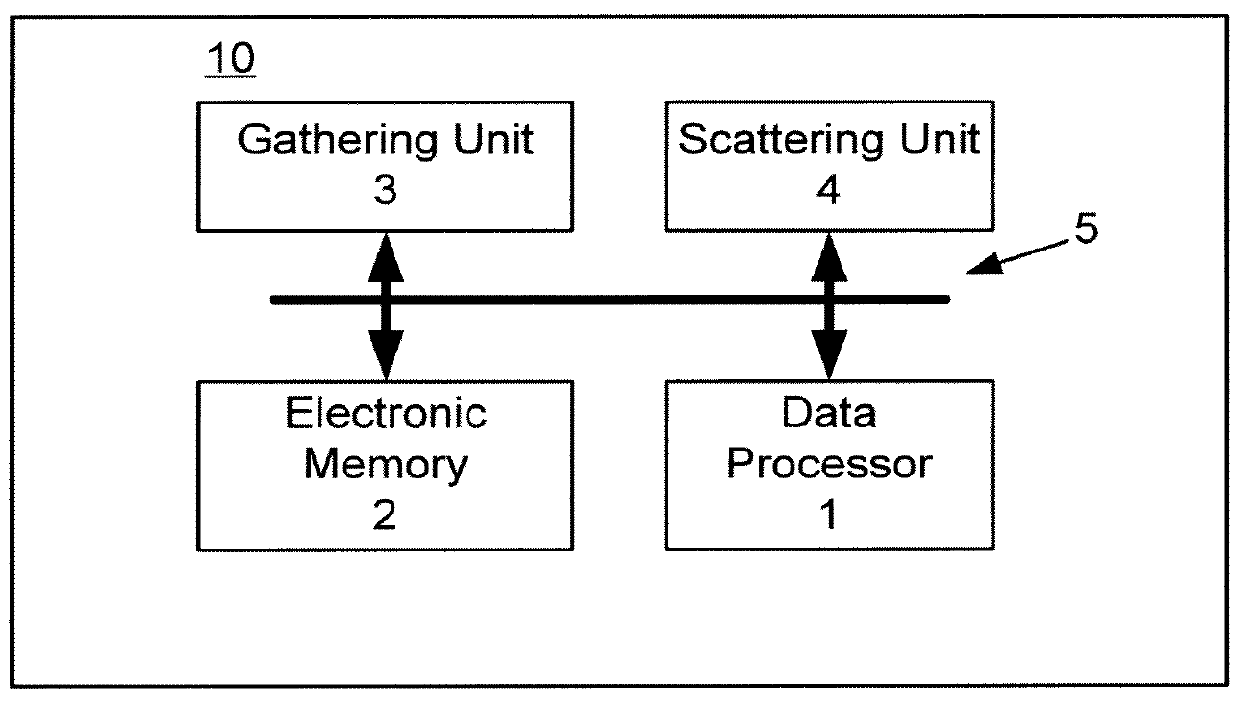

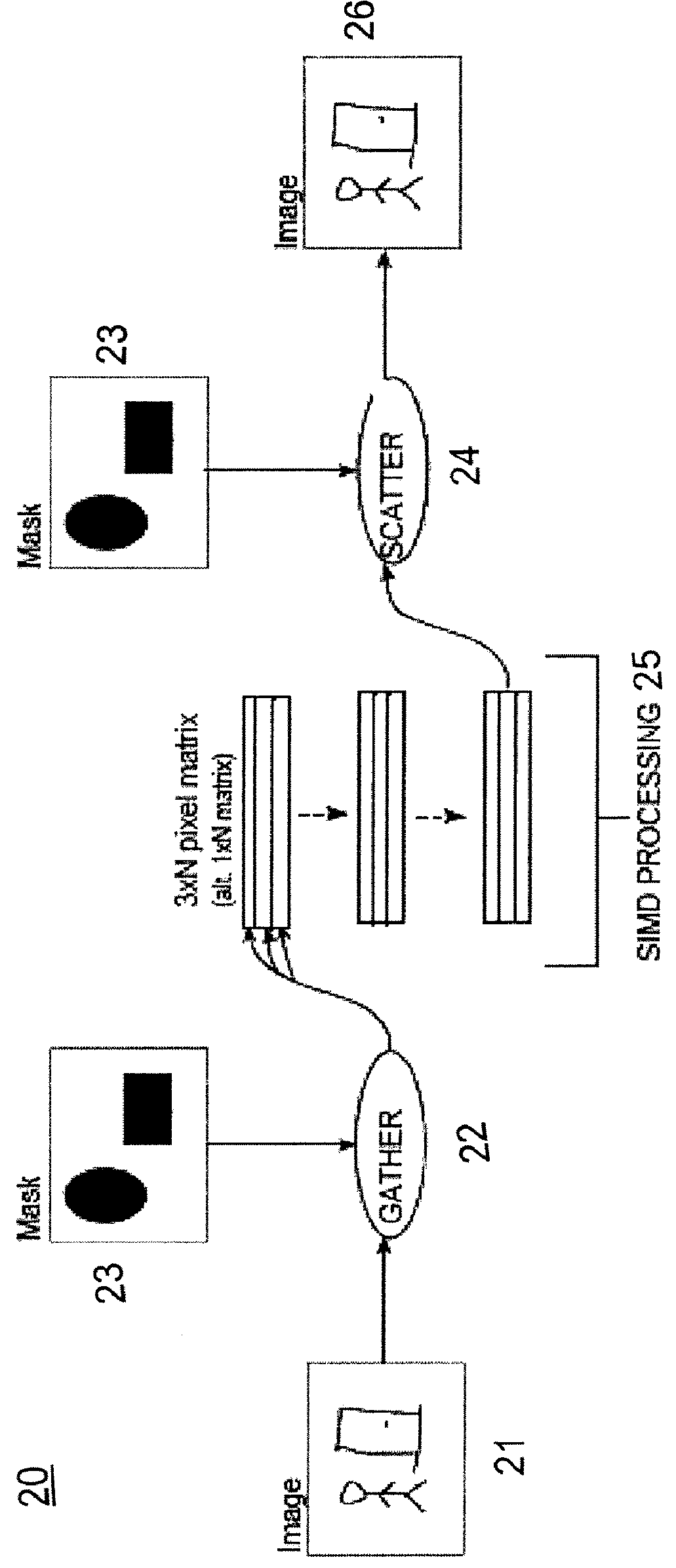

Video analytics system, computer program product, and associated methodology for efficiently using SIMD operations

ActiveUS8260002B2Image analysisCharacter and pattern recognitionTraffic capacityComputer graphics (images)

A video analytics system and associated methodology for performing low-level video analytics processing divides the processing into three phases in order to efficiently use SIMD instructions of many modern data processors. In the first phase, pixels of interest are gathered using a predetermined mask and placed into a pixel matrix. In the second phase, video analytics processing is performed on the pixel matrix, and in the third phase the pixels are scattered using the same predetermined mask. This allows many pixels to be processed simultaneously, increasing overall performance. A DMA unit may also be used to offload the processor during the gathering and scattering of pixels, further increasing performance. A network camera integrates the video analytics system to reduce network traffic.

Owner:AXIS

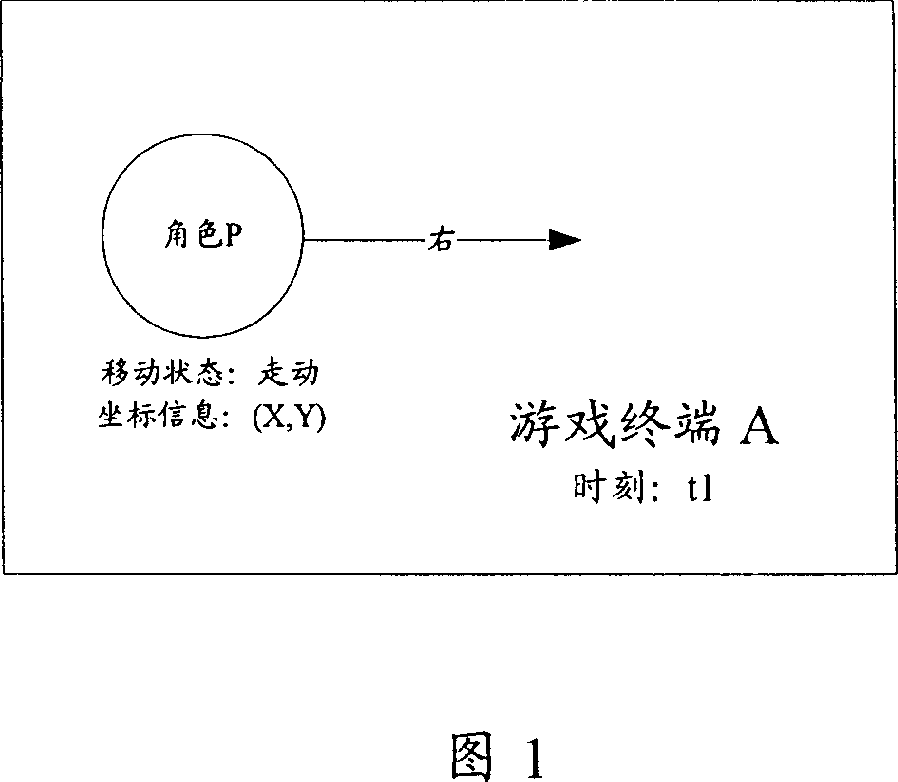

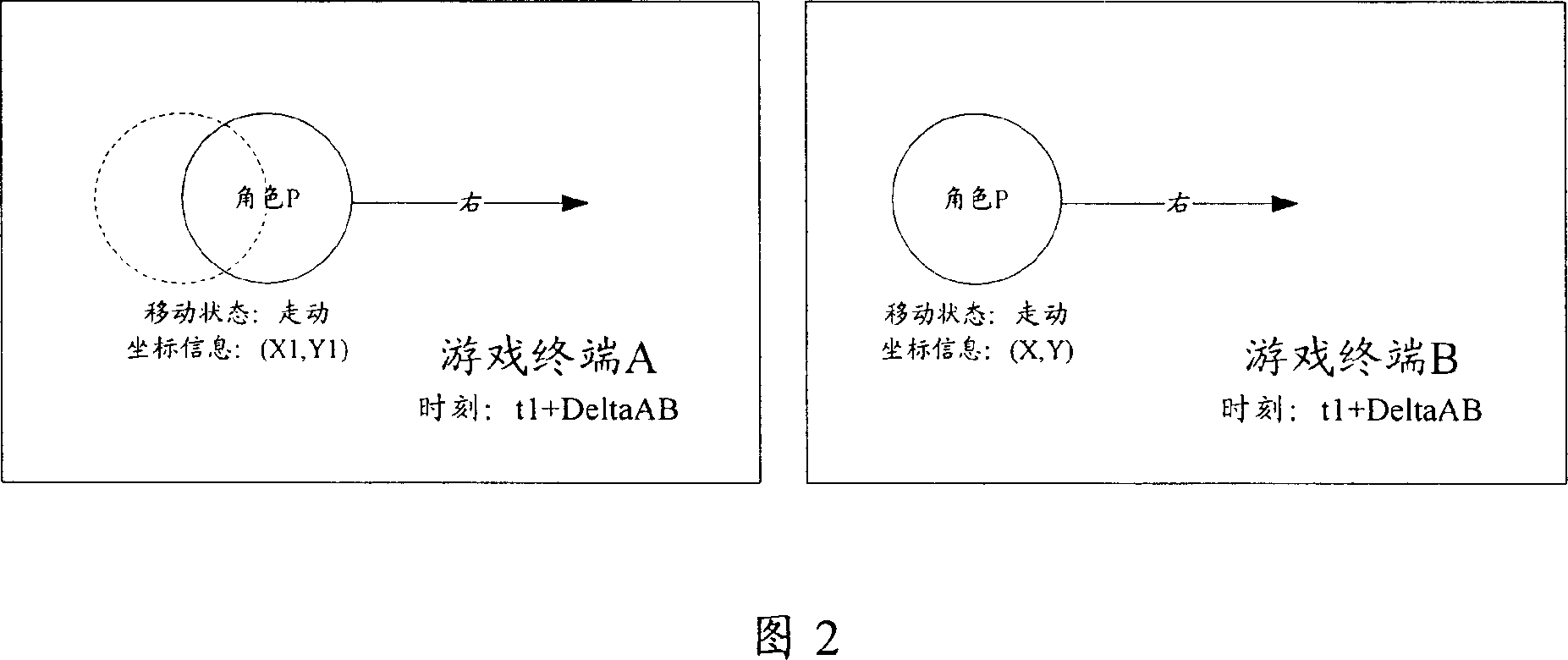

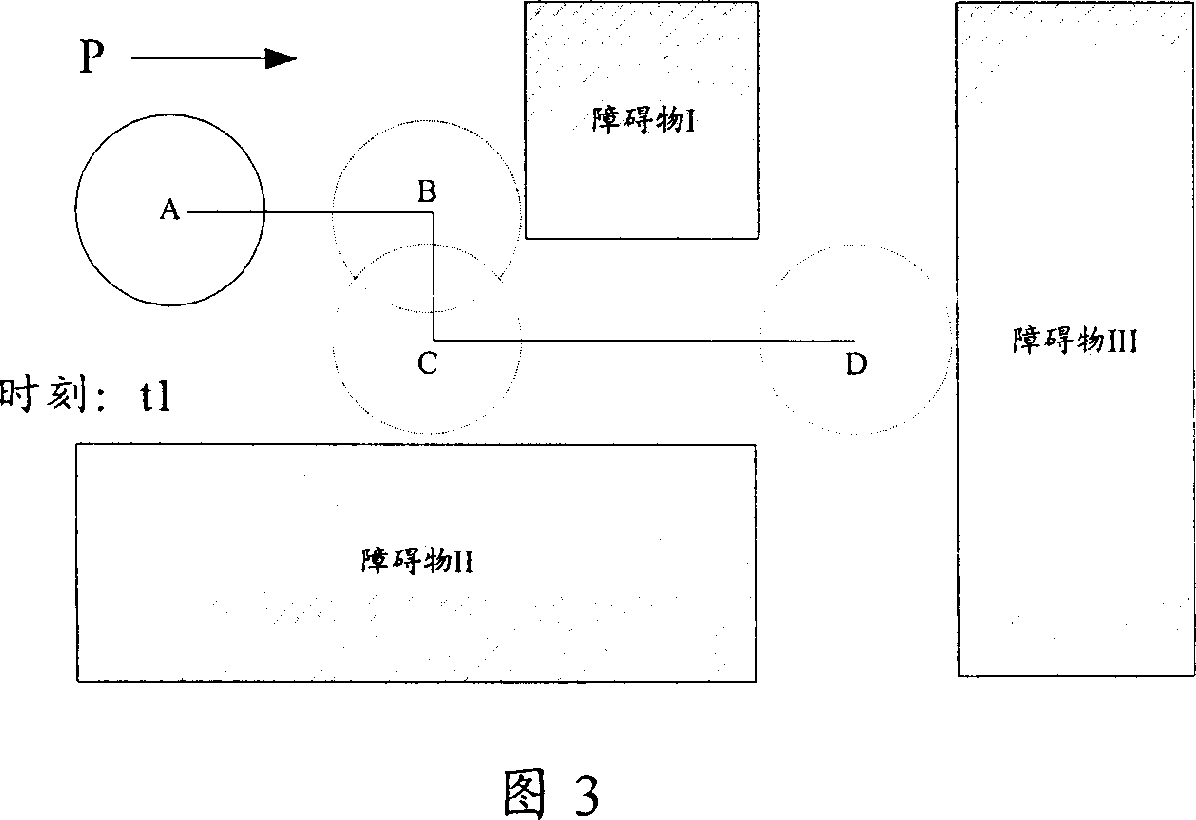

Method for shifting roles in netowrk games

ActiveCN1941788ASend less frequentlyReduce trafficData switching networksSpecial data processing applicationsTraffic capacityNetwork reduction

The method comprises: a) the local game end real-time decides if the moving route of local role varies; if yes, then determines the moving route of local role, and sends the moving message carrying the moving state and moving route of local role to the remote game terminal; b) the remote game end controls the remote role corresponding to the local role to move according to the moving state and moving route in the received moving message. By the invention, the network traffic flow for on-line game can be reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD

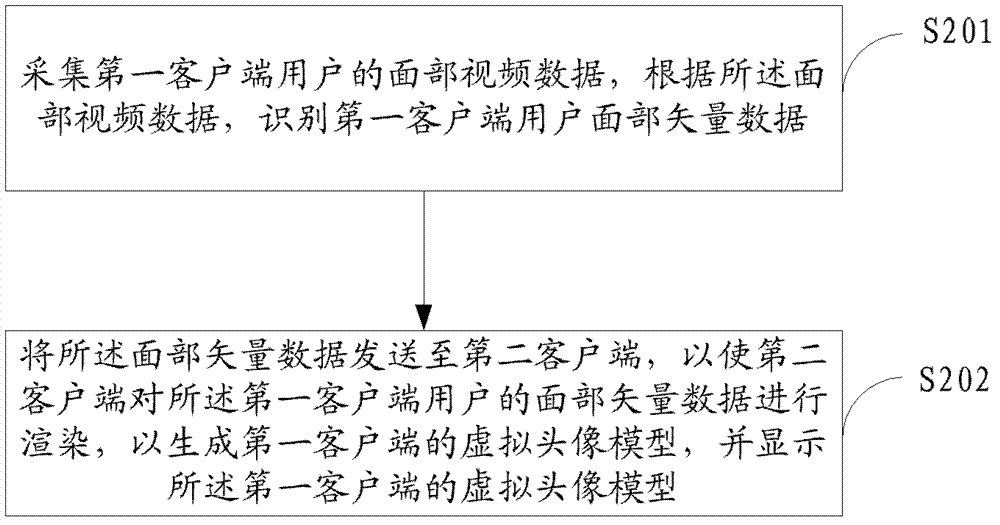

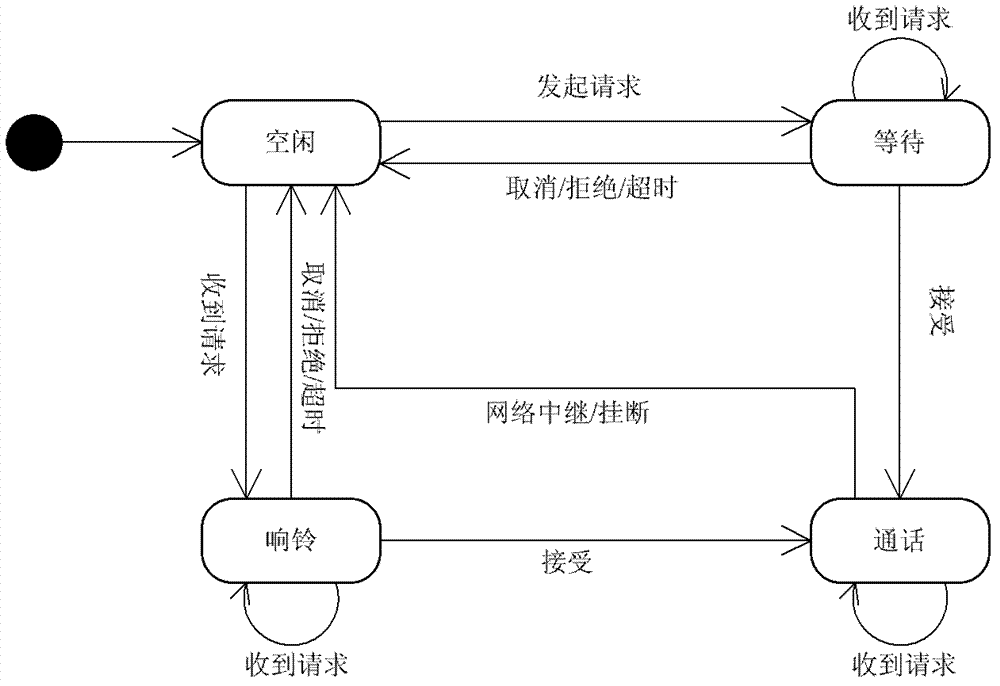

Video chatting method and system

ActiveCN103368929APrivacy protectionLow costTwo-way working systemsData switching networksPattern recognitionNetwork reduction

The invention is applied to the technical field of computers, and provides a video chatting method and a video chatting system. The method comprises the following steps of acquiring the facial video data of a first client user, and identifying the facial vector data of the first client user according to the facial video data; and transmitting the facial vector data to a second client, so that the second client renders the facial vector data of the first client user to generate a virtual image model of the first client user, and displays the virtual image model of the first client user. According to the method and the system, the facial vector data is transmitted through a network in a video chatting process, so that network traffic is greatly reduced, the method and the system can be smoothly used in an ordinary mobile network, the data transmission speed is high, and the network traffic cost is greatly reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD

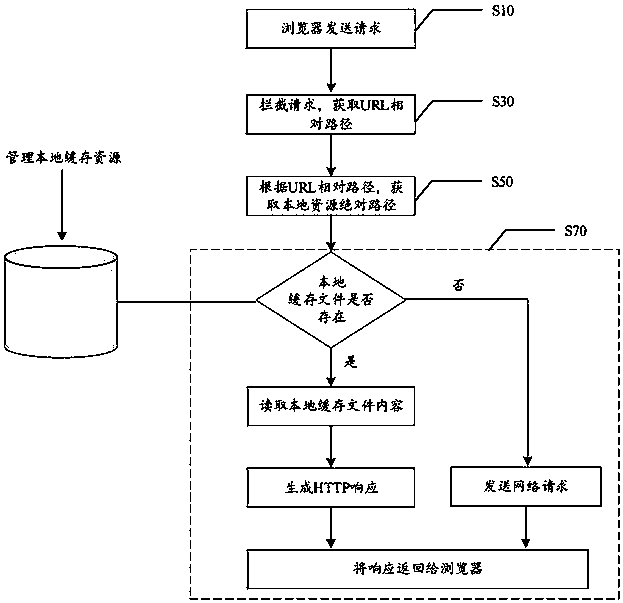

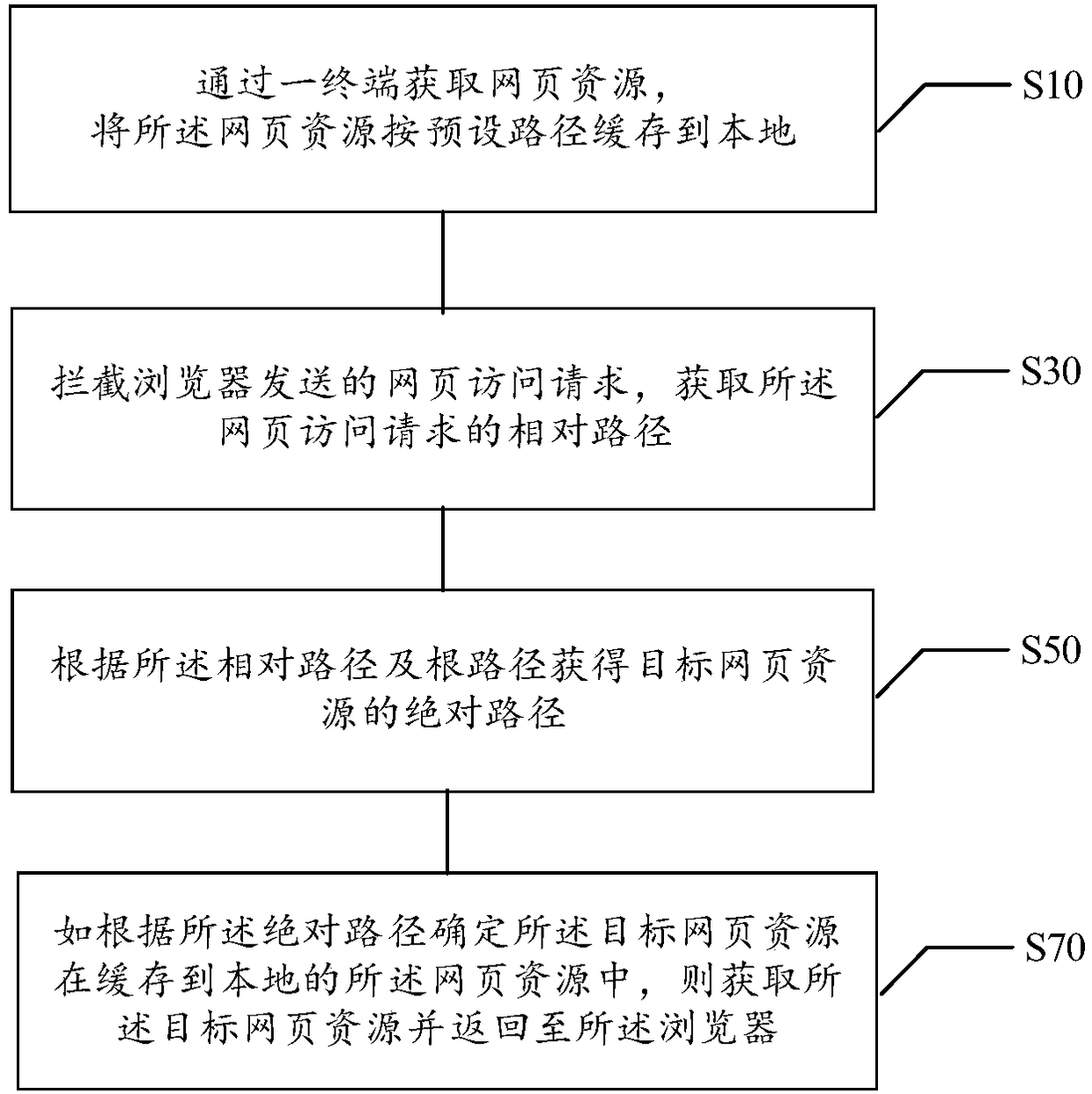

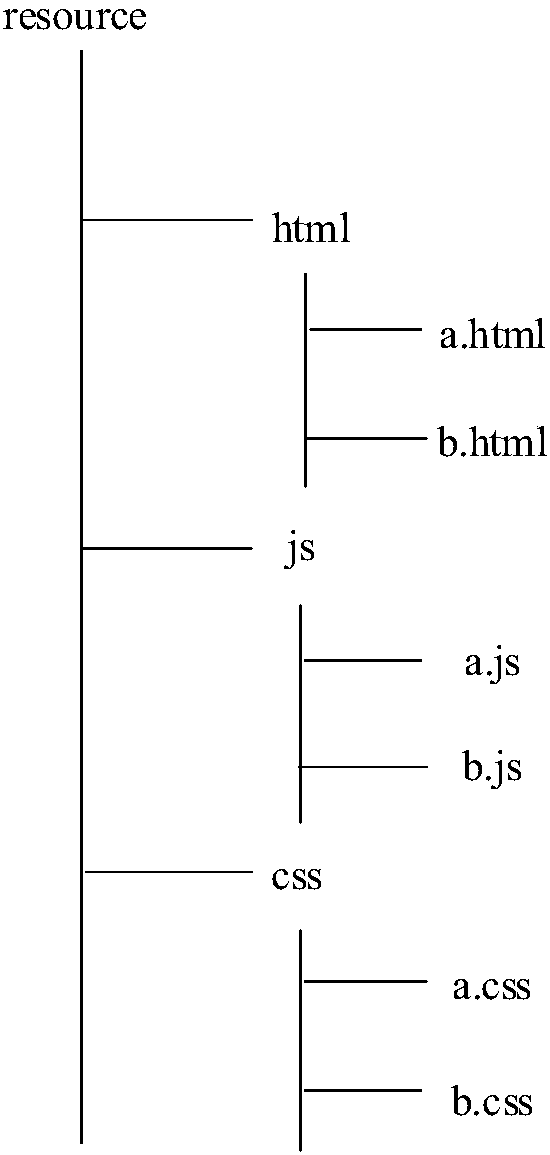

Webpage resource loading method and device, electronic equipment and storage medium

ActiveCN108228818AImprove access speedImprove first visit speedWebsite content managementProgram loading/initiatingTraffic capacityNetwork reduction

The invention provides a webpage resource loading method and device, electronic equipment and a storage medium, and relates to the technical field of data processing. The webpage resource loading method comprises the steps of obtaining webpage resources, and caching the webpage resources according to a directory organization structure of a relative path of the webpage resources; intercepting a webpage access request sent by a browser, and obtaining a relative path of the webpage access request; obtaining a local resource absolute path of the target webpage resources according to the relative paths and a preset path; if it is determined that the target webpage resources are cached into local webpage resources according to the local resource absolute path, obtaining the target webpage resources and returning the target webpage resources to the browser. By means of the method, the first-time access and no-network access experience can be improved, and the network data overhead is reduced;developers can accurately control cache resources; by adopting a mode of searching for the local resources according to the relative paths, no URL is saved to the storage overhead of resource mapping.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

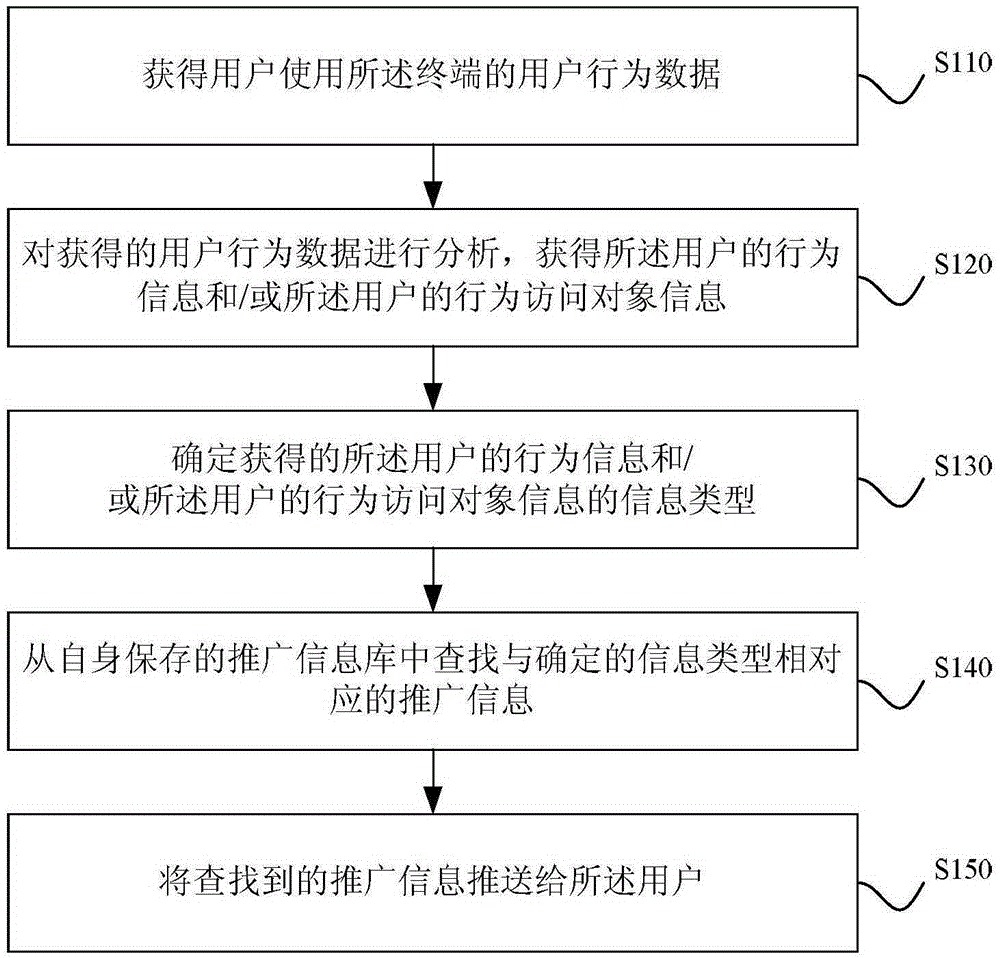

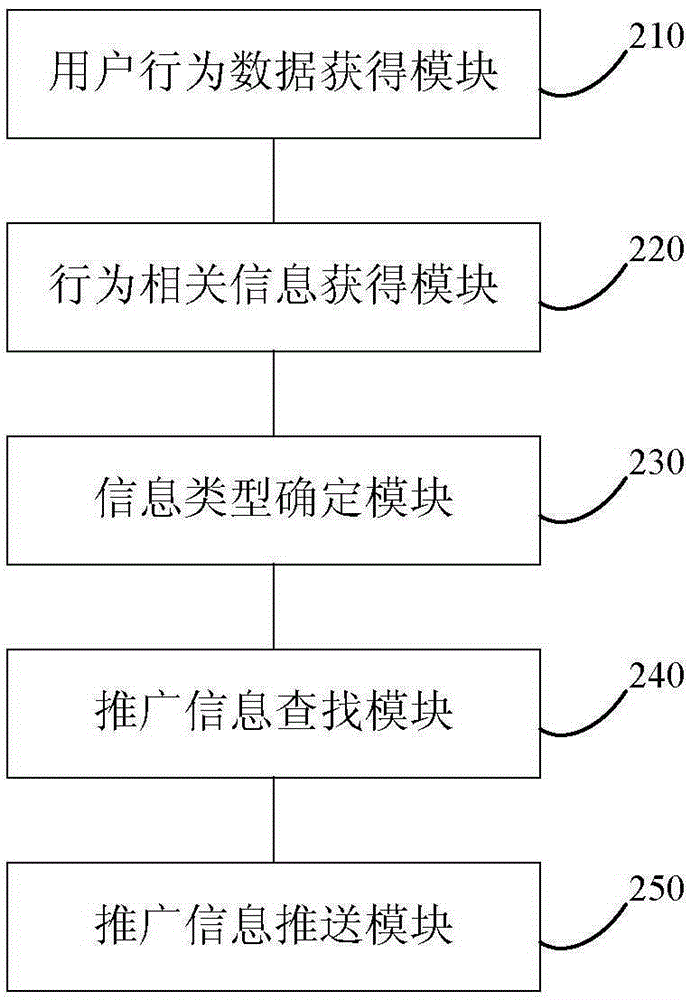

Popularization information pushing method and device

InactiveCN105245583AAvoid disturbancePrivacy protectionTransmissionSpecial data processing applicationsInformation repositoryInformation type

The embodiment of the invention discloses a popularization information pushing method and device. The popularization information pushing method is applied to a terminal and comprises the following steps: obtaining user behaviour data about a user using the terminal; analyzing the obtained user behaviour data so as to obtain behaviour information and / or behaviour access object information of the user; determining the information type of the obtained behaviour information and / or behaviour access object information of the user; searching popularization information corresponding to the determined information type from a popularization information base stored in the device; and pushing the searched popularization information to the user. By applying the technical scheme provided by the embodiment of the invention, the popularization information pushed to the user is possibly interesting to the user; the user can be prevented from being interfered by excessive irrelevant popularization information; furthermore, the technical scheme is executed on the terminal; information transfer between the terminal and a server is unnecessary; the privacy of the user can be protected; the network bandwidth can be saved; and the network flow can be reduced.

Owner:KINGSOFT

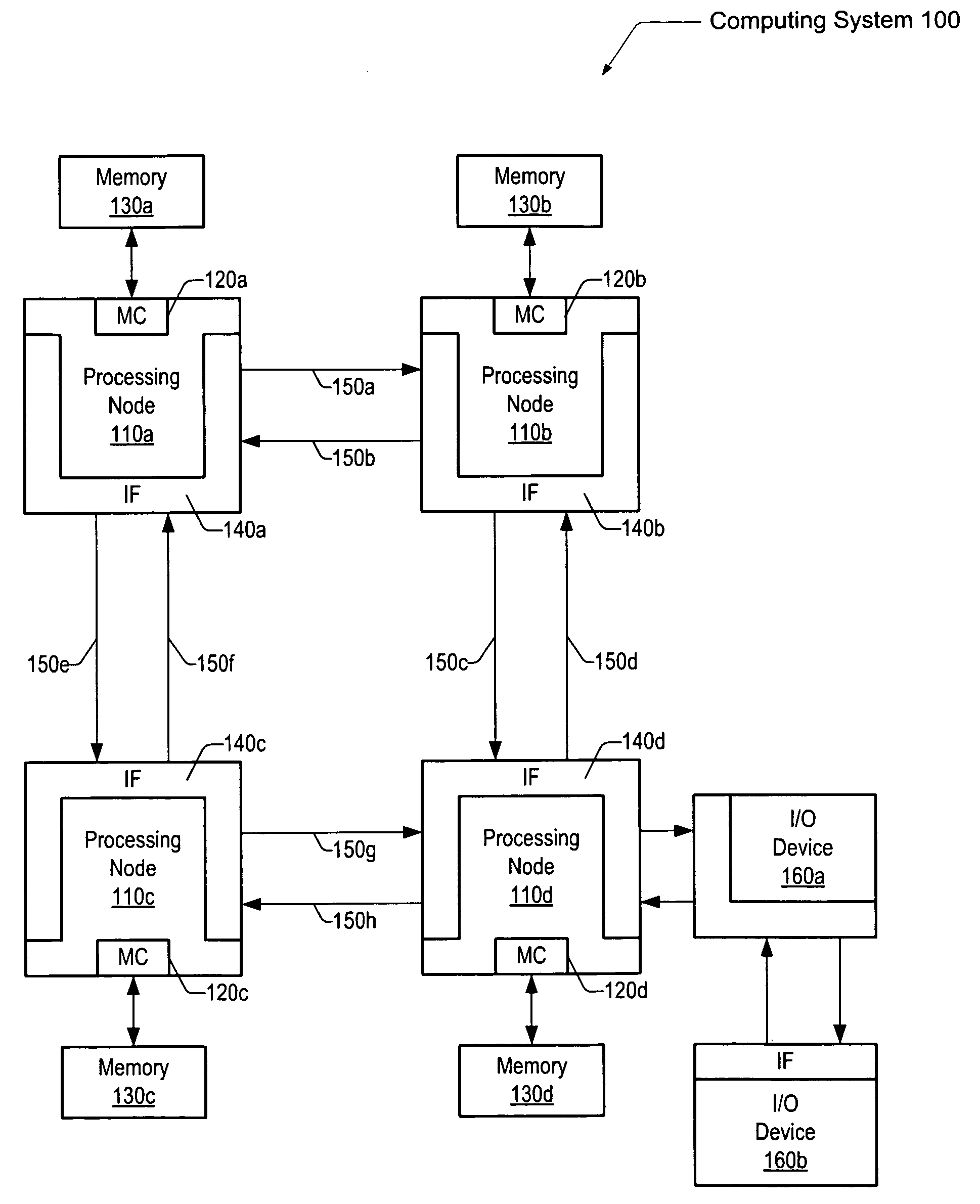

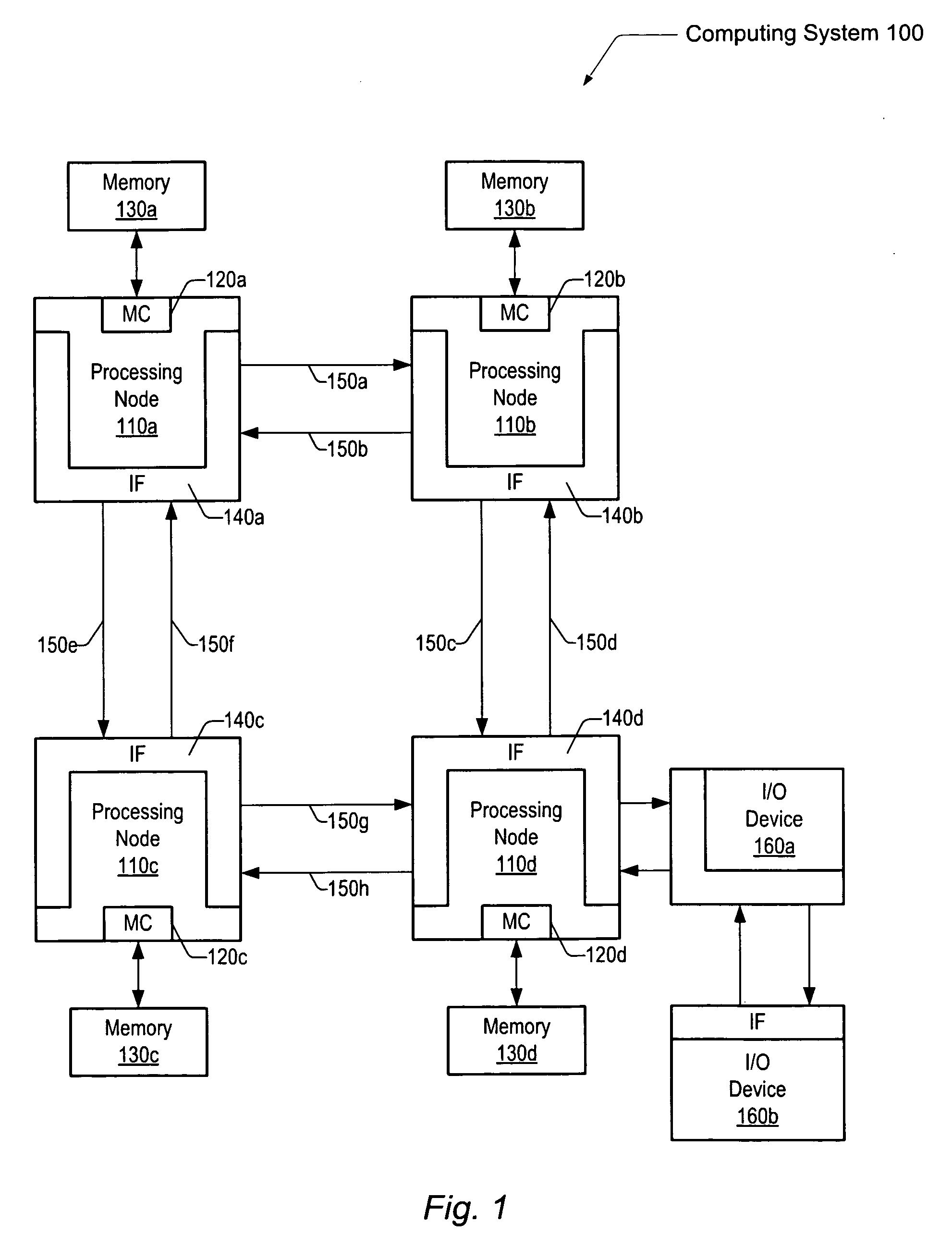

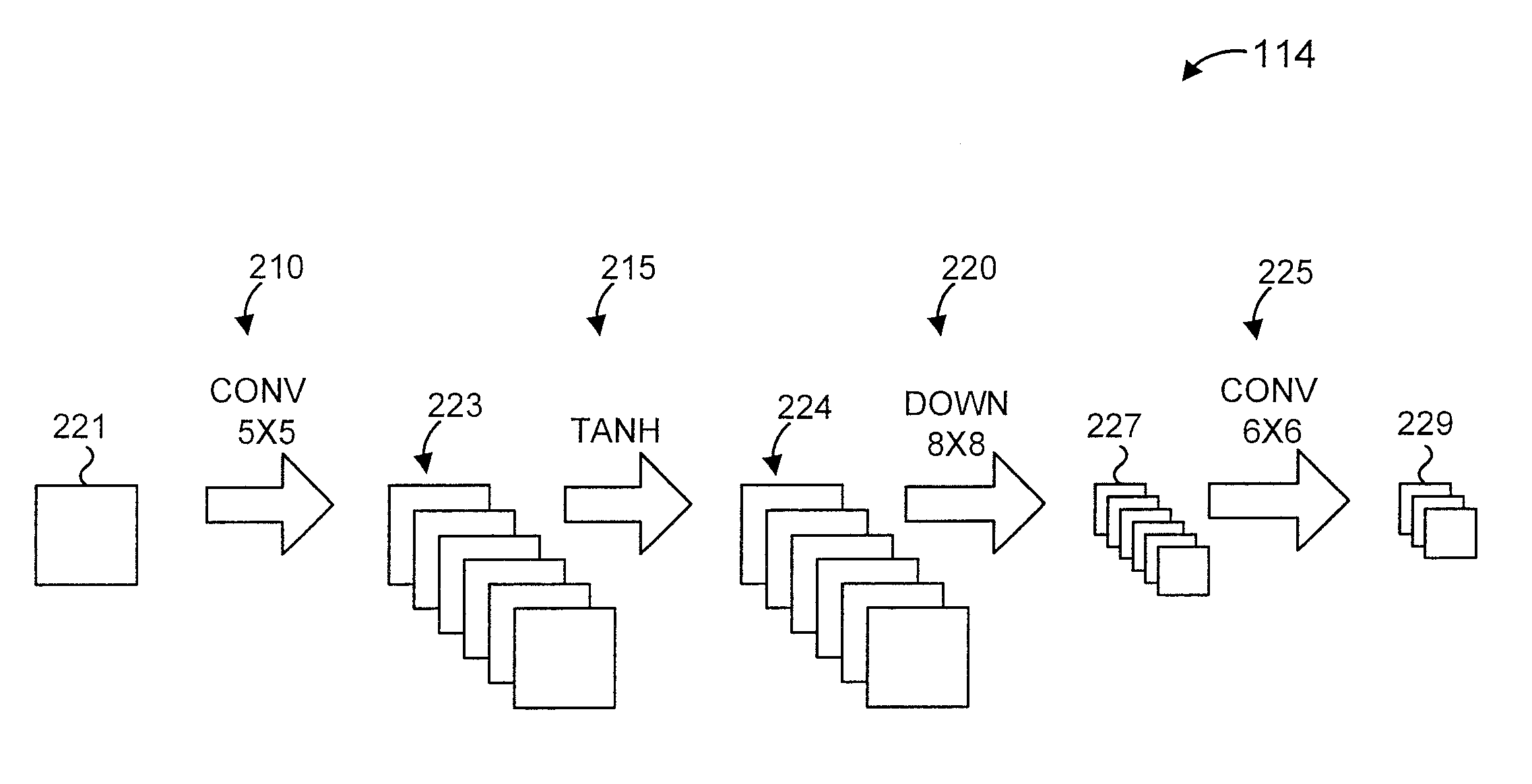

Snoop filtering mechanism

ActiveUS20090327616A1Non-utilization of dedicated directory storage may be avoidedMemory architecture accessing/allocationEnergy efficient ICTTraffic capacityParallel computing

A system and method for selectively transmitting probe commands and reducing network traffic. Directory entries are maintained to filter probe command and response traffic for certain coherent transactions. Rather than storing directory entries in a dedicated directory storage, directory entries may be stored in designated locations of a shared cache memory subsystem, such as an L3 cache. Directory entries are stored within the shared cache memory subsystem to provide indications of lines (or blocks) that may be cached in exclusive-modified, owned, shared, shared-one, or invalid coherency states. The absence of a directory entry for a particular line may imply that the line is not cached anywhere in a computing system.

Owner:ADVANCED MICRO DEVICES INC

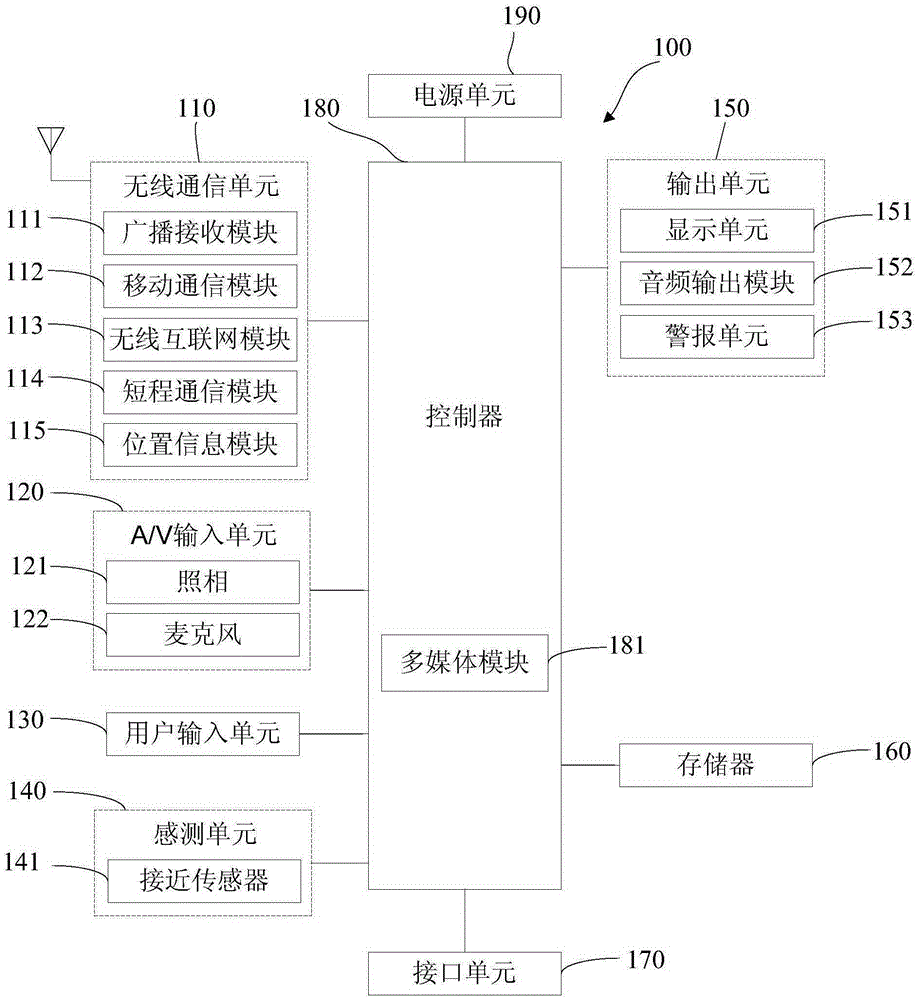

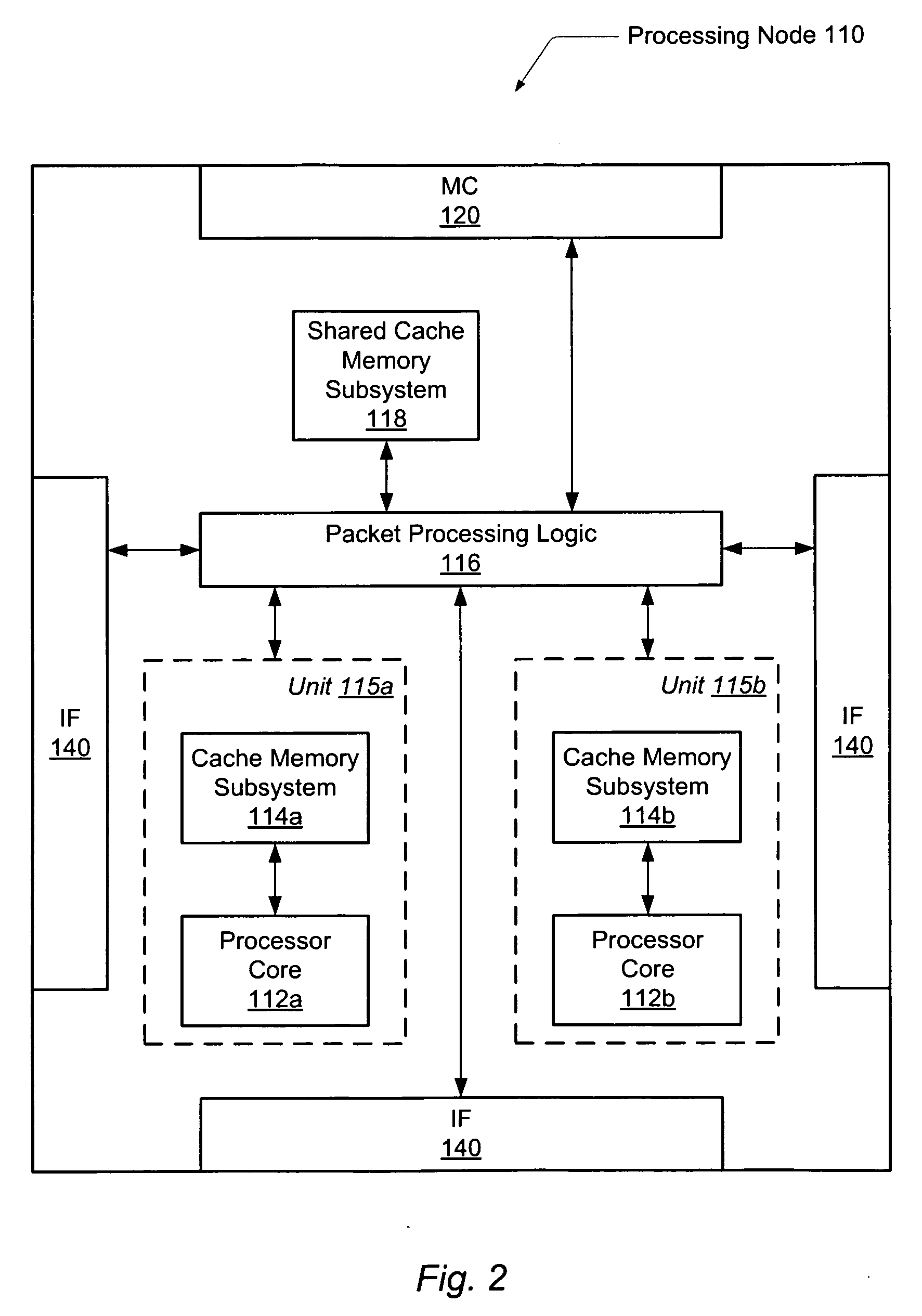

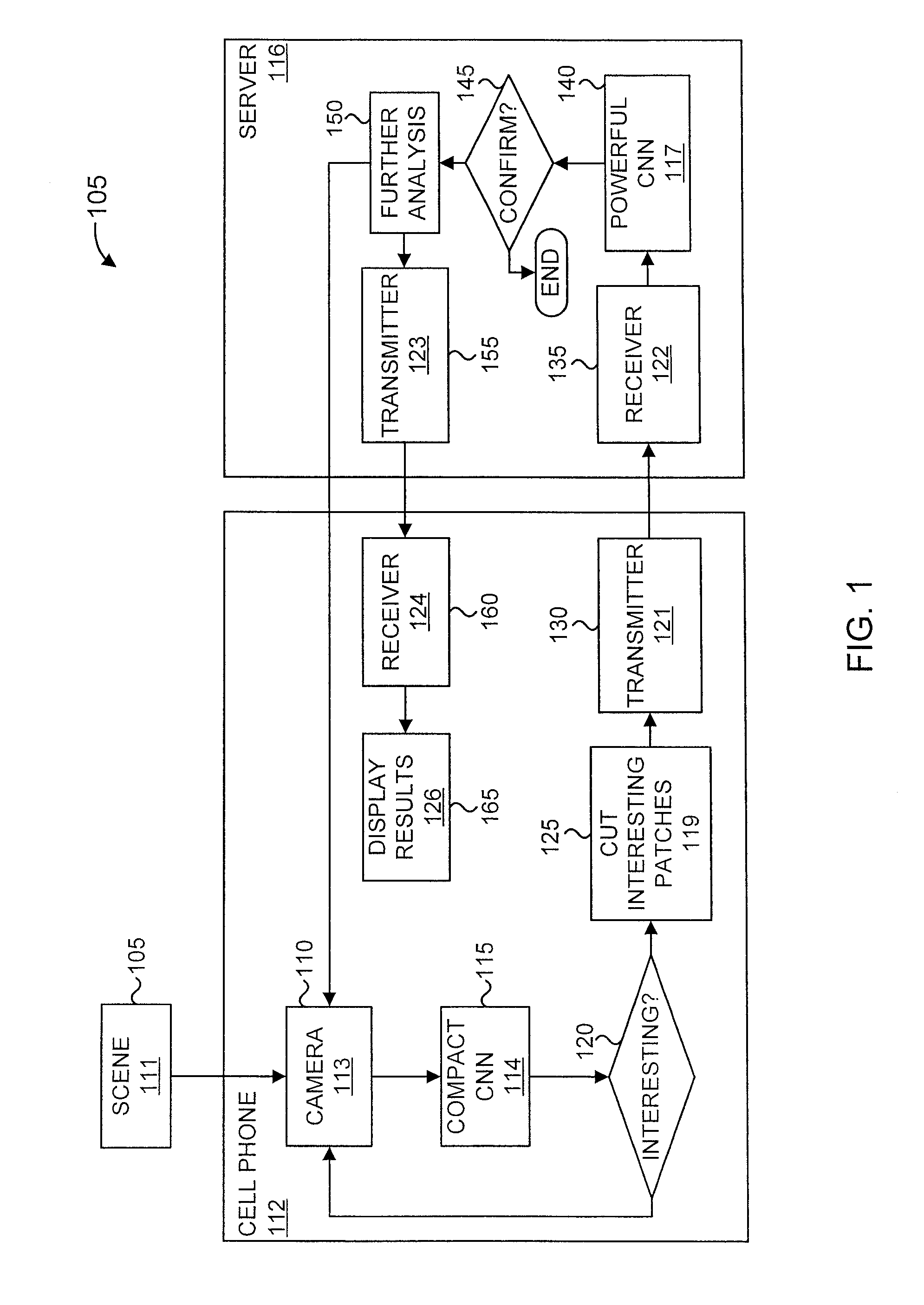

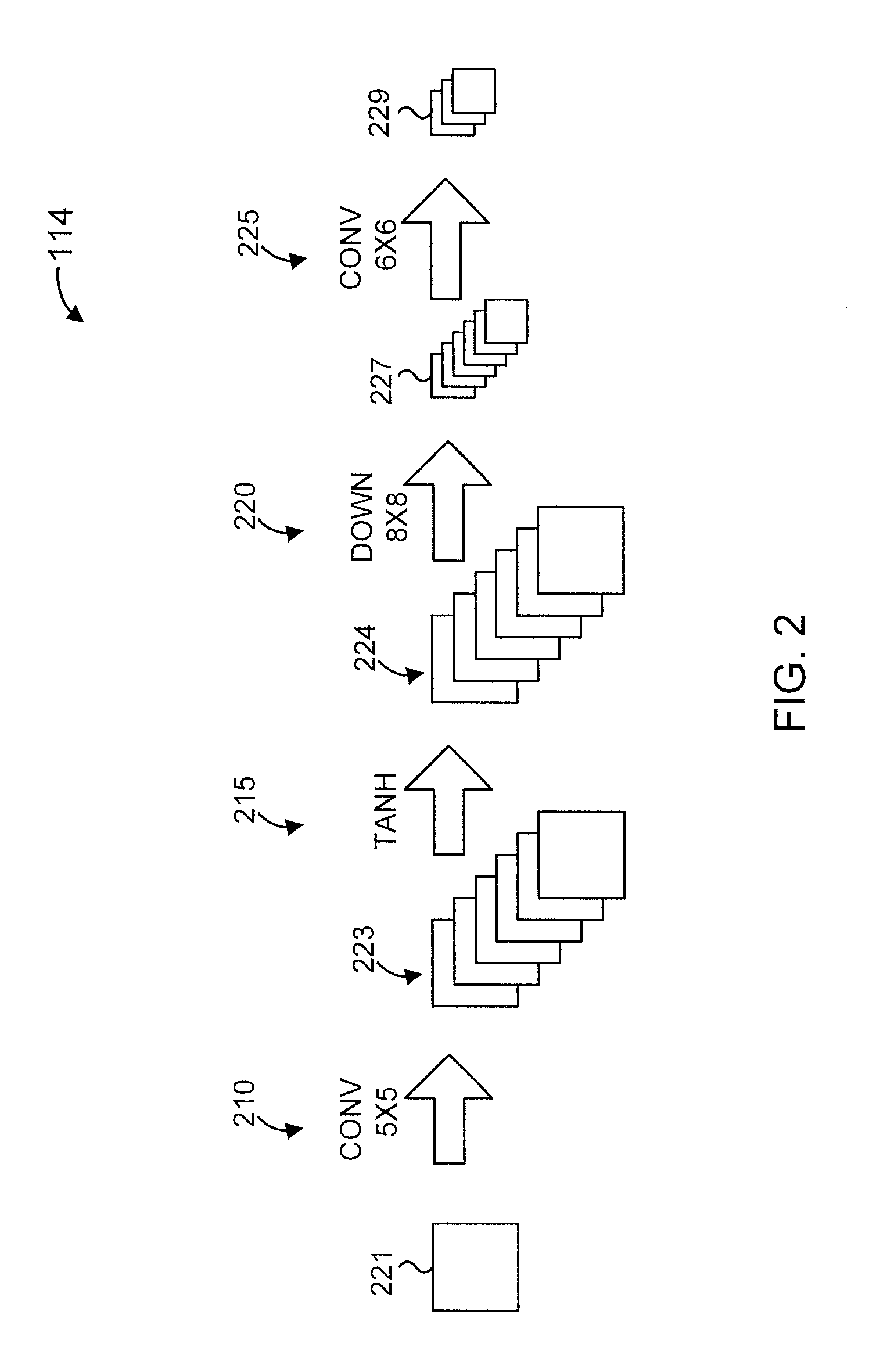

Distributed artificial intelligence services on a cell phone

ActiveUS8463025B2Reduce network trafficDigital data processing detailsDigital computer detailsTraffic capacityNerve network

A cell phone having distributed artificial intelligence services is provided. The cell phone includes a neural network for performing a first pass of object recognition on an image to identify objects of interest therein based on one or more criterion. The cell phone also includes a patch generator for deriving patches from the objects of interest. Each of the patches includes a portion of a respective one of the objects of interest. The cell phone additionally includes a transmitter for transmitting the patches to a server for further processing in place of an entirety of the image to reduce network traffic.

Owner:NEC CORP

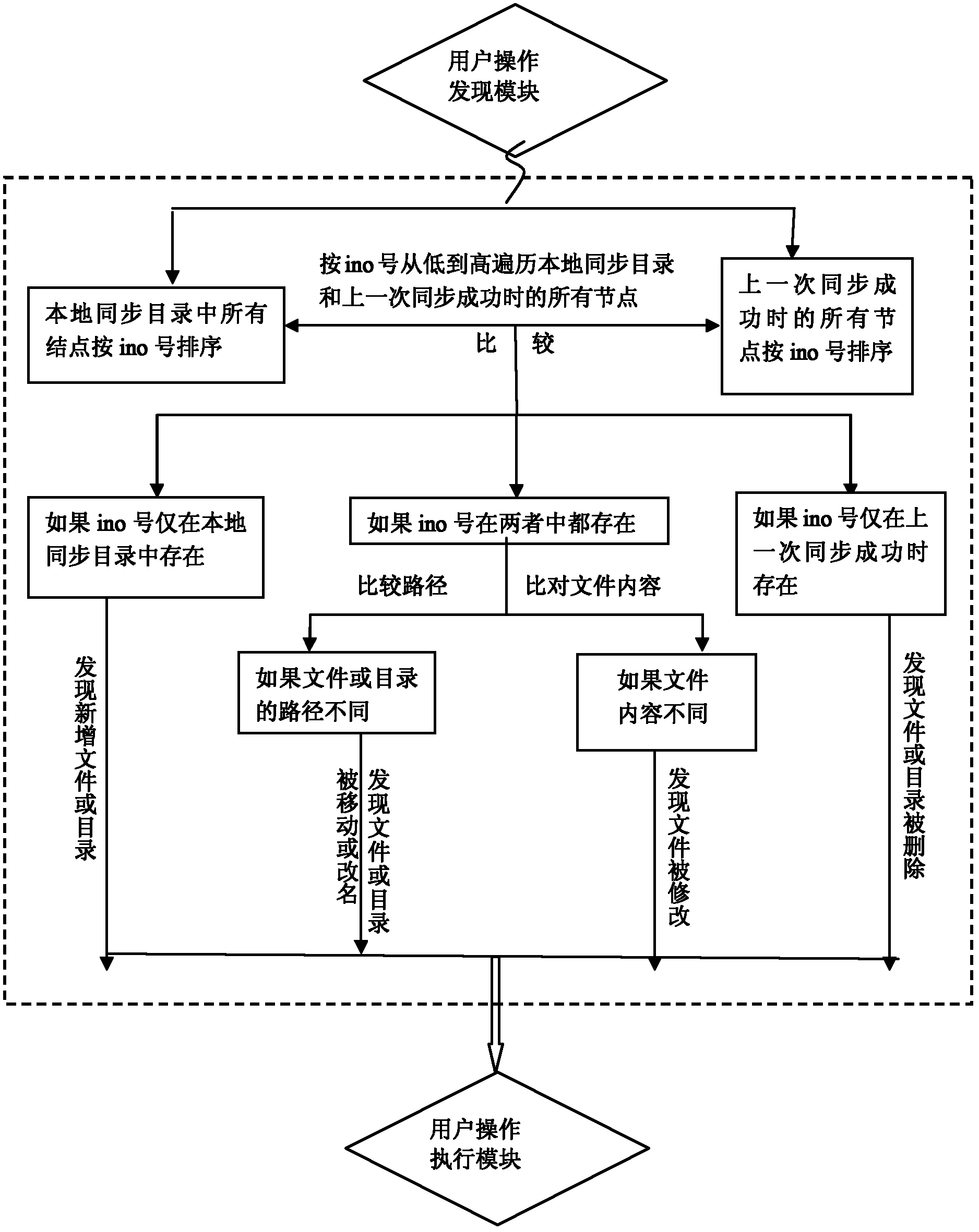

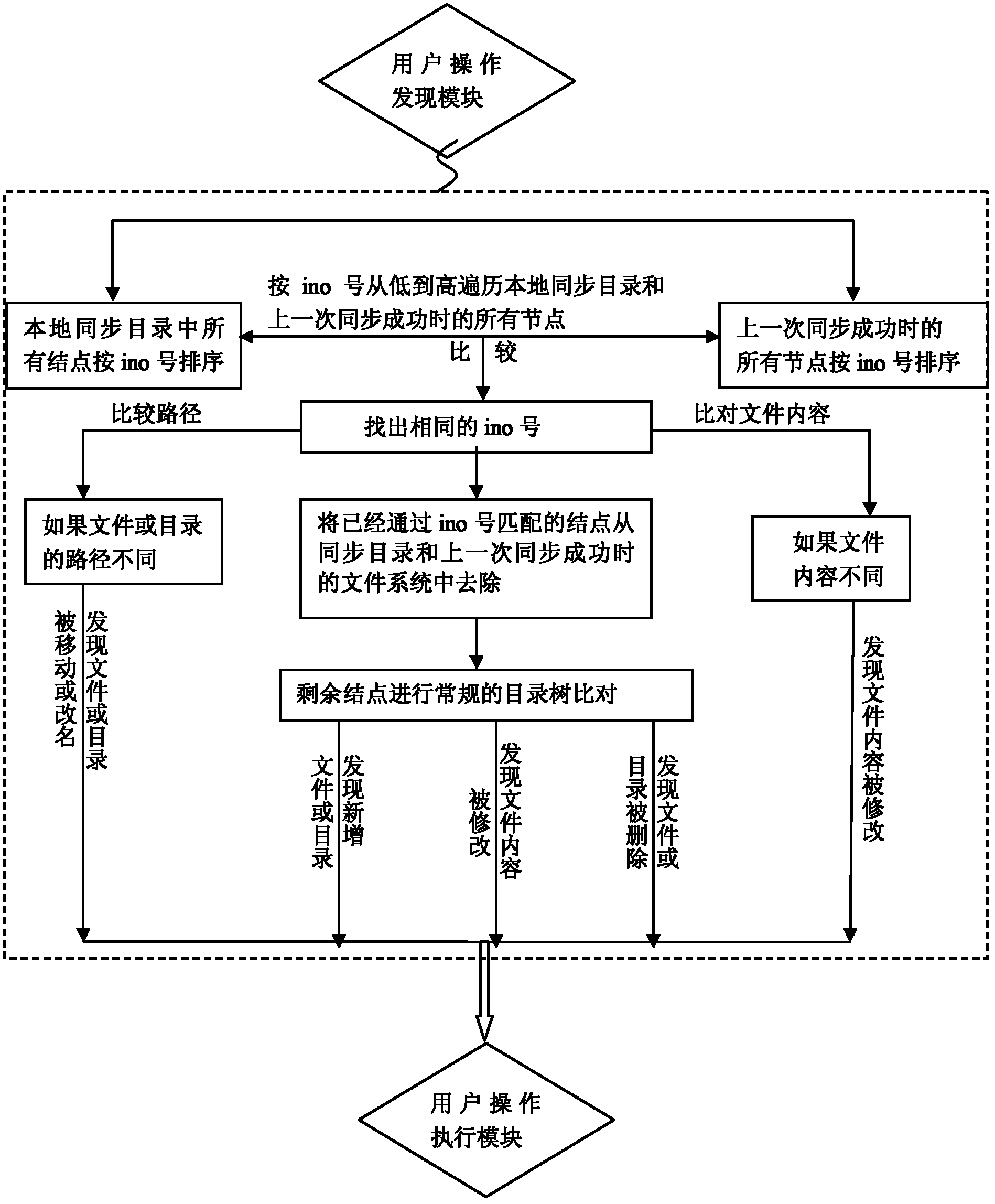

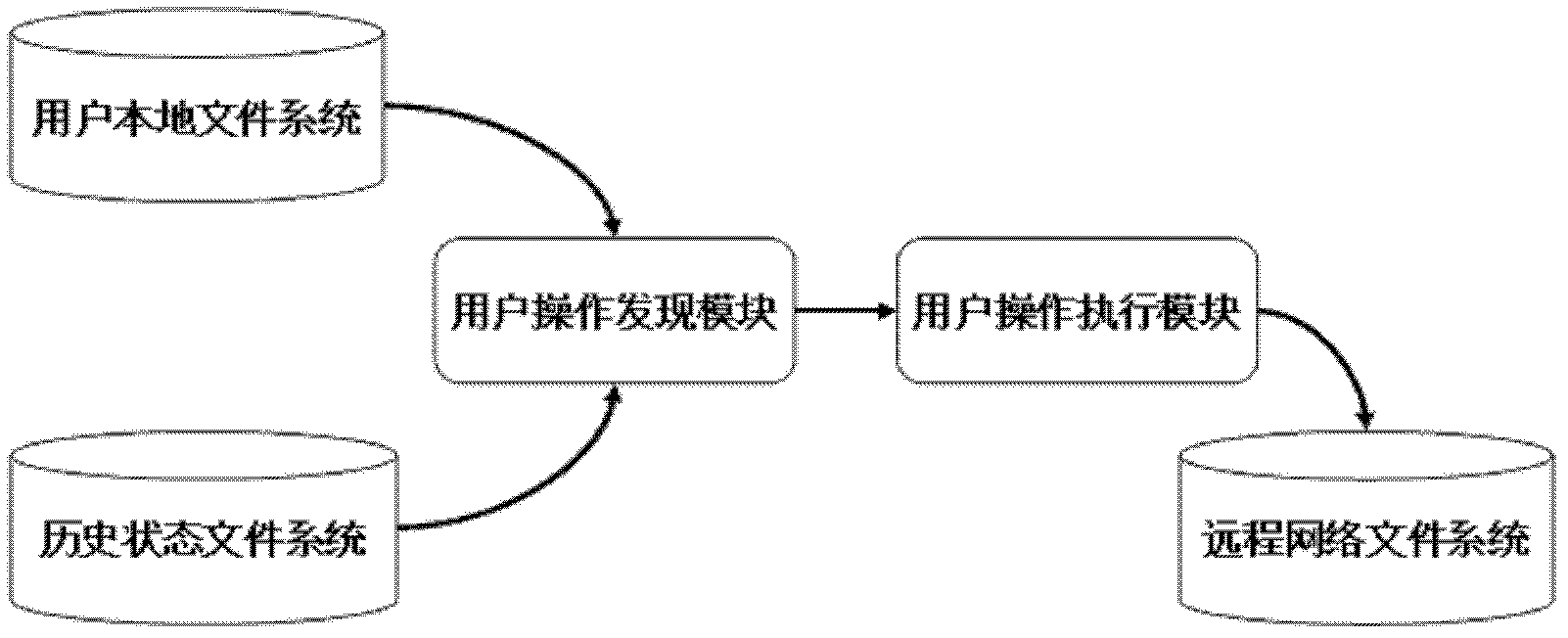

User operation discovery method of file system and synchronous system utilizing the same

ActiveCN102360410AGuaranteed reliabilityReduce consumptionPlatform integrity maintainanceSpecial data processing applicationsTraffic capacityFile synchronization

The invention provides an intelligent user operation discovery method of a file system, which comprises the steps of: using a node mark of the file system as a unique mark, and comparing a node of a local synchronous catalogue with the file system node when the former synchronization operation is successful so as to identify user operation behaviors. In particular, the method in the invention comprises the steps of: using the node mark ino number of the file system as the unique mark, comparing the ino number with the change of paths and contents of the corresponding nodes to identify such user operations as newly adding, deleting, renaming, moving and file content amending, and the like. The method of comparing by the node mark of the file system is combined with a traditional directory tree comparison method to discover the user operation behaviors. The file synchronous system of the invention is used for reducing the synchronous operation quantity and reducing the dissipation of network flow.

Owner:SHANGHAI QINIU INFORMATION TECH

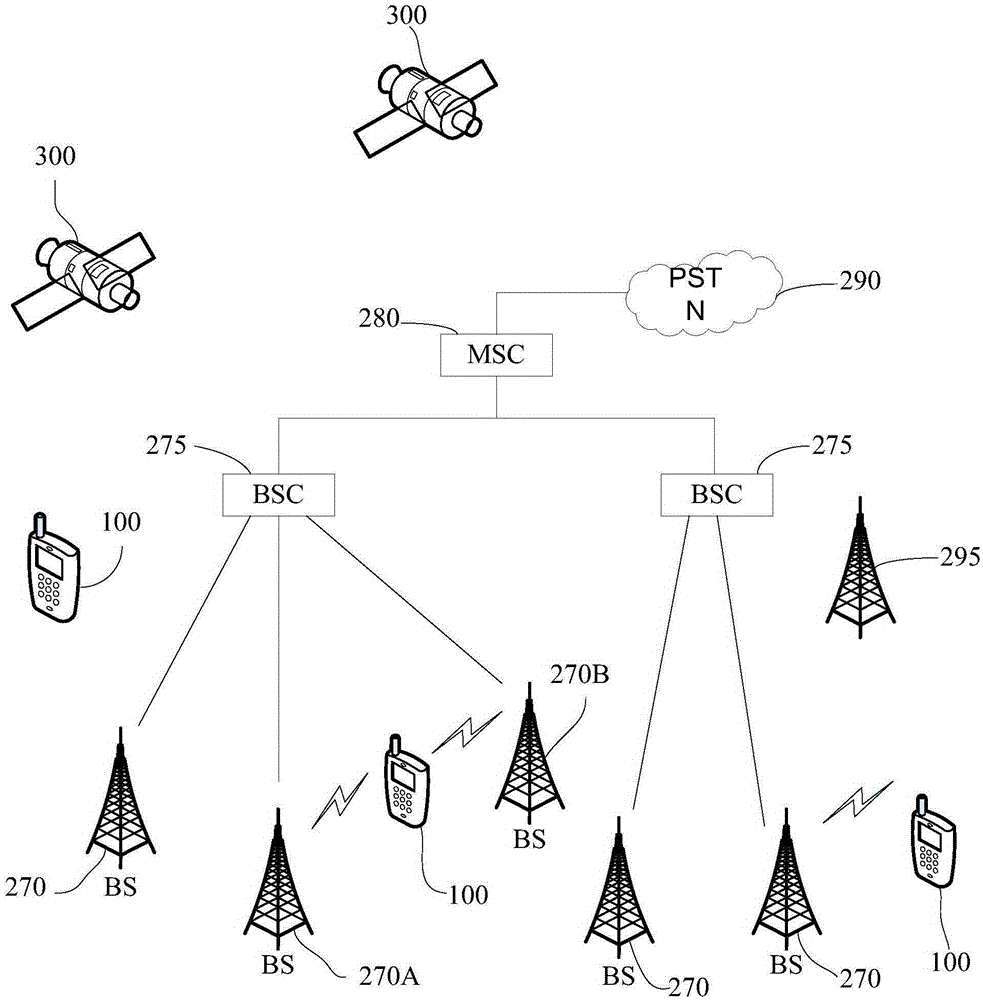

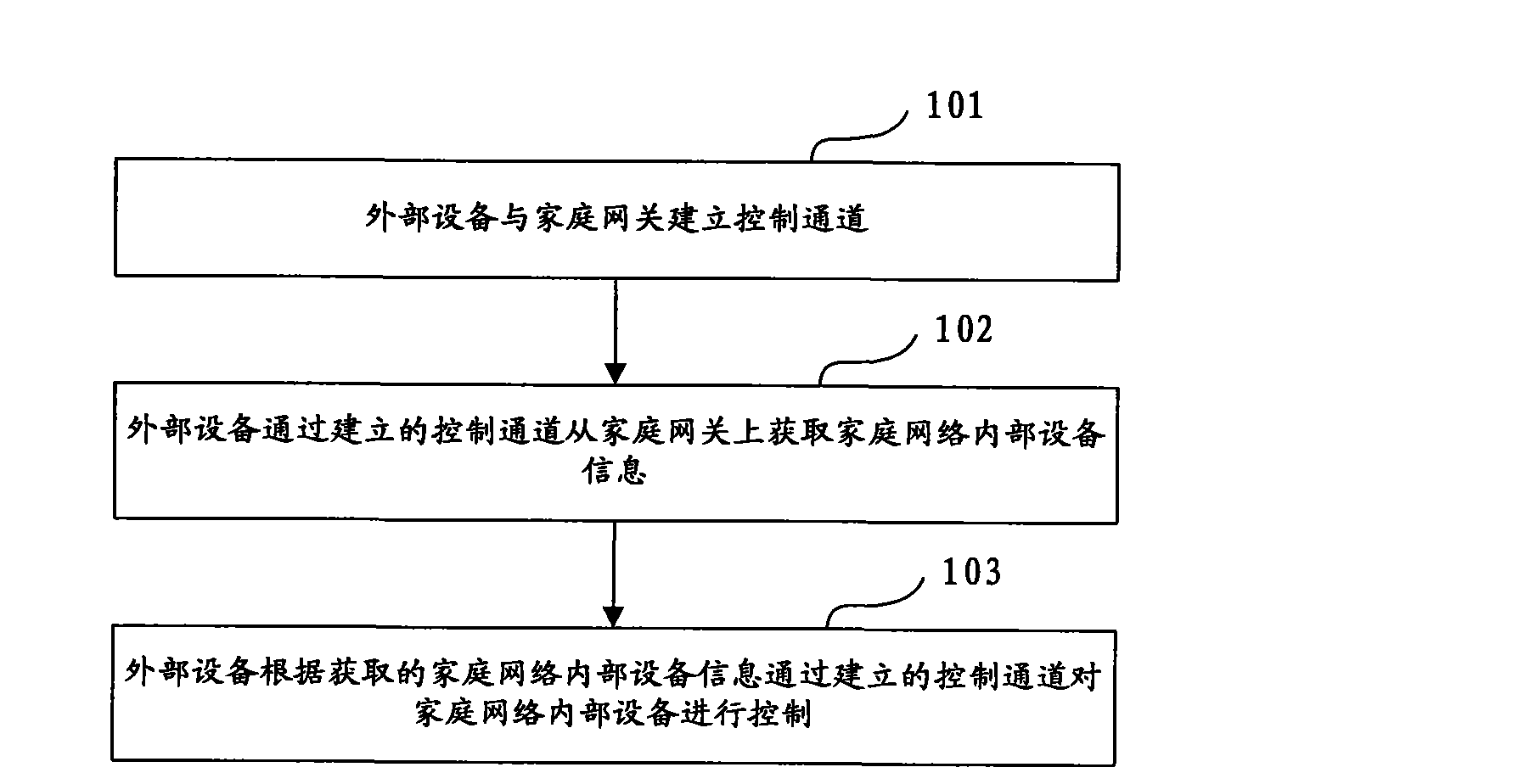

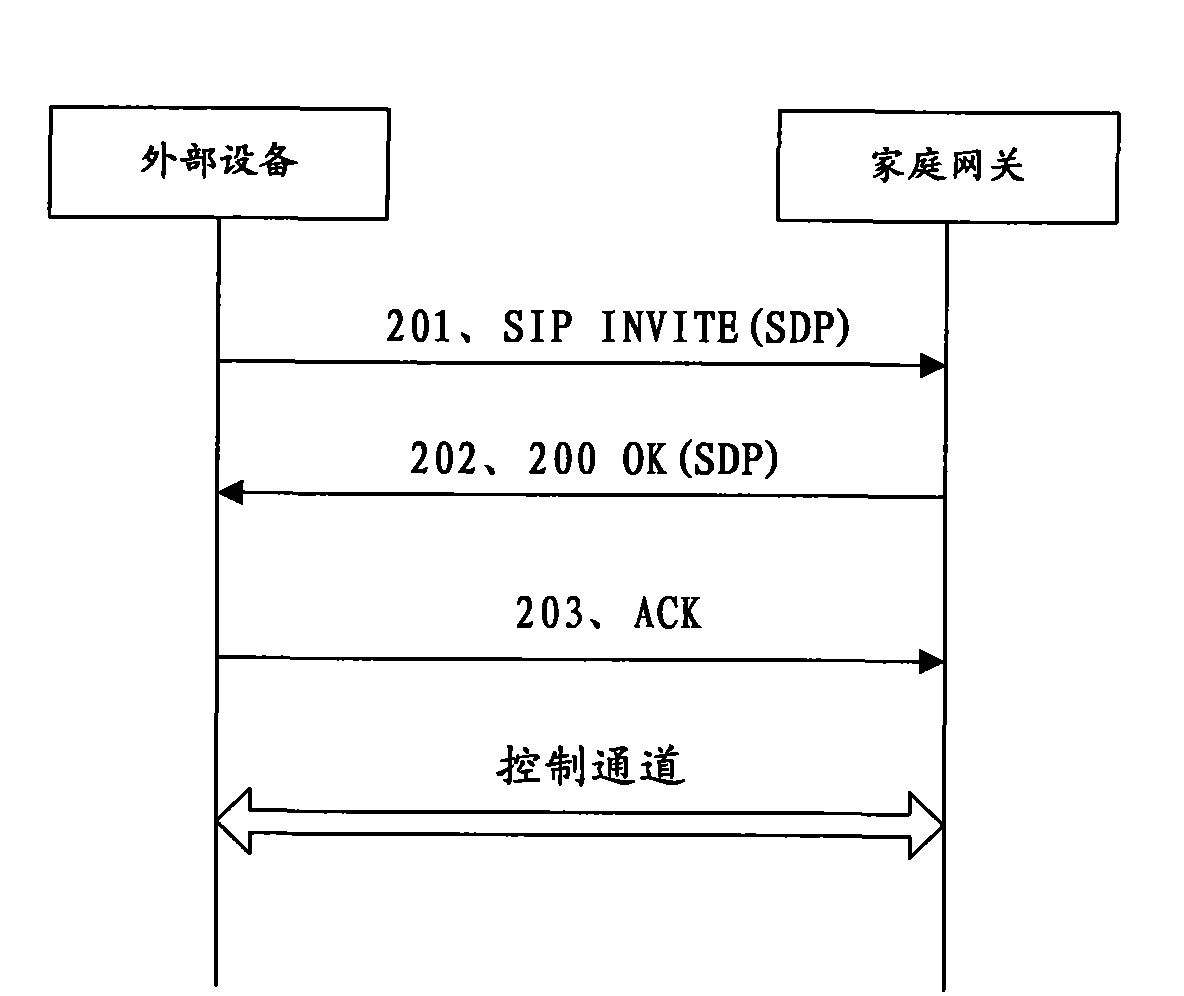

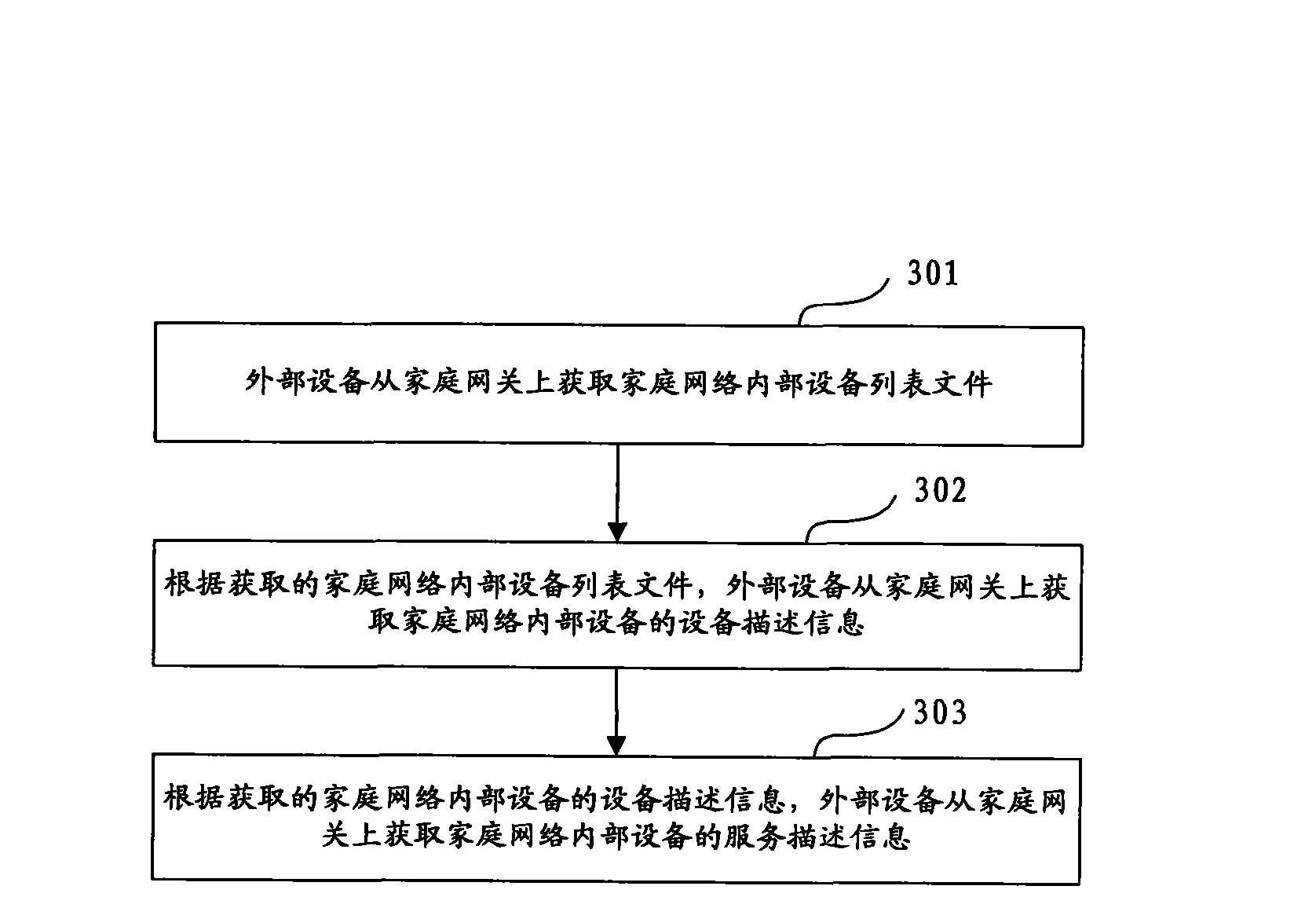

Method, equipment and system for communication between external equipment and internal equipment of home network

InactiveCN101656645ASolve data transmissionReduce network trafficData switching by path configurationNetwork connectionsNetwork reductionData transmission

The embodiment of the invention discloses a method, equipment and a system for the communication between external equipment and internal equipment of a home network, relates to the field of communication, can solve the problem of data transmission for the communication between the external equipment and the internal equipment of the home network and reduces network flow. The method provided by the embodiment of the invention comprises that: the external equipment establishes a connection channel with a home gateway; the external equipment acquires information on the internal equipment of the home network from the home gateway through the established connection channel; and the external equipment controls the internal equipment of the home network through the established connection channelaccording to the acquired information on the internal equipment of the home network. The embodiment of the invention can realize the subscription by the external equipment of the state information onthe internal equipment of the home network.

Owner:GLOBAL INNOVATION AGGREGATORS LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com