Patents

Literature

30 results about "Quick path interconnect" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Memory extending system and memory extending method

ActiveCN103488436ALarge memory capacityAvoid the problem of redundant processing powerInput/output to record carriersComputer architectureQuick path interconnect

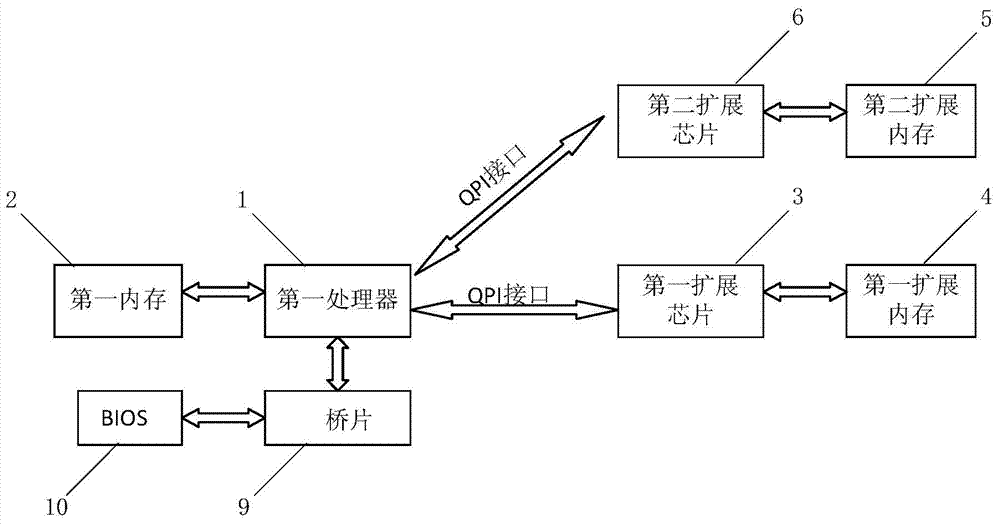

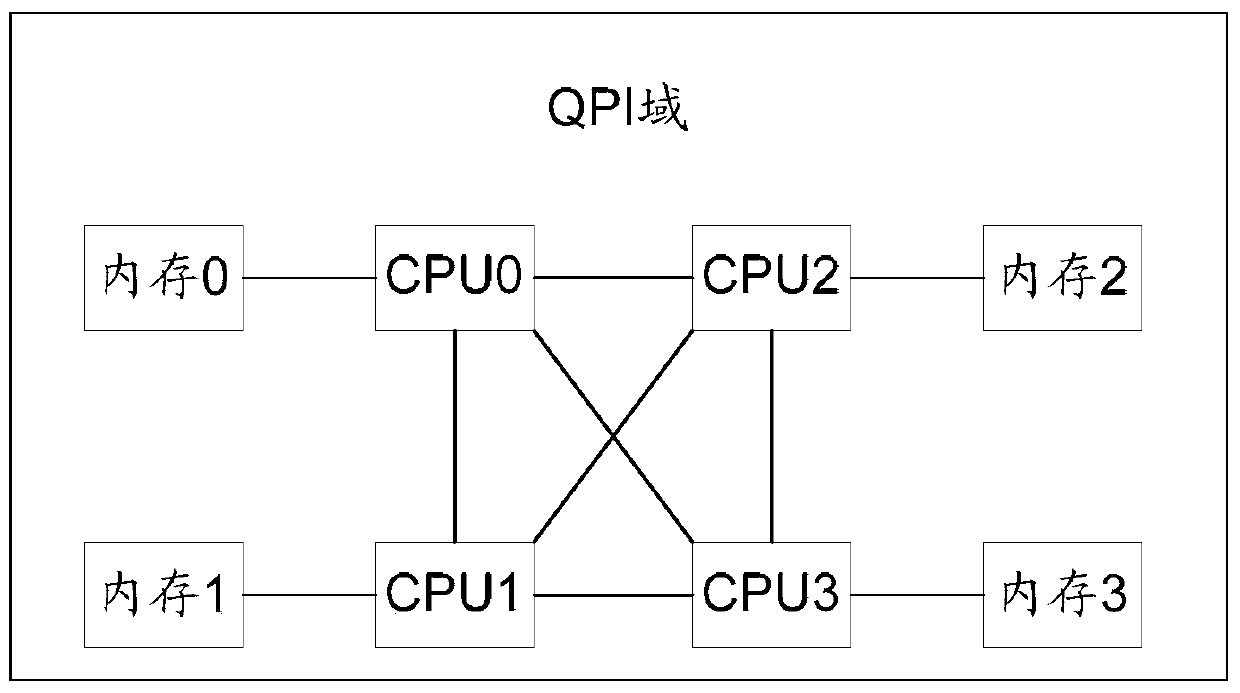

An embodiment of the invention discloses a memory extending system and a memory extending method. The system comprises processors, extended memories, extended chips and multiple processor installation positions, and a memory installation position is arranged at each process installation position; the processor installation positions are connected mutually through QPI (quick path interconnect) interfaces, at least one processor installation position is provided with a processor, and at least one of the rest installation positions serves as extended installation position; the extended chips are installed in the extended installation position; the extended memories are installed to the memory installation positions connected with the extended chips. The memory extending system has the advantages that the extended chips are mounted at other processor installation positions to replace the processors, and the existing processors are enabled to be capable of accessing the extended memories carried by the extended chips through the extended chips, so that memory capacity of the existing processors is increased on the condition that processing capacity is not improved, and the problem of processing capacity redundancy caused by the fact that memories are extended by adding processors in the prior art is solved.

Owner:XFUSION DIGITAL TECH CO LTD

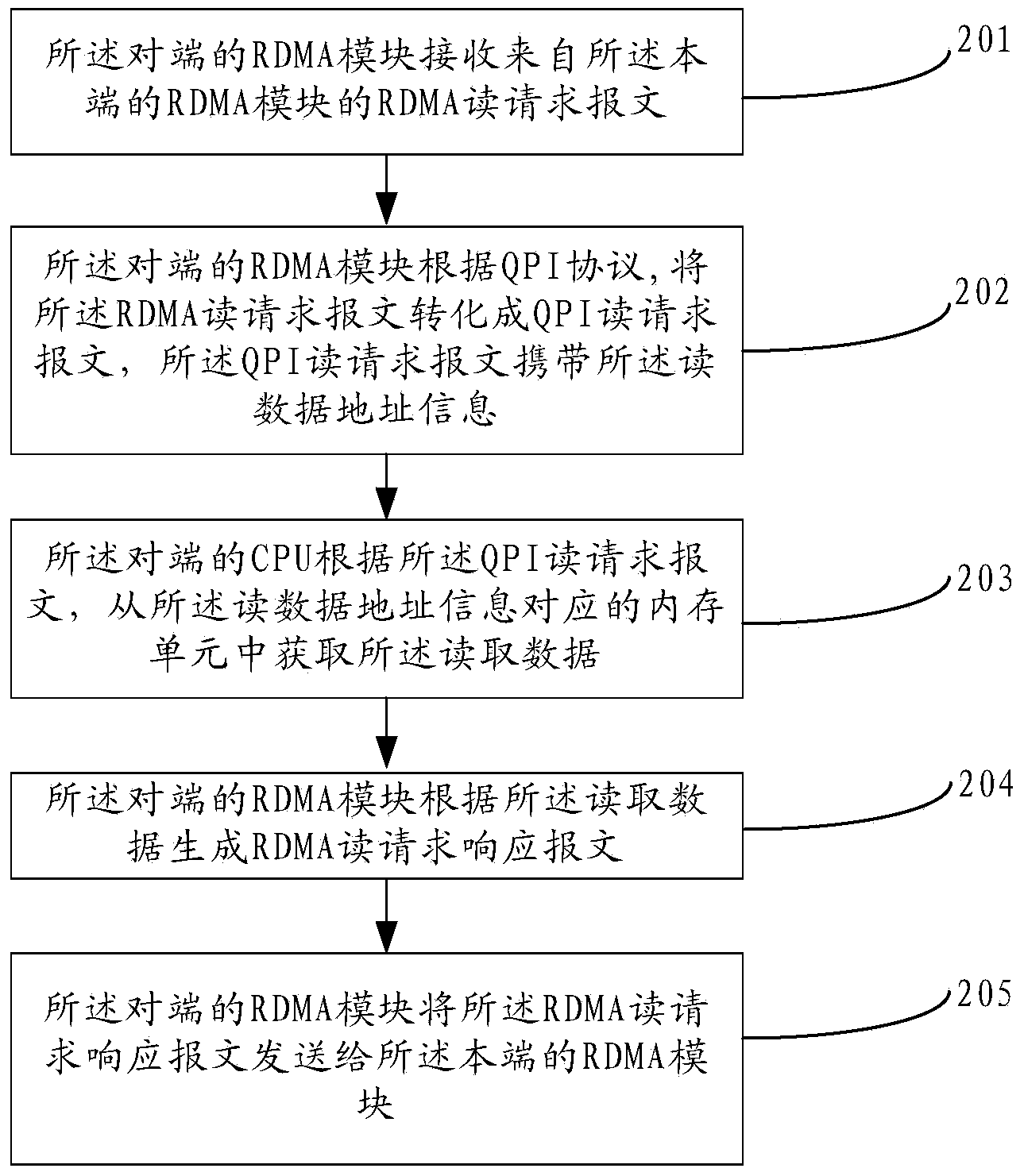

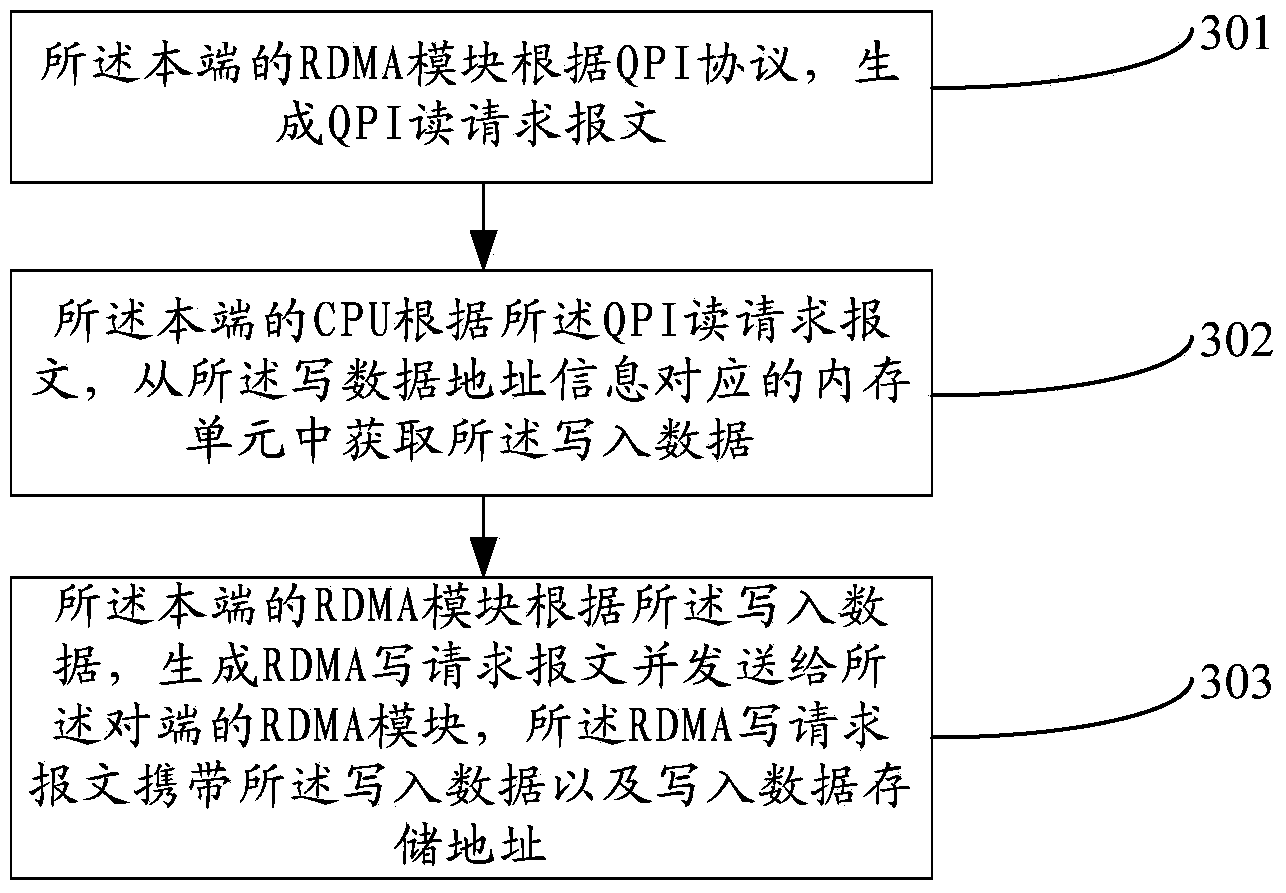

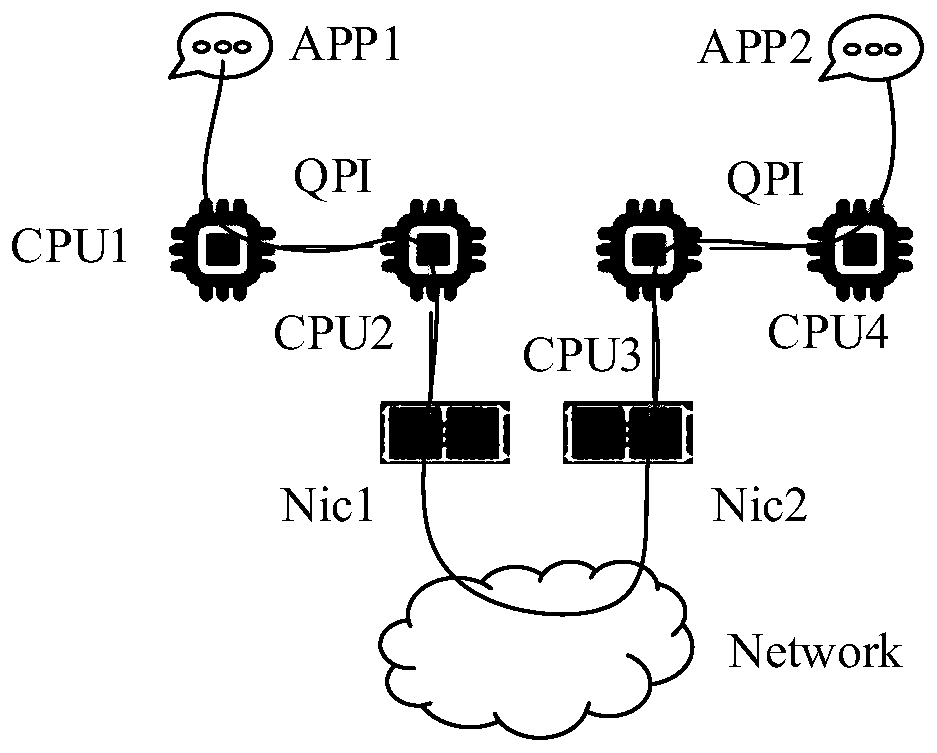

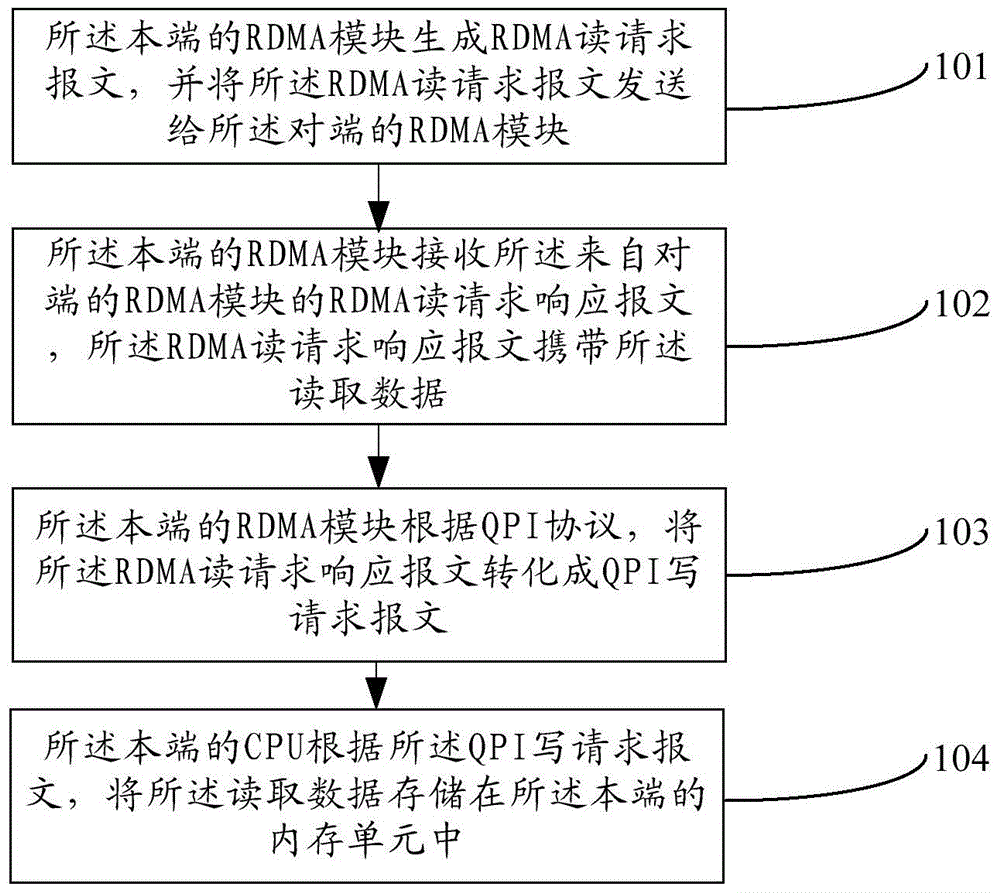

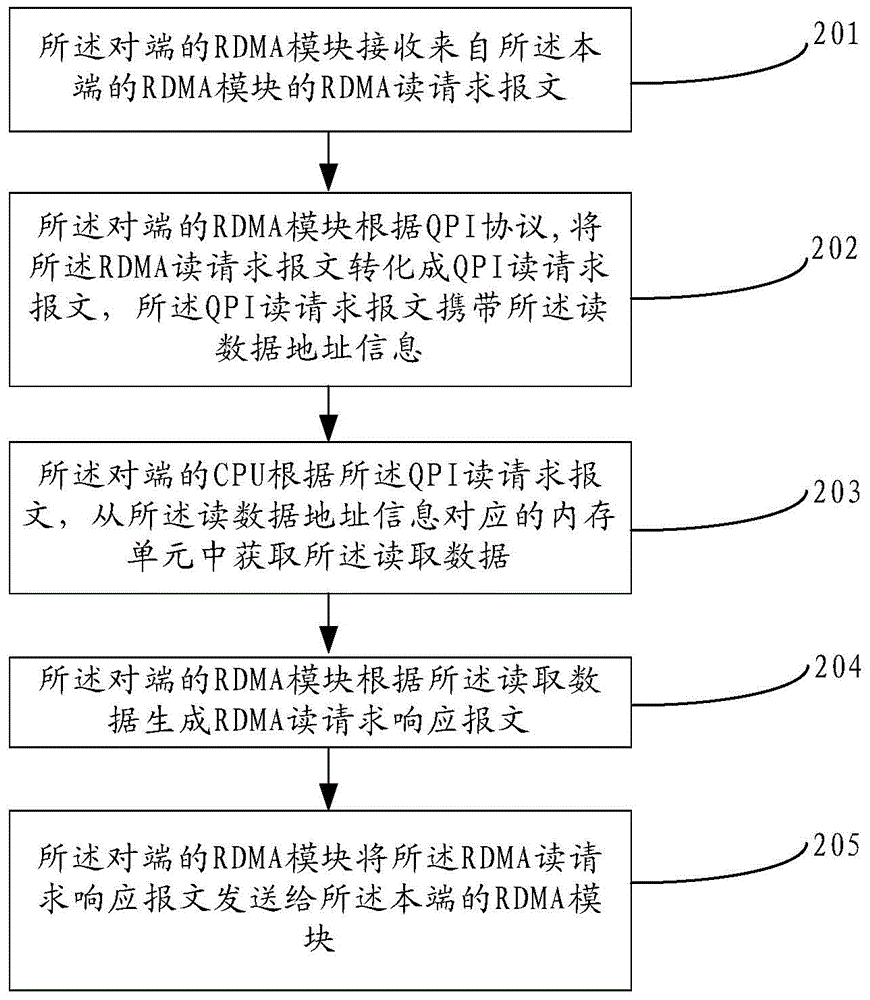

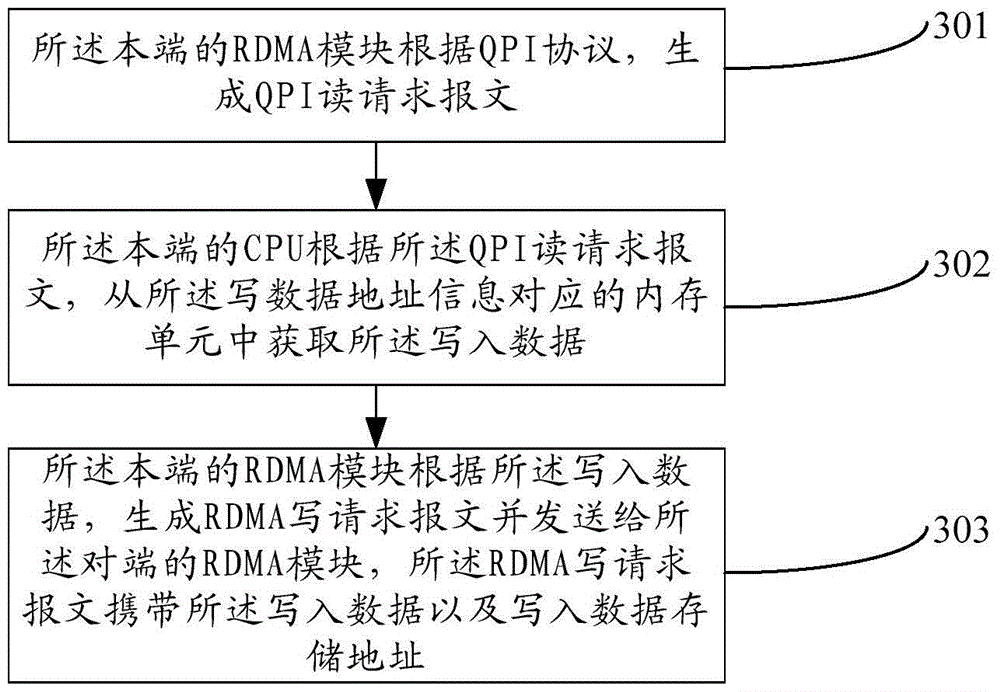

System, device and method for implementation of remote direct memory access

ActiveCN103902486AResolution delay is largeQuick responseTransmissionElectric digital data processingDirect memory accessComputer module

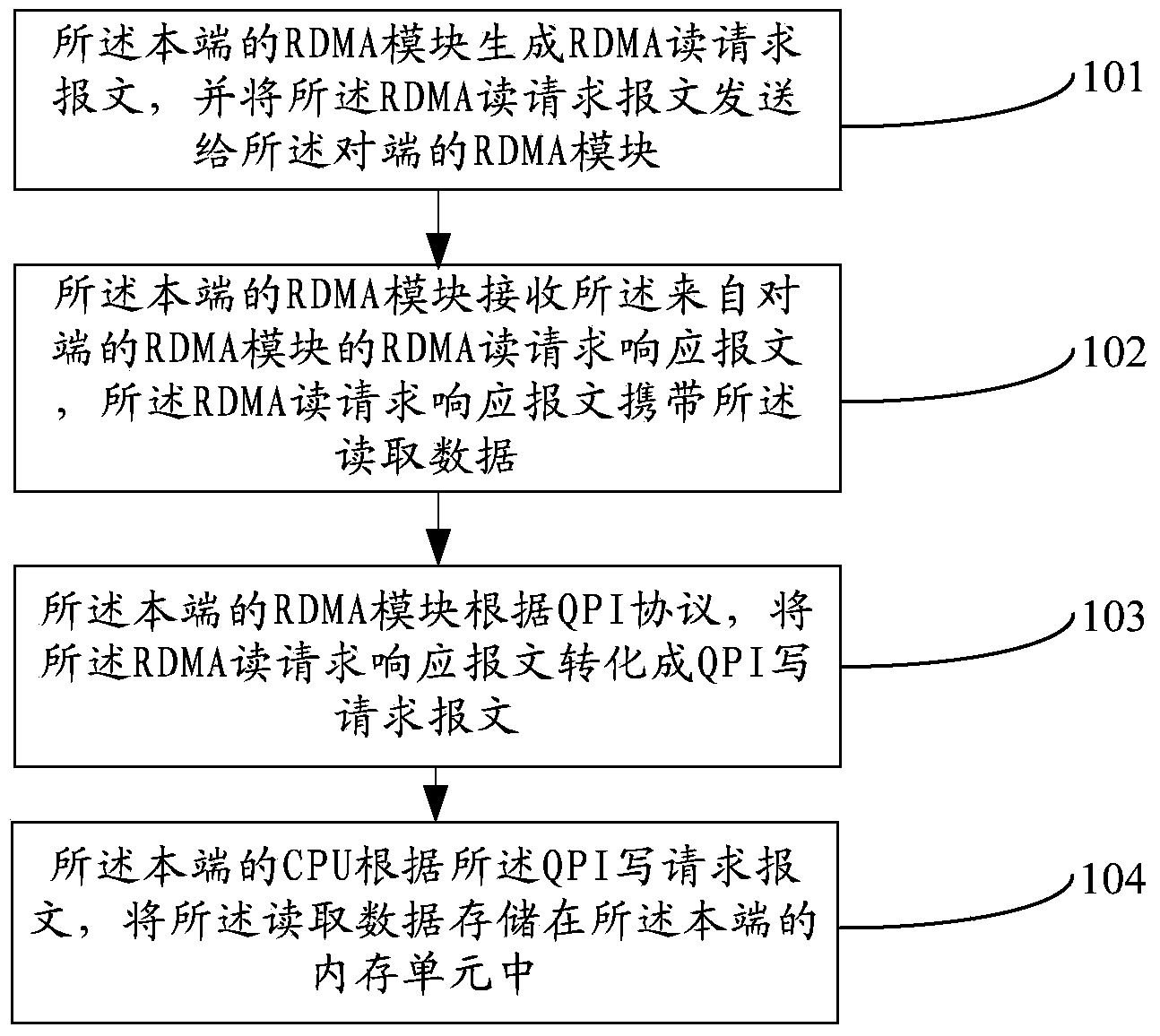

A system, a device and a method for implementation of remote direct memory access are used for a memory access system with a home terminal and an opposite terminal. Each of the home terminal and the opposite terminal comprises a CPU (central processing unit) and an RDMA (remote direct memory access) module which are connected through a QPI (quick path interconnect). The RDMA module of the opposite terminal is capable of converting an RDMA request message into a QPI data request when receiving the RDMA request message sent by the RDMA module of the home terminal, and accordingly the opposite terminal CPU participated in remote direct memory access is enabled to quickly acquire the QPI data request from the RDMA module of the opposite terminal, and time delay resulted from communication between a PCIE (peripheral component interconnect express) bus and the CPU is avoided to enable the data request to be responded quickly.

Owner:XFUSION DIGITAL TECH CO LTD

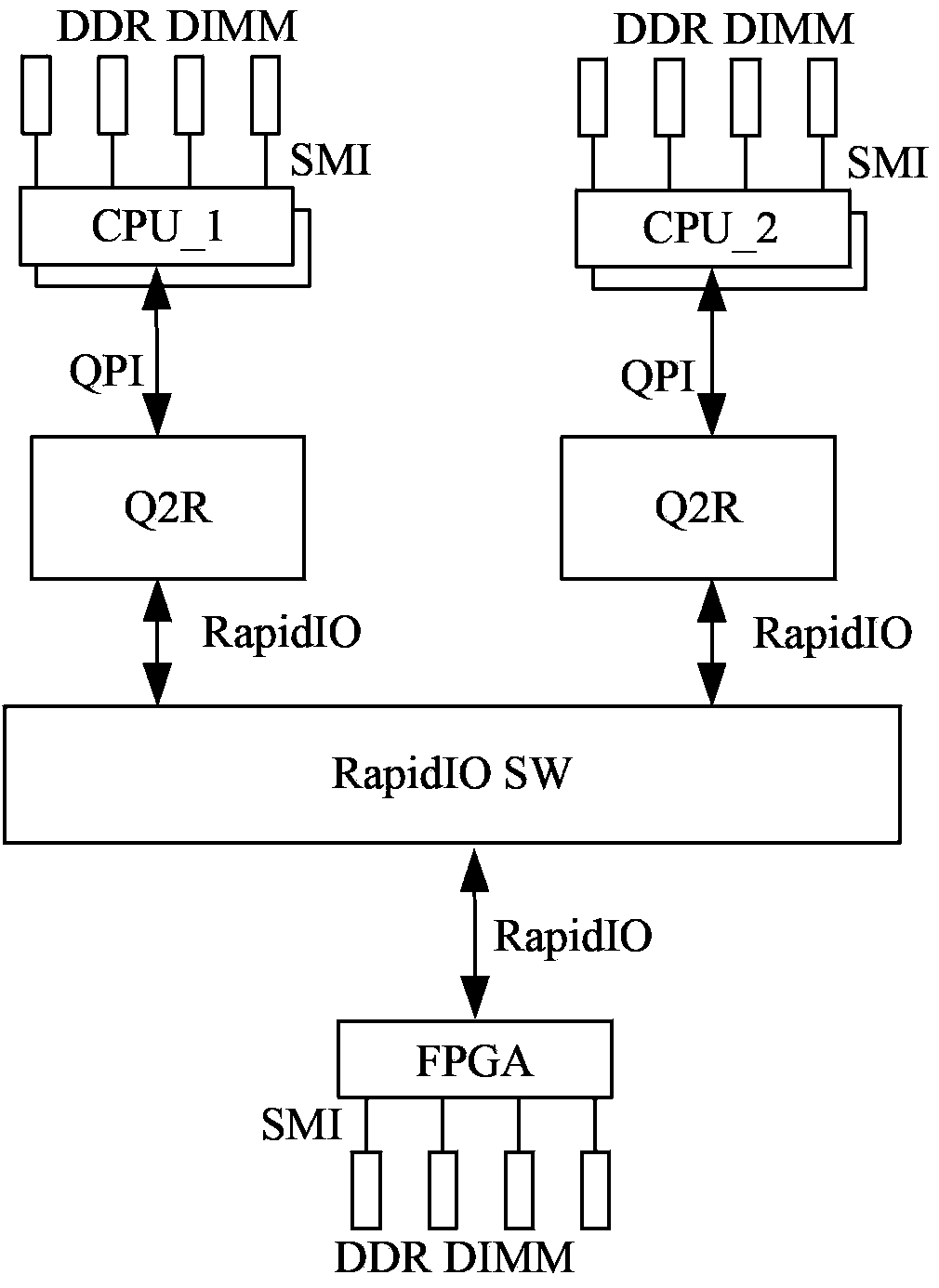

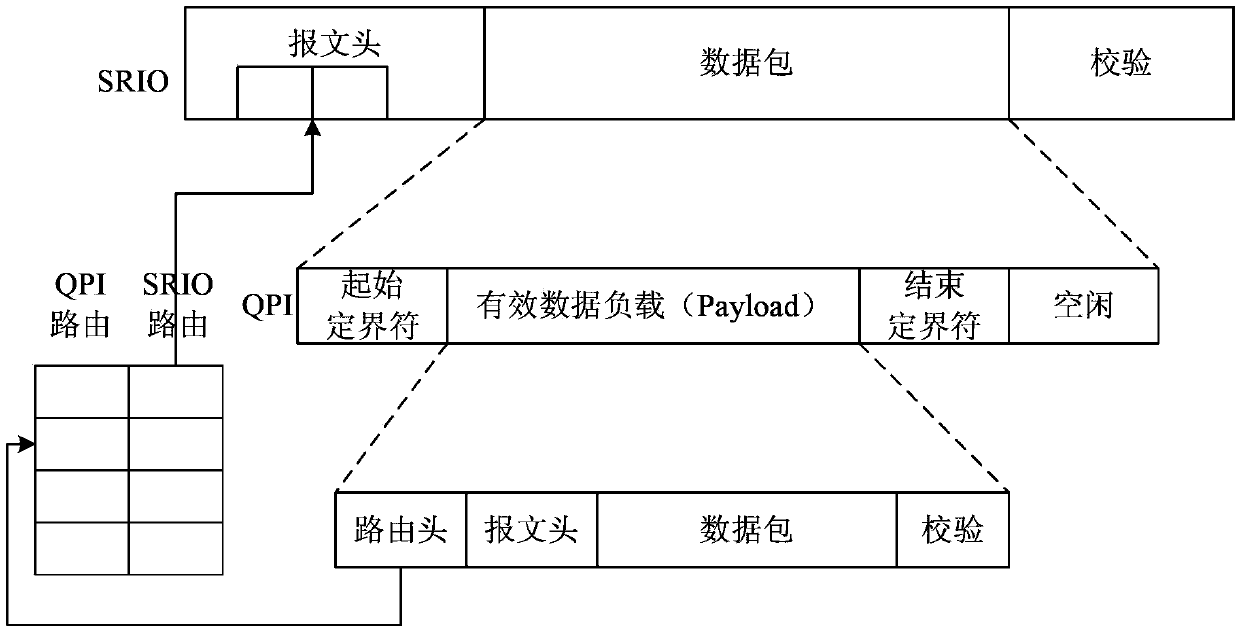

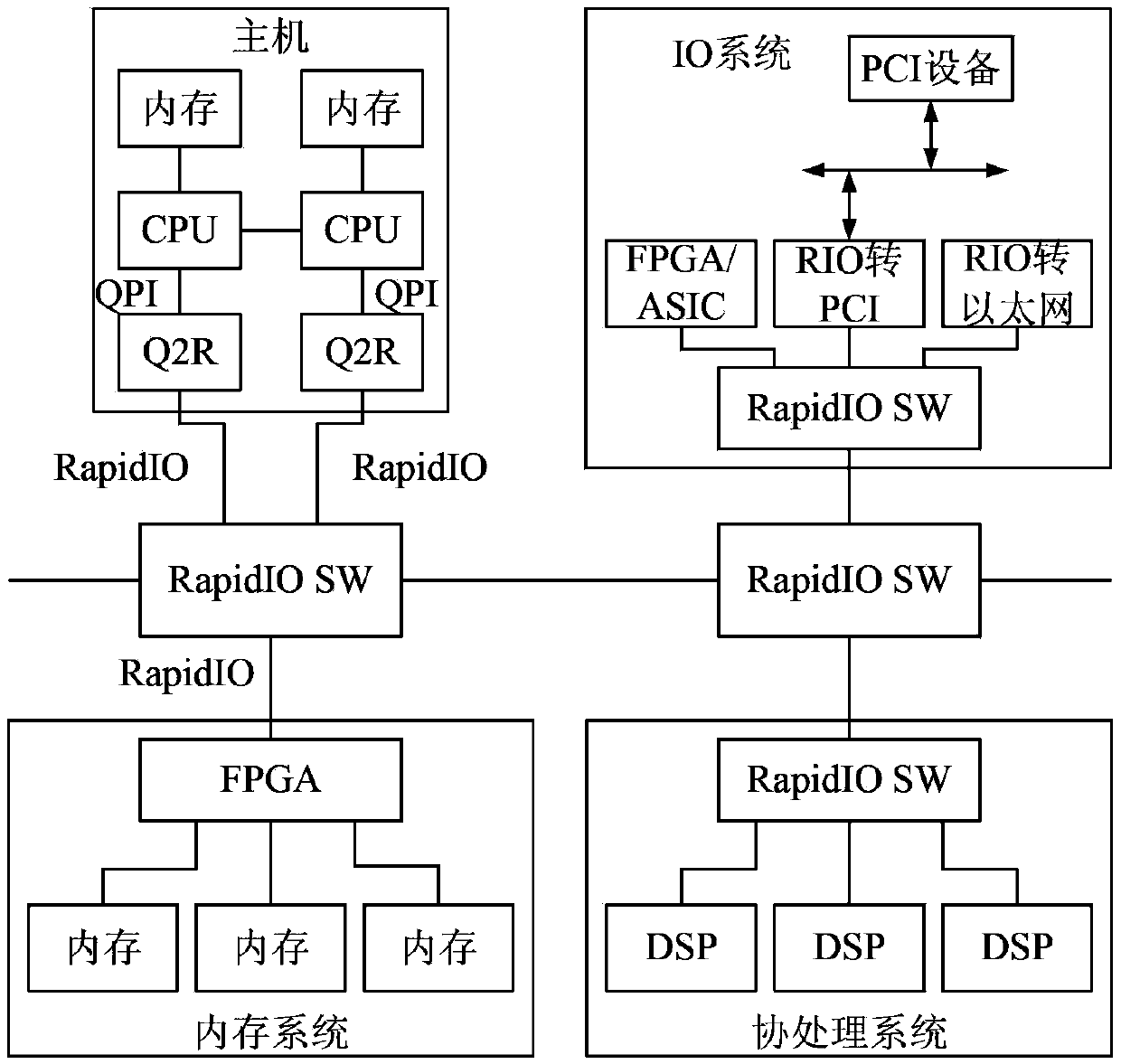

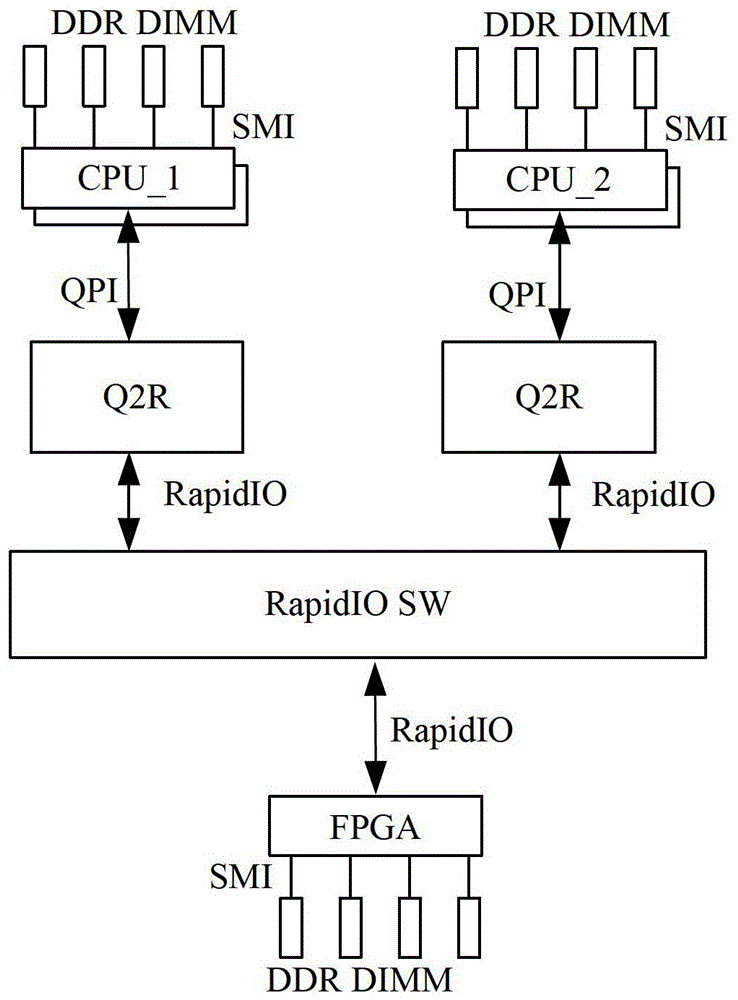

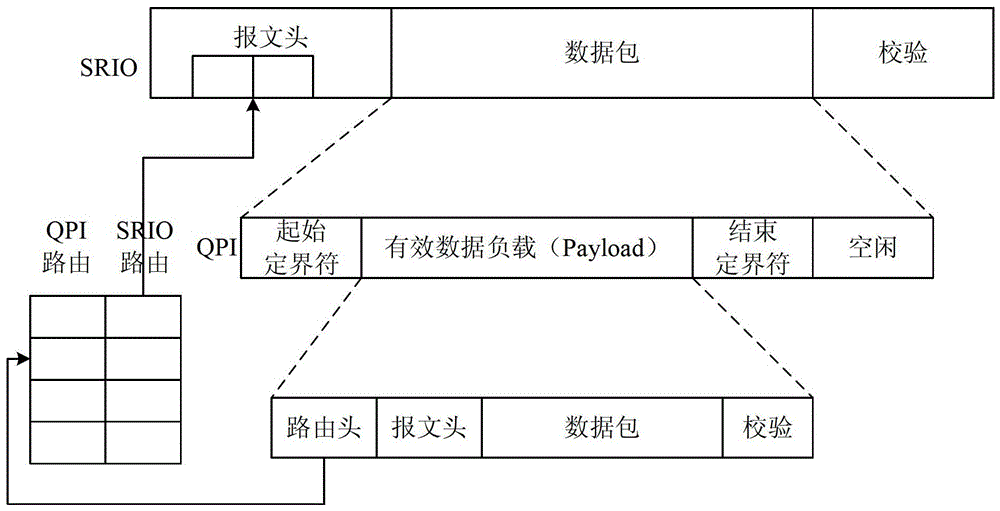

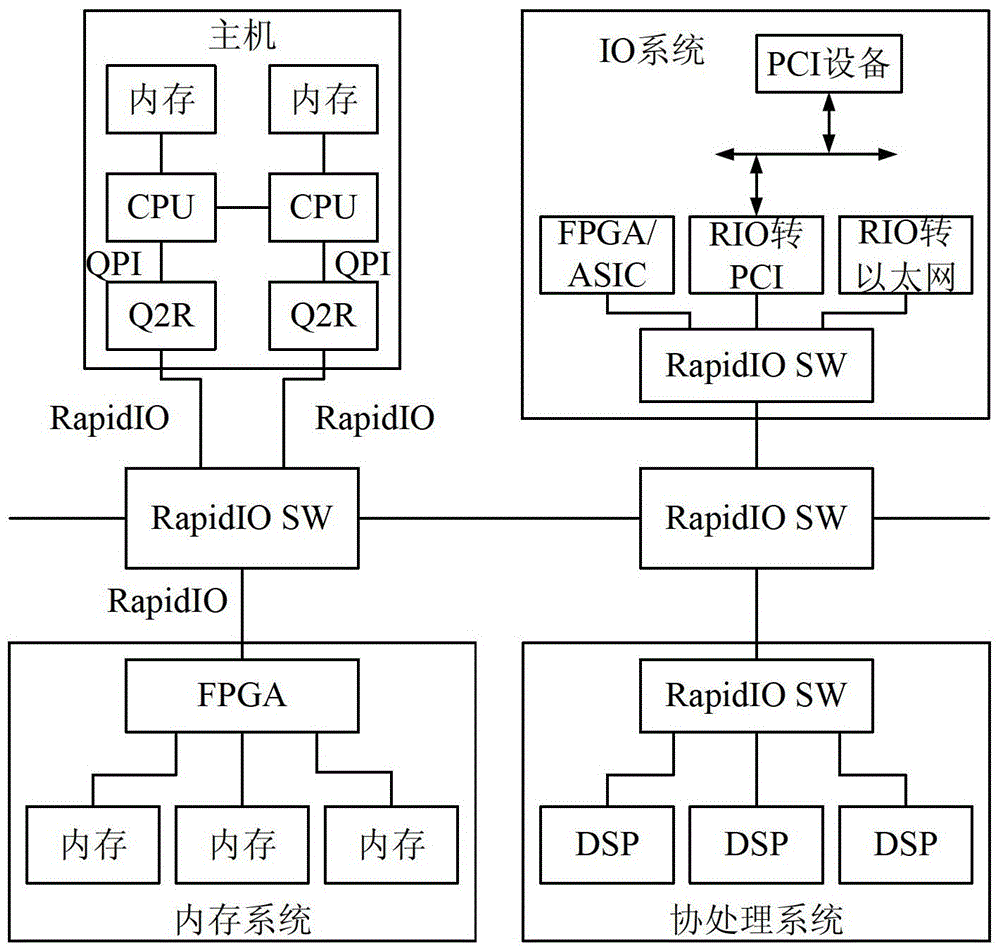

Data processing method, protocol conversion equipment and Internet

ActiveCN103401846AFlexible expansion of system memoryEasy to updateTransmissionComputer hardwareThe Internet

The invention relates to a data processing method, protocol conversion equipment and an Internet. The data processing method comprises the following steps of receiving a QPI (quick path interconnect) message; converting the QPI message according to an RapidIO (rapid input / output) protocol format to obtain an RapidIO message, wherein the RapidIO message carries allocation information for instructing the conversion of the QPI message; and sending the RapidIO message. According to the embodiment of the invention, a QPI interface can be converted into an RapidIO message according to the conversion between the message of the QPI protocol format and the message of the RapidIO protocol format; system equipment and a system memory of a server can be flexibly expanded; and the system equipment and the system memory of the server are convenient to update and maintain.

Owner:XFUSION DIGITAL TECH CO LTD

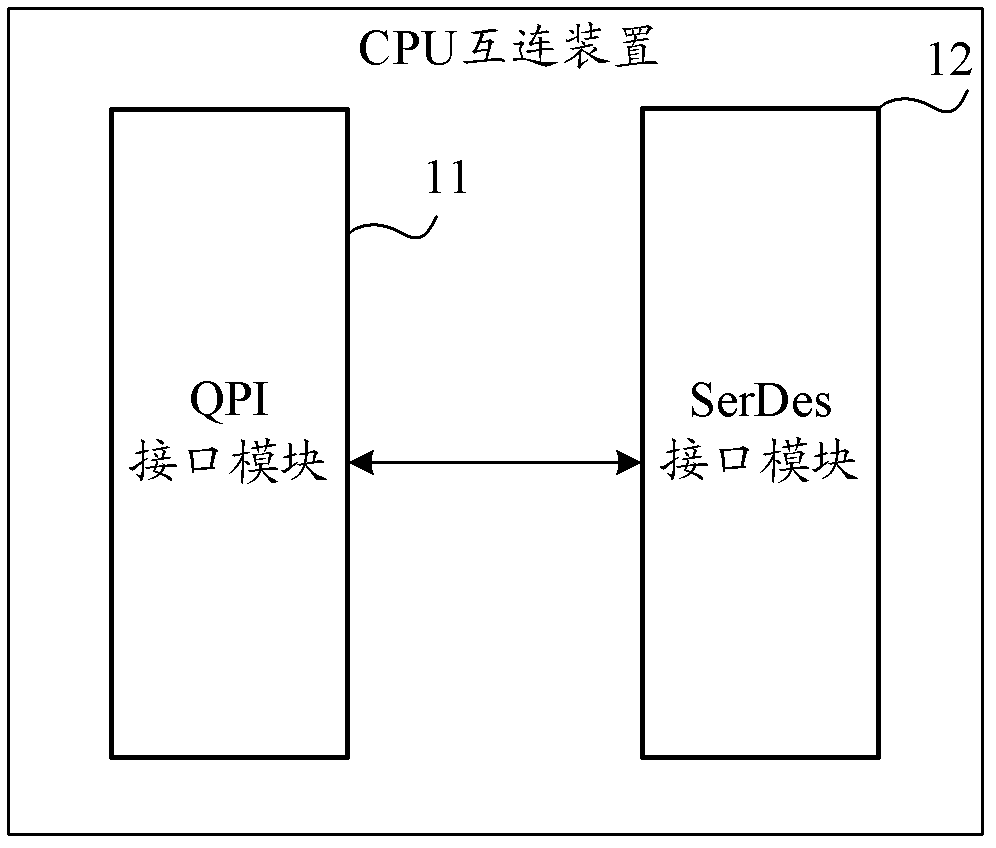

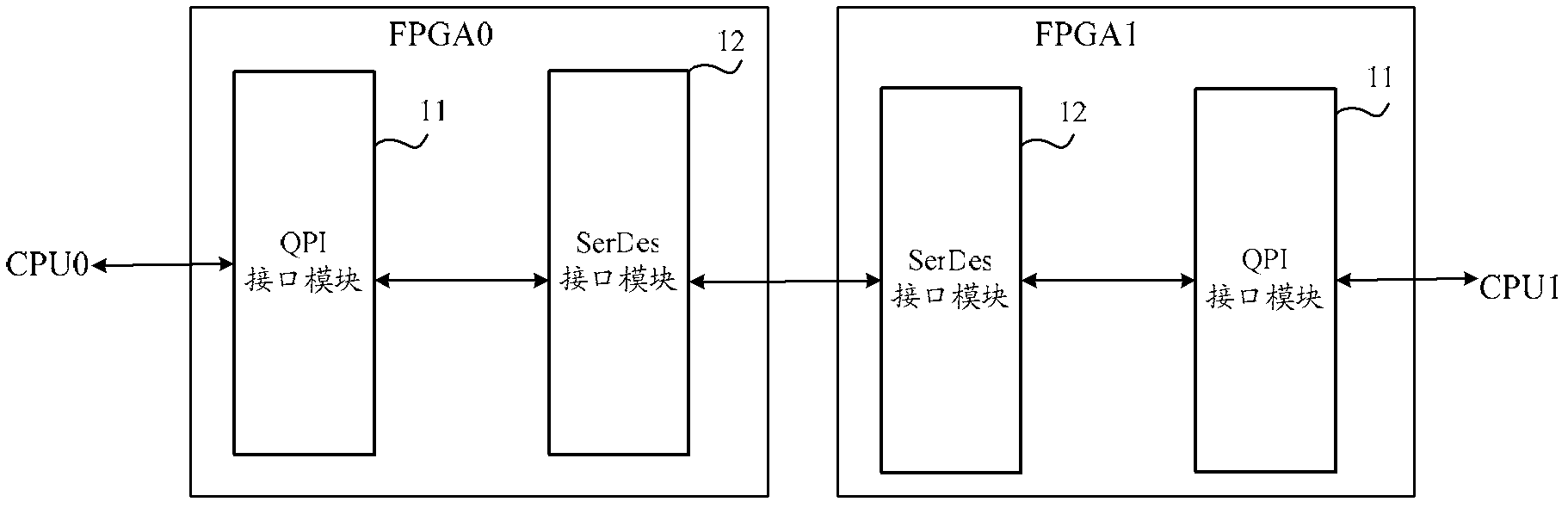

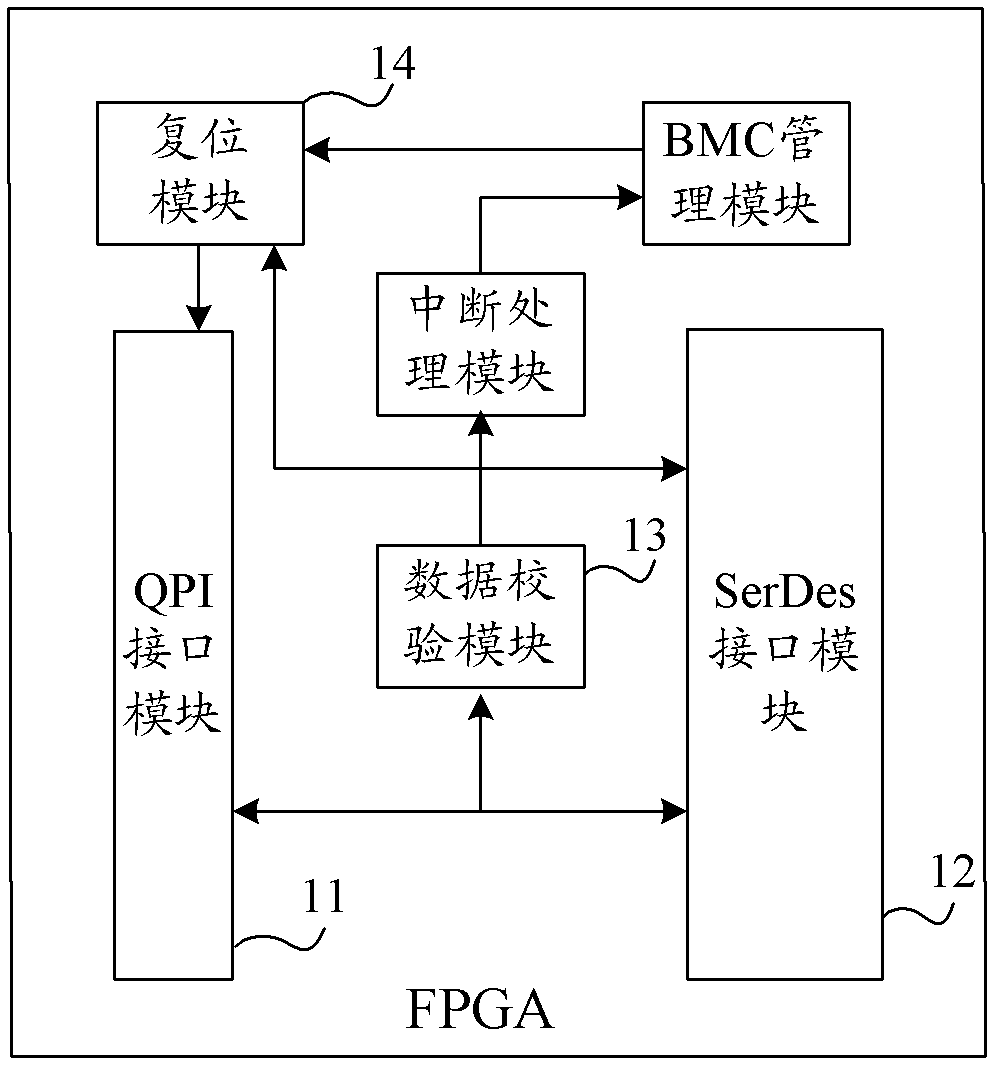

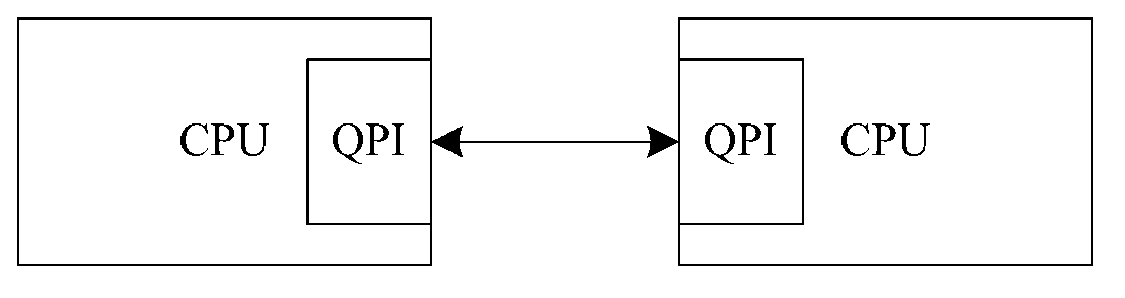

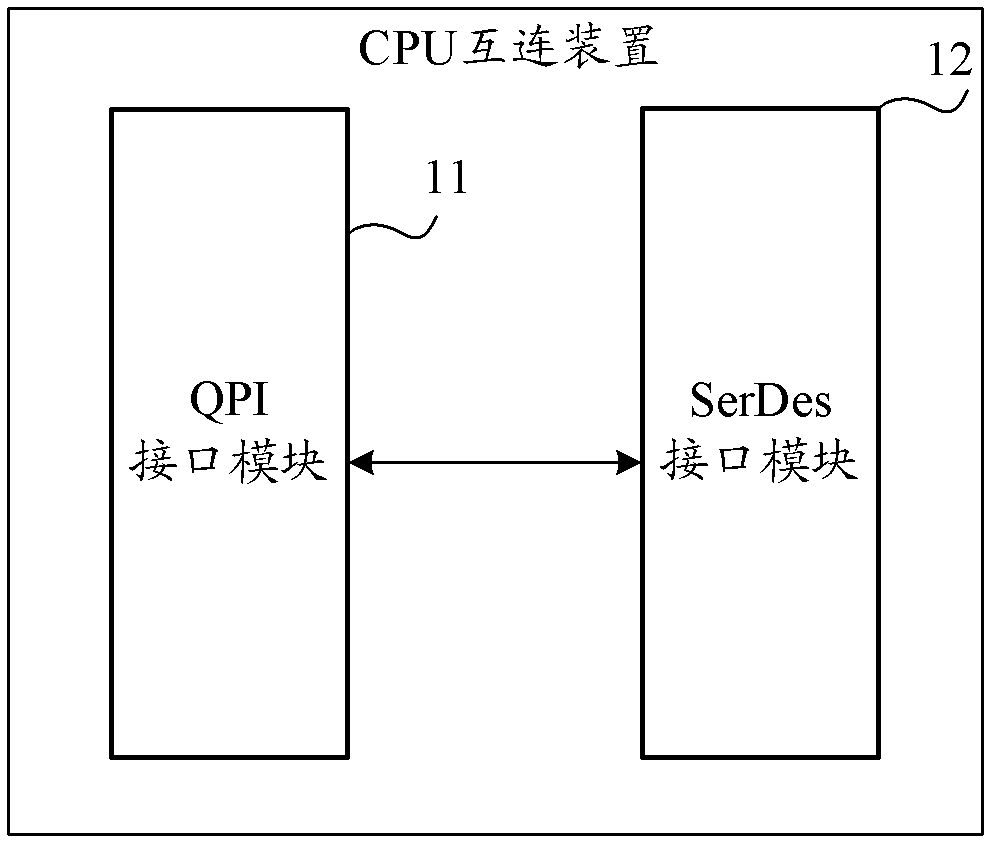

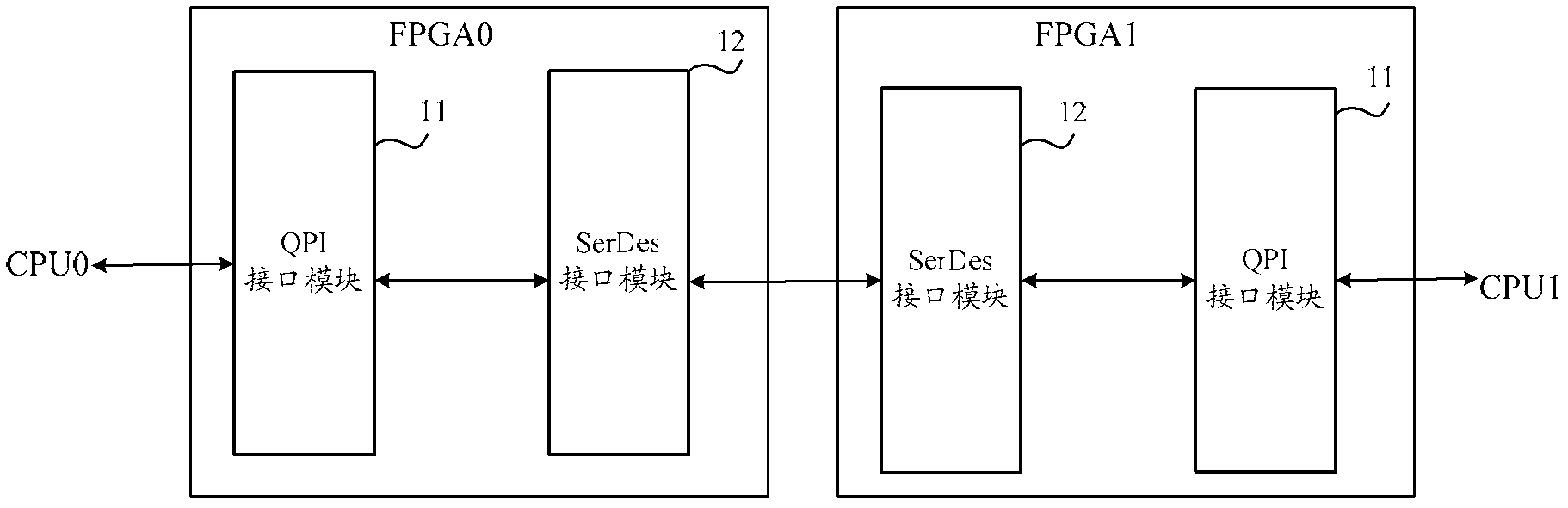

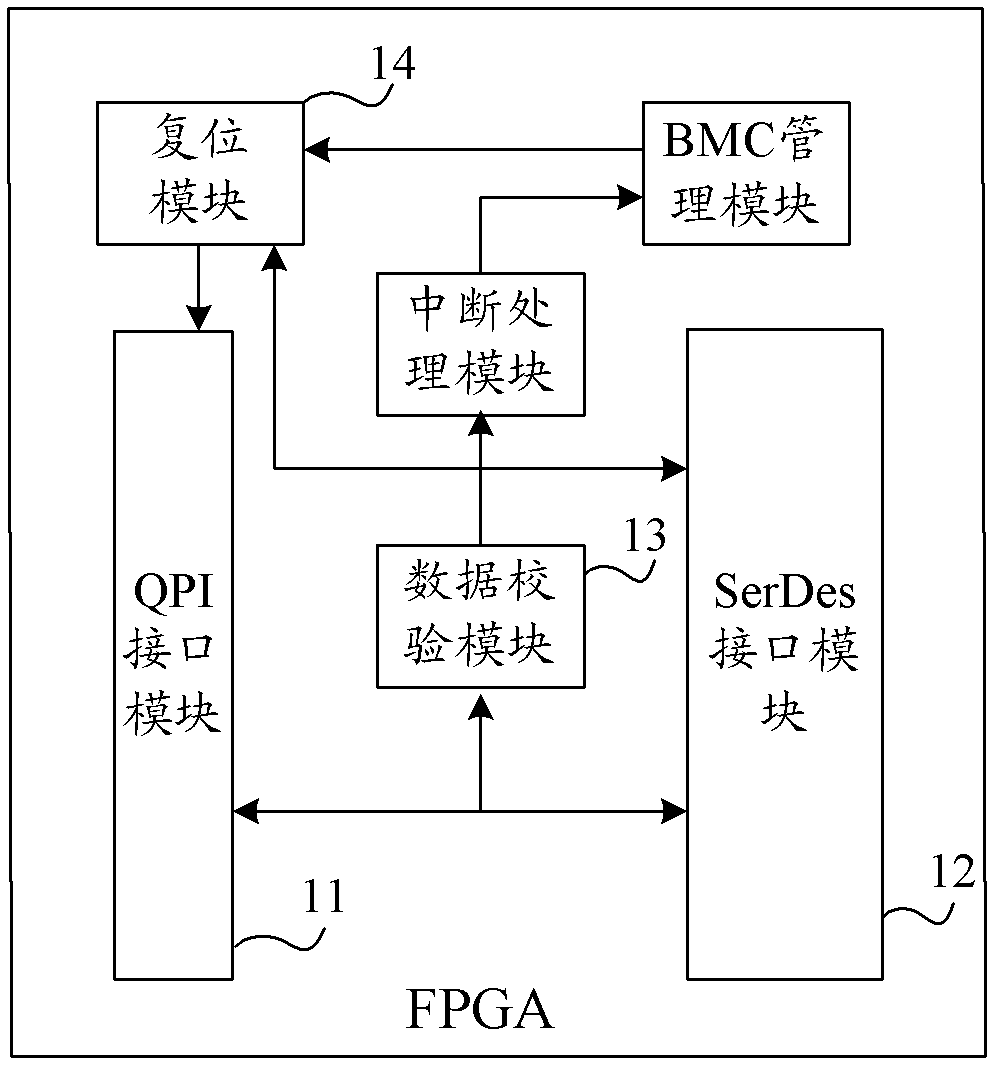

cpu interconnection device

ActiveCN102301364AIncrease or decrease the numberImprove acceleration performanceMultiple digital computer combinationsElectric digital data processingComputer moduleQuick path interconnect

The present invention provides a CPU (Central Processing Unit) interconnecting device comprising: a QPI (Quick Path Interconnect) interface module which is connected with a QPI interface of the CPU and used for converting serial QPI data sent by the CPU into parallel QPI data; a SerDes (Serial Deserial) interface module which is respectively connected with the QPI interface module and the other SerDes interface module, and used for receiving the parallel QPI data output by the QPI interface module and converting the parallel QPI data output by the QPI interface module into high-speed serial SerDes data, then sending the high-speed serial SerDes data to the other SerDes interface module; the other SerDes interface module which is arranged on the other CPU interconnecting device; wherein the SerDes interface module is also used for receiving the high-speed serial SerDes data sent by the other SerDes interface module and converting the received high-speed serial SerDes data into the parallel QPI data; and the QPI interface module is also used for converting the parallel QPI data output by the SerDes interface module into the serial QPI data and sending the serial QPI data to the CPU.

Owner:HUAWEI TECH CO LTD

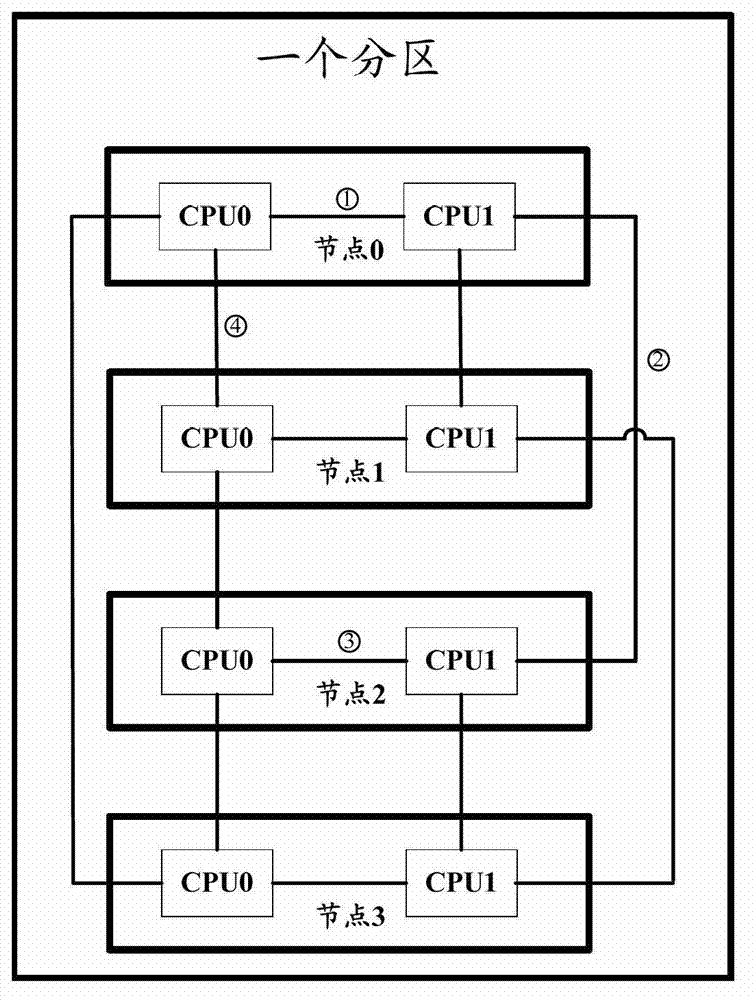

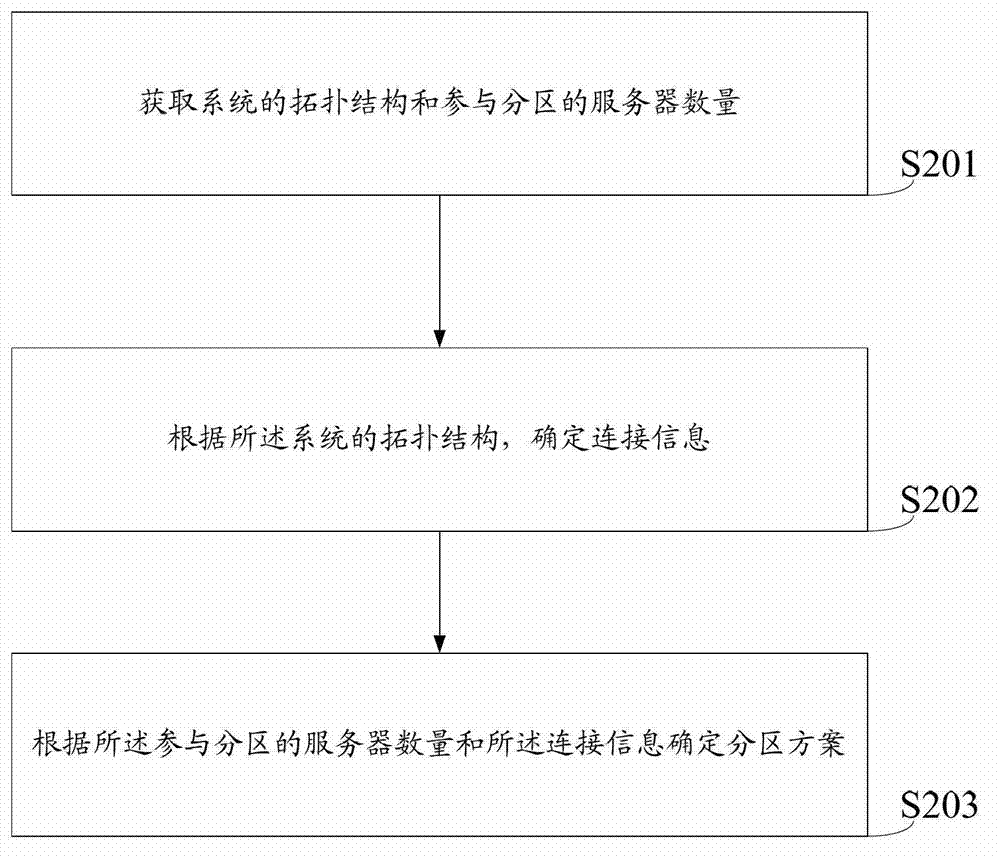

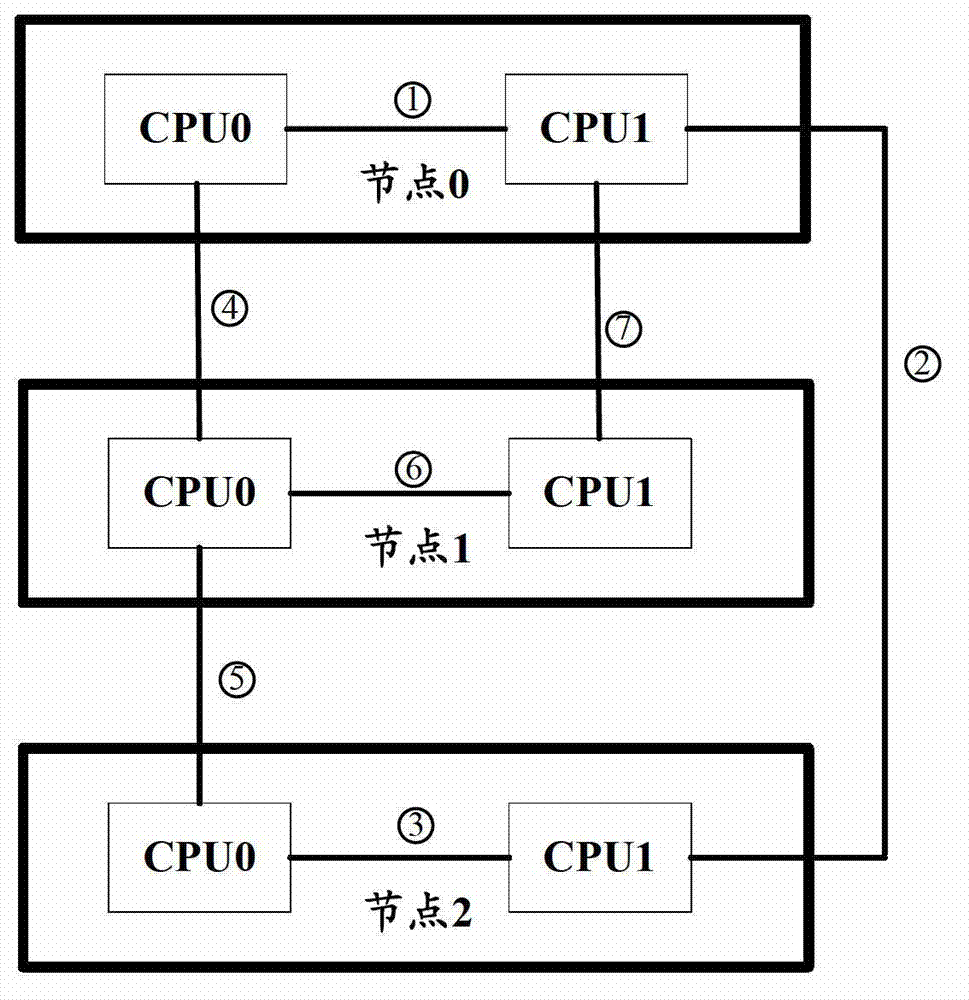

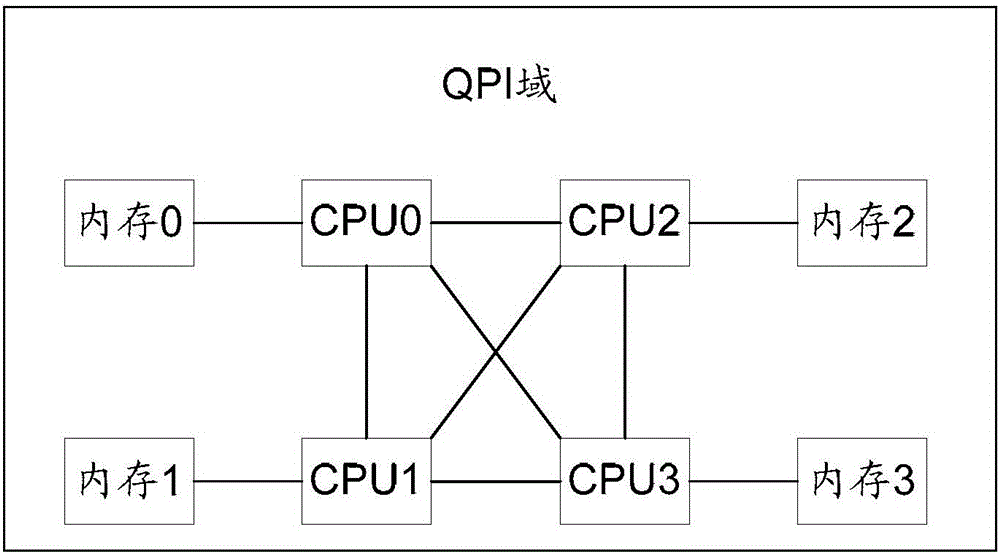

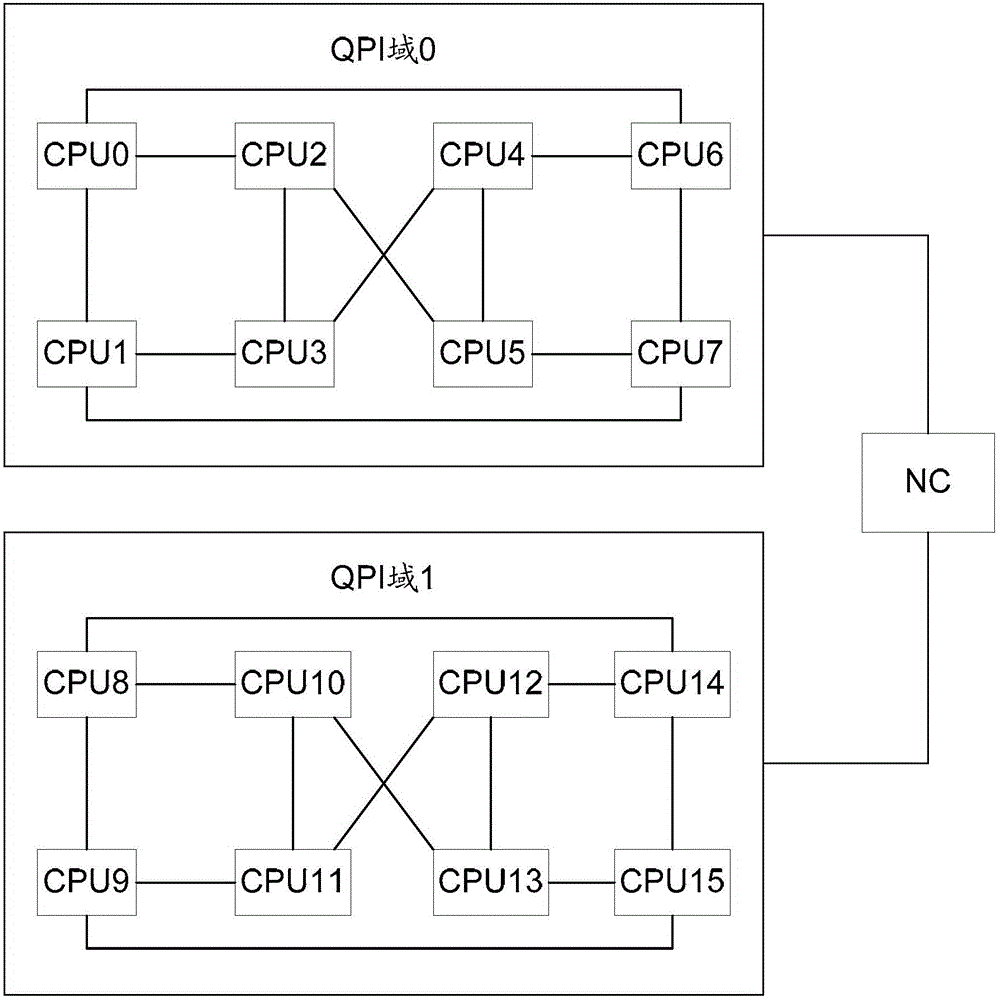

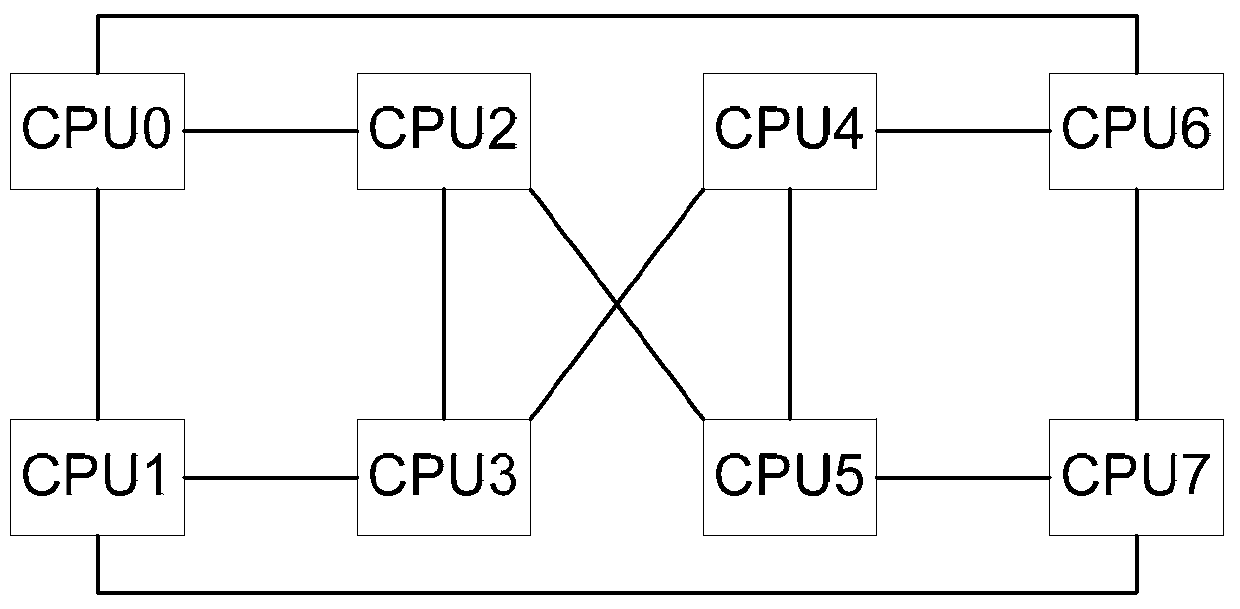

Node partition dividing method, device and server

InactiveCN102932175AReduce workloadLow running costData switching networksStructure of Management InformationElectronic information

The embodiment of the invention discloses a node partition dividing method, device and server, relating to the field of electronic information technology. Through the invention, a partition scheme with a minimum hop count of QPI (quick path interconnect) can be automatically analyzed and obtained according the topological structure of the system, thus the influence of subjective factors is avoided in manual partition division, and the reduction of the operation speed caused by improper partition is relieved so as to relieve the reduction of system performance. The method comprises the following steps of: obtaining the topological structure of the system and the number of nodes participating in partition, wherein the system comprises at least three nodes, and each node comprises at least two CPUs (central processing units); determining the connection information according to the topological structure of the system, wherein the connection information includes the connection relationship between each CPU and other CPUs in the system; and determining the partition scheme according to the number of nodes participating in partition and the connection information, wherein the number of nodes in the partition scheme is the number of nodes participating in partition. The method, device and server disclosed by the invention are applicable to server partition.

Owner:HUAWEI TECH CO LTD

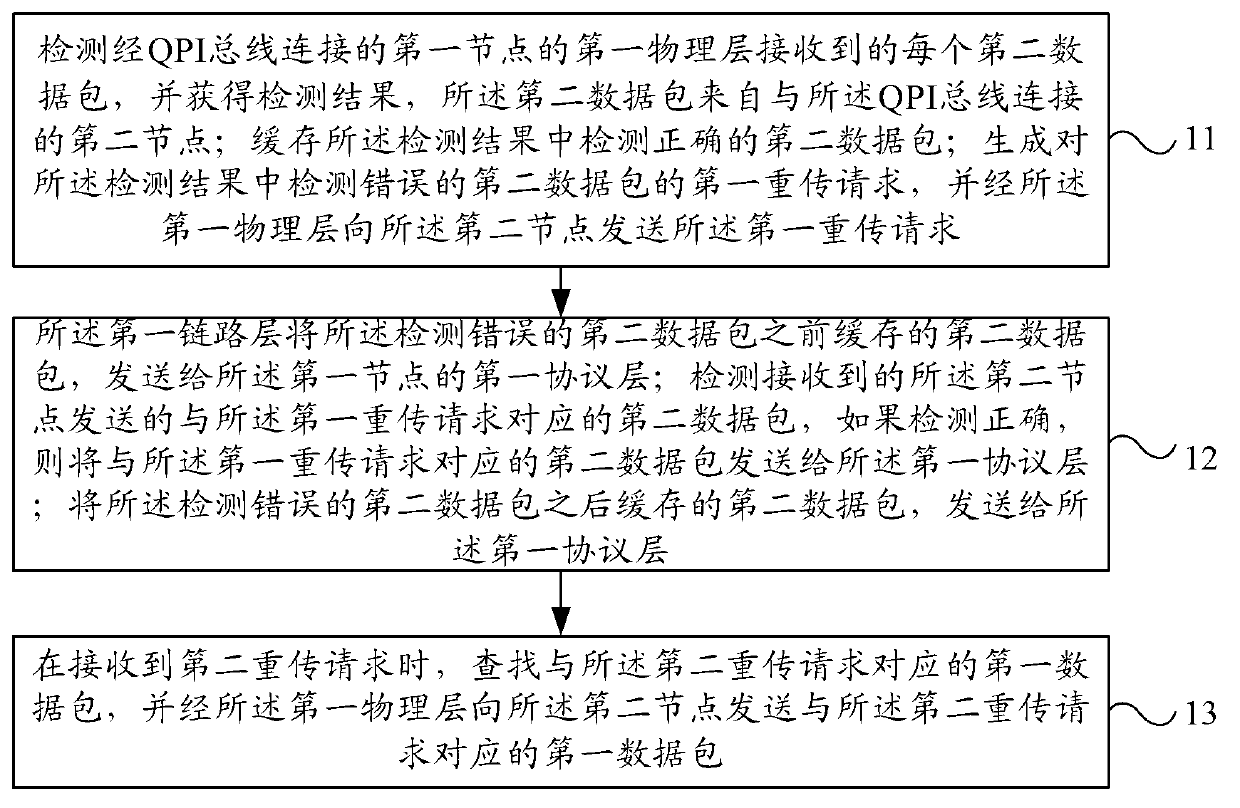

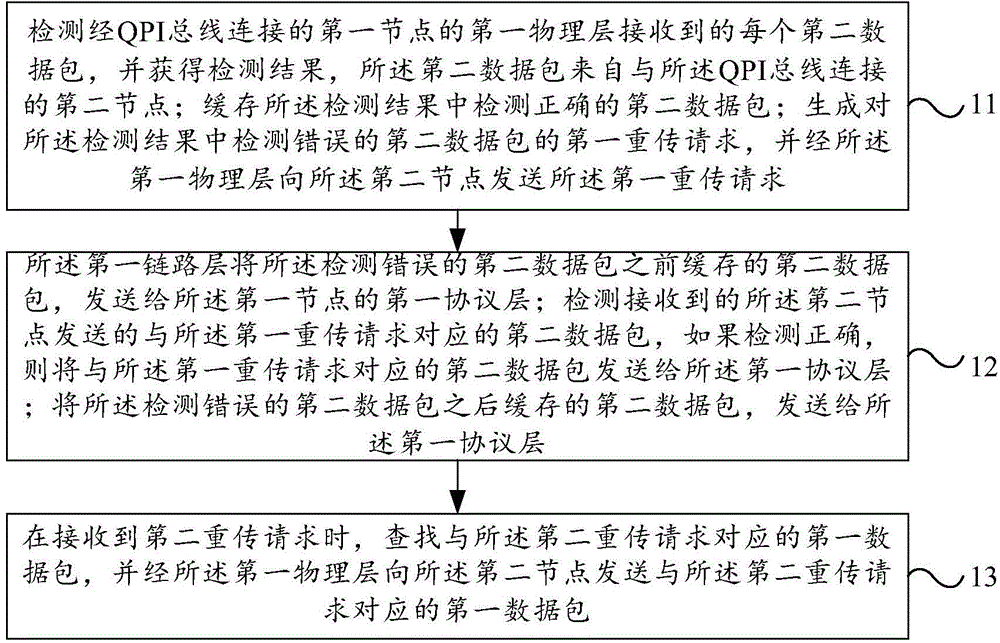

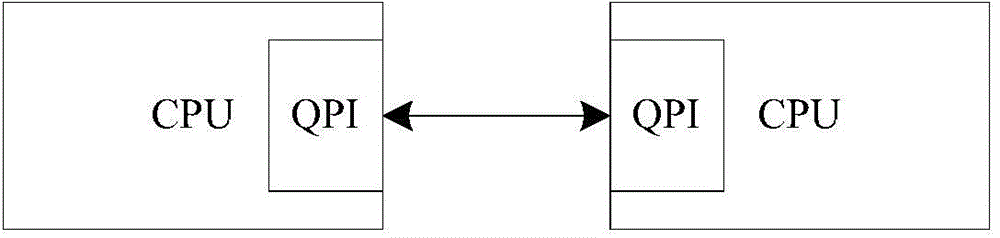

Data packet retransmission method and node in quick path interconnect system

ActiveCN103141050AReduce occupancyIncrease profitError prevention/detection by using return channelData switching networksQuick path interconnectLink layer

Disclosed are a data packet retransmission method and node in a quick path interconnect system. When a first node acts as a sender, only a first data packet detected to be faulty is retransmitted to a second node, so as avoid repeatedly sending to the second node the first data packet already correctly received by the second node, thereby saving system resources occupied by data packet retransmission, and therefore increasing the utilization of the system resources. When the first node acts as a receiver, a first link layer of the first node buffers a second data packet correctly received within the period from sending of a first retransmission request to receiving of a first retransmission response, and upon correctly receiving a second data packet retransmitted by a second node, sends to a first protocol layer the second data packet buffered during said period, so that when the second node only retransmits the second data packet detected to be faulty, the first node does not lose any packet, thereby ensuring the reliability of QPI-bus-based data packet transmission.

Owner:HUAWEI TECH CO LTD

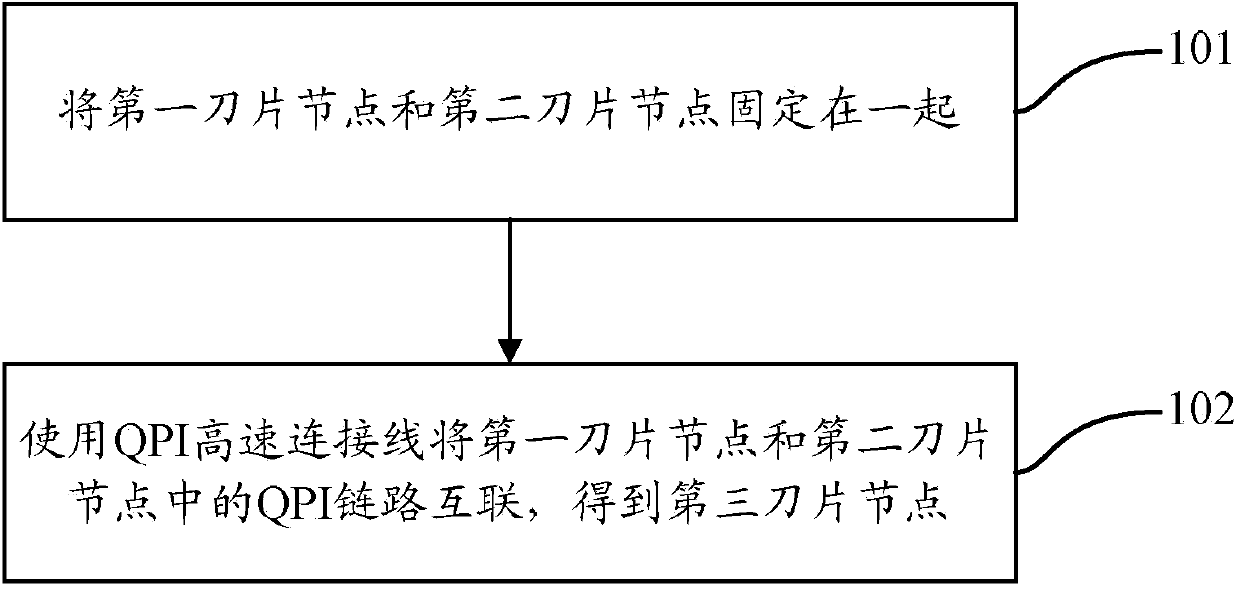

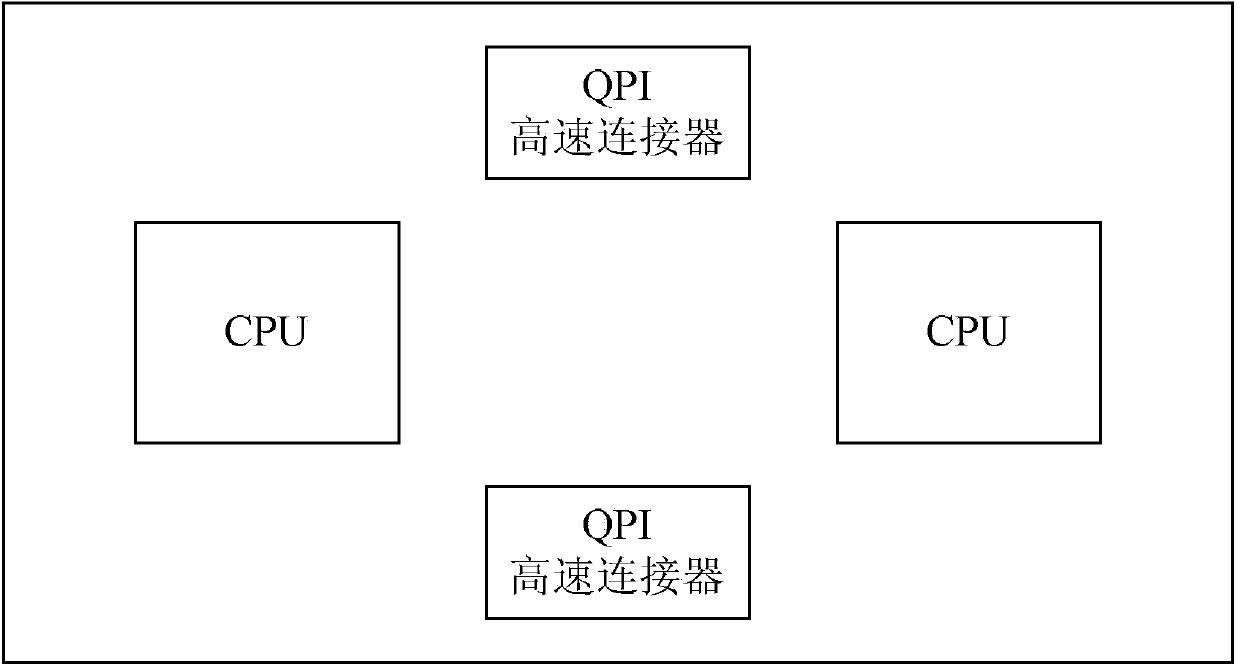

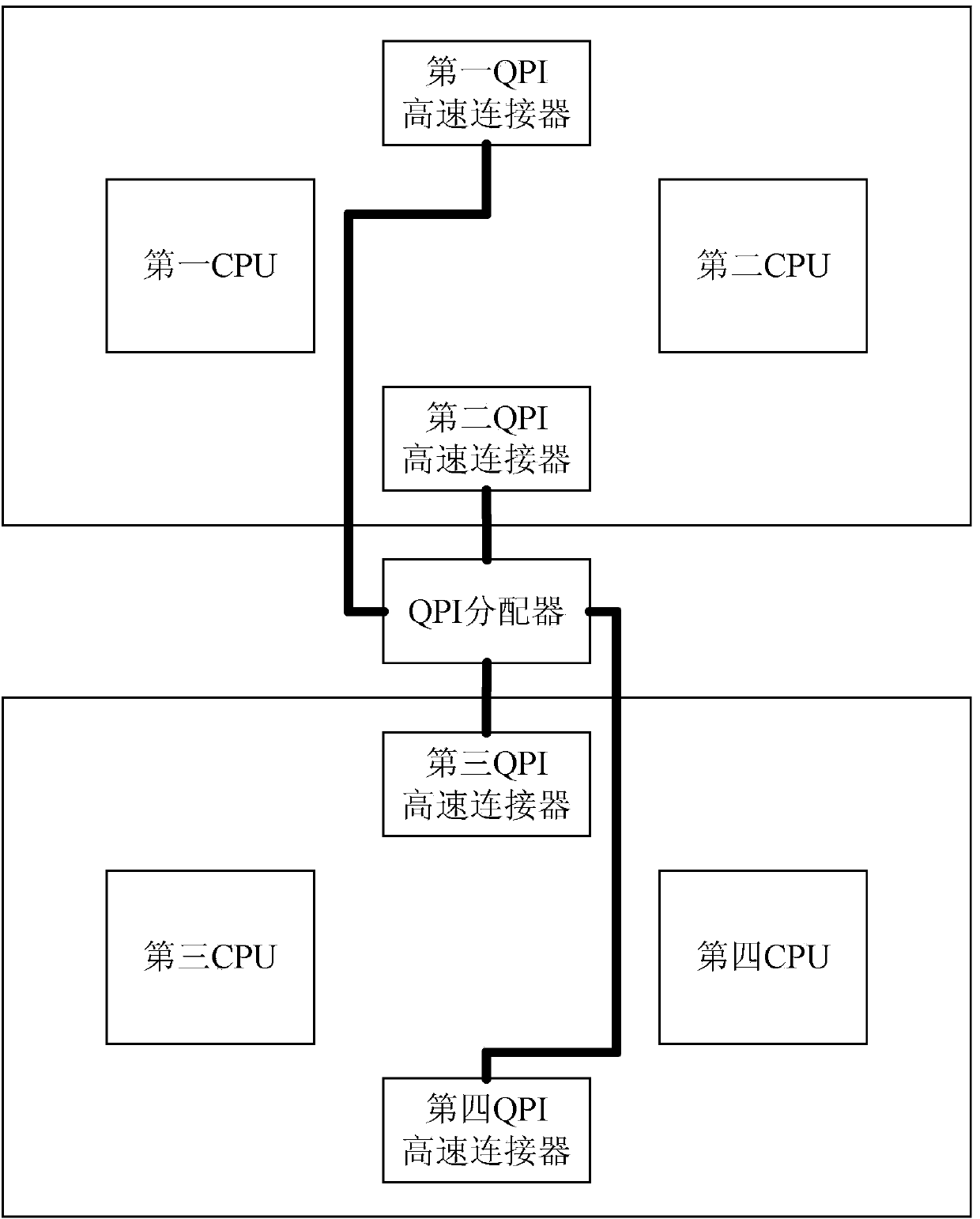

Blade node and extension method thereof

InactiveCN104199521AImprove resource sharingDigital data processing detailsEmbedded systemQuick path interconnect

The invention discloses a blade node and an extension method thereof. The extension method includes the following steps: fixing a first blade node and a second blade node together; using a quick path interconnect (QPI) high-speed connecting line to interconnect QPI links in the first blade node and the second blade node to acquire a third blade node. The QPI high-speed connecting line is used to extend the blade nodes, so that any two CPUs (central processing units) in the blade node acquired through extension can realize mutual access through the QPI links without through any through connection, and resource sharing degree among the blade nodes is improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

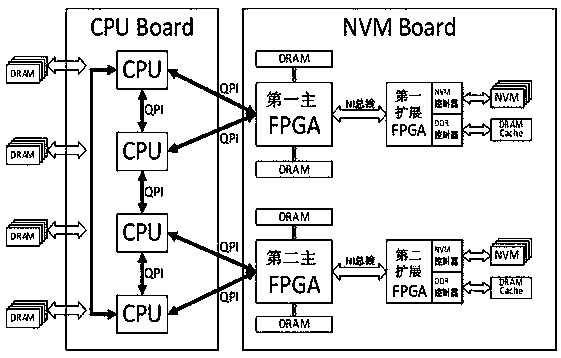

Heterogeneous hybrid memory server architecture

InactiveCN107844433AReduce power consumptionImprove access efficiencyMemory adressing/allocation/relocationEnergy efficient computingStatic random-access memoryParallel computing

The invention discloses a heterogeneous hybrid memory server architecture which comprises a CPU (central processing unit) computation board and an NVM (non-volatile memory) board. CPU chips are arranged on the CPU computation board and are connected with DRAM (dynamic random access memory) chips; master FPGA (field programmable gate array) chips are arranged on the NVM board and are connected withthe DRAM chips and NVM memory bars; the CPU chips are connected with the master FPGA chips by QPI (quick path interconnect) bus; the global cache consistency of non-volatile memories can be maintained by the master FPGA chips, and accordingly global memories can be shared. The heterogeneous hybrid memory server architecture has the advantages that each NVM with low power consumption and high capacity is used as a far-end memory, each DRAM with low capacity and high speed is used as a near-end memory, and accordingly heterogeneous hybrid memory systems with high capacity and low power consumption can be constructed; addresses are compiled for the heterogeneous memories in a unified manner, accordingly, the problems in the aspects of heterogeneous memory system coupling and speed matching can be solved, and the global data consistency can be maintained; the heterogeneous hybrid memory server architecture is high in memory capacity and CPU access efficiency and low in power consumption.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

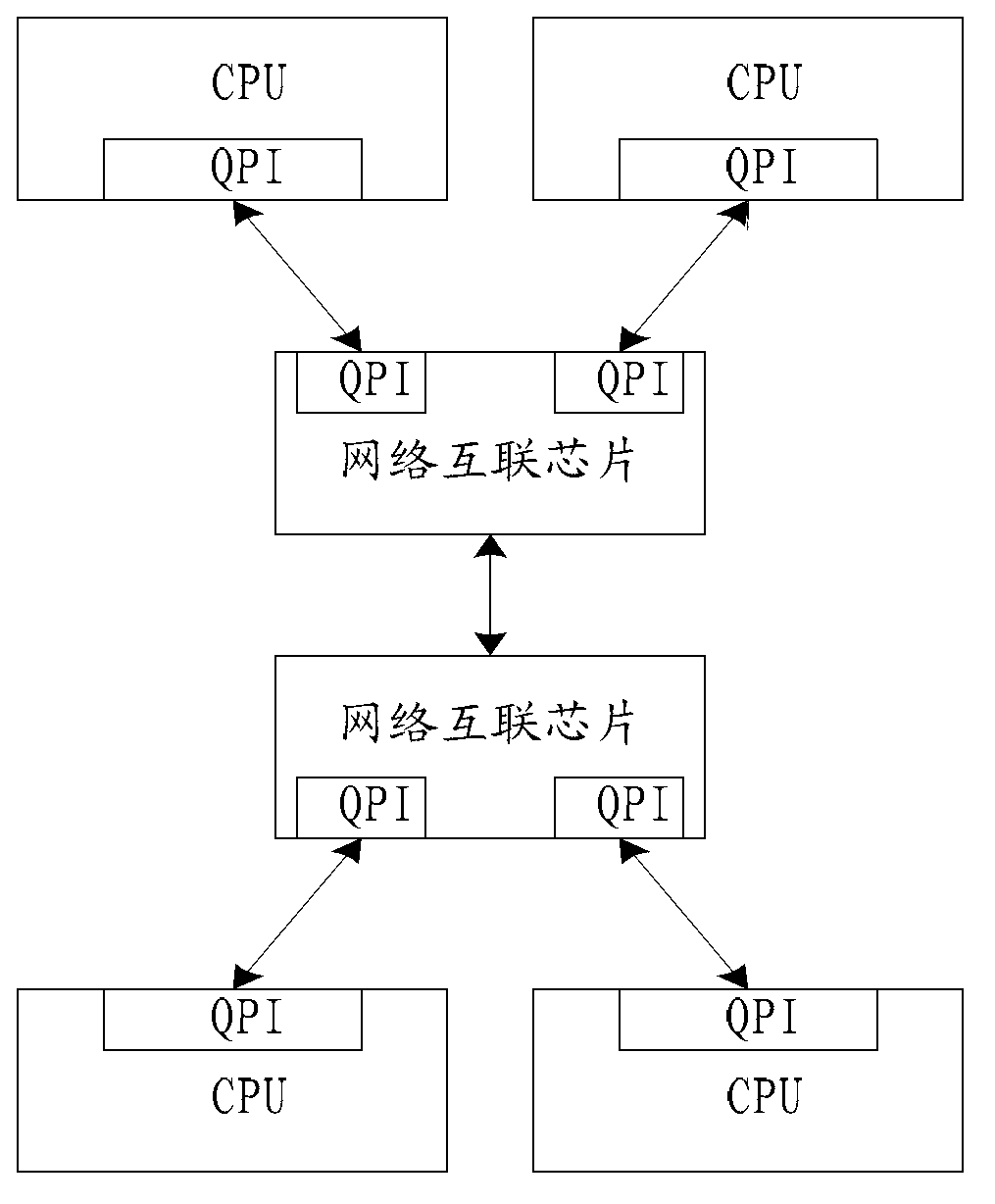

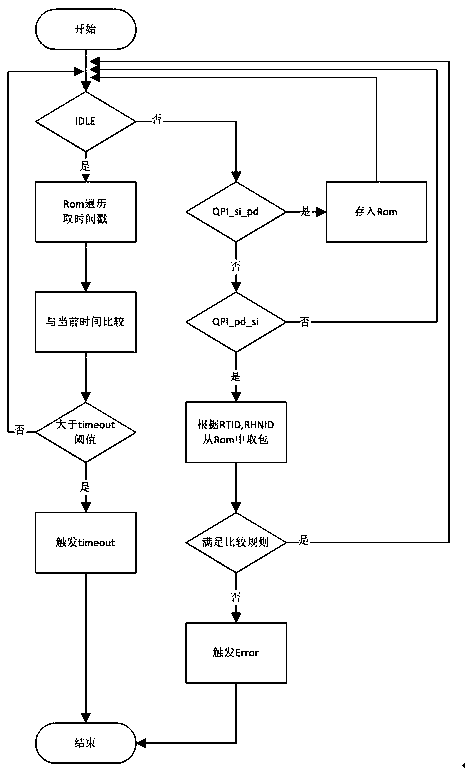

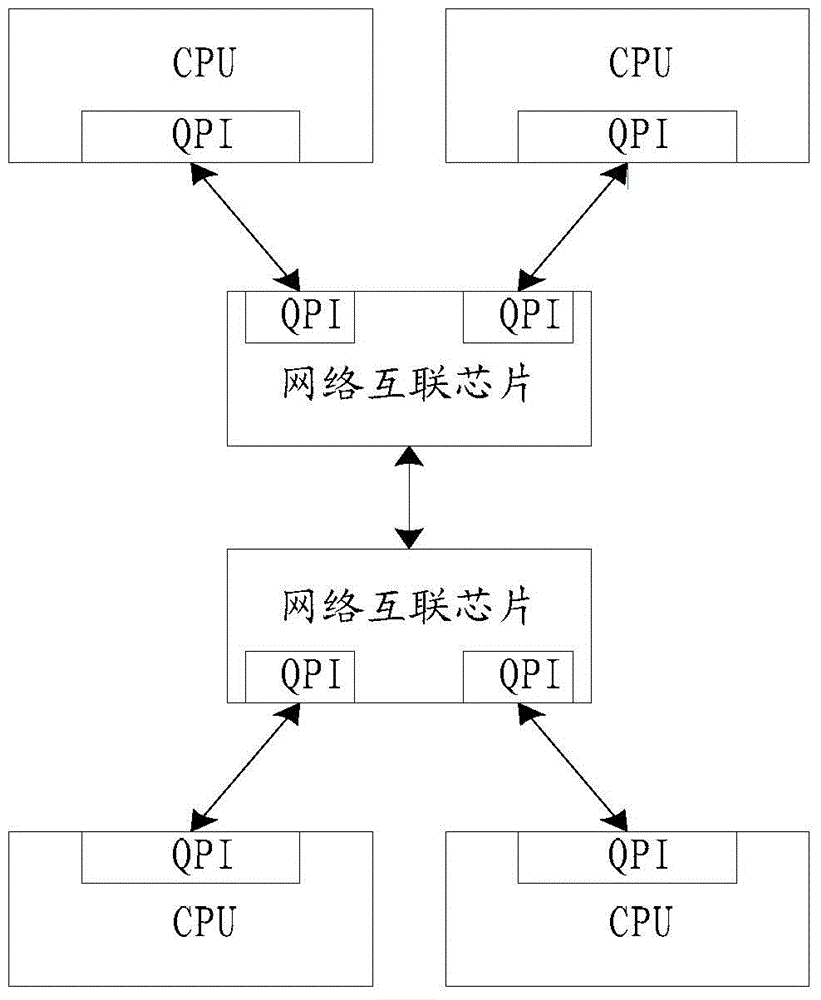

Global detection module method for node interconnection chip verification

InactiveCN105511992AImprove work efficiencyEasy to implementDetecting faulty computer hardwareElectrical testingInterconnectionLogic analyzer

The invention discloses a global detection module method for node interconnection chip verification, belongs to a node interconnection chip module detection method and aims to solve technical problems that logic analyzers are expensive and cannot grab too many signals due to limited pins of the logic analyzers and key information cannot be found and located quickly and simply in stored data. The technical scheme is as follows: the method comprises steps as follows: (1) node interconnection chip logic is embedded in the form of an independent module and runs simultaneously with node interconnection logic, interacted message information of all channels is monitored, error detection is performed according to corresponding protocol rules, overtime and message error information is reported, and full-automatic running is realized; (2) an active QPI (Quick Path Interconnect) message sent by a CPU (central processing unit) is stored, when a QPI message is returned from an NC end, the QPI message is compared with a corresponding and stored source QPI message, and whether the message is an expected message or not is determined.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

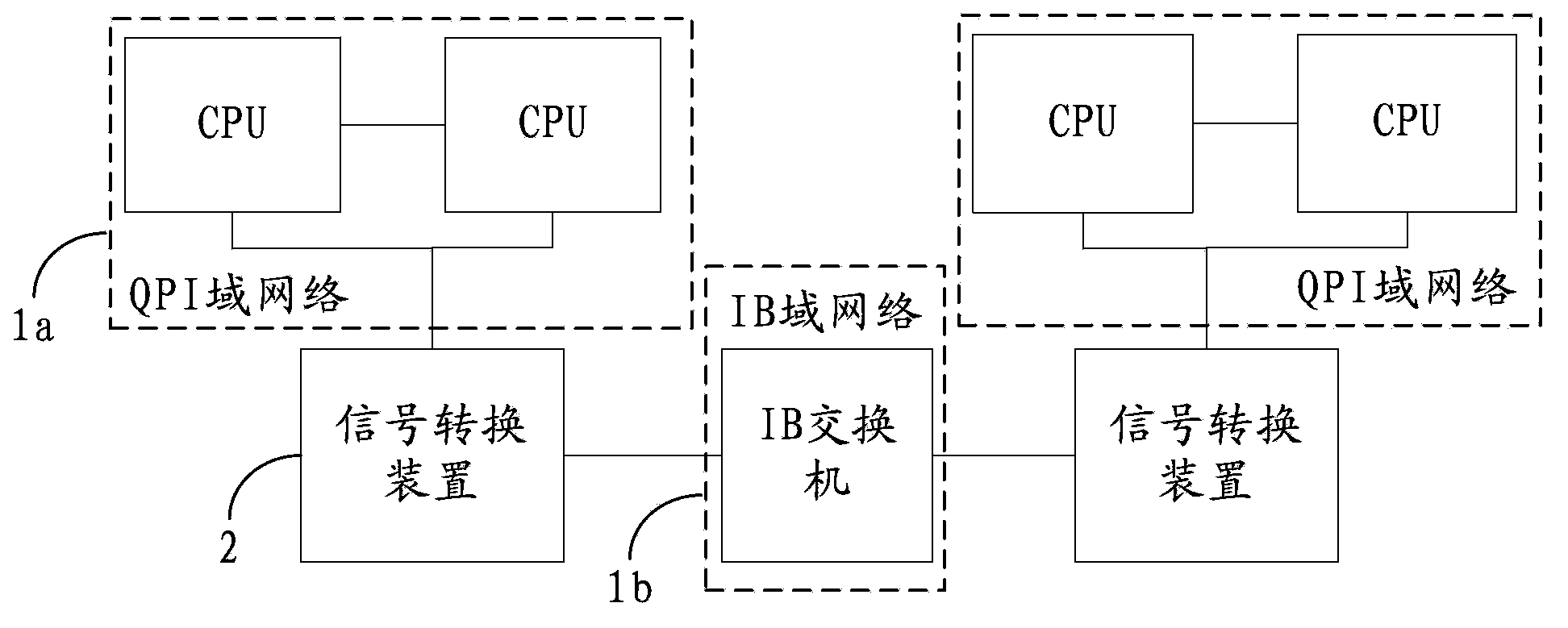

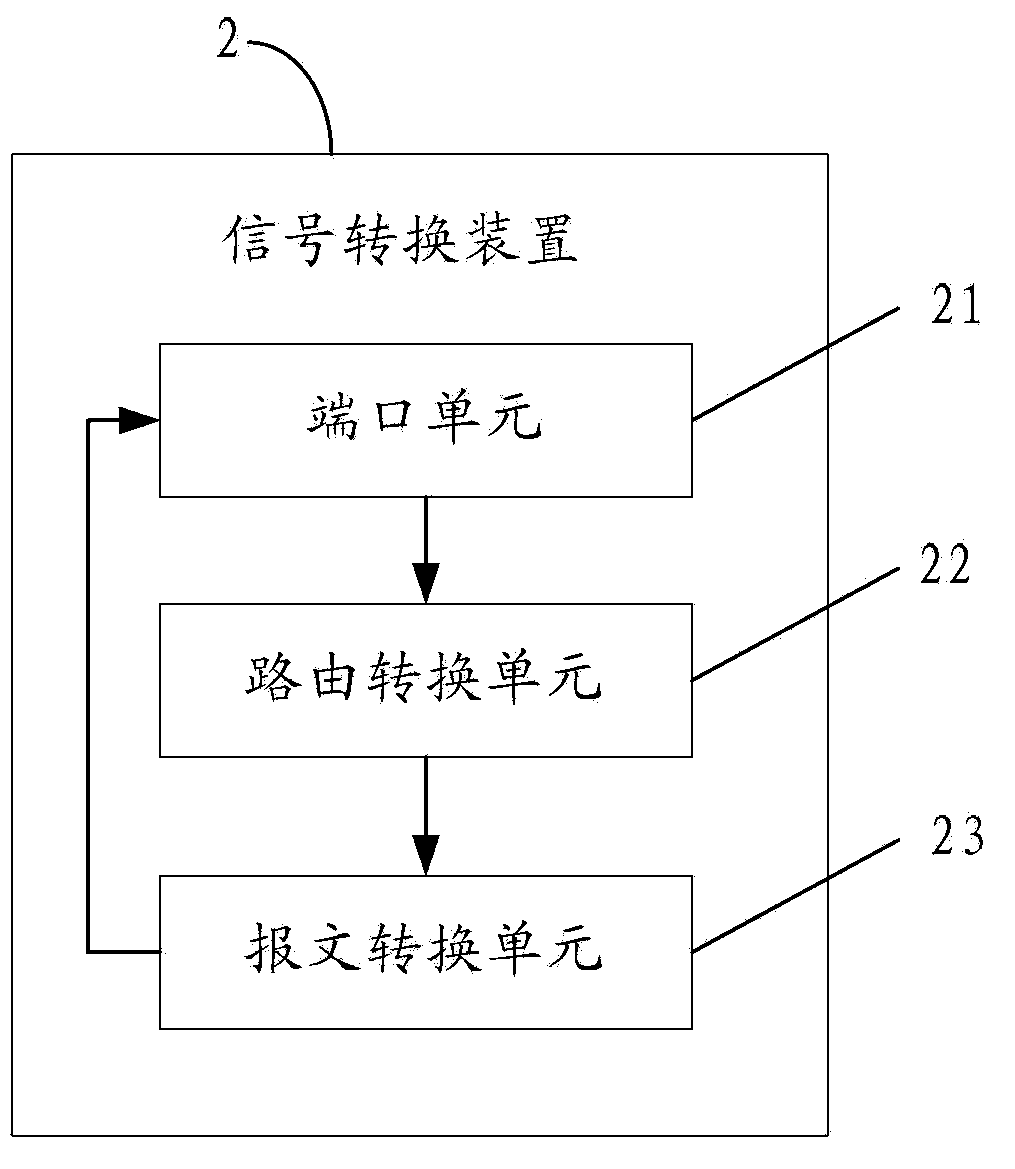

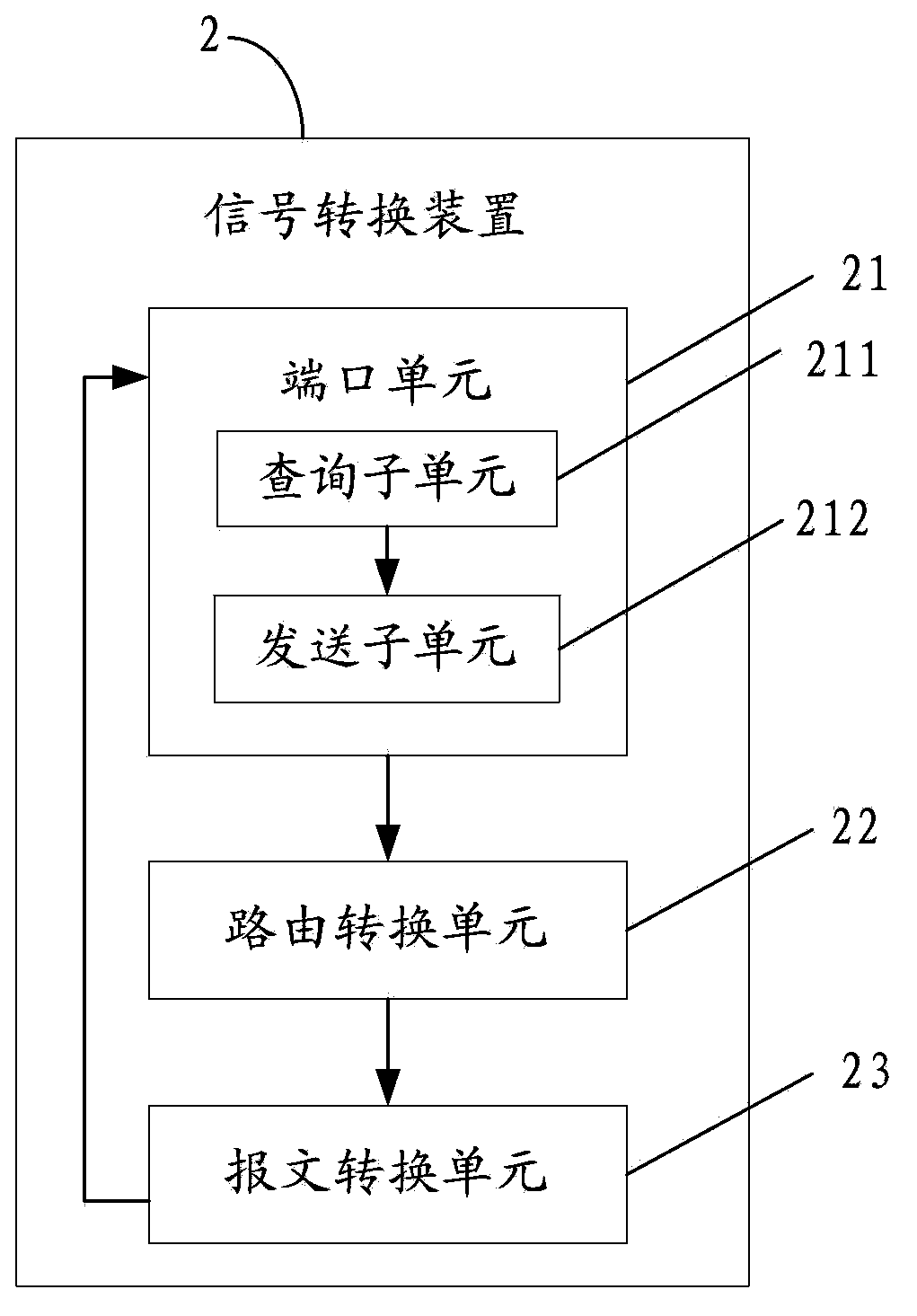

Signal conversion method, device and system

Embodiments of the present invention disclose a signal conversion method, apparatus and system, which relate to the field of communications and can perform direct conversion between a network signal in a quick path interconnect (QPI) domain and a network signal in an infiniband (IB) domain. The method comprises: extracting a destination node address (DNID) field of a QPI packet, obtaining a local address (LID) field corresponding to the DNID field, of an IB packet, using the LID field as a packet head of a converted IB packet, using the QPI packet as a data field of the converted IB packet, and performing packing to obtain the converted IB packet; or extracting a data field of an IB packet, and packing the data field of the IB packet into a converted QPI packet. The embodiments of the present invention are used for conversion between a network signal in a quick path interconnect domain and a network signal in an infiniband domain.

Owner:XFUSION DIGITAL TECH CO LTD

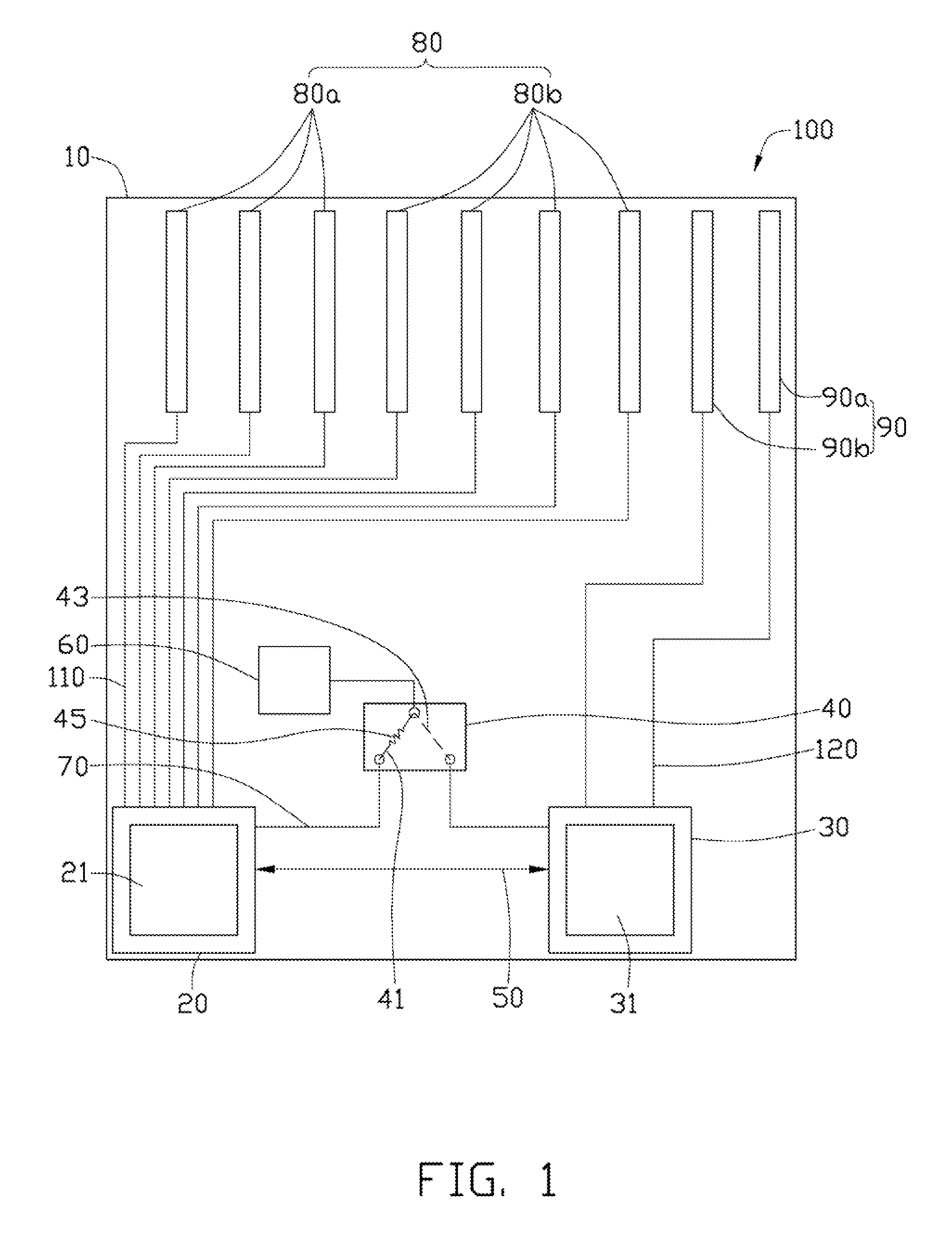

Motherboard used in server computer

InactiveUS8255608B2Component plug-in assemblagesElectric digital data processingCPU socketEmbedded system

Owner:HON HAI PRECISION IND CO LTD

Method for realizing transmission of QPI (Quick Path Interconnect) message through PCI-E (Peripheral Component Interconnect-Express) interface

InactiveCN104539579AReduce Design ComplexityImprove verification efficiencyError preventionPhysical layerStructure of Management Information

The invention provides a method for realizing transmission of a QPI (Quick Path Interconnect) message through a PCI-E (Peripheral Component Interconnect-Express) interface. The PCI-E interface is adopted instead of a QPI physical layer, so that memory expansion, access and maintenance of the PCI-E interface are realized. The design structure of the PCI-E interface for transmitting the QPI message comprises a detection circuit, a PCI-E interface module, a PCI-E and QPI interface transformation configuration module and a QPI upper-layer logic interface module. The method for realizing transmission of the QPI message through the PCI-E interface has the advantages that total mapping of various PCI-E transmission modes such as X16, X8 and X4 to a QPI transmission mode is realized through adoption of a plurality of data mapping relations, so that the usability of a system is greatly enhanced. In the development, design, verification and debugging processes of a QPI protocol relevant chip, the design difficulty and design risk of the chip can be effectively lowered, and the chip development period can be greatly shortened.

Owner:INSPUR GROUP CO LTD

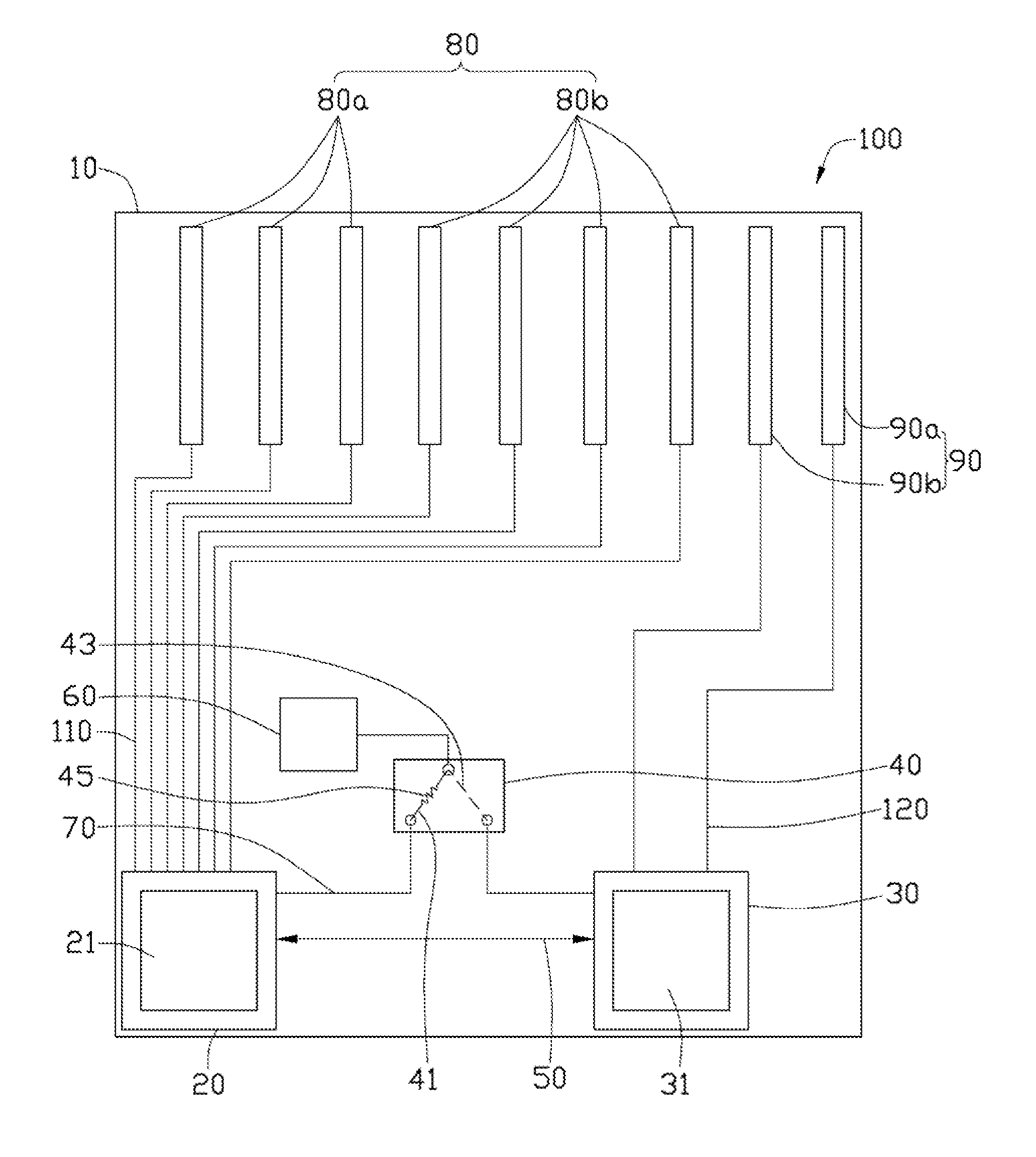

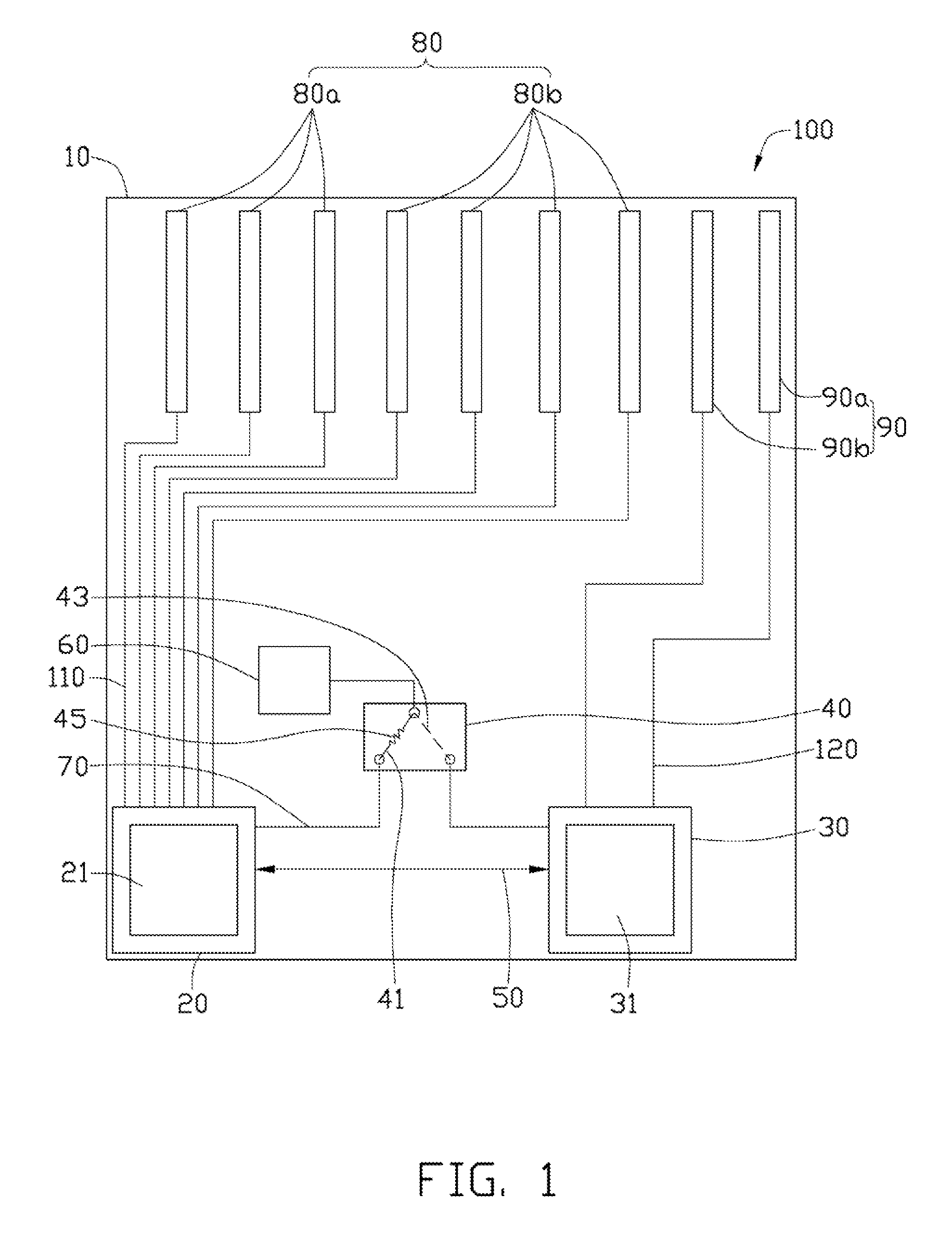

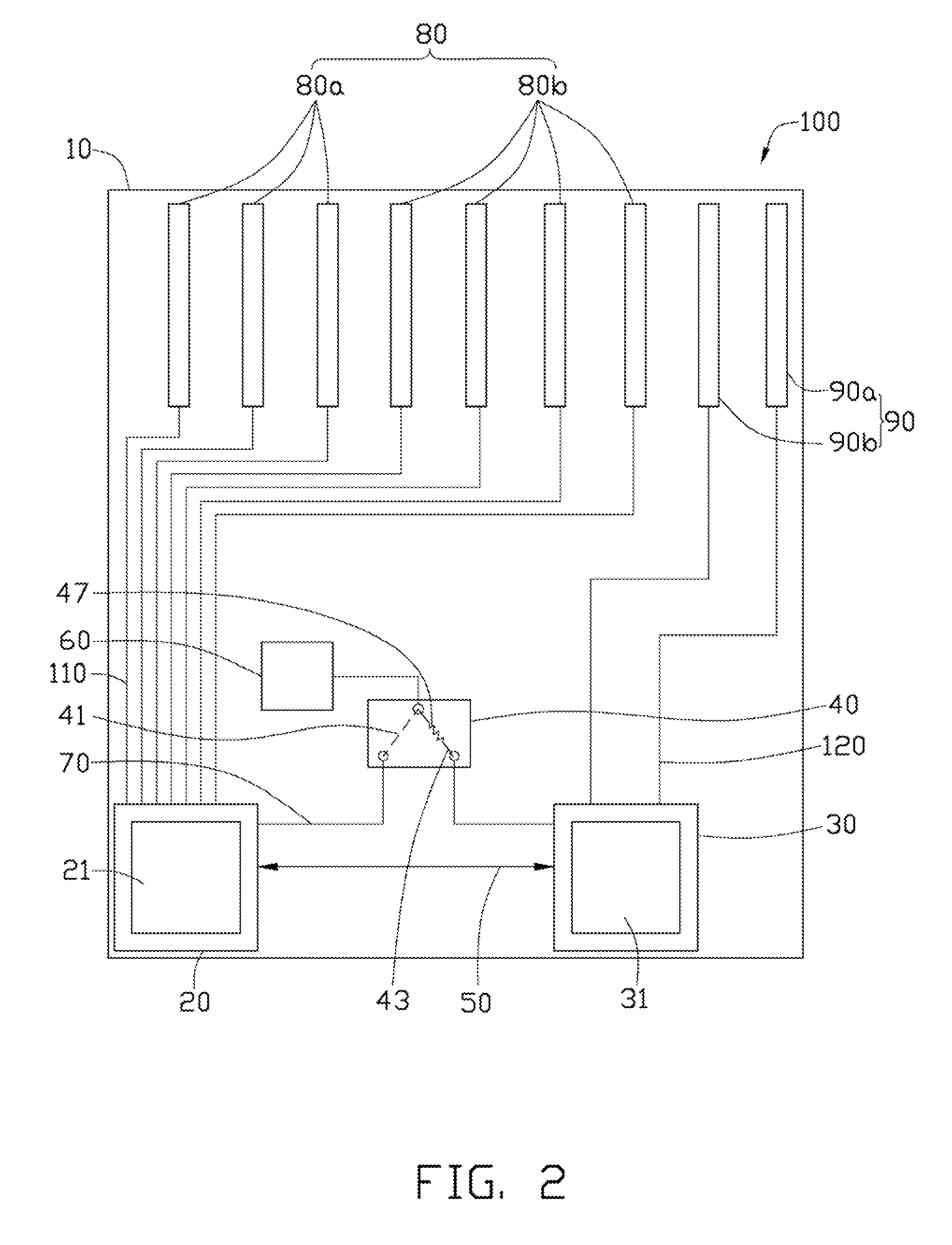

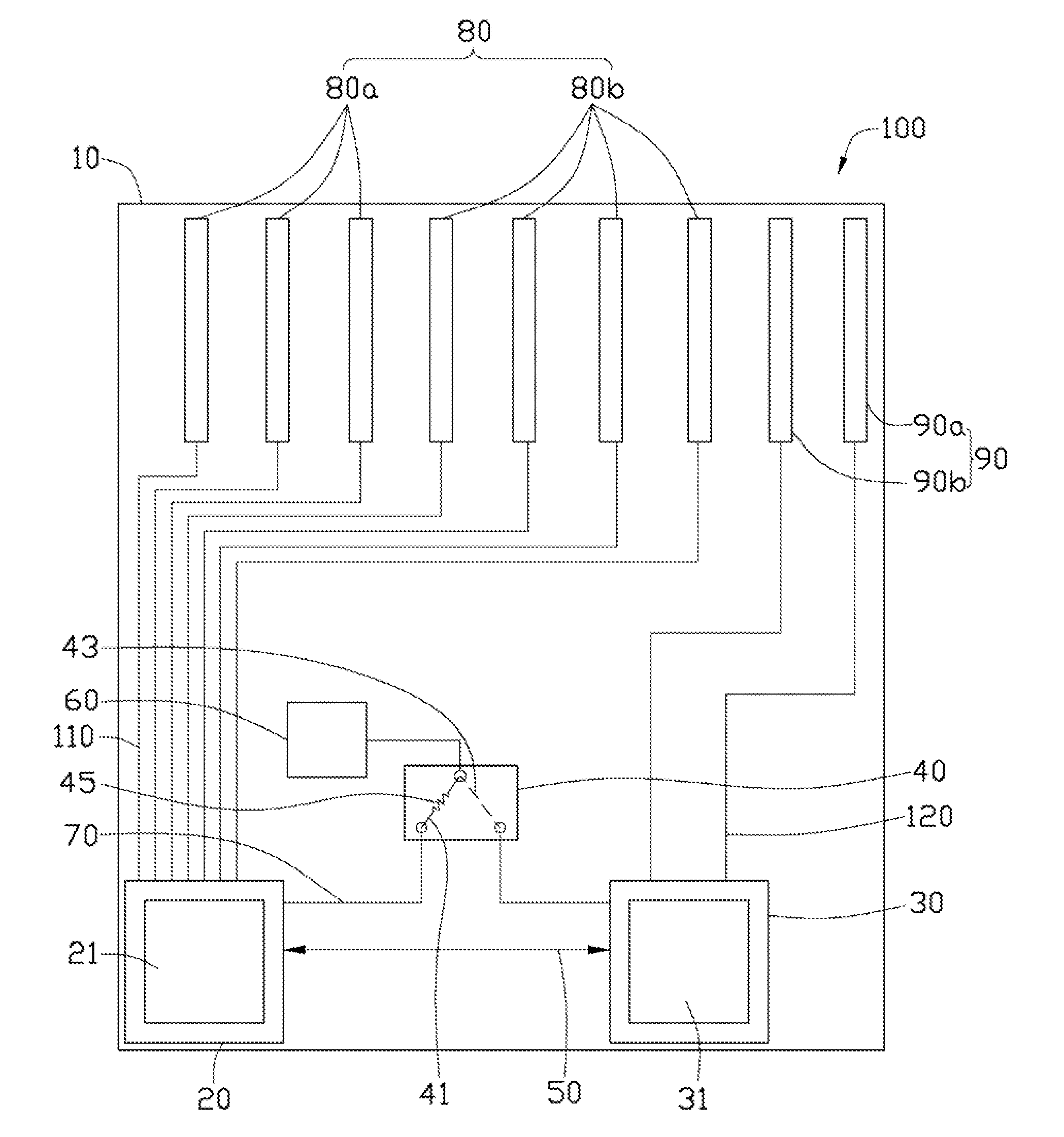

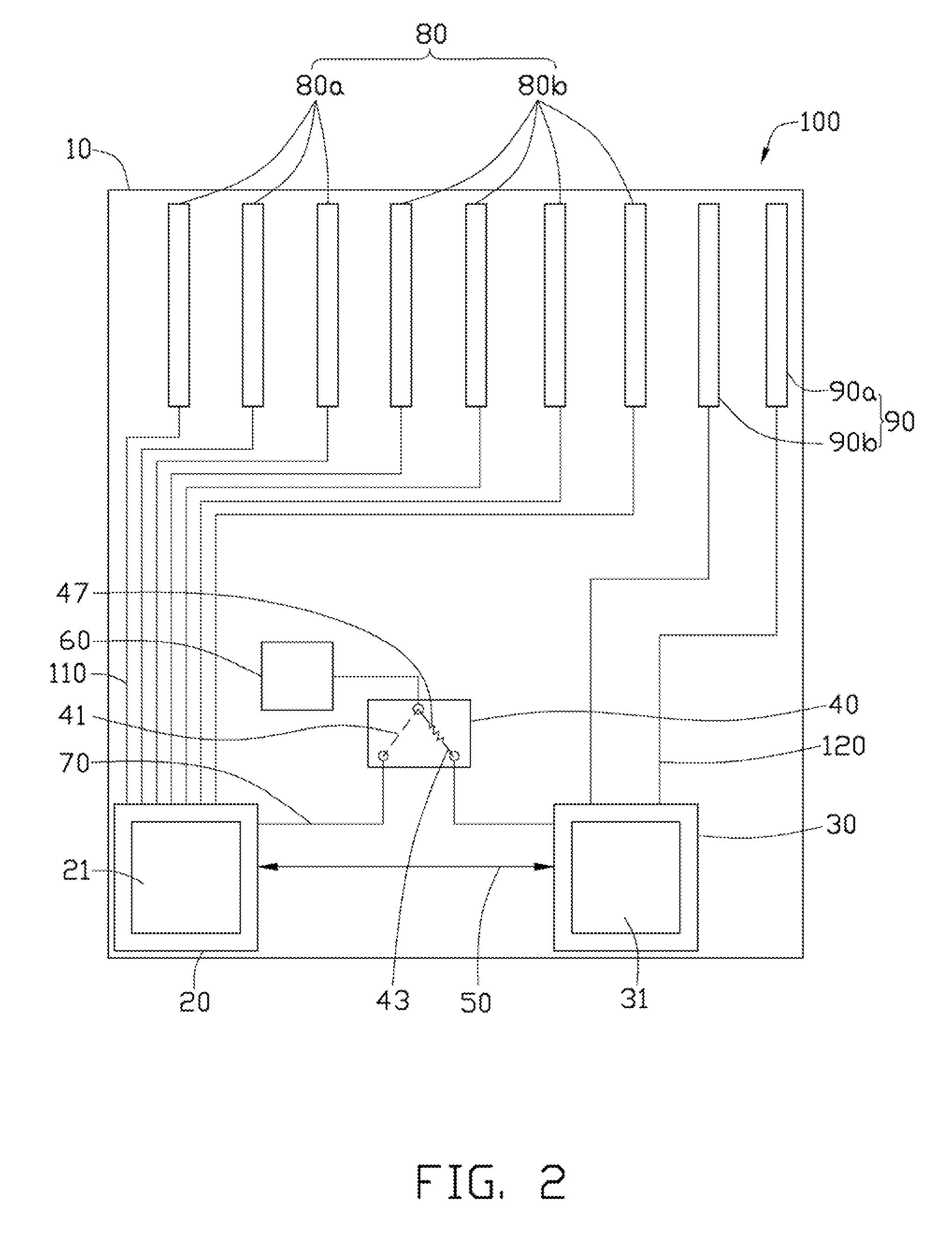

Motherboard used in server computer

InactiveUS20110296075A1Component plug-in assemblagesElectric digital data processingCPU socketEmbedded system

An exemplary motherboard includes a substrate, a first CPU socket provided on the substrate for receiving a first CPU, a second CPU socket provided on the substrate for receiving a second CPU, a switching circuit connected to the first CPU and the second CPU, at least one quick path interconnect (QPI) bus connecting the first CPU to the second CPU, a number of first peripheral component interconnect express (PCI-e) interfaces connected to the first CPU via a number of first wires, a number of second PCI-e interfaces connected to the second CPU via a number of second wires, and a activating chip connected to the first CPU and the second CPU via the switching circuit and configured for starting a peripheral device connected to the first PCI-e interfaces or the second PCI-e interfaces.

Owner:HON HAI PRECISION IND CO LTD

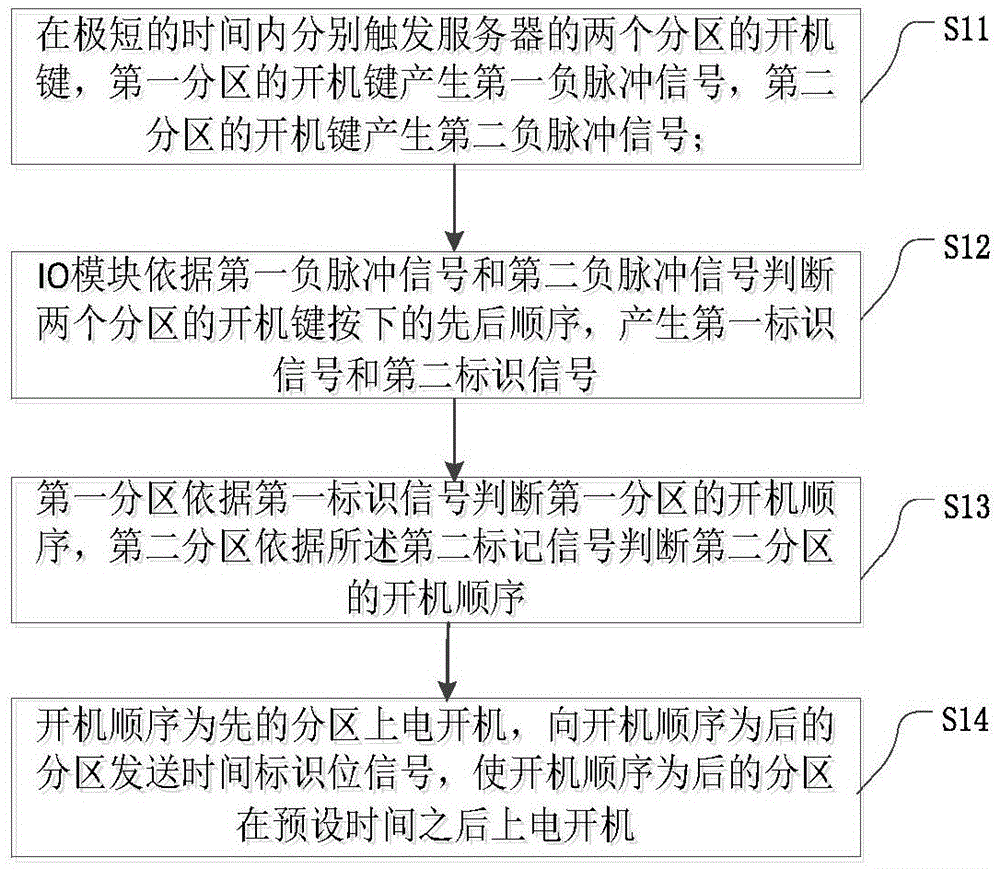

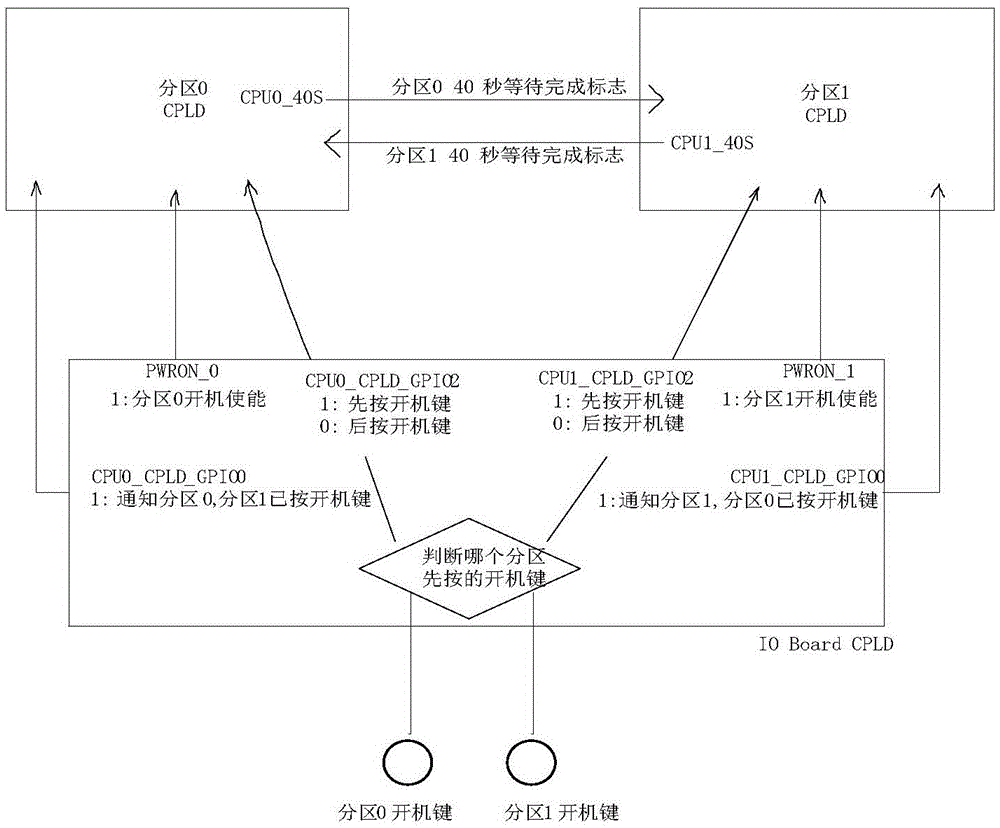

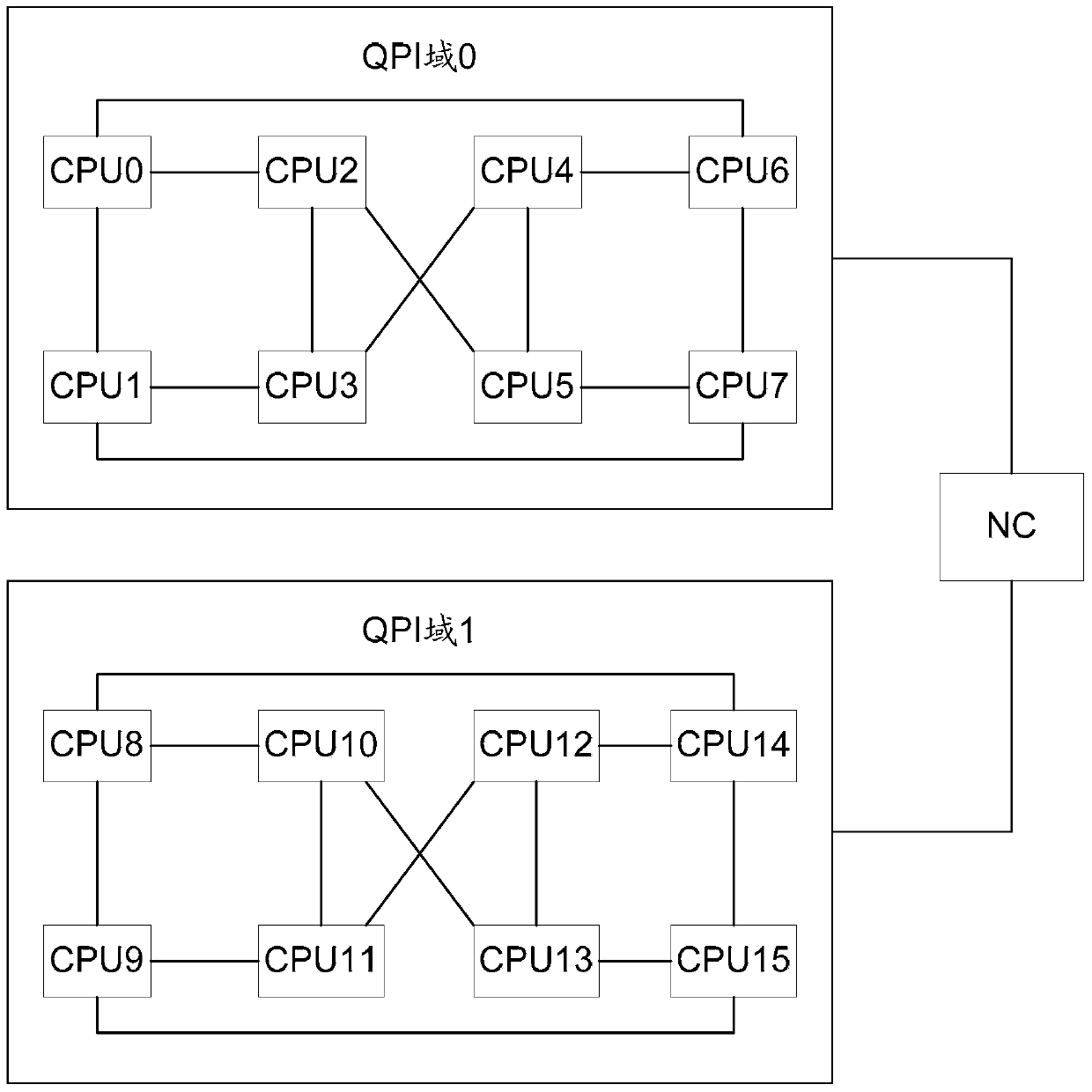

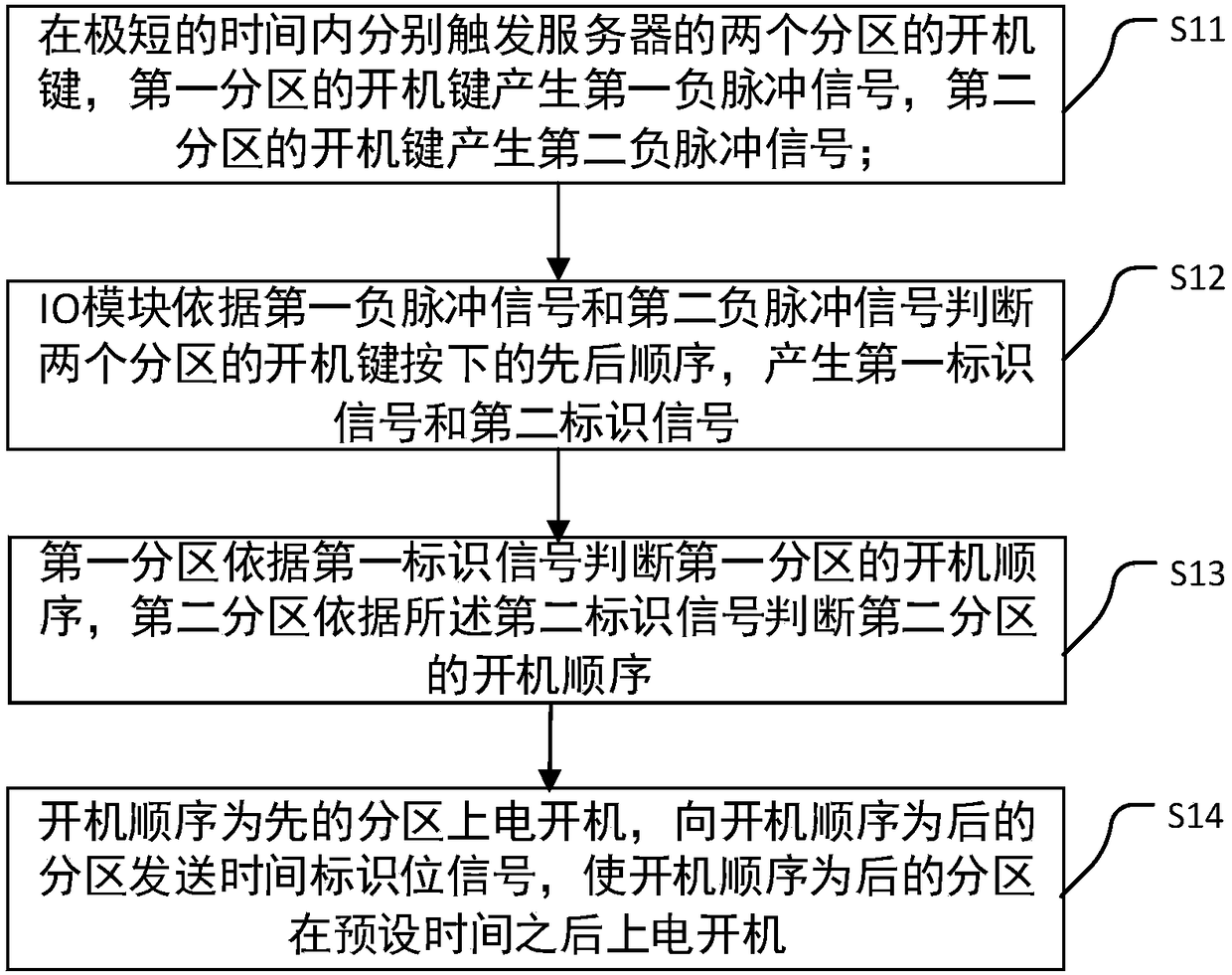

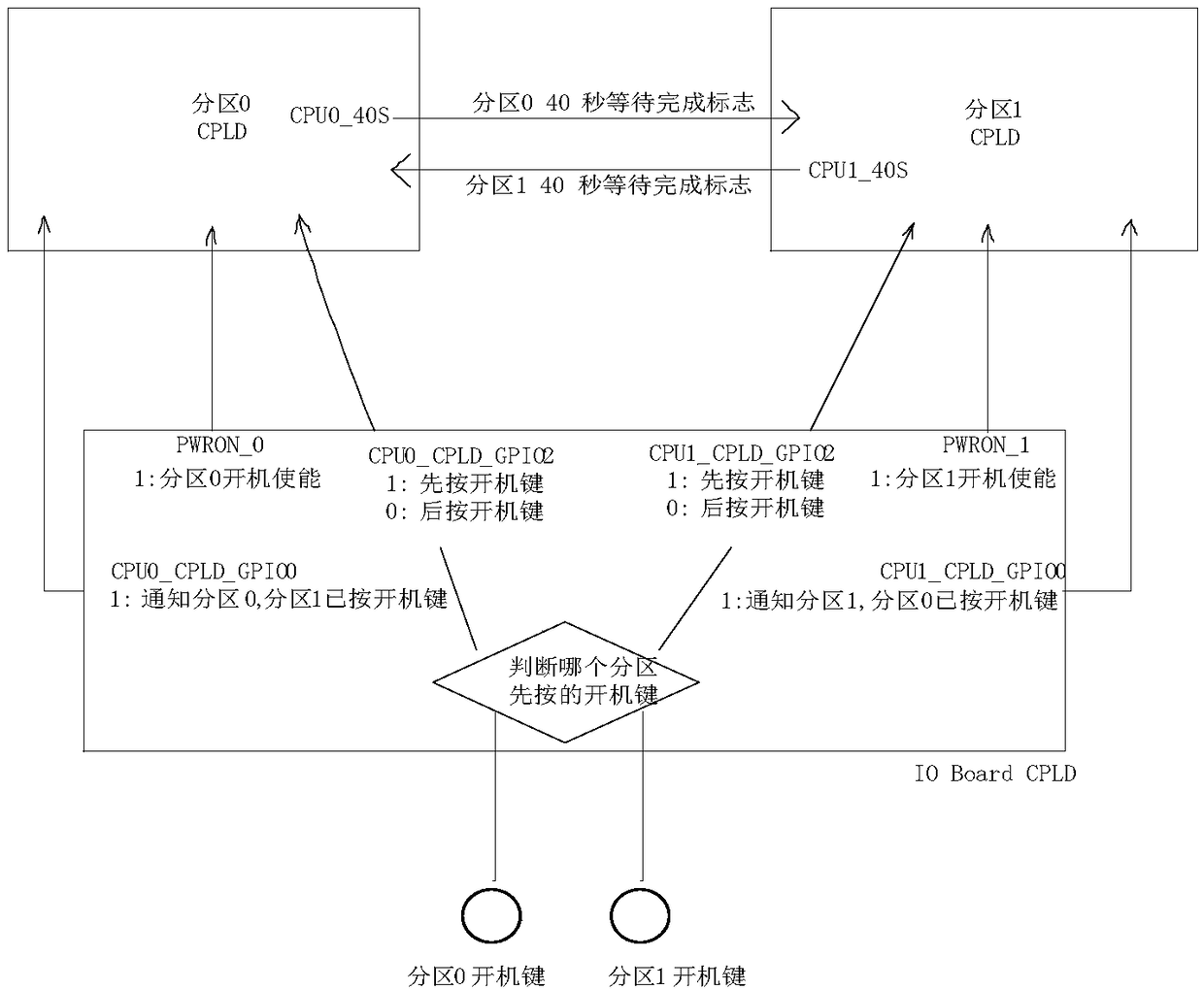

Double-zone power-on control method for server

ActiveCN105607915AAvoid interactionVolume/mass flow measurementPower supply for data processingElectricityComputer module

The invention discloses a double-zone power-on control method for a server. The method comprises the following steps that: power buttons of two zones of the server are triggered in a remarkably short period of time respectively, wherein the power button of a first zone generates a first undershoot signal and the power button of the second zone generates a second undershoot signal; an IO module judges the pressing sequence of the power buttons of two zones according to the first undershoot signal and the second undershoot signal to generate a first identification signal and a second identification signal; the first zone judges the power-on sequence of the first zone according to the first identification signal and the second zone judges the power-on sequence of the second zone according to the second identification signal; the zone in first power-on sequence is electrified to be powered on, a time identification signal is sent to the zone in a second power-on sequence and the zone is electrified to be powered on after a preset period of time. According to the method, training time intervals of QPI (Quick Path Interconnect) buses of two zones are staggered, so that two zones can be powered on normally.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

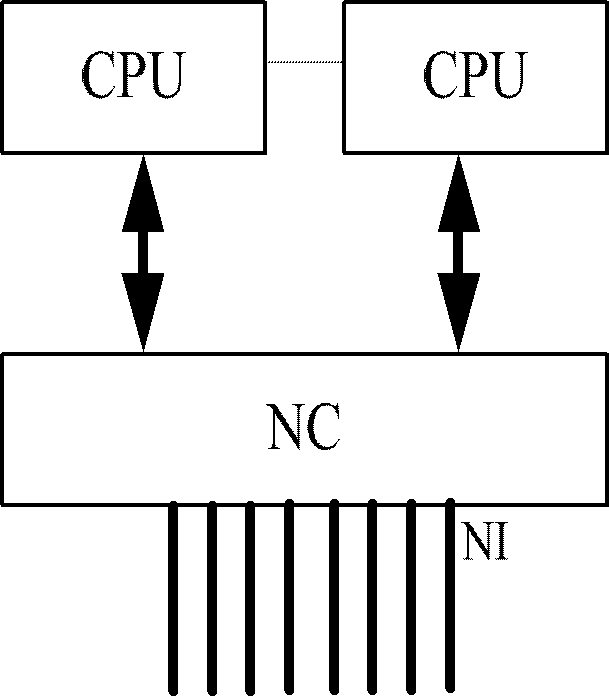

Memory data migration method and node controller

ActiveCN106557429AAchieve migrationMemory adressing/allocation/relocationMirror imageQuick path interconnect

Embodiments of the invention disclose a memory data migration method and a node controller (NC), and relate to the technical field of communications. The cross-QPI (Quick Path Interconnect) domain memory data migration can be realized. According to the specific scheme, the NC sends a first instruction message to a first CPU (Central Processing Unit), wherein the first indication message is used for instructing the first CPU to read memory data in a first address; the NC receives first data sent by the first CPU, wherein the first data is the memory data in the first address; the NC sends a second instruction message to the first CPU, wherein the second instruction message is used for instructing the first CPU to write the first data back to the first address; the NC determines a second address corresponding to the first address according to a pre-established mirror image relationship; and the NC sends a third instruction message to a second CPU, wherein the third instruction message is used for instructing the second CPU to write the first data back to the second address.

Owner:XFUSION DIGITAL TECH CO LTD

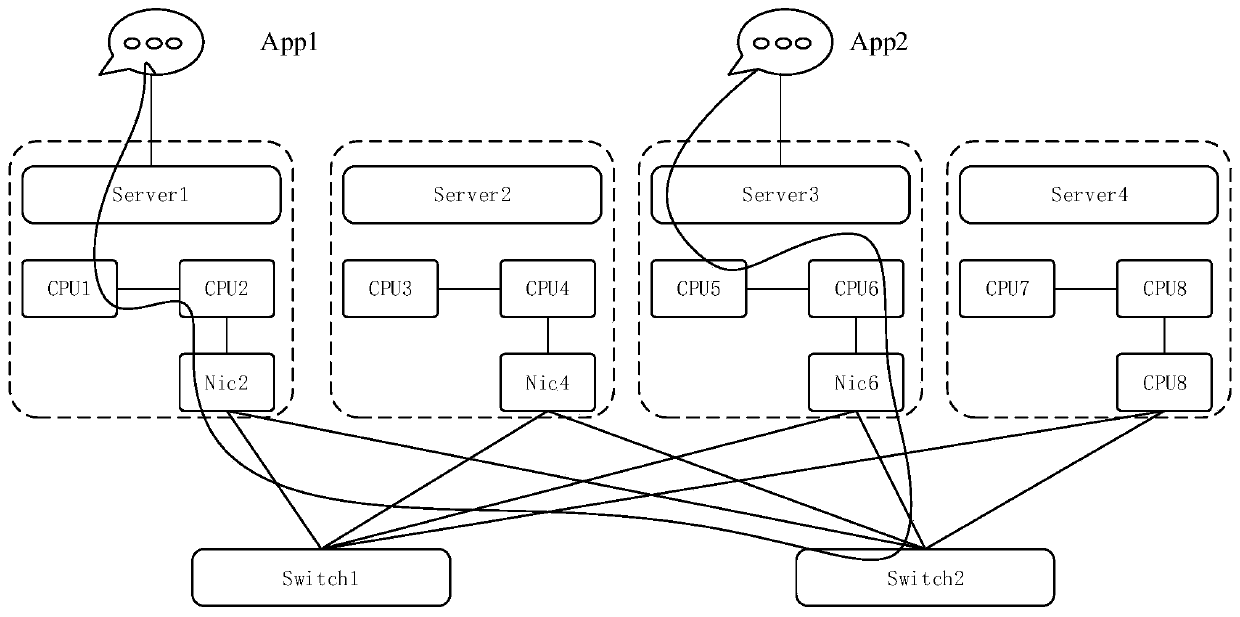

Data processing method and device

ActiveCN111400238AIncrease transfer rateDigital computer detailsEnergy efficient computingComputer architectureEngineering

The invention discloses a data processing method and device. The method comprises the following steps: when a first processor in a plurality of processors of a first server is scheduled, a first processor realizes data communication with a second server through a physical network card of the first server; wherein the first processor is used for sending data to the physical network card through a PCIE (Peripheral Component Interconnect Express) bus and / or receiving the data, and the second processor is used for sending the data to the physical network card through a PCIE bus and / or receiving the data through the PCIE bus; each processor communicates with the physical network card through the PCIE bus; wherein the plurality of processors communicates with each other through a quick path interconnect (QPI) bus, and the plurality of processors communicates with each other through the QPI bus. The plurality of processors is provided with different NUMA (Non Uniform Memory Access) architectures, and the plurality of processors is provided with different NUMA architectures, Non Uniform Memory Access Architectures and Non Uniform Memory Access architectures; wherein the processors fixedlyconnected with the physical network card are other processors except the first processor in the plurality of processors.

Owner:CHINA MOBILE COMM LTD RES INST +1

A memory data migration method and node controller

ActiveCN106557429BAchieve migrationMemory adressing/allocation/relocationMirror imageQuick path interconnect

Owner:XFUSION DIGITAL TECH CO LTD

A kind of server dual-partition start-up control method

ActiveCN105607915BAvoid interactionVolume/mass flow measurementPower supply for data processingElectricityComputer science

The invention discloses a double-zone power-on control method for a server. The method comprises the following steps that: power buttons of two zones of the server are triggered in a remarkably short period of time respectively, wherein the power button of a first zone generates a first undershoot signal and the power button of the second zone generates a second undershoot signal; an IO module judges the pressing sequence of the power buttons of two zones according to the first undershoot signal and the second undershoot signal to generate a first identification signal and a second identification signal; the first zone judges the power-on sequence of the first zone according to the first identification signal and the second zone judges the power-on sequence of the second zone according to the second identification signal; the zone in first power-on sequence is electrified to be powered on, a time identification signal is sent to the zone in a second power-on sequence and the zone is electrified to be powered on after a preset period of time. According to the method, training time intervals of QPI (Quick Path Interconnect) buses of two zones are staggered, so that two zones can be powered on normally.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

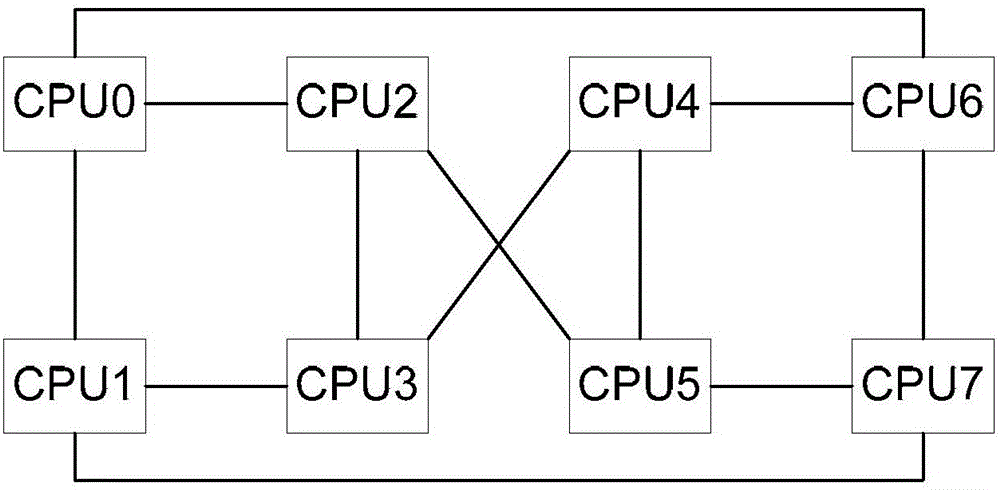

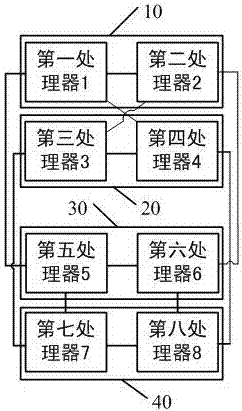

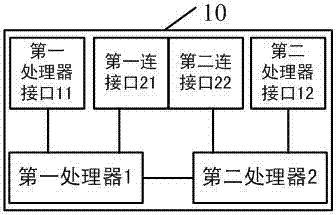

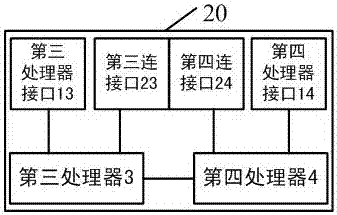

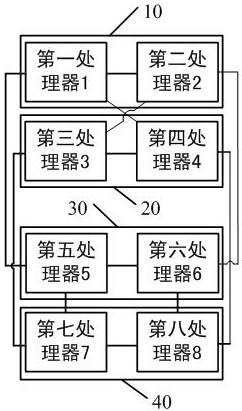

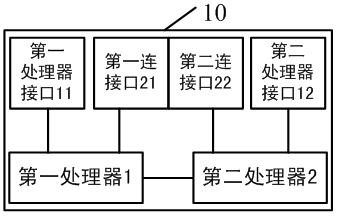

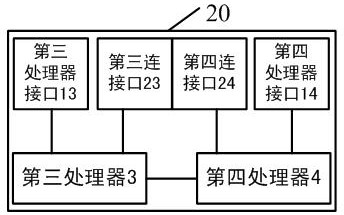

Multipath server interconnection topological structure

ActiveCN107423255AEven heat dissipationOptimize layoutDigital computer detailsElectric digital data processingSingle plateInterconnection

The invention provides a multipath server interconnection topological structure, which comprises a first single plate, a second single plate, a third single plate, a fourth single plate and a back plate, wherein the first single plate comprises a first processor, a second processor, a first processor interface, a second processor interface, a first connection interface and a second connection interface; the second single plate comprises a third processor, a fourth processor, a third processor interface, a fourth processor interface, a third connection interface and a fourth connection interface; and the third single plate comprises a fifth processor, a sixth processor, a fifth processor interface, a six processor interface and a fifth connection interface. The QPI (Quick Path Interconnect) buses of two processors on each single plate are subjected to partitioning processing, and cross interconnection parts do not need to mutually stride; and under a situation that normal work is guaranteed, the multipath server interconnection topological structure can realize even cooling, and a first connection area, a second connection area and a third connection area avoid connection cross routes among processors.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

Method, device and system for implementing remote direct memory access

ActiveCN103902486BResolution delay is largeQuick responseTransmissionElectric digital data processingDirect memory accessComputer module

A system, a device and a method for implementation of remote direct memory access are used for a memory access system with a home terminal and an opposite terminal. Each of the home terminal and the opposite terminal comprises a CPU (central processing unit) and an RDMA (remote direct memory access) module which are connected through a QPI (quick path interconnect). The RDMA module of the opposite terminal is capable of converting an RDMA request message into a QPI data request when receiving the RDMA request message sent by the RDMA module of the home terminal, and accordingly the opposite terminal CPU participated in remote direct memory access is enabled to quickly acquire the QPI data request from the RDMA module of the opposite terminal, and time delay resulted from communication between a PCIE (peripheral component interconnect express) bus and the CPU is avoided to enable the data request to be responded quickly.

Owner:XFUSION DIGITAL TECH CO LTD

Data processing method, protocol conversion device and internet

ActiveCN103401846BFlexible expansion of system memoryEasy to updateTransmissionComputer hardwareThe Internet

The invention relates to a data processing method, protocol conversion equipment and an Internet. The data processing method comprises the following steps of receiving a QPI (quick path interconnect) message; converting the QPI message according to an RapidIO (rapid input / output) protocol format to obtain an RapidIO message, wherein the RapidIO message carries allocation information for instructing the conversion of the QPI message; and sending the RapidIO message. According to the embodiment of the invention, a QPI interface can be converted into an RapidIO message according to the conversion between the message of the QPI protocol format and the message of the RapidIO protocol format; system equipment and a system memory of a server can be flexibly expanded; and the system equipment and the system memory of the server are convenient to update and maintain.

Owner:XFUSION DIGITAL TECH CO LTD

MEZZ card type four framework interconnection method and system

InactiveCN107894809AOptimize structure layoutOptimal Design StructureDigital data processing detailsPlatform Controller HubComputer module

The invention discloses an MEZZ (Mezzanine) card type four framework interconnection method and system. The method includes the following steps: preparing modules and parts to be installed, wherein the modules and parts include a plurality of CPUs and CPU radiating fins, an MEZZ card type CPU sub board, an MEZZ connector, a CPU main board, and a QPI (Quick Path Interconnect) bus; arranging the CPUs on the CPU sub board and the CPU main board respectively, and installing the CPU radiating fins, wherein a PCH (Platform Controller Hub) communicates with the CPUs through a DMI (Direct Media Interface) bus, and a BMC (Baseboard Management Controller) communicates with the PCH through an LPC (Low Pin Count) bus; upending the CPU sub board on the CPU main board through the MEZZ connector; and connecting the CPU sub board and the CPU main board together through the QPI bus. The CPU sub board and the CPU main board are connected together through the QPI bus, the CPU sub board is upended on theCPU main board through the MEZZ connector, and main board layout and structural design are facilitated; and the board layers and the board thickness can be effectively reduced, and wiring is facilitated.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

A configuration method and system for hot-adding central processing unit cpu

ActiveCN106708551BImprove user experiencePromote recoveryDigital computer detailsProgram loading/initiatingComputer architectureEngineering

Owner:XFUSION DIGITAL TECH CO LTD

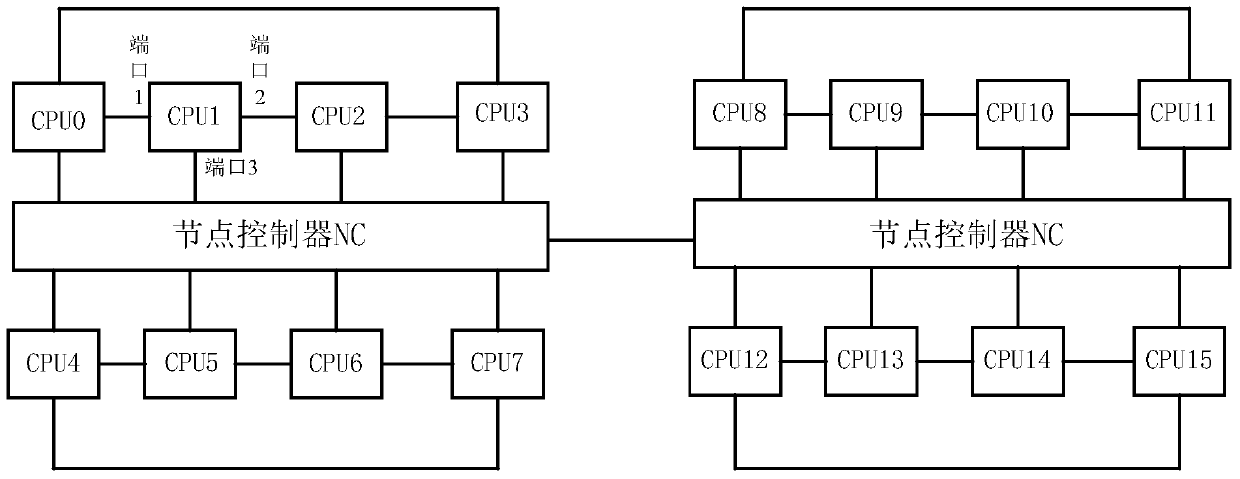

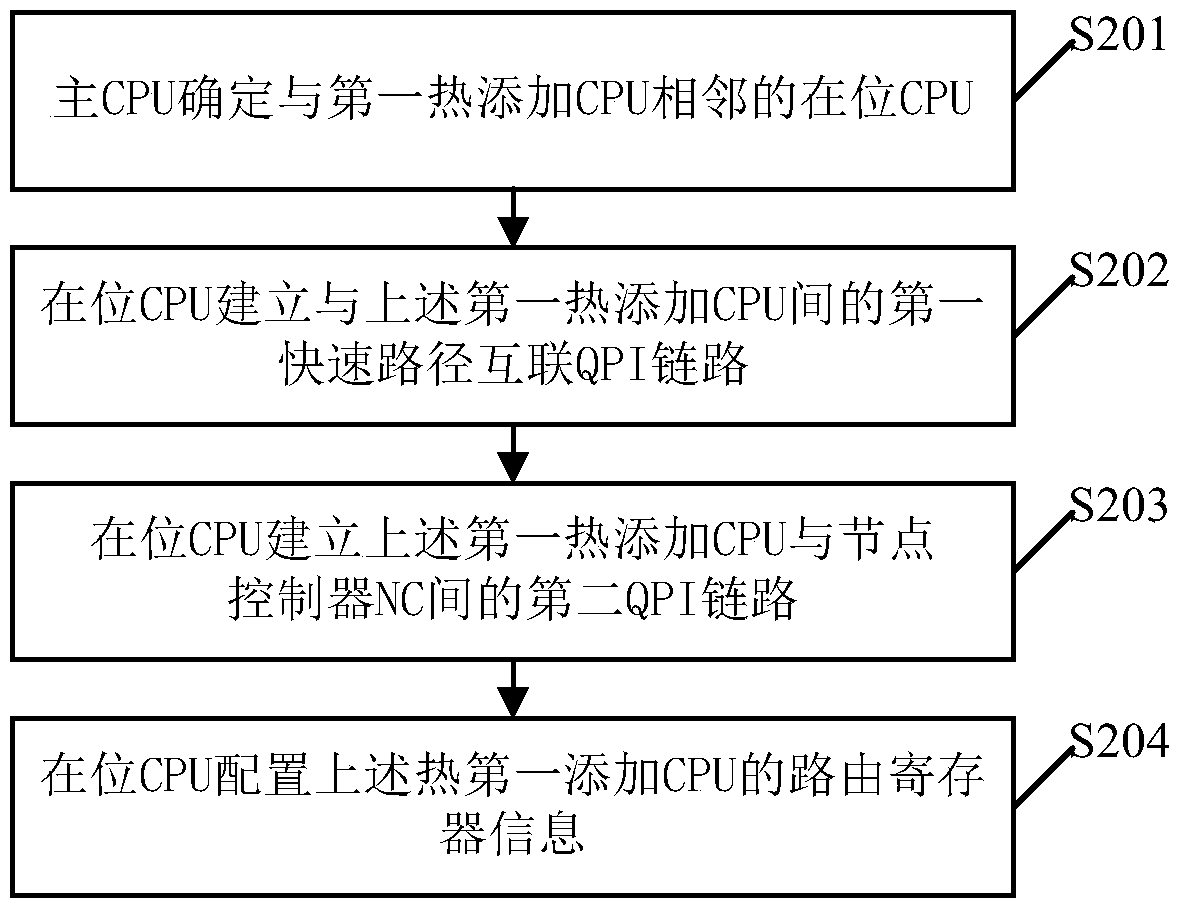

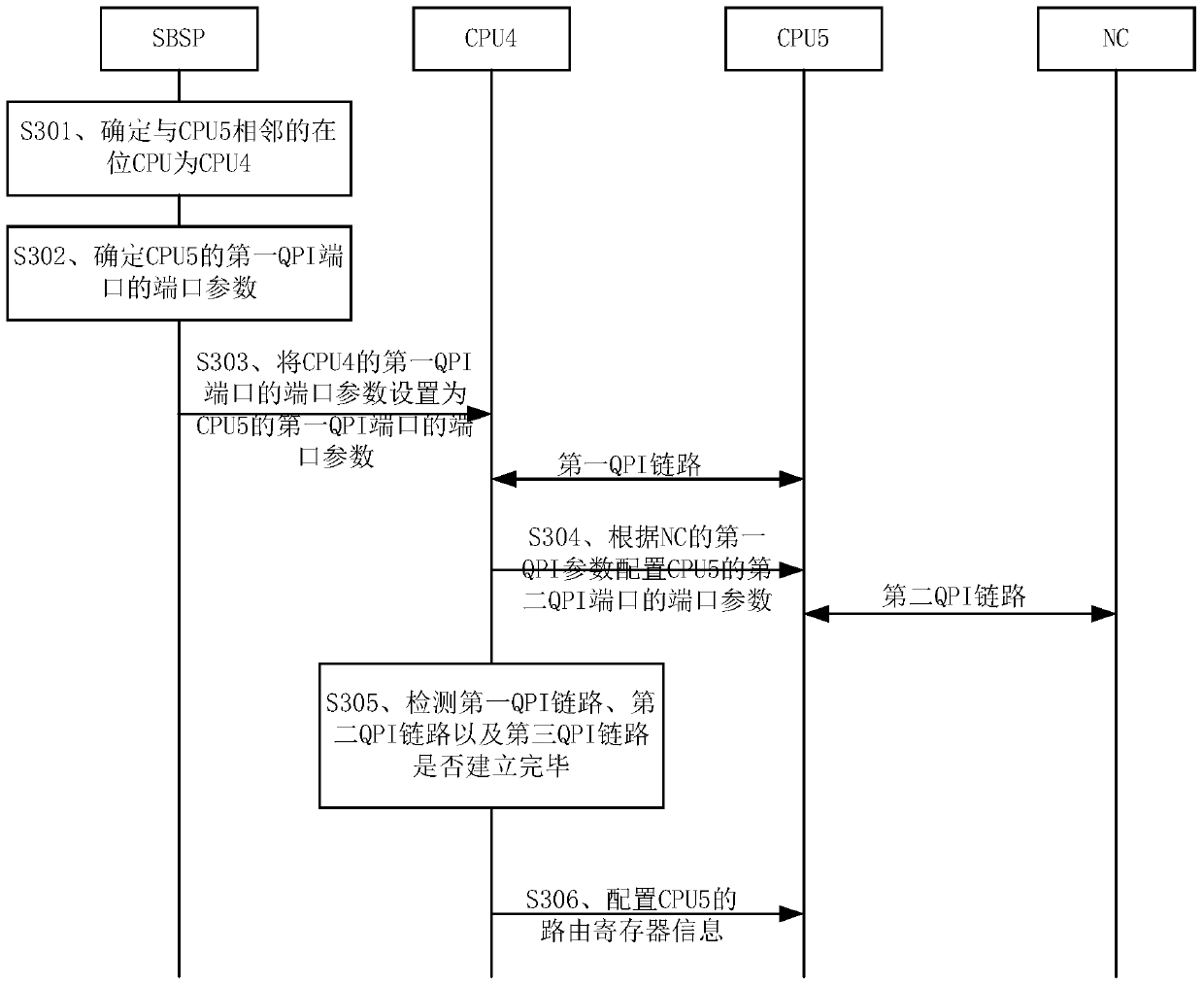

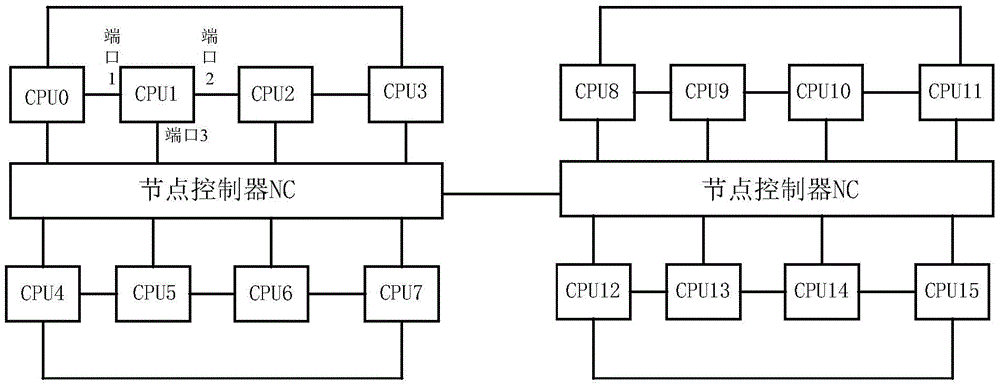

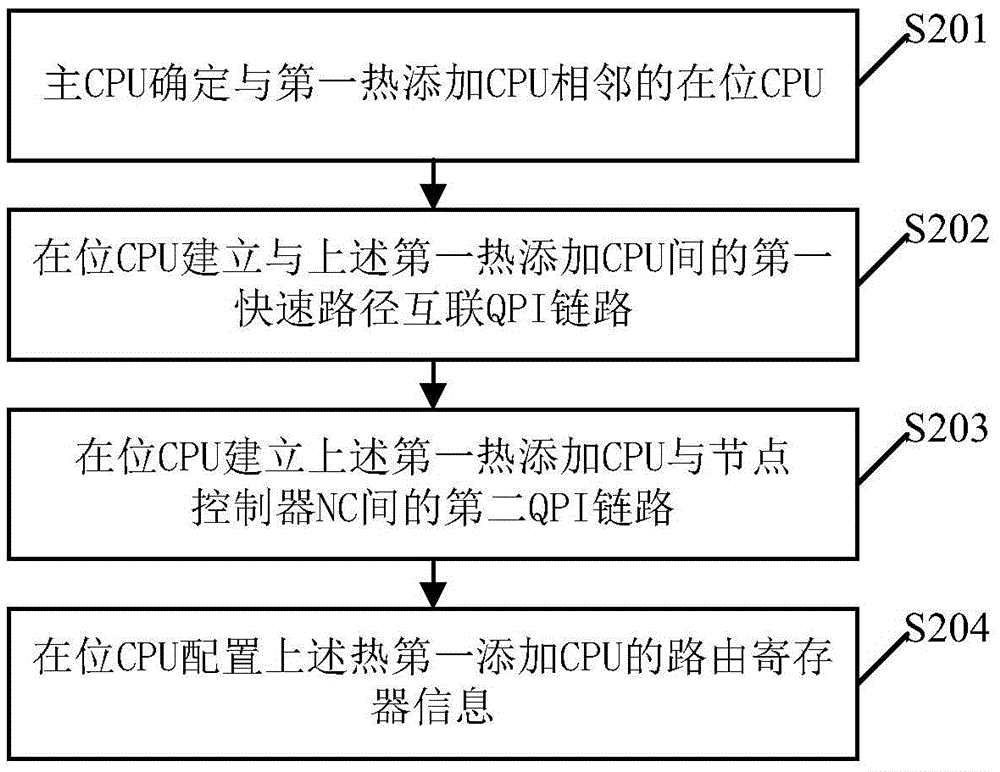

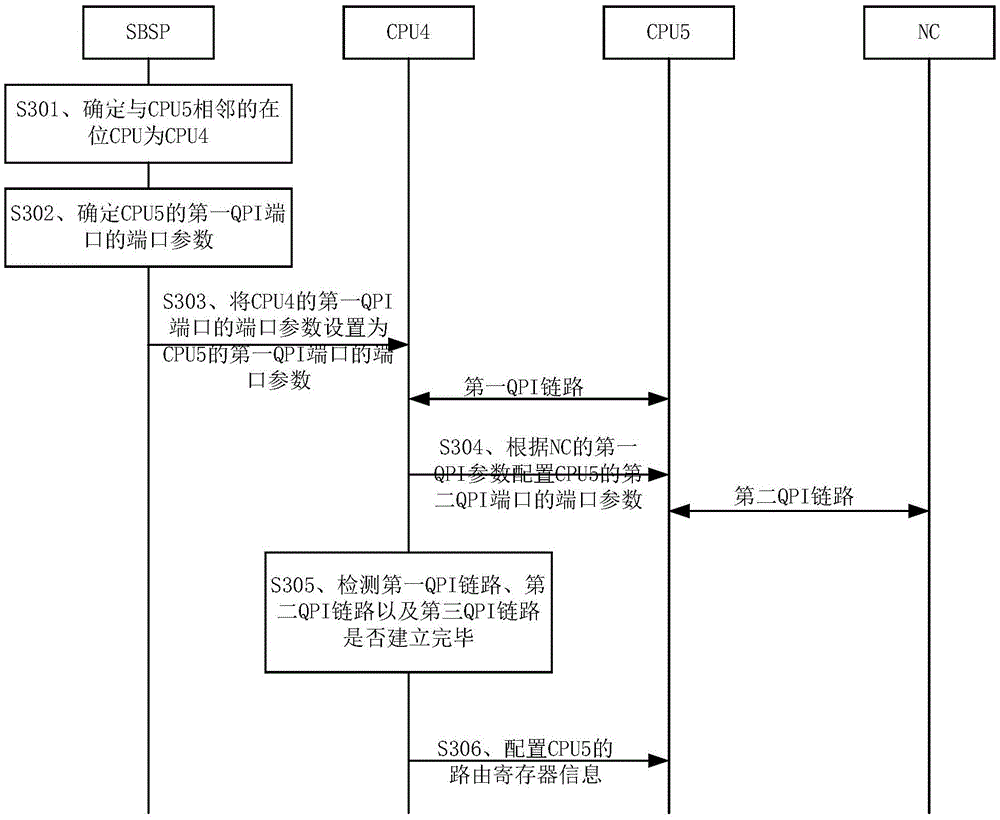

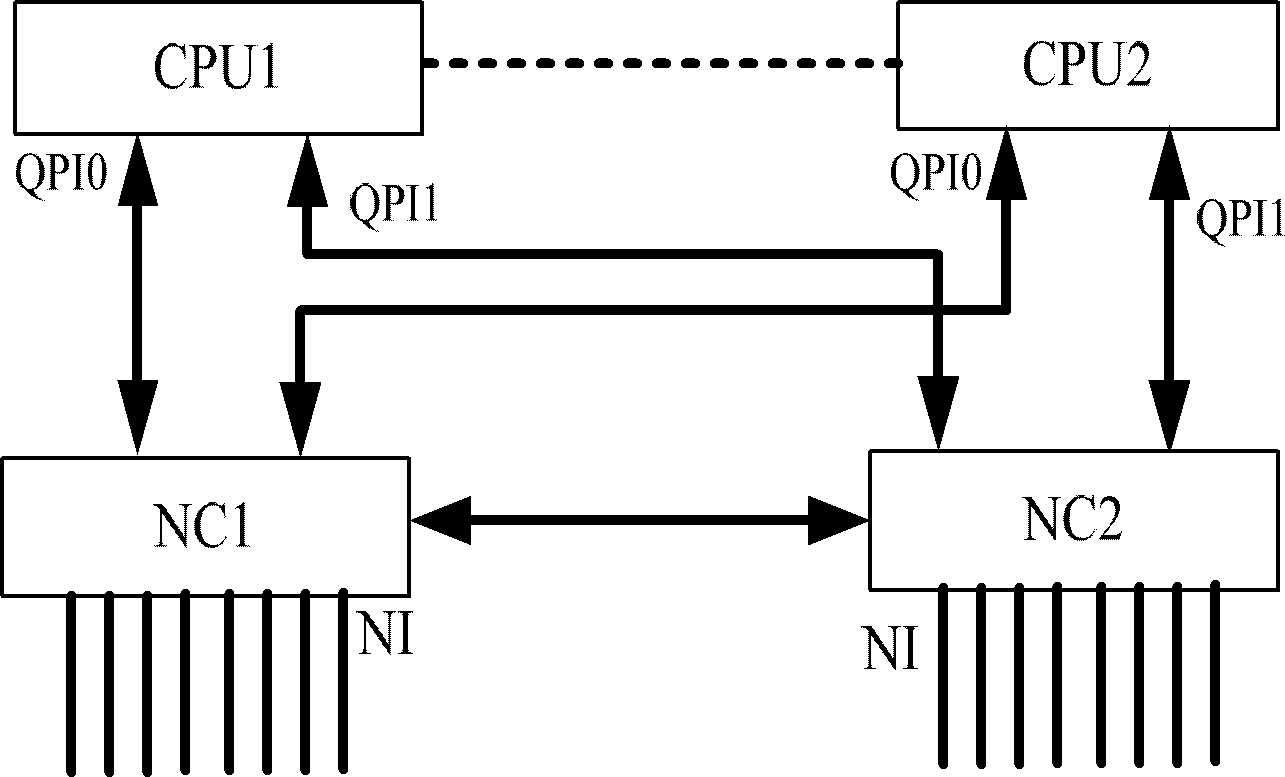

Hotly added CPU configuration method and system

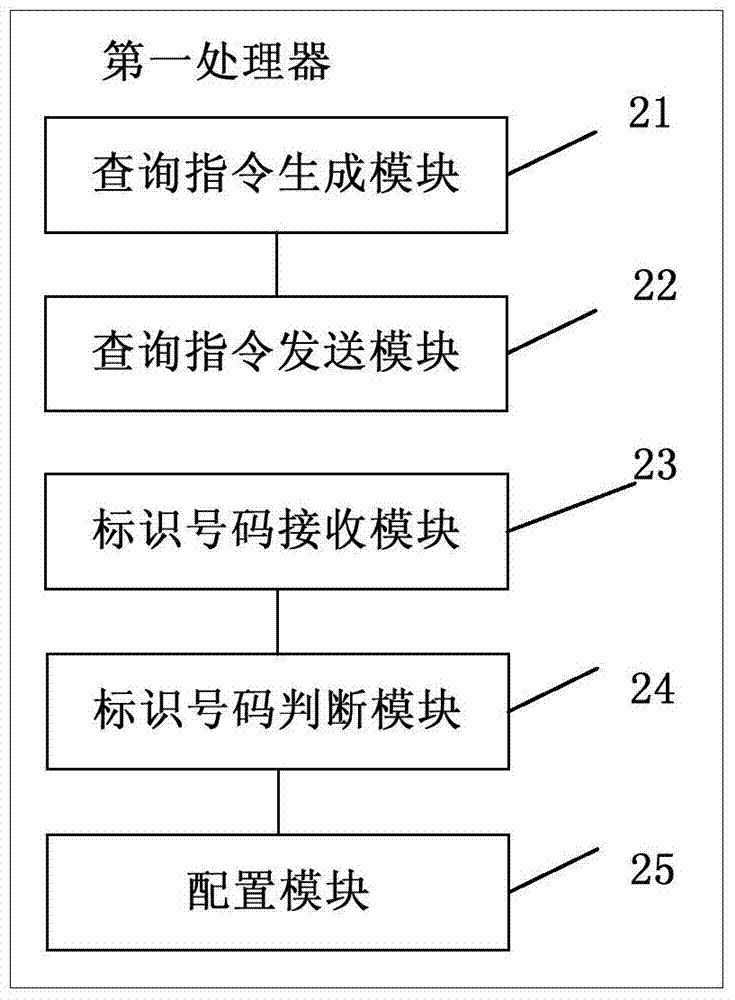

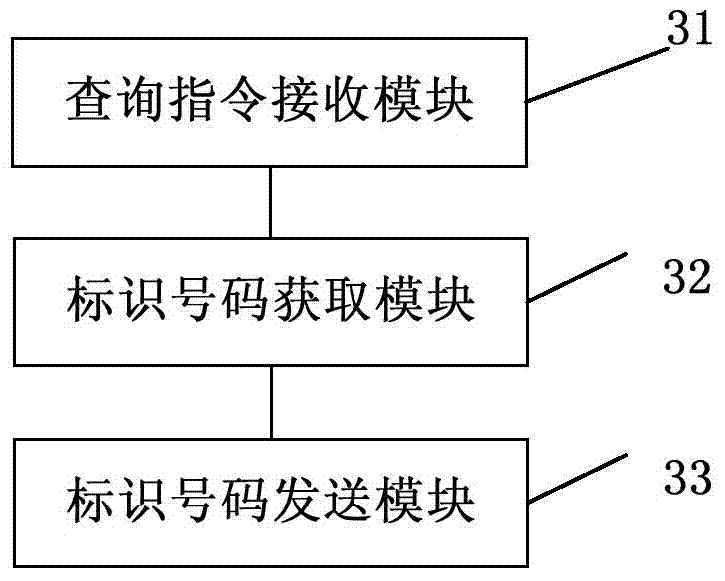

ActiveCN106708551AImprove user experiencePromote recoveryDigital computer detailsProgram loading/initiatingUsage experienceQuick path interconnect

Embodiments of the invention disclose a hotly added CPU (Central Processing Unit) configuration method and system. The method comprises the steps that a main CPU determines an in-place CPU adjacent to a first hotly added CPU; the in-place CPU establishes a first QPI (Quick Path Interconnect) link with the first hotly added CPU, and establishes a second QPI link between the first hotly added CPU and an NC (Node Controller), wherein the NC is an NC connected with the in-place CPU; and the in-place CPU configures route register information of the first hotly added CPU. By implementing the method and the system, the configuration of the hotly added CPU can be realized through the in-place CPU adjacent to the hotly added CPU and does not depend on a distance between the hotly added CPU and the main CPU, so that topological extension is facilitated; and the transfer by the NC and / or other CPUs is no longer needed, so that the topological discovery efficiency is improved, businesses can be quickly recovered, and the usage experience of a user is improved.

Owner:XFUSION DIGITAL TECH CO LTD

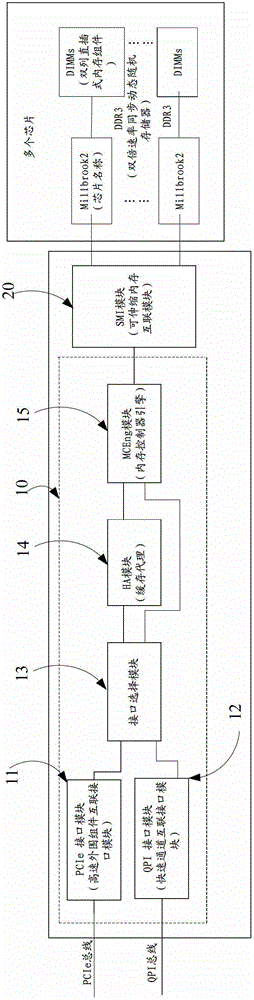

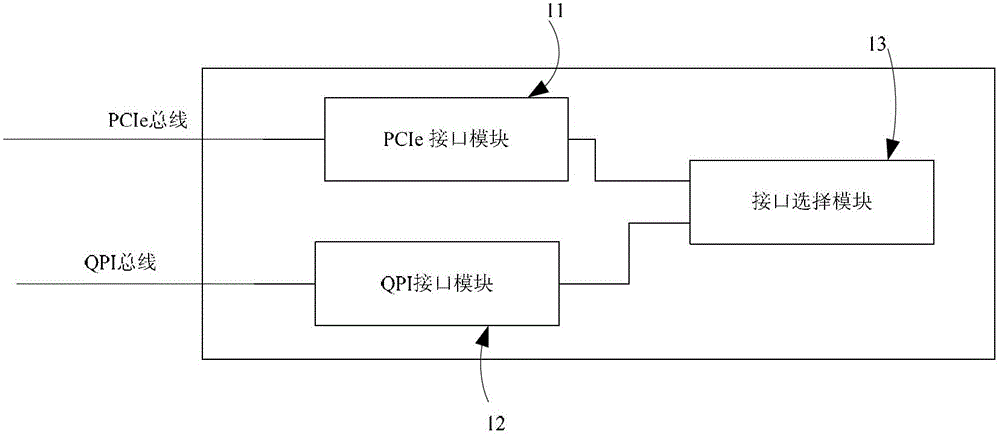

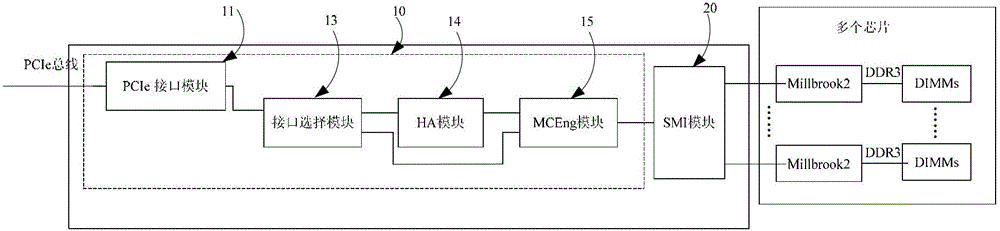

Storage expansion device and server

ActiveCN103180817BImprove performanceInput/output to record carriersComputer moduleMemory controller

The present invention provides a storage expansion apparatus and a server, where the storage expansion apparatus includes: a quick path interconnect QPI interface module, which communicates with a central processing unit CPU through a QPI bus; a peripheral component interconnect express PCIe interface module, which communicates with the CPU through a PCIe bus; an interface selecting module, connected to the QPI interface module and the PCIe interface module separately; a home agent HA module, connected to the interface selecting module; and a memory controller engine MCEng module, connected to the HA module and the interface selecting module separately. The storage expansion apparatus may serve as a CPU memory capacity expansion device, and may also serve as storage expansion hardware of storage IO.

Owner:HUAWEI TECH CO LTD

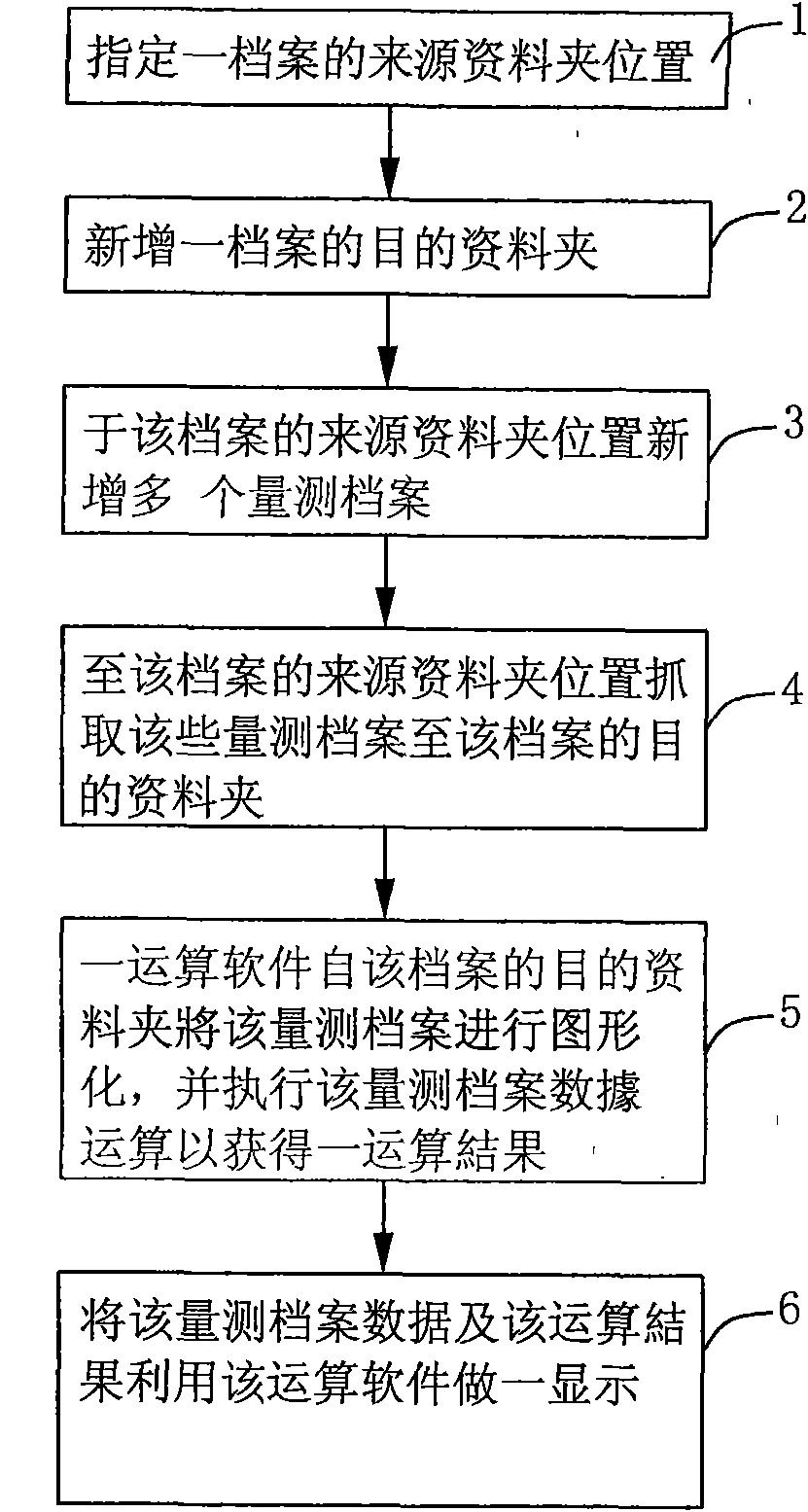

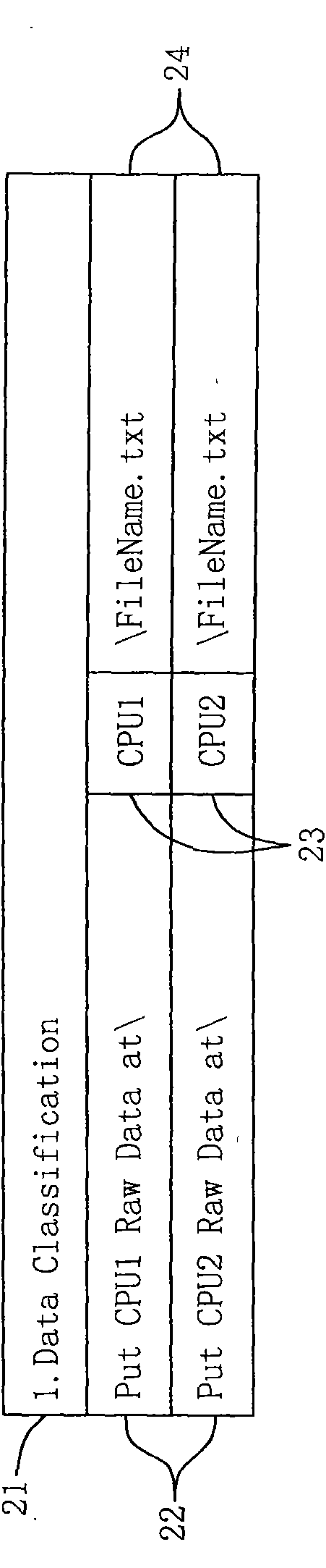

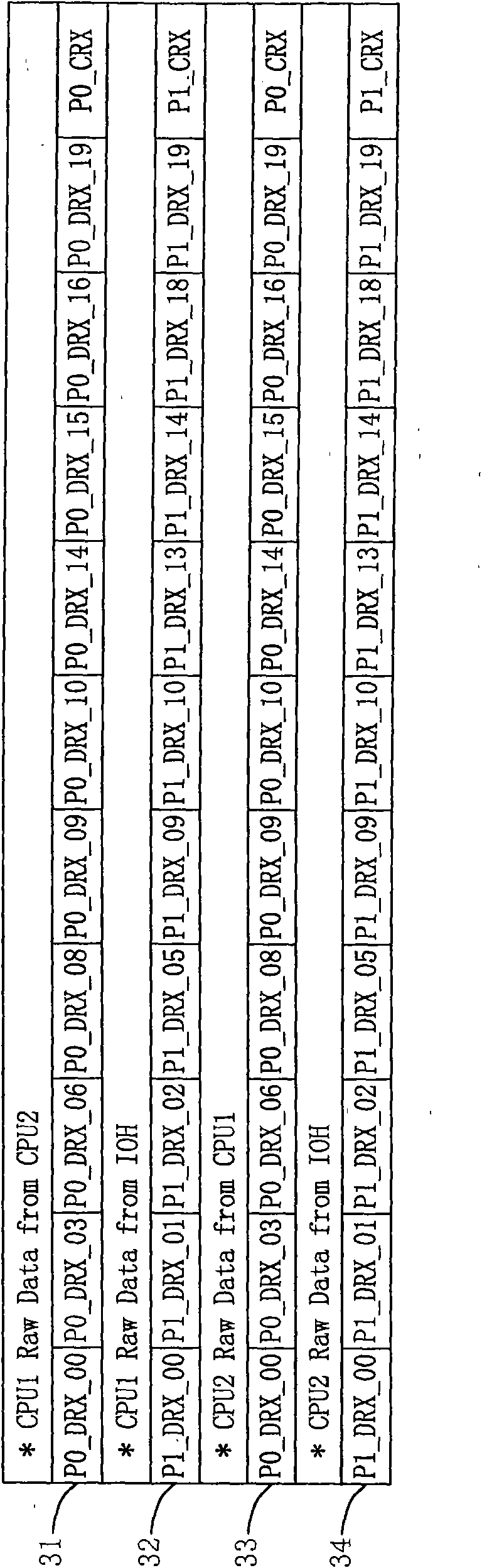

Method for quick path interconnect (QPI) automatic data arranging tool

InactiveCN102073564AShorten the timeReduce human errorDetecting faulty computer hardwareData operationsDatabase

The invention relates to a method for a quick path interconnect (QPI) automatic data arranging tool, which is used for measuring an impedance value of a transmission line by utilizing a QPI interface. The method comprises the following steps of: appointing a source data folder position of a document; adding a target data folder of the document; adding a plurality of measuring documents into the source data folder position of the document; grabbing the measuring documents from the source data folder position of the document to the target data folder of the document; imaging the measuring documents from the target data folder of the document by operation software and executing the measuring document data operation for obtaining an operation result; and displaying the measuring document data and the operation result by utilizing the operation software.

Owner:INVENTEC CORP

Data packet retransmission method and node in quick path interconnect system

ActiveCN103141050BGuaranteed reliabilityIncrease profitError prevention/detection by using return channelData switching networksQuick path interconnectLink layer

Disclosed are a data packet retransmission method and node in a quick path interconnect system. When a first node acts as a sender, only a first data packet detected to be faulty is retransmitted to a second node, so as avoid repeatedly sending to the second node the first data packet already correctly received by the second node, thereby saving system resources occupied by data packet retransmission, and therefore increasing the utilization of the system resources. When the first node acts as a receiver, a first link layer of the first node buffers a second data packet correctly received within the period from sending of a first retransmission request to receiving of a first retransmission response, and upon correctly receiving a second data packet retransmitted by a second node, sends to a first protocol layer the second data packet buffered during said period, so that when the second node only retransmits the second data packet detected to be faulty, the first node does not lose any packet, thereby ensuring the reliability of QPI-bus-based data packet transmission.

Owner:HUAWEI TECH CO LTD

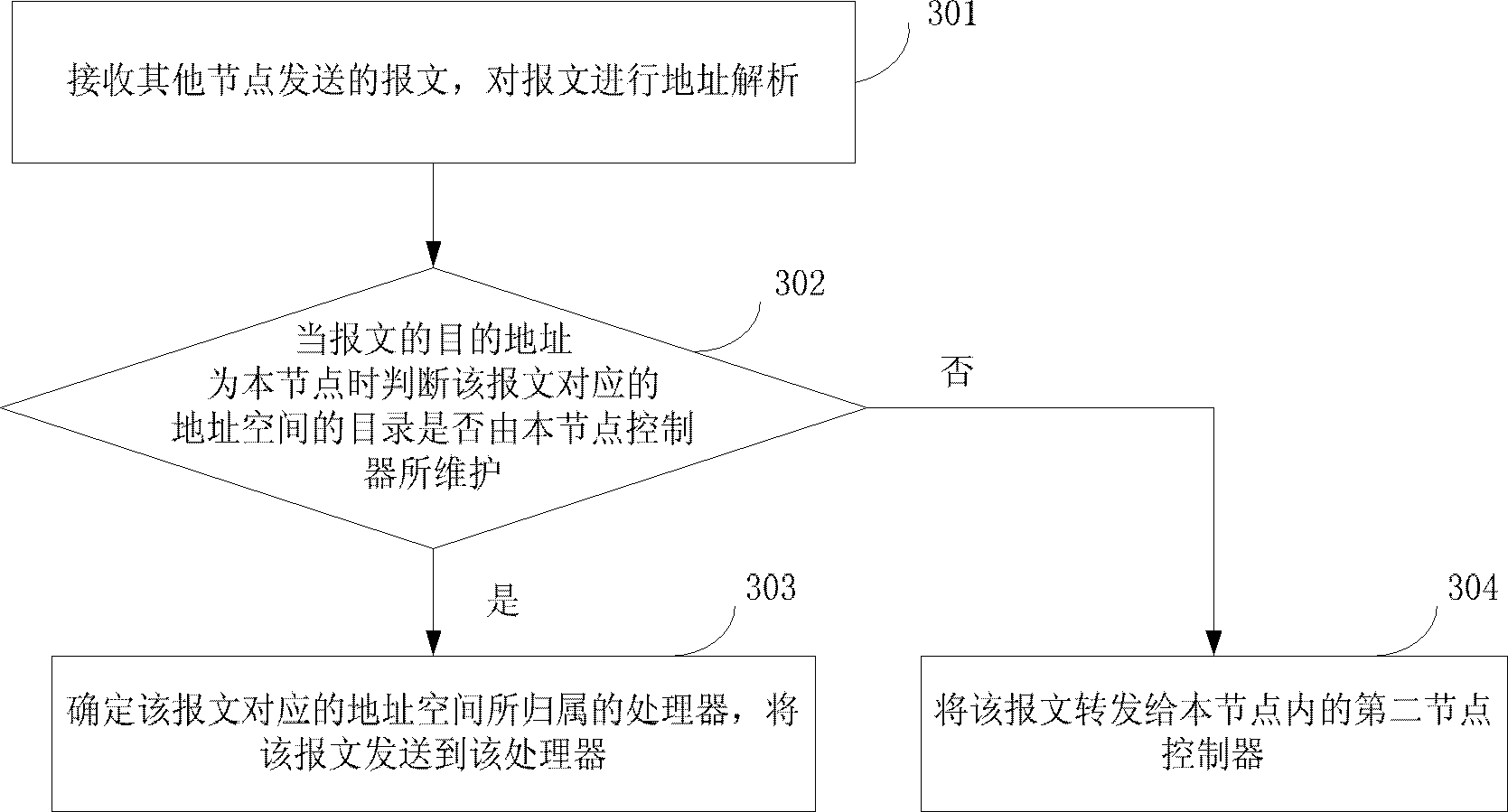

Method, device, and system for processing messages based on CC-NUMA

ActiveCN102318275BHigh speedImprove performanceData switching networksMemory systemsAddress resolutionMessage processing

Owner:HUAWEI TECH CO LTD

A Multi-way Server Interconnection Topology

ActiveCN107423255BEven heat dissipationOptimize layoutDigital computer detailsElectric digital data processingComputer architectureEngineering

The invention provides a multipath server interconnection topological structure, which comprises a first single plate, a second single plate, a third single plate, a fourth single plate and a back plate, wherein the first single plate comprises a first processor, a second processor, a first processor interface, a second processor interface, a first connection interface and a second connection interface; the second single plate comprises a third processor, a fourth processor, a third processor interface, a fourth processor interface, a third connection interface and a fourth connection interface; and the third single plate comprises a fifth processor, a sixth processor, a fifth processor interface, a six processor interface and a fifth connection interface. The QPI (Quick Path Interconnect) buses of two processors on each single plate are subjected to partitioning processing, and cross interconnection parts do not need to mutually stride; and under a situation that normal work is guaranteed, the multipath server interconnection topological structure can realize even cooling, and a first connection area, a second connection area and a third connection area avoid connection cross routes among processors.

Owner:SUZHOU METABRAIN INTELLIGENT TECH CO LTD

CPU interconnecting device

ActiveCN102301364BIncrease or decrease the numberImprove acceleration performanceMultiple digital computer combinationsElectric digital data processingPci interfaceComputer module

The present disclosure provides a CPU interconnect device, the CPU interconnect device connects with a first CPU, which includes a quick path interconnect QPI interface and a serial deserial SerDes interface, the quick path interconnect QPI interface receives serial QPI data sent from a CPU, converts the received serial QPI data into a parallel QPI data, and outputs the parallel QPI data to the serial deserial SerDes interface; the serial deserial SerDes interface converts the parallel QPI data output by the QPI interface into a high-speed serial SerDes data and then send the high-speed serial SerDes data to another CPU interconnect device connected with another CPU. The defects of poor scalability, long data transmission delay, and a high cost of an existing interconnect system among CPUs can be solved.

Owner:HUAWEI TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com