Patents

Literature

37results about How to "Improve super-resolution" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

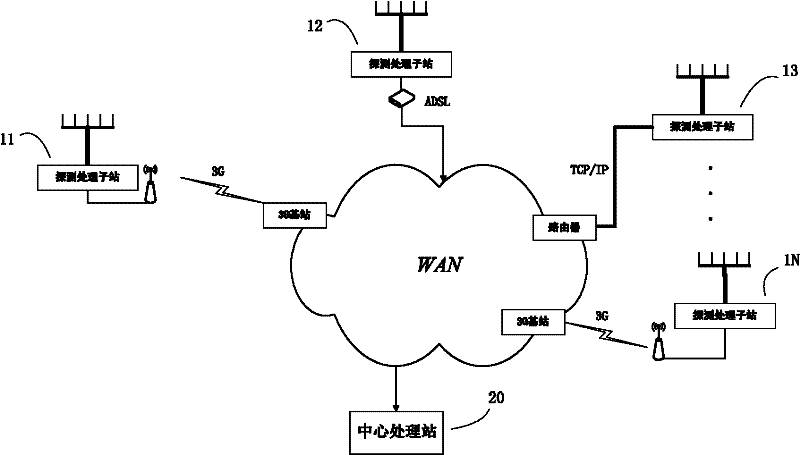

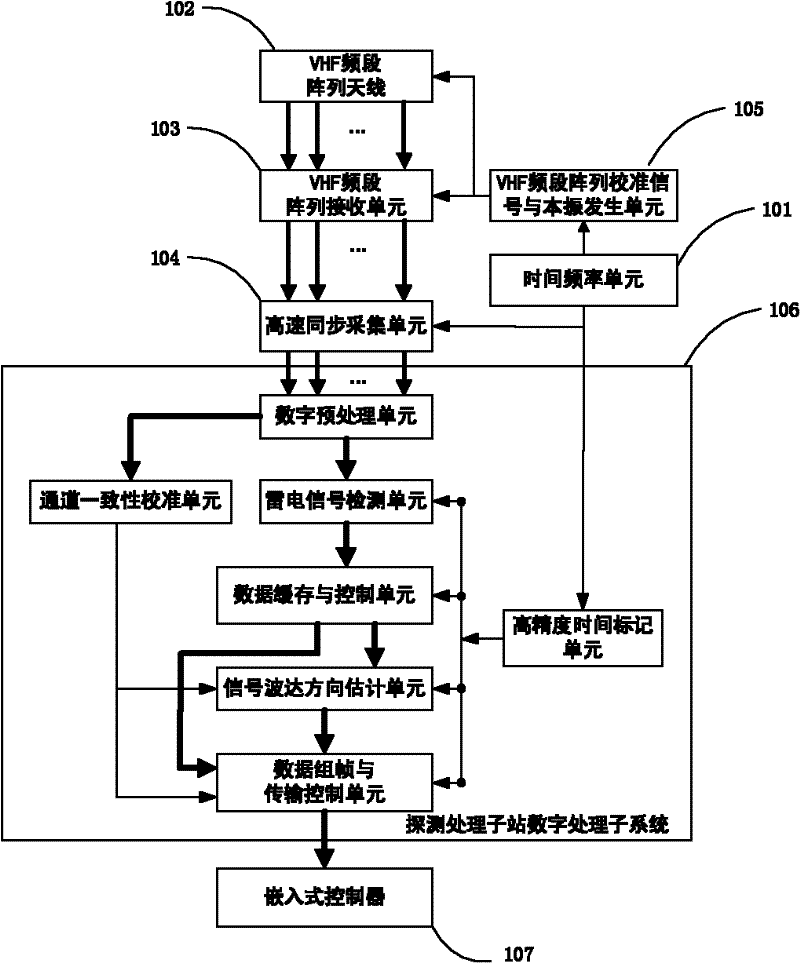

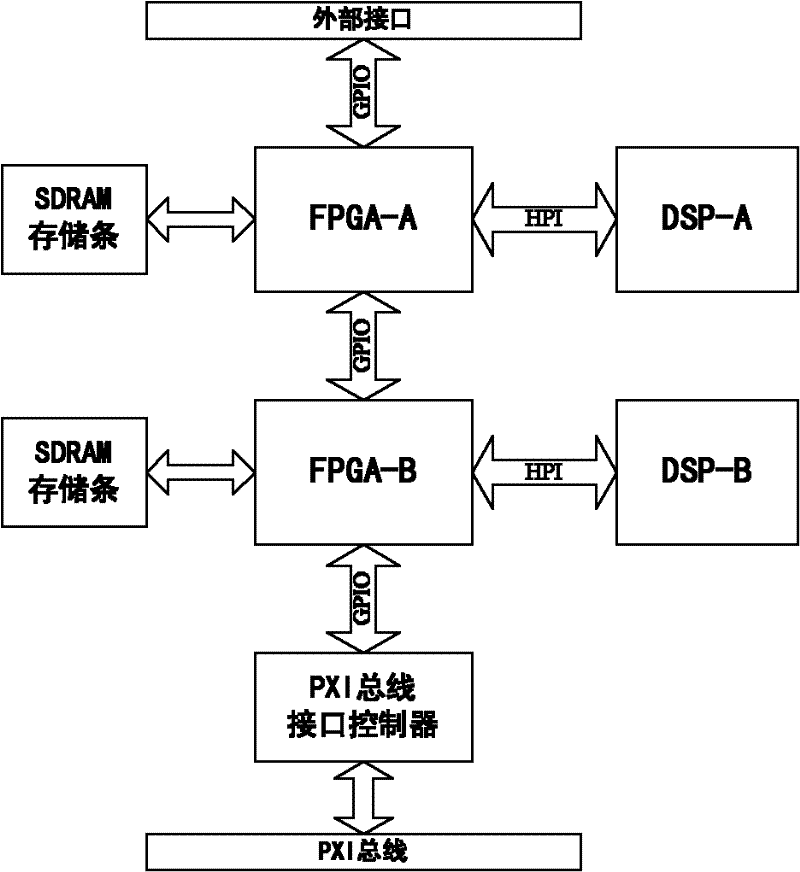

A vhf frequency band cloud lightning detection and positioning system

ActiveCN102288838ARealize spatial positioningGet rid of dependenceElectromagentic field characteristicsEngineeringMultilateration

The invention discloses a VHF (very high frequency) frequency-range intracloud lightning detecting and positioning system, which comprises a central treatment station and a plurality of detection and treatment sub-stations arranged in different positions, wherein the detection and treatment sub-stations and the central treatment station are accessed into a public wide area network in a wired or wireless mode; and through the wide area network, on one hand, obtained intracloud lightning detection data is uploaded to the central treatment station by the detection and treatment sub-stations, andon the other hand, the working state of each detection and treatment sub-station is remotely monitored by the central treatment station. The system can be used for precisely estimating the incidence directions of lightning radiation signals of a plurality of simultaneously occurring lightning radiation sources or the same lightning radiation source arriving at the detection sub-stations through different propagation paths, the arriving time difference of the lightning signals between any two detection and treatment sub-stations is calculated at the central treatment station (TDOA estimation),and the spatial position of an intracloud lightning radiation source is determined by DOA and TDOA information.

Owner:HUAZHONG UNIV OF SCI & TECH

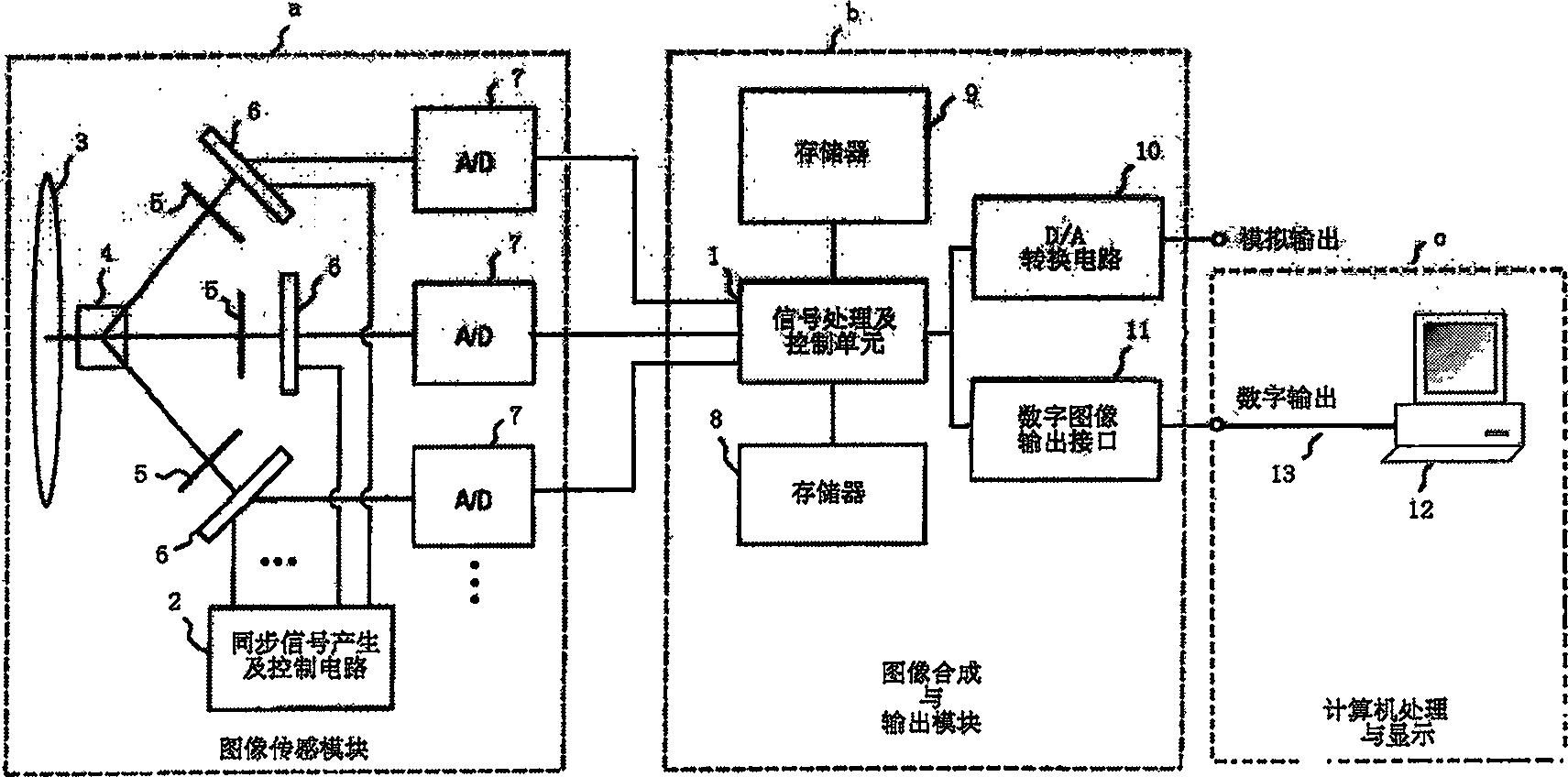

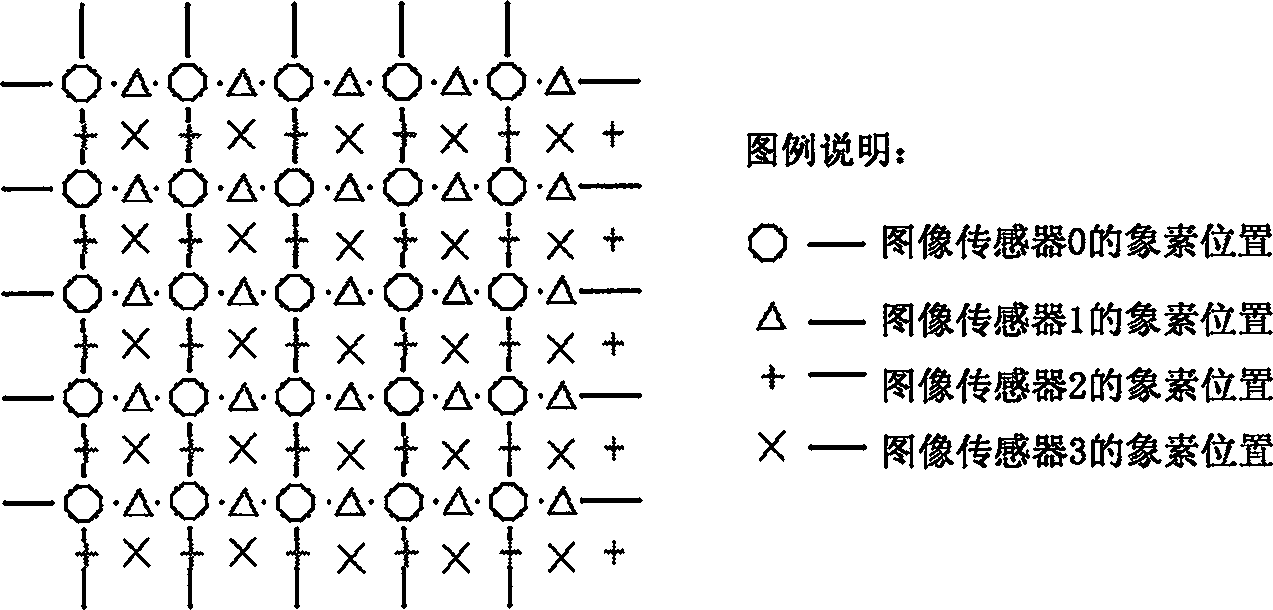

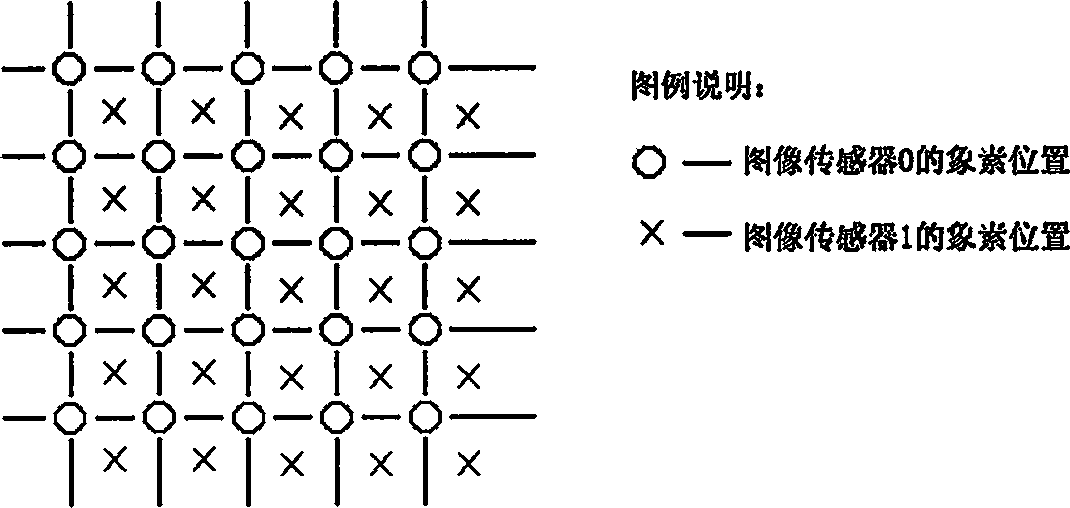

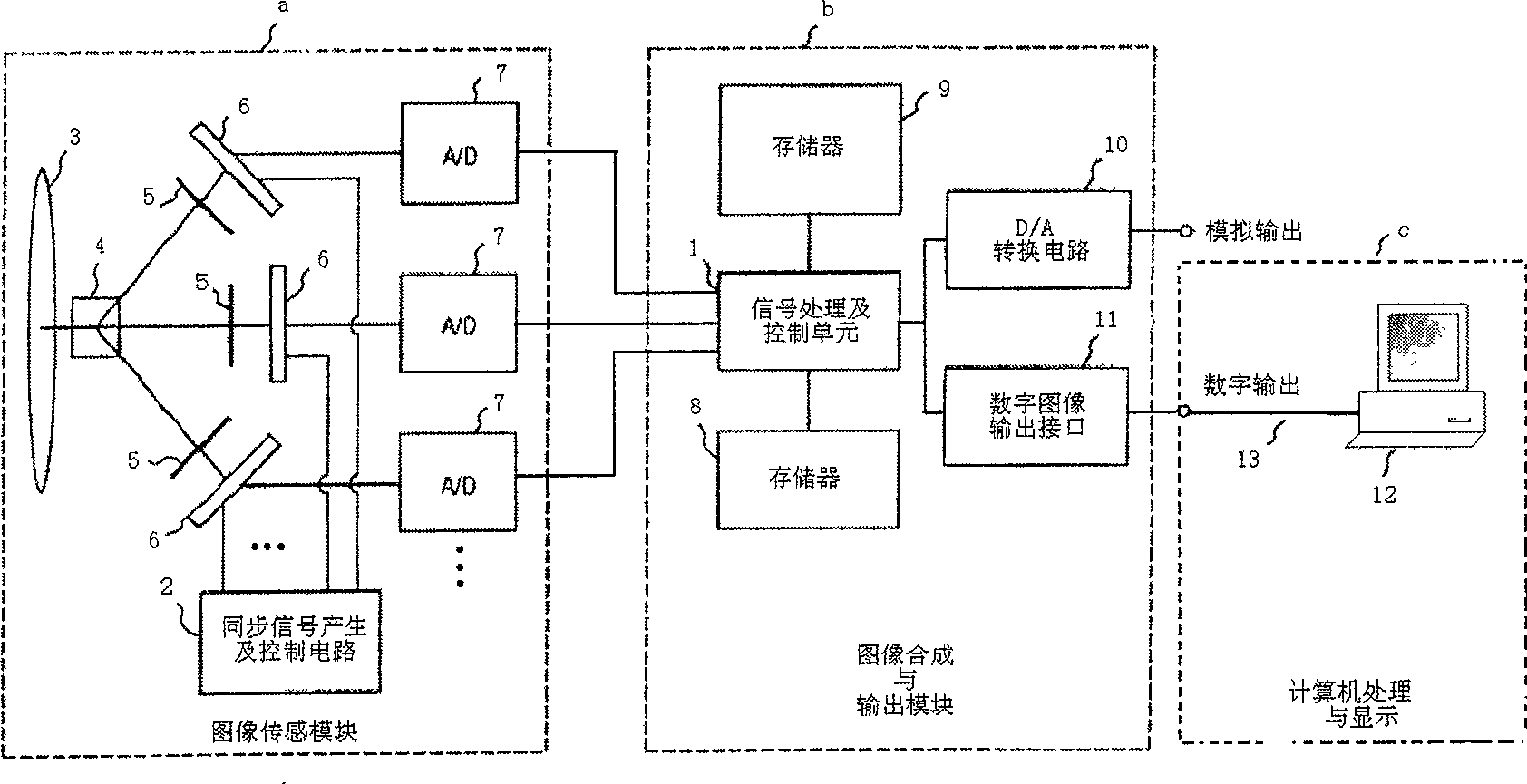

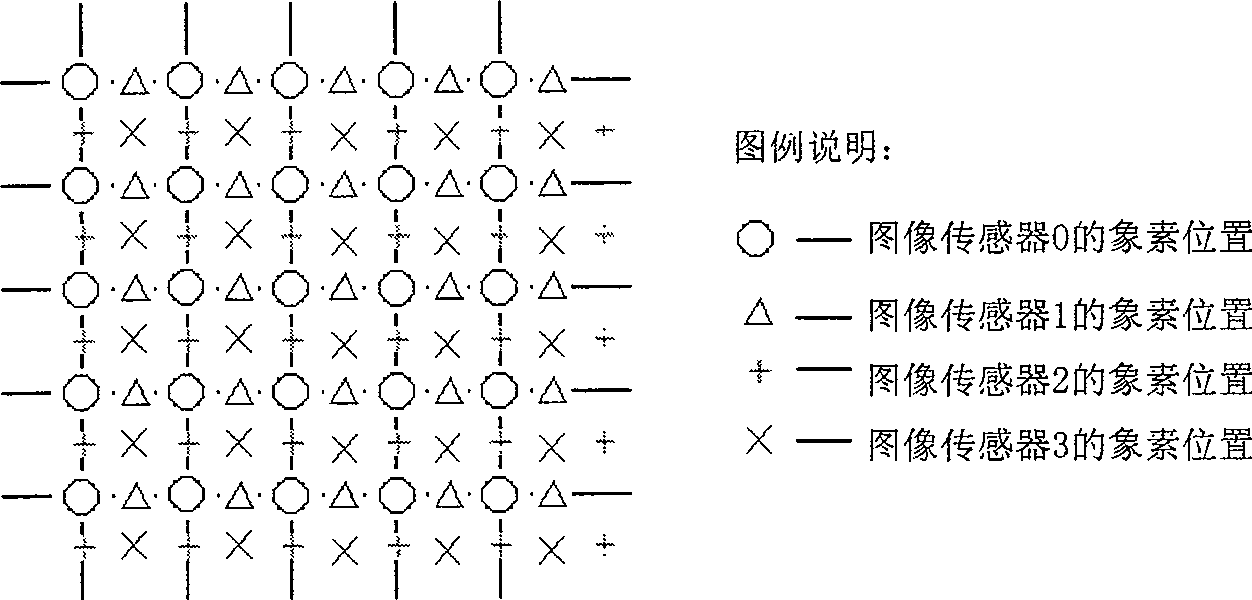

High dynamic equipment for reconstructing image in high resolution

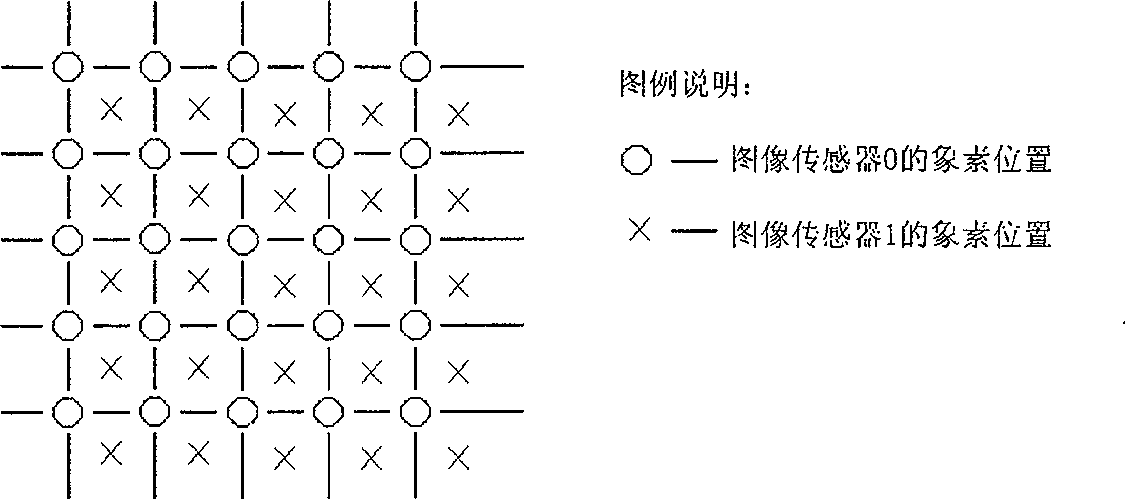

InactiveCN1874499AImprove super-resolutionImprove performance2D-image generationClosed circuit television systemsHigh resolution imageImage quality

The method uses multi low resolution and low dynamic imagers to incorporates the sub-pixel dynamical image formation technology with the technology of multi image reconstructing high dynamical image, and uses a special site distribution of image sensor to implement the reconstructing of ultra-high resolution image, and uses image gray level interpolation to reconstruct image gray level so as to get a high dynamic range and high resolution image.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

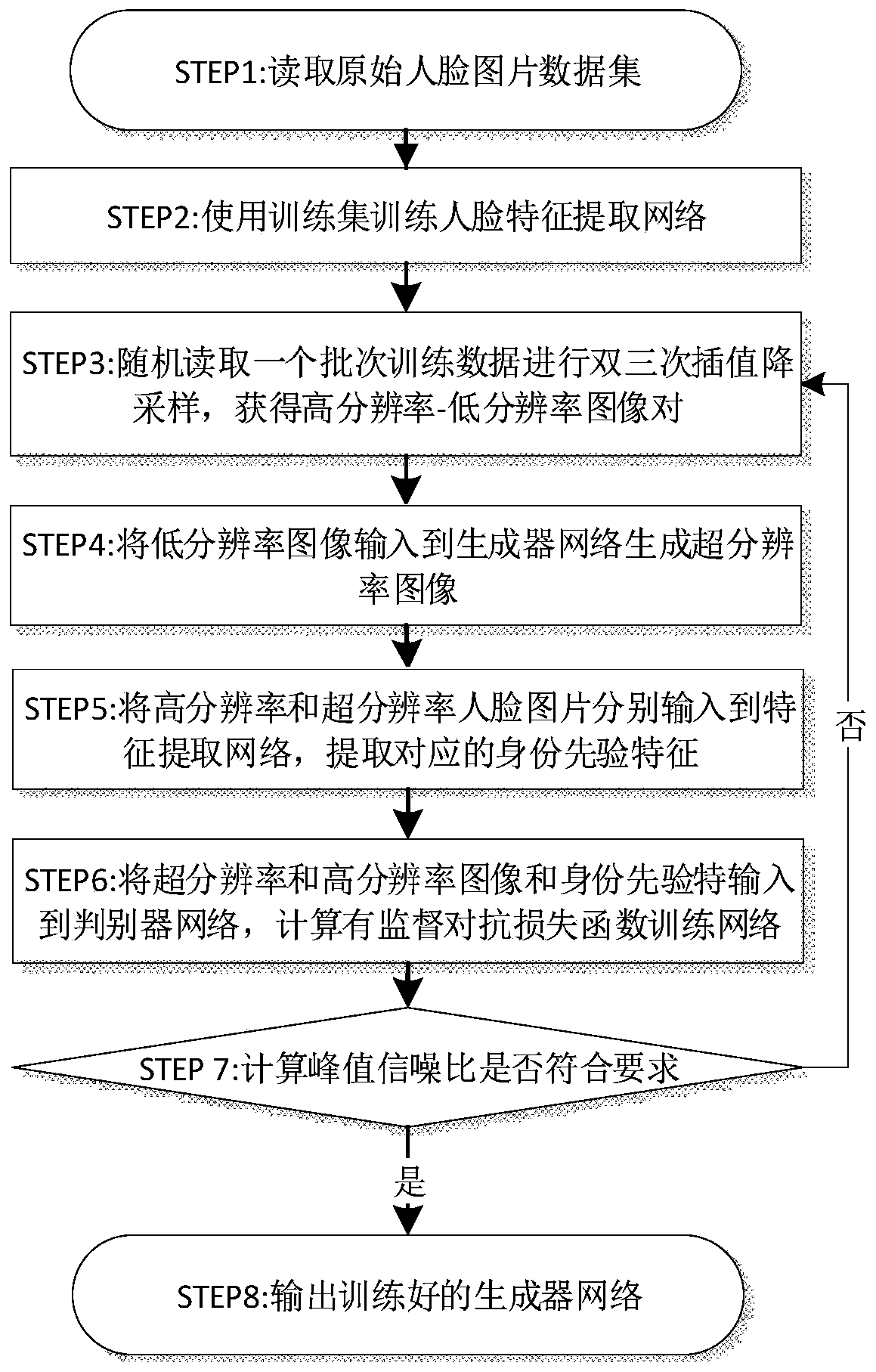

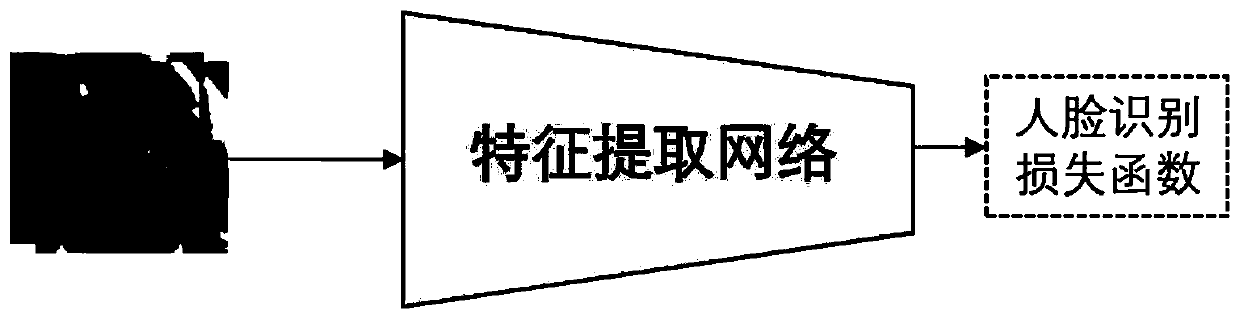

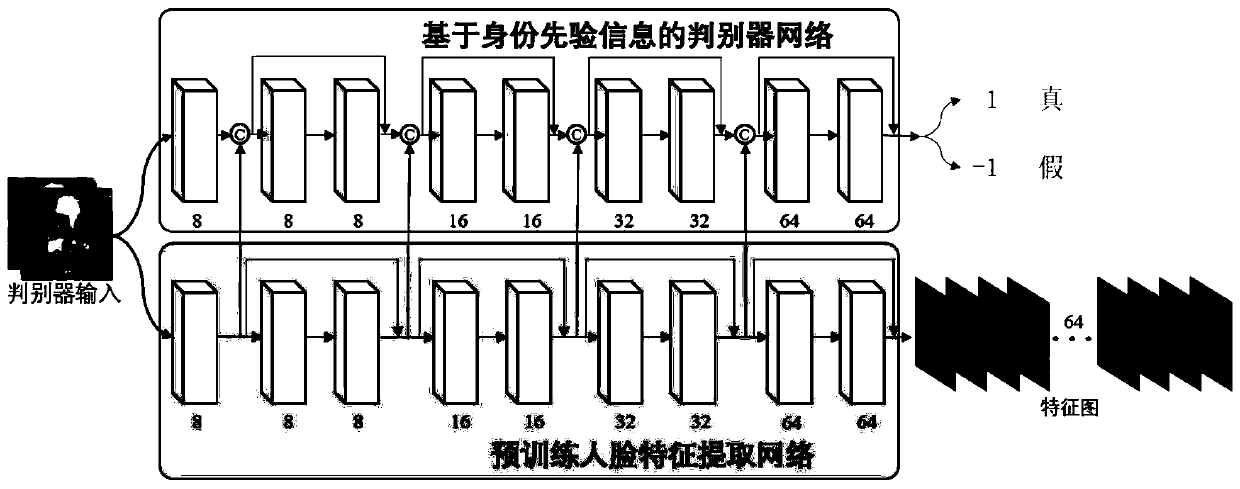

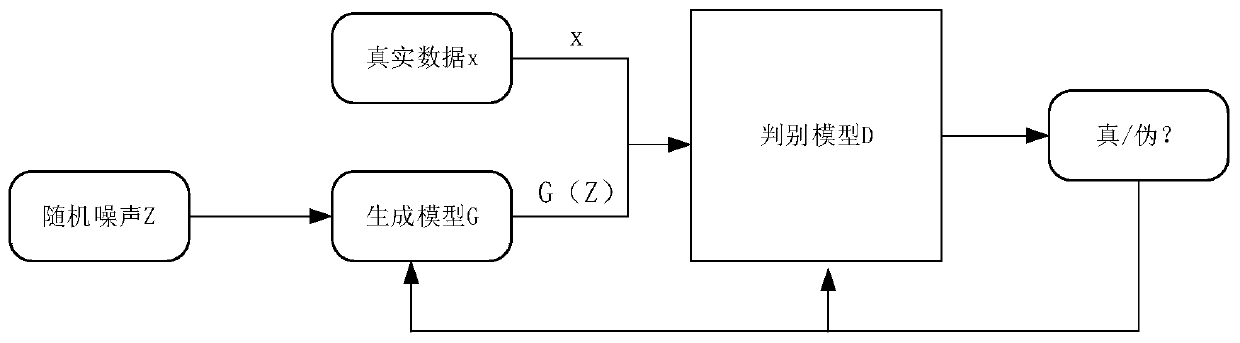

Face super-resolution reconstruction method based on identity prior generative adversarial network

ActiveCN110706157AImprove accuracyImprove super-resolutionGeometric image transformationCharacter and pattern recognitionGenerative adversarial networkMachine learning

The invention relates to a face super-resolution reconstruction method based on an identity priori generative adversarial network. The face super-resolution reconstruction method comprises the following steps: firstly, reading an original face picture data set; then, utilizing a face image-identity label pair to train a face feature extraction network; thirdly, reading the high-resolution face image for bicubic interpolation down-sampling to obtain a high-resolution face image-low-resolution face image pair for model training; fourthly, inputting the low-resolution face image into a generatornetwork to generate a super-resolution face image; respectively inputting the high-resolution face image and the super-resolution face image into a trained face feature extraction network, and extracting identity prior features of the high-resolution face image and the super-resolution face image; and inputting the high-resolution face image, the super-resolution image and the corresponding identity prior features into a discriminator network, calculating a supervised adversarial loss function by using the output of the discriminator network, and training a generative adversarial network by using error back propagation.

Owner:UNIV OF SCI & TECH OF CHINA

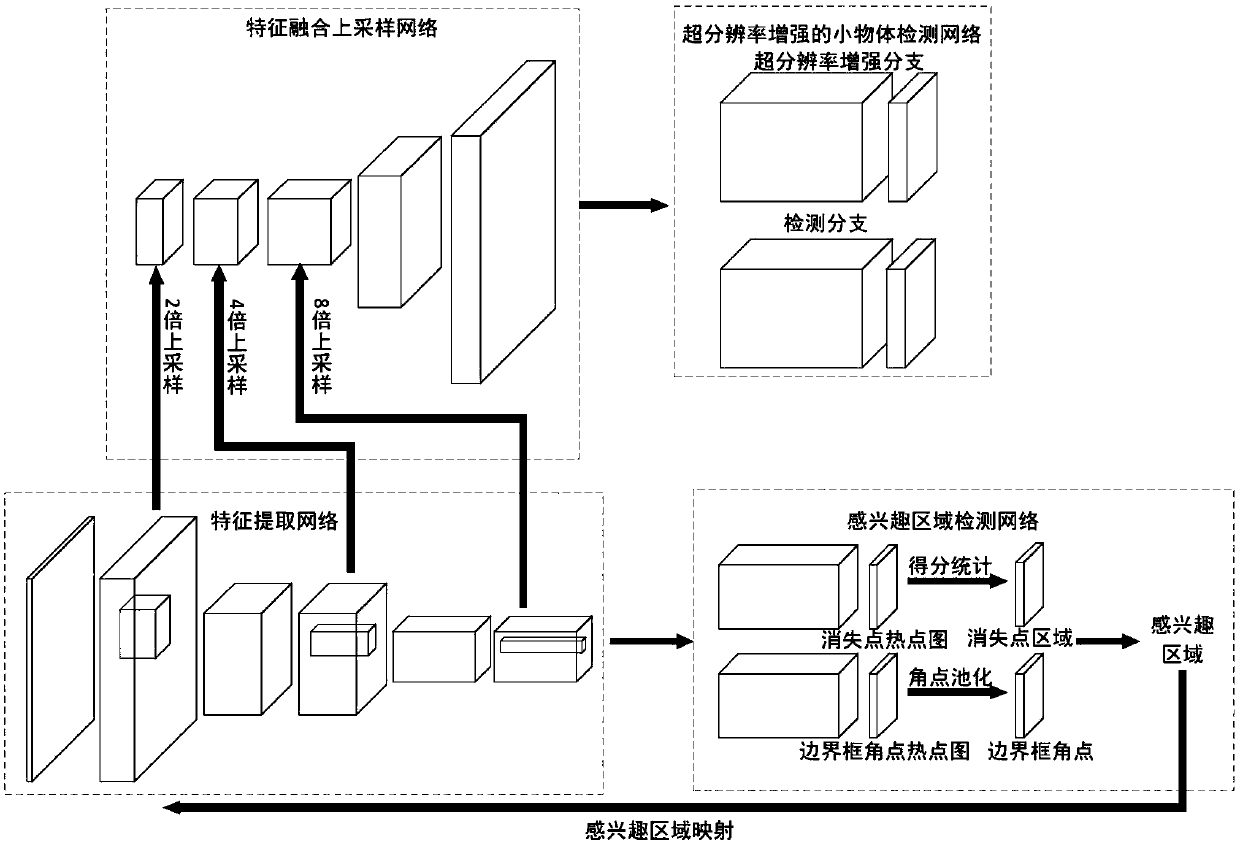

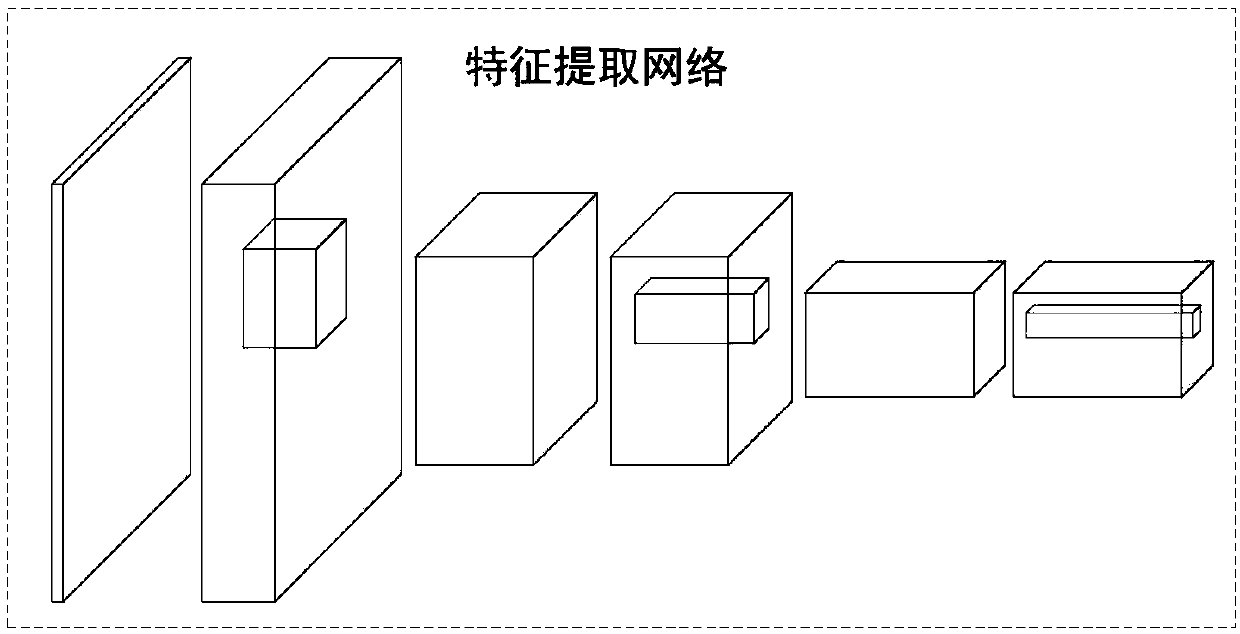

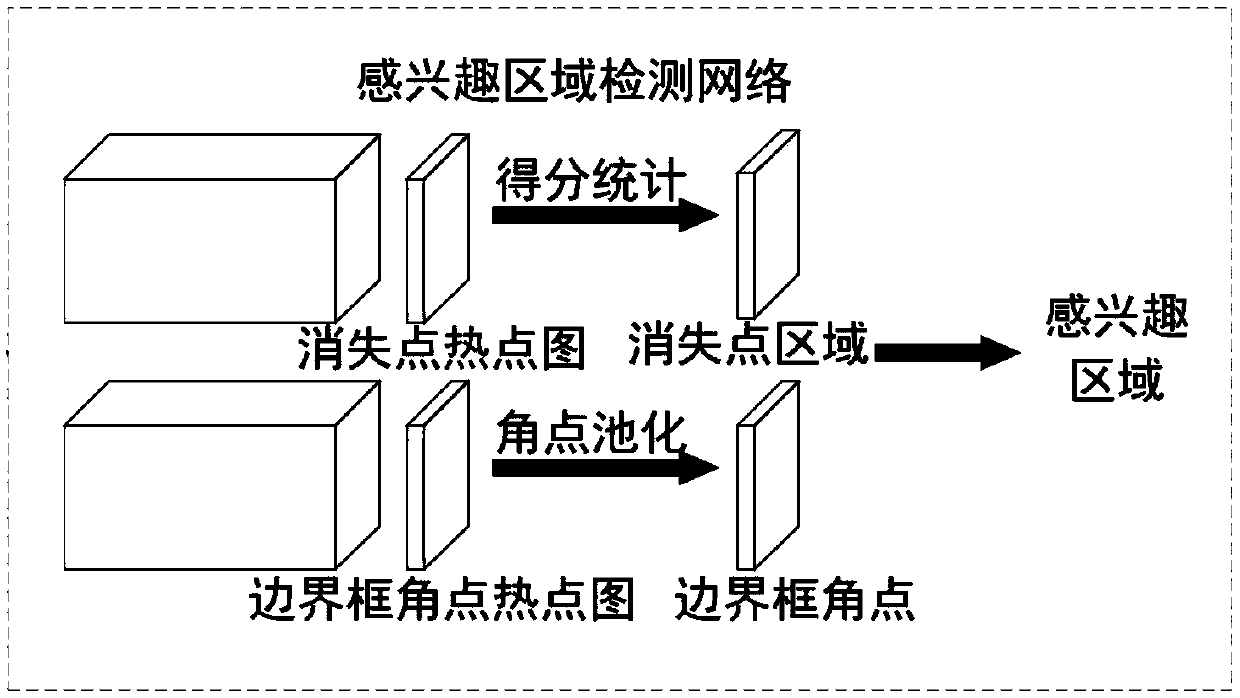

Method for detecting small and medium objects in a structured road based on deep learning

InactiveCN109583321ALess input dataRich feature informationScene recognitionNeural architecturesNetwork structureEngineering

A method for detecting small objects in a structured road based on deep learning comprises the steps that image data, containing the small objects, on the real structured road are collected, and the positions and the category information of the small objects in the structured road are marked through a manual method; Constructing a deep convolutional neural network suitable for small object detection in the structured road and a corresponding loss function; Inputting the acquired image and the labeled data into the convolutional neural network constructed in the previous step, updating the parameter value in the neural network according to the loss value between the output value and the target value, and finally obtaining an ideal network parameter. The invention provides a brand new network structure for the problem that the current neural network is poor in small object detection. On the premise that the calculated amount is not increased basically, the performance of small object detection is greatly improved, and the method can be conveniently deployed in an existing intelligent driving system, so that an intelligent driving automobile can detect dangerous objects on a road in along distance and respond in time, and the safety in the driving process is improved.

Owner:TONGJI UNIV

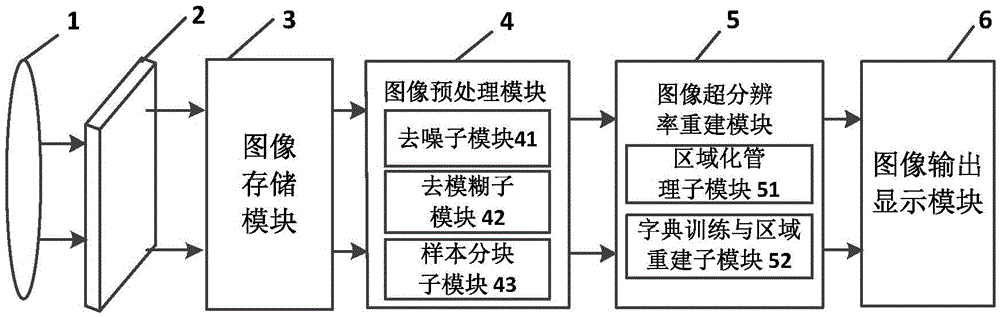

High-efficiency super-resolution imaging device and method with regional management

InactiveCN105678728AImprove super-resolutionReduced imaging timeImage enhancementImage analysisImage storageMedical imaging

The invention provides a high-efficiency super-resolution imaging device and method with regional management, and aims at solving the problem that an existing super-resolution imaging device and method is long in imaging time and low in resolution. The imaging device comprises an imaging lens group, a high-resolution detector, an image storage module, an image pre-processing module, an image super-resolution reconstruction module and an image output display module connected successively, wherein the image super-resolution reconstruction module is composed of an image regional management sub-module and a dictionary training and regional reconstruction sub-module. The imaging method comprises the following steps of obtaining optical signals of a practical scene; obtaining low-resolution images; storing the low-resolution images; preprocessing the images; carrying out regional image management; training dictionaries and reconstructing super-resolution areas, and splicing images of the reconstructed super-resolution sub-regions. The imaging device and method can be used to improve the imaging resolution effectively and shorten the imaging time, and applied to the fields including video monitoring, satellite remote-sensing imaging and medical imaging.

Owner:XIDIAN UNIV

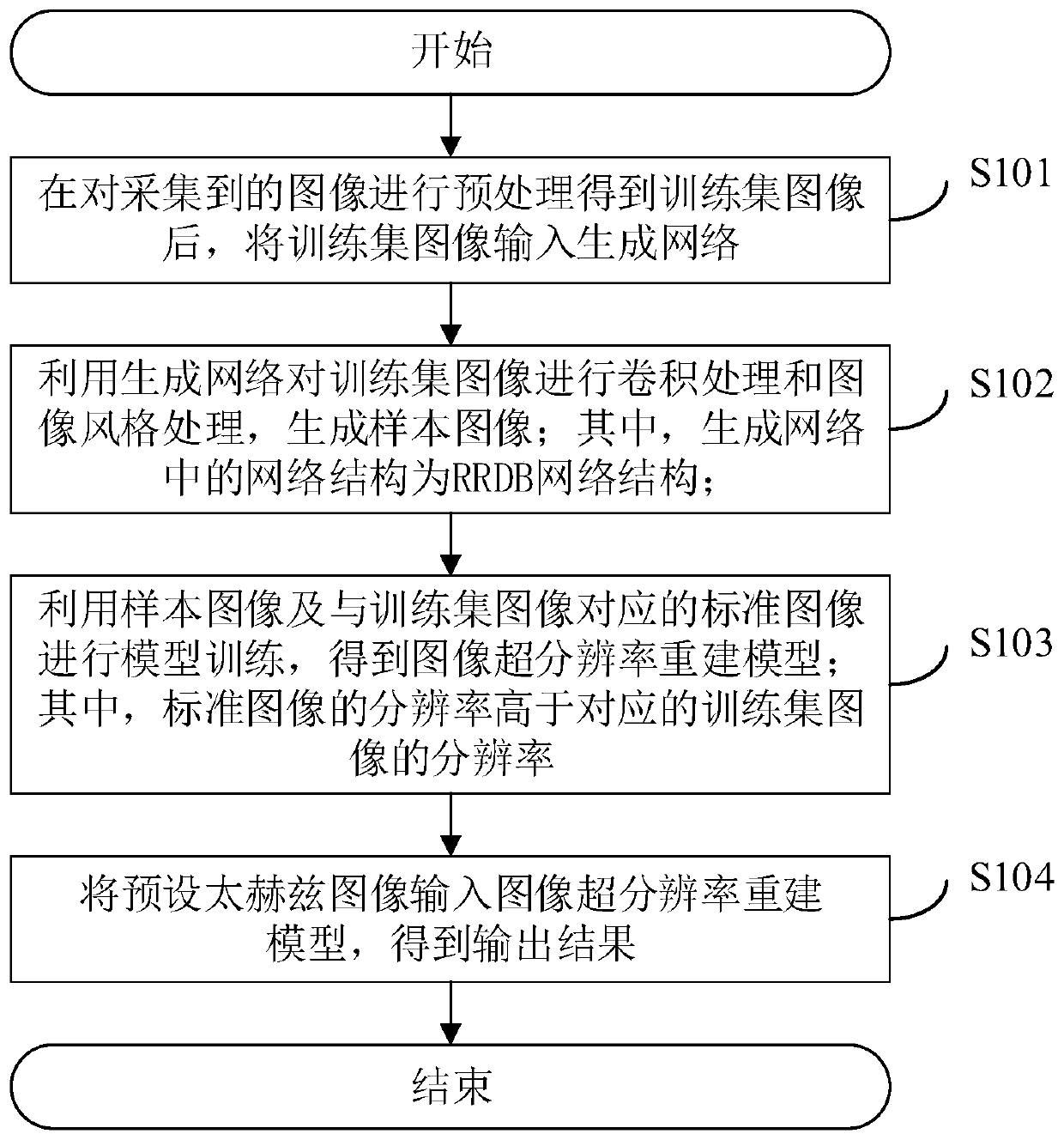

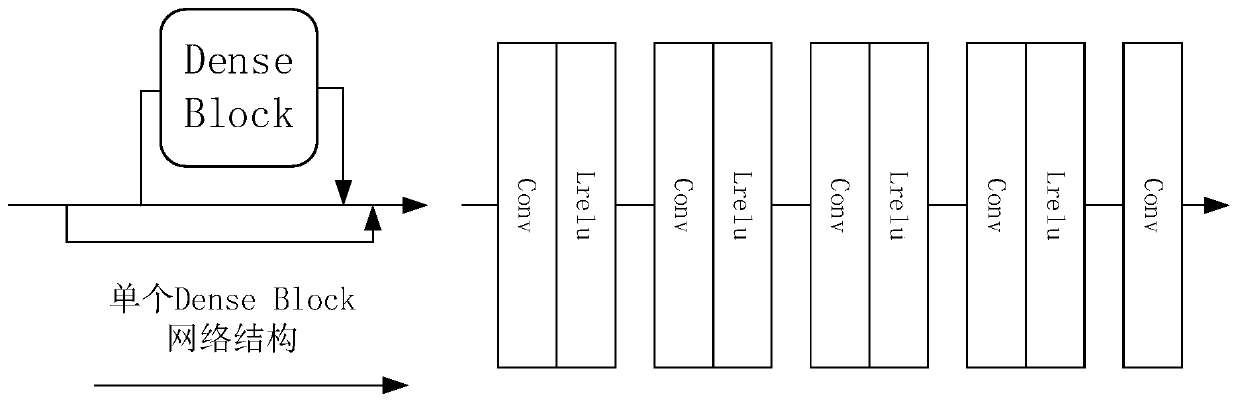

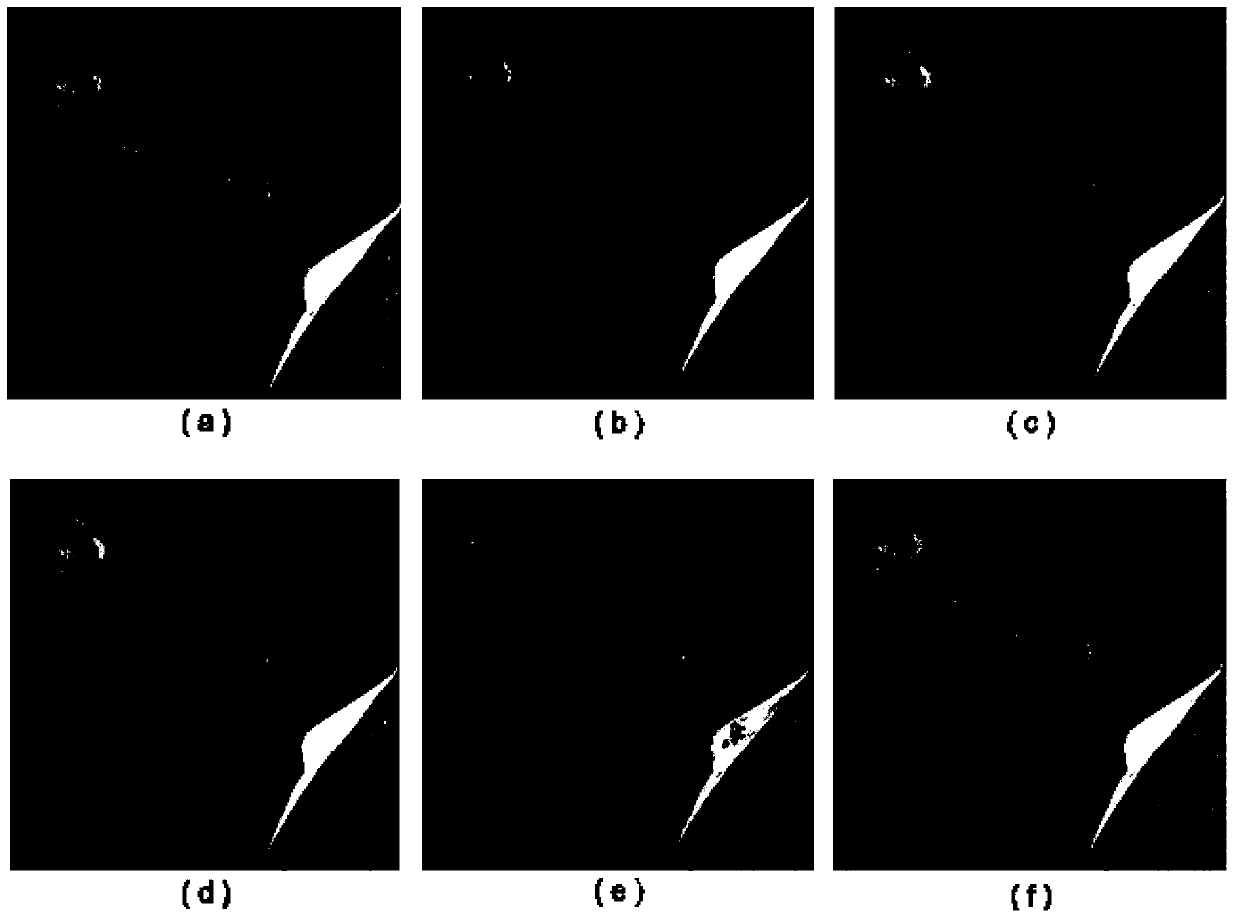

Terahertz image super-resolution reconstruction method, system and related device

ActiveCN109785237AImprove super-resolutionImproving the ability to extract terahertz image featuresGeometric image transformationNeural architecturesReconstruction methodNetwork structure

The terahertz image super-resolution reconstruction method provided by the invention comprises the following steps: preprocessing acquired images to obtain training set images, and inputting the training set images into a generation network; carrying out convolution processing and image style processing on the training set image by utilizing a generation network to generate a sample image; whereinthe network structure in the generation network is an RRDB network structure; performing model training by using the sample image and a standard image corresponding to the training set image to obtain an image super-resolution reconstruction model; and inputting the preset terahertz image into the image super-resolution reconstruction model to obtain an output result. According to the method, thenetwork structure in the generated network is the RRDB network structure, so that the terahertz image feature extraction capability is improved, and the super-resolution of the terahertz image can beimproved. The invention further provides a terahertz image super-resolution reconstruction system and device and a computer readable storage medium which all have the above beneficial effects.

Owner:GUANGDONG UNIV OF TECH

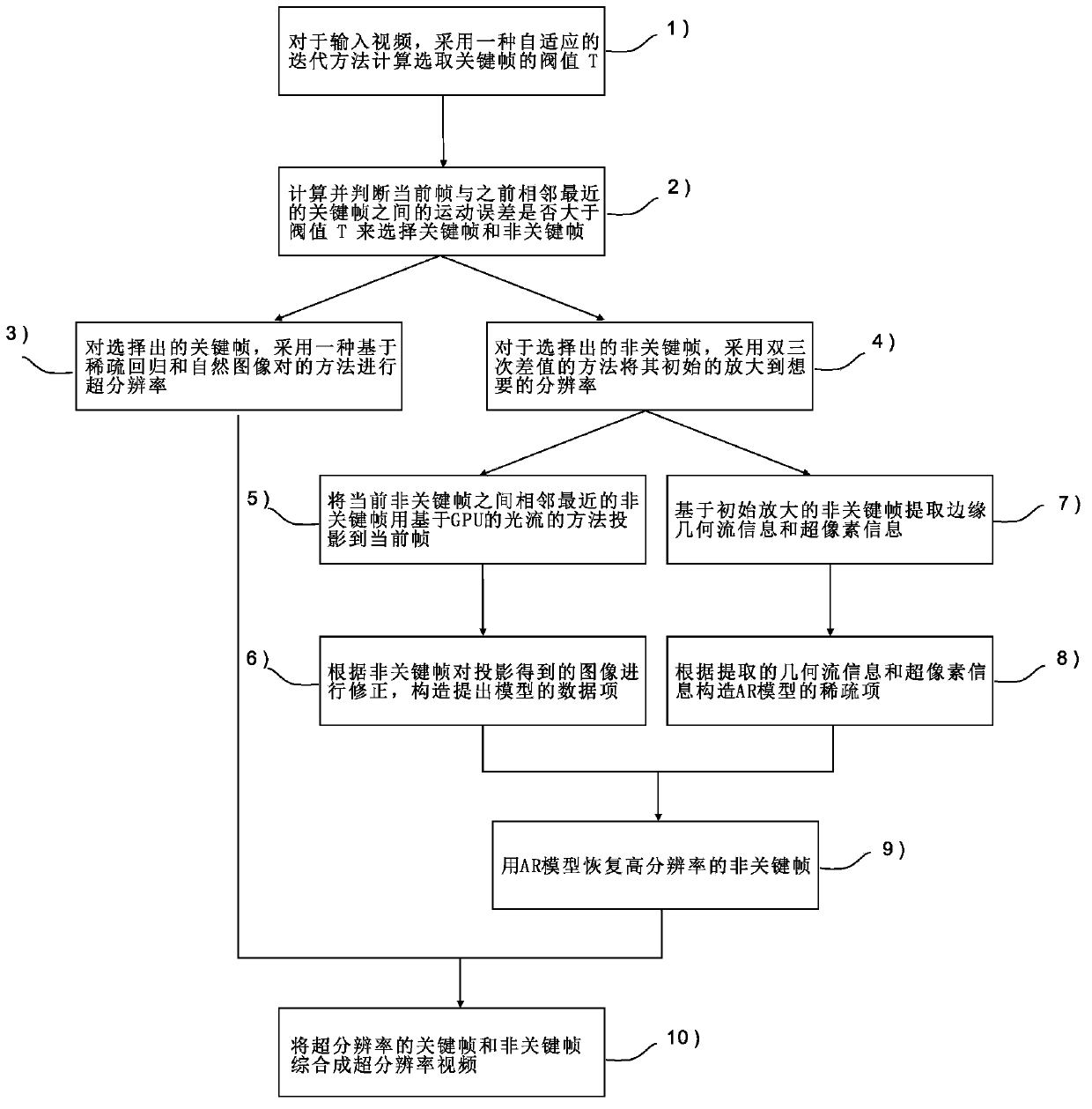

Video super resolution method for self-adaption-based superpixel-oriented autoregression model

InactiveCN103400346AImprove super-resolutionQuality improvementImage enhancementGeometric image transformationComputer graphics (images)Video processing

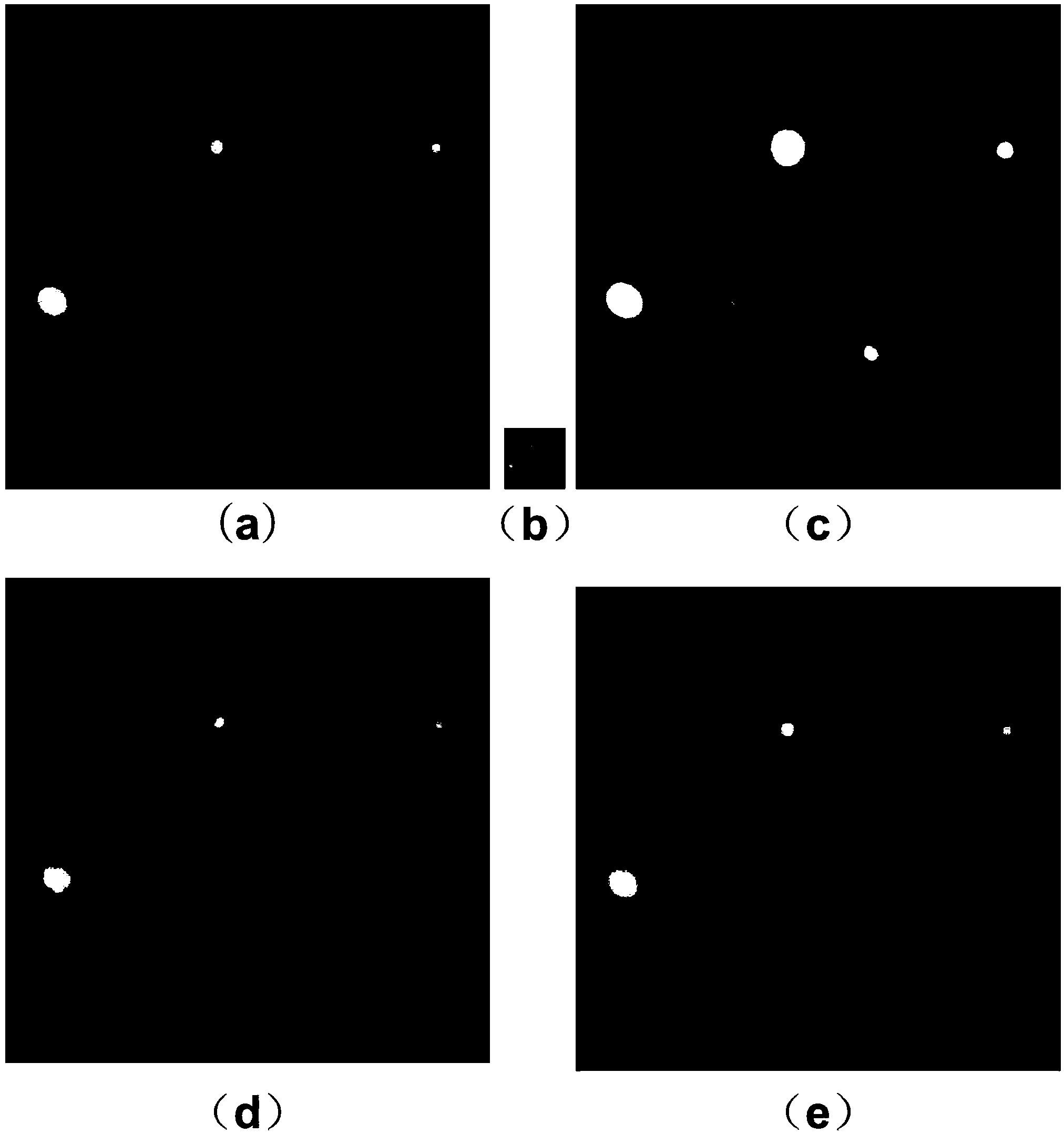

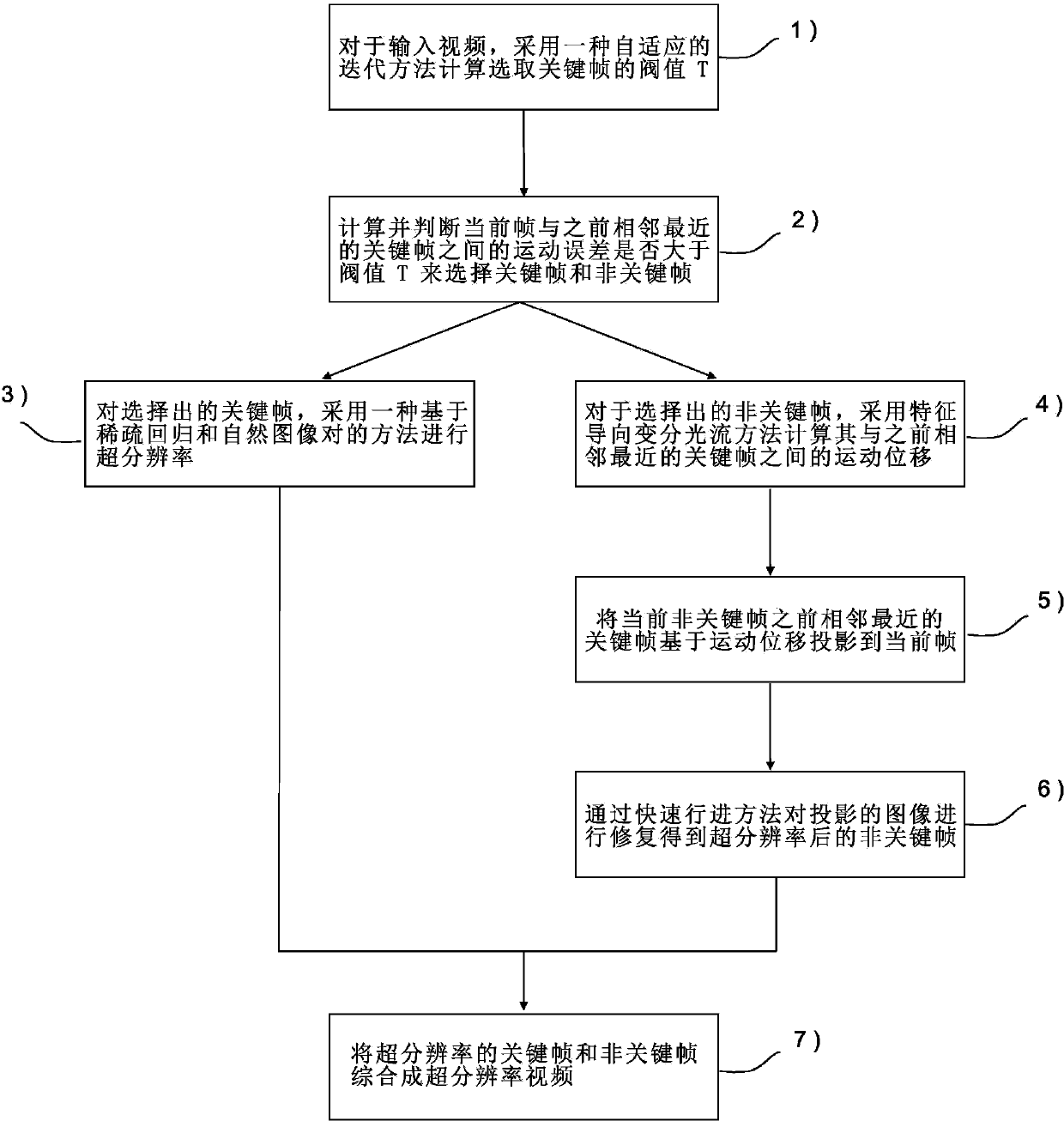

The invention belongs to the technical field of computer vision. In order to provide a video super resolution acquisition method which can be widely applied and can be used for obtaining high-quality videos, the technical scheme adopted by the invention is that a video super resolution method for a self-adaption-based superpixel-oriented autoregression model is characterized by comprising the following steps of dividing a video frame into key frames and non-key frames; performing super resolution on the key frames by adopting a sparse regression and natural image pair-based method, performing the super resolution on the non-key frames by adopting the video super resolution method for the self-adaption-based superpixel-oriented autoregression model by combining adjacent nearest key frames, and synthesizing the obtained super-resolution key frames and super-resolution non-key frames into a super-resolution video. The video super resolution method provided by the invention is mainly used for video processing.

Owner:北京超放信息技术有限公司

Method for upscaling an image and apparatus for upscaling an image

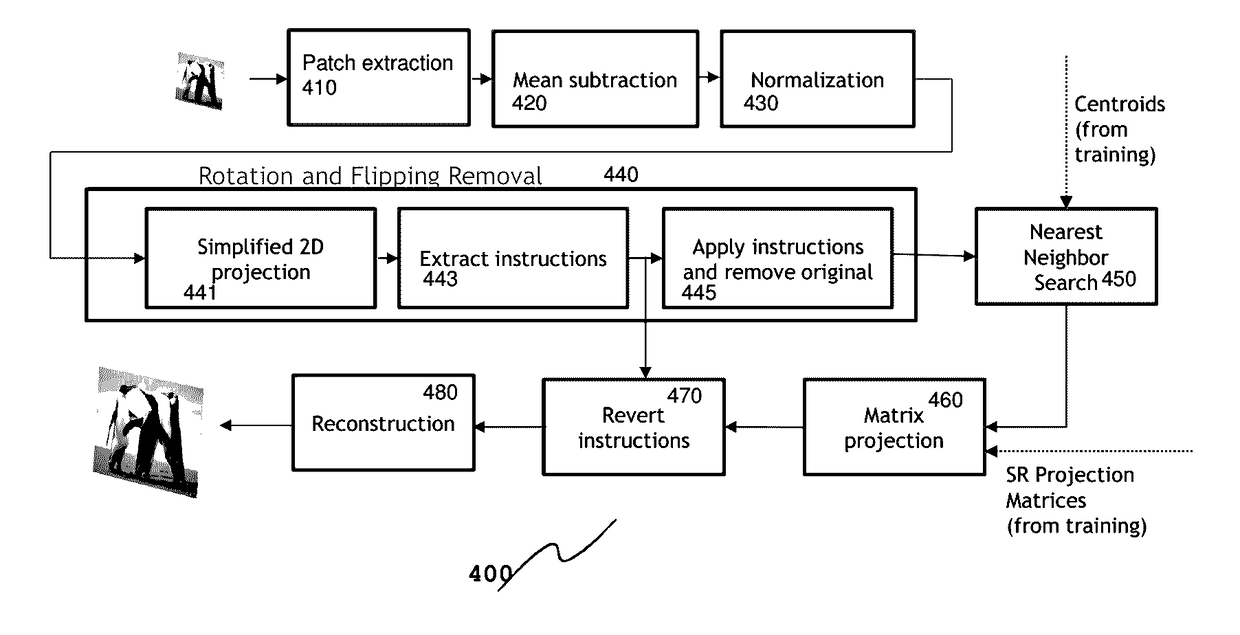

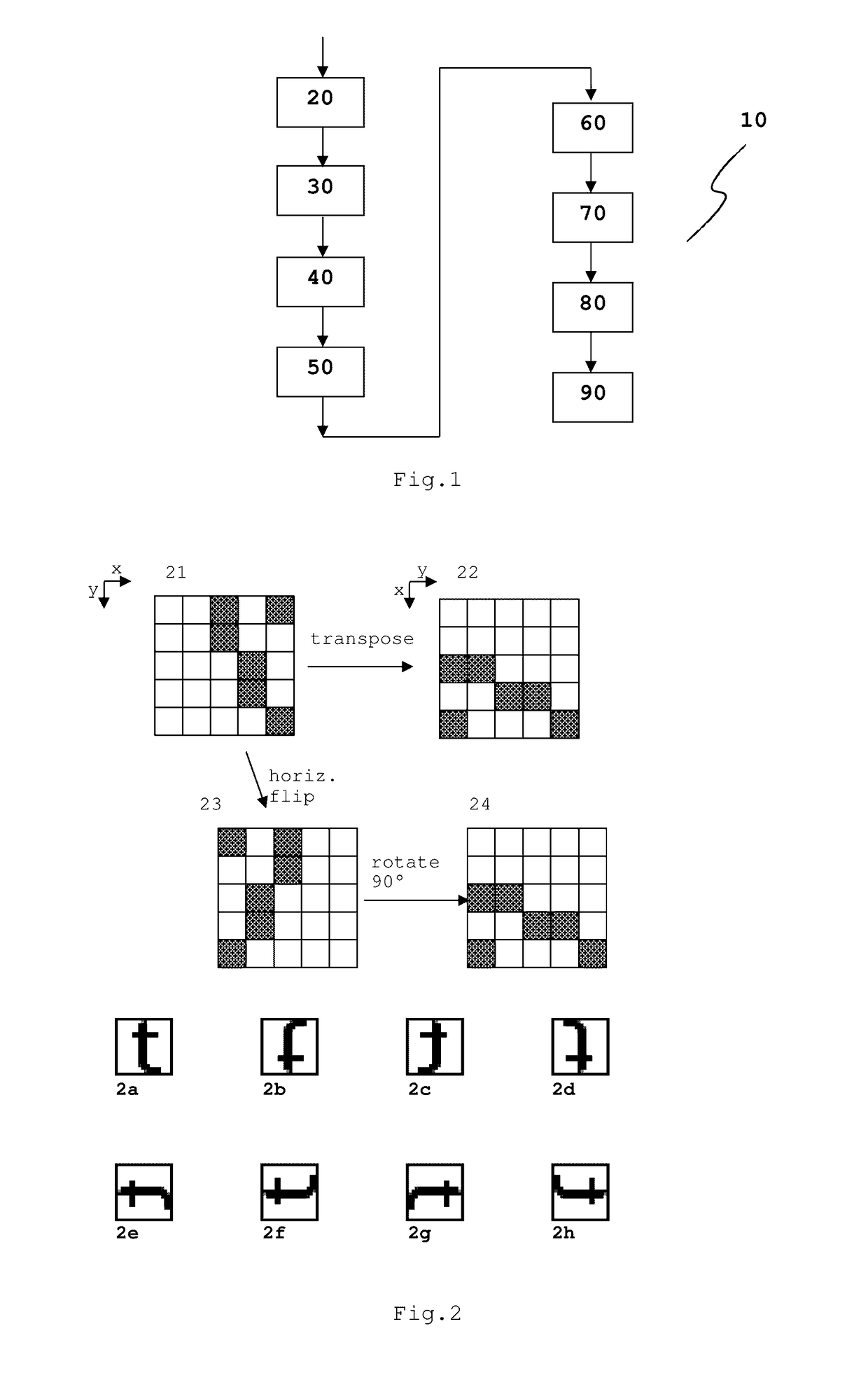

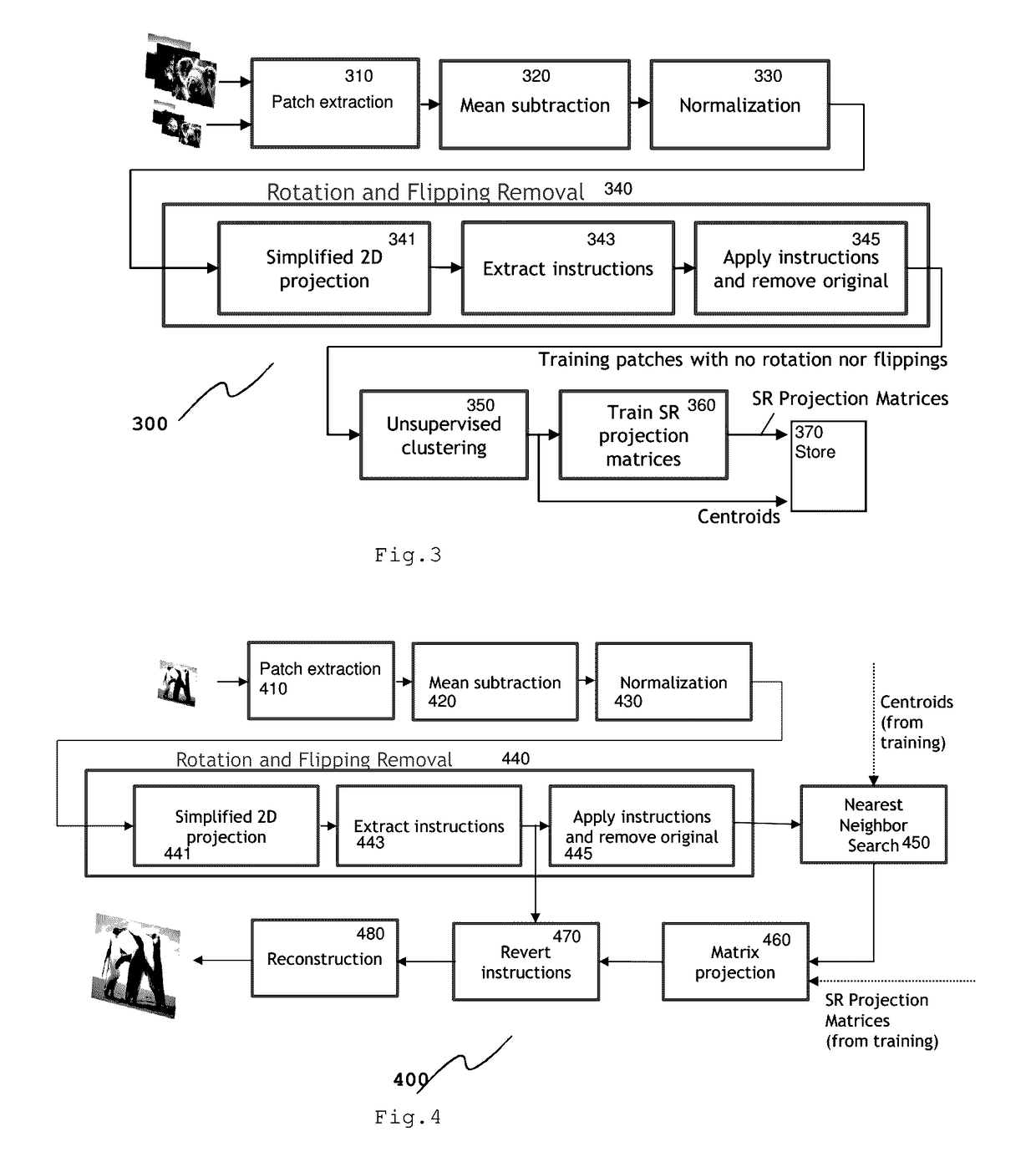

InactiveUS20170132759A1Improve super-resolutionSuitable solutionImage enhancementImage analysisHat matrixImage resolution

Image super-resolution (SR) generally enhance the resolution of images. One of SR's main challenge is discovering mappings between low-resolution (LR) and high-resolution (HR) image patches. The invention learns patch upscaling projection matrices from a training set of images. Input images are divided into overlapping patches, which are normalized and transformed to a defined orientation. Different transformations can be recognized and dealt with by using a simple 2D-projection. The transformed patches are clustered, and cluster specific upscaling projection matrices and corresponding cluster centroids determined during training are applied to obtain upscaled patches. The upscaled patches are assembled to an upscaled image.

Owner:MAGNOLIA LICENSING LLC

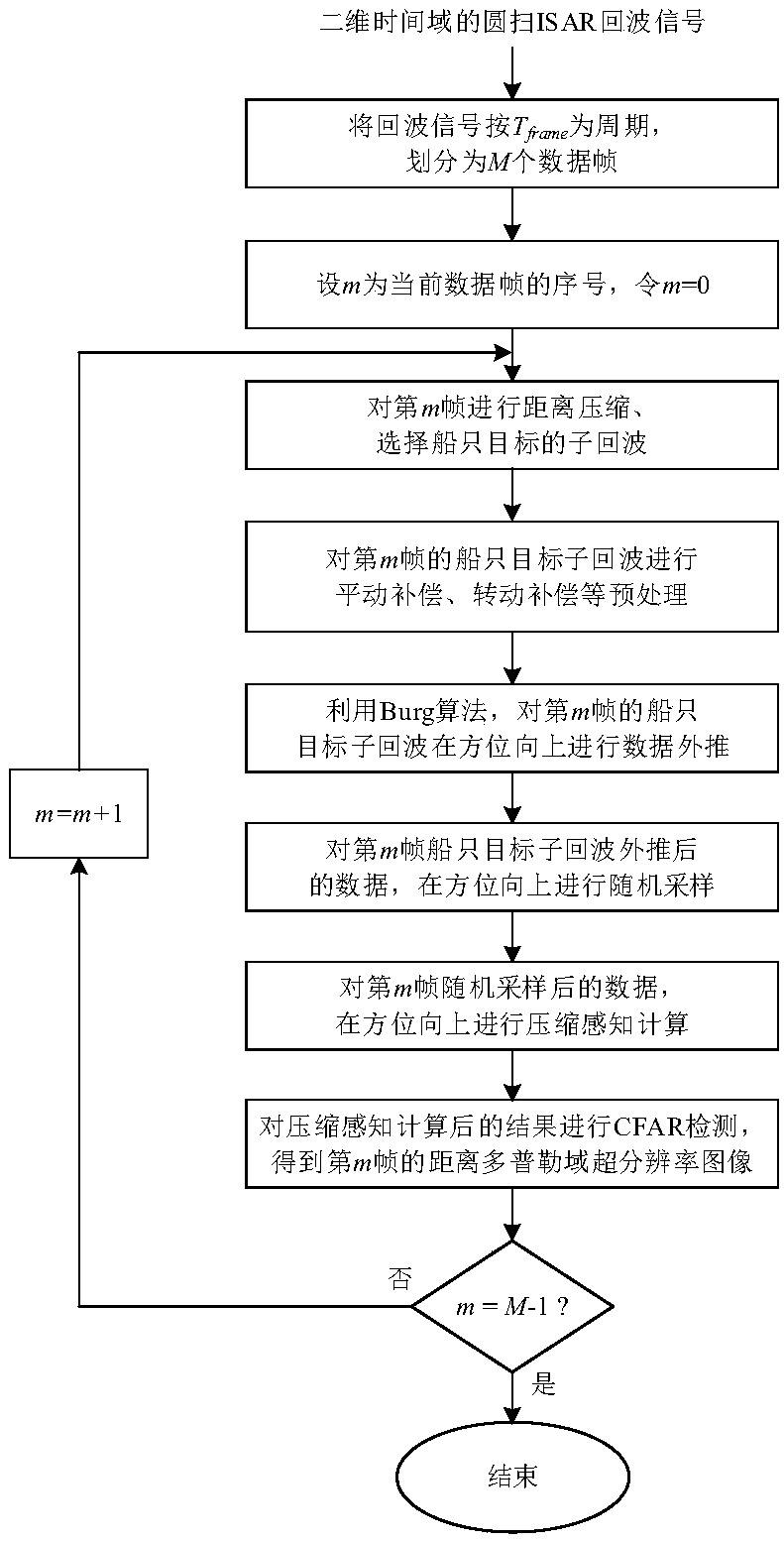

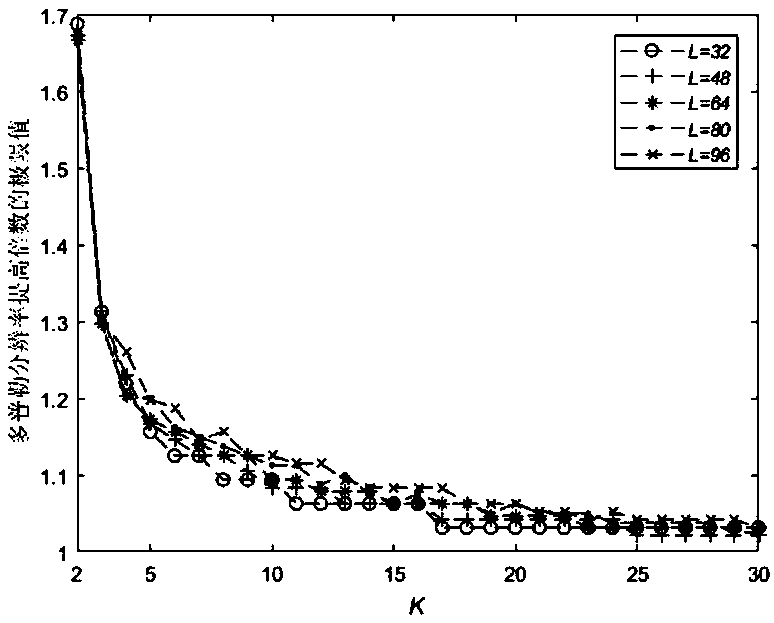

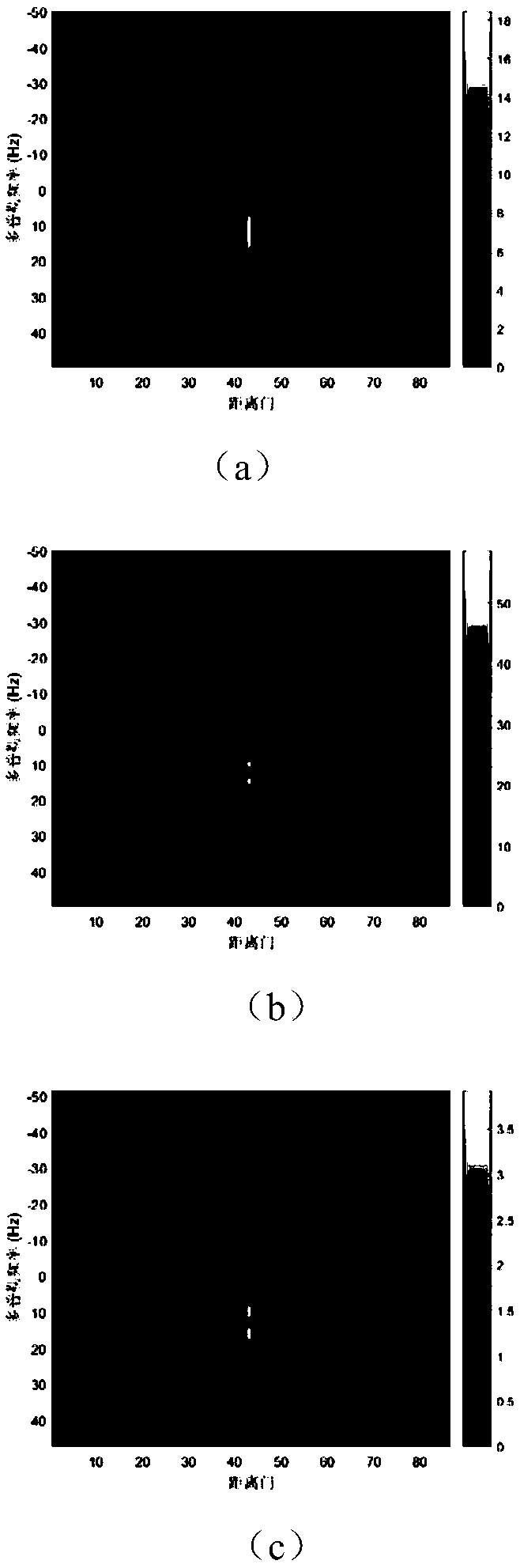

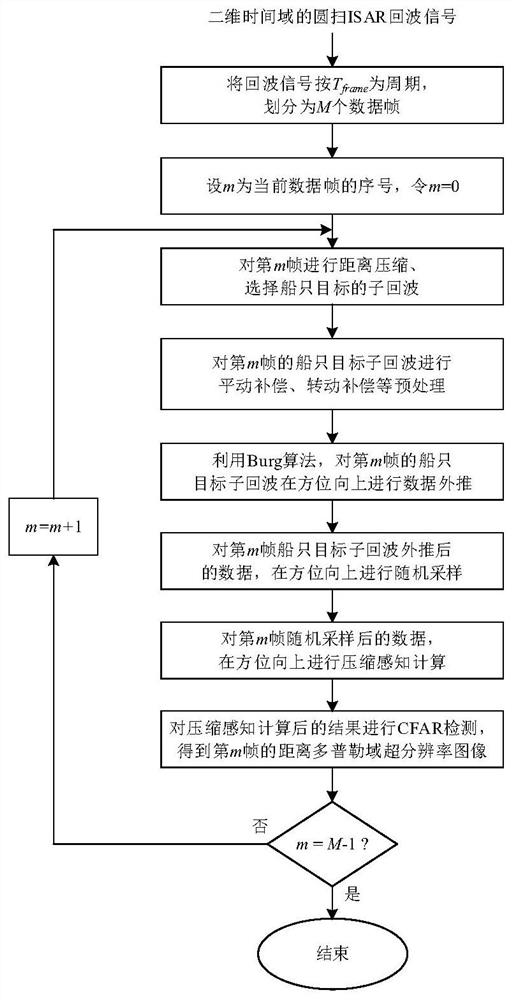

Circular scanning ISAR mode ship super-resolution imaging method

The invention discloses a circular scanning ISAR mode ship super-resolution imaging method and belongs to the radar technology field. The method comprises the following steps of dividing an echo signal in a circular scanning ISAR mode into M data frames and carrying out processing frame by frame; for a mth data frame, carrying out distance compression and selecting the sub-echo of the ship targetof a mth frame; pre-processing the sub-echo of the ship target of the mth frame, wherein preprocessing includes translation compensation and rotational compensation; using a Burg algorithm to carry out data extrapolation at an azimuth direction on the sub-echo of the ship target of the mth frame; randomly sampling extrapolated data in the azimuth direction; for the sampled data, carrying out compressed sensing calculation in the azimuth direction to obtain the sparse coefficient matrix of a current data frame; carrying out constant false alarm rate detection on the sparse coefficient matrix after compressed sensing, and acquiring the distance Doppler domain super-resolution image of the mth frame.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

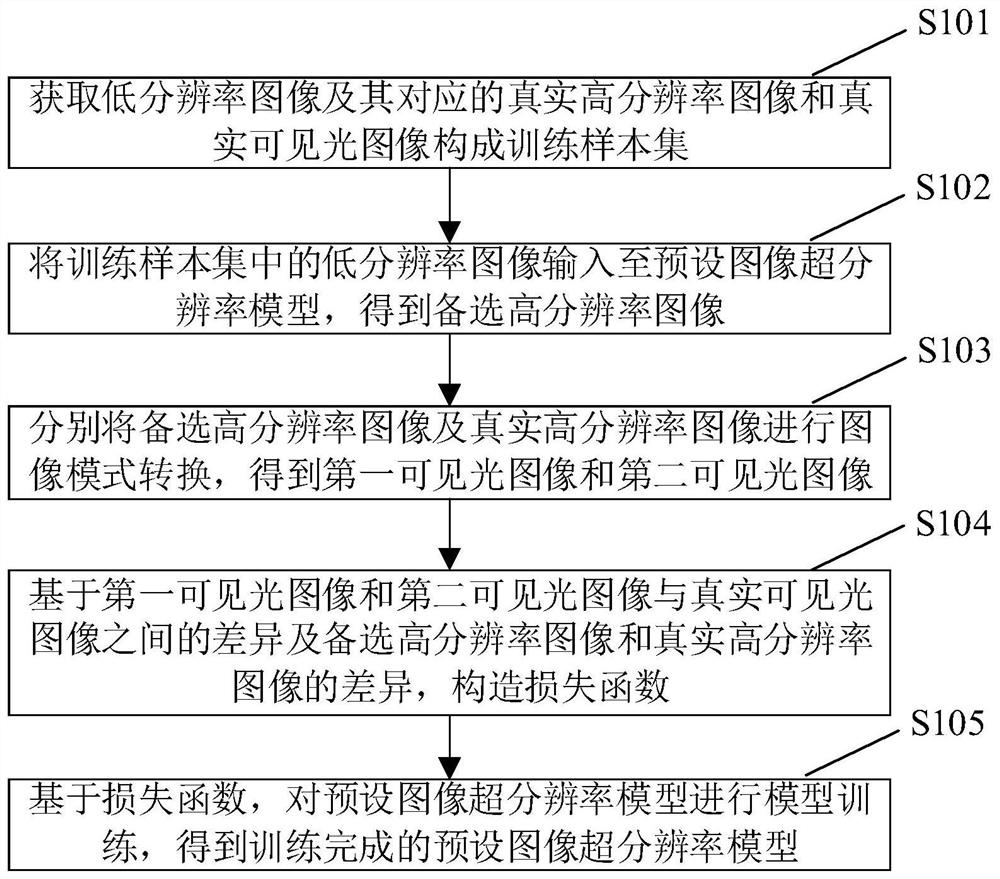

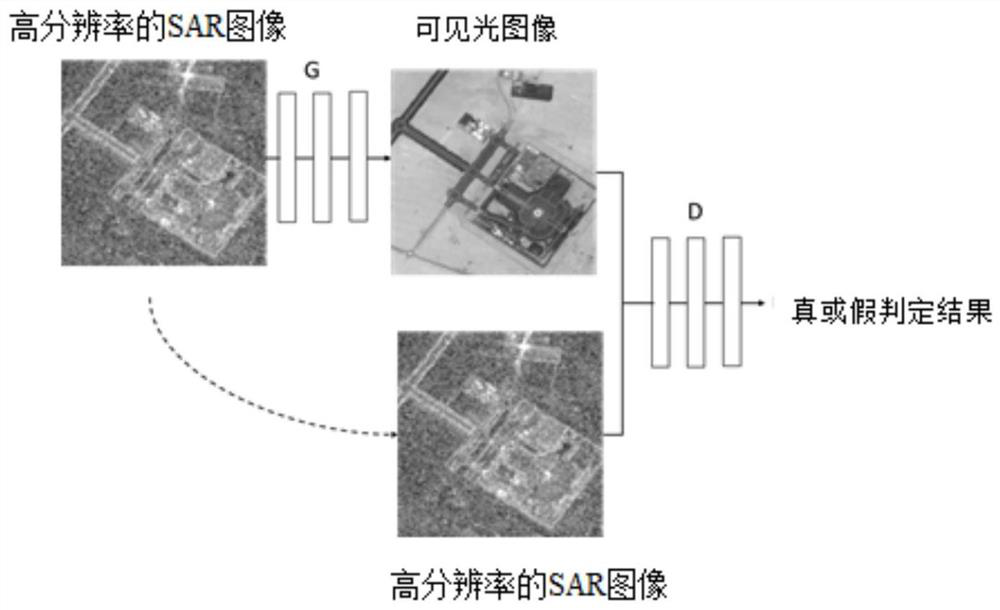

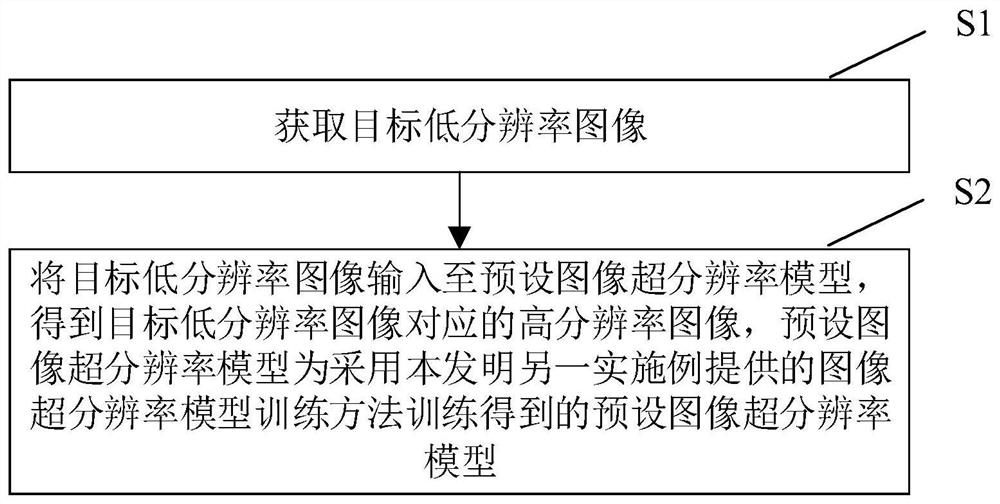

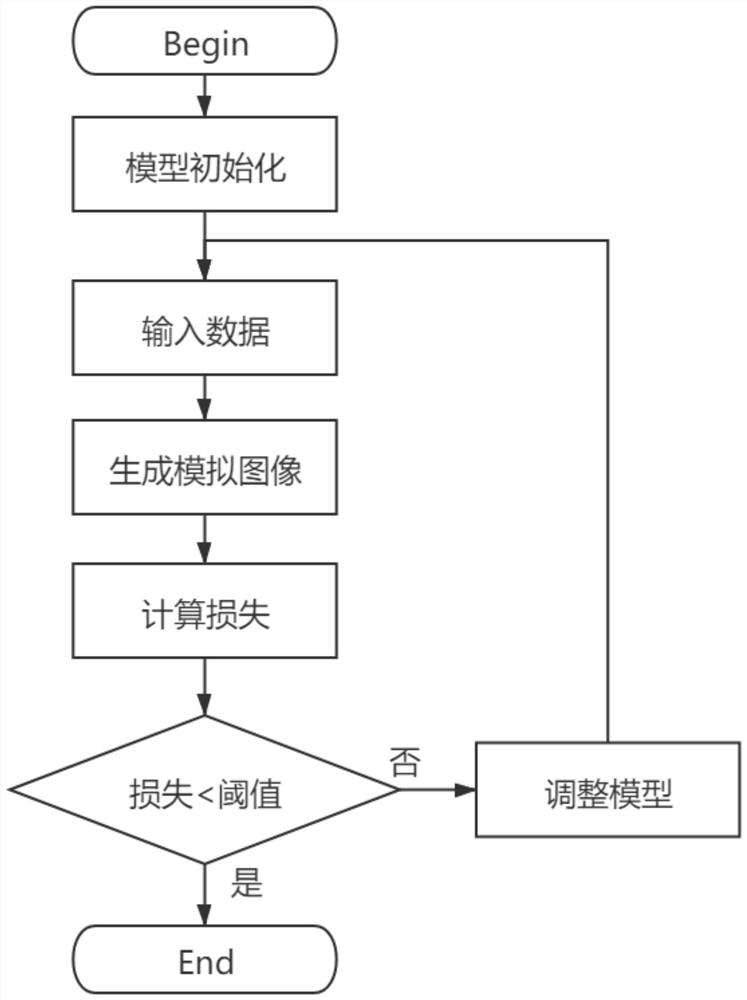

Image super-resolution model training method and device and image super-resolution model reconstruction method and device

ActiveCN112488924AQuality improvementNot limited by magnificationGeometric image transformationCharacter and pattern recognitionImage resolutionMagnification

The invention provides an image super-resolution model training method and device and an image super-resolution model reconstruction method and device. The training method comprises the steps of: obtaining a training sample set; inputting the low-resolution images in the training sample set into a preset image super-resolution model to obtain alternative high-resolution images; respectively performing image mode conversion on the alternative high-resolution image and the real high-resolution image to obtain corresponding visible light images; and constructing a loss function based on the difference between the two groups of visible light images and the real visible light image and the difference between the alternative high-resolution image and the real high-resolution image, and performing model training on a preset image super-resolution model. Mapping errors of alternative high-resolution images and corresponding real high-resolution images are calculated in a visible light space toserve as feedback information to participate in model training, so that the trained preset image super-resolution model can output high-fidelity high-resolution images under the condition of large-scale magnification times.

Owner:SHENZHEN UNIV

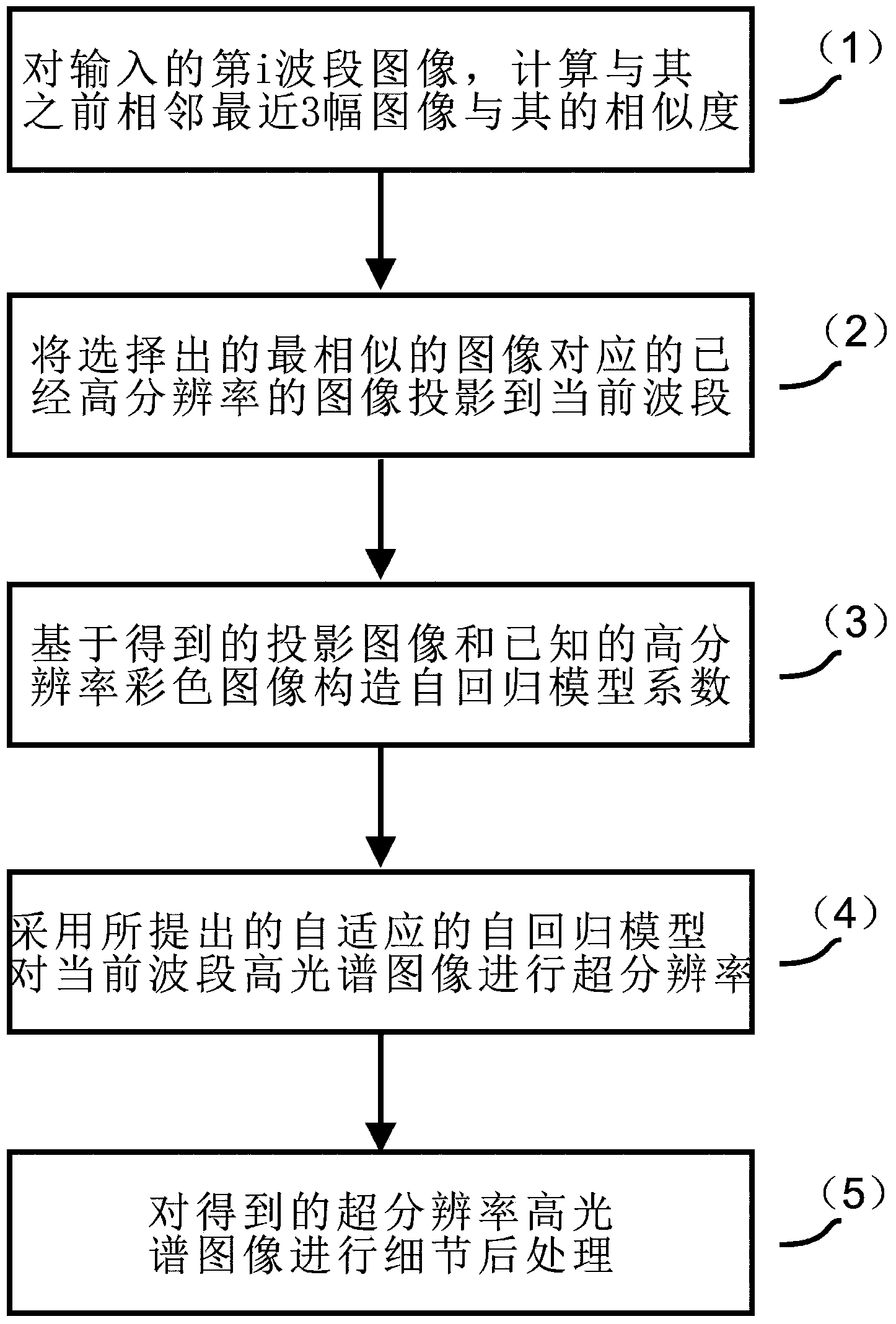

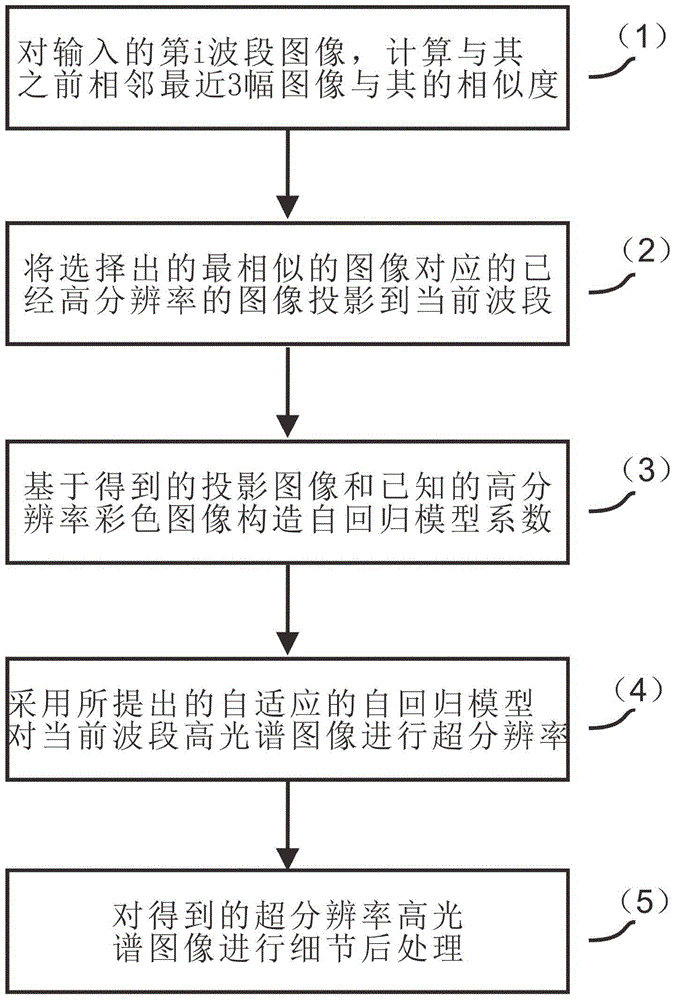

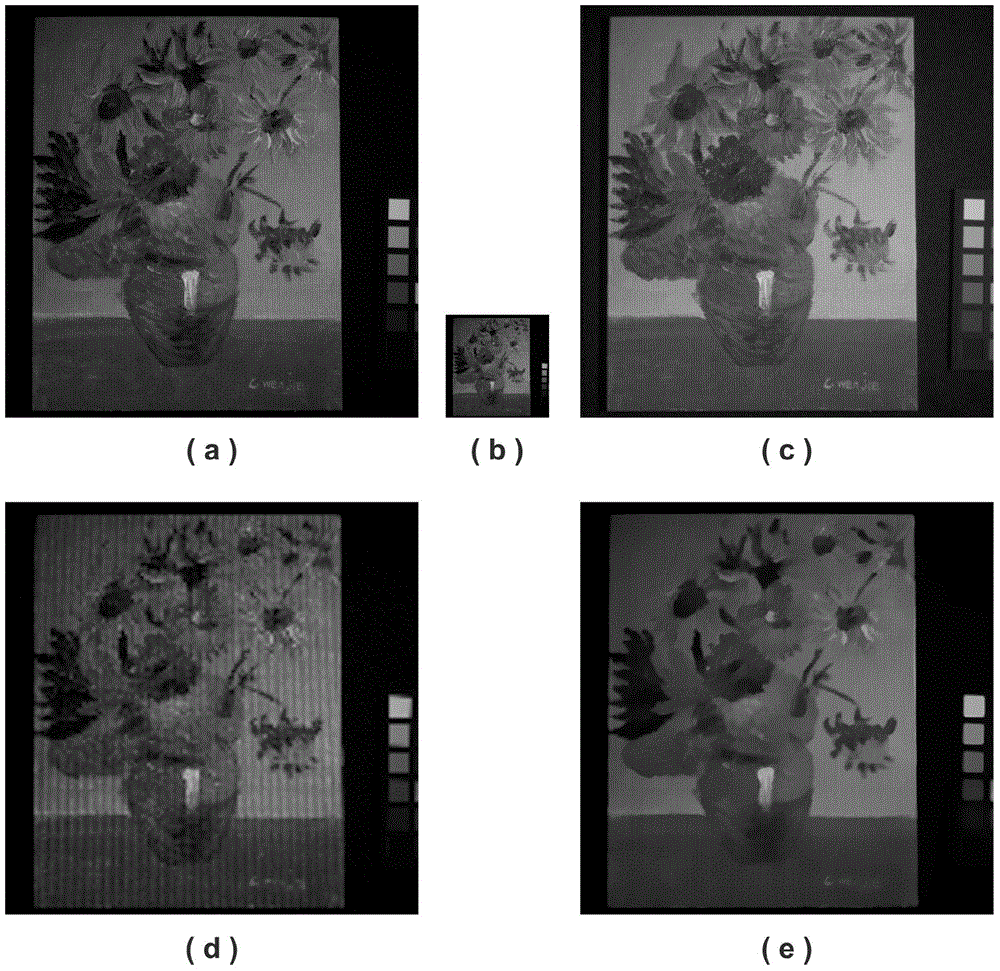

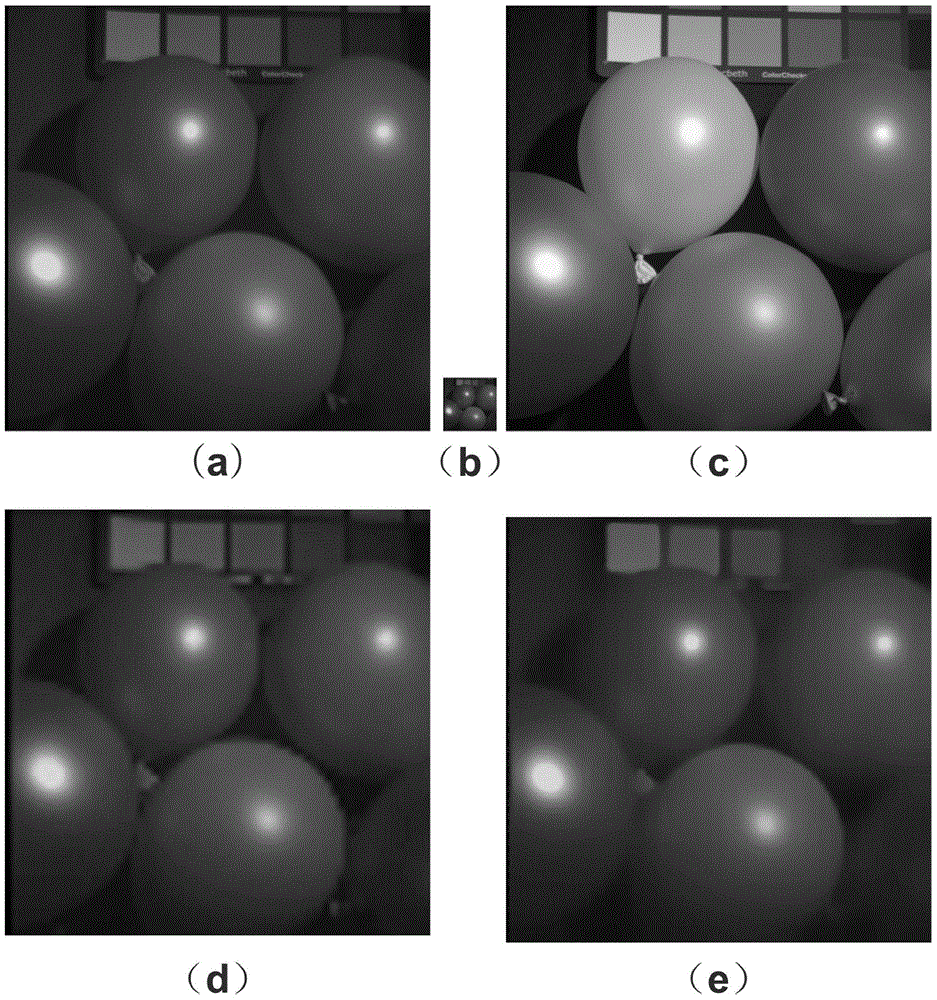

Adaptive autoregressive model-based hyper-spectral imagery super-resolution method

ActiveCN103530860AImprove super-resolutionImproved super resolutionImage enhancementGeometric image transformationColor imageVisual technology

The invention belongs to the technical field of computer vision, and provides a hyper-spectral imagery super-resolution acquisition method which has wide application and can obtain high-quality and hyper-spectral images. For the purpose, the adopted technical scheme is that the adaptive autoregressive model-based hyper-spectral imagery super-resolution method comprises the following steps: amplifying the image of each waveband from the hyper-spectral image of the first wave band by means of high-resolution color images in sequence; searching an image with the highest similarity to an input ith waveband image from three closest wave band images for the ith waveband image, and then projecting the image having exceeded the super-resolution corresponding to the most similar image to the current waveband to obtain a projection image; realizing the super-resolution of the image of the current ith waveband through an adaptive autoregressive model based on the projection image and the high-resolution color image to finally realize the image super-resolution of all wavebands. The method is mainly applied to image processing.

Owner:南京途博科技有限公司

Interactive quantized noise calculating method in compressed video super-resolution

InactiveCN101577825AImprove super-resolutionAccurate Quantization of Noise ValuesTelevision systemsDigital video signal modificationHigh resolution imageComputer science

The invention discloses an interactive quantized noise calculating method in compressed video super-resolution. The method includes the following steps of: first counting the appearance probability of DCT coefficients before quantization before a coding end does quantized operation to the DCT coefficients of a video frame and calculating Laplace parameter capable of representing the distribution of the DCT coefficients before quantization; then writing the distribution parameter of the DCT coefficients before quantization of an obtained image block in a Data-user field reserved in a code stream to be sent to a decoding end through coding; and finally obtaining the distribution parameter of the DCT coefficients before quantization in the code stream from the decoding end, calculating and obtaining the quantized noise according to the distribution probability density of the DCT coefficients before quantization and coefficients after quantization and consequently obtaining a final high-resolution image in a super-resolution algorithm. The calculating method is applicable to the compressed video super-resolution algorithm interacting between the coding end and the decoding end, improves the accuracy of quantized noise by obtaining the distribution parameter of the DCT coefficients before quantization at the coding end and providing the distribution parameter for the decoding end to calculate the quantized noise.

Owner:WUHAN UNIV

Video super-resolution method based on feature-oriented variational optical flow

InactiveCN103400394AImprove super-resolutionQuality improvementImage enhancementImage analysisOptical flowFeature based

The invention belongs to the field of a computer vision technology. In order to provide a video super-resolution acquiring method capable of being widely applied and capable of acquiring a high-quality video, the invention adopts the technical scheme that a video super-resolution method based on feature-oriented variational optical flow is characterized in that video frames are divided into key frames and non-key frames; super-resolution is carried out on the key frames by a method based on sparse regression and natural image pairing; and super-resolution is carried out on the non-key frames by the method based on the feature-oriented variational optical flow in combination with the previous most neighboring key frame, and the super-resolution non-key frames and the super-resolution non-key frames are integrated into a super-resolution video. The video super-resolution method is mainly applied in video processing.

Owner:TIANJIN UNIV

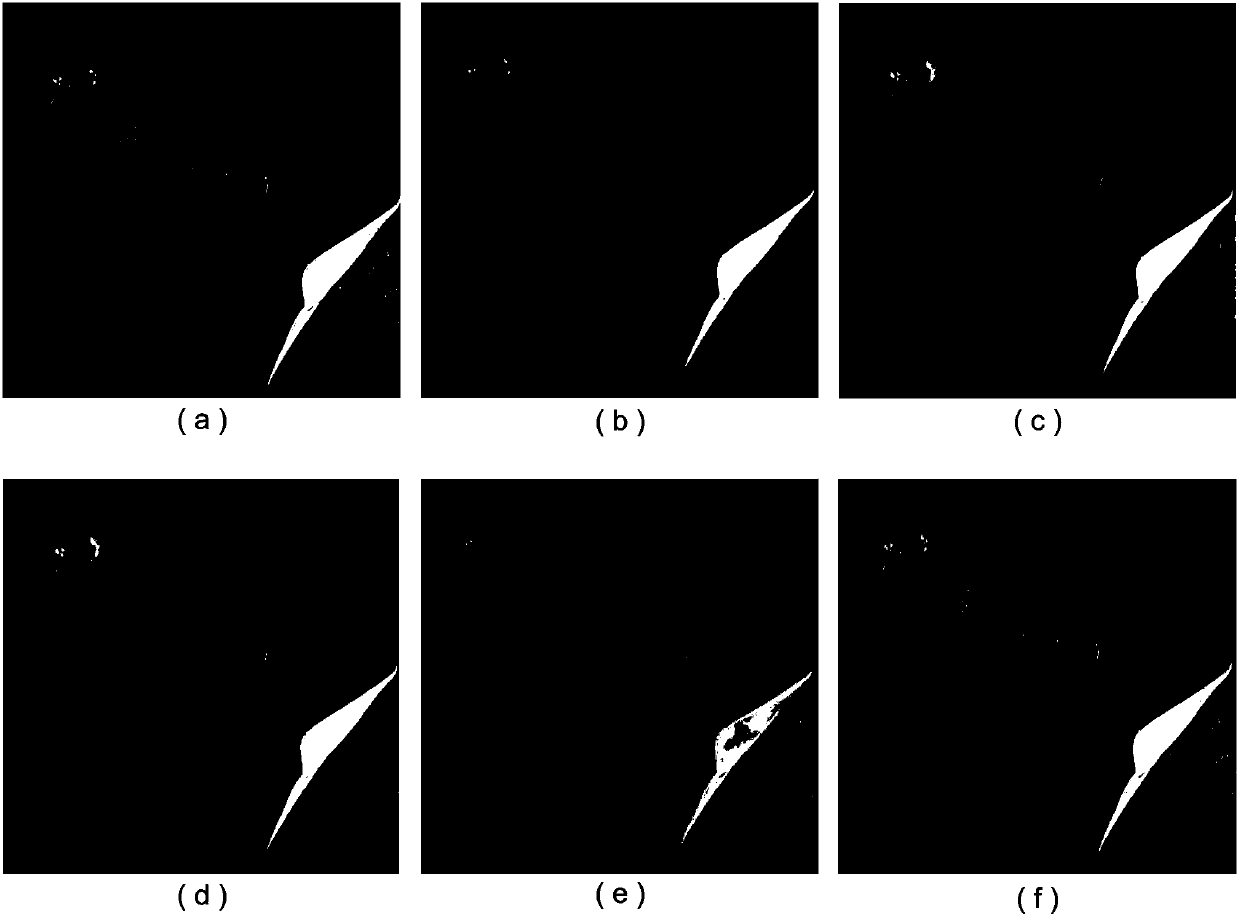

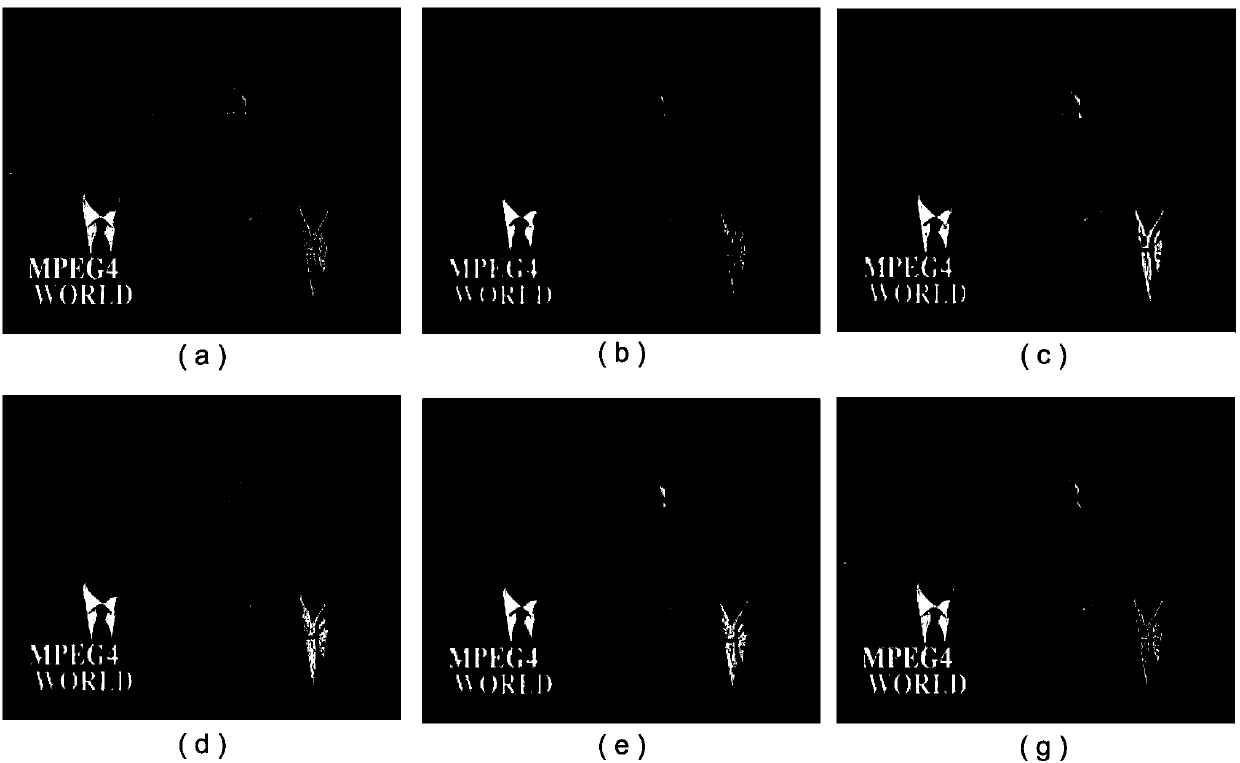

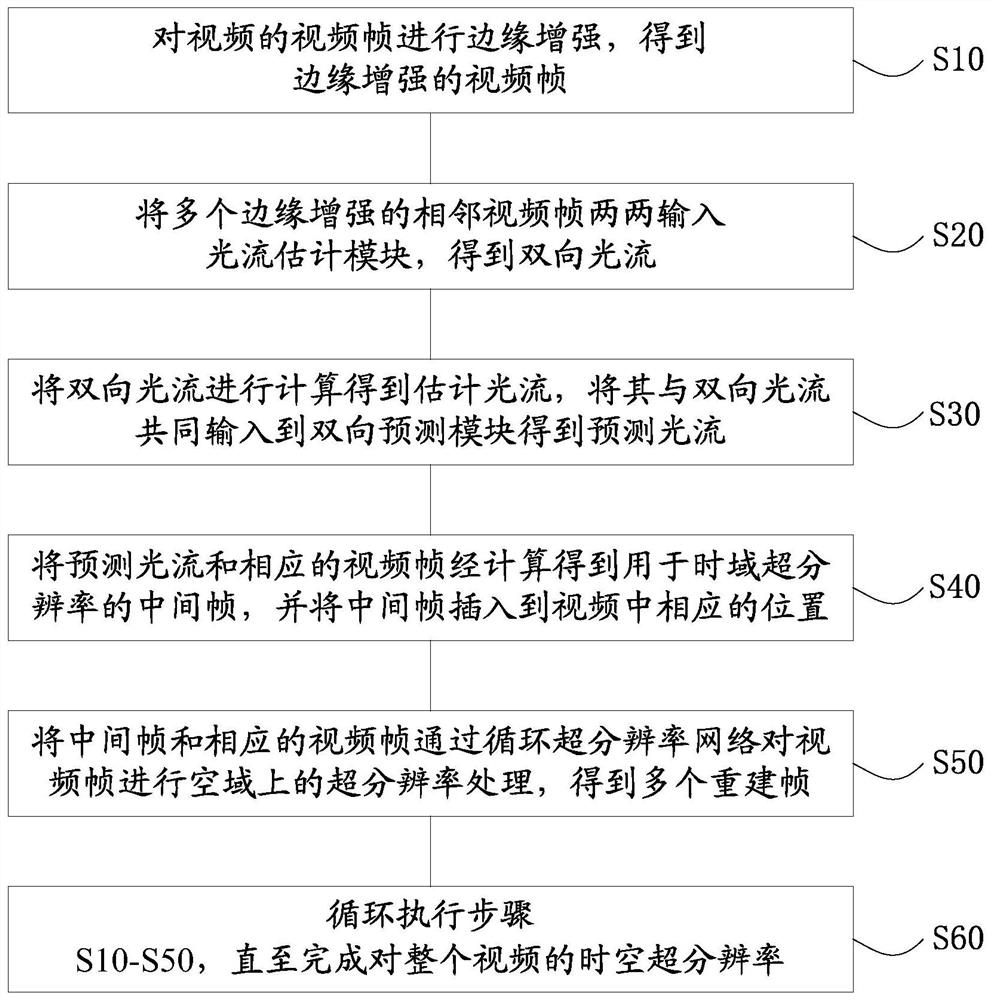

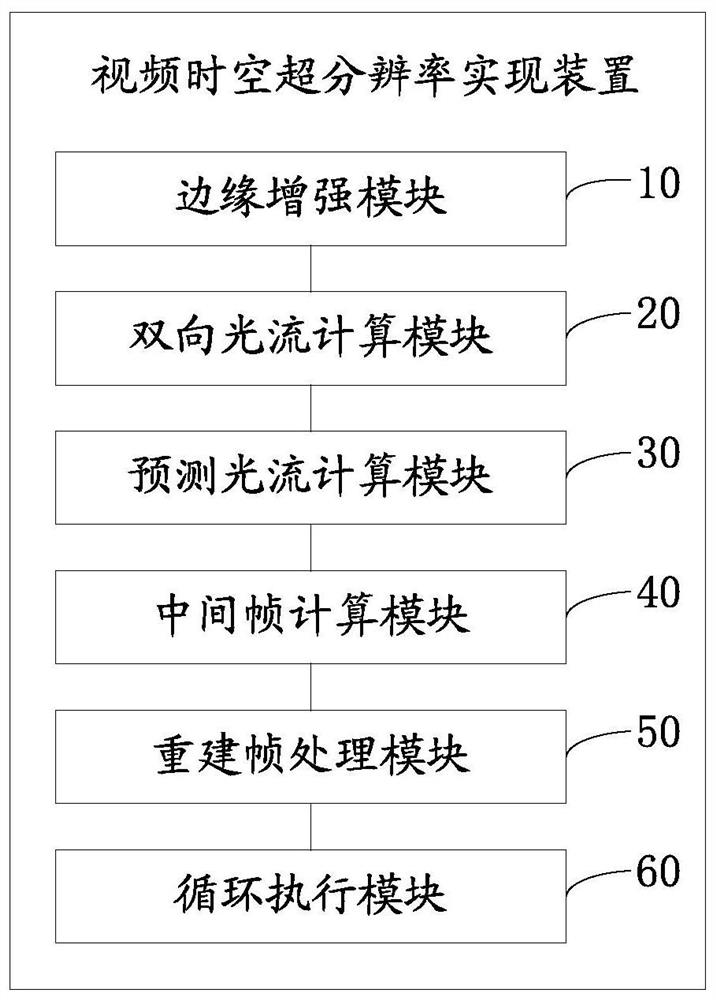

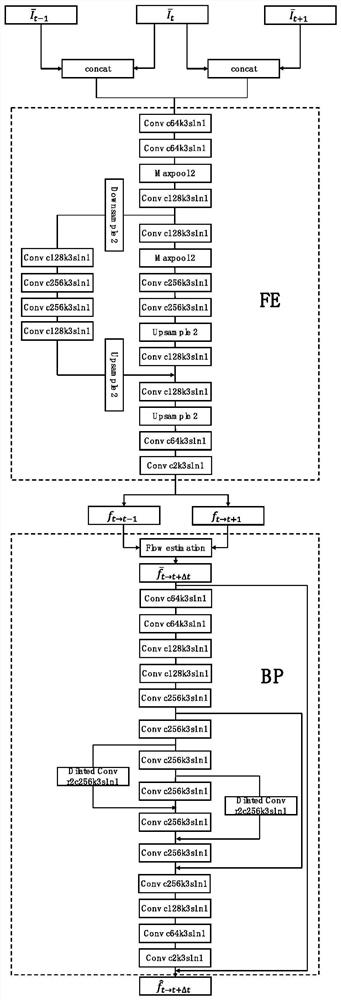

Video space-time super-resolution implementation method and device

ActiveCN112712537AEfficient joint spatio-temporal super-resolutionImprove visual qualityImage analysisGeometric image transformationTime domainImage resolution

The invention provides a video space-time super-resolution implementation method and device, and the method comprises the steps of carrying out the edge enhancement of a video frame of a video, and obtaining an edge-enhanced video frame; inputting the plurality of edge-enhanced adjacent video frames into an optical flow estimation module in pairs to obtain a bidirectional optical flow; calculating the bidirectional optical flow to obtain an estimated optical flow, and inputting the estimated optical flow and the bidirectional optical flow into a bidirectional prediction module together to obtain a predicted optical flow; calculating the prediction optical flow and the corresponding video frame to obtain an intermediate frame for time domain super-resolution, and inserting the intermediate frame into a corresponding position in the video; and performing spatial domain super-resolution processing on the intermediate frame and the corresponding video frame through a cyclic super-resolution network to obtain a plurality of reconstructed frame; and circularly executing the steps until the space-time super-resolution of the whole video is completed. The invention has the beneficial effects that space-time joint super-resolution can be effectively carried out on the video, and the visual quality of the video is improved.

Owner:SHENZHEN UNIV

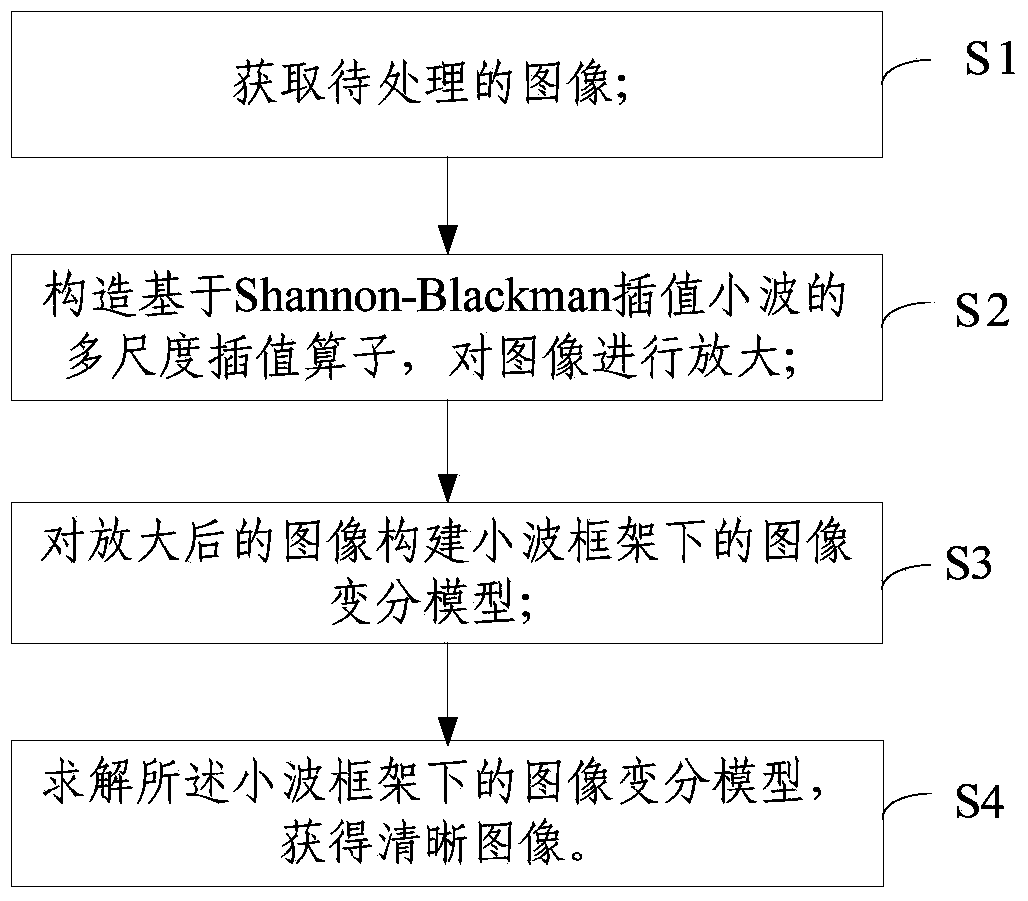

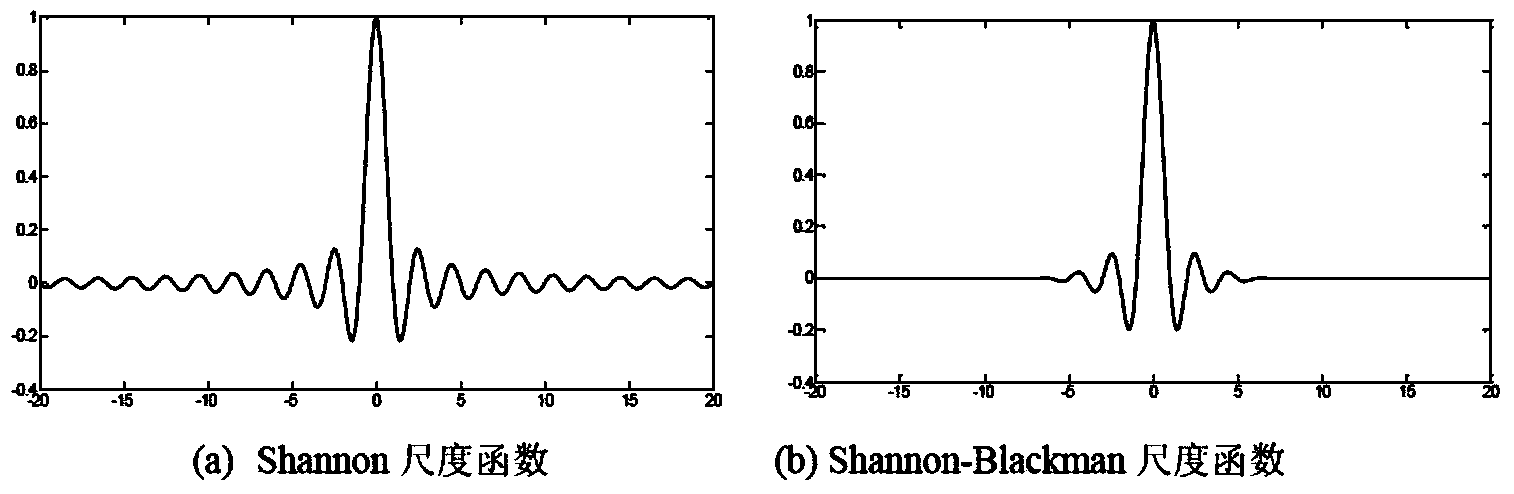

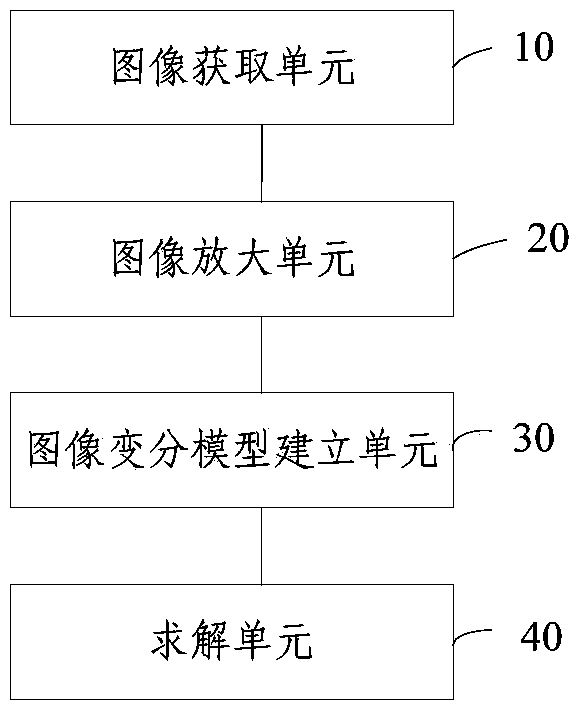

Image processing method and device based on Shannon-Blackman wavelet sparse representation

ActiveCN104392411AImprove super resolutionImplement adaptive configurationGeometric image transformationImaging processingSparse grid

The invention discloses an image processing method and device based on Shannon-Blackman wavelet sparse representation. The method comprises steps as follows: a to-be-processed image is acquired; a multi-scale interpolation operator based on Shannon-Blackman interpolating wavelets is established, and the image is amplified; an image variation model for the amplified image under a wavelet frame is established; and the image variation model under the wavelet frame is solved to obtain a sharp image. According to the Shannon-Blackman wavelet sparse representation based image processing method and device, the image is amplified through the multi-scale interpolation operator, the image variation model under the wavelet frame is established, the image is solved through a sparse grid algorithm to obtain a super-resolution image, and the image processing efficiency and accuracy are improved.

Owner:CHINA AGRI UNIV

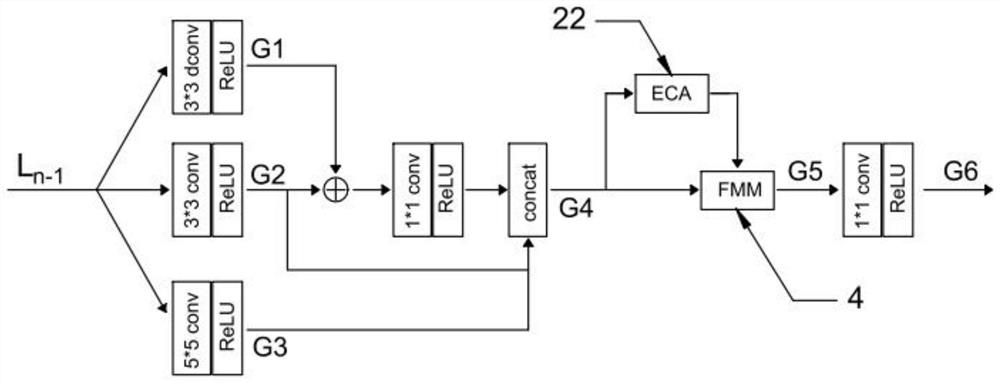

Image enhancement method and device for diabetic renal lesion classification

PendingCN114037624AImprove image qualityImprove accuracyImage enhancementImage analysisNuclear medicineImage resolution

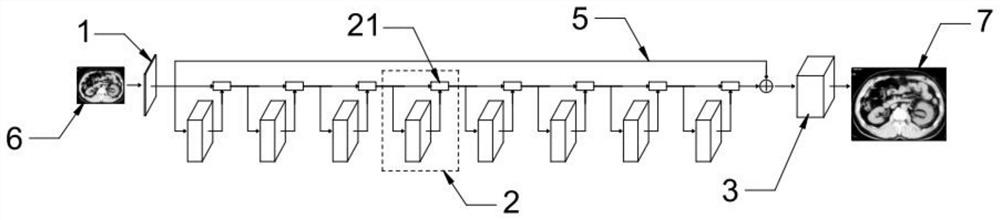

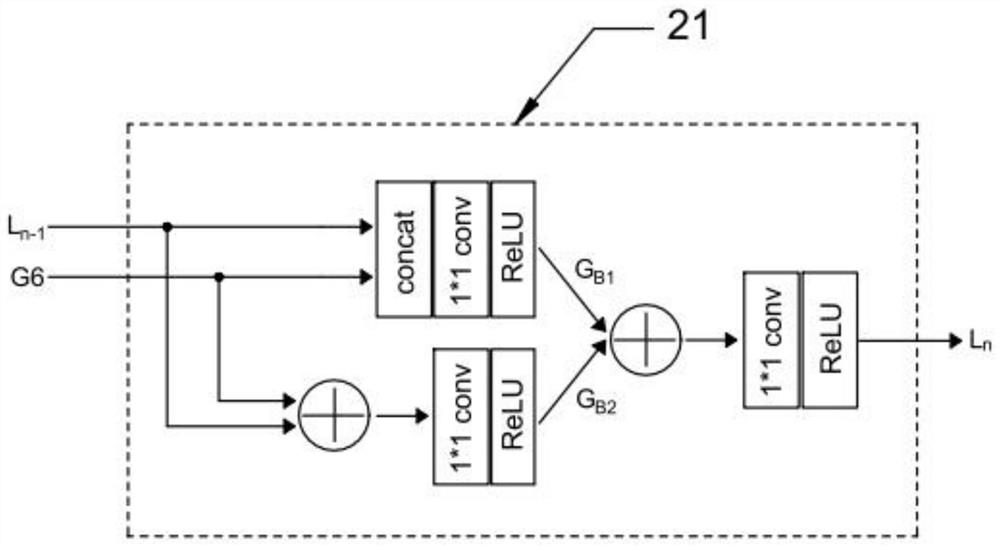

The invention discloses an image enhancement method for diabetic renal lesion classification and electronic equipment, the enhancement method comprises the steps of contrast enhancement, denoising, super-resolution reconstruction and the like, and the accuracy of an image classification network on diabetic renal lesion classification is improved by improving the quality of a kidney CT image. The super-resolution reconstruction network comprises a preliminary feature extraction unit, an MDC feature extraction unit and an up-sampling unit; the MDC feature extraction unit is arranged at the downstream end of the preliminary feature extraction unit; the plurality of MDC feature extraction units are sequentially connected end to end; and the up-sampling unit is arranged at the downstream end of the MDC feature extraction unit and is used for carrying out super-resolution reconstruction on the second feature map. According to the invention, the super-division network is used for repeatedly utilizing the features, the situation that low-layer feature information gradually disappears in continuous nonlinear operation is effectively avoided, and the model obtains good balance between the feature extraction effect and the complexity.

Owner:成都市第二人民医院

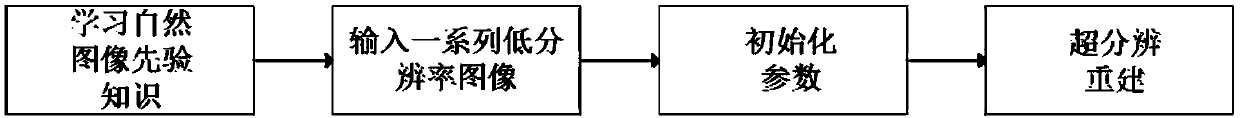

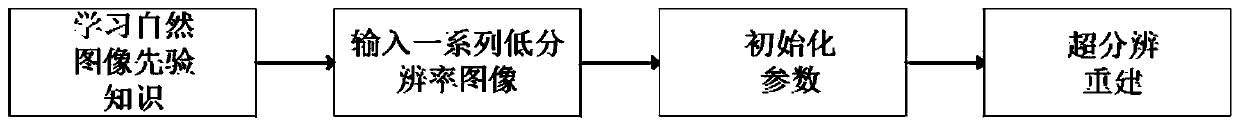

Method of using natural image prior-knowledge for multi-image super-resolution reconstruction

ActiveCN108665412AImprove super-resolutionQuality improvementGeometric image transformationMulti-imageImage resolution

The invention provides a method of using natural image prior-knowledge for multi-image super-resolution reconstruction. According to the method, a fields-of-experts model obtained by learning is introduced to be used as the natural image prior-knowledge in a process of using a series of low-resolution images for super-resolution reconstruction, and quality of multi-image super-resolution reconstruction is improved. Compared with prior knowledge of L1-norm, total variation and the like used by traditional Bayesian multi-image super-resolution methods, the natural image prior-knowledge used in the method is the fields-of-experts model obtained through training of an image database, and can better extract statistical features of natural scenes, and thus obtain better super-resolution reconstruction effects. The method simplifies partial processes of multi-image super-resolution, and shortens calculation time.

Owner:ZHEJIANG UNIV

Equipment for reconstructing high dynamic image in high resolution

InactiveCN100481937CImprove super-resolutionImprove performance2D-image generationClosed circuit television systemsHigh resolution imageImage quality

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

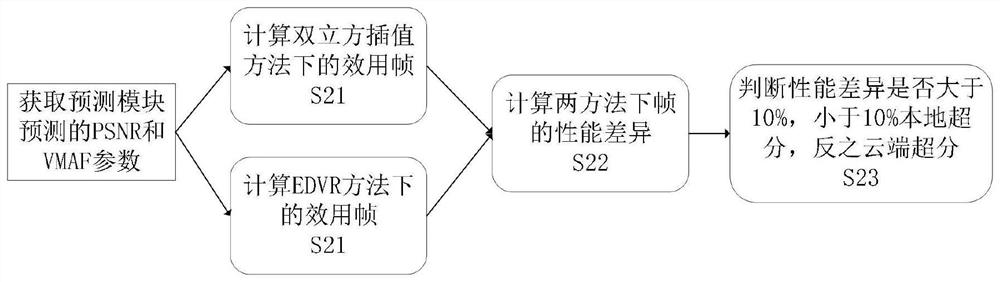

Video super-resolution method and system for cloud fusion

PendingCN114202463ARestore accuratelyEfficient reductionGeometric image transformationNeural architecturesComputational scienceVideo restoration

The invention provides a cloud fusion-oriented video super-resolution method and system, and belongs to the field of video processing, and the system comprises a restoration effect prediction module, a task dynamic scheduling module, a mobile terminal processing module, a cloud processing module and a frame fusion module. The method comprises the following steps: collecting features of a current low-resolution video frame, inputting the features into a restoration effect prediction module, and predicting a super-resolution effect of the current video frame after the current video frame passes through a bicubic interpolation method and a video restoration model based on an enhanced variable convolutional network; whether the current low-resolution video frame is unloaded to the cloud processing module for super-resolution reduction is determined through the task dynamic scheduling module; and inputting the video frame after cloud super-division and the video frame after local processing into a frame fusion module to obtain a high-definition video after super-resolution reduction. According to the method, on the premise that cloud resources are utilized, super-resolution processing of low-resolution videos is achieved, and the method has the advantages of being real-time, rapid and accurate in reduction and low in memory resource occupation.

Owner:SHAANXI NORMAL UNIV

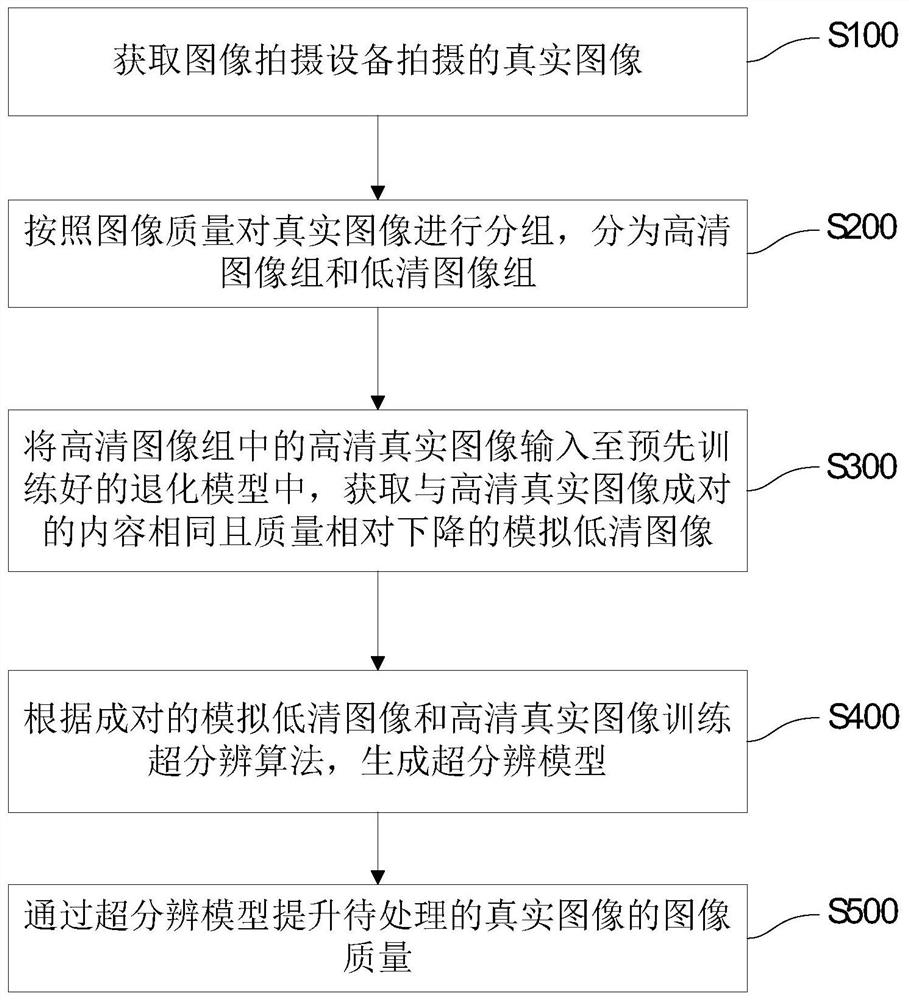

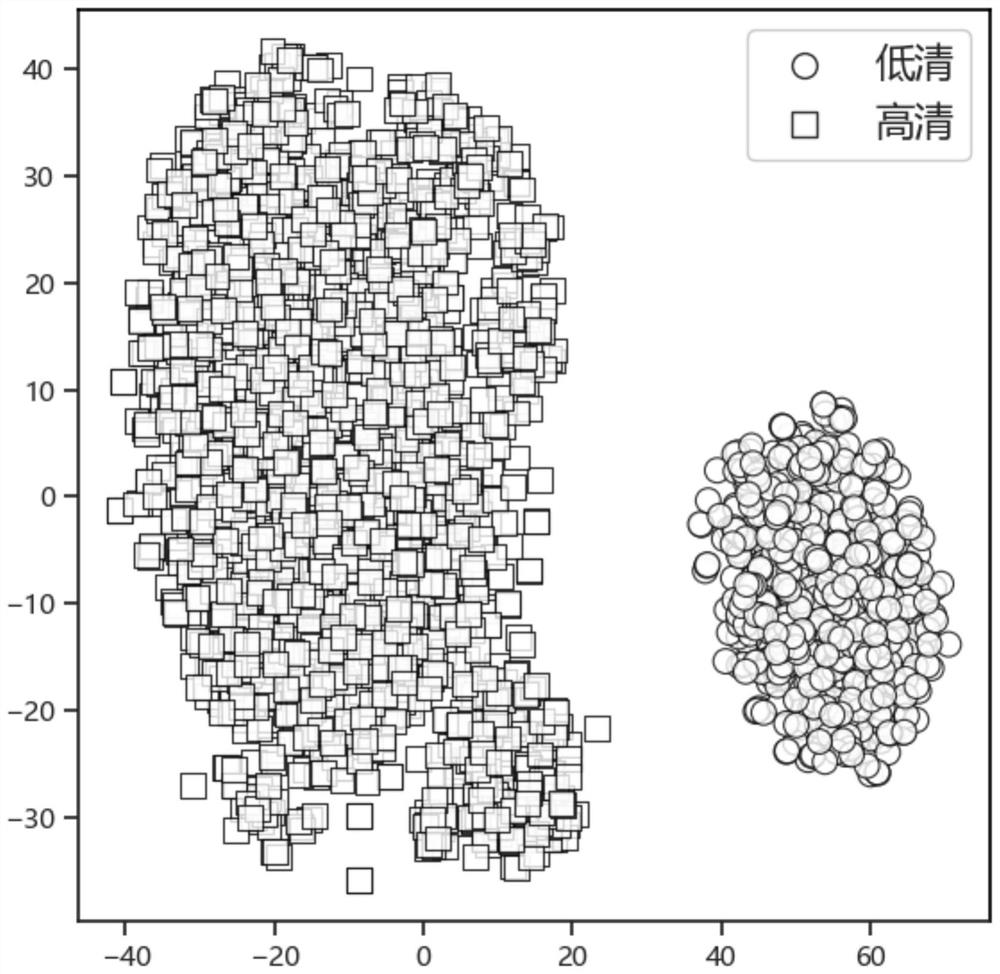

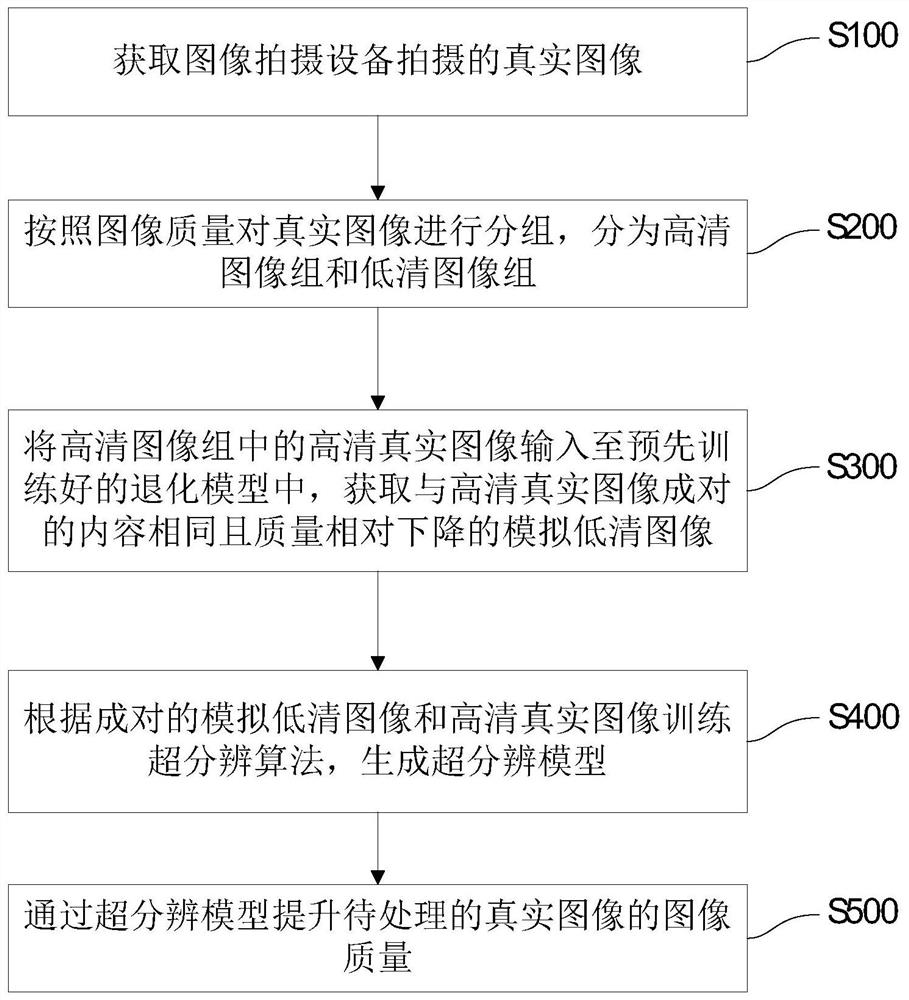

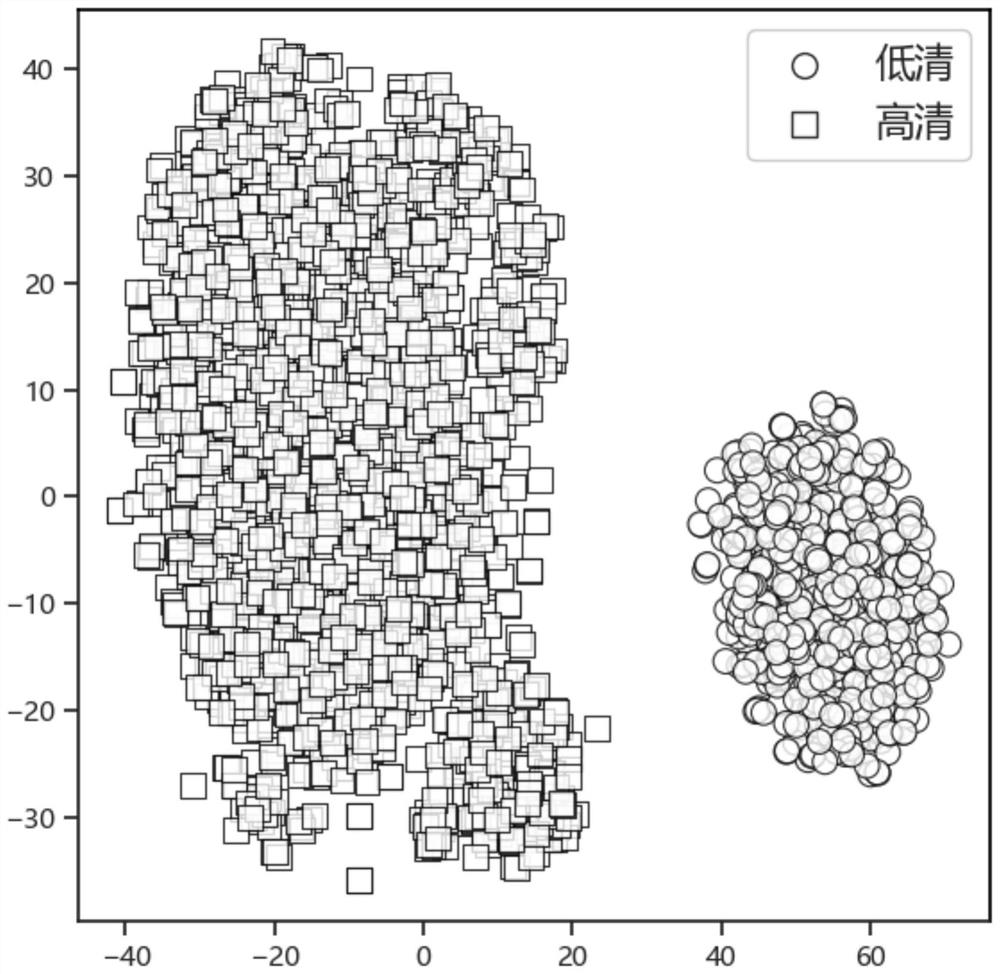

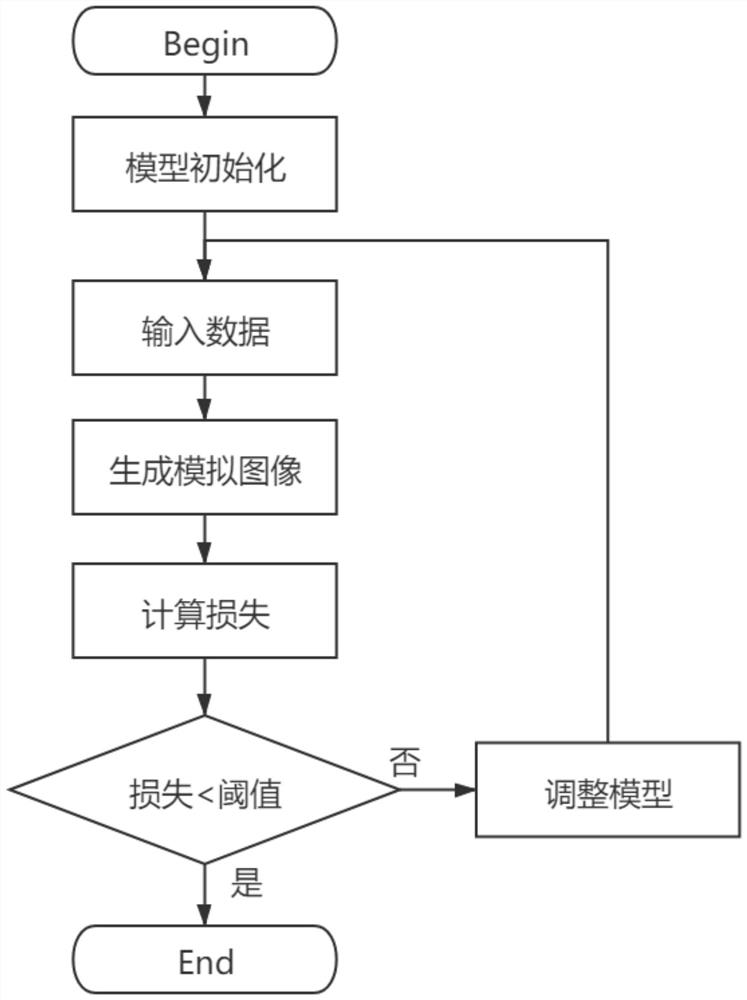

Image processing method, system and device and medium

ActiveCN112200721ASuper resolution effect is goodImprove super resolutionTelevision system detailsImage enhancementPattern recognitionImaging processing

According to the image processing method, system and device and the medium provided by the invention, an image processing mode is designed for the existing problems, and the mode can distinguish a high-definition image from a low-definition image from a real image; a degradation model is further designed, features of the low-definition images can be learned firstly, simulated low-definition imagesin one-to-one correspondence with the high-definition images are generated, and the problem that a super-resolution model is difficult to train on a real image is solved; meanwhile, a filter mechanism is further designed, the image is split into a high-frequency part and a low-frequency part, and the super-division effect of the real image is remarkably improved. Moreover, the method has universality, and according to different application scenes, the optimal super-resolution effect of the scene can be obtained only by using corresponding real images for training. The image after the image quality is improved through the super-resolution model has the advantages of no color distortion, clear details, sharp edges and good overall impression.

Owner:GUANGZHOU YUNCONG ARTIFICIAL INTELLIGENCE TECH CO LTD

Hyperspectral image super-resolution method based on adaptive autoregressive model

ActiveCN103530860BImprove super-resolutionSuper-resolution implementationImage enhancementGeometric image transformationColor imageImaging processing

The invention belongs to the technical field of computer vision, and provides a hyper-spectral imagery super-resolution acquisition method which has wide application and can obtain high-quality and hyper-spectral images. For the purpose, the adopted technical scheme is that the adaptive autoregressive model-based hyper-spectral imagery super-resolution method comprises the following steps: amplifying the image of each waveband from the hyper-spectral image of the first wave band by means of high-resolution color images in sequence; searching an image with the highest similarity to an input ith waveband image from three closest wave band images for the ith waveband image, and then projecting the image having exceeded the super-resolution corresponding to the most similar image to the current waveband to obtain a projection image; realizing the super-resolution of the image of the current ith waveband through an adaptive autoregressive model based on the projection image and the high-resolution color image to finally realize the image super-resolution of all wavebands. The method is mainly applied to image processing.

Owner:南京途博科技有限公司

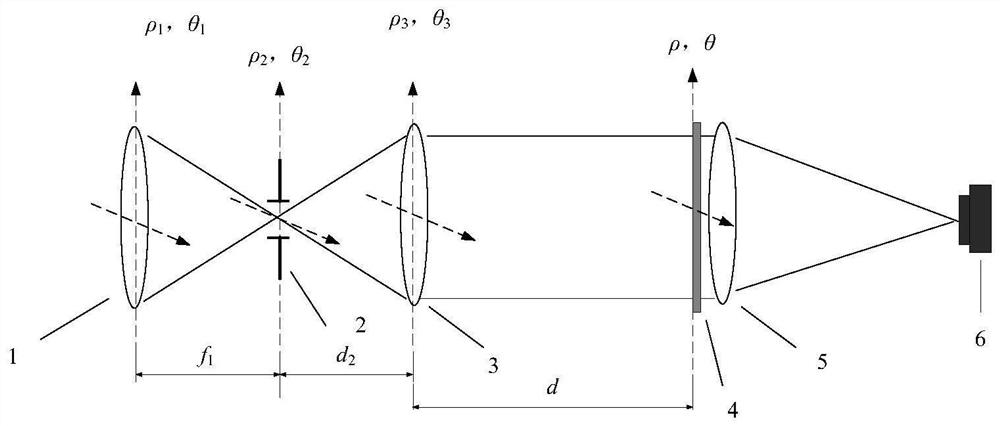

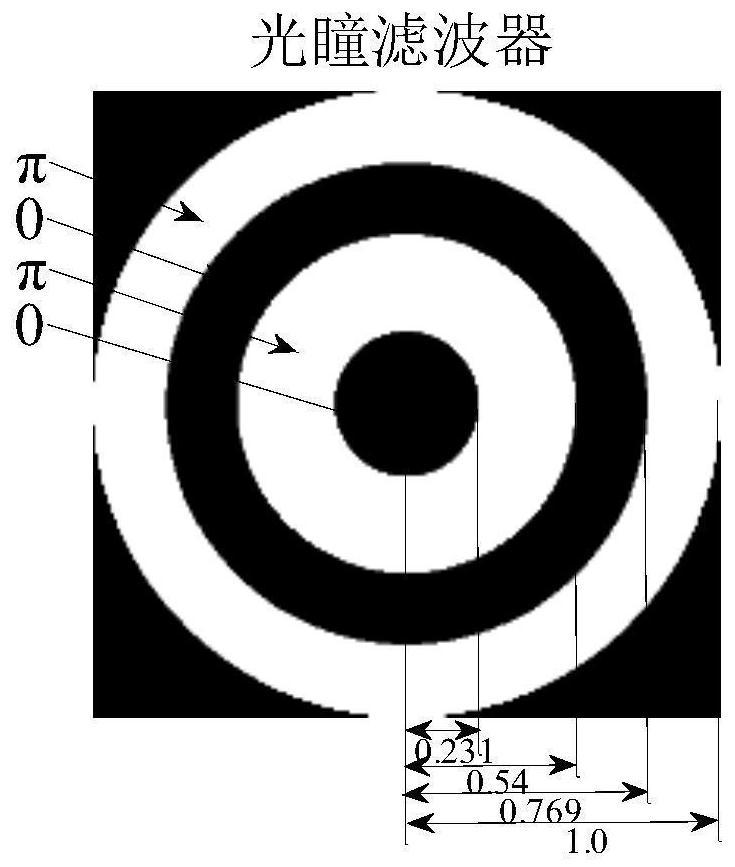

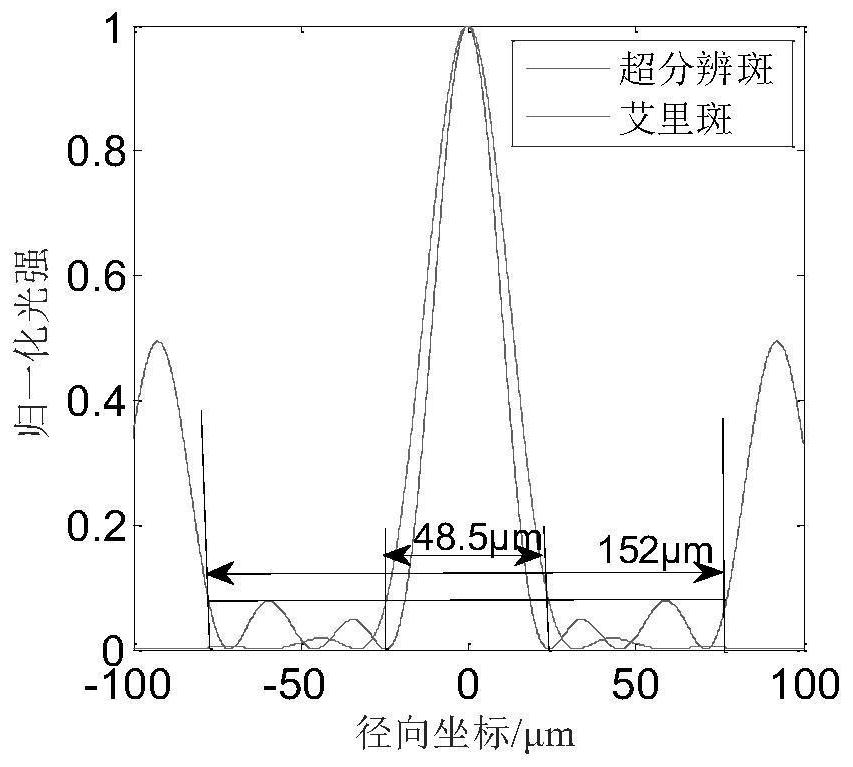

Pupil filter far-field super-resolution imaging system and pupil filter design method

ActiveCN111061063BSolving Diffraction EffectsImprove super-resolutionOptical elementsTarget surfaceIntermediate image

The pupil filter far-field super-resolution imaging system and pupil filter design method belong to the field of super-resolution imaging technology, in order to solve the problem that the final super-resolution imaging is seriously affected in the existing field diaphragm scanning far-field super-resolution imaging system For quality issues, the system is set up in sequence along the light incident direction: front-end optical objective lens, field diaphragm, collimator lens group, pupil filter, imaging lens and CCD detector, and the front-end optical objective lens is used to image distant scenes At the intermediate image plane of the system, the field diaphragm is placed on the rear focal plane of the front optical objective lens, that is, at the intermediate image plane of the entire system, the front focus of the collimator group is at the intermediate image plane of the system, and the pupil filter is placed on the front optical The exit pupil position of the rear end of the system combined with the objective lens and the collimating lens group, and the position and size of the effective aperture of the pupil filter coincide with the exit pupil surface, the imaging lens will perform secondary imaging on the light passing through the pupil filter, and the CCD detector The target plane coincides with the secondary imaging plane.

Owner:CHANGCHUN UNIV OF SCI & TECH

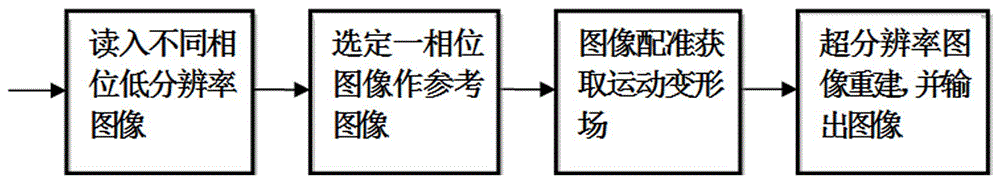

Super-resolution reconstruction method of lung 4D-CT images based on registration

InactiveCN103886568BQuality improvementSimple structureImage enhancementGeometric image transformationImage resolutionReference image

Provided is a lung 4D-CT image super-resolution reconstruction method based on registration. The lung 4D-CT image super-resolution reconstruction method based on registration sequentially comprises the steps that (1) a sequence of low-resolution images with different phases is obtained through lung 4D-CT data; (2) the image, with some phase, in the sequence is selected as a reference image, interpolation amplification is carried out on the image, and the result obtained after interpolation serves as an initial estimated image f<0> of a reconstruction result; (3) the corresponding low-resolution images, with other phases, in the sequence serve as floating images, interpolation amplification is carried out on the floating images, motion deformation fields between the interpolation results of the floating images and the initial estimated image f<0> are estimated respectively; (4) a high-resolution lung 4D-CT image is reconstructed on the basis of the motion deformation fields obtained in the step (3). A multi-plane display image of the lung 4D-CT image obtained through the lung 4D-CT image super-resolution reconstruction method based on registration is clear, the structure is obviously improved, the image resolution is improved, and the quality of the multi-plane display image of the lung 4D-CT data can be effectively improved.

Owner:SOUTHERN MEDICAL UNIVERSITY

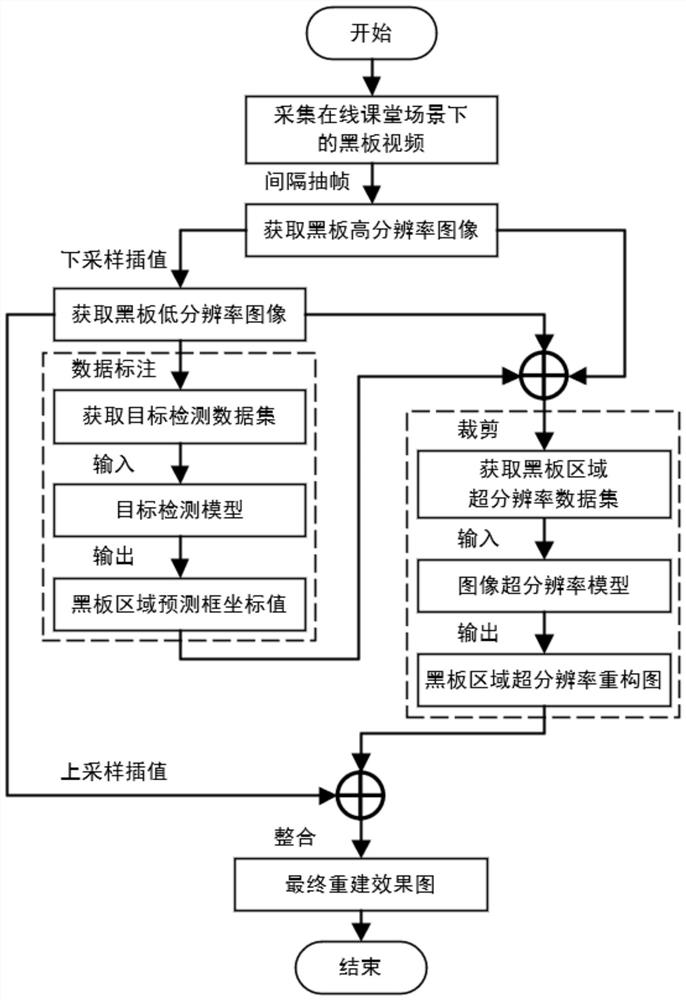

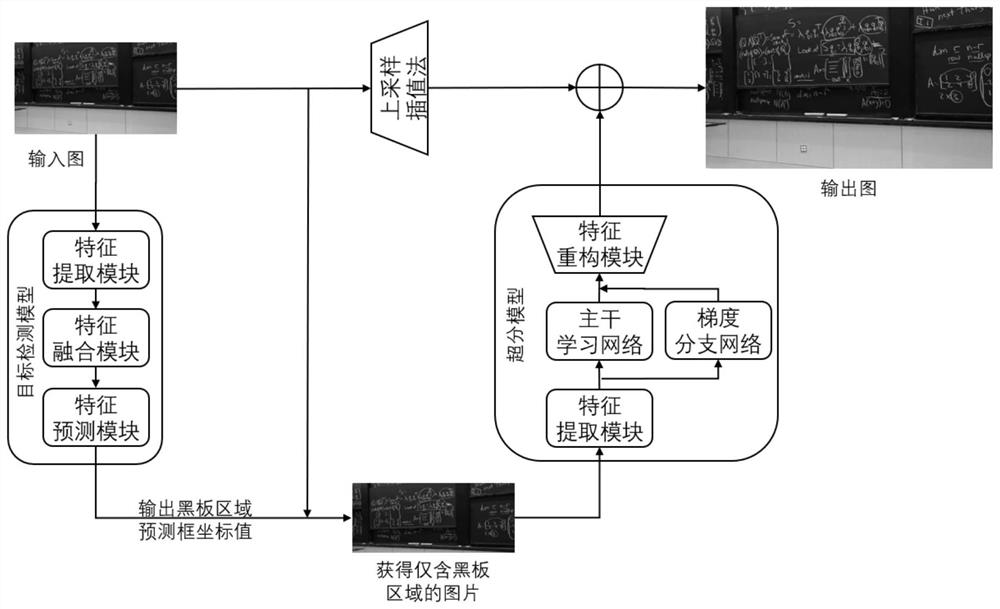

Super-resolution reconstruction method based on blackboard features in online classroom scene

PendingCN114529451AImprove super-resolutionHigh-resolutionImage enhancementImage analysisComputer graphics (images)Feature learning

The invention discloses a super-resolution reconstruction method based on blackboard characteristics in an online classroom scene, and the method comprises the following steps: shooting a blackboard picture through a camera built in a classroom, accurately extracting a blackboard writing region through a target detection model, and carrying out the super-resolution reconstruction of an image. Besides, for the blackboard writing content with obvious edge features, gradient constraint is introduced into the super-resolution model to enhance feature learning, and the resolution of the blackboard writing content is effectively improved, so that the class learning efficiency of students is improved. The method has the characteristics of high running speed, low cost, convenience in use and the like, and ensures that online live teaching of the campus classroom is carried out smoothly.

Owner:SOUTH CHINA UNIV OF TECH

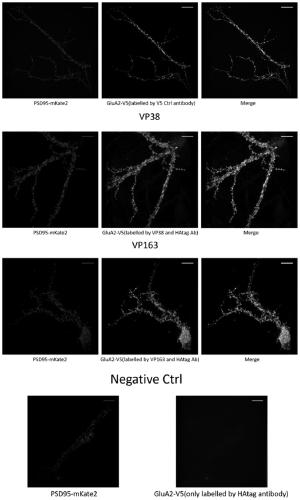

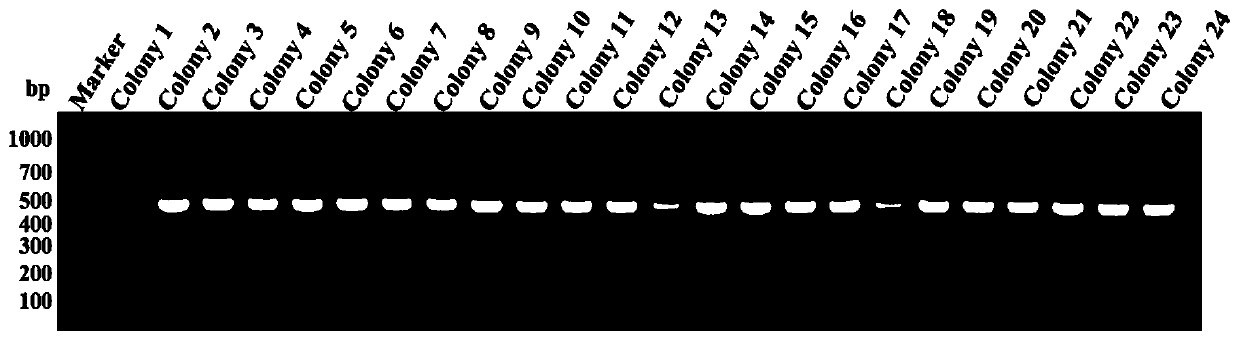

Application of single domain antibody specific for V5 tag protein

ActiveCN109336978BProkaryotic expression is simpleIncrease productionFluorescence/phosphorescenceImmunoglobulins against enzymesOptical reconstructionParalemmin

Owner:REGENECORE BIOTECH CO LTD

An image processing method, system, device and medium

ActiveCN112200721BSuper resolution effect is goodImprove super resolutionImage enhancementTelevision system detailsPattern recognitionImaging processing

An image processing method, system, device and medium provided by the present invention, aiming at the current problems, designs an image processing method, which can distinguish high-definition images and low-definition images from real images; and also designs a The degradation model can first learn the characteristics of the low-definition image, and generate a simulated low-definition image corresponding to the high-definition image one by one, which solves the problem that the super-resolution model is difficult to train on the real image; at the same time, the present invention also designs a filter mechanism, Splitting the image into high-frequency and low-frequency parts significantly improves the super-resolution effect of real images. Moreover, the present invention has versatility, and according to different application scenarios, the optimal super-resolution effect of the scenario can be obtained only by using corresponding real images for training. Moreover, the image after the image quality is improved by the super-resolution model in the present invention has no distortion in color, clear details, sharp edges, and a good overall look and feel.

Owner:GUANGZHOU YUNCONG ARTIFICIAL INTELLIGENCE TECH CO LTD

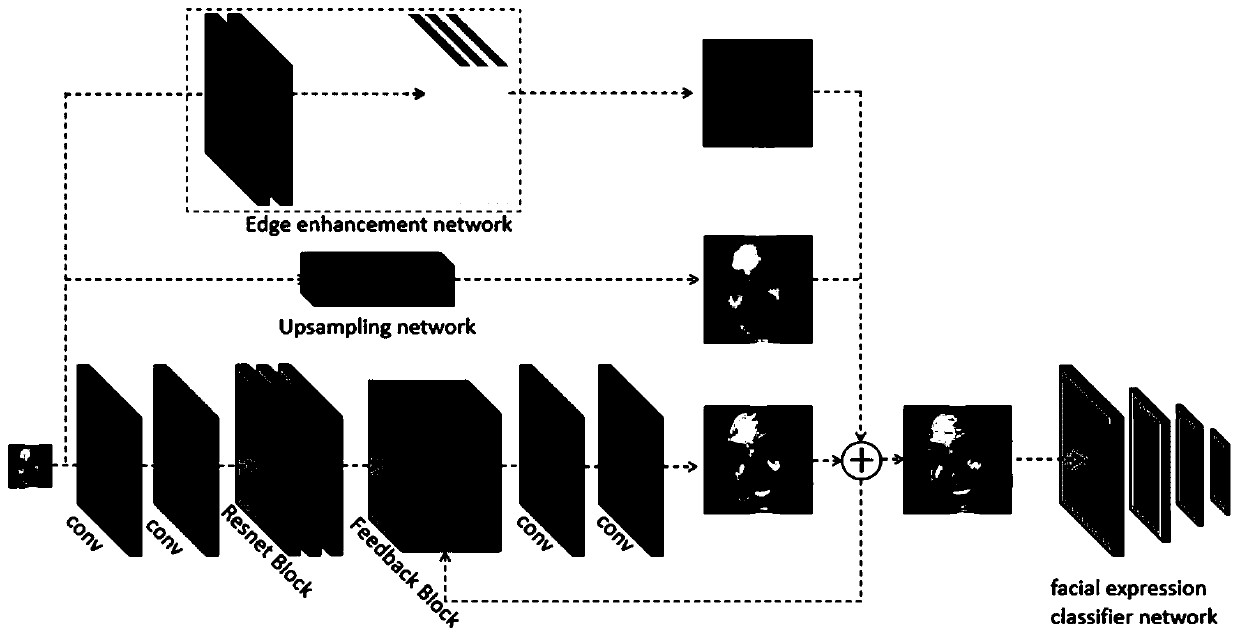

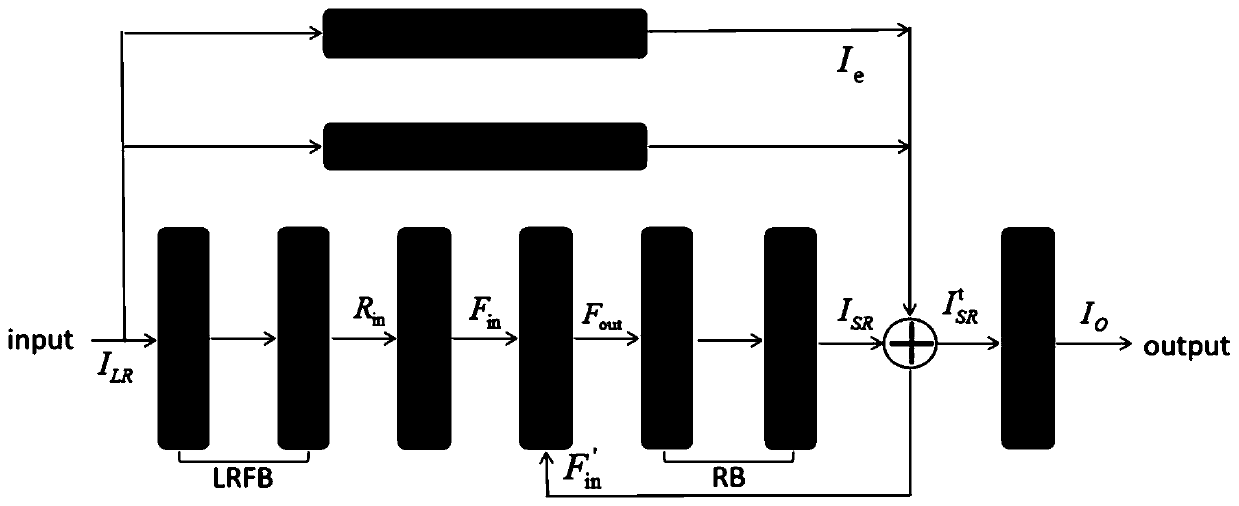

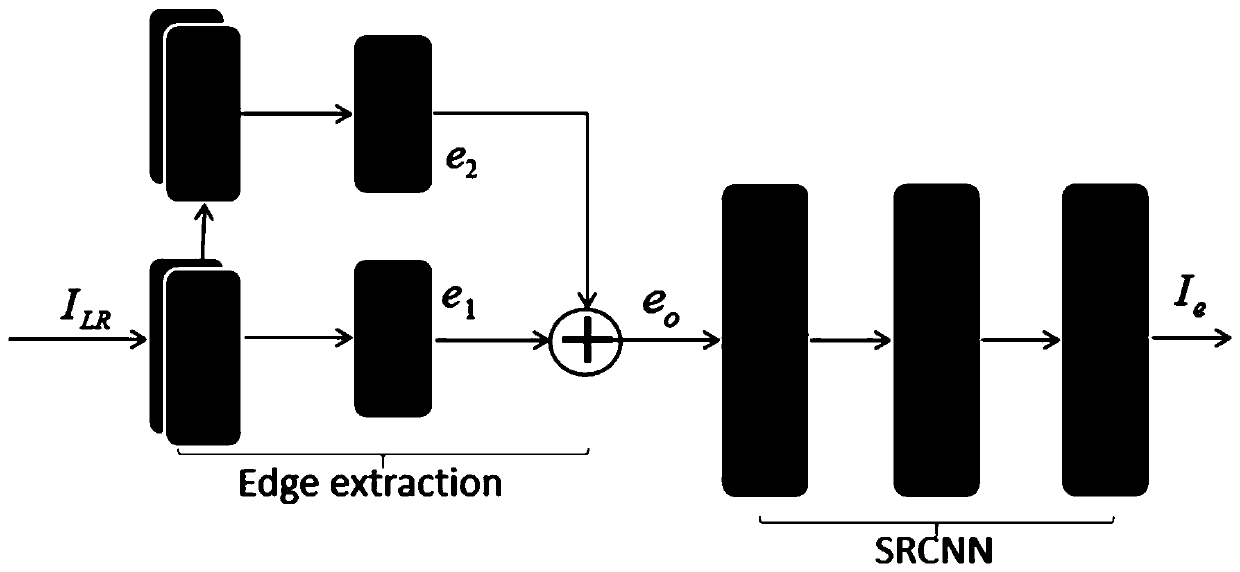

Tiny facial expression recognition method based on E-FCNN

ActiveCN111325098AImprove accuracyHigh precisionImage analysisGeometric image transformationPattern recognitionImage resolution

The invention relates to a tiny facial expression recognition method based on an E-FCNN. The method comprises the following steps that 1, a marginalized face enhancement module, an up-sampling networkmodule and a super-resolution network module based on feedback are established respectively, and the marginalized face enhancement module is used for carrying out edge extraction and enhancement on an input face; wherein the up-sampling network module is used for carrying out interpolation method up-sampling on an input image, and the super-resolution network module based on feedback is used forcarrying out super-resolution processing on the input image; 2, establishing an E-FCNN network model in combination with an marginalized face enhancement module, an up-sampling network module and a super-resolution network module based on feedback; and 3, inputting the facial expression image data of the specific size into the E-FCNN network model to obtain a corresponding facial expression recognition result. Compared with the prior art, the method has the advantages of high recognition precision, high recognition speed and the like.

Owner:SHANGHAI UNIVERSITY OF ELECTRIC POWER

Single-domain antibodies specific for v5-tagged proteins and their derivatives

ActiveCN109438577BProkaryotic expression is simpleIncrease productionFluorescence/phosphorescenceImmunoglobulins against enzymesProtein targetOptical reconstruction

The invention discloses a V5-tag protein specifically targeted single-domain antibody and derived proteins thereof. The V5-tag protein specifically targeted single-domain antibody has the amino acid sequences shown as SEQ ID NO: 1, SEQ ID NO: 9, SEQ ID NO: 17, SEQ ID NO: 25, SEQ ID NO: 33, SEQ ID NO: 41, SEQ ID NO: 49, SEQ ID NO: 57 and SEQ ID NO: 65-72. Through the gene sequences and the host cells of the V5-tag protein specifically targeted single-domain antibody, the V5-tag protein specifically targeted single-domain antibody can be expressed efficiently and can be applied to stochastic optical reconstruction microscopy (STORM) and Photo-activated localization microscopy (PLAM) for tracing and detecting target proteins in biological samples.

Owner:REGENECORE BIOTECH CO LTD

A method for multi-frame image super-resolution reconstruction using prior knowledge of natural images

ActiveCN108665412BImprove super-resolutionQuality improvementGeometric image transformationImage resolutionImage database

The invention provides a method of using natural image prior-knowledge for multi-image super-resolution reconstruction. According to the method, a fields-of-experts model obtained by learning is introduced to be used as the natural image prior-knowledge in a process of using a series of low-resolution images for super-resolution reconstruction, and quality of multi-image super-resolution reconstruction is improved. Compared with prior knowledge of L1-norm, total variation and the like used by traditional Bayesian multi-image super-resolution methods, the natural image prior-knowledge used in the method is the fields-of-experts model obtained through training of an image database, and can better extract statistical features of natural scenes, and thus obtain better super-resolution reconstruction effects. The method simplifies partial processes of multi-image super-resolution, and shortens calculation time.

Owner:ZHEJIANG UNIV

A Super-resolution Imaging Method for Vessels in Circular Scanning ISAR Mode

ActiveCN109116352BImprove SNRImprove sidelobeRadio wave reradiation/reflectionMedicineImage resolution

The invention discloses a method for super-resolution imaging of ships in a circular-scan ISAR mode, which belongs to the technical field of radar and comprises the following steps: dividing echo signals in the circular-scan ISAR mode into M data frames, and processing them frame by frame; In the mth data frame, the range compression is performed, and the sub-echo of the ship target in the m-th frame is selected; the sub-echo of the ship target in the m-th frame is preprocessed including translation compensation and rotation compensation; using Burg Algorithm, for the sub-echo of the ship target in the m-th frame, data extrapolation is performed in the azimuth direction; the extrapolated data is randomly sampled in the azimuth direction; for the sampled data, the compressed sensing calculation is performed in the azimuth direction to obtain The sparse coefficient matrix of the current data frame; the constant false alarm rate detection is performed on the sparse coefficient matrix after compressed sensing, and the range Doppler domain super-resolution image of the mth frame is obtained.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com