Patents

Literature

403 results about "Physical context" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

There is a time and place for everything, and that is where physical context comes in. The physical context involves the actual location, the time of day, the lighting, noise level and related factors.

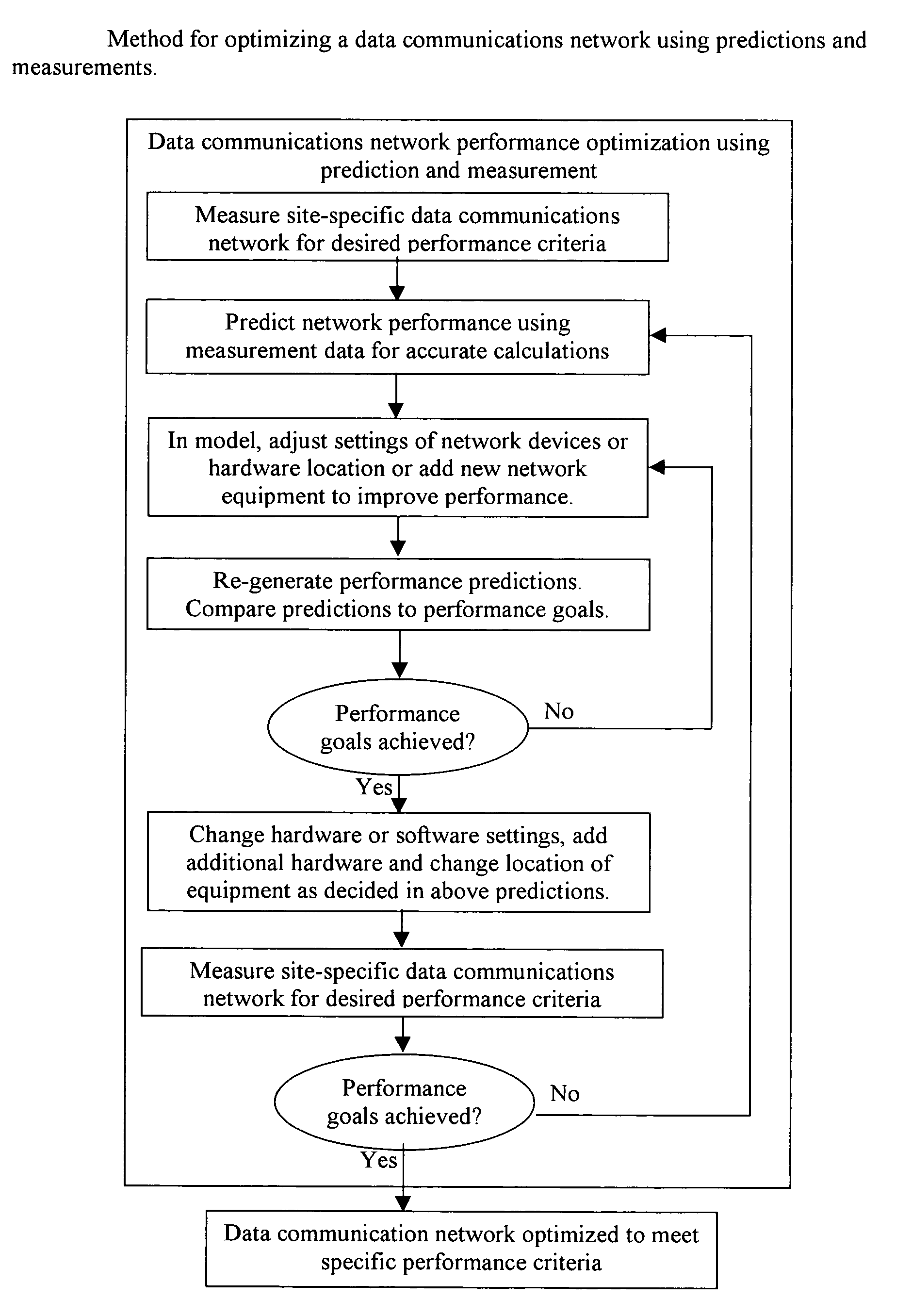

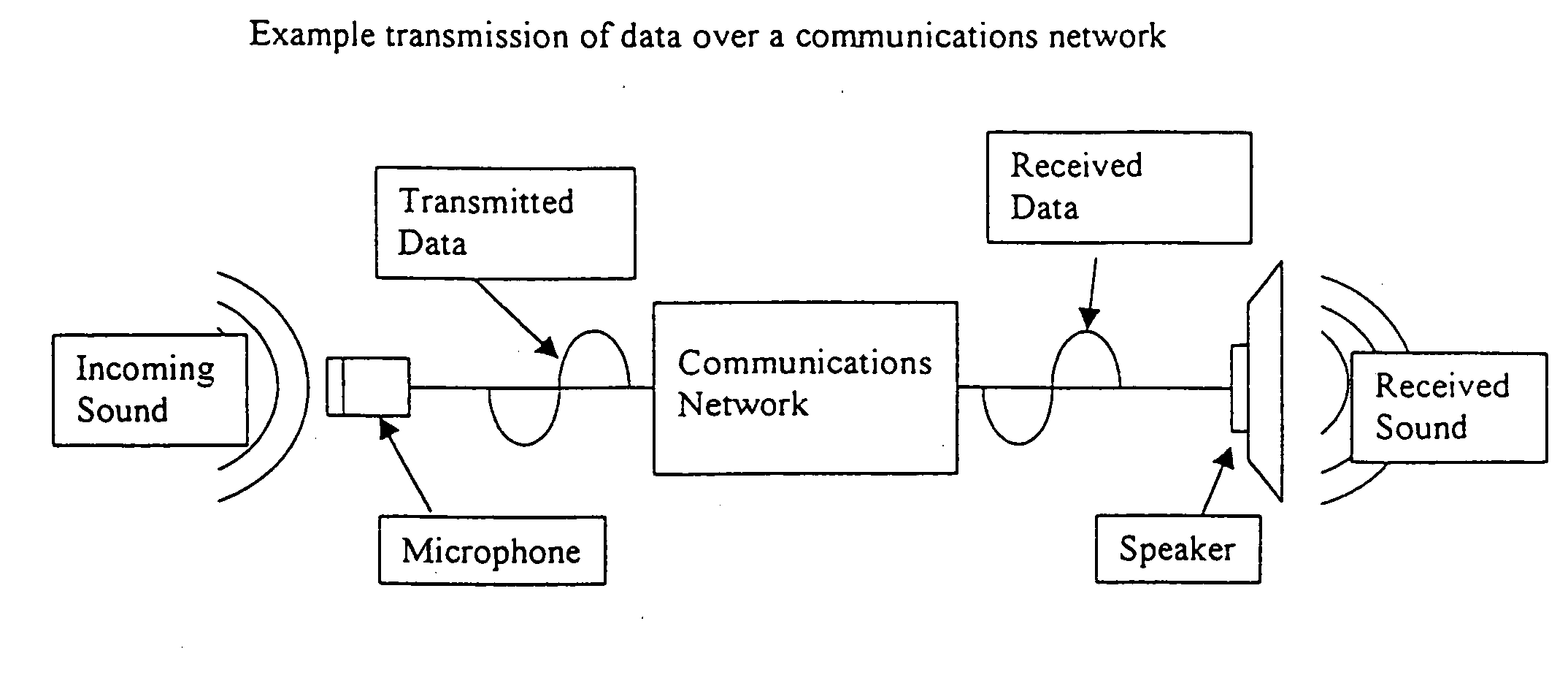

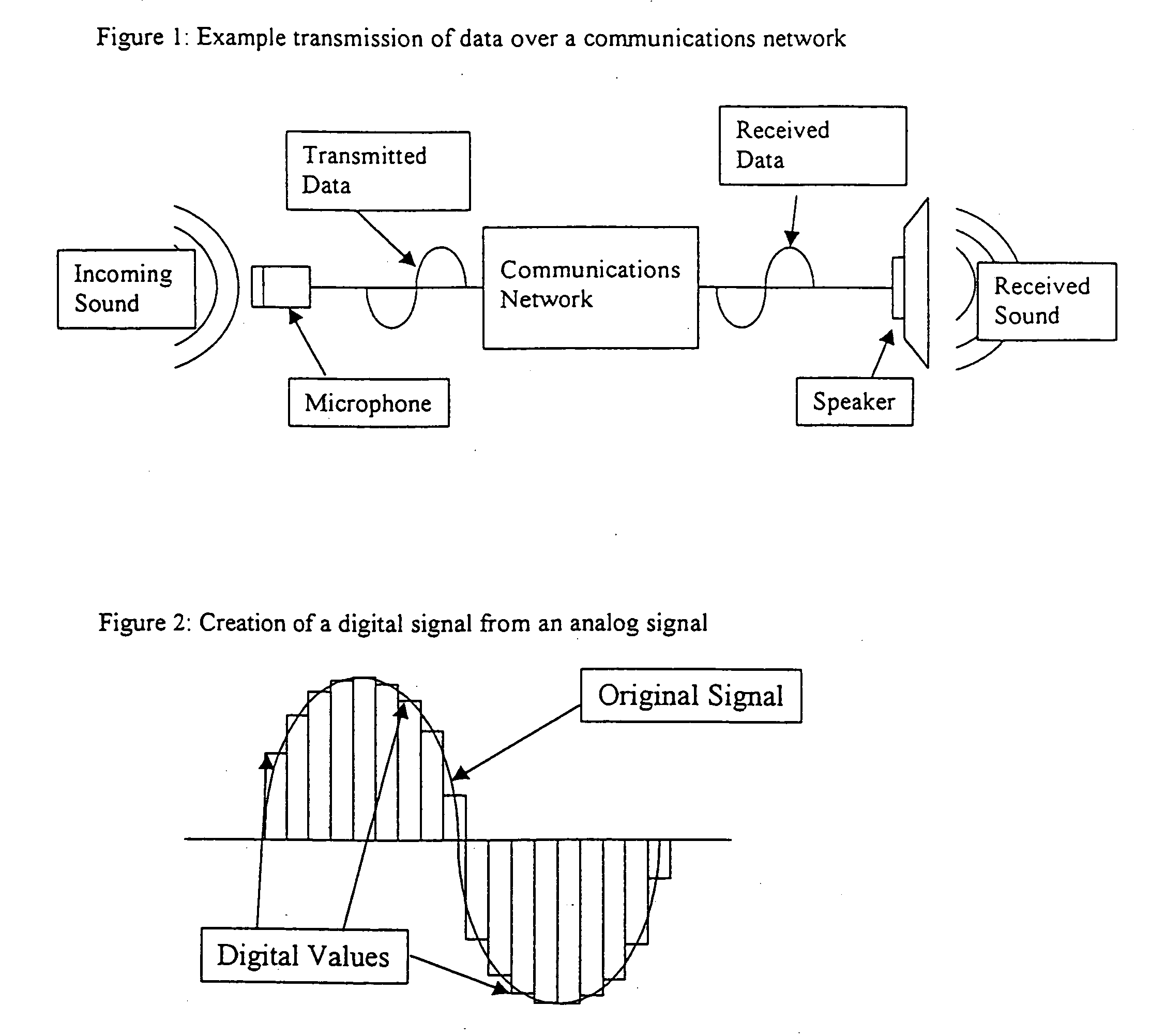

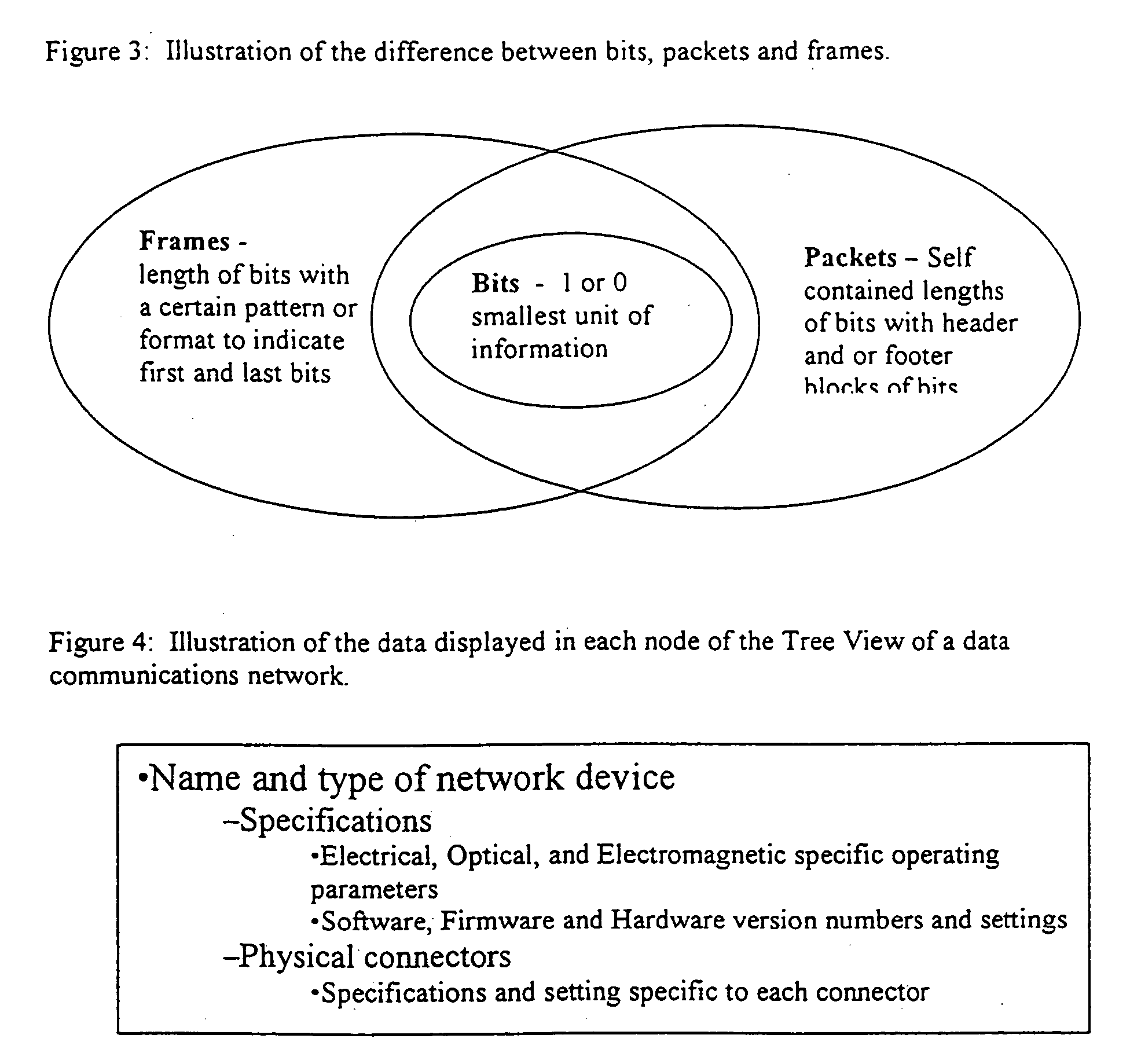

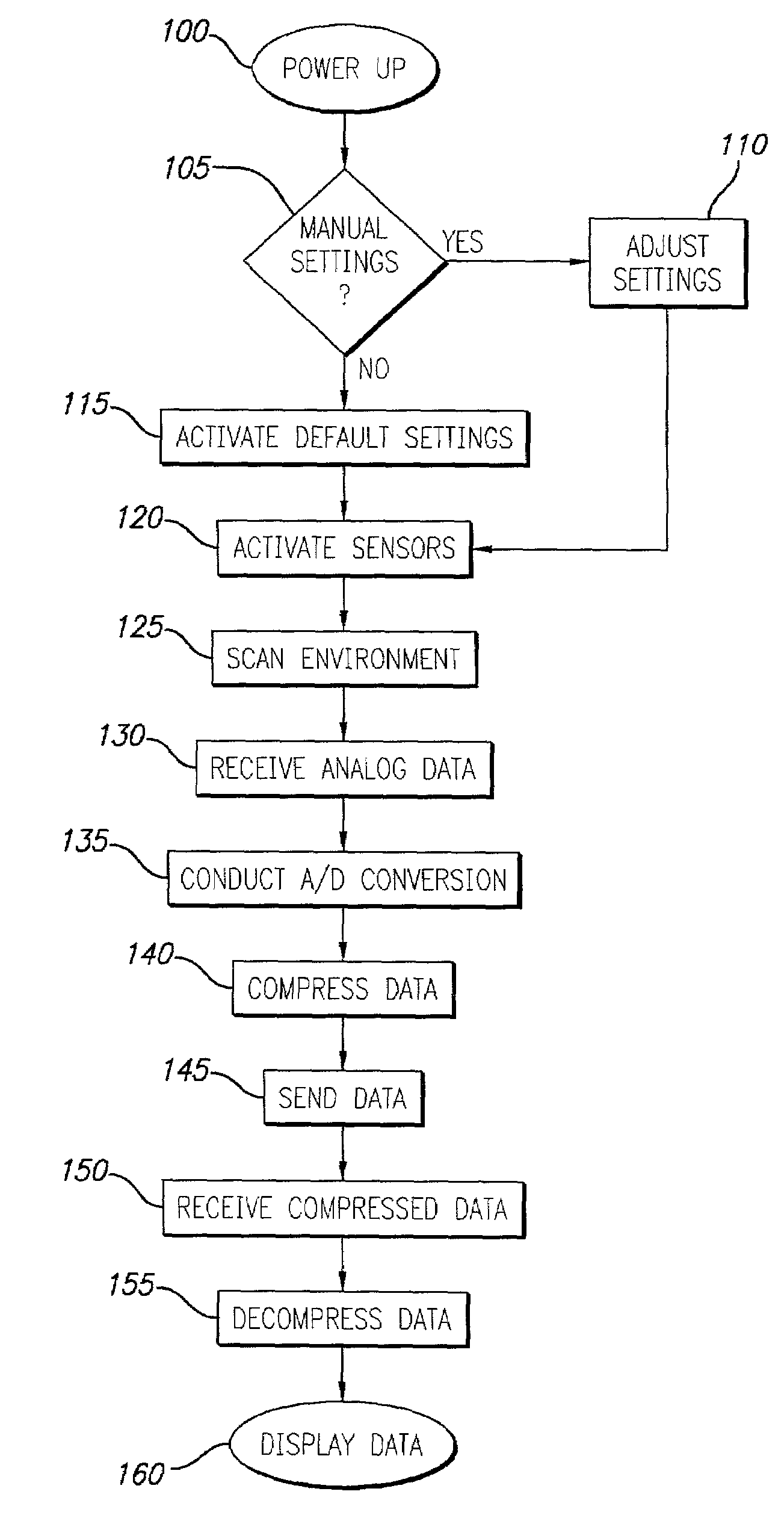

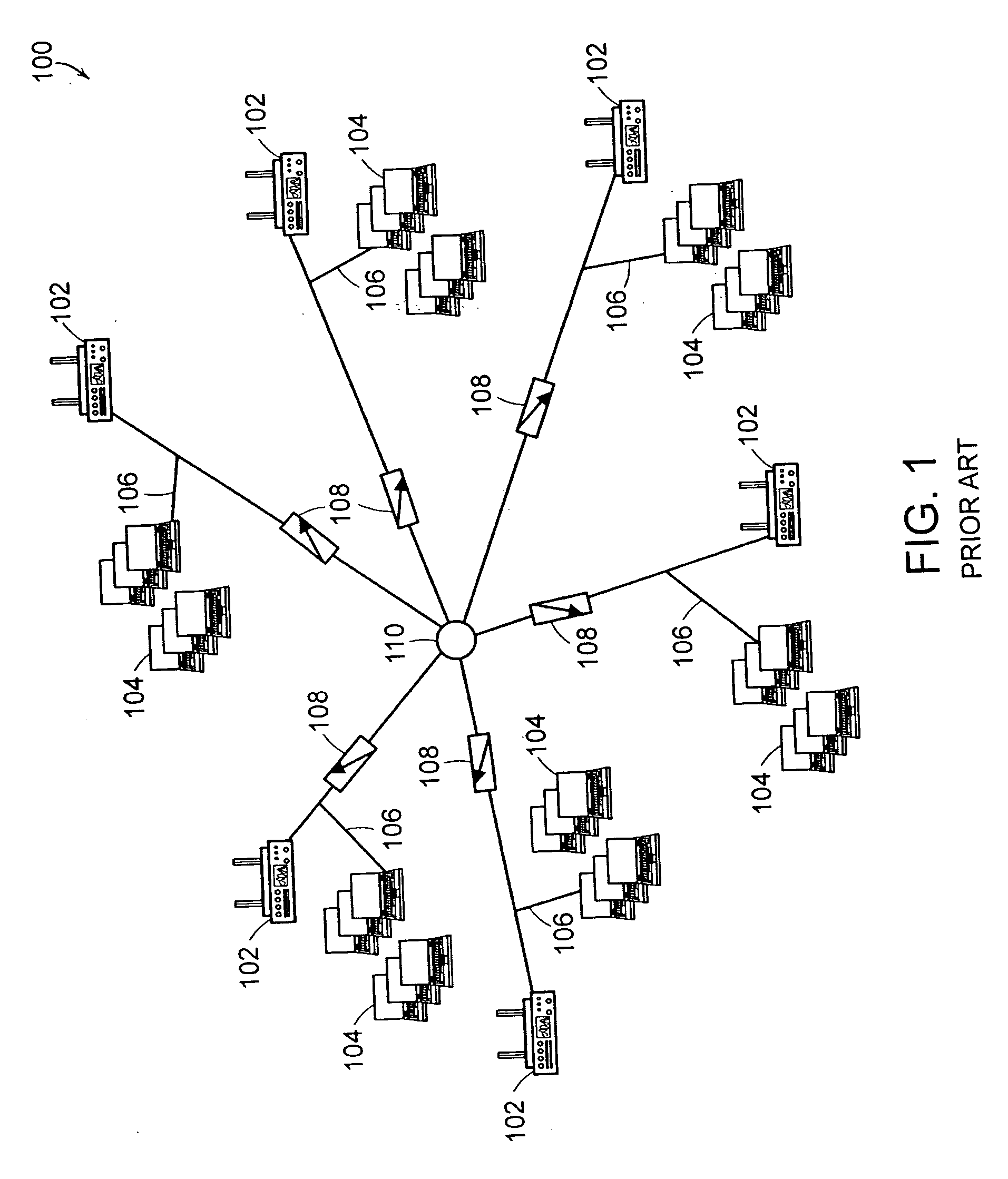

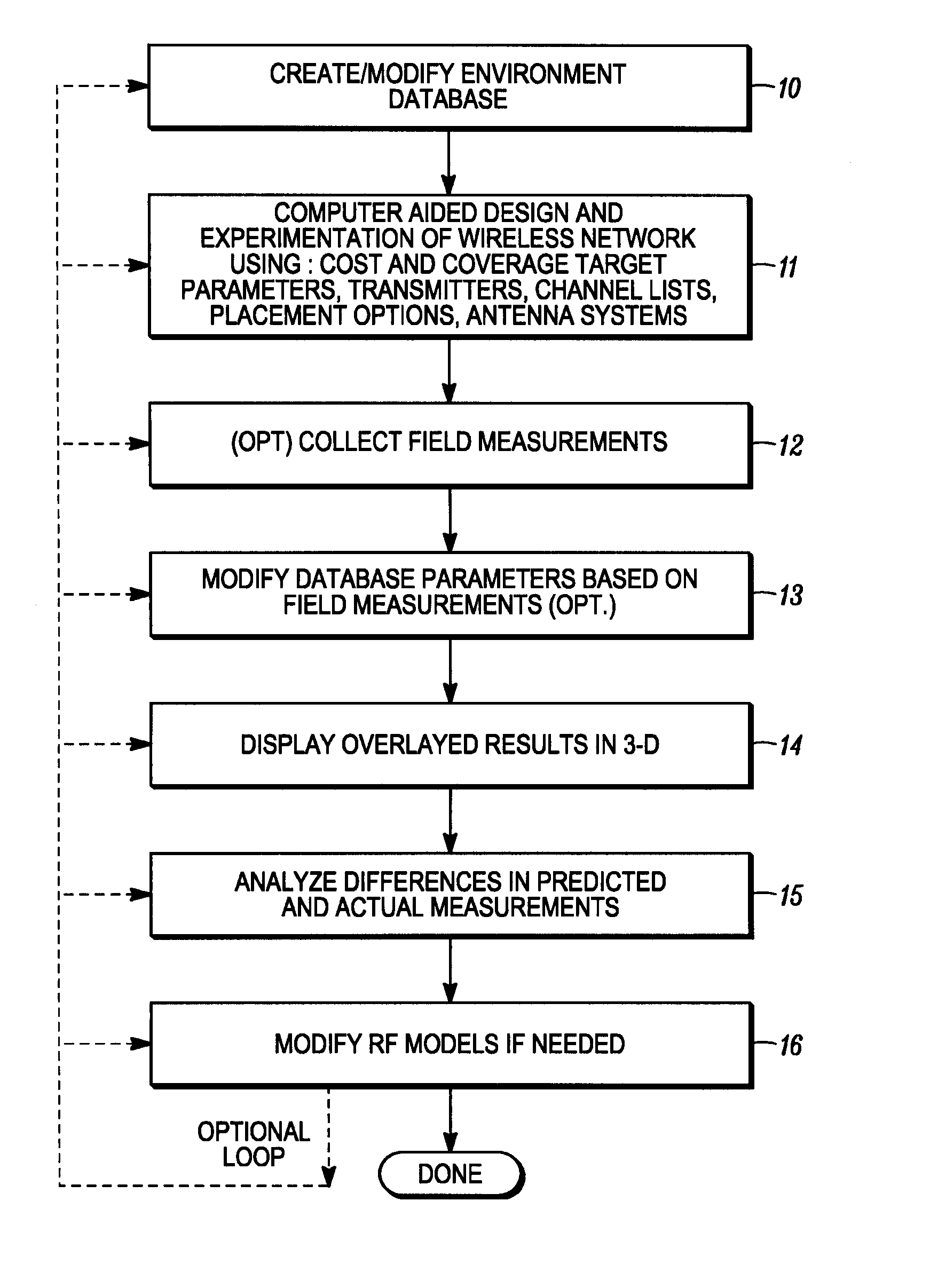

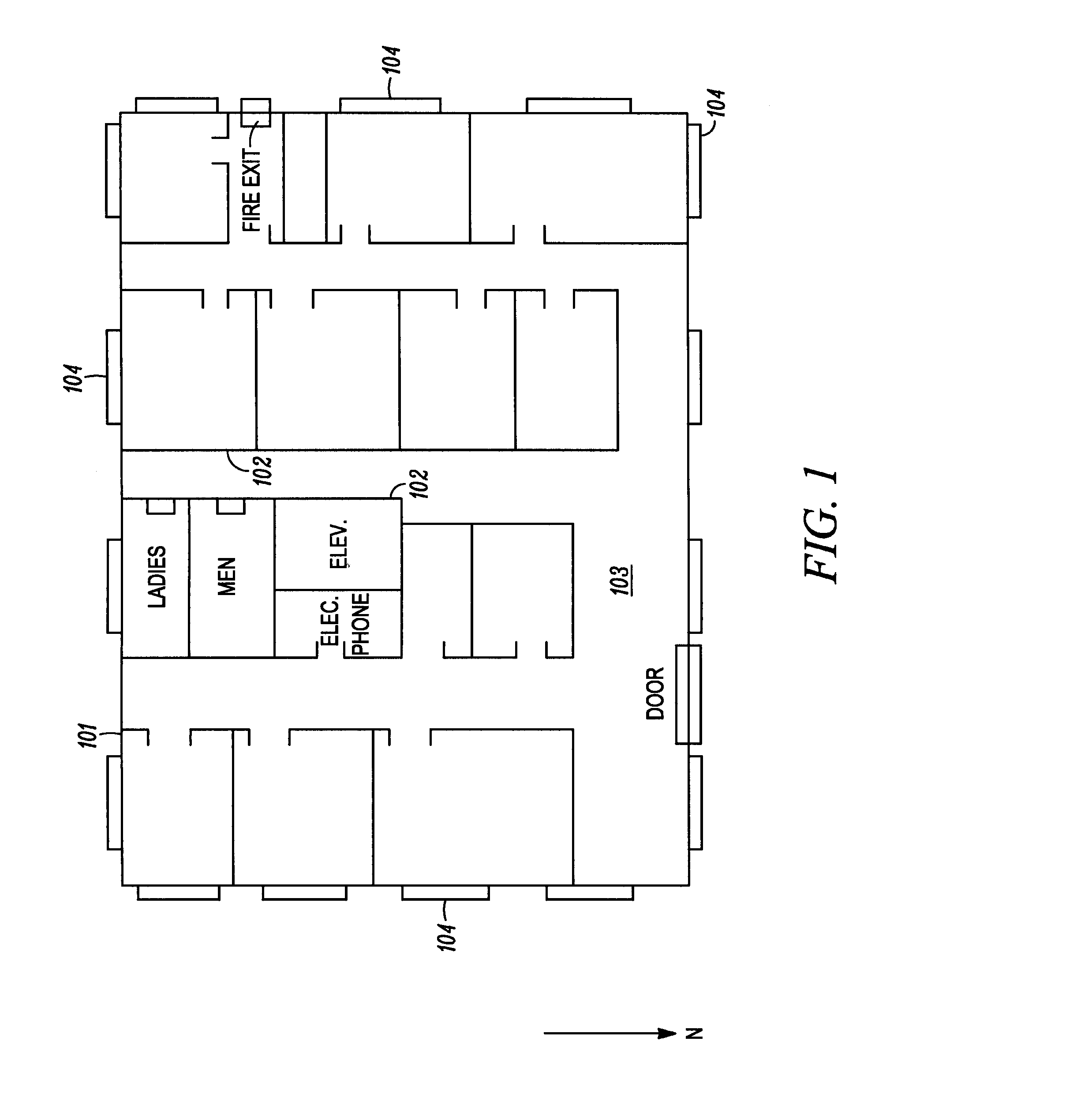

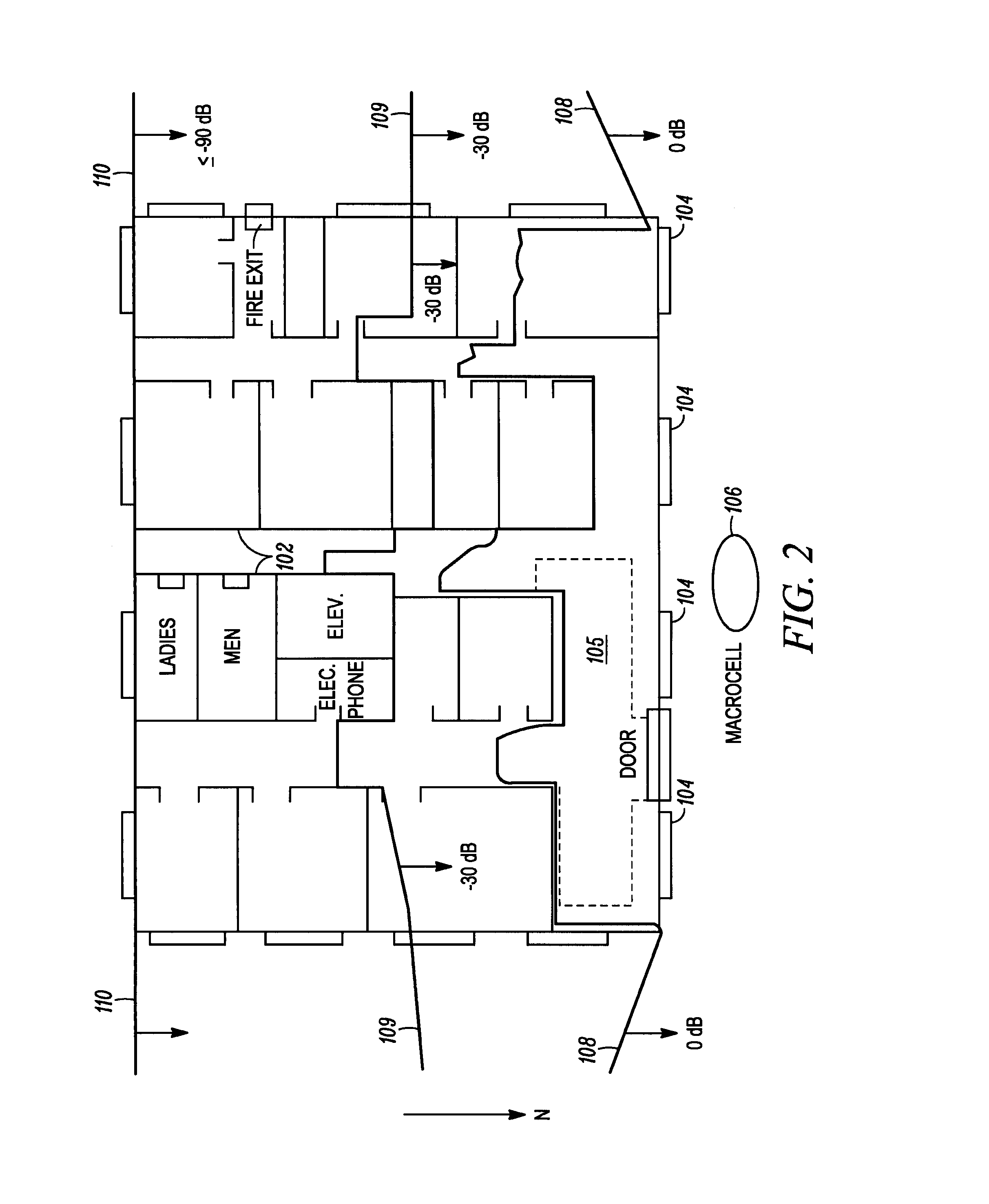

System and method for design, tracking, measurement, prediction and optimization of data communication networks

InactiveUS6973622B1MeasuringImprove performanceAnalogue computers for electric apparatusData switching by path configurationRadio propagationSpecific model

Owner:EXTREME NETWORKS INC

System for real-time economic optimizing of manufacturing process control

InactiveUS6038540AEasy to operateEasy to deployMarket predictionsComplex mathematical operationsProcess measurementSelf adaptive

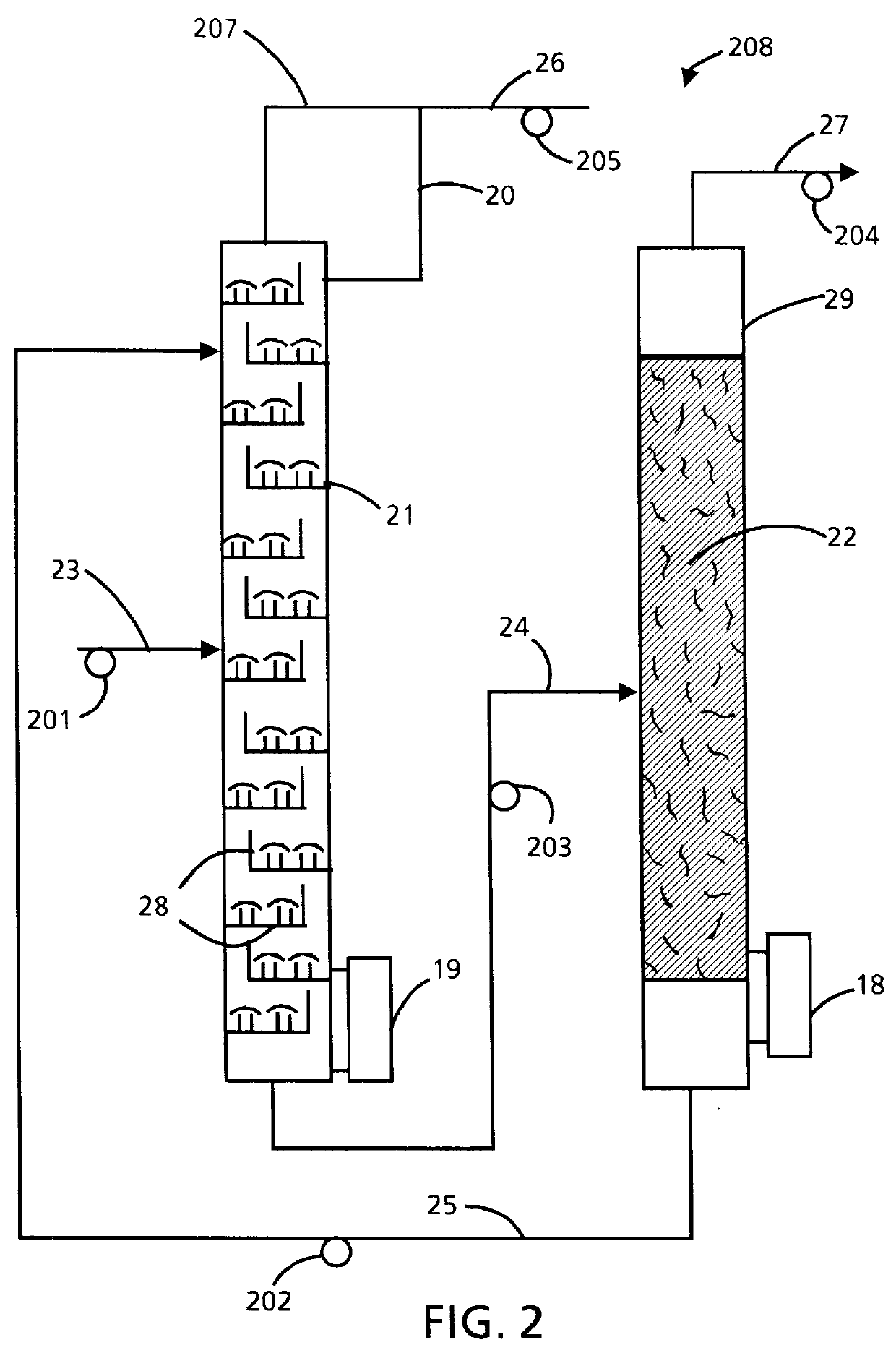

The present invention provides an adaptive process control and profit depiction system which is responsive to both process measurement input signals, economic inputs, and physical environment inputs. The process control system features an interactive optimization modeling system for determining manipulated process variables (also known as setpoints). These manipulated process variables are used to position mechanisms which control attributes of a manufacturing system, such as a valve controlling the temperature of a coolant or a valve controlling the flow rate in a steam line.

Owner:DOW GLOBAL TECH LLC

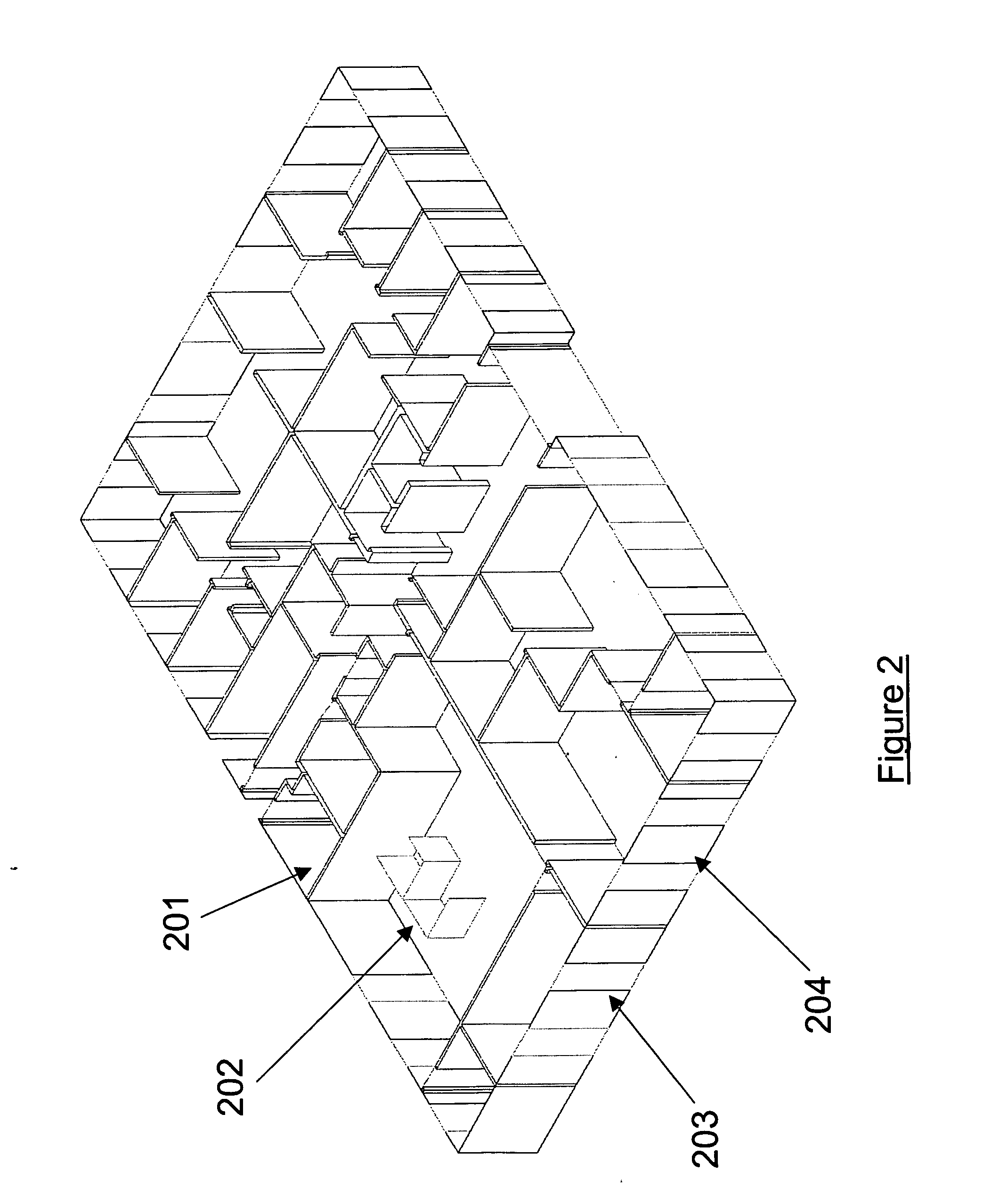

Method and system for modeling and managing terrain, buildings, and infrastructure

ActiveUS7164883B2Enhance on-going managementBroadcast transmission systemsRadio/inductive link selection arrangementsSpecific modelEngineering

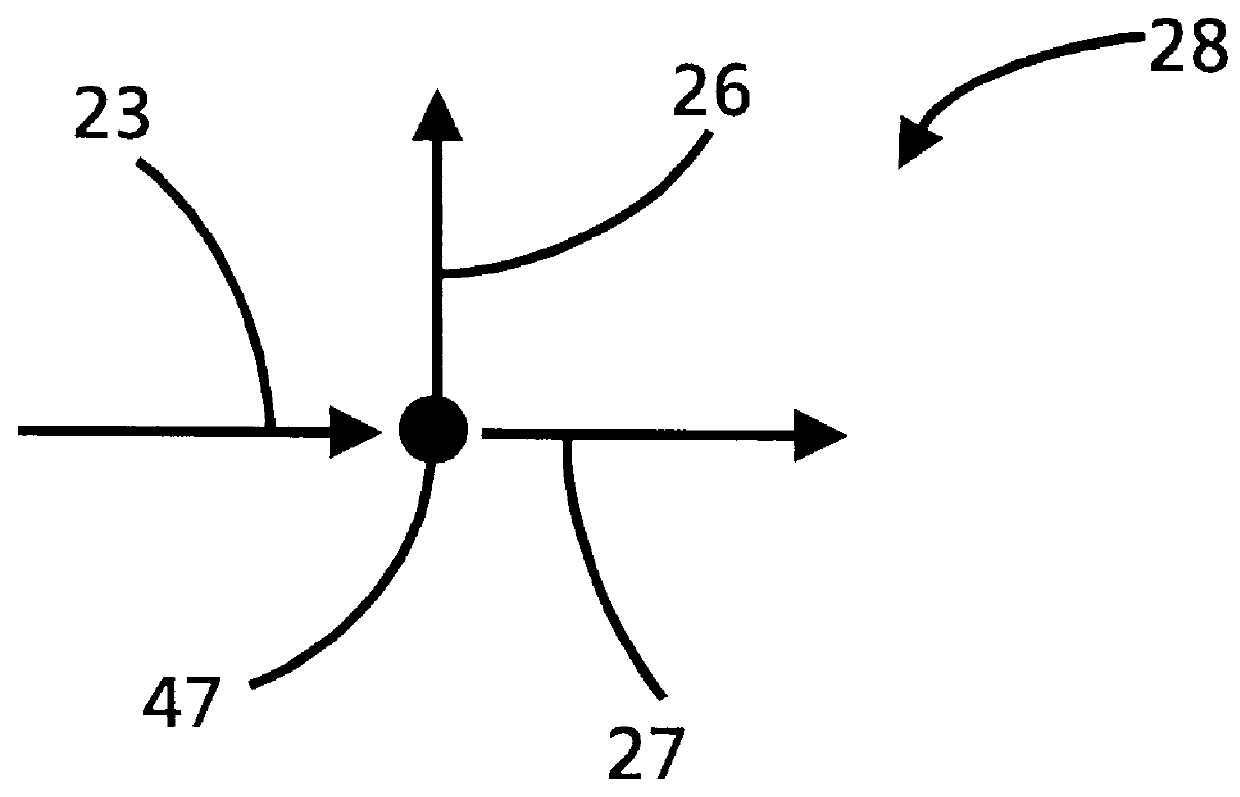

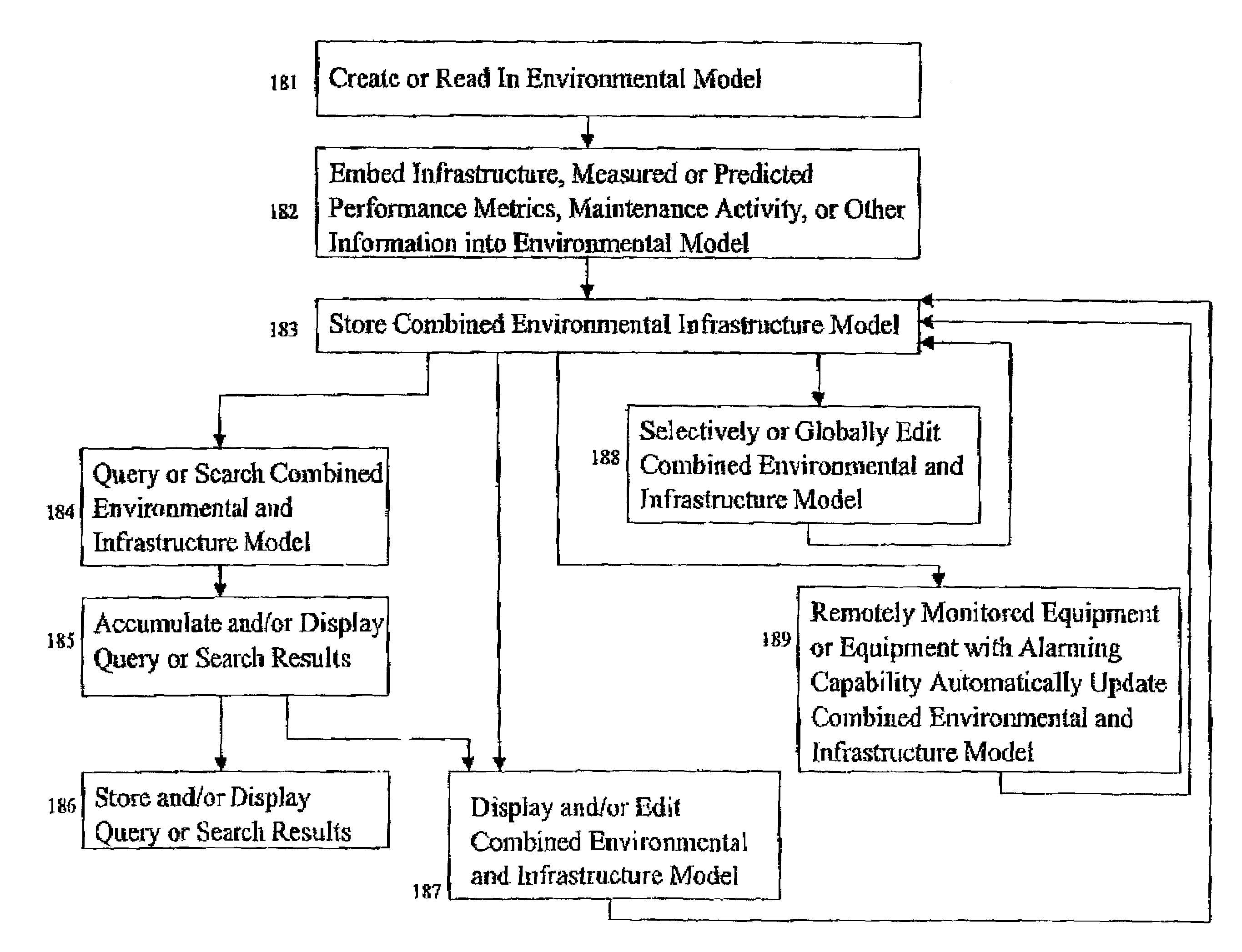

A method and system for creating, using, and managing a three-dimensional digital model of the physical environment combines outdoor terrain elevation and land-use information, building placements, heights and geometries of the interior structure of buildings, along with site-specific models of components that are distributed spatially within a physical environment. The present invention separately provides an asset management system that allows the integrated three-dimensional model of the outdoor, indoor, and distributed infrastructure equipment to communicate with and aggregate the information pertaining to actual physical components of the actual network, thereby providing a management system that can track the on-going performance, cost, maintenance history, and depreciation of multiple networks using the site-specific unified digital format.

Owner:EXTREME NETWORKS INC

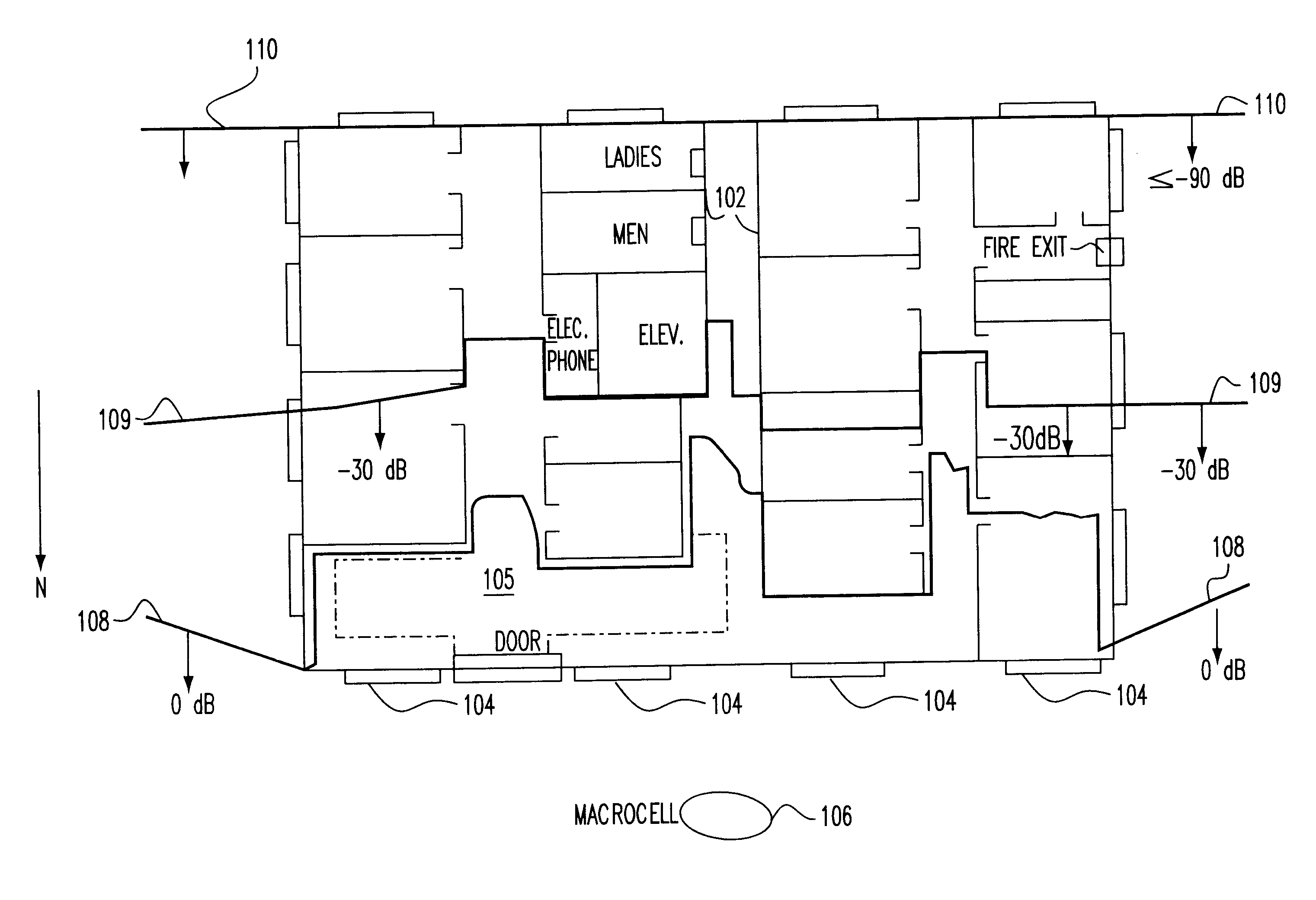

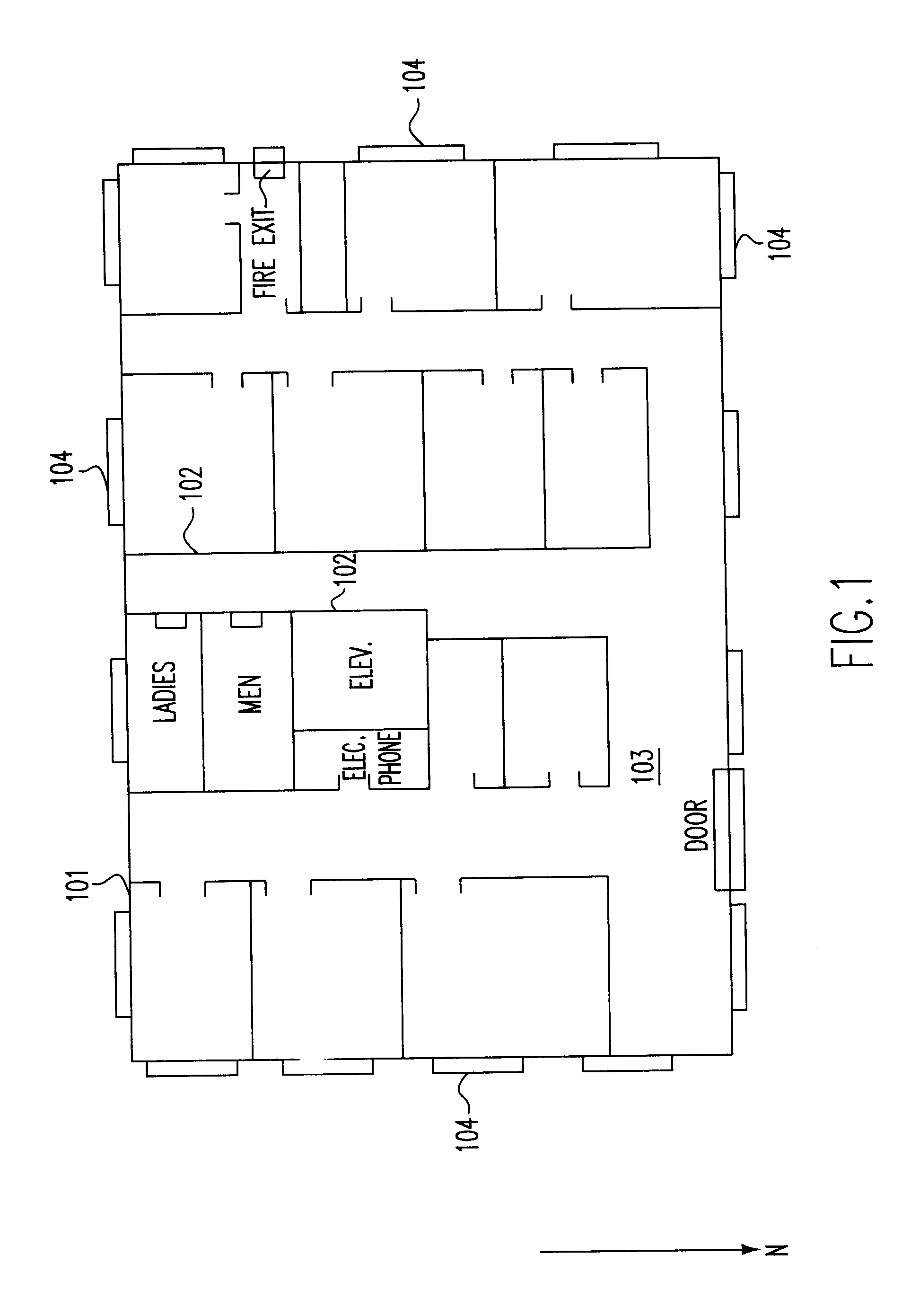

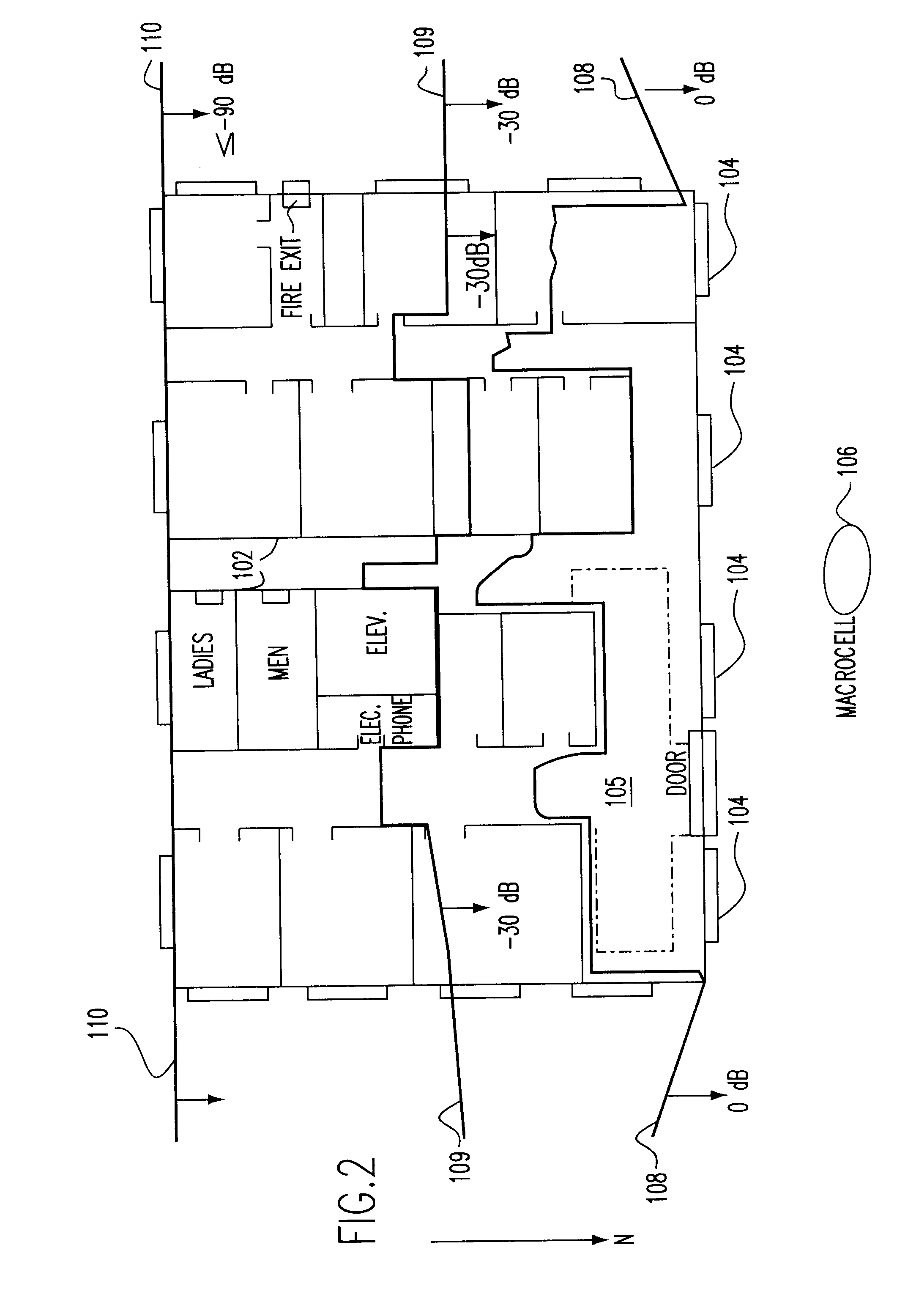

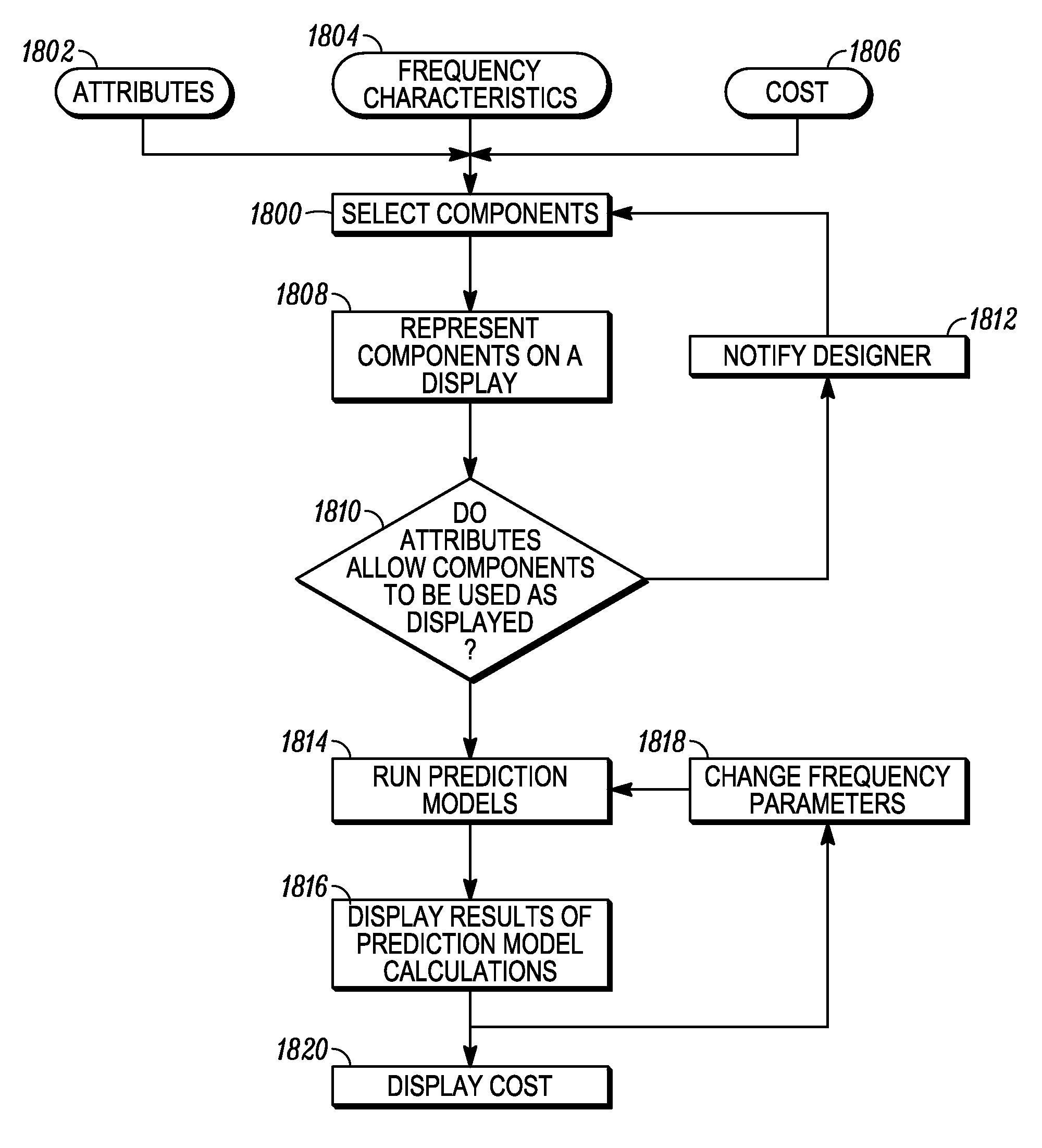

Method and system for designing or deploying a communications network which considers frequency dependent effects

InactiveUS6625454B1Simply and quickly prepareOptimize timingReceivers monitoringRadio/inductive link selection arrangementsBill of materialsDesign engineer

A computerized model provides a display of a physical environment in which a communications network is or will be installed. The communications network is comprised of several components, each of which are selected by the design engineer and which are represented in the display. Errors in the selection of certain selected components for the communications network are identified by their attributes or frequency characteristics as well as by their interconnection compatibility for a particular design. The effects of changes in frequency on component performance are modeled and the results are displayed to the design engineer. A bill of materials is automatically checked for faults and generated for the design system and provided to the design engineer. For ease of design, the design engineer can cluster several different preferred components into component kits, and then select these component kits for use in the design or deployment process.

Owner:EXTREME NETWORKS INC

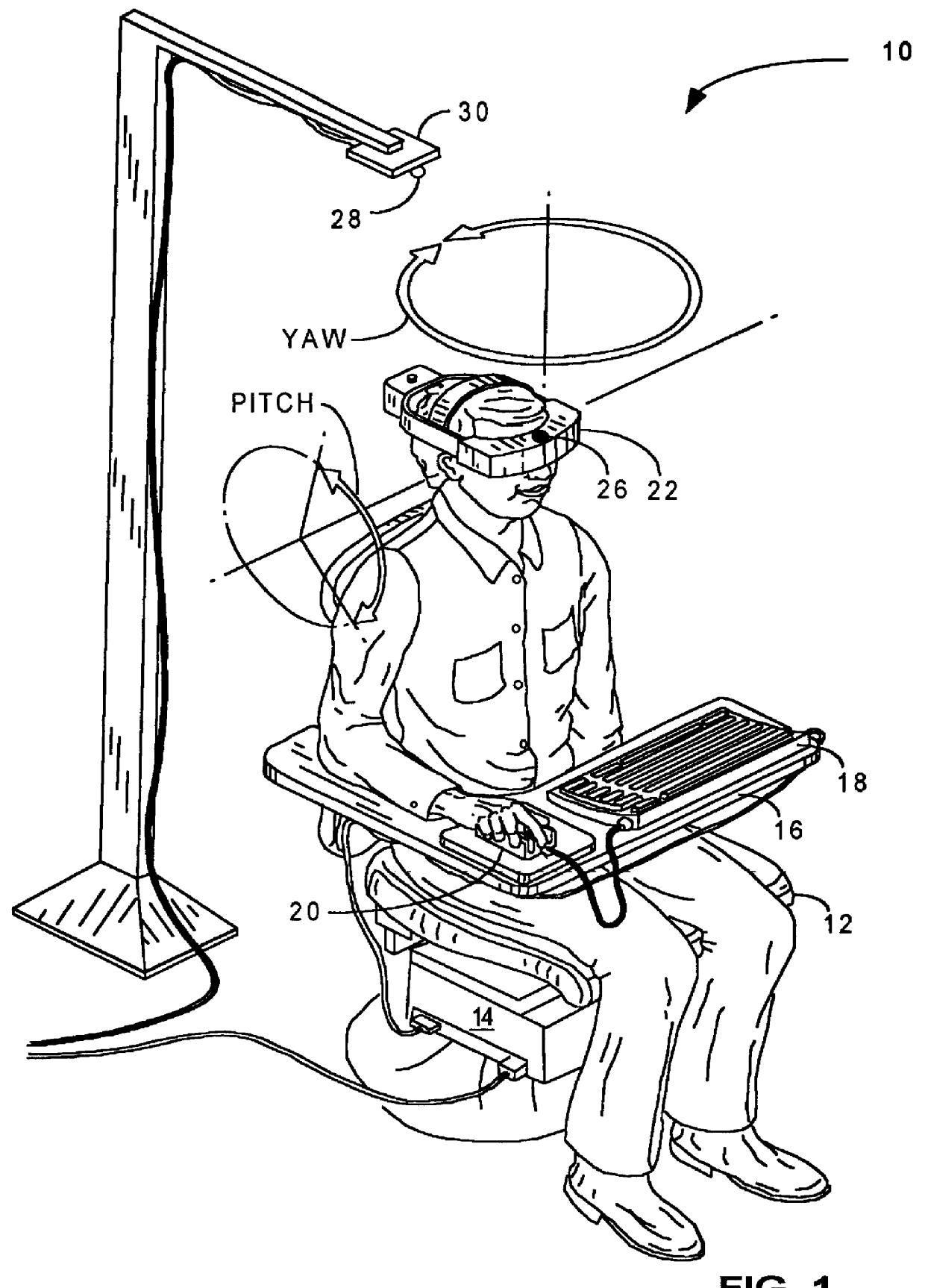

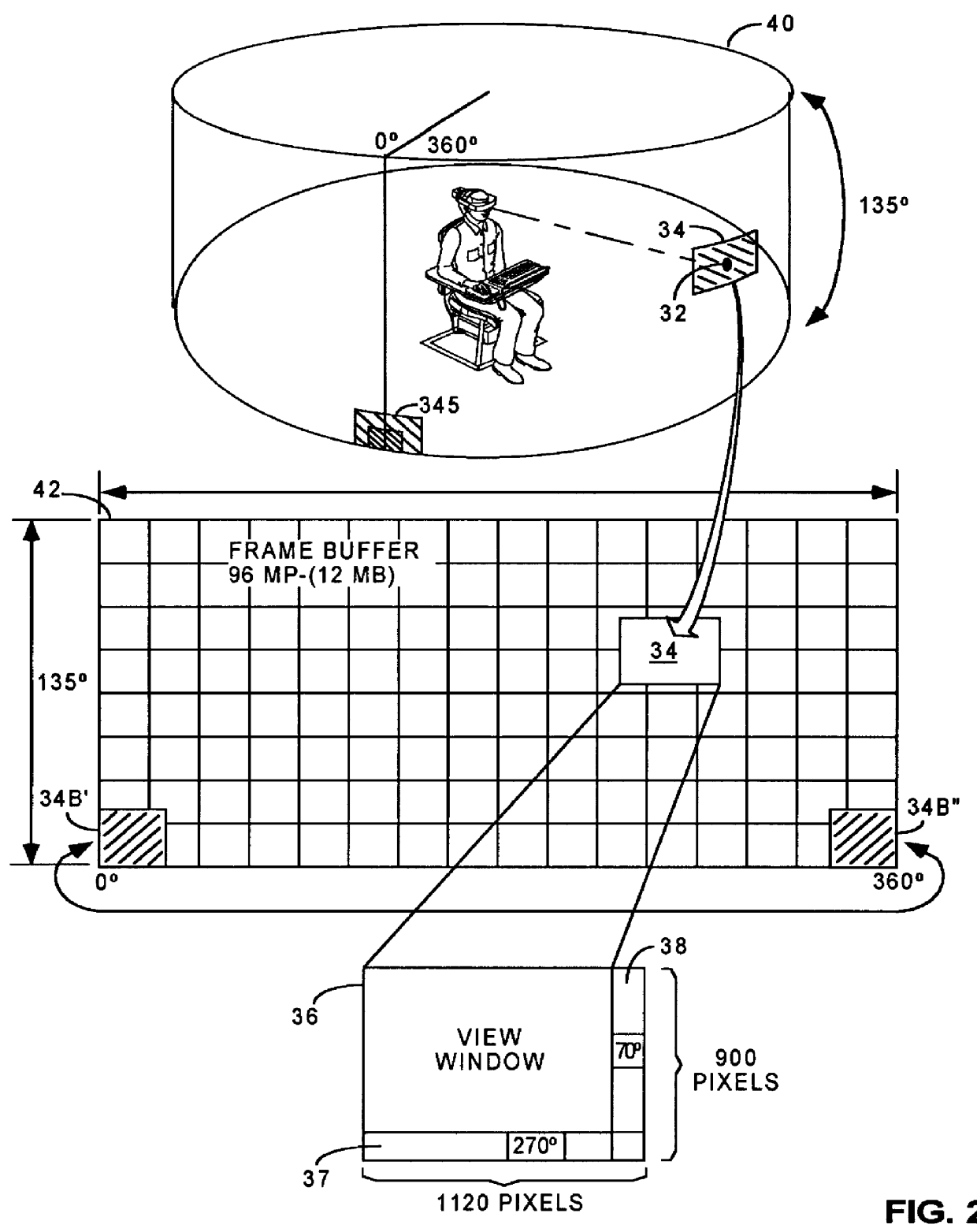

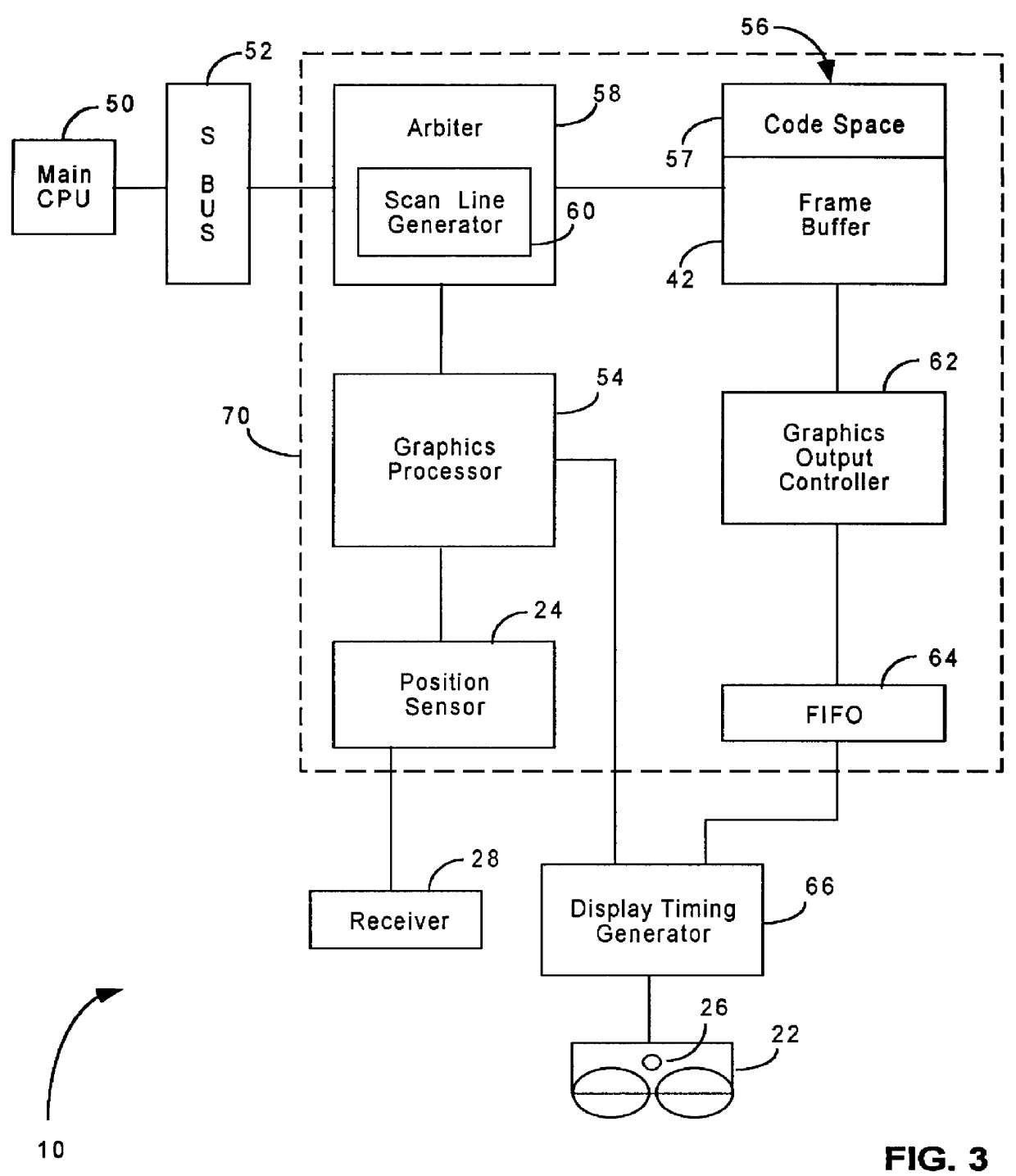

System and method for providing and using a computer user interface with a view space having discrete portions

InactiveUS6061064AEasy accessRapidly navigate through windowInput/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceApplication software

A system and method associate each of a plurality of computer applications with a corresponding physical location external to the computer and display a given one of the applications when the user focuses attention on the physical location associated with that application. Preferably the display as a view window in a graphical user interface, and the user has means for moving that window relative to the given application. The computer can be a portable and display device can be head mounted. Preferably an input device enables the user to interact with the given application, and preferably the physical locations bring to mind their associated applications. In some embodiments, an identifier, such as a bar code or a coded transmitter, is placed near each of the physical locations to help detect when the user focuses attention on that particular location. The invention also provides a head mounted unit which projects a visual image to the user wearing it. The unit also includes an object detector for generating a signal when pointed at certain objects. The object detector is mounted so a user wearing the unit can point the detector by moving his or her head. Preferably the unit includes a see through window so the user can see his or her physical surroundings. The invention also provides for a computer which includes a display, a device for generating a display object on the display, such as a cursor, and a motion sensor for causing the display object to move within the display in response to motion of the display.

Owner:SUN MICROSYSTEMS INC

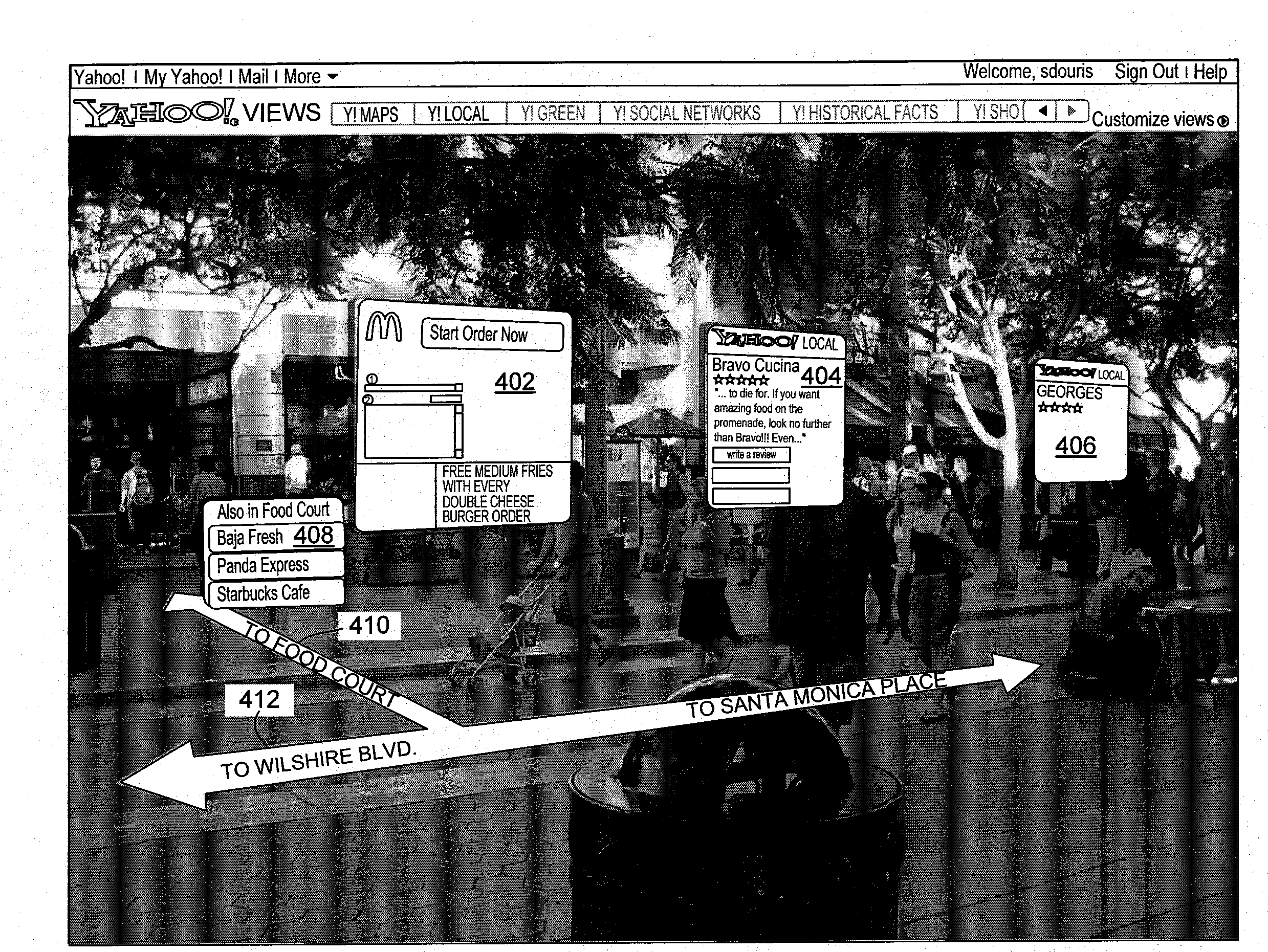

Virtual billboards

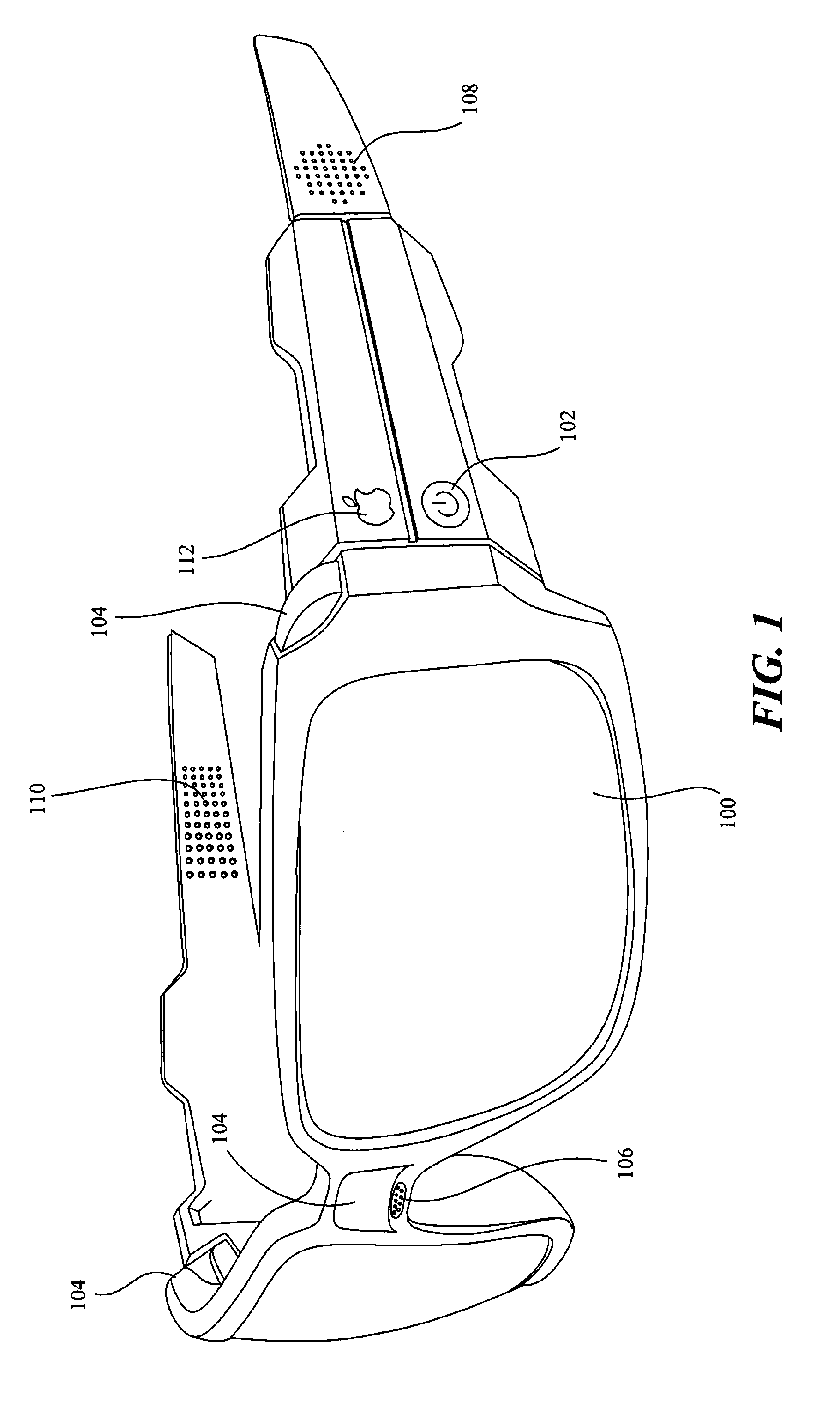

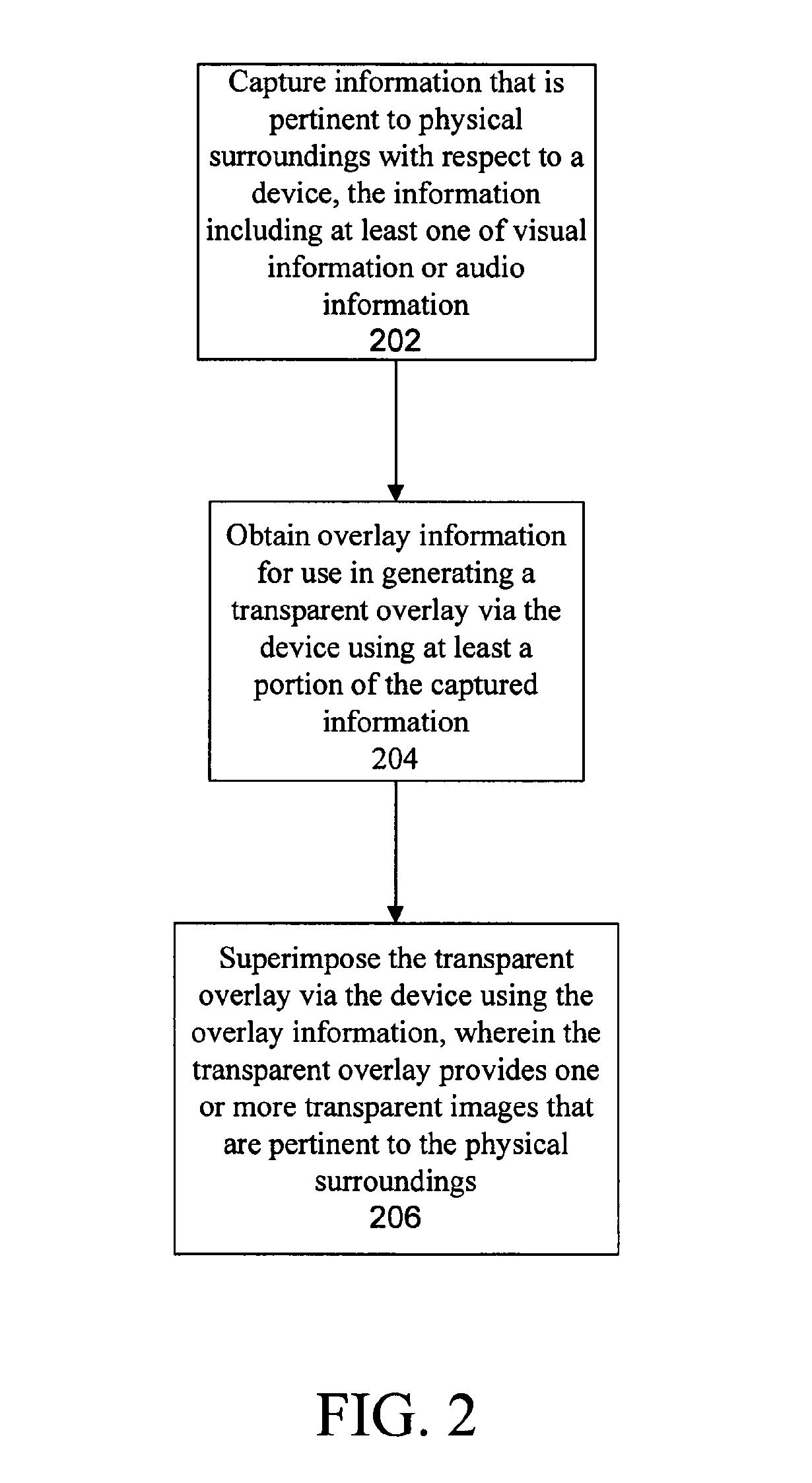

Disclosed are methods and apparatus for implementing a reality overlay device. A reality overlay device captures information that is pertinent to physical surroundings with respect to a device, the information including at least one of visual information or audio information. The reality overlay device may transmit at least a portion of the captured information to a second device. For instance, the reality overlay device may transmit at least a portion of the captured information to a server via the Internet, where the server is capable of identifying an appropriate virtual billboard. The reality overlay device may then receive overlay information for use in generating a transparent overlay via the reality overlay device. The transparent overlay is then superimposed via the device using the overlay information, wherein the transparent overlay provides one or more transparent images that are pertinent to the physical surroundings. Specifically, one or more of the transparent images may operate as “virtual billboards.” Similarly, a portable device such as a cell phone may automatically receive a virtual billboard when the portable device enters an area within a specified distance from an associated establishment.

Owner:SAMSUNG ELECTRONICS CO LTD

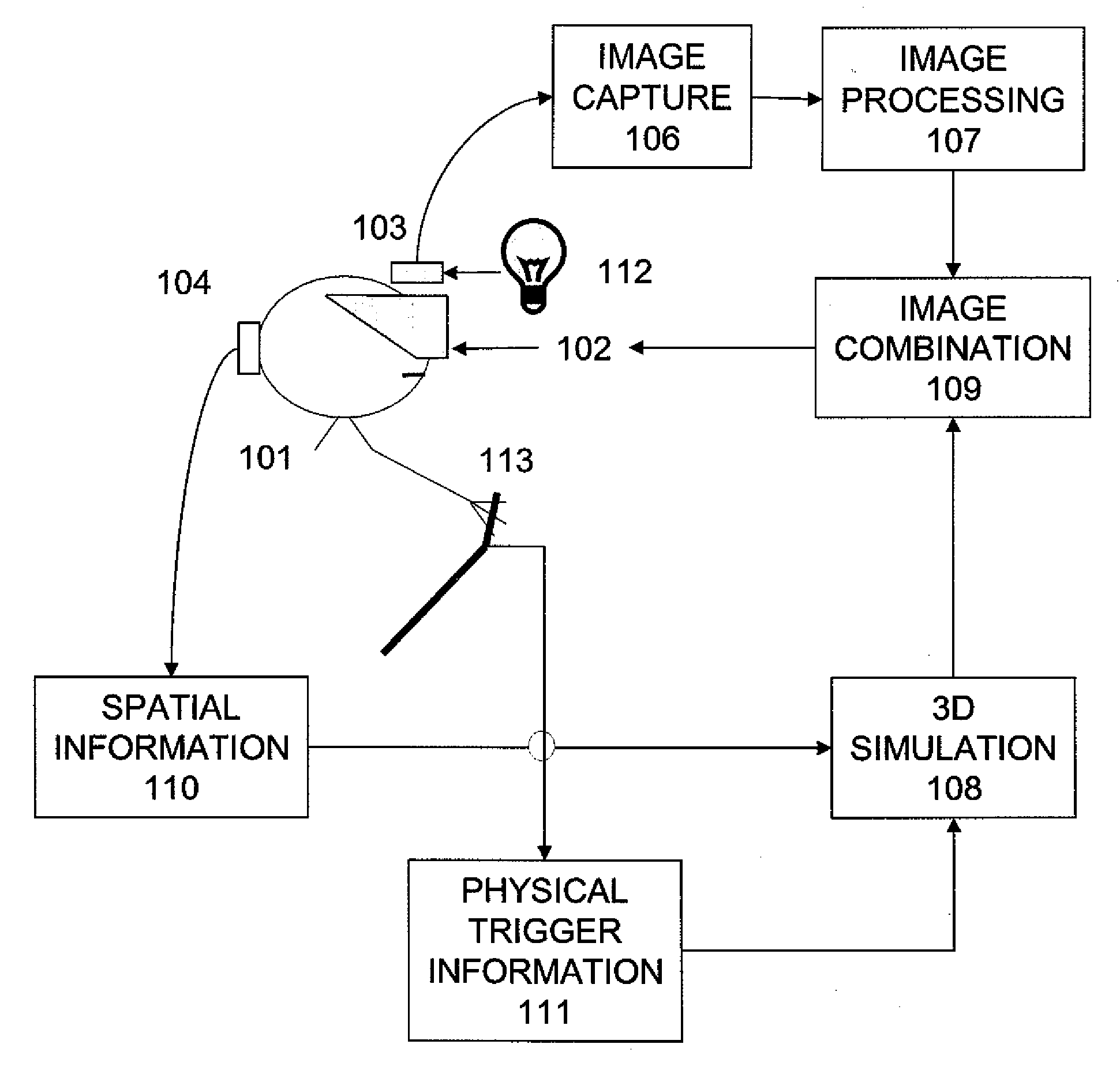

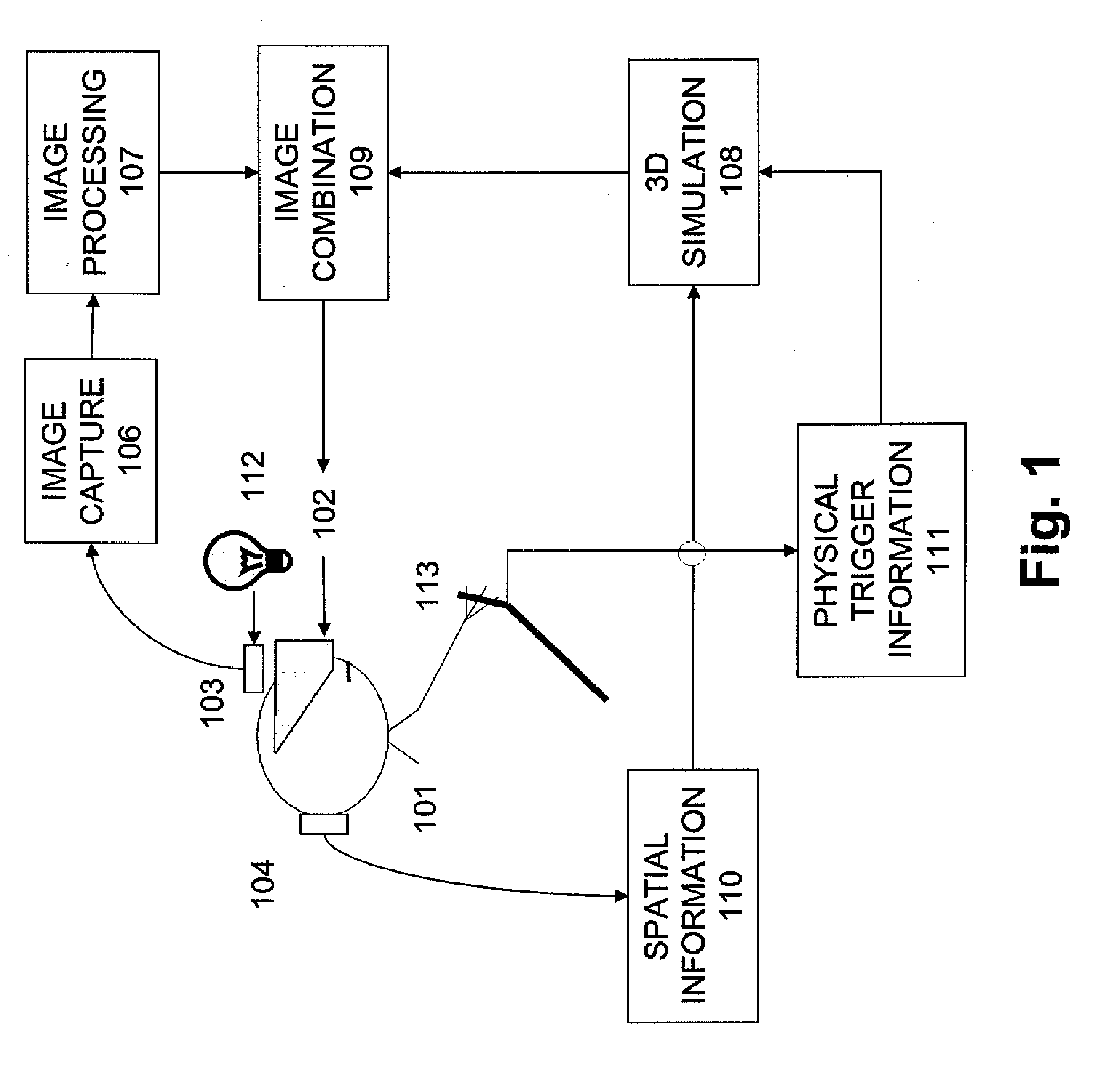

Systems and methods for combining virtual and real-time physical environments

Systems, methods and structures for combining virtual reality and real-time environment by combining captured real-time video data and real-time 3D environment renderings to create a fused, that is, combined environment, including capturing video imagery in RGB or HSV / HSV color coordinate systems and processing it to determine which areas should be made transparent, or have other color modifications made, based on sensed cultural features, electromagnetic spectrum values, and / or sensor line-of-sight, wherein the sensed features can also include electromagnetic radiation characteristics such as color, infra-red, ultra-violet light values, cultural features can include patterns of these characteristics, such as object recognition using edge detection, and whereby the processed image is then overlaid on, and fused into a 3D environment to combine the two data sources into a single scene to thereby create an effect whereby a user can look through predesignated areas or “windows” in the video image to see into a 3D simulated world, and / or see other enhanced or reprocessed features of the captured image.

Owner:BACHELDER EDWARD N +1

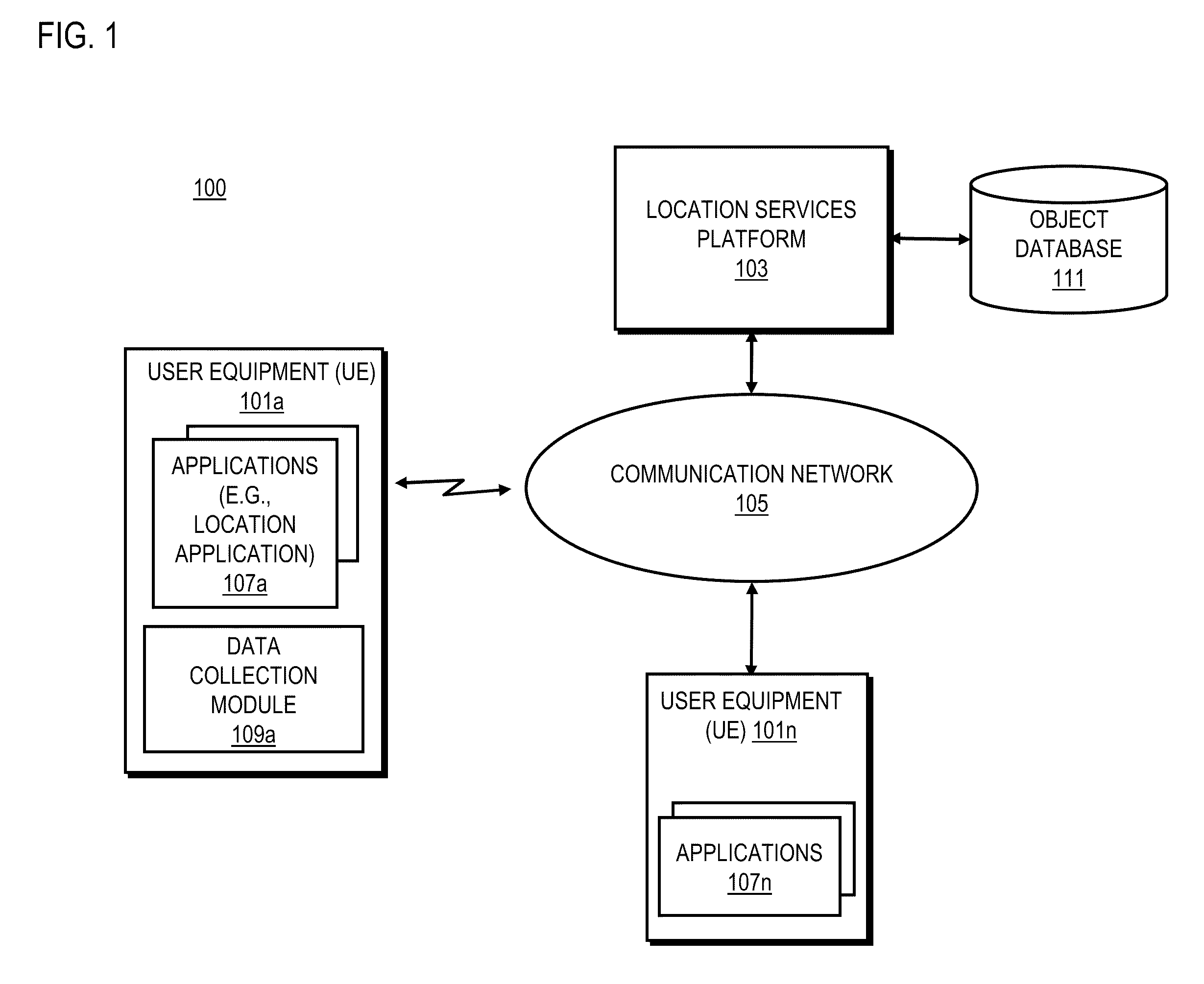

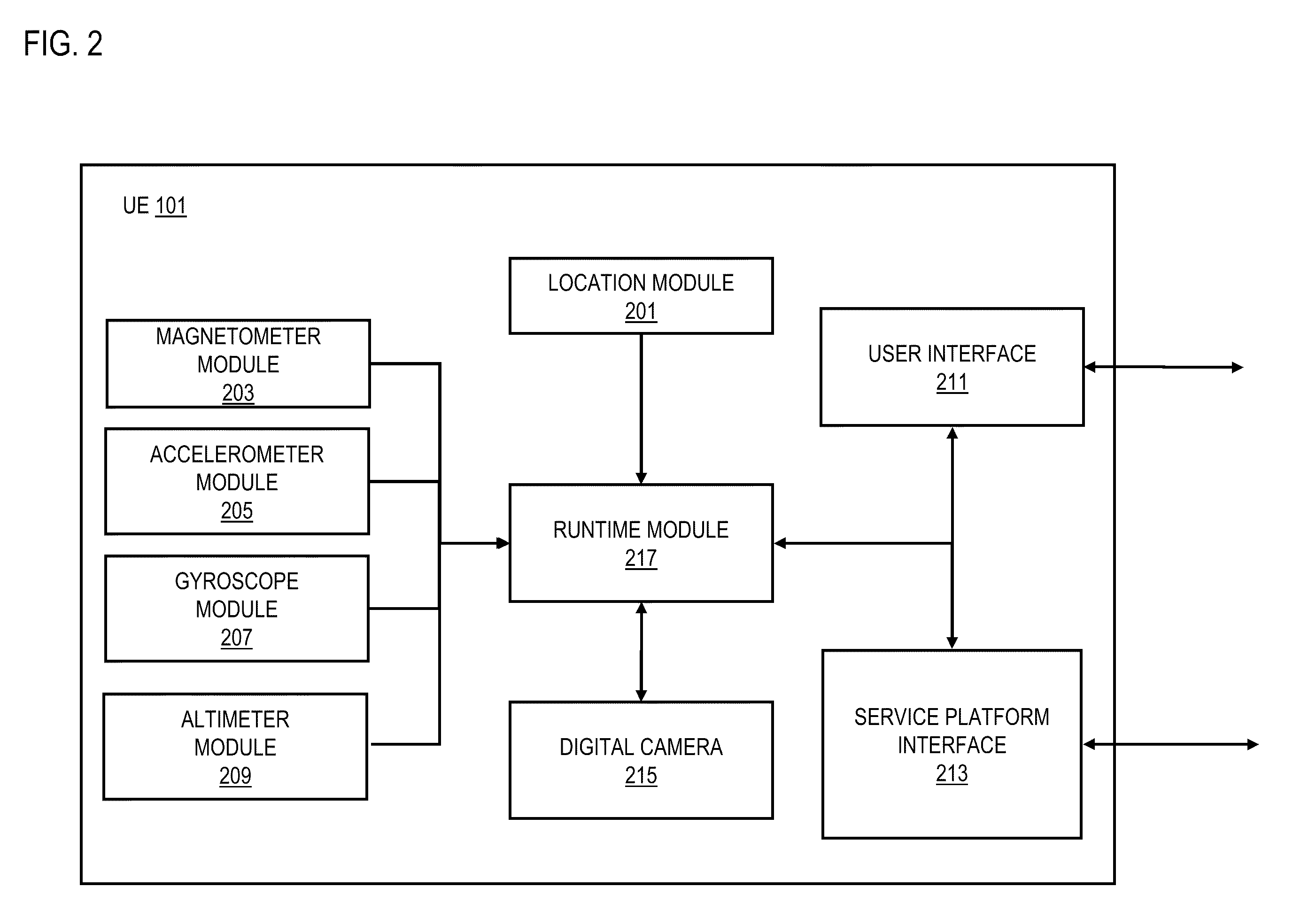

Method and apparatus for an augmented reality user interface

ActiveUS20100328344A1Digital data processing detailsCathode-ray tube indicatorsHorizonUser equipment

An approach is provided for an augmented reality user interface. An image representing a physical environment is received. Data relating to a horizon within the physical environment is retrieved. A section of the image to overlay location information based on the horizon data is determined. Presenting of the location information within the determined section to a user equipment is initiated.

Owner:NOKIA TECHNOLOGLES OY

Method and system for modeling and managing terrain, buildings, and infrastructure

ActiveUS20030023412A1Enhance on-going managementBroadcast with distributionOffice automationSpecific modelLand use

A method and system for creating, using, and managing a three-dimensional digital model of the physical environment combines outdoor terrain elevation and land-use information, building placements, heights and geometries of the interior structure of buildings, along with site-specific models of components that are distributed spatially within a physical environment. The present invention separately provides an asset management system that allows the integrated three-dimensional model of the outdoor, indoor, and distributed infrastructure equipment to communicate with and aggregate the information pertaining to actual physical components of the actual network, thereby providing a management system that can track the on-going performance, cost, maintenance history, and depreciation of multiple networks using the site-specific unified digital format.

Owner:EXTREME NETWORKS INC

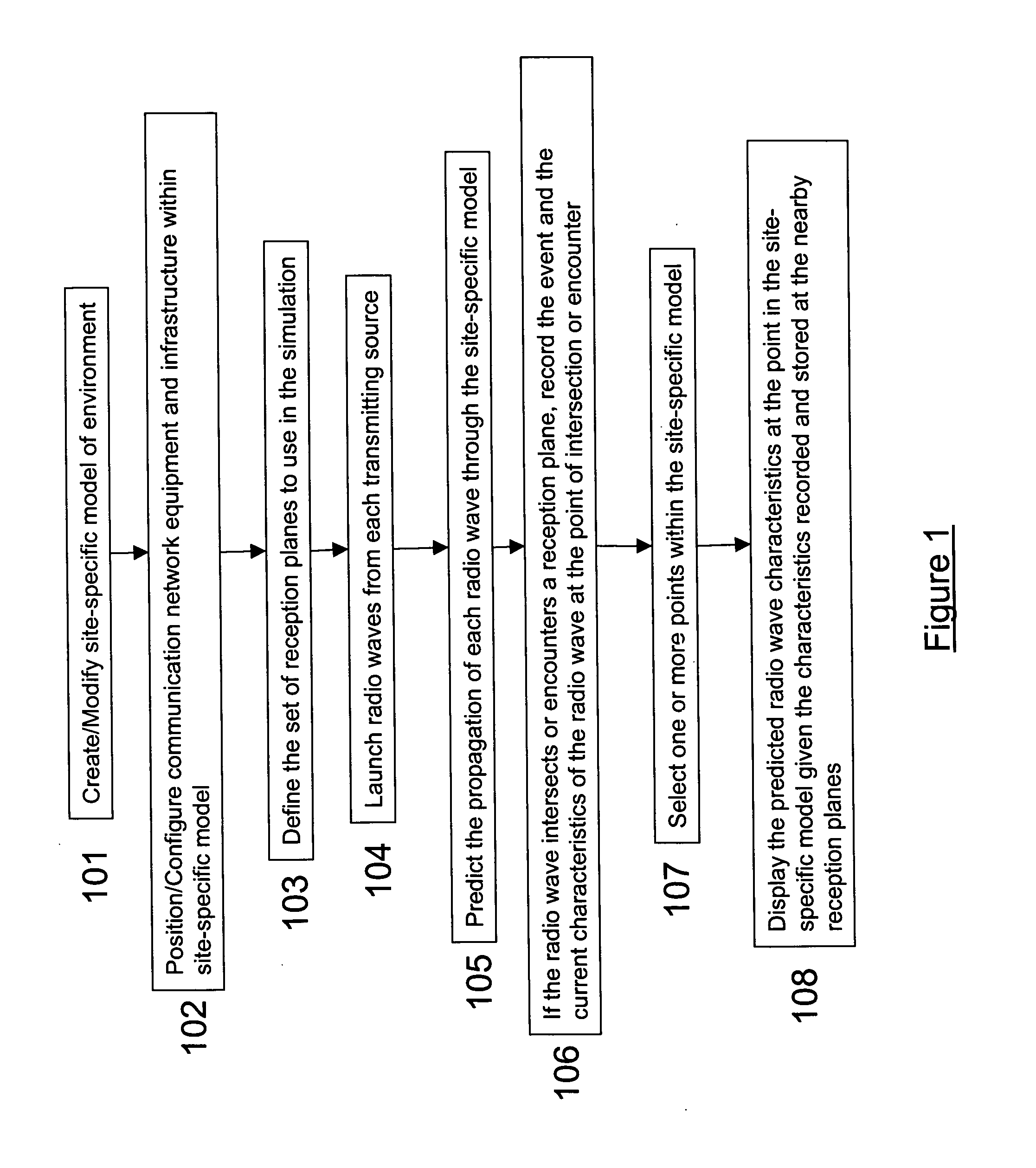

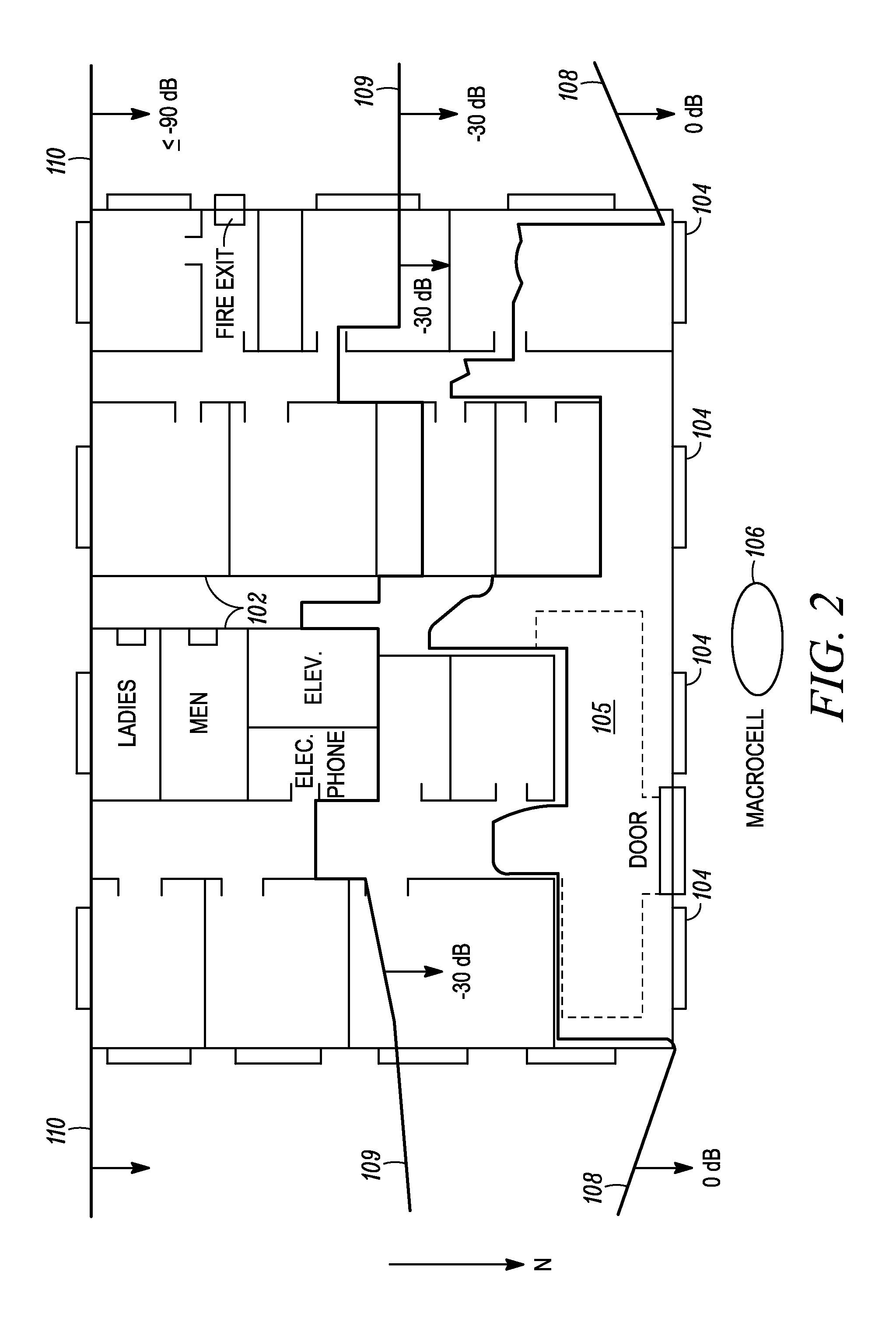

System and method for ray tracing using reception surfaces

InactiveUS20040259554A1Easy to explainQuickly and easily and inexpensivelyAntenna supports/mountingsNetwork planningSpecific modelRadio frequency

This invention provides a system and method for efficient ray tracing propagation prediction and analysis. Given a site-specific model of a physical environment, the present invention places virtual obstructions known as reception surfaces within the environment. As radio waves are predicted to propagate through the environment and intersect with or encounter reception surfaces, the characteristics of the radio wave are captured and stored relative to the location of the interaction with the reception surface. The radio frequency channel environment at any point within the site-specific model can be derived through analysis of the radio wave characteristics captured at nearby reception surfaces.

Owner:WIRELESS VALLEY COMM

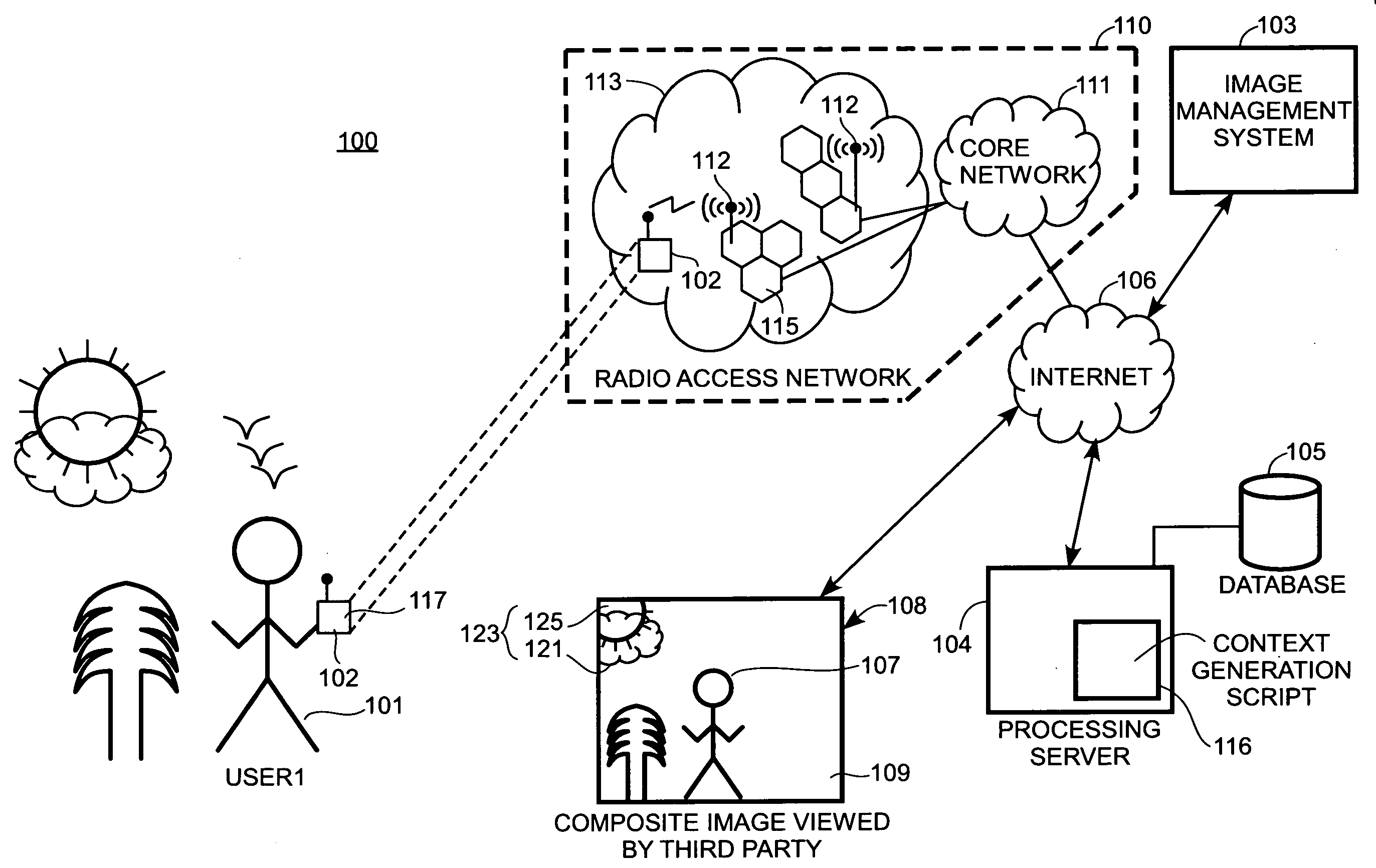

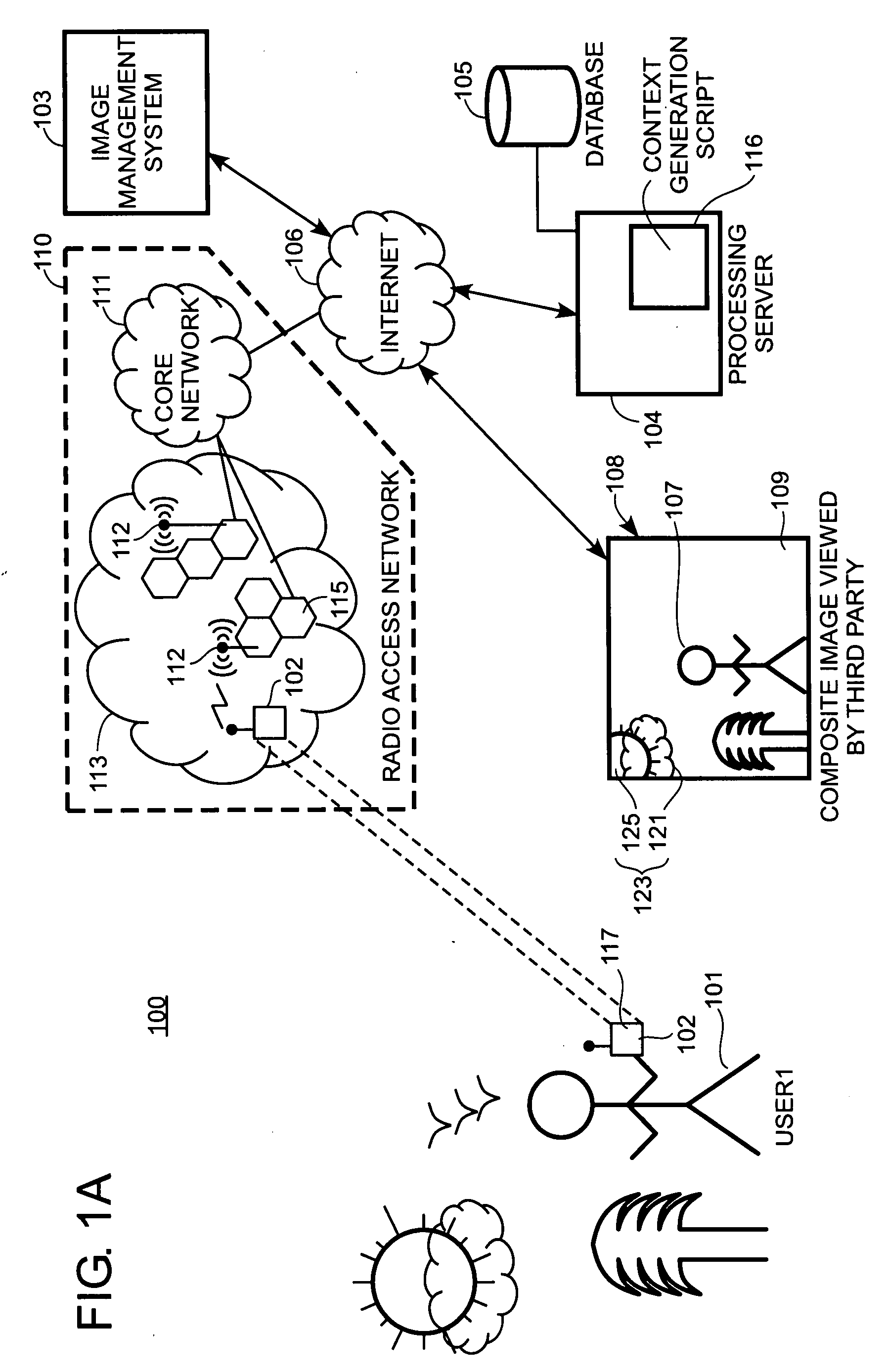

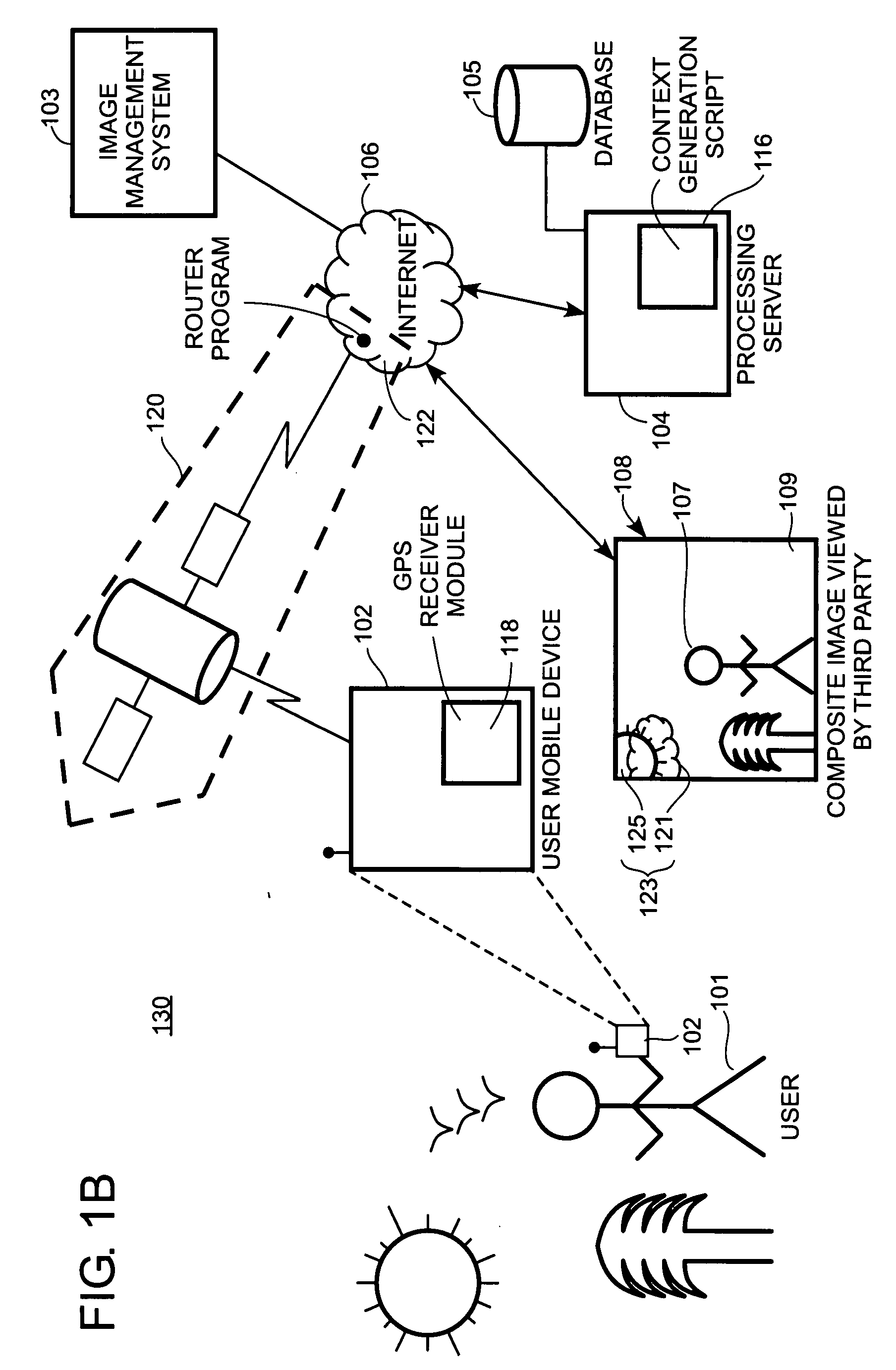

Context avatar

Methods and systems for generating information about a physical context of a user are provided. These methods and systems provide the capability to render a context avatar associated with the user as a composite image that can be broadcast in virtual environments to provide information about the physical context of the user. The composite image can be automatically updated without user intervention to include, among other things, a virtual person image of the user and a background image defined by encoded image data associated with the current geographic location of the user.

Owner:STARBOARD VALUE INTERMEDIATE FUND LP AS COLLATERAL AGENT

Assisted Guidance and Navigation Method in Intraoral Surgery

InactiveUS20140234804A1Shorten the construction periodGood curative effectRadiation diagnostic image/data processingTeeth fillingVisual positioningSurgical department

An assisted guidance and navigation method in intraoral surgeries is a method using computerized tomography (CT) photography and an optical positioning system to track medical appliances, the method including: first providing an optical positioning treatment instrument and an optical positioning device; then obtaining image data of the intraoral tissue receiving treatment through CT photography, precisely displaying actions of the treatment instrument in the image data, and real-time checking an image and guidance and navigation. Therefore, during the surgery, the existing use habits of the physicians are not affected and accurate and convenient auxiliary information is provided, and attention is paid to using the treatment instrument in physical environments such as a patient's tooth or dental model.

Owner:HUANG JERRY T +1

System and method for design, tracking, measurement, prediction and optimization of data communication networks

InactiveUS20050265321A1Quickly and easily designQuick and easy measurementError preventionFrequency-division multiplex detailsRadio propagationSpecific model

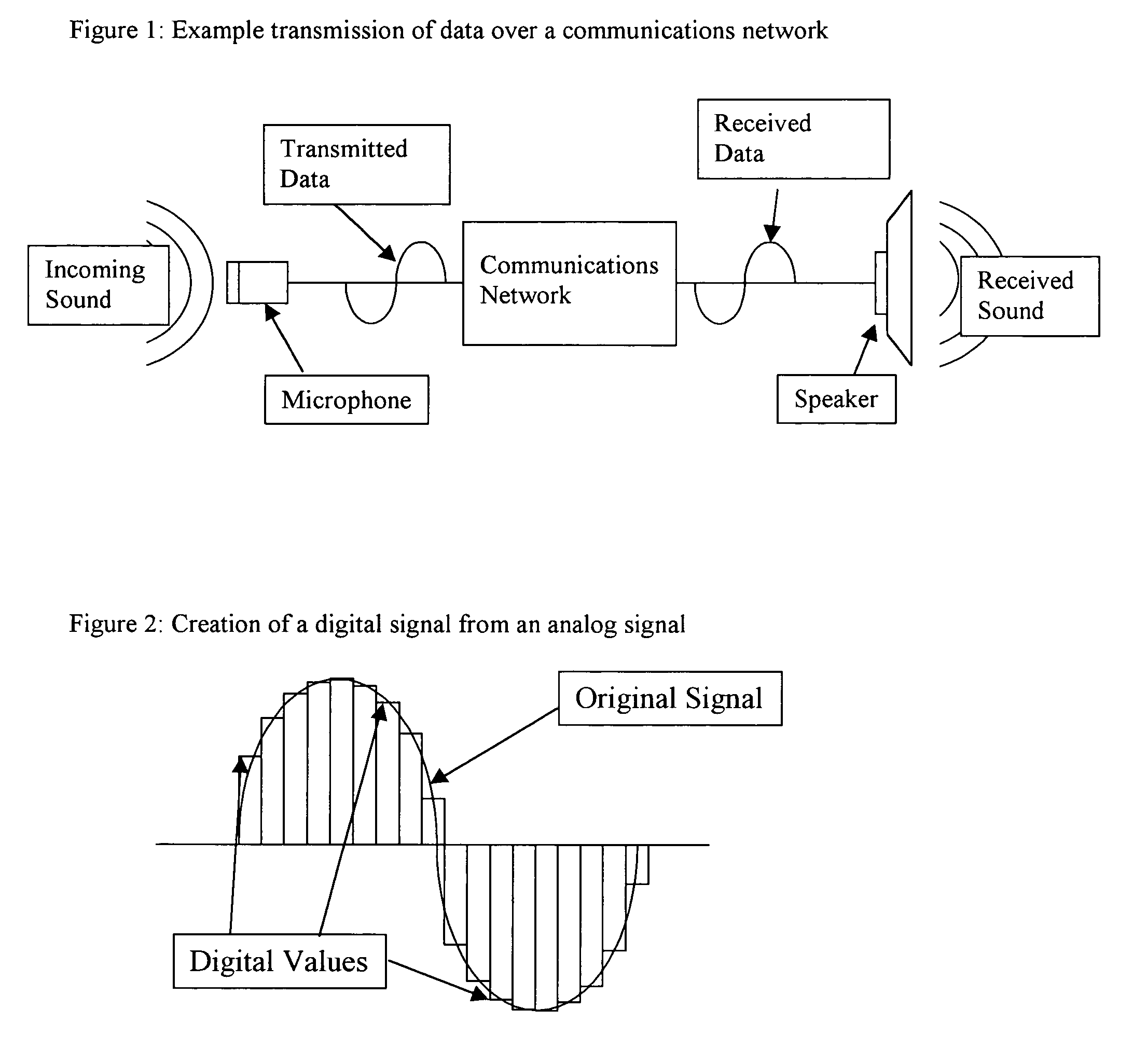

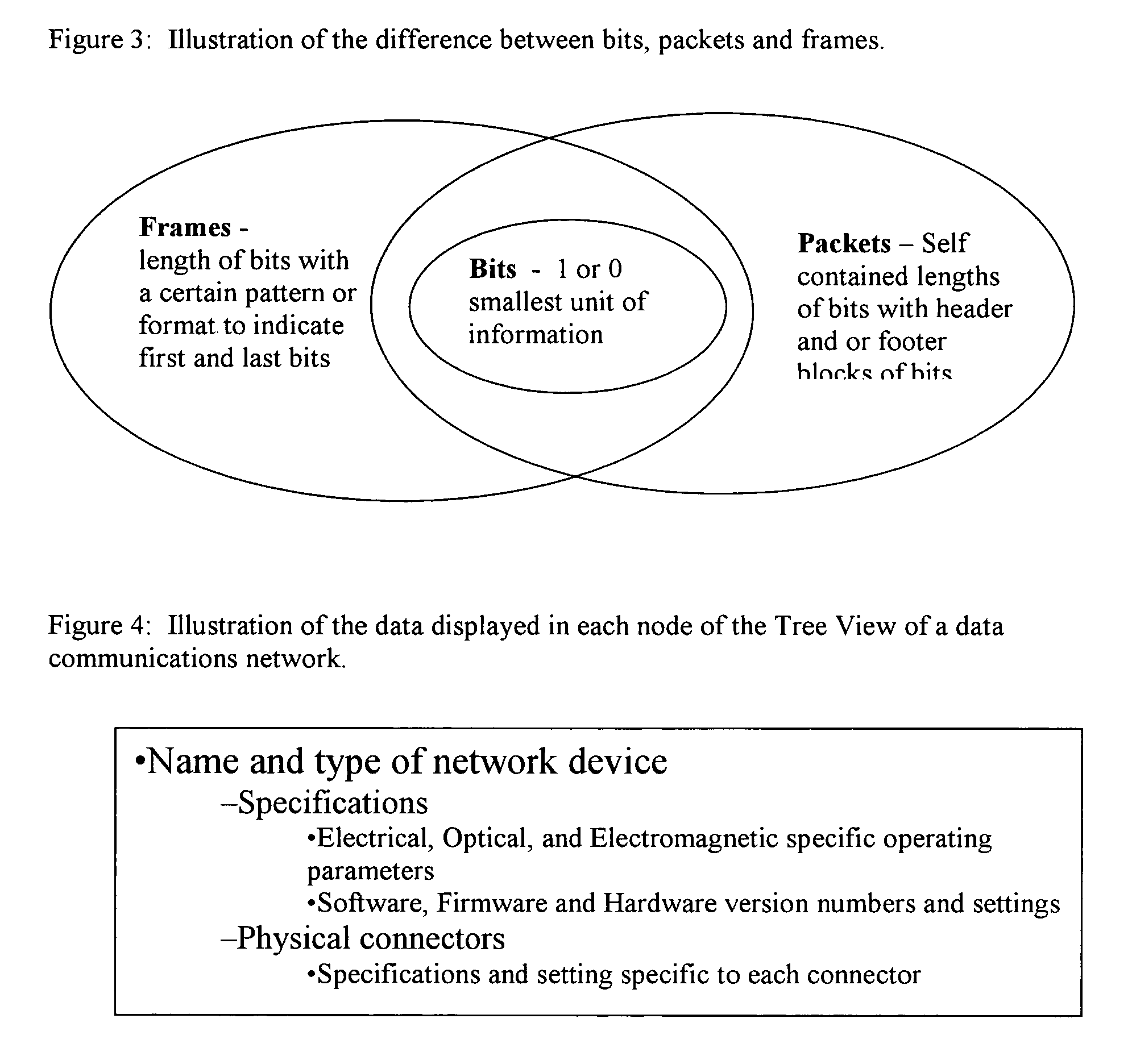

A system and method for design, tracking, measurement, prediction and optimization of data communications networks includes a site specific model of the physical environment, and performs a wide variety of different calculations for predicting network performance using a combination of prediction modes and measurement data based on the components used in the communications networks, the physical environment, and radio propagation characteristics.

Owner:EXTREME NETWORKS INC

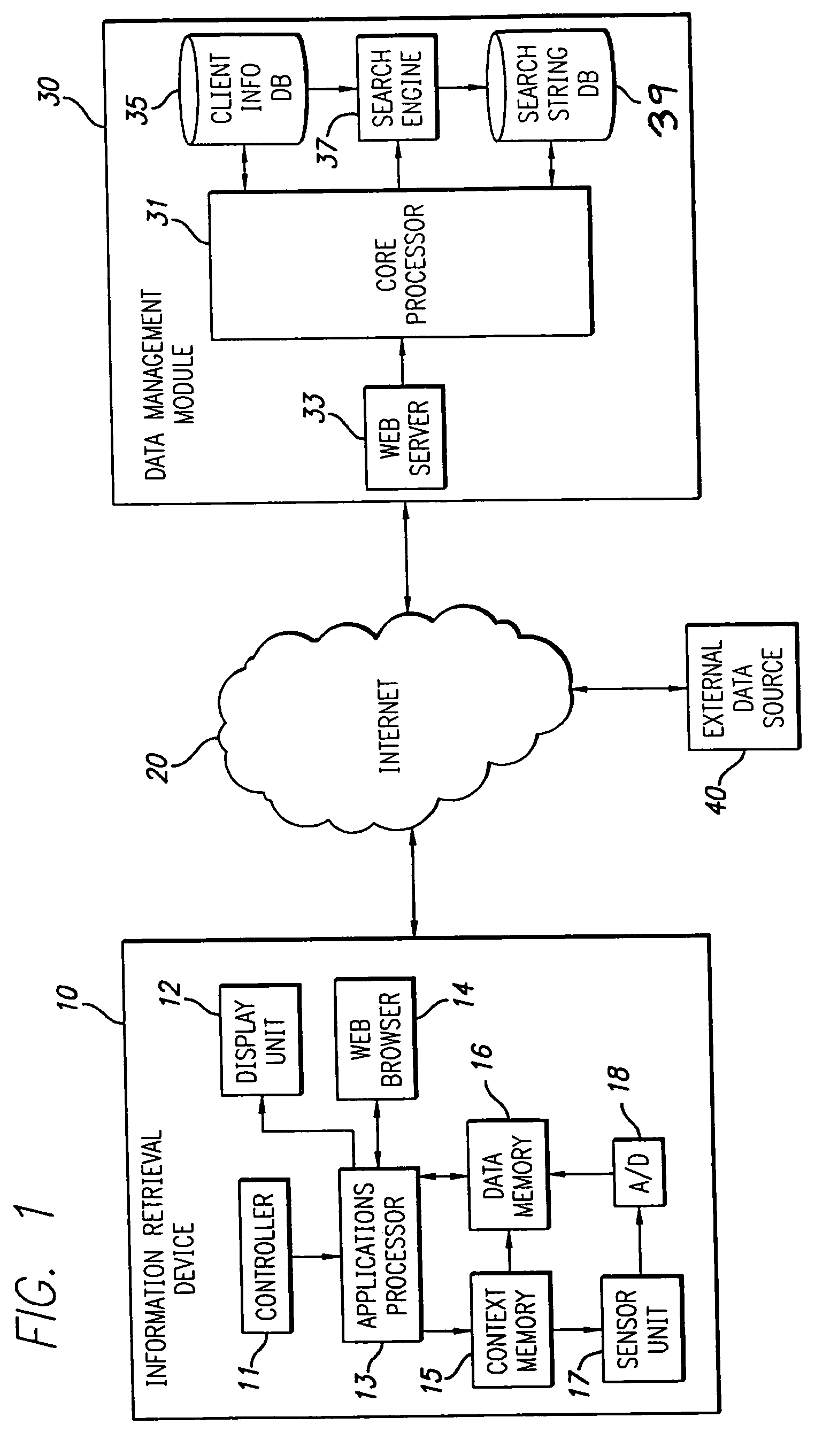

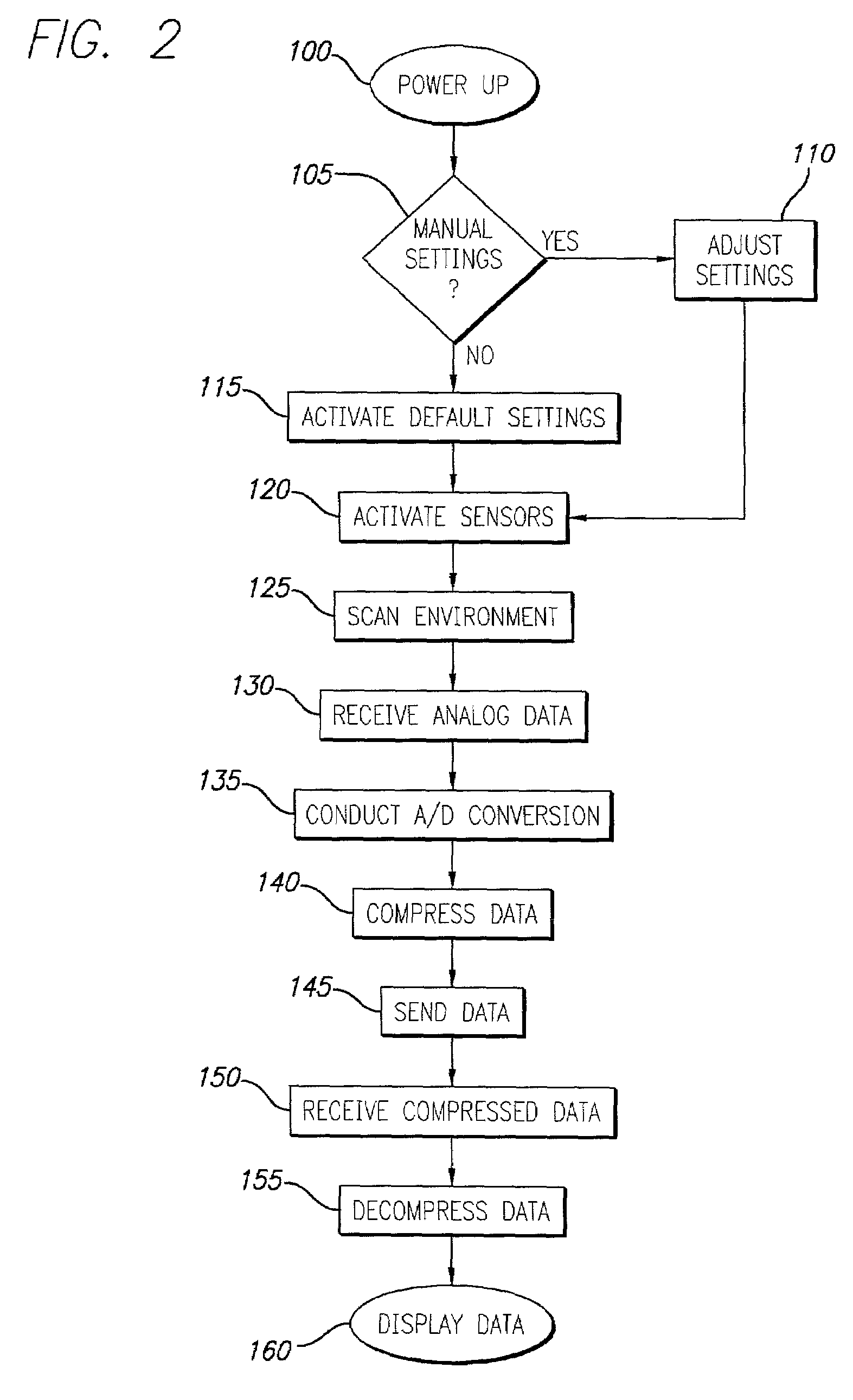

Method and apparatus for delivering content via information retrieval devices

InactiveUS7228327B2Digital data information retrievalElectrical apparatusInformation typeReal-time data

A method for anticipating a user's desired information using a PDA device connected to a computer network is provided. This method further comprises maintaining a database of user tendencies within the computer network, receiving sensor data from the user's physical environment via the PDA device, generating query strings using both tendency data and sensor data, retrieving data from external data sources using these generated query strings, organizing the retrieved data into electronic folders, and delivering this organized data to the user via the PDA device. In particular, a data management module anticipates the type of information a user desires by combining real time data taken from a sensor unit within a PDA and data regarding the history of that particular user's tendencies stored within the data management module.

Owner:INTELLECTUAL VENTURES I LLC

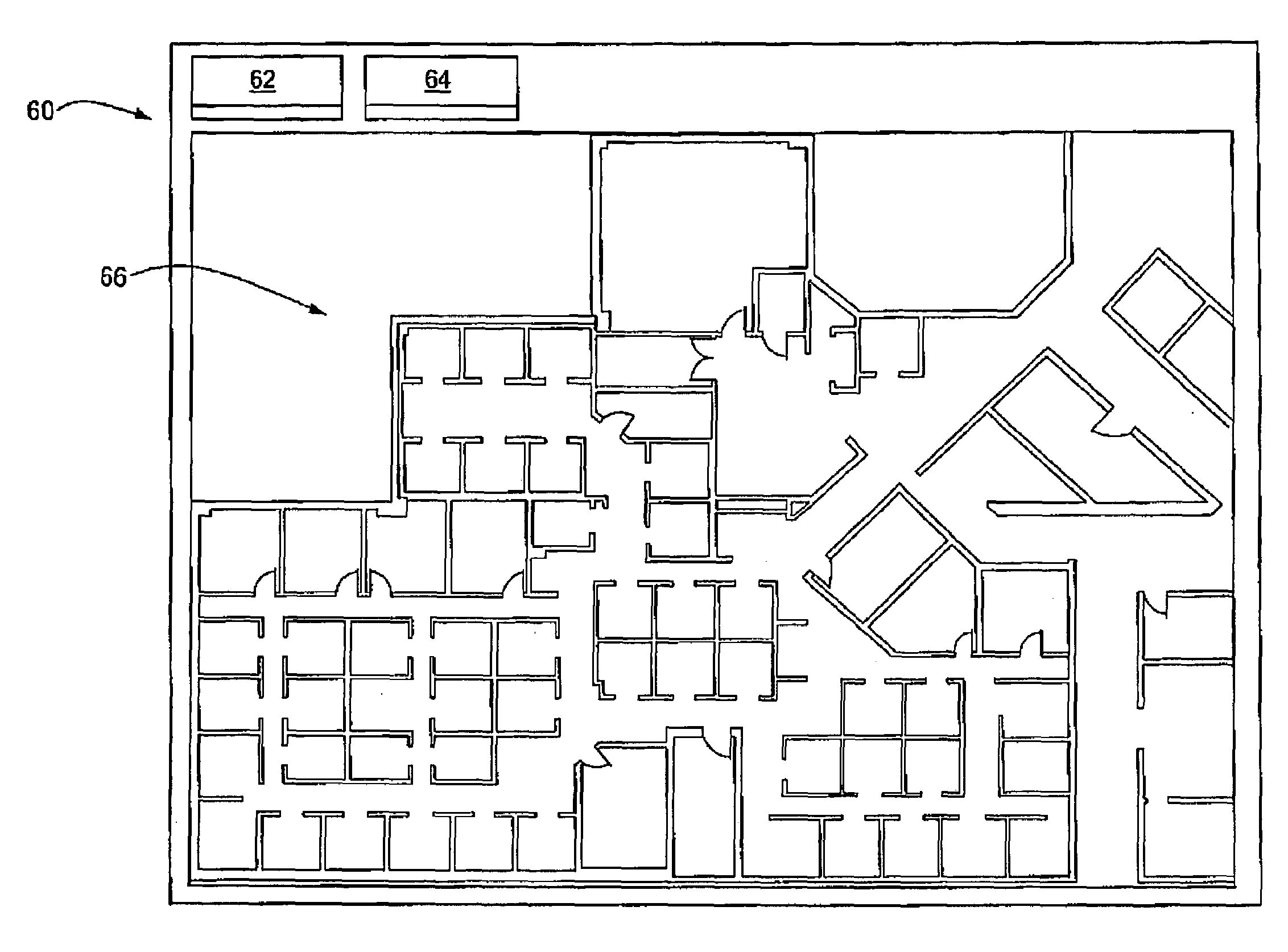

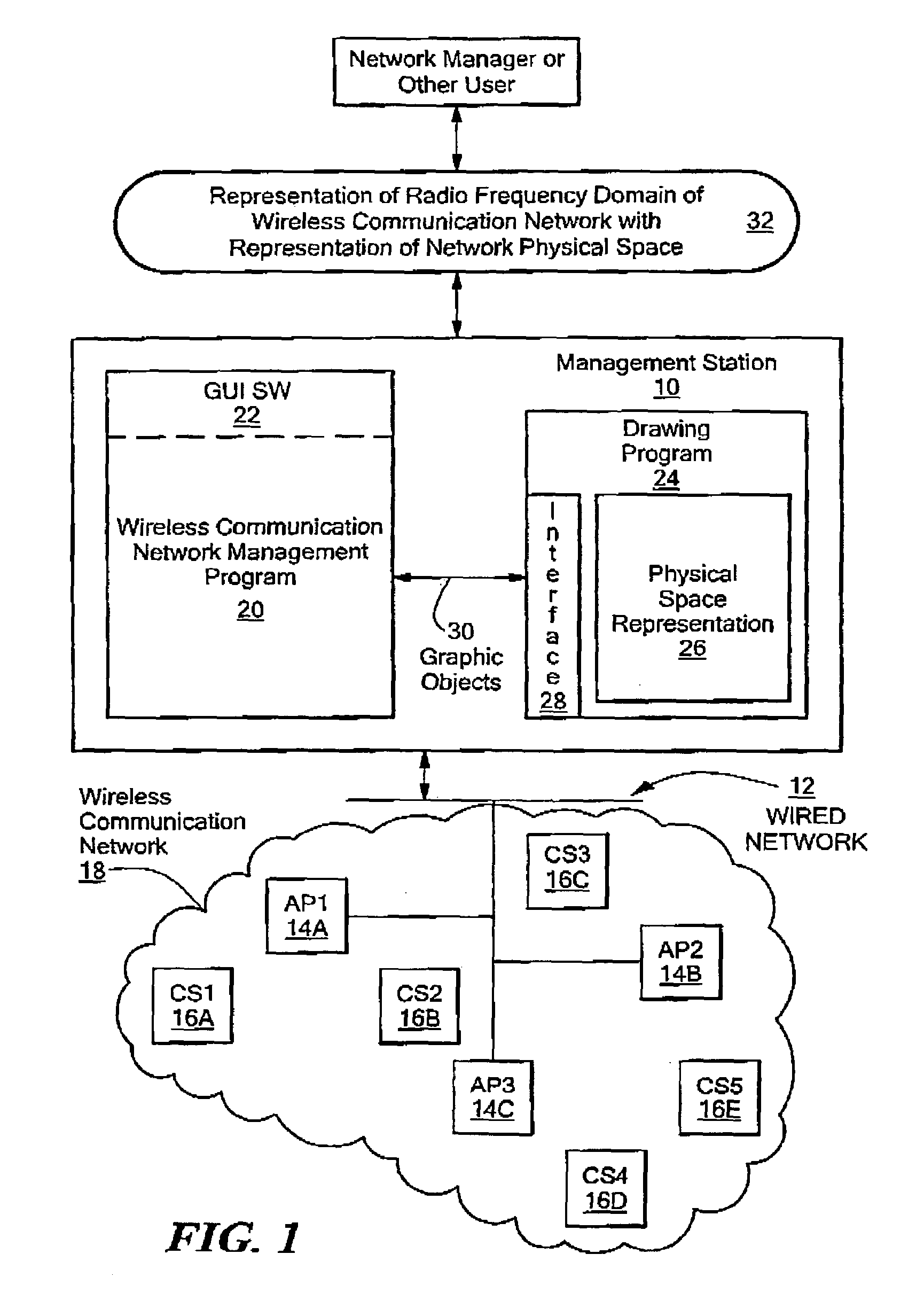

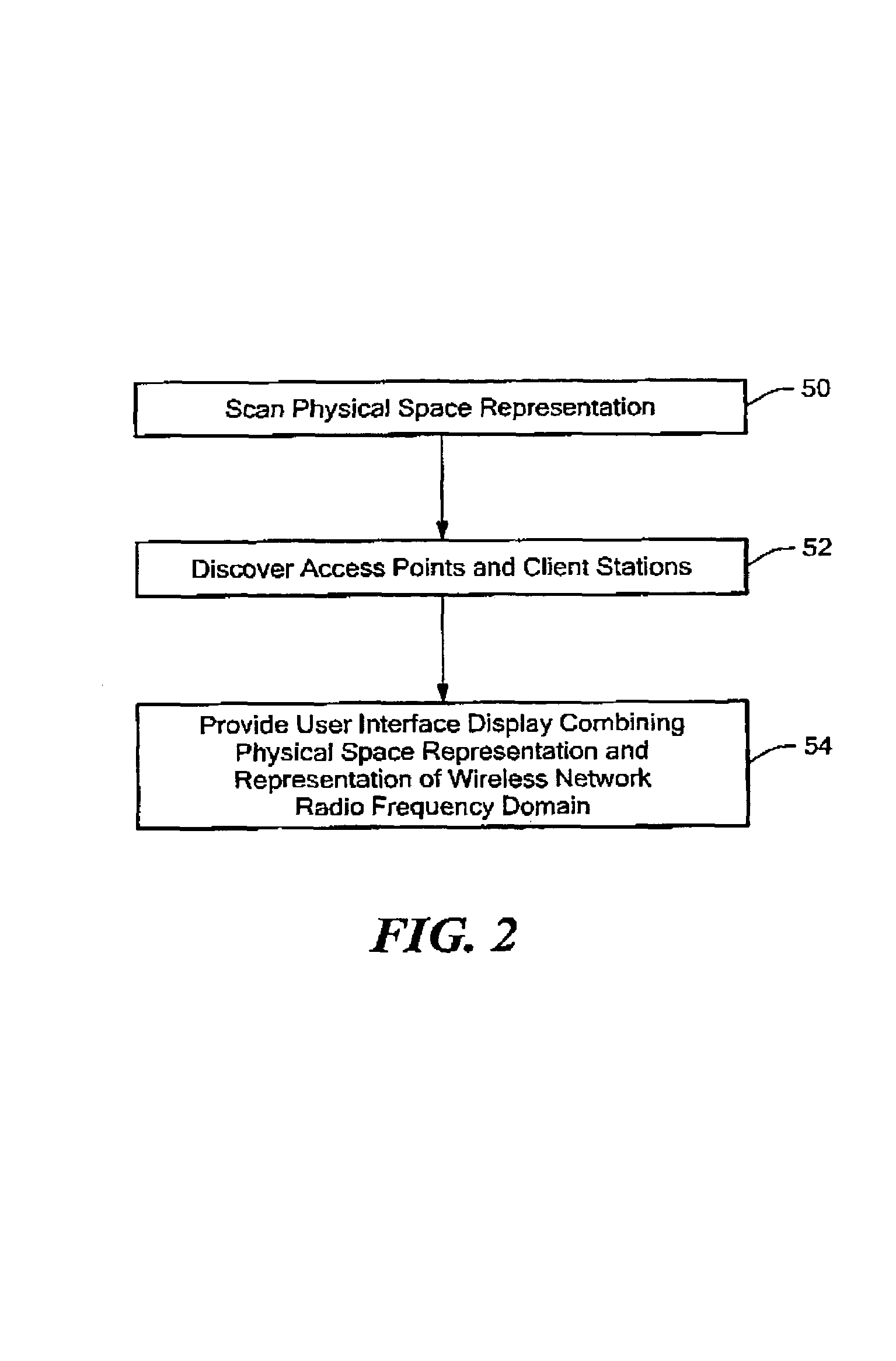

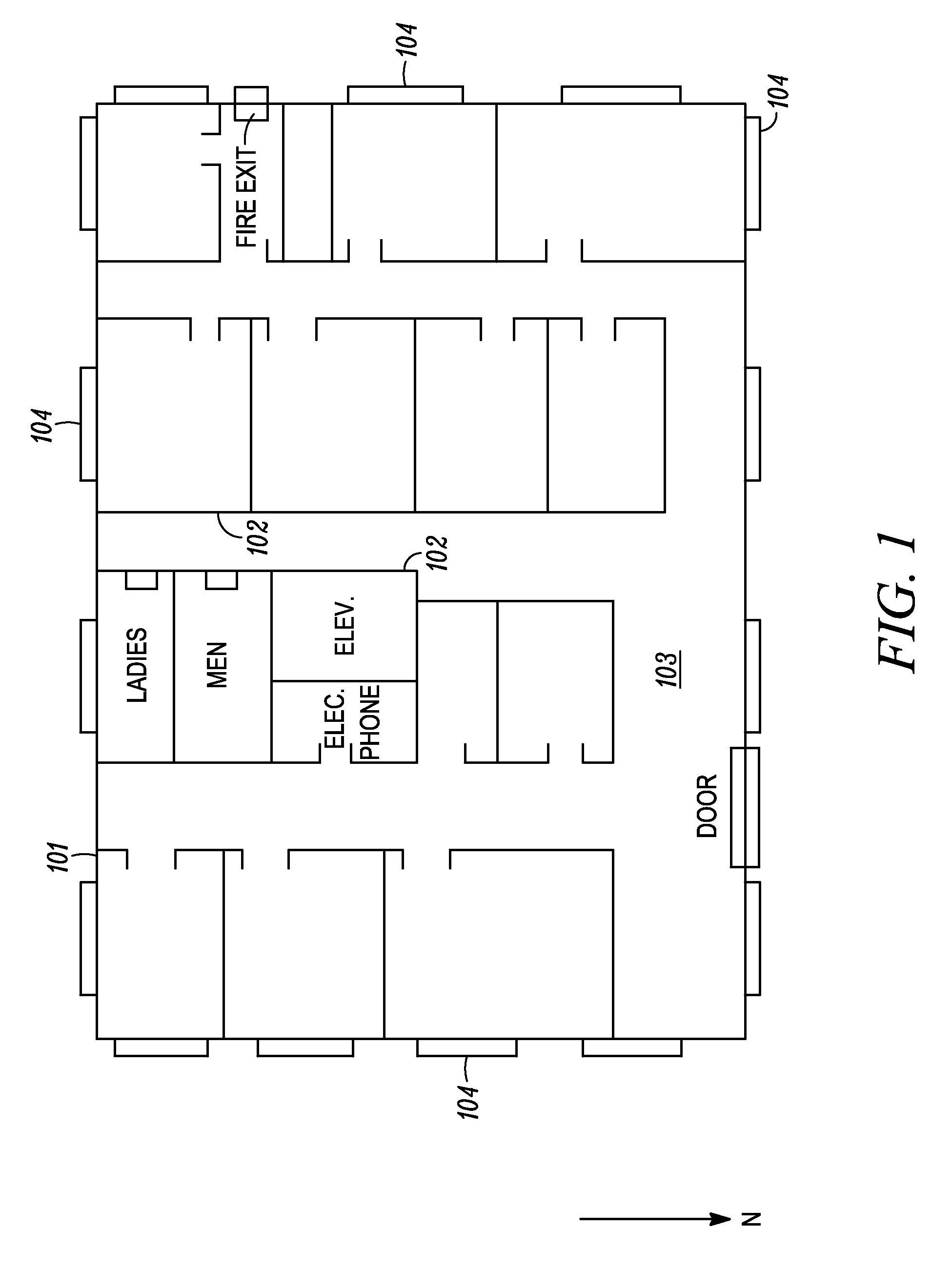

Automatically populated display regions for discovered access points and stations in a user interface representing a wireless communication network deployed in a physical environment

ActiveUS7043277B1Easy to placeRadio/inductive link selection arrangementsSubstation equipmentDrag and dropTelecommunications

A system for providing automatically populated display regions showing discovered access points and stations in a user interface representing a wireless communication network deployed in a physical environment. A generated user interface includes a first display region in which access point representations are displayed as corresponding access points are automatically discovered within the wireless network, and a second display region in which station representations are displayed as corresponding stations are automatically discovered within the wireless network. The first and second display regions are provided external to another display region, referred to as the “physical space” display region, in which is shown a representation of the physical space in which the wireless network is deployed. As the access point and station representations appear in the first display region and second display region, the user can manipulate them, for example by drag and drop operations using a computer mouse, in order to place them appropriately in the physical space display region. In response, the disclosed system superimposes the access point and station representations over the physical space representation. The access point and station representations can subsequently be similarly repositioned by the user within the physical space representation.

Owner:AI-CORE TECH LLC

Method and system for designing or deploying a communications network which considers component attributes

InactiveUS7085697B1Simply and quickly prepareOptimize timingAnalogue computers for electric apparatusRadio/inductive link selection arrangementsBill of materialsDisplay device

A computerized model provides a display of a physical environment in which a communications network is or will be installed. The communications network is comprised of several components, each of which are selected by the design engineer and which are represented in the display. Errors in the selection of certain selected components for the communications network are identified by their attributes or frequency characteristics as well as by their interconnection compatability for a particular design. The effects of changes in frequency on component performance are modeled and the results are displayed to the design engineer. A bill of materials is automatically checked for faults and generated for the design system and provided to the design engineer. For ease of design, the design engineer can cluster several different preferred components into component kits, and then select these component kits for use in the design or deployment process.

Owner:EXTREME NETWORKS INC

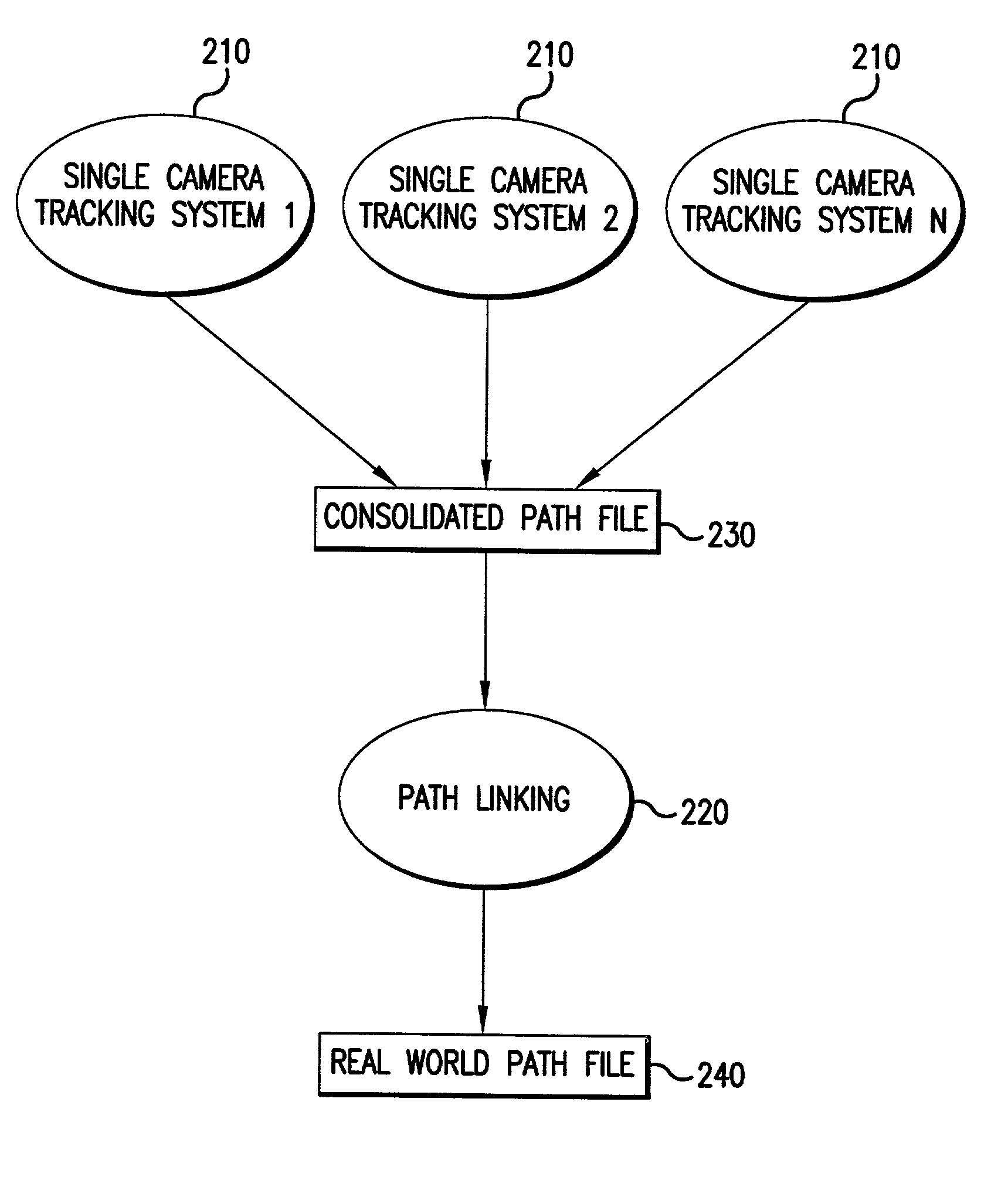

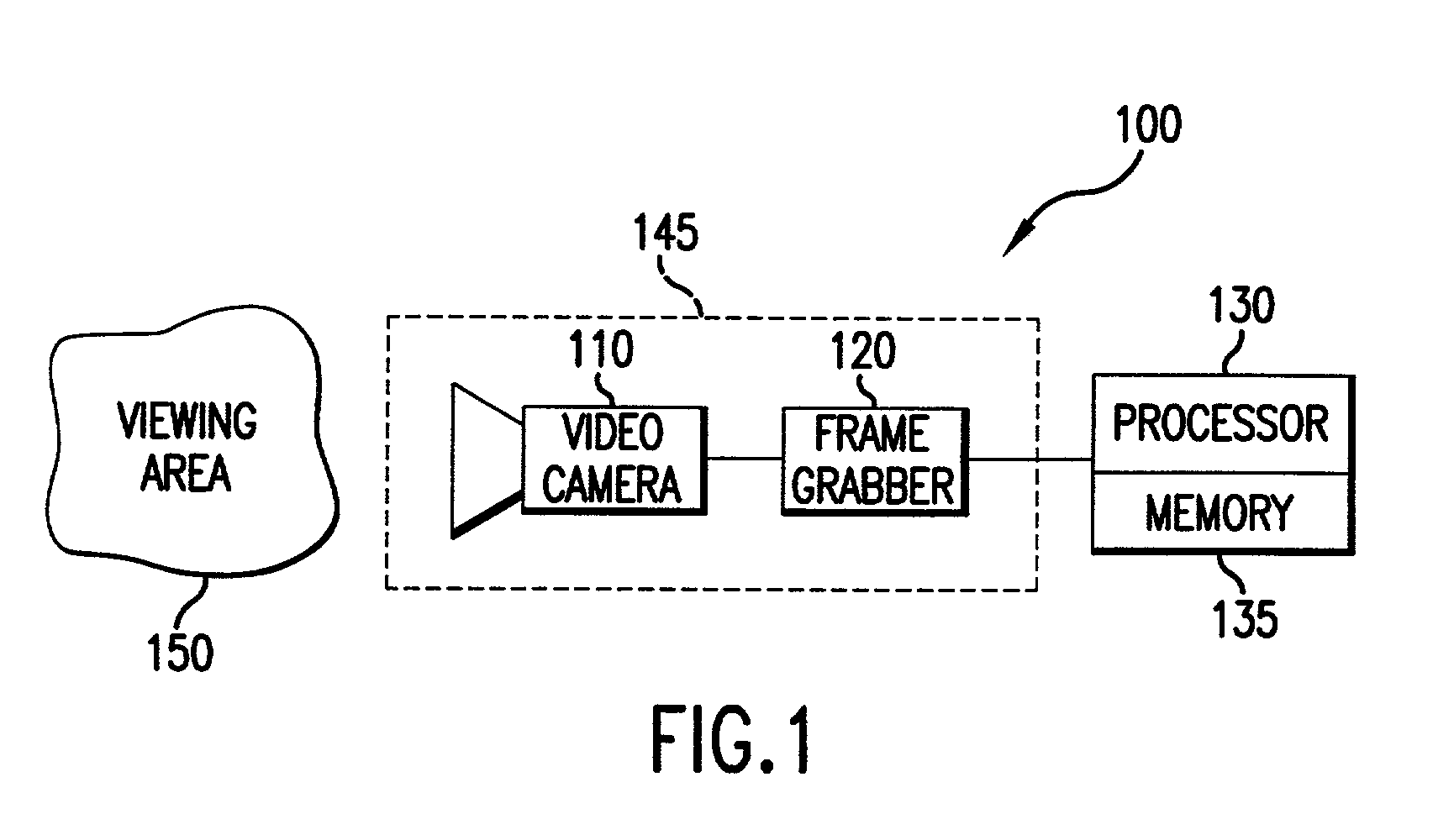

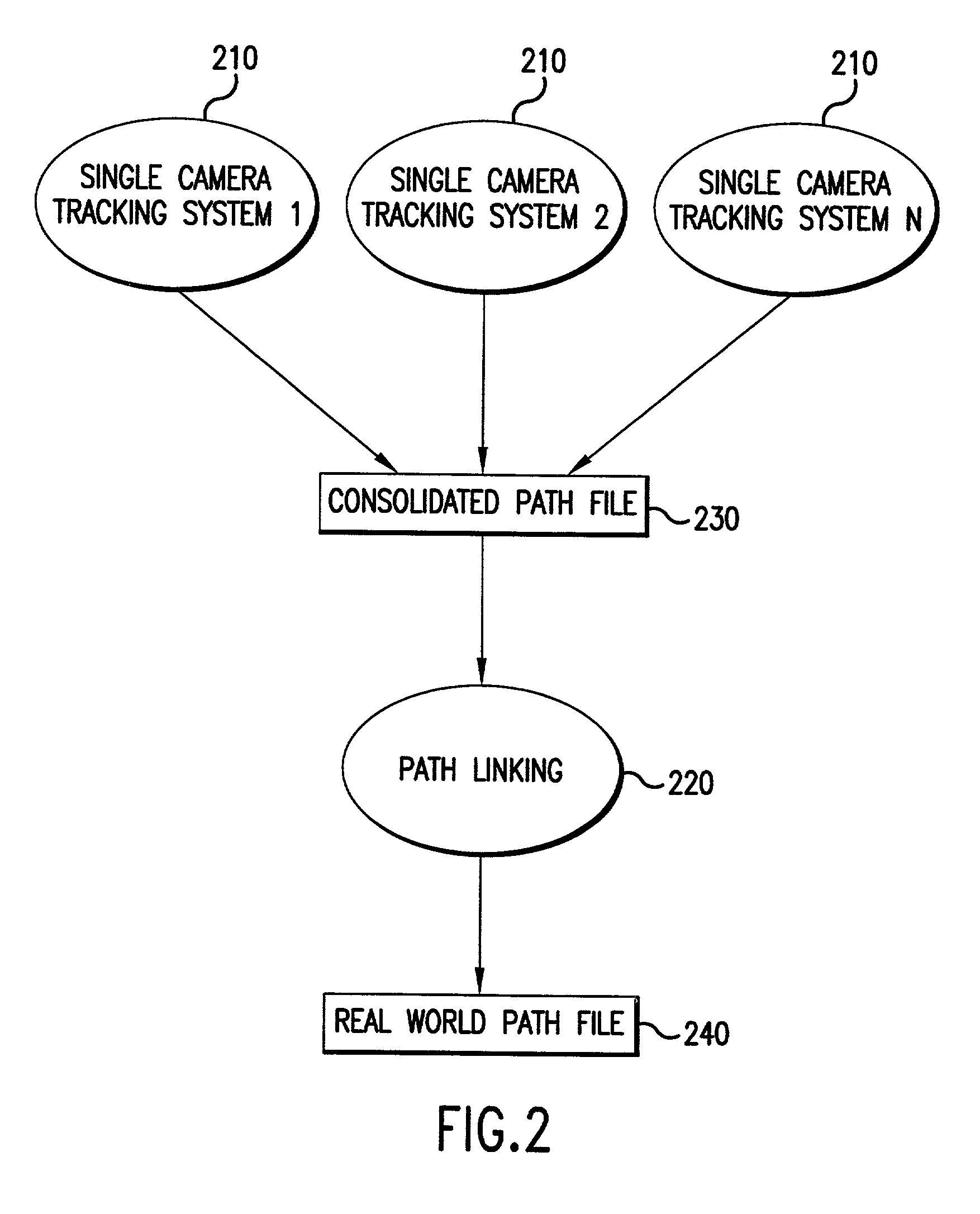

System and method for multi-camera linking and analysis

The present invention is directed to a system and method for tracking objects, such as customers in a retail environment. The invention is divided into two distinct software subsystems, the Customer Tracking system and the Track Analysis system. The Customer Tracking System tracks individuals through multiple cameras and reports various types of information regarding the tracking function, such as location by time and real world coordinates. The Track Analysis system converts individual tracks to reportable actions. Inputs to the Customer Tracking System are a set of analog or digital cameras that are positioned to view some physical environment. The output of the Customer Tracking System is a set of customer tracks that contain time and position information that describe the path that an individual took through the environment being monitored. The Track Analysis System reads the set of customer tracks produced by the tracking system and interprets the activities of individuals based on user defined regions of interest (ROI). Examples of a region of interest are: (1) a teller station in a bank branch, (2) a check-out lane in a retail store, (3) an aisle in a retail store, and the like.

Owner:FLIR COMML SYST

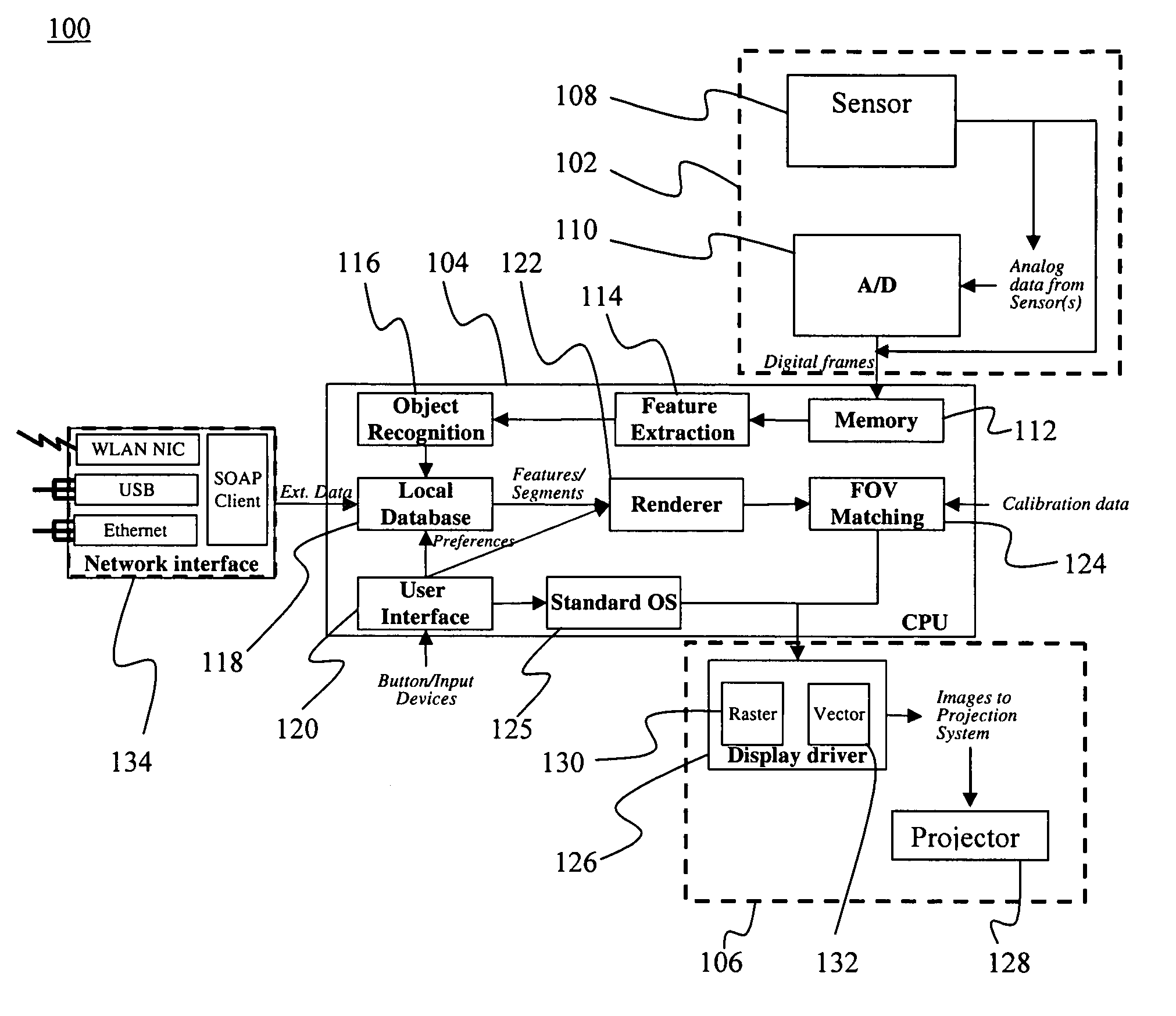

Enhanced perception lighting

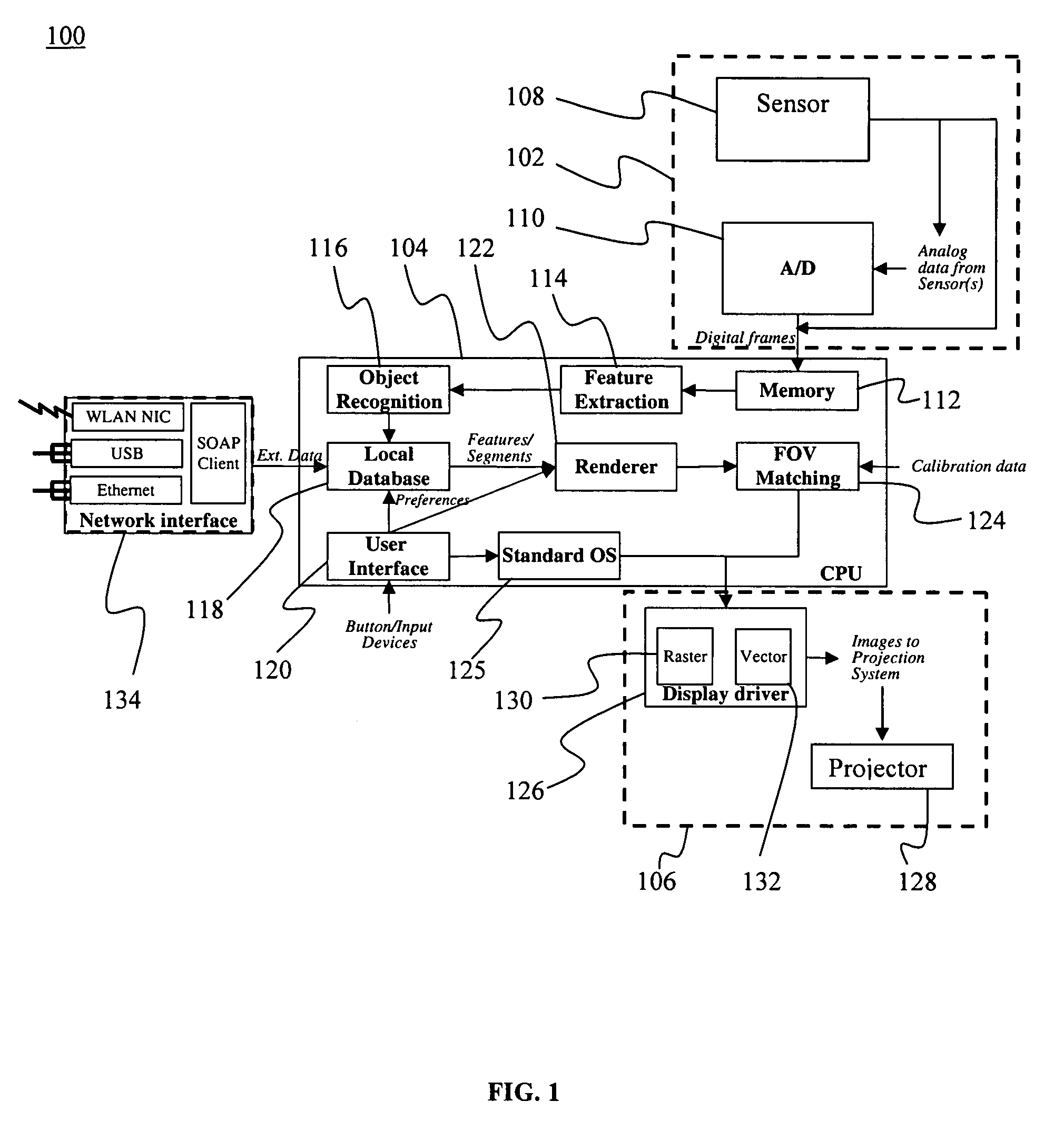

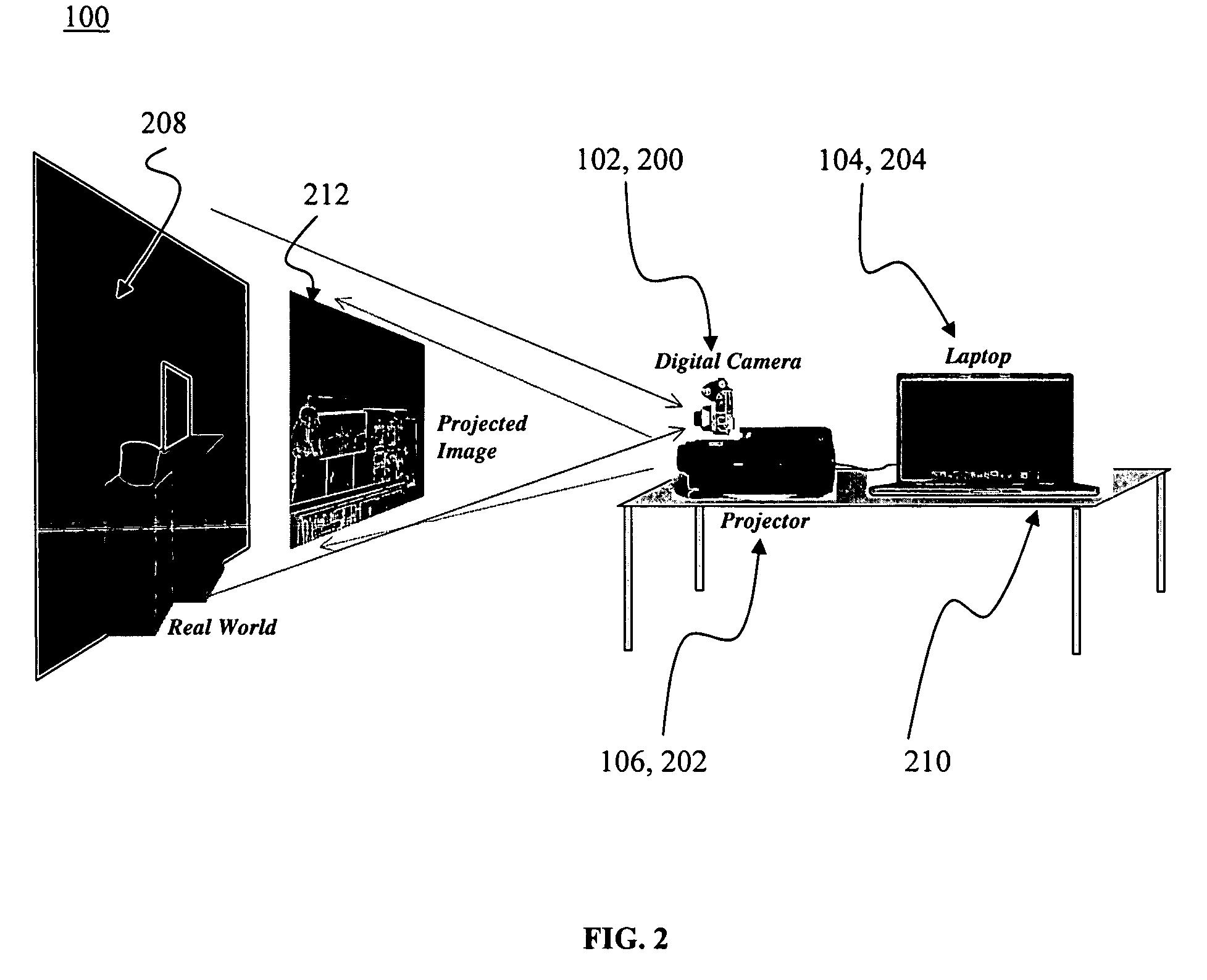

InactiveUS7315241B1Facilitate cognitionImprove user perceptionElectrical apparatusElectric lighting sourcesDisplay deviceEffect light

The present invention relates to an enhanced perception lighting (EPL) system for providing enhanced perception of a user's physical environment. The EPL system comprises a sensor module for detecting and sampling a physical aspect from at least one point in a physical environment and for generating an observation signal based on the physical aspect; a processor module coupled with the sensor module for receiving the observation signal, processing the observation signal, and generating an output signal based on the observation signal; and a projection display module located proximate the sensor module and communicatively connected with the processor module for projecting a display onto the at least one point in the physical environment based upon the output signal. The system allows a user to gather information from the physical environment and project that information onto the physical environment to provide the user with an enhanced perception of the physical environment.

Owner:HRL LAB

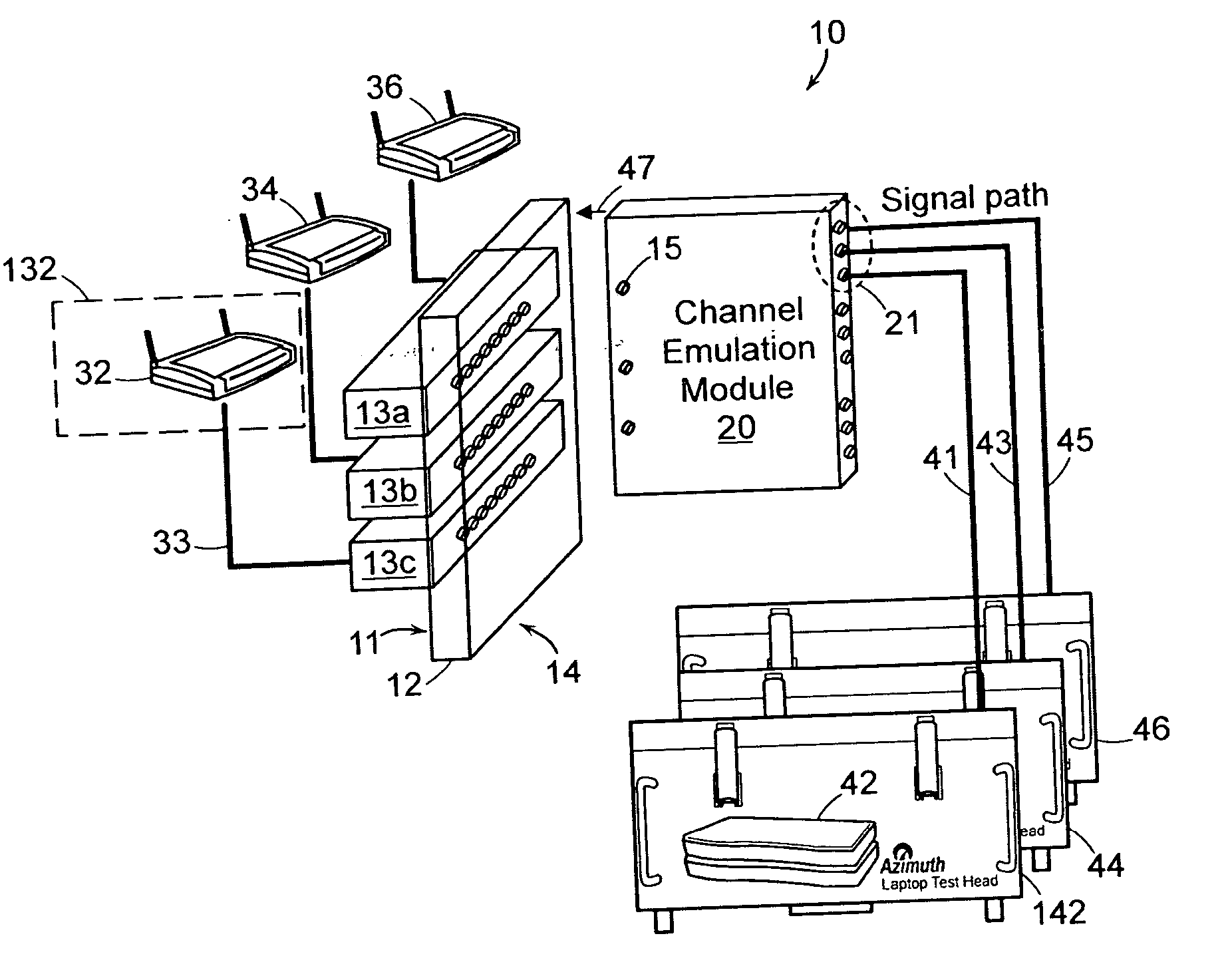

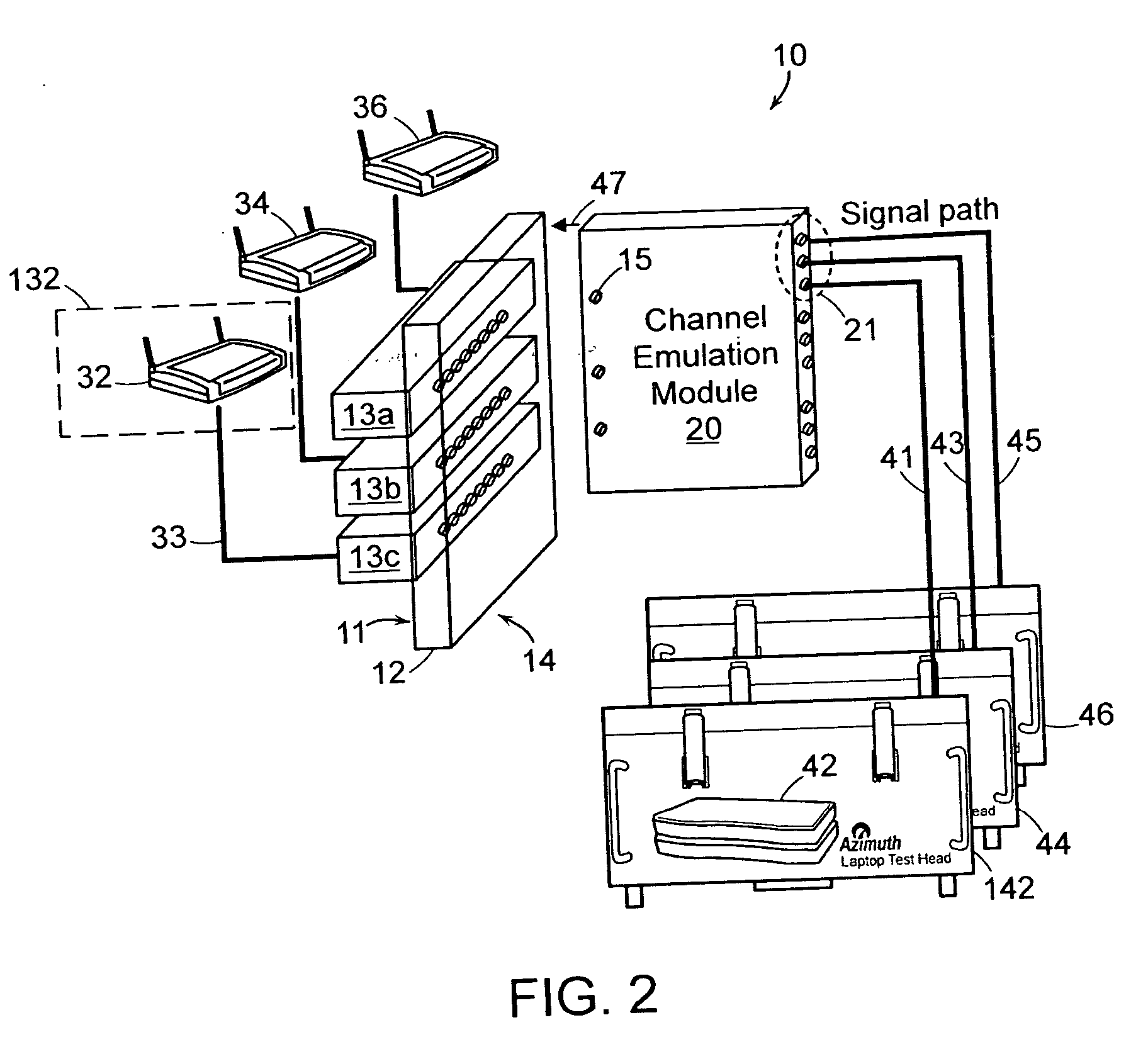

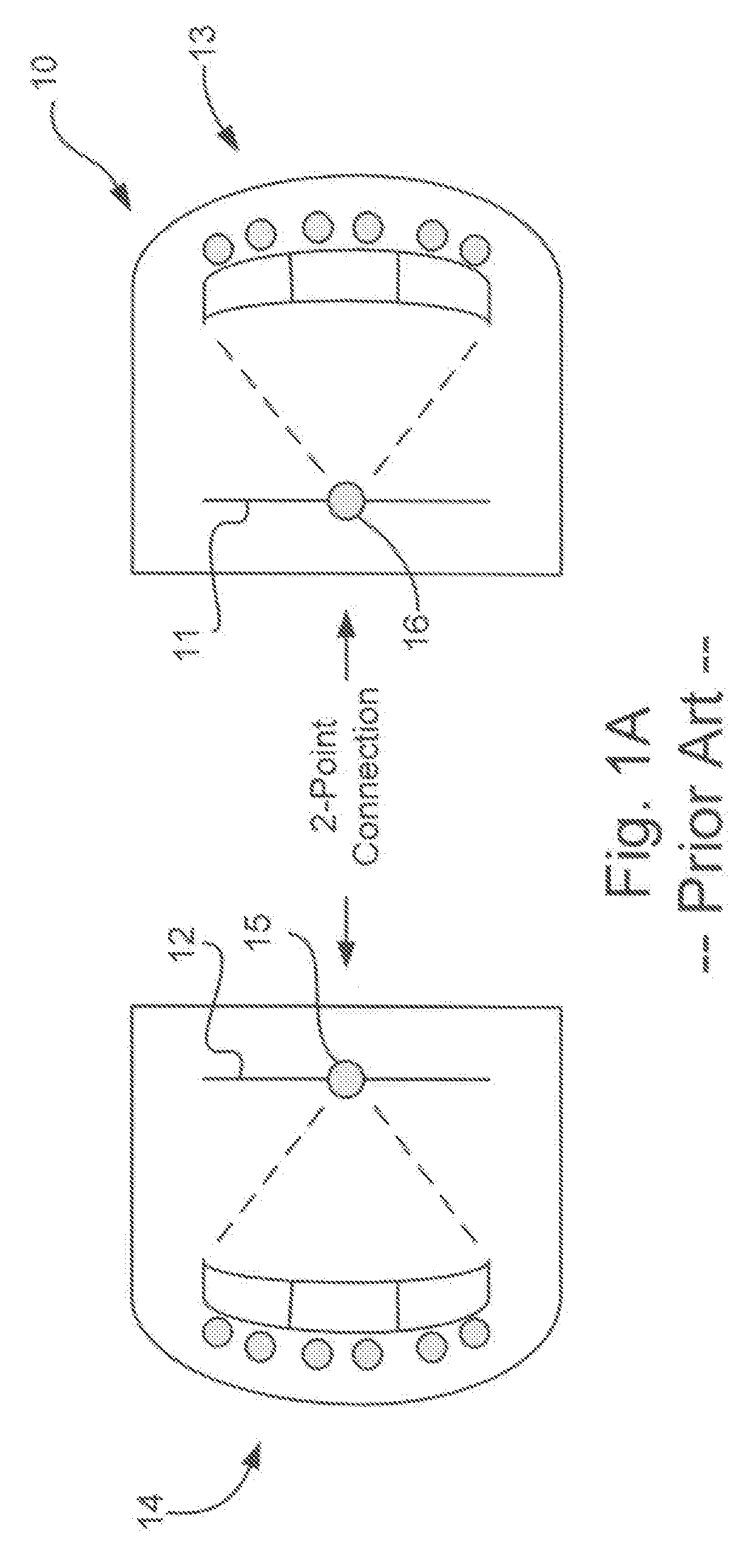

Apparatus and method for use in testing wireless devices

InactiveUS20060229018A1Reduces nondeterministic delayImprove accuracyTransmission monitoringRadio transmissionTimestampTransmission channel

A modular test chassis for use in testing wireless devices includes a backplane and a channel emulation module coupled to the backplane. The channel emulation module comprises circuitry for emulating the effects of a dynamic physical environment (including air, interfering signals, interfering structures, movement, etc.) on signals in the transmission channel shared by the first and second device. Different channel emulation modules may be included in the test system depending upon the protocol, network topology or capability under test. A test module may be provided to generate traffic at multiple interfaces of SISO or MIMO DUTs to enable thorough testing of device and system behavior in the presence of emulated network traffic and fault conditions. A latency measurement system and method applies timestamps frames as they are transmit and received at the test module for improved latency measurement accuracy.

Owner:AZIMUTH SYSTEMS

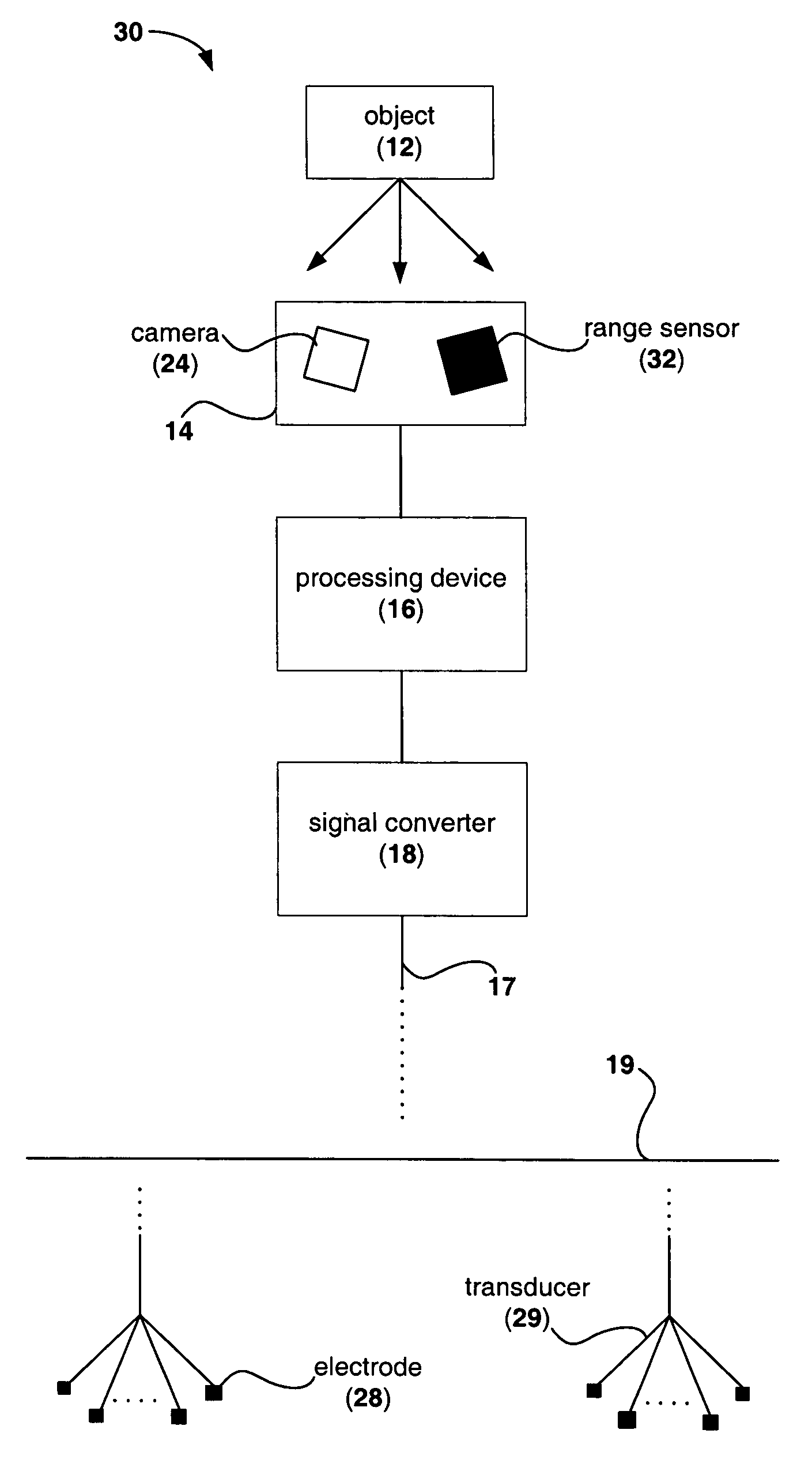

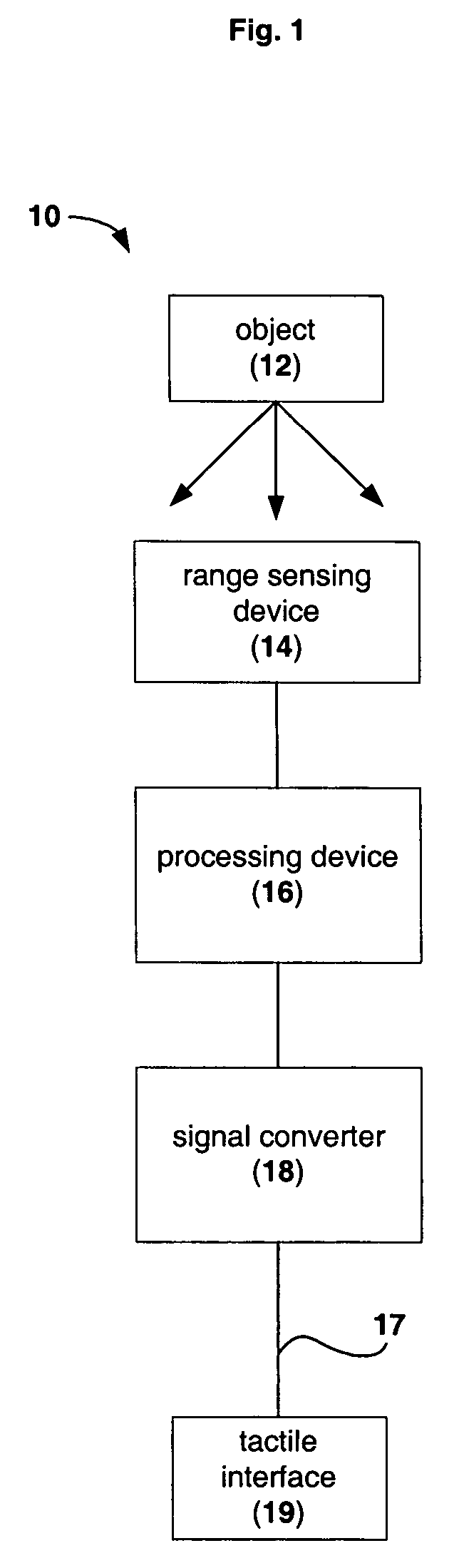

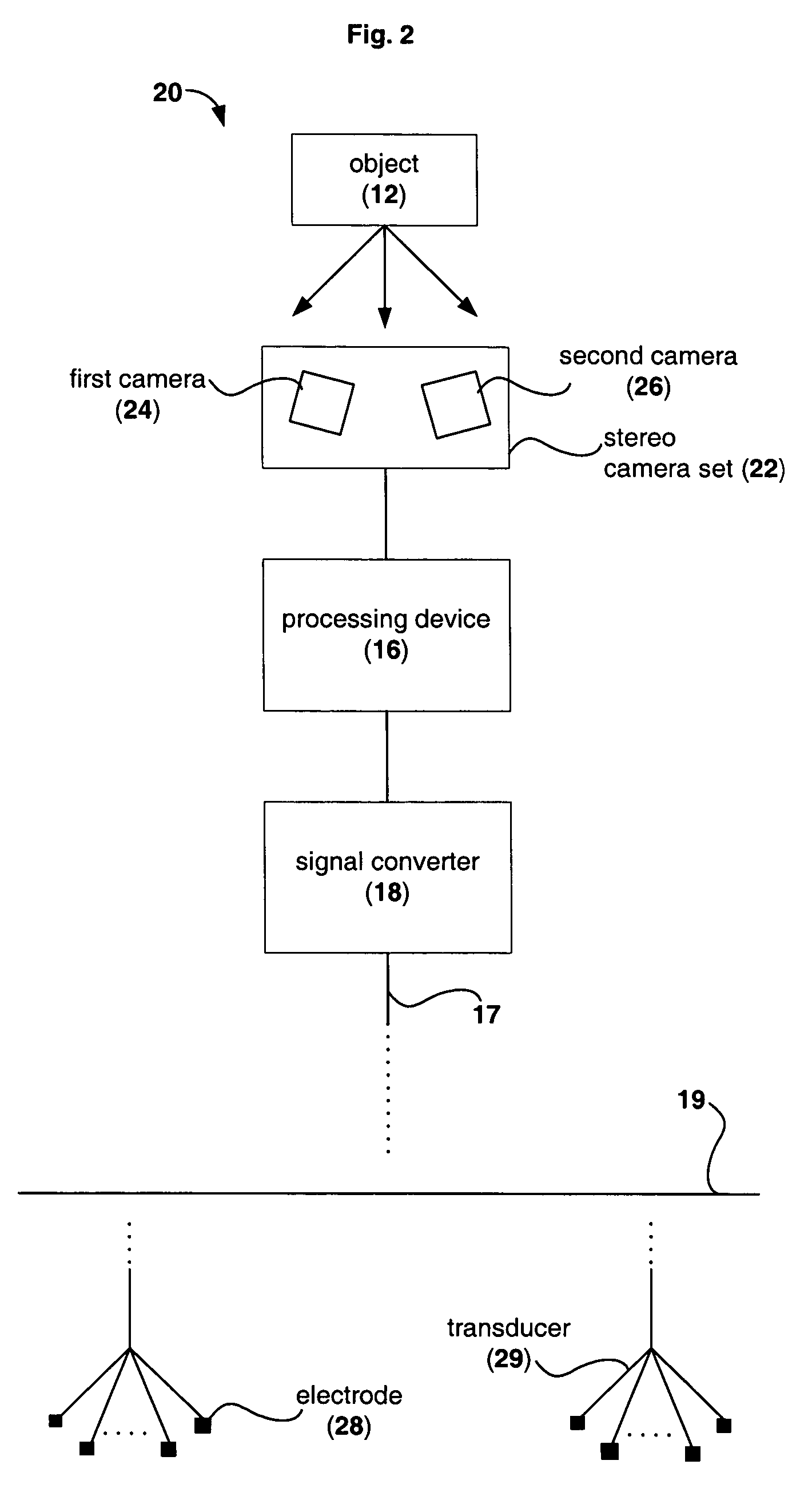

Device for providing perception of the physical environment

Owner:UNIV OF WOLLONGONG

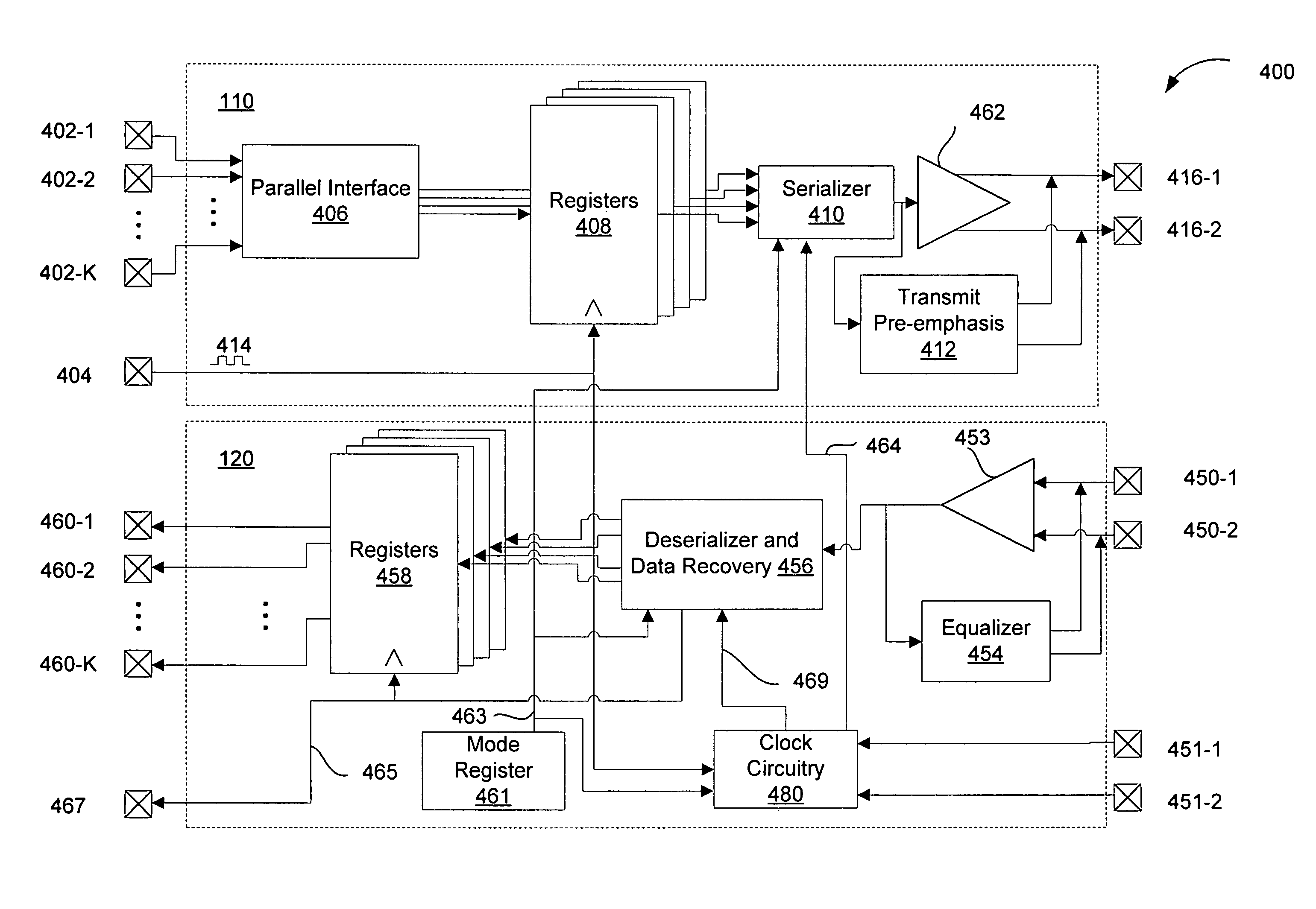

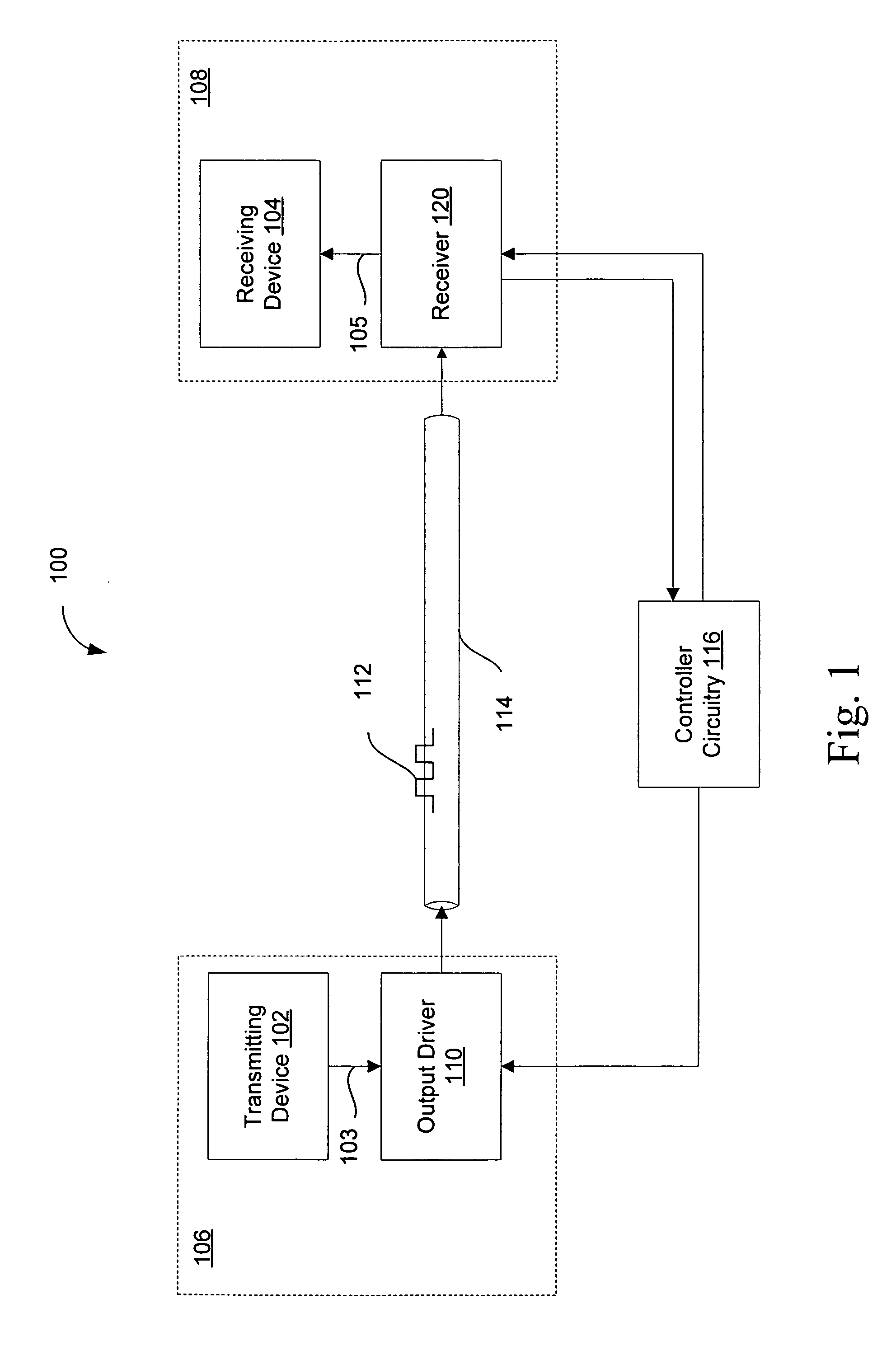

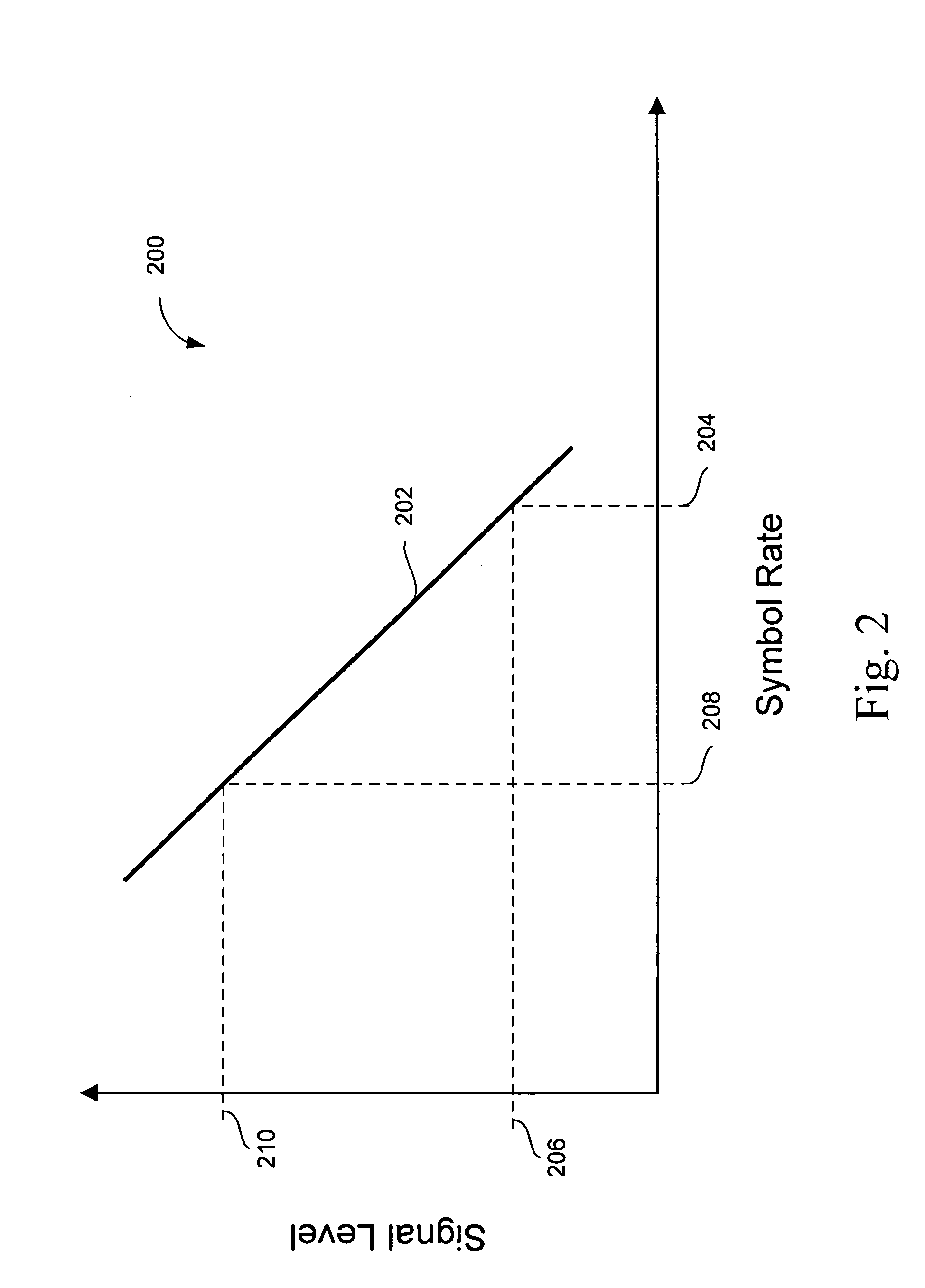

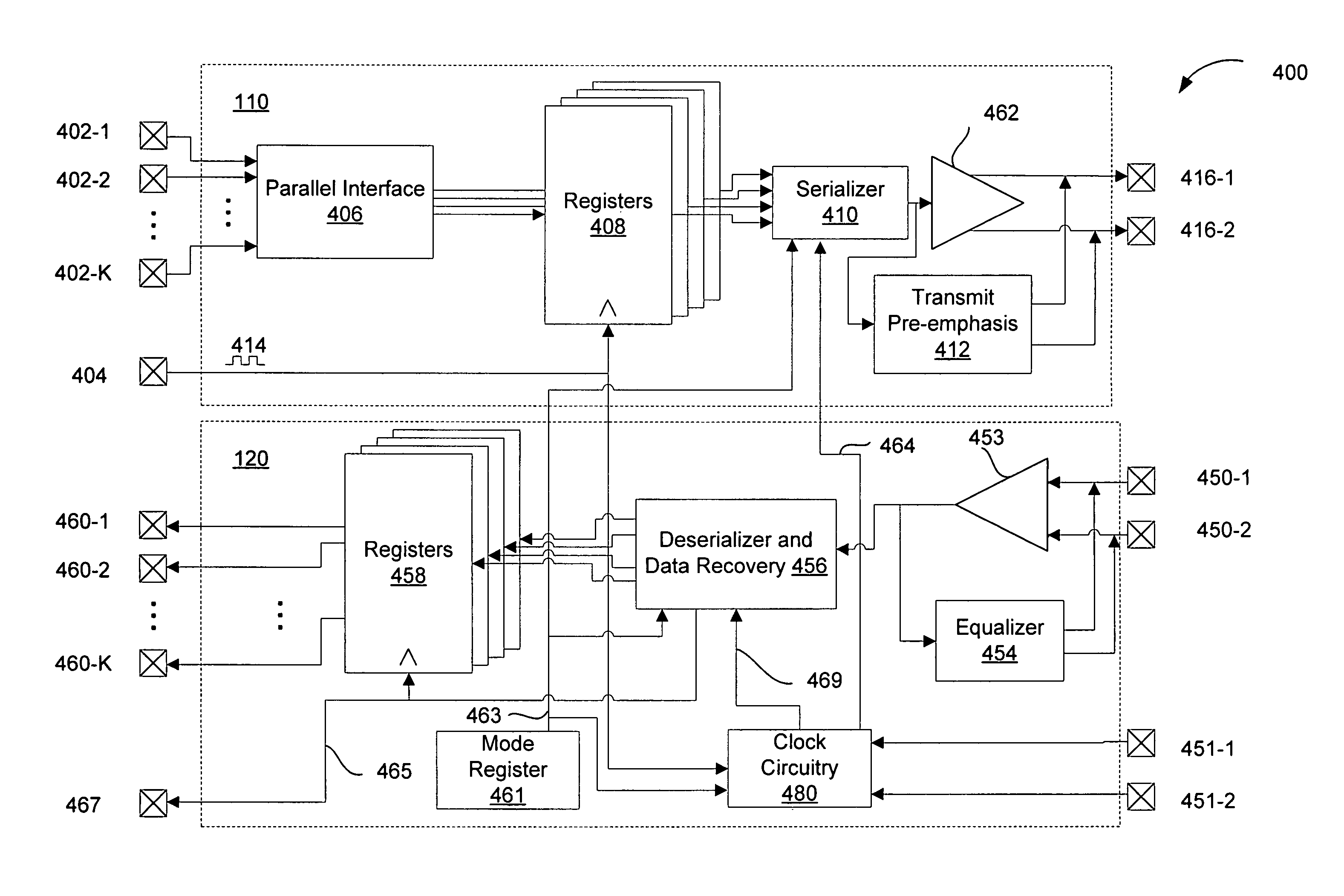

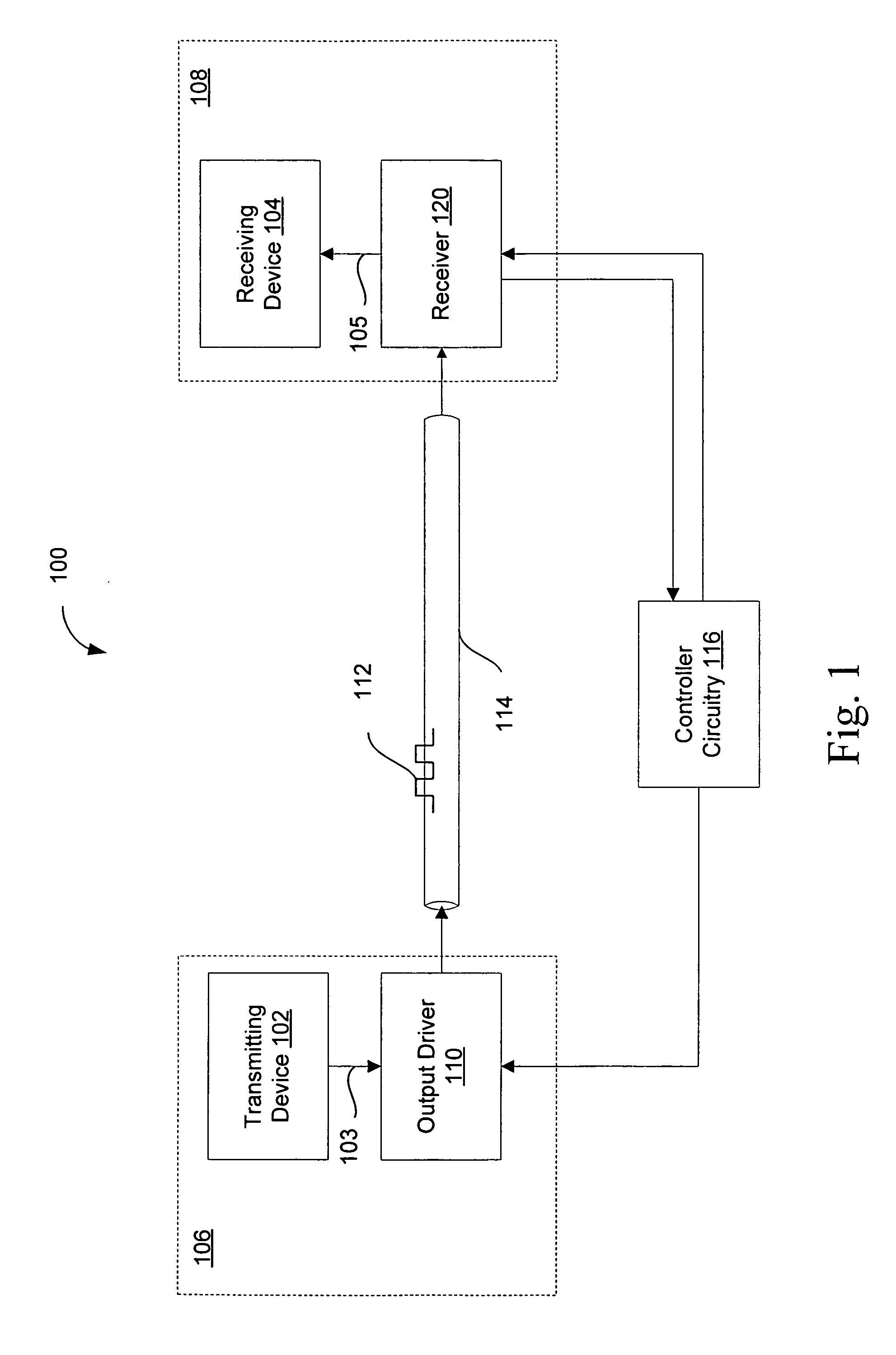

Transparent multi-mode PAM interface

ActiveUS20050089126A1Amplitude-modulated carrier systemsAmplitude-modulated pulse demodulationState variationData rate

Provided are a method and apparatus for high-speed, multi-mode PAM symbol transmission. A multi-mode PAM output driver drives one or more symbols, the number of levels used in the PAM modulation of the one or more symbols depending on the state of a PAM mode signal. Additionally, the one or more symbols are driven at a symbol rate, the symbol rate selected in accordance with the PAM mode signal so that a data rate of the driven symbols in constant with respect to changes in the state of the PAM mode signal. Further provided are methods for determining the optimal number of PAM levels for symbol transmission and reception in a given physical environment.

Owner:RAMBUS INC

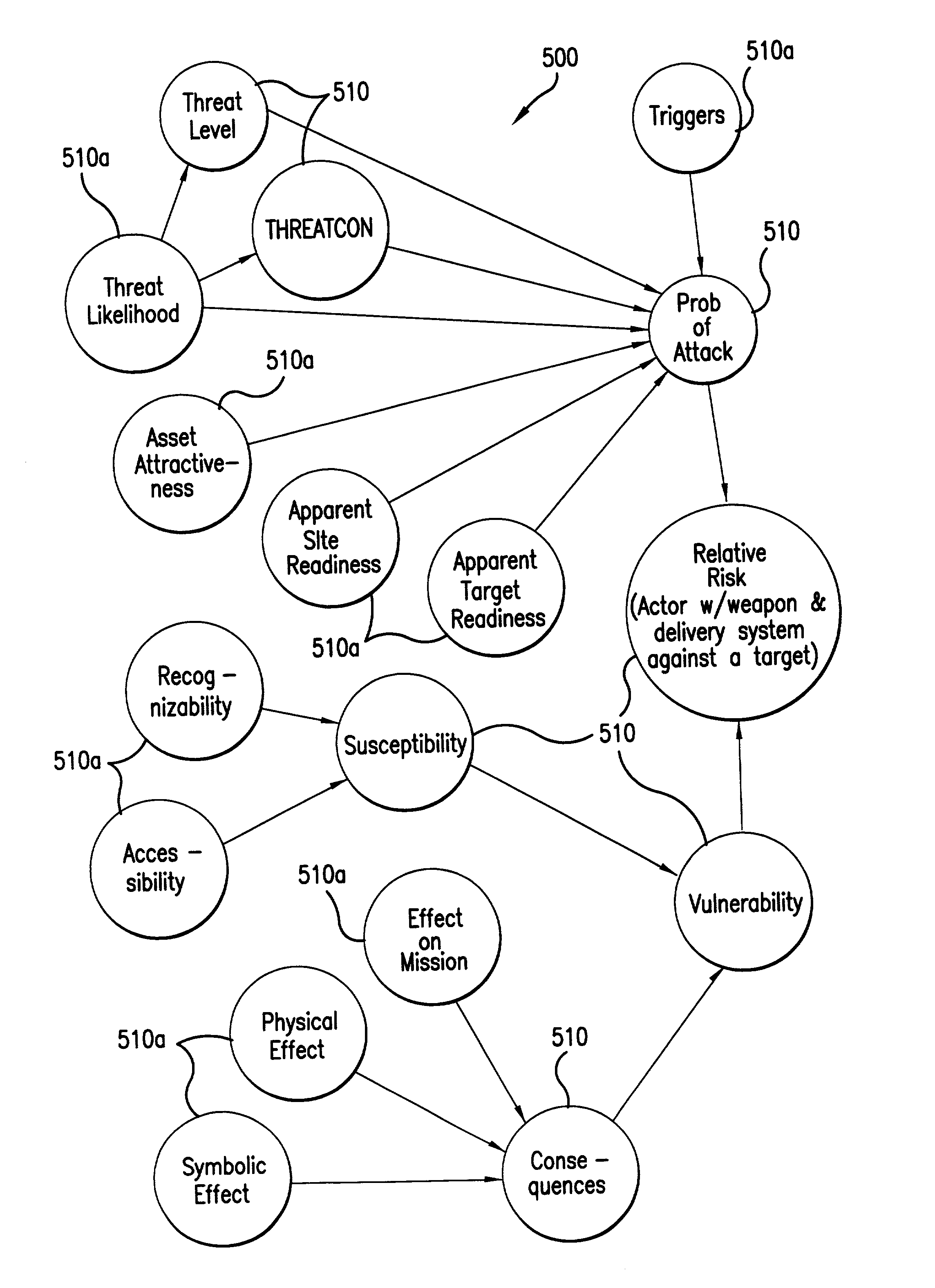

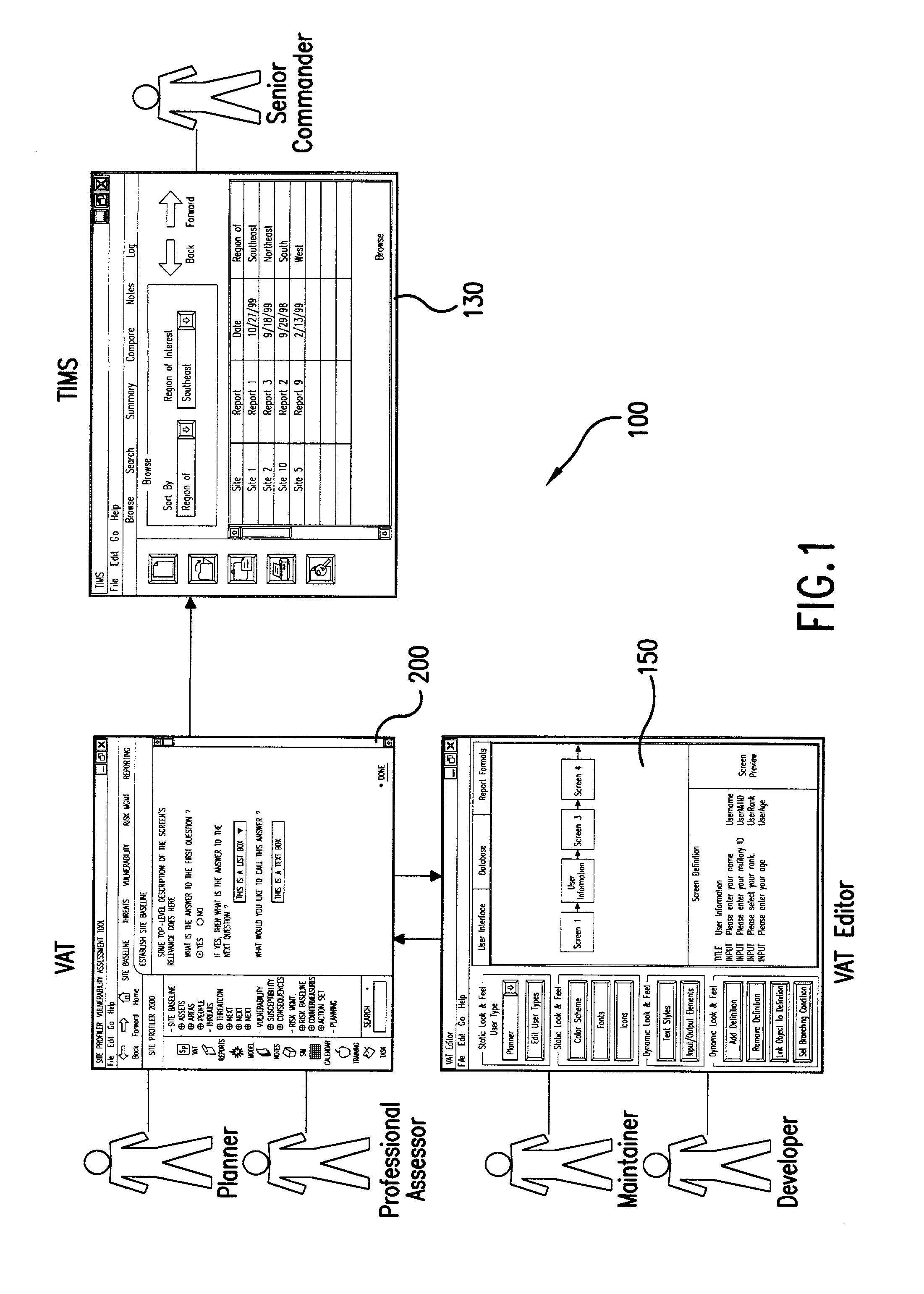

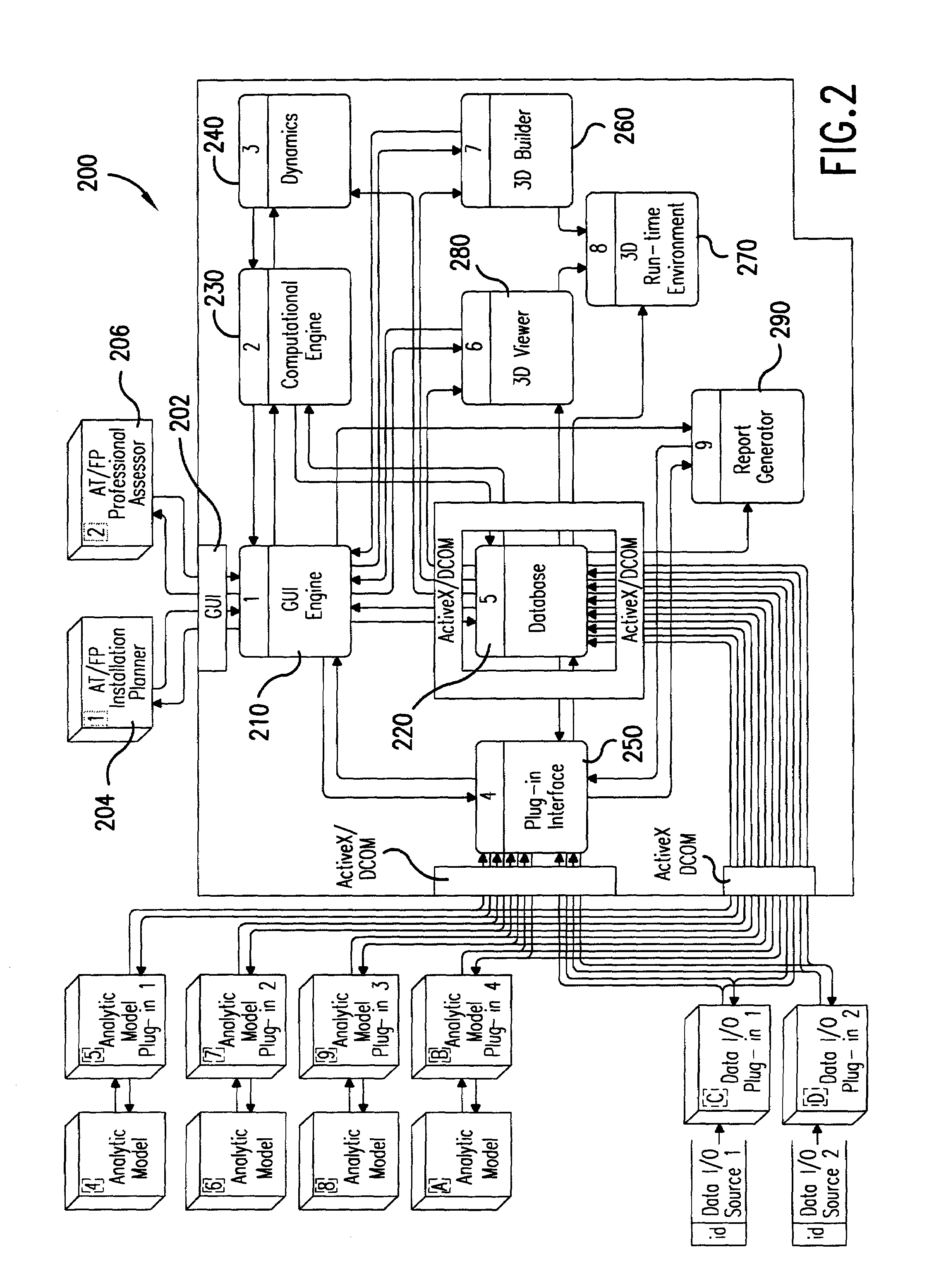

Method and apparatus for risk management

InactiveUS7130779B2Quickly and easily buildDetermine effectivenessHand manipulated computer devicesFinanceIT risk managementPersistent object

An integrated risk management tool includes a persistent object database to store information about actors (individuals and / or groups), physical surroundings, historical events and other information. The risk management tool also includes a decision support system that uses data objects from the database and advanced decision theory techniques, such as Bayesian Networks, to infer the relative risk of an undesirable event. As part of the relative risk calculation, the tool uses a simulation and gaming environment in which artificially intelligent actors interact with the environment to determine susceptibility to the undesired event. Preferred embodiments of the tool also include an open “plug-in” architecture that allows the tool to interface with existing consequence calculators. The tool also provides facilities for presenting data in a user-friendly manner as well as report generation facilities.

Owner:DIGITAL SANDBOX

Transparent multi-mode PAM interface

ActiveUS7308058B2Amplitude-modulated carrier systemsAmplitude-modulated pulse demodulationData rateEngineering

Provided are a method and apparatus for high-speed, multi-mode PAM symbol transmission. A multi-mode PAM output driver drives one or more symbols, the number of levels used in the PAM modulation of the one or more symbols depending on the state of a PAM mode signal. Additionally, the one or more symbols are driven at a symbol rate, the symbol rate selected in accordance with the PAM mode signal so that a data rate of the driven symbols is constant with respect to changes in the state of the PAM mode signal. Further provided are methods for determining the optimal number of PAM levels for symbol transmission and reception in a given physical environment.

Owner:RAMBUS INC

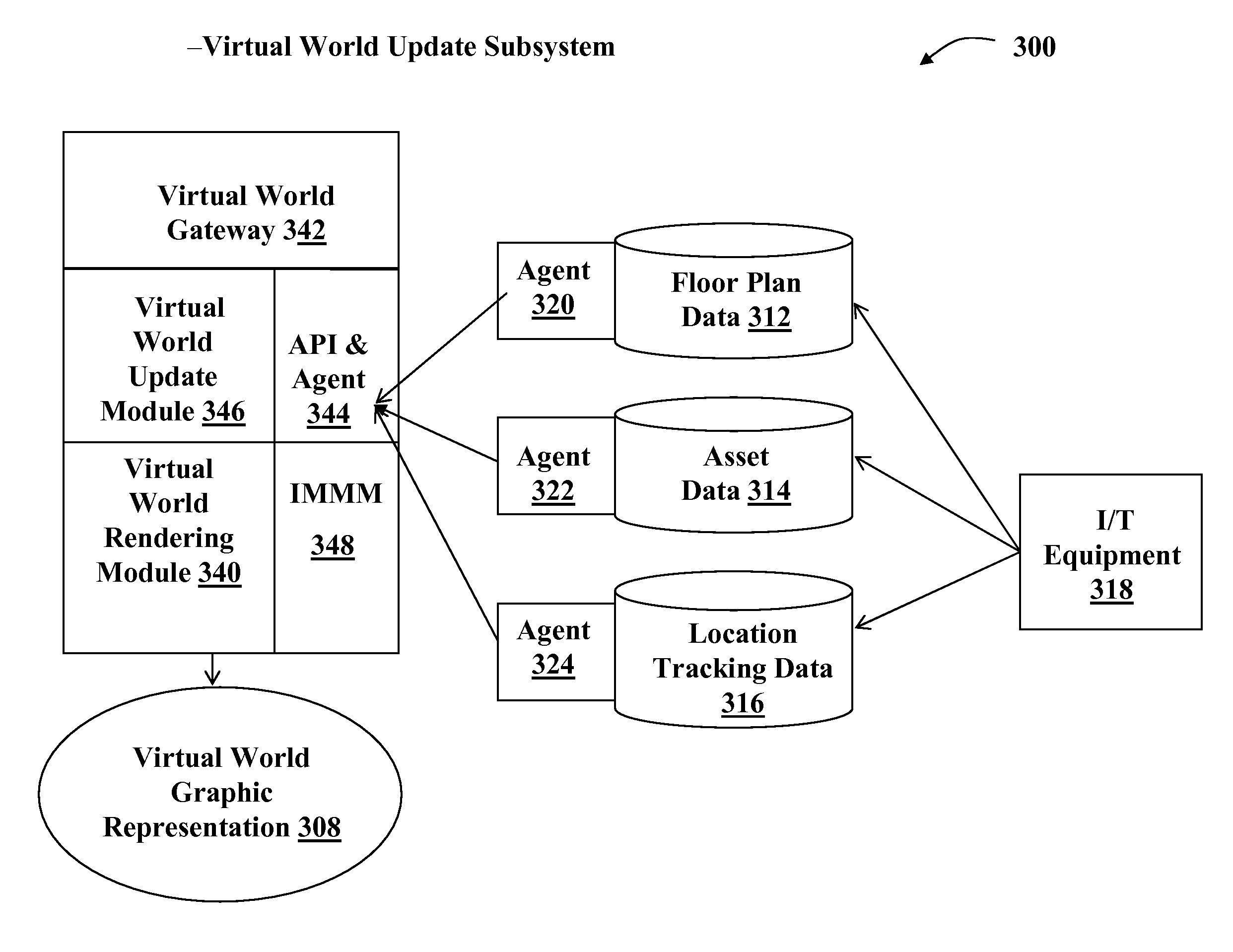

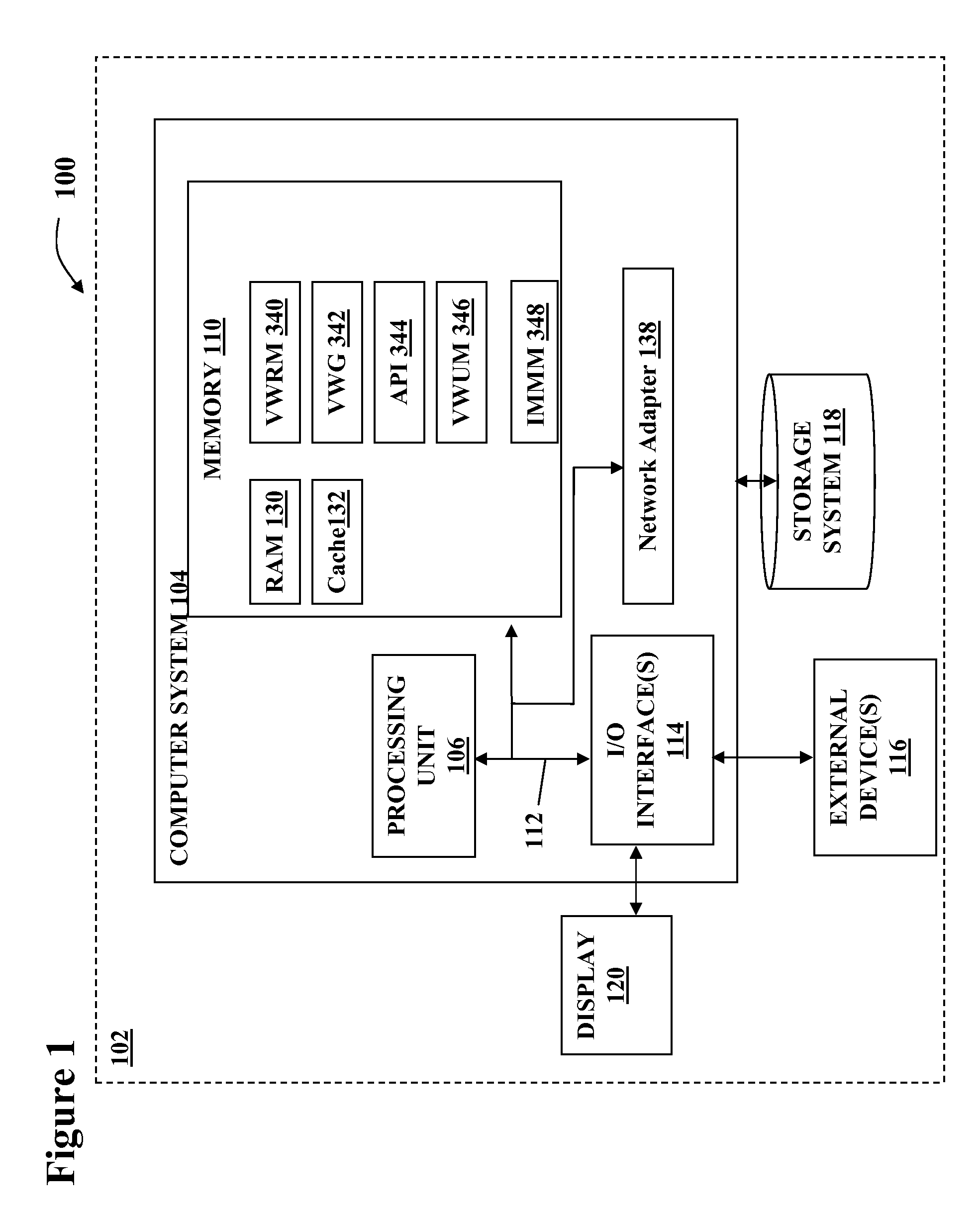

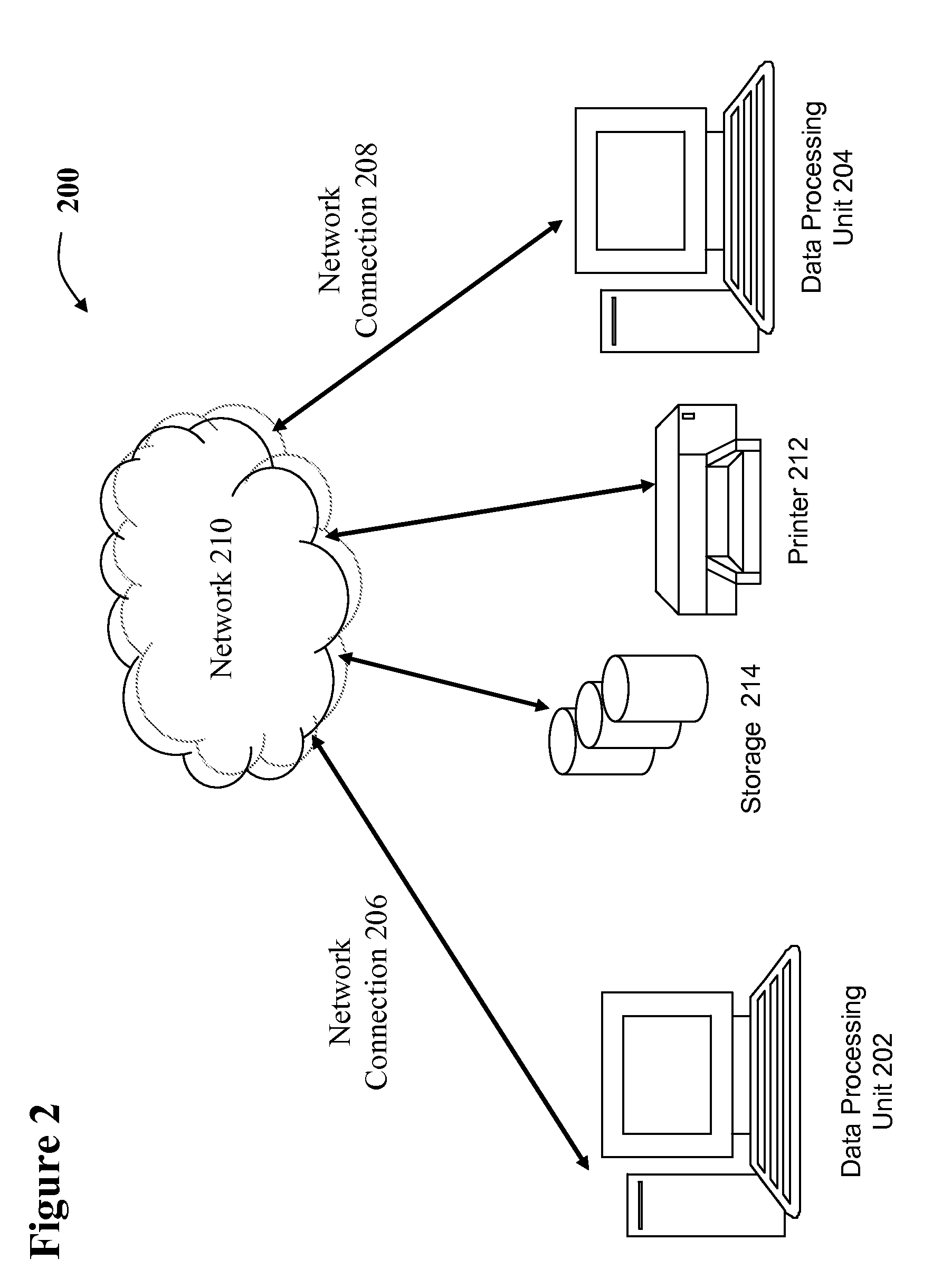

System and method for managing virtual world environments based upon existing physical environments

In general, the present invention provides a system and method for creating, managing and utilizing virtual worlds for enhanced management of an Information Technology (IT) environment. Two dimensional (2D) and three dimensional (3D) virtual world renditions are automatically created to replicate the associated real-life IT environment. Such virtual environments can then be used to familiarize staff with remote locations and to securely provide virtual data center tours to others. The virtual environments are managed through an information monitor and management module that generates a work order sent to the physical location for reconfiguration of the real-life environment.

Owner:IBM CORP

Method and system for designing or deploying a communications network which allows simultaneous selection of multiple components

InactiveUS7096173B1Simply and quickly prepareOptimize timingReceivers monitoringAnalogue computers for electric apparatusInterconnectivityPhysical context

A method and apparatus for designing or deploying a communications network is disclosed. A computerized model which represents a physical environment in which a communications network is or will be installed is provided. System components which may be used in the physical network as a part of the network having electrical characteristics or frequency specific information are identified. At least one component kit composed of at least two system components, wherein interconnectivity of the at least two system components without a fault is assured, is also identified. Using the computerized model, at least one performance characteristic for a part of the communications network is determined. At least one component kit in a part of the communications network together with a representation of a part of the physical environment is represented.

Owner:EXTREME NETWORKS INC

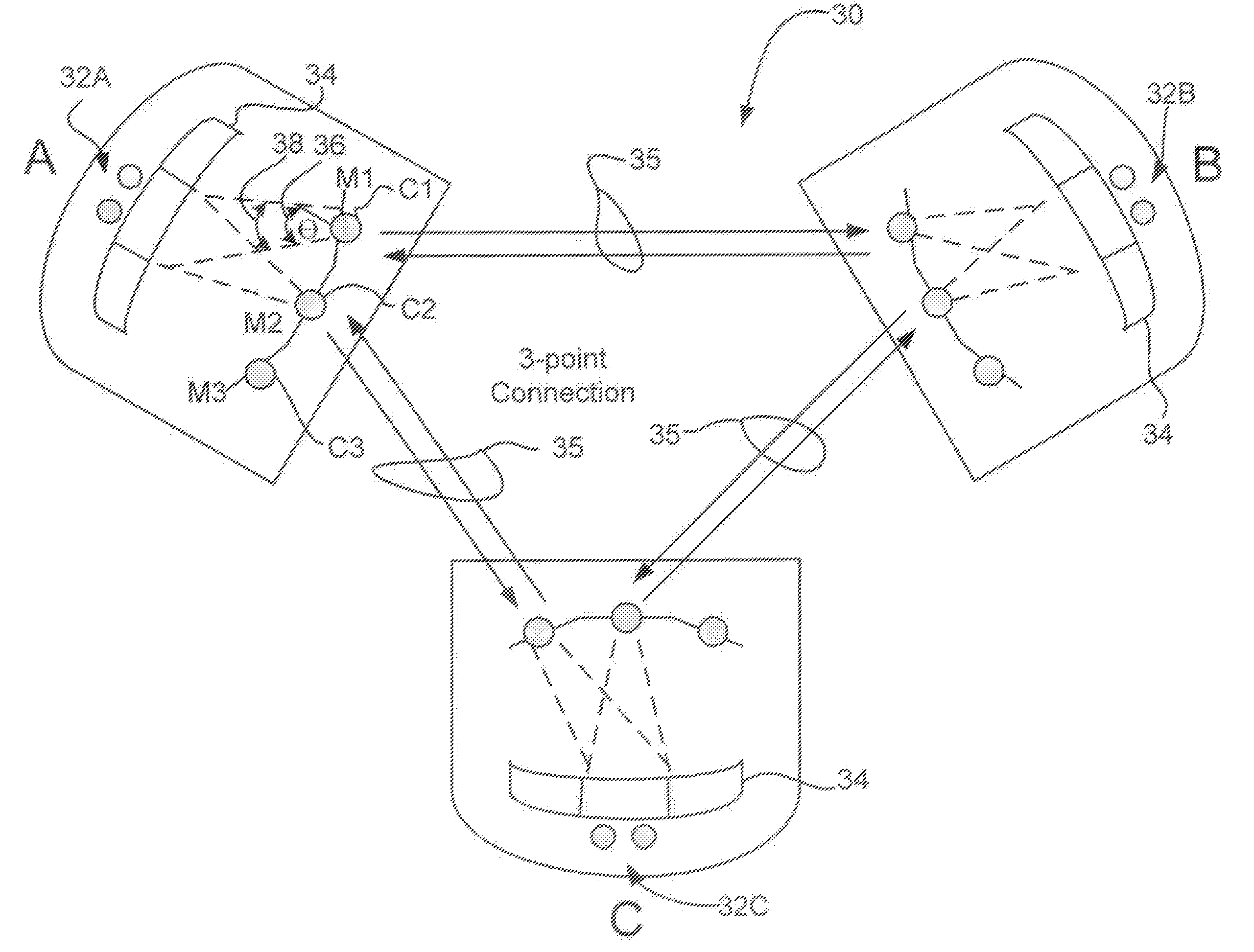

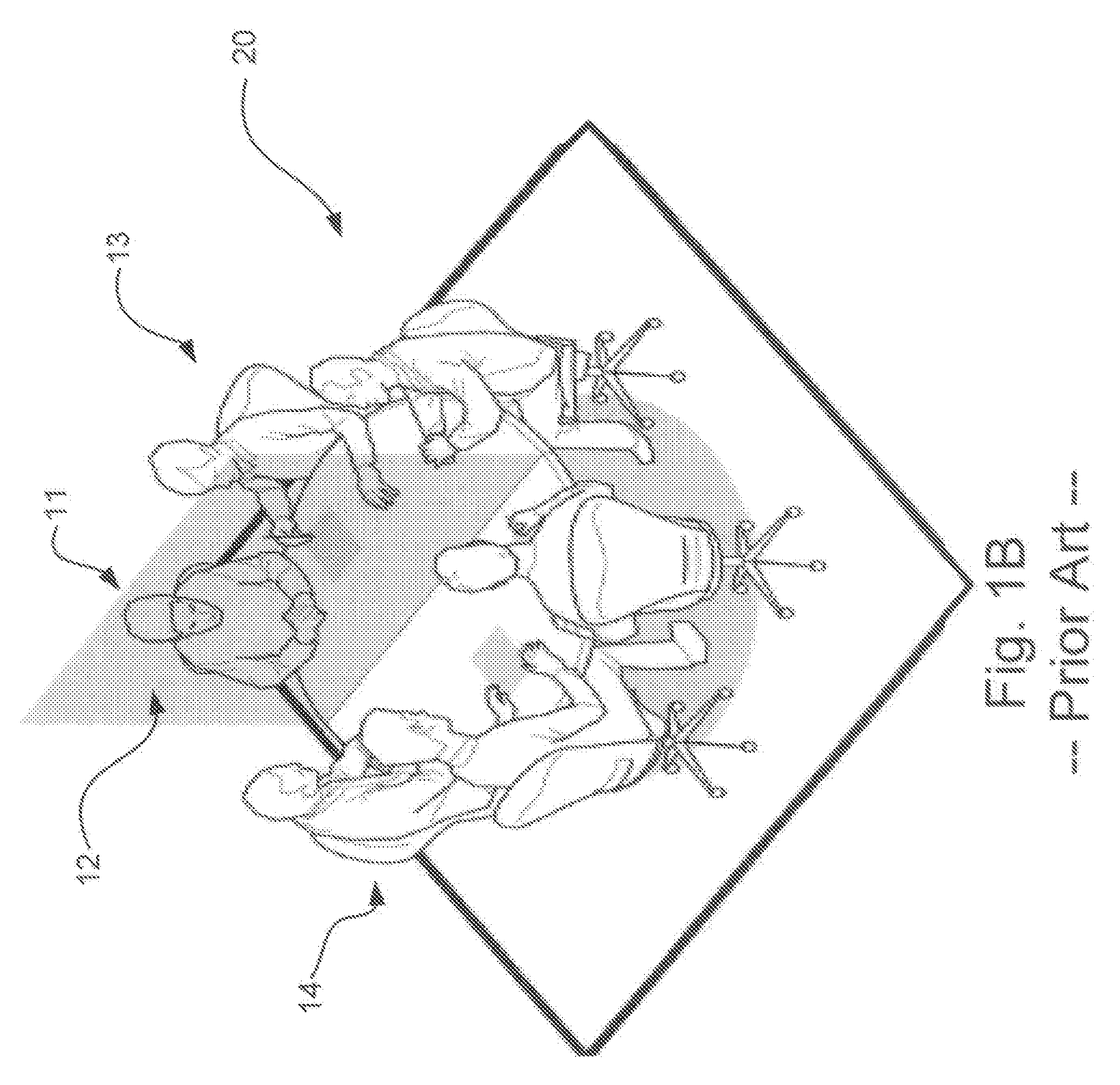

Blended Space For Aligning Video Streams

InactiveUS20070279483A1Television conference systemsData switching by path configurationEnvironment of AlbaniaBlended spaces

A method is described for aligning video streams and positioning camera in a collaboration event to create a blended space. A local physical environment of one set of attendees is combined with respective apparent spaces of other sets of attendees that are transmitted from two or more remote environments. A geometrically consistent shared space is created that maintains natural collaboration cues of eye contact and directional awareness. The remote environments in the local physical environment are represented in a fashion that is geometrically consistent with the local physical environment. The local physical environment extends naturally and consistently with the way the remote environments may be similarly extended with their own blended spaces. Therefore, an apparent shared space that is sufficiently similar for all sets of attendees is presented in both the local and remote physical environments.

Owner:HEWLETT PACKARD DEV CO LP

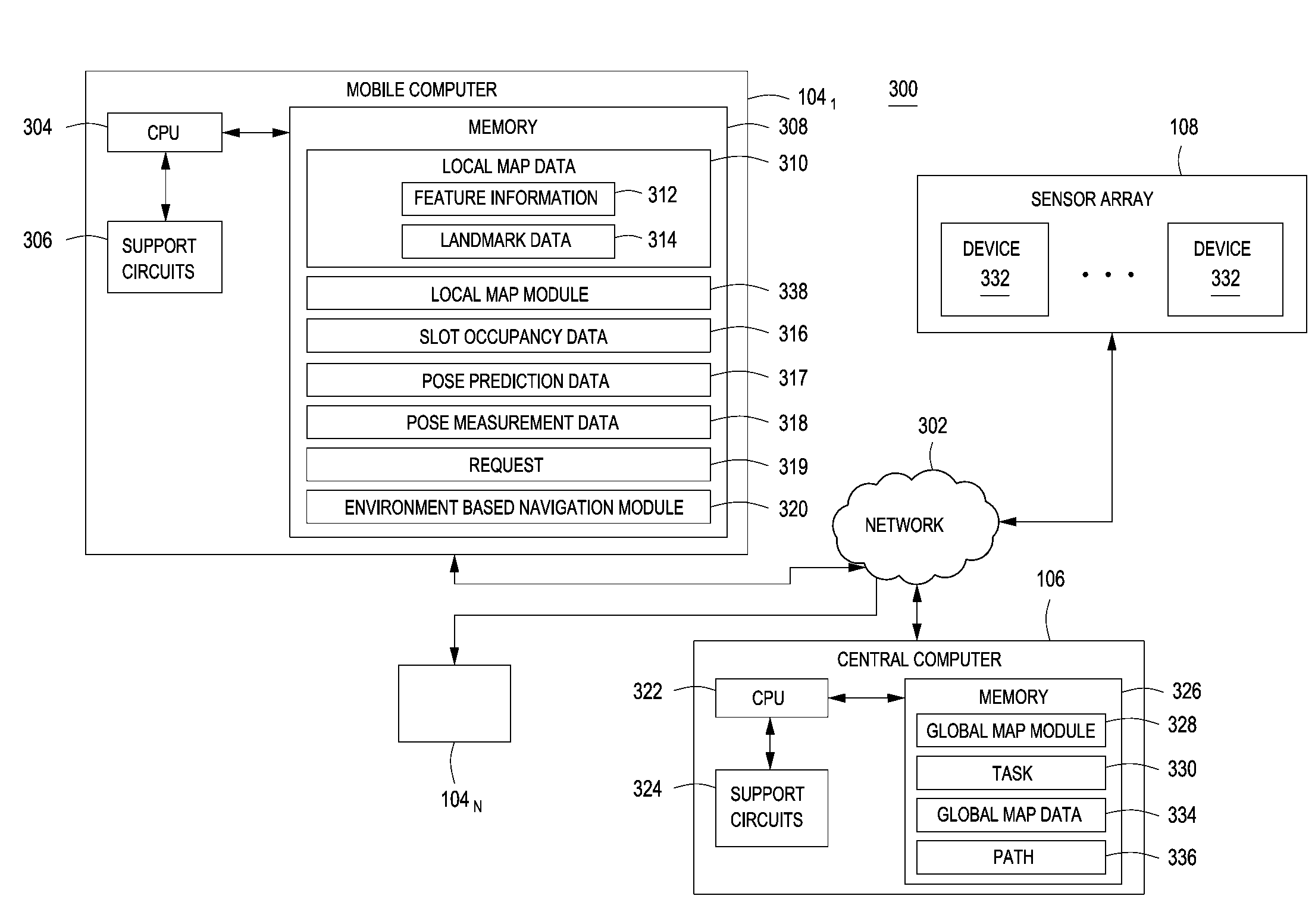

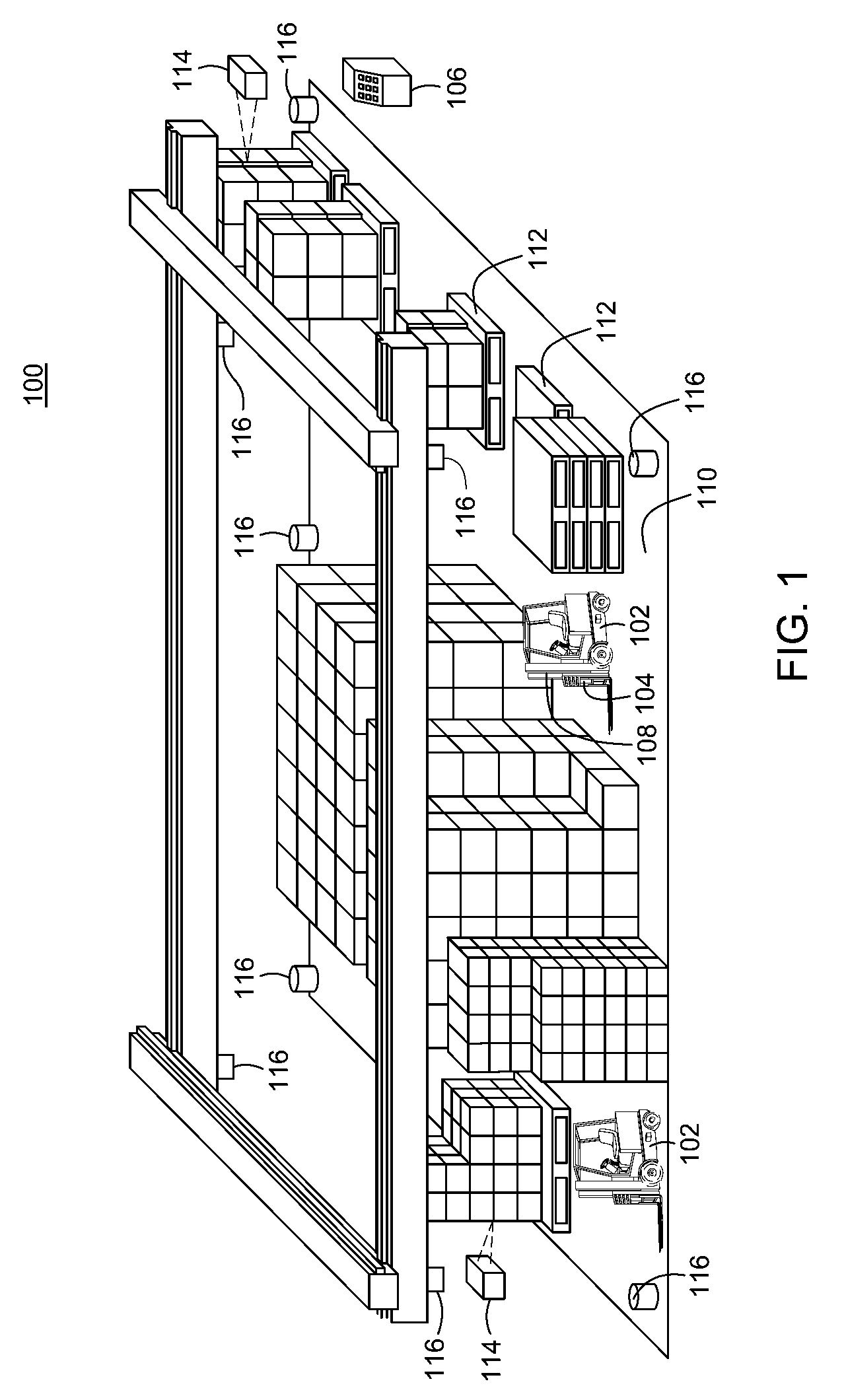

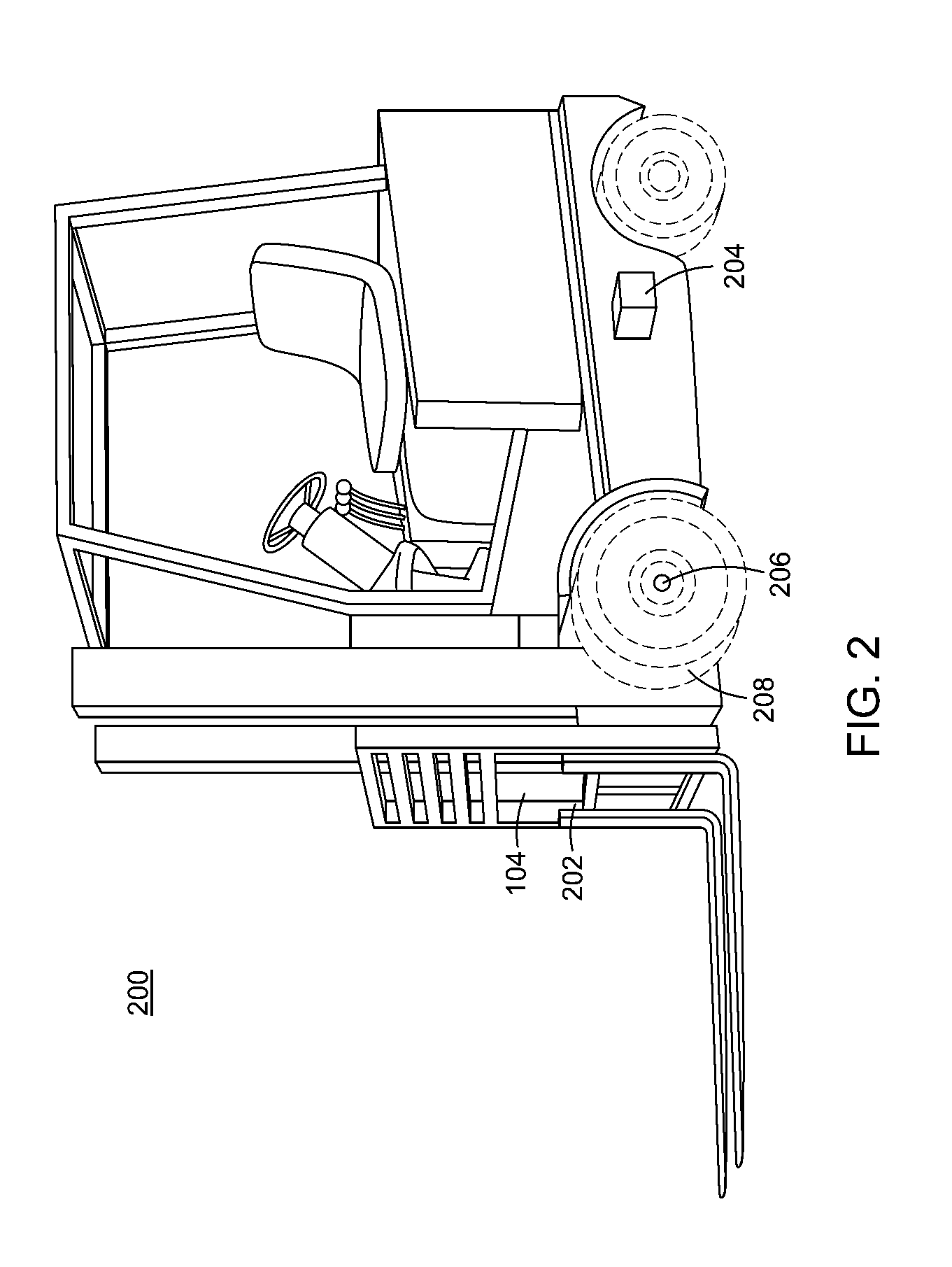

Method and apparatus for sharing map data associated with automated industrial vehicles

ActiveUS20120323431A1Instruments for road network navigationLifting devicesComputer sciencePhysical context

A method and apparatus for sharing map data between industrial vehicles in a physical environment is described. In one embodiments, the method includes processing local map data associated with a plurality of industrial vehicles, wherein the local map data comprises feature information generated by the plurality of industrial vehicles regarding features observed by industrial vehicles in the plurality of vehicles; combining the feature information associated with local map data to generate global map data for the physical environment; and navigating an industrial vehicle of the plurality of industrial vehicles using at least a portion of the global map data.

Owner:CROWN EQUIP CORP

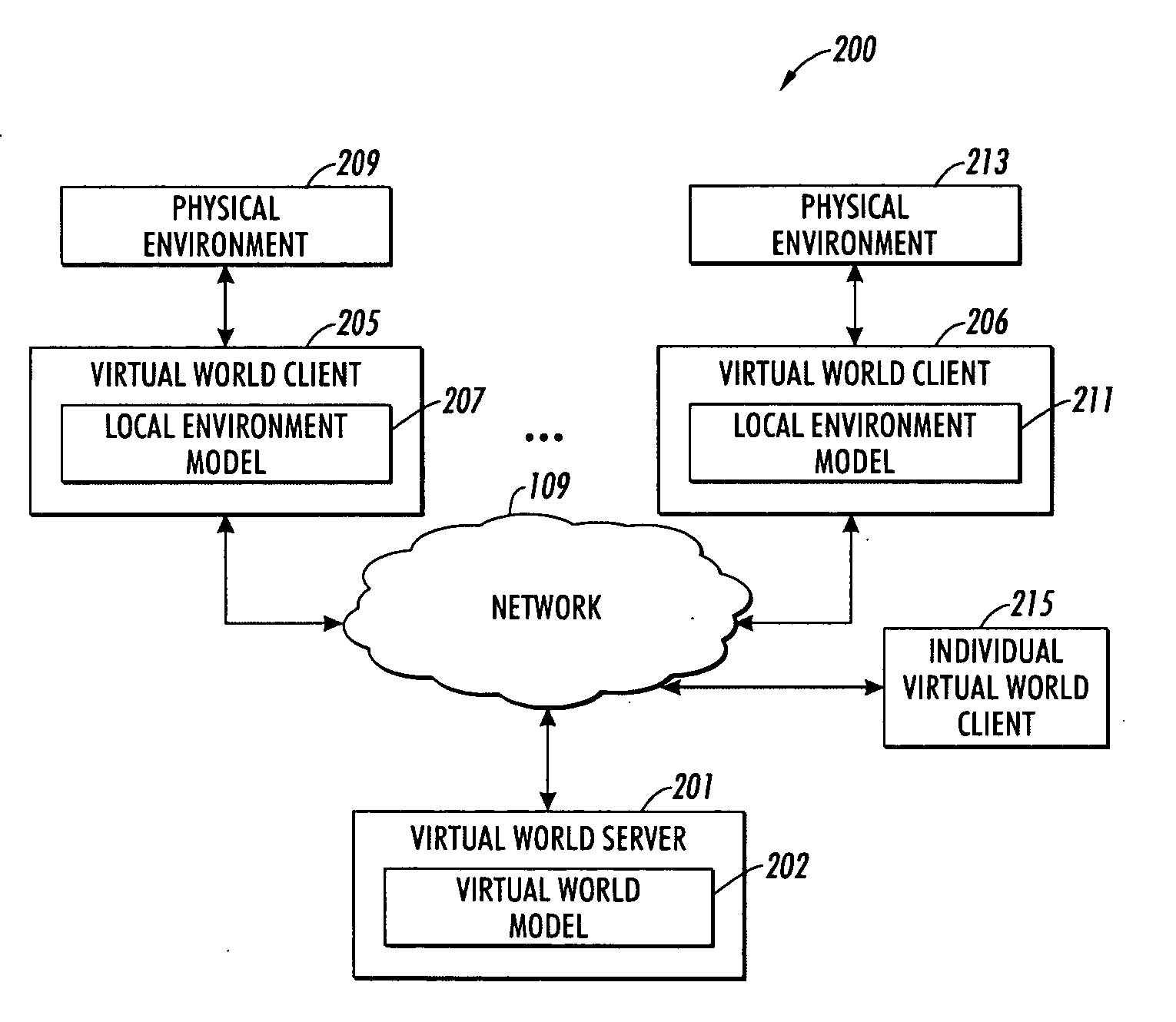

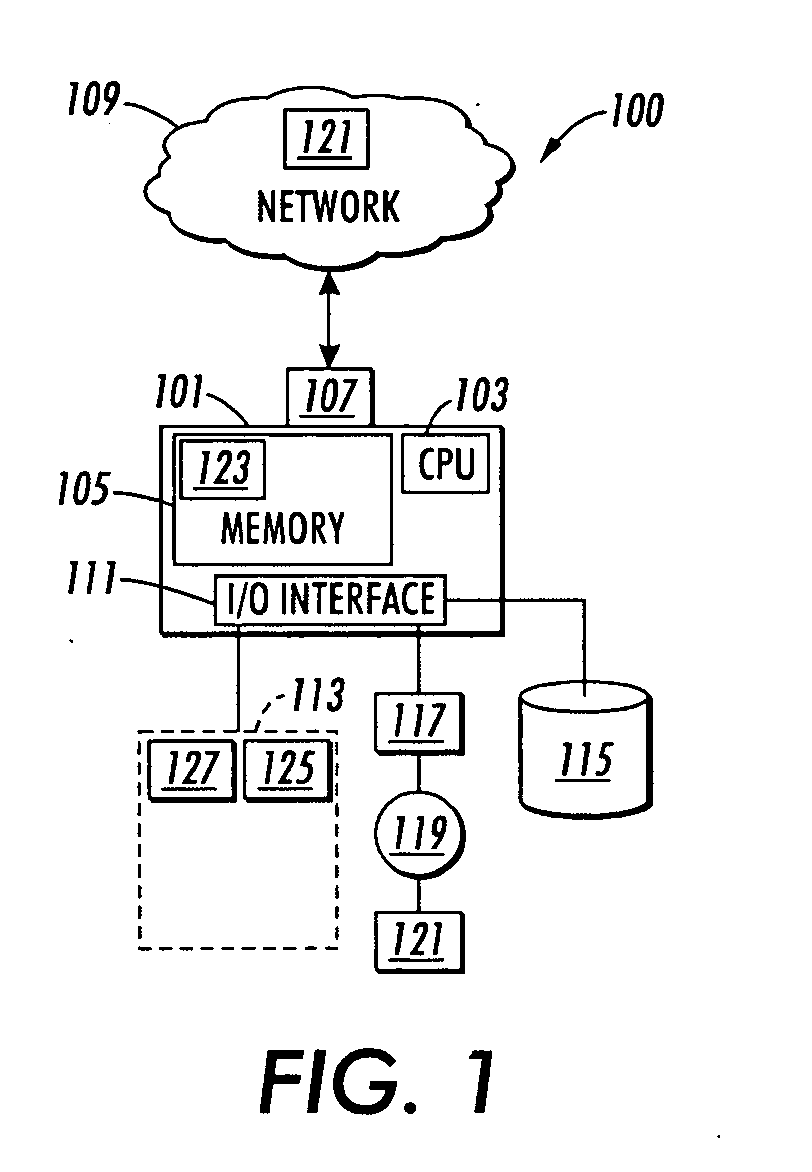

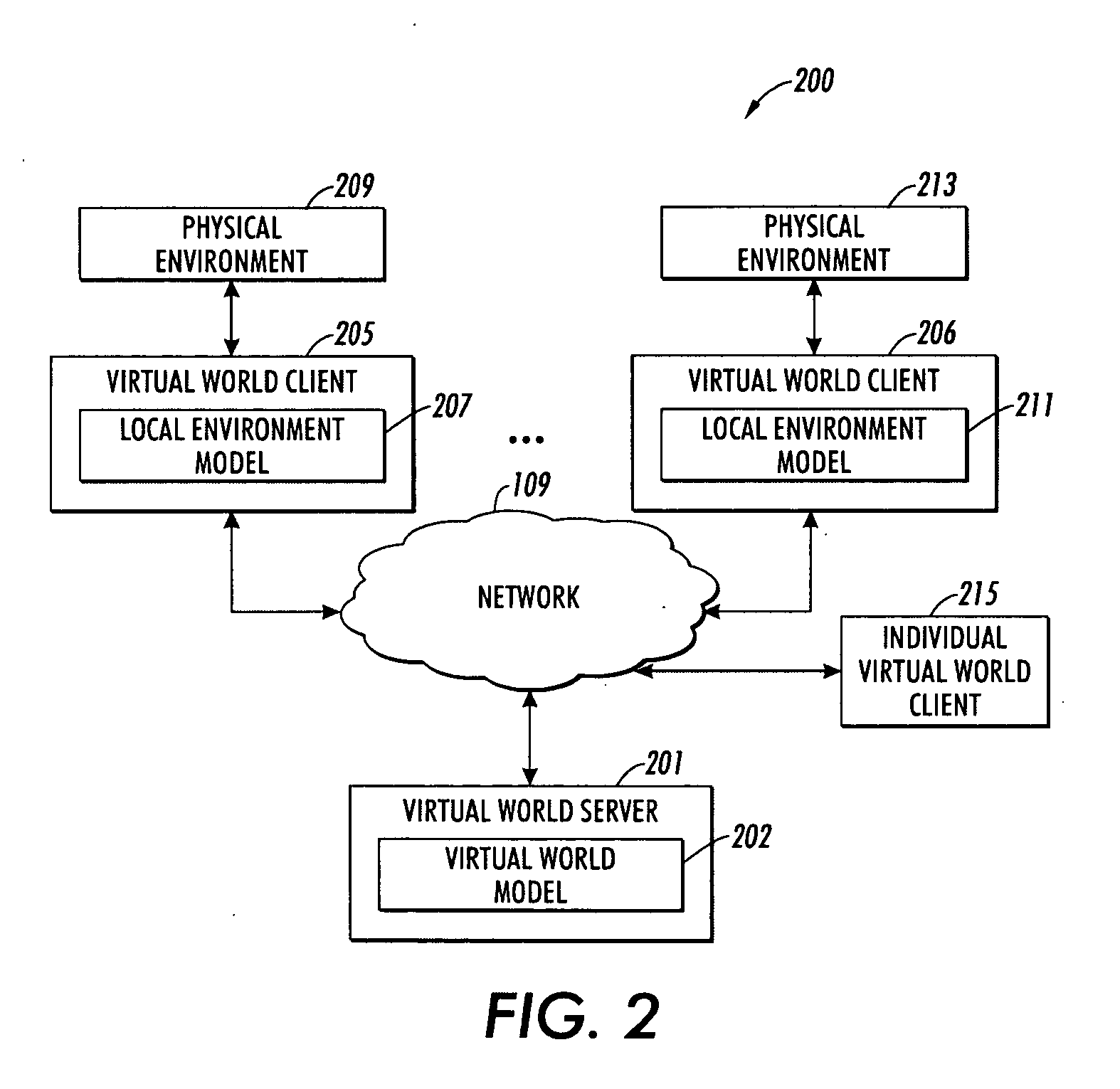

Physical-virtual environment interface

ActiveUS20100125799A1Multiple digital computer combinationsInput/output processes for data processingVirtual worldClient-side

Apparatus, methods, and computer-program products are disclosed for automatically interfacing a first physical environment and a second physical environment to a virtual world model where some avatar controller piloting commands are automatically issued by a virtual world client to a virtual world server responsive to the focus of the avatar controller.

Owner:XEROX CORP

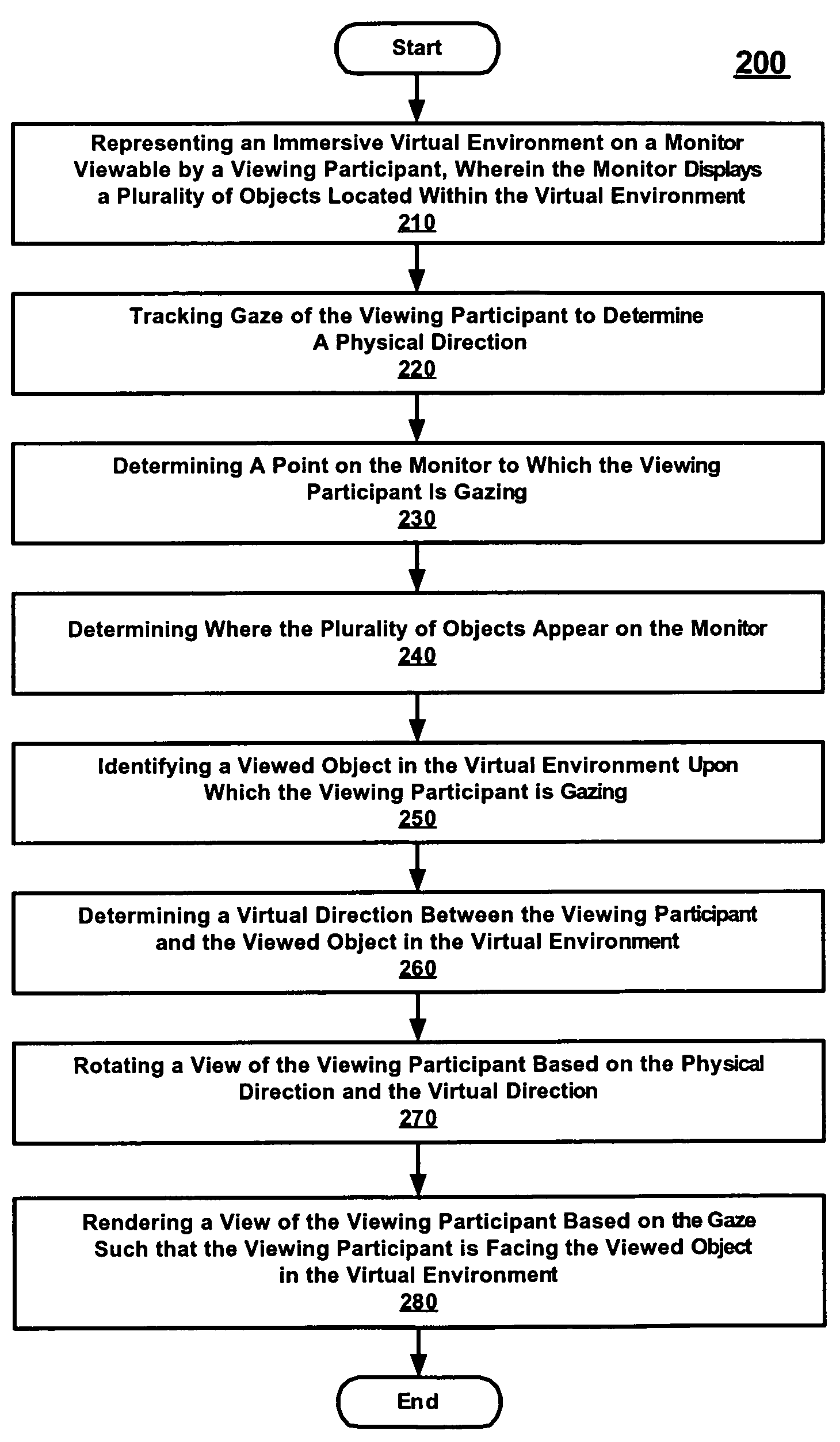

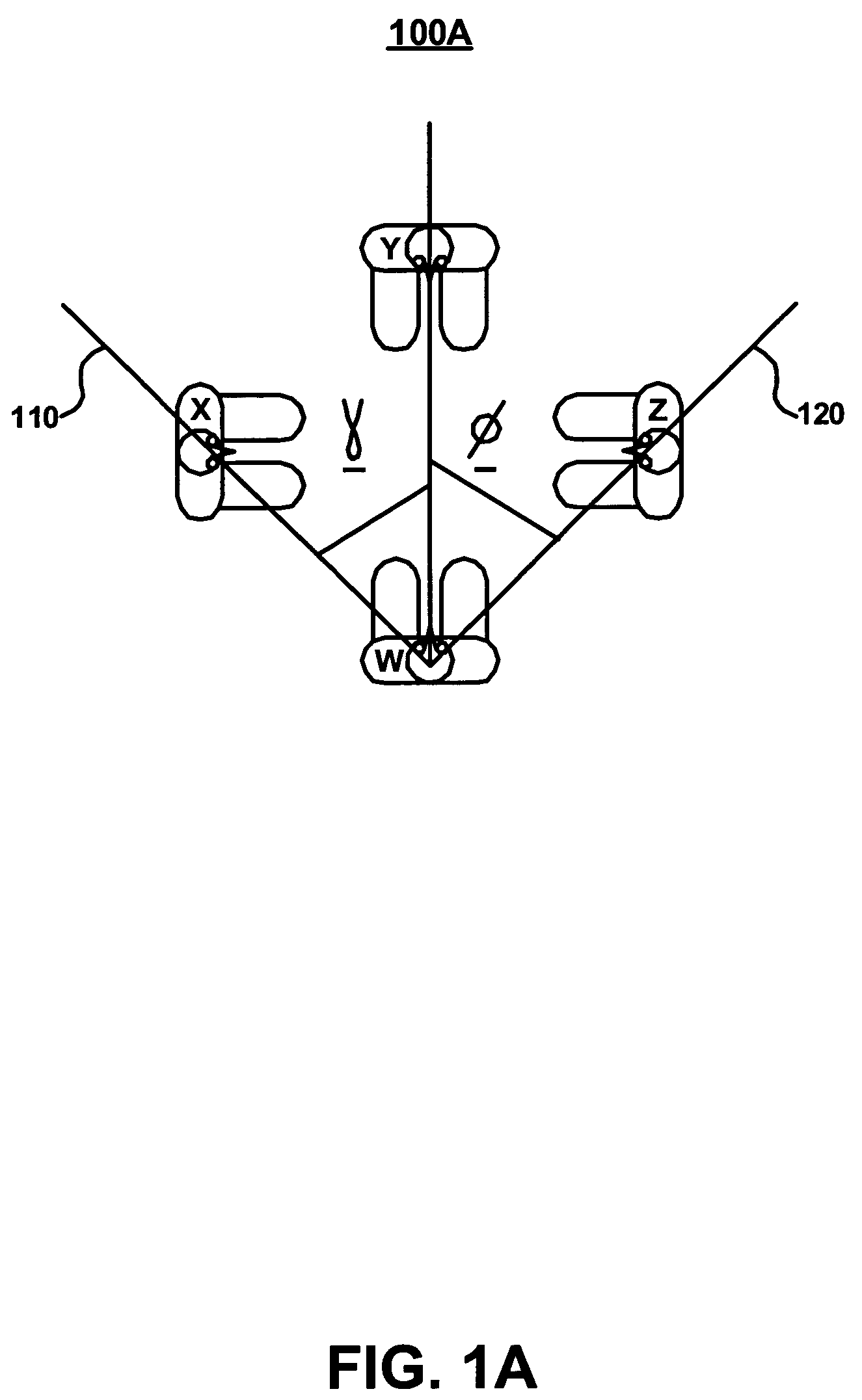

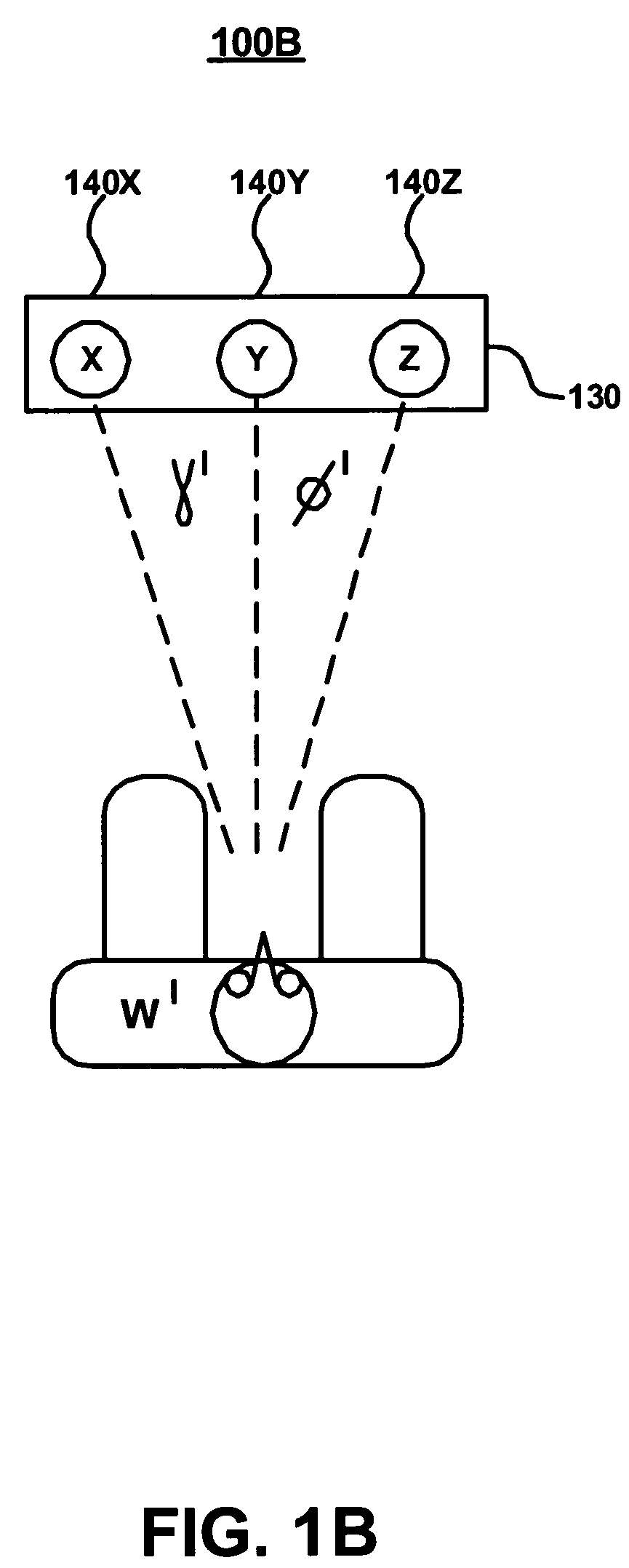

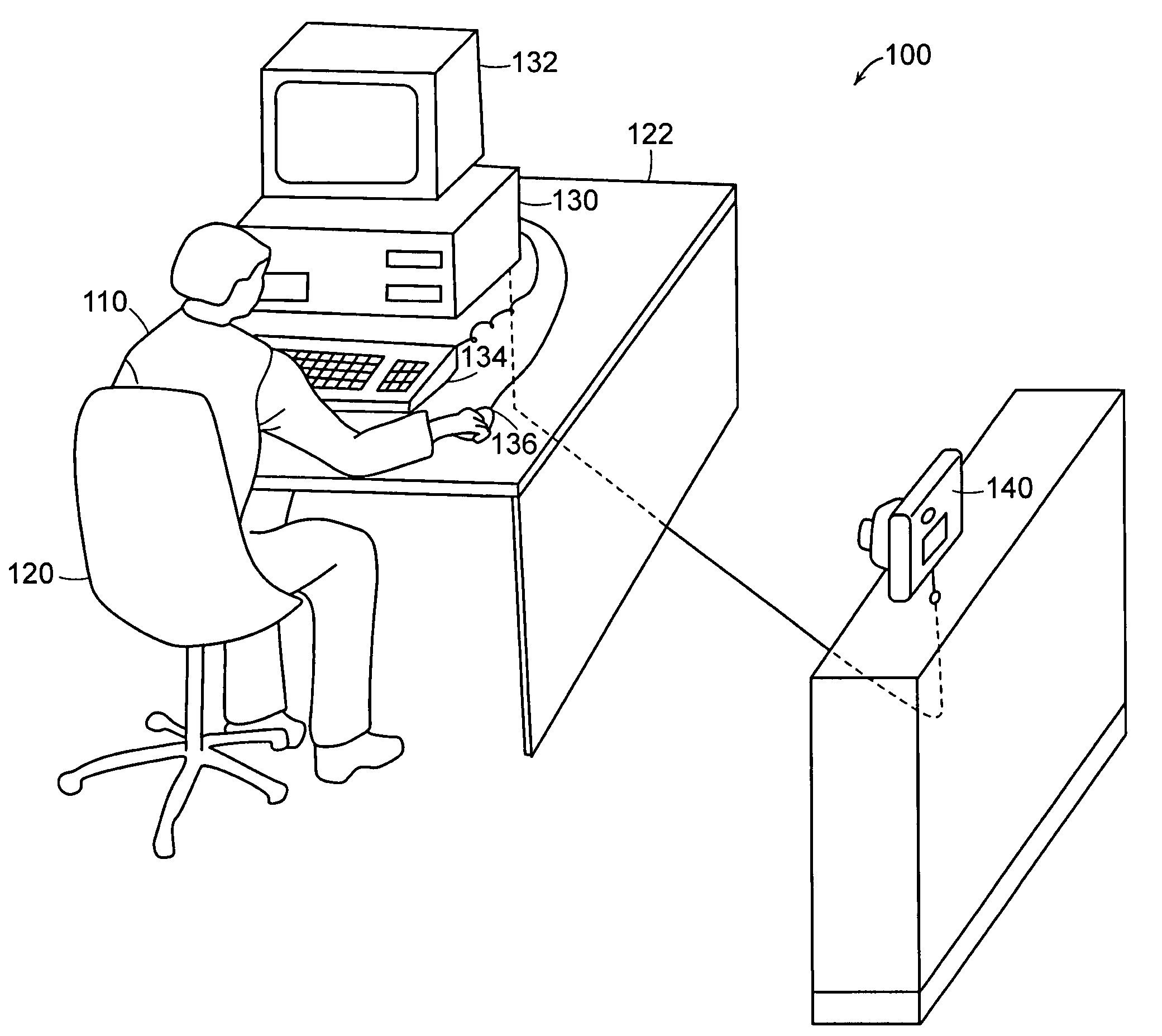

Method and system for communicating gaze in an immersive virtual environment

InactiveUS7532230B2Cathode-ray tube indicatorsTwo-way working systemsHuman–computer interactionImmersive virtual environment

A method of communicating gaze. Specifically, one embodiment of the present invention discloses a method of communicating gaze in an immersive virtual environment. The method begins by representing an immersive virtual environment on a monitor that is viewable by a viewing participant. The monitor displays a plurality of objects in the virtual environment. The physical gaze of the viewing participant is tracked to determine a physical direction of the physical gaze within a physical environment including the viewing participant. Thereafter, a viewed object is determined at which the viewing participant is gazing. Then, a virtual direction is determined between the viewing participant and the viewed object in the immersive virtual environment. A model of the viewing participant is rotated based on the physical and virtual directions to render a view of the viewing participant such that the viewing participant is facing the viewed object in the immersive virtual environment.

Owner:HEWLETT PACKARD DEV CO LP

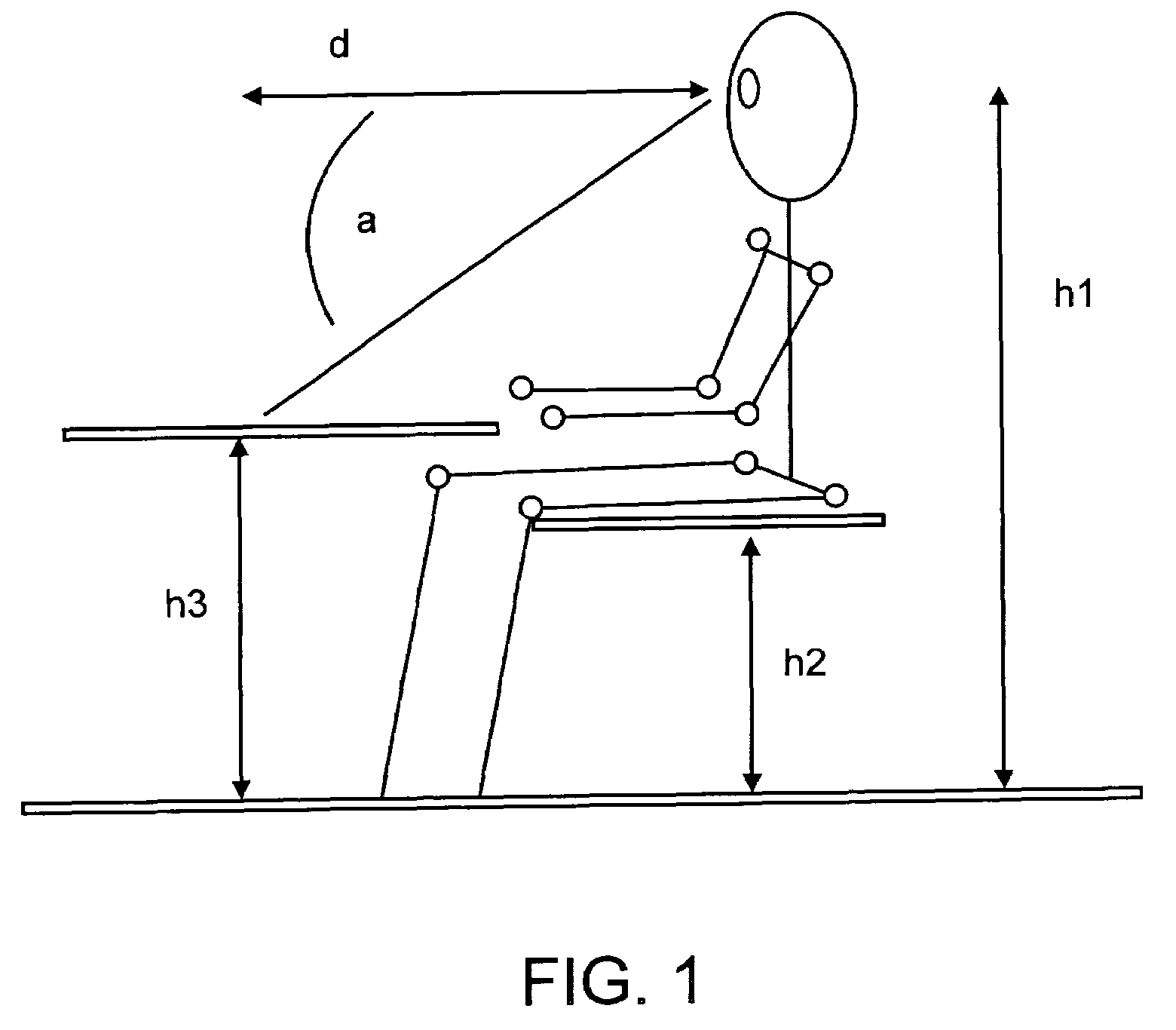

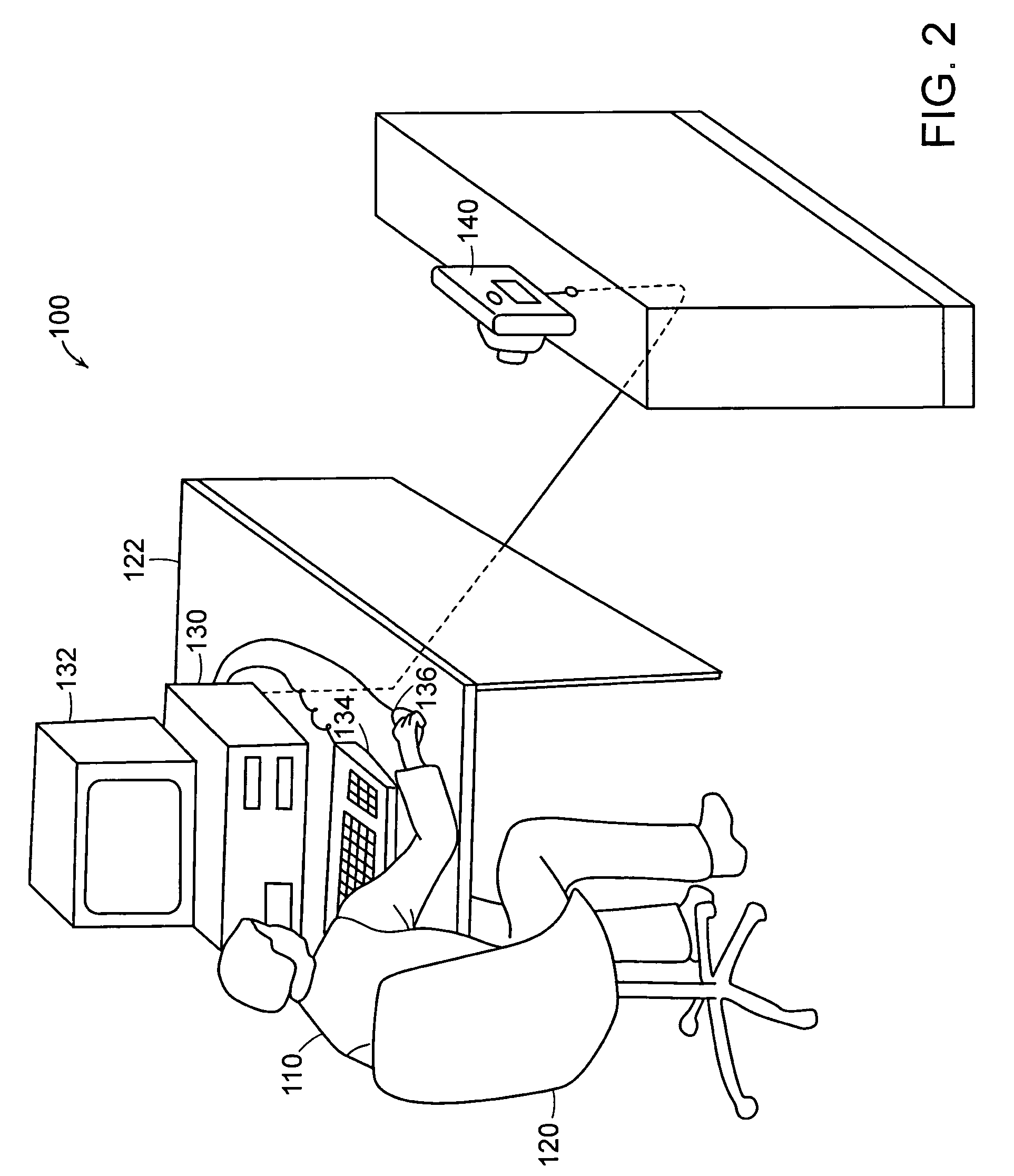

System and method for ergonomic tracking for individual physical exertion

ActiveUS7315249B2Low costPreventing various kinds of incapacitating traumaSurgeryDiagnostic recording/measuringBiomechanicsComputer module

A system and method are provided for tracking a user's posture. The system and method include an environmental module for determining the user's physical environment, a biomechanical module for determining at least a user's posture in the user's physical environment, and an output module for outputting to the user an indication of at least the user's posture relative to at least a target correct posture. The physical environment can include a computer workstation environment, a manufacturing environment, a gaming environment, and / or a keypadding environment.

Owner:LITTELL STEPHANIE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com