Patents

Literature

52results about How to "Reduce switching overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Multithreaded computer system and multithread execution control method

ActiveUS20070266387A1Improve usabilityEnhancing substantial availabilityEnergy efficient ICTDigital computer detailsProcessor elementProcessor register

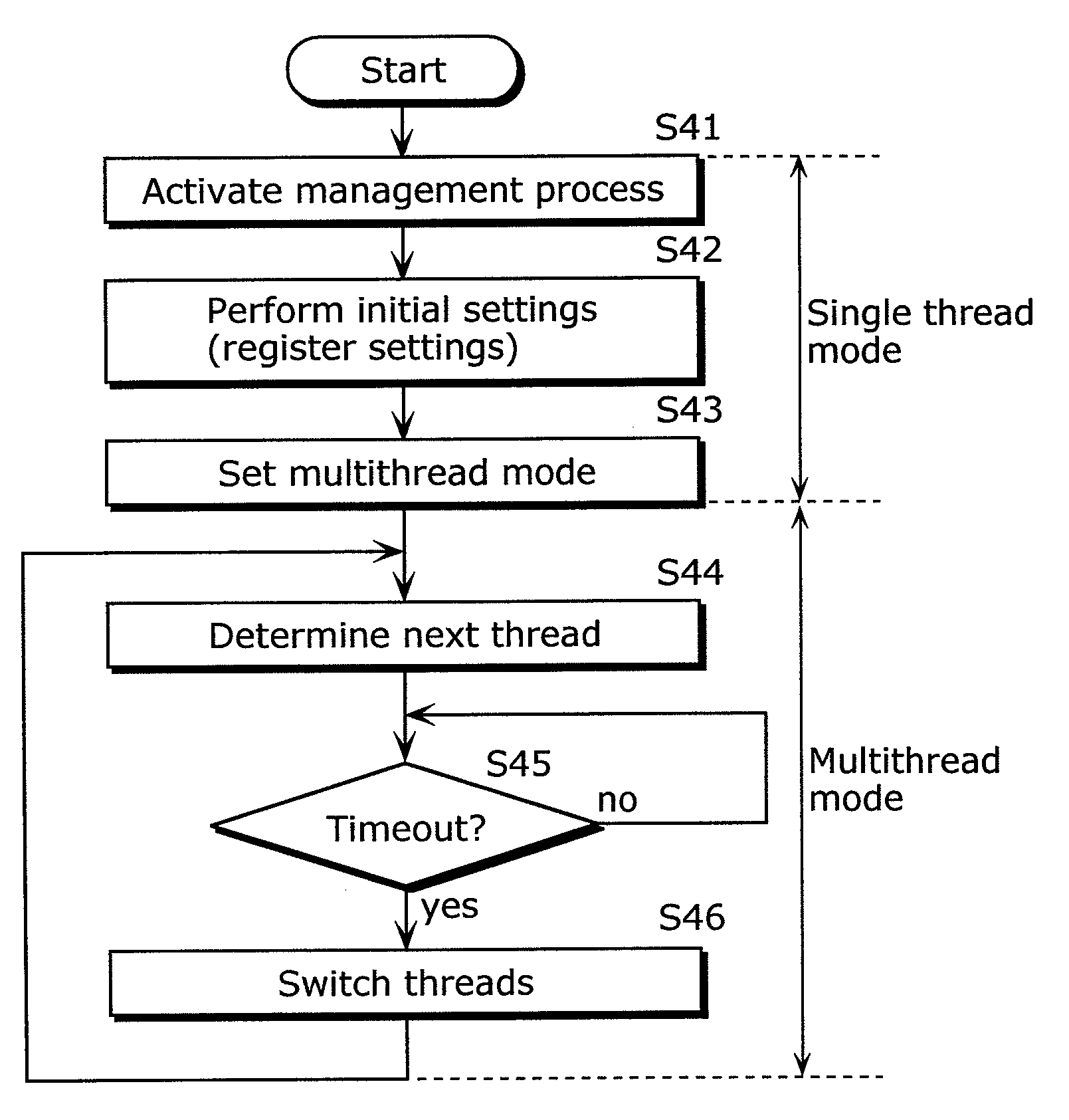

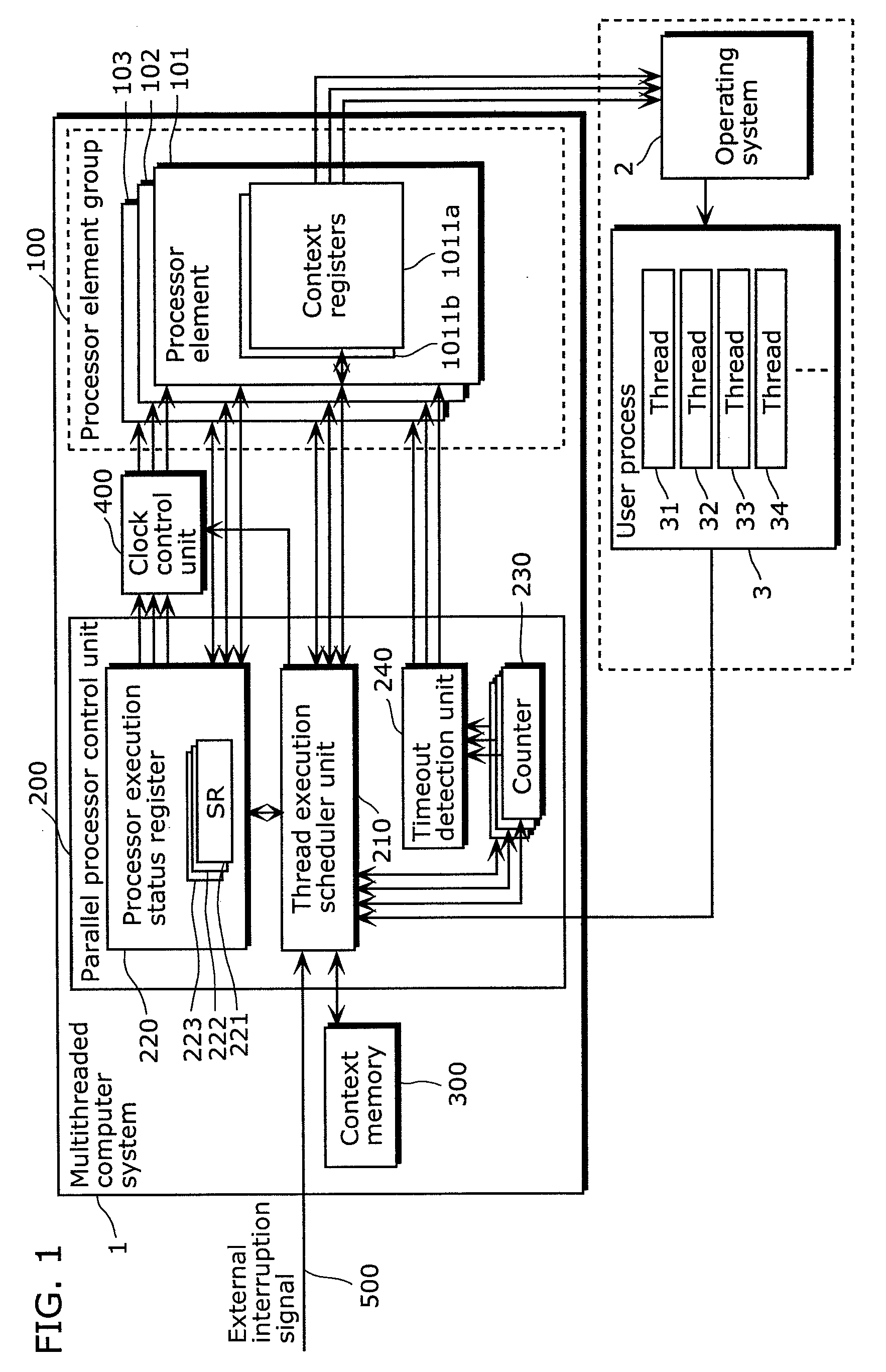

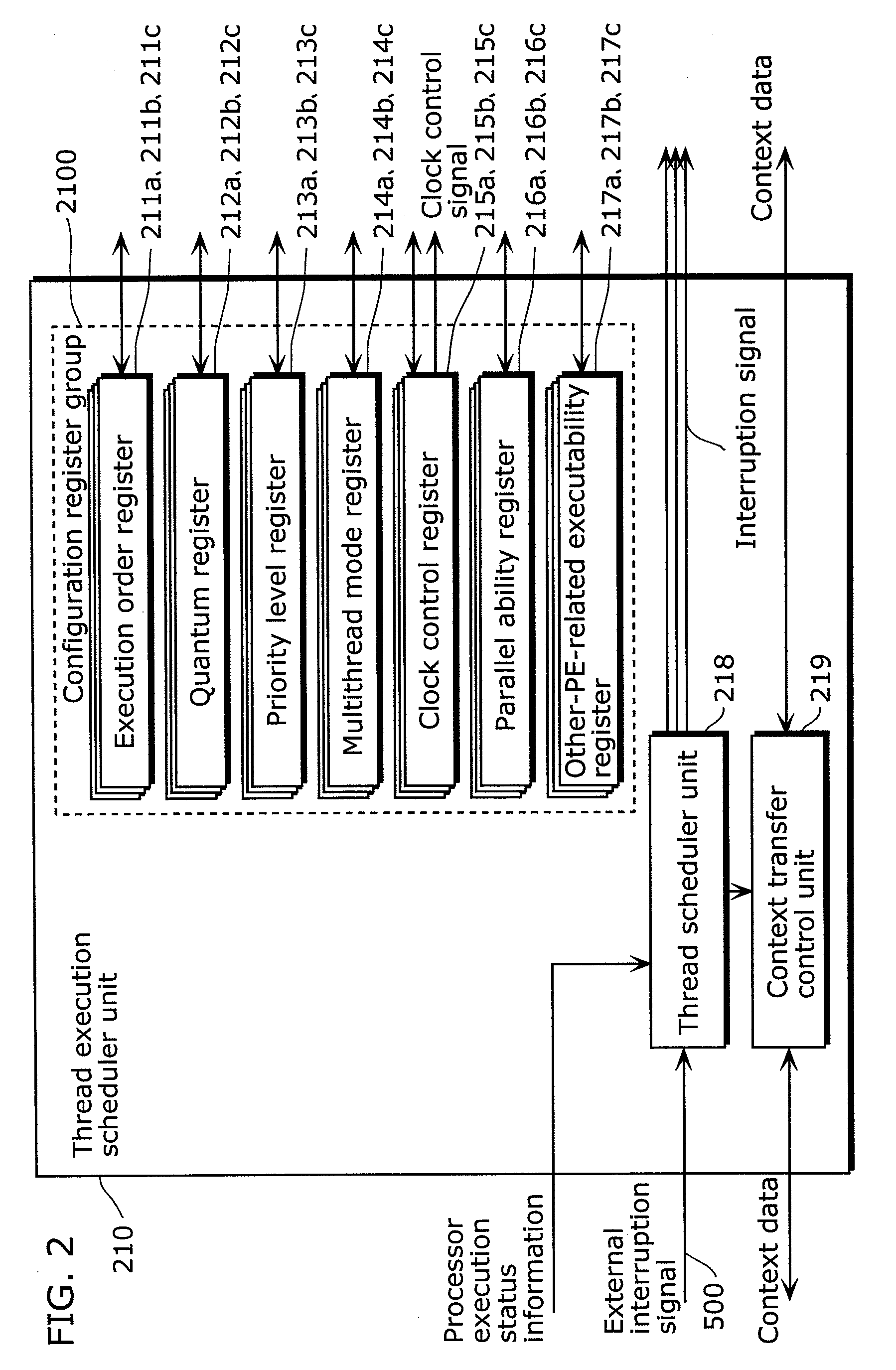

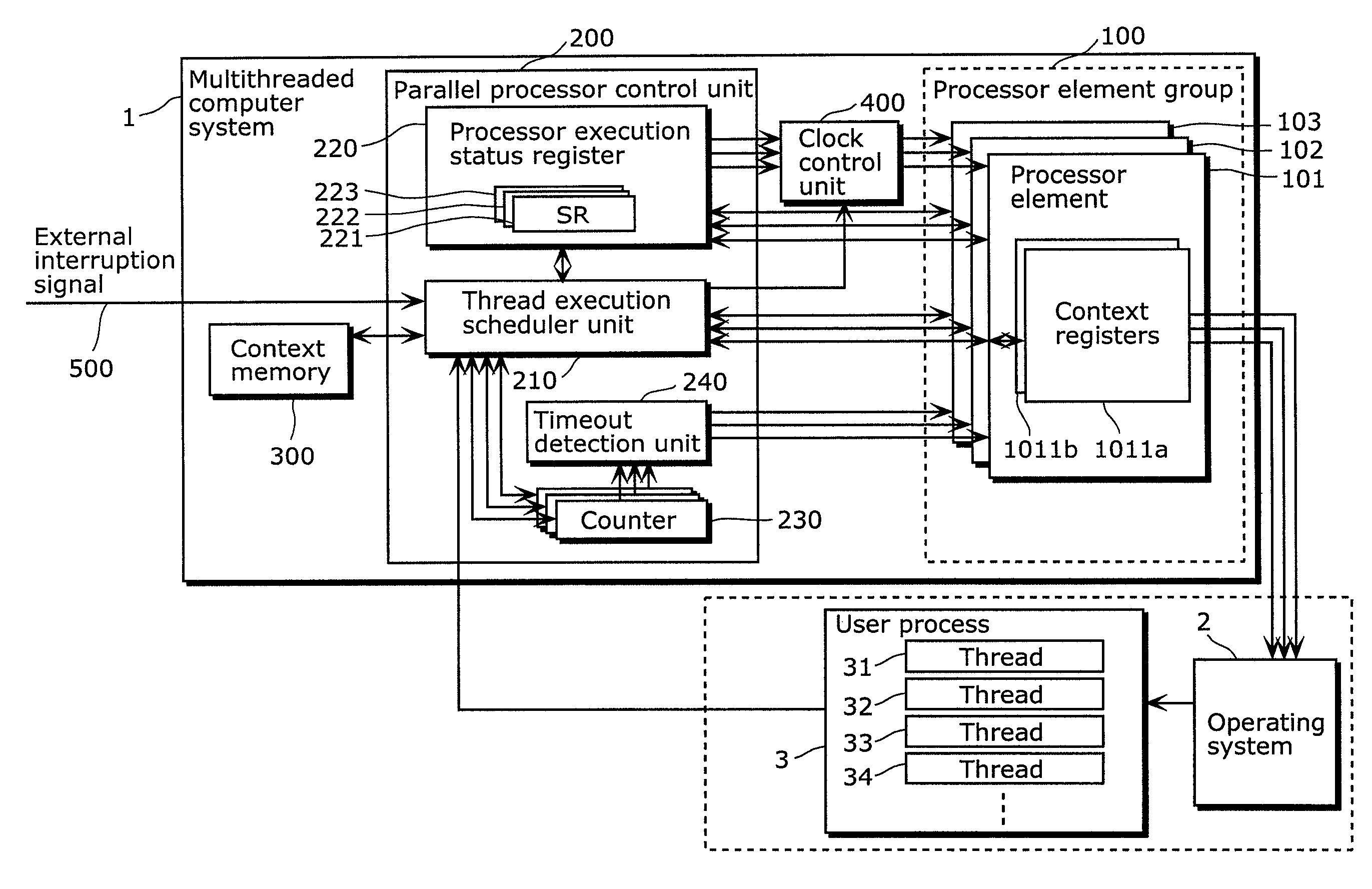

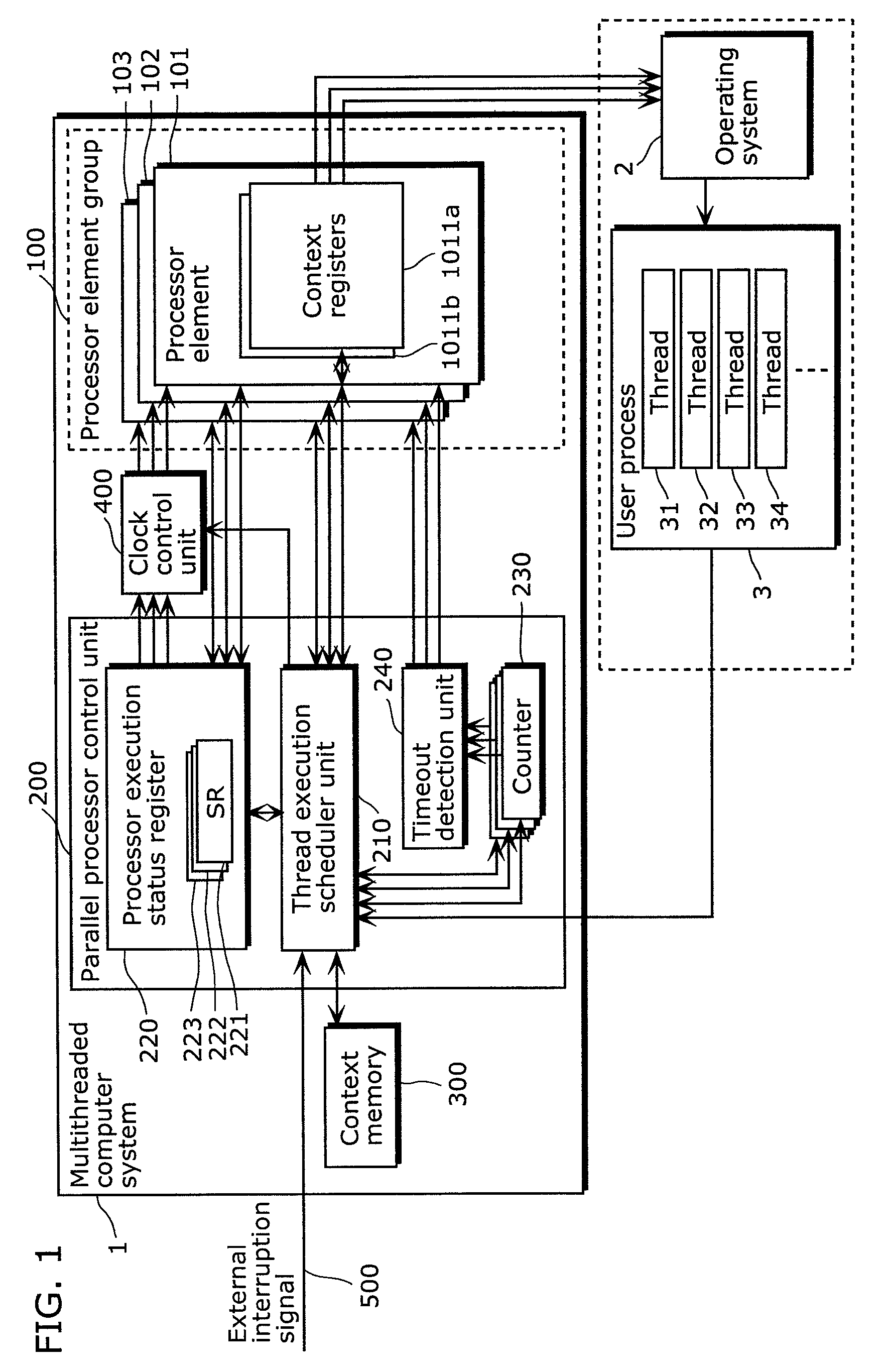

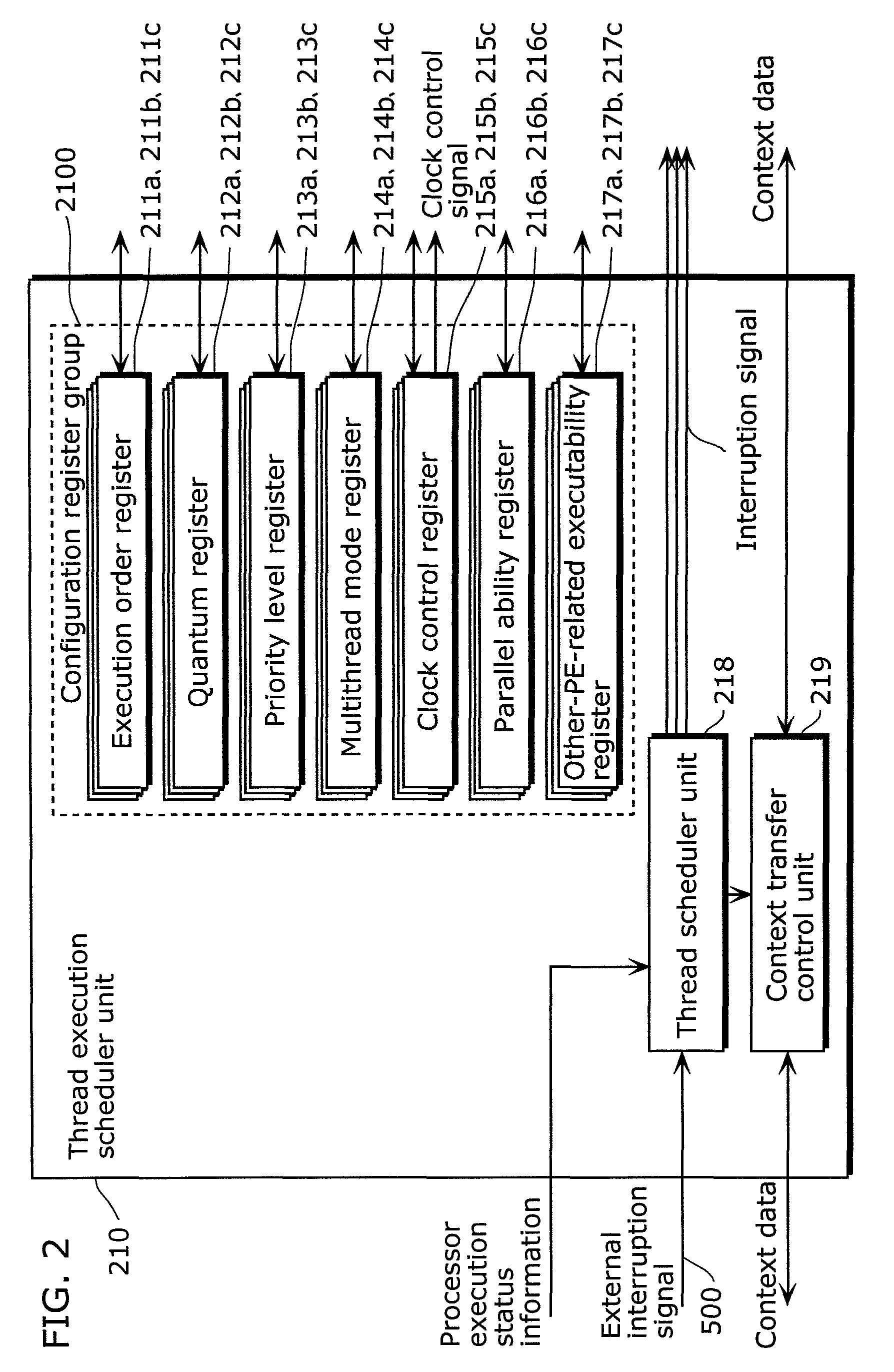

A multithreaded computer system of the present invention includes a plurality of processor elements (PEs) 101 to 103, and a parallel processor control unit 200, which switches threads in each PE, and the parallel processor control unit 200 includes: a plurality of execution order registers, which hold, for each processor element, an execution order of threads to be executed; a plurality of counters 230, which count an execution time for a thread that is being executed by each processor element and generate a timeout signal when the counted time reaches a limit assigned to the thread; and a thread execution scheduler unit 210, which switches the thread that is being executed to the thread to be executed by each processor element based on the execution order held in said execution order register and the timeout signal.

Owner:BEIJING ESWIN COMPUTING TECH CO LTD

Multithreaded computer system and multithread execution control method

ActiveUS8001549B2Enhancing substantial availabilityReduce switching overheadEnergy efficient ICTDigital computer detailsProcessor elementProcessor register

A multithreaded computer system of the present invention includes a plurality of processor elements (PEs) and a parallel processor controller which switches threads in each PE. The parallel processor controller includes a plurality of execution order registers which hold, for each processor element, an execution order of threads to be executed; a plurality of counters which count an execution time for a thread that is being executed by each processor element and generate a timeout signal when the counted time reaches a limit assigned to the thread; and a thread execution scheduler which switches the thread that is being executed to the thread to be executed by each processor element based on an execution order held in the execution order register and the timeout signal.

Owner:BEIJING ESWIN COMPUTING TECH CO LTD

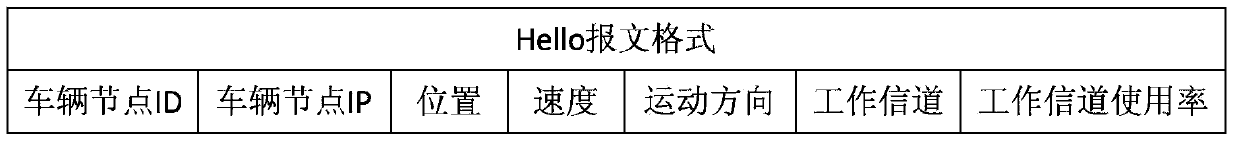

Multi-channel multi-path routing protocol for vehicle team ad-hoc networks

InactiveCN104093185AReduce competitionReduce conflictNetwork topologiesTeam communicationAlgorithm Selection

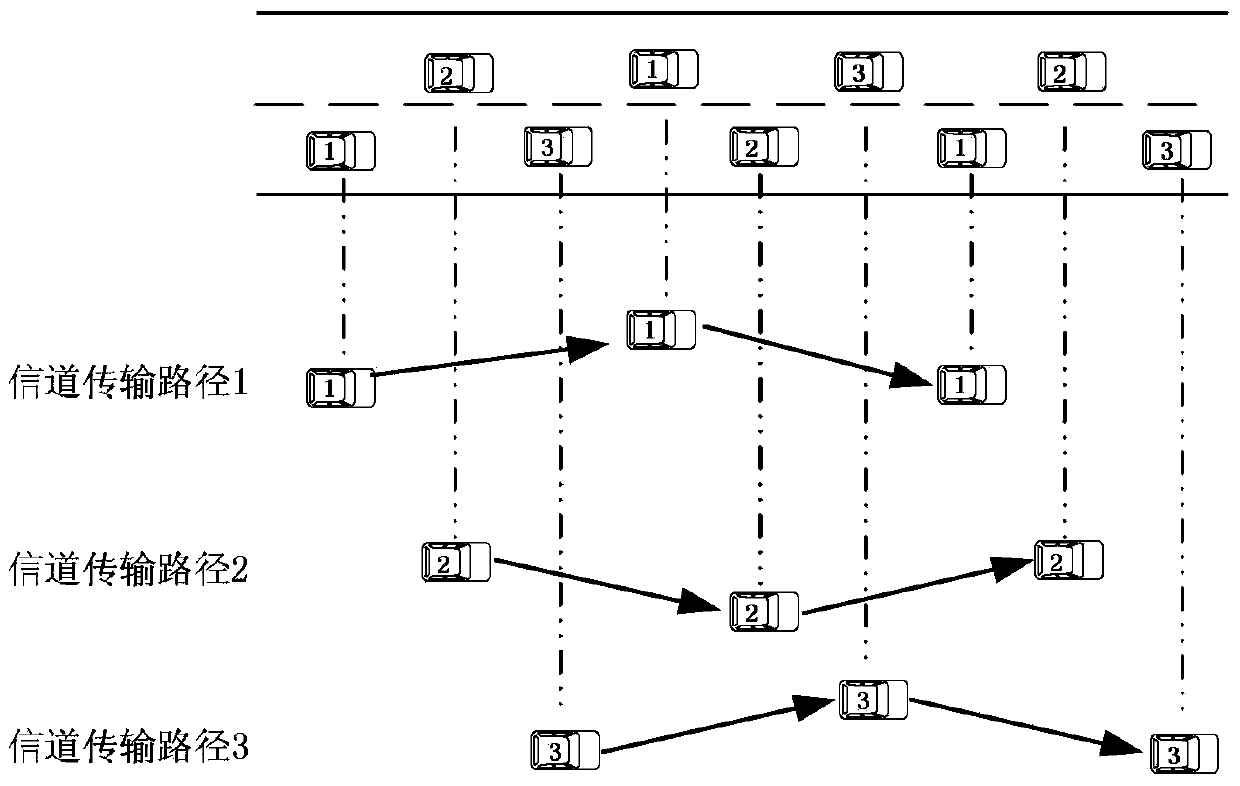

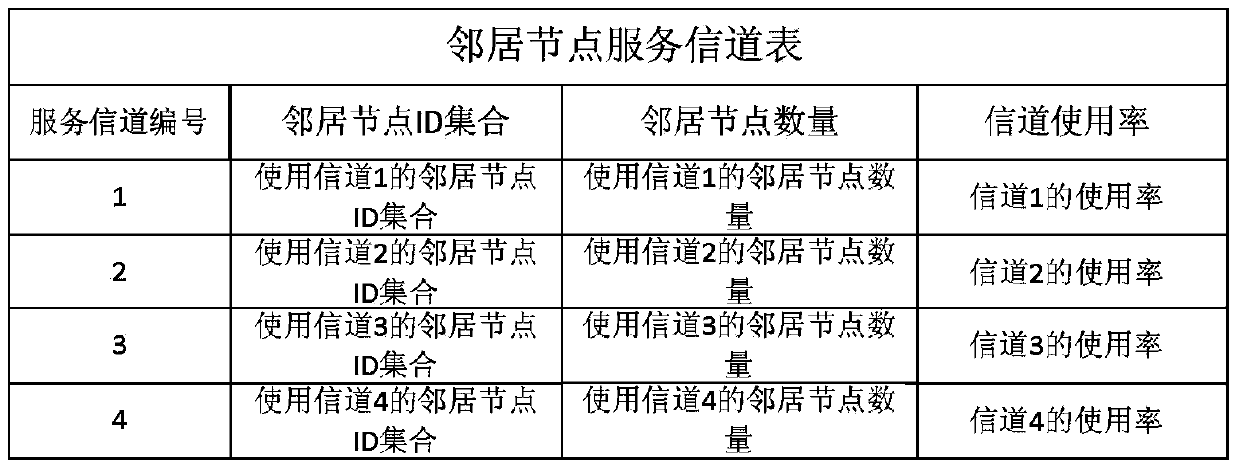

The invention discloses a multi-channel multi-path routing protocol for vehicle team ad-hoc networks. The multi-channel multi-path routing protocol mainly comprises: (1) each vehicle node is made to work on a service channel, the vehicle nodes using the same service channel form a channel transmission path in a vehicle team, and multiple transmission paths of multiple channels are formed in the vehicle team; (2) each vehicle node acquires position, speed and motion direction information of other vehicle nodes through an adaptive distributed position service; and (3) a multi-channel greedy forwarding algorithm is adopted: when the vehicle nodes send or forward a data message, a next-hop neighbor node is selected by use of the greedy forwarding algorithm according to the position of a destination node and the working channel utilization rate of a neighbor node until the data message reaches the destination node. By adopting the provided multi-channel multi-path routing protocol for vehicle team ad-hoc networks, vehicle team communication is enabled to be fully self-organized without relying on any infrastructure, adjacent vehicle nodes are allowed to communicate on different channel transmission paths at the same moment, the network throughput is improved, and the routing protocol supports multi-hop big data transmission and has practical application prospects.

Owner:SOUTH CHINA UNIV OF TECH

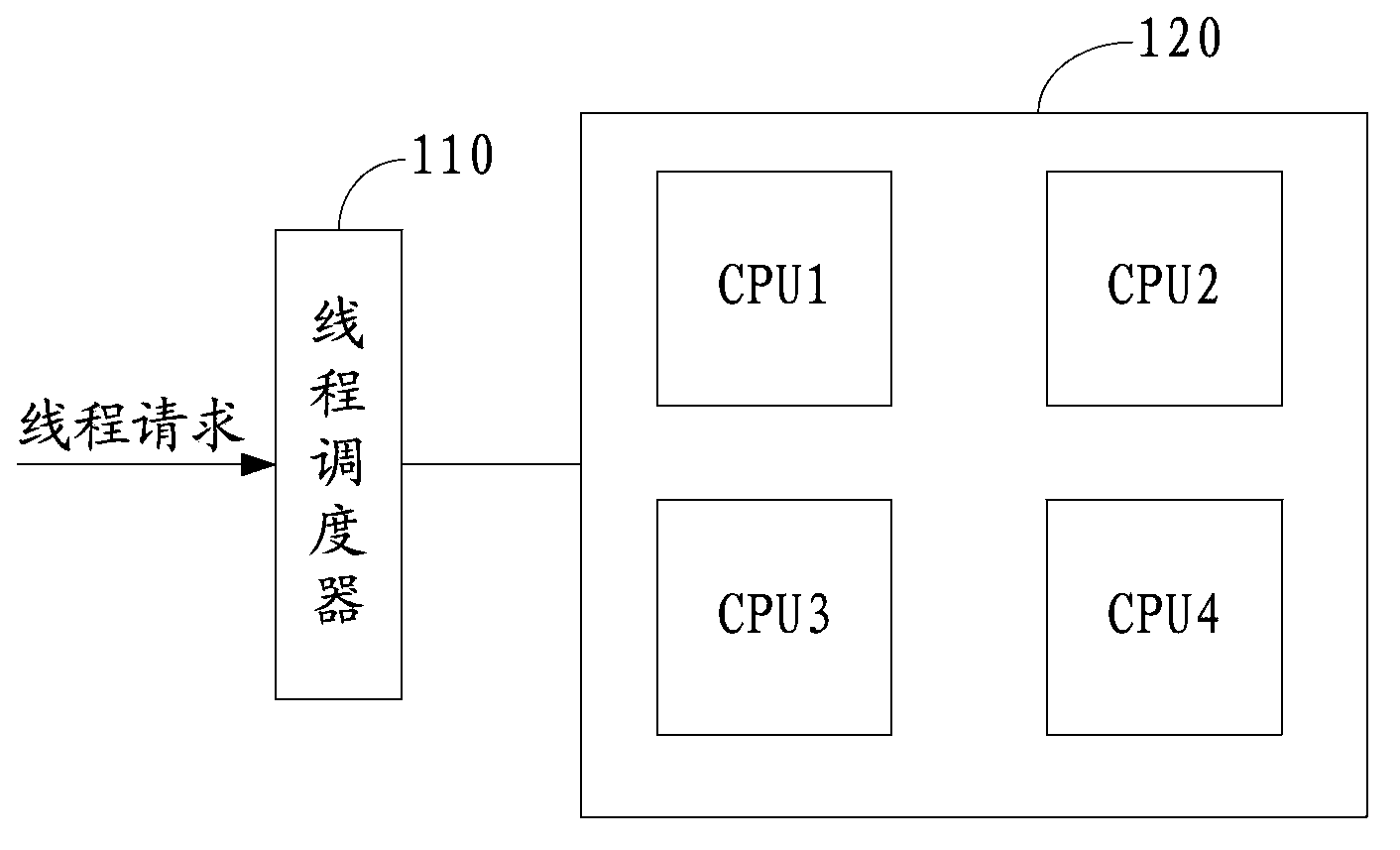

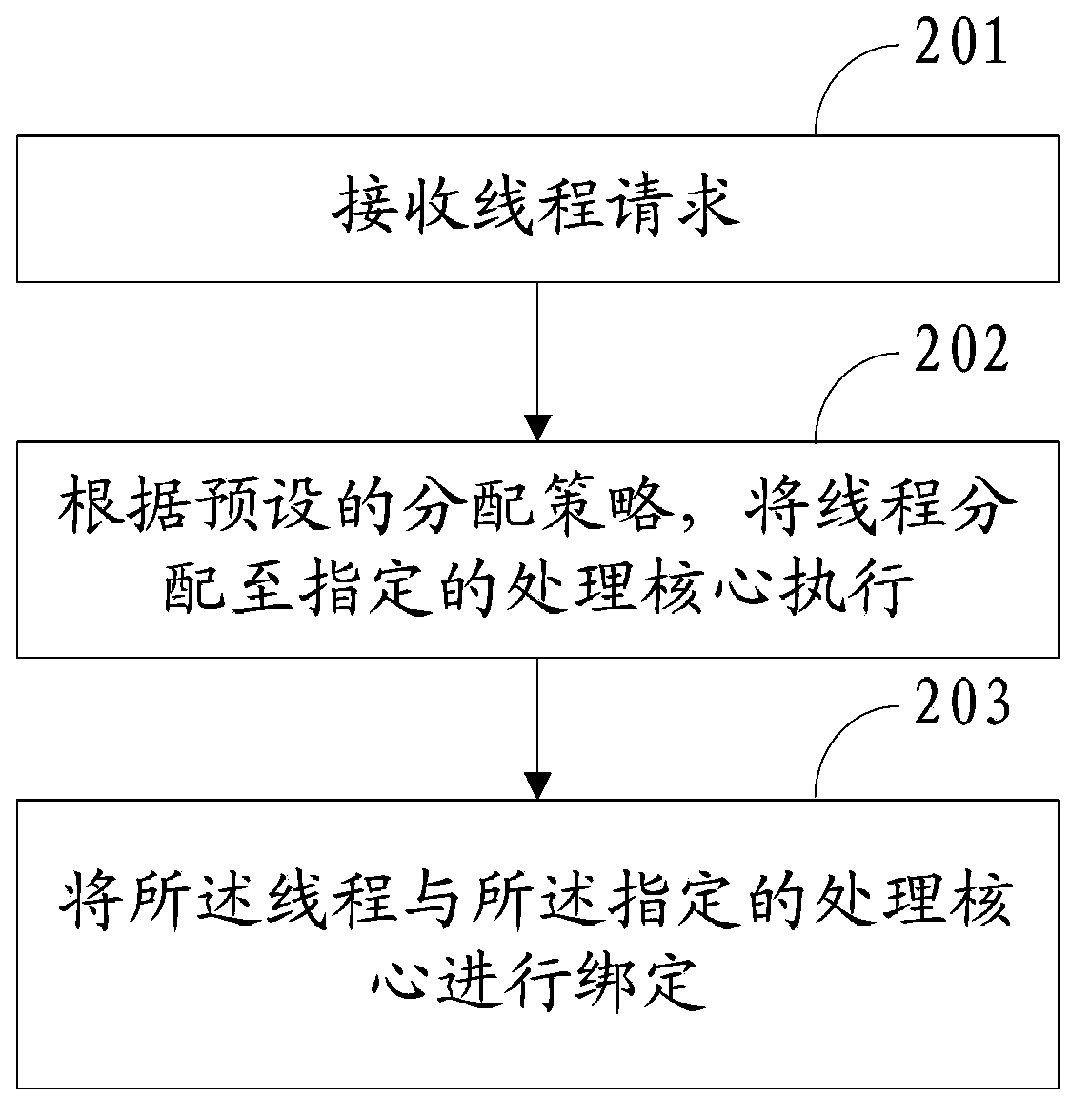

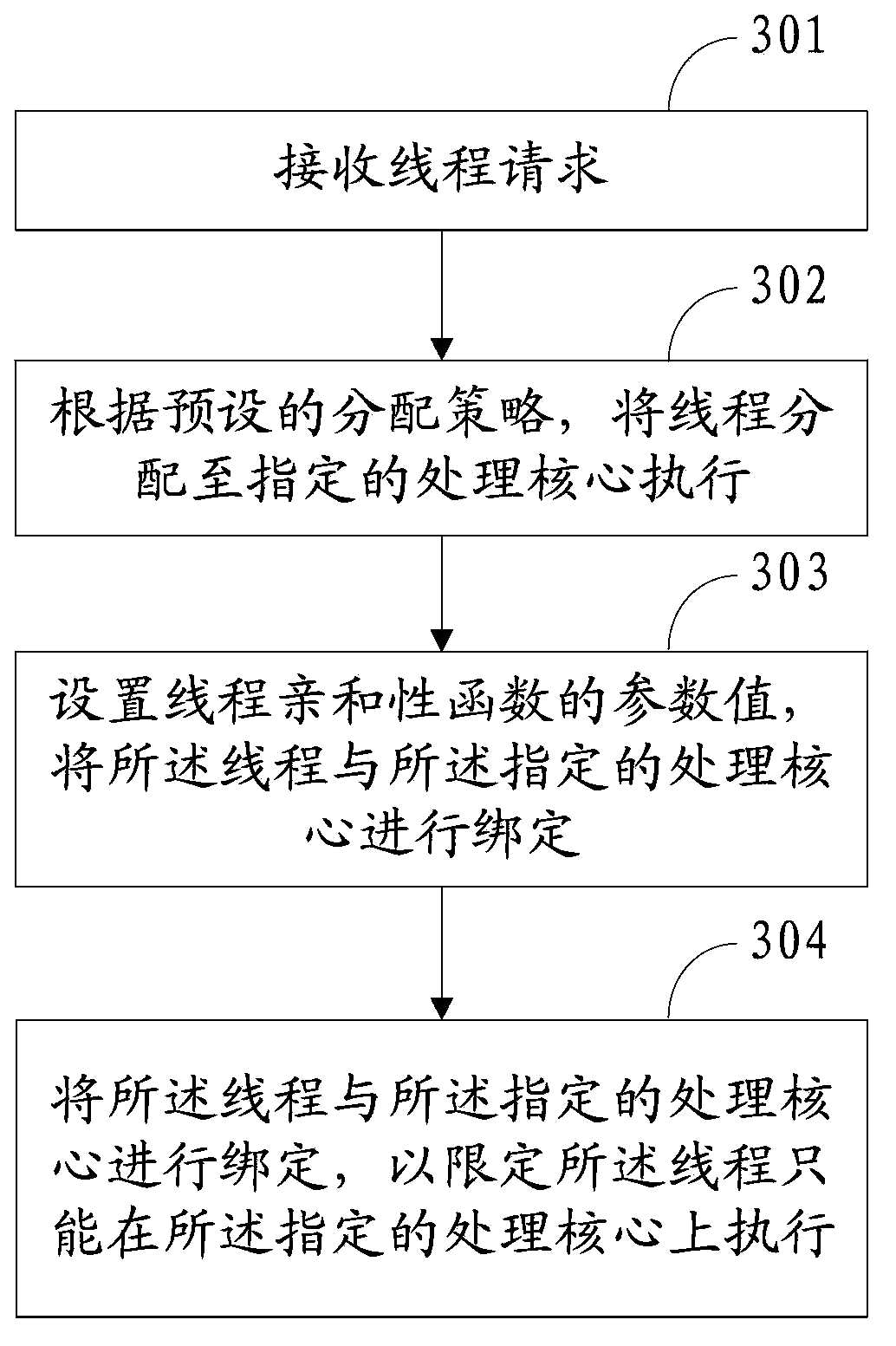

Thread scheduling method, thread scheduling device and multi-core processor system

InactiveCN103365718AAvoid switching between coresReduce switching overheadProgram initiation/switchingProcessing coreThread scheduling

The embodiment of the invention provides a thread scheduling method, a thread scheduling device and a multi-core processor system. The thread scheduling method comprises the following steps: by receiving a thread request, according to a preset allocation strategy, allocating a thread to an appointed processing core for execution; setting parameter values of a thread affinity function; binding the thread with the appointed processing core so as to confine the thread only to the appointed processing core for execution. Through the thread scheduling method, the thread scheduling device and the multi-core processor system, inter-core switching between multiple threads is avoided, the thread switching expense is reduced, the thread switching time is shortened, and the multi-thread executing efficiency is enhanced.

Owner:GUIYANG LONGMASTER INFORMATION & TECHNOLOGY CO LTD

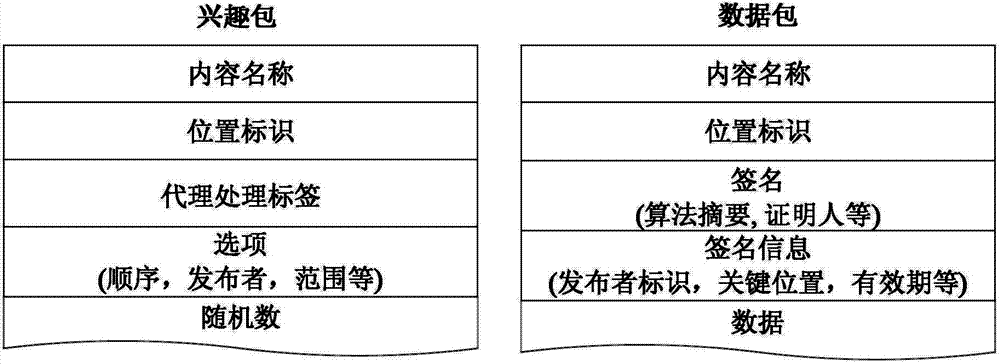

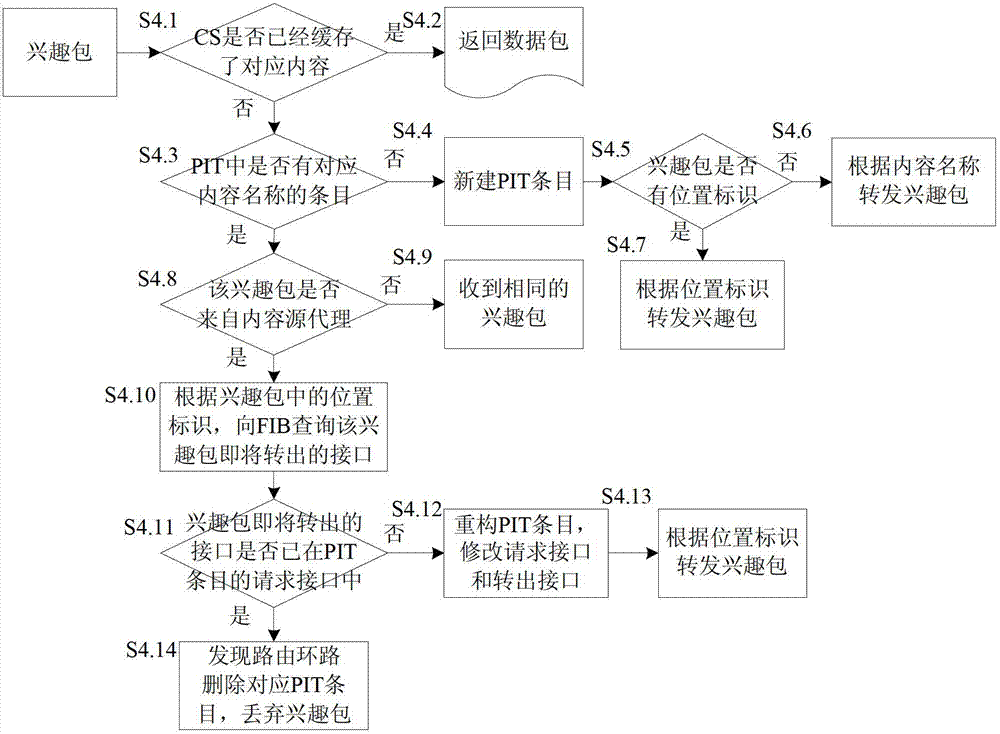

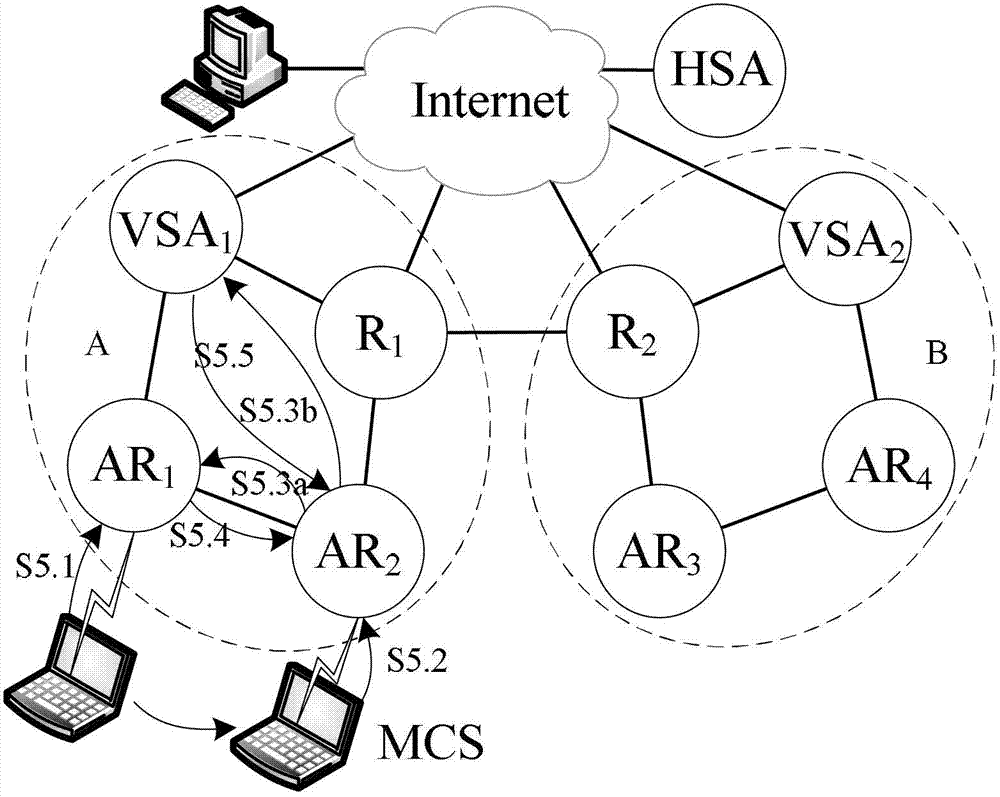

Packet structure and interest packet transfer and source switching and source agency selection method

InactiveCN103200640AReduce the probability of packet lossReduce switching overheadWireless communicationNetwork packetMobile content

The invention discloses a packet structure and an interest packet transfer, source switching and source agency selection method. The packet structure is used in a content center network. The content center network is divided into a plurality of mobile management regions, wherein each mobile management region has at least one content source agency and names the content source agency or a router in the region; when the content source agency receives an interest packet, a corresponding position marker is added into the interest packet according to position information of a mobile content source; an agency handling label is arranged to enable the interest packet to be transferred to the mobile content source according to the position marker; and the interest packet of the content center network expands one position marker and the agency handling label, one position marker is expanded in a data packet and used for showing the position of content, and the agency handling label shows that the position marker in the interest packet is set by the content source agency.

Owner:BEIJING JIAOTONG UNIV

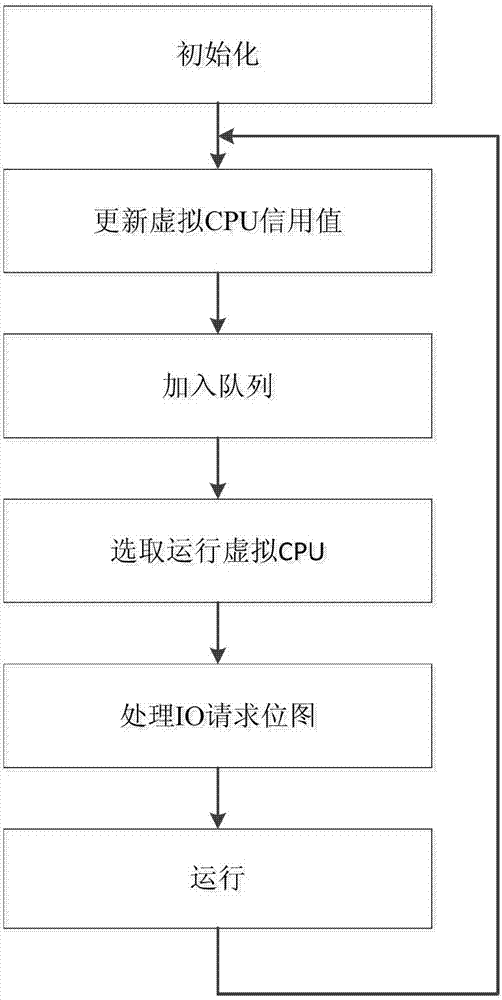

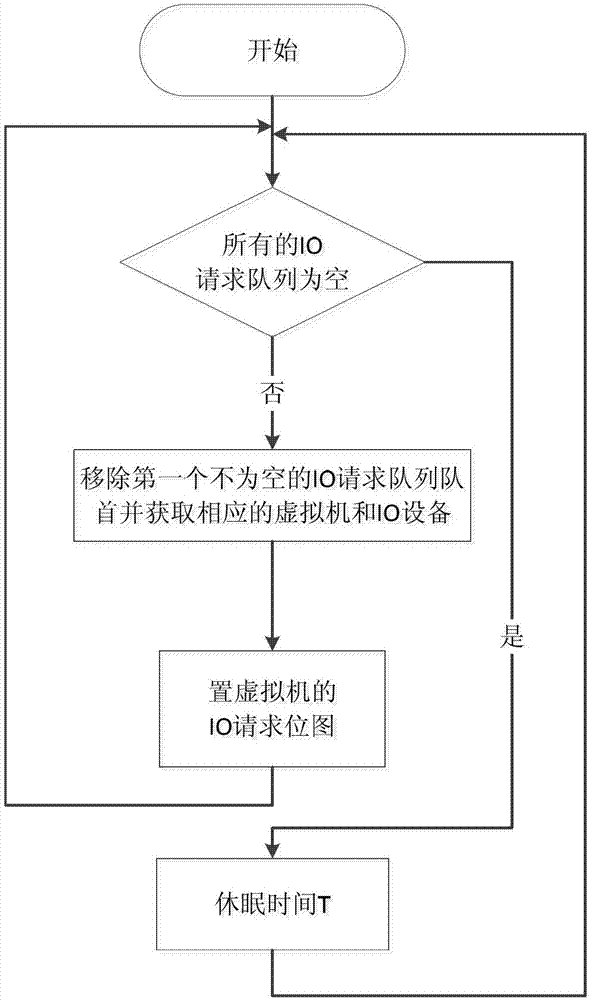

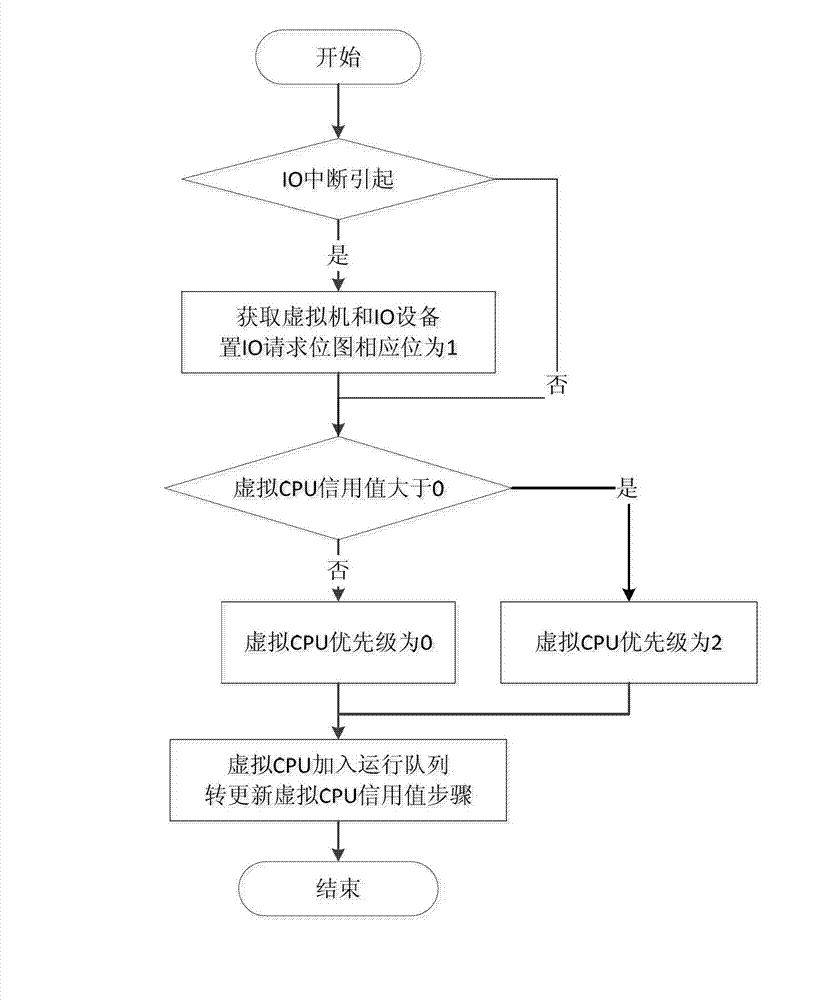

Virtual CPU scheduling method

ActiveCN103049332AReduce switching overheadShort response delayResource allocationSoftware simulation/interpretation/emulationVirtualizationResponse delay

The invention relates to a virtual central processing unit (CPU) scheduling method; belongs to the technical field of computer virtualization; and solves the problem that existing virtual CPU scheduling methods adopts fixed length time slices to perform scheduling on all virtual CPUs, accordingly virtual machine performance is affected due to resource limit. The virtual CPU scheduling method comprises the steps of initializing, updating virtual CPU credit values, joining the queue, selecting running the virtual CPU, processing integrated optics (OI) request bitmap, and running. The virtual CPU scheduling method sets scheduling time slices according to virtual CPU running state, during virtual CPU scheduling, and dynamically sets the scheduling time slices of the virtual CPU according to the IO request bitmap and a scheduling time slice table of a virtual machine which the virtual CPU belongs; the OI request bitmap reflects running characters of each virtual machine, accordingly virtual machines mainly basing on CPU operation have small switching overhead, virtual machines mainly basing on OI operation have short response delay, and further the effects of being suitable for various different application environment and meeting different application service type requirements are achieved.

Owner:HUAZHONG UNIV OF SCI & TECH

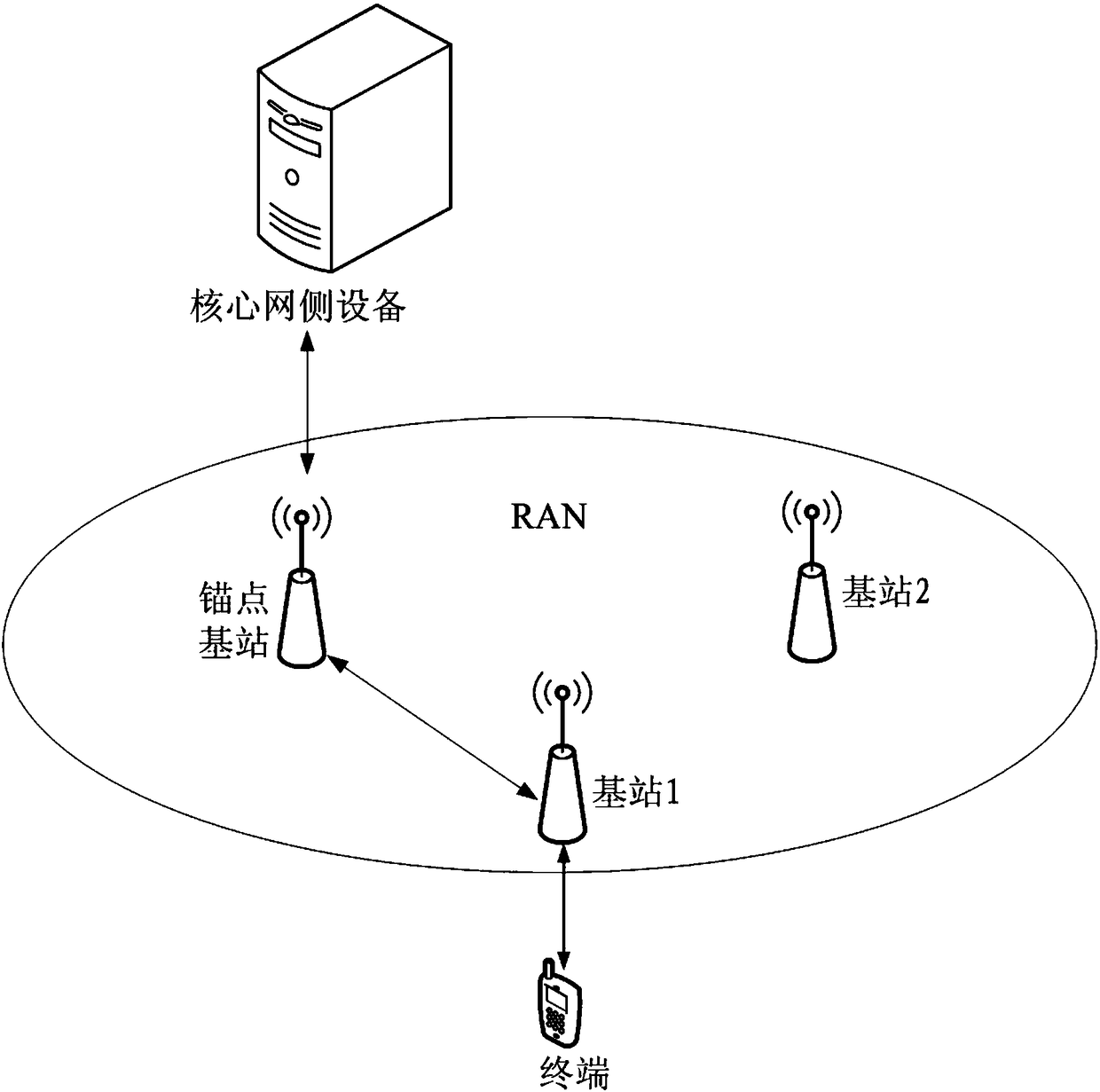

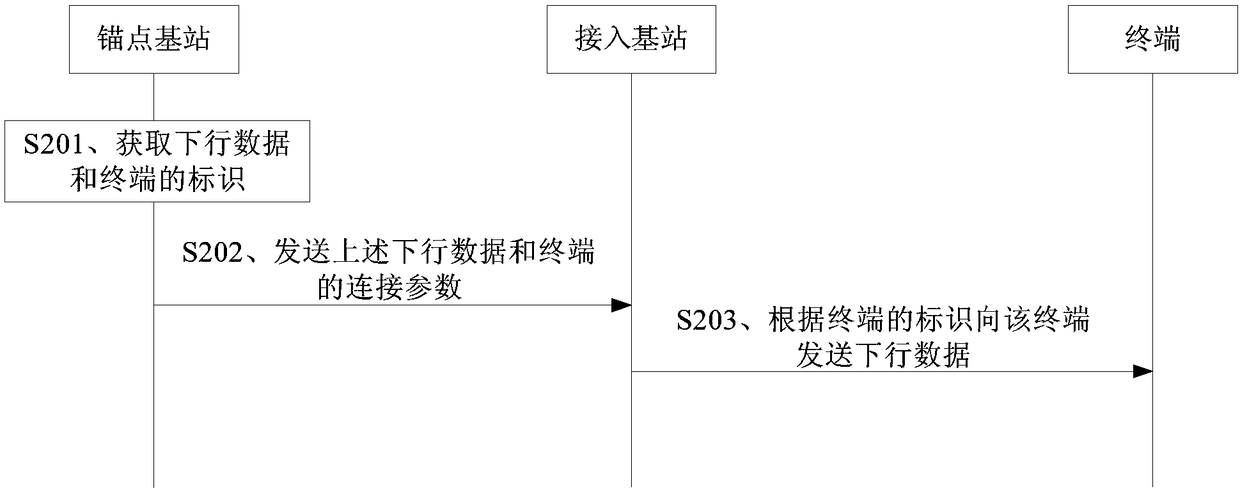

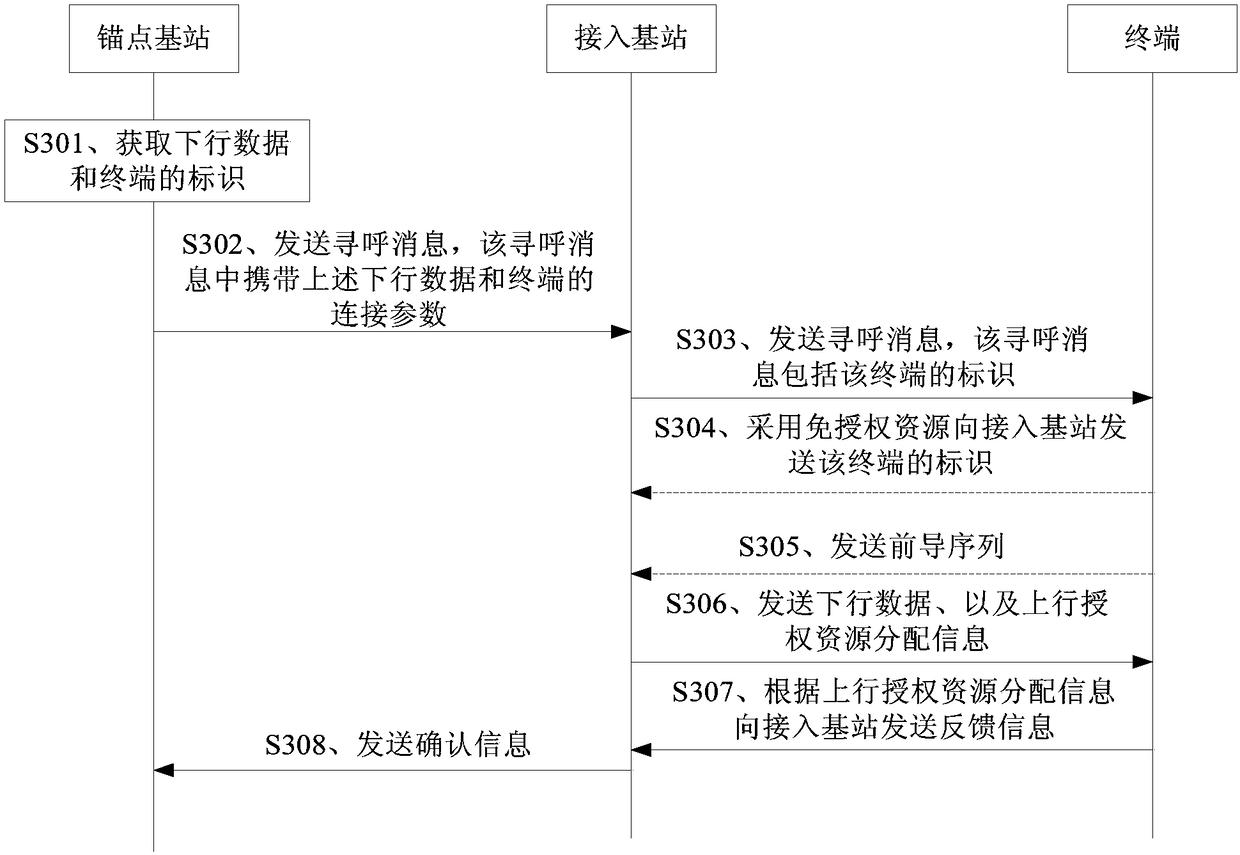

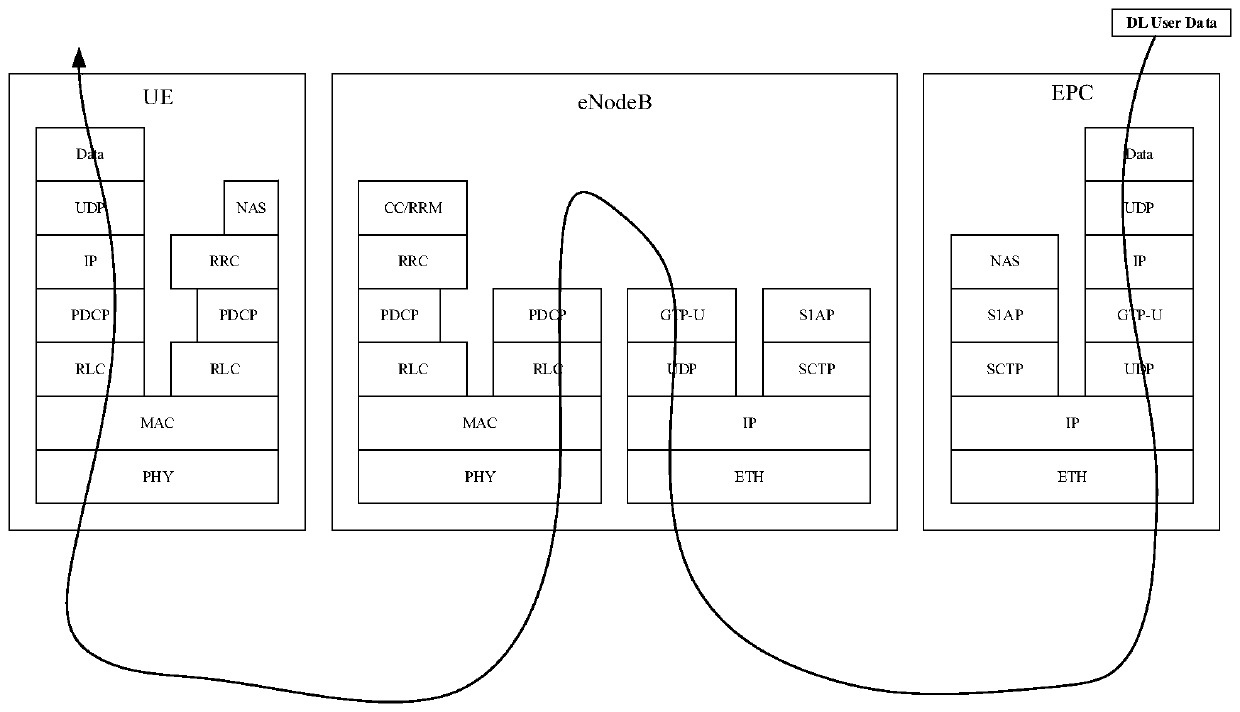

Downlink data transmission method and device

ActiveCN108738139AMinus send delayReduce complexityWireless communicationData transmissionRadio Resource Control

The invention provides a downlink data transmission method and device. The method comprises the steps that an access base station receives downlink data sent by an anchor base station and connection parameters of a terminal, wherein the access base station is the base station accessed by the terminal at present, the connection parameters of the terminal are stored in the anchor base station and contain an identifier of the terminal, and the terminal is in a radio resource control (RRC) inactive state; and the access base station sends the downlink data to the terminal according to the identifier of the terminal. Downlink data transmission in the RRC_INACTIVE state is achieved, and therefore the sending delay of the downlink data can be shortened; and in addition, an RRC signaling process can be not involved, so that the complexity of terminal state maintenance is reduced, or a switching process is omitted, so that the switching overhead is reduced.

Owner:HUAWEI TECH CO LTD

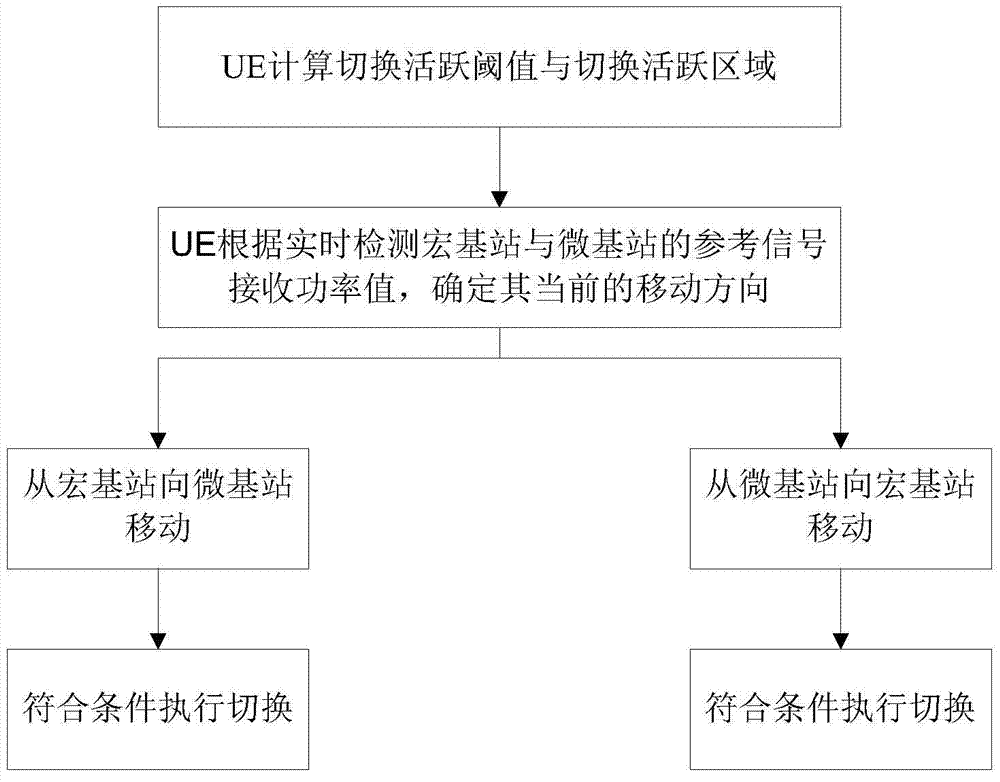

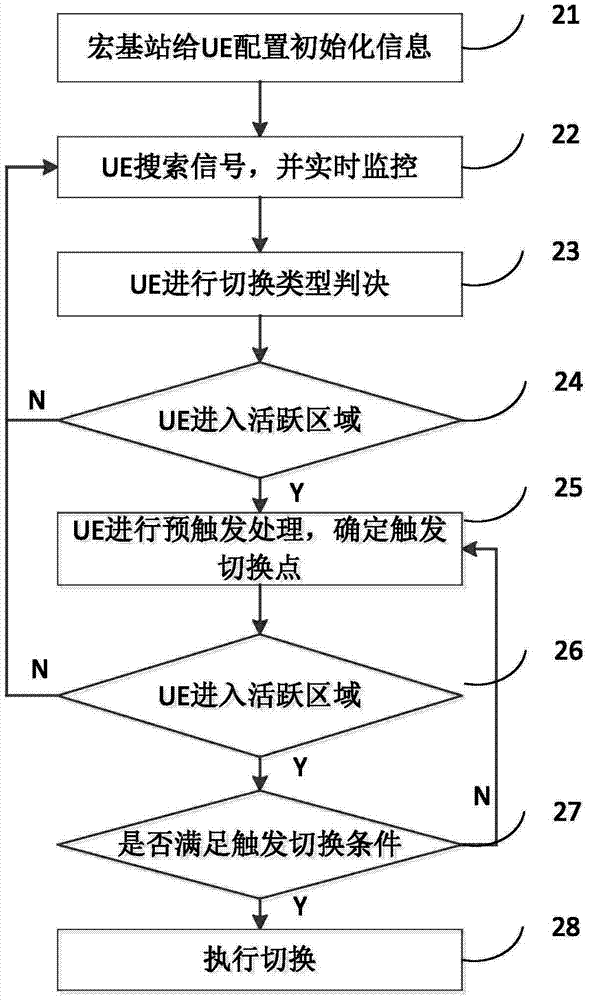

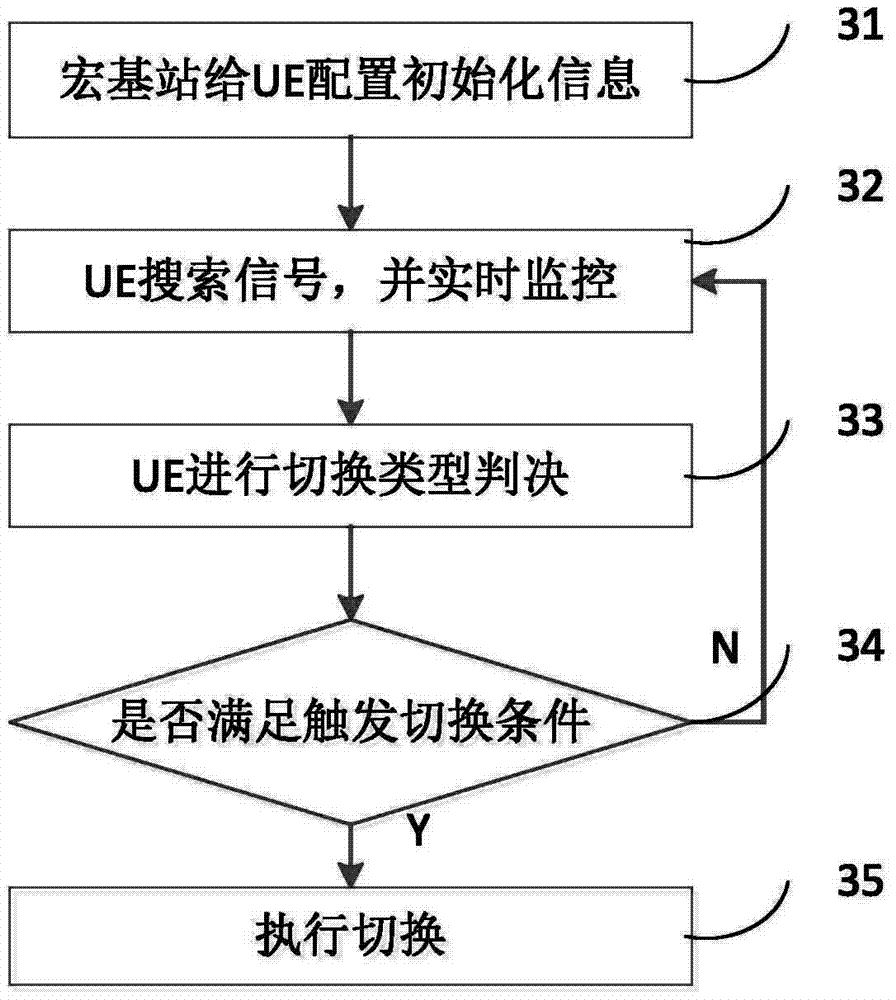

Switching method under heterogeneous cellular network

ActiveCN103781133AReduce complexityImprove switching performanceWireless communicationMacro base stationsResource utilization

The invention discloses a switching method under a heterogeneous cellular network, which comprises the steps of: calculating a switching active threshold HAT by user equipment (UE) according to an effective switching threshold EHT and an active index AH which are transmitted by a macro base station, and determining a coverage area of the switching active area HAA of the macro base station according to the acquired HAT value; determining movement direction by the current UE according to reference signal receiving power RSRP values of the macro base station and a micro base station; if movement from the macro base station to the micro base station is determined, determining whether the UE enters the HAA area of the macro base station according to the reference signal receiving power RSRP value and HAT value of the macro base station, thereby determining whether switching from the macro base station to the micro base station is required; and if movement from the micro base station to the macro base station is determined, determining whether the UE enters the non-HAA area of the macro base station according to the reference signal receiving power RSRP value of the macro base station, thereby determining whether switching from the micro base station to the macro base station is required. The switching method under the heterogeneous cellular network according to the invention has the functions of: improving resource utilization rate, and reducing system performance reduction and ping-pong effect caused by switching time delay.

Owner:BEIJING UNIV OF POSTS & TELECOMM +1

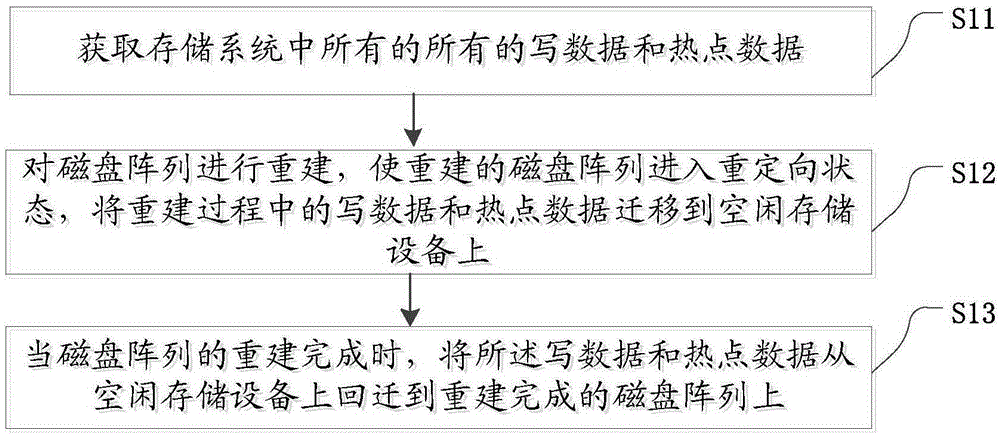

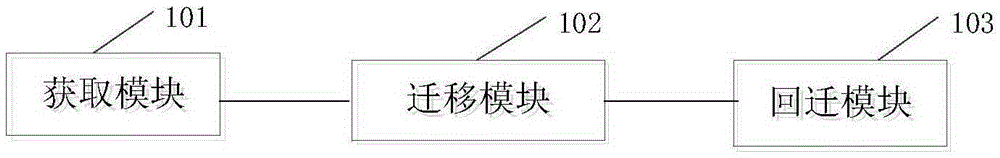

Disk array reconstruction optimization method and device

InactiveCN105353991AReduce workloadImprove stabilityInput/output to record carriersDisk arrayComputer engineering

The invention discloses a disk array reconstruction optimization method and device. The method comprises: acquiring all write data and hot data in a storage system, wherein the hot data is data read twice at least in the whole reconstruction period of a disk array; reconstructing the disk array so that the reconstructed disk array enters a redirected state, and migrating the write data and the hot data in the reconstruction process to an idle storage device, wherein the idle storage device is a storage agent device; and when the reconstruction of the disk array is accomplished, migrating the write data and the hot data from the idle storage device to the reconstructed disk array. The method improves the stability of the storage system.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

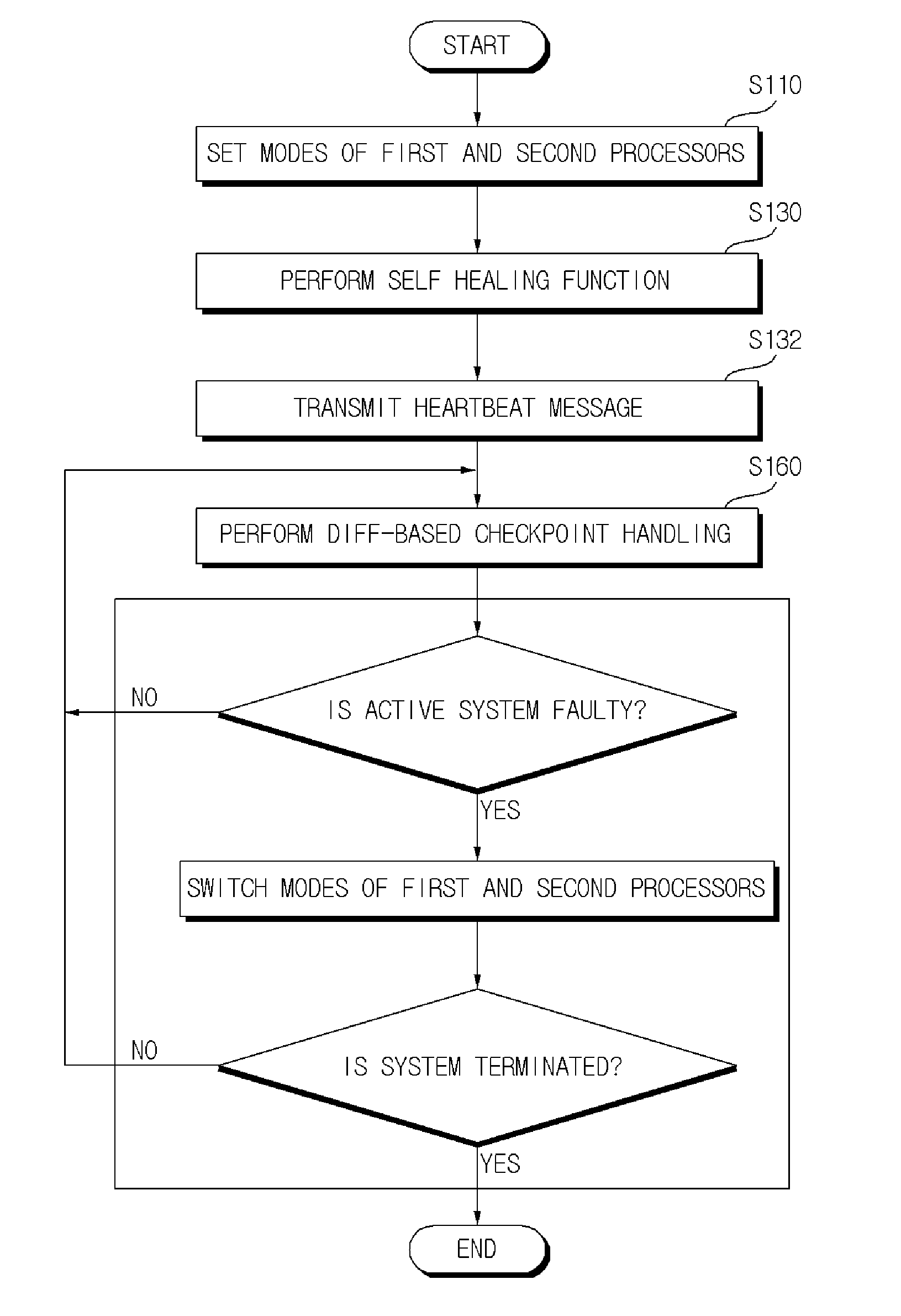

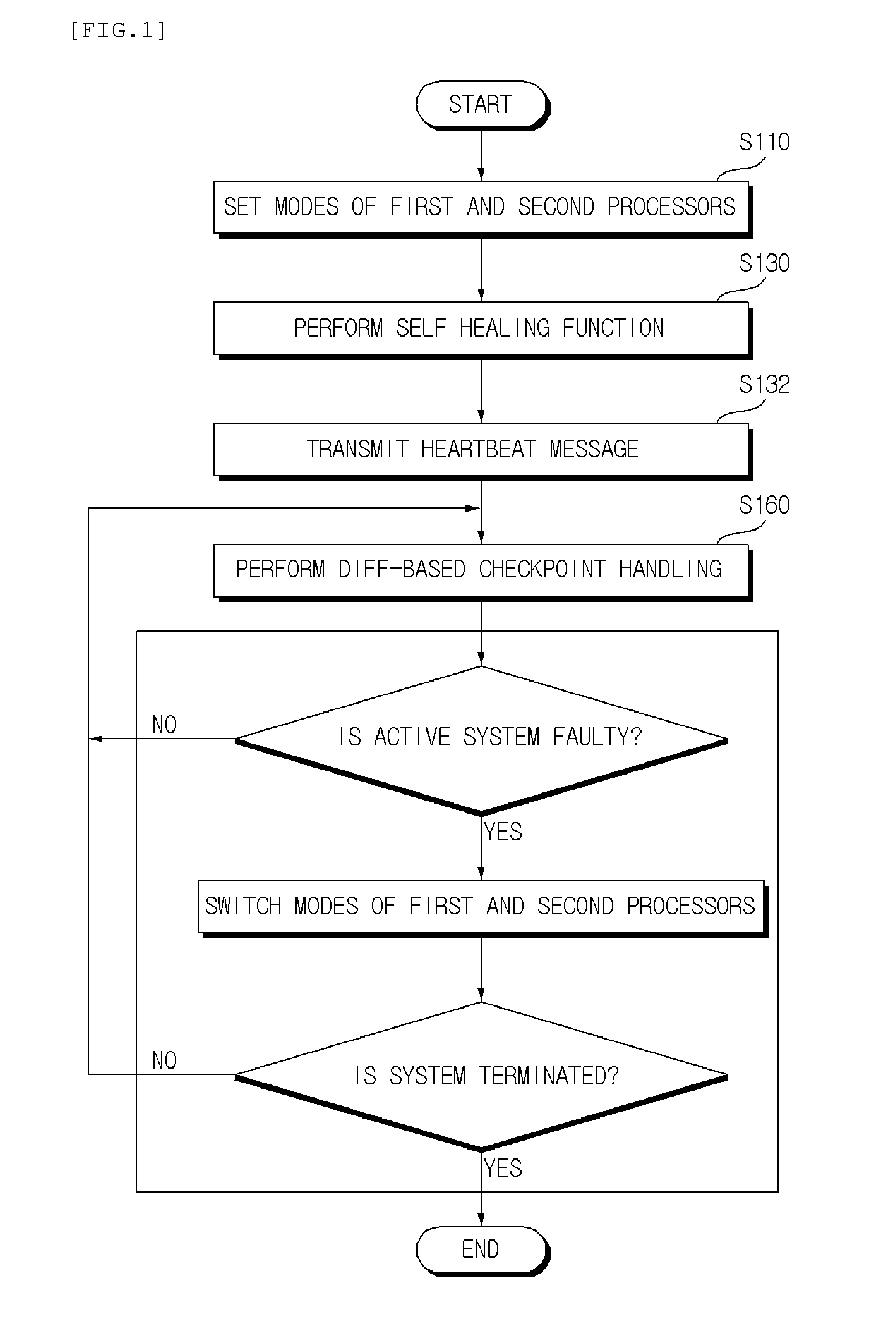

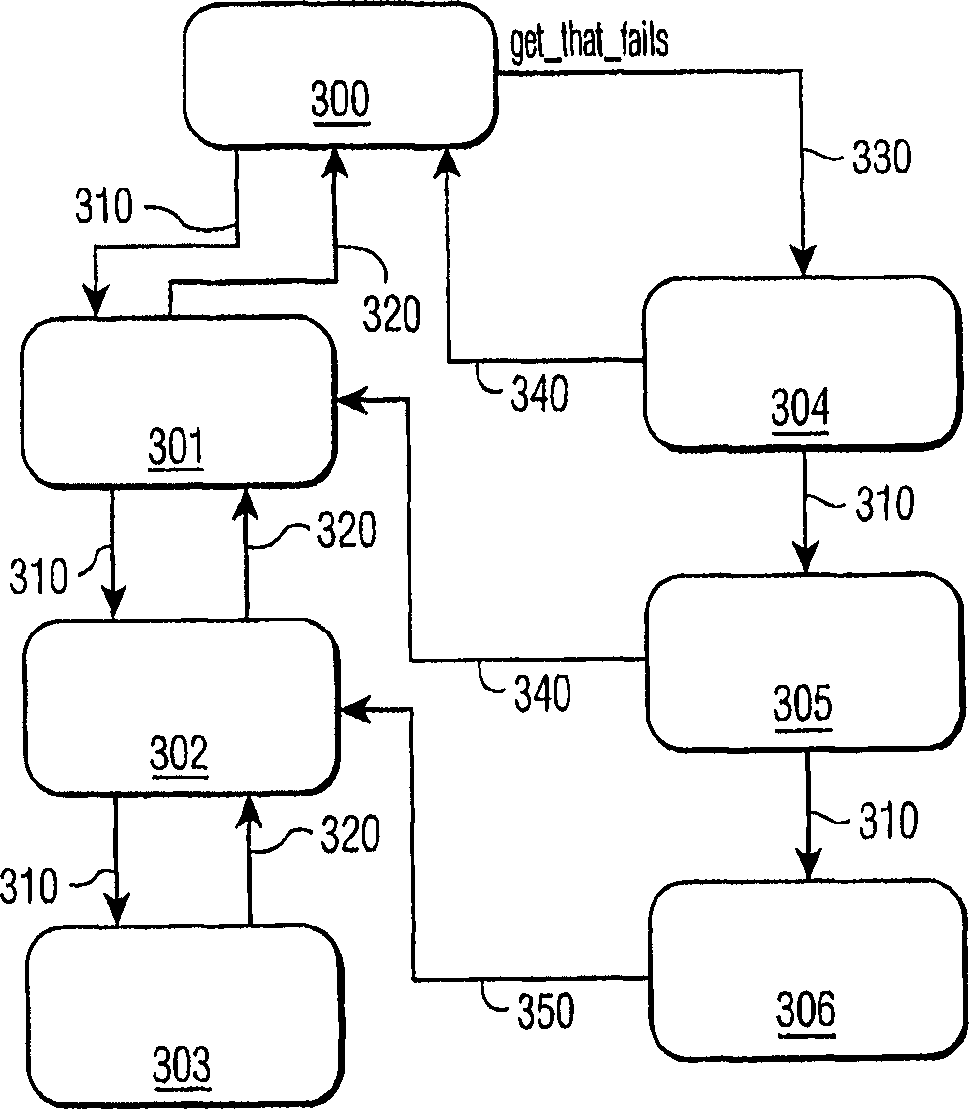

Operating method of software fault-tolerant handling system

InactiveUS20140089731A1Promote recoveryShorten the time intervalRedundant hardware error correctionSoftware faultSoftware fault tolerance

An exemplary embodiment provides an operating method of a software fault-tolerant handling system, and more particularly, to an operating method of a fault-tolerant handling system in which a fault recovery is easy in a fault-tolerant technology of copying with various faults which can occur in a computing device.

Owner:ELECTRONICS & TELECOMM RES INST

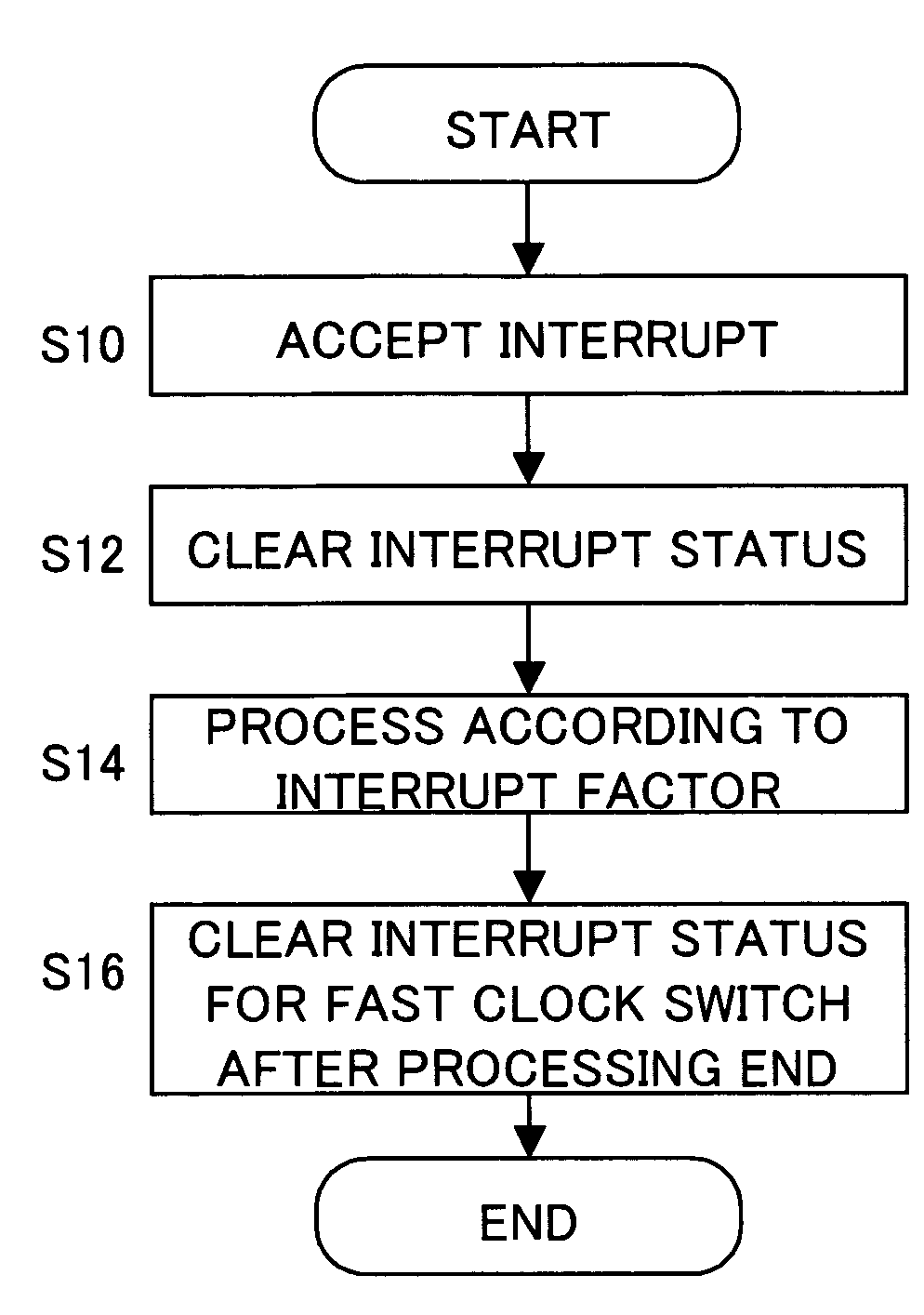

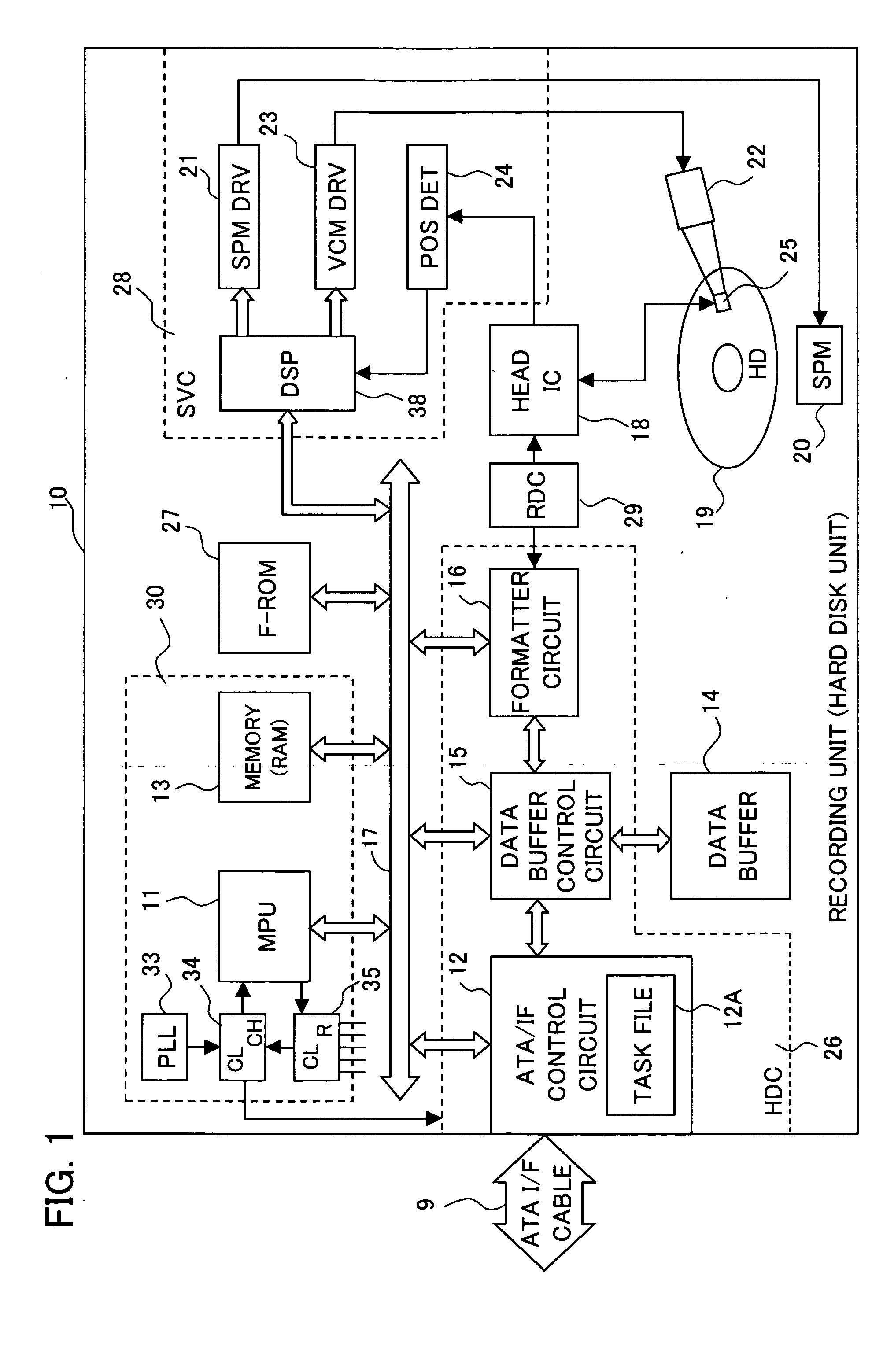

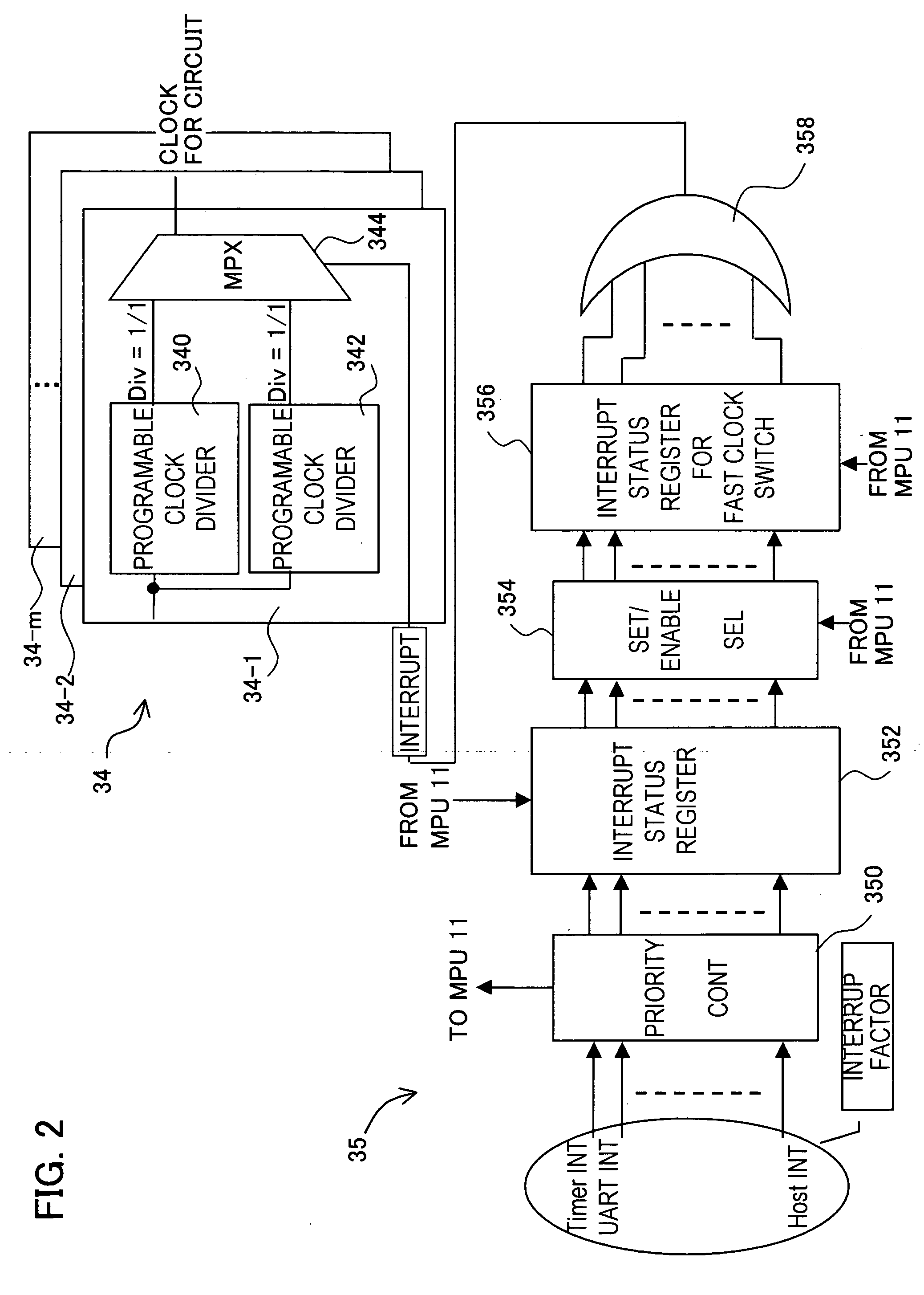

Information processing apparatus and media storage apparatus using the same

InactiveUS20050262374A1Reduce switching overheadReduce power consumptionEnergy efficient ICTVolume/mass flow measurementInformation processingTemporal information

An information processing apparatus switches a clock to reduce power consumption of an information processing unit. In order to reduce an overhead time in switching, the information processing apparatus includes an interrupt controller for generating a clock switch signal by accepting an interrupt to each information processing unit and a clock switch circuit for switching the clock to be supplied to the information processing unit. Using a hardware interrupt signal to switch the clock to be supplied to circuits, the circuit clock can be switched real time, and reduction of the power consumption can be achieved.

Owner:TOSHIBA STORAGE DEVICE CORP

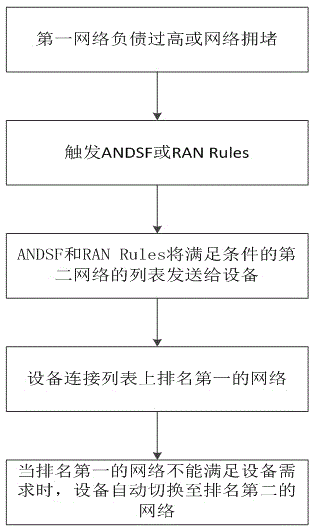

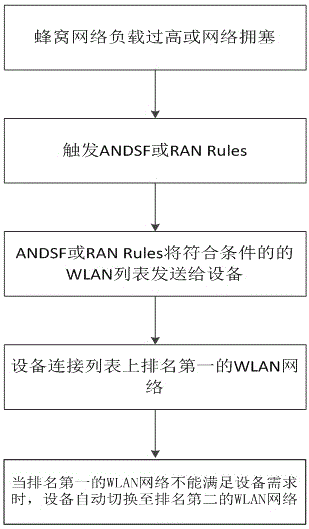

Network selection optimization method for heterogeneous network

InactiveCN105491627AReduce switching overheadReduce switching delayWireless communicationTraffic volumeCellular network

The invention provides a method for access network selection according to real-time network conditions, and relates to a network selection optimization method for a heterogeneous network. The heterogeneous network is a network comprising a cellular network and a WLAN, the cellular network is a first network, and the WLAN is a second network. When the service of the first network to which equipment joins is not satisfactory, according to a network list provided by ANDSF and RAN Rules, the equipment offloads the traffic from a top network, and when the load of the first network is too high, or the first network is congested, the equipment can timely switch to the second network. Through adoption of the method, a shortcoming that a better network cannot be selected timely is improved, the network performance is improved, and the resource utilization is maximized.

Owner:NANJING UNIV OF SCI & TECH

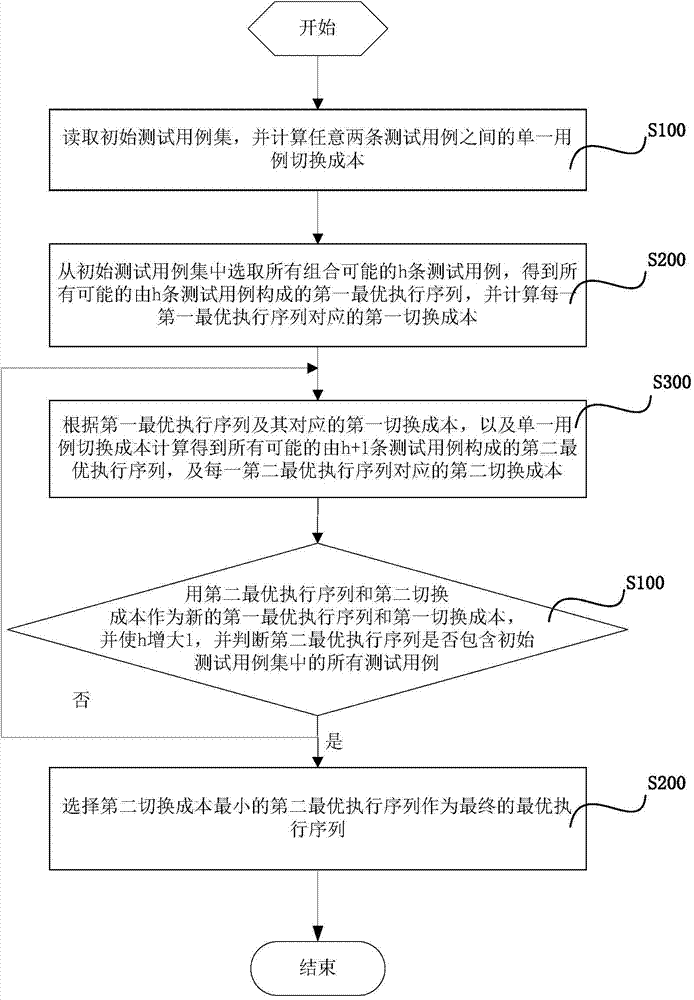

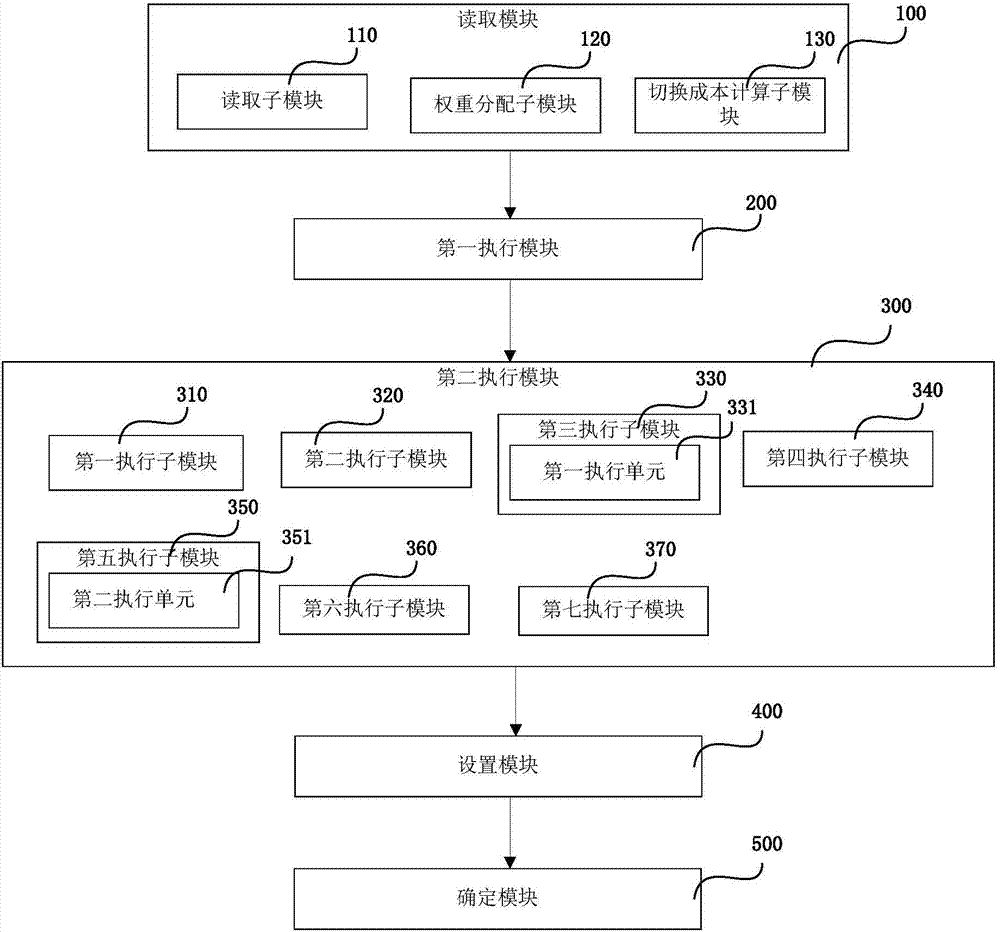

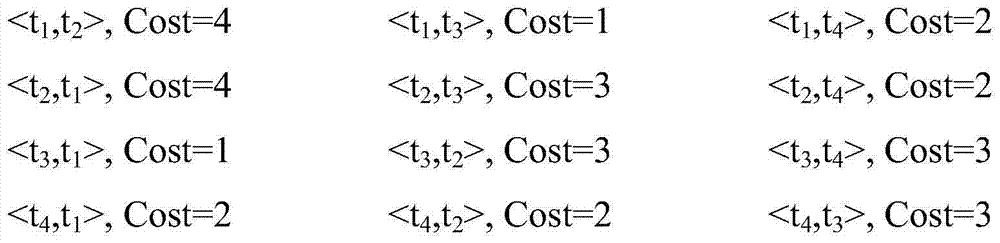

Method and system for determining execution sequence of test case suite

InactiveCN103577325AMinimal reconfiguration timesSmall test switching costsSoftware testing/debuggingComputer architectureTest suite

The invention discloses a method and a system for determining an execution sequence of a test case suite. The method comprises the steps as follows: the single case switching cost between any two test cases in an initial test case suite is calculated; h test cases with all combination possibilities are selected from the initial test case suite, and all the possible first optimum execution sequences and first switching costs which are formed by the h test cases are obtained; according to the first switching costs and the single case switching cost, all possible second optimum execution sequences and second switching costs which are formed by h+1 test cases are calculated and obtained; the second optimum execution sequences and the second switching costs are taken as the first optimum execution sequences and the first switching costs respectively, h is increased by 1, a previous step is returned until the second optimum execution sequences comprise all test cases; and a second optimum execution sequence with the smallest second switching cost is taken as the optimum execution sequence, so that minimum reconfiguration times of parameters in a test can be realized, and the expenditure is reduced.

Owner:NANJING UNIV

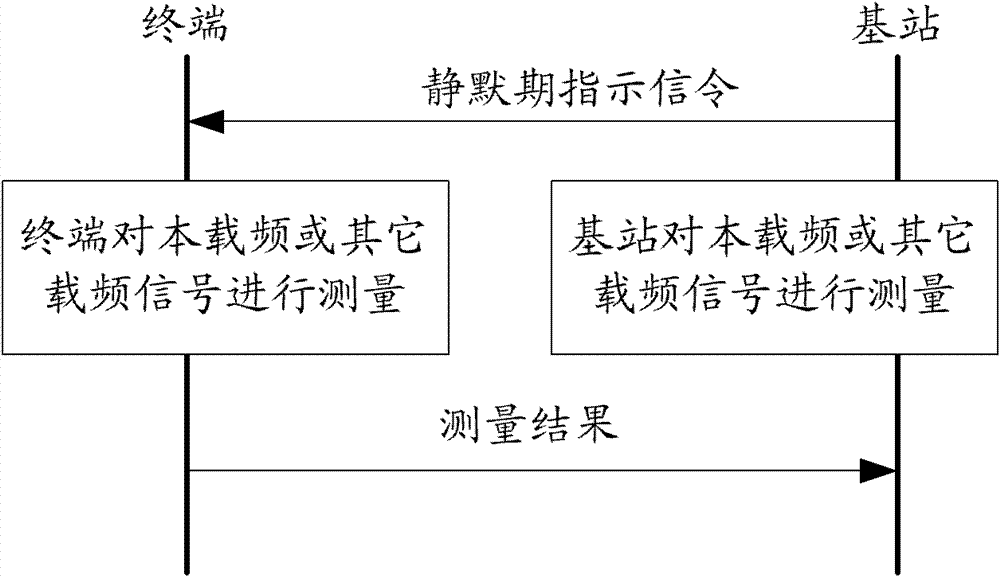

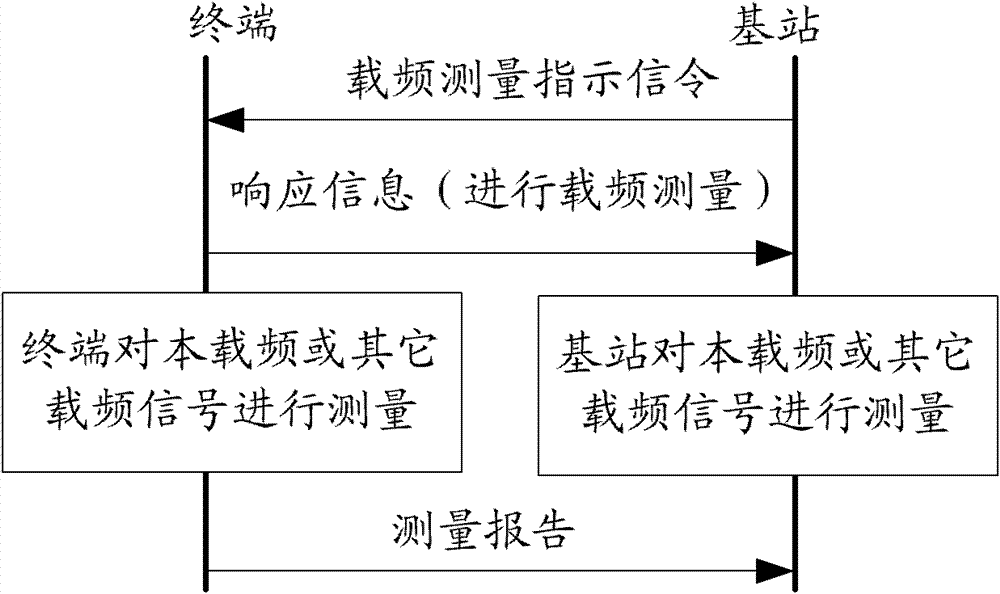

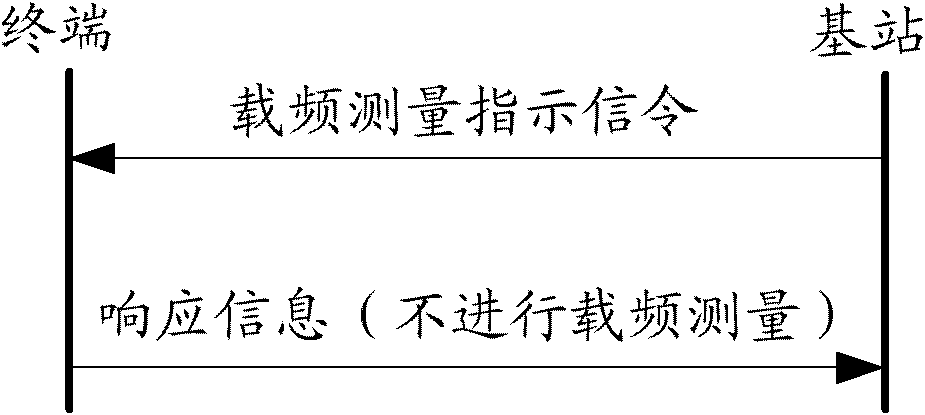

Method and system for measuring carrier frequency information in wireless communication system

InactiveCN102781030AImprove communication qualityReduce switching overheadWireless communicationQuiet periodBase station

The invention discloses three schemes for a method for measuring carrier frequency information in a wireless communication system. One of the three schemes includes that a base station sends out a quiet period indication signaling at a downlink channel, and a terminal which successfully receives the quiet period indication signaling sent out by the base station measures the carrier frequency information at a first time-frequency resource and feeds back a measurement result of the carrier frequency information to the base station at a second time-frequency resource. The invention also discloses three schemes for a system for measuring the carrier frequency information in the wireless communication system. One of the three schemes includes that a carrier frequency information measurement and feedback unit is used on the condition that the base station sends out the quiet period indication signaling at the downlink channel, the terminal which successfully receives the quiet period indication signaling sent out by the base station measures the carrier frequency information at the first time-frequency resource and feeds back the measurement result of the carrier frequency information to the base station at the second time-frequency resource. By the aid of the method and the system, the base station can know channel quality situations of all terminals on the current carrier frequency.

Owner:ZTE CORP

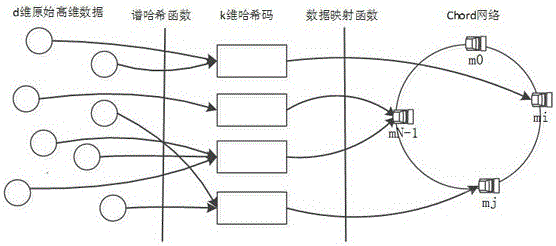

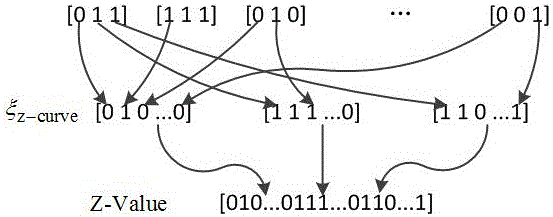

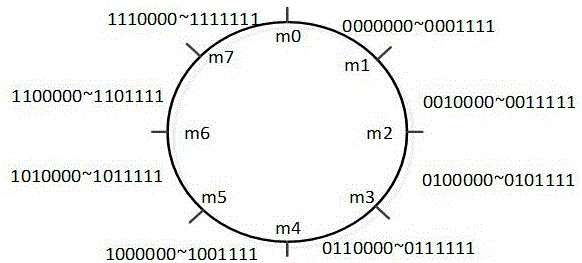

Neighbor storage method based on data mapping algorithm

InactiveCN106020724AAvoid memory-intensive problemsNeighbor storage implementationInput/output to record carriersRouting tableNear neighbor

The invention discloses a neighbor storage method based on a data mapping algorithm. The neighbor storage method comprises the following steps: utilizing a spectral Hash algorithm to carry out Hash mapping on a high-dimensional data sample to obtain the k-dimensional binary system Hash code of each high-dimensional data item; utilizing a Z-curve method to convert the k-dimensional binary system Hash code of each high-dimensional data item to obtain the Z-Value value of each high-dimensional data item; utilizing a Chord method to construct a distributed node network, wherein the distributed node network consists of a Chord ring and m node servers distributed on the Chord ring; mapping and storing the Z-Value value of each high-dimensional data item into the node servers, and updating a node server routing table which obtains a stored node; and according to the high-dimensional data item to be queried, determining the Z-Value value of the high-dimensional data item to be queried, and searching the node server routing table of each node clockwise to find the node server where the high-dimensional data item is positioned. The neighbor storage method can realize neighbor storage and also can lower the switching expenditure of the node server, improve query accuracy, improve the operation efficiency of the whole system and lower network bandwidth when dependence query is carried out.

Owner:NANJING UNIV OF POSTS & TELECOMM

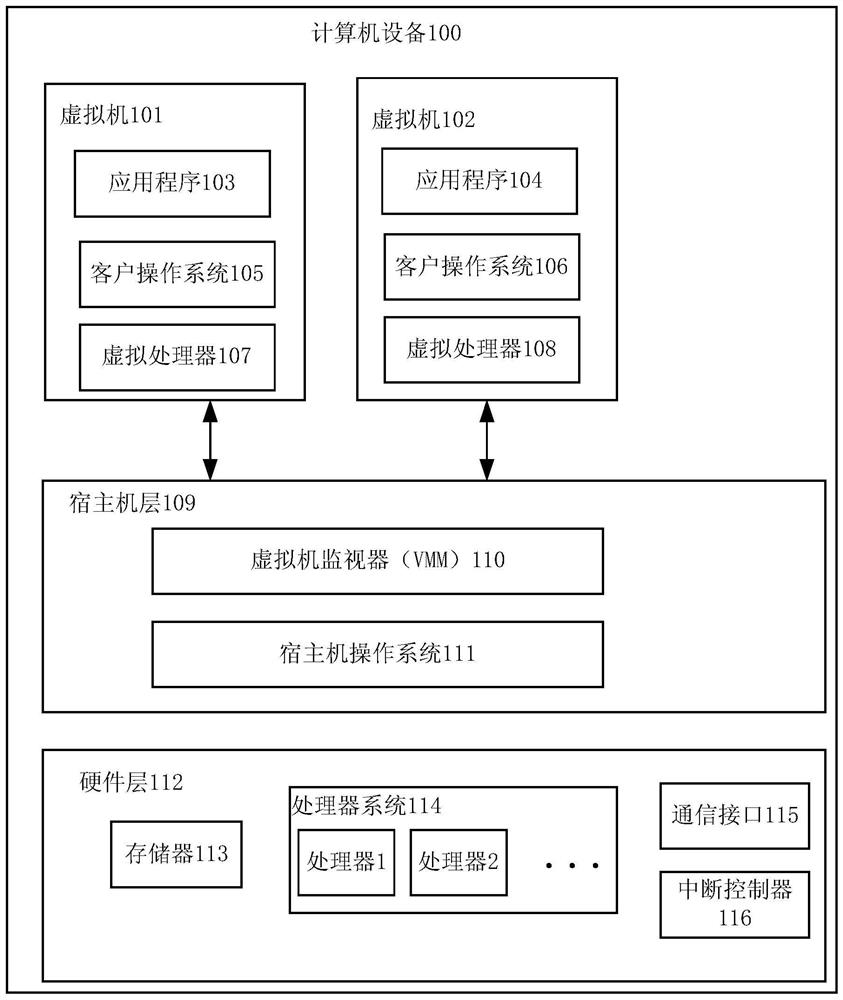

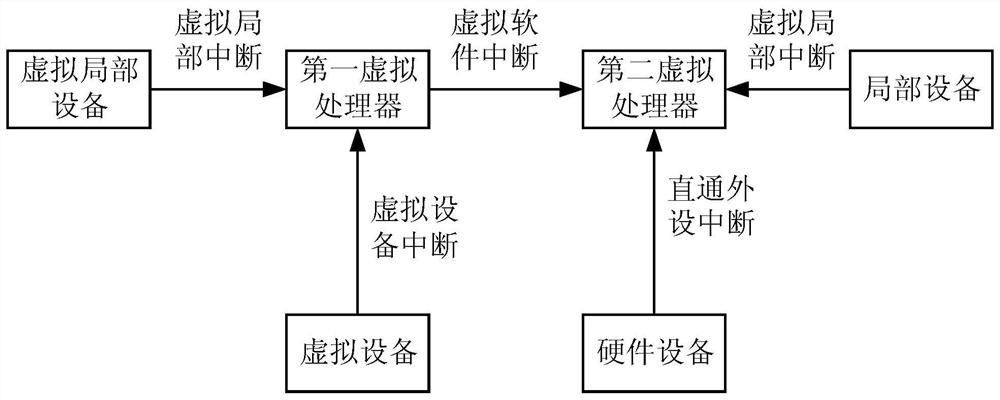

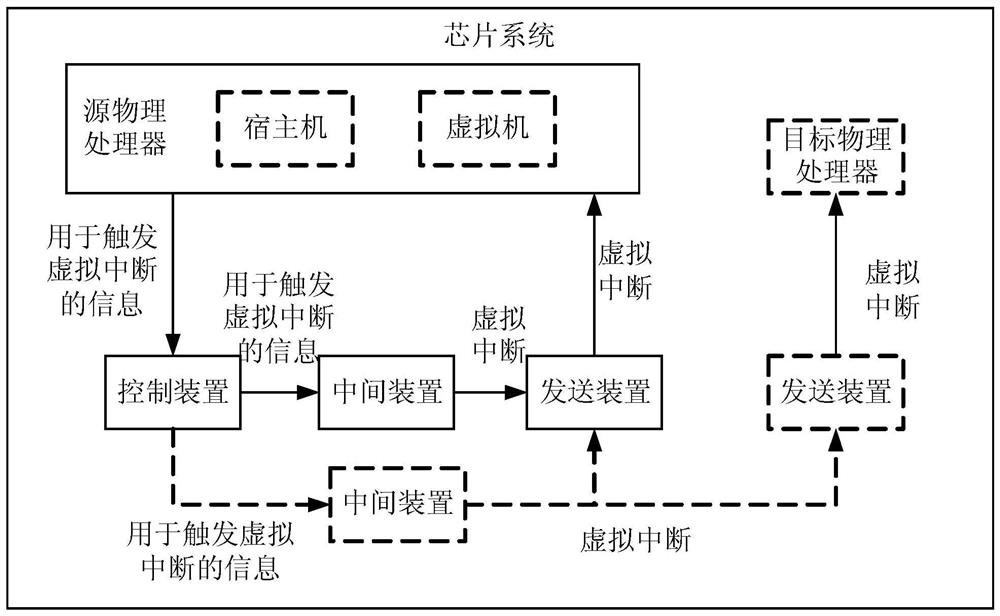

Chip system, method for processing virtual interrupt and corresponding device

PendingCN114371907AImprove performanceReduce switching overheadSoftware simulation/interpretation/emulationVirtualizationInformation control

The invention discloses a chip system which is applied to the technical field of virtualization. The chip system comprises a source physical processor, a control device, an intermediate device, a sending device and a target physical processor, a host machine or a virtual machine runs on the source physical processor, and a register in the control device receives information written by the host machine or the virtual machine and used for triggering virtual interruption. The control device sends the information used for triggering the virtual interrupt in the register to the intermediate device; the intermediate device triggers the virtual interrupt according to the information for triggering the virtual interrupt and sends the virtual interrupt to the sending device; the transmission device transmits the virtual interrupt to the target physical processor. The scheme is used for reducing the overhead of switching from the virtual machine to the host machine or from the user mode of the host machine to the kernel mode of the host machine due to virtual interruption.

Owner:HUAWEI TECH CO LTD

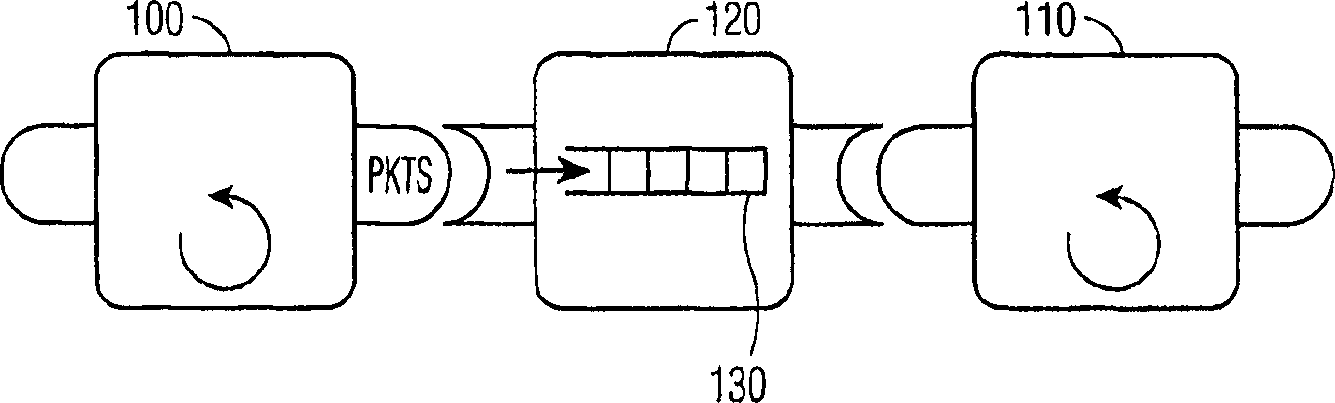

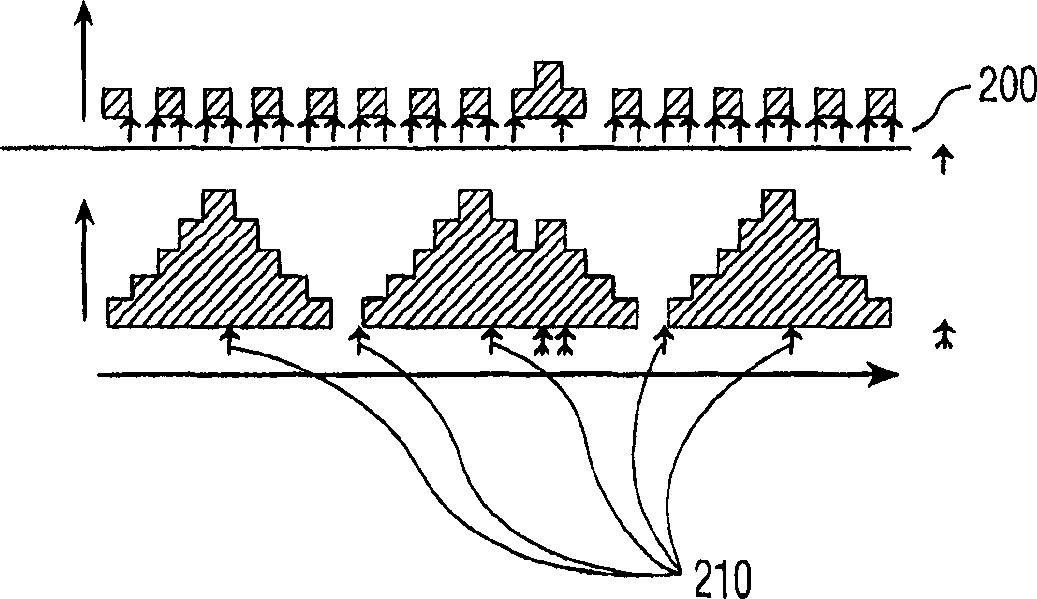

Threshold on unblocking a processing node that is blocked due to data packet passing

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

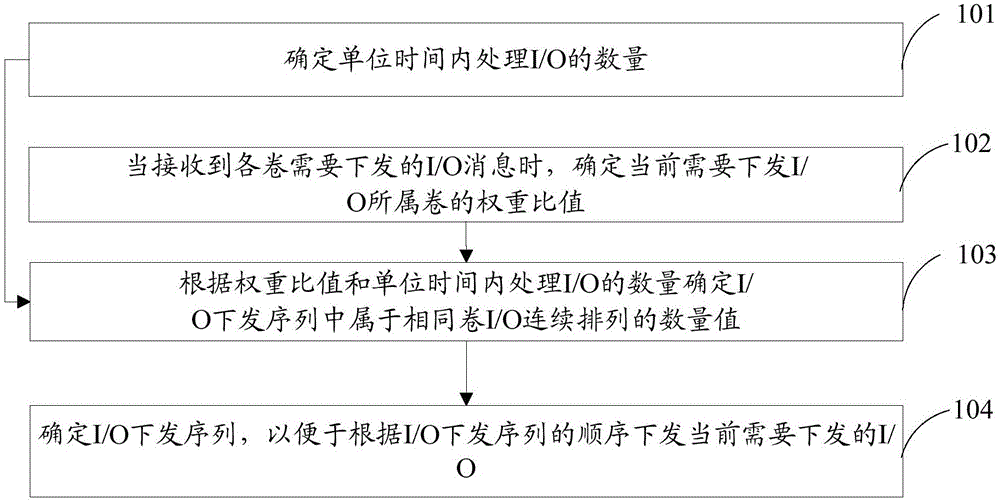

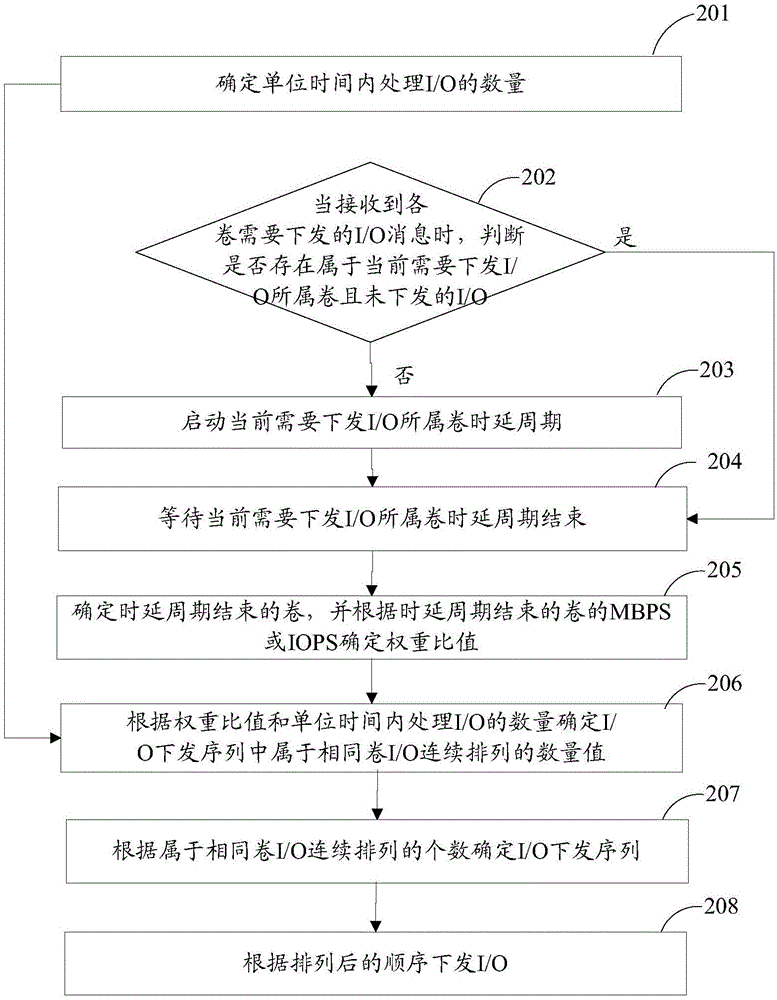

Realization method and device of storage QoS (Quality of Service) control strategy

ActiveCN105159620AAvoid crossingReduce switching timesInput/output to record carriersQuality of serviceComputer architecture

The embodiment of the invention discloses a realization method and device of a storage QoS (Quality of Service) control strategy, relates to the technical field of storage and can solve the problems that the processing efficiency of a storage system is lowered since the QoS control strategy in the prior art is issued by taking single I / O (Input / Output) of each volume as granular ranking. The method comprises the following steps: determining an amount of I / O processed within unit time; when the I / O which needs to be issued by each volume, determining a weight ratio of the volume to which the I / O which needs to be issued at present; according to the weight ratio and the amount of the I / O processed within the unit time, determining an amount value of the I / O which is continuously arranged and belongs to the same volume in an I / O issuing sequence; and determining the I / O issuing sequence to conveniently issue the I / O which needs to be issued at present according to the order of the I / O issuing sequence, wherein the number of the I / O which is continuously arranged and belongs to the same volume in the I / O issuing sequence is equal to the amount value.

Owner:CHENGDU HUAWEI TECH

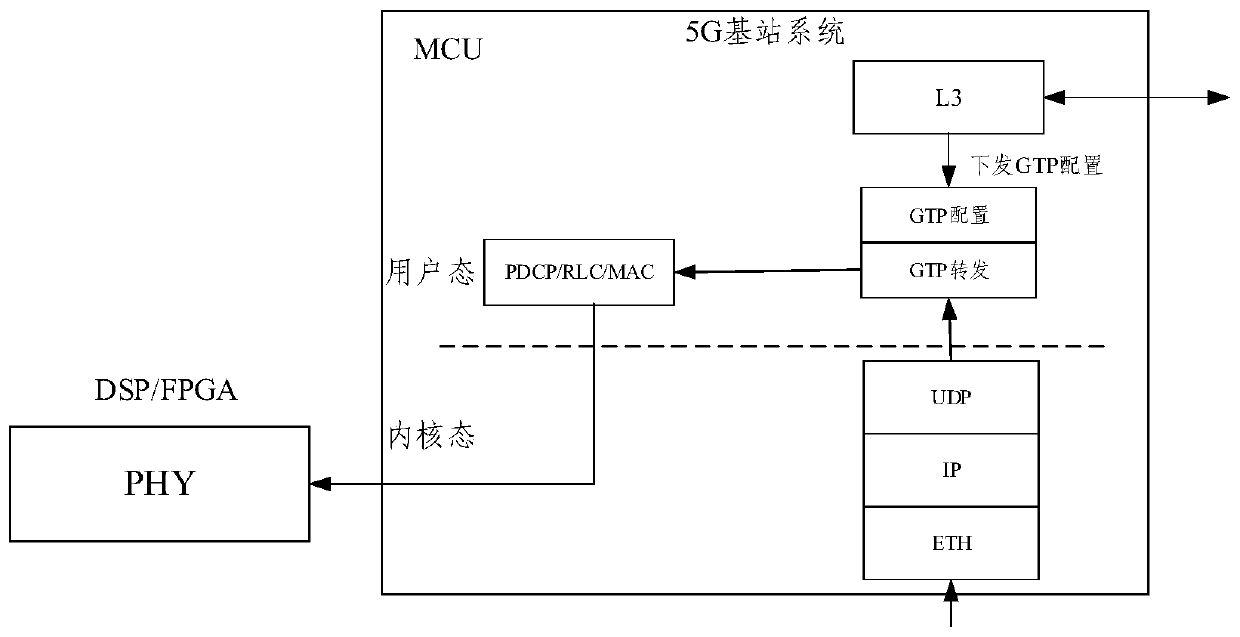

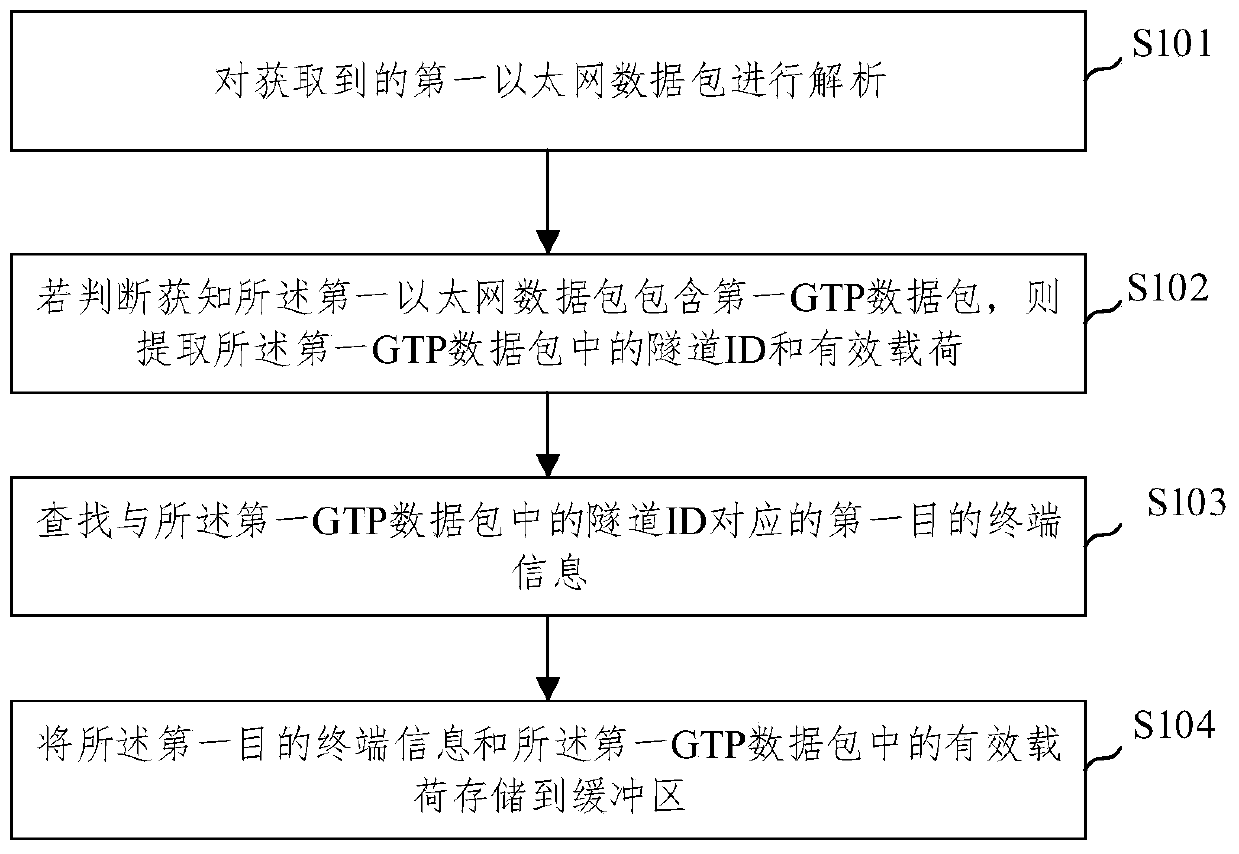

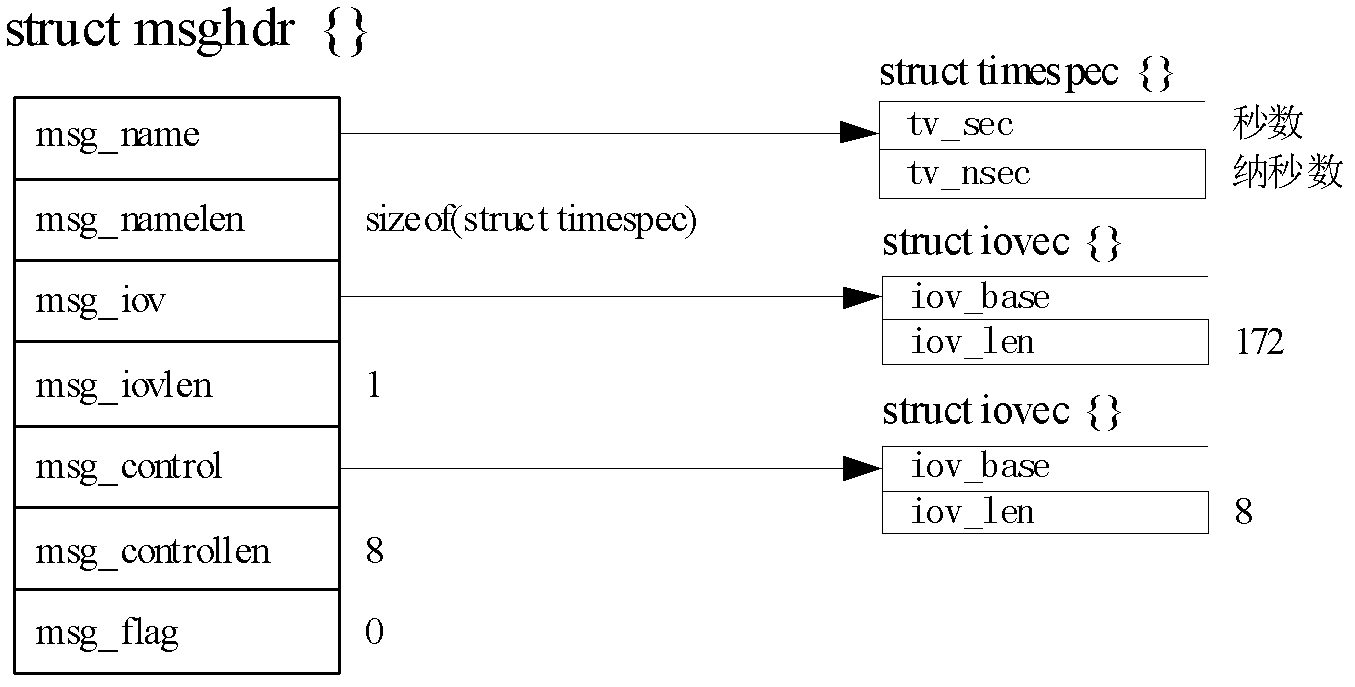

GTP downlink data transmission optimization method and device

ActiveCN110167197AImprove processing efficiencyReduce switching overheadConnection managementData switching networksNetwork packetGNU/Linux

The embodiment of the invention provides a GTP downlink data transmission optimization method and device. The method comprises the steps of analyzing an obtained first Ethernet data packet; if judgingthat the first Ethernet data packet comprises a first GTP data packet, extracting a tunnel ID and a payload in the first GTP data packet; searching first destination terminal information corresponding to the tunnel ID in the first GTP data packet; and storing the first destination terminal information and the payload in the first GTP data packet in a buffer area. According to the GTP downlink data transmission optimization method and device provided by the embodiment of the invention, the IP protocol stack of the Linux system kernel is logically avoided, and the switching overhead of the kernel mode and the user mode is reduced. After the Ethernet data packet is received, the GTP data is directly processed in the Linux system kernel mode, so that the GTP data processing flow is greatly simplified, the data processing efficiency is improved, and the data transmission rate is further improved.

Owner:WUHAN HONGXIN TELECOMM TECH CO LTD

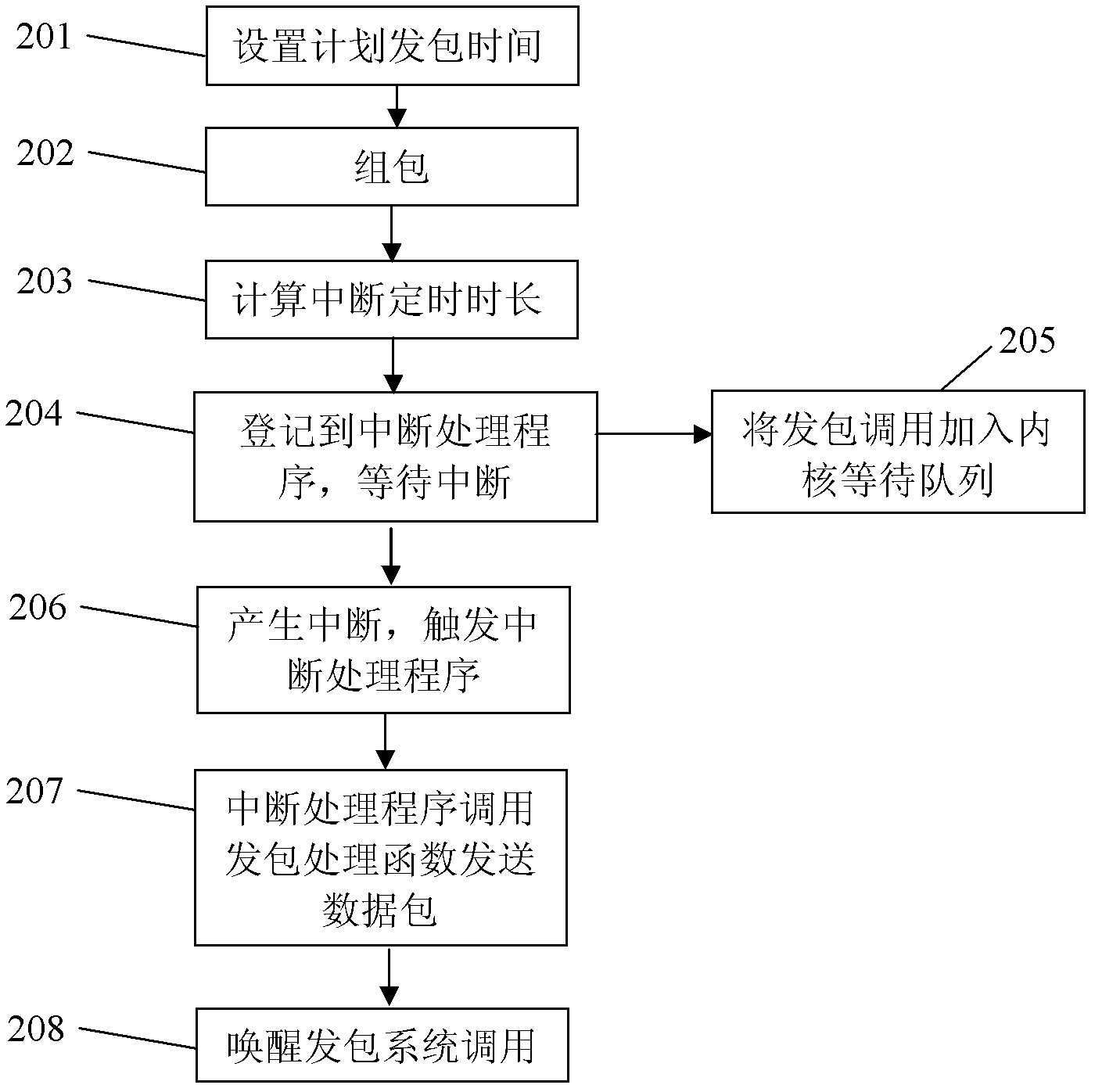

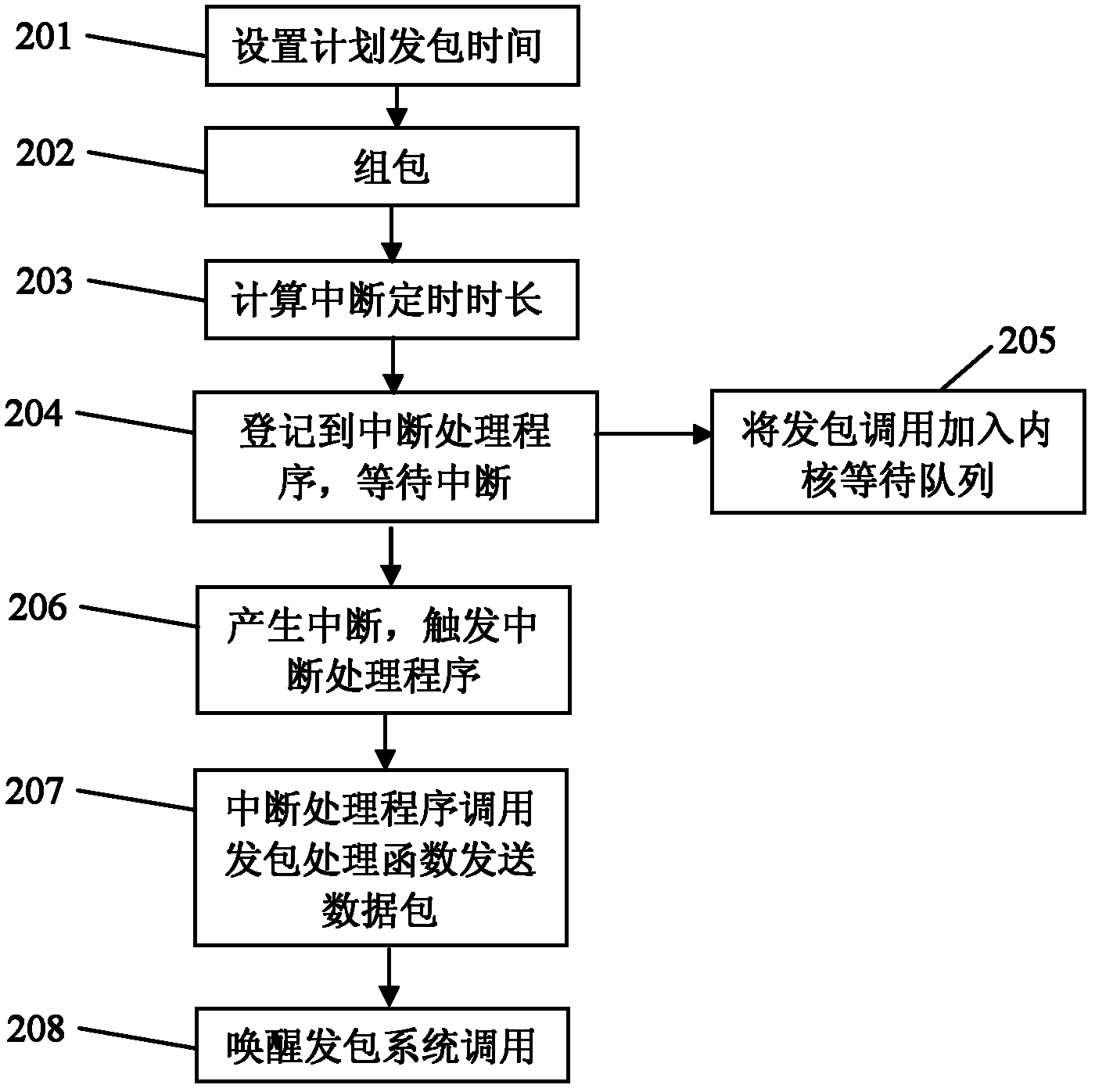

Method for realizing quasi-real-time transmission of media data

InactiveCN102325136AAchieve timingReduce context switching overheadProgram initiation/switchingTransmissionTime delaysProgram planning

The invention relates to a method for realizing quasi-real-time transmission of media data. The method comprises the following steps of: firstly, setting planned pocket transmitting time and assembling media data to be assembled to obtain a data packet to be transmitted; then calculating interrupted fixed time length according to the planned pocket transmitting time and registering a pocket transmitting function and a data pocket to be transmitted to an interruption processing program, waiting for interruption and calling and adding the system into a kernel waiting queue; after interrupted fixed time is expired, generating interruption, triggering the interruption processing program to finish the transmission of the data pocket to be transmitted; and after transmission is finished, awakening a corresponding pocket transmitting system in the kernel waiting queue for calling. By using the method, the context switching cost caused by working space switching is reduced, the influence on time delay caused by system dispatching is lowered and the quasi-real-time transmission of the data pocket is realized.

Owner:ZTE CORP

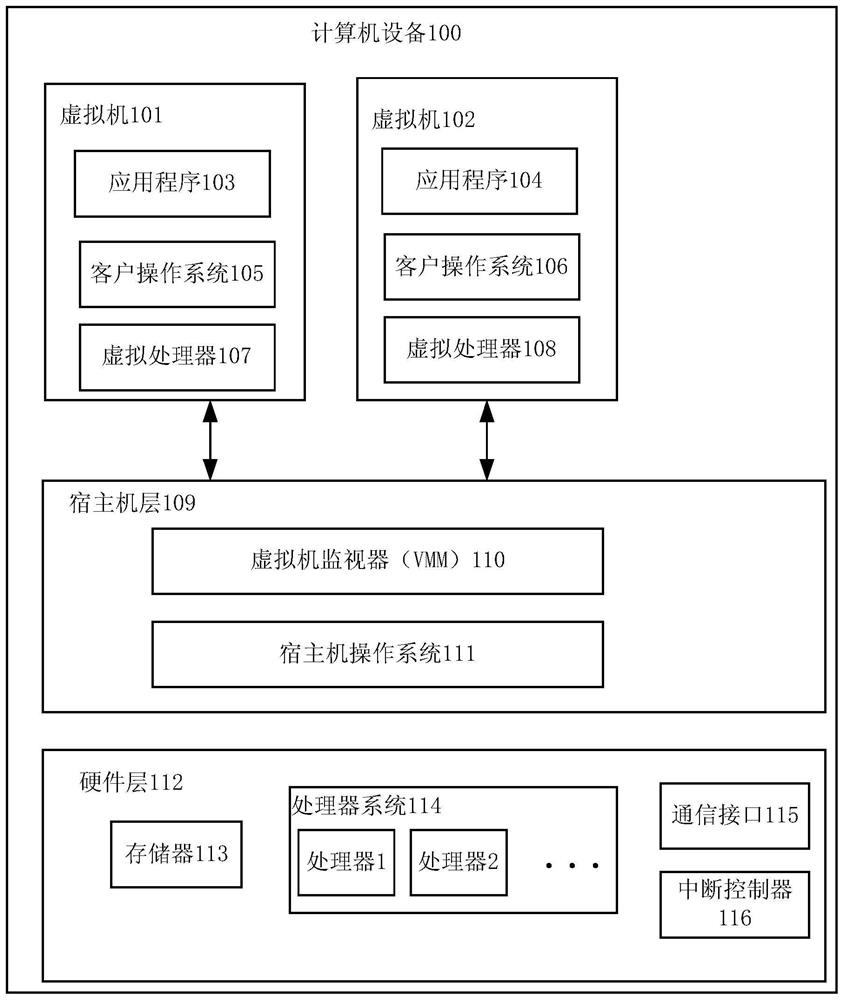

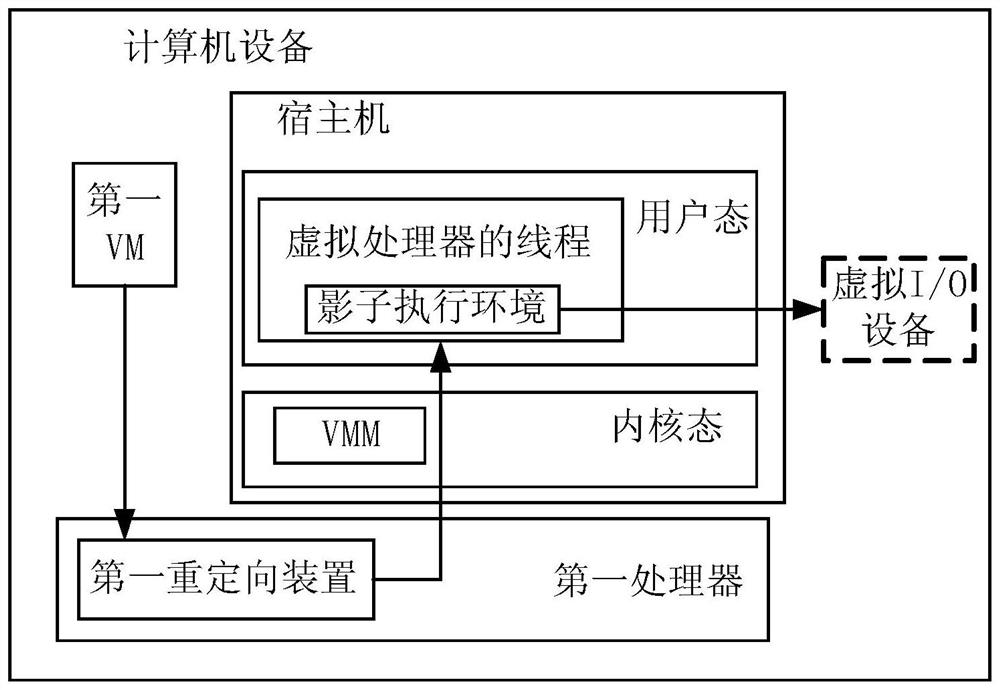

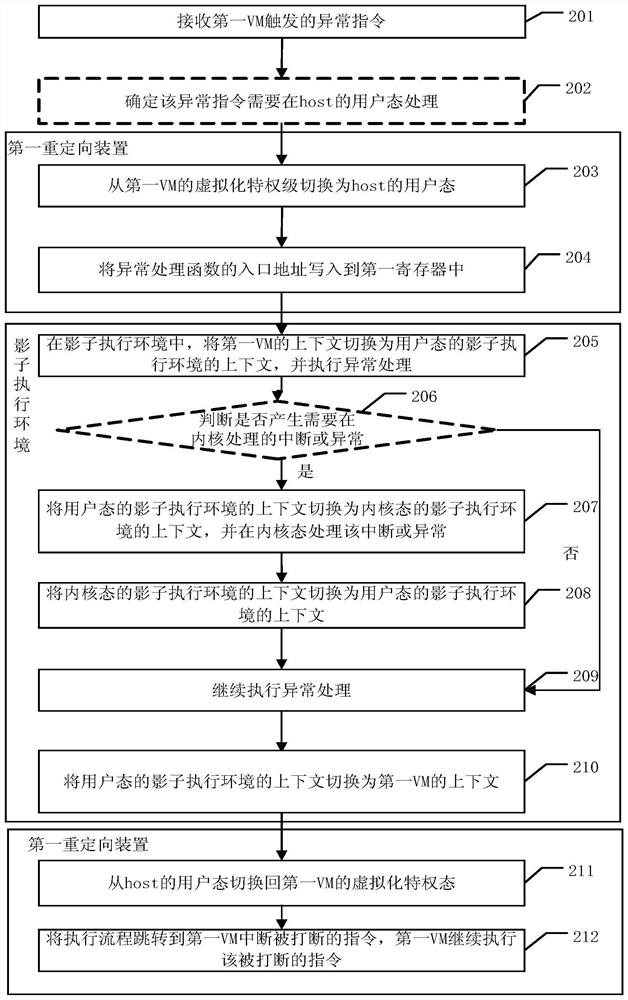

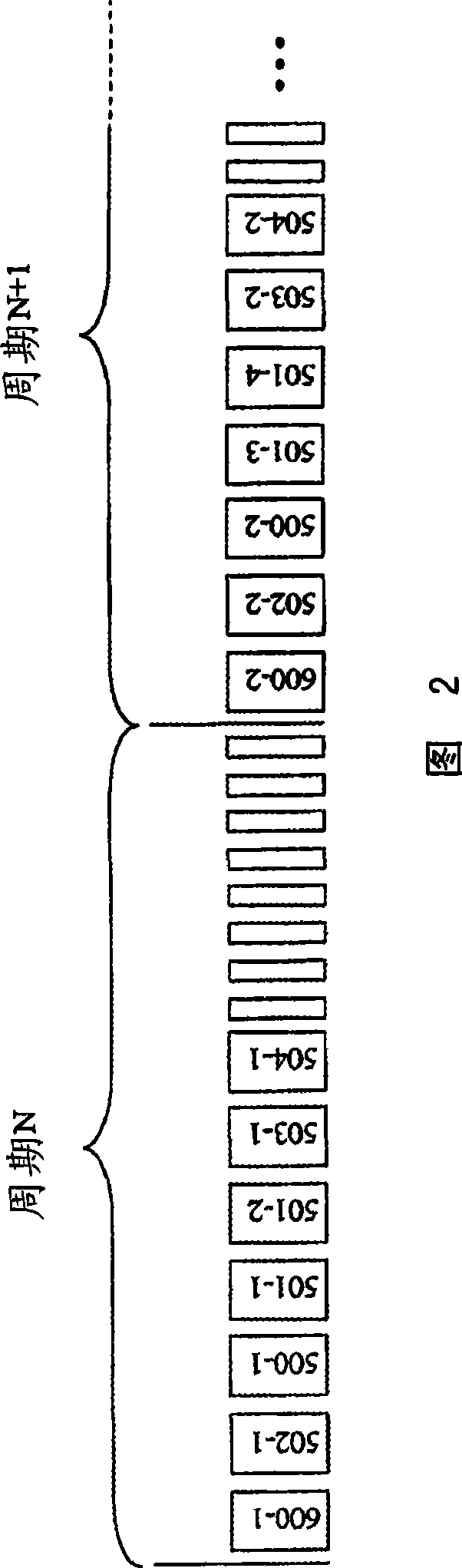

Computer equipment, exception processing method and interrupt processing method

PendingCN114077379AImprove performanceReduce switching overheadInput/output to record carriersResource allocationVirtualizationProcessing

According to the computer equipment, in the virtualized I / O process, privilege level switching in the synchronous processing process is achieved through a redirection device in a processor, the virtualized privilege level of a VM is directly switched to the user mode of a host, and corresponding exception processing is executed. In the asynchronous processing process, the asynchronous interrupt request transmission can be realized through the virtual event notification device and the interrupt controller in the processor without the kernel mode of the host. According to the method, the switching overhead is reduced in the synchronous processing process or the asynchronous processing process, and the performance of the computer equipment is improved.

Owner:HUAWEI TECH CO LTD

Method and system for the dynamic allocation of resources

InactiveCN101370691AIncrease usage intensityConnection limitNetwork traffic/resource managementHybrid switching systemsCommunications systemStation

Owner:ROBERT BOSCH GMBH

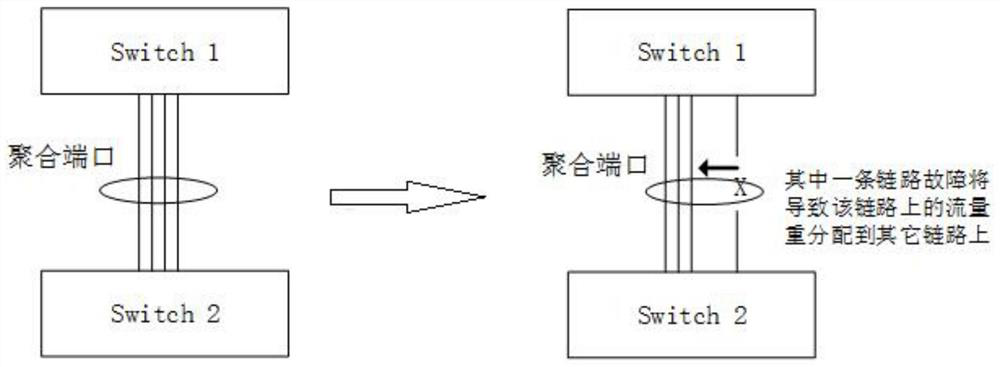

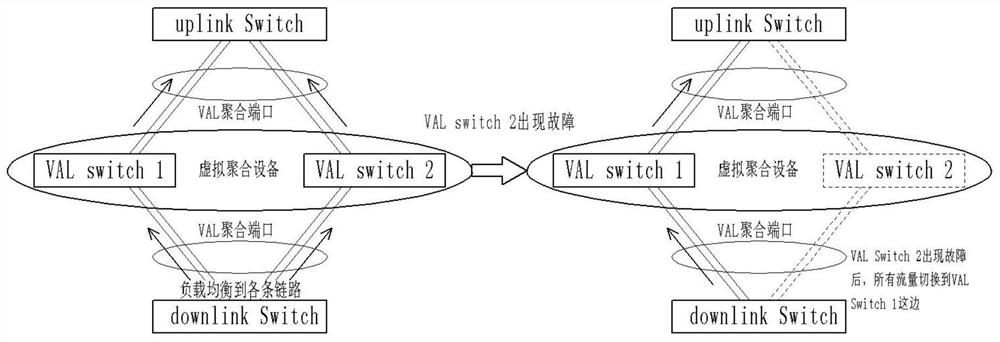

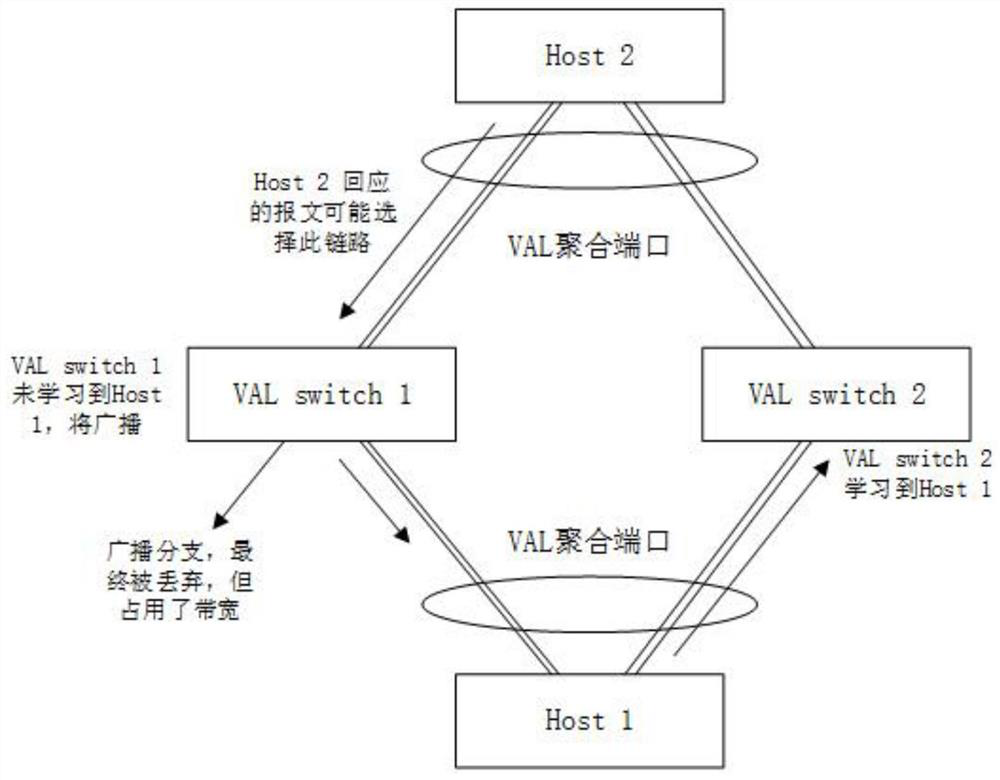

Local forwarding system for cross-device link aggregation

ActiveCN111884922ASimplify processing logicImprove developmentData switching networksHigh level techniquesLocal learningLink aggregation

The invention discloses a local forwarding system for cross-device link aggregation, which belongs to the technical field of link aggregation, and comprises a local forwarding system establishment stage and a local forwarding stage, and is characterized in that in the local forwarding system establishment stage, a VP mapping table is firstly established; then, the message sent by the host reachesany VAL device to carry out local learning; redirection processing is performed on the message after local learning, the message is redirectioned to an INT port, and the message is sent to an oppositeterminal; and then learning is carried out based on a VP port converted from a VLAN ID field in the VAL TAG, and after the forwarding table entry is synchronously learned, forwarding messages arriving at any VAL device subsequently are forwarded through local table look-up. The method is simple in software processing logic, easy to develop, short in period, low in requirement for platforms and resources, low in influence and free of software maintenance of MAC address forwarding tables, packaging / de-packaging and other operations.

Owner:芯河半导体科技(无锡)有限公司

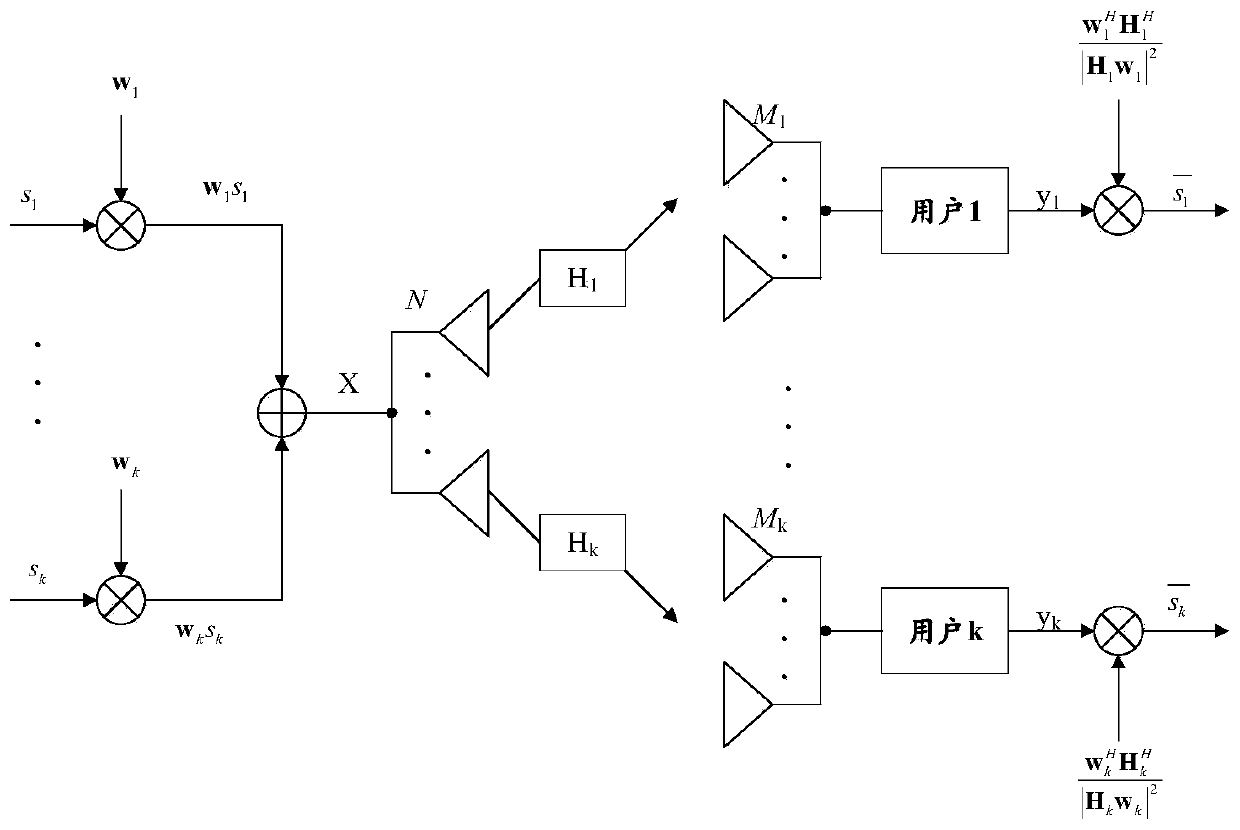

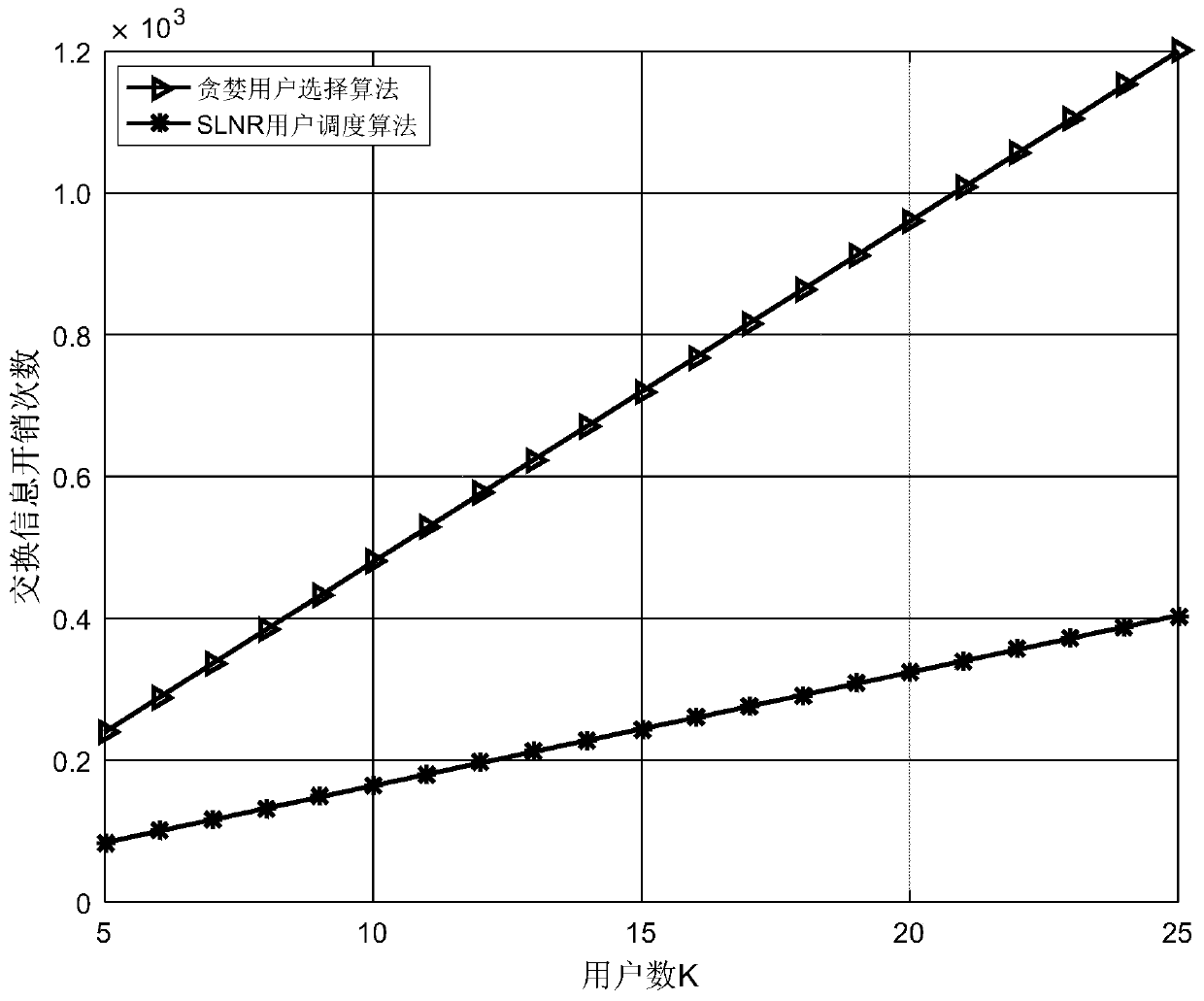

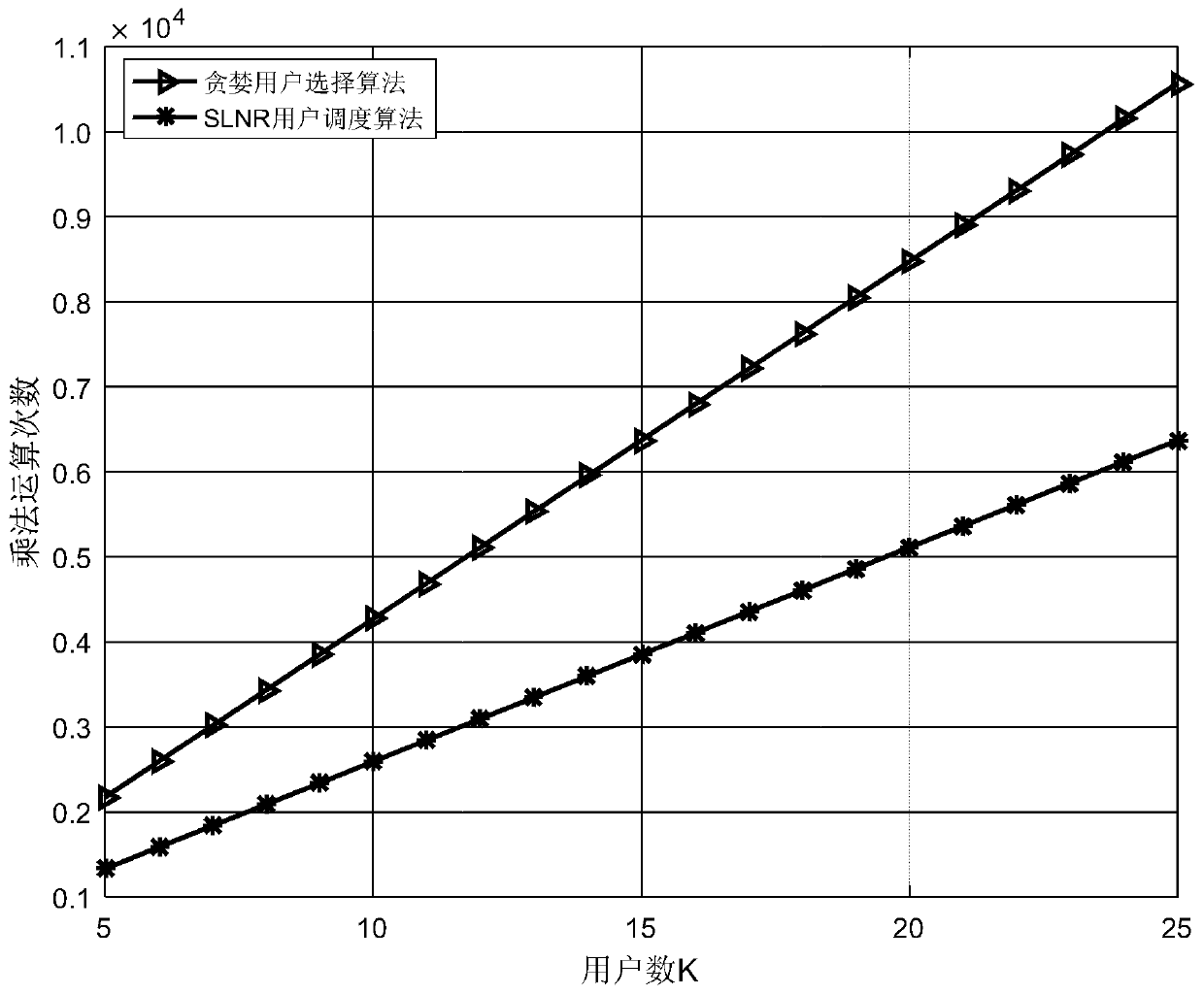

MU-MIMO system user scheduling method based on signal-to-leakage-and-noise ratio

ActiveCN110212957AReduce complexityReduce switching overheadRadio transmissionChannel state informationComputation complexity

The invention discloses an MU-MIMO system user scheduling method based on a signal-to-leakage-and-noise ratio, relates to the field of mobile communication. A user scheduling algorithm based on an SLNR is used to maximize the SLNR of the single-antenna receiving system. Each base station calculates a weighted sum rate and then transmits a value to a central unit, and then the central unit determines an optimal user by comparing scalar feedback from the base stations. According to the method, the greedy user search algorithm is improved, the channel state information (CSI) exchange overhead isreduced, the calculation complexity is effectively reduced, and the performance loss of the signal-to-noise ratio can be almost ignored.

Owner:GUANGXI UNIV

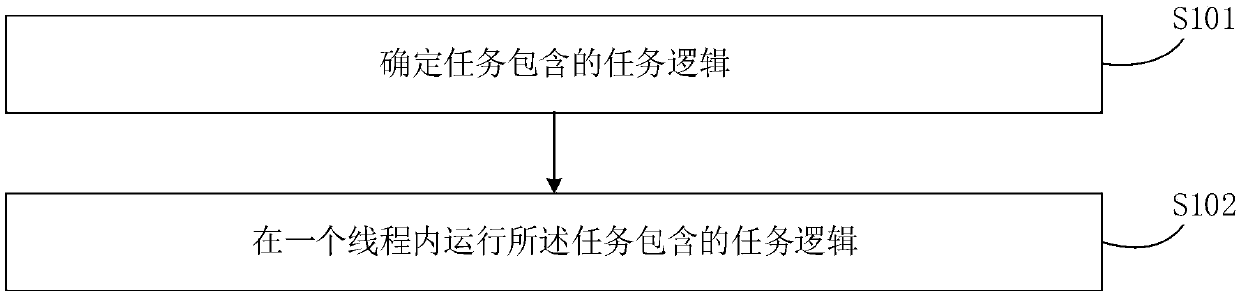

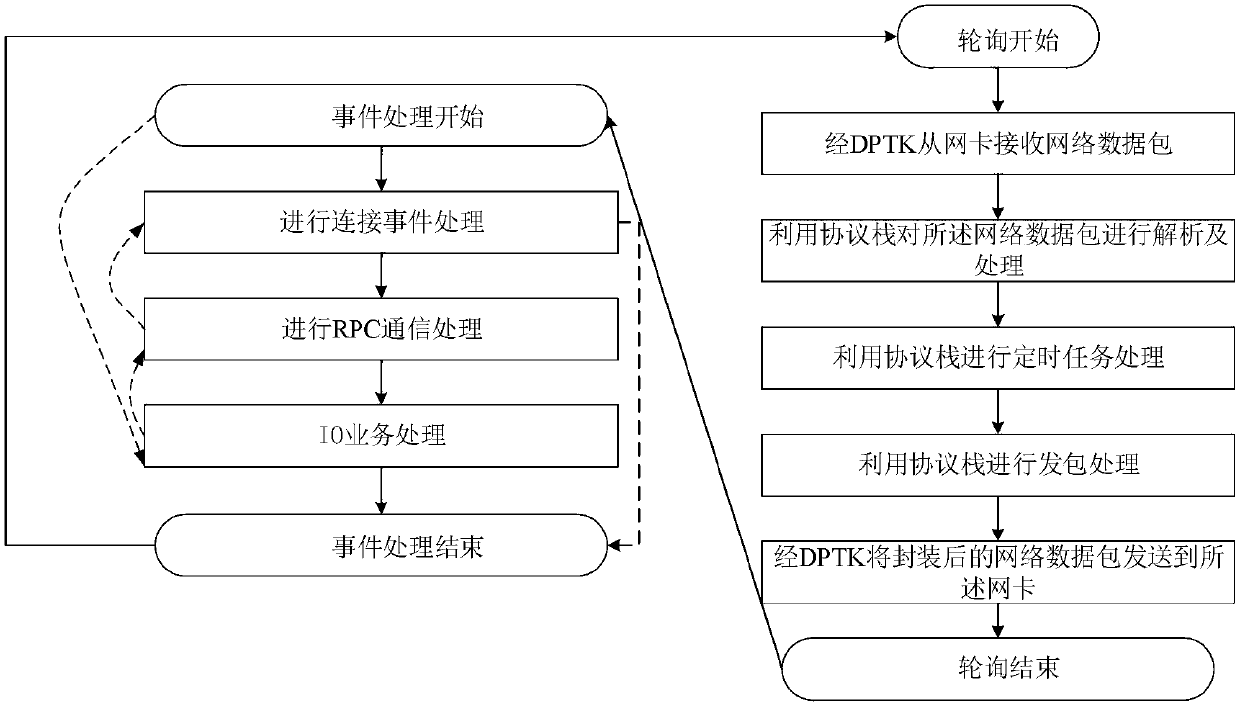

Task logic processing method, device and equipment

ActiveCN110798366AReduce switching overheadReduce processing delayProgram initiation/switchingData switching networksComputer hardwareComputer architecture

The invention discloses a task logic processing method. The method comprises: determining task logic contained in a task; and running the task logic contained in the task in one thread. By the adoption of the method, the problems that in the prior art, thread switching expenditure is increased, and processing time delay is increased are solved.

Owner:ALIBABA CLOUD COMPUTING LTD

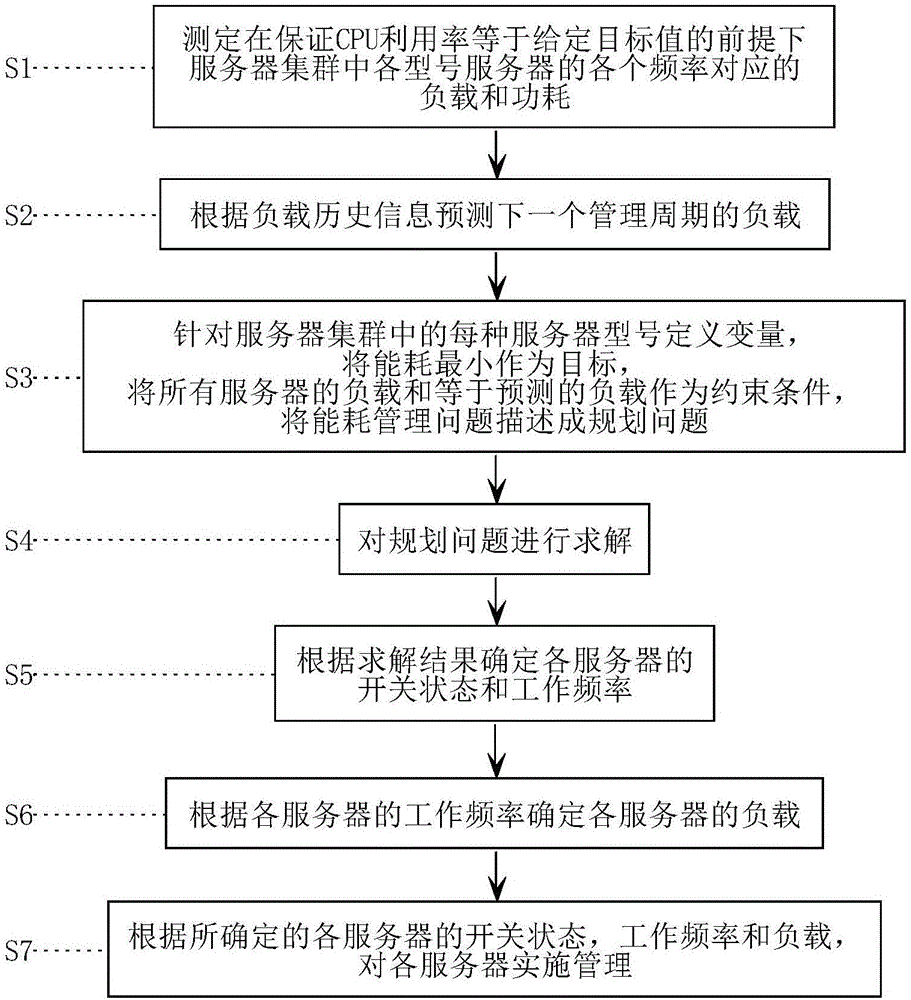

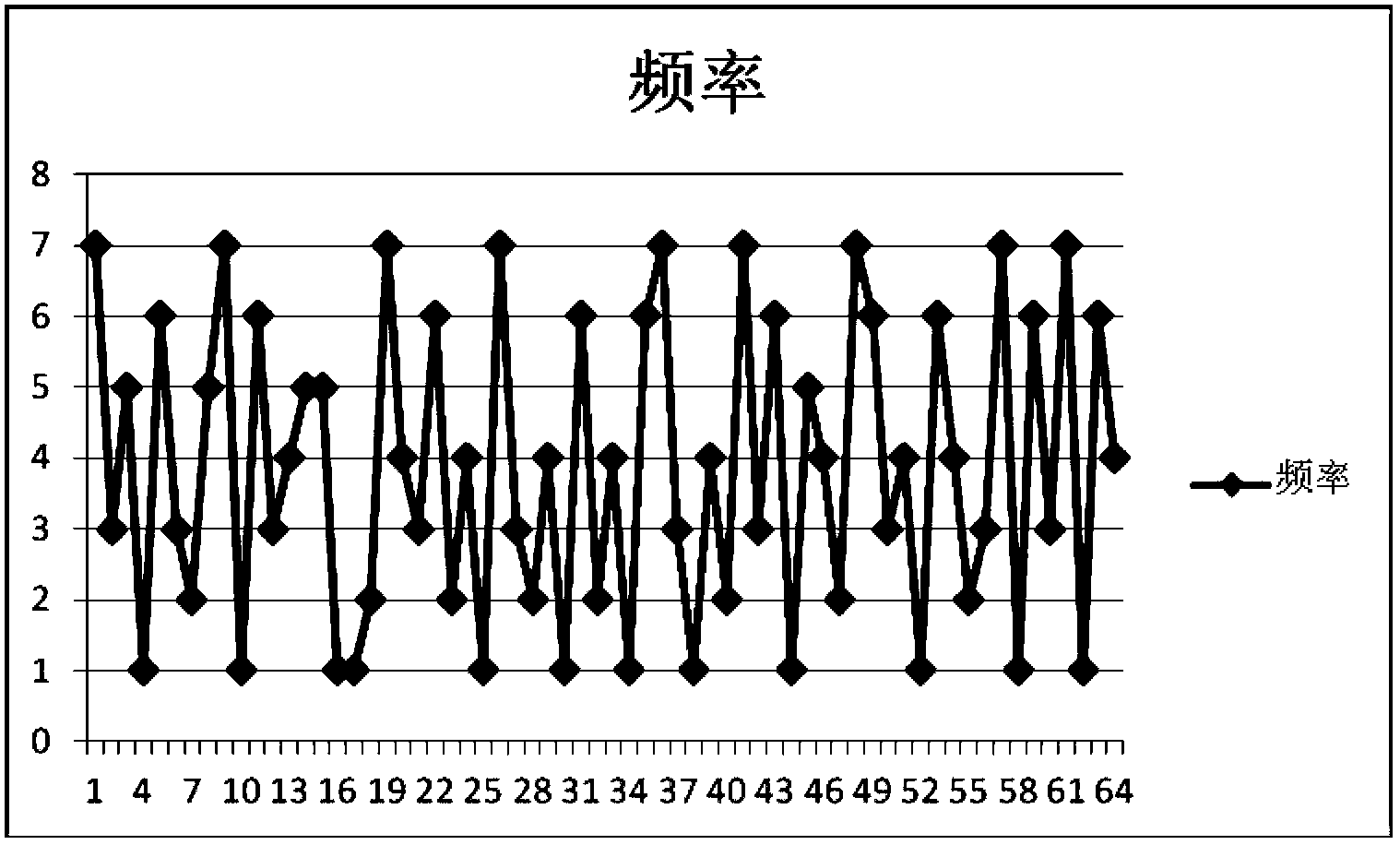

Online energy consumption management method and apparatus of large-scale server cluster

InactiveCN106452822AReduce in quantityAvoid wastingHardware monitoringPower supply for data processingDynamic managementComputer science

The embodiments of the invention disclose an online energy consumption management method of a large-scale server cluster. Under the condition that it is ensured that the CPU utilization rate of starting servers is equal to a given target value, servers in a cluster are dynamically managed according to load conditions so as to enable energy consumption of the cluster to be the lowest. Variables are defined for each server model in the cluster, an energy consumption management problem is described into a planning problem, and accordingly, the switch state, the work frequency and the load of each server are determined according to a solving result of the planning problem. The planning variable defining mode used by the invention can greatly reduce the quantity of the variables, even if the method is applied to a large-scale cluster, the planning problem can still be solved in an online mode. The method allows the server frequency to be switched between two adjacent discrete frequencies so as to prevent performance waste, and at the same time, the quantity of the servers needing to be switched between the two frequencies is also reduced as much as possible so that the switching cost is decreased. The invention further discloses an online energy consumption management apparatus of a large-scale server cluster.

Owner:SHANTOU UNIV

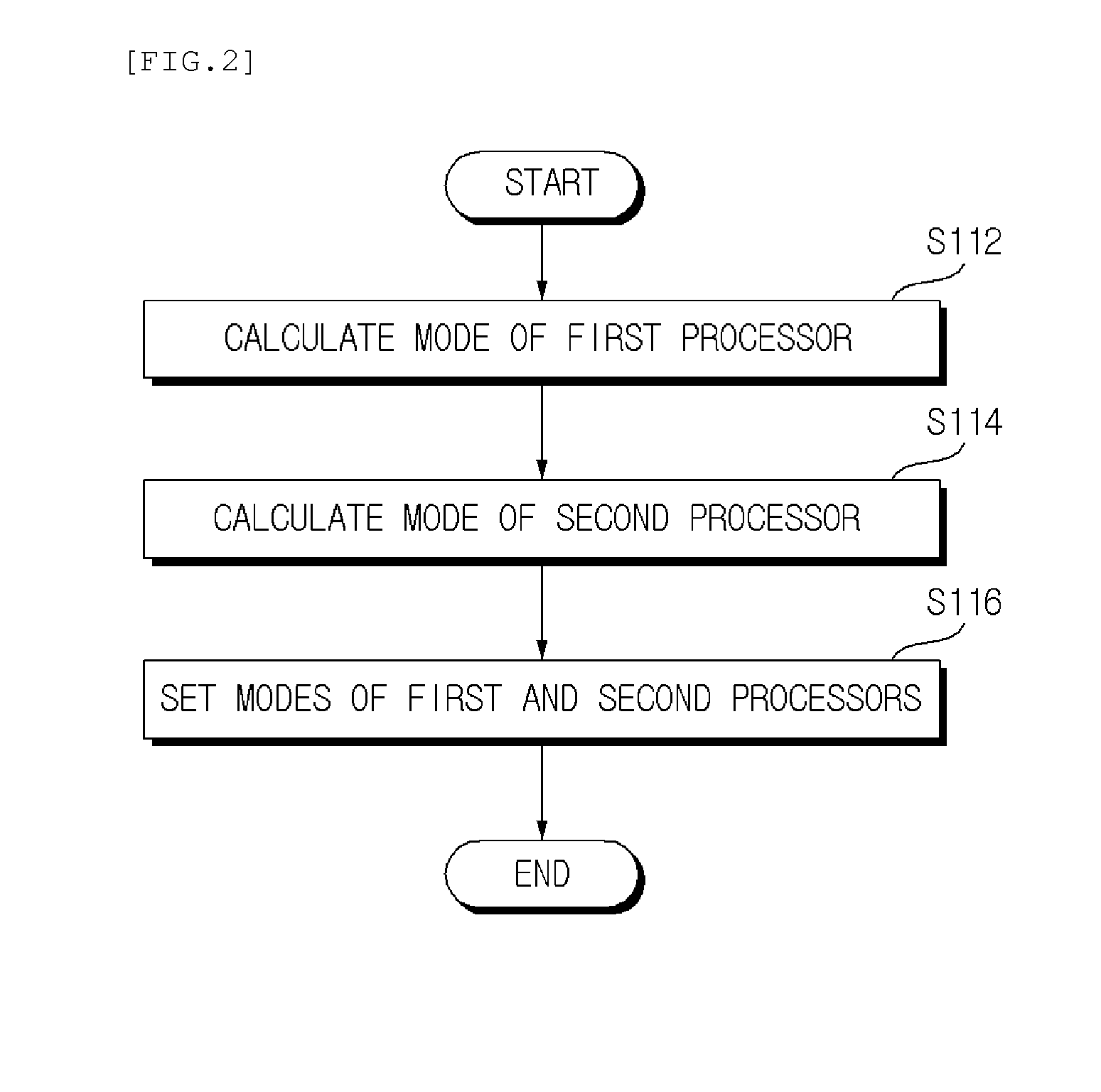

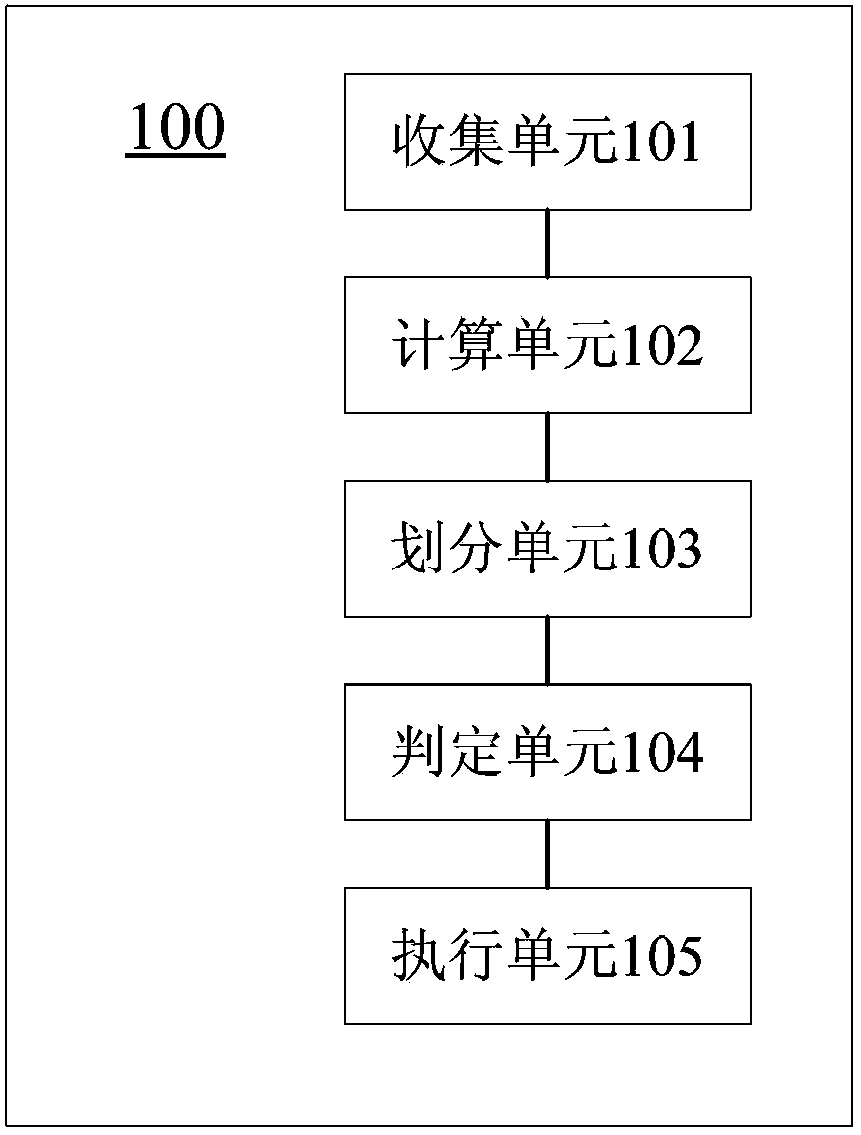

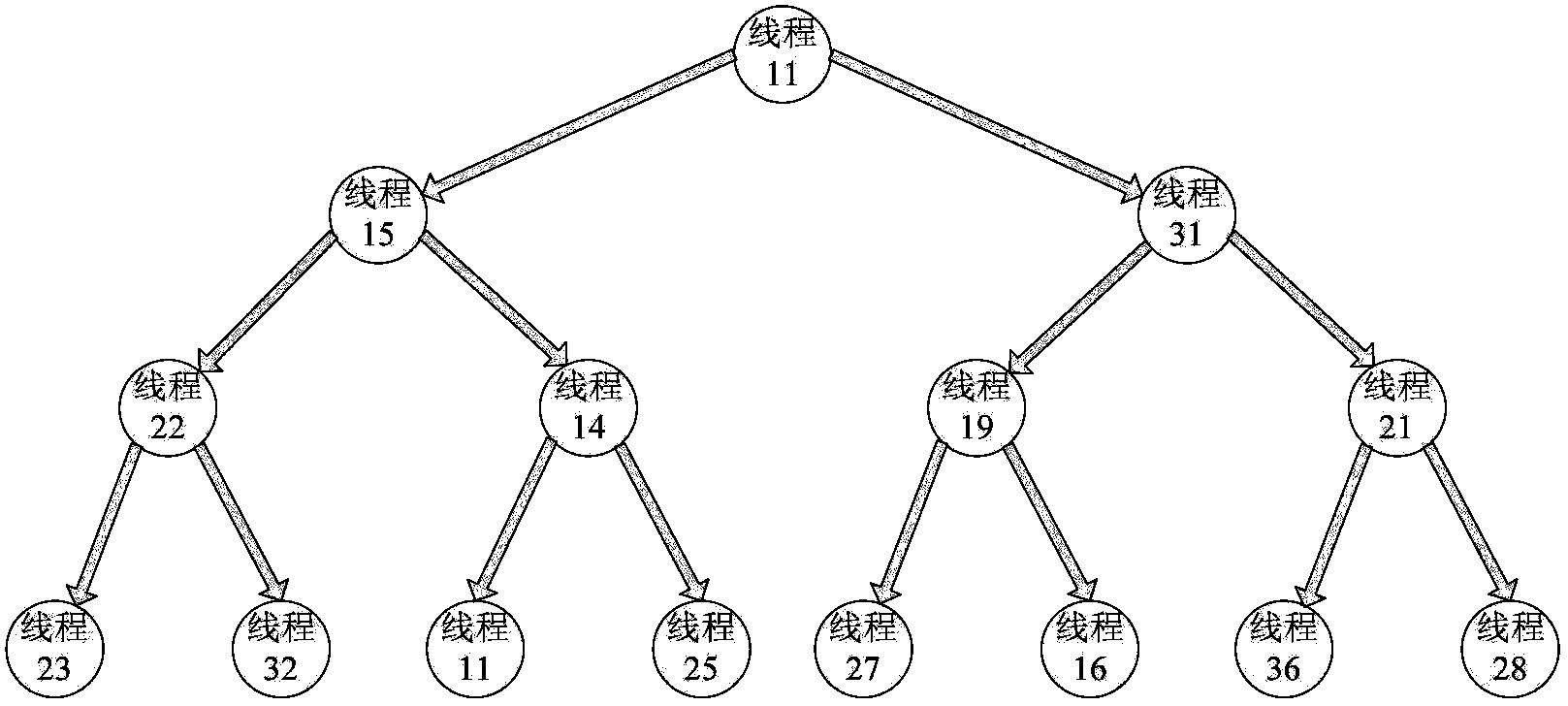

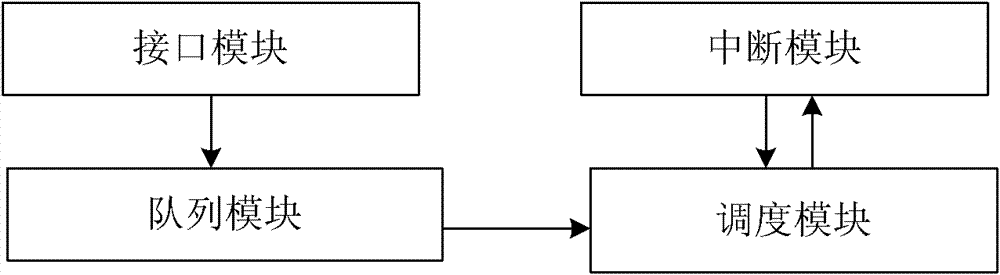

Thread processing device and method and computer system

InactiveCN103514029AReduce the frequency of switching back and forthReduce switching overheadMultiprogramming arrangementsPower consumptionComputerized system

The invention provides a thread processing device and method and a computer system. The thread processing device comprises a collection unit, a calculation unit, a partition unit, a judgment unit and an execution unit. The collection unit is used for collecting a plurality of threads to be executed within the scheduled time. The calculation unit is used for calculating the memory access proportion of each thread in more threads in the executing process. The partition unit is used for partitioning the threads into n groups based on the memory access proportion of each thread. The judgment unit is used for judging whether the threads in one group of the n groups are executed completely or not. The execution unit is used for continuing to execute the threads in the one group on the condition that the threads in the one group of the n groups are not executed completely, and executing the threads of the other groups on the condition that the threads in the one group of the n groups have been executed completely. According to the thread processing device and method and the computer system, the frequentness of repeated switching of frequency can be reduced effectively, so switching expenditures are reduced effectively, power consumption is lowered and efficiency is improved.

Owner:SONY CORP

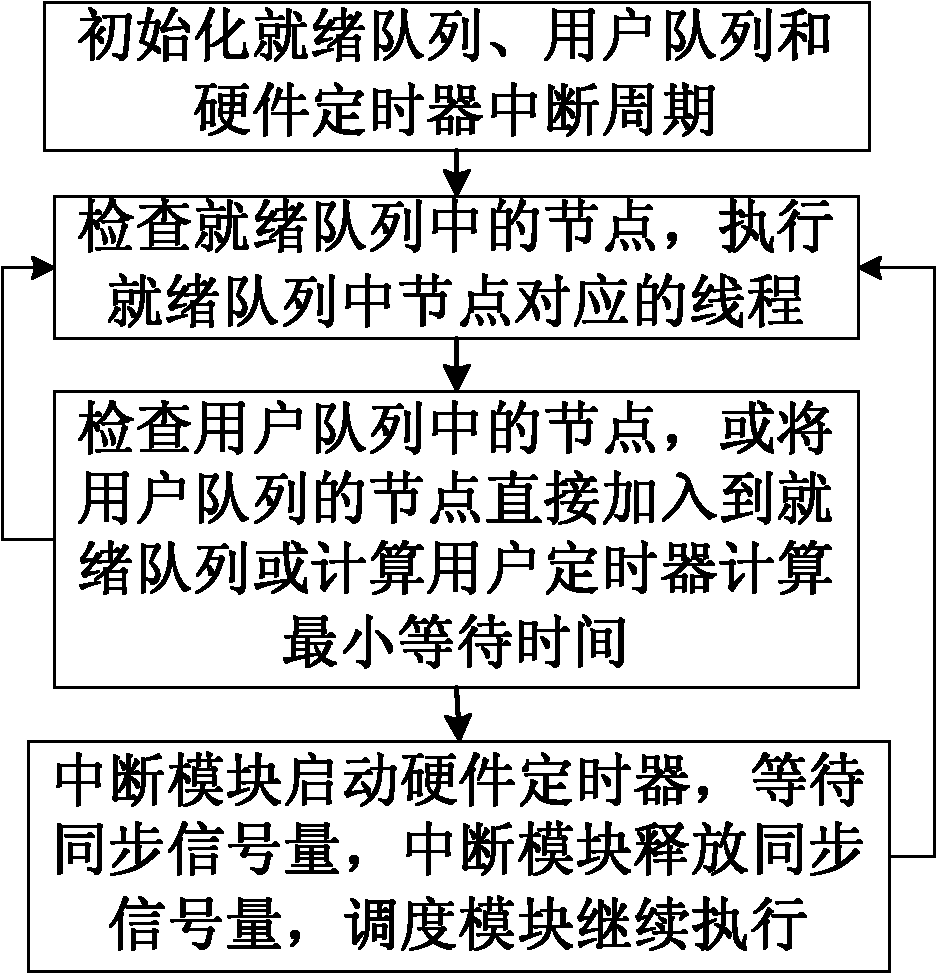

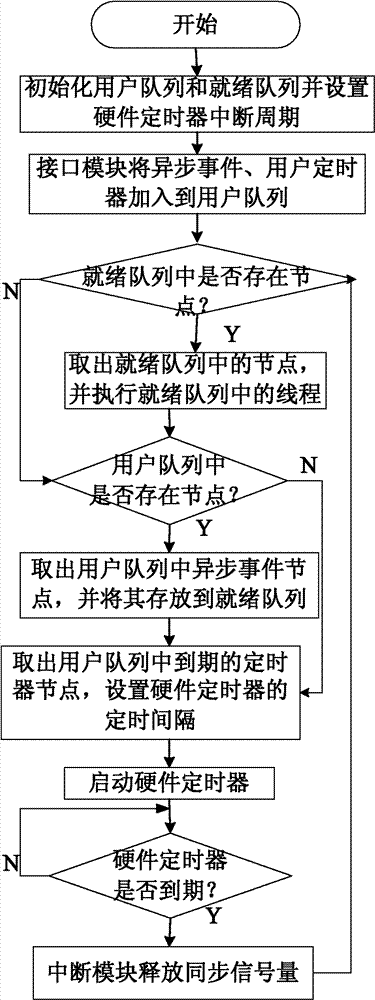

Processing method and system of timers and asynchronous events

ActiveCN102455940BAccurate timingReduce switching overheadProgram initiation/switchingOrder controlIsochronous signal

The invention provides a method capable of ensuring ordered control of multiple user timers and asynchronous events in an embedded system, and also improving the execution efficiency of the existing user timers and asynchronous events, and the invention also provides a system for realizing the method. The method provided by the invention can simultaneously start multiple user timers as well as simultaneously process multiple asynchronous events, thus ensuring the versatility of the technical scheme. Because the user timers and the asynchronous events are simultaneously executed in multiple threads of one task (scheduler task of the invention), the expense for switching between tasks is reduced, the execution efficiency is improved, and the corresponding threads for executing the asynchronous events or the user timers are orderly controlled by the release of synchronous signals.

Owner:MAIPU COMM TECH CO LTD

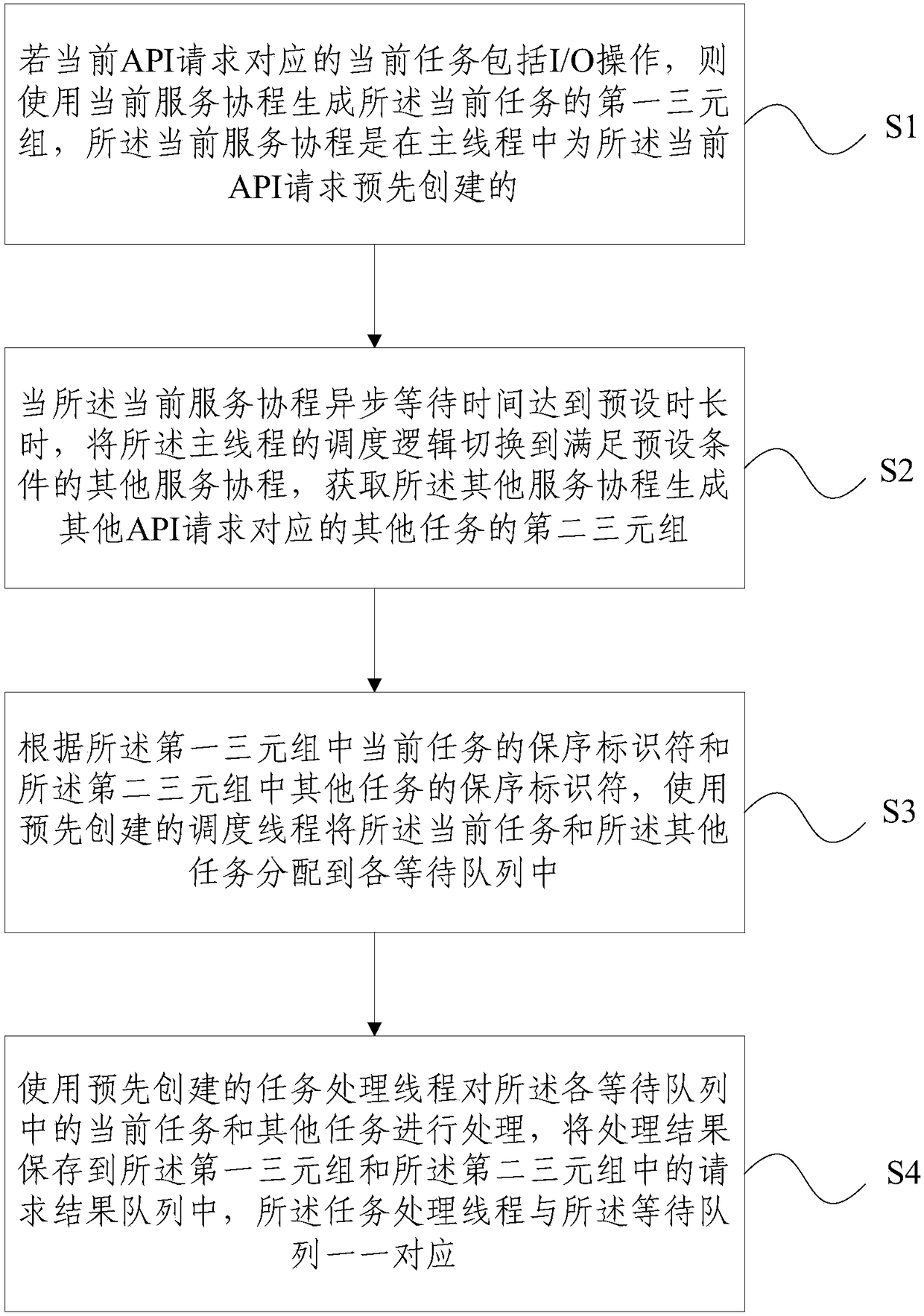

Method and system for concurrent processing of API request

ActiveCN108089919AAvoid disorderReduce switching overheadProgram initiation/switchingResource allocationDistributed computingWaiting time

The invention provides a method and system for concurrent processing of an API request. The method comprises the steps that S1, if a current task corresponding to the current API request comprises anI / O operation, a first triad of the current task is generated by using a current service co-routine; S2, when the asynchronous waiting time of the current service co-routine reaches preset duration, the scheduling logic of a main thread is switched to other service co-routines meeting preset conditions, and second triads generated by the other service co-routines are acquired; S3, according to theorder-preserving identifier of the current task in the first triad and order-preserving identifiers of other tasks in the second triads, the current task and the other tasks are distributed to a waiting queue by using a scheduling thread; S4, the current task and other tasks are processed by using a task processing thread, and processing results are stored in a request result queue of the first triad and the second triads. The method and the device achieve order-preserving high-concurrent processing of the API request.

Owner:北京云杉世纪网络科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com