Patents

Literature

58 results about "Chip multi processor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

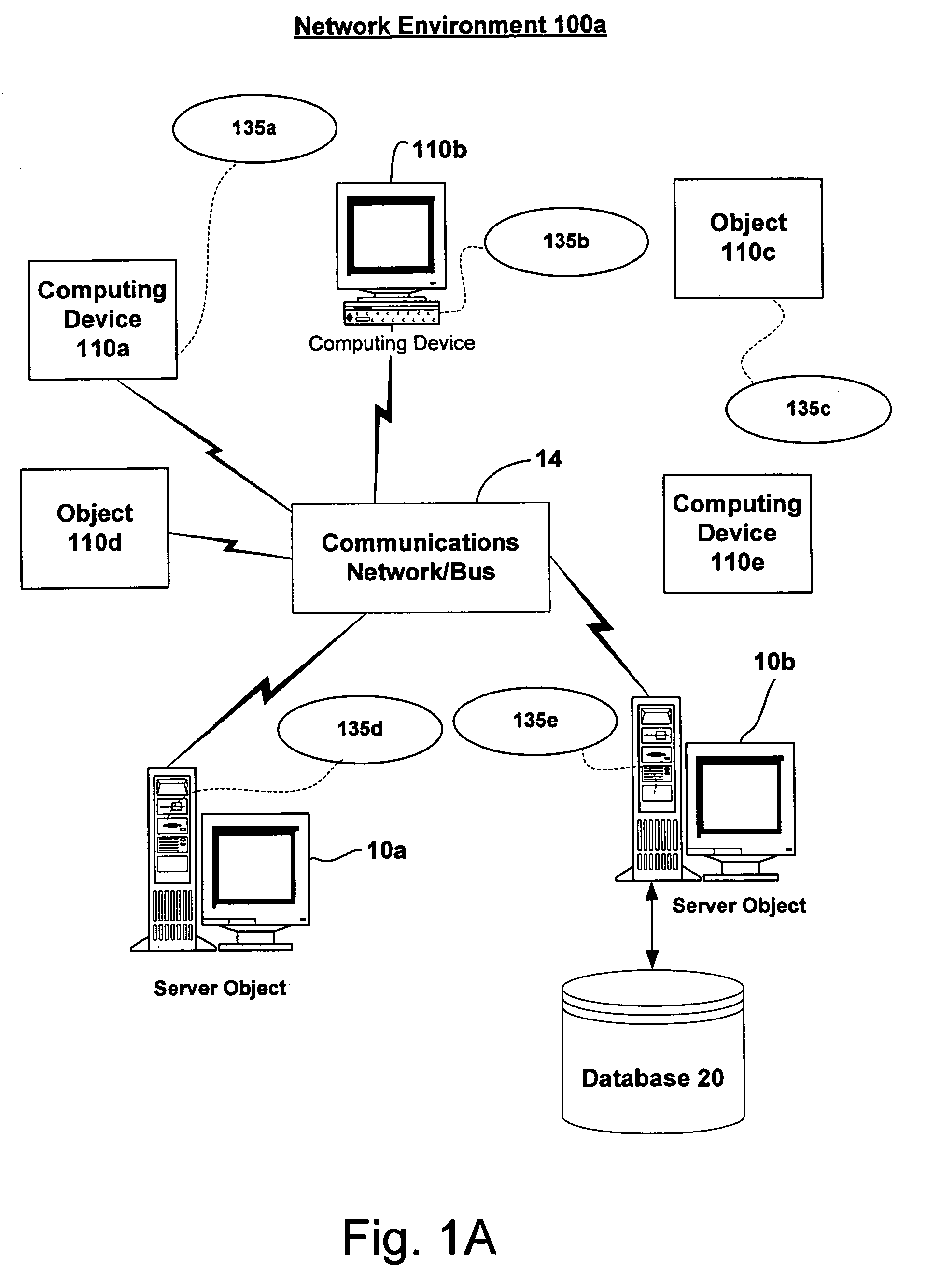

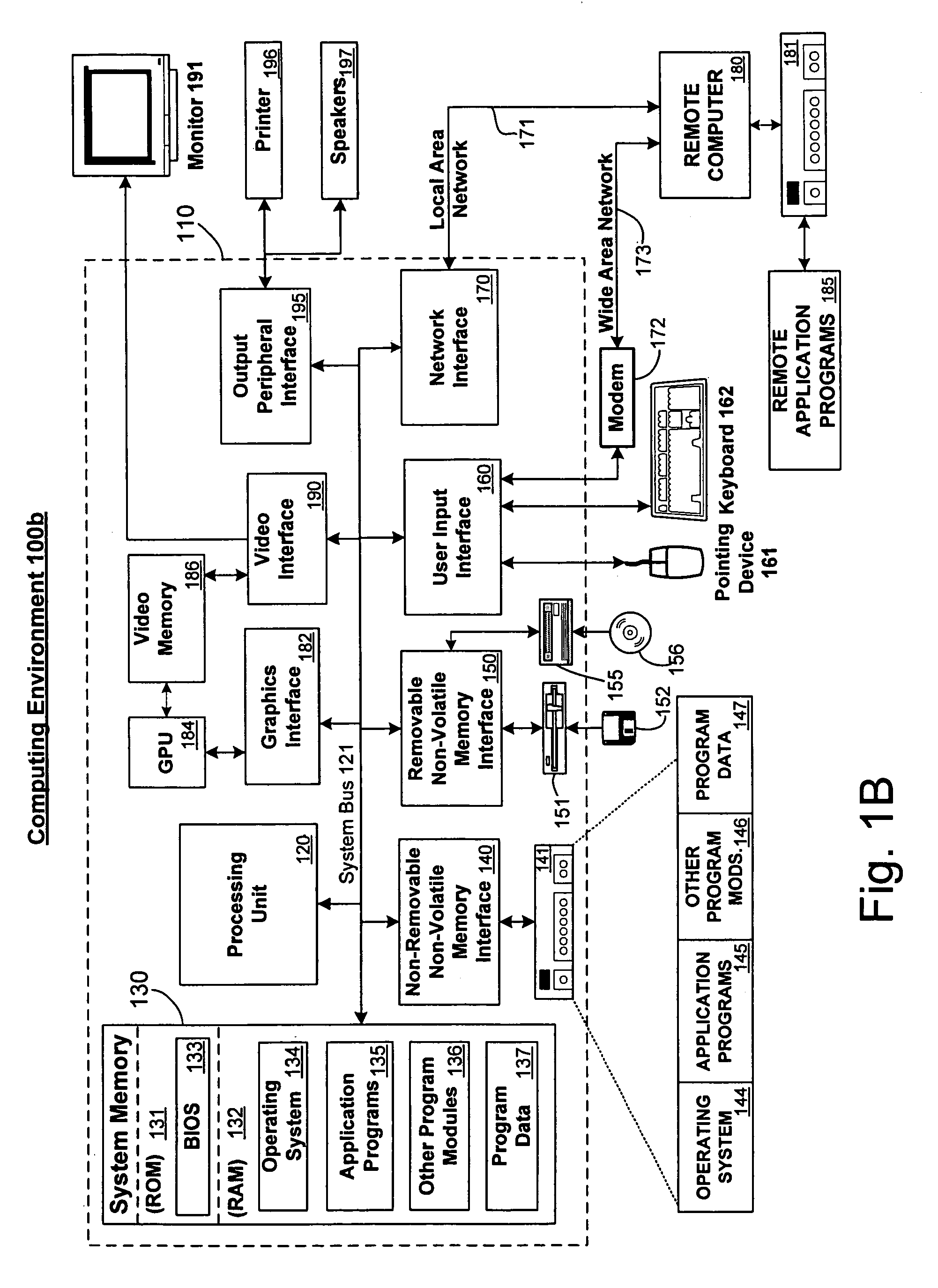

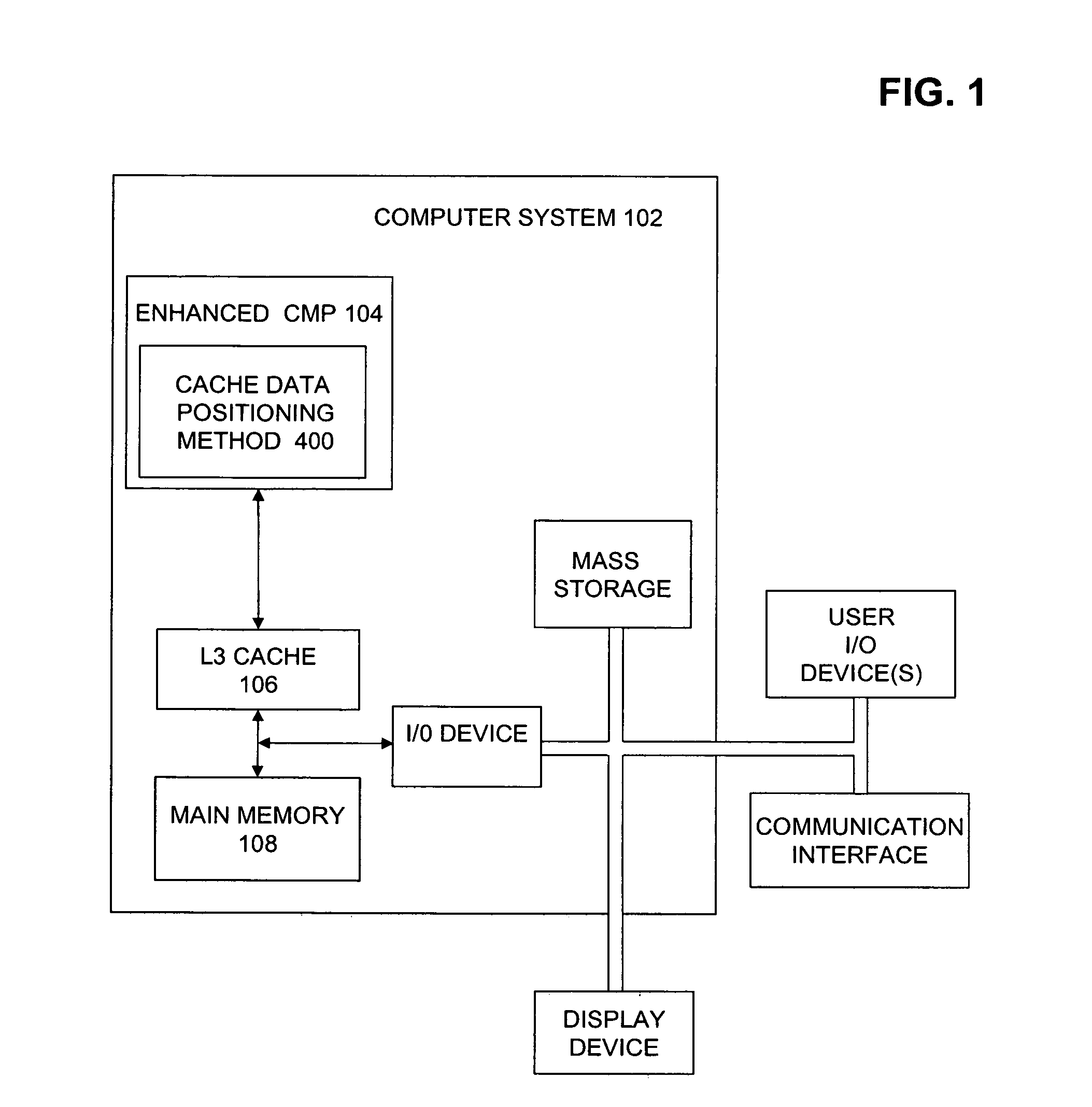

Cache sharing for a chip multiprocessor or multiprocessing system

InactiveUS20040059875A1Energy efficient ICTMemory adressing/allocation/relocationMultiprocessingChip multi processor

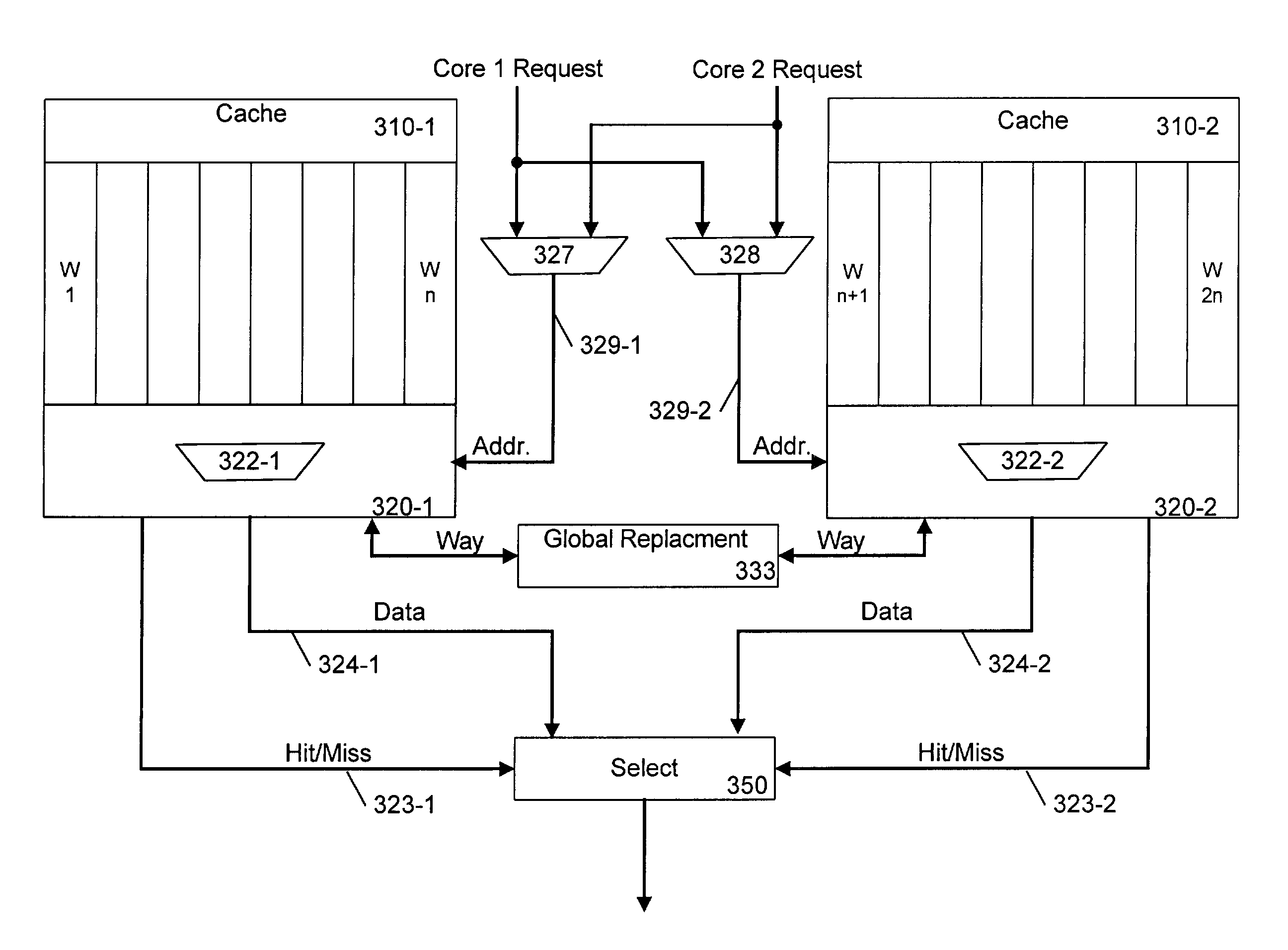

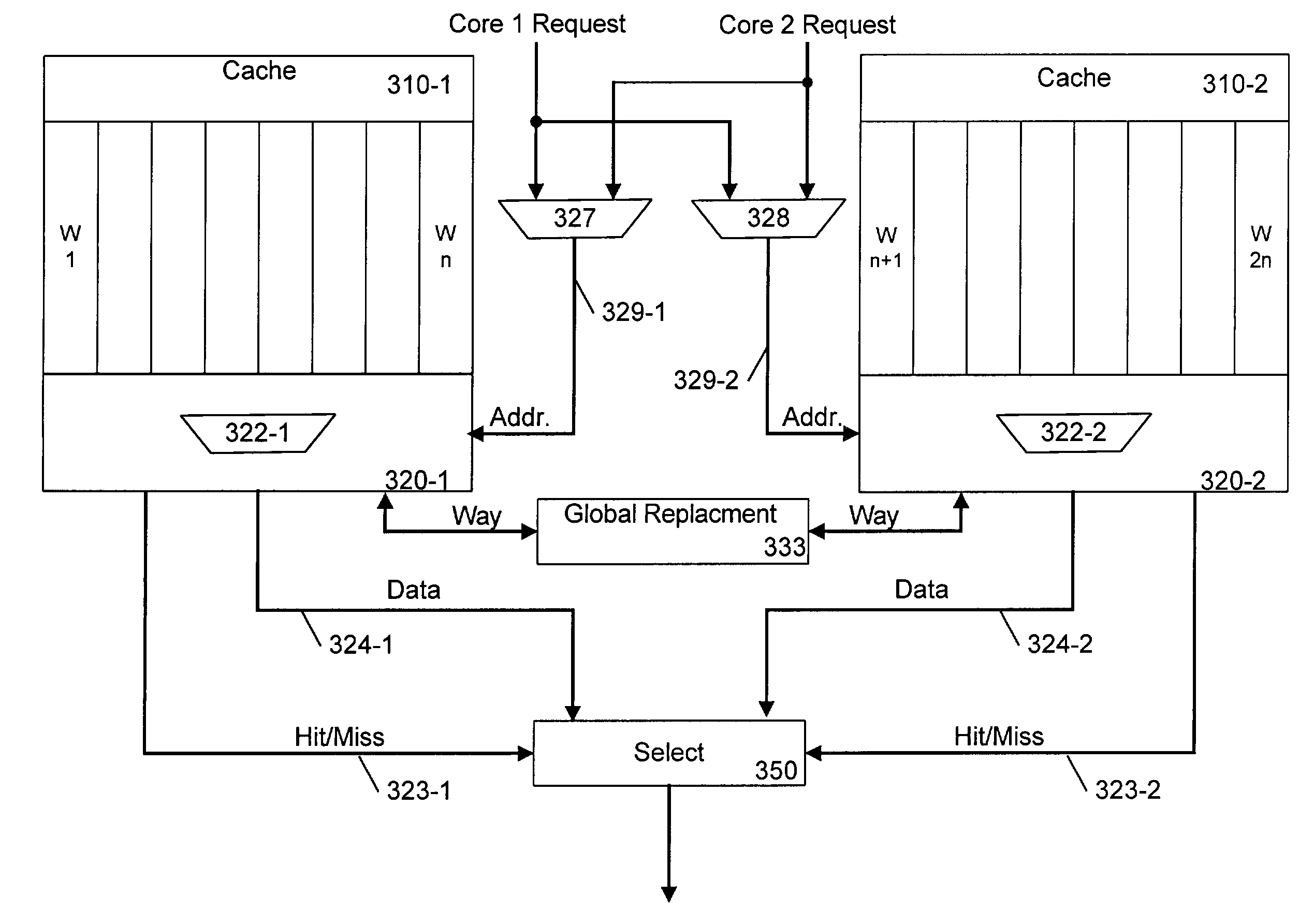

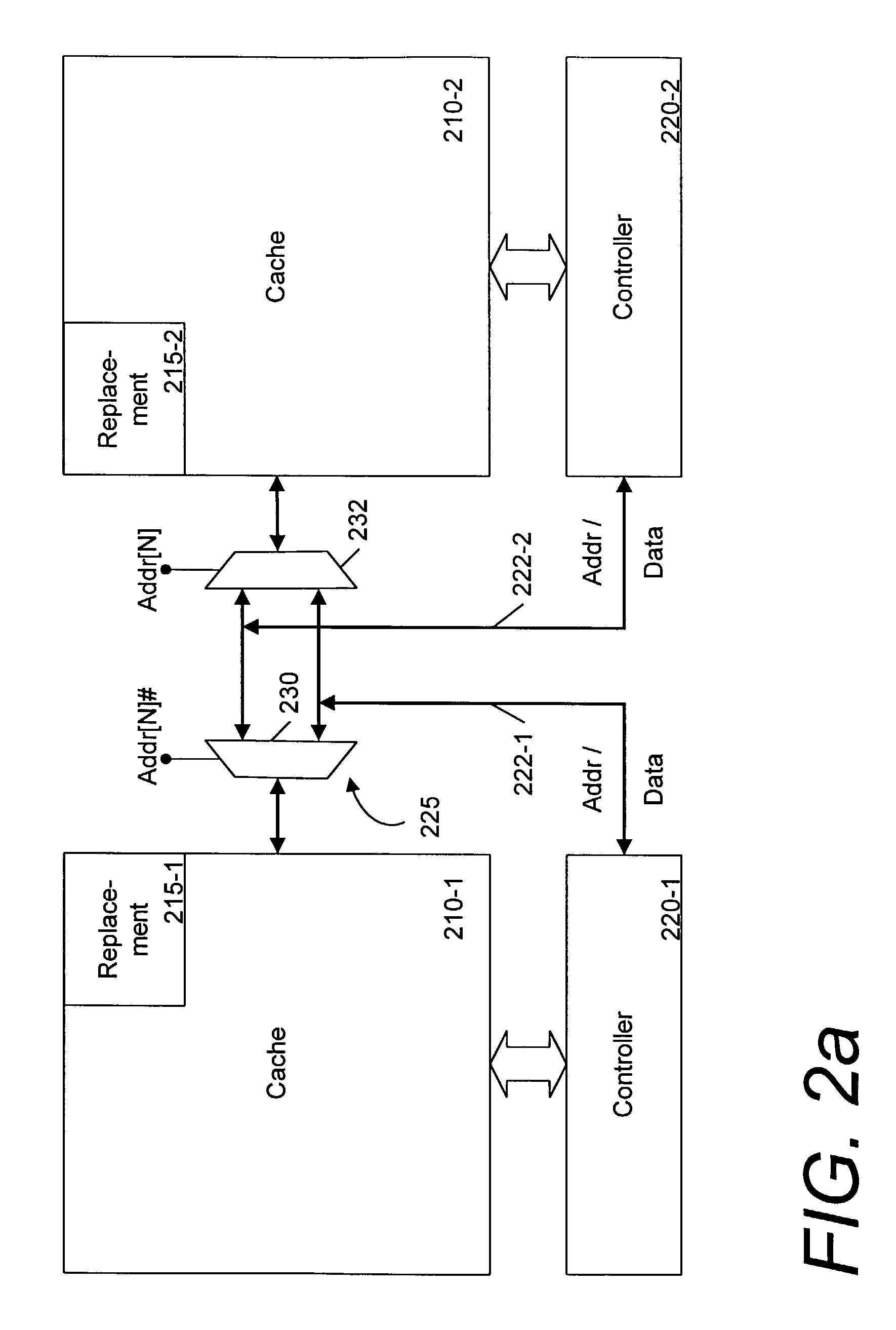

Cache sharing for a chip multiprocessor. In one embodiment, a disclosed apparatus includes multiple processor cores, each having an associated cache. A control mechanism is provided to allow sharing between caches that are associated with individual processor cores.

Owner:INTEL CORP

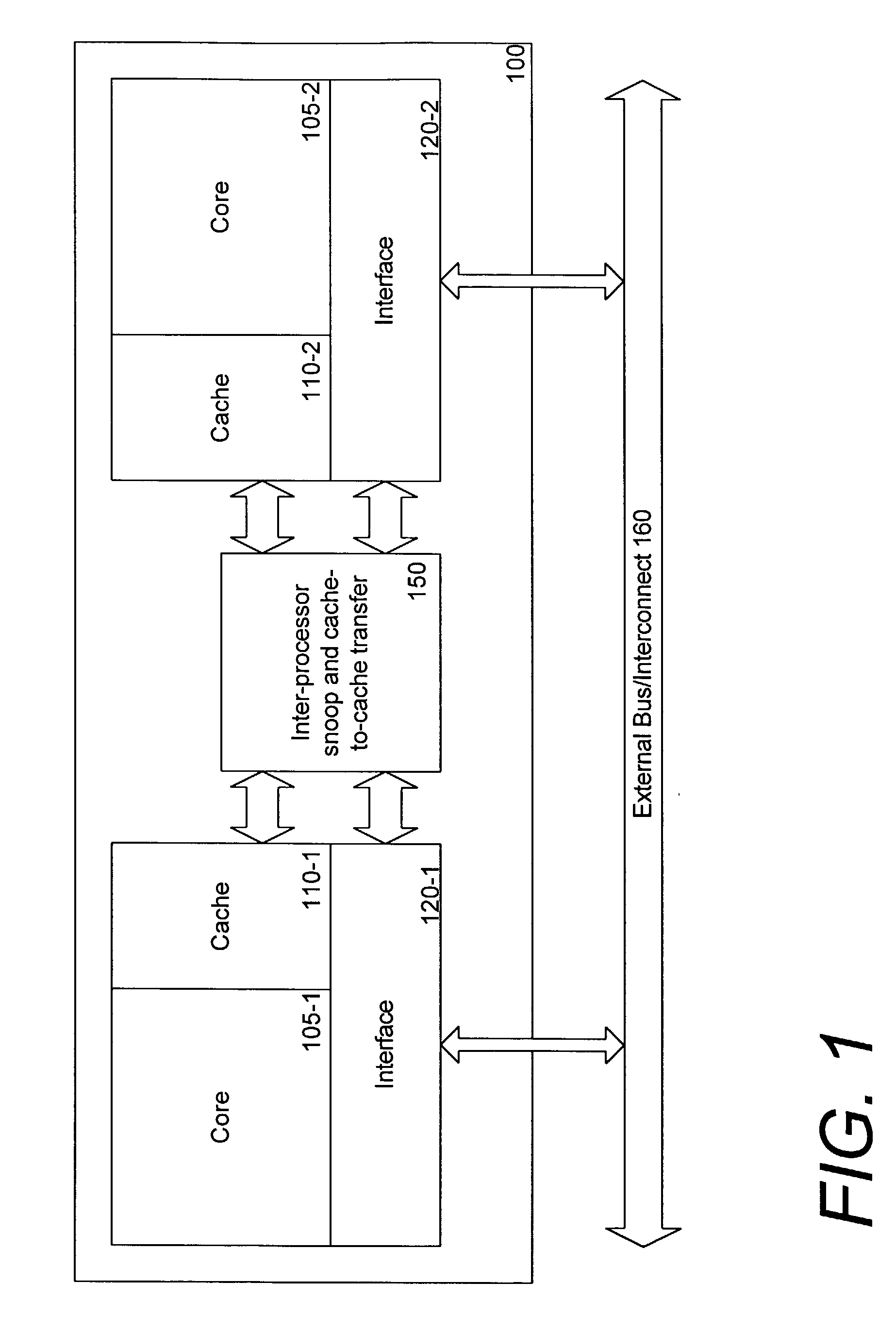

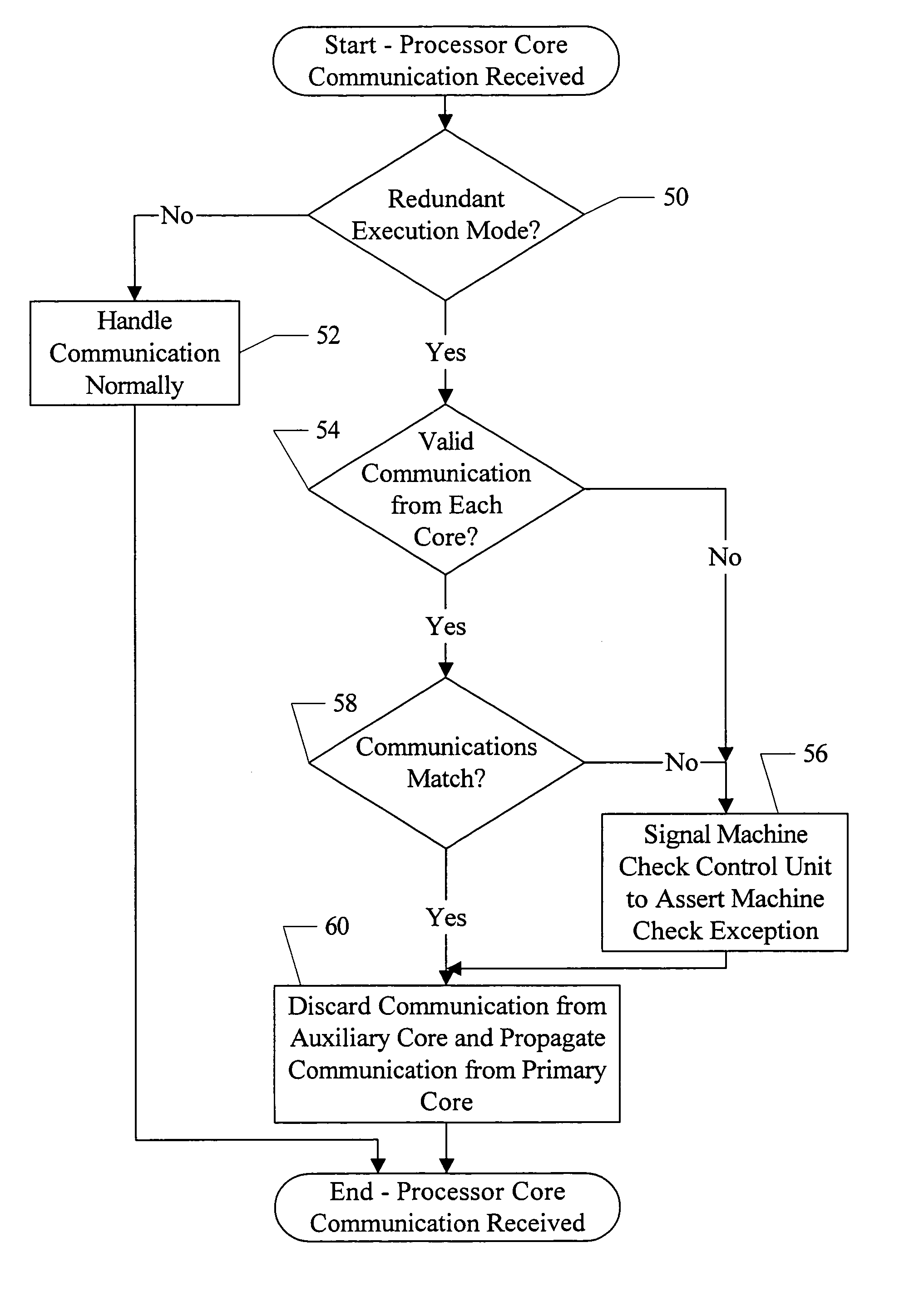

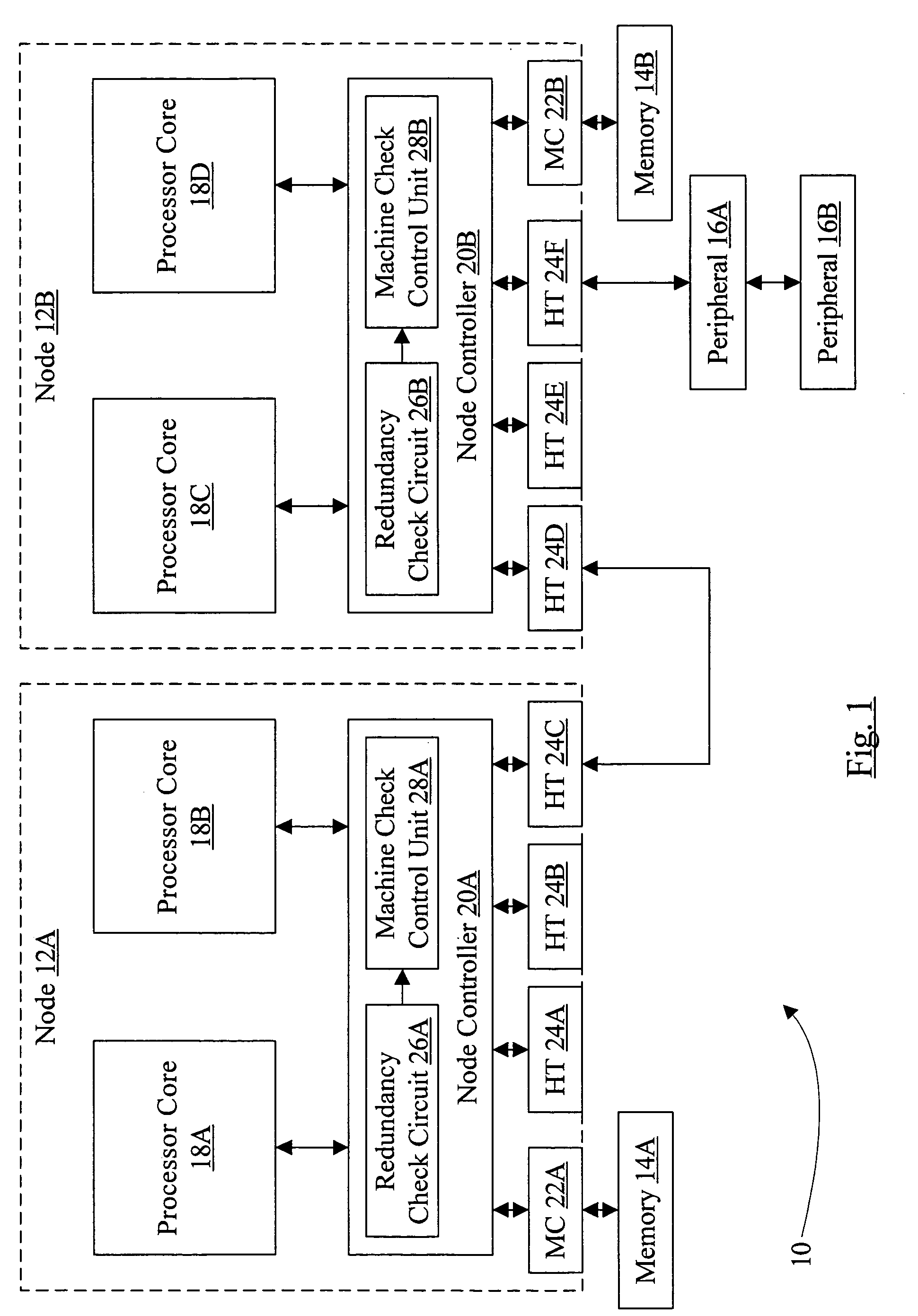

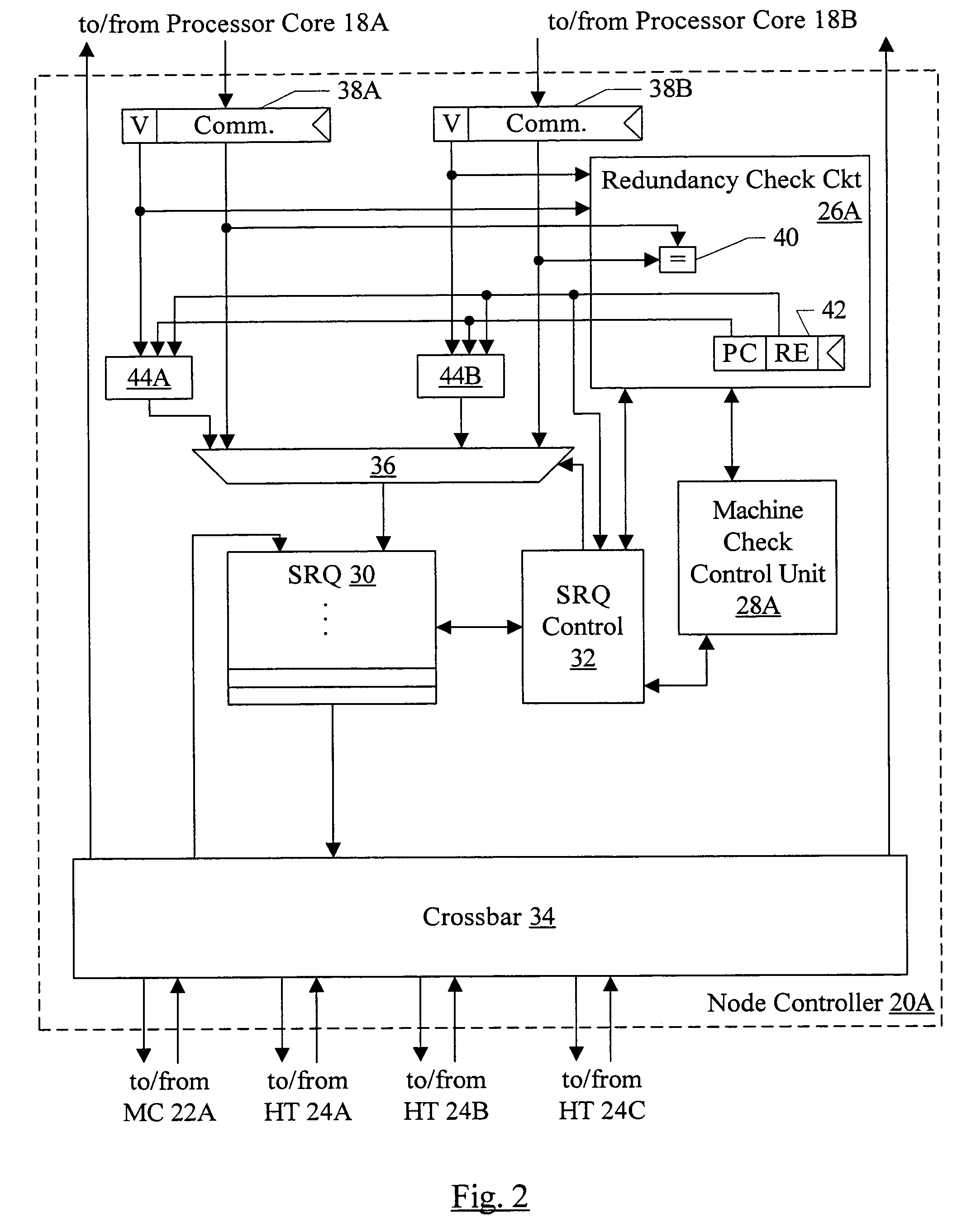

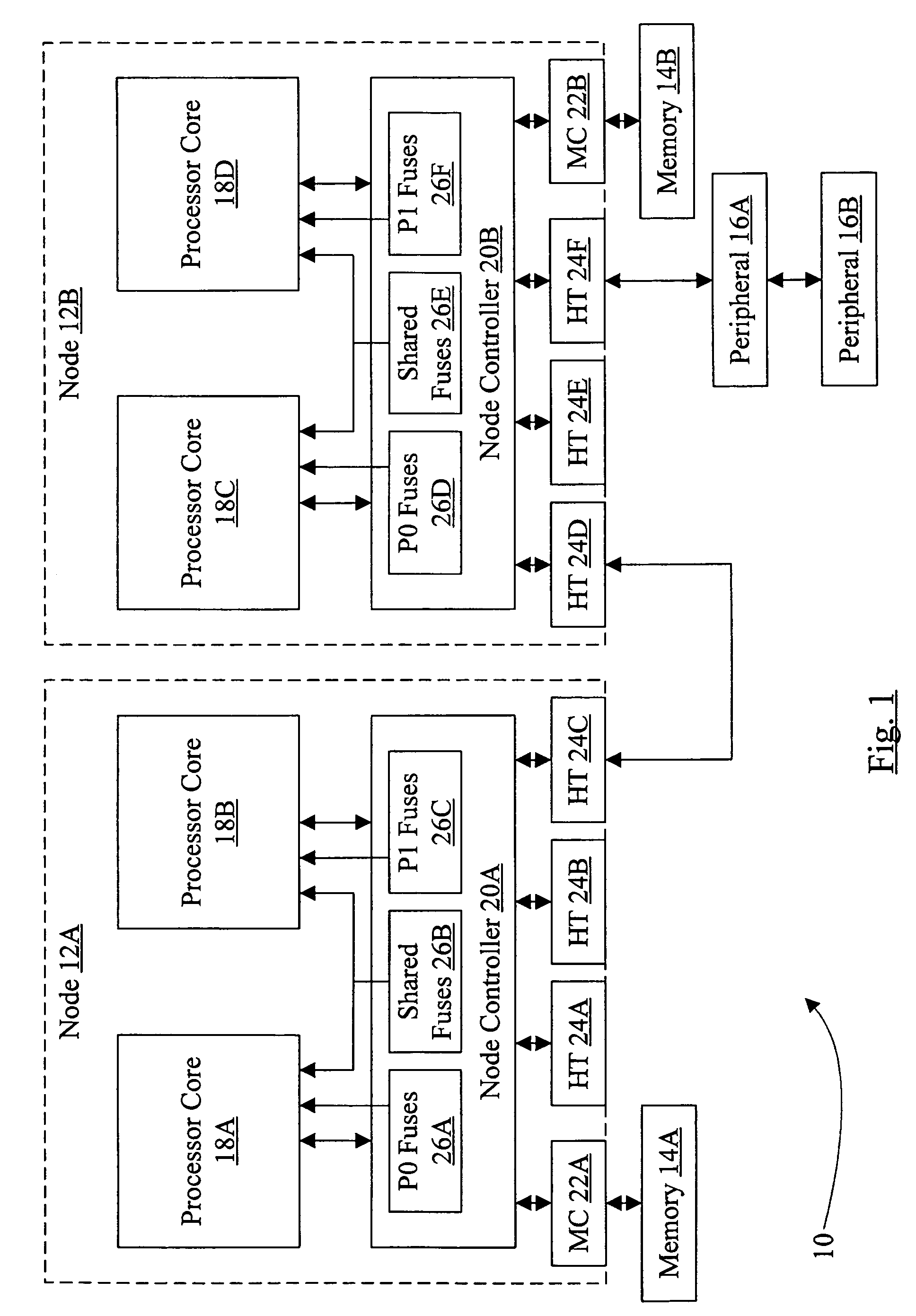

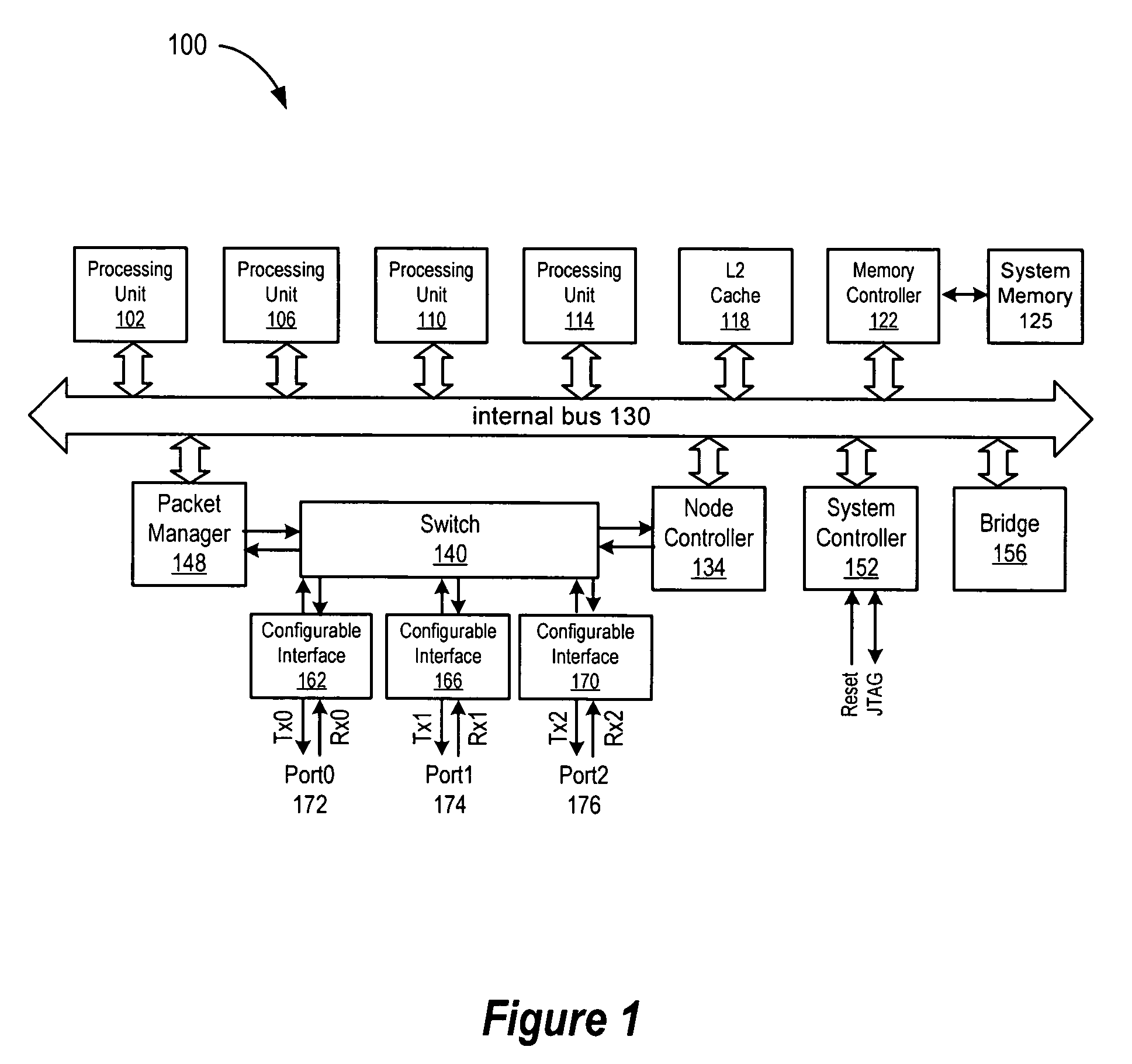

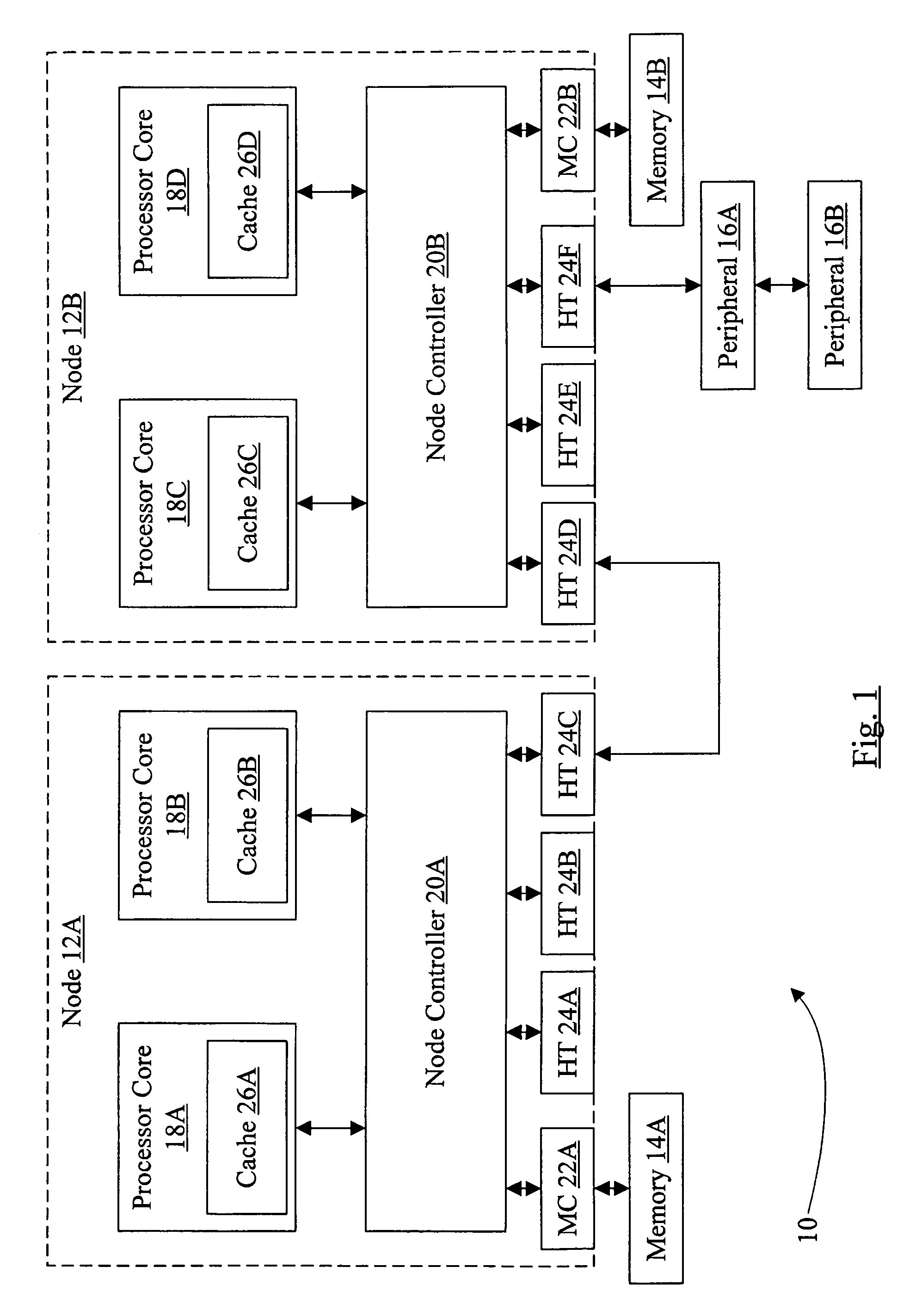

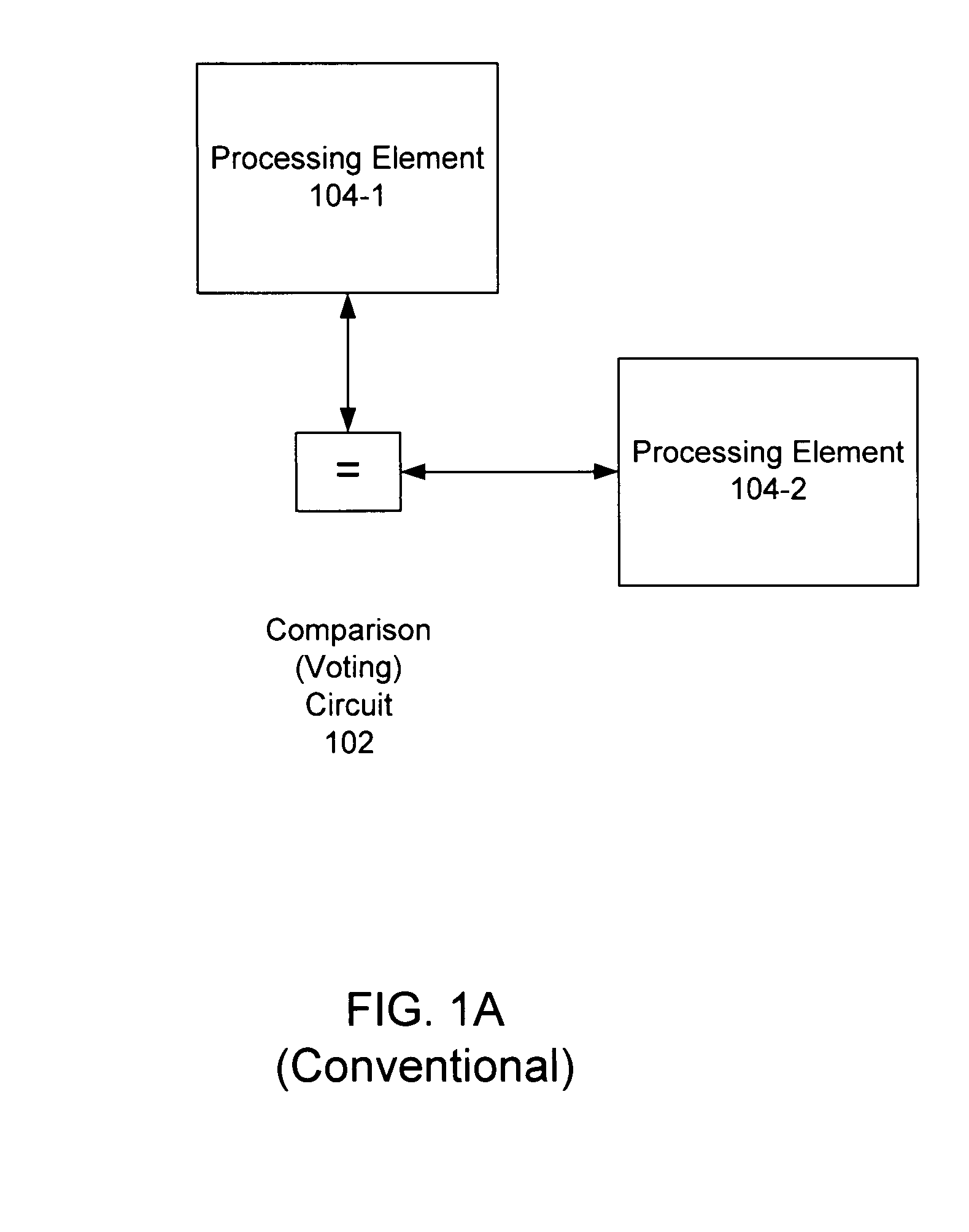

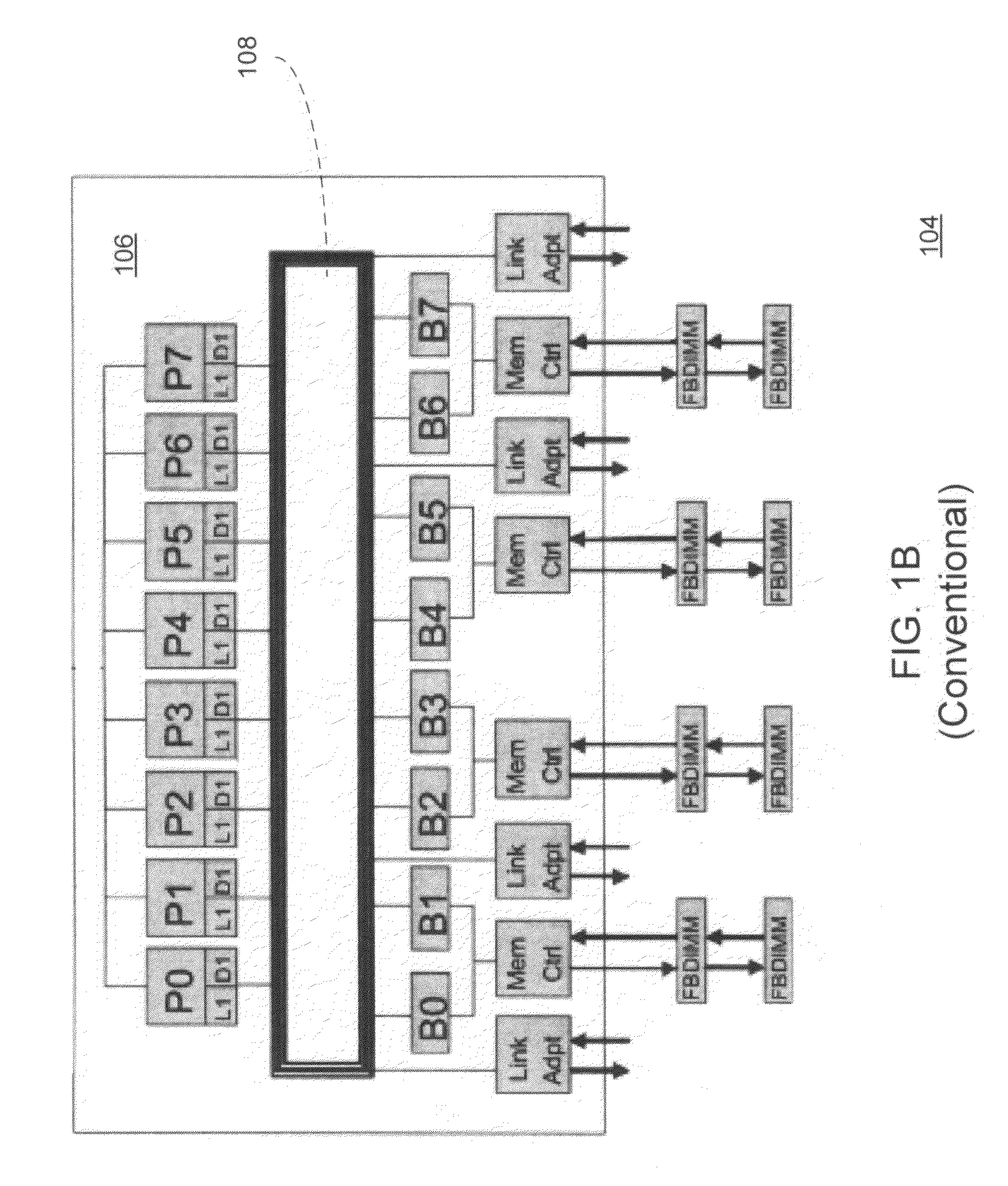

Core redundancy in a chip multiprocessor for highly reliable systems

ActiveUS7328371B1Error detection/correctionGeneral purpose stored program computerParallel computingComputerized system

In one embodiment, a node comprises a plurality of processor cores and a node controller coupled to the processor cores. The node controller is configured to route communications from the processor cores to other devices in a computer system. The node controller comprises a circuit coupled to receive the communications from the processor cores. In a redundant execution mode in which at least a first processor core is redundantly executing code that a second processor core is also executing, the circuit is configured to compare communications from the first processor core to communications from the second processor core to verify correct execution of the code. In some embodiments, the processor cores and the node controller may be integrated onto a single integrated circuit chip as a CMP. A similar method is also contemplated.

Owner:ADVANCED MICRO DEVICES INC

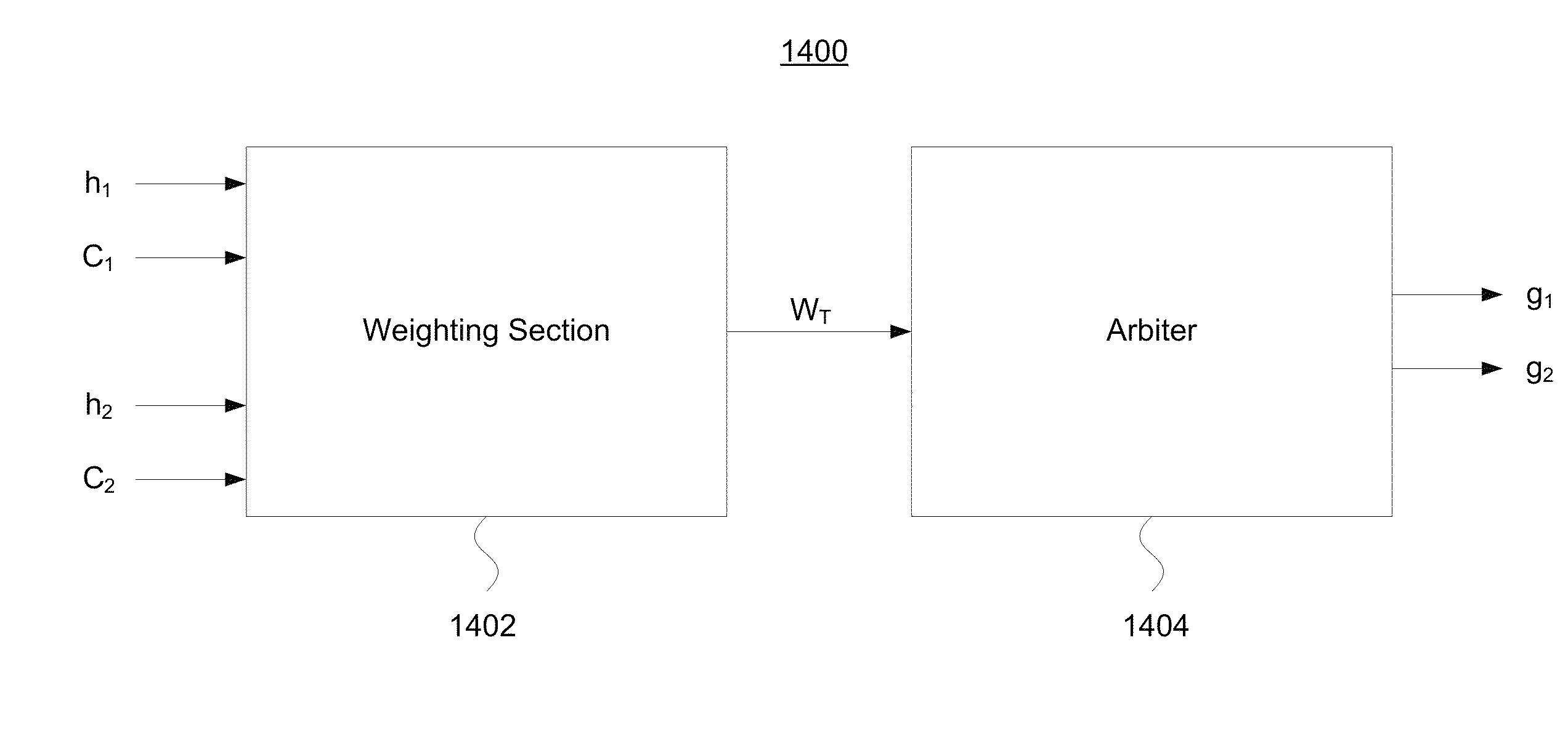

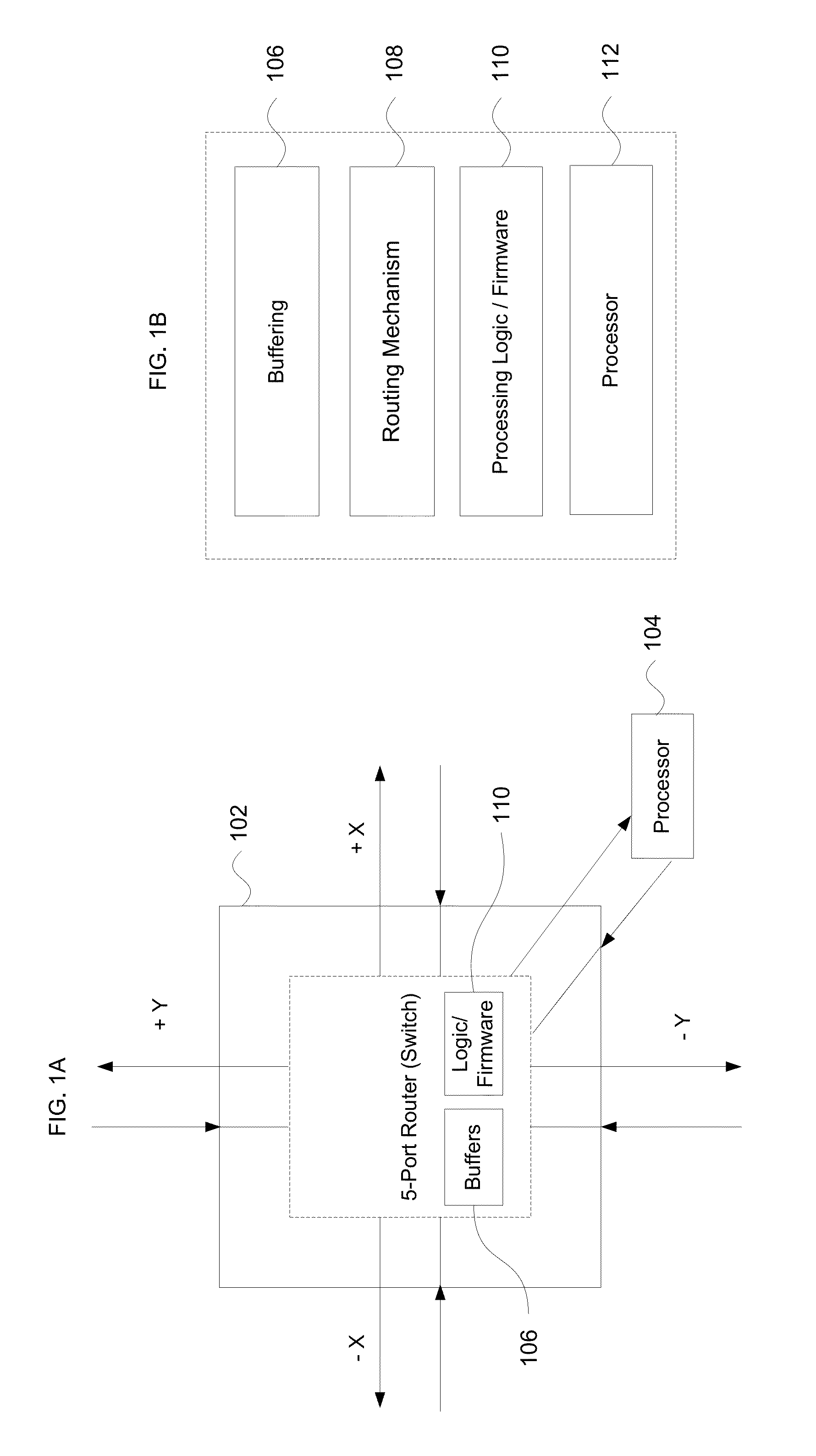

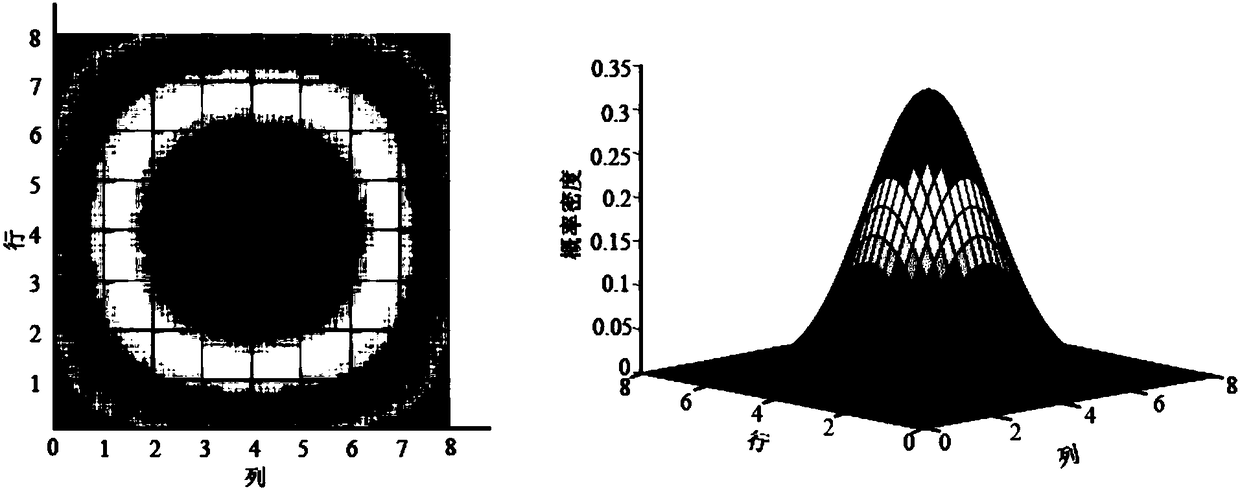

Probabilistic distance-based arbitration

ActiveUS8705368B1Reduce varianceEffective synchronizationMultiplex system selection arrangementsError preventionQuality of serviceInterconnection

Probabilistic arbitration is combined with distance-based weights to achieve equality of service in interconnection networks, such as those used with chip multiprocessors. This arbitration desirably used incorporates nonlinear weights that are assigned to requests. The nonlinear weights incorporate different arbitration weight metrics, namely fixed weight, constantly increasing weight, and variably increasing weight. Probabilistic arbitration for an on-chip router avoids the need for additional buffers or virtual channels, creating a simple, low-cost mechanism for achieving equality of service. The nonlinearly weighted probabilistic arbitration includes additional benefits such as providing quality-of-service features and fairness in terms of both throughput and latency that approaches the global fairness achieved with age-base arbitration. This provides a more stable network by achieving high sustained throughput beyond saturation. Each router or switch in the network may include an arbiter to apply the weighted probabilistic arbitration.

Owner:GOOGLE LLC

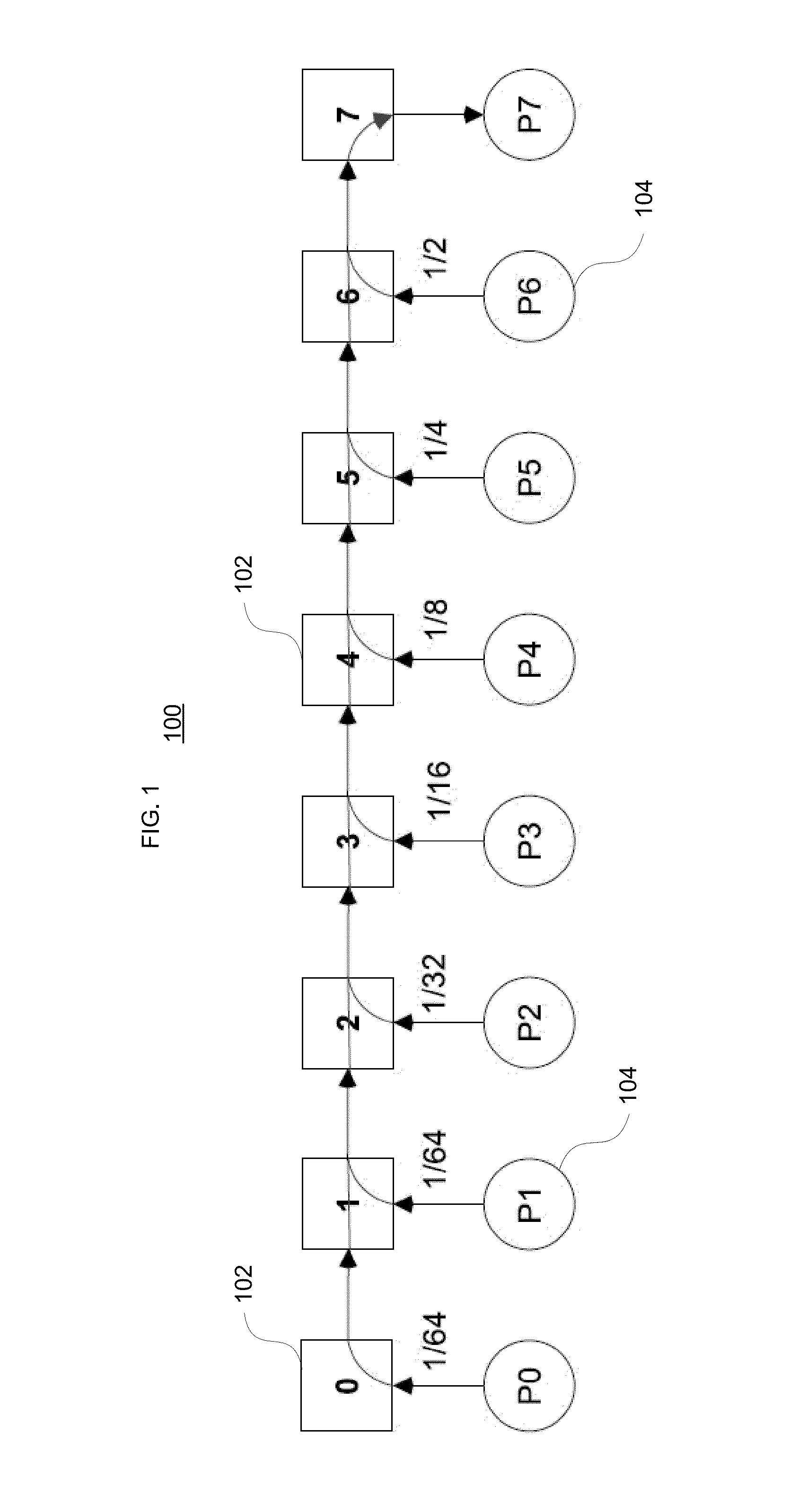

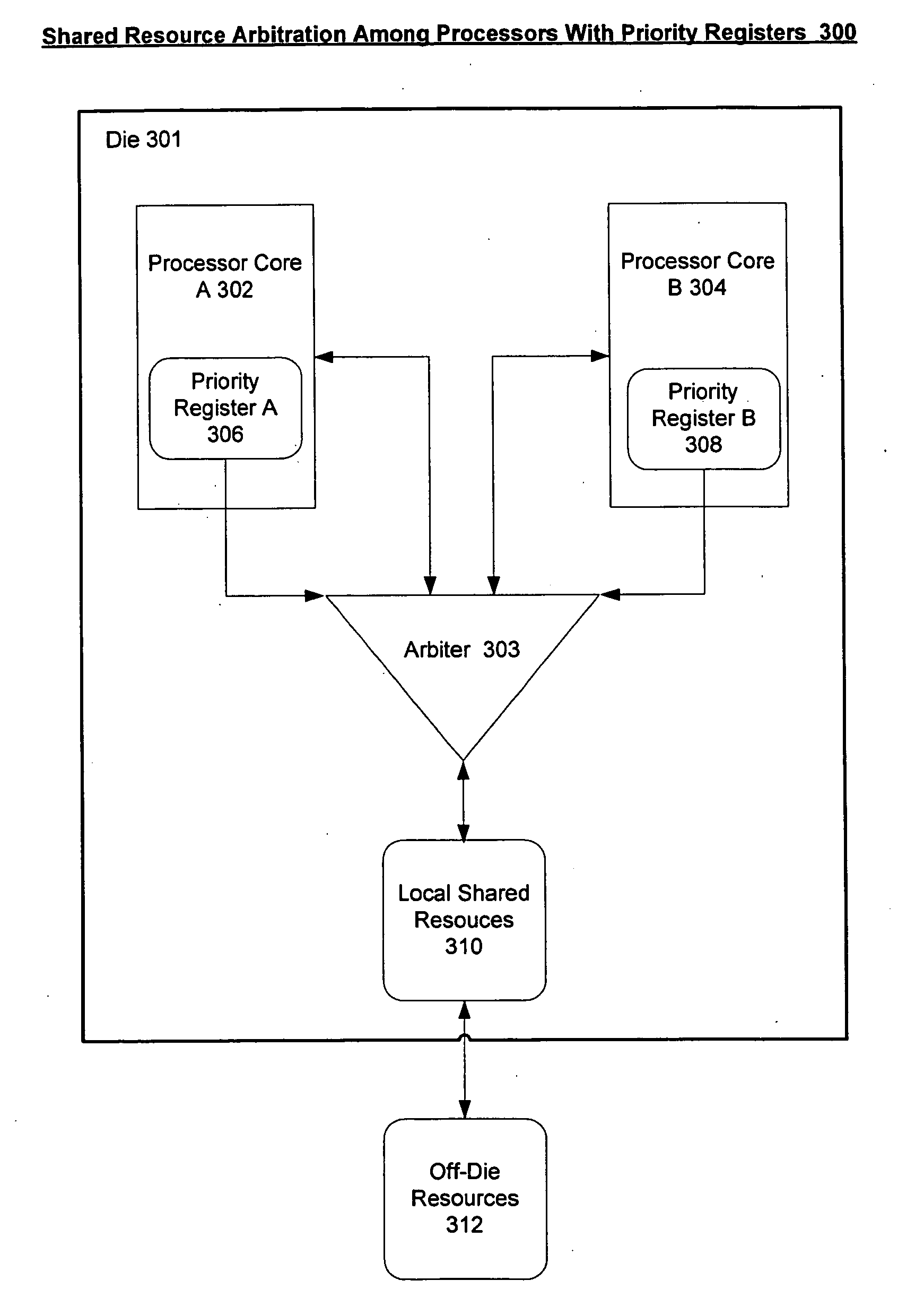

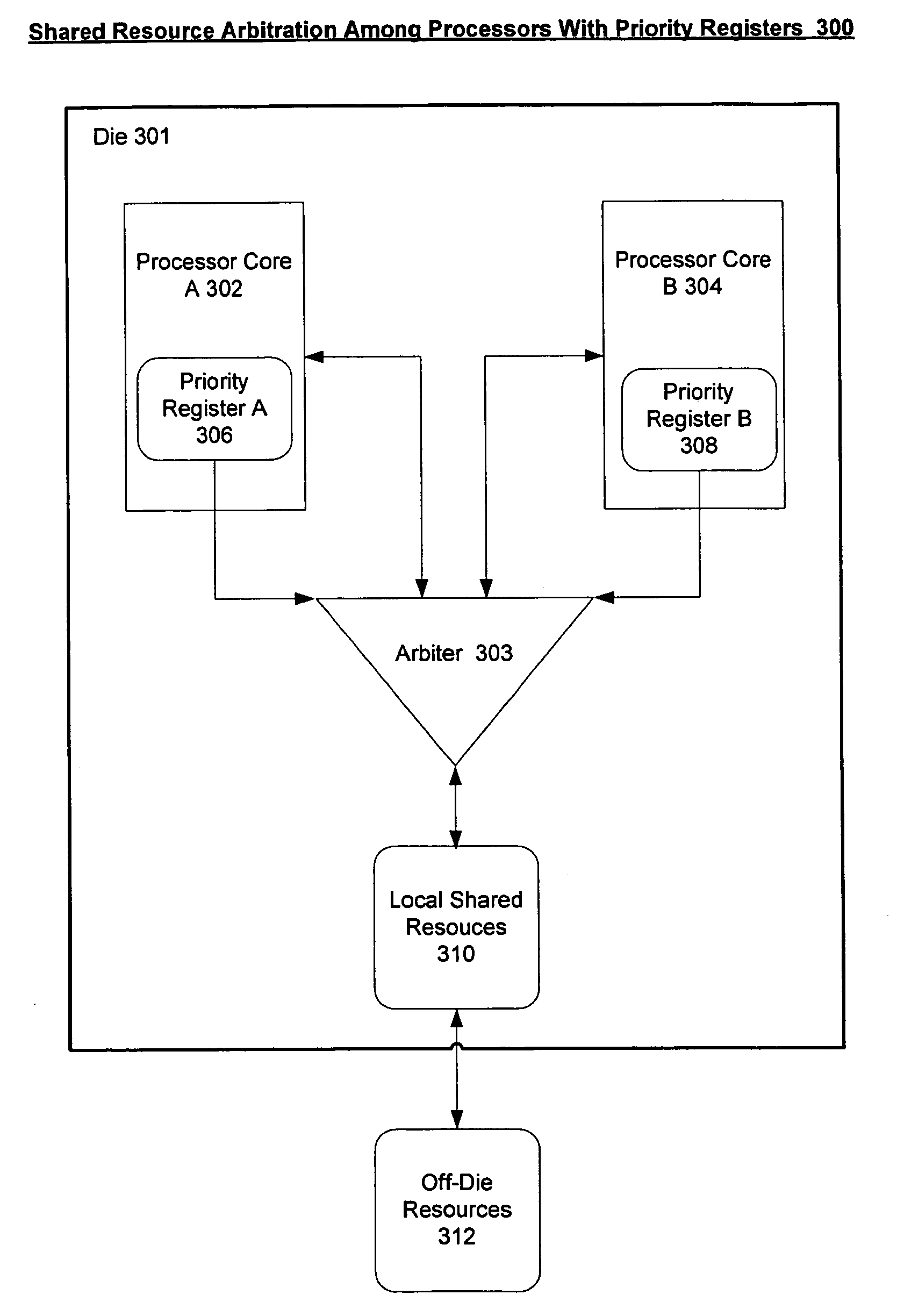

Priority registers for biasing access to shared resources

InactiveUS20060179196A1High perceived system performanceImprove system performanceMultiprogramming arrangementsMemory systemsResource basedShared resource

A priority register is provided for each of a multiple processor cores of a chip multiprocessor, where the priority register stores values that are used to bias resources available to the multiple processor cores. Even though such multiple processor cores have their own local resources, they must compete for shared resources. These shared resources may be stored on the chip or off the chip. The priority register biases the arbitration process that arbitrates access to or ongoing use of the shared resources based on the values stored in the priority registers. The way it accomplishes such biasing is by tagging operations issued from the multiple processor cores with the priority values, and then comparing the values within each arbiter of the shared resources.

Owner:MICROSOFT TECH LICENSING LLC

Priority registers for biasing access to shared resources

InactiveUS7380038B2Improve system performanceMultiprogramming arrangementsMemory systemsProcessor registerResource based

A priority register is provided for each of a multiple processor cores of a chip multiprocessor, where the priority register stores values that are used to bias resources available to the multiple processor cores. Even though such multiple processor cores have their own local resources, they must compete for shared resources. These shared resources may be stored on the chip or off the chip. The priority register biases the arbitration process that arbitrates access to or ongoing use of the shared resources based on the values stored in the priority registers. The way it accomplishes such biasing is by tagging operations issued from the multiple processor cores with the priority values, and then comparing the values within each arbiter of the shared resources.

Owner:MICROSOFT TECH LICENSING LLC

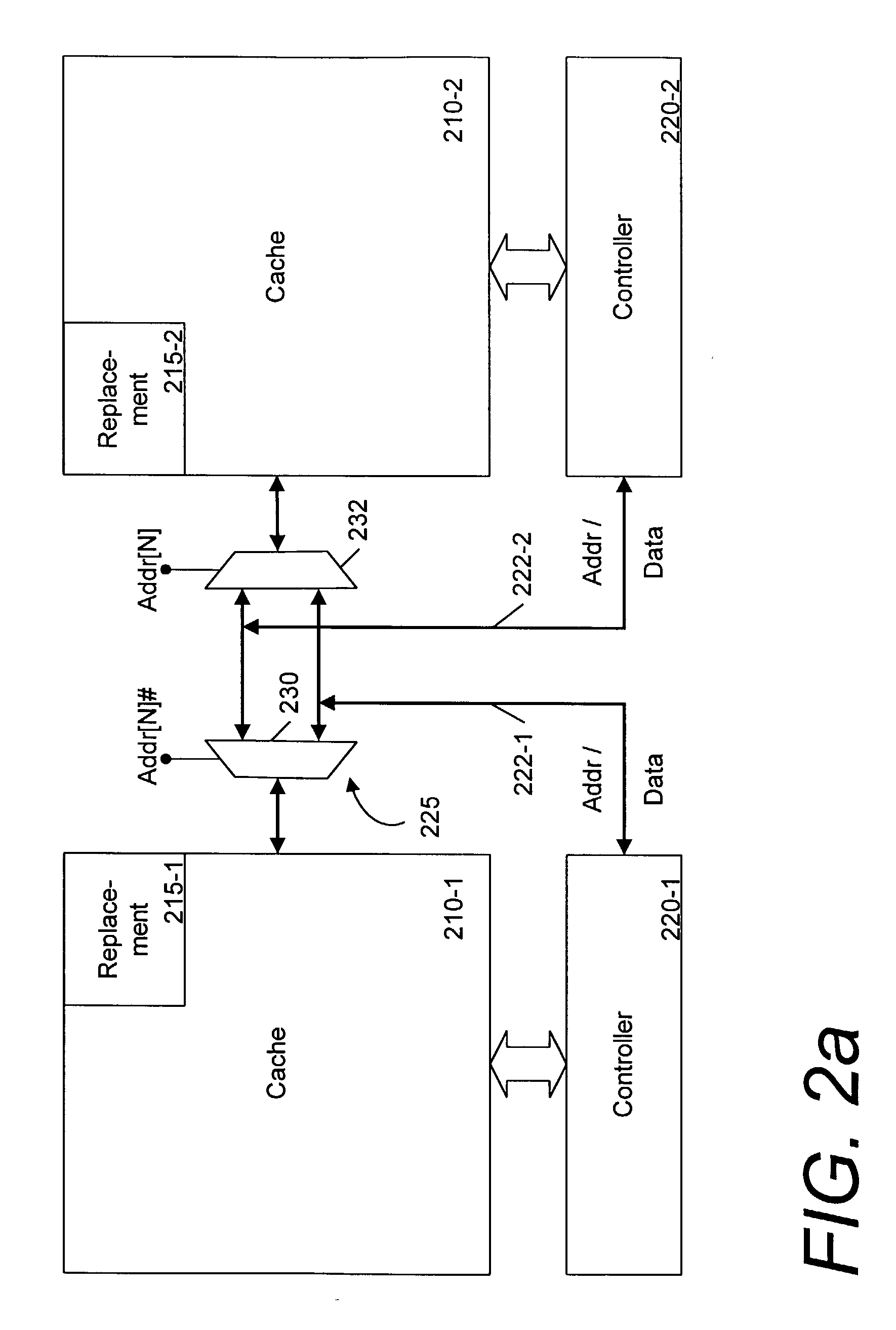

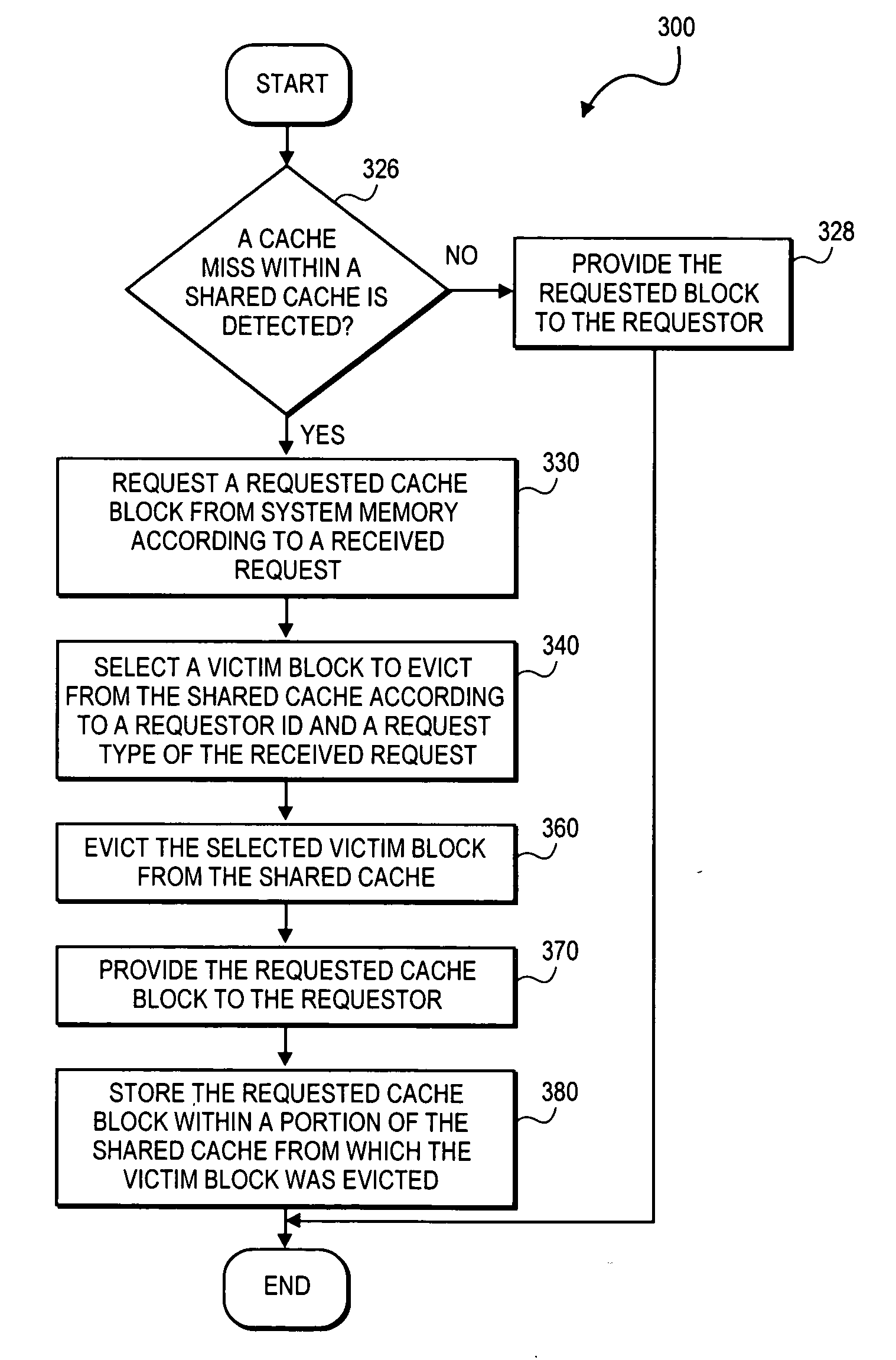

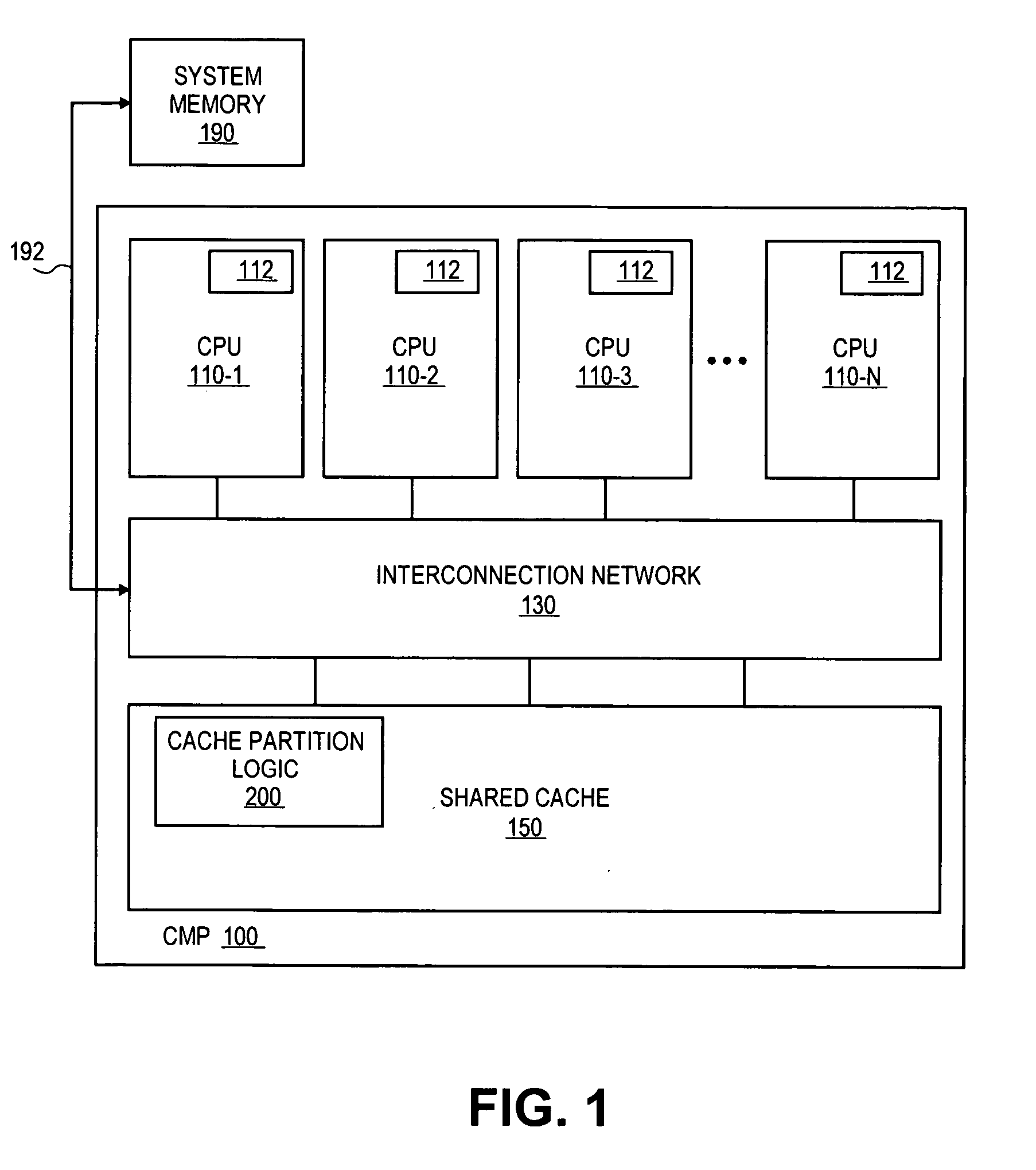

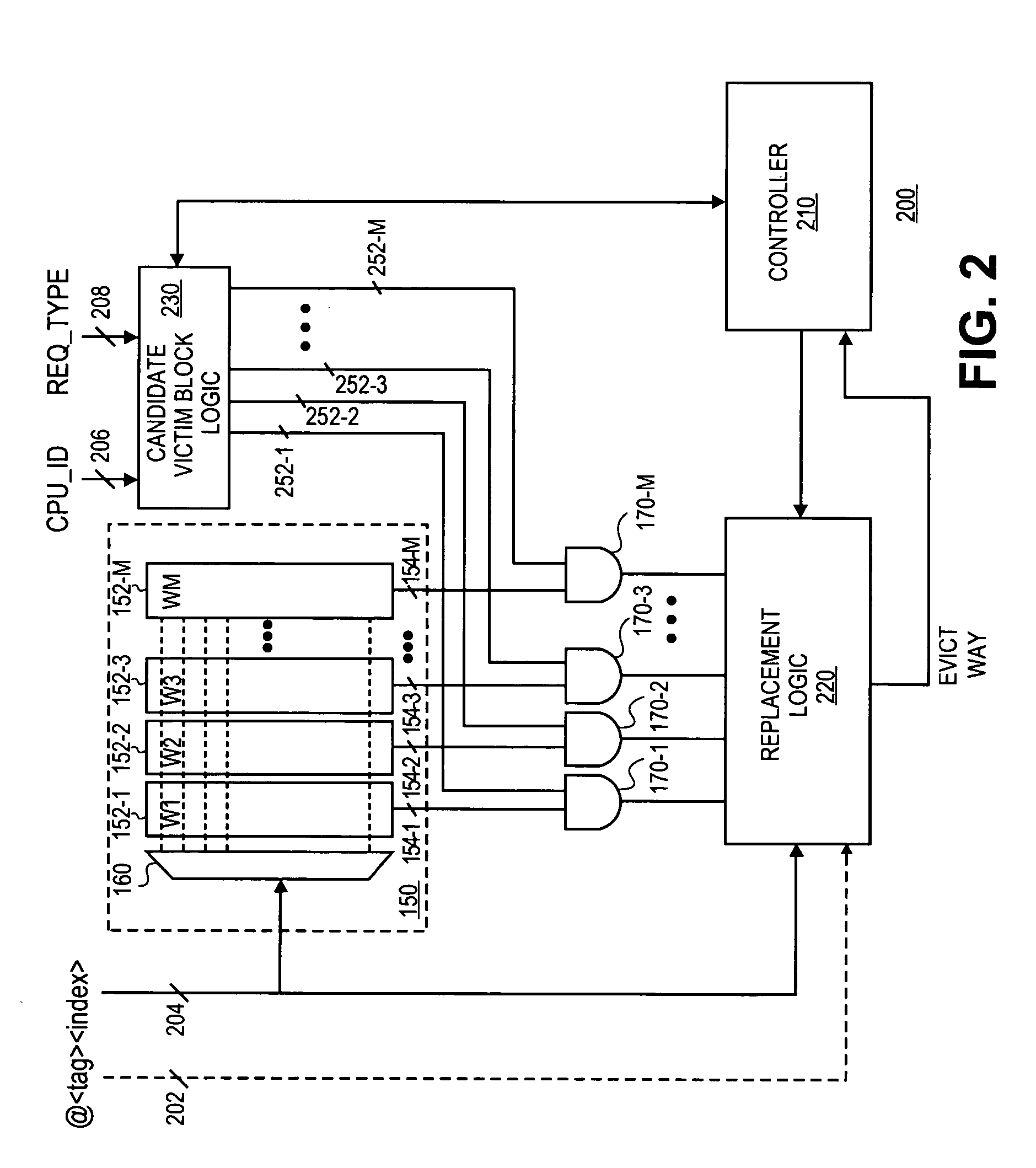

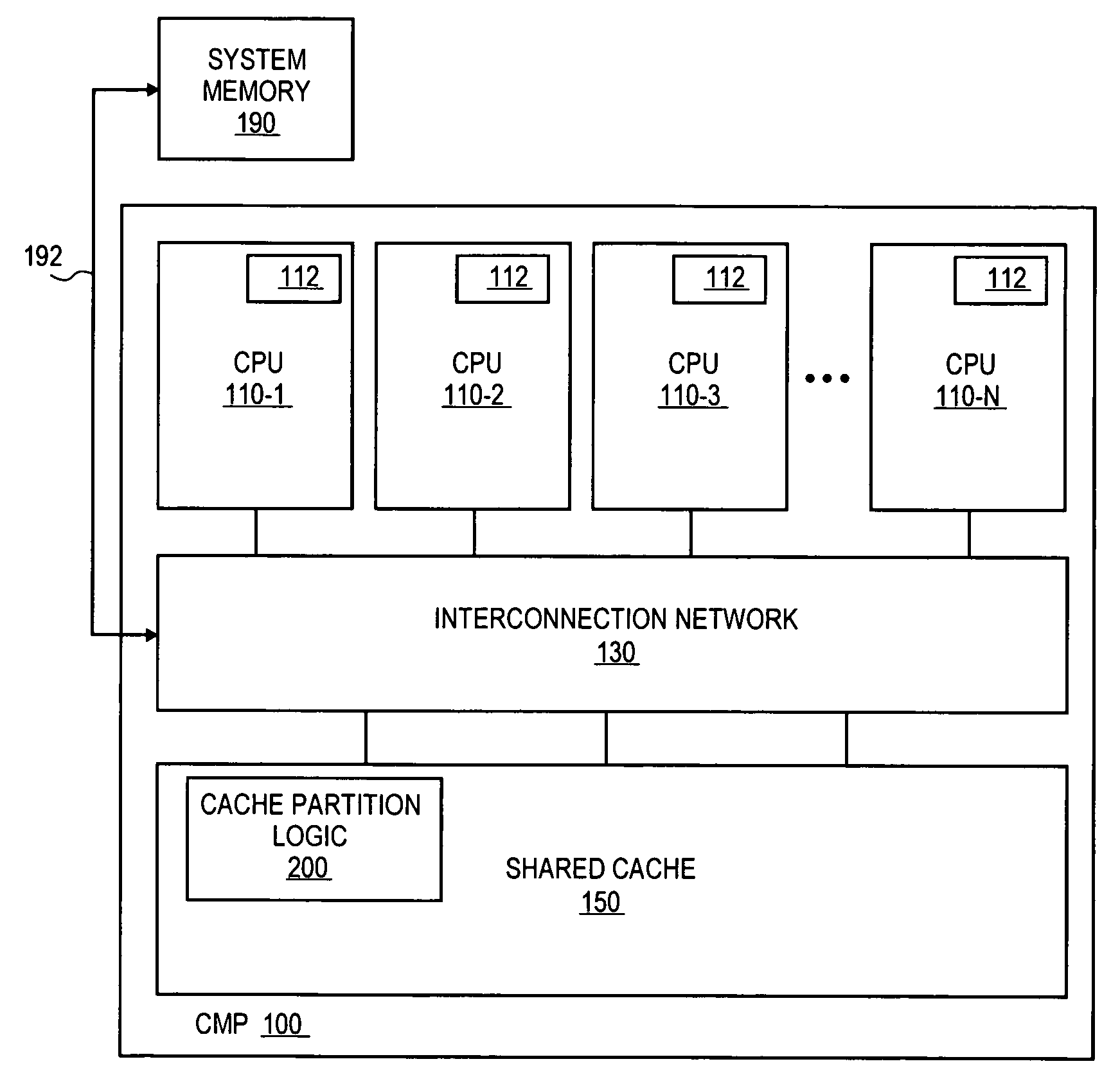

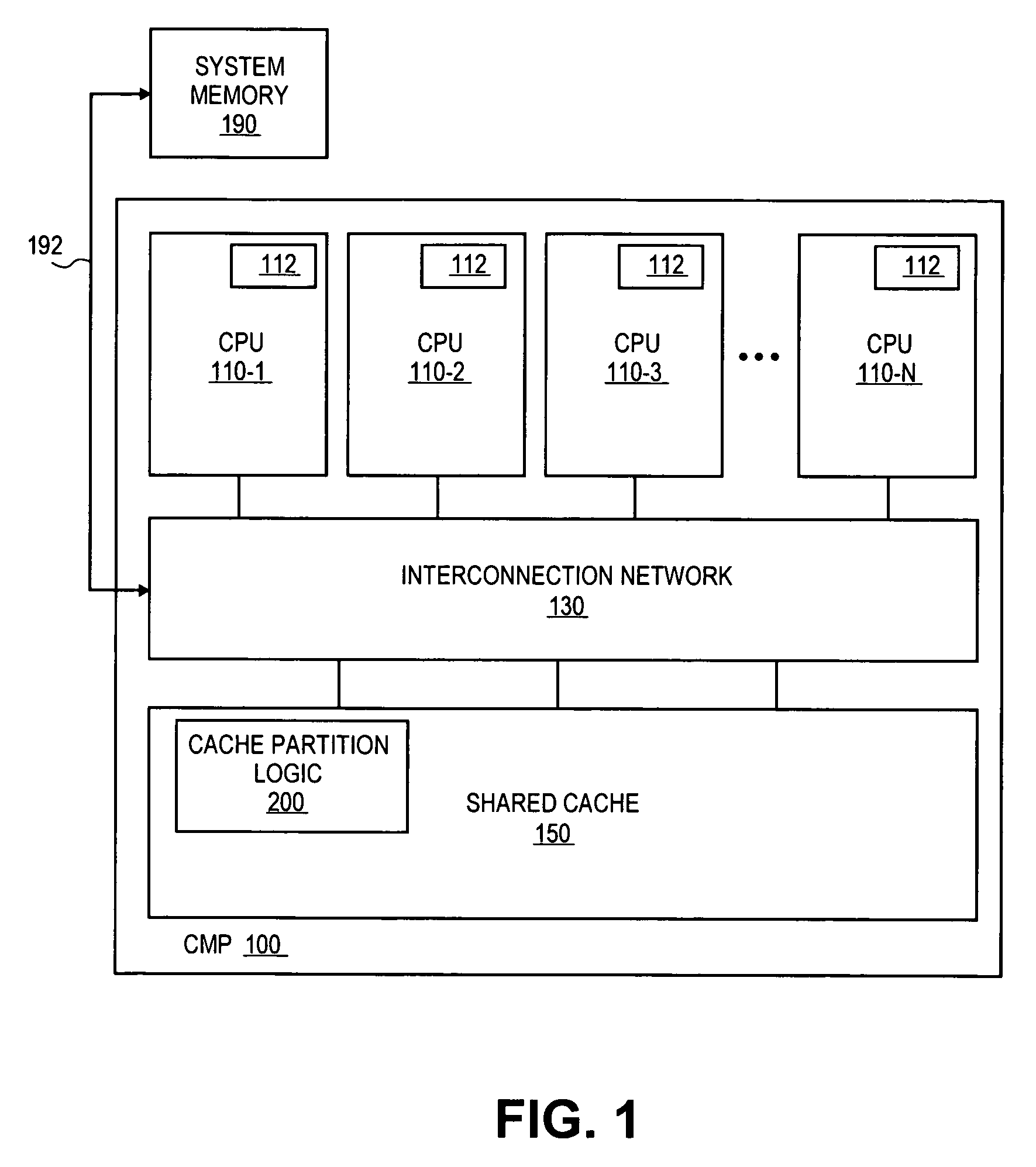

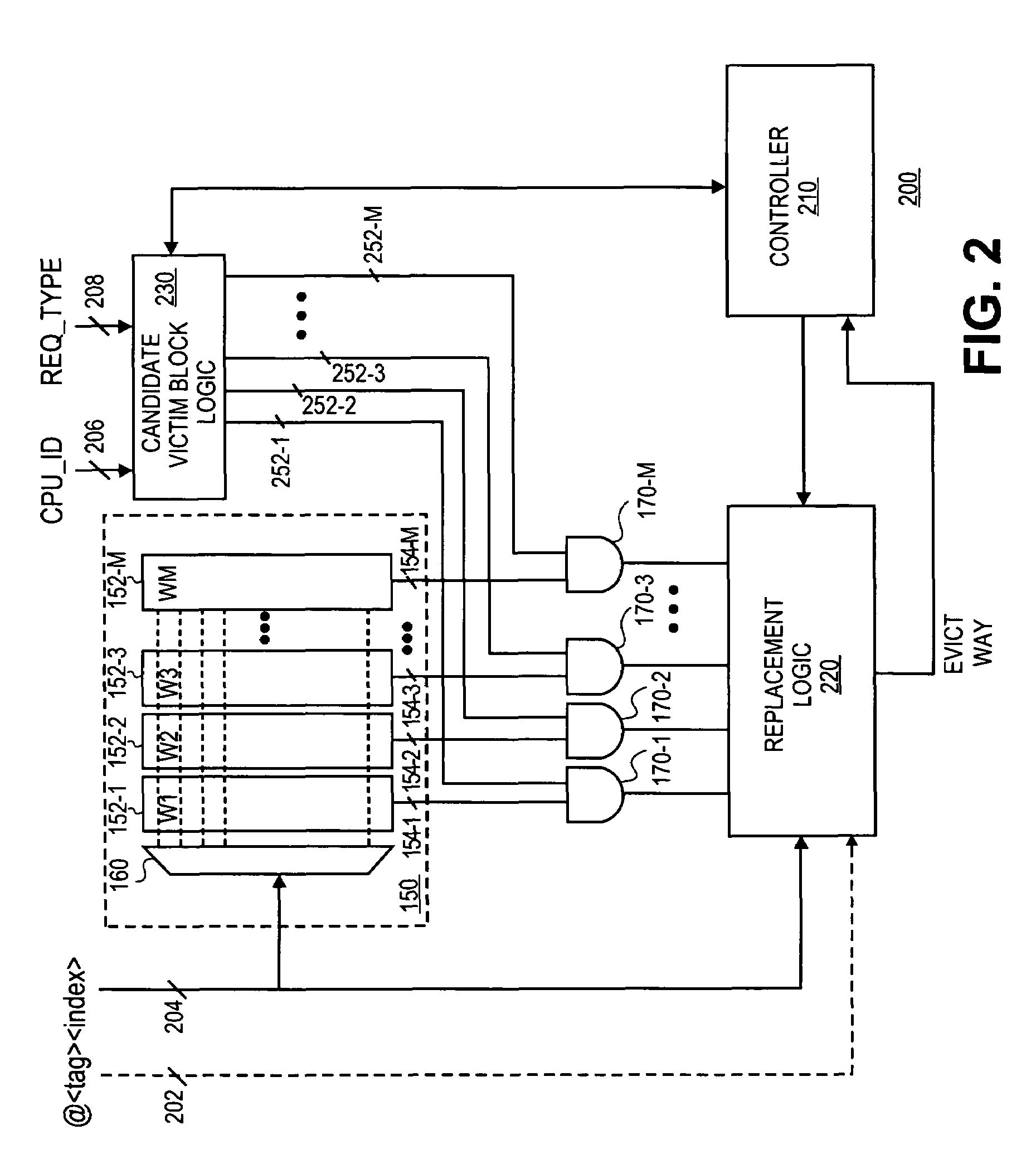

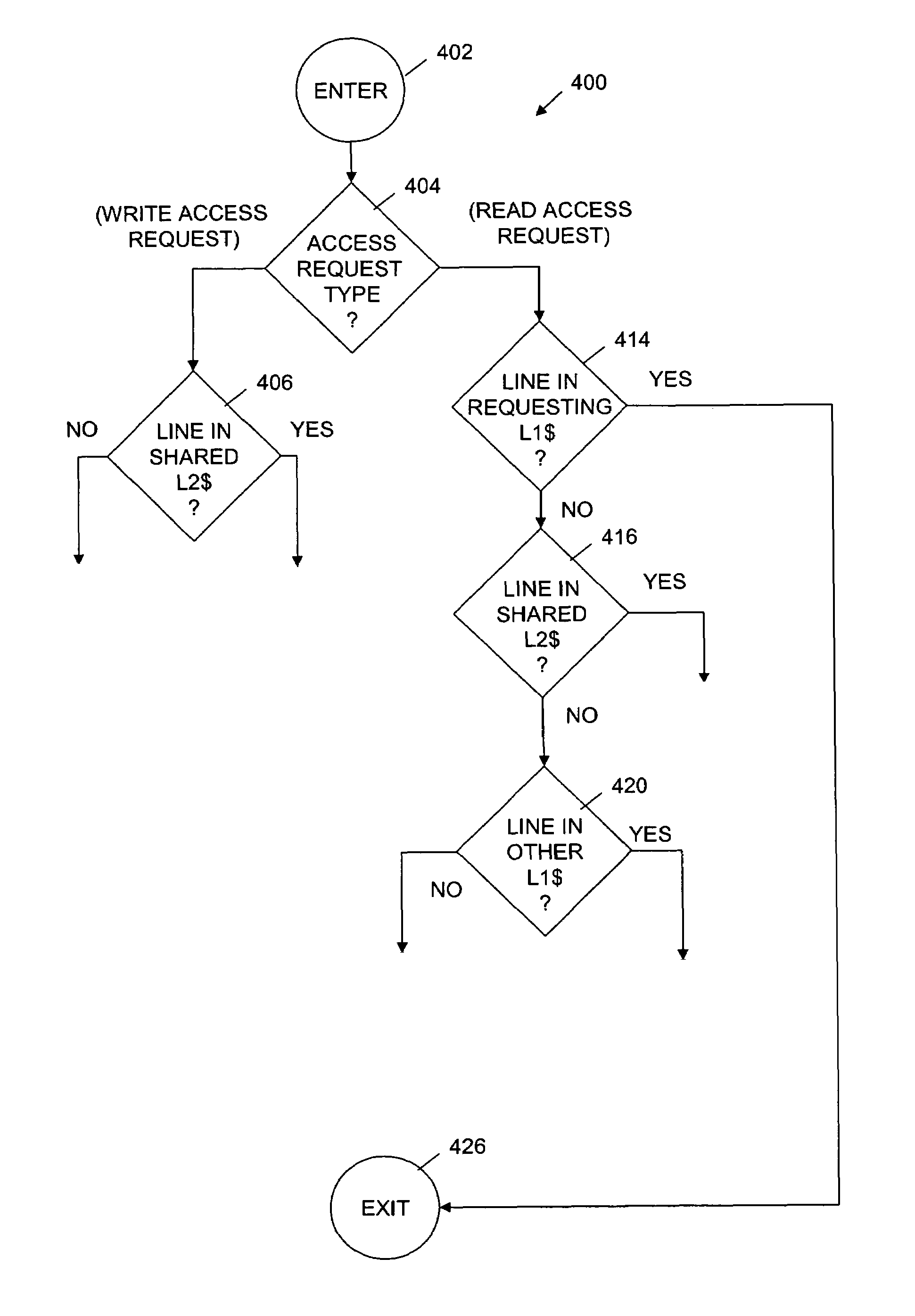

Apparatus and method for partitioning a shared cache of a chip multi-processor

InactiveUS20060004963A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingType selection

A method and apparatus for partitioning a shared cache of a chip multi-processor are described. In one embodiment, the method includes a request of a cache block from system memory if a cache miss within a shared cache is detected according to a received request from a processor. Once the cache block is requested, a victim block within the shared cache is selected according to a processor identifier and a request type of the received request. In one embodiment, selection of the victim block according to a processor identifier and request type is based on a partition of a set-associative, shared cache to limit the selection of the victim block from a subset of available cache ways according to the cache partition. Other embodiments are described and claimed.

Owner:INTEL CORP

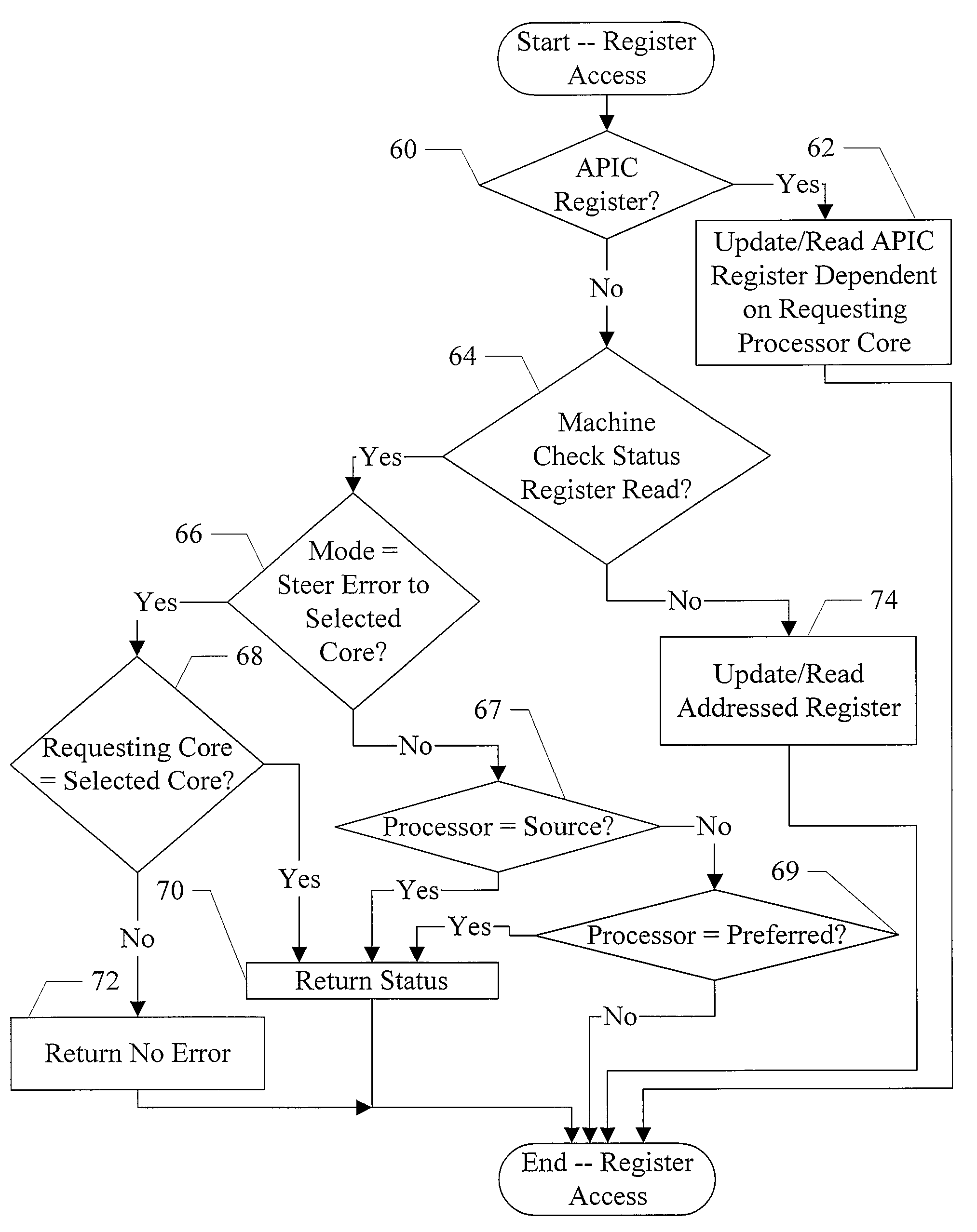

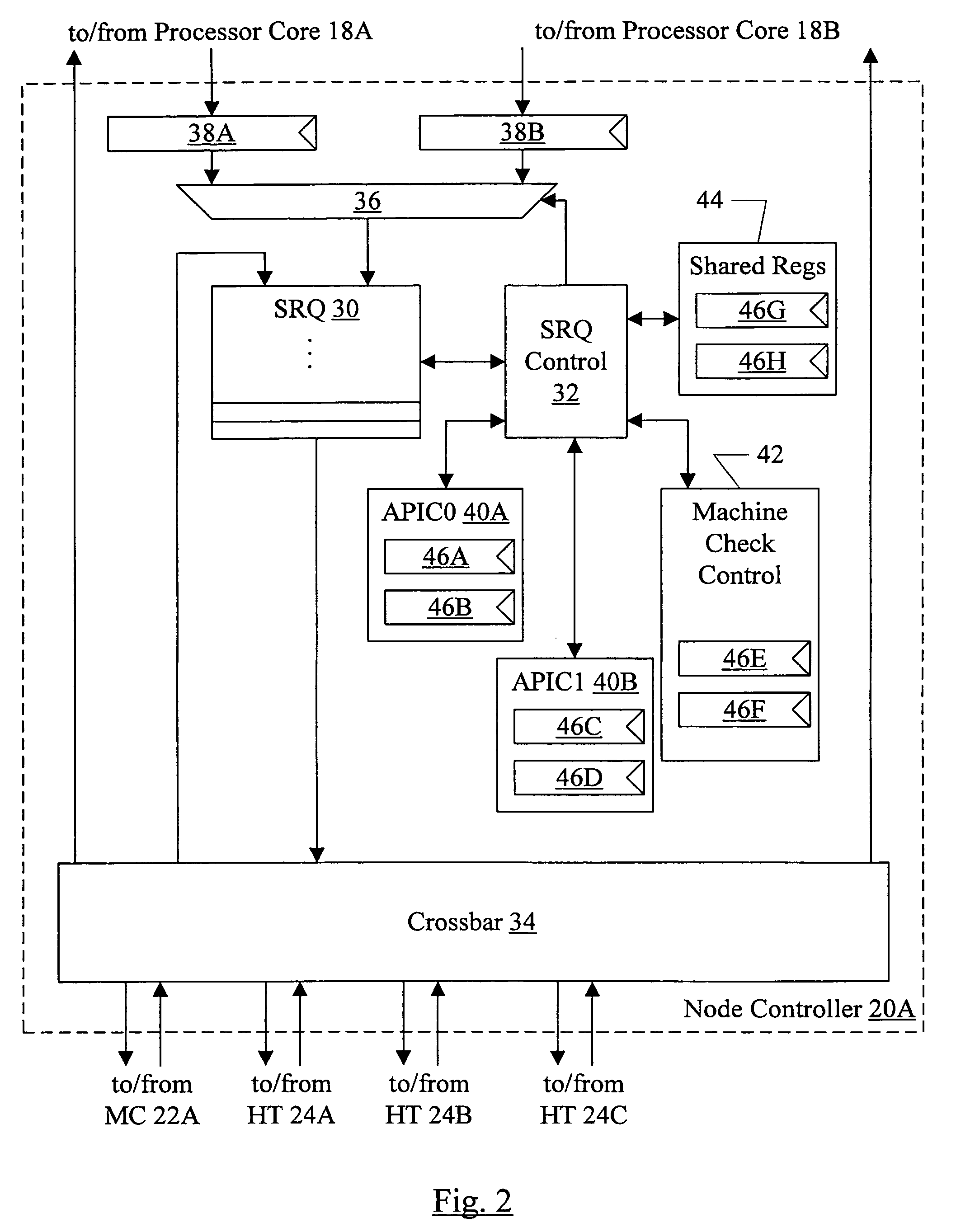

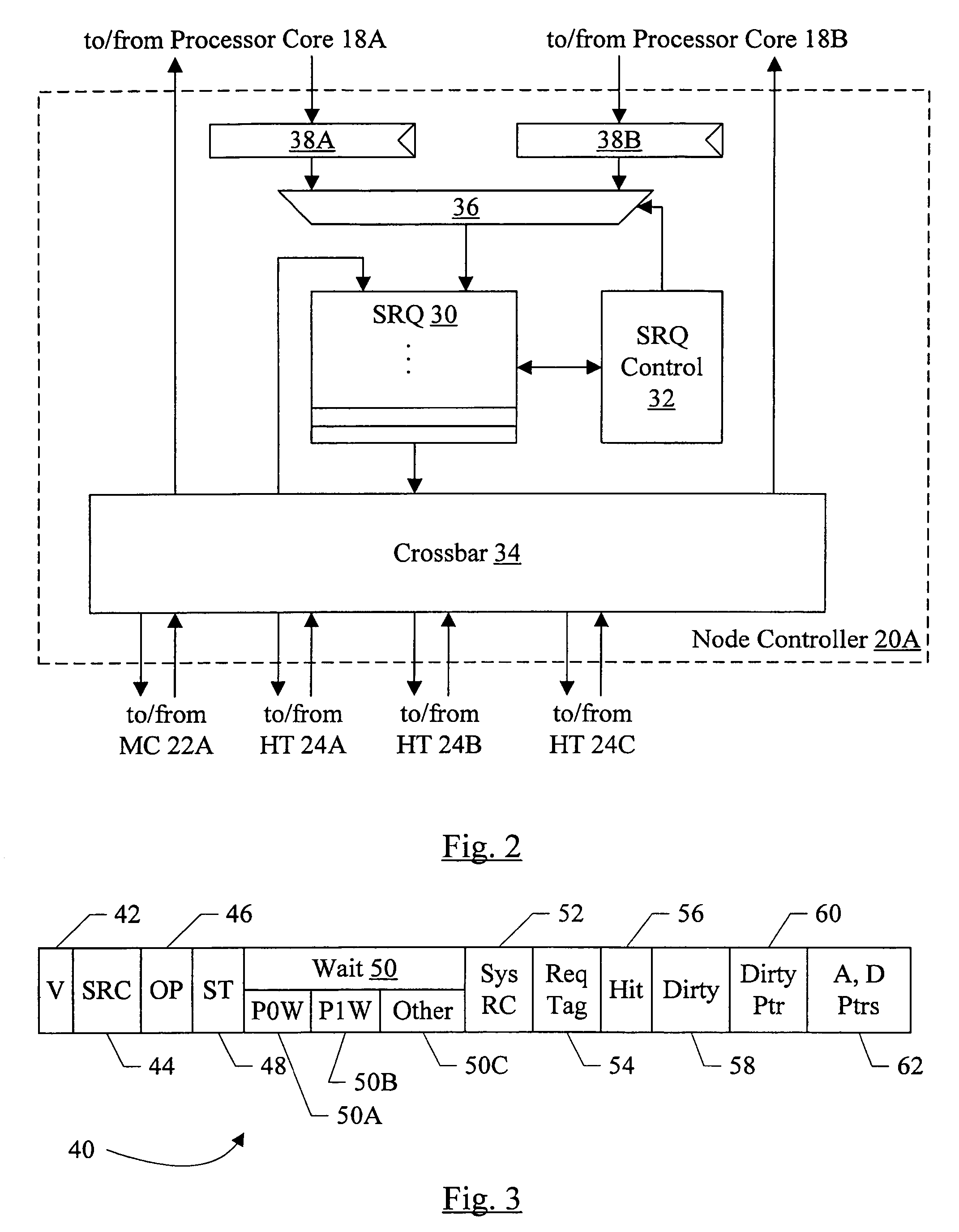

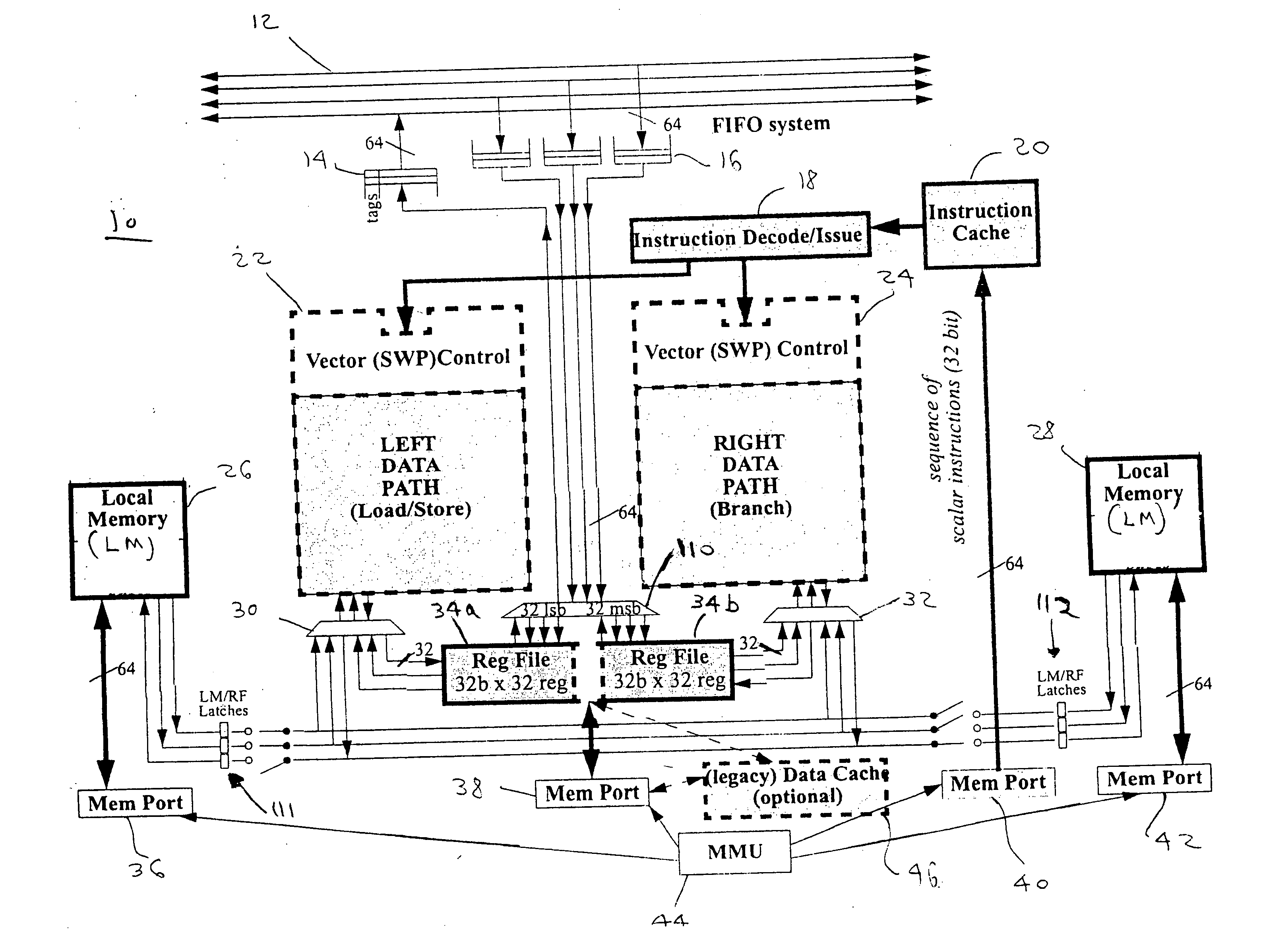

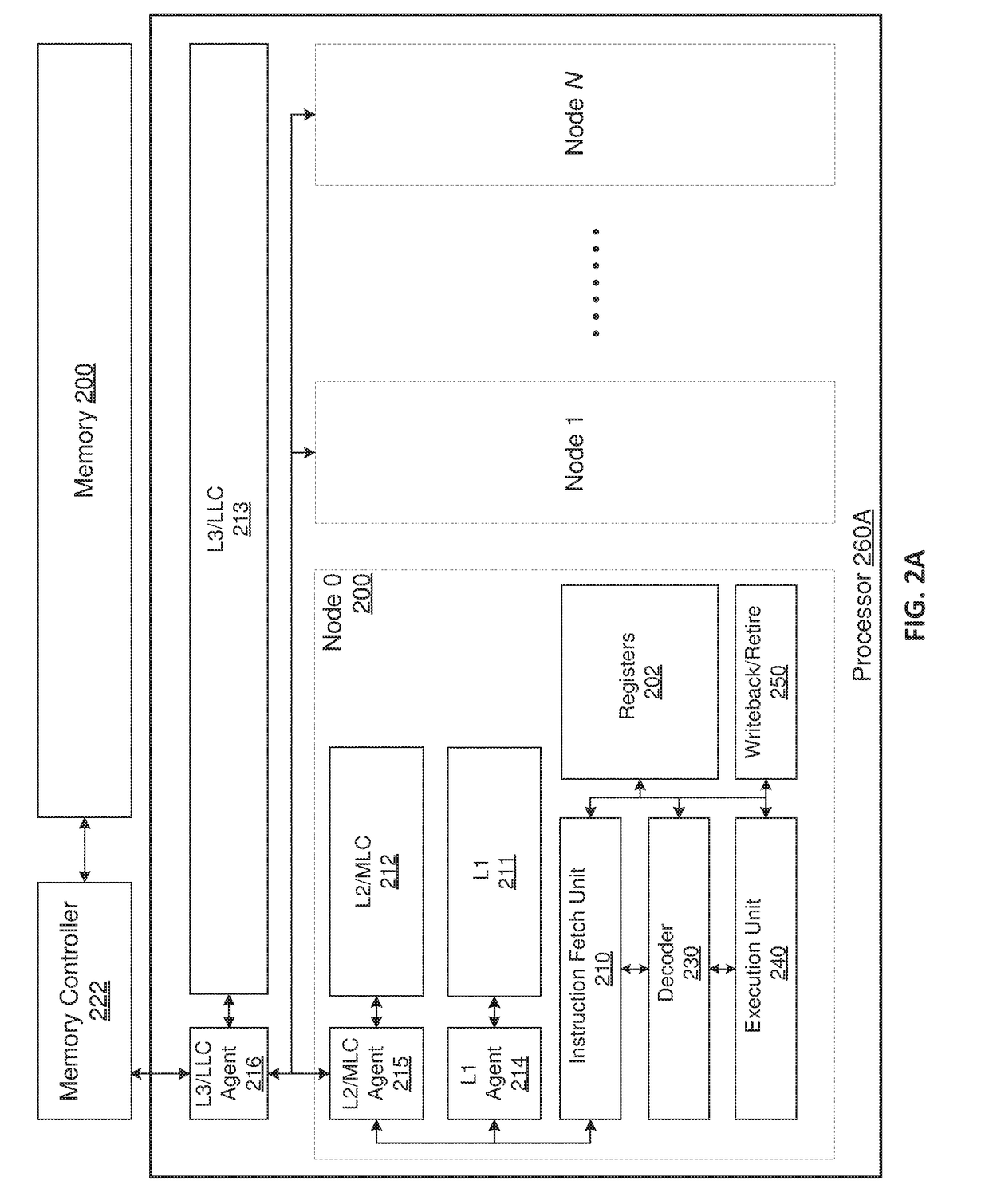

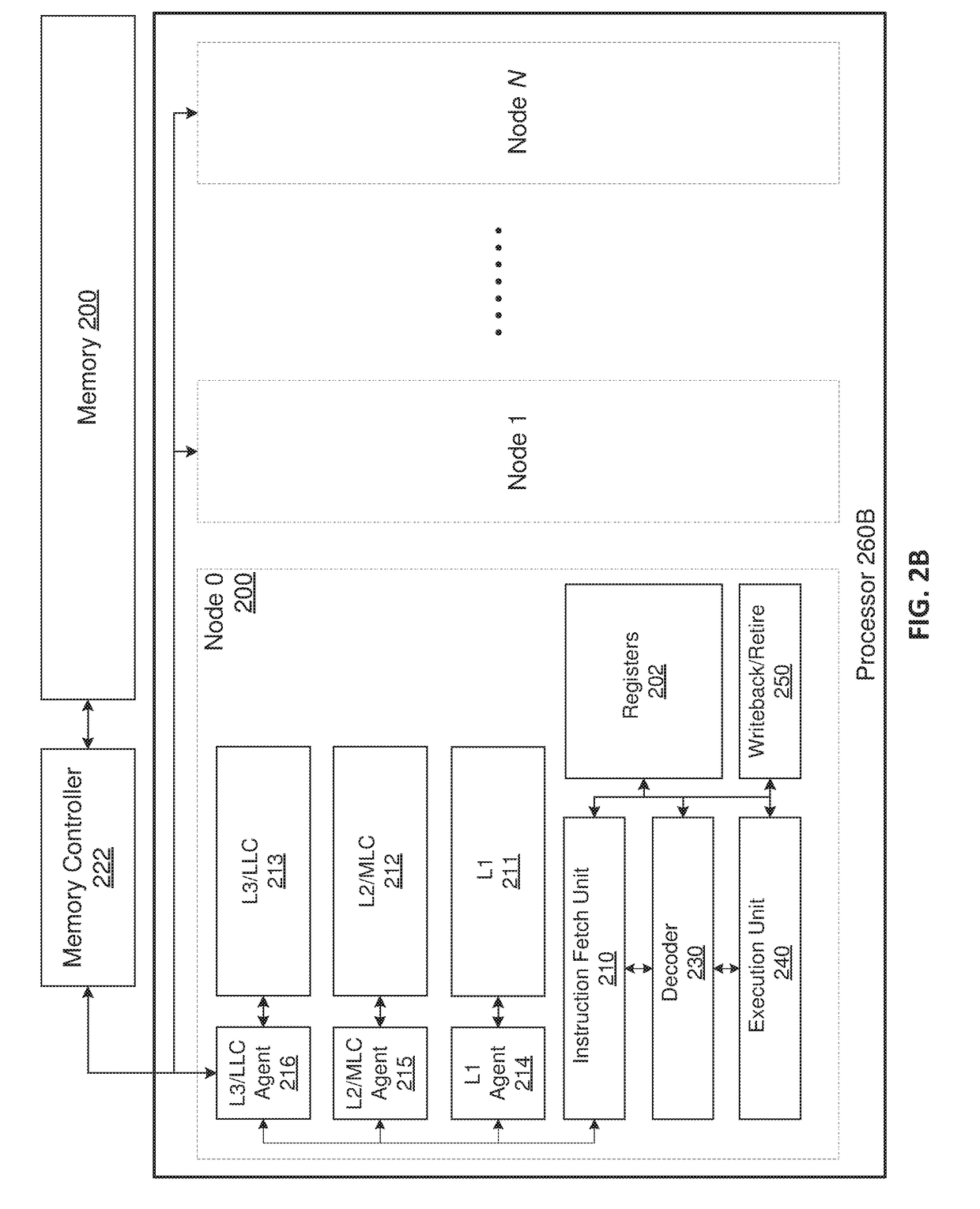

Shared resources in a chip multiprocessor

ActiveUS7383423B1Single instruction multiple data multiprocessorsElectric digital data processingProcessor registerParallel computing

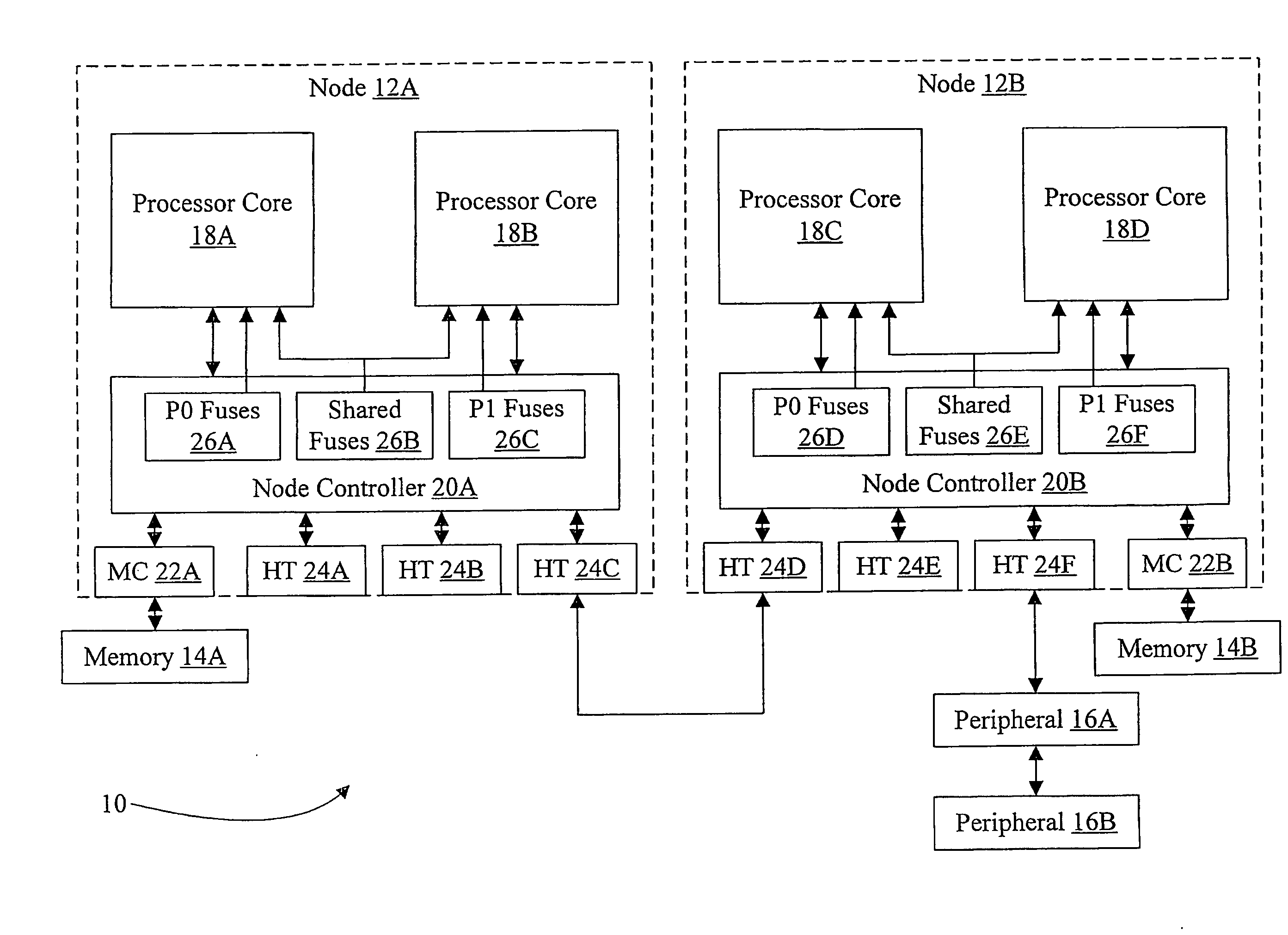

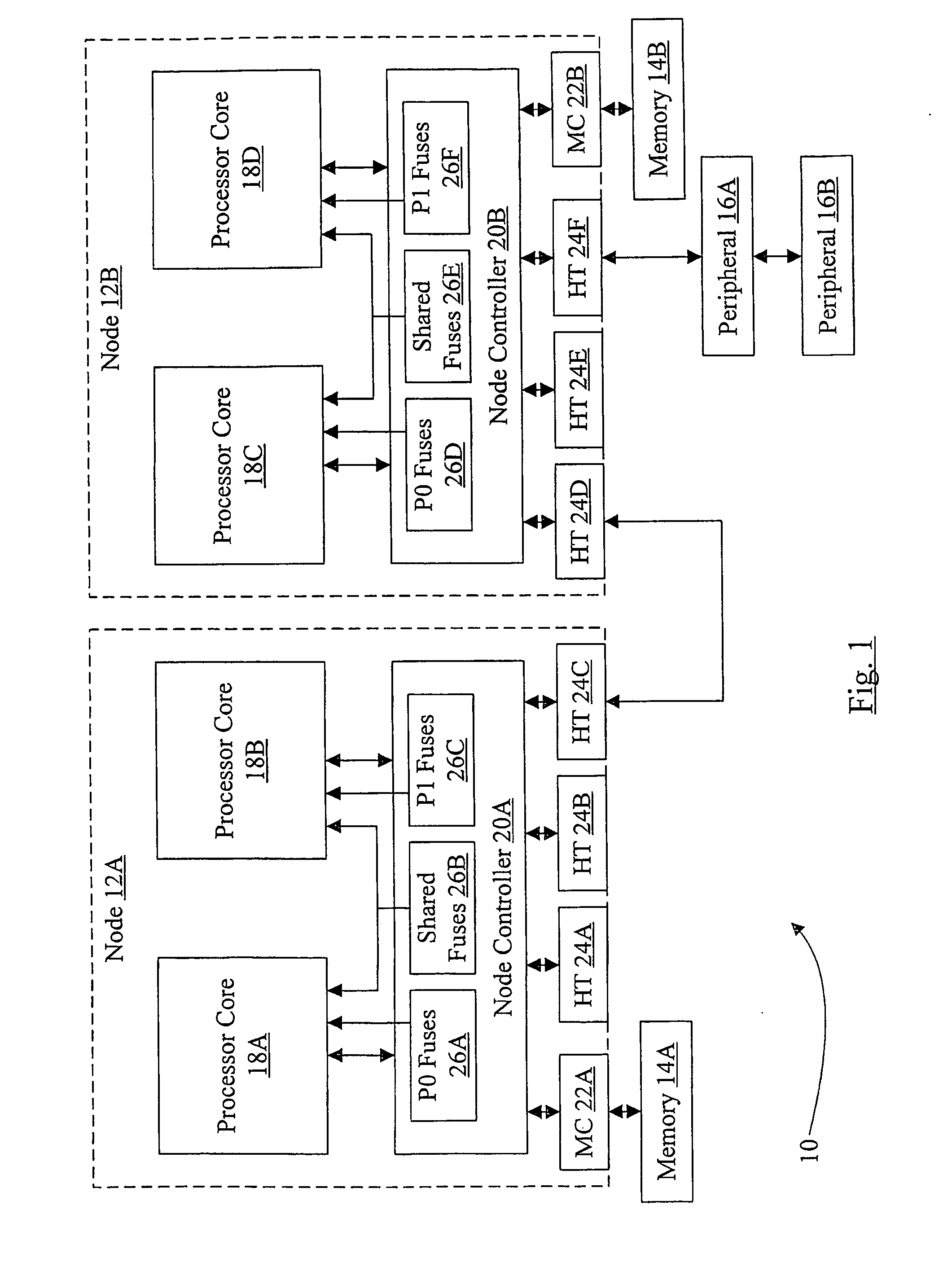

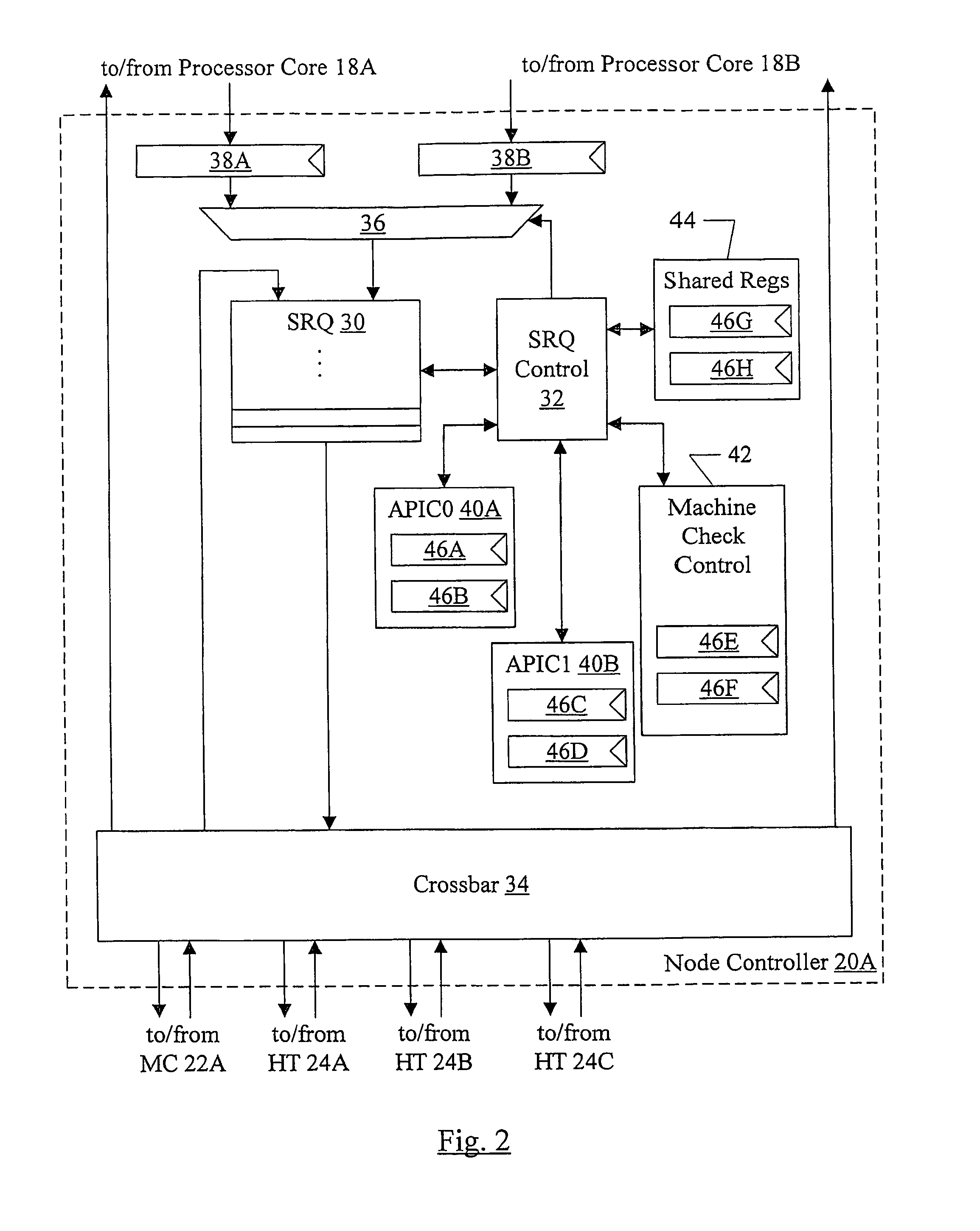

In one embodiment, a node comprises a plurality of processor cores and a node controller configured to receive a first read operation addressing a first register. The node controller is configured to return a first value in response to the first read operation, dependent on which processor core transmitted the first read operation. In another embodiment, the node comprises the processor cores and the node controller. The node controller comprises a queue shared by the processor cores. The processor cores are configured to transmit communications at a maximum rate of one every N clock cycles, where N is an integer equal to a number of the processor cores. In still another embodiment, a node comprises the processor cores and a plurality of fuses shared by the processor cores. In some embodiments, the node components are integrated onto a single integrated circuit chip (e.g. a chip multiprocessor).

Owner:GLOBALFOUNDRIES US INC

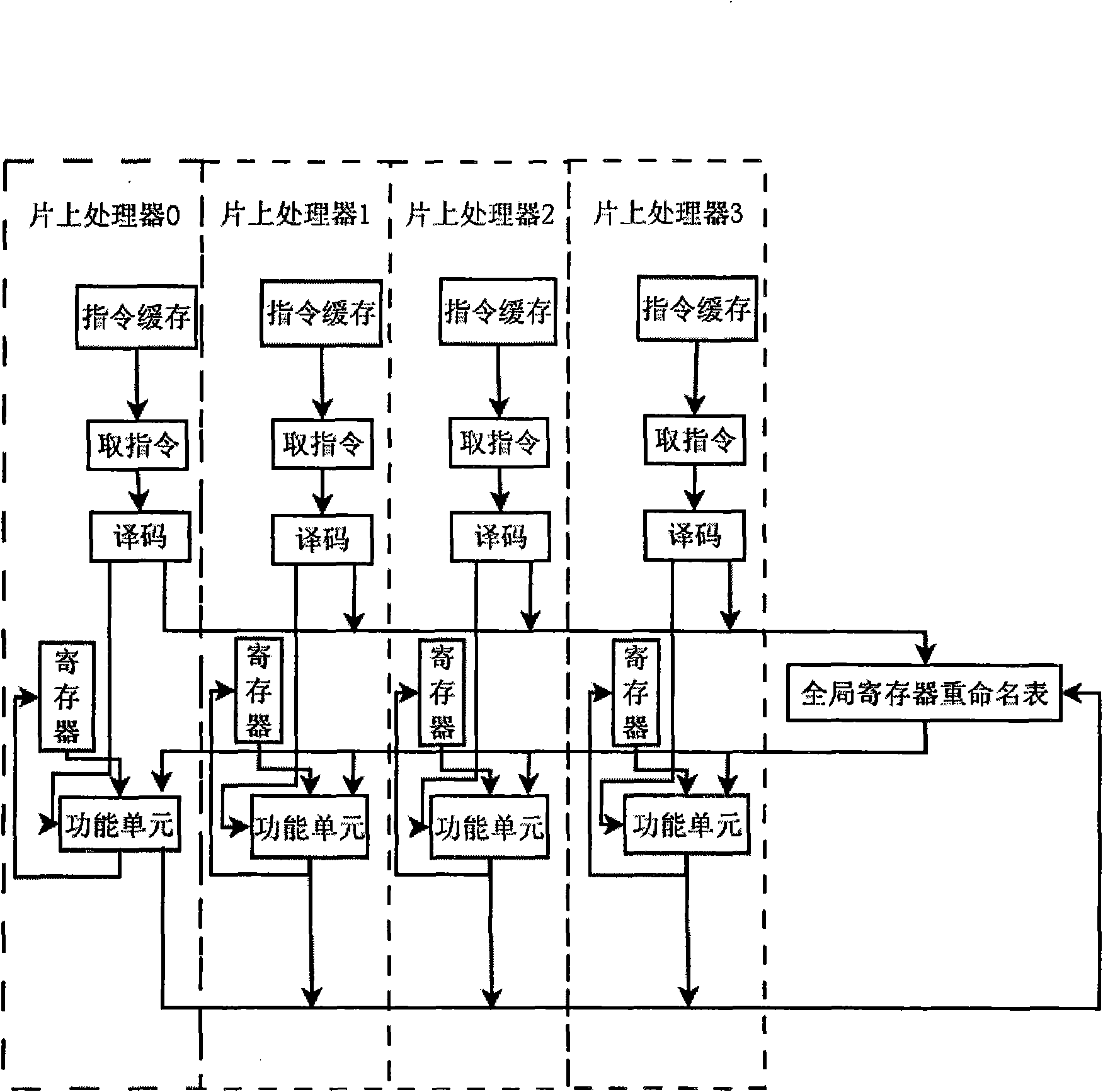

Implementation method of rename table of global register under on-chip multi-processor system framework

InactiveCN101582025AResolve dependenciesReduce trafficConcurrent instruction executionHardware structureProcessing Instruction

The invention relates to the technical field of on-chip multi-processor system structure, aiming at providing an implementation method of a rename table of a global register under on-chip multi-processor system framework. The method comprises the steps: designing the rename table of the global register; processing the dependency relationship 'writing-after-writing' of command; processing the dependency relationship 'writing-after-reading' of command; acquiring operand; writing back results; and submitting the command. As the rename table of the global register is used by the method, the dependency relationship of the commands of different processors can be recorded, and the dependency of 'writing-after-reading' and 'writing-after-writing' can be solved; the operand of the commands of the different processors can be maintained and transmitted through the rename table of the global register, so that the internuclear communication volume can be reduced; as hardware configuration used by the rename table of the global register is a logical table, the structure is simple, the complexity of hardware is low, the area of the rename table of the global register is only 1% of that of one on-chip processor, and tiny area cost is caused.

Owner:ZHEJIANG UNIV

Cache sharing for a chip multiprocessor or multiprocessing system

InactiveUS7076609B2Energy efficient ICTMemory adressing/allocation/relocationMultiprocessingChip multi processor

Owner:INTEL CORP

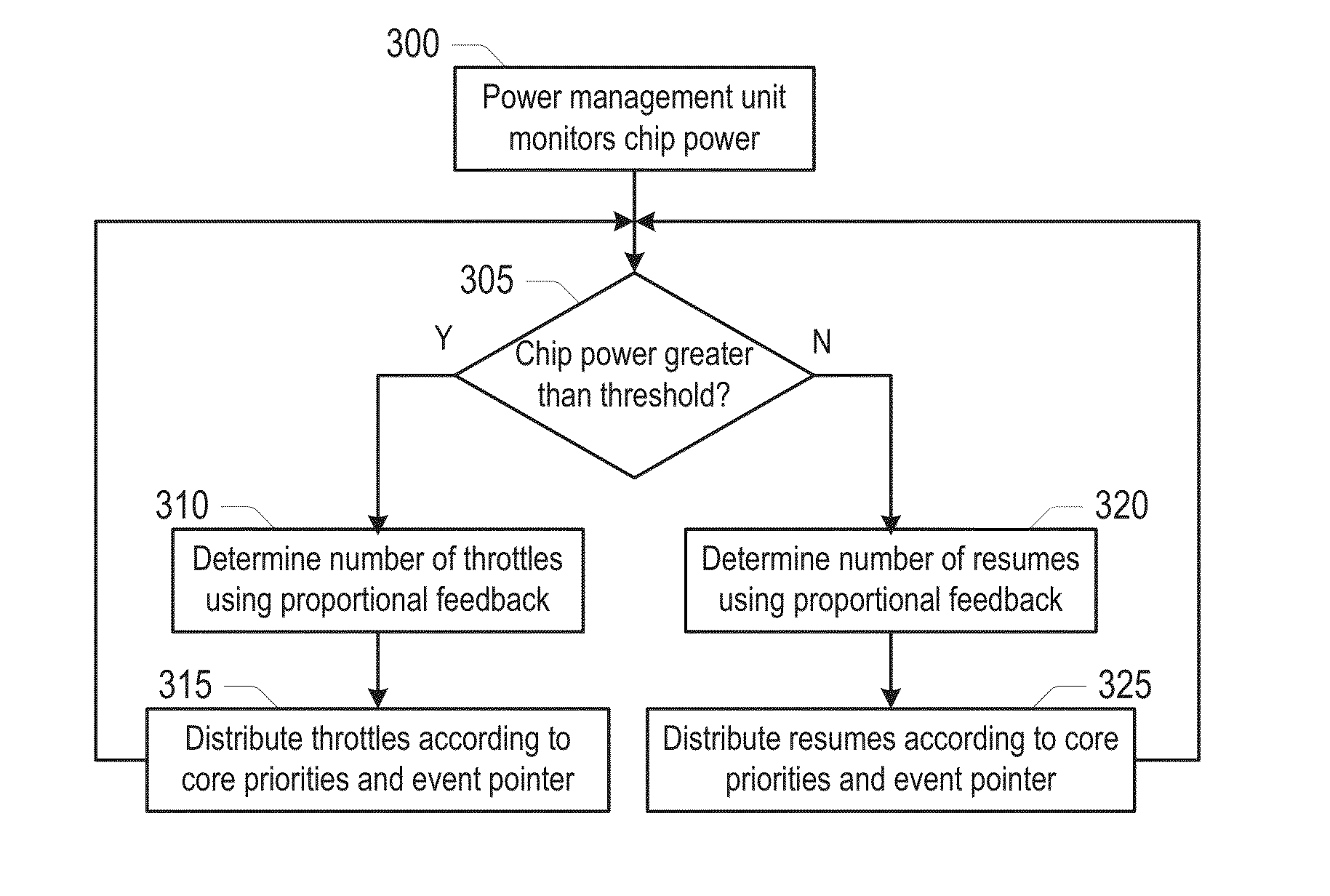

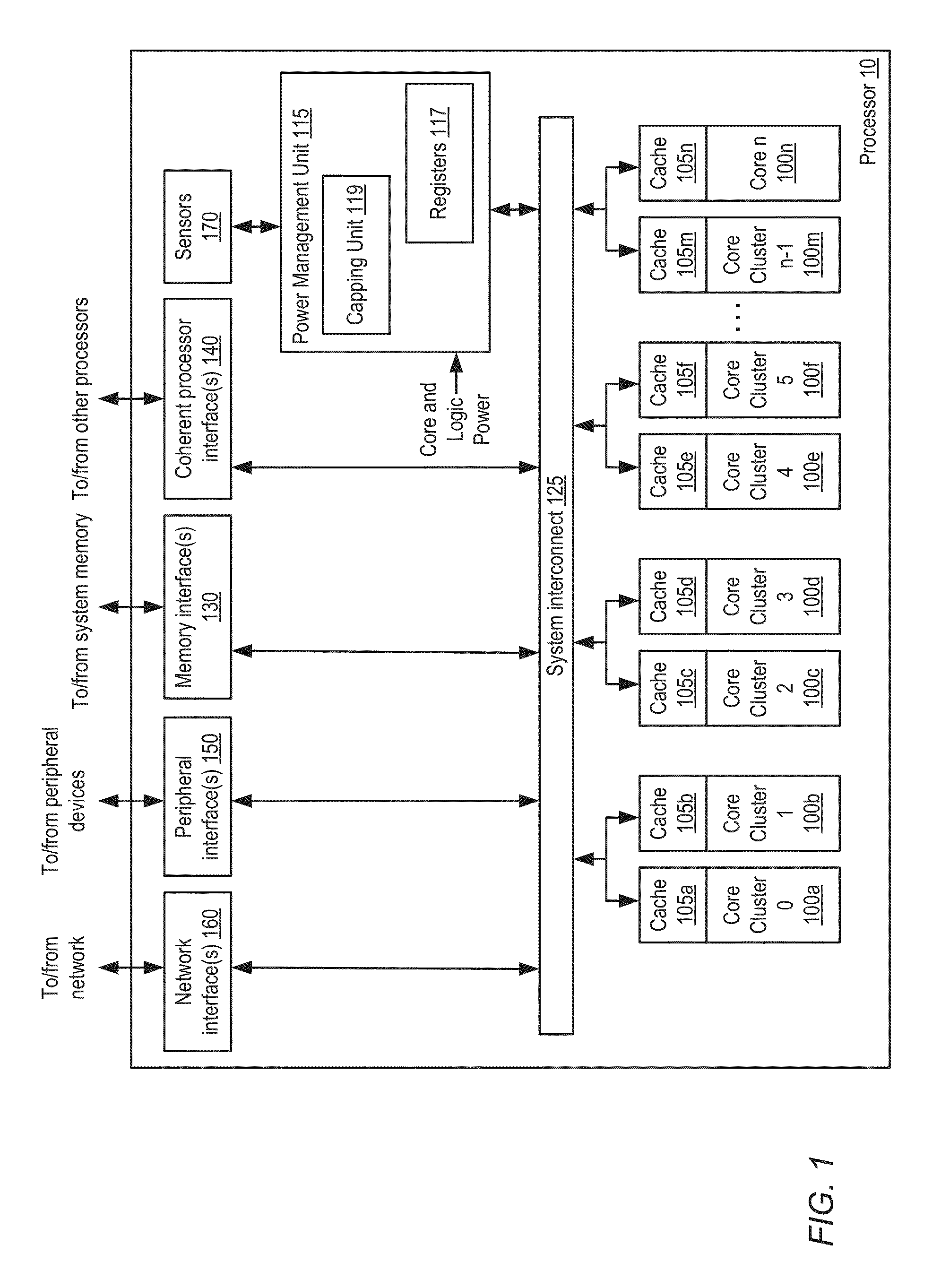

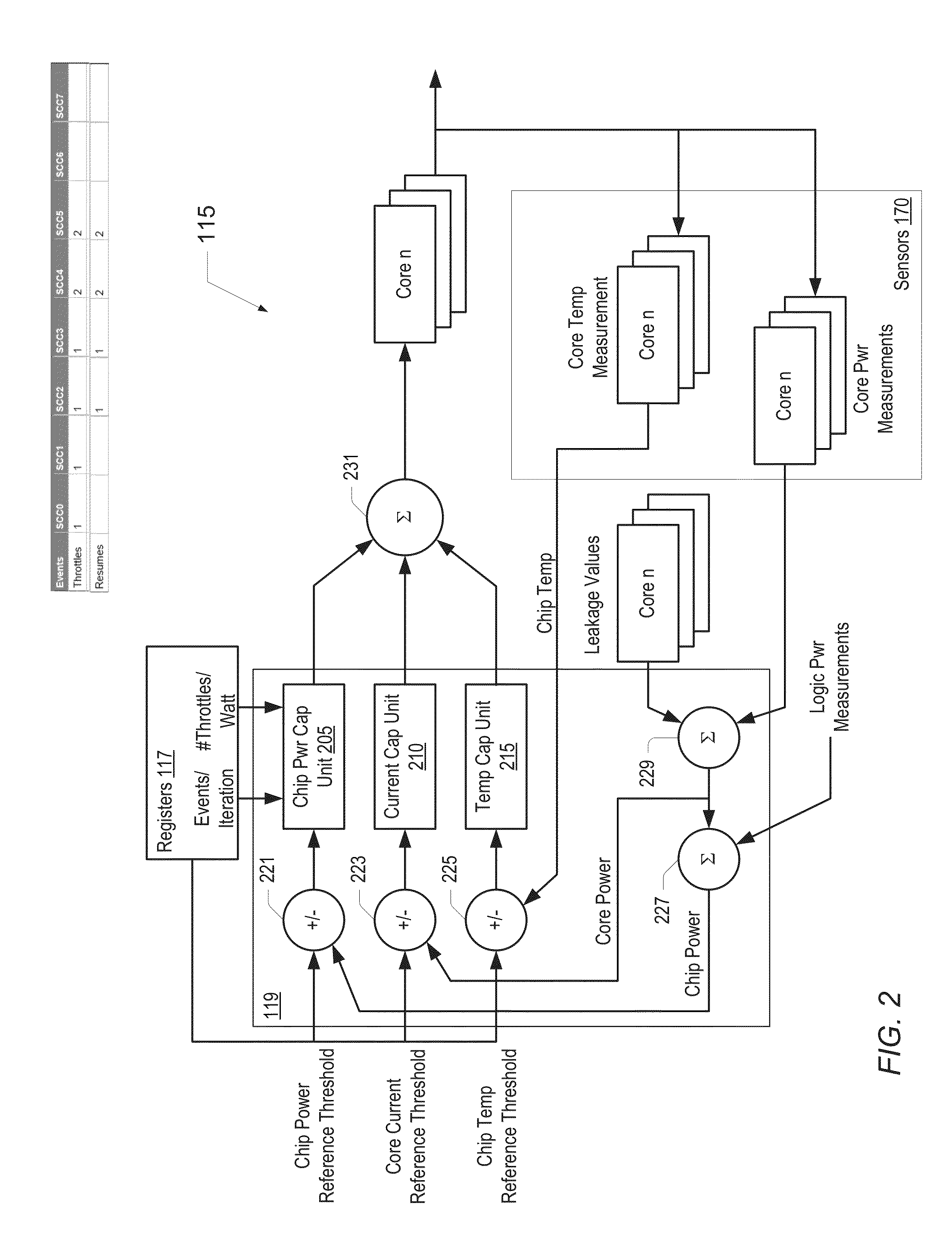

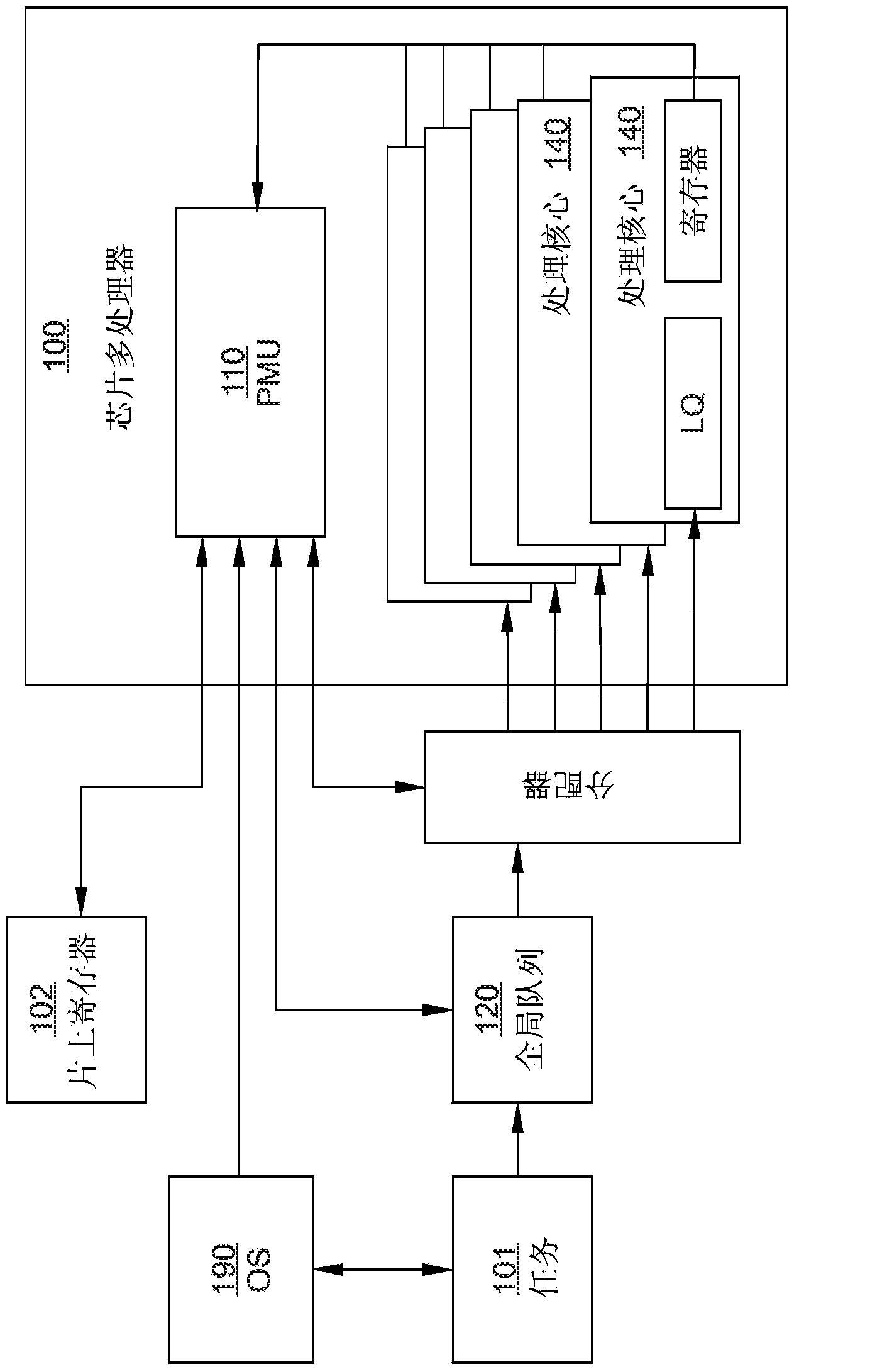

System and method for managing power in a chip multiprocessor using a proportional feedback mechanism

ActiveUS20150370303A1Lower processor frequencyPower supply for data processingEnergy efficient computingPower Management UnitEngineering

A system includes a power management unit that may monitor the power consumed by a processor including a plurality of processor core. The power management unit may throttle or reduce the operating frequency of the processor cores by applying a number of throttle events in response to determining that the plurality of cores is operating above a predetermined power threshold during a given monitoring cycle. The number of throttle events may be based upon a relative priority of each of the plurality of processor cores to one another and an amount that the processor is operating above the predetermined power threshold. The number of throttle events may correspond to a portion of a total number of throttle events, and which may be dynamically determined during operation based upon a proportionality constant and the difference between the total power consumed by the processor and a predetermined power threshold.

Owner:ORACLE INT CORP

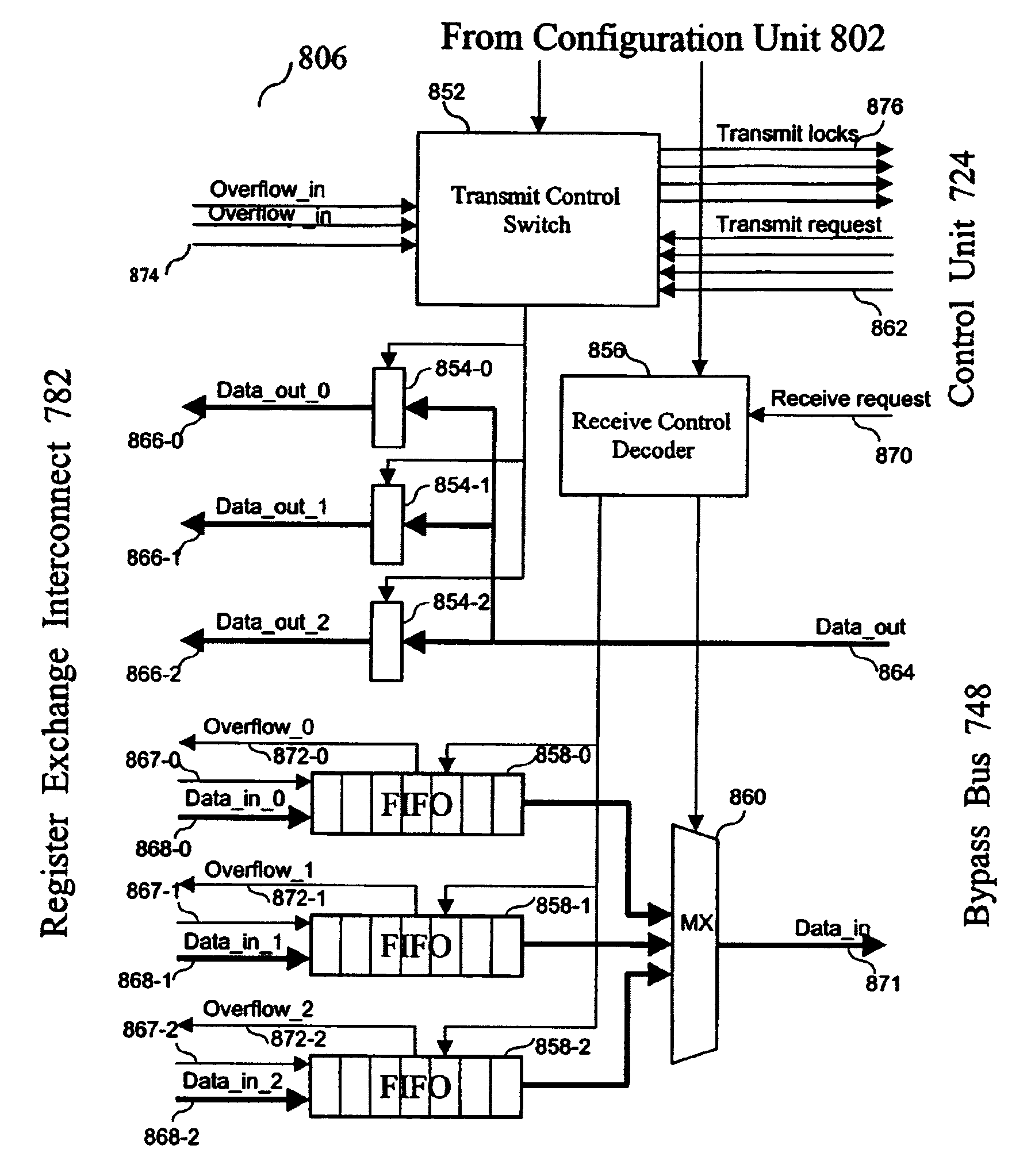

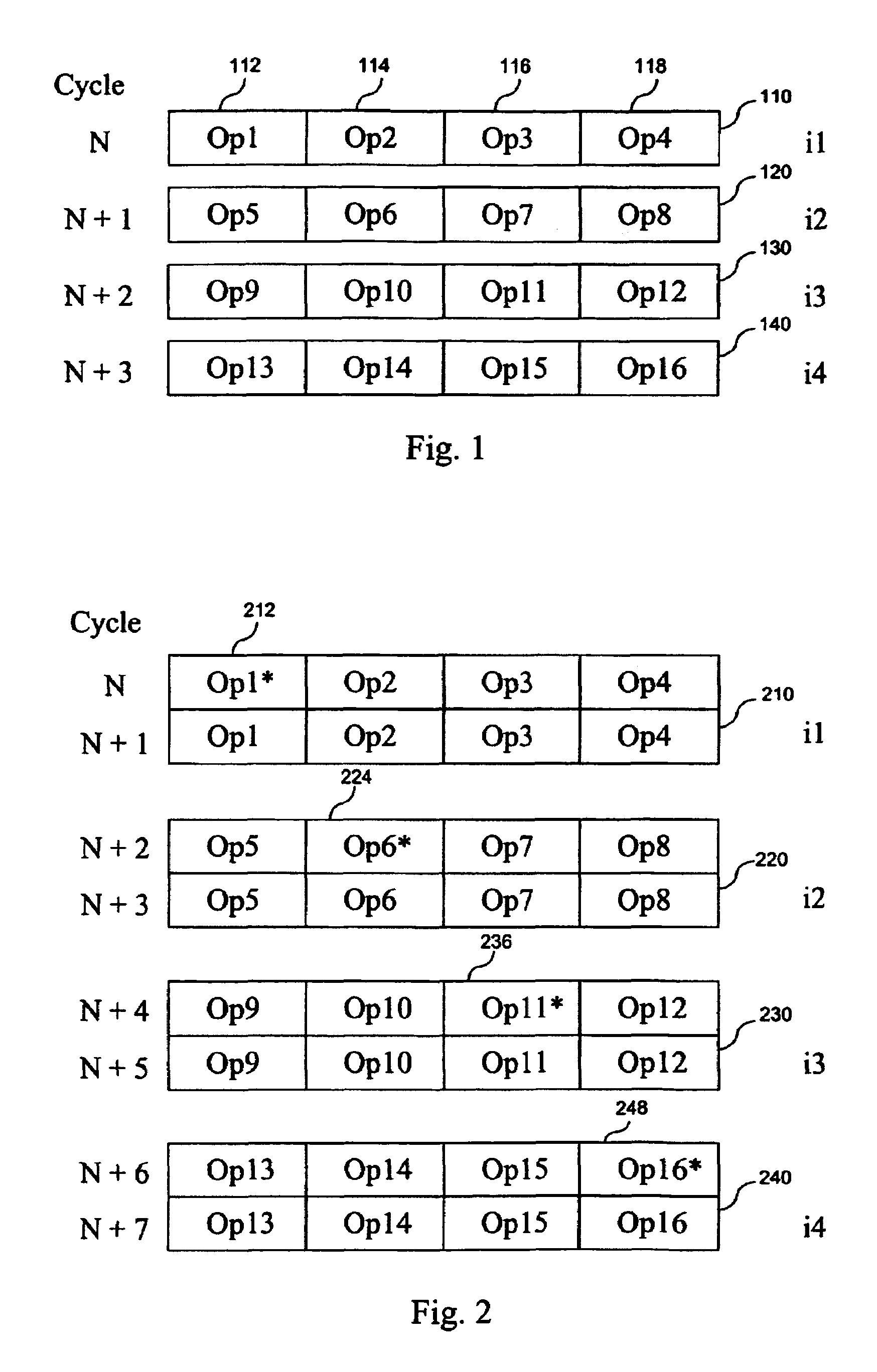

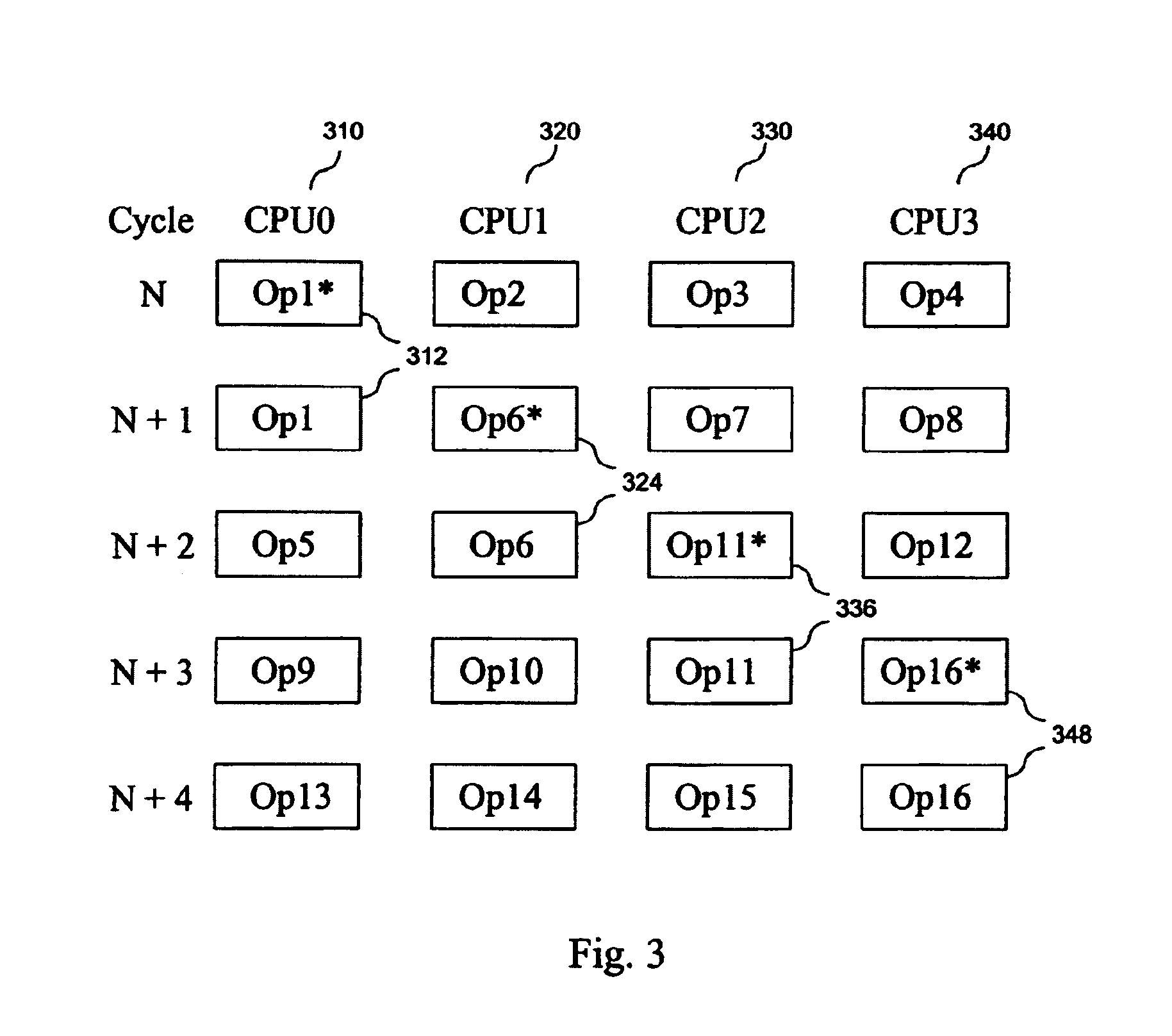

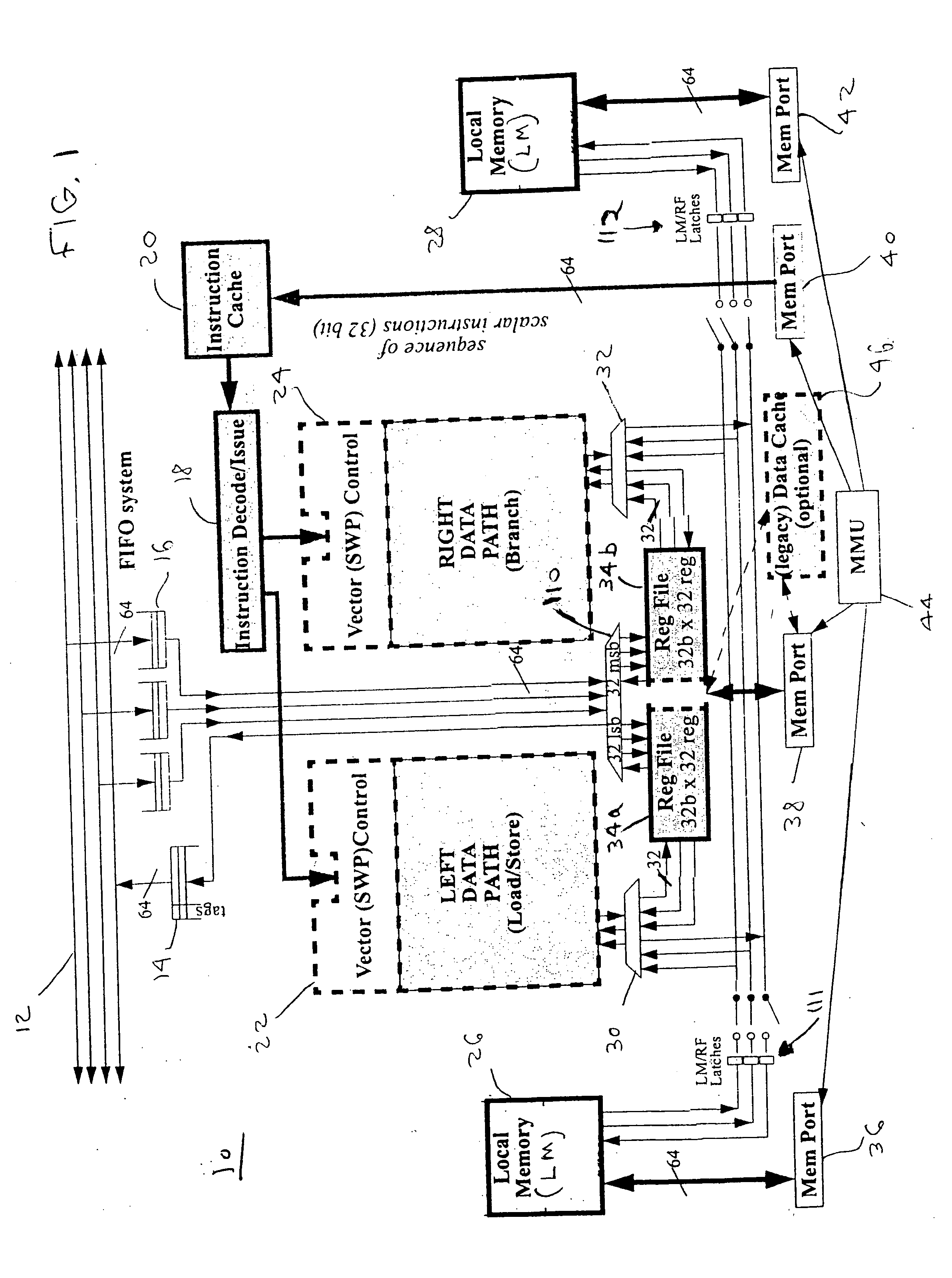

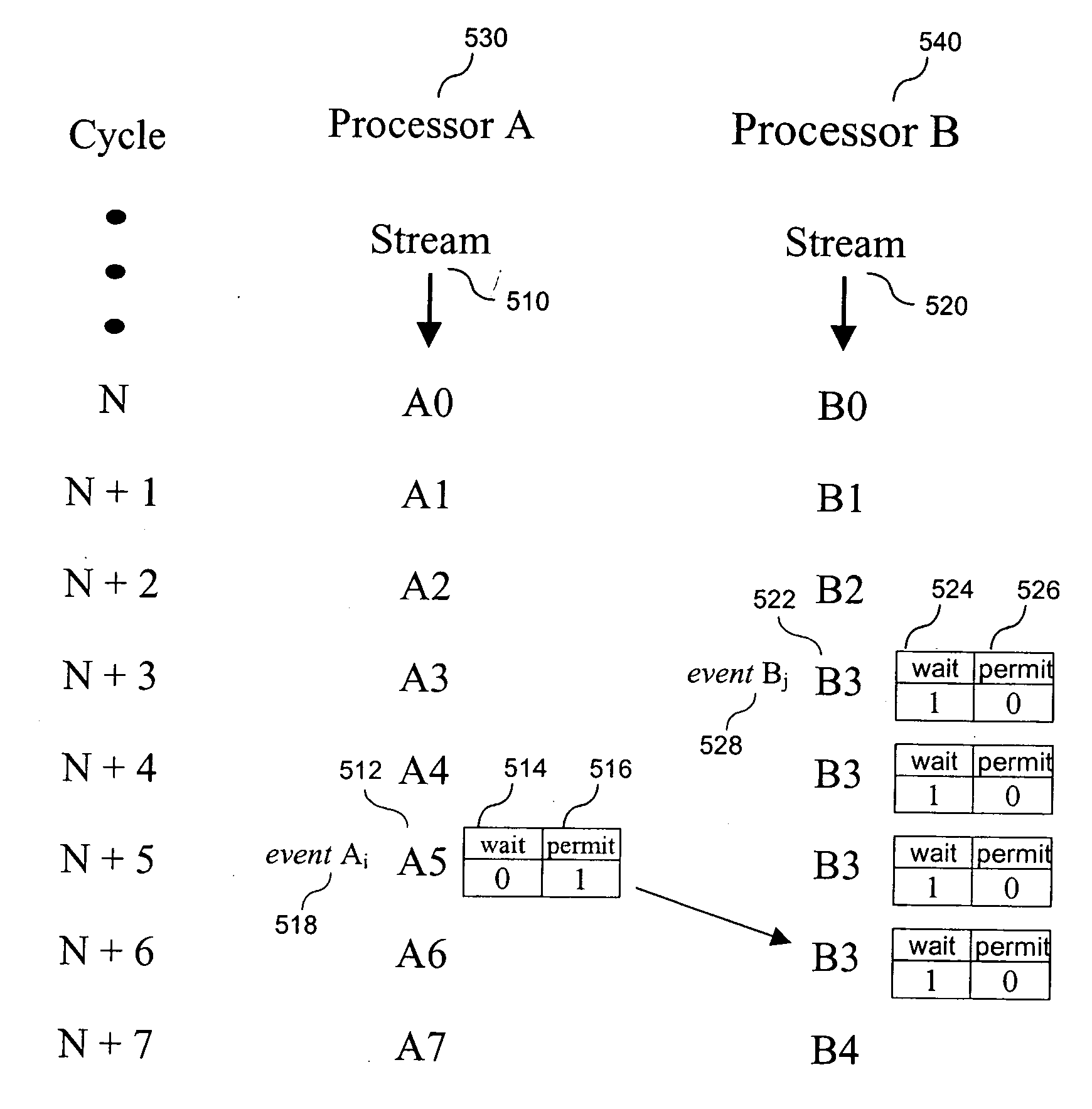

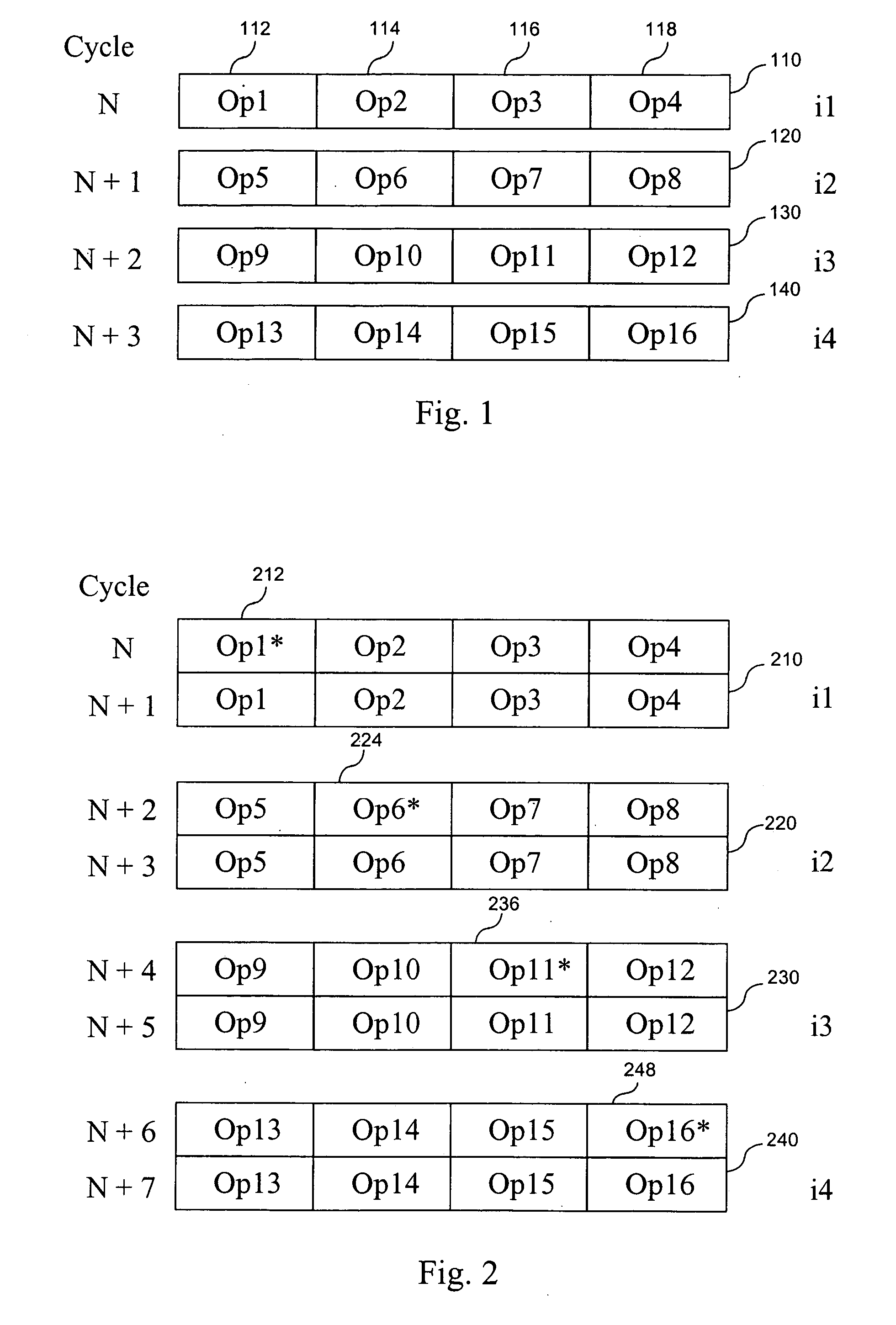

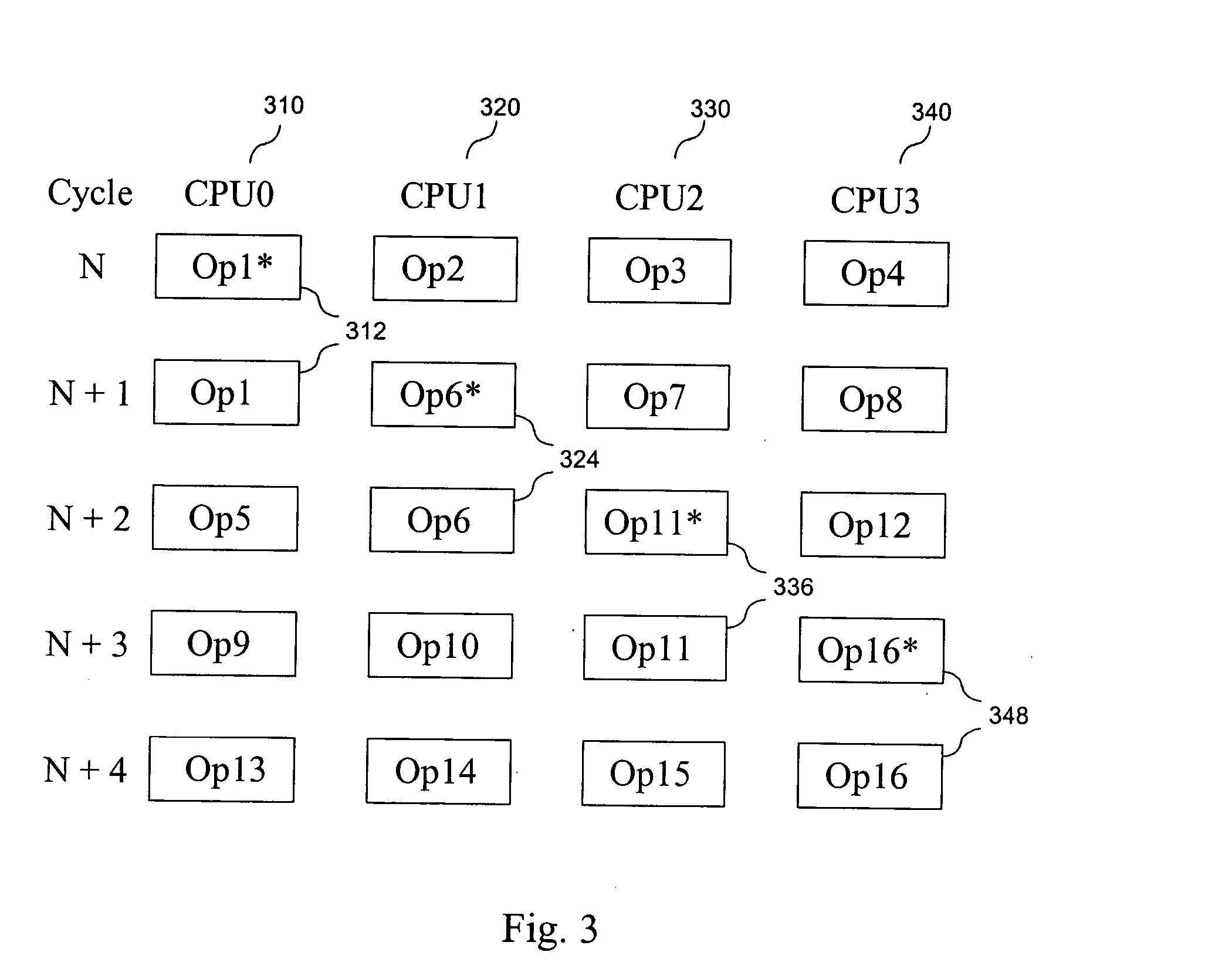

Single-chip multiprocessor with cycle-precise program scheduling of parallel execution

InactiveUS7143401B2High levelMinimize the interstream dependenciesResource allocationProgram synchronisationMicrocontrollerProcessor register

A single-chip multiprocessor system and operation method of this system based on a static macro-scheduling of parallel streams for multiprocessor parallel execution. The single-chip multiprocessor system has buses for direct exchange between the processor register files and access to their store addresses and data. Each explicit parallelism architecture processor of this system has an interprocessor interface providing the synchronization signals exchange, data exchange at the register file level and access to store addresses and data of other processors. The single-chip multiprocessor system uses ILP to increase the performance. Synchronization of the streams parallel execution is ensured using special operations setting a sequence of streams and stream fragments execution prescribed by the program algorithm.

Owner:ELBRUS INT

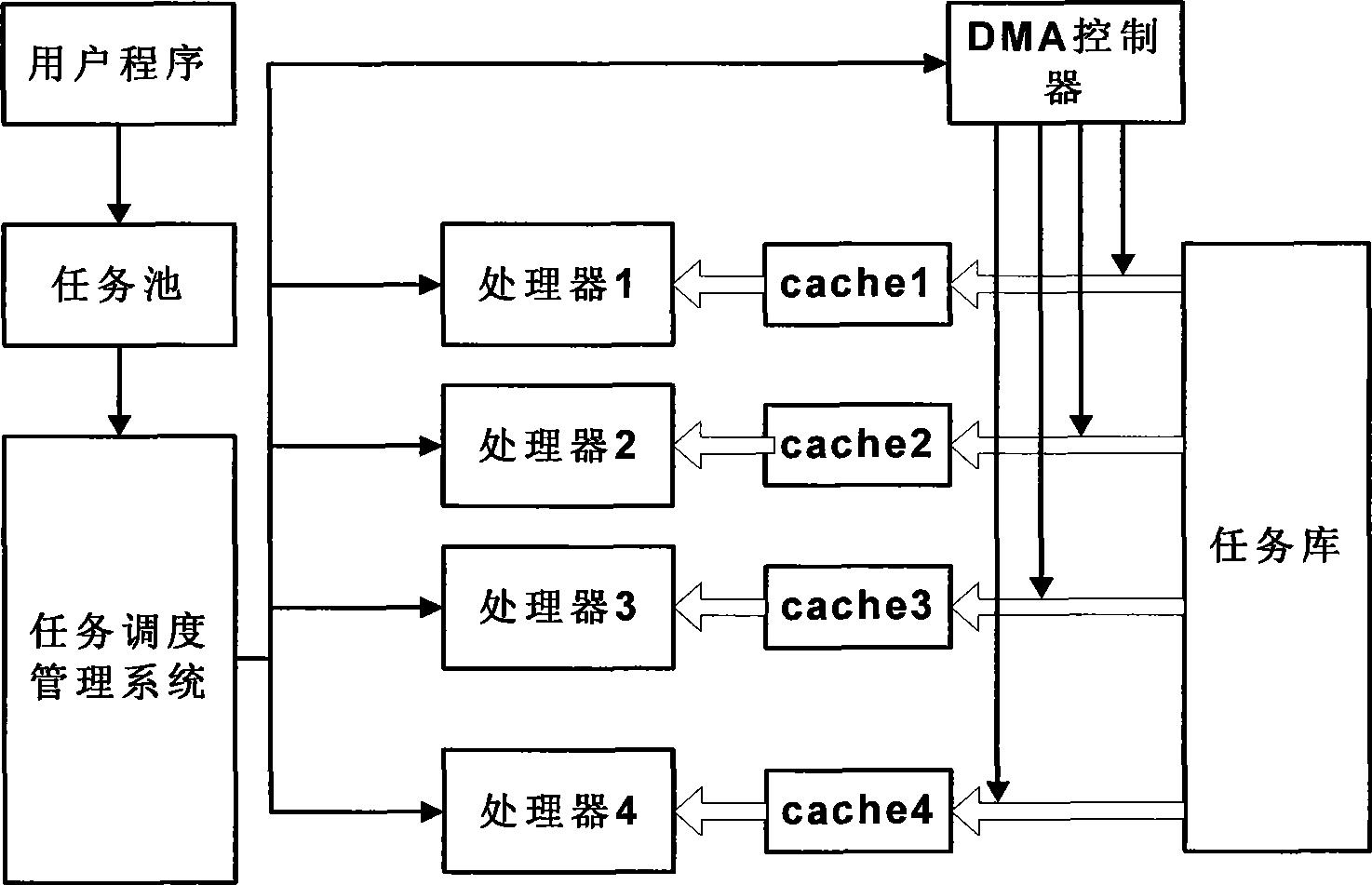

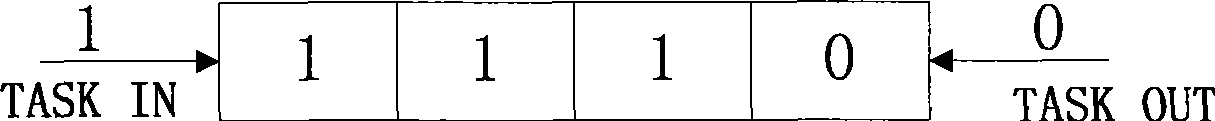

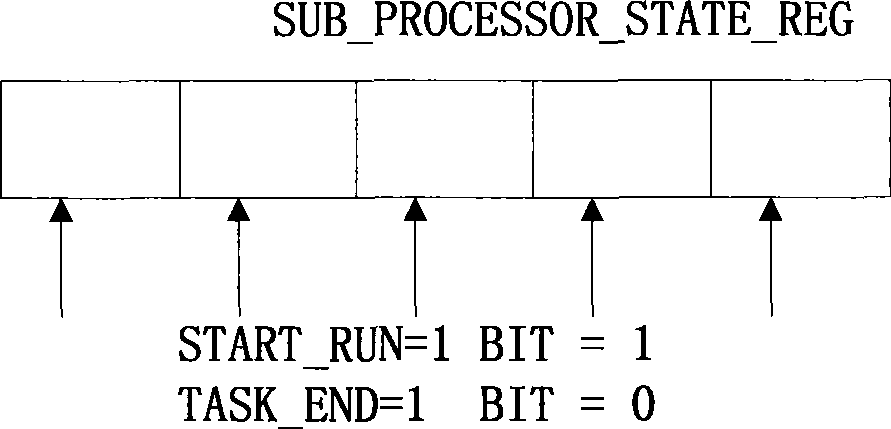

Single-chip multi-processor task scheduling and managing method

InactiveCN101387952AImplement parallel processingImprove processing speedResource allocationConcurrent instruction executionMIMDData stream

The invention relates to a dispatching method for the task management of a single-chip multiprocessor, which is based on a structural system of the multi-instruction multi-data-stream (MIMD) of a single-chip multiprocessor. The dispatching method performs task dispatching, task distribution, task management and sub-processor management for parallel tasks running in a system, and realizes the parallel treatment of the single-chip multiprocessor. The dispatching method for the task management of the single-chip multiprocessor can be applied to a single-chip multiprocessor system composed of various sub-processors which have independent local ROM, such as an MCU of an 8051 structural system. Further, the sub-processors can be homogeneous, and also can be heterogeneous.

Owner:SHANGHAI UNIV

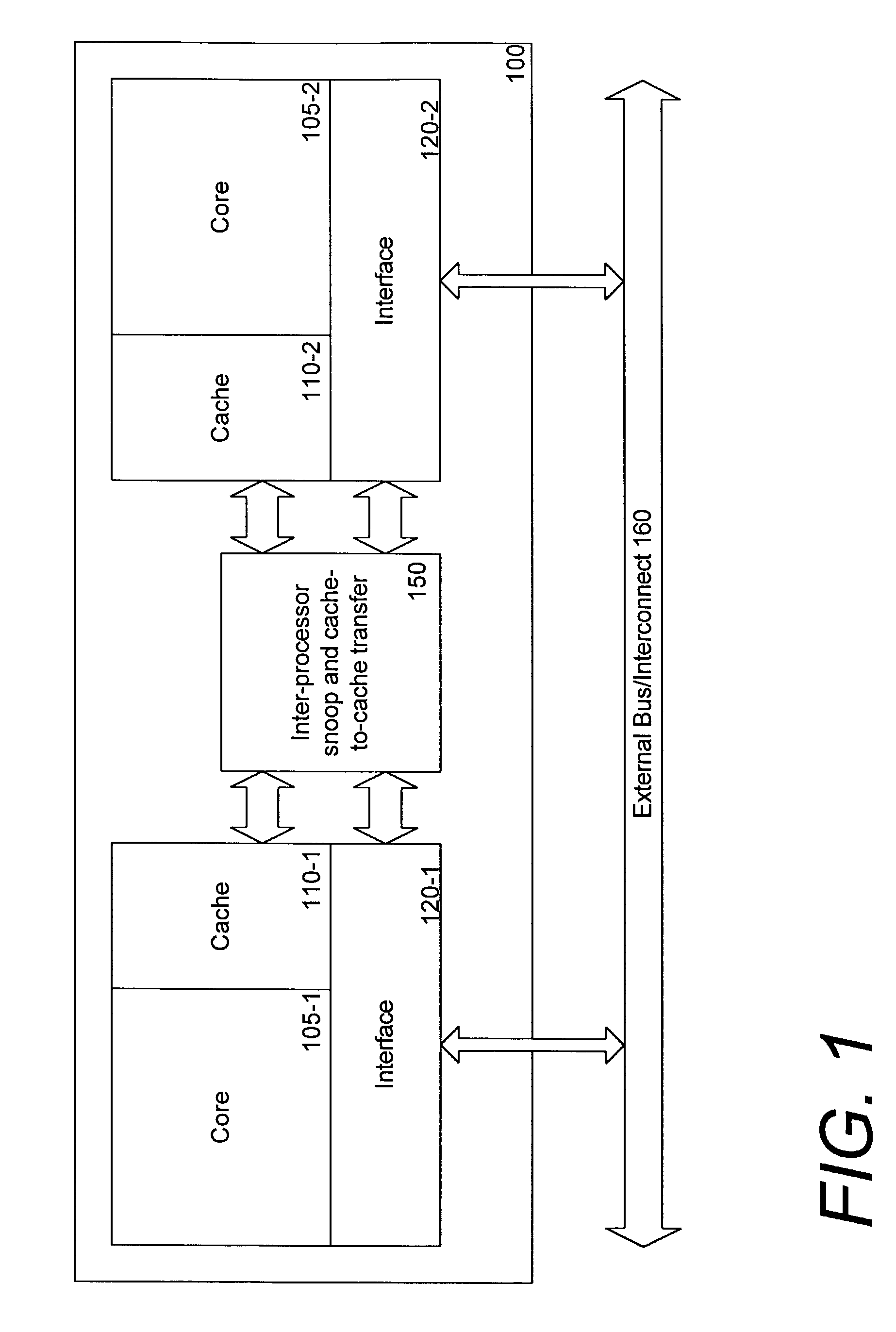

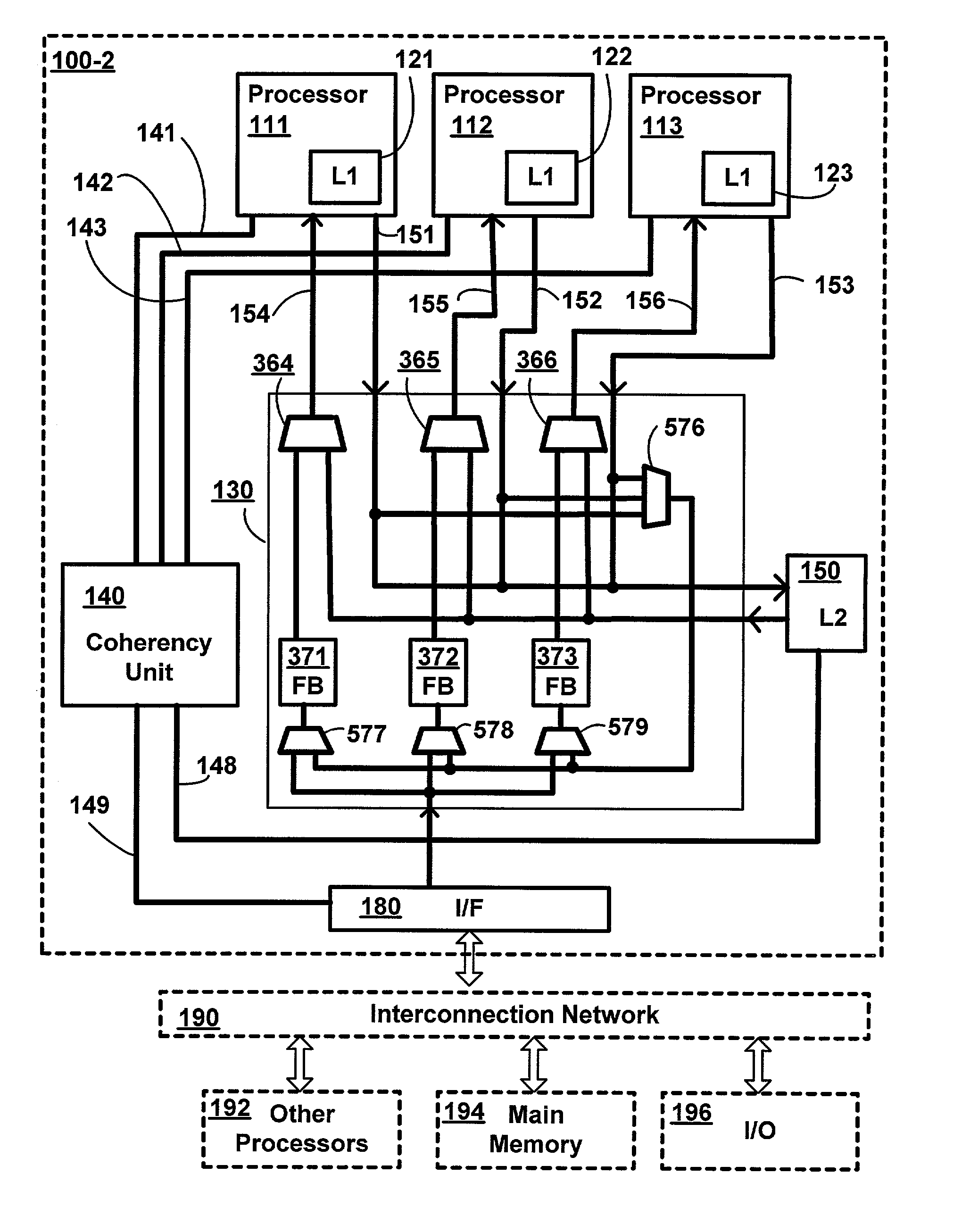

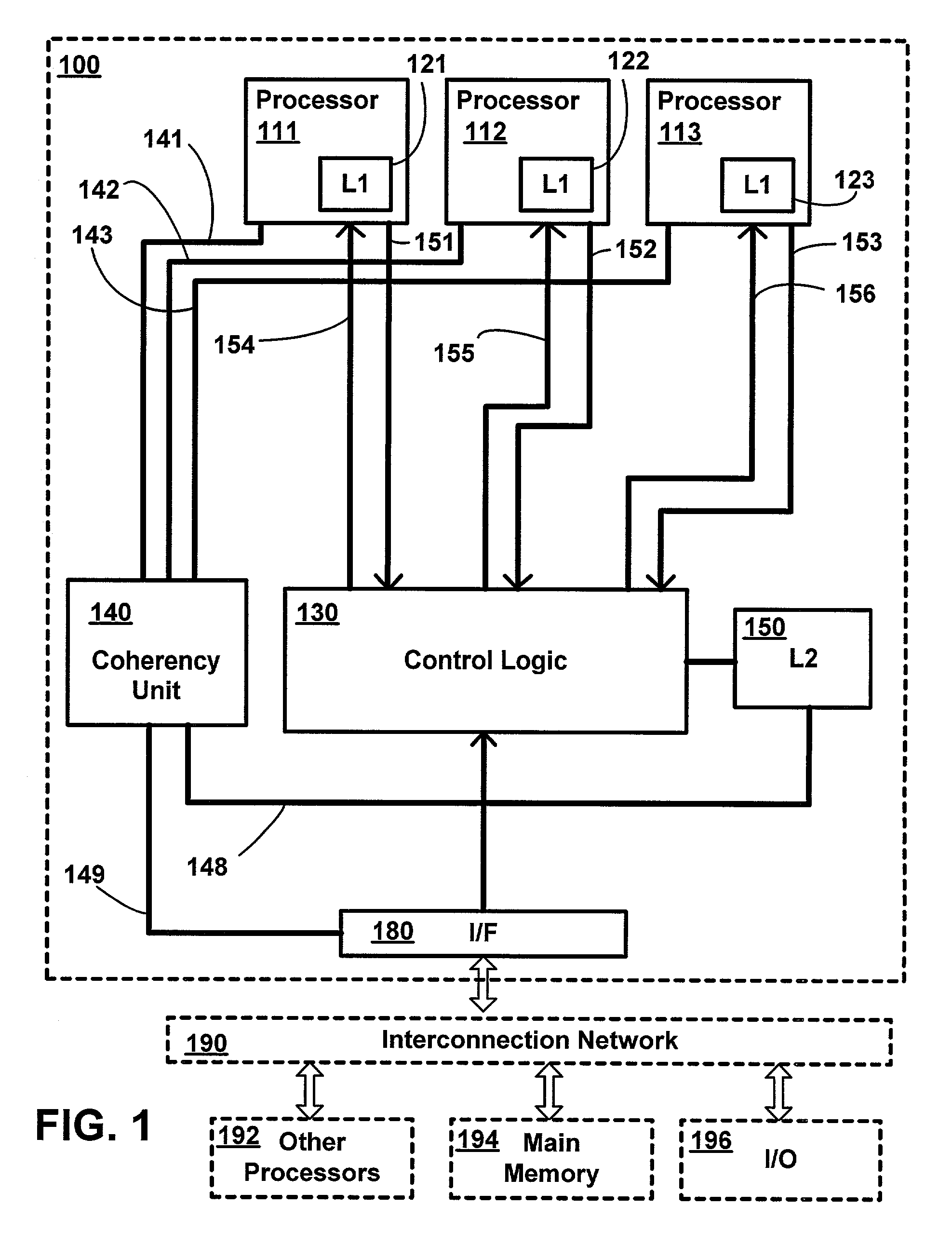

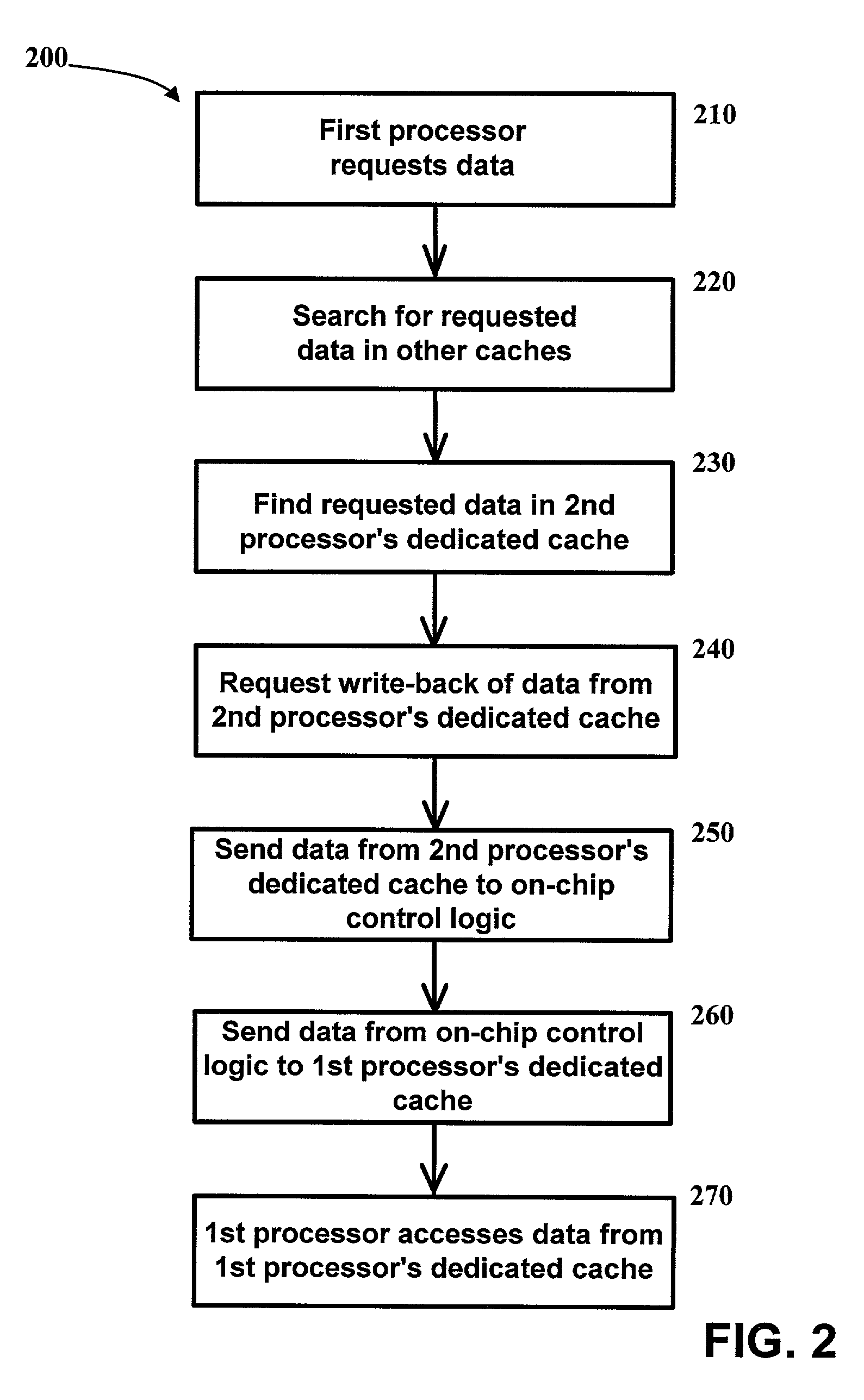

Transfer of cache lines on-chip between processing cores in a multi-core system

Cache coherency is maintained between the dedicated caches of a chip multiprocessor by writing back data from one dedicated cache to another without routing the data off-chip. Various specific embodiments are described, using write buffers, fill buffers, and multiplexers, respectively, to achieve the on-chip transfer of data between dedicated caches.

Owner:INTEL CORP

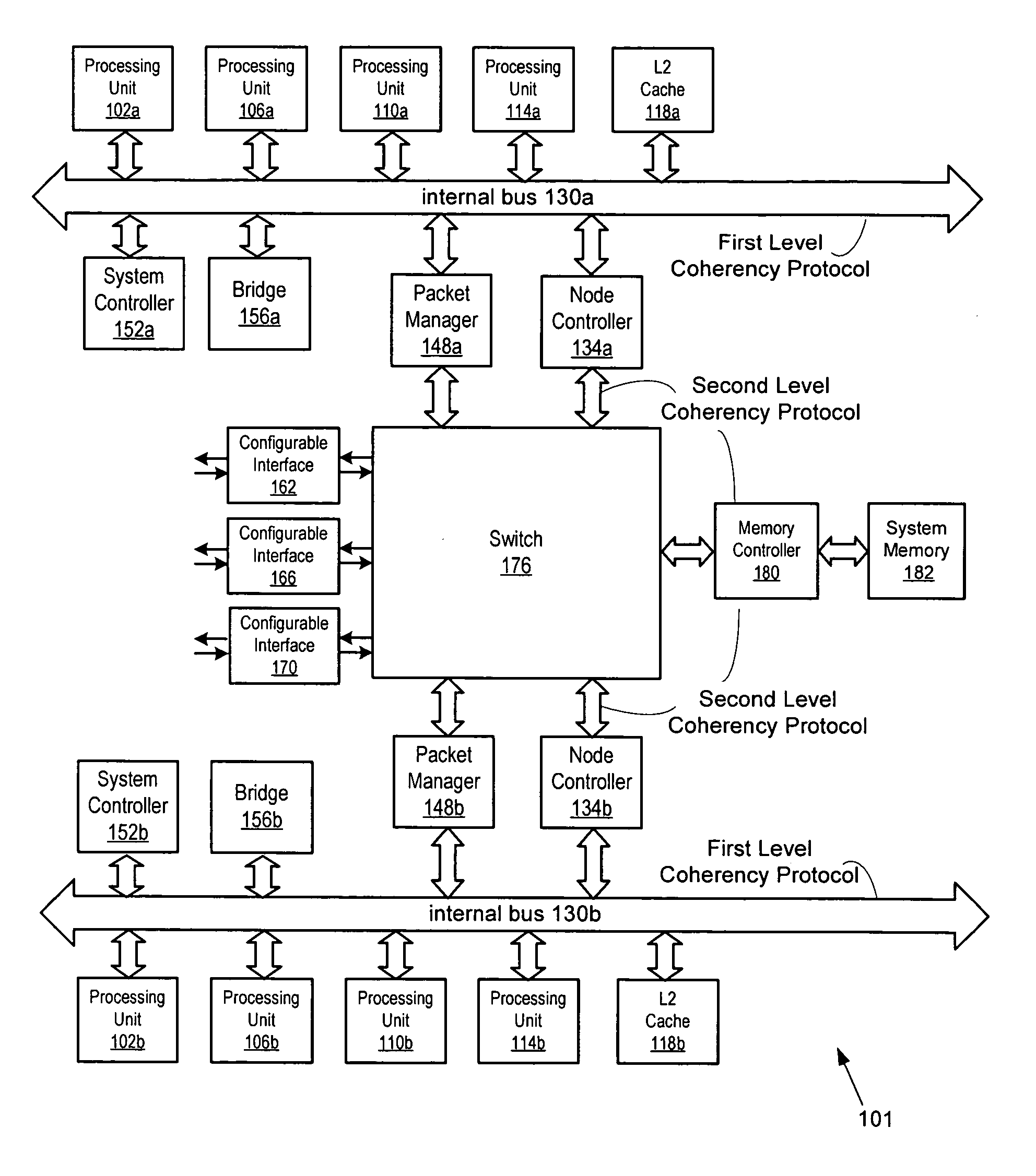

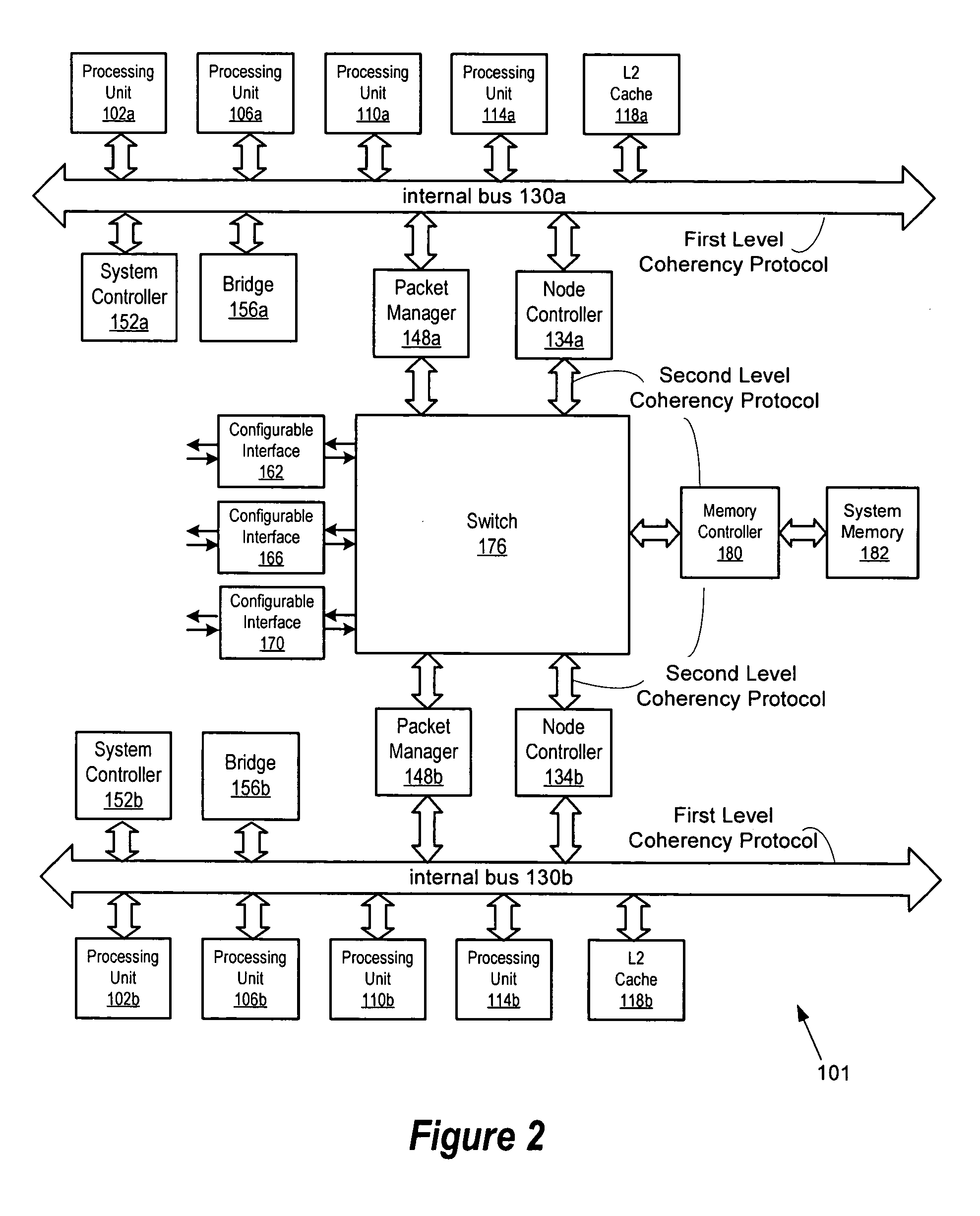

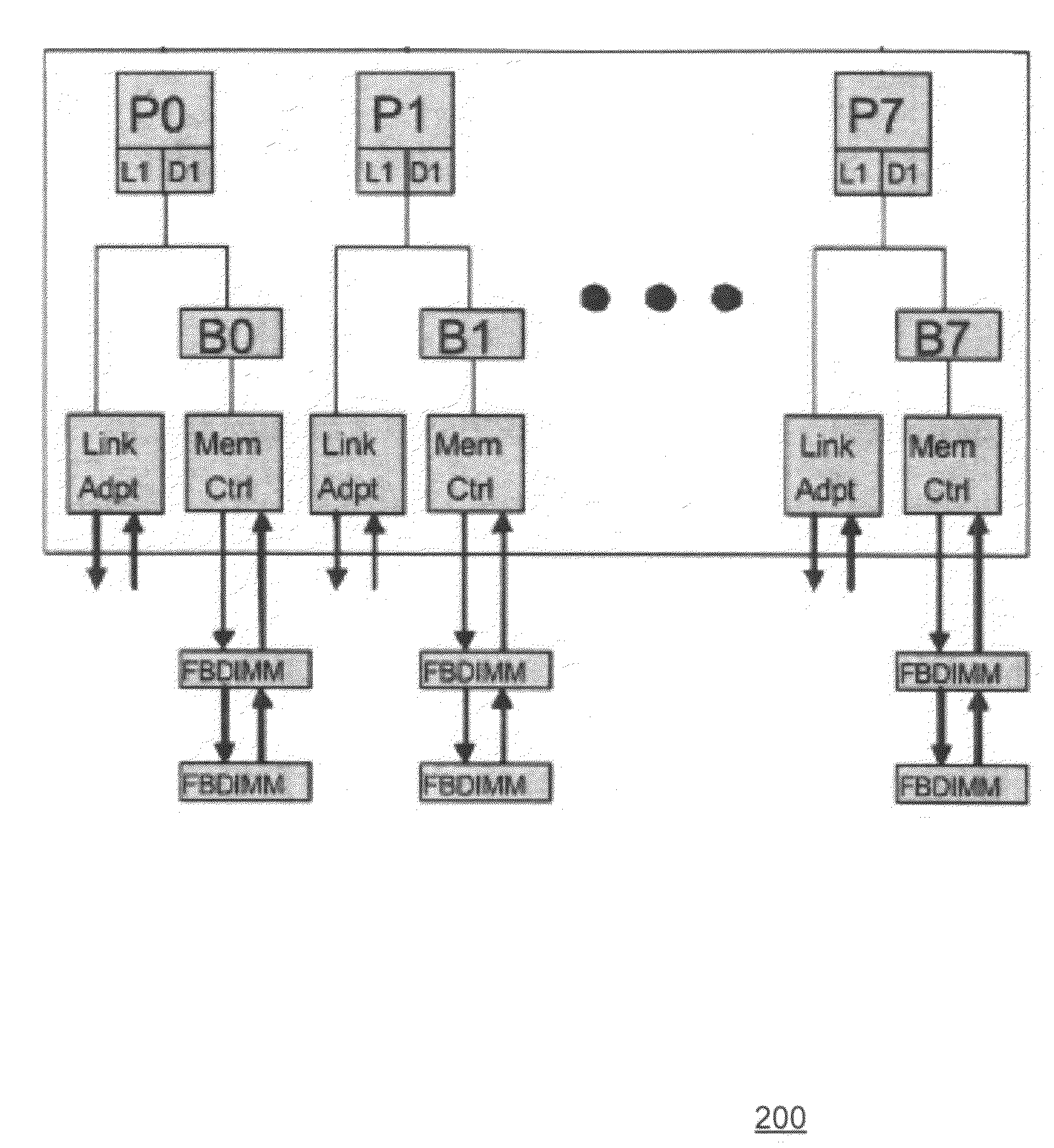

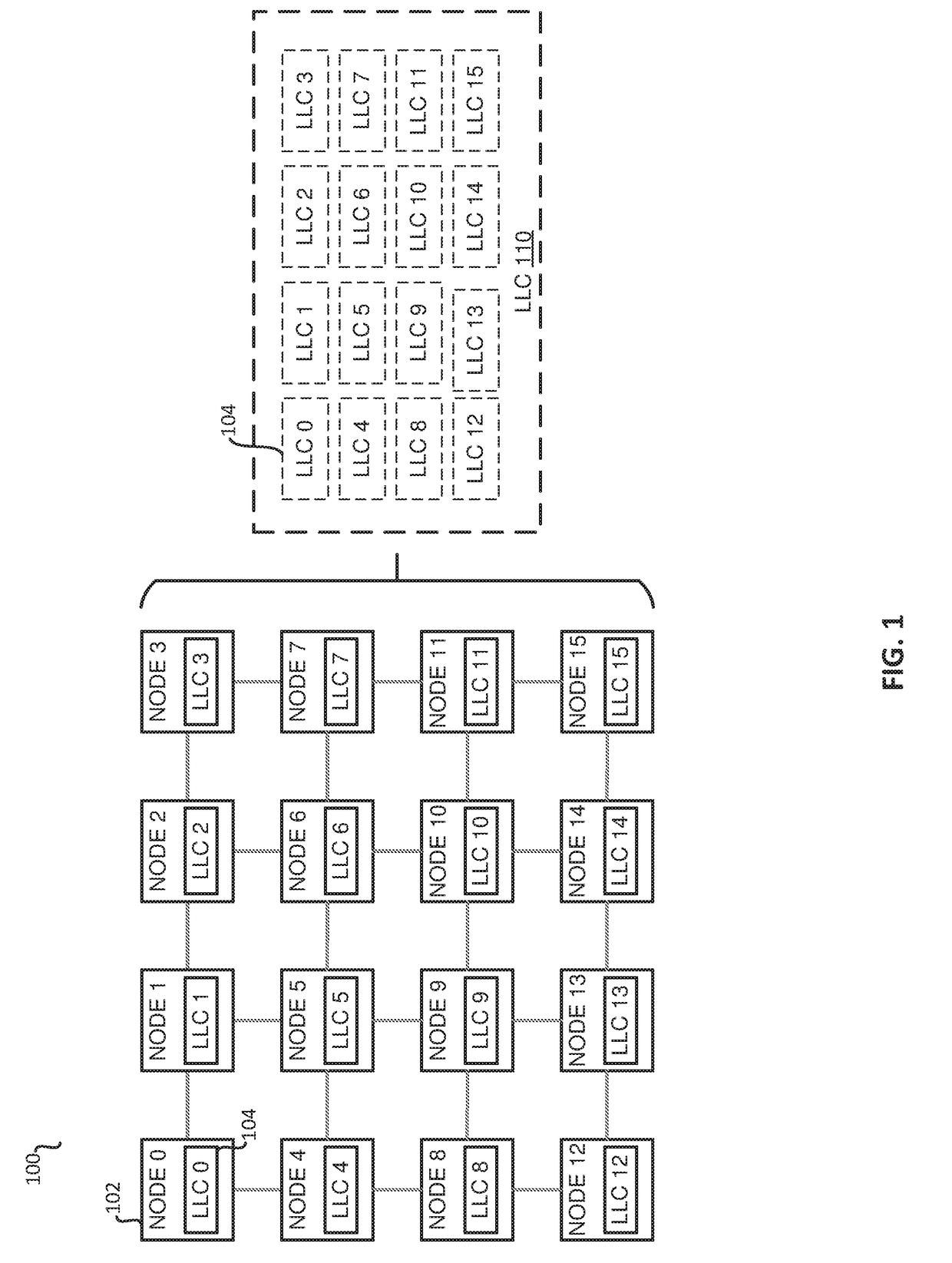

Nonuniform chip multiprocessor

ActiveUS20060059315A1Increase the number ofMemory adressing/allocation/relocationMemory systemsDistributed memoryParallel computing

In accordance with the present invention, an integrated circuit system and method are provided for increasing the number of processors on a single integrated circuit to a number that is larger than would typically be possible to coordinate on a single bus. In the present invention a two-level memory coherency scheme is implemented for use by multiple processors operably connected to multiple buses in the same integrated circuit. A control device, such as node controller, is used to control traffic between the two coherency levels. In one embodiment of the invention the first level of coherency is implemented using a “snoopy” protocol and the second level of coherency is a directory-based coherency scheme. In some embodiments of the invention, the directory-based coherency scheme is implemented using a centralized memory and directory architecture. In other embodiments of the invention, the second level of coherency is implemented using distributed memory and a distributed directory. In another alternate embodiment of the invention, a third level of coherency is implemented for transfer of data externally from the integrated circuit.

Owner:AVAGO TECH INT SALES PTE LTD

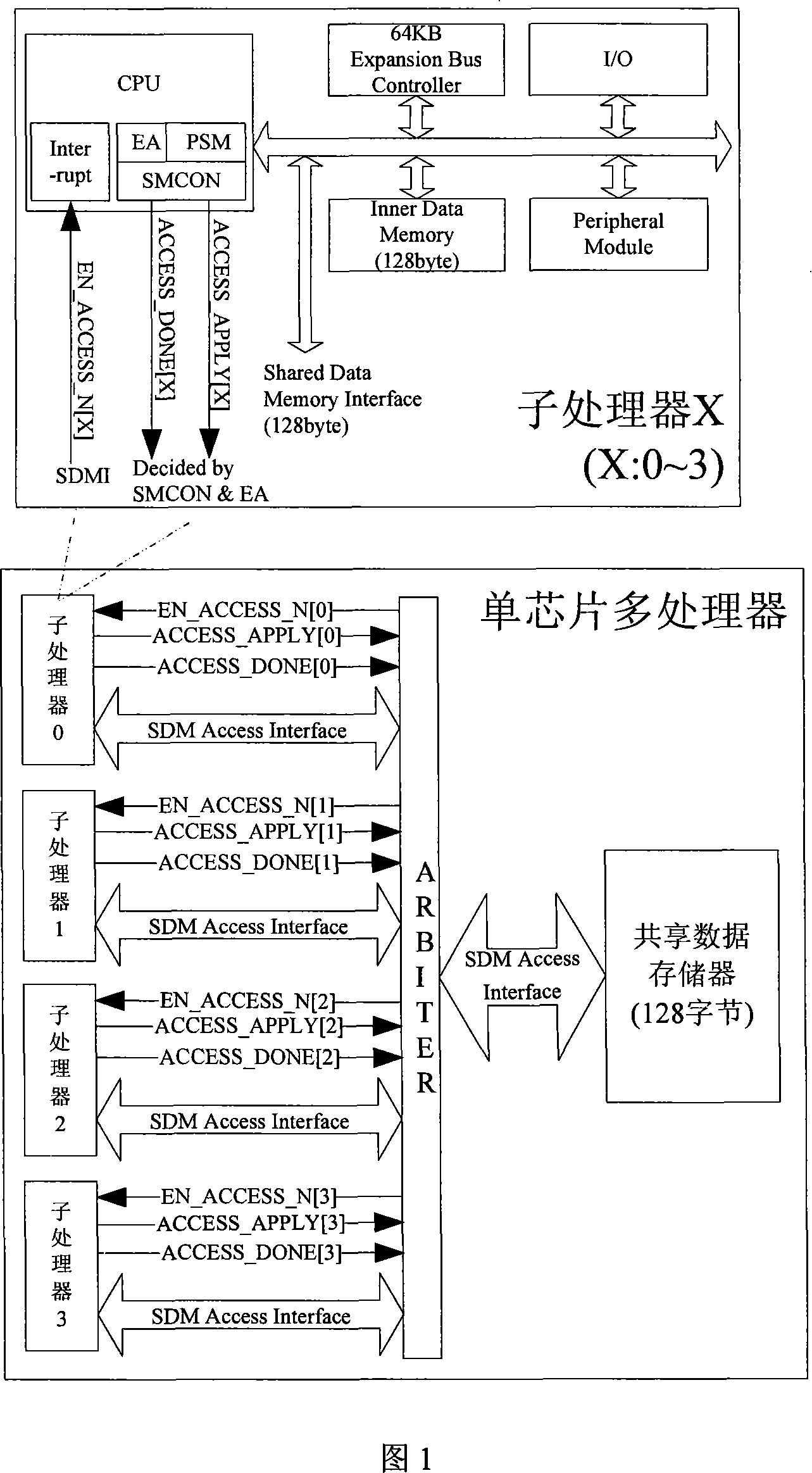

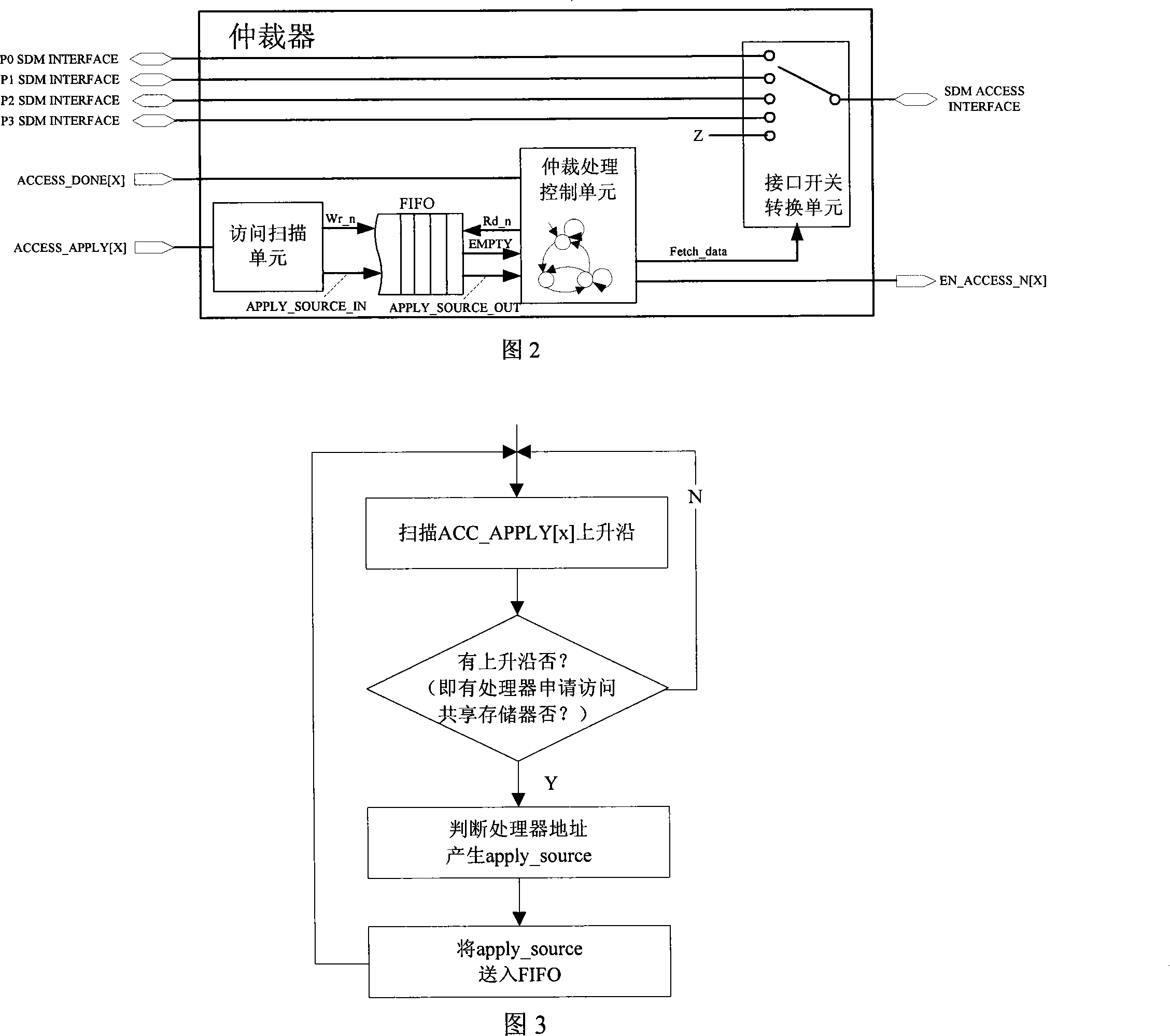

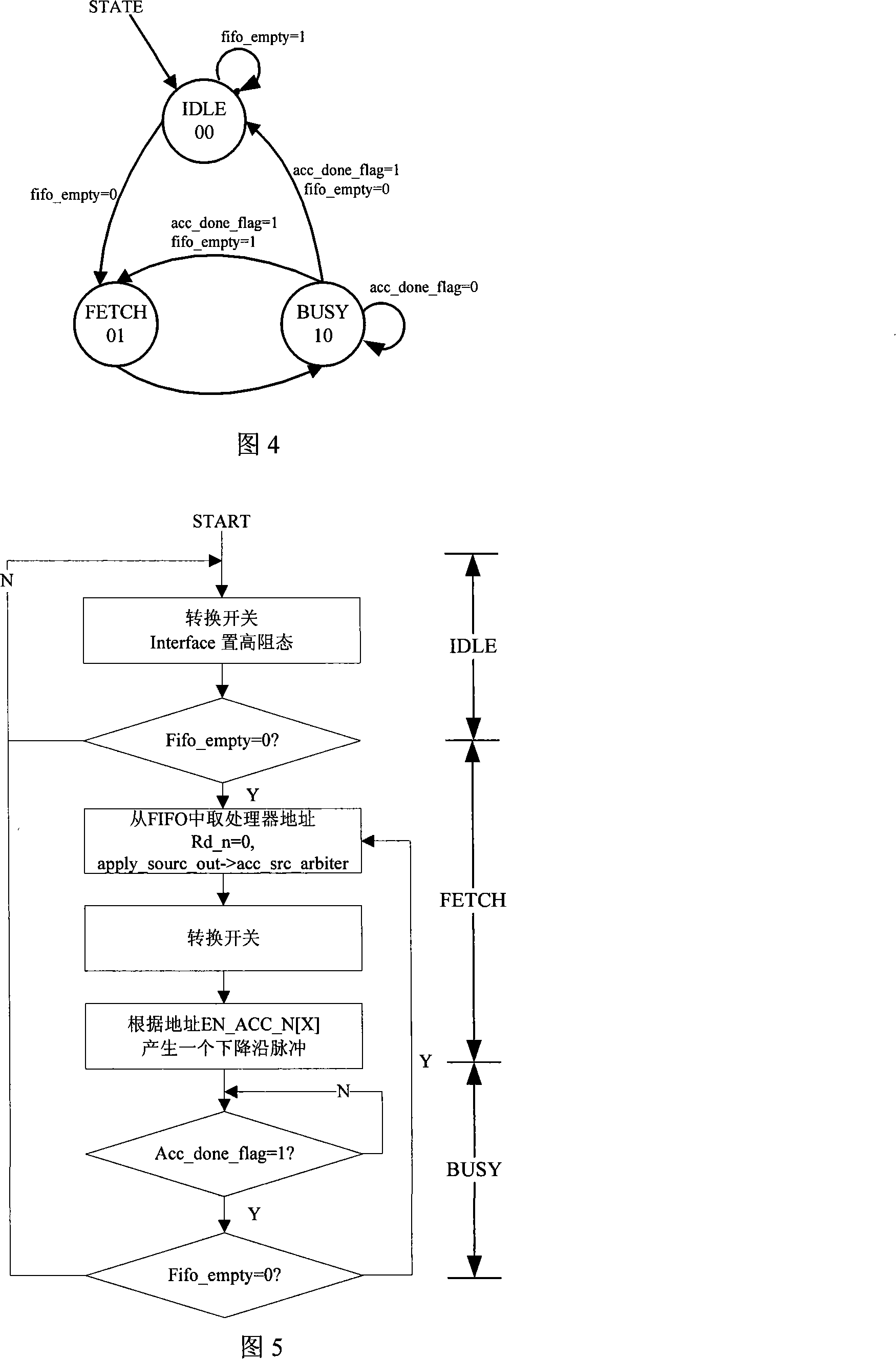

Single chip multi-processor shared data storage space access method

InactiveCN101187908ADigital computer detailsElectric digital data processingMicrocontrollerData space

The invention relates to a visit method for sharing data storing space of a single chip multi-processor, which uses the data space sharing of high 128 bytes in each sub-processor for transmission of command and data among the processors, and a sharing data memory interruption (SDMI) is added in each sub-processor, and the SDMI and an arbiter are effectively combined as a visit mechanism of a data sharing memory for solving competition which exists in data exchange among each sub-processor. The visit of an entire single chip multi-processor to the data sharing memory is only judged by the arbiter, and is realized through the style of interruption. The handshaking signal between the arbiter and each sub-processor adopts on-chip style, and the transmission speed is fast, and the resource which is occupied in little, which is convenient for integration and control. Each sub-processor can not only work separately, but also can coordinately work with other processors. Each micro processor is substantially a relatively simple single-thread micro processor, and a plurality of the sub-processors parallel carry out program codes, which have relatively high command level parallelism. The method can be applied to a multi-processor system of MCS-51 command system, and can be applied to other fields of multi-microcontrollers, and multi-microprocessors and the like.

Owner:SHANGHAI UNIV +2

Apparatus and method for partitioning a shared cache of a chip multi-processor

InactiveUS7558920B2Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingChip multi processor

A method and apparatus for partitioning a shared cache of a chip multi-processor are described. In one embodiment, the method includes a request of a cache block from system memory if a cache miss within a shared cache is detected according to a received request from a processor. Once the cache block is requested, a victim block within the shared cache is selected according to a processor identifier and a request type of the received request. In one embodiment, selection of the victim block according to a processor identifier and request type is based on a partition of a set-associative, shared cache to limit the selection of the victim block from a subset of available cache ways according to the cache partition. Other embodiments are described and claimed.

Owner:INTEL CORP

Shared Resources in a Chip Multiprocessor

ActiveUS20080184009A1Single instruction multiple data multiprocessorsMachine execution arrangementsProcessor registerParallel computing

In one embodiment, a node comprises a plurality of processor cores and a node controller configured to receive a first read operation addressing a first register. The node controller is configured to return a first value in response to the first read operation, dependent on which processor core transmitted the first read operation. In another embodiment, the node comprises the processor cores and the node controller. The node controller comprises a queue shared by the processor cores. The processor cores are configured to transmit communications at a maximum rate of one every N clock cycles, where N is an integer equal to a number of the processor cores. In still another embodiment, a node comprises the processor cores and a plurality of fuses shared by the processor cores. In some embodiments, the node components are integrated onto a single integrated circuit chip (e.g. a chip multiprocessor).

Owner:GLOBALFOUNDRIES U S INC

Combined system responses in a chip multiprocessor

ActiveUS7296167B1Volume/mass flow measurementMemory adressing/allocation/relocationIntegrated circuitReal-time computing

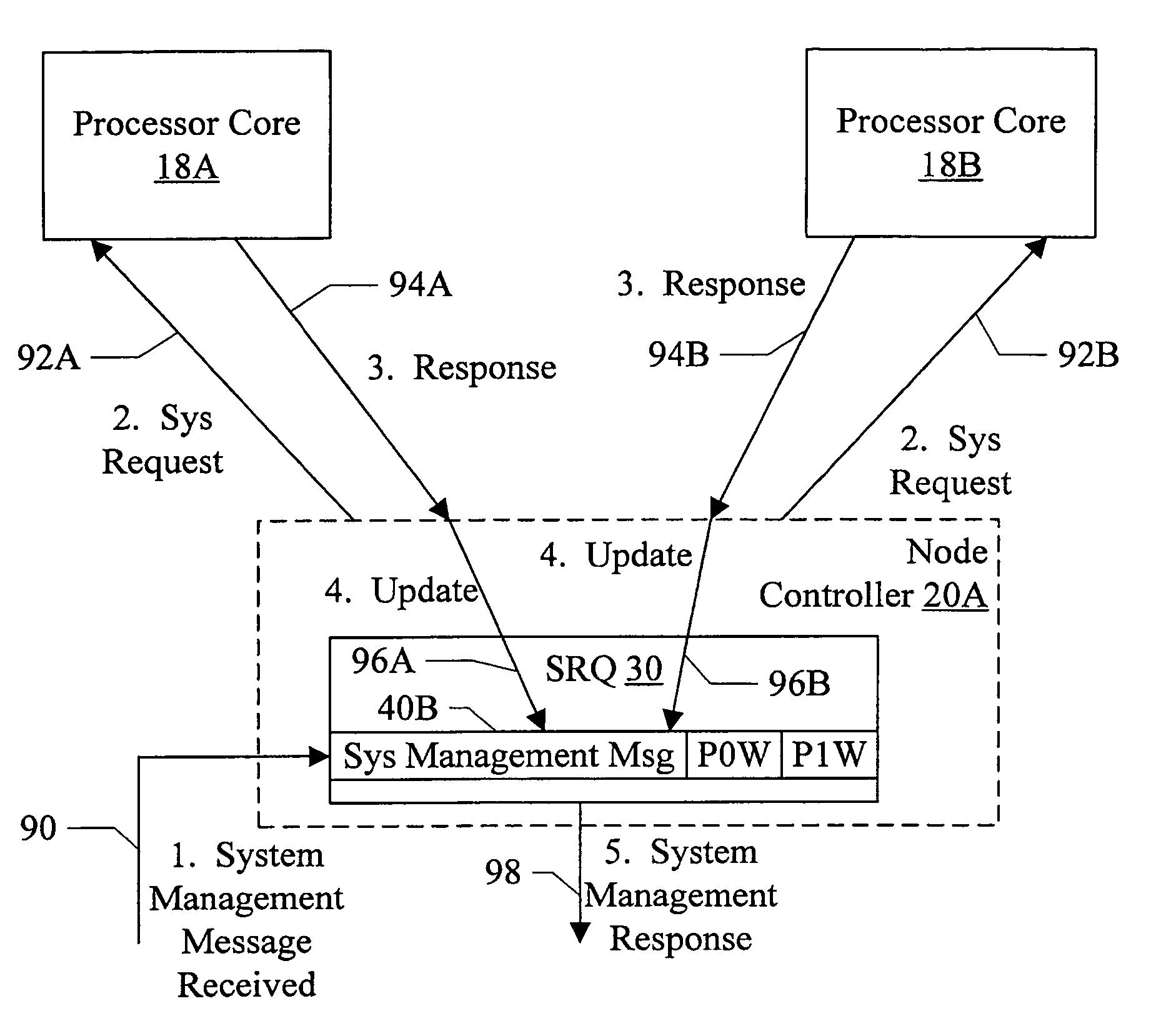

In one embodiment, a node comprises, integrated onto a single integrated circuit chip (in some embodiments), a plurality of processor cores and a node controller coupled to the plurality of processor cores. The node controller is coupled to receive an external request transmitted to the node, and is configured to transmit a corresponding request to at least a subset of the plurality of processor cores responsive to the external request. The node controller is configured to receive respective responses from each processor core of the subset. Each processor core transmits the respective response independently in response to servicing the corresponding request and is capable of transmitting the response on different clock cycles than other processor cores. The node controller is configured to transmit an external response to the external request responsive to receiving each of the respective responses.

Owner:MEDIATEK INC

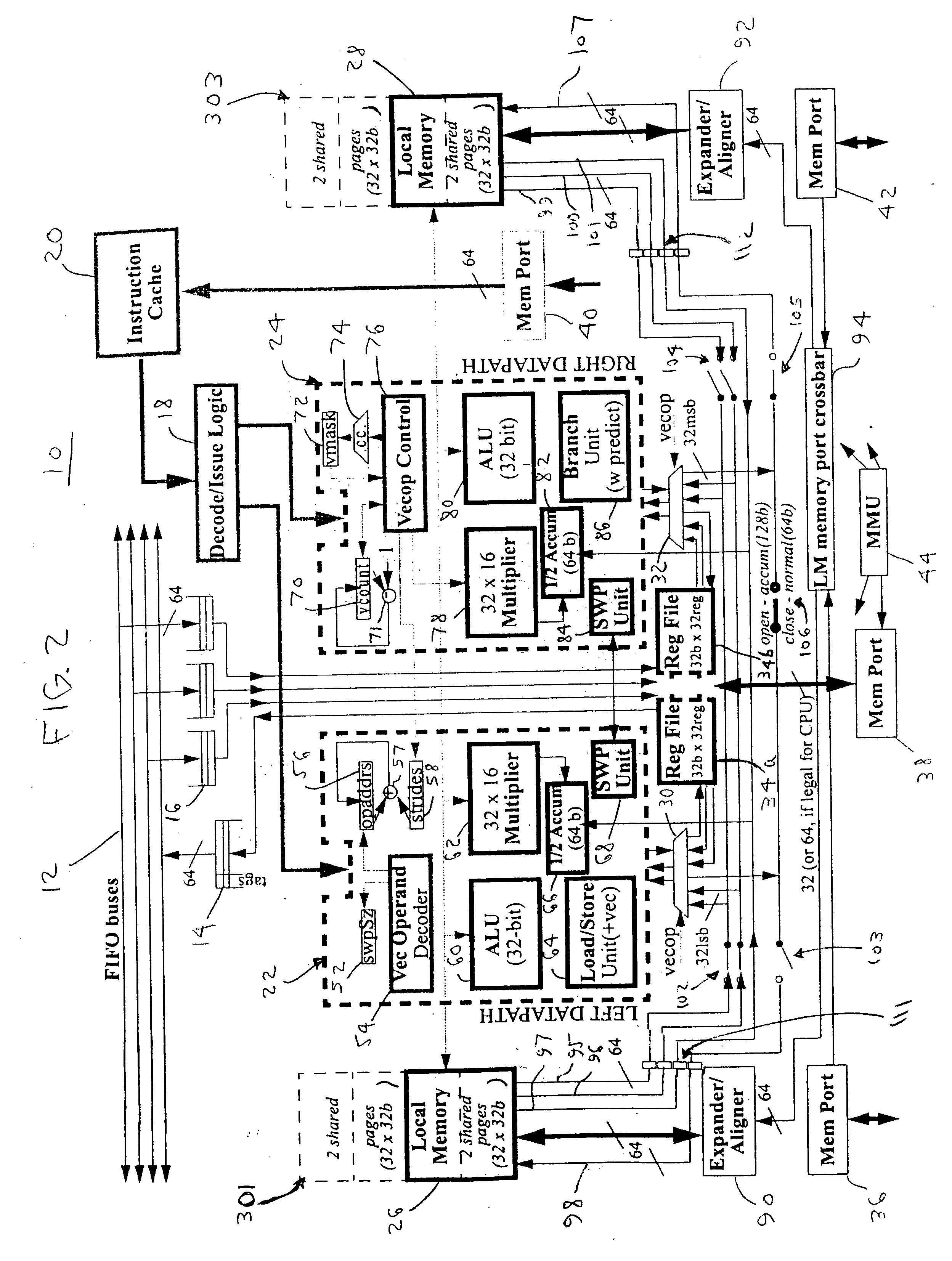

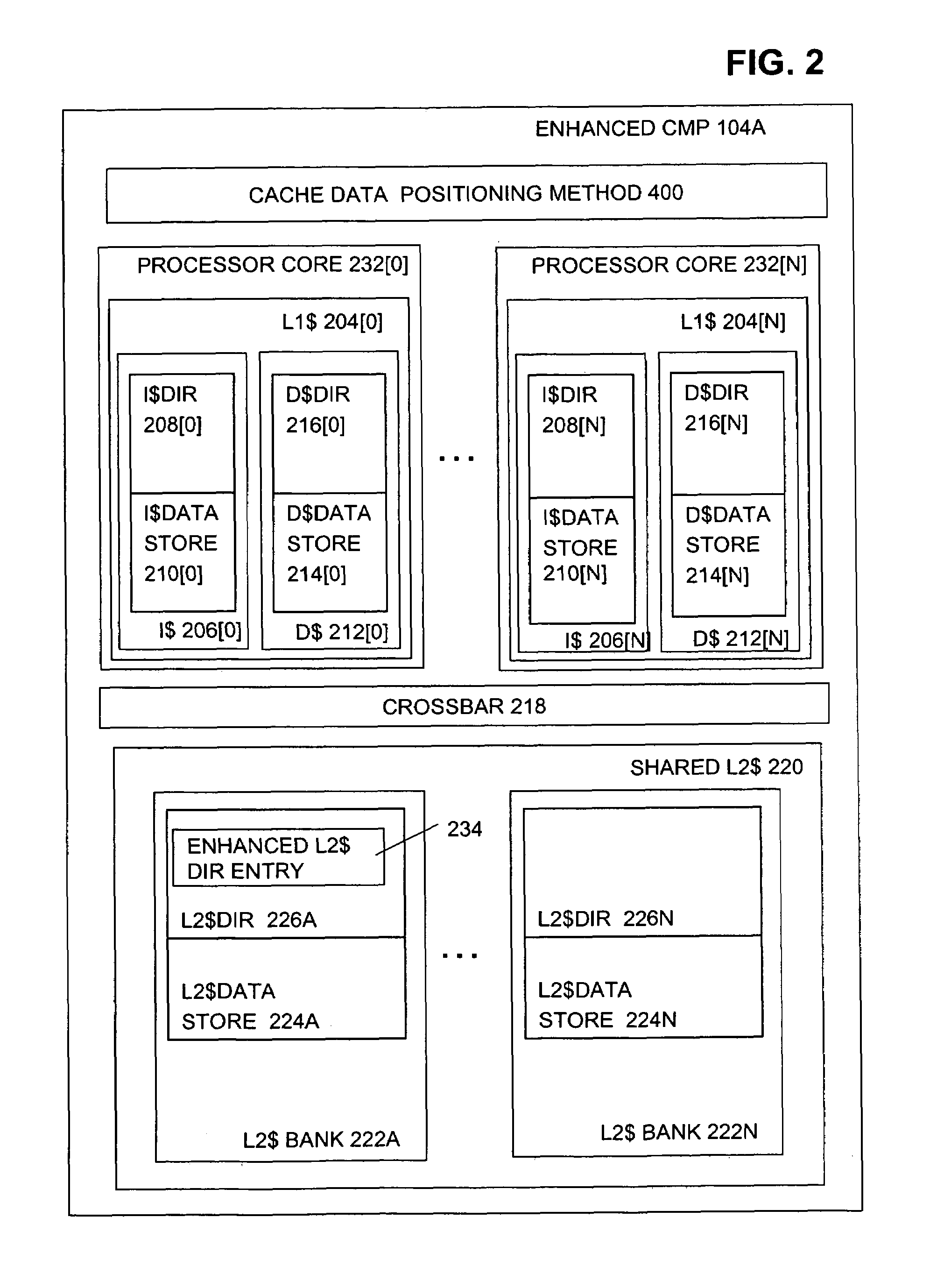

Chip multiprocessor for media applications

InactiveUS20050182915A1Single instruction multiple data multiprocessorsRegister arrangementsControl registerOperand

A chip multiprocessor (CMP) includes a plurality of processors disposed on a peripheral region of a chip. Each processor has (a) a dual datapath for executing instructions, (b) a compiler controlled register file (RF), coupled to the dual datapath, for loading / storing operands of an instruction, and (c) a compiler controlled local memory (LM), a portion of the LM disposed to a left of the dual datapath and another portion of the LM disposed to a right of the dual datapath, for loading / storing operands of an instruction. The CMP also has a shared main memory disposed at a central region of the chip, a crossbar system for coupling the shared main memory to each of the processors, and a first-in-first-out (FIFO) system for transferring operands of an instruction among multiple processors.

Owner:DEVANEY PATRICK +2

Chip multiprocessor with configurable fault isolation

One embodiment relates to a high-availability computation apparatus including a chip multiprocessor. Multiple fault zones are configurable in the chip multiprocessor, each fault zone being logically independent from other fault zones. Comparison circuitry is configured to compare outputs from redundant processes run in parallel on the multiple fault zones. Another embodiment relates to a method of operating a high-availability system using a chip multiprocessor. A redundant computation is performed in parallel on multiple fault zones of the chip multiprocessor and outputs from the multiple fault zones are compared. When a miscompare is detected, an error recovery process is performed. Other embodiments, aspects and features are also disclosed.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

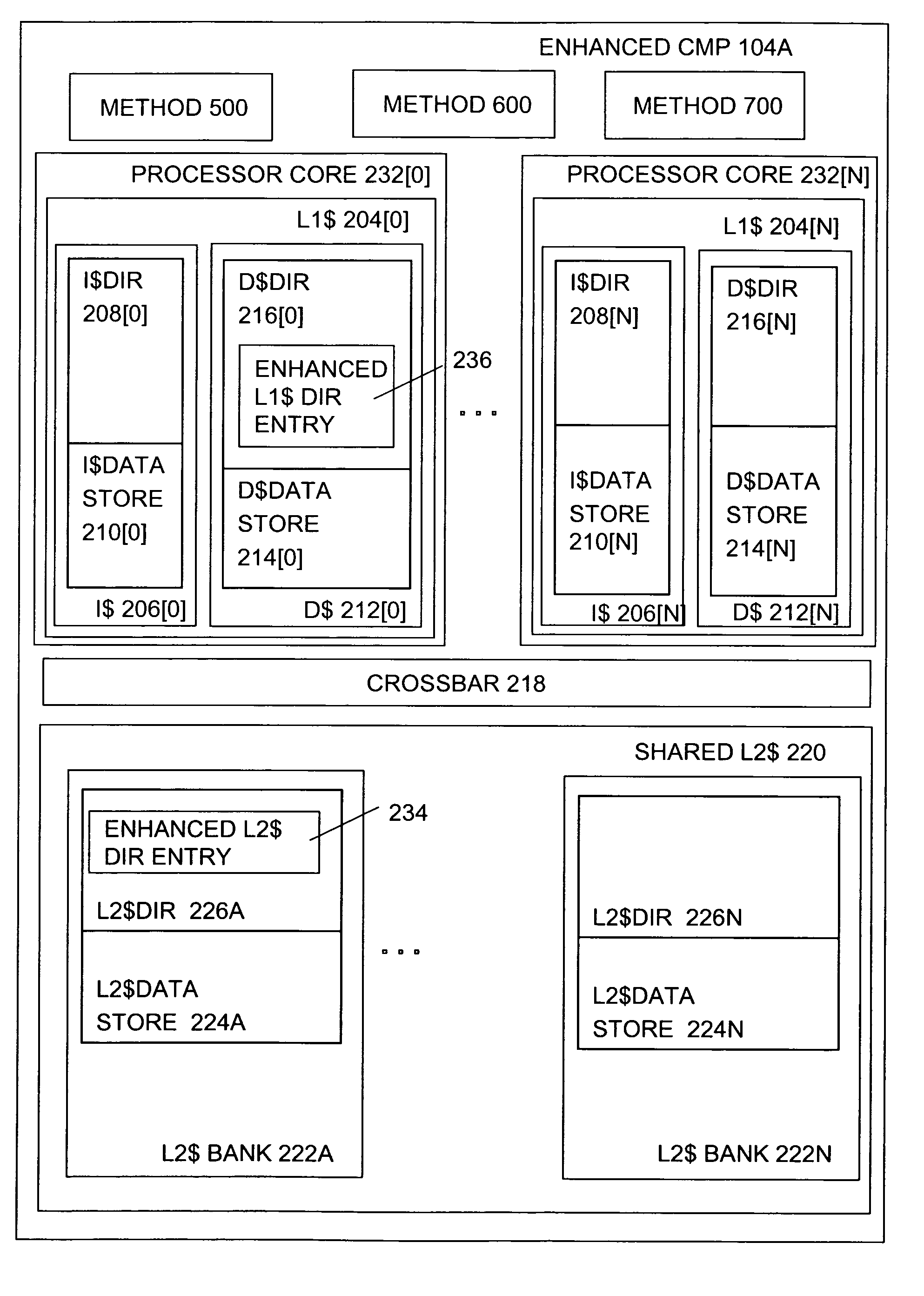

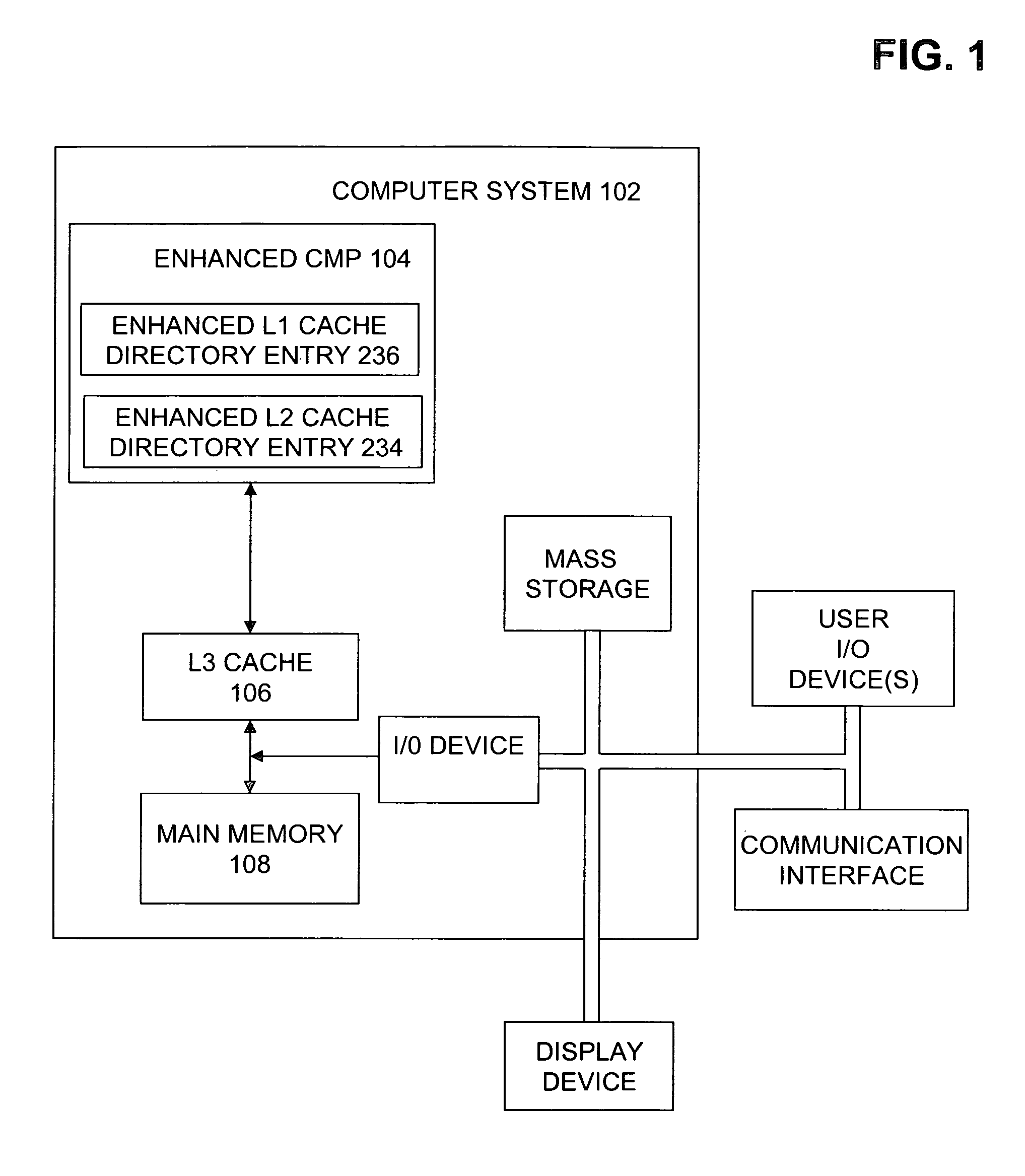

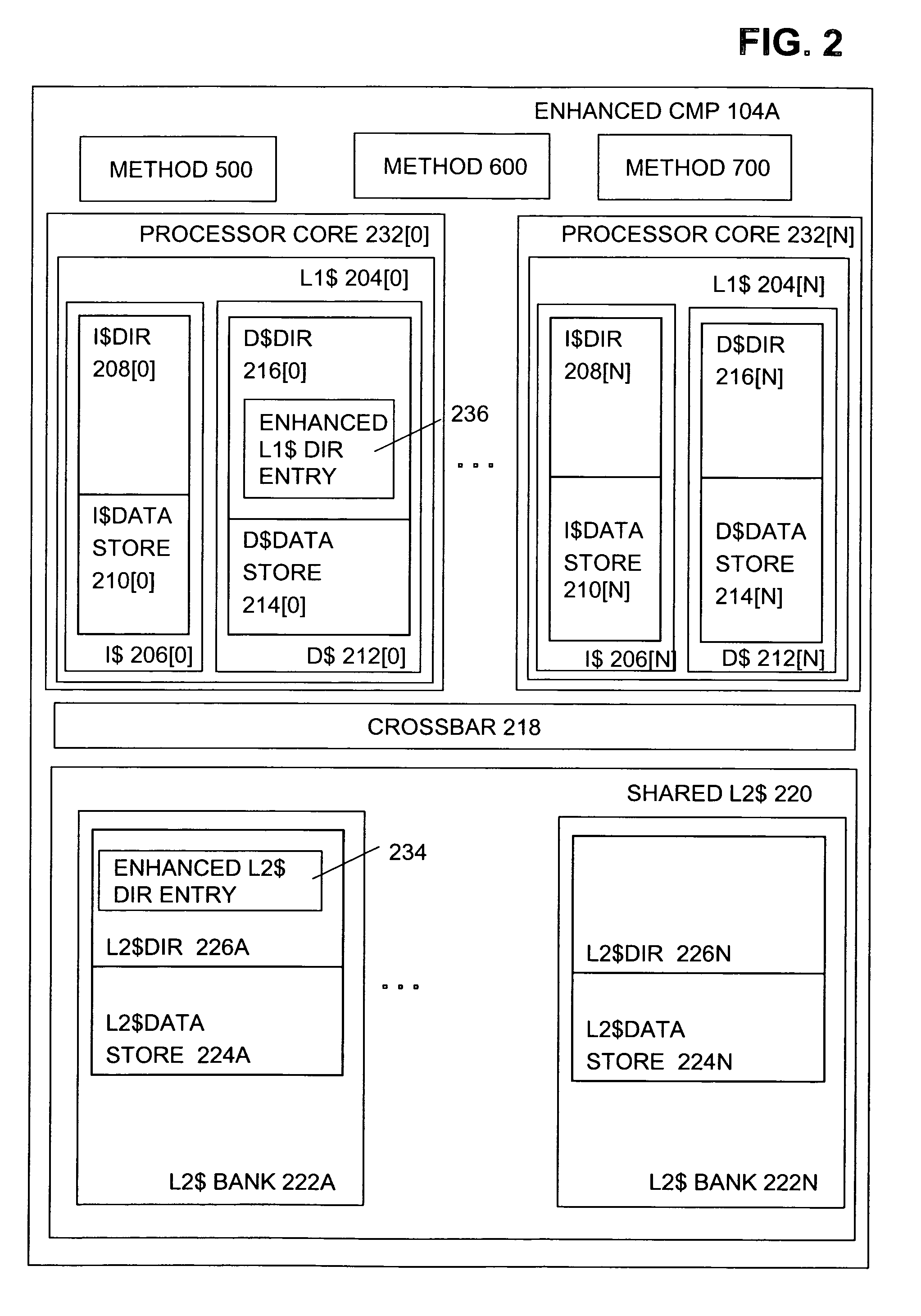

Efficient caching of stores in scalable chip multi-threaded systems

In accordance with one embodiment, an enhanced chip multiprocessor permits an L1 cache to request ownership of a data line from a shared L2 cache. A determination is made whether to deny or grant the request for ownership based on the sharing of the data line. In one embodiment, the sharing of the data line is determined from an enhanced L2 cache directory entry associated with the data line. If ownership of the data line is granted, the current data line is passed from the shared L2 to the requesting L1 cache and an associated enhanced L1 cache directory entry and the enhanced L2 cache directory entry are updated to reflect the L1 cache ownership of the data line. Consequently, updates of the data line by the L1 cache do not go through the shared L2 cache, thus reducing transaction pressure on the shared L2 cache.

Owner:ORACLE INT CORP

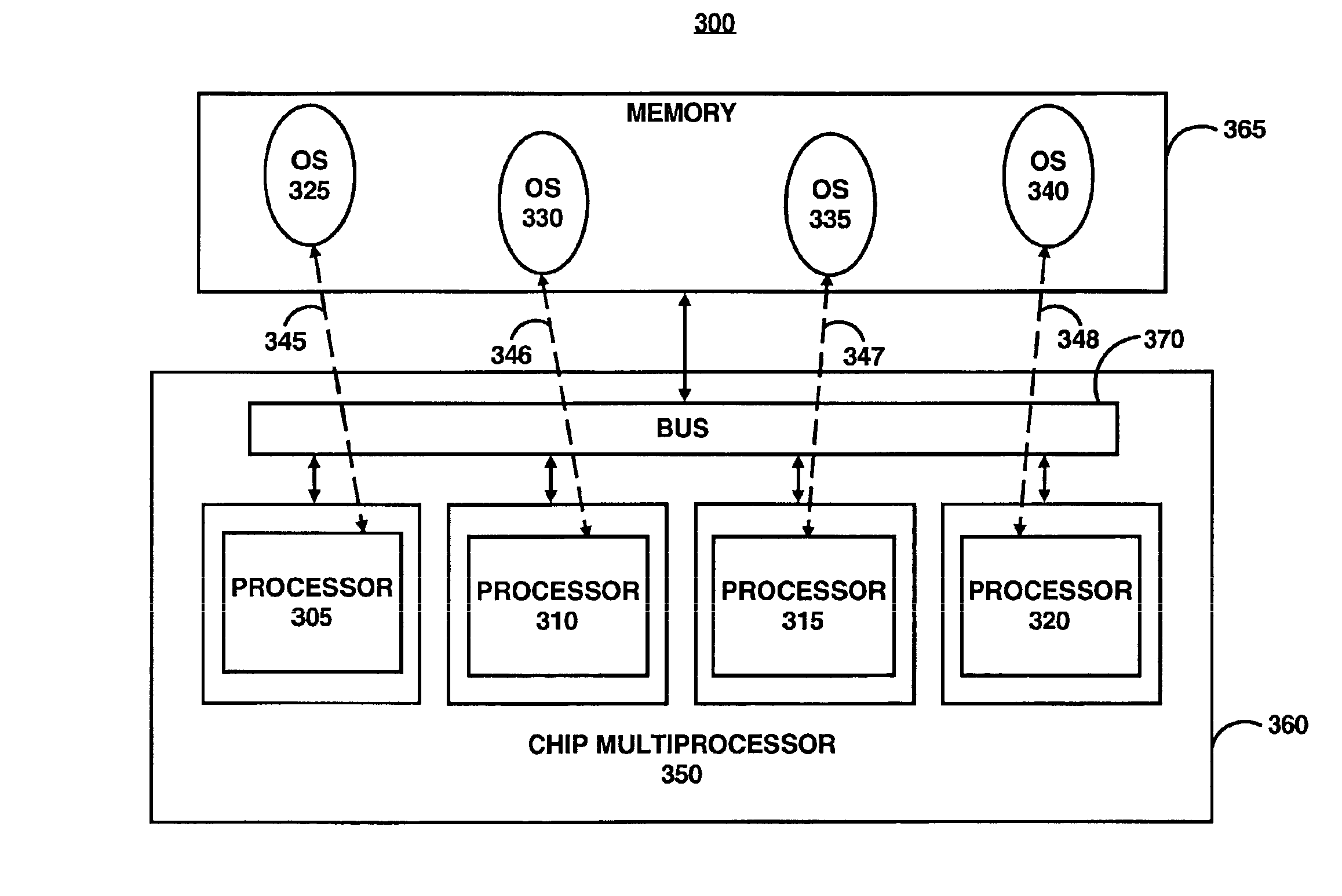

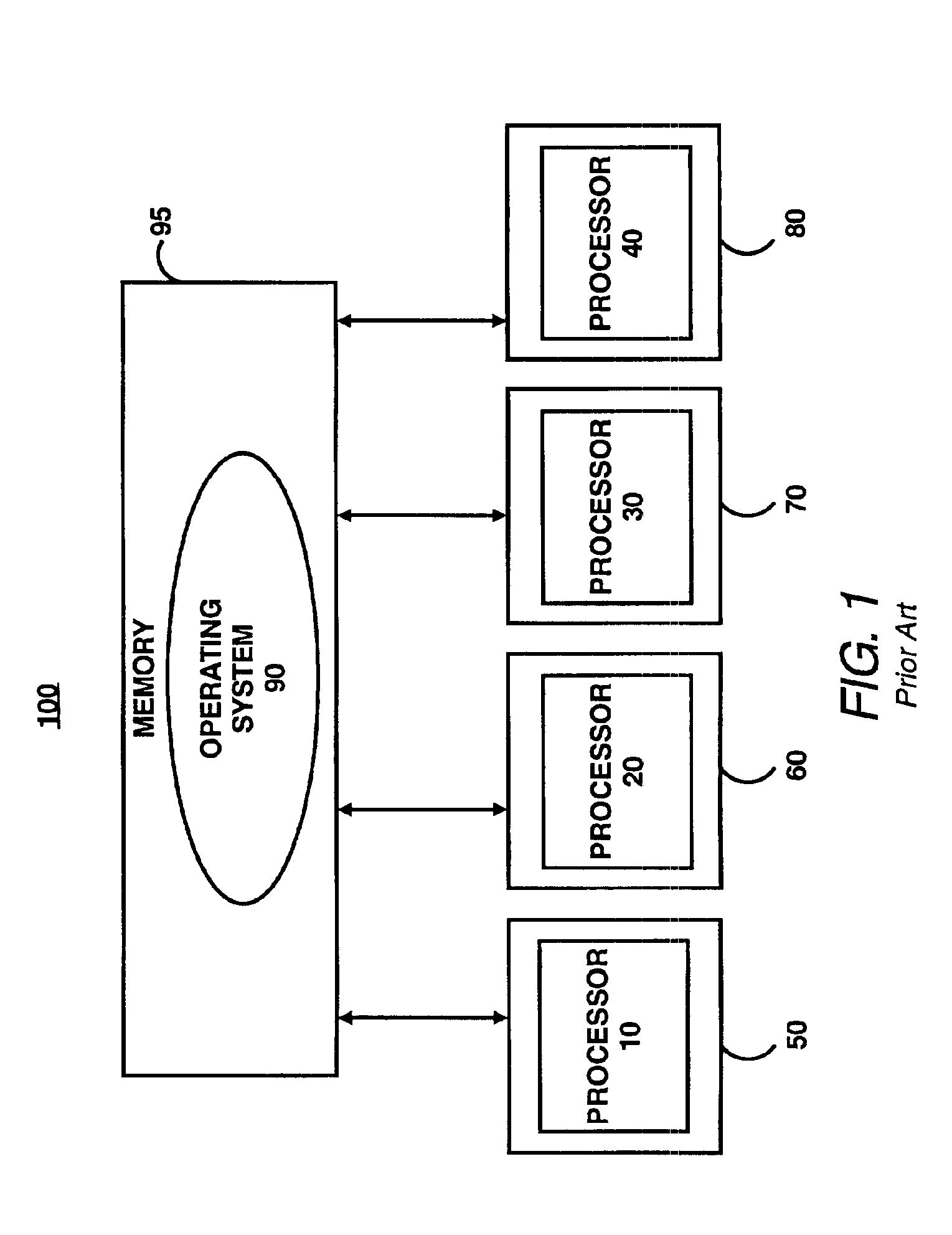

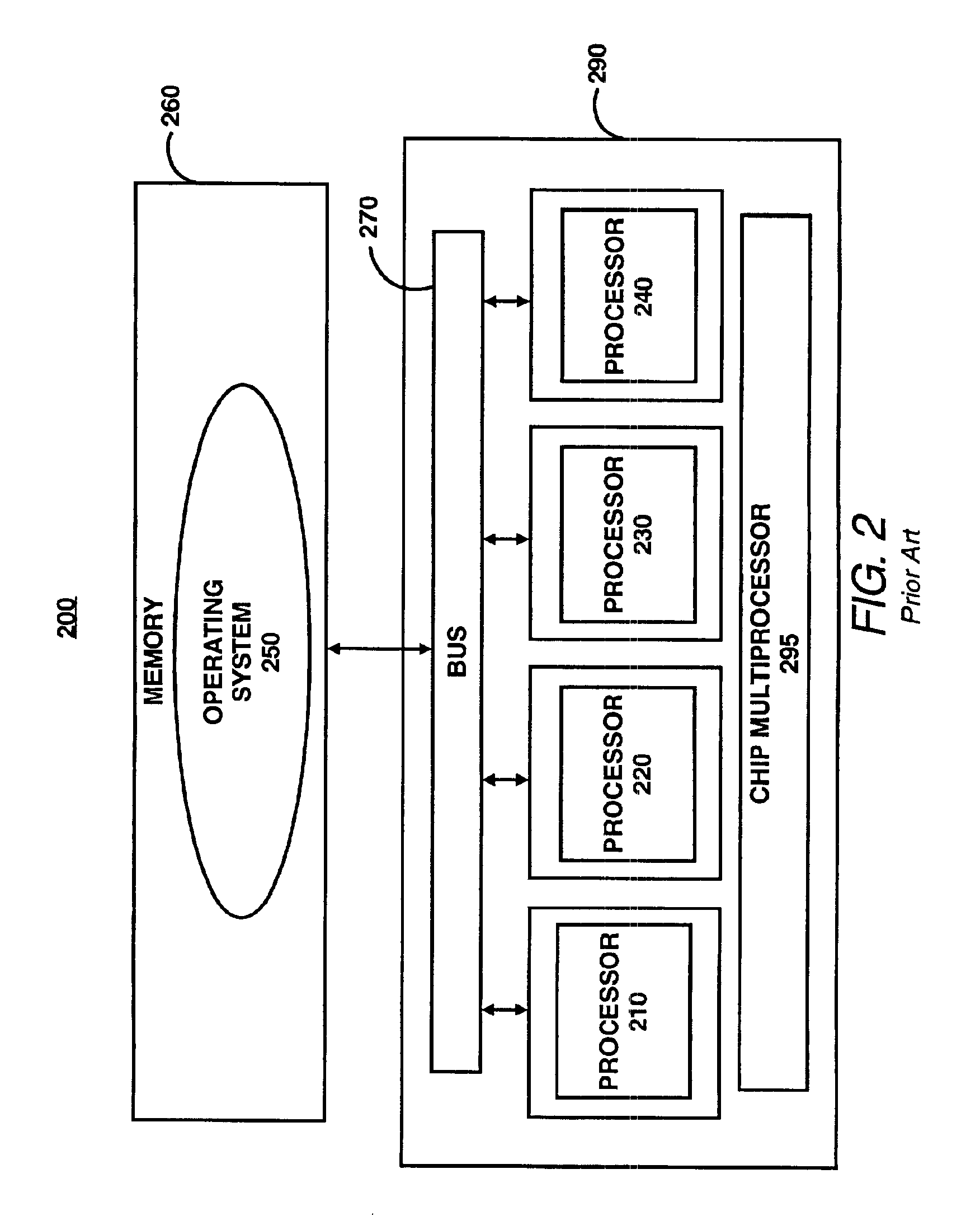

Chip multiprocessor with multiple operating systems

InactiveUS6874014B2Improve efficiencyImprove scalabilityEnergy efficient ICTResource allocationOperational systemOperating system

Multiple processors are mounted on a single die. The die is connected to a memory storing multiple operating systems or images of multiple operating systems. Each of the processors or a group of one or more of the processors is operable to execute a distinct one of the multiple operating systems. Therefore, resources for a single operating system may be dedicated to one processor or a group of processors. Consequently, a large number of processors mounted on a single die can operate efficiently.

Owner:SONRAI MEMORY LTD

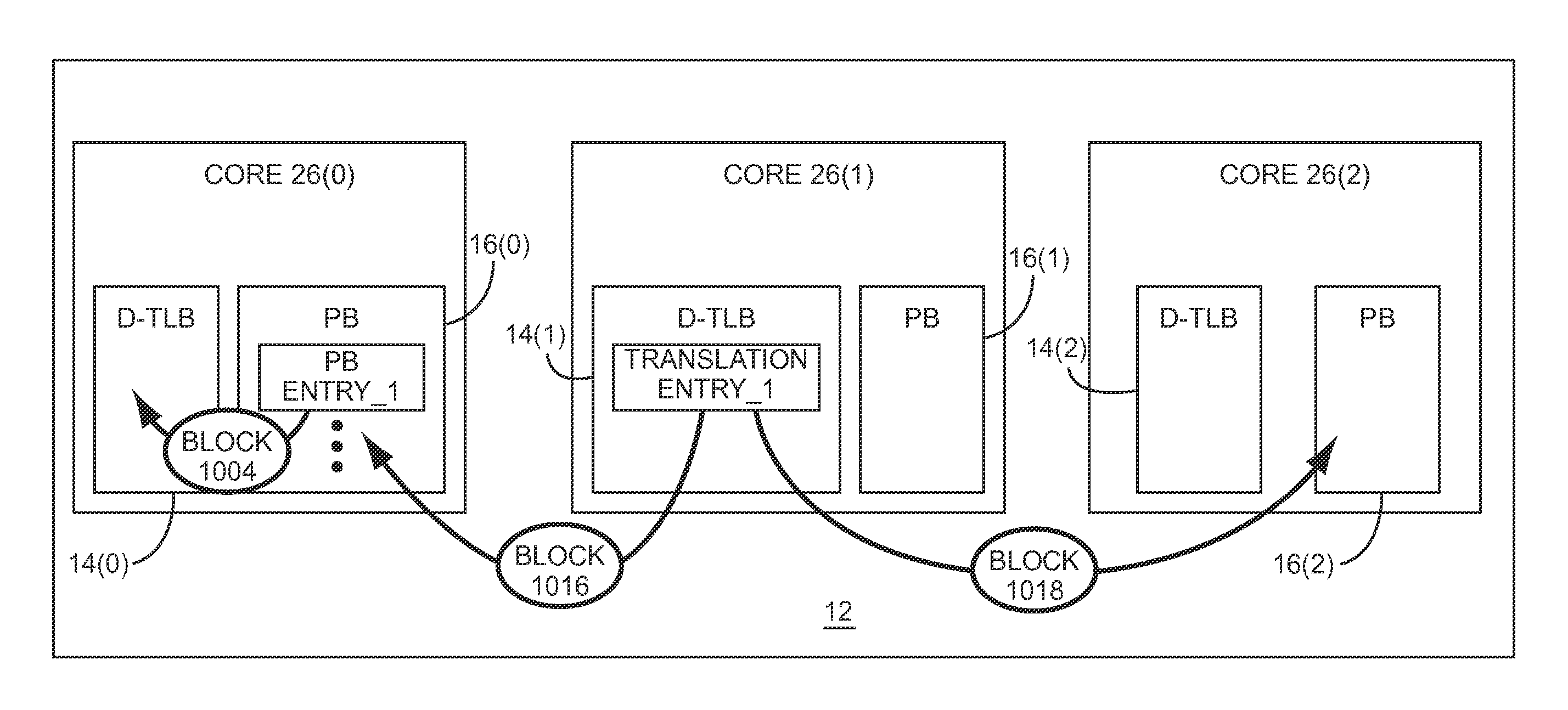

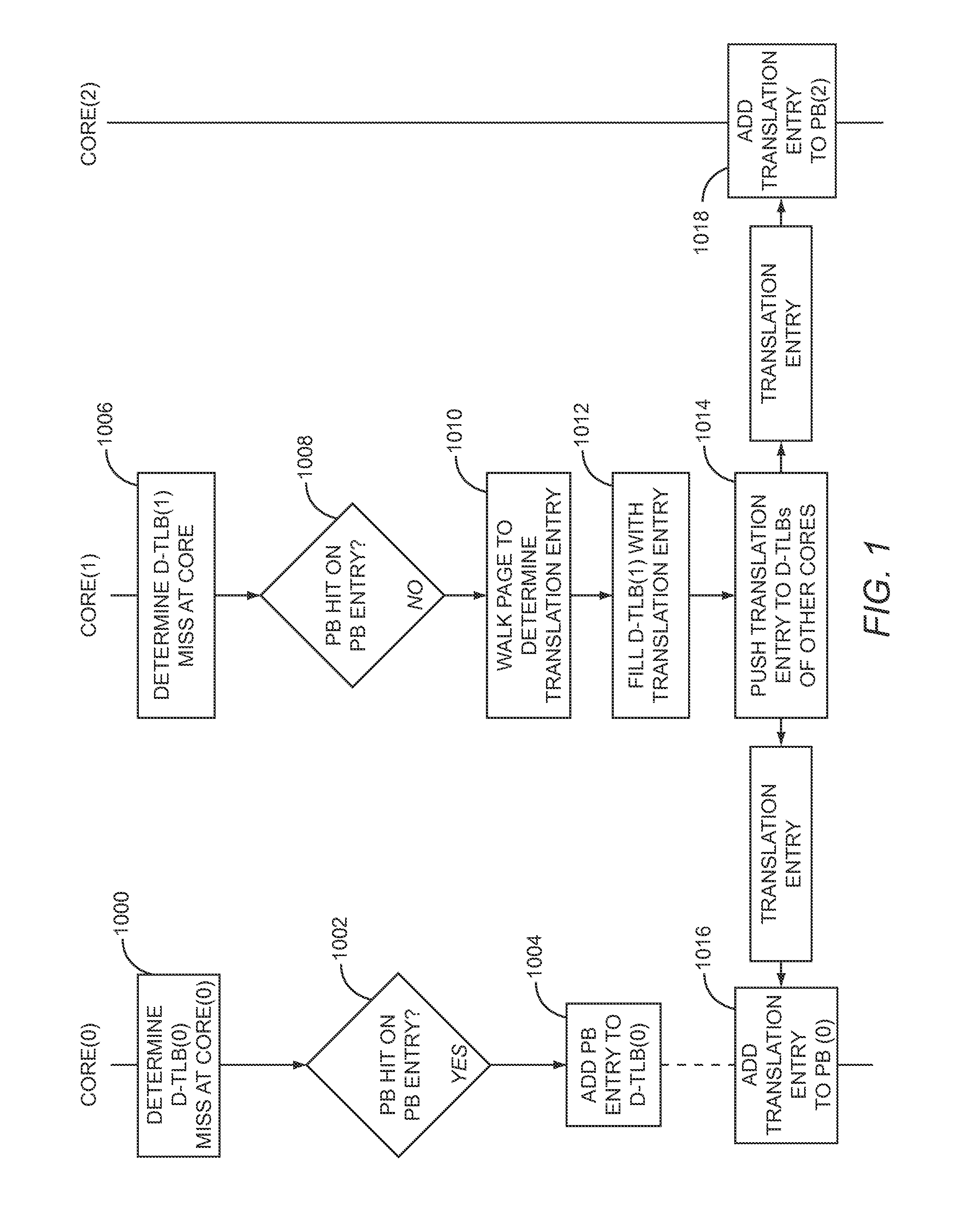

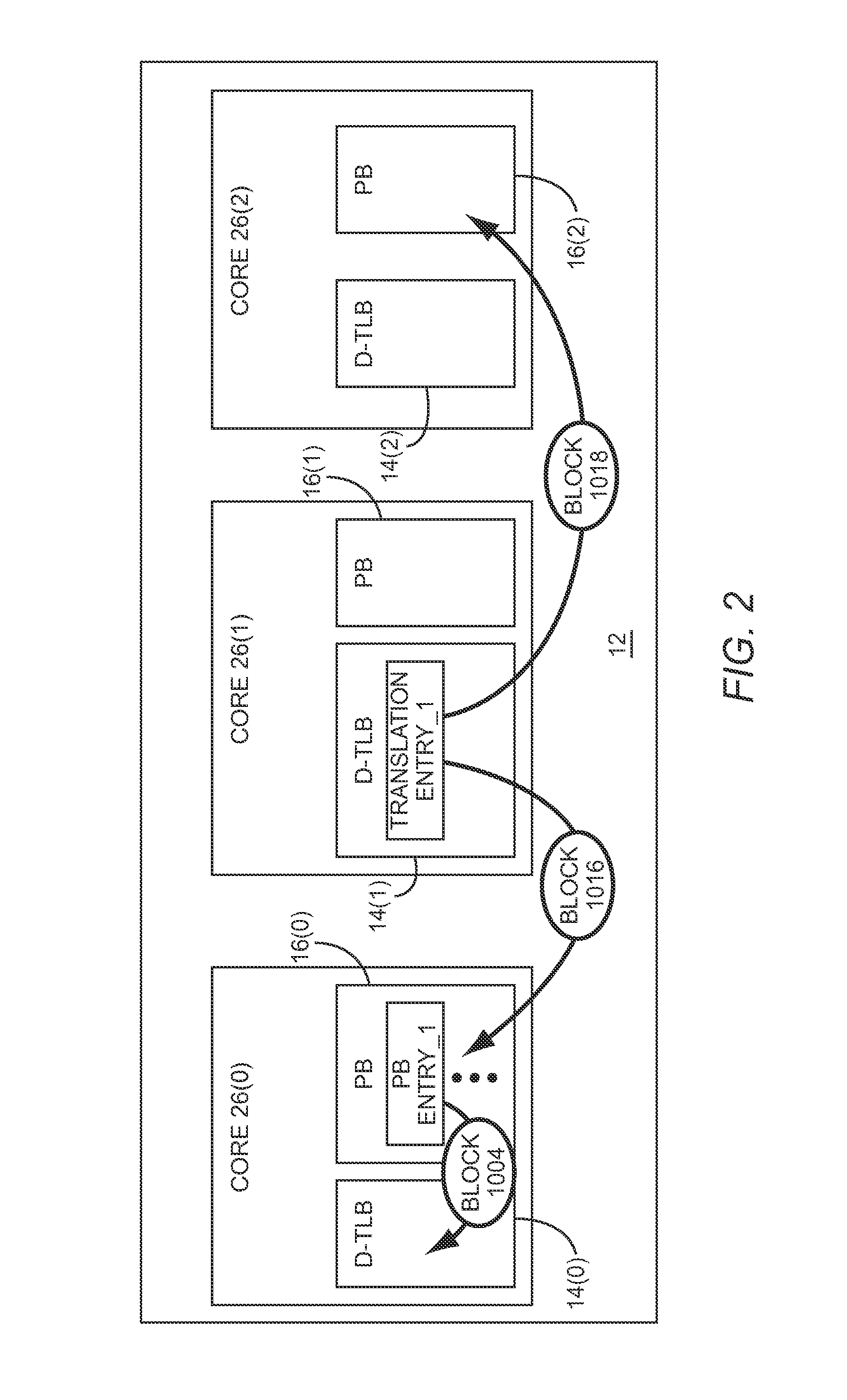

Inter-core cooperative TLB prefetchers

InactiveUS8880844B1Increase confidence valueIncrease valueMemory architecture accessing/allocationDigital data processing detailsTranslation lookaside bufferOperating system

A chip multiprocessor includes a plurality of cores each having a translation lookaside buffer (TLB) and a prefetch buffer (PB). Each core is configured to determine a TLB miss on the core's TLB for a virtual page address and determine whether or not there is a PB hit on a PB entry in the PB for the virtual page address. If it is determined that there is a PB hit, the PB entry is added to the TLB. If it is determined that there is not a PB hit, the virtual page address is used to perform a page walk to determine a translation entry, the translation entry is added to the TLB and the translation entry is prefetched to each other one of the plurality of cores.

Owner:THE TRUSTEES FOR PRINCETON UNIV

Method and system for leveraging non-uniform miss penality in cache replacement policy to improve processor performance and power

ActiveUS20190004970A1Memory architecture accessing/allocationInput/output to record carriersParallel computingChip multi processor

Method, system, and apparatus for leveraging non-uniform miss penalty in cache replacement policy to improve performance and power in a chip multiprocessor platform is described herein. One embodiment of a method includes: determining a first set of cache line candidates for eviction from a first memory in accordance to a cache line replacement policy, the first set comprising a plurality of cache line candidates; determining a second set of cache line candidates from the first set based on replacement penalties associated with each respective cache line candidate in the first set; selecting a target cache line from the second set of cache line candidates; and responsively causing the selected target cache line to be moved from the first memory to a second memory.

Owner:INTEL CORP

Single-chip multiprocessor with clock cycle-precise program scheduling of parallel execution

InactiveUS20070006193A1Guaranteed usageMinimize the interstream dependenciesResource allocationProgram synchronisationMicrocontrollerMulti processor

A single-chip multiprocessor system and operation method of this system based on a static macro-scheduling of parallel streams for multiprocessor parallel execution. The single-chip multiprocessor system has buses for direct exchange between the processor register files and access to their store addresses and data. Each explicit parallelism architecture processor of this system has an interprocessor interface providing the synchronization signals exchange, data exchange at the register file level and access to store addresses and data of other processors. The single-chip multiprocessor system uses ILP to increase the performance. Synchronization of the streams parallel execution is ensured using special operations setting a sequence of streams and stream fragments execution prescribed by the program algorithm.

Owner:ELBRUS INT

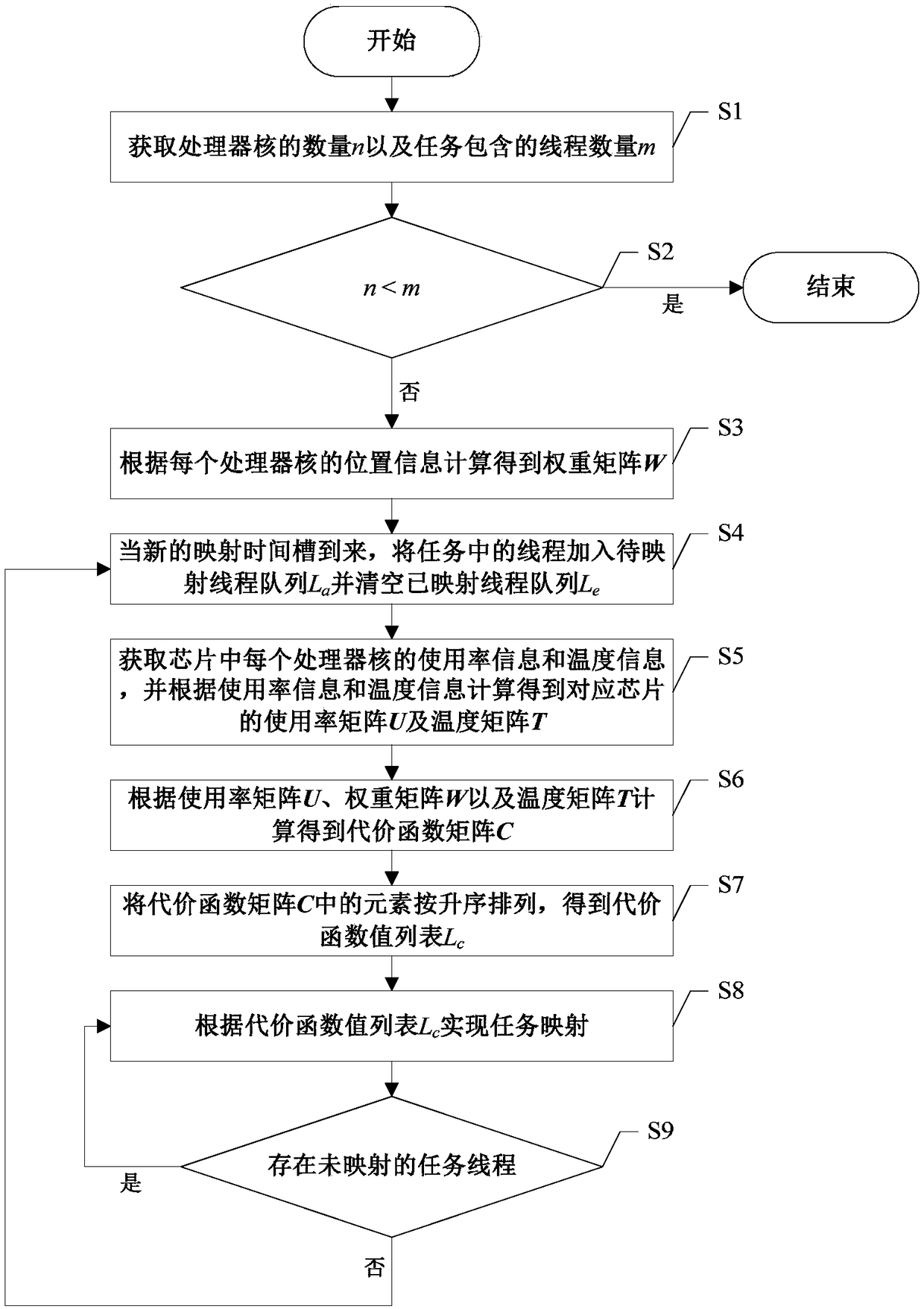

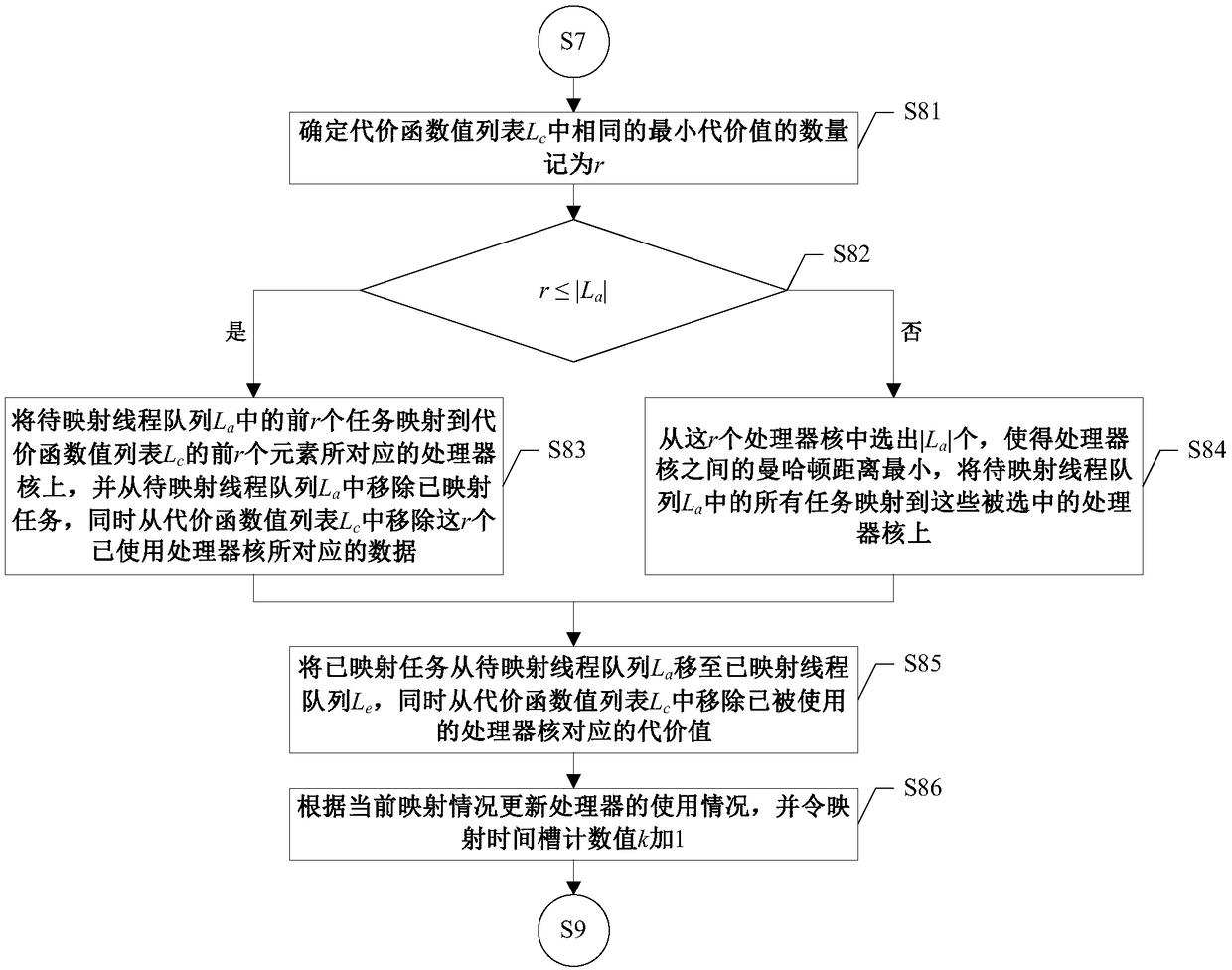

Task mapping method for information security of multi-core processor

ActiveCN108563949AReduce correlationLower success ratePlatform integrity maintainanceComputer architectureSide information

The invention discloses a task mapping method for the information security of a multi-core processor, and belongs to the technical field of on-chip multi-processor task mapping algorithm and hardwaresecurity. For the hot side information channel leakage problem of the multi-core processor, the dynamic security task mapping method is provided, a task thread is mapped to the optimum combination selected from a processor core set with the same cost function value, the correlation between the instruction information of the processor in the executing process and the transient temperature and spacetemperature is reduced, and therefore the success rate of stealing information on a chip through a hot side information channel by an attacker is reduced.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

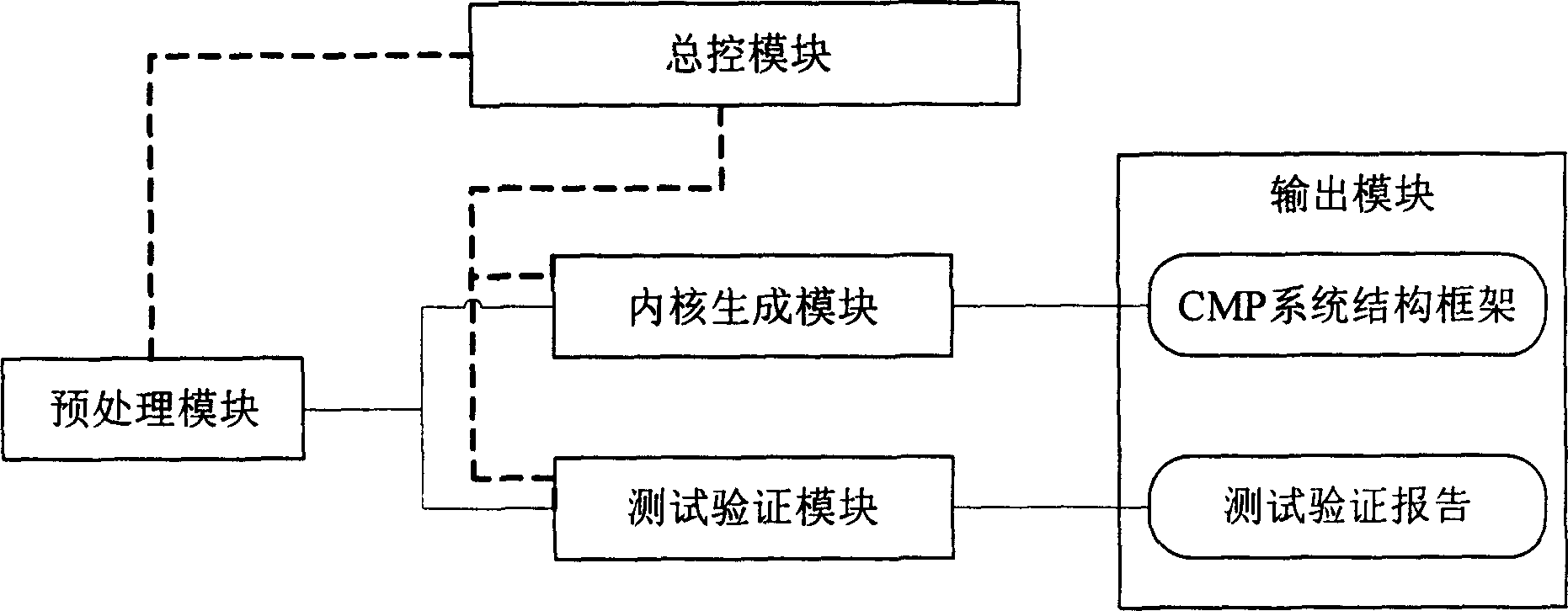

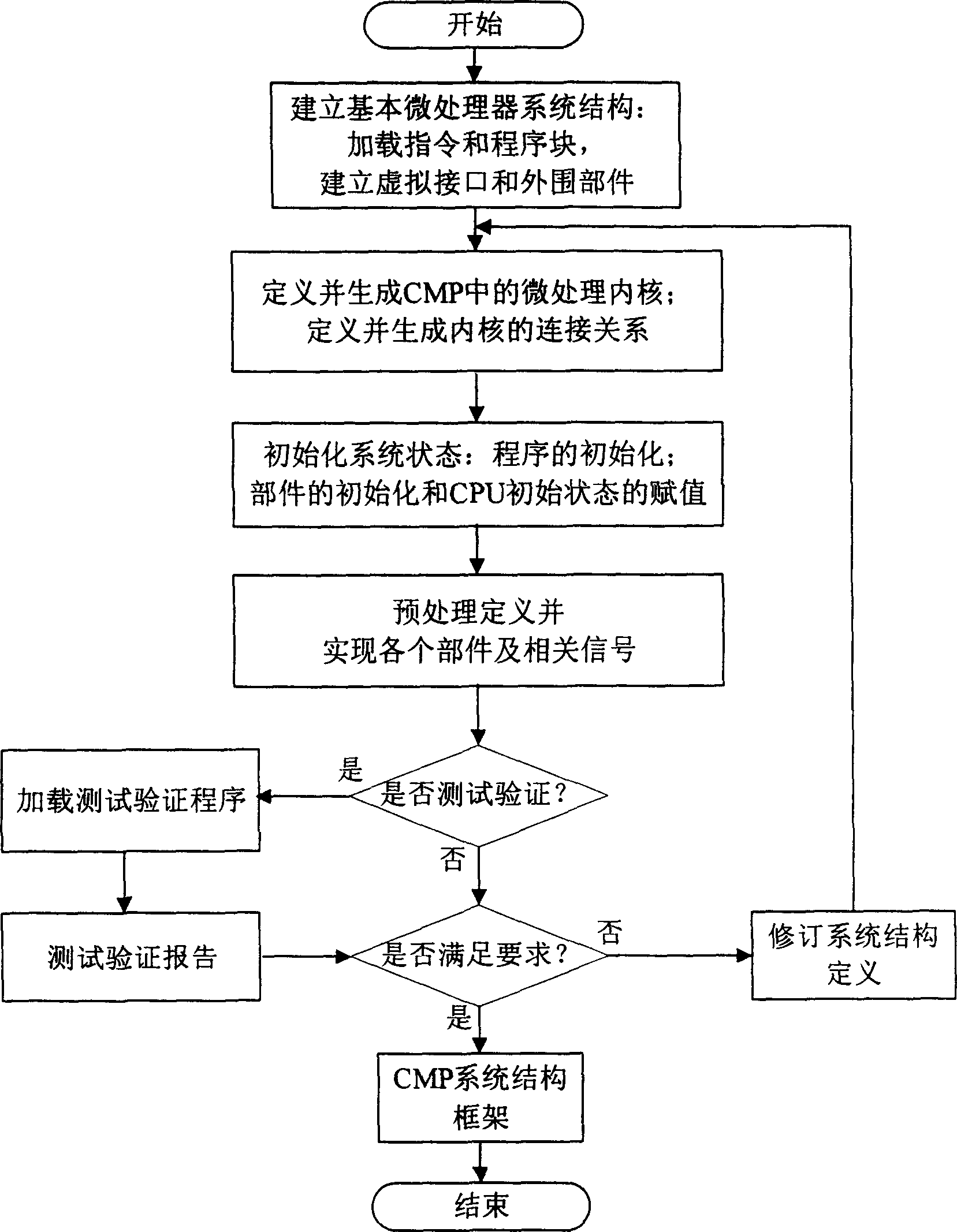

Single-chip analog system with multi-processor structure

InactiveCN1645338AAchieve modularityImprove readabilitySoftware testing/debuggingComputer architectureMulti processor

An analog system of single chip-multiple processor structure is featured as connecting general control module separately to preprocessing module, core generating module and test verification module; connecting the preprocessing module simultaneously to core generating module and test verification module; connecting the core generating module to frame structure of CMP system on output module and connecting the test verification module to test verification report of output module.

Owner:TSINGHUA UNIV

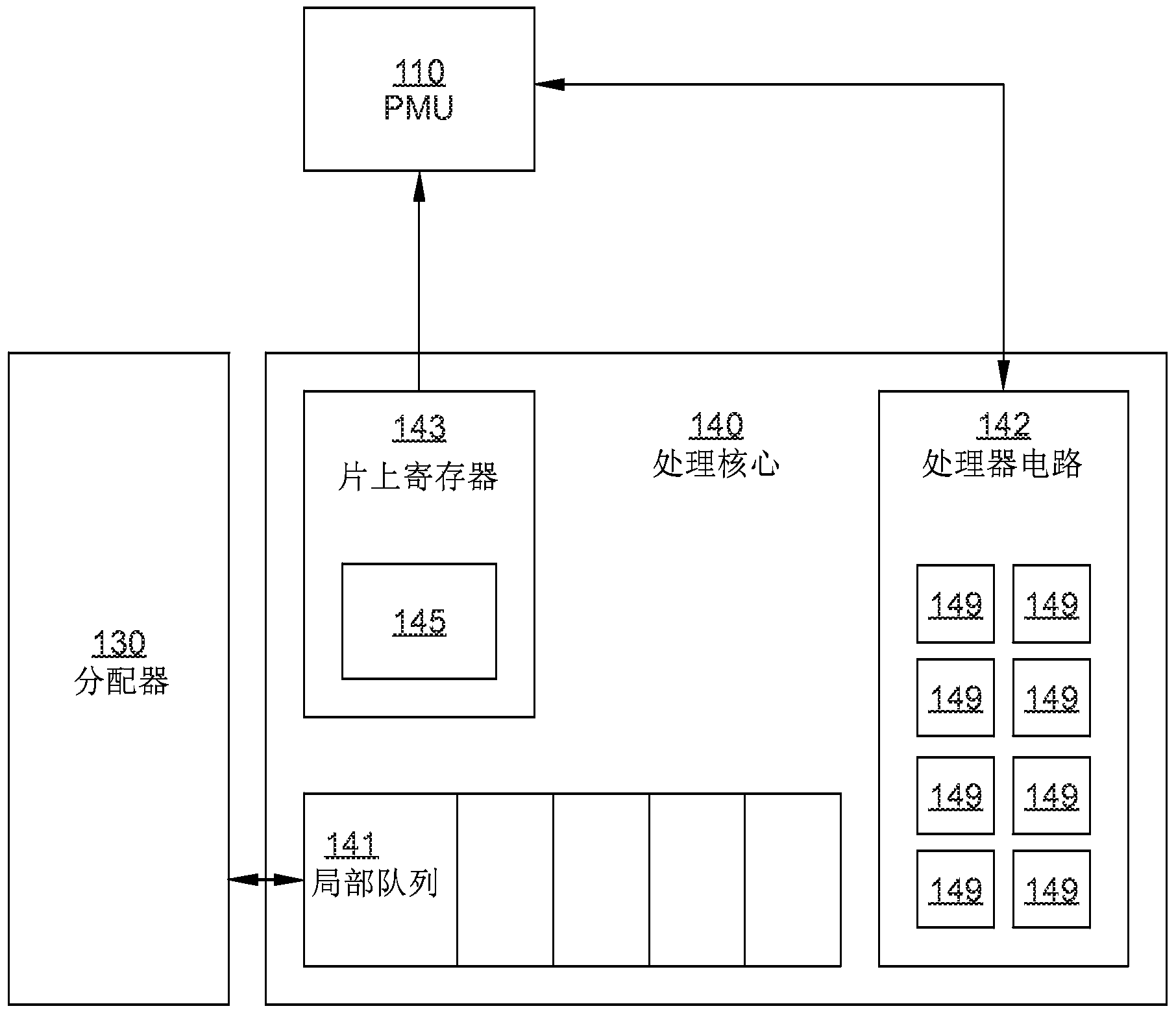

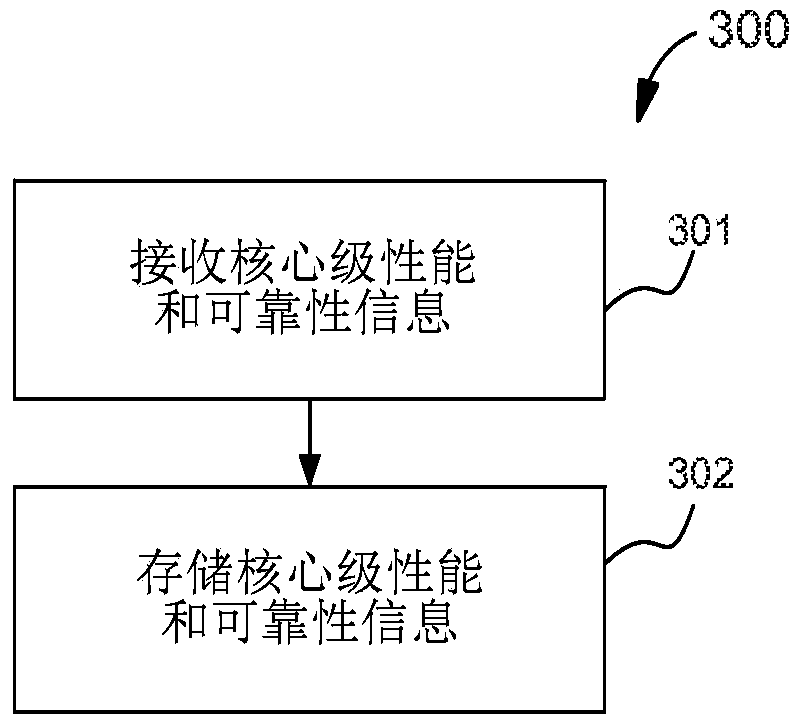

Core-level dynamic voltage and frequency scaling in a chip multiprocessor

InactiveCN104205087ADigital data processing detailsGeneral purpose stored program computerDependabilityFrequency regulation

Techniques described herein generally include methods and systems related to manufacturing a chip multiprocessor having multiple processor cores. An example method may include receiving performance or reliability information associated with each of the multiple processor cores, wherein the received performance or reliability information is determined prior to packaging of the chip multiprocessor, and storing the received performance or reliability information such that stored performance or reliability information is used to adjust an operating parameter of at least one of the multiple processor cores of the chip multiprocessor.

Owner:EMPIRE TECH DEV LLC

Efficient on-chip instruction and data caching for chip multiprocessors

The storage of data line in one or more L1 caches and / or a shared L2 cache of a chip multiprocessor is dynamically optimized based on the sharing of the data line. In one embodiment, an enhanced L2 cache directory entry associated with the data line is generated in an L2 cache directory of the shared L2 cache. The enhanced L2 cache directory entry includes a cache mask indicating a storage state of the data line in the one or more L1 caches and the shared L2 cache. In some embodiments, where the data line is stored in the shared L2 cache only, a portion of the cache mask indicates a storage history of the data line in the one or more L2 caches.

Owner:ORACLE INT CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com