Patents

Literature

45 results about "Vision processing unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A vision processing unit (VPU) is (as of 2018) an emerging class of microprocessor; it is a specific type of AI accelerator, designed to accelerate machine vision tasks.

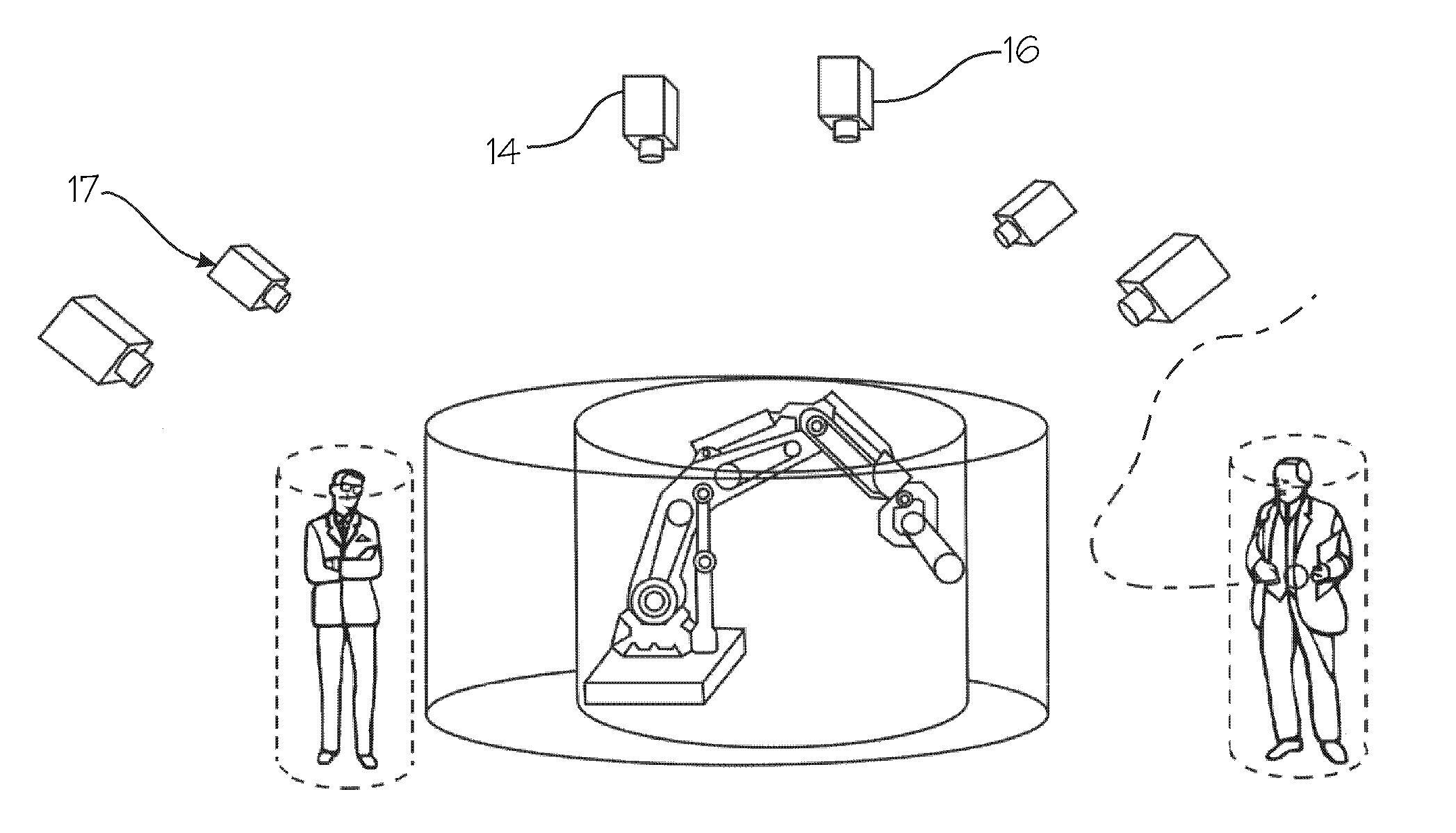

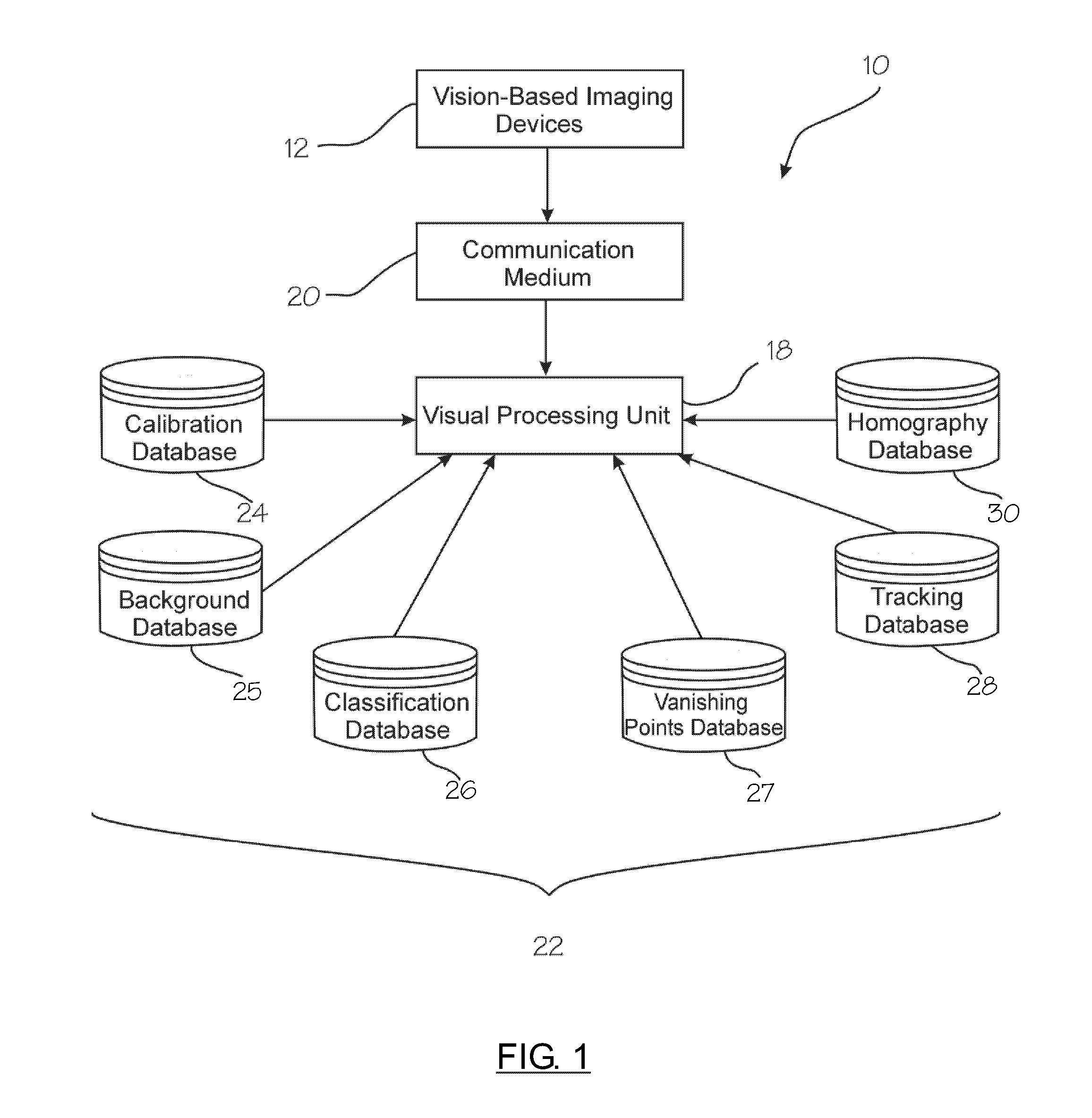

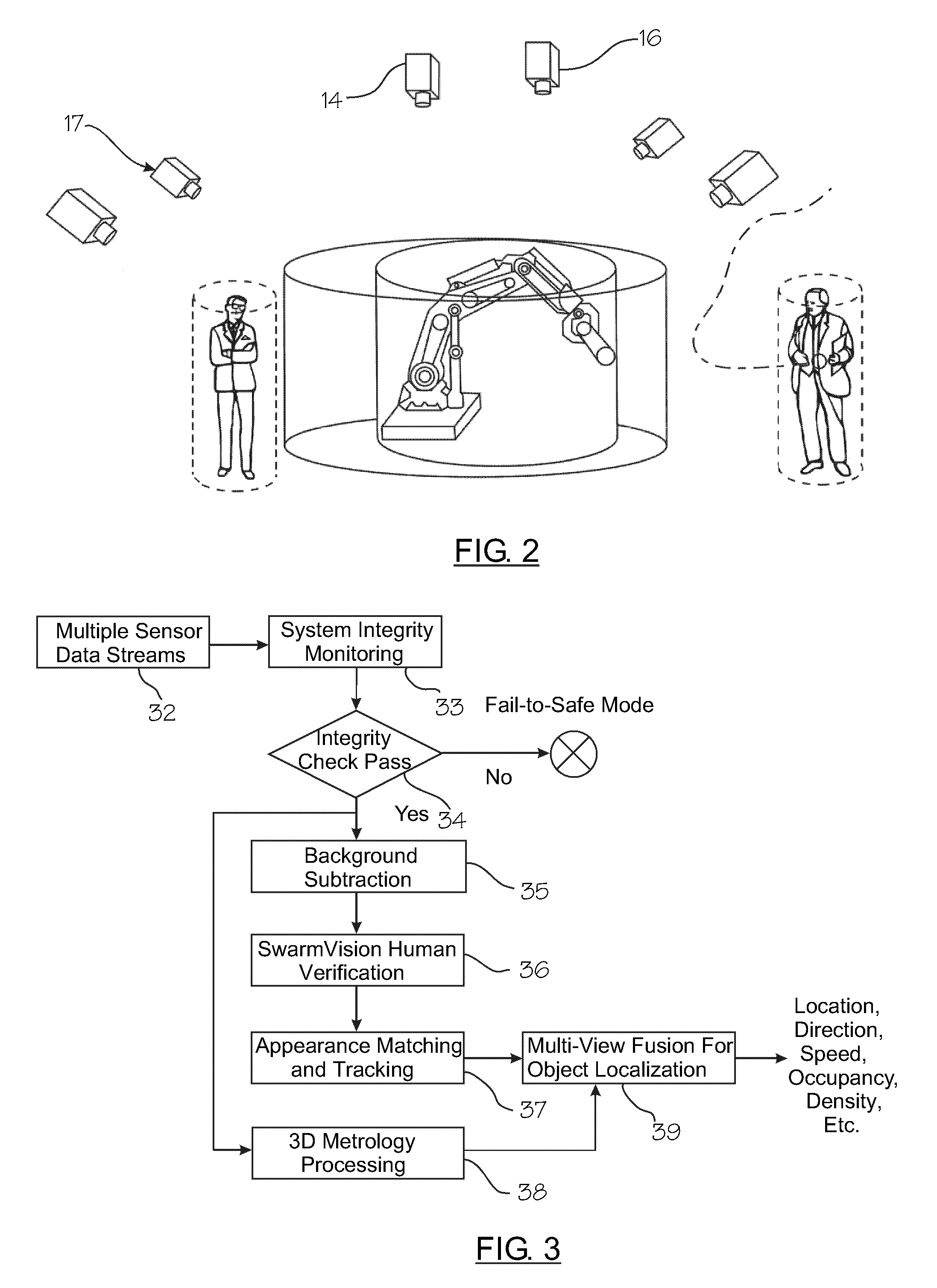

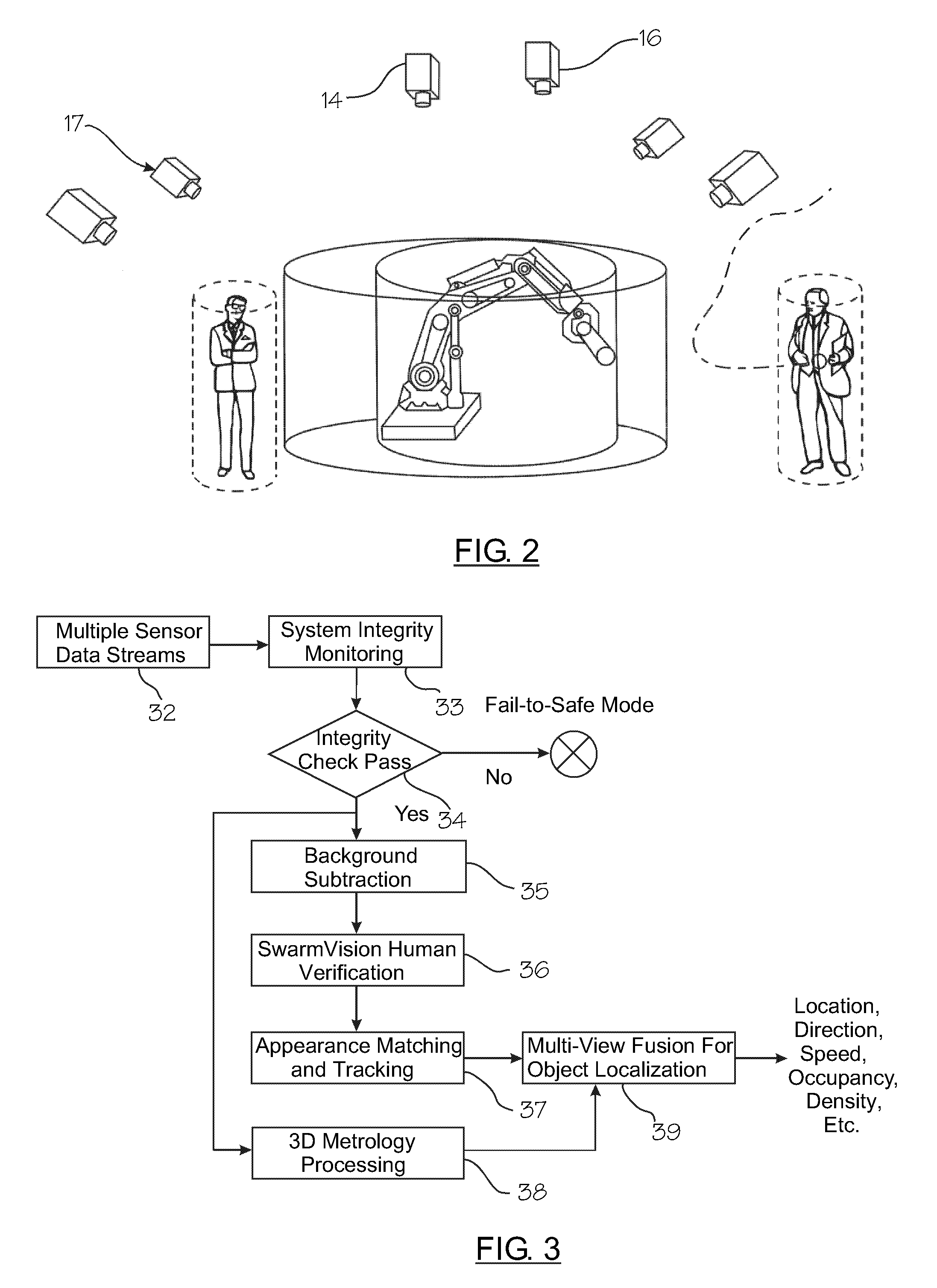

Vision System for Monitoring Humans in Dynamic Environments

ActiveUS20110050878A1Improve productivityImproving work cell activity efficiencyTelevision system detailsCharacter and pattern recognitionViewpointsControl signal

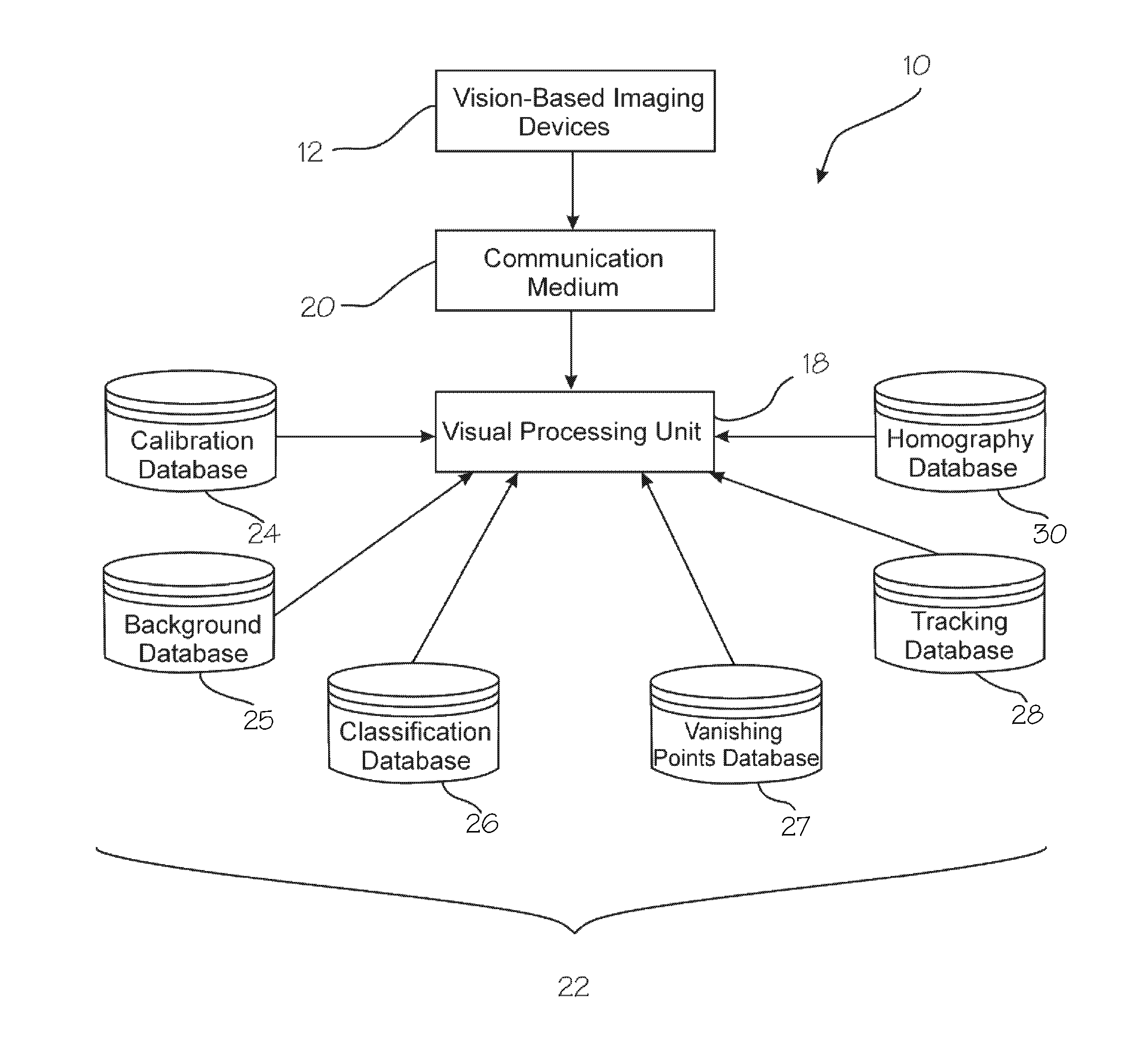

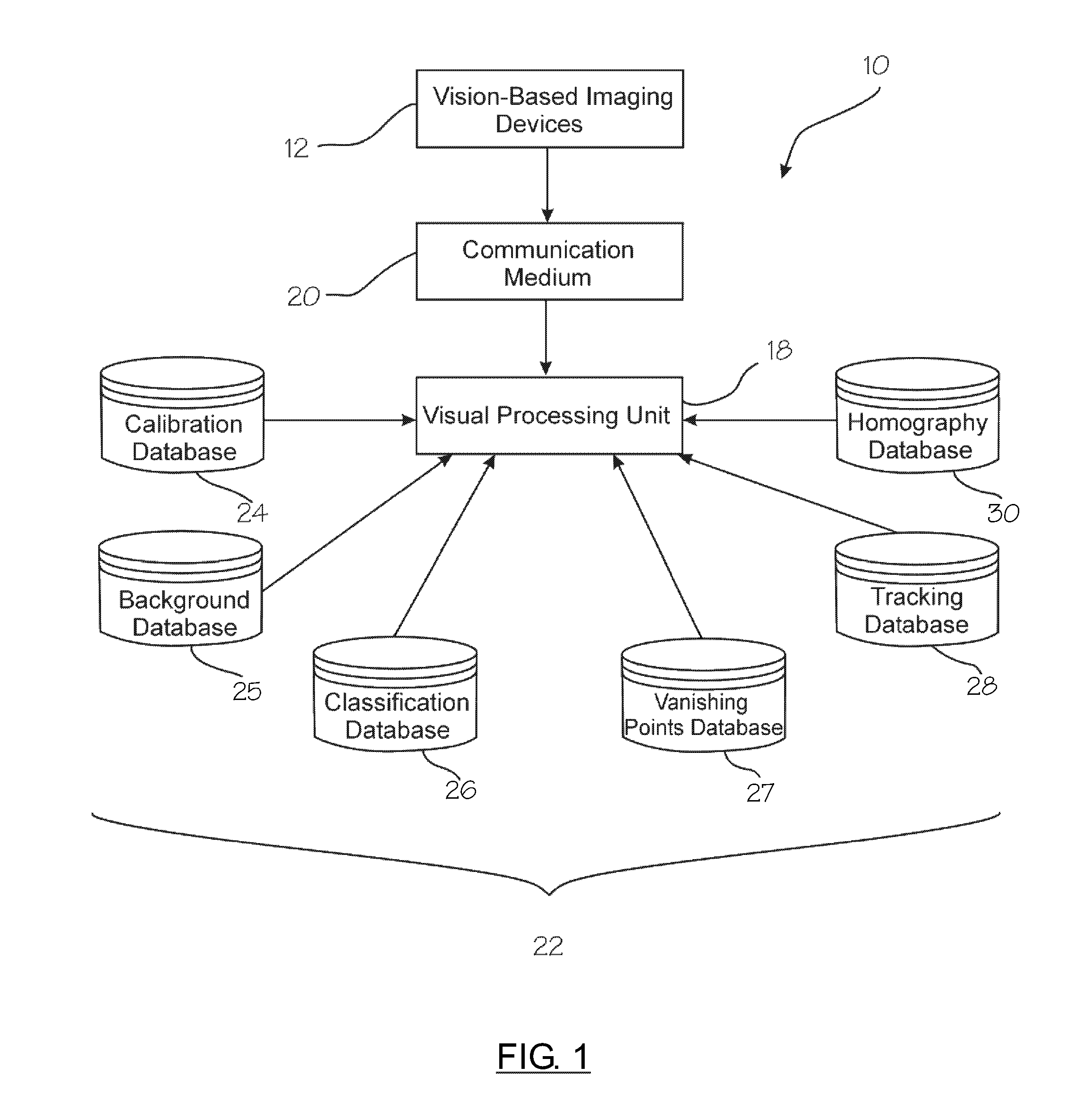

A safety monitoring system for a workspace area. The workspace area related to a region having automated moveable equipment. A plurality of vision-based imaging devices capturing time-synchronized image data of the workspace area. Each vision-based imaging device repeatedly capturing a time synchronized image of the workspace area from a respective viewpoint that is substantially different from the other respective vision-based imaging devices. A visual processing unit for analyzing the time-synchronized image data. The visual processing unit processes the captured image data for identifying a human from a non-human object within the workspace area. The visual processing unit further determining potential interactions between a human and the automated moveable equipment. The visual processing unit further generating control signals for enabling dynamic reconfiguration of the automated moveable equipment based on the potential interactions between the human and the automated moveable equipment in the workspace area.

Owner:GM GLOBAL TECH OPERATIONS LLC

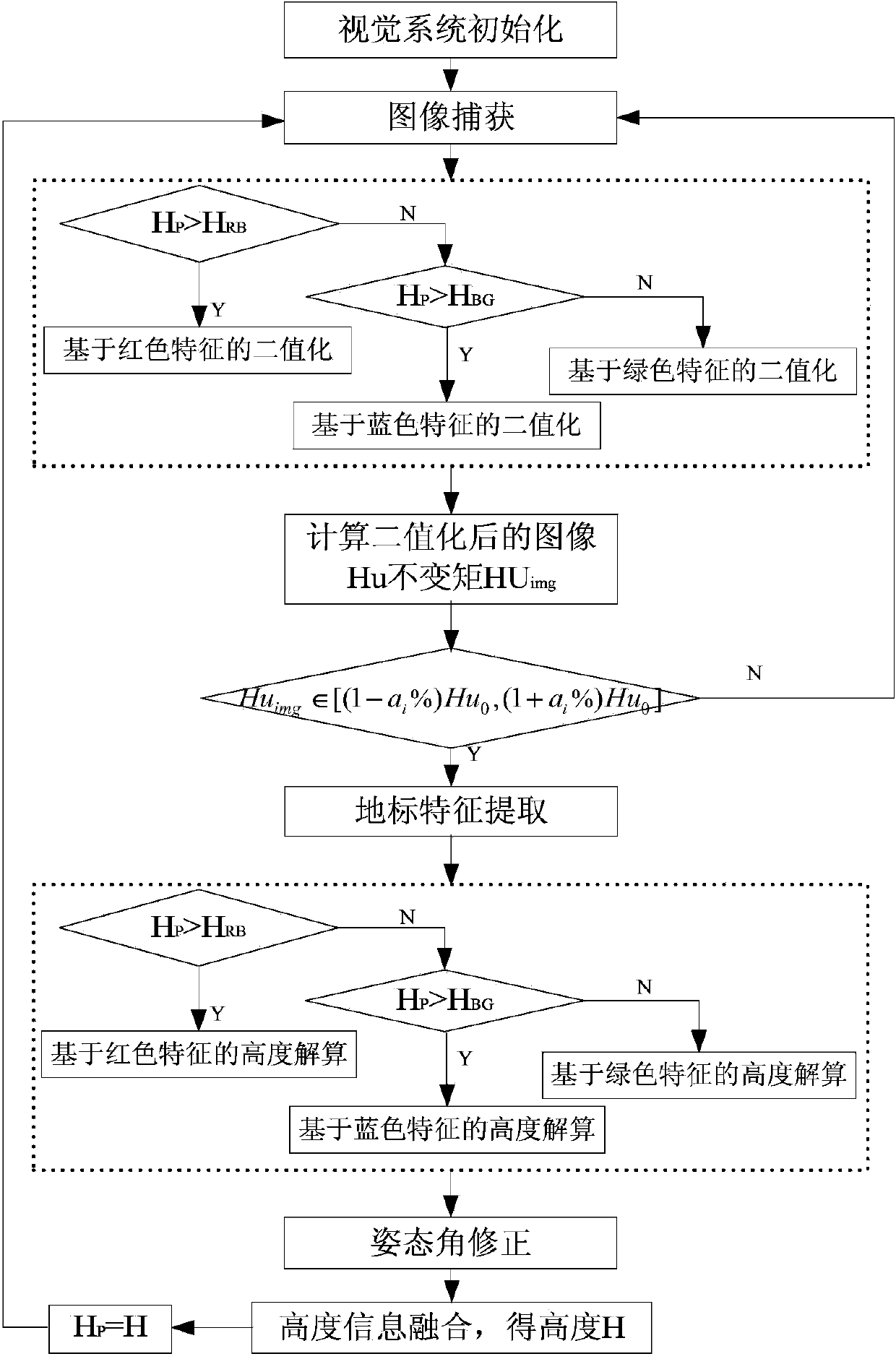

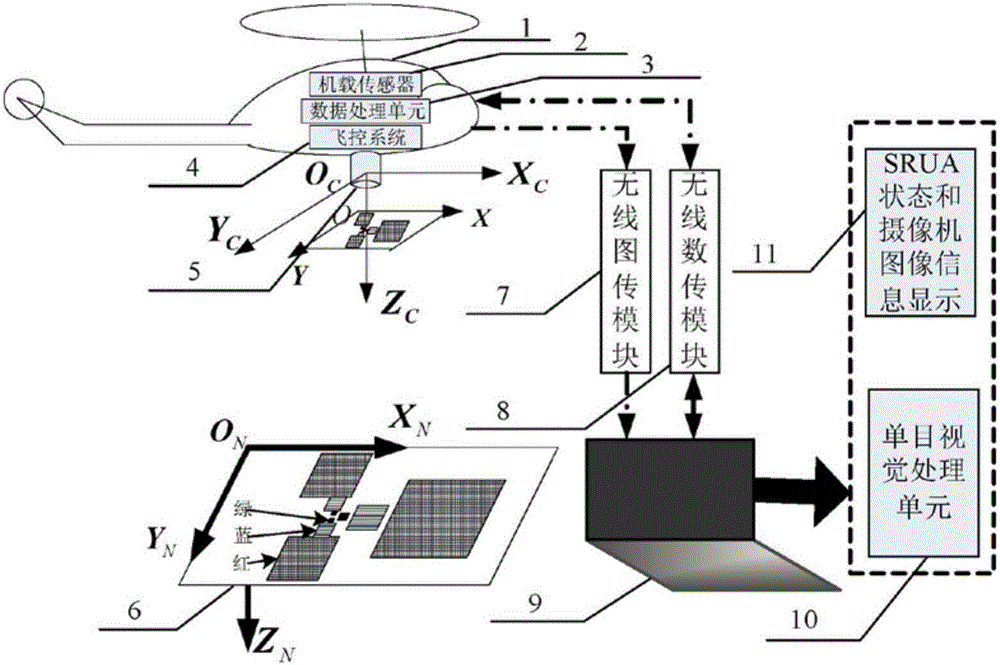

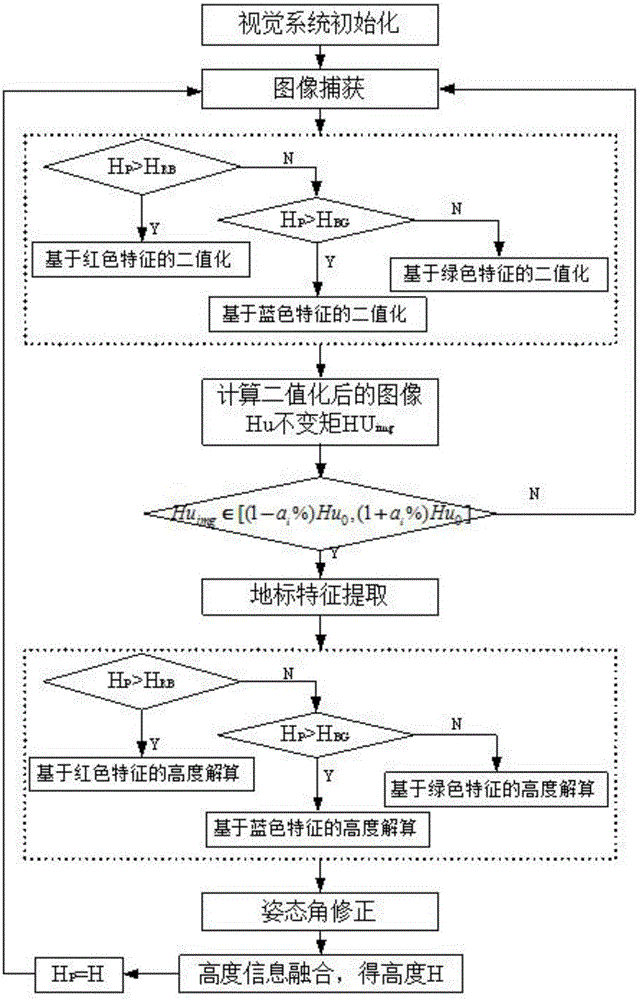

Rotor unmanned aircraft independent take-off and landing system based on three-layer triangle multi-color landing ground

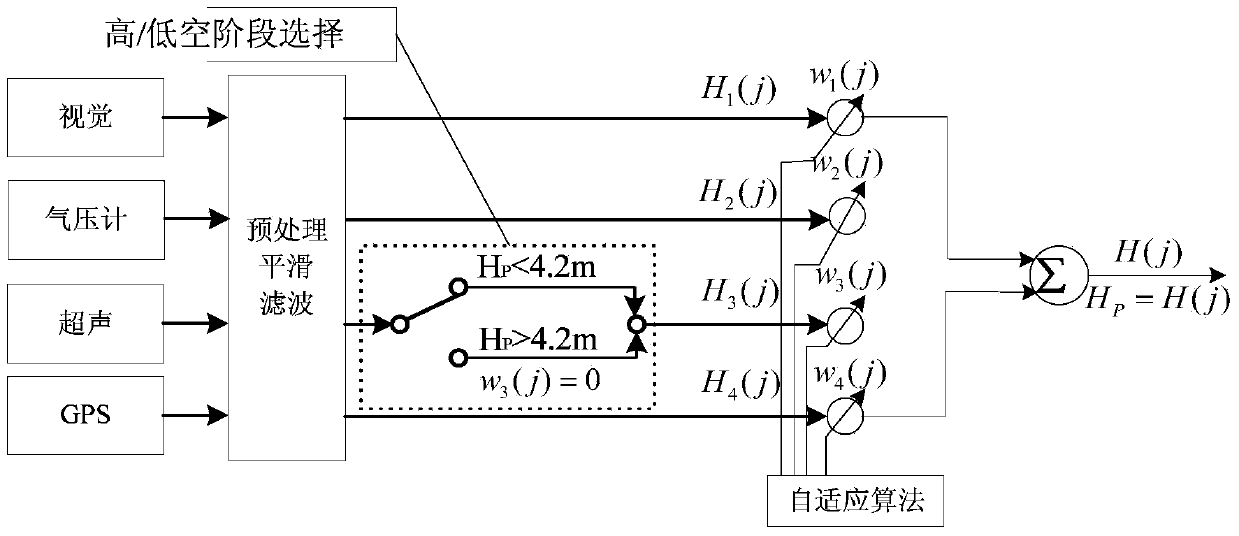

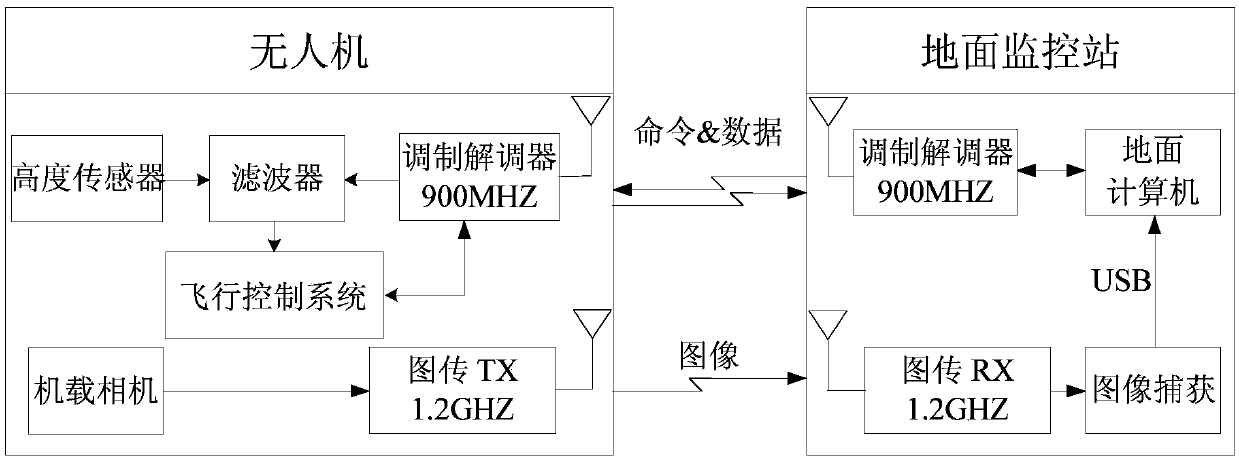

ActiveCN103809598AImprove recognition rateBright colorPosition/course control in three dimensionsWireless image transmissionVision processing unit

Disclosed is a rotor unmanned aircraft independent take-off and landing system based on three-layer triangle multi-color landing ground. The system comprises a small rotor unmanned aircraft (SRUA), an onboard sensor, a data processing unit, a flight control system, an onboard camera, landing ground, a wireless image transmission module, a wireless data transmission module and a ground monitor station, wherein the onboard sensor comprises an inertial measurement unit, a global positioning system (GPS) receiver, a barometer, an ultrasound device and the like, the data processing unit is used for integrating sensor data, the flight control system finishes route planning to achieve high accuracy control over the SRUA, the onboard camera is used for collecting images of the landing ground, the landing ground is a specially designed landing point for an unmanned aircraft, the wireless image transmission module can transmit the images to a ground station, the wireless data transmission module can achieve communication of data and instructions between the unmanned aircraft and the ground station, and the ground station is composed of a visual processing unit and a display terminal. According to the rotor unmanned aircraft independent take-off and landing system based on the three-layer triangle multi-color landing ground, the reliability of SRUA navigation messages is guaranteed, the control accuracy of the SRUA is increased, costs are low, the application is convenient, and important engineering values can be achieved.

Owner:BEIHANG UNIV

Vision system for monitoring humans in dynamic environments

ActiveUS8253792B2Improve efficiencyImprove securityTelevision system detailsCharacter and pattern recognitionViewpointsControl signal

A safety monitoring system for a workspace area. The workspace area related to a region having automated moveable equipment. A plurality of vision-based imaging devices capturing time-synchronized image data of the workspace area. Each vision-based imaging device repeatedly capturing a time synchronized image of the workspace area from a respective viewpoint that is substantially different from the other respective vision-based imaging devices. A visual processing unit for analyzing the time-synchronized image data. The visual processing unit processes the captured image data for identifying a human from a non-human object within the workspace area. The visual processing unit further determining potential interactions between a human and the automated moveable equipment. The visual processing unit further generating control signals for enabling dynamic reconfiguration of the automated moveable equipment based on the potential interactions between the human and the automated moveable equipment in the workspace area.

Owner:GM GLOBAL TECH OPERATIONS LLC

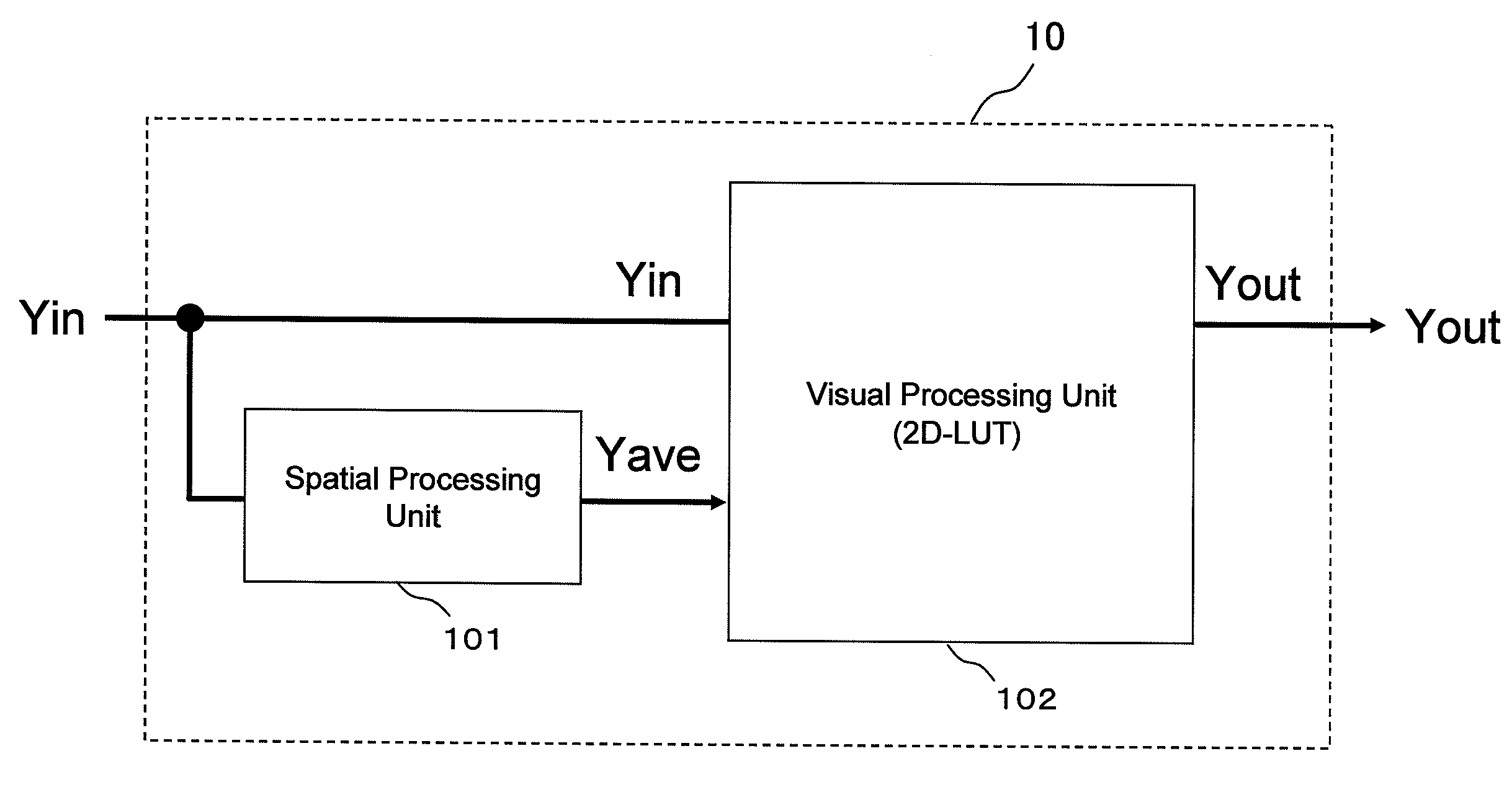

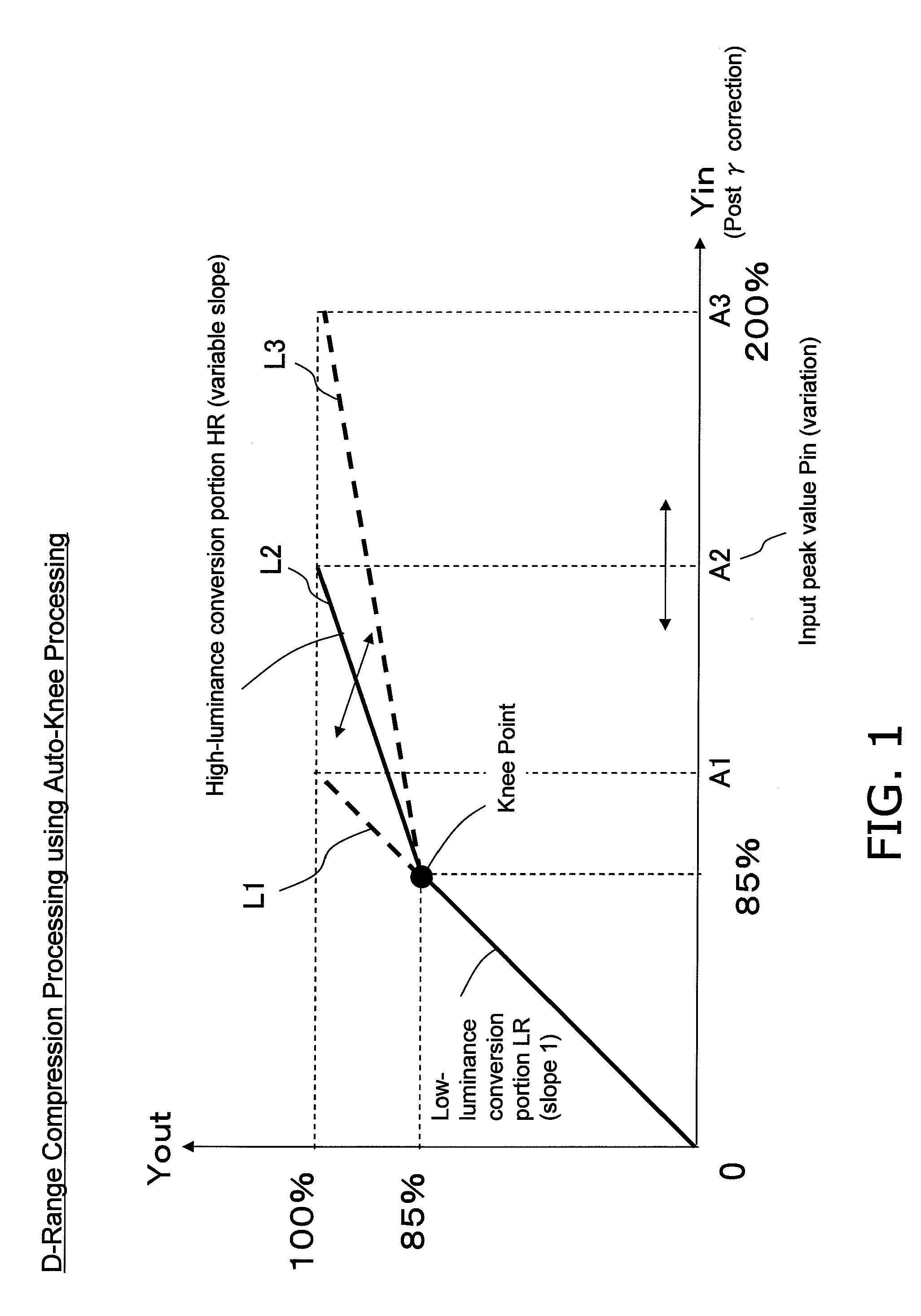

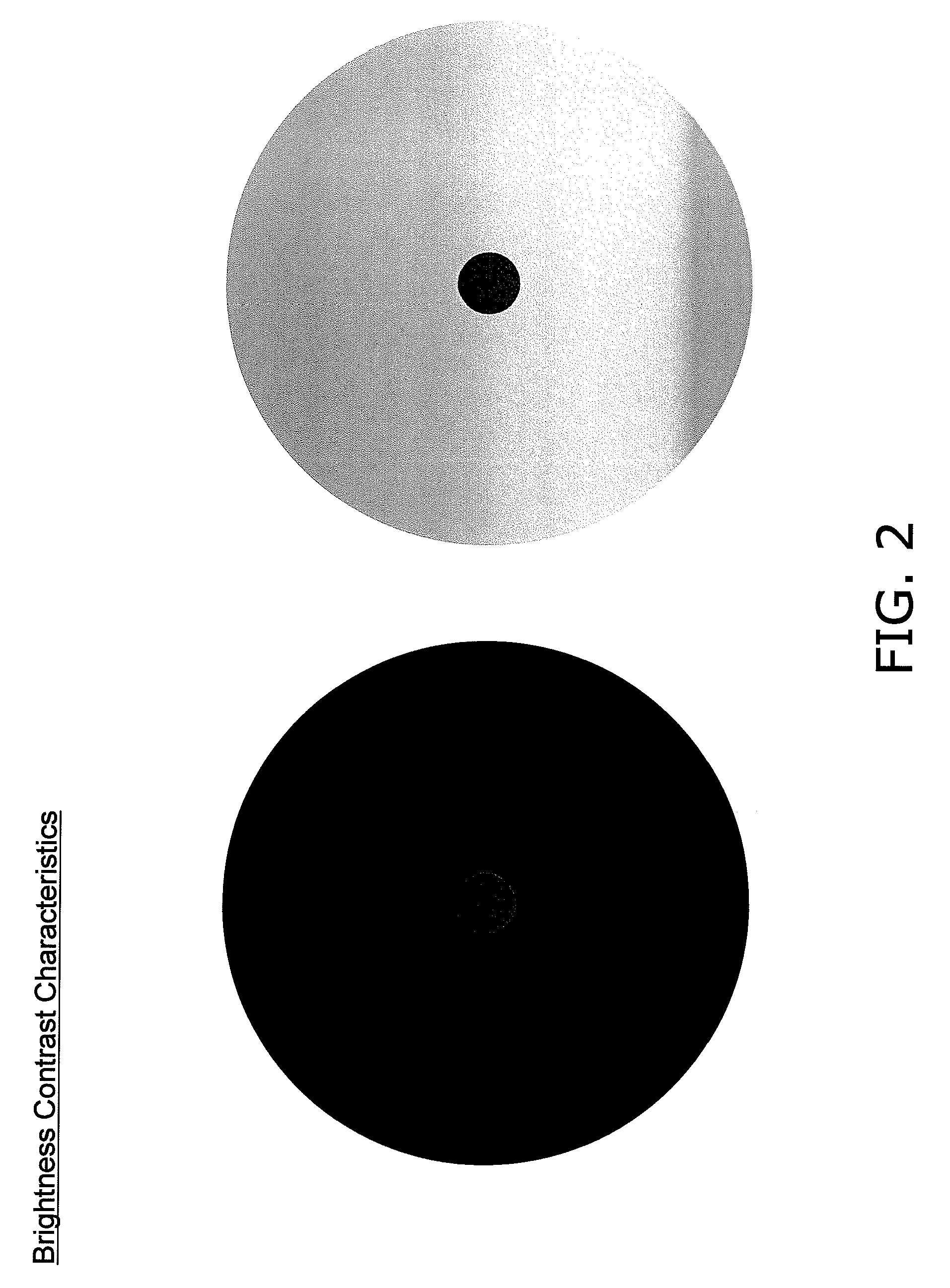

Dynamic range compression apparatus, dynamic range compression method, computer-readable recording medium, integrated circuit, and imaging apparatus

InactiveUS20090295937A1Maintain contrastThe implementation process is simpleTelevision system detailsCharacter and pattern recognitionPeak valueVision processing unit

A dynamic range (D-range) compression apparatus that uses a look-up table (LUT) is capable of dynamic compression that compresses the peak input value to the full output range, even when image signals with variable D-ranges are inputted.According to this D-range compression apparatus, D-range compression processing that places the D-range of the image signal within a predetermined output D-range is performed by the visual processing unit converting the tone of the image signal in accordance with the surrounding average luminance signal. Furthermore, with this D-range compression apparatus, the image signal is amplified in accordance with amplification input / output conversion characteristics determined based on the peak value in the image detected by the peak detection unit, and therefore it is possible to perform a dynamic amplification processing in accordance with the peak value so that the D-range of the image signal outputted from the visual processing unit becomes a predetermined output D-range.

Owner:PANASONIC CORP

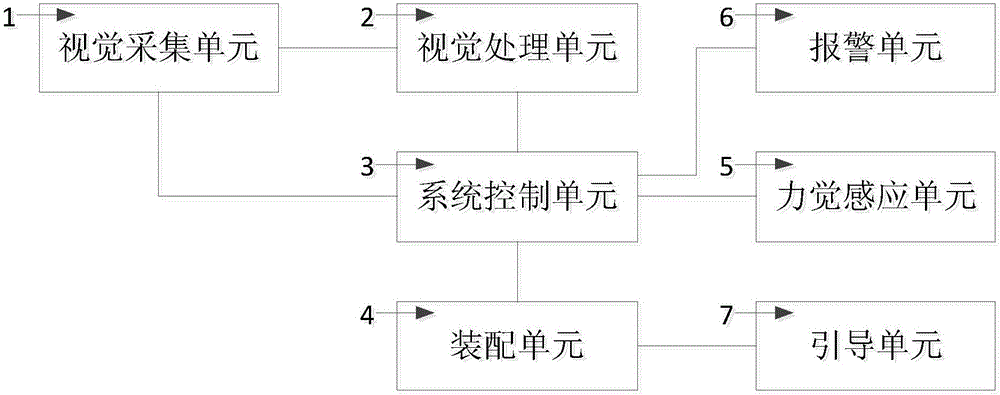

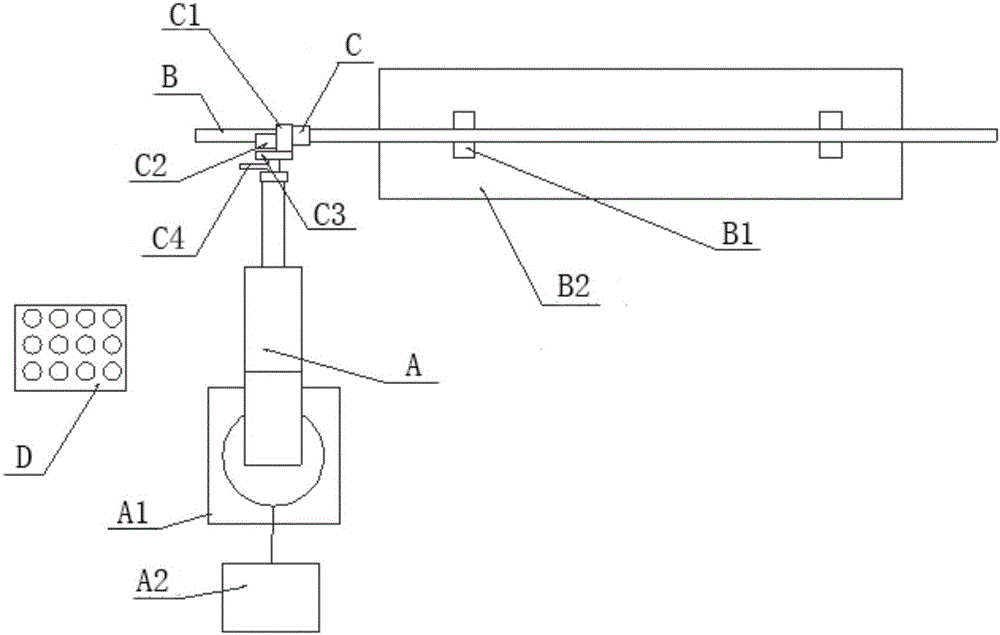

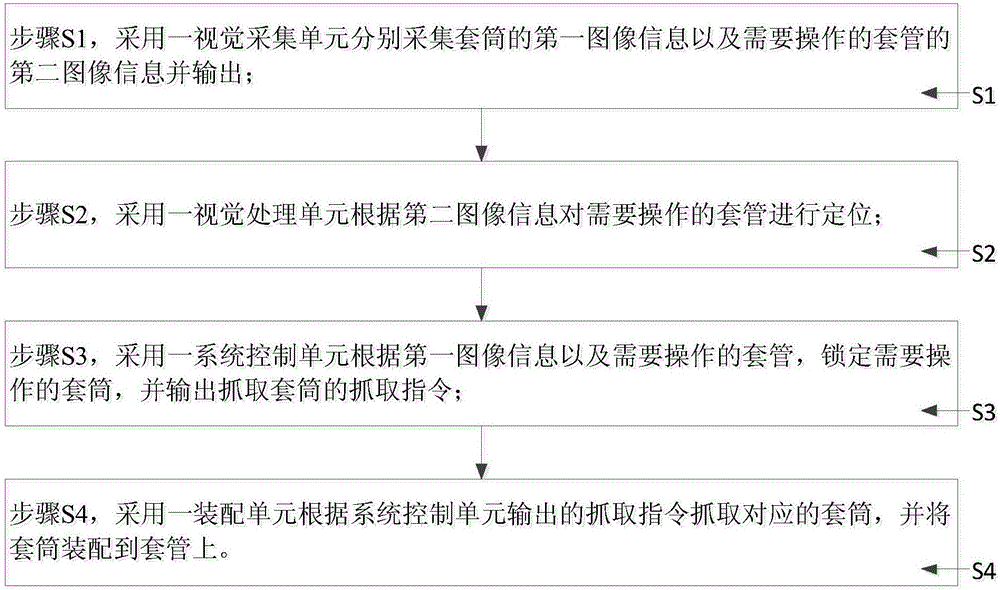

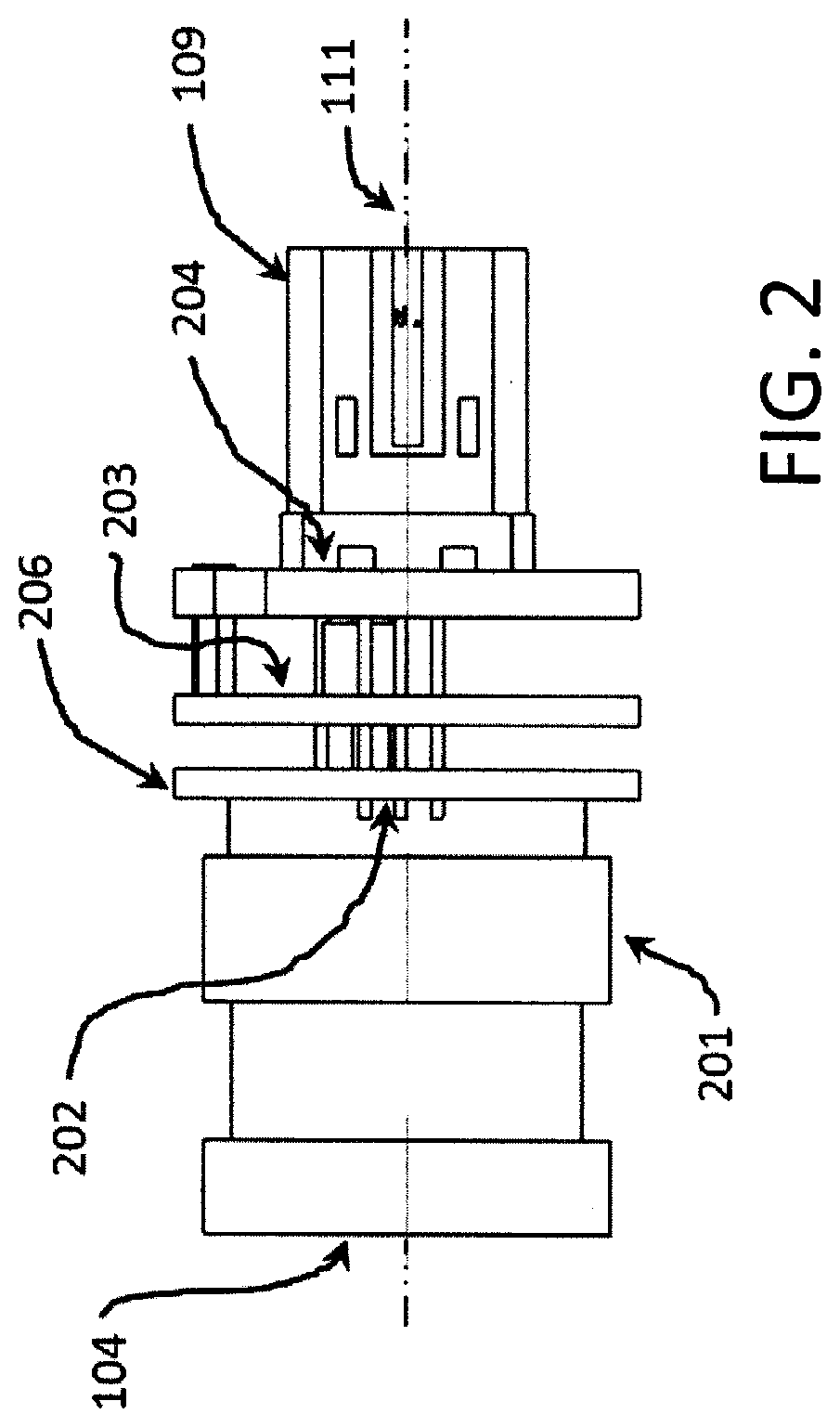

Automatic assembling system and method

ActiveCN106141645AReduce labor costsImprove assembly progressMetal working apparatusVision processing unitComputer science

The invention discloses an automatic assembling system and method and belongs to the technical field of sleeve assembling. The system comprises a visual collecting unit, a visual processing unit, a system control unit and an assembling unit. The method includes the following steps that firstly, the visual collecting unit is used for collecting and outputting first image information of a sleeve and second image information of a casing pipe to be operated; secondly, the visual processing unit is used for positioning the casing pipe to be operated according to the second image information; thirdly, the system control unit is used for locking the sleeve to be operated according to the first image information and the casing pipe to be operated, and outputting a grabbing instruction for sleeve grabbing; and fourthly, the assembling unit is used for grabbing the corresponding sleeve according to the grabbing instruction output by the system control unit and assembling the sleeve on the casing pipe. According to the technical scheme, the automatic assembling system and method have the beneficial effects of reducing labor cost in the assembling process, accelerating assembling progress and guaranteeing the automatic assembling accuracy rate.

Owner:SHANGHAI FANUC ROBOTICS

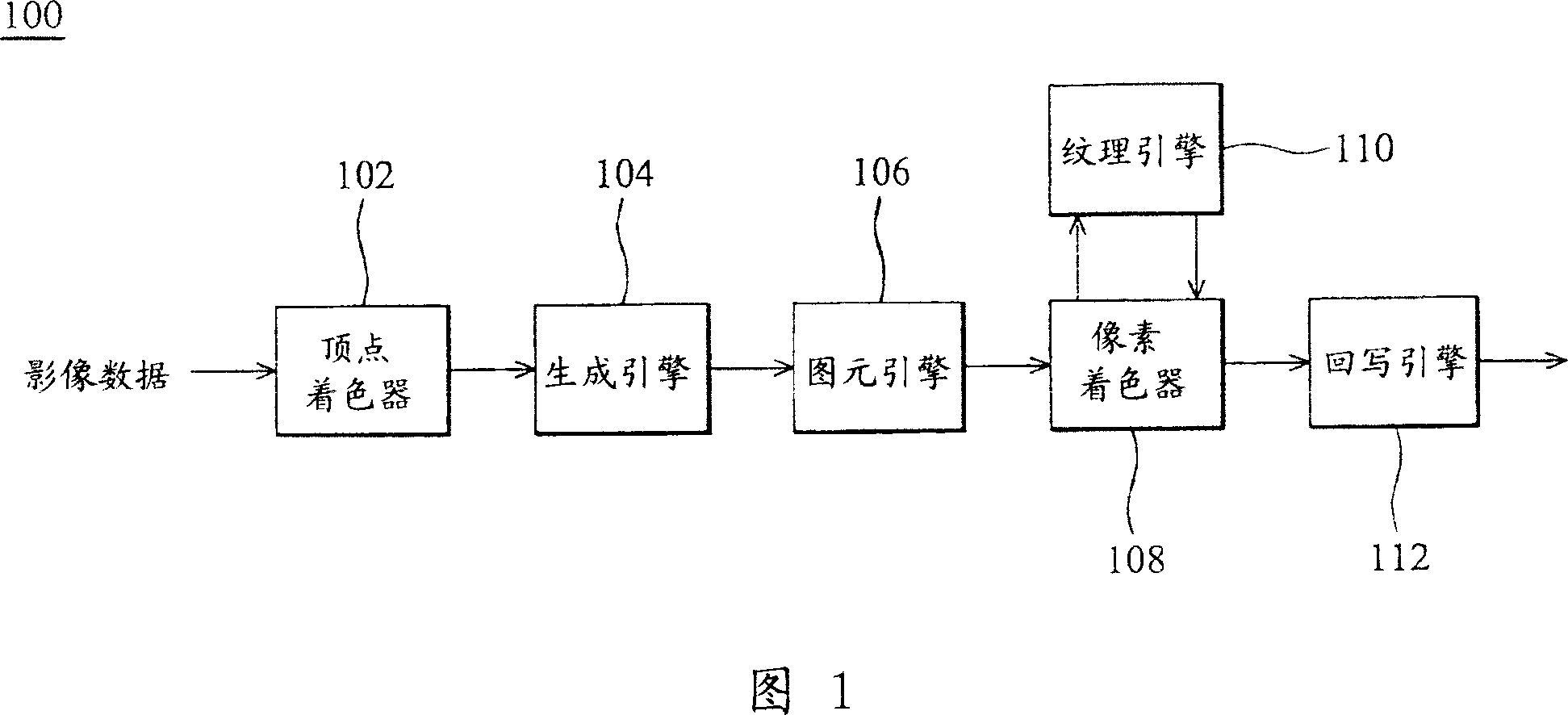

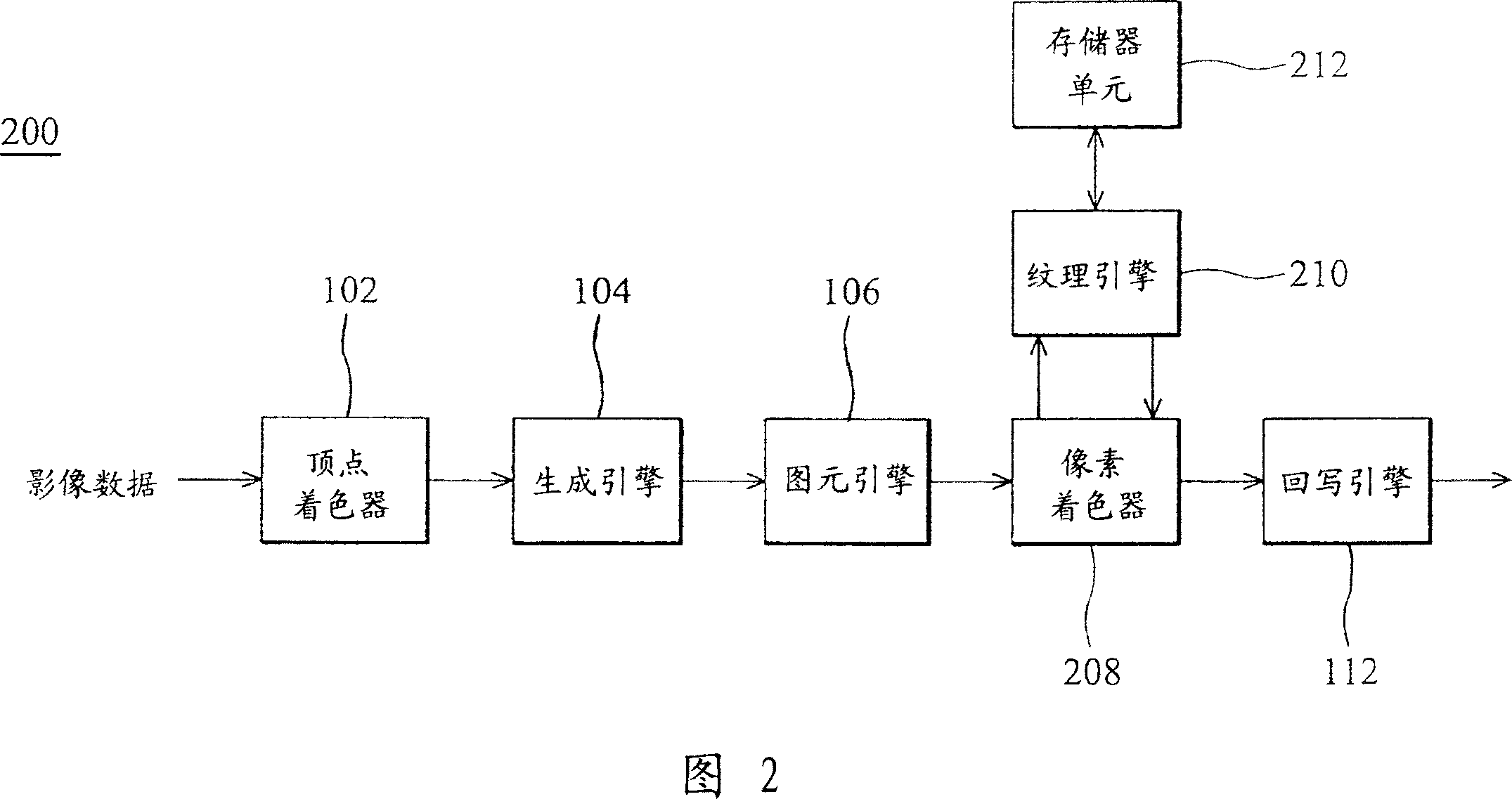

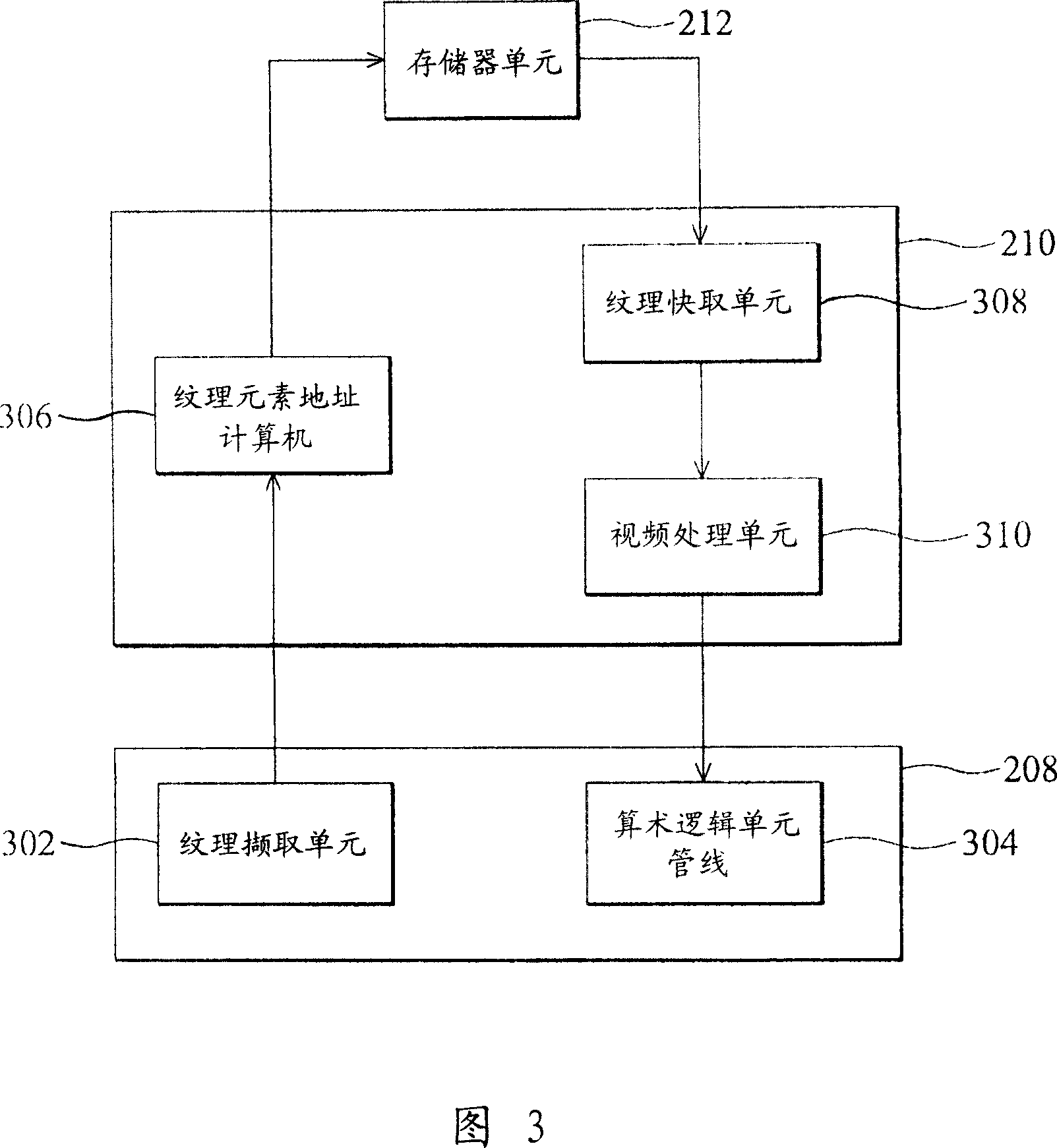

Grain engine for grain vedio signal processing, graph processor and method

ActiveCN101004833AProcessor architectures/configurationDetails involving image processing hardwareGraphicsMemory address

The embodiment of the present invention discloses a texture engine which includes a texture element address calculator, a texture quick capturing unit and a vision processing unit. The texture element address calculator receives one texture and vision requirement of a pixel, the texture and vision requirement include the position information for the texture data in a texture mapping design and vision operating information required by the pixel, the texture mapping design is stored in a memory unit. When executing the vision operating defined by the texture and vision requirement, the texture element address calculator calculates the texture data in the memory unit for a pixel and the image data required by the pixel. The texture quick capturing unit captures a copy for the image data and the texture data based on the memory address in the memory unit, the memory address is calculated by the texture element address calculator. The vision processing unit receives the image data, and executes the vision process based on the texture and vision requirement.

Owner:VIA TECH INC

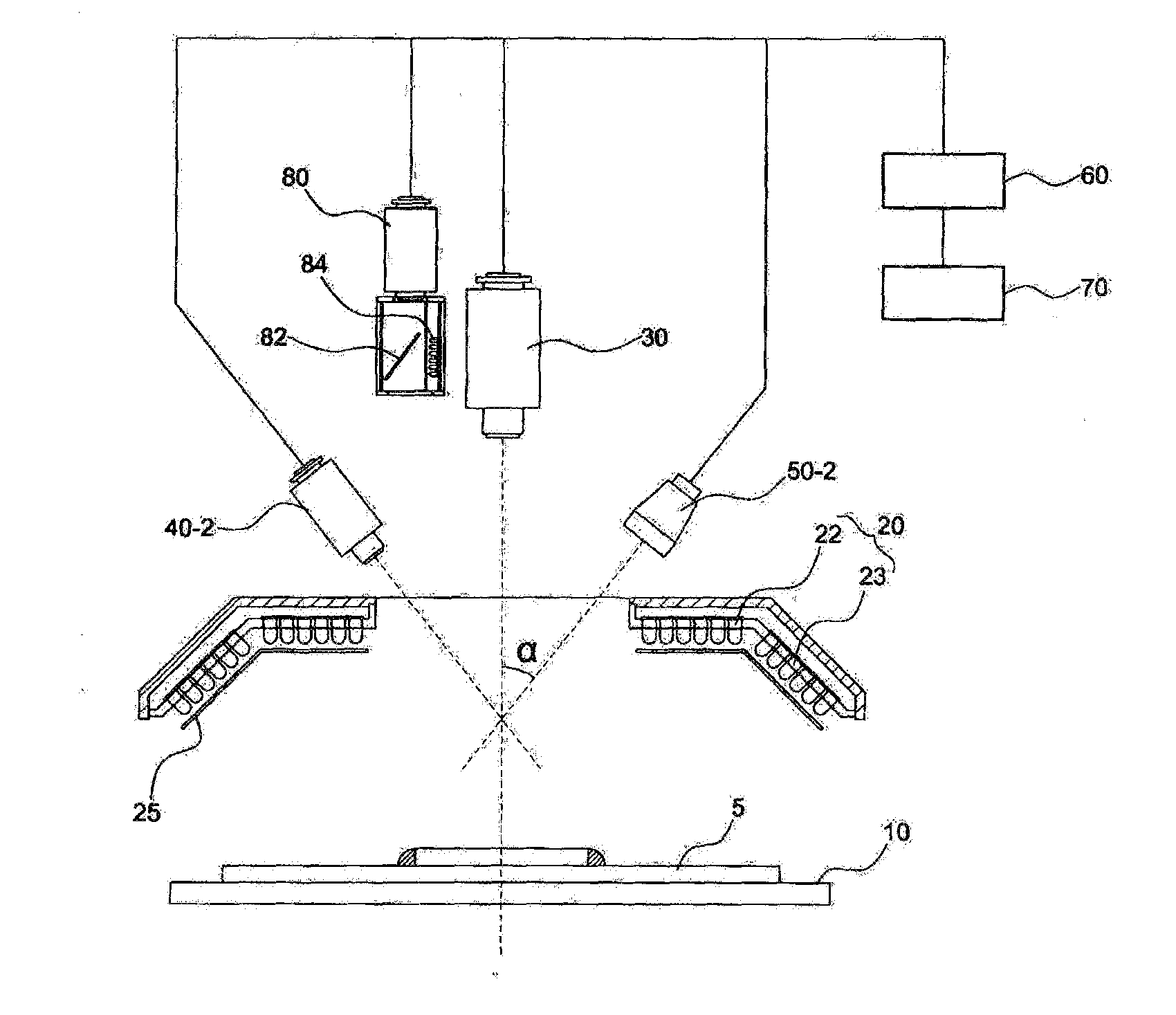

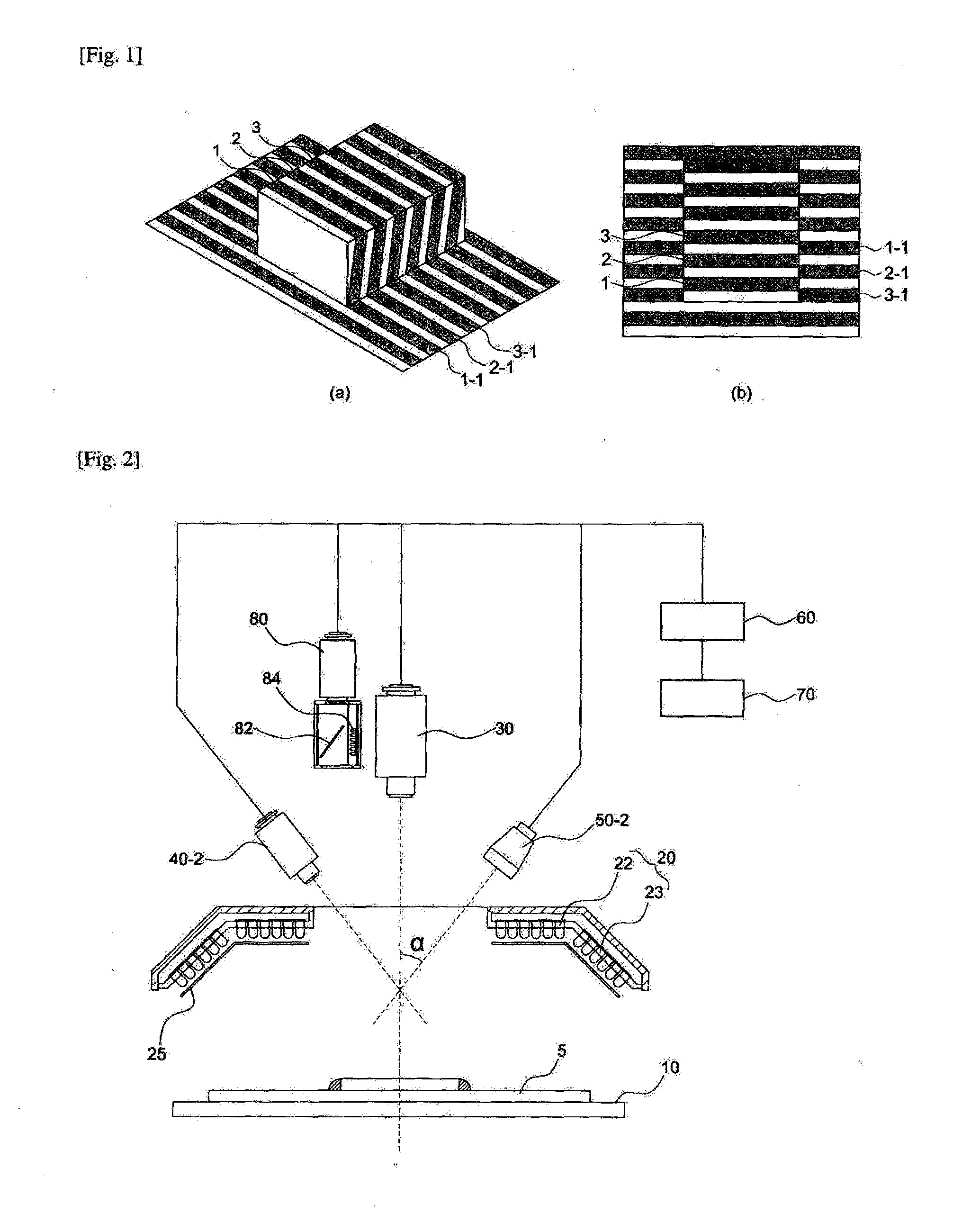

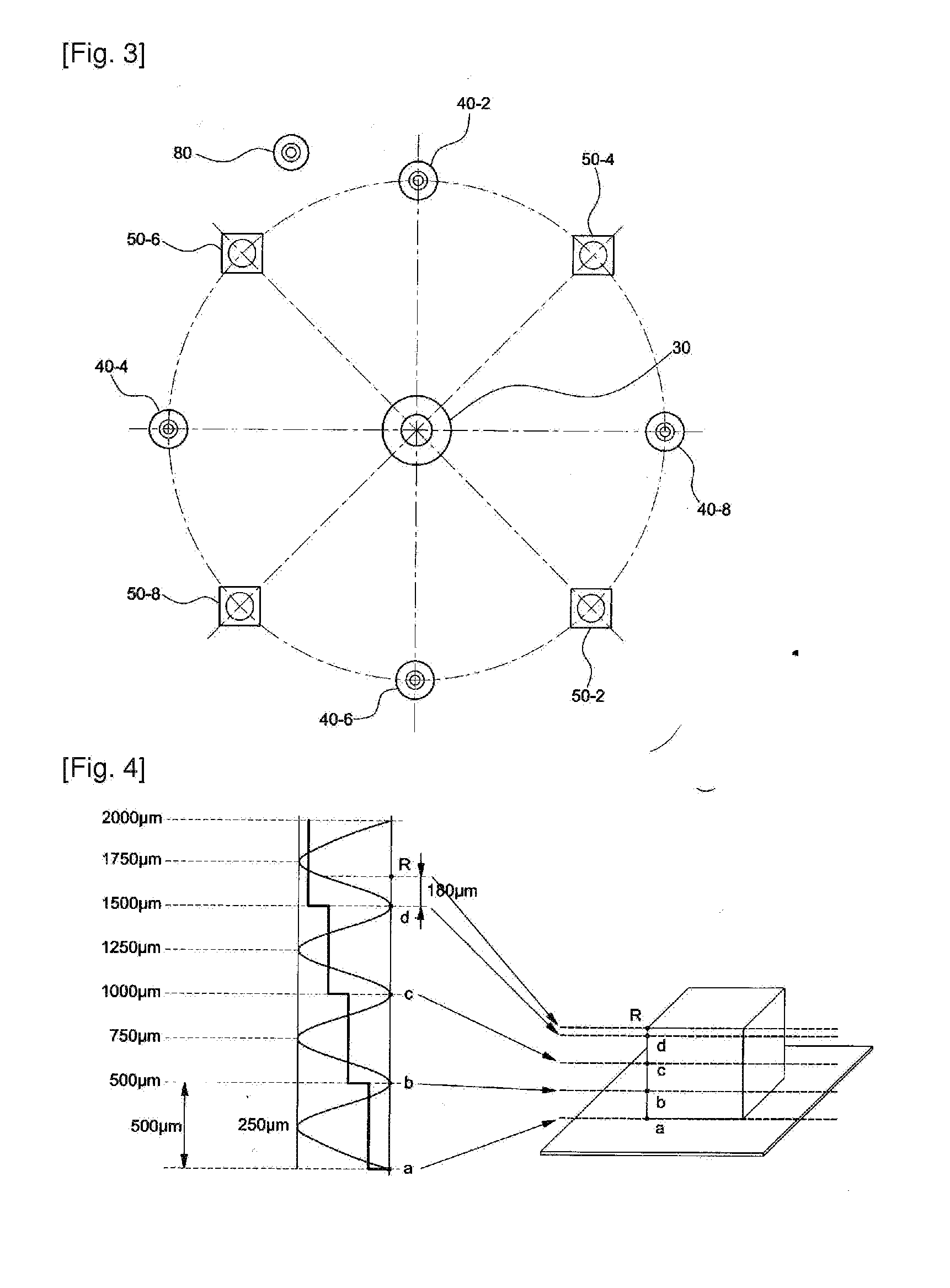

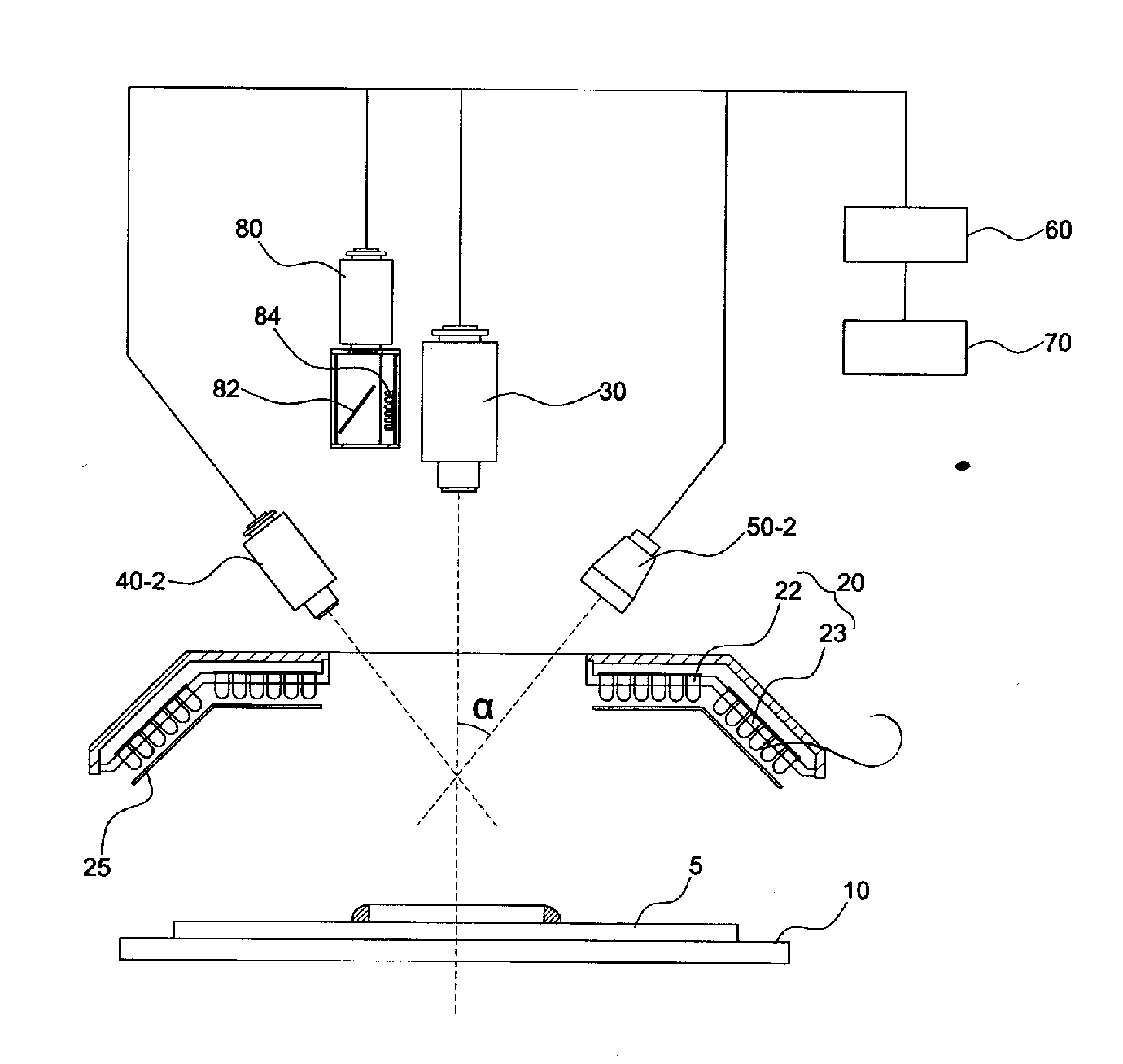

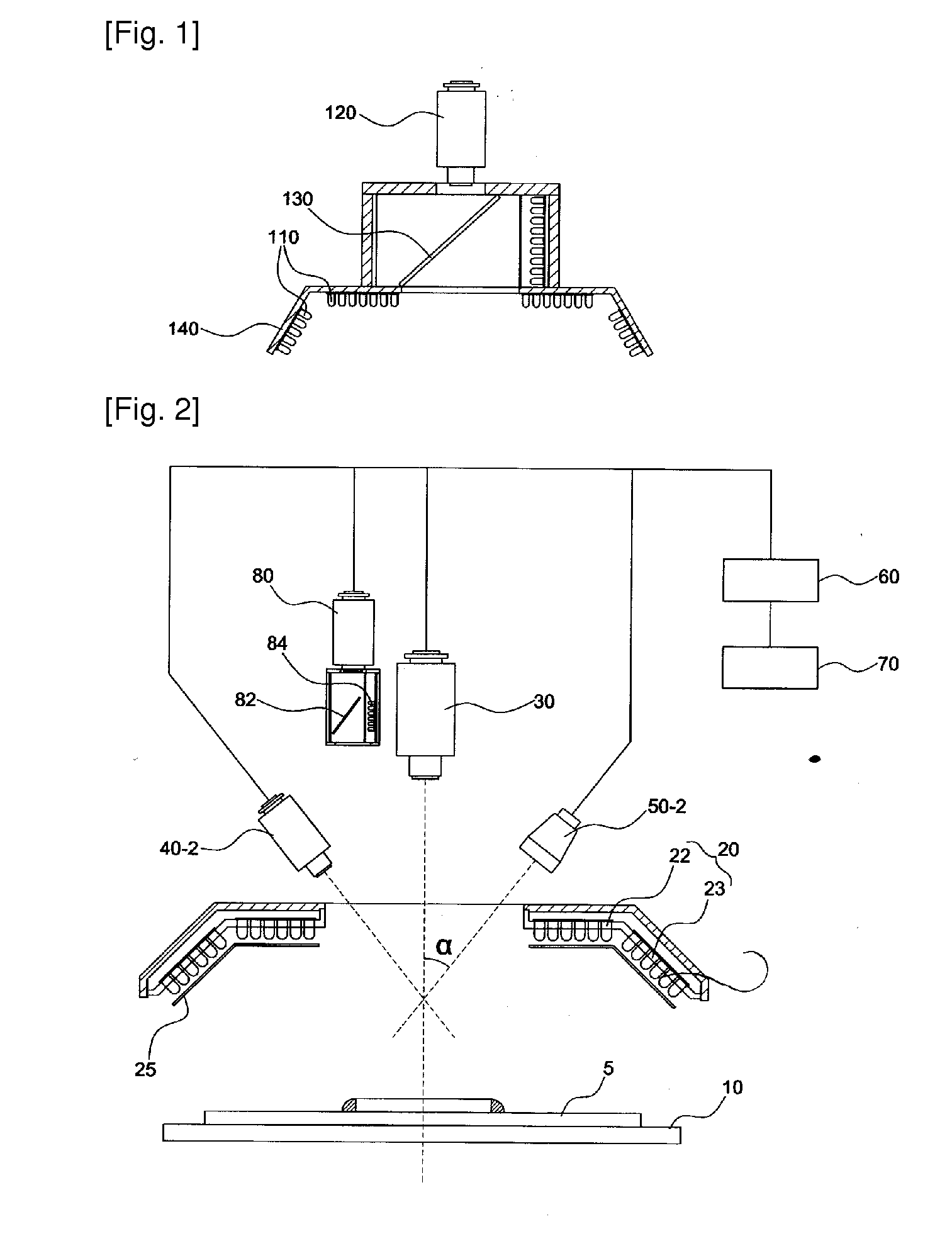

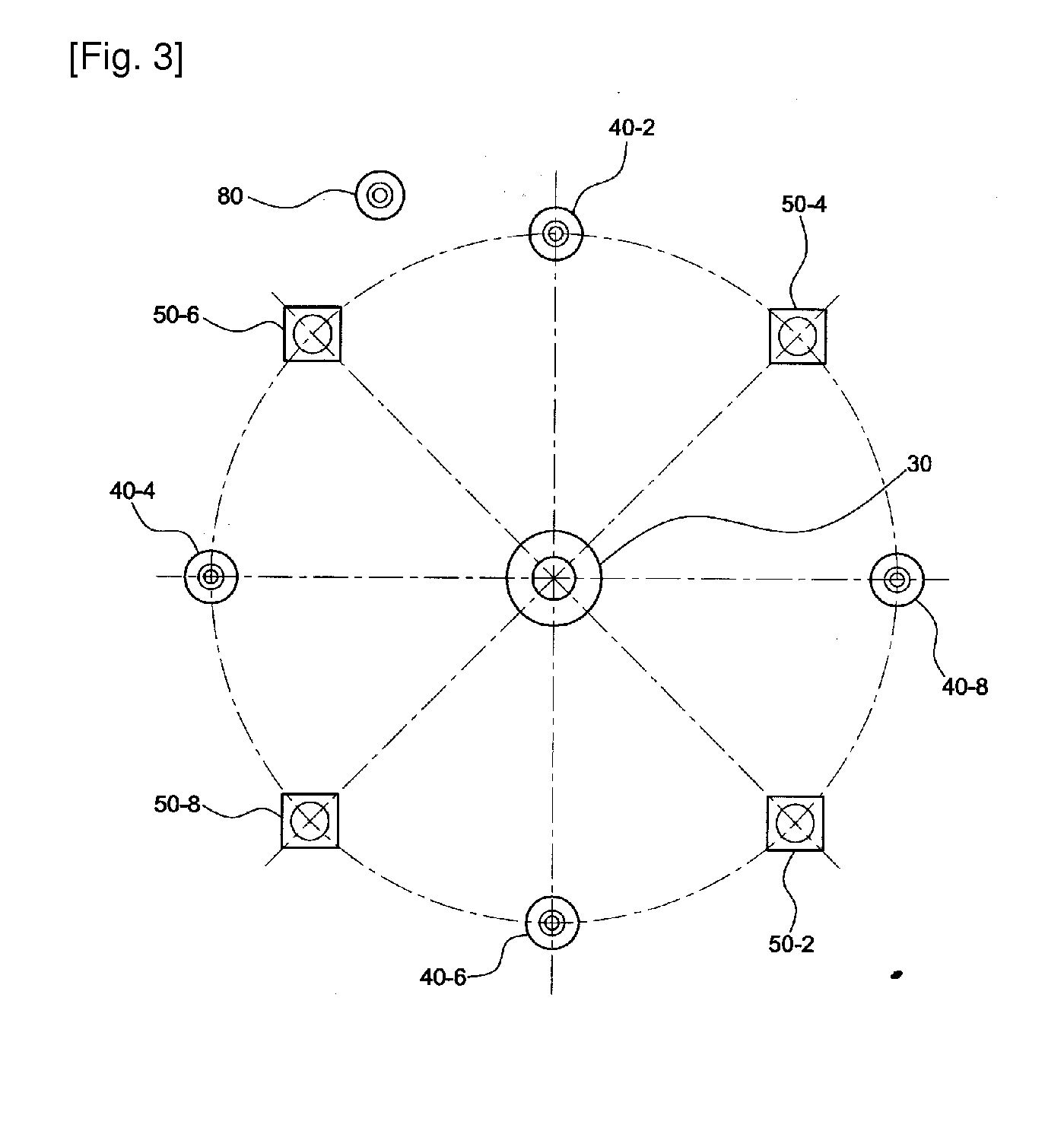

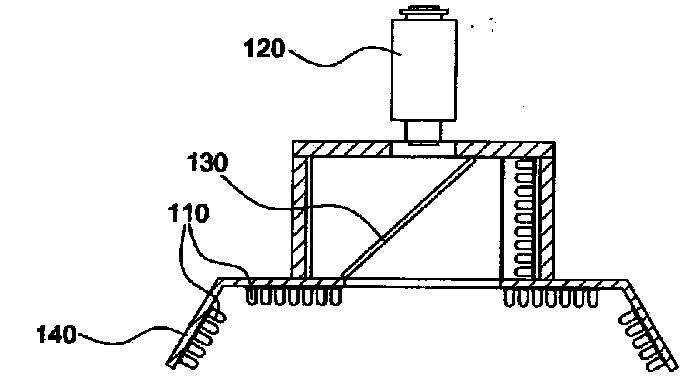

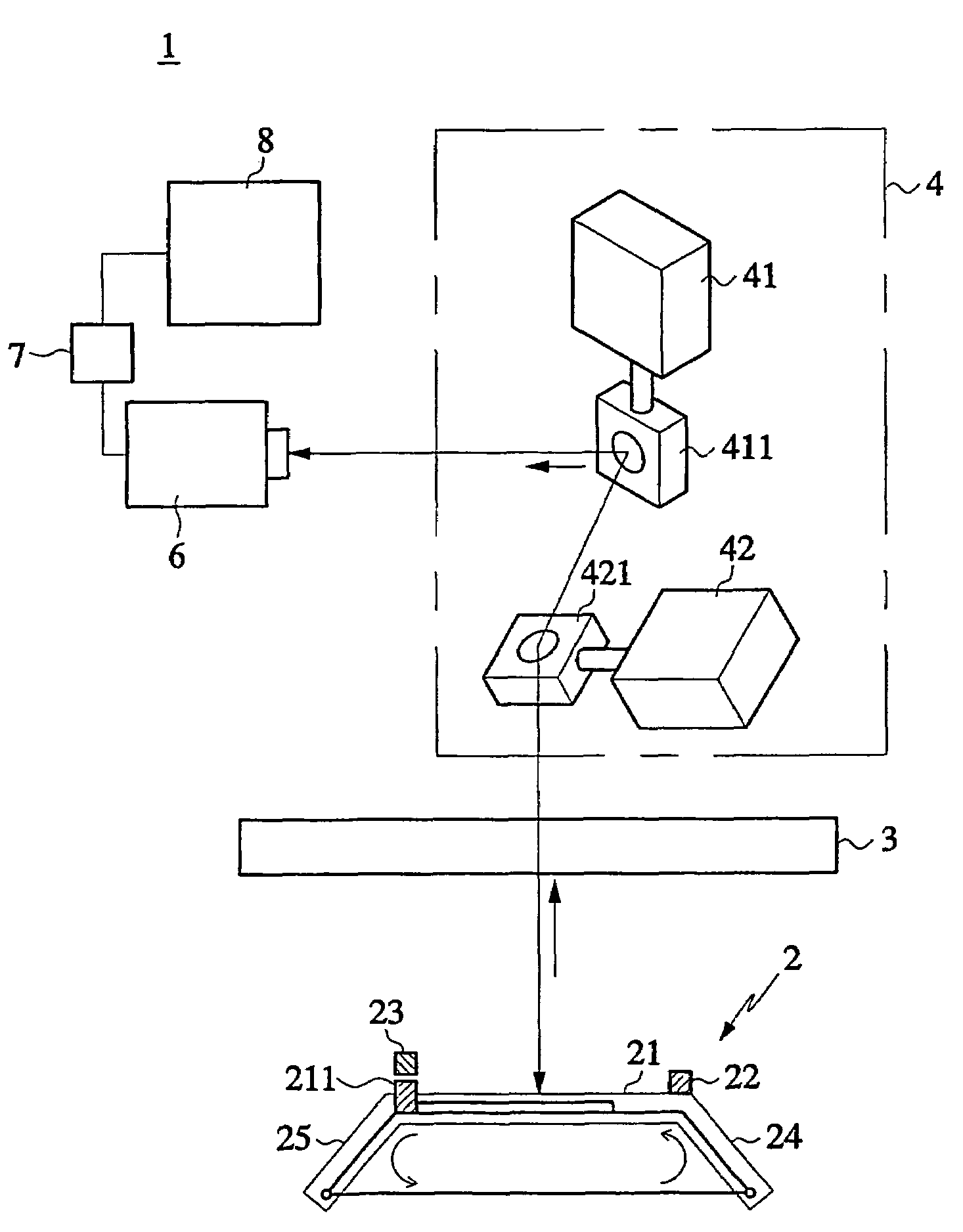

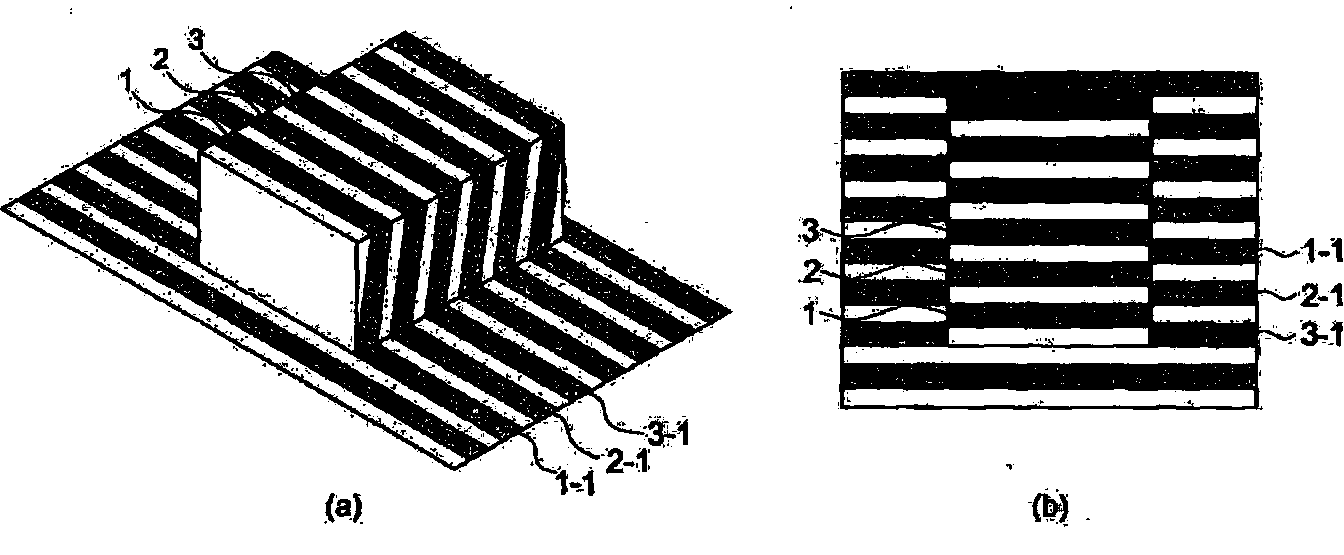

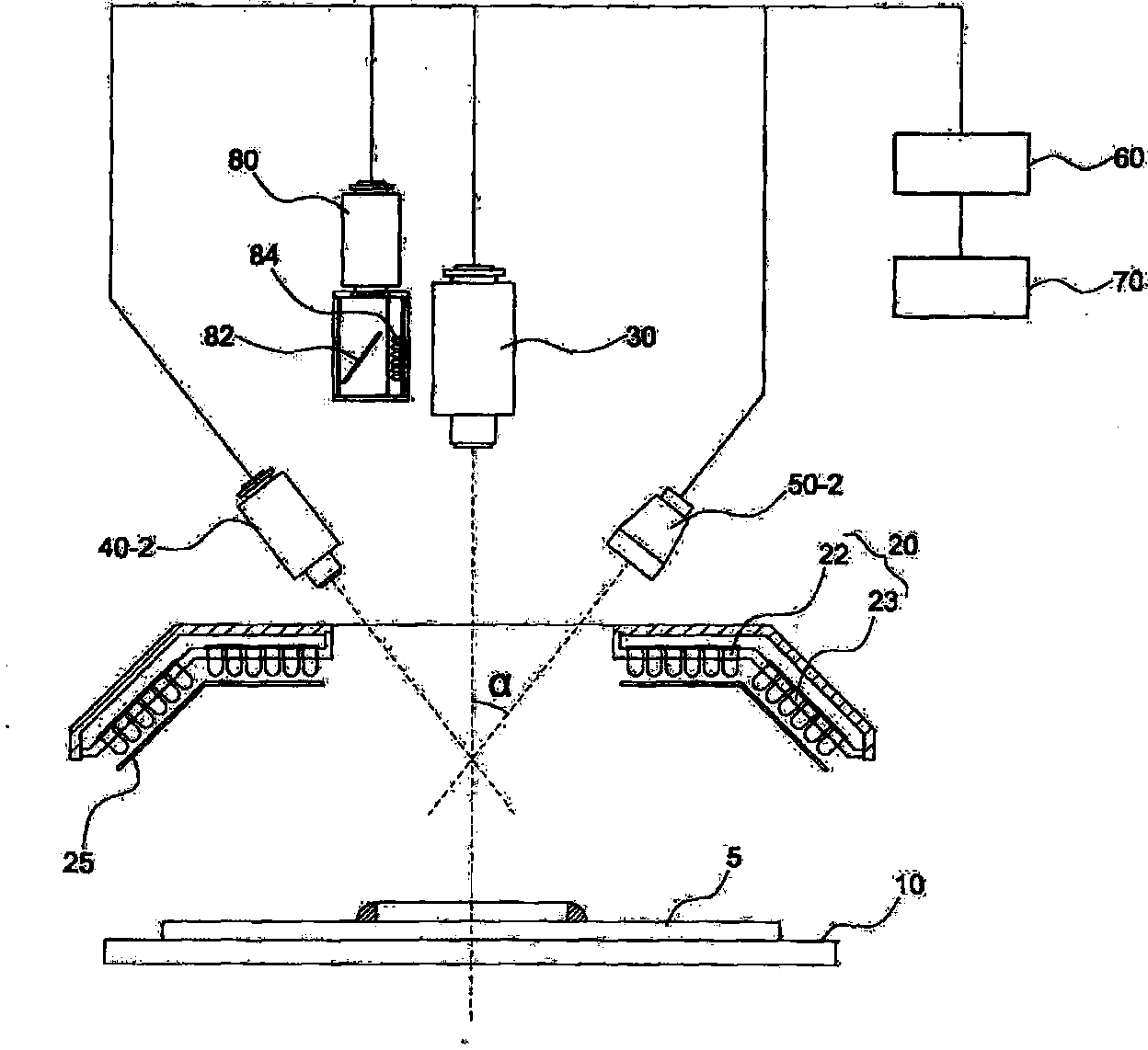

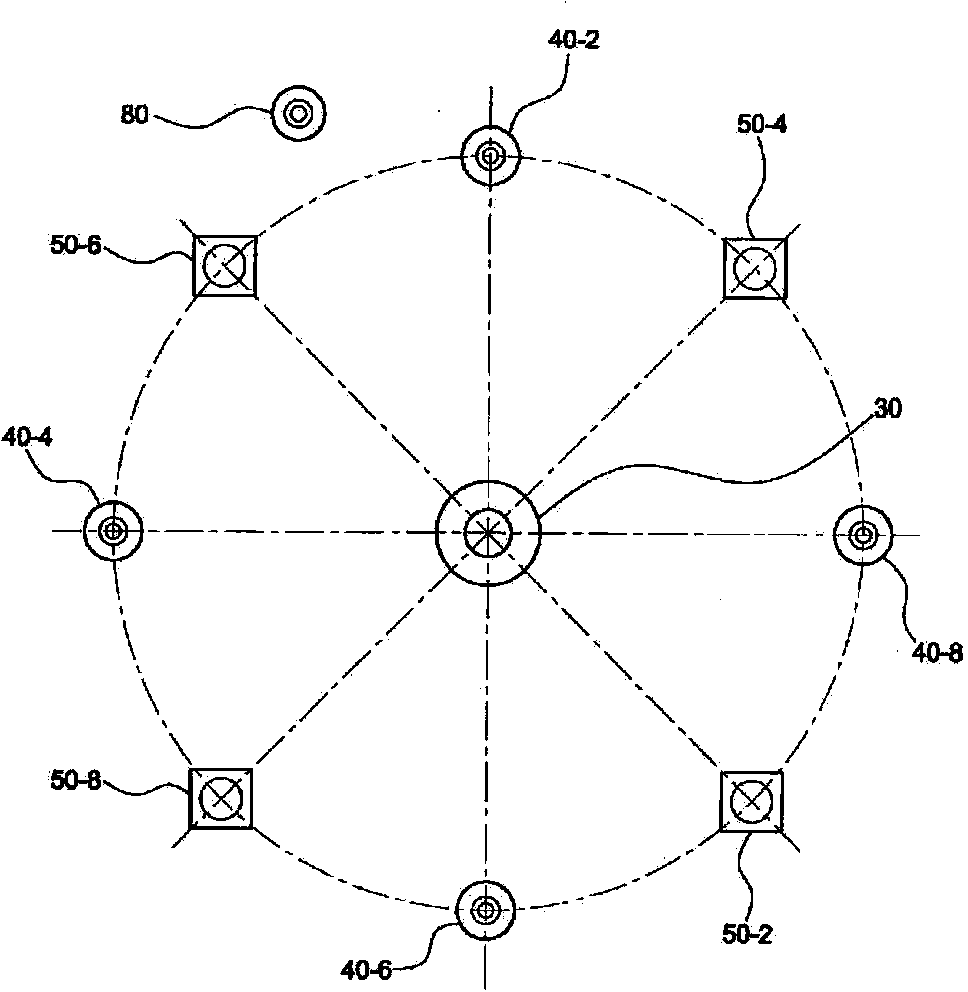

Vision testing device using multigrid pattern

InactiveUS20130342677A1Increase speedImprove uniformityMaterial analysis by optical meansColor television detailsGrid patternVisual test

Provided is a vision testing device using a multigrid pattern for determining good or bad of a testing object by photographing the testing object assembled or mounted during the component assembly process and comparing the photographed image with a previously inputted target image, comprising: a stage part for fixing or transferring the testing object to the testing location; a lighting part for providing lighting to the testing object located on an upper portion of the stage part; a center camera part for obtaining a 2-dimensional image of the testing object located in a center of the lighting part; a irradiating part placed on a side section of the center camera part; a vision processing unit for reading the image photographed by the center camera part and determining good or bad of the testing object; and a control unit for controlling the stage part, the grid pattern irradiating part, the center camera part, wherein the grid pattern irradiating part irradiates grid patterns having periods of different intervals.

Owner:MIRTEC

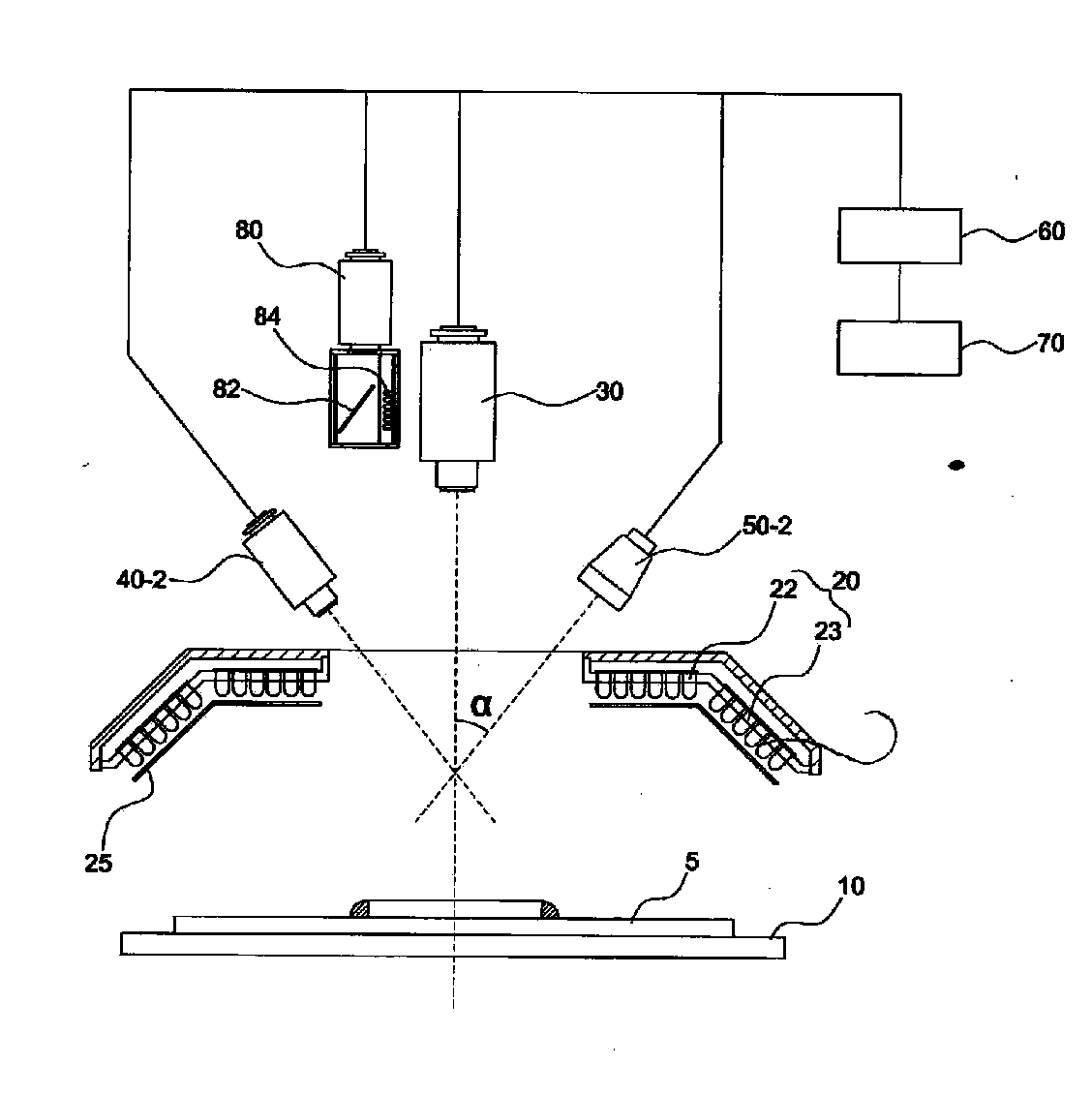

Vision testing device with enhanced image clarity

InactiveUS20140232850A1Improve light uniformityClear imagingImage enhancementImage analysisGrid patternVisual test

The vision testing device with an enhanced image clarity for determining good or bad of a testing object by photographing a testing object assembled or mounted during the component assembly process and comparing the photographed image with a previously inputted target image, comprising: a stage part for fixing or transferring the testing object to a testing location; a lighting part for providing lighting to the testing object located on an upper portion of the stage part; a first camera part for obtaining a 2-dimensional image of the testing object located in a center of the lighting part; a plurality of second camera parts placed on a side section of the first camera part; a plurality of grid pattern irradiating parts placed between cameras of the second camera parts; a vision processing unit for reading the image photographed by the first camera part and the second camera parts and determining good or bad of the testing object; a control unit for controlling the stage part, the grid pattern irradiating parts, and the first and second camera parts; and a light diffusion part. The present invention enhances the uniformity of light being irradiated on the surface of the testing object. In addition, the present invention enables a more clear shooting of an image by removing a half mirror placed on the front of a camera part in the center, and enables convenient maintenance by miniaturizing the size of the device and dispersing the configuration.

Owner:MIRTEC

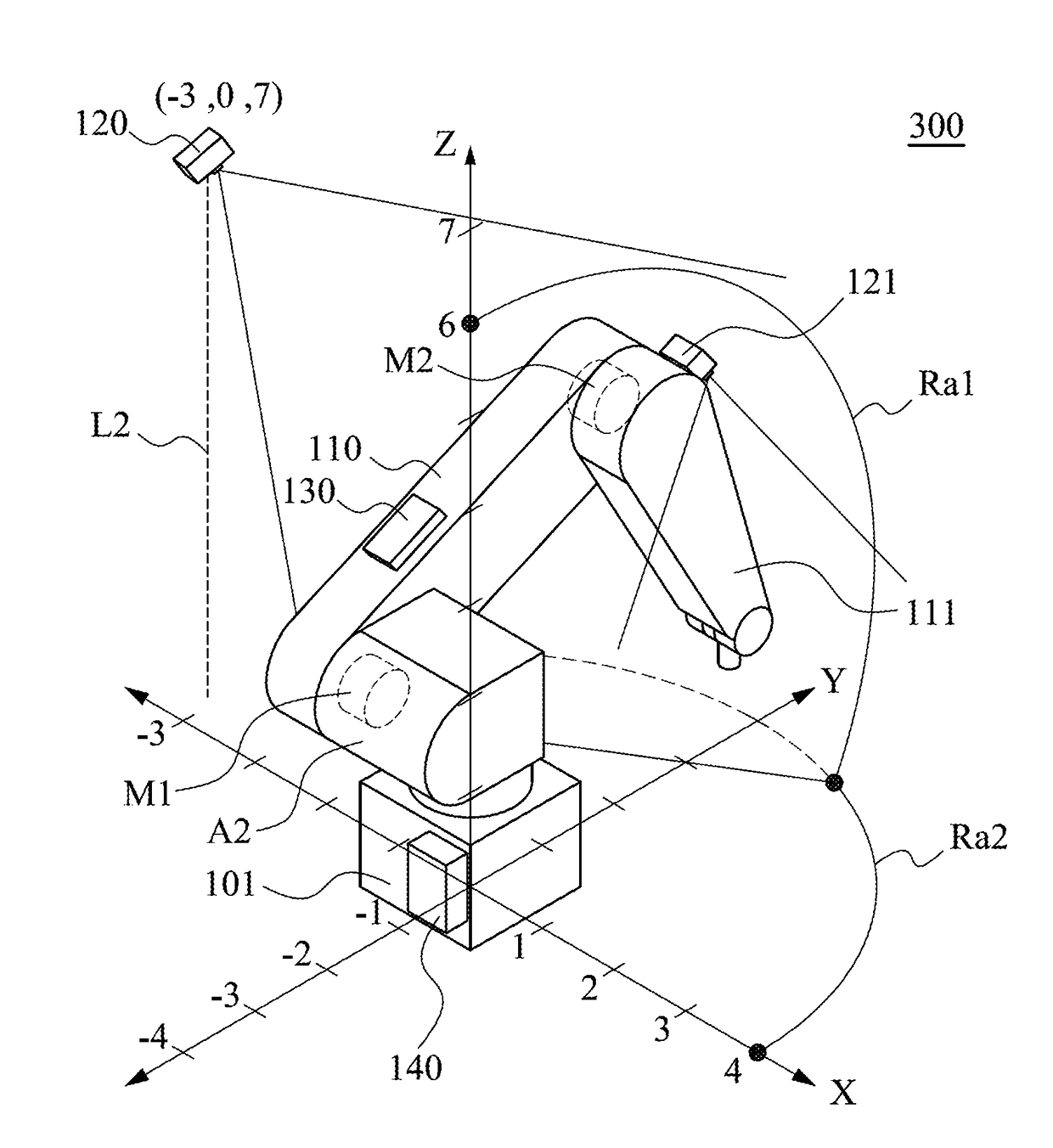

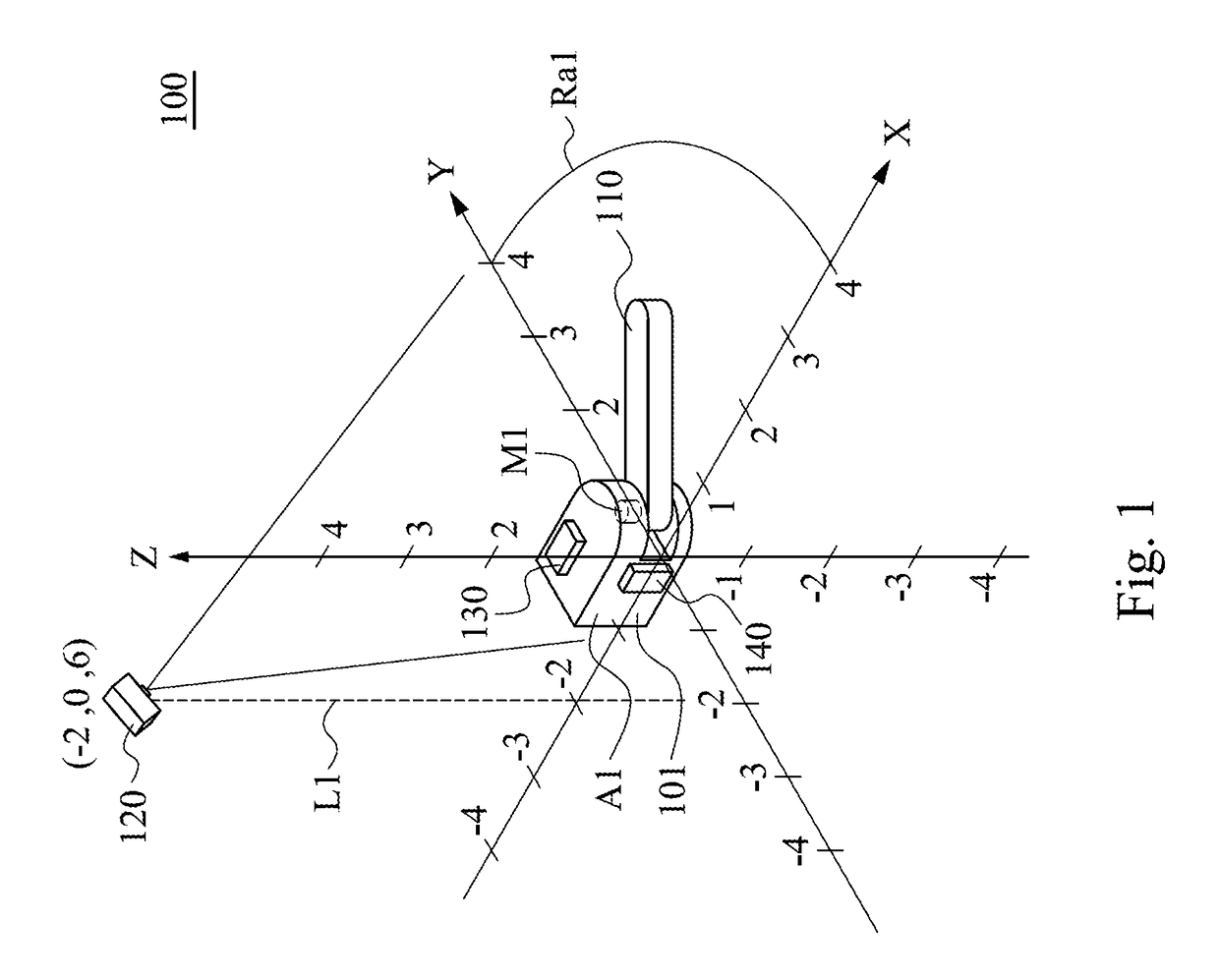

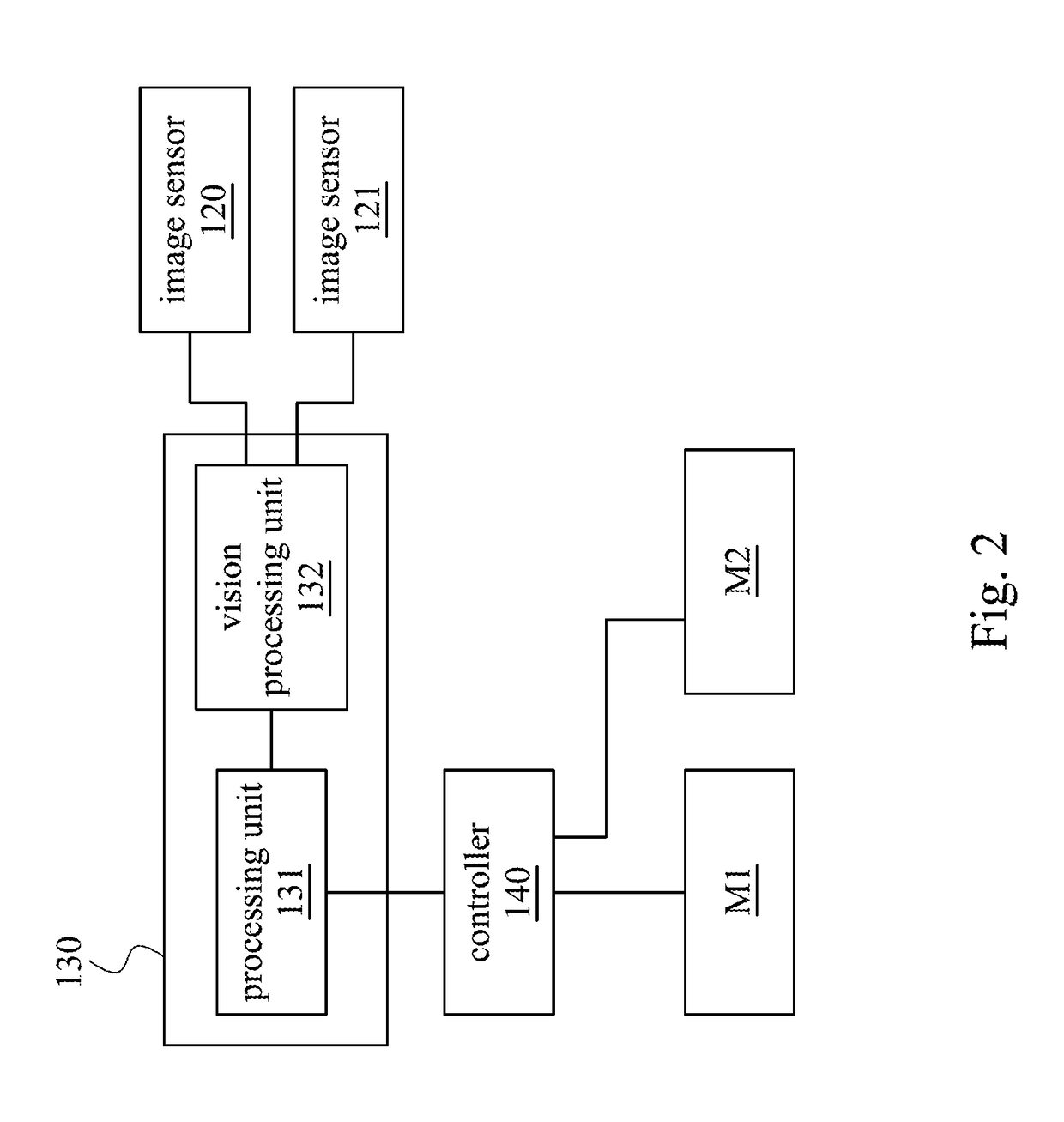

Anti-collision system and Anti-collision method

InactiveUS20180141213A1Preventing the servo motor from breakdownAvoid failureProgramme controlProgramme-controlled manipulatorRobotic armVision processing unit

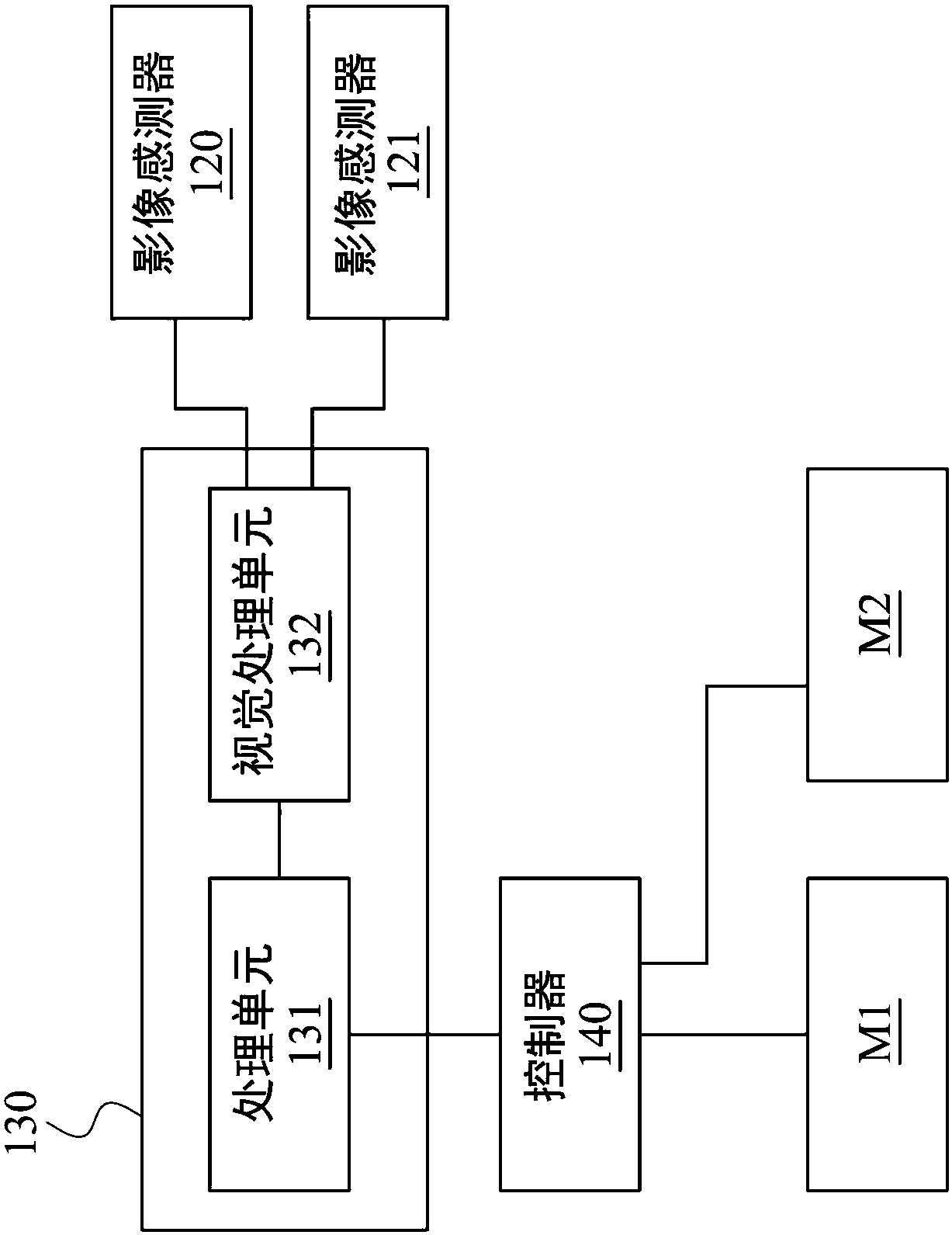

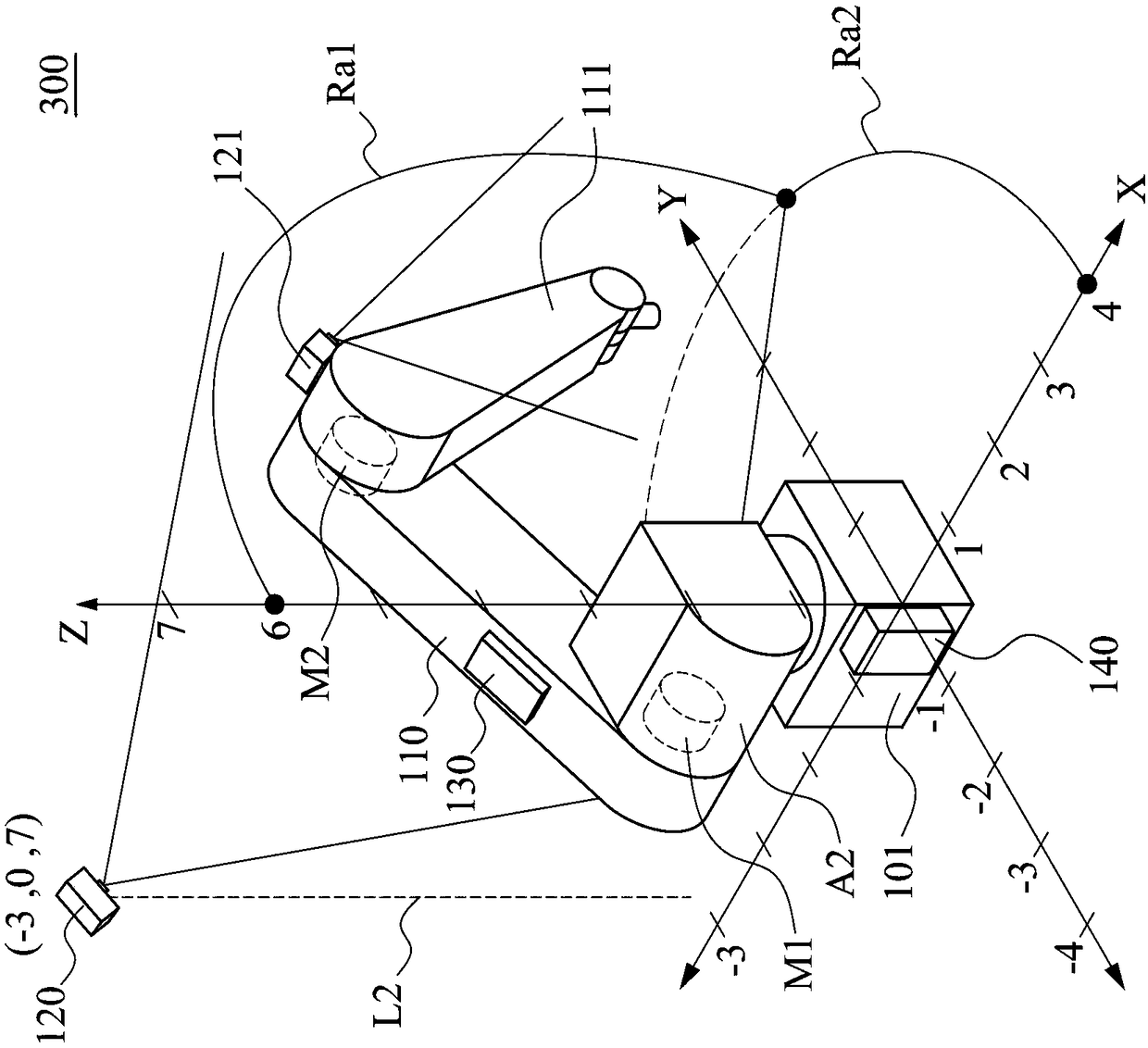

An anti-collision system uses for preventing an object collide with automatic robotic arm. Wherein, the automatic robotic arm includes a controller. The anti-collision system includes a first image sensor, a vision processing unit and a processing unit. The first image sensor captures a first image. The vision processing unit receives the first image, recognizes the object of the first image and estimates an object movement estimation path of the object. The processing unit is coupled to the controller to access an arm movement path. The processing unit estimates an arm estimation path of the automatic robotic arm, analyzes the first image to establish a coordinate system, and determines whether the object will collide with the automatic robotic arm according to the arm estimation path of the automatic robotic arm and the object movement estimation path of the object.

Owner:INSTITUTE FOR INFORMATION INDUSTRY

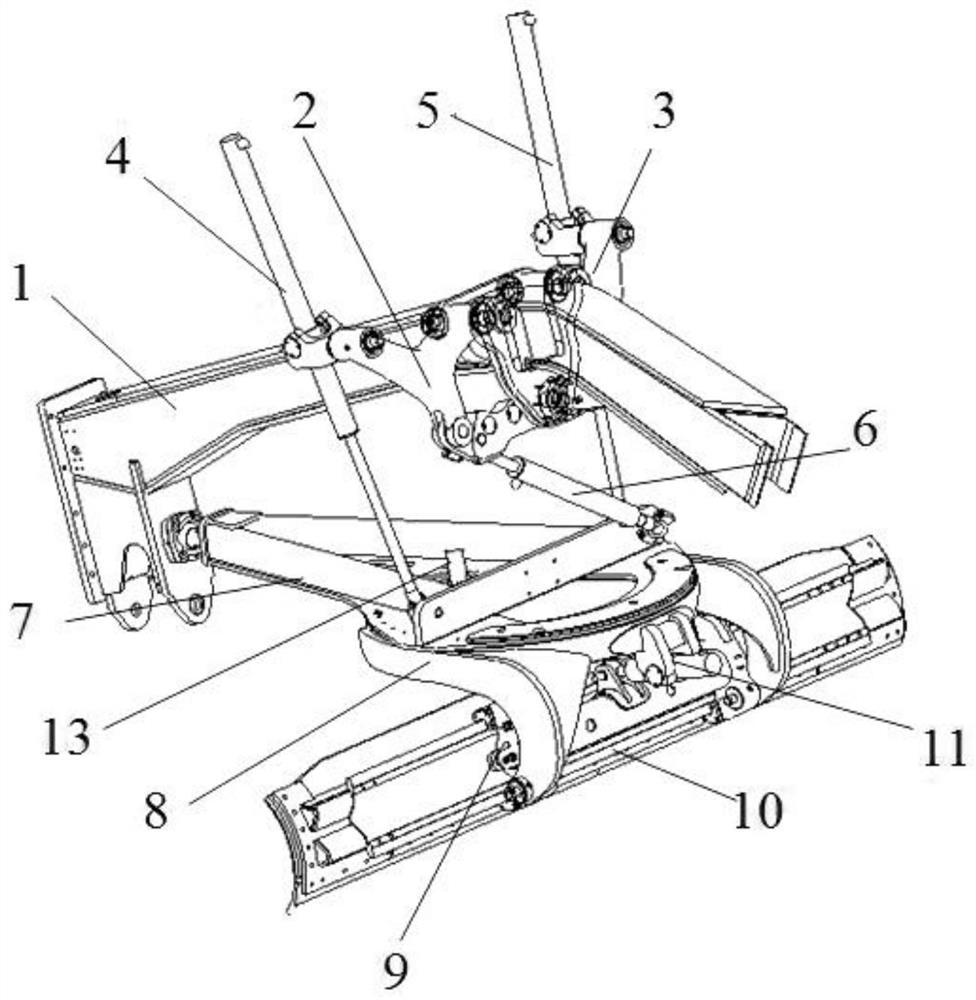

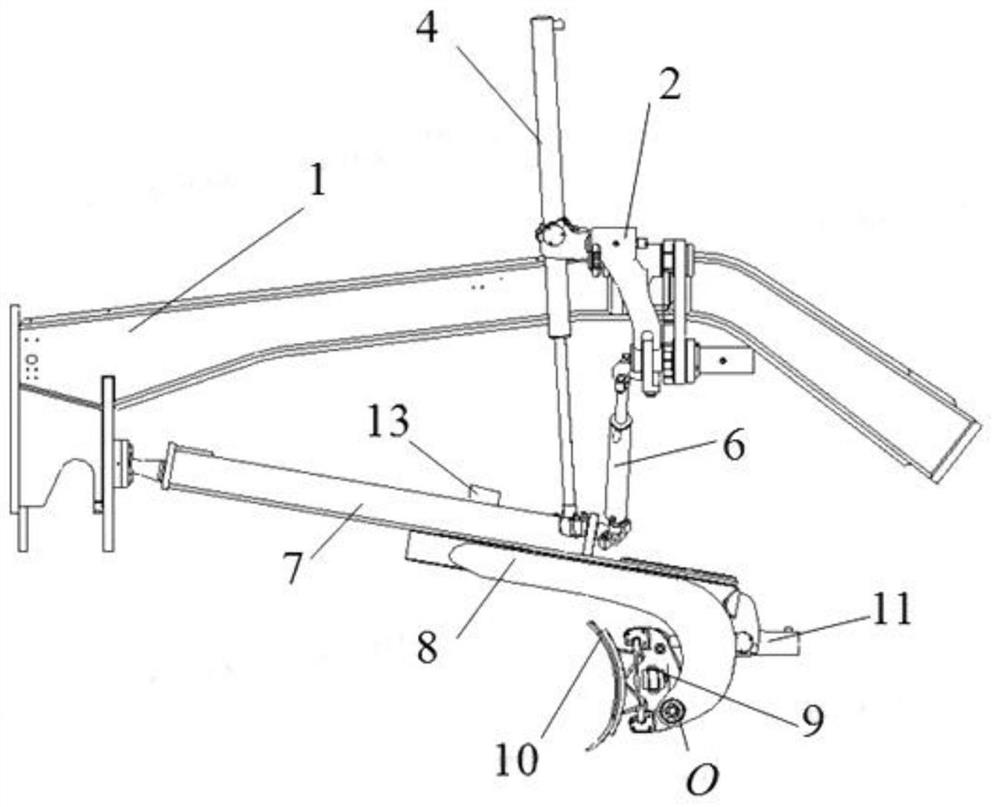

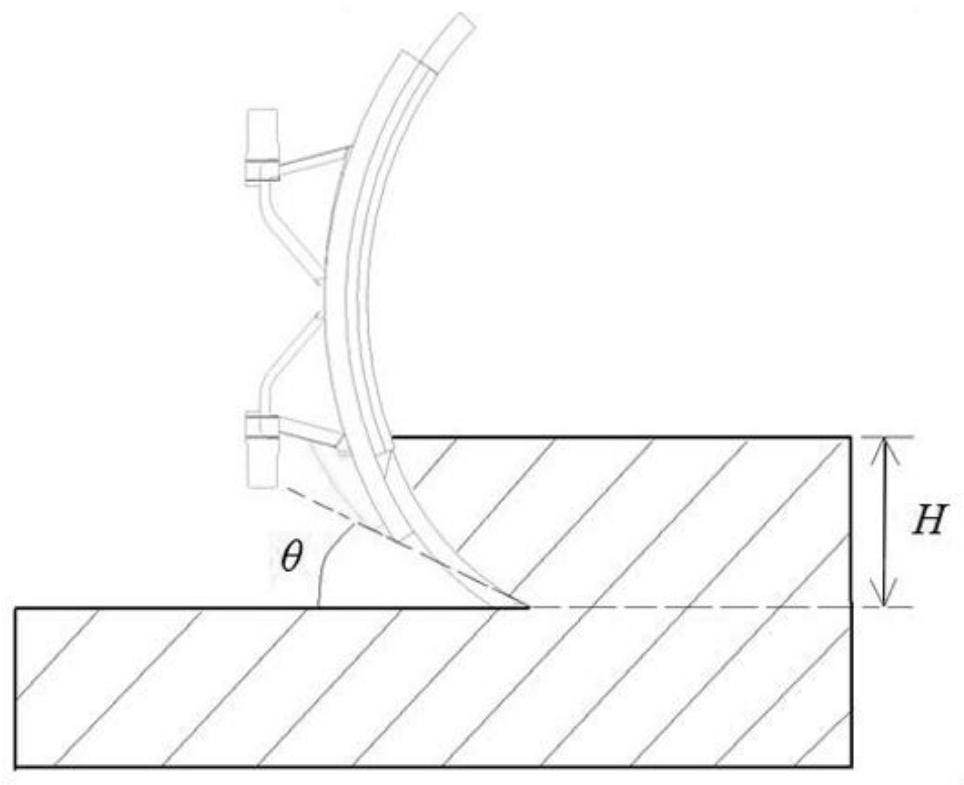

Scraper knife posture control system and method based on machine vision and land leveler

ActiveCN112627261AReduce the difficulty of manipulationReduce labor intensityMechanical machines/dredgersDriver/operatorPhysical medicine and rehabilitation

The invention discloses a scraper knife posture control system and method based on machine vision and a land leveler, and belongs to the technical field of land levelers. A scraper knife can be automatically adjusted to a set posture according to an operation instruction of a driver, and the control difficulty and labor intensity of the driver are reduced. The scraper knife posture control system comprises a plurality of cameras, a visual processing unit and a vehicle-mounted controller, wherein the cameras are installed at set positions of the land leveler and used for collecting real-time position information of a plurality of visual perception tracking points installed at the set positions of the land leveler; the visual processing unit is used for acquiring the current posture of a scraper knife according to the received real-time position information of each visual perception tracking point, and generating a target state of a scraper knife posture control mechanism in combination with the target posture, sent by a man-machine display, of the scraper knife; and the vehicle-mounted controller is used for controlling the scraper knife posture control mechanism through an electric proportional control valve according to the target state and the current state of the scraper knife posture control mechanism, so that the scraper knife posture control mechanism drives the scraper knife to a target posture.

Owner:XUZHOU XUGONG ROAD CONSTR MACHINERY +1

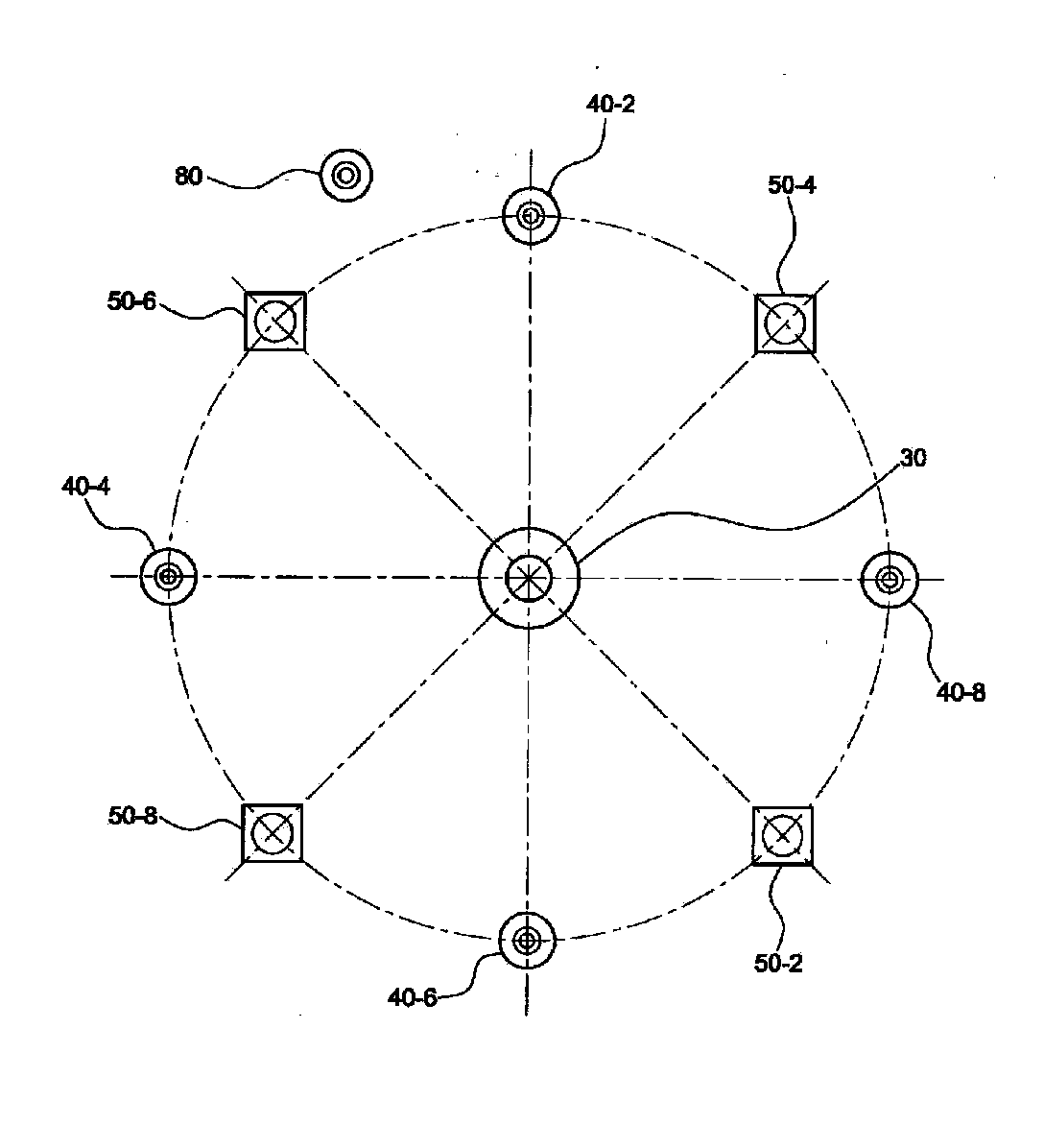

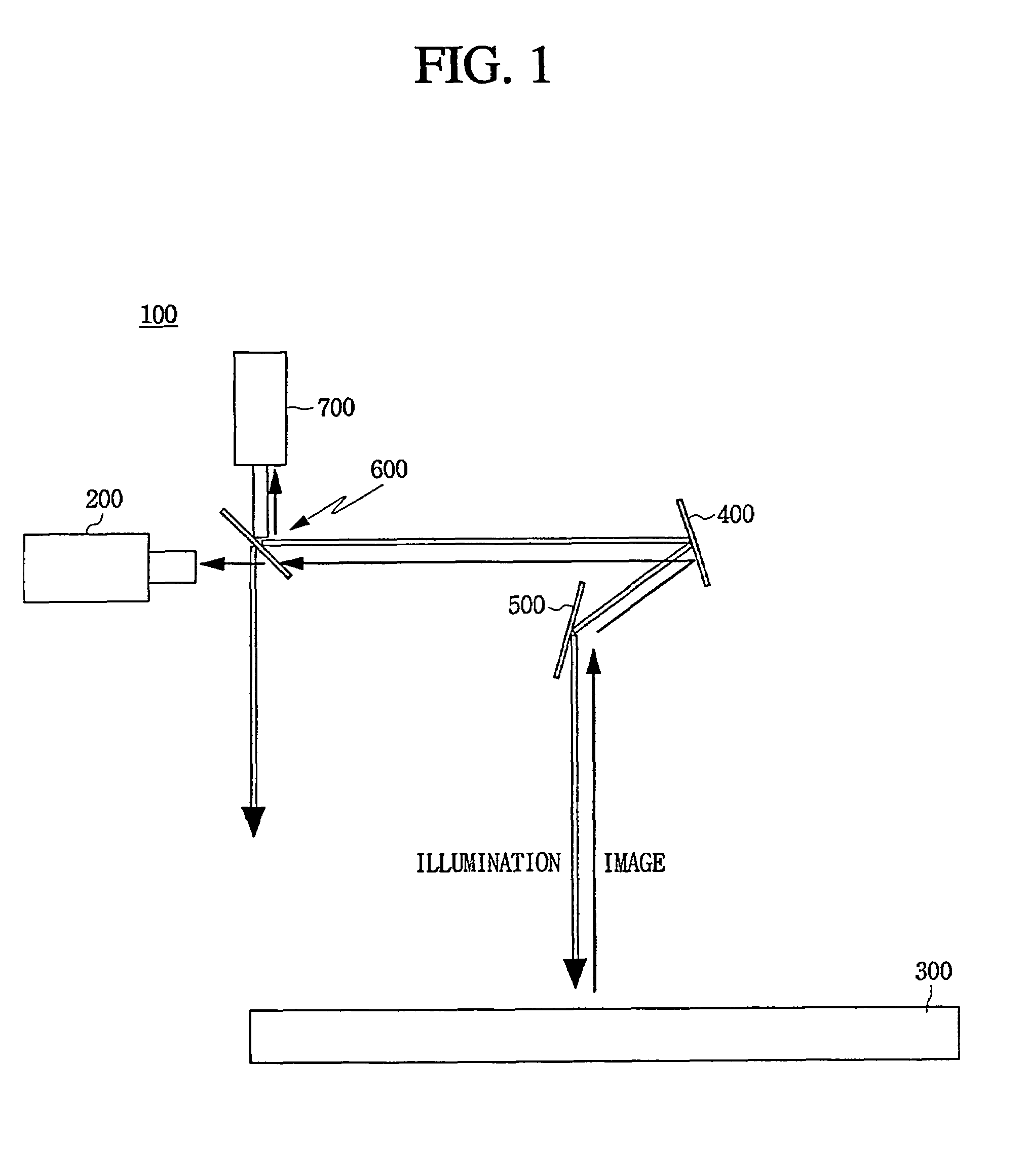

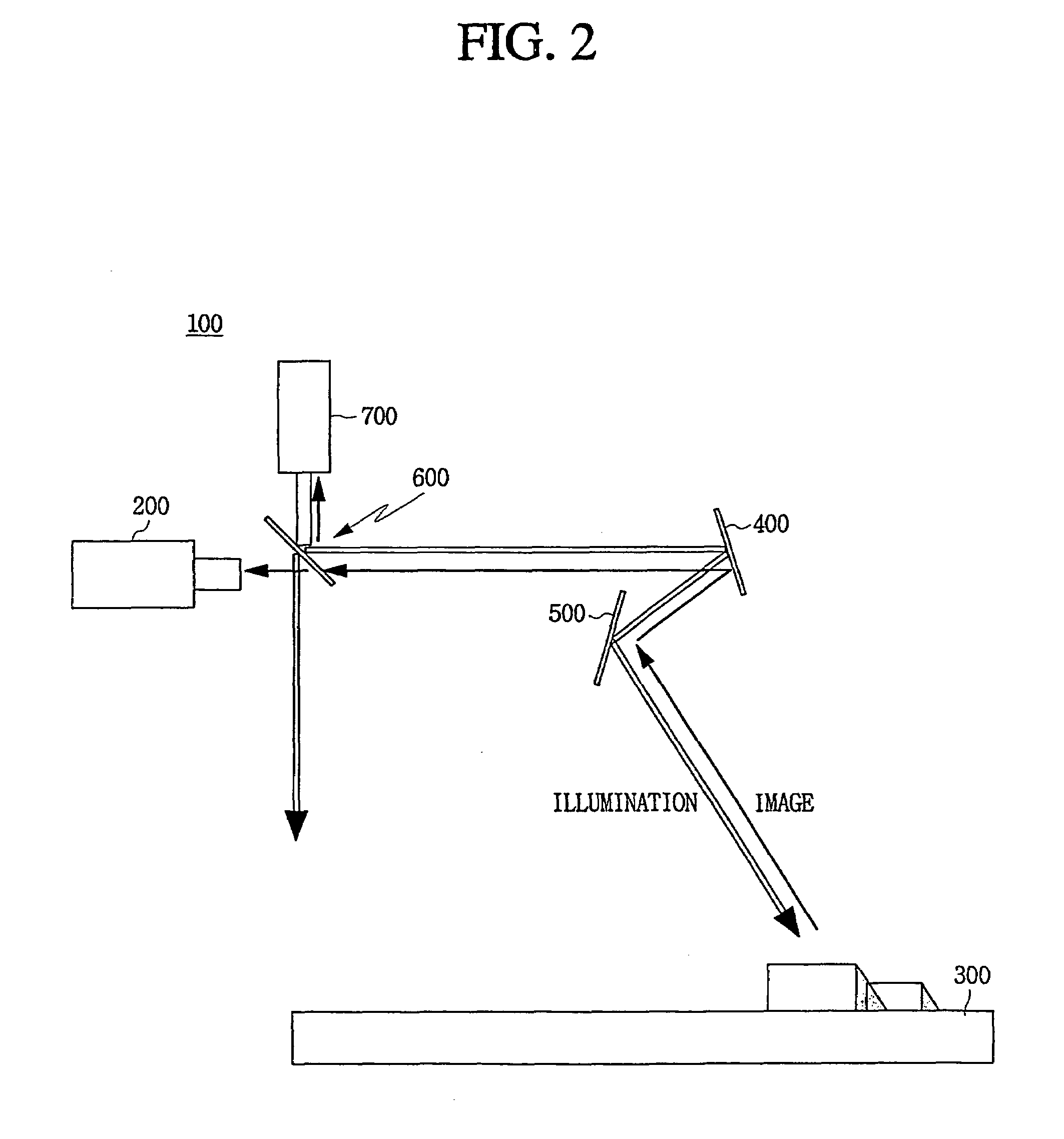

Vision inspection apparatus using a full reflection mirror

ActiveUS7365837B2Improve inspection effectDrive torqueMaterial analysis by optical meansElectrical componentsEffect lightComputer module

The present invention relates to a vision inspection apparatus and method using total reflection mirrors. The present invention provides a vision inspection apparatus using the total reflection mirrors comprising; a board position control module for fixing a printed circuit board; an independent lighting unit for primarily illuminating the printed circuit board; a photographing position control module for changing a reflection angle to required location coordinates of the printed circuit board; a camera for obtaining an image of the printed circuit board; a control unit including a motion controller, a lighting controller, and an to image processor to control the components; and a vision processing unit for reading the image obtained through the camera and judging whether the image is good or bad. The present invention has effects capable of obtaining a clear image of inspection objects by increasing a quantity of light entered into the camera through a stationary direct illuminating type of independent lighting unit, and accomplishing a precise inspection by preventing shadows from generating on the vision inspection of the object such as a printed circuit board on which different sizes of components are mounted.

Owner:MIRTEC

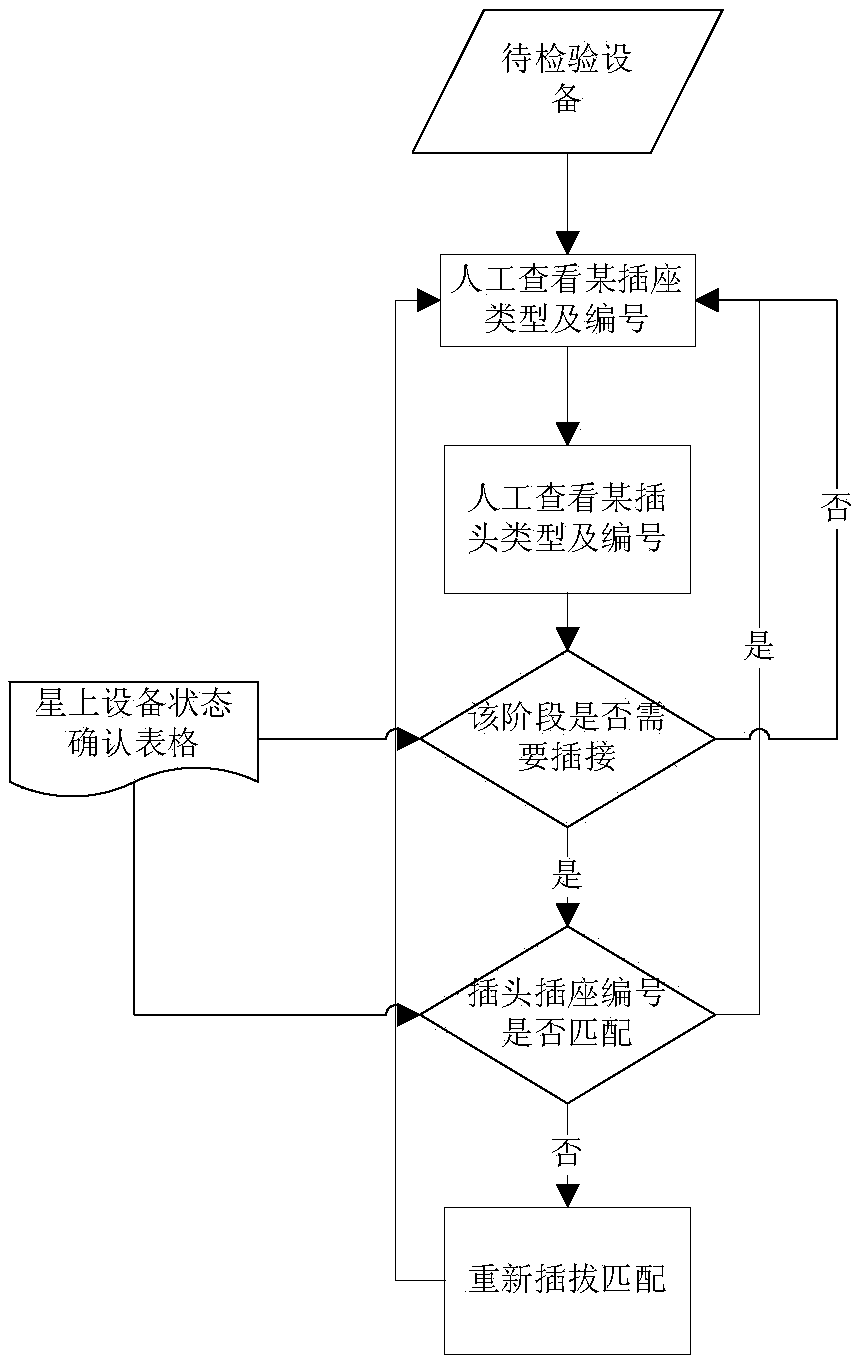

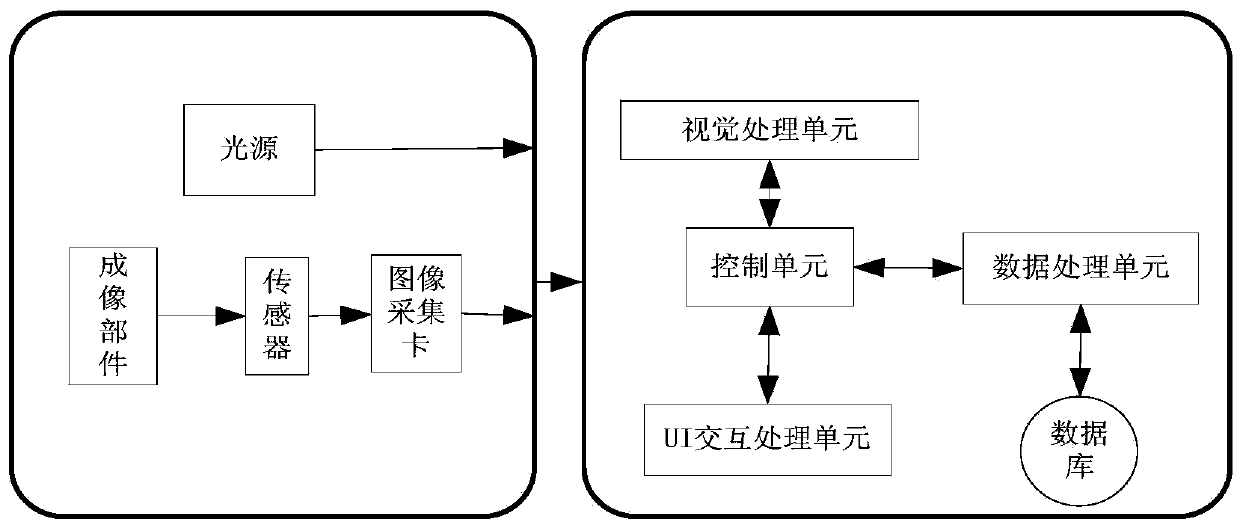

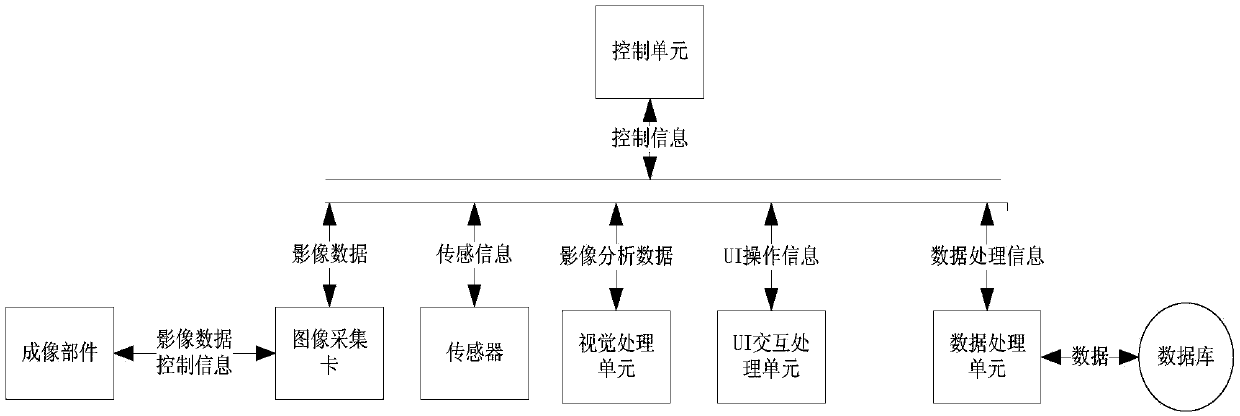

Satellite electric connector plugging examination system and method based on machine vision

ActiveCN105371888ASimplify the process of mating inspectionWill not be affected by subjective factorsMeasurement devicesElectricityVision processing unit

The invention relates to a satellite electric connector plugging examination system and method based on machine vision. The satellite electric connector plugging examination system based on the machine vision comprises a light source, an imaging part, a sensor, an image acquisition card, a visual processing unit, a control unit, a data processing unit, a database and a UI interactive processing unit. The problem of implementation of satellite electric connector plugging examination by adopting a machine vision system is solved so that reliability, real-time performance and safety of the satellite electric connector plugging examination link can be enhanced, and complexity and difficulty of the satellite electric connector plugging examination link can be reduced.

Owner:AEROSPACE DONGFANGHONG SATELLITE

Anti-collision system and anti-collision method

ActiveCN108098768AAvoid forceNo damageProgramme controlProgramme-controlled manipulatorRobotic armVision processing unit

Owner:INSTITUTE FOR INFORMATION INDUSTRY

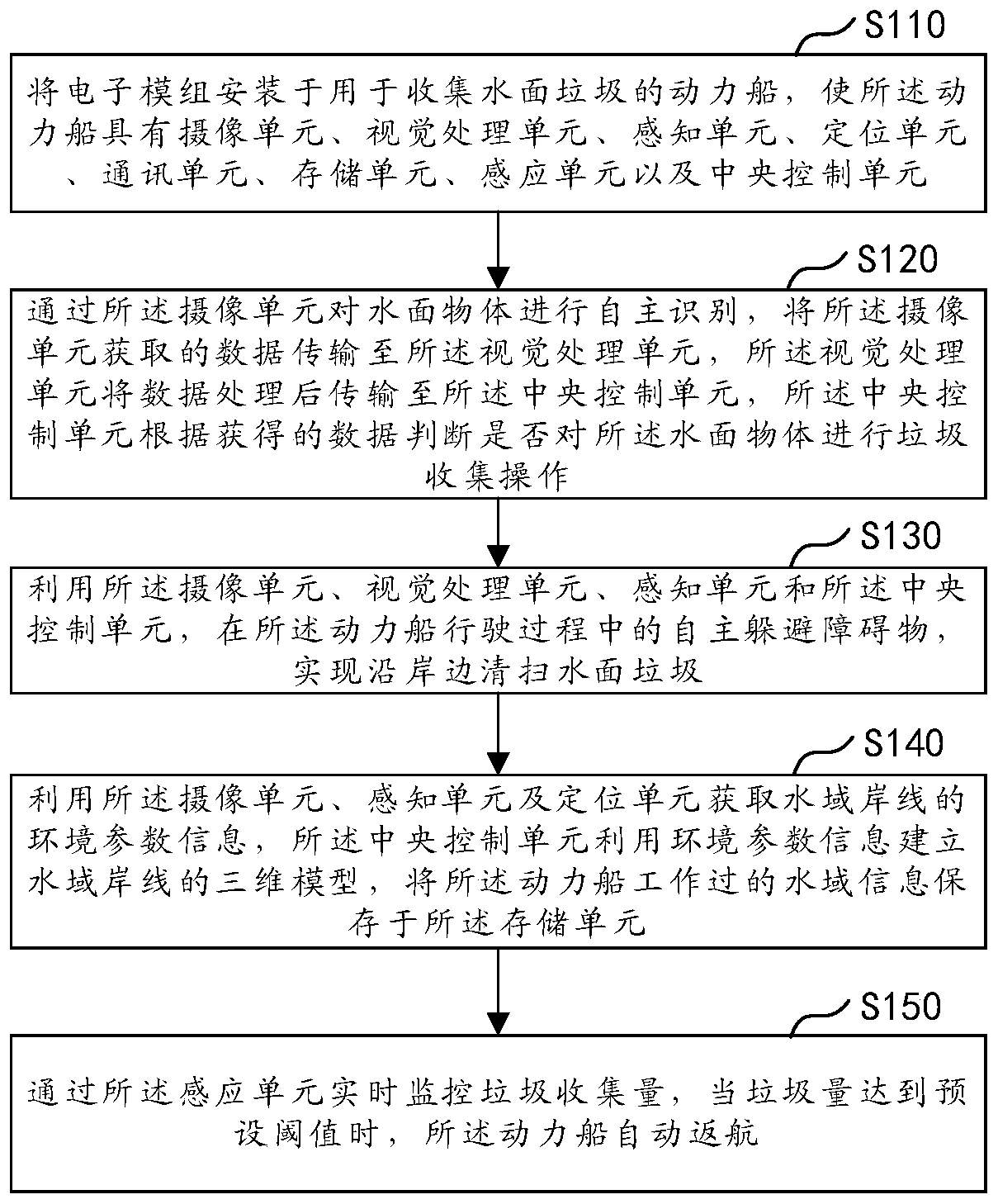

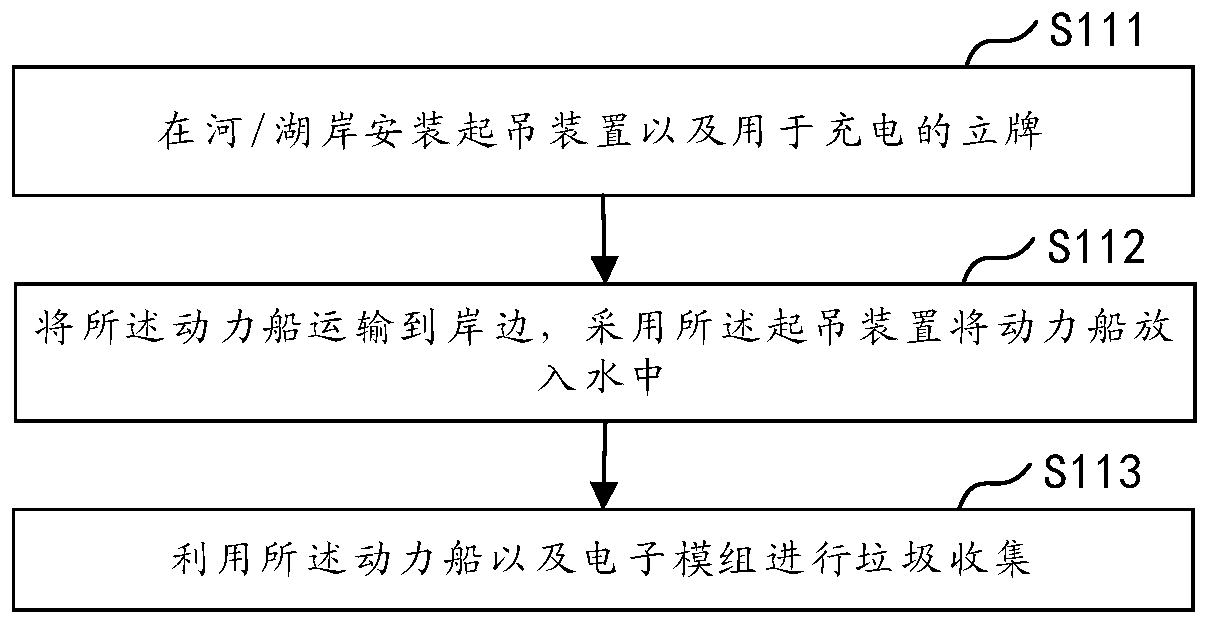

Water surface garbage autonomous collection method and system thereof

InactiveCN111591400AAchieve cleaningAvoid navigation problemsWater cleaningWaterborne vesselsRefuse collectionControl cell

The invention discloses a water surface garbage autonomous collection method and a system thereof. The method comprises the steps of enabling an electronic module to be installed on a power ship for collecting water surface garbage, carrying out the autonomous recognition of a water surface object through a camera unit, and judging whether the garbage collection operation is carried out on the water surface object or not, using the camera unit, a visual processing unit, a sensing unit and a central control unit for autonomously avoiding obstacles and sweeping garbage on the water surface alongthe shore, establishing a three-dimensional model of a water area shoreline, and storing the information of the water area where the power ship works in the storage unit, monitoring the garbage collection amount in real time through the sensing unit, and when the garbage amount reaches a preset threshold value, enabling the power ship to automatically return. By the adoption of the water surfacegarbage autonomous collection method, autonomous recognition is conducted on the objects on the water surface through the camera unit, and garbage collection, autonomous obstacle avoidance, real-timemonitoring and automatic return are conducted on the objects on the water surface through the central control unit.

Owner:陕西欧卡电子智能科技有限公司

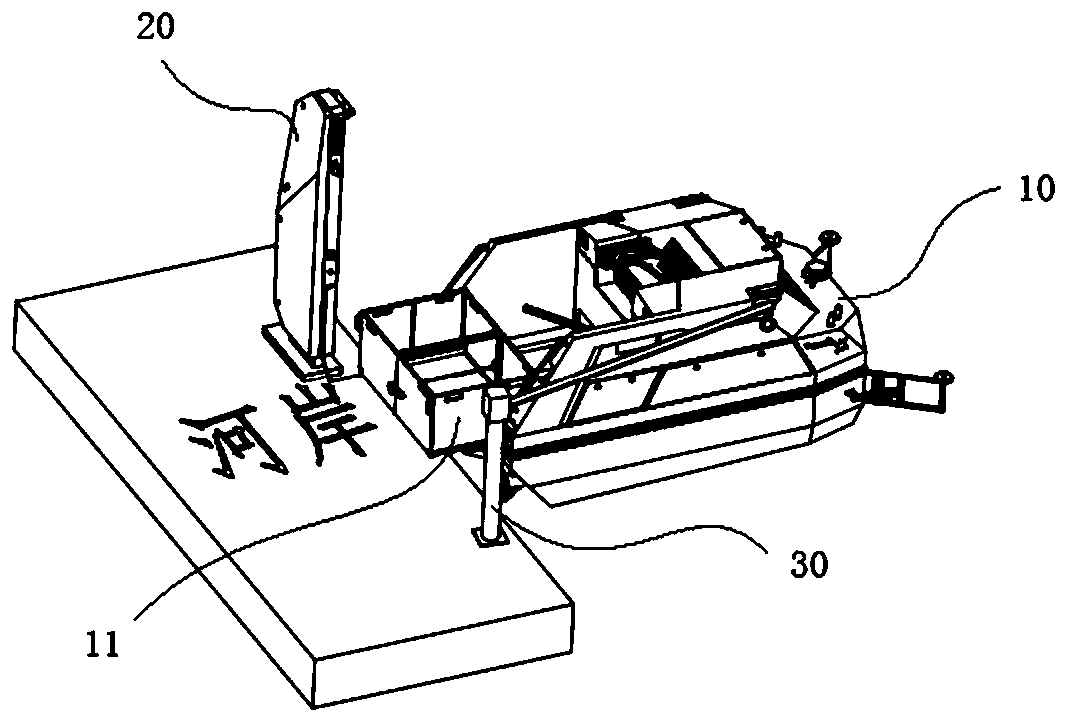

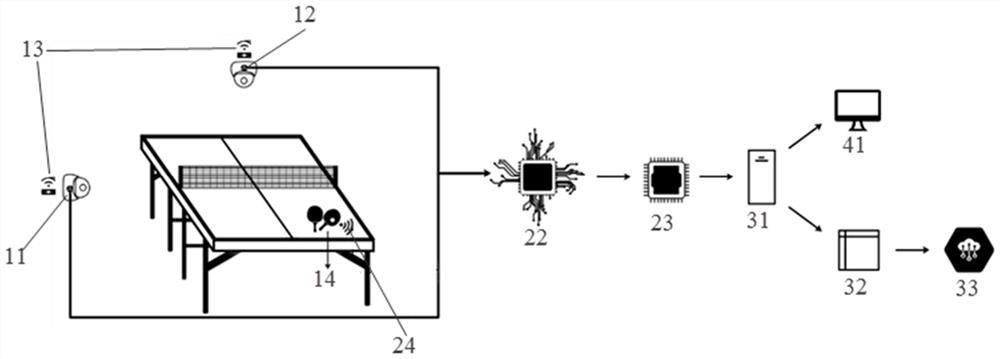

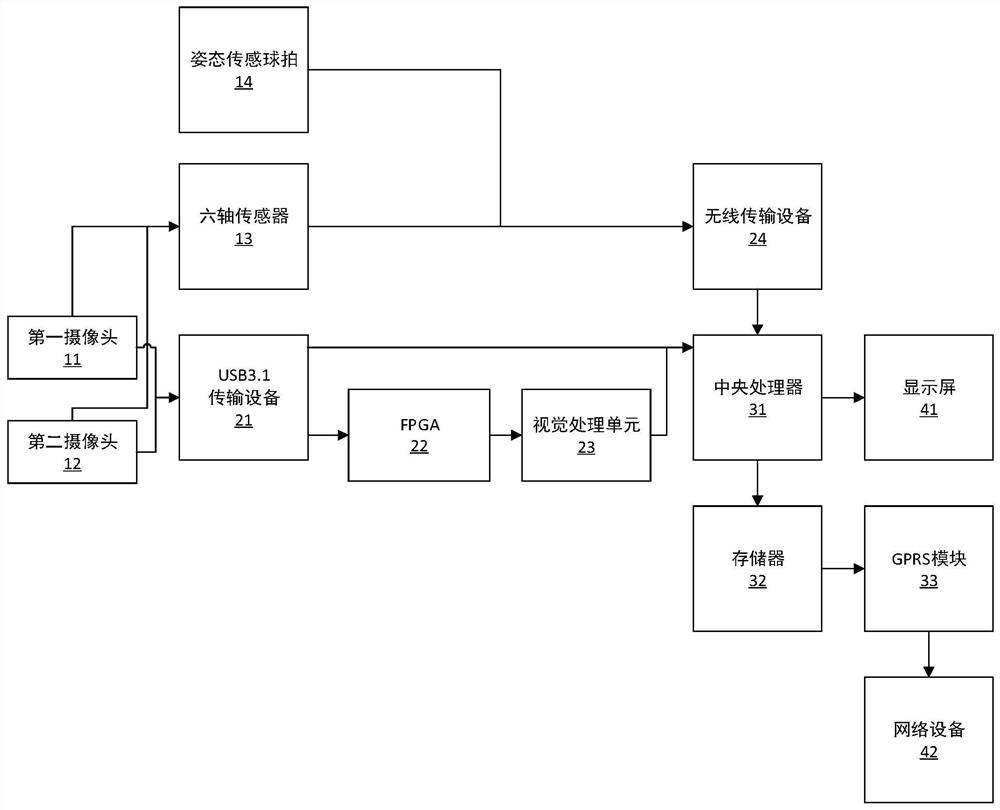

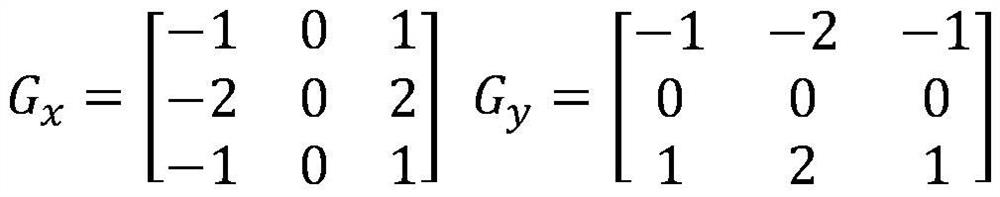

Ping-pong ball trajectory tracking device and method based on deep learning

PendingCN112702481AReduce manufacturing costEasy to deployTelevision system detailsCharacter and pattern recognitionWireless transmissionSimulation

The invention discloses a ping-pong ball trajectory tracking device and method based on deep learning. The device comprises a posture sensing racket, a six-axis sensor, wireless transmission equipment, a first camera, a second camera, USB 3.1 transmission equipment, an FPGA, a visual processing unit, a central processing unit, a display screen, a memory, a GPRS module and network equipment. Two cameras capture table tennis pictures, the FPGA, the visual processing unit and the central processing unit are combined with the posture sensing racket to obtain a table tennis track, track analysis is conducted, the ball hitting condition of a player is obtained, the memorizer is used for storing data, the data is uploaded to the cloud through the GPRS module, and real-time checking is achieved. The three-dimensional position of the ping-pong ball is obtained in real time, the motion trail of the ping-pong ball is drawn, ball hitting conditions of players are analyzed, equipment is arranged in an embedded mode, utilized resources are small, and operation is easy and convenient.

Owner:HANGZHOU DIANZI UNIV

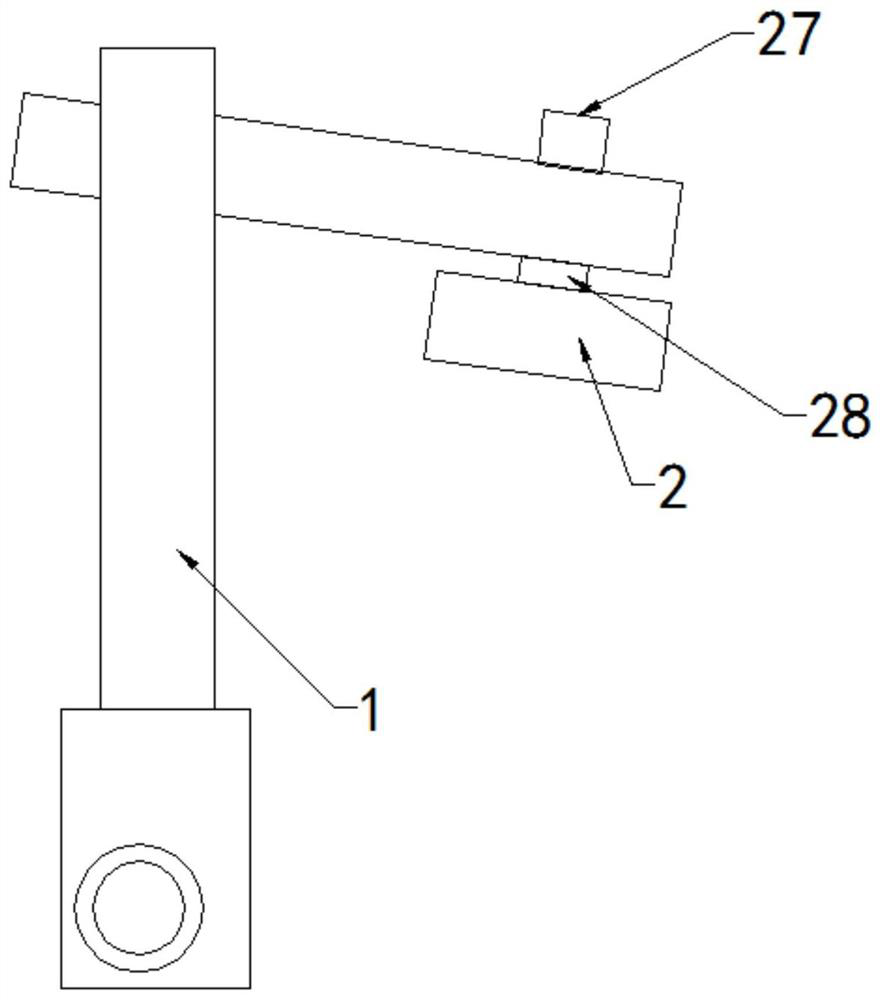

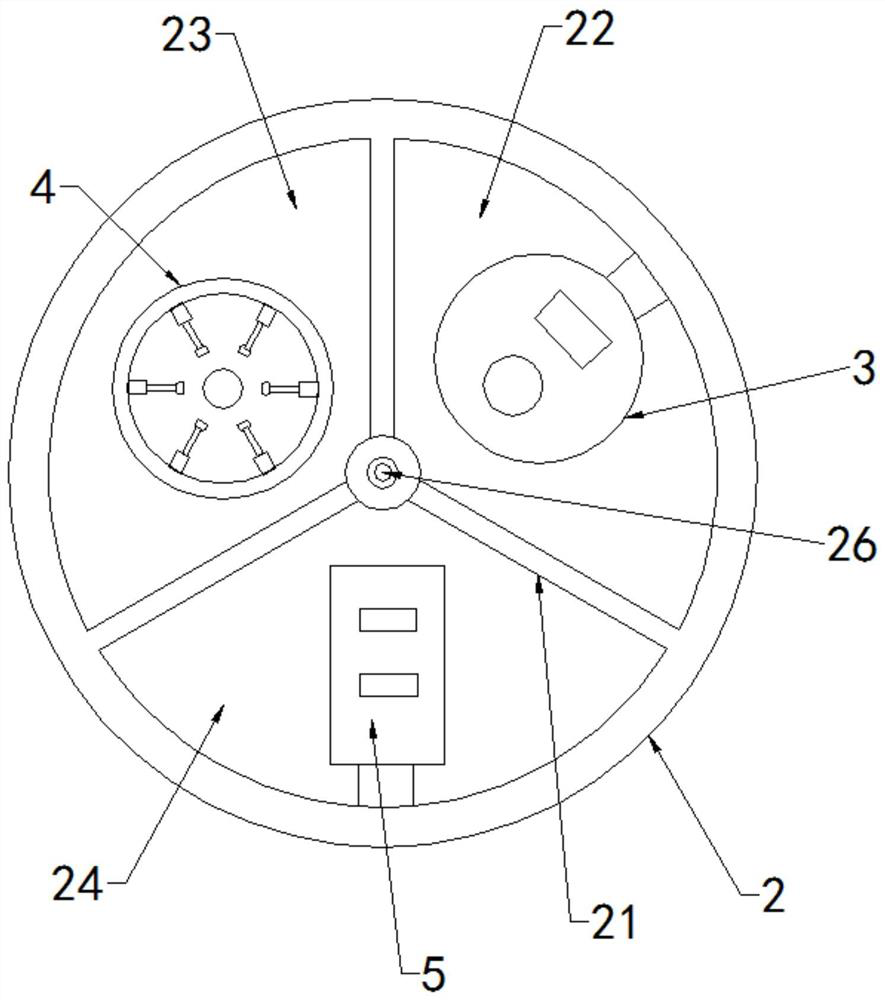

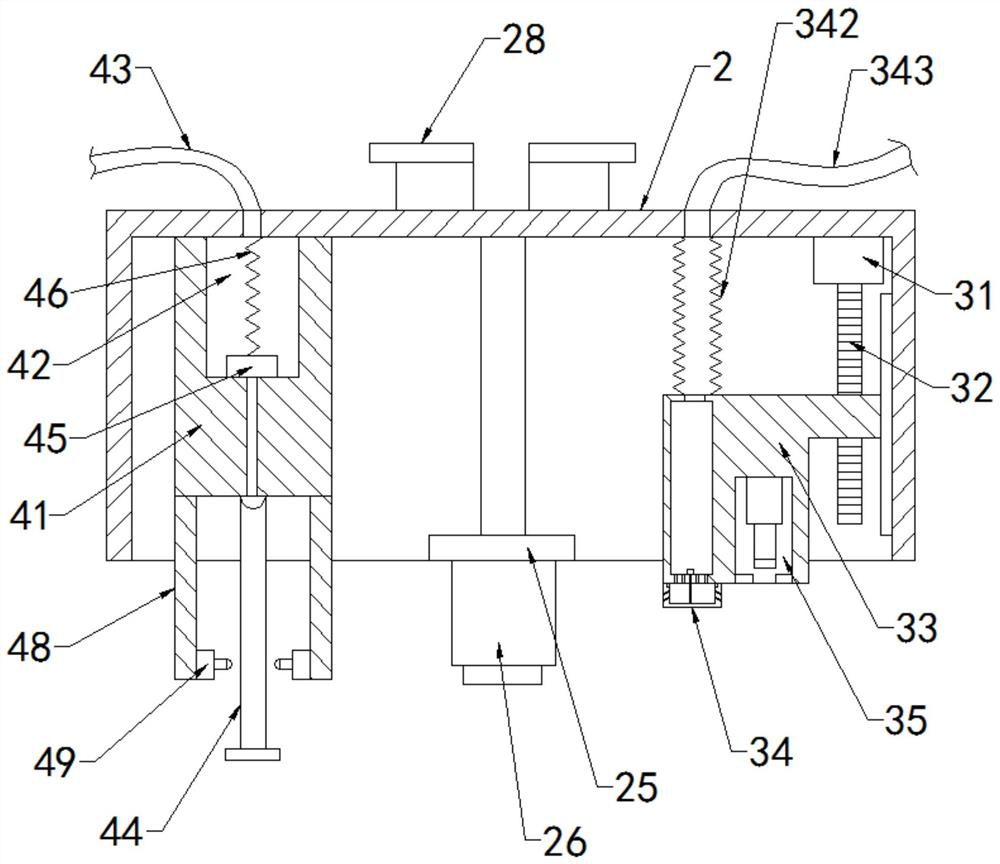

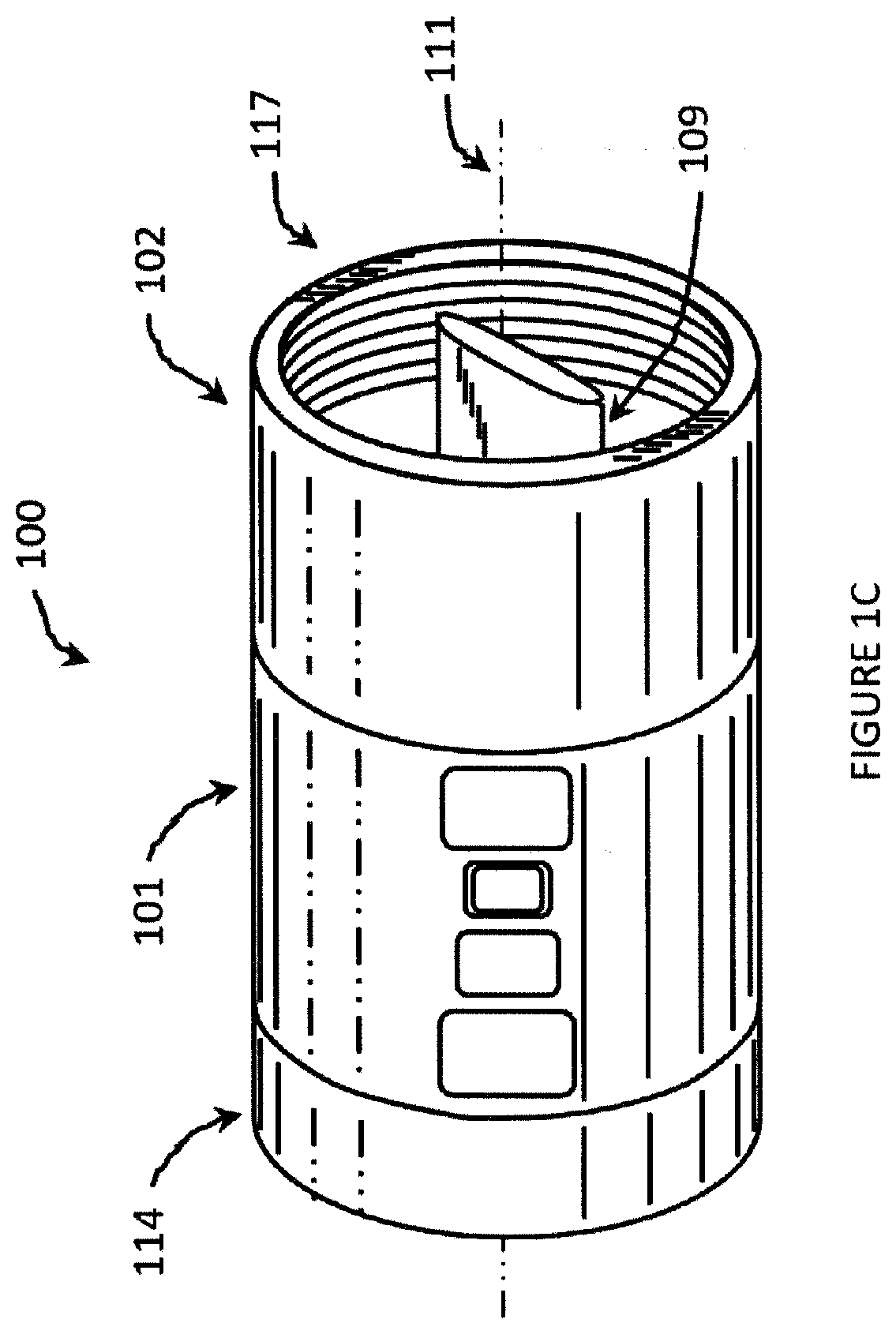

Mechanical arm visual control method and device

ActiveCN113733141AImprove gripAvoid the unstable phenomenon of adsorption and graspingGripping headsEngineeringVision processing unit

The invention discloses a mechanical arm visual control device. According to the technical scheme, the mechanical arm visual control device is characterized by comprising a mechanical arm body, partition plates, a mounting base, a shifting mechanism, a first grabbing mechanism and a second grabbing mechanism, and a mounting cylinder with a mounting cavity is assembled at the tail end of the mechanical arm body; the partition plates are arranged in the mounting cylinder and divides the mounting cavity of the mounting cylinder into a first cavity body, a second cavity body and a third cavity body, and the first cavity body, the second cavity body and the third cavity body are all arranged to be fan-shaped cavity bodies; the mounting base is arranged in the center of the bottom of the mounting cylinder and located at the joints of the partition plates, a visual processing unit is mounted on the mounting base, and the visual processing unit comprises a visual sensor, a visual processor and a distance measuring sensor; the shifting mechanism is arranged in the first cavity and used for shifting parts into the grabbing range of the mechanical arm; the first grabbing mechanism is arranged in the second cavity and used for adsorbing and grabbing the parts; and the second grabbing mechanism is arranged in the third cavity and used for clamping and grabbing the part. The mechanical arm visual control device has the beneficial effects of being stable in grabbing and high in working effect.

Owner:QUZHOU COLLEGE OF TECH

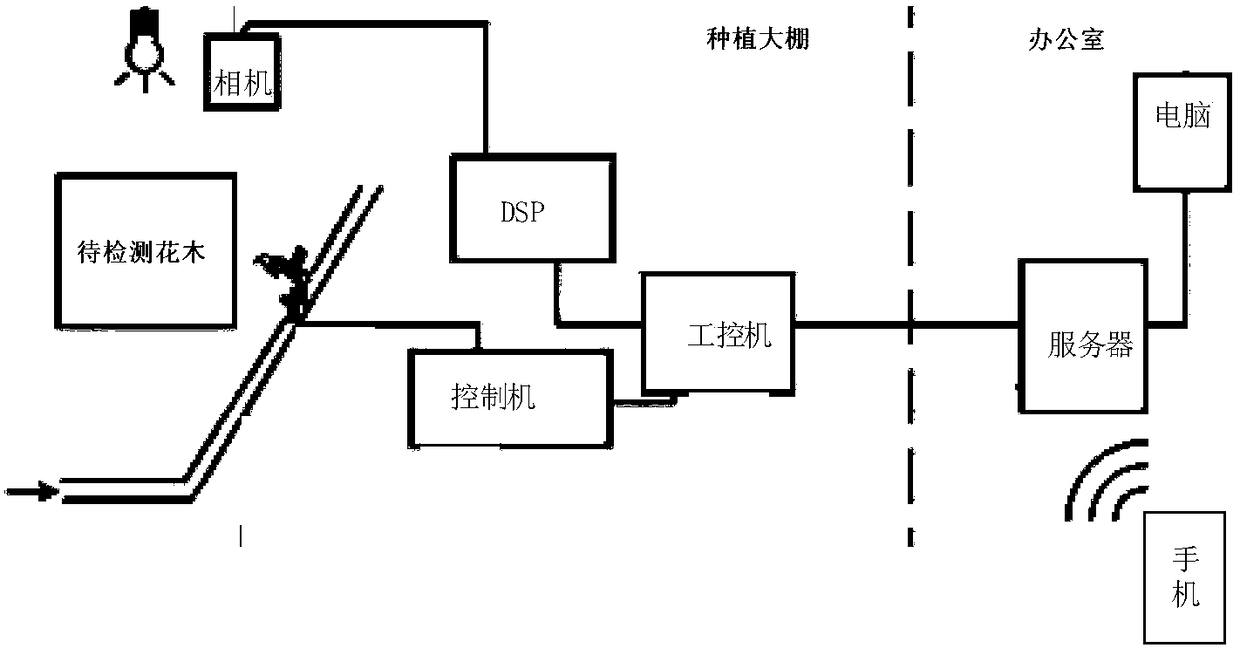

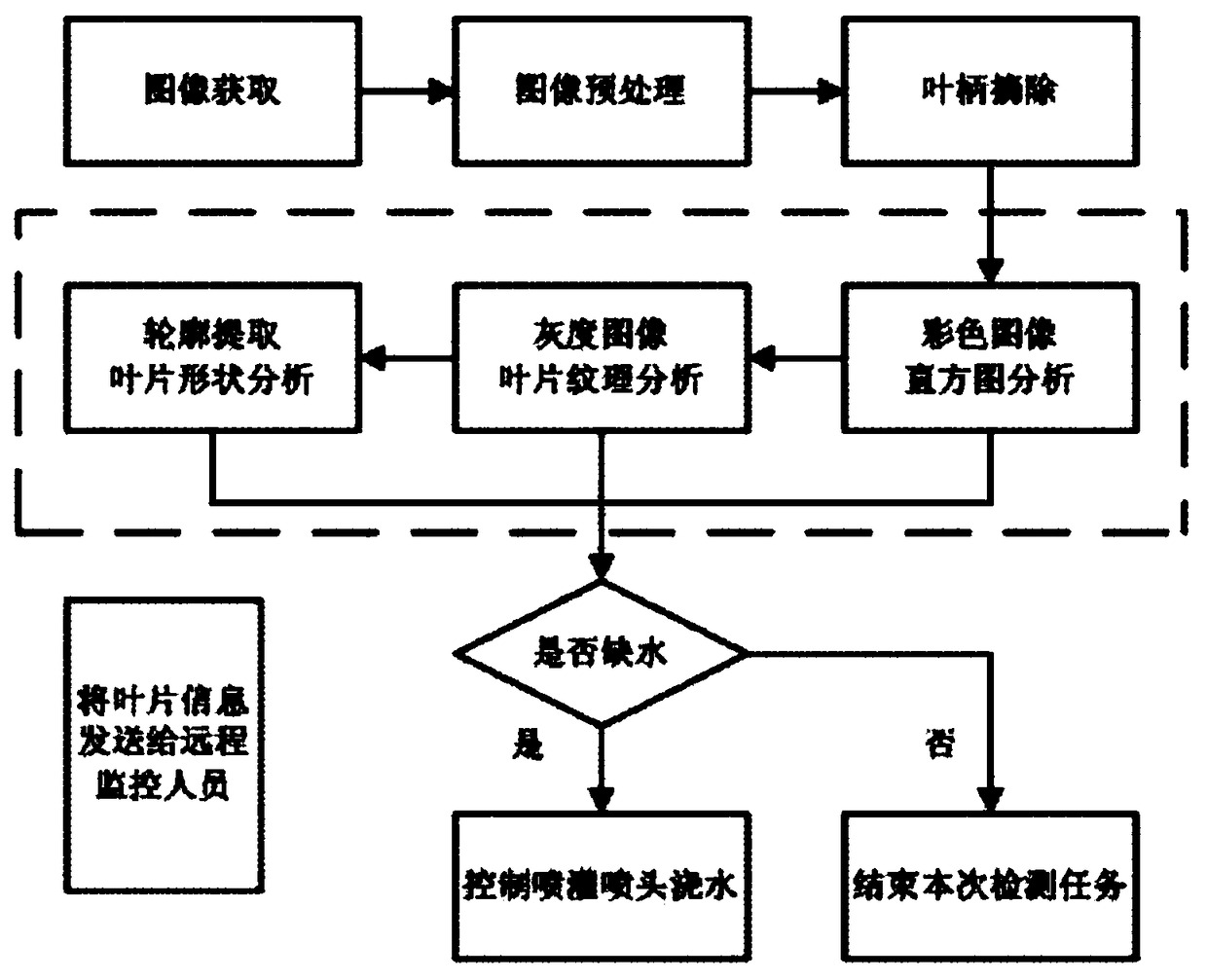

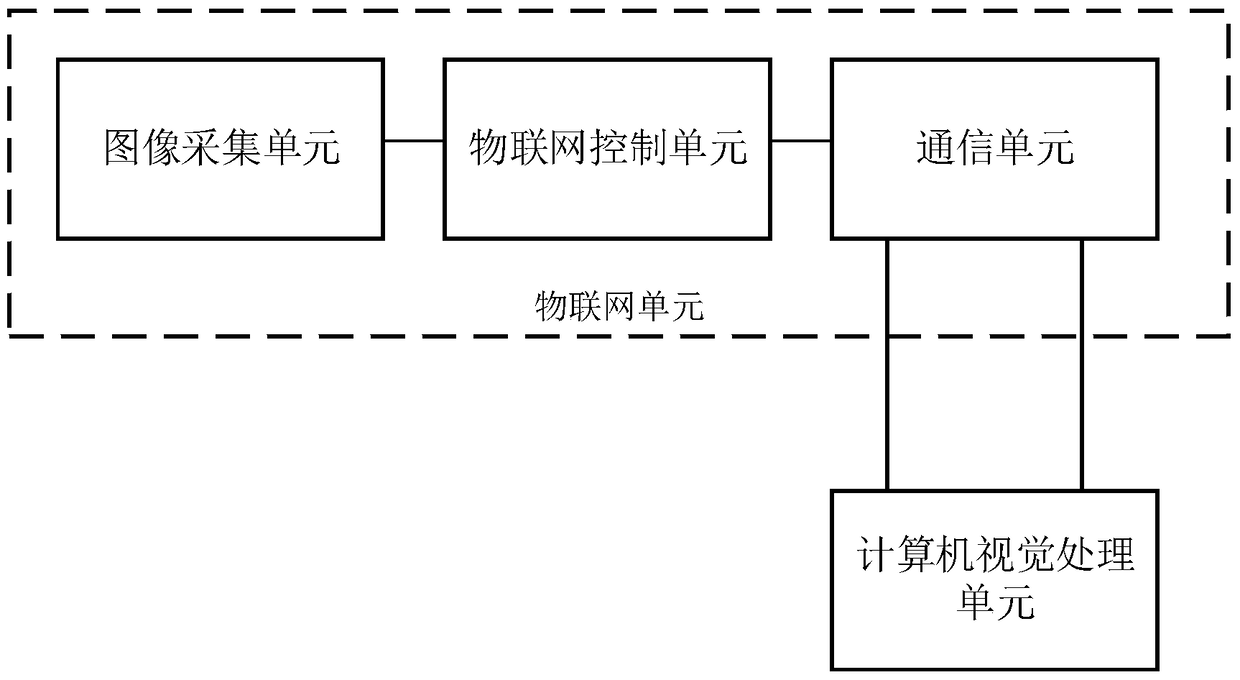

A Grassland Drought Monitoring System and Method Based on Computer Vision and Internet of Things

ActiveCN105427279BRealize free designReduce development costsImage enhancementData processing applicationsImaging processingCommunication unit

The invention discloses a grassland drought status monitoring system based on computer vision and the Internet of things and a grassland drought status monitoring method. The system comprises an image acquisition unit, an Internet of things control unit, a communication unit and a computer vision processing unit, wherein the image acquisition unit and the communication unit are respectively in communication connection with the Internet of things control unit, the communication unit communicates with the computer vision processing unit through the network, the Internet of things control unit controls the image acquisition unit for acquiring images of plants from different angles, the images are transmitted through the communication unit to the computer vision processing unit, and the images acquired from different angles are analyzed and processed by the computer vision processing unit to acquire a drought status of the plants. Through the system and the method, the image processing speed and accuracy can be improved, under the common action of the Internet of things module and the computer vision module, grassland degradation and the drought status can be warned in advance, and thereby remote drought monitoring can be realized.

Owner:CHINA AGRI UNIV

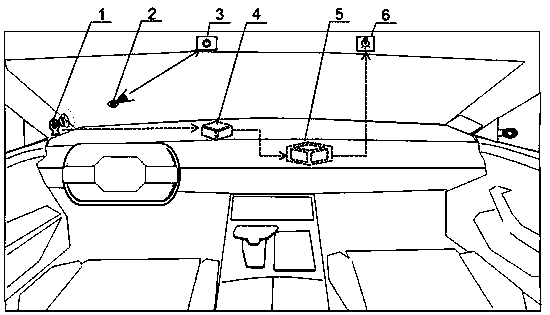

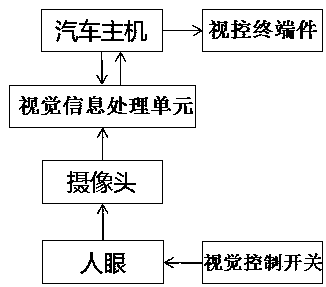

Automobile vision switch control system

The invention discloses an automobile vision switch control system. The system comprises an automobile main engine, a camera, a vision processing unit, and a vision control switch. During running, thecamera carries out real-time monitoring to obtain binocular dynamic features of a driver, the features are output to the vision processing unit, the vision processing unit generates a human eye dynamic digital model, on the basis of the model, binocular focal point high-frequency calculation is carried out, a determined human eye focal point space position is compared with space position of the vision control switch built in the vision processing unit, whether an equipment operator watches a certain vision control switch or not is judged, a judging result is output to the automobile main engine, the automobile main engine issues a control instruction to equipment, and vision control over the automobile function can be achieved. The blinking frequency or fixation duration serves as an instruction signal to be subjected to function control, and vision control is achieved.

Owner:FAW CAR CO LTD

Vision testing device using multigrid pattern

InactiveCN103547884AAccurate calculationQuickly Calculate Accurate AltitudeOptically investigating flaws/contaminationUsing optical meansGrid patternVision testing

The vision testing device of the present invention is a vision testing device for discriminating a satisfactory or a nonsatisfactory testing object by shooting a testing object assembled or mounted during the component assembly process, and comparing the image shot with a previously inputted target image, and comprises: a stage part for fixing or transferring the testing object to the testing location; a lighting part located on the upper portion of the stage part, for providing lighting to the testing object; a center camera part located in the center of the lighting part, for obtaining a 2-dimensional image of the testing object; a grid pattern irradiating part placed on the side section of the center camera part; a vision processing unit for reading the image shot at the center camera part and discriminating satisfactory or non-satisfactory testing objects; and a control unit for controlling the stage part, the grid pattern irradiating part, and the camera part, wherein the grid pattern irradiating part irradiates grid patterns each having periods of different intervals.

Owner:MIRTEC

Interactive image processing system Using Infrared Cameras

ActiveUS20200336729A1Improve depth qualityImprove user experienceImage enhancementTelevision system detailsImaging processingComputer graphics (images)

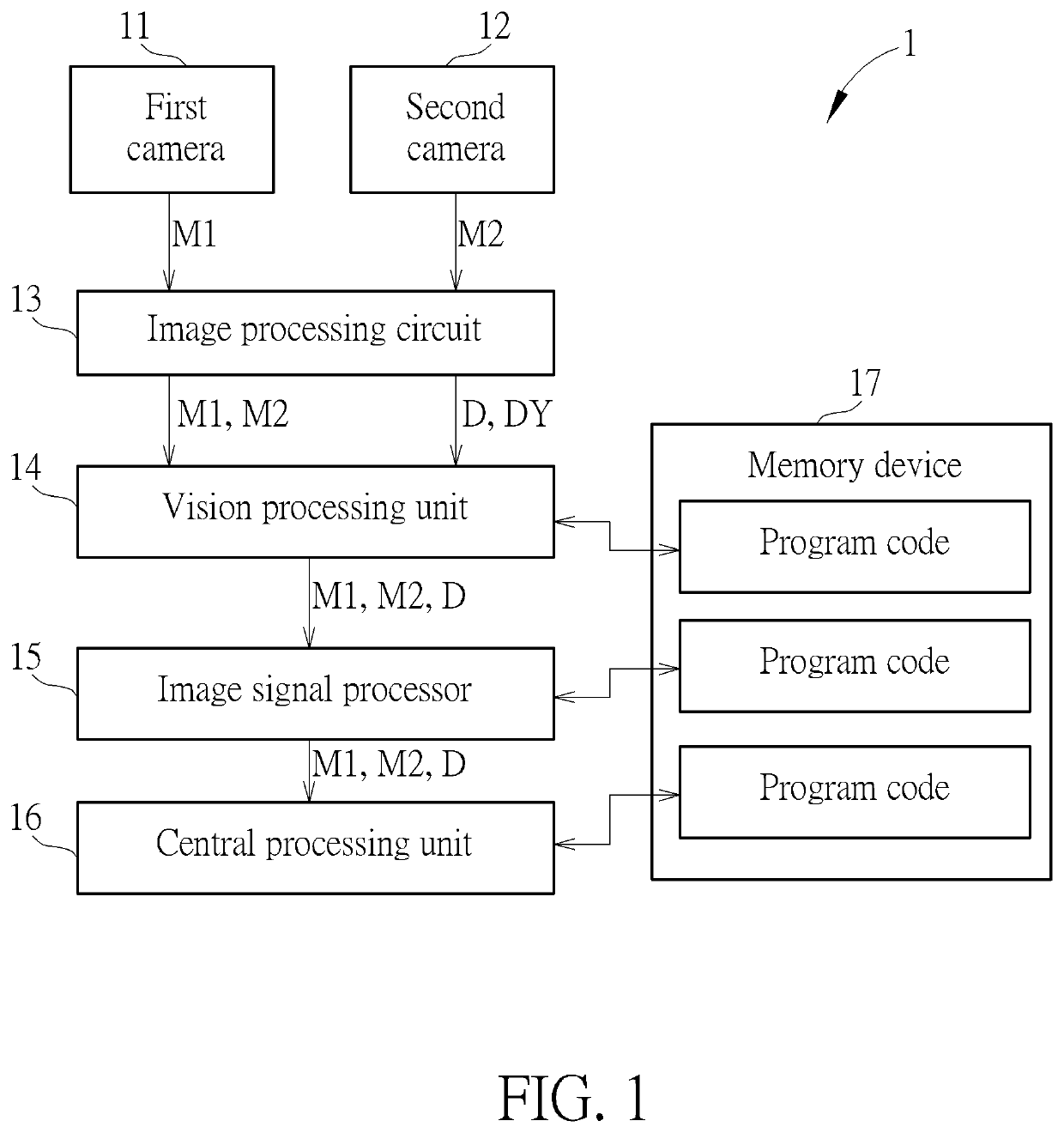

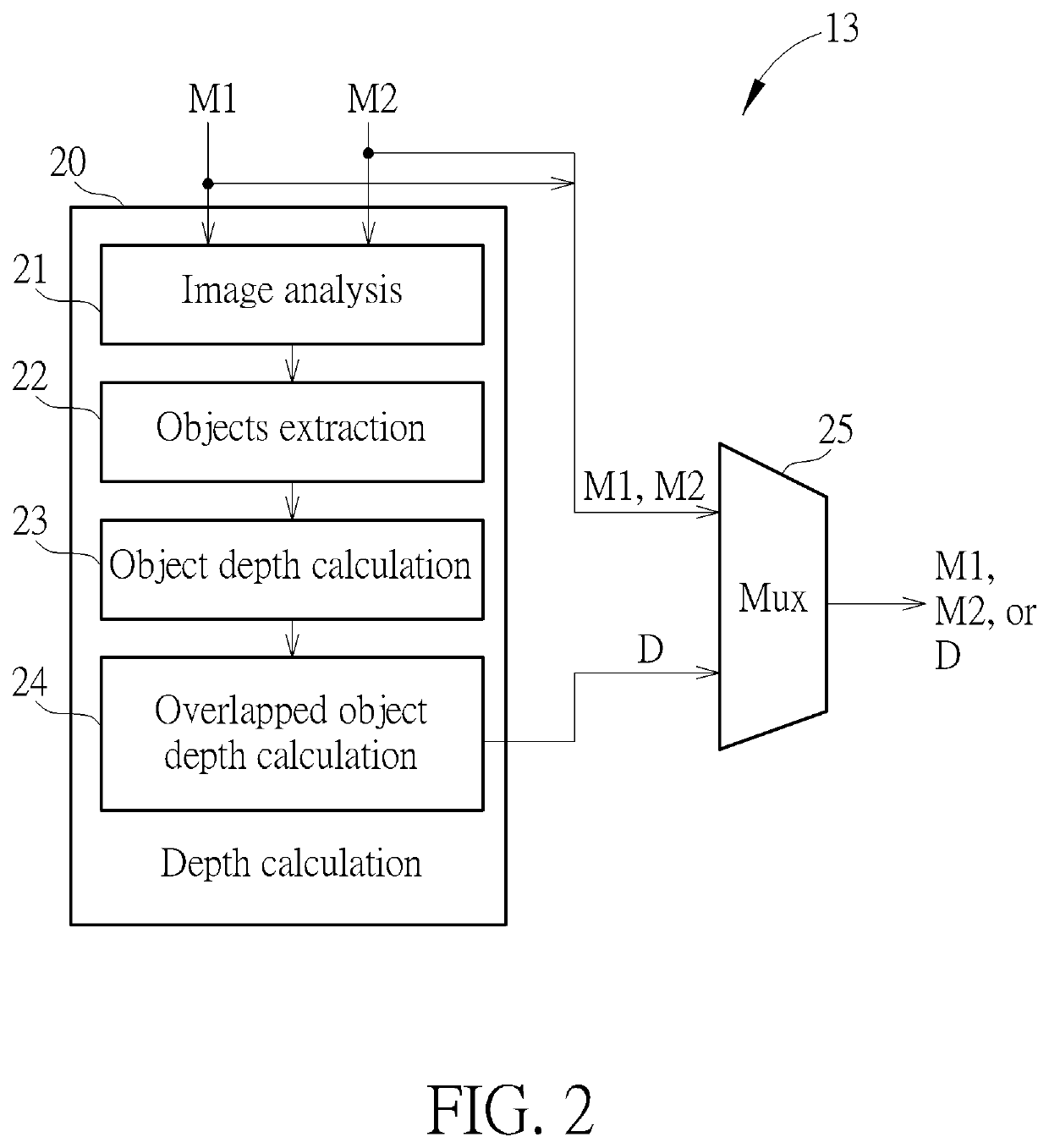

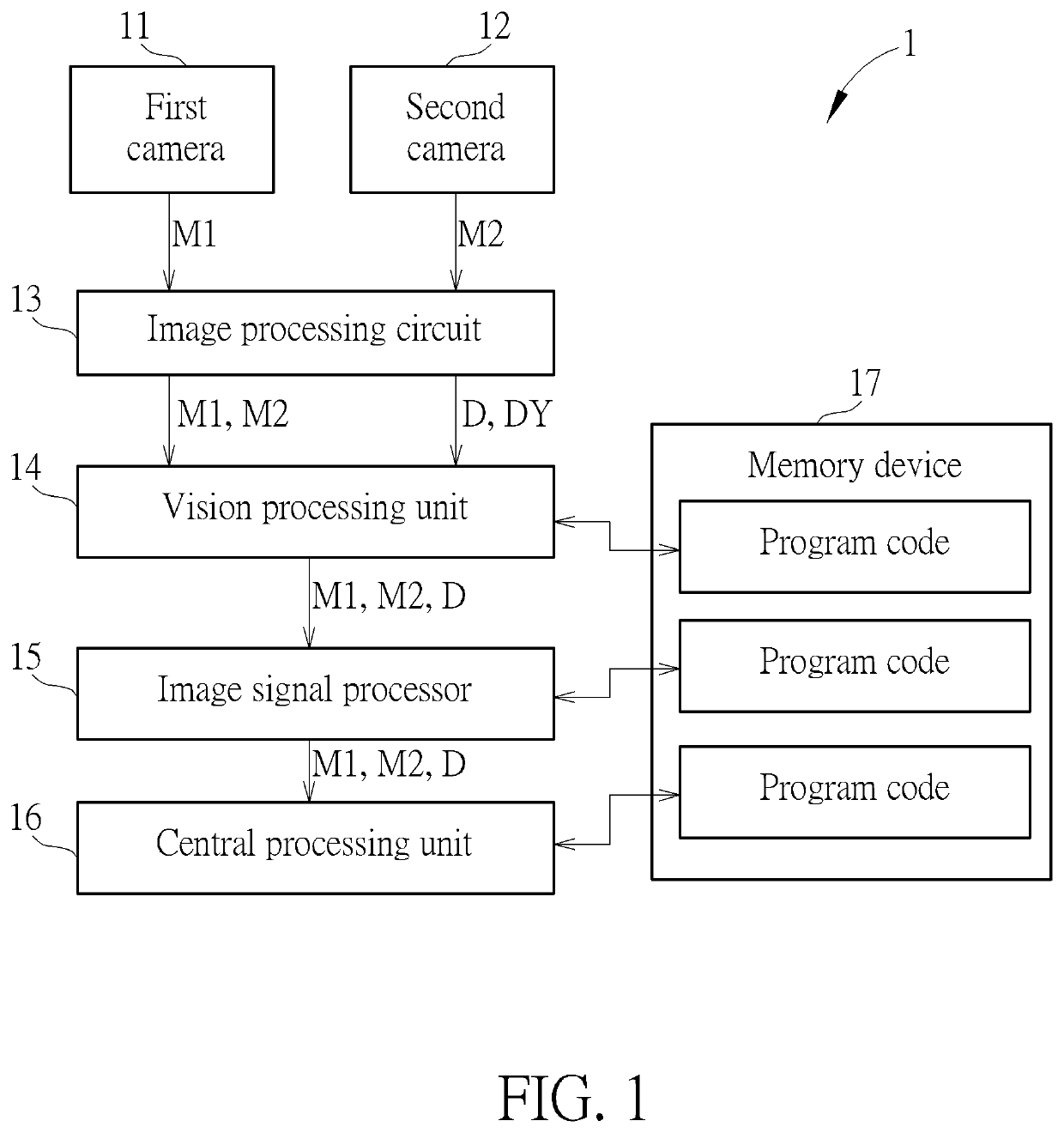

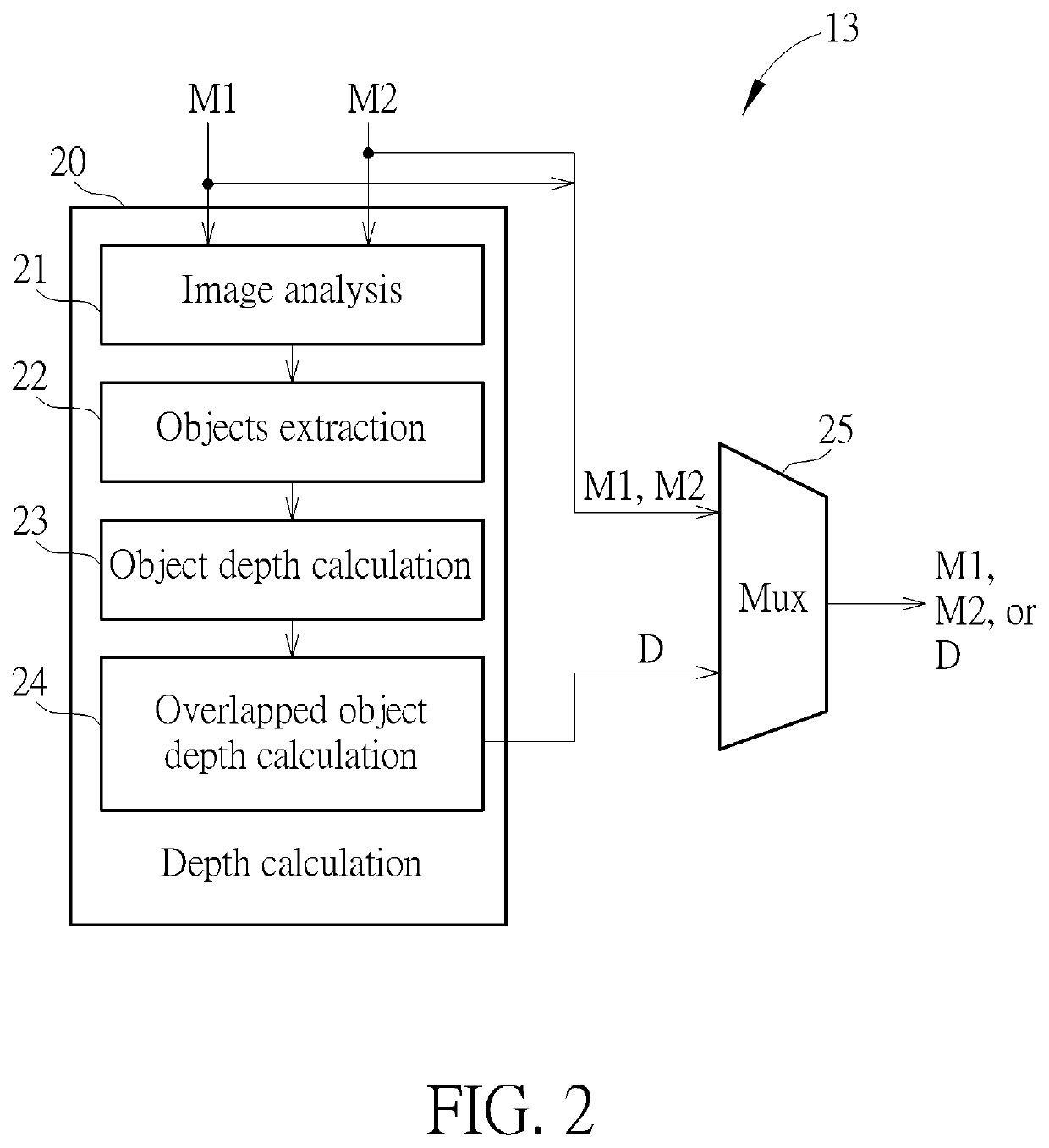

An interactive image processing system including a first infrared camera, a second infrared camera, an image processing circuit, a vision processing unit, an image signal processor, a central processing unit, and a memory device is disclosed. The present disclosure calculates depth data according to infrared images generated by the first and second infrared cameras to improve depth quality.

Owner:XRSPACE CO LTD

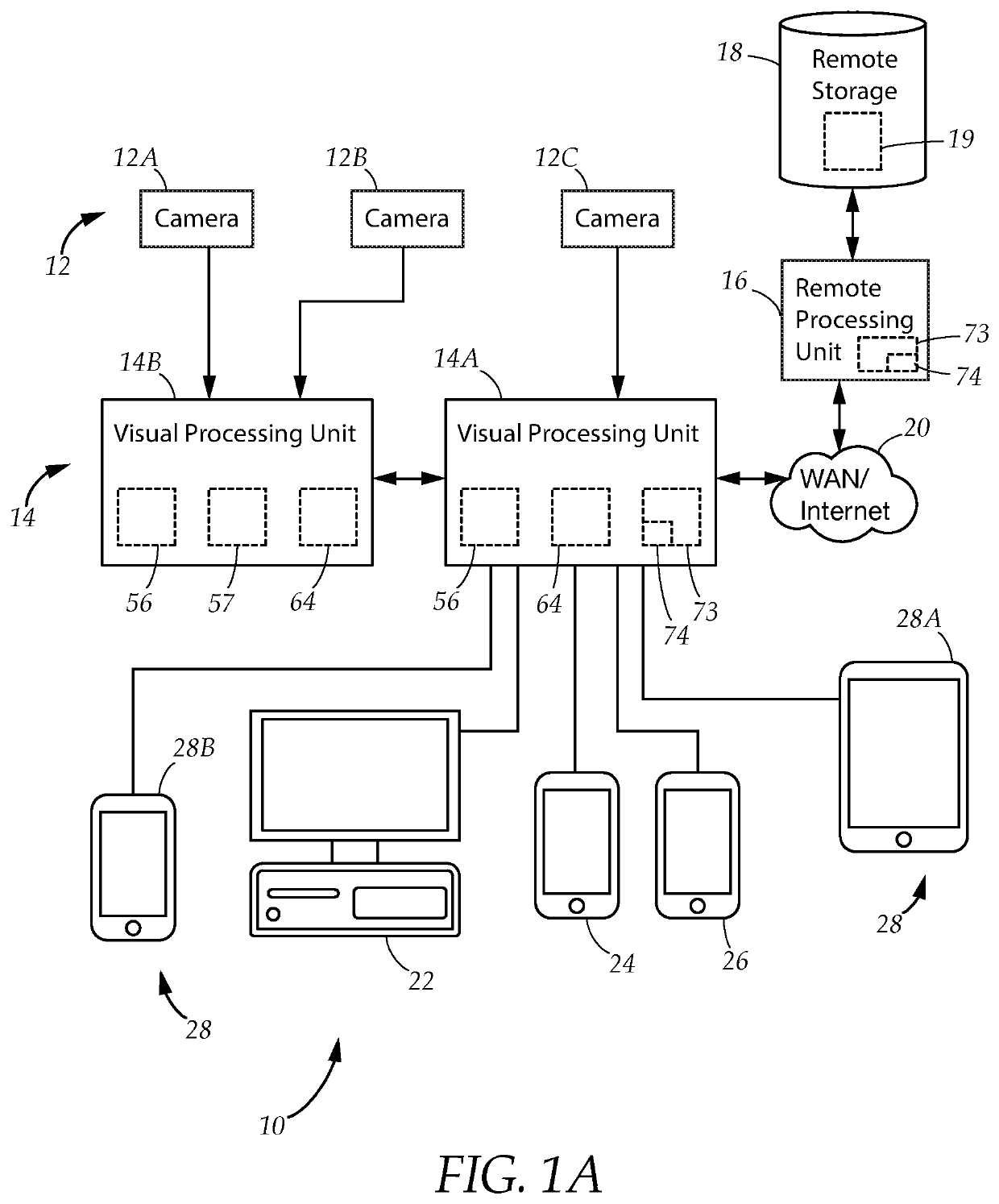

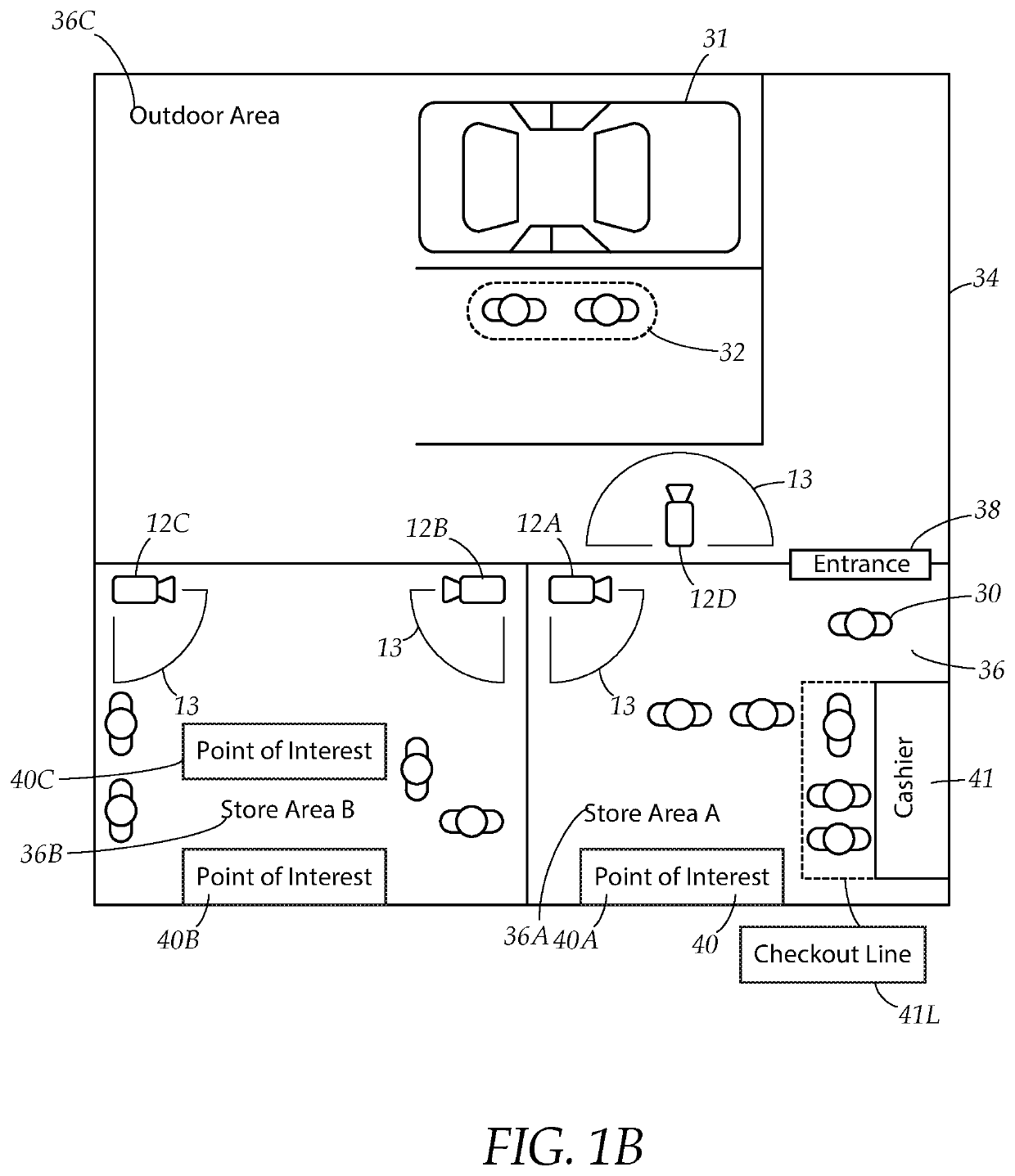

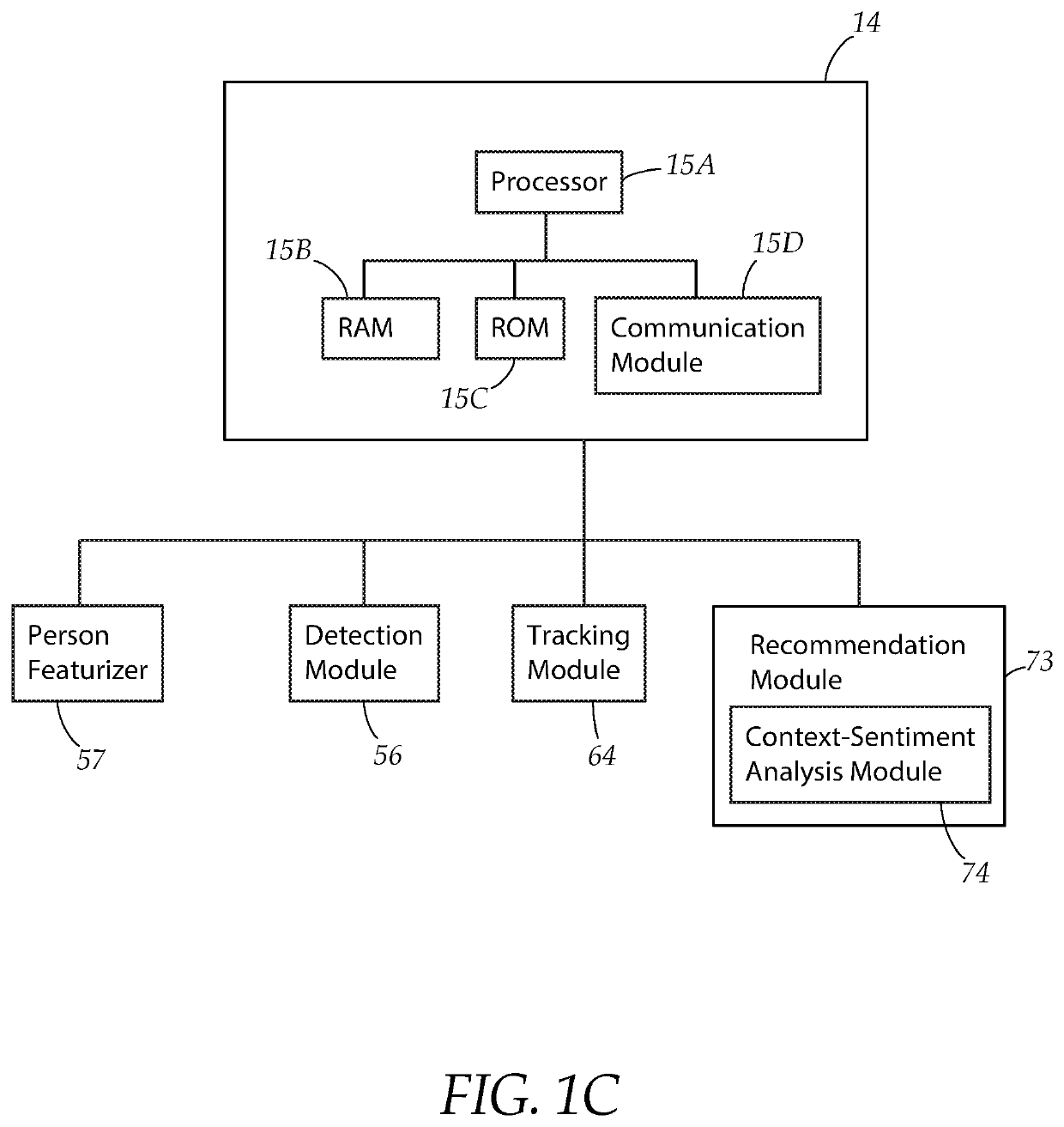

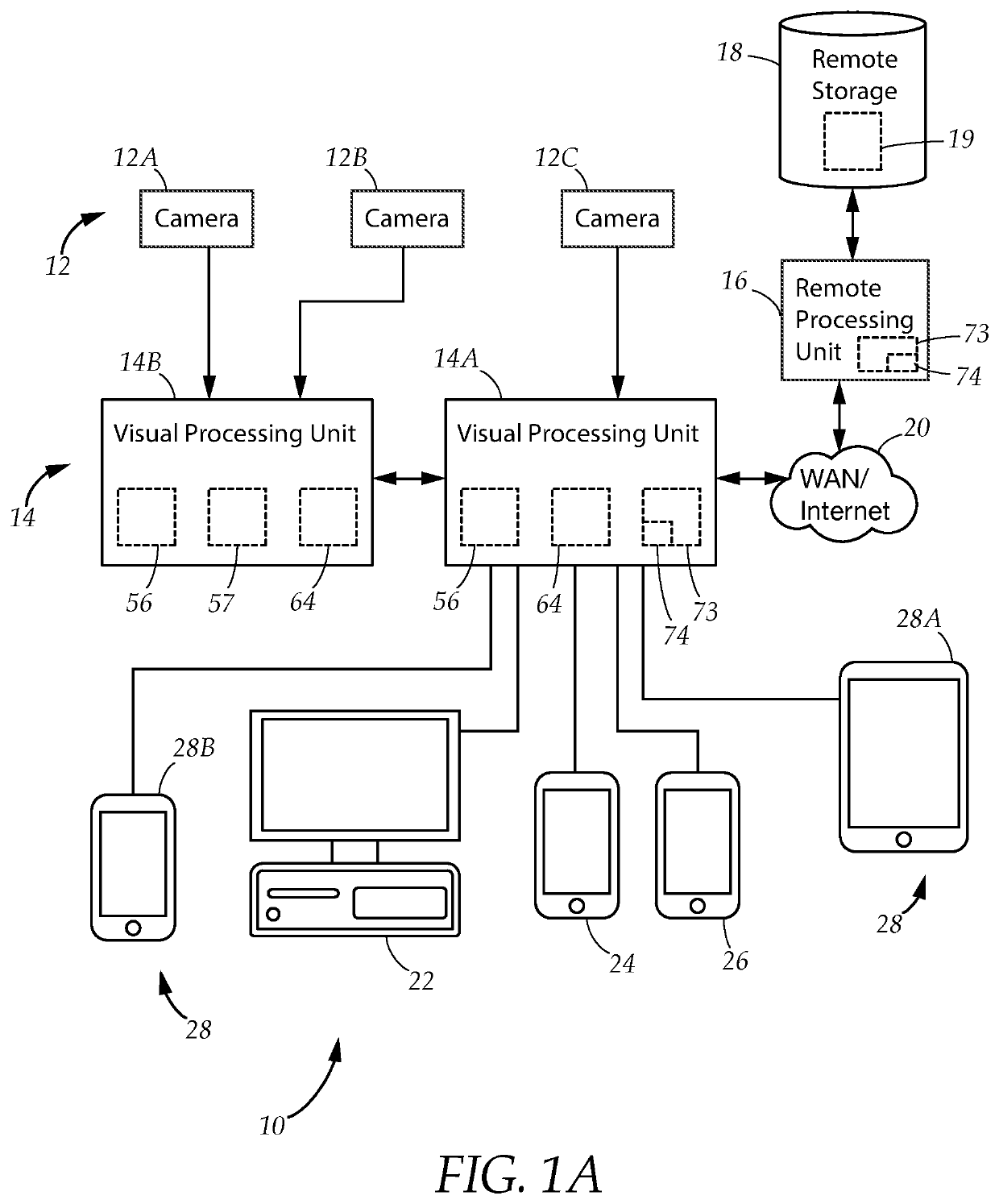

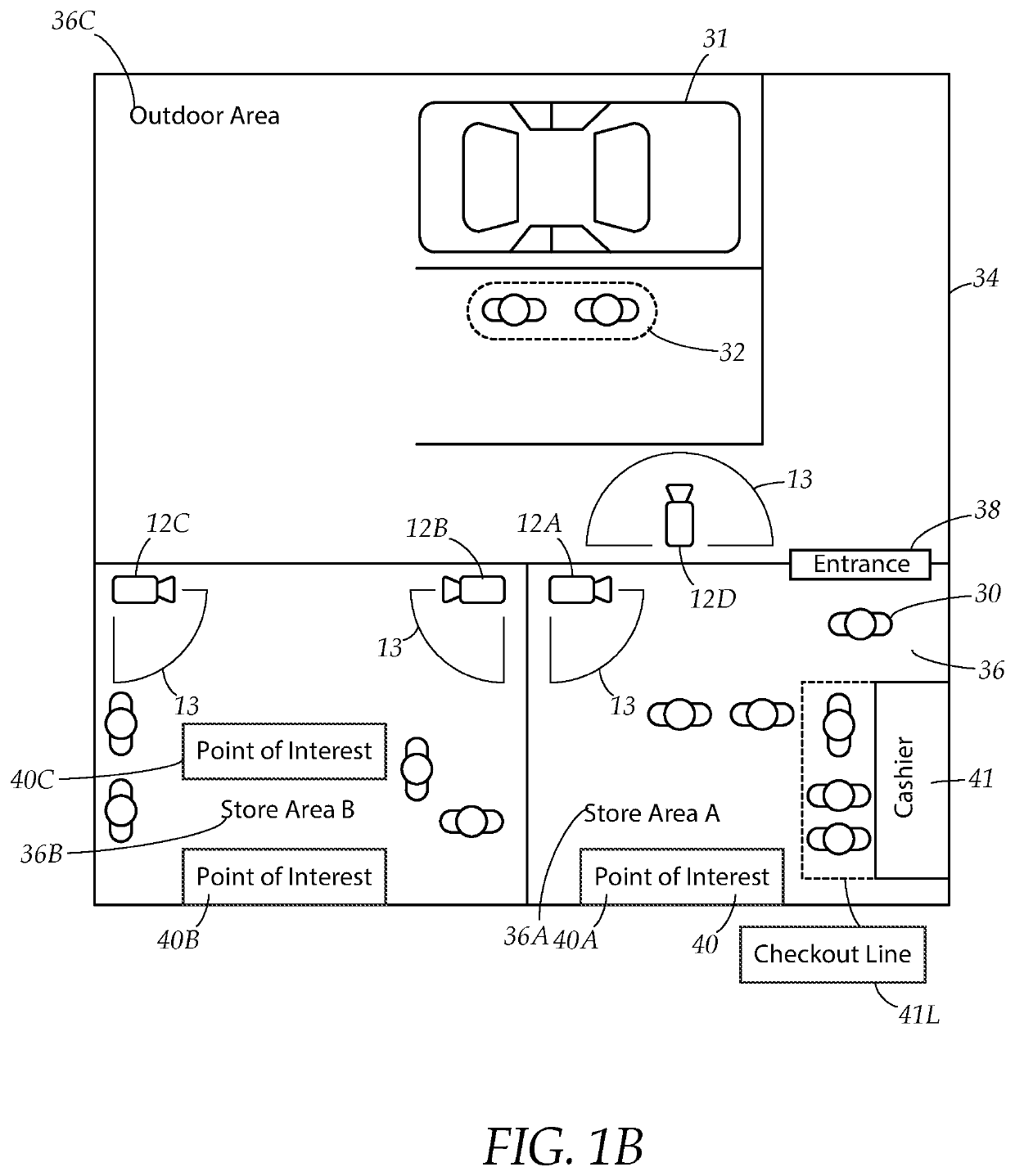

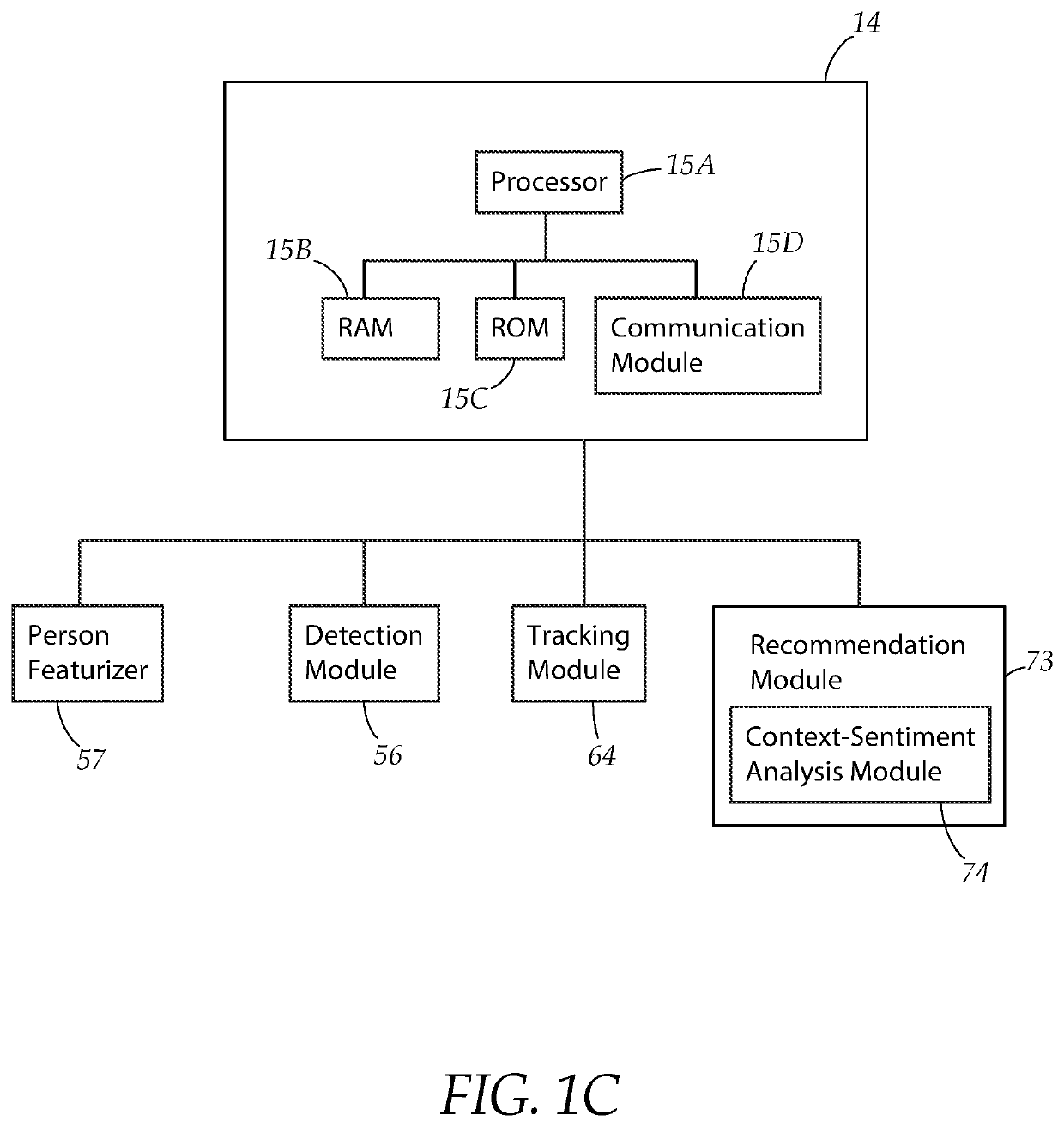

System and method for visually tracking persons and imputing demographic and sentiment data

ActiveUS11024043B1Maximizes overall likelihood valueAvoid duplicationImage enhancementImage analysisCustomer requirementsRadiology

A visual tracking system for tracking and identifying persons within a monitored location, comprising a plurality of cameras and a visual processing unit, each camera produces a sequence of video frames depicting one or more of the persons, the visual processing unit is adapted to maintain a coherent track identity for each person across the plurality of cameras using a combination of motion data and visual featurization data, and further determine demographic data and sentiment data using the visual featurization data, the visual tracking system further having a recommendation module adapted to identify a customer need for each person using the sentiment data of the person in addition to context data, and generate an action recommendation for addressing the customer need, the visual tracking system is operably connected to a customer-oriented device configured to perform a customer-oriented action in accordance with the action recommendation.

Owner:RADIUSAI INC

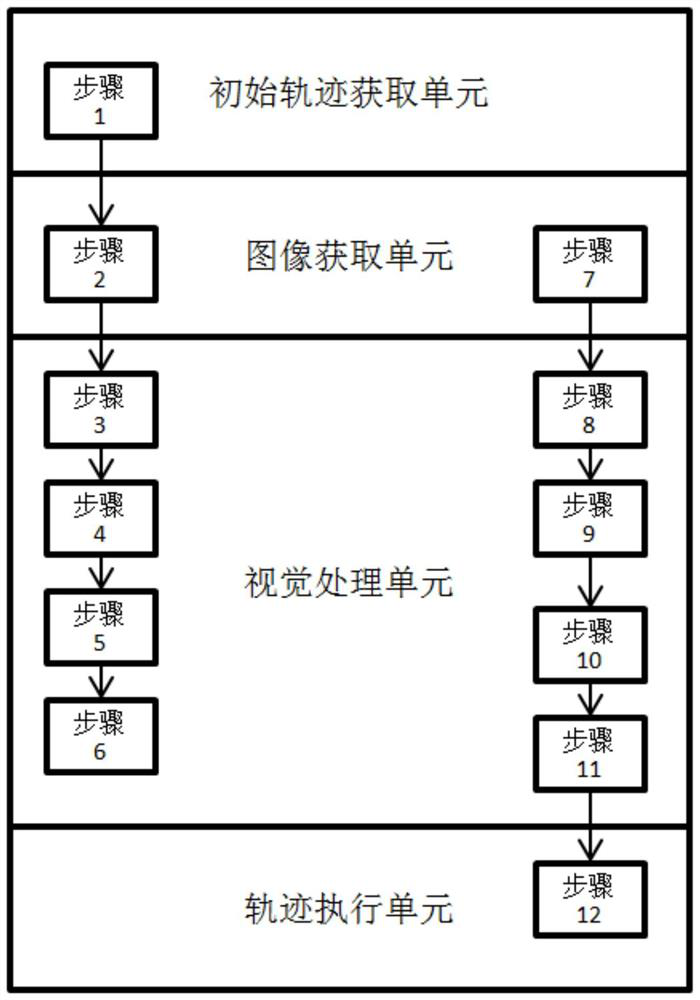

Machining track deformation compensation method and system

PendingCN112435350AEasy to edit laterFlexible deformation compensation capabilityImage enhancementImage analysisComputer graphics (images)Engineering

The invention provides a machining track deformation compensation method and system, and the method comprises the following steps: obtaining an initial track; acquiring an image; performing preprocessing operations such as splicing and calibration on the acquired images; setting a plurality of anchoring point measuring boxes, and calculating in a corresponding range to obtain anchoring points; generating track point measurement boxes at the initial track point positions, and setting track point coordinate calculation modes corresponding to the track point measurement boxes; obtaining an anchoring point; updating the position of the corresponding anchored track point measurement box according to the obtained coordinates of the anchoring point; calculating coordinates in the range of the track point measuring box in a set mode and updated to the corresponding track points, and obtaining a correction track; directly executing track correction; completing the steps through an initial trackacquisition unit, an image acquisition unit, a visual processing unit and a track execution unit of the machining track deformation compensation system, and deviation rectification or positioning ofa large complex track can be achieved in a simple mode.

Owner:深圳群宾精密工业有限公司

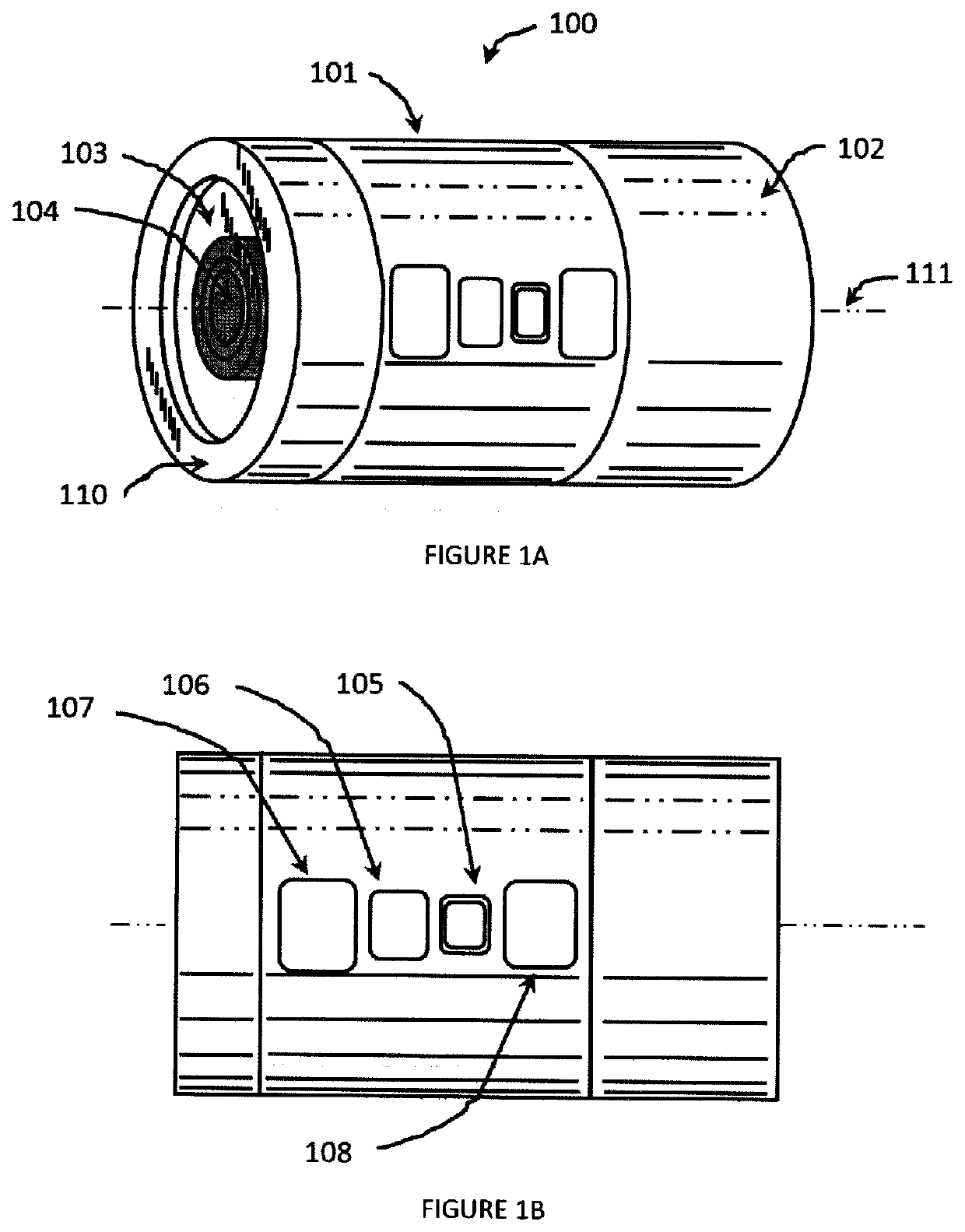

Multifunctional Device For Use in Augmented/Virtual/Mixed Reality, Law Enforcement, Medical, Military, Self Defense, Industrial, and Other Applications

ActiveUS20200358931A1Low fatigueReduce wearTelevision system detailsColor television detailsVision processingTriage

A variable configuration sensing, emitting, processing, and analysis system for various uses including but not limited to Virtual / Augmented / Mixed / Actual Reality imaging and tracking; Machine Vision; Object Identification and Characterization; First Responder Tracking, Diagnostics, and Triage; Environmental / Condition Monitoring and Assessment; Guidance, Navigation & Control; Communications; Logistics; and Recording. The variable configuration sensor, emitter, processor, and analysis system contains a housing and a mounting component adaptable to a variety of applications. The housing may include one or more sensors and / or emitters, vision processing units, micro-processing units, connectors, and power supplies. The sensors may include but are not limited to electromagnetic and / or ionizing radiation, distance, motion, acceleration, pressure, position, humidity, temperature, wind, sound, toxins, and magnetic. The emitters may include but are not limited to electromagnetic and / or ionizing radiation, sound, and fluids. The device may be ruggedized for use in extreme environments.

Owner:JENKINSON GLENN MICHAEL

System and method for visually tracking persons and imputing demographic and sentiment data

ActiveUS20210304421A1Maximizes overall likelihood valueAvoid duplicationImage enhancementImage analysisCustomer requirementsRadiology

A visual tracking system for tracking and identifying persons within a monitored location, comprising a plurality of cameras and a visual processing unit, each camera produces a sequence of video frames depicting one or more of the persons, the visual processing unit is adapted to maintain a coherent track identity for each person across the plurality of cameras using a combination of motion data and visual featurization data, and further determine demographic data and sentiment data using the visual featurization data, the visual tracking system further having a recommendation module adapted to identify a customer need for each person using the sentiment data of the person in addition to context data, and generate an action recommendation for addressing the customer need, the visual tracking system is operably connected to a customer-oriented device configured to perform a customer-oriented action in accordance with the action recommendation.

Owner:RADIUSAI INC

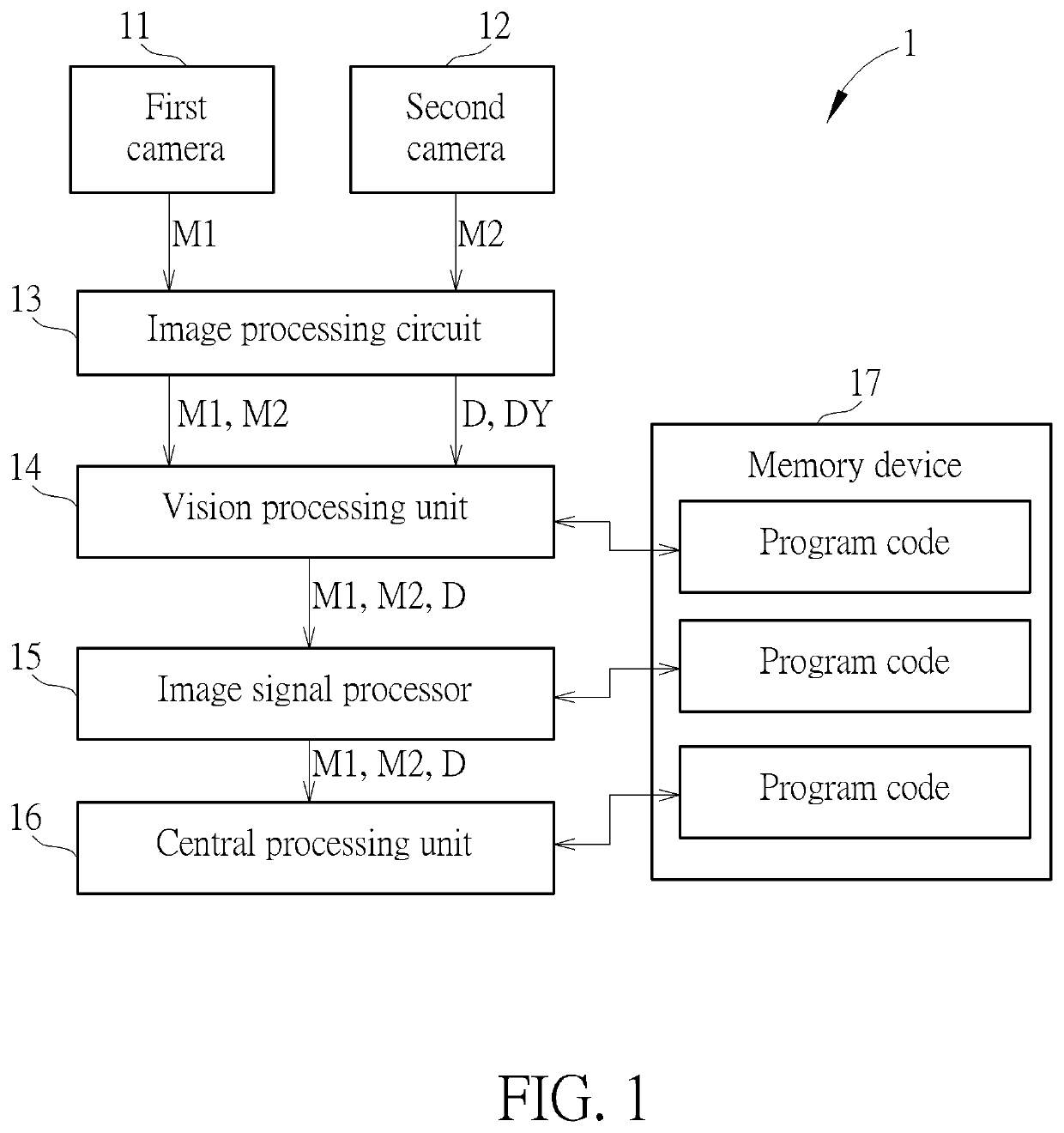

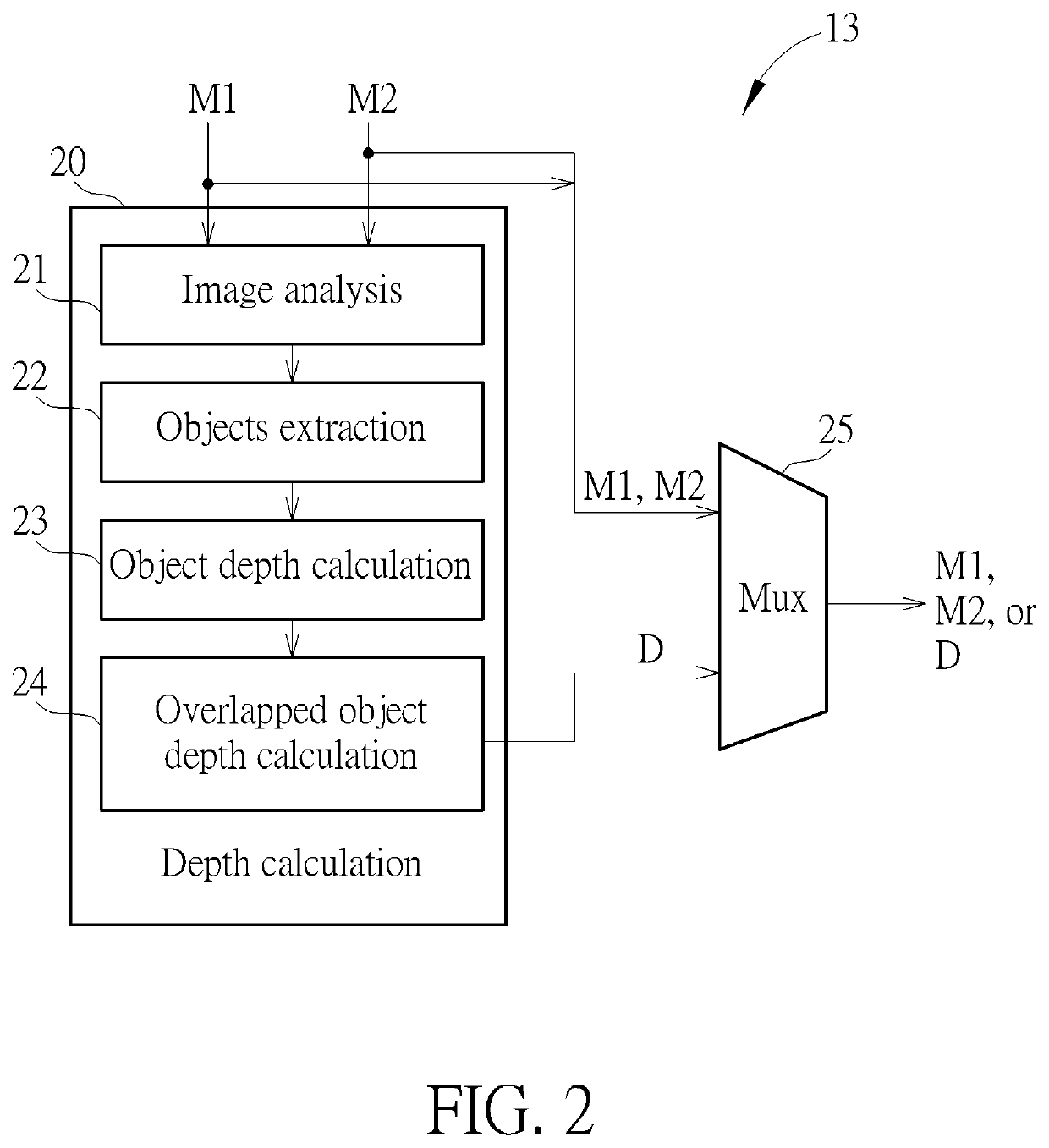

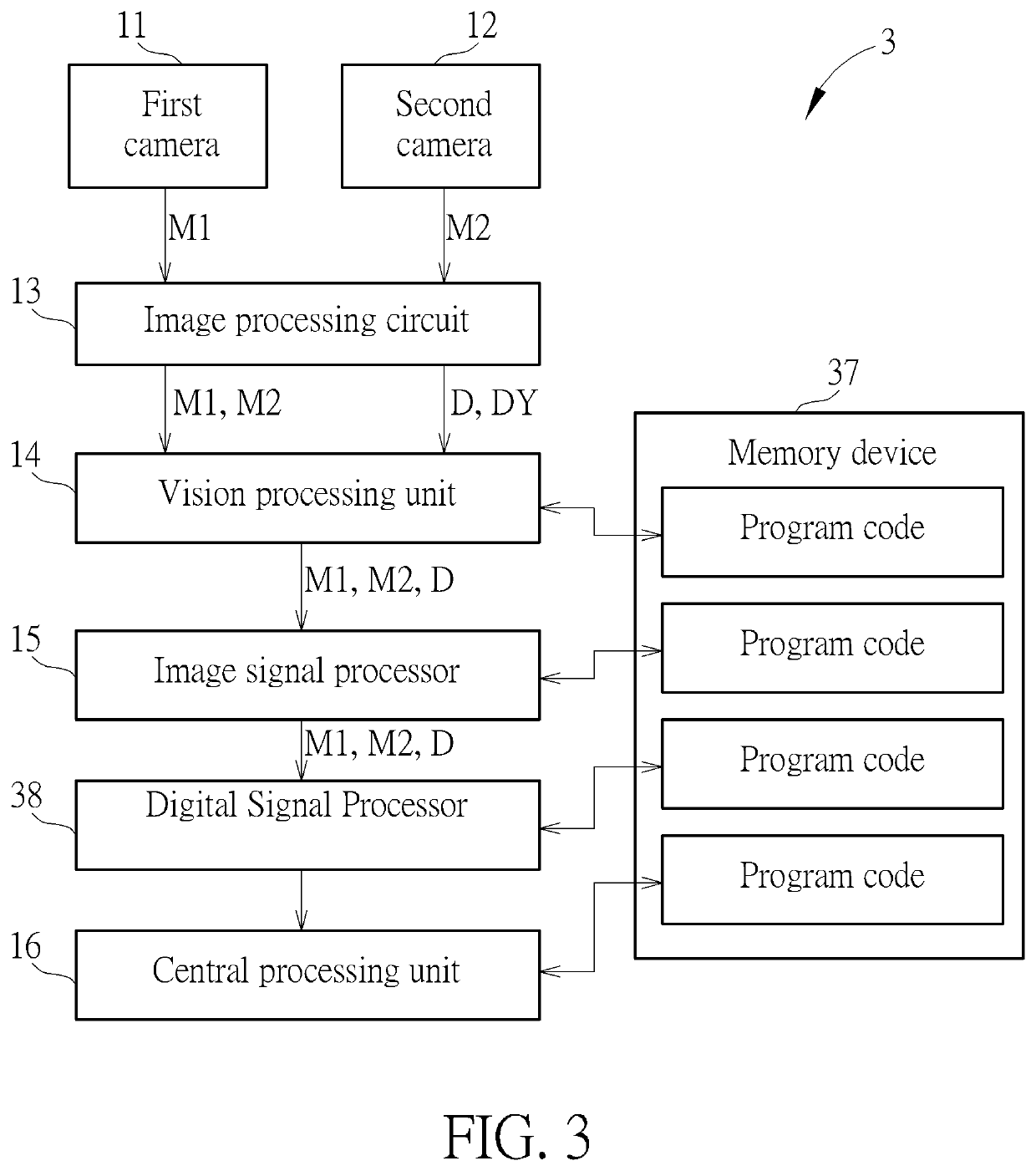

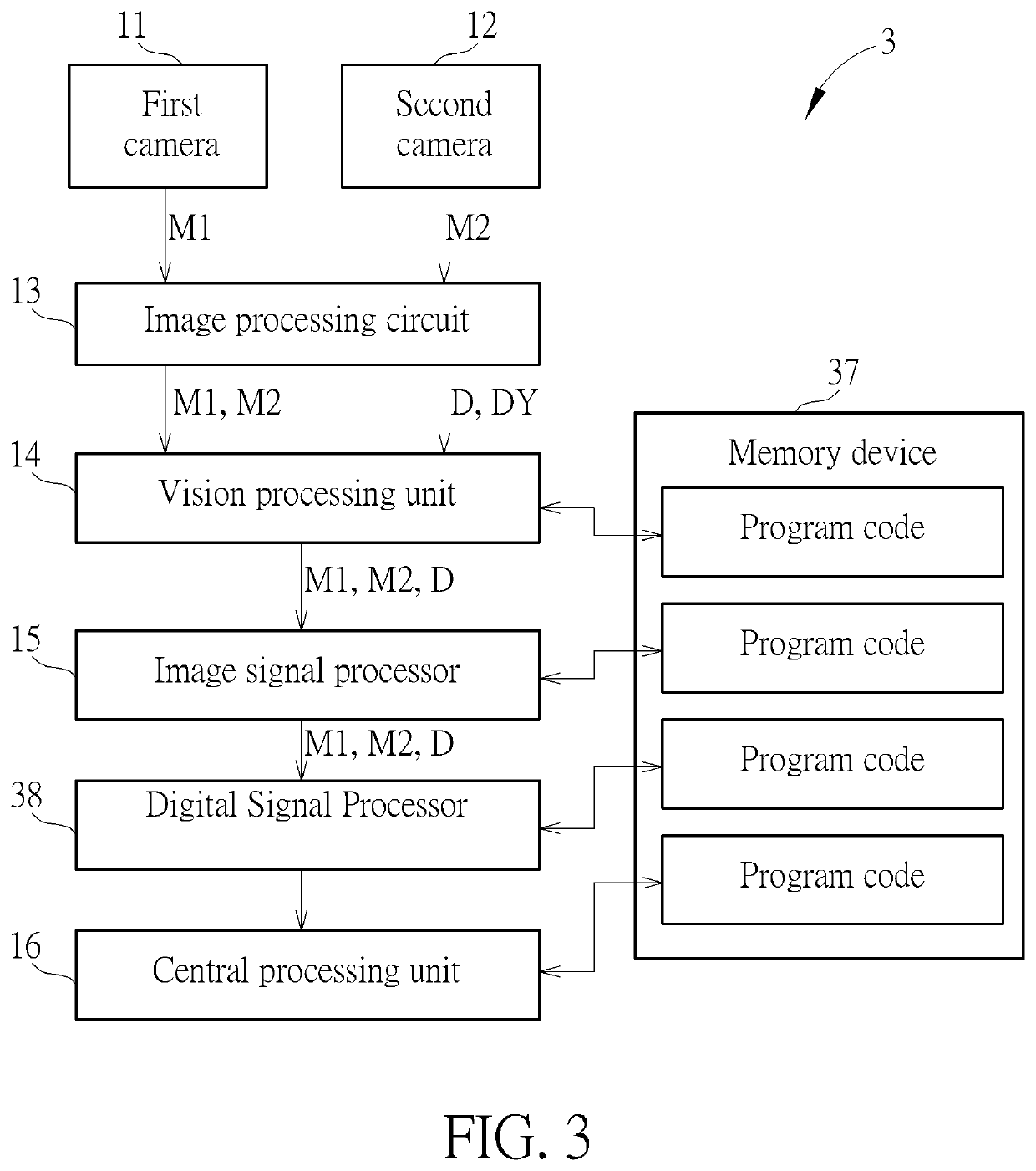

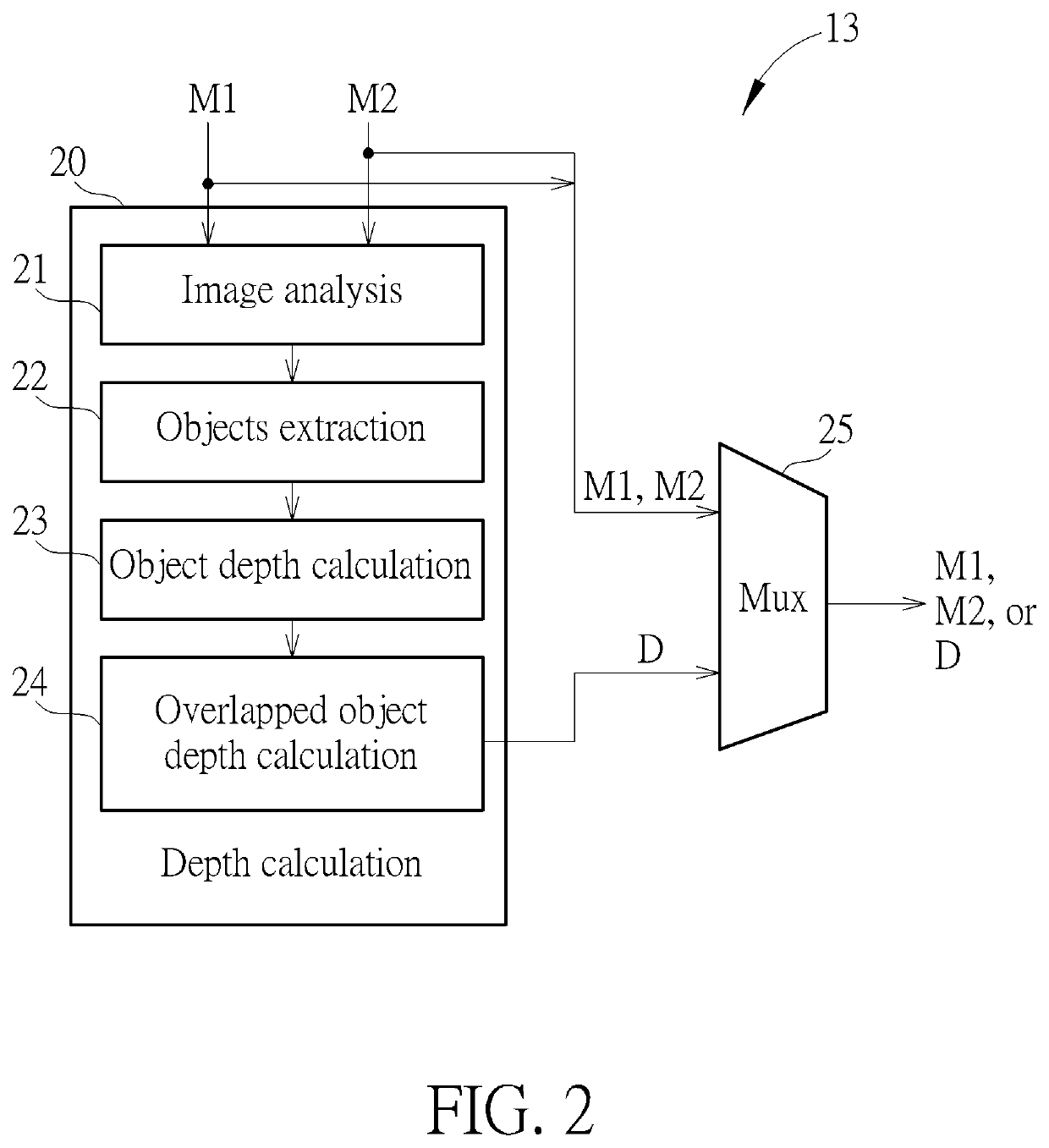

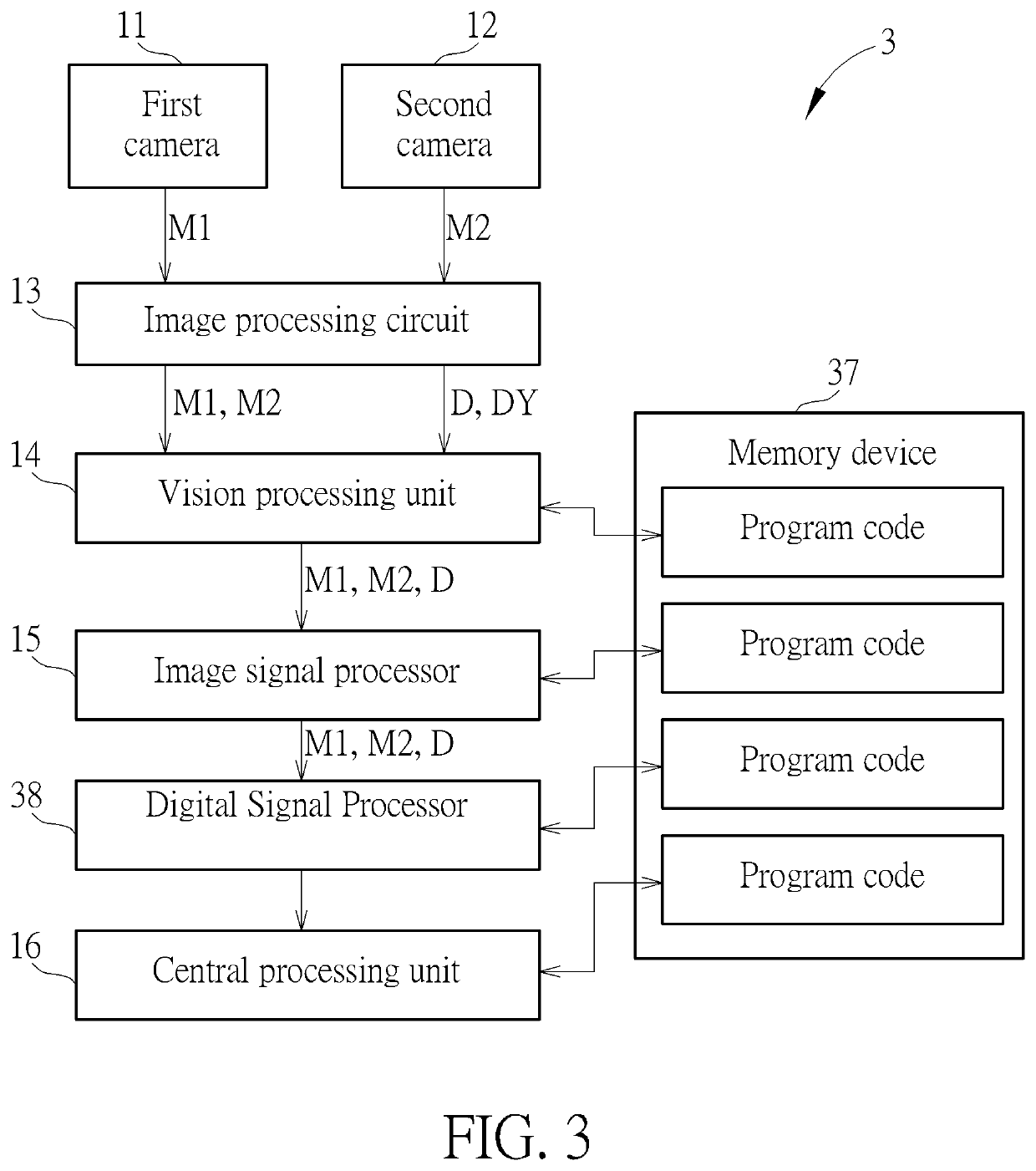

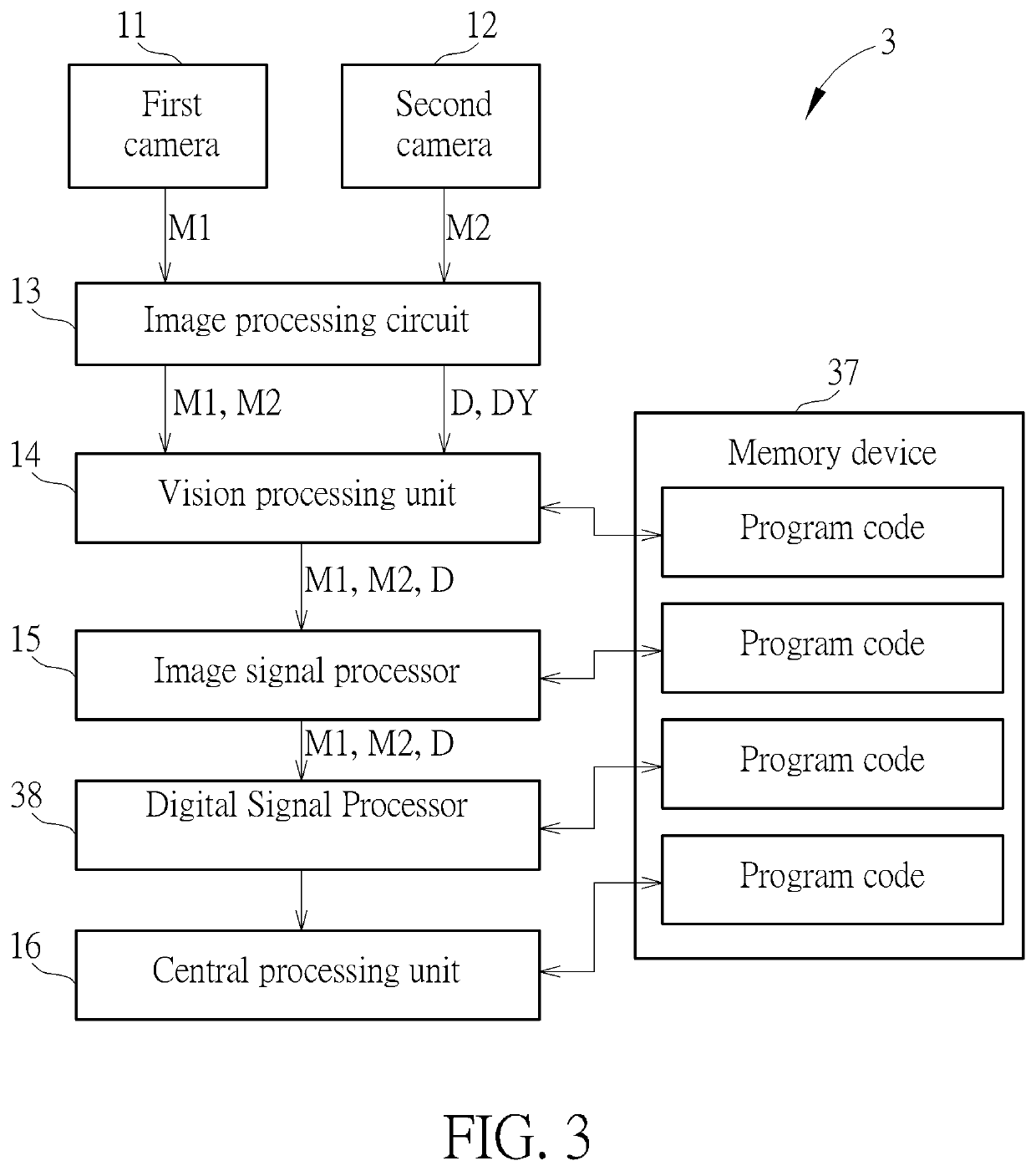

Method, apparatus, and non-transitory computer-readable medium for interactive image processing using depth engine and digital signal processor

ActiveUS10885671B2Reduce the burden onImage enhancementTelevision system detailsImaging processingComputer graphics (images)

An apparatus for interactive image processing including a first camera, a second camera, an image processing circuit, a vision processing unit, an image signal processor, a central processing unit, and a memory device is disclosed. The present disclosure utilizes the image processing circuit to calculate depth data according to raw images generated by the first and second cameras at the front-end of the interactive image processing system, so as to ease the burden of depth calculation by the digital signal processor in the prior art.

Owner:XRSPACE CO LTD

Method, Apparatus, Medium for Interactive Image Processing Using Depth Engine and Digital Signal Processor

ActiveUS20200334860A1Reduce the burden onImage enhancementTelevision system detailsImaging processingComputer graphics (images)

An apparatus for interactive image processing including a first camera, a second camera, an image processing circuit, a vision processing unit, an image signal processor, a central processing unit, and a memory device is disclosed. The present disclosure utilizes the image processing circuit to calculate depth data according to raw images generated by the first and second cameras at the front-end of the interactive image processing system, so as to ease the burden of depth calculation by the digital signal processor in the prior art.

Owner:XRSPACE CO LTD

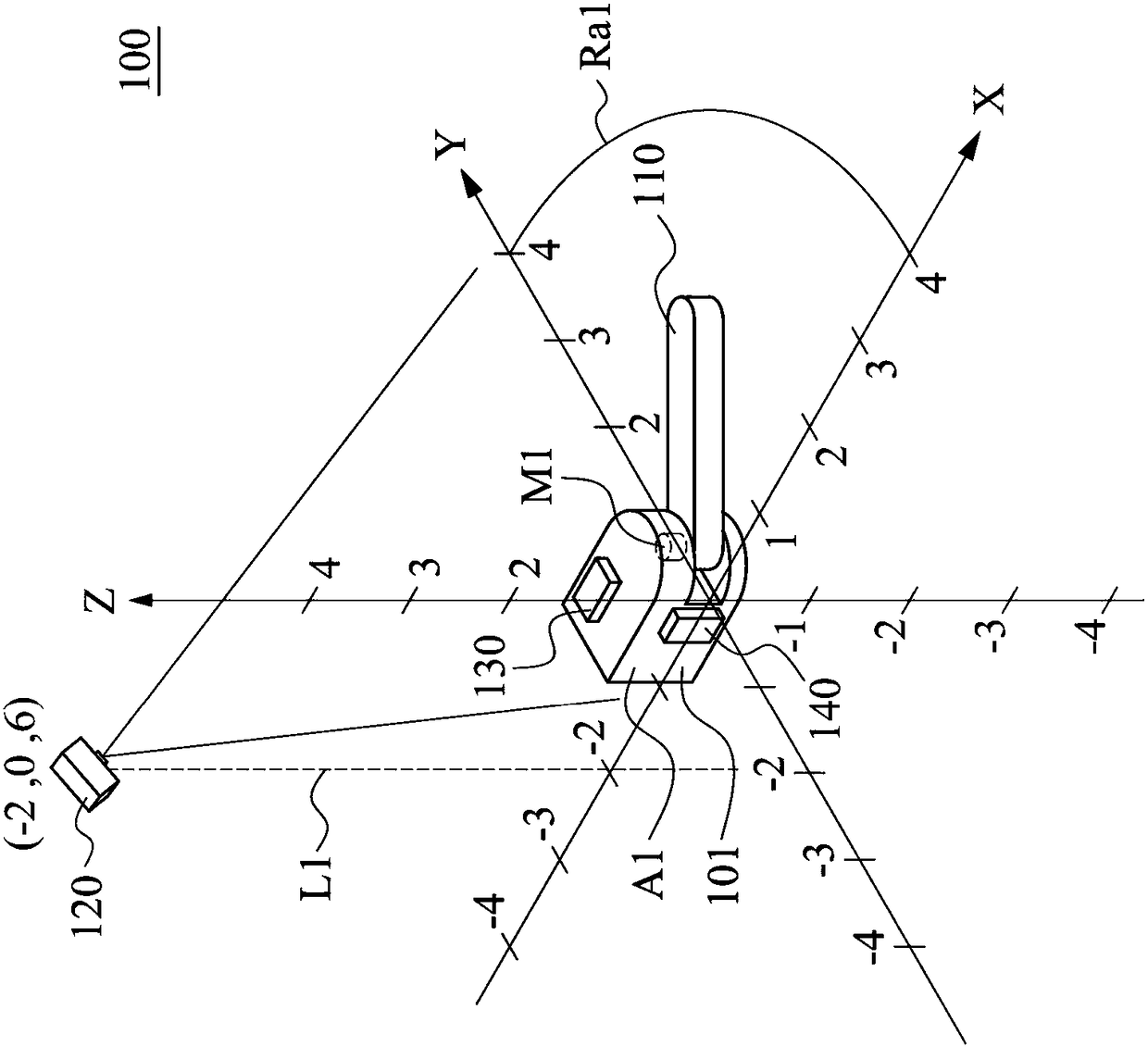

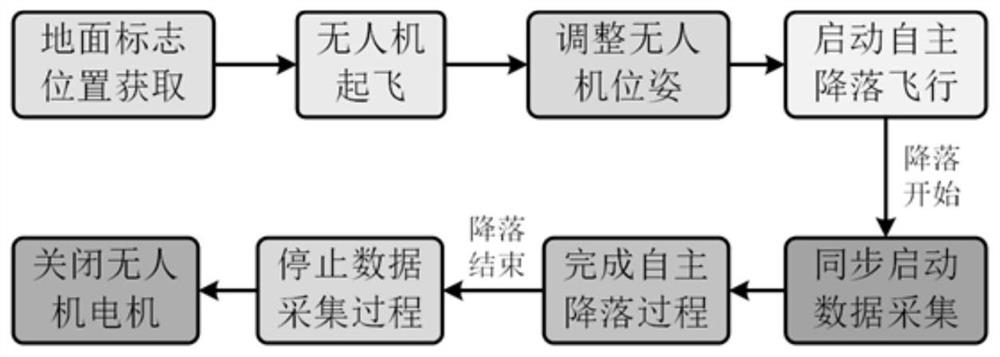

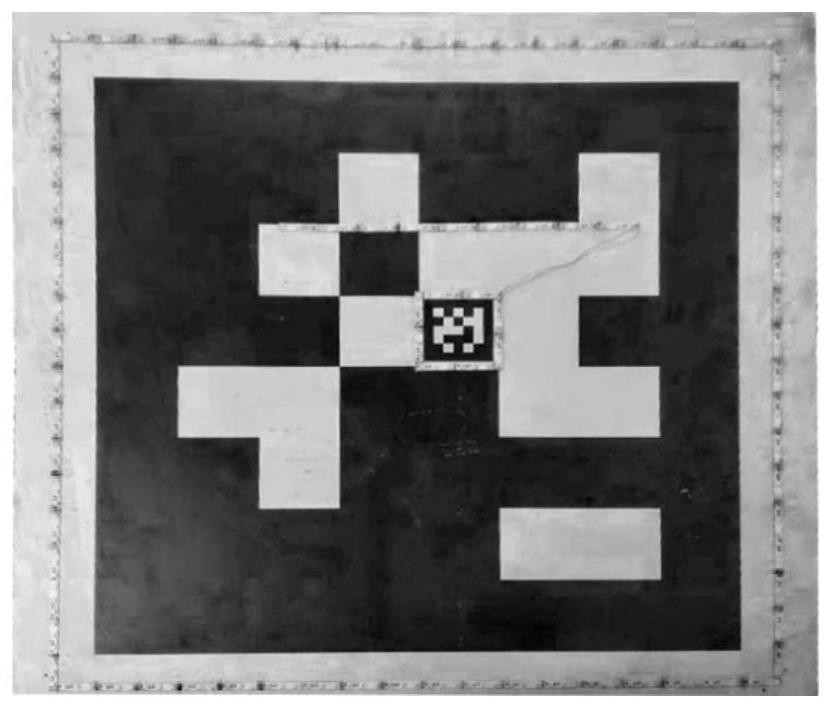

An autonomous take-off and landing system for rotary-wing unmanned aerial vehicles based on a three-layer character-shaped multi-color landing pad

ActiveCN103809598BGuaranteed reliabilityGuaranteed measurement accuracyPosition/course control in three dimensionsWireless image transmissionEngineering

Disclosed is a rotor unmanned aircraft independent take-off and landing system based on three-layer triangle multi-color landing ground. The system comprises a small rotor unmanned aircraft (SRUA), an onboard sensor, a data processing unit, a flight control system, an onboard camera, landing ground, a wireless image transmission module, a wireless data transmission module and a ground monitor station, wherein the onboard sensor comprises an inertial measurement unit, a global positioning system (GPS) receiver, a barometer, an ultrasound device and the like, the data processing unit is used for integrating sensor data, the flight control system finishes route planning to achieve high accuracy control over the SRUA, the onboard camera is used for collecting images of the landing ground, the landing ground is a specially designed landing point for an unmanned aircraft, the wireless image transmission module can transmit the images to a ground station, the wireless data transmission module can achieve communication of data and instructions between the unmanned aircraft and the ground station, and the ground station is composed of a visual processing unit and a display terminal. According to the rotor unmanned aircraft independent take-off and landing system based on the three-layer triangle multi-color landing ground, the reliability of SRUA navigation messages is guaranteed, the control accuracy of the SRUA is increased, costs are low, the application is convenient, and important engineering values can be achieved.

Owner:BEIHANG UNIV

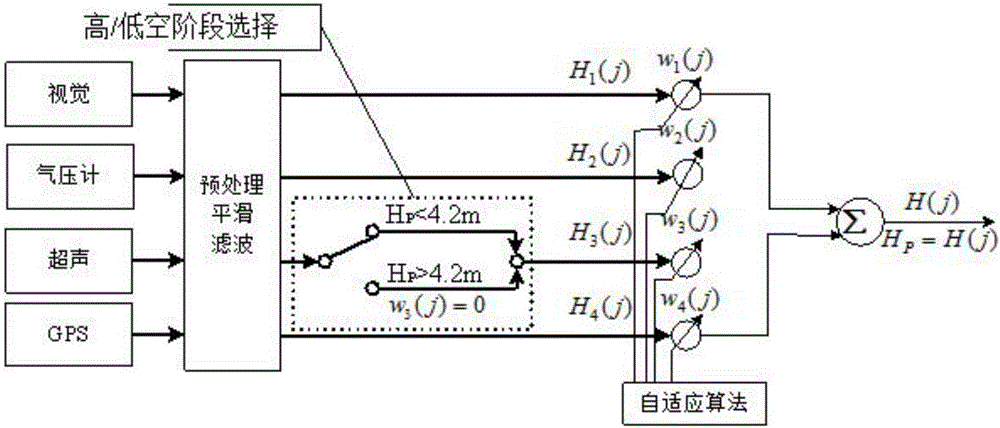

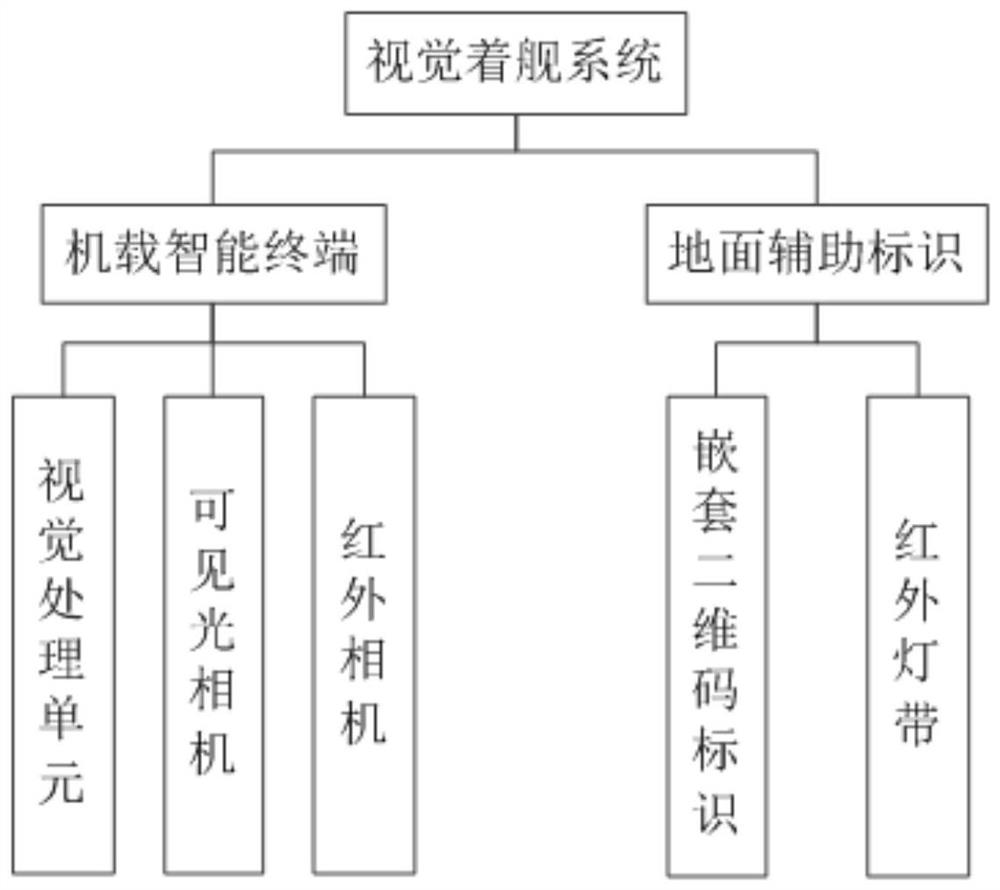

Visual carrier landing guiding method and system for unmanned rotorcraft

PendingCN114115345AAvoid influenceSolve the problem of day and night usePosition/course control in three dimensionsVision processing unitVisual perception

The invention belongs to the technical field of aerospace, and particularly relates to a rotor unmanned aerial vehicle visual carrier landing guiding method and system. Comprising an airborne intelligent terminal and a ground auxiliary identifier. The airborne intelligent terminal comprises a visual processing unit, a visible light camera and an infrared camera, and the visible light camera or the infrared camera is used for obtaining ground auxiliary identification image information in real time and sending the ground auxiliary identification image information to the visual processing unit; the visual processing unit compares the received image information with a target image library in the visual processing unit, obtains the speed quantity required by the landing of the unmanned aerial vehicle corresponding to the current image acquisition moment, and feeds back the speed quantity to the flight control system; the flight control system judges whether the current speed of the unmanned aerial vehicle meets the speed quantity required by landing of the unmanned aerial vehicle; according to the invention, based on visual servo control of the image, autonomous landing of the unmanned aerial vehicle under supervision control is realized.

Owner:CHINA HELICOPTER RES & DEV INST

Interactive image processing system using infrared cameras

ActiveUS11039118B2Quality improvementAccuracy efficiencyImage enhancementTelevision system detailsImaging processingComputer graphics (images)

An interactive image processing system including a first infrared camera, a second infrared camera, an image processing circuit, a vision processing unit, an image signal processor, a central processing unit, and a memory device is disclosed. The present disclosure calculates depth data according to infrared images generated by the first and second infrared cameras to improve depth quality.

Owner:XRSPACE CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com