Patents

Literature

76results about How to "Improve memory usage" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

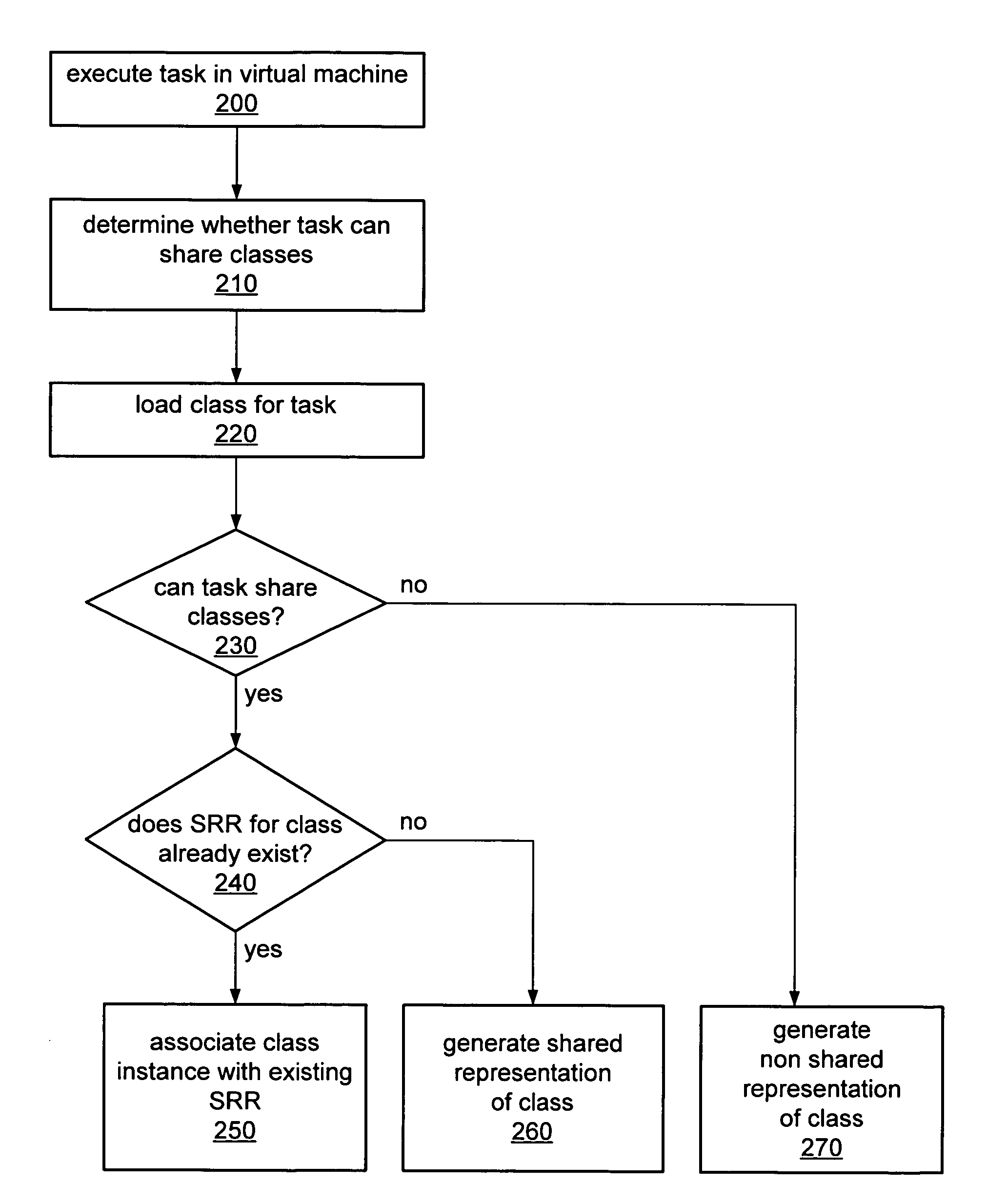

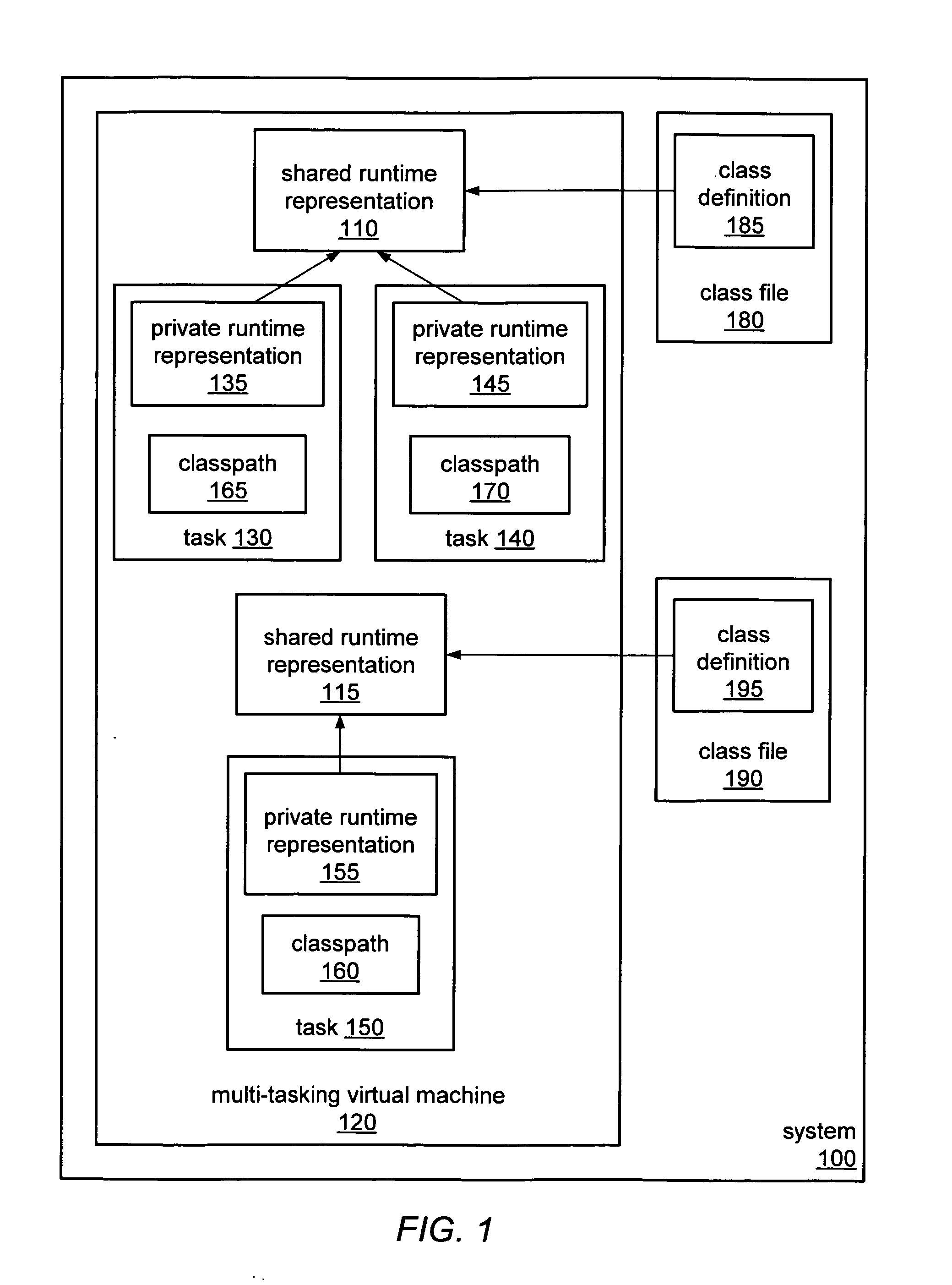

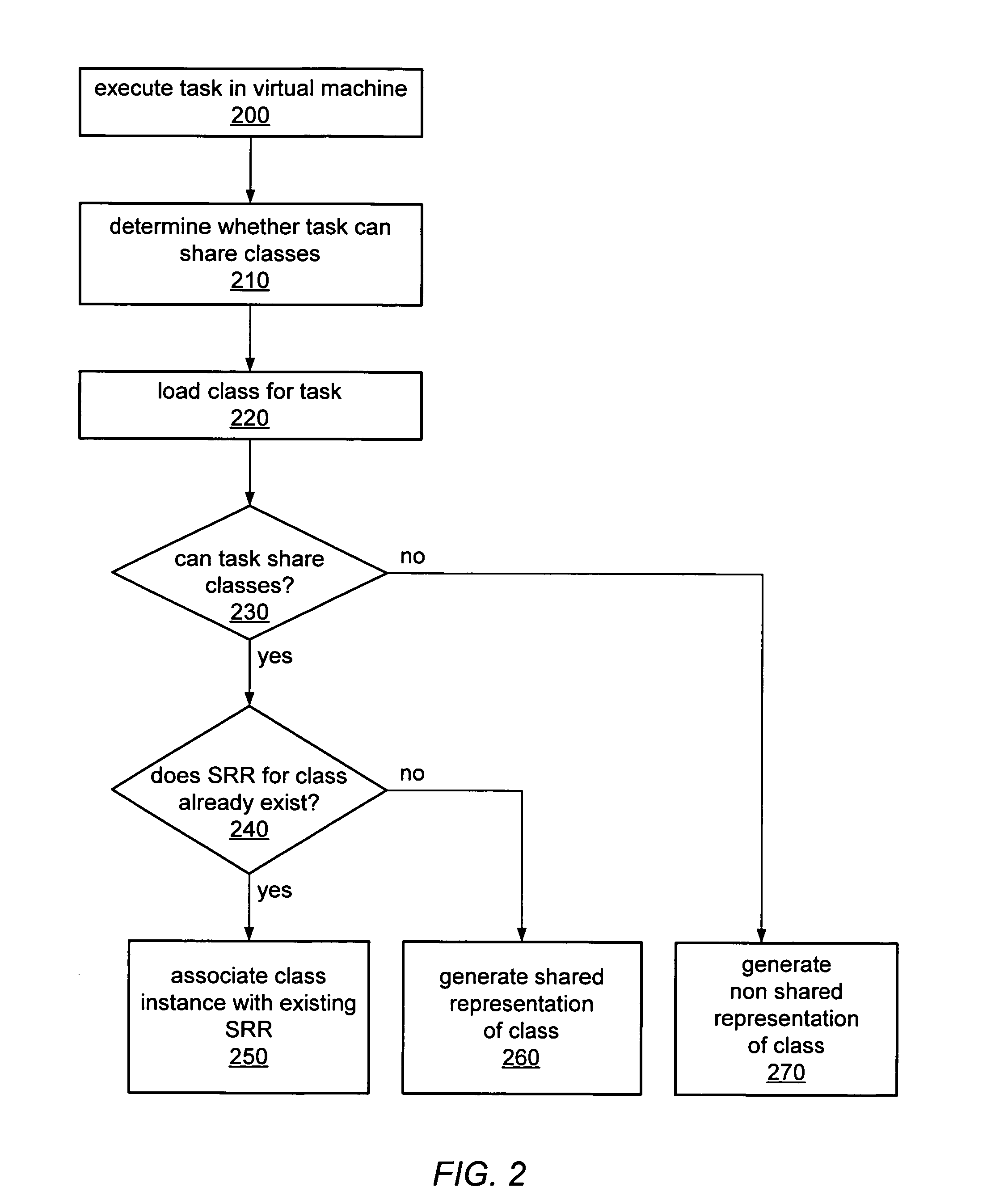

Supporting per-program classpaths with class sharing in a multi-tasking virtual machine

ActiveUS20070245331A1Improve memory usageImprove performanceSoftware simulation/interpretation/emulationMemory systemsVirtual machine

System and method for supporting per-program classpath and class sharing in a multi-tasking virtual machine. A virtual machine may allow each program to specify its classpath independently of other programs classpaths. Tasks that specify identical classpaths for their respective class loaders may share the runtime representation of classes. A multi-tasking virtual machine may generate and compare canonical forms of classpaths to determine which programs may share classes with each other. The runtime representation of a class may be split between shared and private portions of the runtime representation. A shared runtime representation may be associated with multiple private runtime representations. In one embodiment, unique class loader keys and a system dictionary may be used to associate tasks, class loaders and the shared representations of classes.

Owner:ORACLE INT CORP

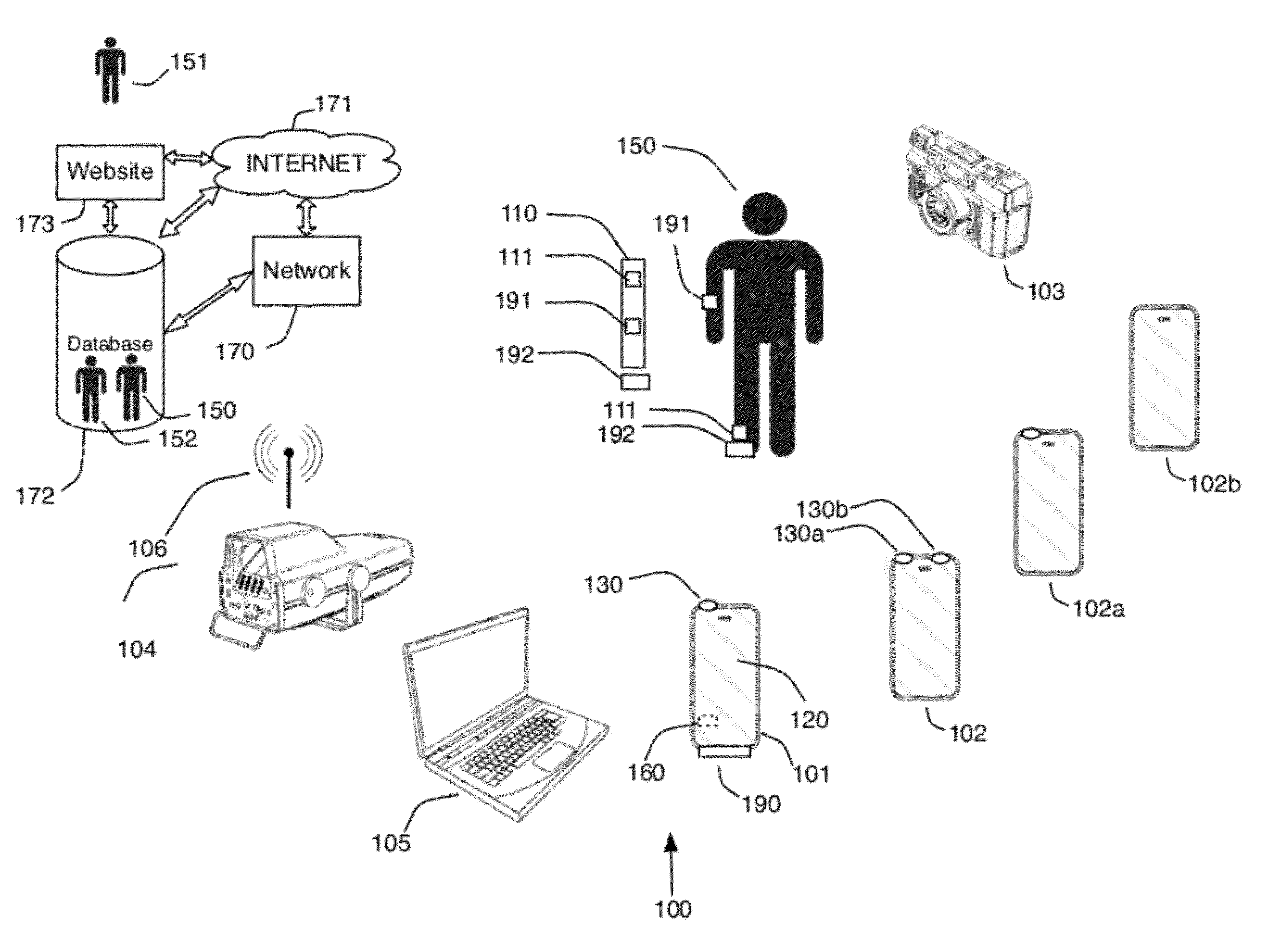

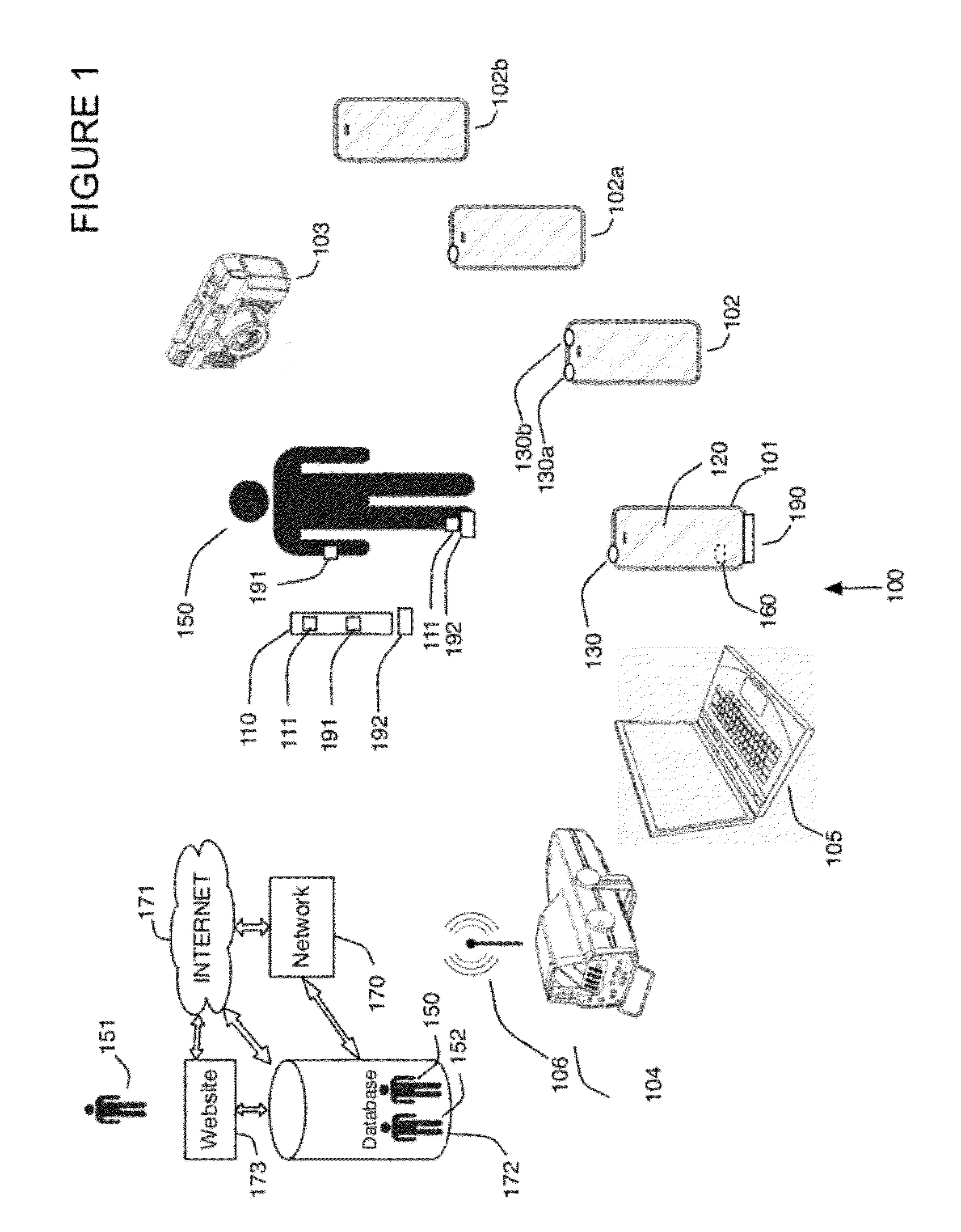

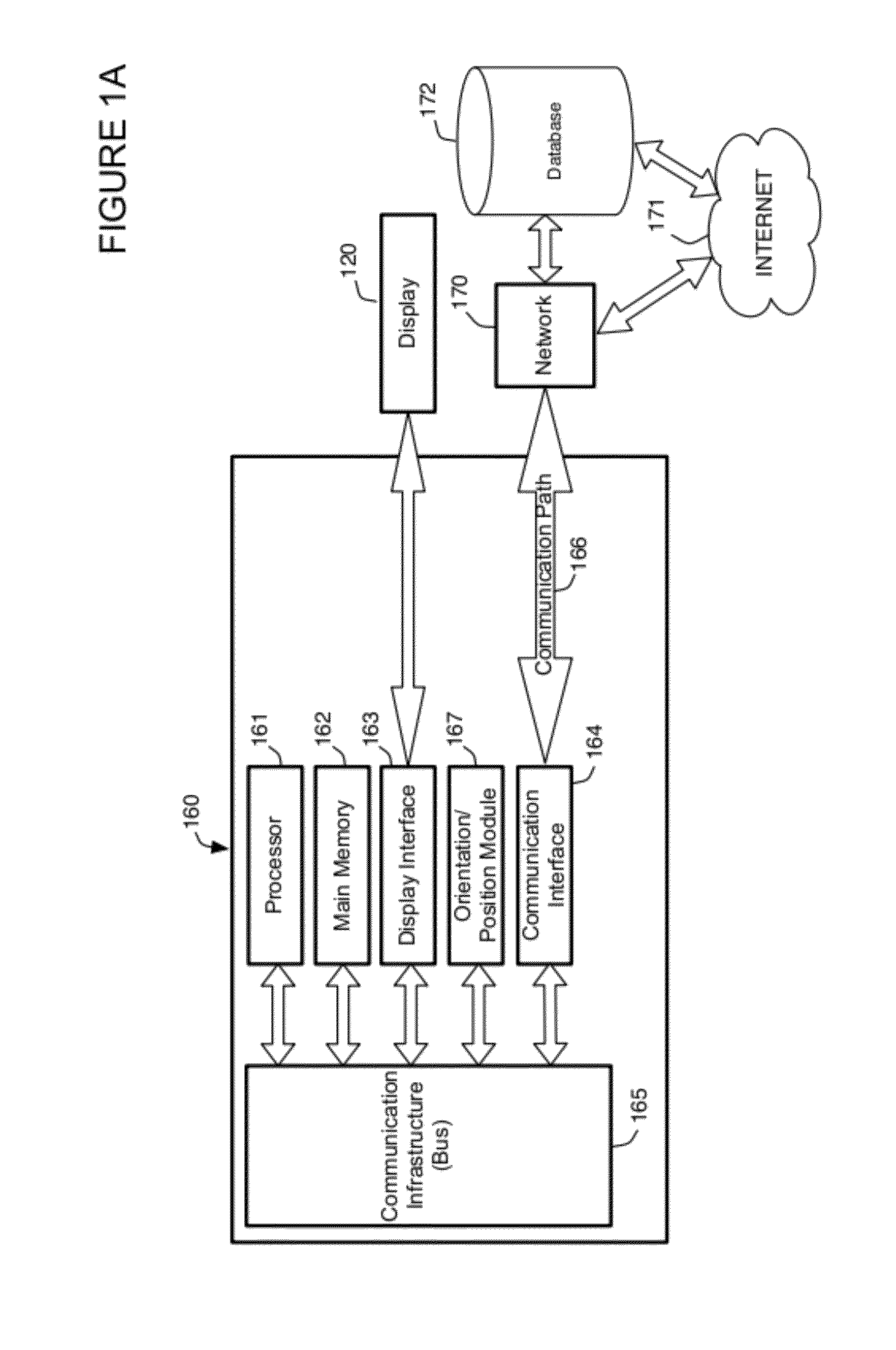

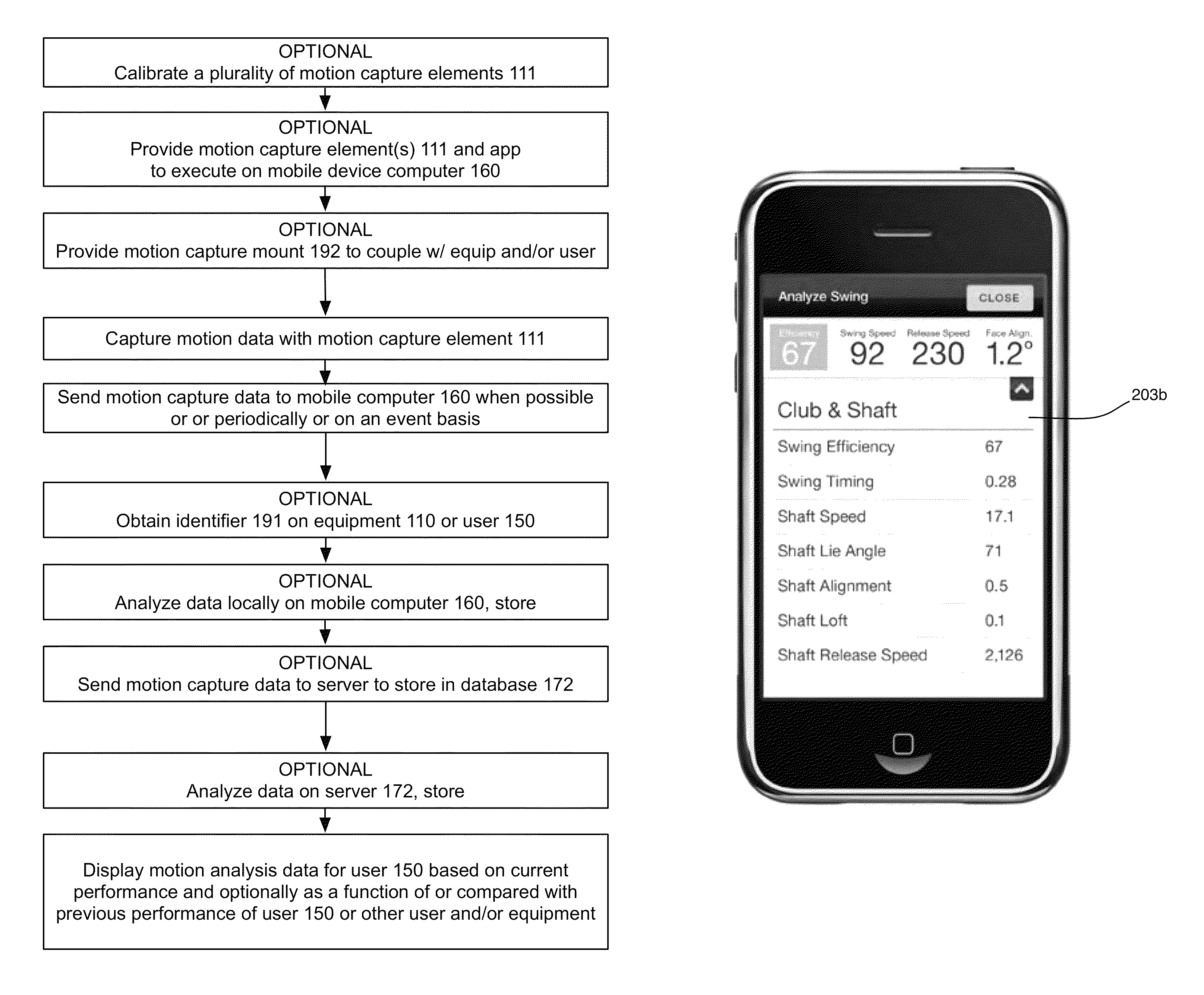

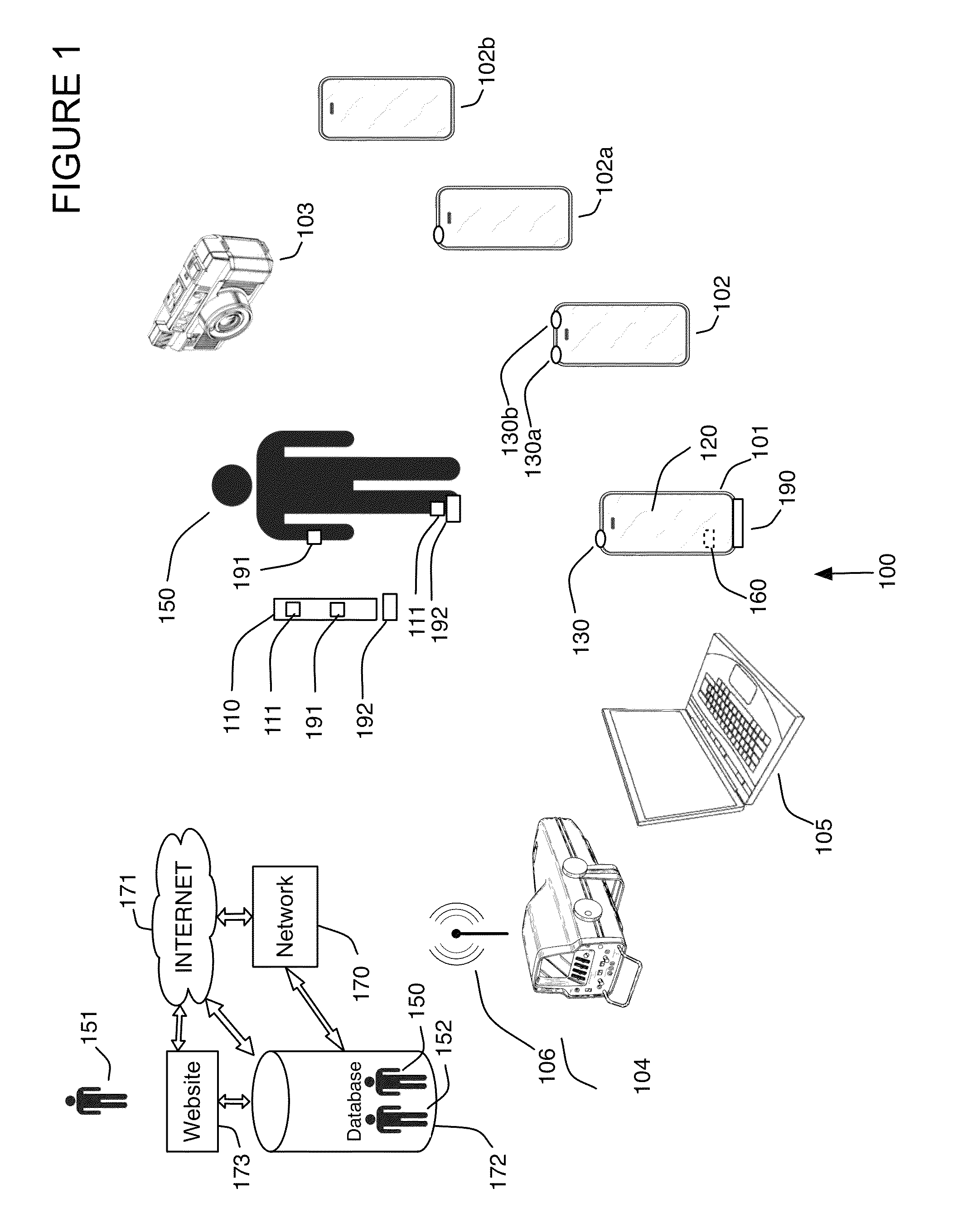

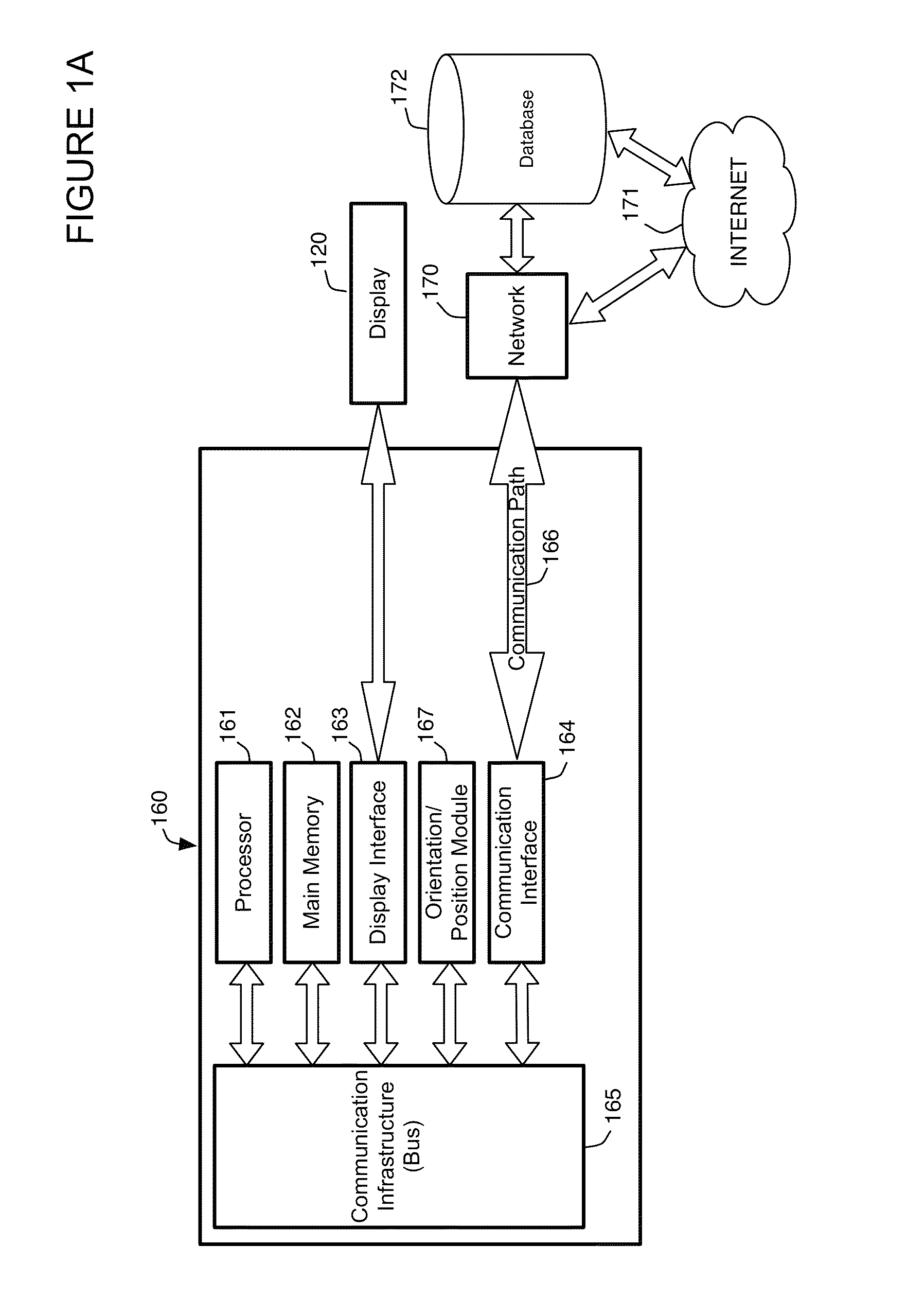

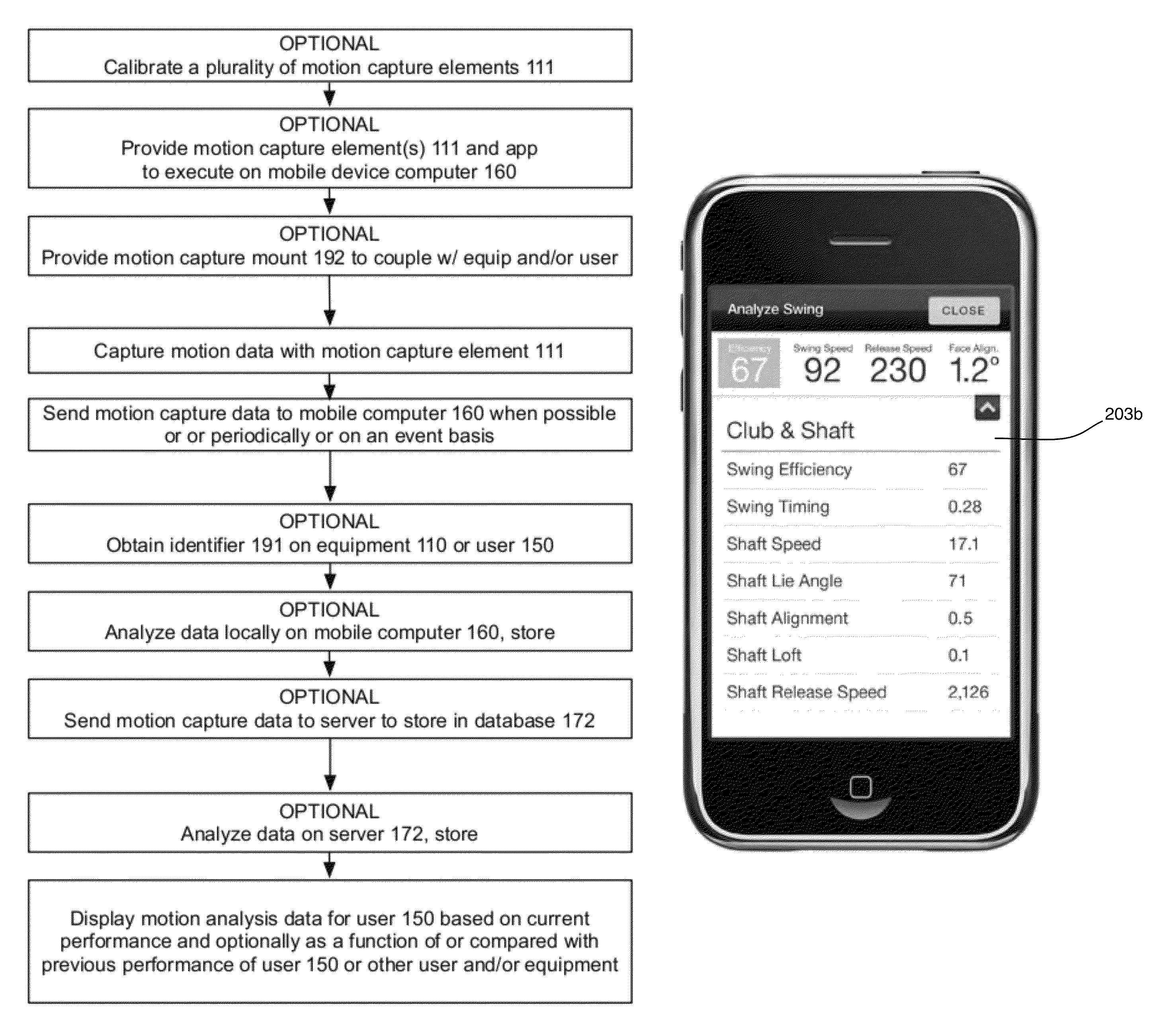

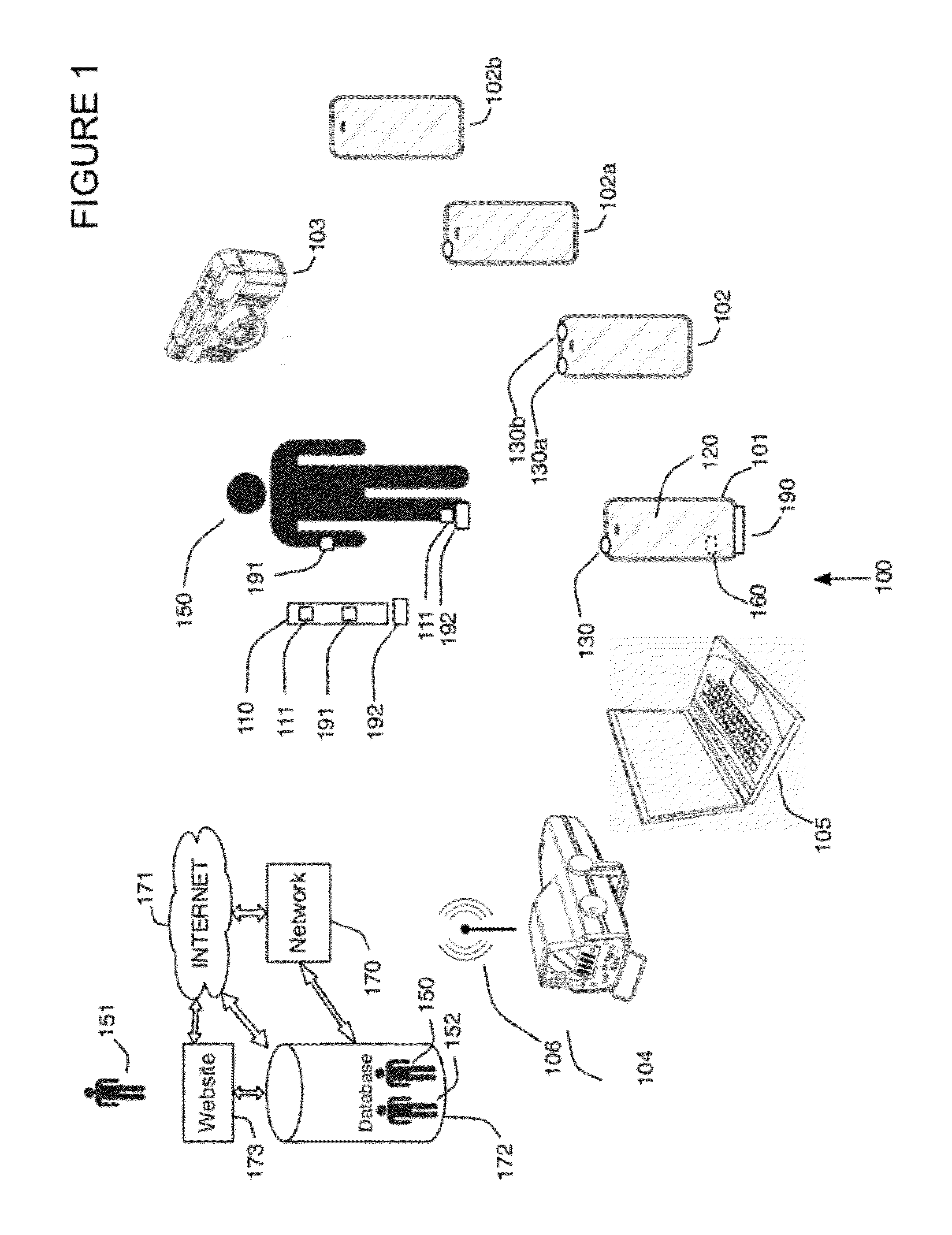

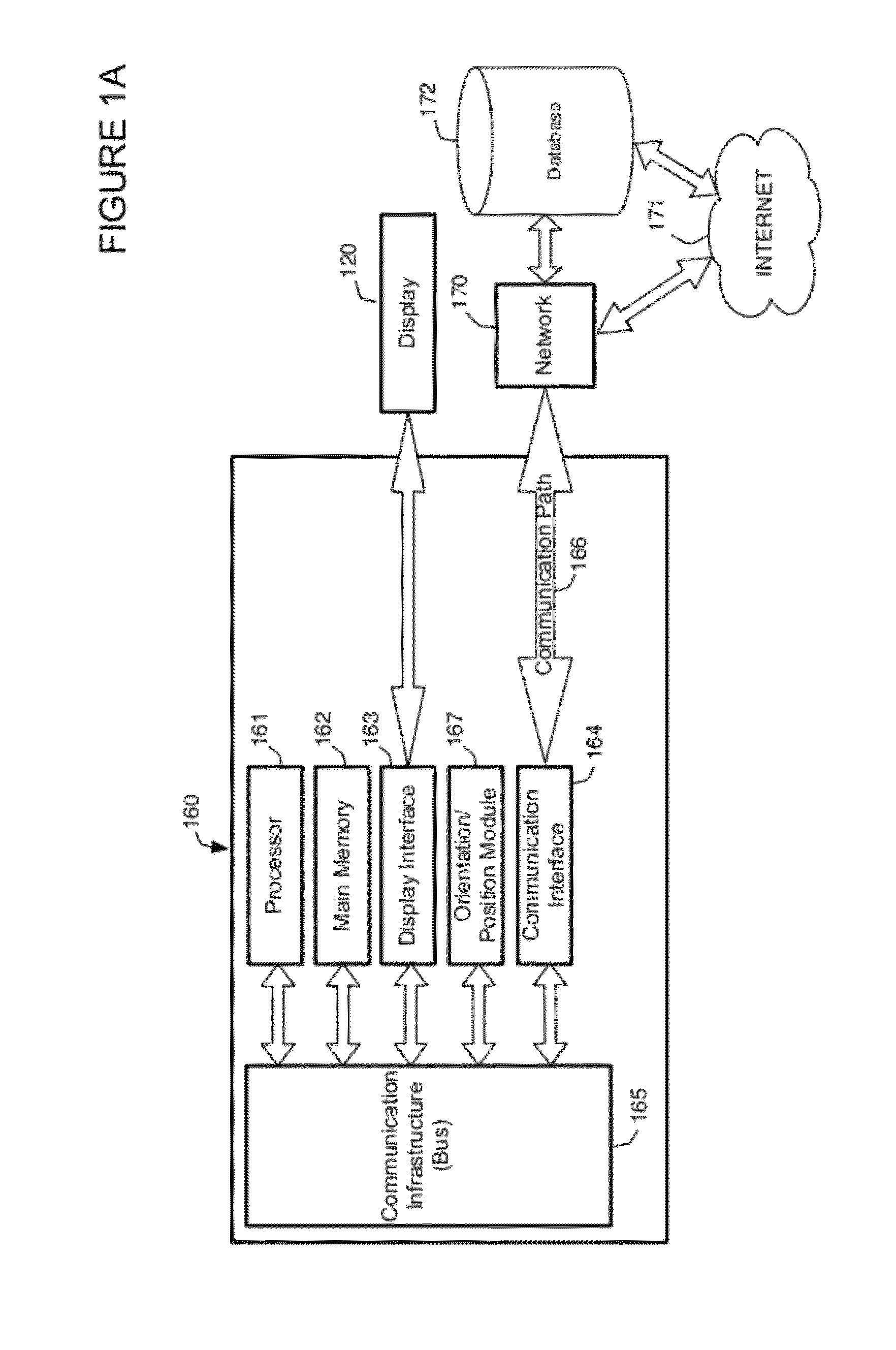

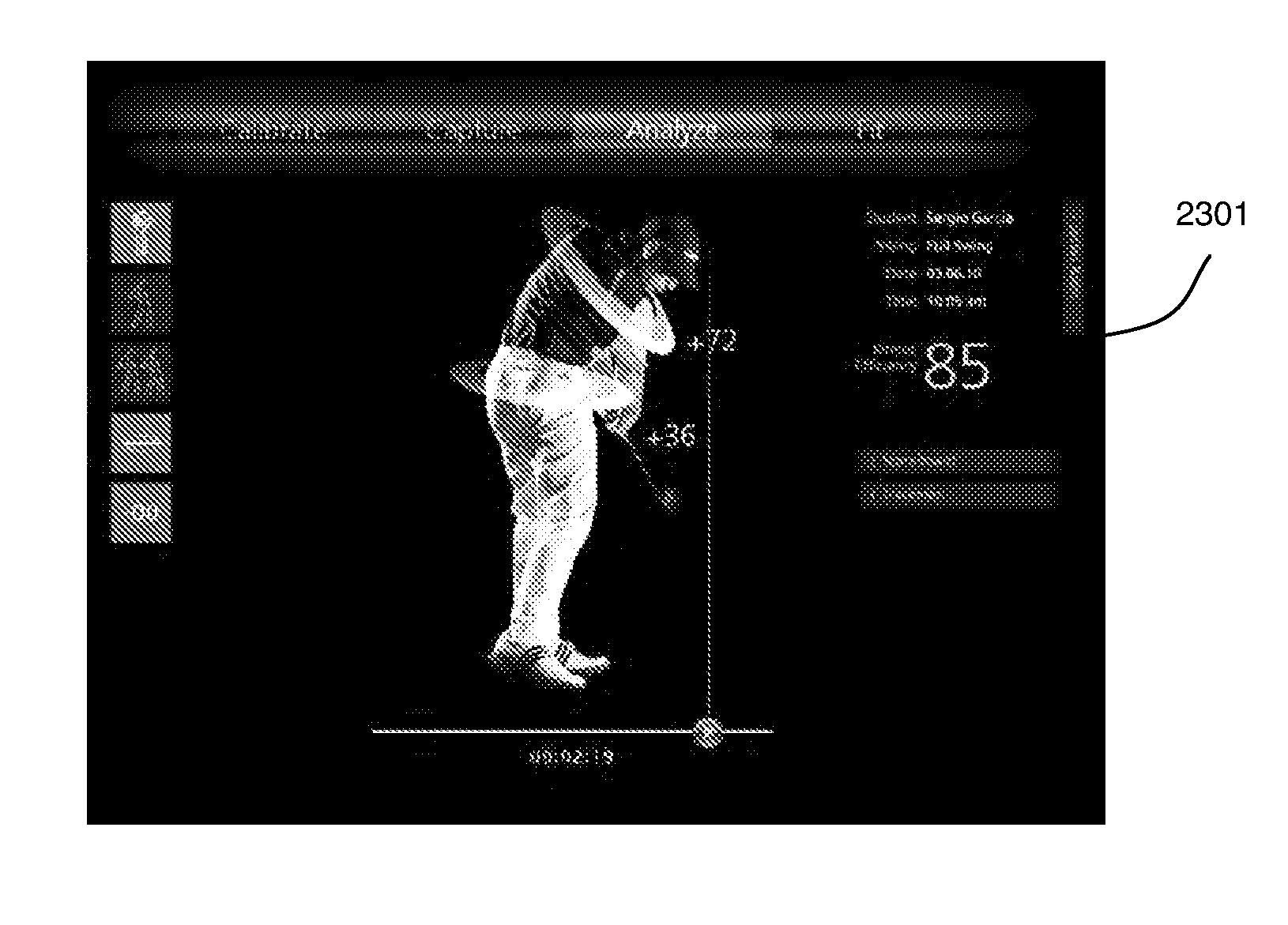

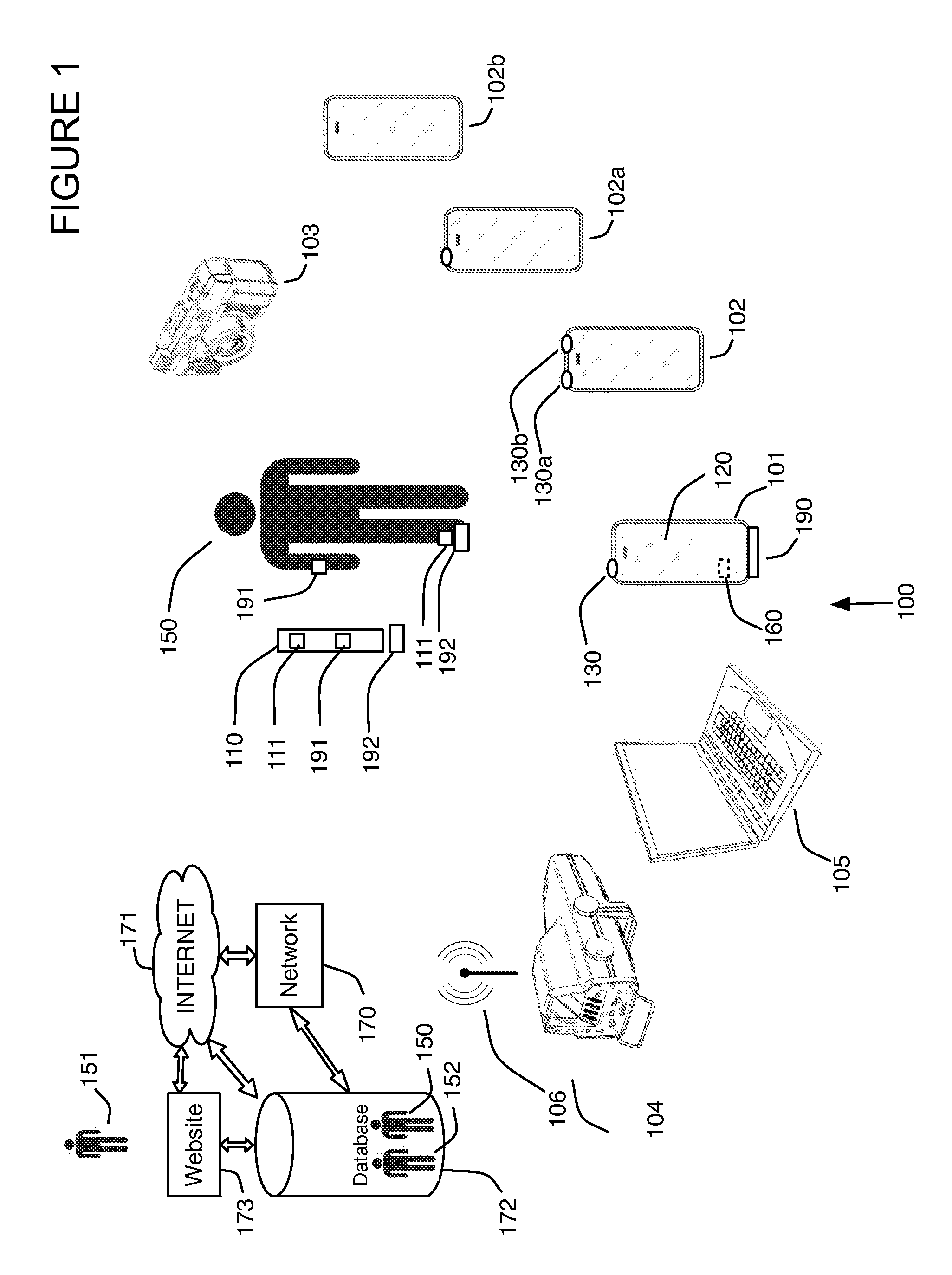

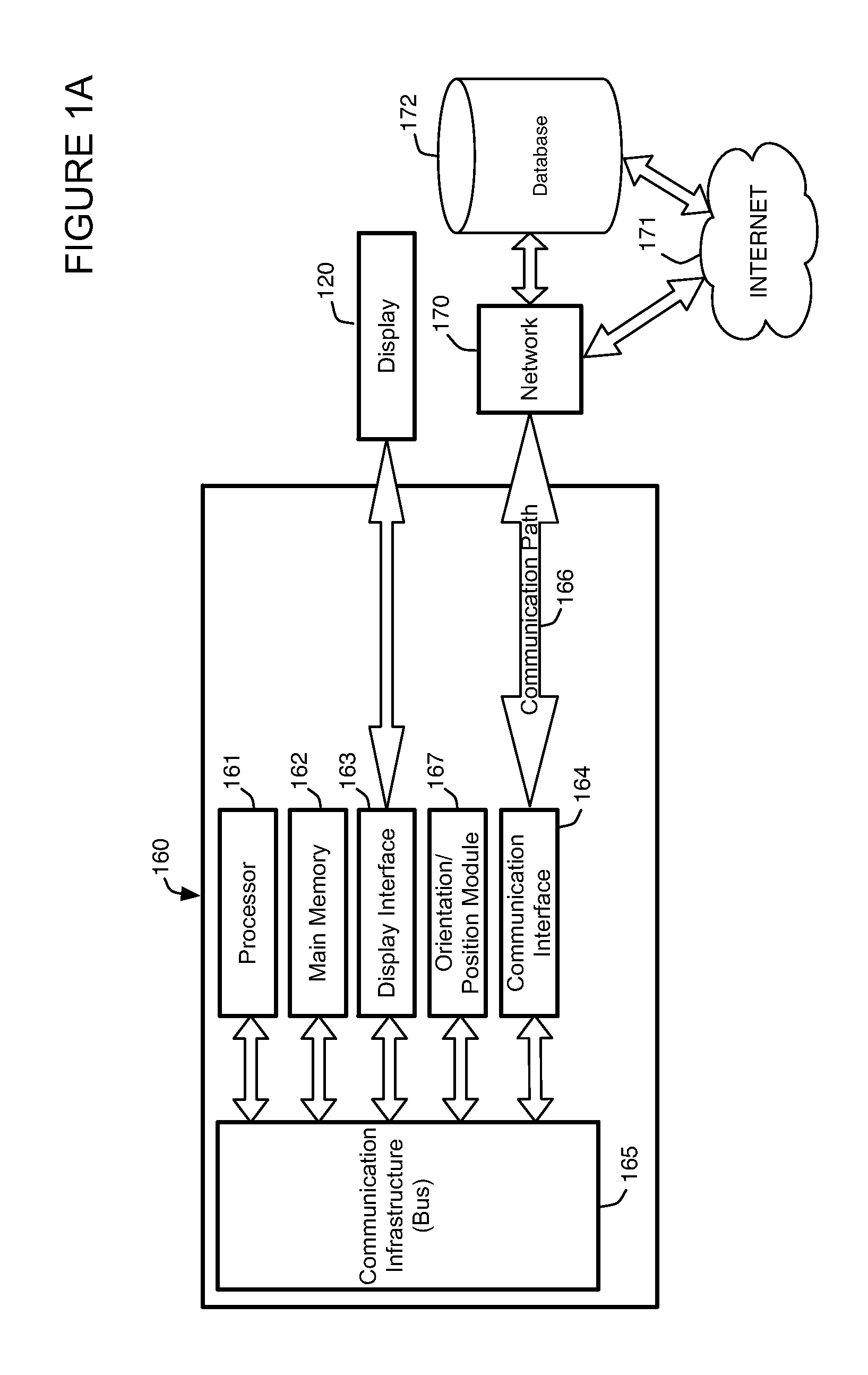

System and method for utilizing motion capture data

ActiveUS20120122574A1Increase in entertainment levelImprove performanceCathode-ray tube indicatorsAnimationData systemMobile phone

System and method for utilizing motion capture data for healthcare compliance, sporting, gaming, military, virtual reality, industrial, retail loss tracking, security, baby and elderly monitoring and other applications for example obtained from a motion capture element and relayed to a database via a mobile phone. System obtains data from motion capture elements, analyzes data and stores data in database for use in these applications and / or data mining, which may be charged for. Enables unique displays associated with the user, such as 3D overlays onto images of the user to visually depict the captured motion data. Ratings, compliance, ball flight path data can be calculated and displayed, for example on a map or timeline or both. Enables performance related equipment fitting and purchase. Includes active and passive identifier capabilities.

Owner:NEWLIGHT CAPITAL LLC

Virtual reality system for viewing current and previously stored or calculated motion data

ActiveUS8944928B2Easy to understandExtension of timeCosmonautic condition simulationsAnimationAnalysis dataSimulation

Virtual reality system for viewing current and previously stored or calculated motion data. System obtains data from motion capture elements, analyzes data and stores data in database for use in virtual reality applications and / or data mining, which may be charged for. Enables unique displays associated with the user, such as 3D overlays onto images of the user to visually depict the captured motion data. Ratings, compliance, ball flight path data can be calculated and displayed, for example on a map or timeline or both. Enables performance related equipment fitting and purchase. Includes active and passive identifier capabilities.

Owner:NEWLIGHT CAPITAL LLC

System and method for utilizing motion capture data

ActiveUS8905855B2Easy to understandExtension of timeCathode-ray tube indicatorsAnimationData dredgingAnalysis data

System and method for utilizing motion capture data for healthcare compliance, sporting, gaming, military, virtual reality, industrial, retail loss tracking, security, baby and elderly monitoring and other applications for example obtained from a motion capture element and relayed to a database via a mobile phone. System obtains data from motion capture elements, analyzes data and stores data in database for use in these applications and / or data mining, which may be charged for. Enables unique displays associated with the user, such as 3D overlays onto images of the user to visually depict the captured motion data. Ratings, compliance, ball flight path data can be calculated and displayed, for example on a map or timeline or both. Enables performance related equipment fitting and purchase. Includes active and passive identifier capabilities.

Owner:NEWLIGHT CAPITAL LLC

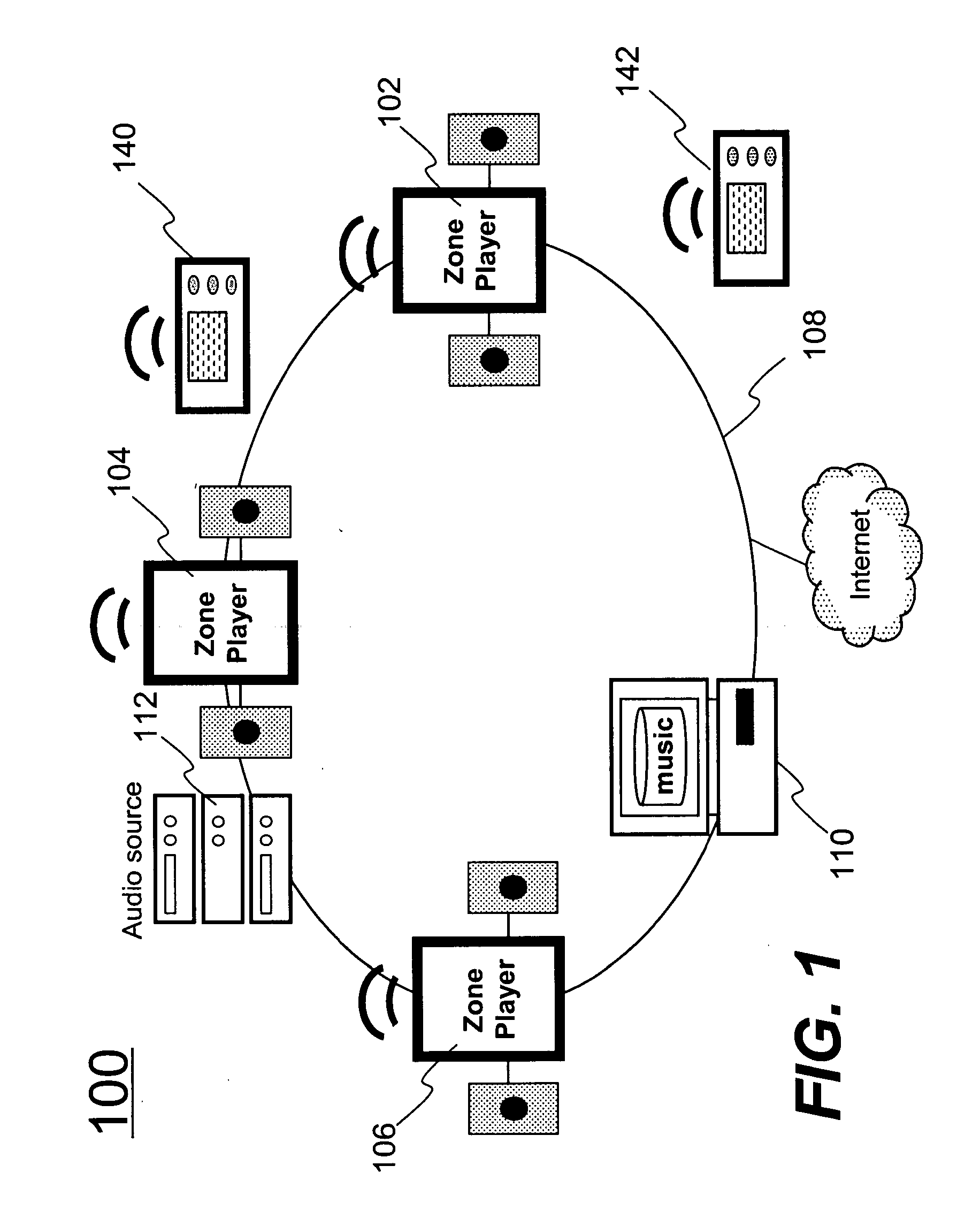

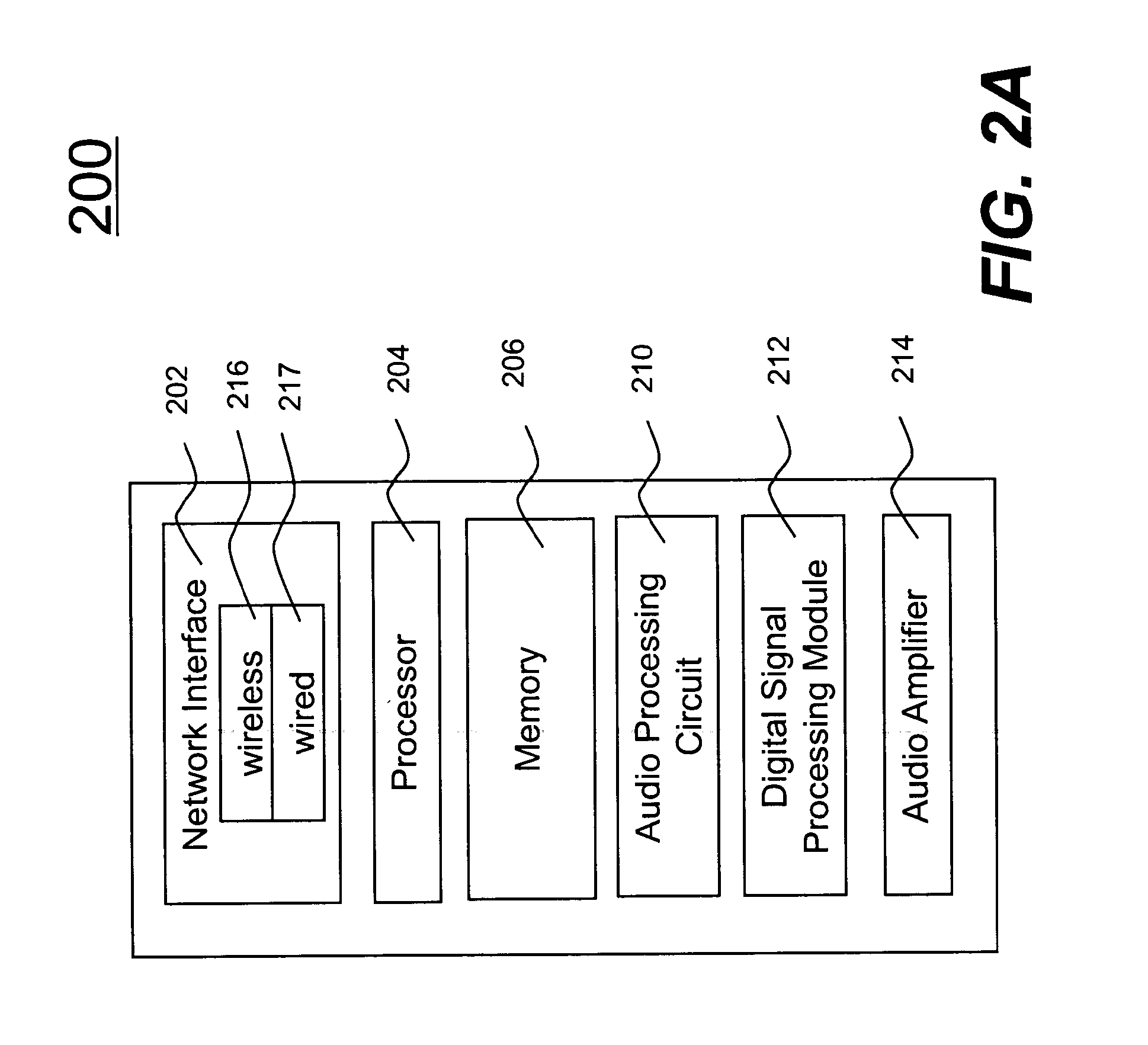

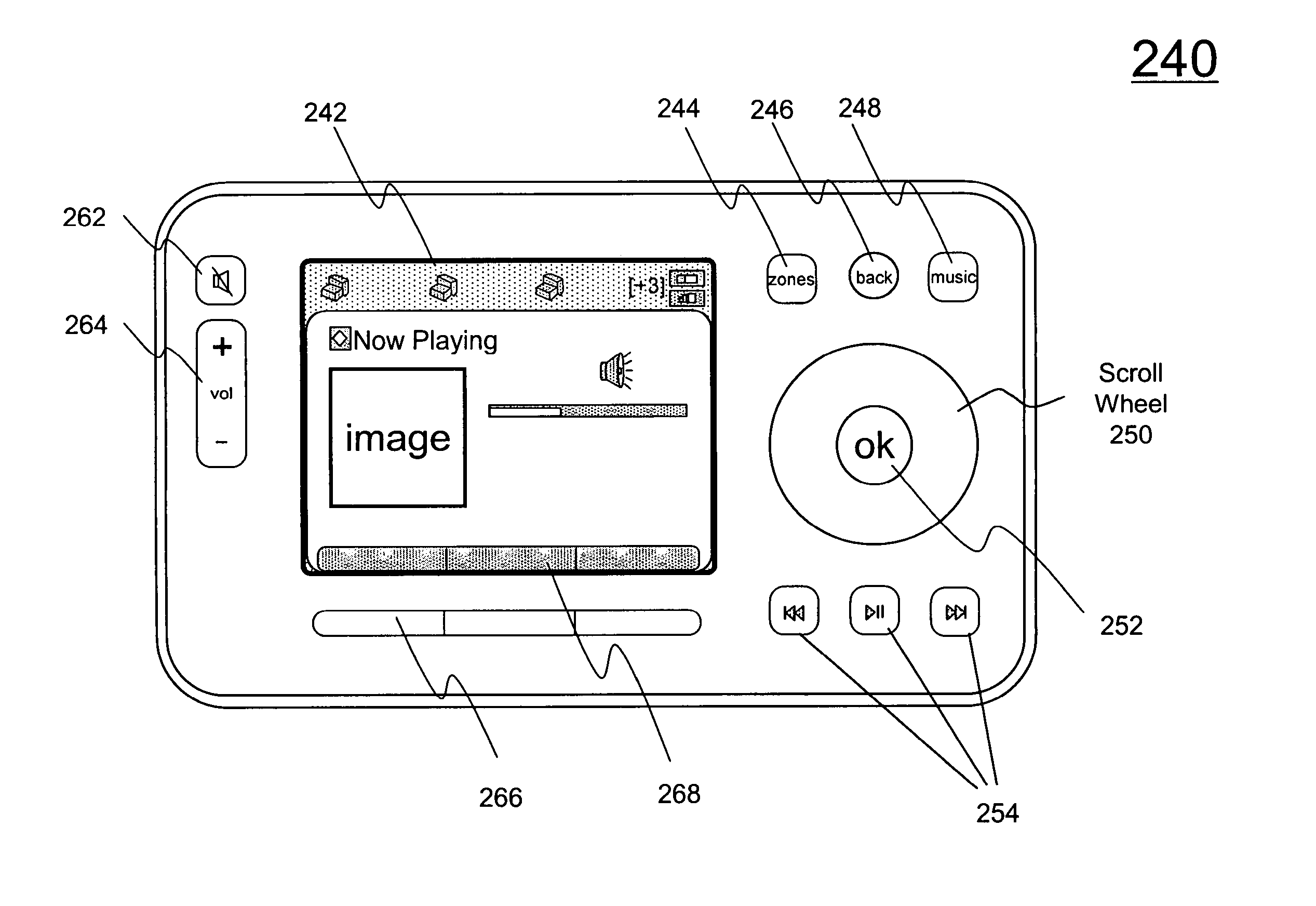

Method and apparatus for managing a playlist by metadata

ActiveUS20130191749A1Sacrificing manageabilityImprove memory usageInput/output for user-computer interactionTelevision system detailsMetadata managementSingle item

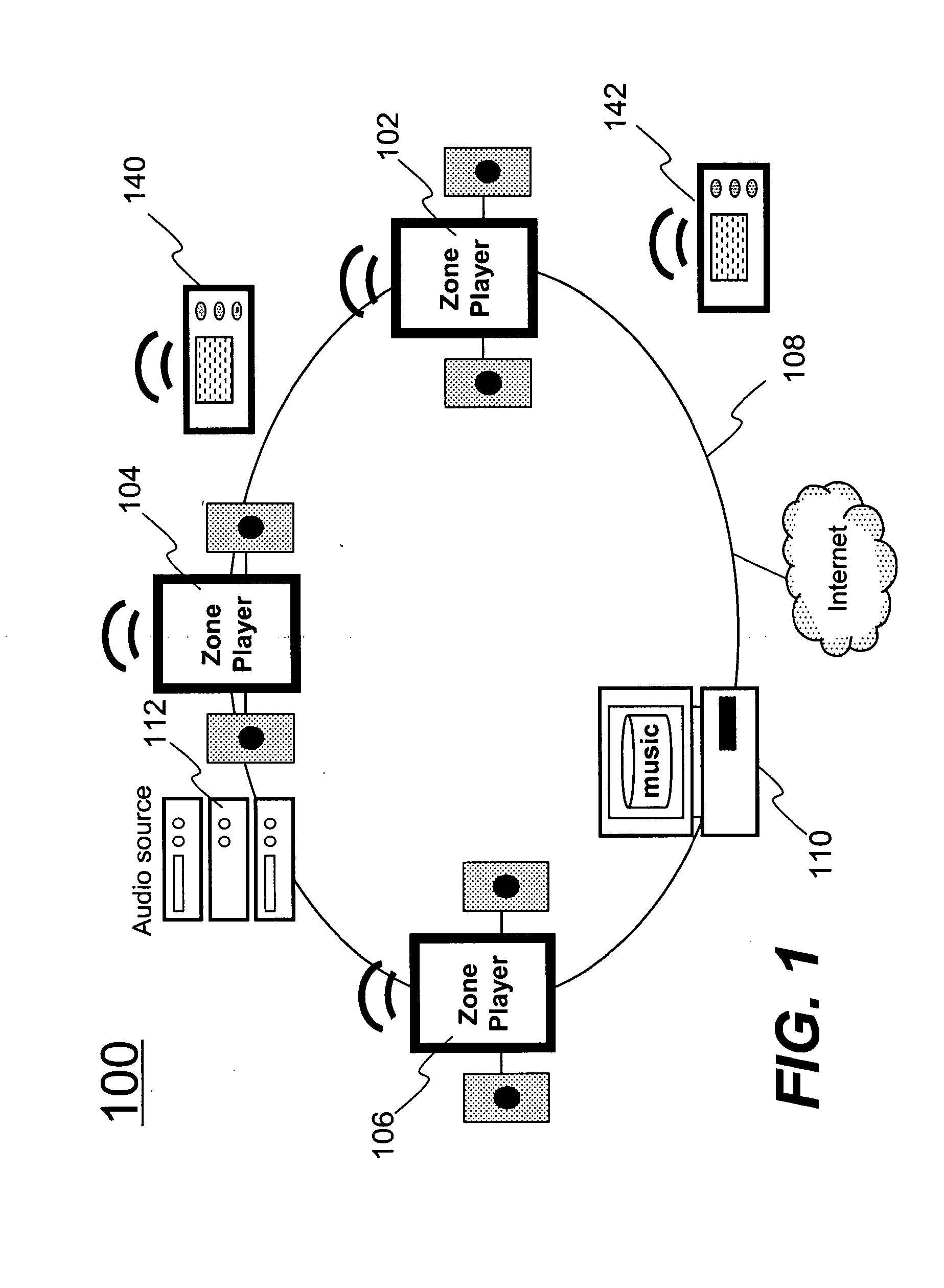

Techniques for managing a playlist in a multimedia system are disclosed. According to one aspect of the techniques, the playlist is structured to be able to include as many items as desired. To facilitate the manageability of such playlist, the playlist is built with a plurality of items. Each of the items is associated with metadata that includes information related to, for example, artist, album, genre, composer, and track number. The metadata for each item may be parsed, updated or logically operated upon to facilitate the management of the playlist. In another embodiment, each of the items is either a single item or a group item. A single item contains metadata of a corresponding source. A group item contains metadata of accessing other constituent items, which again may be single items or group items. As a result, the playlist can accommodate as many items as desired in a limited memory space without compromising the manageability of the playlist. Each of the items can be removed from, added to, or moved around in the playlist without concerning that an item may further include many items therein.

Owner:SONOS

Virtual reality system for viewing current and previously stored or calculated motion data

ActiveUS20130063432A1Increase in entertainment levelImprove performanceAnimationSimulatorsAnalysis dataData library

Virtual reality system for viewing current and previously stored or calculated motion data. System obtains data from motion capture elements, analyzes data and stores data in database for use in virtual reality applications and / or data mining, which may be charged for. Enables unique displays associated with the user, such as 3D overlays onto images of the user to visually depict the captured motion data. Ratings, compliance, ball flight path data can be calculated and displayed, for example on a map or timeline or both. Enables performance related equipment fitting and purchase. Includes active and passive identifier capabilities.

Owner:NEWLIGHT CAPITAL LLC

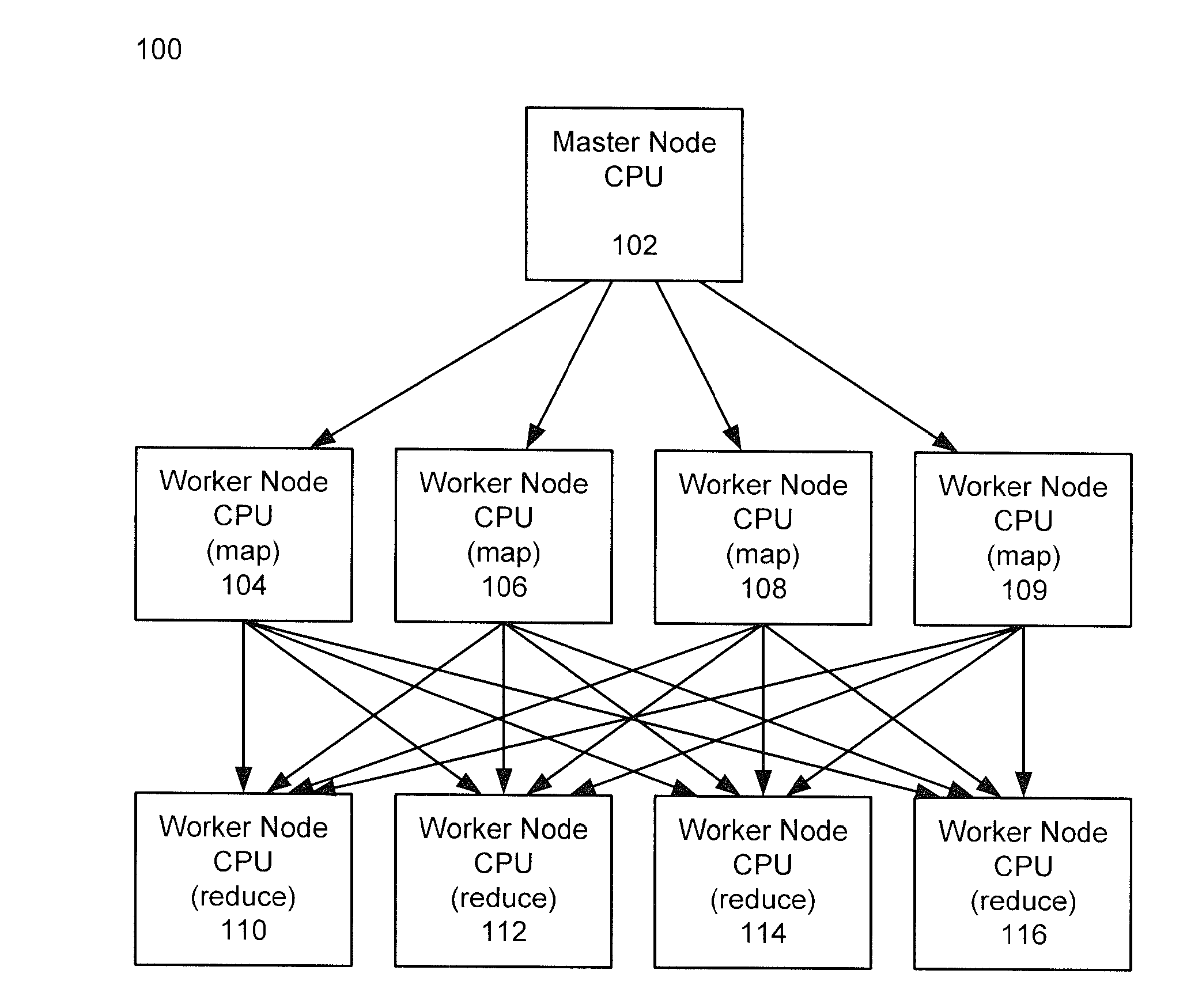

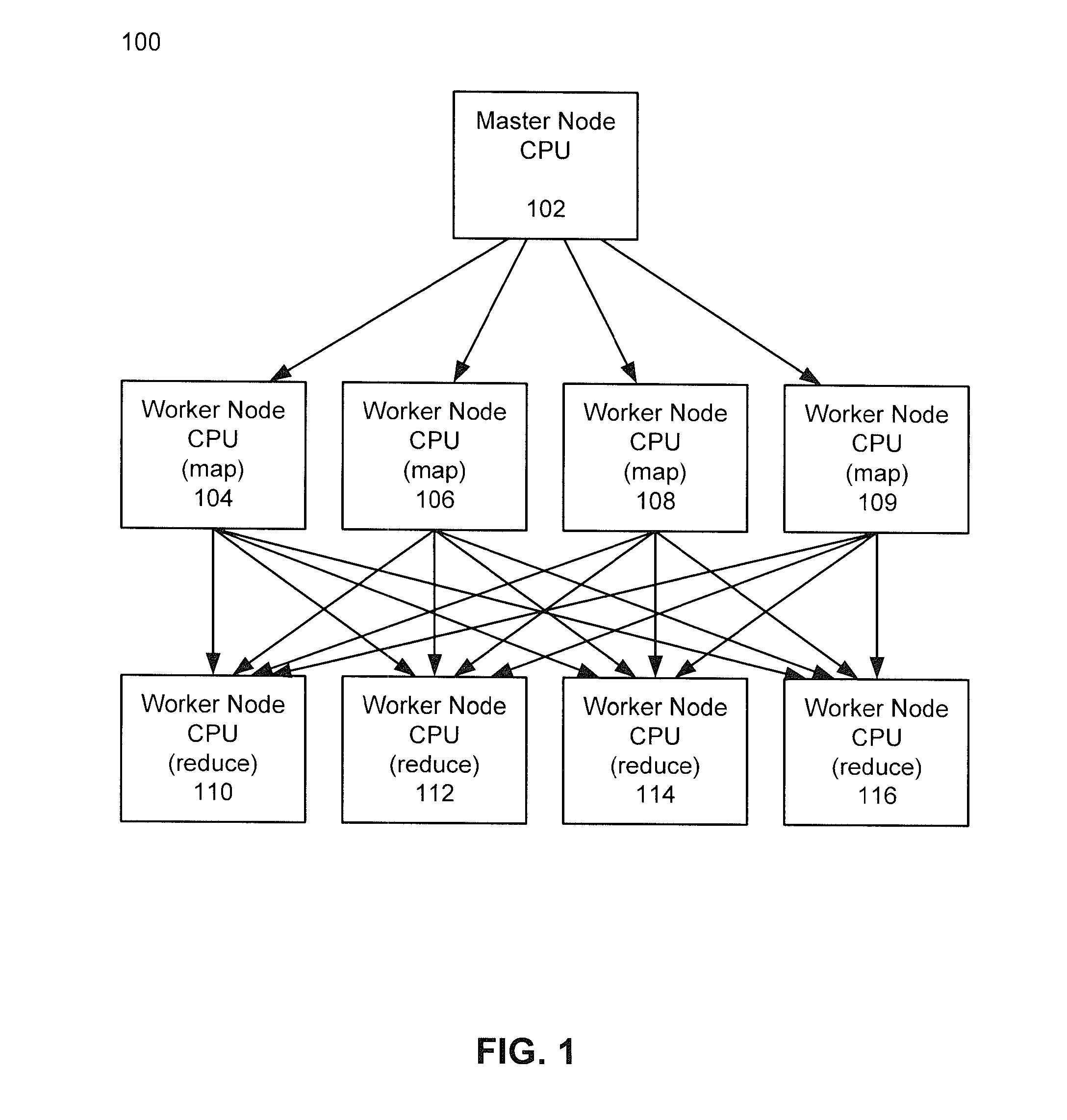

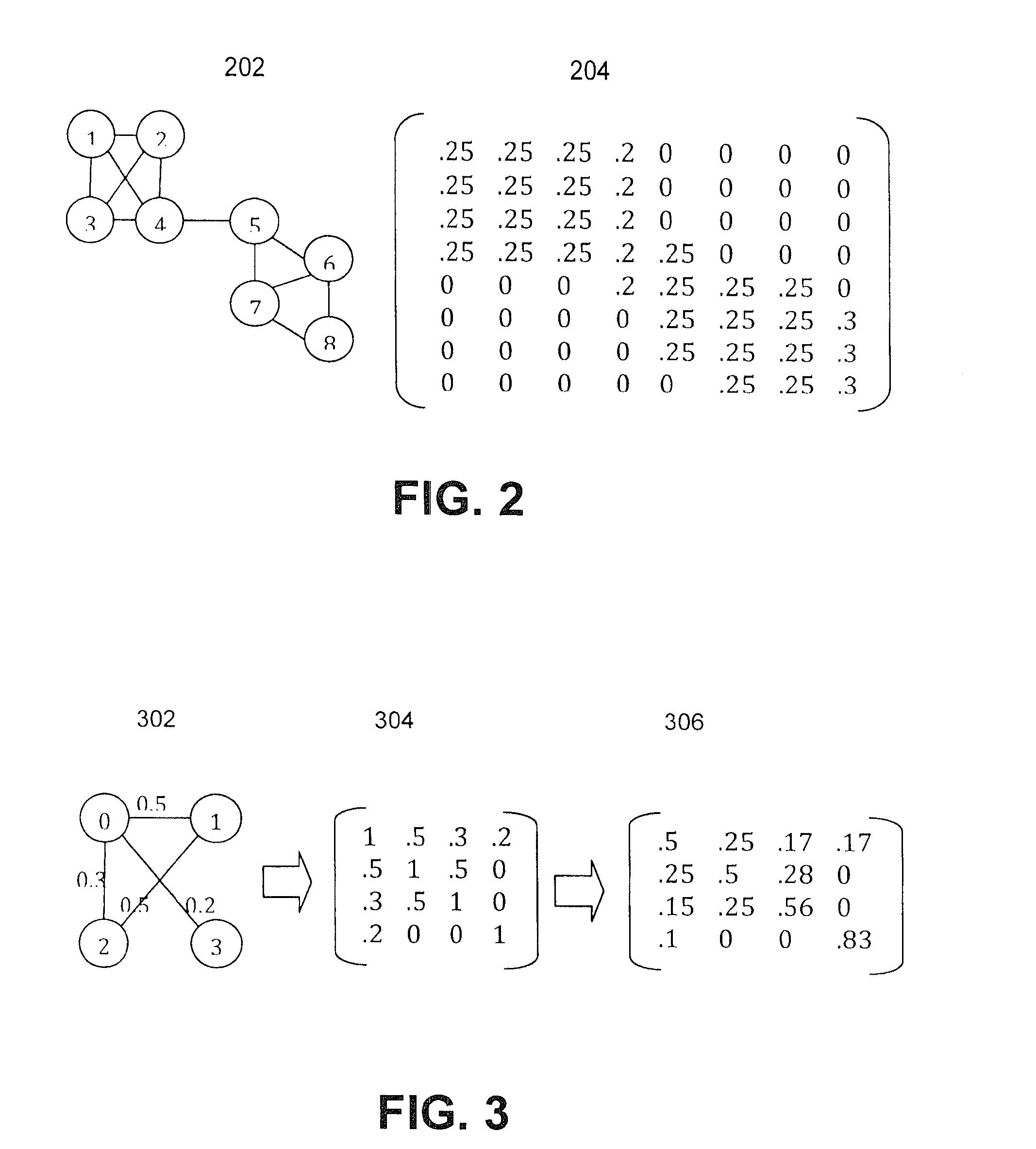

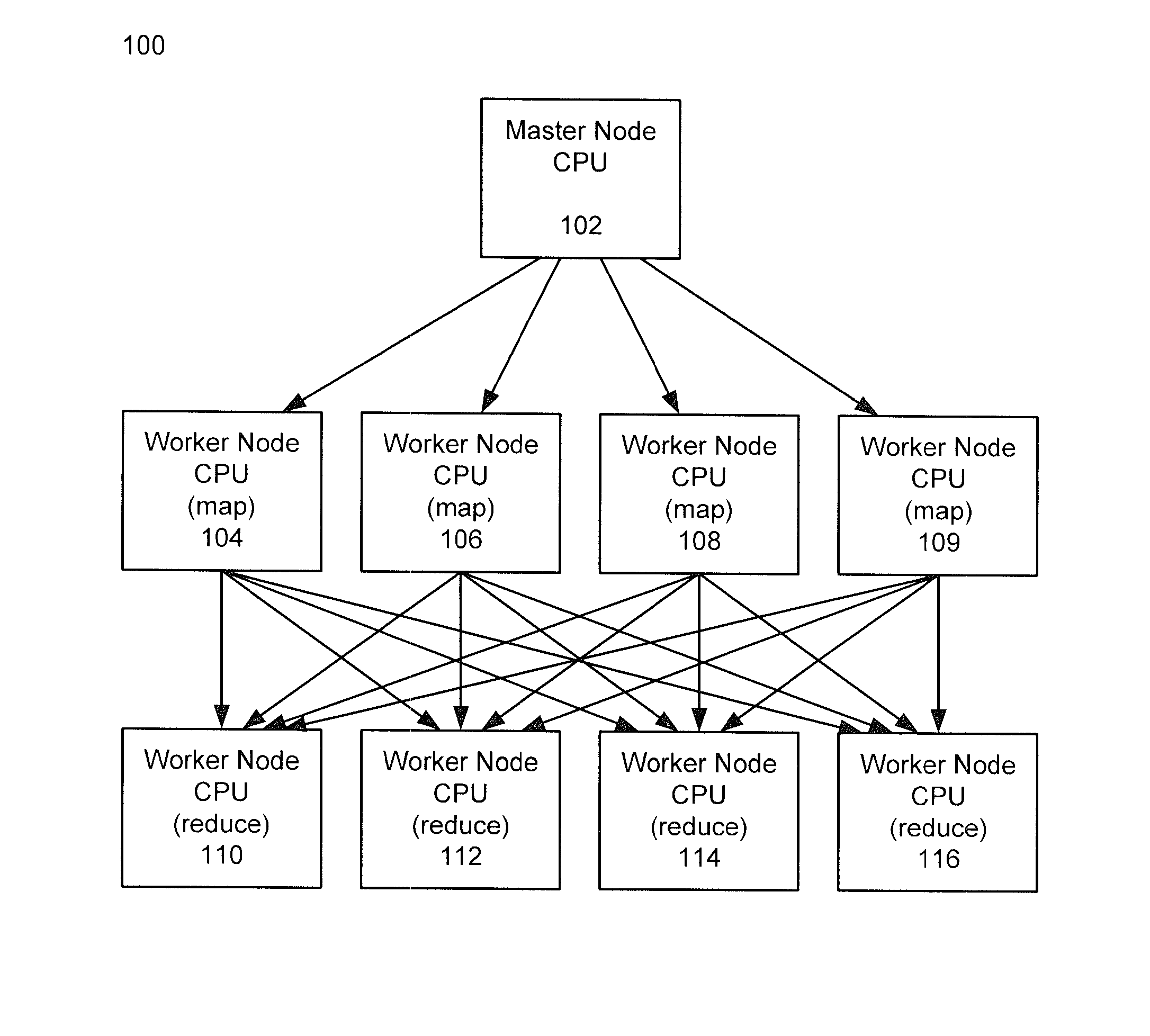

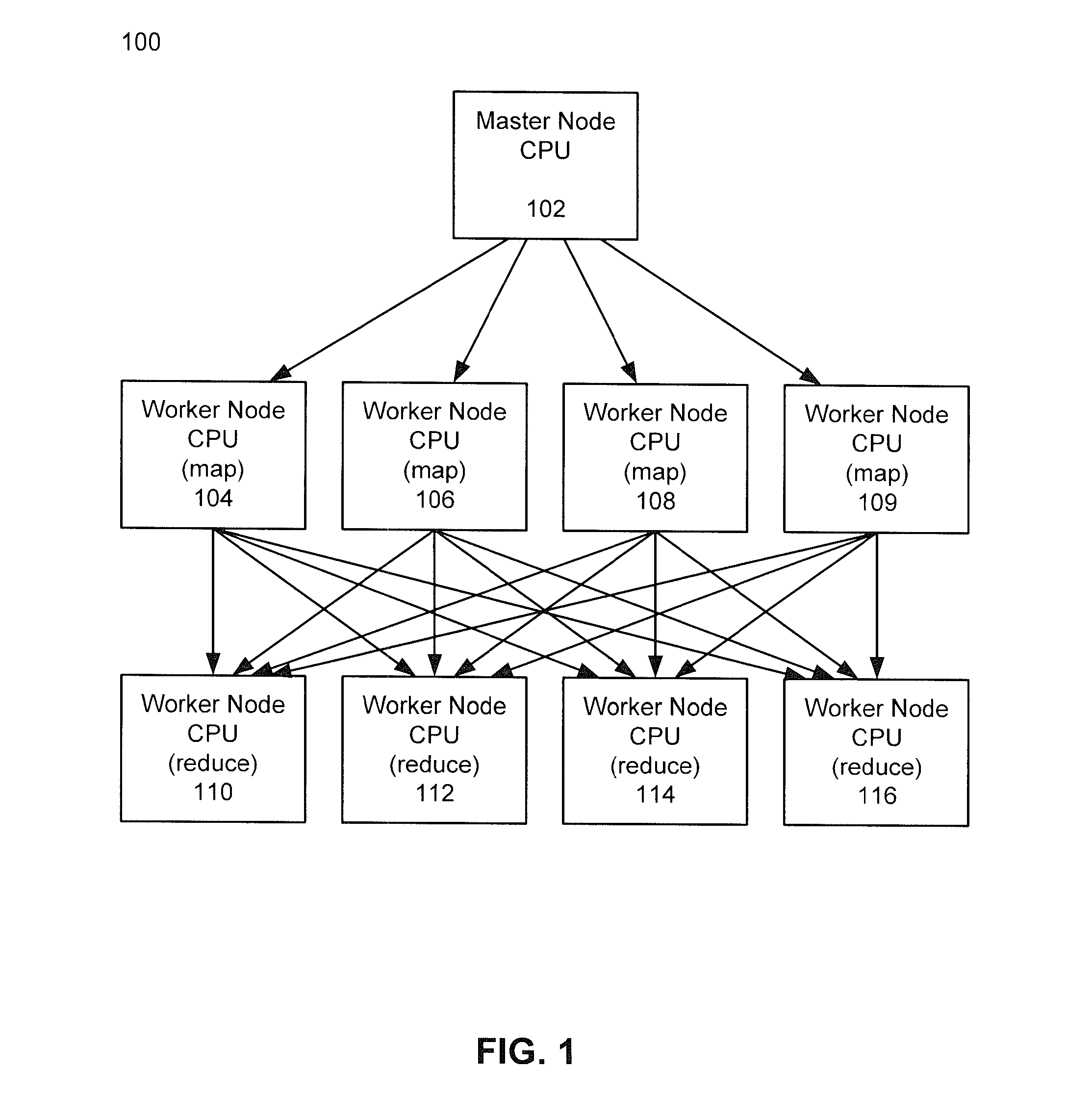

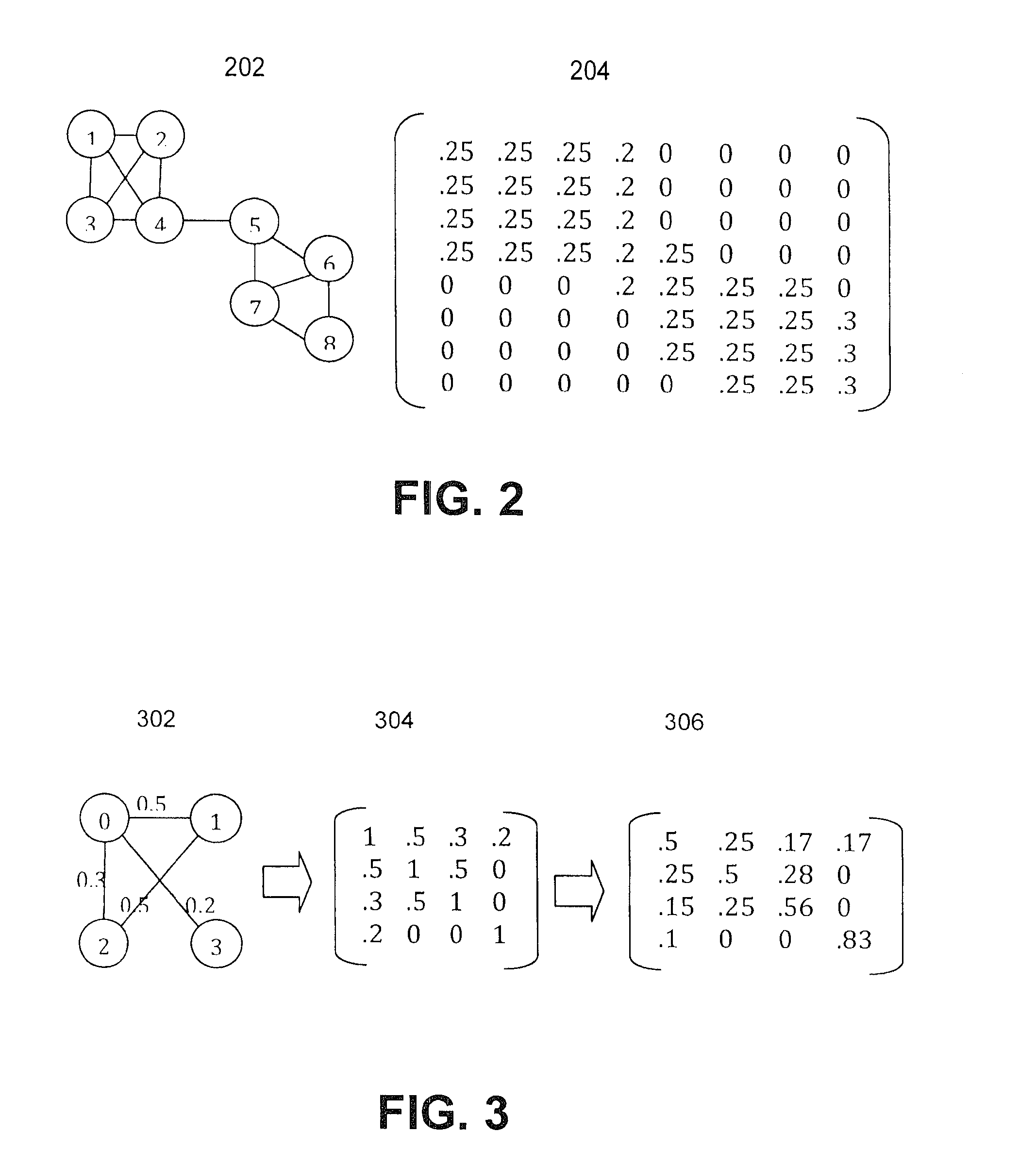

Methods and systems for using map-reduce for large-scale analysis of graph-based data

ActiveUS20130024412A1Improve memory usageEasy to implementKnowledge representationSpecial data processing applicationsCluster algorithmMarkov clustering

Embodiments are described for a method for processing graph data by executing a Markov Clustering algorithm (MCL) to find clusters of vertices of the graph data, organizing the graph data by column by calculating a probability percentage for each column of a similarity matrix of the graph data to produce column data, generating a probability matrix of states of the column data, performing an expansion of the probability matrix by computing a power of the matrix using a Map-Reduce model executed in a processor-based computing device; and organizing the probability matrix into a set of sub-matrices to find the least amount of data needed for the Map-Reduce model given that two lines of data in the matrix are required to compute a single value for the power of the matrix. One of at least two strategies may be used to computing the power of the matrix (matrix square, M2) based on simplicity of execution or improved memory usage.

Owner:SALESFORCE COM INC

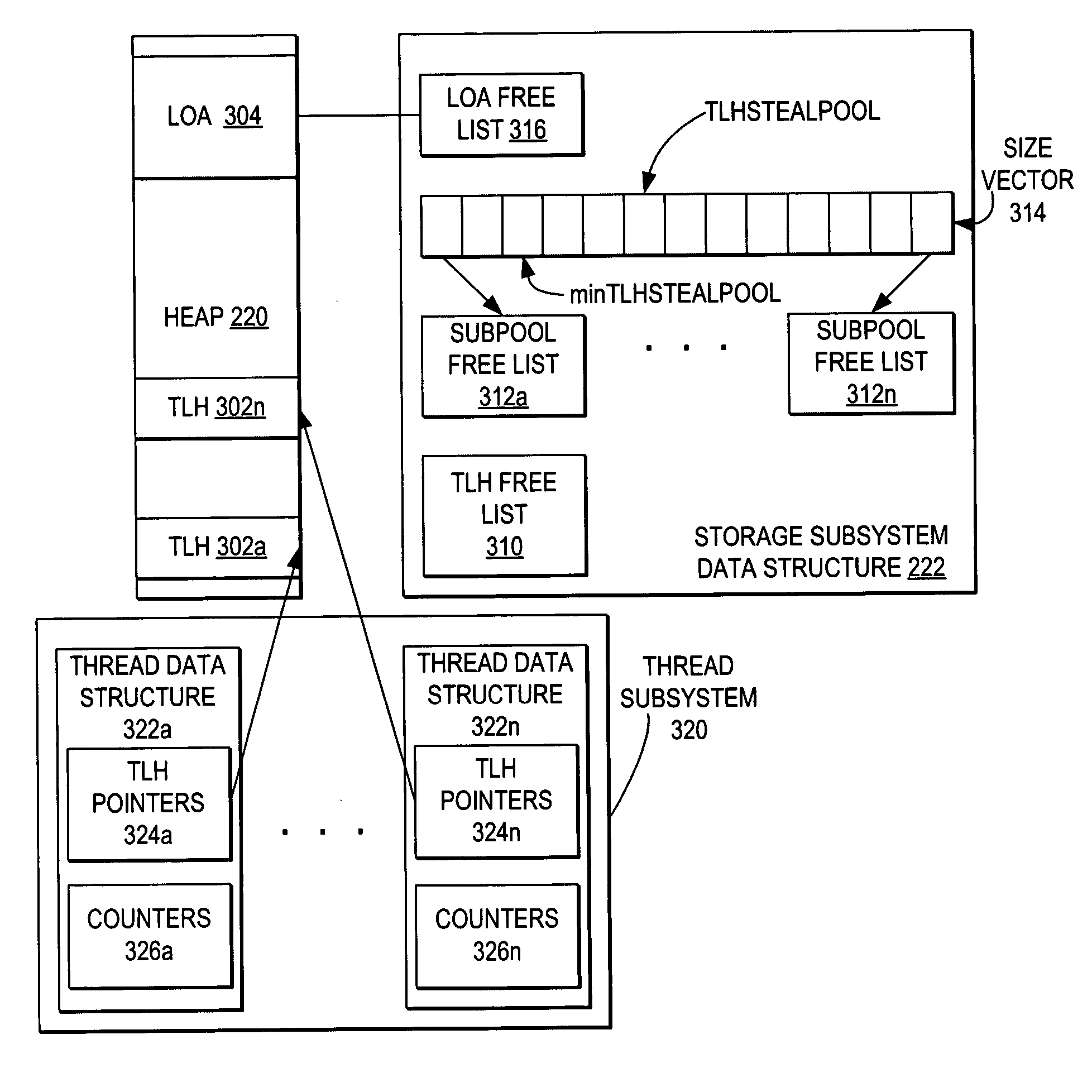

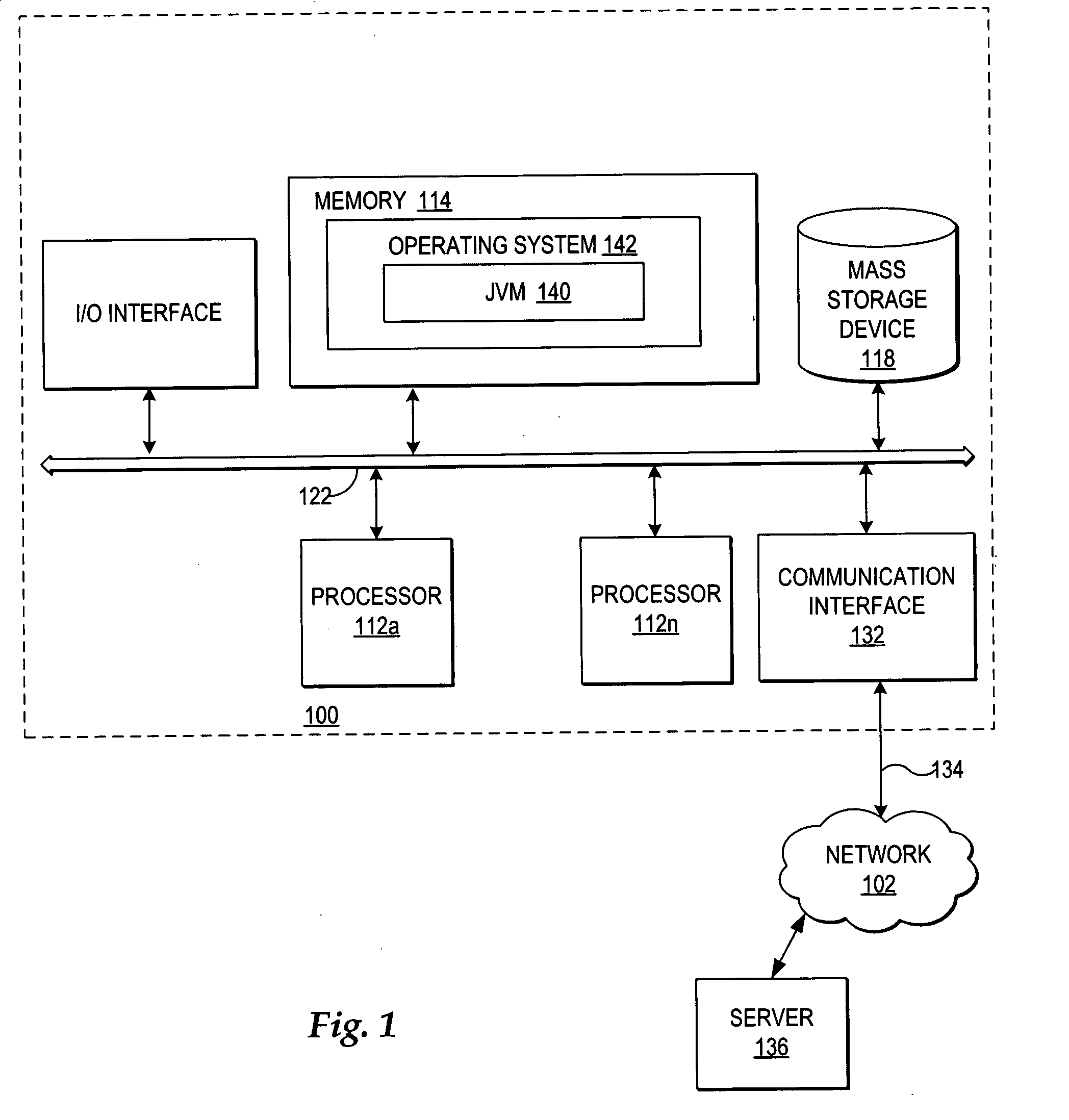

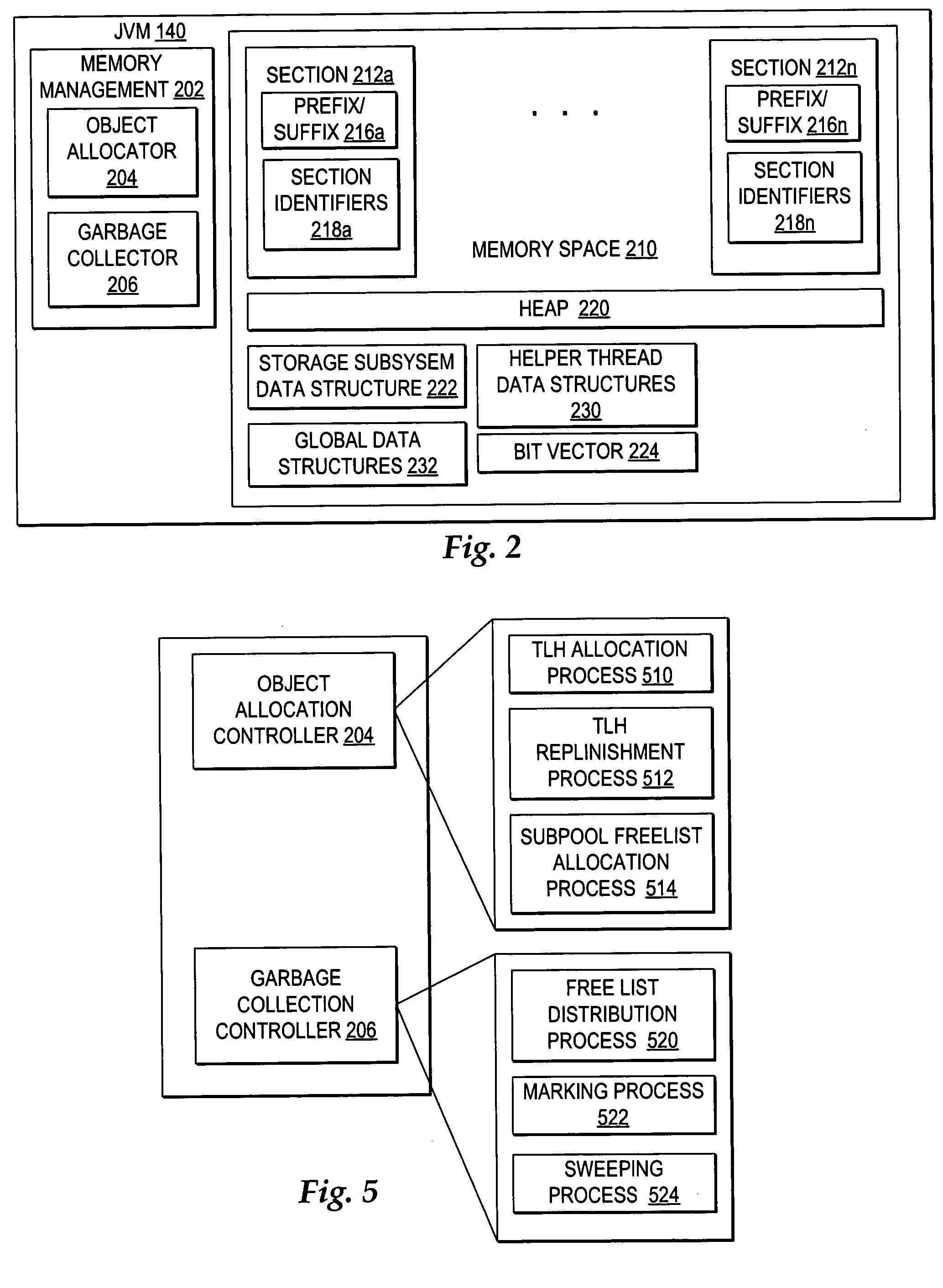

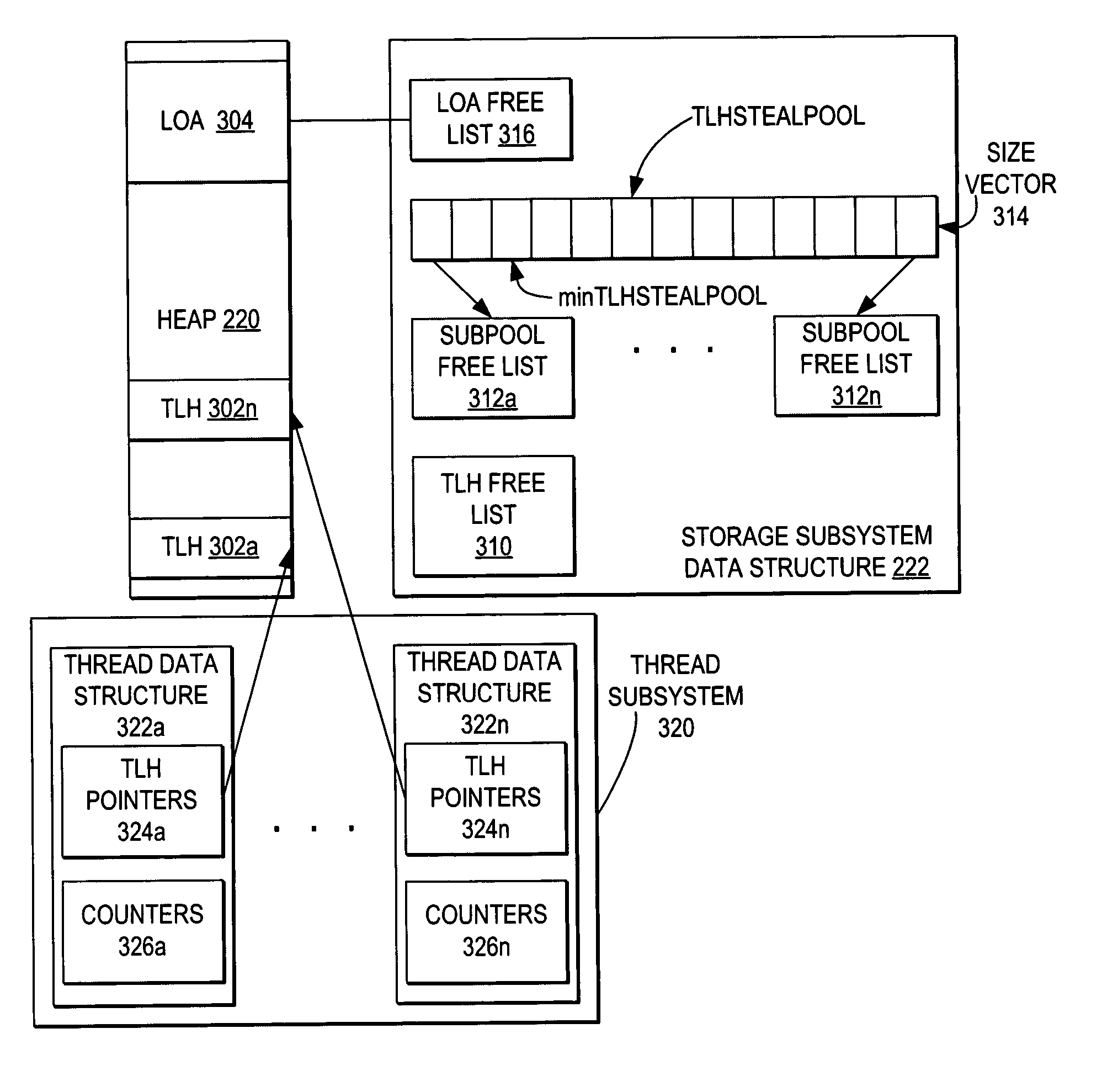

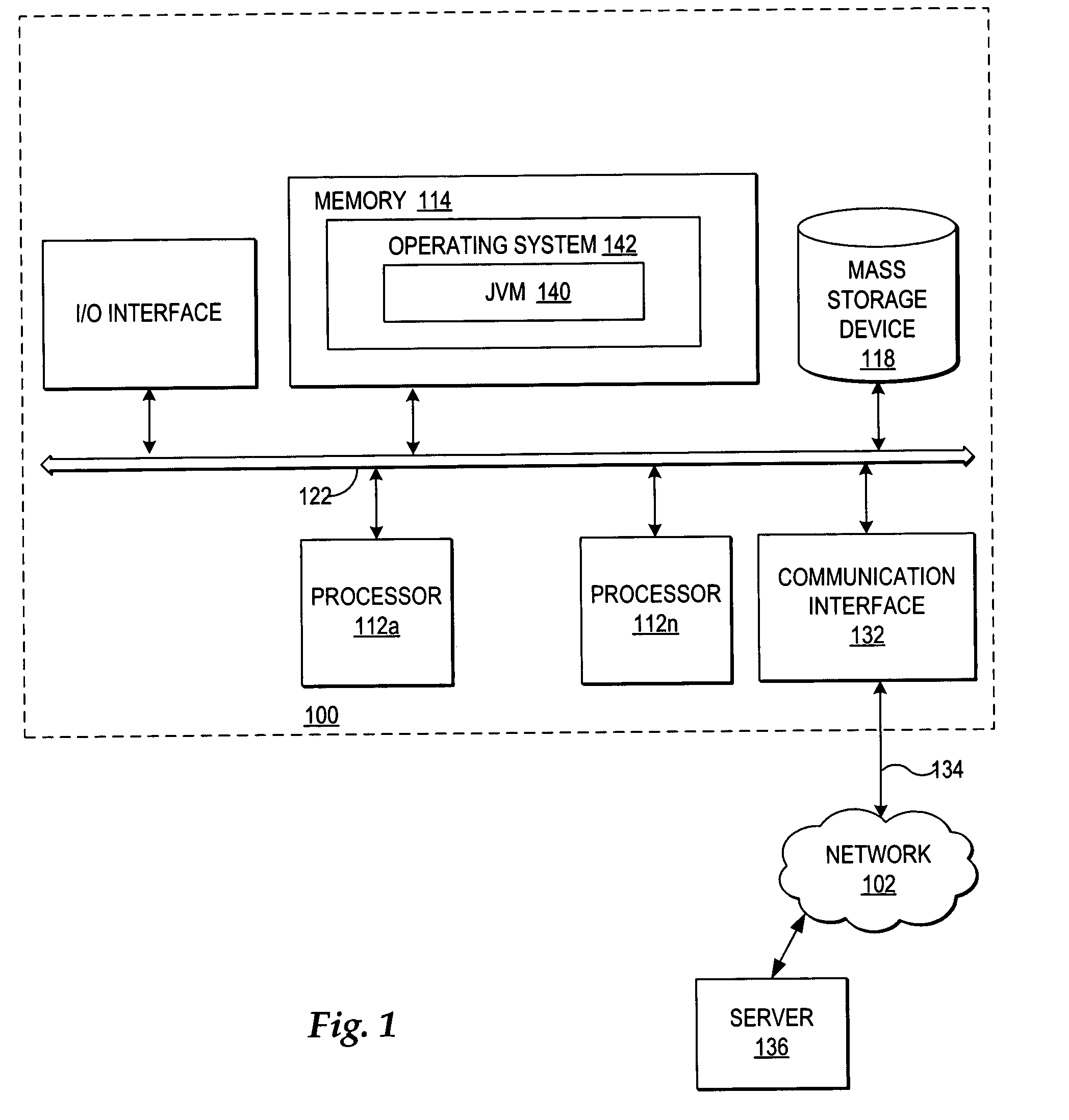

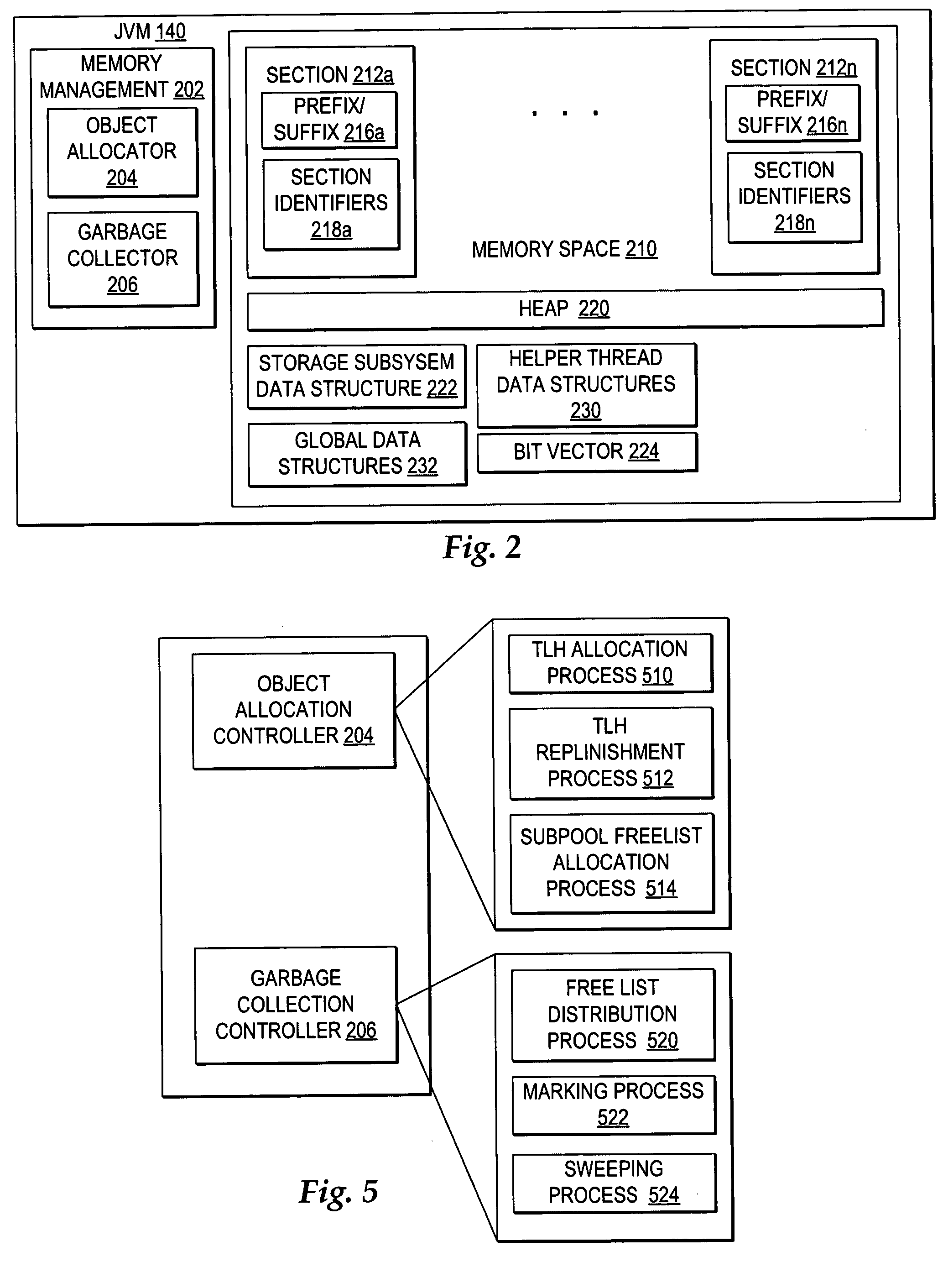

Free item distribution among multiple free lists during garbage collection for more efficient object allocation

InactiveUS20050273568A1Easy to manageEfficient managementData processing applicationsMemory adressing/allocation/relocationParallel computingComputer science

A method, system, and program for improving free item distribution among multiple free lists during garbage collection for more efficient object allocation are provided. A garbage collector predicts future allocation requirements and then distributes free items to multiple subpool free lists and a TLH free list during the sweep phase according to the future allocation requirements. The sizes of subpools and number of free items in subpools are predicted as the most likely to match future allocation requests. In particular, once a subpool free list is filled with the number of free items needed according to the future allocation requirements, any additional free items designated for the subpool free list can be divided into multiple TLH sized free items and placed on the TLH free list. Allocation threads are enabled to acquire free items from the TLH free list and to replenish a current TLH without acquiring heap lock.

Owner:IBM CORP

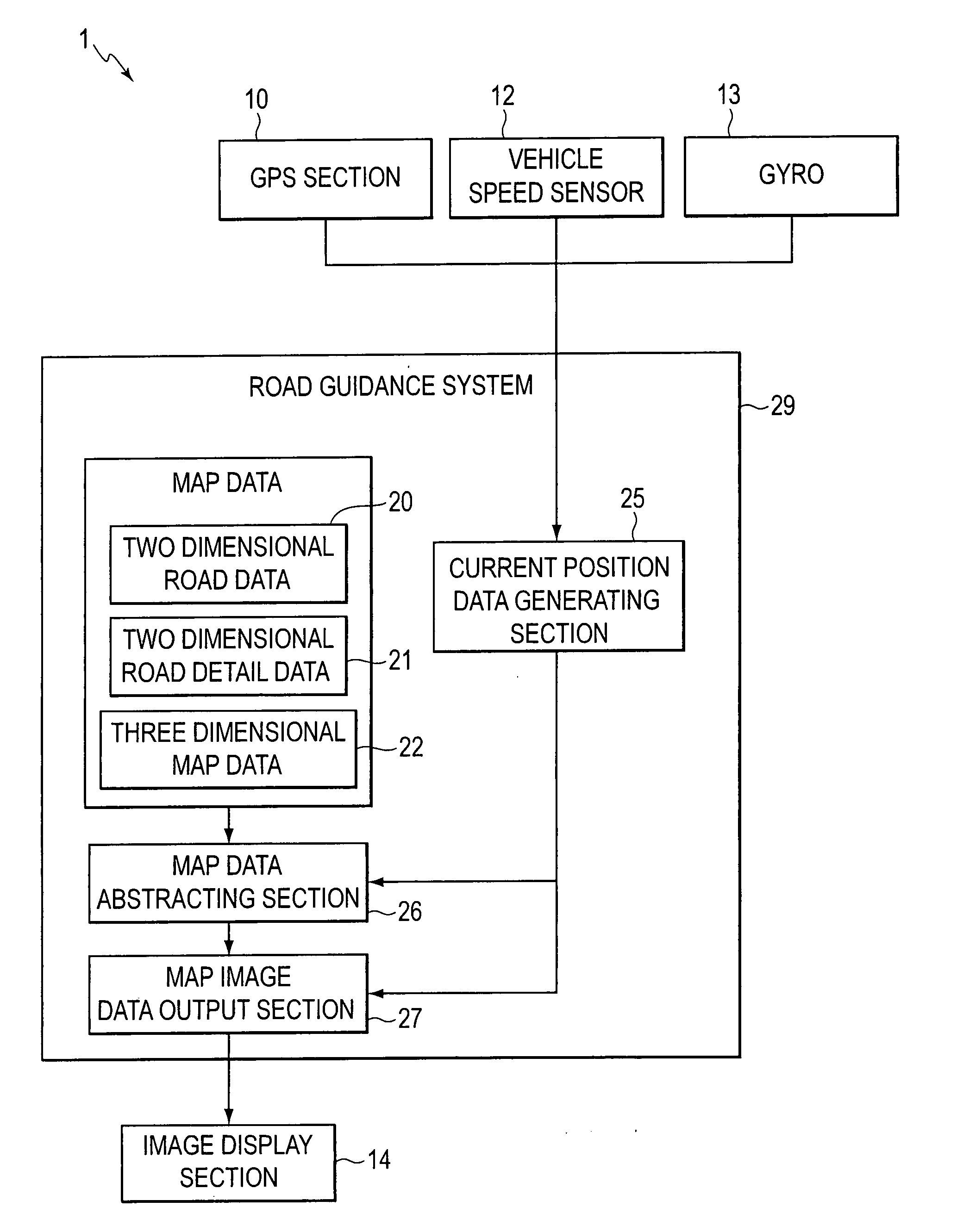

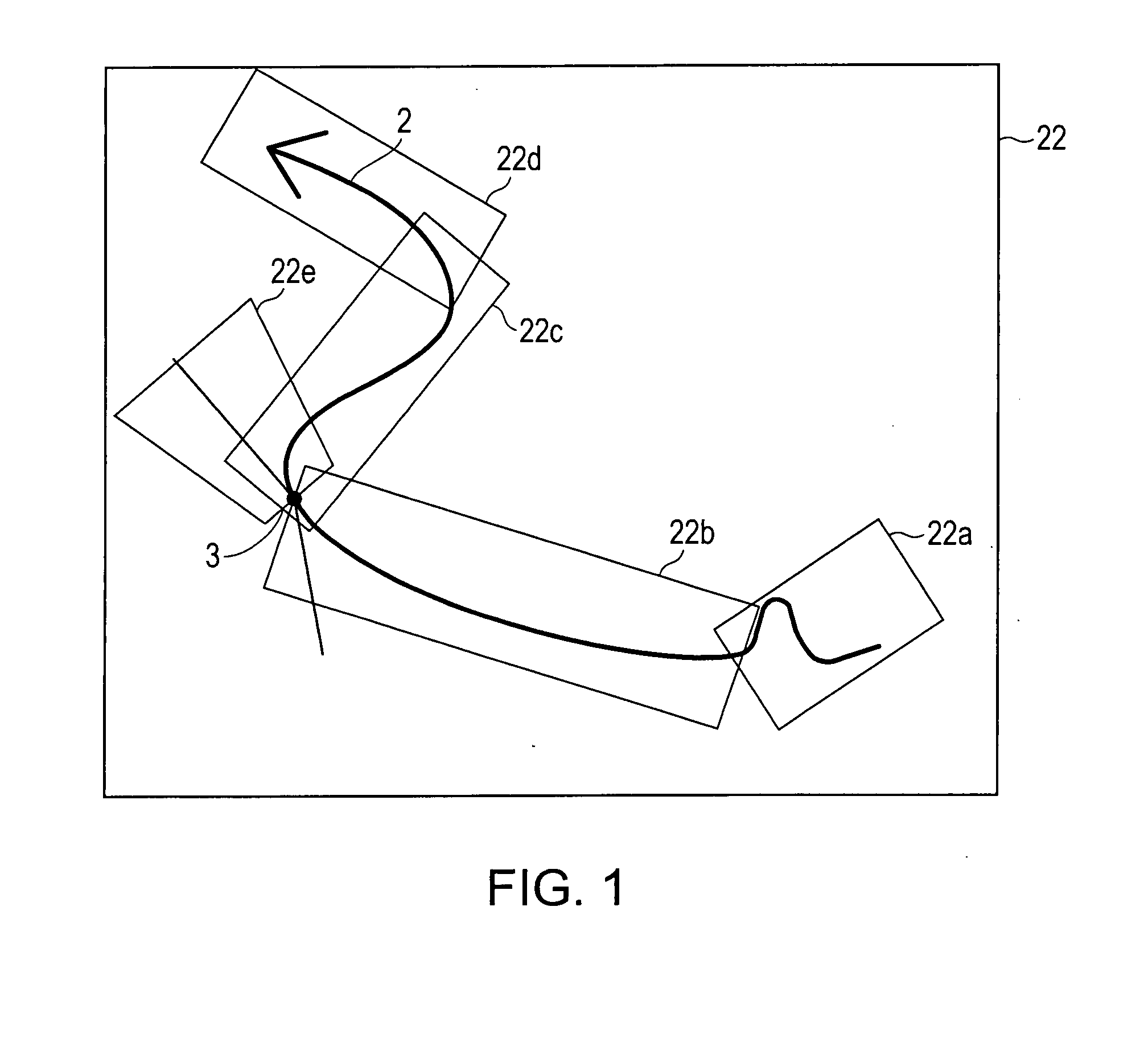

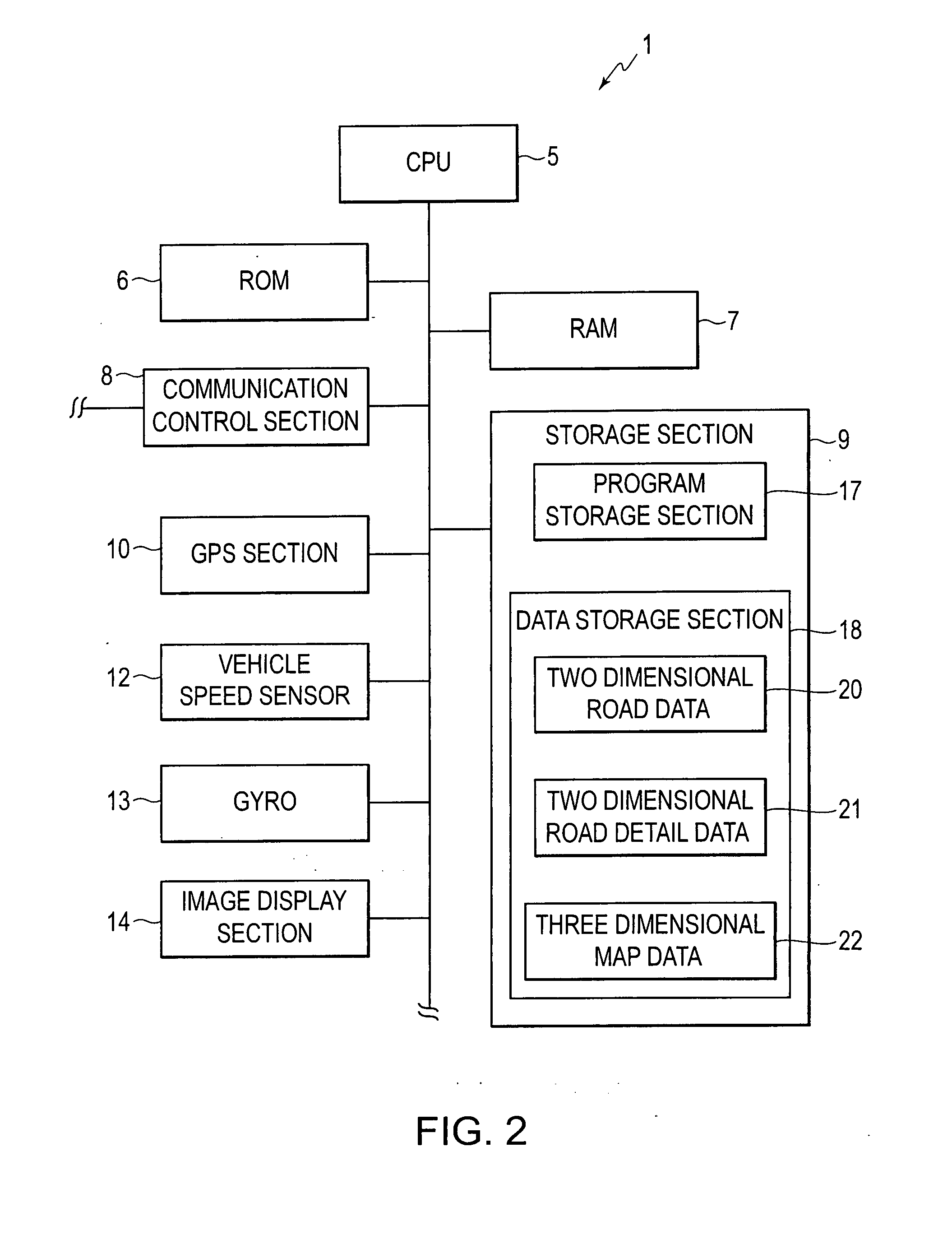

Navigation apparatuses, methods, and programs

InactiveUS20080162043A1Improve memory usageInstruments for road network navigationRoad vehicles traffic controlViewpointsComputer vision

Owner:AISIN AW CO LTD

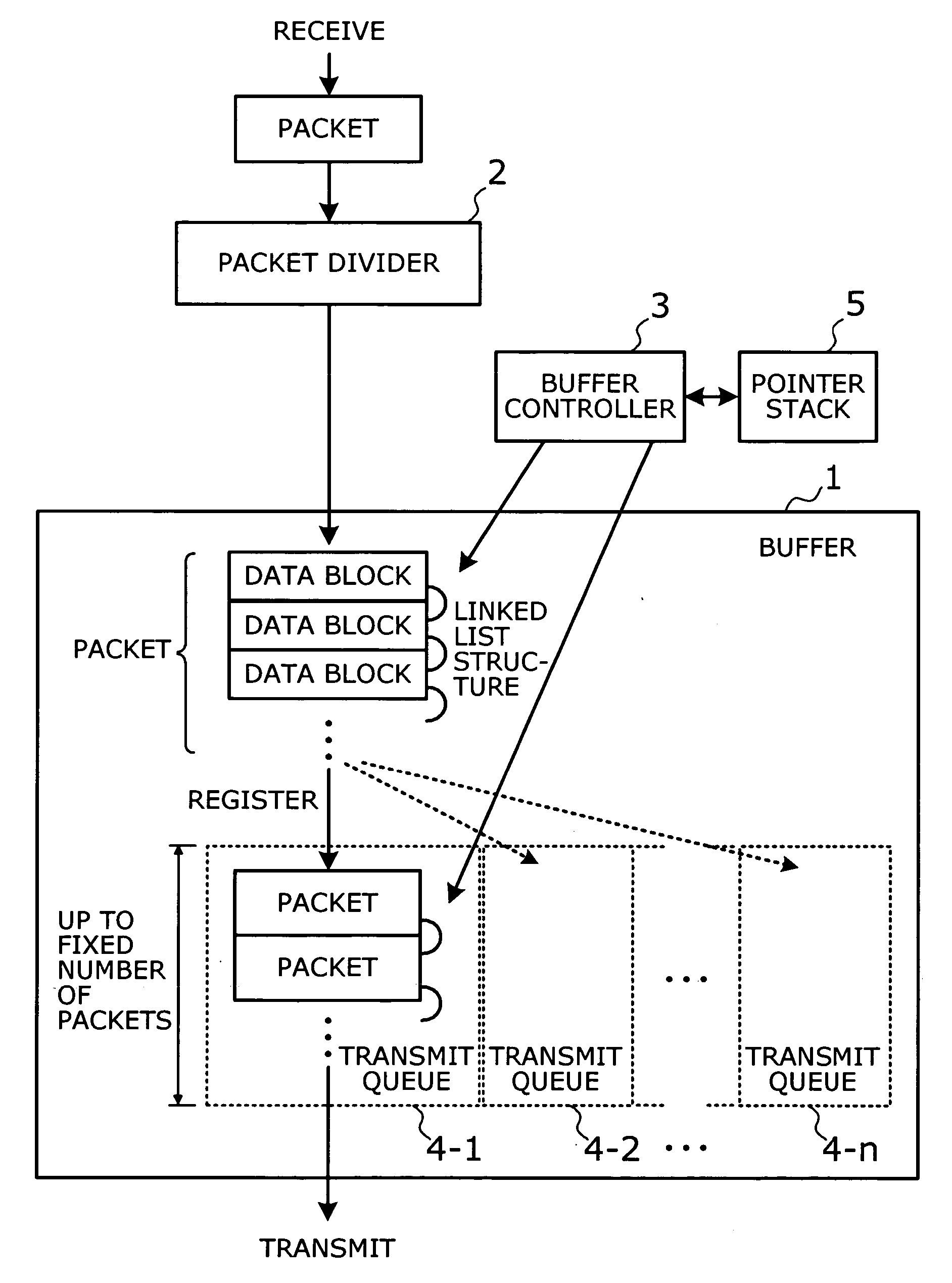

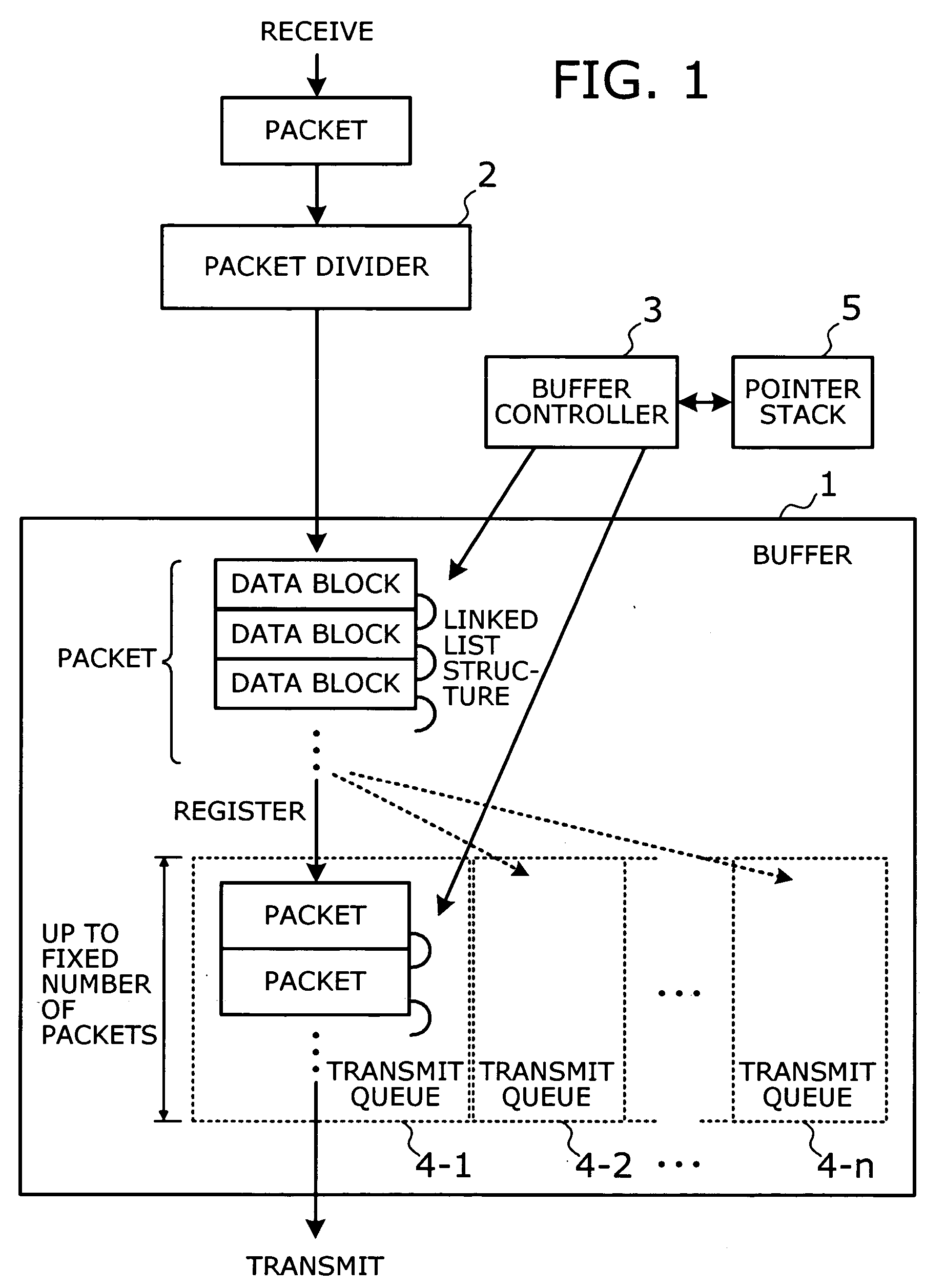

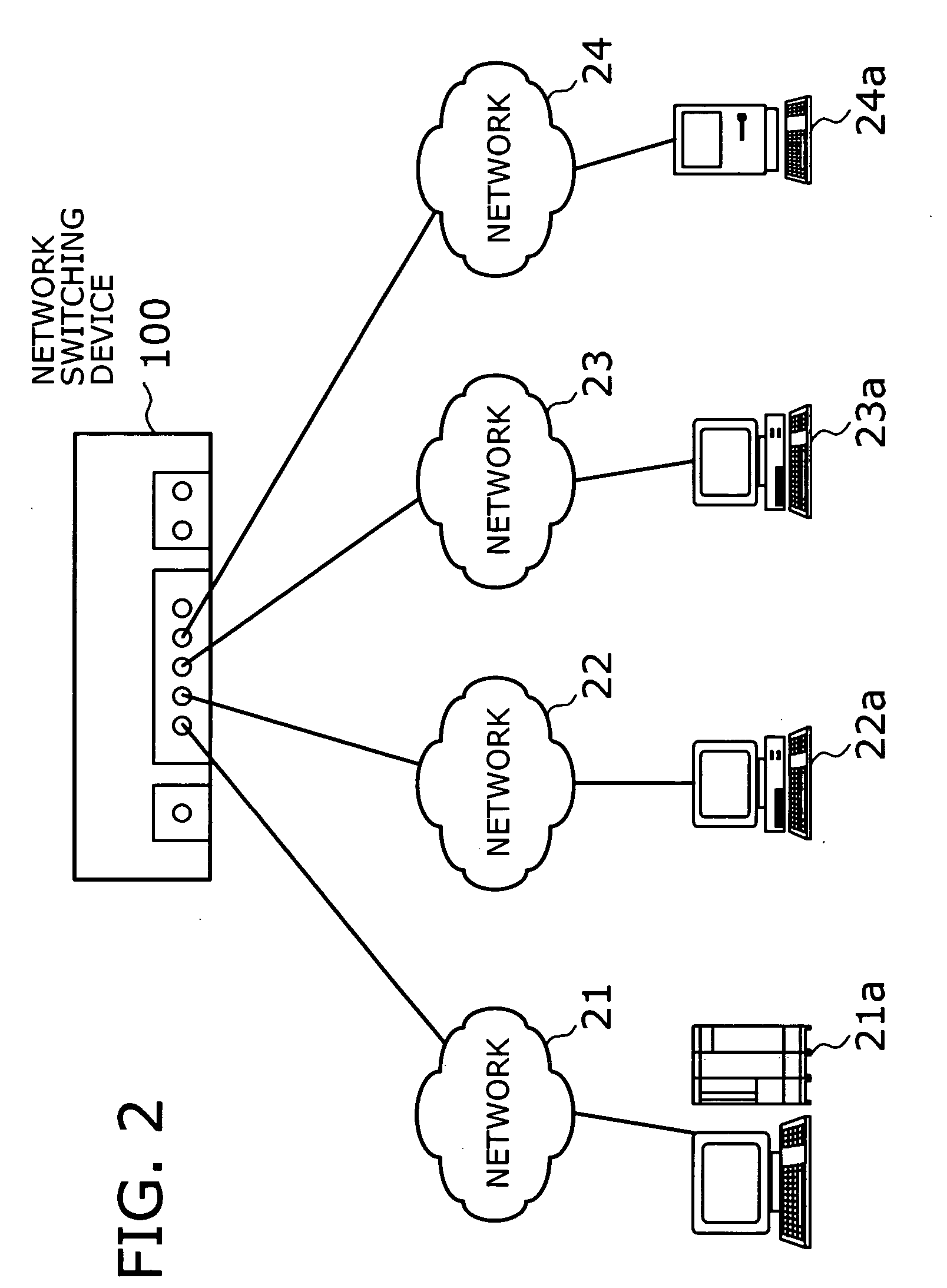

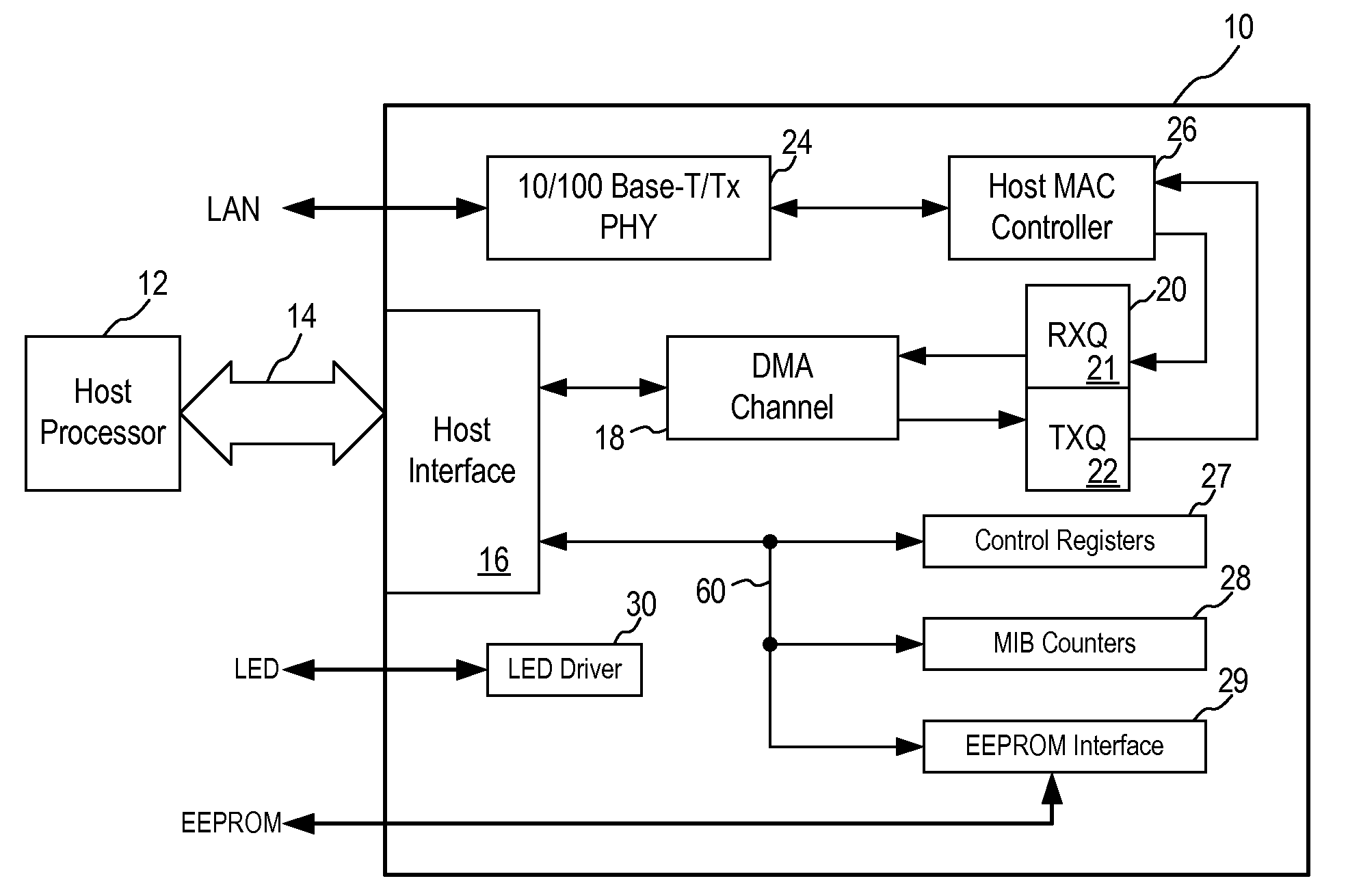

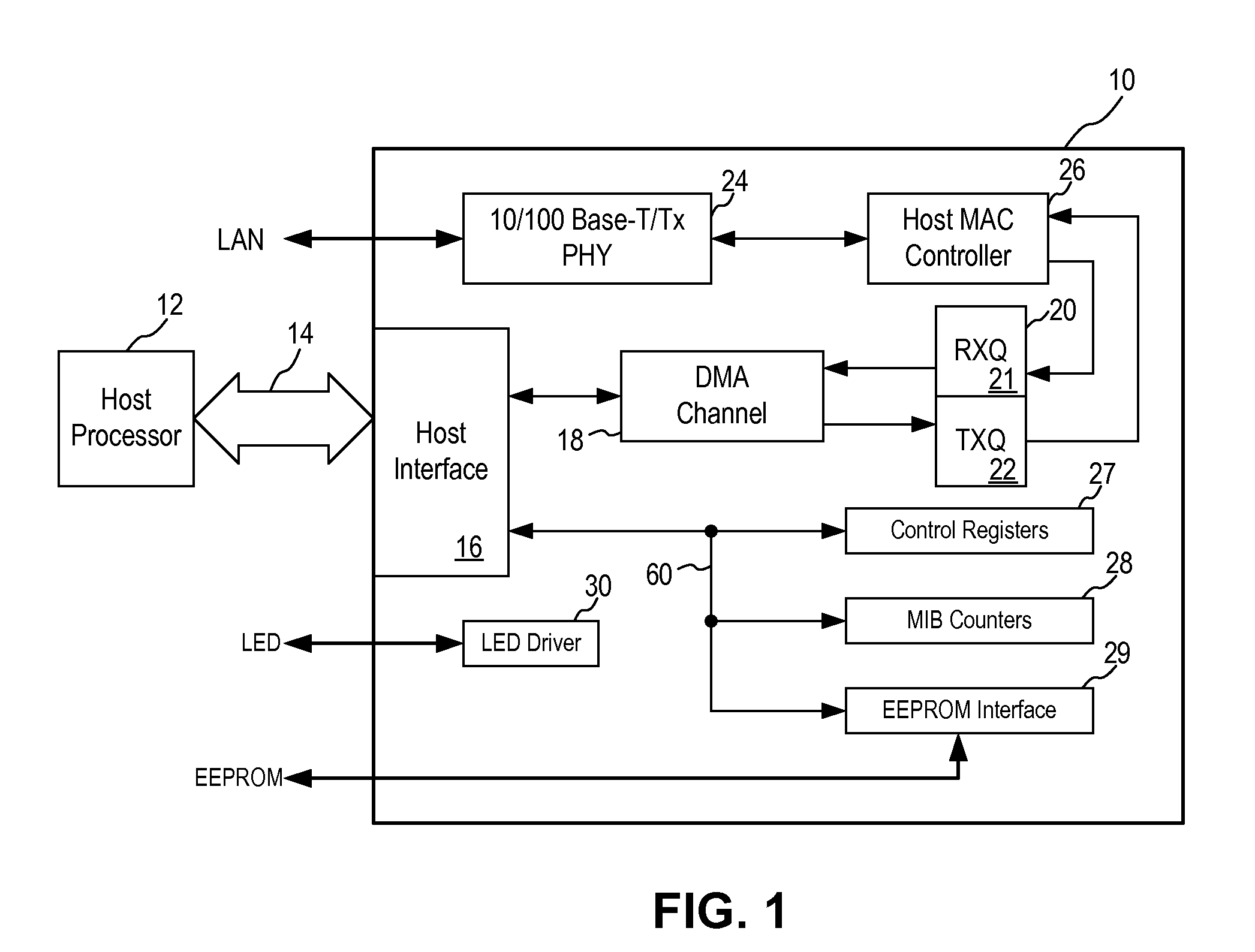

Network switching device and method using shared buffer

InactiveUS20050163141A1Improve efficiencyImprove memory usageData switching by path configurationElectric digital data processingComputer hardwareNetwork packet

A network switching device that prevents its shared buffer from suffering a blocking problem, while achieving a higher memory use efficiency in buffering variable-length packets. Every received packet is divided into one or more fixed-length data blocks and supplied to the buffer. Under the control of a buffer controller, a transmit queue is created to store up to a fixed number of data blocks for each different destination network, and the data blocks written in the buffer are registered with a transmit queue corresponding to a given destination. The linkage between data blocks in each packet, as well as the linkage between packets in each transmit queue, is managed as a linked list structure based on the locations of data blocks in the buffer.

Owner:SOCIONEXT INC

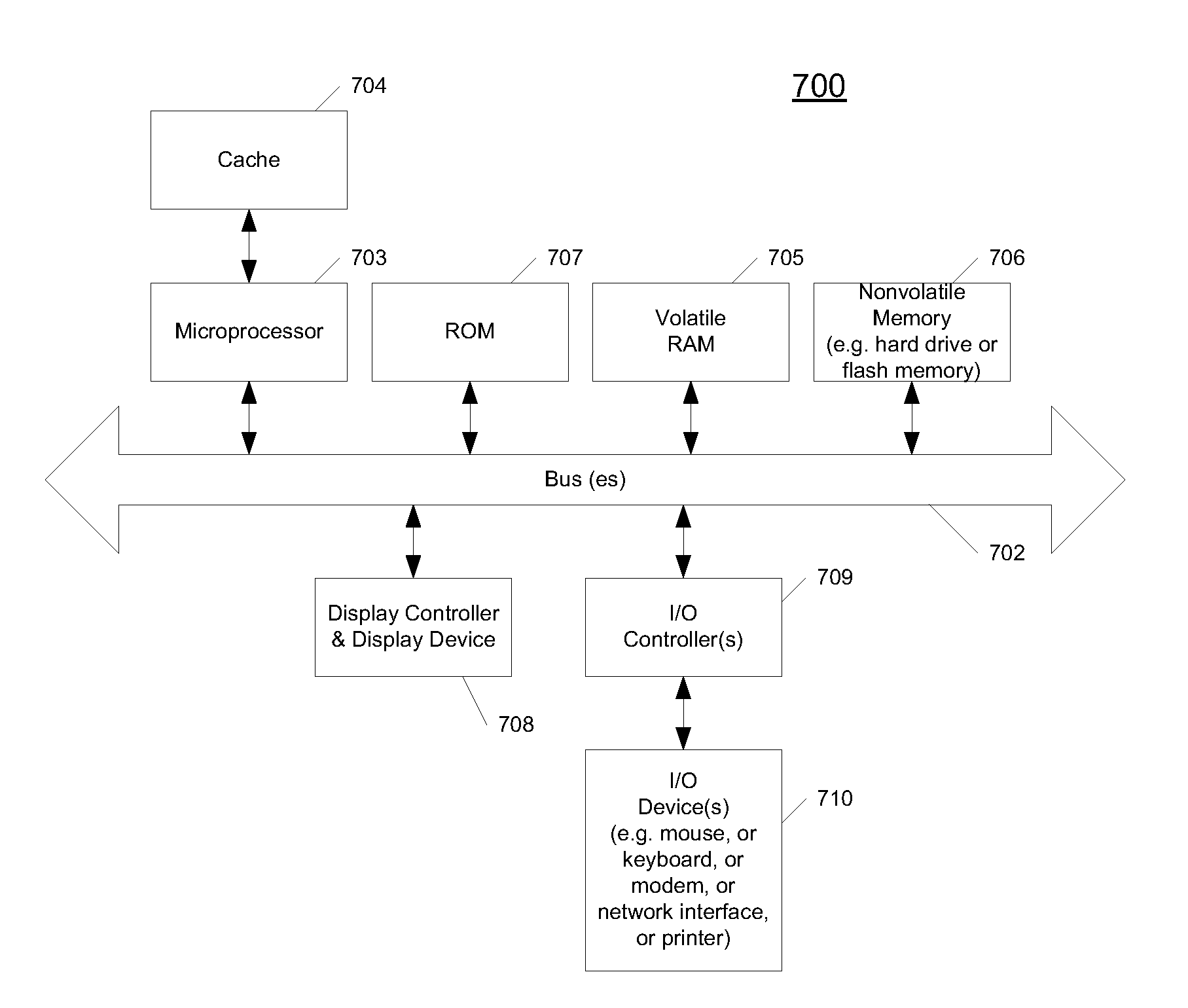

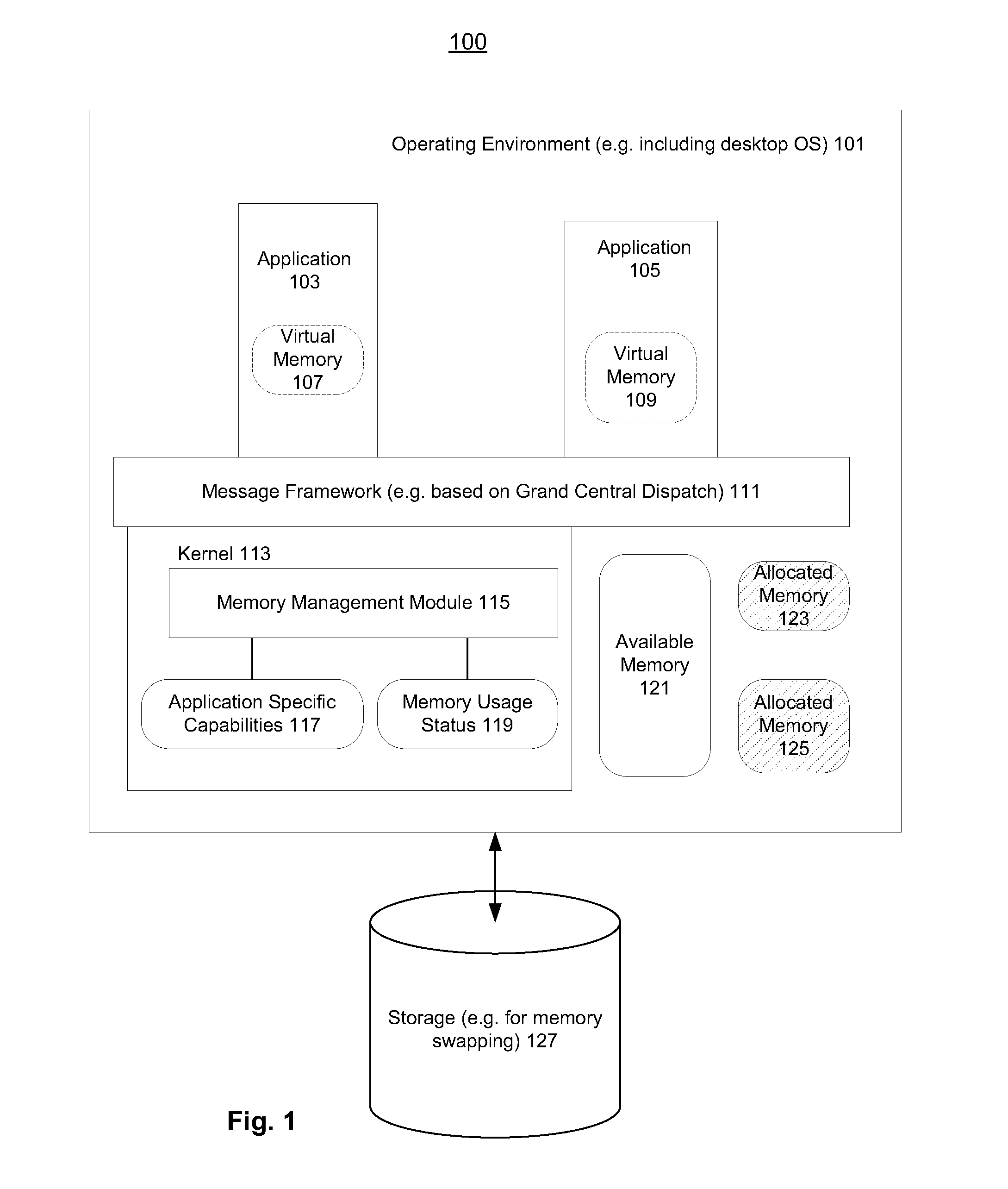

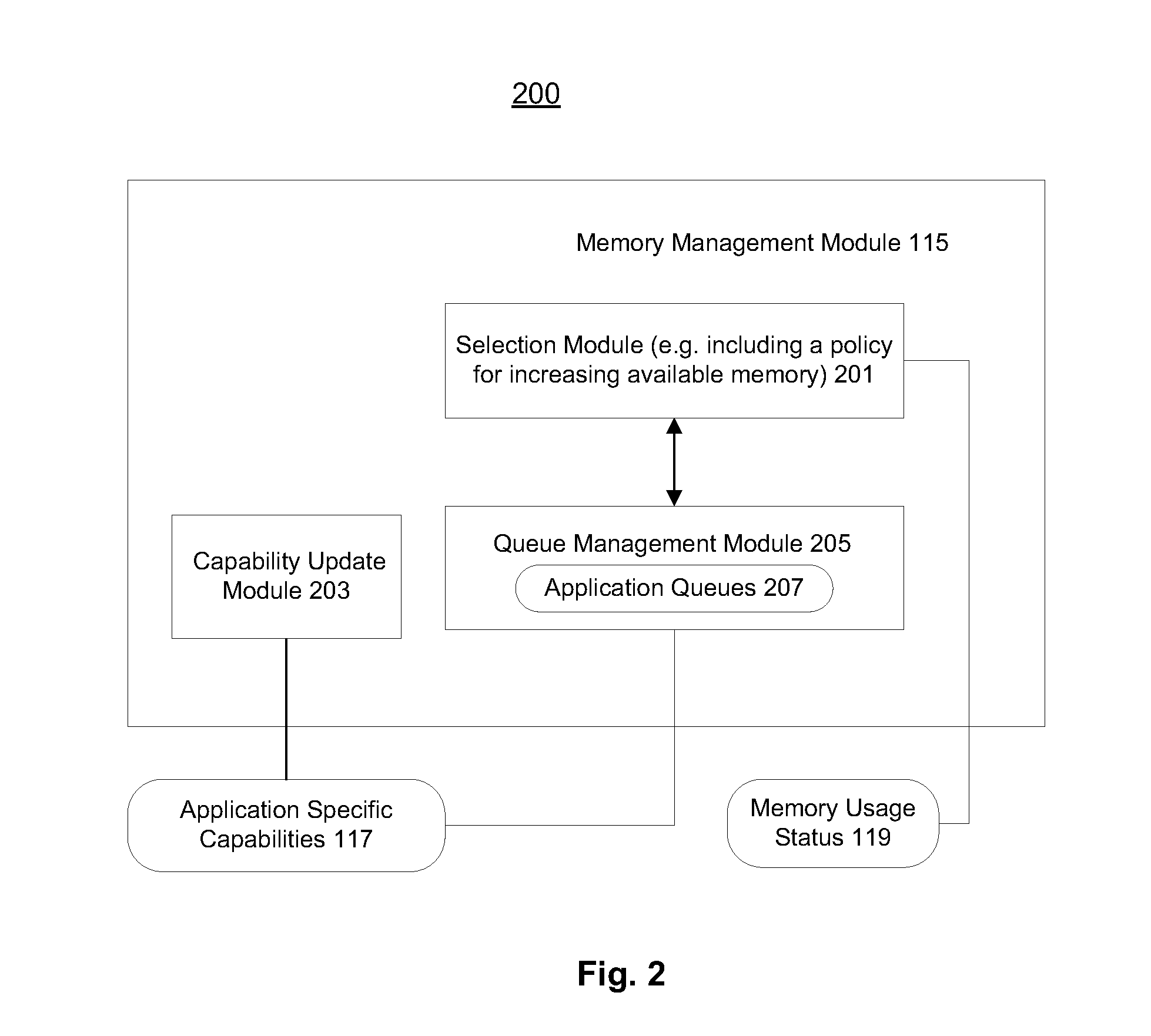

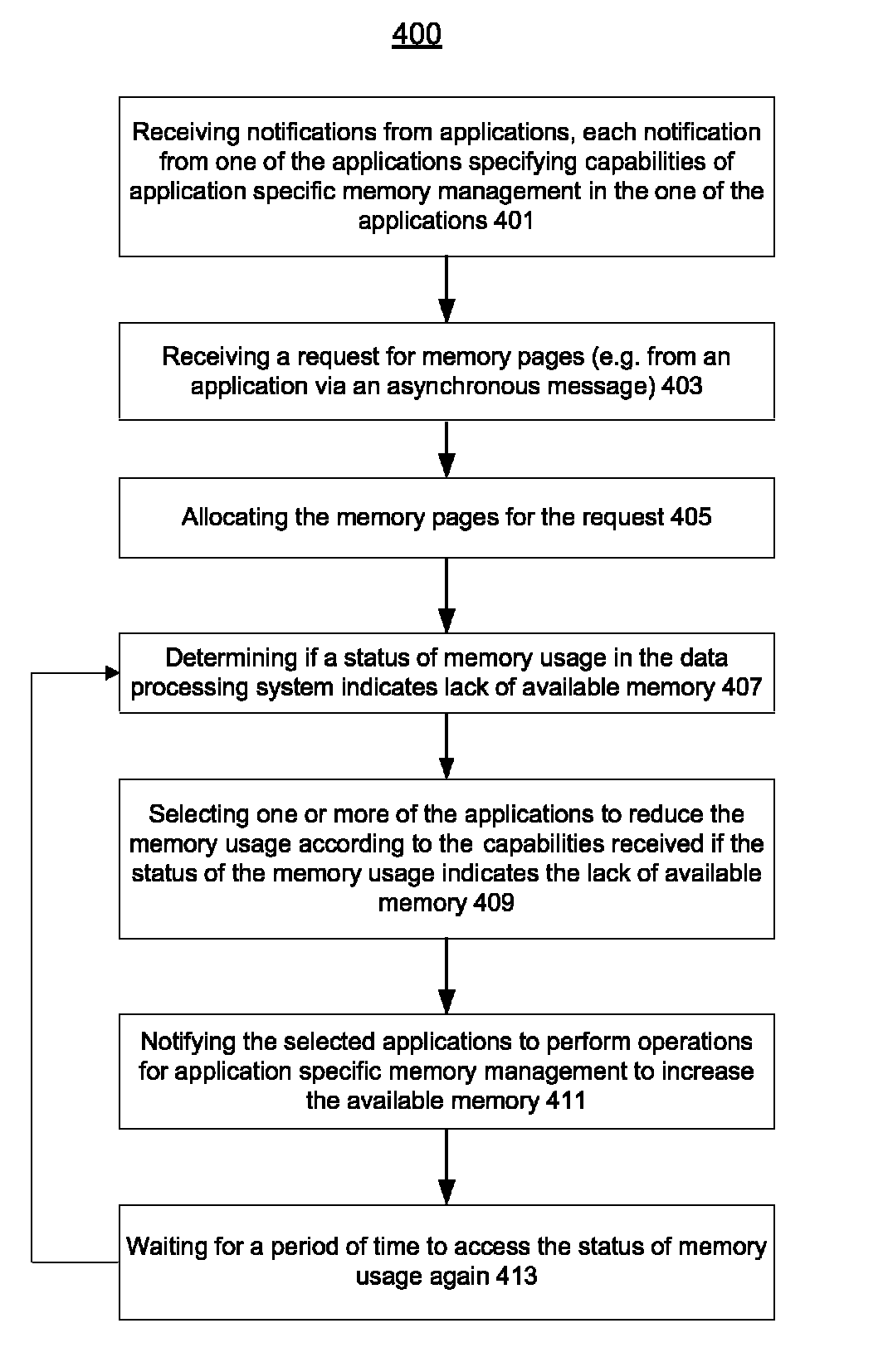

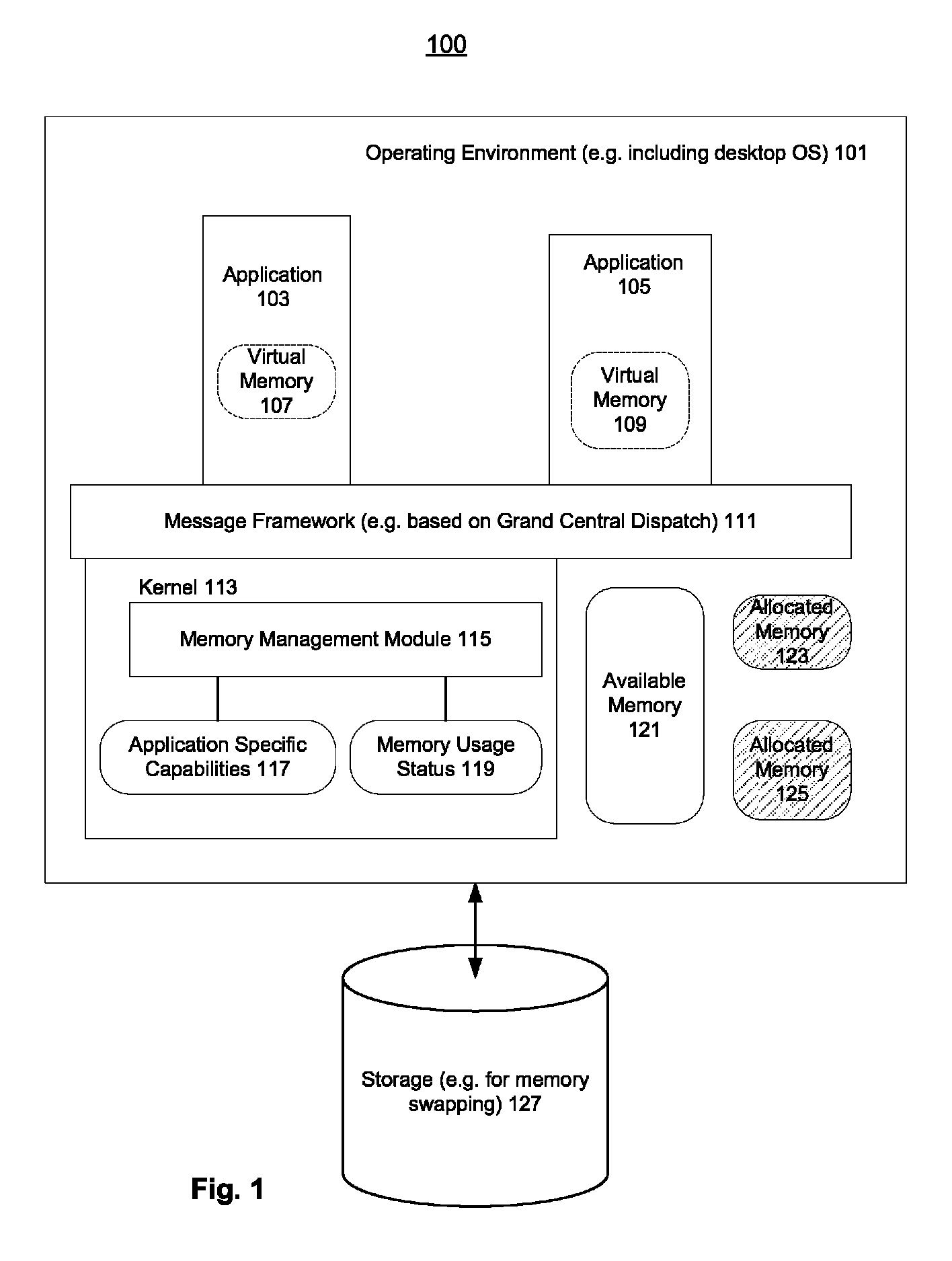

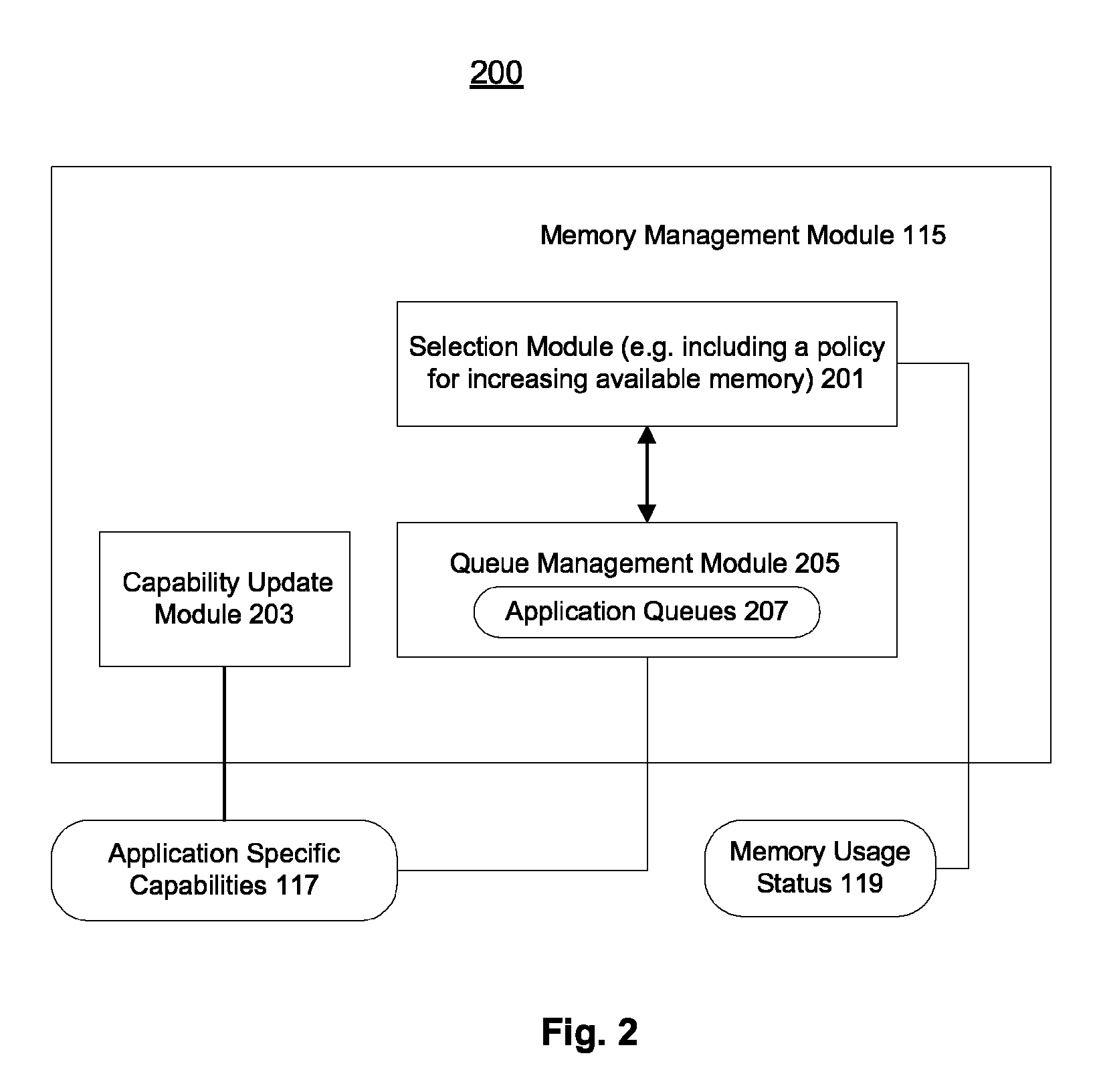

Cooperative memory management

ActiveUS20120179882A1Comprehensive performance is smallReduce memory usageMemory adressing/allocation/relocationSpecific program execution arrangementsMultiple applicationsData processing

A method and an apparatus for selecting one or more applications running in a data processing system to reduce memory usage according to information received from the applications are described. Notifications specifying the information including application specific memory management capabilities may be received from the applications. A status of memory usage indicating lack of available memory may be determined to notify the selected applications. Accordingly, the notified applications may perform operations for application specific memory management to increase available memory.

Owner:APPLE INC

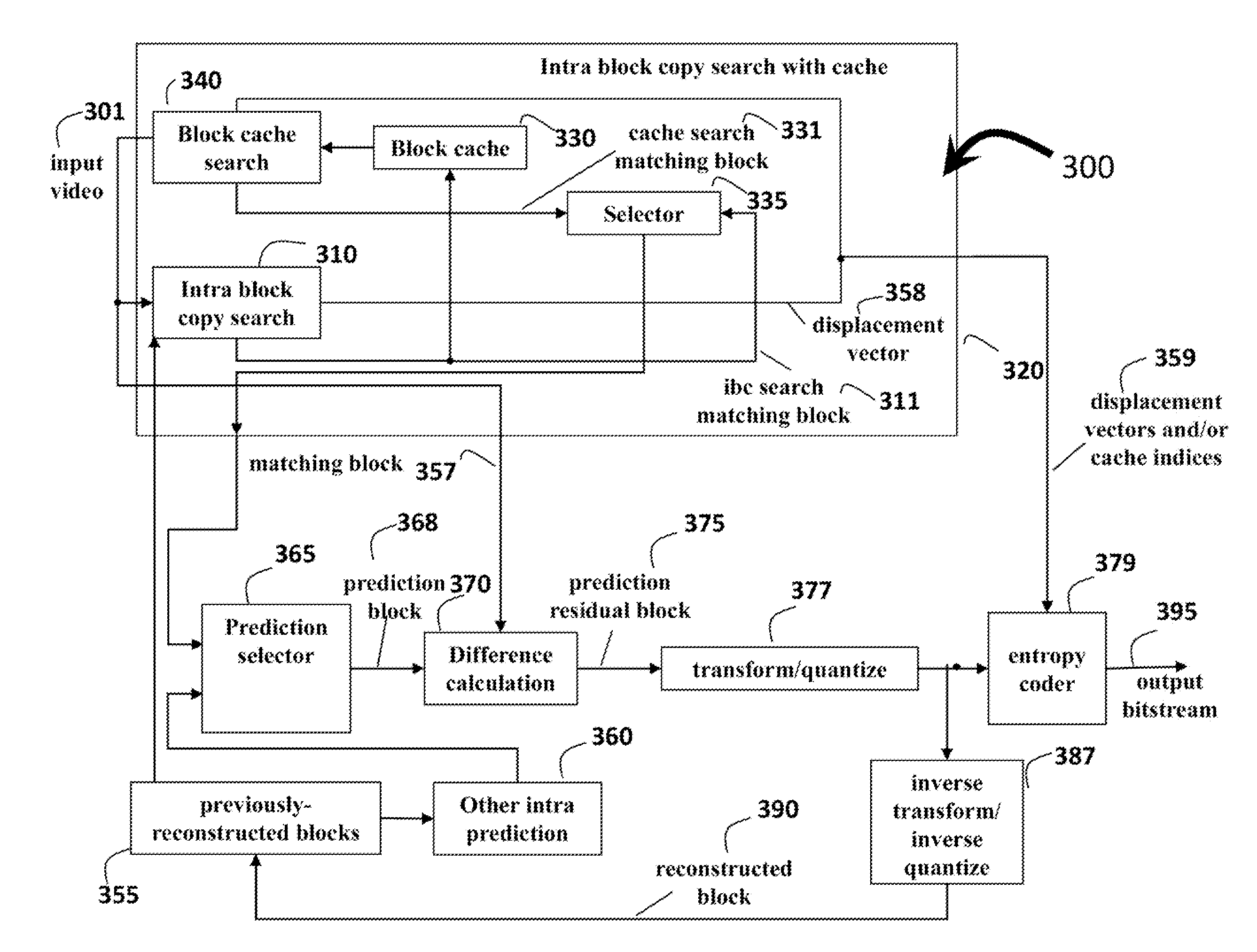

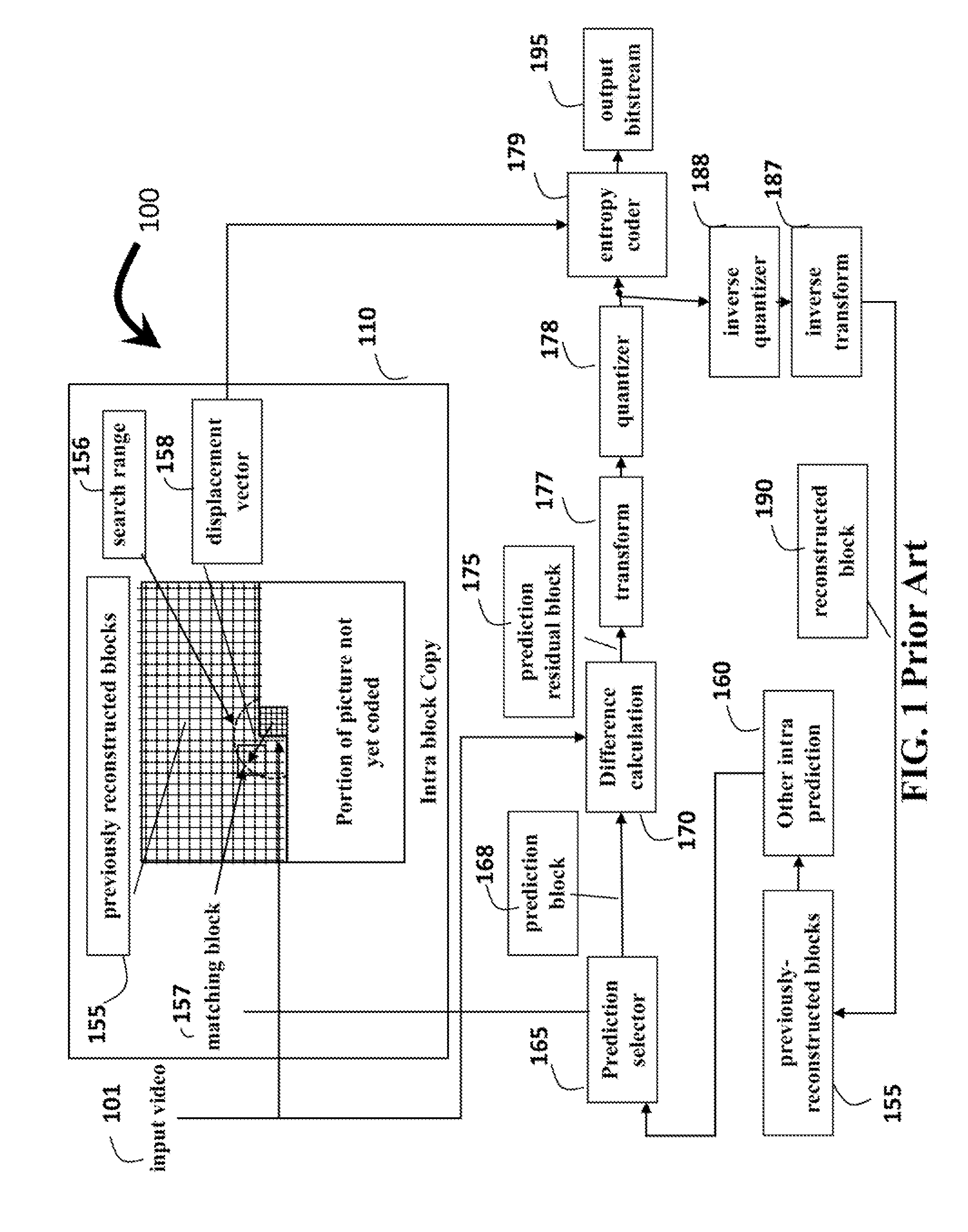

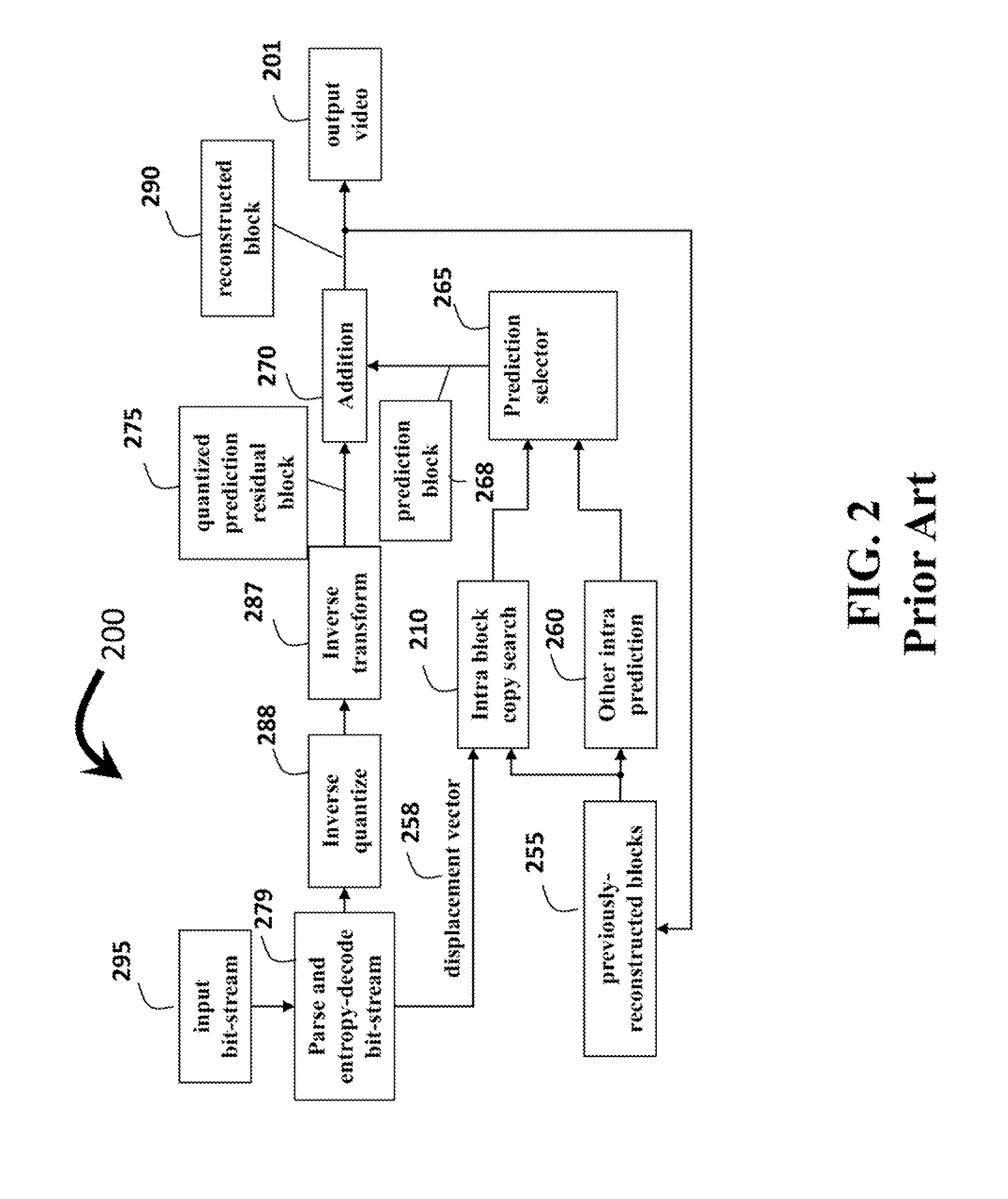

Block Copy Modes for Image and Video Coding

InactiveUS20150264383A1Increase the search rangeImprove memory usageColor television with pulse code modulationColor television with bandwidth reductionVideo encodingParallel computing

A method decodes blocks in pictures of a video in an encoded bitstream by storing previously decoded blocks in a buffer. The previously decoded blocks are displaced less than a predetermined range relative to a current block being decoded. Cached blocks are maintained in a cache. The cached blocks include a set of best matching previously decoded blocks that are displaced greater than the predetermined range relative to the current block. The bitstream is parsed to obtain a prediction indicator that determines whether the current block is predicted from the previously decoded blocks in the buffer or the cached blocks in the cache. Based on the prediction indicator, a prediction residual block is generated, and in a summation process, the prediction residual block is added to a reconstructed residual block to form a decoded block as output.

Owner:MITSUBISHI ELECTRIC RES LAB INC

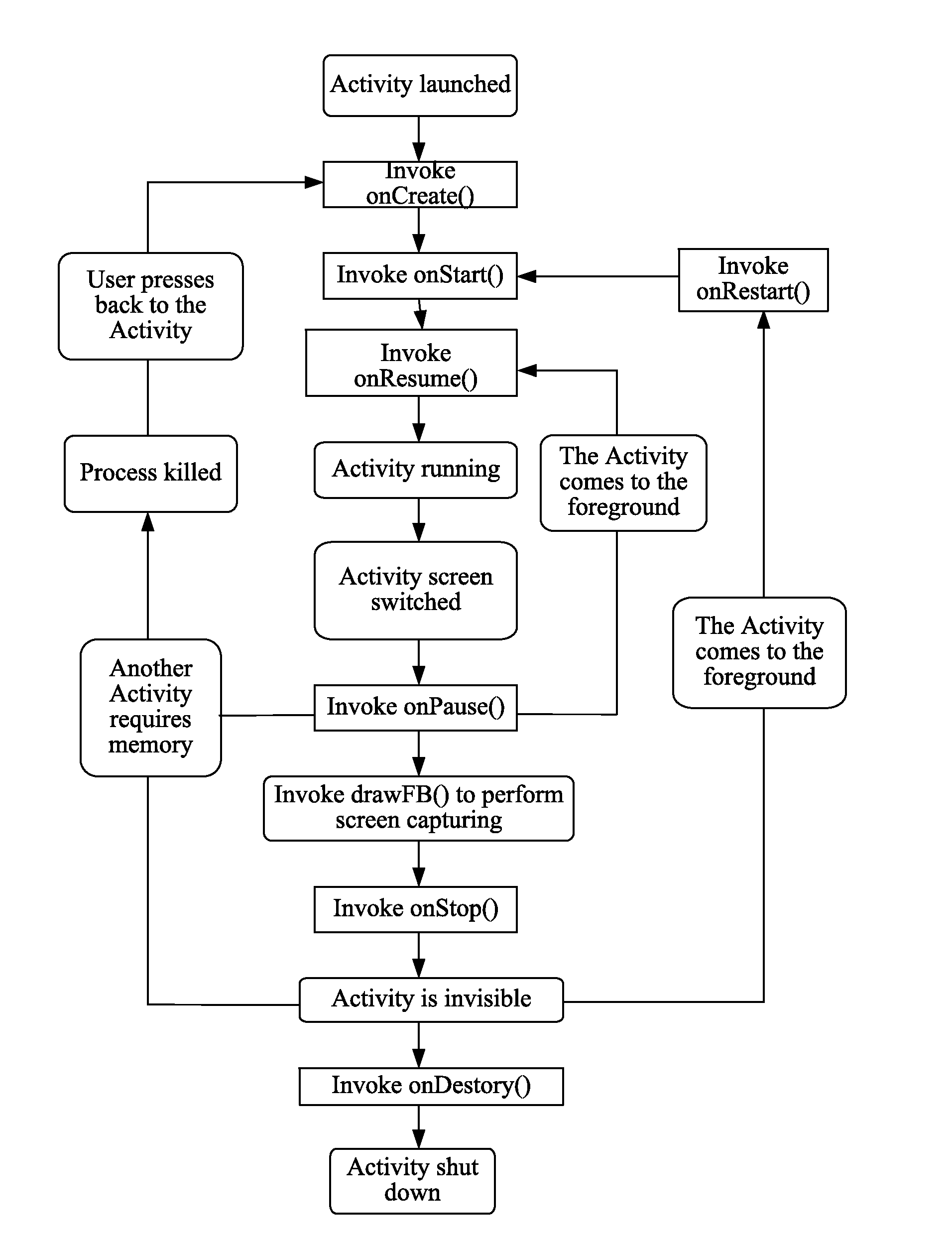

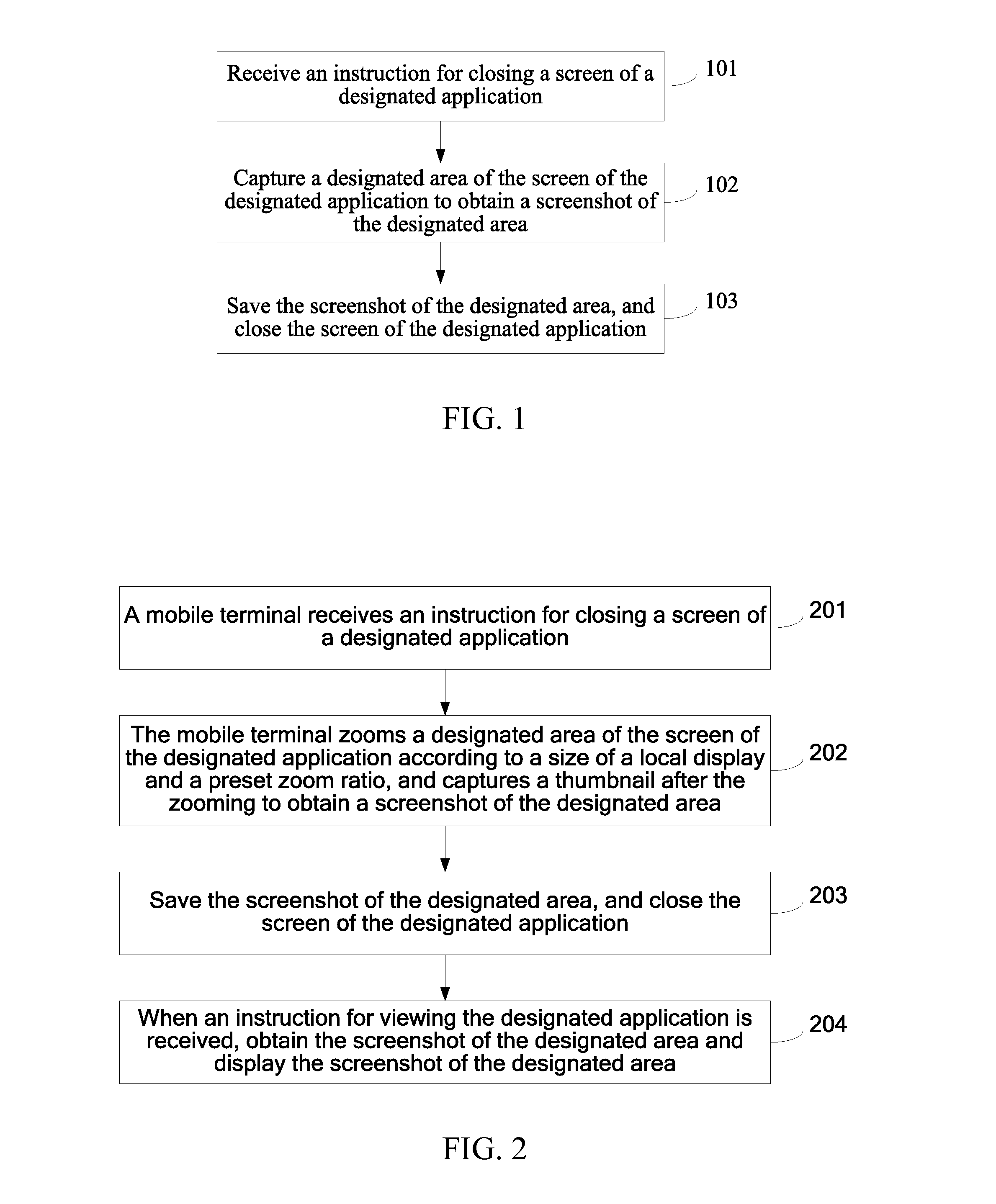

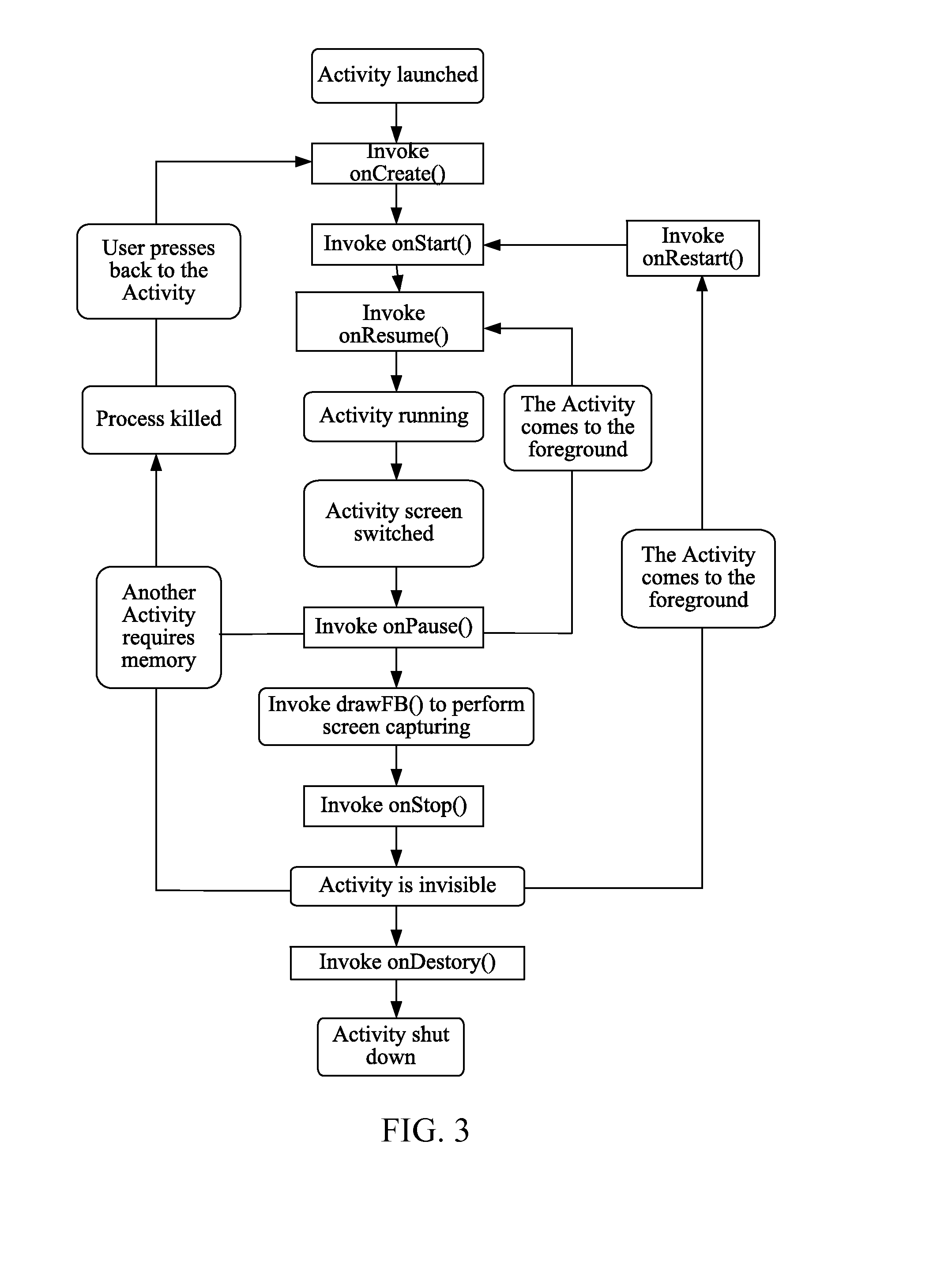

Method and Apparatus for Taking Screenshot of Screen of Application in Mobile Terminal

ActiveUS20140237405A1Improve memory usageImprove capture efficiencyError detection/correctionExecution for user interfacesApplication softwareComputer engineering

Owner:HONOR DEVICE CO LTD

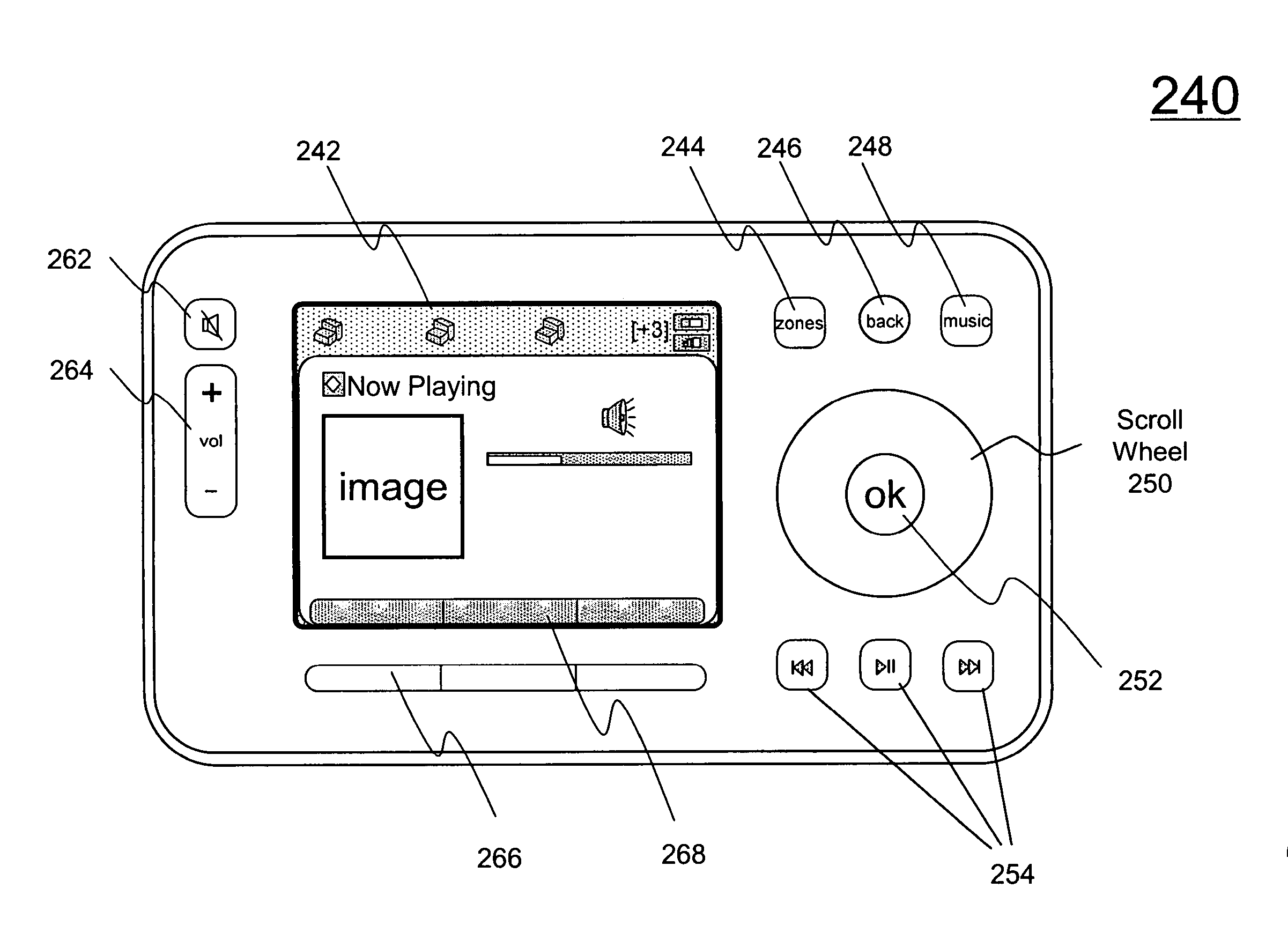

Method and apparatus for displaying single and internet radio items in a play queue

ActiveUS20130219273A1Improve memory usageInput/output for user-computer interactionTelevision system detailsInternet radioSingle item

Owner:SONOS

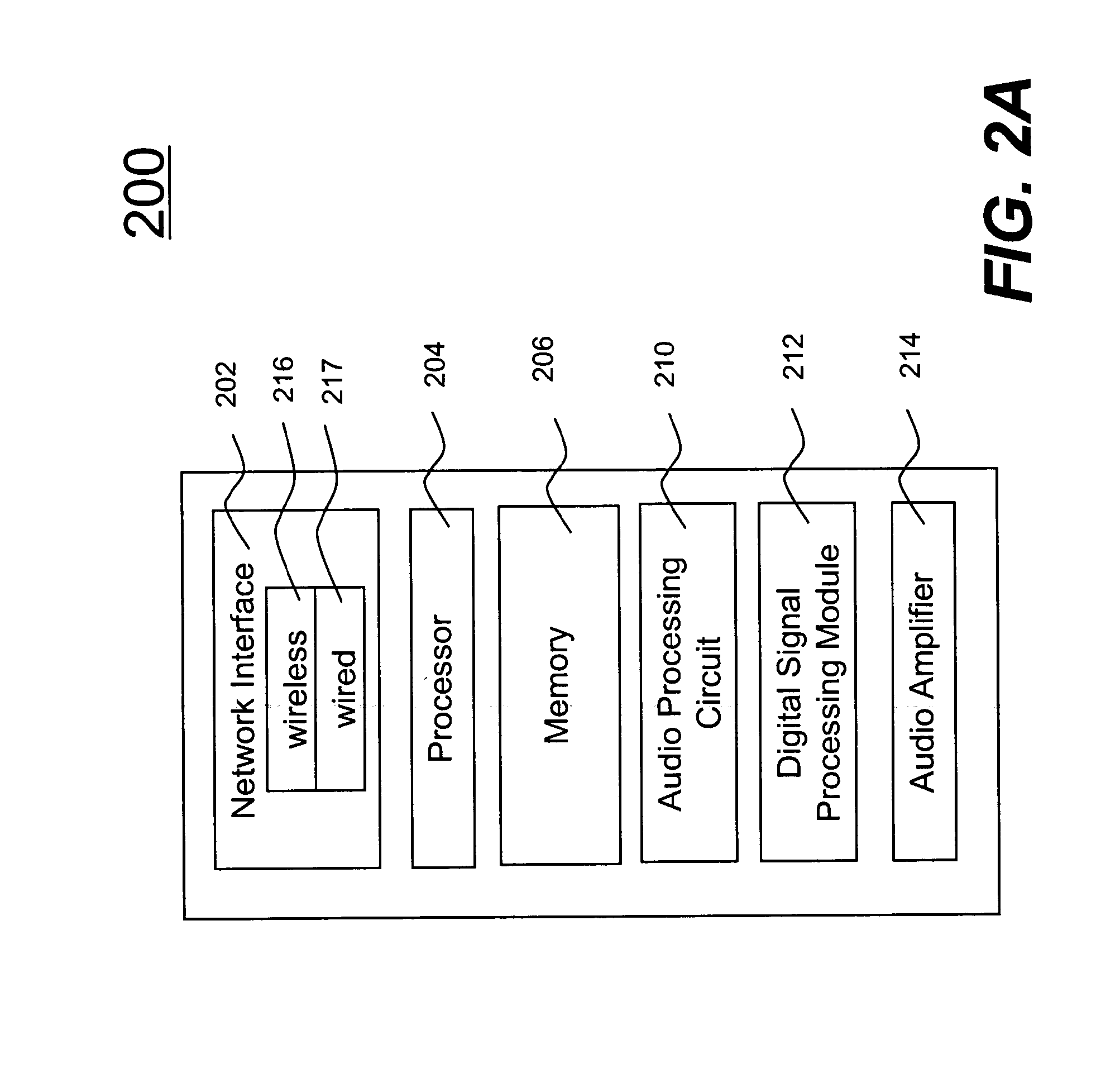

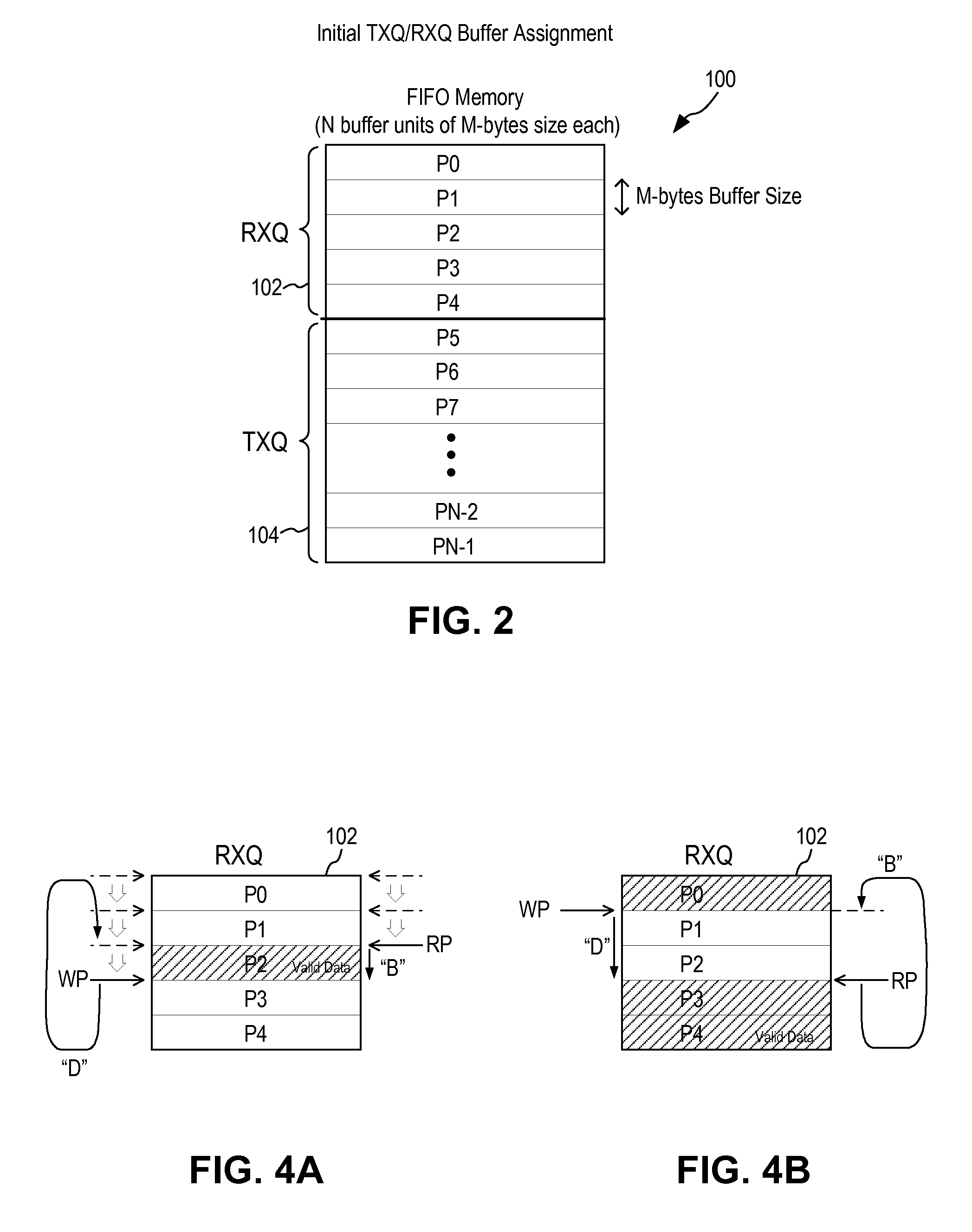

Dynamic Queue Memory Allocation With Flow Control

InactiveUS20100202470A1Improve memory usageSolve high memory usageData switching by path configurationSpatial allocationComputer science

A method in an Ethernet controller for allocating memory space in a buffer memory between a transmit queue (TXQ) and a receive queue (RXQ) includes allocating initial memory space in the buffer memory to the RXQ and the TXQ; defining a RXQ high watermark and a RXQ low watermark; receiving an ingress data frame; determining if a memory usage in the RXQ exceeds the RXQ high watermark; if the RXQ high watermark is not exceeded, storing the ingress data frame in the RXQ; if the RXQ high watermark is exceeded, determining if there are unused memory space in the TXQ; if there are no unused memory space in the TXQ, transmitting a pause frame to halt further ingress data frame; if there are unused memory space in the TXQ, allocating unused memory space in the TXQ to the RXQ; and storing the ingress data frame in the RXQ.

Owner:MICREL

Free item distribution among multiple free lists during garbage collection for more efficient object allocation

InactiveUS7149866B2Easy to manageEfficient managementData processing applicationsMemory adressing/allocation/relocationParallel computingFree list

A method, system, and program for improving free item distribution among multiple free lists during garbage collection for more efficient object allocation are provided. A garbage collector predicts future allocation requirements and then distributes free items to multiple subpool free lists and a TLH free list during the sweep phase according to the future allocation requirements. The sizes of subpools and number of free items in subpools are predicted as the most likely to match future allocation requests. In particular, once a subpool free list is filled with the number of free items needed according to the future allocation requirements, any additional free items designated for the subpool free list can be divided into multiple TLH sized free items and placed on the TLH free list. Allocation threads are enabled to acquire free items from the TLH free list and to replenish a current TLH without acquiring heap lock.

Owner:INT BUSINESS MASCH CORP

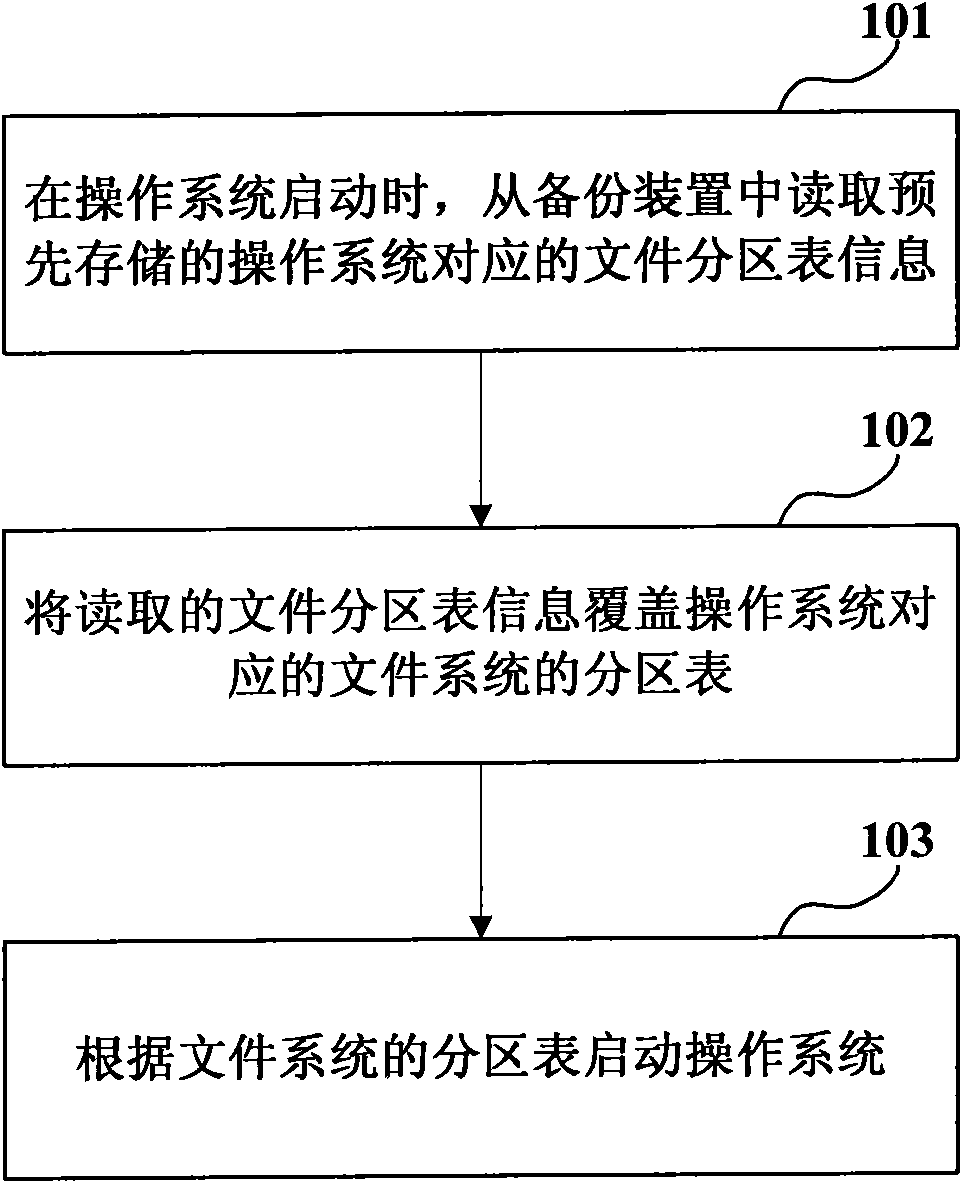

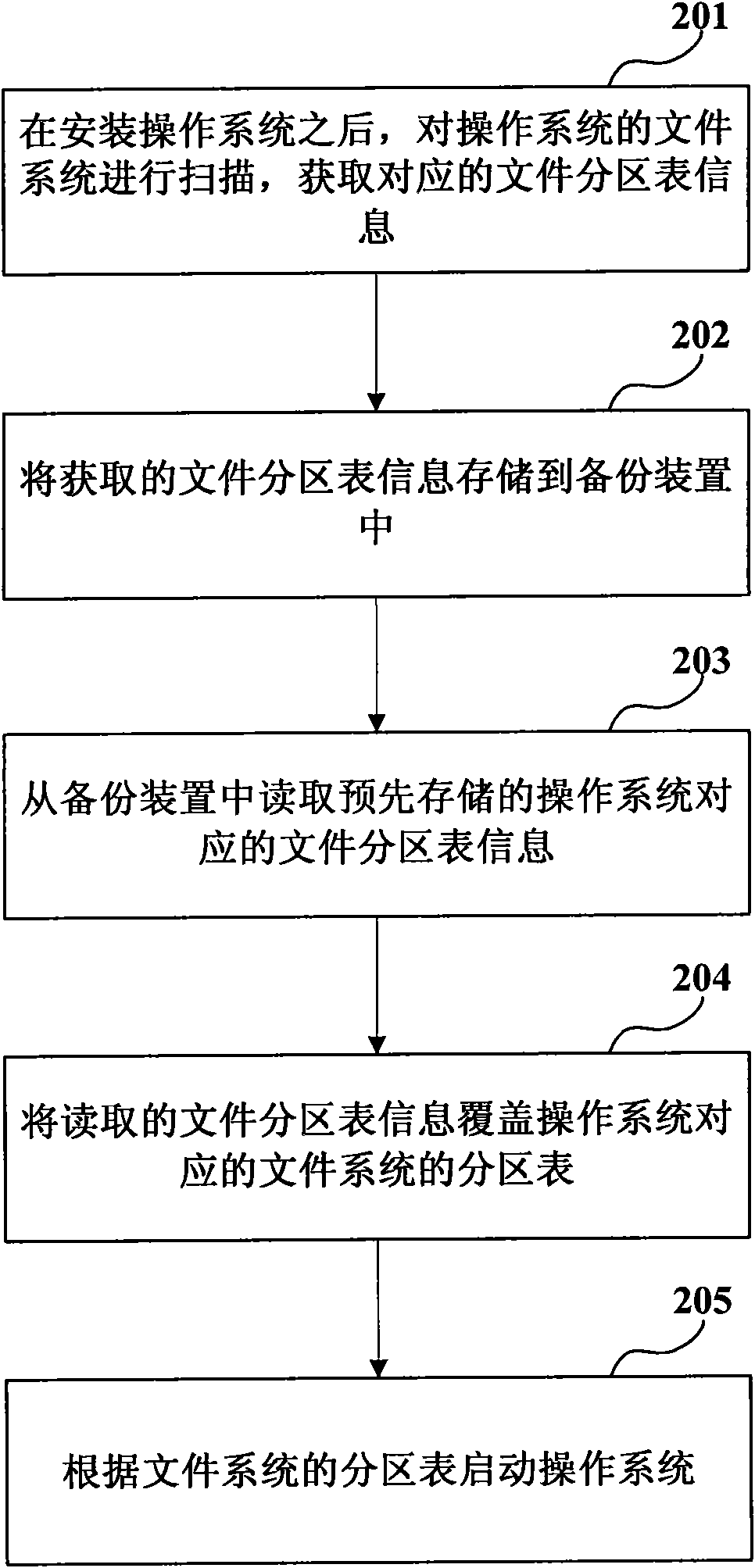

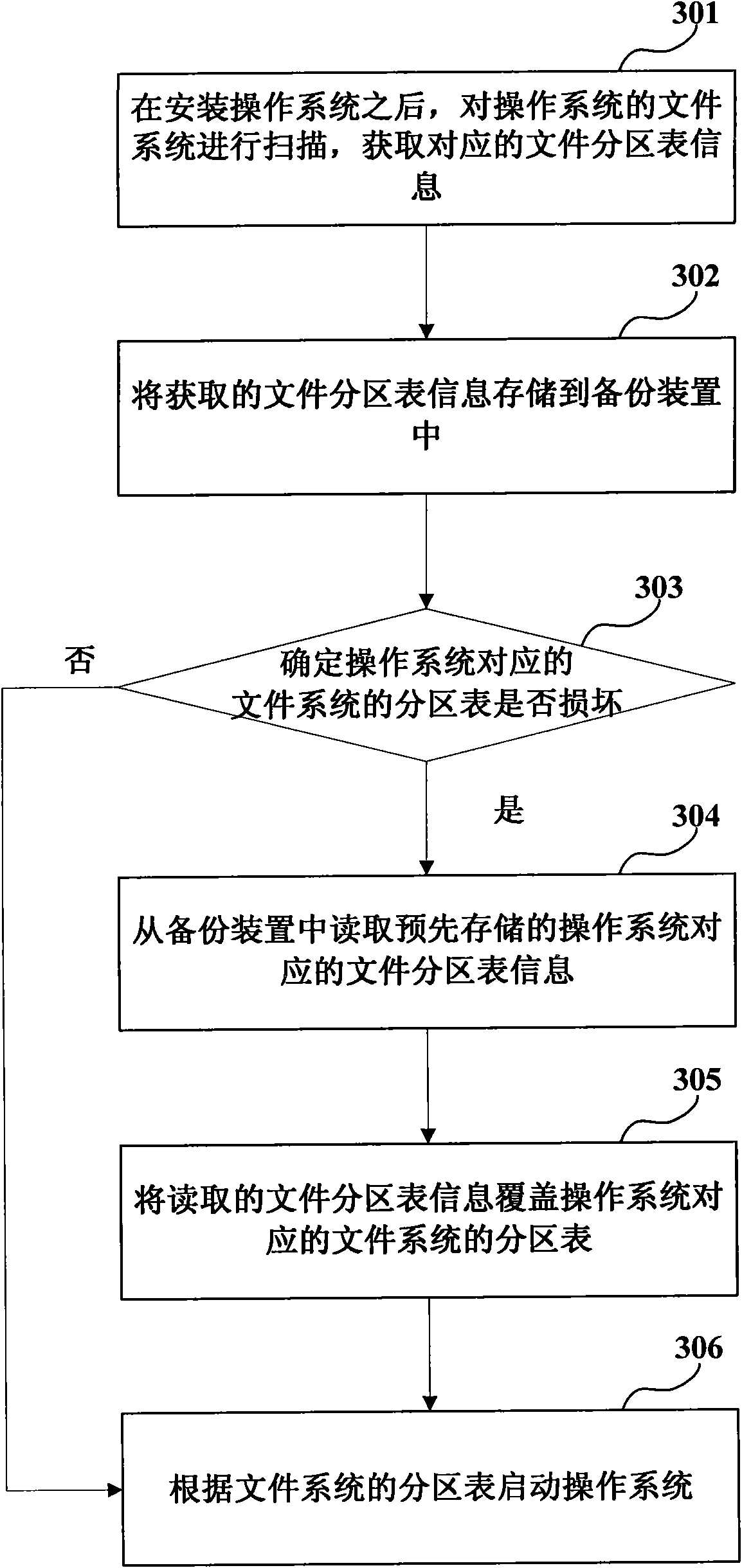

Fault tolerance method and device for file system

InactiveCN101930384AShorten the timeStart fastRedundant operation error correctionFault toleranceRepair time

The embodiment of the invention provides a fault tolerance method and a fault tolerance device for a file system. The method comprises the following steps of: when an operating system is started, reading pre-stored file partition table information corresponding to the operating system from a backup device; covering read file partition table information on a partition table of the file system; and starting the operating system according to the partition table of the file system. The embodiment of the invention has the advantages of little backup information, short repair time, quick starting of the system, and high utilization rate of internal storage.

Owner:BEIJING CHINESE ACAD OF SCI SOFTWARE CENT CO LTD

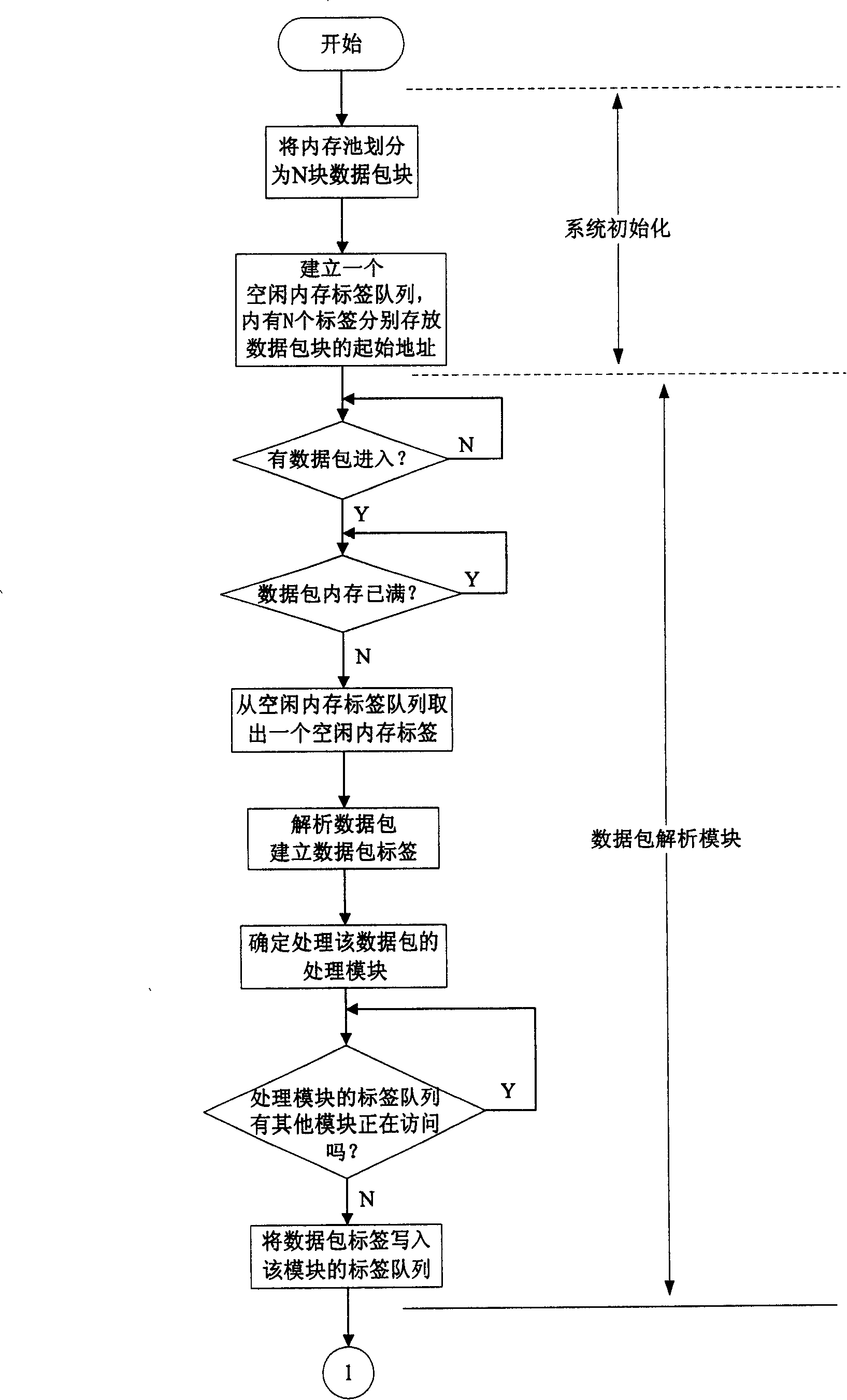

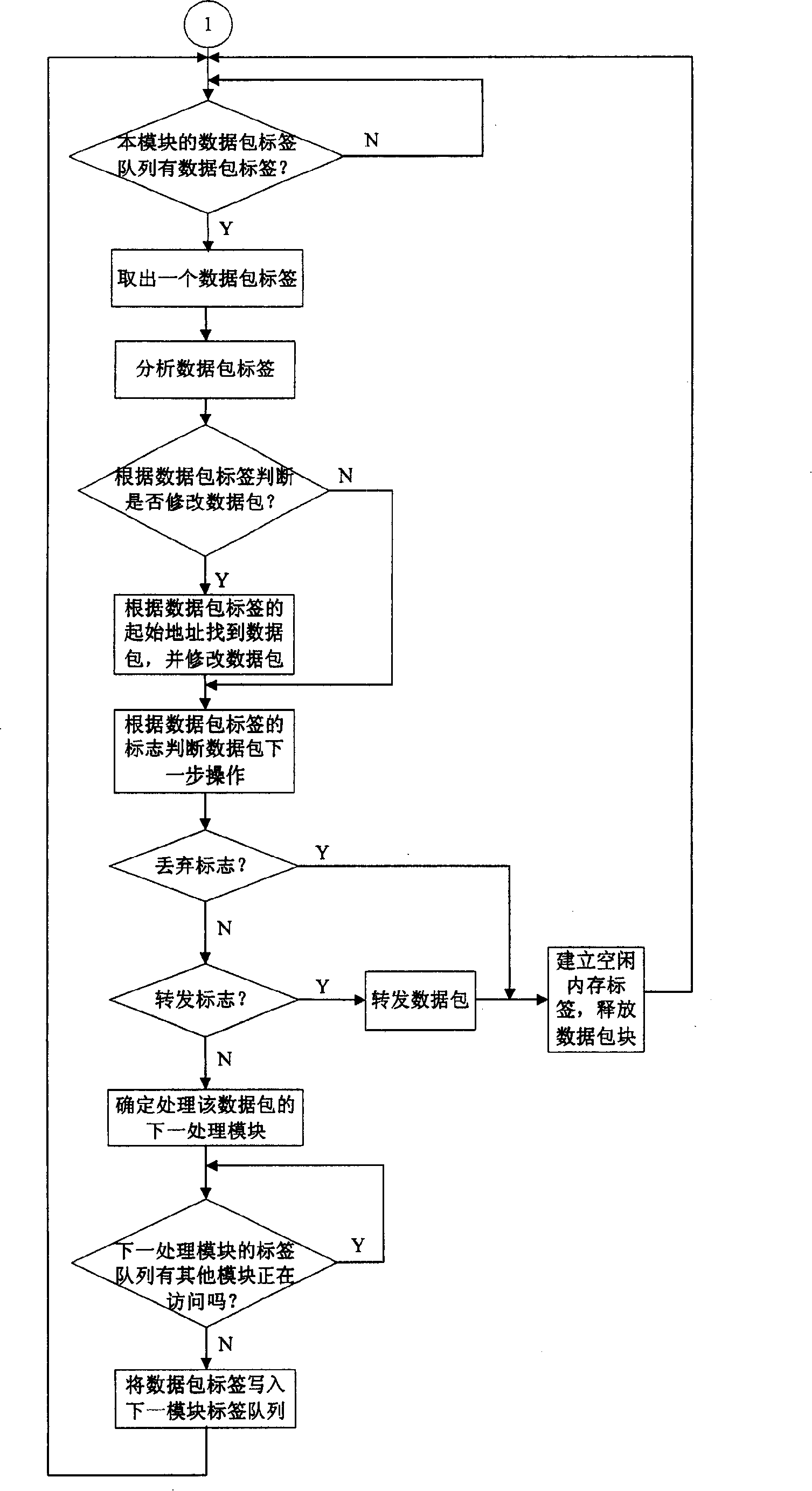

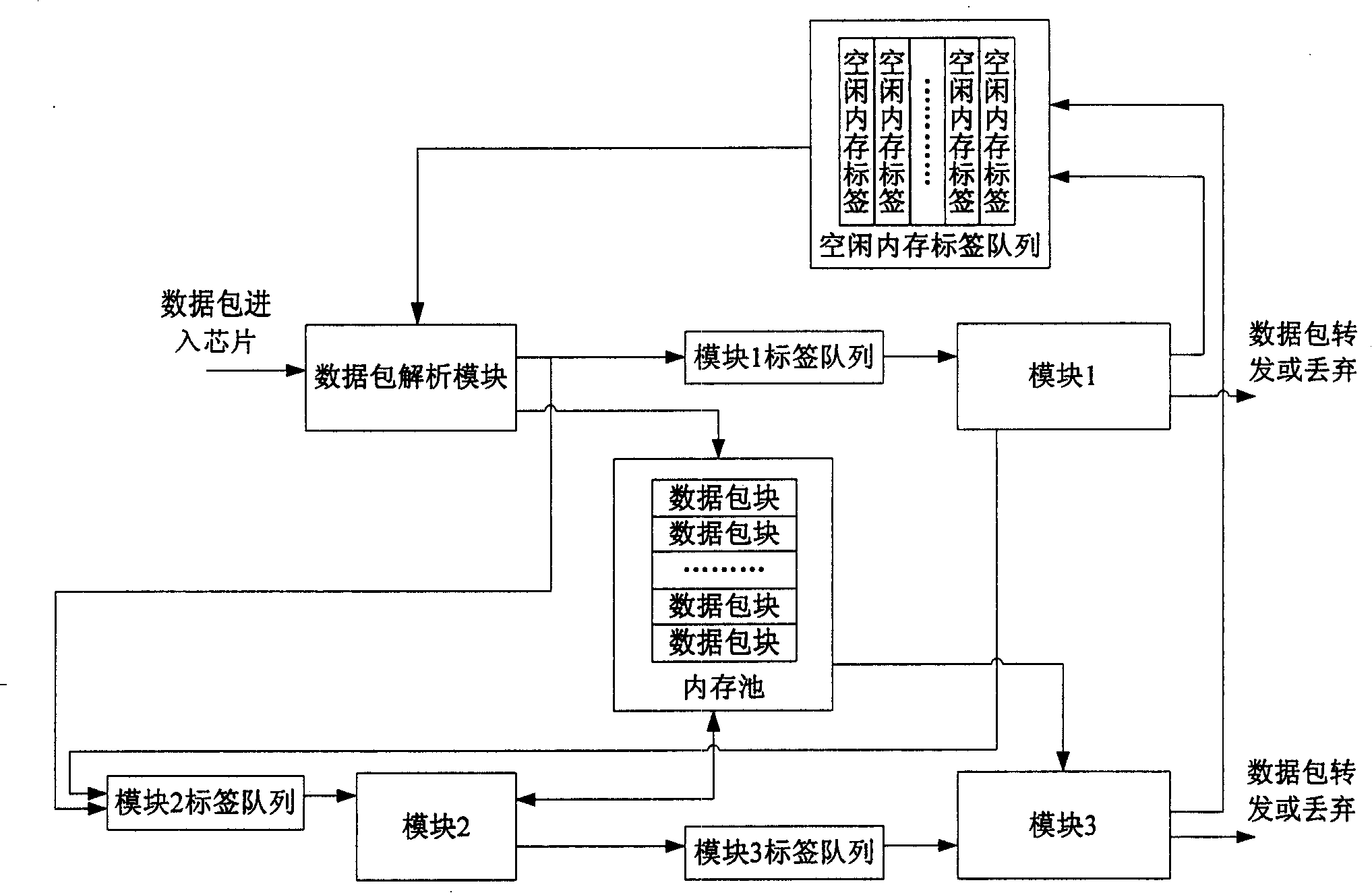

Firewall chip data packet buffer management method

ActiveCN101212451AReduce development costsReduce manufacturing costData switching networksAnalysis dataProcess module

The invention discloses a method for managing the data packet caching of a firewall chip, which comprises the following steps: a memory is distributed in the inner of the chip; the memory is also divided into a plurality of plates with same size and each plate can store a data packet. A tag queue of free memory is built and each lag correspondingly stores an initial address of the data packet plate. If a data packet enters, a lag is taken form the tag queue of the free memory. The data packet is stored into the data packet plate according to the initial address of the data packet plate indicated by the lag; an analysis data packet builds a data packet lag; a process module which determines to process the data packet writes the data packet lag into the data lag queue of the module; the module takes out the data packet lag from the data packet lag queue of the module and carries out a corresponding process according the content of the data packet lag to realize the function possessed by a common firewall. The invention avoids the frequent flitting of the data packet inside the chip, thus enhancing process efficiency of the data packet.

Owner:BEIJING TOPSEC NETWORK SECURITY TECH

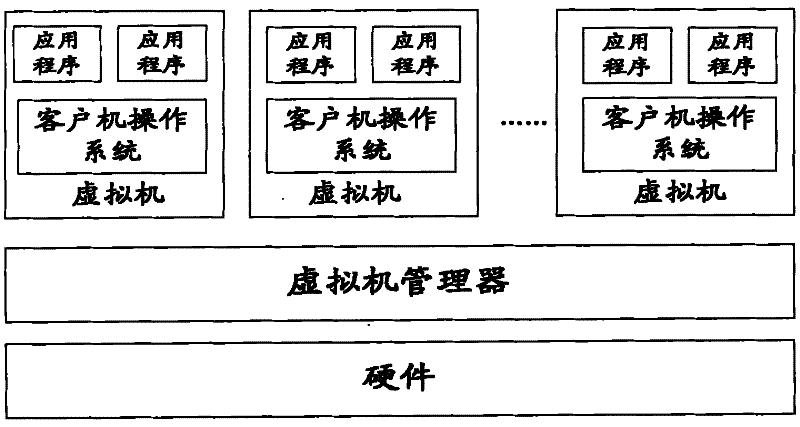

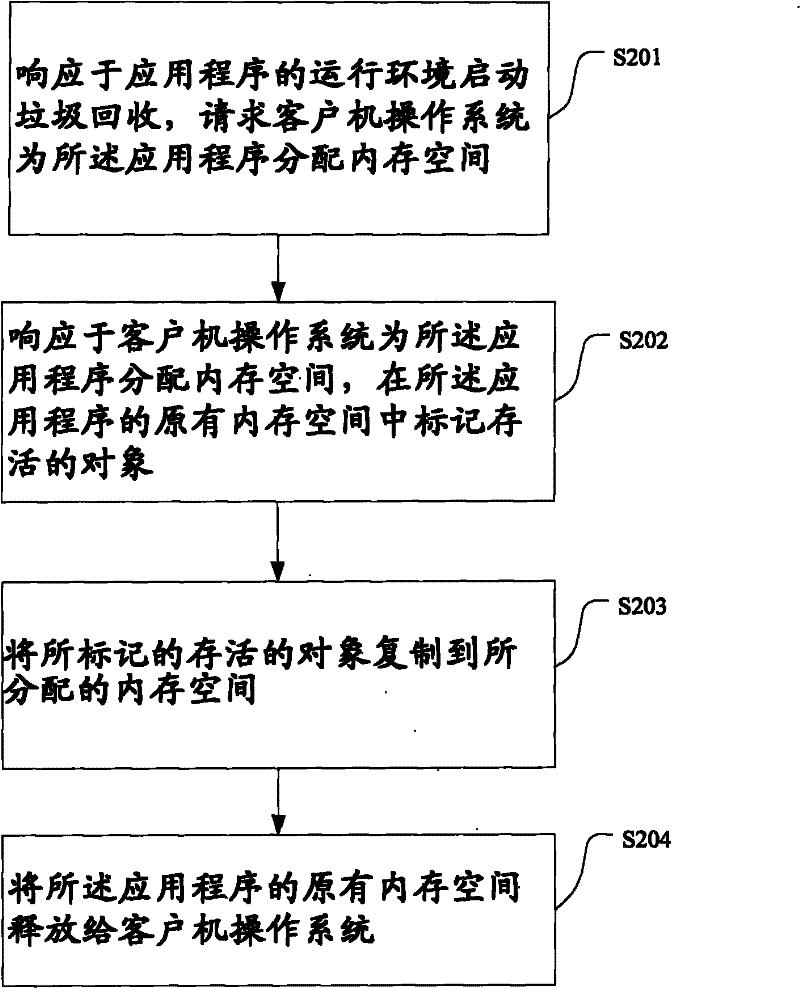

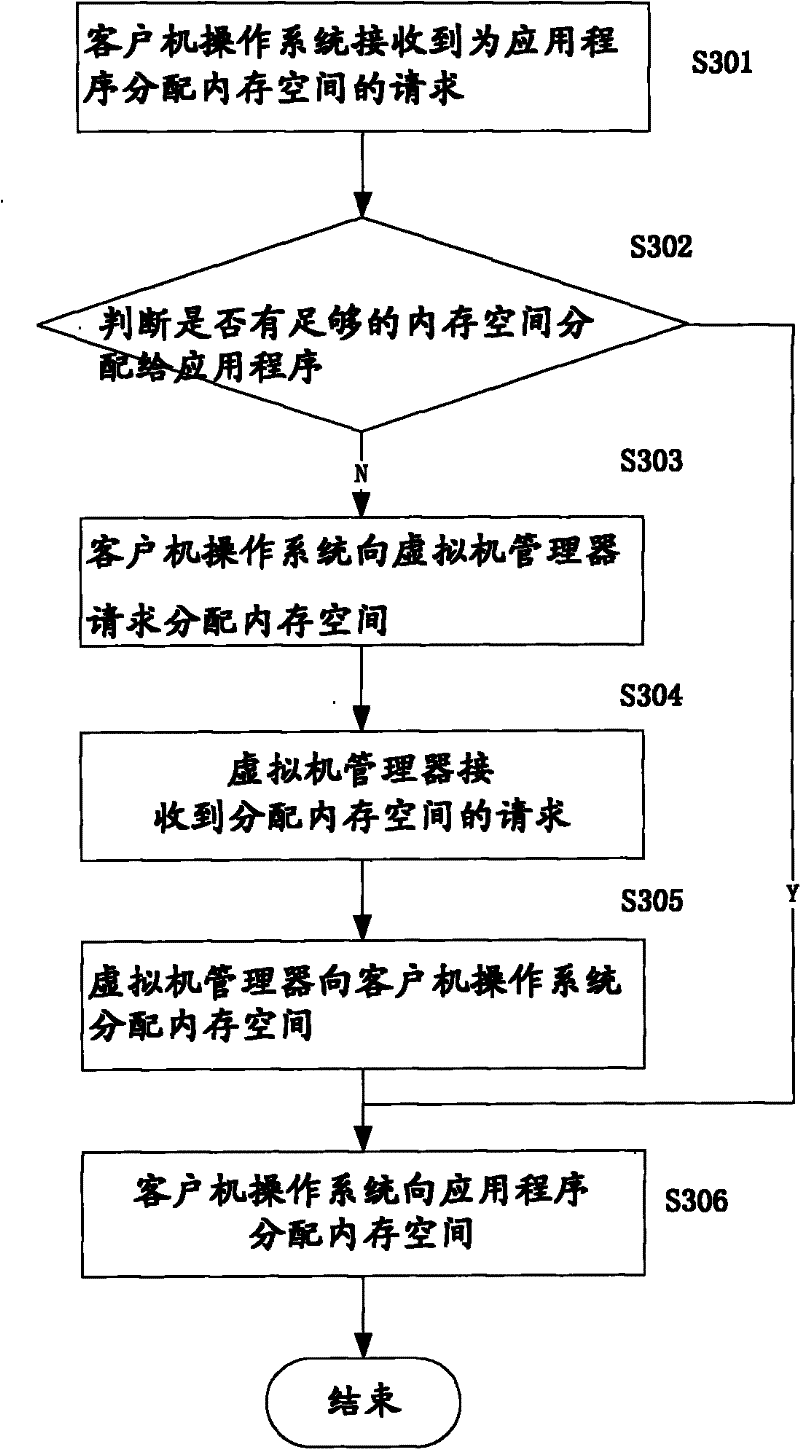

Garbage recycling method and system in virtual environment

ActiveCN102236603AImprove memory usageMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationVirtualizationOperating environment

The invention discloses a garbage recycling method and system in a virtual environment. The virtual environment comprises a virtual machine manager, at least one client operating system and at least one application program running in each client operating system, wherein the application program is used for memory management based on a garbage recycling mechanism. The method comprises the following steps that: responding to an operating environment of the application program, a garbage recycler is started for recycling the garbage, and the garbage recycler requests the client operating system for allocating memory space; responding to receiving the request for allocating the memory space, the client operating system allocates the memory space for the garbage recycler; the garbage recycler marks live objects in an original memory space of the application program and copies the marked live objects to the allocated memory space; and the garbage recycler releases the original memory space of the application program to the client operating system.

Owner:IBM CORP

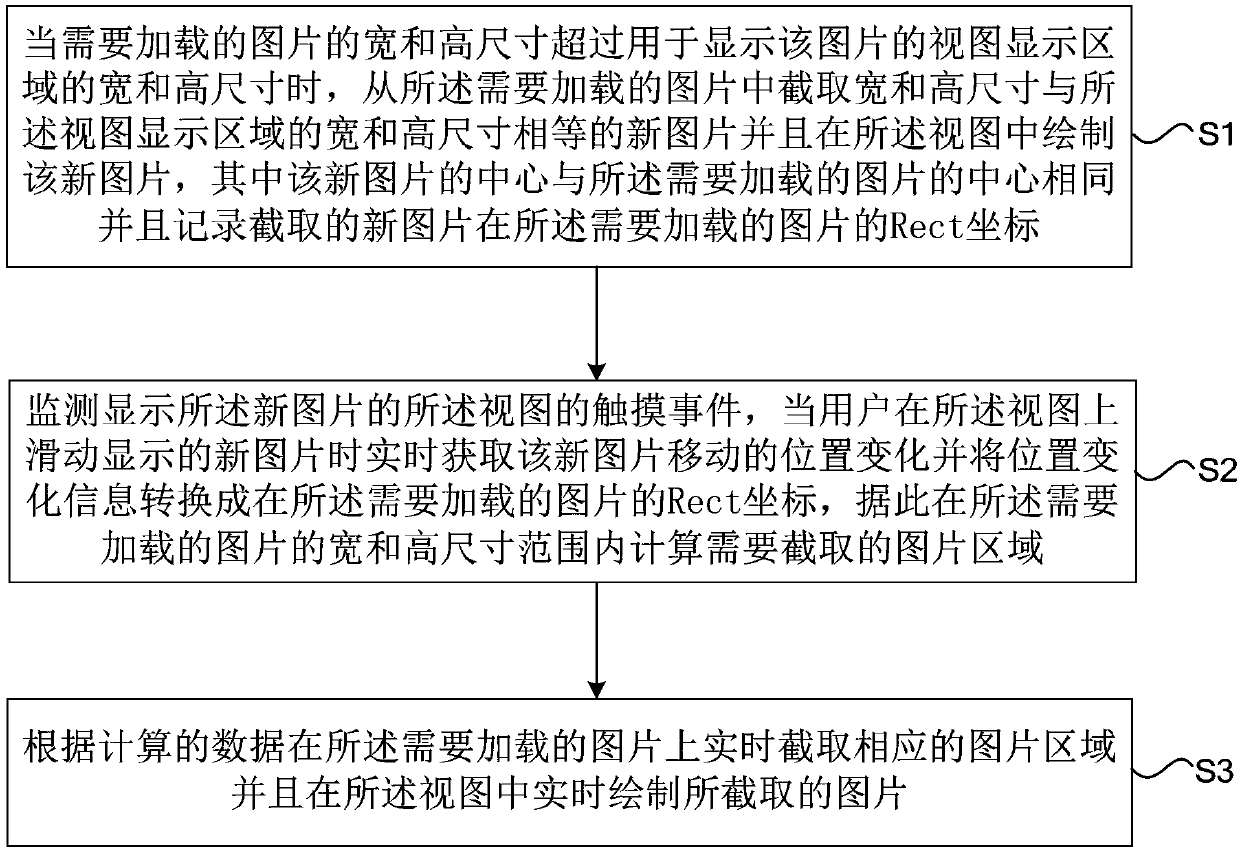

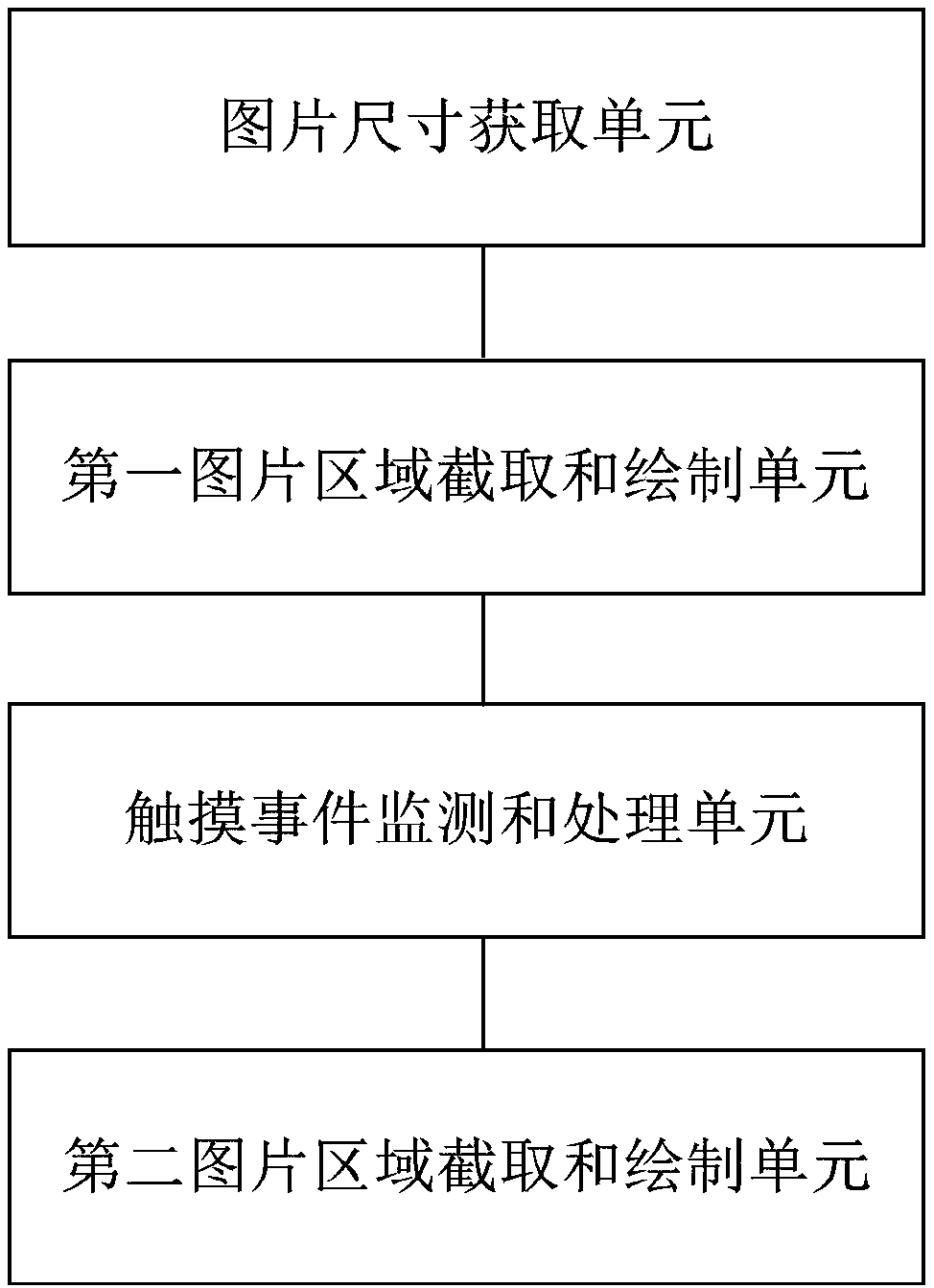

Picture loading method and device

InactiveCN107608731AReduce consumption and occupancyImprove memory usageSpecific program execution arrangementsInput/output processes for data processingCalculated dataComputer graphics (images)

The invention provides a picture loading method and device. The method comprises the following steps that: when the sizes of the width and the height of a picture which needs to be loaded exceed the sizes of the width and the height of a view displaying area used for displaying the picture, capturing a new picture of which the sizes of the width and the height are equal to the sizes of the width and the height of the view displaying area from the picture which needs to be loaded, drawing the new picture in a view, monitoring a touch event of the view which displays the new picture, obtaining the position change of the movement of the new picture in real time when a user displays the new picture in a sliding way on the view, converting position change information into the Rect coordinate ofthe picture which needs to be loaded, and therefore, calculating a picture are which needs to be captured in the size range of the height and the width of the picture which needs to be loaded; and according to the calculated data, capturing a corresponding picture area in real time on the picture which needs to e loaded, and drawing the captured picture in real time in the view.

Owner:ALIBABA (CHINA) CO LTD

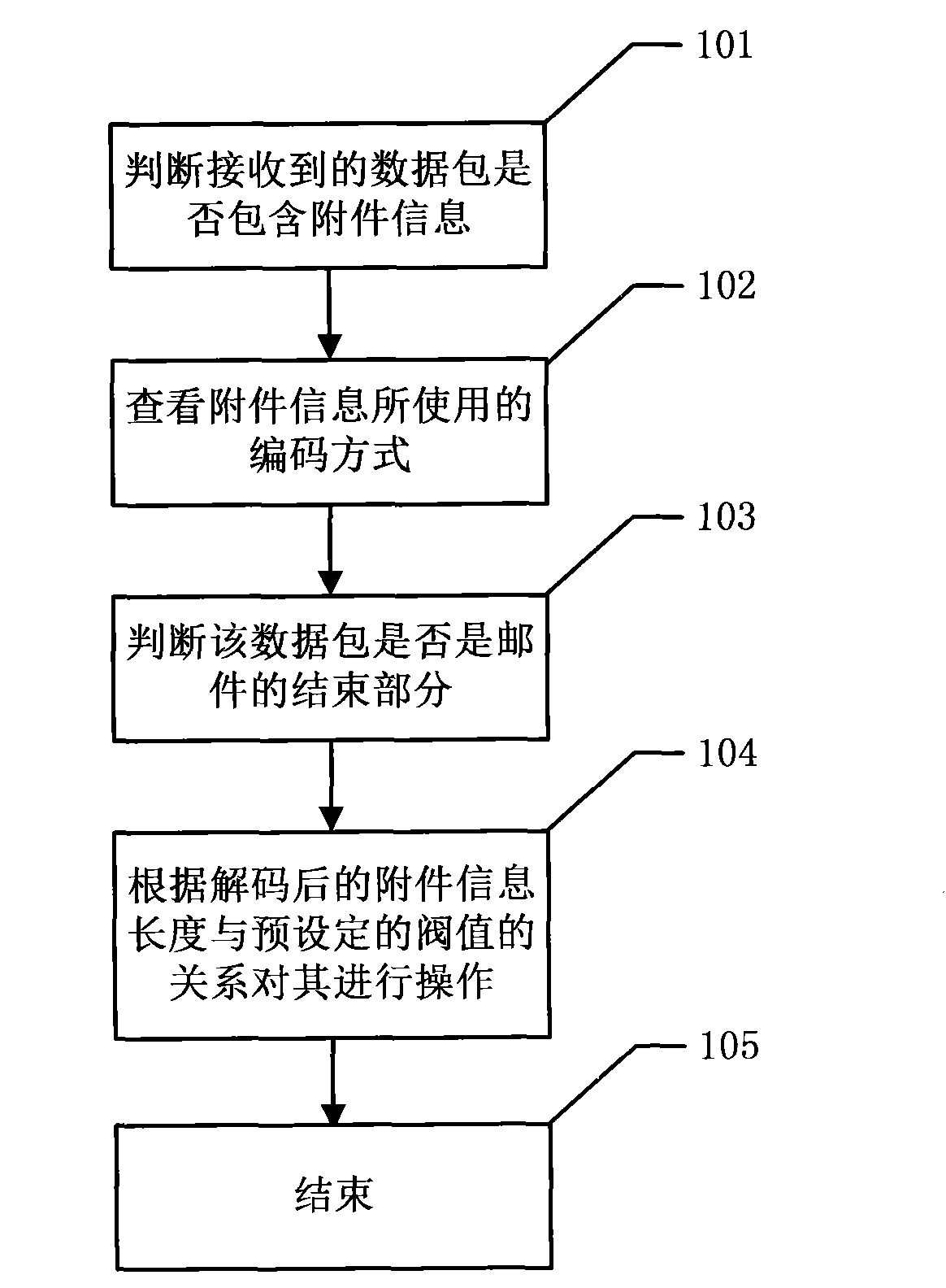

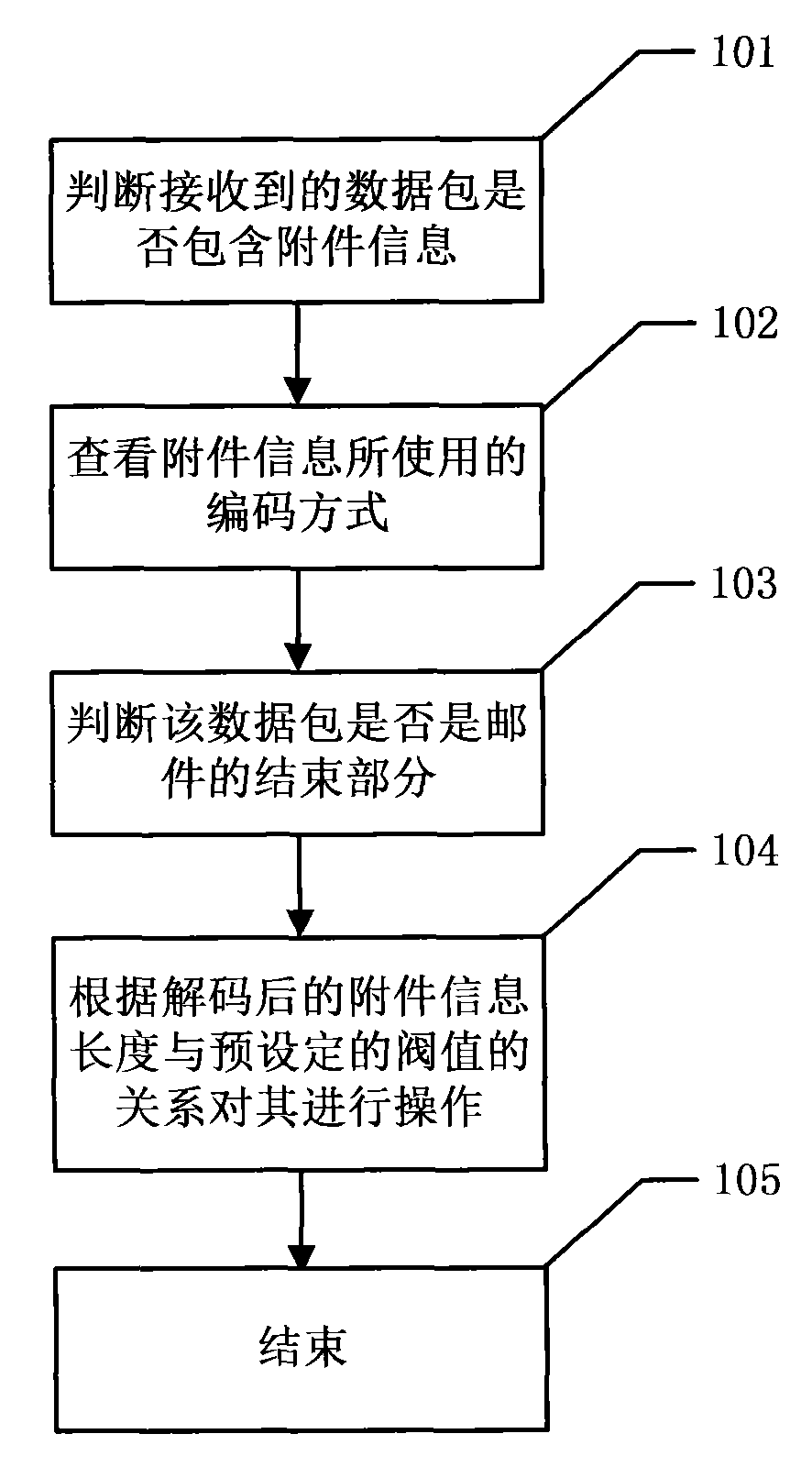

Packet-level dynamic mail attachment virus detection method

ActiveCN101789105AReduce sizeImprove memory usageOffice automationData switching networksInternal memoryNetwork packet

The invention discloses a packet-level dynamic mail attachment virus detection method and relates to website security technical field. The method includes that: the received packet is judged if containing attachment information; the encoding mode of the attachment information is checked; the packet is judged if the packet is the end part of the mail; the packet is processed according to the relationship of decoded attachment information length and the preset threshold. The packet-level dynamic mail attachment virus detection method can improve the internal memory utilization rate and the virus detection efficiency.

Owner:BEIJING ANTIY NETWORK SAFETY TECH CO LTD

Methods and systems for using map-reduce for large-scale analysis of graph-based data

ActiveUS8943011B2Improve memory usageDigital data processing detailsBiological testingCluster algorithmGraphics

Embodiments are described for a method for processing graph data by executing a Markov Clustering algorithm (MCL) to find clusters of vertices of the graph data, organizing the graph data by column by calculating a probability percentage for each column of a similarity matrix of the graph data to produce column data, generating a probability matrix of states of the column data, performing an expansion of the probability matrix by computing a power of the matrix using a Map-Reduce model executed in a processor-based computing device; and organizing the probability matrix into a set of sub-matrices to find the least amount of data needed for the Map-Reduce model given that two lines of data in the matrix are required to compute a single value for the power of the matrix. One of at least two strategies may be used to computing the power of the matrix (matrix square, M2) based on simplicity of execution or improved memory usage.

Owner:SALESFORCE COM INC

Cooperative memory management

ActiveUS8892827B2Comprehensive performance is smallIncrease pressureResource allocationMemory adressing/allocation/relocationData processing systemApplication specific

A method and an apparatus for selecting one or more applications running in a data processing system to reduce memory usage according to information received from the applications are described. Notifications specifying the information including application specific memory management capabilities may be received from the applications. A status of memory usage indicating lack of available memory may be determined to notify the selected applications. Accordingly, the notified applications may perform operations for application specific memory management to increase available memory.

Owner:APPLE INC

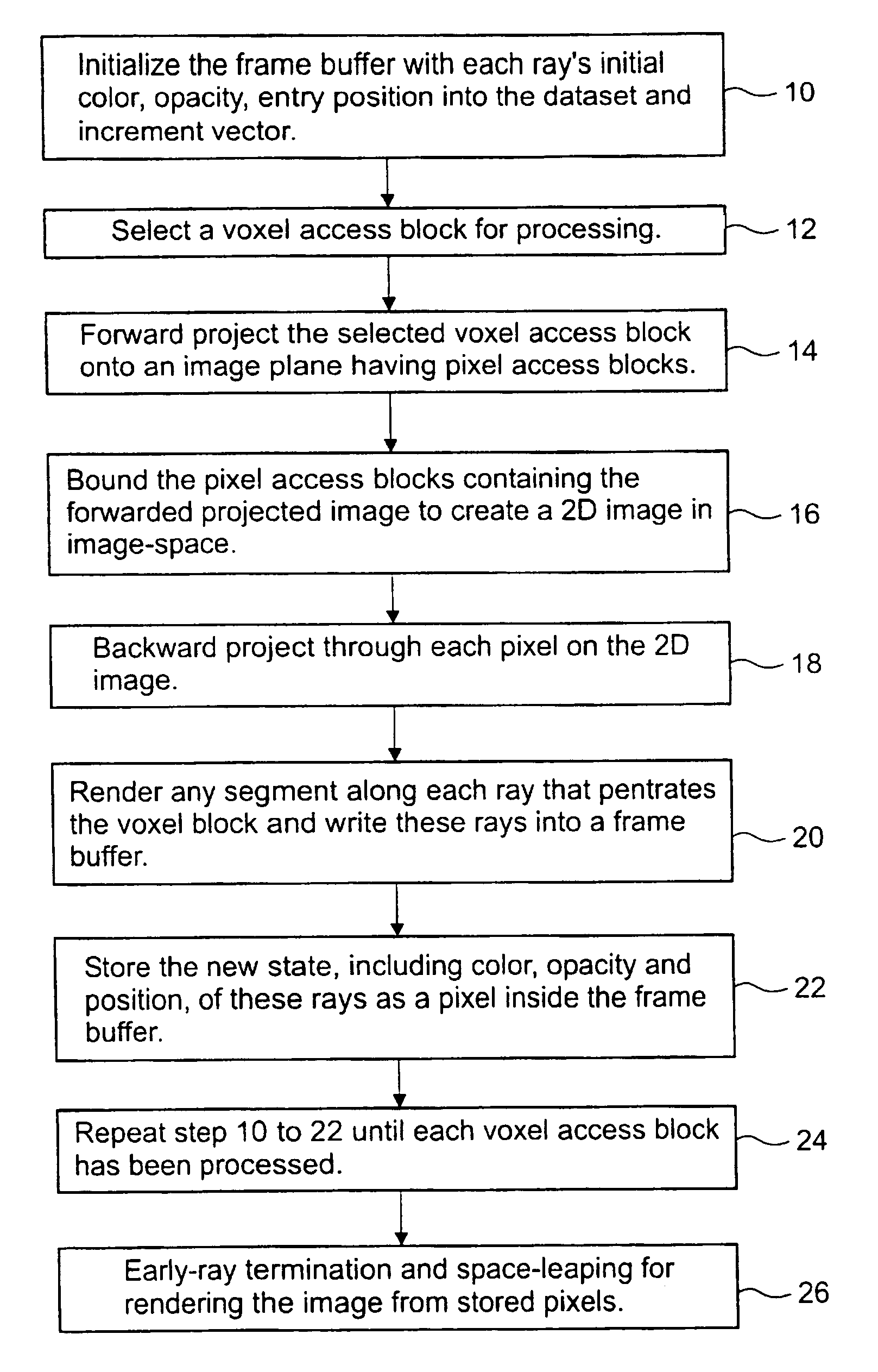

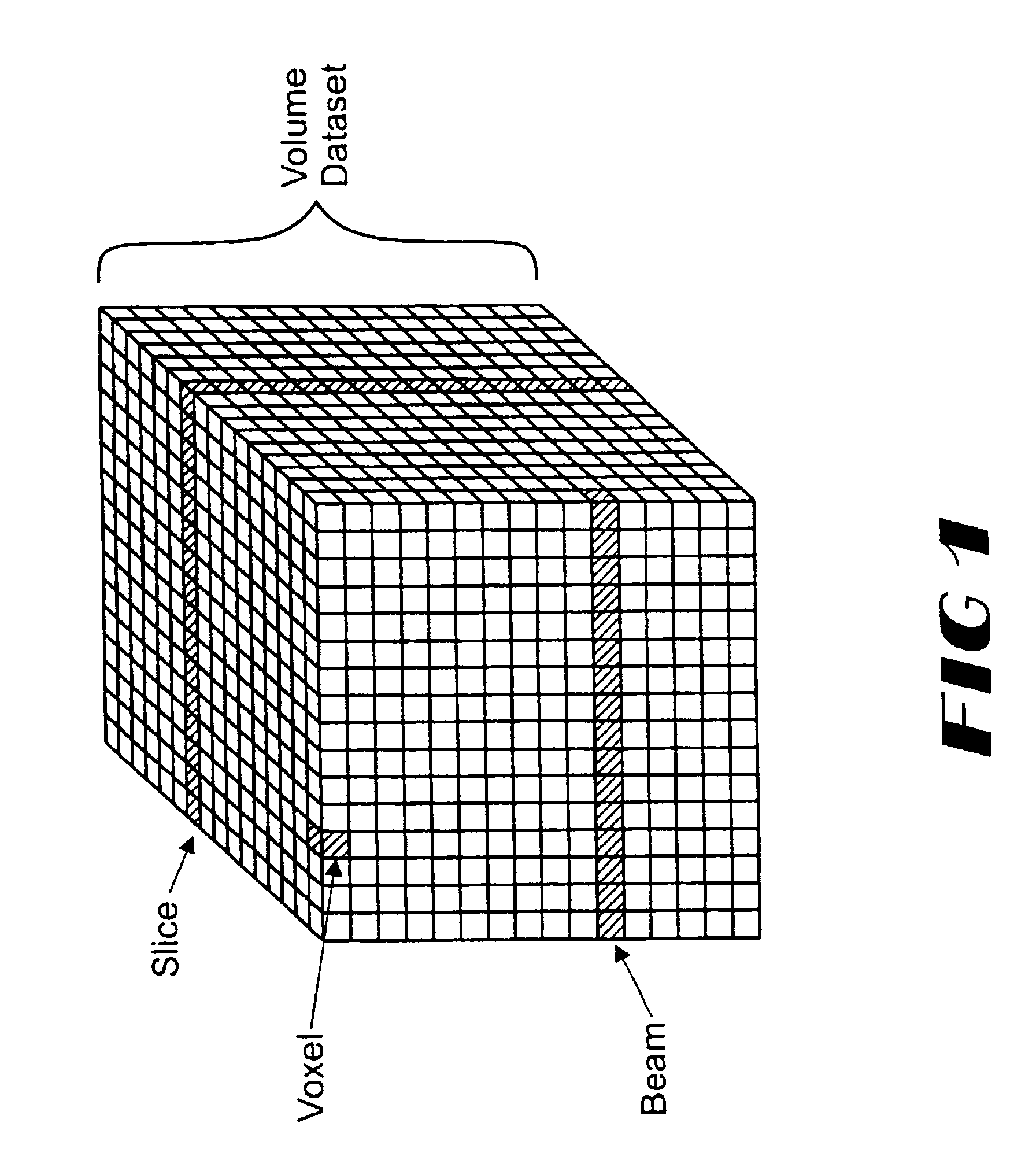

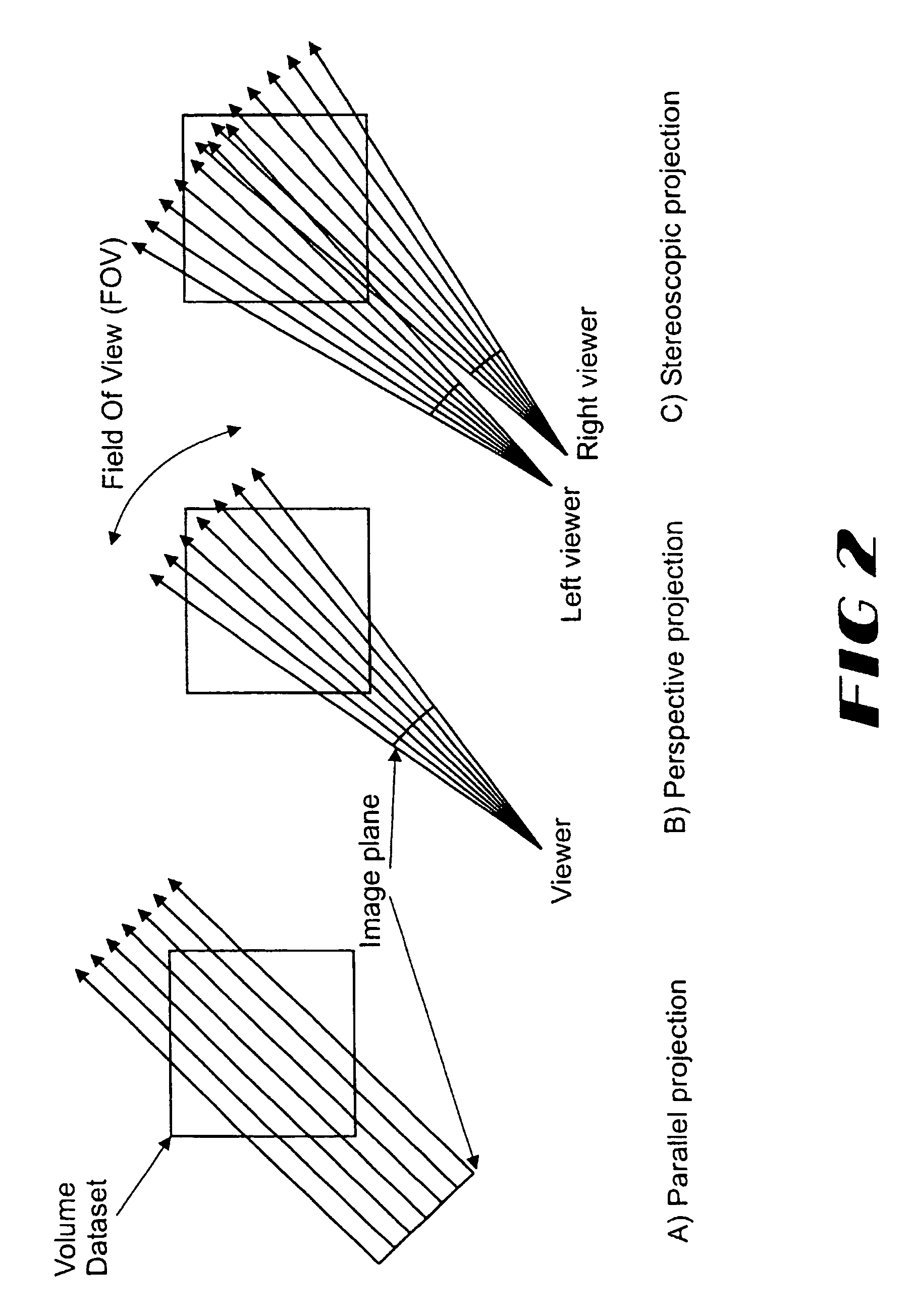

Resample and composite engine for real-time volume rendering

InactiveUSRE42638E1Speed up the processReduce in quantityDetails involving image processing hardwareSteroscopic systemsInternal memoryVoxel

The present invention is a digital electronic system for rendering a volume image in real time. The system accelerators the processing of voxels through early ray termination and space leaping techniques in the projection guided ray casting of the voxels. Predictable and regular voxel access from high-speed internal memory further accelerates the volume rendering. Through the acceleration techniques and devices of the present invention real-time rendering of parallel and perspective views, including those for stereoscopic viewing, are achieved.

Owner:RUTGERS THE STATE UNIV

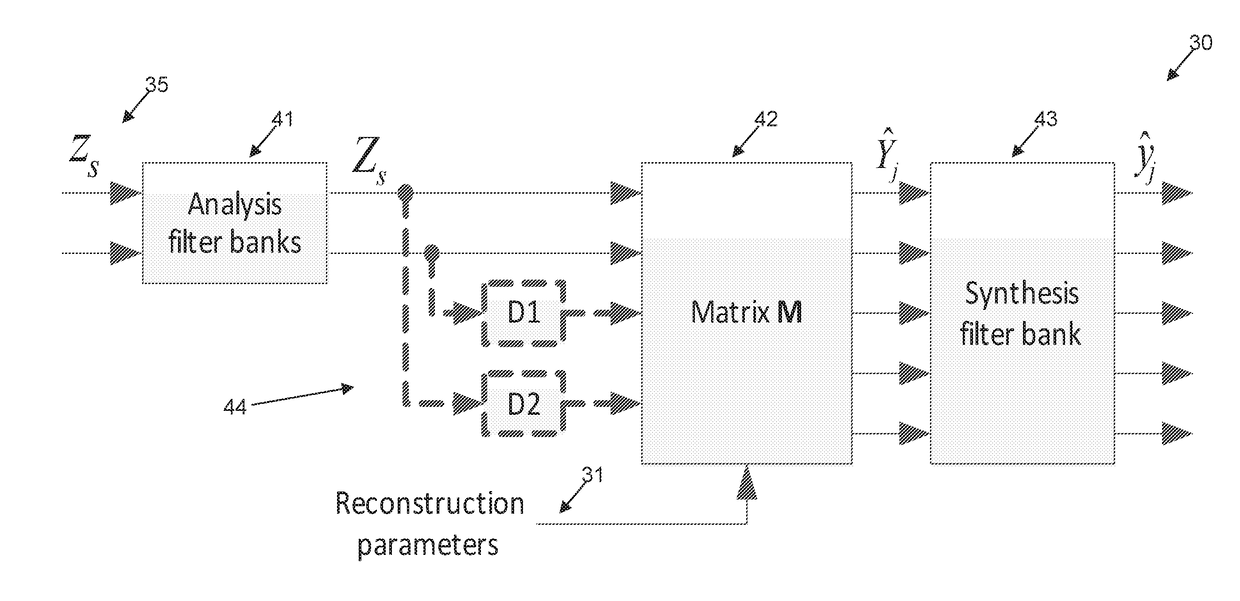

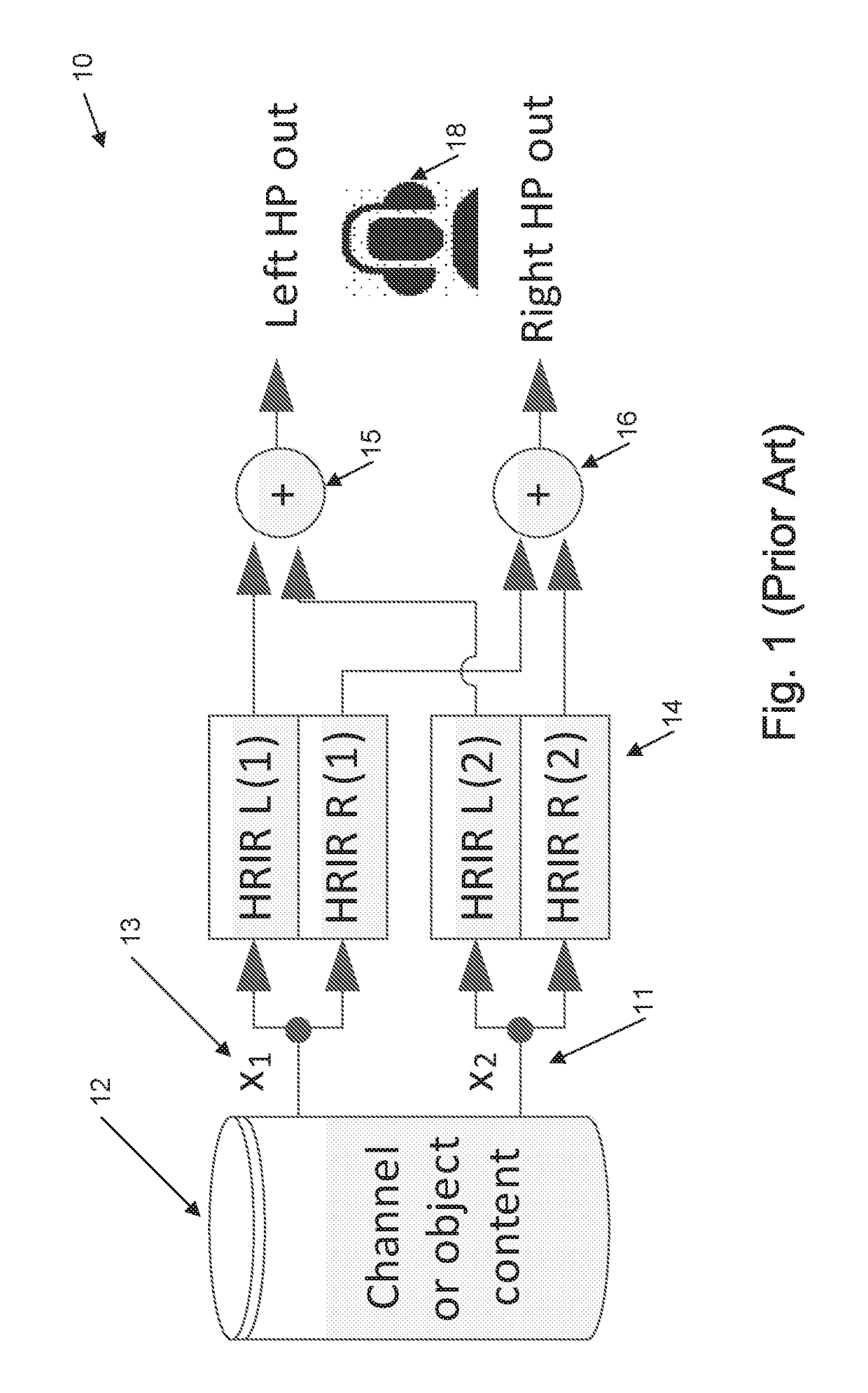

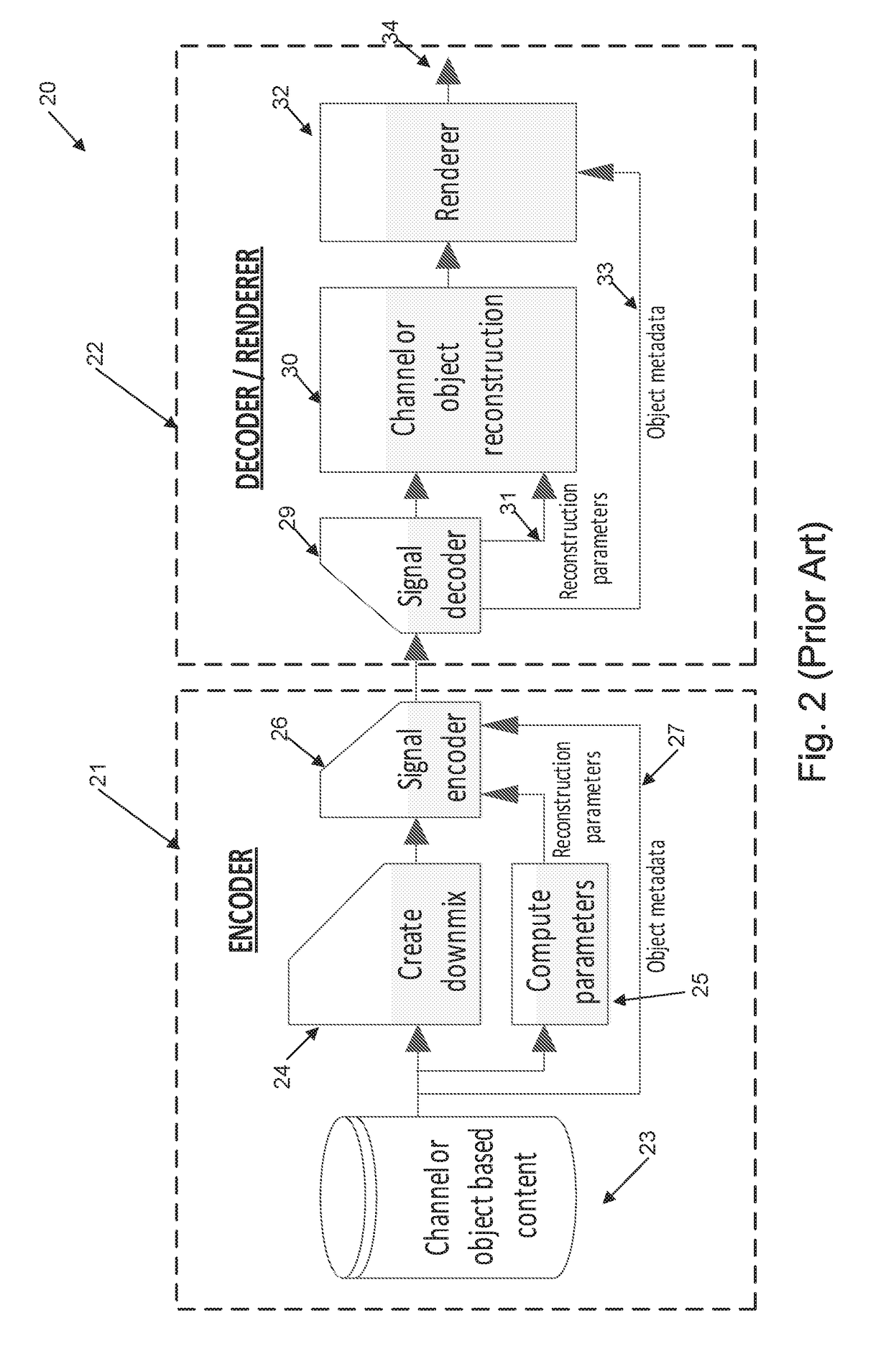

Audio Decoder and Decoding Method

ActiveUS20180233156A1Efficient implementationHigh resolutionHearing device energy consumption reductionSpeech analysisDecoding methodsData stream

A method for representing a second presentation of audio channels or objects as a data stream, the method comprising the steps of: (a) providing a set of base signals, the base signals representing a first presentation of the audio channels or objects; (b) providing a set of transformation parameters, the transformation parameters intended to transform the first presentation into the second presentation; the transformation parameters further being specified for at least two frequency bands and including a set of multi-tap convolution matrix parameters for at least one of the frequency bands.

Owner:DOLBY LAB LICENSING CORP +1

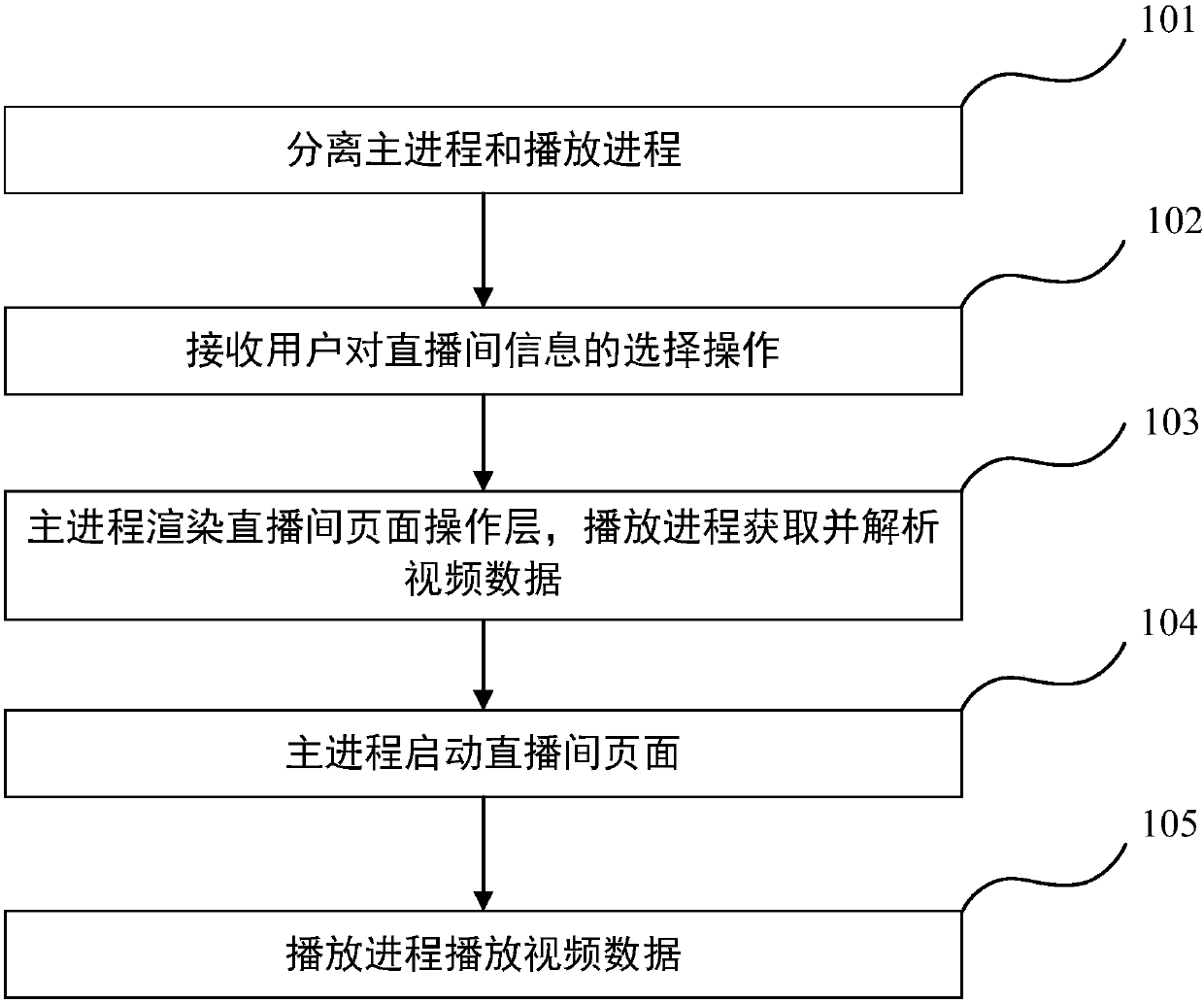

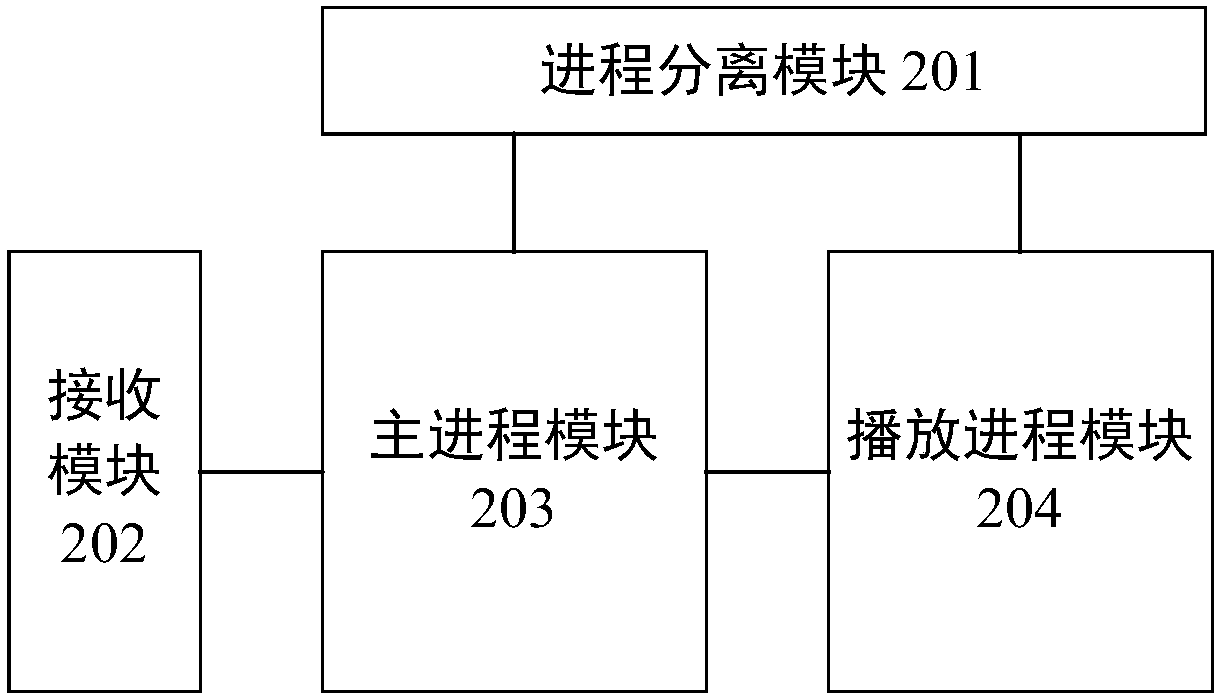

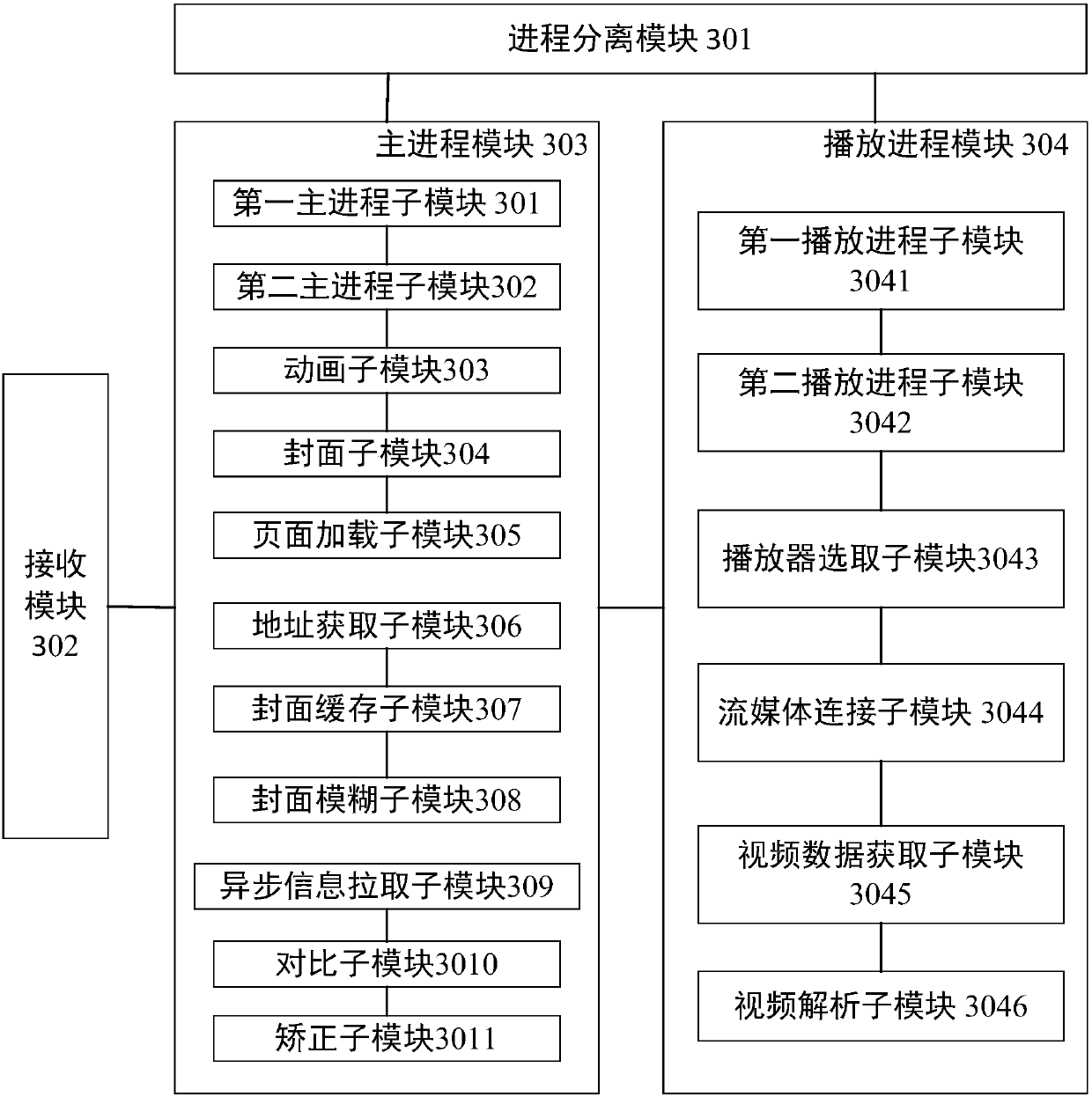

Network direct broadcast video loading method and terminal

InactiveCN107920281AImprove device memory usageImprove throughputSelective content distributionComputer hardwareUtilization rate

The invention provides a network direct broadcast video loading method and terminal. The method comprises the following steps: a host process and a playing process are separated, selection operation of direct broadcasting room information is received, video data is obtained and parsed via the playing process while a direct broadcast room page operation layer is rendered via the host process; the direct broadcast room page is started via the host process, and the video data is played via the playing process. Via the network direct broadcast video loading method, memory utilization rates of equipment can be improved, and throughput rates of a processor can be improved.

Owner:北京小唱科技有限公司

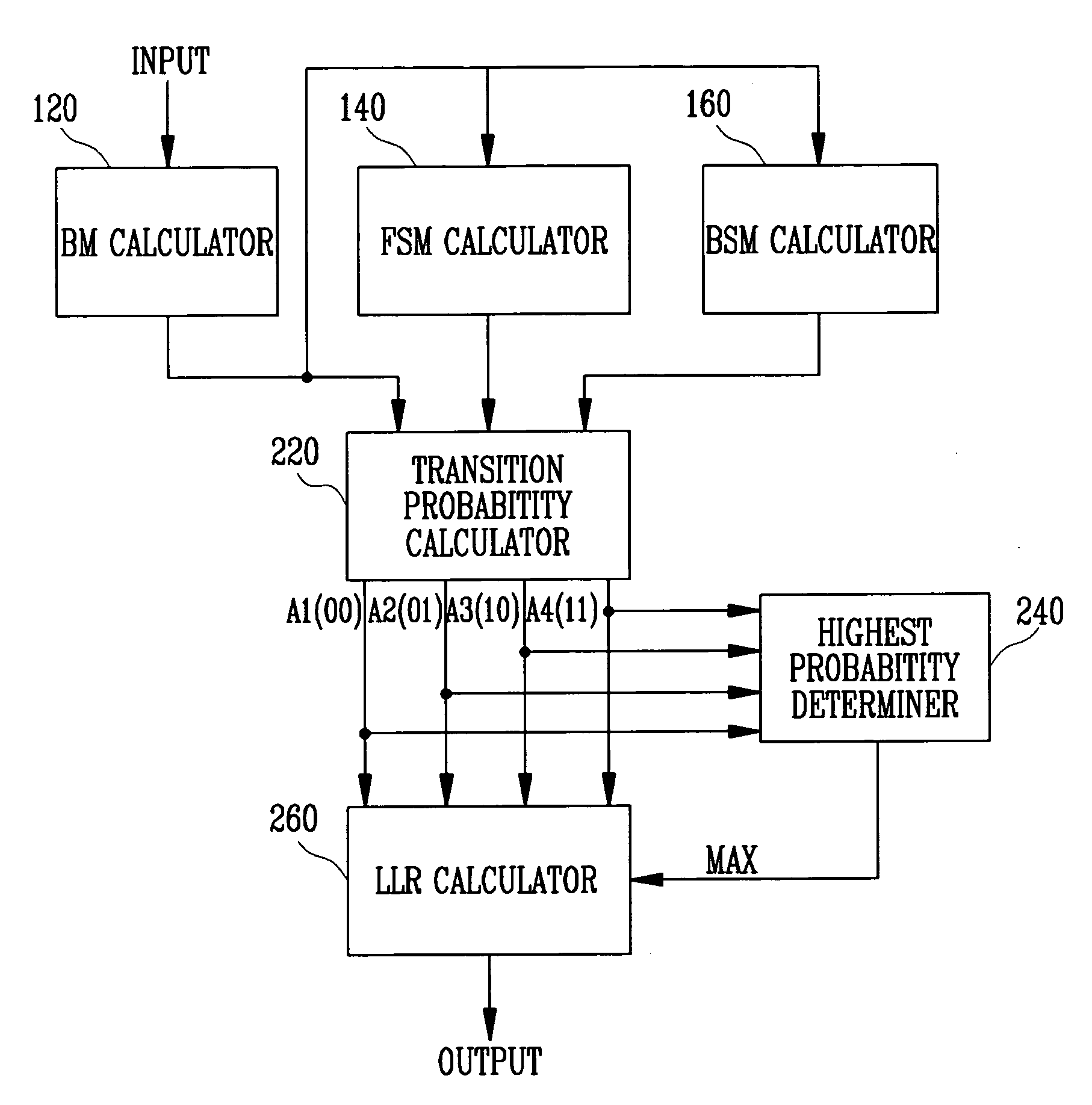

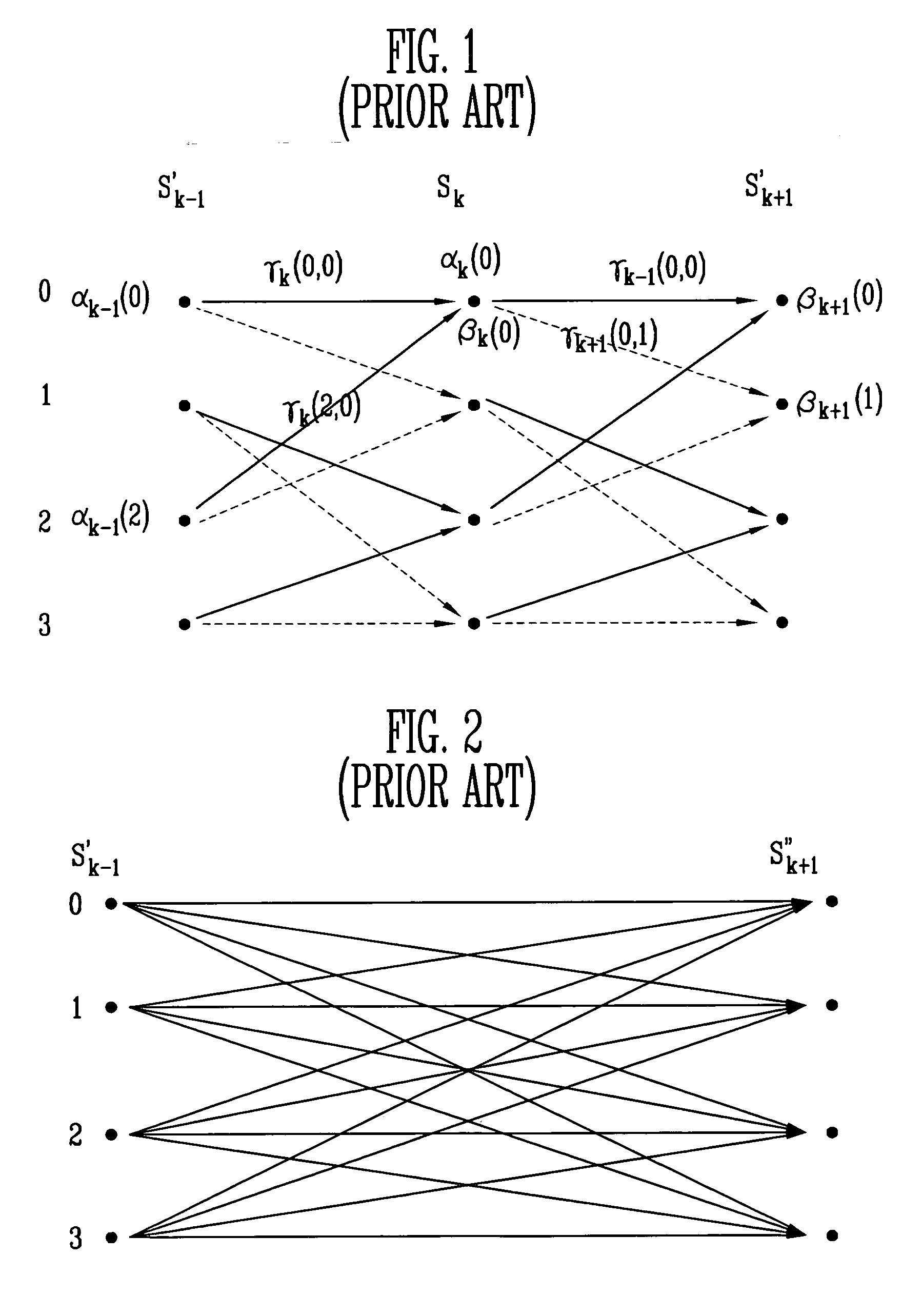

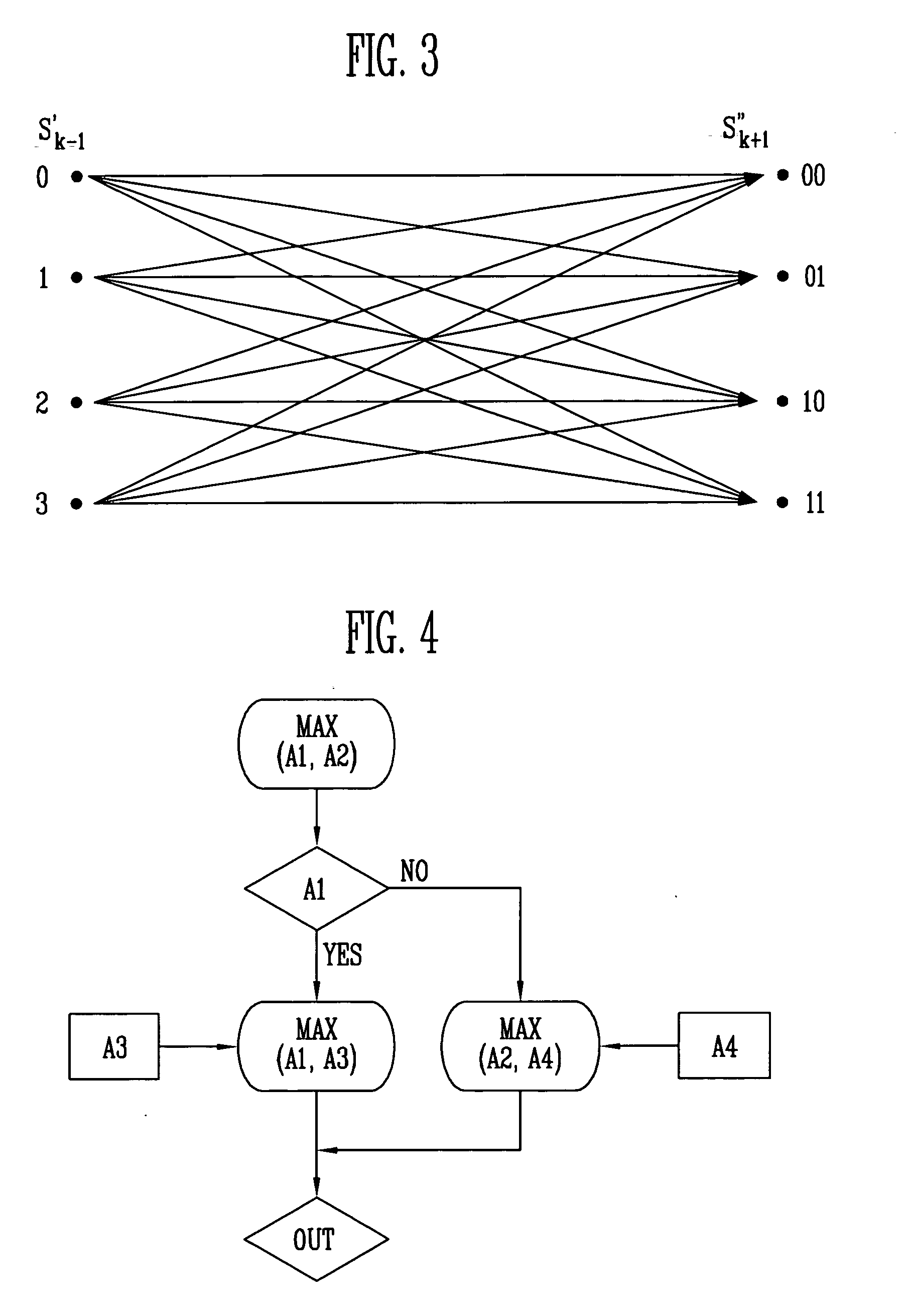

Apparatus and method for computing LLR

InactiveUS20070136649A1Improve decoding speedImprove memory usageData representation error detection/correctionCode conversionTime segmentLogit

Provided are an apparatus and method for efficiently computing a log likelihood ratio (LLR) using the maximum a posteriori (MAP) algorithm known as block combining. The method includes the steps of: calculating alpha values, beta values and gamma values of at least two time sections; calculating transition probabilities of respective states in the at least two time sections; performing a cormparison operation for some of the transition probabilities to determine the highest value, selecting one of the other transition probabilities according to the determined high value, comparing the determined value with the selected value to select the higher value, and thereby obtaining the highest of the transition probabilities; and determining an operation to apply according to the highest transition probability and calculating an LLR.

Owner:ELECTRONICS & TELECOMM RES INST

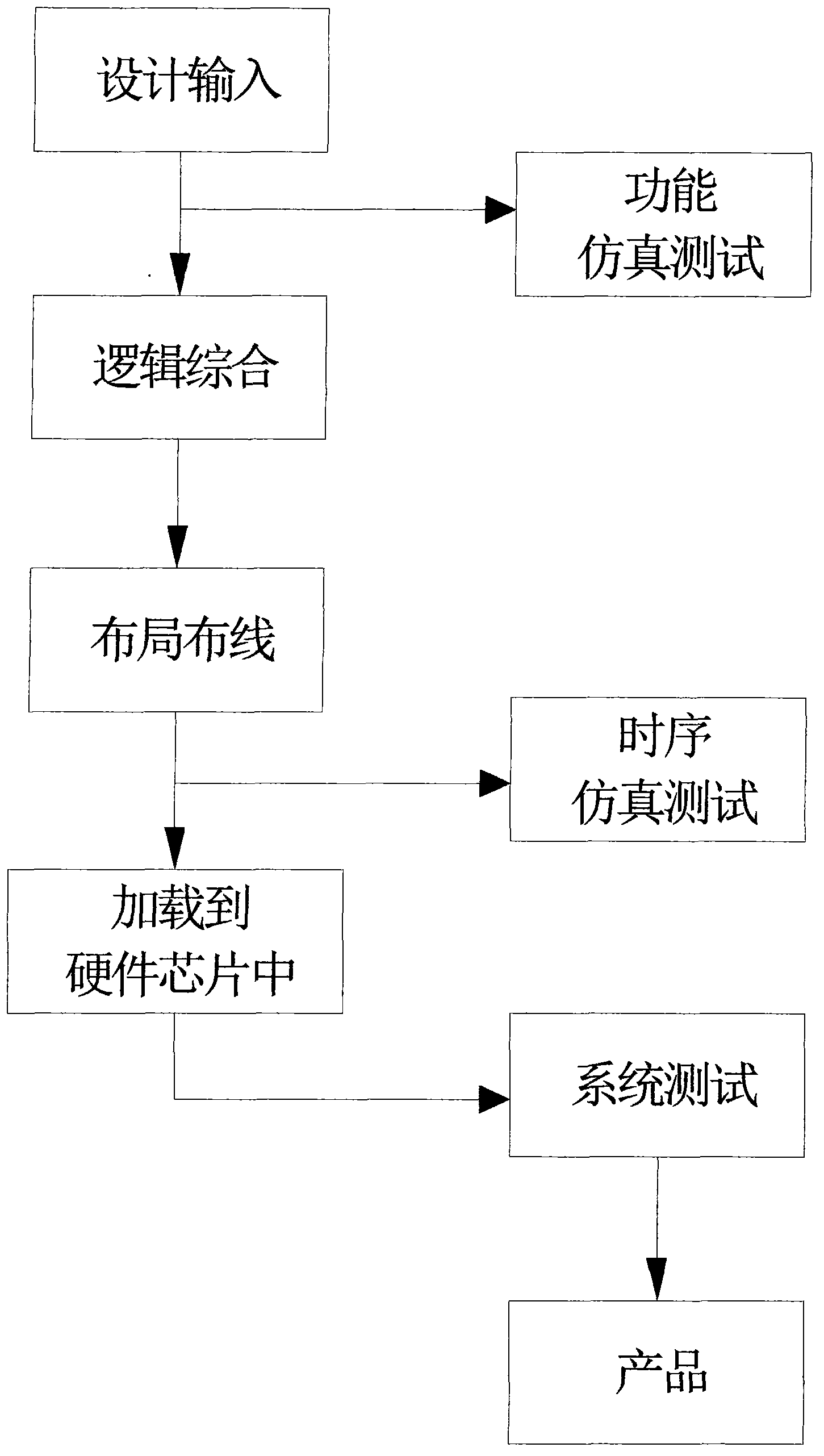

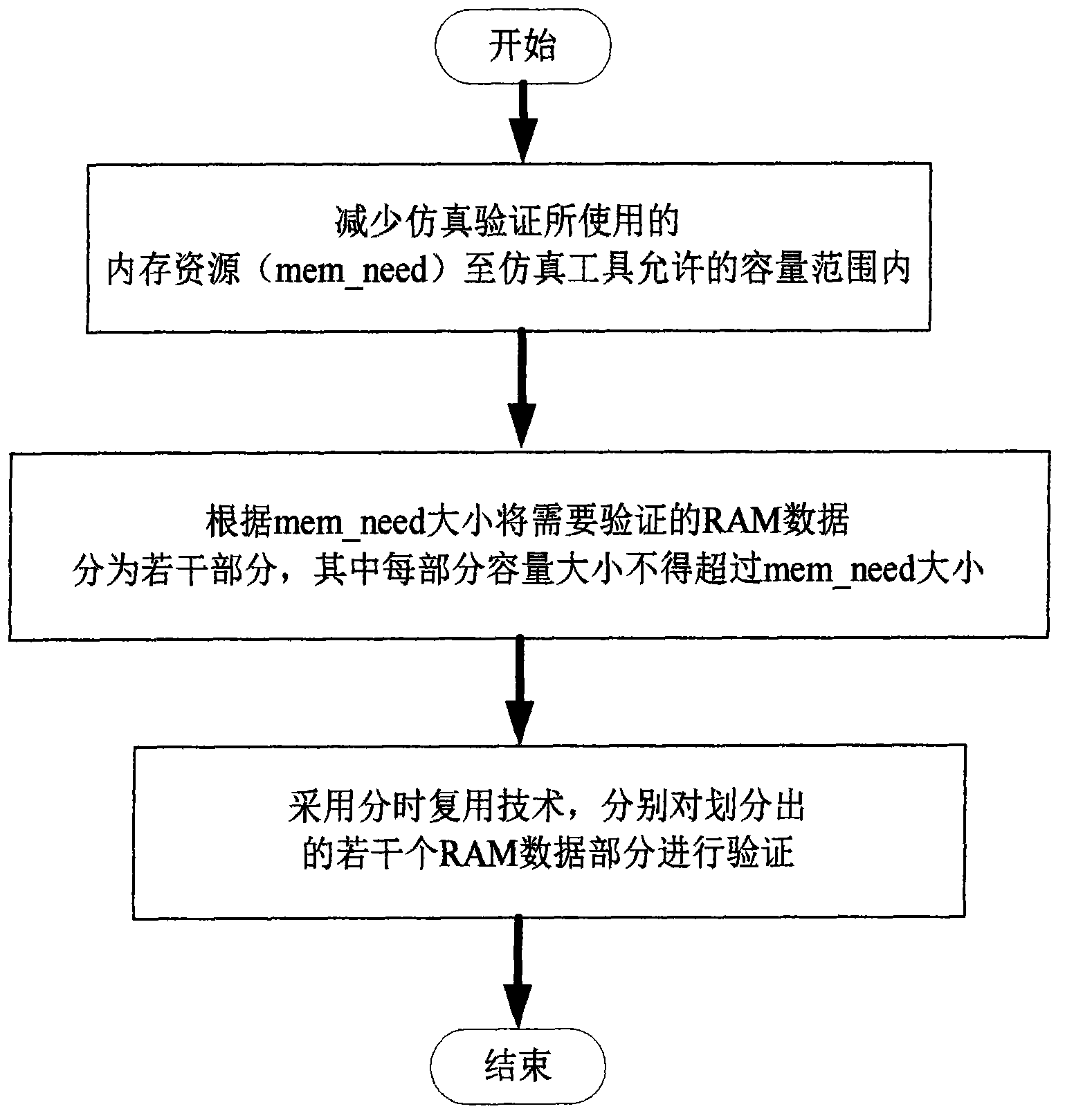

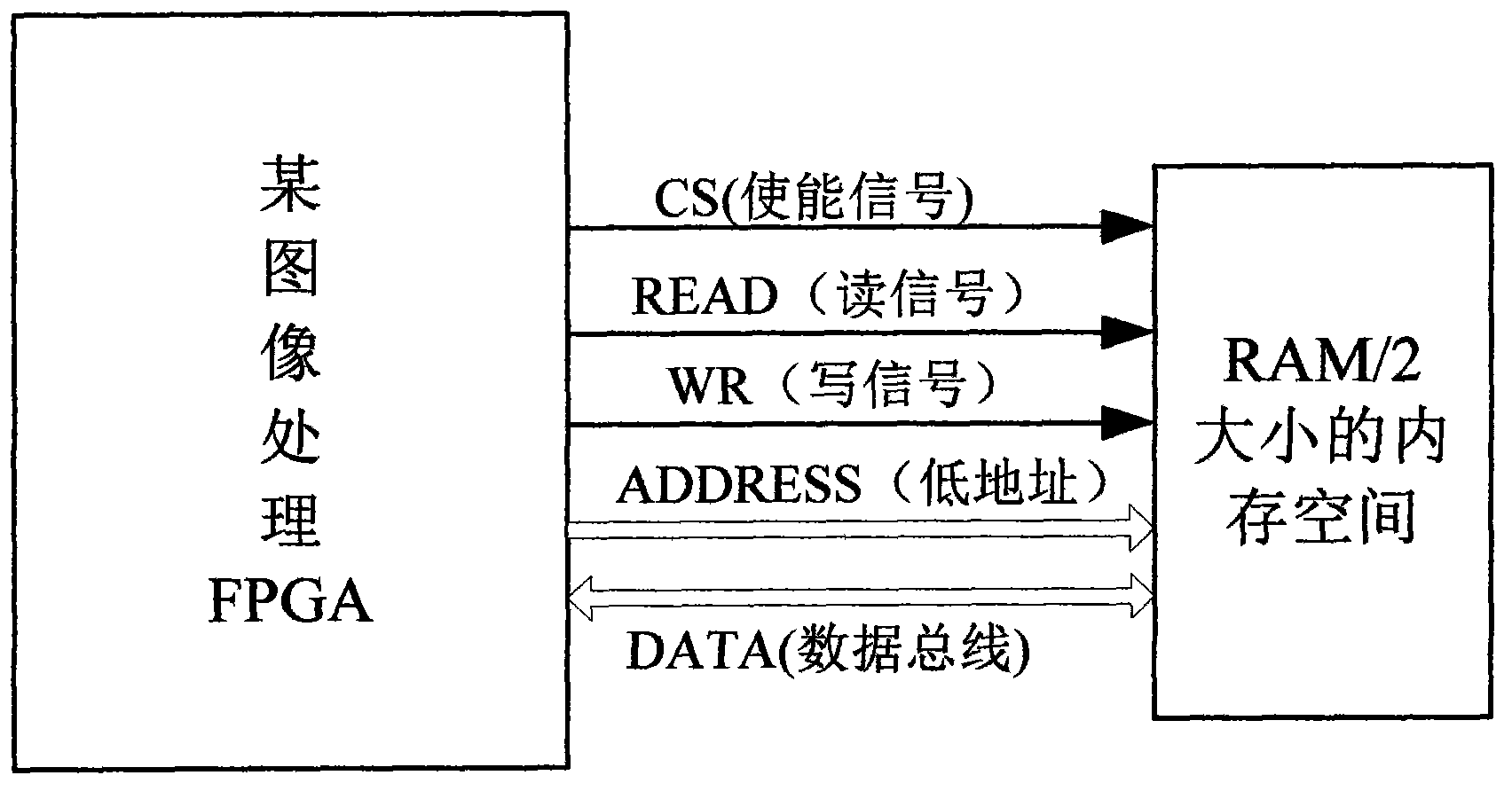

High data volume FPGA (Field Programmable Gate Array) simulating testing method based on time sharing multiplex

InactiveCN102854801AReduce dynamic memory allocationReduce the number of instantiationsSimulator controlElectric testing/monitoringInternal memoryFpga emulation

The invention belongs to the field of testing technology of programmable logic element and particularly relates to a high data volume FPGA (Field Programmable Gate Array) simulating testing method based on time sharing multiplex, and aims to solve the problem that the high data volume FPGA simulating test is insufficient in internal memory and improves the sufficiency of the high data volume FPGA simulating test. The high data volume FPGA simulating testing method comprises the step of reducing the internal memory source used for the simulating test within the allowable capacity range of a simulating tool, dividing the RAM (Random Access Memory) data to be tested into a plurality of parts based on the volume of the internal memory source, testing the plurality of divided RAM data through a time sharing multiplex way, and dynamically calculating the internal memory space of a system as current simulating requirement through an internal memory dynamic managing method, and distributing and releasing the internal memory.

Owner:北京京航计算通讯研究所

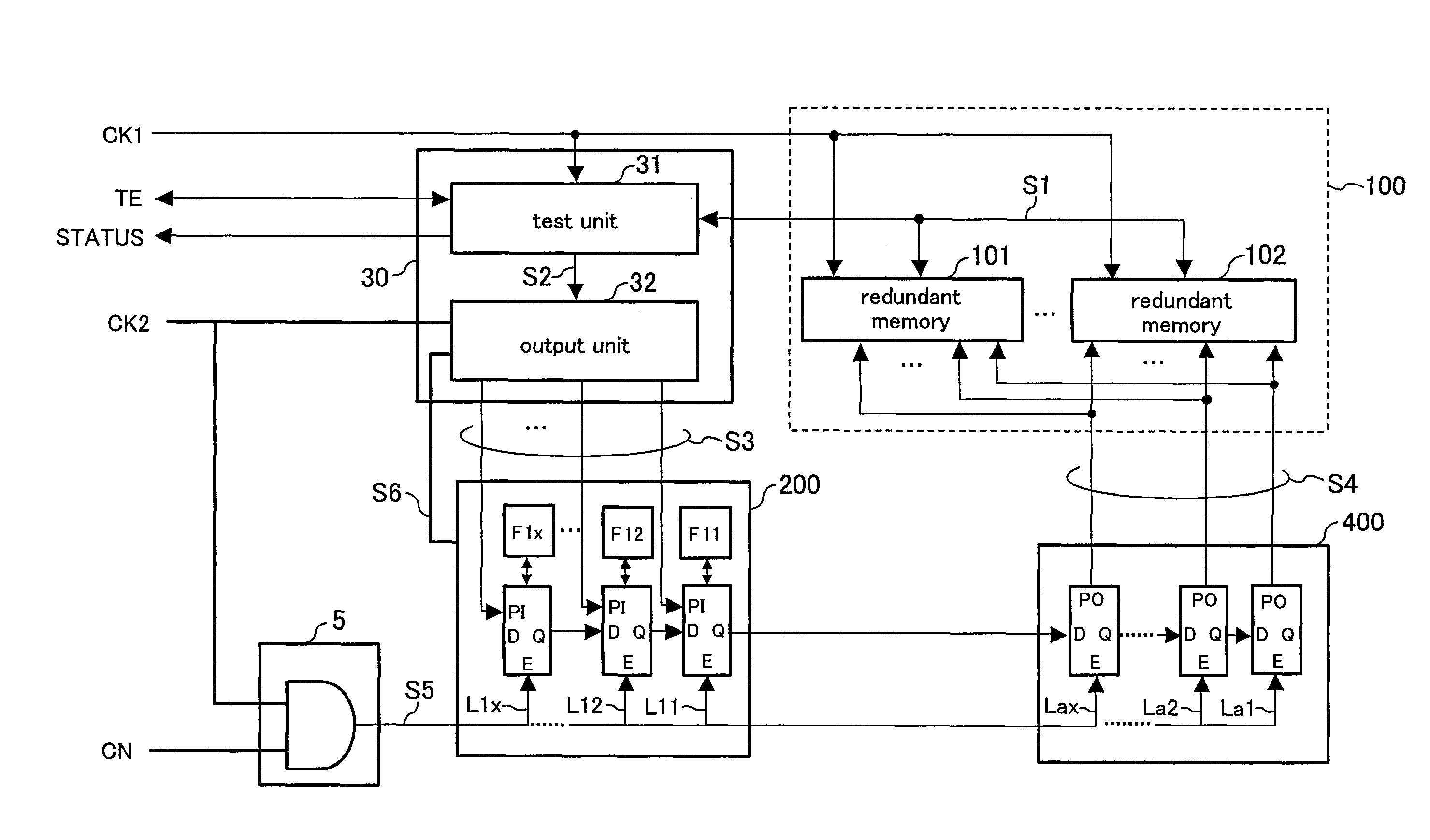

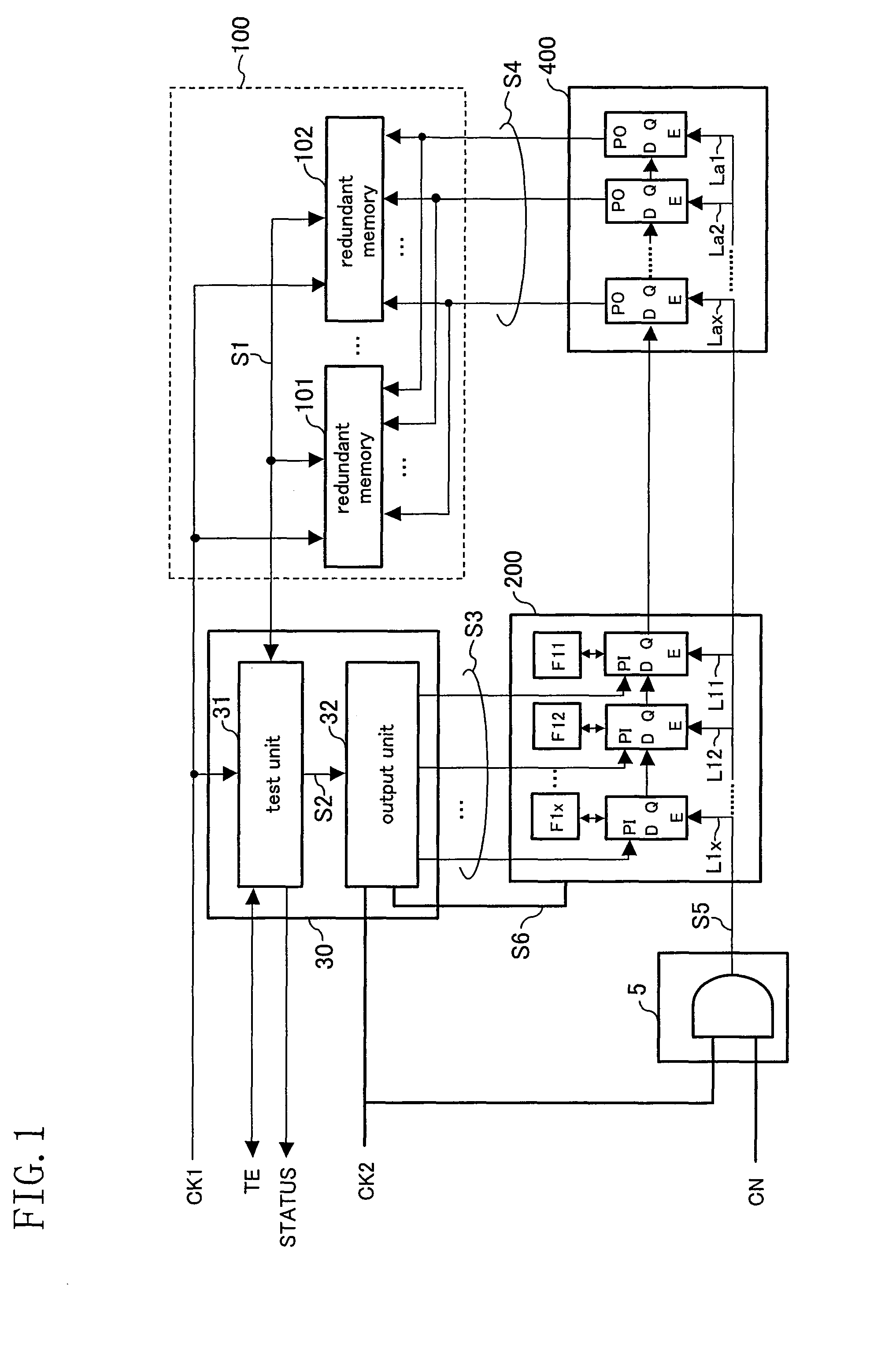

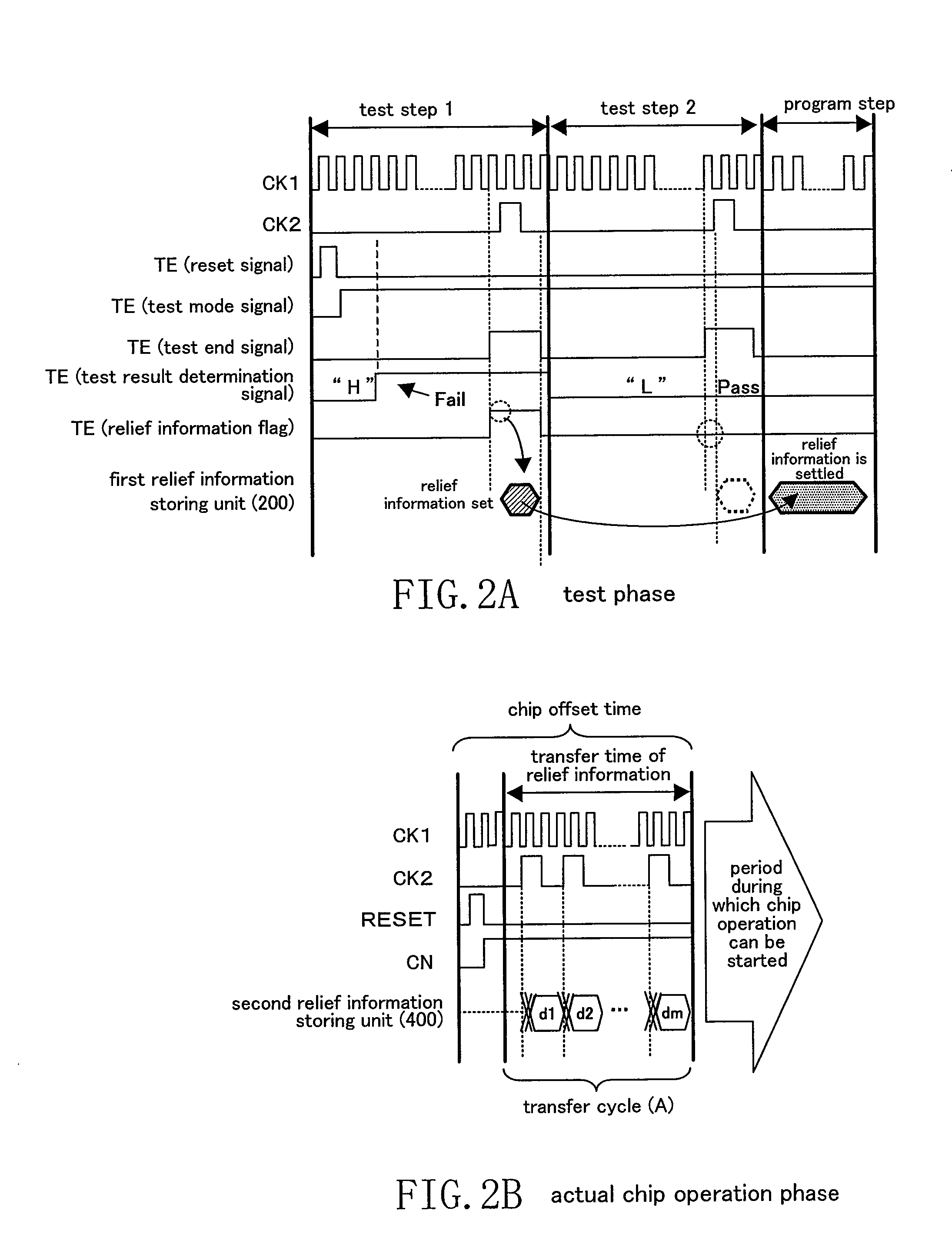

Semiconductor device

In a semiconductor device having a redundant memory, the area of the device is reduced and a time required to transfer relief information is reduced. Moreover, a transfer control of relief information is facilitated. A first relief information storing unit stores relief information for relieving a redundant memory having a defective cell. A plurality of redundant memories share a second relief information storing unit. The second relief information storing unit is connected in series to the first relief information storing unit. The relief information is transferred from the first relief information storing unit to the second relief information storing unit.

Owner:CETUS TECH INC

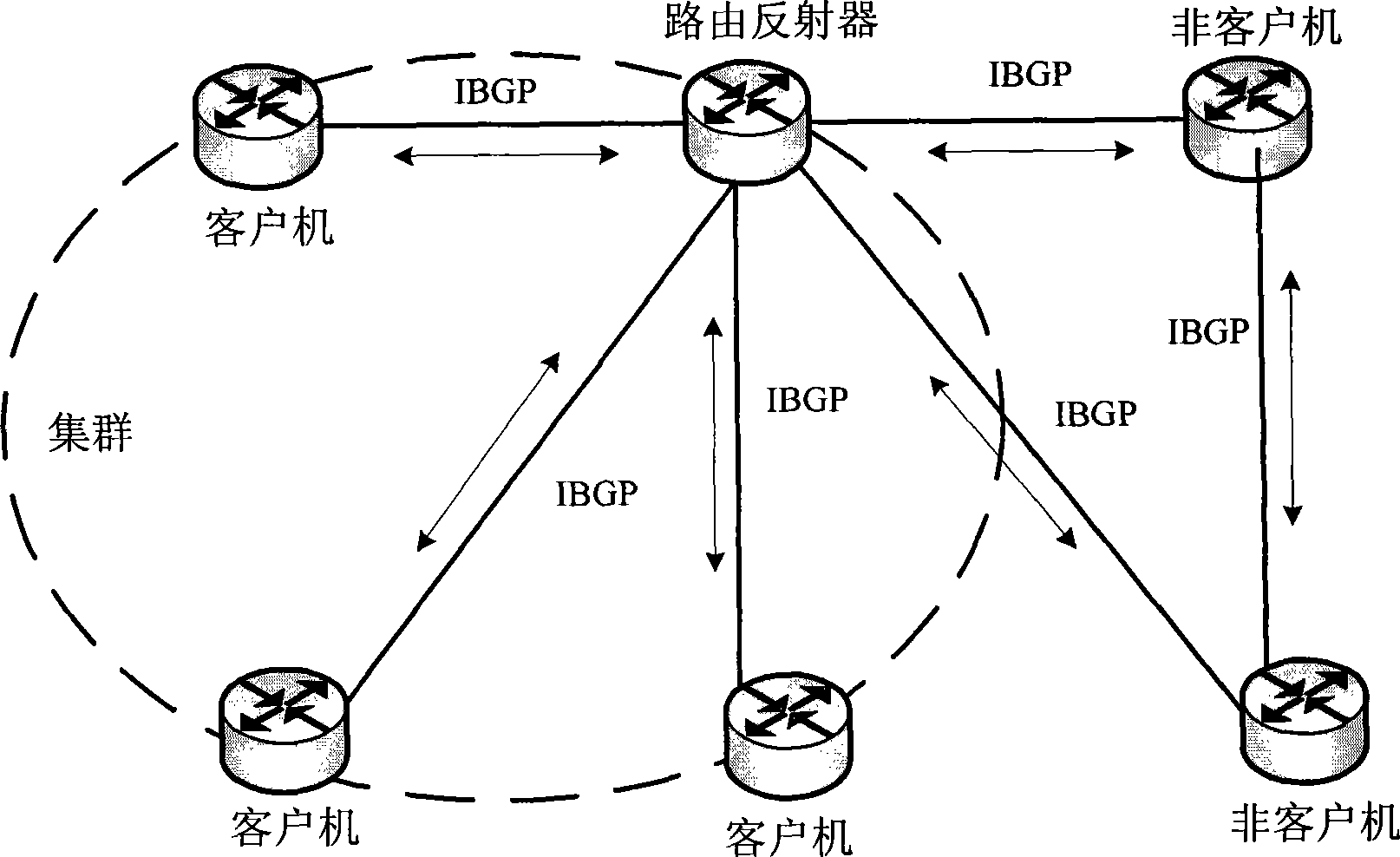

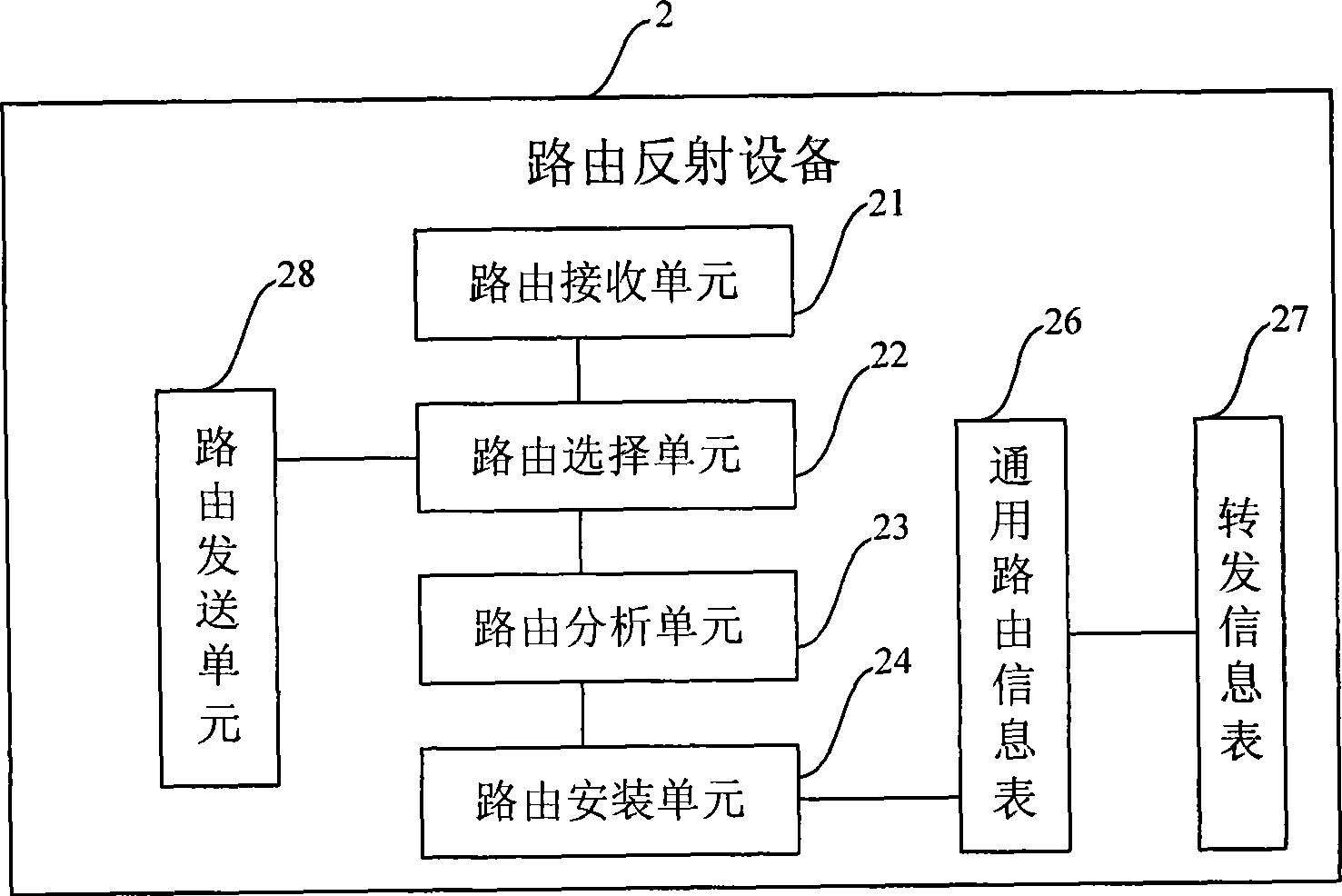

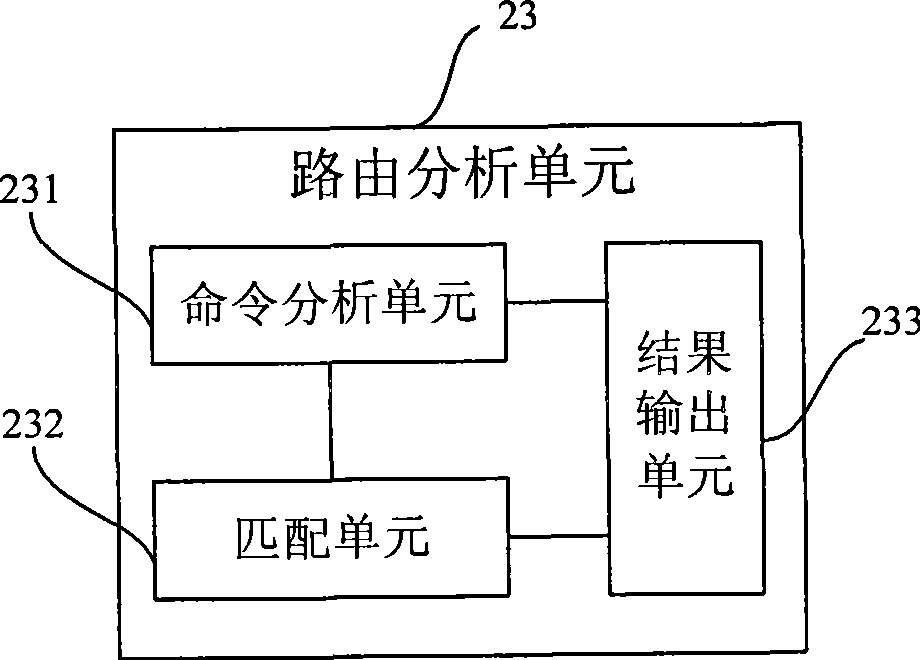

Reflected route processing method and route reflecting device

ActiveCN101420357AEasy to handleReduce down brush handlingNetwork connectionsOptimum routeComputer science

The present invention discloses a method of processing reflection route. The method comprises steps of selecting a route as the optimum route after receiving reflection route information; sending the optimum route, judging whether the optimum route has been added into a general route information table or not according to control commands, if so, not adding the optimum route into the general route information table, or else, entering the next step; adding the optimum route into the general route information table. Correspondingly, the present also discloses a route reflection device. According to the technical solution provided by the present invention, memory utilization rate of the device is improved through control commands to limit routes for reflection being added into the general route information table.

Owner:HUAWEI TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com