Patents

Literature

68 results about "Queuing network" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

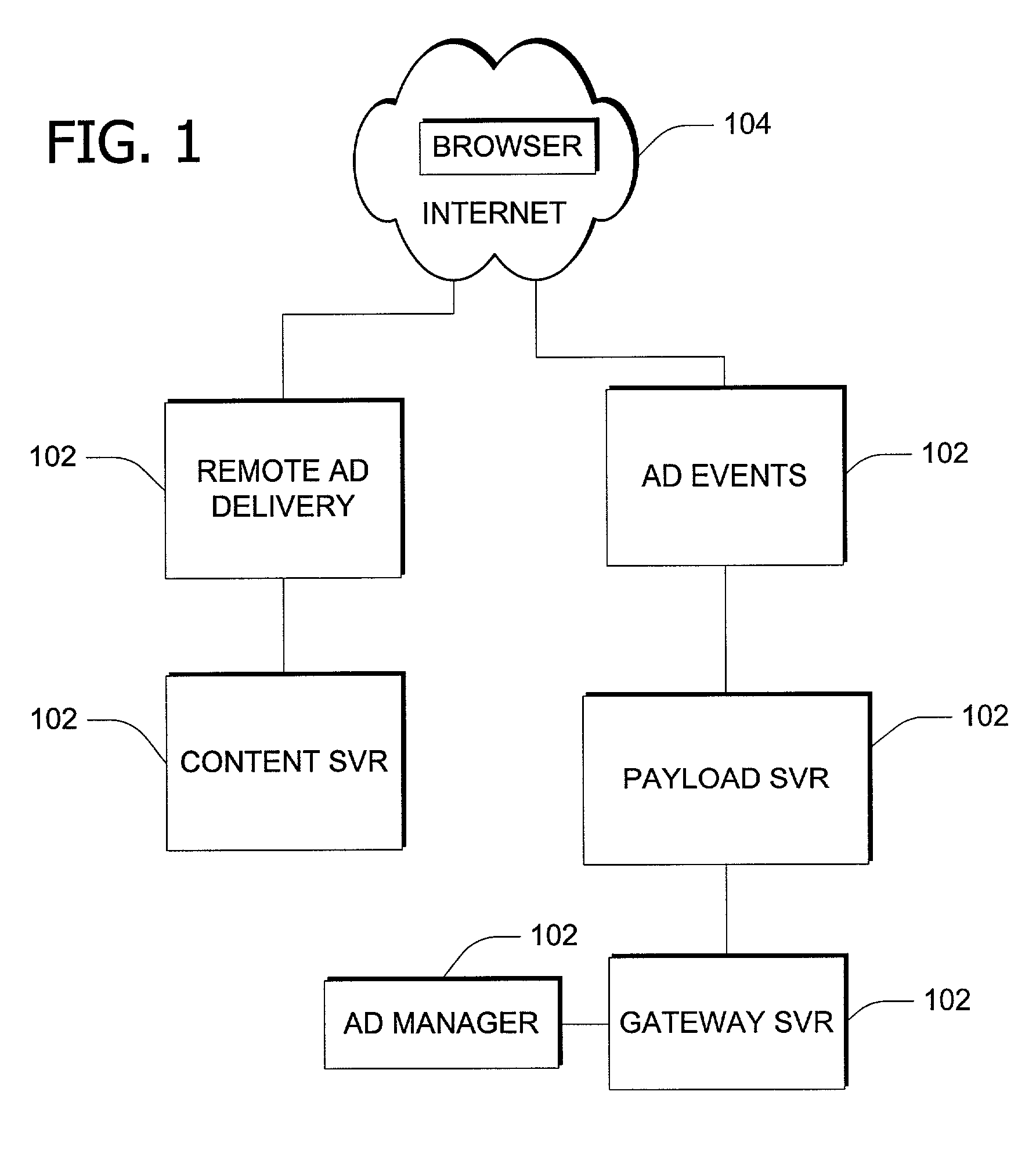

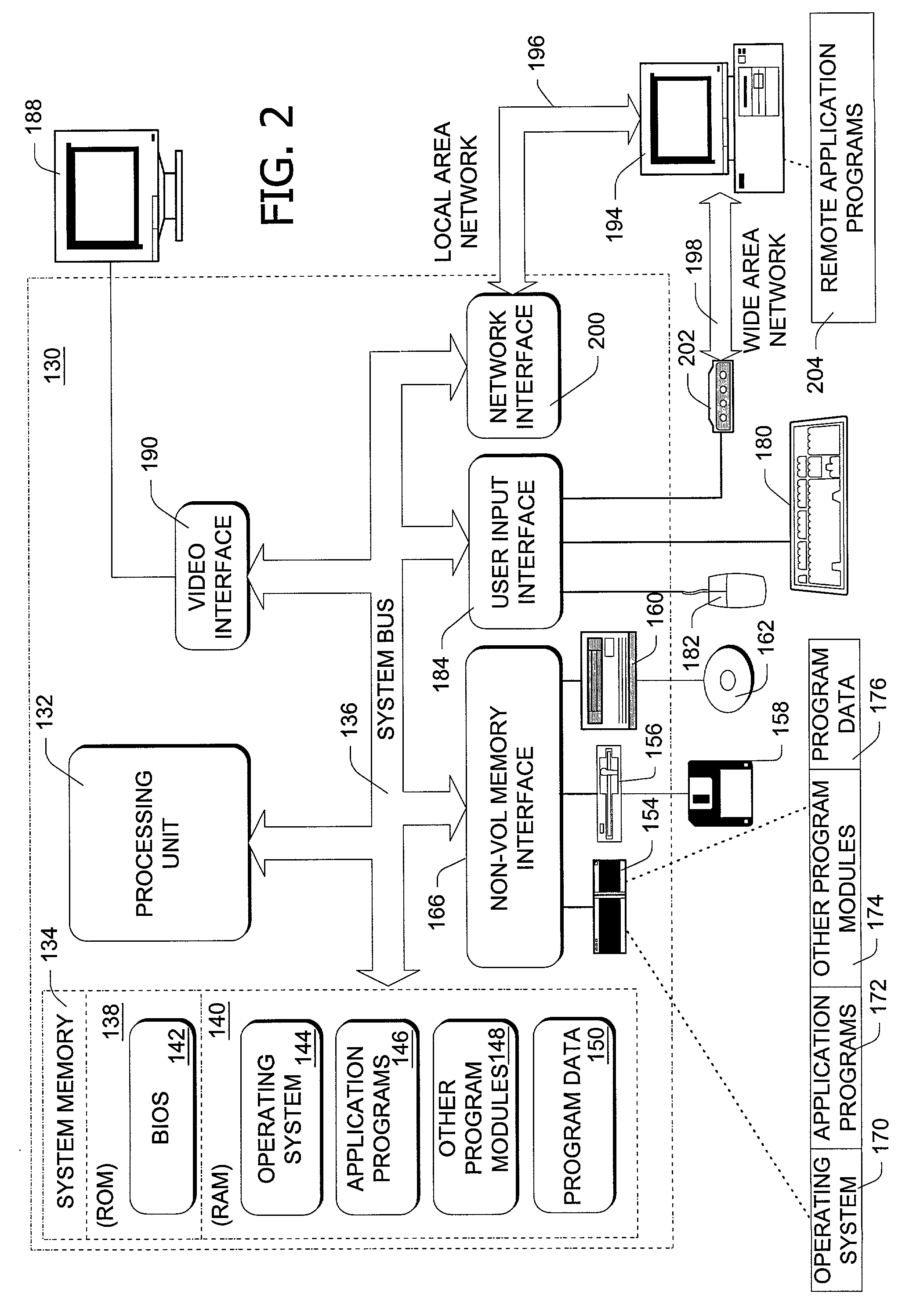

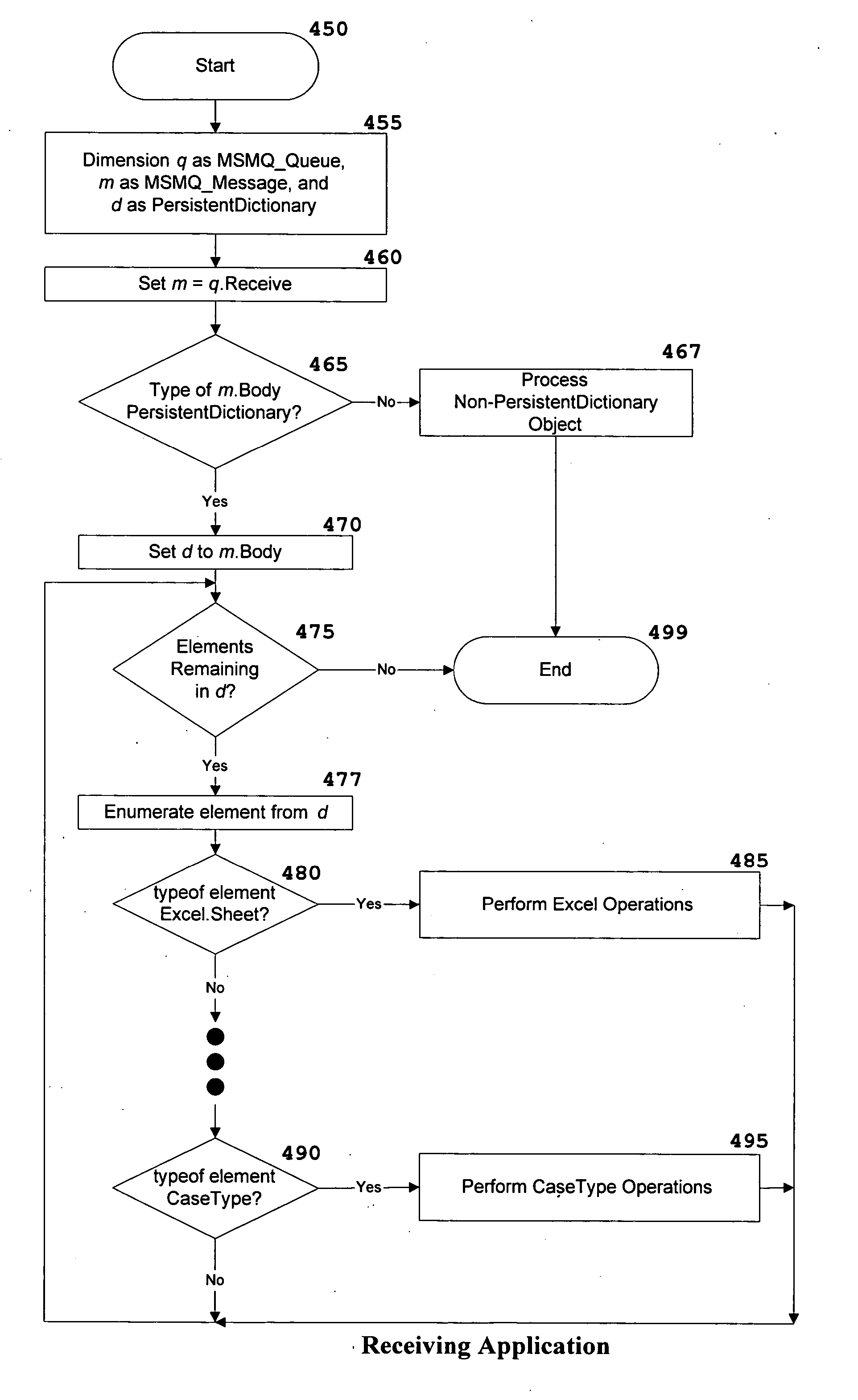

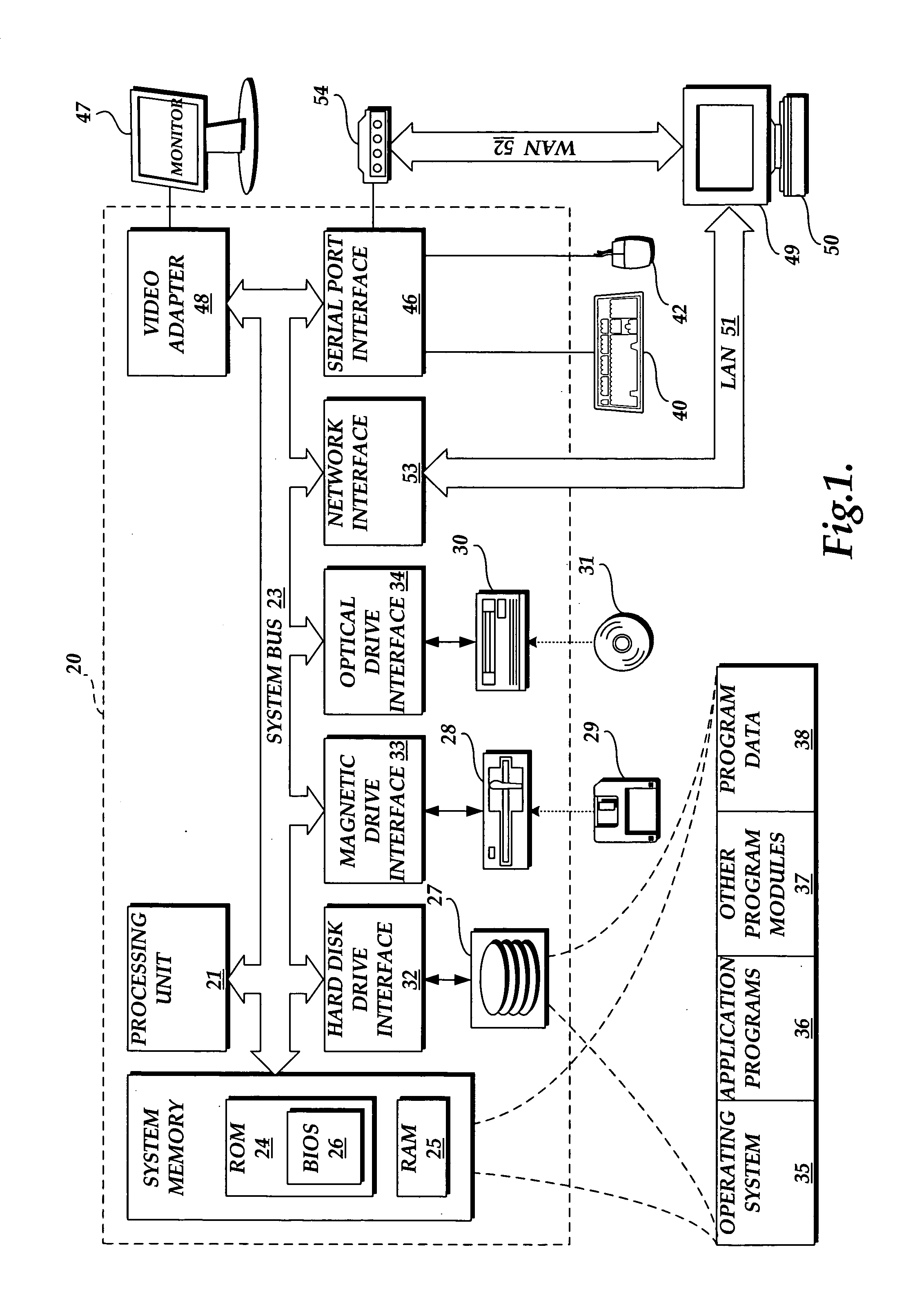

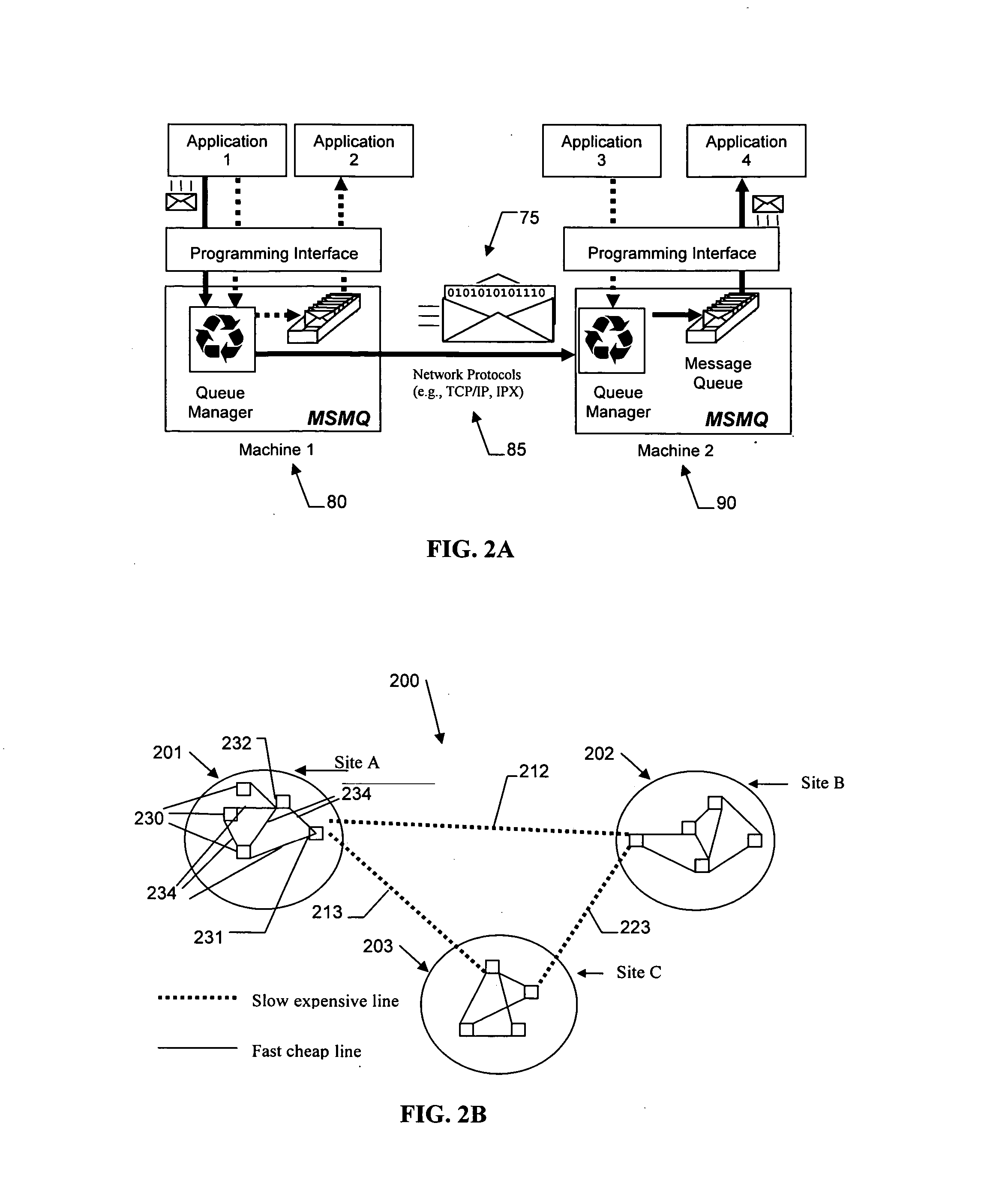

Method and apparatus for creating, sending, and using self-descriptive objects as messages over a message queuing network

InactiveUS6848108B1Easy to adaptMore robustDigital data processing detailsMultiprogramming arrangementsSemanticsQueuing network

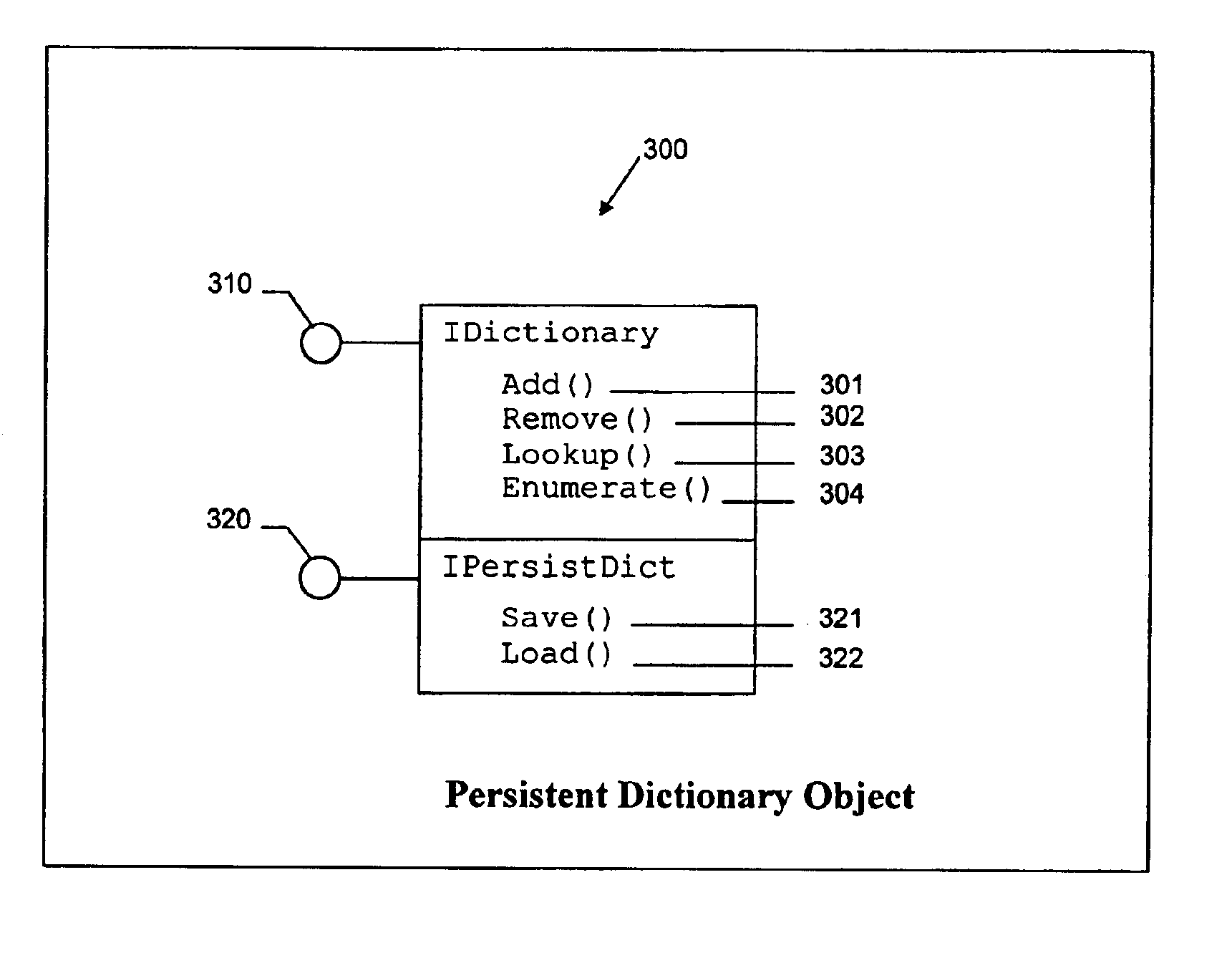

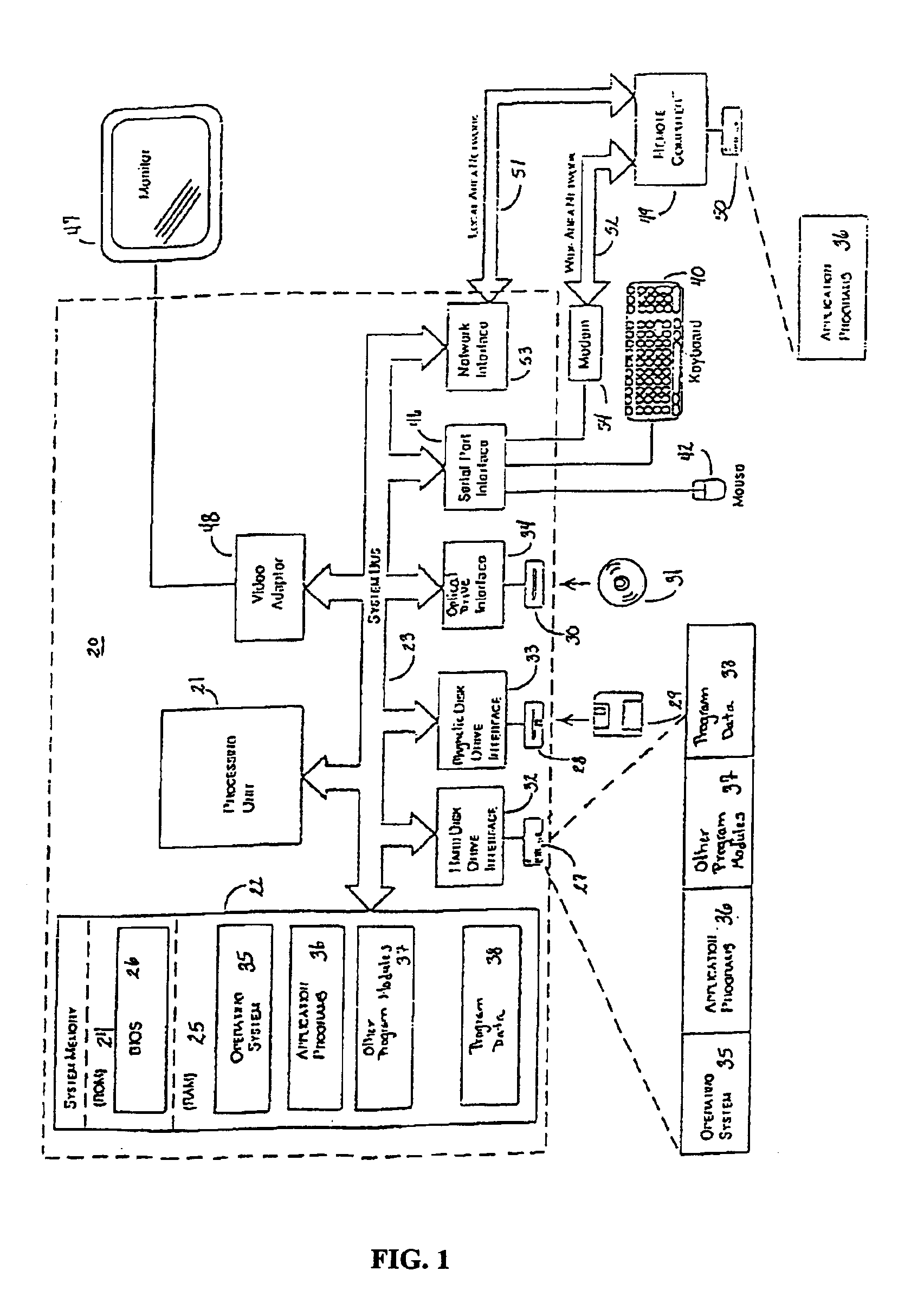

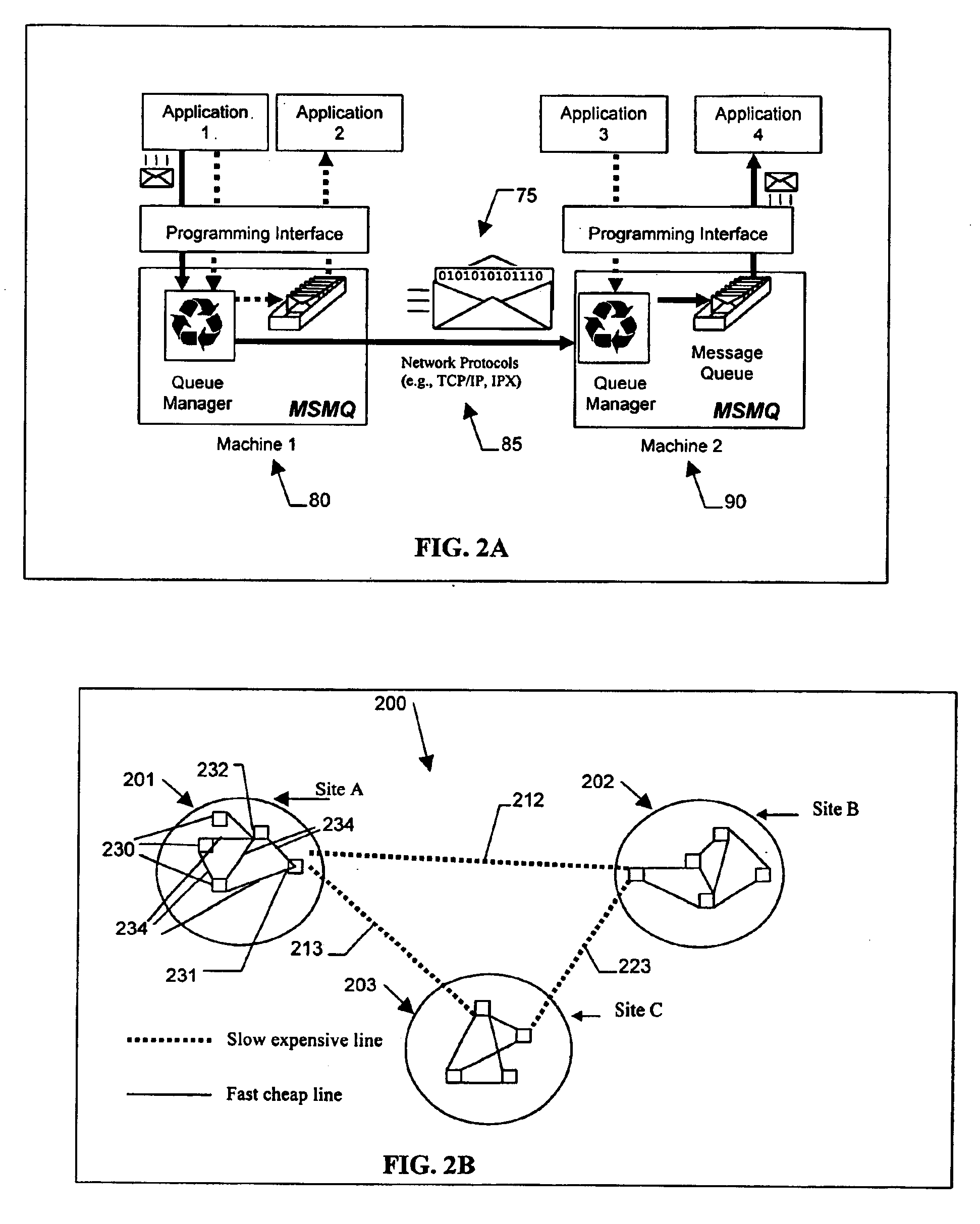

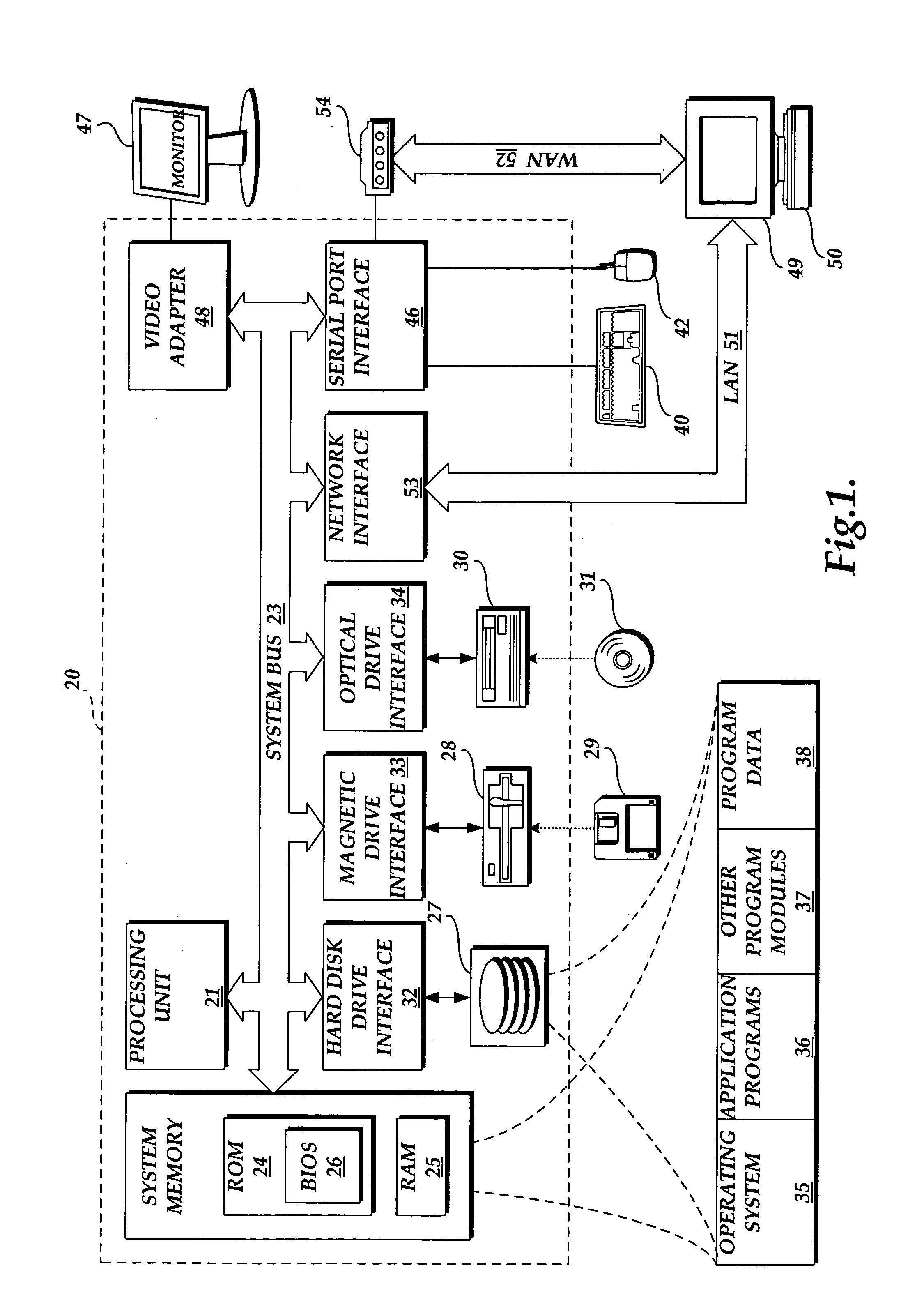

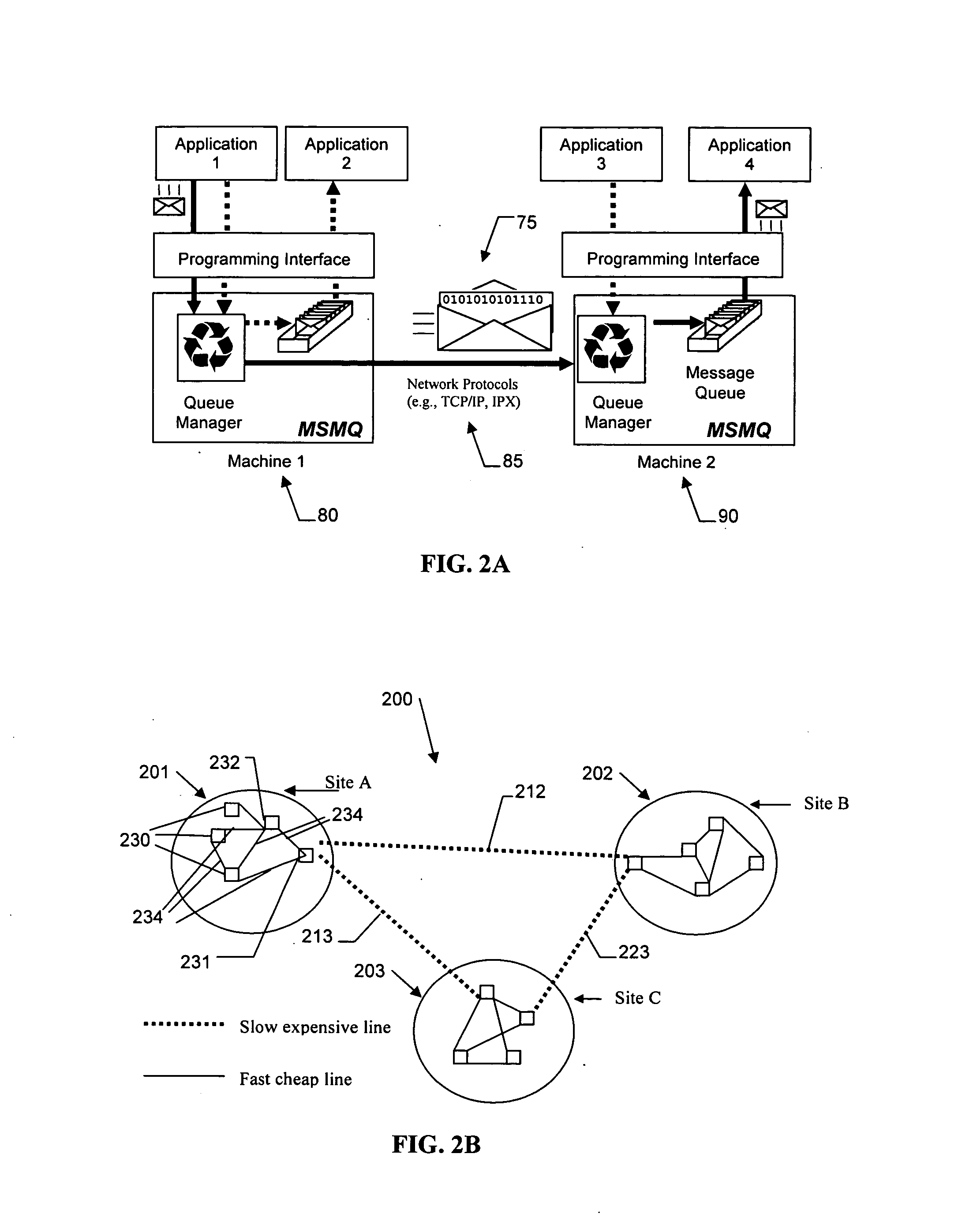

An invention for creating, sending, and using self-descriptive objects as messages over a network is disclosed. In an embodiment of the present invention, self-descriptive persistent dictionary objects are serialized and sent as messages across a message queuing network. The receiving messaging system unserializes the message object, and passes the object to the destination application. The application then queries or enumerates message elements from the instantiated persistent dictionary, and performs the programmed response. Using these self-descriptive objects as messages, the sending and receiving applications no longer rely on an a priori convention or a special-coding serialization scheme. Rather, messaging applications can communicate arbitrary objects in a standard way with no prior agreement as to the nature and semantics of message contents.

Owner:MICROSOFT TECH LICENSING LLC

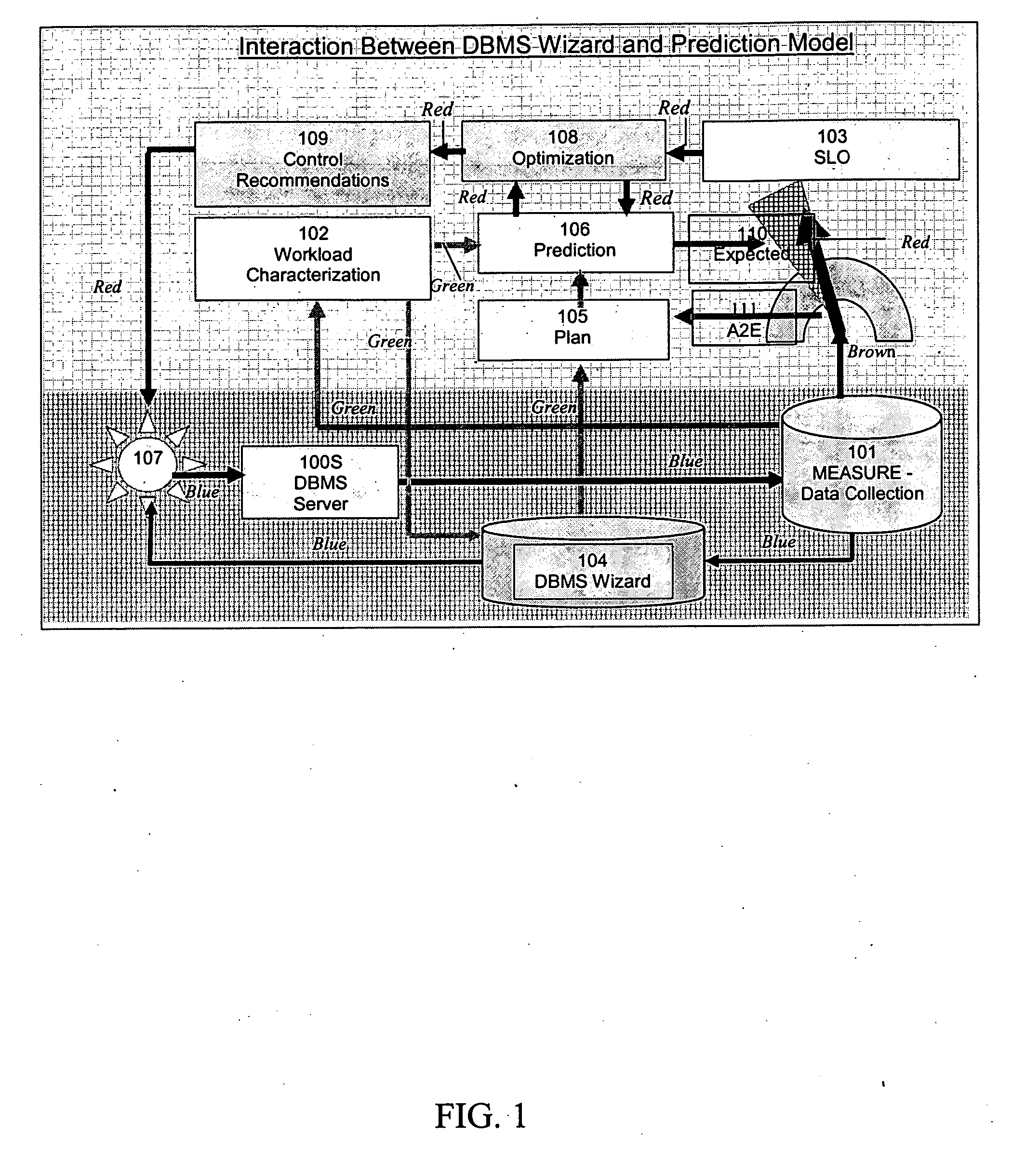

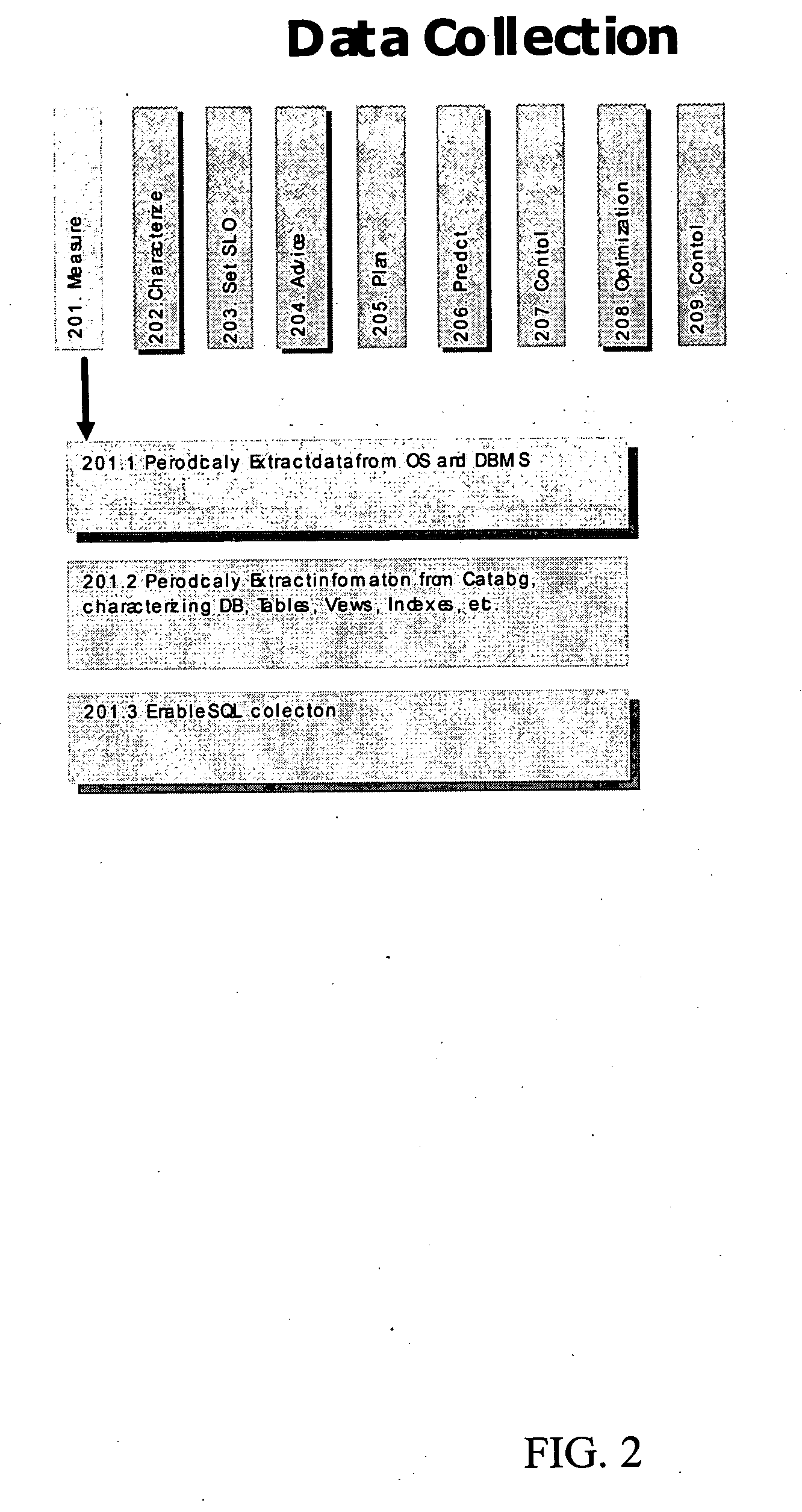

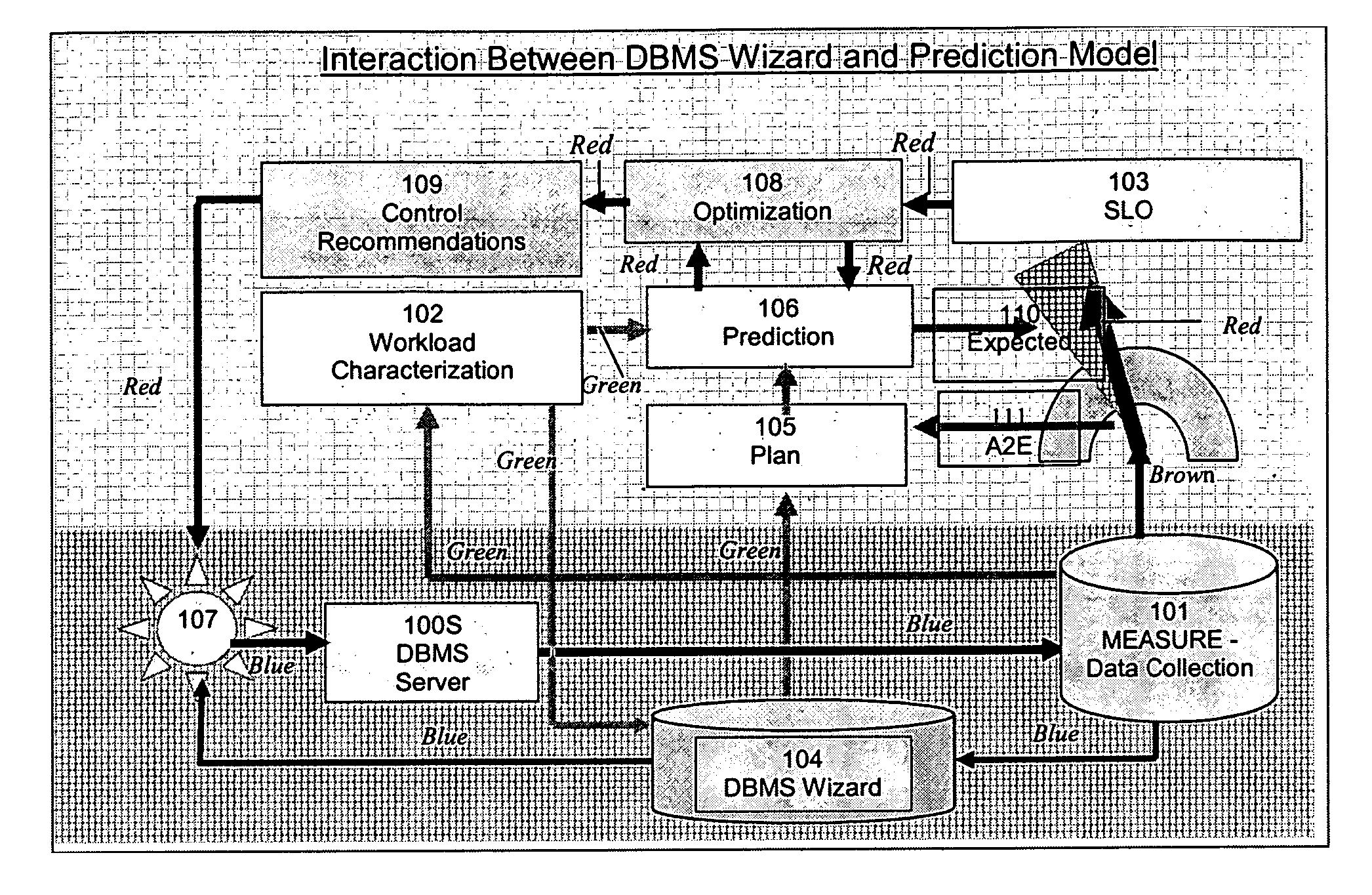

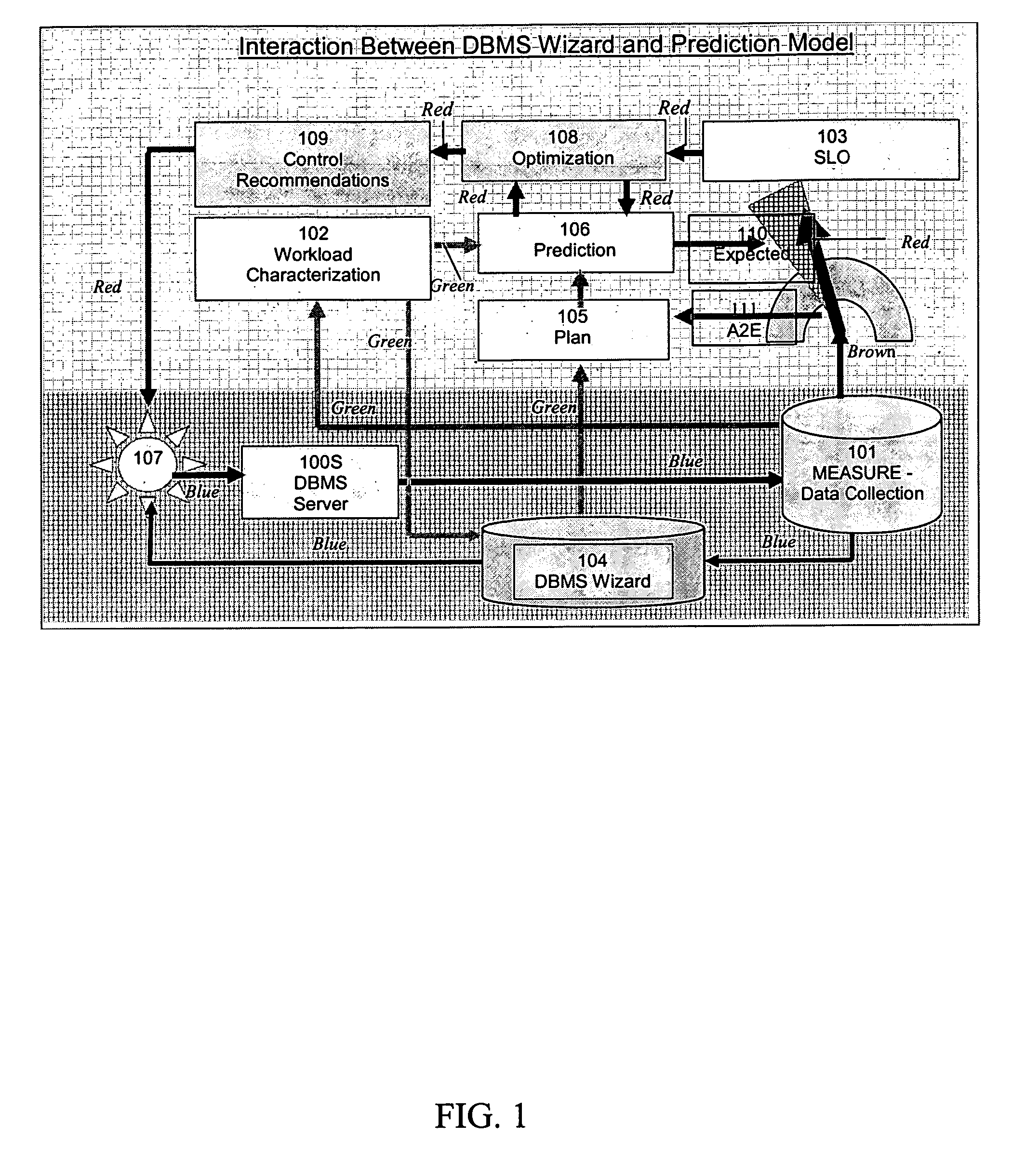

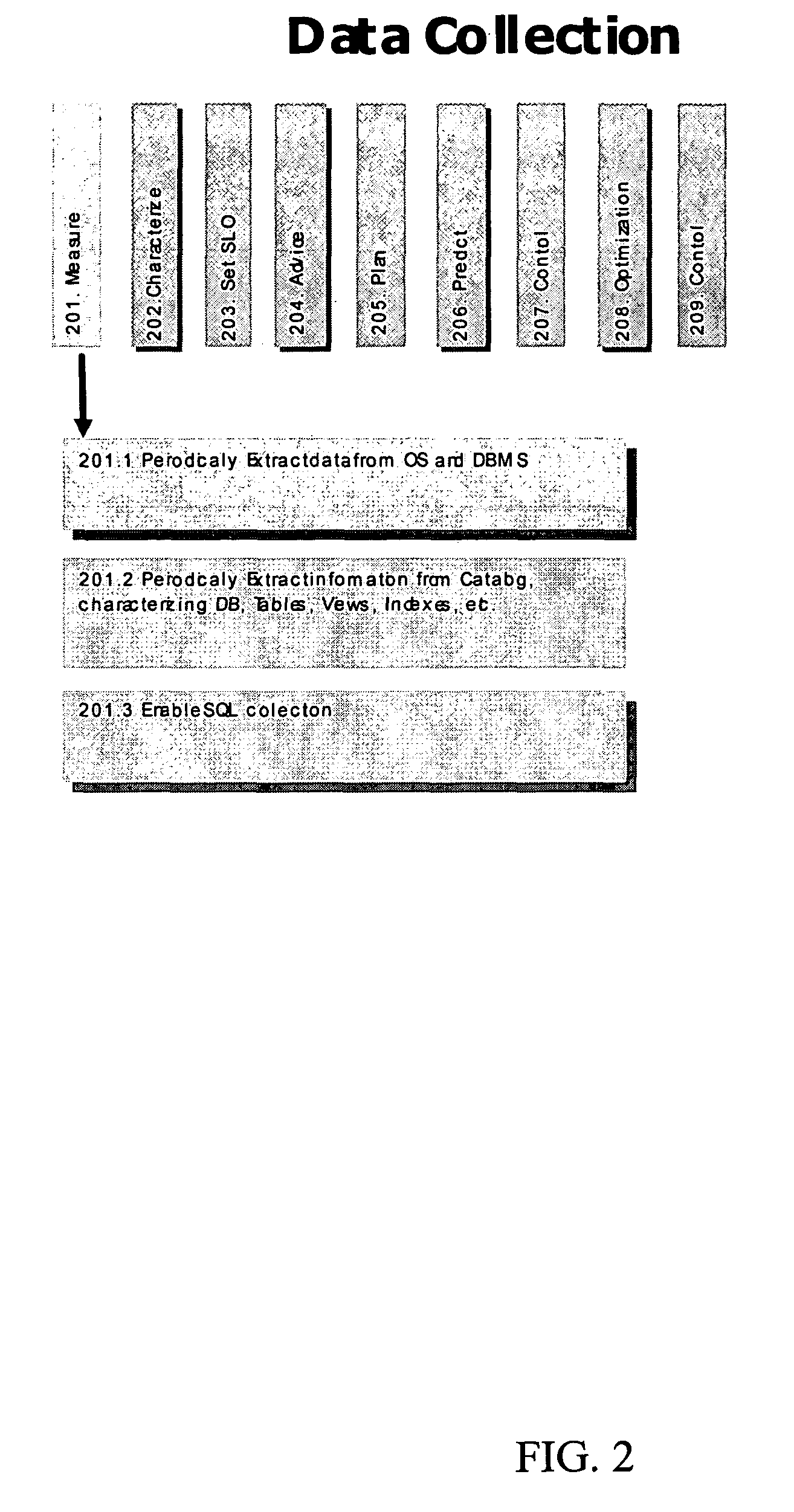

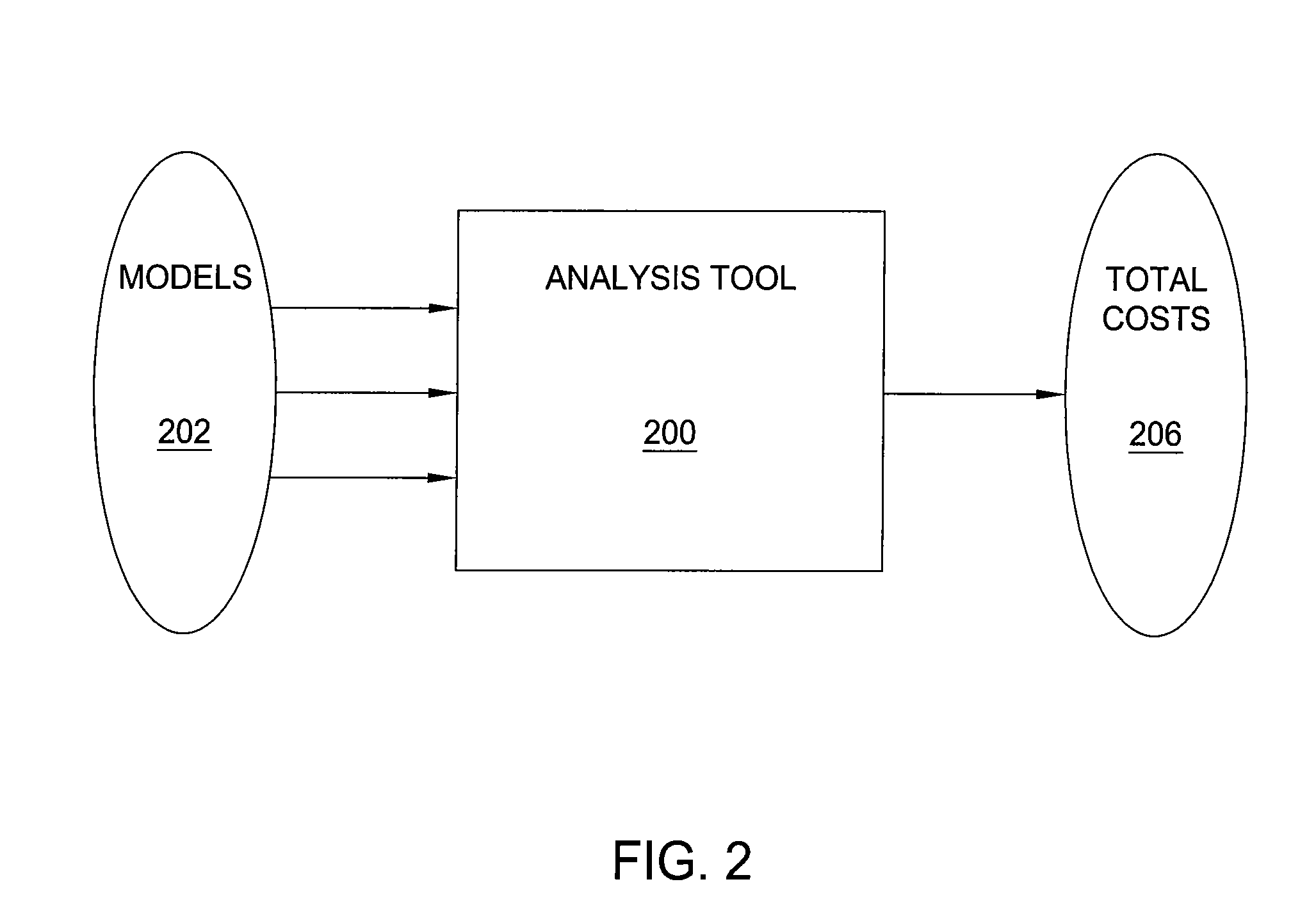

Method of incorporating DBMS wizards with analytical models for DBMS servers performance optimization

ActiveUS20070083500A1Enhancing autonomic computingImprove balanceDigital data information retrievalDigital data processing detailsAnalytic modelResource utilization

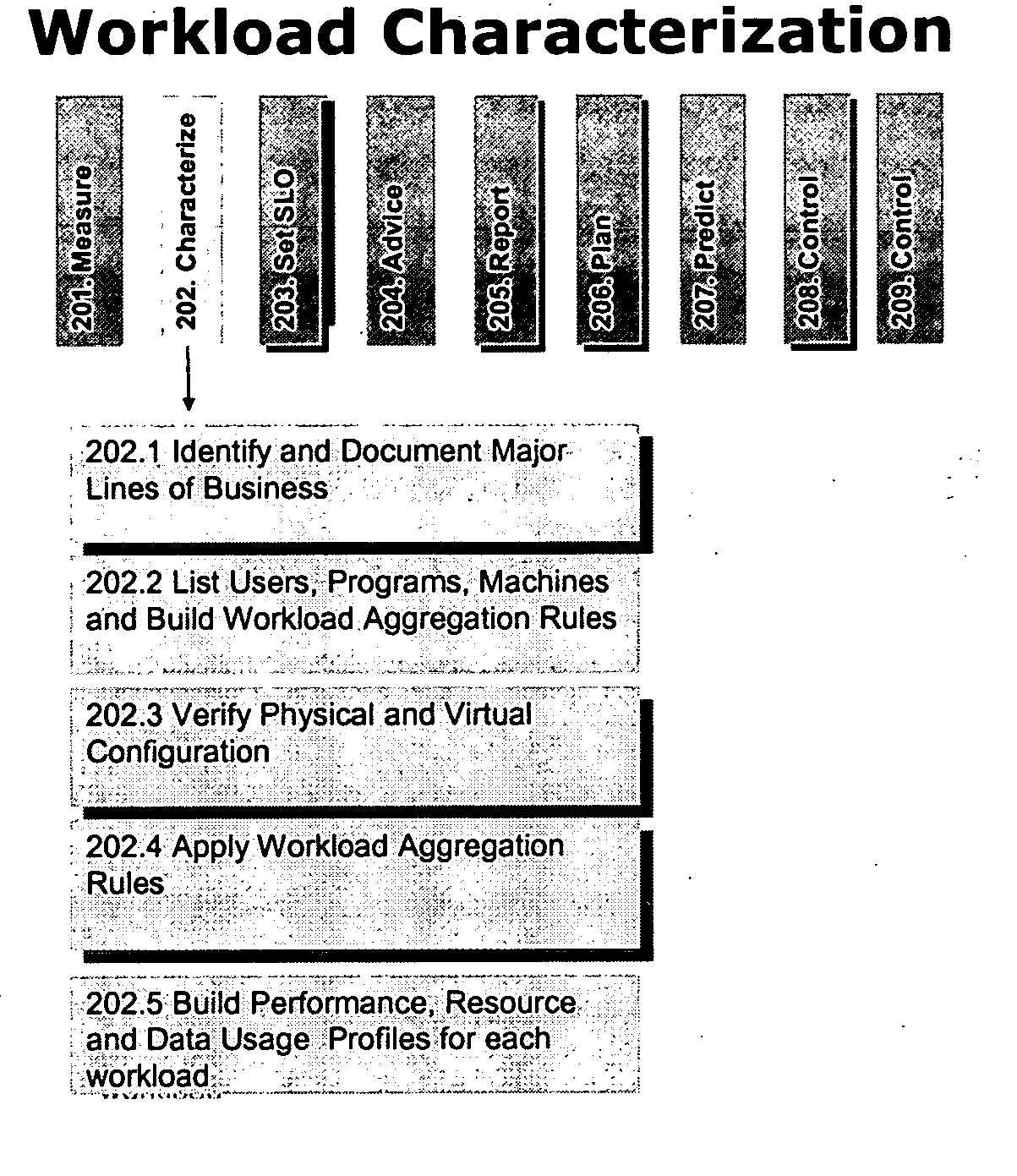

Disclosed is an improved method and system for implementing DBMS server performance optimization. According to some approaches, the method and system incorporates DBMS wizards recommendations with analytical queuing network models for purpose of evaluating different alternatives and selecting the optimum performance management solution with a set of expectations, enhancing autonomic computing by generating periodic control measures which include recommendation to add or remove indexes and materialized views, change the level of concurrency, workloads priorities, improving the balance of the resource utilization, which provides a framework for a continuous process of the workload management by means of measuring the difference between the actual results and expected, understanding the cause of the difference, finding a new corrective solution and setting new expectations.

Owner:DYNATRACE

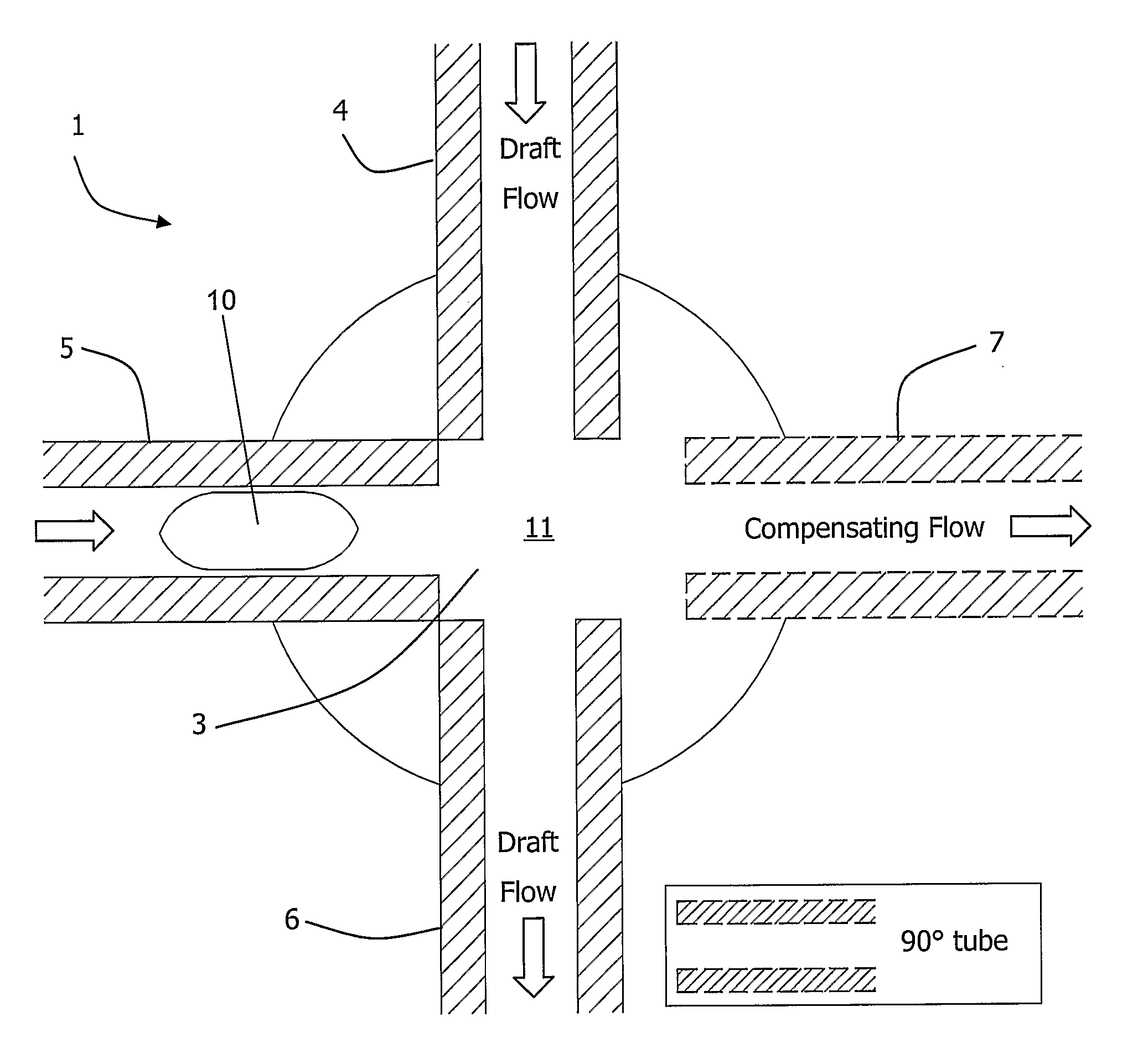

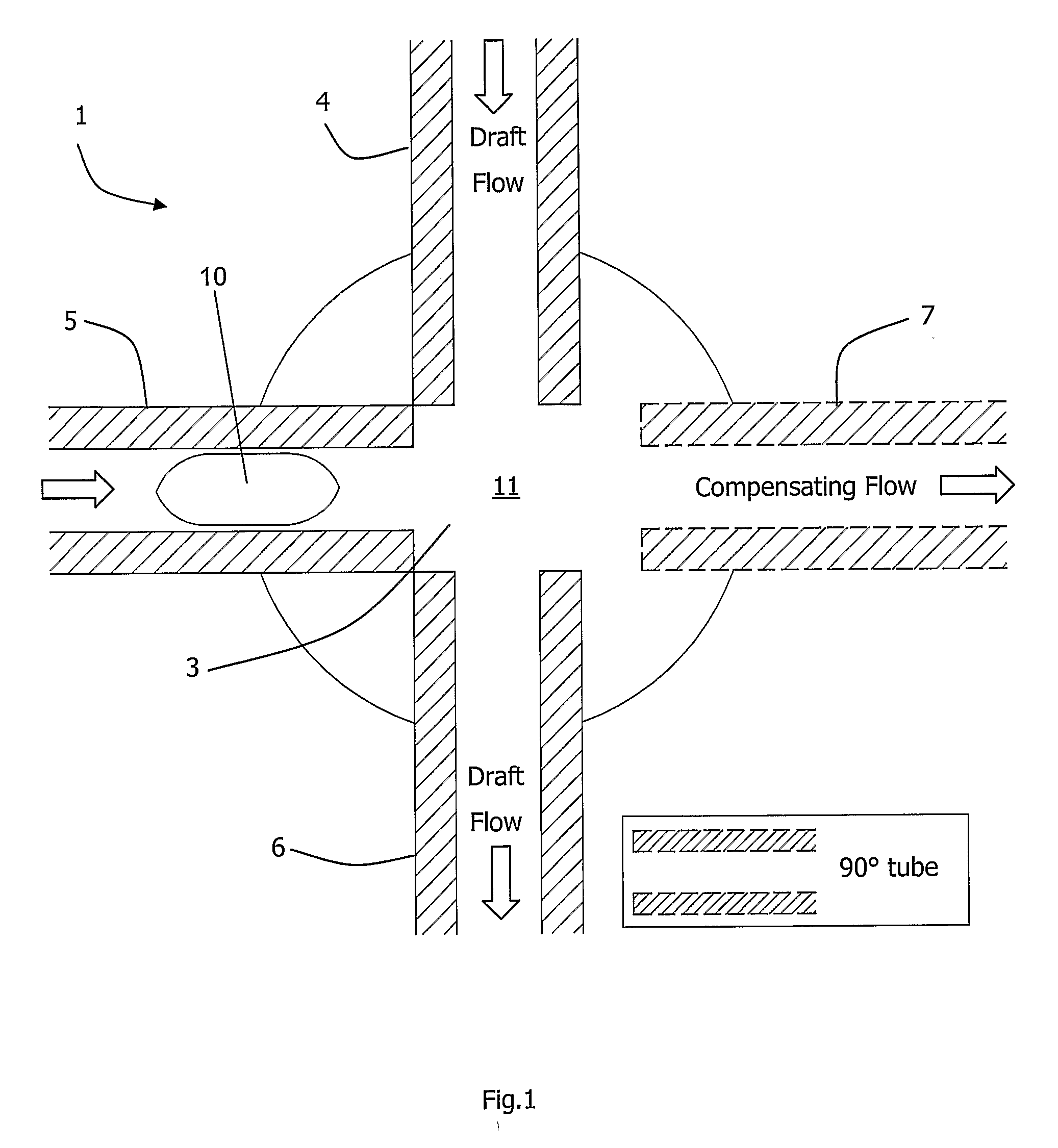

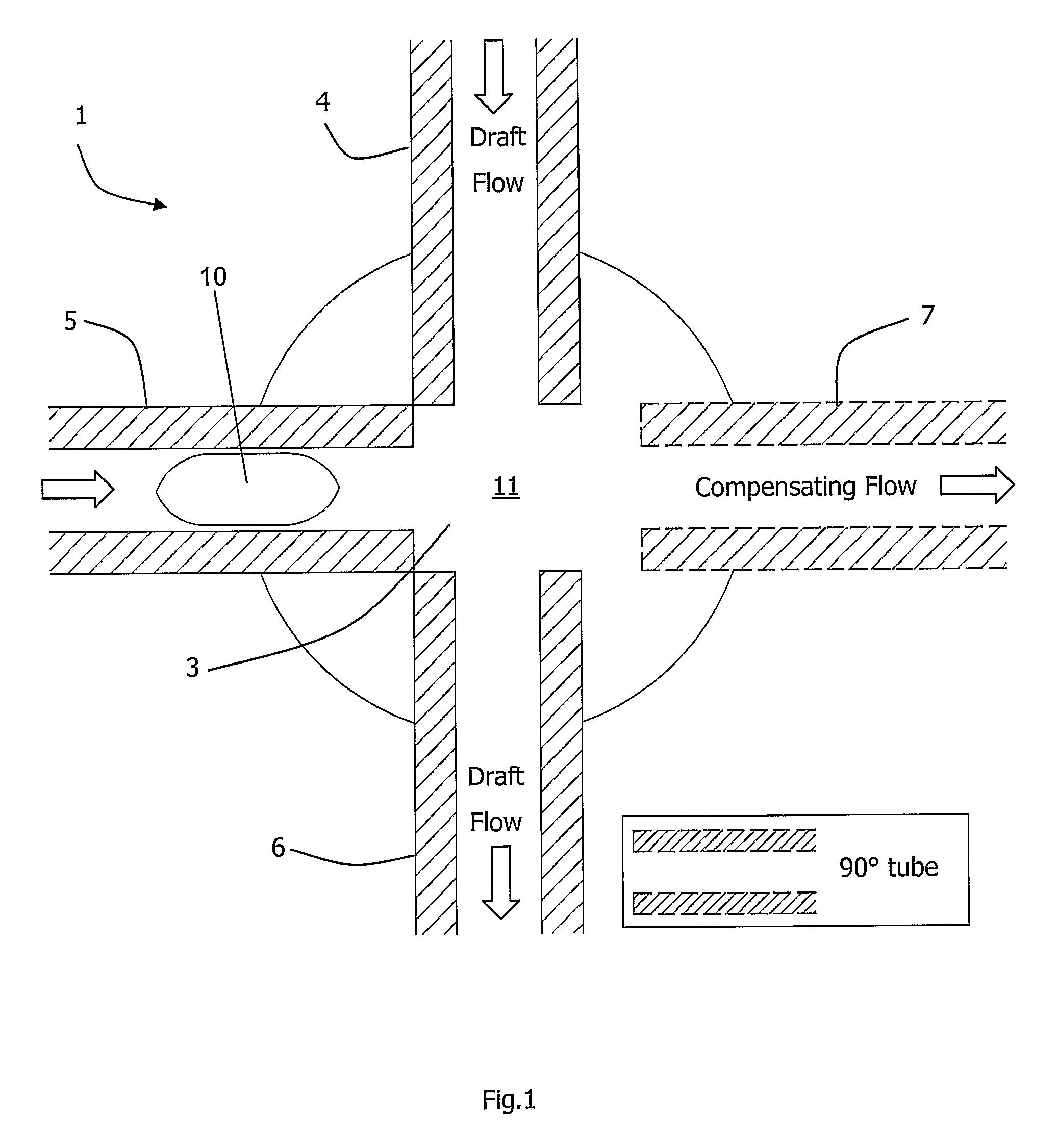

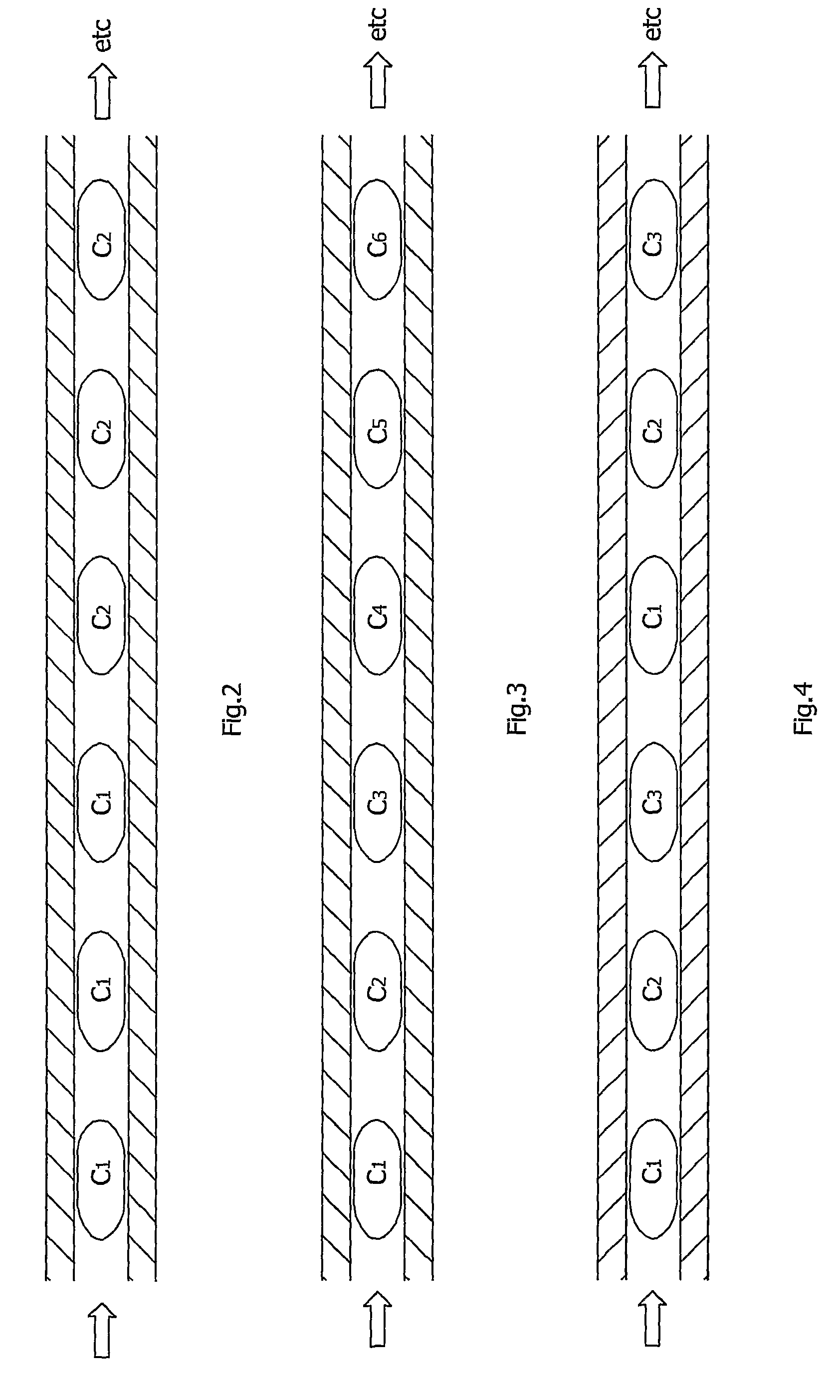

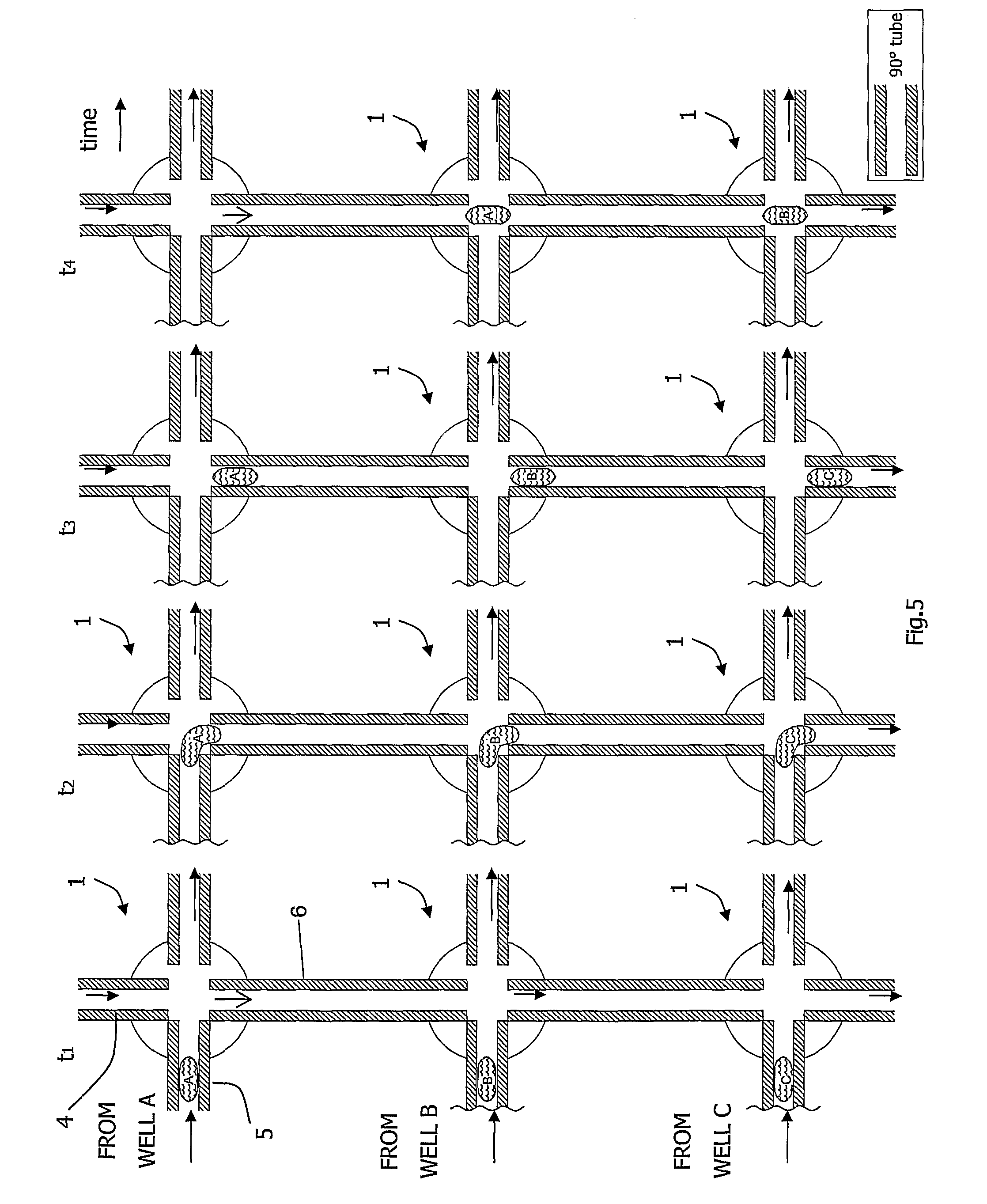

Microfluidic droplet queuing network

ActiveUS20100015606A1Bioreactor/fermenter combinationsSequential/parallel process reactionsGravitational forceQueuing network

A multi-port liquid bridge (1) adds aqueous phase droplets (10) in an enveloping oil phase carrier liquid (11) to a draft channel (4, 6). A chamber (3) links four ports, and it is permanently full of oil (11) when in use. Oil phase is fed in a draft flow from an inlet port (4) and exits through a draft exit port (6) and a compensating flow port (7). The oil carrier and the sample droplets (3) (“aqueous phase”) flow through the inlet port (5) with an equivalent fluid flow subtracted through the compensating port (7). The ports of the bridge (1) are formed by the ends of capillaries held in position in plastics housings. The phases are density matched to create an environment where gravitational forces are negligible. This results in droplets (10) adopting spherical forms when suspended from capillary tube tips. Furthermore, the equality of mass flow is equal to the equality of volume flow. The phase of the inlet flow (from the droplet inlet port (5) and the draft inlet port (4) is used to determine the outlet port (6) flow phase.

Owner:STOKES BIO LTD

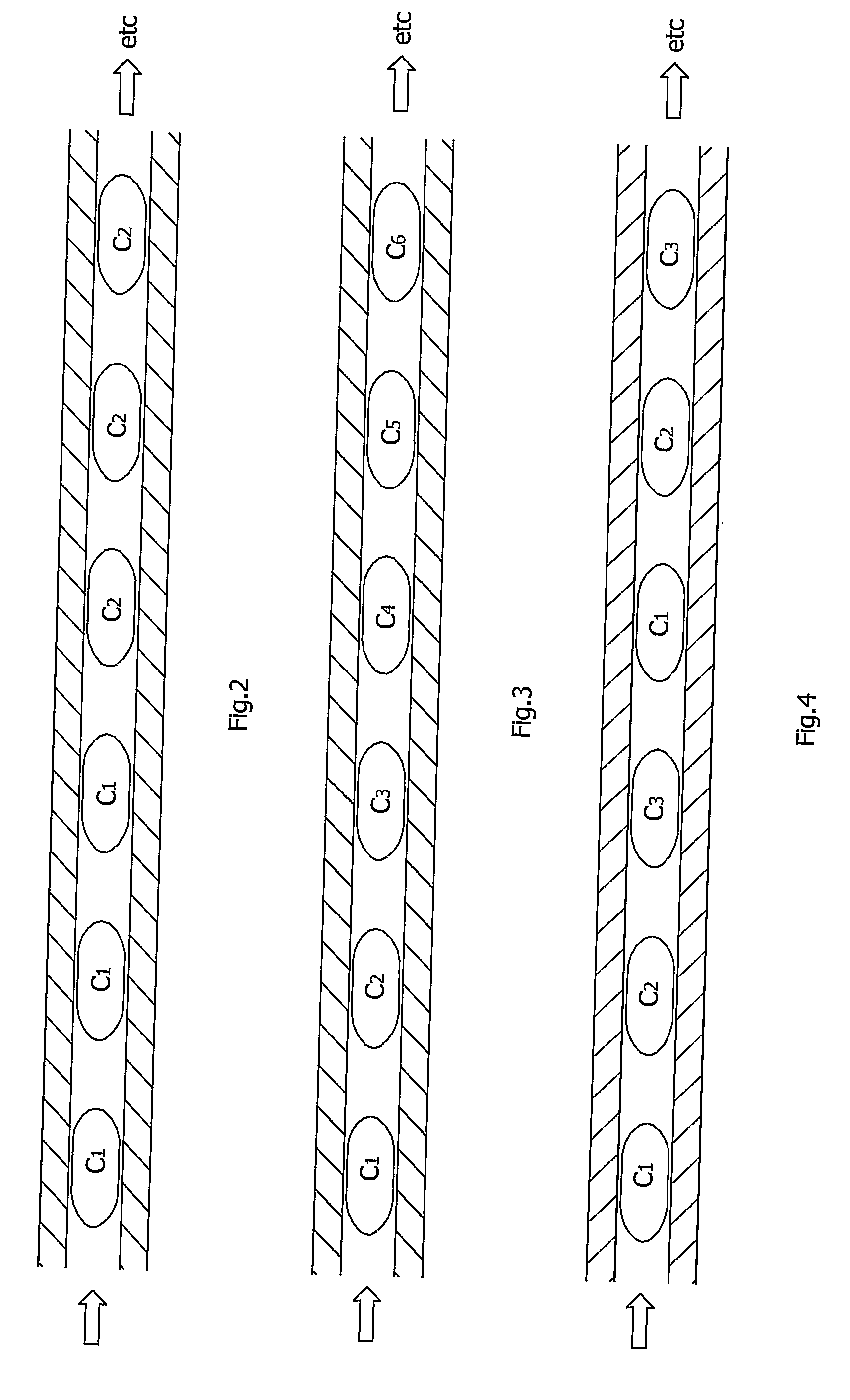

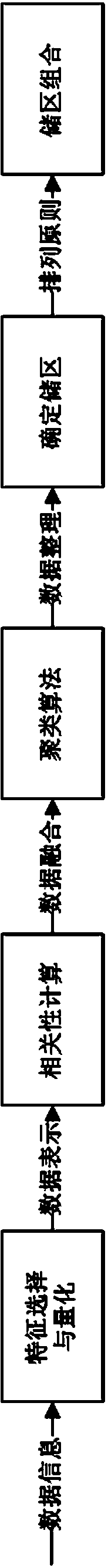

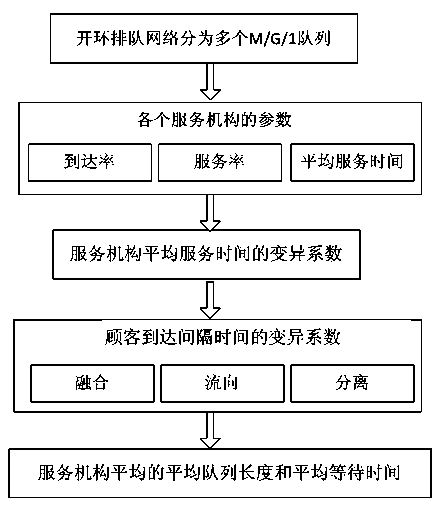

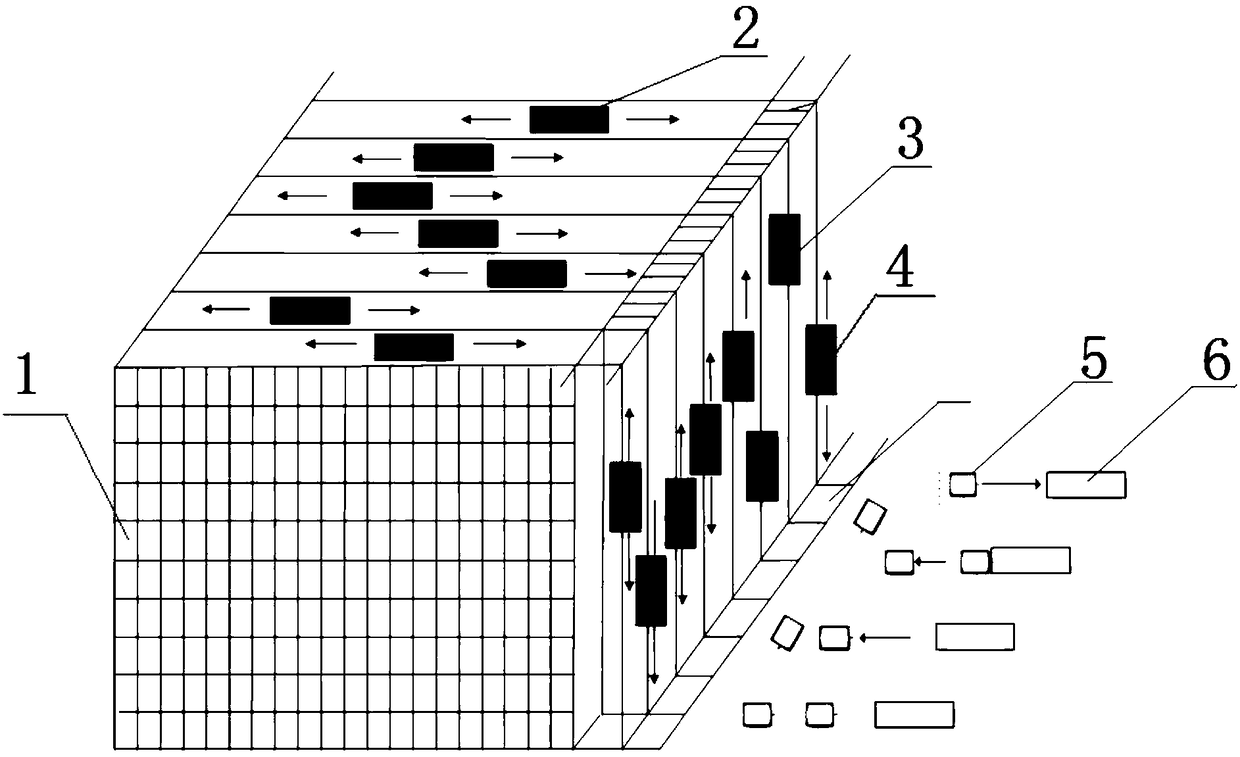

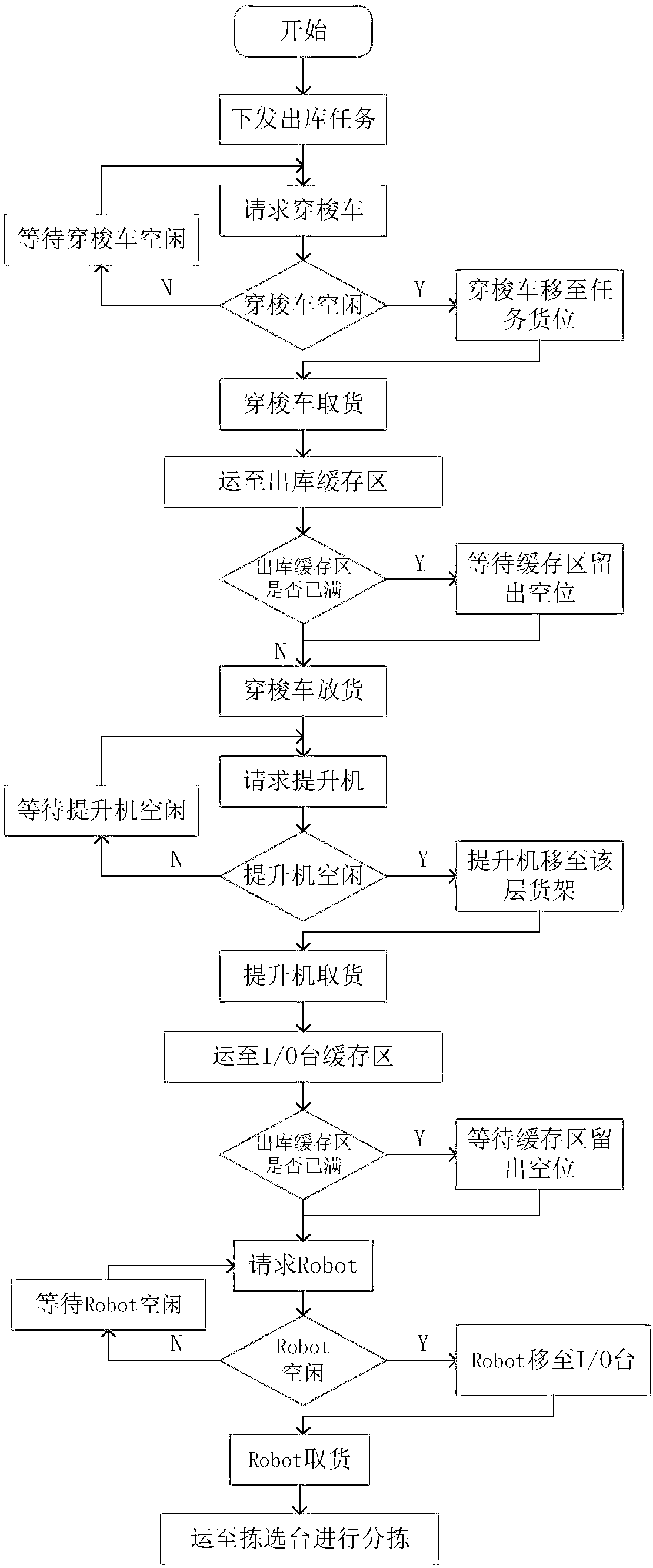

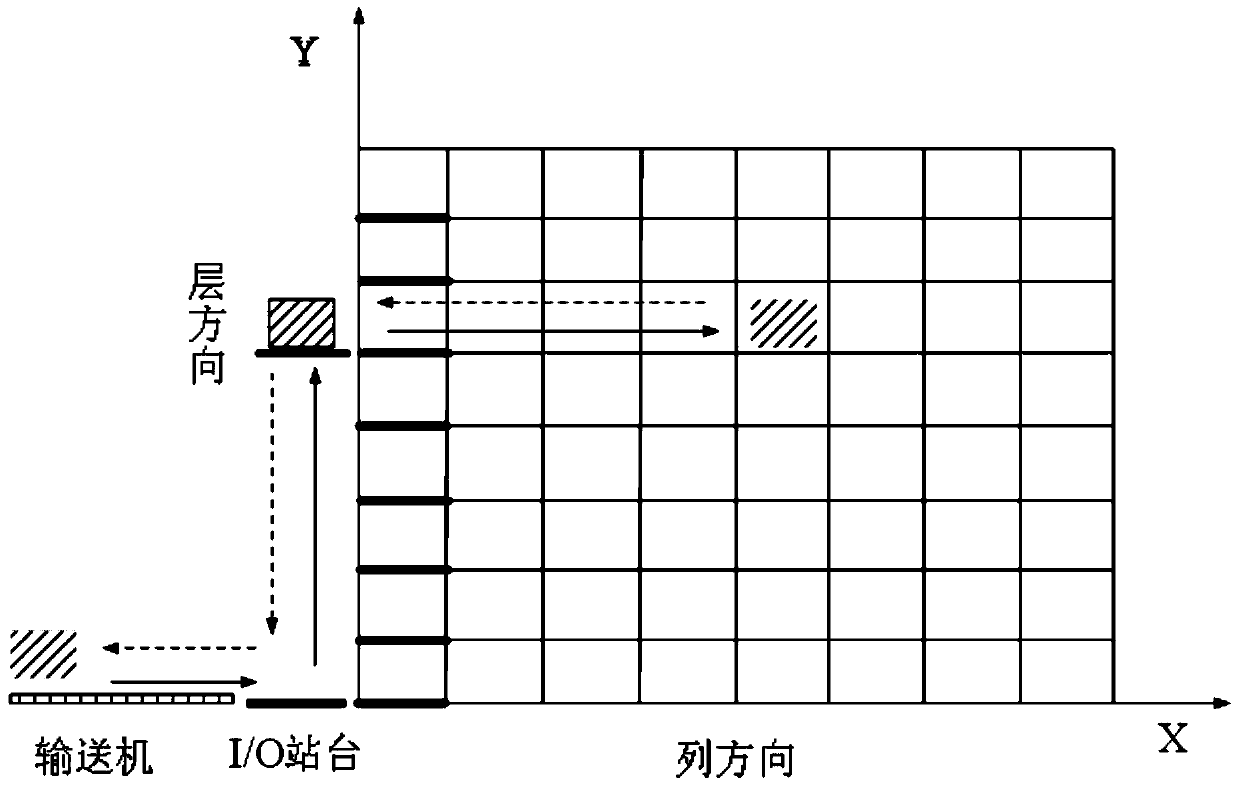

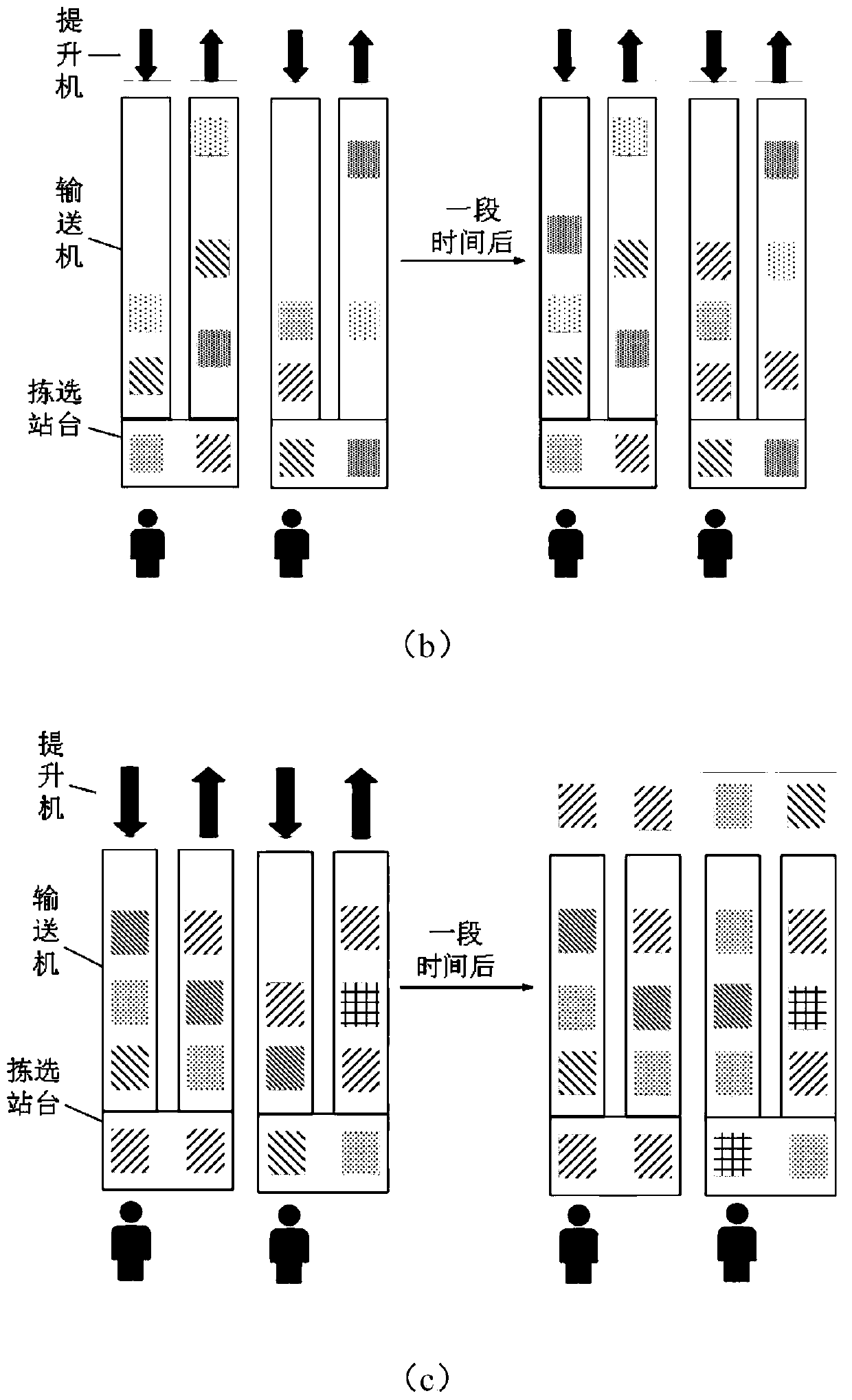

Goods location allocating method applied to automatic warehousing system of multi-layer shuttle vehicle

The invention discloses a goods location allocating method applied to an automatic warehousing system of a multi-layer shuttle vehicle. The method comprises the following steps: firstly, according to the quantities of goods shelves and tunnels, generating plane layout structure data of the system; then analyzing the waiting time of the shuttle vehicle executing an outbound task as well as the idle time of a hoister; establishing an open queuing network model for describing the system; analyzing the relationship among the waiting time of the shuttle vehicle, the idle time of the hoister and the time of inbound and outbound works by a decomposition process; determining that the higher the reaching rate of a task serviced by the shuttle vehicle is, the lower the goods location of the task is, and the goods with highest correlation are allocated at different layers, so that multiple shuttle vehicles can provide service simultaneously; finally putting forward a principle of dividing storage zones in light of item correlation, establishing a correlation matrix of outbound items, clustering the items by an ant colony algorithm, and combining and arranging the storage zones in a two-dimensional plane according to the analysis result of the queuing network model, thereby realizing the allocation of goods location. By the method, the waiting time of the shuttle vehicle and the idle time of the hoister can be effectively shortened, so that the rate of equipment utilization and the throughput capacity of a distribution center are increased.

Owner:SHANDONG UNIV

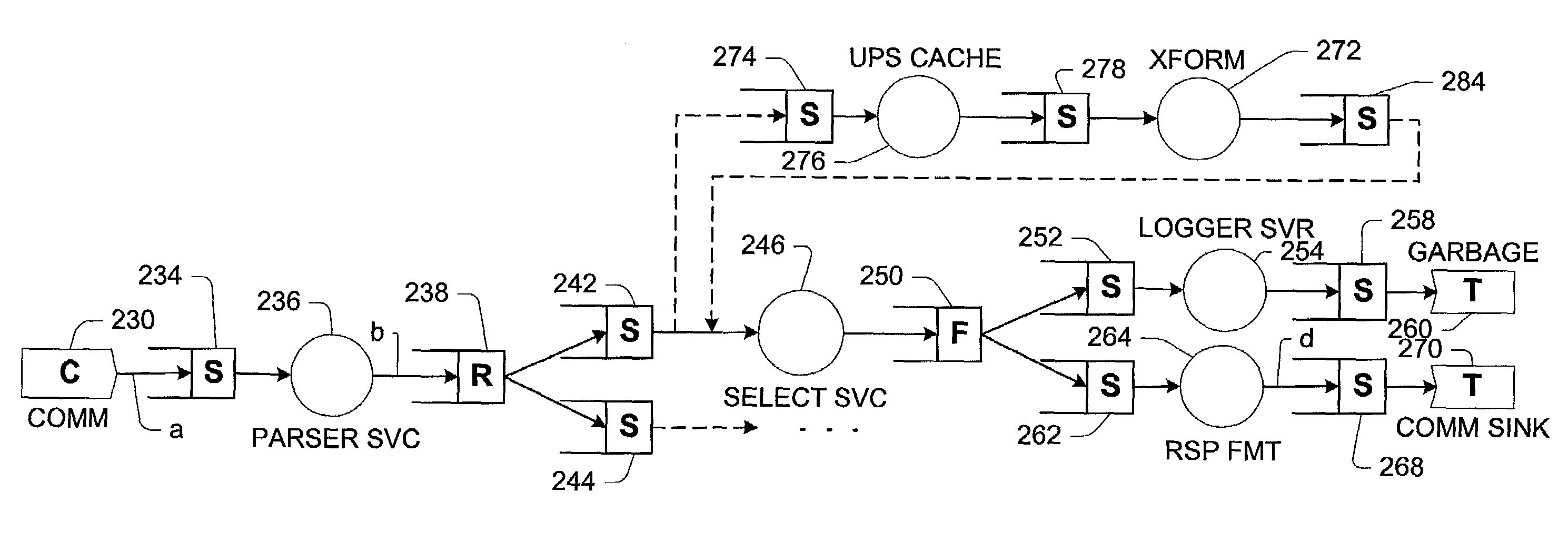

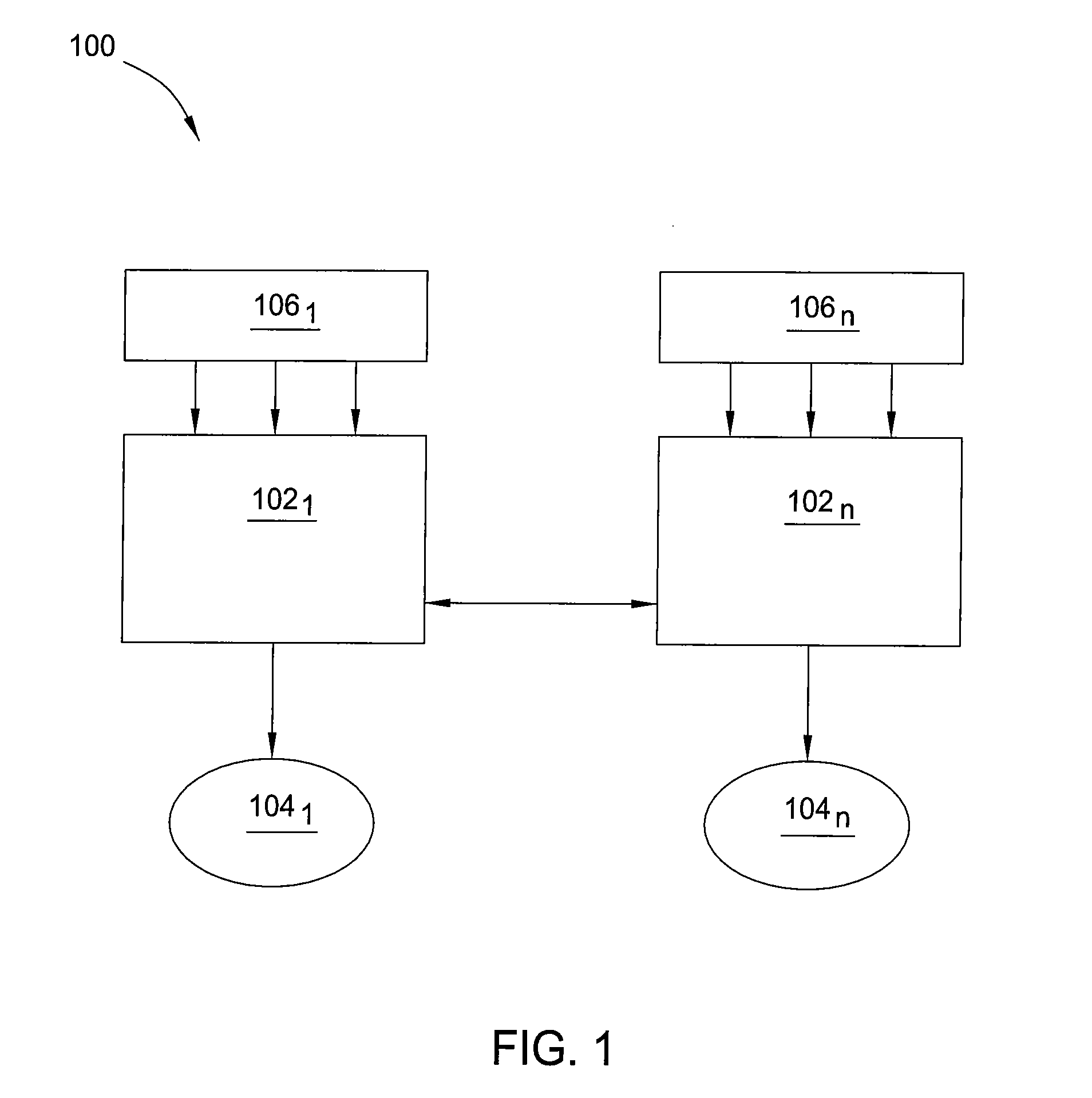

Programming framework including queueing network

InactiveUS7114158B1Increase the number ofMaximize throughputData processing applicationsDigital data processing detailsMulti processorQueuing network

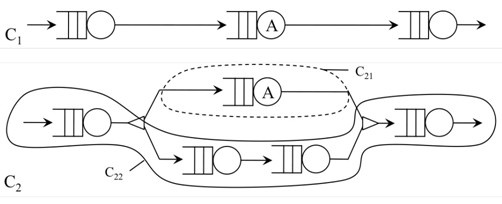

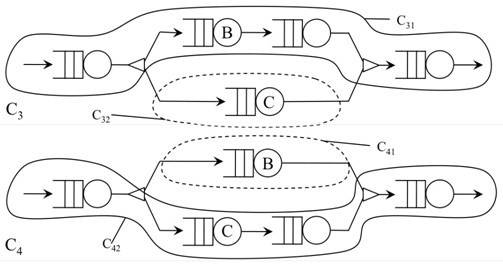

A queueing network framework for processing messages in stages in a multi-processor environment. An event source generates work packets that have information relating to the messages to be processed. The work packets are queued before processing by a plurality of application services. Each application service follows a queue and defines a processing stage. At each processing stage, the application service operates on a batch of the work packets queued for it by the respective queue.

Owner:MICROSOFT TECH LICENSING LLC

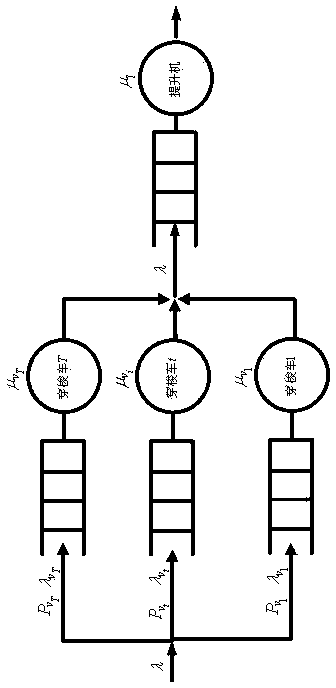

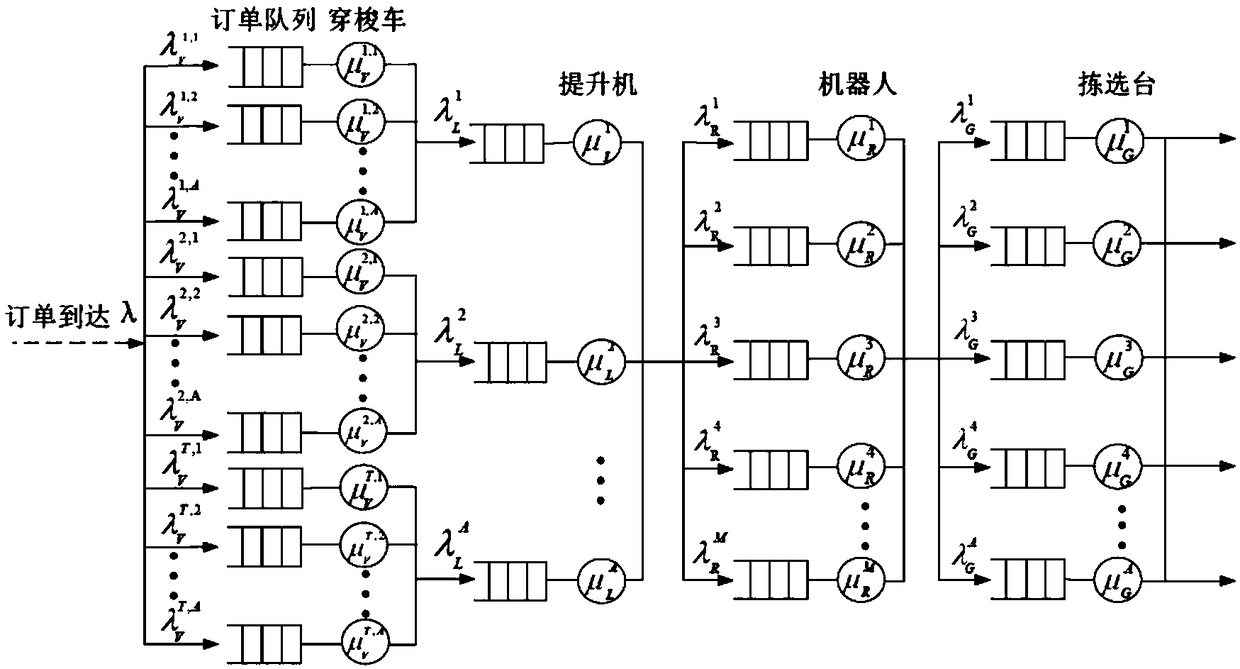

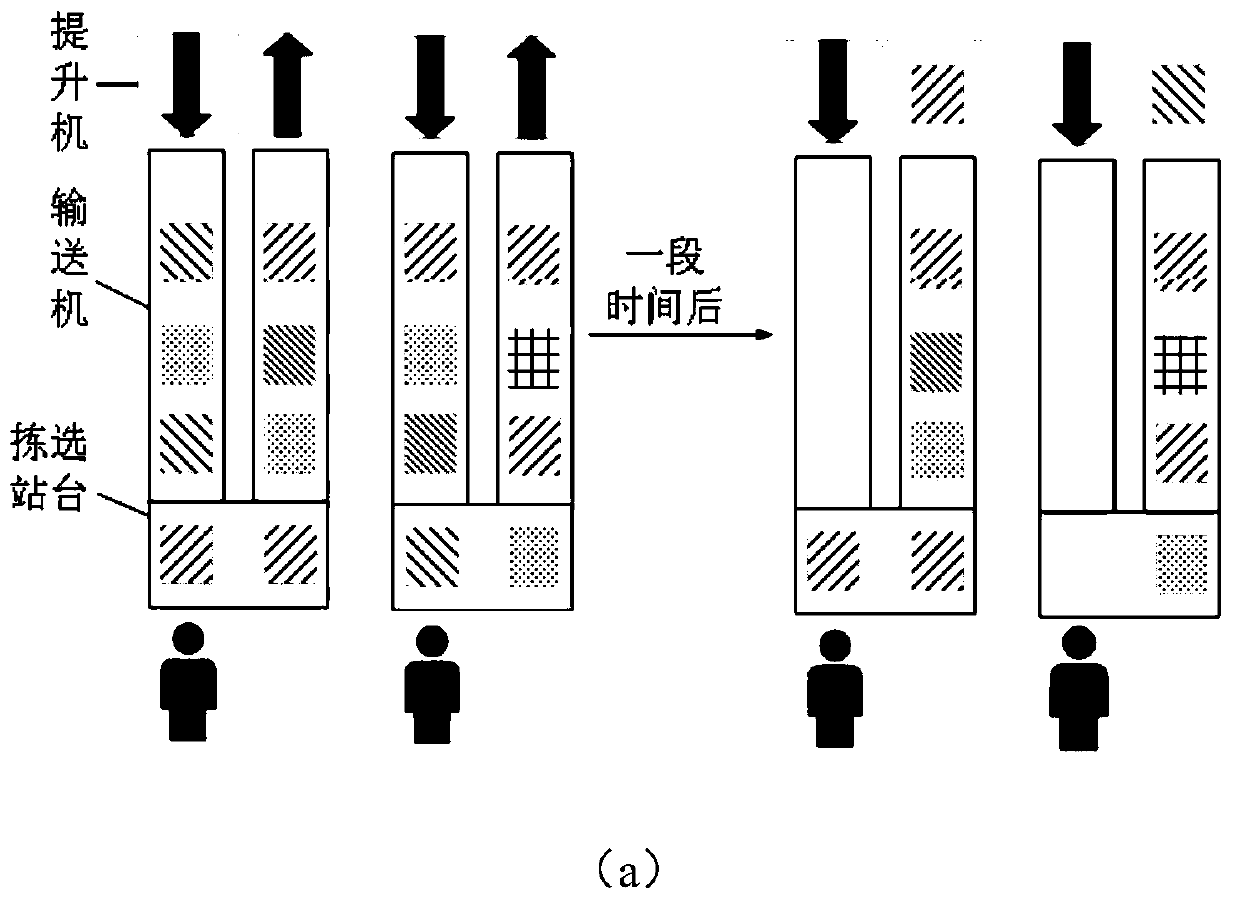

Multilayer shuttling car warehousing conveying system based on warehousing robot and conveying method

InactiveCN109292343AIncrease the number ofIncrease flexibilityStorage devicesSimulationNetwork model

The invention discloses a multilayer shuttling car warehousing conveying system based on a warehousing robot and a conveying method. The system comprises a stereoscopic warehouse for storing goods, the warehousing robot for transporting the goods, and a warehousing intelligent control system for realizing the goods storage control. An open-loop queuing network model is built to analyze performances of the system, to analyze equipment factors of influencing the order aging, to calculate the order service time, the waiting time of the warehousing robot and the utilization rate of the warehousingrobot and to analyze the order arrival rate and the number sensitivity of the warehousing robot; and when the storage capacity is higher, the order arrival rate is higher or the change amplitude is higher, and the advantages of the multilayer shuttling car warehousing conveying system based on the warehousing robot are more obvious.

Owner:SHANDONG UNIV

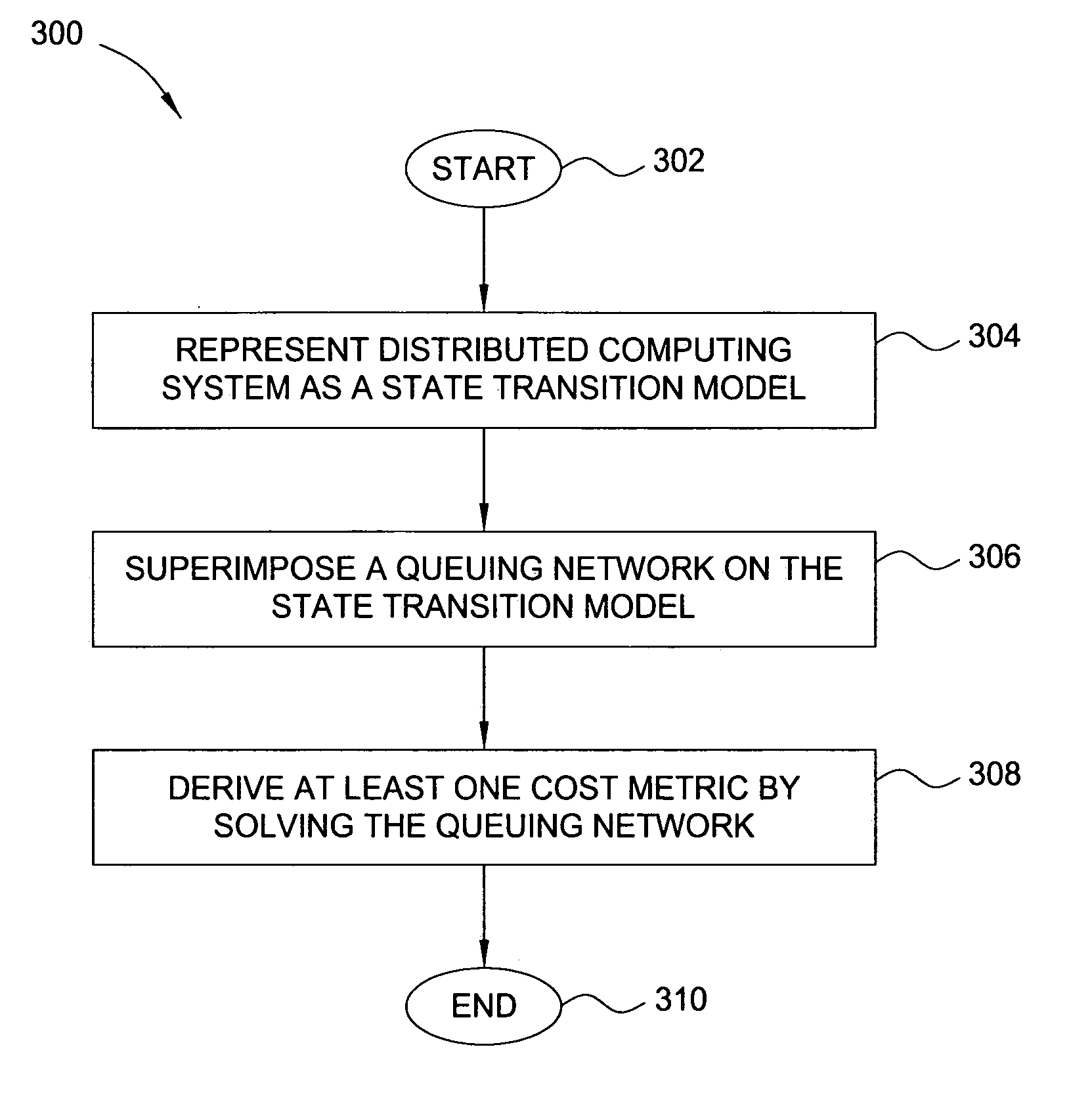

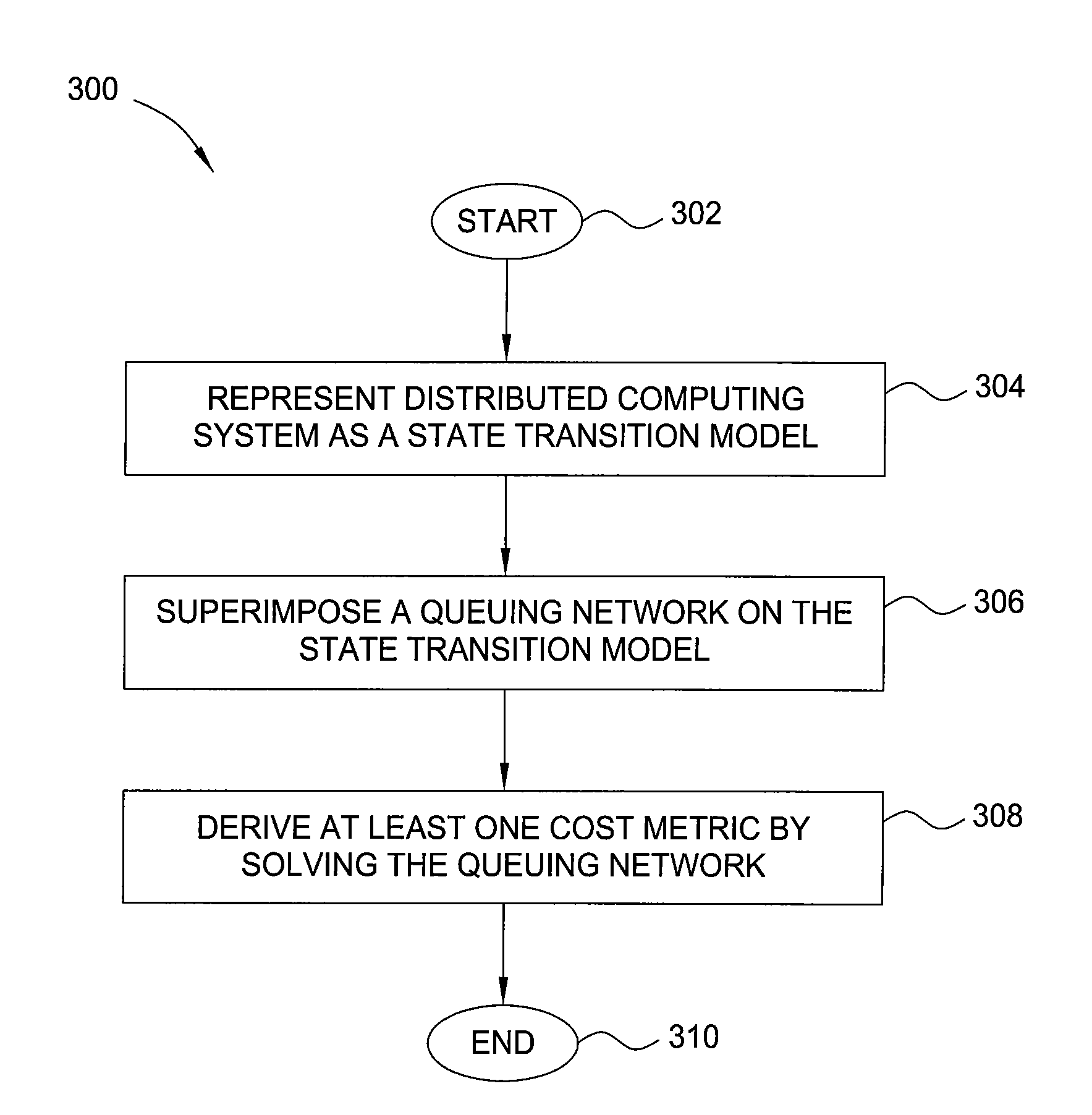

Method and apparatus for performance and policy analysis in distributed computing systems

One embodiment of the present method and apparatus for performance and policy analysis in distributed computing systems includes representing a distributed computing system as a state transition model. A queuing network is then superimposed upon the state transition model, and the effects of one or more policies on the distributed computing system performance are identified in accordance with a solution to the queuing network.

Owner:IBM CORP

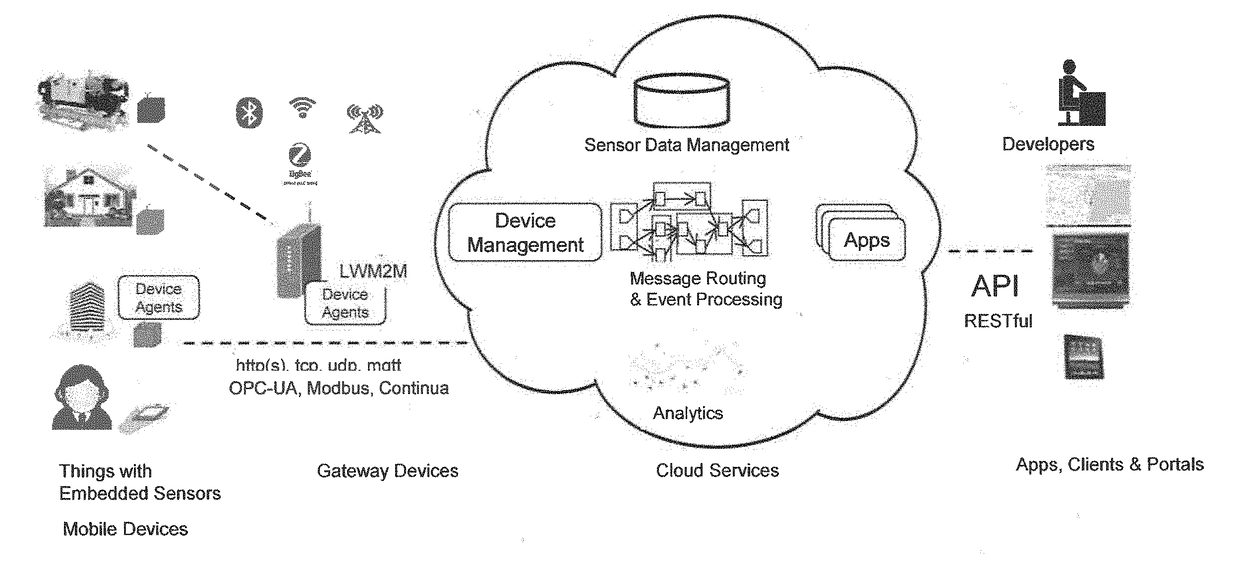

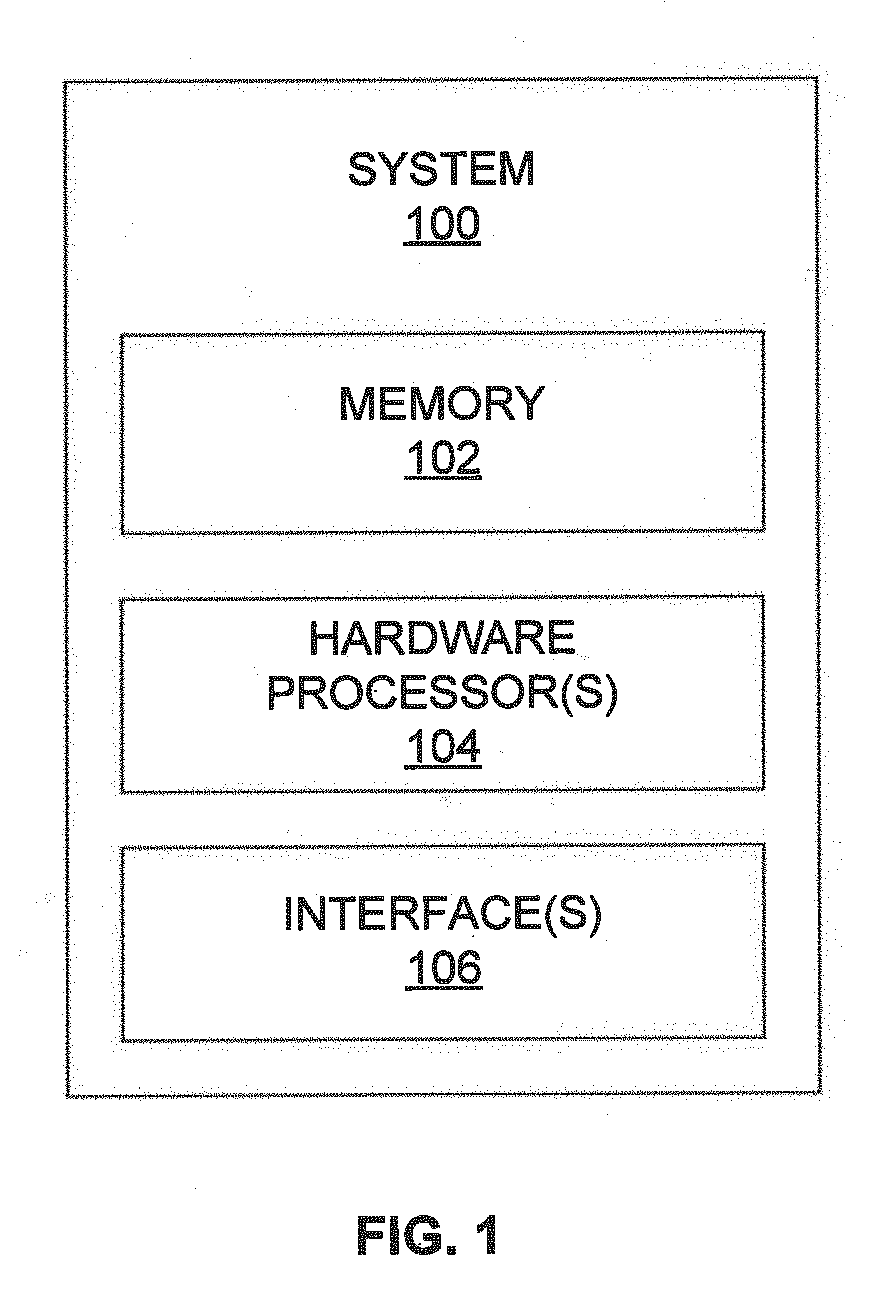

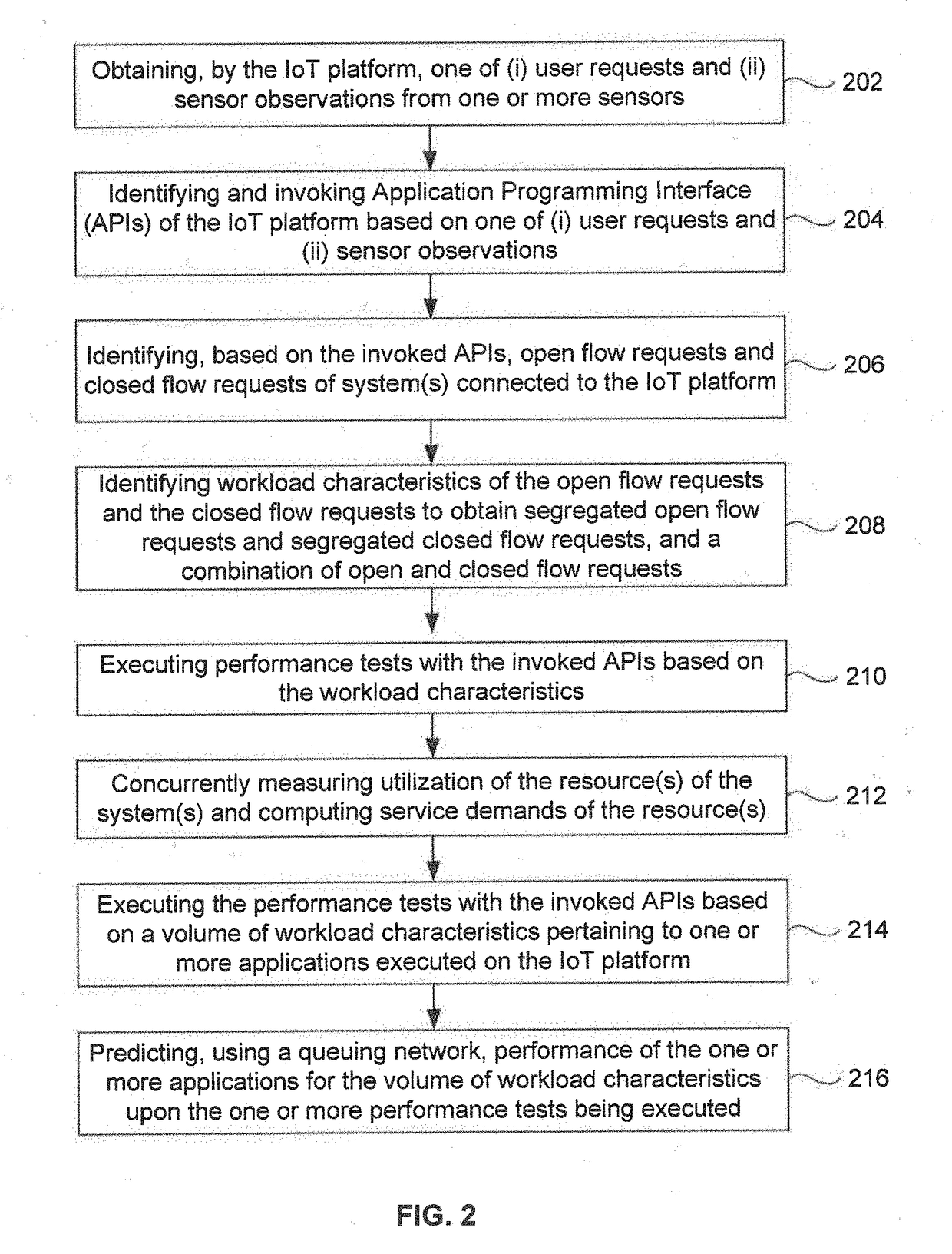

SYSTEMS AND METHODS FOR PREDICTING PERFORMANCE OF APPLICATIONS ON AN INTERNET OF THINGS (IoT) PLATFORM

ActiveUS20180081730A1Predictive performanceResource allocationError detection/correctionResource utilizationApplication programming interface

Performance prediction systems and method of an Internet of Things (IoT) platform and applications includes obtaining input(s) comprising one of (i) user requests and (ii) sensor observations from sensor(s); invoking Application Programming Interface (APIs) of the platform based on input(s); identifying open flow (OF) and closed flow (CF) requests of system(s) connected to the platform; identifying workload characteristics of the OF and CF requests to obtain segregated OF and segregated CF requests, and a combination of open and closed flow requests; executing performance tests with the APIs based on the workload characteristics; measuring resource utilization of the system(s) and computing service demands of resource(s) from measured utilization, and user requests processed by the platform per unit time; executing the performance tests with the invoked APIs based on volume of workload characteristics pertaining to the application(s); and predicting, using queuing network, performance of the application(s) for the volume of workload characteristics.

Owner:TATA CONSULTANCY SERVICES LTD

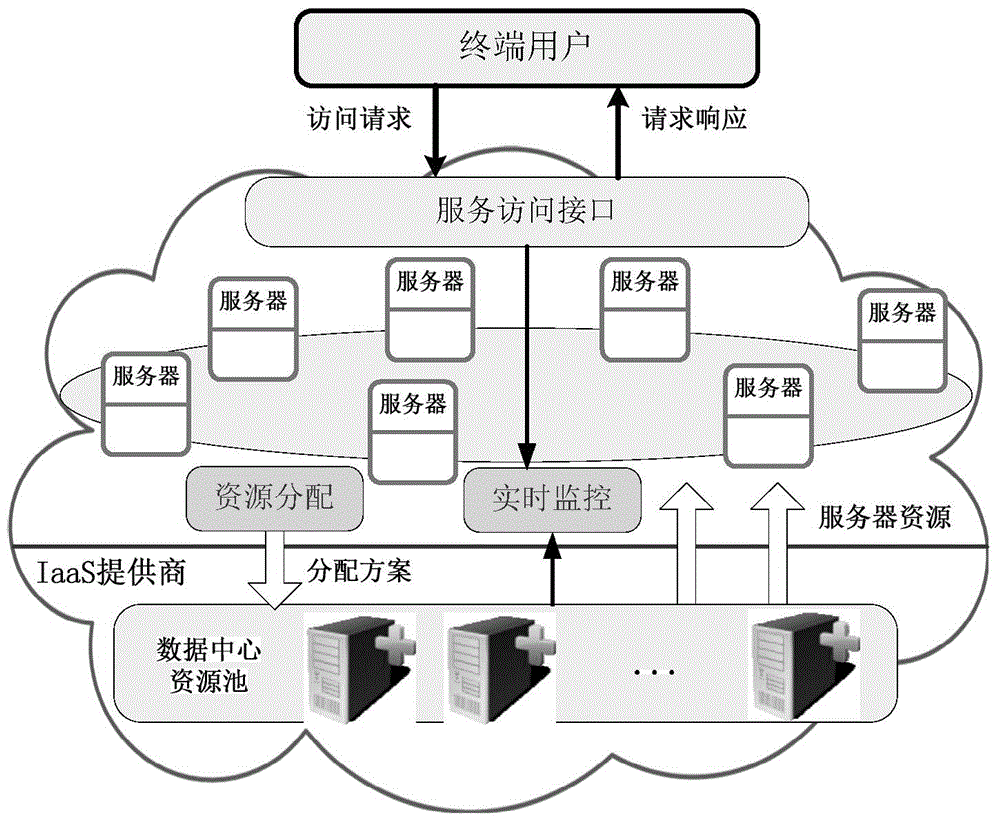

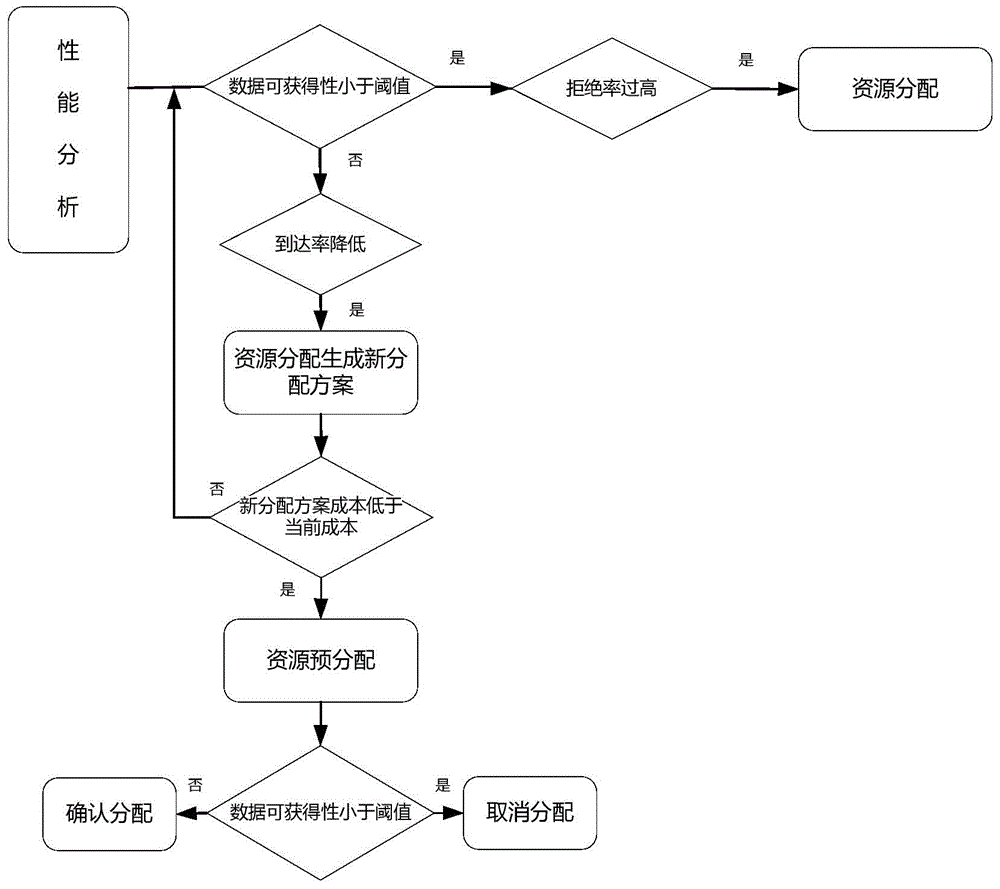

Cloud storage system resource dynamic allocation method based on DHT mechanism

ActiveCN104092756AReduce use costGuaranteed access performanceTransmissionQueuing networkComputer terminal

The invention discloses a cloud storage system resource dynamic allocation method based on a DHT mechanism. The method includes the following steps that first, according to the conditions for a terminal user to have access to a cloud storage system, the user access request volume, the access request state, the access performance and server state data in the system are monitored in real time by the cloud storage system, and monitoring data are acquired; second, the data acquired in the first step in real time are analyzed, and whether resource supply reaches the target performance level or not in the running process of the system is judged; third, a resource allocation model is established, and resources are allocated according to the resource allocation model. According to the method, the service performance level and the resource usage conditions are analyzed through a queuing network, so that the service performance of a server is guaranteed, and resource usage cost is reduced.

Owner:SOUTHEAST UNIV +1

Method and apparatus for creating, sending, and using self-descriptive objects as messages over a message queuing network

InactiveUS20050071316A1Easy to adaptMore robustDigital data processing detailsMultiprogramming arrangementsMessage queueSemantics

An invention for creating, sending, and using self-descriptive objects as messages over a network is disclosed. In an embodiment of the present invention, self-descriptive persistent dictionary objects are serialized and sent as messages across a message queuing network. The receiving messaging system unserializes the message object, and passes the object to the destination application. The application then queries or enumerates message elements from the instantiated persistent dictionary, and performs the programmed response. Using these self-descriptive objects as messages, the sending and receiving applications no longer rely on an a priori convention or a special-coding serialization scheme. Rather, messaging applications can communicate arbitrary objects in a standard way with no prior agreement as to the nature and semantics of message contents.

Owner:MICROSOFT TECH LICENSING LLC

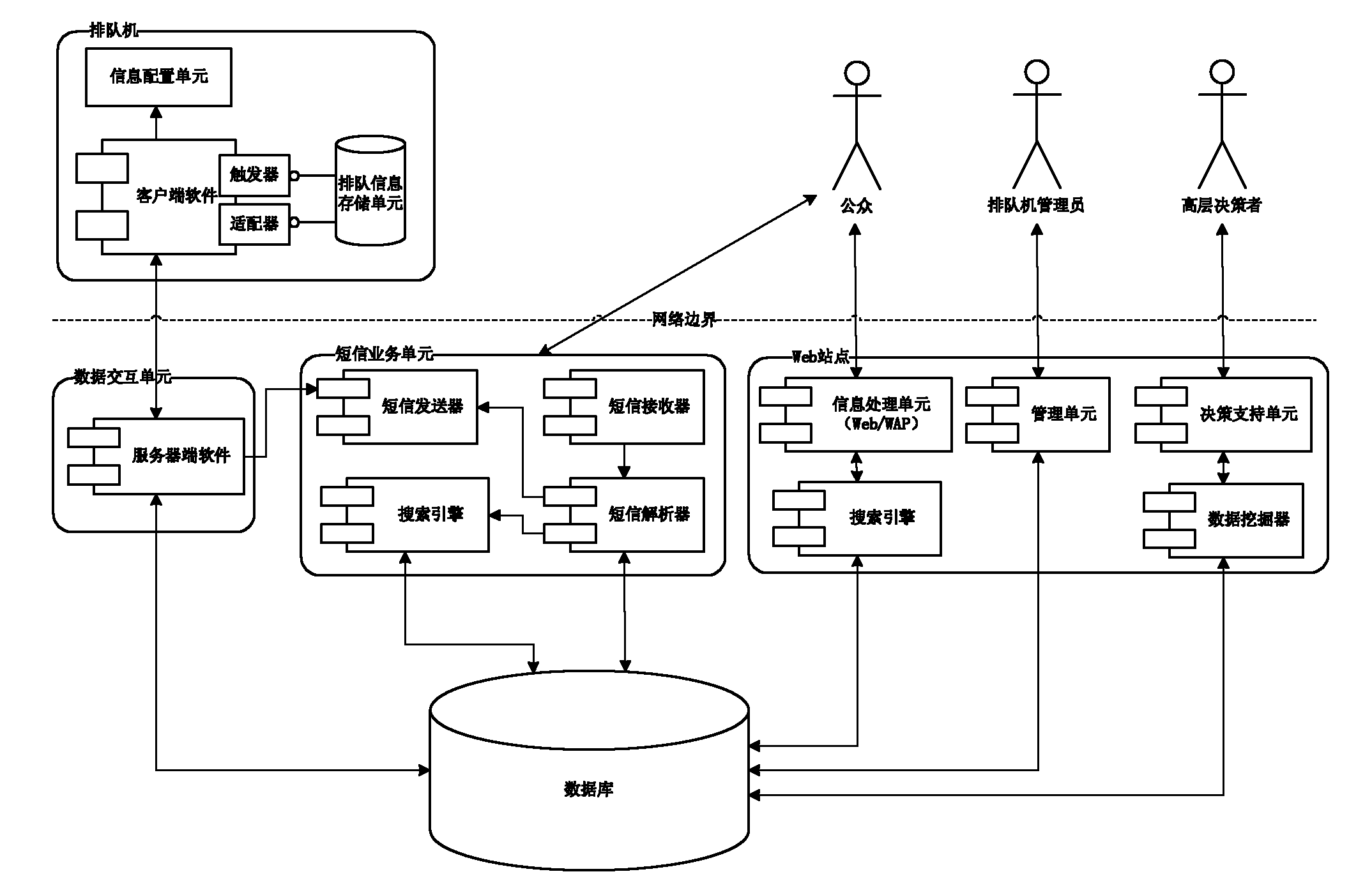

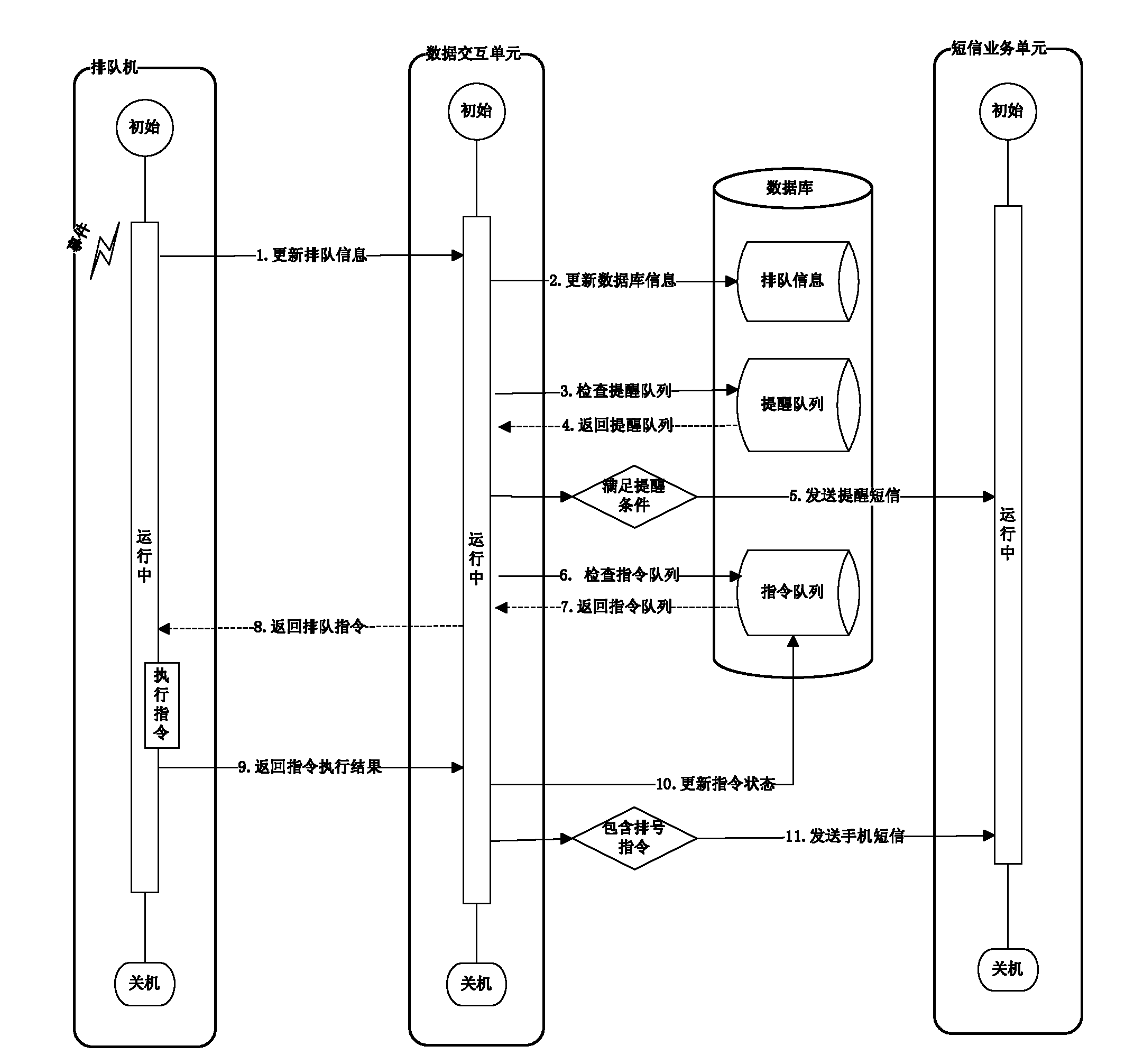

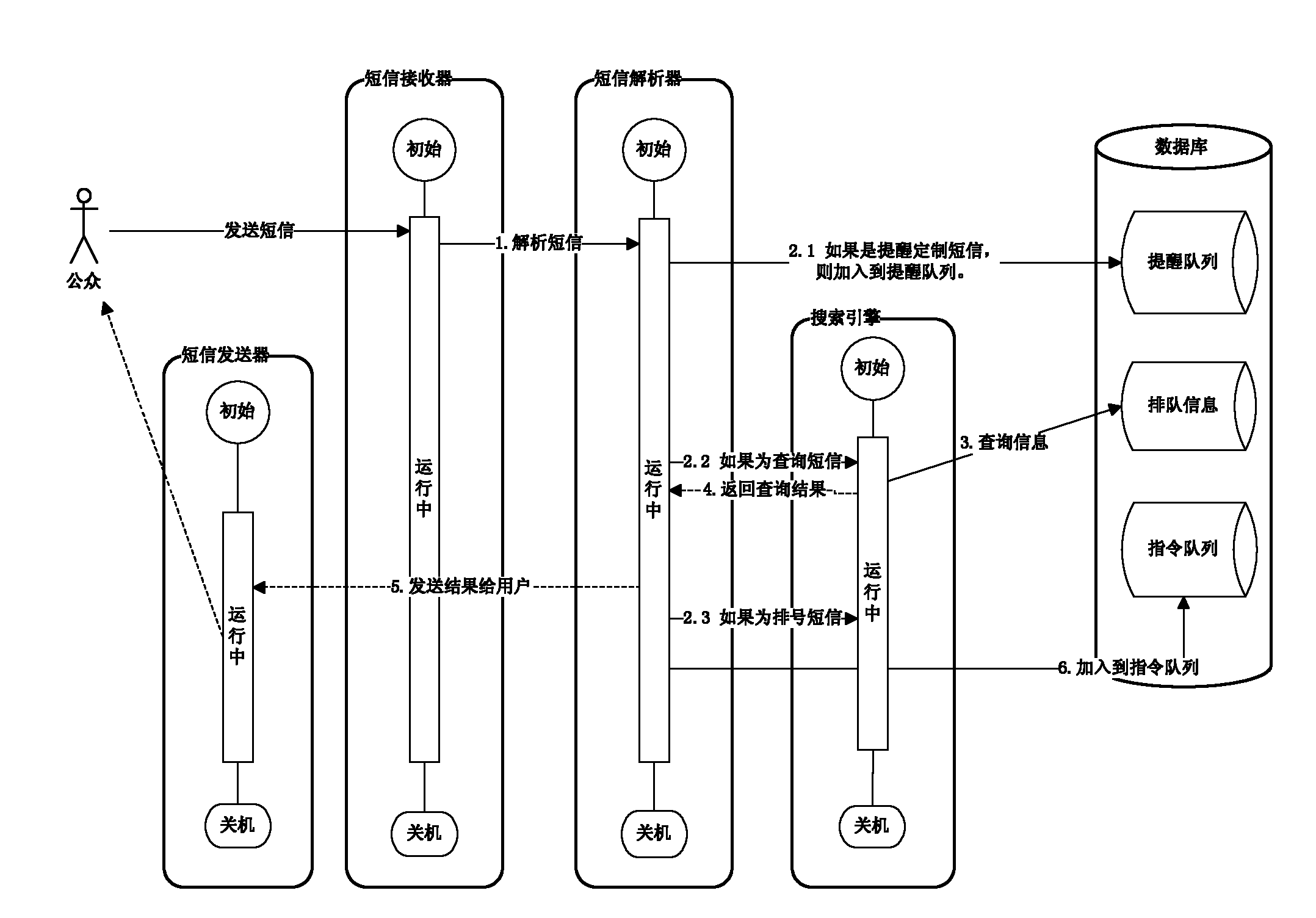

Heterogeneous real-time queuing machine system, devices of system and remote queuing reservation method

The invention discloses a heterogeneous real-time queuing machine system, devices of the system and a remote queuing reservation method. The heterogeneous real-time queuing machine system comprises a queuing machine with a client side, and a server in network connection with the queuing machine. According to a user-sent request of querying the specified service type of the target queuing machine in a specified area, the server searches out the address of the target queuing machine and the current queuing information of a specified service type, and the address and the current queuing information of a second queuing machine which provides services same as or similar to services of the target queuing machine in the specified region and currently has the least amount of people queuing for the corresponding service type, and feeds back the searched information to the user. The system is formed by upgrading the existing queuing equipment of a service providing mechanism and organizing the upgraded queuing equipment into a network system, thus, the queuing time of people is saved, great convenience is provided for service transaction of people, and the load balance of the service resource is realized; and simultaneously, a queuing machine administrator can execute daily maintenance for the queuing machine remotely, and data support also can be provided for senior decision makers.

Owner:冯东伟 +1

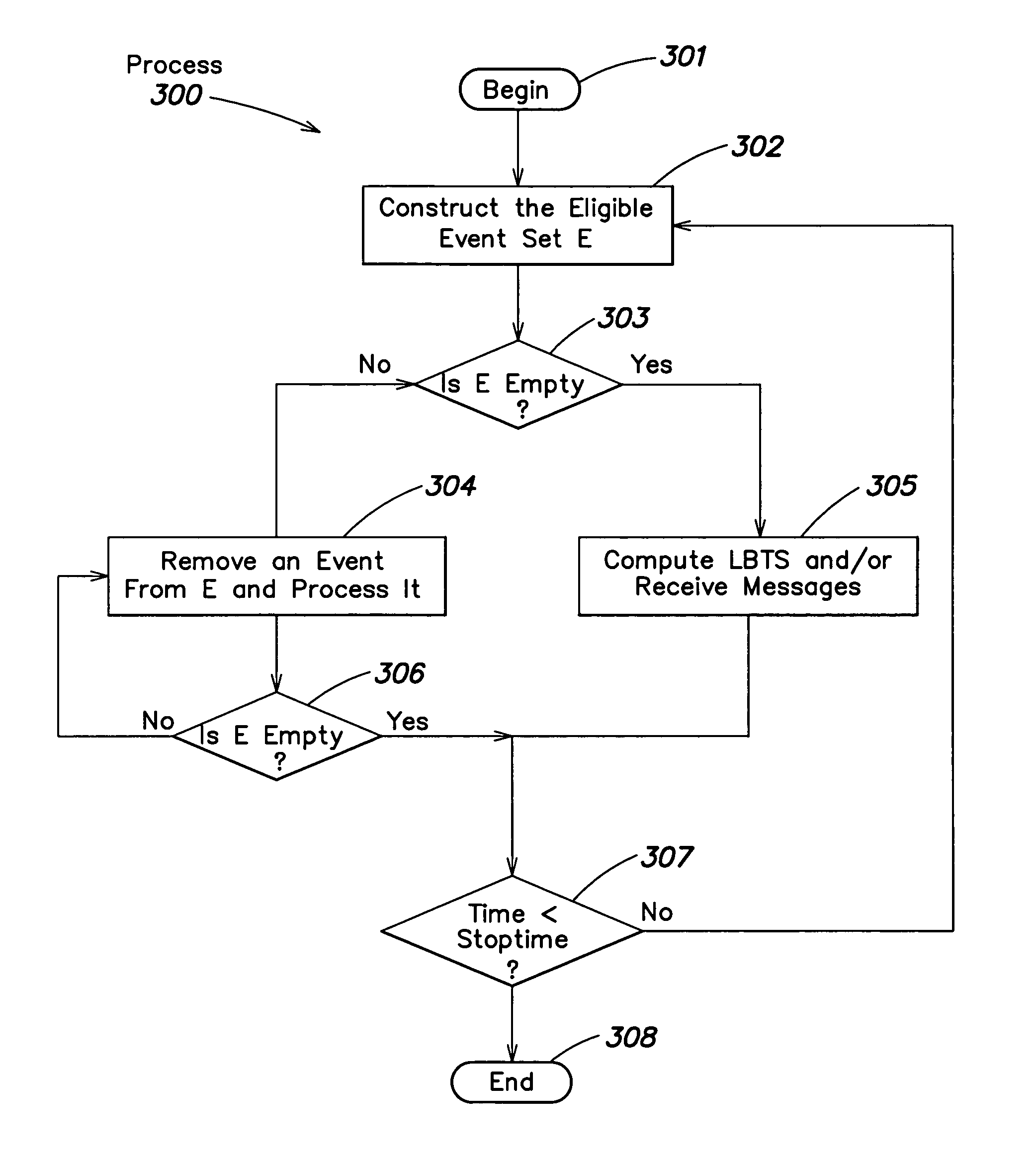

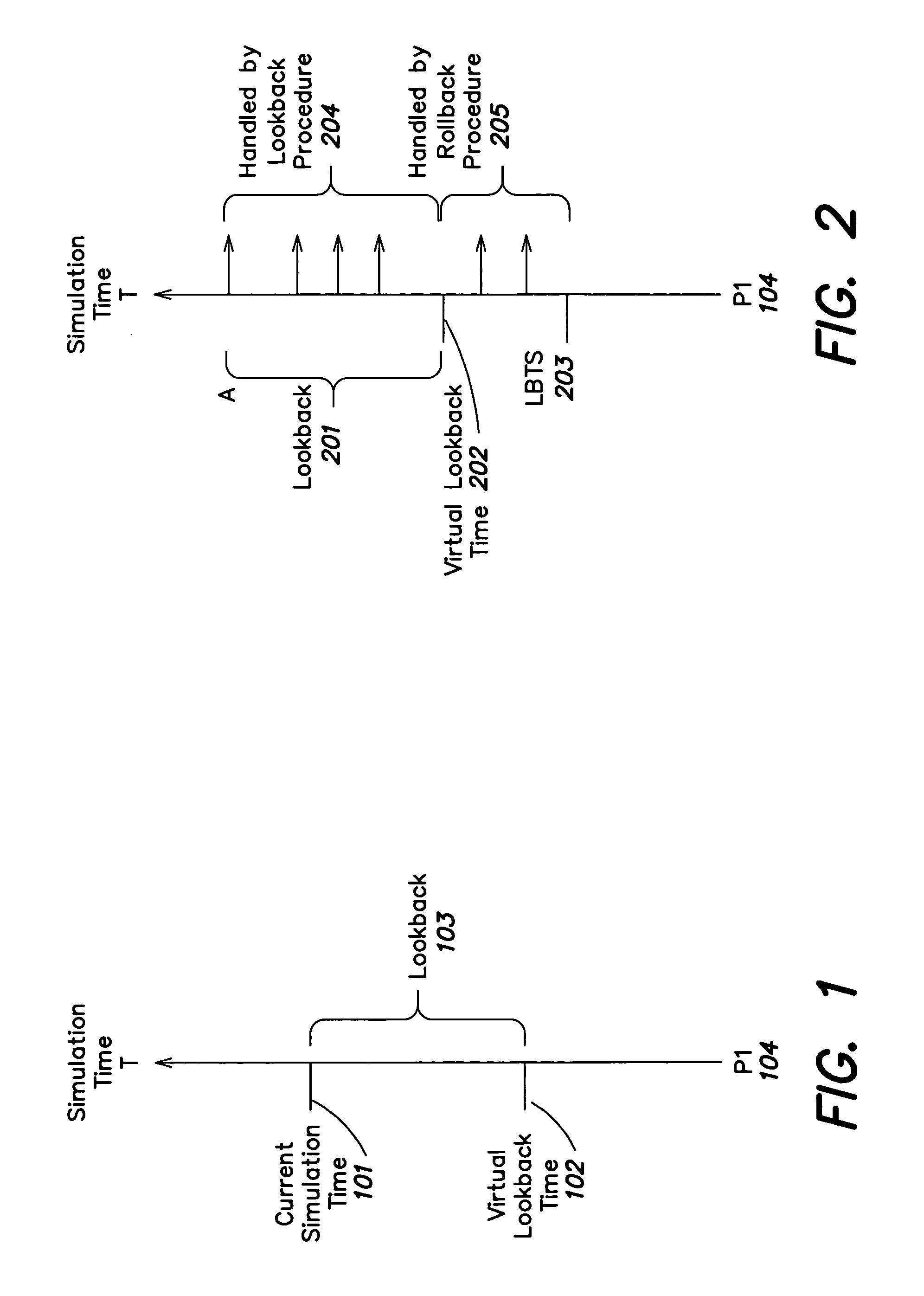

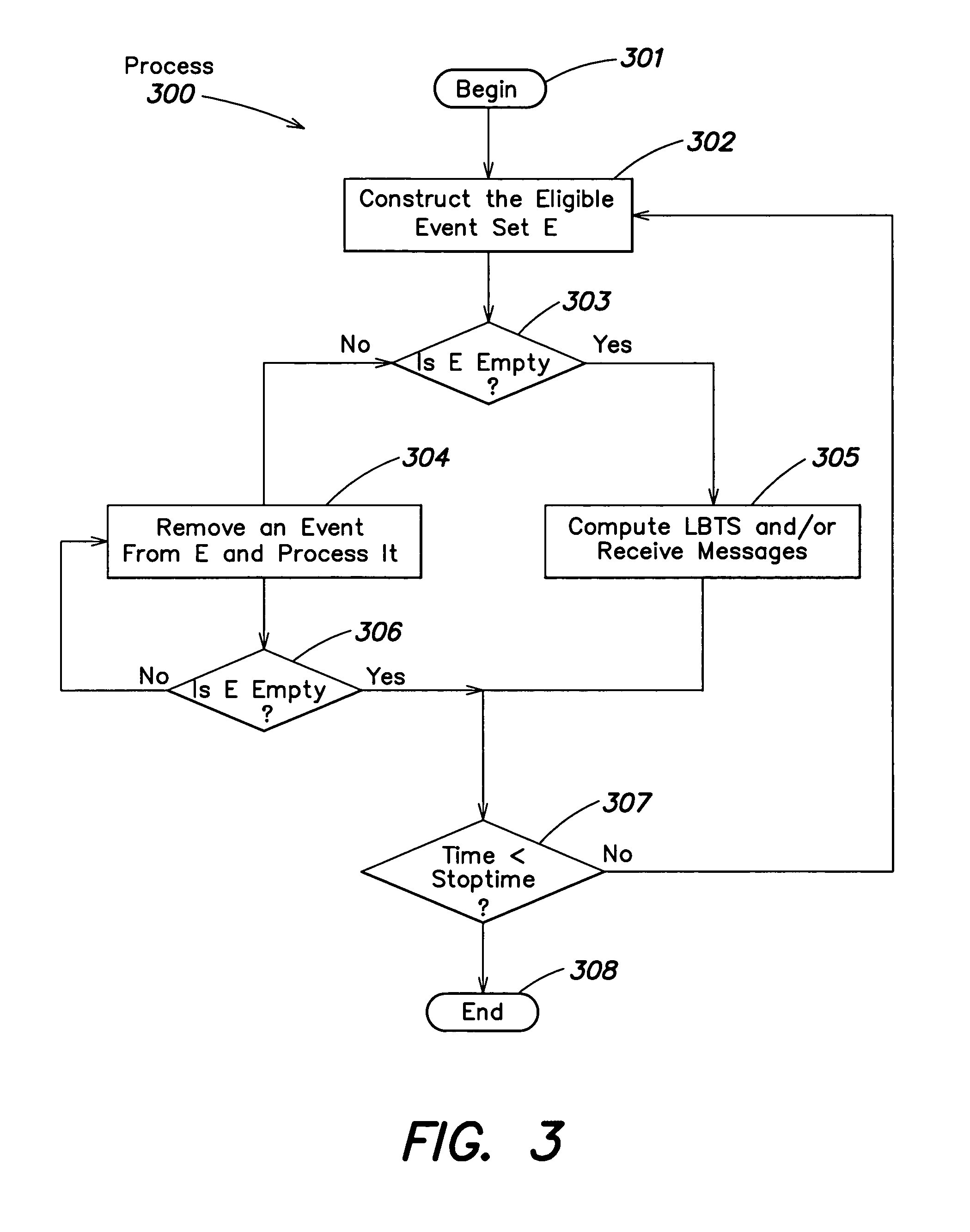

Discrete event simulation system and method

Lookback is defined as the ability of a logical process to change its past locally (without involving other logical processes). Logical processes with lookback are able to process out-of-timestamp order events, enabling new synchronization protocols for the parallel discrete event simulation. Two of such protocols, LB-GVT (LookBack-Global Virtual Time) and LB-EIT (LookBack-Earliest Input Time), are presented and their performances on the Closed Queuing Network (CQN) simulation are compared with each other. Lookback can be used to reduce the rollback frequency in optimistic simulations. The relation between lookahead and lookback is also discussed in detail. Finally, it is shown that lookback allows conservative simulations to circumvent the speedup limit imposed by the critical path.

Owner:RENESSELAER POLYTECHNIC INST

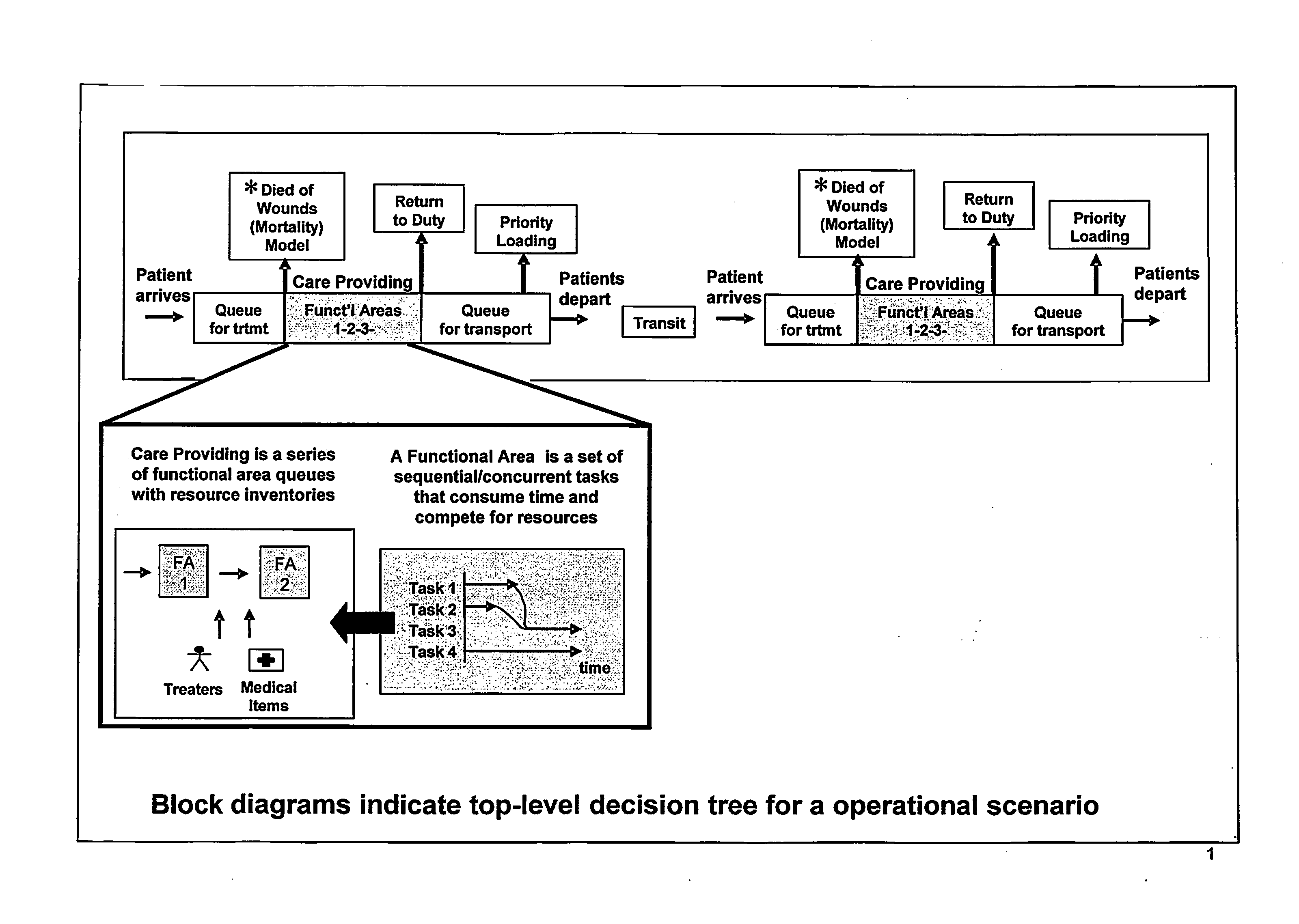

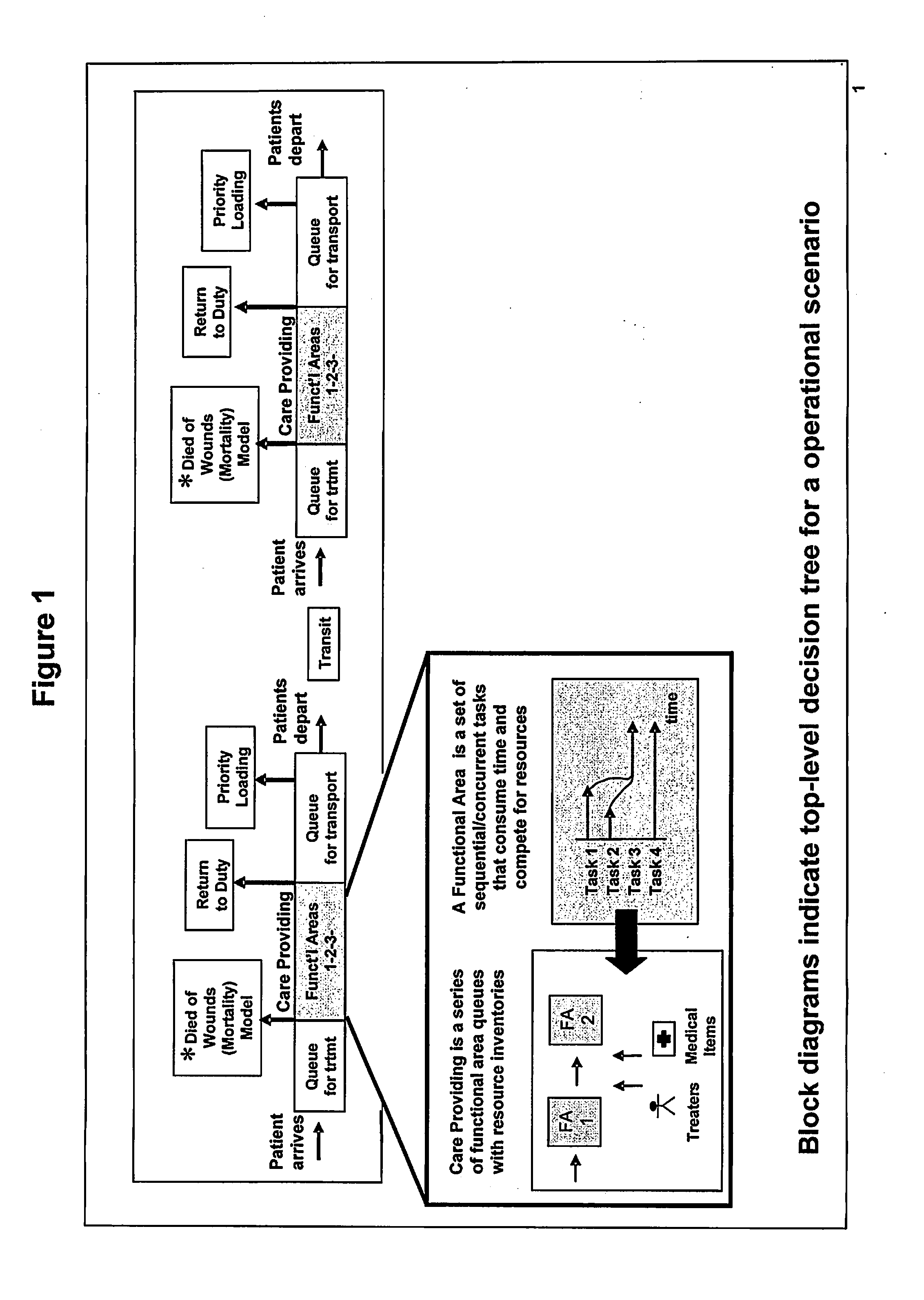

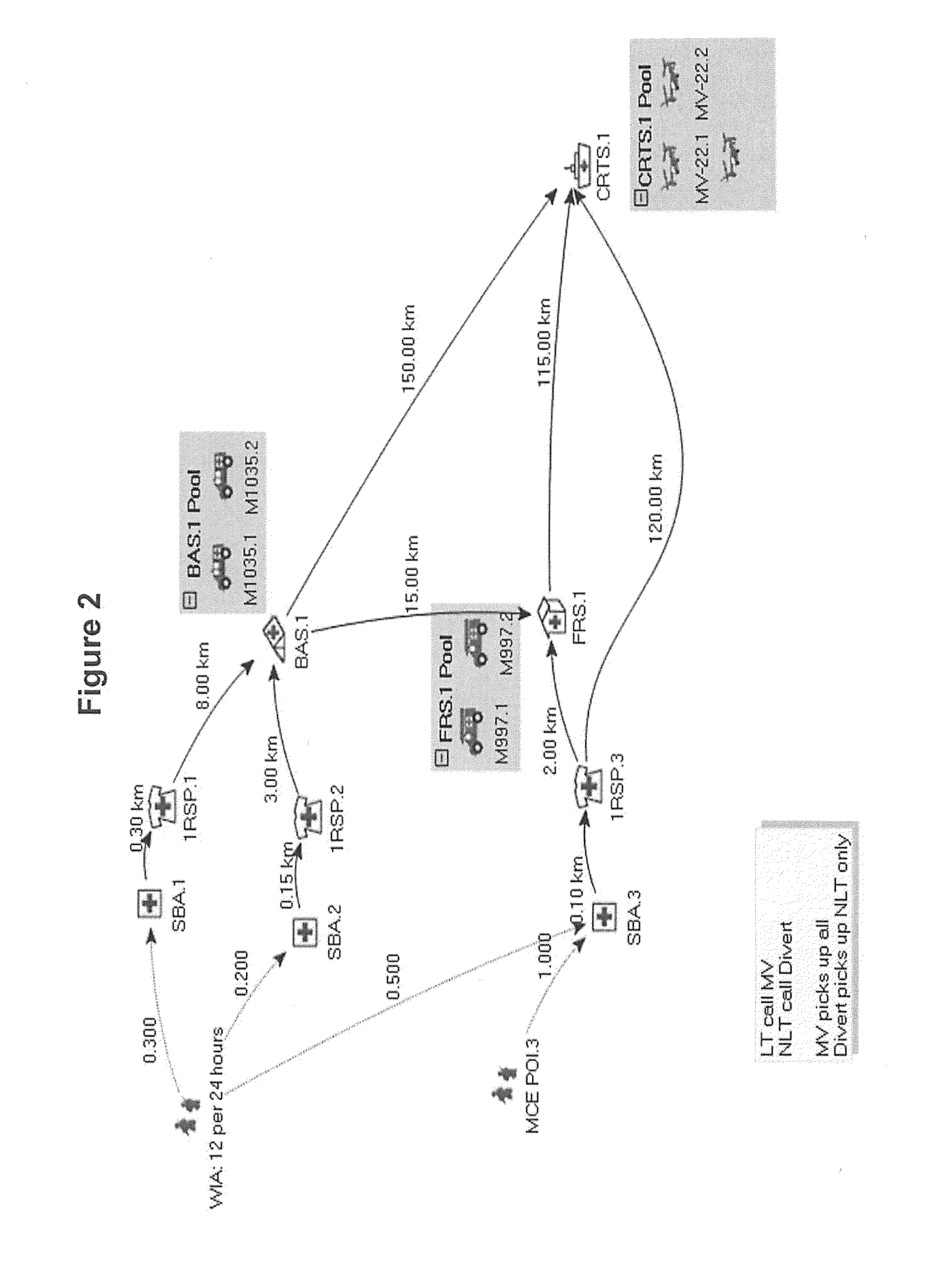

Medical Logistics Management Program

ActiveUS20150242577A1Medical simulationMechanical/radiation/invasive therapiesDiseaseRisk evaluation

This invention are computer implementable programs and method designed as medical planning tool that (1) models the patient flow from the point of injury through more definitive care, and (2) supports operations research and systems analysis studies, operational risk assessment, and field medical services planning. The computer program of this invention comprises six individual modules, including the casualty generation module, which uses an exponential distribution to stochastically generate wounded in action, disease, and nonbattle injuries; a care providing module uses generic task sequences, simulated treatment times, and personnel, consumable supply, and equipment requirements to model patient treatment and queuing within a functional area; a network / transportation module simulates the evacuation (including queuing) and routing of patients through the network of care via transportation assets; a reporting module produces an database detailing various metrics, such as patient disposition, time-in-system data, and consumable, equipment, personnel, and transportation utilization rates, which can be filtered according to the user's needs.

Owner:THE UNITED STATES OF AMERICA AS REPRESENTED BY THE SECRETARY OF THE NAVY

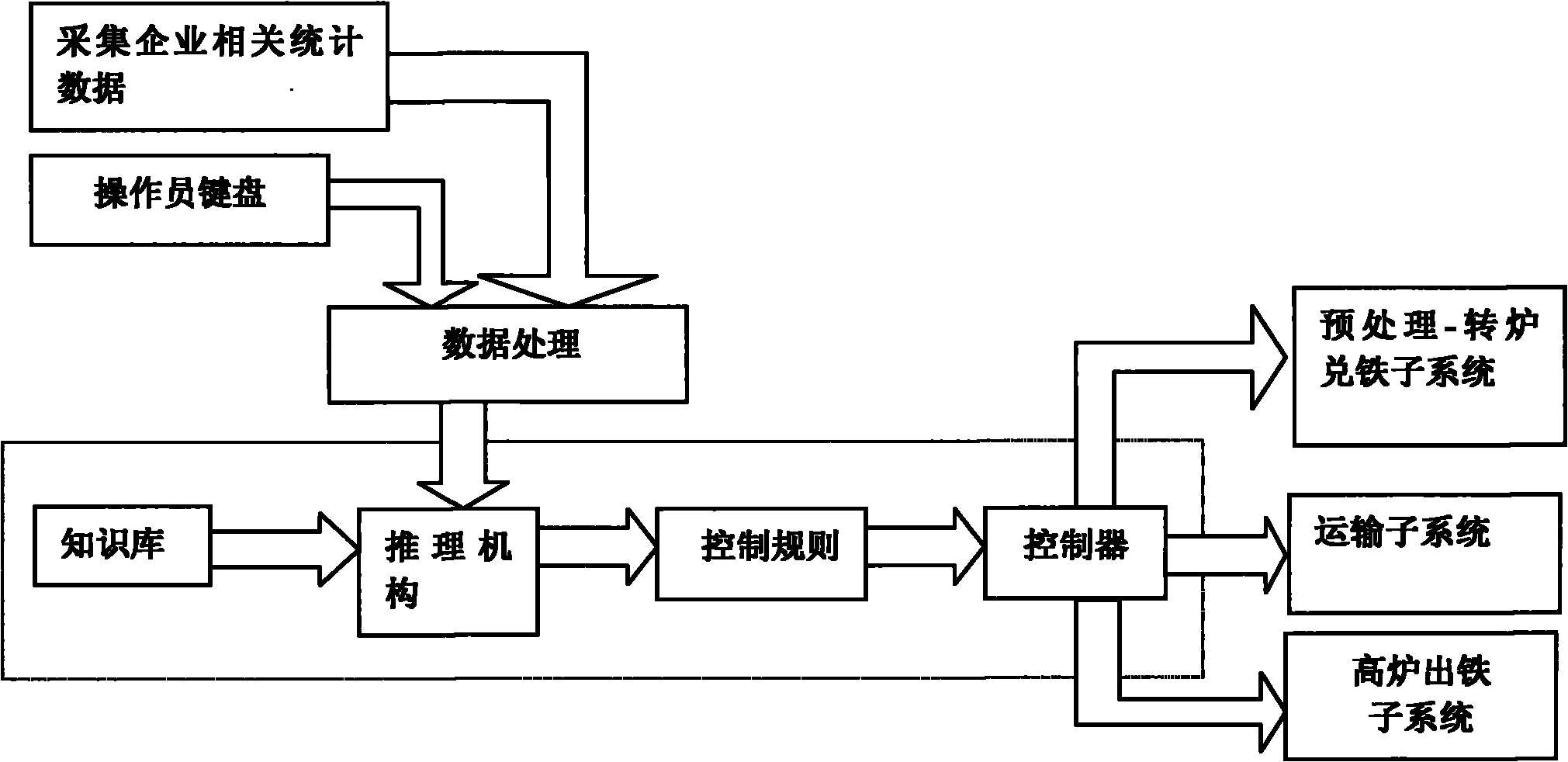

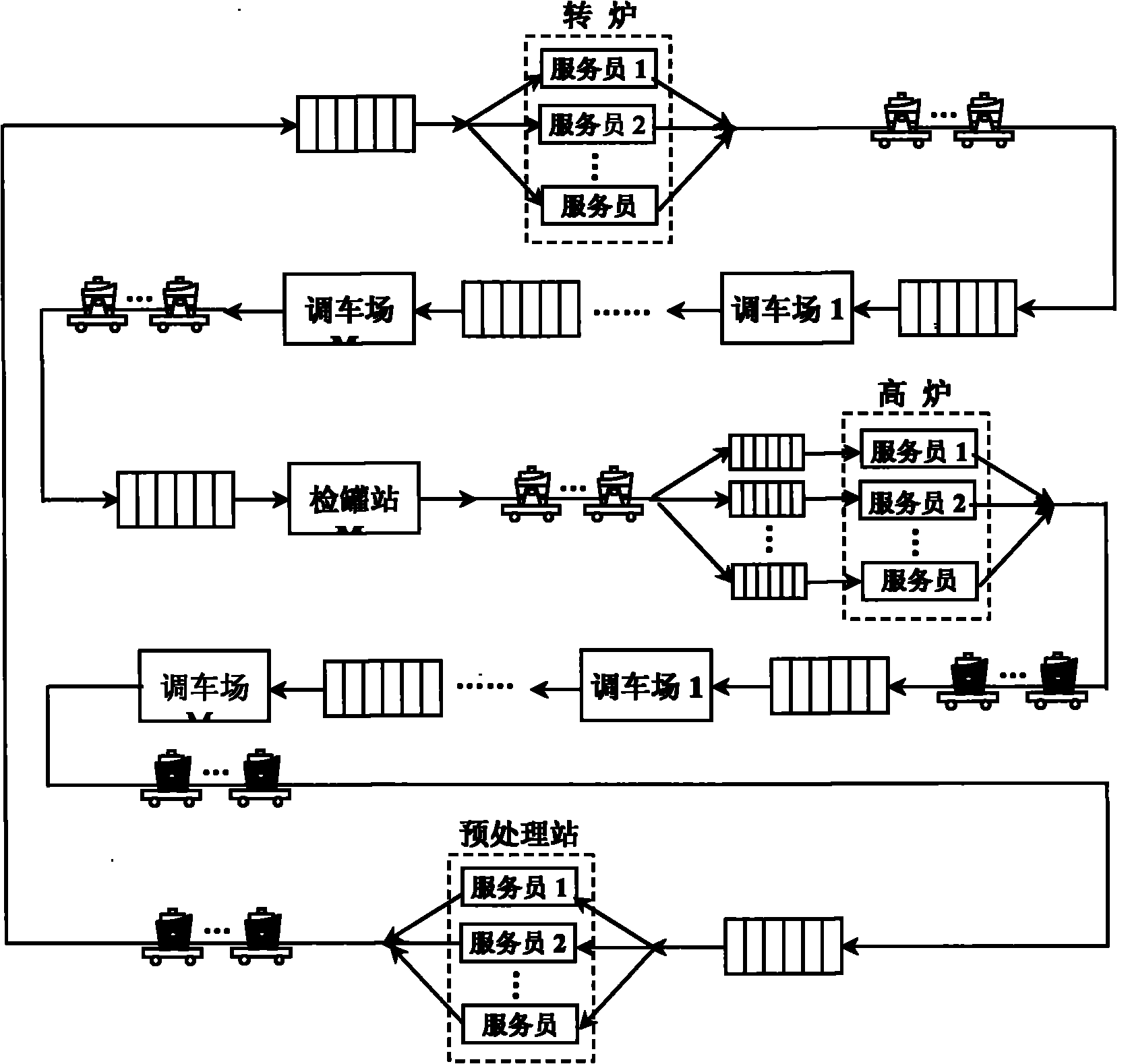

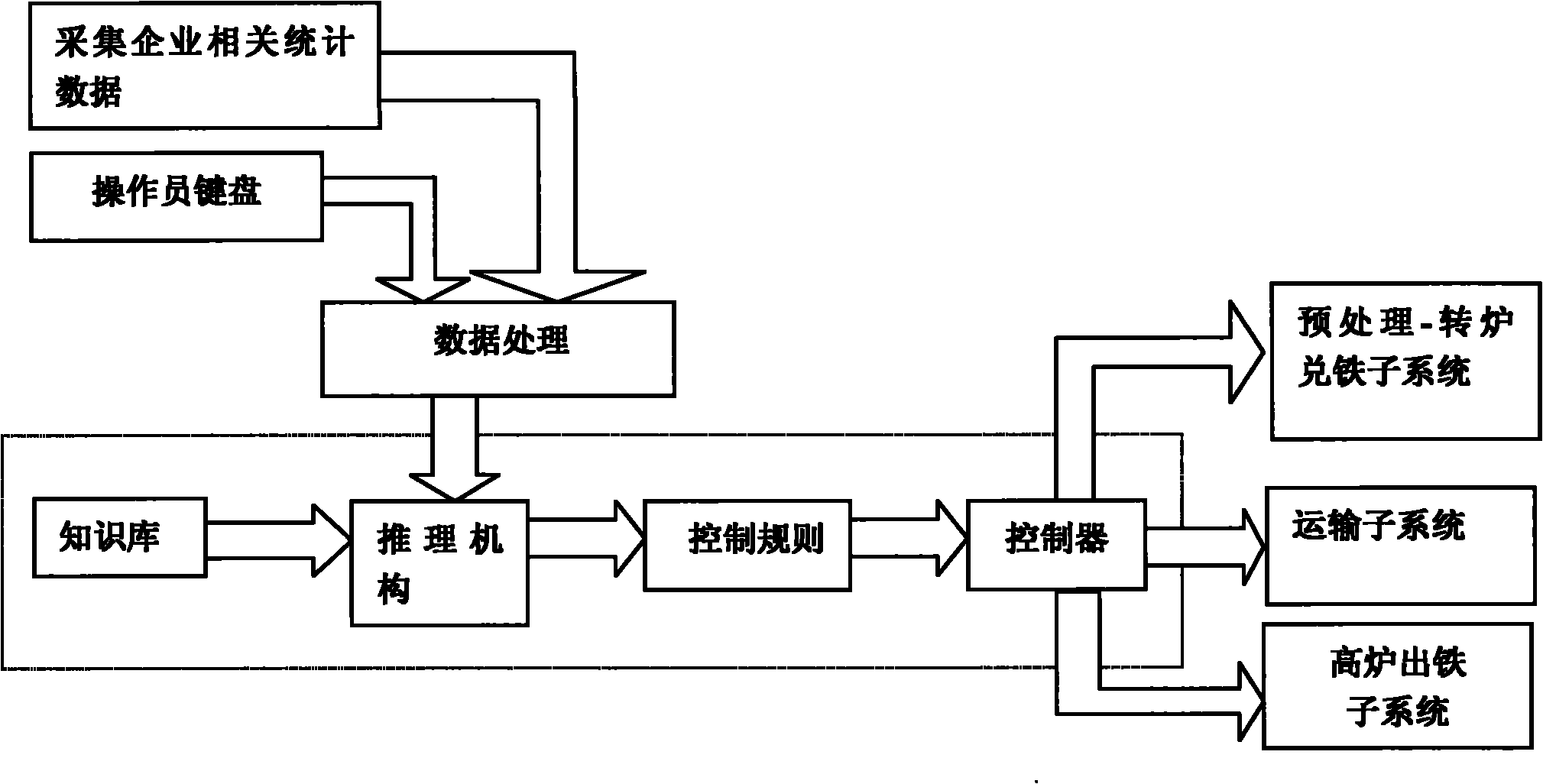

Expert system and control method applied to blast furnace-converter sector production scheduling process control of steel enterprises

InactiveCN101833322AAdaptableSimple control systemTotal factory controlProgramme total factory controlInformation processingDirect effects

The invention discloses an expert system and a method which are applied to the blast furnace-converter sector production scheduling process control of steel enterprises. The expert system comprises a knowledge base, a control rule set, an inference engine and an information processing part, the operation experience of workers is integrated to carry out reasoning discrimination and make necessary decision to realize reasonable scheduling on the basis of a queuing theory method and a system and simulation theory. A computer is controlled by a programmable logic controller suitable for process control to reasonably design a batch-arrival closed type repairable queuing network system which is composed of stations in a blast furnace-converter sector and has multiple service counters in parallel-series connection. The control system has simpleness and strong adaptability, can directly influence productivity of related equipment and running cost of the system, can satisfy actual production requirement, can save capital and can be widely applied to blast furnace-converter sector production scheduling system control of large-scale integrated iron and steel works.

Owner:KUNMING UNIV OF SCI & TECH

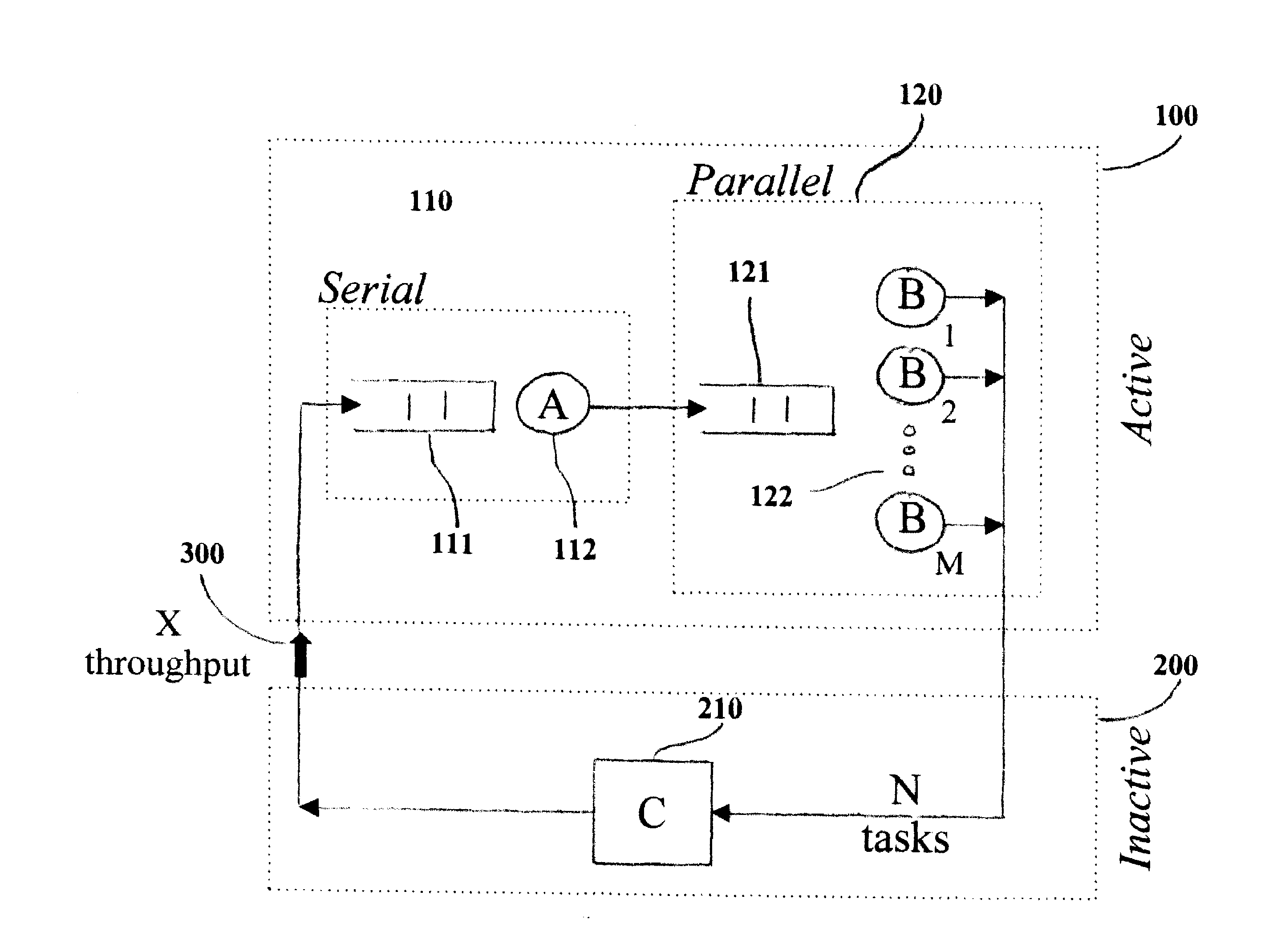

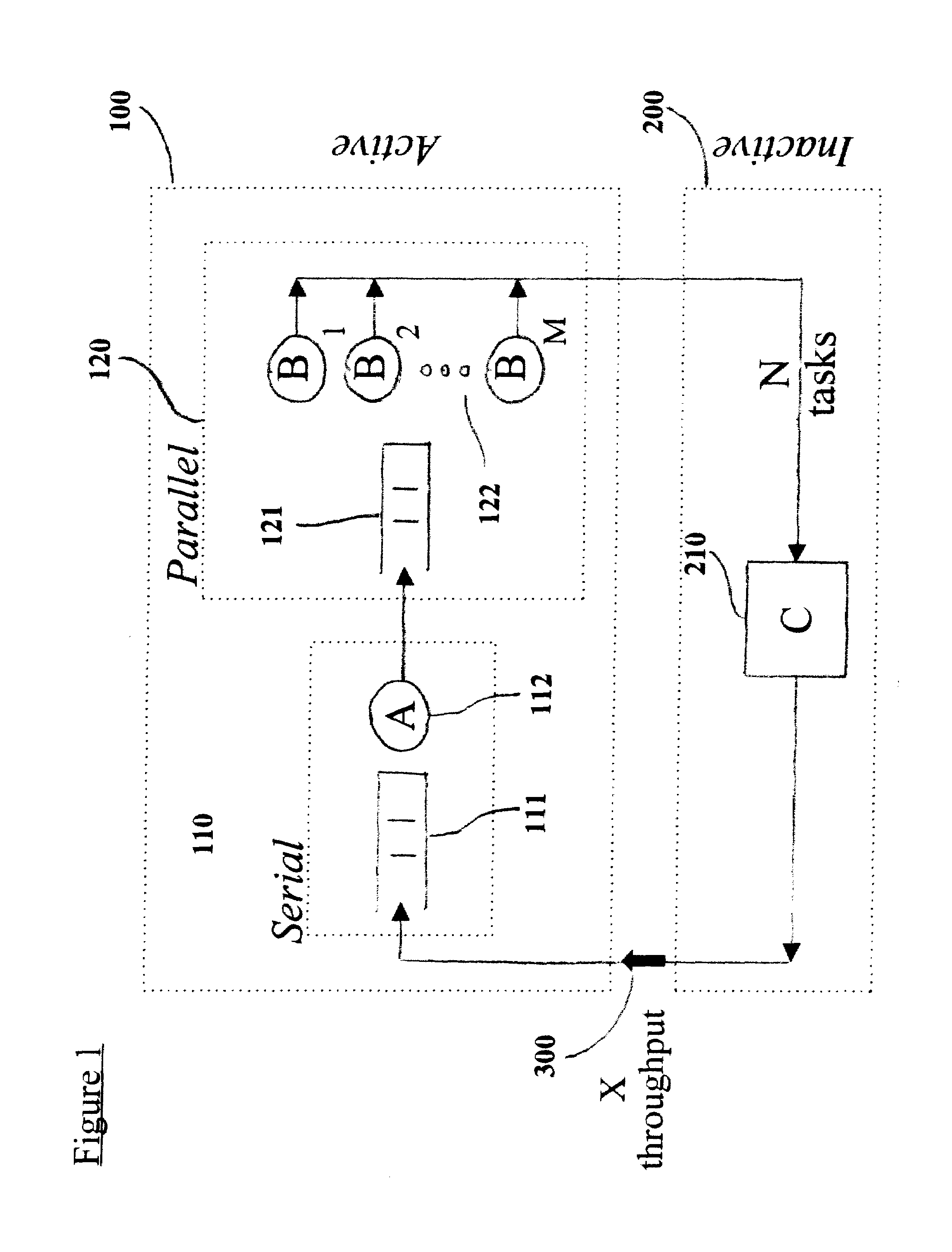

Method and system for dynamic performance modeling of computer application services

InactiveUS20070299638A1Simple and efficient to solveAccurate predictionError detection/correctionMultiple digital computer combinationsQueueing network modelsWeb service

A generic queueing network model of a Web services environment is introduced. The behavior of a service is abstracted in three phases: serial, parallel and dormant, thus yielding a Serial Parallel Queueing Network (SPQN) model with a small number of parameters. A method is provided for estimated the parameters of the model that is based on stochastic approximation techniques for solving stochastic optimization problems. The parameter estimation method is shown to perform well in a noisy environment, where performance data is obtained through measurements or using approximate model simulations.

Owner:IBM CORP

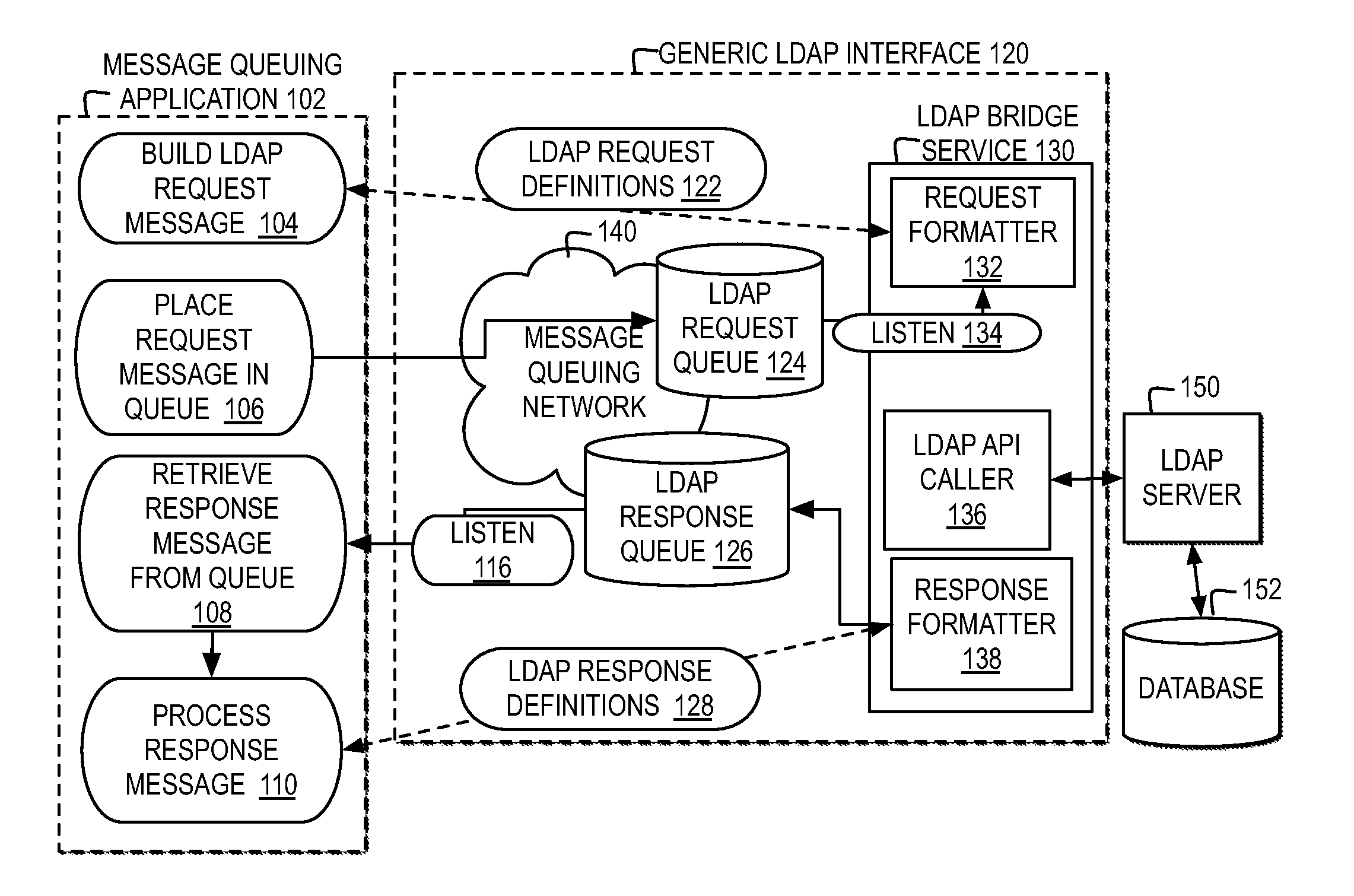

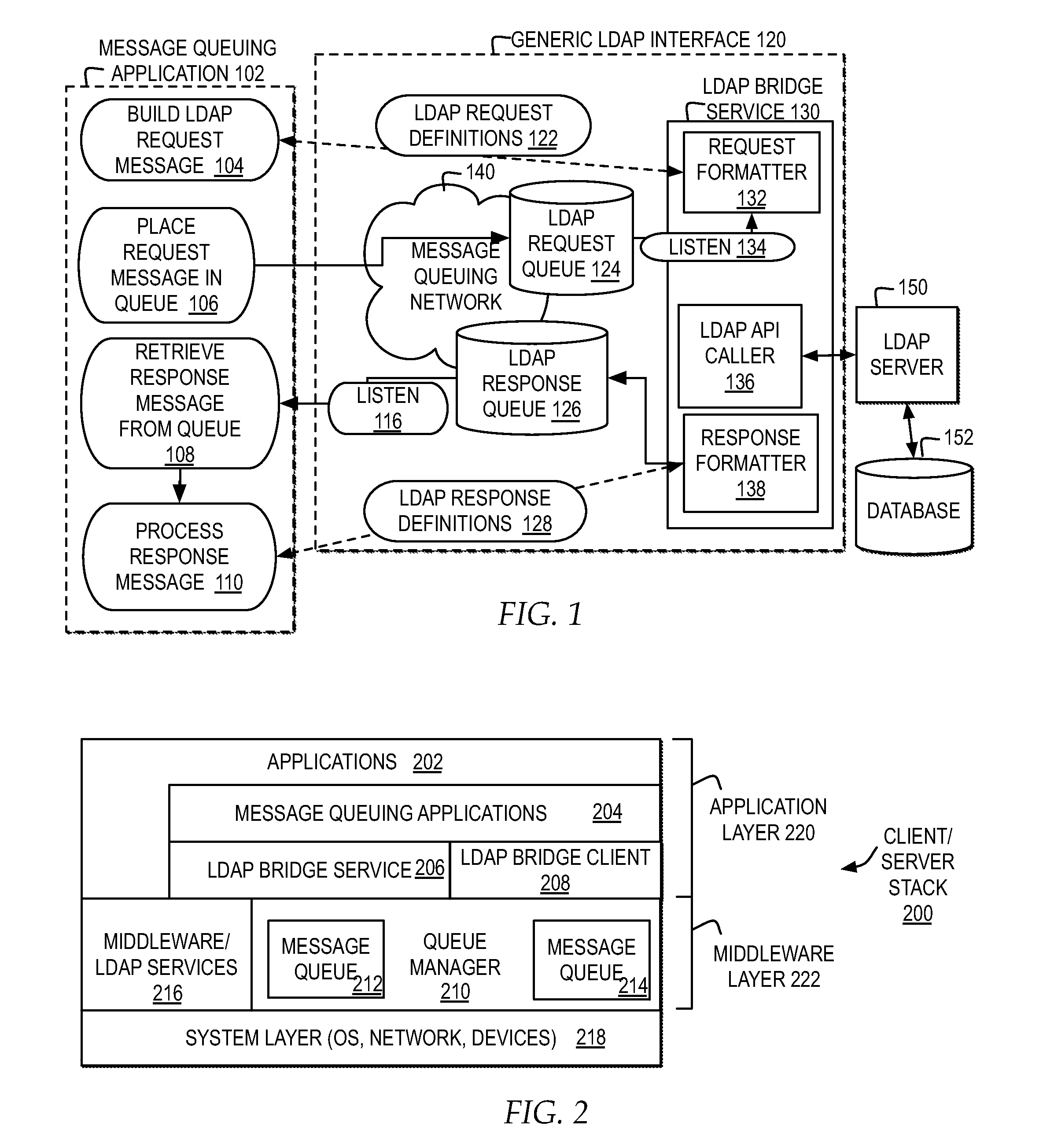

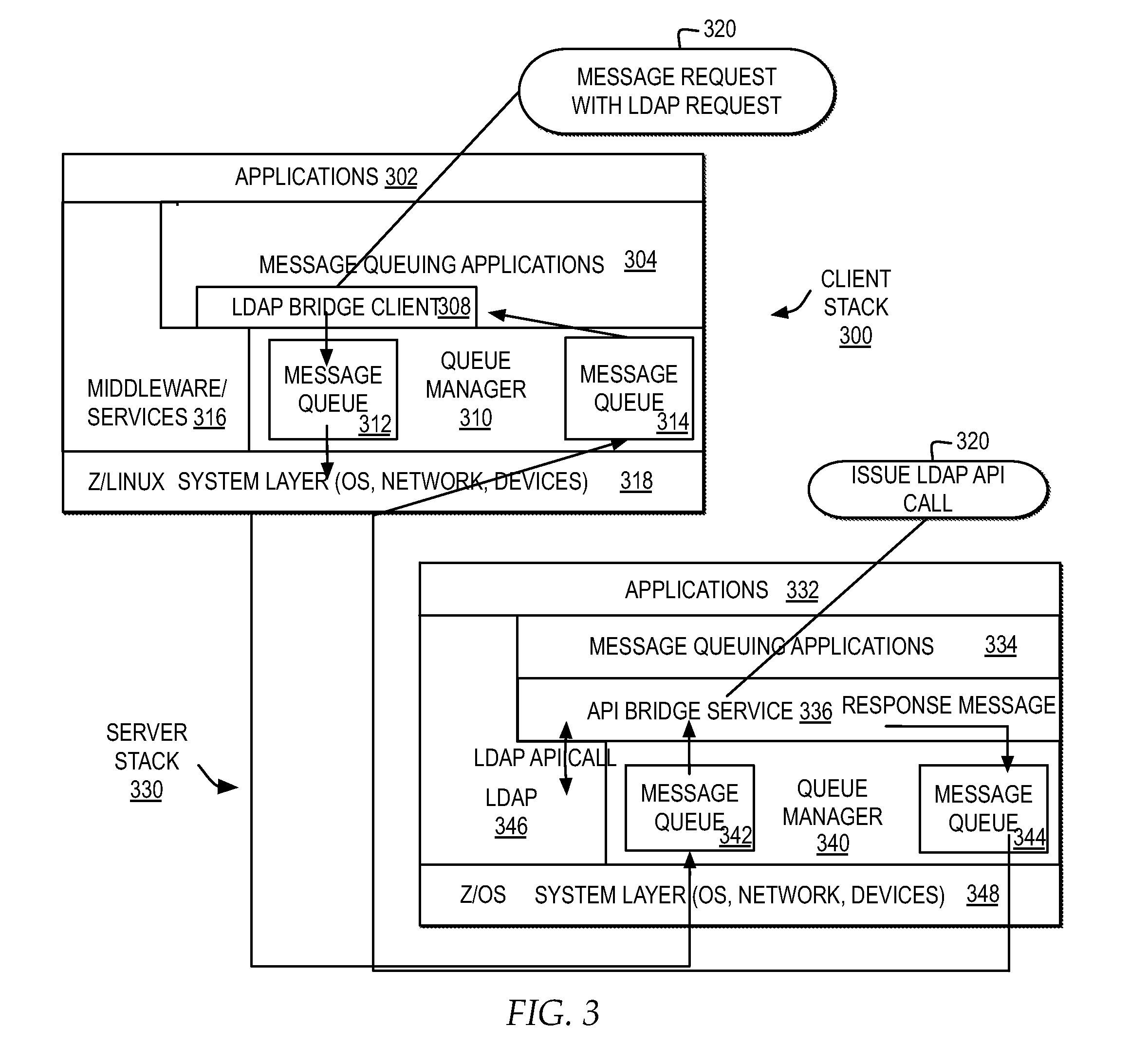

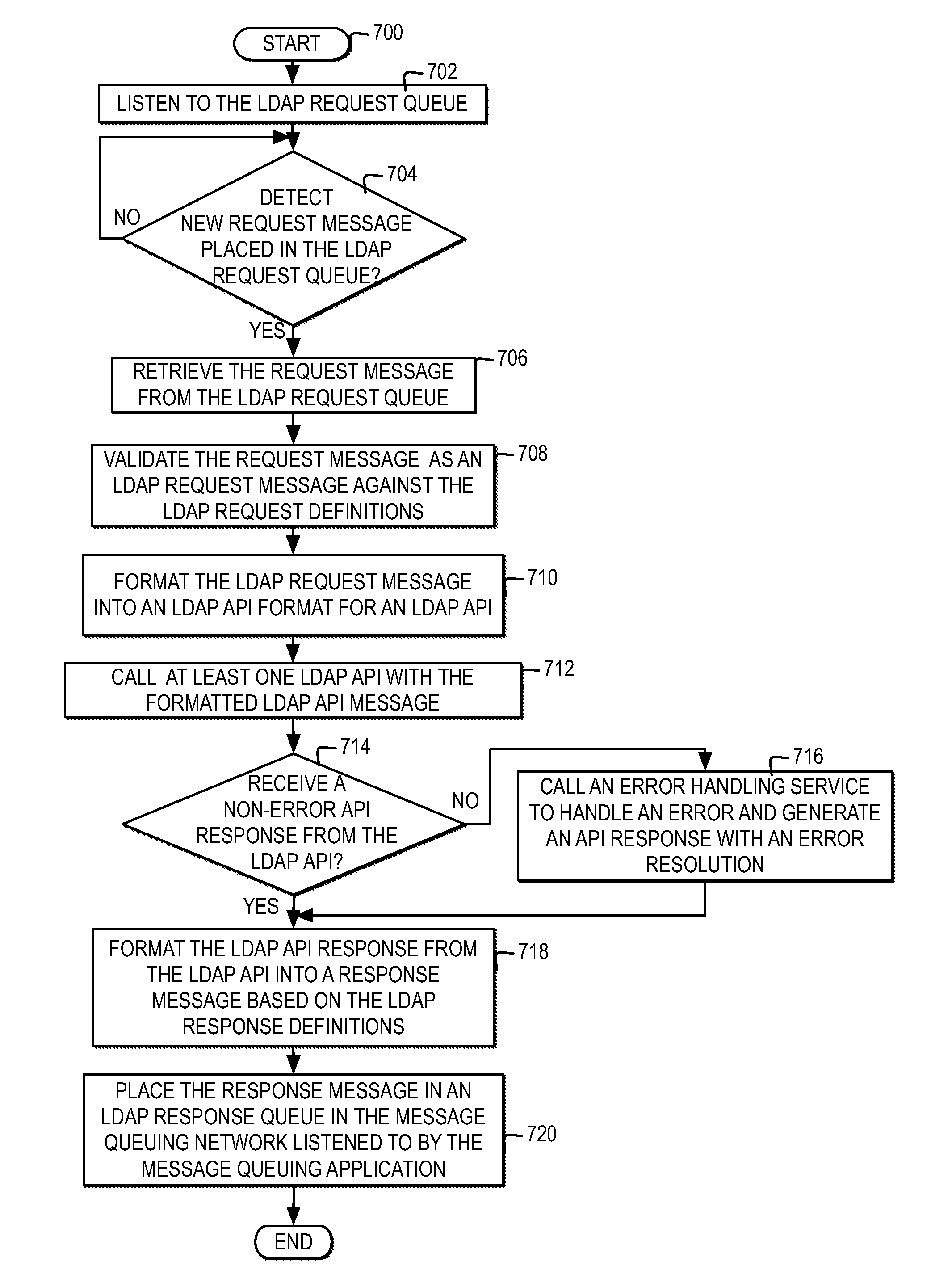

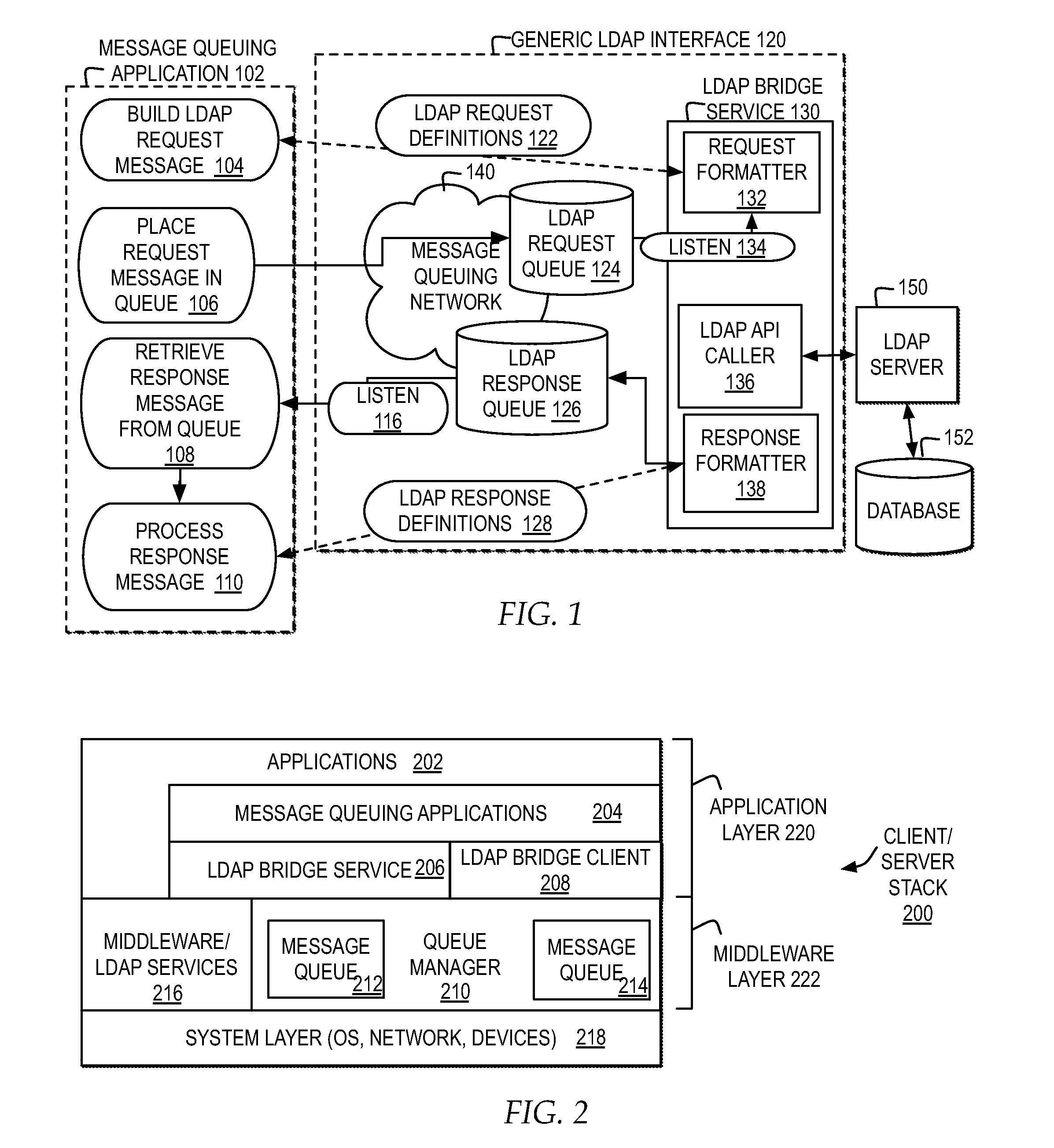

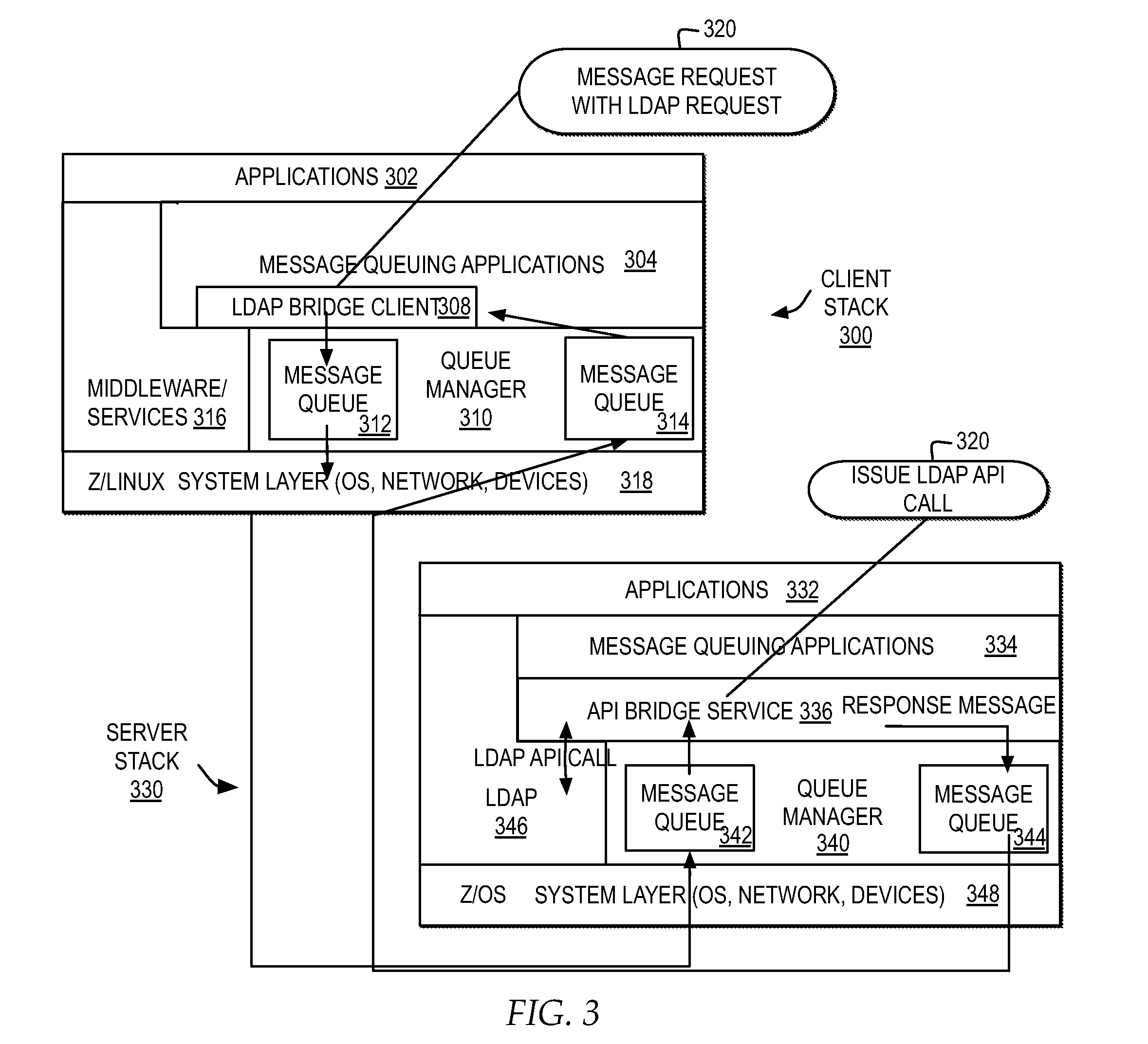

Application access to ldap services through a generic ldap interface integrating a message queue

InactiveUS20130111499A1Multiprogramming arrangementsSpecific program execution arrangementsMessage queueQueuing network

An LDAP bridge service retrieves a generic LDAP message request, placed in a request queue by a message queuing application, from the request queue. The LDAP bridge service formats the generic LDAP request into a particular API call for at least one LDAP API. The LDAP bridge service calls at least one LDAP API with the particular API call for requesting at least one LDAP service from at least one LDAP server managing a distributed directory. Responsive to the LDAP bridge service receiving at least one LDAP specific response from at least one LDAP API, the LDAP bridge service translates the LDAP specific response into a response message comprising a generic LDAP response. The API bridge service, places the response message in a response queue of the message queuing network, wherein the message queuing application listens to the response queue for the response message.

Owner:IBM CORP

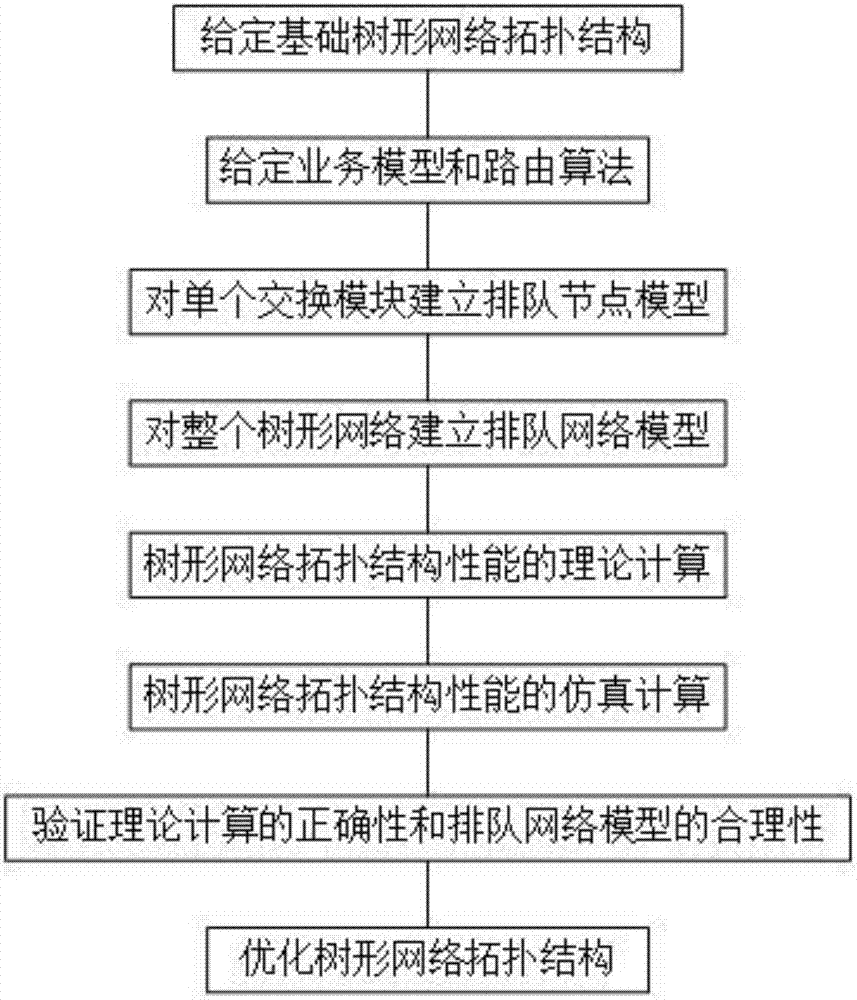

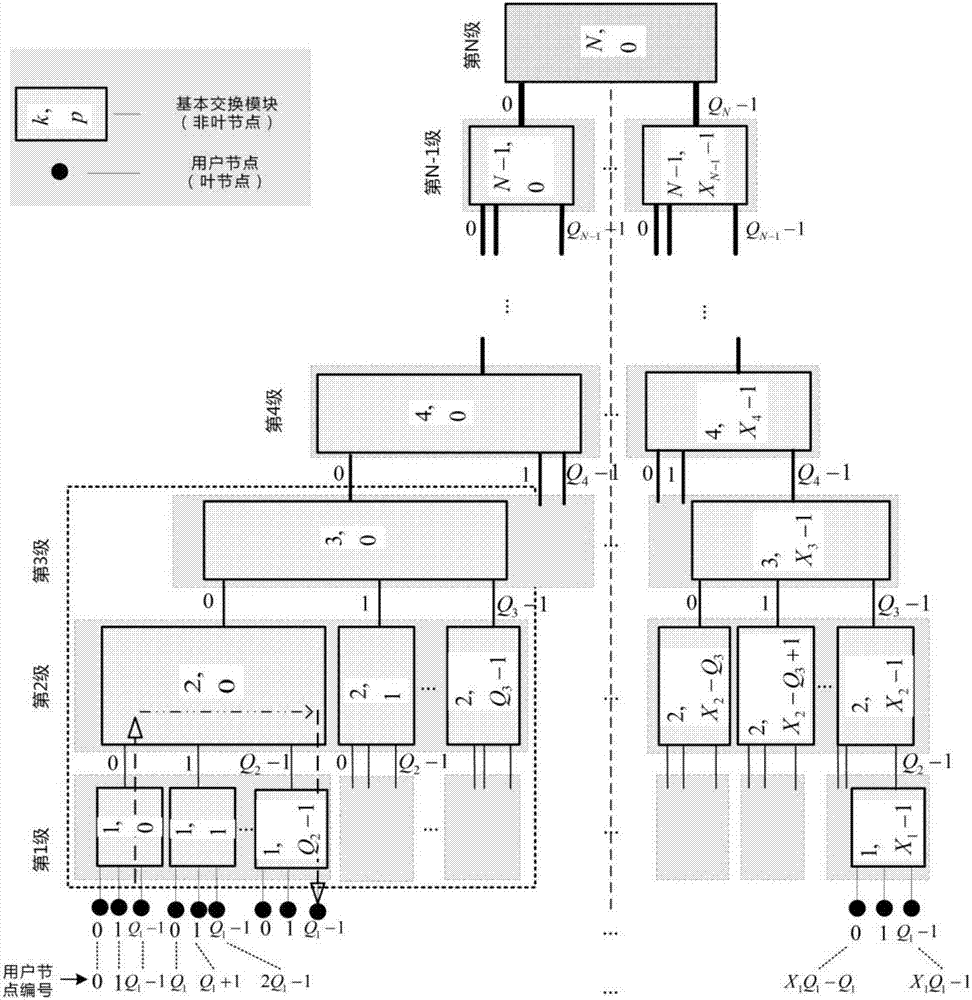

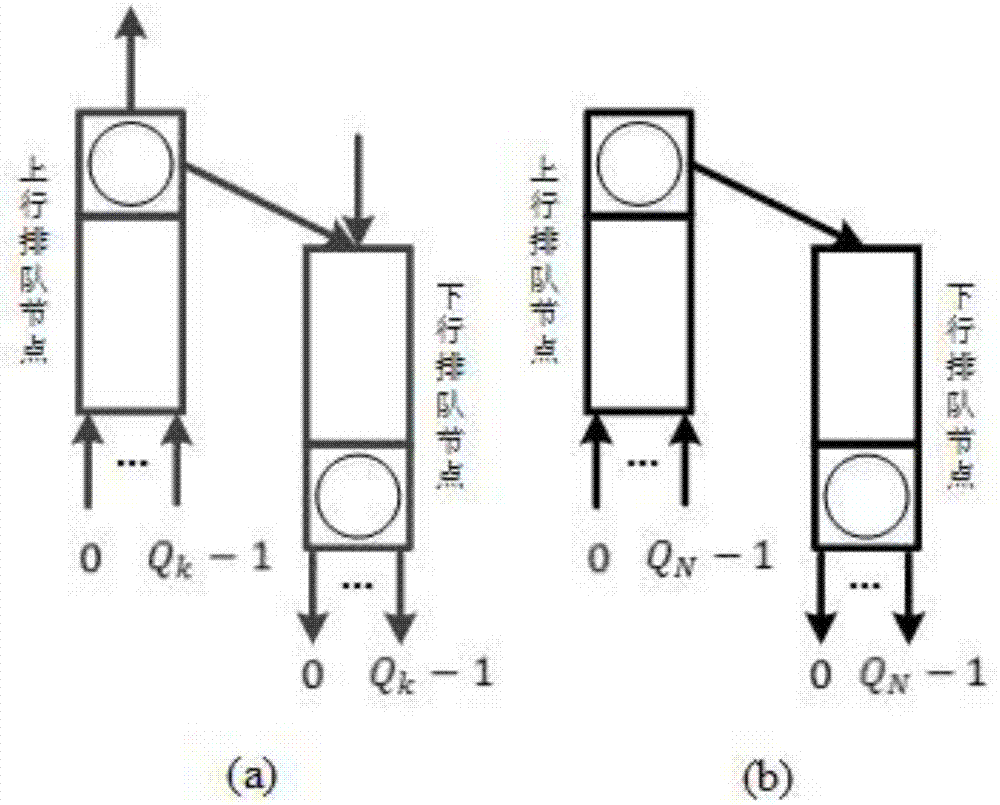

Optimization method for tree network topology structure based on queuing theory

ActiveCN106936645ARealize quantitative analysisReflect performanceData switching networksService modelLimited resources

The invention provides an optimization method for a tree network topology structure based on a queuing theory and is sued for solving the optimization design problem of large-scale user node interconnection under limited resources and preset service. The method comprises the realization steps of presetting a basic tree network topology structure, a service model and a routing algorithm; establishing a queuing node model of a single basic switching module and a queuing network mode of a whole tree network; carrying out theoretical calculation and simulating calculation on the performance of the tree network topology structure; verifying the theoretical calculation accuracy and the reasonability of the queuing network model; and optimizing the tree network topology structure and parameters. According to the method, the queuing network model is established, the tree network is analyzed quantitatively, the influences of the service strength, the cache, the switching modules and the network topology structure on the network performance are taken into consideration, and the method is applicable to the establishment of the optimum tree network topology structure under preset service demands.

Owner:XIDIAN UNIV

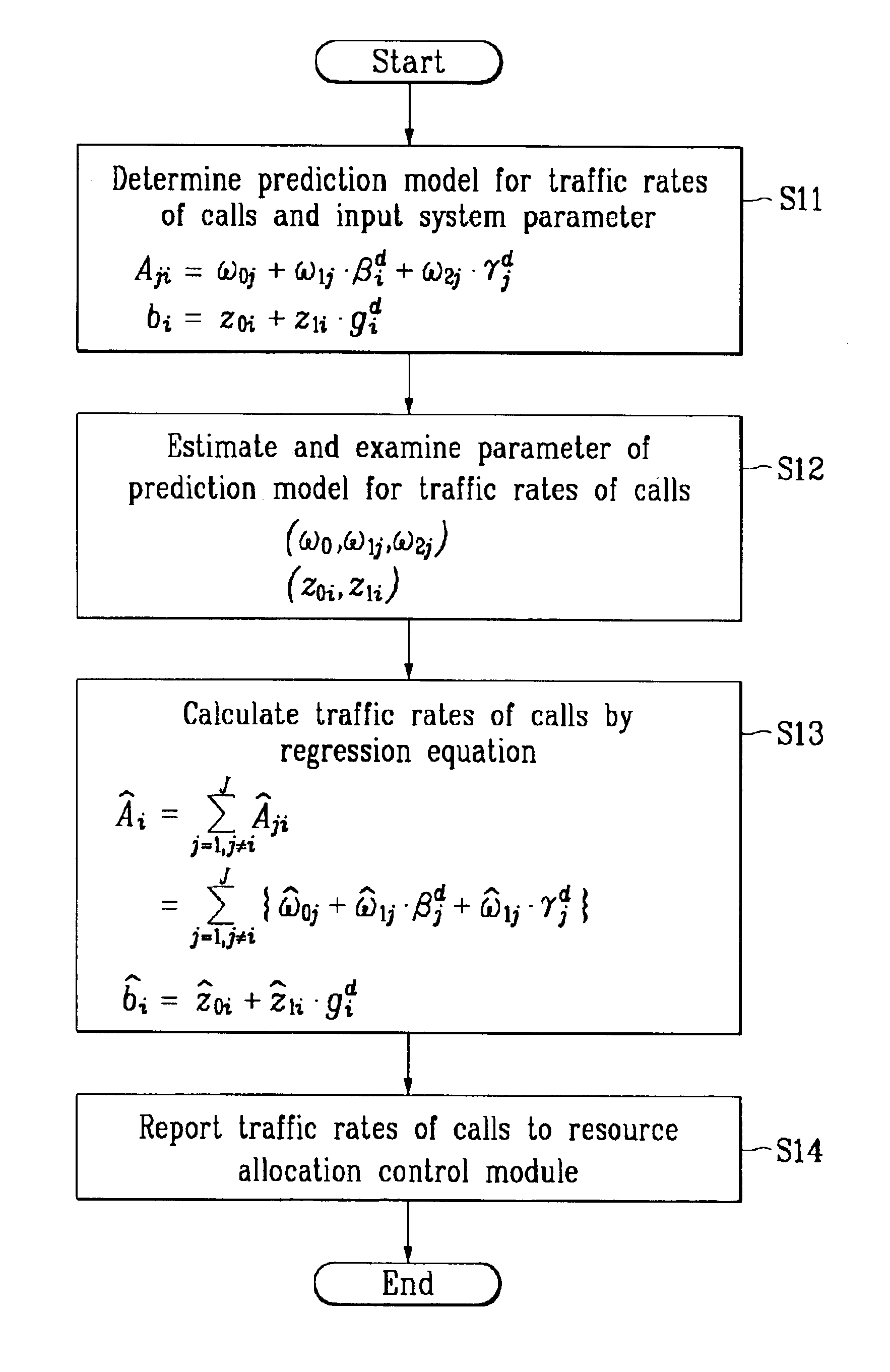

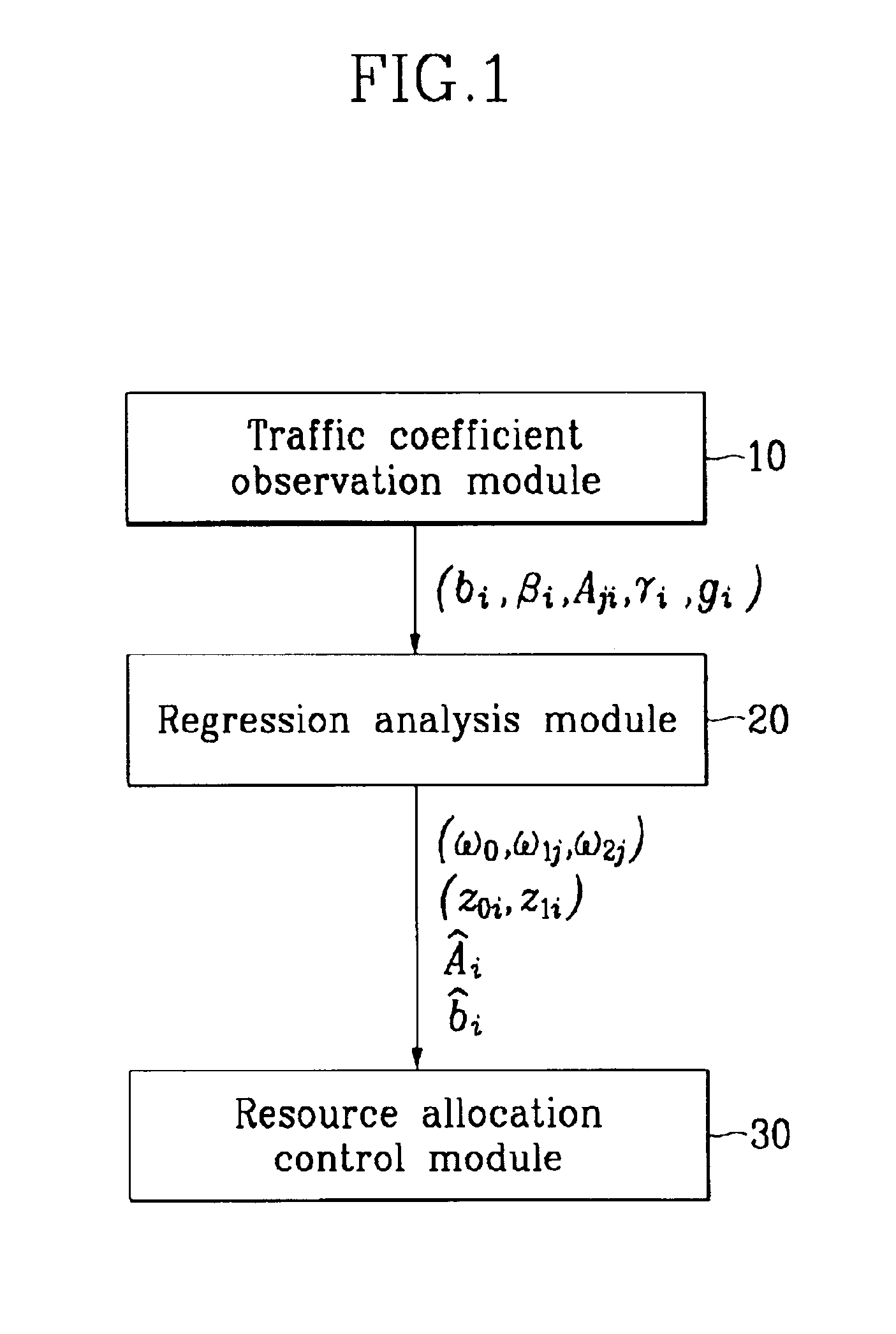

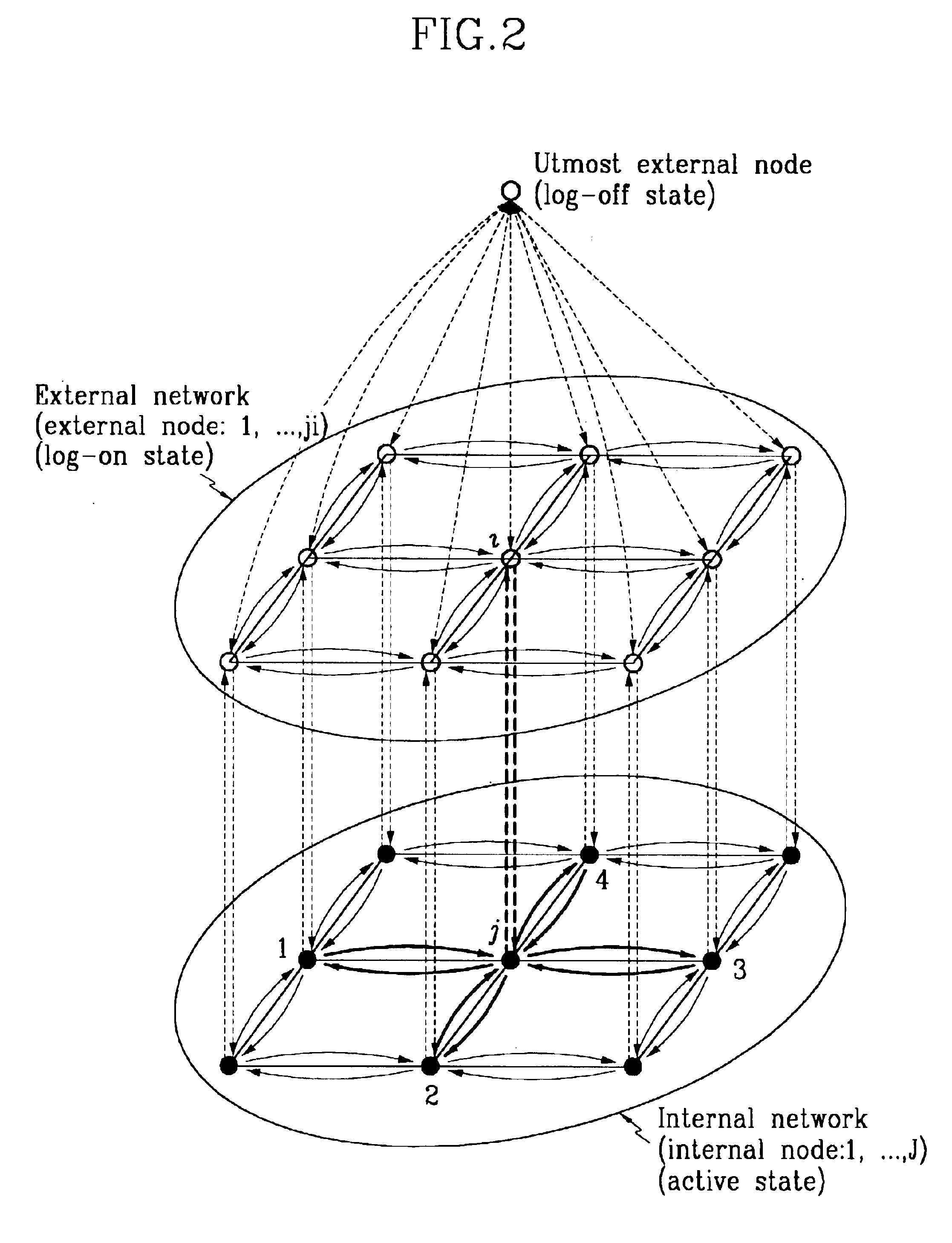

System for estimating traffic rate of calls in wireless personal communication environment and method for the same

ActiveUS6909888B2Efficient use ofError preventionTransmission systemsTraffic capacityRegression analysis

A system for estimating a traffic rate of calls in system environments providing wireless personal communication services on an open queuing network includes modules that have functions of making three sets of nodes, “log_off”, “log_on” and “active” according to the status of communication terminal equipment, observing the number of “log_on” and “active” terminals by minimum areas of each wireless personal communication service, and predicting traffic probability by minimum areas. More specifically, the present invention includes: a traffic parameter observation module for making a set of nodes and collecting observations measured in real time on the respective nodes; a regression analysis module for performing a regression analysis of the observations to assume a prediction model for traffic rates of calls and to estimate the traffic rates of internal-to-internal or external-to-internal calls; and a resource allocation control module for determining whether to allocate resources and how much of the resources to allocate according to the traffic rates of internal-to-internal or external-to-internal calls.

Owner:ELECTRONICS & TELECOMM RES INST

Method of incorporating DBMS wizards with analytical models for DBMS servers performance optimization

ActiveUS8200659B2Improve balanceEnhancing autonomic computingDigital data information retrievalDigital data processing detailsAnalytic modelResource utilization

Disclosed is an improved method and system for implementing DBMS server performance optimization. According to some approaches, the method and system incorporates DBMS wizards recommendations with analytical queuing network models for purpose of evaluating different alternatives and selecting the optimum performance management solution with a set of expectations, enhancing autonomic computing by generating periodic control measures which include recommendation to add or remove indexes and materialized views, change the level of concurrency, workloads priorities, improving the balance of the resource utilization, which provides a framework for a continuous process of the workload management by means of measuring the difference between the actual results and expected, understanding the cause of the difference, finding a new corrective solution and setting new expectations.

Owner:DYNATRACE

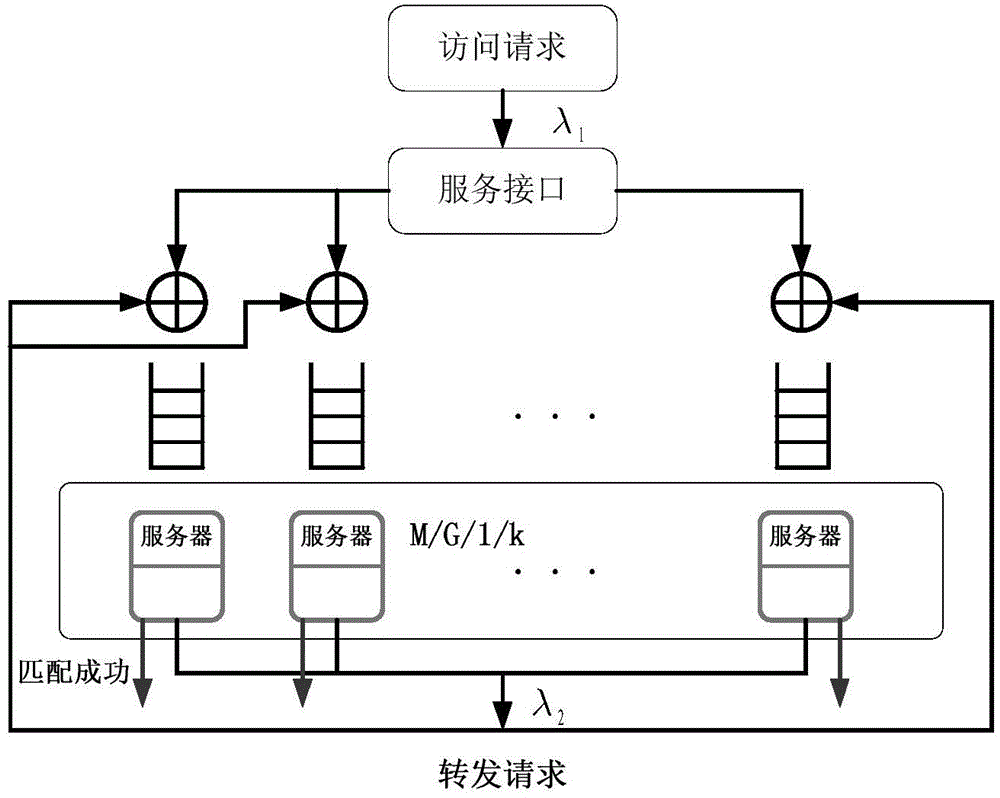

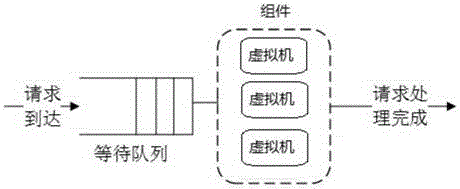

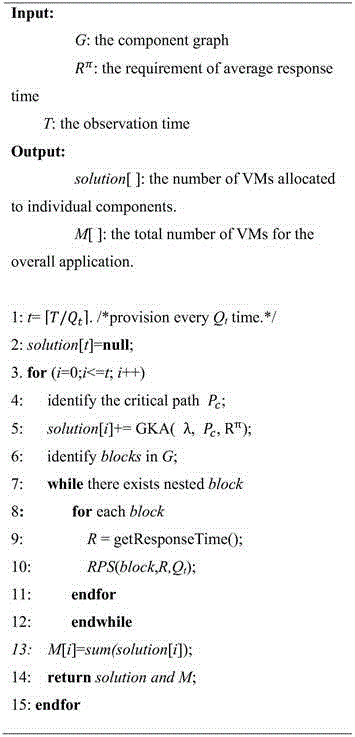

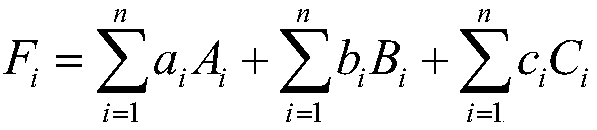

Elasticity analysis process oriented cloud resource allocation optimization method

ActiveCN106407007AResponse Time EstimationResource allocationSoftware simulation/interpretation/emulationProcess orientedElastic analysis

The invention provides an elasticity analysis process oriented cloud resource allocation optimization method. The method comprises the steps of S1, carrying out performance modeling on an elasticity analysis process through adoption of an open queuing network theory, namely modeling the whole analysis process to form an open queuing network, wherein each component in the process corresponds to sub-queues in a queuing network system, and the output of one component is the input of another component; and S2, estimating average response time of the whole open queuing network through a queuing theory, carrying out cloud resource allocation on each component according to the estimated average response time, thereby enabling the total number of resources to be the least on the premise of satisfying the average response time required by a user. According to the method, the resource allocation can be carried out on the analysis process with requests arriving continuously, the average response time of the system is estimated by employing a queuing theory; the response time is estimated relatively accurately, an allocable server solution set of each component is estimated according to the queuing theory, and a quasi-optimal solution is obtained by employing a heuristic algorithm.

Owner:SHANGHAI JIAO TONG UNIV

Microfluidic droplet queuing network

ActiveUS7993911B2Bioreactor/fermenter combinationsSequential/parallel process reactionsOil phaseEngineering

A multi-port liquid bridge (1) adds aqueous phase droplets (10) in an enveloping oil phase carrier liquid (11) to a draft channel (4, 6). A chamber (3) links four ports, and it is permanently full of oil (11) when in use. Oil phase is fed in a draft flow from an inlet port (4) and exits through a draft exit port (6) and a compensating flow port (7). The oil carrier and the sample droplets (3) (“aqueous phase”) flow through the inlet port (5) with an equivalent fluid flow subtracted through the compensating port (7). The ports of the bridge (1) are formed by the ends of capillaries held in position in plastics housings. The phases are density matched to create an environment where gravitational forces are negligible. This results in droplets (10) adopting spherical forms when suspended from capillary tube tips. Furthermore, the equality of mass flow is equal to the equality of volume flow. The phase of the inlet flow (from the droplet inlet port (5) and the draft inlet port (4) is used to determine the outlet port (6) flow phase.

Owner:STOKES BIO LTD

Method and apparatus for creating, sending, and using self-descriptive objects as messages over a message queuing network

InactiveUS20050071314A1Easy to adaptMore robustDigital data processing detailsMultiprogramming arrangementsMessage queueSemantics

An invention for creating, sending, and using self-descriptive objects as messages over a network is disclosed. In an embodiment of the present invention, self-descriptive persistent dictionary objects are serialized and sent as messages across a message queuing network. The receiving messaging system unserializes the message object, and passes the object to the destination application. The application then queries or enumerates message elements from the instantiated persistent dictionary, and performs the programmed response. Using these self-descriptive objects as messages, the sending and receiving applications no longer rely on an a priori convention or a special-coding serialization scheme. Rather, messaging applications can communicate arbitrary objects in a standard way with no prior agreement as to the nature and semantics of message contents.

Owner:MICROSOFT TECH LICENSING LLC

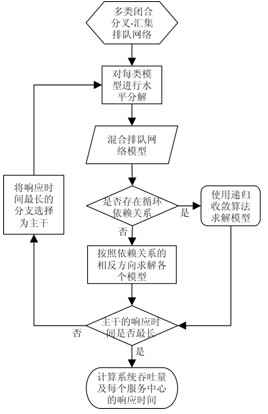

Method for analyzing performance of multi-class closed fork-join queuing network based on horizontal decomposition

ActiveCN102123053AImprove the efficiency of performance analysisEfficient solutionData switching networksDecompositionQueuing network

The invention discloses a method for analyzing performance of a multi-class closed fork-join queuing network based on horizontal decomposition. According to the method, each class of model including fork-join operation in the models is subjected to horizontal decomposition so that a computer can implement rapid and accurate analysis on the performance of the queuing network models to obtain analyzable parameters of actual system performance, thereby improving the efficiency of the computer in analyzing the system performance.

Owner:ZHEJIANG UNIV

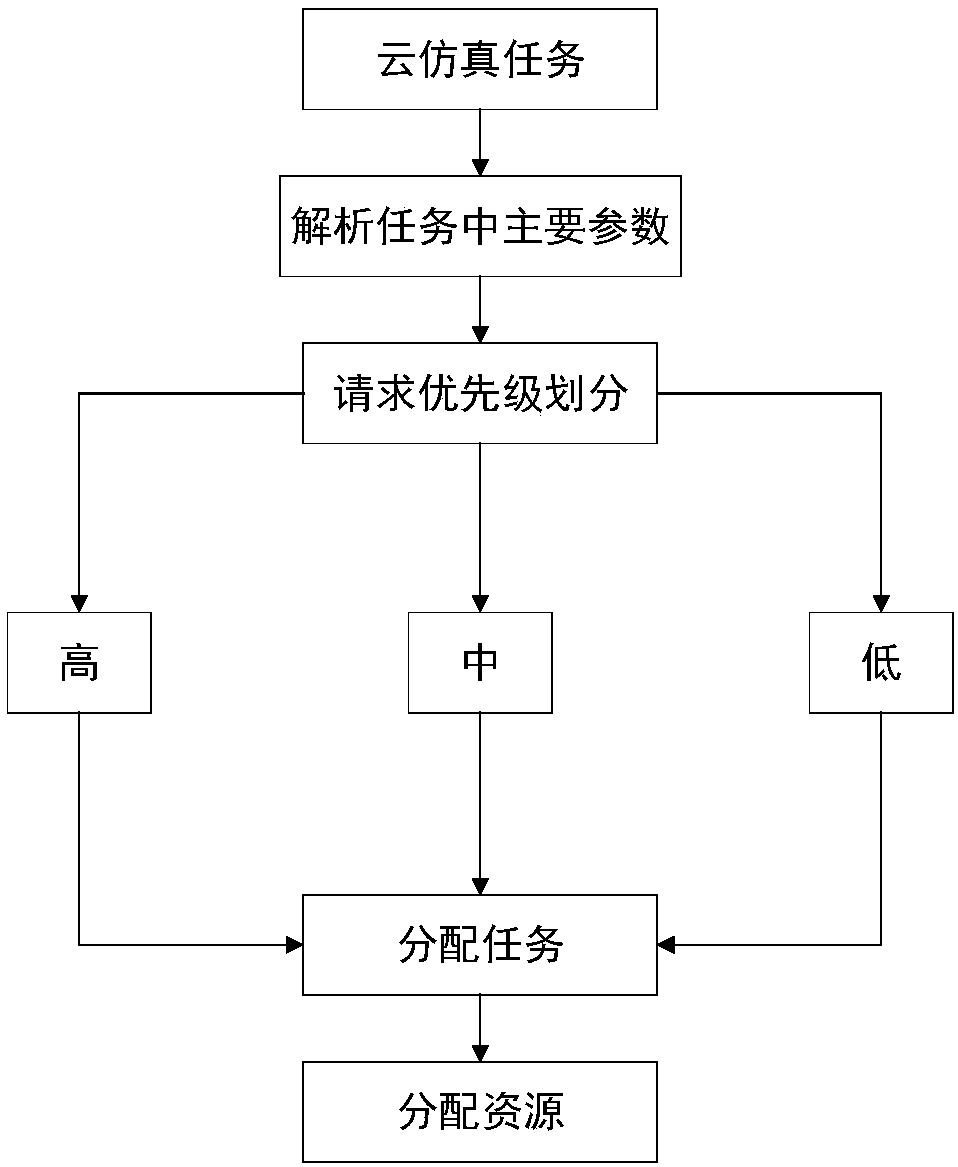

A cloud simulation task scheduling method based ona hybrid queuing network

ActiveCN109542608AImplement dynamic allocationGlobal effect maximizationProgram initiation/switchingEntry timeQueuing network

The invention discloses a cloud simulation task scheduling method based on a hybrid queuing network. The method includes calculating a resource quantity required by each of the plurality of cloud simulation tasks; Prioritizing the plurality of cloud simulation tasks according to the resource amount of each cloud simulation task; constructing A plurality of sub-queues, and allocating a plurality ofcloud simulation tasks to the plurality of sub-queues according to the request entry time of the plurality of cloud simulation tasks and the number of cloud simulation tasks that each sub-queue can bear, so as to provide an optimized cloud simulation task scheduling method and improve the processing efficiency of the cloud simulation tasks.

Owner:BEIJING SIMULATION CENT

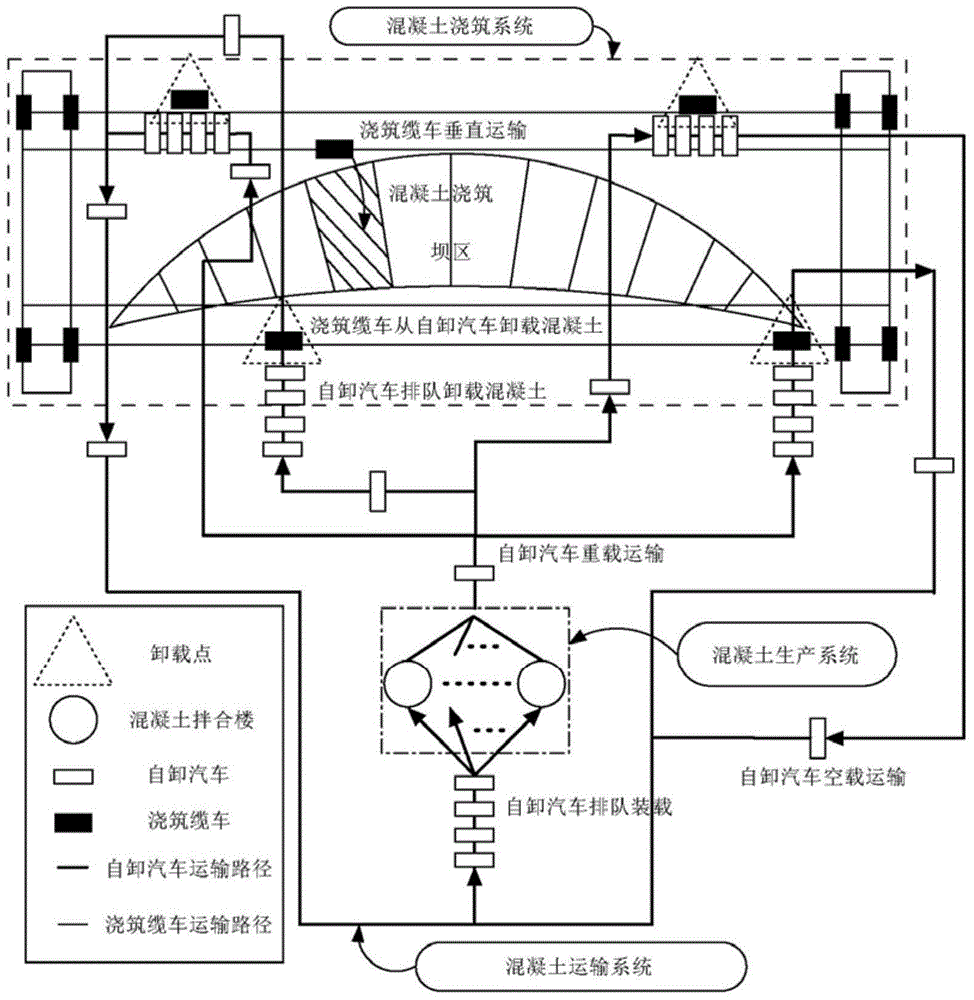

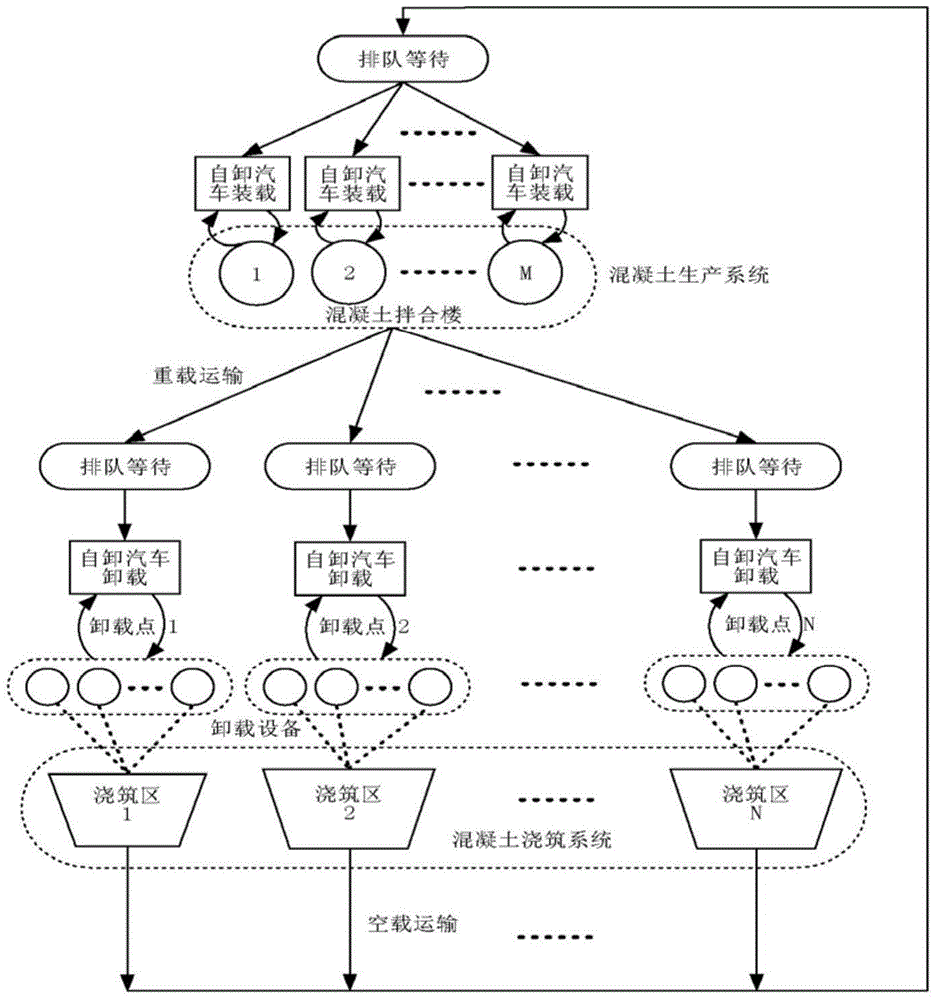

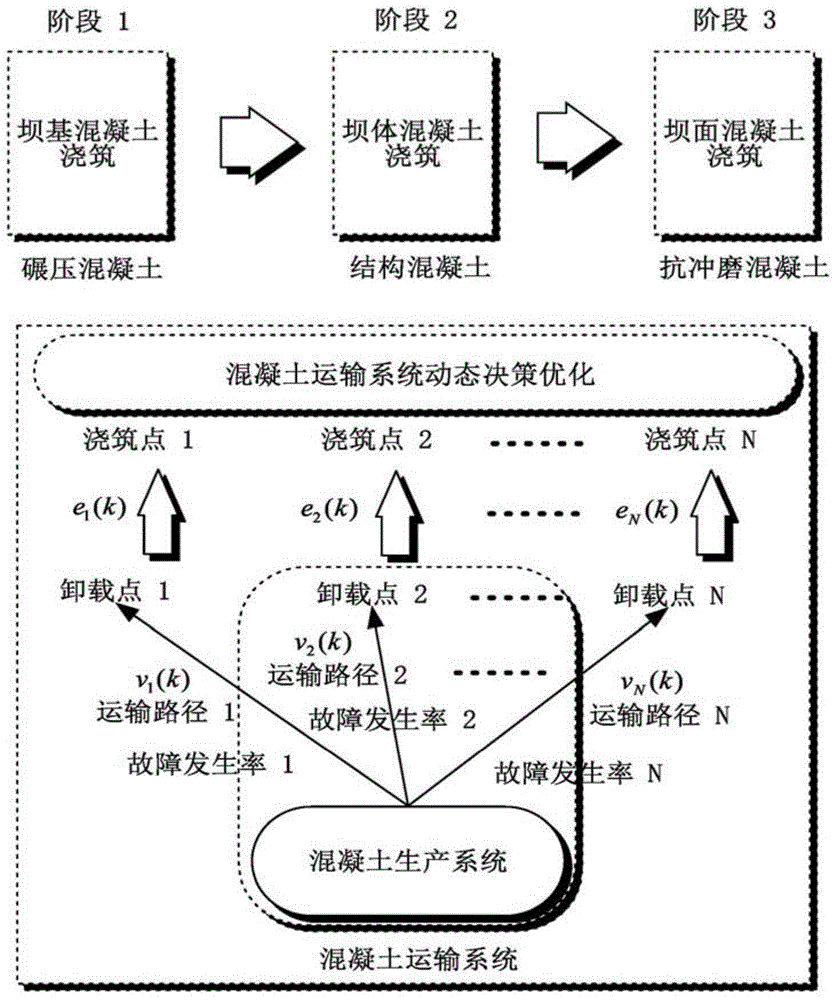

Optimization decision-making method for concrete transportation queuing networks during high arch dam engineering construction

InactiveCN104376387ASolving Dynamic Optimization ProblemsImprove construction efficiencyForecastingLogisticsHigh archesQueuing network

The invention belongs to the technical field of engineering management, and discloses an optimization decision-making method for concrete transportation queuing networks during high arch dam engineering construction. The concrete transportation queuing networks can be dynamically optimized by the aid of the optimization decision-making method during high arch dam construction. The method includes steps of A, describing relevant uncertain parameters by the aid of fuzzy random numbers; B, computing system state transition probabilities of the transportation networks; C, building multi-objective and multistage decision-making optimization models; D, equivalently transforming the models to form solvable multistage decision-making optimization models; E, solving the models by the aid of particle swarm optimization algorithms on the basis of paired processes. The optimization decision-making method has the advantage of applicability to improving the construction efficiency and reducing the running cost during high arch dam engineering construction.

Owner:SICHUAN UNIV

Method and apparatus for performance and policy analysis in distributed computing systems

InactiveUS20080262817A1Analogue computers for electric apparatusTransmissionPresent methodQueuing network

One embodiment of the present method and apparatus for performance and policy analysis in distributed computing systems includes representing a distributed computing system as a state transition model. A queuing network is then superimposed upon the state transition model, and the effects of one or more policies on the distributed computing system performance are identified in accordance with a solution to the queuing network.

Owner:INT BUSINESS MASCH CORP

Multi-layer shuttle vehicle system conveyor cache length modeling and optimizing method

InactiveCN110134985AShorten the cache lengthShorten delivery timeDesign optimisation/simulationLogisticsCritical conditionSimulation

The invention discloses a multi-layer shuttle vehicle system conveyor cache length modeling and optimizing method, and the method comprises the steps: a buffer memory input and output balance model ofthe conveyor is built on the basis of deeply analyzing the warehouse-out operation process of the multilayer shuttle vehicle system; and under the condition that the conveyor cache meets the normal operation of the sorting operation, an optimal condition and a critical condition of the cache length are provided. A material box warehouse-out operation process is analyzed through an open-loop queuing network modeling method, a material box average warehouse-out time model is established, and an optimization model of the buffer length of the conveyor is established according to the relation between material box warehouse-out operation flow distribution and the manual picking efficiency; in order to shorten the average warehouse-out time of the material box, k-means algorithm and a storage area arrangement rule are utilized to optimize storage positions, and the buffer length of the conveyor is reduced.

Owner:SHANDONG UNIV

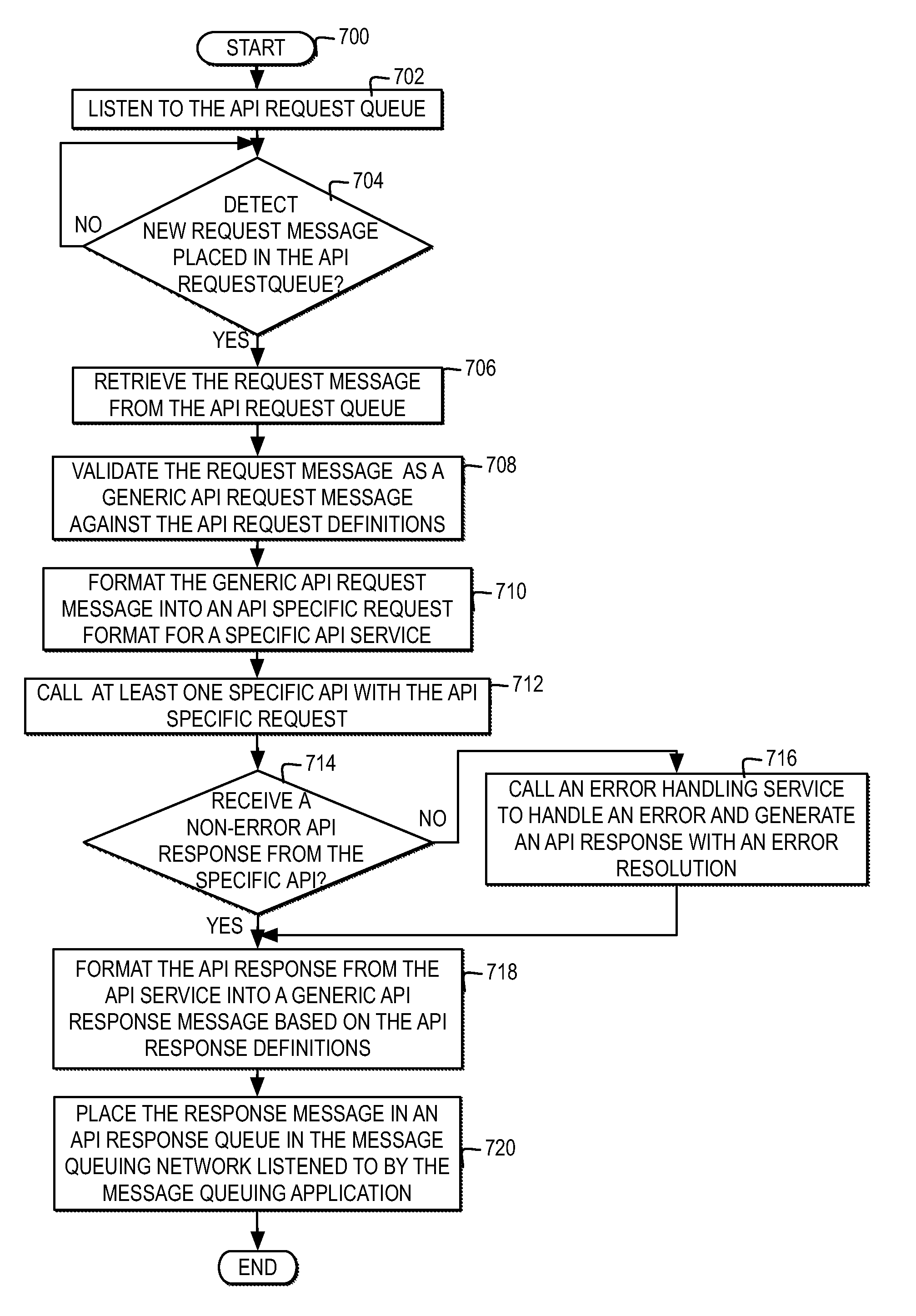

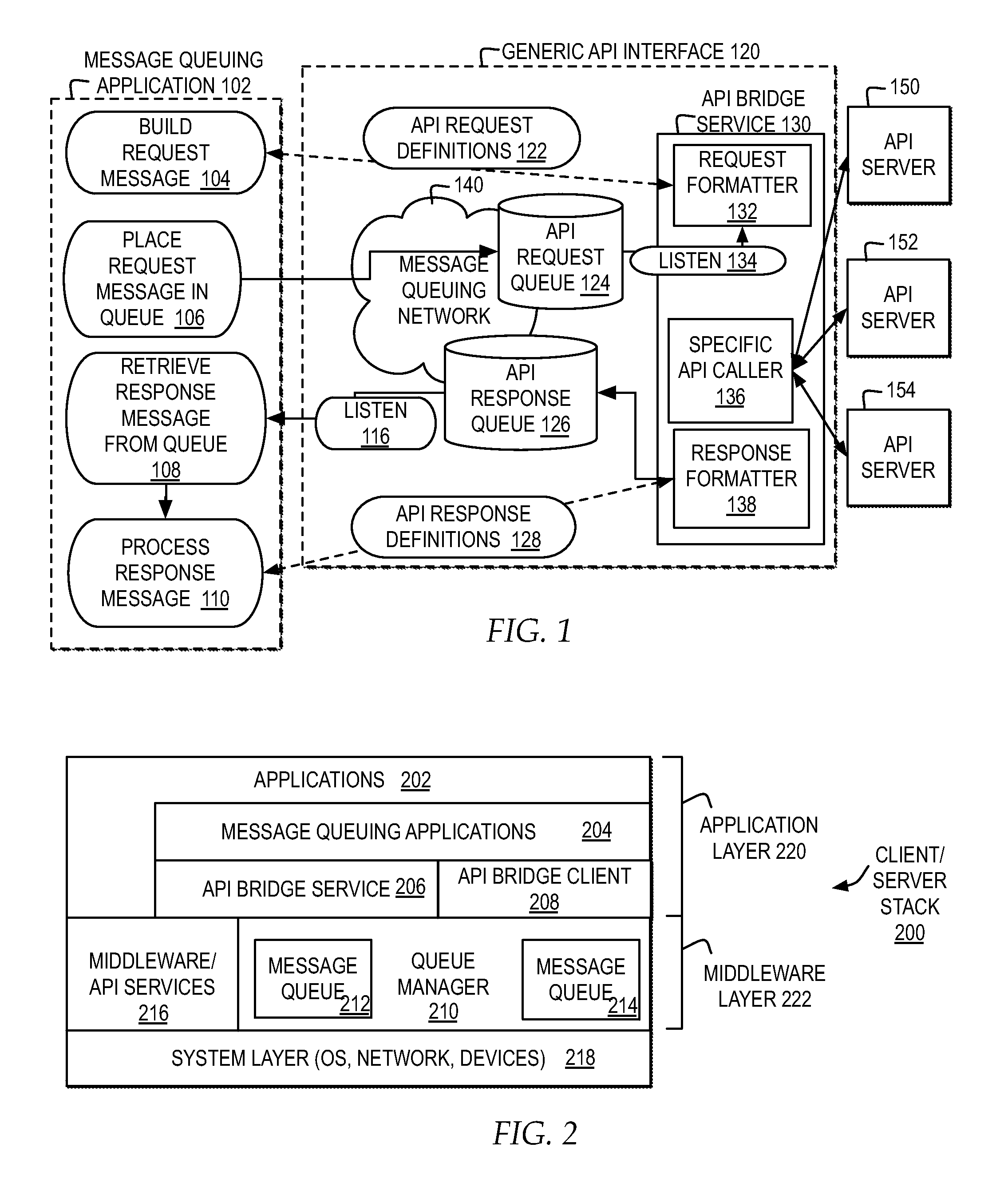

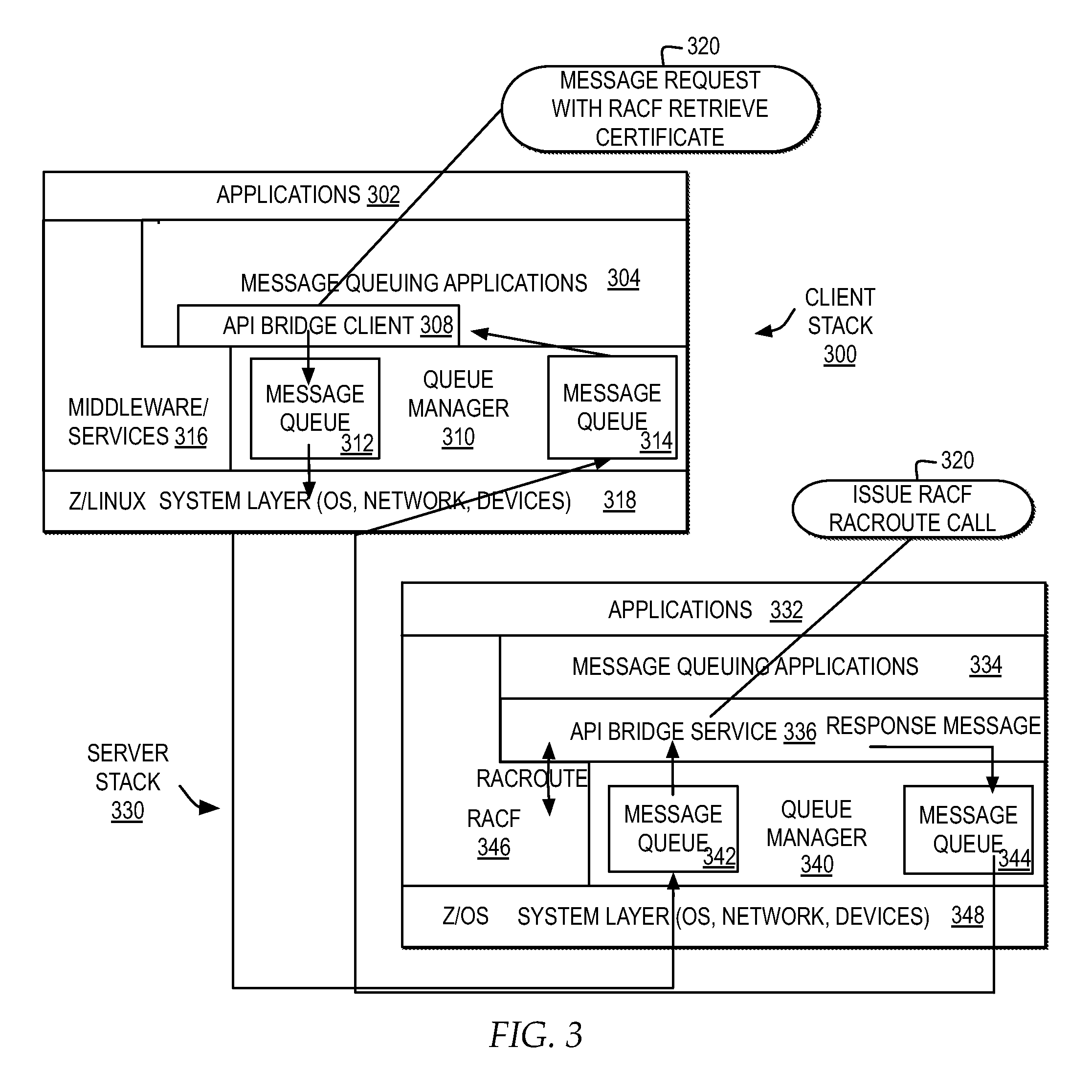

Message queuing application access to specific API services through a generic API interface integrating a message queue

InactiveUS8910185B2Multiprogramming arrangementsSpecific program execution arrangementsMessage queueUse of services

Owner:INT BUSINESS MASCH CORP

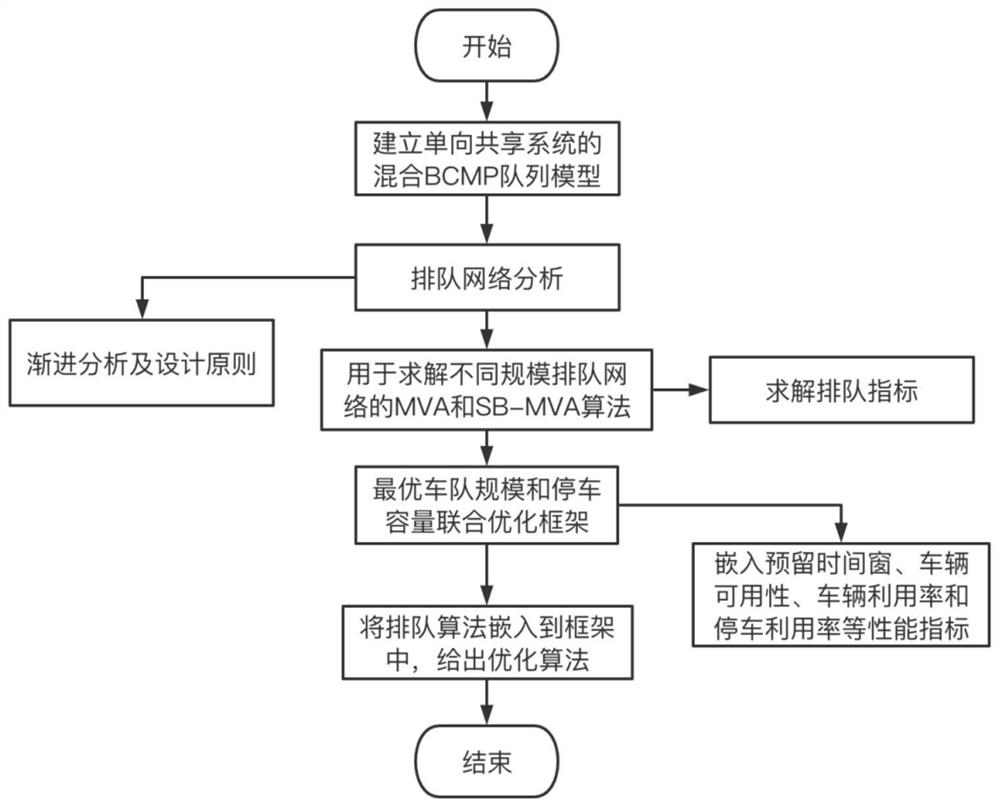

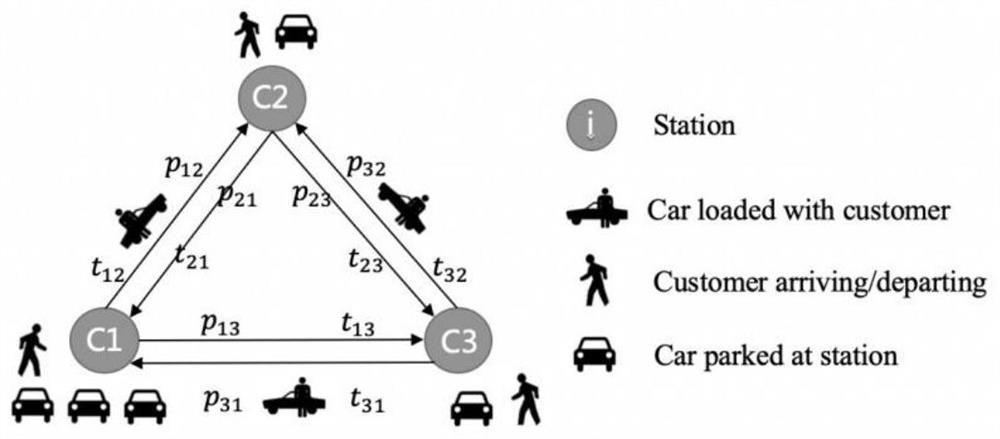

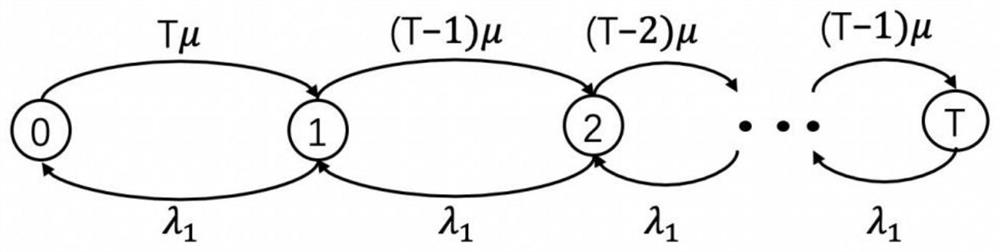

One-way vehicle sharing system scale optimization method based on queuing theory

ActiveCN112419601AProof of uniquenessIndication of parksing free spacesChecking apparatusOccupancy rateParking space

The invention provides a one-way vehicle sharing system scale optimization method based on a queuing theory, and relates to the technical field of planning and design of one-way vehicle sharing systems. A hybrid BCMP queuing network model is used for describing vehicle circulation in a one-way sharing system, realistic factors such as reservation strategies, road congestion and limited parking spaces are clearly introduced, and the asymptotic relationship between the system scale and the vehicle availability Vi and parking occupancy rate Ui is clear and incorporated into a joint optimization framework. Then a joint optimization problem of the system scale is proposed according to a profit maximization principle, and an optimal motorcade scale and parking station capacity meeting a given performance index are determined. According to the invention, the remaining capacity of a physical road is mapped to the number of servers of a queuing network to be clearly introduced into road congestion, and a parking station model is modeled as an interdependent open network to limit the capacity of a parking lot.

Owner:东北大学秦皇岛分校

Application access to LDAP services through a generic LDAP interface integrating a message queue

InactiveUS8910184B2Interprogram communicationSpecific program execution arrangementsMessage queueQueuing network

An LDAP bridge service retrieves a generic LDAP message request, placed in a request queue by a message queuing application, from the request queue. The LDAP bridge service formats the generic LDAP request into a particular API call for at least one LDAP API. The LDAP bridge service calls at least one LDAP API with the particular API call for requesting at least one LDAP service from at least one LDAP server managing a distributed directory. Responsive to the LDAP bridge service receiving at least one LDAP specific response from at least one LDAP API, the LDAP bridge service translates the LDAP specific response into a response message comprising a generic LDAP response. The API bridge service, places the response message in a response queue of the message queuing network, wherein the message queuing application listens to the response queue for the response message.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com