Patents

Literature

77results about "Multiprocessor based energy comsumption reduction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

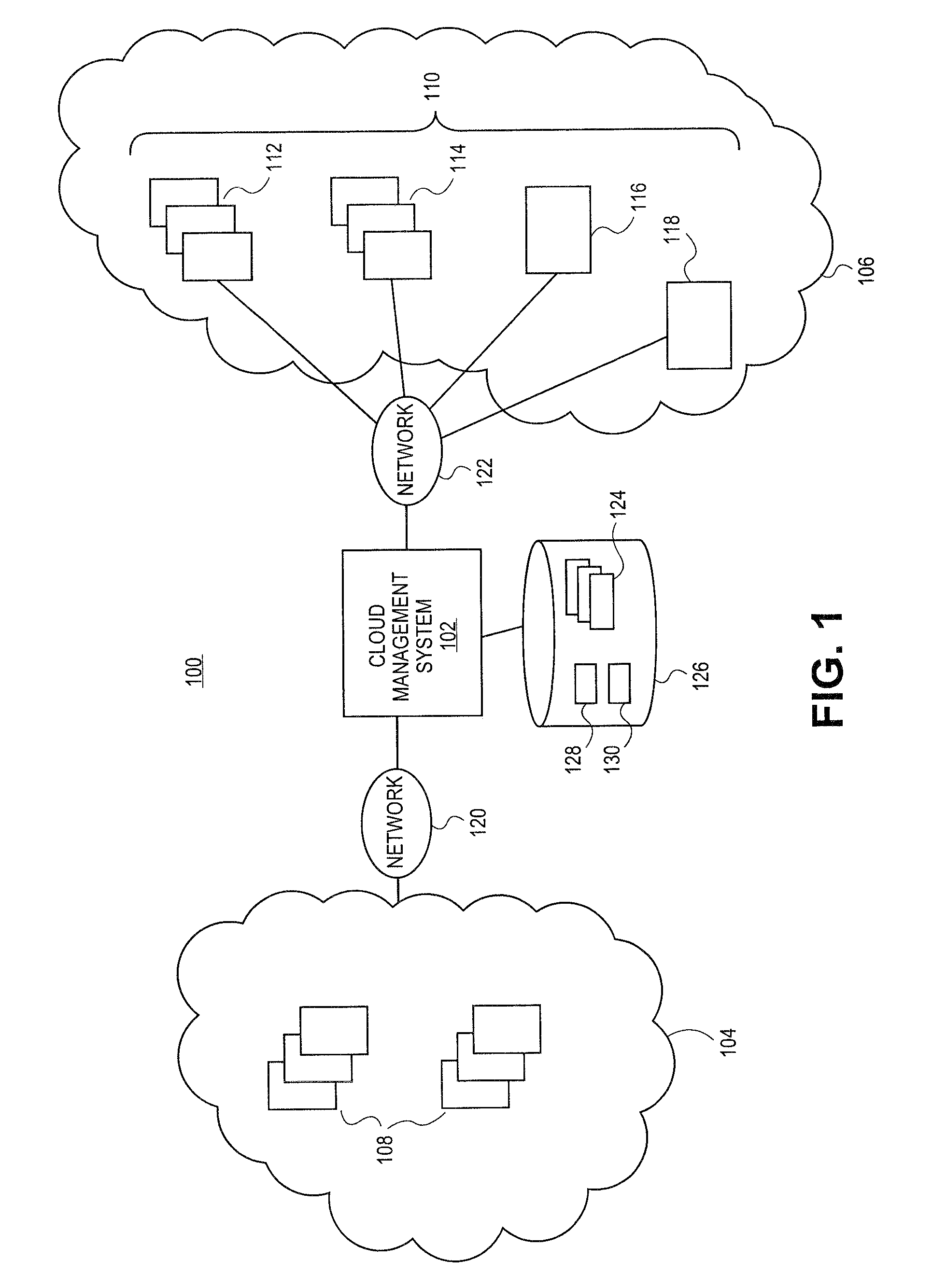

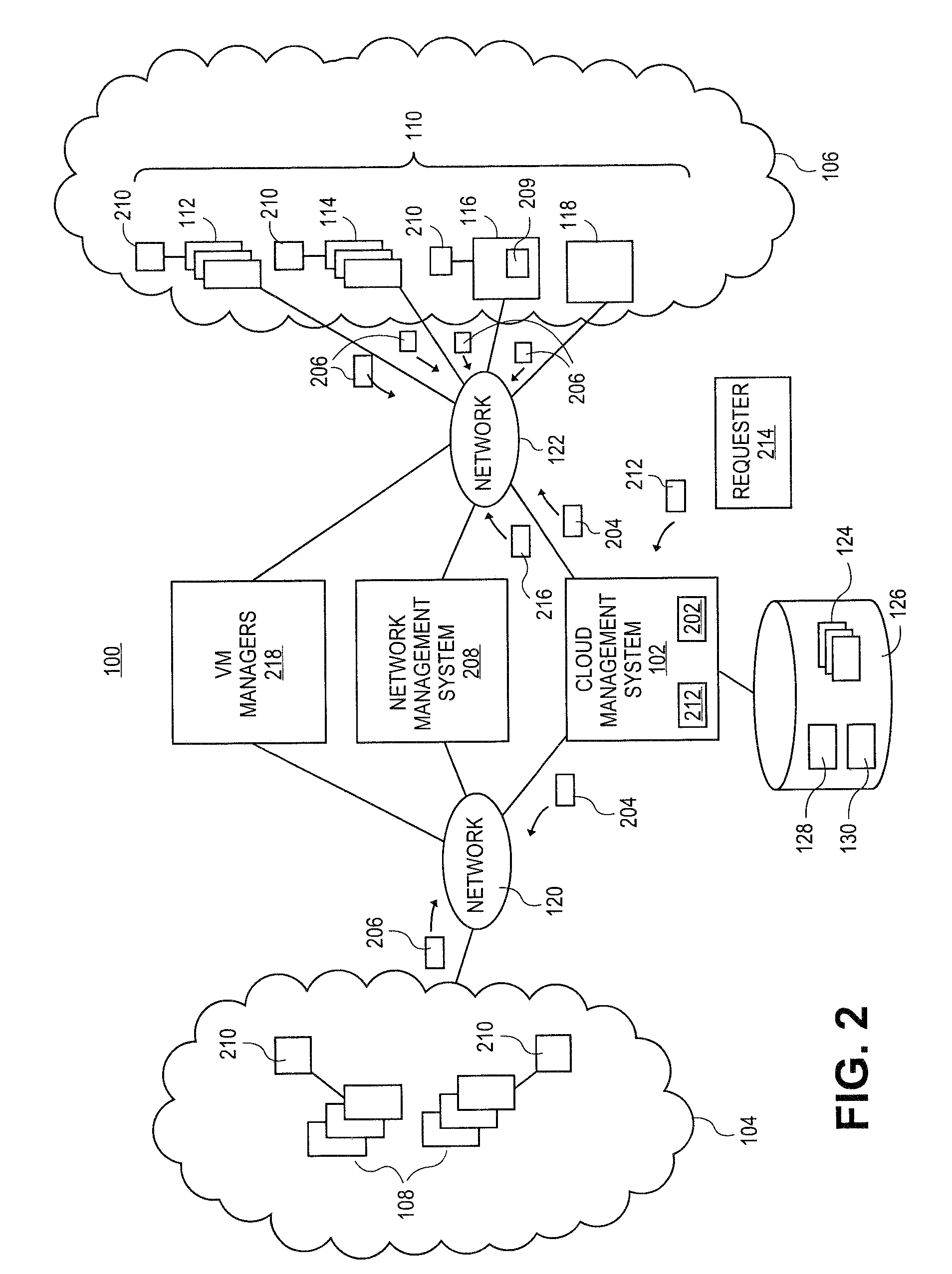

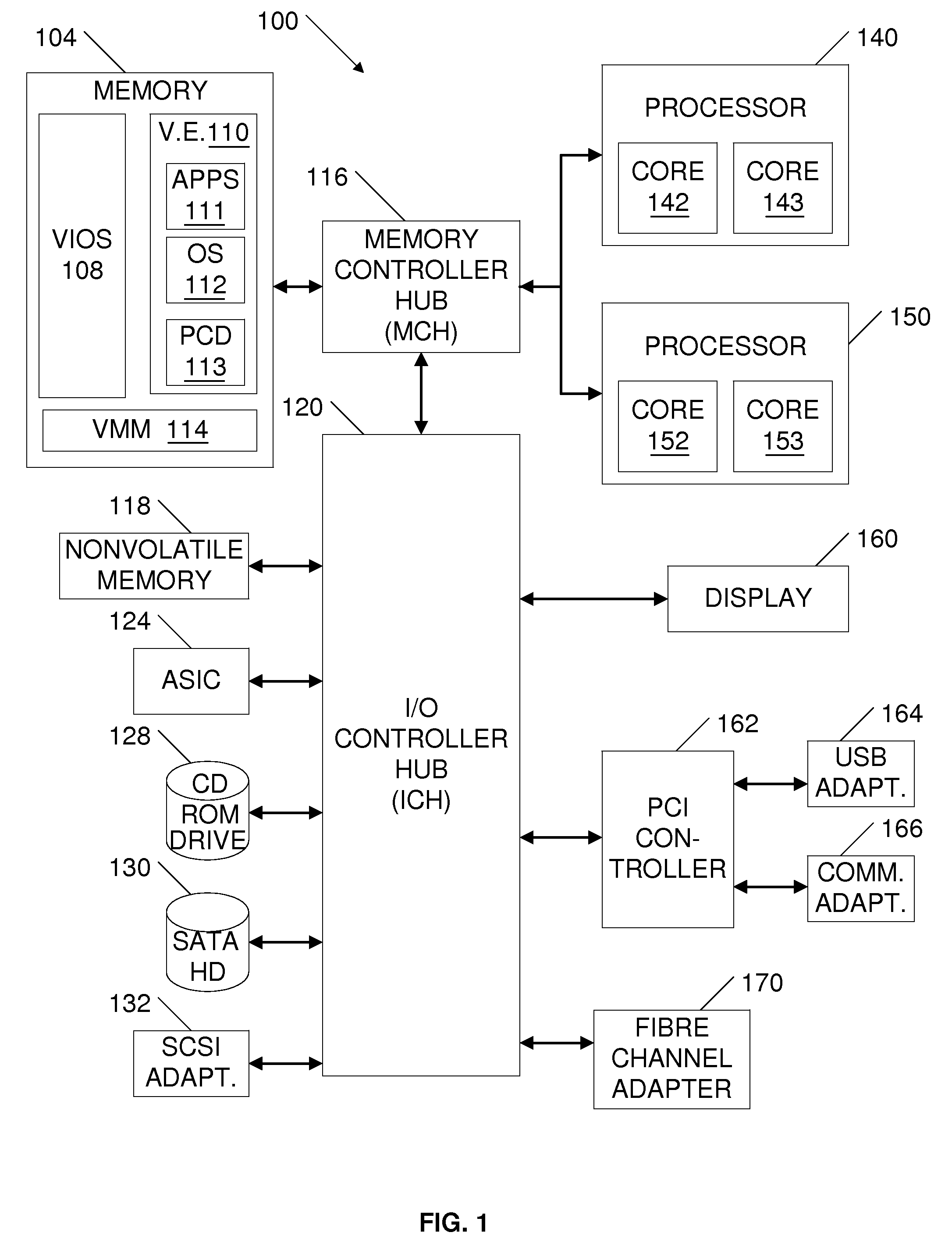

Methods and systems for flexible cloud management with power management support

A cloud management system can determine if the operational state of the virtual machines and / or the computing systems needs to be altered in order to instantiate virtual machines. If the operational state of the computing systems needs to be altered, the cloud management system retrieves an identification of the power management systems supporting the computing systems. The cloud management system can utilize the identification of the power management systems to instruct the power management systems to alter the power state of the computing system in order to instantiate the virtual machines.

Owner:RED HAT

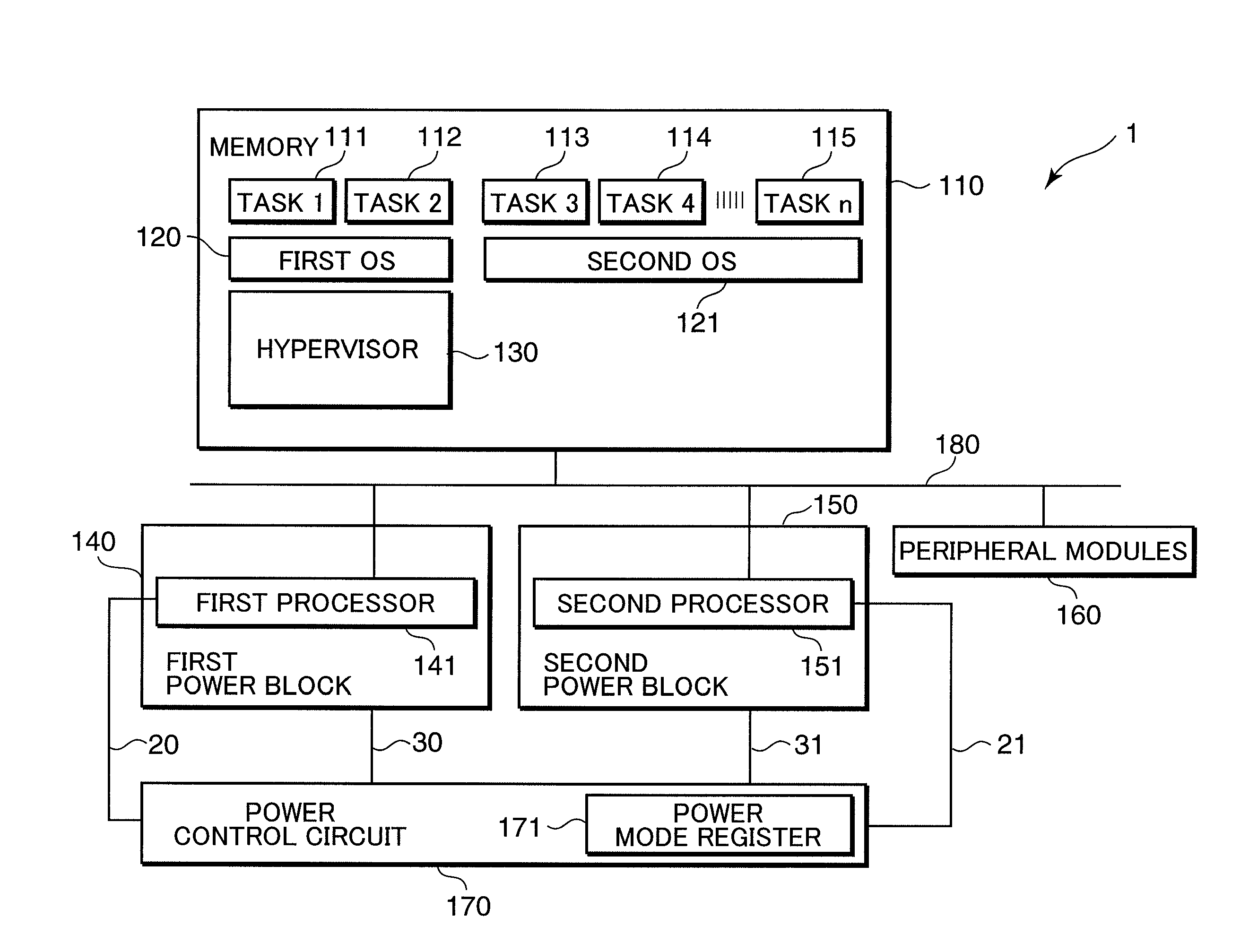

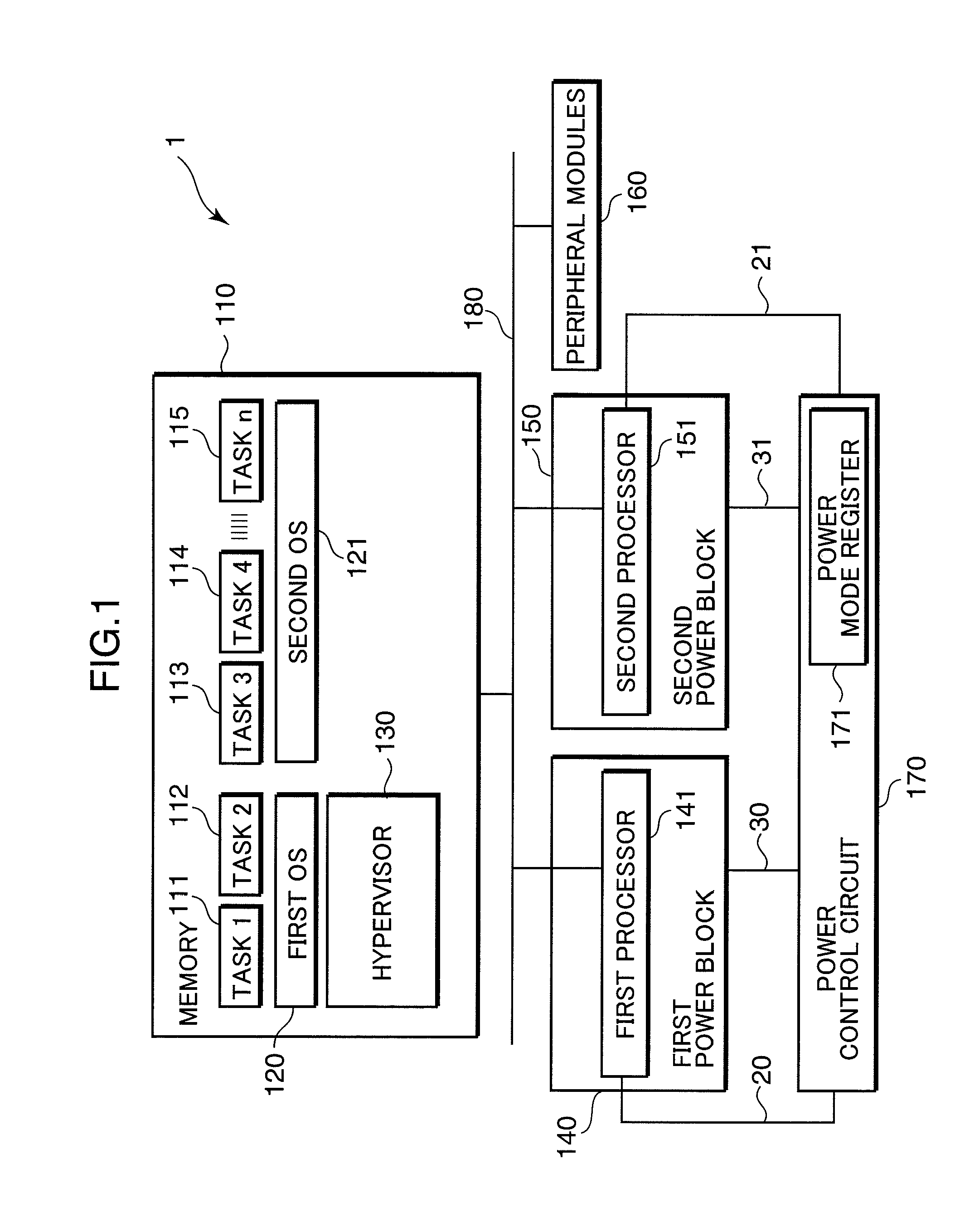

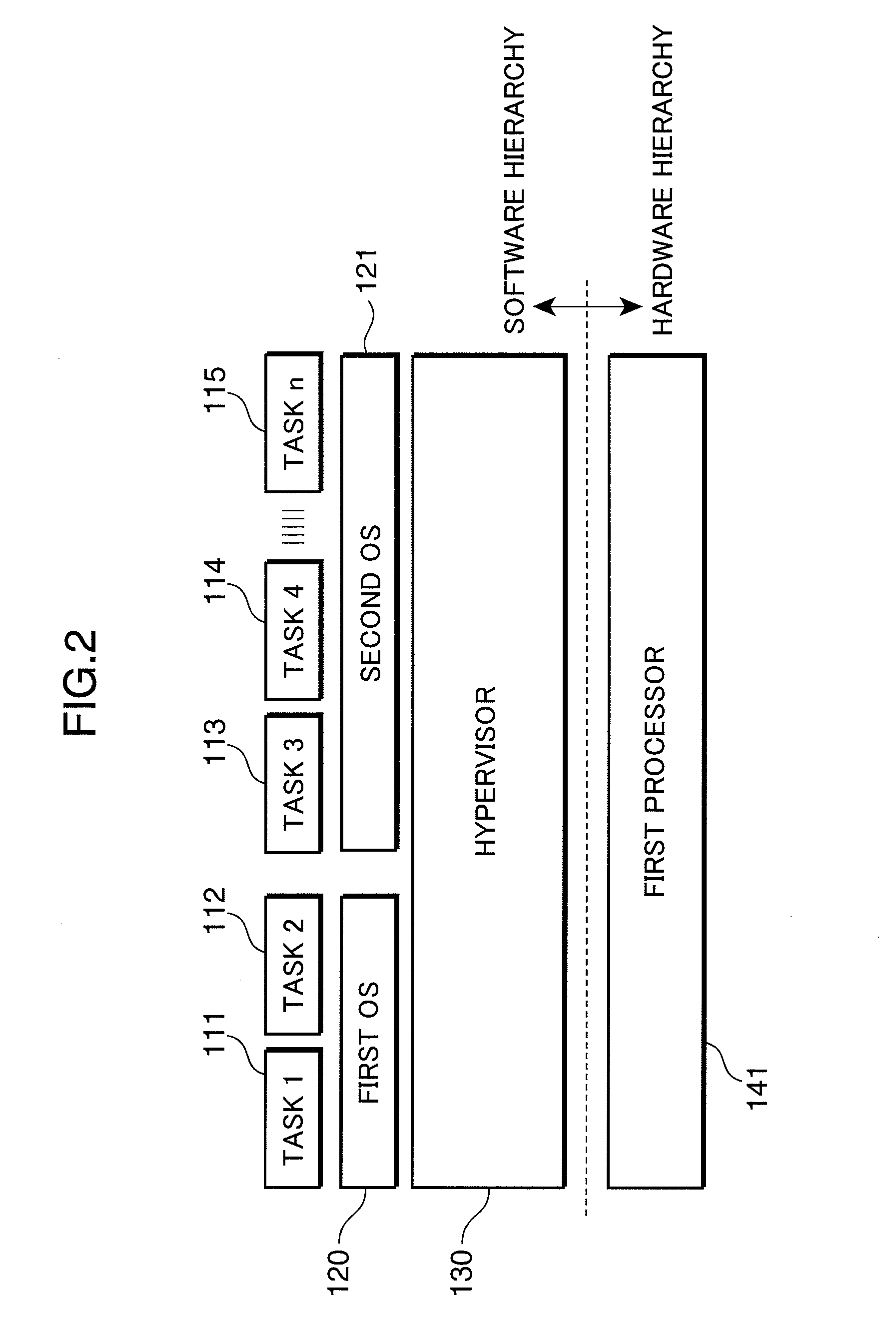

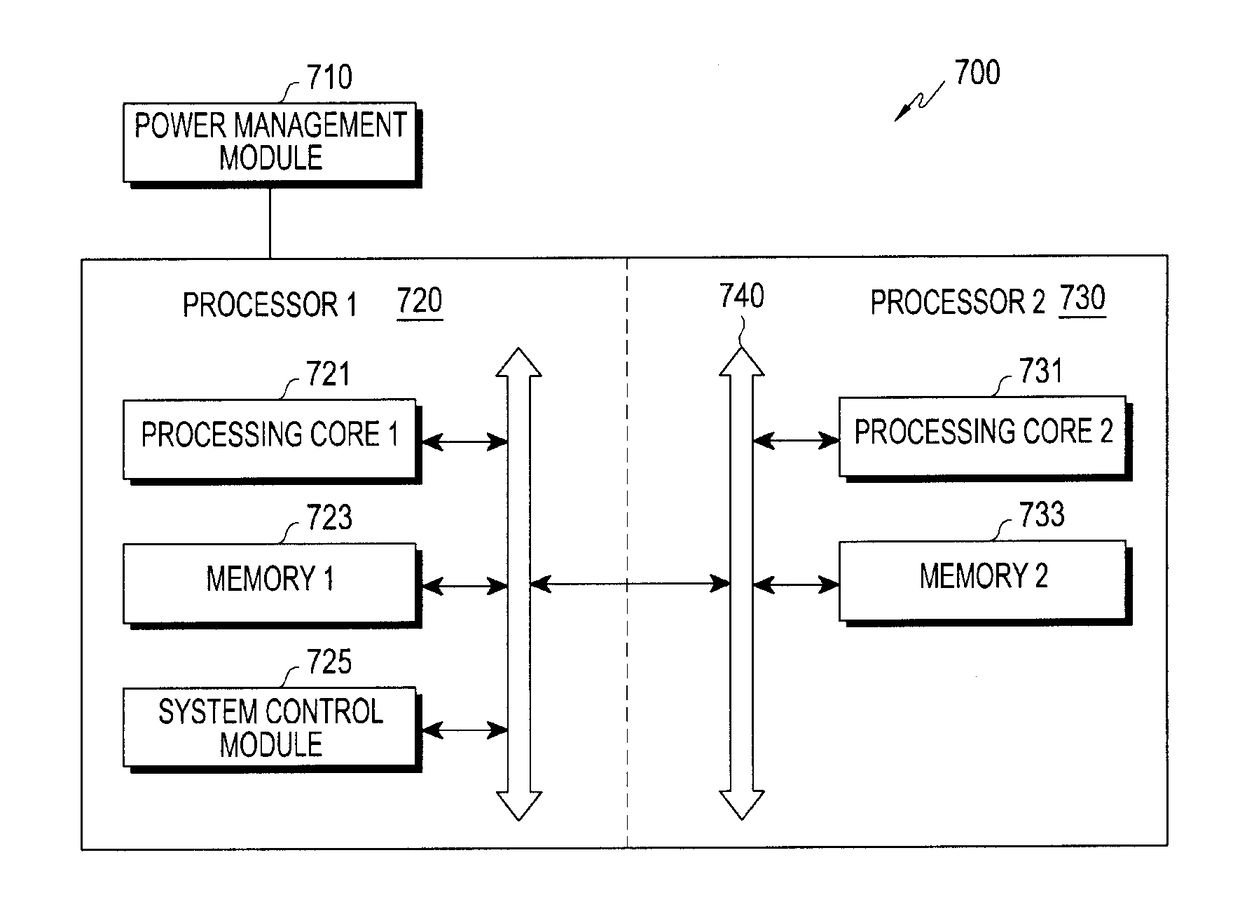

Multiprocessor control apparatus, multiprocessor control method, and multiprocessor control circuit

ActiveUS20100185833A1Reduce power consumptionReduce the number of timesMemory architecture accessing/allocationSusbset functionality useMulti processorParallel computing

An object of the invention is to reduce the electric power consumption resulting from temporarily activating a processor requiring a large electric power consumption, out of a plurality of processors. A multiprocessor system (1) includes: a first processor (141) which executes a first instruction code; a second processor (151) which executes a second instruction code, a hypervisor (130) which converts the second instruction code into an instruction code executable by the first processor (141); and a power control circuit (170) which controls the operation of at least one of the first processor (141) and the second processor (151). When the operation of the second processor (151) is suppressed by the power control circuit (170), the hypervisor (130) converts the second instruction code into the instruction code executable by the first processor (141), and the first processor (141) executes the converted instruction code.

Owner:GK BRIDGE 1

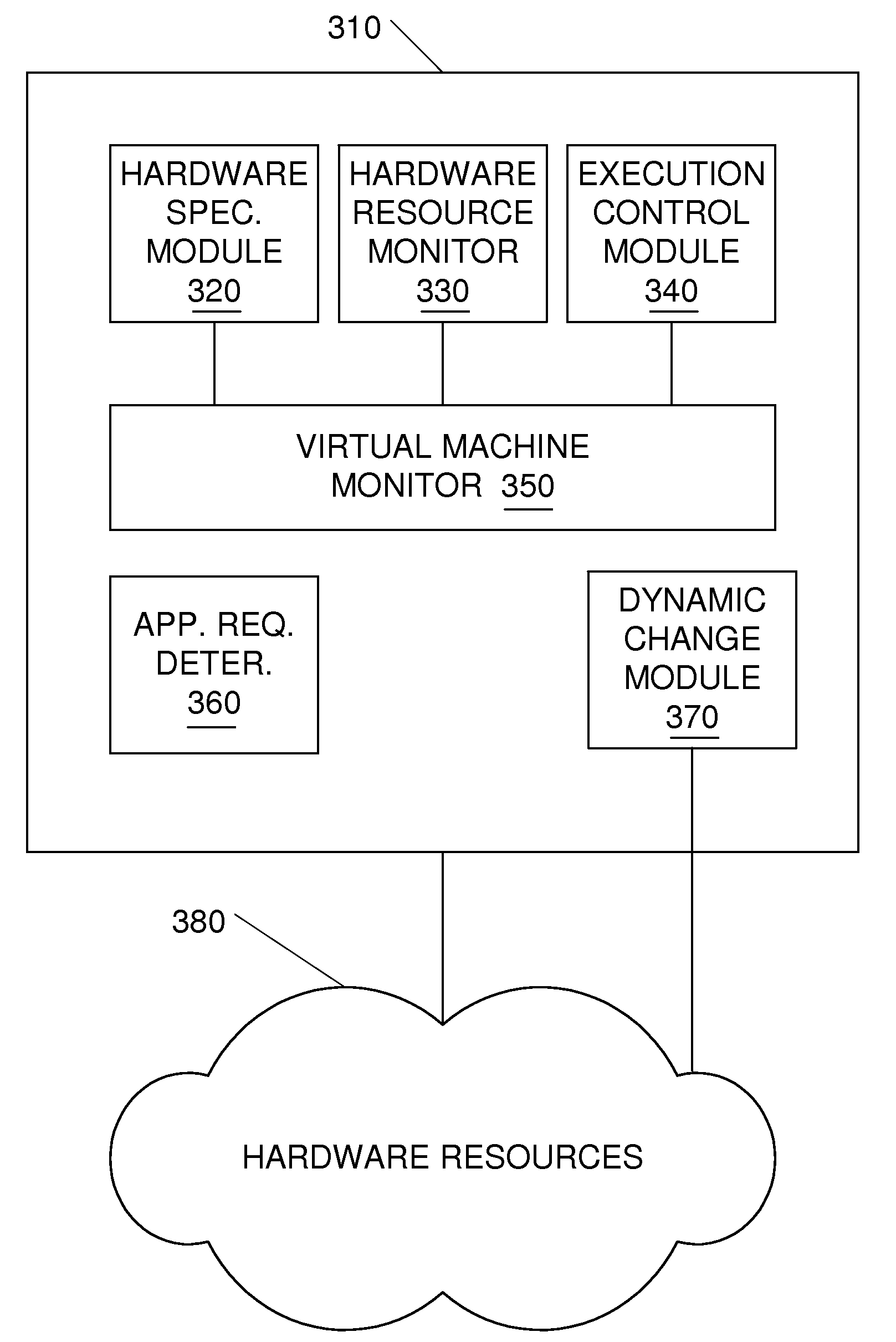

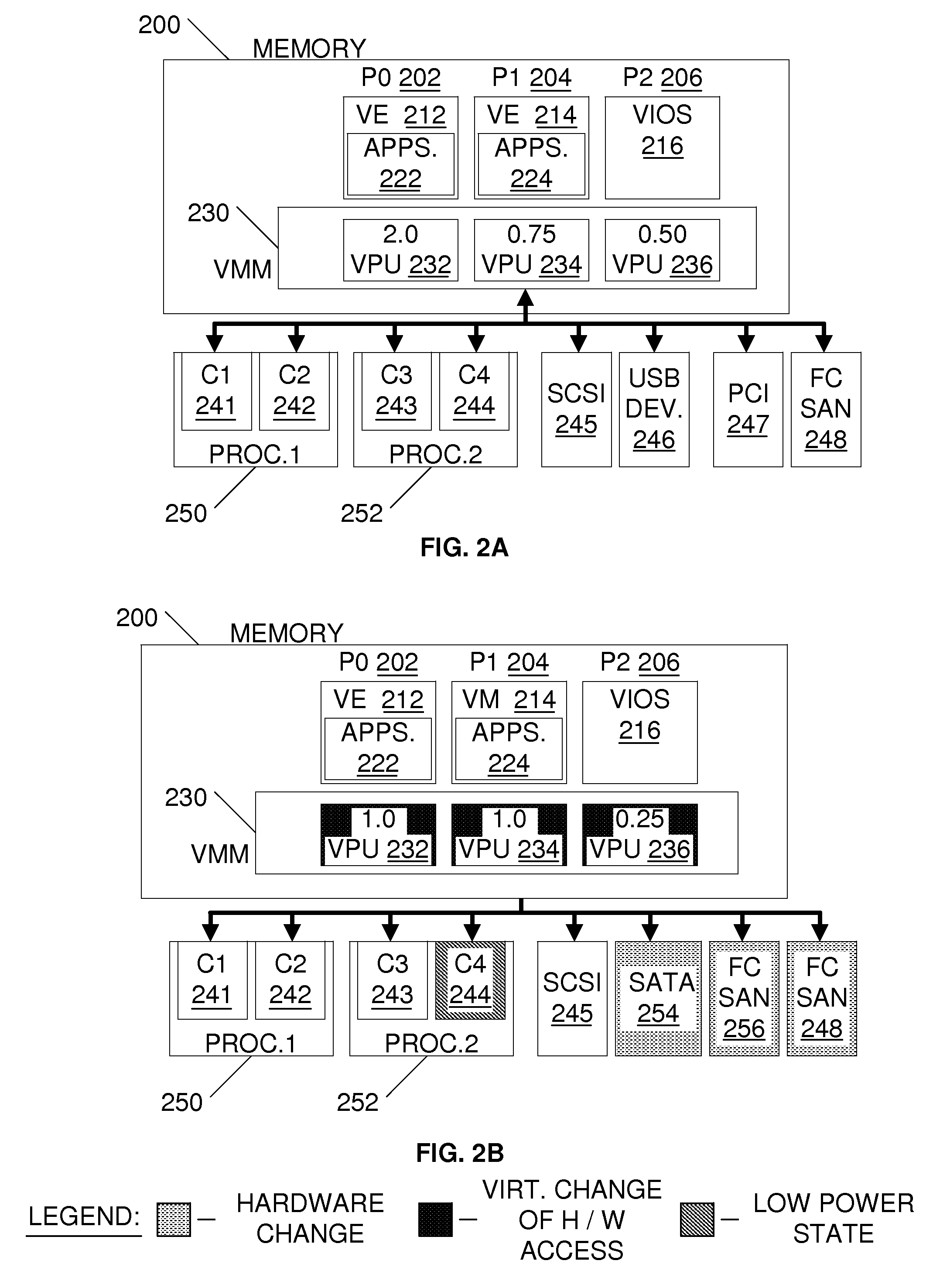

Dynamic Checking of Hardware Resources for Virtual Environments

ActiveUS20100186010A1Preventing executionResource allocationSoftware simulation/interpretation/emulationComputer hardwareApplication software

Embodiments that dynamically check availability of hardware resources for applications of virtual environments are contemplated. Various embodiments comprise one or more computing devices having various hardware resources available to applications of a virtual environment. Hardware resources may comprise, for example, amounts of memory, amounts or units of processing capability of one or more processors, and various types of peripheral devices. The embodiments may store hardware data pertaining to a specified amount of hardware recommended or required for an application to execute within the virtual environment. The embodiments may generally monitor for changes to the hardware configuration, which may affect amount of hardware available to the virtual environment and / or application. If the changes to the hardware reduce the amount of available hardware to a point beyond the specified amount of hardware, the embodiments may prevent the application from being executed or prevent the changes to the hardware configuration.

Owner:IBM CORP

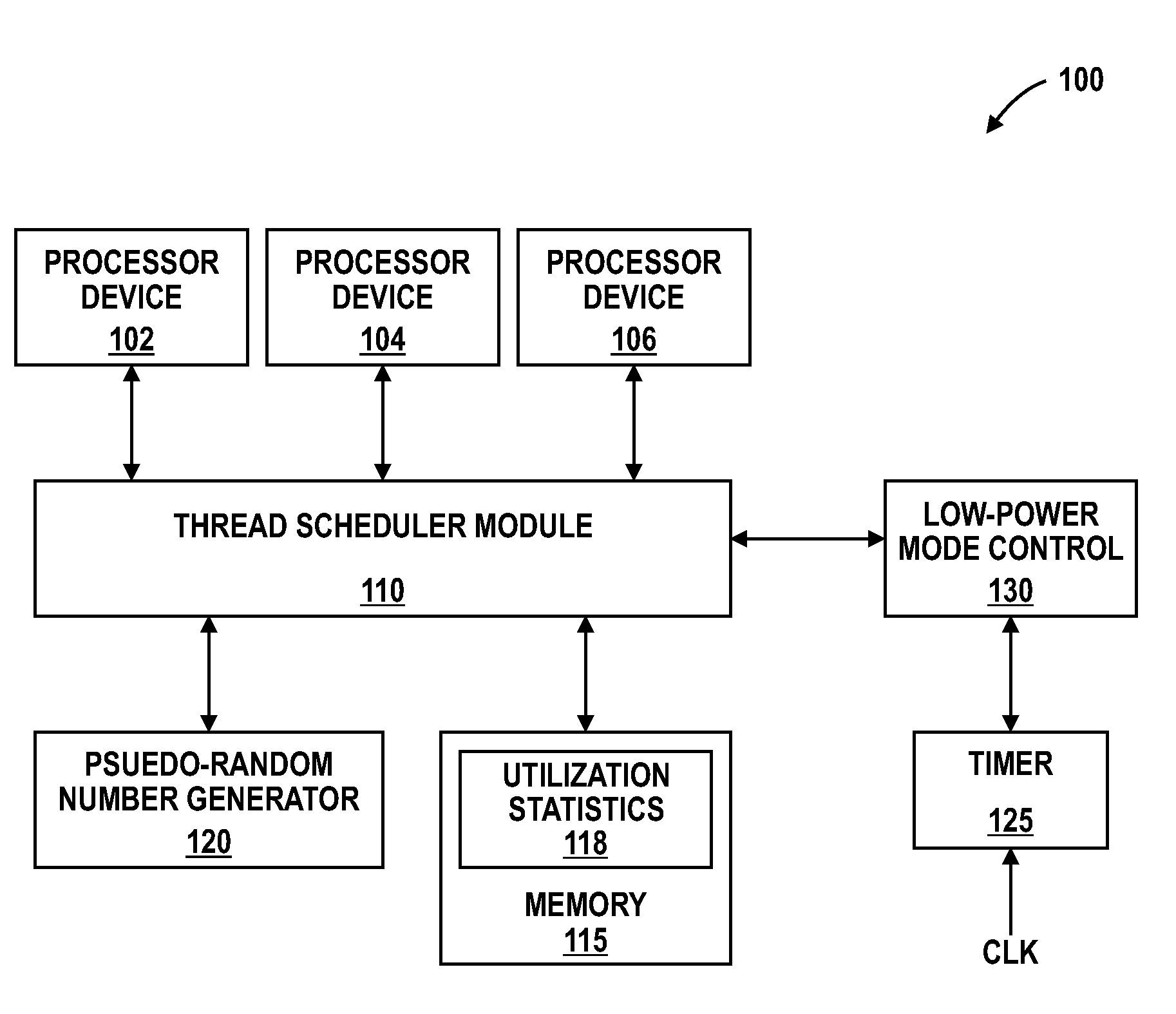

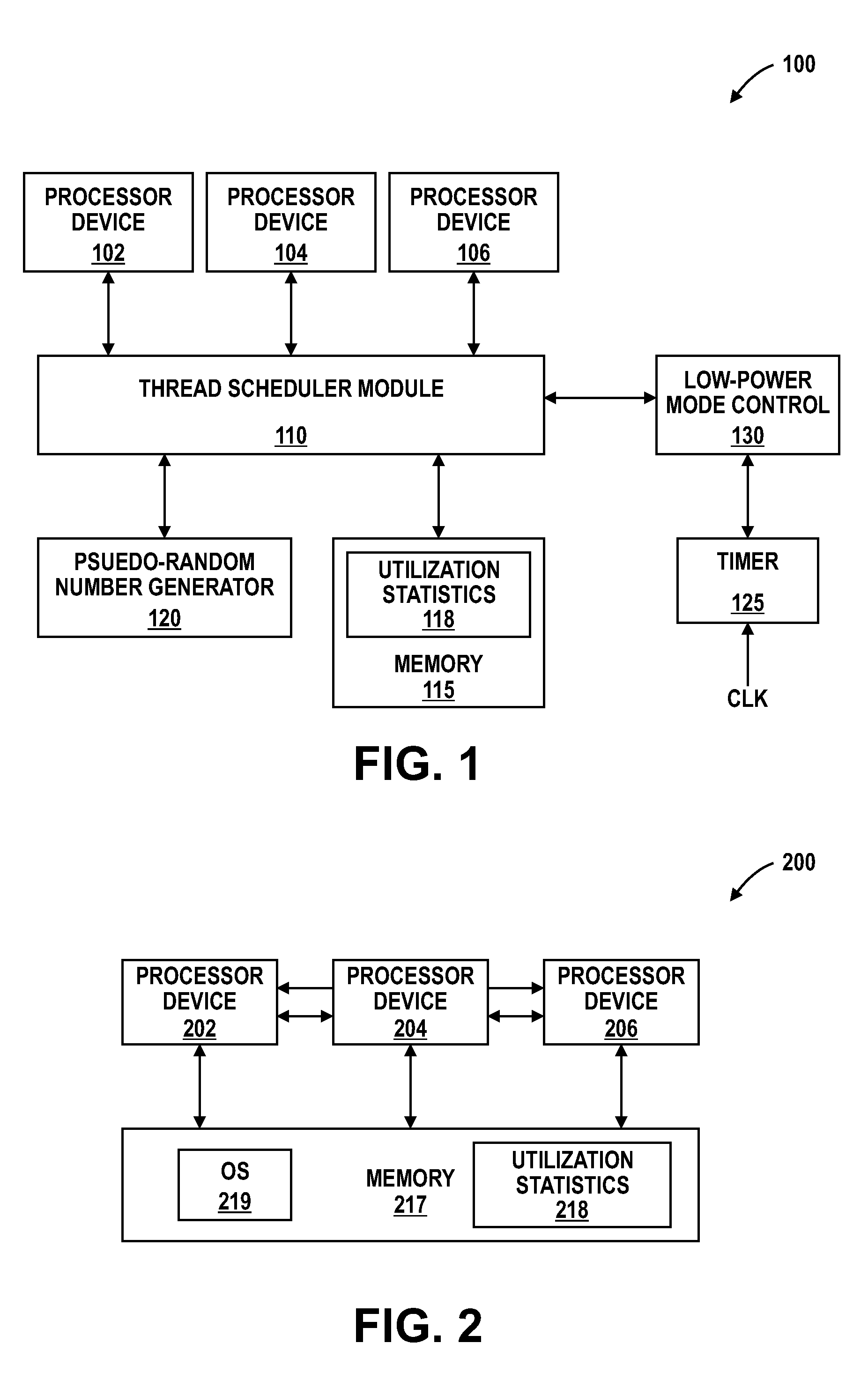

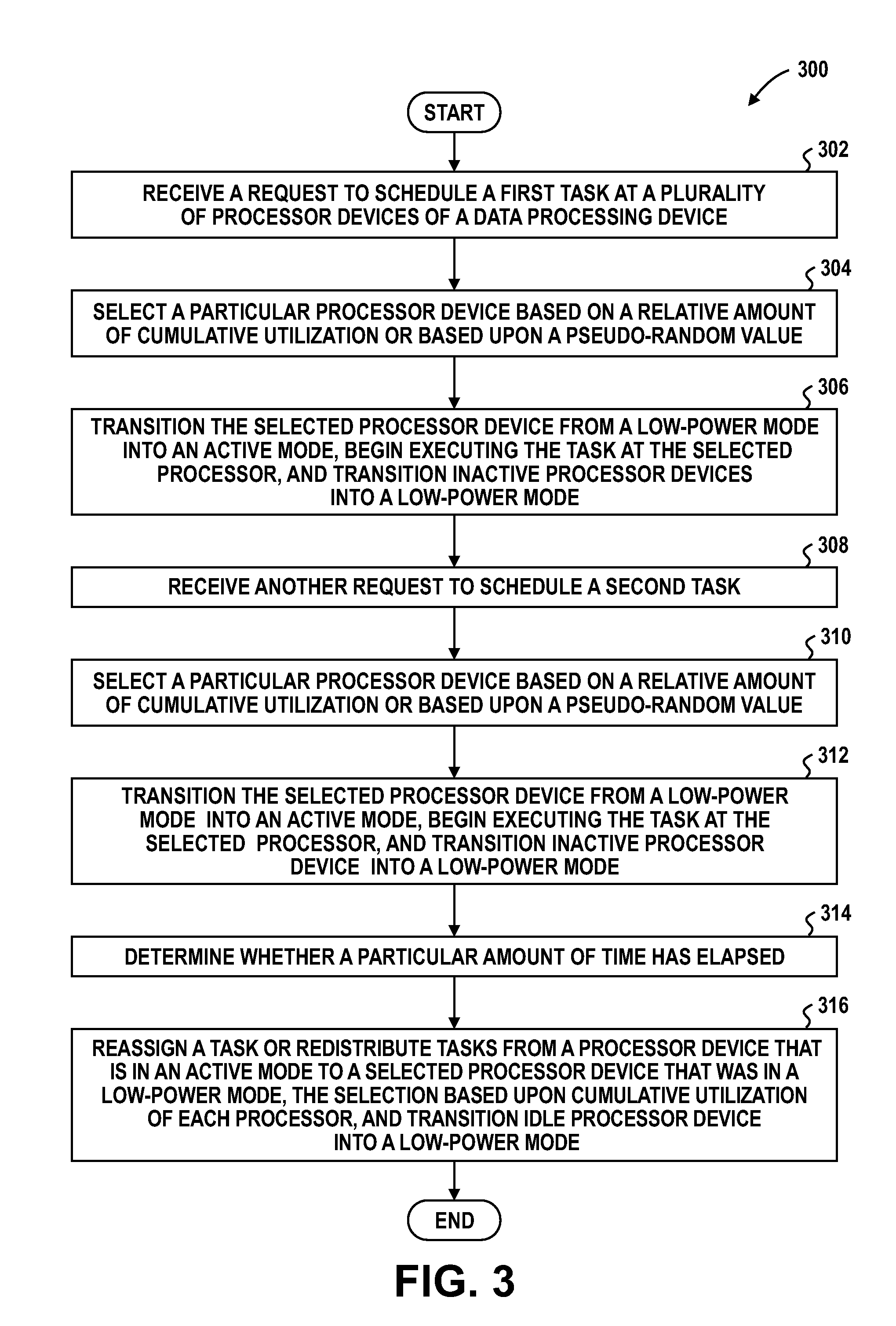

Scheduler for processor cores and methods thereof

ActiveUS20100107166A1Digital computer detailsMultiprogramming arrangementsParallel computingFixed sequence

A data processing device assigns tasks to processor cores in a more distributed fashion. In one embodiment, the data processing device can schedule tasks for execution amongst the processor cores in a pseudo-random fashion. In another embodiment, the processor core can schedule tasks for execution amongst the processor cores based on the relative amount of historical utilization of each processor core. In either case, the effects of bias temperature instability (BTI) resulting from task execution are distributed among the processor cores in a more equal fashion than if tasks are scheduled according to a fixed order. Accordingly, the useful lifetime of the processor unit can be extended.

Owner:MEDIATEK INC

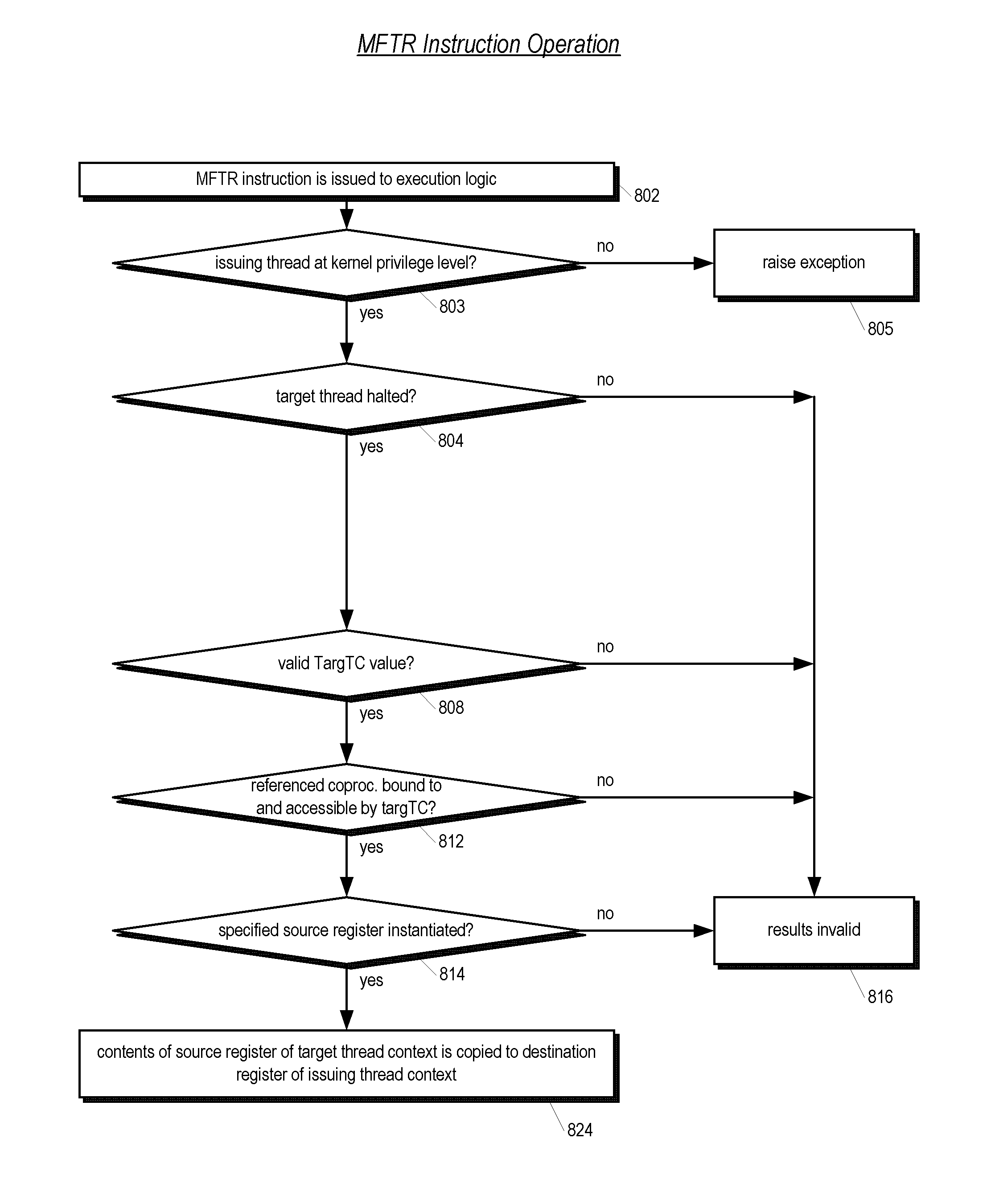

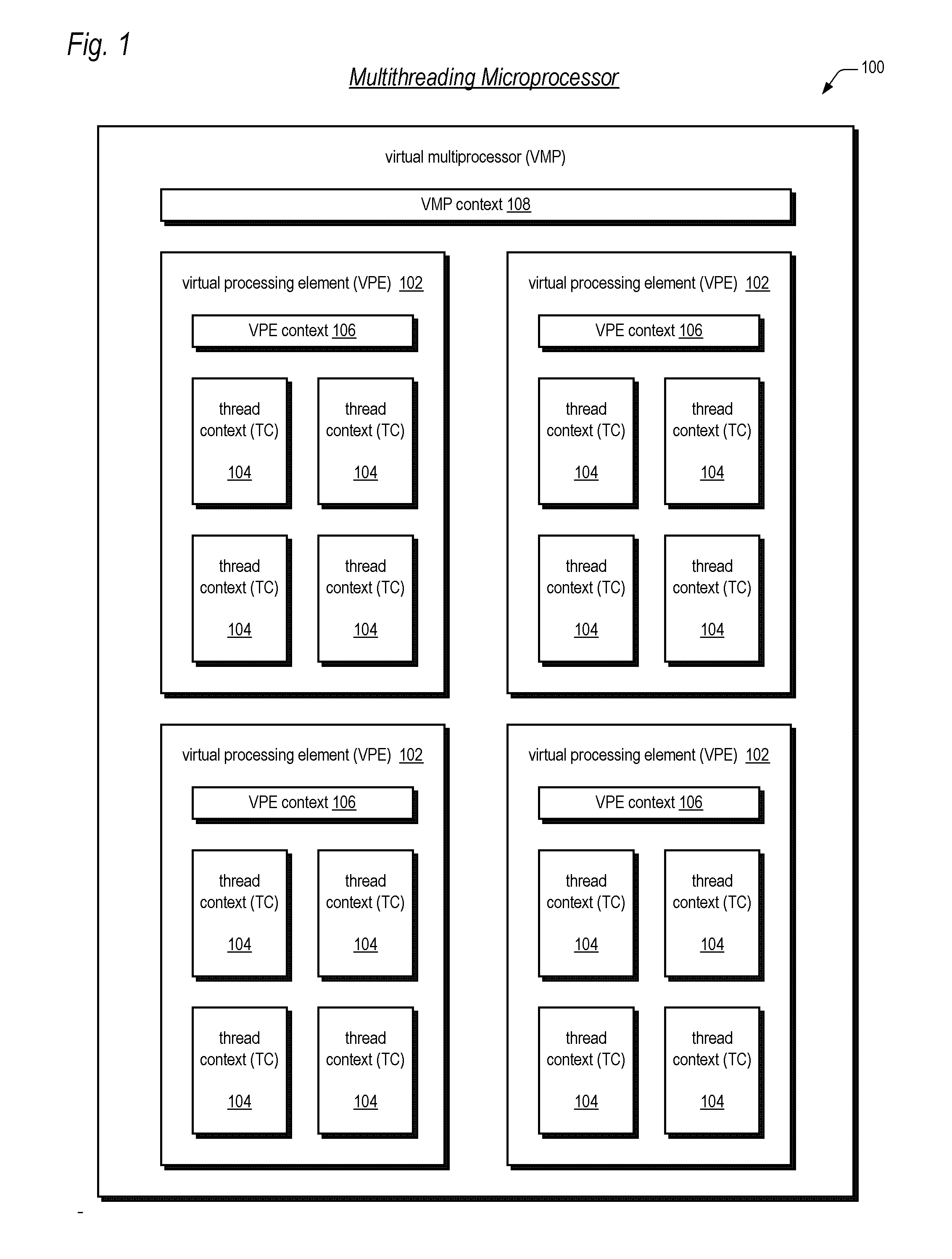

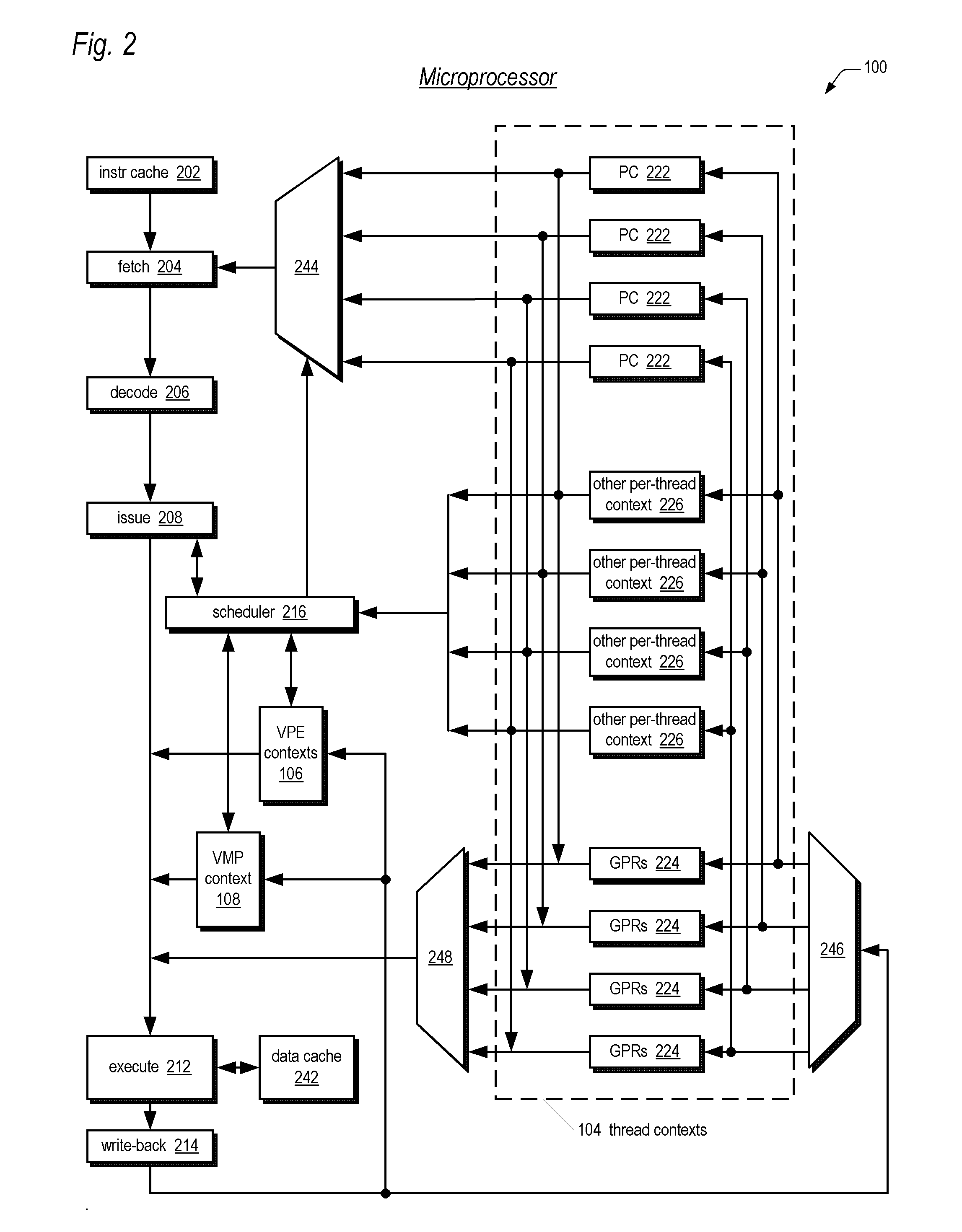

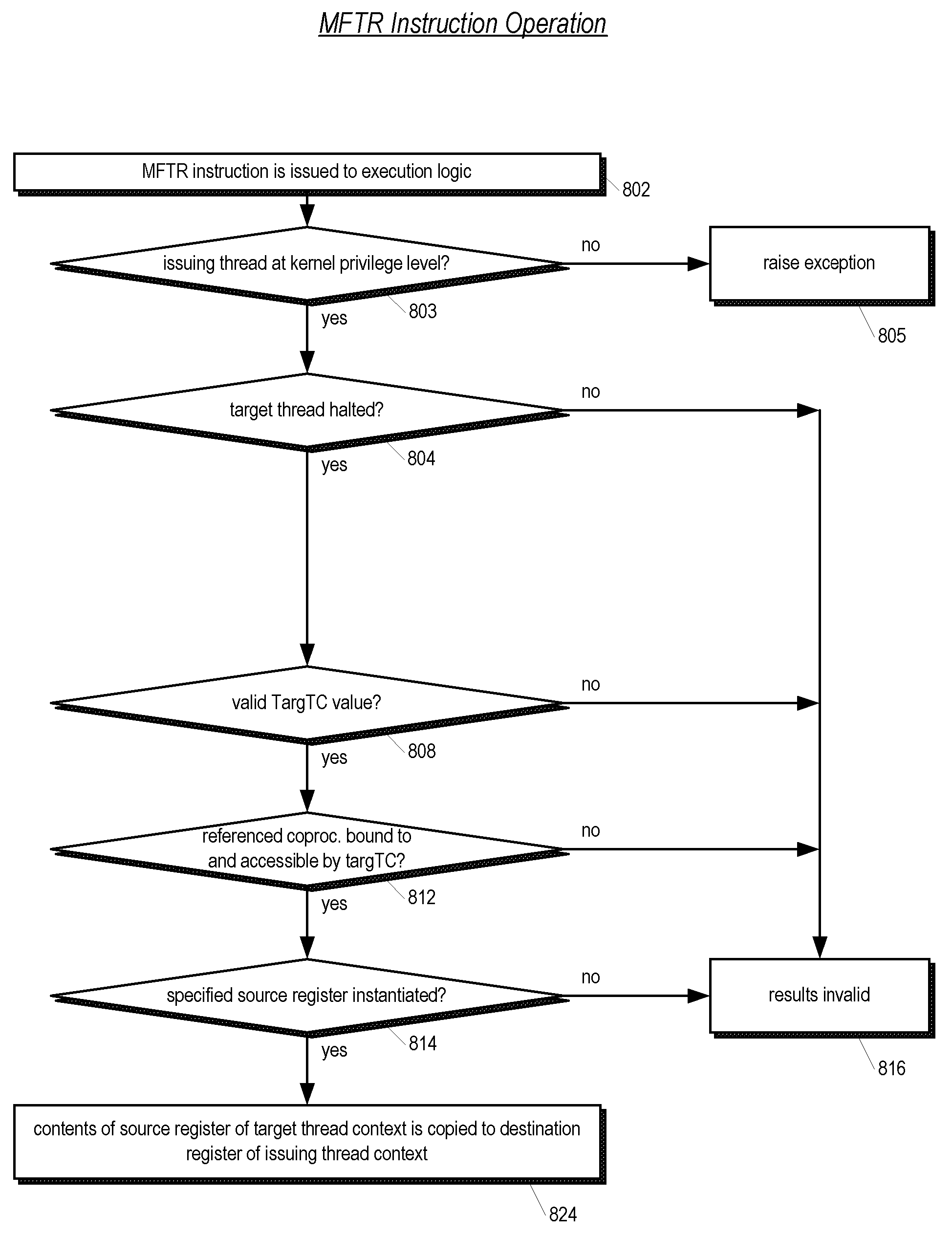

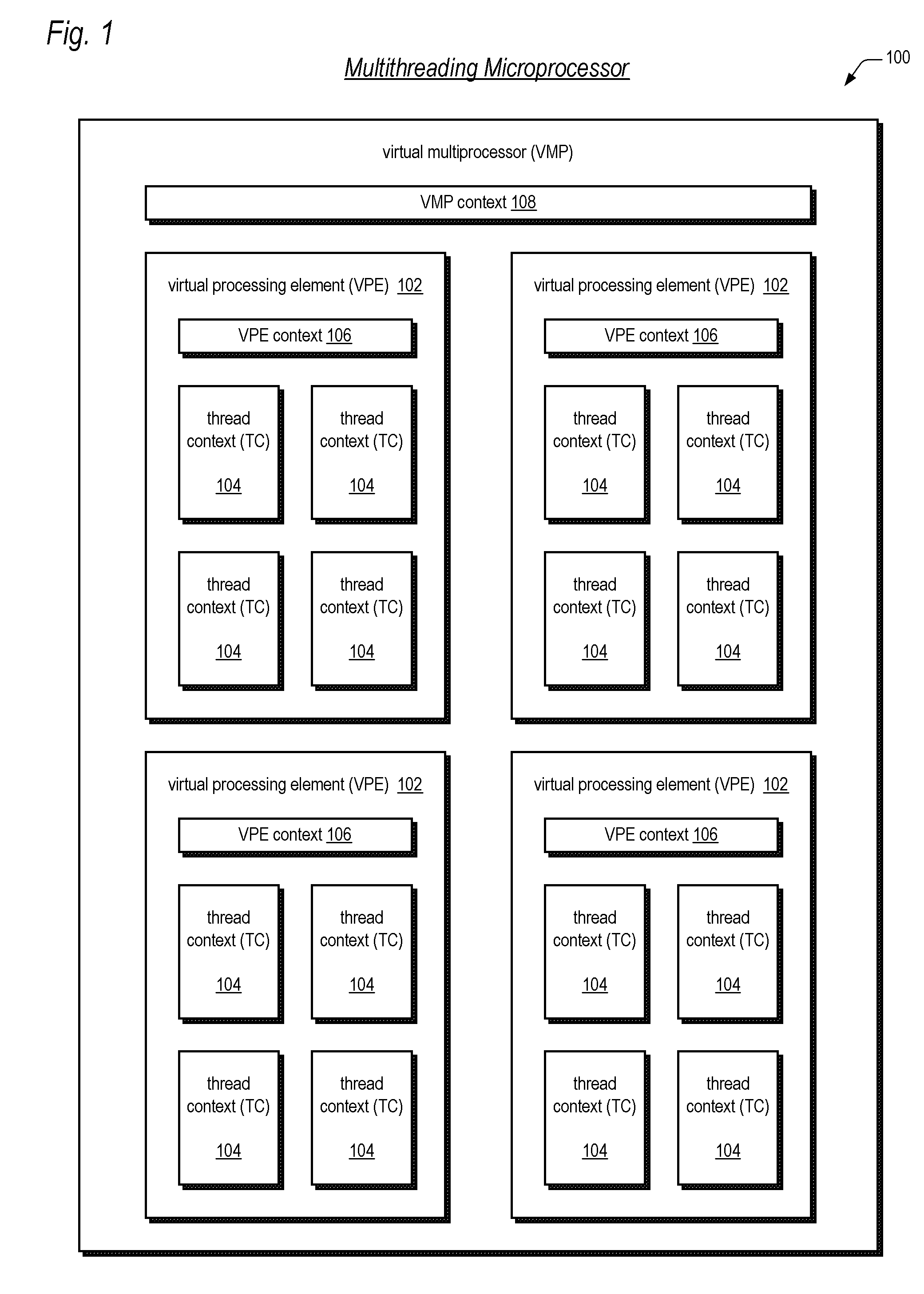

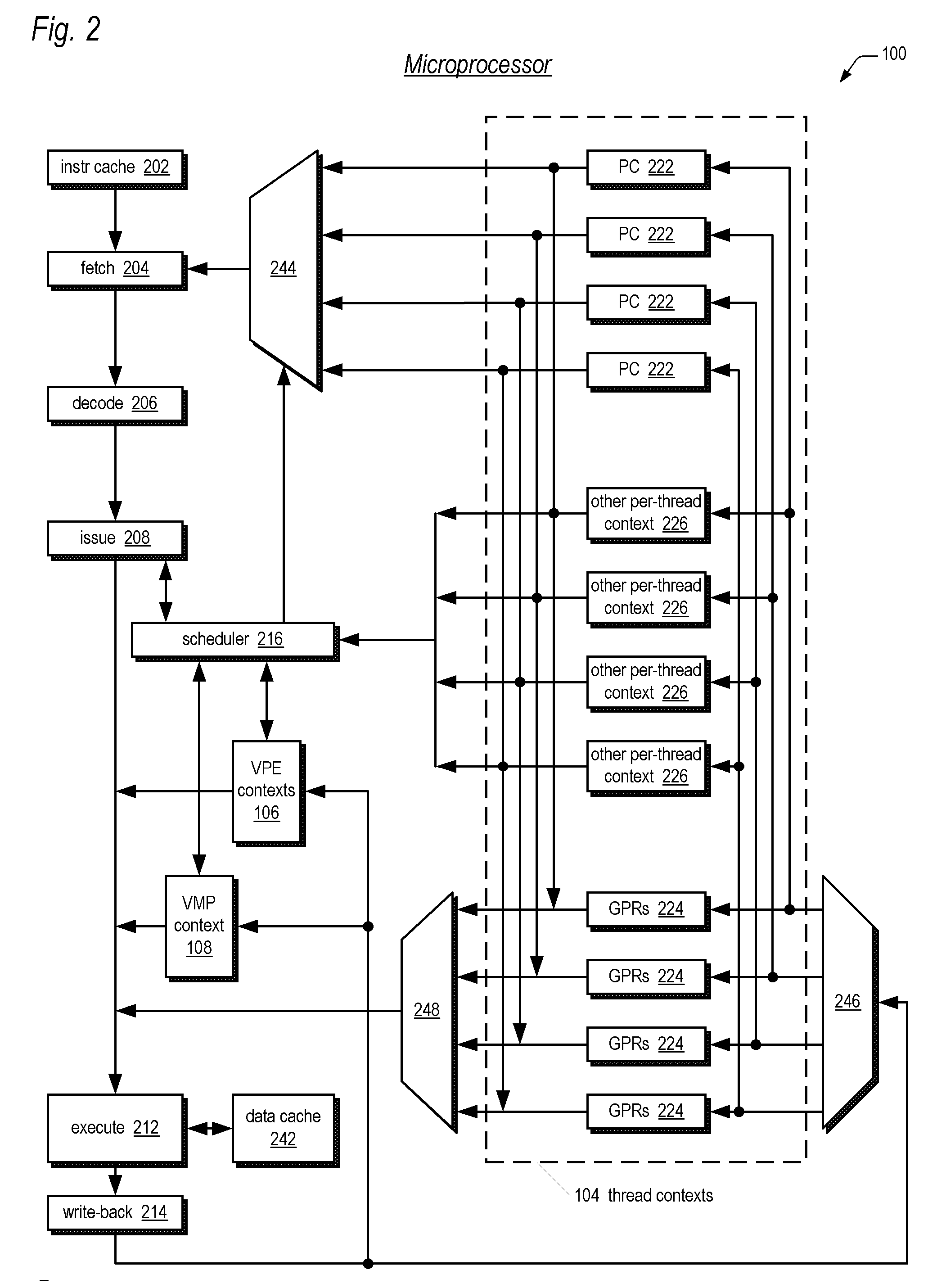

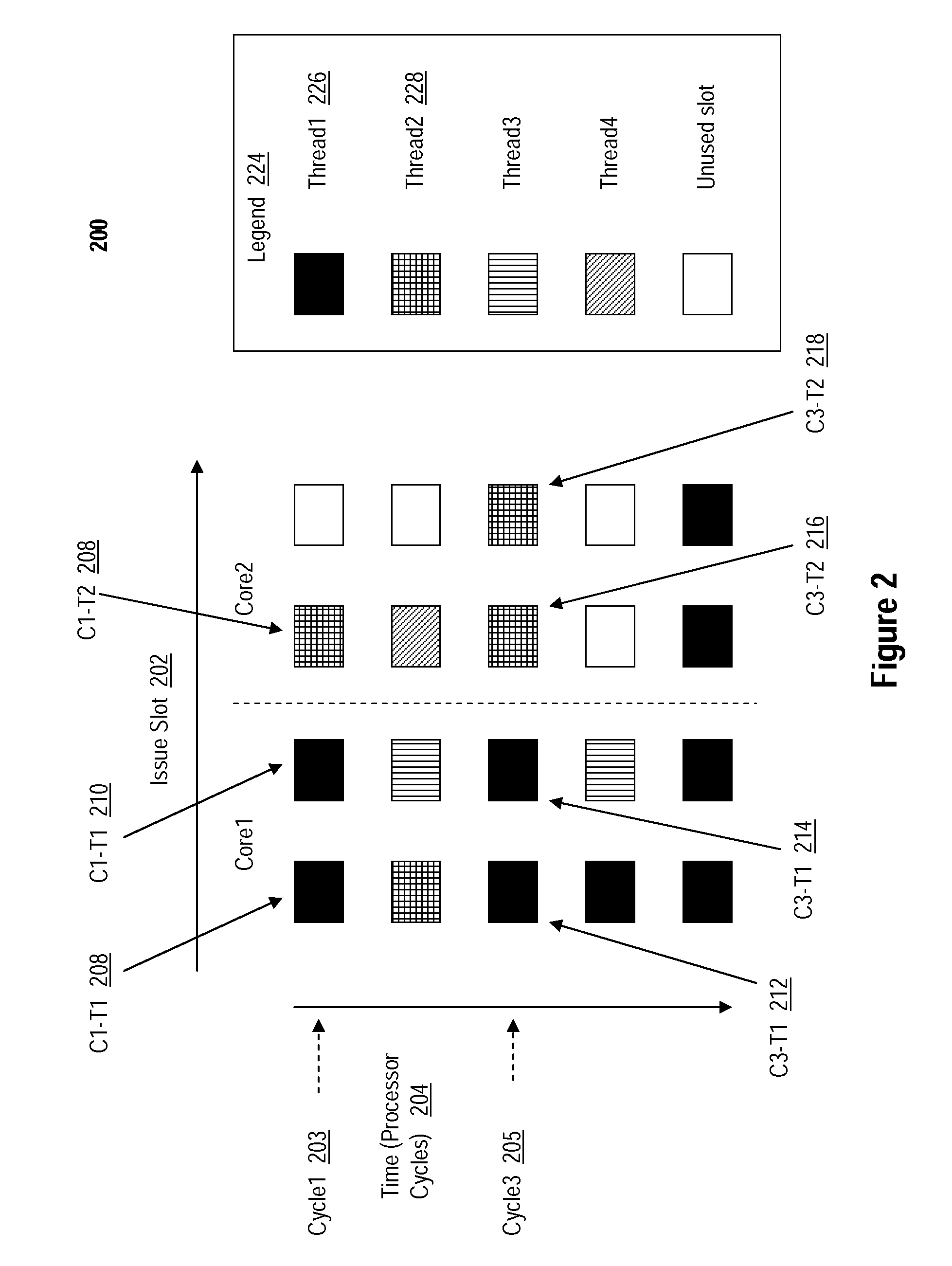

Symmetric multiprocessor operating system for execution on non-independent lightweight thread contexts

ActiveUS20070044106A2Lightweight in of areaLightweight in of powerMultiprogramming arrangementsMemory systemsOperational systemMulti processor

A multiprocessing system is disclosed. The system includes a multithreading microprocessor having a plurality of thread contexts (TCs), a translation lookaside buffer (TLB) shared by the plurality of TCs, and an instruction scheduler, coupled to the plurality of TCs, configured to dispatch to execution units, in a multithreaded fashion, instructions of threads executing on the plurality of TCs. The system also includes a multiprocessor operating system (OS), configured to schedule execution of the threads on the plurality of TCs, wherein a thread of the threads executing on one of the plurality of TCs is configured to update the shared TLB, and prior to updating the TLB to disable interrupts, to prevent the OS from unscheduling the TLB-updating thread from executing on the plurality of TCs, and disable the instruction scheduler from dispatching instructions from any of the plurality of TCs except from the one of the plurality of TCs on which the TLB-updating thread is executing.

Owner:MIPS TECH INC

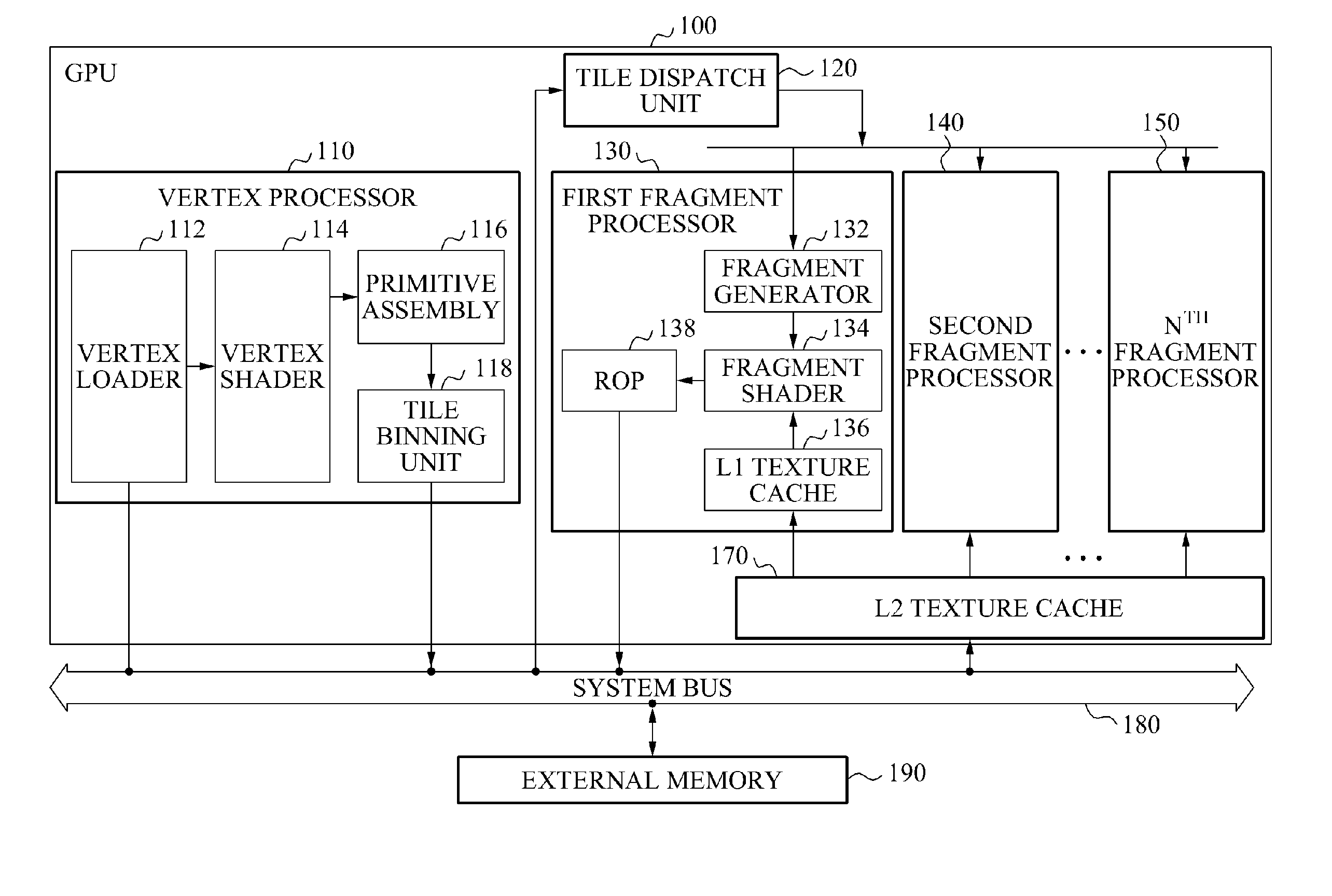

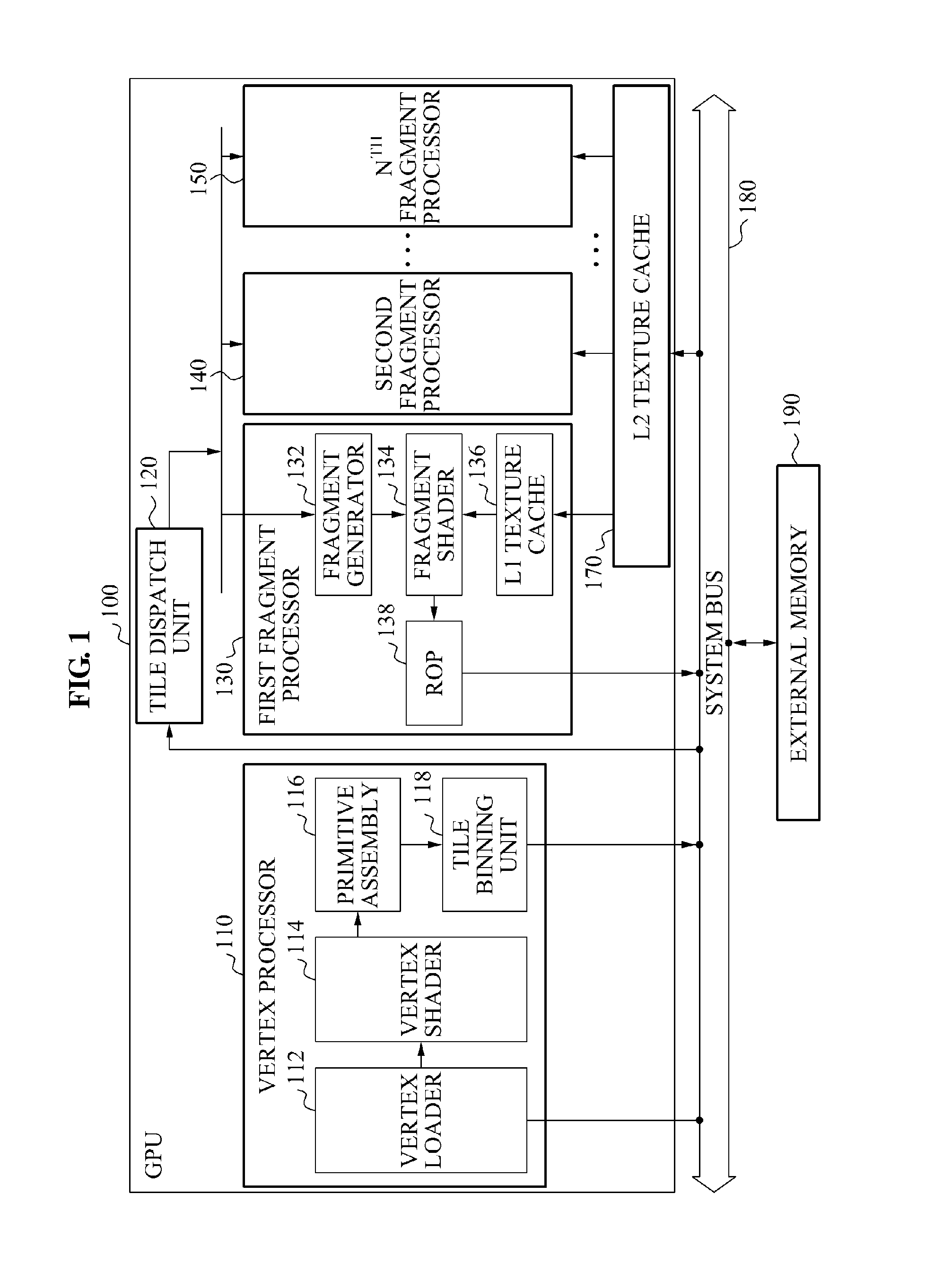

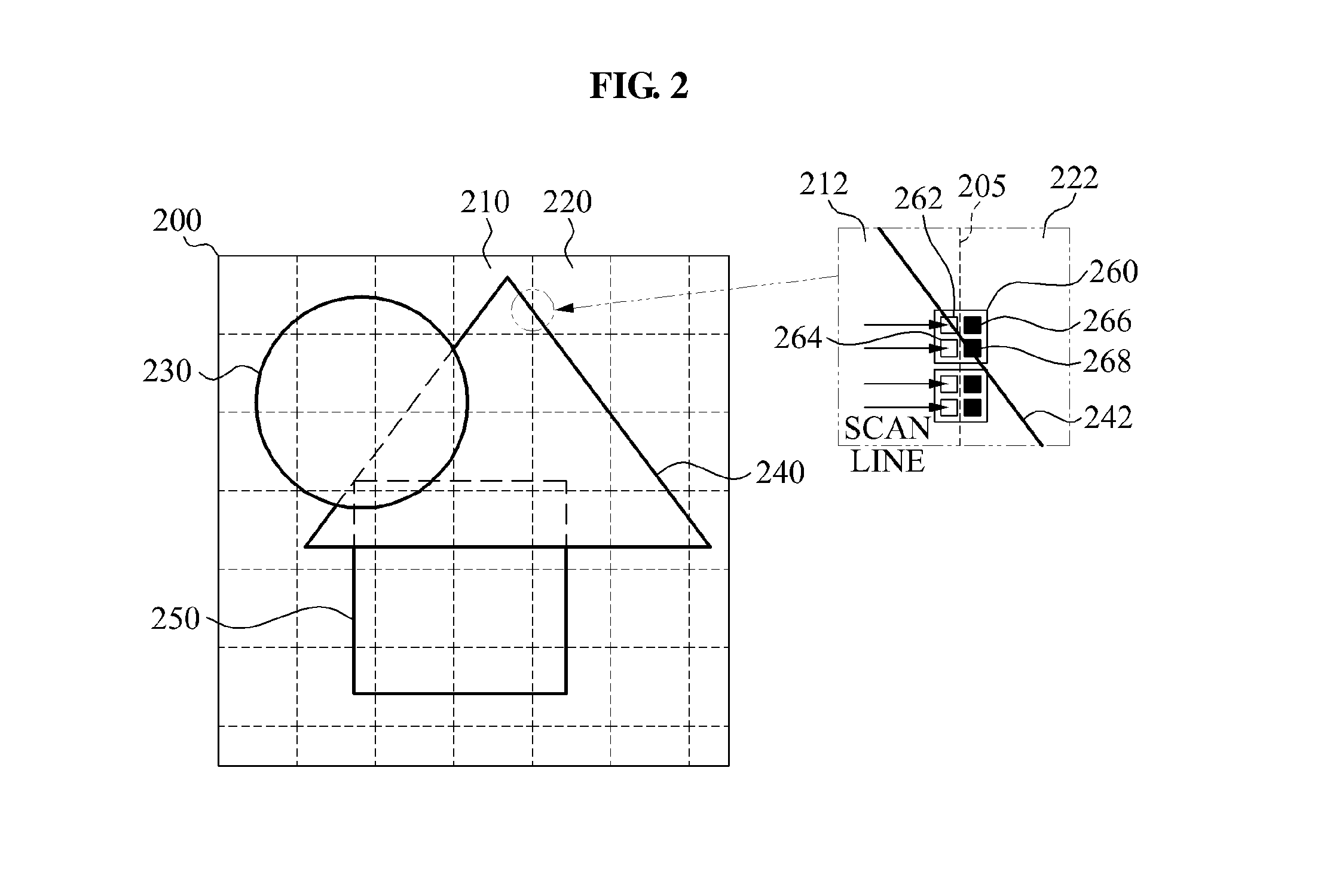

Method and apparatus for tile based rendering using tile-to-tile locality

ActiveUS20120320069A1Multiple digital computer combinationsProcessor architectures/configurationFragment processingComputer graphics (images)

Owner:SAMSUNG ELECTRONICS CO LTD

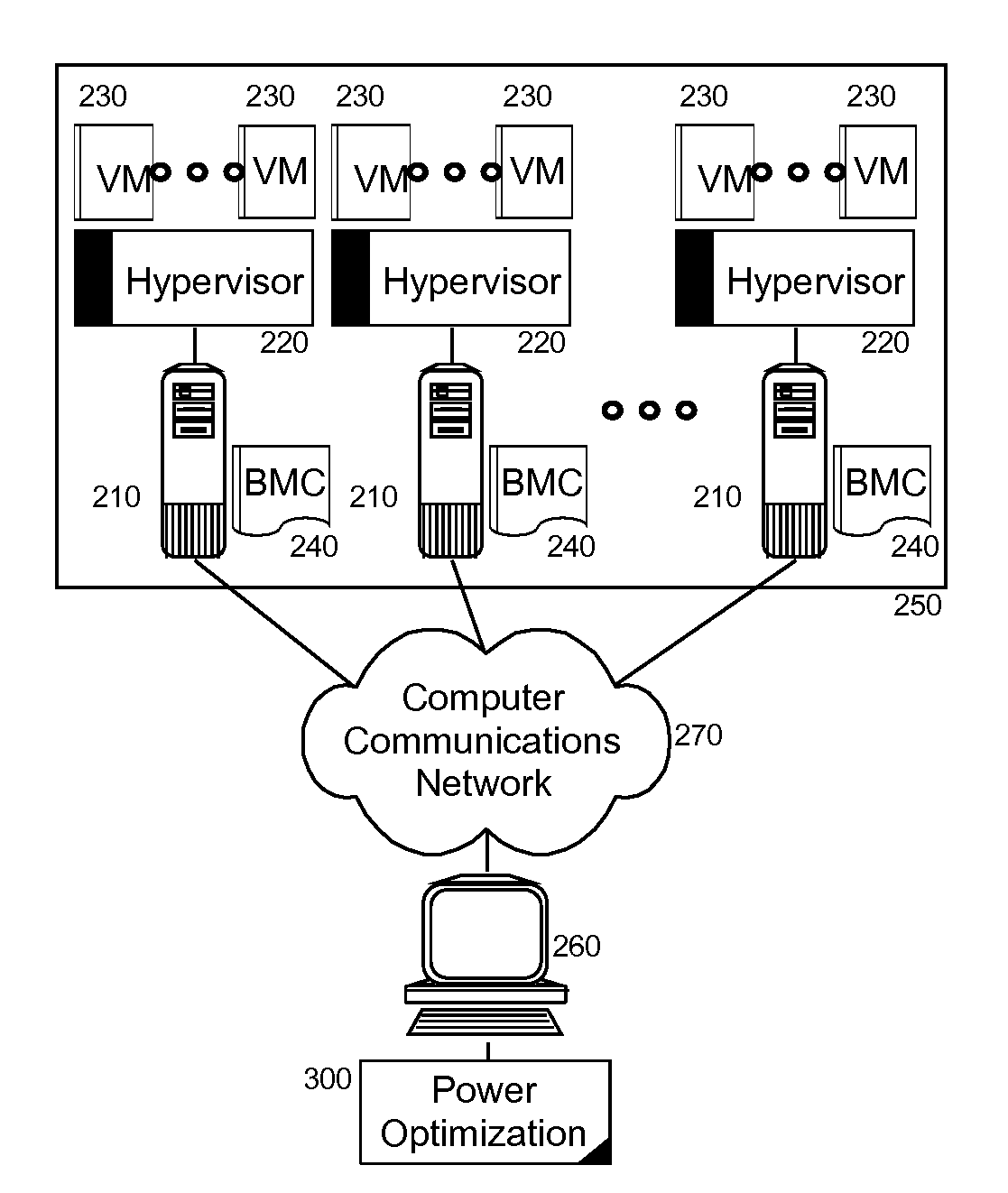

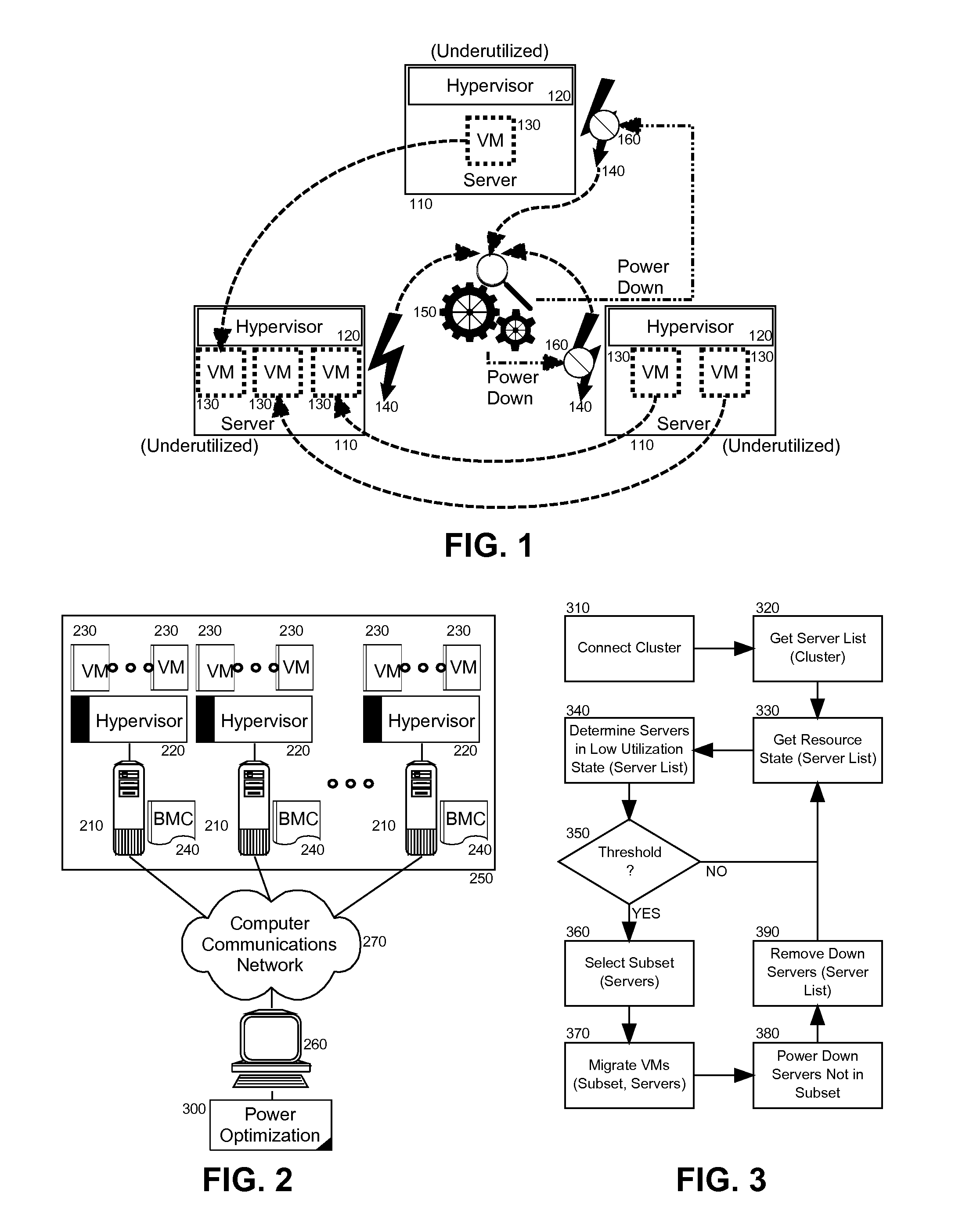

Power optimization via virtualization opportunity

InactiveUS20100115509A1Reduce power consumptionDistributed system energy consumption reductionVolume/mass flow measurementVirtualizationComputer program

Embodiments of the present invention provide a method, system and computer program product for power optimization via virtualization opportunity determination. In an embodiment of the invention, a method for power optimization via virtualization opportunity determination can be provided. The method can include monitoring power utilization in individual server hosts in a cluster and determining a set of the server hosts in the cluster demonstrating low power utilization. The method also can include selecting a subset of server hosts in the set and migrating each VM in non-selected server hosts in the set to the subset of server hosts. Finally, the method can include powering down the non-selected server hosts.

Owner:LENOVO ENTERPRISE SOLUTIONS SINGAPORE

Symmetric multiprocessor operating system for execution on non-independent lightweight thread contexts

ActiveUS20070044105A2Lightweight in of areaLightweight in of powerMultiprogramming arrangementsMemory systemsOperational systemMulti processor

A multiprocessing system is disclosed. The system includes a multithreading microprocessor, including a plurality of thread contexts (TCs), each comprising a first control indicator for controlling whether the TC is exempt from servicing interrupt requests to an exception domain for the plurality of TCs, and a virtual processing element (VPE), comprising the exception domain, configured to receive the interrupt requests, wherein the interrupt requests are non-specific to the plurality of TCs, wherein the VPE is configured to select a non-exempt one of the plurality of TCs to service each of the interrupt requests, the VPE further comprising a second control indicator for controlling whether the VPE is enabled to select one of the plurality of TCs to service the interrupt requests. The system also includes a multiprocessor operating system (OS), configured to initially set the second control indicator to enable the VPE to service the interrupts, and further configured to schedule execution of threads on the plurality of TCs, wherein each of the threads is configured to individually disable itself from servicing the interrupts by setting the first control indicator, rather than by clearing the second control indicator.

Owner:MIPS TECH INC

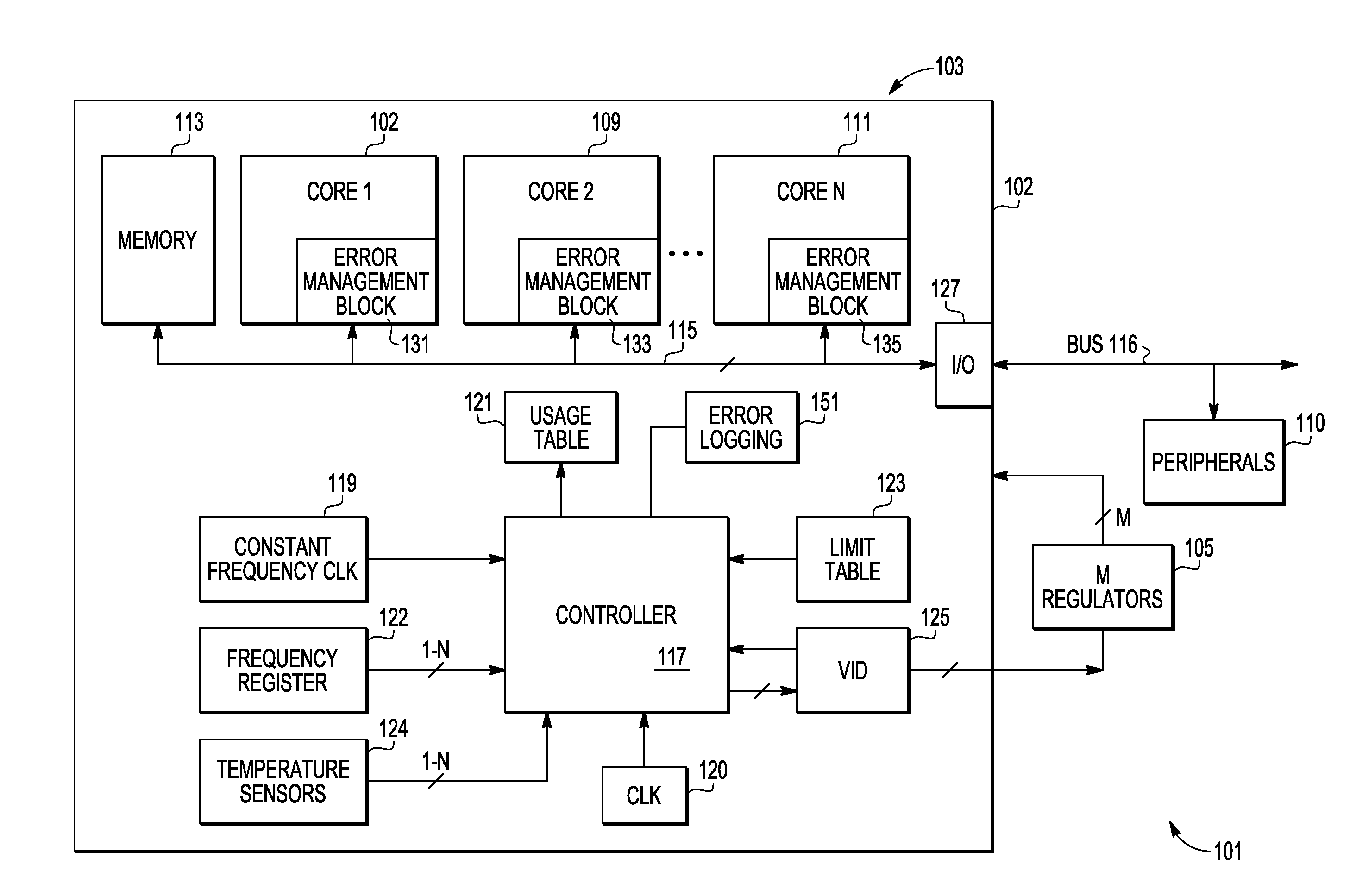

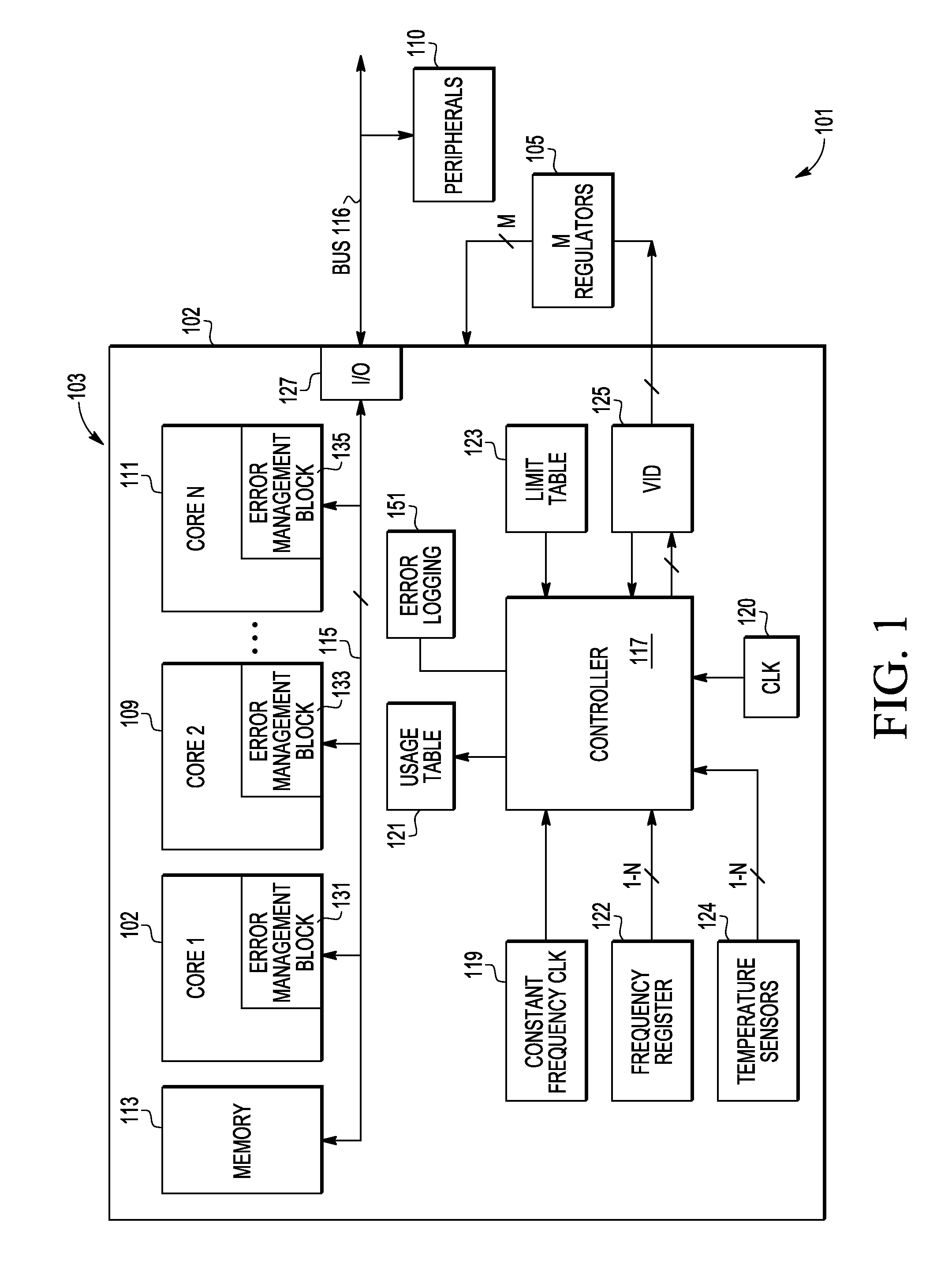

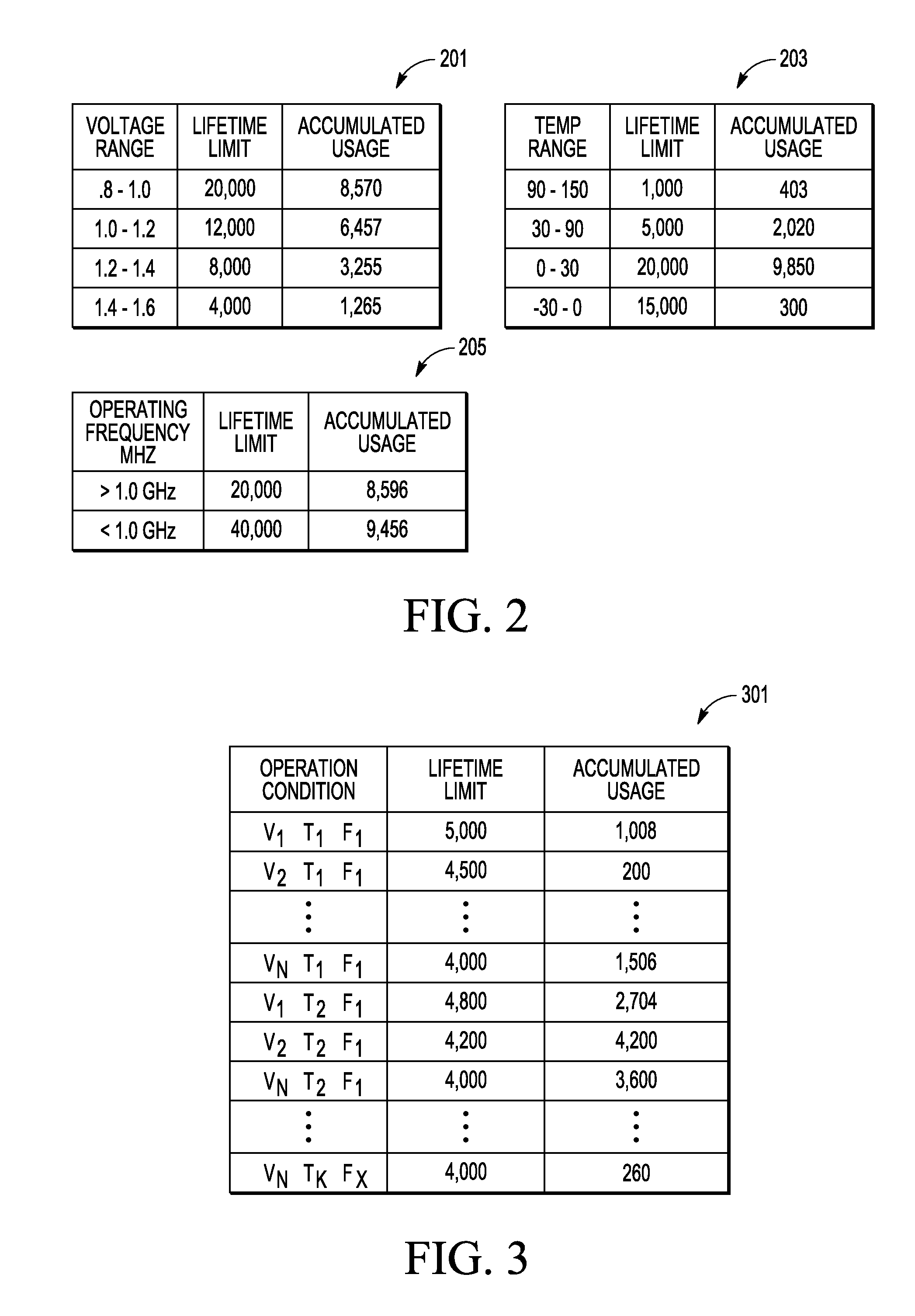

Multiple core data processor with usage monitoring

InactiveUS20120036398A1Digital computer detailsRedundant operation error correctionMulti-core processorData processing

Owner:FREESCALE SEMICON INC

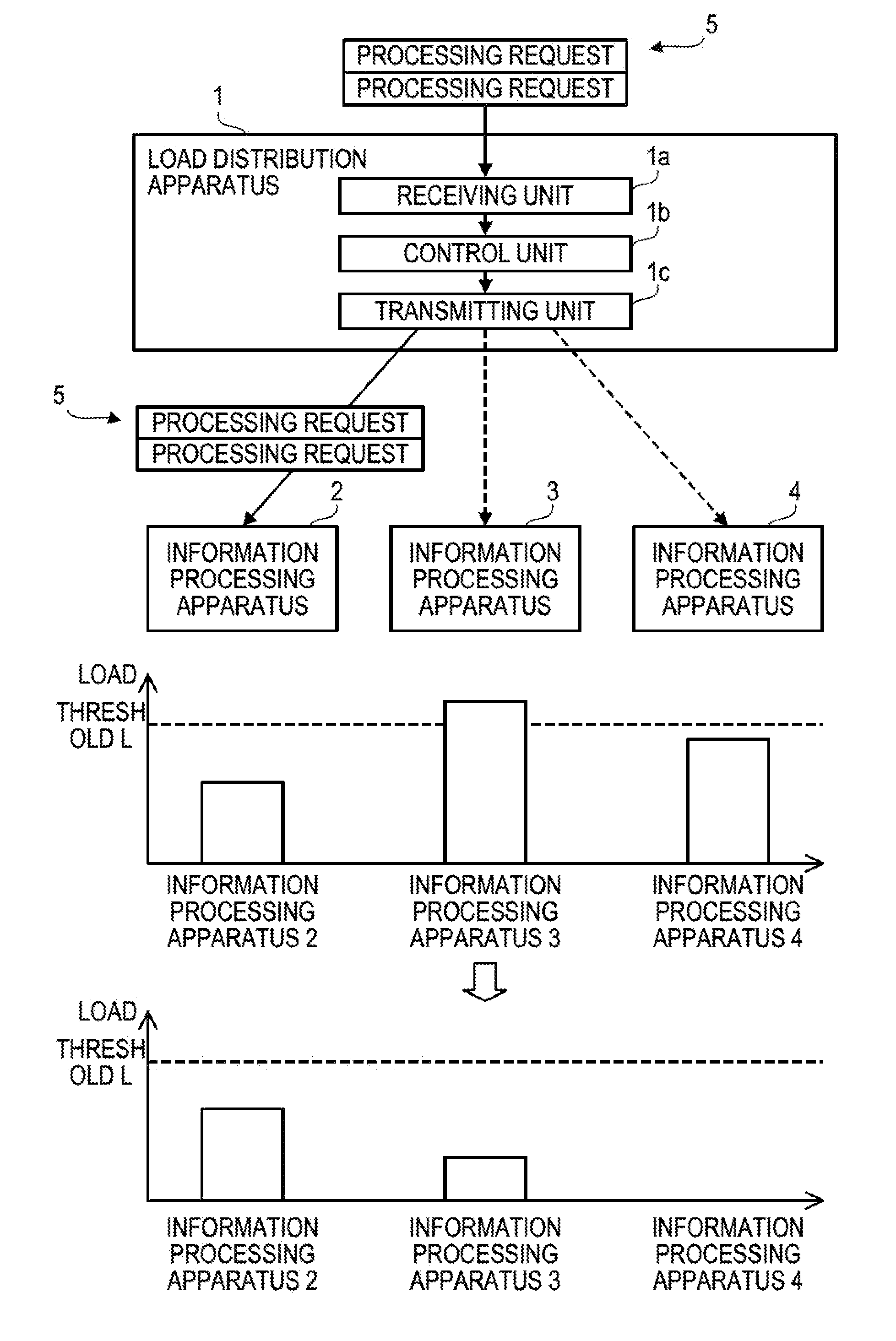

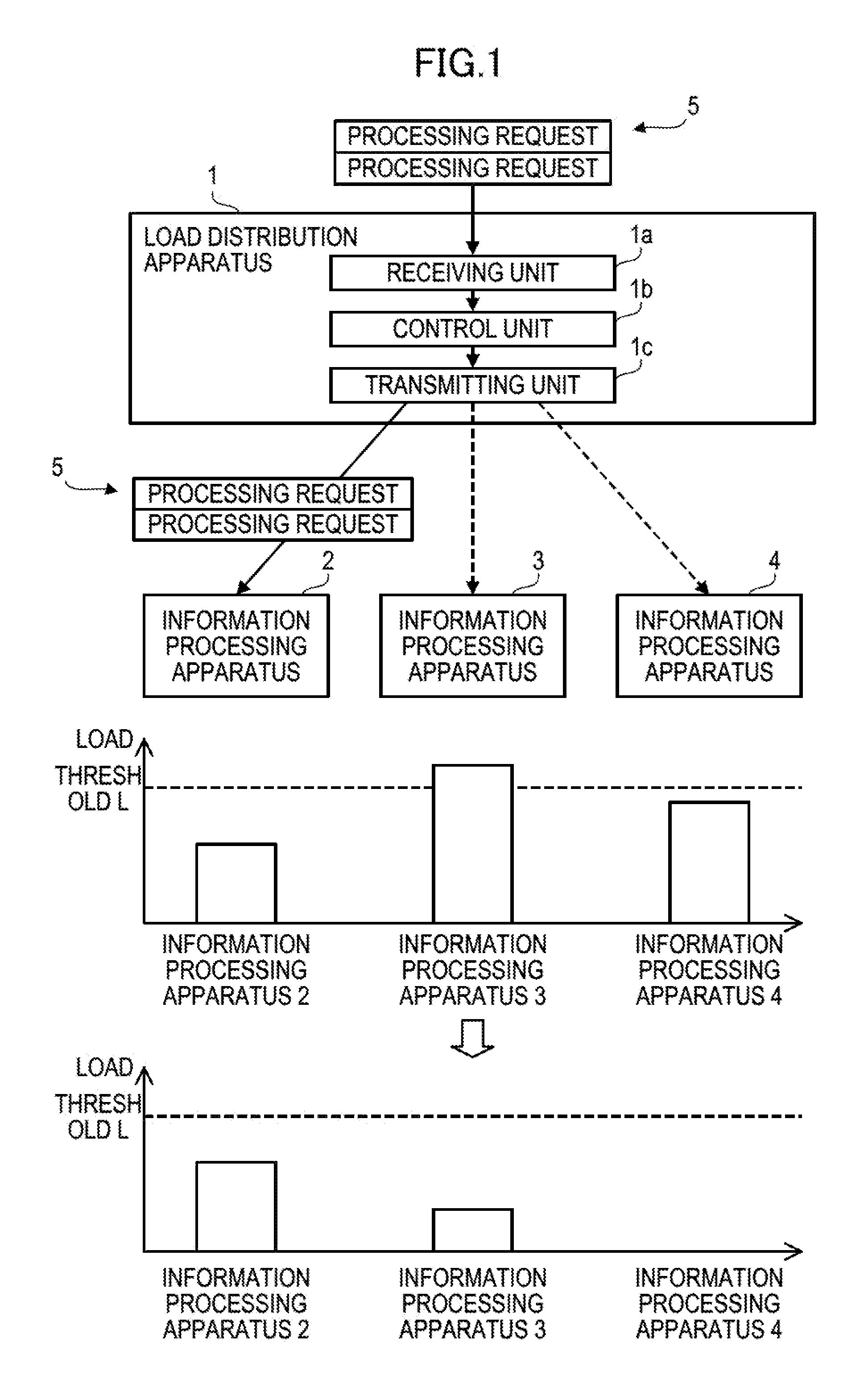

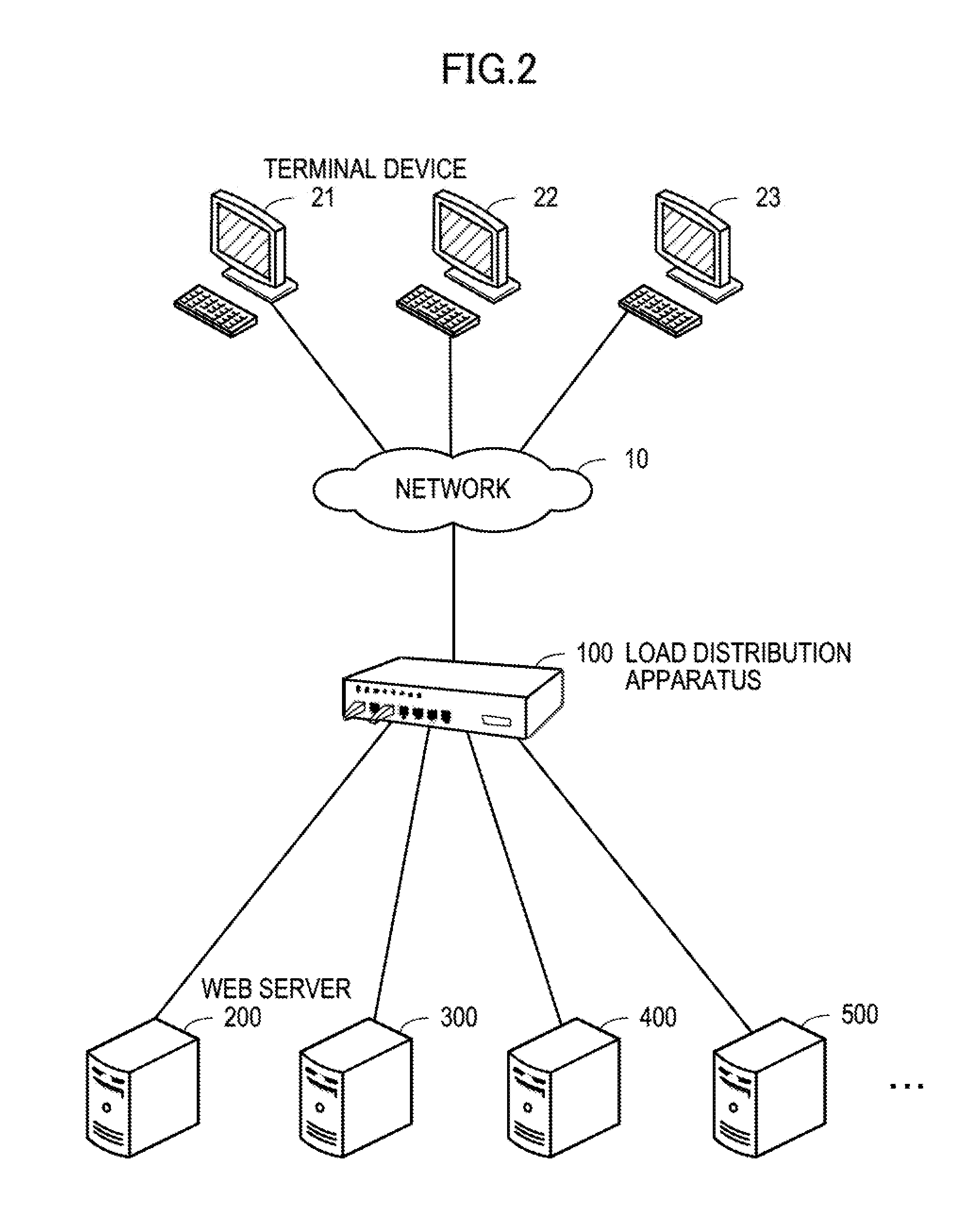

Load distribution apparatus, load distribution method, and storage medium

InactiveUS20100185766A1Volume/mass flow measurementDigital computer detailsInformation processingLoad distribution

A load distribution apparatus includes: a control unit that refers to a load information storage unit that stores load values of a plurality of information processing apparatuses, selects an information processing apparatus with the load value smaller than a predetermined threshold from the plurality of information processing apparatuses, and determines the information processing apparatus with the load value smaller than a predetermined threshold as an allocation destination of processing requests until the load value of the information processing apparatus reaches the predetermined threshold; and a transmitting unit that transmits the processing requests to the allocation destination determined by the control unit.

Owner:FUJITSU LTD

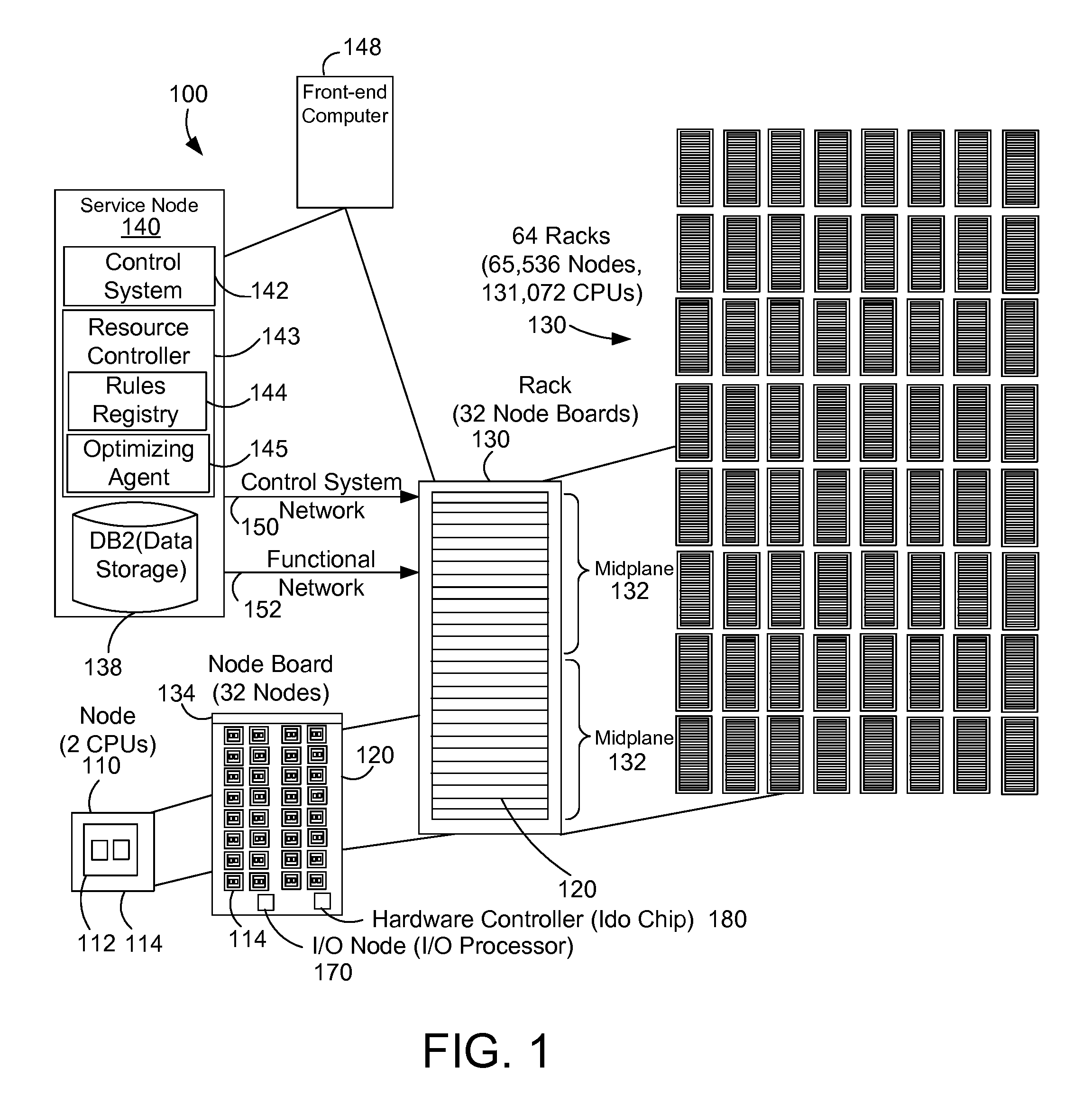

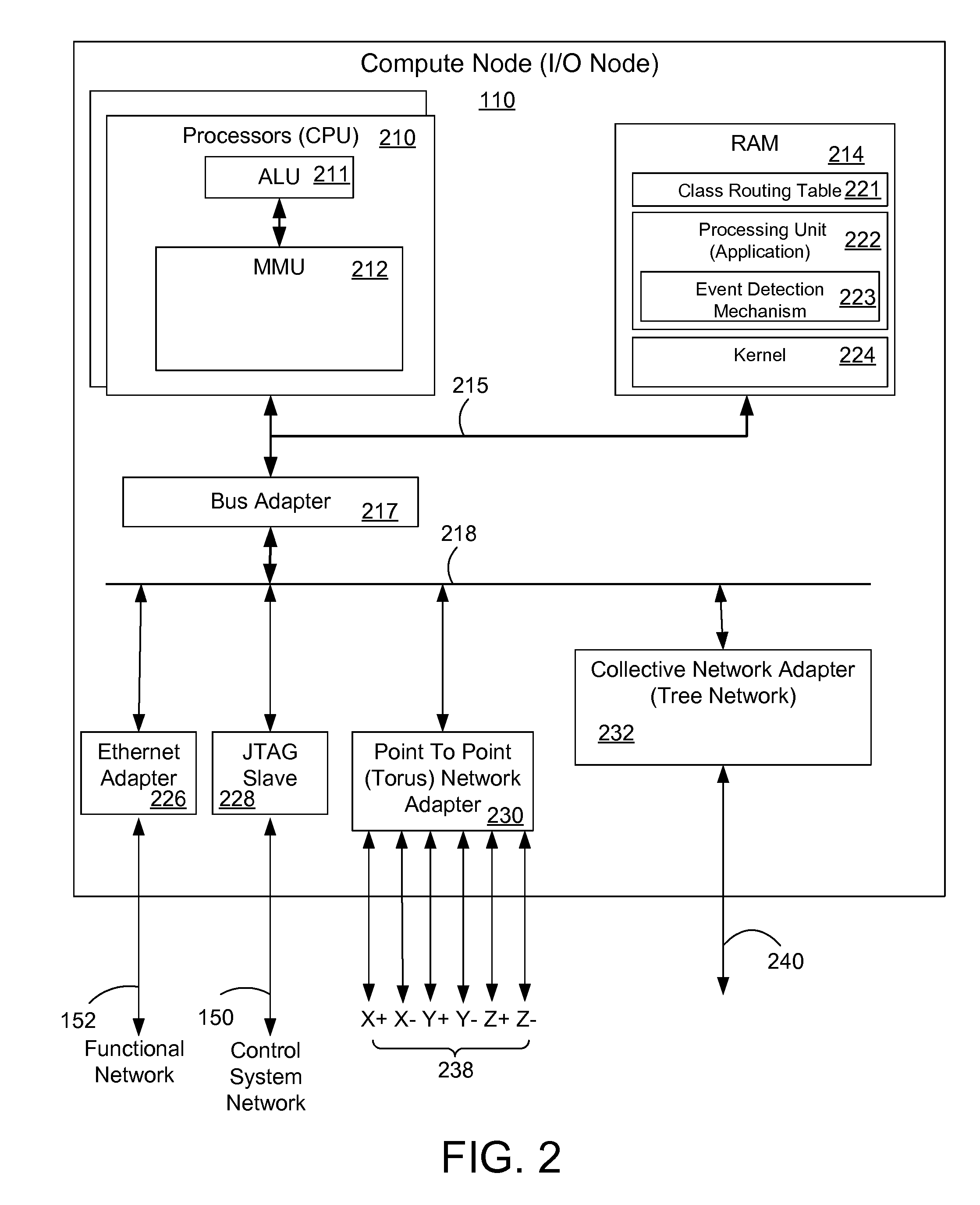

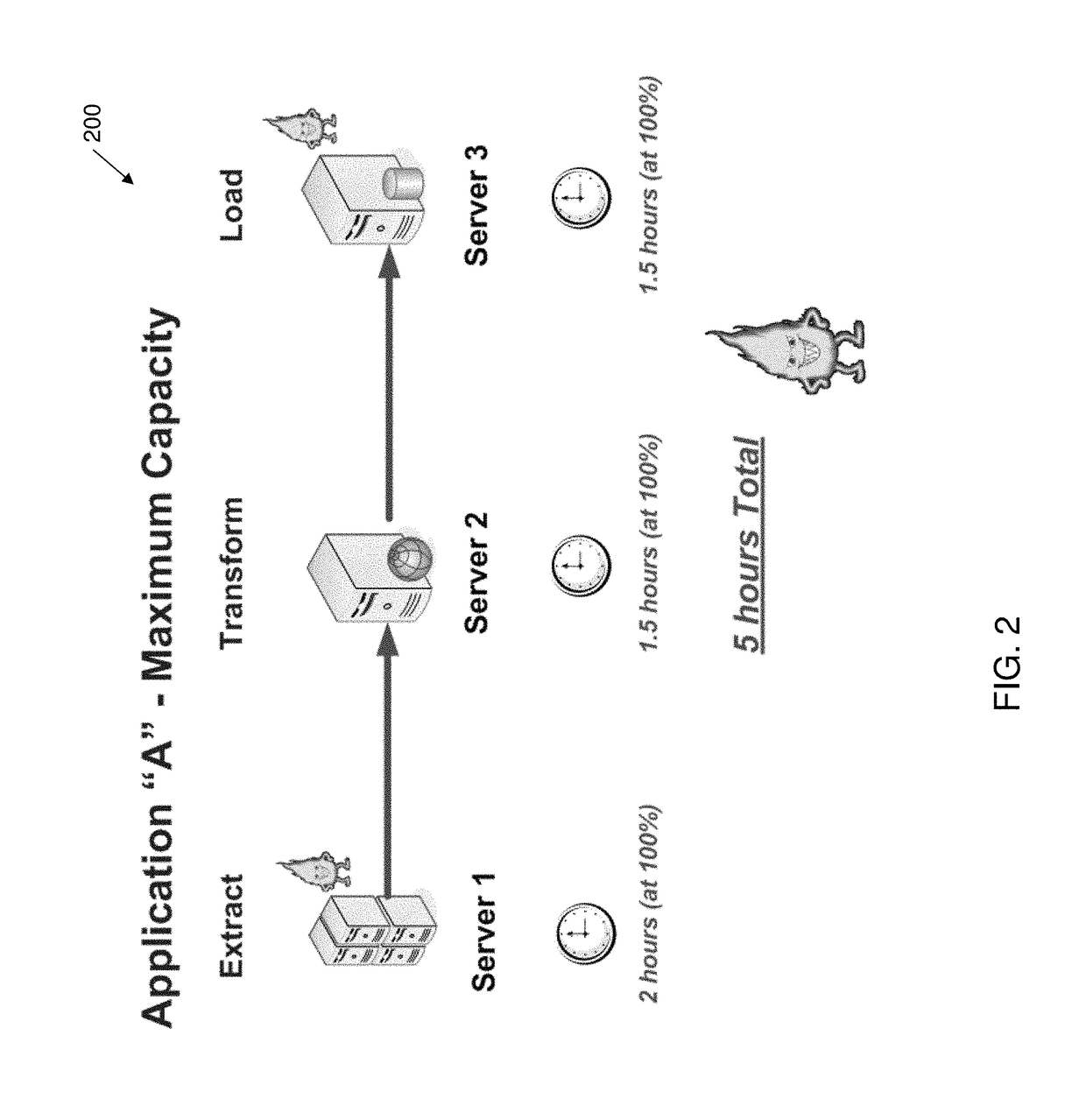

Dynamic resource adjustment for a distributed process on a multi-node computer system

ActiveUS20100186019A1Resource allocationEfficient power electronics conversionDownstream processingDynamic resource

A method dynamically adjusts the resources available to a processing unit of a distributed computer process executing on a multi-node computer system. The resources for the processing unit are adjusted based on the data other processing units handle or the execution path of code in an upstream or downstream processing unit in the distributed process or application.

Owner:IBM CORP

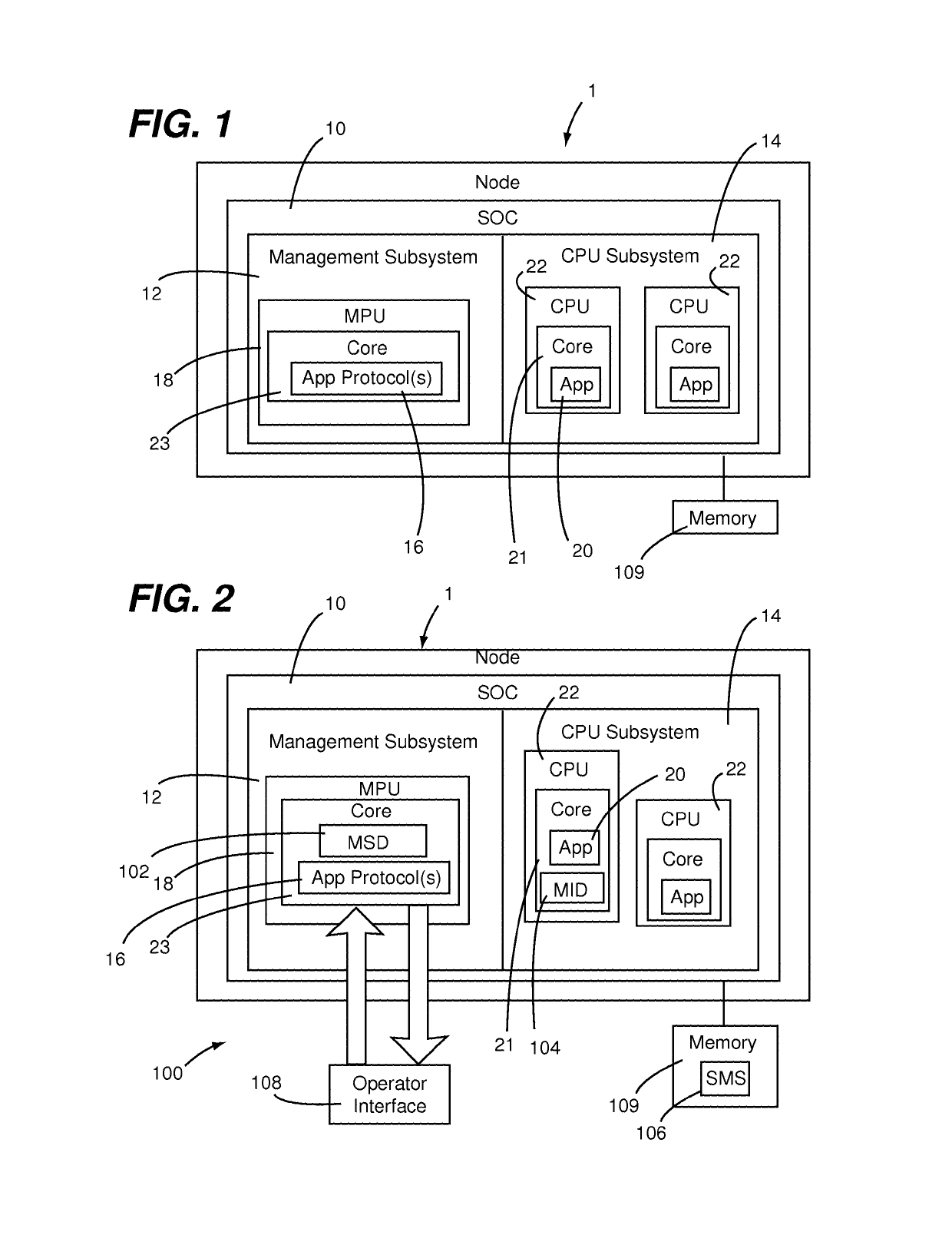

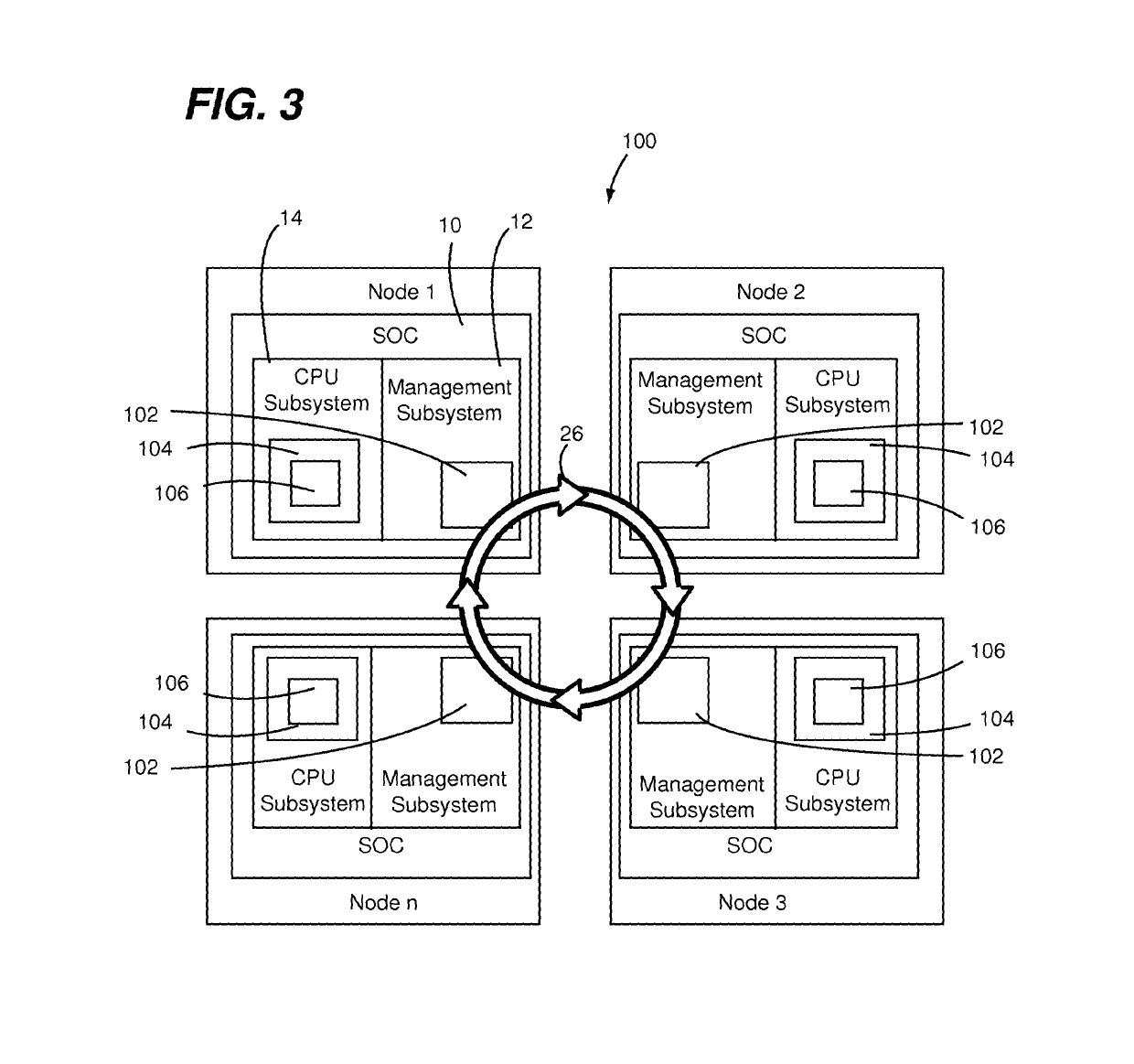

System, method and computer readable medium for offloaded computation of distributed application protocols within a cluster of data processing nodes

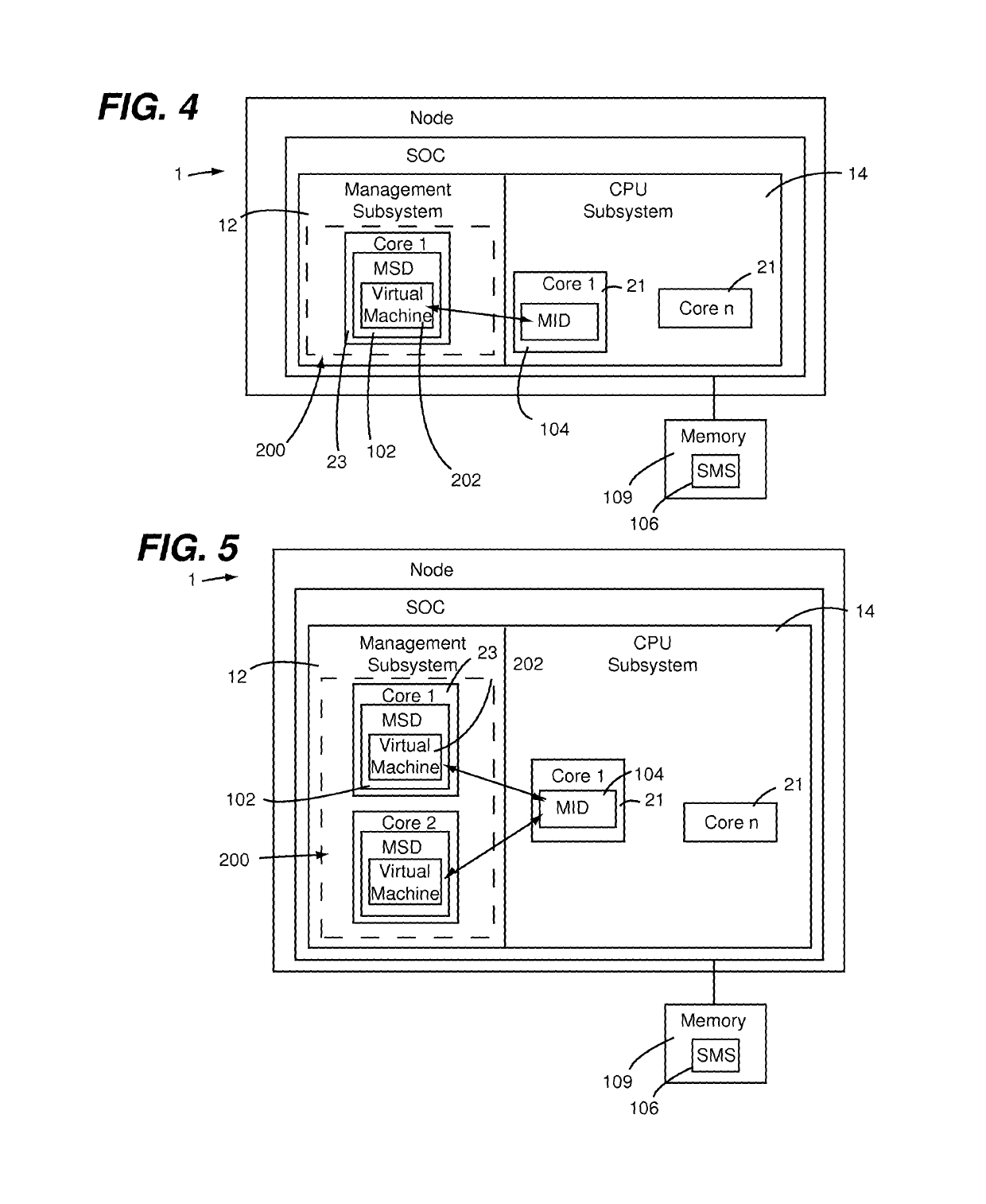

InactiveUS10311014B2Avoid disturbanceReduce latencyResource allocationDistributed system energy consumption reductionWireless Application ProtocolApplication software

A data processing node includes a management environment, an application environment, and a shared memory segment (SMS). The management environment includes at least one management services daemon (MSD) running on one or more dedicated management processors thereof. One or more application protocols are executed by the at least one MSD on at least one of the dedicated management processors. The management environment has a management interface daemon (MID) running on one or more application central processing unit (CPU) processors thereof. The SMS is accessible by the at least one MSD and the MID for enabling communication of information of the one or more application protocols to be provided between the at least one MSD and the MID. The MID provides at least one of management service to processes running within the application environment and local resource access to one or more processes running on another data processing node.

Owner:III HLDG 2

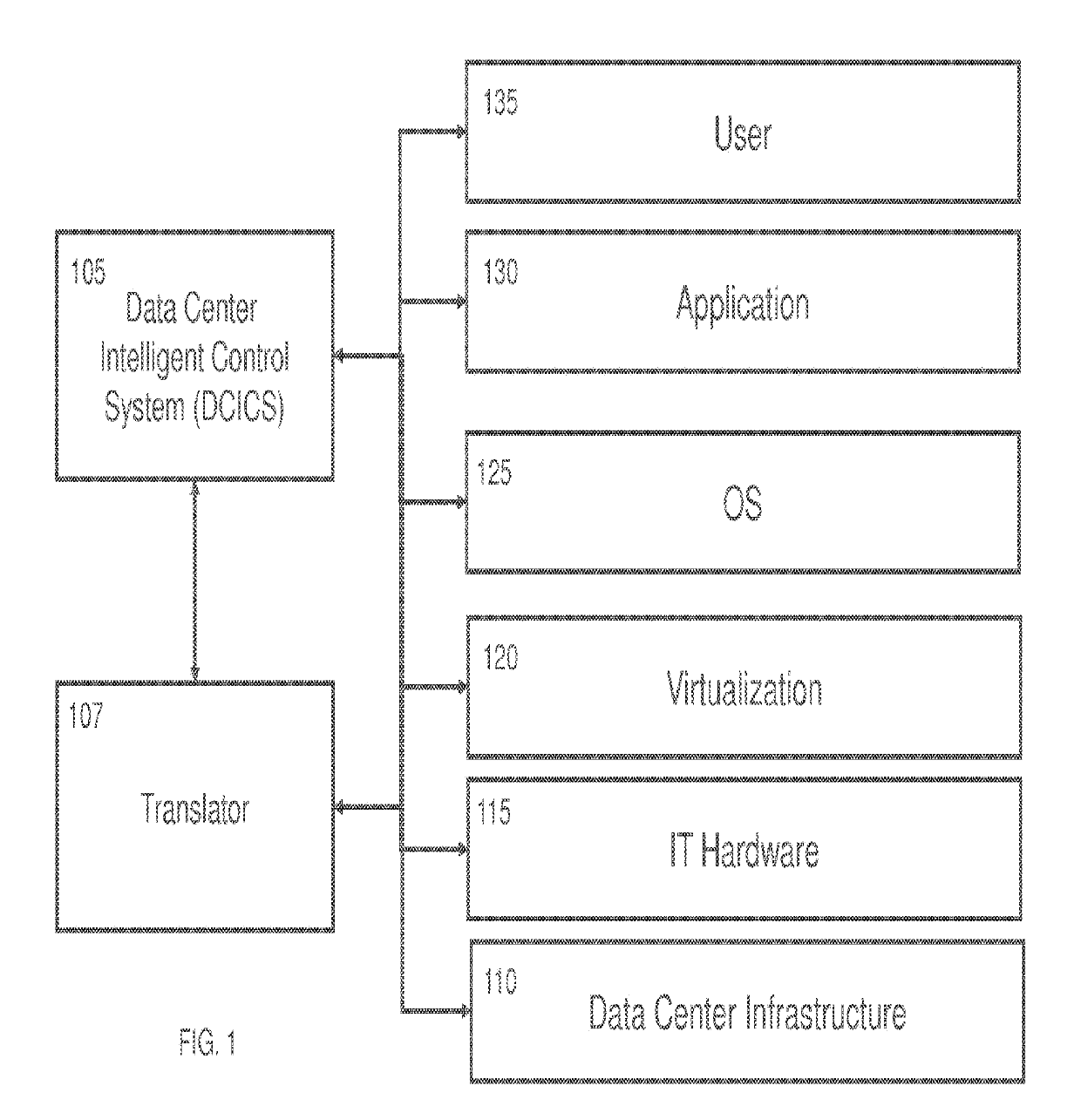

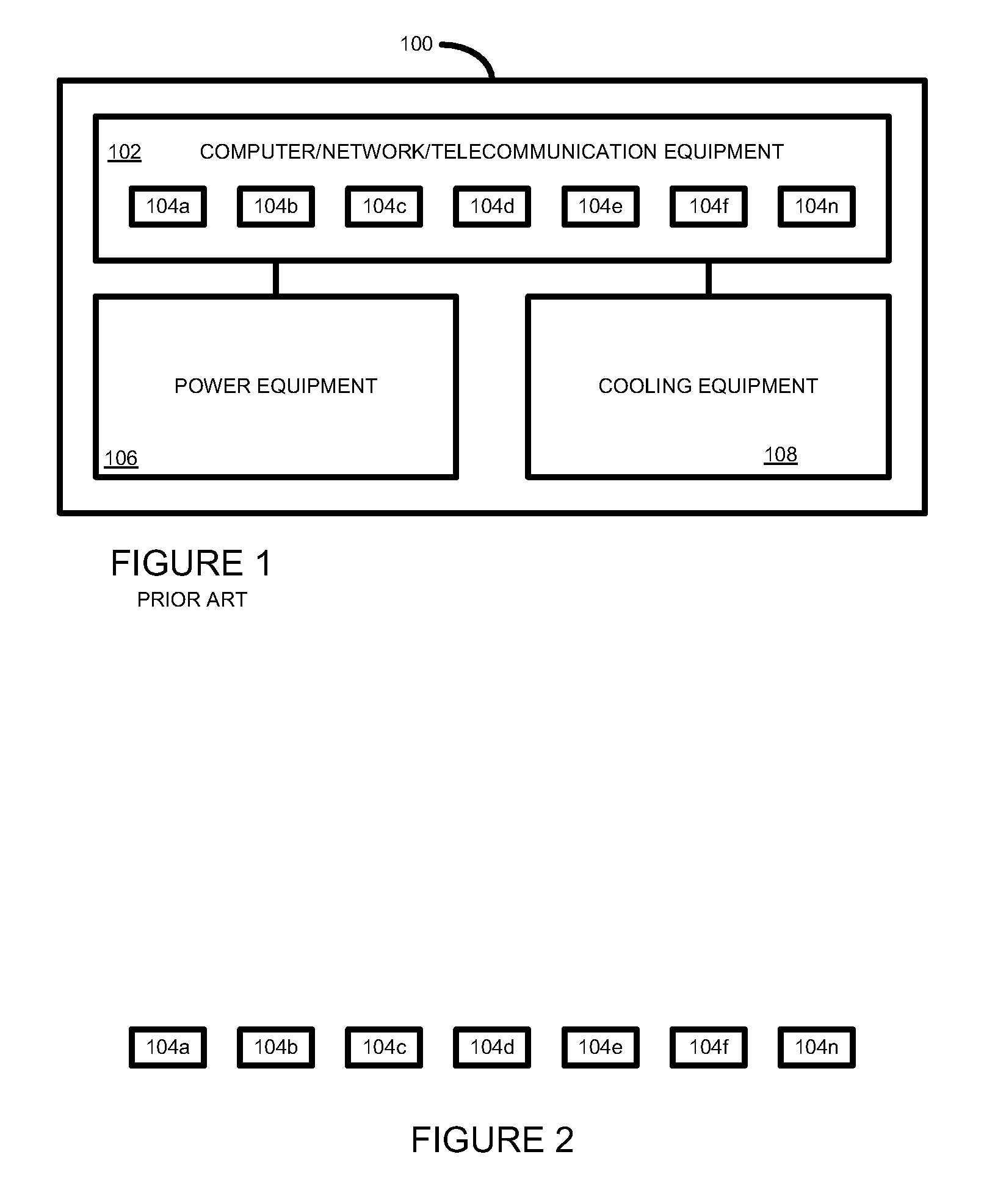

Data Center Optimization and Control

InactiveUS20190235449A1Easy to optimizeEasy to operateServersSpace heating and ventilation safety systemsData centerControl system

Systems and methods of monitoring, analyzing, optimizing and controlling data centers and data center operations are disclosed. The system includes data collection and storage hardware and software for harvesting operational data from data center assets and operations. Intelligent analysis and optimization software enables identification of optimization and / or control actions. Control software and hardware enables enacting a change in the operational state of data centers.

Owner:BASELAYER TECH

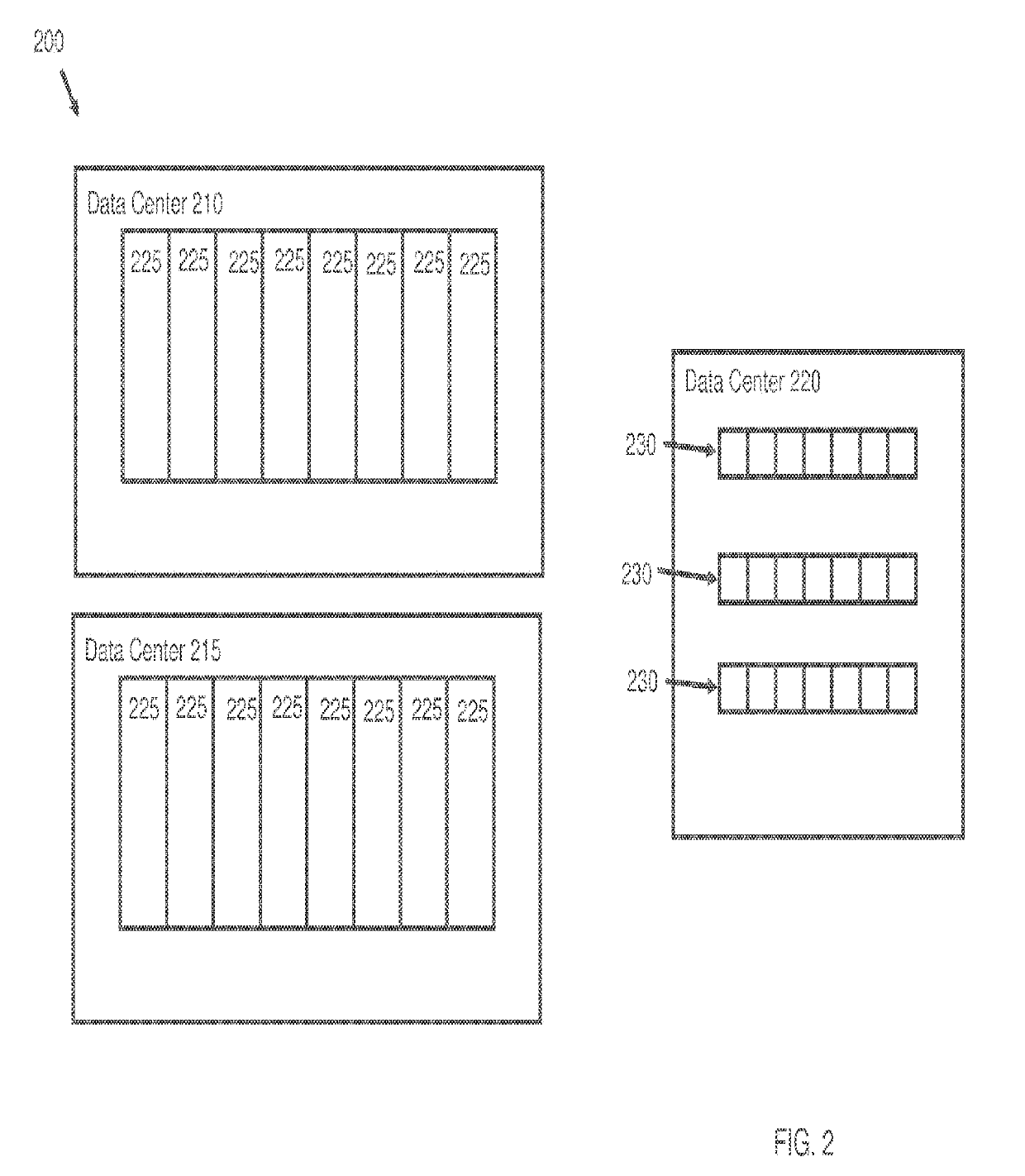

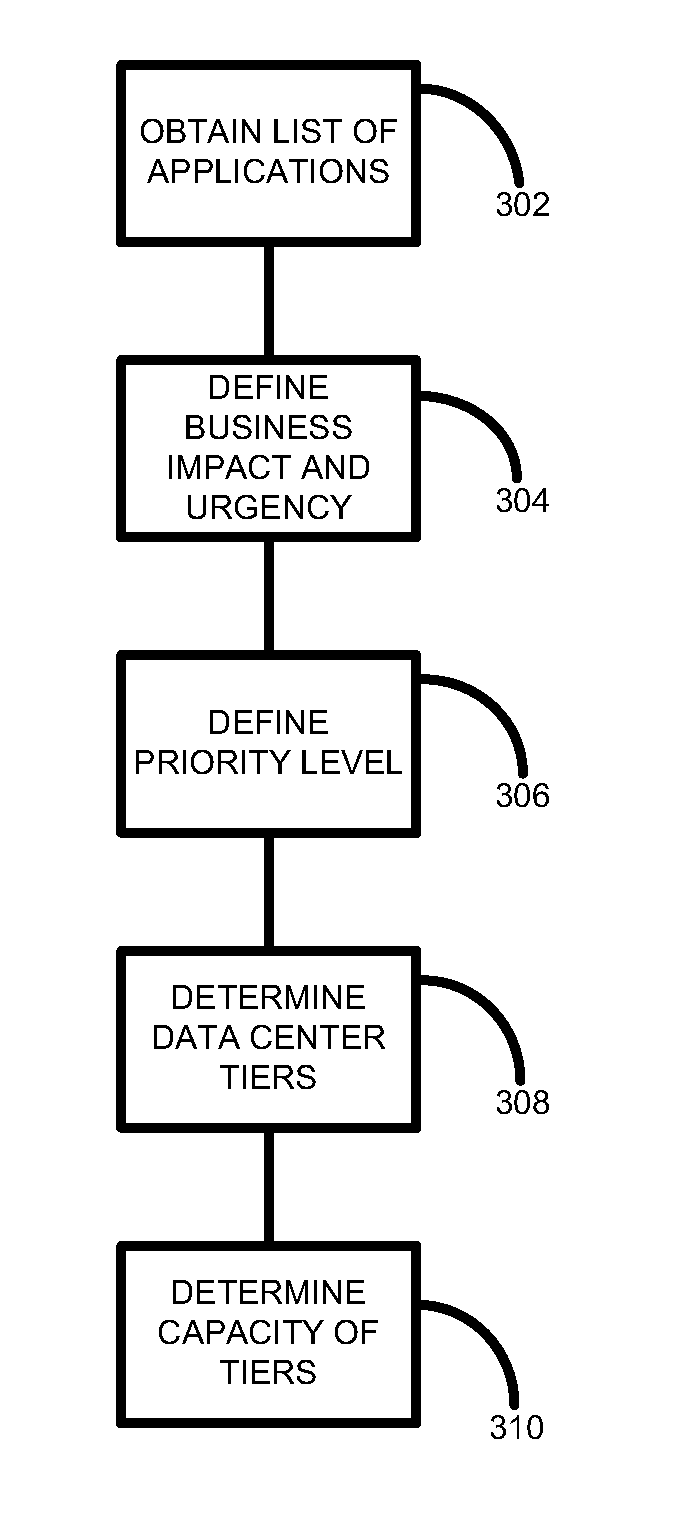

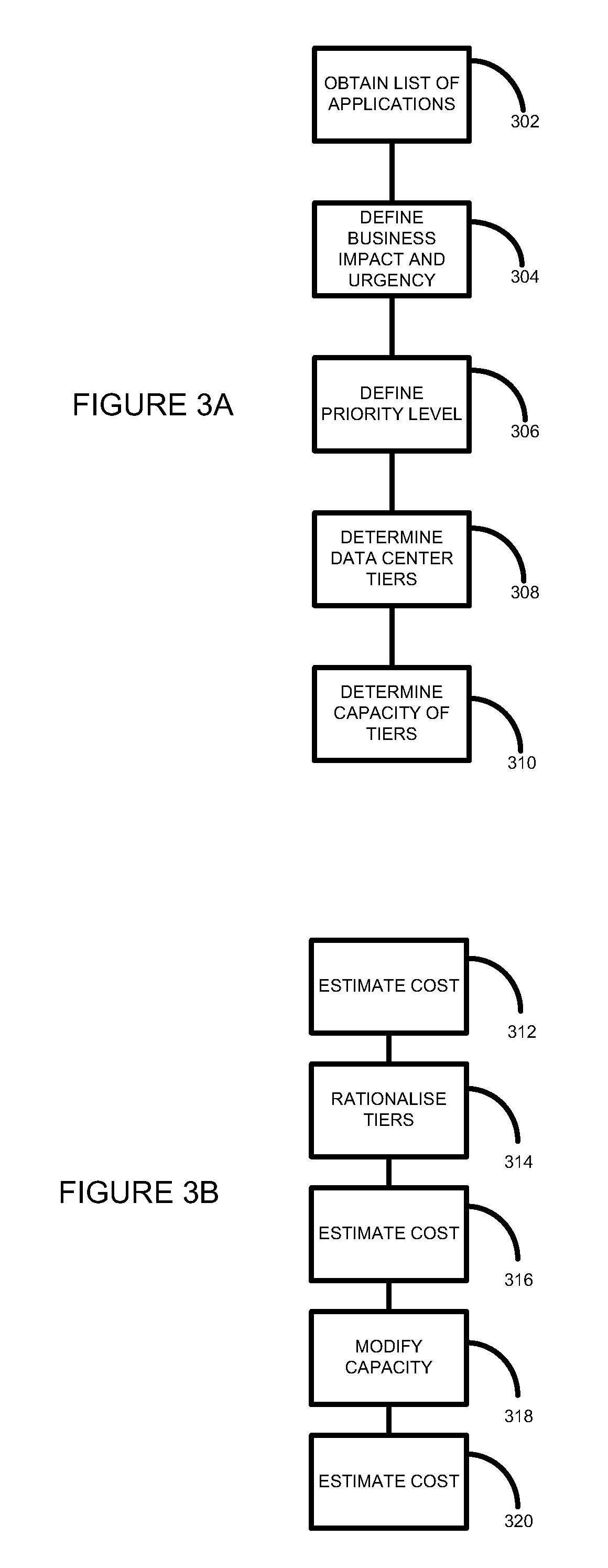

Data center and data center design

InactiveUS20100111105A1Time-division multiplexReliability/availability analysisData centerDistributed computing

According to one embodiment of the present invention, there is provided a data center comprising: a plurality of data center sections, each section having a different predefined level of reliability; and a plurality of sets of applications, each set of applications being populated on one of the plurality of data center sections.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

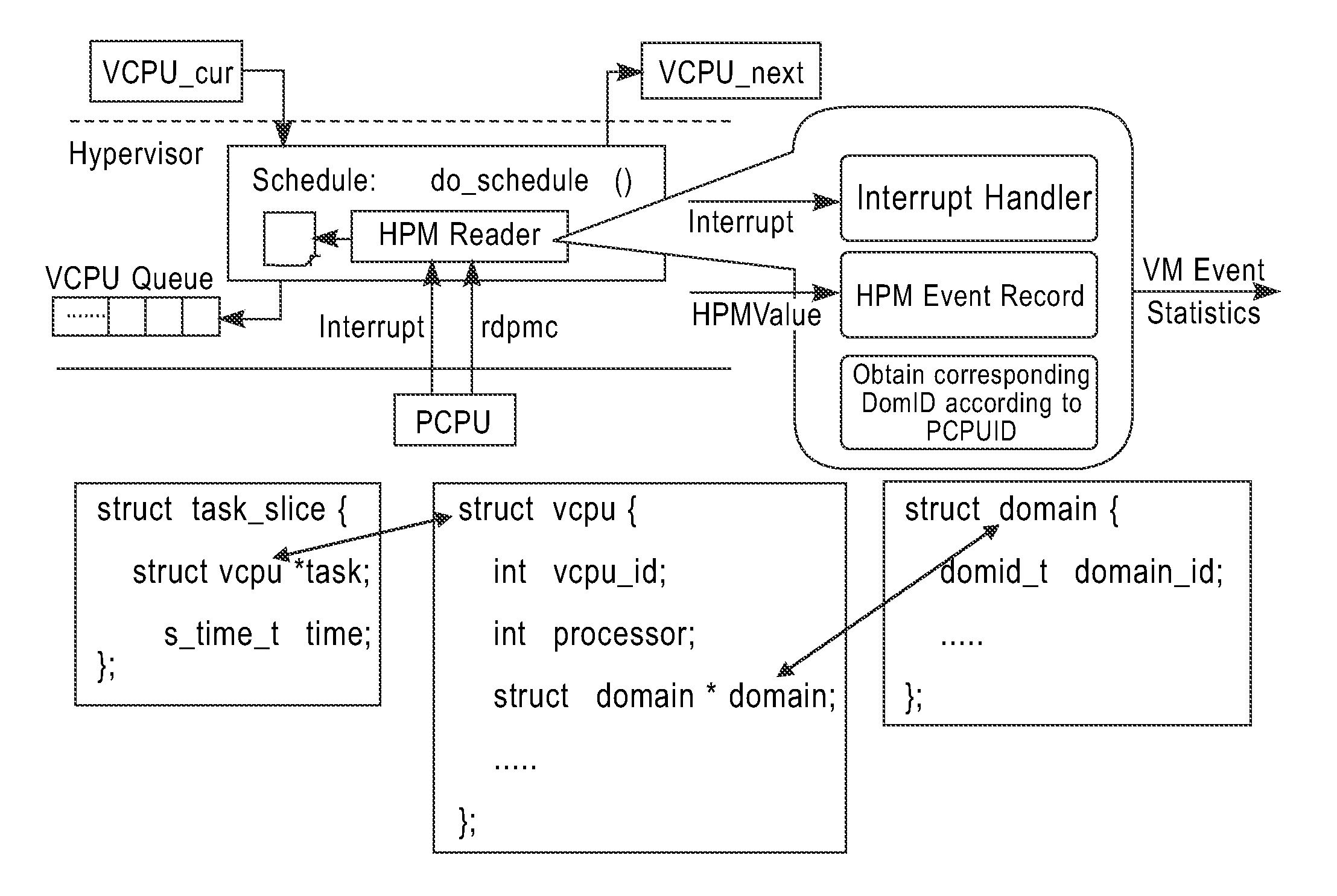

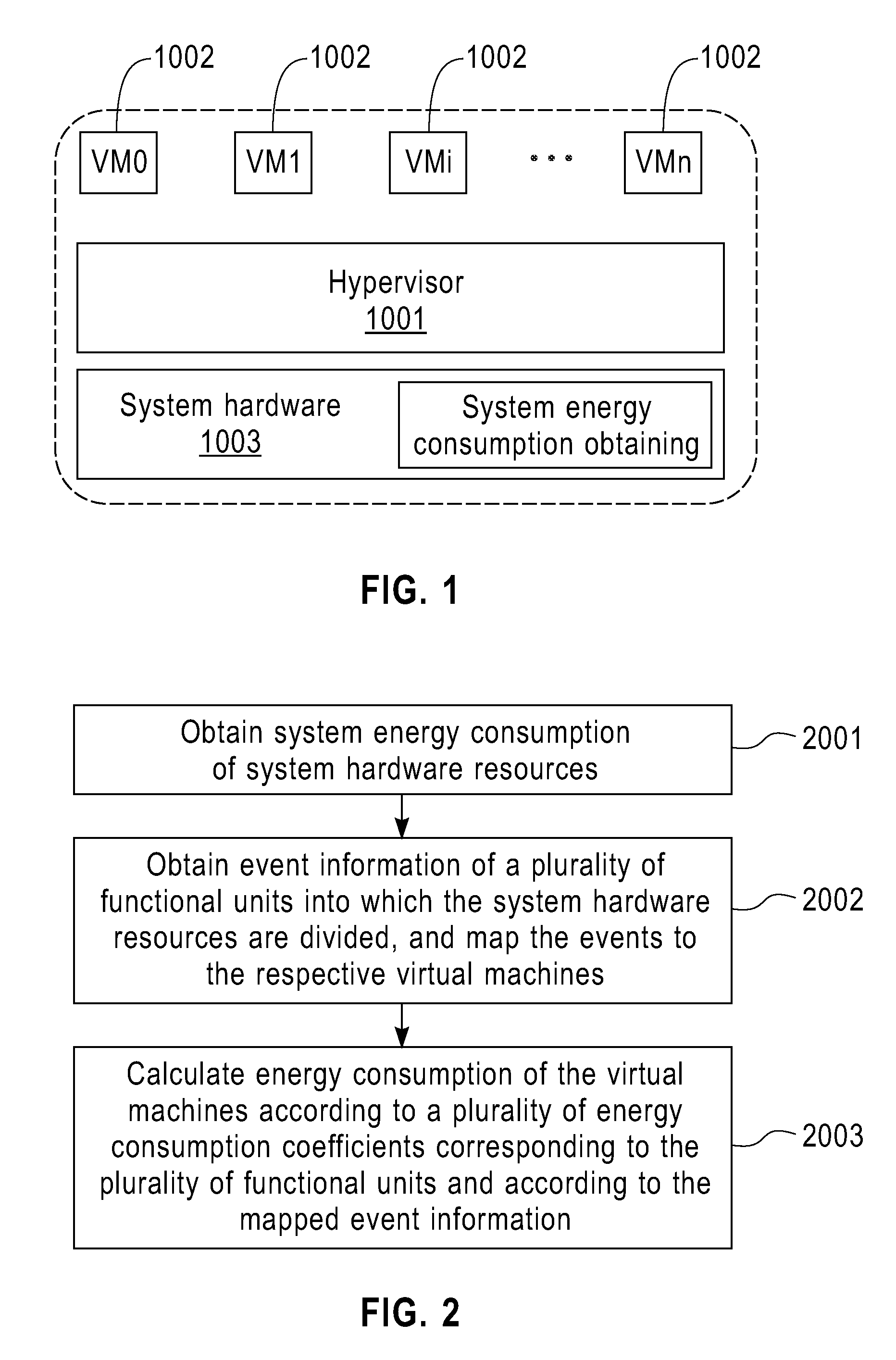

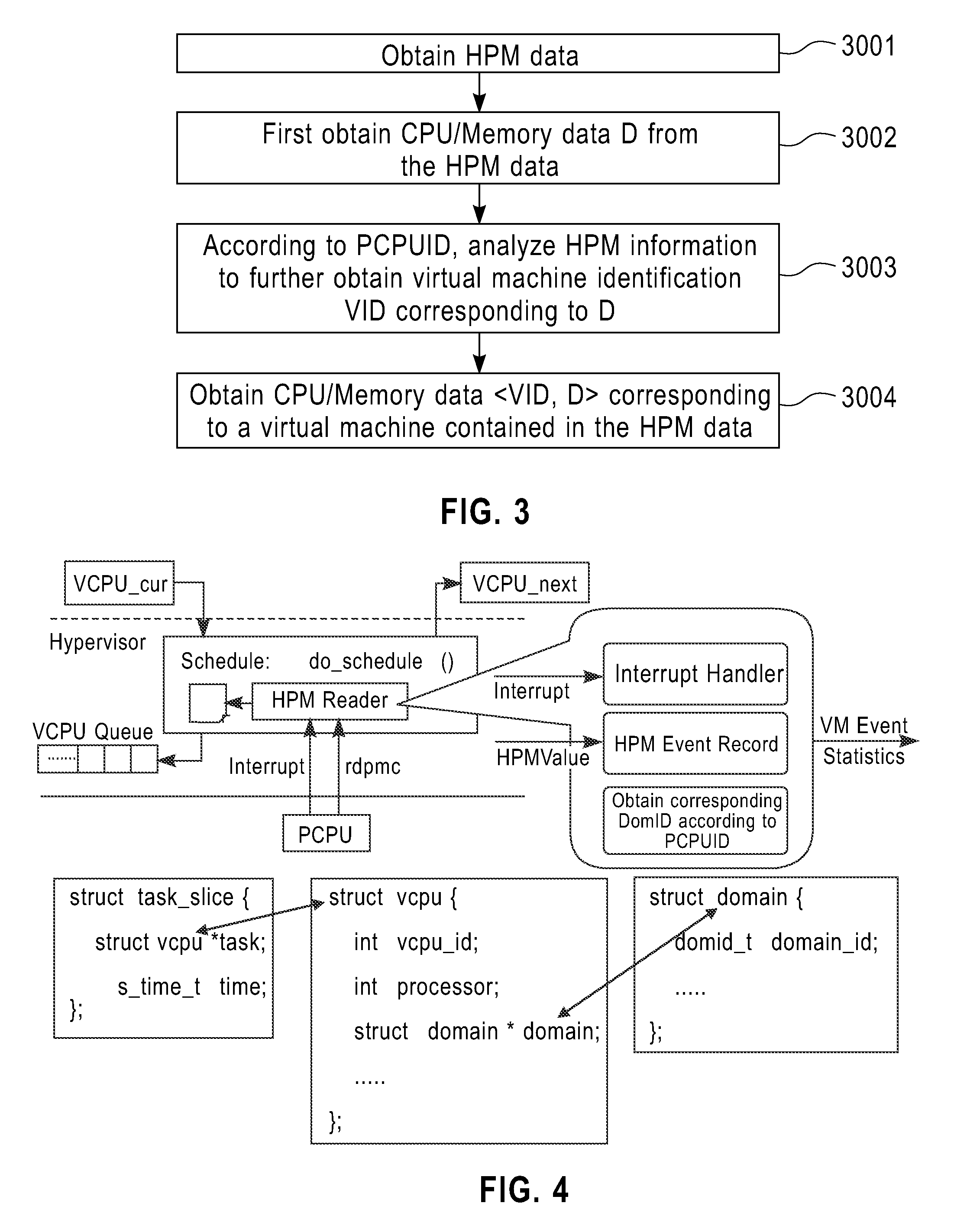

Method and apparatus for estimating virtual machine energy consumption

ActiveUS20120323509A1Improve accuracyElectric devicesPower supply for data processingEnergy depletionSystem hardware

A method and apparatus for estimating virtual machine energy consumption, and in particular, a method and apparatus for estimating virtual machine energy consumption in a computer system. The method includes: obtaining system energy consumption of the system hardware resources; obtaining event information of a plurality of functional units into which the system hardware resources are divided, and mapping the event information to the respective virtual machines; and calculating energy consumption of the virtual machines according to a plurality of energy consumption coefficients corresponding to the plurality of functional units and according to the event information mapped to the functional units of the respective virtual machines.

Owner:INT BUSINESS MASCH CORP

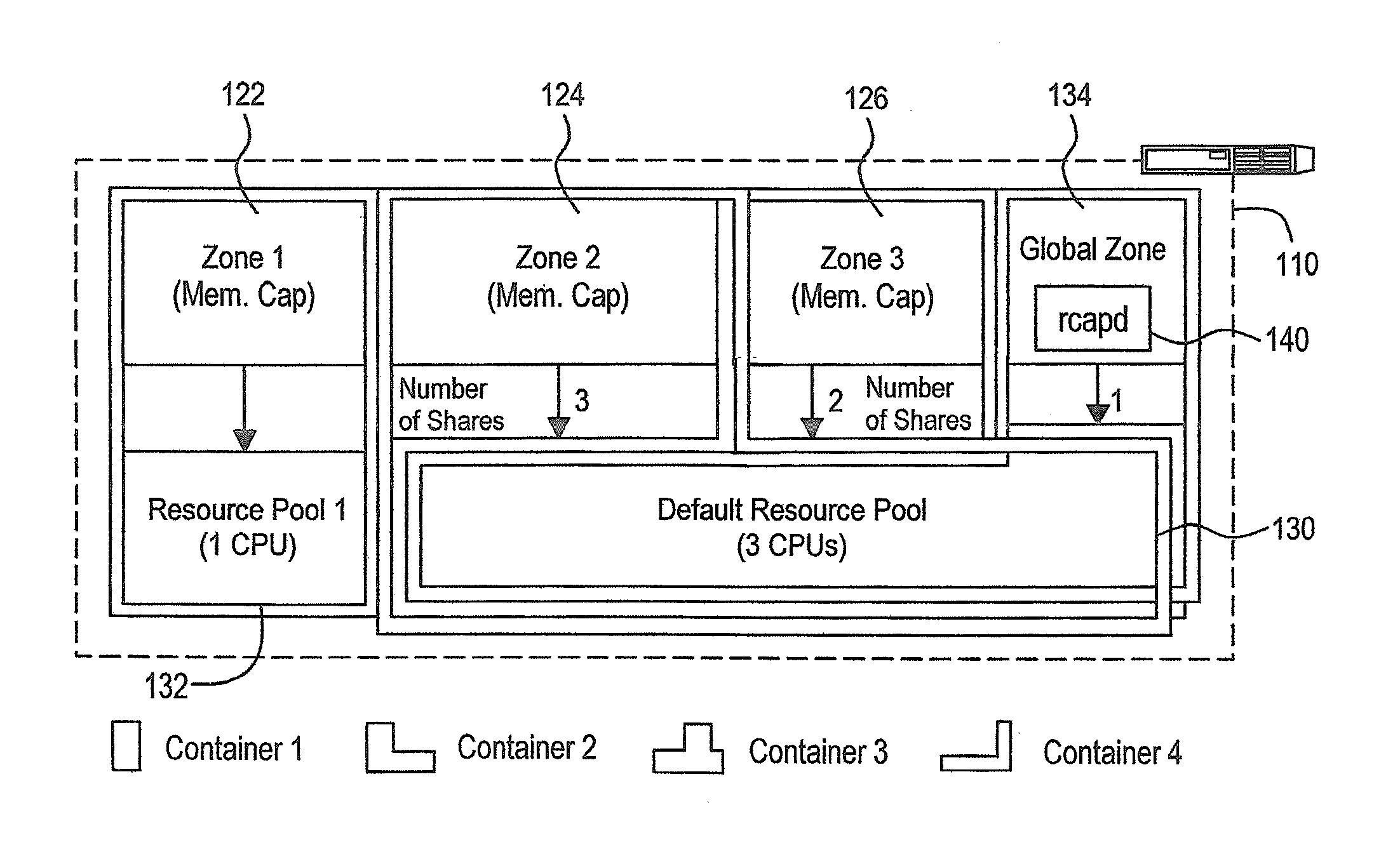

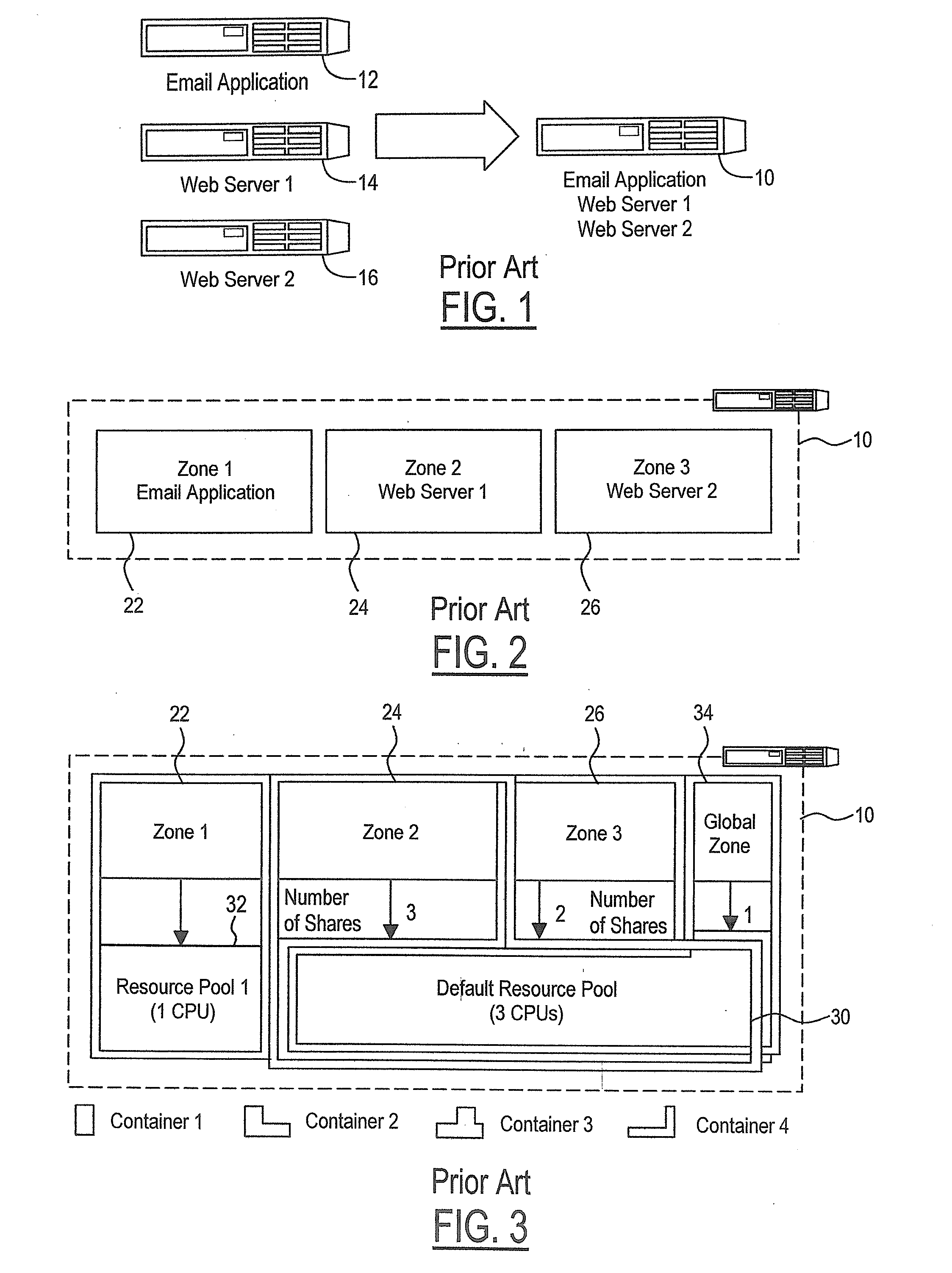

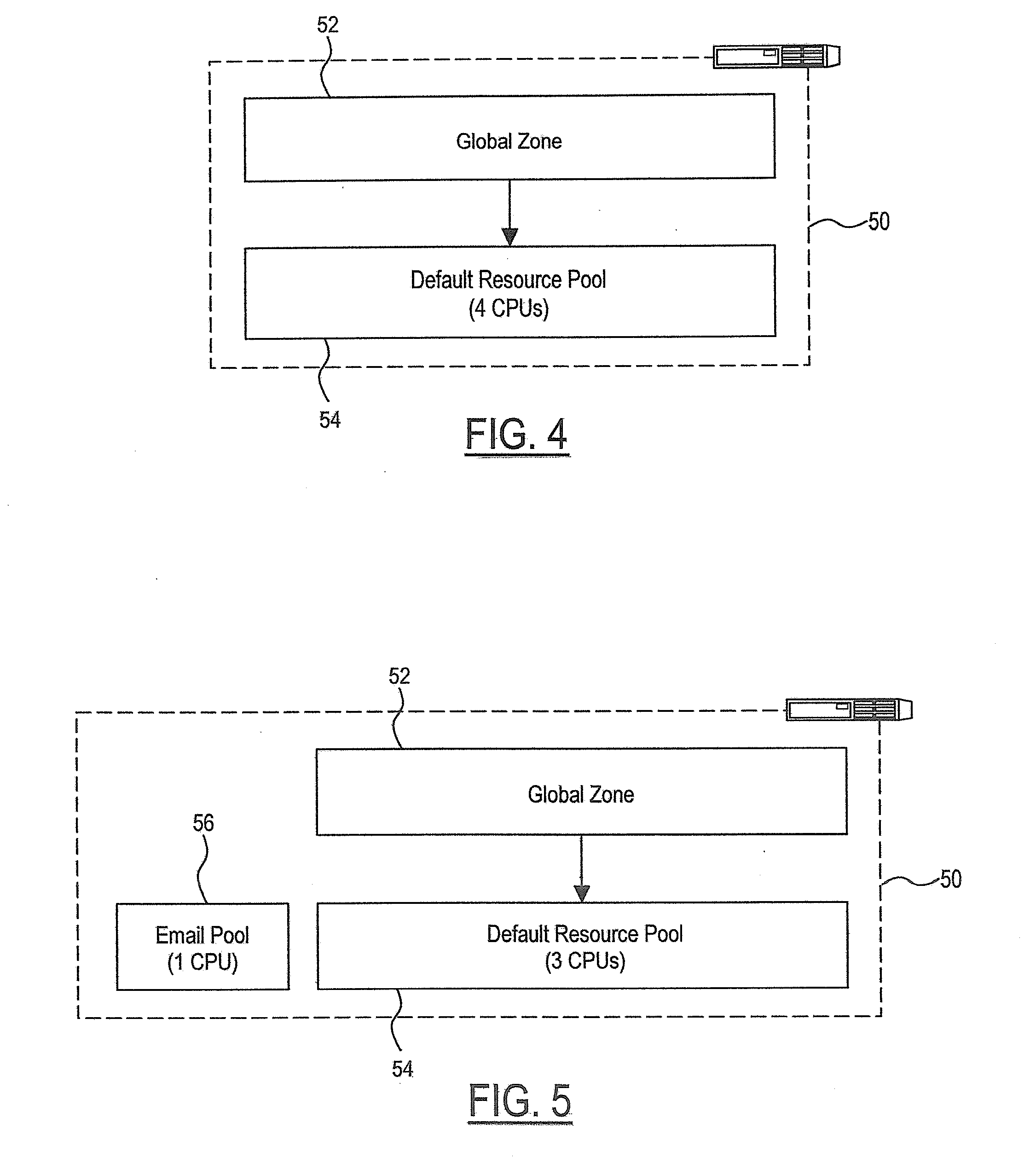

Physical memory capping for use in virtualization

ActiveUS20080320242A1Control consumptionIncrease resourcesProgram controlMemory systemsComputer hardwareVirtualization

A method of implementing virtualization involves an improved approach to resource management. A virtualizing subsystem is capable of creating separate environments that logically isolate applications from each other. Some of the separate environments share physical resources including physical memory. When a separate environment is configured, properties for the separate environment are defined. Configuring a separate environment may include specifying a physical memory usage cap for the separate environment. A global resource capping background service enforces physical memory caps on any separate environments that have specified physical memory caps.

Owner:SUN MICROSYSTEMS INC

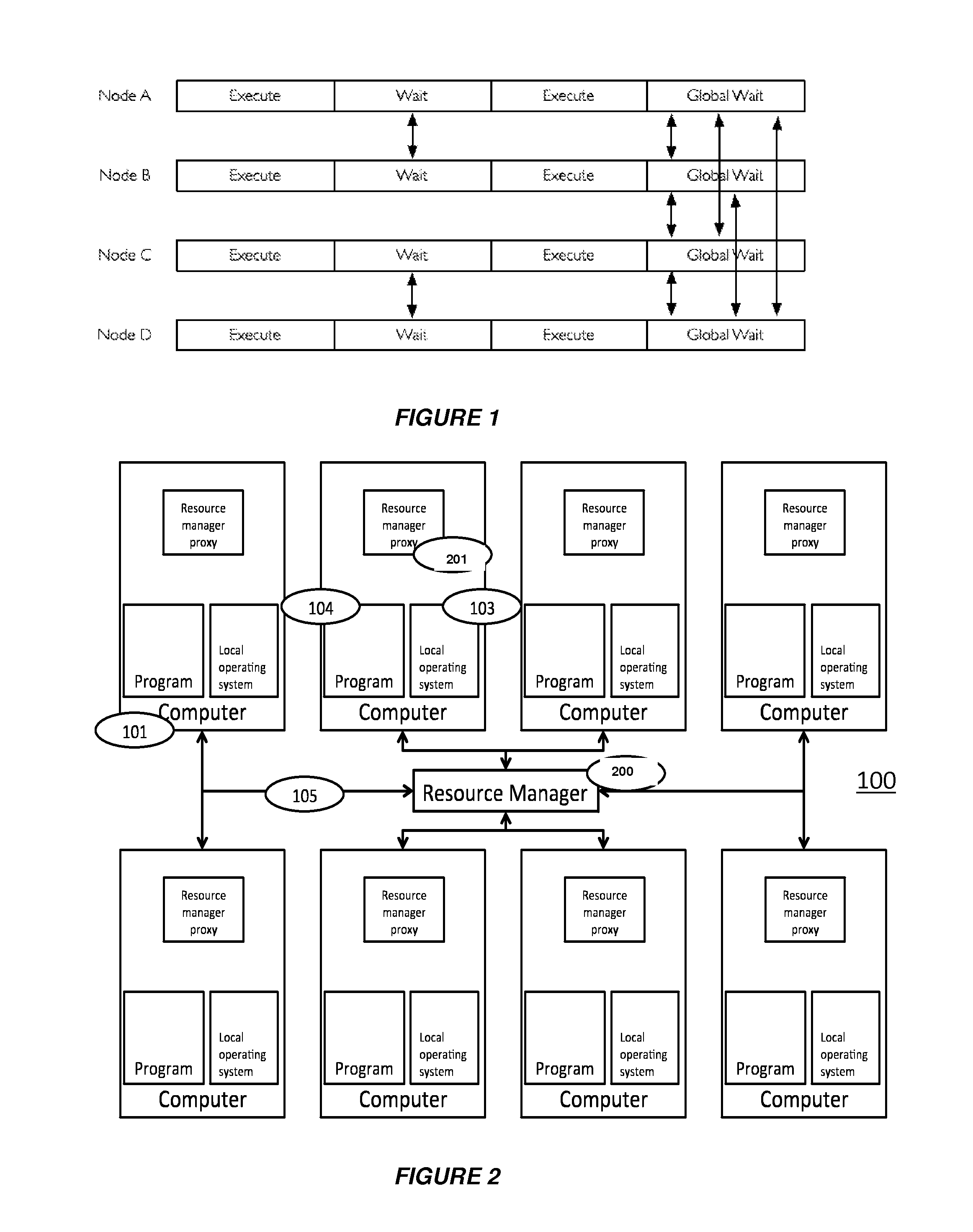

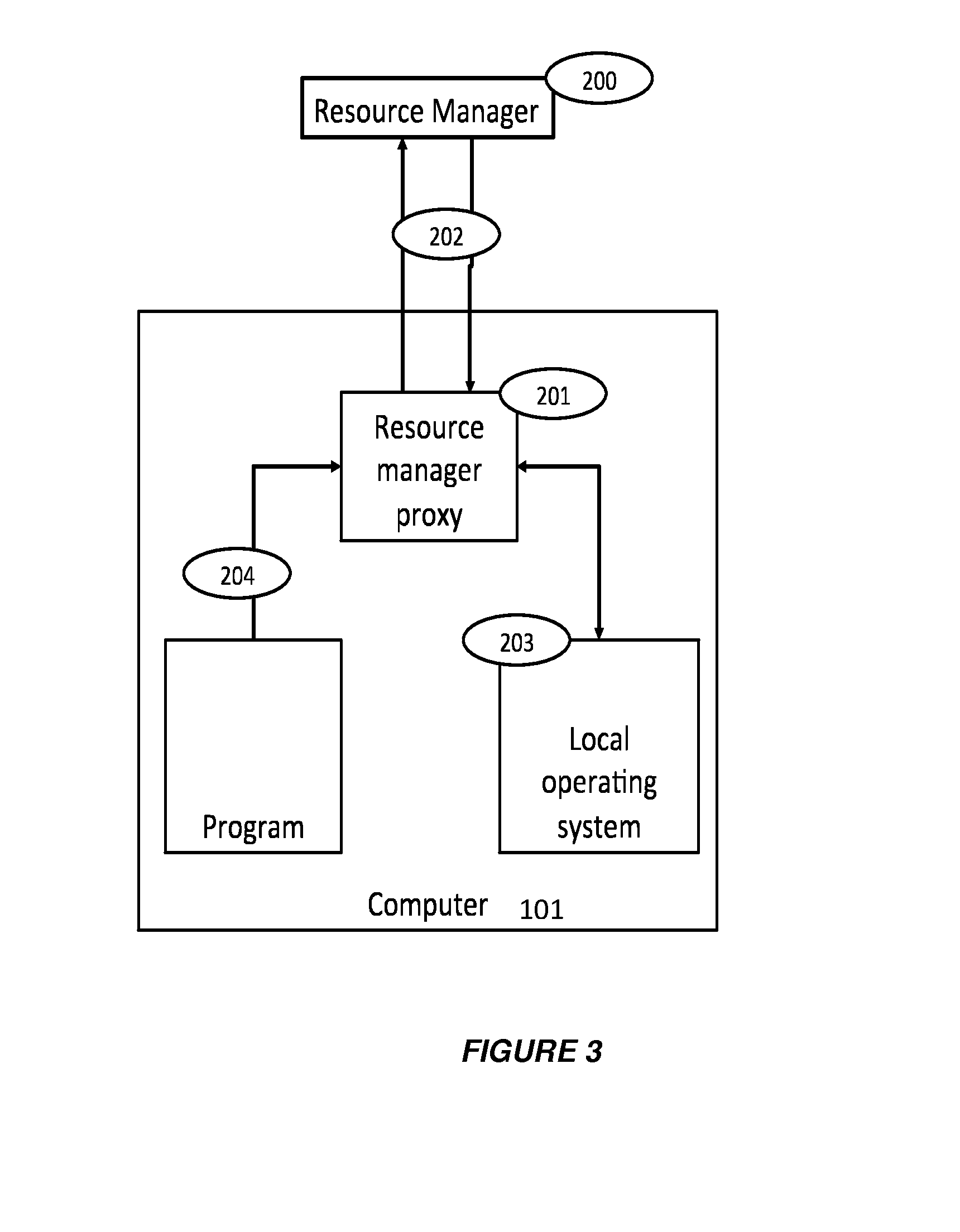

Method of executing an application on a computer system, a resource manager and a high performance computer system

ActiveUS20150378419A1Small impact run-timeEfficient use of energySusbset functionality useVolume/mass flow measurementComputerized systemResource management

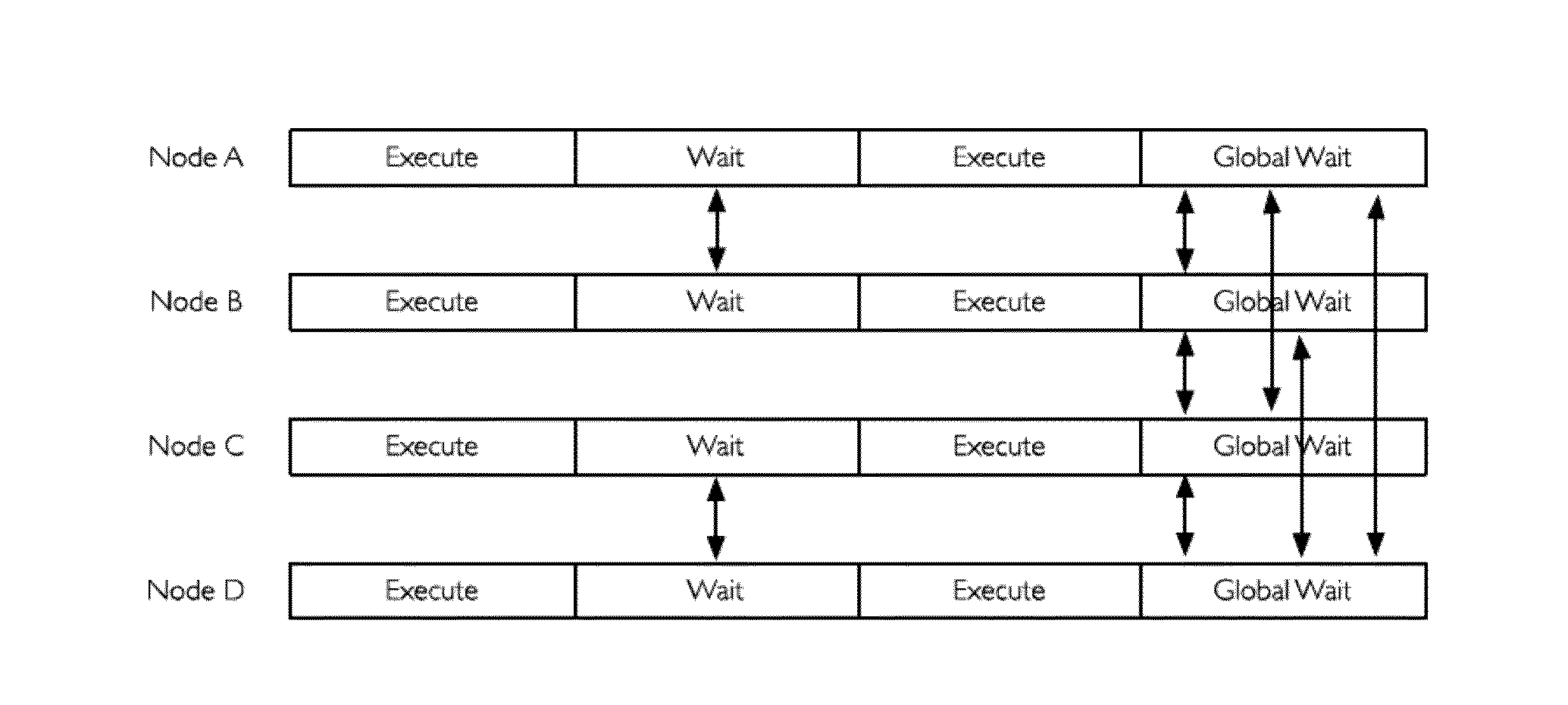

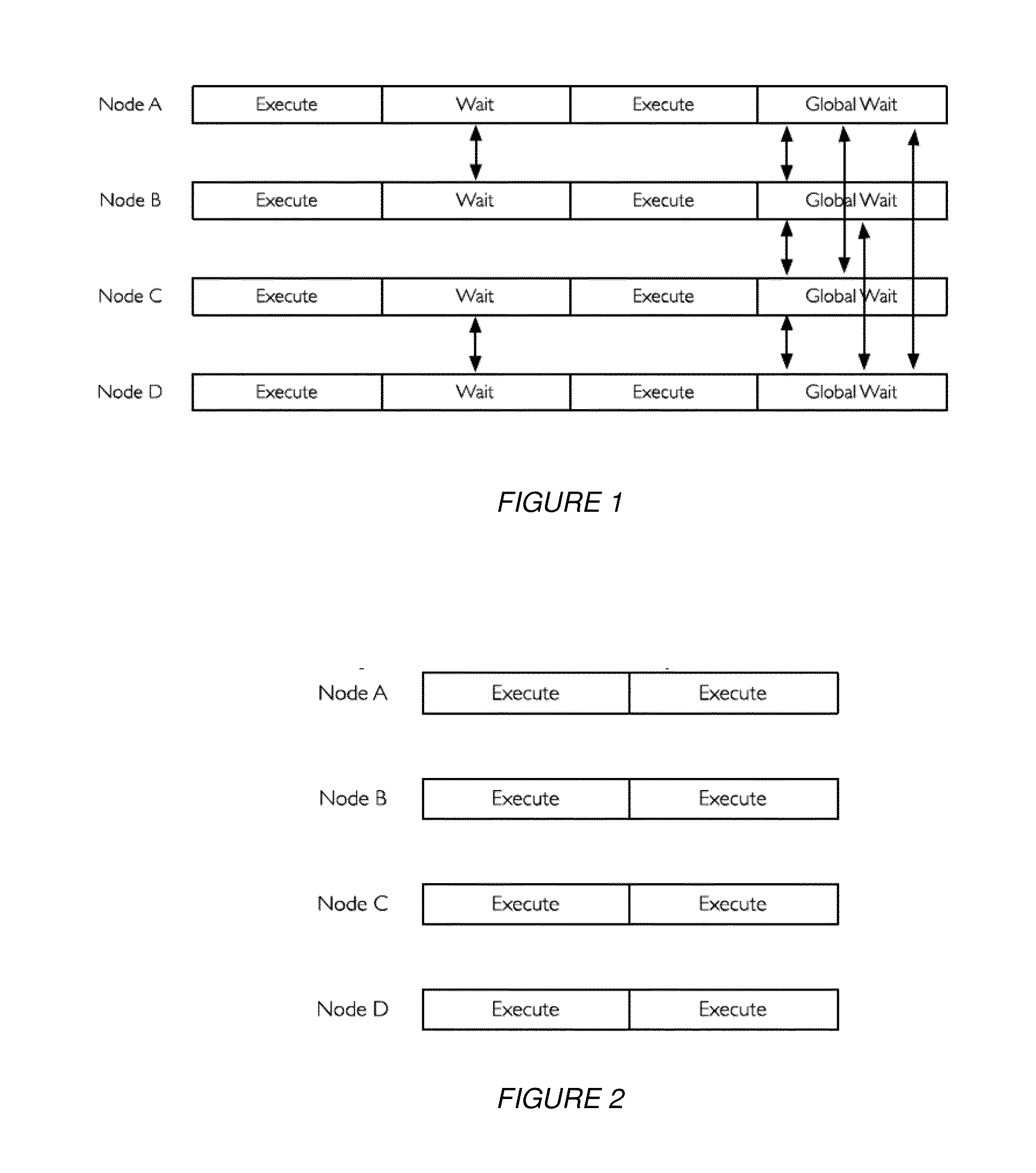

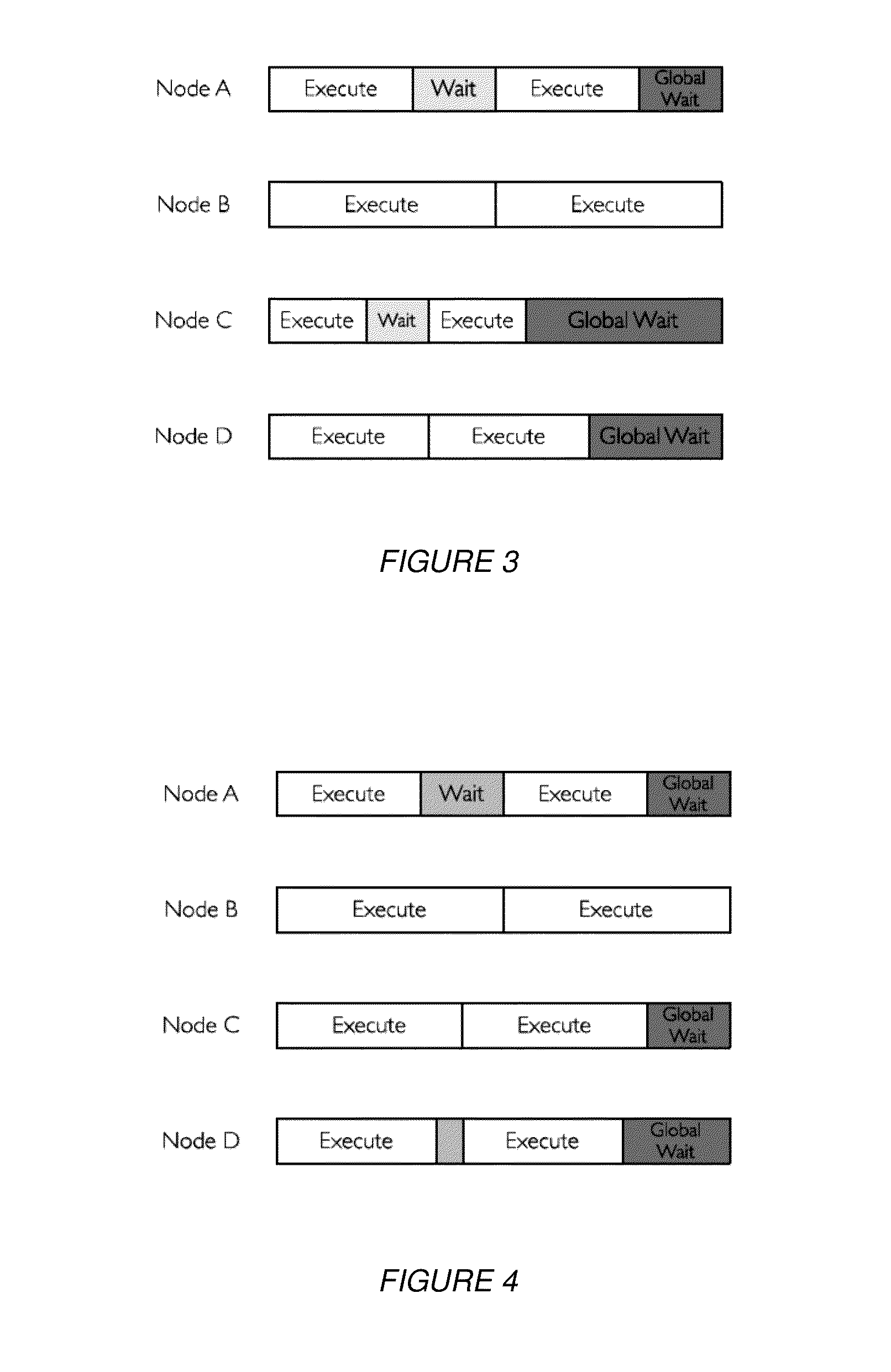

In an HPC system, a Resource Manager deliberately introduces heterogeneities to the execution speeds of some, but not all, of the nodes allocated to an application during the application's execution. These heterogeneities may cause changes to the amount of time spent waiting on coordination points: computation intensive applications will be most affected by these changes, IO bound applications less so. By monitoring wait time reports received from a Communications library, the Manager can discriminate between these two types of applications and suitable power states can be applied to the nodes allocated to the application. If the application is IO bound then nodes can be switched to a lower-power state to save energy. This can be applied at any point during application execution so that the hardware configuration can be adjusted to keep optimal efficiency as the application passes through phases with different energy use and performance characteristics.

Owner:FUJITSU LTD

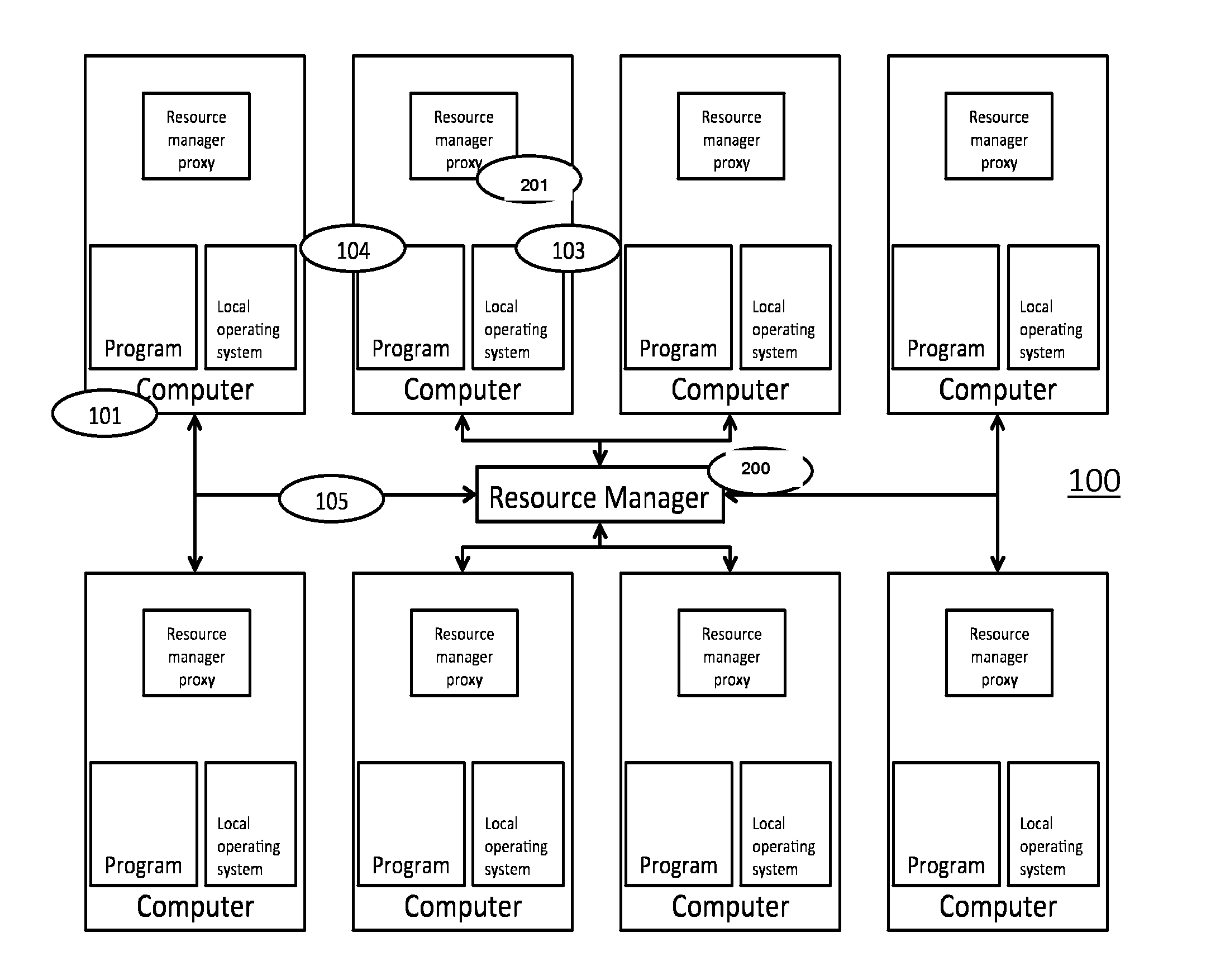

Method of executing an application on a distributed computer system, a resource manager and a distributed computer system

ActiveUS20150378406A1Load minimizationSave energyResource allocationError detection/correctionResource managementOperating system

Owner:FUJITSU LTD

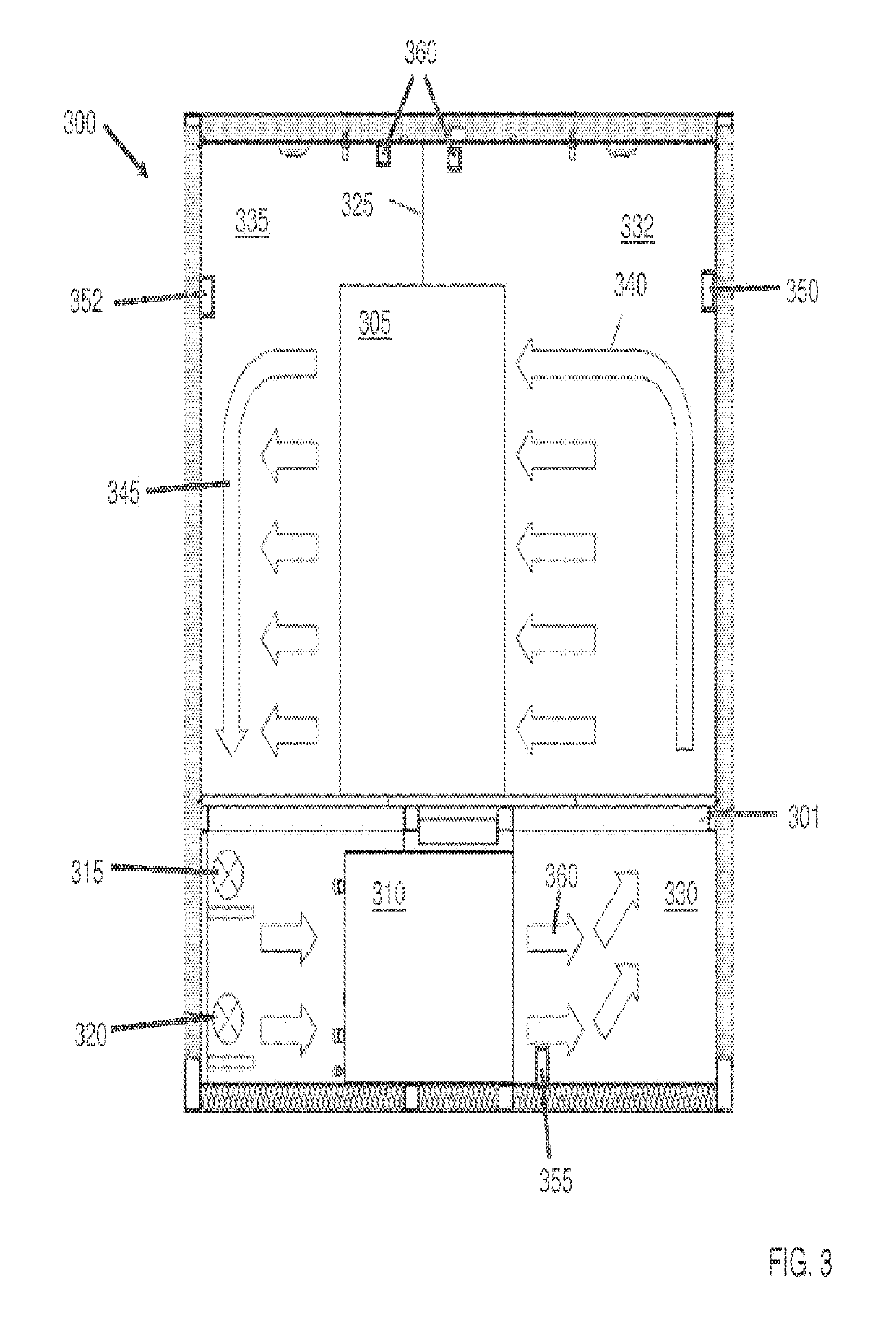

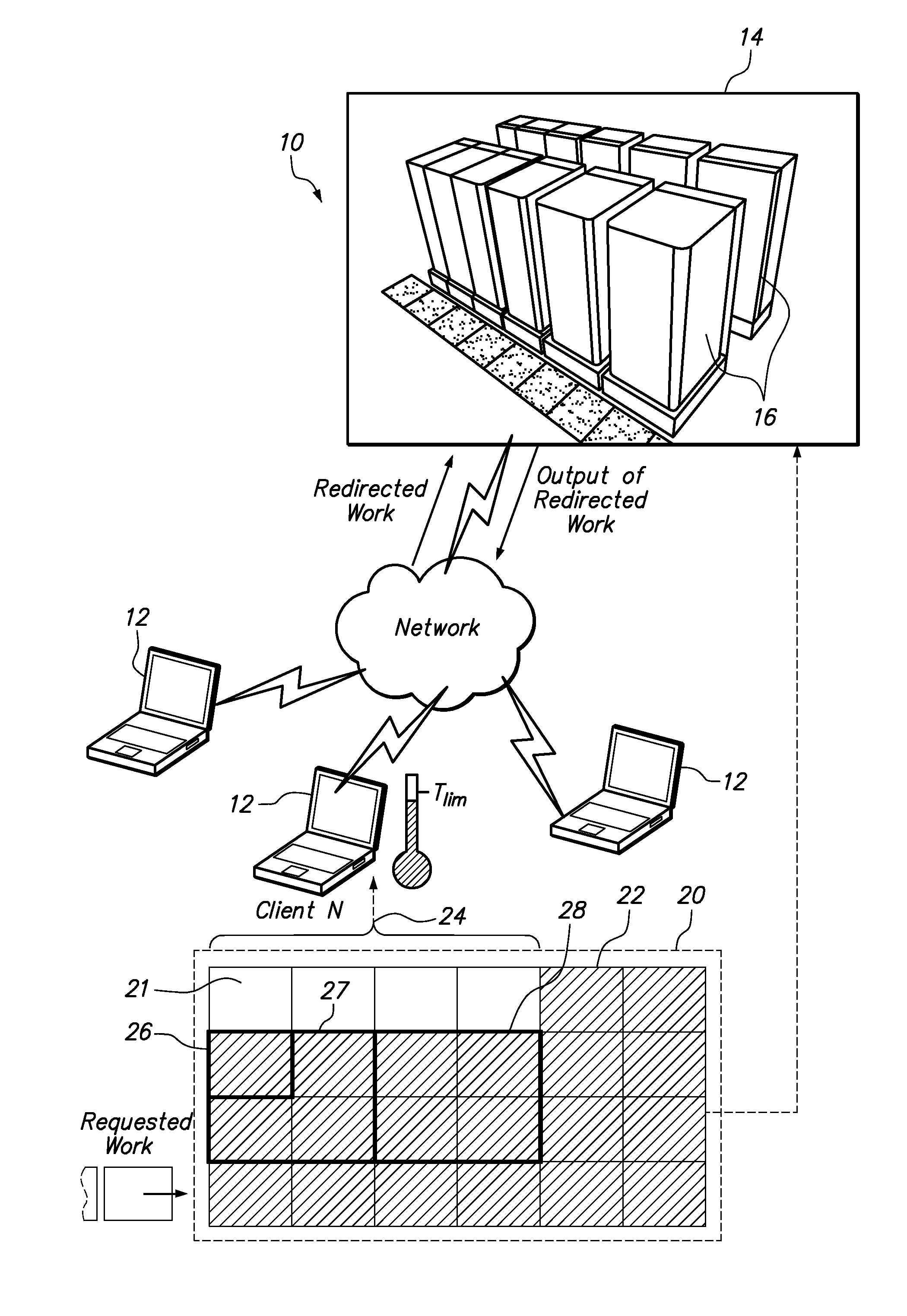

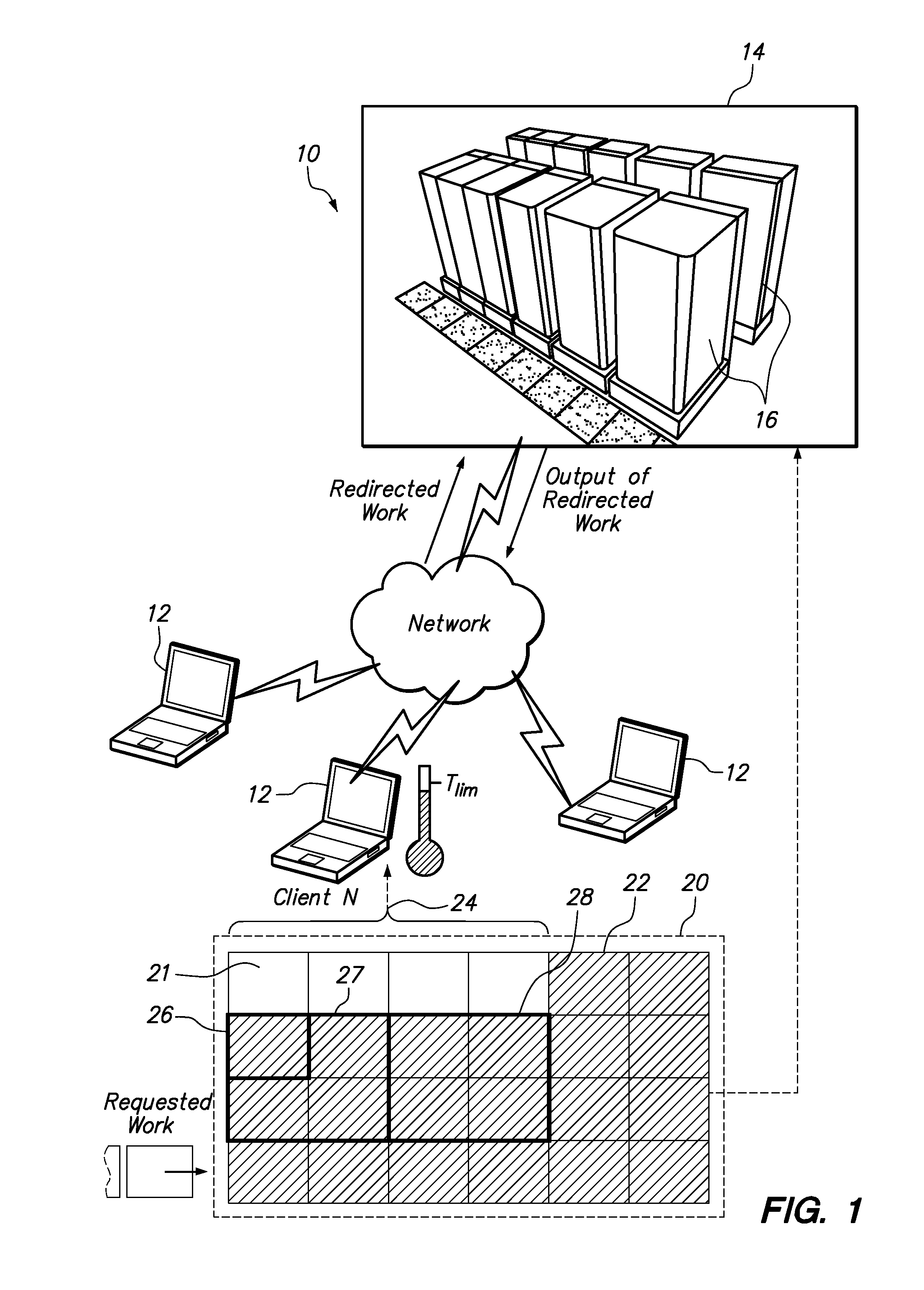

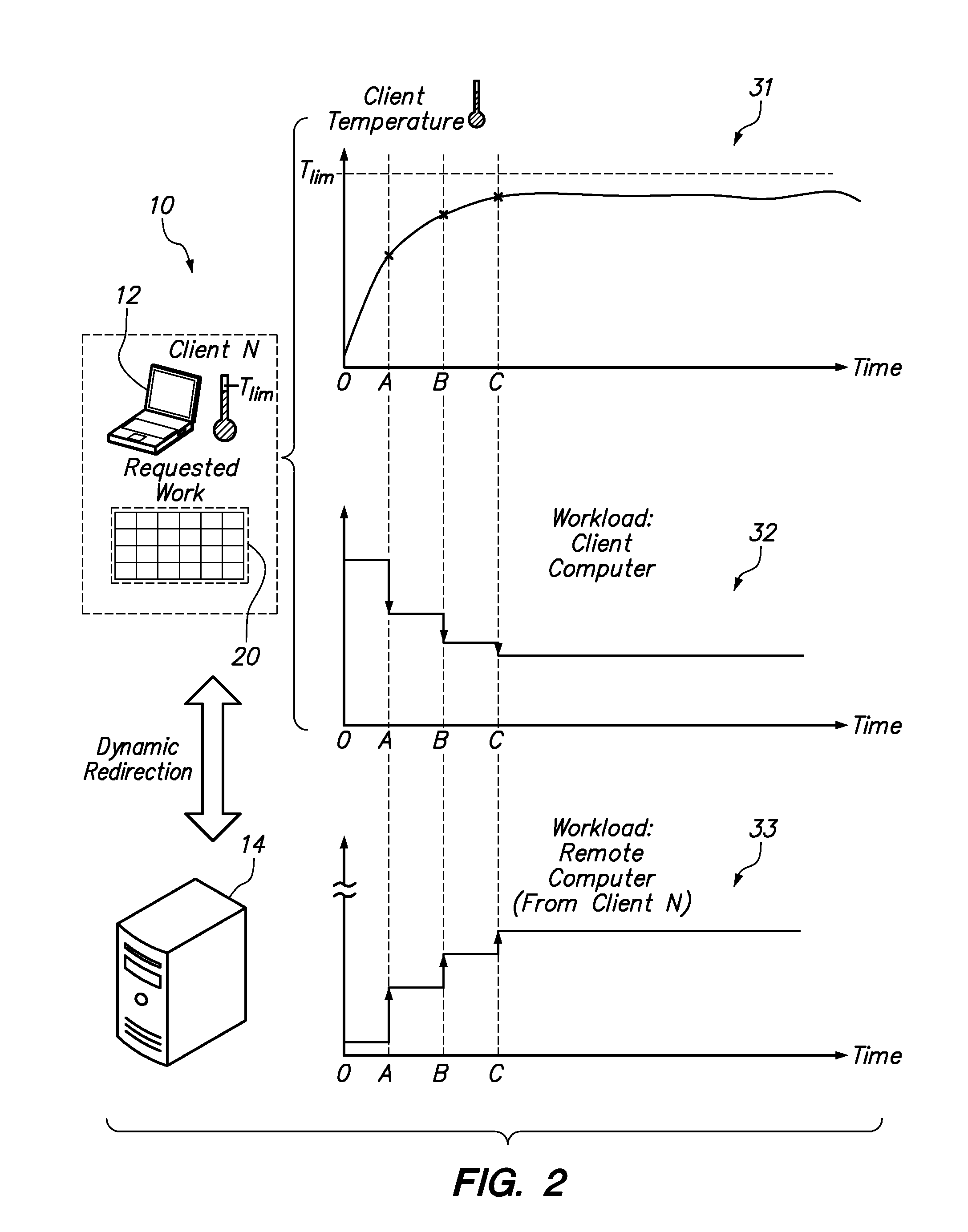

Thermal management using distributed computing systems

ActiveUS20100313204A1Distributed system energy consumption reductionTemperatue controlRemote computerClient-side

One embodiment provides a computer-implemented method for enforcing a temperature limit of a client computer. The method includes receiving a request for computer-executable work to be executed on a client computer. If executing all of the requested work on the client computer would cause the client computer to exceed the temperature limit, a portion of the requested work may be selectively redirected to a remote computer in an amount selected to enforce the temperature limit of the client computer. Simultaneously, the remainder of the requested work may be executed on the client computer. The output of the redirected work may be communicated from the remote computer back to the client computer over a network.

Owner:LENOVO GLOBAL TECH INT LTD

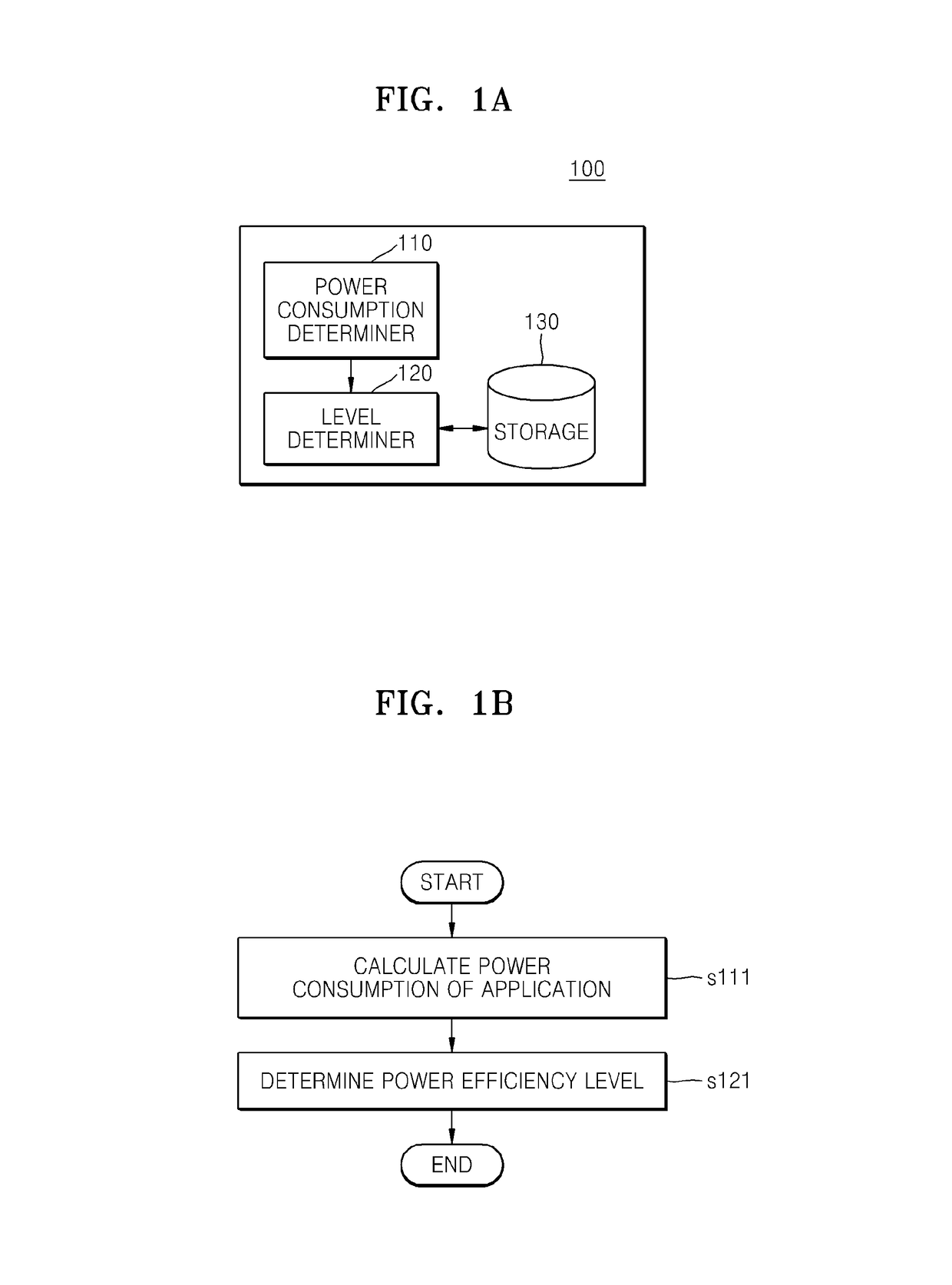

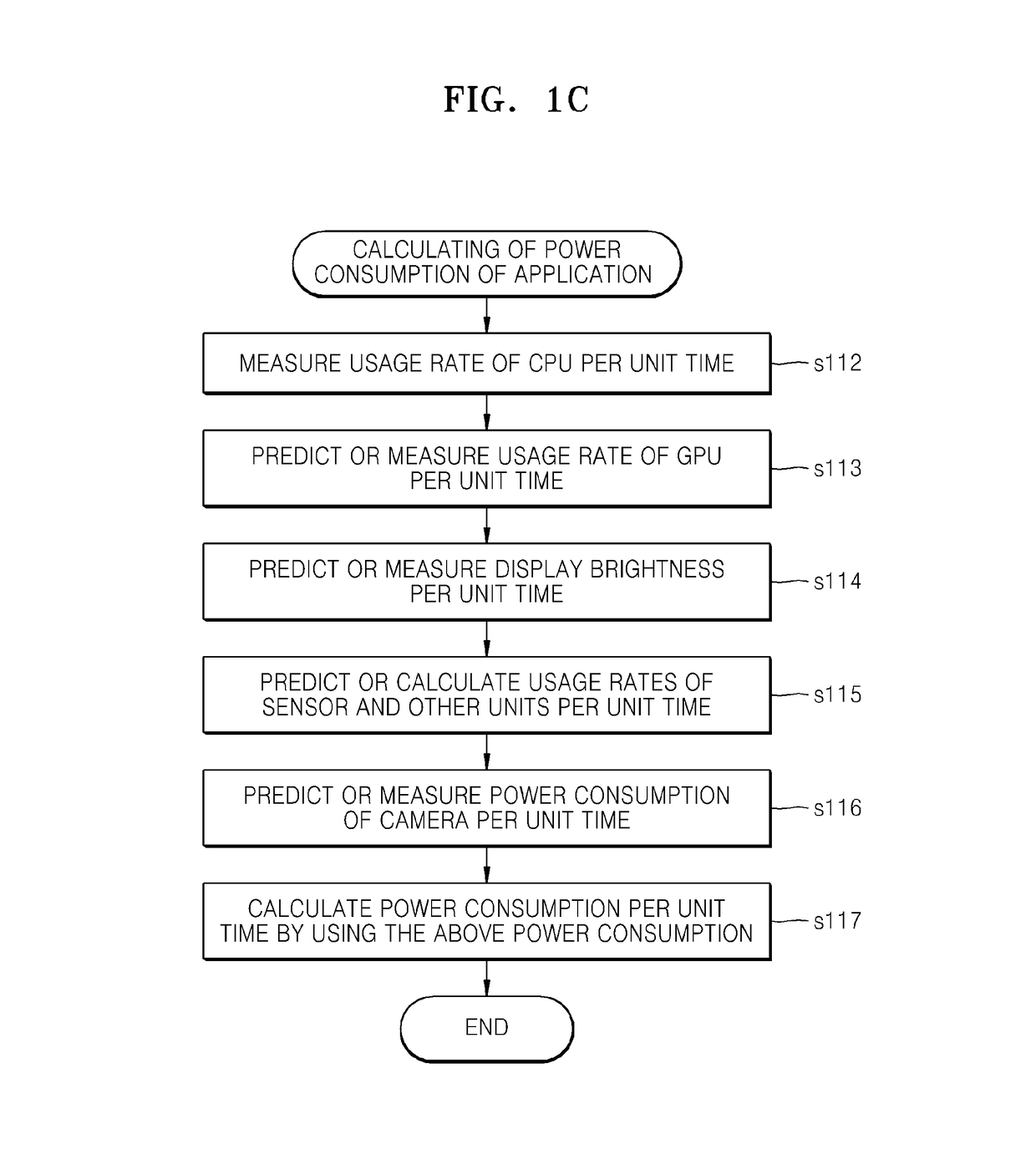

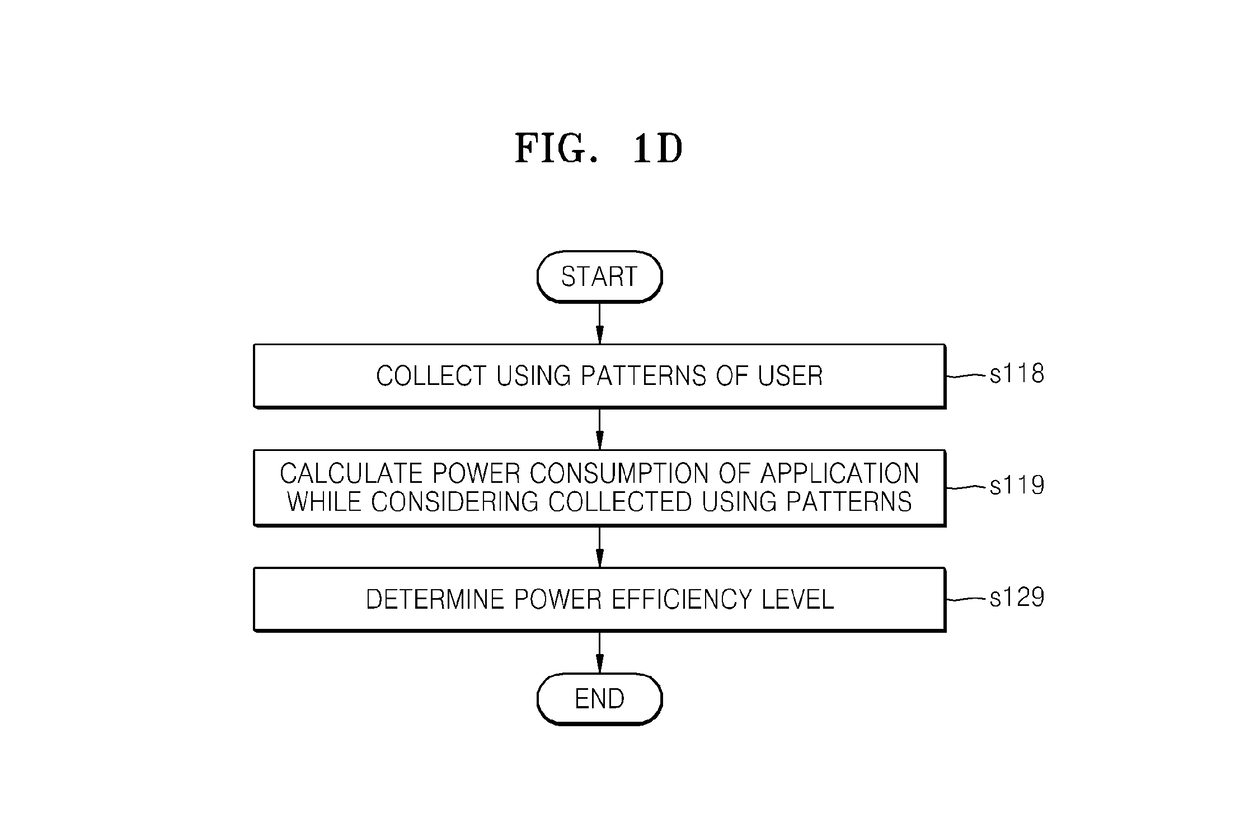

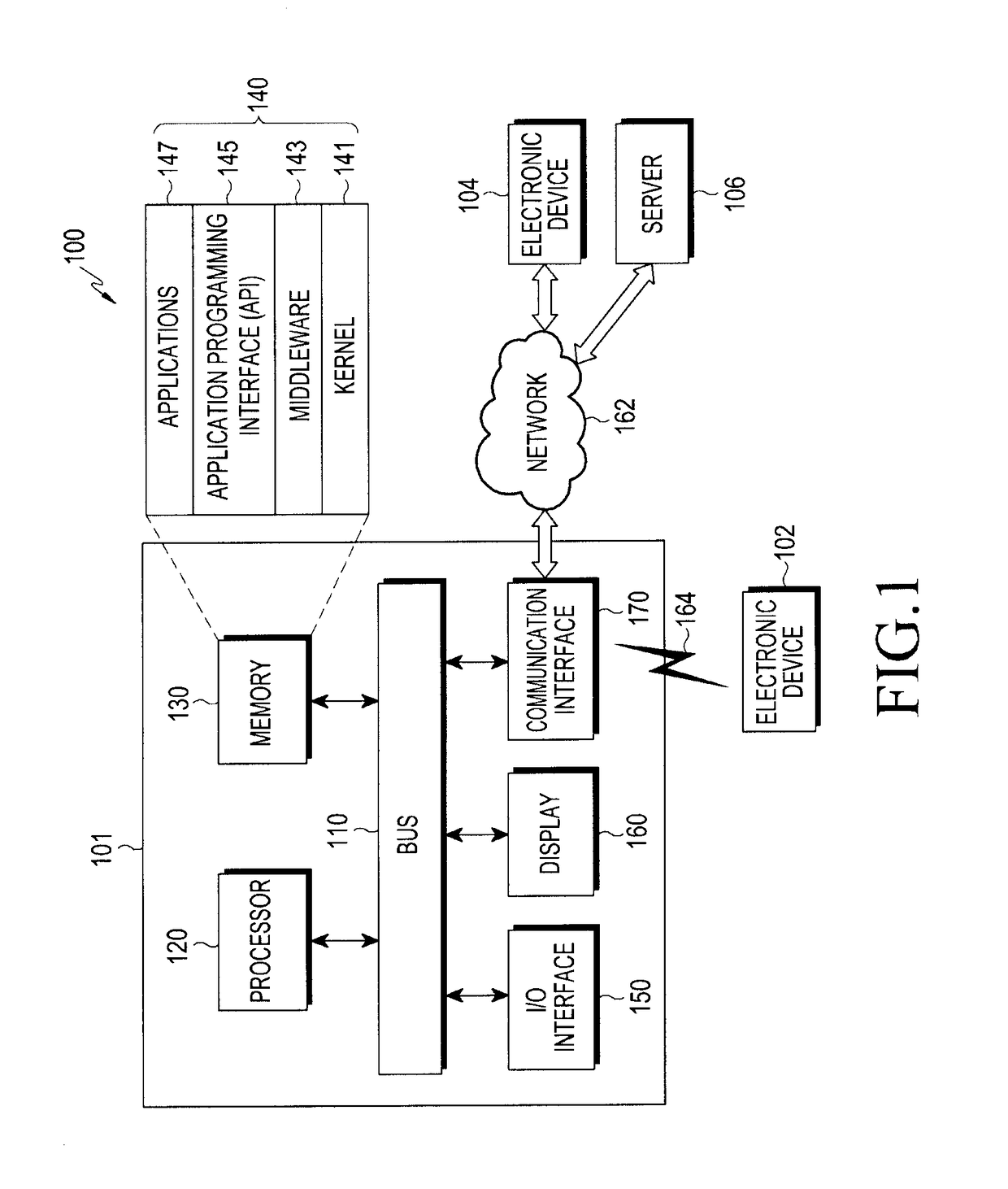

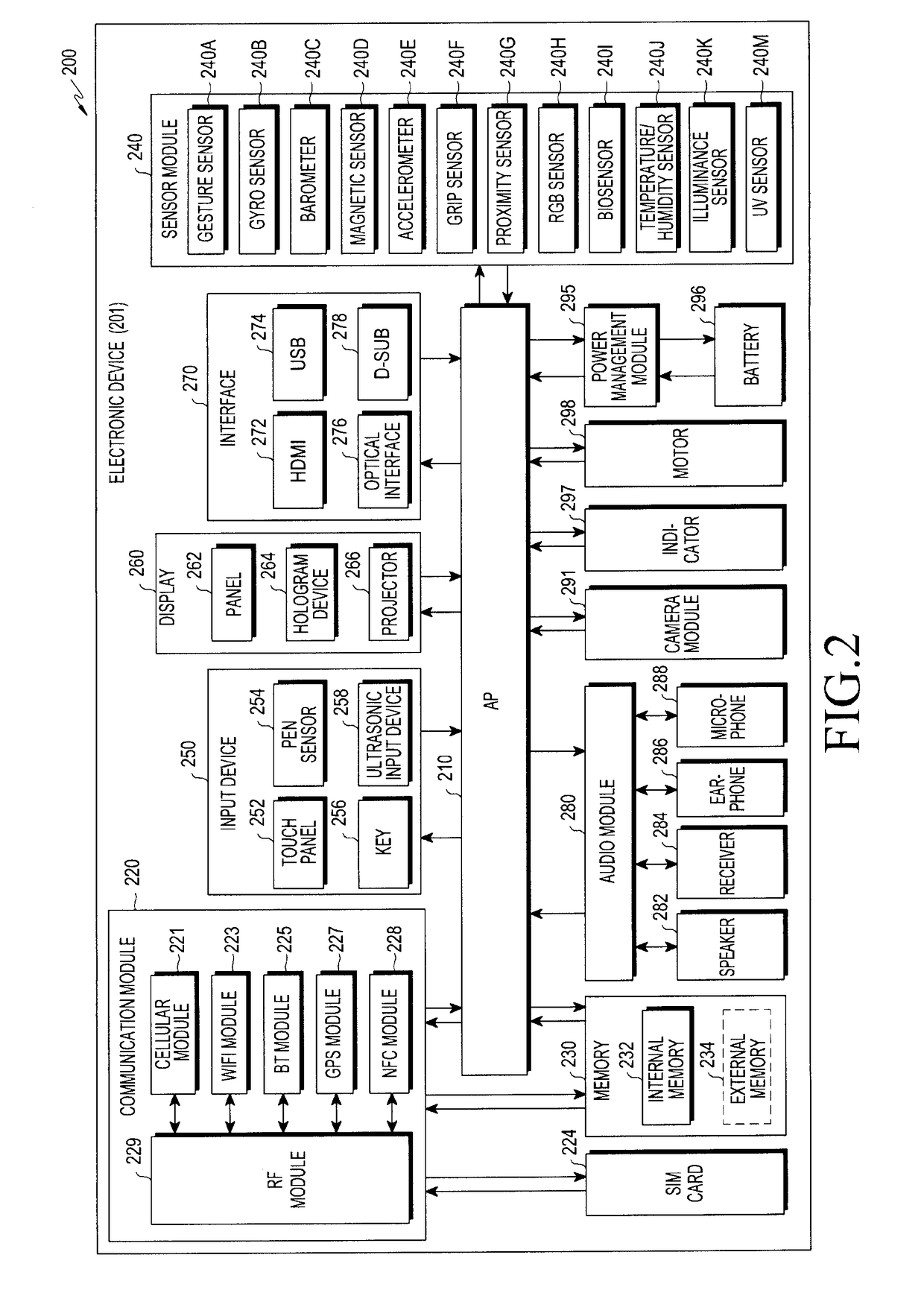

Mobile terminal and method of determining and displaying power efficiency of an application

ActiveUS10180857B2Program initiation/switchingMultiple digital computer combinationsPower consumptionEmbedded system

A method and mobile terminal for determining a power efficiency of an application installed in and executed by a mobile terminal. The method includes: determining power consumption per unit time according to units of the installed and executed application; and determining a power efficiency level of the installed and executed application based on the determined power consumption per unit time. The mobile terminal includes: a power consumption determiner configured to determine power consumption per unit time according to units of the installed and executed application; and a level determiner configured to determine a power efficiency level of the installed and executed application based on the determined power consumption per unit time.

Owner:SAMSUNG ELECTRONICS CO LTD

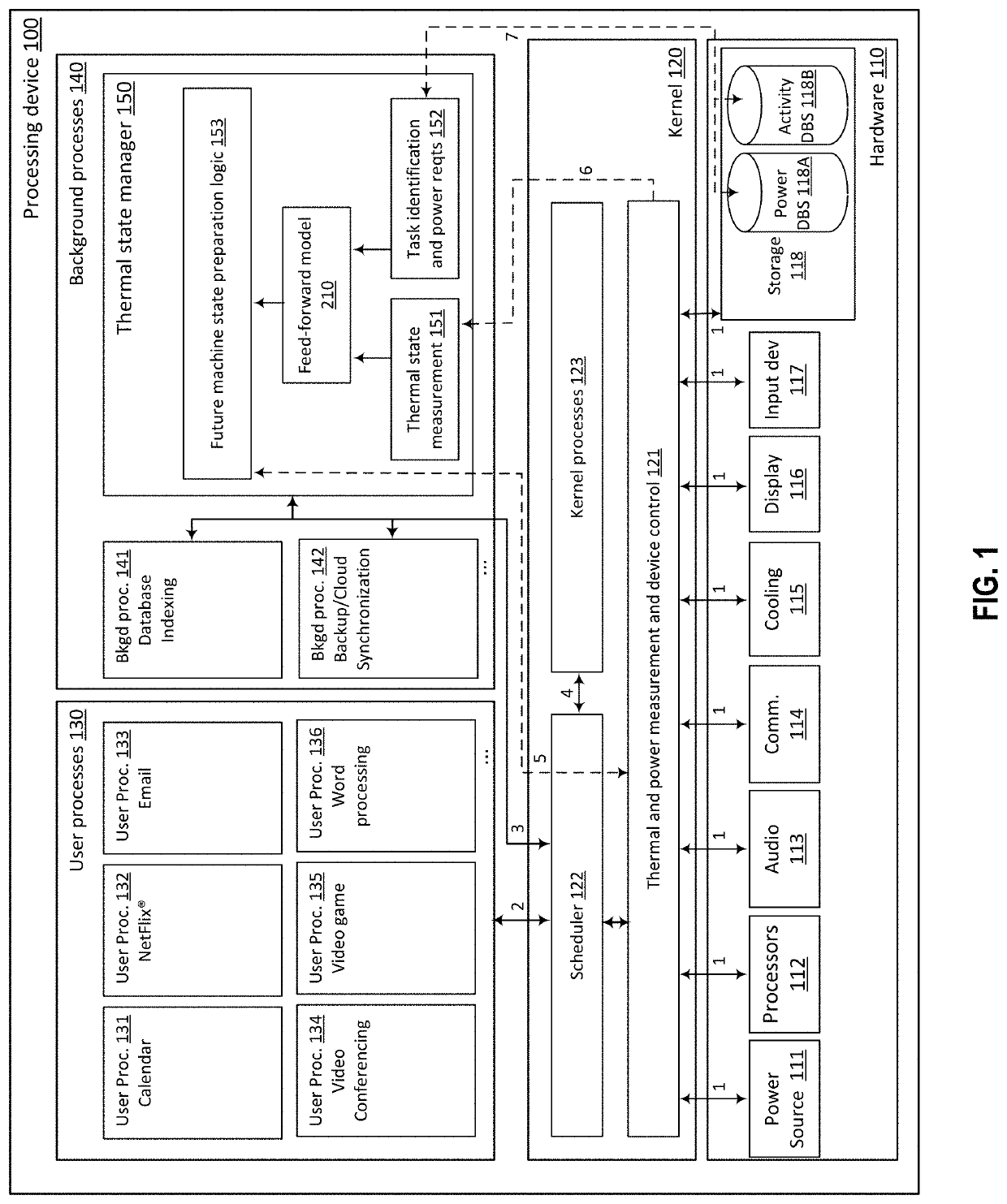

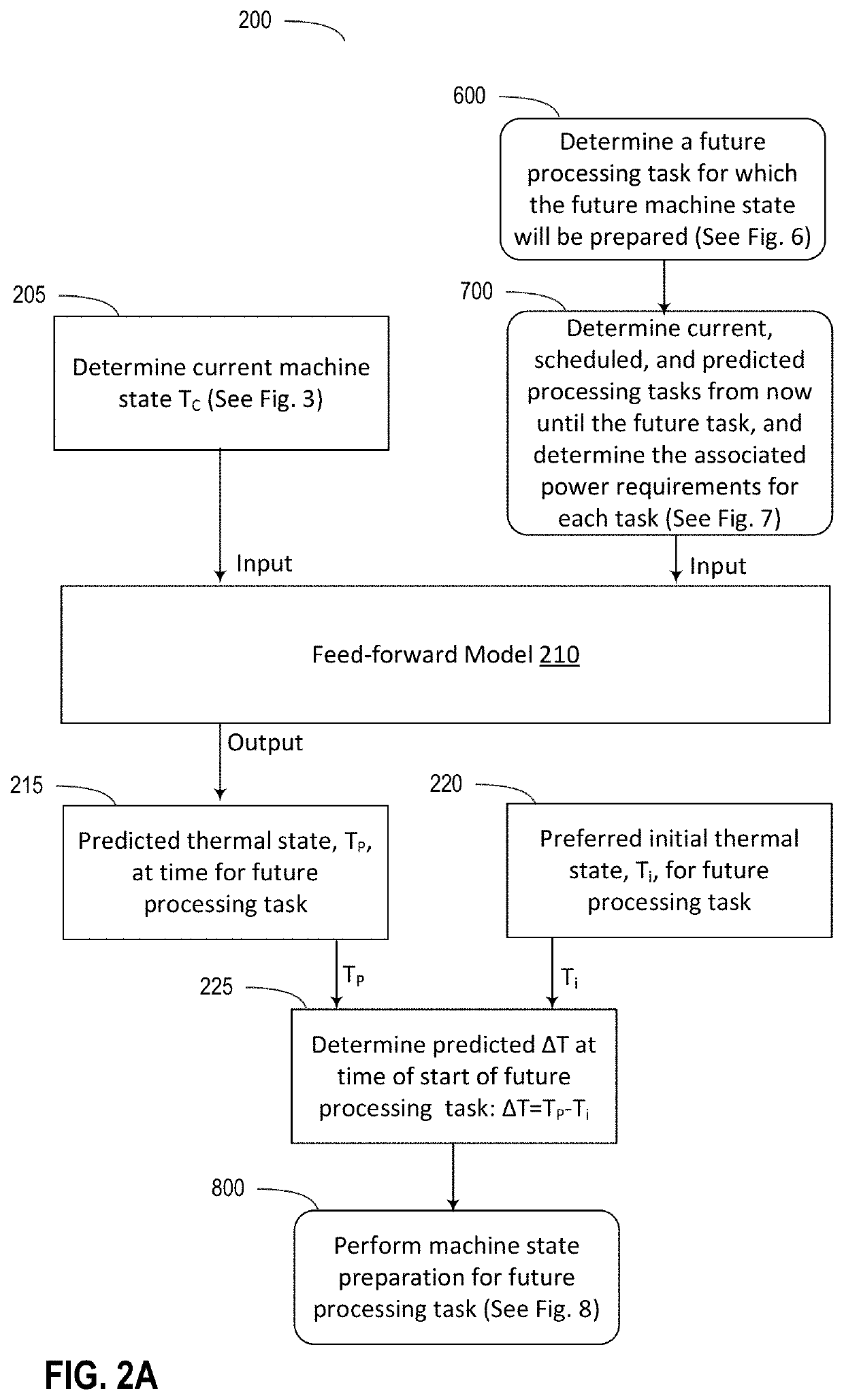

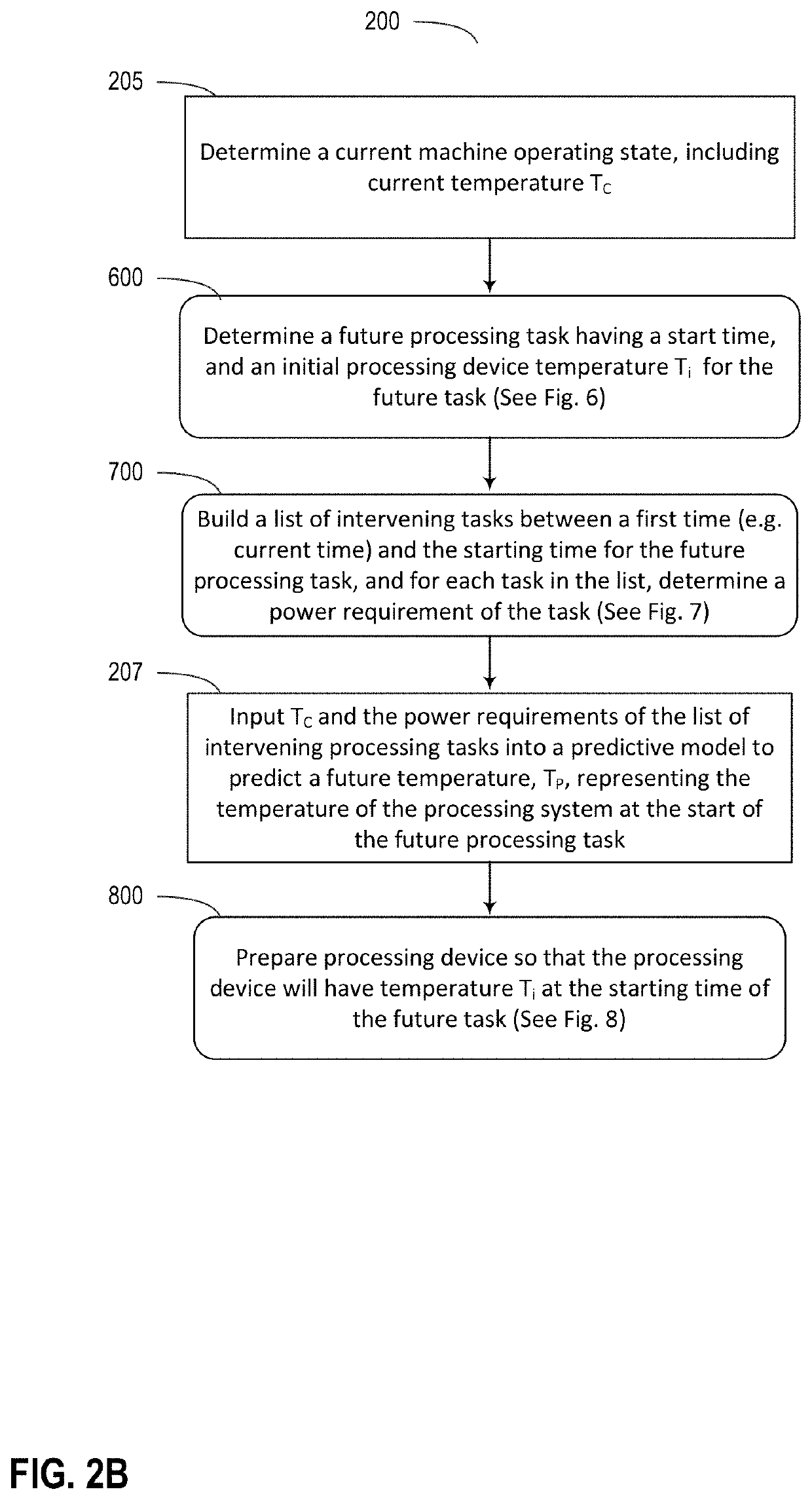

Predictive control systems and methods

ActiveUS10671131B2Maximizes experienceContinuously-optimized computingDigital data processing detailsMultiprocessor based energy comsumption reductionControl systemData mining

Systems and methods are disclosed for determining a current machine state of a processing device, predicting a future processing task to be performed by the processing device at a future time, and predicting a list of intervening processing tasks to be performed by a first time (e.g. a current time) and the start of the future processing task. The future processing task has an associated initial state. A feed-forward thermal prediction model determines a predicted future machine state at the time for starting the future processing task. Heat mitigation processes can be applied in advance of the starting of the future processing task, to meet the future initial machine state for starting the future processing task.

Owner:APPLE INC

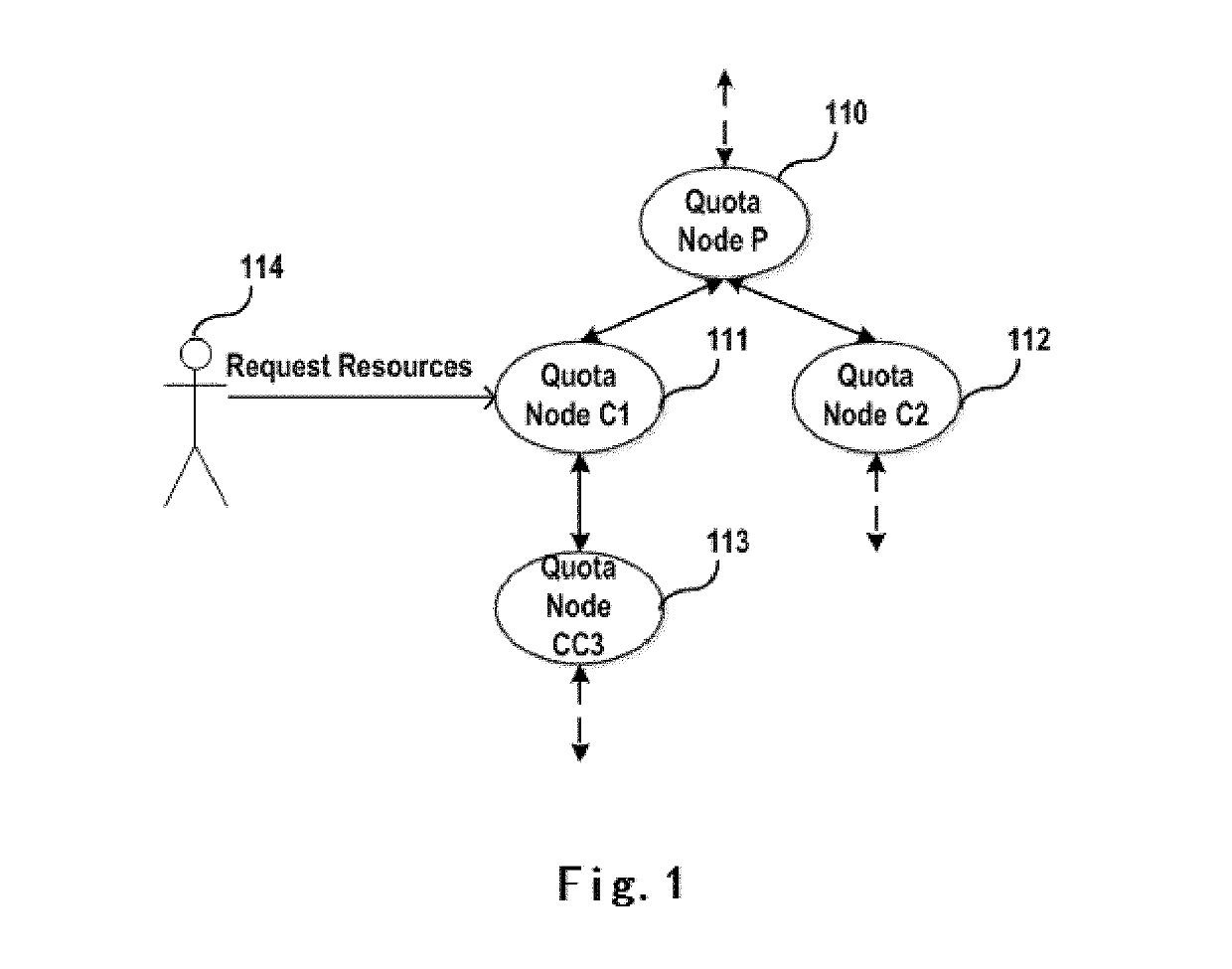

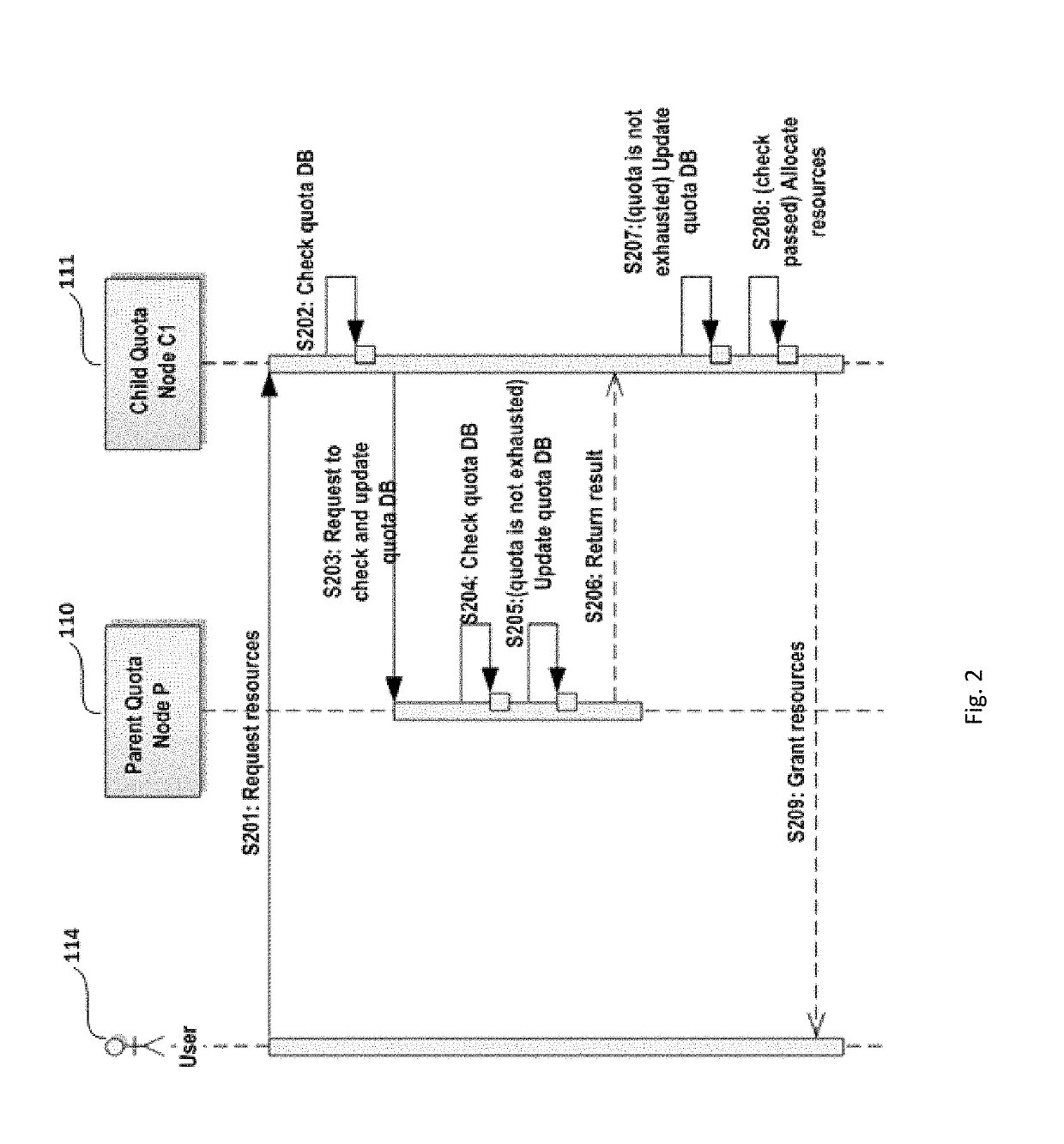

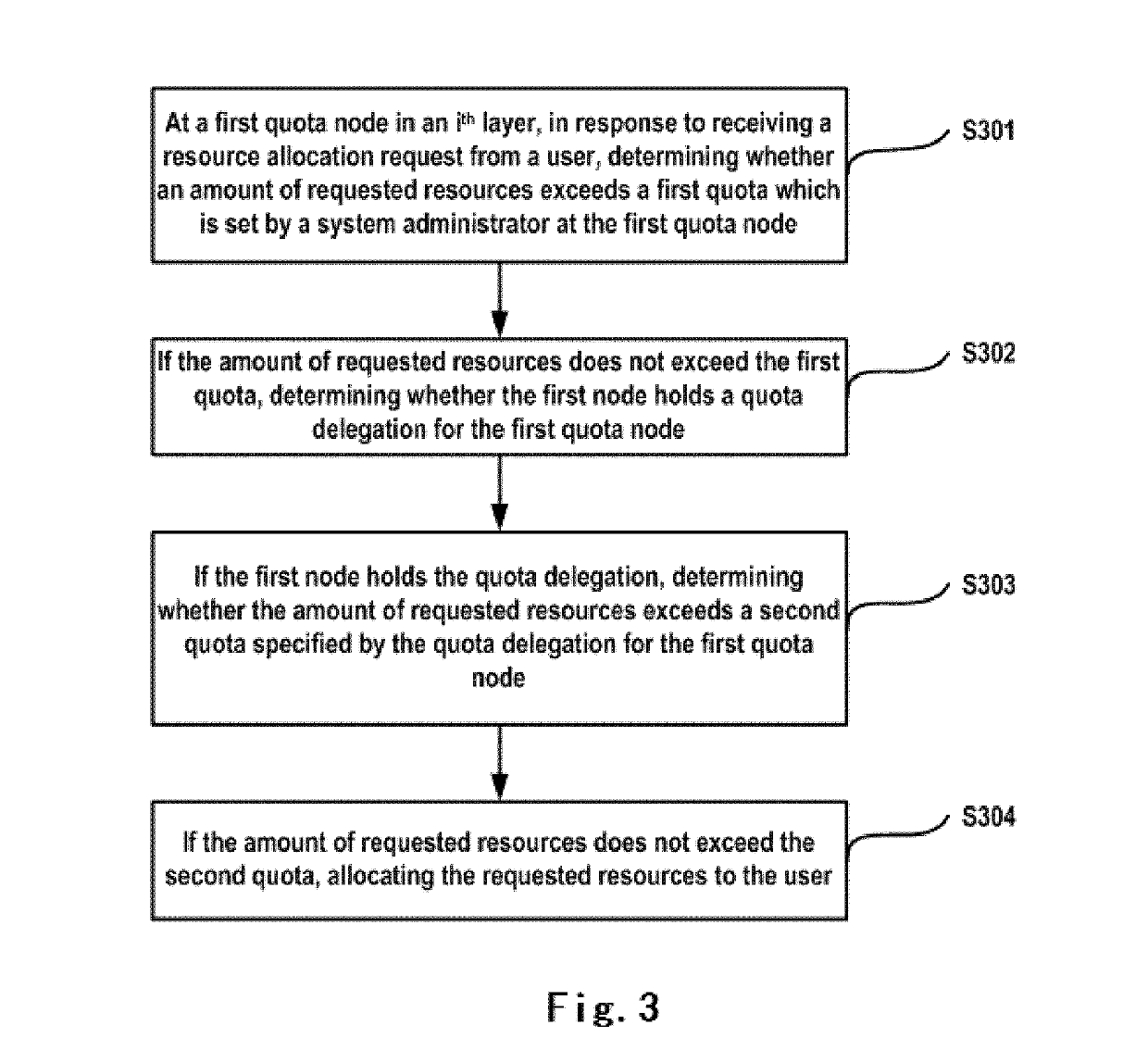

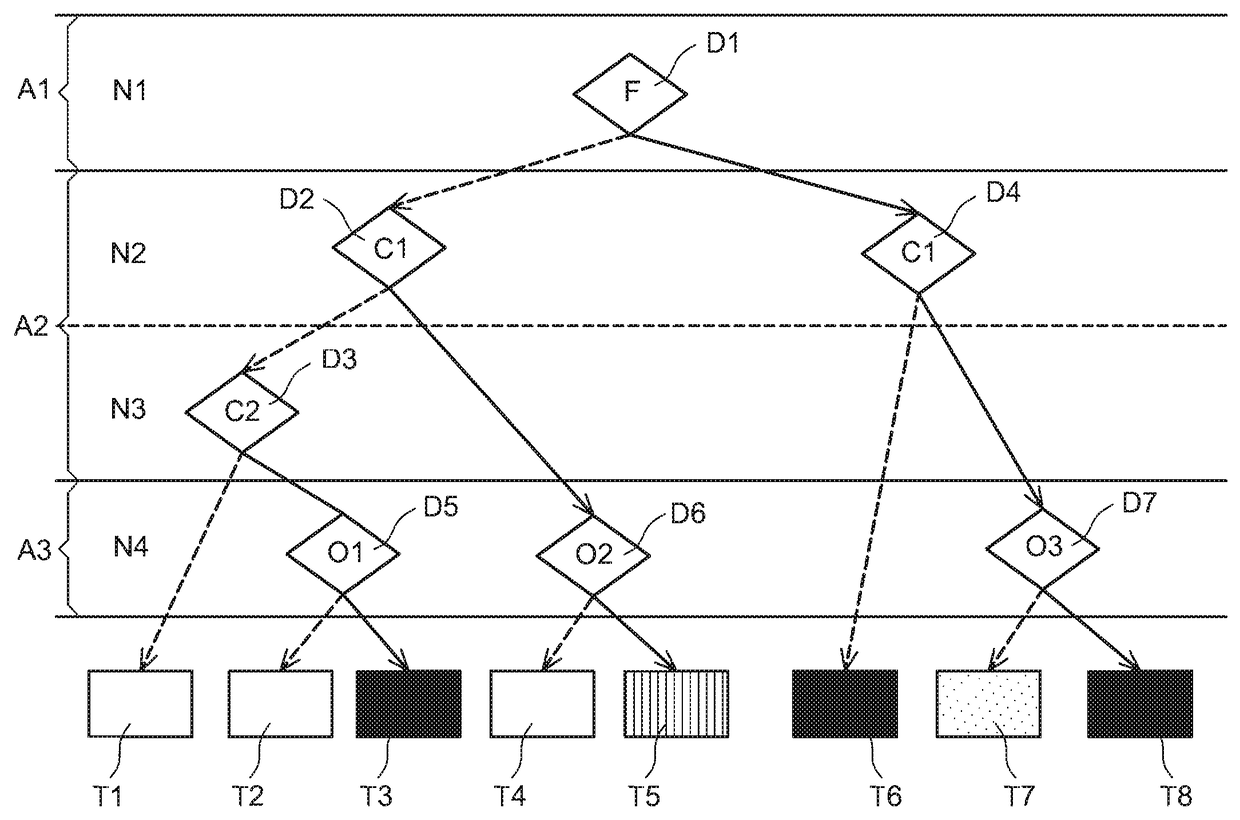

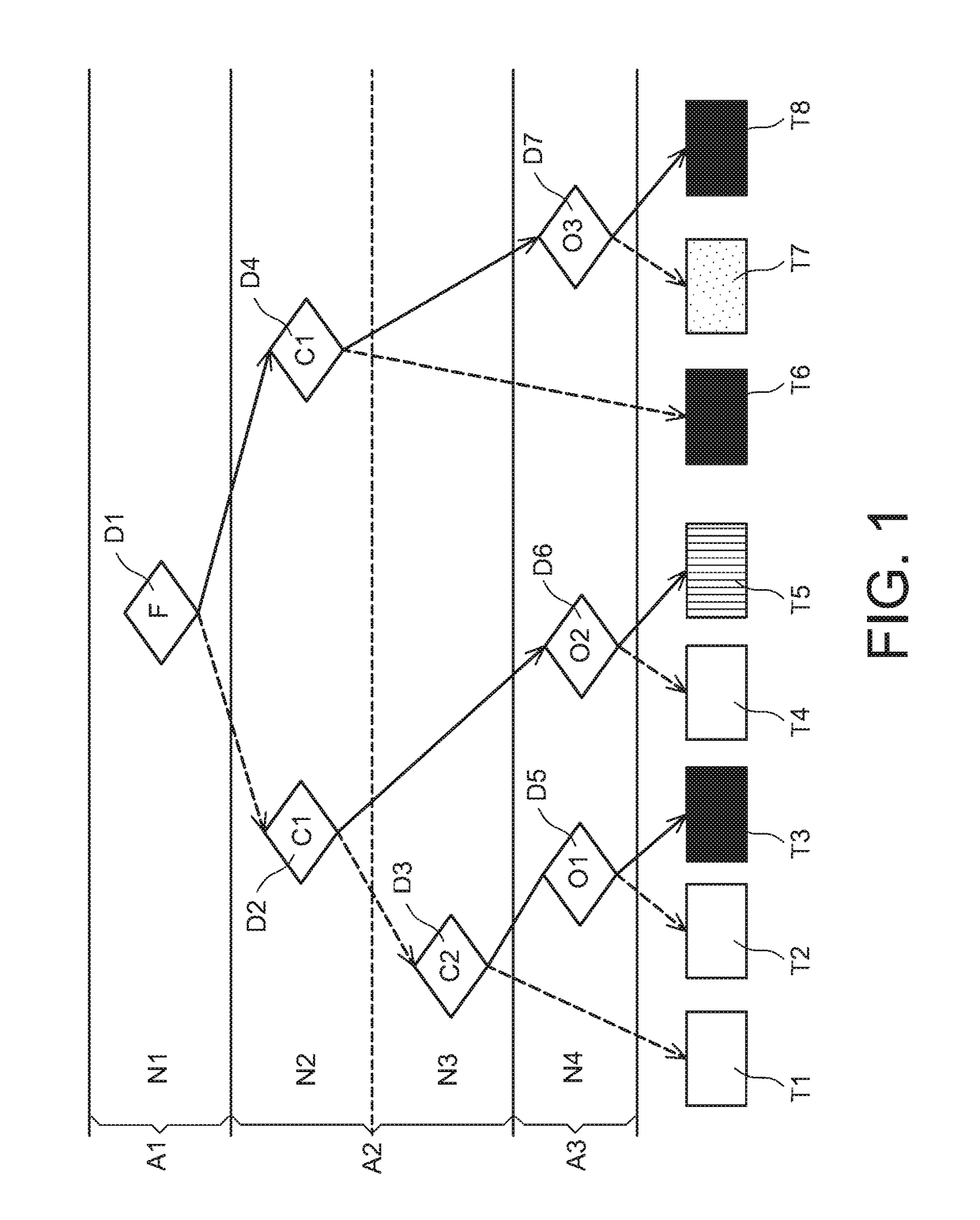

Managing resource allocation in hierarchical quota system

ActiveUS11003497B2Reduce communicationImprove system throughputResource allocationDigital computer detailsResource assignmentDistributed computing

A method for managing resource allocation in a hierarchical quota system, comprising n layers of quota nodes, n being a positive integer greater than 1, and comprises at a first quota node in an ith layer of quota nodes, in response to receiving a resource allocation request from a user, determining whether an amount of requested resources exceeds a first quota; if the amount of requested resources does not exceed the first quota, determining whether the first node holds a quota delegation for the first quota node; if the first node holds the quota delegation, determining whether the amount of requested resources exceeds a second quota specified by the quota delegation for the first quota node; if the amount of requested resources does not exceed the second quota, allocating the requested resources to the user.

Owner:EMC IP HLDG CO LLC

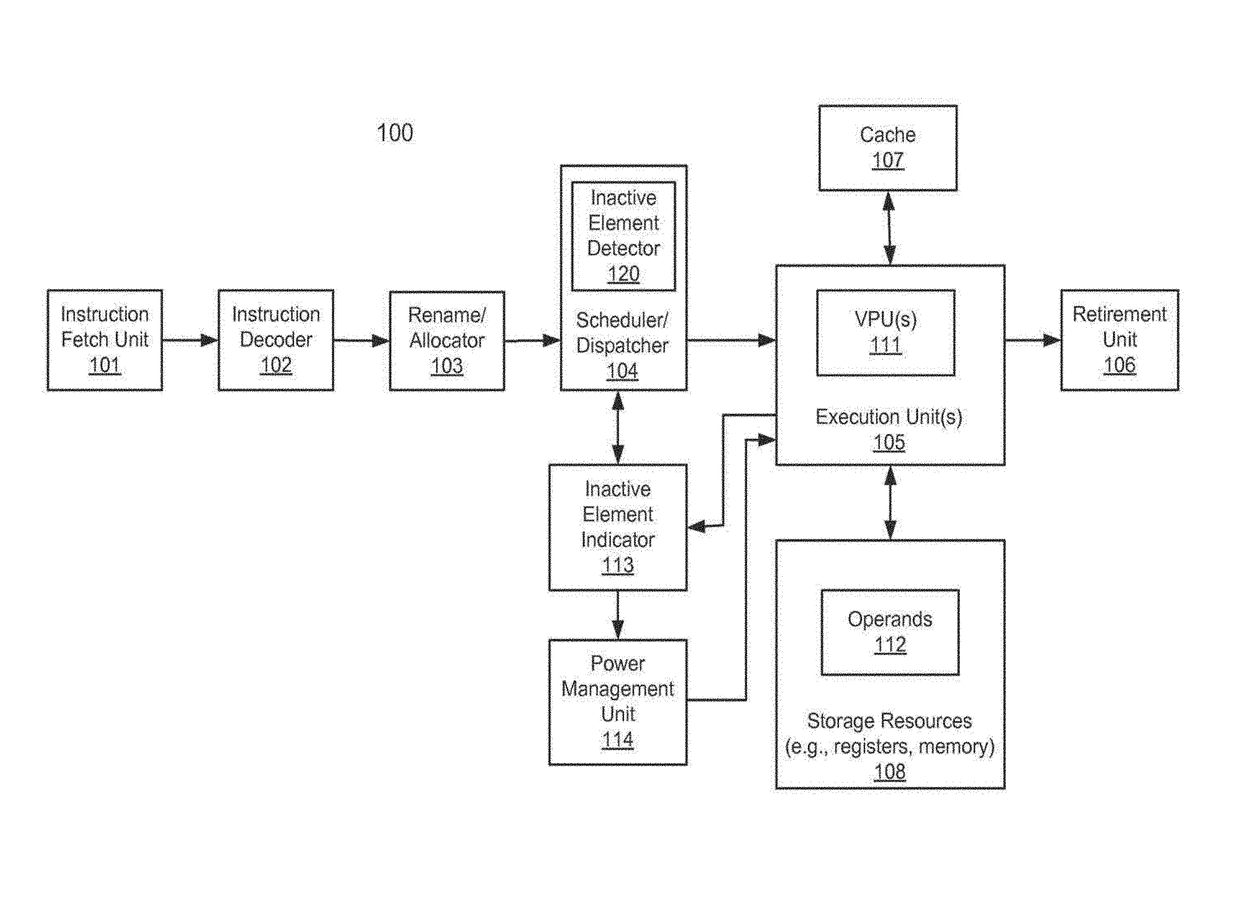

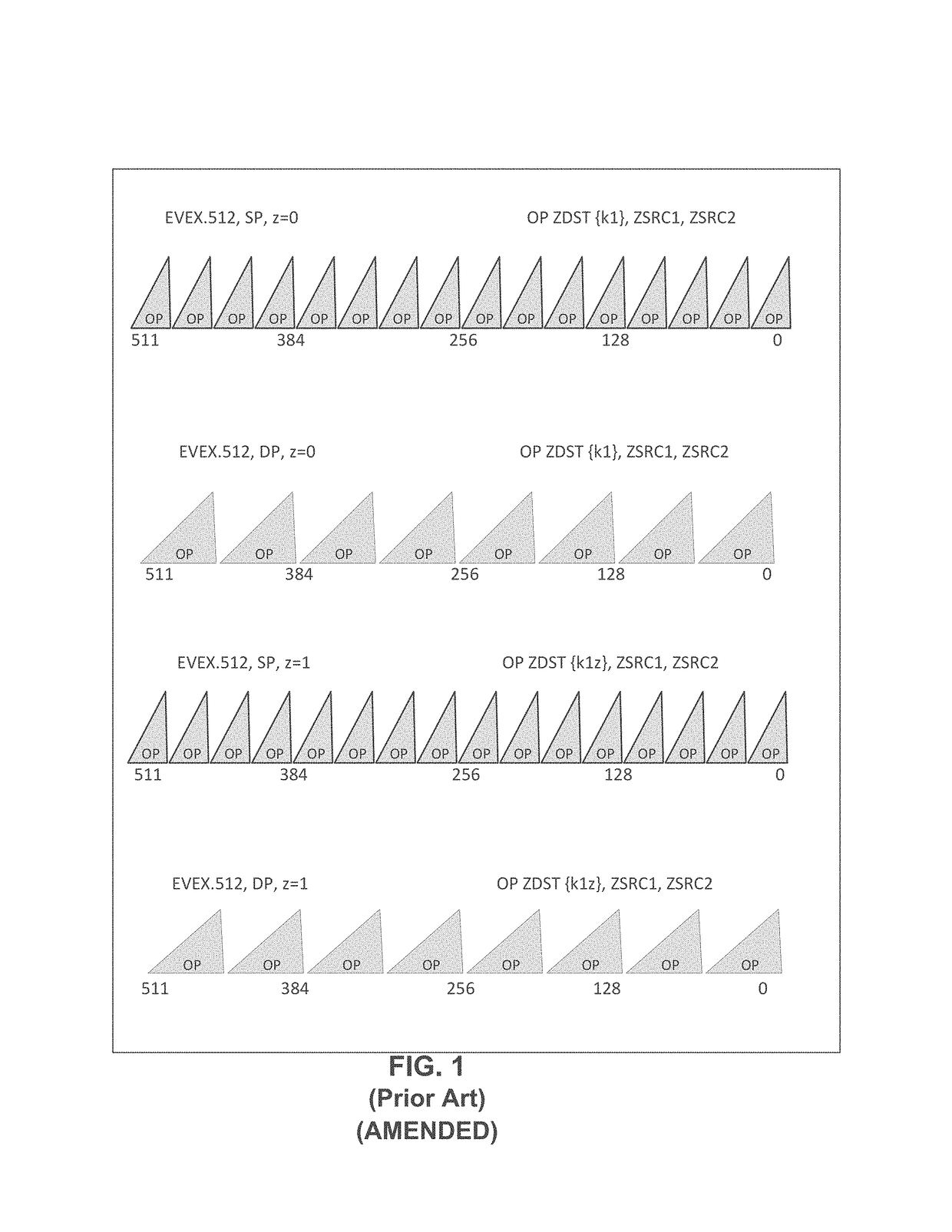

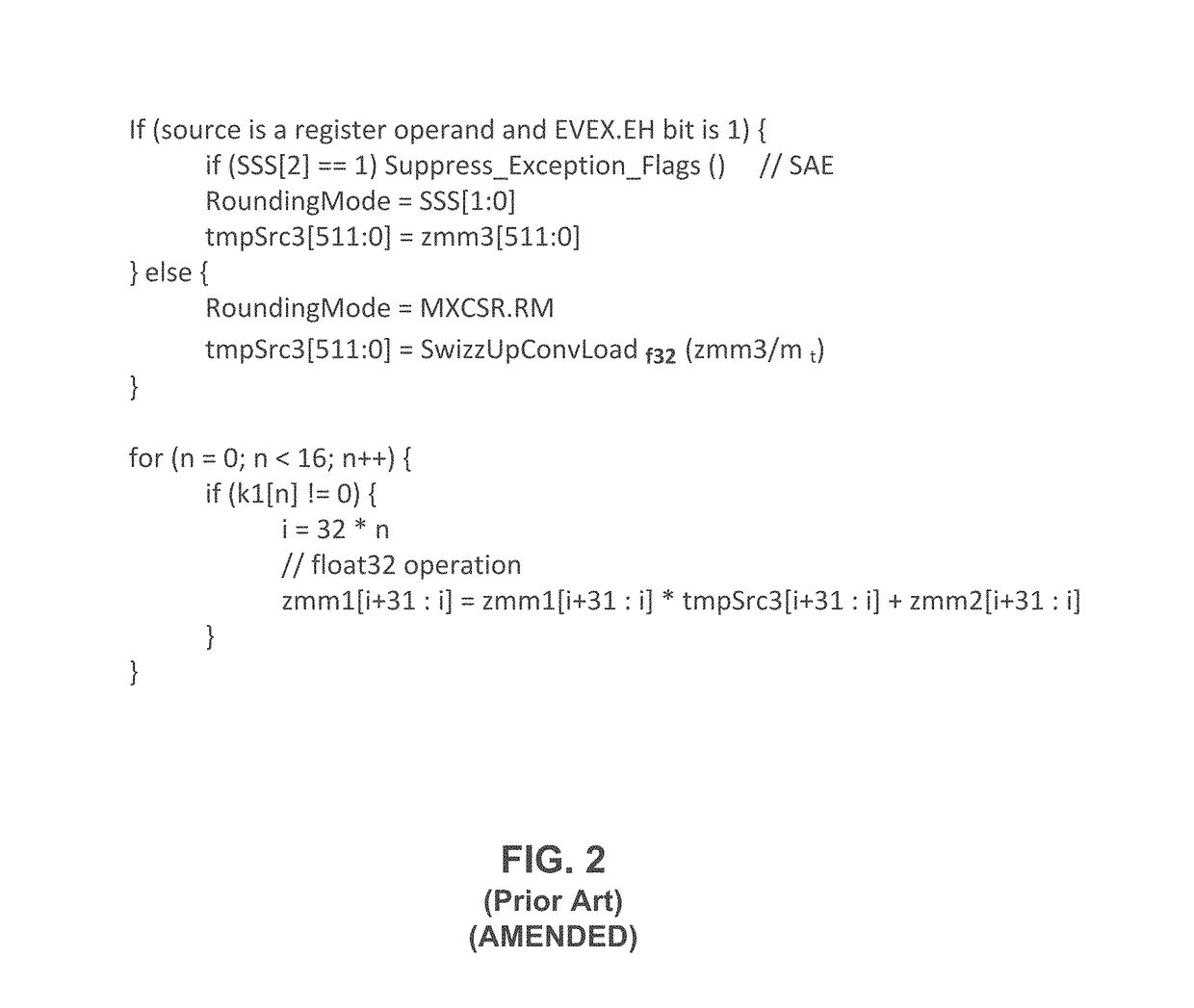

Vector mask driven clock gating for power efficiency of a processor

ActiveUS10133577B2Concurrent instruction executionPower supply for data processingScheduling instructionsPower Management Unit

A processor includes an instruction schedule and dispatch (schedule / dispatch) unit to receive a single instruction multiple data (SIMD) instruction to perform an operation on multiple data elements stored in a storage location indicated by a first source operand. The instruction schedule / dispatch unit is to determine a first of the data elements that will not be operated to generate a result written to a destination operand based on a second source operand. The processor further includes multiple processing elements coupled to the instruction schedule / dispatch unit to process the data elements of the SIMD instruction in a vector manner, and a power management unit coupled to the instruction schedule / dispatch unit to reduce power consumption of a first of the processing elements configured to process the first data element.

Owner:INTEL CORP

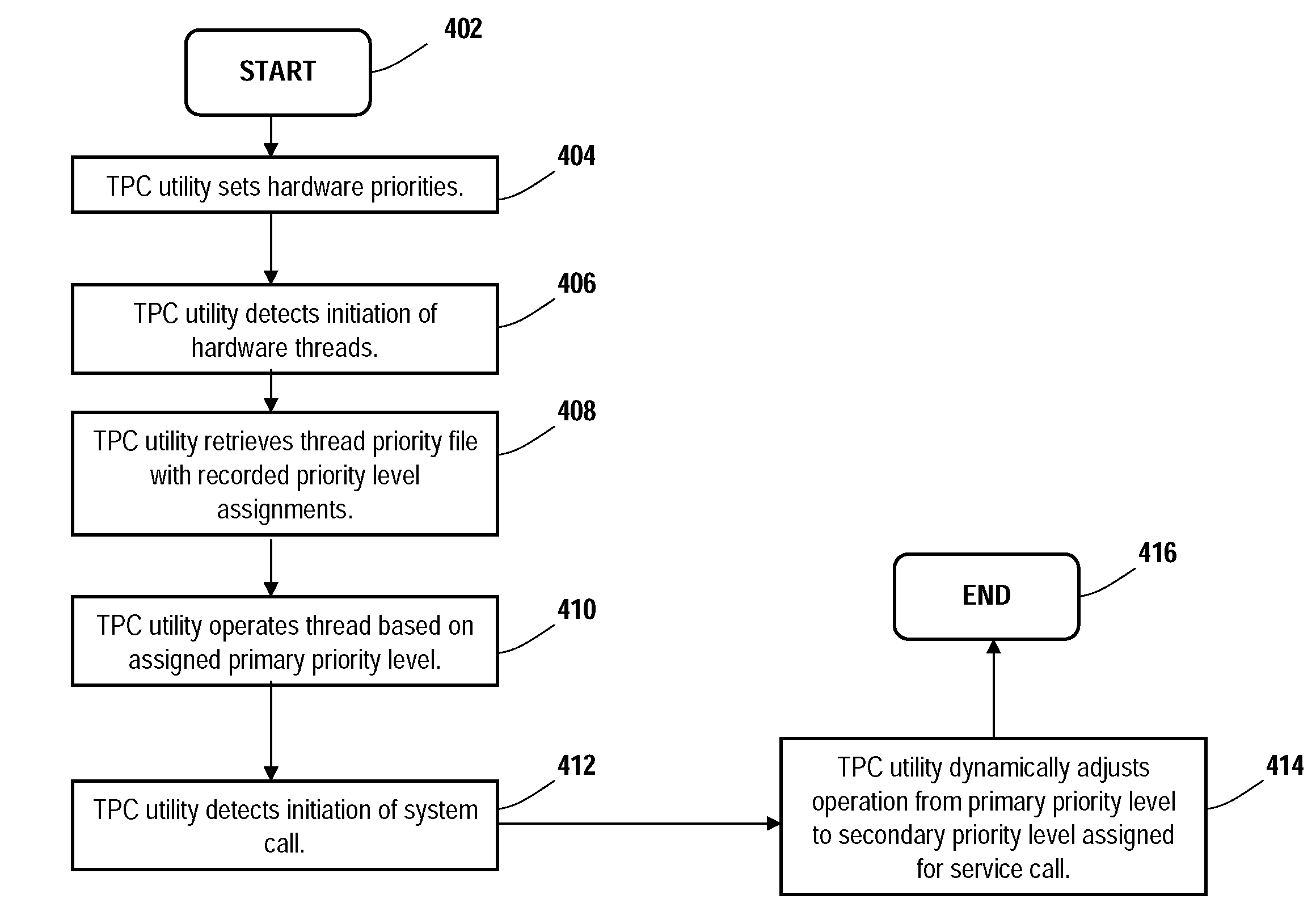

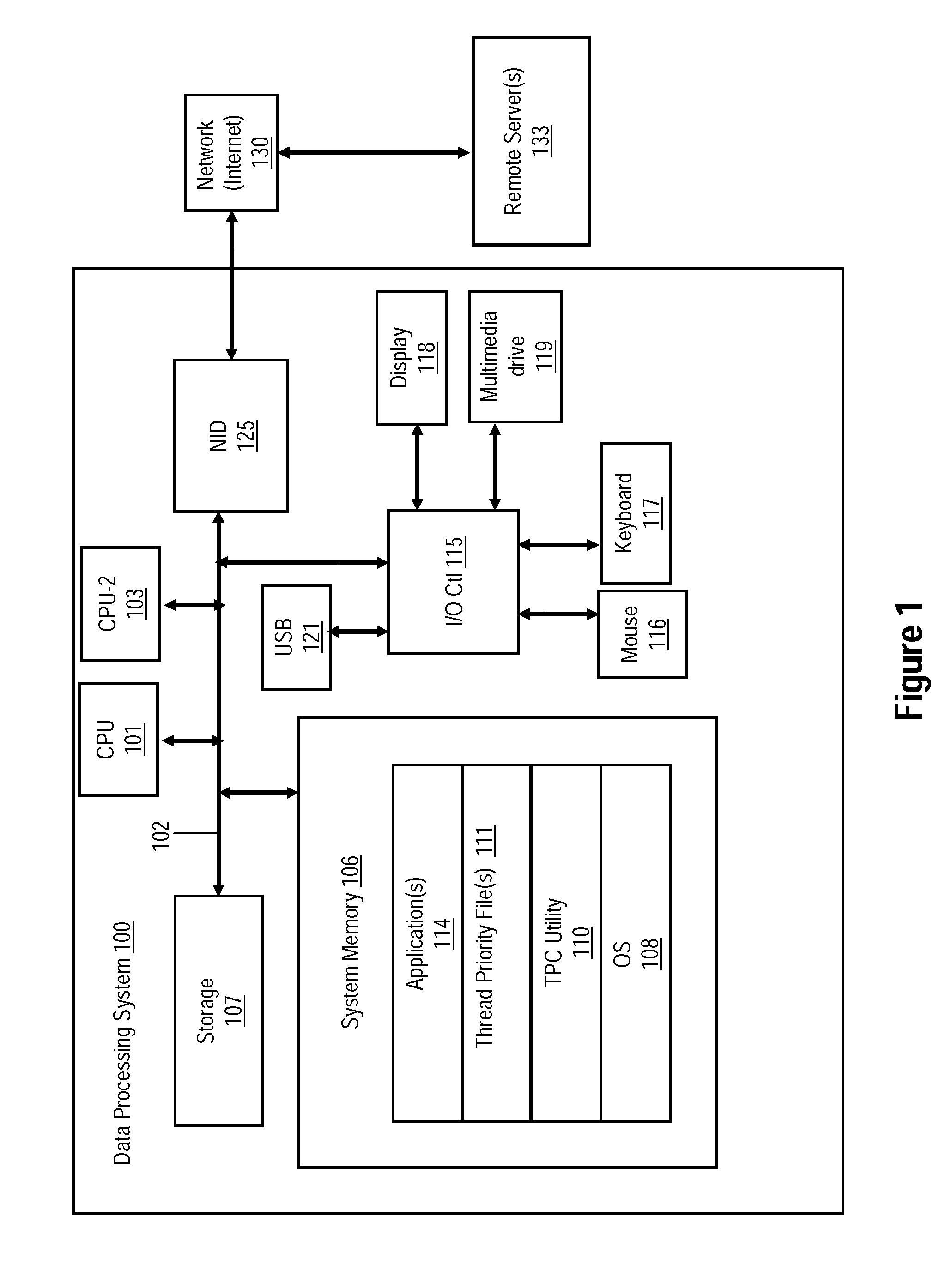

Mechanism to control hardware multi-threaded priority by system call

InactiveUS20100115522A1Reduce energy consumptionRaise priorityDigital computer detailsMultiprogramming arrangementsData processing systemHardware thread

A method, a system and a computer program product for controlling the hardware priority of hardware threads in a data processing system. A Thread Priority Control (TPC) utility assigns a primary level and one or more secondary levels of hardware priority to a hardware thread. When a hardware thread initiates execution in the absence of a system call, the TPC utility enables execution based on the primary level. When the hardware thread initiates execution within a system call, the TPC utility dynamically adjusts execution from the primary level to the secondary level associated with the system call. The TPC utility adjusts hardware priority levels in order to: (a) raise the hardware priority of one hardware thread relative to another; (b) reduce energy consumed by the hardware thread; and (c) fulfill requirements of time critical hardware sections.

Owner:IBM CORP

Multi-processor device

Owner:SAMSUNG ELECTRONICS CO LTD

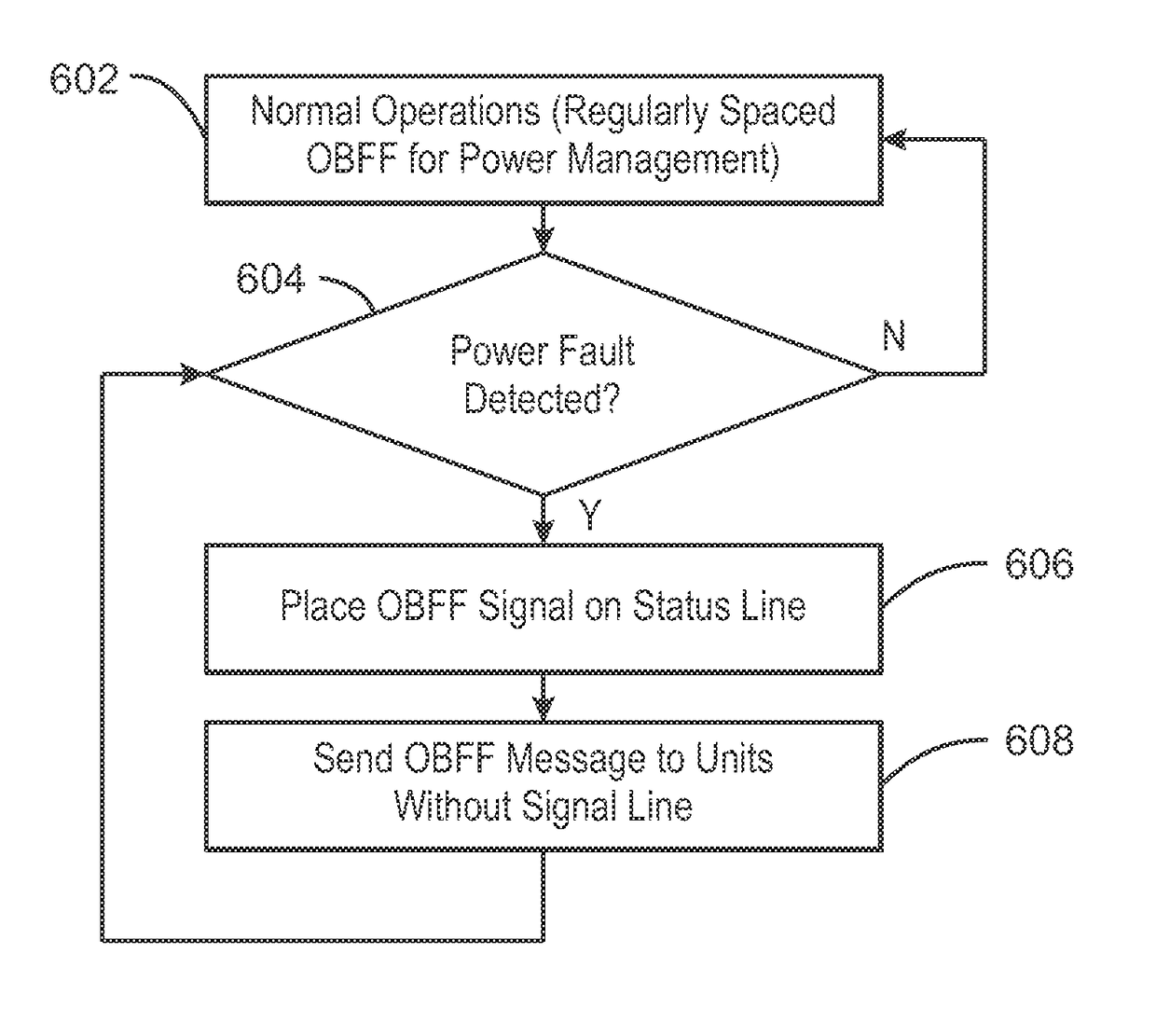

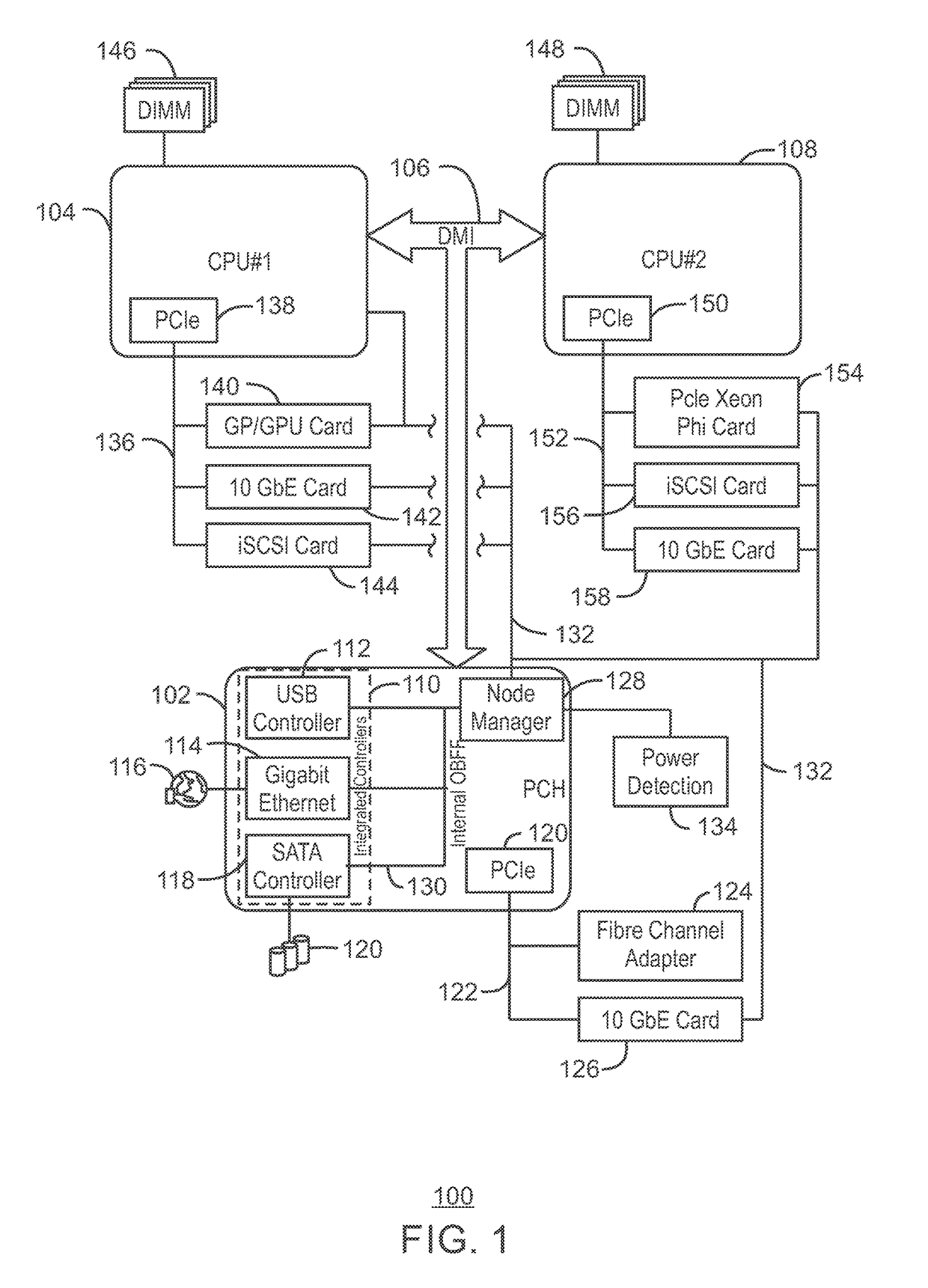

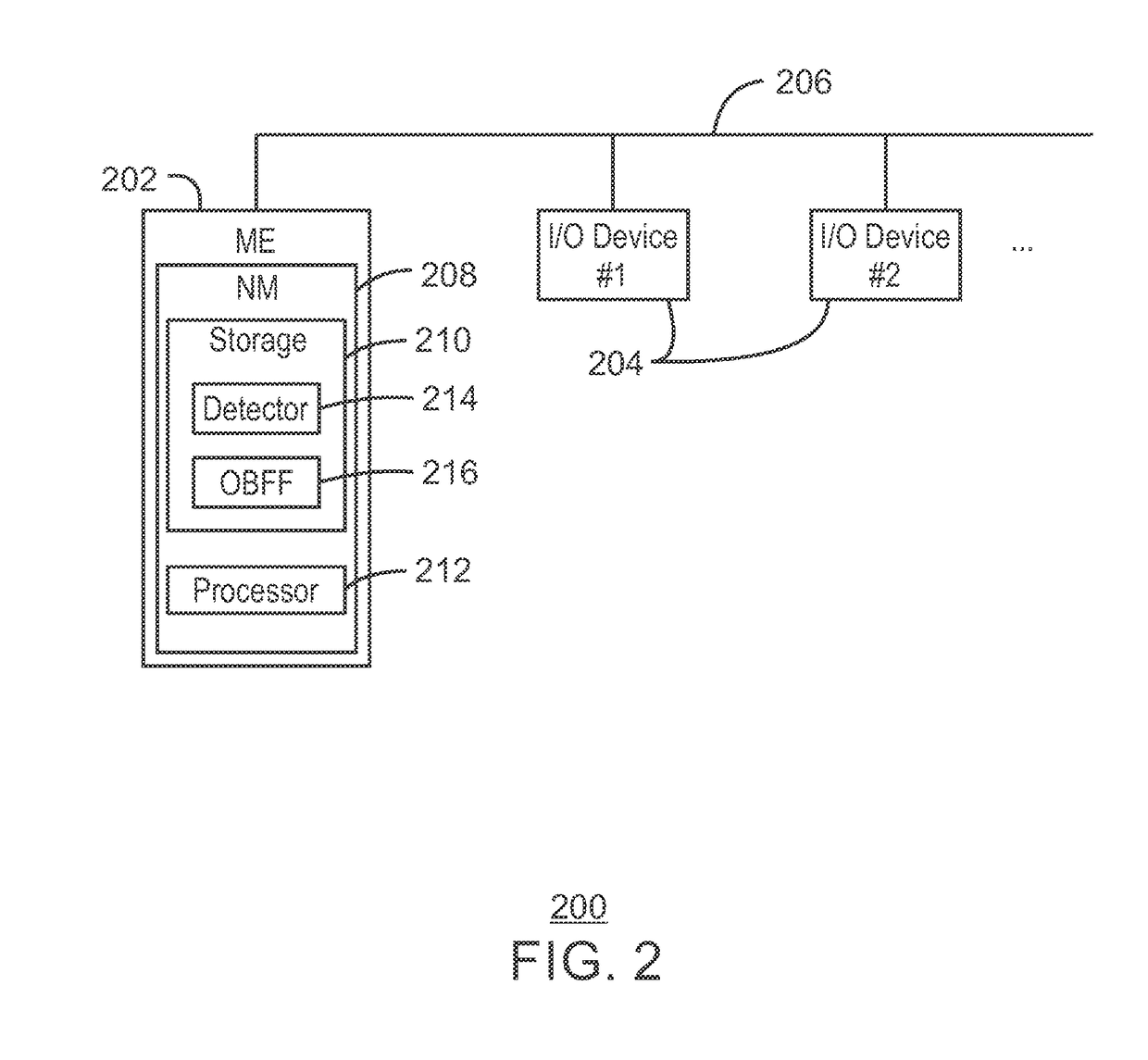

Throttling device power

ActiveUS9891686B2Power supply for data processingMultiprocessor based energy comsumption reductionComputerized systemElectric power

An apparatus and system for throttling I / O devices in a computer system is provided. In an example, a method for throttling device power demand during critical power events. The method includes detecting a critical power event and issuing a signal to system devices to defer optional transactions during the critical power event.

Owner:INTEL CORP

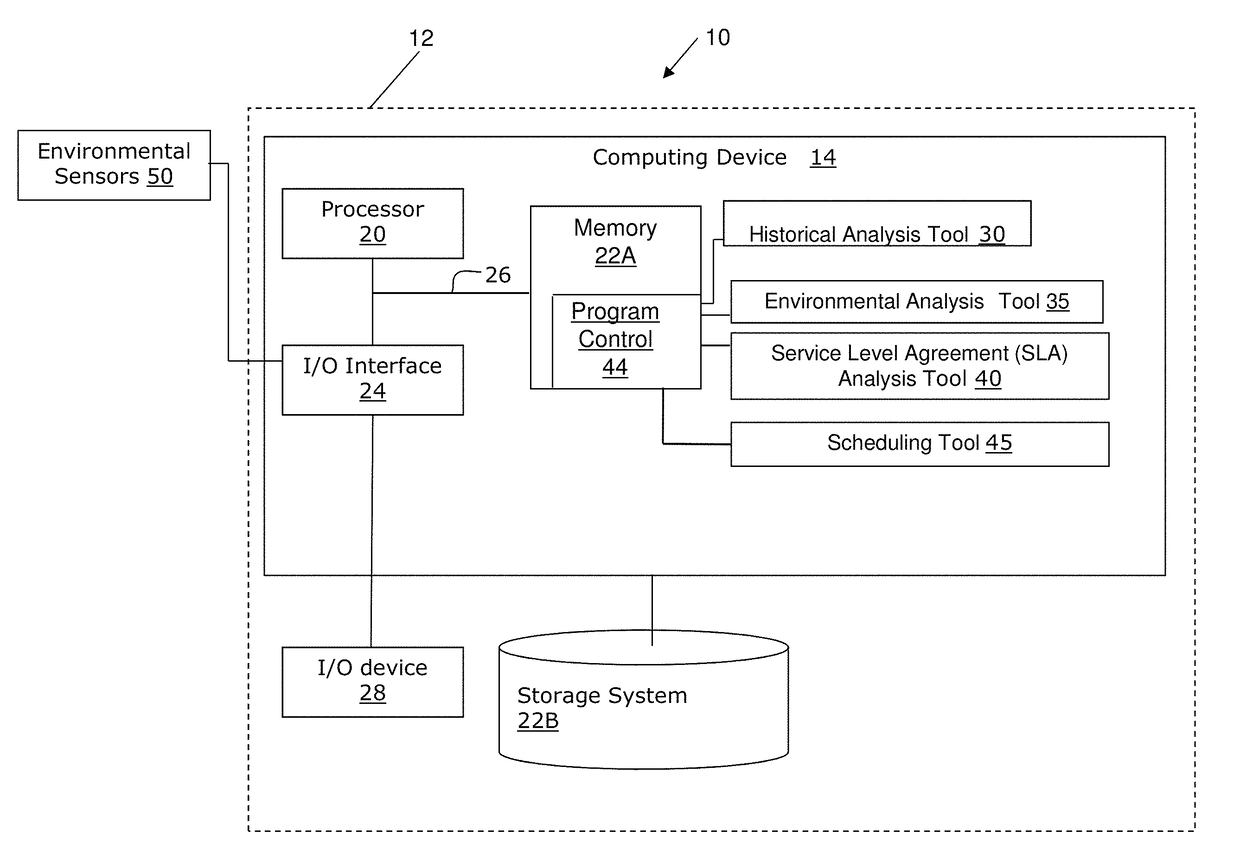

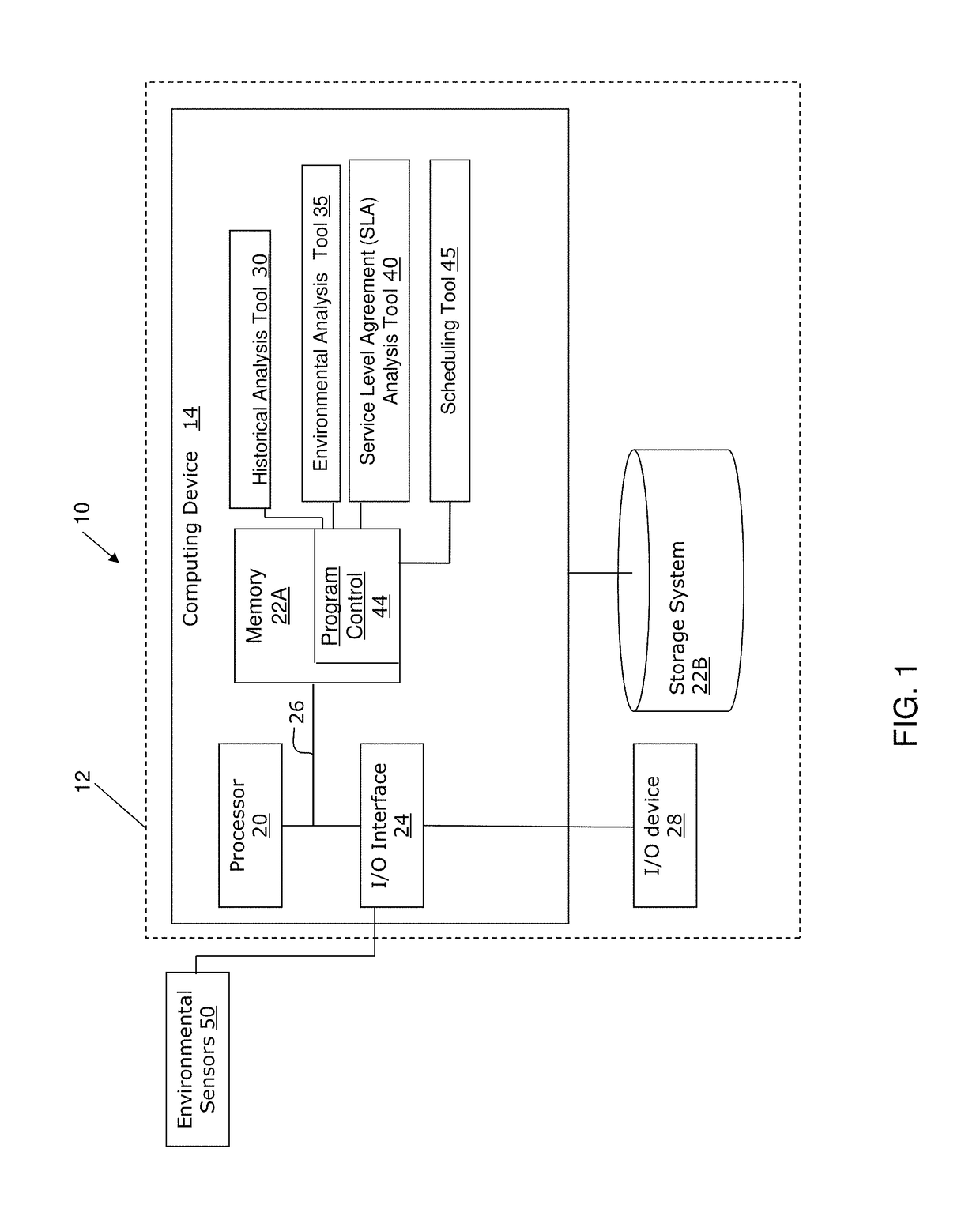

System and method to control heat dissipation through service level analysis

ActiveUS10073717B2Easy to processProgram initiation/switchingResource allocationService-level agreementData center

The system and method generally relate to reducing heat dissipated within a data center, and more particularly, to a system and method for reducing heat dissipated within a data center through service level agreement analysis, and resultant reprioritization of jobs to maximize energy efficiency. A computer implemented method includes performing a service level agreement (SLA) analysis for one or more currently processing or scheduled processing jobs of a data center using a processor of a computer device. Additionally, the method includes identifying one or more candidate processing jobs for a schedule modification from amongst the one or more currently processing or scheduled processing jobs using the processor of the computer device. Further, the method includes performing the schedule modification for at least one of the one or more candidate processing jobs using the processor of the computer device.

Owner:KYNDRYL INC

Method and computer program for offloading execution of computing tasks of a wireless equipment

Owner:COMMISSARIAT A LENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES +1

Relay user equipment device and capability discovery method thereof

A relay user equipment (UE) executes a capability discovery method. The relay UE receives a trigger for delivery of a UE capability message. The relay UE reports UE capabilities of the relay UE by transmitting the UE capabilities in the UE capability message in response to the trigger to the request source. The UE capability message may comprise information to address at least network slicing or advanced power saving. For example, the UE capability message may comprise a field for accommodating network controlled power saving capability which reflects whether a power saving state of the relay UE is network controllable.

Owner:CLOUD NETWORK TECH SINGAPORE PTE LTD

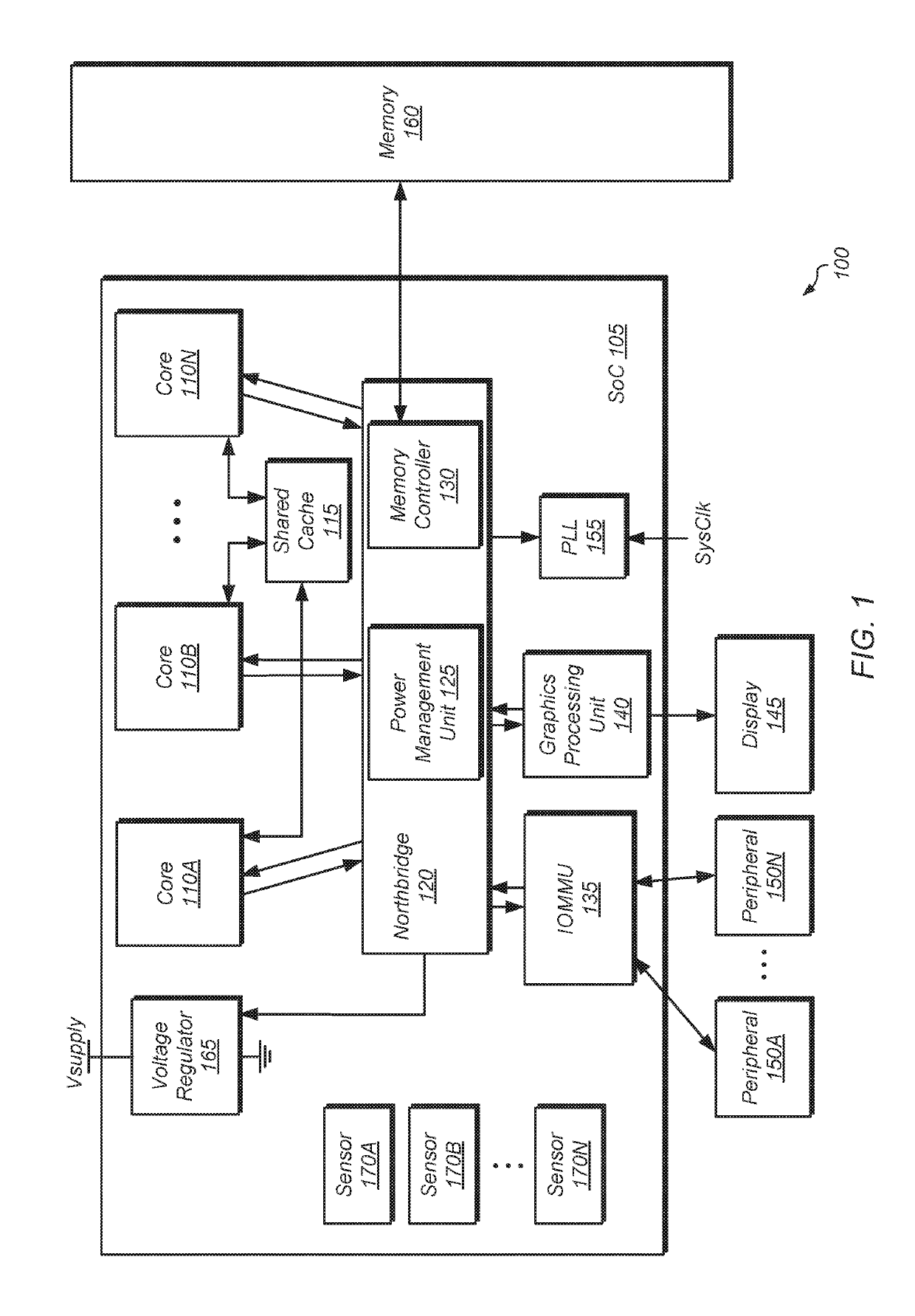

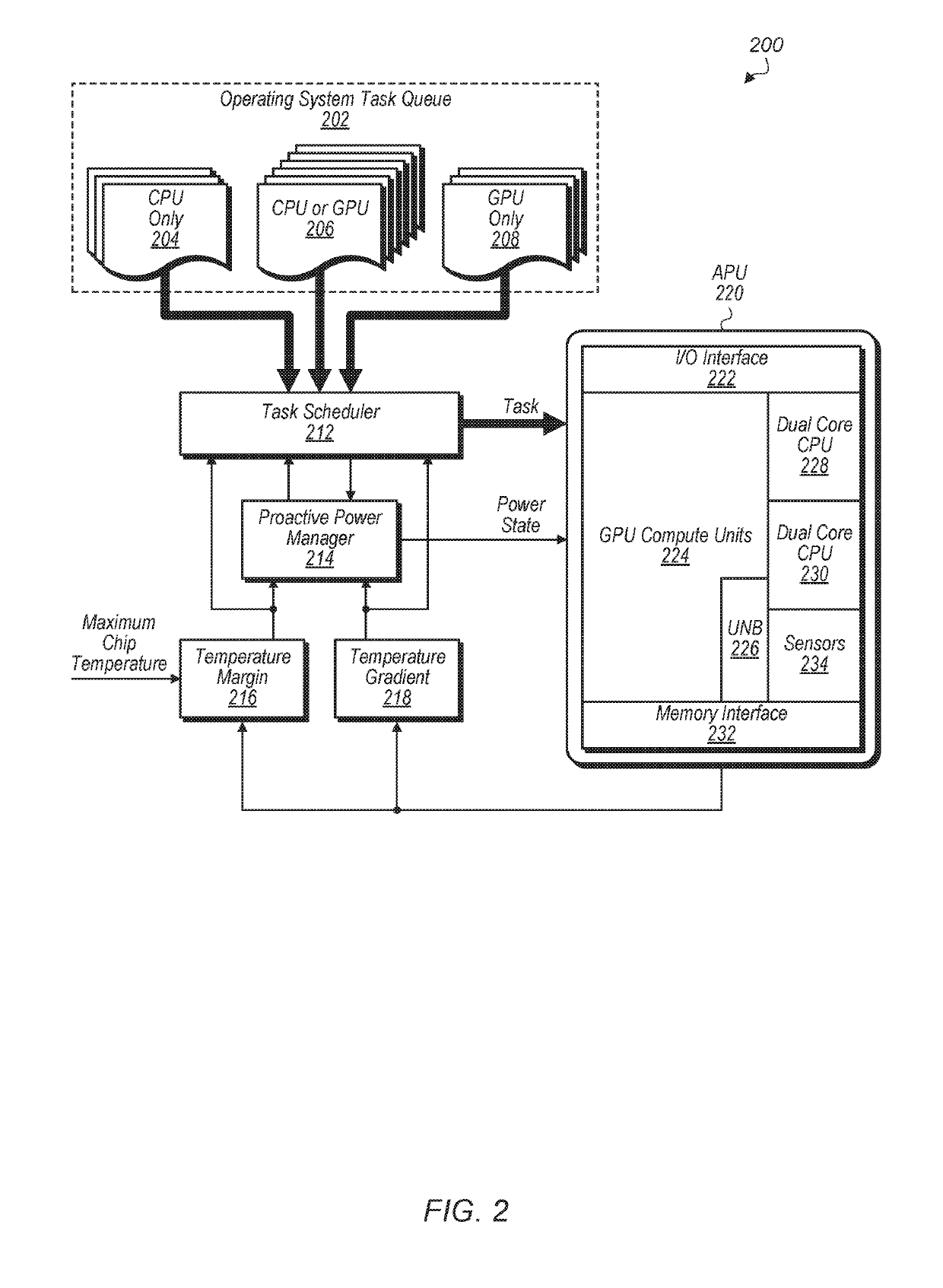

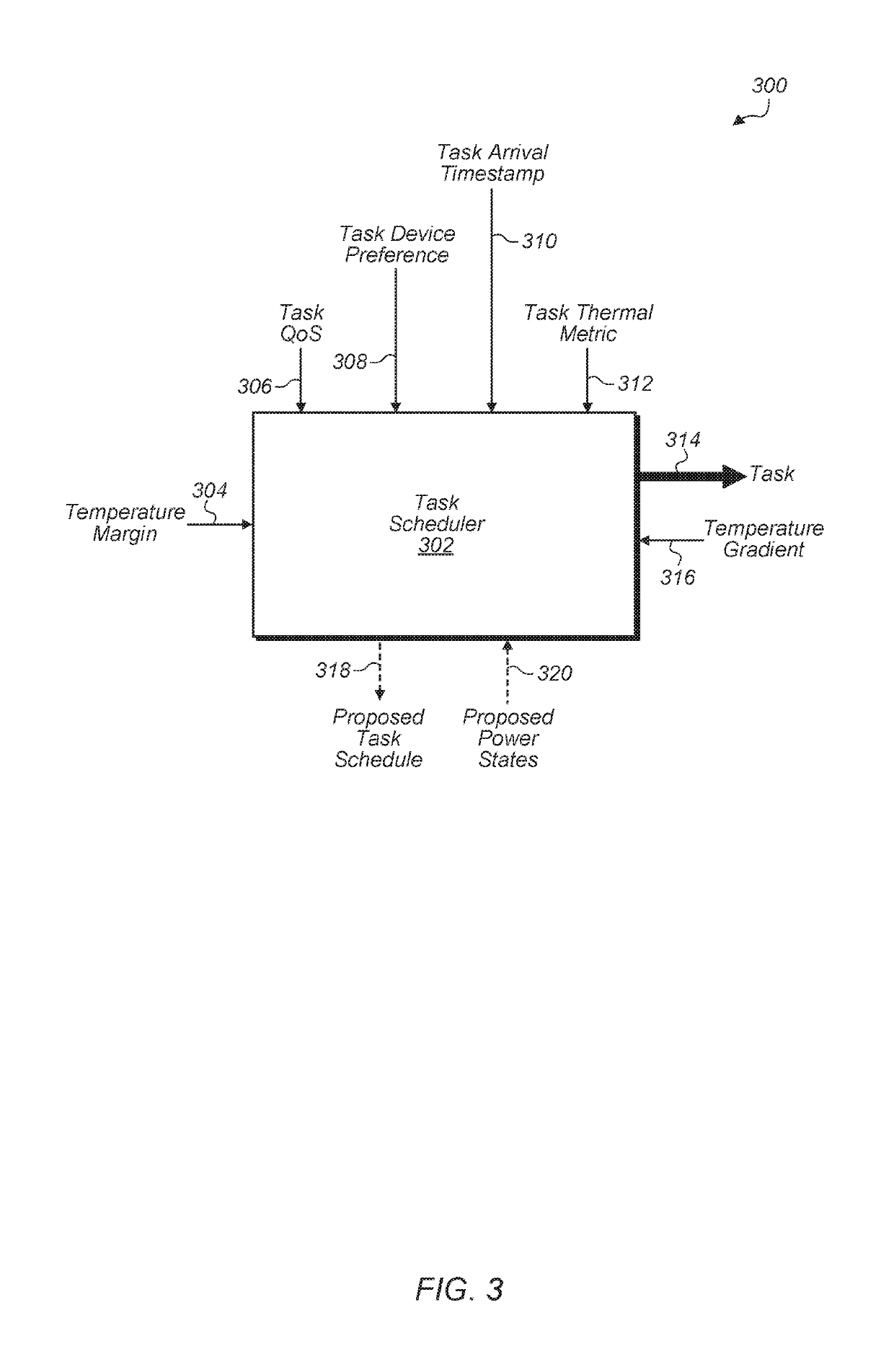

Temperature-aware task scheduling and proactive power management

Systems, apparatuses, and methods for performing temperature-aware task scheduling and proactive power management. A SoC includes a plurality of processing units and a task queue storing pending tasks. The SoC calculates a thermal metric for each pending task to predict an amount of heat the pending task will generate. The SoC also determines a thermal gradient for each processing unit to predict a rate at which the processing unit's temperature will change when executing a task. The SoC also monitors a thermal margin of how far each processing unit is from reaching its thermal limit. The SoC minimizes non-uniform heat generation on the SoC by scheduling pending tasks from the task queue to the processing units based on the thermal metrics for the pending tasks, the thermal gradients of each processing unit, and the thermal margin available on each processing unit.

Owner:ADVANCED MICRO DEVICES INC

Popular searches

Microorganism preservation Fermentation Program synchronisation Memory adressing/allocation/relocation General purpose stored program computer Single machine energy consumption reduction Specific program execution arrangements High level techniques Machine execution arrangements Computation using denominational number representation

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com