Patents

Literature

30results about How to "Reduce data exchange" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

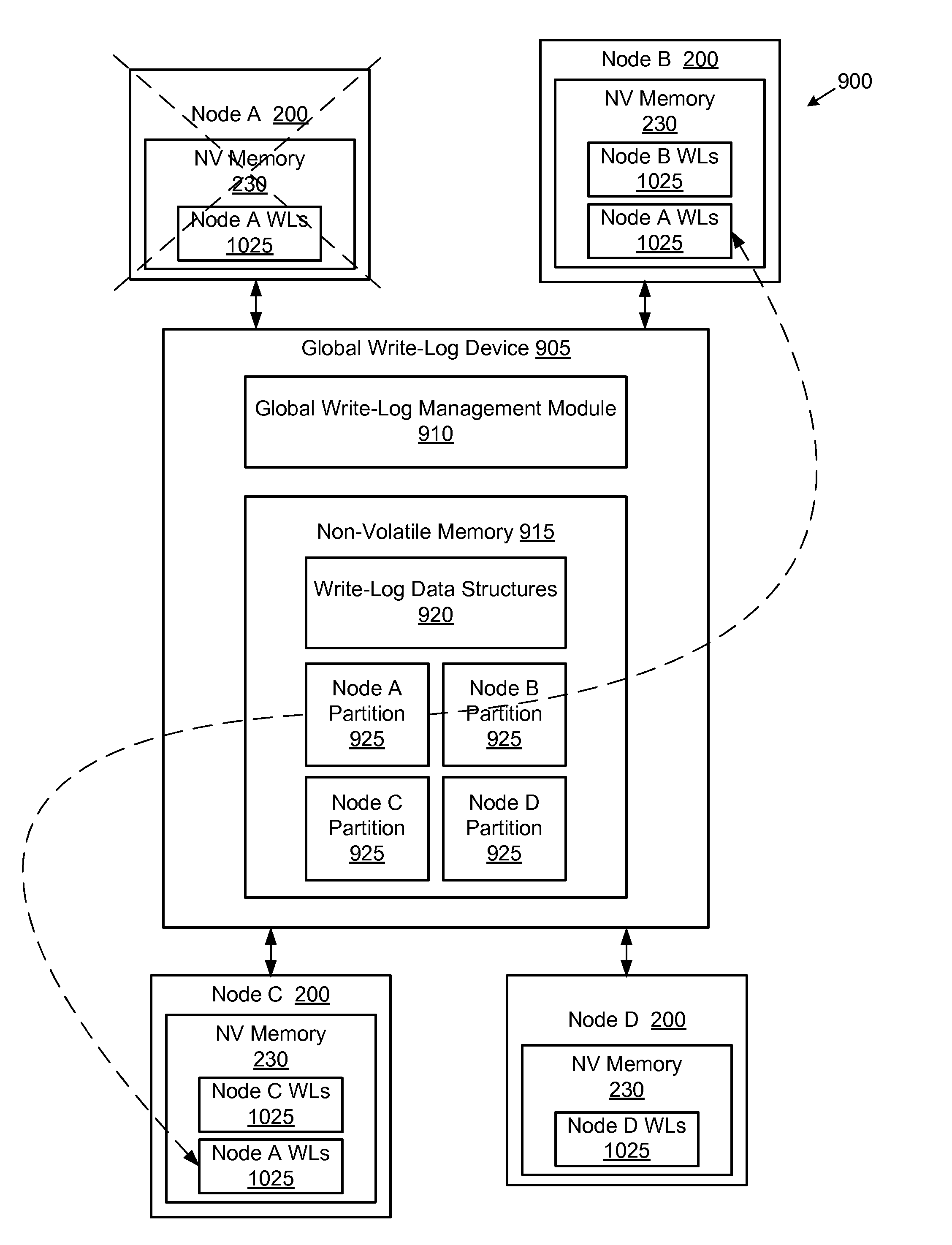

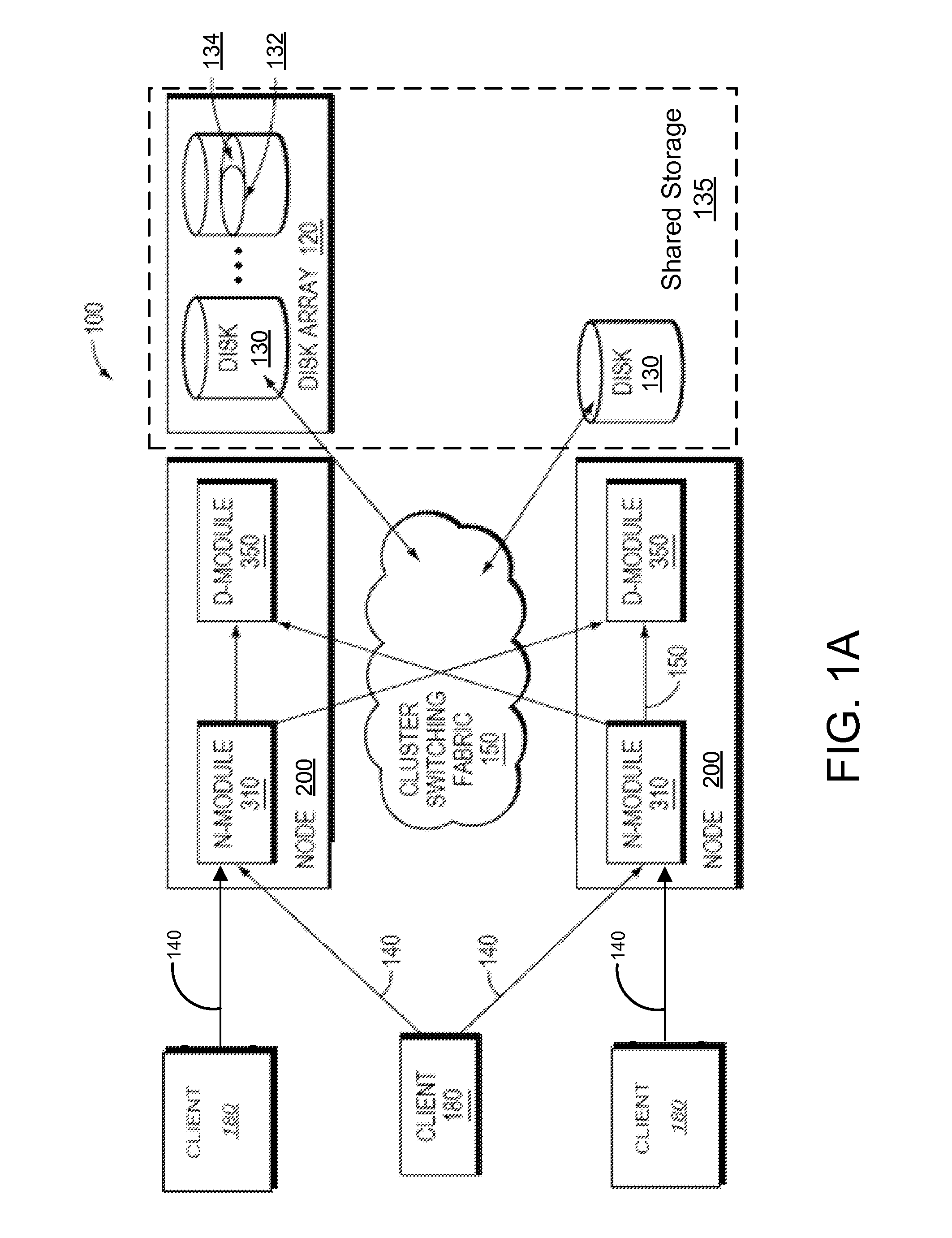

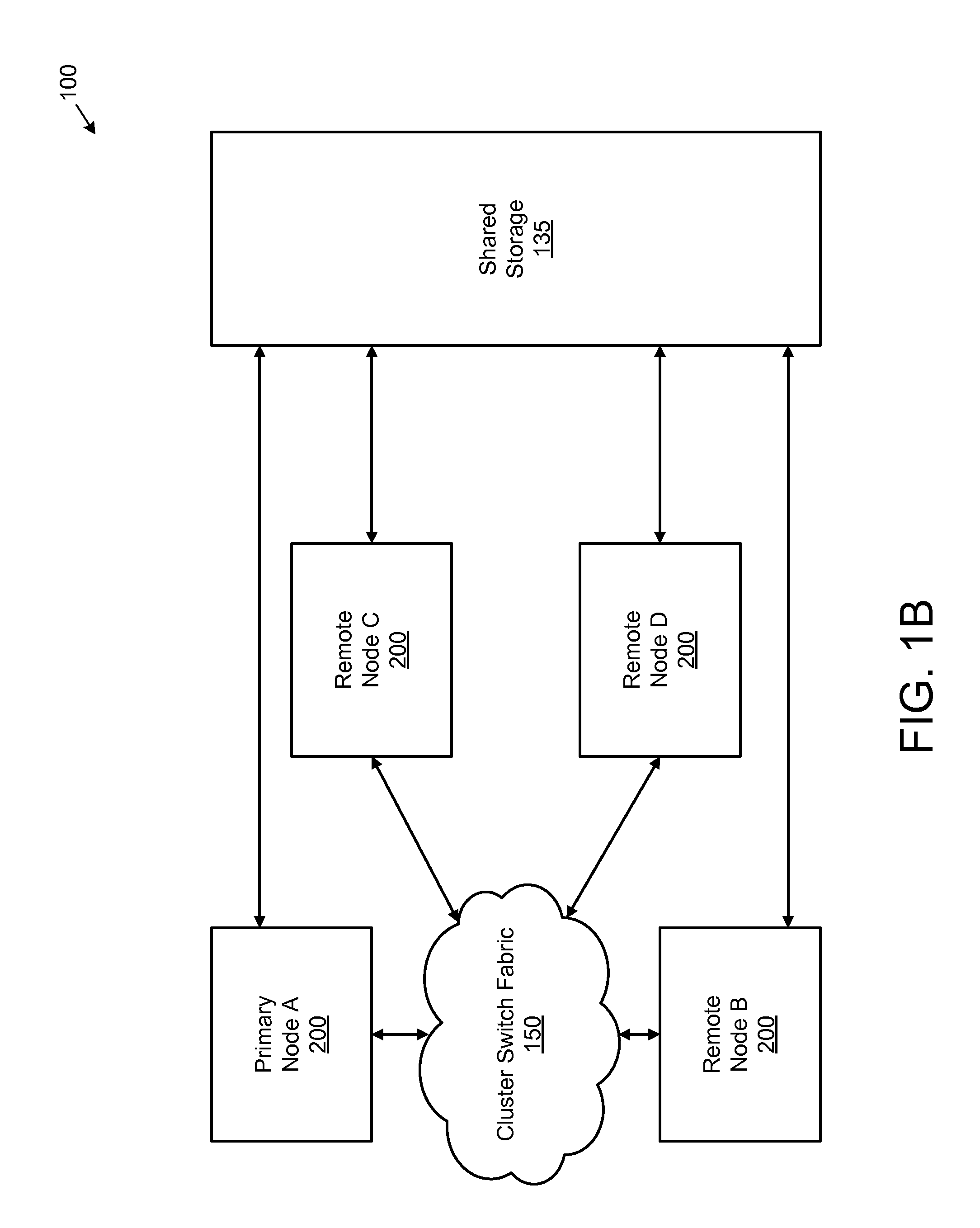

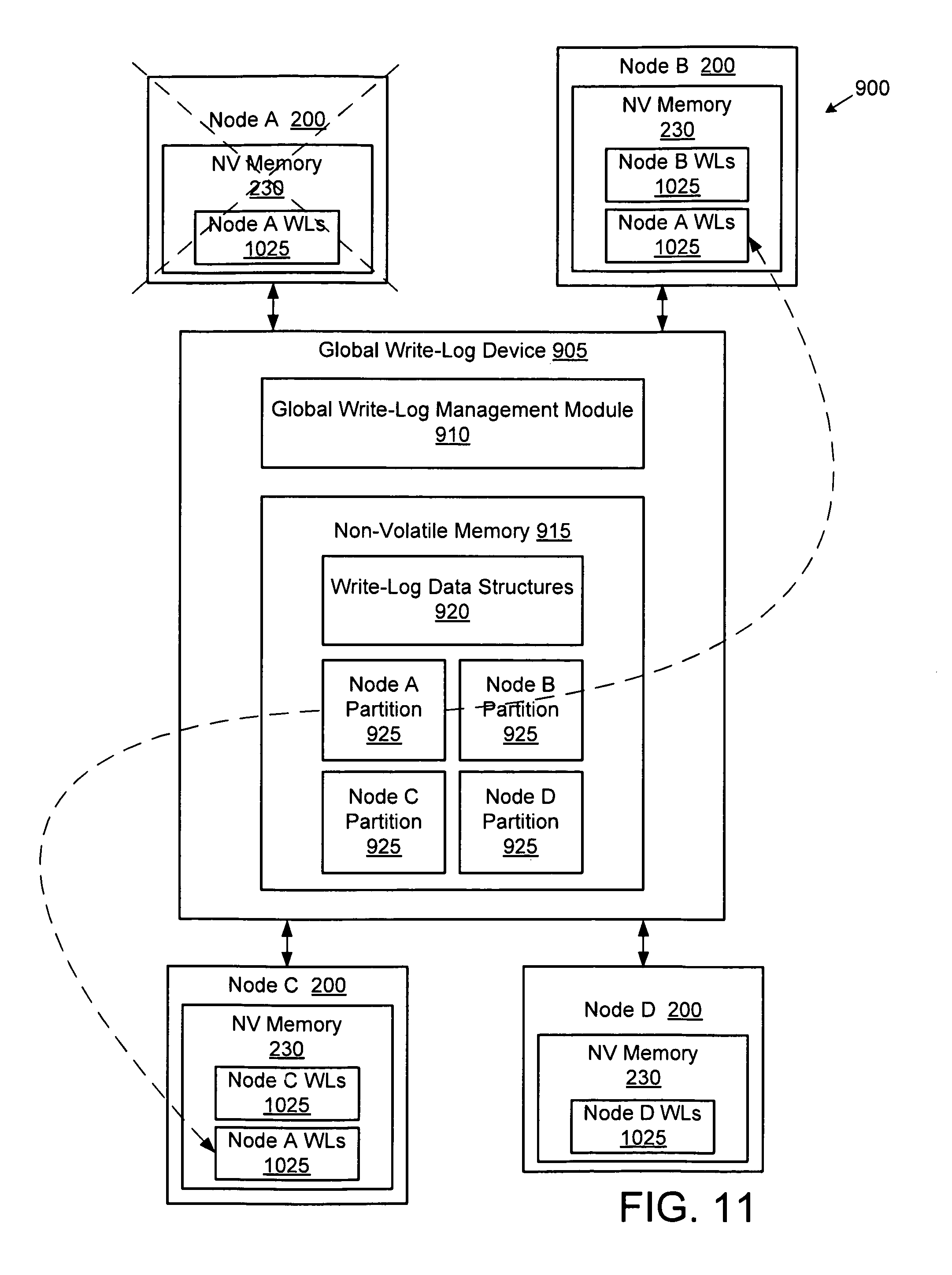

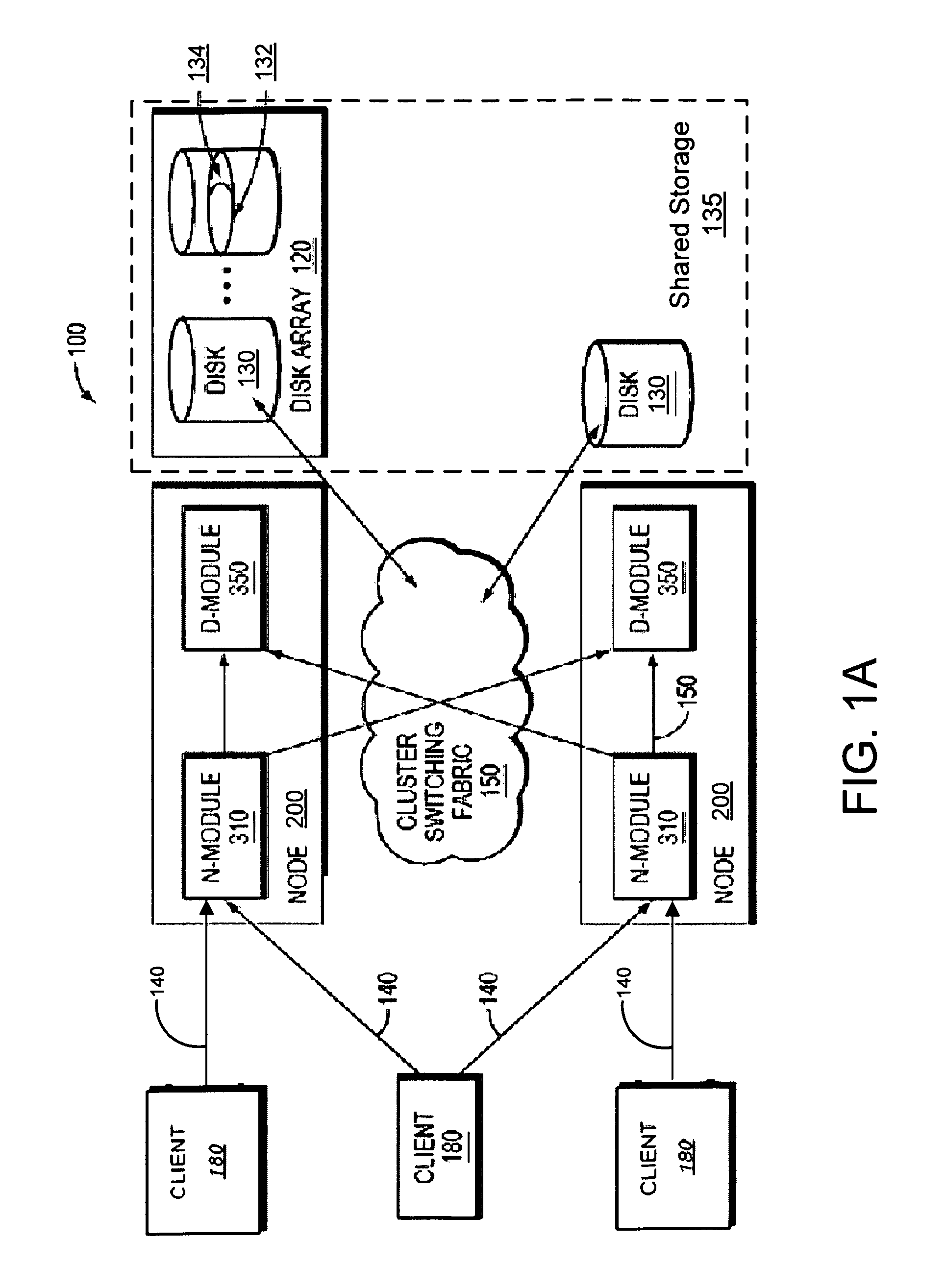

Global write-log device for managing write logs of nodes of a cluster storage system

ActiveUS20120042202A1Reduce data exchangeSave resourcesTransmissionRedundant hardware error correctionFailoverCluster systems

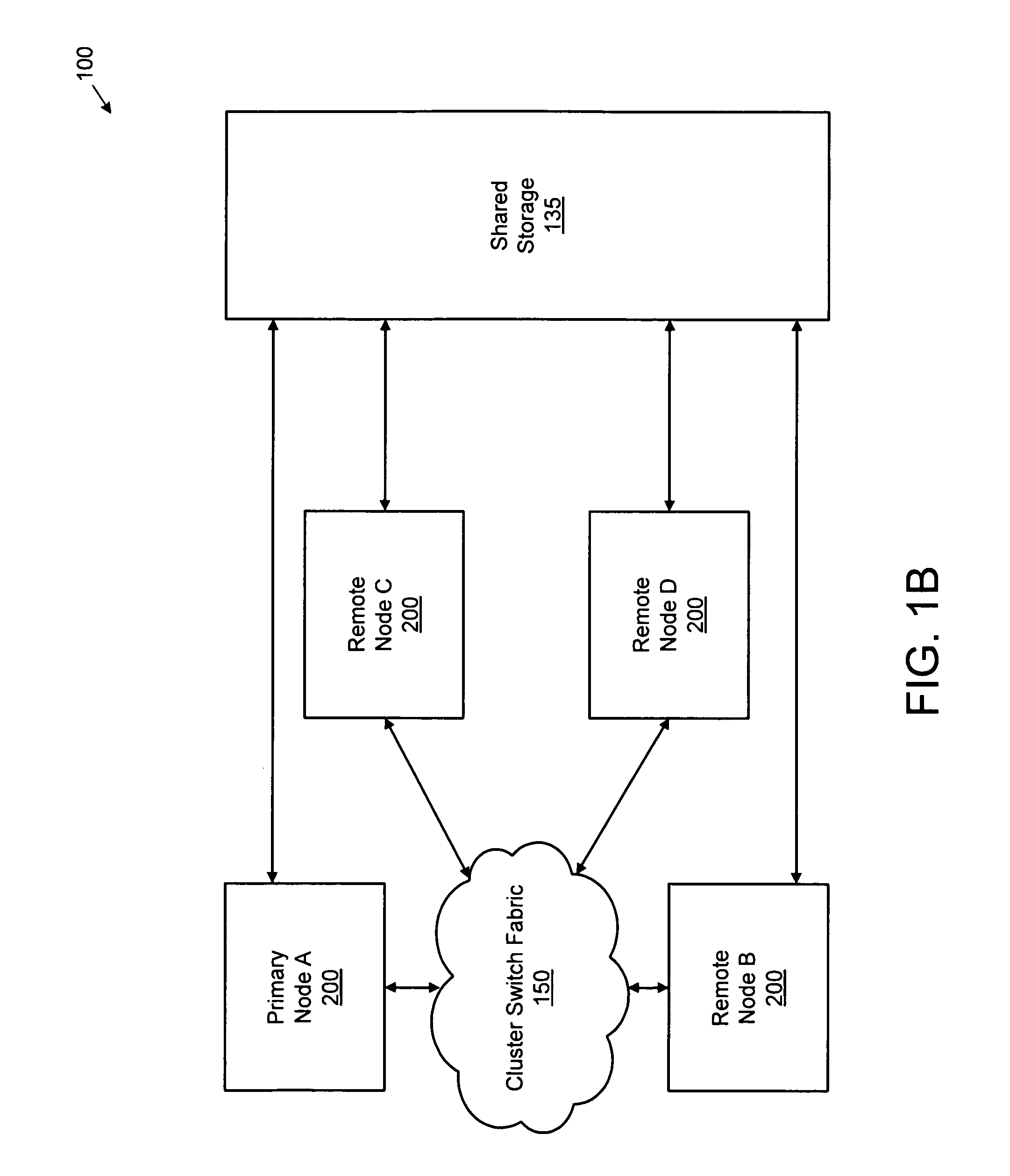

A cluster system comprises a plurality of nodes that provides data-access service to a shared storage, each node having at least one failover partner node for taking over services of a node if the node fails. Each node may produce write logs for the shared storage and periodically send write logs at predetermined time intervals to a global device which stores write logs from each node. The global device may detect failure of a node by monitoring time intervals of when write logs are received from each node. Upon detection of a node failure, the global device may provide the write logs of the failed node to one or more partner nodes for performing the write logs on the shared storage. Write logs may be transmitted only between nodes and the global device to reduce data exchanges between nodes and conserving I / O resources of the nodes.

Owner:NETWORK APPLIANCE INC

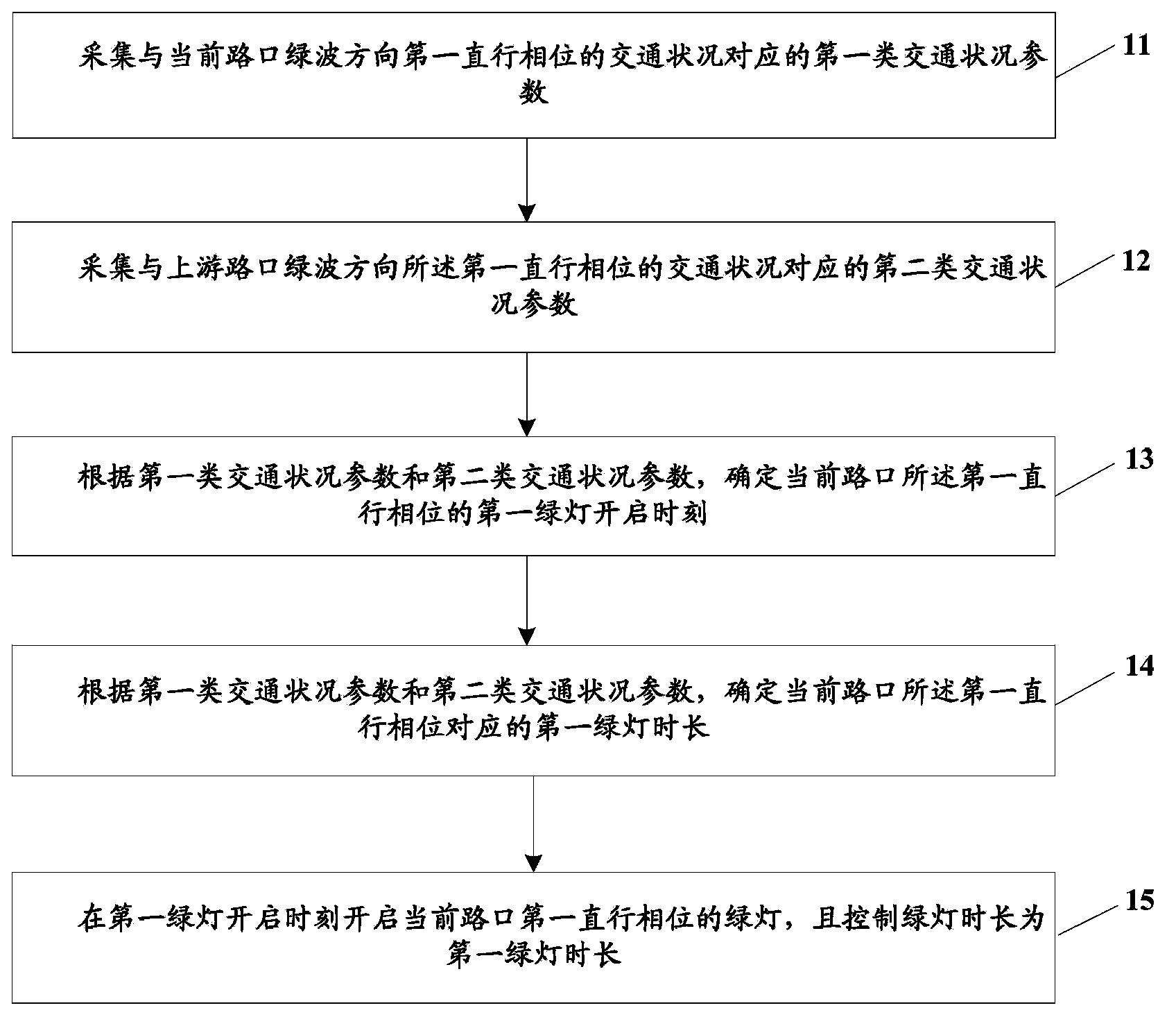

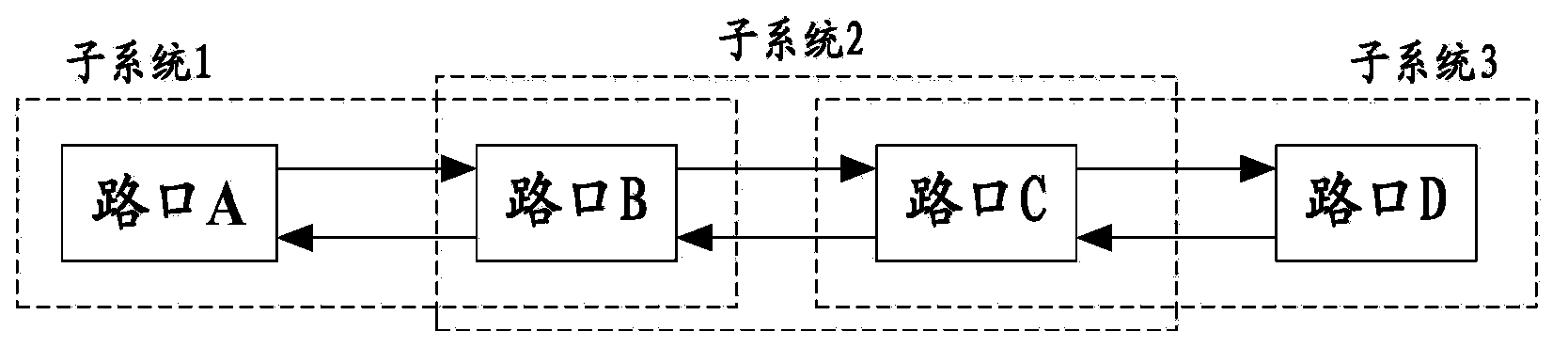

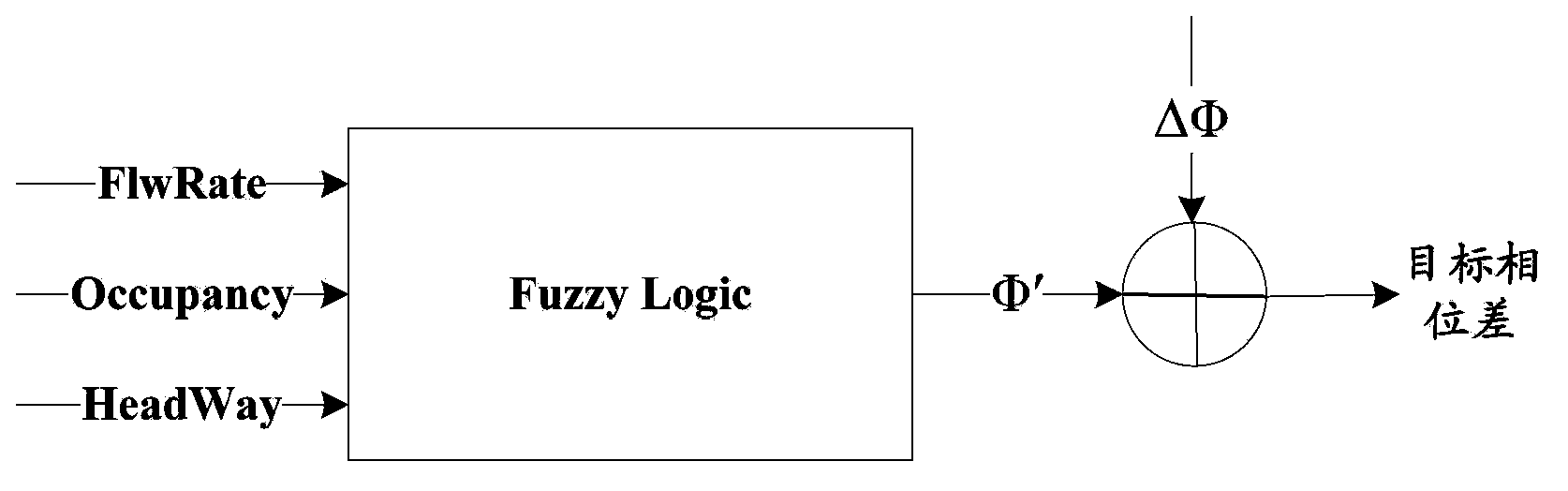

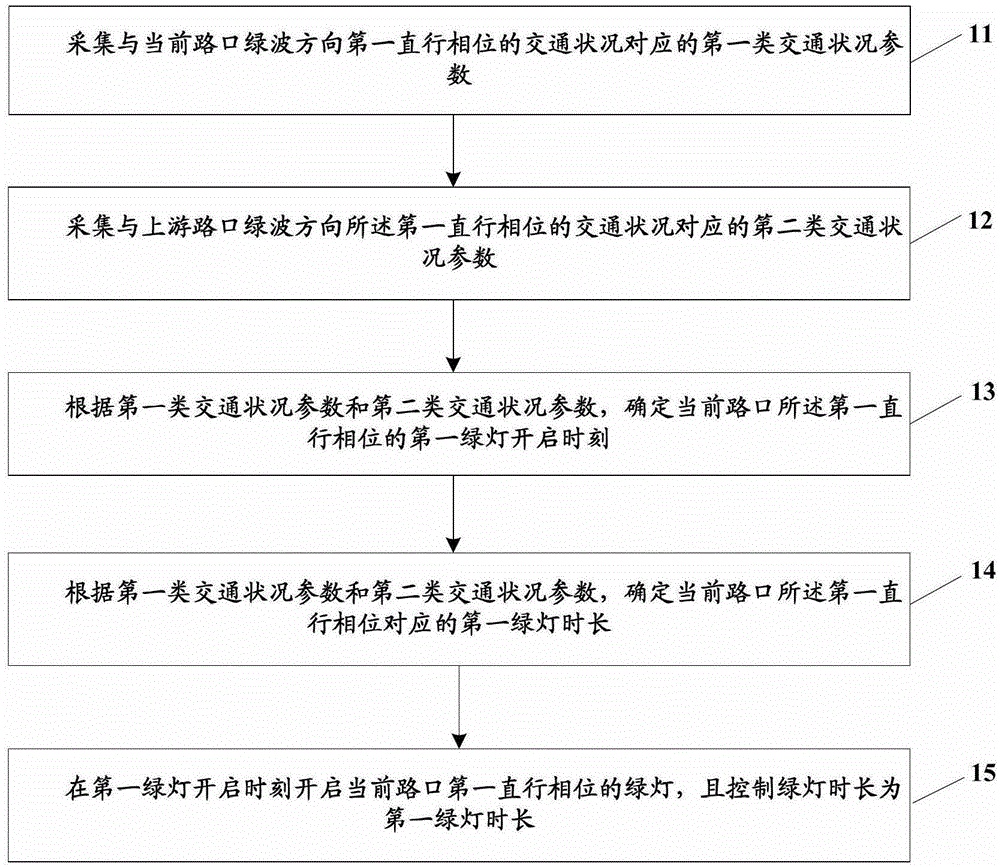

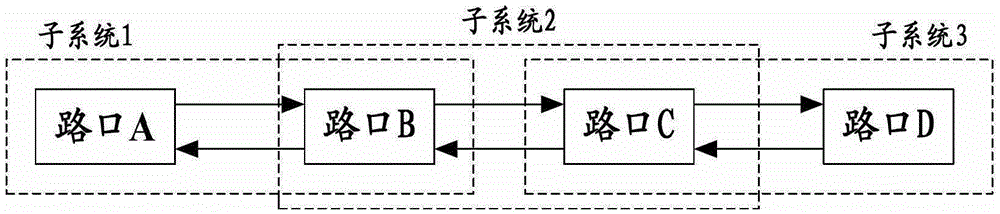

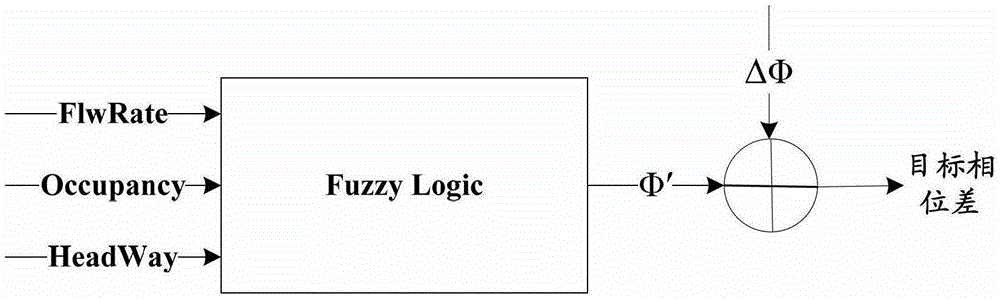

Traffic adaptive control method and traffic adaptive control device

ActiveCN103778791AShorten the timeReduce the number of stopsControlling traffic signalsEngineeringGreen-light

The embodiment of the present invention provides a traffic adaptive control method and a traffic adaptive control device. The method comprises the steps of acquiring first type traffic status parameters corresponding to the traffic status of a first straight going phase in a green wave direction of a current intersection; acquiring second type traffic status parameters corresponding to the traffic status of the first straight going phase in the green wave direction of an upstream intersection; determining a first green light turn-on moment of the first straight going phase of the current intersection according to the first type traffic status parameters and the second type traffic status parameters; determining a first green light duration corresponding to the first straight going phase of the current intersection according to the first type traffic status parameters and the second type traffic status parameters; turning on a green light of the first straight going phase of the current intersection at the first green light turn-on moment, and controlling the green light duration as the first green light duration. According to the traffic adaptive control method and the traffic adaptive control device of the present invention, by adjusting the turn-on moment and duration of the green light of traffic lights, the vehicles as many as possible can pass through various traffic light intersections of a green wave band while not stopping.

Owner:ZTE CORP

Global write-log device for managing write logs of nodes of a cluster storage system

A cluster system comprises a plurality of nodes that provides data-access service to a shared storage, each node having at least one failover partner node for taking over services of a node if the node fails. Each node may produce write logs for the shared storage and periodically send write logs at predetermined time intervals to a global device which stores write logs from each node. The global device may detect failure of a node by monitoring time intervals of when write logs are received from each node. Upon detection of a node failure, the global device may provide the write logs of the failed node to one or more partner nodes for performing the write logs on the shared storage. Write logs may be transmitted only between nodes and the global device to reduce data exchanges between nodes and conserving I / O resources of the nodes.

Owner:NETWORK APPLIANCE INC

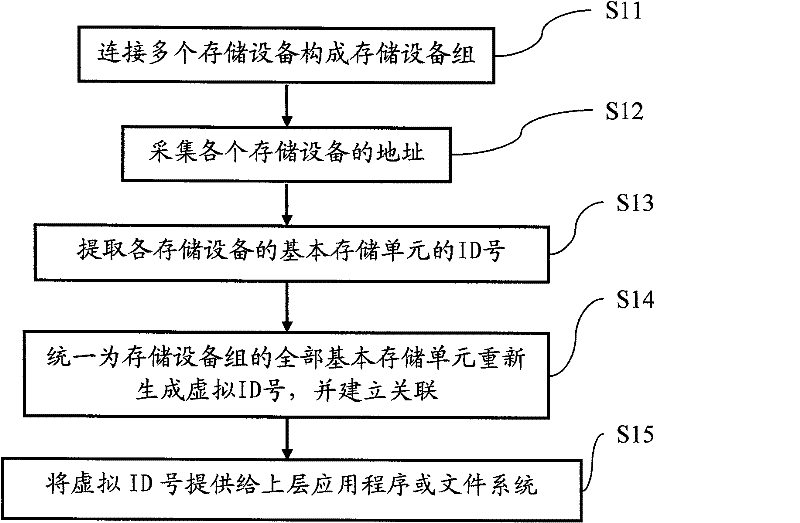

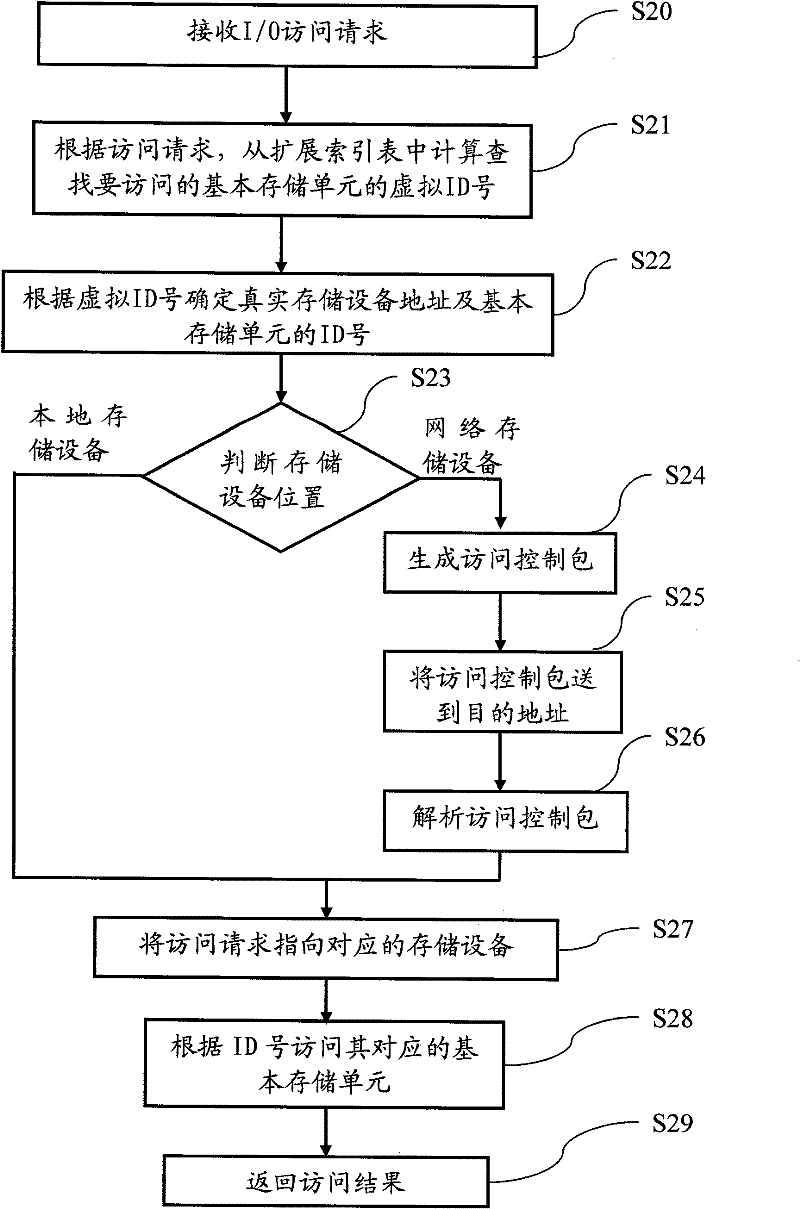

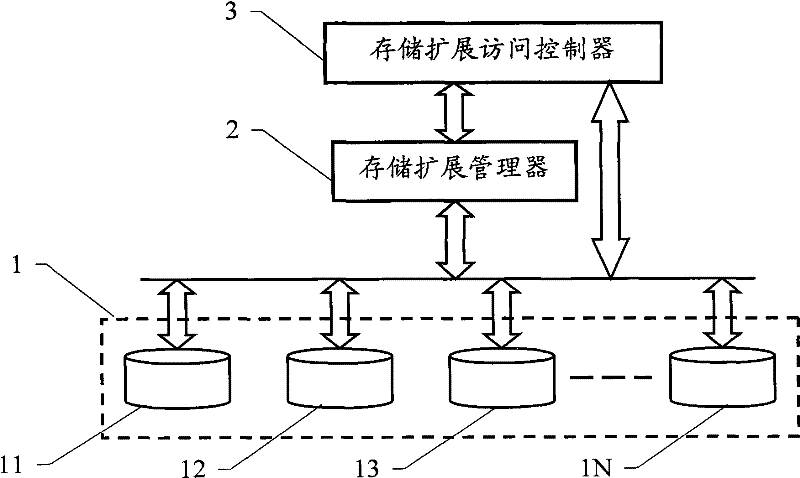

Method and system for expanding capacity of memory device

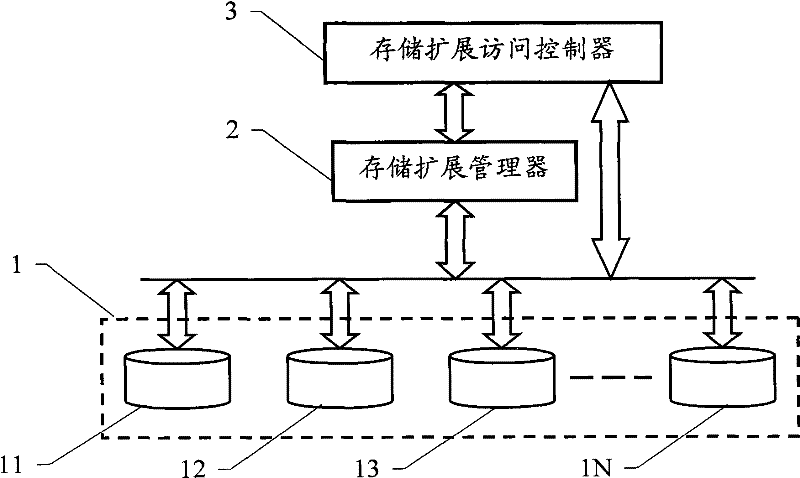

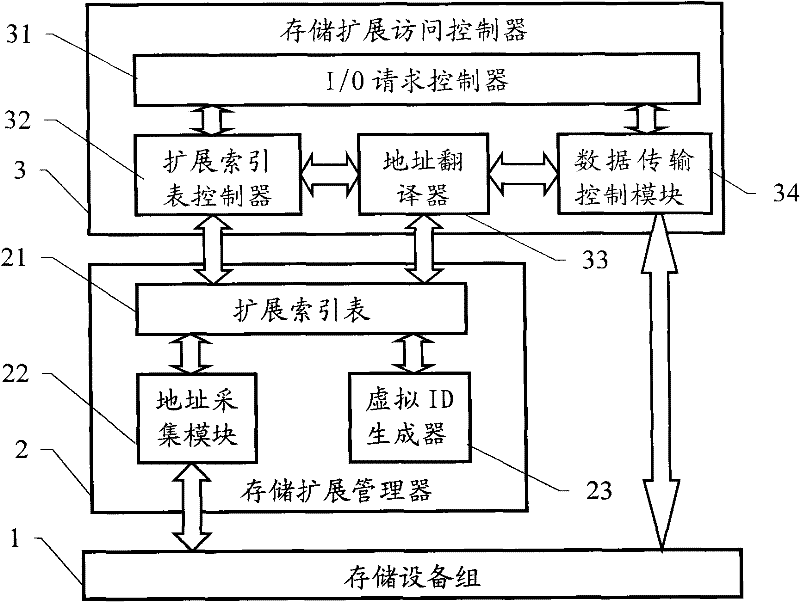

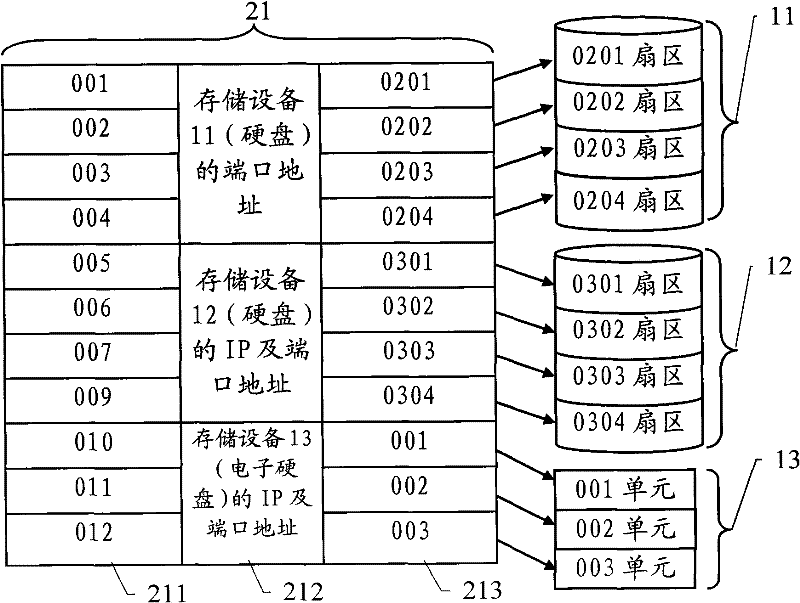

ActiveCN101751233AIncrease storage capacityIncrease profitInput/output to record carriersExtension methodStorage cell

The invention relates to a method and a system for expanding the capacity of a memory device. The method for expanding the capacity of a memory device comprises the following steps of connecting a plurality of memory devices to form a memory device group and is characterized by further comprising the steps of: recording the connecting address of each memory device and all available ID numbers of a basic memory cell of the memory device; distributing and recording the unique virtual ID number for each basic memory cell; associating each virtual ID number with the connecting address of the corresponding memory device and the ID number of the basic memory cell; and accessing to the corresponding basic memory cell via the virtual ID number. The invention also discloses a system for realizing the method. The invention ensures that a plurality of memory devices are virtualized to form a large hard disc with the capacity being equal to the total capacity of the plurality of memory devices, thereby realizing the unlimited expansion of the memory capacity, greatly improving the memory capacity and realizing the network memory.

Owner:CHENGDU SOBEY DIGITAL TECH CO LTD

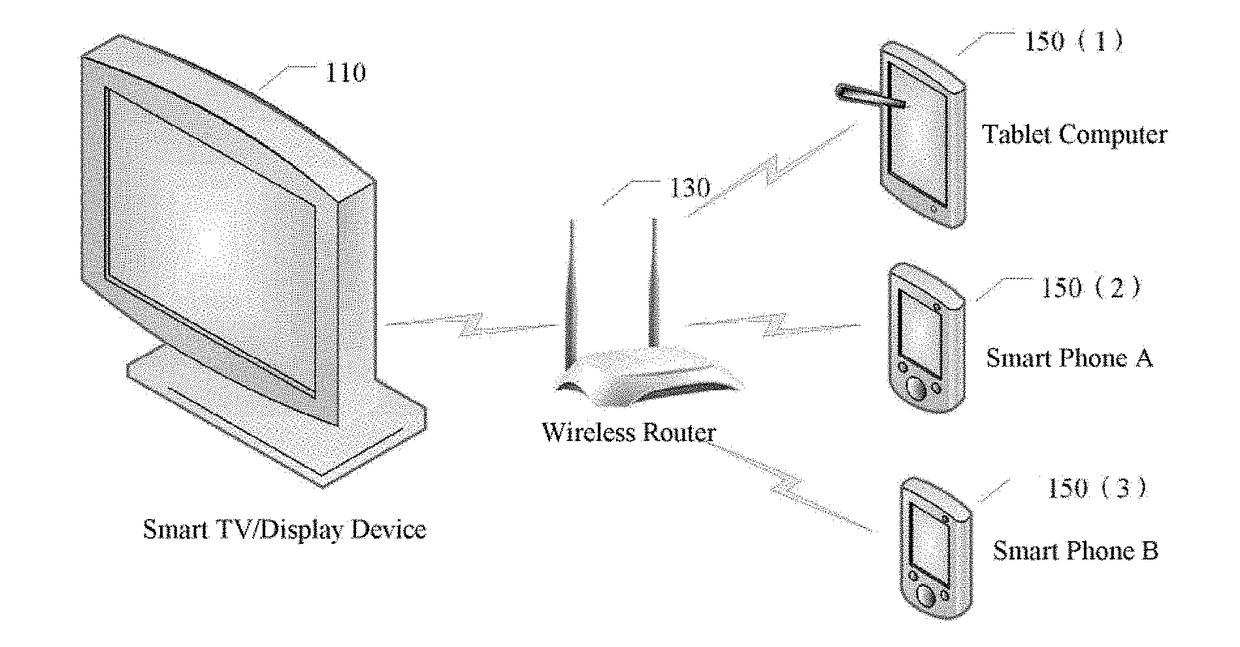

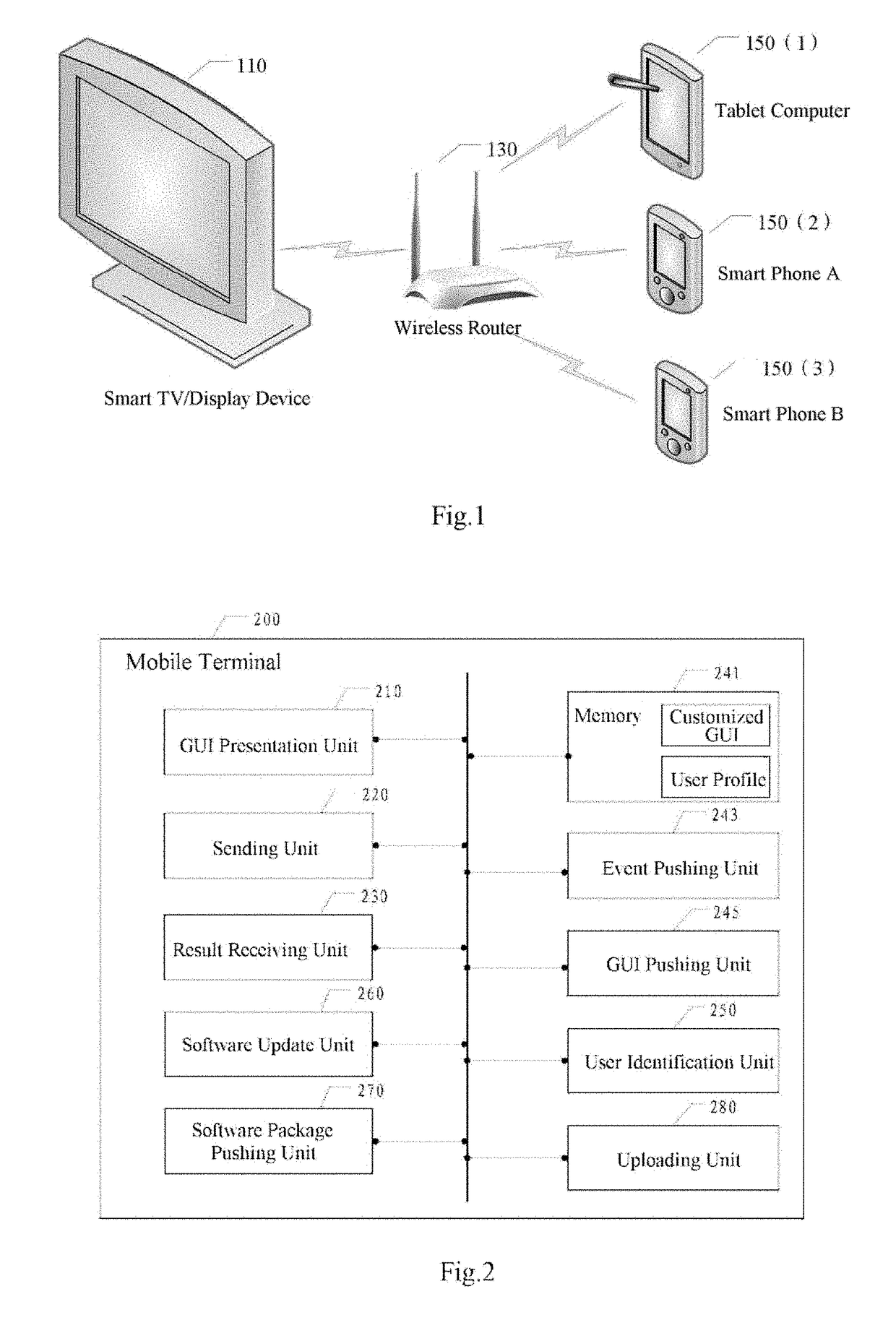

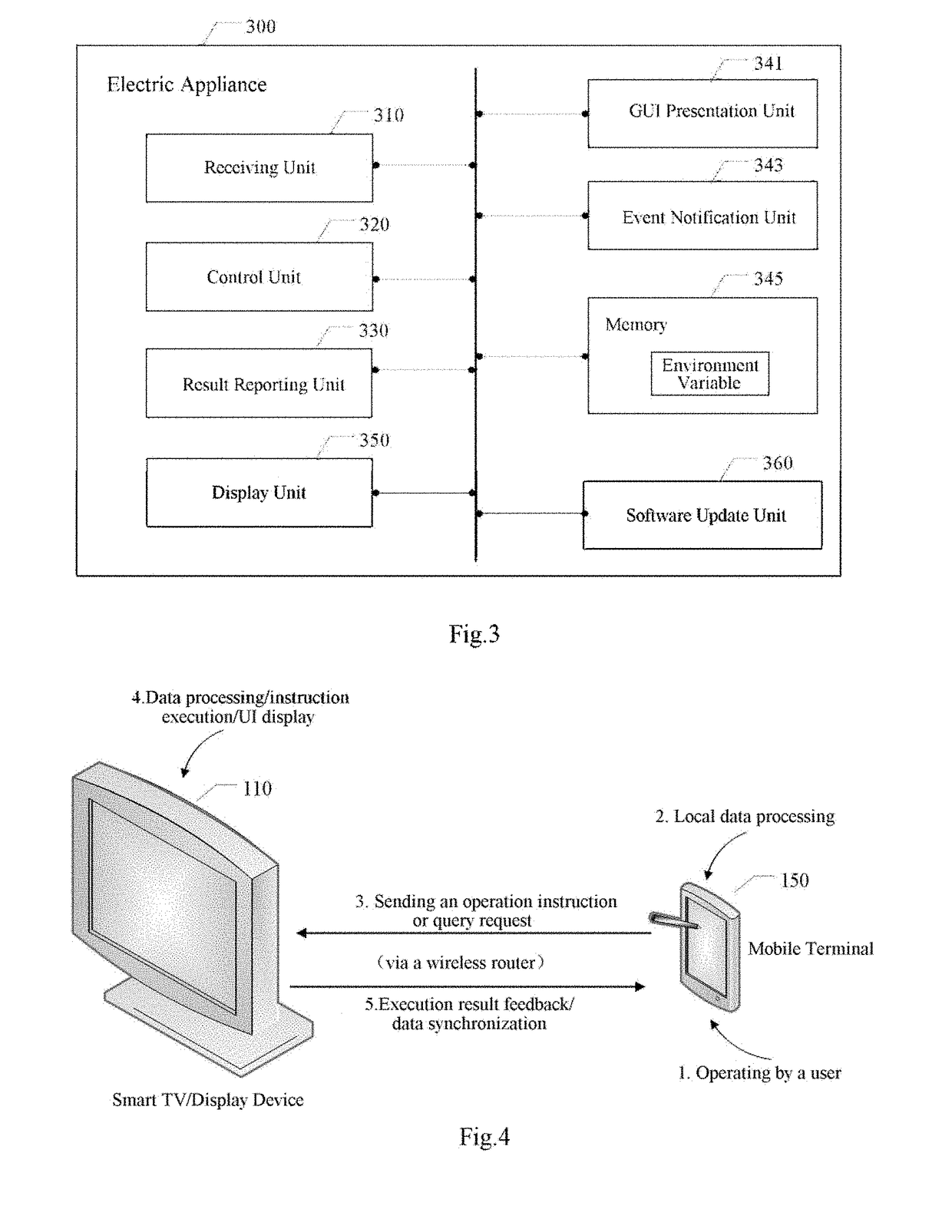

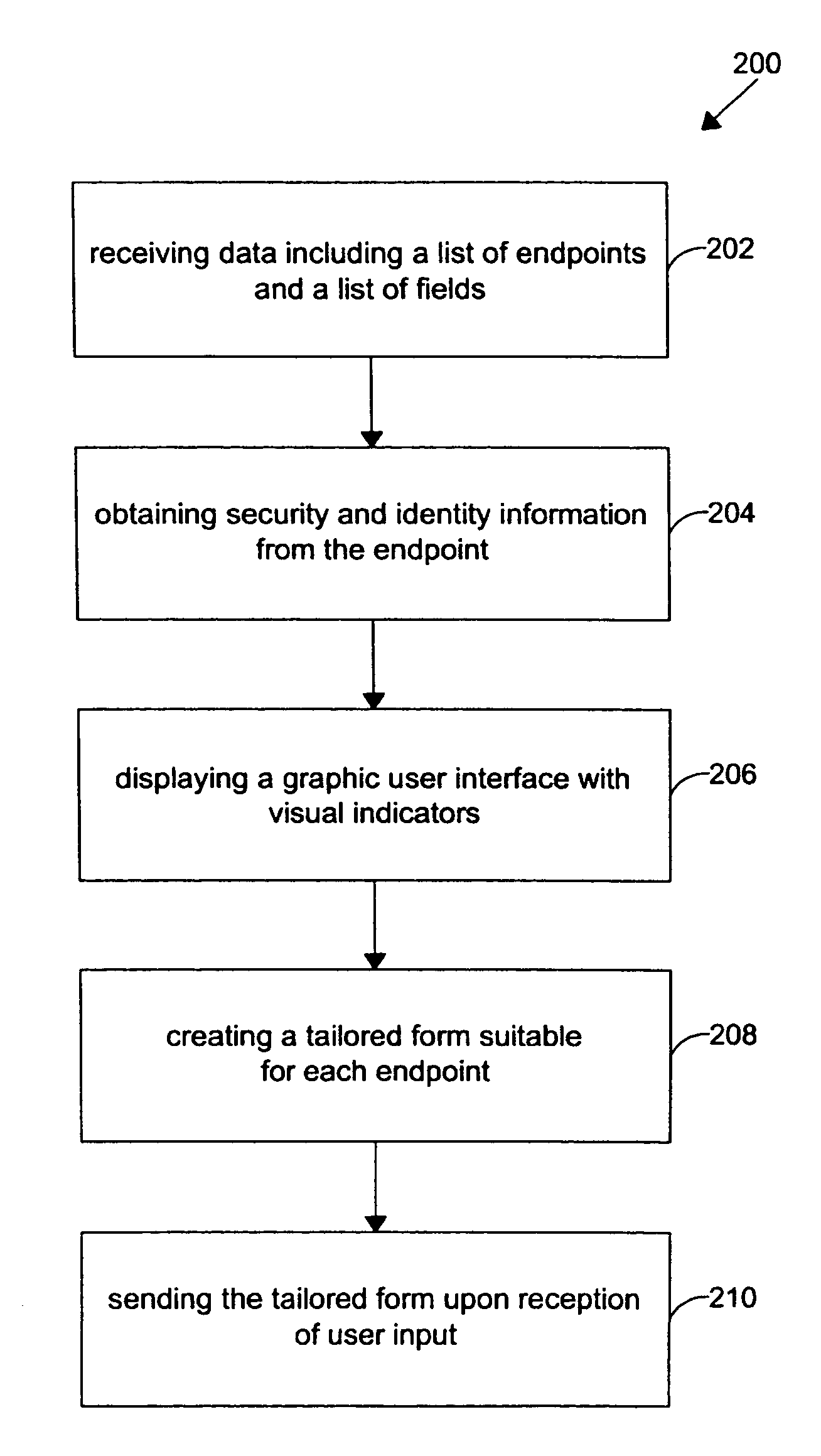

Device, system and method for operating electric appliance by mobile terminal

InactiveUS20180220099A1Easy maintenanceReduce complexityTelevision system detailsColor television detailsTerminal operationControl unit

A system for operating an electric appliance by a mobile terminal is disclosed. The mobile terminal includes a platform-independent GUI presentation unit; a sending unit configured to send, to the electric appliance, an operation instruction and / or query request based on the user interaction input received via a GUI element; and a result receiving unit configured to receive the instruction execution result and / or query result. The electric appliance includes platform-dependent components, including: a receiving unit configured to receive the operation instruction and / or query request; a control unit configured to execute the instruction and / or query; a display unit configured to display contents; and a result report unit configured to report the instruction execution result and / or query result to the mobile terminal. The GUI presentation unit changes at least one GUI element presented, so as to reflect the instruction execution result and / or query result in the GUI.

Owner:BOE TECH GRP CO LTD

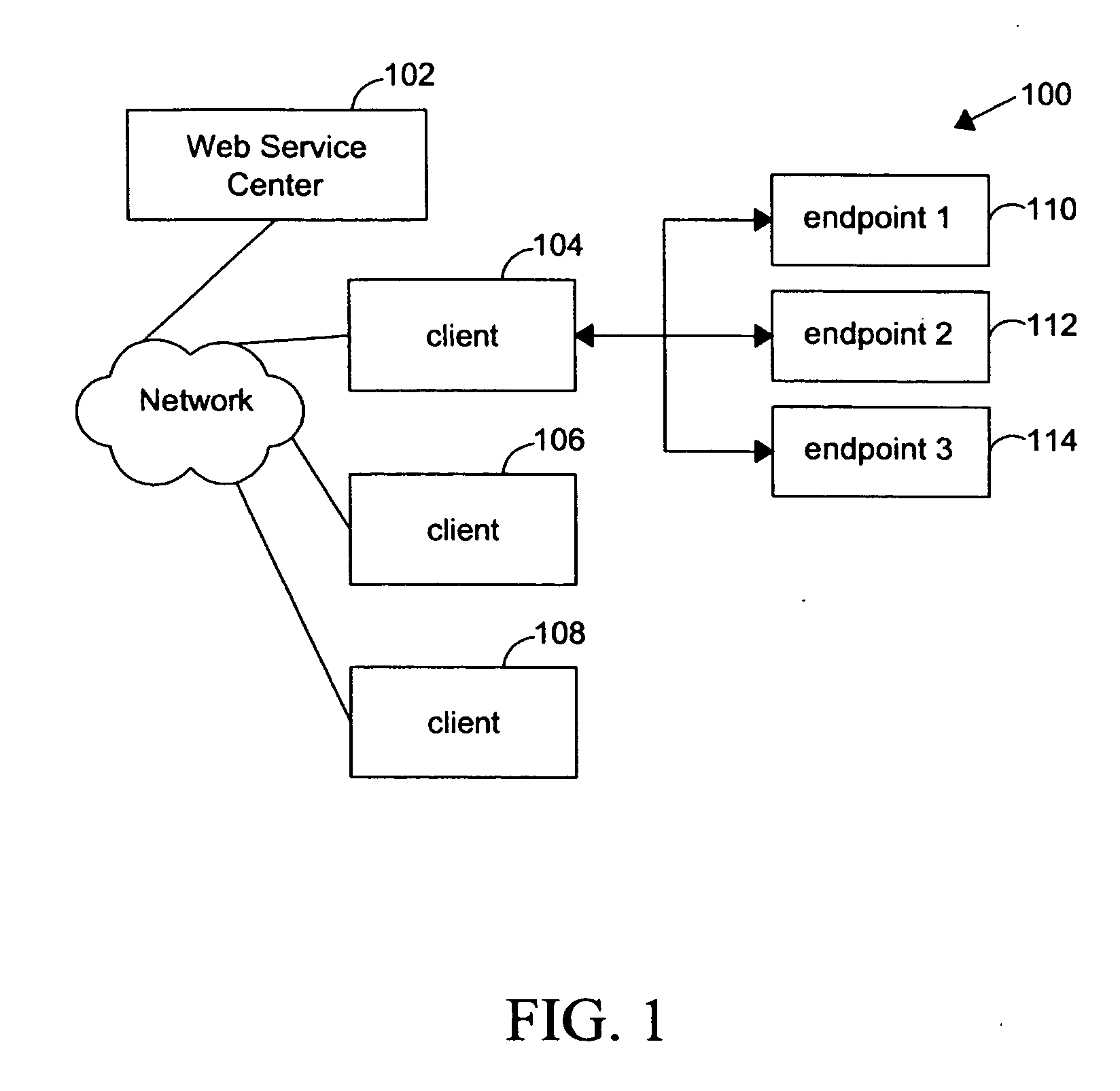

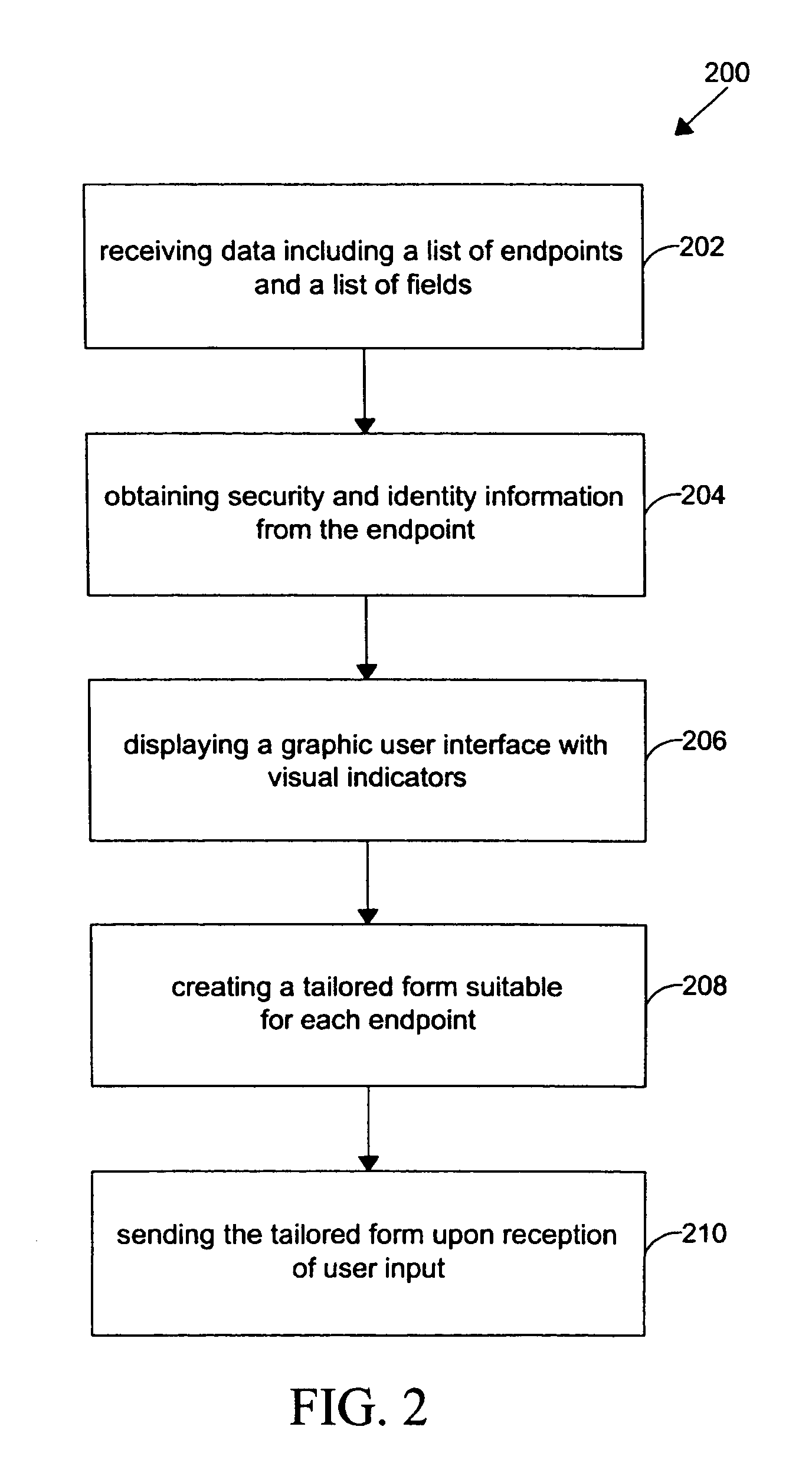

Method and system for selective information delivery in a rich client form

InactiveUS20060212696A1Good user interfaceSelective information deliveryNatural language data processingOffice automationWeb serviceClient-side

The present invention is directed to a method and system for delivering user selective information from a rich client to multiple parties through a single GUI. Rich client interfaces may be designed to submit information to multiple destinations, targeting to access multiple web services. In the present invention, users of the client interface may be informed about parties to whom they are submitting information. An enhanced user interface may include various visual indicators on each input field, which may provide information about the party receiving the input field. The rich client may validate each party before the information is sent. Selective information through a user interaction at the client side may be delivered from the client to the multiple parties.

Owner:IBM CORP

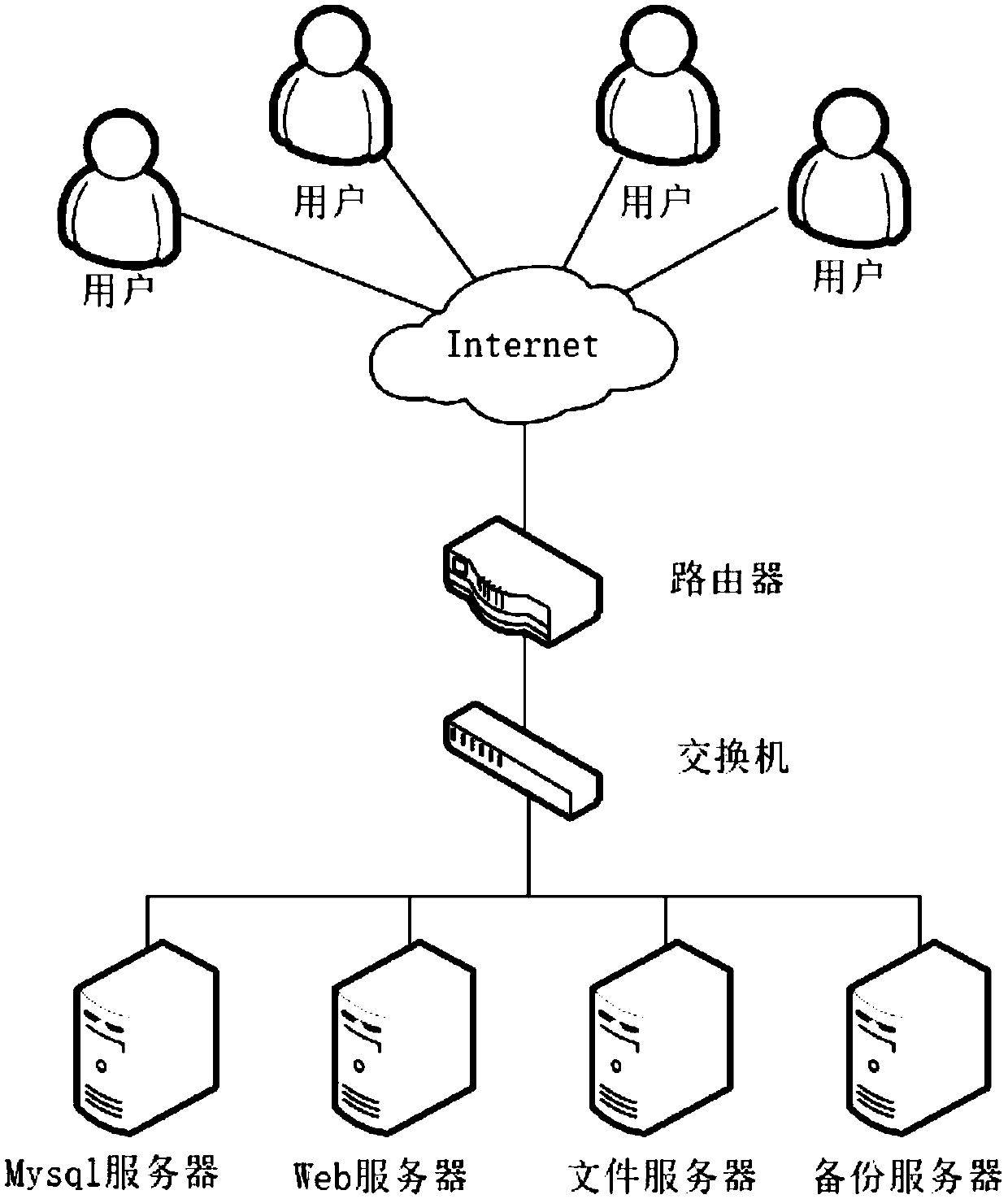

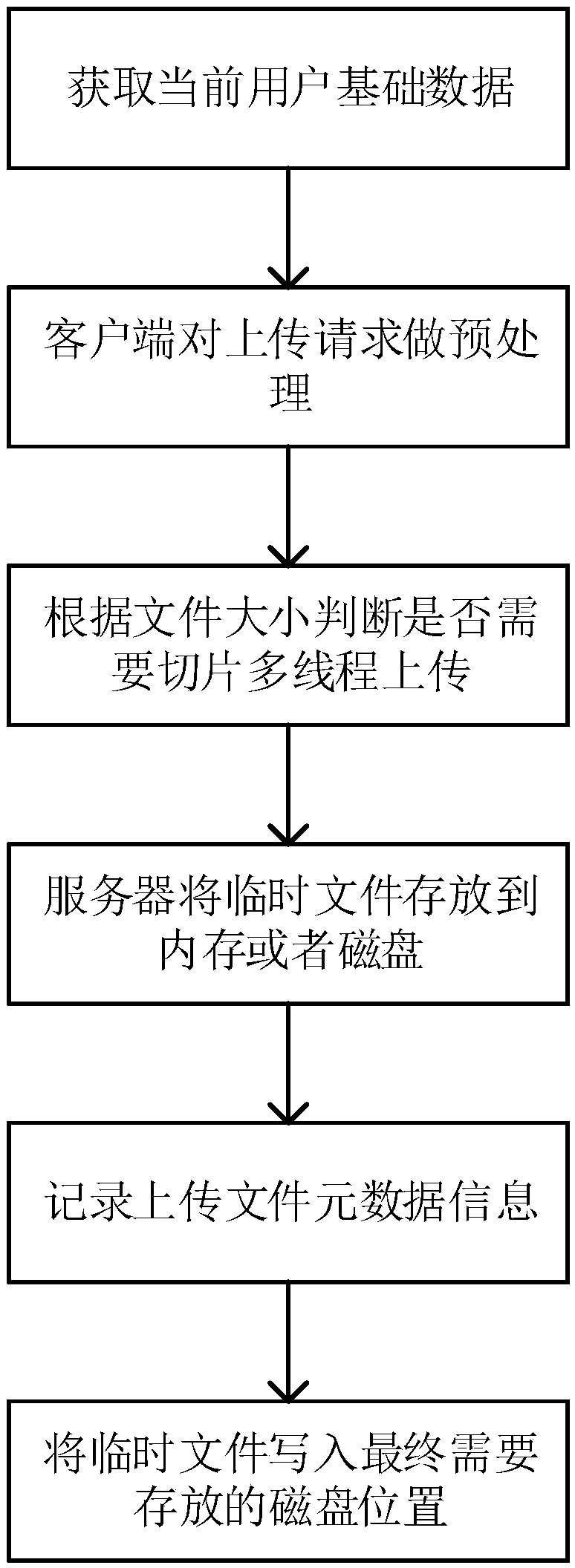

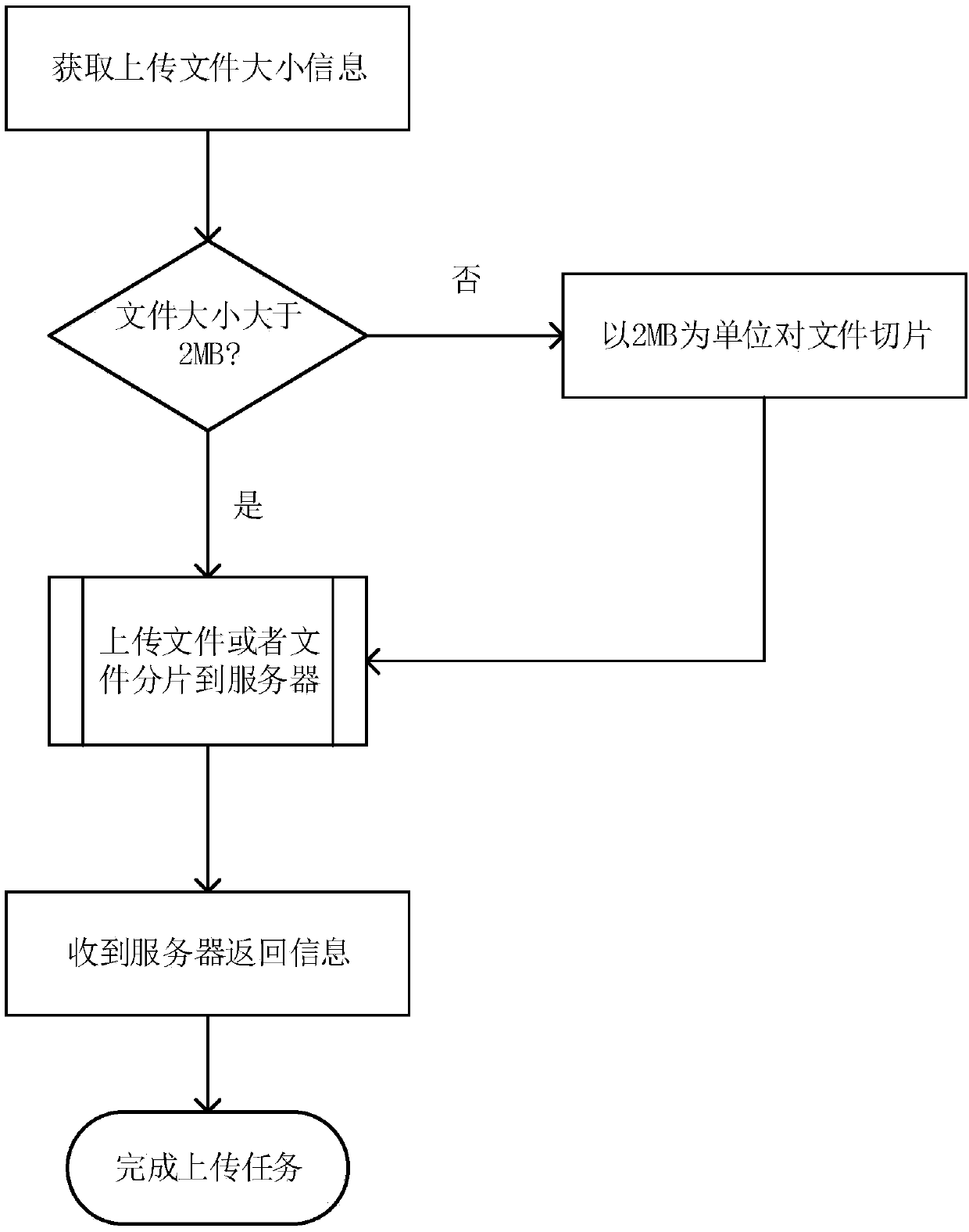

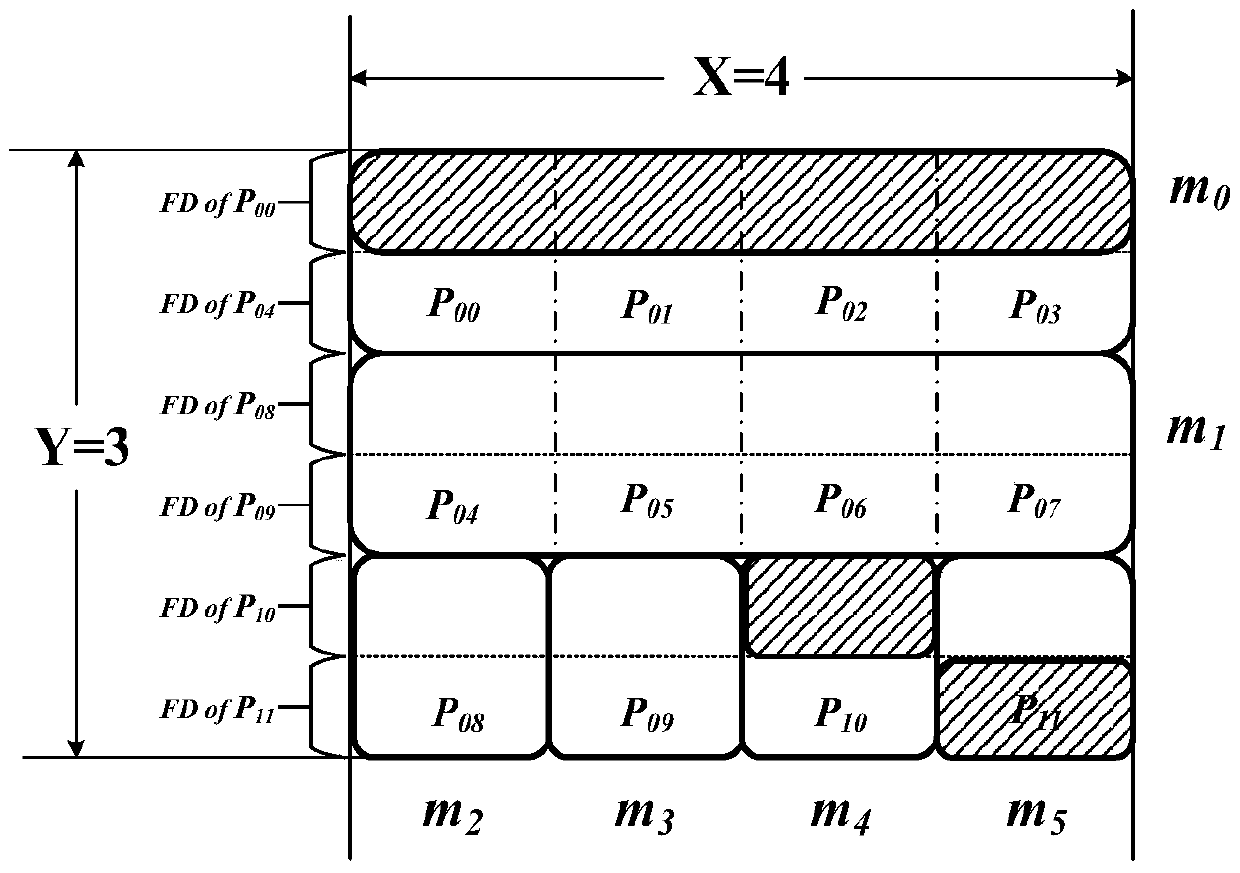

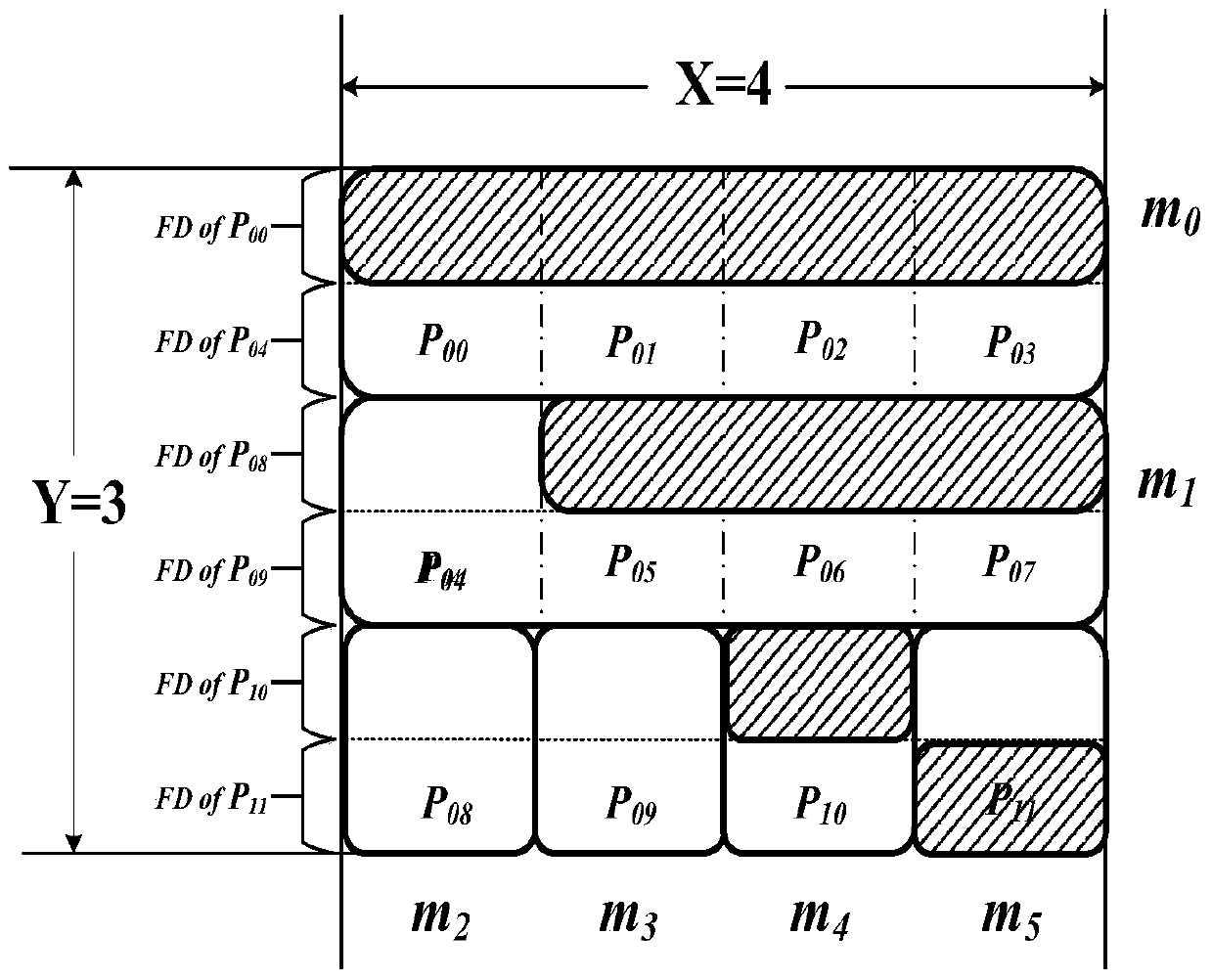

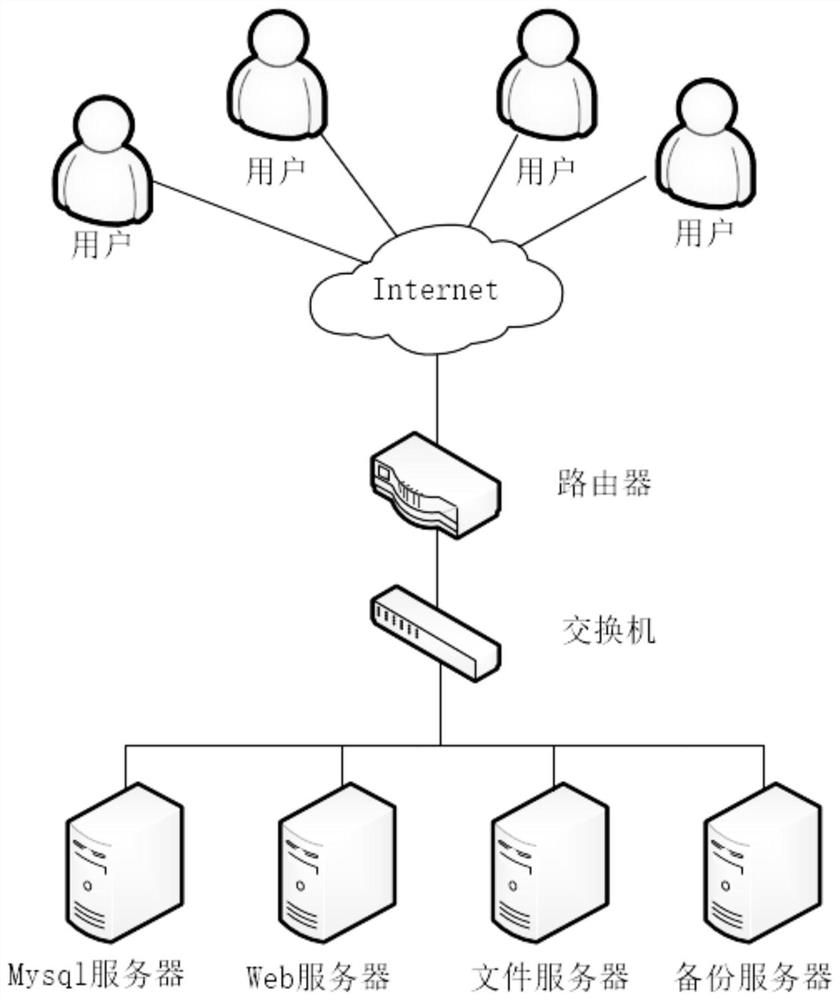

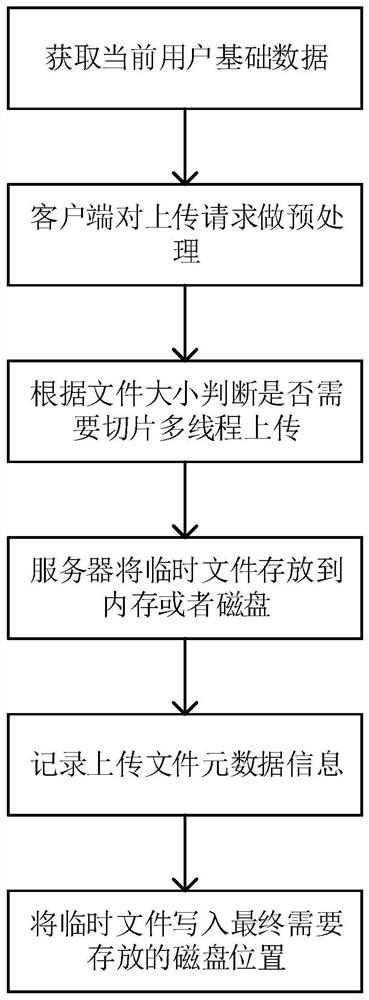

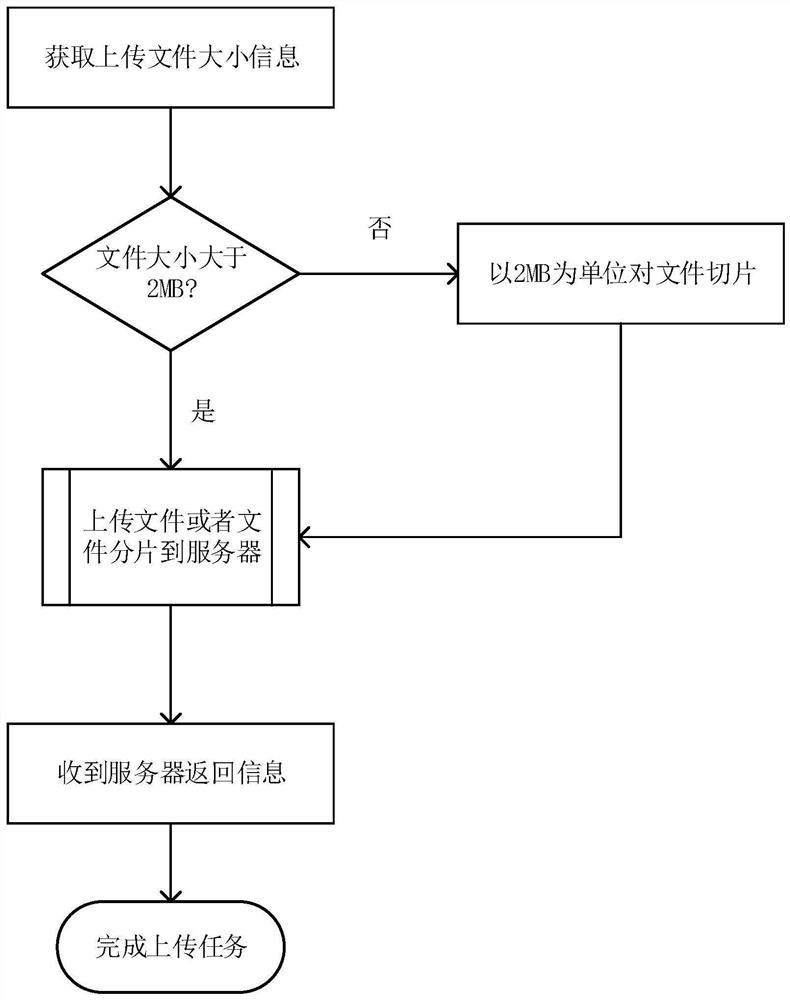

Multi-thread uploading optimization method based on memory allocation

ActiveCN109547566AReduce wasteImplement control transferError preventionFile access structuresNetwork conditionsCache locality

The invention discloses a multi-thread uploading optimization method based on memory allocation. The method comprises the steps of 1) acquiring basic data of a current operation user; 2) preprocessingan uploading request by utilizing obtained information; 3) judging whether slicing and multi-thread uploading are needed or not according to the size of an uploaded file; 4) storing a temporary fileof the uploaded file in a designated cache position according to a current idle memory condition of a server; 5) recording metadata information of the uploaded file in a database; and 6) writing the temporary file in a cache into a disk position in which the file finally needs to be stored. Starting from two aspects of service logic and server memory management, a client and the server cooperate,so that relatively high uploading success rate is achieved when network conditions are relatively poor; and meanwhile, a breakpoint resume function is supported, so that the uploading speed of the client and the bandwidth utilization rate of the server are improved.

Owner:SOUTH CHINA UNIV OF TECH

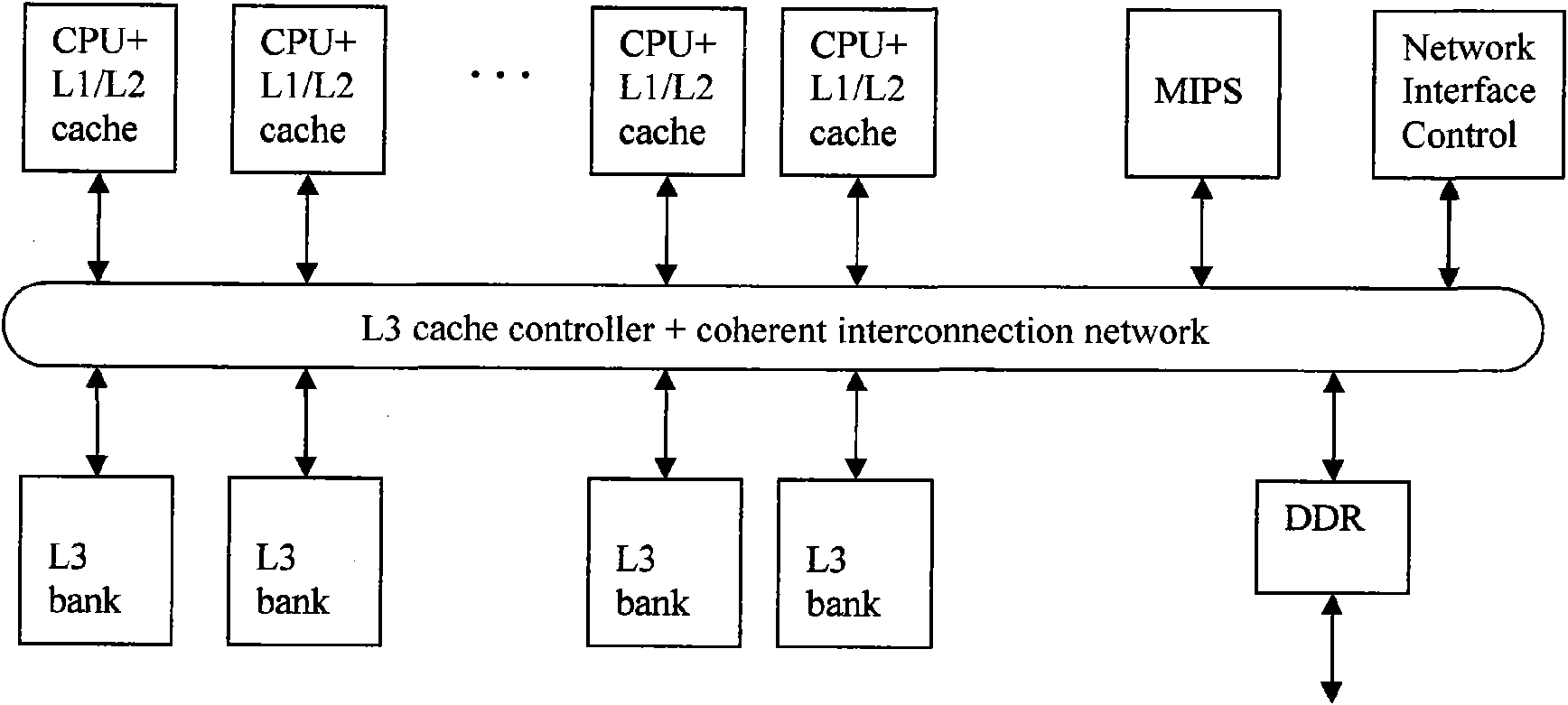

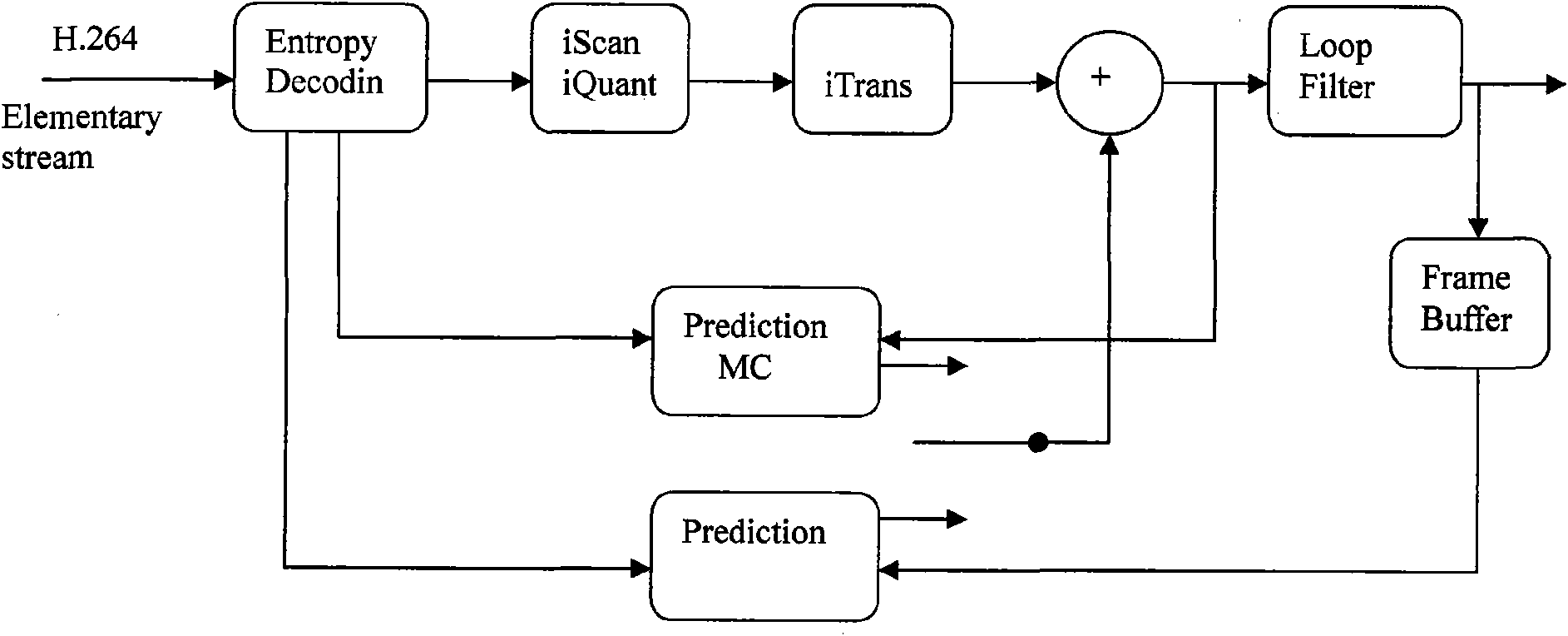

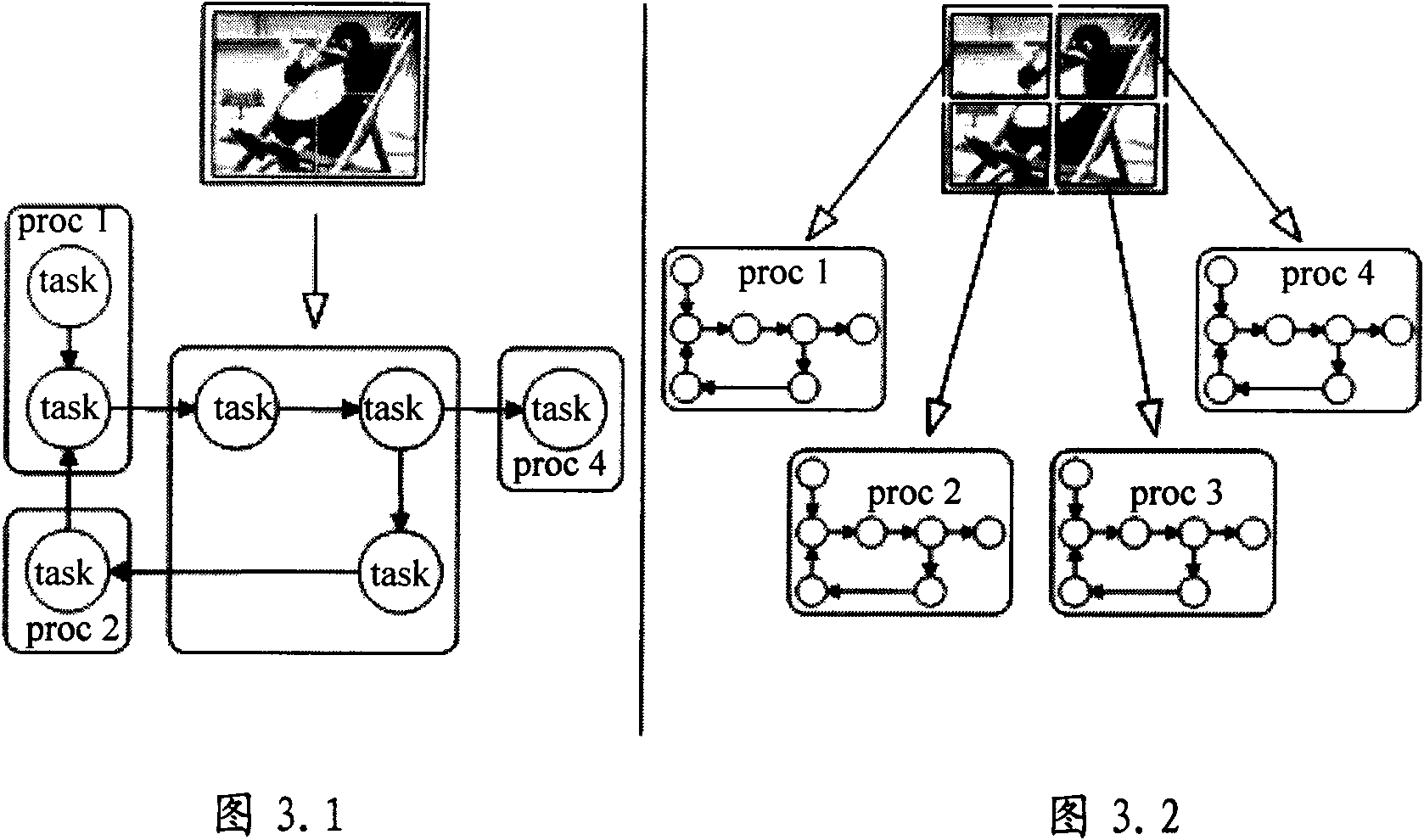

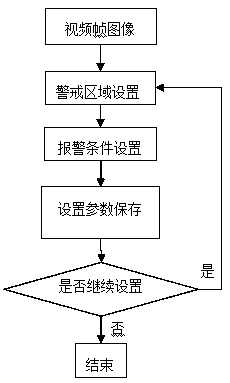

Multi-DSP core framework and method for rapidly processing parallel video signal

InactiveCN101616327AReduce data exchangeTelevision systemsDigital video signal modificationData needsData exchange

The invention discloses a multi-DSP core framework and a method for rapidly processing a parallel video signal, which aims to solve the problems of a slow processing speed of the prior technology. The method comprises the following steps: receiving a video stream image; carrying out serial-parallel processing on the video stream image according to a preset step; and carrying out parallel processing on at least one of the steps according to a preset program. By adopting the method, each DSP core transfers allocated data to a flash memory (L1 / L2) for processing; because data needed to be transferred in or out is a small part of a frame of the image, data exchange of a system is greatly reduced; and each core can carry out multi-step processing on the allocated data, which further reduces the data exchange.

Owner:RAYNET TECH

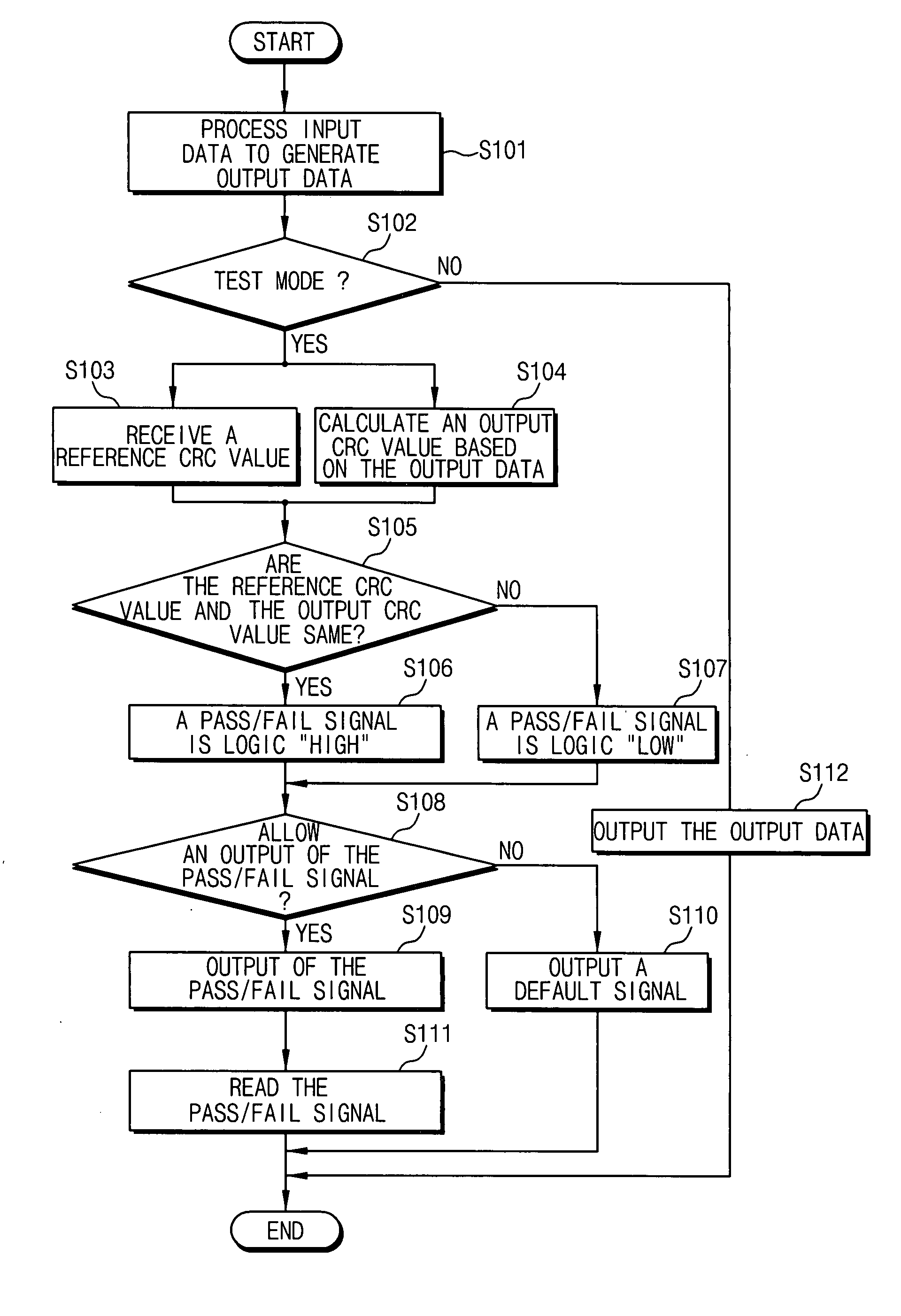

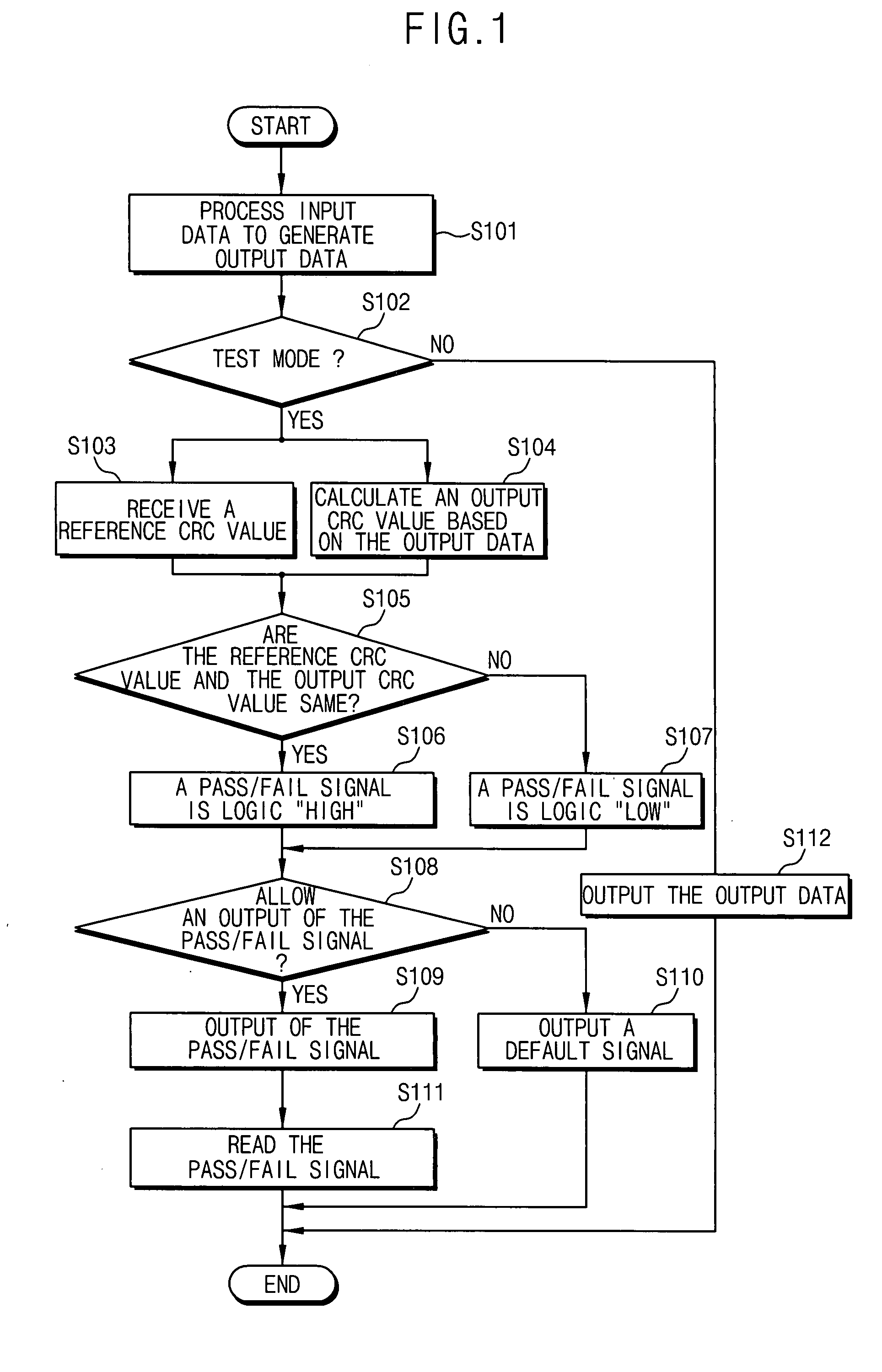

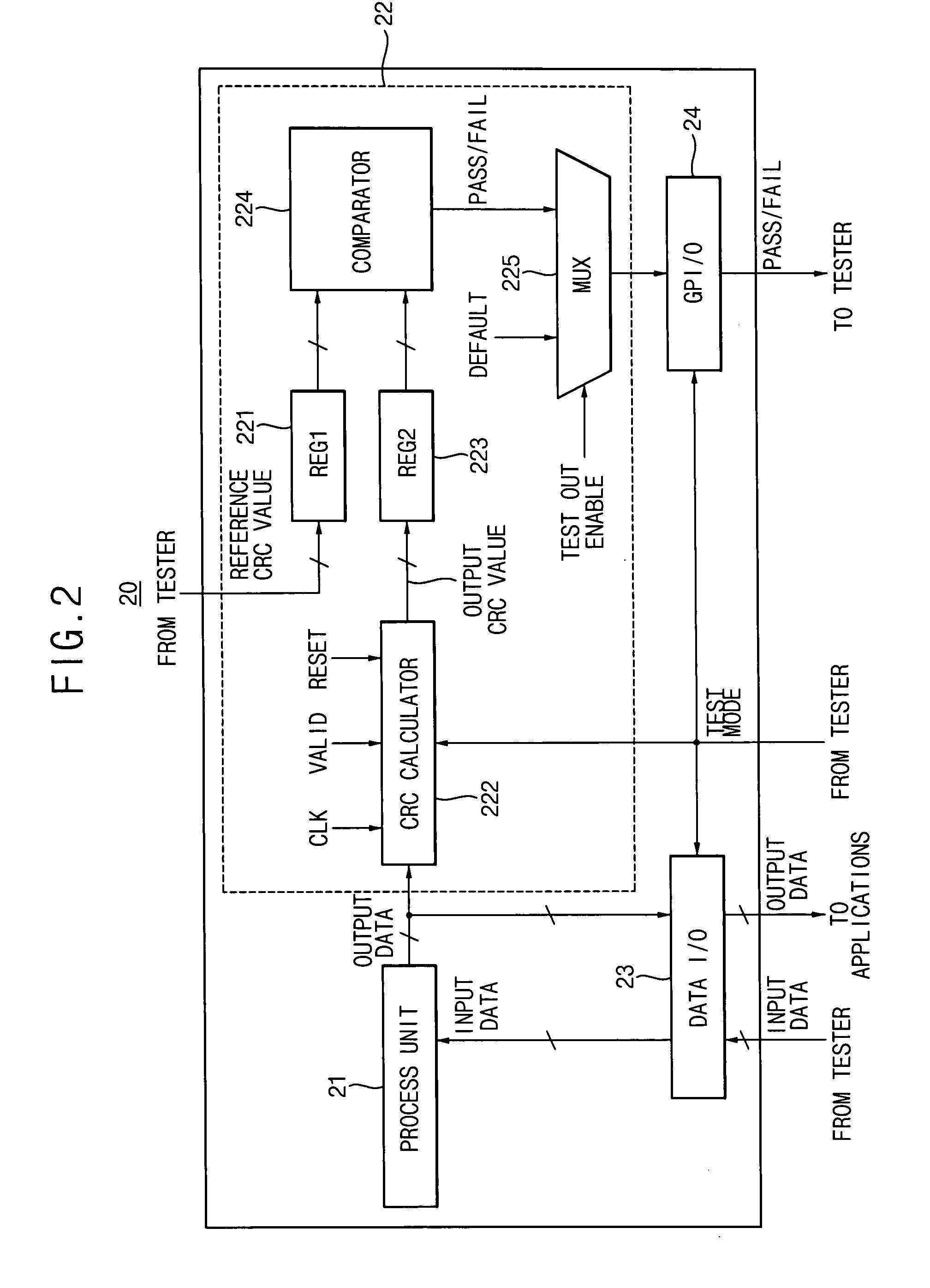

Digital apparatus and method of testing the same

InactiveUS20080052575A1Small spacingThe testing process is simpleElectronic circuit testingFunctional testingComputer scienceDigital device

A digital apparatus and a method of testing the same according to example embodiments which may reduce the amount of data exchanged because the digital apparatus may provide a pass / fail signal to the tester.

Owner:SAMSUNG ELECTRONICS CO LTD

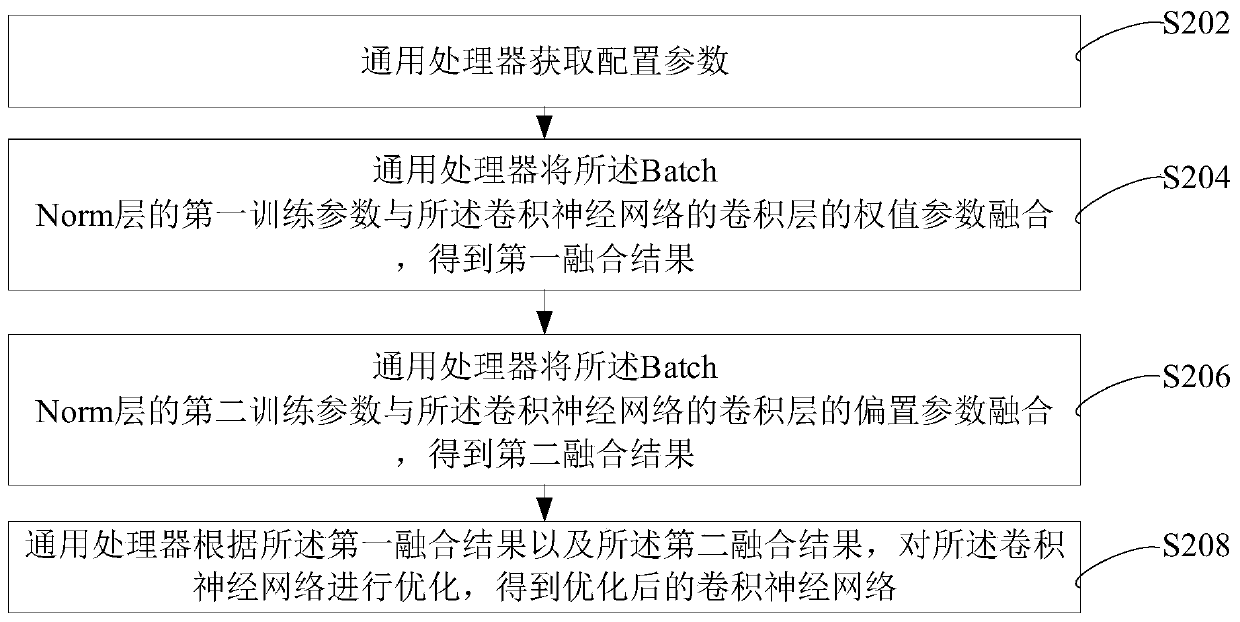

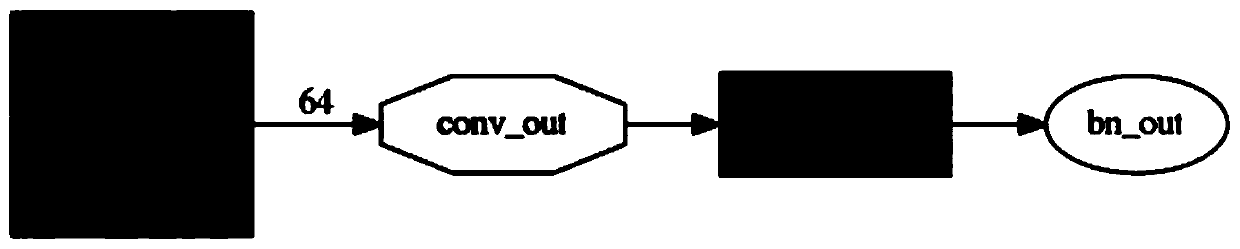

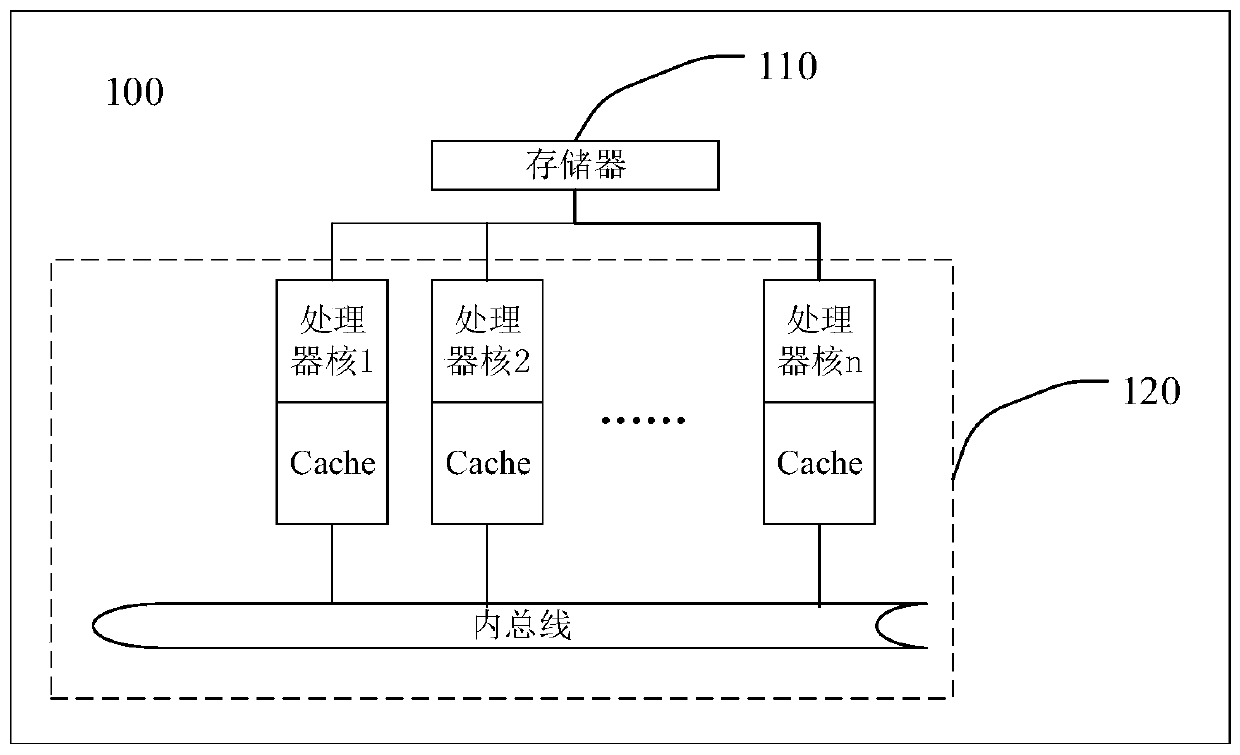

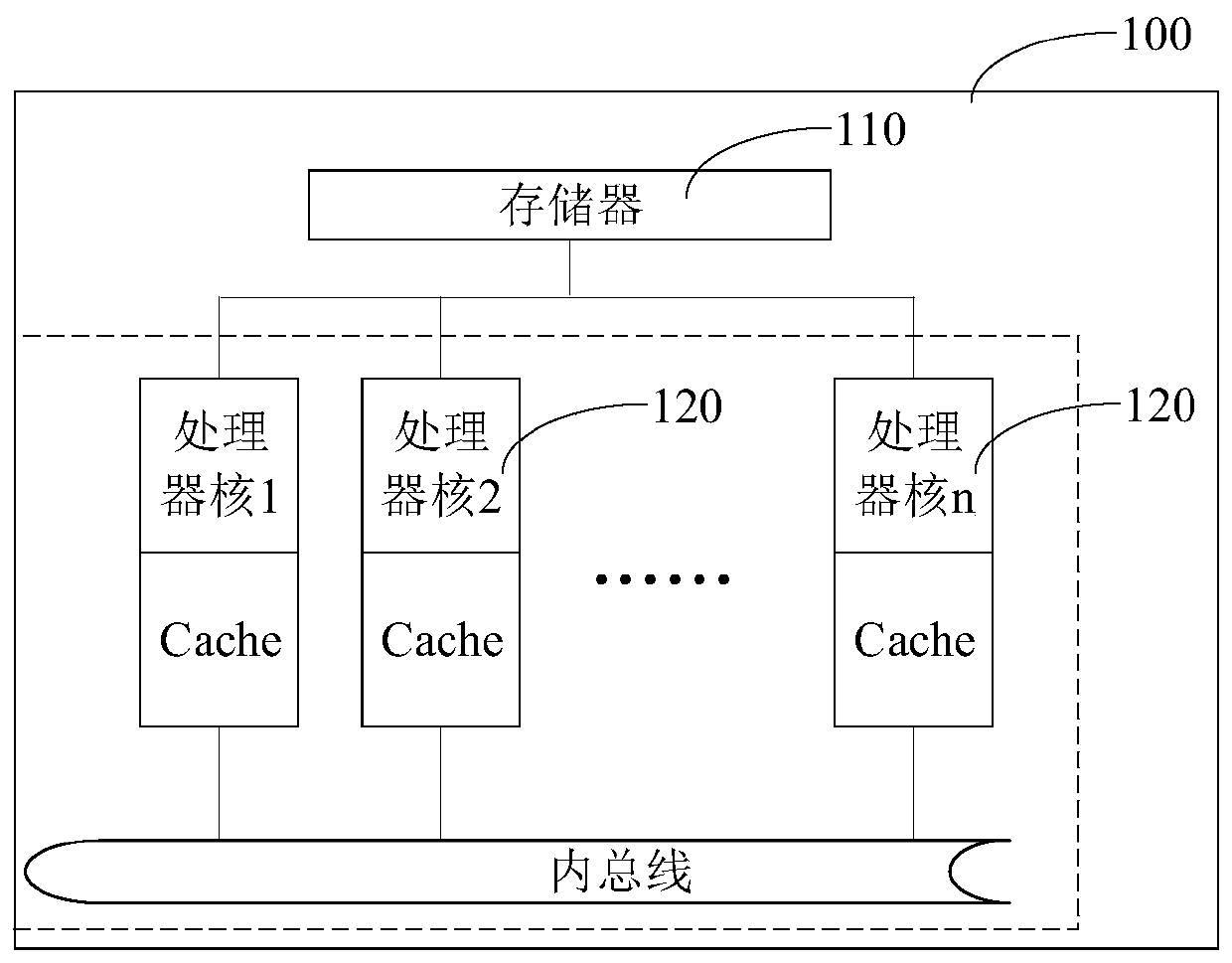

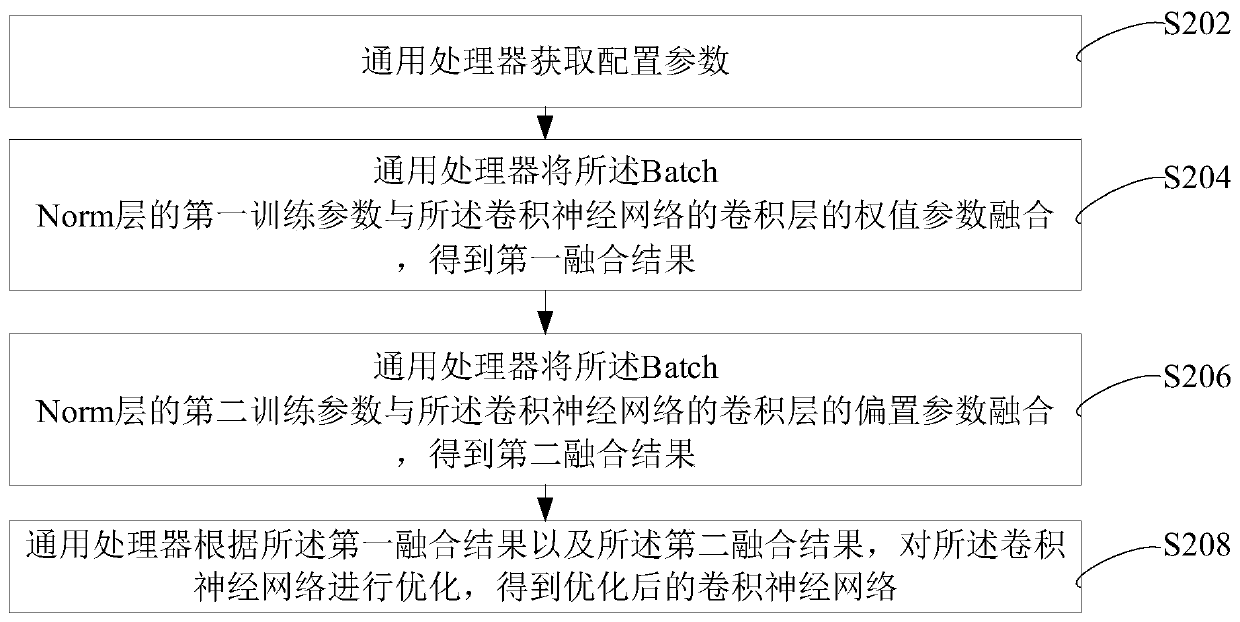

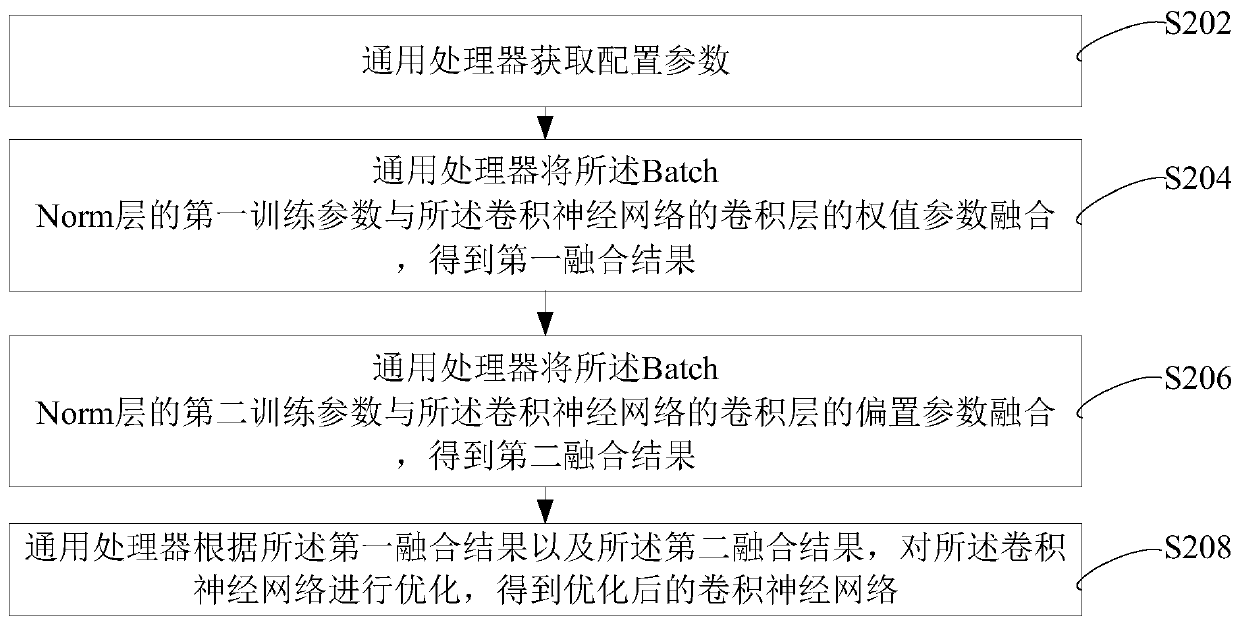

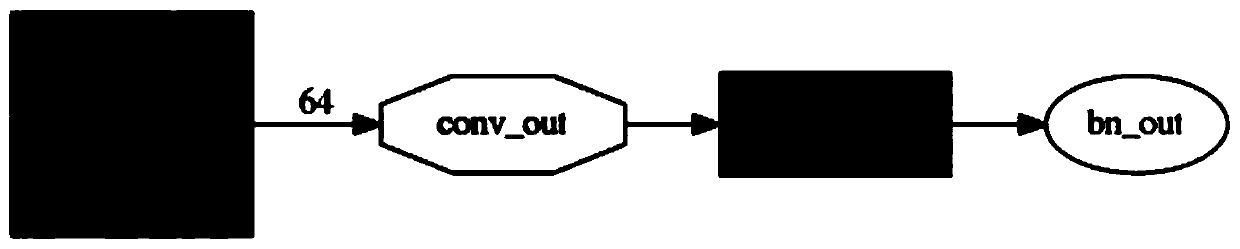

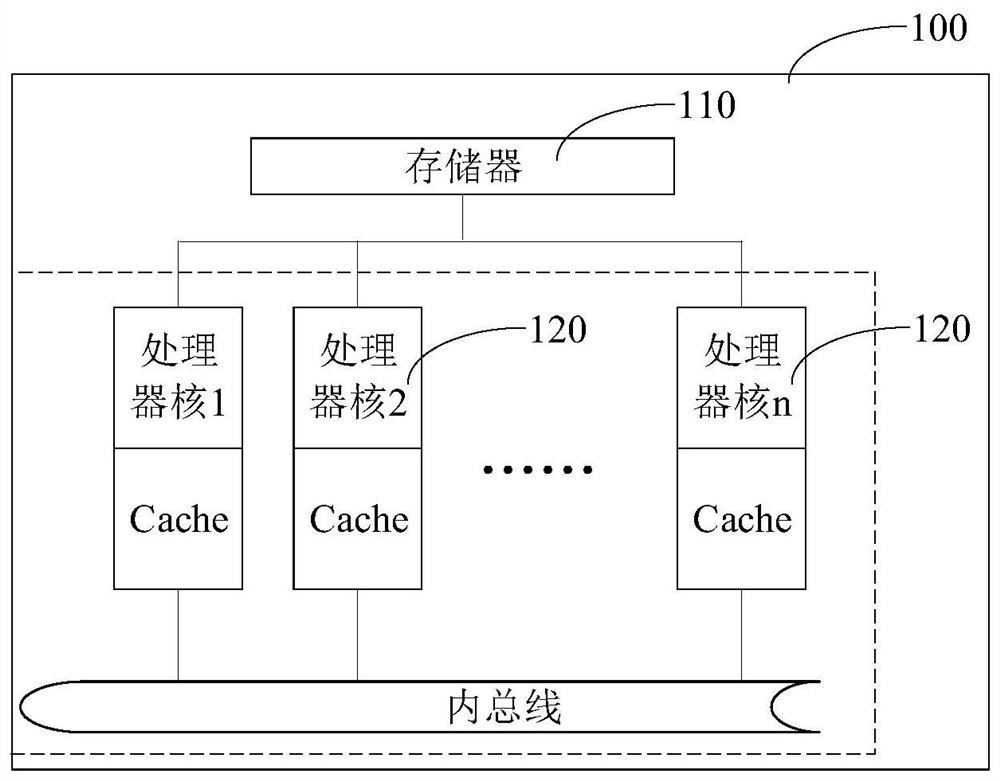

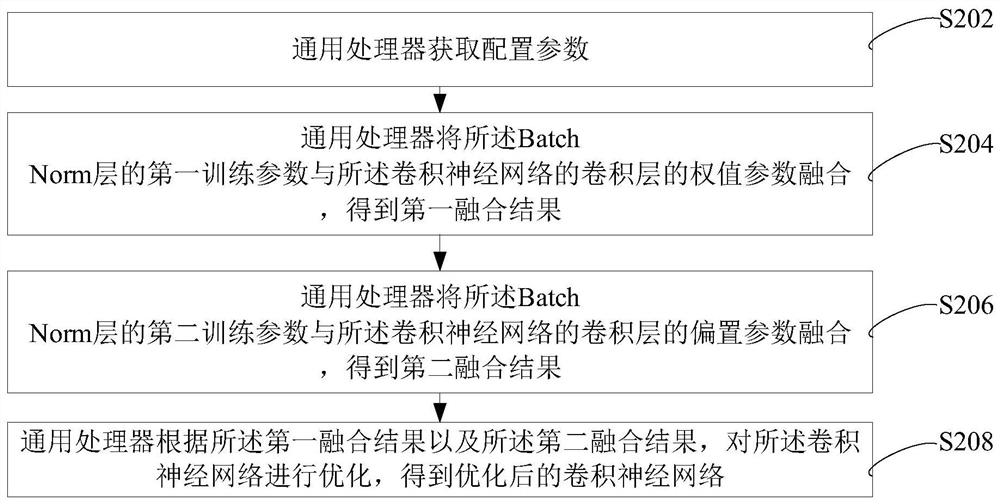

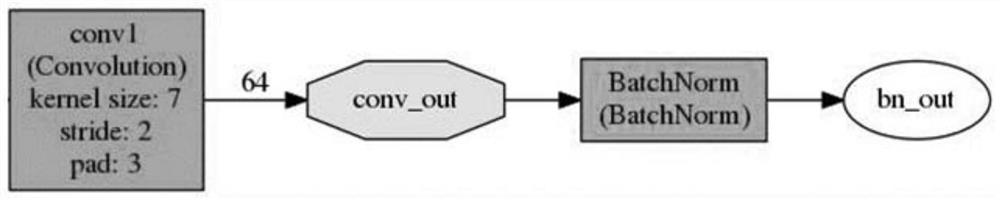

Learning task compiling method of artificial intelligence processor and related product

The invention relates to a method, a device for compiling a learning task of an artificial intelligence processor, a storage medium and a system. The method comprises the following steps: firstly, fusing a redundant neural network layer into a convolutional layer to optimize a convolutional neural network structure, and then compiling a learning task of an artificial intelligence processor based on the optimized convolutional neural network. By adopting the method to compile the learning task of the artificial intelligence processor, the compiling efficiency is high, and data exchange in the processing process can be reduced when the learning task is executed on equipment.

Owner:CAMBRICON TECH CO LTD

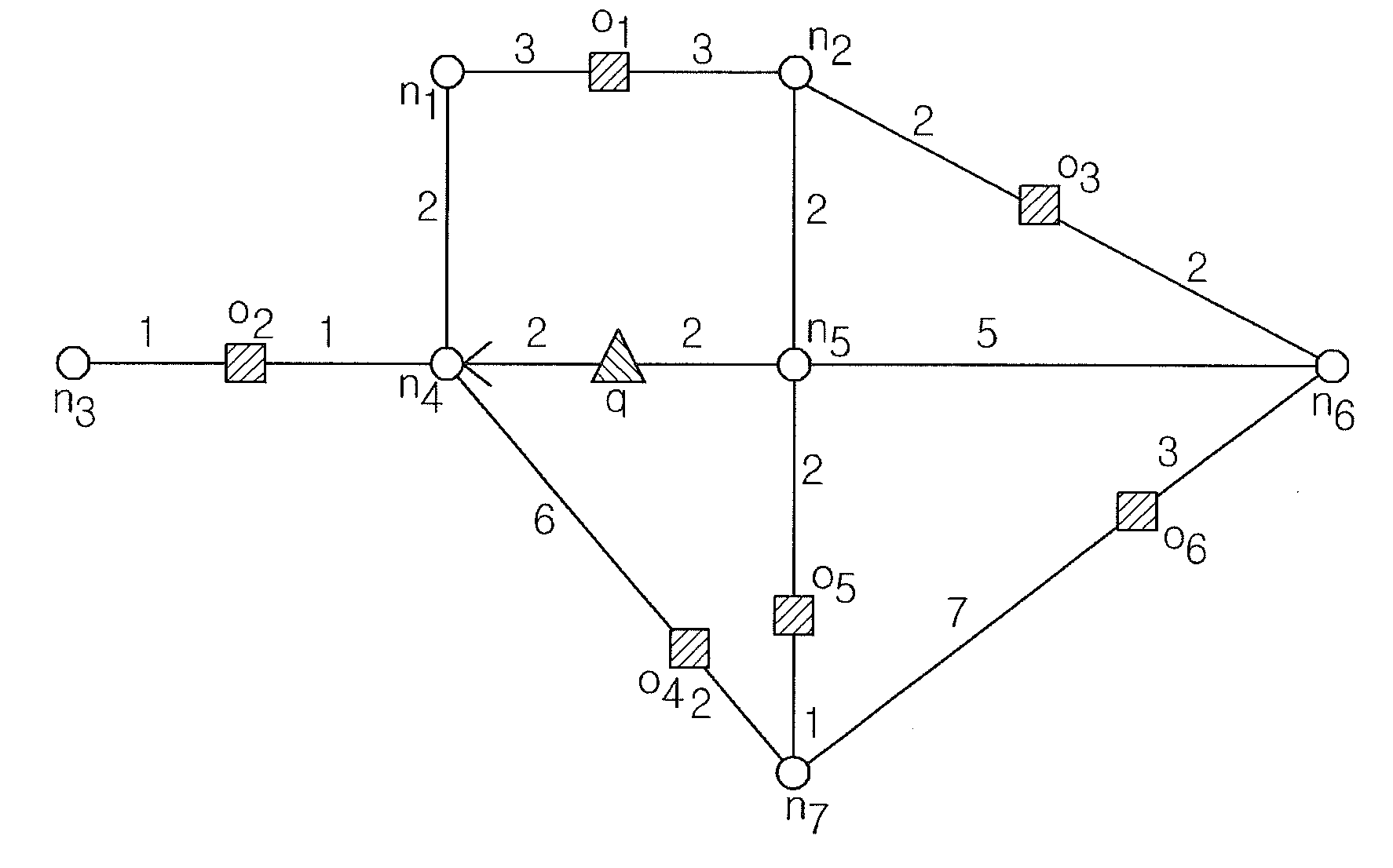

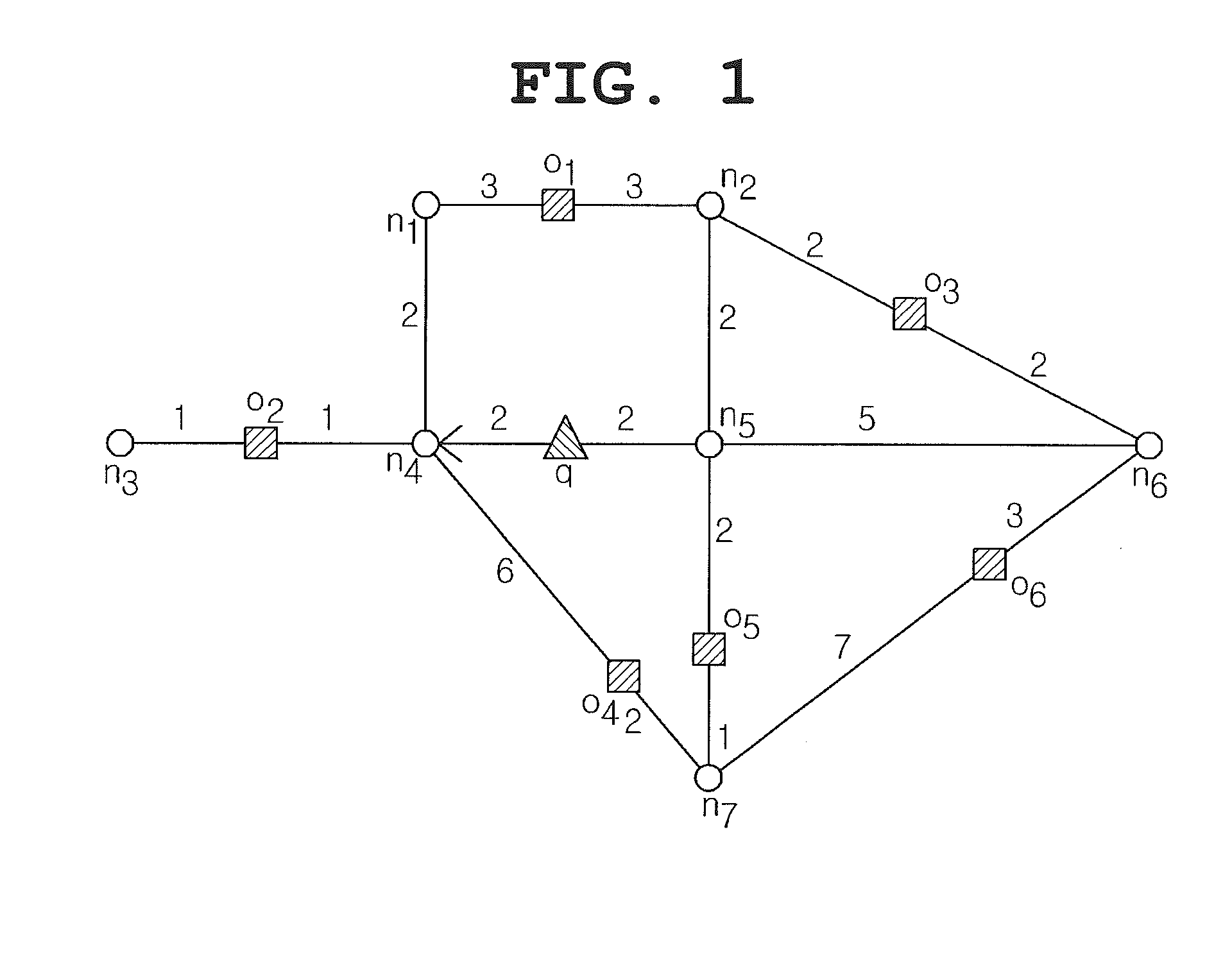

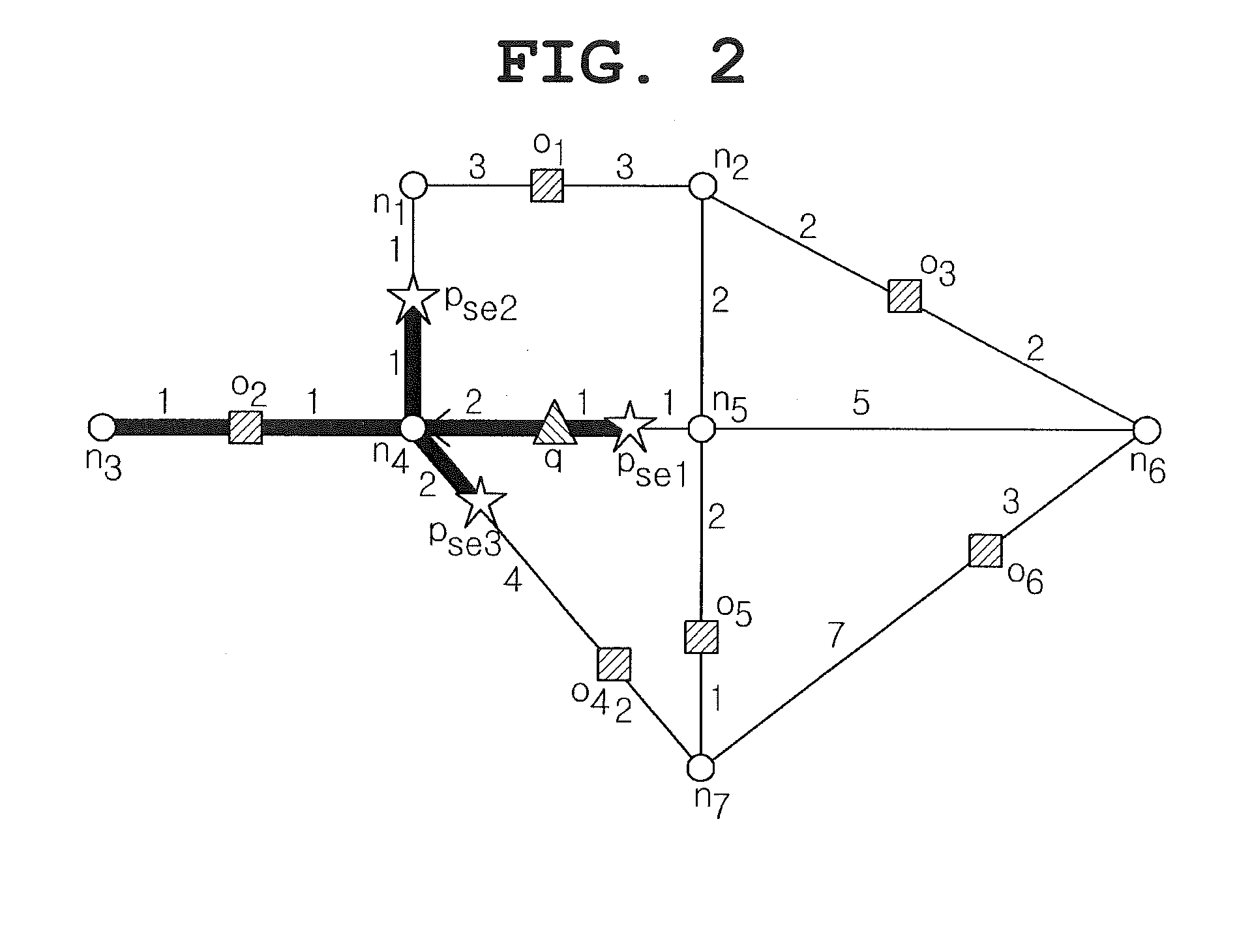

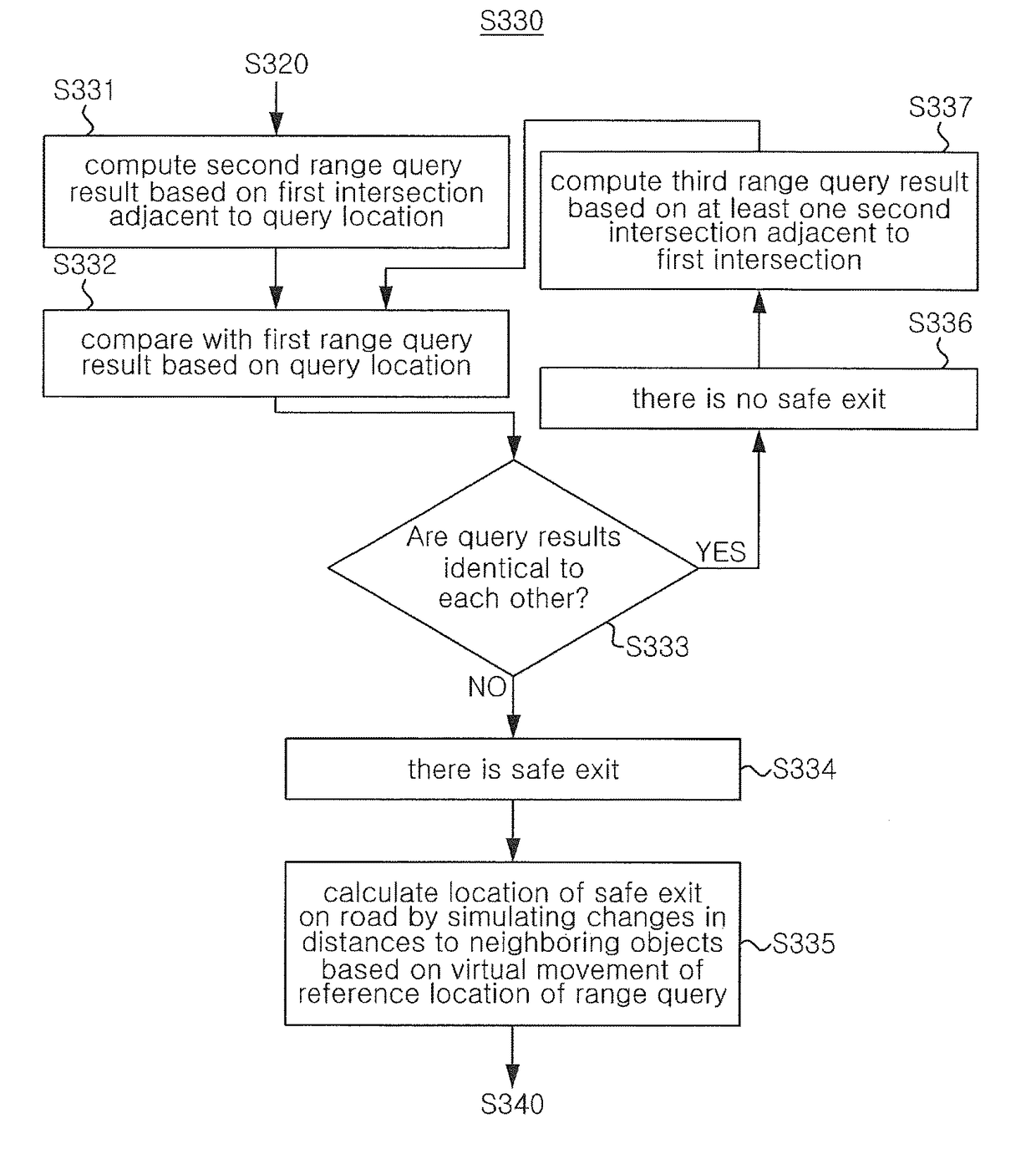

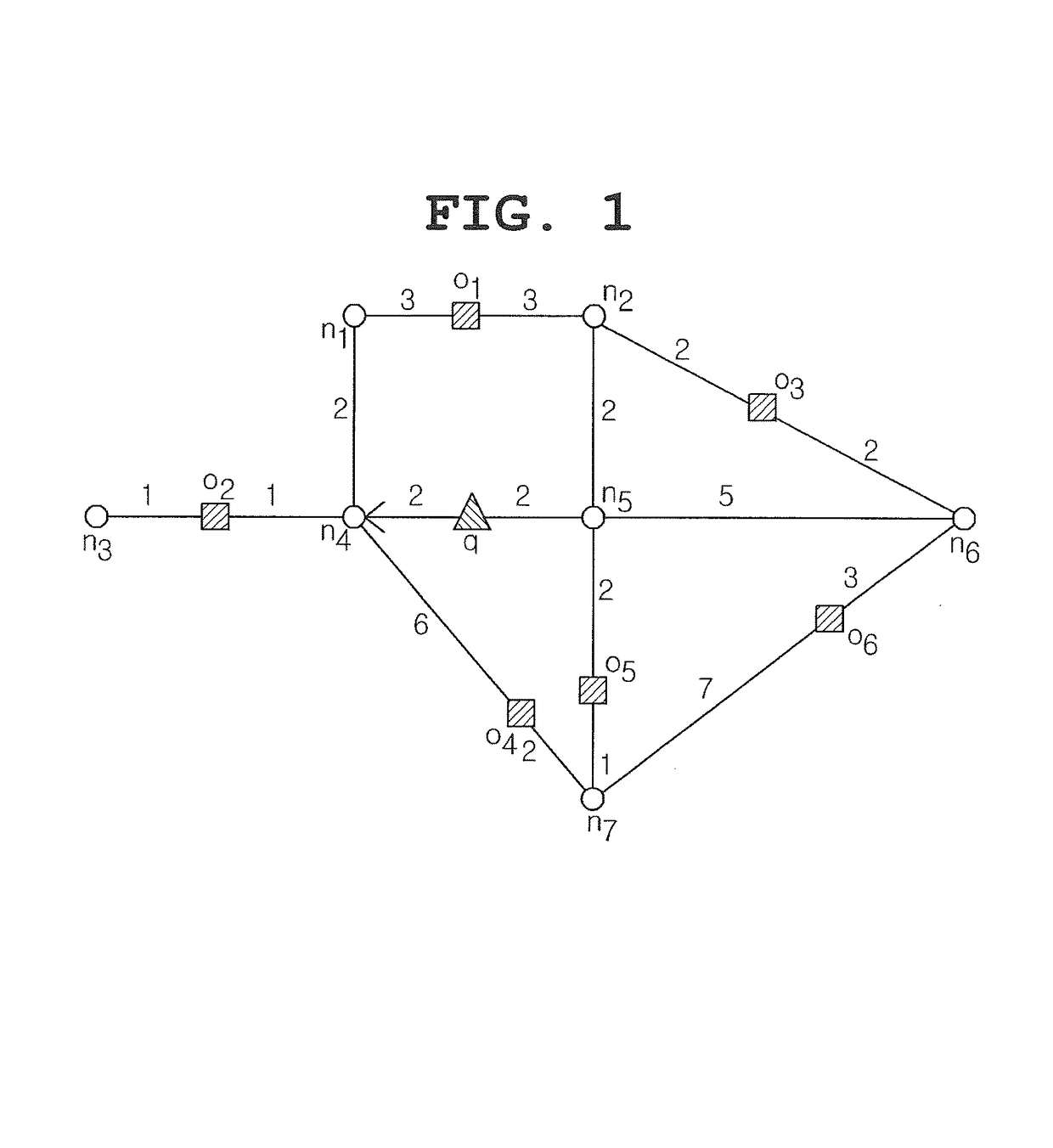

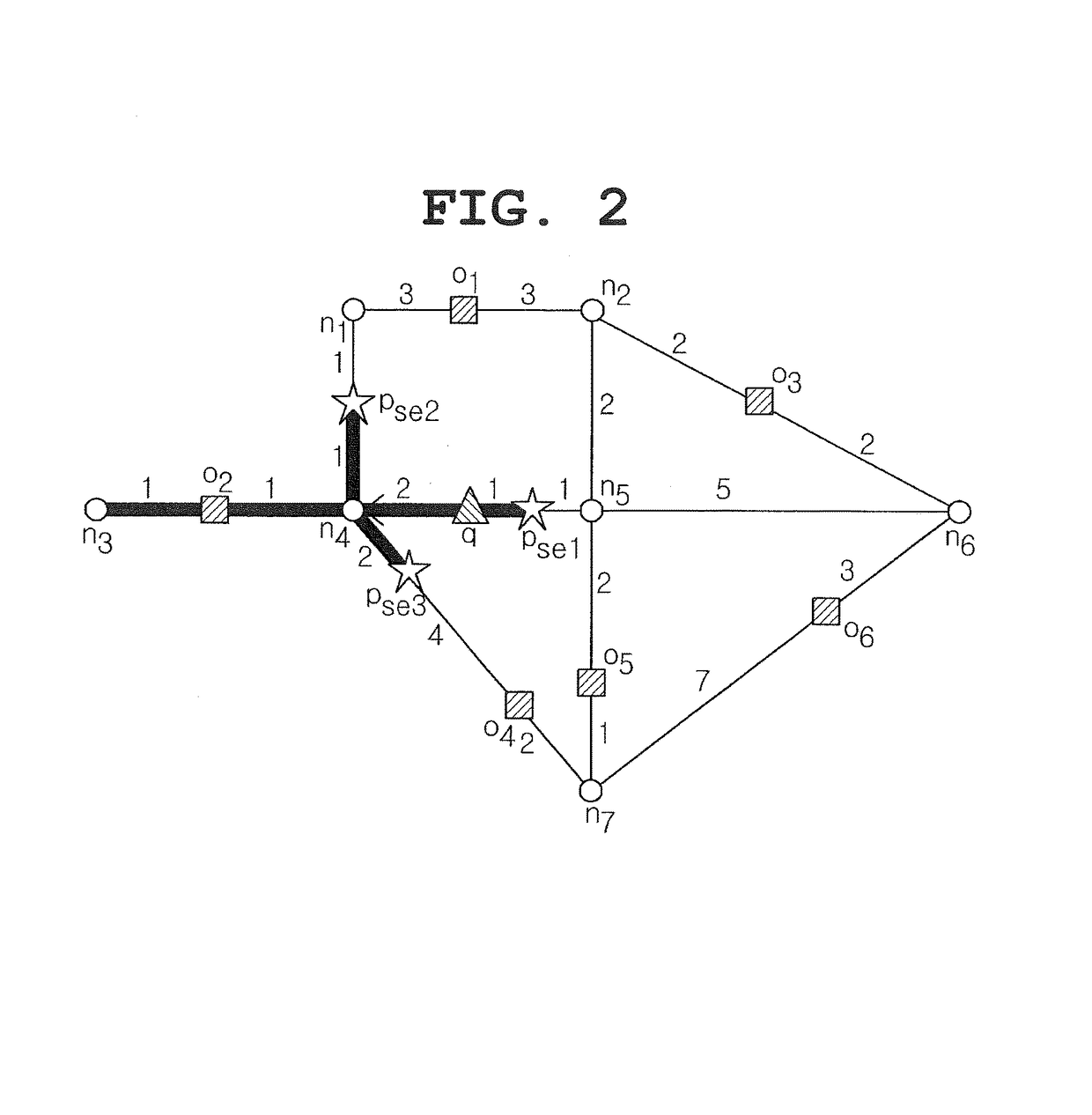

Method and apparatus of computing location of safe exit for moving range query in road network

ActiveUS20160076901A1Minimize communicationLow costInstruments for road network navigationRoad vehicles traffic controlRoad networksReal-time computing

The present invention relates to a method of computing the result of a moving range query and the locations of safe exits in a road network and, more particularly, to a method and apparatus that receive a range query request from a moving client terminal and that provide a range query result, a safe region in which the range query result is maintained, and the locations of safe exits. The present invention provides a method in which a server provides a first query processing result and information about a safe region that provides a range query result identical to the first query result, thereby minimizing communication between a server and a client terminal when the client terminal is located in the safe region.

Owner:AJOU UNIV IND ACADEMIC COOP FOUND

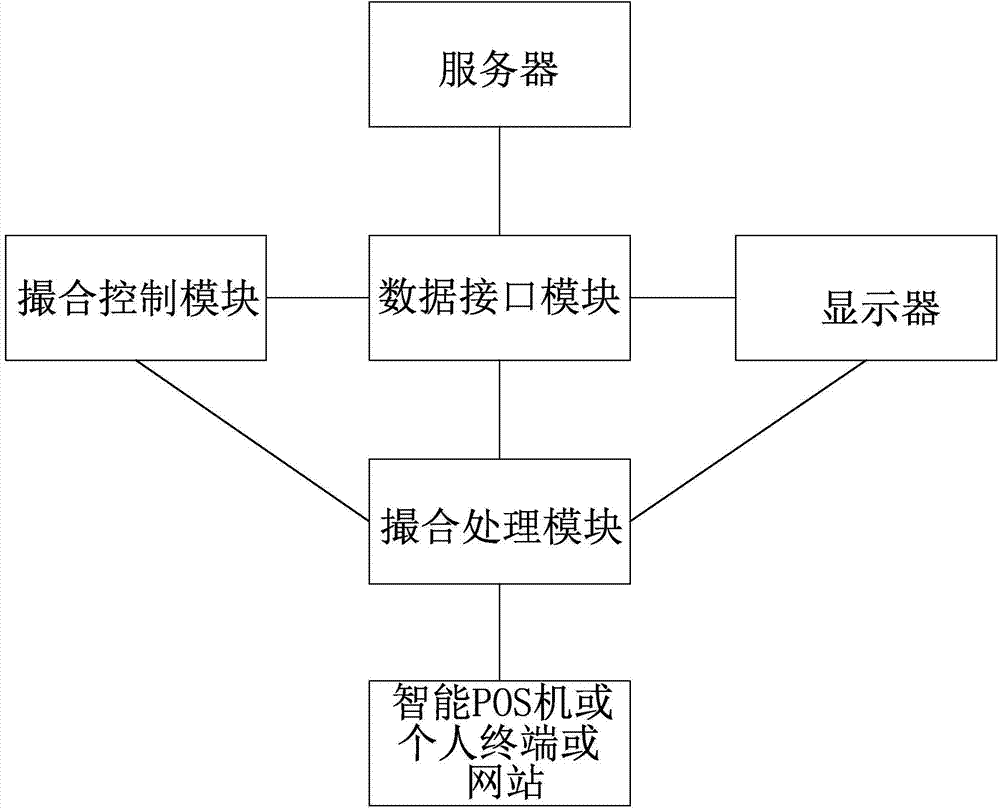

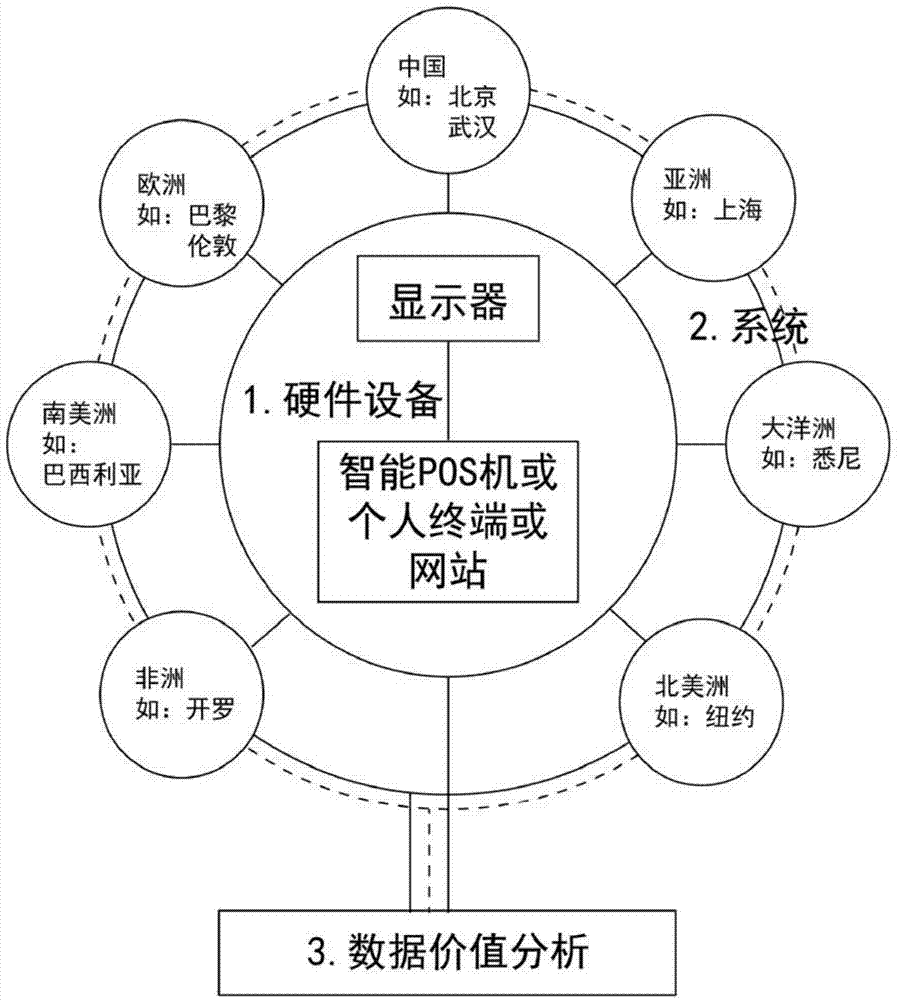

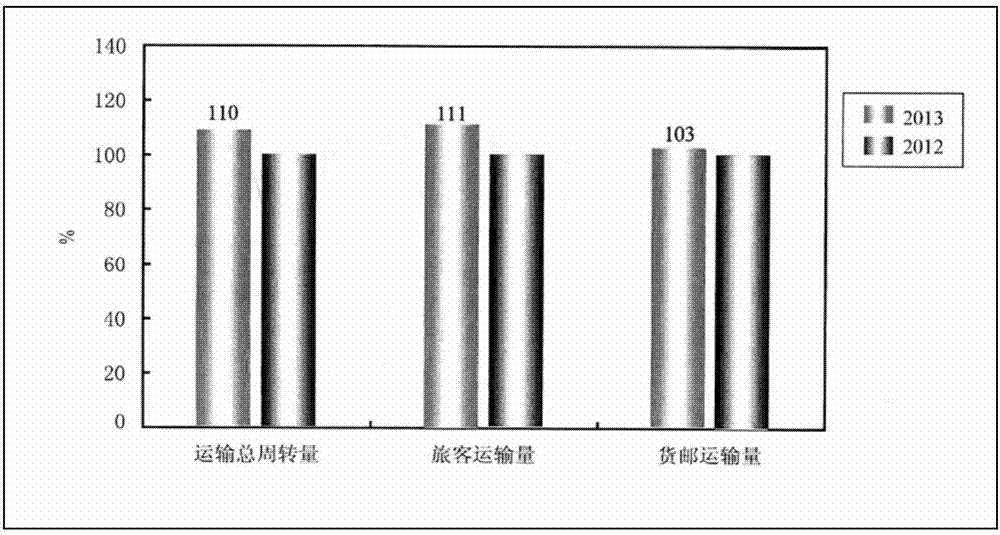

Method for trading products in matching system in price guessing manner

InactiveCN104778617AReduce the verification processIncrease occupancyBuying/selling/leasing transactionsThe InternetData acquisition

The invention provides a method for trading products in a matching system in a price guessing manner. The method comprises steps as follows: product information is acquired in real time by a data acquisition module in the matching system which is pre-installed on an intelligent POS (point-of-sale) machine or downloaded onto a personal mobile terminal by a passenger through the Internet, and the passenger trades the products in the matching system according to the product information; the product information comprises the price range of each product, and the passenger inputs one or more passenger bids in the matching system through input equipment according to the price ranges and uploads the one or more passenger bids to a data storage module in the matching system after confirming that the one or more passenger bids are right. The method for trading the products in the matching system in the price guessing manner has the benefits as follows: particularly for upgrading trades at a boarding gate, the seat utilization rate of each plane is increased, the vacancy rate of each plane is decreased, and the marginal income is increased.

Owner:陈雨淅

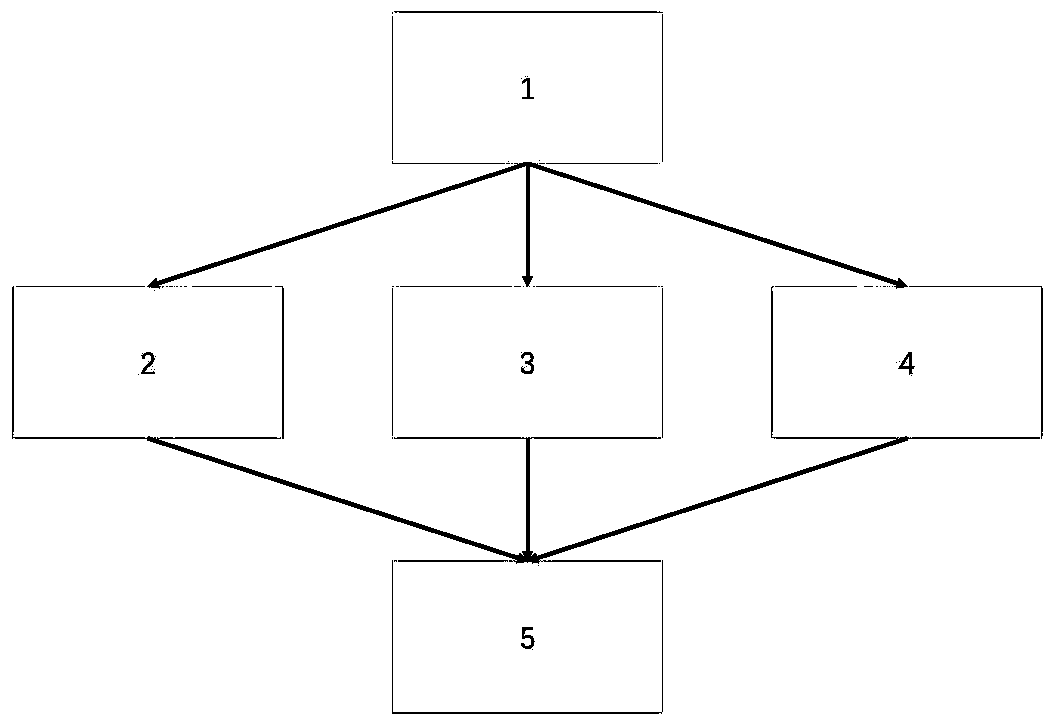

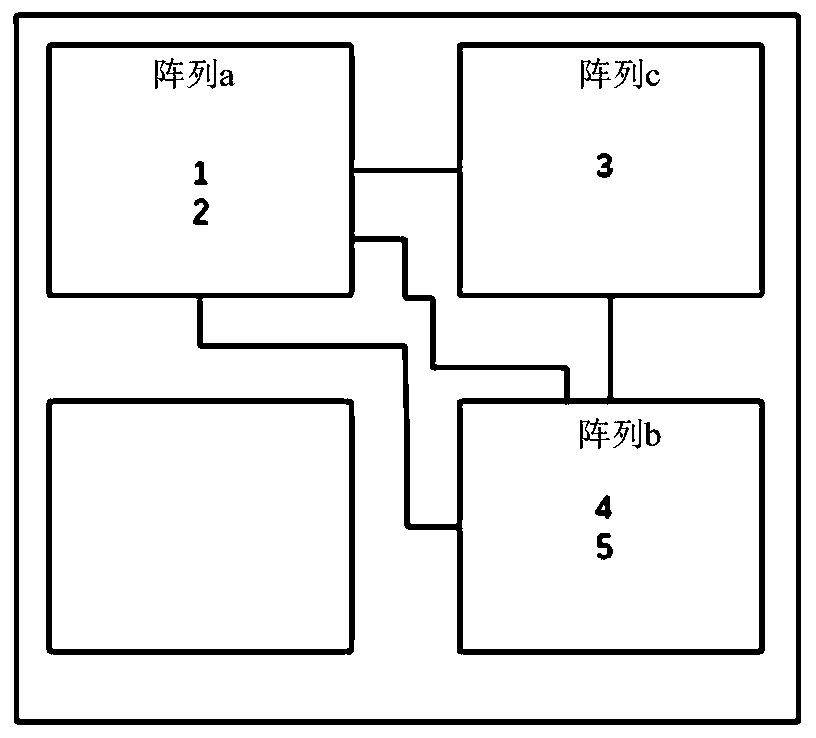

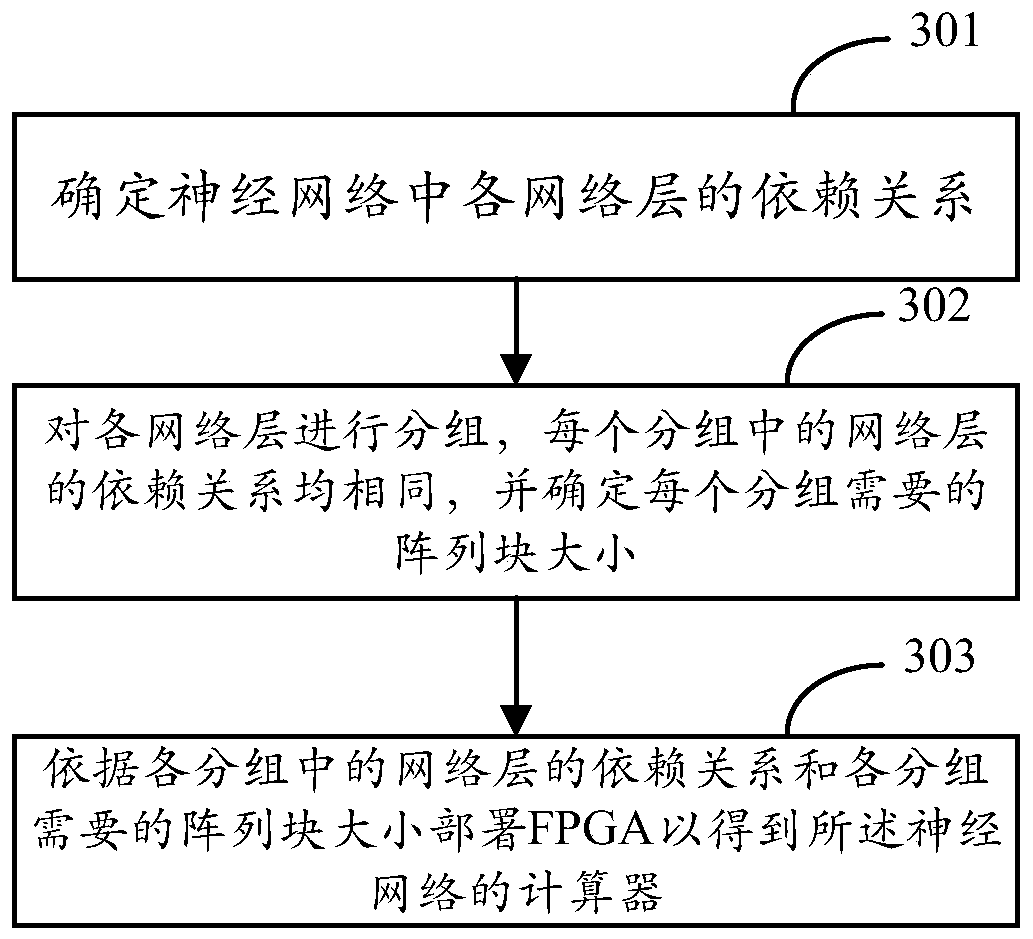

FPGA-based neural network calculator generation method and device

PendingCN111027688AReduce data exchangeShorten the lengthPhysical realisationComputer simulationsPathPingConcurrent computation

The invention discloses an FPGA-based neural network calculator generation method and device. The method comprises the steps of determining a dependency relationship of each network layer in a neuralnetwork; grouping the network layers, wherein the dependency relationship of the network layers in each group are the same; determining the size of an array block required by each group; and deployingand deploying the FPGA according to the dependency relationship of the network layer in each group and the size of the array block required by each group so as to obtain the calculator of the neuralnetwork. According to the method, network layers which do not depend on each other (i.e., have the same dependency relationship) are divided into one group by analyzing the dependency relationship ofthe neural network, so that parallel computing can be realized in the array blocks allocated to the network layers, the critical path length is effectively shortened, and the computing efficiency is improved. In addition, the positions of the array blocks required by each group are arranged on the FPGA according to the dependency relationship, so that data exchange between arrays can be reduced, and the calculation efficiency is further improved.

Owner:HANGZHOU WEIMING XINKE TECH CO LTD +1

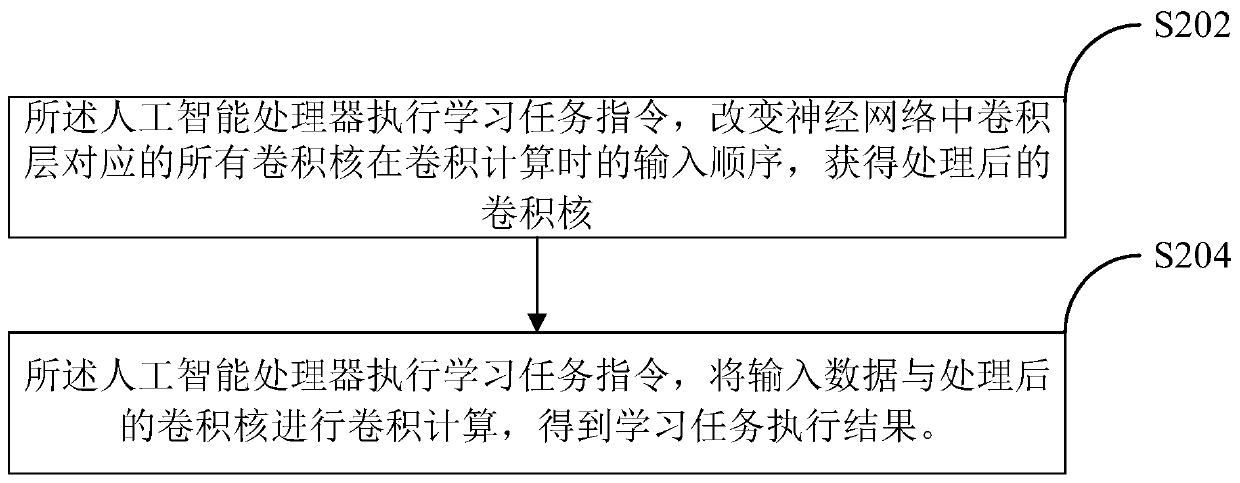

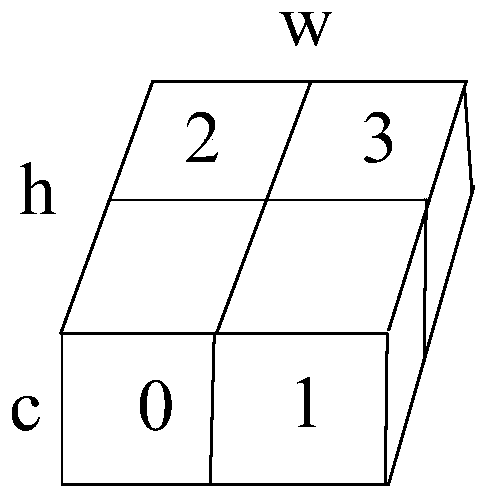

Method for executing learning tasks by artificial intelligence processor and related product

PendingCN111325339ASimple structureReduce in quantityNeural architecturesPhysical realisationAlgorithmNeural network nn

The invention relates to a method for an artificial intelligence processor to execute a learning task and a related product, and the method comprises the steps: enabling the artificial intelligence processor to execute a learning task instruction, changing the input sequence of all convolution kernels corresponding to a convolution layer in a neural network during convolution calculation, and obtaining a processed convolution kernel; and the artificial intelligence processor executes the learning task instruction, and performs convolution calculation on the input data and the processed convolution kernel to obtain a learning task execution result.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

Learning task compiling method of artificial intelligence processor and related product

ActiveCN110766146ASimple structureReduce in quantityNeural architecturesNeural learning methodsEngineeringData exchange

The invention relates to a learning task compiling method of an artificial intelligence processor and a related product. The learning task compiling method of the artificial intelligence processor comprises the following steps: firstly, fusing a redundant neural network layer into a convolutional layer to optimize a convolutional neural network structure, and then compiling a learning task of theartificial intelligence processor based on the optimized convolutional neural network. By adopting the method to compile the learning task of the artificial intelligence processor, the compiling efficiency is high, and data exchange in the processing process can be reduced when the learning task is executed on equipment.

Owner:CAMBRICON TECH CO LTD

A traffic adaptive control method and device

ActiveCN103778791BShorten the timeReduce the number of stopsControlling traffic signalsEngineeringSignal light

An adaptive traffic control method and device, the method comprising: acquiring a first-type traffic status parameter corresponding to the traffic status of the first straight-ahead phase in the green wave direction of a current intersection (11); acquiring a second-type traffic status parameter corresponding to the traffic status of the first straight-ahead phase in the green wave direction of an upstream intersection (12); determining the first green light turn-on time of the first straight-ahead phase of the current intersection according to the first-type traffic status parameter and the second-type traffic status parameter (13); determining the first green light duration corresponding to the first straight-ahead phase of the current intersection according to the first-type traffic status parameter and the second-type traffic status parameter (14); and turning on the green light at the first straight phase of the current intersection at the first green light turn-on time, and controlling the green light duration to be the first green light duration (15). The method adjusts the green light turn-on time and the duration of a signal light, enabling as many vehicles as possible to pass through an intersection with multiple signal lights in the green wave band without stopping.

Owner:ZTE CORP

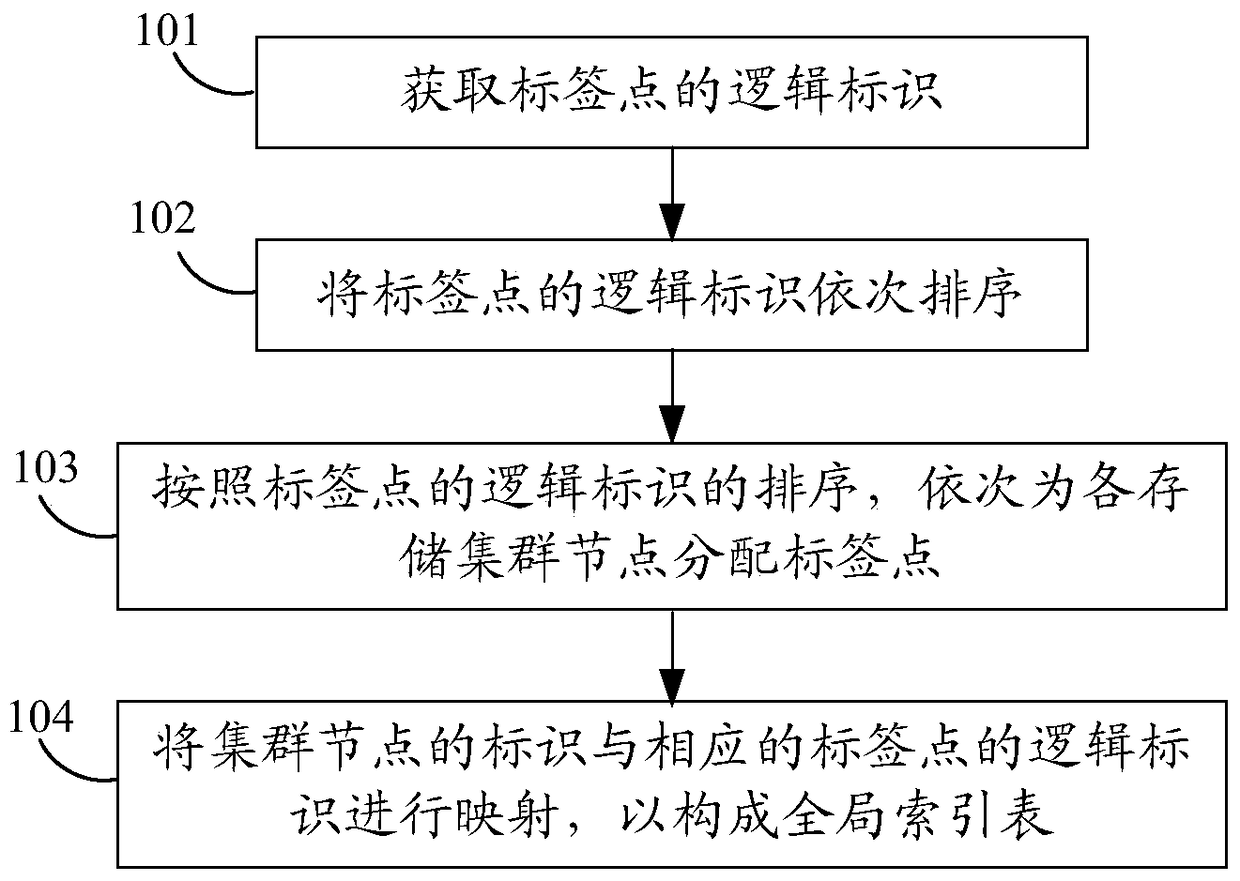

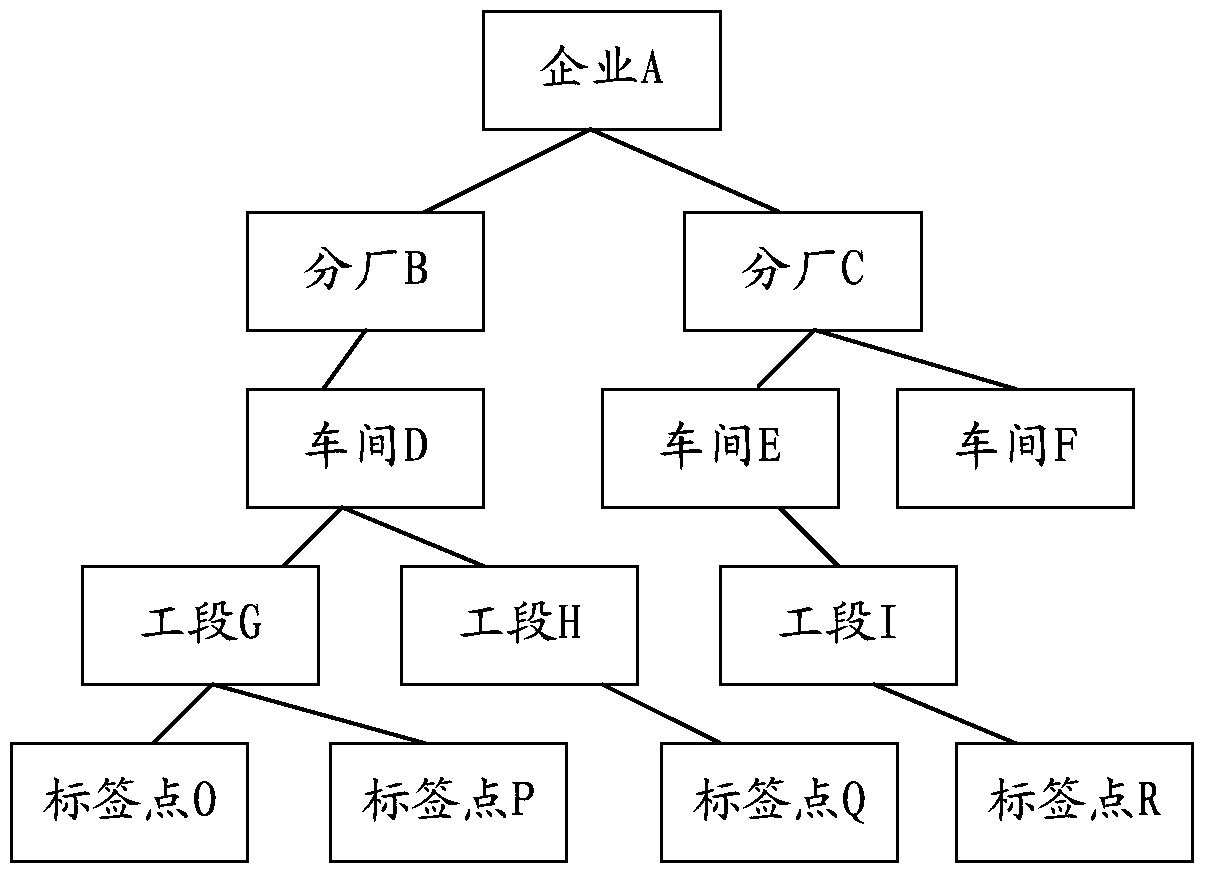

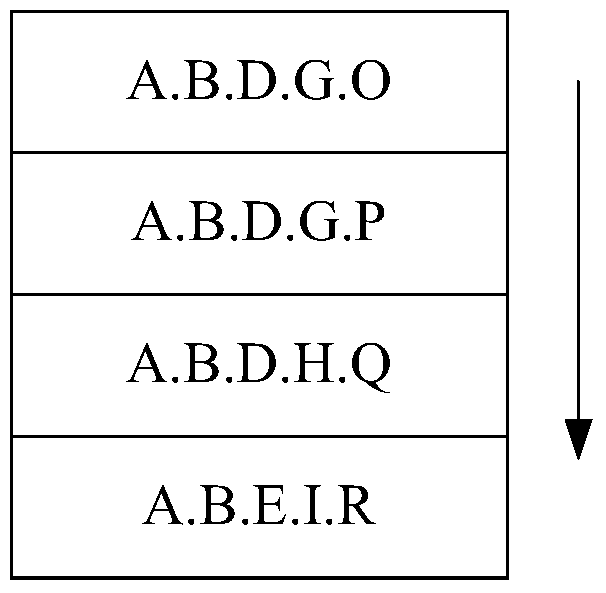

A method and system for global indexing

ActiveCN105160002BImprove continuityReduce instabilityOther databases indexingSpecial data processing applicationsInstabilityComputation process

The invention provides a global indexing method, which comprises the following steps: obtaining the logic identifiers of tag points, wherein the logic identifiers of the tag points are successively formed by the practical identifiers of each entity in a factory logic structure which comprises the tag points from top to bottom; successively sorting the logic identifiers of the tag points; according to the sequence of the logic identifiers of the tag points, successively distributing the tag points for each storage cluster node; and mapping the identifier of each cluster node and the logic identifier of the corresponding tag point to form a global index table. The method can effectively improve the space continuity of tag point storage, reduces data exchanged carried out among a plurality of storage nodes by a database collector or a second computation process and lowers the instability of network transmission load and business flow, the global index table of which the identifier of the storage cluster node and the logic identifier of the tag point are mapped can be obtained, and data use efficiency can be effectively improved.

Owner:ZHEJIANG SUPCON TECH

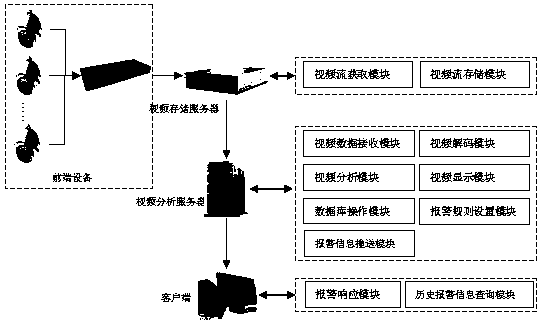

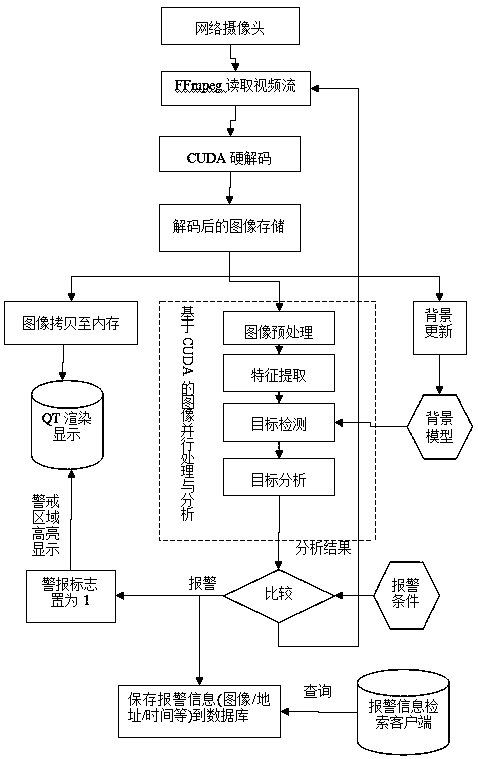

An intelligent video analysis and monitoring system

ActiveCN108040221BWith cruise functionReduce occupancyTelevision system detailsColor television detailsVideo storageAlarm message

The invention provides an intelligent video analysis and monitoring system. A front end device is connected with a video storage server, the video storage server is connected with a video analysis server, and the video analysis server is connected with a client; the front end device comprises a webcam and a switch, the video storage server comprises a video stream acquisition module and a video storage module, the video analysis server comprises a video data receiving module, a video decoding module, a video analysis module, a video display module, an alarm rule setting module, a database operation module and an alarm information push module; the client comprises a historical alarm information query module and alarm response module; the video analysis module uses the CUDA hard decoding technology and the CUDA-based image parallel processing method to analyze the image so as to effectively reduce the CPU resource occupancy rate, and the parallel processing technology accelerates the analysis process, thus effectively reducing the analysis time consumption and providing guarantee for real-time analysis.

Owner:JIANGXI HONGDU AVIATION IND GRP

Method and apparatus of computing location of safe exit for moving range query in road network

ActiveUS9752888B2Effective informationReduce data exchangeInstruments for road network navigationRoad networksReal-time computing

Owner:AJOU UNIV IND ACADEMIC COOP FOUND

Learning task compiling method of artificial intelligence processor and related product

ActiveCN110889497ASimple structureReduce in quantityNeural architecturesMachine learningEngineeringData exchange

The invention relates to a learning task compiling method of an artificial intelligence processor and a related product. The learning task compiling method of the artificial intelligence processor comprises the following steps of firstly, fusing a redundant neural network layer into a convolutional layer to optimize a convolutional neural network structure, and then compiling a learning task of the artificial intelligence processor based on the optimized convolutional neural network. By adopting the method to compile the learning task of the artificial intelligence processor, the compiling efficiency is high, and the data exchange in the processing process can be reduced when the method is executed on a device.

Owner:CAMBRICON TECH CO LTD

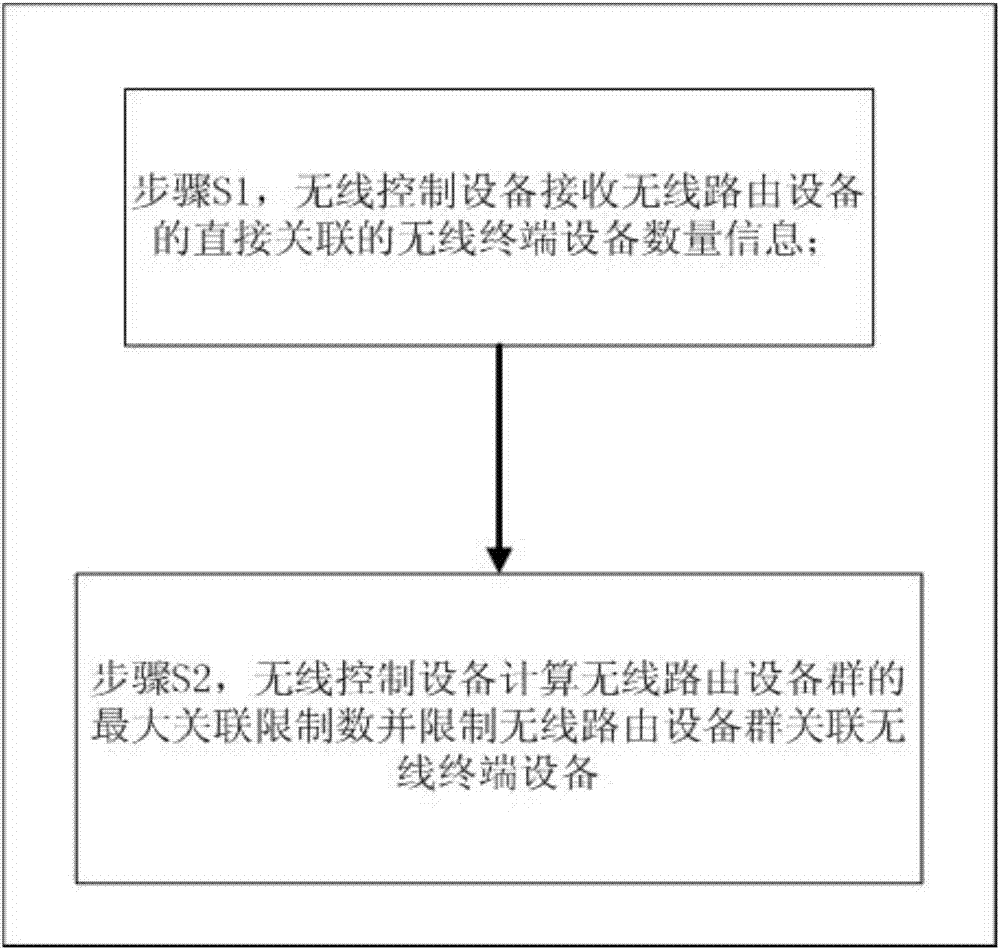

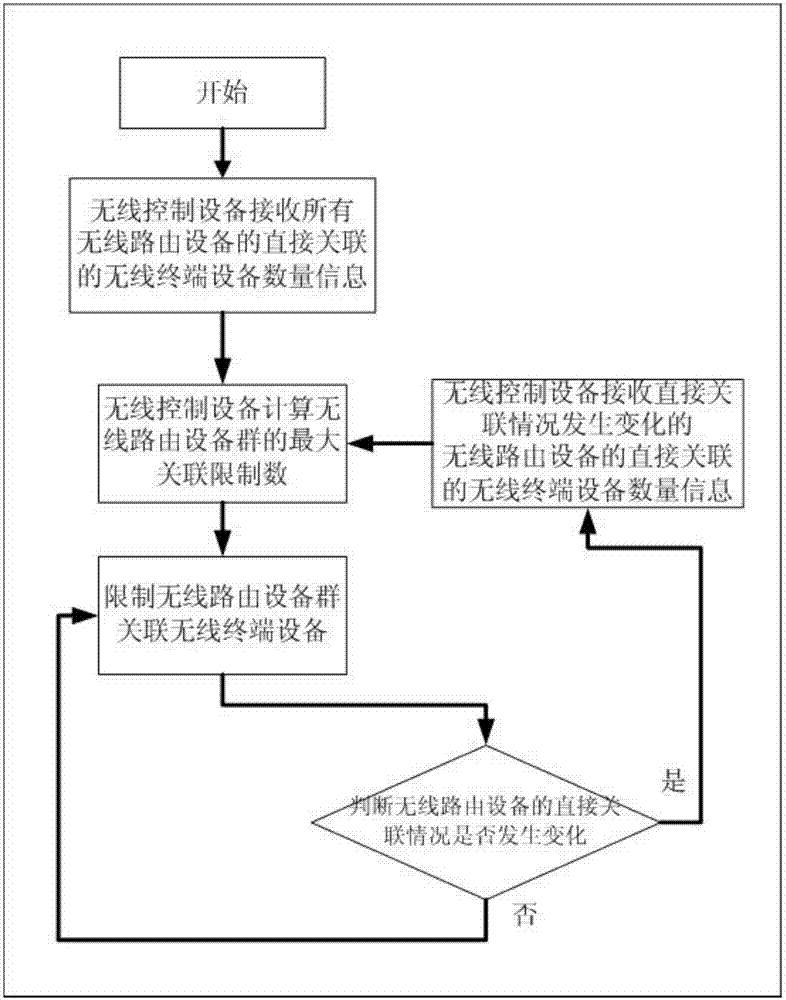

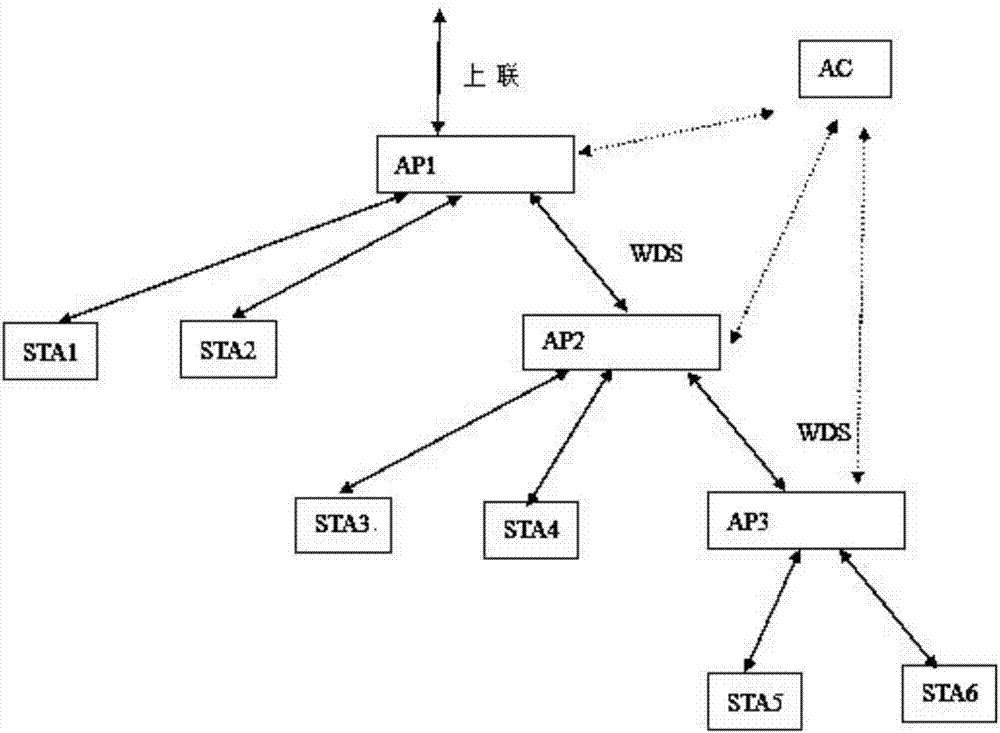

Association number limiting method and system based on bridging function of wireless distribution system

InactiveCN107148065AAvoid congestionSolve problems such as congestionAssess restrictionNetwork topologiesLocal area networkControllability

The invention relates to the technical field of wireless local area network processing, and in particular relates to an association number limiting method and system based on the bridging function of a wireless distribution system. The association number limiting method comprises the steps that: S1, wireless control equipment receives wireless terminal equipment number information directly associated with wireless routing equipment in a wireless routing equipment group formed through the bridging function of the wireless distribution system; and S2, according to the wireless terminal equipment number directly associated with each wireless routing equipment in the step S1, the wireless control equipment calculates the maximum association limiting number of the wireless routing equipment group, and limits the wireless routing equipment group to associate with the wireless terminal equipment through the maximum association limiting number. The association number limiting method is high in controllability, easy to implement, high in error-tolerant rate and good in limiting effect.

Owner:PHICOMM (SHANGHAI) CO LTD

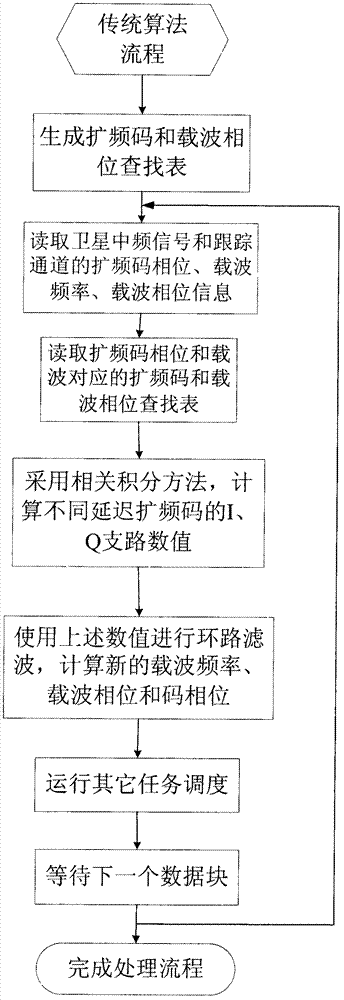

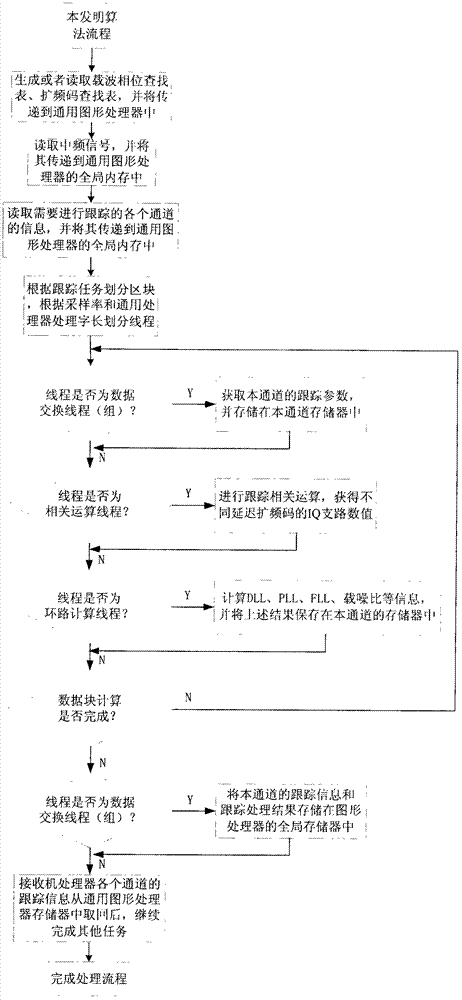

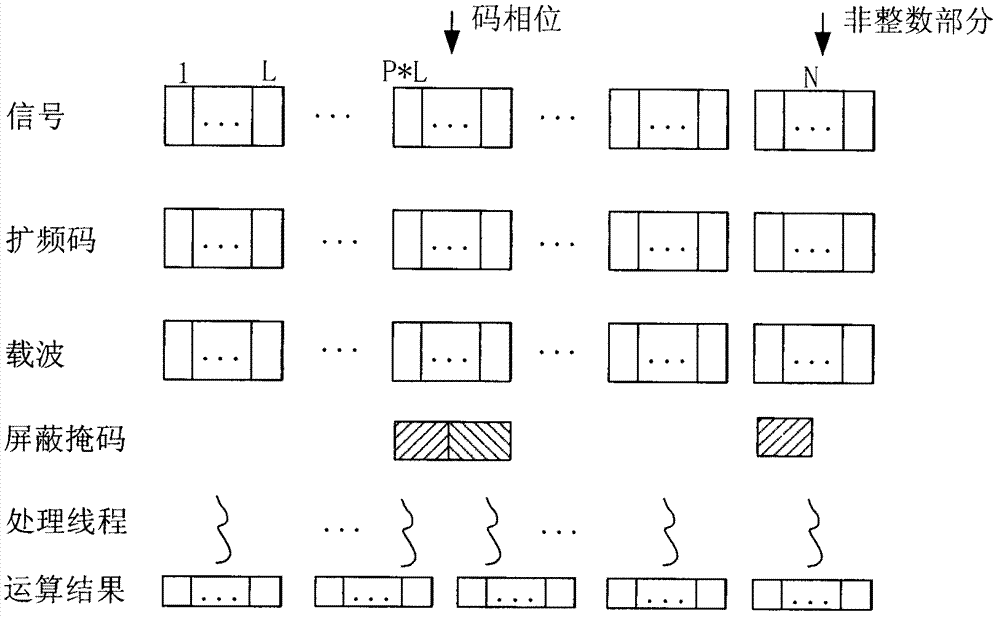

Universal graphic processor based bit compression tracking method for satellite navigation system

InactiveCN102176033BSpeed up task schedulingHigh speedSatellite radio beaconingGraphicsComputer science

The invention provides a universal graphic processor based bit compression tracking method for a satellite navigation system, which comprises the following steps of: distributing tracking tasks of each channel to a block of the universal graphic processor for processing according to the number of tracking channels of the satellite navigation system, meanwhile, performing bit compression on every L sampling points and distributing the every L sampling points to a thread of the universal processor for processing according to a sampling rate N of the system and a processing field length L of theuniversal processor; and individually processing L sampling points at the position of a code phase trip point and the last less than L sampling points through one thread respectively, wherein all threads are responsible for completing relative calculations in a tracking process which comprises the following four steps of: the alignment of data bits, the calculation of shielding masks, the correlation of segmentation, and the subtraction and summation of vectors, and each block has one thread which is used for calculating a tracking loop according the summed result and is responsible for adjusting the phase of a spread spectrum code, a carrier frequency, and a carrier phase . In the invention, the efficient tracking calculation of the satellite navigation system is realized by using the parallel calculation capacity of the universal graphic processor.

Owner:BEIHANG UNIV

A learning task compiling method for an artificial intelligence processor and related products

ActiveCN110889497BSimple structureReduce in quantityMachine learningNeural architecturesTheoretical computer scienceNetwork structure

This application relates to a learning task compiling method for an artificial intelligence processor and related products, wherein the learning task compiling method for an artificial intelligence processor includes: firstly, performing convolution by fusing redundant neural network layers into a convolutional layer The optimization of the neural network structure, and then compile the learning tasks of the artificial intelligence processor based on the optimized convolutional neural network. Using the method to compile learning tasks for artificial intelligence processors has high compilation efficiency, and can reduce data exchange during processing when executed on a device.

Owner:CAMBRICON TECH CO LTD

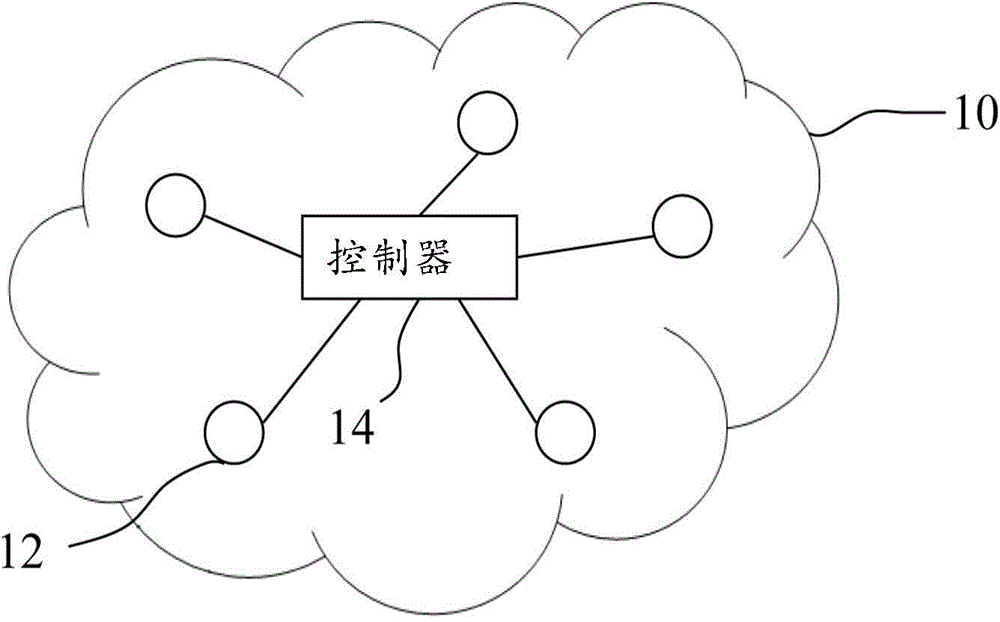

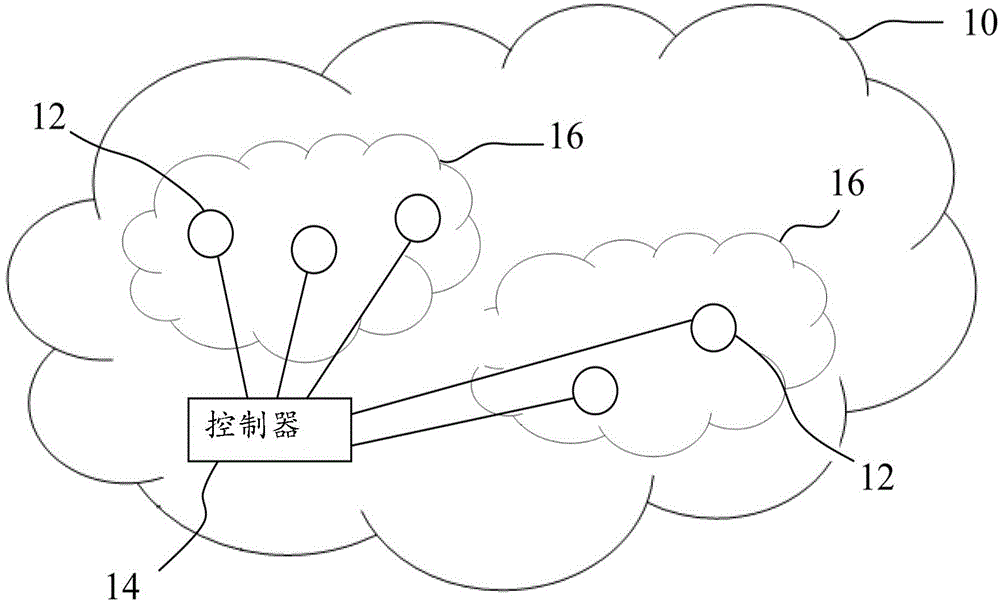

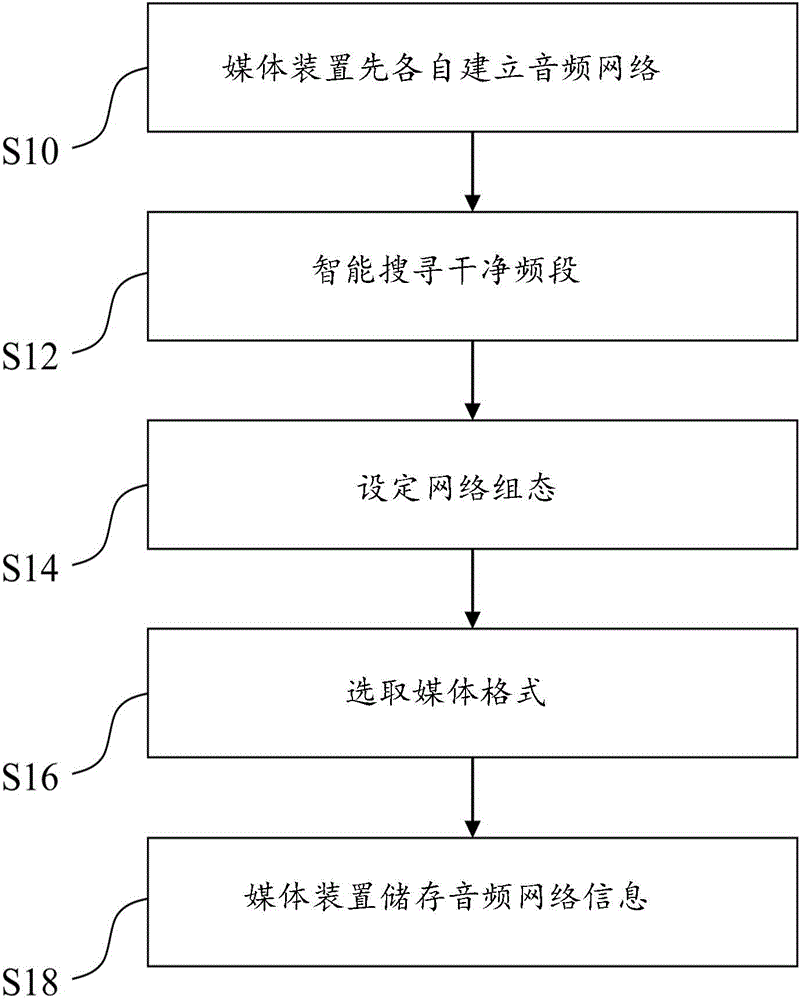

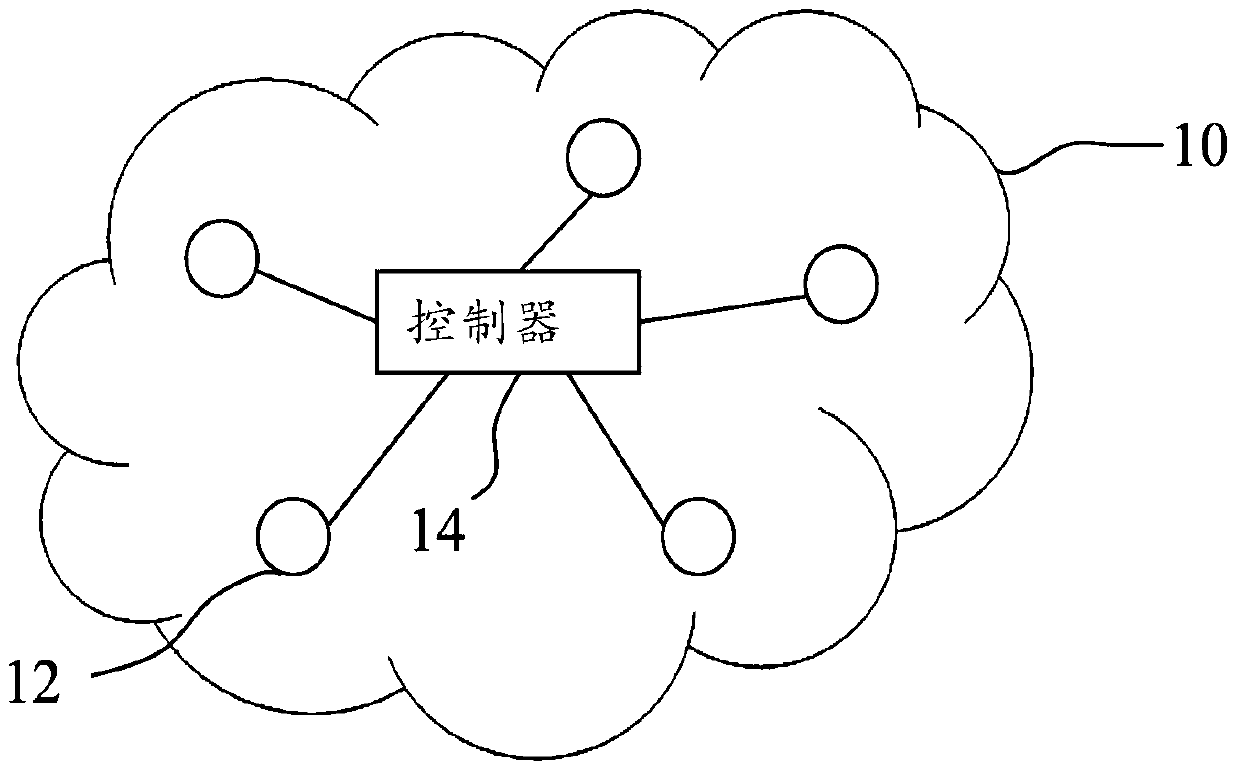

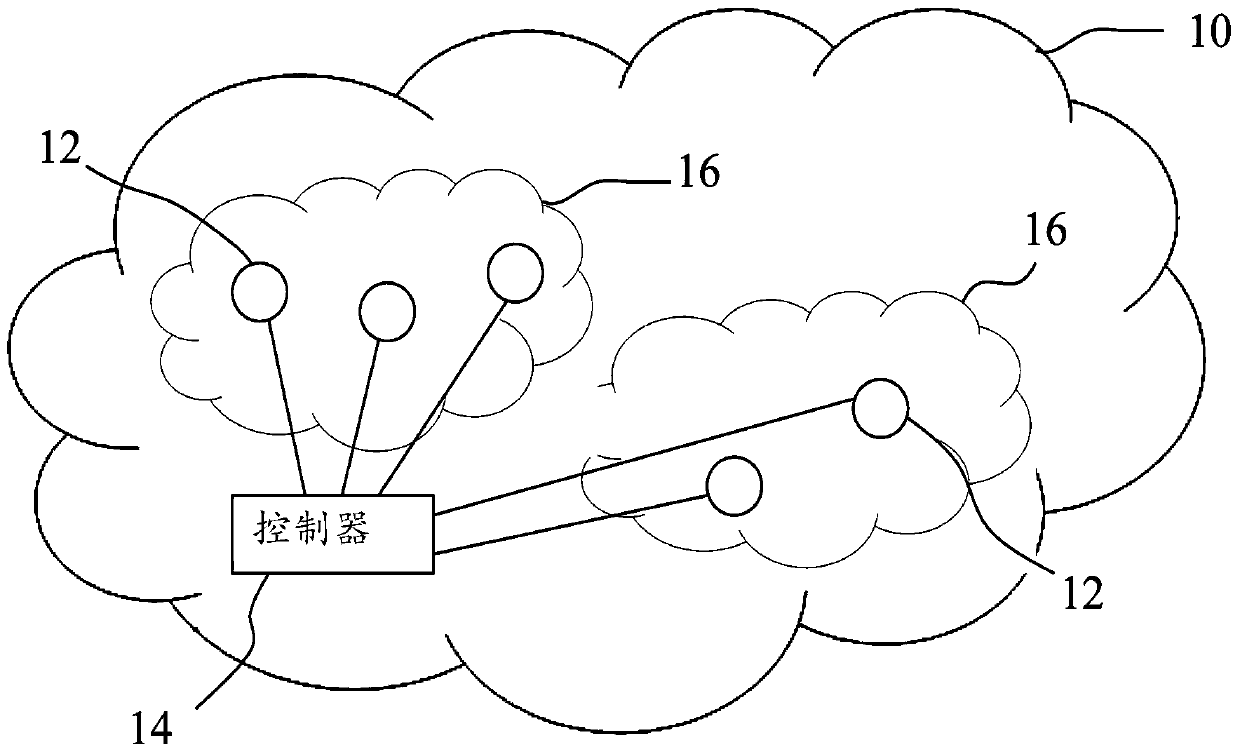

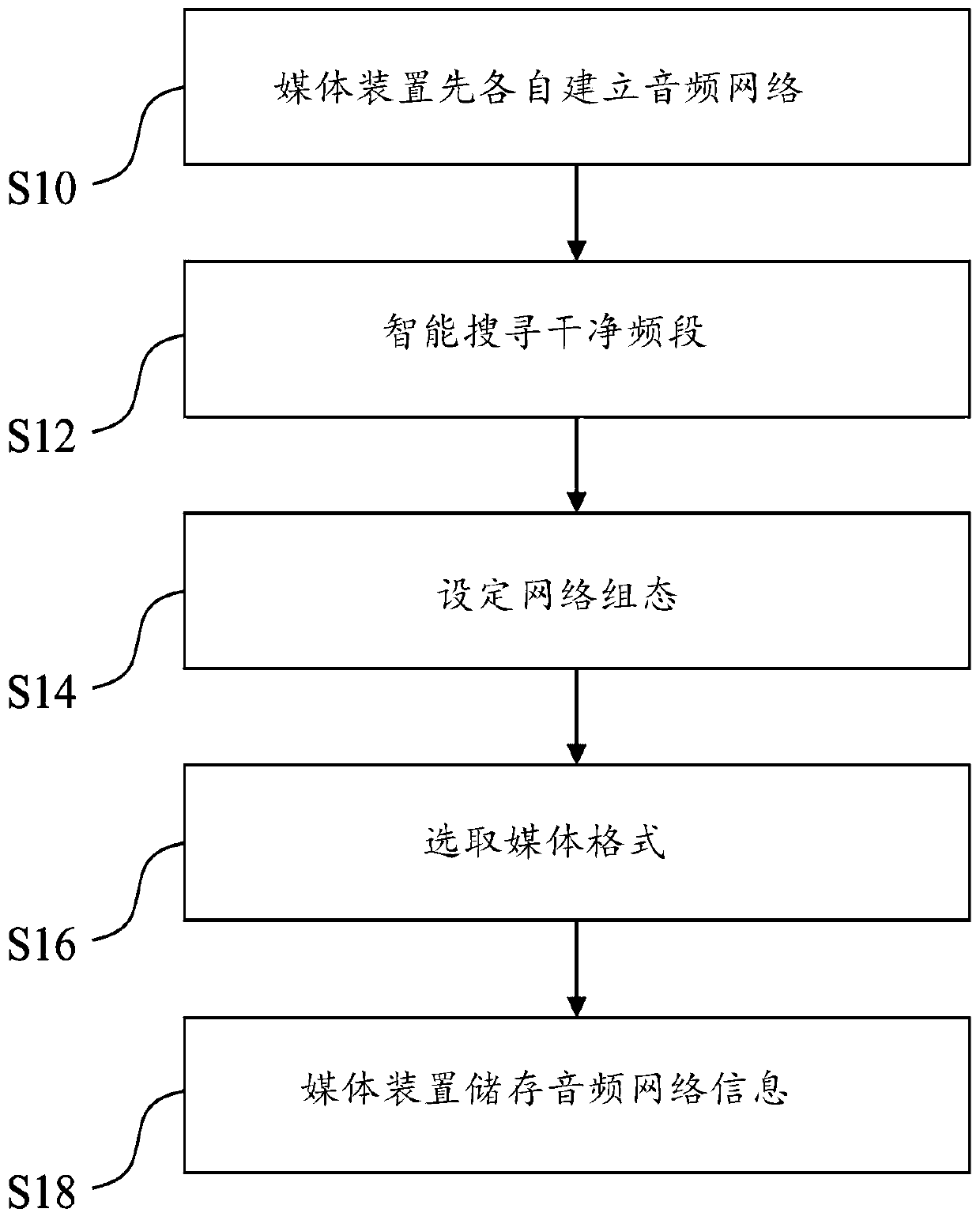

Audio network construction method for multiple domain media devices

InactiveCN106161148AReduce data exchangeData switching by path configurationComputer hardwareNetwork construction

The invention discloses an audio network construction method for multiple domain media devices. Multiple media devices capable of being connected with multiple domains are added to the same audio network, and the media devices are all linked with a controller. The method comprises the following steps: each media device builds an audio network, information of the audio network is optimized, and the audio network information is stored; the controller issues a linking instruction to at least one first device in the media devices and requires the first device to be added to the audio network of a second device in the media devices; and the first device queries the stored audio network information from the second device, acquires the audio network information of the second device and tries to be added to the audio network of the second device.

Owner:NFORE TECH

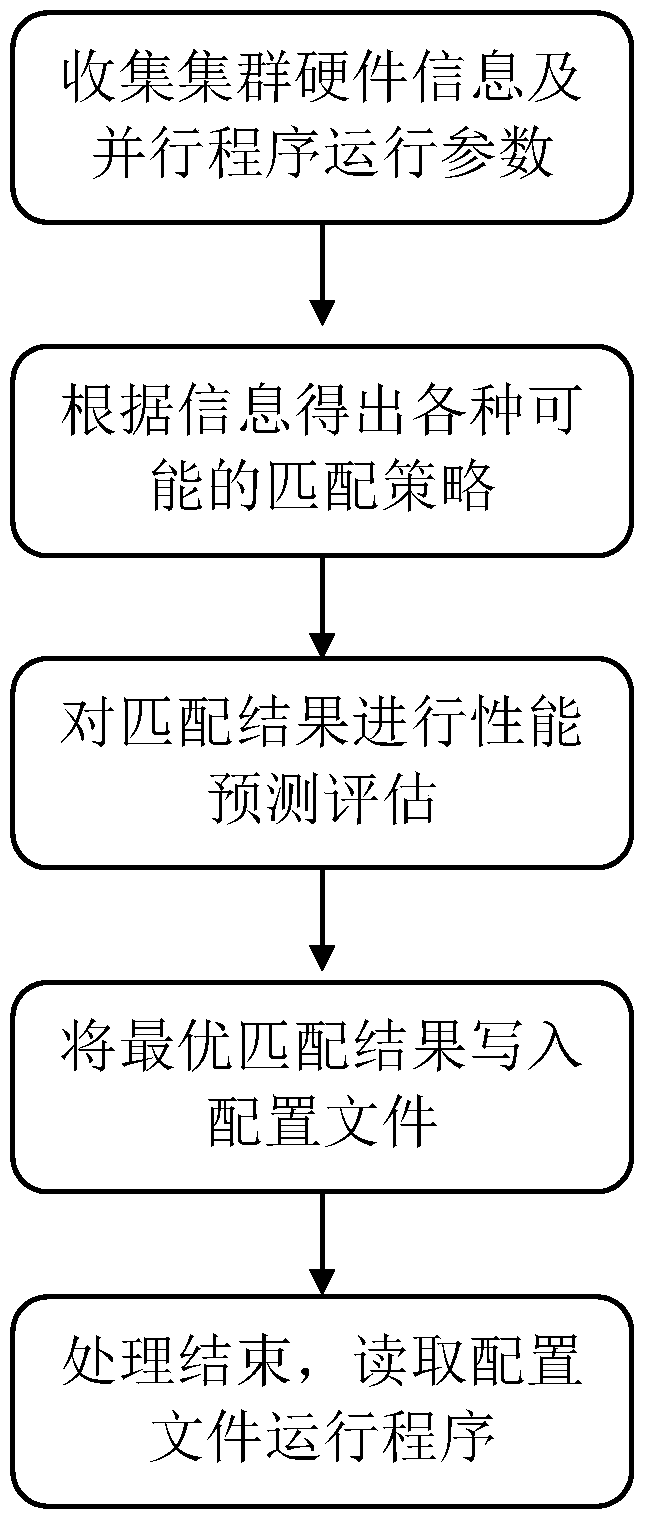

A parallel I/O optimization method and system based on reducing inter-process communication overhead

ActiveCN104778088BReduce overheadImprove I/O performanceInterprogram communicationConcurrent instruction executionParallel I/OSystem configuration

The invention discloses a method for reducing inter-process communication based on process affinity so as to improve the efficiency of parallel I / O, including: firstly analyzing the parallel I / O program with Collective I / O as the mainstream, collecting and counting the cluster Machine node information and MPI program configuration information; then, the system calculates the matching results of various possible machine nodes and agent processes through preprocessing operations, and then determines the best matching strategy through the performance prediction module; finally, the preprocessing is obtained The matching strategy is written in the configuration file. Experimental results show that the system is simple to configure, and can determine the best process allocation scheme for program operation through simple express preprocessing without modifying the original program code, thereby reducing inter-process communication overhead and improving parallel I / O performance purposes.

Owner:HUAZHONG UNIV OF SCI & TECH

Audio network construction method of multi-network domain media device

InactiveCN106161148BReduce data exchangeData switching by path configurationComputer hardwareNetwork construction

The invention discloses an audio network construction method for multiple domain media devices. Multiple media devices capable of being connected with multiple domains are added to the same audio network, and the media devices are all linked with a controller. The method comprises the following steps: each media device builds an audio network, information of the audio network is optimized, and the audio network information is stored; the controller issues a linking instruction to at least one first device in the media devices and requires the first device to be added to the audio network of a second device in the media devices; and the first device queries the stored audio network information from the second device, acquires the audio network information of the second device and tries to be added to the audio network of the second device.

Owner:NFORE TECH CO LTD

Method and system for expanding capacity of memory device

ActiveCN101751233BIncrease profitIncrease read and write bandwidthInput/output to record carriersOperating systemStorage cell

The invention relates to a method and a system for expanding the capacity of a memory device. The method for expanding the capacity of a memory device comprises the following steps of connecting a plurality of memory devices to form a memory device group and is characterized by further comprising the steps of: recording the connecting address of each memory device and all available ID numbers of a basic memory cell of the memory device; distributing and recording the unique virtual ID number for each basic memory cell; associating each virtual ID number with the connecting address of the corresponding memory device and the ID number of the basic memory cell; and accessing to the corresponding basic memory cell via the virtual ID number. The invention also discloses a system for realizing the method. The invention ensures that a plurality of memory devices are virtualized to form a large hard disc with the capacity being equal to the total capacity of the plurality of memory devices, thereby realizing the unlimited expansion of the memory capacity, greatly improving the memory capacity and realizing the network memory.

Owner:CHENGDU SOBEY DIGITAL TECH CO LTD

A Multi-thread Upload Optimization Method Based on Memory Allocation

ActiveCN109547566BReduce wasteImplement control transferError preventionFile access structuresParallel computingNetwork conditions

The invention discloses a multi-thread uploading optimization method based on memory allocation. The method comprises the steps of 1) acquiring basic data of a current operation user; 2) preprocessingan uploading request by utilizing obtained information; 3) judging whether slicing and multi-thread uploading are needed or not according to the size of an uploaded file; 4) storing a temporary fileof the uploaded file in a designated cache position according to a current idle memory condition of a server; 5) recording metadata information of the uploaded file in a database; and 6) writing the temporary file in a cache into a disk position in which the file finally needs to be stored. Starting from two aspects of service logic and server memory management, a client and the server cooperate,so that relatively high uploading success rate is achieved when network conditions are relatively poor; and meanwhile, a breakpoint resume function is supported, so that the uploading speed of the client and the bandwidth utilization rate of the server are improved.

Owner:SOUTH CHINA UNIV OF TECH

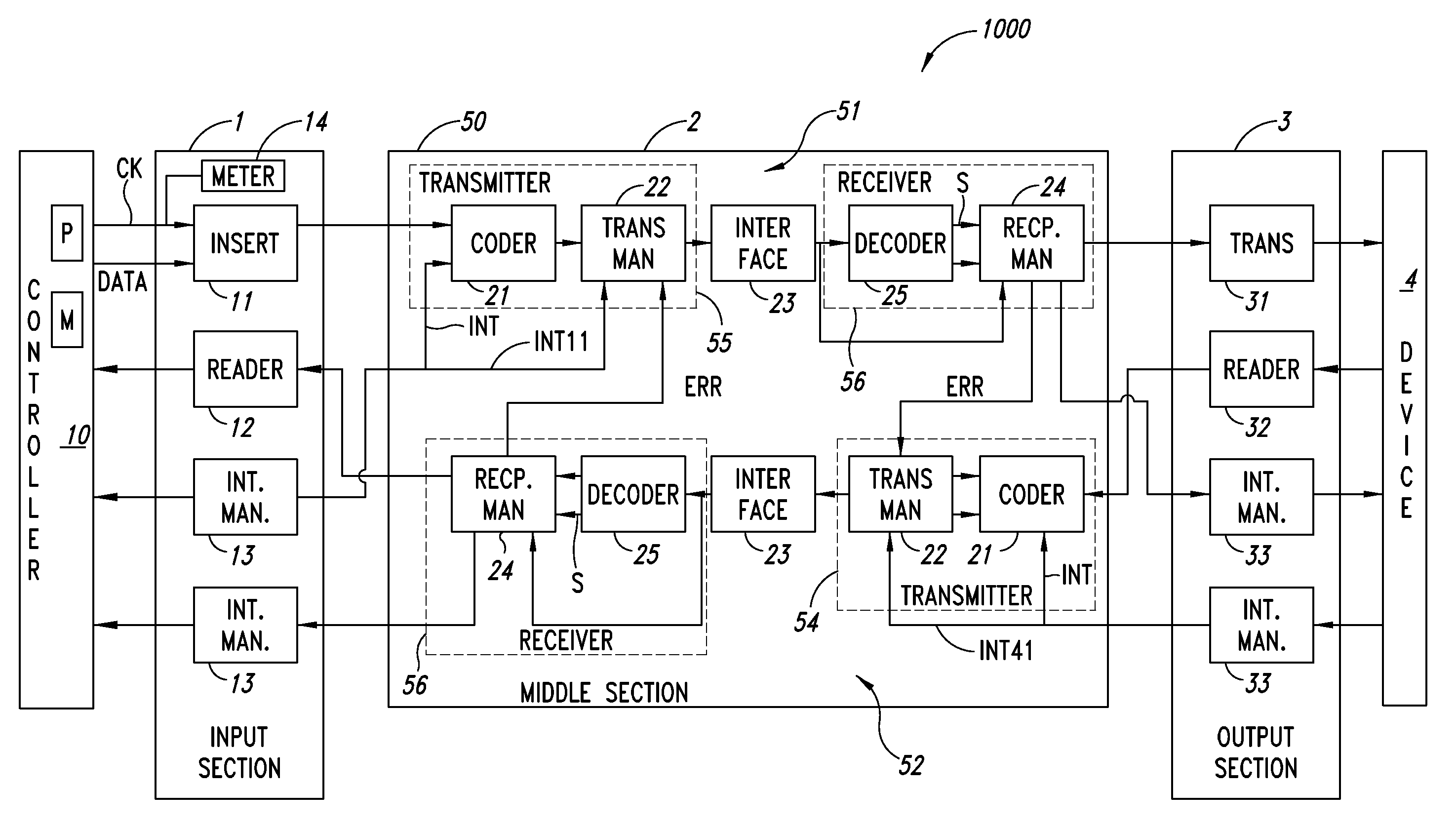

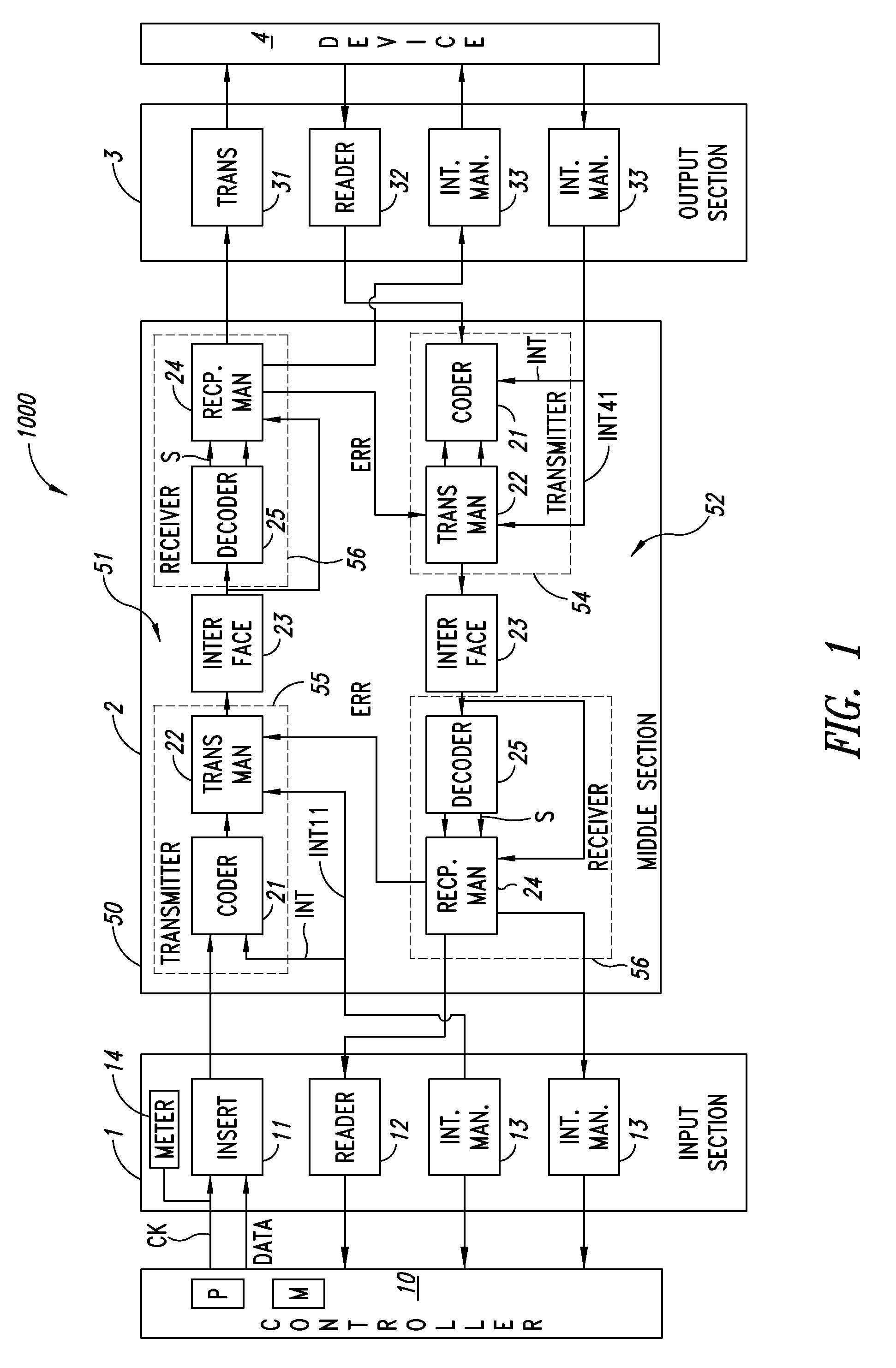

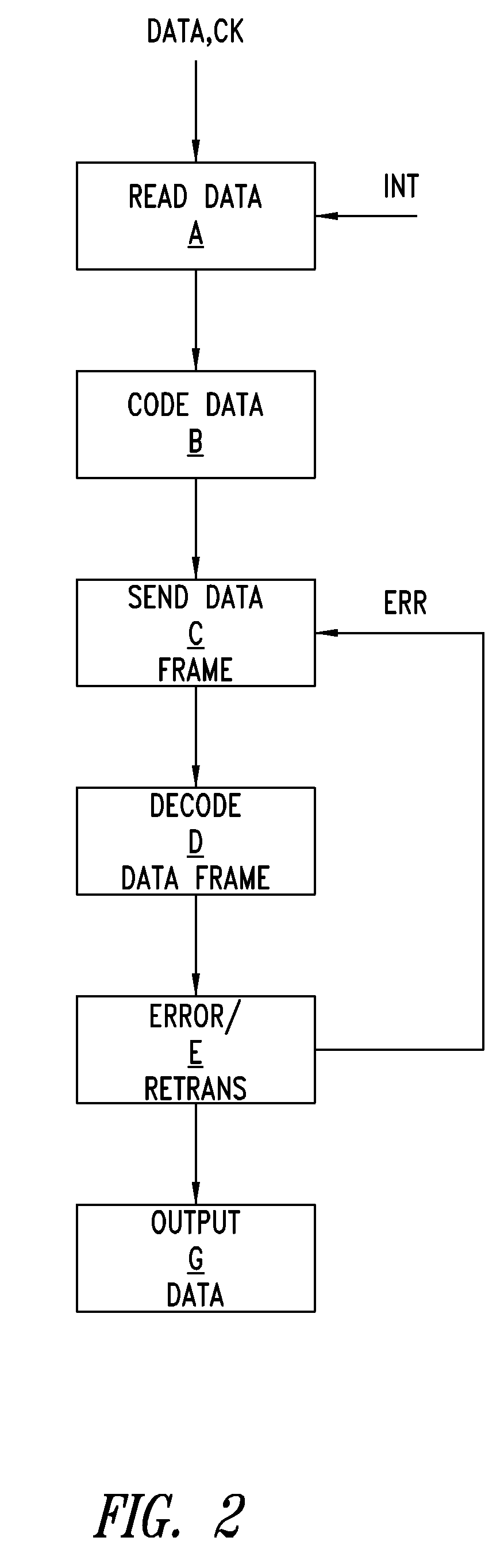

Signals communication apparatus

ActiveUS8416717B2Reduce data exchangeReduce power consumptionError preventionTransmission control/equalisingEngineeringTransmitter

A signal communication apparatus comprising first and second channels for communicating signals between two electronic devices; each of said first and second channels has a transmitter for transmitting signals, a receiver for receiving signals and an interface arranged between the transmitter and the receiver. The transmitters code the signals in the form of a frame of H bits, with H being an integer, and adapted to serially transmit said frame of H bits through said interface, and the receivers decode the frames of H bits, a frame of H bits having P data bits, K redundancy bits and a sequence of L control bits adapted to identify the type of frame to be transmitted, with L, P and K being integers.

Owner:STMICROELECTRONICS SRL

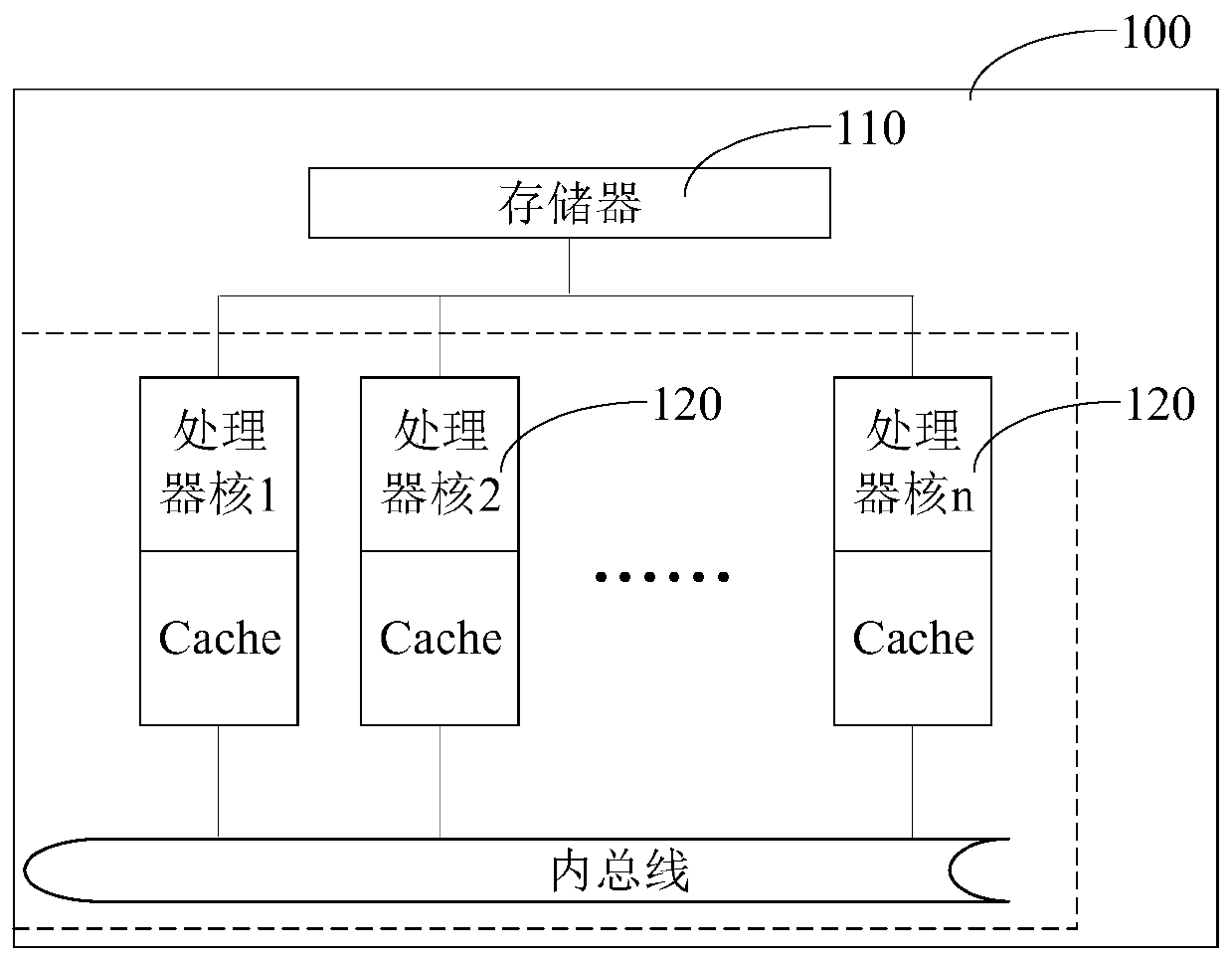

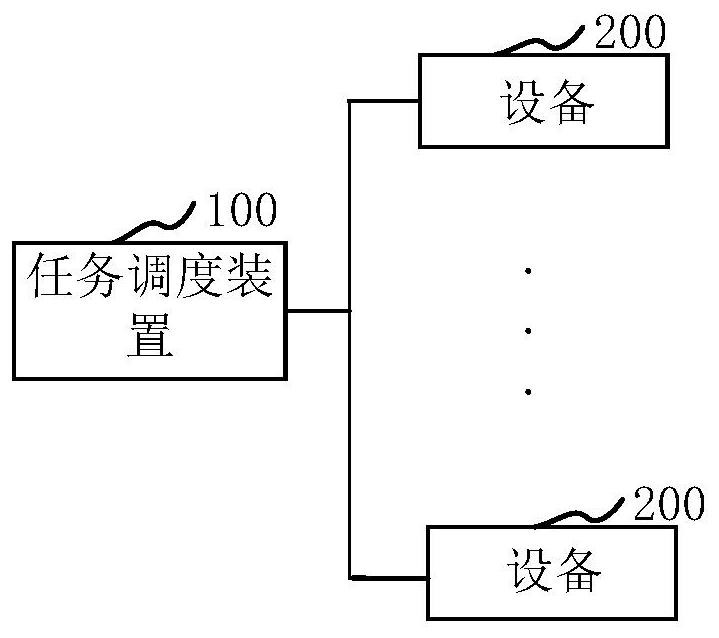

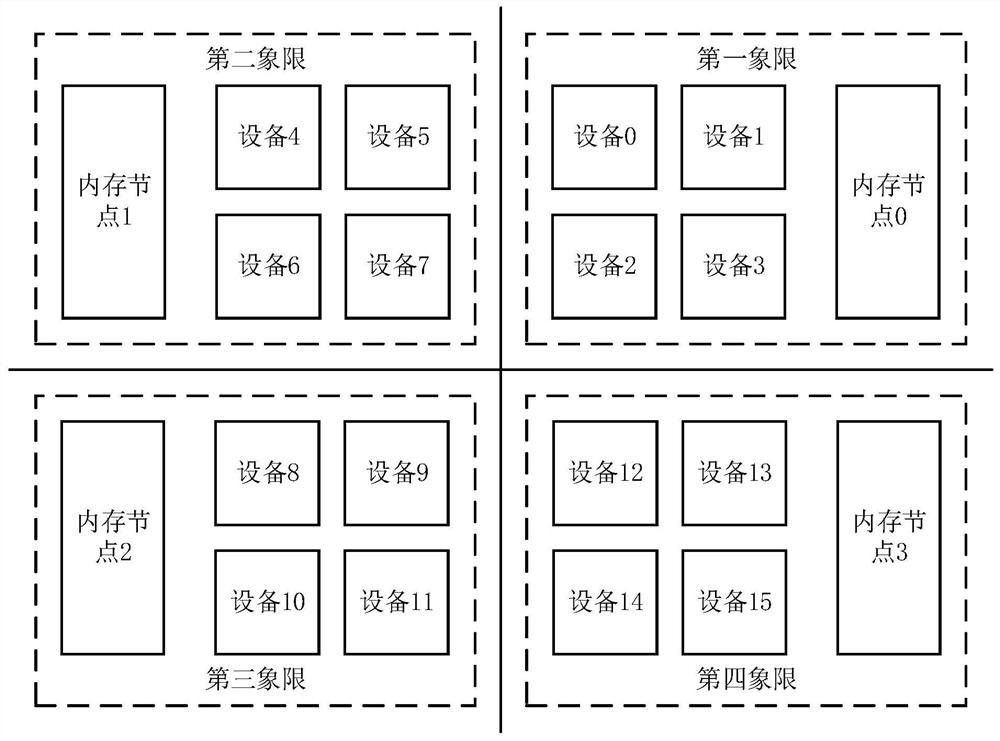

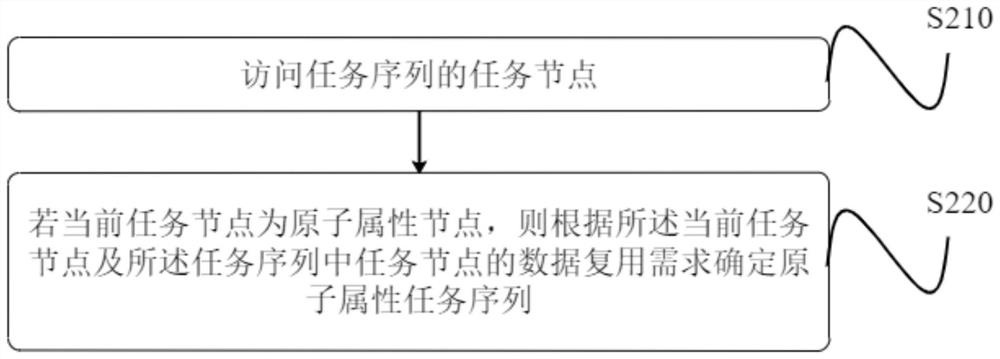

Task processing method and device, computer equipment and storage medium

PendingCN113282382AReduce data exchangeReduce data copyingProgram initiation/switchingResource allocationSoftware engineeringData exchange

The invention relates to a task processing method and device, computer equipment and a storage medium. Atomic attribute nodes of a task sequence are identified, an atomic attribute task sequence is obtained based on the atomic attribute nodes, tasks contained in the atomic attribute task sequence are continuously executed on target equipment; due to the fact that each task in the atomic attribute task sequence can reuse the data on the devices, the method of the embodiment can not only reduce data exchange between the devices in the task execution process, but also reduce data copying when the tasks need to be continuously executed.

Owner:CAMBRICON TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com