Patents

Literature

40results about How to "Reduce the number of dispatches" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

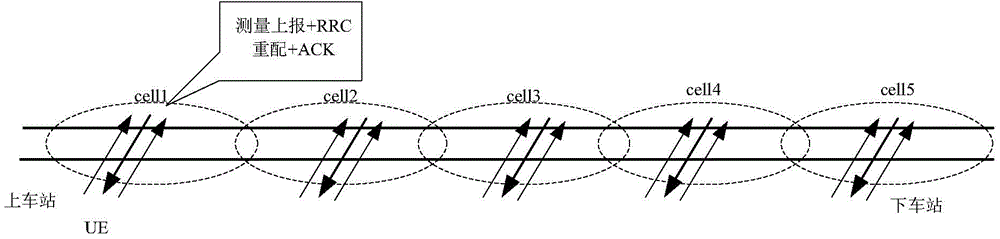

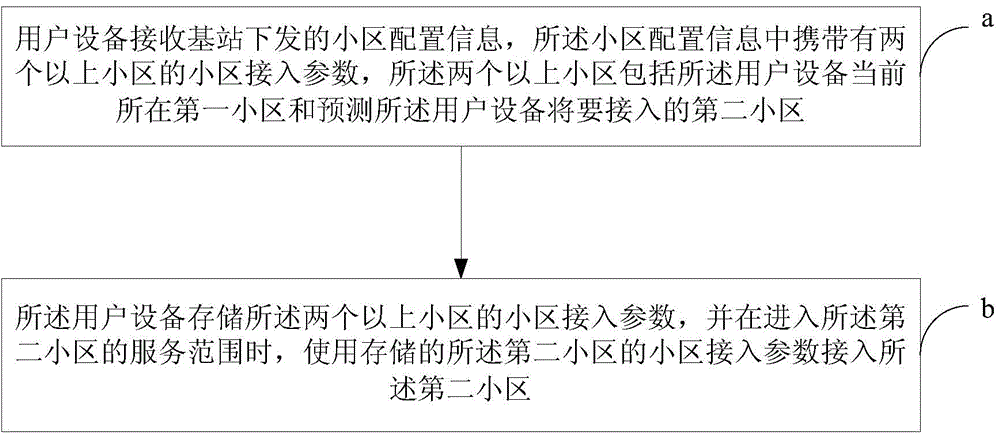

Cell configuration method and device

ActiveCN105228192AReduce transmission interruption timeReduced throughput timeAssess restrictionConnection managementUser equipmentThroughput

The present invention provides a cell configuration method and device, and belongs to the field of wireless communications. The cell configuration method comprises the steps that a base station issues cell configuration information to served user equipment, and the user equipment receives the cell configuration information issued by the base station, the cell configuration information comprising cell access parameters of two or more cells, and the two or more cells comprising a first cell where the user equipment is disposed and a second cell to which the user equipment is predicted to access; and the user equipment stores the cell access parameters of the two or more cells and accesses into the second cell by using the stored cell access parameters of the second cell when entering a service range of the second cell. With adoption of the technical scheme provided by the present invention, transmission interruption time and low throughput time of the user equipment can be reduced, PDCCH scheduling times are also reduced, signaling overheads are lowered, and power consumption of the user equipment declines.

Owner:CHINA MOBILE COMM GRP CO LTD

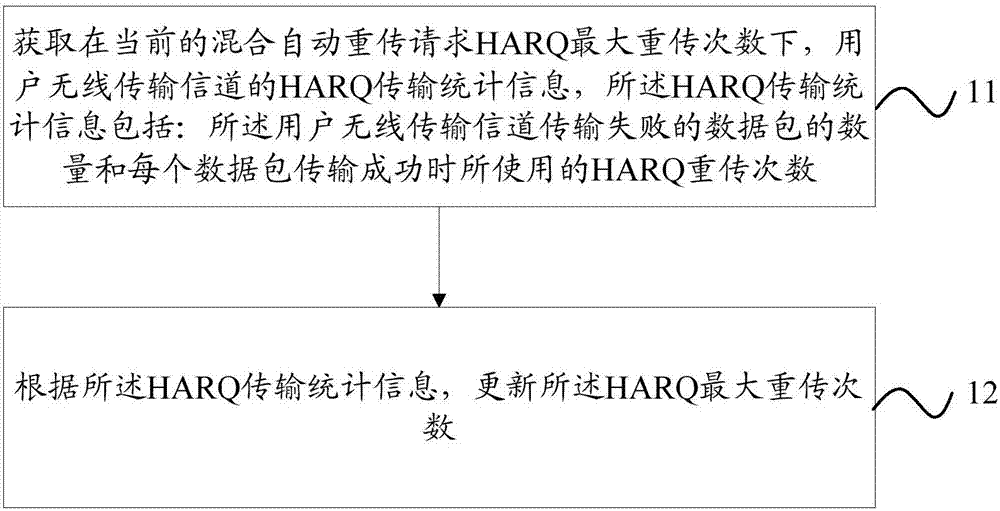

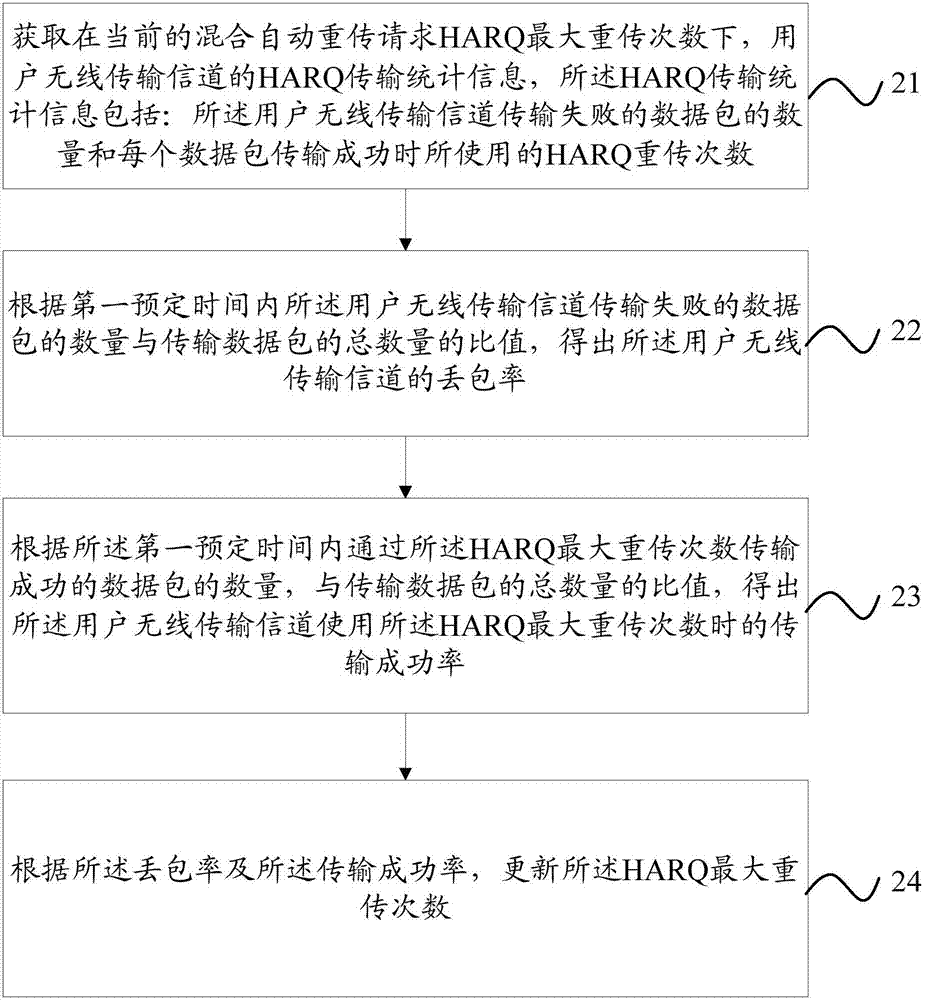

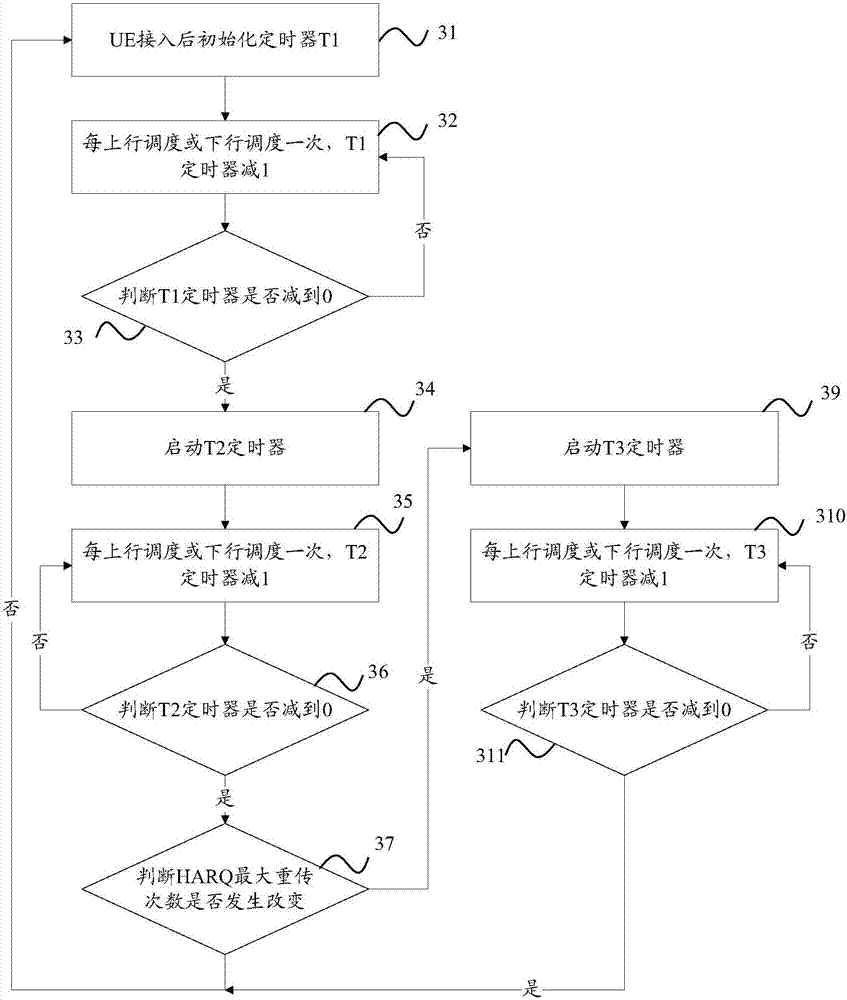

Method and device for adjusting maximum repeat times of hybrid automatic repeat request (HARQ) and base station

InactiveCN107294661AReduce the probability of packet lossReduce the number of dispatchesError prevention/detection by using return channelData switching networksWireless transmissionSuccessful transmission

The invention provides a method and a device for adjusting the maximum repeat times of a hybrid automatic repeat request (HARQ) and a base station, so as to solve the problems that when different users use the same HARQ maximum repeat times for transmission, packet loss is likely to be caused, redundancy of the scheduling times happens and the resource utilization rate is low. The method comprises steps: under the current HARQ maximum repeat times, HARQ transmission statistics information for a wireless transmission channel of a user is acquired, wherein the HARQ transmission statistics information comprises the number of data packets with failed transmission by the wireless transmission channel of the user and the HARQ repeat times used in the case of successful transmission of each data packet; and according to the HARQ transmission statistics information, the HARQ maximum repeat times are updated. According to the number of data packets with failed transmission by the wireless transmission channel of the user and the HARQ repeat times used in the case of successful transmission of each data packet, the HARQ maximum repeat times are adjusted, and on the premise of reducing the data packet loss rate, the resource utilization rate is improved.

Owner:ZTE CORP

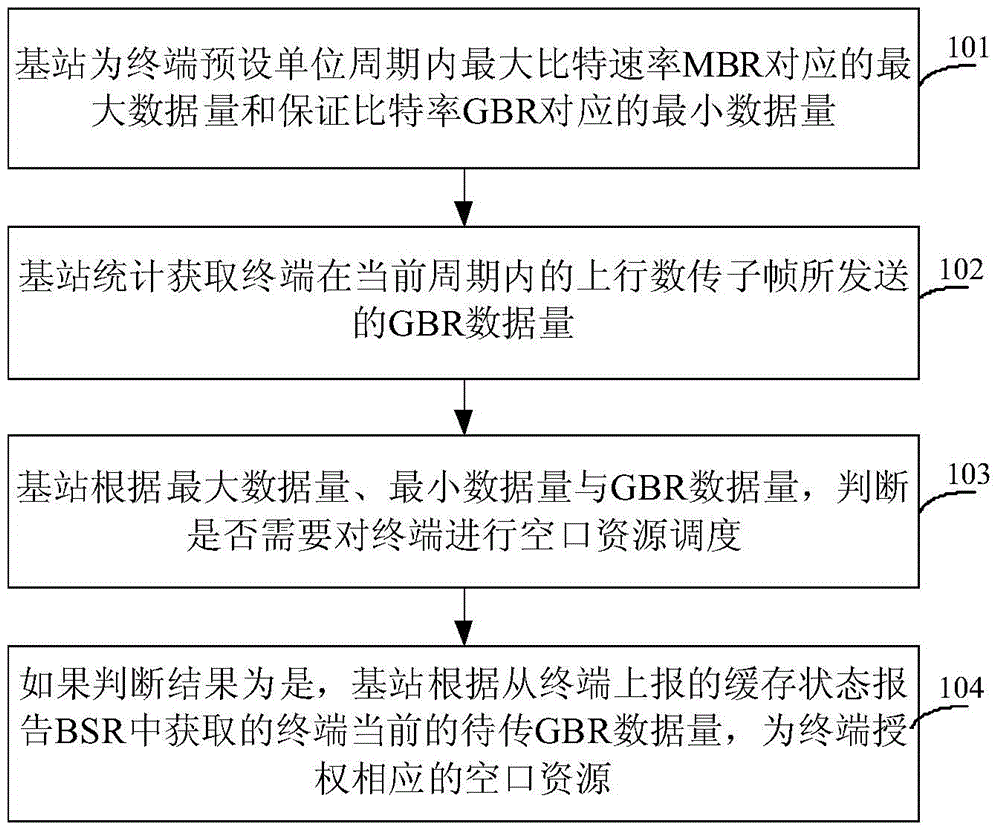

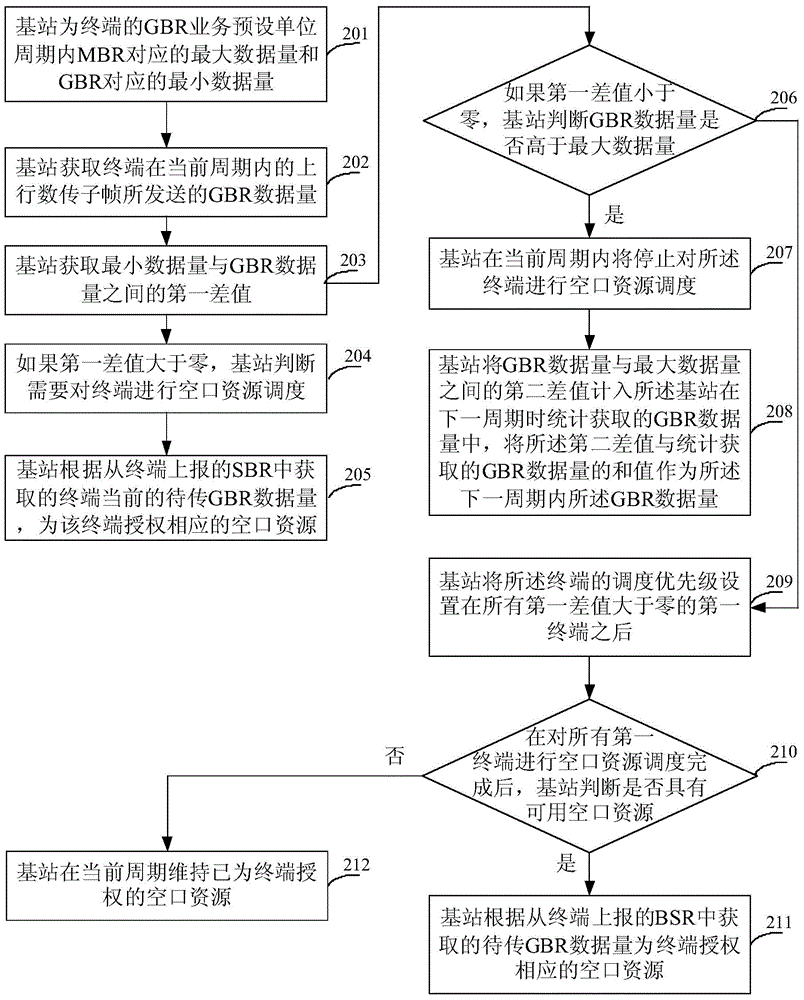

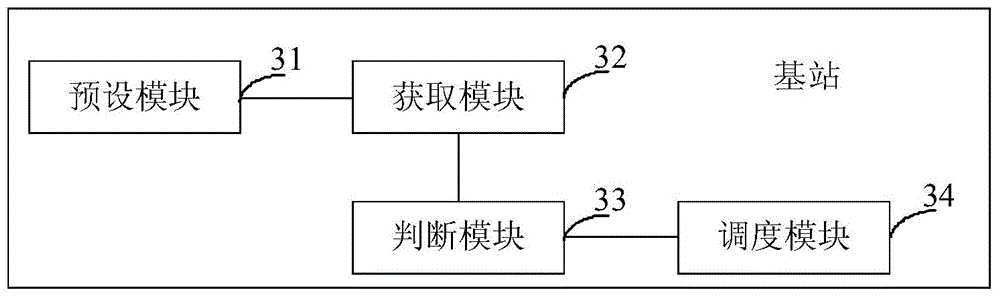

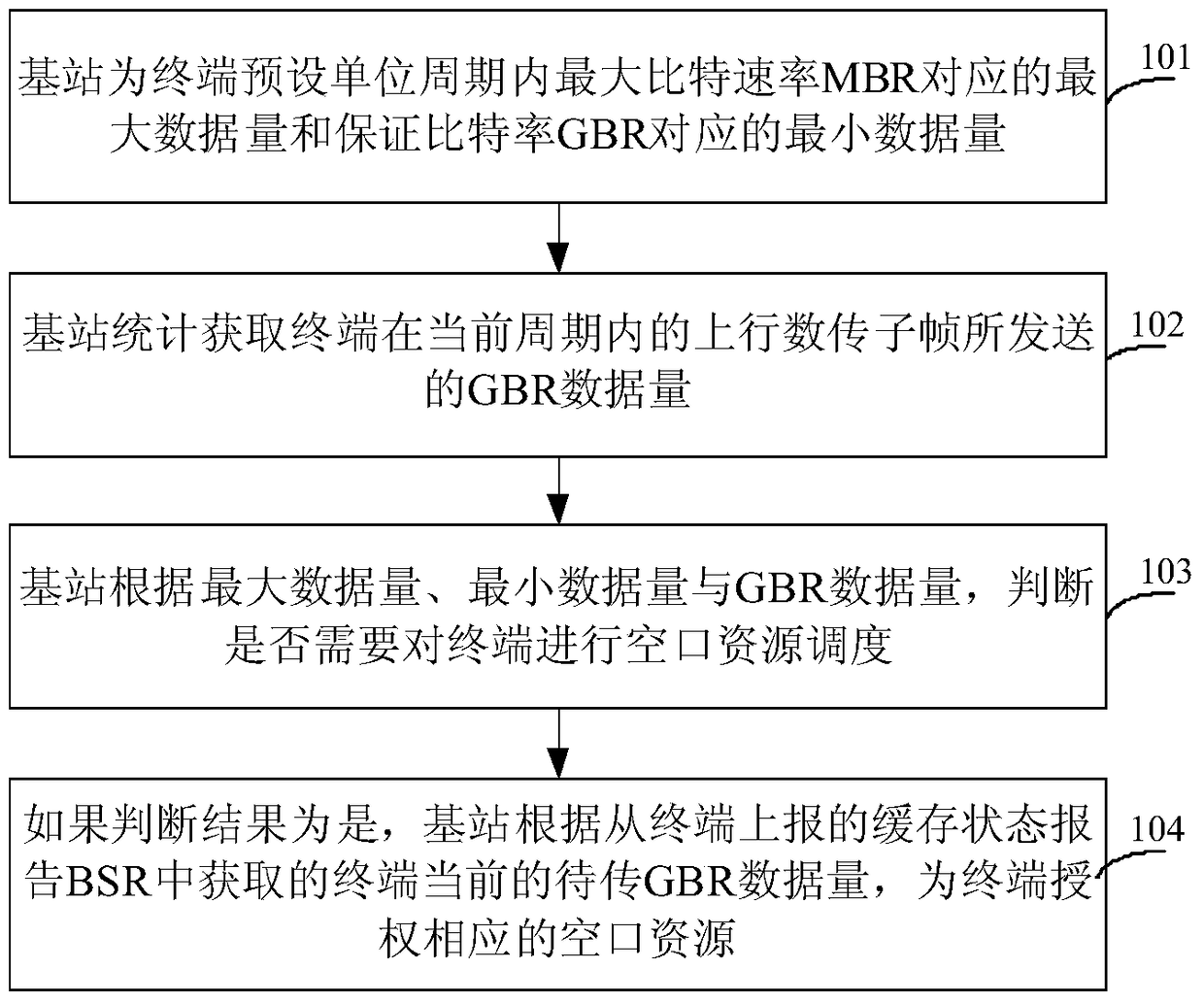

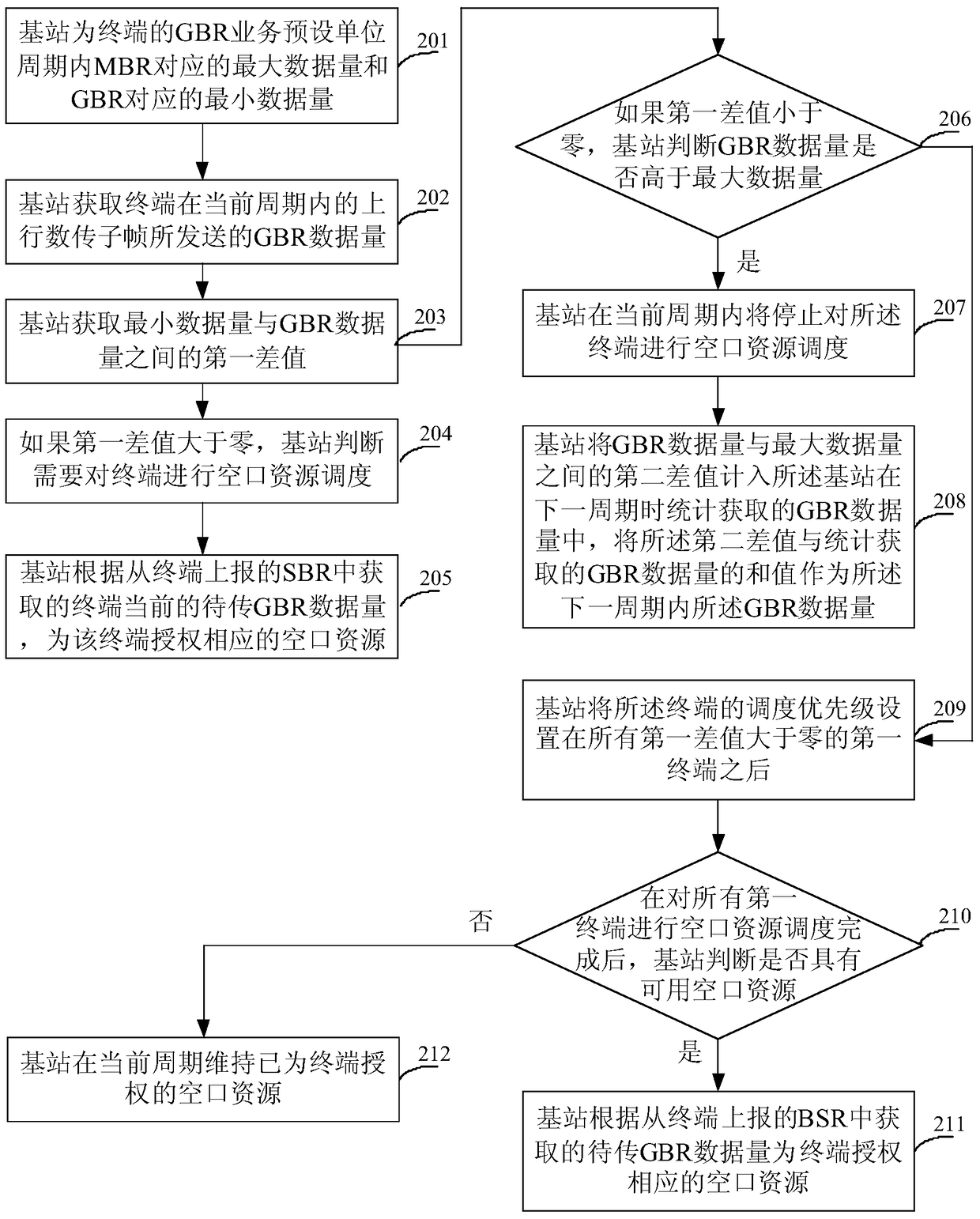

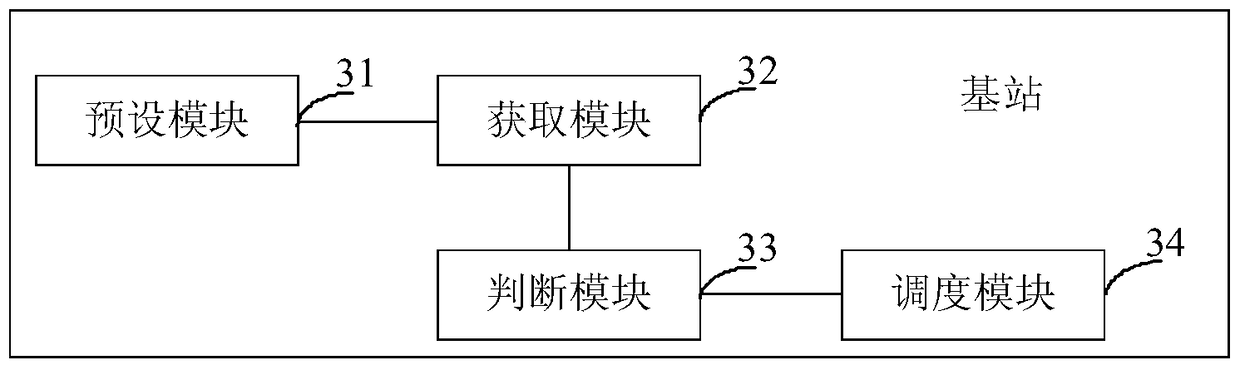

Uplink GBR (Guaranteed Bit Rate) service scheduling method and base station

ActiveCN104981020AReduce occupancyIncrease flexibilityWireless communicationAir interfaceControl channel

The invention provides an uplink GBR (Guaranteed Bit Rate) service scheduling method and a base station. The method comprises that a base state presets the maximum data size corresponding to a MBR (Maximum Bit Rate) and the minimum data size corresponding to the GBR in a unit period for the GBR service of a terminal; the obtained GBR data size sent by an uplink data transmission subframe of the terminal during a current period is counted; whether or not the air interface resource scheduling is carried out is determined according to the maximum data size, the minimum data size and the GBR data size; and if the air interface resource scheduling is determined to be carried out, corresponding air interface resources are granted according to a current to-be-transmitted GBR data size obtained from the BSR (Buffer Status Report) reported by the terminal. According to the invention, the base station determines whether or not the terminal carries out the air interface resource scheduling according to the actually-transmitted GBR data size, the maximum data size and the minimum data size of the terminal during the current period, and when the scheduling is needed, the air interface resources corresponding to the current to-be-transmitted GBR data size are granted to the terminal, so that the scheduling frequency is lowered, and the occupation of CCE (Control Channel Element) resources is reduced.

Owner:CHENGDU TD TECH LTD

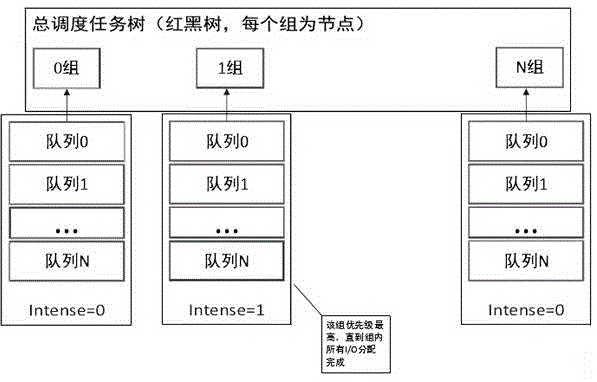

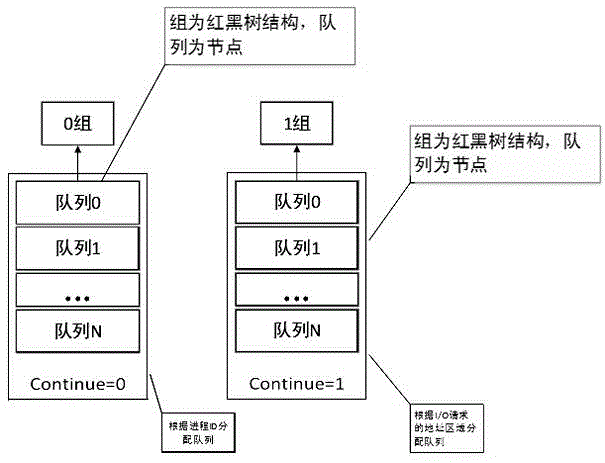

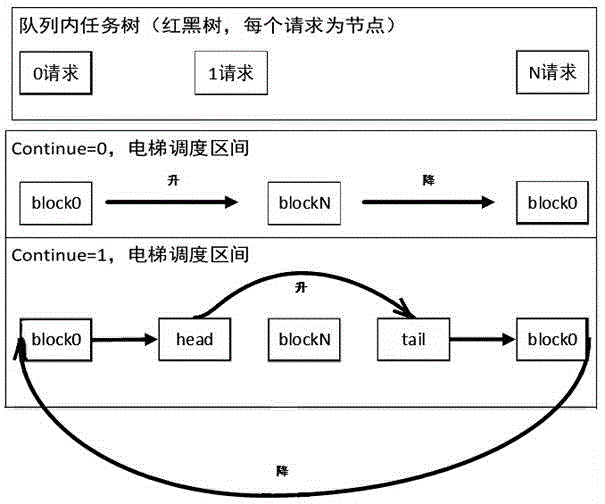

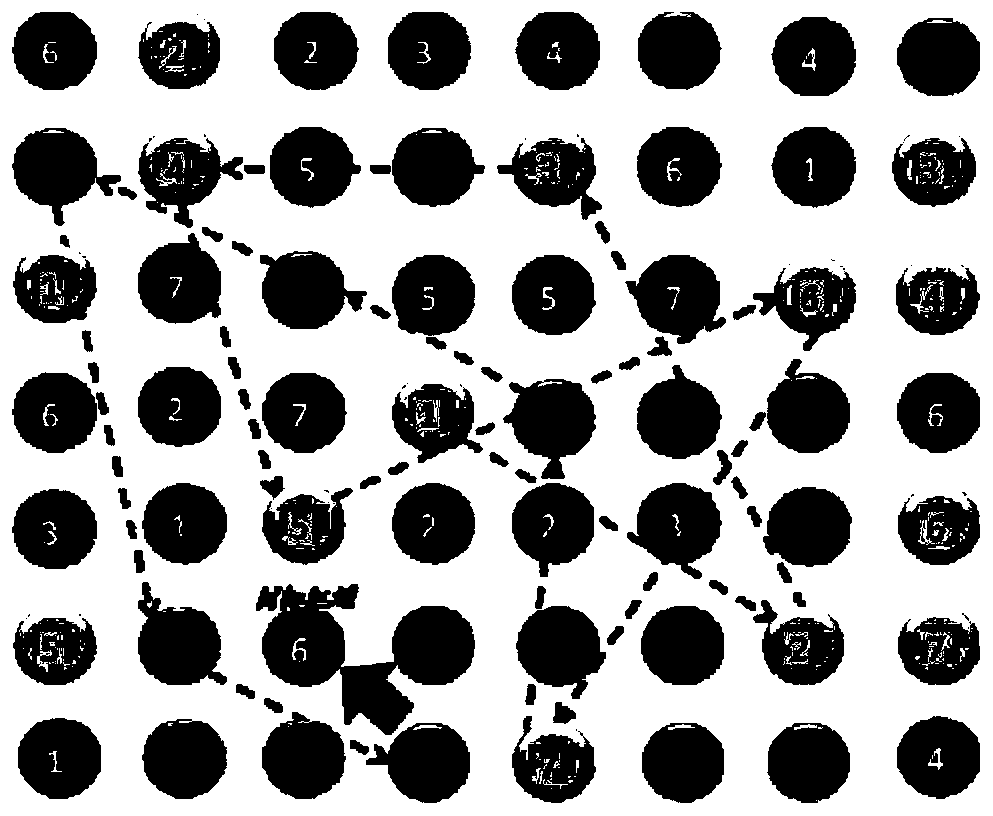

CFQ (complete fair quenching) dispatching method

InactiveCN104360965AOvercome the drop in throughputImprove operational efficiencyElectric digital data processingApplication procedureLinux kernel

The invention provides a CFQ (complete fair quenching) dispatching method, and relates to the field of linux kernel I / O (input / output) dispatching. The CFQ dispatching method comprises the following steps: firstly, dispatching among groups; then, dispatching in groups; and finally, dispatching in queues; making a request to the kernel to modify relevant marks and switch between a dispatching way among the groups and a dispatching way in the groups when intensive I / O operation is needed to be performed on application programs, modifying an elevator algorithm bound adopted for dispatching in queues into a frequent access area. When the Intense mark of a certain group is set on 1, the priority of the equipment corresponding to the group is the highest, and the dispatching among the groups is reduced; when the Continue mark of a certain group is set on 1, queues of the group are distinguished into different queues according to the area requested by I / O, so that the I / P intensive request can be possibly completed in one queue; and when the Continue mark of a certain group is set on 1, the operation bound of the elevator dispatching algorithm in the queues is changed to head and tail, so that the intensive I / O request is accessed in a centralized manner, and therefore, the round times for elevator dispatching are reduced.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

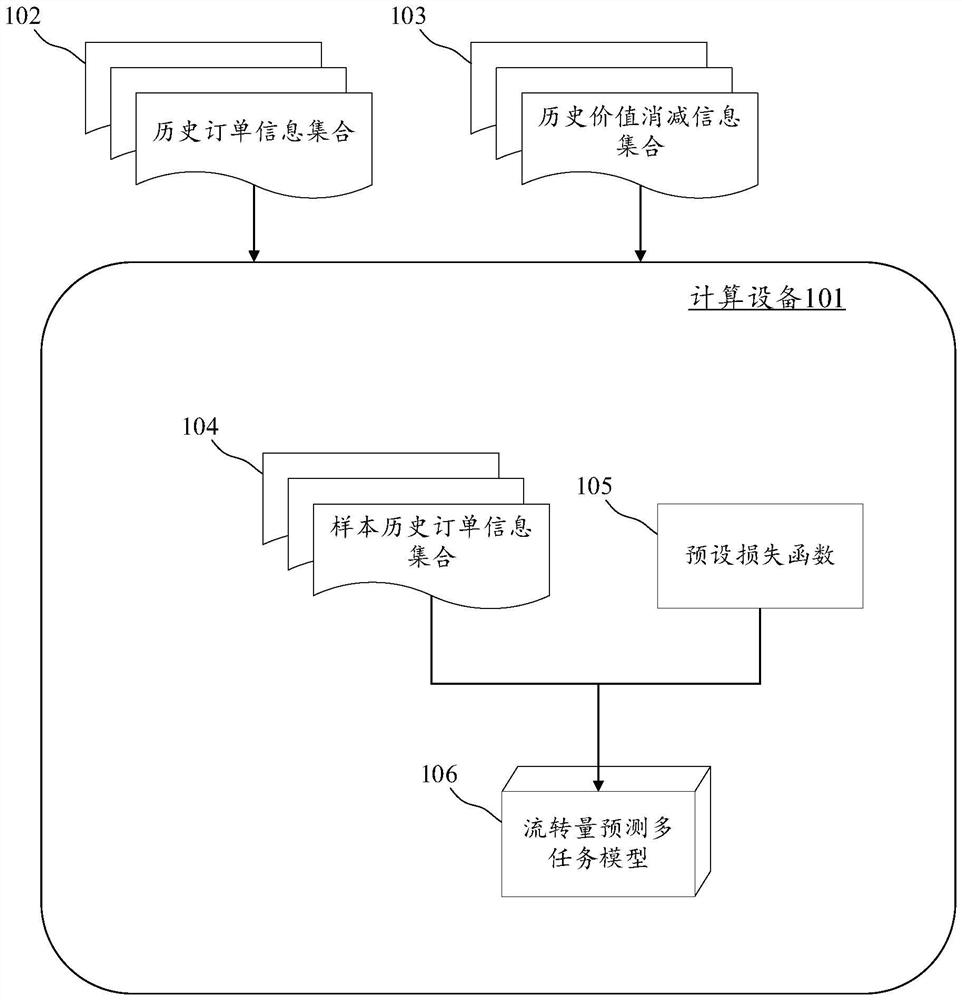

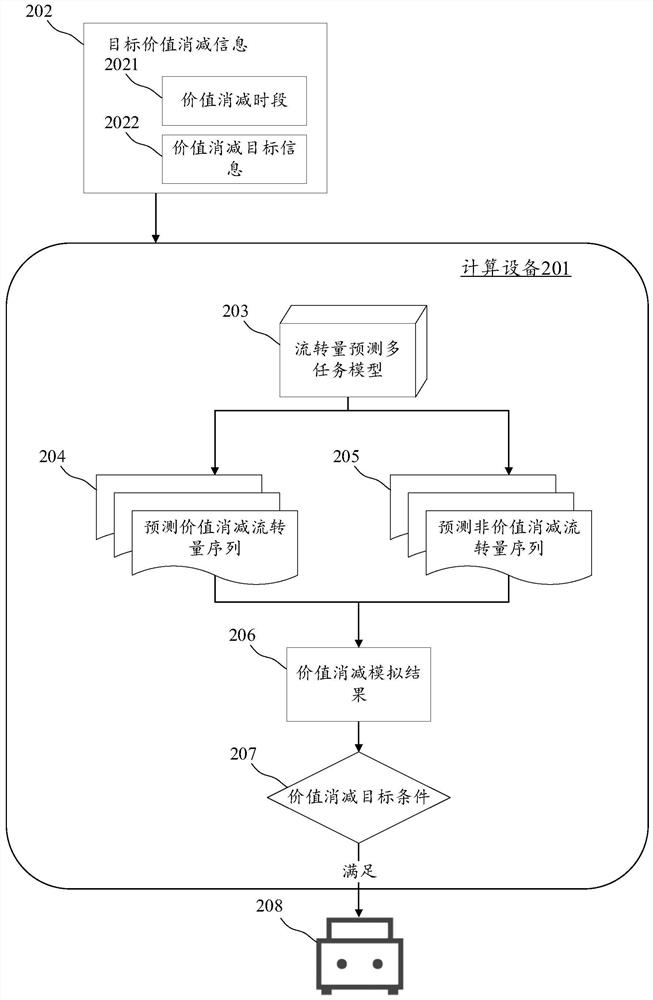

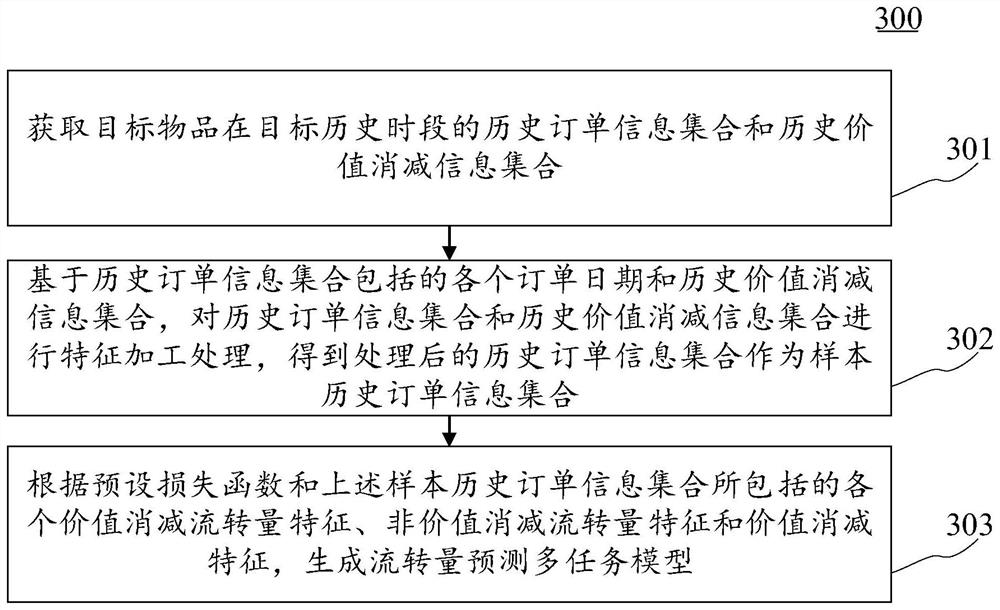

Flow prediction multi-task model generation method, scheduling method, device and equipment

PendingCN114202130AReduce wasteReduce the number of times the quantity cannot meet the demandForecastingResourcesData miningOperations research

The embodiment of the invention discloses a flow quantity prediction multi-task model generation method, a scheduling method, a device and equipment. A specific embodiment of the method comprises the steps of obtaining a historical order information set and a historical value reduction information set of a target article in a target historical time period; based on each order date and a historical value reduction information set included in the historical order information set, performing feature processing on the historical order information set and the historical value reduction information set to obtain a processed historical order information set as a sample historical order information set; and generating a flow prediction multi-task model according to a preset loss function and each value reduction flow feature, non-value reduction flow feature and value reduction feature included in the sample historical order information set. According to the embodiment, the accuracy of the flow volume prediction result is improved, and the waste of transportation resources is reduced.

Owner:BEIJING JINGDONG ZHENSHI INFORMATION TECH CO LTD

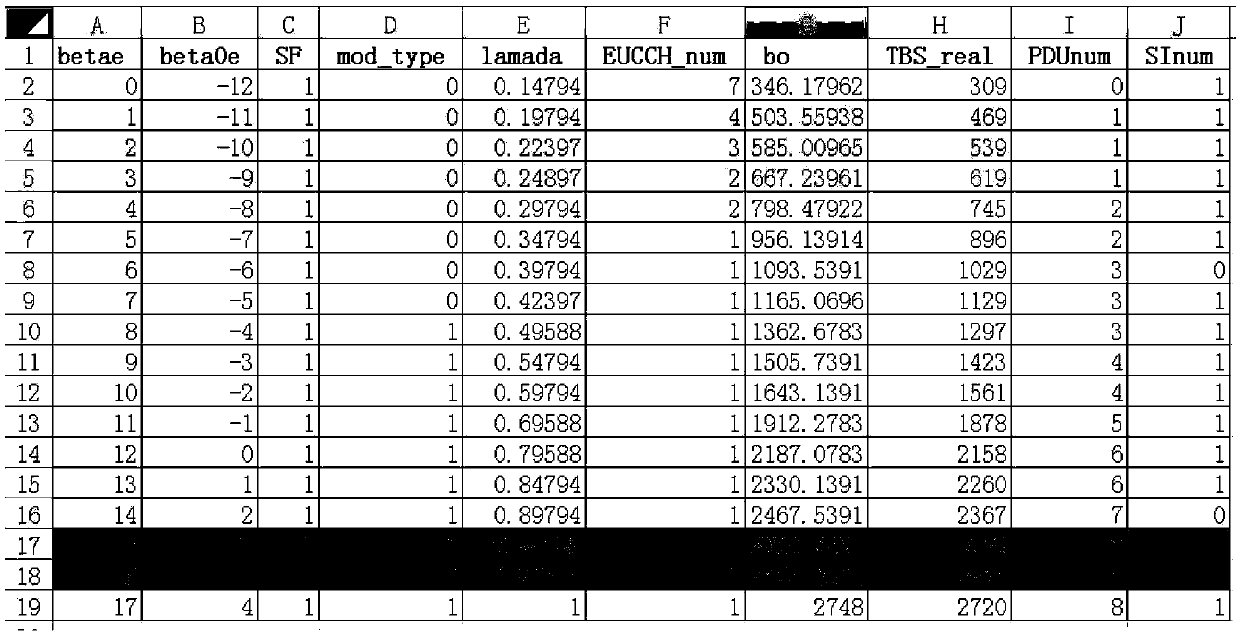

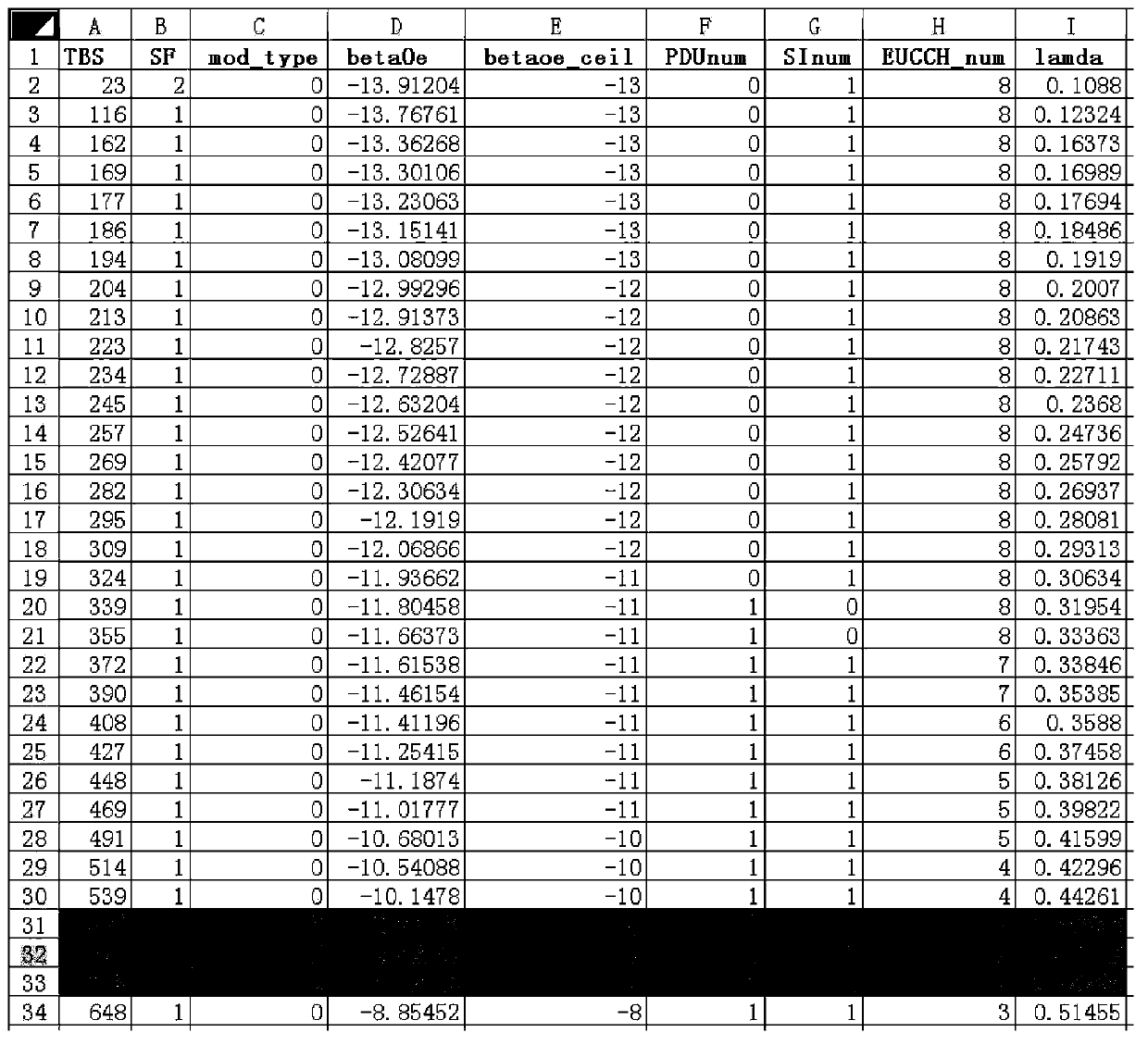

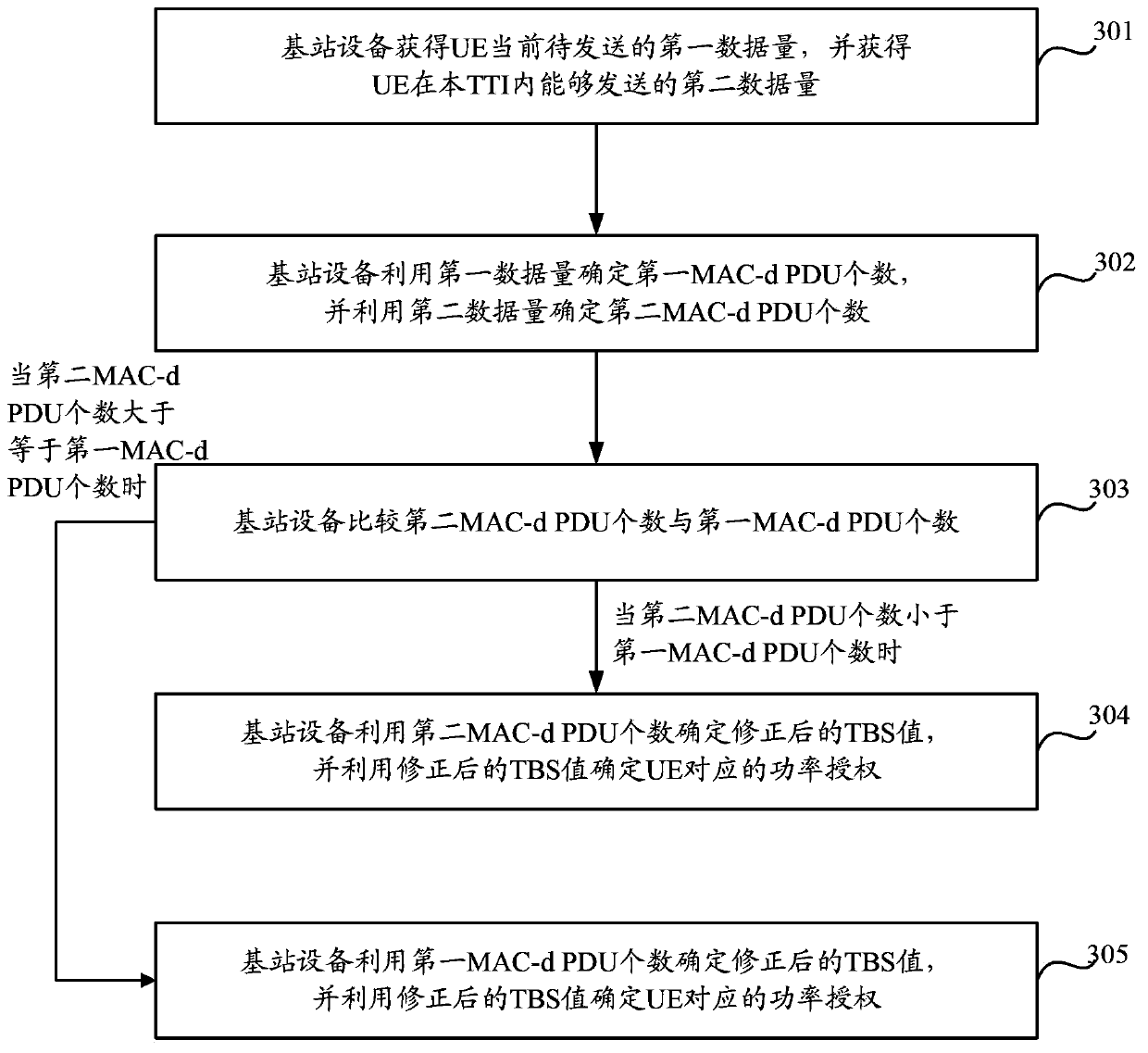

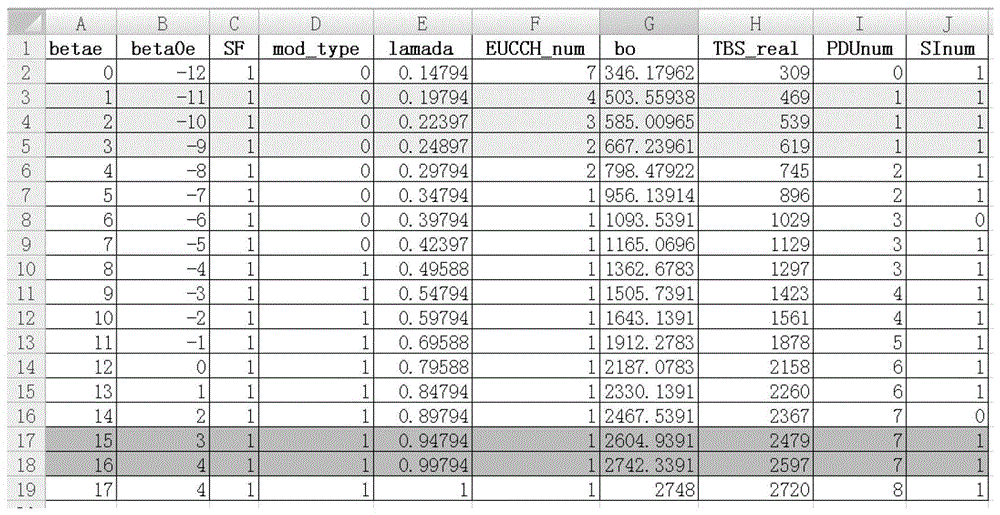

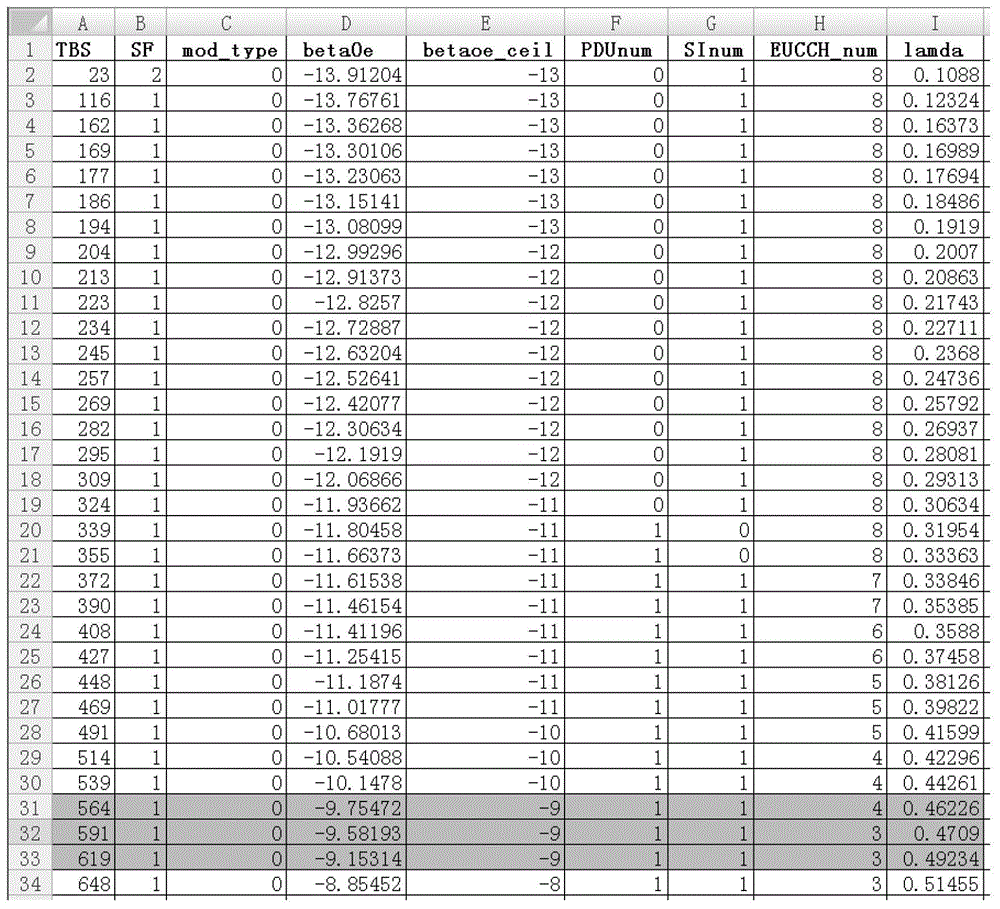

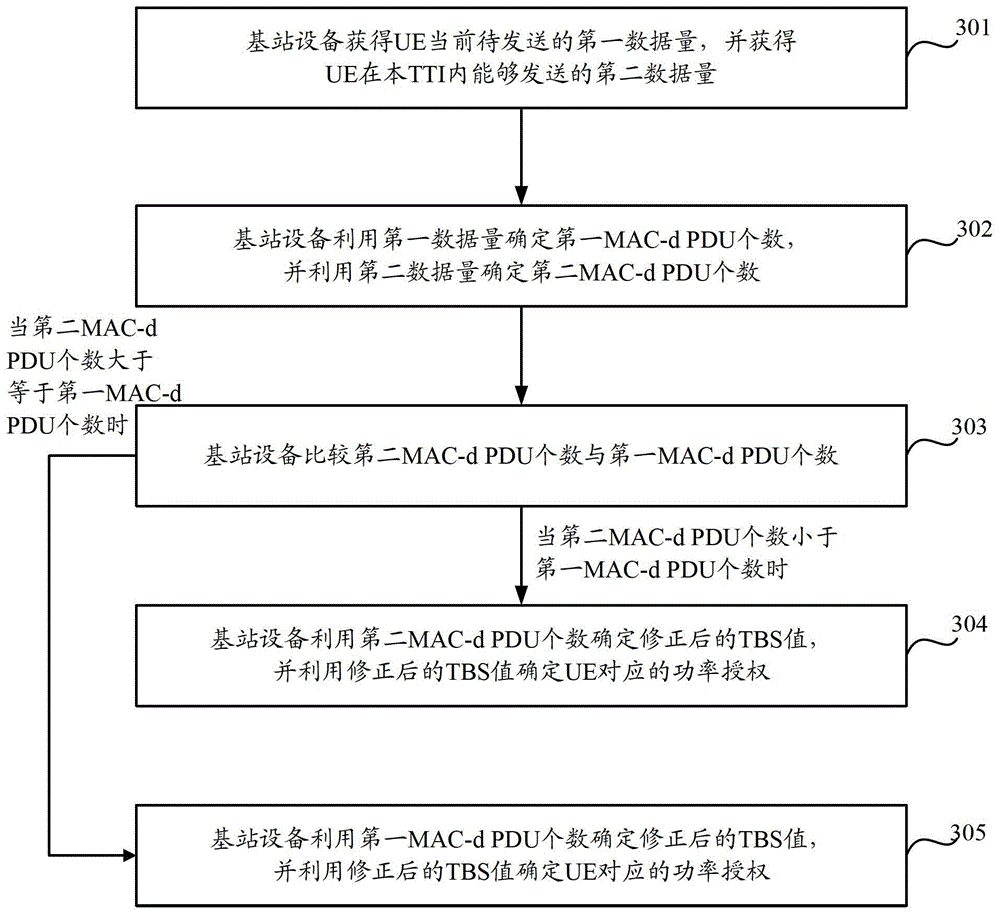

Method and device for determining power authorization

ActiveCN103428841AReduce transmit powerReduce slot interferencePower managementResource utilizationAuthorization

The invention discloses a method and a device for determining power authorization. The method includes the steps: acquiring a first data volume to be currently transmitted of UE (user equipment) by base station equipment, and acquiring a second data volume capable of being transmitted by the UE within a TTI (transmission time interval); determining the number of first MAC-d PDUs (protocol data units) by the base station equipment according to the first data volume, and determining the number of second MAC-d PDUs by the base station equipment according to the second data volume; determining a modified TBS value of a transport block size by the base station equipment according to the number of the second MAC-d PDUs and determining the power authorization corresponding to the UE by the base station equipment according to the modified TBS value when the number of the second MAC-d PDUs is smaller than that of the first MAC-d PDUs; determining the modified TBS value by the base station equipment according to the number of the first MAC-d PDUs and determining the power authorization corresponding to the UE by the base station equipment according to the modified TBS value when the number of the second MAC-d PDUs is larger than or equal to that of the first MAC-d PDUs. By the aid of the method and the device, dispatching efficiency and transport efficiency can be improved, resource utilization rate is increased, and the throughput capacity of a cell is improved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

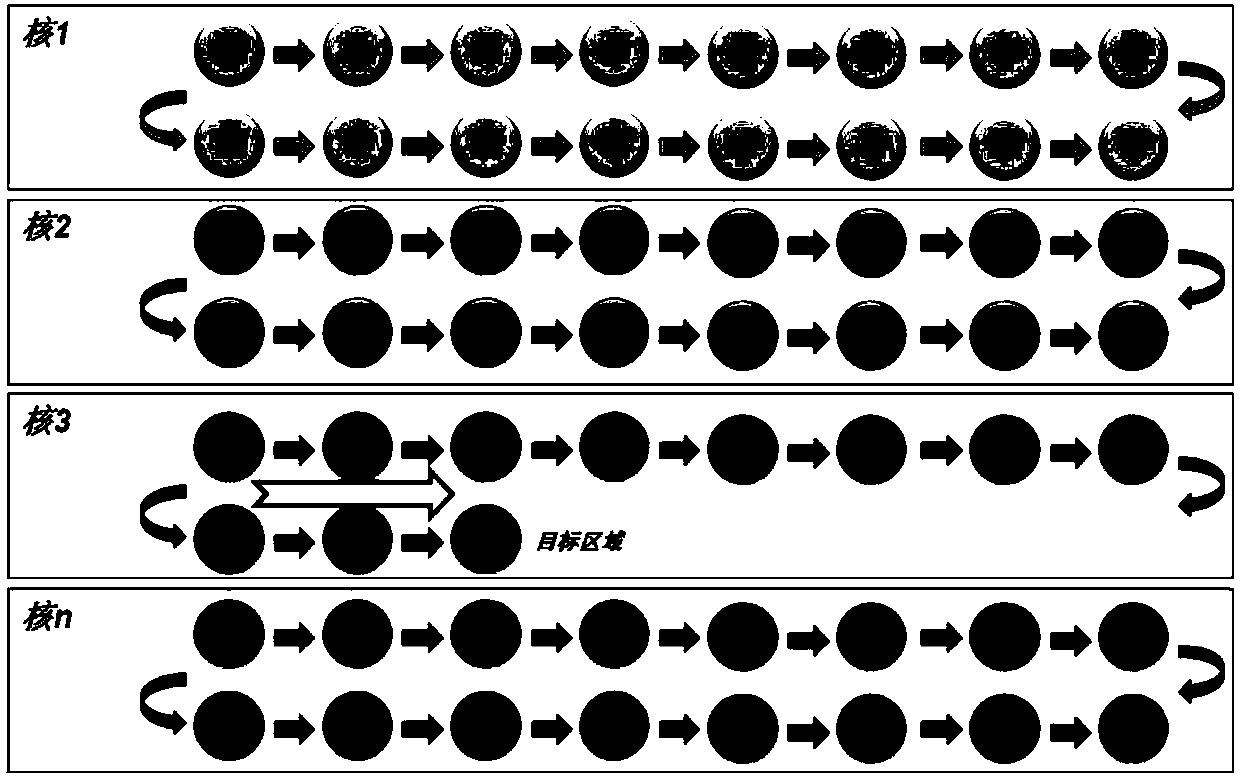

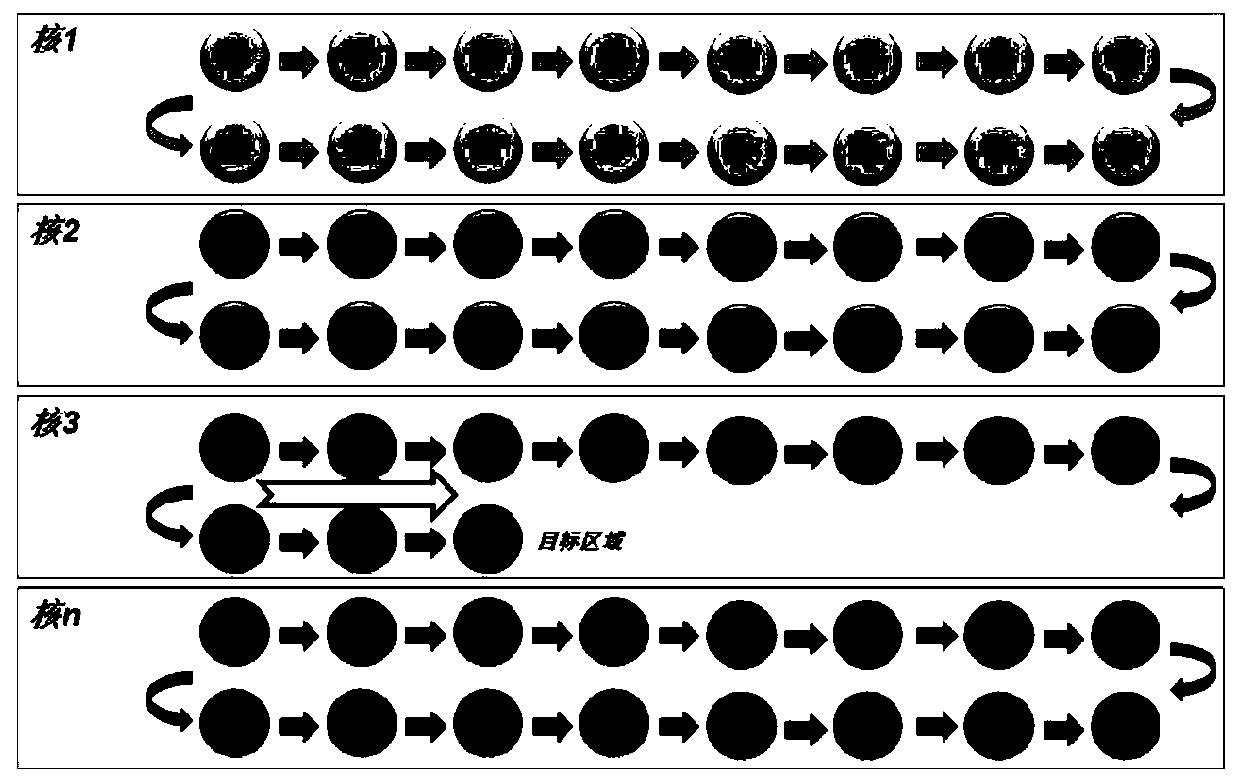

Multi-modal massive-data-flow scheduling method under multi-core DSP

ActiveCN107608784AImprove versatilityImprove portabilityResource allocationInterprogram communicationData streamParallel computing

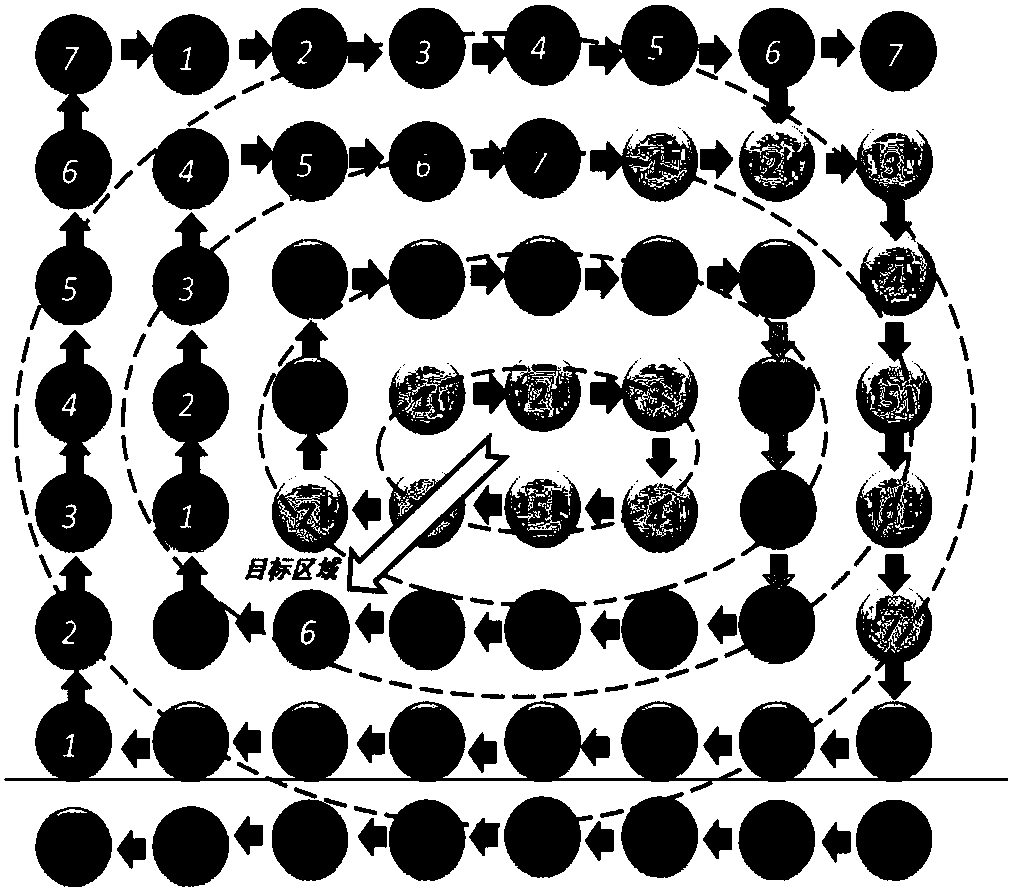

The invention discloses a multi-modal massive-data-flow scheduling method under a multi-core DSP. The multi-core DSP includes a main control core and an acceleration core. Requests are transmitted between the main control core and the acceleration core through a request packet queue. Three data block selection methods of continuous selection, random selection and spiral selection are determined onthe basis of data dimensions and data priority orders. Two multi-core data block allocation methods of cyclic scheduling and load balancing scheduling are determined according to load balancing. Datablocks selected and determined through a data block grouping method according to allocation granularity are loaded into multiple computing cores for processing. The method adopts multi-level data block scheduling manners, satisfies requirements of system loads, data correlation, processing granularity, the data dimensions and the orders when the data blocks are scheduled, and has good generalityand portability; and expands modes and forms of data block scheduling from multiple levels, and has a wider scope of application. According to the method, a user only needs to configure the data blockscheduling manners and the allocation granularity, a system automatically completes data scheduling, and efficiency of parallel development is improved.

Owner:XIAN MICROELECTRONICS TECH INST

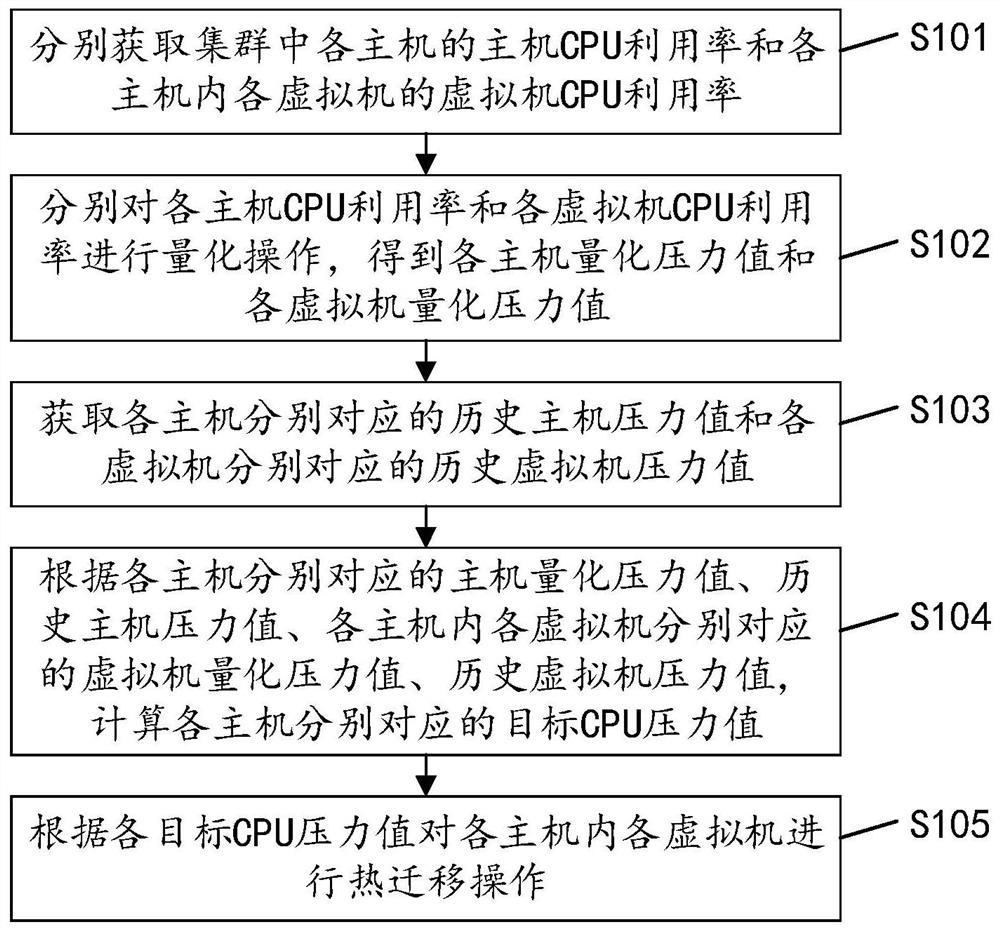

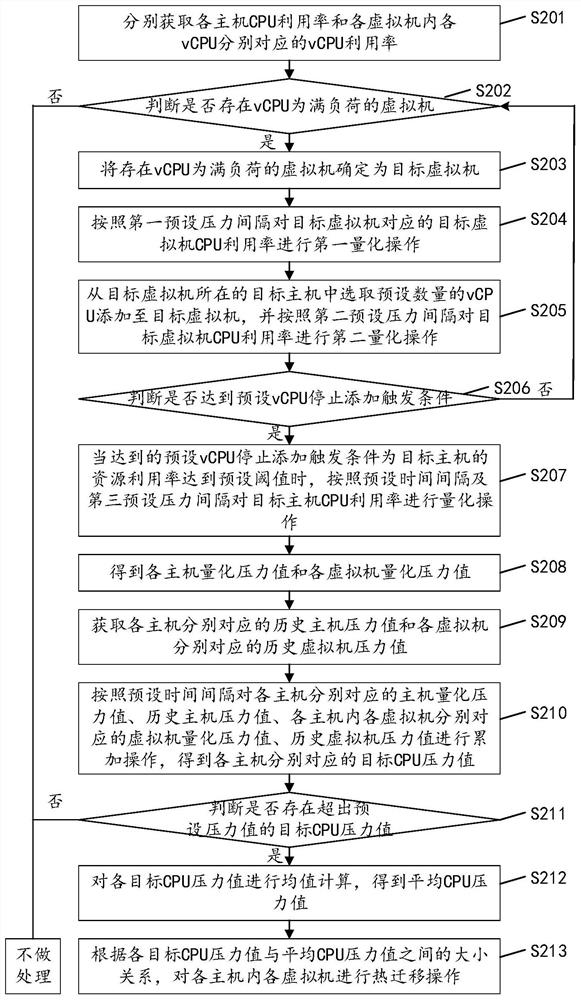

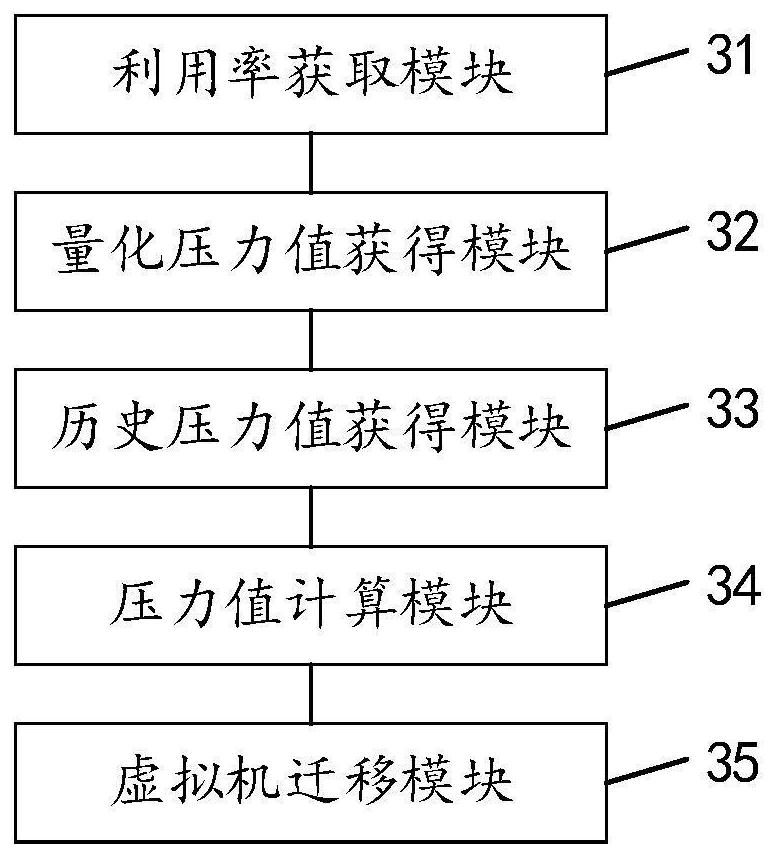

Cluster distributed resource scheduling method, device and equipment and storage medium

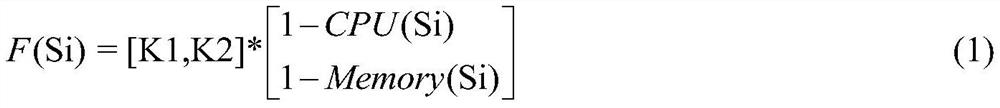

ActiveCN111858031AReduce the number of dispatchesRelieve pressureResource allocationEnergy efficient computingTerm memoryData loss

The invention discloses a cluster distributed resource scheduling method. The method comprises the steps of respectively acquiring the CPU utilization rate of each host and the CPU utilization rate ofeach virtual machine; quantizing the CPU utilization rate of each host and the CPU utilization rate of each virtual machine to obtain a quantized pressure value of each host and a quantized pressurevalue of each virtual machine; acquiring each historical host pressure value and each historical virtual machine pressure value; calculating a target CPU pressure value corresponding to each host according to the host quantized pressure value and the historical host pressure value corresponding to each host, and the virtual machine quantized pressure value and the historical virtual machine pressure value corresponding to each virtual machine in each host; and performing thermal migration operation on each virtual machine in each host according to each target CPU pressure value. According to the method, the pressure of network resources is reduced, and the probability of memory data loss caused by frequent thermal migration of the virtual machine is reduced. The invention further disclosesa cluster distributed resource scheduling device and equipment and a storage medium, which have corresponding technical effects.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

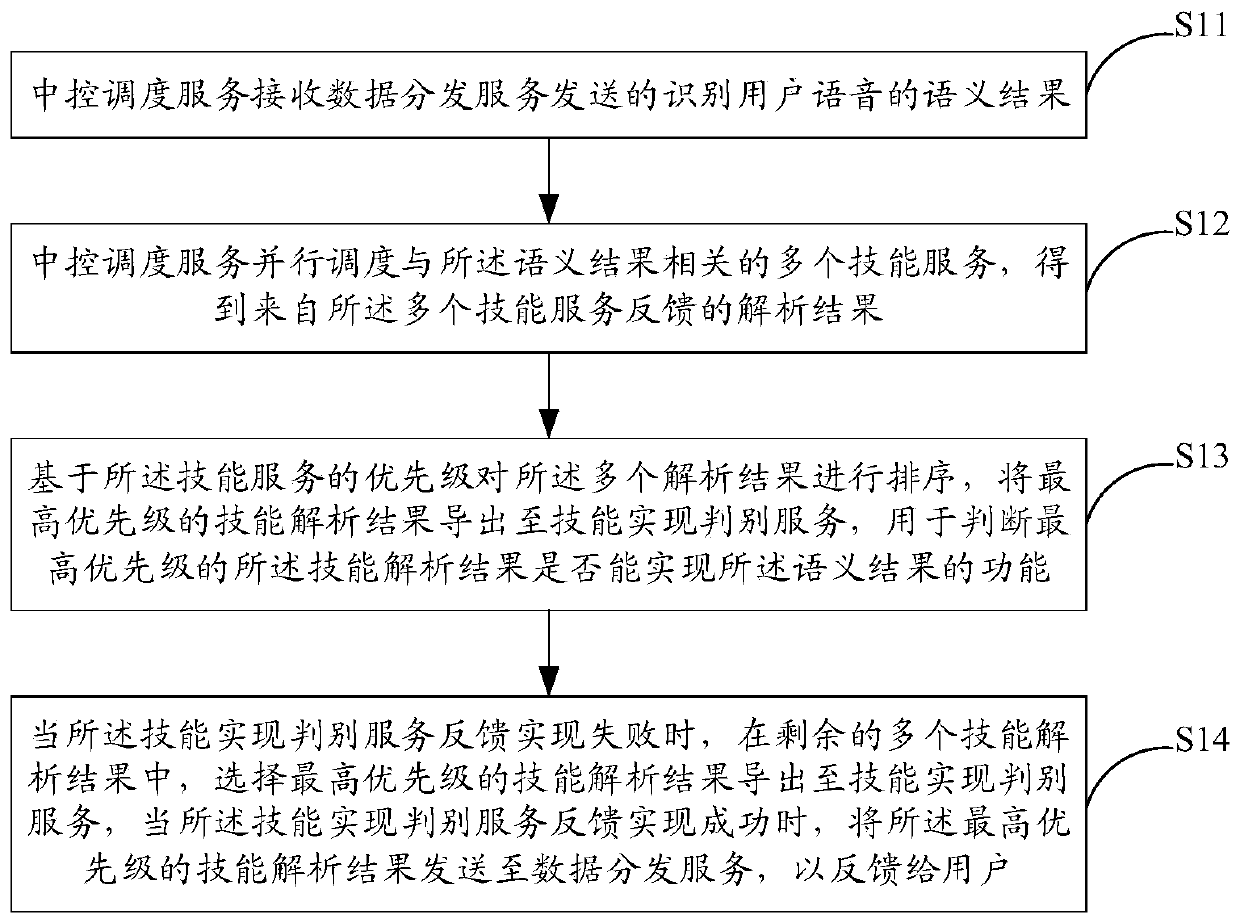

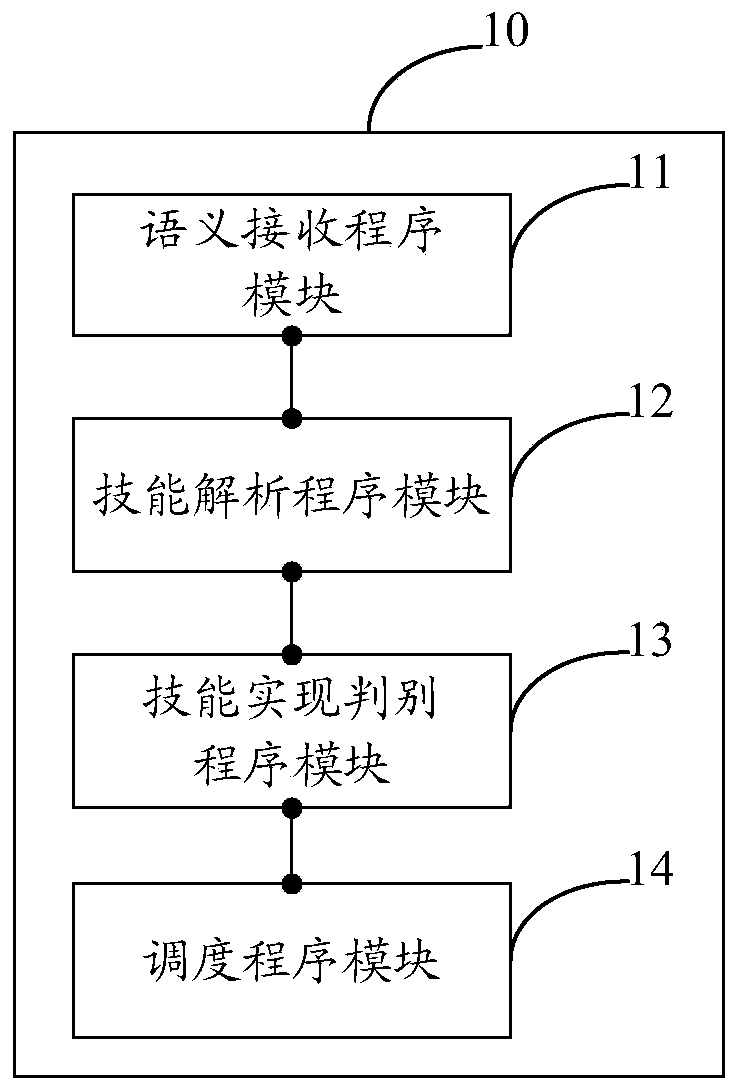

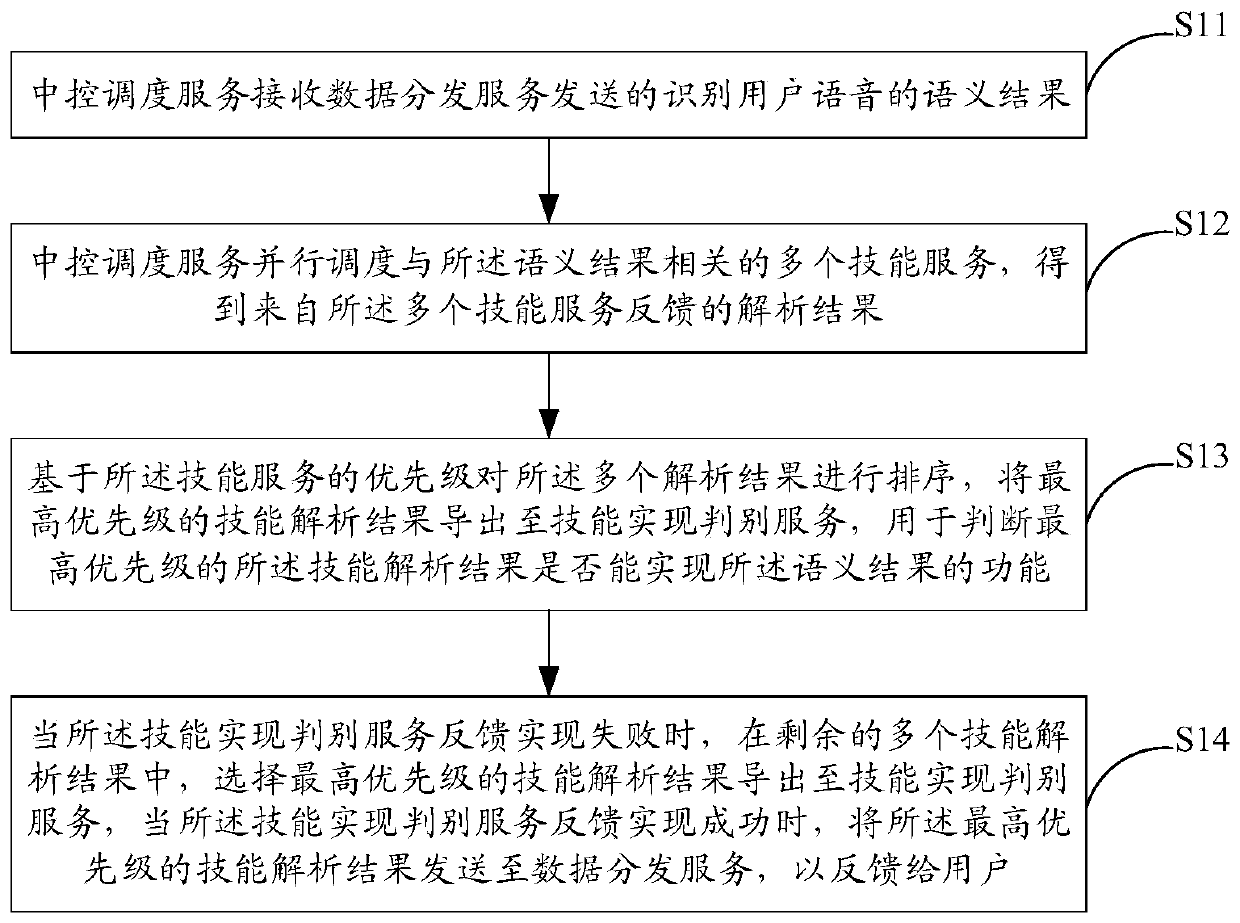

Skill scheduling method and system for voice dialogue platform

ActiveCN111161717AImprove experienceReduce the number of dispatchesMultimedia data queryingSpeech recognitionSkill setsSpoken dialog

The embodiment of the invention provides a skill scheduling method for a voice dialogue platform. The method comprises the following steps: a central control scheduling service receives a semantic result of user voice; the central control scheduling service schedules a plurality of skill services related to the semantic result in parallel to obtain analysis results fed back by the plurality of skill services; the plurality of analysis results are sorted based on the priority of the skill service, and the skill analysis result with the highest priority is exported to the skill realization discrimination service; and when the feedback of the skill realization discrimination service fails to be realized, the skill analysis result with the highest priority is selected from the remaining skillanalysis results, and the skill analysis result is exported to the skill realization discrimination service, and when the feedback of the skill realization discrimination service is successfully realized, the skill analysis result with the highest priority is sent to the data distribution service so as to feed back the skill analysis result to the user. The embodiment of the invention further provides a skill scheduling system for the voice dialogue platform. According to the embodiment of the invention, skill scheduling efficiency is improved, delay is reduced, and user experience is improved.

Owner:AISPEECH CO LTD

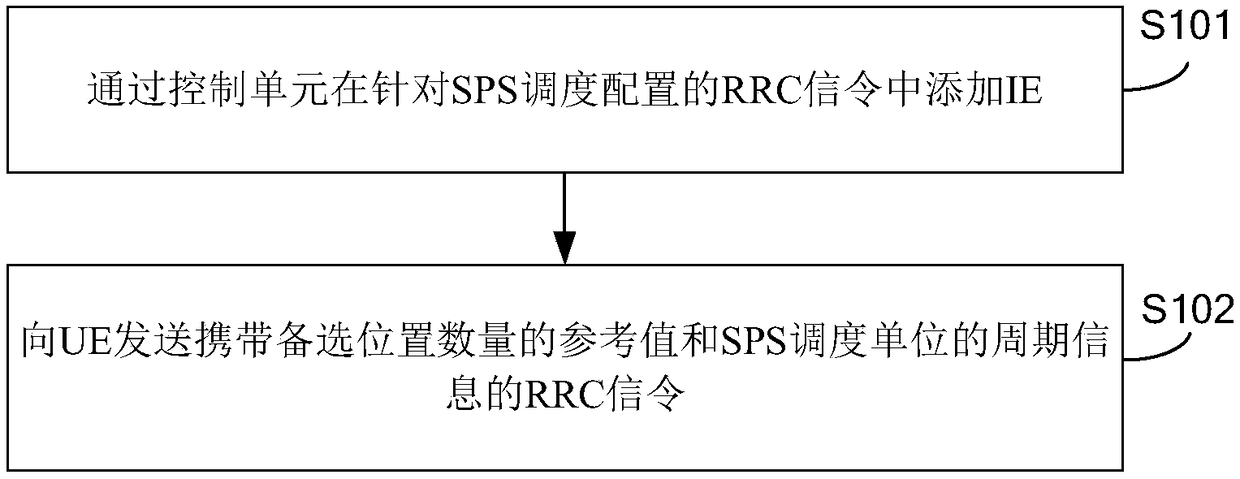

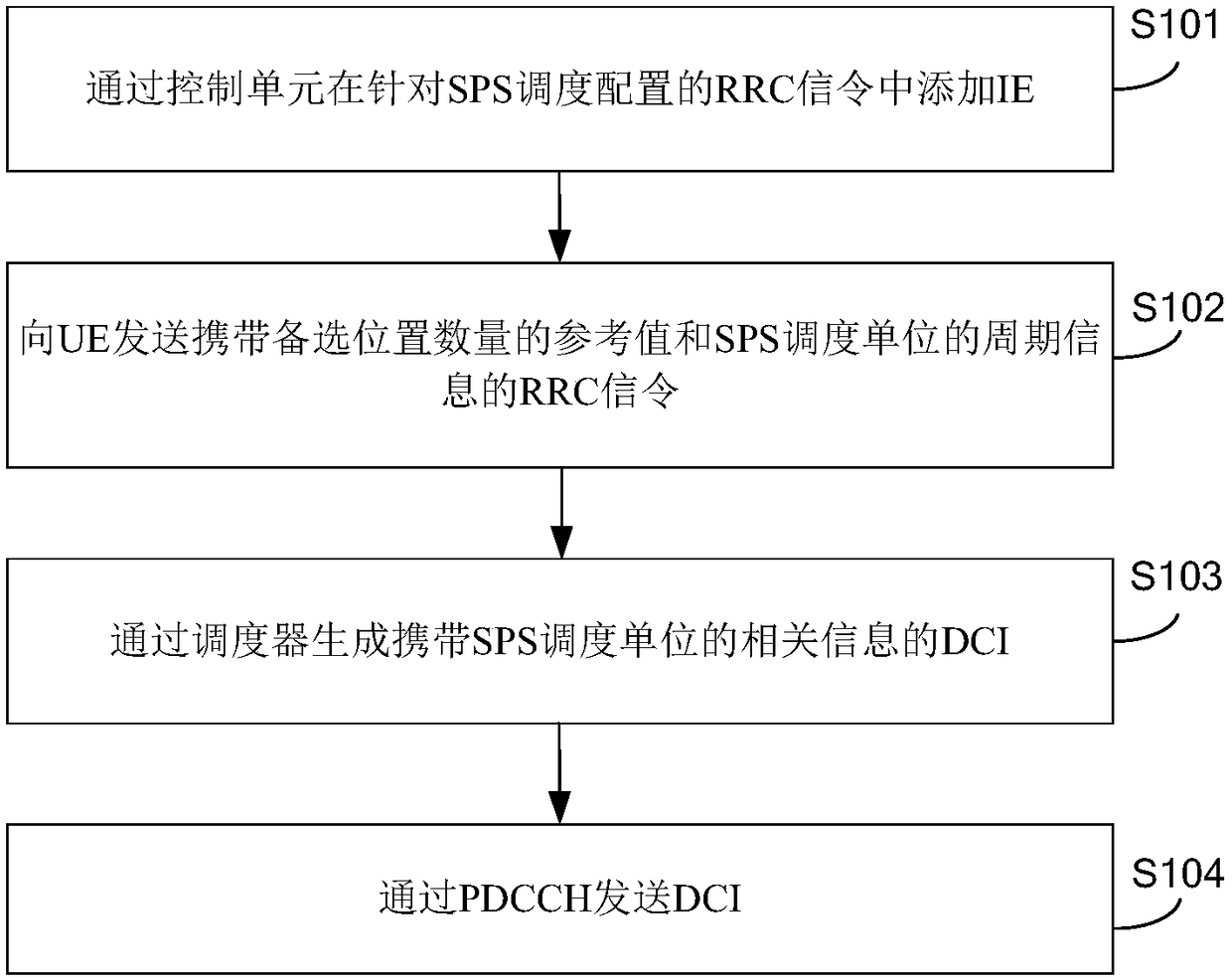

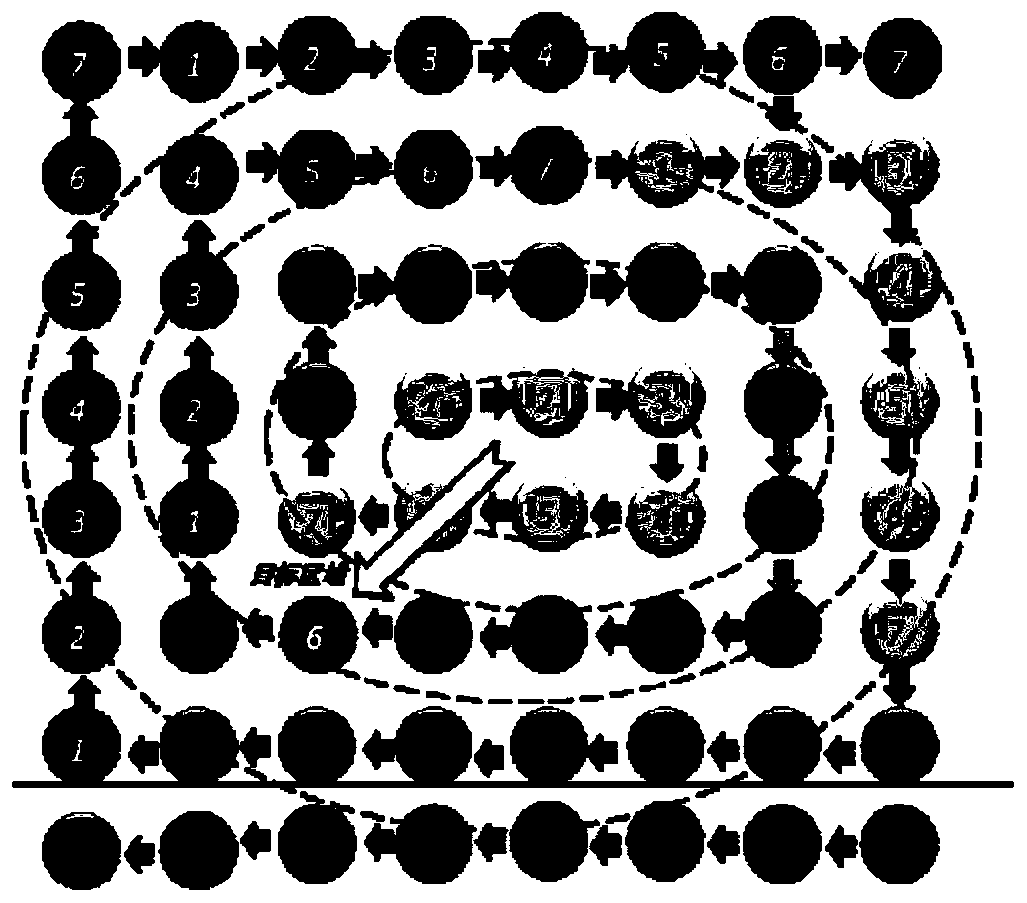

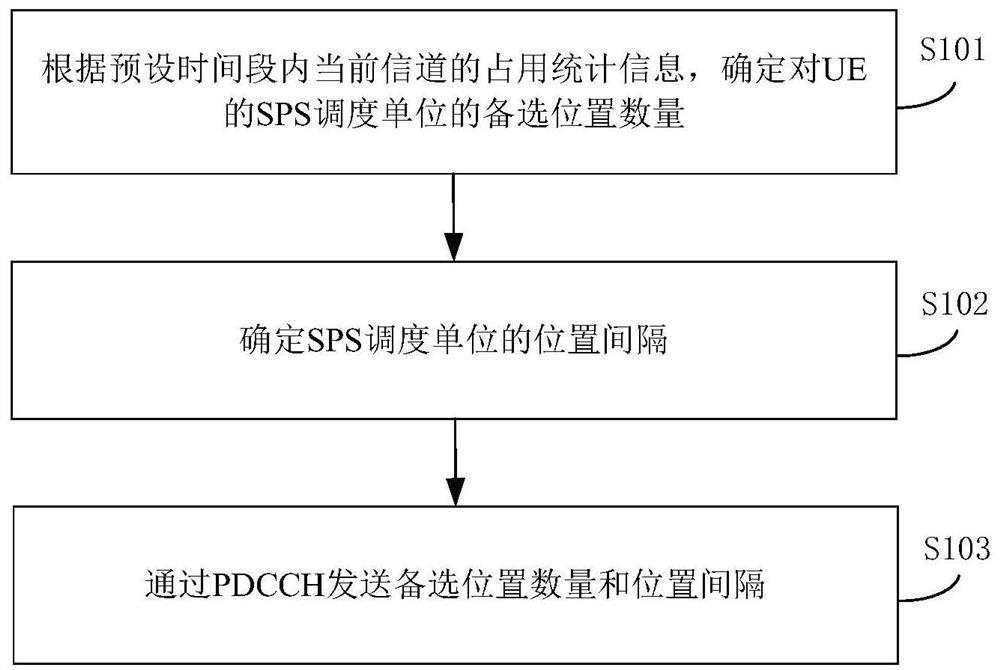

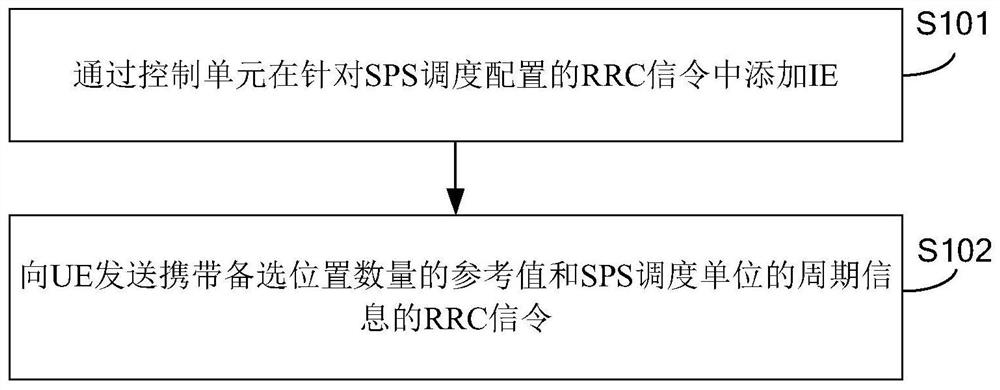

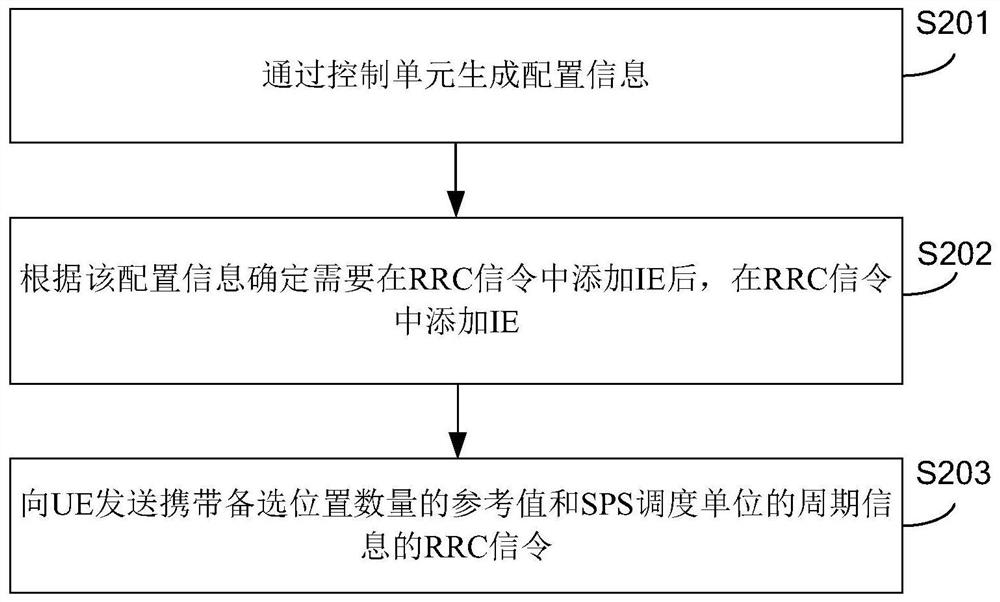

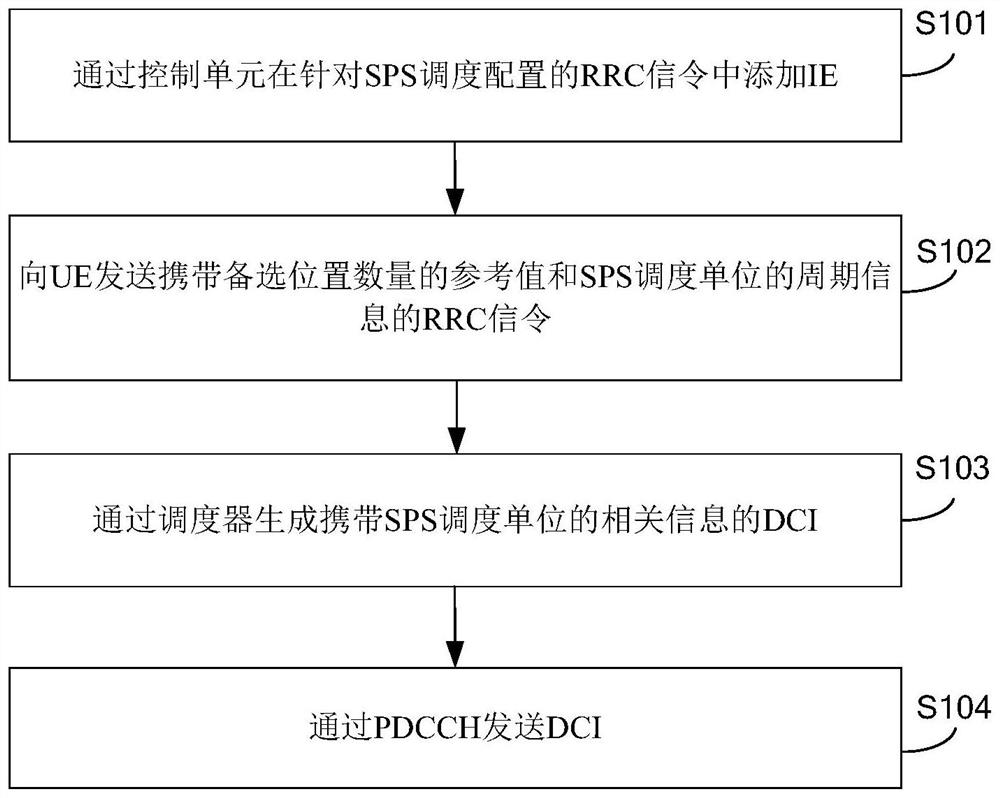

Information transmitting method and device, base station and user equipment

ActiveCN108702777AReduce the number of dispatchesReduce wasteSignal allocationConnection managementControl channelDistributed computing

The present disclosure relates to an information transmitting method and apparatus, a demodulating method and apparatus for a semi-persistent scheduling scheduling unit, a base station, a user equipment, and a computer readable storage medium. The information sending method includes: adding, by using the control unit, the information element IE in the radio resource control RRC signaling configured for the semi-persistent scheduling SPS scheduling, where the IE is used to indicate the reference to the number of candidate positions of the SPS scheduling unit of the user equipment UE. a value; transmitting, to the UE, RRC signaling carrying a reference value of the number of candidate locations and period information of the SPS scheduling unit. In this embodiment, the RRC signaling carryingthe reference value of the candidate location number and the periodic information of the SPS scheduling unit is sent to the UE, so that the UE can determine the number of candidate locations accordingto the received RRC signaling, and receive the SPS scheduling at the candidate location. Units to reduce the number of times the base station dispatches to the SPS scheduling unit, thereby reducing wasted common control channel resources.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

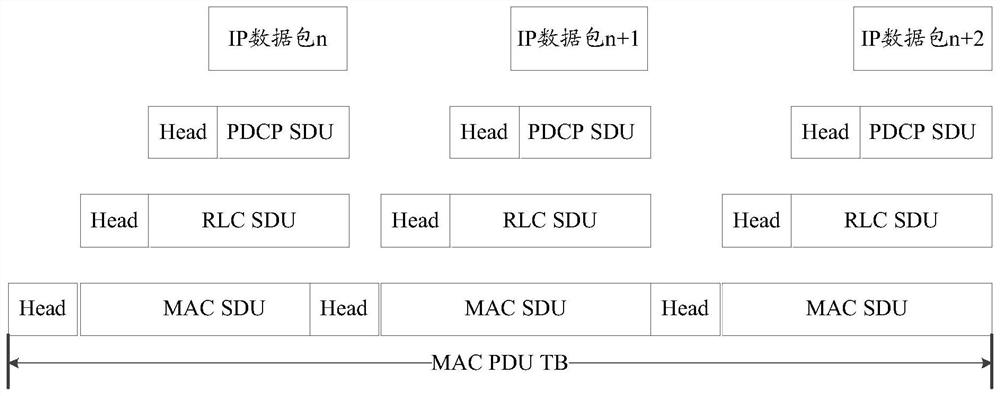

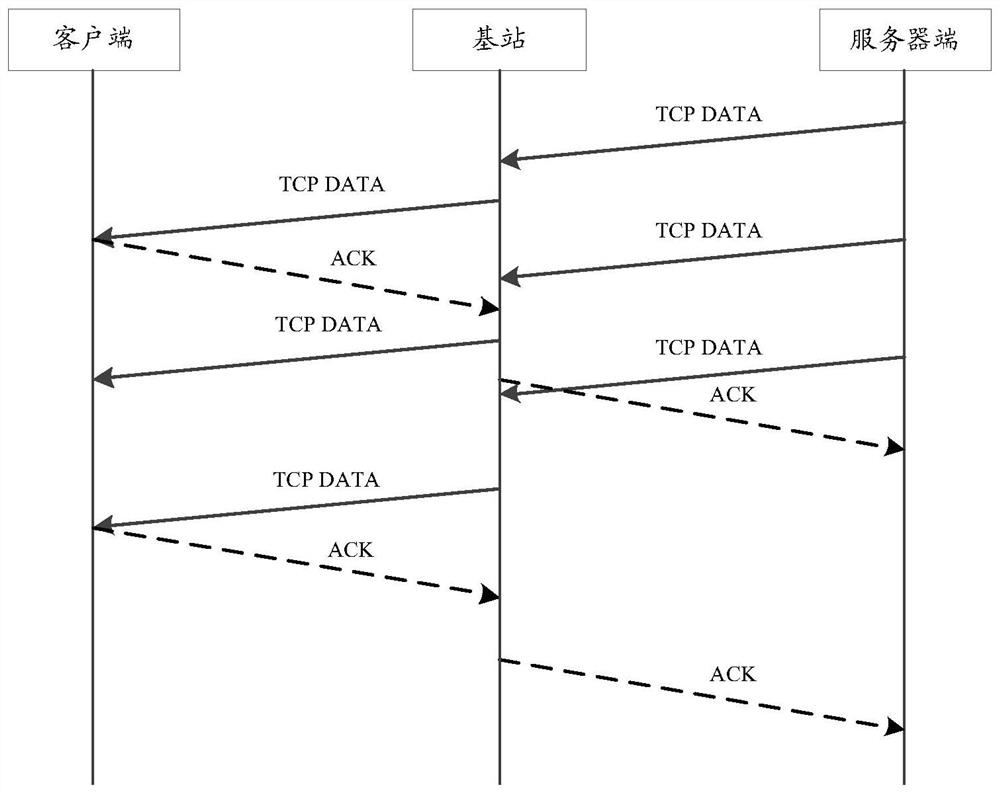

Data transmission method and device of 5G system

ActiveCN112188553ASimplify the addition processGuaranteed validityNetwork traffic/resource managementData switching networksData transmissionEngineering

The invention relates to the field of communication, in particular to a data transmission method and device of a 5G system. The data transmission method is used for reducing the load of the system andimproving the processing efficiency, and comprises the steps of: determining a number of data packets to be sent, and determining a threshold value interval corresponding to the current load of the system; then converging the data packets to be sent according to a preset threshold value when determining that the number of the data packets reaches the preset threshold value corresponding to the threshold value interval, and generating a converged data packet; and finally, sending the converged data packet to a receiver, and triggering the receiver to analyze the converged data packet. Thus, the data packets to be sent can be converged into the converged data packet and then sent to the receiver, the message primitive processing number of protocol interlayer interaction is reduced, the header adding process is simplified, the convergence condition is accurately limited, the effectiveness of the processing result is ensured, the load of a system processor is reduced, the processing efficiency is improved, the scheduling frequency is reduced, the operation overhead of the system is reduced, and the resource consumption is reduced.

Owner:DATANG MOBILE COMM EQUIP CO LTD

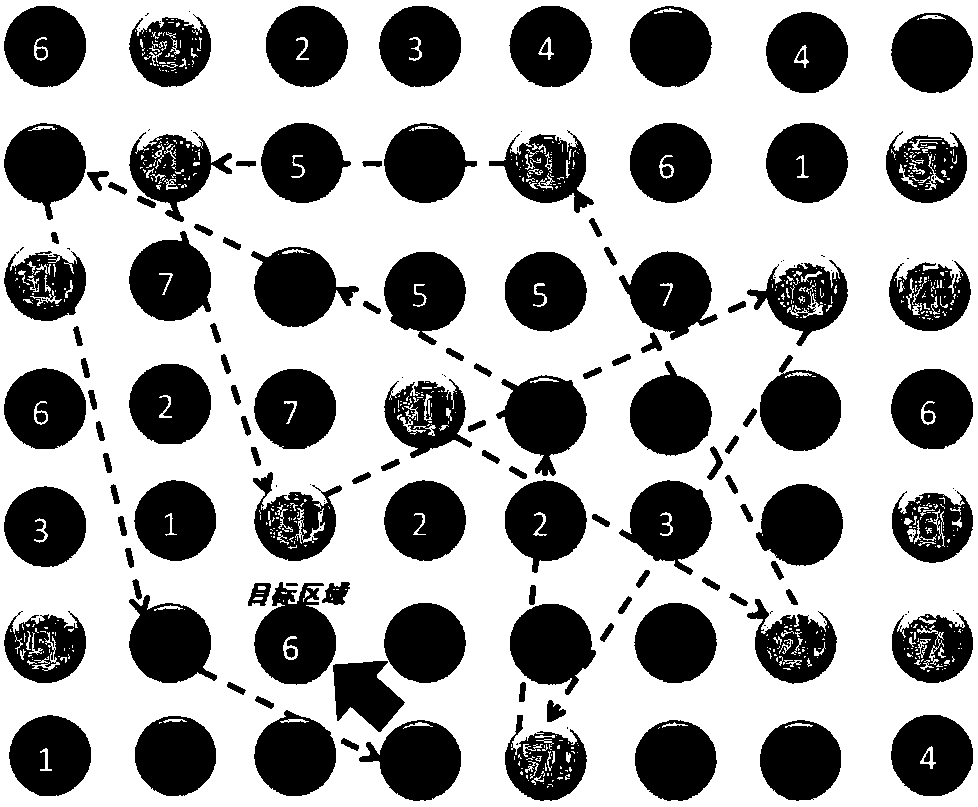

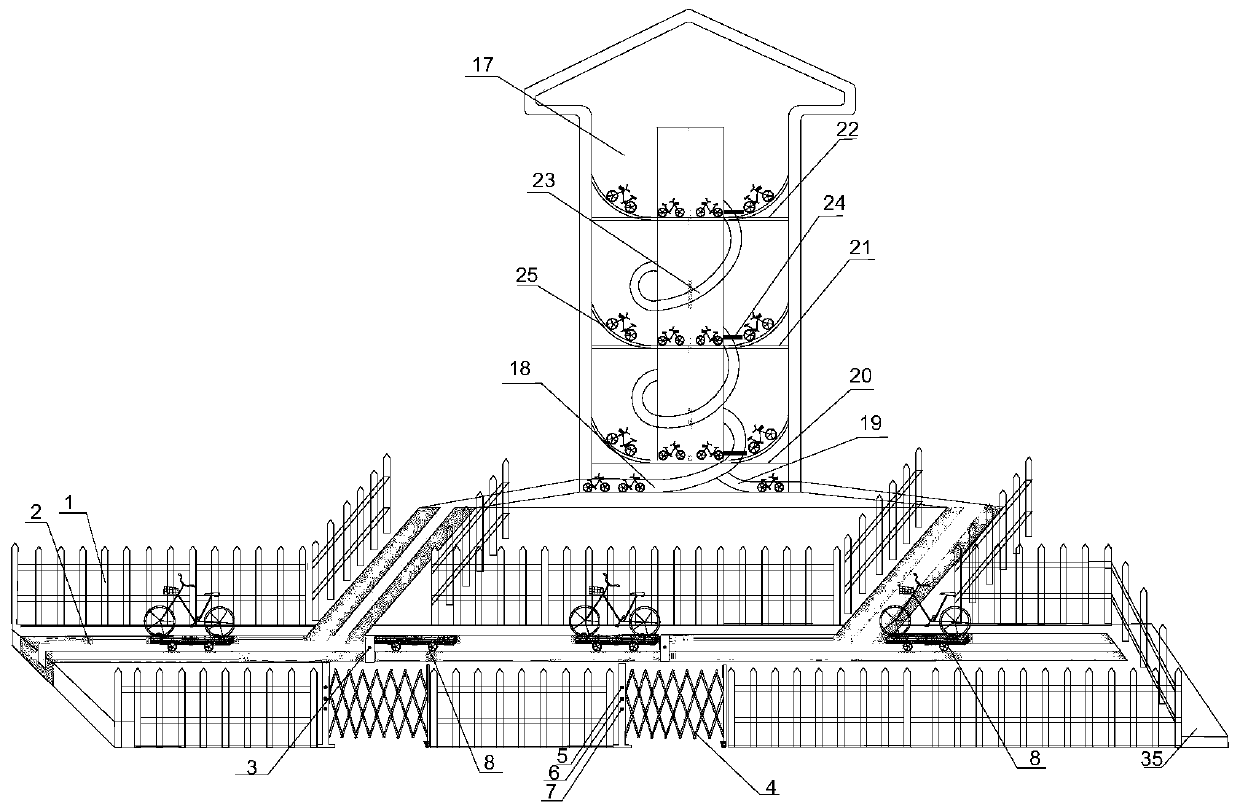

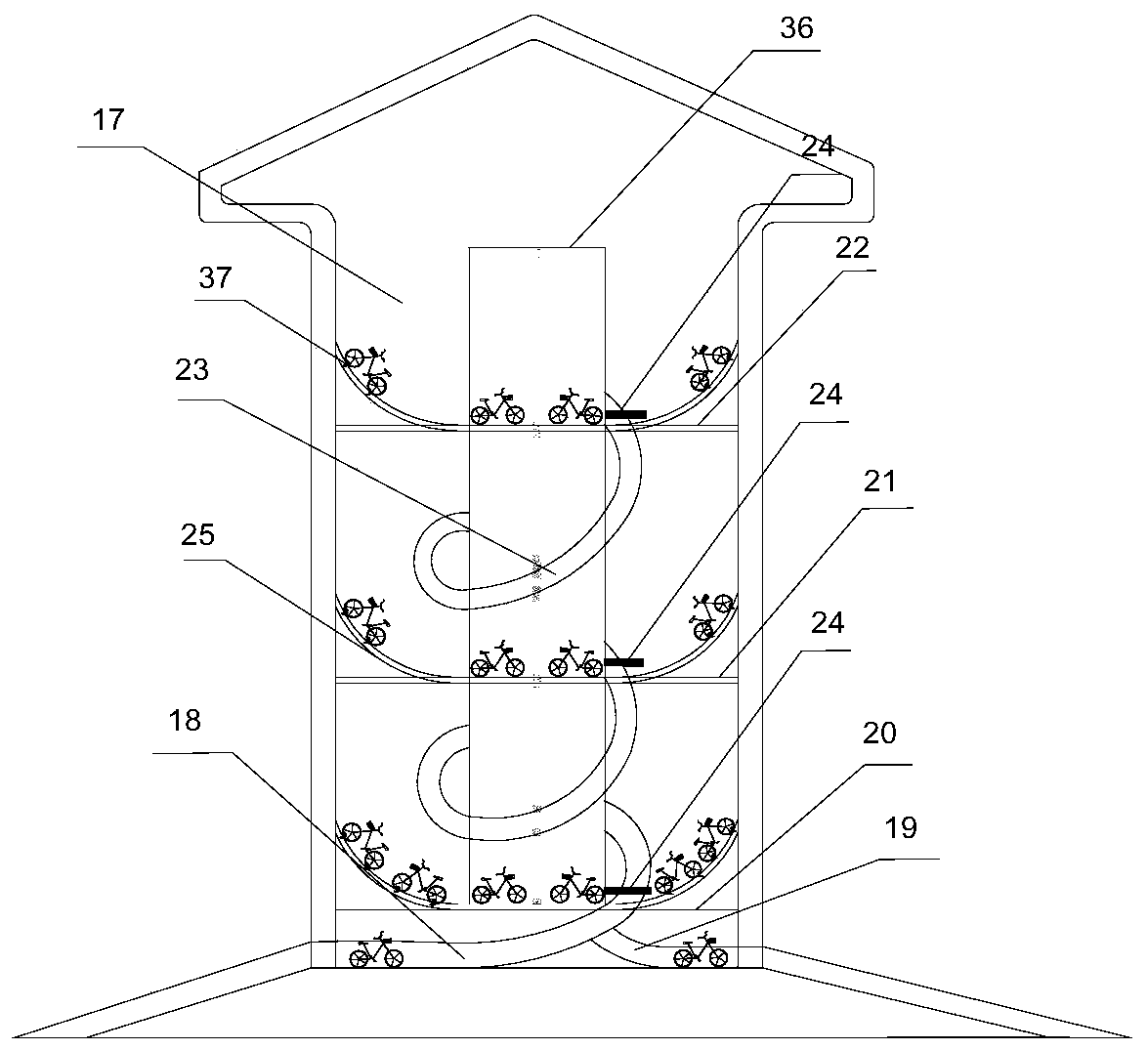

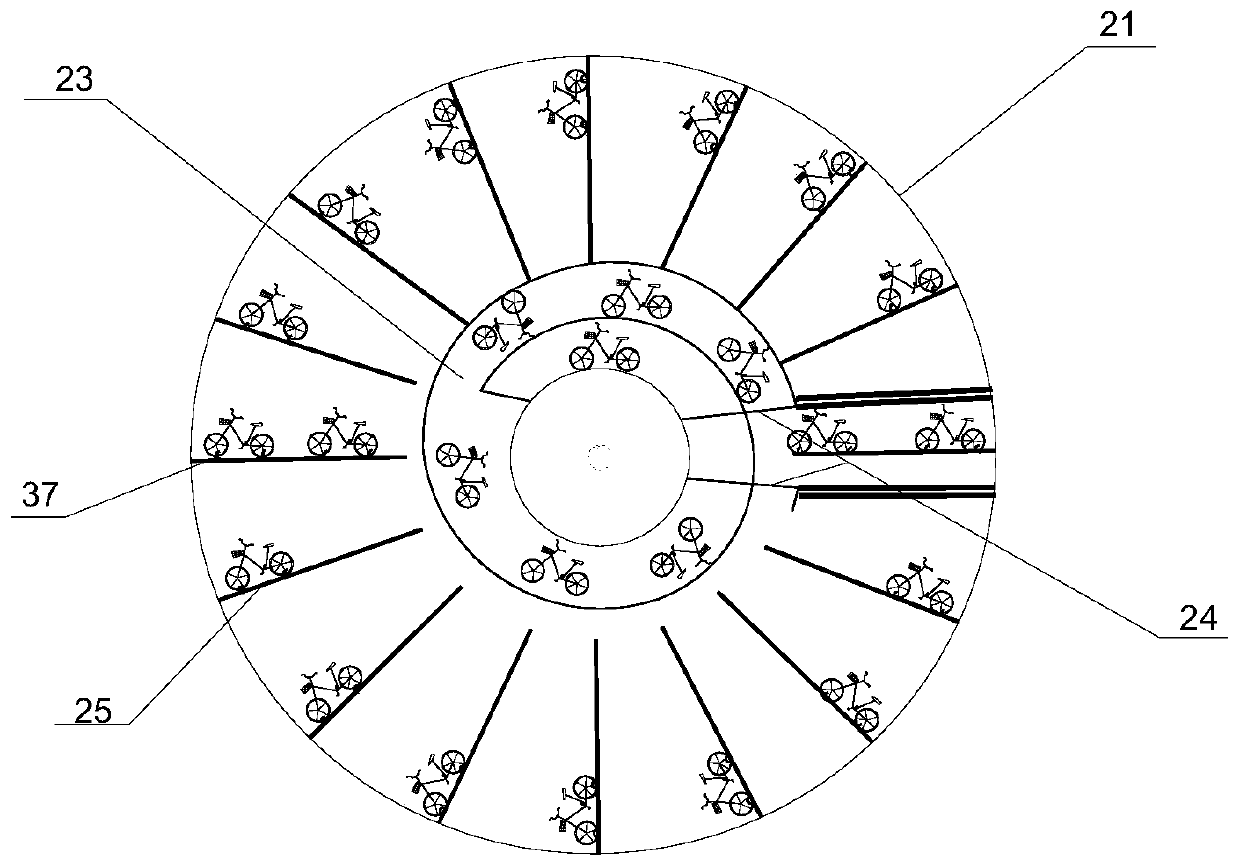

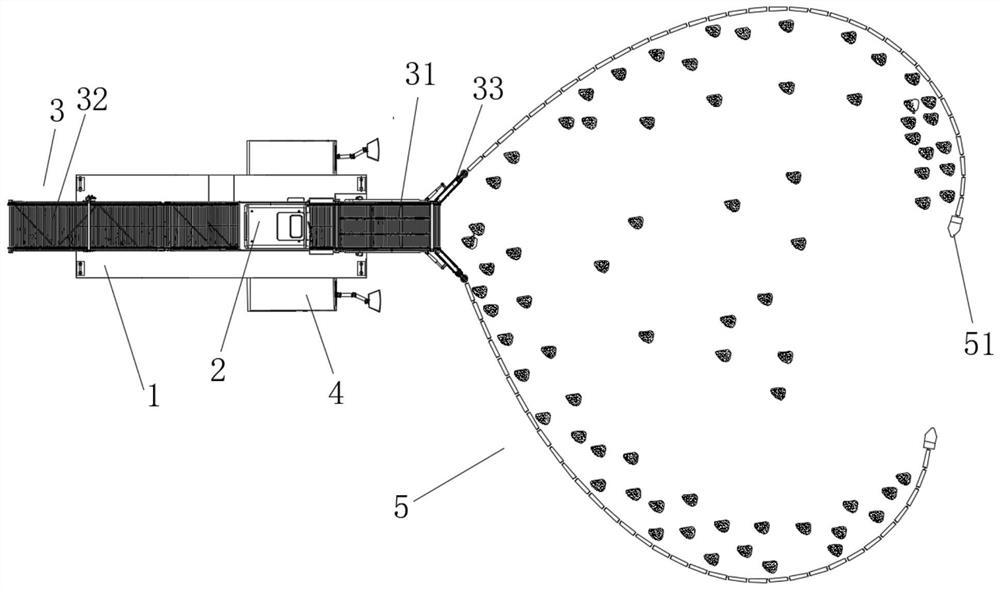

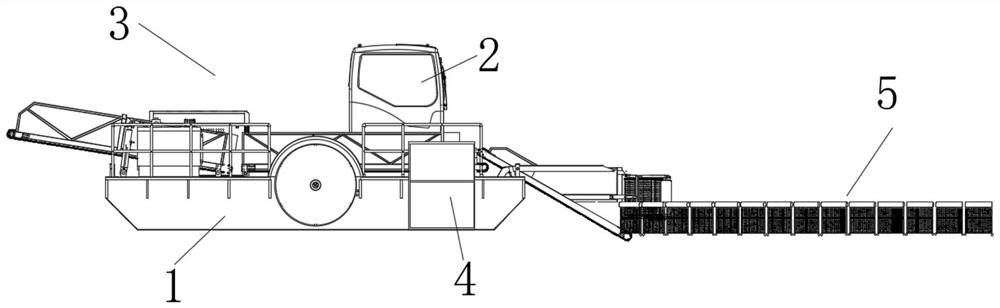

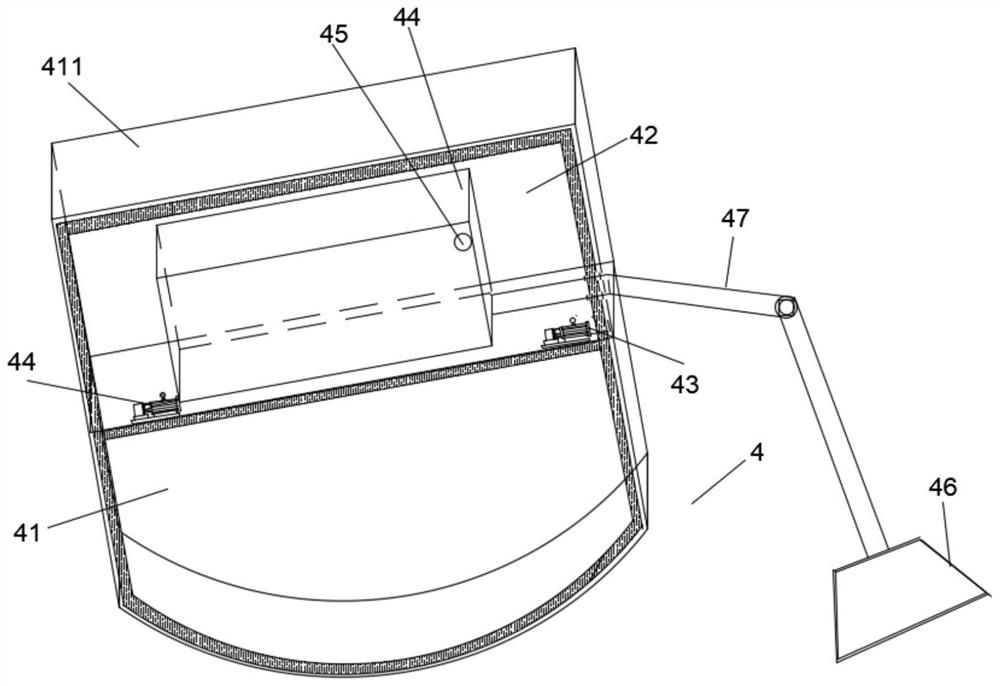

Shared bicycle flowing system and automatic scheduling system and method based on sub-region division

ActiveCN111598481ASmall footprintEffectively regulate parking behaviorRoad vehicles traffic controlForecastingDistributed computingBicycle sharing

The invention provides a shared bicycle flowing system and an automatic scheduling system and method based on sub-region division. The shared bicycle flowing system comprises overground conveying devices arranged at bicycle taking and placing points, underground conveying devices connected with the overground conveying devices at the bicycle taking and placing points, and a multi-layer storage device capable of providing bicycles for the overground conveying devices or the underground conveying devices. And the adjacent bicycle taking and placing points and the overground storage devices are connected through the overground conveying devices or the underground conveying devices to form a mobile conveying network of the shared bicycles. Proposed flow system, linkage of stations in a certainarea is realized; the demand quantity of each station is predicted through a comprehensive demand prediction method, then dynamic subarea division is performed to form a demand scheduling scheme of each station in subareas, finally, the mobile system realizes automatic transportation of shared bicycles according to the scheduling scheme, and when a user has a demand, an efficient and convenient bicycle access service is provided for the user to the maximum extent.

Owner:SHANDONG JIAOTONG UNIV +2

Water surface trash cleaning ship for environment management

PendingCN113832934AVersatileMake up for collection width limitationsBatteries circuit arrangementsWater cleaningOil canRefuse collection

The invention discloses a water surface trash cleaning ship for environment management. A trash cleaning device and a floating oil recovery device are arranged on a ship body, a water surface fence collection device is arranged at the front end of a trash collection device and comprises two traction ships and a plurality of buoys which are connected with the traction ships, the traction ships take storage batteries as power and are provided with automatic navigation charging systems, and correspondingly, the floating oil recovery device is provided with a traction ship charging device. Compared with the prior art, the water surface trash cleaning ship for environment management has the advantages of comprehensive function, multiple purposes, economy and high efficiency, and water surface trash and floating oil can be cleaned at the same time. The water surface fence collection device is additionally arranged and is suitable for operation in narrow water areas and shallow water where existing ships cannot reach; and a charging dock is arranged on the main ship body, the automatic navigation control systems and electric quantity detection systems are arranged on the traction ships and are used for automatic navigation and charging of the traction ships, and the traction ships do not need to be salvaged and recycled manually.

Owner:青岛瑞龙科技有限公司

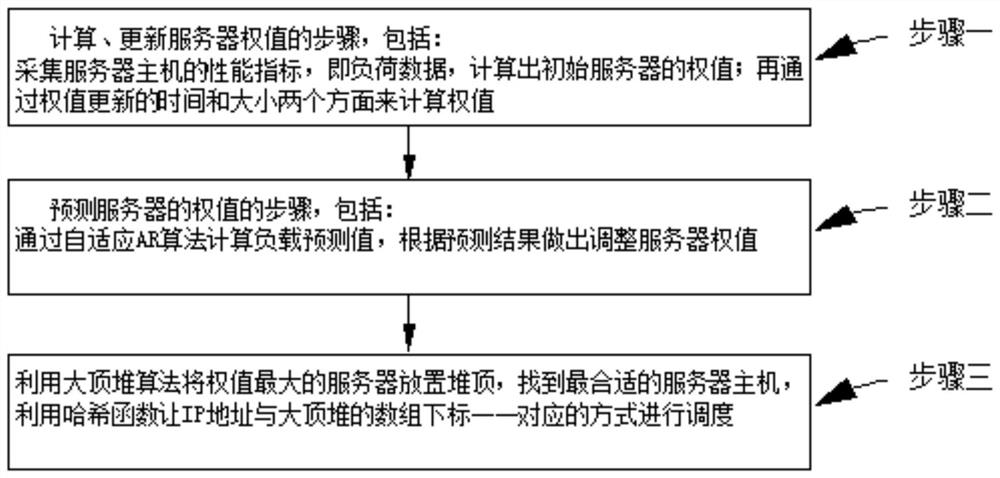

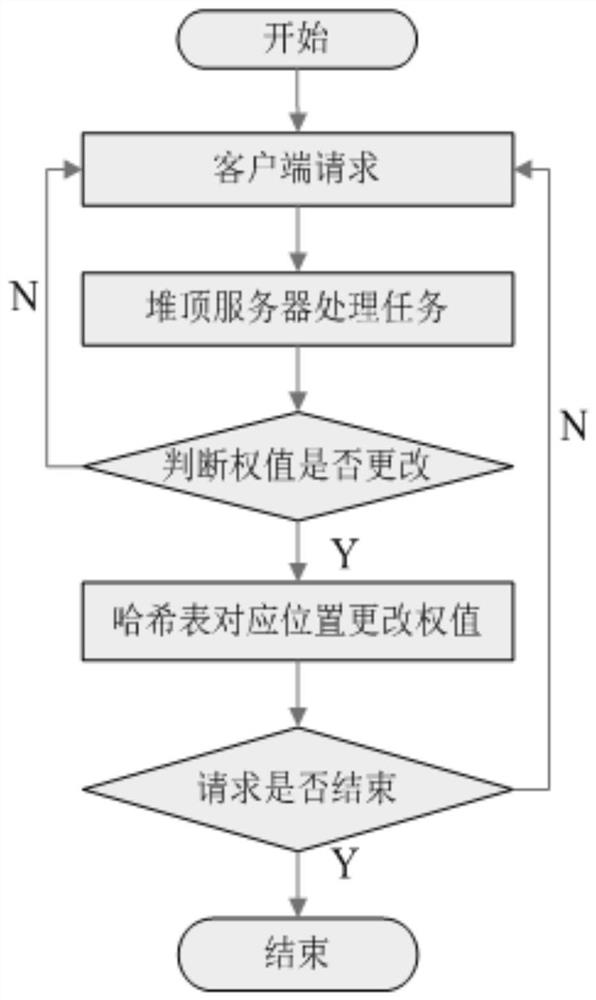

Web cluster load balancing method based on load data dynamic update rate

PendingCN113377544AReduce the number of dispatchesShort response timeResource allocationCluster systemsParallel computing

The invention discloses a web cluster load balancing method based on a load data dynamic update rate, and belongs to the field of web cluster load balancing methods. The problem that the average response capability of all nodes of an existing whole web cluster system is low is solved. A web cluster load balancing method based on a load data dynamic update rate comprises the following steps: collecting performance indexes, namely load data, of a server host, and calculating a weight value of an initial server; calculating the weight through two aspects of weight updating time and weight updating size; calculating a load prediction value through an adaptive AR algorithm, and adjusting a server weight according to a prediction result; and placing the server with the maximum weight on the top of the heap by using a large top heap algorithm, and finding the most suitable server host. According to the invention, the load balancing effect is improved by improving and innovating algorithms of all parts, and system resources are utilized more effectively.

Owner:HARBIN UNIV OF SCI & TECH

Scheduling method and base station for uplink gbr service

ActiveCN104981020BReduce occupancyIncrease flexibilityWireless communicationAir interfaceControl channel

Owner:CHENGDU TD TECH LTD

Prediction and scheduling method of electric power system

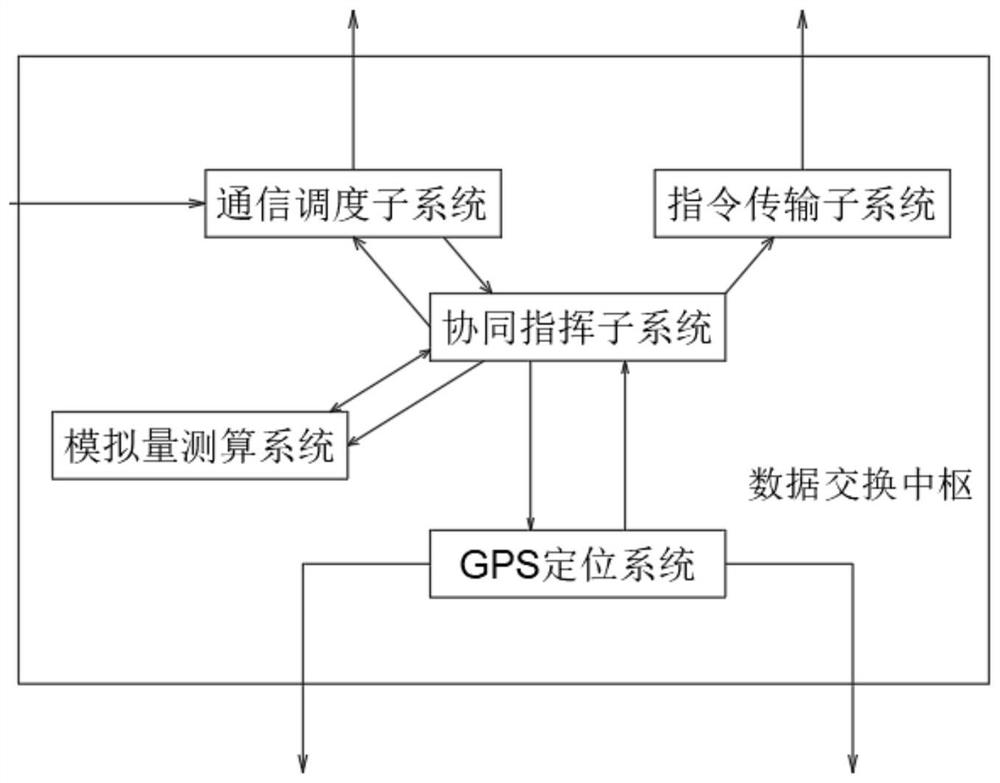

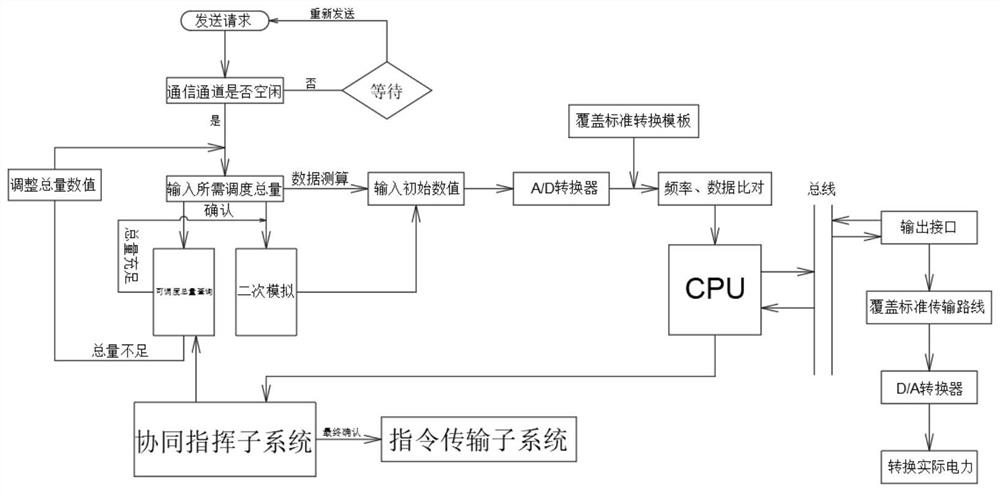

PendingCN114069620AReduce the number of dispatchesReduce schedule pressureGeneration forecast in ac networkElectric power transmissionCommunications system

The invention discloses a prediction and scheduling method for an electric power system, and particularly relates to the field of electric power management. The system comprises a data exchange center, an internal communication system, an electric power production system and an electric power transmission system, wherein the data exchange center comprises a communication scheduling subsystem, a cooperative command subsystem, an analog quantity measuring and calculating system, an instruction transmission subsystem and a GPS positioning system, and a data interaction end of the communication scheduling subsystem is in signal connection with a data interaction end of the cooperative command subsystem. According to the system, the analog quantity measuring and calculating system is designed to measure and calculate the dispatched electric power to measure and calculate the actual dispatched effective electric power and the theoretical dispatched electric power, and the data results measured and calculated for many times are recorded and calculated to serve as a standard template for subsequent dispatching, and the template can be updated in real time, an error between each measurement result and an actual result is reduced, so a user can more intuitively measure a value needing to be reported when applying for power dispatching.

Owner:JIANGSU ELECTRIC POWER CO +1

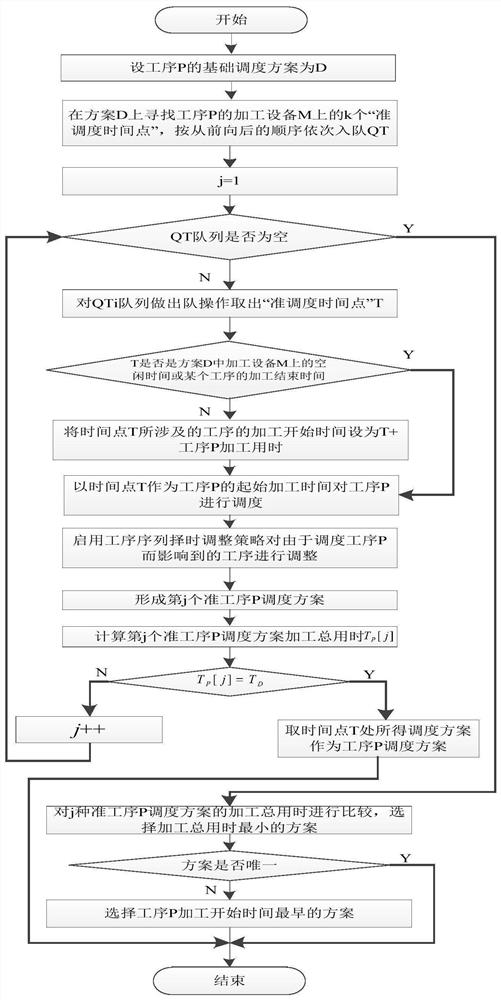

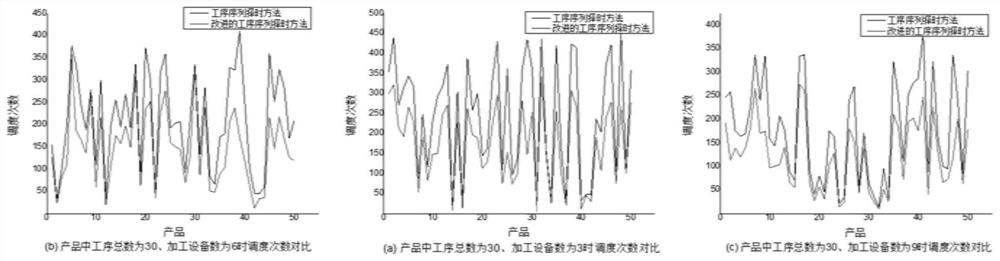

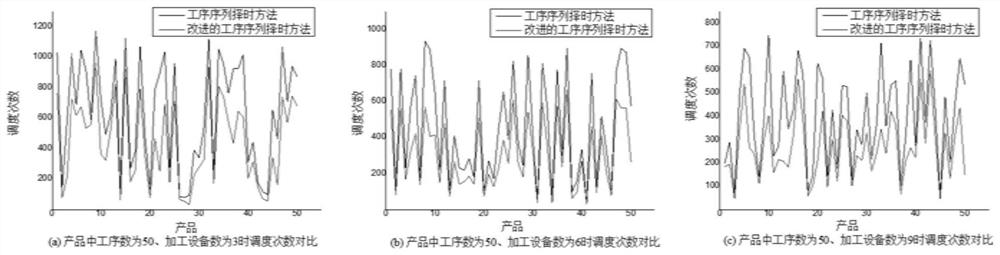

Improved process sequence time selection comprehensive scheduling method

PendingCN113159494AWithout reducing the optimization effectWithout reducing complexityResourcesRound complexityAlgorithms performance

The invention relates to the technical field of automatic processing, provides an improved process sequence time-selection comprehensive scheduling method, and provides an improved process sequence time-selection comprehensive scheduling algorithm for solving the problems that when an existing process sequence time-selecting comprehensive scheduling algorithm is used for processing a comprehensive scheduling problem, the solving process is tedious, and operation redundancy occurs. According to the algorithm, when a process sequence time selection strategy is used for determining a process scheduling scheme, the process does not need to be subjected to trial scheduling on all quasi scheduling time points sometimes, the process scheduling scheme can be obtained only by performing trial scheduling on part of quasi scheduling time points, and a result is the same as a result obtained by the process sequence time selection strategy. According to the algorithm, on the premise that the optimization effect of the algorithm is not reduced, the scheduling frequency is reduced, the algorithm complexity is reduced, and the algorithm performance is improved.

Owner:HUIZHOU UNIV

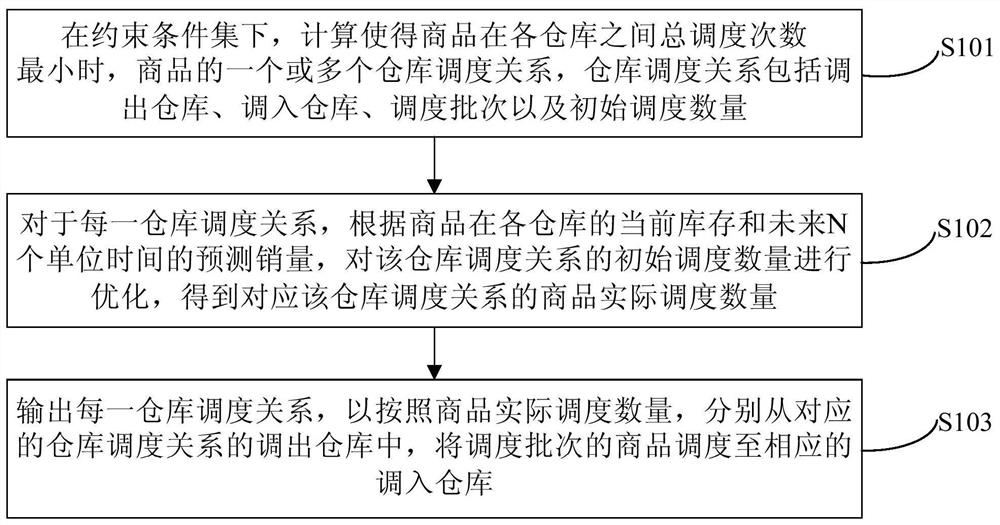

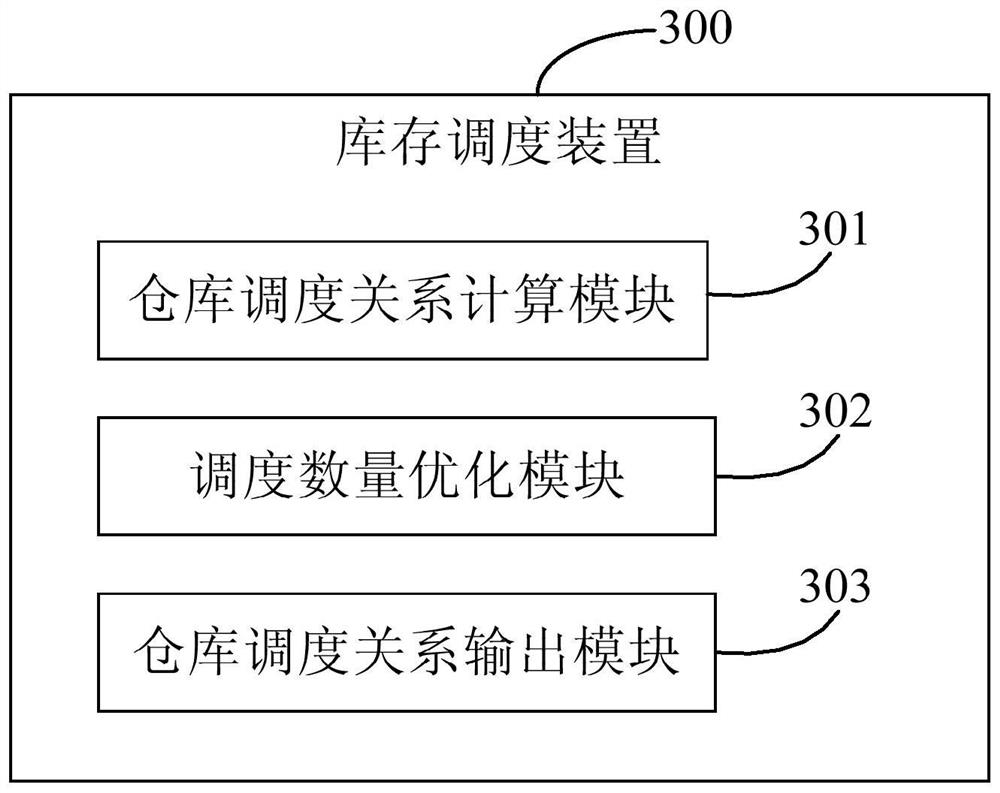

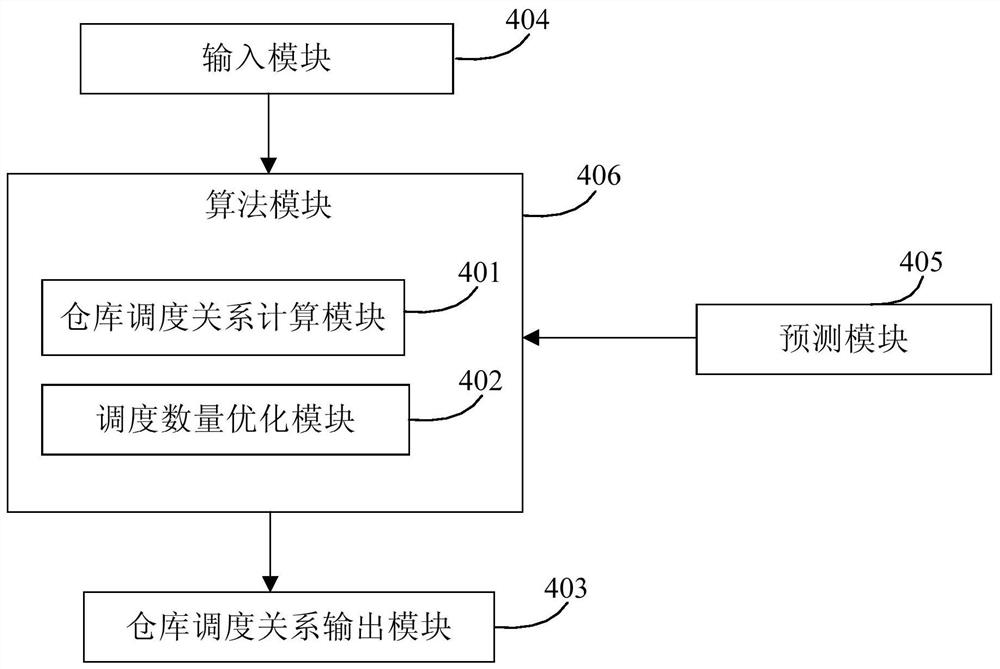

Inventory scheduling method and device

ActiveCN113222304AReduce the number of dispatchesMeet batch balancing requirementsResourcesLogisticsOperations researchIndustrial engineering

Owner:BEIJING JINGDONG ZHENSHI INFORMATION TECH CO LTD

A multi-modal scheduling method for massive data streams under multi-core DSP

ActiveCN107608784BImprove versatilityImprove portabilityResource allocationInterprogram communicationData streamParallel computing

The invention discloses a multi-modal massive-data-flow scheduling method under a multi-core DSP. The multi-core DSP includes a main control core and an acceleration core. Requests are transmitted between the main control core and the acceleration core through a request packet queue. Three data block selection methods of continuous selection, random selection and spiral selection are determined onthe basis of data dimensions and data priority orders. Two multi-core data block allocation methods of cyclic scheduling and load balancing scheduling are determined according to load balancing. Datablocks selected and determined through a data block grouping method according to allocation granularity are loaded into multiple computing cores for processing. The method adopts multi-level data block scheduling manners, satisfies requirements of system loads, data correlation, processing granularity, the data dimensions and the orders when the data blocks are scheduled, and has good generalityand portability; and expands modes and forms of data block scheduling from multiple levels, and has a wider scope of application. According to the method, a user only needs to configure the data blockscheduling manners and the allocation granularity, a system automatically completes data scheduling, and efficiency of parallel development is improved.

Owner:XIAN MICROELECTRONICS TECH INST

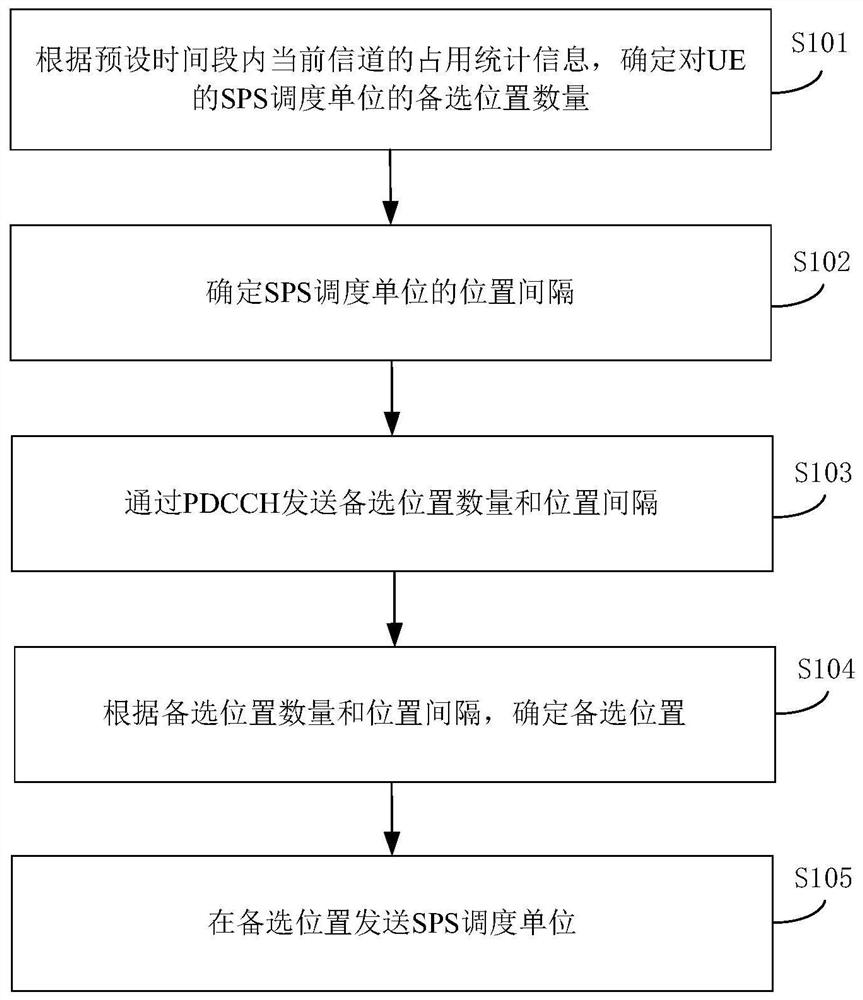

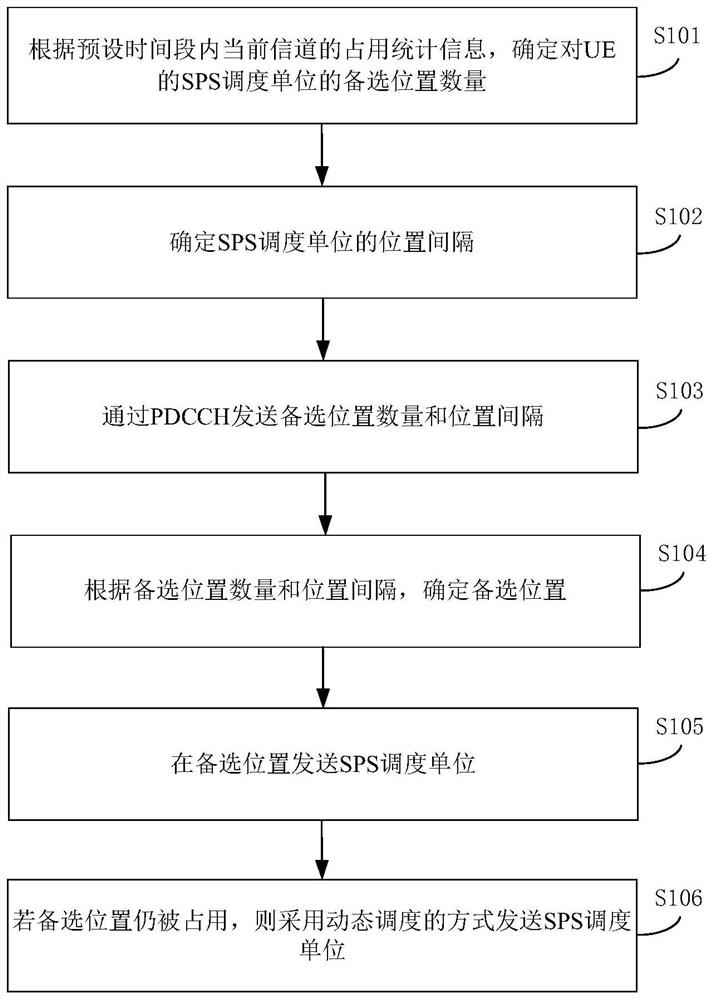

Semi-persistent scheduling dispatching unit occupied indication method and device and base station

ActiveCN108521886BReduce the number of dispatchesReduce wasteWireless communicationEngineeringControl channel

The present disclosure relates to a method and device for indicating that an SPS scheduling unit is occupied, a method and device for demodulating an SPS scheduling unit, a base station, user equipment, and a computer-readable storage medium. Wherein, the indication method that the SPS scheduling unit is occupied includes: according to the occupancy statistical information of the current channel within a preset time period, determining the number of candidate positions of the SPS scheduling unit for the user equipment UE; determining the position interval of the SPS scheduling unit; The downlink control channel PDCCH transmits the number of candidate positions and the position interval. The embodiments of the present disclosure enable the UE to determine the candidate positions of the SPS scheduling unit according to the number of candidate positions and the position interval, and receive the SPS scheduling unit at the candidate position, so as to reduce the number of times of scheduling the SPS scheduling unit, thereby reducing the waste of public resources. Control channel resources.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

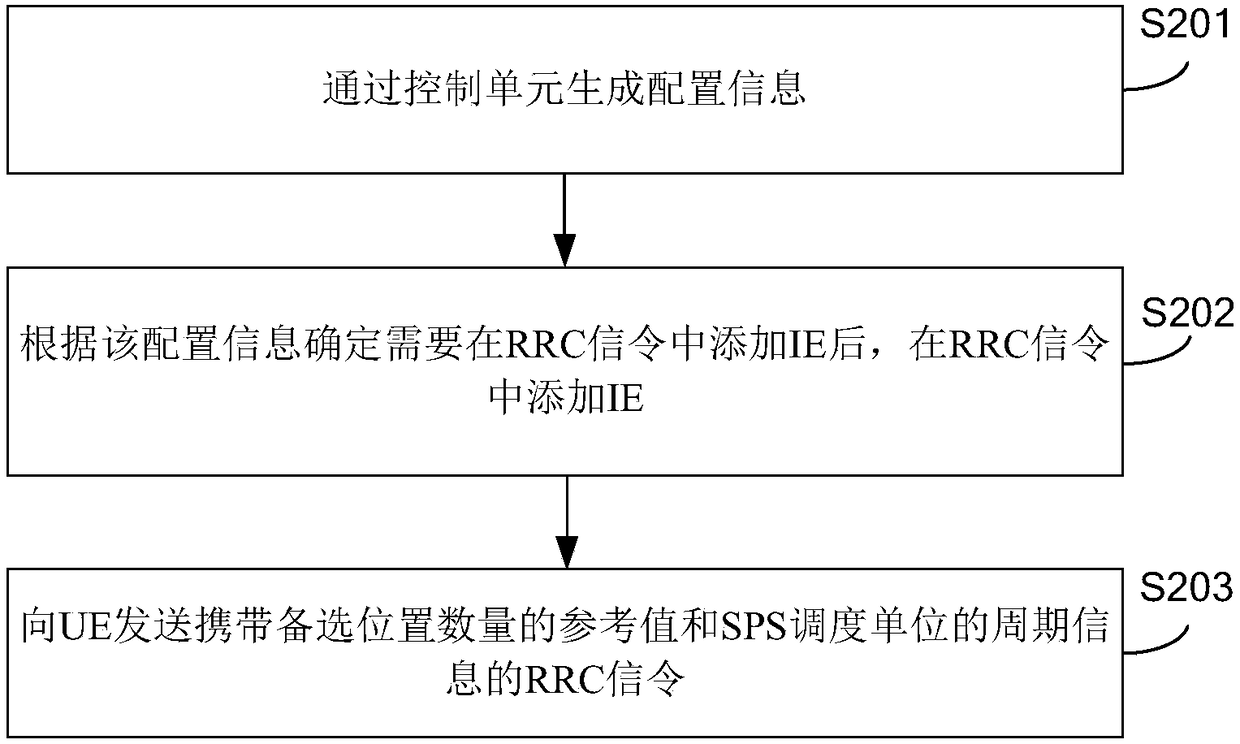

Information sending method and device, base station and user equipment

ActiveCN108702777BReduce the number of dispatchesReduce wasteSignal allocationConnection managementInformation transmissionControl cell

The present disclosure relates to an information sending method and device, a semi-persistent scheduling scheduling unit demodulation method and device, a base station, user equipment, and a computer-readable storage medium. Wherein, the information sending method includes: using the control unit to add an information element IE in the radio resource control RRC signaling configured for semi-persistent scheduling SPS scheduling, and the IE is used to indicate the reference to the number of candidate positions of the SPS scheduling unit of the user equipment UE value; send the RRC signaling carrying the reference value of the number of candidate positions and the cycle information of the SPS scheduling unit to the UE. In this embodiment, the RRC signaling carrying the reference value of the number of candidate positions and the period information of the SPS scheduling unit is sent to the UE, so that the UE can determine the number of candidate positions according to the received RRC signaling, and receive SPS scheduling at the candidate positions unit to reduce the number of times the base station schedules the SPS scheduling unit, thereby reducing wasted common control channel resources.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

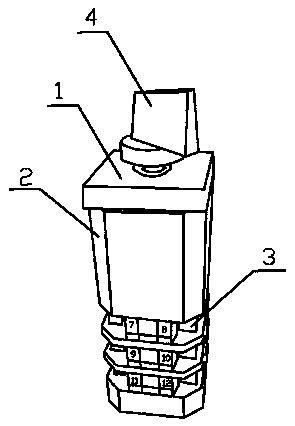

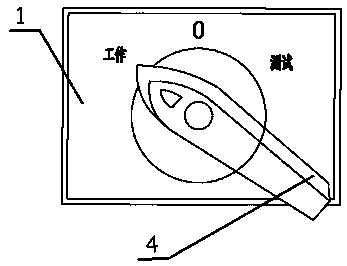

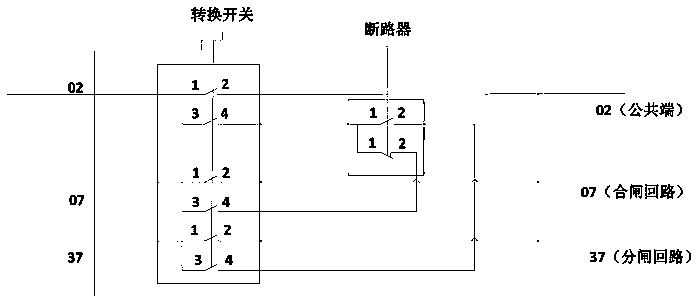

Self-looping device of circuit breaker control loop

PendingCN109270444ALow costShort response timeCircuit interrupters testingOpen-circuit testCircuit breaker

The invention relates to the technical field of circuit breaker control loop fault detection, in particular to a self-looping device of a circuit breaker control loop. The self-looping device comprises an operating panel, an insulating shell and a changeover switch for load conversion; the operating panel is mounted at the top of the insulating shell; the operating panel is provided with a workinggear position, a test gear position, a zero setting gear position and an operating handle capable of rotating the changeover switch; and any three groups of contact points on the changeover switch are respectively set as a changeover switch public end test contact point, a changeover switch closing circuit test contact point and a changeover switch opening circuit test point. The self-looping device has reasonable and compact structure and convenient use, so that the arrangement and distribution of the fault treatment work of the circuit breaker control loop are more reasonable, and a professional structure of a fault treatment personnel team is more targeted, so as to shorten the response time of a fault repair command and reduce the number of persons for the fault treatment and reducingthe cost.

Owner:ILI POWER SUPPLY COMPANY SATE GRID XINJIANG ELECTRIC POWER +1

Method and device for determining power authorization

ActiveCN103428841BReduce transmit powerReduce slot interferencePower managementResource utilizationAuthorization

The invention discloses a method and a device for determining power authorization. The method includes the steps: acquiring a first data volume to be currently transmitted of UE (user equipment) by base station equipment, and acquiring a second data volume capable of being transmitted by the UE within a TTI (transmission time interval); determining the number of first MAC-d PDUs (protocol data units) by the base station equipment according to the first data volume, and determining the number of second MAC-d PDUs by the base station equipment according to the second data volume; determining a modified TBS value of a transport block size by the base station equipment according to the number of the second MAC-d PDUs and determining the power authorization corresponding to the UE by the base station equipment according to the modified TBS value when the number of the second MAC-d PDUs is smaller than that of the first MAC-d PDUs; determining the modified TBS value by the base station equipment according to the number of the first MAC-d PDUs and determining the power authorization corresponding to the UE by the base station equipment according to the modified TBS value when the number of the second MAC-d PDUs is larger than or equal to that of the first MAC-d PDUs. By the aid of the method and the device, dispatching efficiency and transport efficiency can be improved, resource utilization rate is increased, and the throughput capacity of a cell is improved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

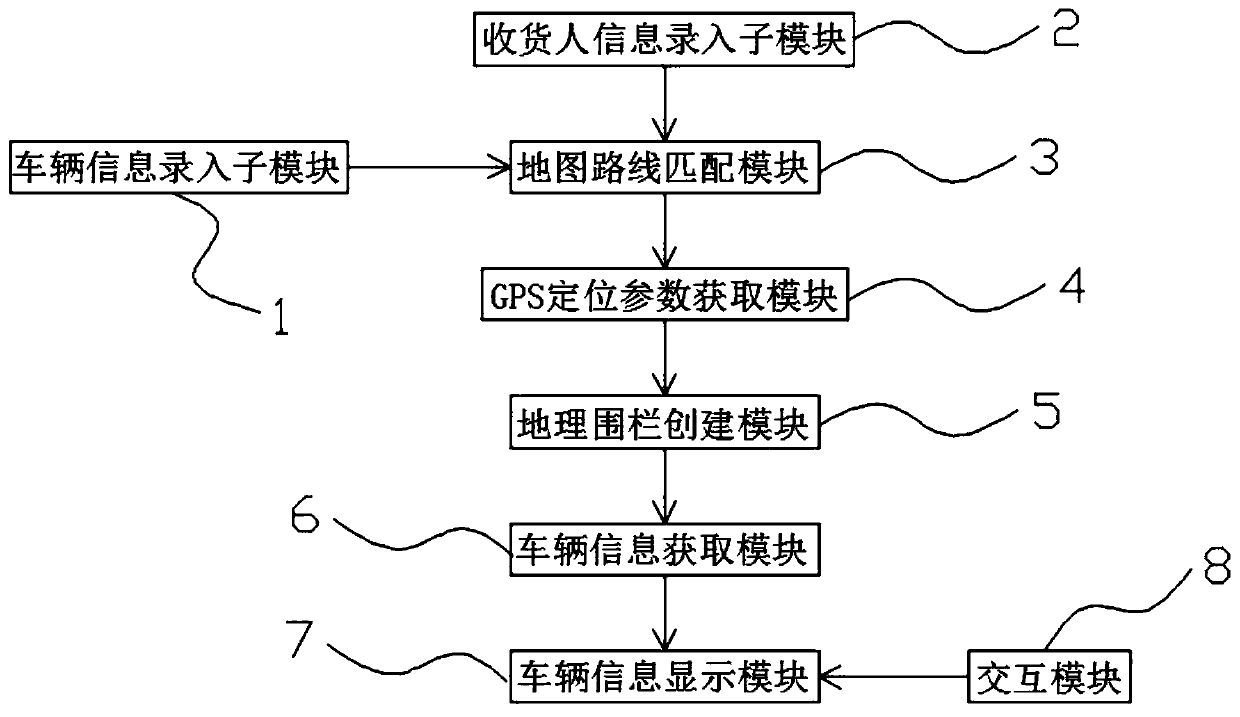

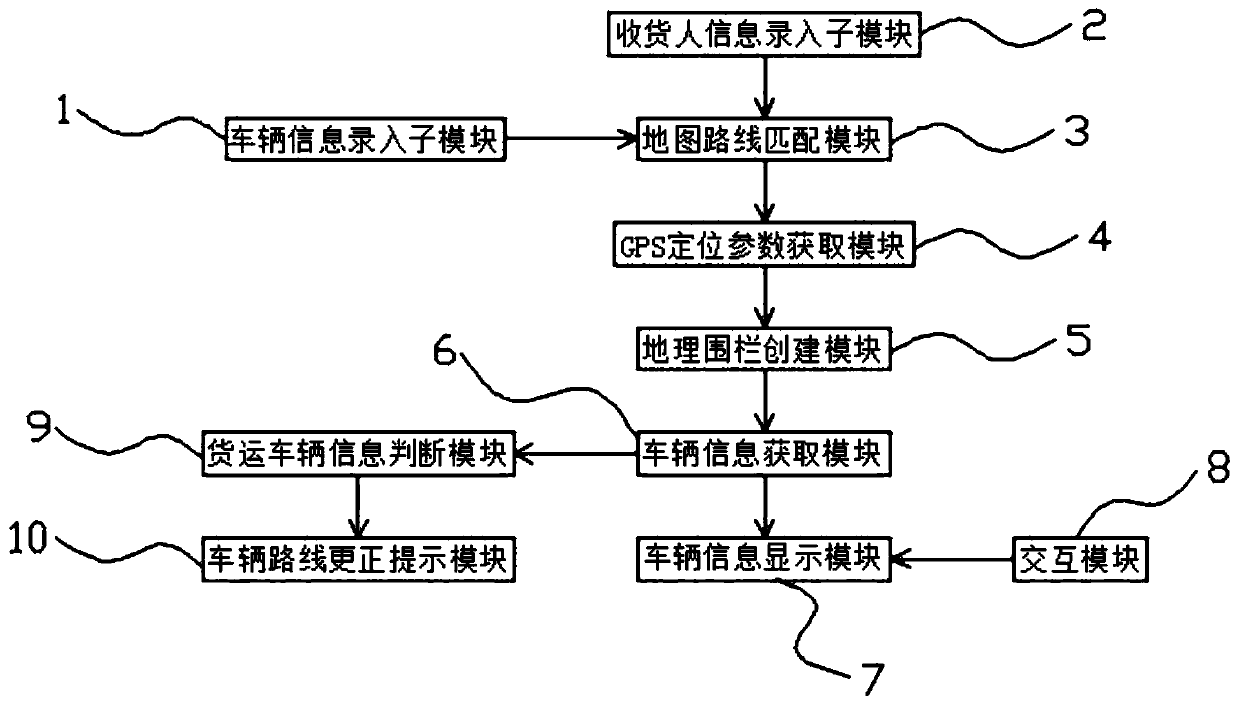

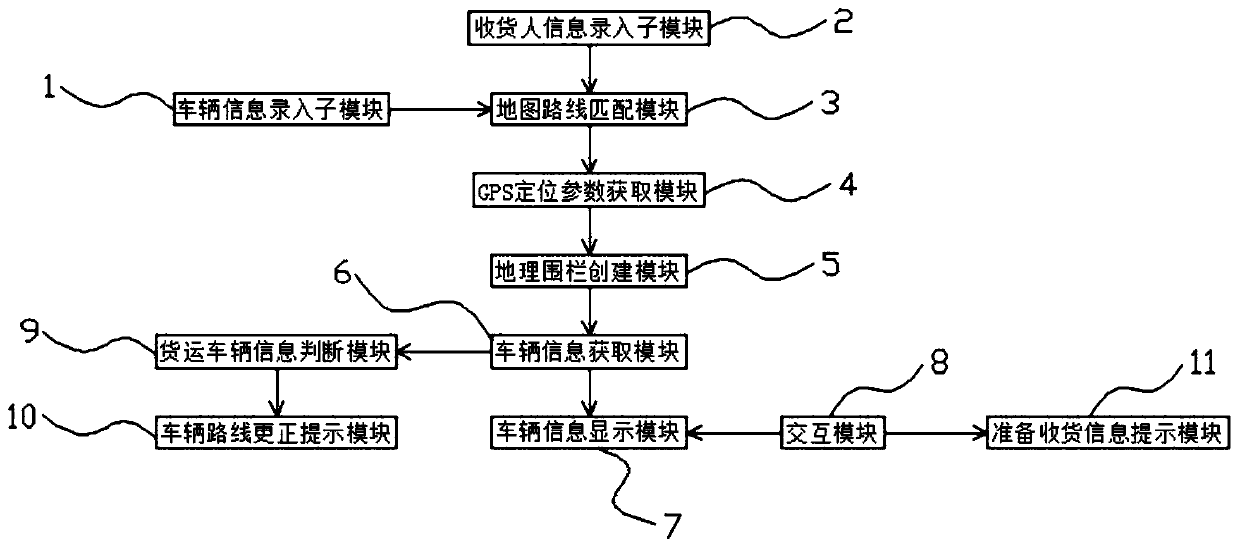

Vehicle efficient scheduling system and scheduling method

PendingCN111554082AReal-time access to freight vehicle informationAvoid misdirectionRoad vehicles traffic controlParticular environment based servicesDynamic dataGps positioning

The invention discloses an efficient vehicle scheduling system, and the system comprises a vehicle information input sub-module, a consignee information input sub-module, a map route matching module,a GPS positioning parameter acquisition module, a geofence creation module, a vehicle information acquisition module, a vehicle information display module and an interaction module. The invention further provides an efficient vehicle scheduling method based on the system. Dynamic data such as the position, the vehicle speed and the parking state of the vehicle are obtained in time through positioning management-vehicle positioning. The historical driving track of a freight vehicle is checked through the positioning management-historical track, and real and timely data support is provided for reasonable scheduling, so wrong commanding and low-efficiency scheduling are avoided, the scheduling number is greatly reduced, and the transportation cost is saved.

Owner:成都快驿供应链管理有限公司

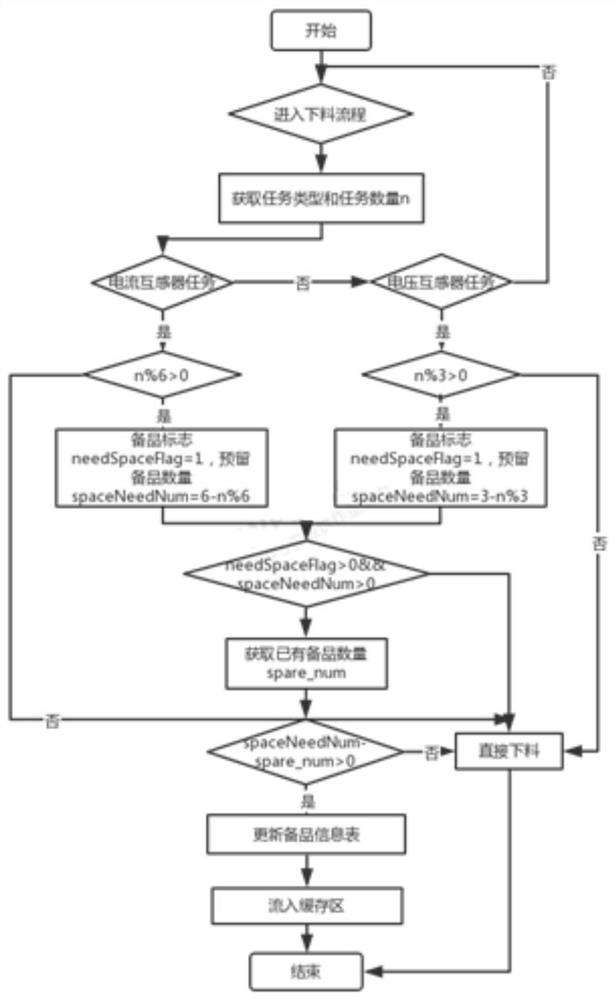

Automatic scheduling method based on dynamic collaborative position complementing strategy

ActiveCN112986890AReduce the number of dispatchesReduce dispatch timeElectrical testingControl systemReliability engineering

The invention discloses an automatic scheduling method based on a dynamic collaborative position complementing strategy. The method comprises the following steps of S1, carrying out the differential research and judgment according to the verification type and number of a current mutual inductor verification task, and calculating the number of mutual inductors which need to be complemented in advance, S2, controlling a mutual inductor verification line to start verification of the mutual inductor, S3, reserving samples in the cache region, wherein the number of the samples is equal to that of the mutual inductors needing to be complemented from the detected mutual inductors, and S4, when the number of the samples verified for the last time in the mutual inductor verification task is not full of the preset number, enabling the control system of the mutual inductor verification line to schedule the samples in the cache region to enter the verification platform for complementing so as to complete the verification for the last time. The method is suitable for verification of the mutual inductor.

Owner:WUHAN NARI LIABILITY OF STATE GRID ELECTRIC POWER RES INST

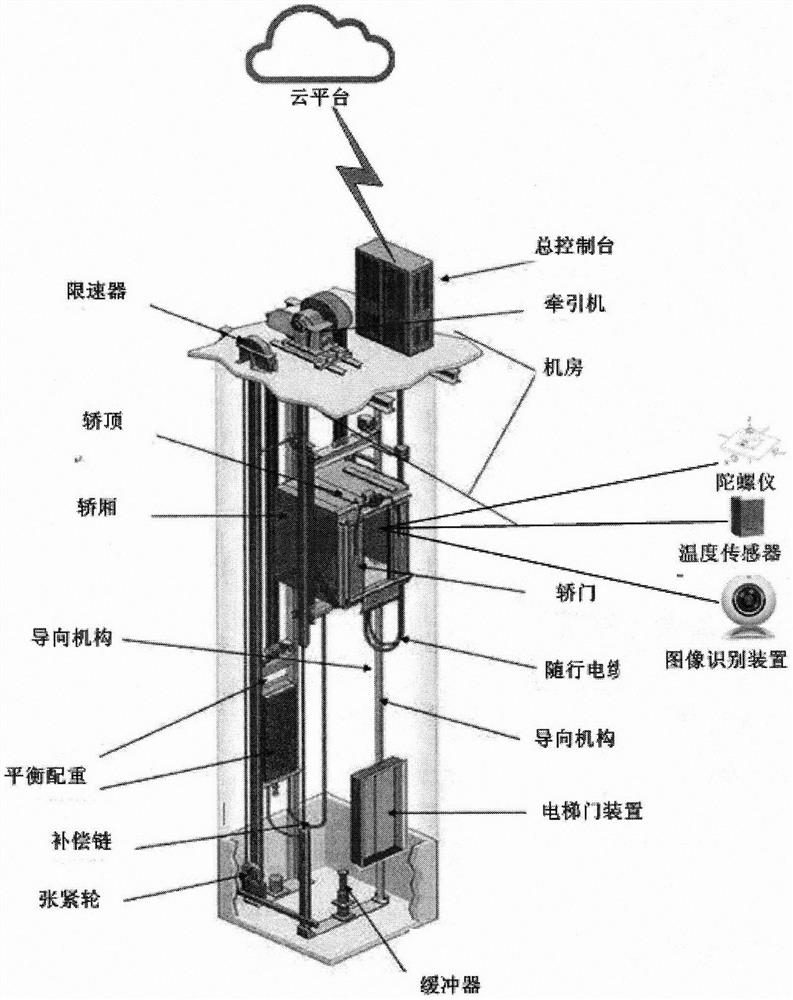

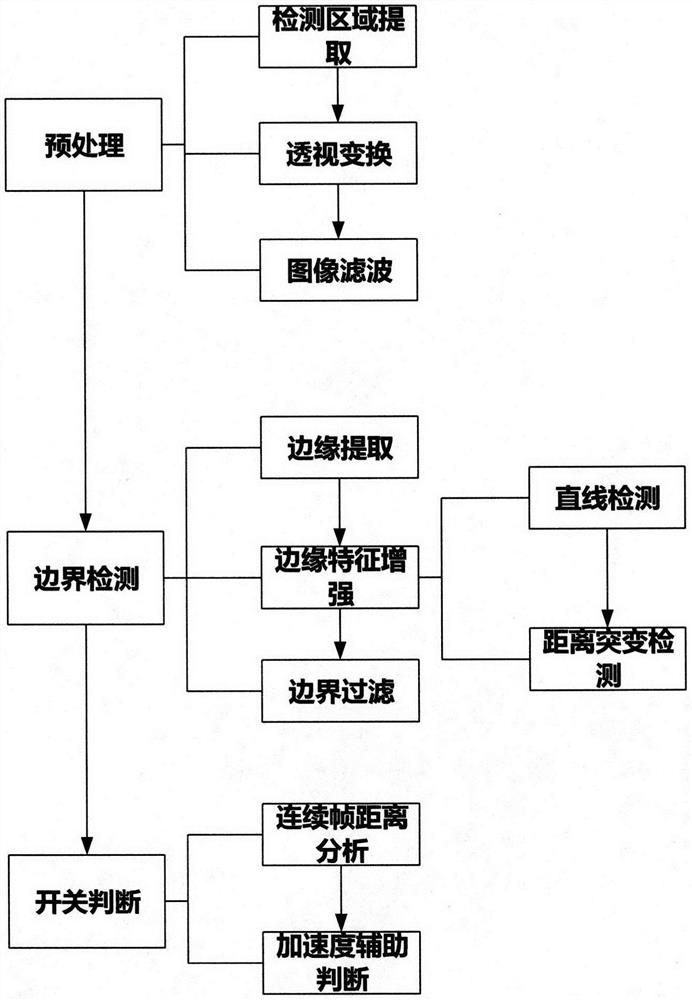

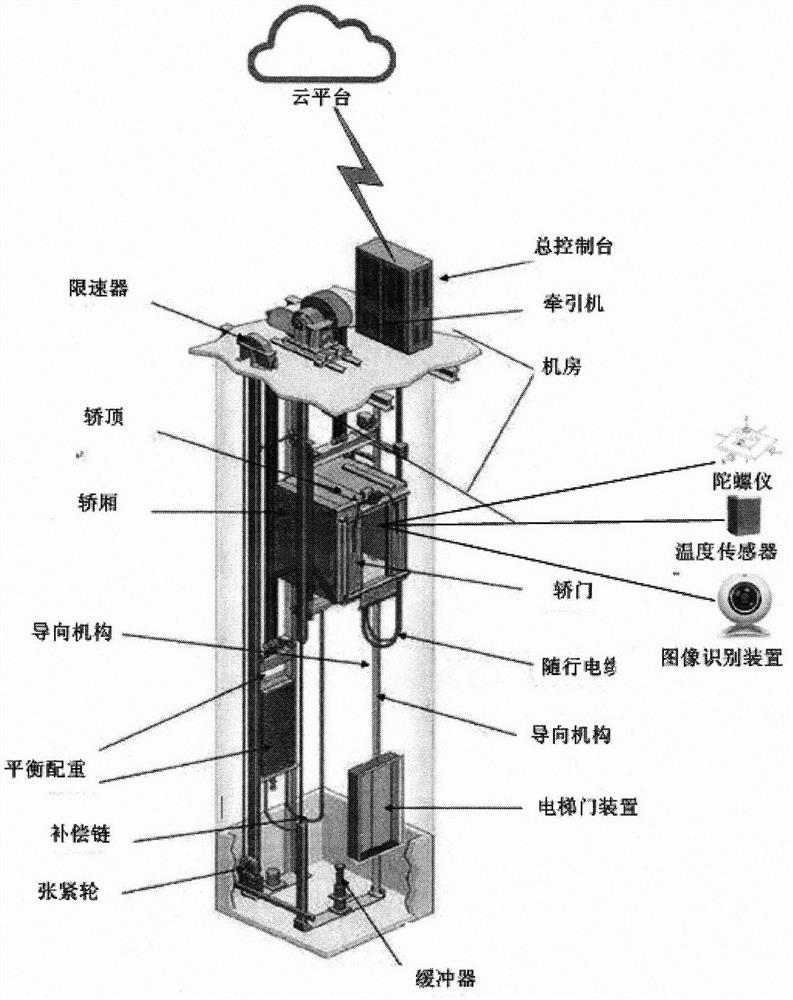

An Elevator Control System with Video Monitoring

InactiveCN109368434BEfficient, safe and stable switchingReduce consumptionClosed circuit television systemsElevatorsVideo monitoringImaging processing

The invention discloses an elevator control system with the video monitoring function. An image identification device comprises a camera, a signal processing module, a video collection module and an infrared irradiation module, the signal processing module sequentially caries out image processing on received video data, the opening and closing state of an elevator door can be detected, video encoding and caching are carried out, the video data and received sensor signals are transmitted to a total control table, the total control table uploads related data to a cloud platform to be displayed in real time, and the alarm is given out when abnormity happens.

Owner:张勇 +1

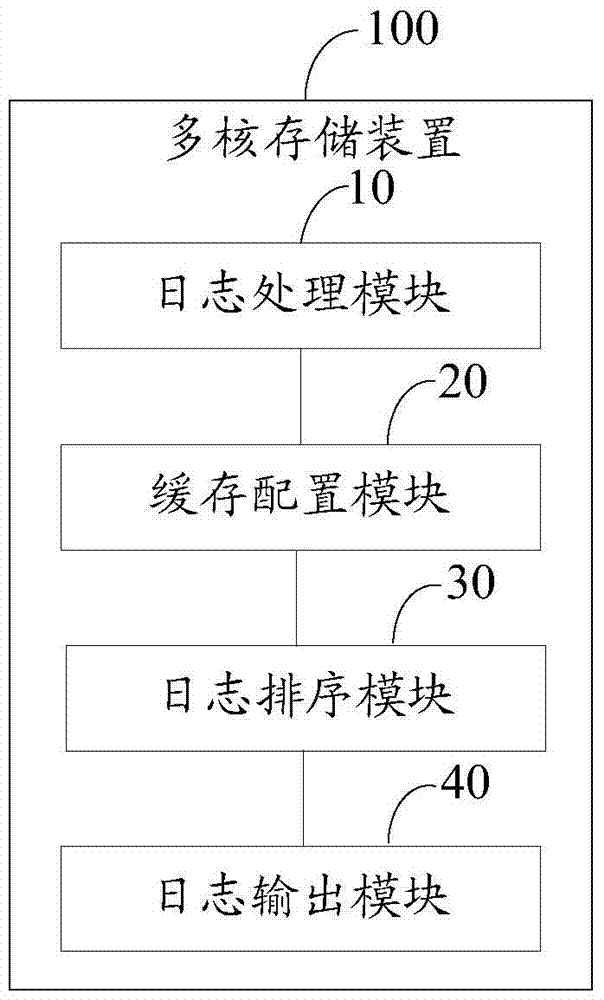

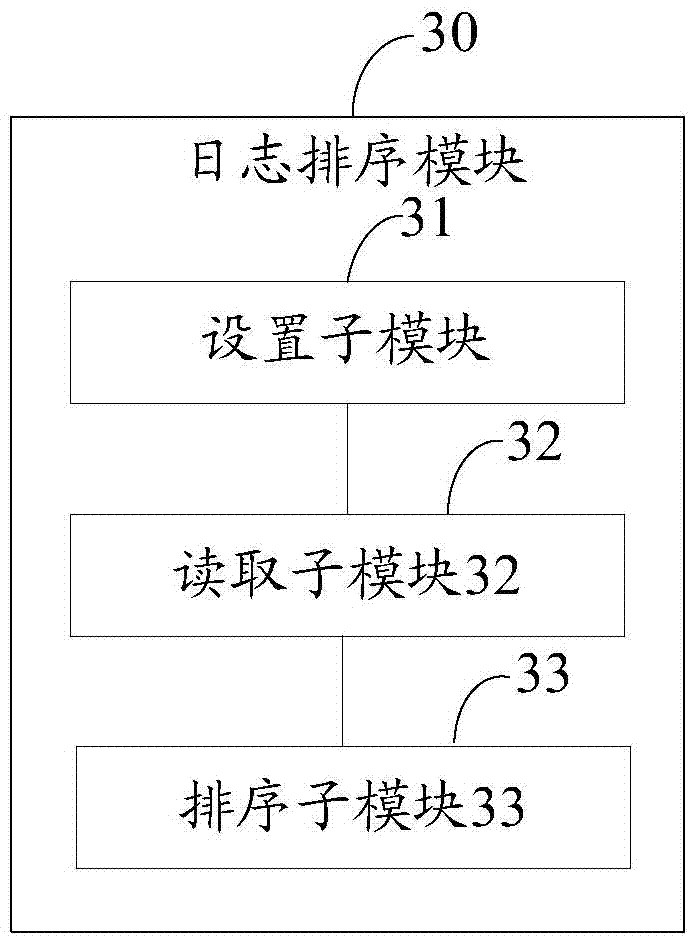

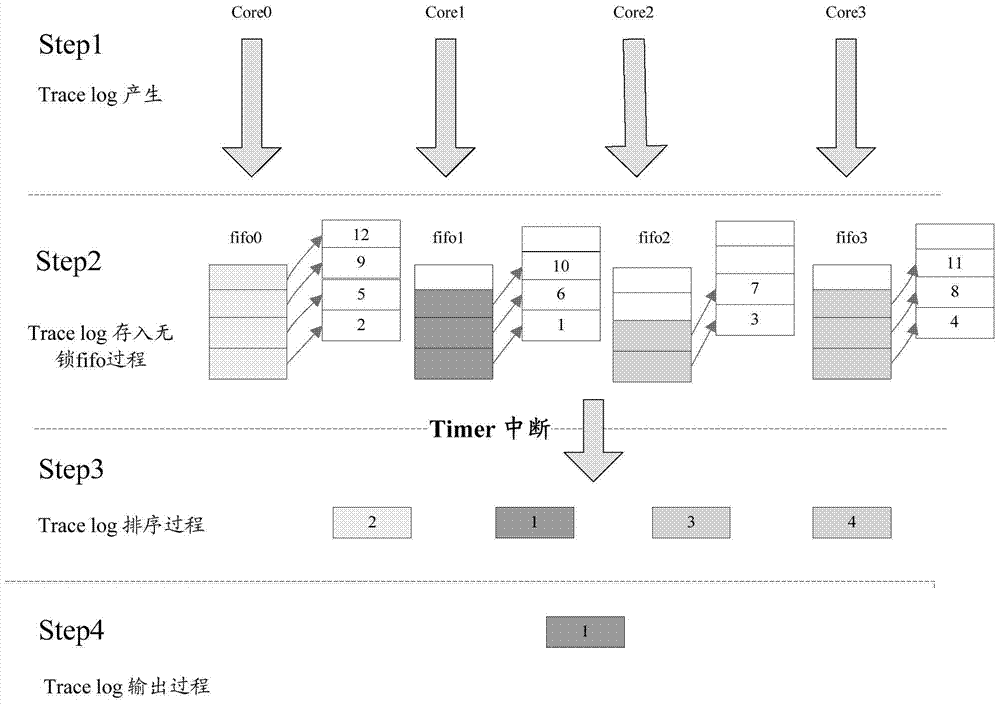

Multi-core storage device and tracking log output processing method in multi-core environment

ActiveCN104461931BThe trace log is generated synchronouslyReduce the number of dispatchesSpecial data processing applicationsTimestampResource utilization

The present invention is applicable to the field of storage technology, and provides a tracking log output processing method in a multi-core environment. The method includes: when the tracking log of each core is generated, the tracking log data is packaged, and time stamp information is added. Timestamp information is used to identify the sequence of trace log records; configure a lock-free circular cache structure and a static cache structure for each of the multiple cores, which are used to temporarily store the pointer of the trace log and temporarily store the data generated by each core. Tracking logs and corresponding timestamps; obtaining the timestamp information of the tracking logs according to the pointers of the tracking logs, and sorting the tracking logs of different static cache structures according to the timestamp information; when the timer is interrupted, the Trace log output processing. The present invention also correspondingly provides a multi-core storage device for realizing the above method. Thereby, the present invention can make the cache operation and the output operation run simultaneously, reduce the times of scheduling, and improve the utilization rate of resources.

Owner:RAMAXEL TECH SHENZHEN

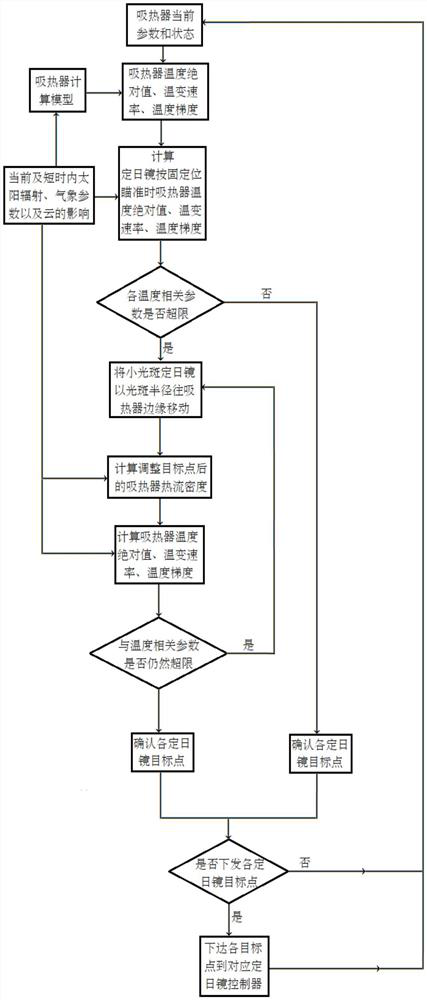

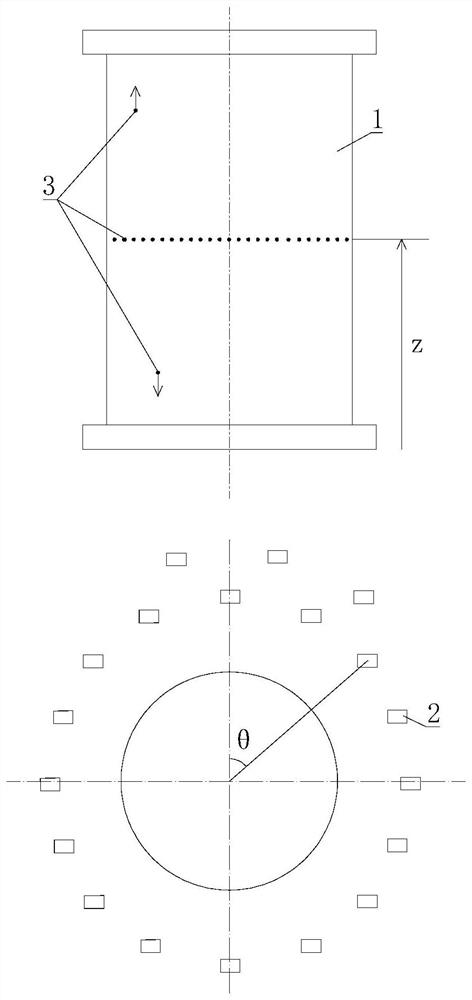

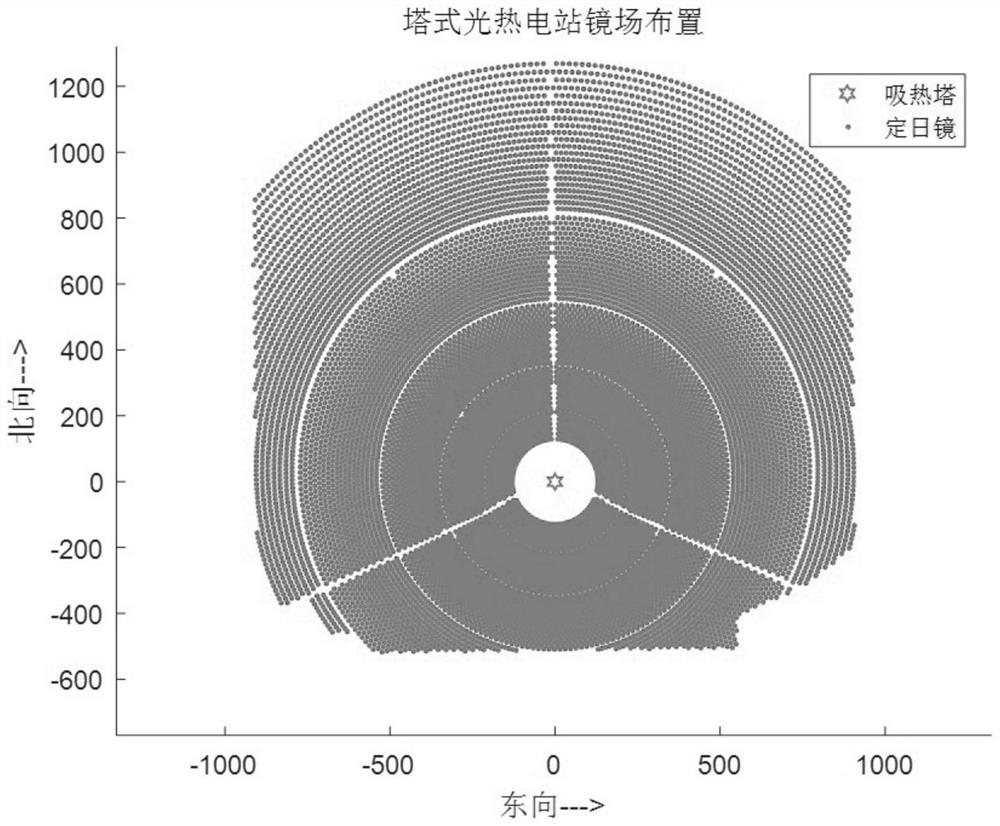

Tower type photo-thermal power station heliostat scheduling method based on heat absorber temperature control

ActiveCN114877543ATo achieve the purpose of safe and controllableAddress reliabilitySolar heating energySolar heat collector controllersTemperature controlHeliostat

The invention belongs to the technical field of tower-type solar photo-thermal power generation, and particularly relates to a tower-type photo-thermal power station heliostat scheduling method based on heat absorber temperature control, which comprises the following steps: firstly, obtaining the current parameters and state of a heat absorber and the influence parameters of solar radiation, meteorological parameters and cloud in the current and short period; calculating temperature-related parameters corresponding to each time sequence within a certain time and judging whether the temperature-related parameters exceed the limit or not; if not, determining each heliostat target point of each time sequence; and if the over-limit condition exists, calculating whether the temperature-related parameters exceed the limit or not according to calculation aiming points obtained by gradually moving the heliostats from the initial aiming points to the edge of the heat absorber until the temperature-related parameters do not exceed the limit any more, then confirming the target points of the heliostats in the time sequences, and scheduling the heliostats. According to the method, the target point of the heliostat is controlled and the heliostat is scheduled based on the temperature related parameters of the heat absorber, so that the temperature of the heat absorber can be ensured to be controllable under various normal steady-state working conditions, starting, stopping, preheating and changing working conditions that a mirror field is influenced by cloud and the like.

Owner:DONGFANG BOILER GROUP OF DONGFANG ELECTRIC CORP

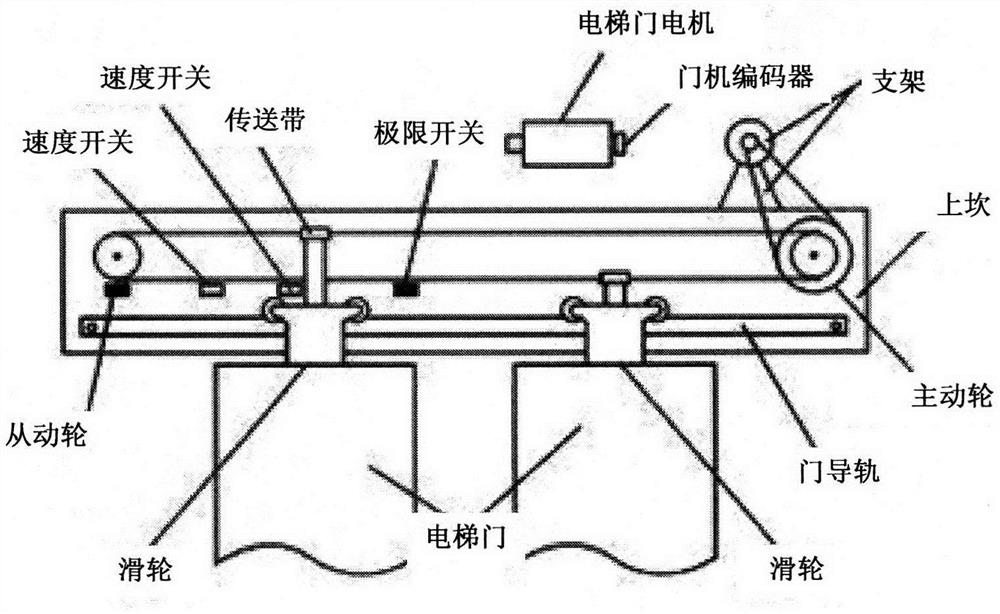

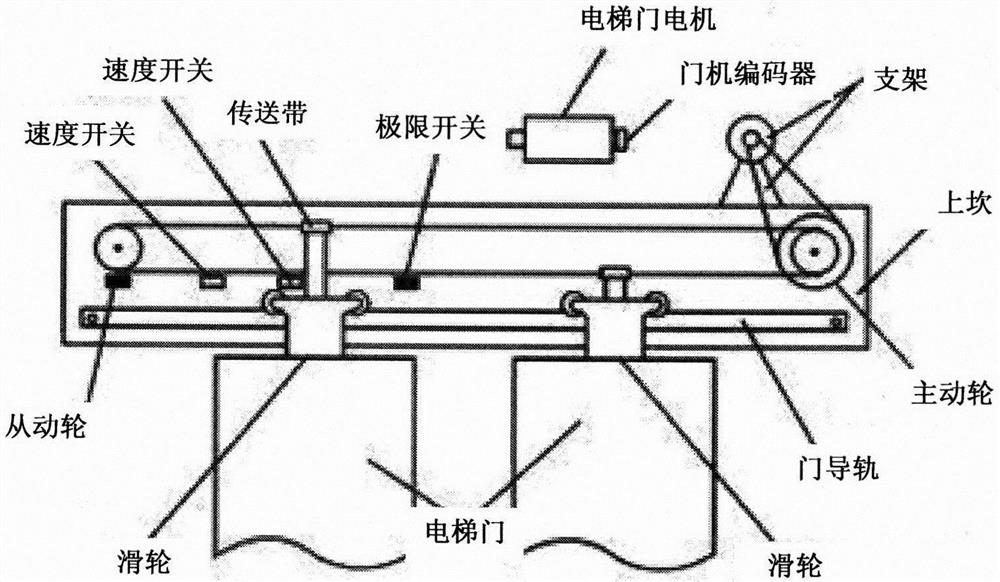

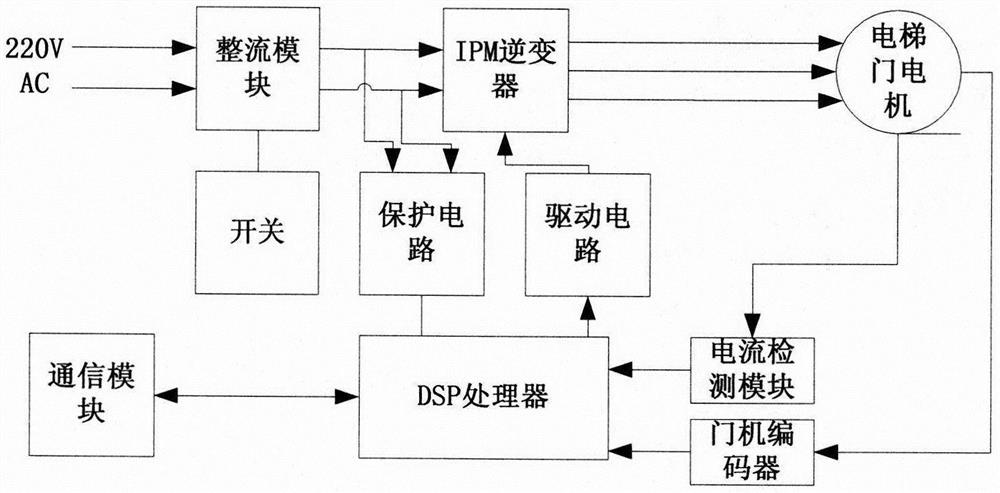

An Elevator System with Normal Speed Curve Elevator Door Control

InactiveCN109368460BEfficient, safe and stable switchingAvoid harmElevatorsBuilding liftsElevator systemControl manner

The invention discloses an elevator system subjected to normal speed curve elevator door control. A portal crane controller mainly comprises a communication module, a switch, a DSP, a rectification module, an IPM inverter, a protection circuit, a drive circuit and a current detection module. The DSP uses a normal speed curve control manner to control opening and closing of an elevator door, so that the elevator door is not prone to happening collision when the elevator door runs to the end point during opening and closing; by detecting the distance and speed, the motor is controlled to make the elevator door run, be opened and be closed smoothly; and a normal speed curve is that the door closing speed change is in a shape of a curve in normal distribution.

Owner:张勇 +1

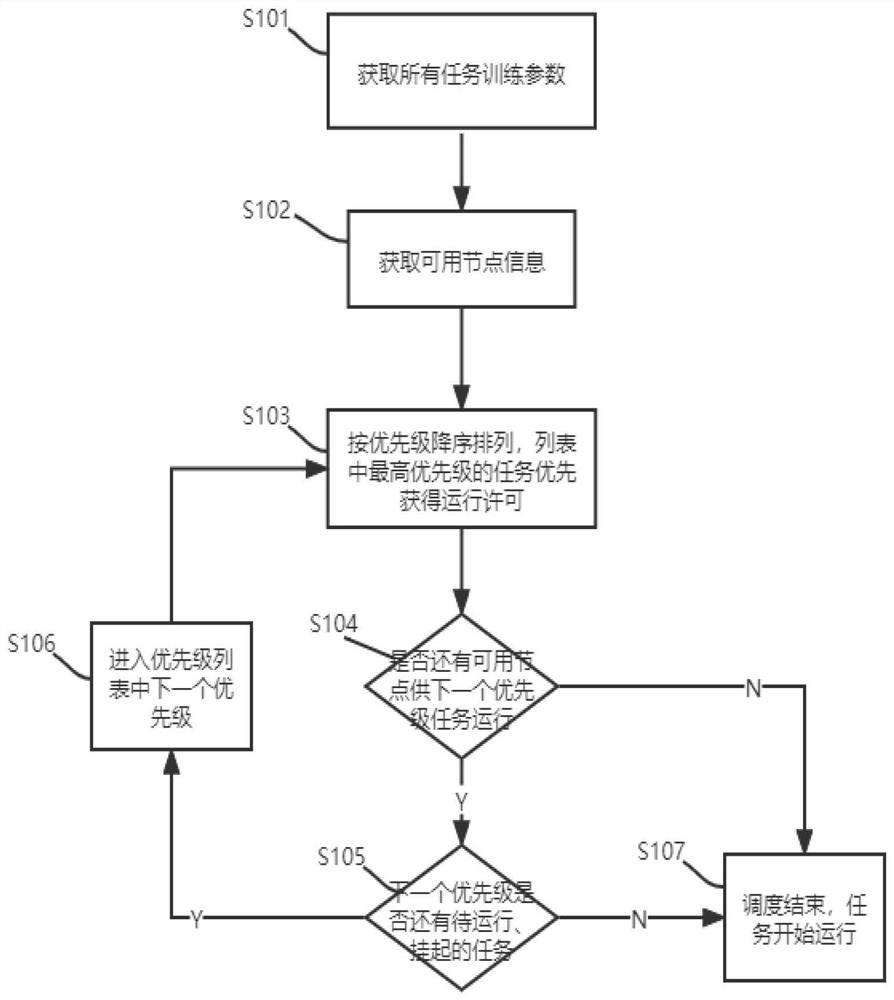

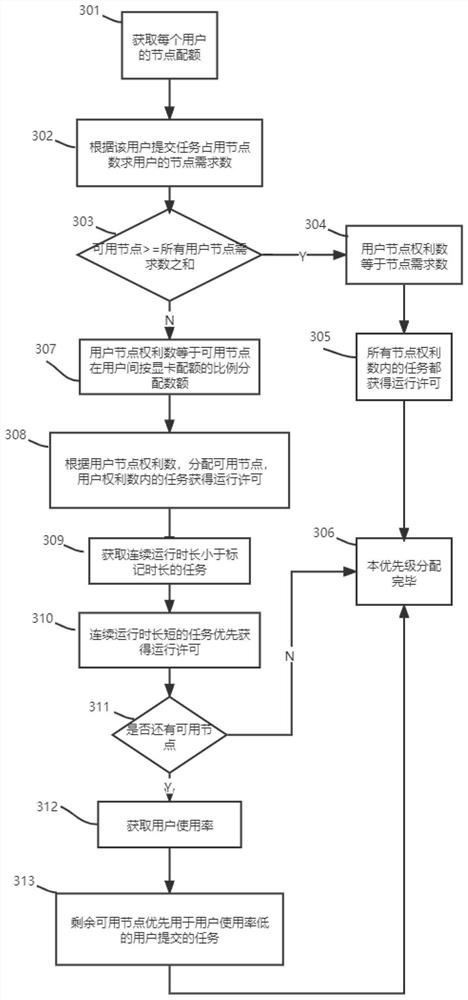

Method and device for cluster management task scheduling

PendingCN114138441AAchieve balanced distributionReduce the number of dispatchesProgram initiation/switchingResource allocationReal-time computingDistributed computing

The invention relates to the field of node allocation, in particular to a cluster management task scheduling method, which can realize reasonable allocation of limited computing power resources between a user and a task as much as possible through reference and arrangement allocation of multiple dimensions such as task priority, user right number, running time length and user utilization rate. And the distribution balance of internal resources is realized. The method has the advantages that compared with traditional scheduling, the problem of butt joint coupling of a scheduling module and other modules in a traditional module is solved through independent scheduling module design, and a program is more flexible and easy to expand. A two-in-one mode of interruption and starting logic is adopted, task states, newly-built tasks, interrupted tasks or restarted tasks do not need to be concerned from the scheduling level, only results need to be calculated, the independence of a task scheduling module is improved from the bottom layer design, and the task scheduling efficiency is improved. Conditions are provided for more subsequent users, a larger cluster and a more complex platform service scene.

Owner:杭州幻方人工智能基础研究有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com