Patents

Literature

32results about How to "Fast inference" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

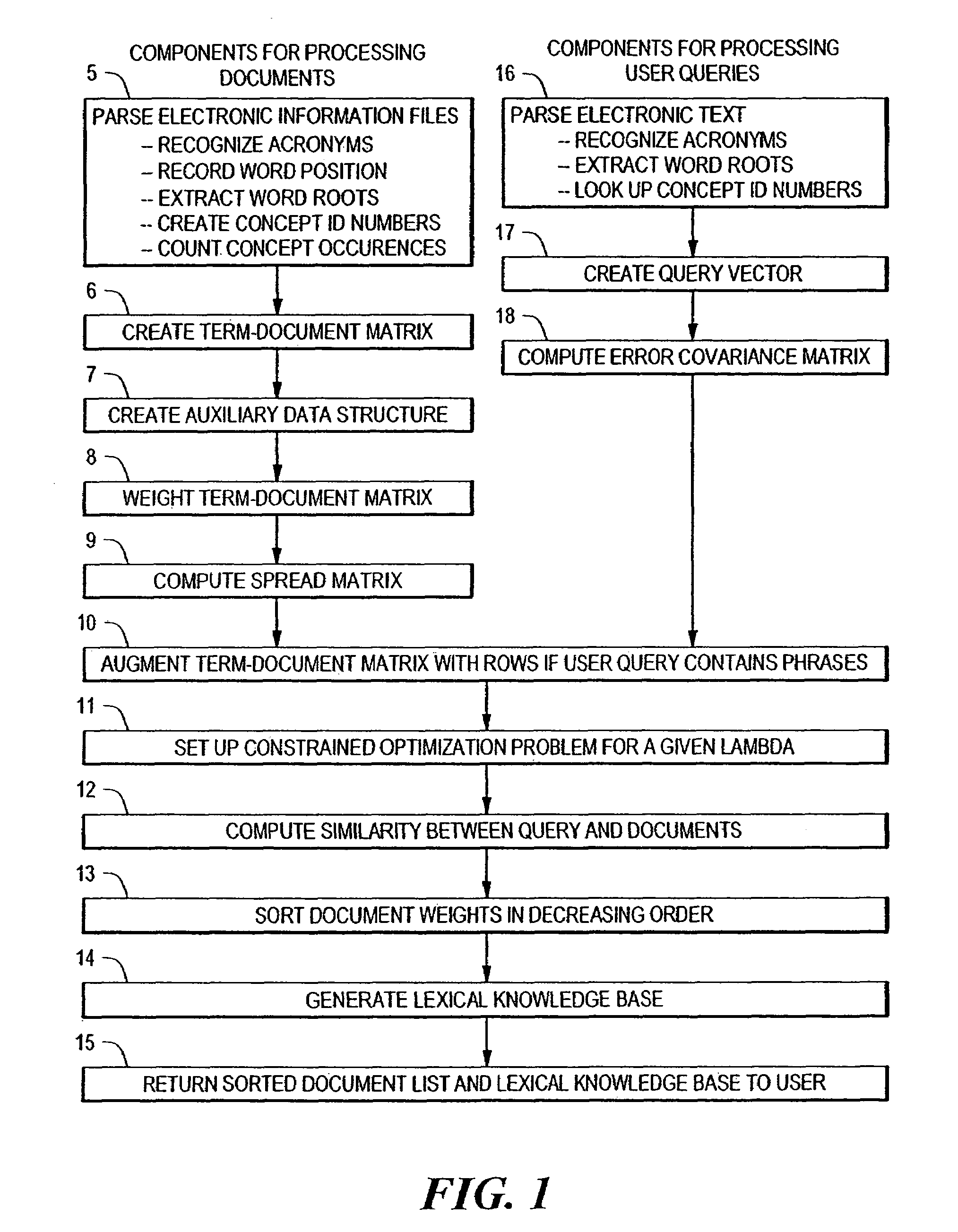

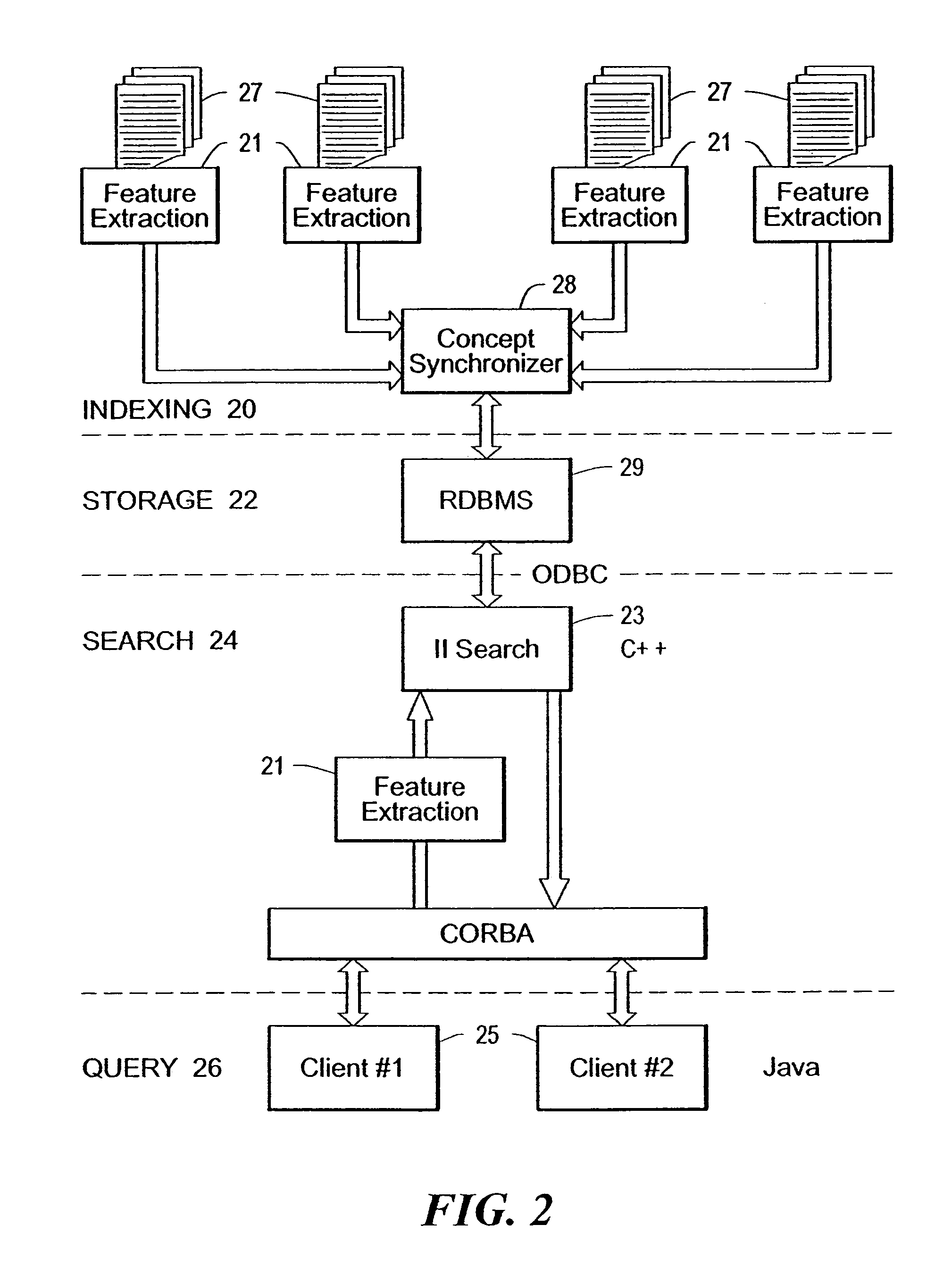

Extended functionality for an inverse inference engine based web search

InactiveUS20050021517A1Improve efficiencyFast and scalableNatural language translationData processing applicationsMain diagonalAlgorithm

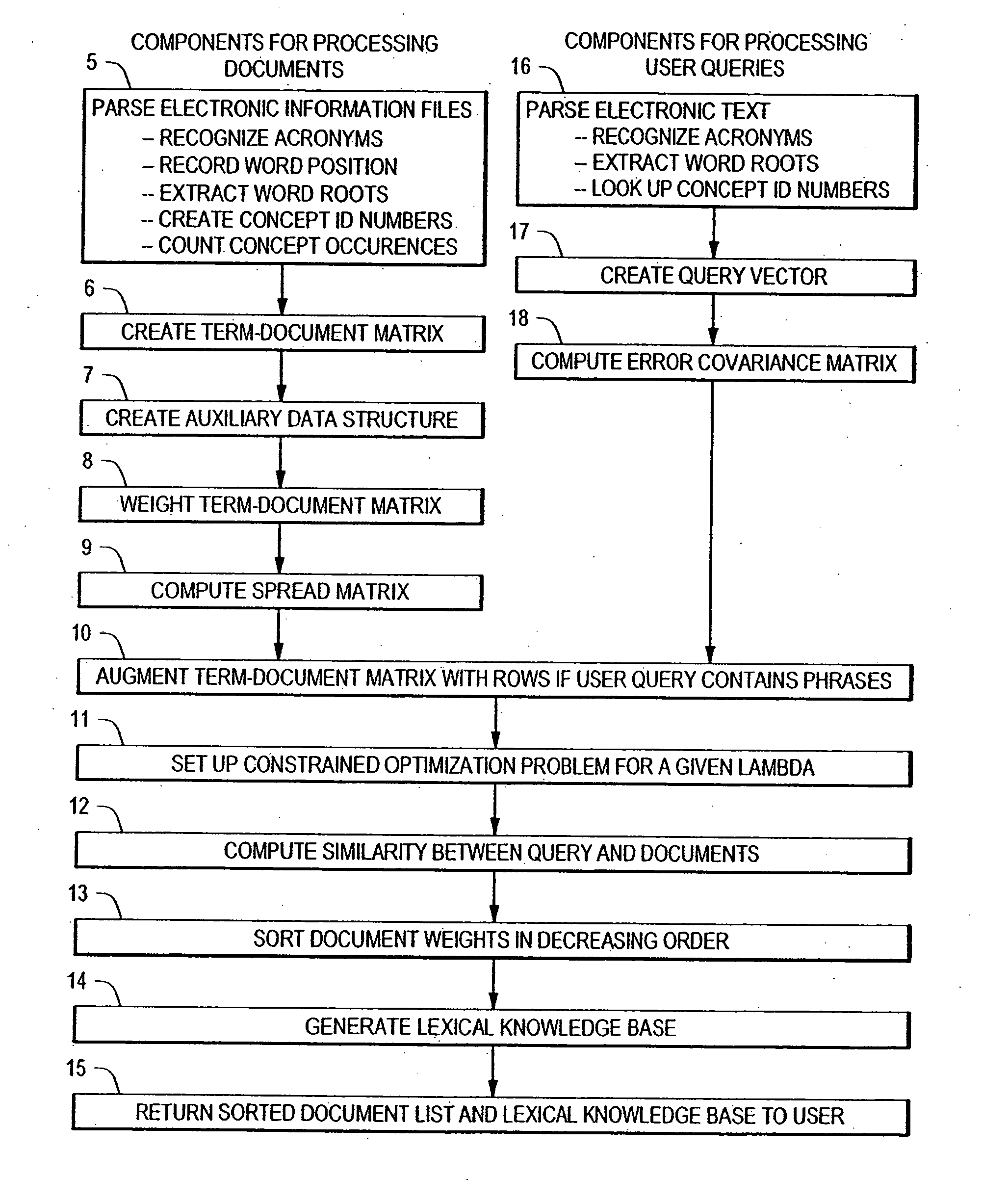

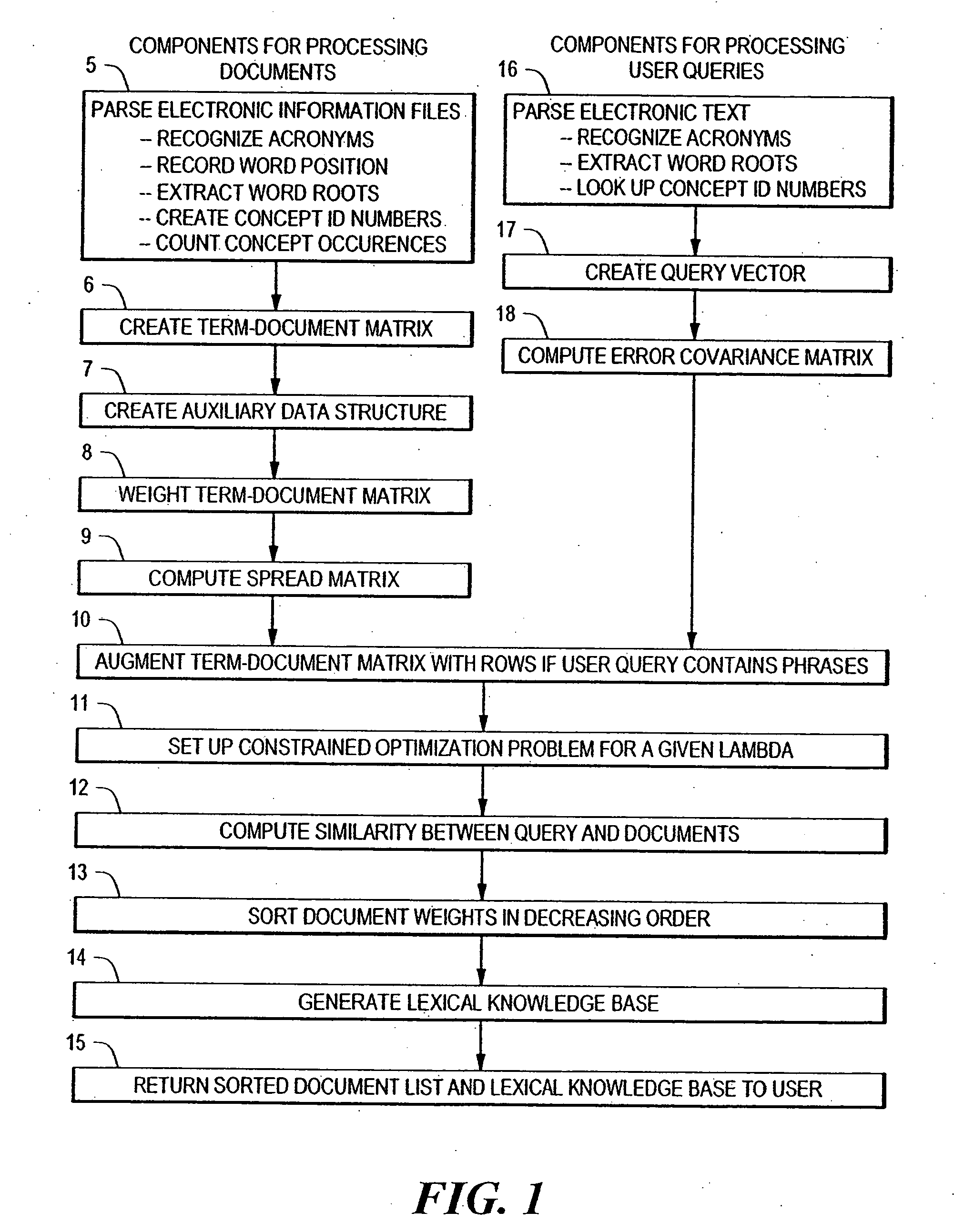

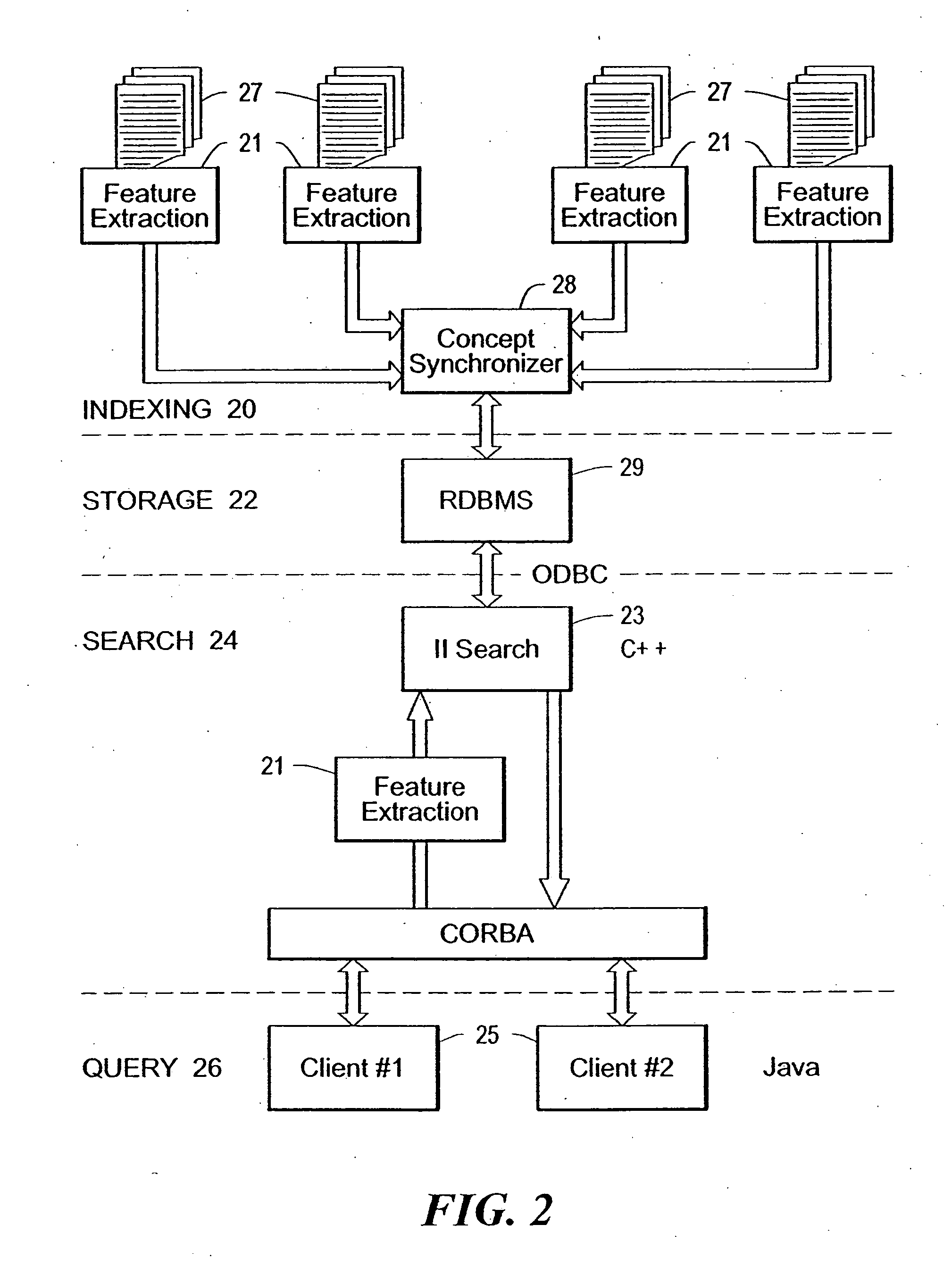

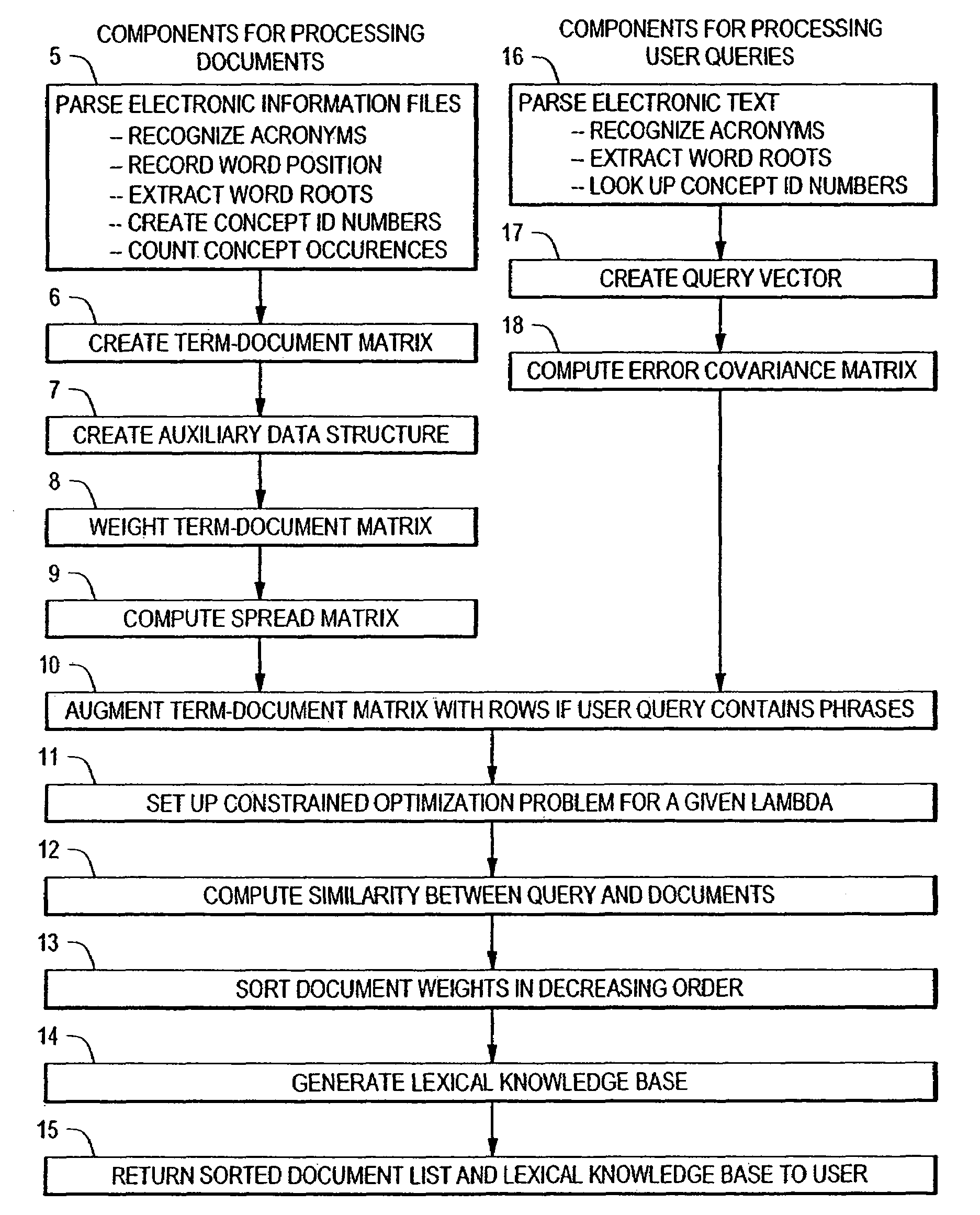

An extension of an inverse inference search engine is disclosed which provides cross language document retrieval, in which the information matrix used as input to the inverse inference engine is organized into rows of blocks corresponding to languages within a predetermined set of natural languages. The information matrix is further organized into two column-wise partitions. The first partition consists of blocks of entries representing fully translated documents, while the second partition is a matrix of blocks of entries representing documents for which translations are not available in all of the predetermined languages. Further in the second partition, entries in blocks outside the main diagonal of blocks are zero. Another disclosed extension to the inverse inference retrieval document retrieval system supports automatic, knowledge based training. This approach applies the idea of using a training set to the problem of searching databases where information that is diluted or not reliable enough to allow the creation of robust semantic links. To address this situation, the disclosed system loads the left-hand partition of the input matrix for the inverse inference engine with information from reliable sources.

Owner:FIVER LLC

Extended functionality for an inverse inference engine based web search

InactiveUS7269598B2Improve efficiencyFast and scalableNatural language translationData processing applicationsMain diagonalAlgorithm

An extension of an inverse inference search engine is disclosed which provides cross language document retrieval, in which the information matrix used as input to the inverse inference engine is organized into rows of blocks corresponding to languages within a predetermined set of natural languages. The information matrix is further organized into two column-wise partitions. The first partition consists of blocks of entries representing fully translated documents, while the second partition is a matrix of blocks of entries representing documents for which translations are not available in all of the predetermined languages. Further in the second partition, entries in blocks outside the main diagonal of blocks are zero. Another disclosed extension to the inverse inference retrieval document retrieval system supports automatic, knowledge based training. This approach applies the idea of using a training set to the problem of searching databases where information that is diluted or not reliable enough to allow the creation of robust semantic links. To address this situation, the disclosed system loads the left-hand partition of the input matrix for the inverse inference engine with information from reliable sources.

Owner:FIVER LLC

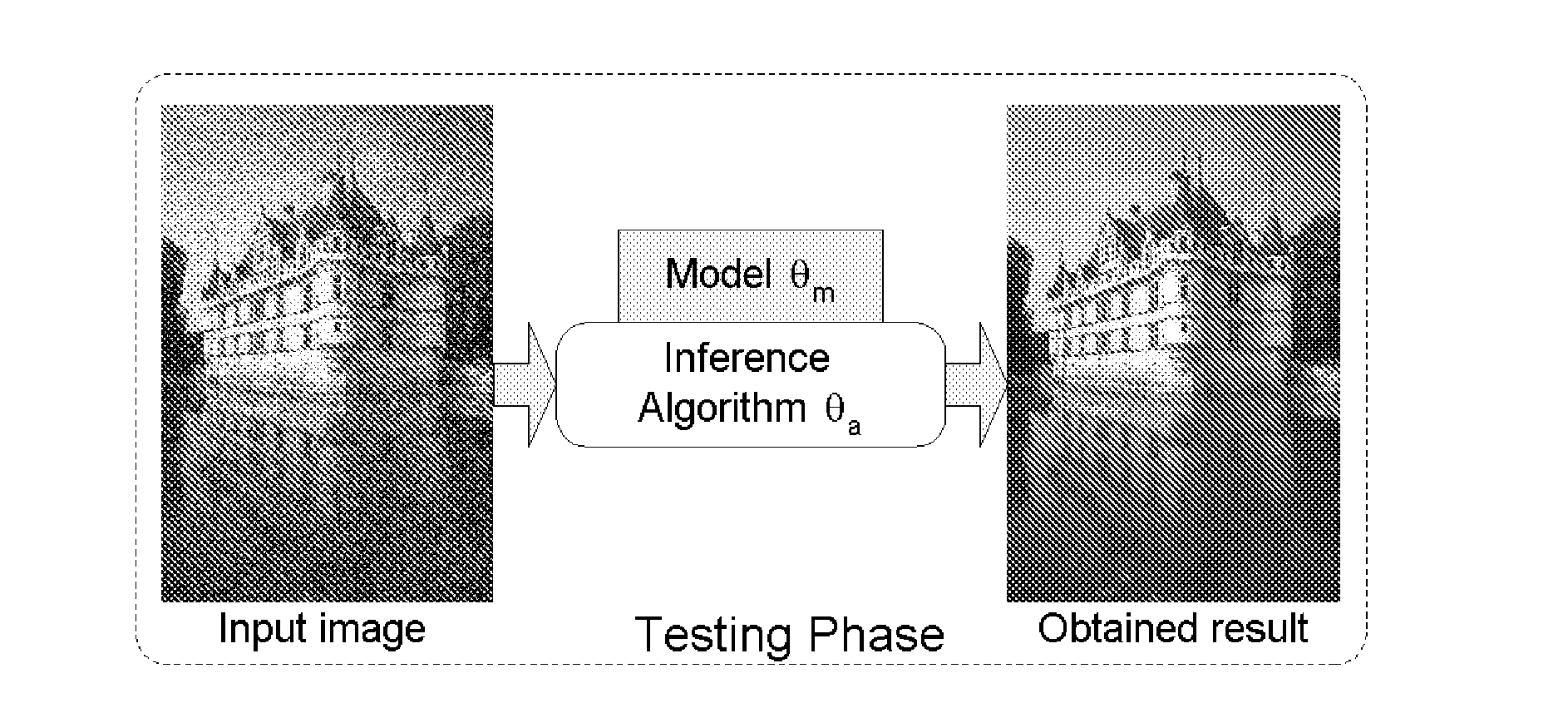

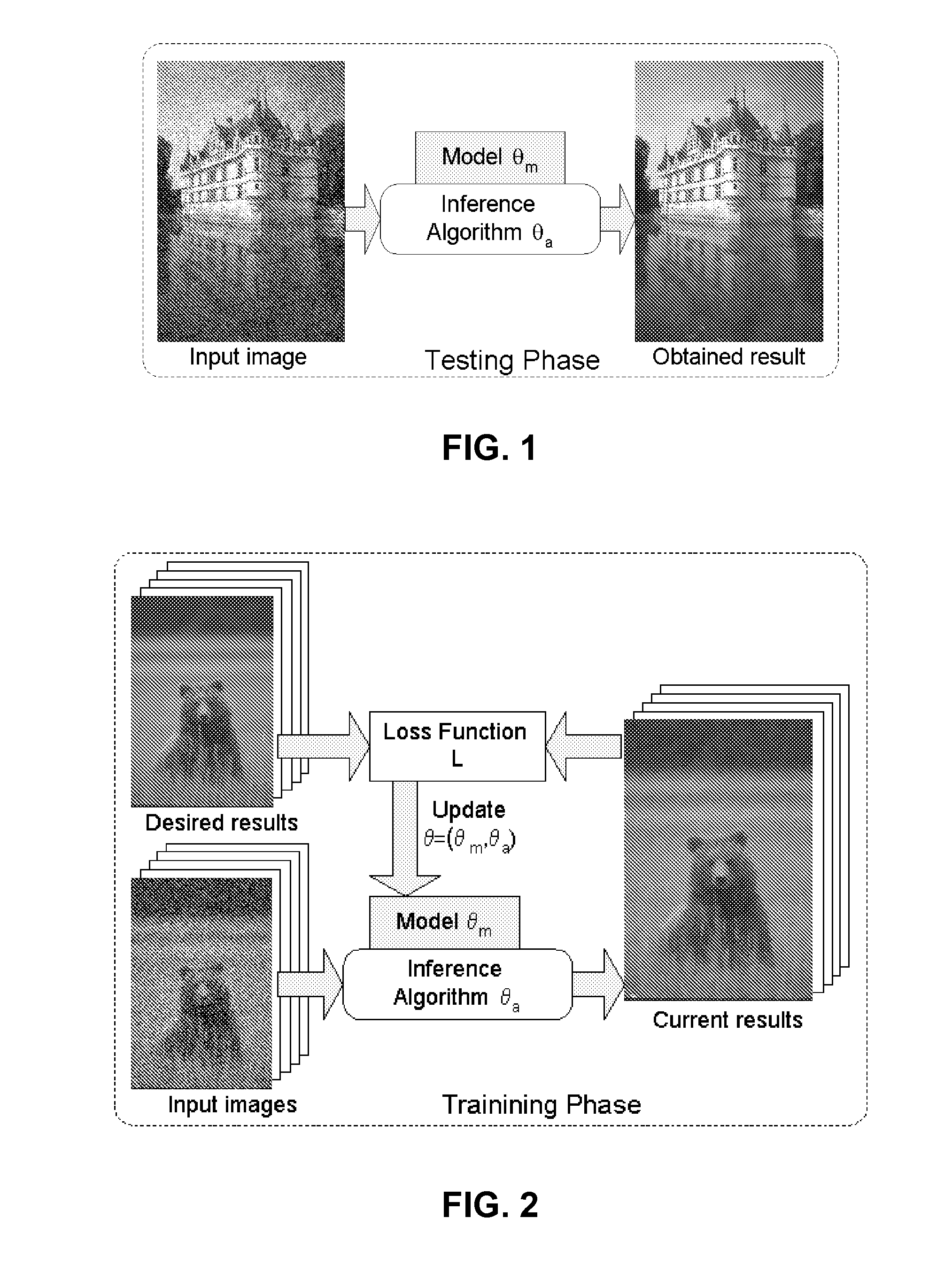

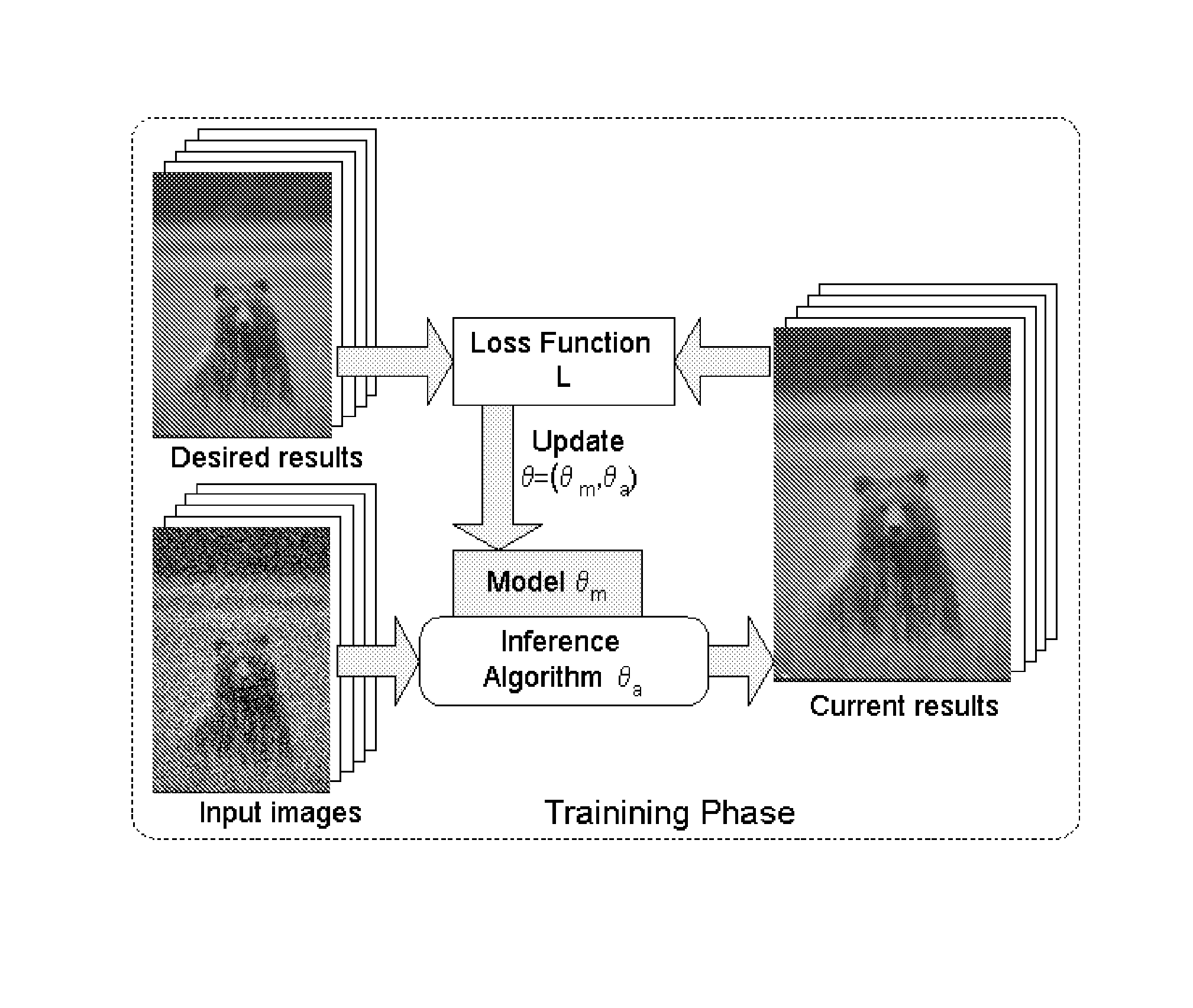

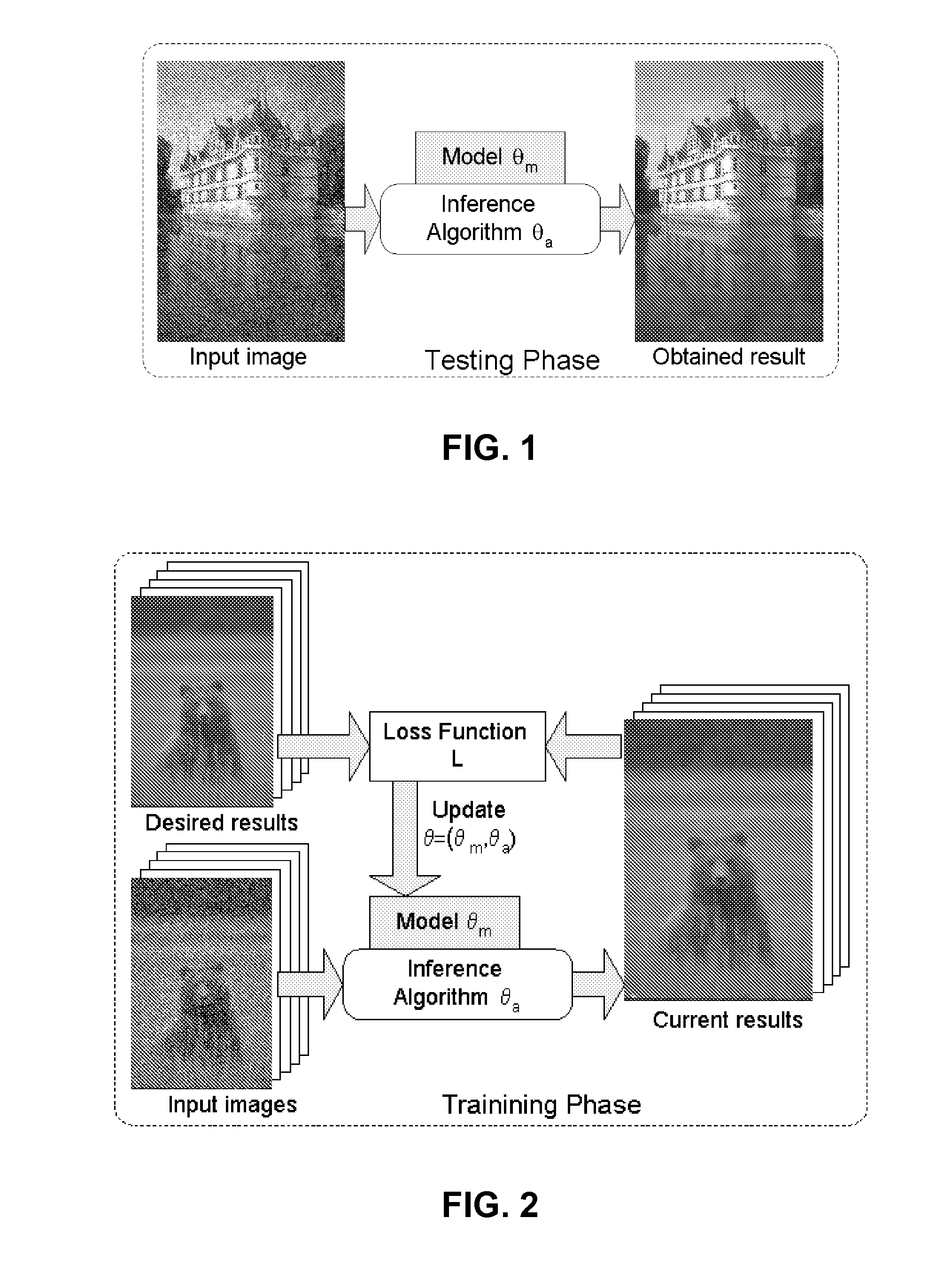

Systems and methods for training an active random field for real-time image denoising

InactiveUS20100020208A1Benchmark performanceClose to state-of-the-art accuracyTelevision system detailsImage enhancementImage denoisingConditional random field

An Active Random Field is presented, in which a Markov Random Field (MRF) or a Conditional Random Field (CRF) is trained together with a fast inference algorithm using pairs of input and desired output and a benchmark error measure.

Owner:FLORIDA STATE UNIV RES FOUND INC

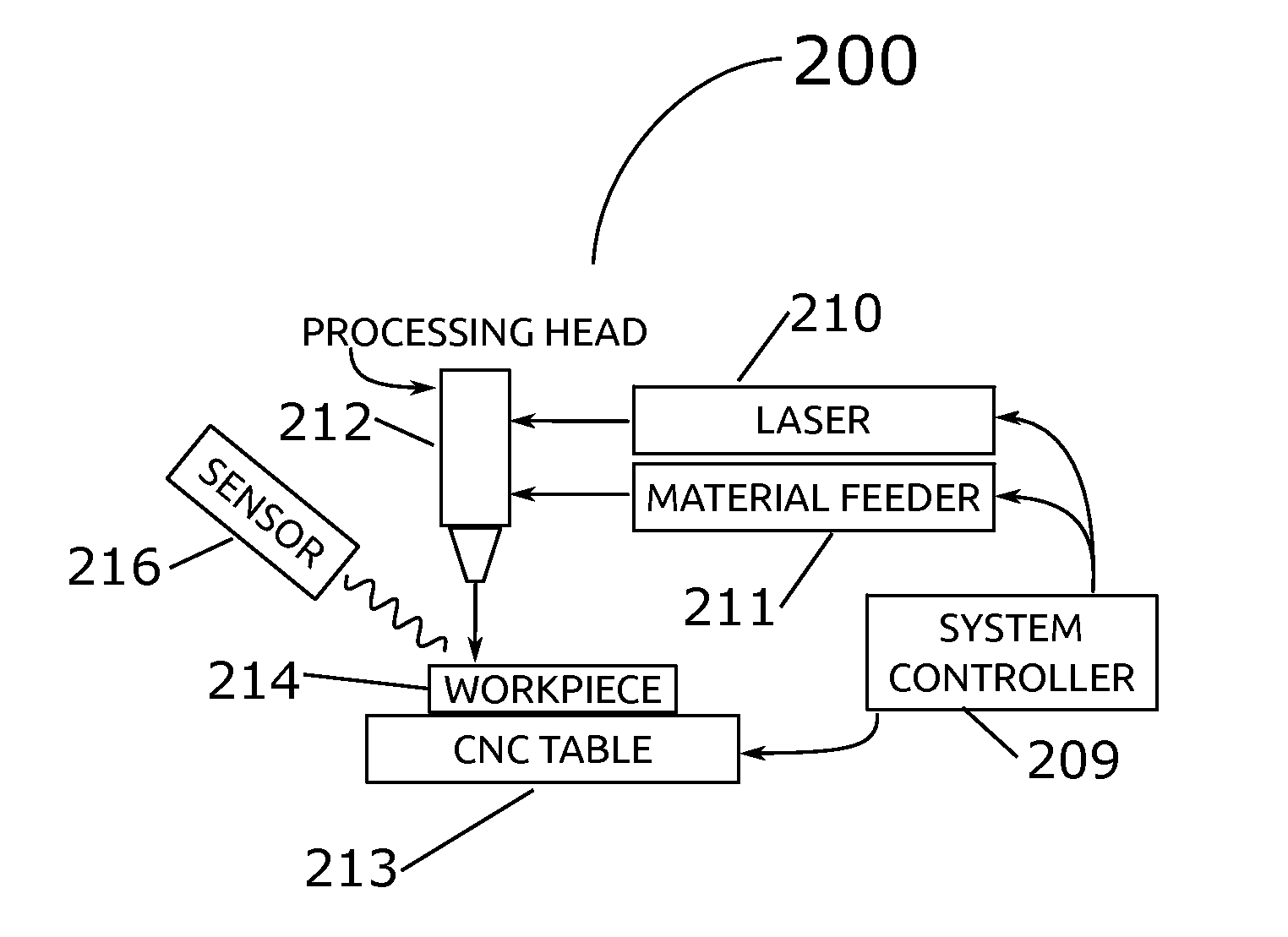

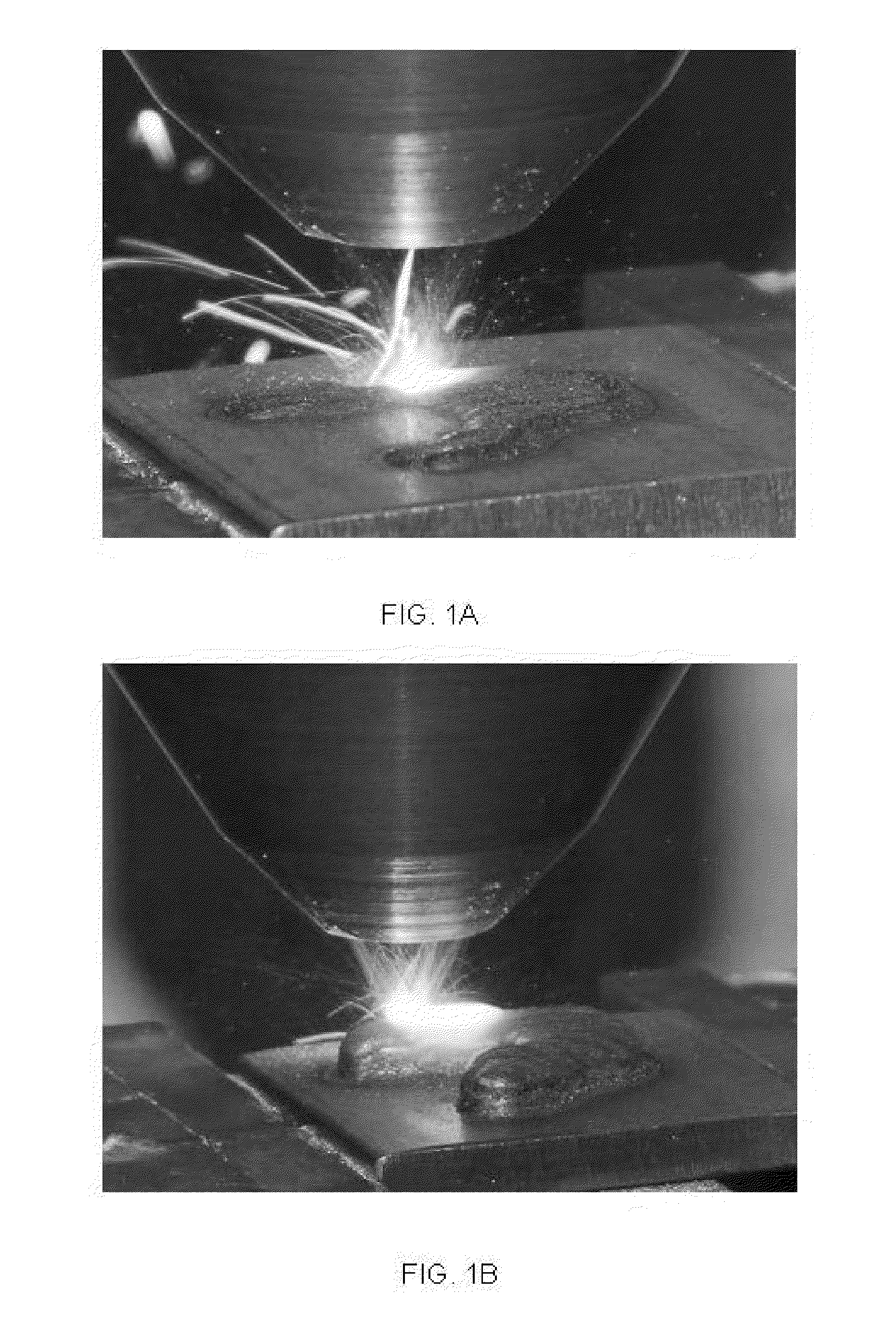

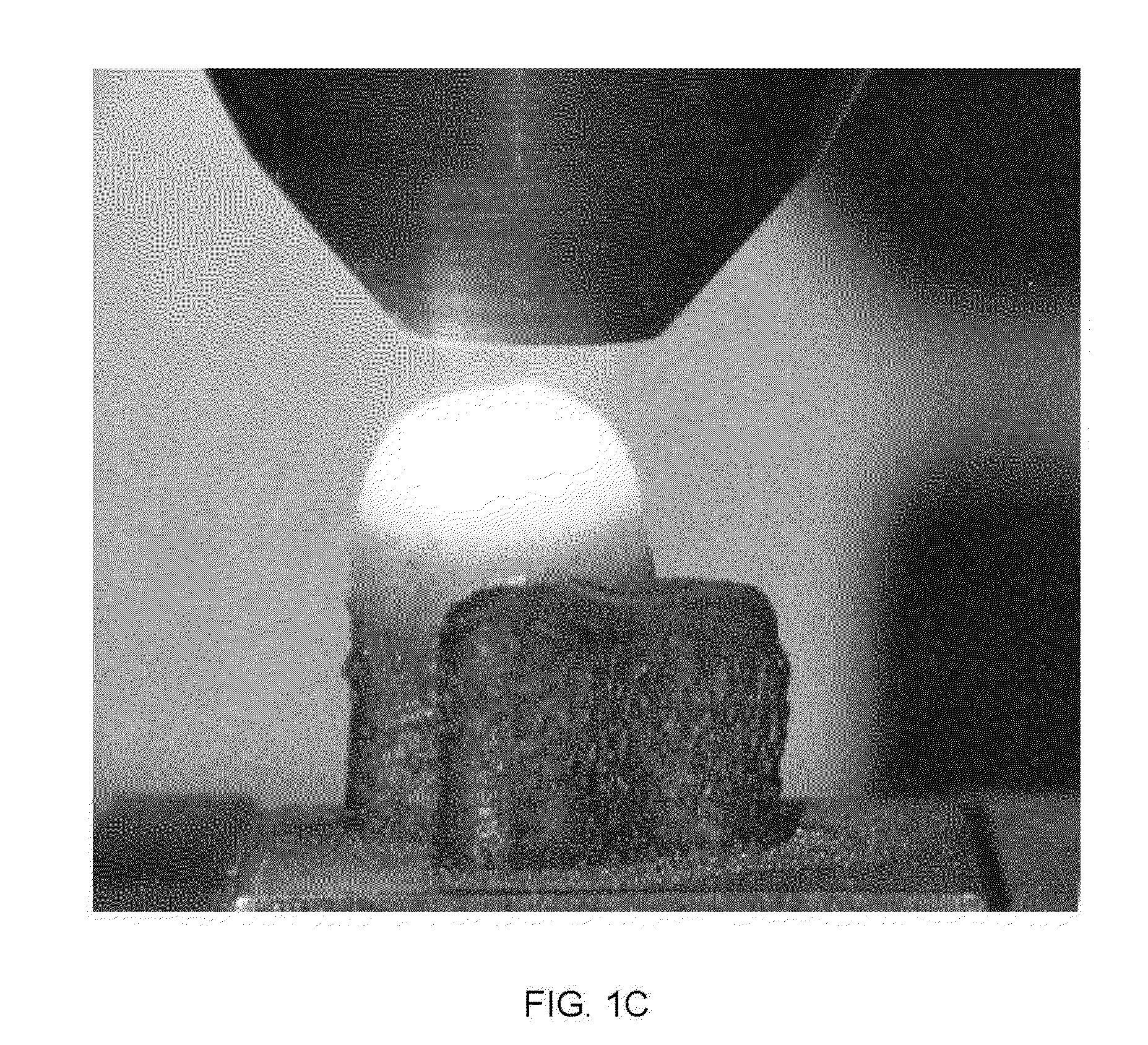

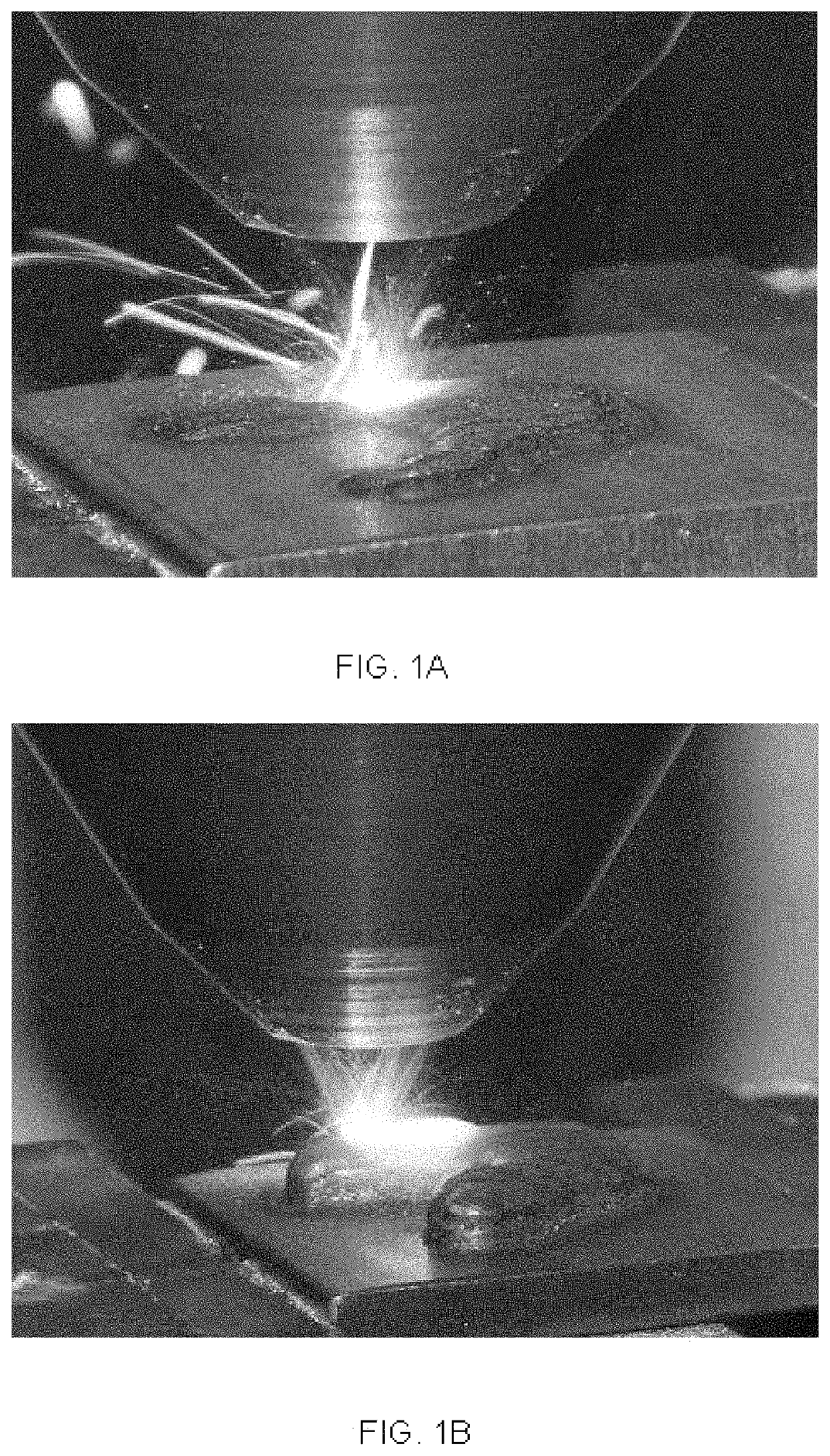

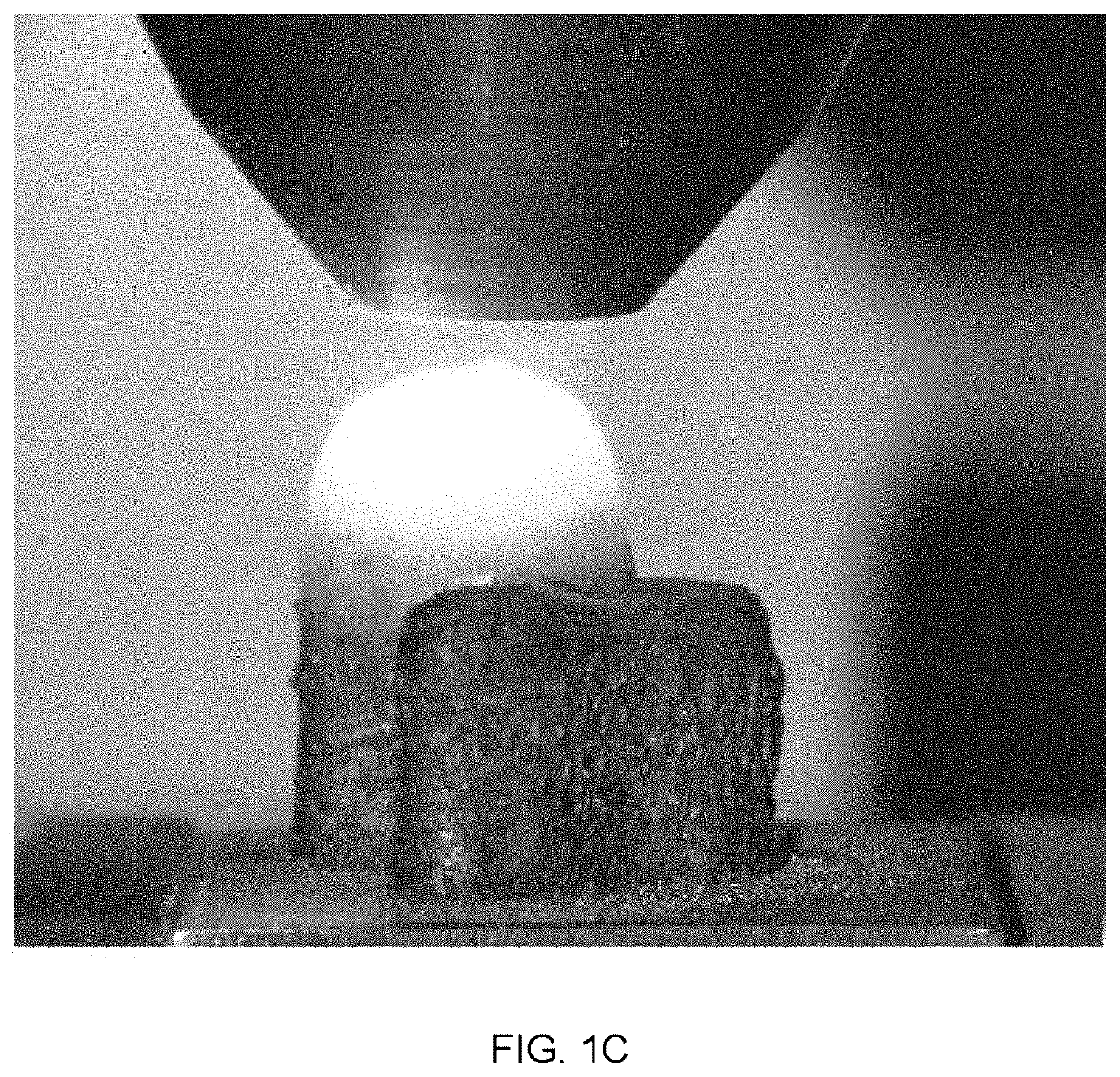

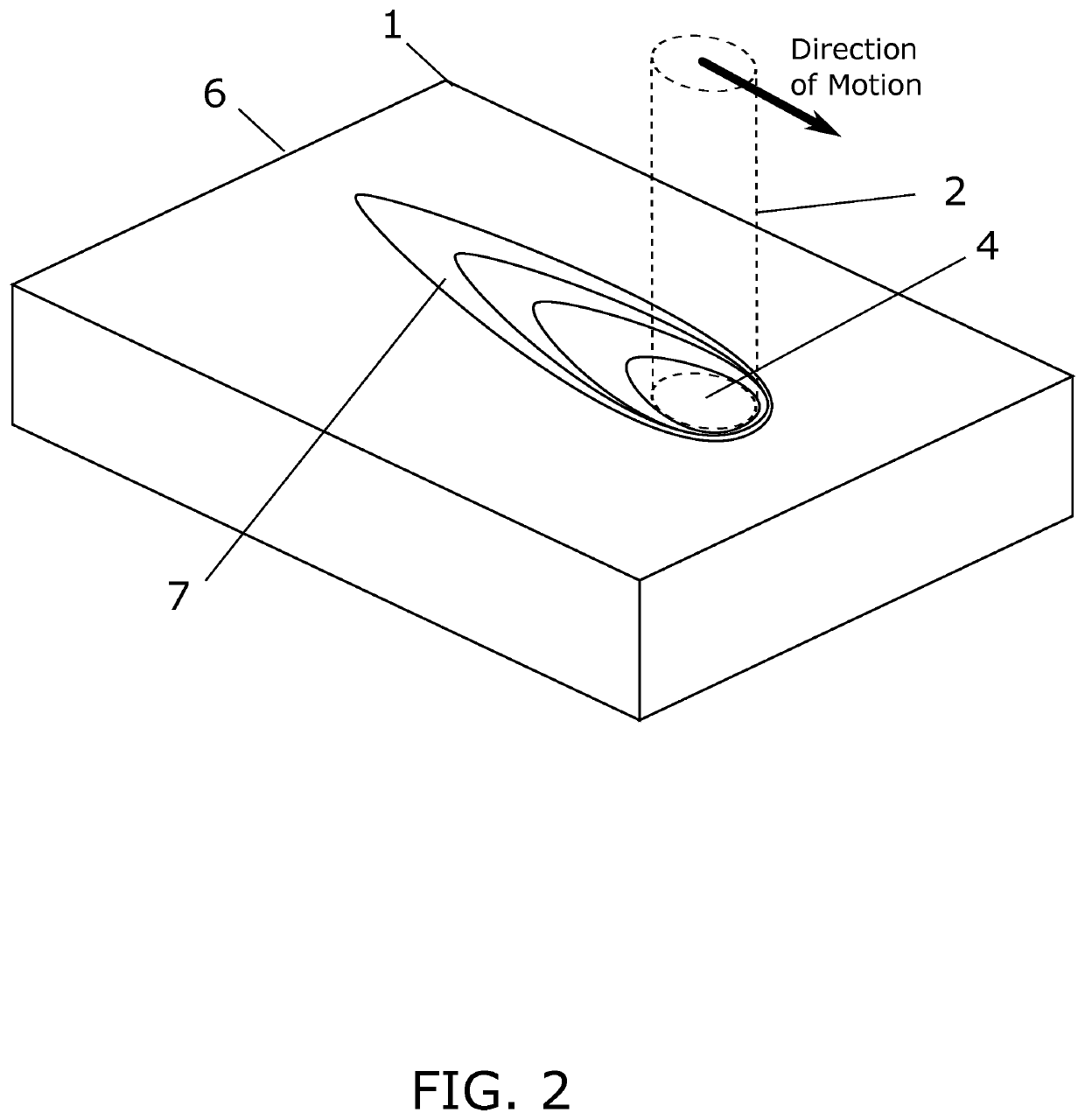

System and method for controlling the input energy from an energy point source during metal processing

ActiveUS20160151859A1Fast balanceFast inferenceAdditive manufacturing apparatusArc welding apparatusPhysicsWavelength range

A method for controlling, during metal processing, the input energy from an energy point source that directs focused emitted energy onto a metal workpiece having a geometry, wherein the directed focused emitted energy creates a melt pool and hot zone on the workpiece that emit radiation during the process. The method comprises determining a wavelength range for the emitted radiation that is within a spectral range of radiation emitted by the hot zone during processing that is comparatively high in amount in relation to the amount of radiation emitted by the melt pool in that spectral range during processing; directing the beam onto the workpiece to generate a melt pool and hot zone on the structure; measuring the intensity of radiation within the determined wavelength range; and adjusting the input energy from the energy point source based upon the measured intensity of radiation within the determined wavelength range.

Owner:PROD INNOVATION & ENG L LC

Systems and methods for training an active random field for real-time image denoising

InactiveUS8866936B2Improve accuracyImprove performanceImage enhancementTelevision system detailsConditional random fieldImage denoising

An Active Random Field is presented, in which a Markov Random Field (MRF) or a Conditional Random Field (CRF) is trained together with a fast inference algorithm using pairs of input and desired output and a benchmark error measure.

Owner:FLORIDA STATE UNIV RES FOUND INC

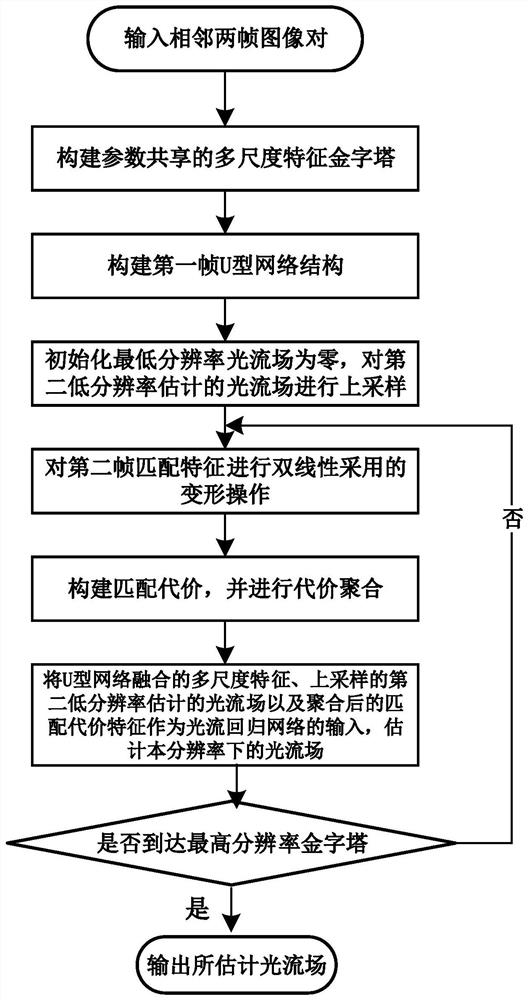

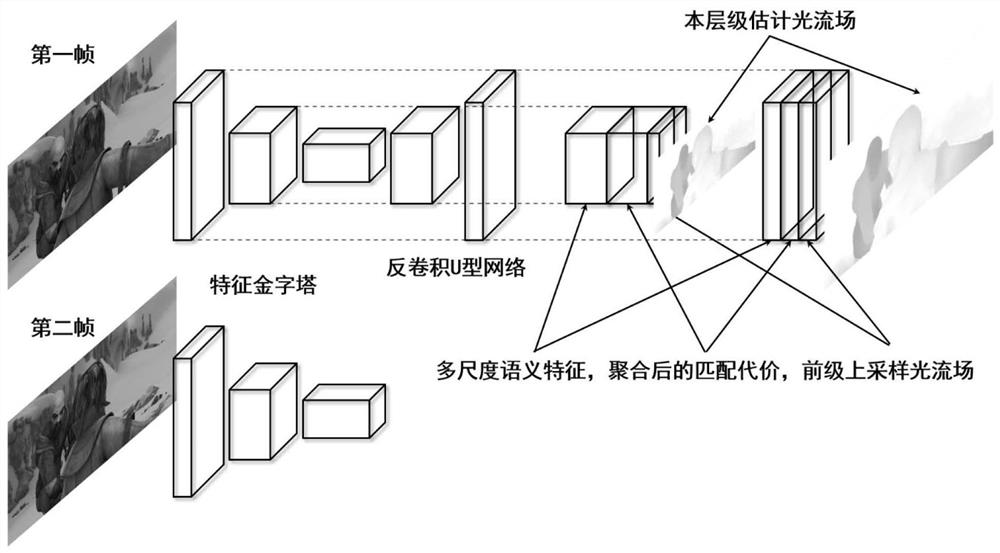

Real-time optical flow estimation method based on lightweight convolutional neural network

ActiveCN111626308AAccurate estimateShorten the space distanceCharacter and pattern recognitionNeural architecturesImage resolutionNetwork structure

The invention discloses a real-time optical flow estimation method based on a lightweight convolutional neural network, and the method comprises the steps: giving two adjacent frames of images, and constructing a multi-scale feature pyramid with shared parameters; on the basis of the constructed feature pyramid, constructing a U-shaped network structure of a first frame of image by adopting a deconvolution operation to perform multi-scale information fusion; initializing the lowest resolution optical flow field to be zero, and performing deformation operation based on bilinear sampling on a second frame matching feature after the optical flow estimated by the second low resolution is up-sampled; carrying out local similarity calculation based on an inner product on the features of the first frame and the deformed features of the second frame, constructing a matching cost, and carrying out cost aggregation; taking the multi-scale features, the up-sampled optical flow field and the matching cost features after cost aggregation as the input of an optical flow regression network, and estimating the optical flow field under the resolution; and repeating until the optical flow field under the highest resolution is estimated. According to the invention, optical flow estimation is more accurate, and the model is lightweight, efficient, real-time and rapid.

Owner:SHANGHAI JIAO TONG UNIV

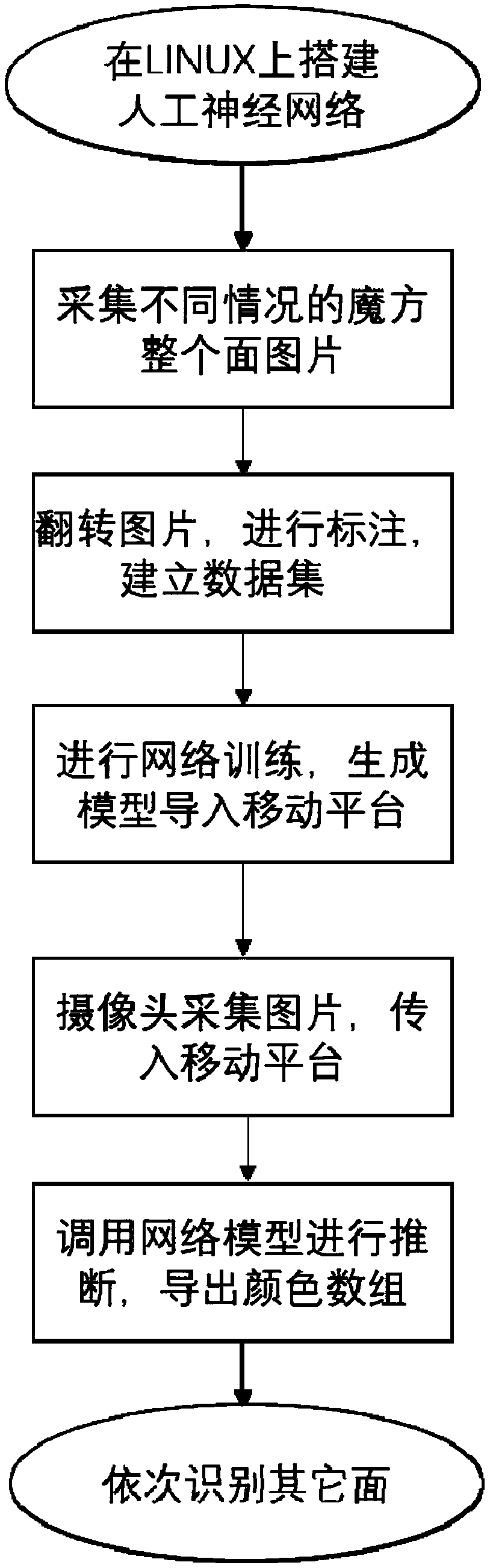

Magic cube color identification method based on artificial neural network

InactiveCN108830908AAvoid the disadvantages of being affected by lightIntelligent recognition processingImage enhancementImage analysisData setArray data structure

The invention discloses a magic cube color identification method based on an artificial neural network. The method includes steps: establishing an artificial neural network training model; acquiring pictures of surfaces of a magic cube, marking color blocks along contours of the color clocks of the magic cube on the pictures by employing rectangular marking frames, marking color categories of thecolor blocks according to category numbers, storing coordinates of upper left corners and lower right corners of the rectangular marking frames and the corresponding category numbers in marking filescorresponding to the pictures; training the artificial neural network training model by employing the marking files and a data set formed by the corresponding pictures, and forming a network model file stored in a mobile platform; and during identification, acquiring pictures of surfaces of a to-be-processed magic cube according to the sequence by employing a camera by the mobile platform, retrieving the network model file for identification to identify a determination picture of the surface at each time of acquisition of the picture of one surface, outputting a color number group of the surface, and finally forming two-dimensional color rectangular output to complete identification. According to the method, the identification rate in a complex environment is increased by introduction of amagic cube robot color identification algorithm into the neural network.

Owner:TIANJIN UNIV

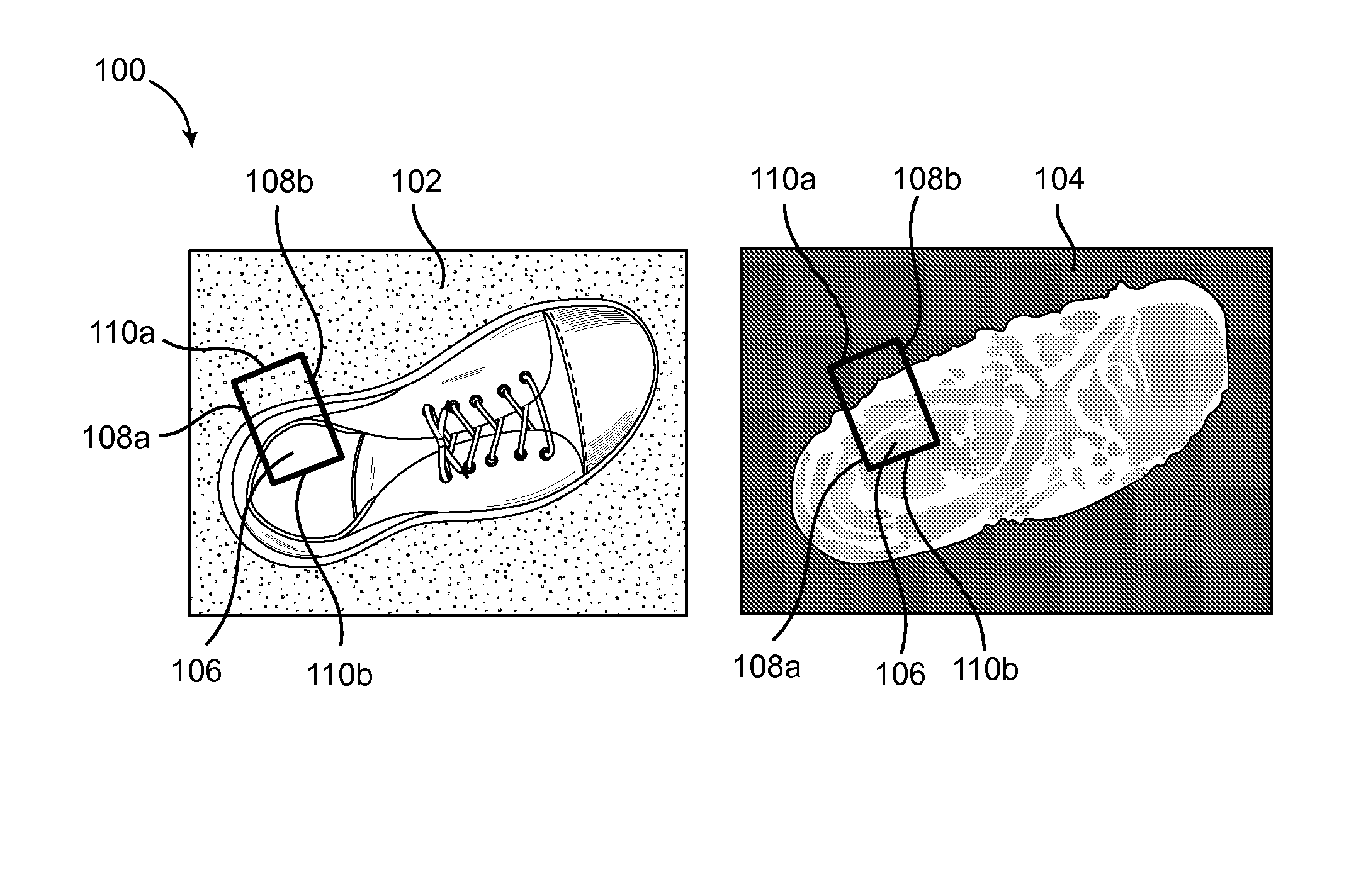

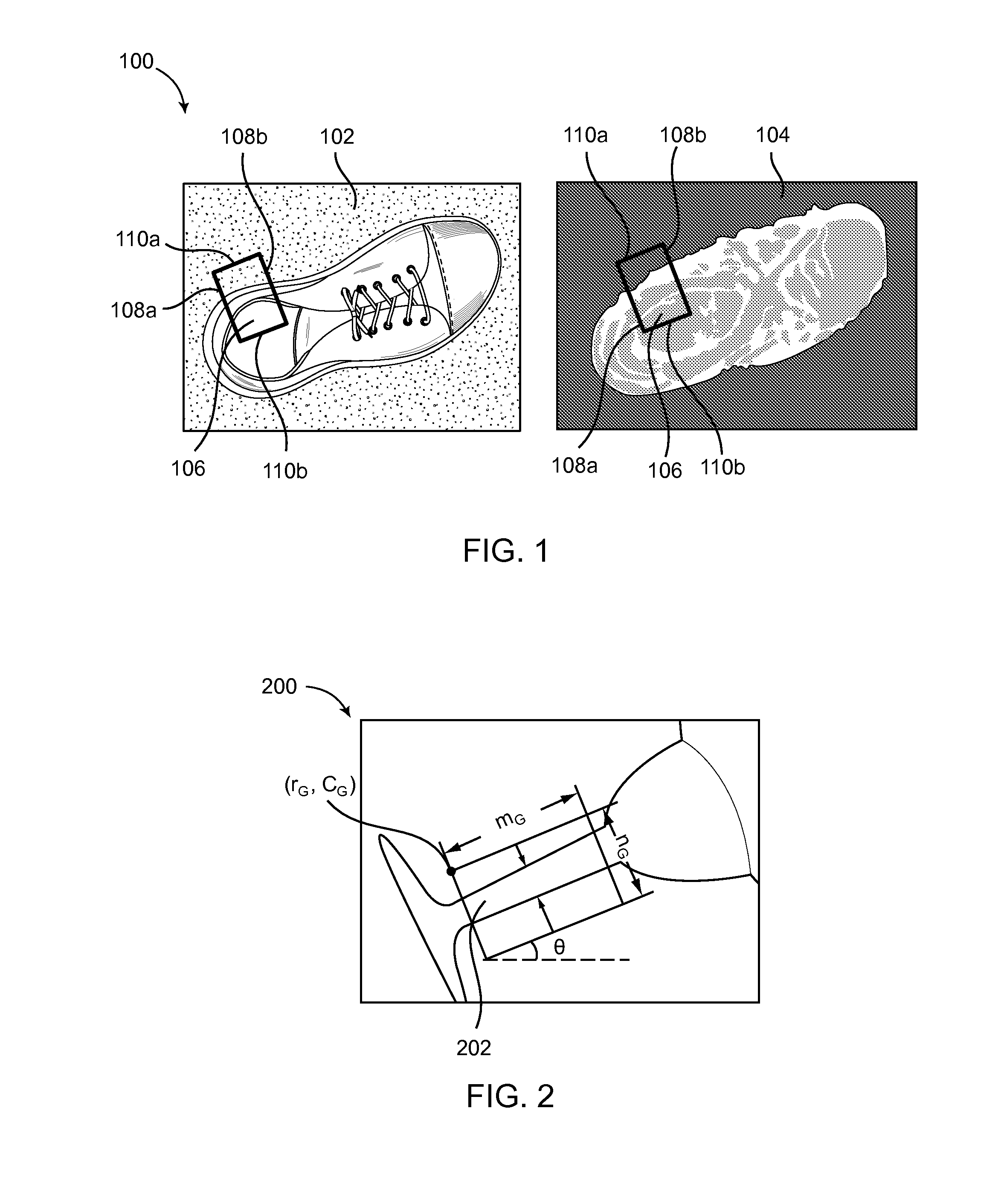

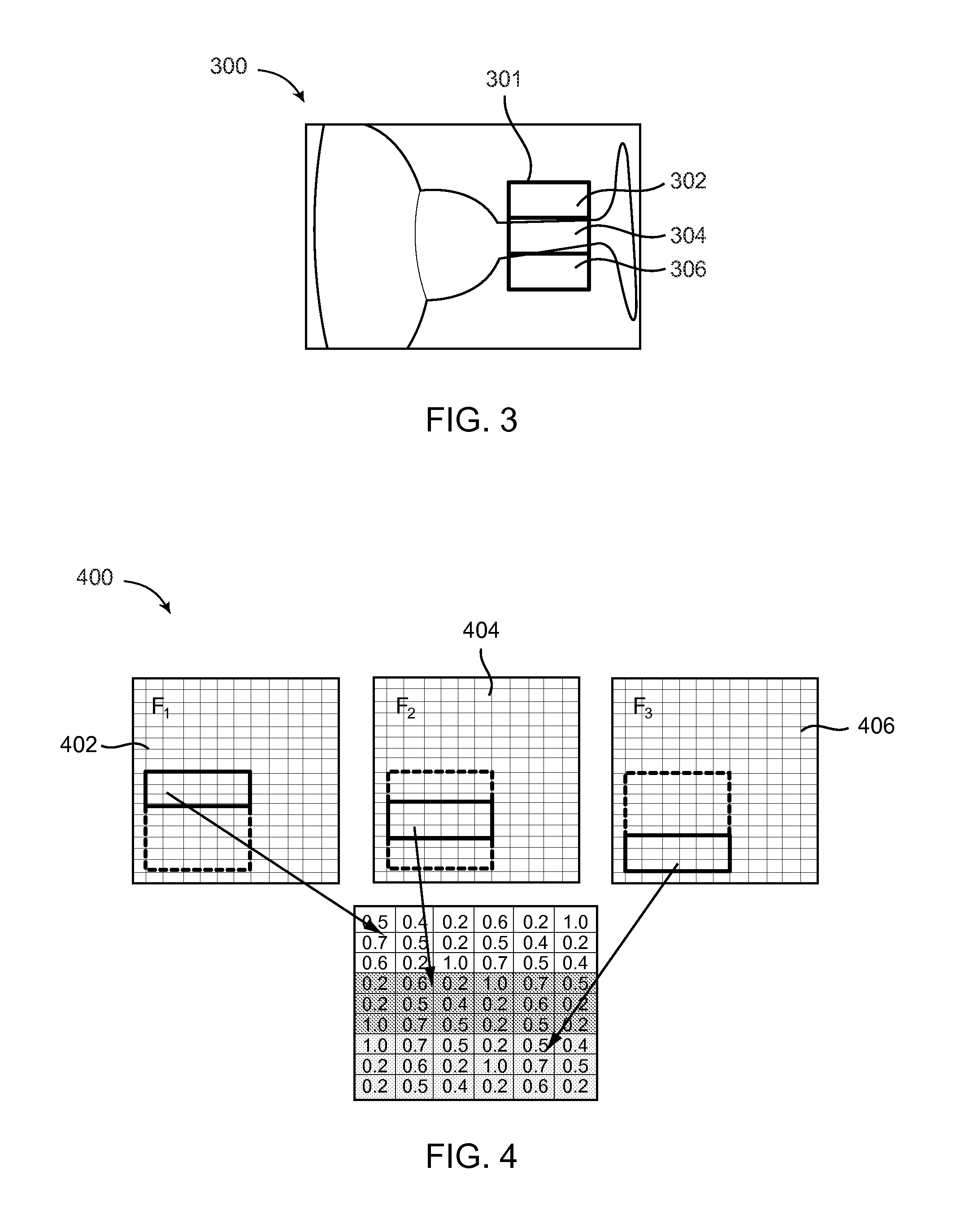

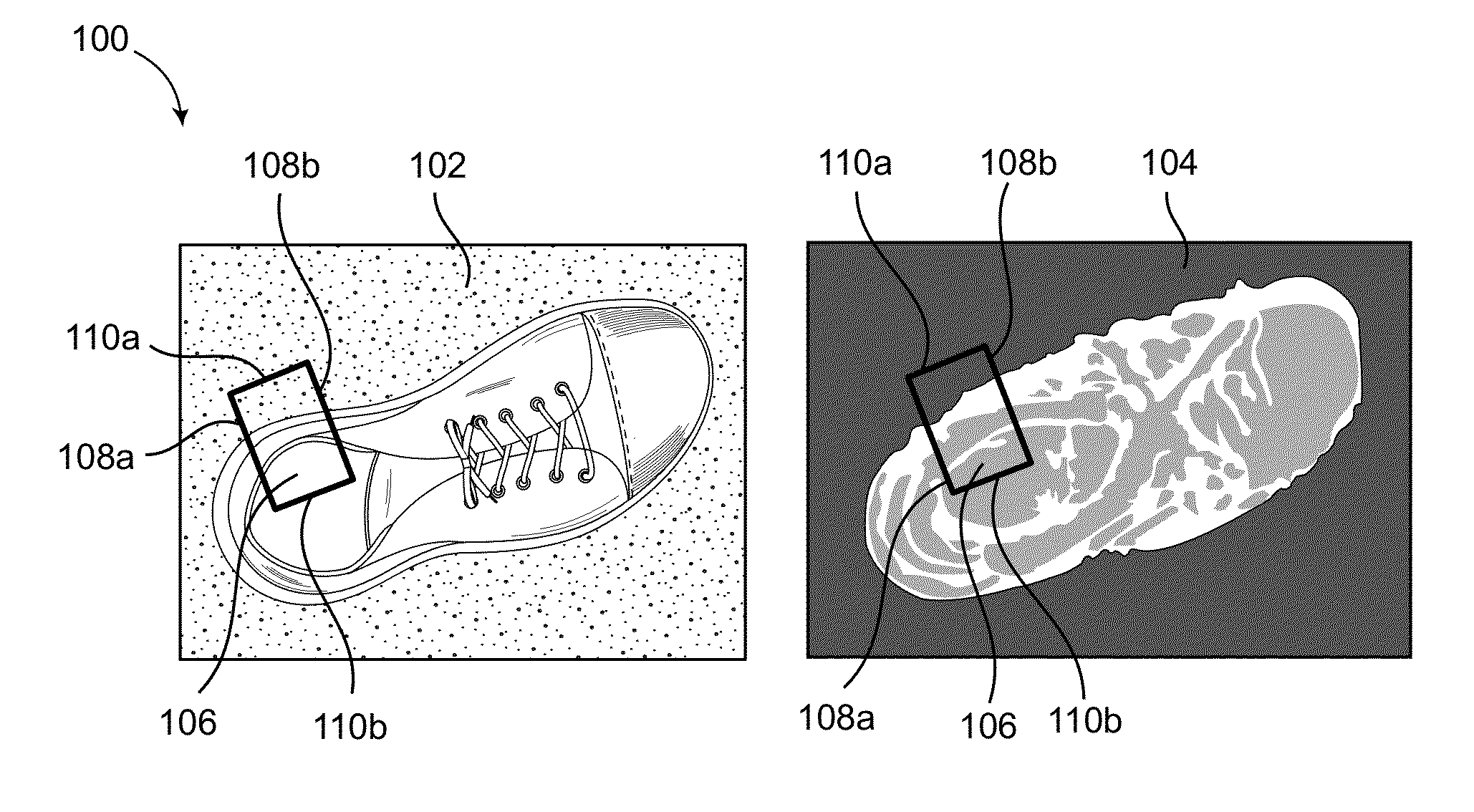

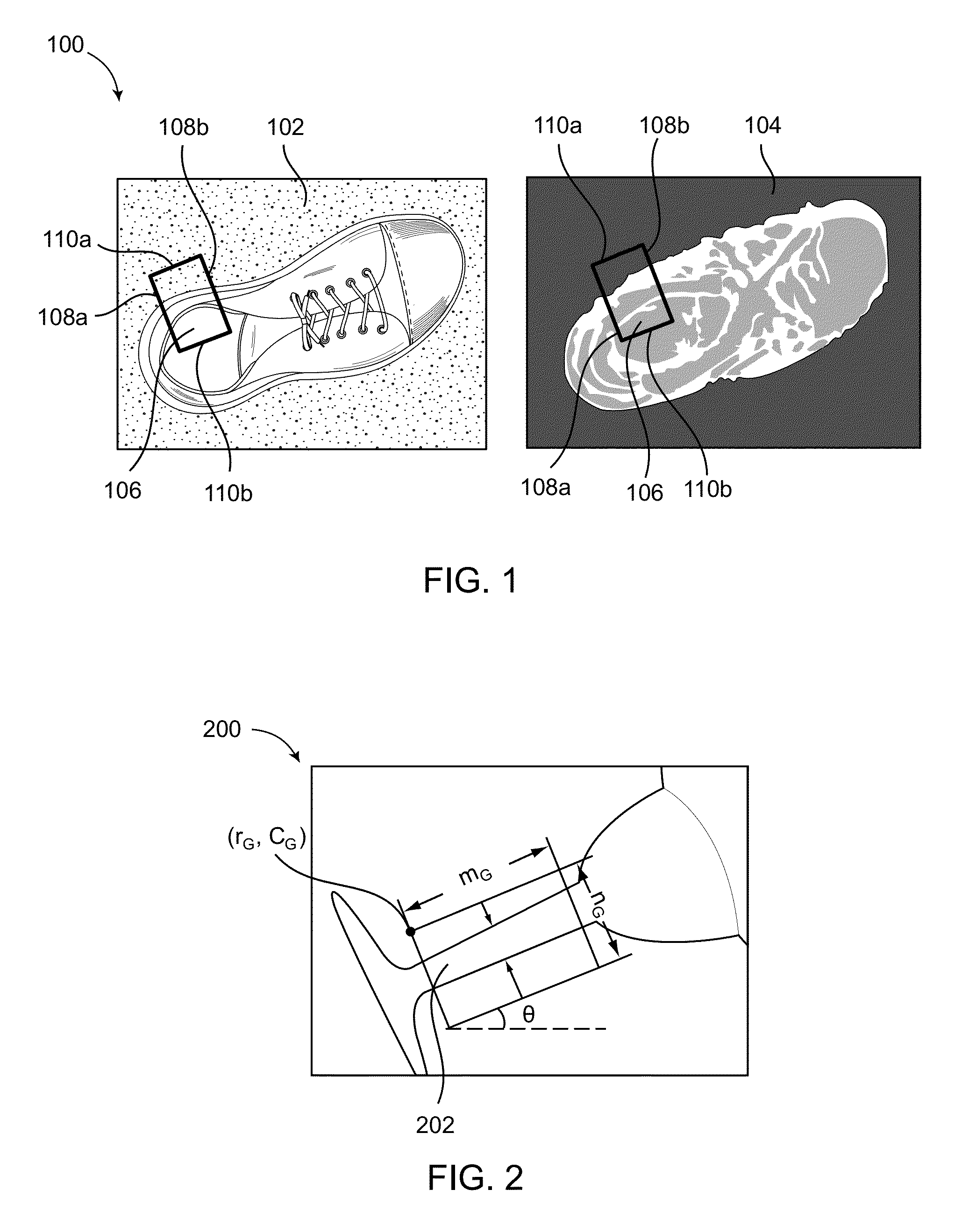

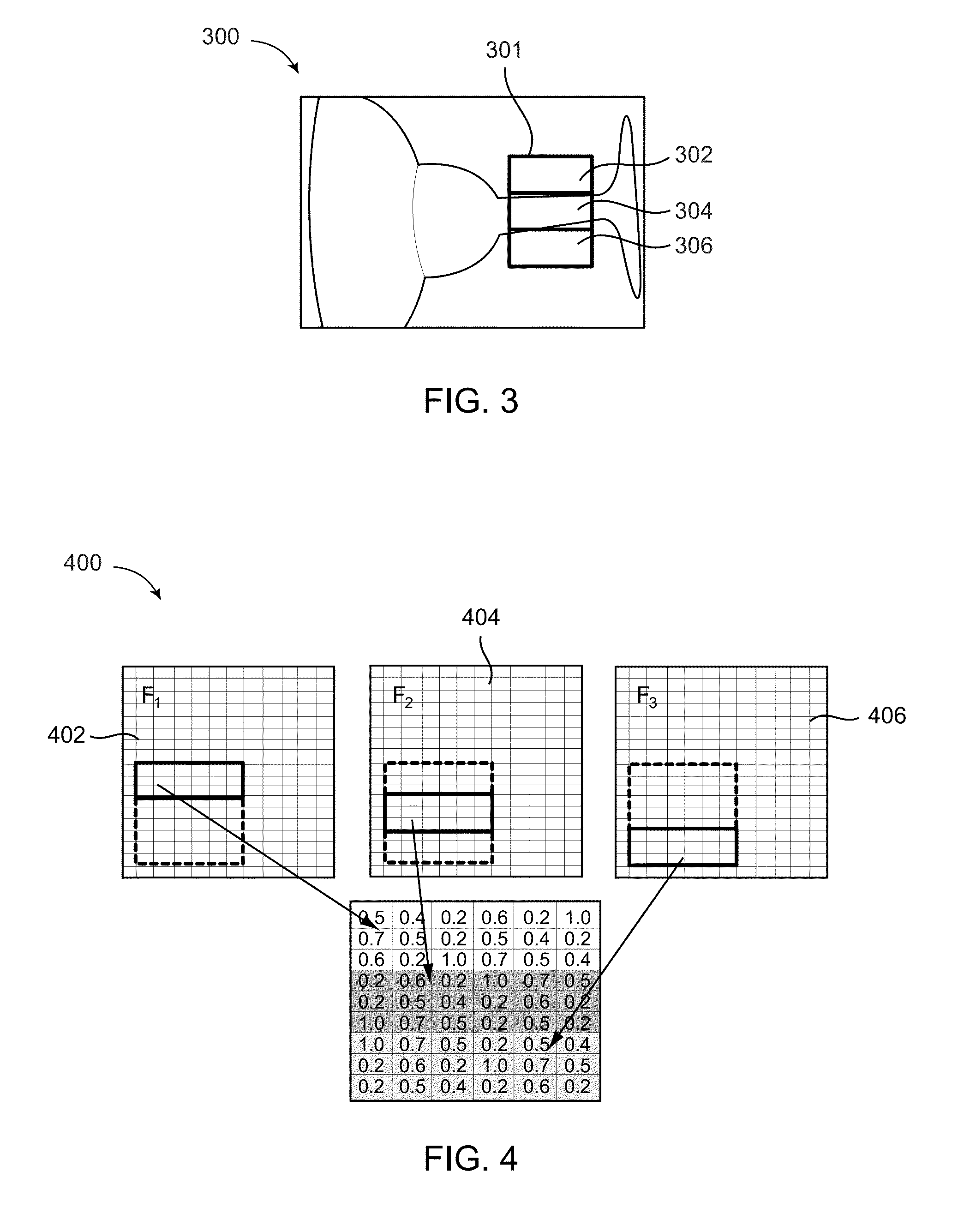

Prediction of successful grasps by end of arm tooling

ActiveUS20140016856A1Fast computerChoose accuratelyImage enhancementImage analysisPhysical modelEngineering

Given an image and an aligned depth map of an object, the invention predicts the 3D location, 3D orientation and opening width or area of contact for an end of arm tooling (EOAT) without requiring a physical model.

Owner:CORNELL UNIVERSITY

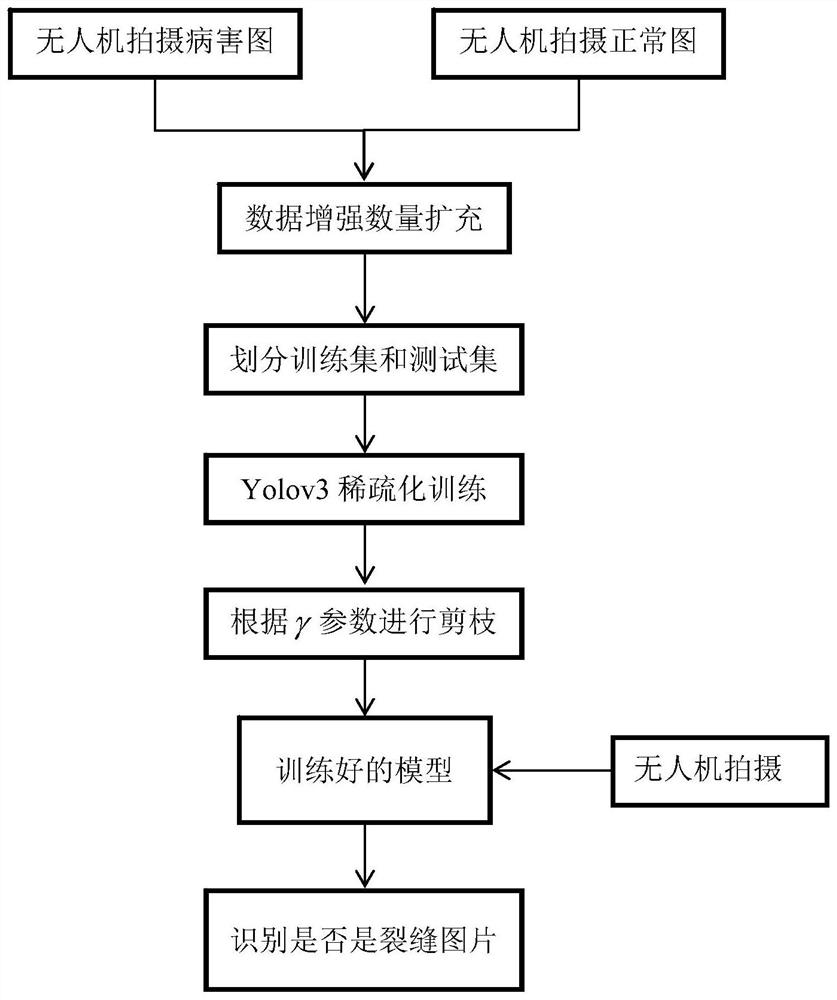

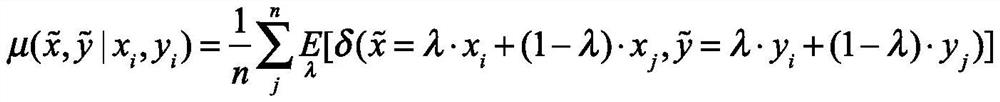

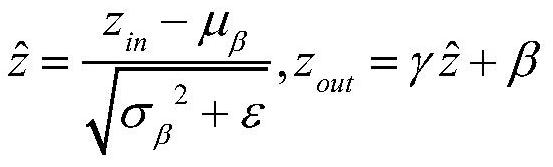

Bridge disease real-time detection method based on model pruning

ActiveCN111832607AFast inferenceGood pruning effectCharacter and pattern recognitionNeural architecturesData setEngineering

The invention discloses a bridge disease real-time detection method based on model pruning, and the method comprises the following steps: firstly obtaining a bridge apparent damage image through an unmanned plane, and dividing a data set into a training set, a verification set and a test set according to a proportion; increasing the number of data sets by applying an image enhancement method; constructing a yolov3 network framework, adding L1 regularization constraints of gamma parameter items to each batch of normalization layers (BN) of the network, setting a sparsity adjustment strategy ofprogressive local attenuation, and training the reconstructed network to obtain a training model; after the gamma parameters of all the trained BN layers are obtained, performing model pruning of thelayers and the channels according to the sizes of the gamma parameters between the layers and in the layers, deleting the channel with the small weight, and obtaining a pruned model; and carrying outbridge apparent disease automatic identification by using the trained model. The invention is high in efficiency and low in cost, and compared with a traditional manual label marking training method,the method has more obvious automation and high efficiency.

Owner:SOUTHEAST UNIV

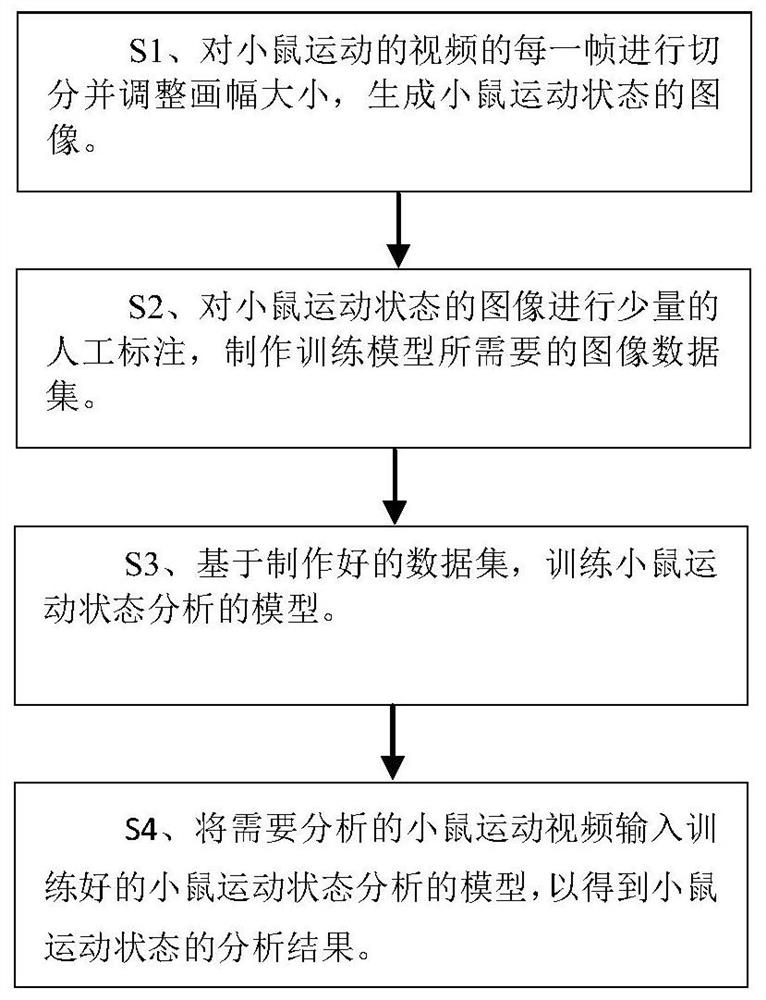

Mouse motion state analysis method based on deep learning algorithm

PendingCN112507961AIncrease training speedImprove accuracyCharacter and pattern recognitionNeural architecturesManual annotationData set

The invention provides an animal motion state video analysis method based on a deep learning algorithm. The method comprises the following steps: segmenting each frame of a mouse motion video, adjusting the size of a picture, and generating an image of a mouse motion state; carrying out a small amount of manual annotation on the images of the mouse motion state, and making an image data set required by the training model; training a mouse motion state analysis model based on the manufactured data set; and inputting the mouse motion video to be analyzed into the trained mouse motion state analysis model to obtain an analysis result of the mouse motion state. According to the mouse motion state analysis method, through a convolutional neural network technology and a transfer learning technology in deep learning, under the condition that only a small amount of video data is labeled and data distribution is unbalanced, and therefore, an end-to-end mouse motion state analysis model with high training speed, high accuracy, high inference speed and highdeduction speed is constructed.

Owner:SHANGHAI TECH UNIV

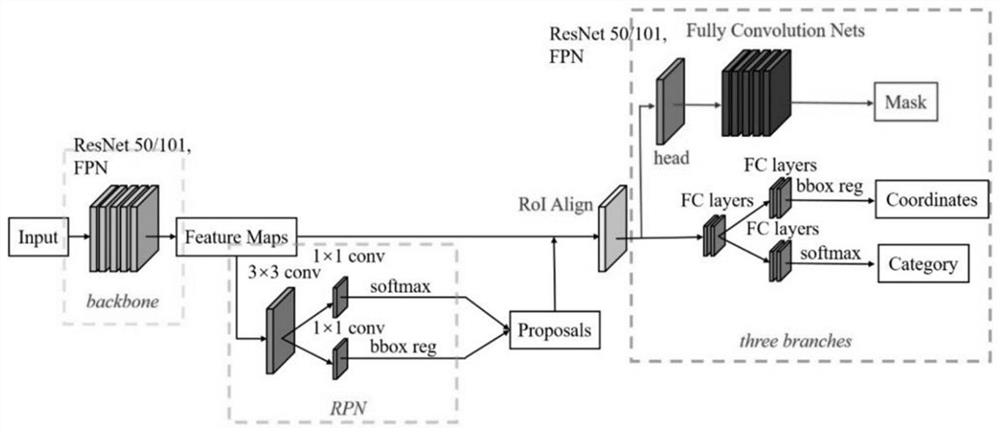

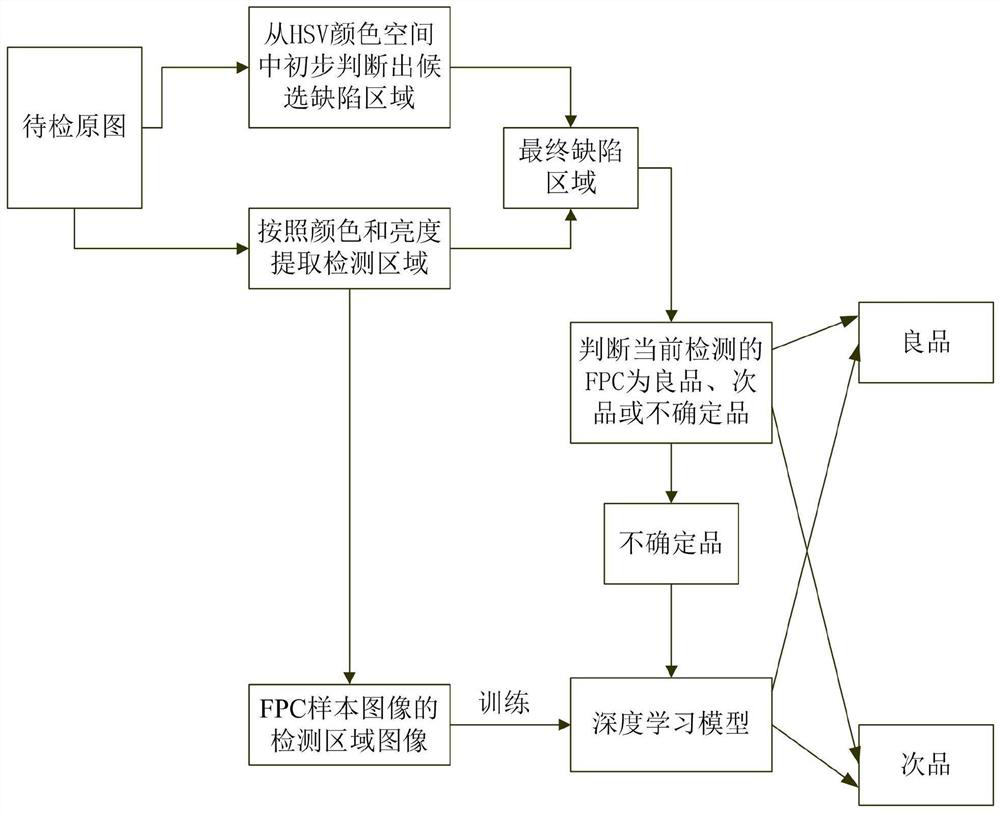

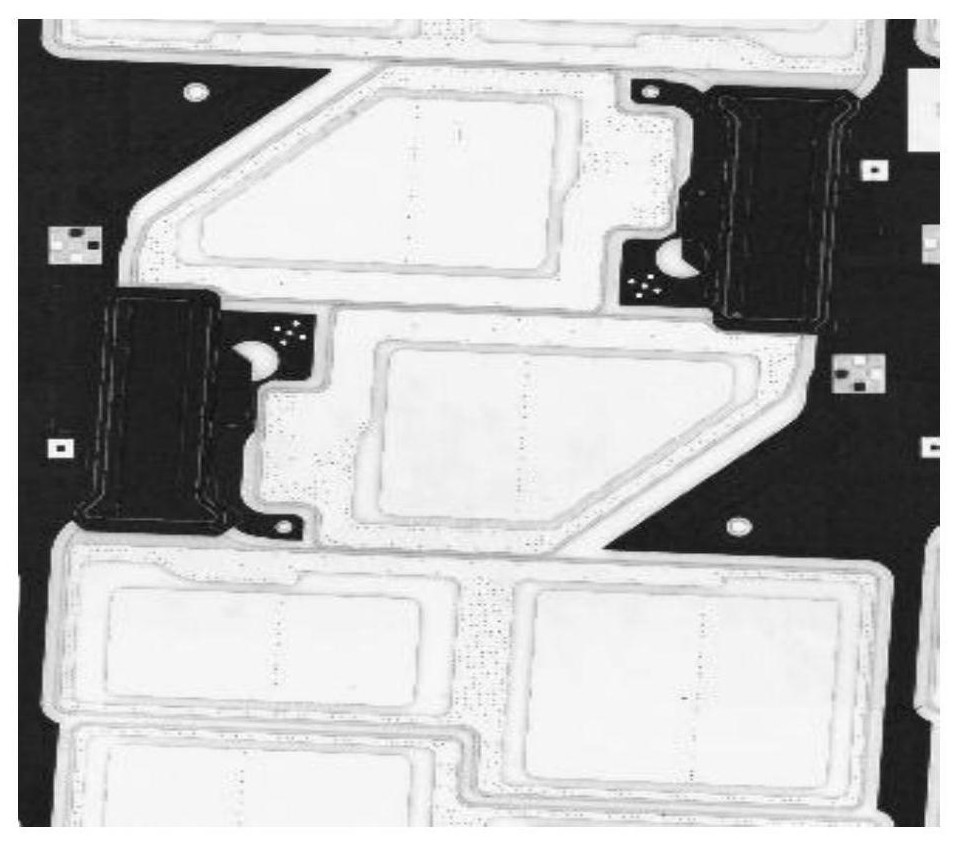

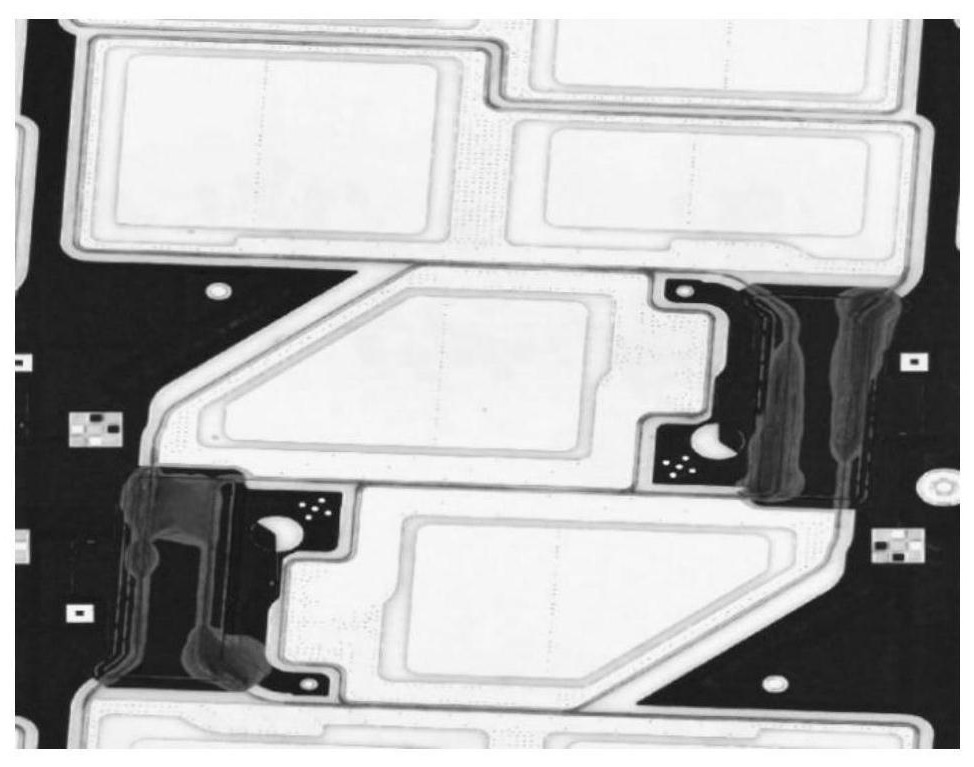

FPC defect detection method and system based on HSV and CNN, and storage medium

PendingCN114266743AShort deployment timeDescribe wellImage enhancementImage analysisImaging processingAlgorithm

The invention discloses an HSV and CNN-based FPC defect detection method and system and a storage medium. The detection method comprises the steps of extracting a detection region in a to-be-detected original image according to color and brightness; converting the to-be-detected original image from an RGB color space to an HSV color space, and preliminarily judging candidate defect areas from the HSV color space according to preset defect parameters; taking an intersection between the candidate defect area and the detection area as a final defect area; comparing the final defect area with a preset threshold value, and judging whether the currently detected FPC is a good product, a defective product or an uncertain product; and identifying the detection area of the uncertain product by using a pre-trained deep learning model, and predicting whether the uncertain product belongs to a good product or a defective product. According to the invention, defect detection is carried out on the FPC to be detected in a mode of combining image processing and a deep learning model, and the method has the advantages of low cost and high precision.

Owner:SHENZHEN TECH UNIV

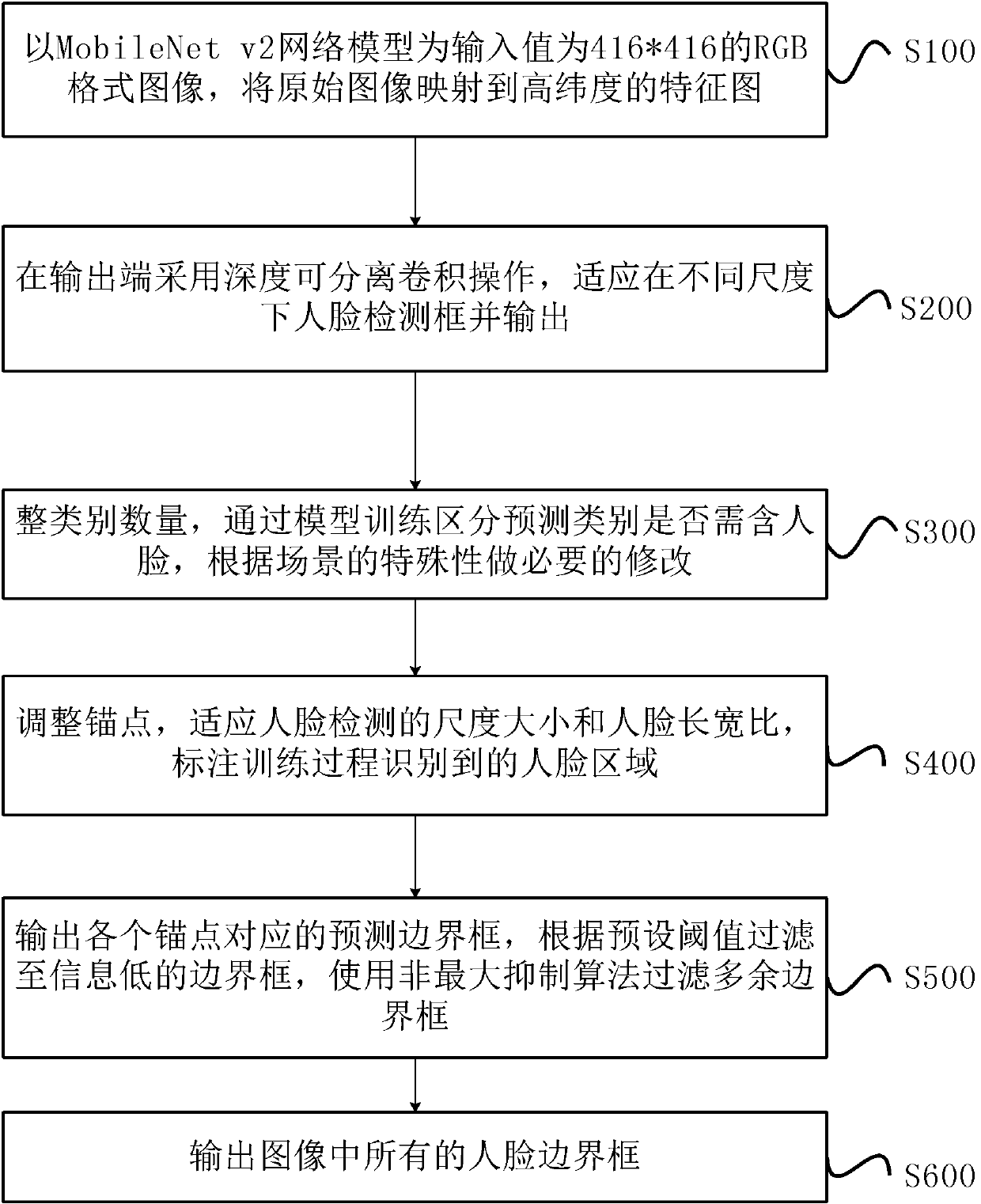

YOLO-based face detection method

PendingCN110826537AFast inferenceImprove compatibilityCharacter and pattern recognitionFace detectionEngineering

The invention discloses a YOLO-based face detection method which is realized through end-to-end, does not need the additional feature engineering assistance, is fast in inference speed, has a calculation speed of about 0.09 second, can be applied in real time, is relatively better in compatibility with conditions, such as figure postures, angles, partial occlusion, etc., and is high in recall rateand strong in robustness.

Owner:GUANGZHOU JIUBANG DIGITAL TECH

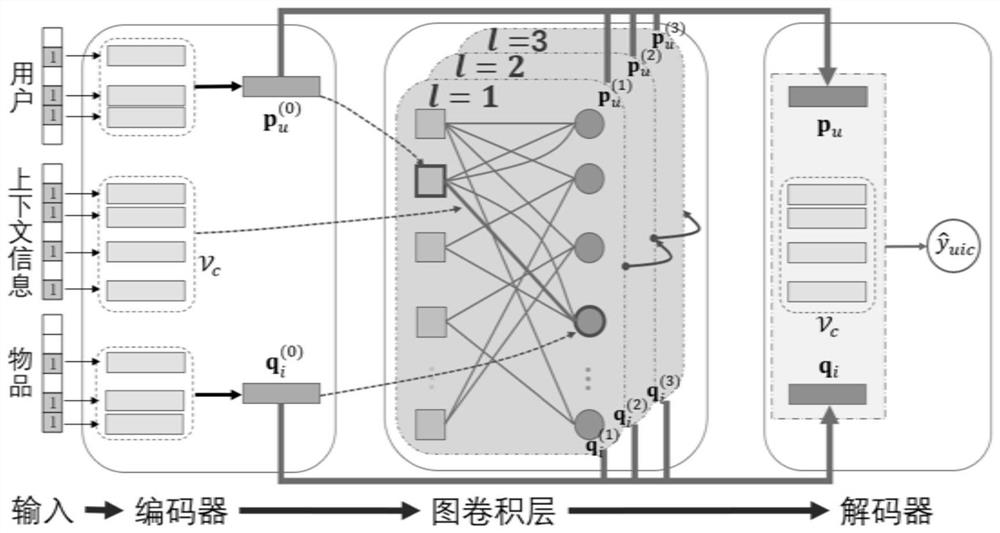

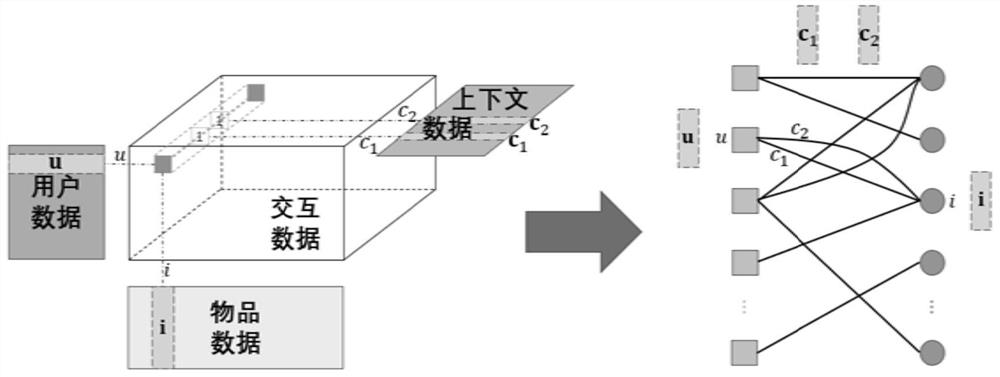

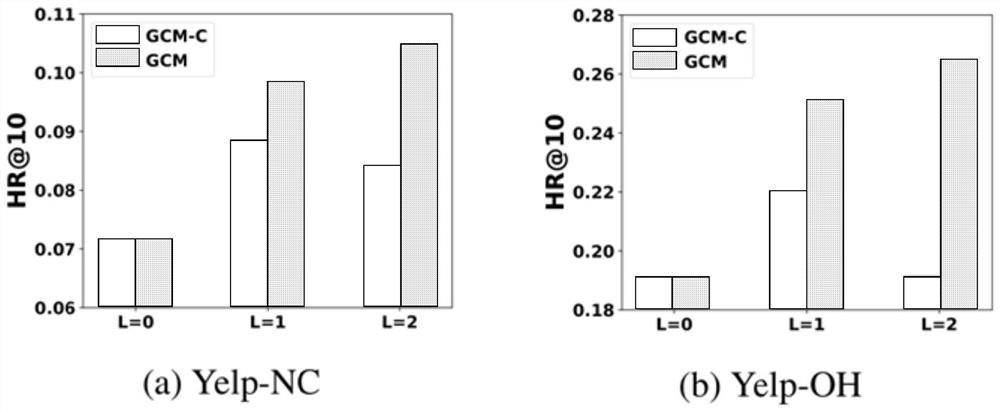

Context-aware graph convolution recommendation system

PendingCN112364242AFast inferenceHigh precisionDigital data information retrievalNeural architecturesAlgorithmTheoretical computer science

The invention discloses a context-aware graph convolution recommendation system. The context-aware graph convolution recommendation system comprises an encoder, a graph convolution layer and a decoder, the encoder associates each non-zero feature of the input user information, article information and context information with a hidden space vector, and combines the hidden space vectors from three domains of the user information, the article information and the context information; in the graph convolution layer, graph convolution operation is performed based on a pre-constructed user article bipartite graph with attributes in combination with output of an encoder, and final feature representation of a user and an article is obtained through multiple times of graph convolution operation; andthe decoder predicts the preference degree of the user to the article under the context information based on the final feature representation of the user and the article and the associated embeddingset of the context information. The system is a universal recommendation system framework suitable for online service, various auxiliary information can be combined, the collaborative filtering effectcan be captured, and the model performance is improved.

Owner:UNIV OF SCI & TECH OF CHINA

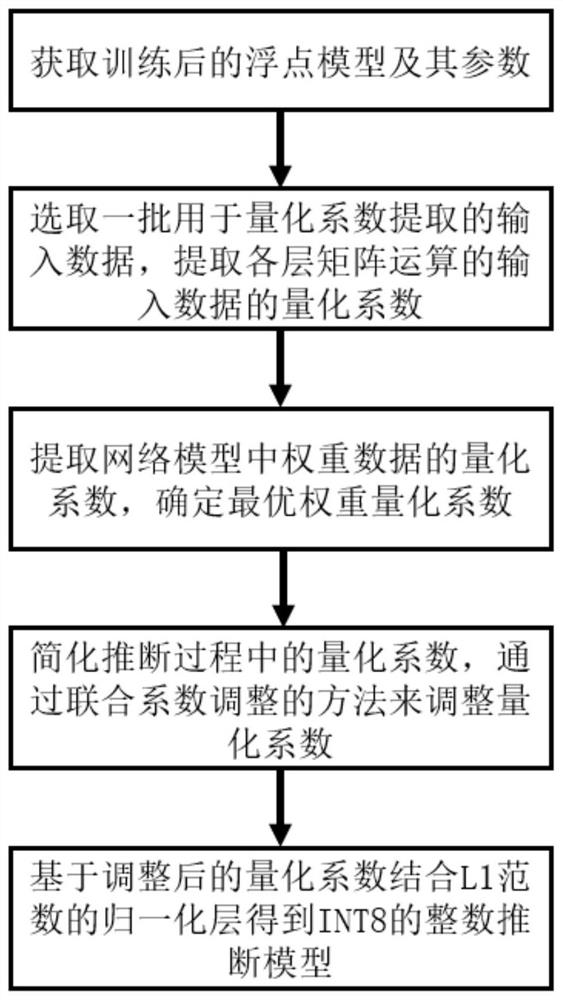

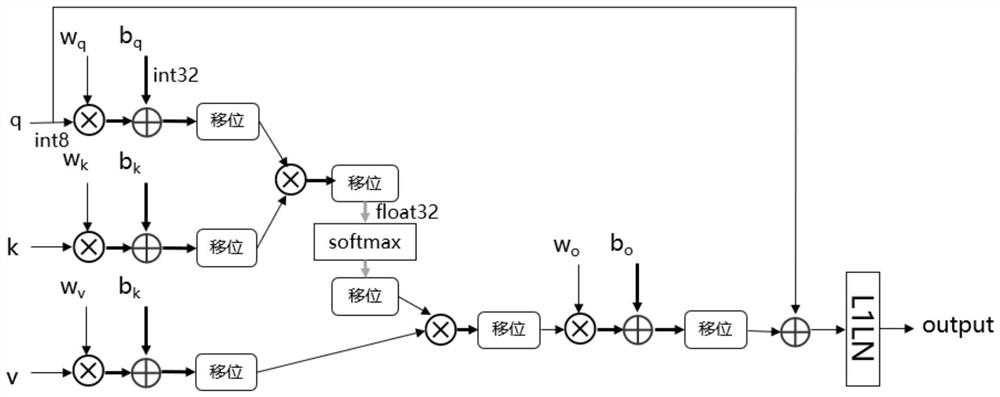

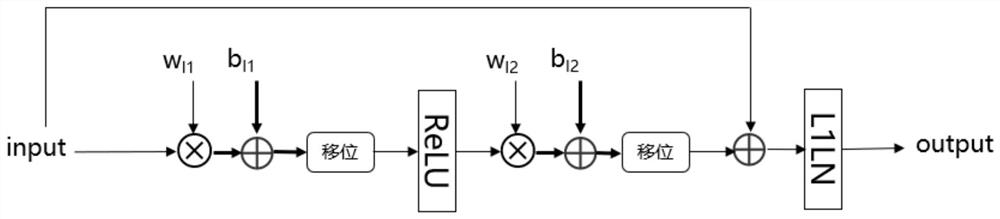

INT8 offline quantization and integer inference method based on Transform model

ActiveCN113011571AReduce precision lossFast inferenceDigital data processing detailsNeural architecturesAlgorithmTheoretical computer science

The invention provides an INT8 offline quantization and integer inference method based on a Transform model. The INT8 offline quantization and integer inference method based on the Transform model comprises the following steps: converting an L2 norm of a normalization layer in an original Transform floating point model into an L1 norm; carrying out model training; performing forward inference through a small amount of data to obtain a quantization coefficient of input data of each layer of matrix operation, and extracting the quantization coefficient as general floating point data; obtaining a weight quantization coefficient of each linear layer in the floating point model, extracting the weight quantization coefficient as general floating point data, and determining an optimal weight quantization coefficient in each layer according to a mean square error calculation method; converting quantization coefficients related to quantization operation in the inference process into 2-n floating-point number forms, and adjusting the quantization coefficients through a joint coefficient adjustment method; and obtaining an integer inference model of INT8 based on the adjusted quantization coefficient in combination with a normalization layer of an L1 norm. According to the invention, errors caused by hardware resources required by model calculation and model quantification can be reduced, hardware resource consumption is reduced, and the deduction speed of the model is increased.

Owner:SOUTH CHINA UNIV OF TECH

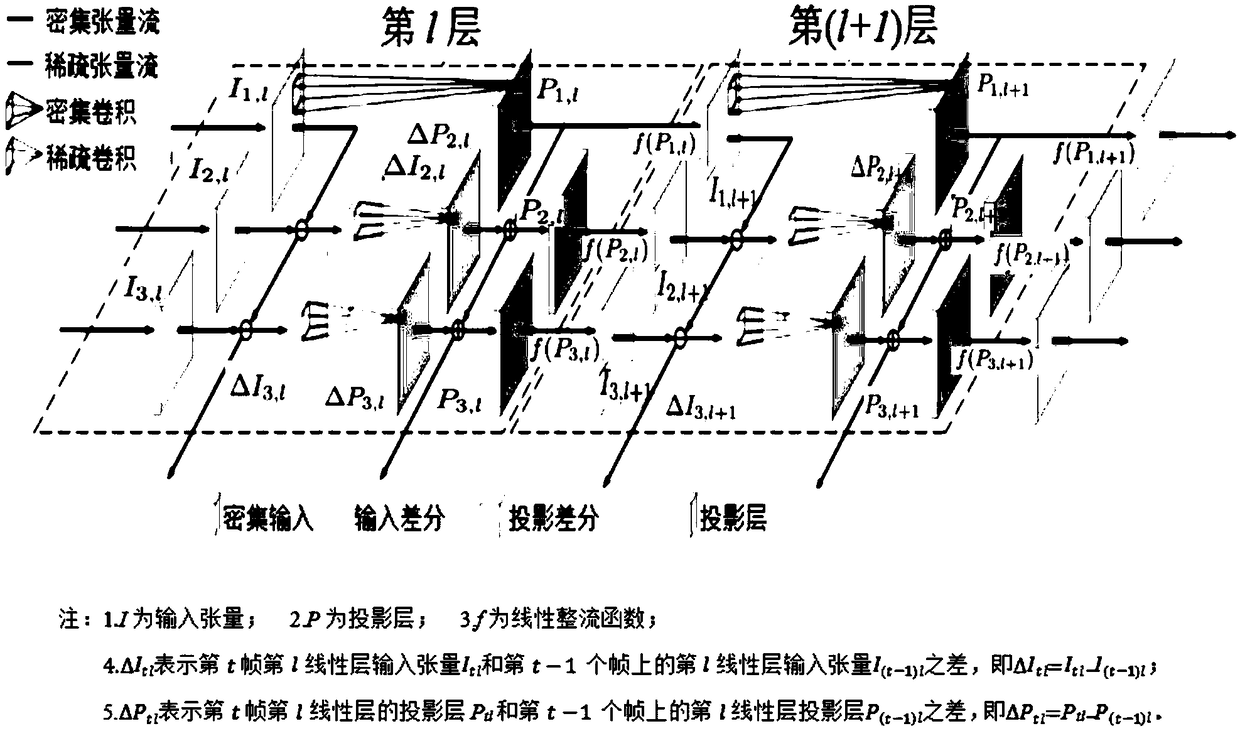

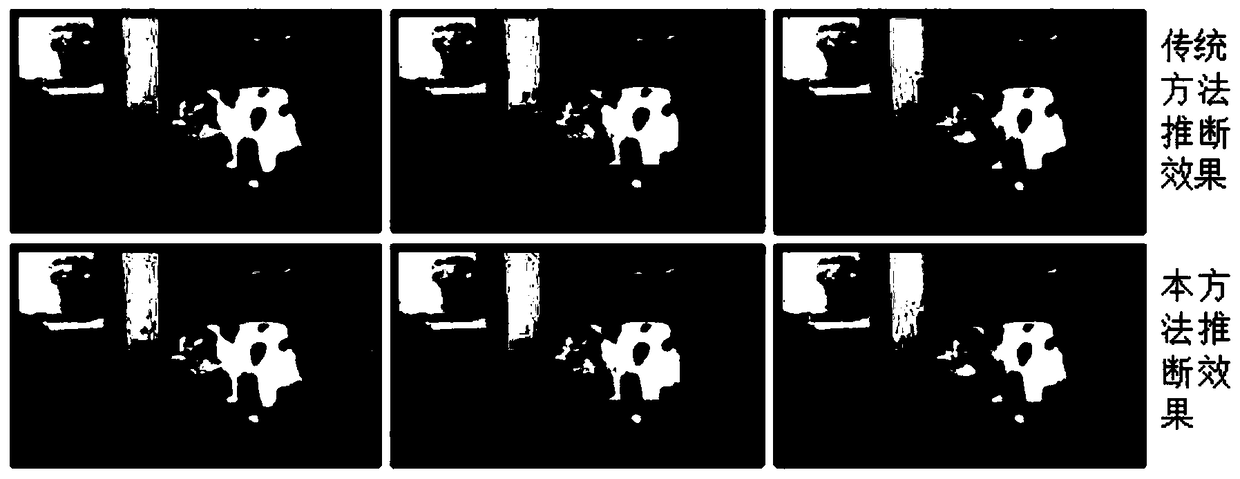

A fast video inference method based on cyclic residual module

InactiveCN109272035AReduce operational complexityAvoid time costCharacter and pattern recognitionNeural architecturesVision processingTime changes

The invention provides a fast video inference method based on a cyclic residual module, a cyclic residual module, which reduces the complexity of neural network operation, improves the sparsity of intermediate feature mapping, and synthesizes efficient inference engine, is used in the method. The process is as follows: firstly, frame similarity is utilized in cyclic residual module to reduce theredundant calculation in frame-by-frame video convolutional neural network inference; then, by improving the sparse approximation output of the intermediate feature map, the inference speed is accelerated and the inference precision is ensured by the error control mechanism. Finally, the synthesis of efficient inference engine, that is, the use of matrix vector multiplication in the dynamic sparsity of the accelerator, so that the cycle residual module is in efficient operation. Compared with the traditional method, the invention can remarkably improve the running speed of the vision processing system, and enhance the understanding ability of the network to the real-time changing video fragments, thereby realizing the acceleration of the video inference process on the premise of ensuring the identification accuracy.

Owner:SHENZHEN WEITESHI TECH

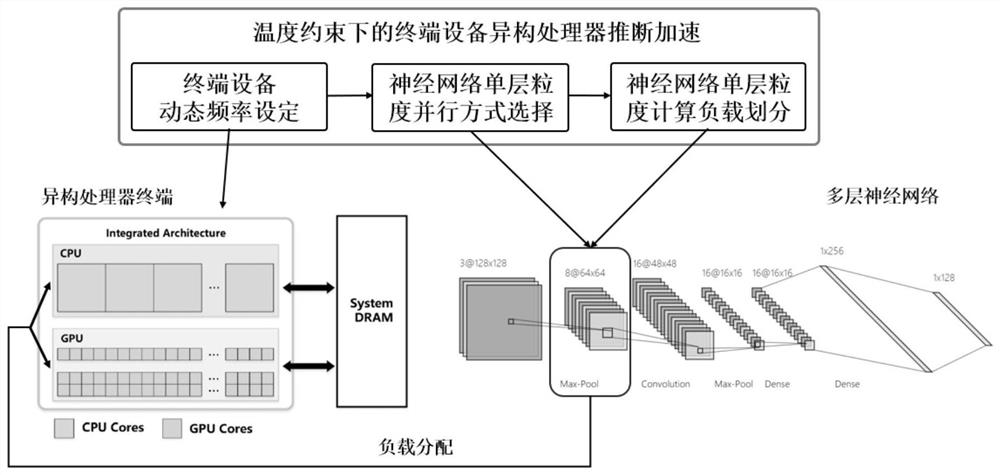

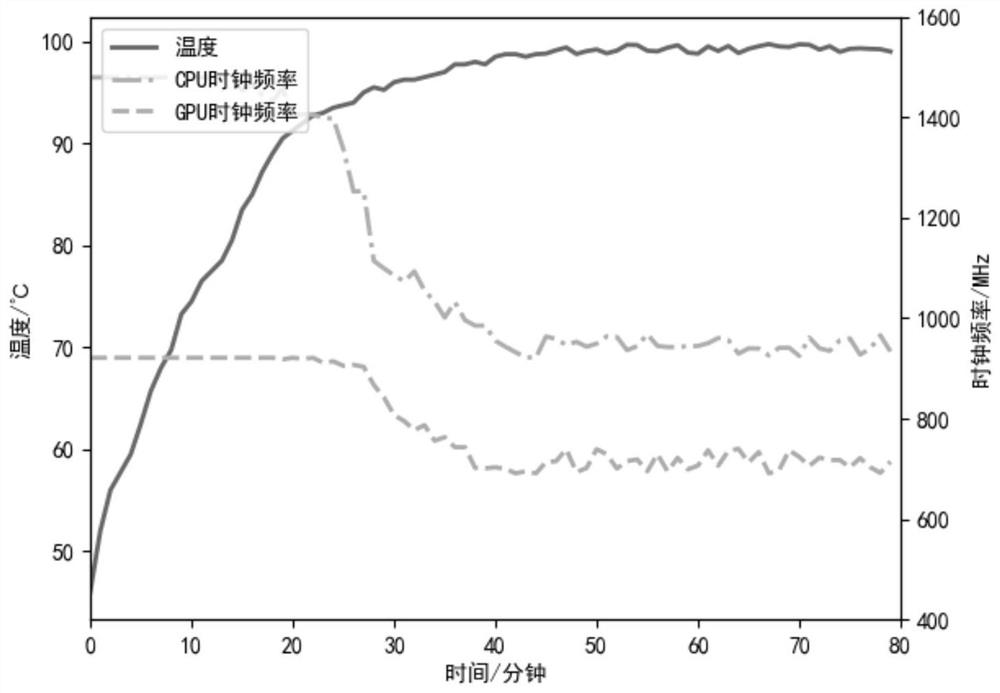

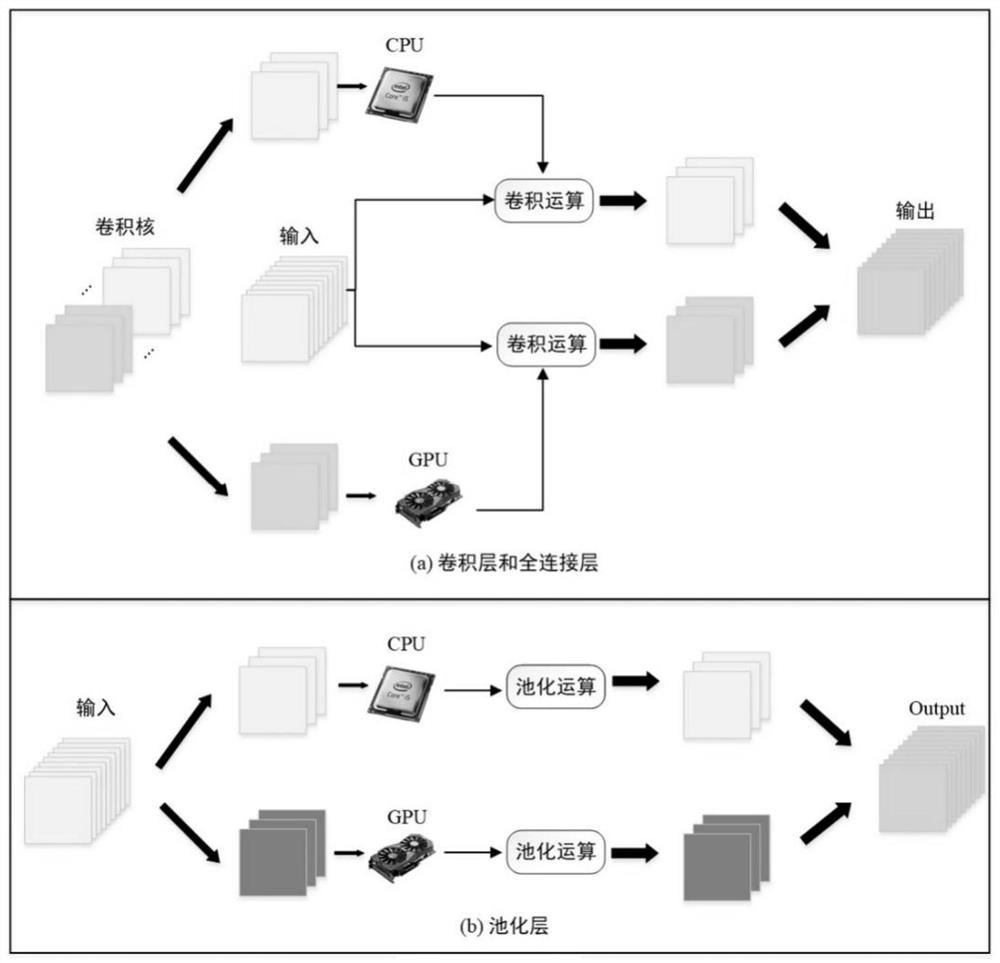

Terminal equipment heterogeneous processor inference acceleration method under temperature constraint

PendingCN114117918AFast inferenceGuaranteed uptimeDesign optimisation/simulationConstraint-based CADAlgorithmTerminal equipment

The invention provides a terminal equipment heterogeneous processor inference acceleration method under temperature constraint, aims at intelligent terminal equipment equipped with a plurality of heterogeneous processors in an industrial production environment, and solves the problem of low terminal equipment inference efficiency caused by deep neural network interlayer heterogeneity, processor heterogeneity and environment temperature. According to the method, firstly, the environment temperature of industrial production and the power of a terminal device processor are considered, a terminal device dynamic frequency model under temperature constraint is established, and a temperature sensing dynamic frequency algorithm is used for setting the device frequency; secondly, designing a deep neural network single-layer parallel method according to calculation modes and structural characteristics of different layers in the deep neural network; and finally, a heterogeneous processor in the terminal equipment is utilized to design a deep neural network single-layer calculation task allocation method oriented to the heterogeneous processor, and low delay and robustness of collaborative inference of the heterogeneous processor of the terminal equipment are guaranteed.

Owner:SOUTHEAST UNIV +1

System and method for controlling the input energy from an energy point source during metal processing

ActiveUS10632566B2Fast balanceFast inferenceAdditive manufacturing apparatusIncreasing energy efficiencyHot zoneLight beam

Owner:PROD INNOVATION & ENG L LC

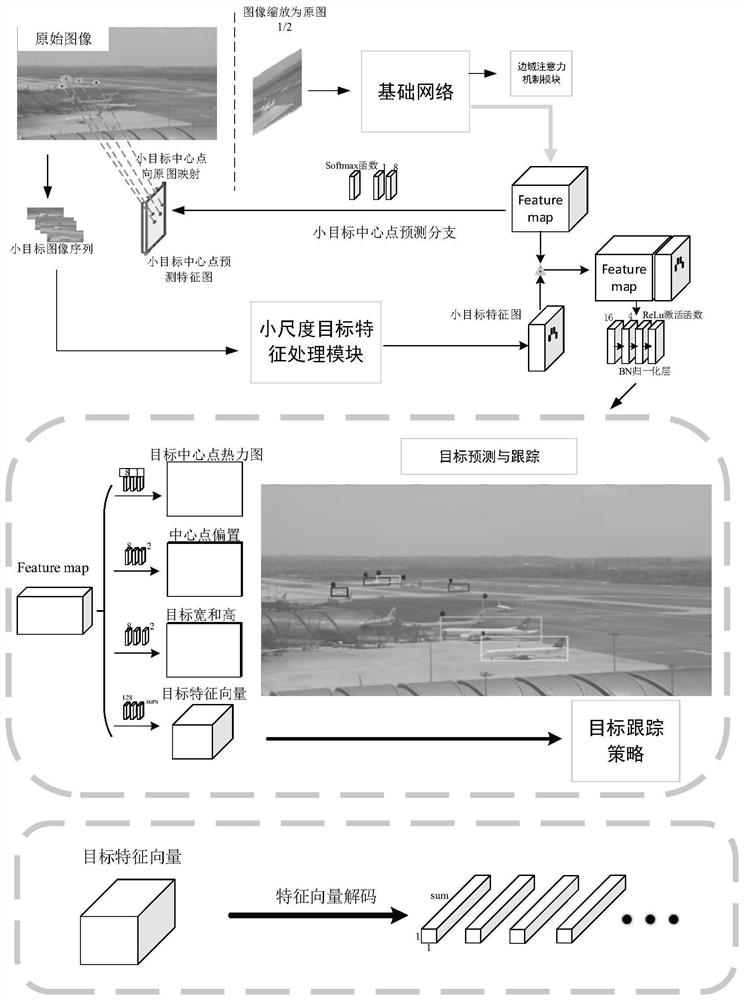

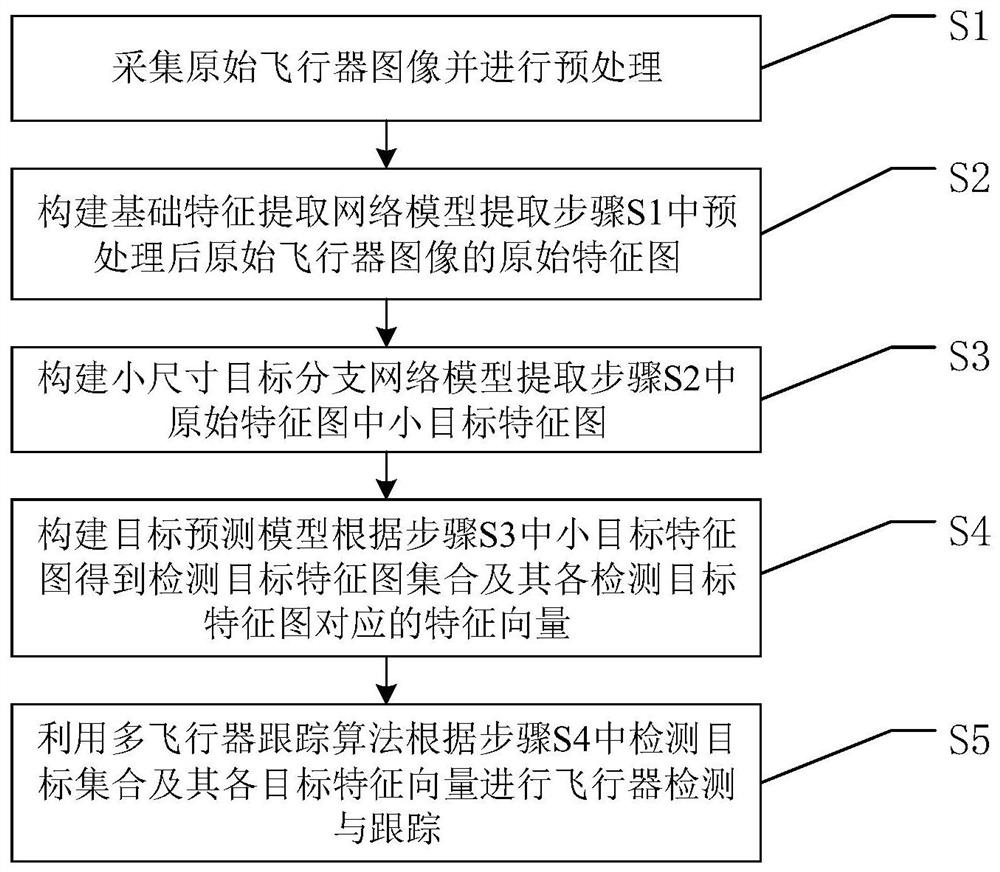

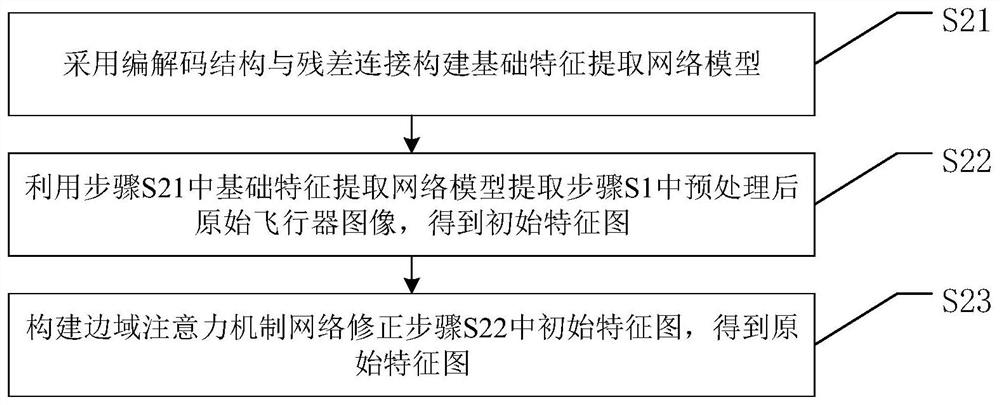

Aircraft detection and tracking method based on multi-scale self-adaption and side domain attention

ActiveCN113792631AImprove tracking performanceImprove surveillance capabilitiesImage enhancementImage analysisFeature vectorFeature extraction

The invention discloses an aircraft detection and tracking method based on multi-scale self-adaption and side domain attention, and the method comprises the steps: extracting an original feature map of a preprocessed original aircraft image through constructing a basic feature extraction network, and extracting a small-target feature map in the original feature map through combining with a small-size target branch network model; and obtaining a detection target feature map set and feature vectors corresponding to the detection target feature maps according to the small target feature maps by using a target prediction model, and performing aircraft detection and tracking by using a multi-aircraft tracking algorithm. Fusion transmission of shallow texture features and deeper semantic features of the feature map is optimized by using a coding and decoding structure and residual connection, the inference speed is improved, information fusion is more sufficient, and the feature extraction capability of a network model is effectively improved by combining a side domain attention mechanism network; and the small-size target branch network model is utilized to reduce the information loss degree, the small-size target detection accuracy is effectively optimized, and the management efficiency of airport scene aircrafts is improved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

Prediction of successful grasps by end of arm tooling

ActiveUS9098913B2Effective spaceFast computerImage enhancementImage analysisPhysical modelComputer science

Given an image and an aligned depth map of an object, the invention predicts the 3D location, 3D orientation and opening width or area of contact for an end of arm tooling (EOAT) without requiring a physical model.

Owner:CORNELL UNIVERSITY

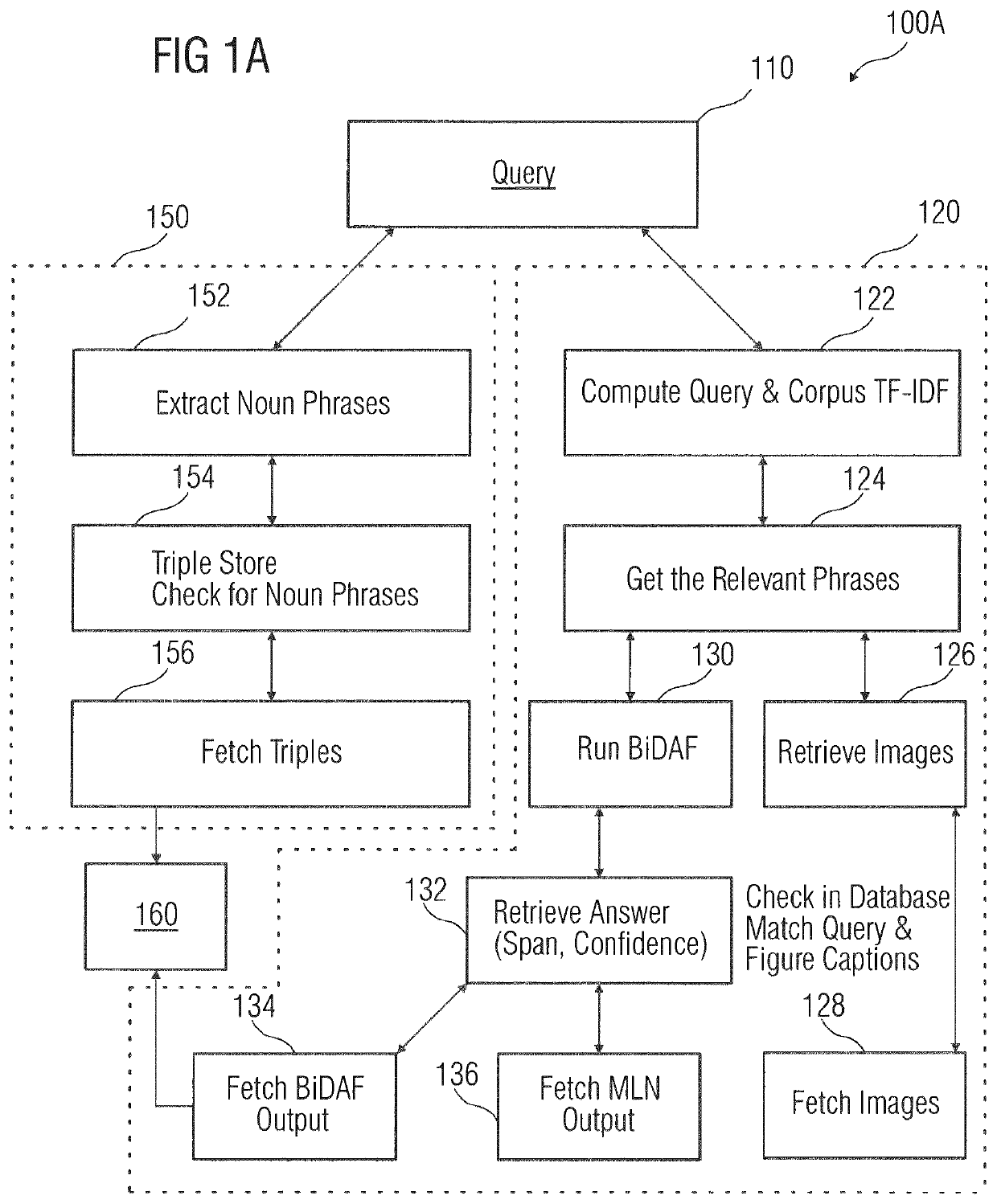

System, apparatus and method of managing knowledge generated from technical data

PendingUS20220358379A1Fast inferencePromote productionSemantic analysisKnowledge representationKnowledge-based systemsData system

System, apparatus and method for managing knowledge generated from technical data are disclosed. The method comprising receiving a user query for technical data stored as a knowledge base (842A) on a knowledge-based system (842); determining, by an inference engine (822), a contextual relevance between the user query and the knowledge base (842A), wherein the knowledge base (842A) comprises a query-able framework of the technical data including processed textual sections and indexed images; identifying textual sections and images of the knowledge base (842A) associated with the user query based on the contextual relevance; determining, by the inference engine (822), relevancy of the identified textual sections and indexed images based on frequency of terms in the query with respect to the identified textual sections and the indexed images; and generating, by the inference engine (822), a response (818A) to the user query including extracted textual sections and indexed images having a relevancy score that exceeds a threshold.

Owner:JAIN SAMYAK +8

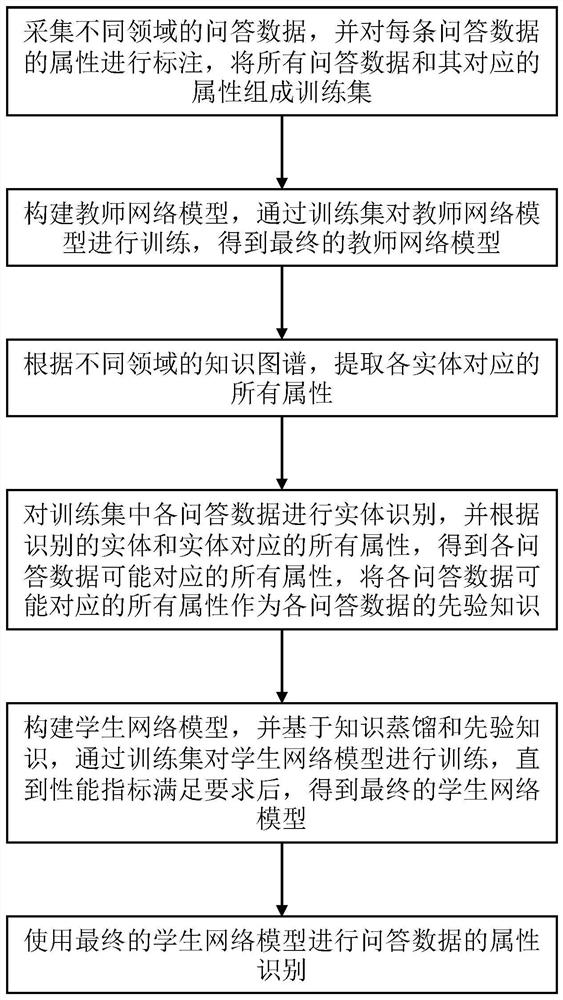

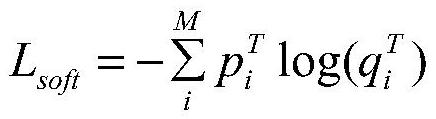

Attribute recognition method based on knowledge distillation, terminal equipment and storage medium

PendingCN113515614ARealize attribute recognitionImprove efficiencyNatural language data processingKnowledge representationTerminal equipmentEngineering

Owner:厦门渊亭信息科技有限公司

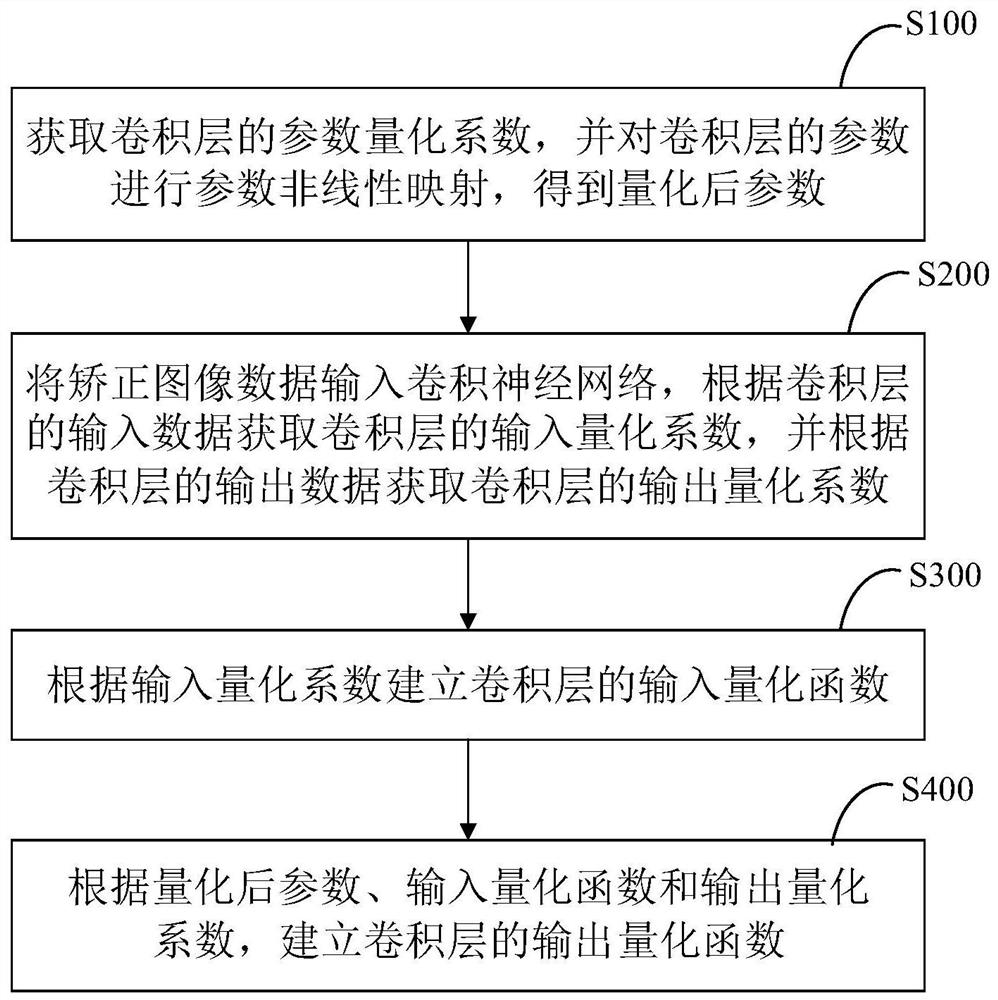

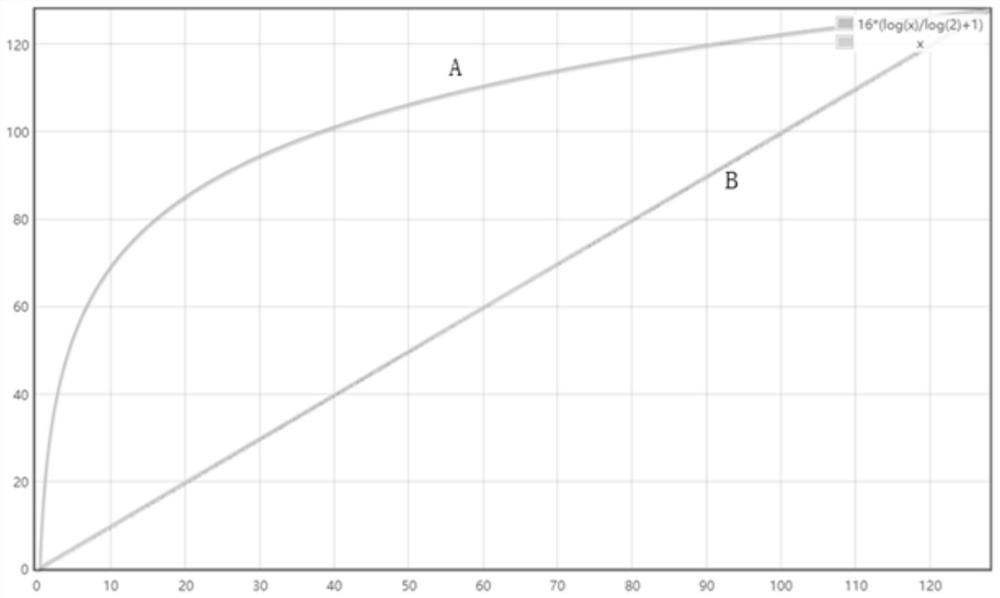

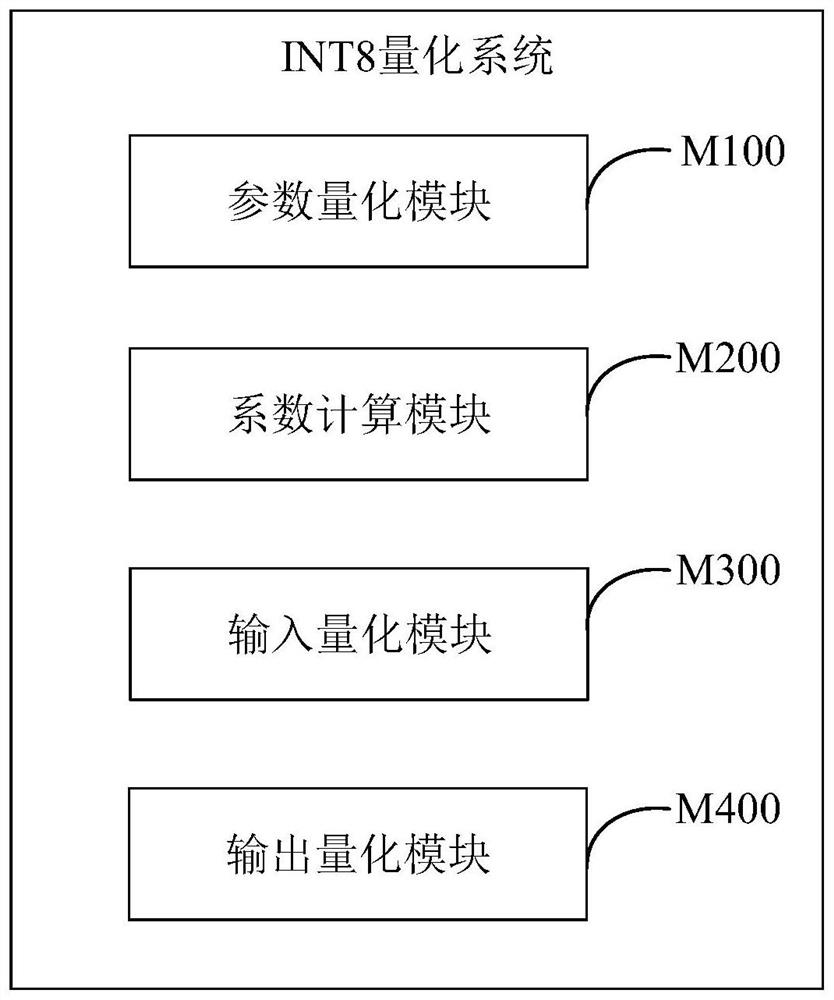

Convolutional neural network INT8 quantification method, system and device and storage medium

PendingCN111767993AFast inferenceReduce precision lossNeural architecturesNeural learning methodsEngineeringConvolution

The invention provides a convolutional neural network INT8 quantification method, system and device and a storage medium, and the method comprises the steps: obtaining a parameter quantification coefficient of a convolution layer, carrying out the parameter nonlinear mapping of a parameter of the convolution layer, and obtaining a quantified parameter; obtaining an input quantization coefficient of the convolution layer, and establishing an input quantization function of the convolution layer, the input quantization function being used for performing nonlinear mapping on input data of the convolution layer to obtain quantized input data; and obtaining an output quantization coefficient of the convolution layer, and establishing an output quantization function of the convolution layer according to the quantized parameter and the input quantization function, the output quantization function being used for performing nonlinear mapping on output data of the convolution layer to obtain quantized output data. By the adoption of the method and device, off-line nonlinear quantization is conducted on model parameters, input and output, pure integer operation of the whole model is achieved,and quantization precision is improved.

Owner:SUZHOU KEDA TECH

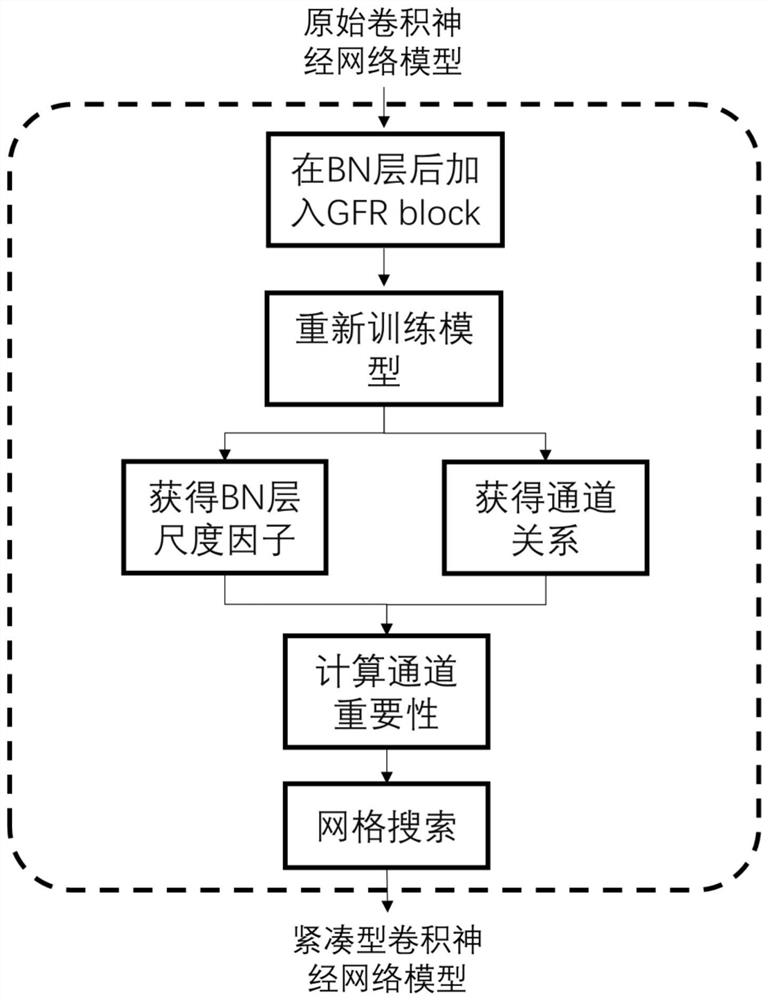

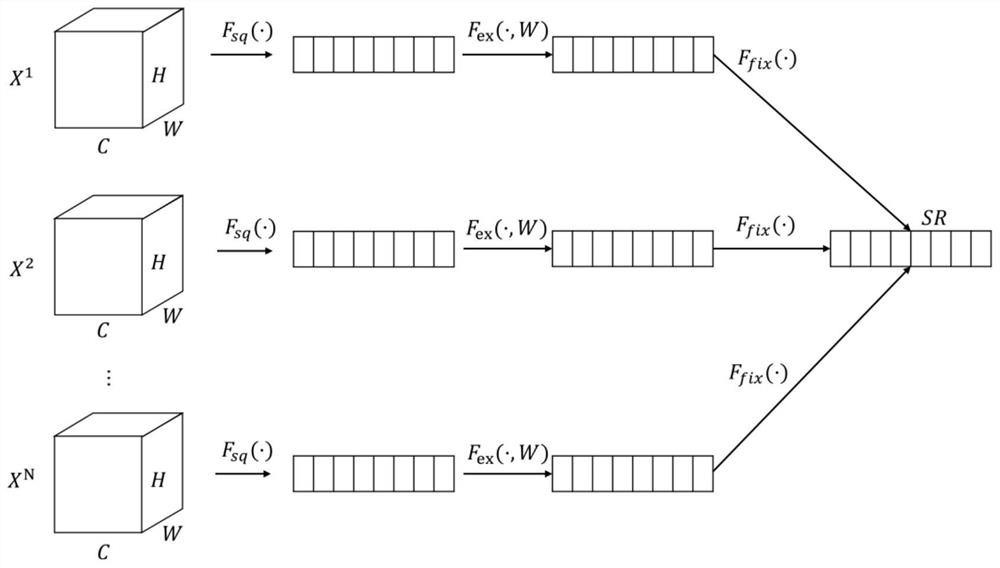

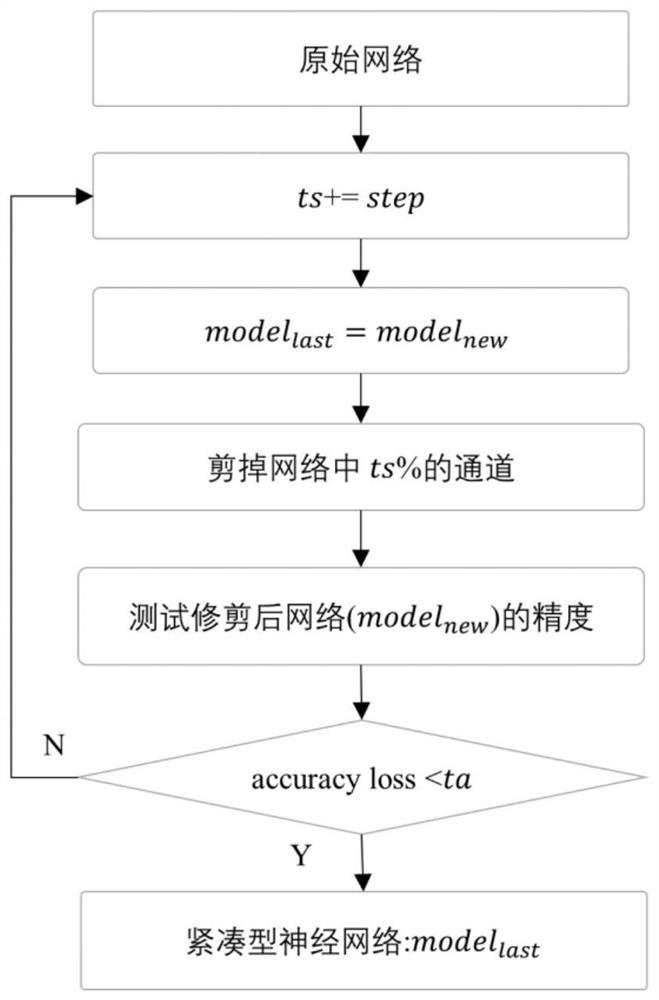

Convolutional neural network compression method based on global feature relationship

InactiveCN112308213AHigh precisionFast inferenceCharacter and pattern recognitionNeural architecturesAlgorithmEngineering

The invention belongs to the field of computer science, and discloses a convolutional neural network compression method based on a global feature relationship. According to the method, a global feature relationship (GFR) submodule is added behind a Batch Normalization (BN) layer of the convolutional neural network, so that the aims of extracting a channel relationship and suppressing an unimportant channel are fulfilled. The GFR sub-module mainly relates to pooling, full connection layer and moving average operation. Then, the importance degree of each channel is evaluated by combining the channel relationship and the channel scale factor of the BN layer, and the index can help the model compression method to screen out important channels in the model more accurately. And finally, by utilizing a channel pruning algorithm and a grid search technology, the compression of the network model is completed by consuming less time and computing resources without finely adjusting the model, so the running memory and the storage memory of the model are remarkably reduced, and the deduction speed of the model is increased, so the possibility of deploying the convolutional neural network on thesmall mobile device is greatly improved.

Owner:HUNAN UNIV

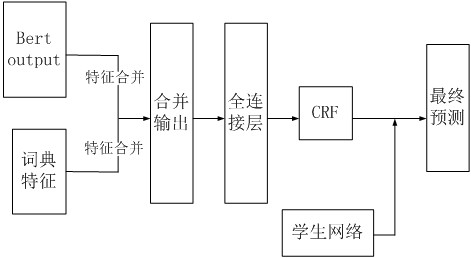

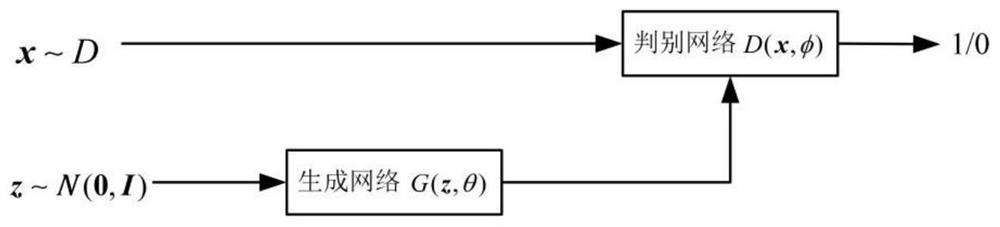

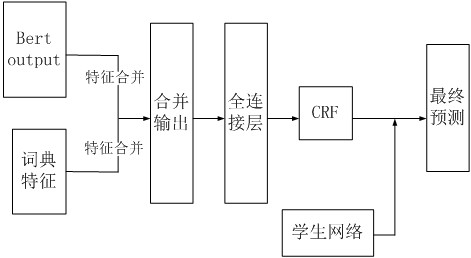

A dynamic vocabulary enhancement combined model distillation method

ActiveCN112699678AWill not increase the burdenMake up for the problem of inaccurate semantic understandingNatural language data processingMachine learningAlgorithmEngineering

The invention relates to the technical field of natural language processing in the field of artificial intelligence, and discloses a dynamic vocabulary enhancement combined model distillation method, which comprises the following steps: on the basis of an ALBert language model, adjusting the language model by combining a fine adjustment technology with a dynamic vocabulary enhancement technology to obtain a finely adjusted language model, and taking the finely adjusted language model as a teacher model; different from the conventional fine adjustment logic, when the language model is finely adjusted, in the fine adjustment process, combining the characteristics of the dictionary information with the output characteristics of the language model, and then performing fine adjustment; and after fine adjustment is finished, distilling the teacher model, and taking an obtained model prediction result as a training basis of the student model. According to the model distillation method provided by the invention, the dictionary information is introduced as the key information, so that the model can still capture the dictionary information as a feature under the condition of greatly reducing the size, thereby achieving the purposes of greatly reducing the size of the model and accelerating the inference speed under the condition of not sacrificing the extraction accuracy.

Owner:达而观数据(成都)有限公司

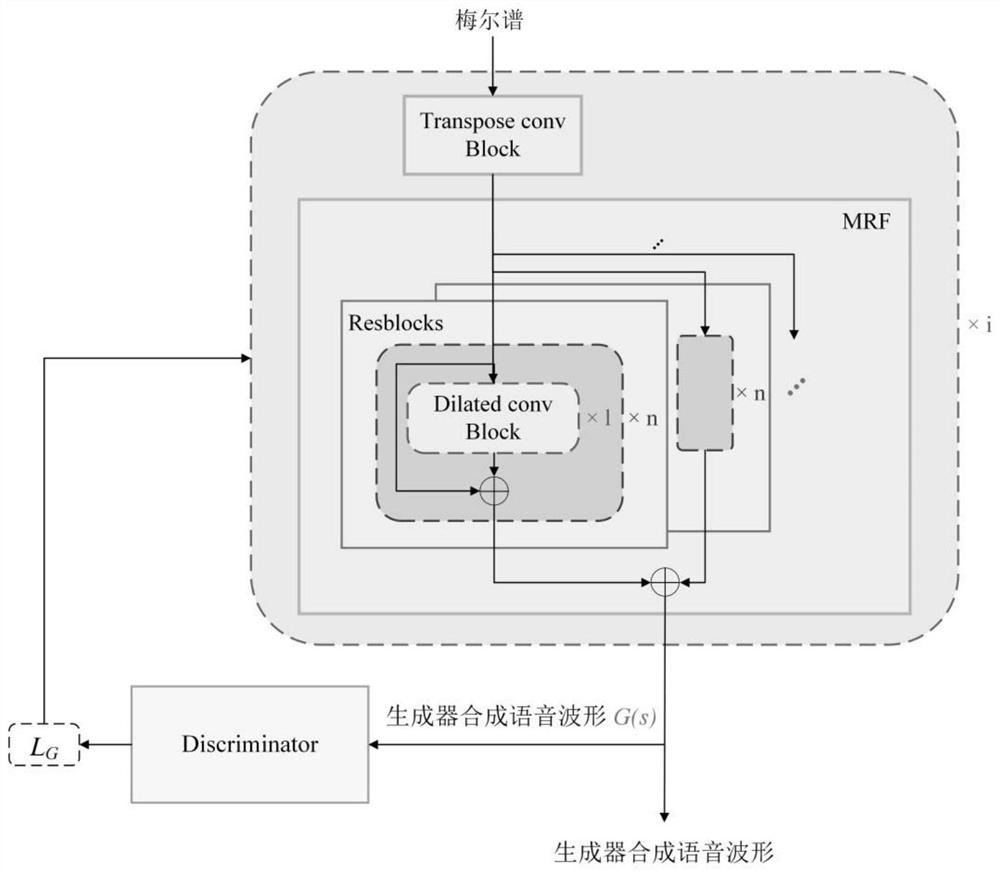

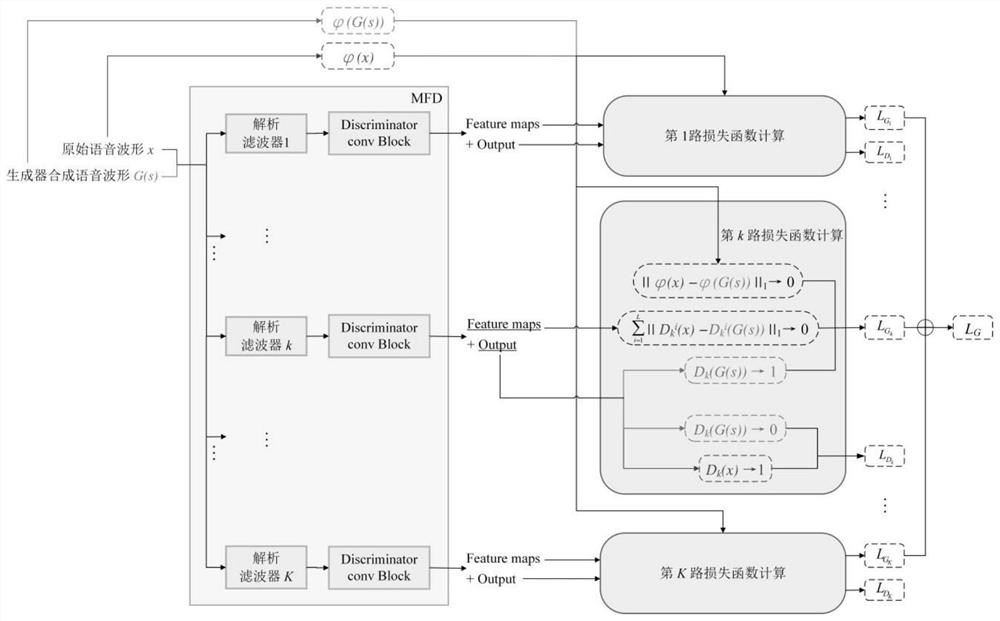

Deep network waveform synthesis method and device based on filter bank frequency discrimination

PendingCN114882867AFast inferenceApplicable to the development of real-time scenariosSpeech synthesisFrequency spectrumSynthesis methods

The invention discloses a deep network waveform synthesis method and device based on filter bank frequency distinguishing. The method comprises the following steps: designing a plurality of filter banks of any frequency passbands by adopting an analytical method; voice signals output by the generator are fed into a filter bank in parallel, and signals of a plurality of narrow frequency bands are obtained; the method comprises the following steps of: respectively inputting a narrow-band signal into each sub-discriminator for processing, training parameters of a generative adversarial network by synthesizing a loss function of the sub-discriminator, feeding a test text into a given acoustic model front-end network to generate a test Mel spectrum, and inputting the test Mel spectrum into a generator to generate a voice signal. The device comprises a processor and a memory. The voice waveform synthesis GAN network provided by the invention solves the problem of aliasing failure of a high-frequency part, and greatly reduces the spectrum distortion of a high-frequency band.

Owner:TIANJIN UNIV

A Model Distillation Method Combined with Dynamic Vocabulary Augmentation

ActiveCN112699678BWill not increase the burdenMake up for the problem of inaccurate semantic understandingNatural language data processingMachine learningEngineeringData mining

The invention relates to the technical field of natural language processing in the field of artificial intelligence, and discloses a model distillation method combined with dynamic vocabulary enhancement, including: on the basis of the ALbert language model, the language model is adjusted by fine-tuning technology combined with dynamic vocabulary enhancement technology , get the fine-tuned language model, and use it as the teacher model; when fine-tuning the language model, it is different from the conventional fine-tuning logic. In the fine-tuning process, the features of the dictionary information are first combined with the output features of the language model, and then Then fine-tune; after fine-tuning, the teacher model is distilled, and the obtained model prediction results are used as the training basis for the student model. The model distillation method provided by the present invention introduces dictionary information as key information, so that the model can still capture dictionary information as features in the case of greatly reducing the size, so as to greatly reduce the size of the model without sacrificing the accuracy of extraction. The purpose of inferring speed.

Owner:达而观数据(成都)有限公司

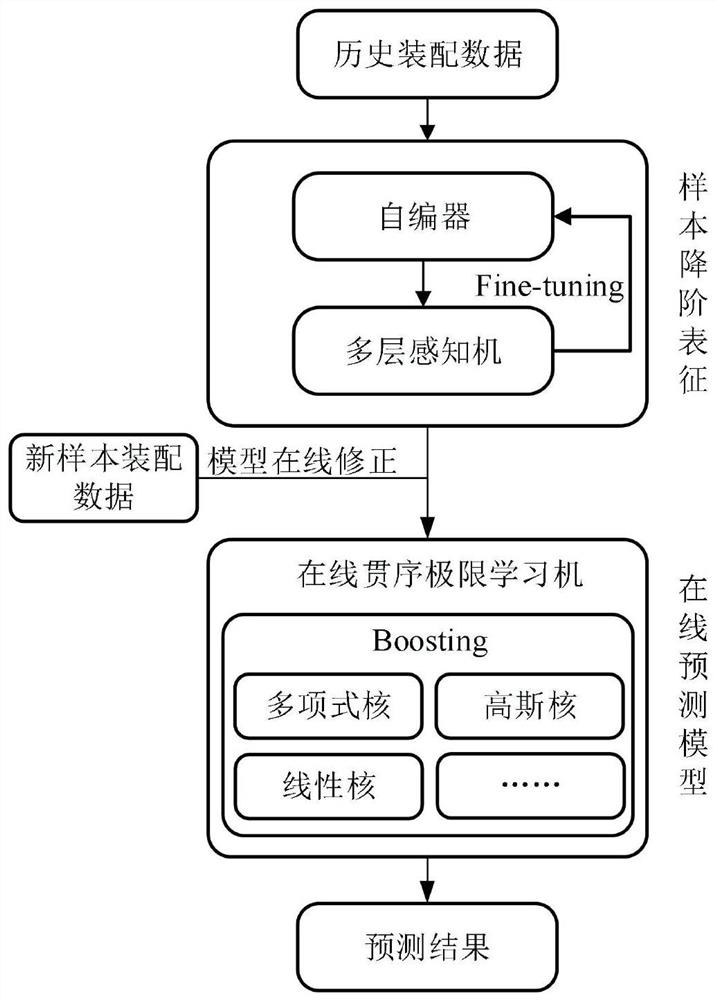

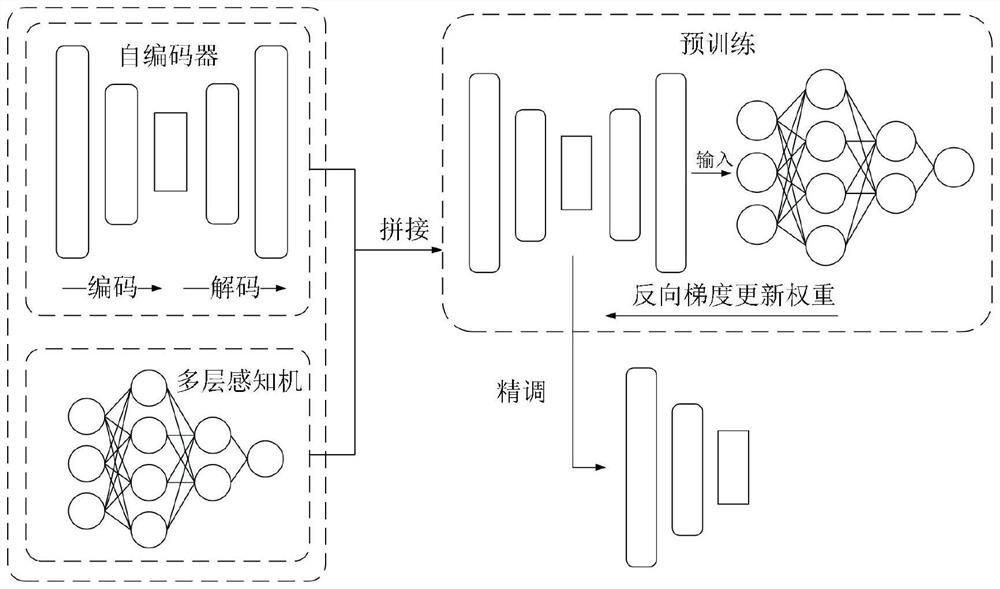

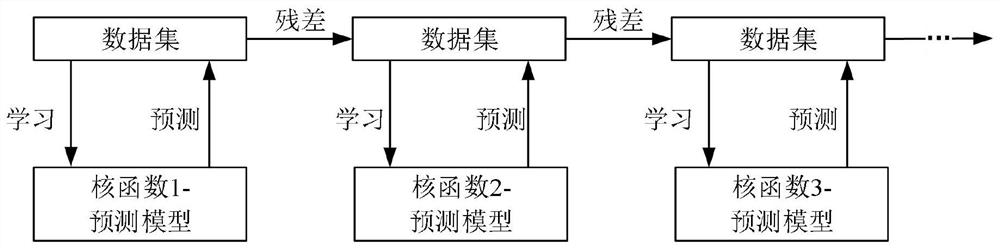

Electromechanical product assembly error online prediction method

PendingCN114529040AReduce distanceFast inferenceForecastingCharacter and pattern recognitionIncremental learningAutoencoder

The invention provides a mechanical and electrical product assembly error online prediction method based on an auto-encoder and a Boosting-OSKELM algorithm, and the method comprises the steps: firstly constructing a corresponding sample data set for a certain assembly process in combination with the assembly technology and assembly error historical assembly data; secondly, carrying out dimensionality reduction on the input process data of each sample point based on an auto-encoder with a Fine-Tuning skill, and forming new order reduction representation data by the process data after dimensionality reduction and original assembly error data; thirdly, inputting the process data subjected to dimension reduction into a Boosting-KELM model, and determining a lifting sequence of a kernel function and a corresponding hyper-parameter in combination with prediction performance on a test set; and finally, according to an incremental learning recursion formula of the online sequential kernel extreme learning machine, forming a Boosting-OSKELM model so as to realize online prediction of the assembly error. According to the method, the assembly error of the mechanical and electrical product can be predicted online.

Owner:NANJING UNIV OF SCI & TECH

Method for robustly classifying pictures by using sparse network based on retention dynamics process

PendingCN114692834AReduce occupancyFast inferenceCharacter and pattern recognitionNeural architecturesPattern recognitionAlgorithm

The invention provides a method for robustly classifying pictures by using a sparse network based on a retention dynamic process, which combines a neural tangent kernel theory and an adversarial training dynamic process, obtains respective adversarial samples of the sparse network and a dense network by using adversarial attacks, and obtains the sparse network suitable for adversarial training. And adversarial training is carried out on the picture set by using the sparse network to obtain a classifier, so that the adversarial attack can be effectively resisted. The performance of the sparse network obtained by the method is equivalent to the performance of the original dense network, the adversarial robustness is superior to that of the recently proposed Inverse Weight Ineritance2020, the target sparse network is found during initialization, the adversarial training-pruning process does not need to be iteratively carried out like the existing method, the training time is greatly shortened, and the training efficiency is improved. Therefore, the method is superior to an existing same-task method, and deployment of the robust adversarial neural network on resource limited equipment becomes possible.

Owner:NANJING UNIV

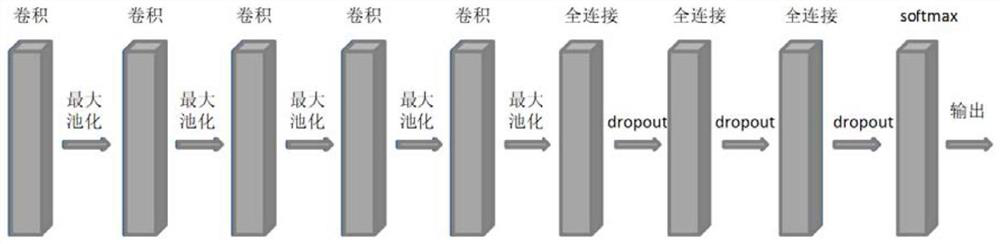

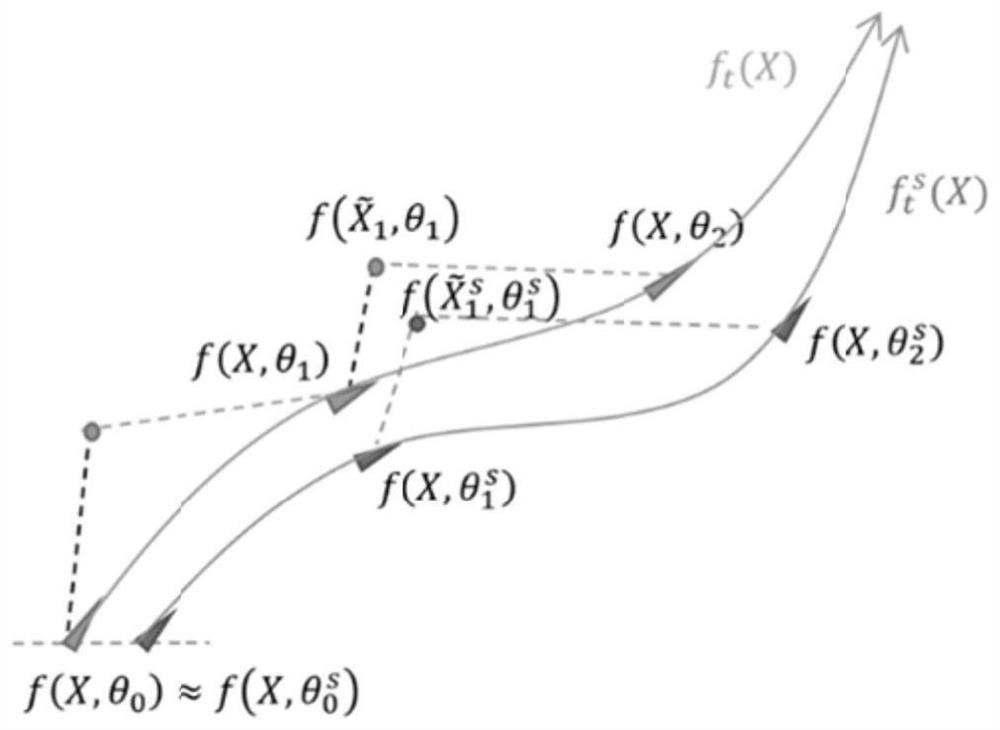

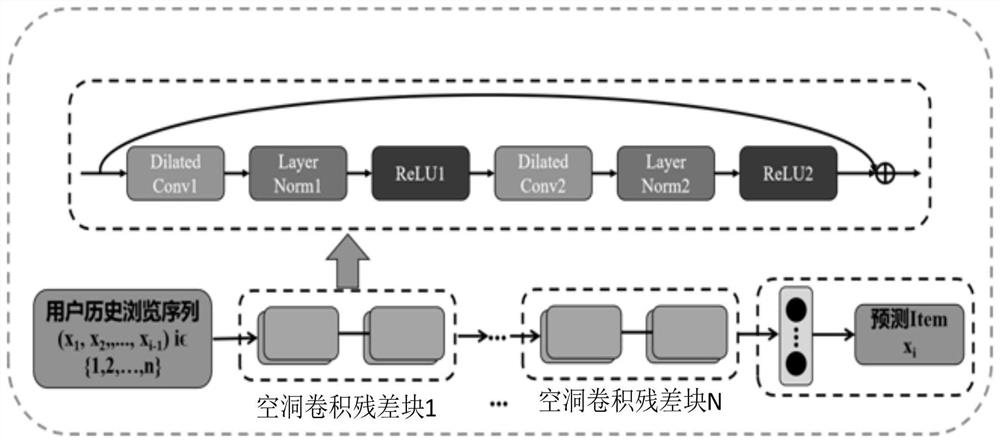

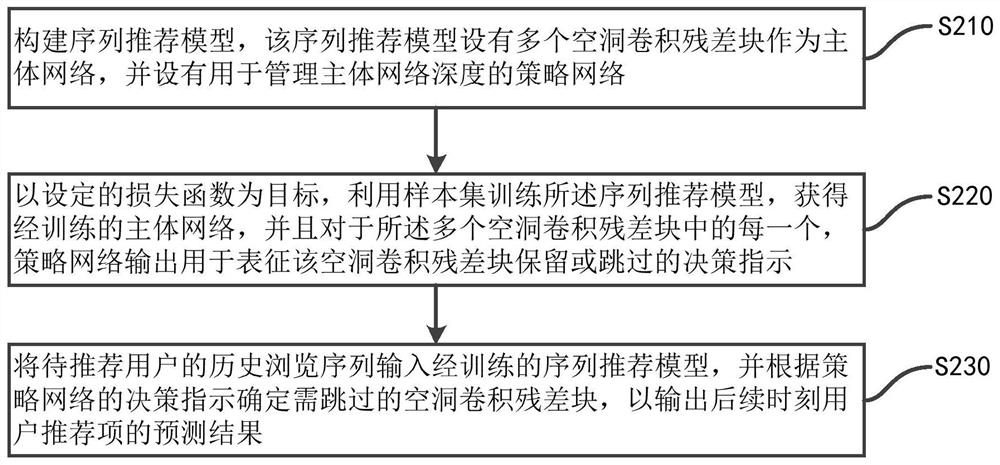

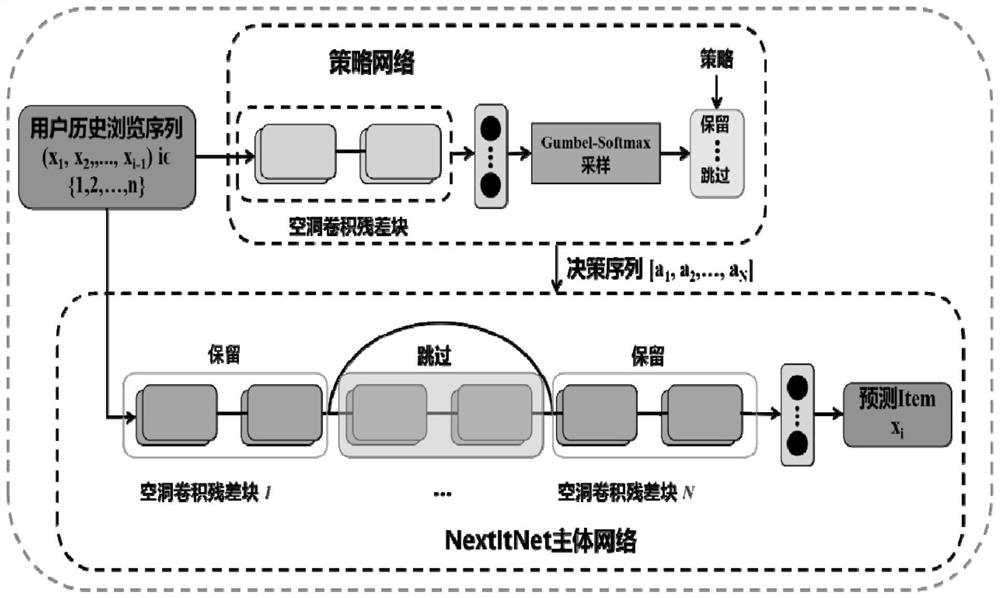

Sequence recommendation method and system based on adaptive network depth

PendingCN111931058AFast and accurate recommendation serviceReduce computational overheadDigital data information retrievalCharacter and pattern recognitionRecommendation modelNetwork output

The invention discloses a sequence recommendation method and system based on adaptive network depth. The method comprises the steps that a sequence recommendation model is constructed, and the sequence recommendation model is provided with a plurality of cavity convolution residual blocks as a main body network and is provided with a strategy network used for managing the depth of the main body network; taking a set loss function as a target, training the sequence recommendation model by using a sample set to obtain a trained main body network, and for each of the plurality of hole convolutionresidual blocks, outputting a decision indication used for representing reservation or skipping of the hole convolution residual block by the strategy network; and inputting the historical browsing sequence of the to-be-recommended user into the trained sequence recommendation model, and determining a hole convolution residual block needing to be skipped according to the decision indication of the strategy network so as to output a prediction result of the user recommendation item at the subsequent moment. According to the invention, the depth of the main network can be adaptively adjusted byusing the strategy network, and quick and accurate recommendation services can be provided for users.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

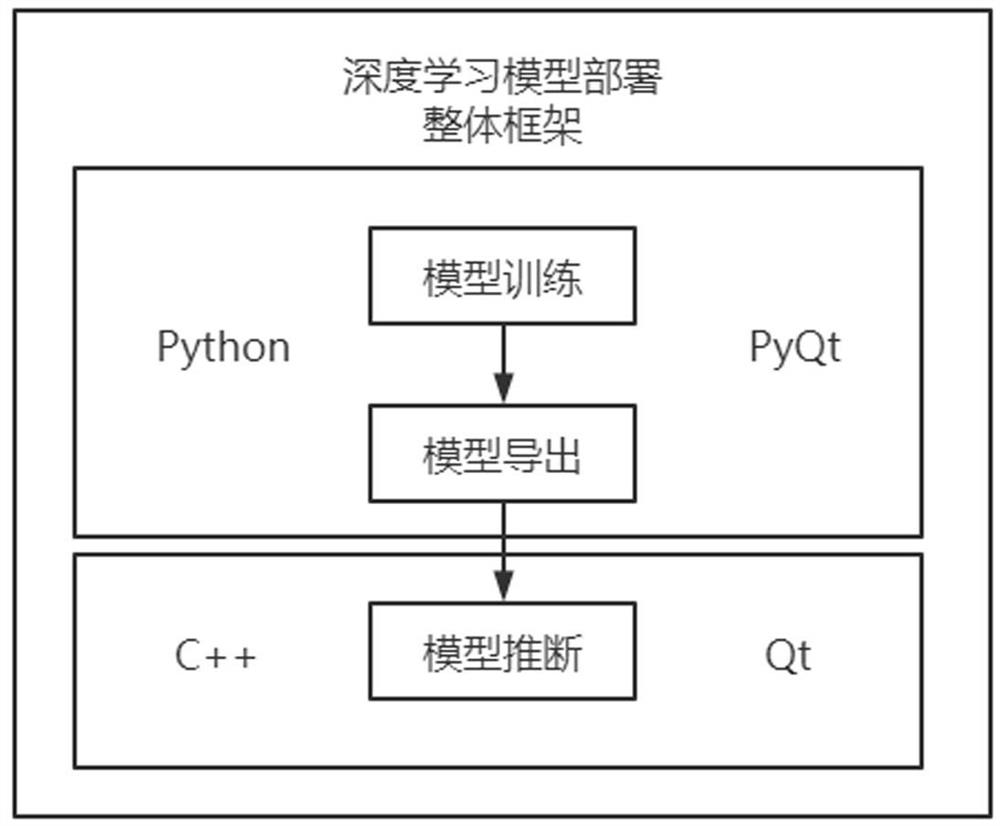

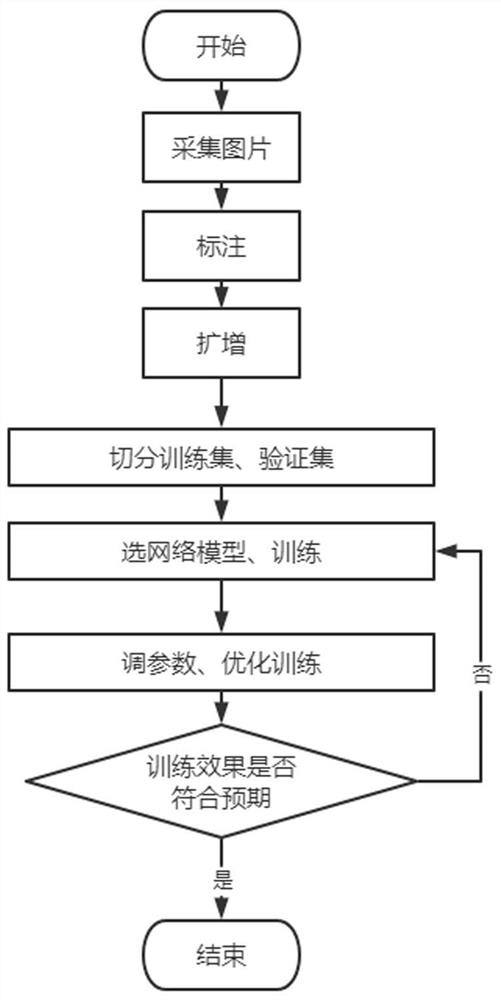

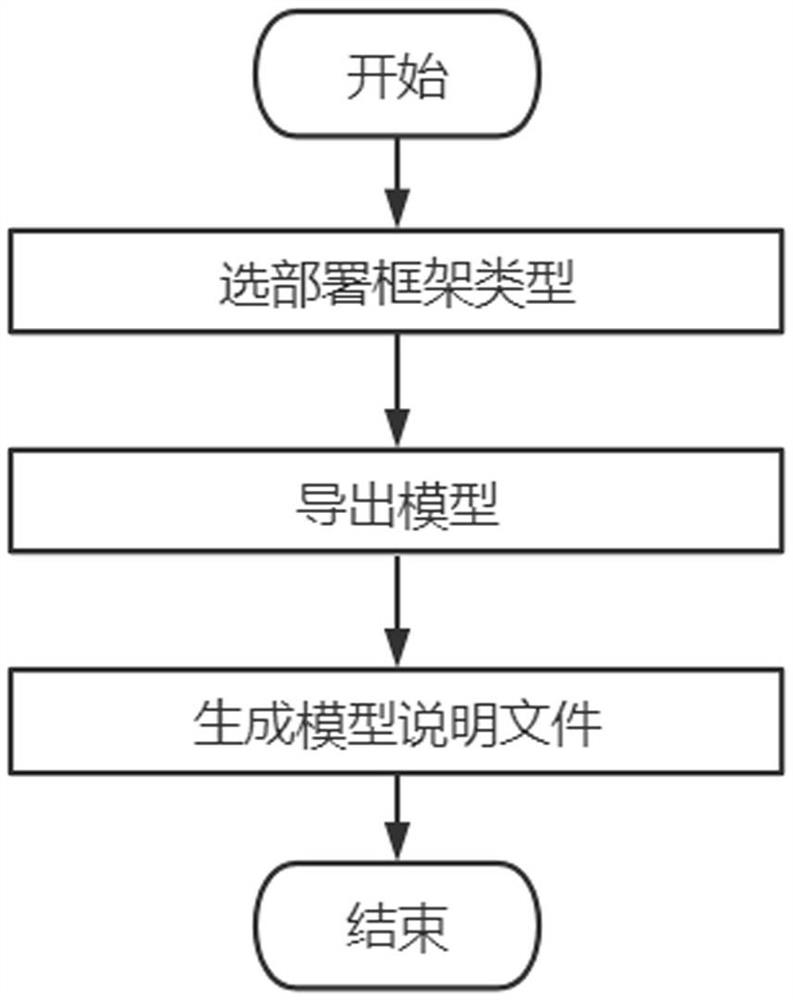

Deep learning model deployment method and device, and medium

InactiveCN114663437AEasy to operateImprove efficiencyImage enhancementImage analysisEngineeringData mining

The invention discloses a deep learning model deployment method and device, and a medium, and the method comprises the following steps: S100, model training: carrying out the preprocessing of a defect picture obtained from an industrial camera, and training a model through employing the processed defect picture; s200, model exporting: selecting a deployment framework type, and exporting a model and a model description file; s300, model deployment: importing an industrial picture obtained from an industrial camera, loading and reading a model description file, automatically selecting a corresponding inference parameter according to the model description file, and inferring and outputting a detection result through model identification; according to the method, the model description file is generated while the model is exported, the model description file is directly loaded at the model deployment end, the model inference frame type is automatically matched and selected, manual selection is not needed, and the operation is simple.

Owner:苏州中科行智智能科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com