Patents

Literature

51results about How to "Rich texture details" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Image denoising method based on generative adversarial network

ActiveCN110473154AReduce the "checkerboard effect"Reduce "chessboard effect"Image enhancementImage analysisImage denoisingPattern recognition

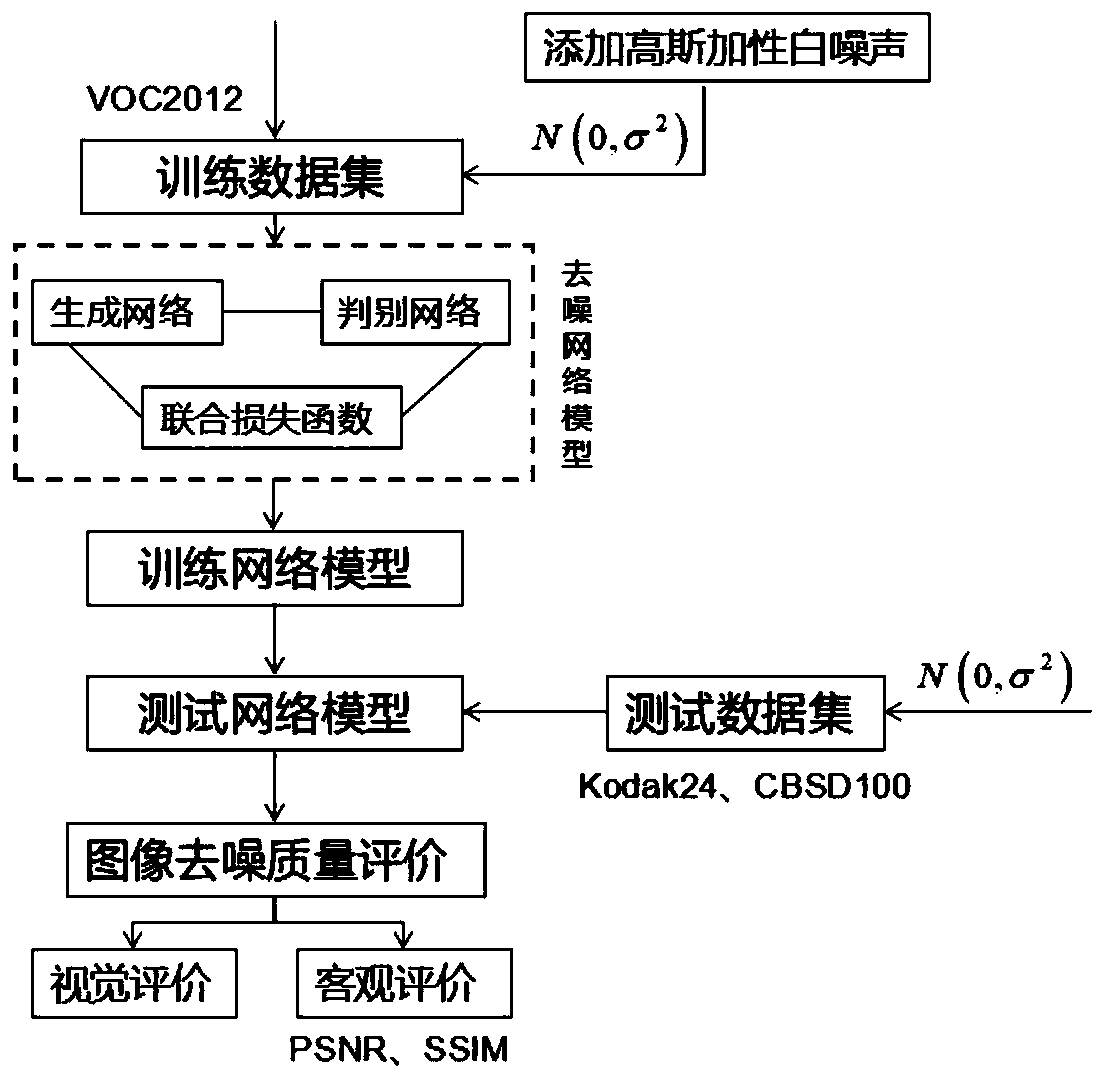

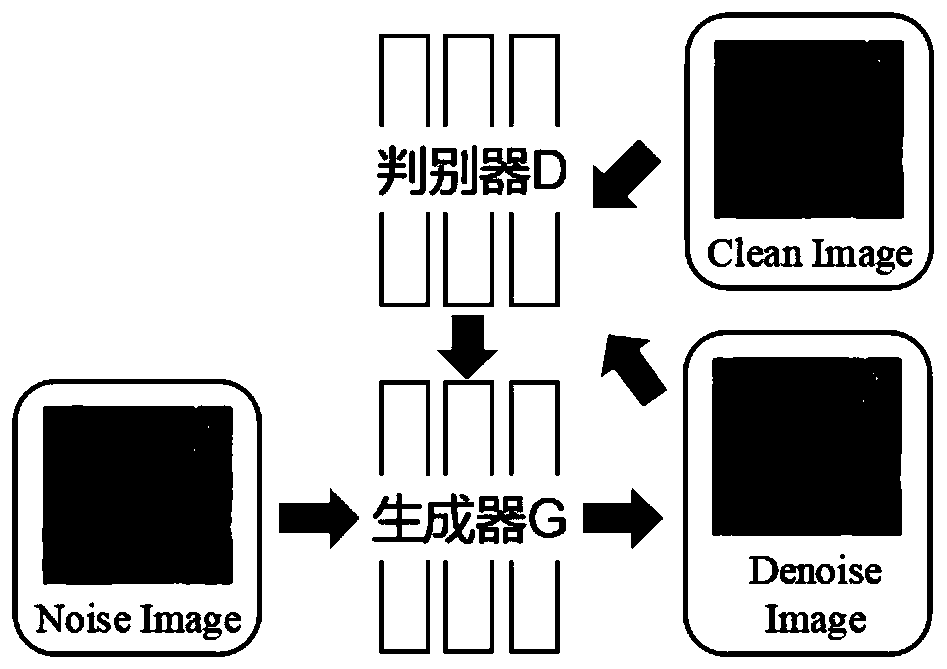

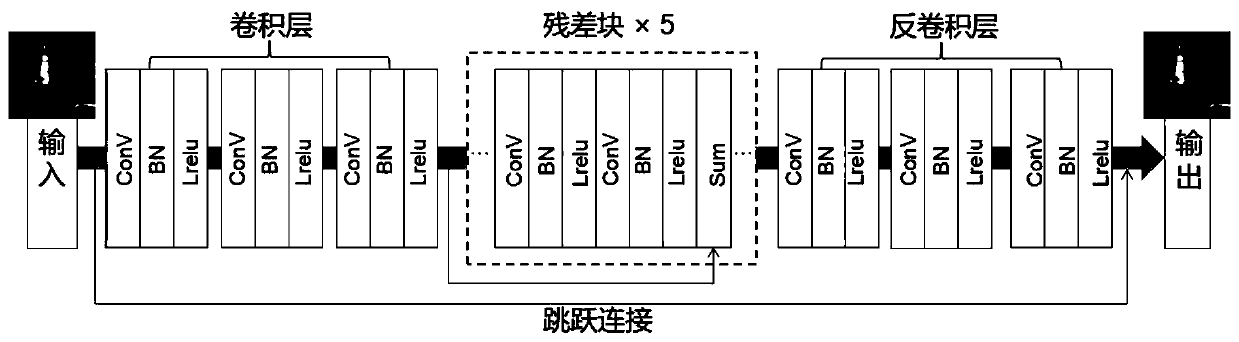

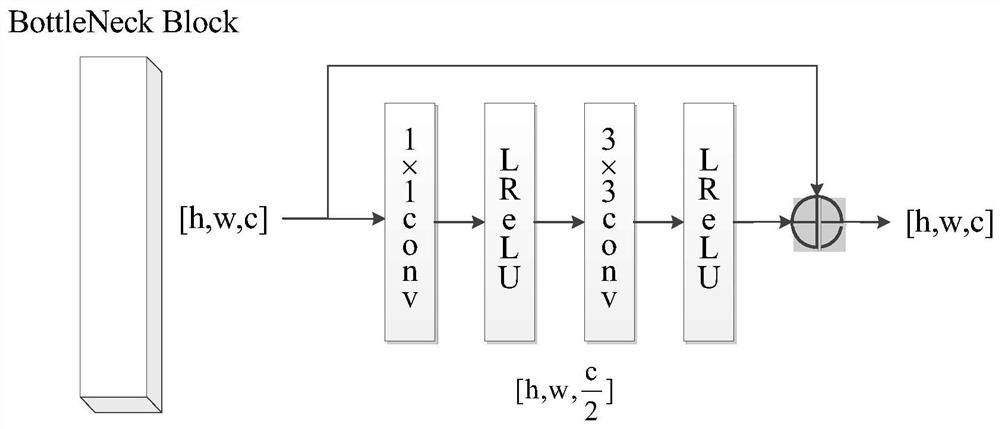

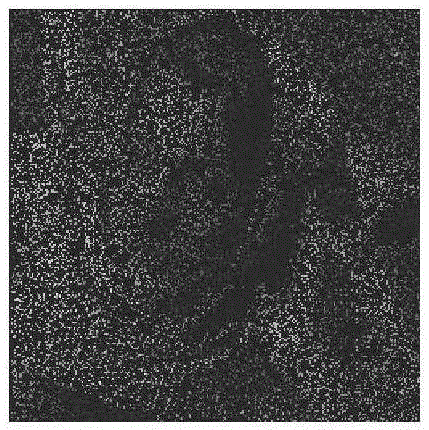

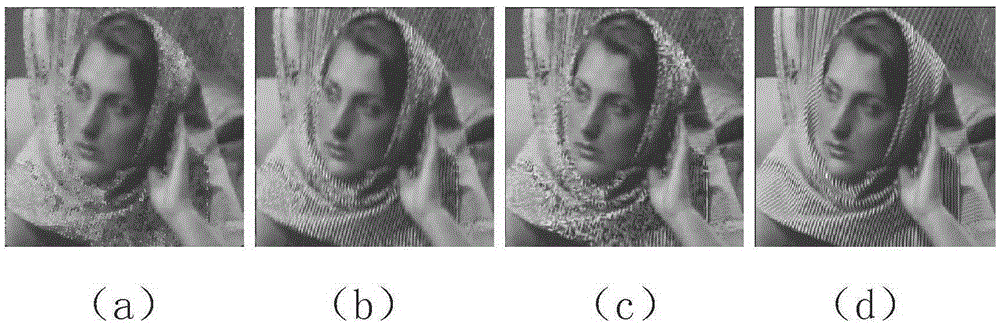

The invention discloses an image denoising method based on a generative adversarial network, and the method is characterized by comprising the following steps: 1, selecting an experiment data set; 2,selecting Gaussian additive white noise as a noise model; 3, building a generative network model, and training a generator network G for denoising; 4, establishing a discrimination network model, wherein a discriminator D is used for carrying out authenticity classification on the input image; 5, constructing a joint loss function model; 6, training a generative adversarial network; and step 7, carrying out image denoising quality evaluation. According to the image denoising method based on the generative adversarial network, the denoising effect of reserving more texture details and edge features can be achieved.

Owner:XIAN UNIV OF TECH

Attention mechanism-based image blind deblurring method and system

ActiveCN111709895AClear textureImprove featuresImage enhancementImage analysisFeature extractionDeblurring

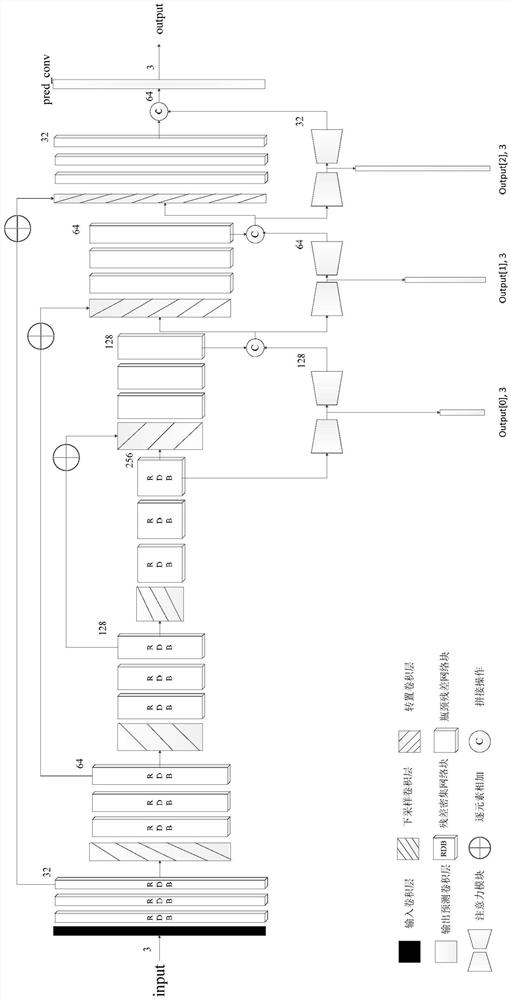

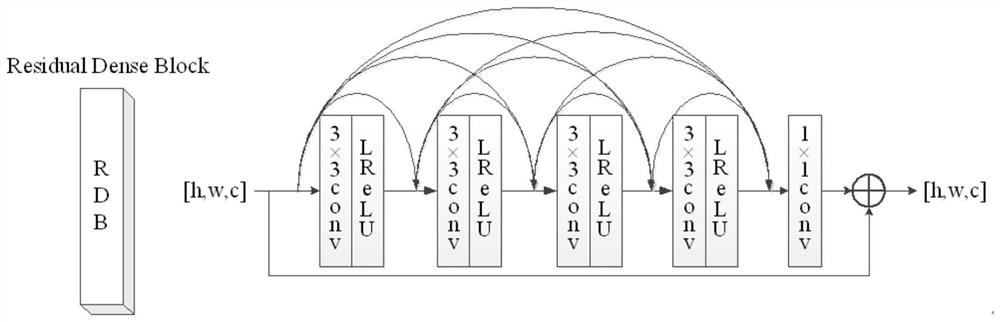

The invention provides an attention mechanism-based image blind deblurring method and system, and the method comprises the steps that a multi-scale attention network directly recovers a clear image inan end-to-end mode, wherein the multi-scale attention network adopts an asymmetric encoding and decoding structure, and the encoding side of the encoding and decoding structure adopts a residual dense network block to complete feature extraction and expression of an input image by the multi-scale attention network; a plurality of attention modules are arranged on the decoding side of the encodingand decoding structure, the attention modules output preliminary restored images, and the preliminary restored images form a pyramid-type multi-scale structure of the image; the attention module further outputs an attention feature map, and the attention feature map models the relationship between the long-distance areas from the global perspective to process the blurred image; dark channel priorloss and multi-scale content loss form a loss function, and the loss function is used for reversely optimizing the network and is not self-optimized.

Owner:INNOVATION ACAD FOR MICROSATELLITES OF CAS +1

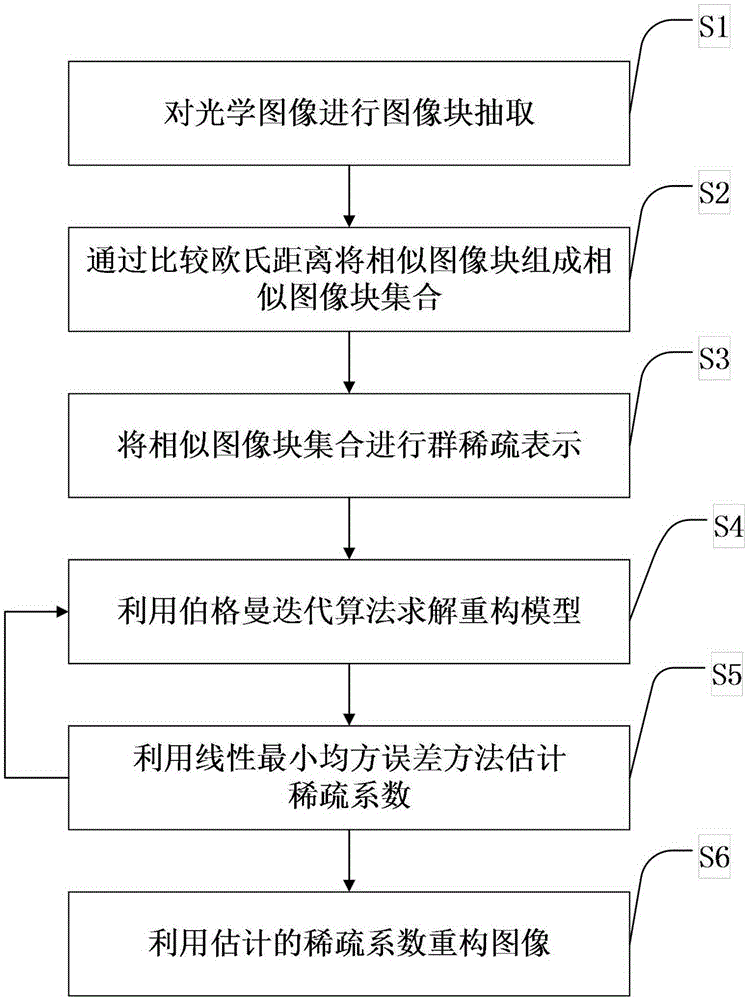

Image reconstruction method based on group sparsity coefficient estimation

InactiveCN105427264AImprove estimation accuracyPreserve Texture DetailsImage enhancementDigital signal processingDeblurring

The invention discloses an image reconstruction method based on group sparsity coefficient estimation. The method belongs to the technical field of digital image processing and is an image reconstruction method based on similar block set sparsity coefficient estimation, wherein similar image blocks are searched by an Euclidean distance at first; partial and non-partial sparse representation is carried out to a similar image block set, so that the sparser and more accurate coefficient is obtained; a reconstruction model is solved further by a Bergman iteration algorithm; and the sparsity coefficient is estimated according to a linear minimum mean square error rule, so that the accurate estimation of the small coefficient containing image texture detailed information is ensured. The method disclosed by the invention has the advantages that the linear minimum mean square error estimation is carried out to the similar image block set sparsity representation coefficient, so that obvious effects are obtained in repair, deblurring and other aspects; a reconstructed image will also have the more abundant detailed information; overall visual effects become clearer; and the method can be used in the repair and the deblurring of the optical images.

Owner:CHONGQING UNIV

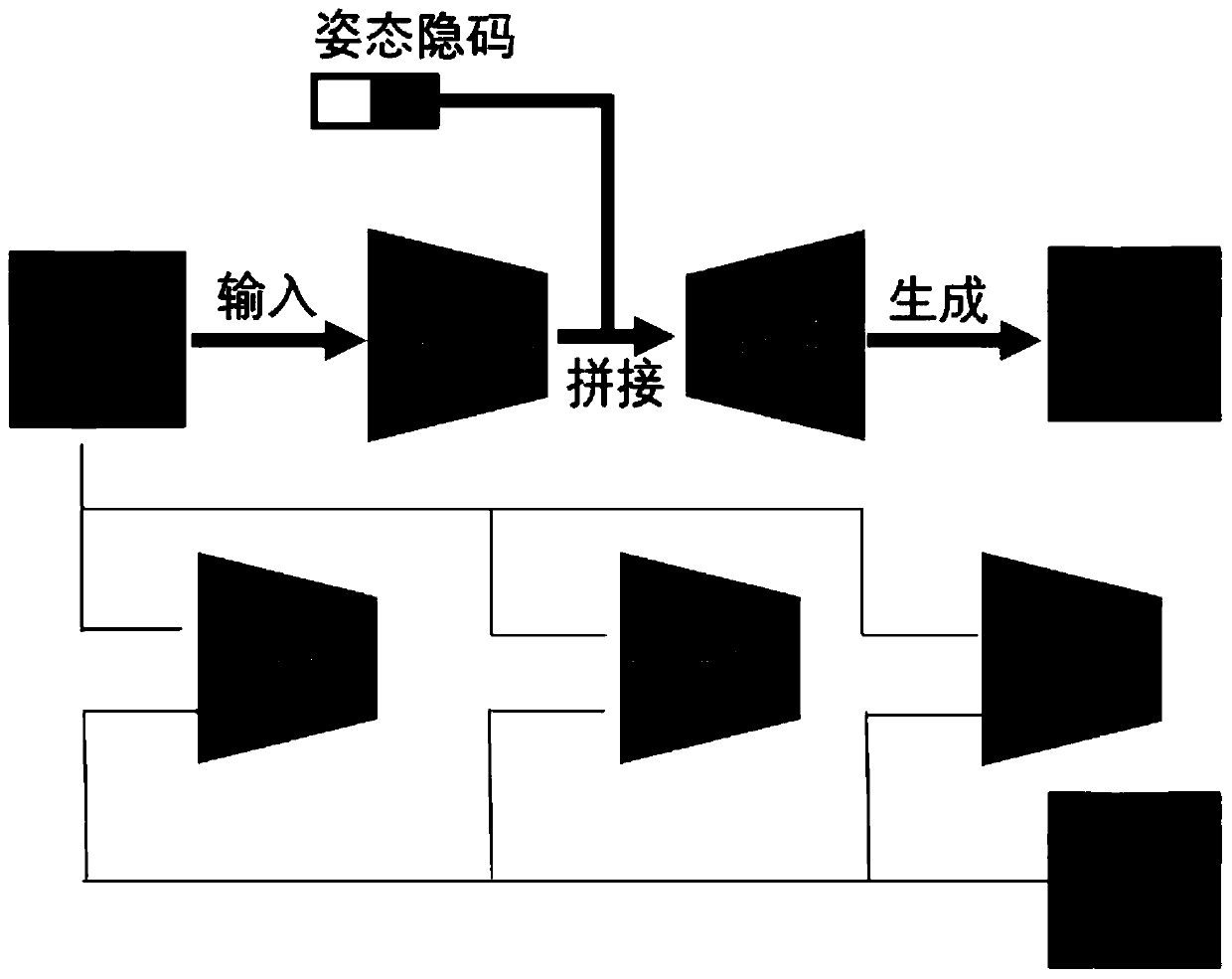

Face image correction method based on decoupling expression learning generative adversarial network

InactiveCN111428667ARich texture detailsFast convergenceCharacter and pattern recognitionNeural architecturesPattern recognitionQuality of vision

The invention discloses a face image correction method based on a decoupling expression learning generative adversarial network. The method comprises the following steps: a model comprising an auto-encoder of a U-net network structure and three discriminators is trained; then, identity information representation of the face image is learned through the auto-encoder, the posture of the face image can be generated through explicit control in combination with the posture hidden code, and the three discriminators predict authenticity, posture and identity information of the face image respectively, so that the face image with rich texture details is generated. According to the invention, the visual quality of the generated face image can be significantly improved.

Owner:天津中科智能识别有限公司

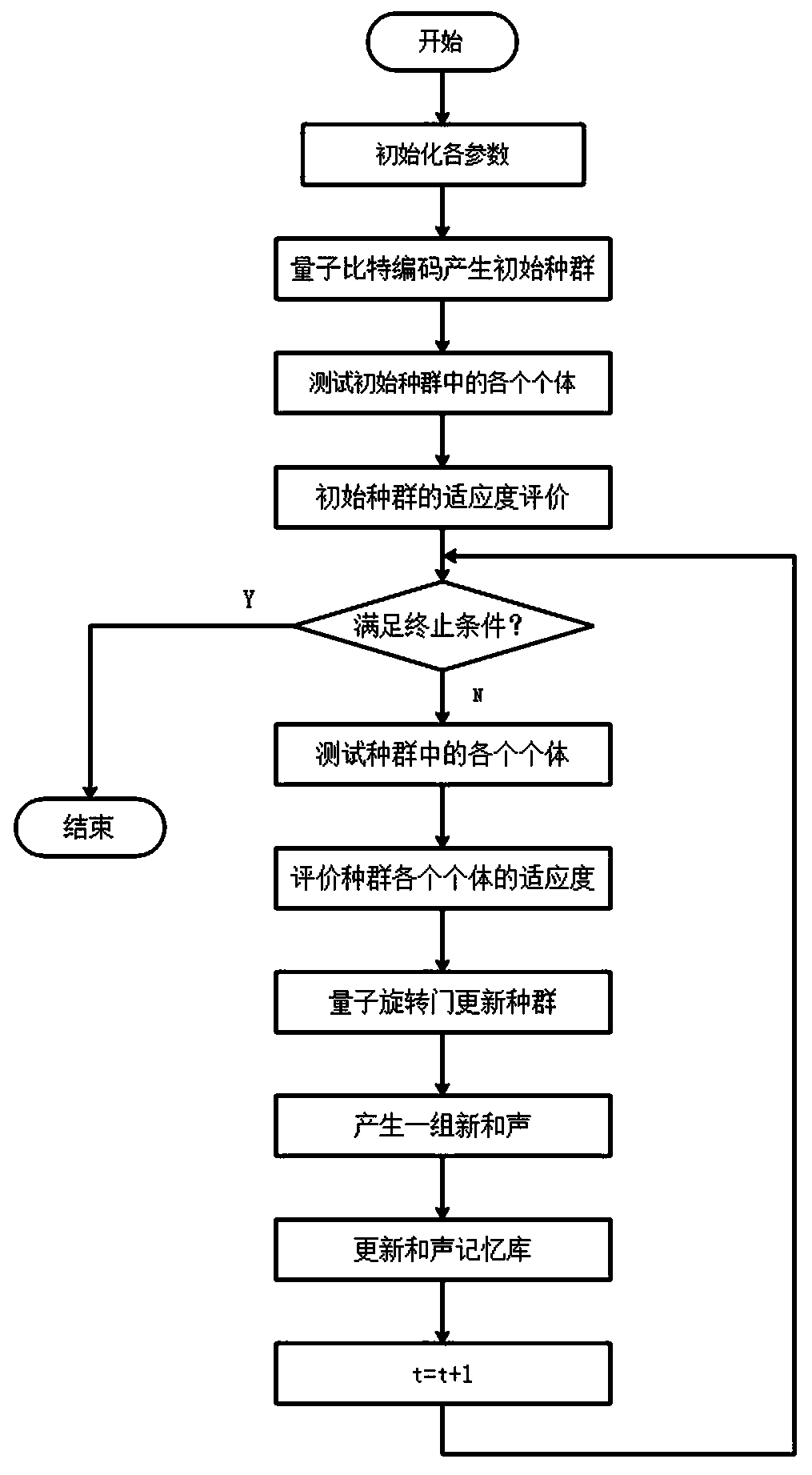

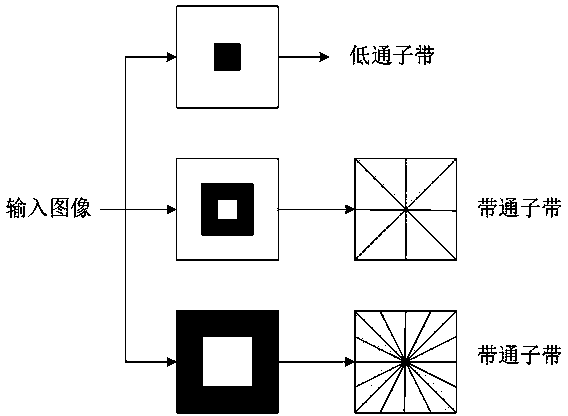

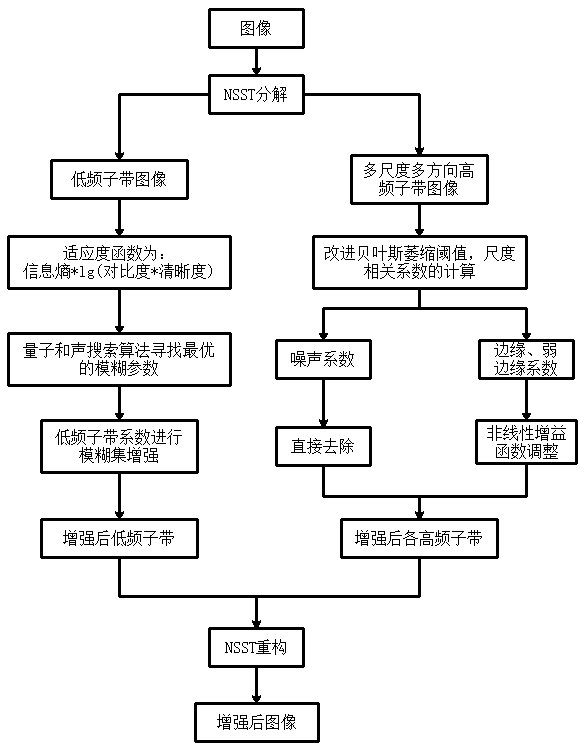

NSST domain flotation froth image enhancement and denoising method based on quantum harmony search fuzzy set

ActiveCN110246106AIncrease brightnessIncrease contrastImage enhancementImage analysisPattern recognitionDecomposition

The invention relates to an NSST domain flotation froth image enhancement and denoising method based on a quantum harmony search fuzzy set. The NSST domain flotation froth image enhancement and denoising method comprises the steps: carrying out NSST decomposition on a flotation froth image, and obtaining a low-frequency sub-band image and multi-scale high-frequency sub-bands; performing quantum harmony search fuzzy set enhancement on the low-frequency sub-band image; secondly, for the multi-scale high-frequency sub-bands, removing a noise coefficient by combining an improved BayesShrink thresholding and scale correlation, and enhancing an edge coefficient and a texture coefficient through a nonlinear gain function; and finally, performing NSST reconstruction on coefficients of the processed low-frequency sub-band and each high-frequency sub-band to obtain an enhanced de-noised image. According to the NSST domain flotation froth image enhancement and denoising method, the brightness, the contrast and the definition of the froth image can be improved, and the froth edge is obviously enhanced while noise is effectively inhibited, and more texture details are reserved, and subsequent processing such as froth segmentation and edge detection is facilitated.

Owner:FUZHOU UNIV

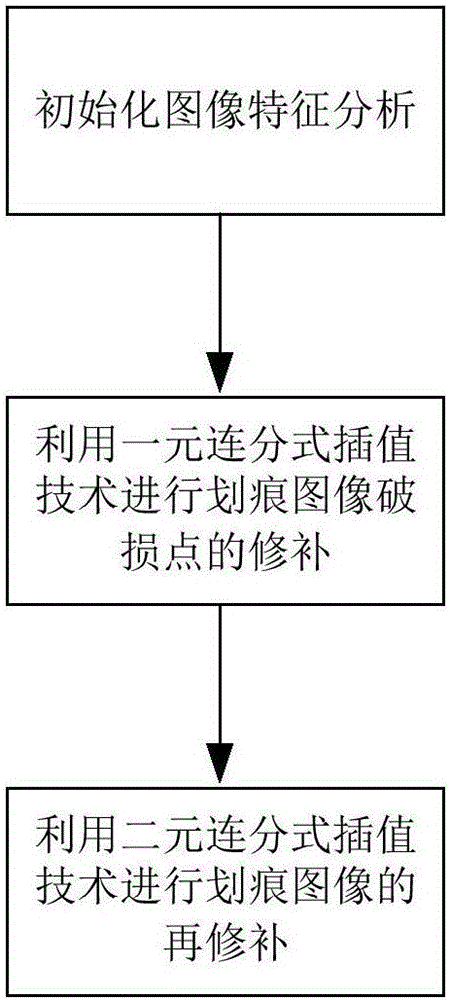

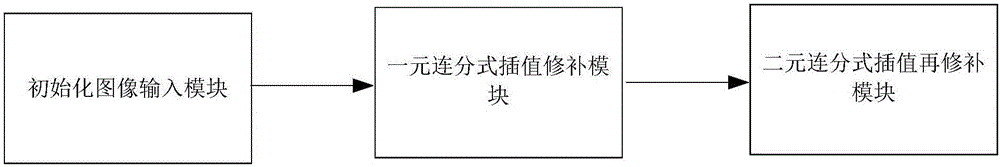

Adaptive image inpainting method based on continued fraction interpolation technology and system thereof

ActiveCN106846279AQuality improvementImprove efficiencyImage enhancementImage analysisPattern recognitionSelf adaptive

The present invention relates to an adaptive image inpainting method based on a continued fraction interpolation technology and a system thereof. Compared to the prior art, the defects of bad inpainting effect and low efficiency are solved. The method comprises the following steps: initialing image characteristic analysis; employing a unitary continued fraction interpolation technology to perform inpainting of damaged points of a scratched image; employing a binary continued fraction interpolation technology to perform inpainting again of the scratched image, taking an initial inpainting image A as an information image, determining the position of each damaged point through a mask artwork, employing the binary continued fraction interpolation to update pixel information around the damaged points to obtain pixel information of each damaged point, and obtaining a final inpainting image B. The adaptive image inpainting method based on the continued fraction interpolation technology and the system thereof improve the quality and efficiency of image inpainting, can perform inpainting of any types of scratches, and are suitable for processing of all the images.

Owner:HEFEI UNIV OF TECH

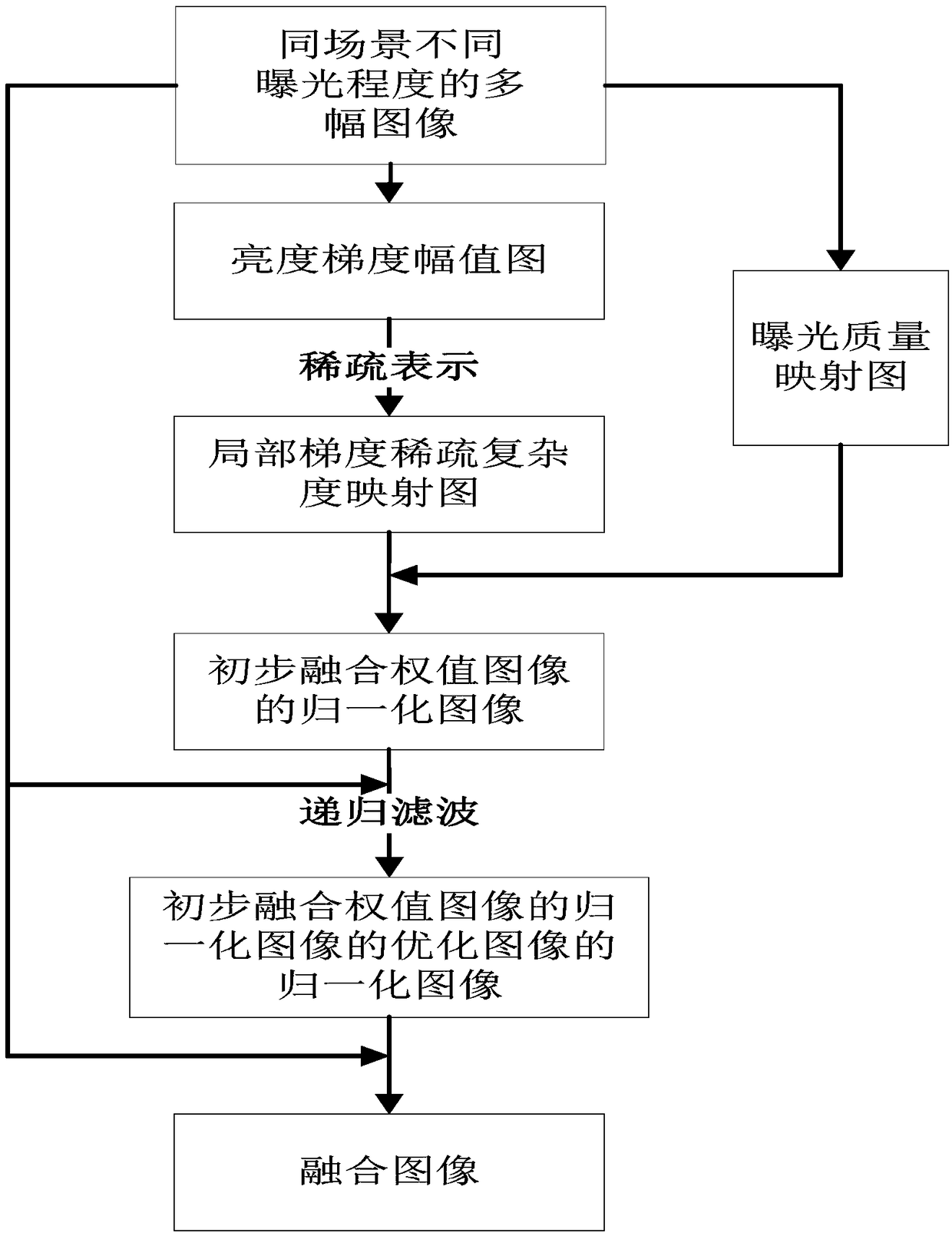

A multi-exposure image fusion method for halo removal

ActiveCN109035155AEliminate halo phenomenonImprove visual effectsImage enhancementImage fusionHigh definition

The invention discloses a multi-exposure image fusion method for halo removal, which calculates a local gradient sparse complexity map and an exposure quality map of a brightness image of each exposure image. Then, the preliminary fusion weight image of brightness images of each exposure image are obtained through fusion, and the normalized image of the optimized image of the normalized image of the preliminary fusion weight image of the brightness image of each exposure image is obtained. The final fusion image of all the exposure images is obtained. The invention has the advantages that thelocal gradient sparse complexity mapping map and the exposure quality mapping map are constructed for image fusion according to the sparse representation of the gradient magnitude image of the luminance image, the influence of the invalid gradient of the large magnitude in the image fusion process is effectively suppressed, the halo phenomenon in the fusion image is eliminated, and the texture details of the image under different exposure conditions in the multi-exposure image sequence can be extracted more effectively, so that the fusion image contains more texture details and has higher definition.

Owner:NINGBO UNIV

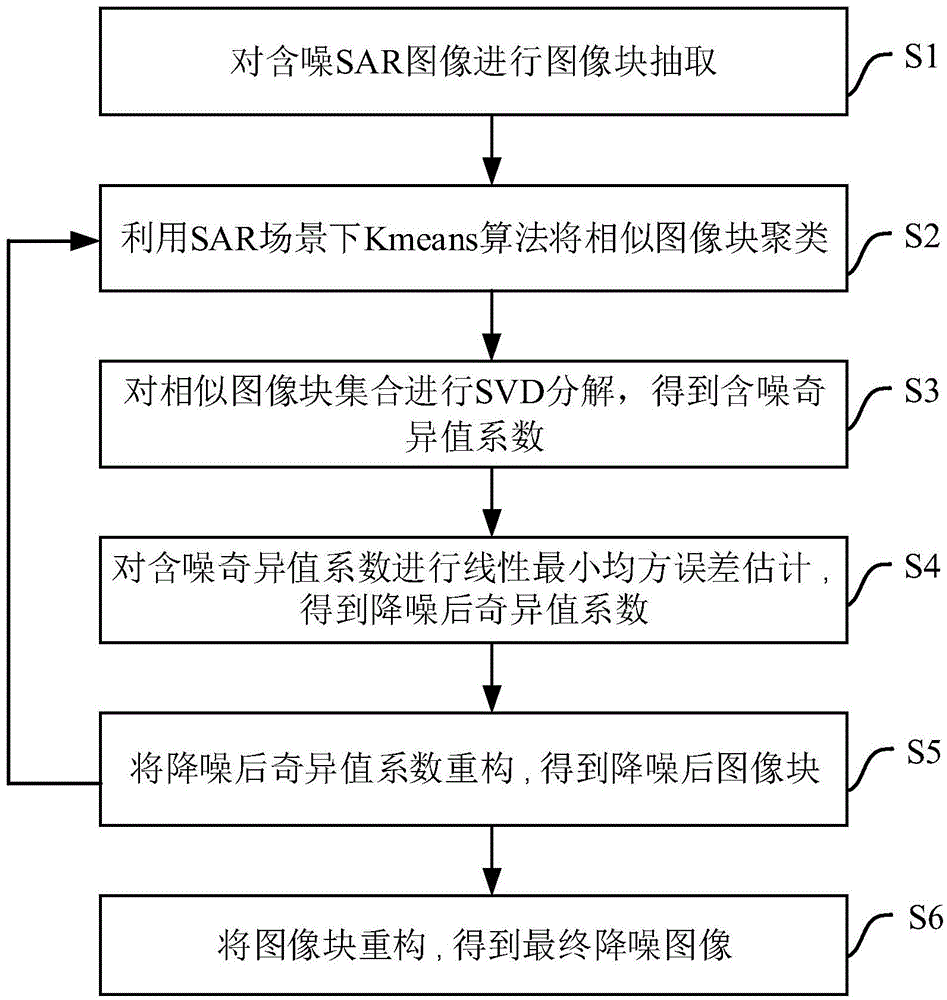

SAR image noise reduction method based on linear minimum mean square error estimation

InactiveCN104978716AImprove estimation accuracyImprove denoising effectImage enhancementCharacter and pattern recognitionSingular value decompositionPattern recognition

The present invention discloses an SAR image noise reduction method based on linear minimum mean square error estimation. The method belongs to the technical field of digital image processing. The method is an SAR image noise reduction method that combines a nonlocal image similarity with a sparse representation. The method comprises: firstly, clustering similar images by a Kmeans clustering method; performing singular value decomposition on a similar block set to obtain a noisy singular value coefficient involving row and column correlation information; in order to enable the singular value coefficient after noise reduction to better approximate a reality coefficient, estimating the singular value coefficient by using a linear minimum mean square error criterion; and then reconstructing the estimated singular value coefficient to obtain an initial noise reduction image block, performing clustering noise reduction on noisy image blocks again in combination with an initial noise reduction result, and reconstructing the image blocks after noise reduction to obtain a final noise reduction image. According to the SAR image noise reduction method based on linear minimum mean square error estimation provided by the present invention, not only the noise reduction effect is obvious, but also the image texture details can be effectively preserved, and the final noise reduction image has a good visual effect; and the method can be applicable to SAR image noise reduction.

Owner:CHONGQING UNIV

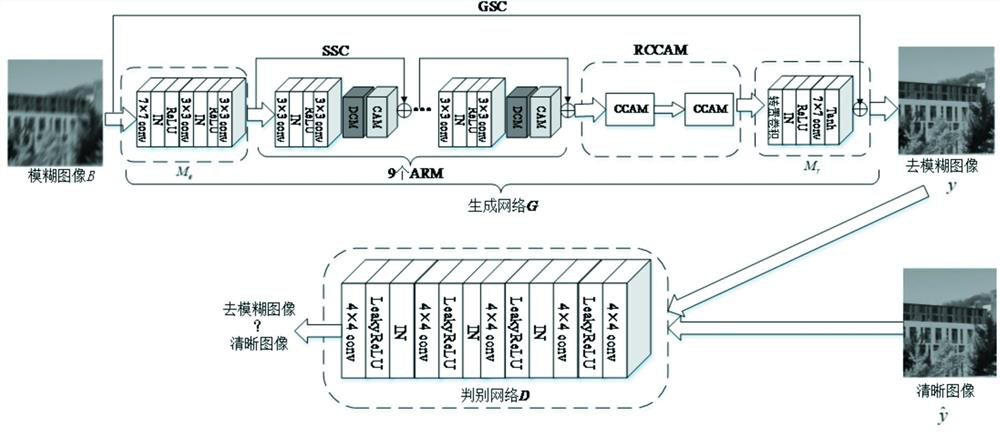

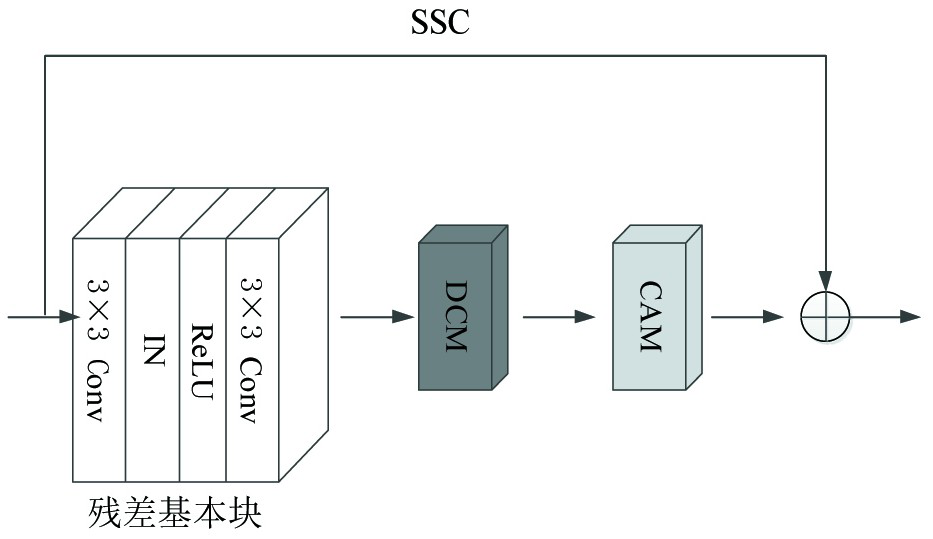

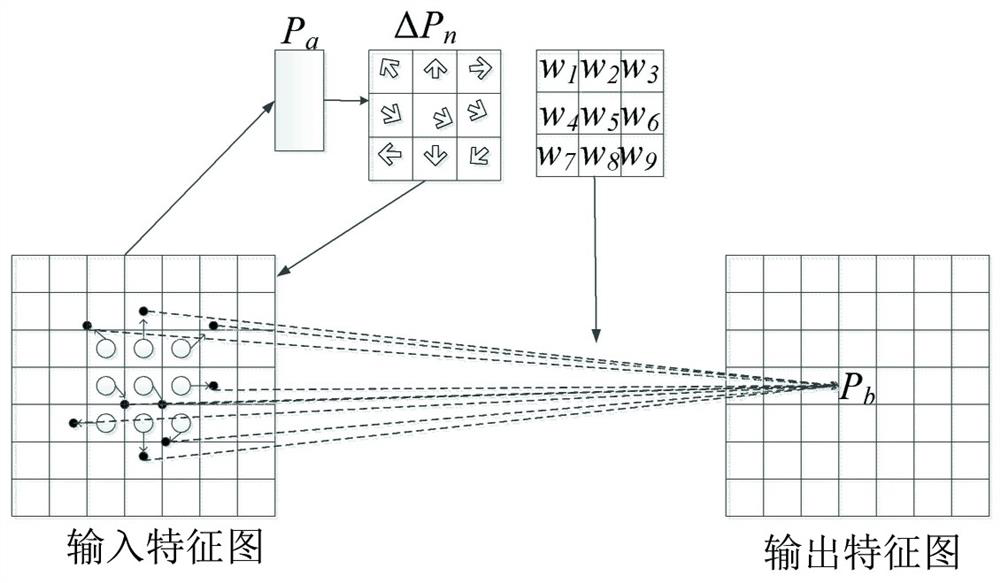

Moving image deblurring method based on self-adaptive residual errors and recursive cross attention

ActiveCN112164011AAchieve removalSolve the problem of inhomogeneity that cannot effectively adapt to motion blurred imagesImage enhancementImage analysisFeature extractionDeblurring

The invention discloses a moving image deblurring method based on self-adaptive residual error and recursive cross attention, which is characterized by comprising the following steps of: 1) establishing a deblurring network framework; (2) carrying out shallow feature extraction, (3) carrying out an adaptive residual process, (4) carrying out a recursive cross attention process, (5) carrying out image reconstruction and (6) carrying out network model discrimination. The method can solve the non-uniformity problem of a motion blurred image, remove artifacts, obtain more image high-frequency features and reconstruct a high-quality image with rich texture details.

Owner:GUILIN UNIV OF ELECTRONIC TECH

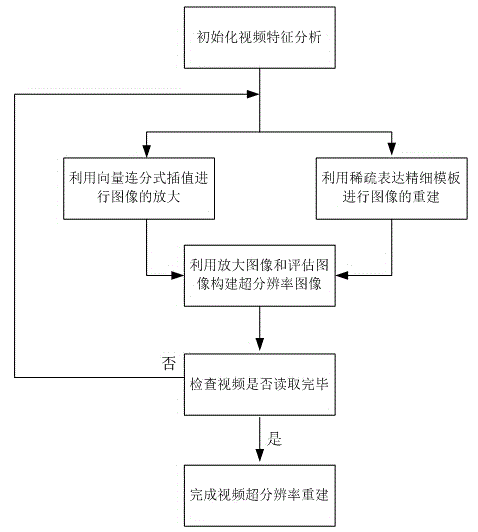

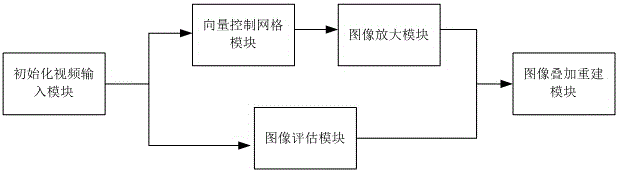

Video super-resolution reestablishing method and system based on sparse representation and vector continued fraction interpolation under polar coordinates

The invention relates to a video super-resolution reestablishing method and system based on sparse representation and vector continued fraction interpolation under polar coordinates. Compared with the prior art, the defects that a super-resolution reestablishing technology cannot be suitable for all videos and a reestablished video image can be fuzzy are overcome. The video super-resolution reestablishing method comprises the following steps that initialized video feature analyzing is carried out, an image is amplified through the vector continued fraction interpolation, the image is reestablished through a sparse representation exquisite template, a super-resolution image is established through the amplified image and the assessed image, whether reading of a video is finished or not is detected, if yes, video super-resolution reestablishing is finished, and if not, the image continues to be amplified through the vector continued fraction interpolation. By means of the video super-resolution reestablishing method and system, the quality and efficiency of video image reestablishing are improved, and the application degree of the super-resolution reestablishing technology in different videos is improved.

Owner:HEFEI UNIV OF TECH

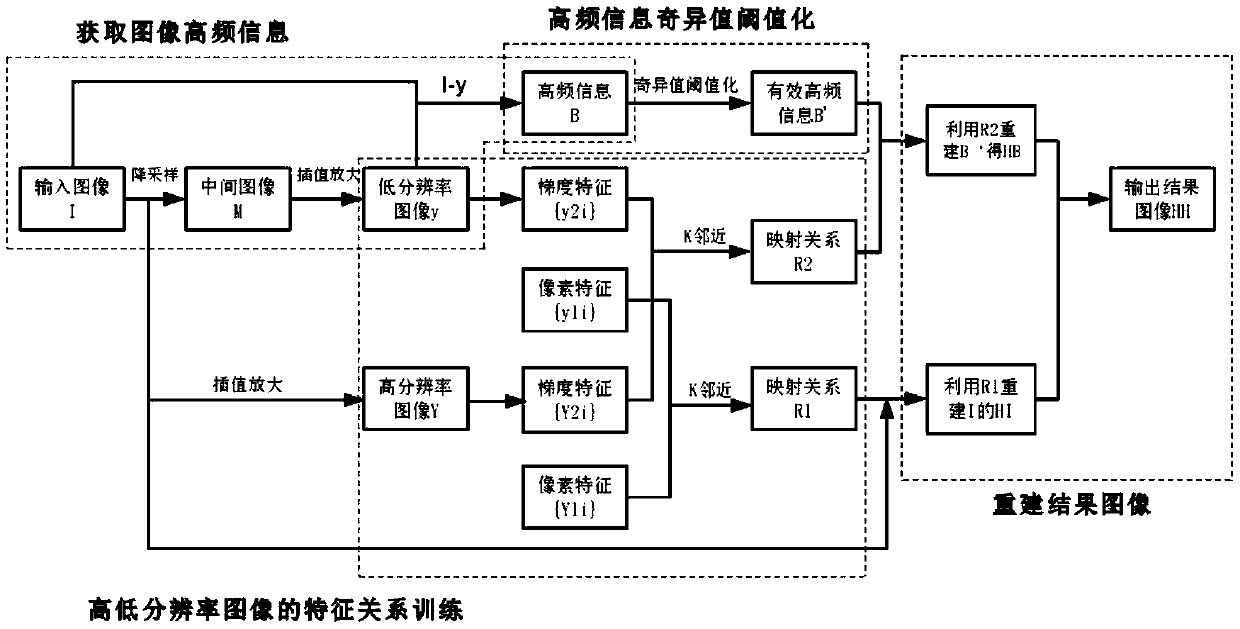

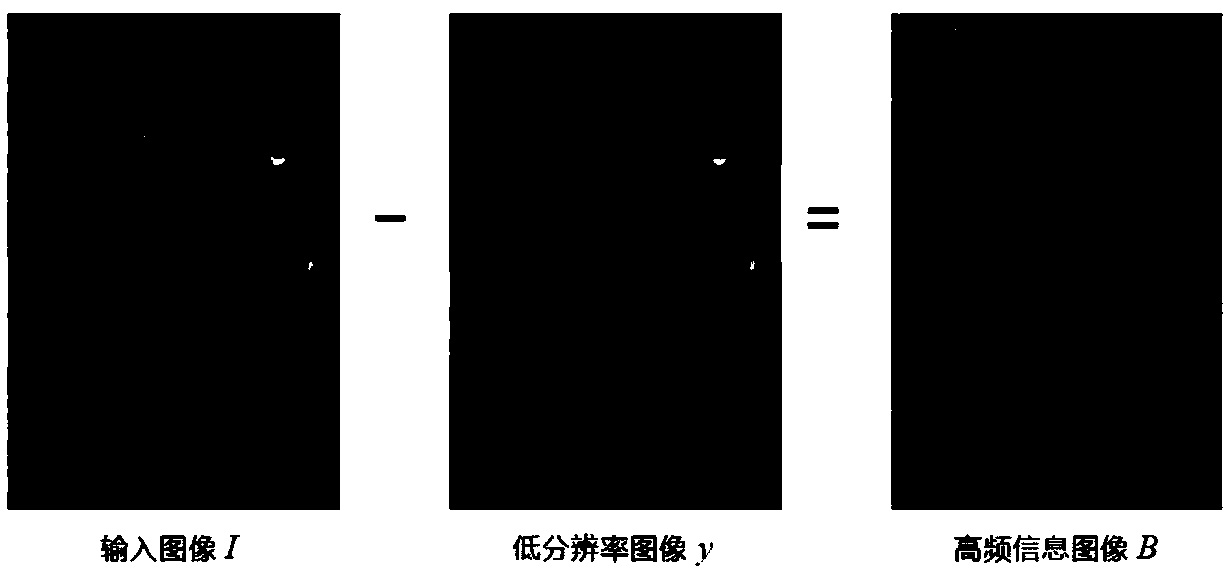

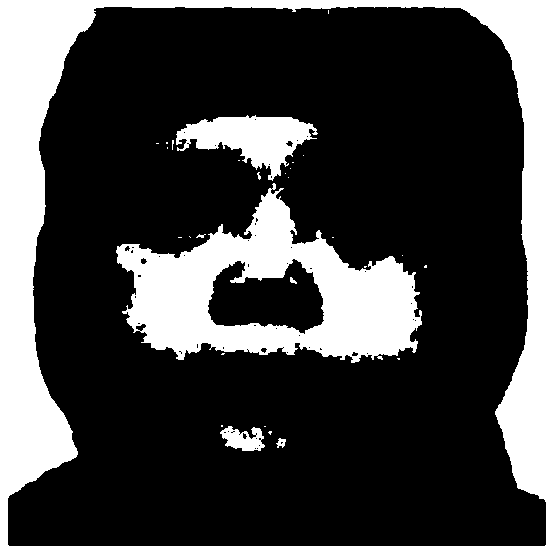

A super-resolution image reconstruction method and system based on multi-feature learning

ActiveCN109559278APreserve edge textureInhibitionImage enhancementImage analysisSingular value thresholdingImaging Feature

The invention discloses a super-resolution image reconstruction method and system based on multi-feature learning, and the method makes full use of the rich information contained in a single input image for reconstruction, and does not depend on an external database. According to the method, a mapping relation between image features is established based on cross-scale similarity of images, and a high-resolution image containing high-frequency information is reconstructed for an input image directly by using the mapping relation, so that the defect of high-frequency information loss caused by image reconstruction by using an interpolation amplification method is well overcome. According to the method, effective high-frequency information is acquired by using singular value thresholding, andthe high-frequency information is amplified by using a gradient feature mapping relation and then is overlapped on a high-resolution image in a blocking manner, so that a final image reconstruction result is obtained. According to the method for reconstructing the image by utilizing the image feature combination, noise points of the reconstructed image are effectively inhibited, image edge and texture information is well kept, and detail enhancement of the image is realized.

Owner:SHANDONG UNIV OF FINANCE & ECONOMICS

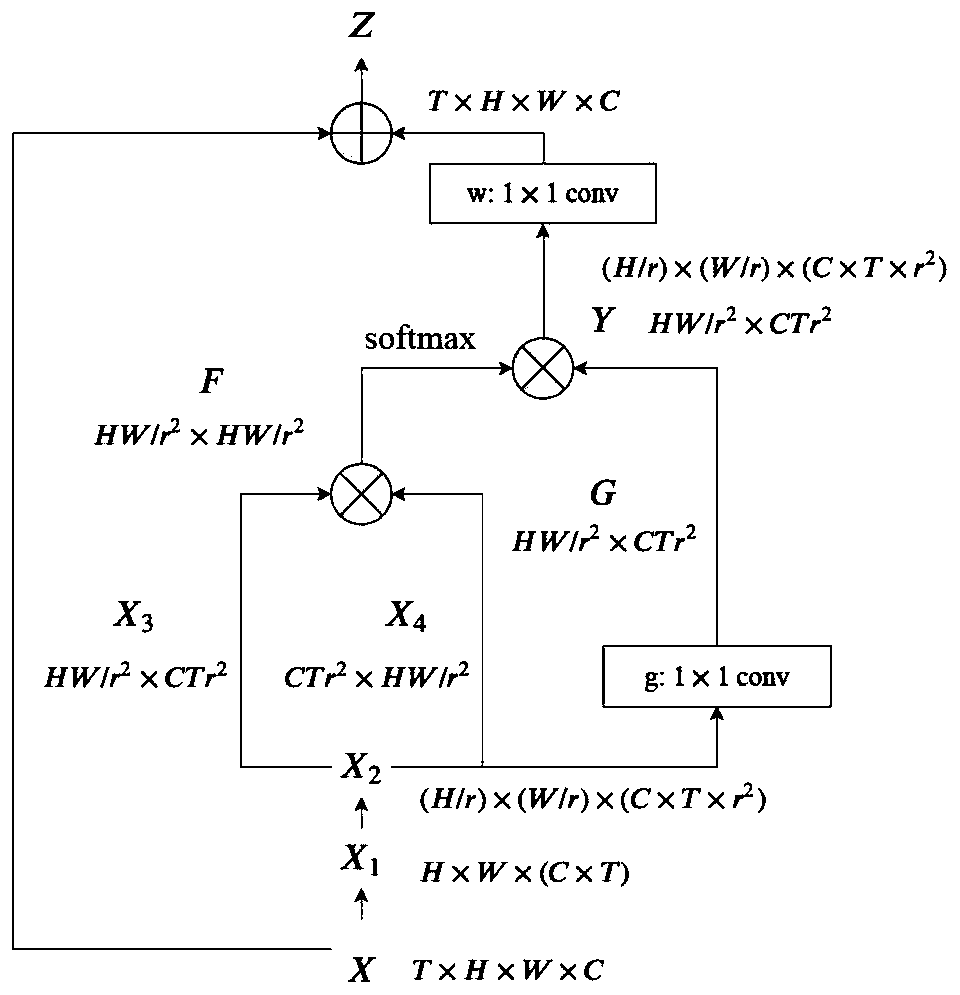

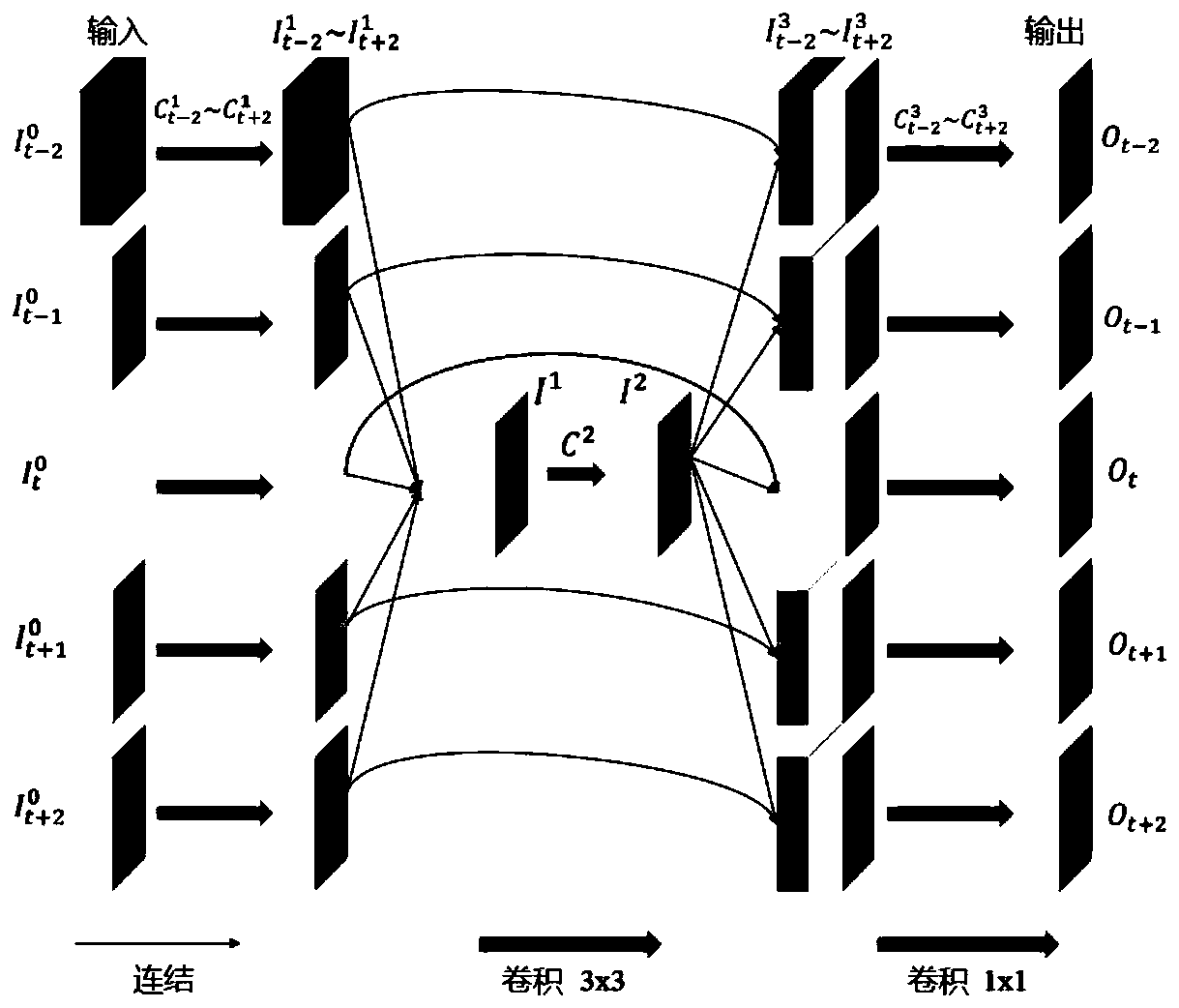

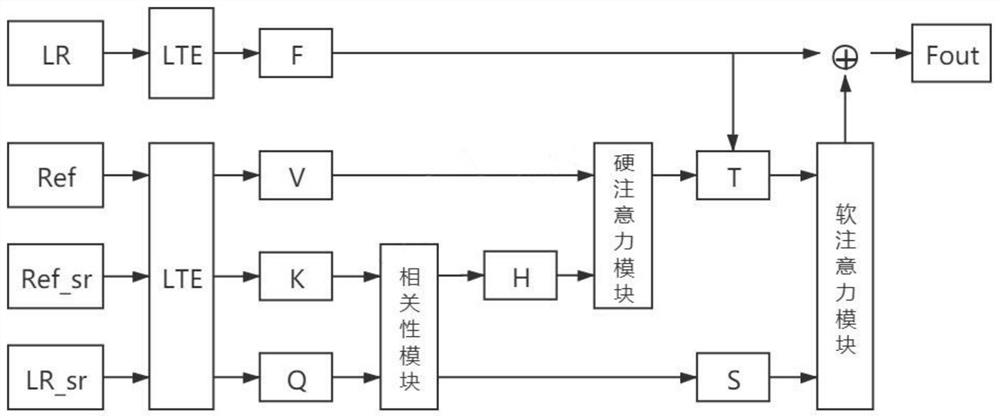

Video super-resolution reconstruction method

ActiveCN110706155AImprove fusion effectRich texture detailsImage enhancementImage analysisTemporal correlationImage resolution

The invention discloses a video super-resolution reconstruction method, including a non-local space-time network and a progressive fusion network. In the non-local space-time network, input multiple frames are fused together to form a whole high-dimensional feature tensor graph, and the whole high-dimensional feature tensor graph is deformed, separated, calculated and extracted to obtain a multi-frame video mixed with the non-local space-time correlation. And in the progressive fusion network, multiple frames output by the non-local space-time network are sent into the progressive fusion residual block, and the space-time correlation among the multiple frames is gradually fused. And finally, the fused low-resolution feature tensor graph is amplified to obtain a final high-resolution videoframe. According to the video super-resolution reconstruction method, the spatial-temporal correlation among multiple frames is effectively fused, and rich texture details can be recovered while the video resolution is enhanced.

Owner:WUHAN UNIV

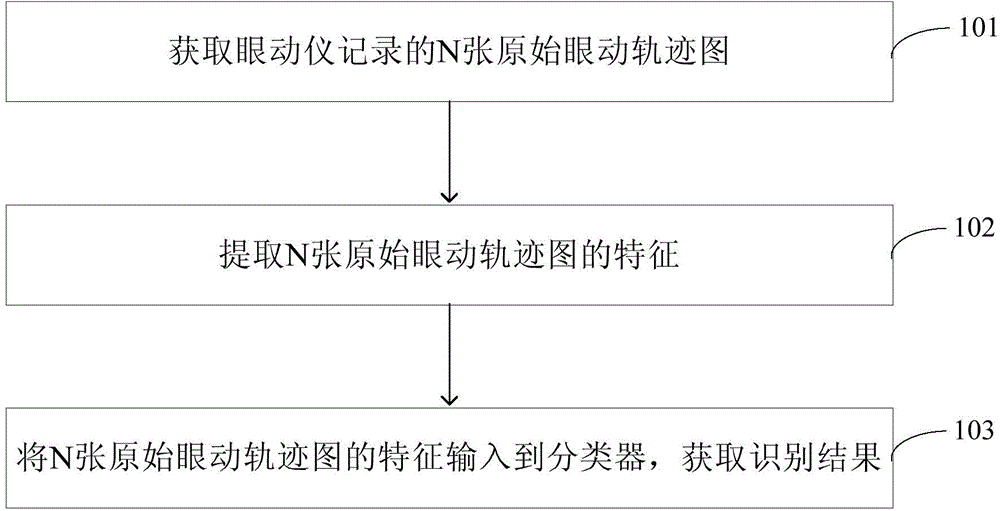

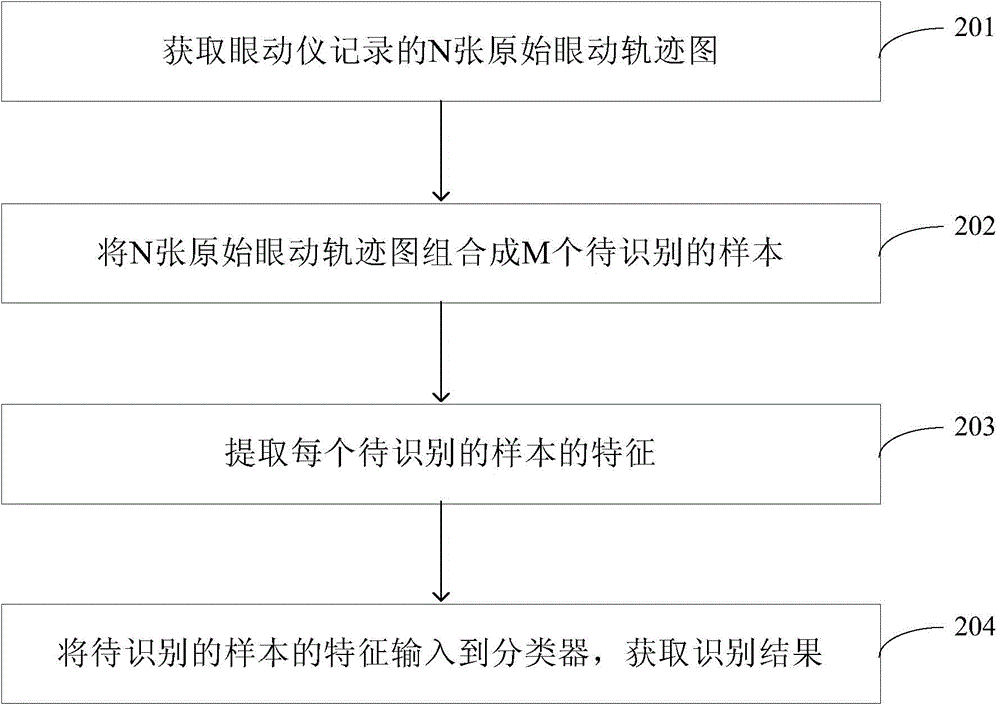

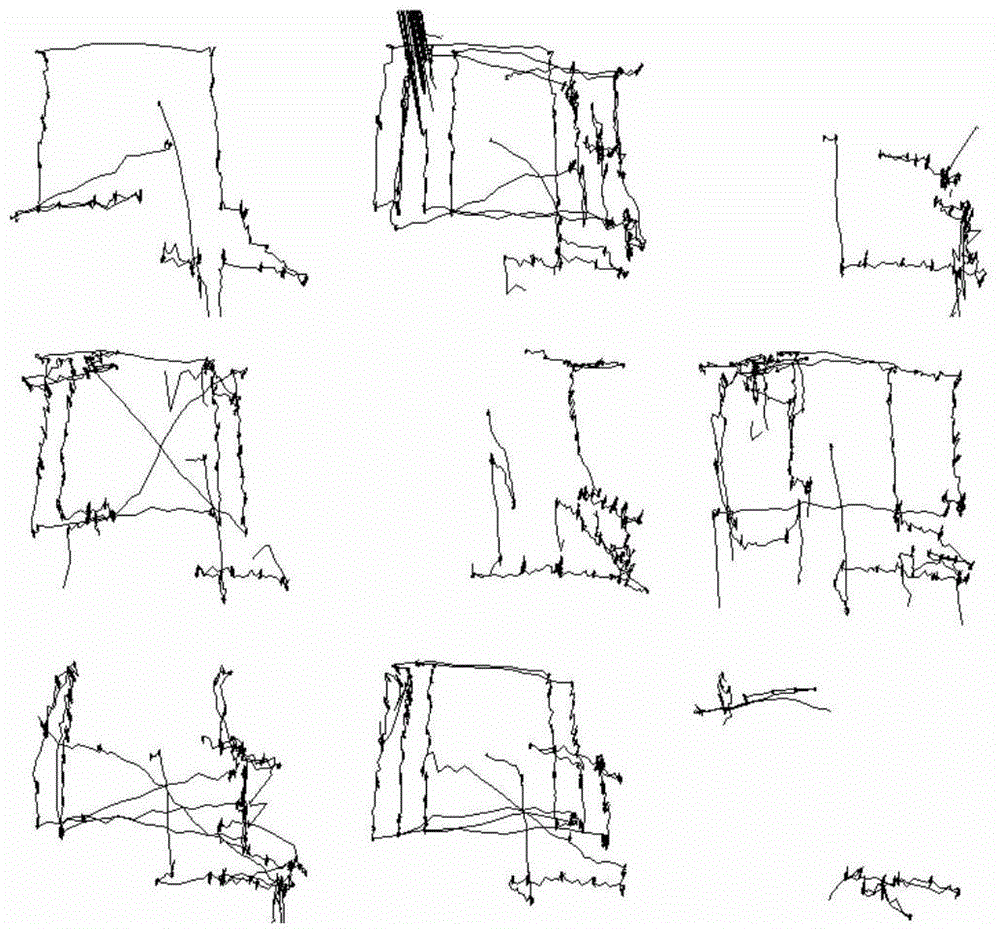

Eye movement track recognition method and device apparatus based on textural features

InactiveCN104899565ARich texture detailsImprove recognition accuracyAcquiring/recognising eyesEye movementTextural feature

The invention provides an eye movement track recognition method and apparatus based on textural features. By acquiring an original eye movement track diagram recorded by an eye tracker and extracting features of the original eye movement track diagram, the extracted features are input into a classifier; a recognition result is acquired; and by carrying out recognition on the original eye movement track diagram, texture details of an eye movement track are more abundant, so that the recognition accuracy rate is improved.

Owner:INST OF RADIATION MEDICINE ACAD OF MILITARY MEDICAL SCI OF THE PLA +1

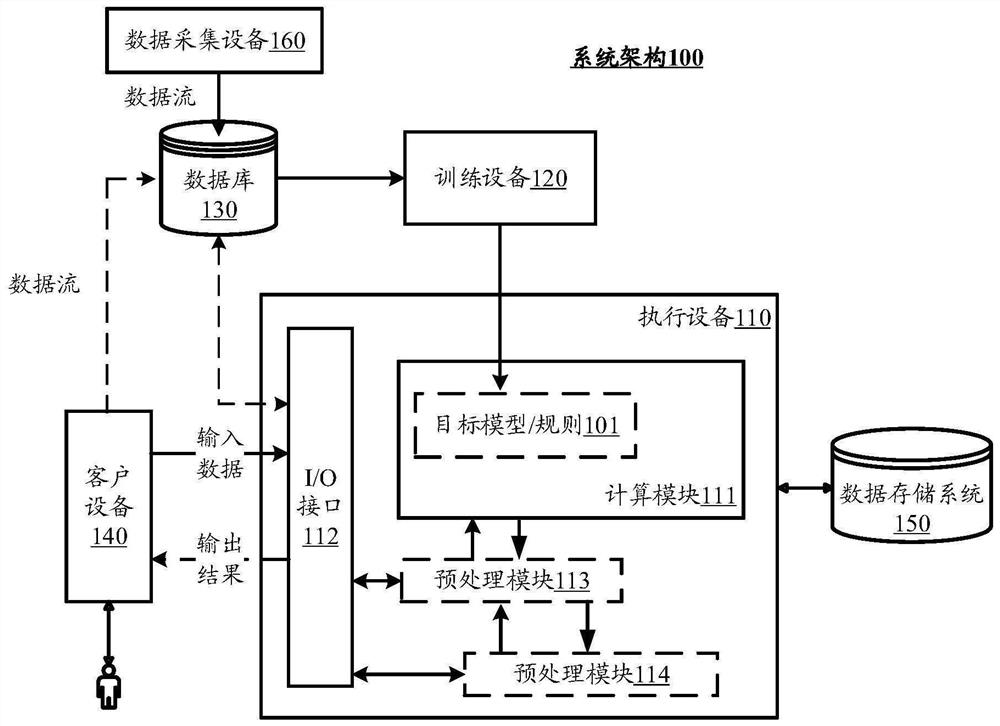

Image fusion method, and training method and device of image fusion model

PendingCN114119378AImprove background qualityQuality improvementImage enhancementImage analysisColor imageFeature extraction

The invention provides an image fusion method and a training method and device of an image fusion model, and relates to the field of artificial intelligence, in particular to the field of computer vision. The image fusion method comprises the following steps: acquiring a color image to be processed, an infrared image and a background reference image, wherein the infrared image and the color image to be processed are shot for the same scene; and inputting the color image to be processed, the infrared image and the background reference image into the image fusion model for feature extraction, and performing image fusion based on the extracted features to obtain a fused image. The image quality of the fused image can be improved, and meanwhile it is guaranteed that the color of the fused image is accurate and natural.

Owner:HUAWEI TECH CO LTD

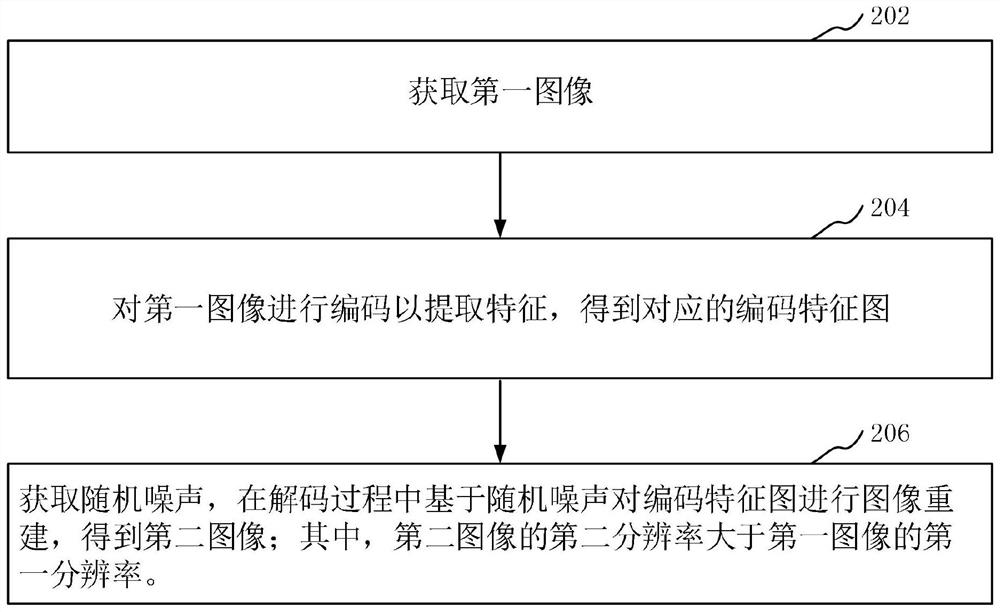

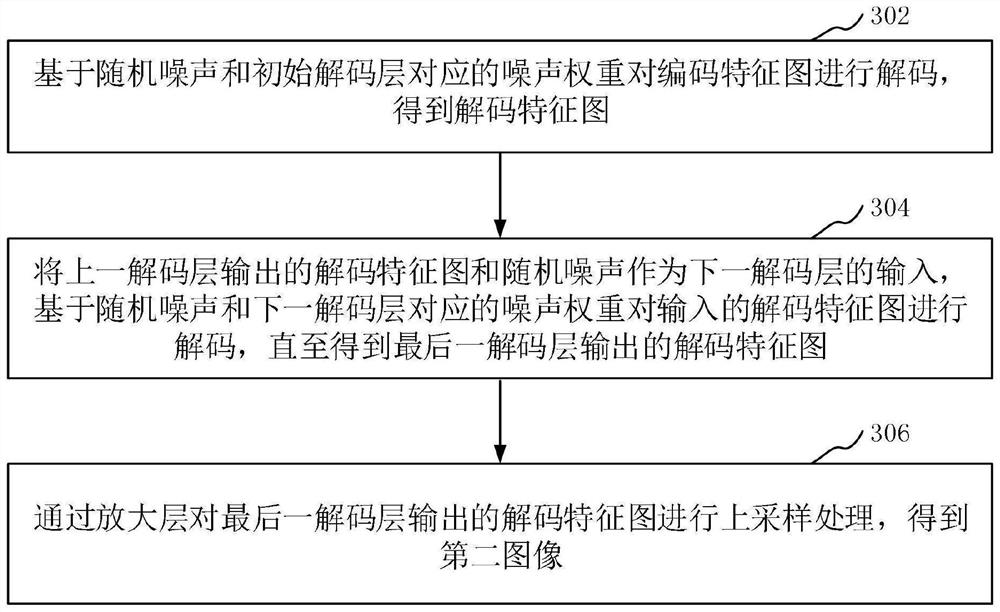

Image processing method and device, electronic equipment and computer readable storage medium

PendingCN113592965ARich texture details2D-image generationImage codingImaging processingComputer graphics (images)

The invention relates to an image processing method and device, electronic equipment and a computer readable storage medium, and the method comprises the steps: obtaining a first image, coding the first image to extract features, and obtaining a corresponding coding feature map; and obtaining random noise, and performing image reconstruction on the coded feature map based on the random noise in a decoding process to obtain a second image, wherein the second resolution of the second image is greater than the first resolution of the first image. By adopting the method, the texture details of the image can be increased in the super-resolution reconstruction process of the image, so that the texture details of the image are richer.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

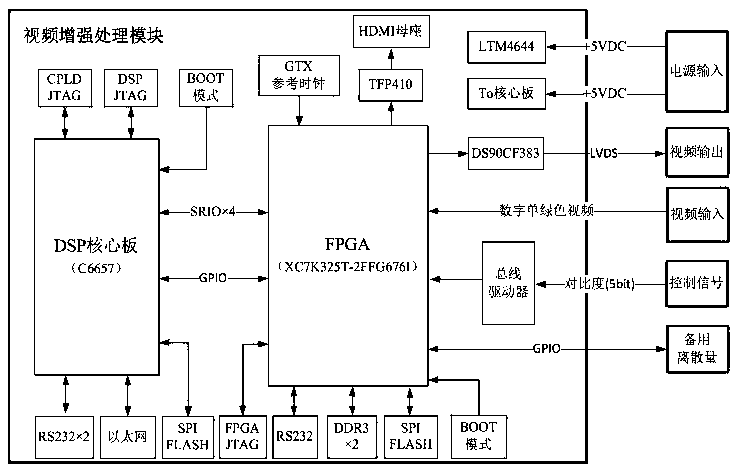

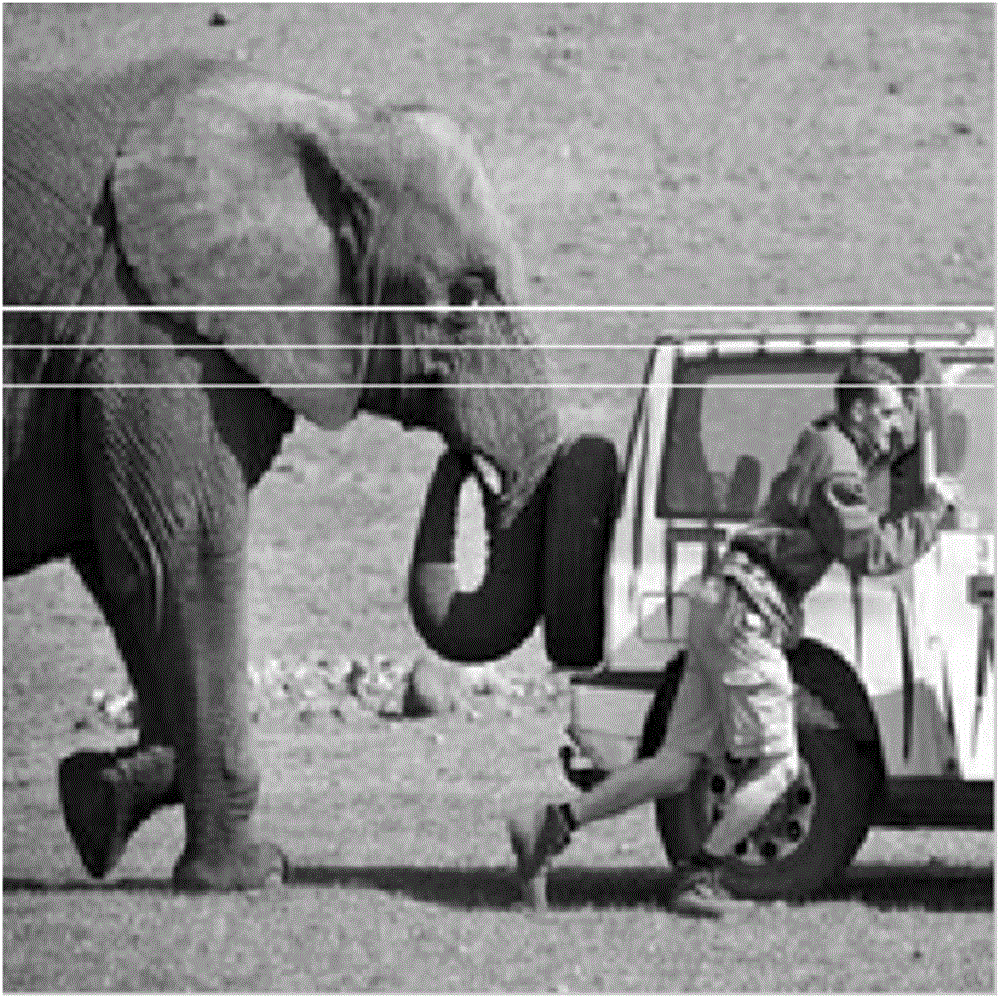

An infrared video enhancement system based on multi-level guided filtering

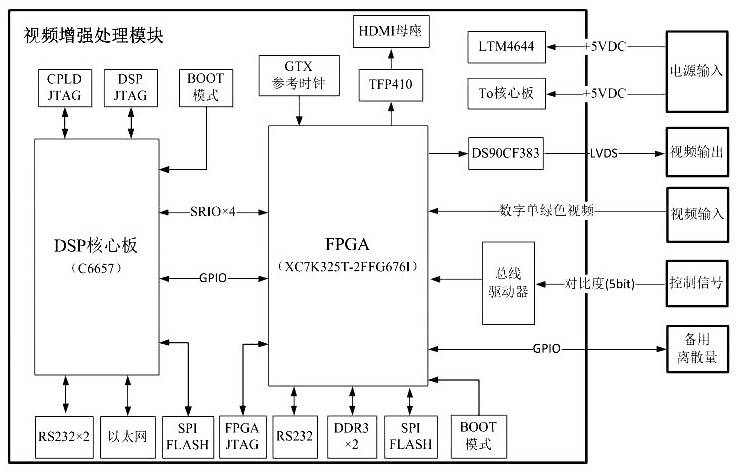

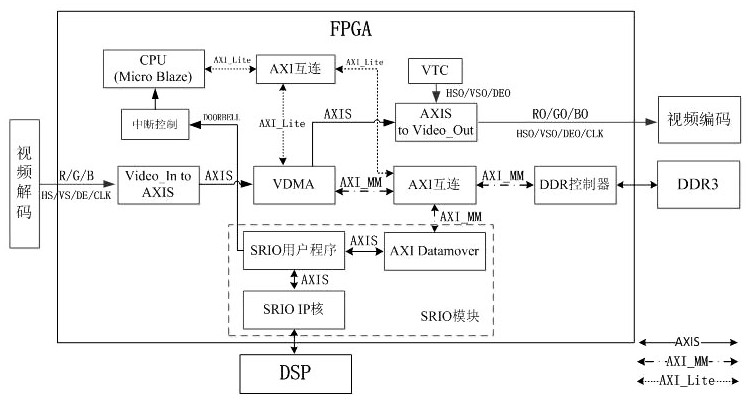

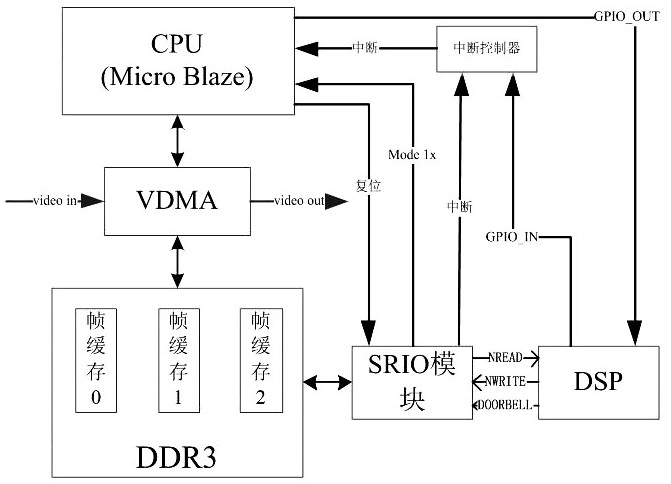

ActiveCN109873998AControllable processing timeGuaranteed scalabilityTelevision system detailsColor signal processing circuitsComputation complexityImaging processing

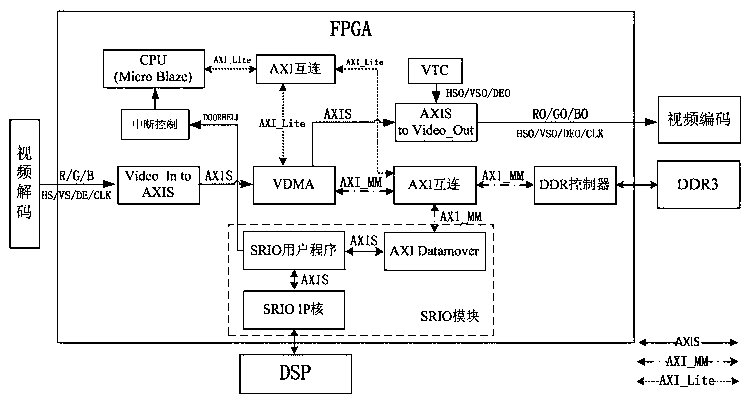

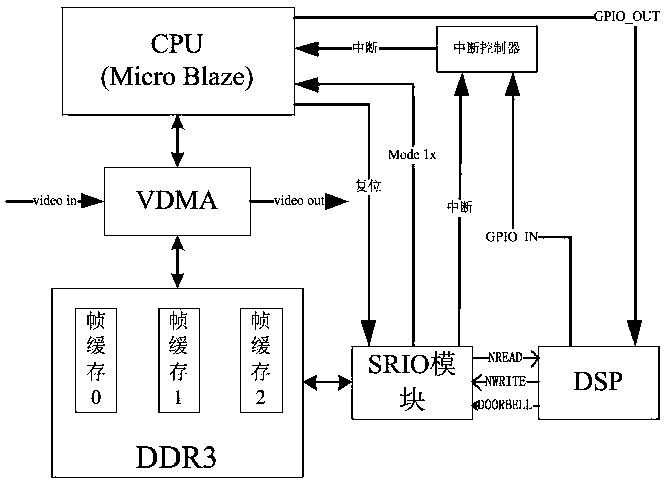

The invention discloses an infrared video enhancement system based on multi-level guided filtering, and the system comprises an FPGA module and a DSP module. The FPGA module and the DSP module are incommunication connection through an SRIO interface, wherein the FPGA module completes digital single green video data acquisition and transmits the data to the DSP module through the SRIO interface; after video enhancement processing is completed in the DSP module, the video data is transmitted back to the FPGA module through the SRIO interface, and finally the video data is output to an externalscreen display through an LVDS interface of the FPGA module after being converted into a format through the FPGA module. A framework system of the DSP module and the FPGA module is adopted, and the single-frame image processing is efficient. The video transmission is achieved between the DSP module and the FPGA module through an SRIO interface, and the system has the advantages of being high in bandwidth, high in efficiency, high in real-time performance, low in time delay and the like. According to the present invention, the multi-level guided filtering is achieved through box filtering, thecalculation complexity is irrelevant to the size of a window, clear structural characteristics and rich texture details can be reserved, and the system is suitable for occasions with high requirementsfor real-time performance.

Owner:SUZHOU CHANGFENG AVIATION ELECTRONICS

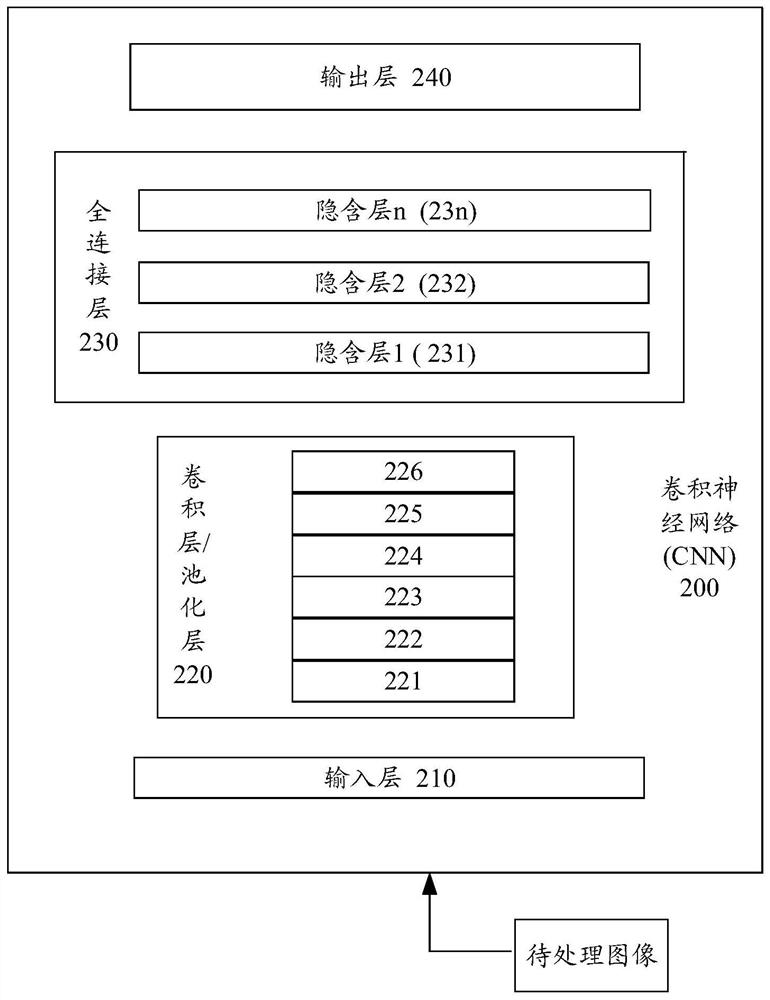

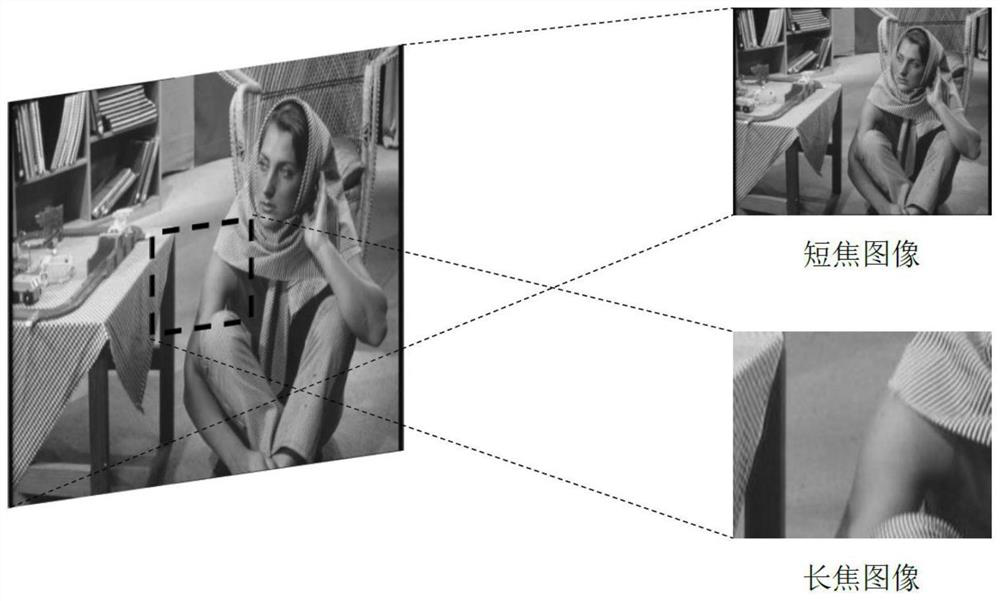

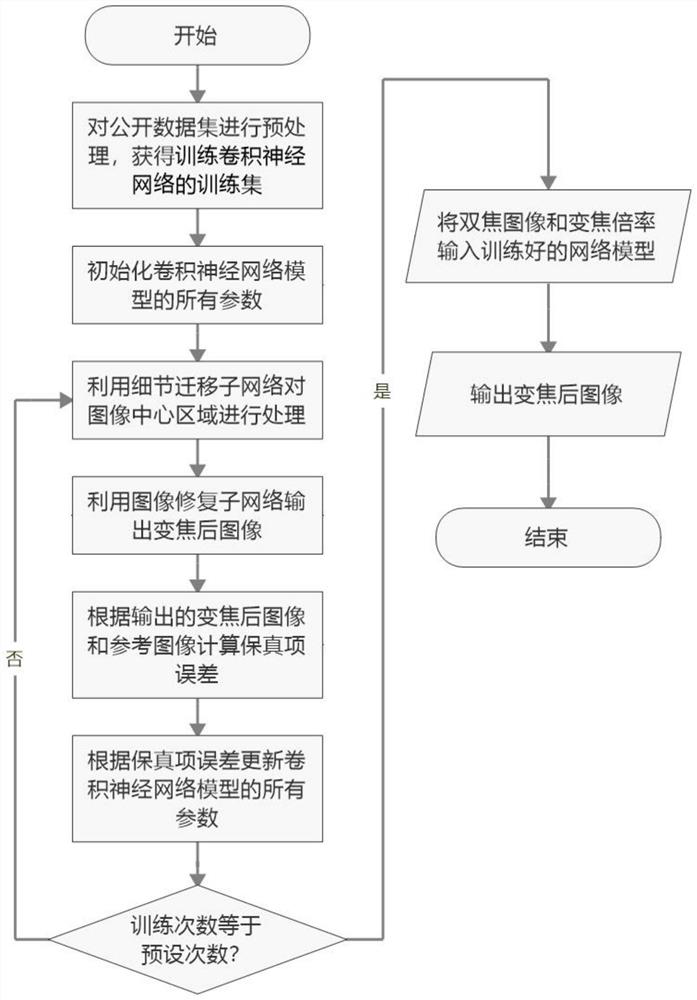

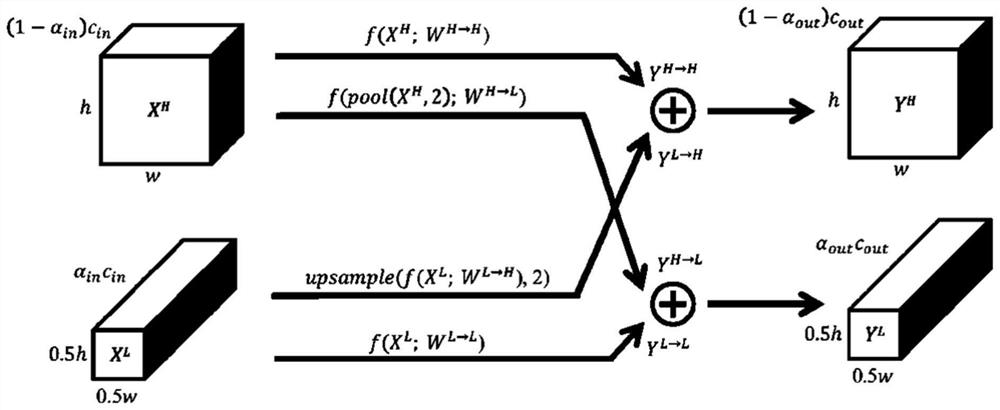

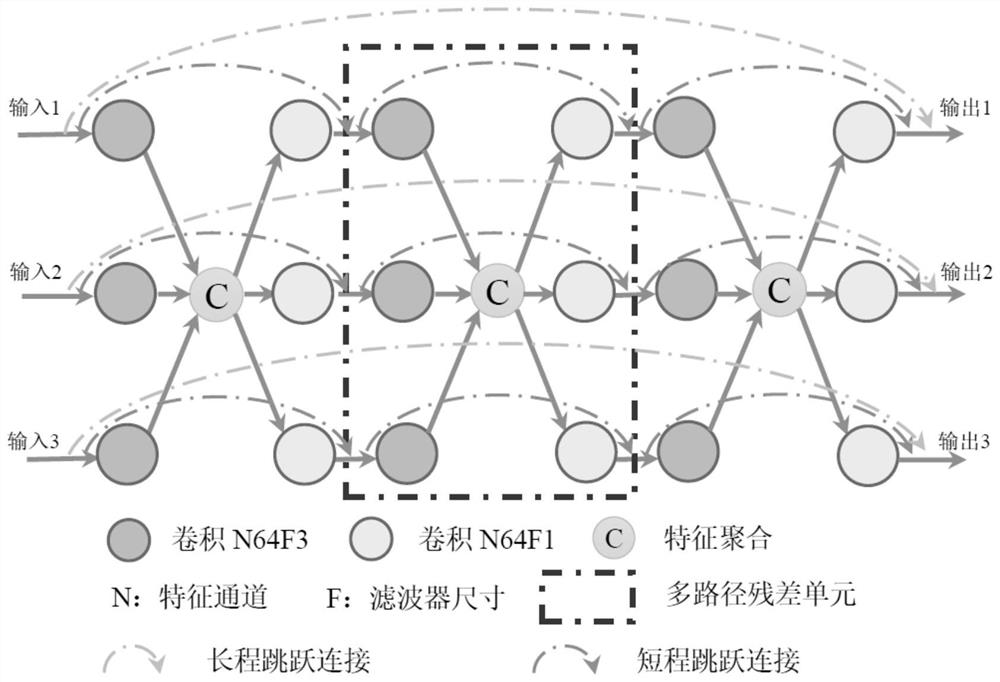

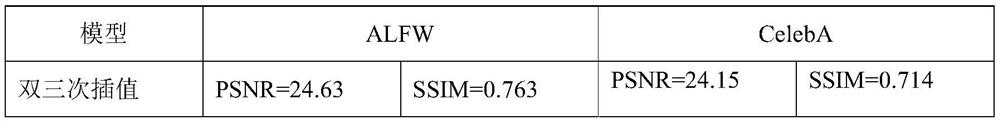

Bifocal camera continuous digital zooming method based on convolutional neural network model

ActiveCN111654621AHigh zoom ratioImprove robustnessTelevision system detailsColor television detailsData setDigital zoom

The invention discloses a bifocal camera continuous digital zooming method based on a convolutional neural network model. The bifocal camera continuous digital zooming method includes: preprocessing the public data set to obtain high-resolution images, low-resolution images and corresponding reference images with the same size to form image pairs as a training set; establishing a convolutional neural network model to iteratively train the training set for a preset number of times; and inputting a low-resolution image obtained by the short-focus camera and a high-resolution image and zoom magnification obtained by the long-focus camera, and outputting a zoomed image by using a bicubic interpolation method through the convolutional neural network model trained by using the cut up-sampled image and the high-resolution image. According to the method, the convolutional neural network model is utilized to realize digital zoom image synthesis with continuous multiplying power, and compared with an existing continuous digital zoom method, rich texture details provided by a high-resolution image obtained by a telephoto camera can be more effectively utilized.

Owner:ZHEJIANG UNIV

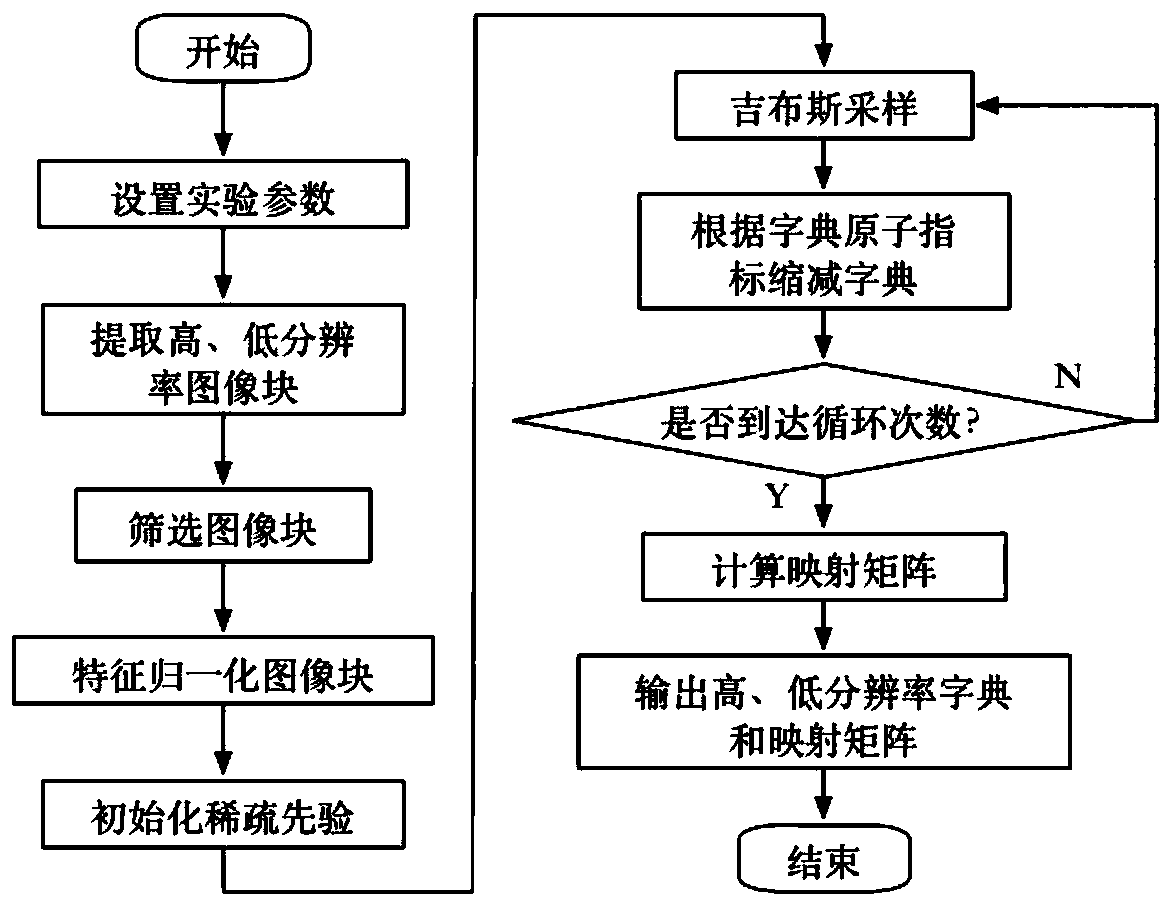

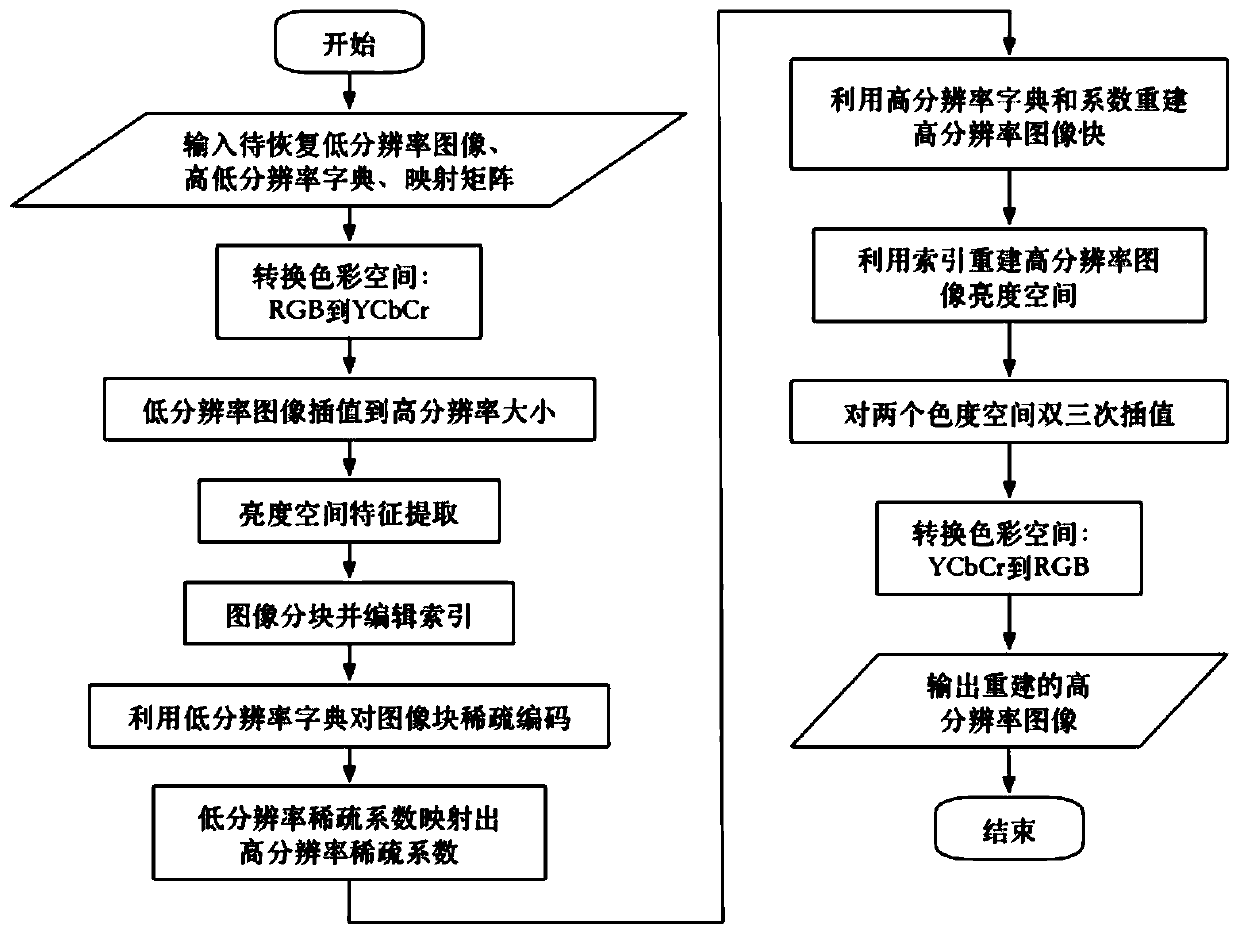

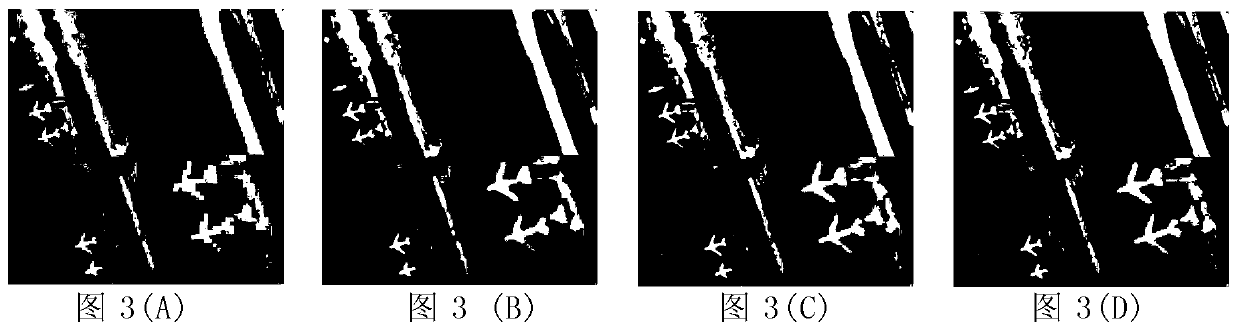

A remote sensing image super-resolution reconstruction method combining a two-parameter beta process with a dictionary

PendingCN109712074ASolve training problemsReduce dimensionalityImage enhancementGeometric image transformationPattern recognitionImage resolution

The invention provides an accurate and small-computation remote sensing image super-resolution reconstruction method combining a two-parameter beta process with a dictionary, and belongs to the technical field of remote sensing image processing. The method comprises the following steps: S1, inputting a low-resolution image to be reconstructed, a high-resolution image dictionary D(x), a low-resolution image dictionary D(y) and a mapping matrix A; S2, performing sparse coding on the input low-resolution image according to a dictionary D (y) to obtain a low-resolution sparse coefficient, mappinga high-resolution sparse coefficient corresponding to the low-resolution sparse coefficient by using a matrix A, and reconstructing a high-resolution image by using the high-resolution sparse coefficient and a dictionary D (x); the acquisition method of the dictionary D (x), the dictionary D (y) and the matrix A comprises the steps that according to paired high-resolution and low-resolution training images, the dictionary D (x), the dictionary D (y) and the matrix A are acquired through a double-parameter beta process, the matrix A is a mapping matrix from sparse coefficients of the dictionaryD (y) to sparse coefficients of the dictionary D (x), and the sparse coefficients are products of coefficient weights and dictionary atoms.

Owner:HEILONGJIANG UNIV

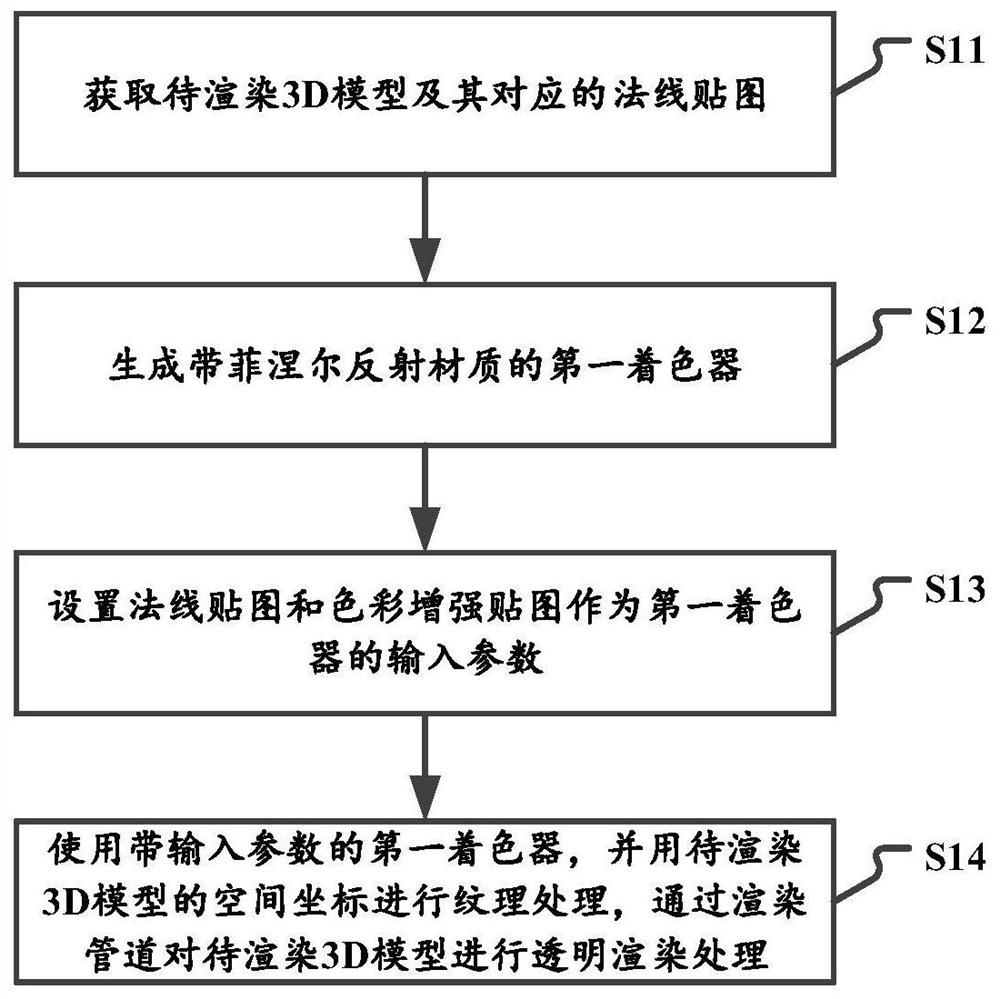

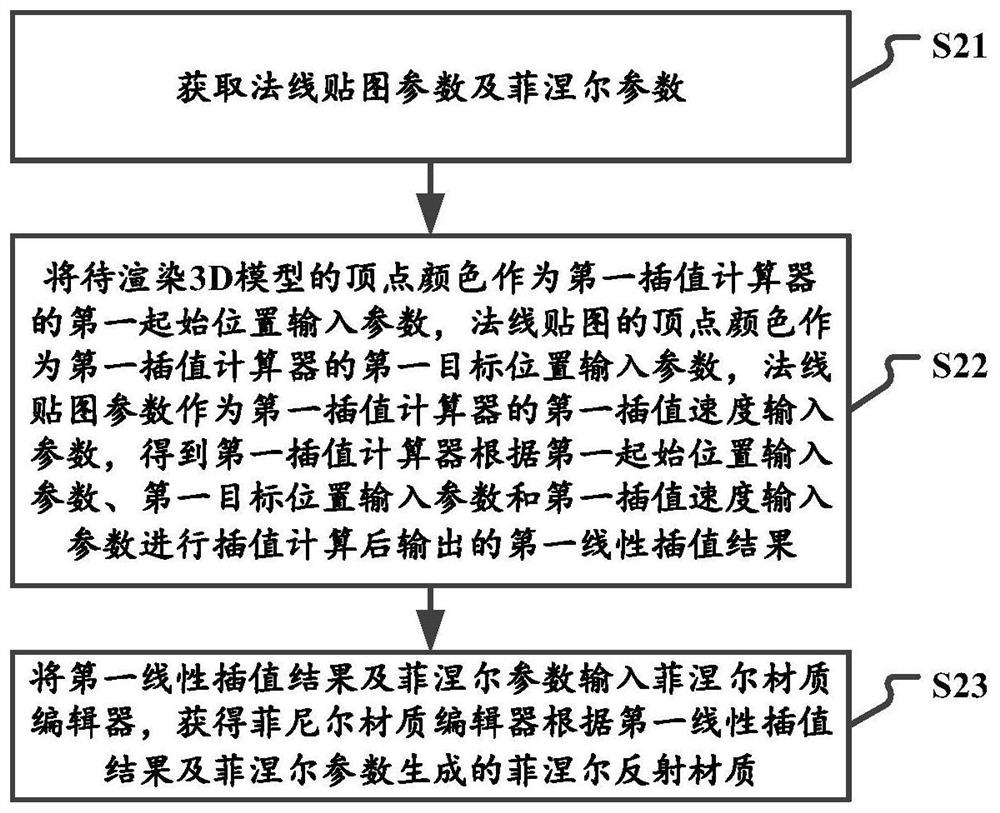

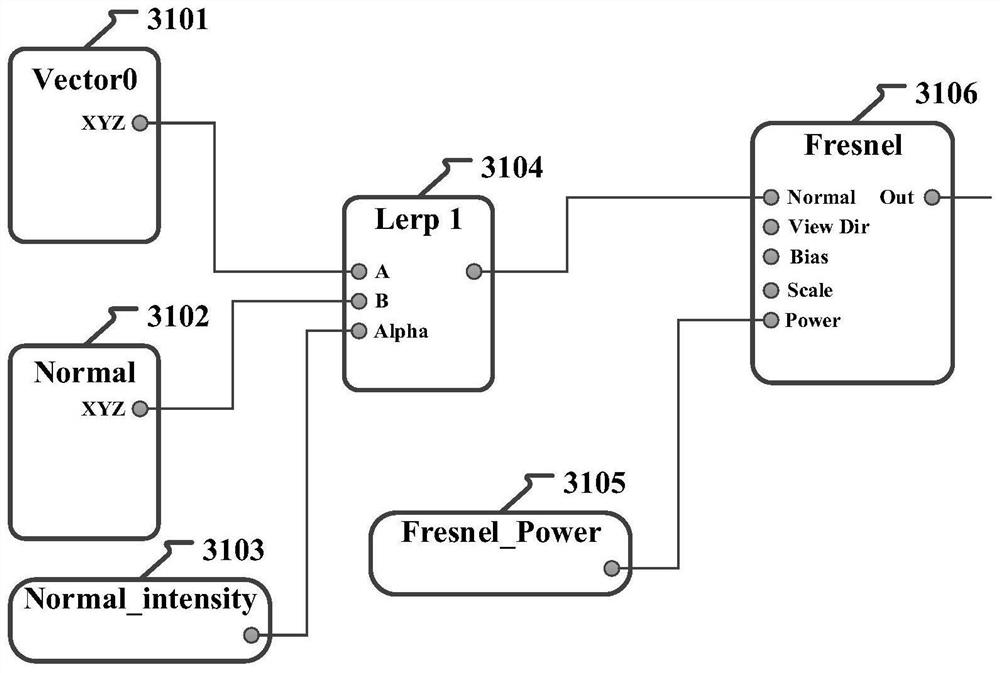

3D model rendering method and device

PendingCN112053424ARich texture detailsRich color variationDetails involving 3D image dataAnimationComputer graphics (images)Algorithm

The invention relates to a 3D model rendering method and device. The method comprises the steps of obtaining a to-be-rendered 3D model and a corresponding normal map; generating a first shader with aFresnel reflection material; setting a normal map and a color enhancement map as the input parameters of the first shader; and performing texture processing by using the first shader with input parameters and the space coordinates of the to-be-rendered 3D model, and performing transparent rendering processing on the to-be-rendered 3D model through a rendering pipeline. According to the technical scheme, the Fresnel material is generated through the normal map, so that the surface of the 3D model has rich texture details, and the reflection phenomenon of different intensities can be generated according to the change of the position of the camera; the 3D model can generate different Fresnel effects by selecting different normal maps; and light and shadow flow is added to the surface of the 3D model through the color enhancement chartlet, and a large number of rich color changes instead of a pure light sweeping effect are achieved.

Owner:BEIJING PERFECT ZEALKING TECH CO LTD

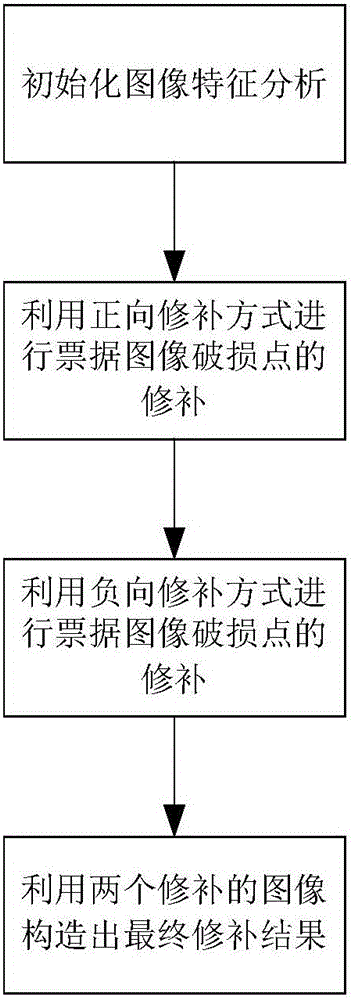

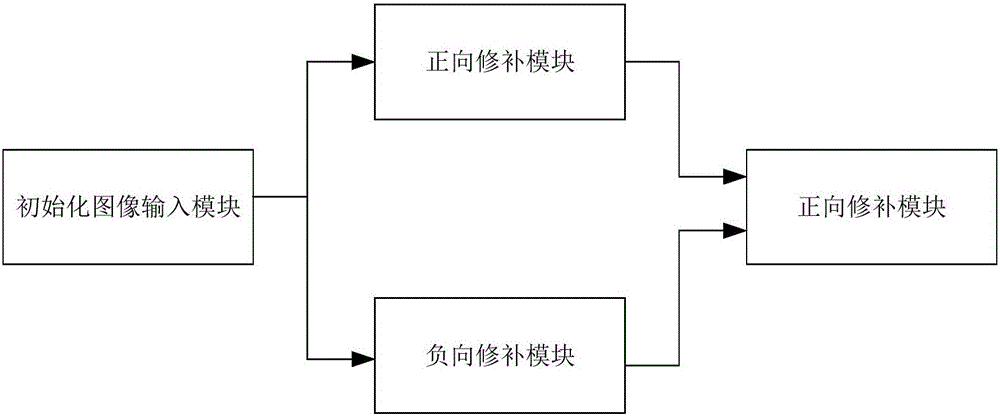

Damaged bank note image positive and negative repair method and damaged bank note image positive and negative repair system based on continued fraction interpolation technology

ActiveCN105957038AQuality improvementImprove efficiencyImage enhancementPattern recognitionImaging Feature

The invention relates to a damaged bank note image positive and negative repair method and a damaged bank note image positive and negative repair system based on a continued fraction interpolation technology. Compared with the prior art, the defect that the image repair technology lacks of stability and universality and cannot be used to repair image details is solved. The method comprises the following steps: making an initial image feature analysis; repairing the damaged points of a note image using a positive repair way; repairing the damaged points of the note image using a negative repair way; and constructing a final repair result C using the two repaired images. The quality and efficiency of image repair are improved.

Owner:安徽兆尹信息科技股份有限公司

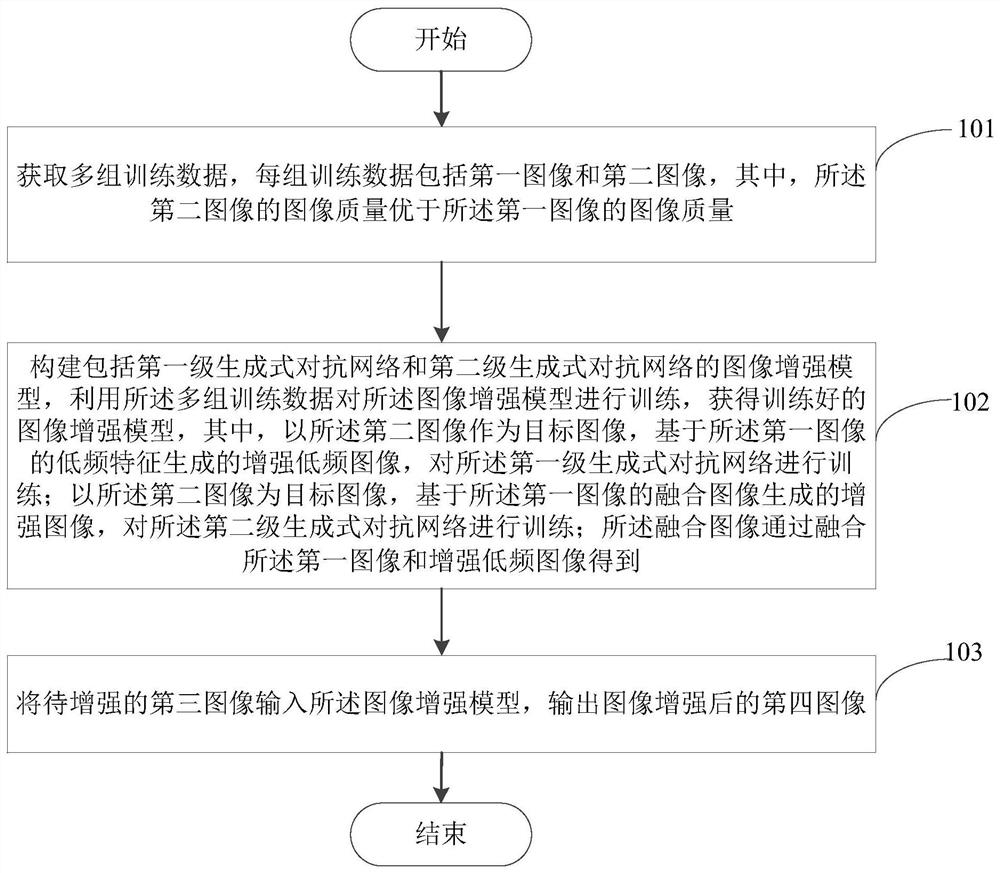

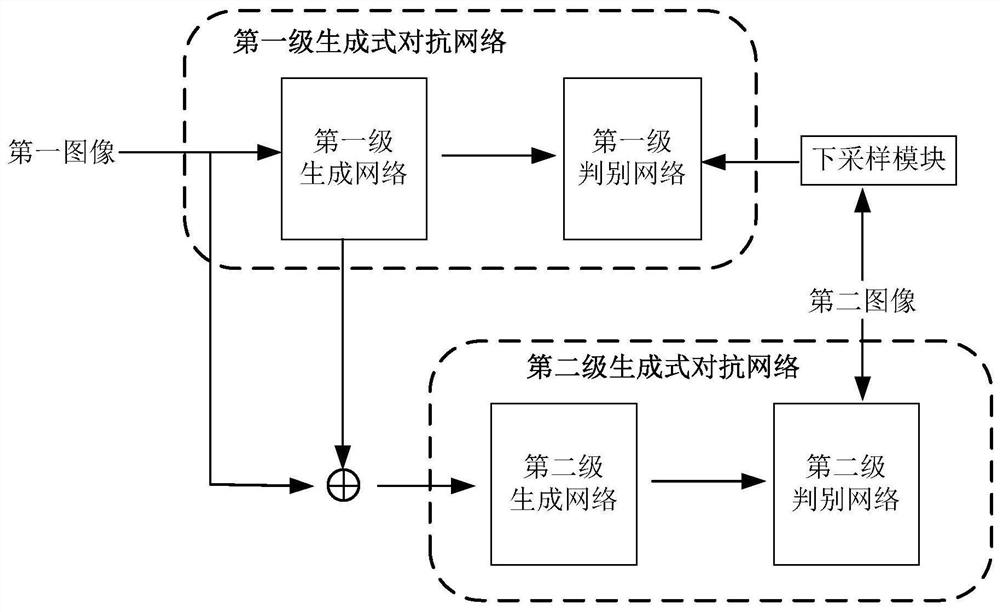

Image enhancement method and device and computer readable storage medium

PendingCN114511449AReduce generationReduce the impact of noiseImage enhancementImage analysisPattern recognitionImaging processing

The invention provides an image enhancement method and device and a computer readable storage medium, and belongs to the technical field of image processing. The image enhancement method comprises the following steps: acquiring multiple groups of training data, wherein each group of training data comprises a first image and a second image; constructing an image enhancement model comprising a first-stage generative adversarial network and a second-stage generative adversarial network, training the image enhancement model by using multiple groups of training data, and training the first-stage generative adversarial network by using an enhanced low-frequency image generated based on the low-frequency features of the first image by taking the second image as a target image; taking the second image as a target image, generating an enhanced image based on the fused image of the first image, and training the second-stage generative adversarial network; the fused image is obtained by fusing the first image and the enhanced low-frequency image; and inputting a third image to be enhanced into the trained image enhancement model, and outputting a fourth image after image enhancement. The image quality of the image can be improved.

Owner:RICOH KK

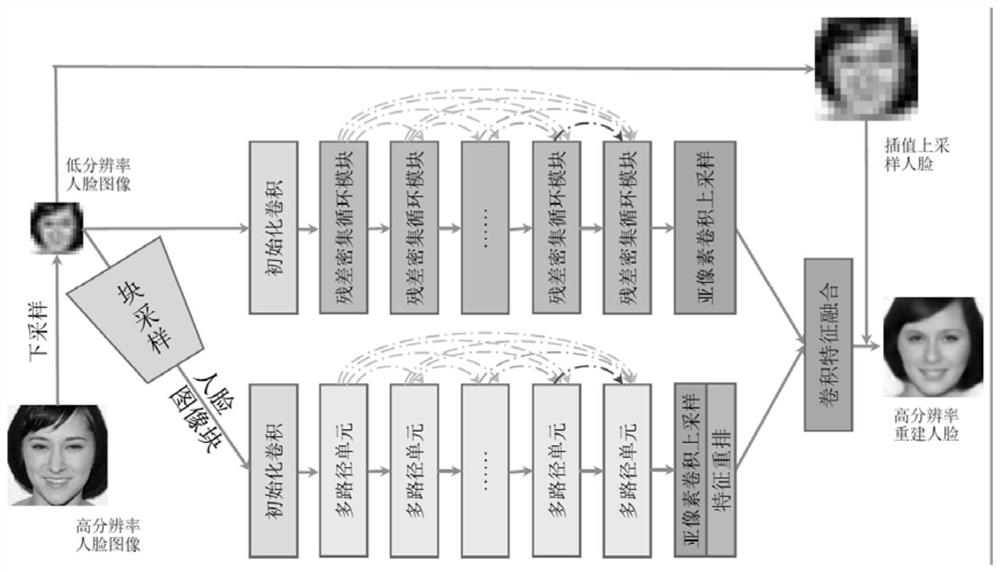

Face illusion structure method and system based on double-path deep fusion

ActiveCN112288626AQuality improvementAccurate modelingGeometric image transformationCharacter and pattern recognitionImage resolutionRadiology

The invention discloses a face illusion structure method and system based on double-path deep fusion, and the method comprises the steps of extracting human face features through cyclic convolution and residual learning in a face global feature extraction process, and carrying out the global contour modeling of a face; in a block-based face local structure information expression process, samplingan input face to obtain a large number of local face blocks for independently modeling a mapping relationship between low-resolution and high-resolution local blocks; in addition, rearranging and mapping the feature expressions of the local blocks back to the global face space; in the face global and local feature fusion illusion structure, fusing the global contour features and the local featuresof the face, so that the high-quality face illusion structure is realized. According to the invention, the global contour features of the human face are effectively extracted, the high-dimensional and low-dimensional mapping relation of the local blocks is independently learned, effective fusion is achieved, the contour of the illusion human face image is clearer, and texture details are richer.

Owner:WUHAN UNIV

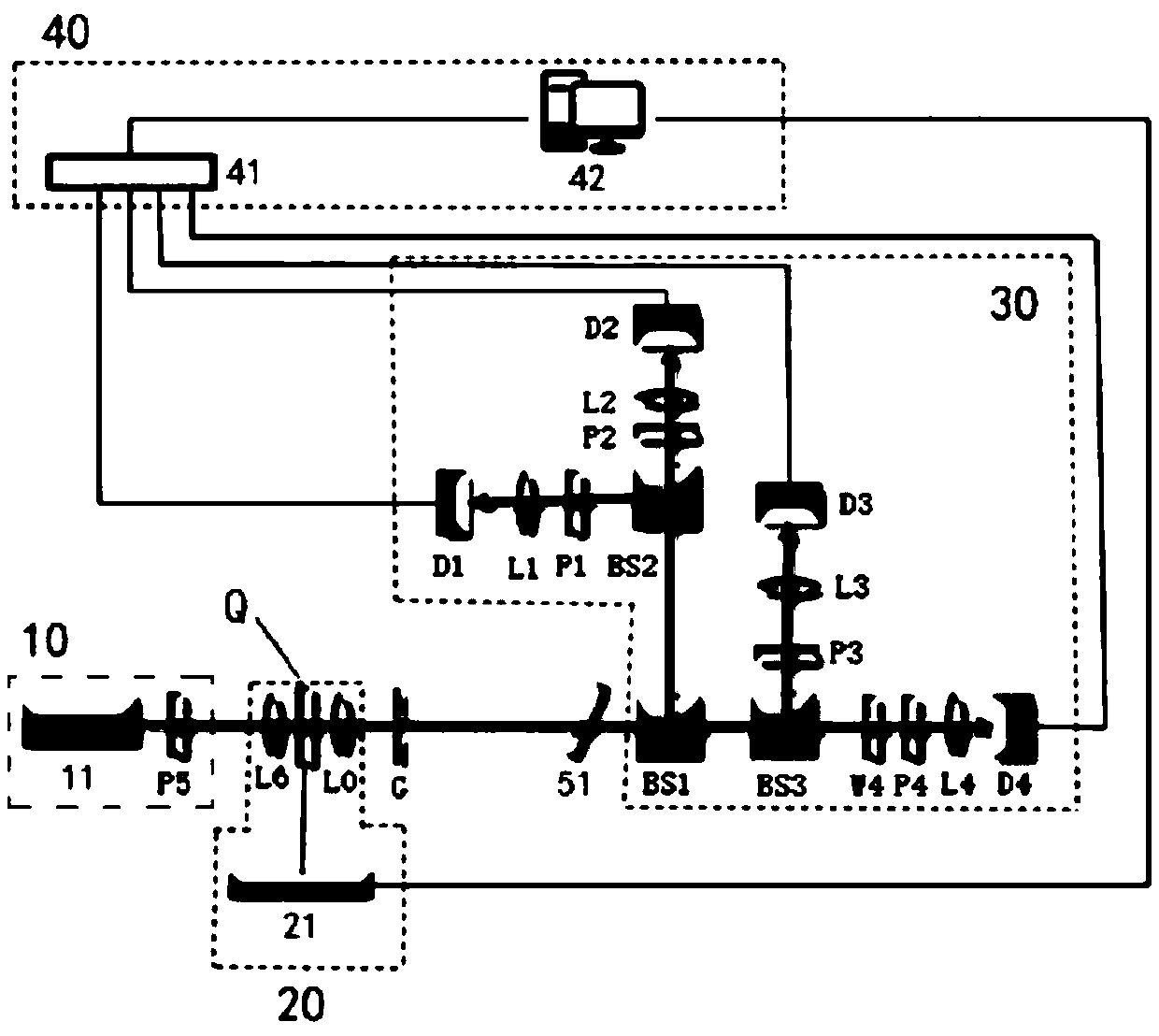

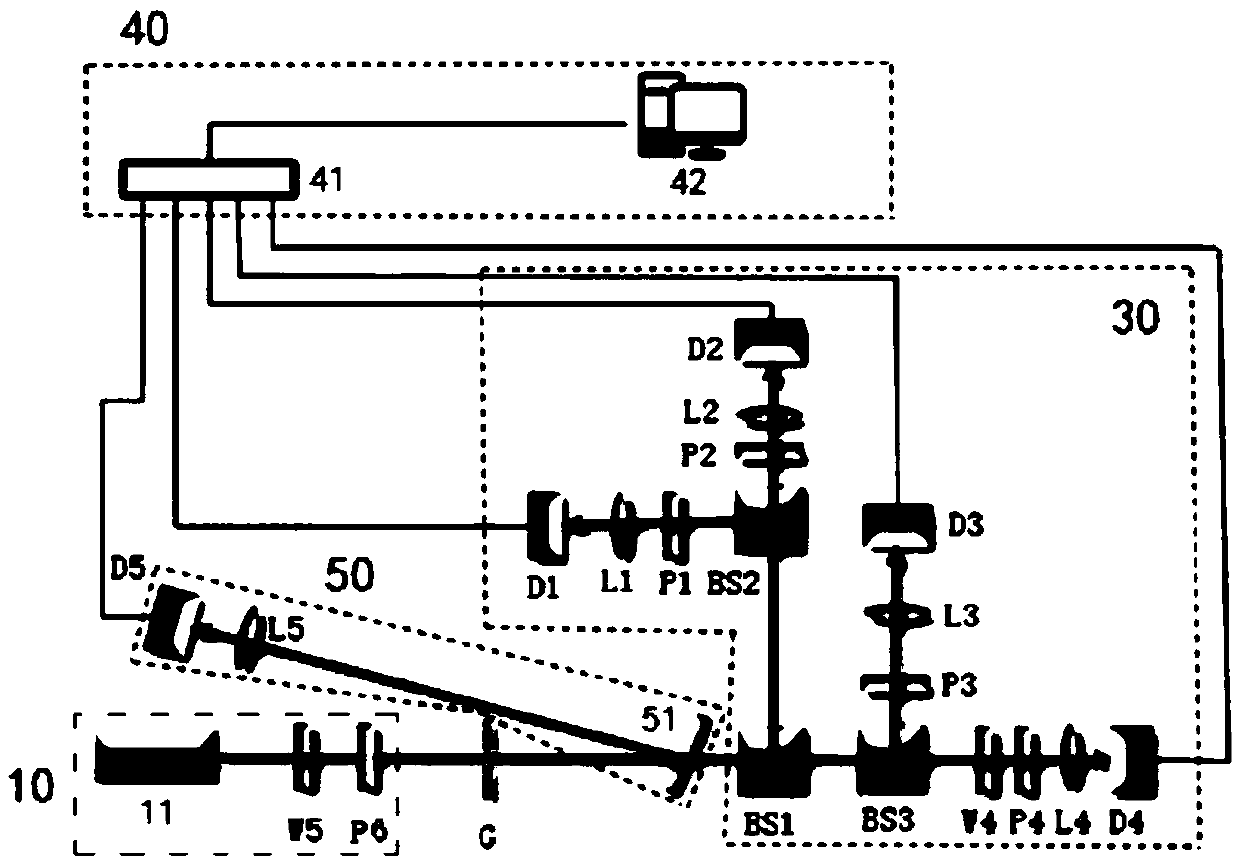

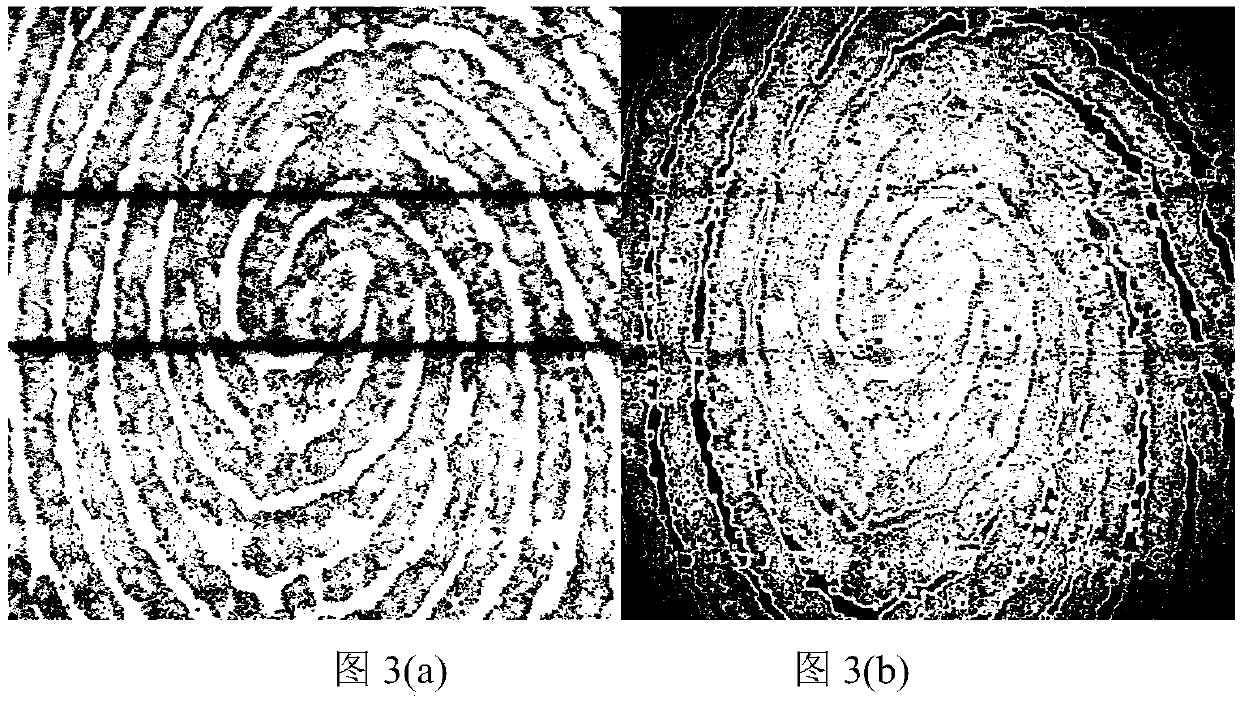

Latent fingerprint label-free Stokes parameter polarized confocal microimaging system and method

InactiveCN108982373AImprove visibilityRich texture detailsPolarisation-affecting propertiesLatent fingerprintData acquisition

The invention discloses a latent fingerprint label-free Stokes parameter polarized confocal microimaging system and method. The system comprises a polarization generation module, a confocal scanning module, a polarization measurement module and a data collection imaging module. According to the system, fingerprint polarization scanning imaging is carried out based on Stokes parameter measurement,and Stokes parameter and polarized parameter fingerprint images can be obtained after a monochromatic plane polarized light passes through an object printed with fingerprint marks; the background brightness formed by transmission of an objected, such as a mirror, can be well reduced, so that the fingerprint has remarkable contrast with the background, the image background interference can be weakened, and the fingerprint differentiation degree can be improved; and the fingerprint image has rich texture details and shows clear featured details of primary feature, secondary feature and partial tertiary feature which is hard to observe in other fingerprint imaging methods. The method is simple and easy to operate, does not need processing on a fingerprint sample, and can avoid destroying fingerprints.

Owner:SOUTH CHINA NORMAL UNIVERSITY

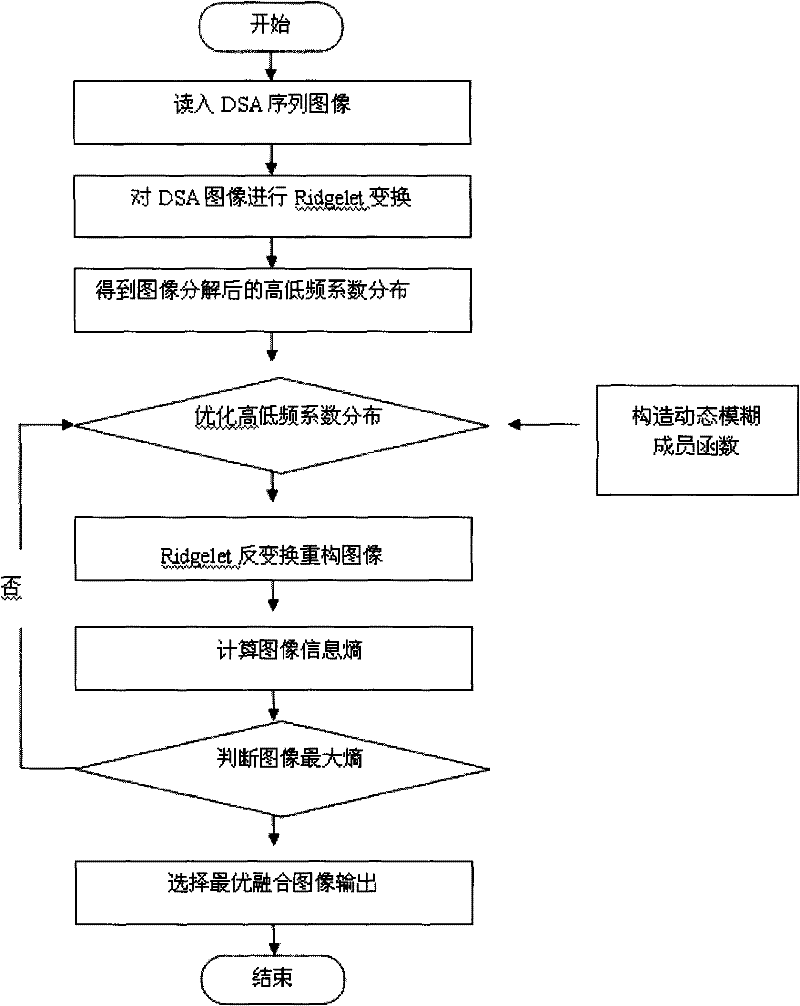

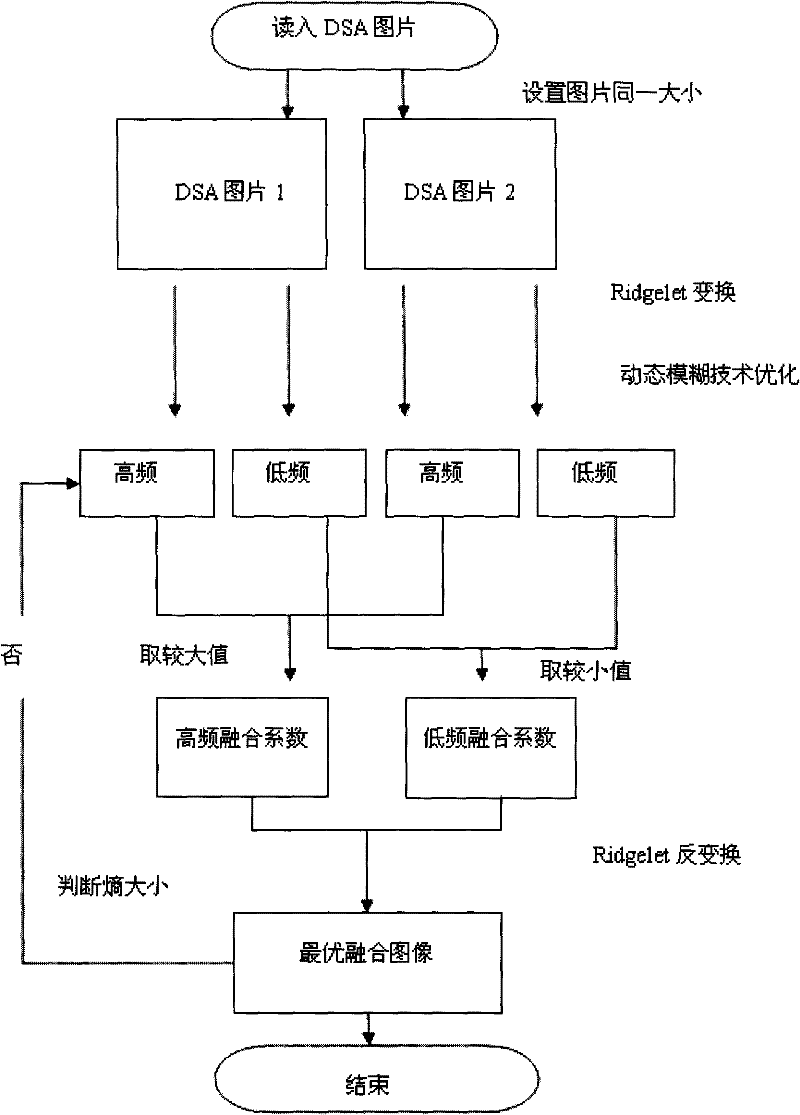

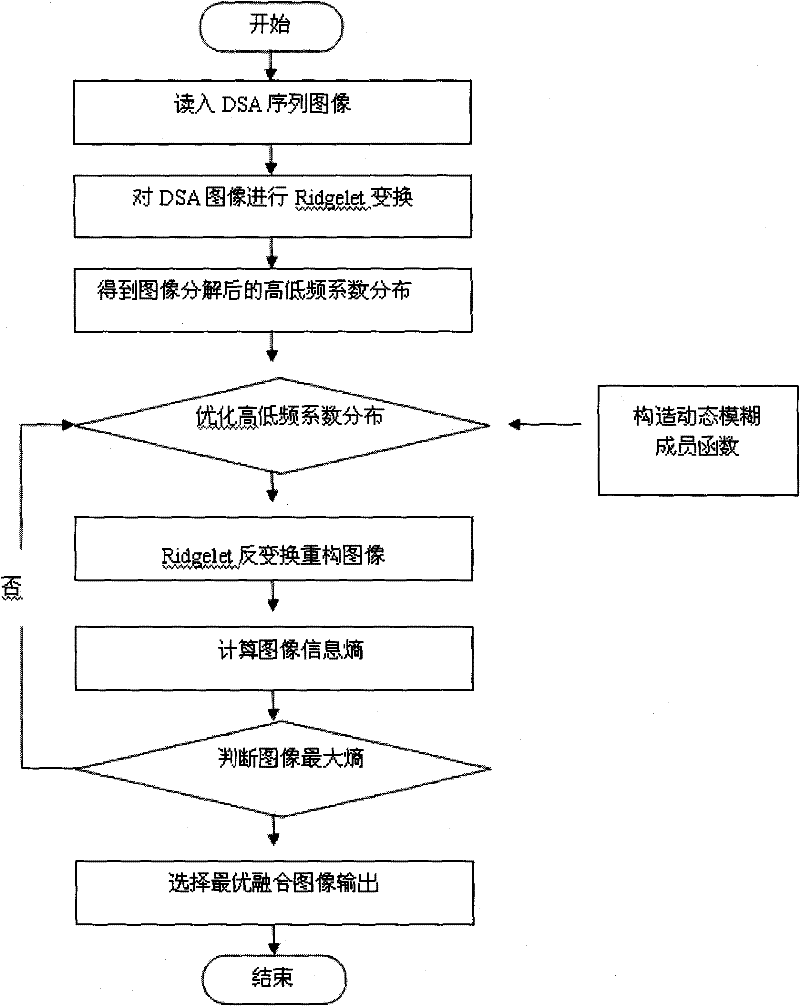

Medical digital subtraction image fusion method based on ridgelet transform

InactiveCN101799918BIncrease information entropyReduce error rateImage enhancementTime complexityRoot-mean-square deviation

The invention discloses a medical digital subtraction image fusion method based on ridgelet transformation, which comprises the following steps: (1) respectively performing ridgelet transformation on two images to obtain a ridgelet transformation matrix; (2) performing fusion processing; ( 3) Carry out ridgelet inverse transform to the ridgelet transformation matrix after fusion, and the reconstructed image obtained is the image after fusion; it is characterized in that: in step (1), the initial judgment threshold and the step size are set, and the process is carried out accordingly. Ridgelet transform, use the inverse transformation to reconstruct the fusion image, calculate the information entropy of the fusion image, use the dynamic fuzzy method to change the judgment threshold according to the step size and repeat the above operation, use the judgment threshold corresponding to the maximum information entropy as the final judgment threshold, and obtain the step (1 ) described ridgelet transformation matrix. The invention can effectively improve the information entropy of the fusion image, reduce the root mean square error rate, and the performance is better than other traditional fusion methods. The time complexity of the algorithm is low, and the results obtained are relatively accurate, which greatly enriches the texture details of medical images.

Owner:SUZHOU UNIV

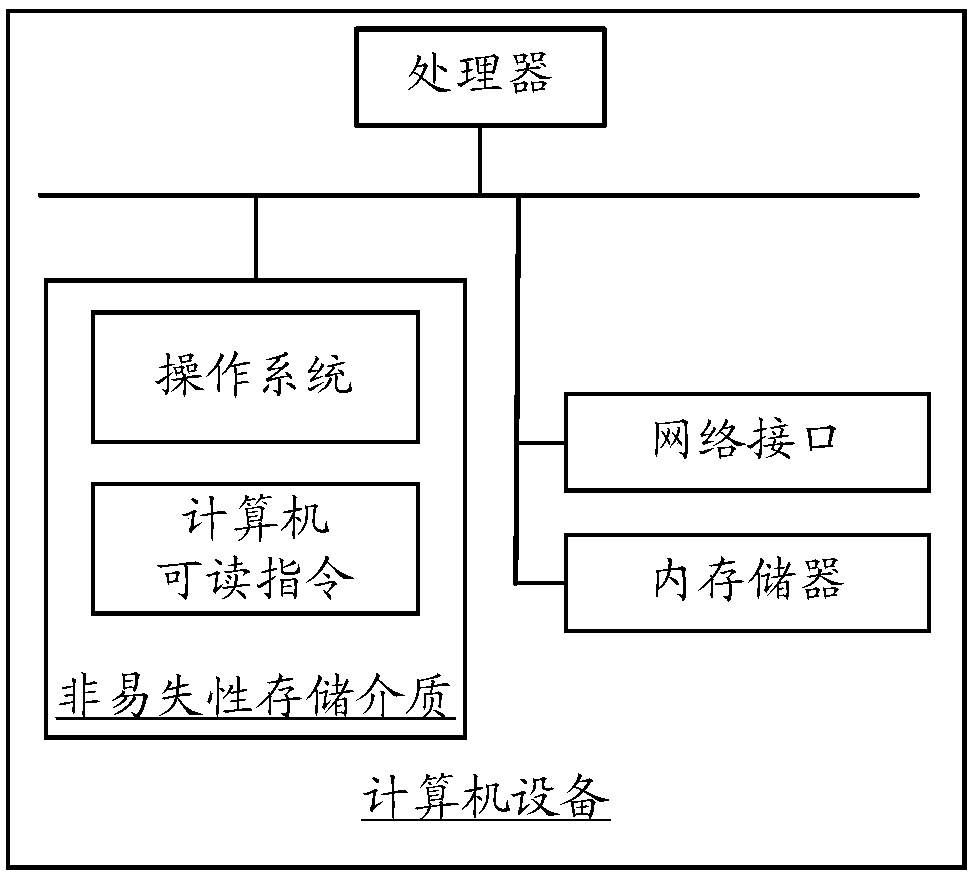

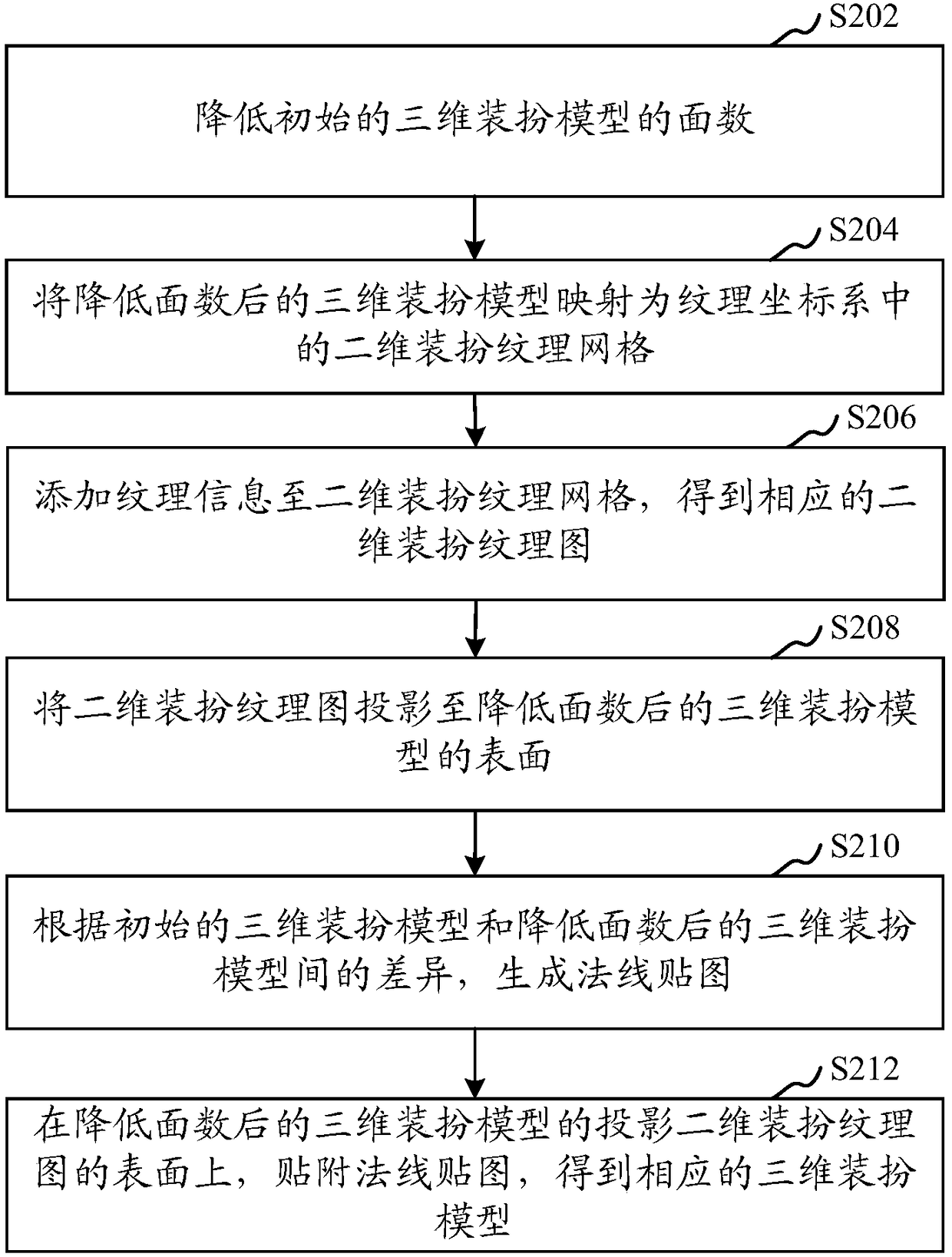

Three-dimensional dress-up model processing method and device, computer equipment and storage medium

ActiveCN108876921ARich texture detailsImprove efficiencyImage generation3D-image renderingComputer graphics (images)High surface

The invention relates to a three-dimensional dress-up model processing method and device, computer equipment and a storage medium. The method comprises the steps of reducing a surface quantity of an initial three-dimensional dress-up model; mapping a three-dimensional dress-up model subjected to the surface quantity reduction into a two-dimensional dress-up texture grid in a texture coordinate system; adding texture information to the two-dimensional dress-up texture grid, thereby obtaining a corresponding two-dimensional dress-up texture image; projecting the two-dimensional dress-up textureimage to the surface of the three-dimensional dress-up model subjected to the surface quantity reduction; generating a normal map according to the difference between the initial three-dimensional dress-up model and the three-dimensional dress-up model subjected to the surface quantity reduction; and on the surface of the projected two-dimensional dress-up texture image of the three-dimensional dress-up model subjected to the surface quantity reduction, attaching the normal map to obtain a corresponding three-dimensional dress-up model. Through the projected two-dimensional dress-up texture image added with the texture information, and in combination with the normal map of texture details of the model with a high surface quantity, the final three-dimensional dress-up model has a lot of richtexture details, and the efficiency is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

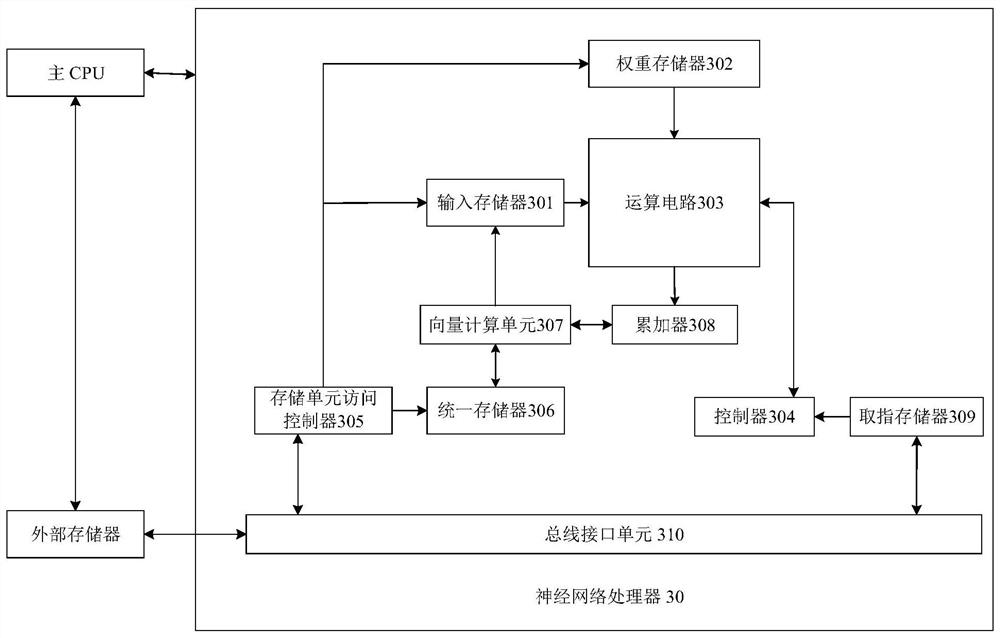

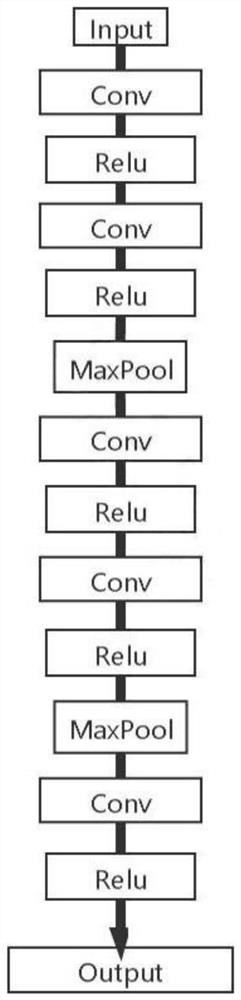

Seismic data texture feature reconstruction method based on deep learning

PendingCN113269818AAvoid manual operationAvoiding Spatial Aliasing ProblemsImage enhancementImage analysisTexture extractionImaging Feature

The invention discloses a seismic data texture feature reconstruction method based on deep learning. According to the method, the texture extraction network is adopted, the shallow convolutional network is used for training, and the texture extraction network continuously updates own parameters along with the training process, so that the texture extraction network can extract the most appropriate texture feature information. The similarity ri, j between every two feature blocks of one feature block in the up-sampling low-resolution image feature map Q and one feature block in the down-sampling and up-sampling reference image feature map K is respectively calculated by adopting a normalized inner product method, transfer learning is carried out by calculating the similarity through blocks, and texture transfer is carried out by using an attention mechanism. And adversarial loss and perception loss are added to the loss function part. According to the method, parameters can be automatically updated, other prior information is not needed, a complex texture feature structure can be learned, the problem of spatial aliasing is effectively avoided, and clear high-resolution seismic data can be quickly reconstructed.

Owner:HEBEI UNIV OF TECH

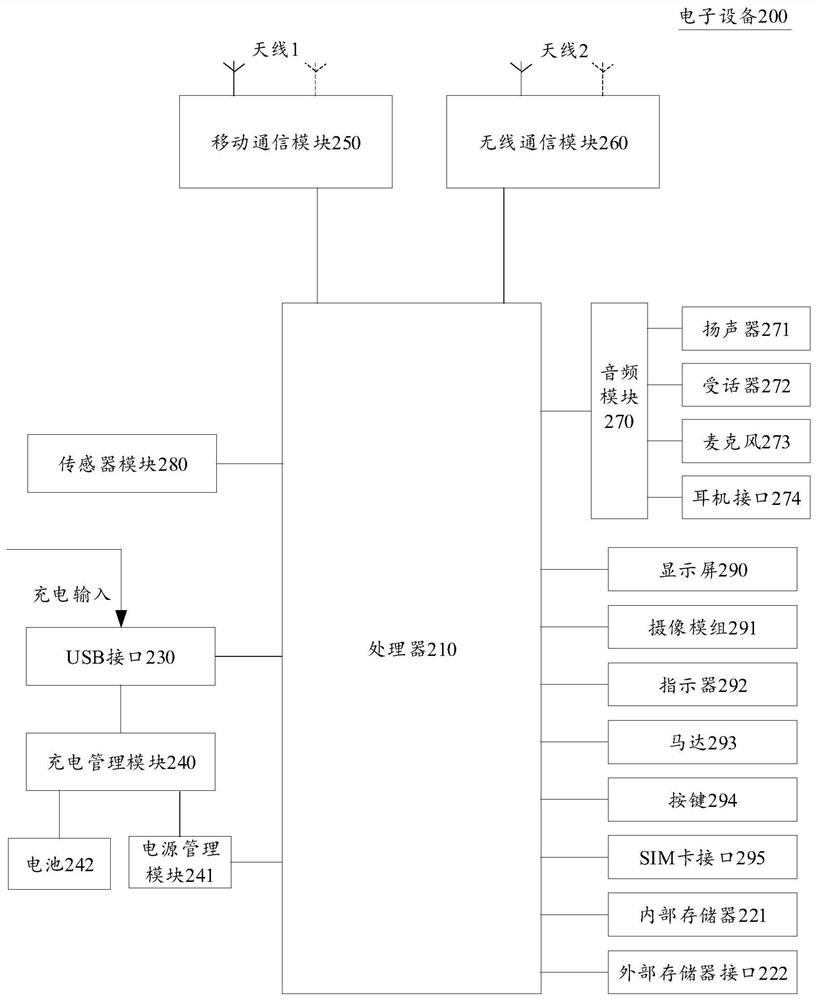

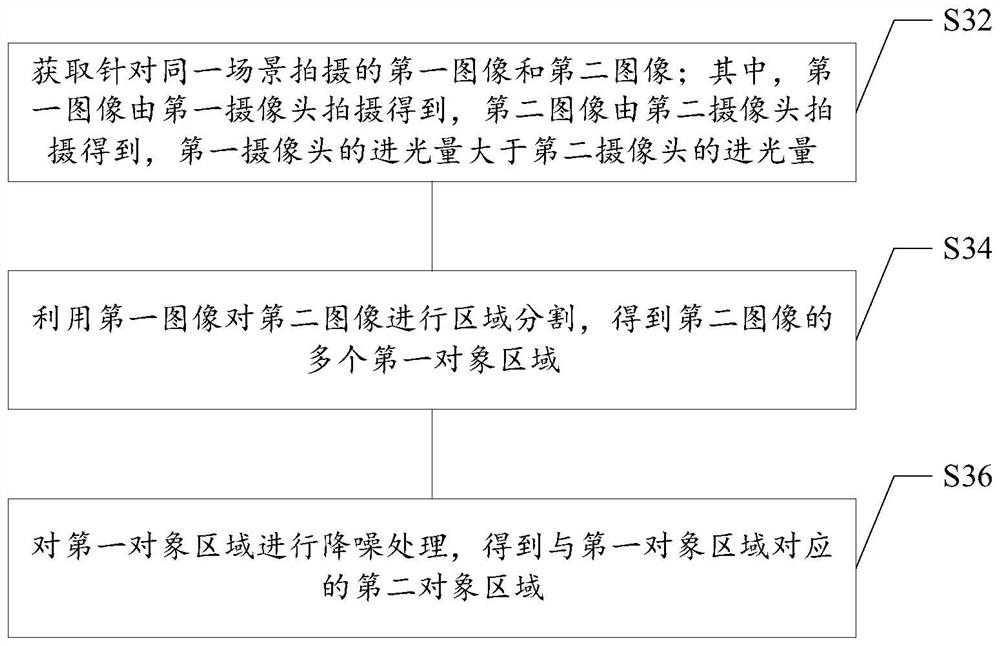

Image processing method and device, computer readable storage medium and electronic equipment

PendingCN113538462ARich texture detailsImprove noise reductionImage enhancementImage analysisImaging processingComputer graphics (images)

The invention provides an image processing method and device, a computer readable storage medium and electronic equipment, and relates to the technical field of image processing. The image processing method comprises the following steps: acquiring a first image and a second image shot for the same scene; wherein the first image is shot by a first camera, the second image is shot by a second camera, and the light incoming amount of the first camera is greater than that of the second camera; performing region segmentation on the second image by using the first image to obtain at least one first object region of the second image; and performing noise reduction on the first object area to obtain a second object area corresponding to the first object area. The image quality can be improved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

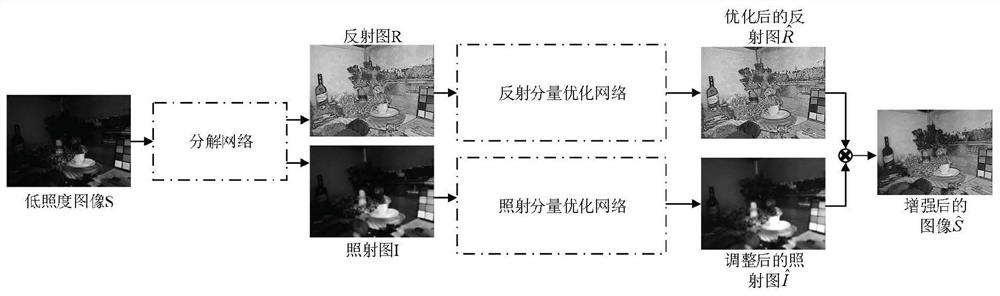

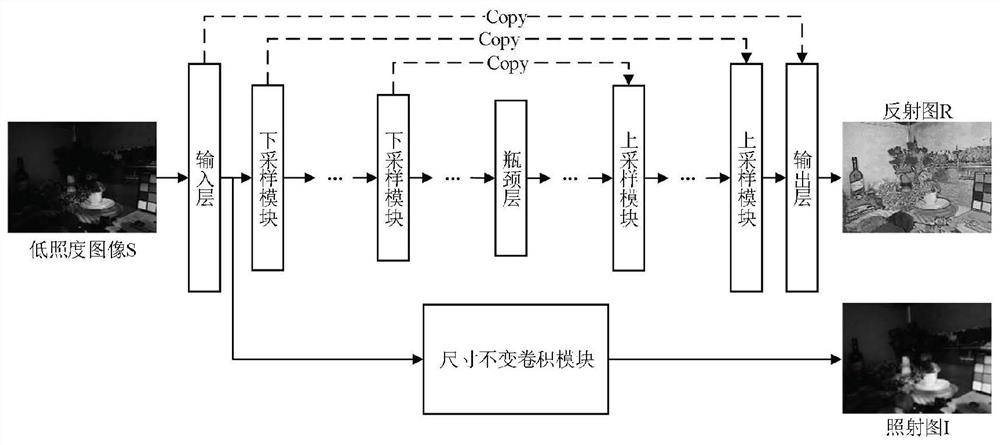

No-reference low-illumination image enhancement method based on deep convolutional neural network

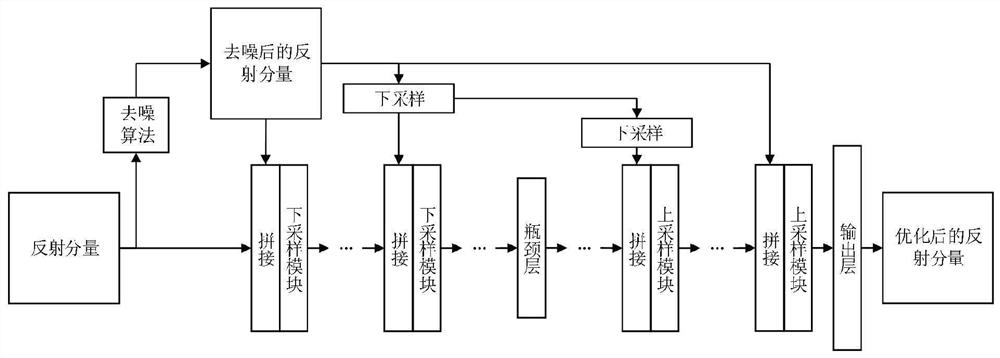

ActiveCN112927164AGood detail improvement abilityReduce difficultyImage enhancementImage analysisImaging processingFeature extraction

The invention relates to a no-reference low-illumination image enhancement method based on a deep convolutional neural network, and belongs to the field of image processing. The method comprises the following steps: firstly, constructing a feature extraction module comprising two branches by using a deep convolutional neural network, and extracting an irradiation component and a reflection component from an input low-illumination image; and then, denoising the reflection component, and integrating the denoised reflection component into an optimization network to obtain an optimized reflection component; then, inputting the irradiation component into the optimization network to obtain an optimized irradiation component; and finally, multiplying the optimized irradiation component by the reflection component to obtain a final enhancement result. According to the method, the reflection component extracted from the input image is fully utilized, the interference of noise in the image is effectively reduced, and the detail expression capability is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Novel infrared and visible light image fusion algorithm

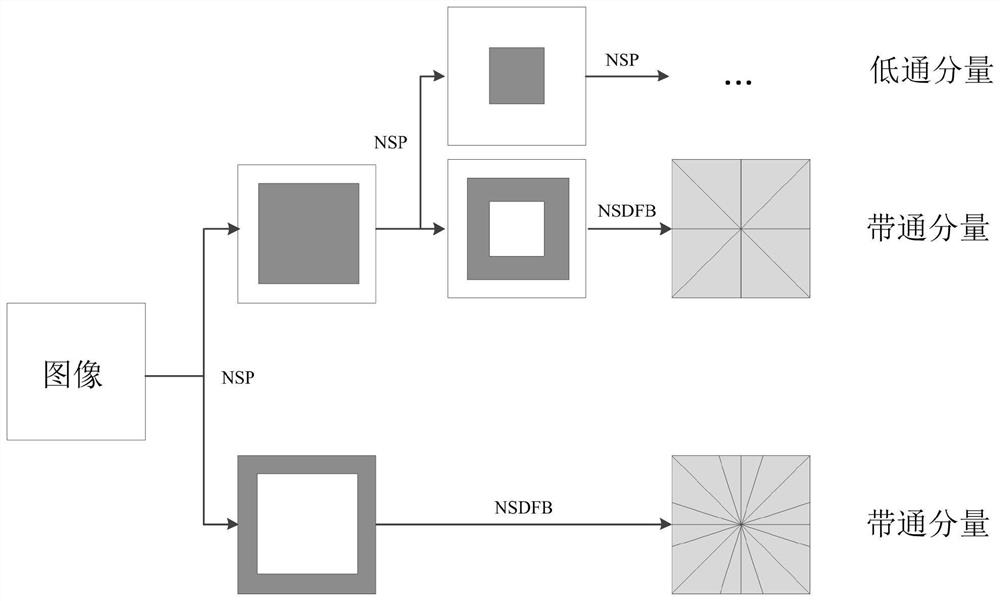

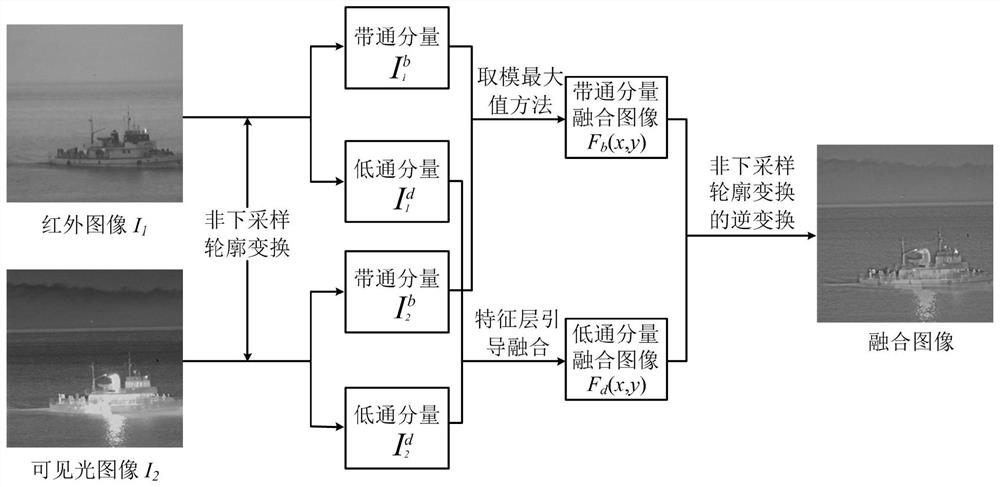

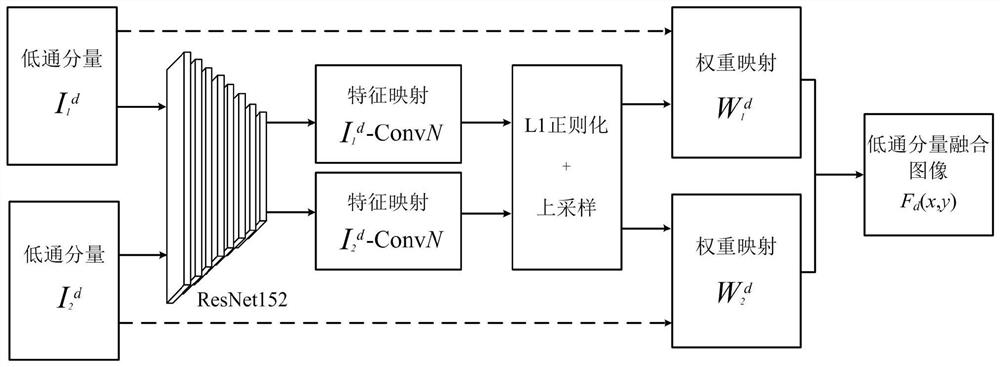

ActiveCN112950519APreserve contour informationClear visual expressionImage enhancementImage analysisAlgorithmImage fusion algorithm

The invention relates to a novel infrared and visible light image fusion algorithm, which comprises the following steps of: respectively carrying out multi-scale transformation on a pre-registered infrared image and a pre-registered visible light image by utilizing non-subsampled contour transformation to obtain band-pass components and low-pass components respectively corresponding to the infrared image and the visible light image; fusing the low-pass components by using a method of guiding image depth features through a deep neural network to obtain a low-pass component fused image; comparing the band-pass components by using a modulus maximum value method, selecting the maximum value as a weight value of band-pass component fusion, and fusing the band-pass components according to the weight value to obtain a band-pass component fusion image; and reconstructing the low-pass component fusion image and the band-pass component fusion image through inverse transformation of non-subsampled contour transformation to obtain a final fusion image. According to the method, main information of hte source image can be reserved in the result image to the maximum extent, and noise and artifacts cannot occur in the fused image.

Owner:CHANGCHUN INST OF OPTICS FINE MECHANICS & PHYSICS CHINESE ACAD OF SCI

Infrared video enhancement system based on multi-level guided filtering

ActiveCN109873998BControllable processing timeGuaranteed scalabilityTelevision system detailsColor signal processing circuitsComputer hardwareImaging processing

Owner:SUZHOU CHANGFENG AVIATION ELECTRONICS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com