Patents

Literature

91 results about "High Bandwidth Memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

High Bandwidth Memory (HBM) is a high-performance RAM interface for 3D-stacked SDRAM from Samsung, AMD and SK Hynix. It is to be used in conjunction with high-performance graphics accelerators and network devices. The first HBM memory chip was produced by SK Hynix in 2013, and the first devices to use HBM were the AMD Fiji GPUs in 2015.

High bandwidth memory interface

InactiveUS7299330B2Improved high bandwidth chip-to-chip interfaceData transmission errorEnergy efficient ICTDigital storageComputer hardwareHigh bandwidth

Owner:CONVERSANT INTPROP MANAGEMENT INC

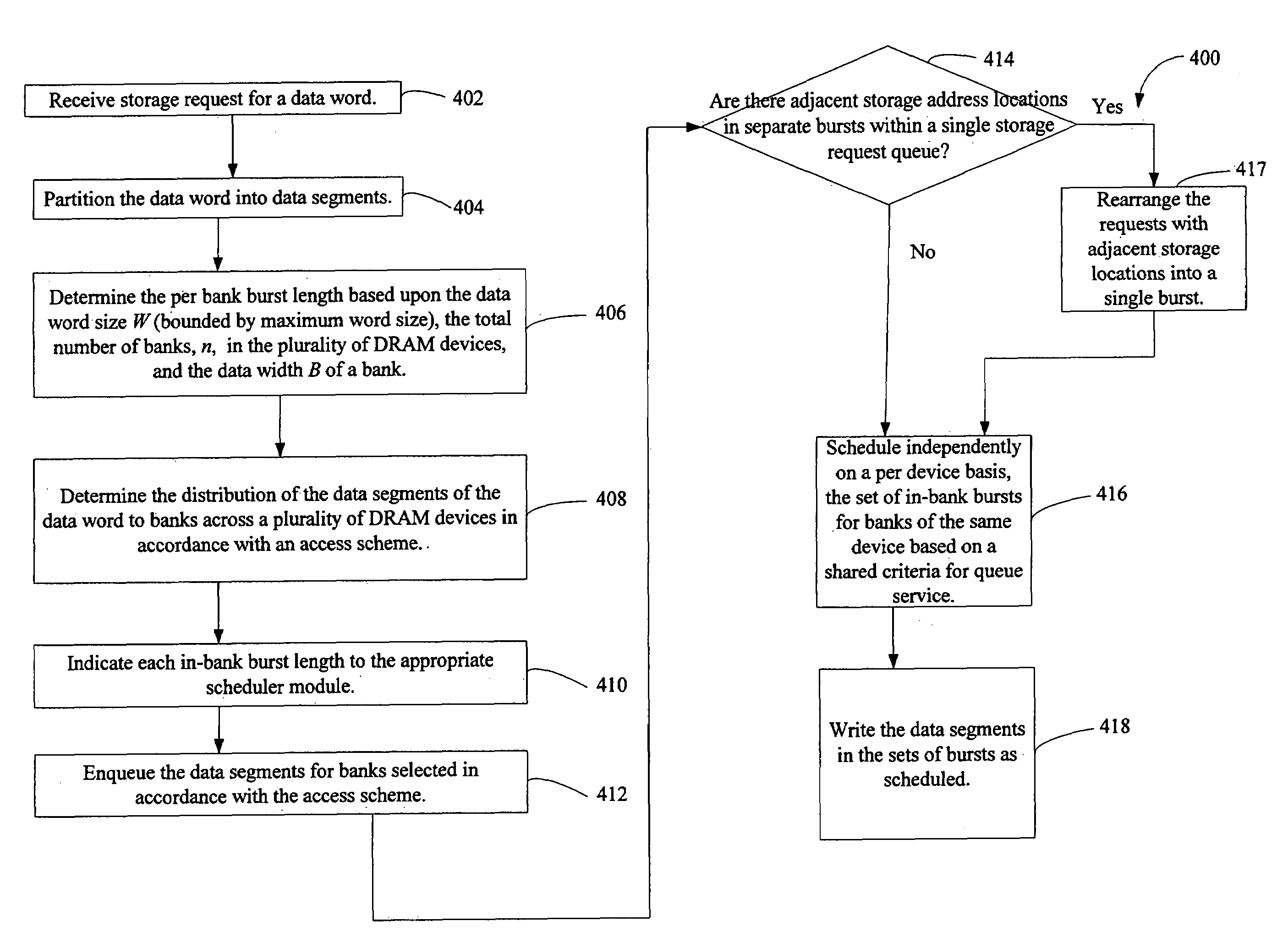

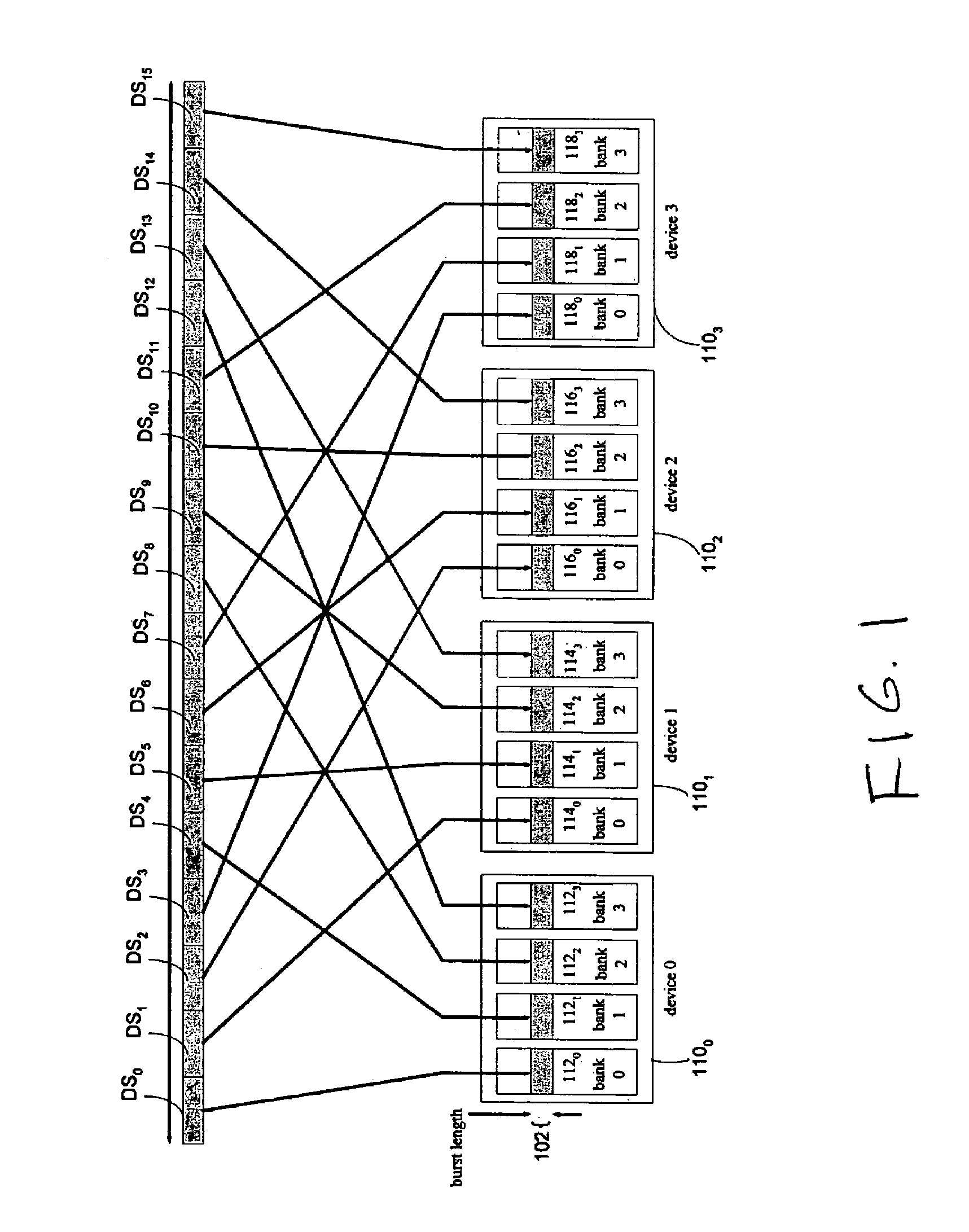

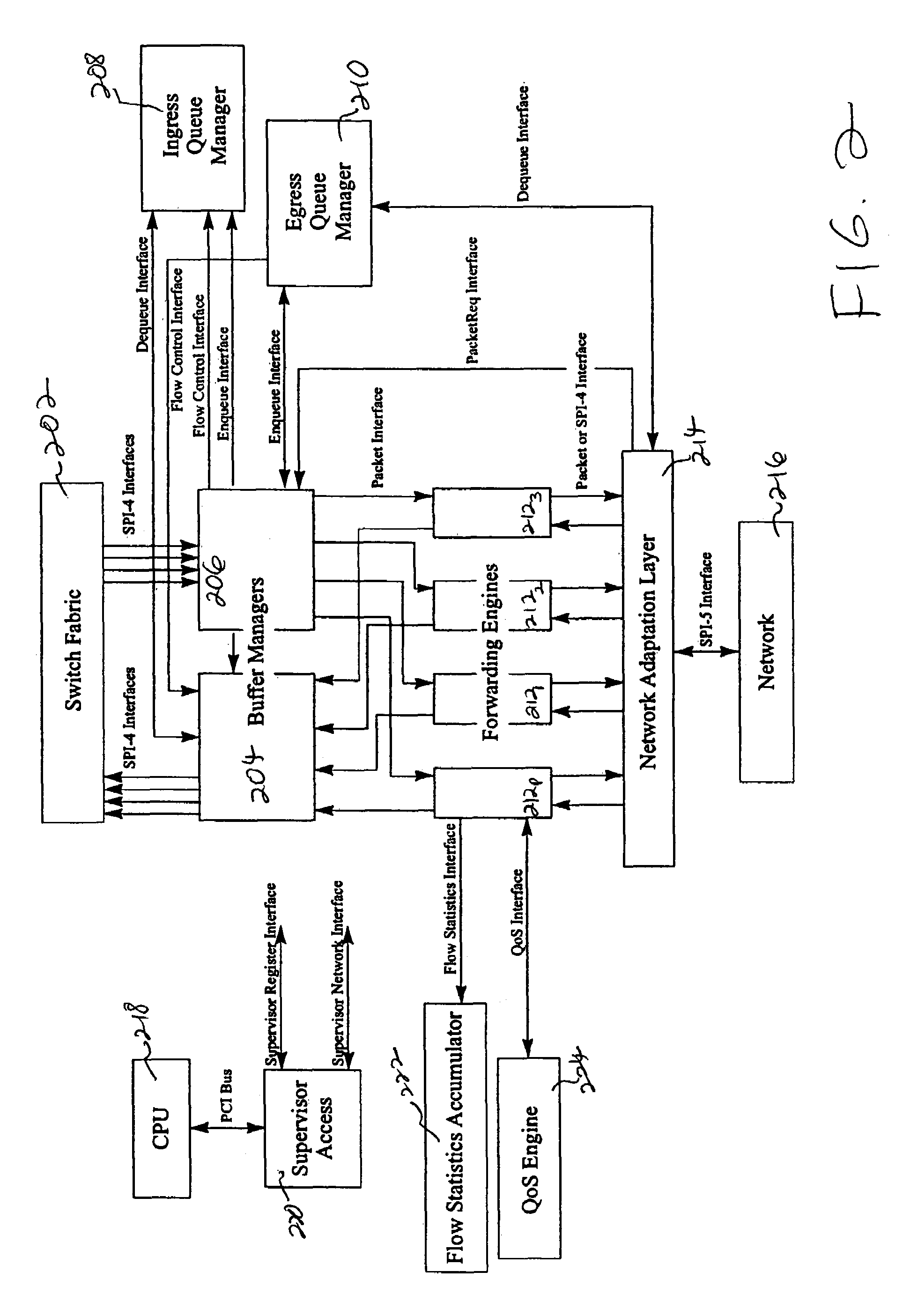

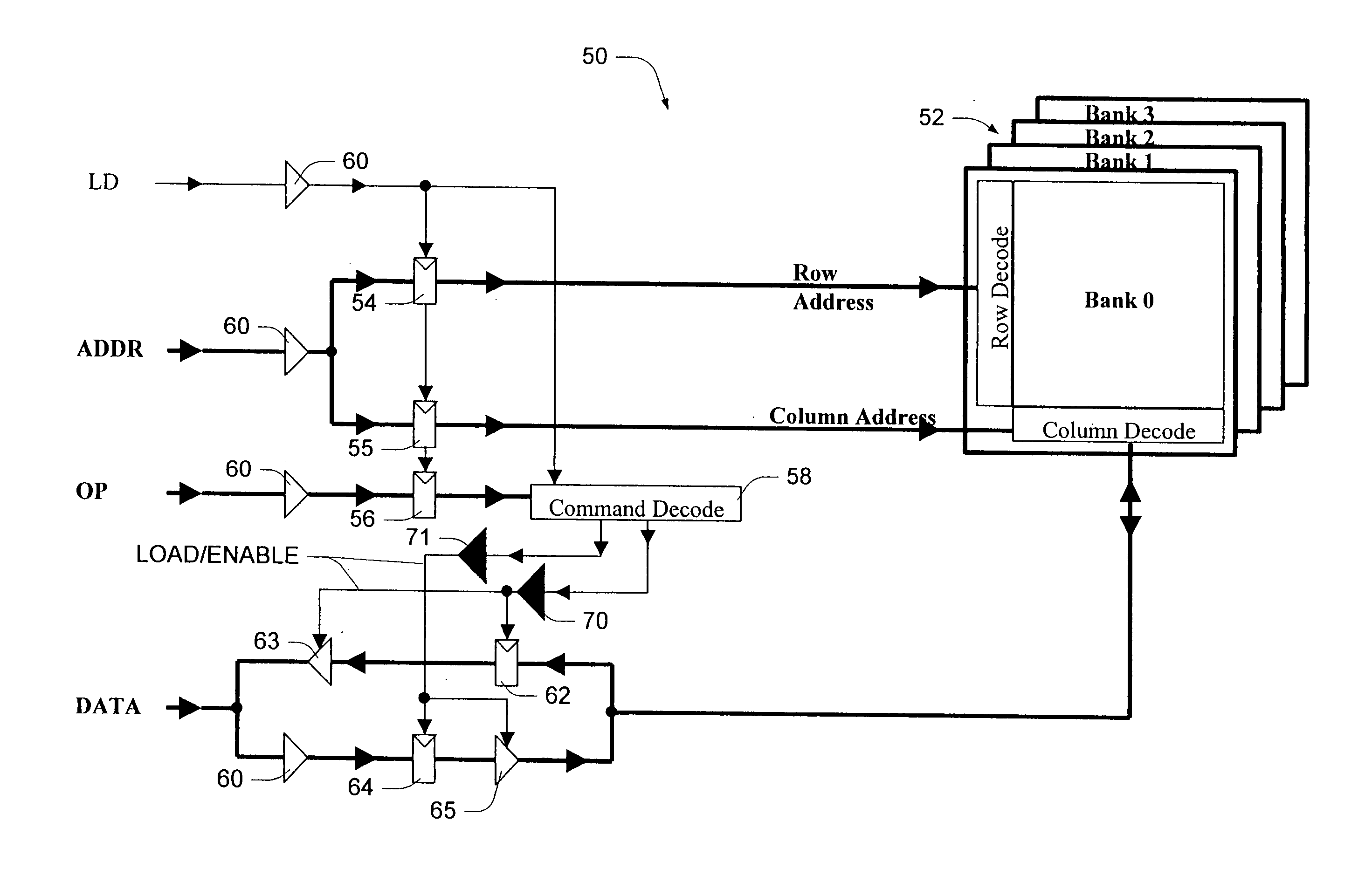

High bandwidth memory management using multi-bank DRAM devices

InactiveUS7296112B1Performance maximizationHigh bandwidthMemory adressing/allocation/relocationHidden dataData segment

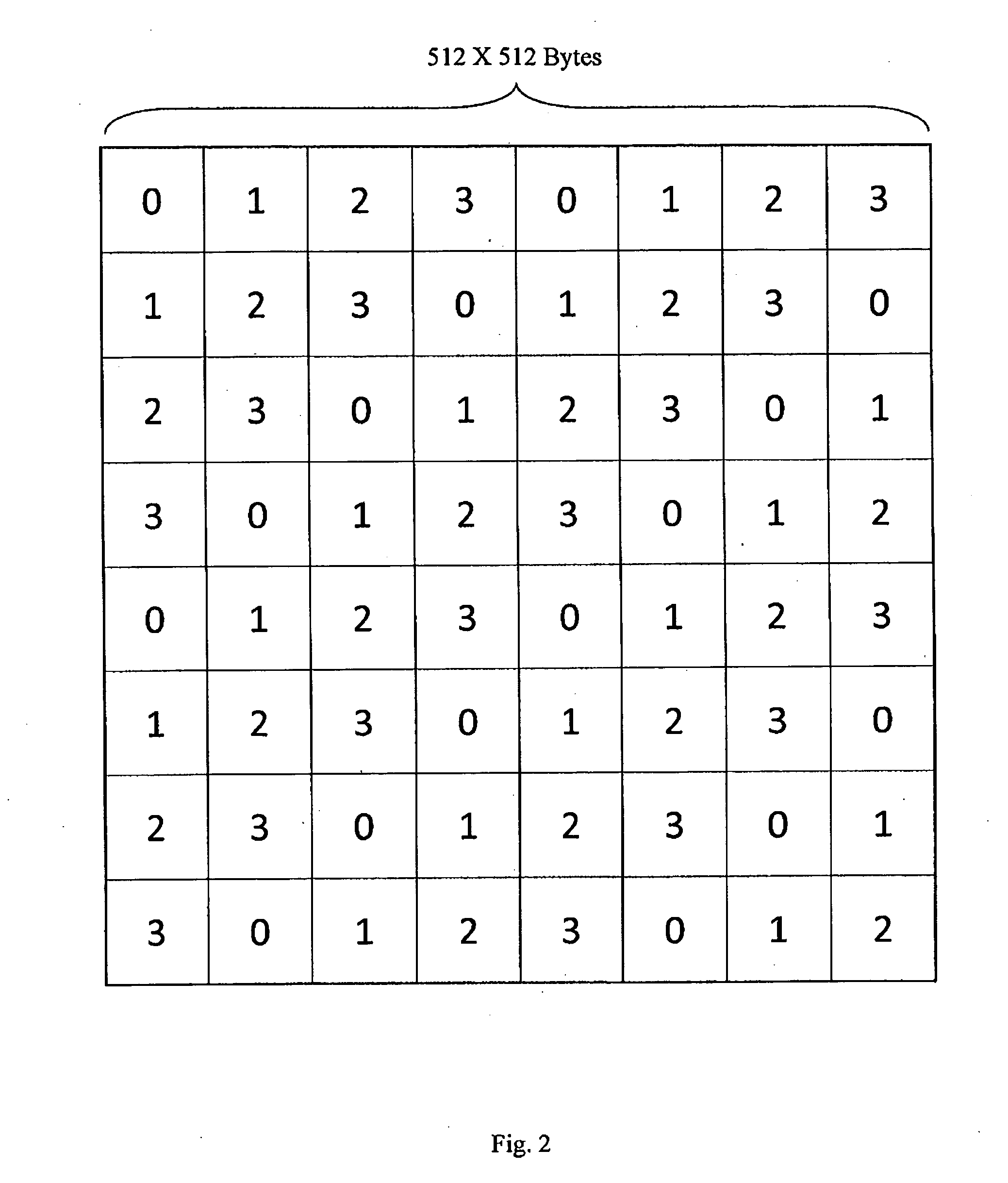

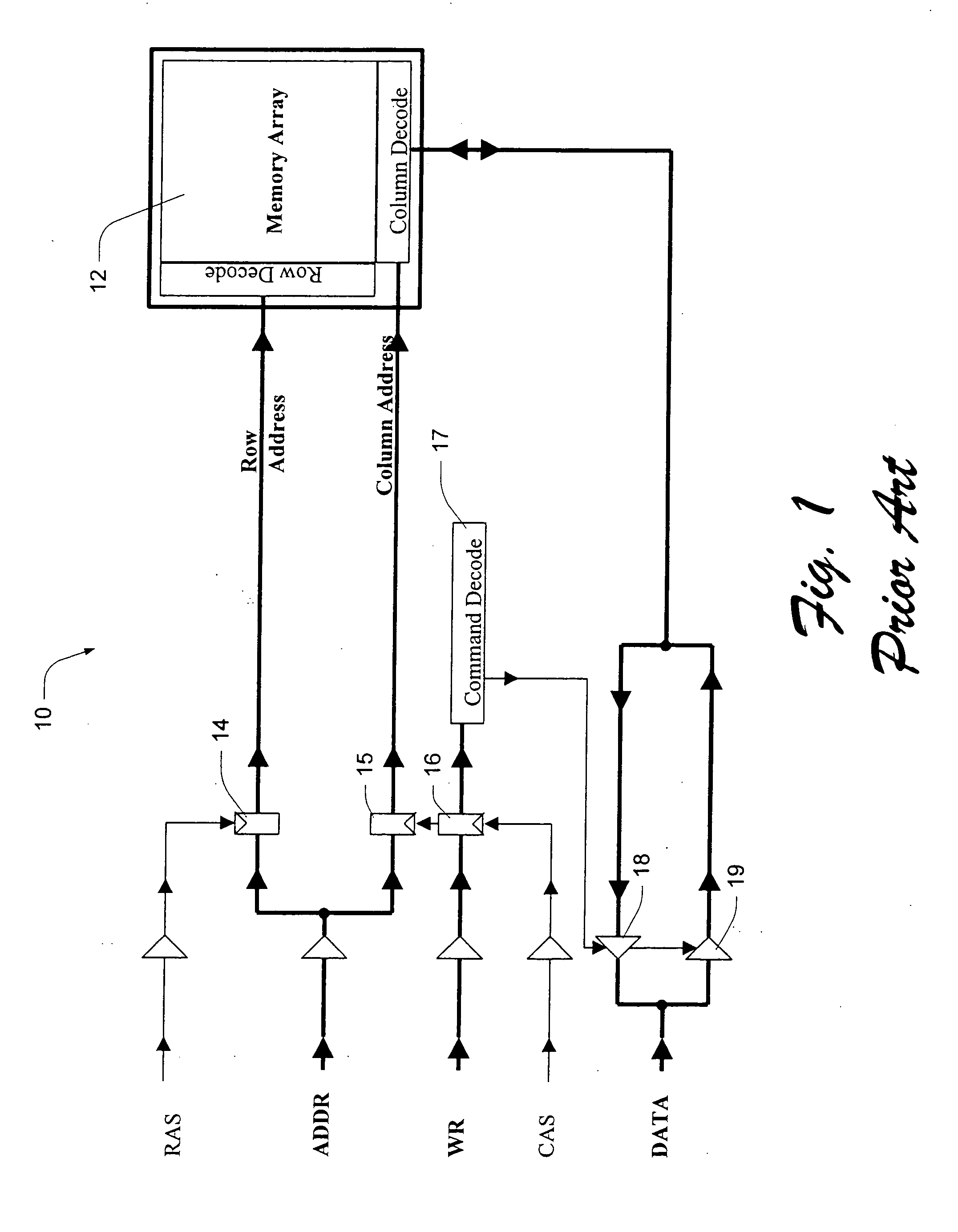

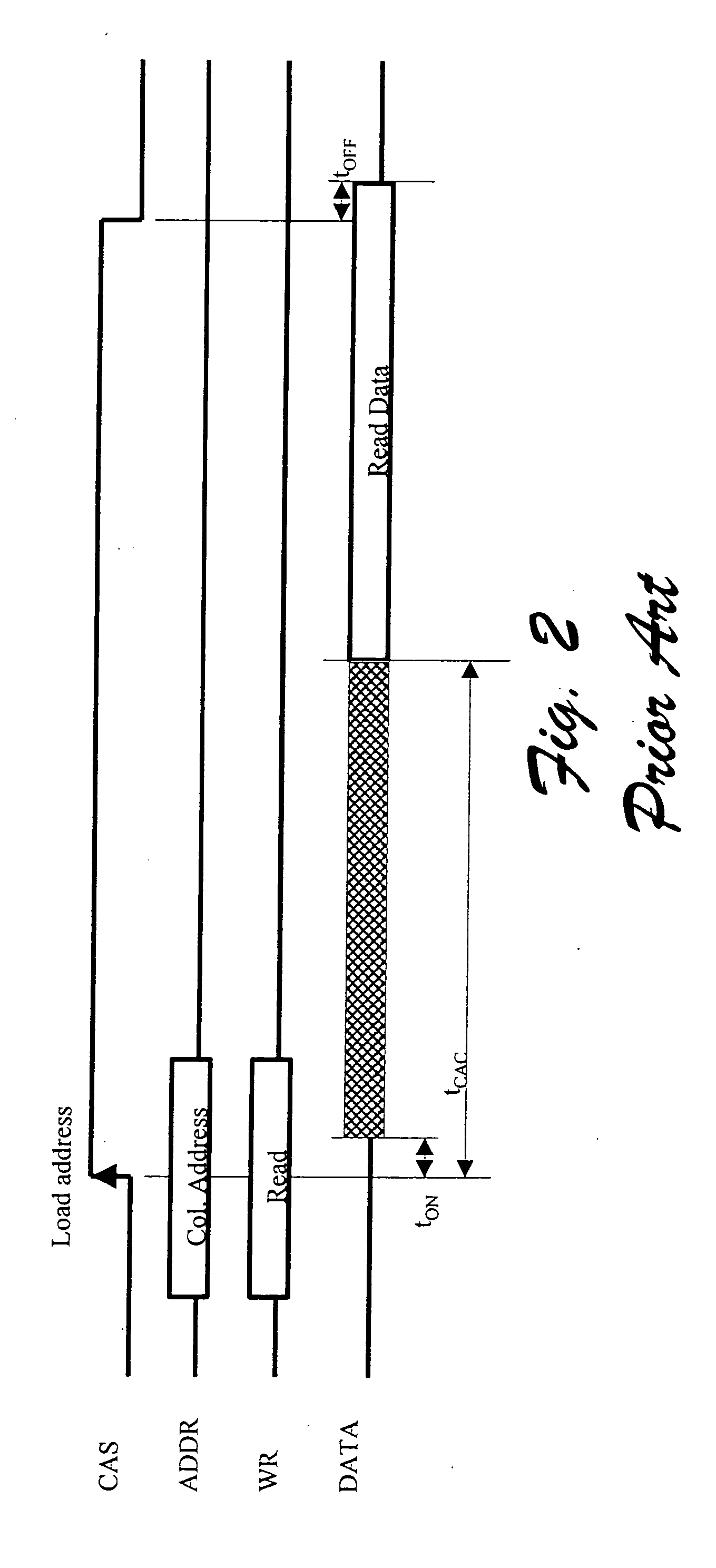

The disclosure describes implementations for accessing in parallel a plurality of banks across a plurality of DRAM devices. These implementations are suited for operation within a parallel packet processor. A data word in partitioned into data segments which are stored in the plurality of banks in accordance with an access scheme that hides pre-charging of rows behind data transfers. A storage distribution control module is communicatively coupled to a memory comprising a plurality of storage request queues, and a retrieval control module is communicatively coupled to a memory comprising a plurality of retrieval request queues. In one example, each request queue may be implemented as a first-in-first-out (FIFO) memory buffer. The plurality of storage request queues are subdivided into sets as are the plurality of retrieval queues. Each is set is associated with a respective DRAM device. A scheduler for each respective DRAM device schedules data transfer between its respective storage queue set and the DRAM device and between its retrieval queue set and the DRAM device independently of the scheduling of the other devices, but based on a shared criteria for queue service.

Owner:CISCO TECH INC

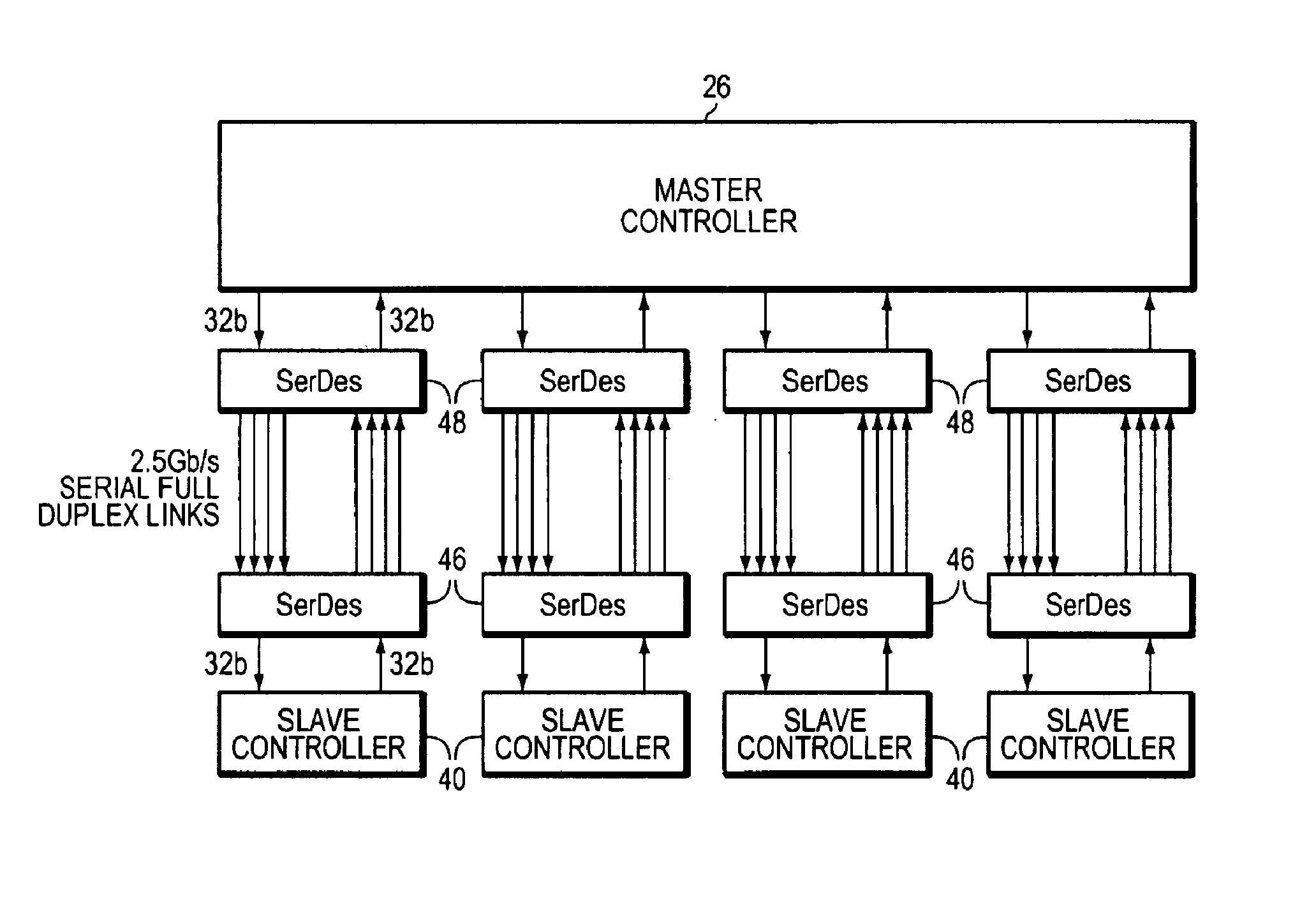

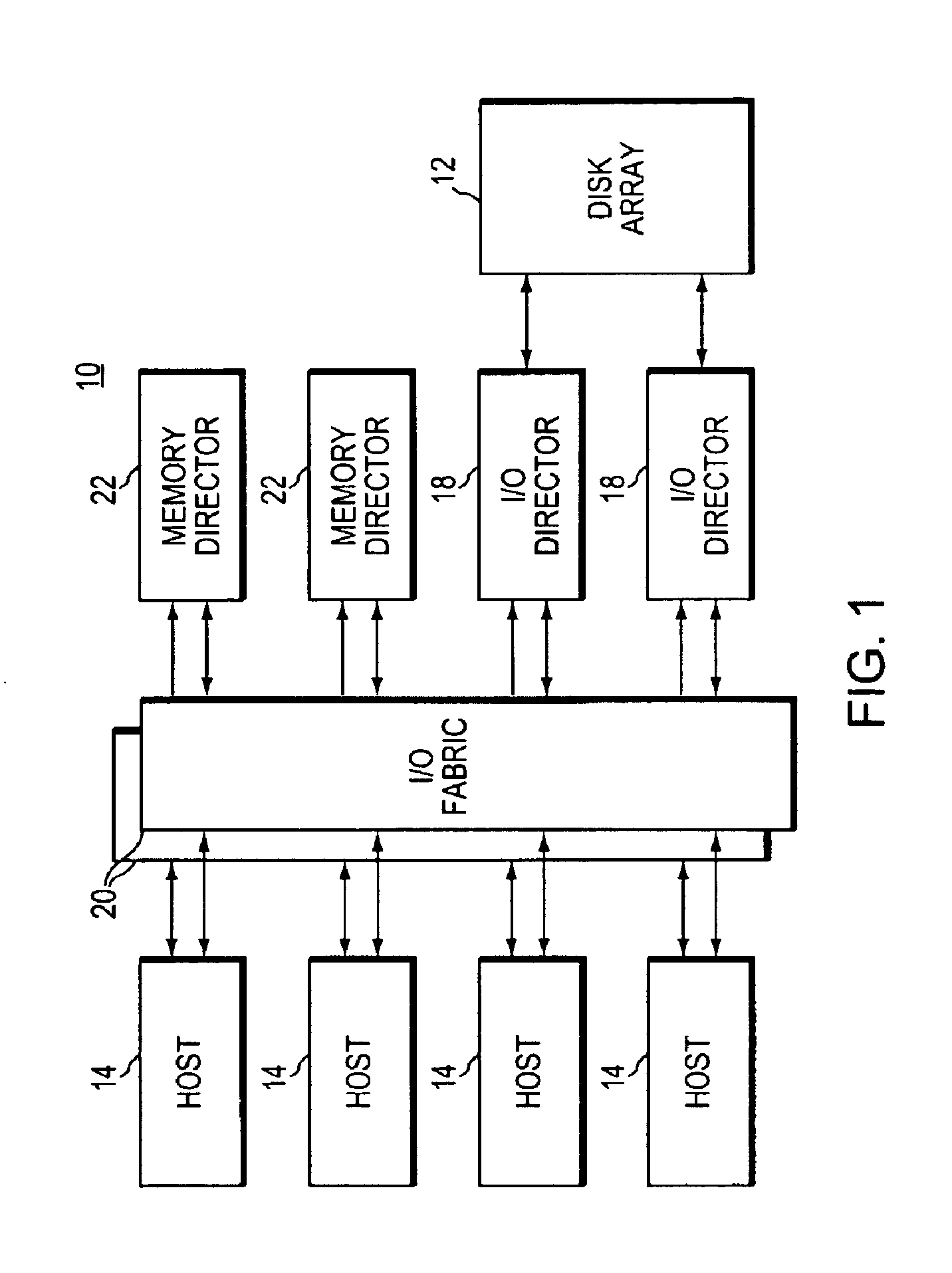

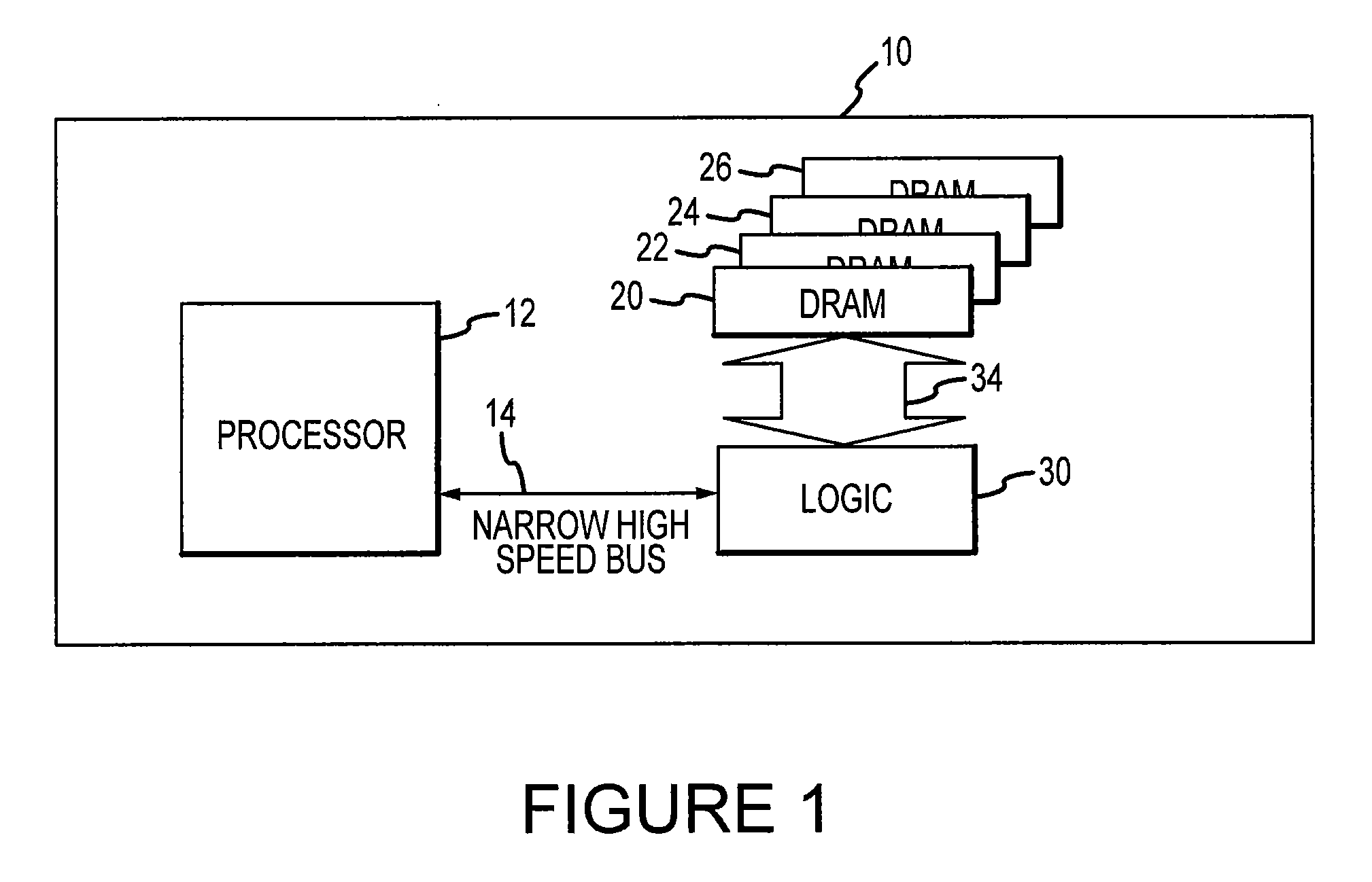

Large high bandwidth memory system

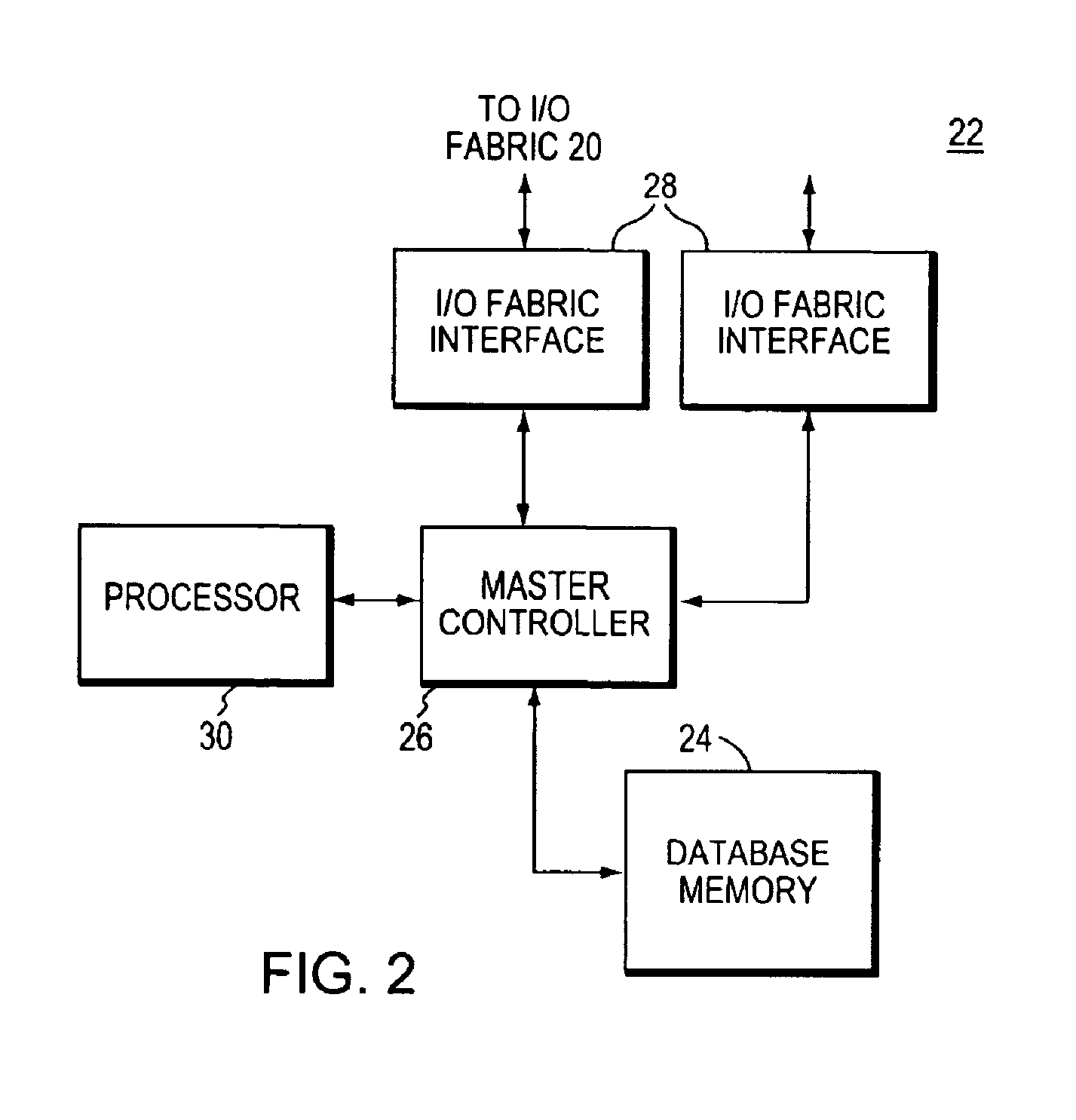

InactiveUS7089379B1Memory adressing/allocation/relocationDigital storageNetwork packetMaster controller

A memory system is divided into memory subsystems. Each subsystem includes a slave controller. Each slave controller is coupled to a serial link. A master controller is coupled to the slave controllers via the serial links, and the master controller is capable of initiating a memory access to a memory subsystem by communicating with the slave controller via the serial link. Each memory subsystem includes memory arrays coupled to the slave controller. Each memory array includes memory channels. Memory accesses to a memory array on a memory subsystem are interleaved by the slave controller between the memory channels, and memory accesses to a memory subsystem are striped by the slave controller between the memory arrays on the memory subsystem. Memory accesses are striped between memory subsystems by the master controller. The master controller and slave controllers communicate by sending link packets and protocol packets over the serial links.

Owner:EMC IP HLDG CO LLC

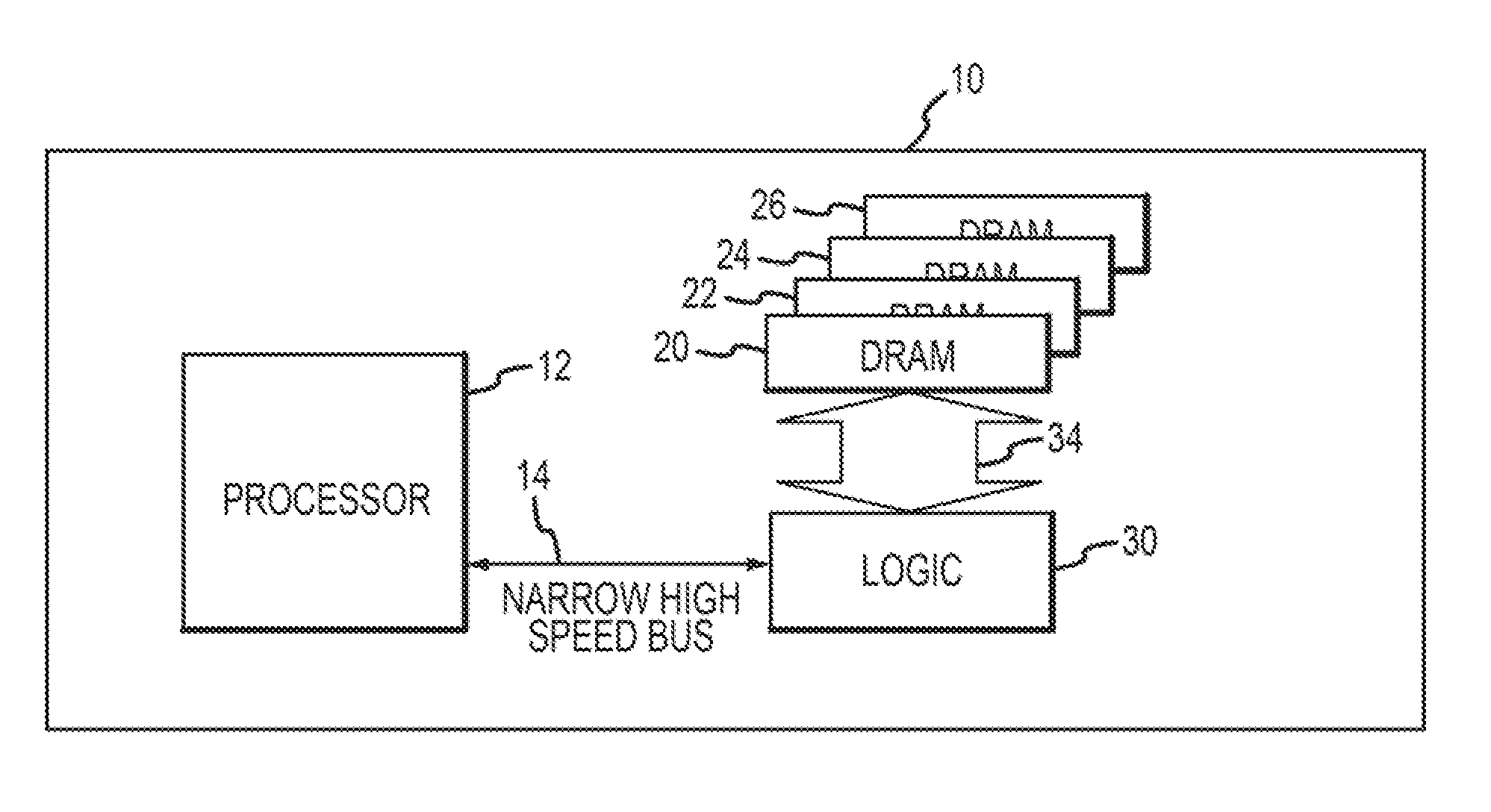

Computer accelerator system with improved efficiency

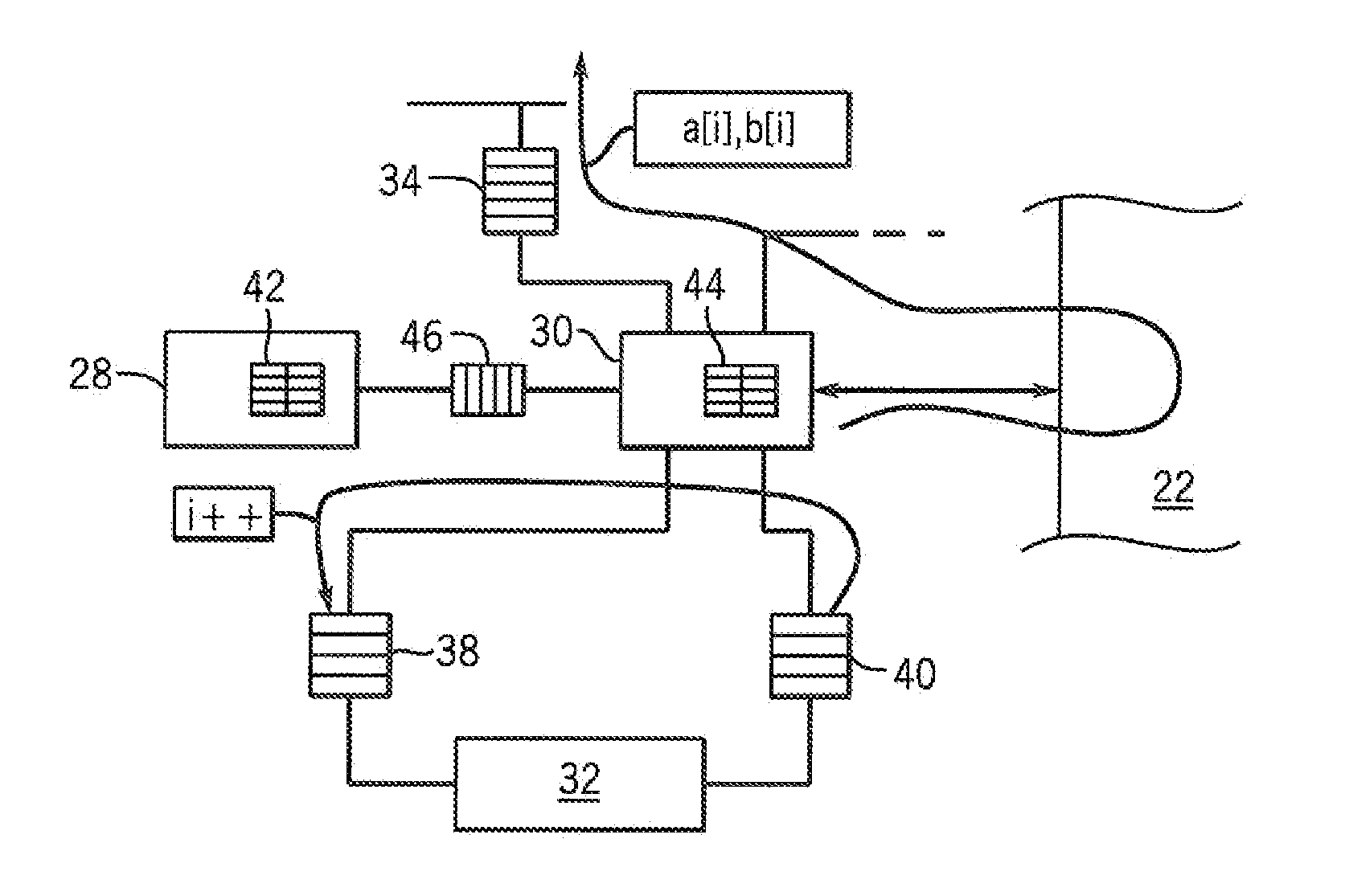

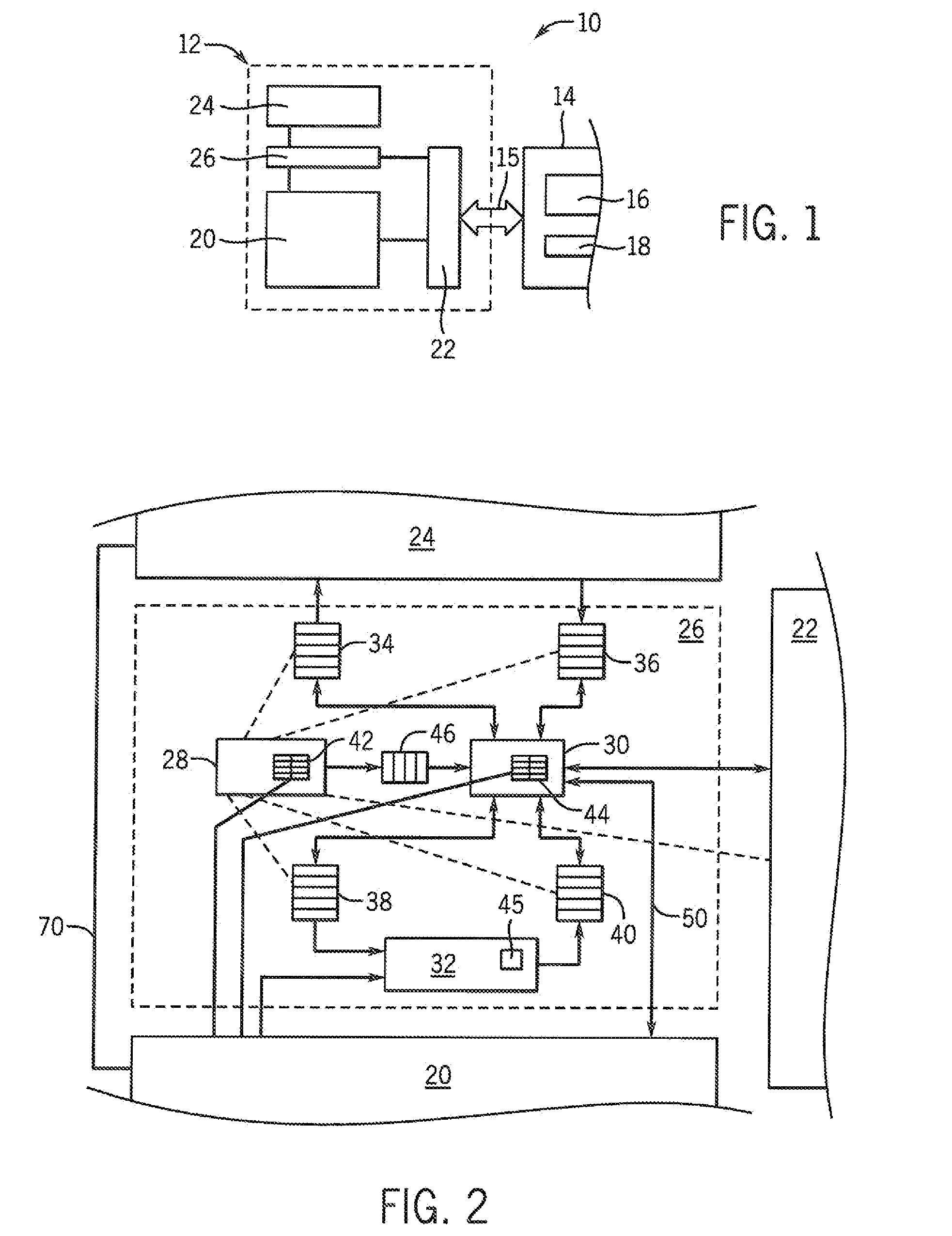

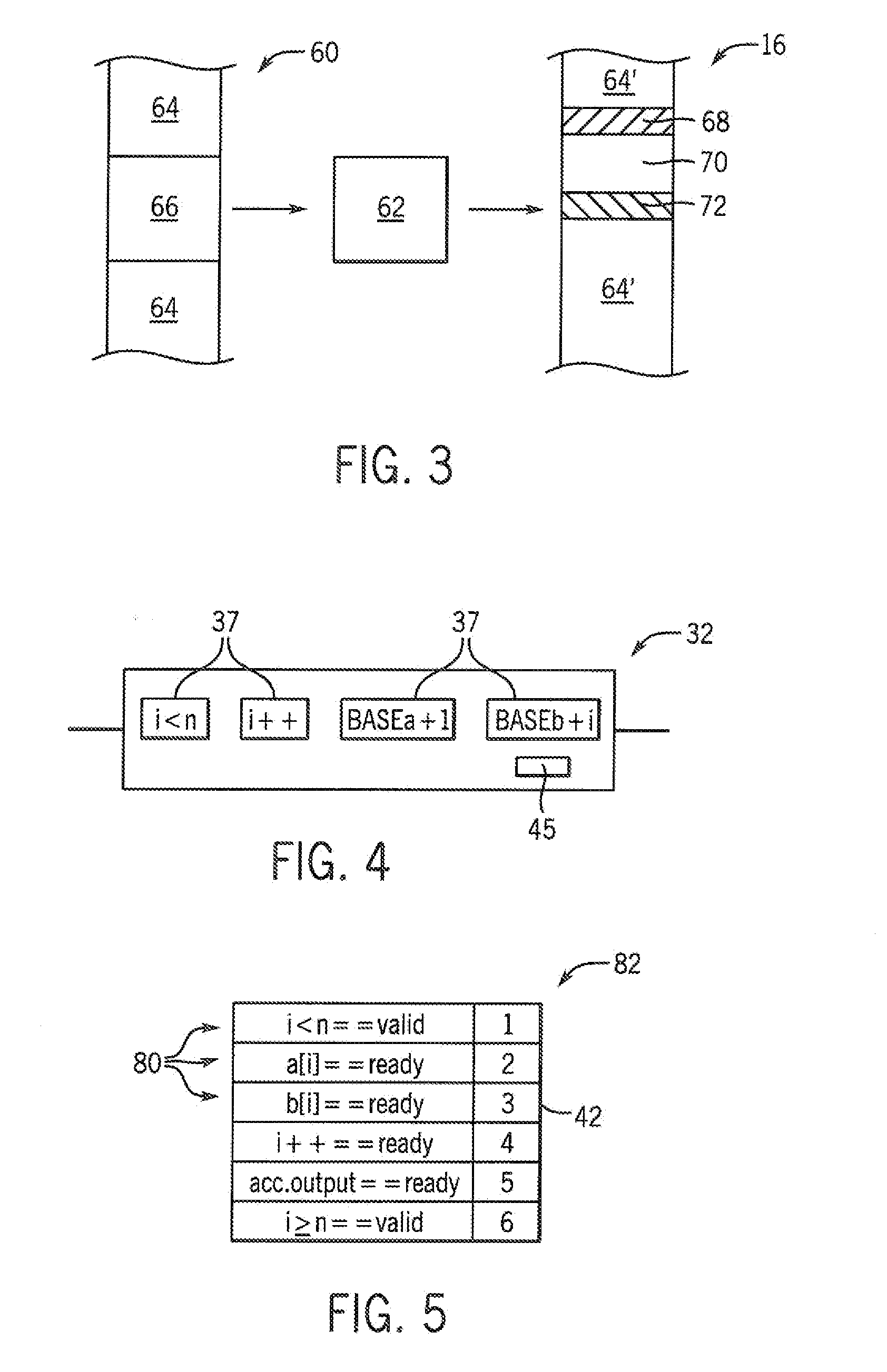

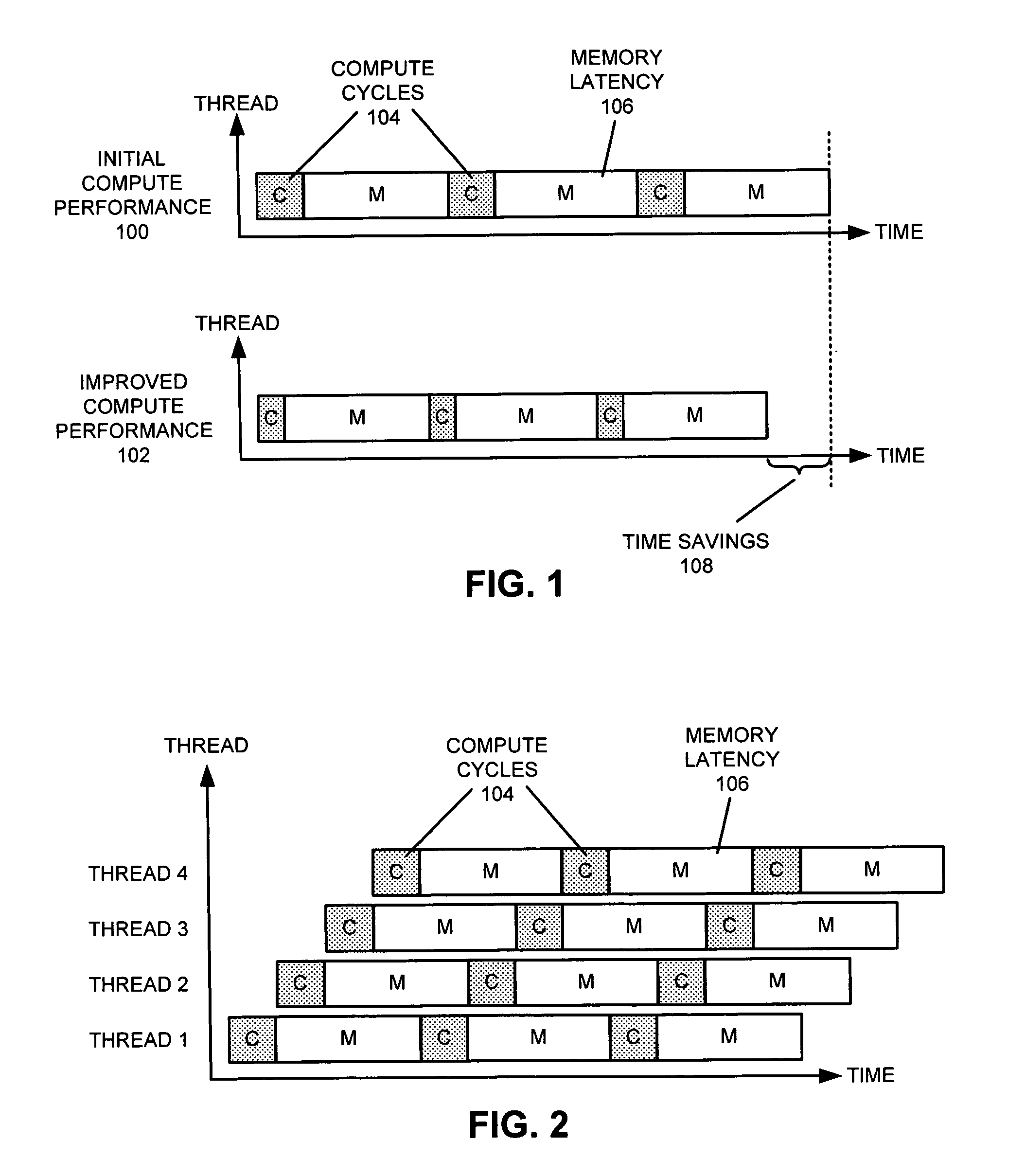

ActiveUS20150261528A1Efficient executionReduced computational abilityResource allocationDigital computer detailsSleep stateParallel computing

A specialized memory access processor is placed between a main processor and accelerator hardware to handle memory access for the accelerator hardware. The architecture of the memory access processor is designed to allow lower energy memory accesses than can be obtained by the main processor in providing data to the hardware accelerator while providing the hardware accelerator with a sufficiently high bandwidth memory channel. In some embodiments, the main processor may enter a sleep state during accelerator calculations to substantially lower energy consumption.

Owner:WISCONSIN ALUMNI RES FOUND

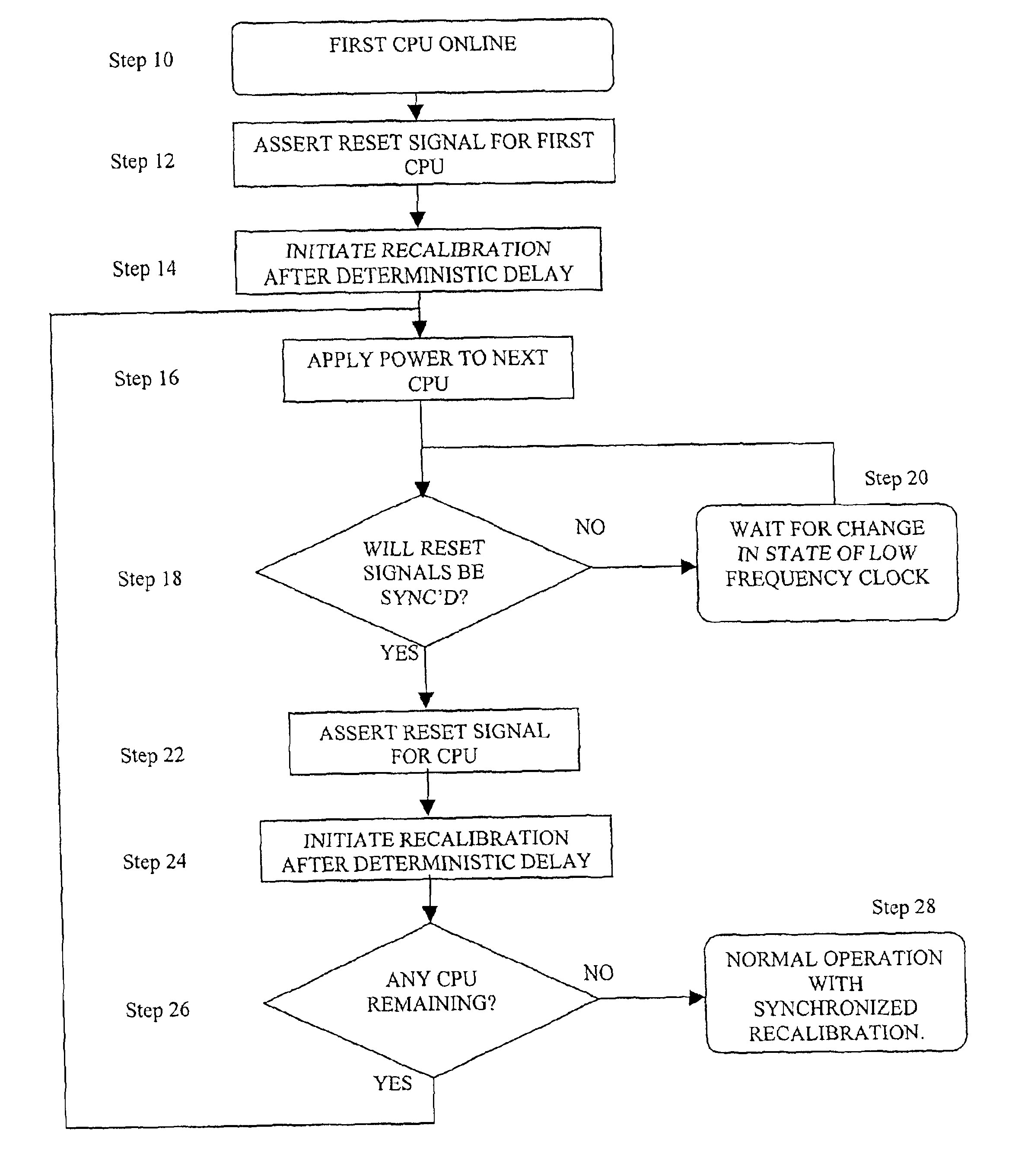

Coordinated recalibration of high bandwidth memories in a multiprocessor computer

Methods and apparatus for implementing high-bandwidth memory subsystems in a multiprocessor computing environment. Each component in the memory subsystem has a recalibration procedure. The computer provides a low-frequency clock signal with a period substantially equal to the duration between recalibration cycles of the components of the memory subsystem. Transitions in the low-frequency clock signal initiate a deterministically-determined delay. Lapse of the delay in turn triggers the recalibration of the components of the memory subsystem, ensuring synchronous recalibration. Synchronizing the recalibration procedures minimizes the unavailability of the memory subsystems, consequently reducing voting errors between CPUs.

Owner:STRATUS TECH IRELAND LTD

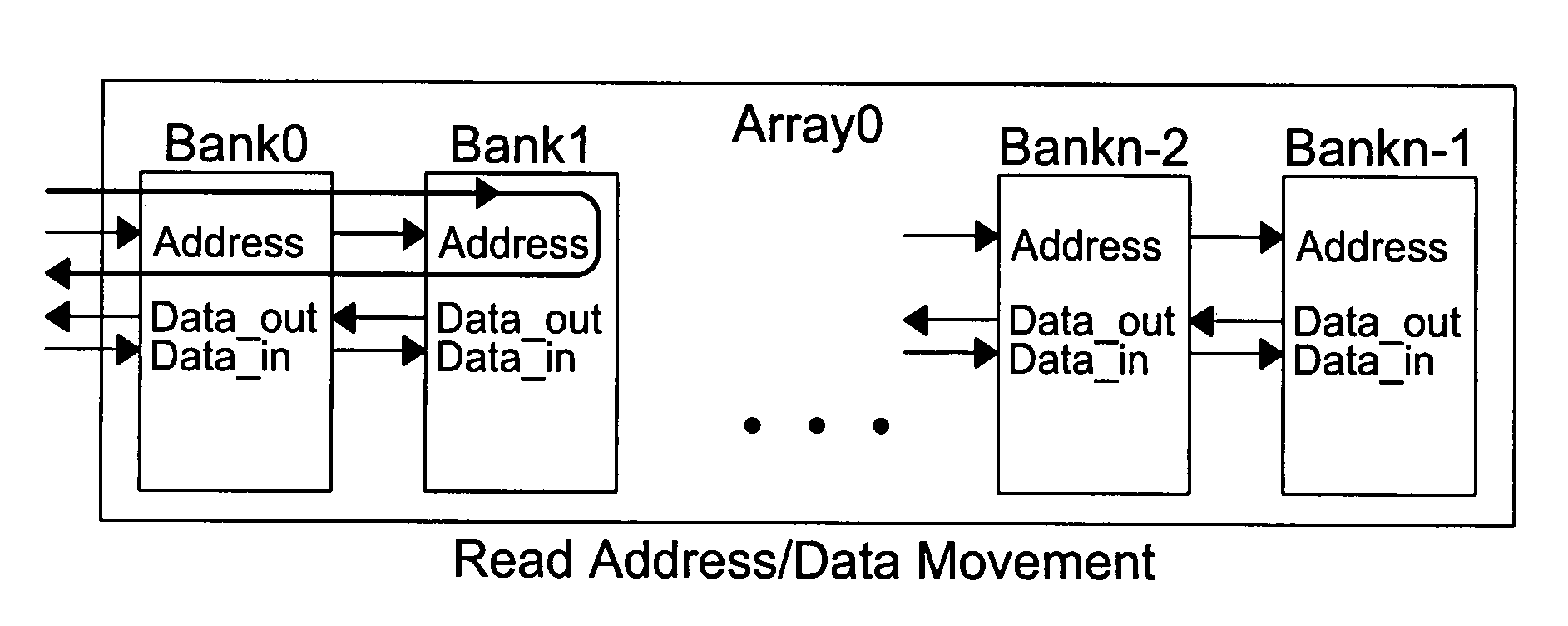

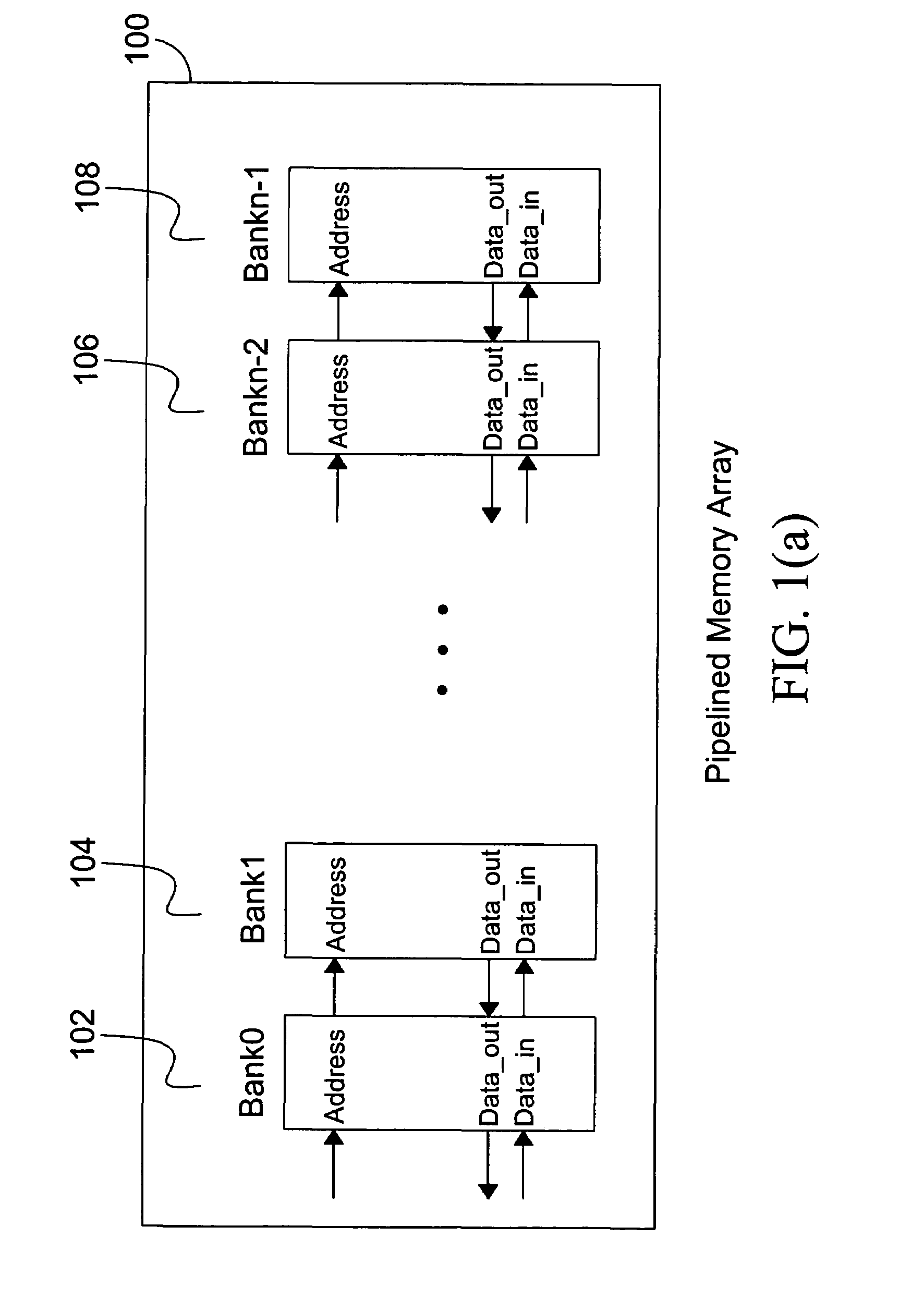

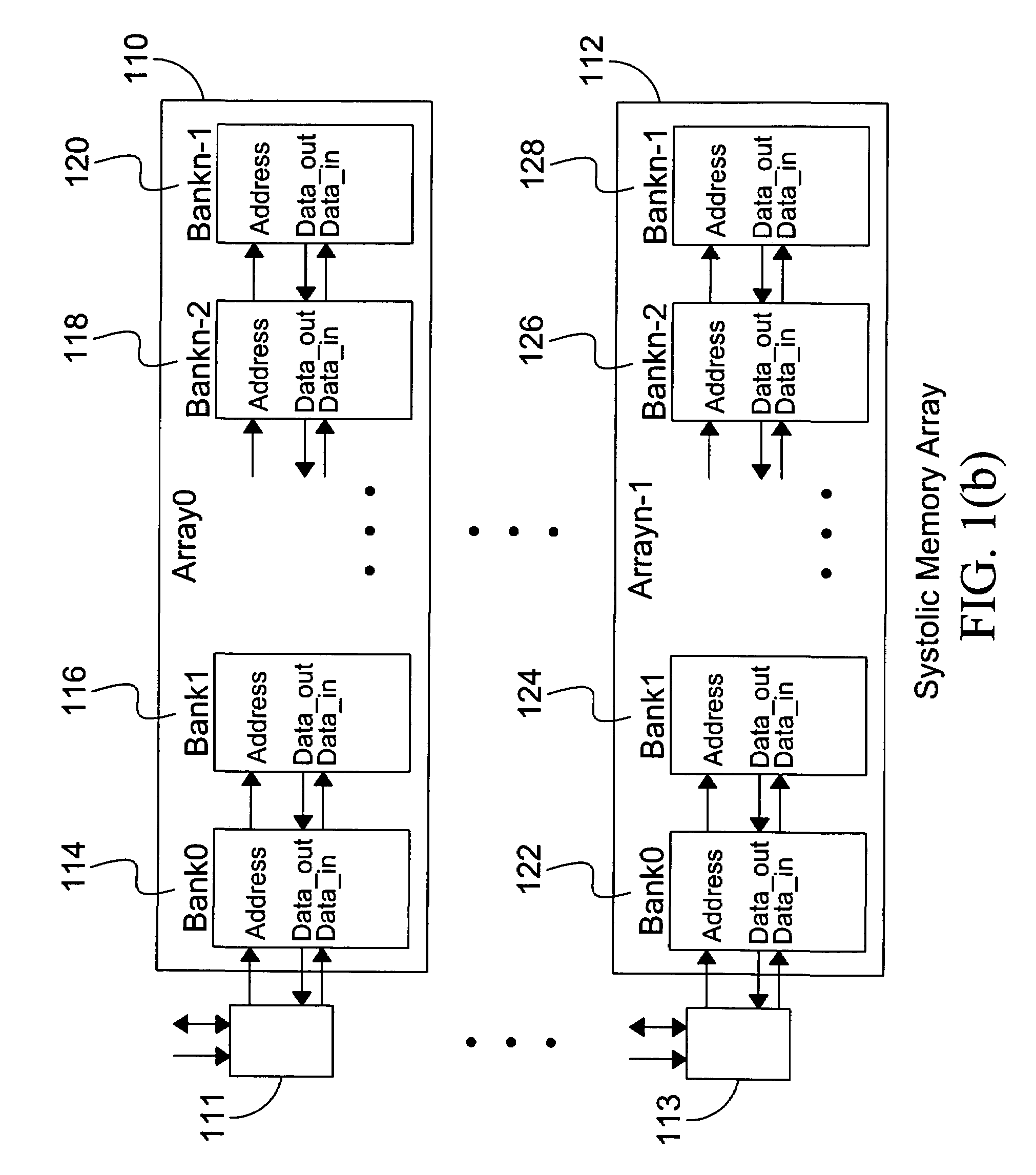

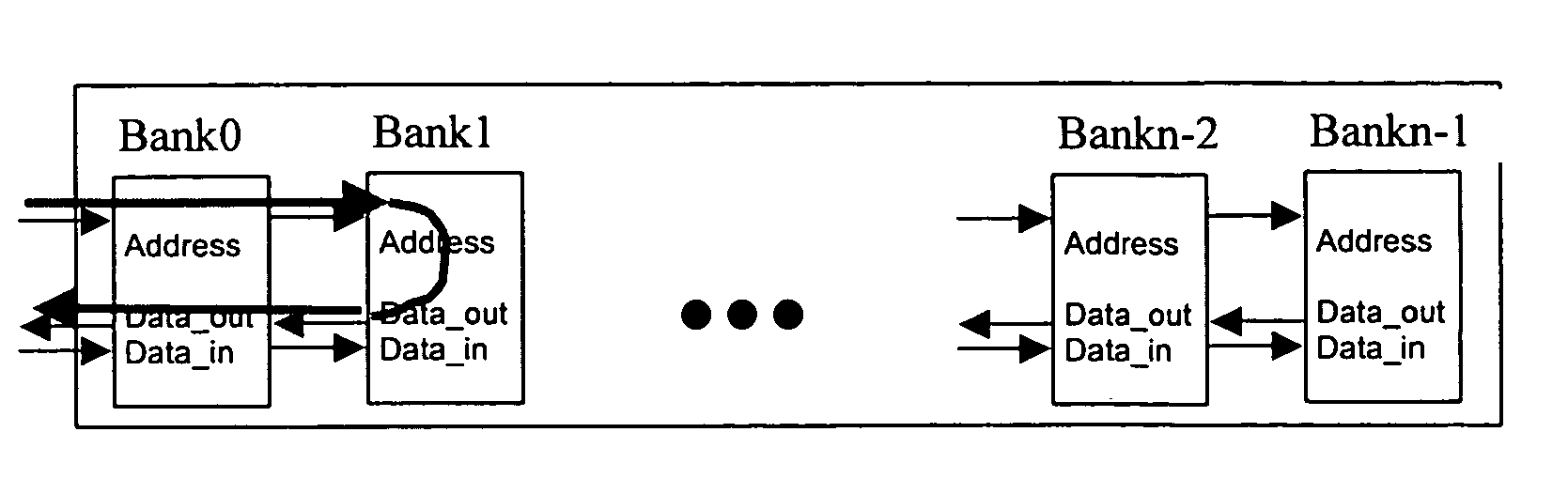

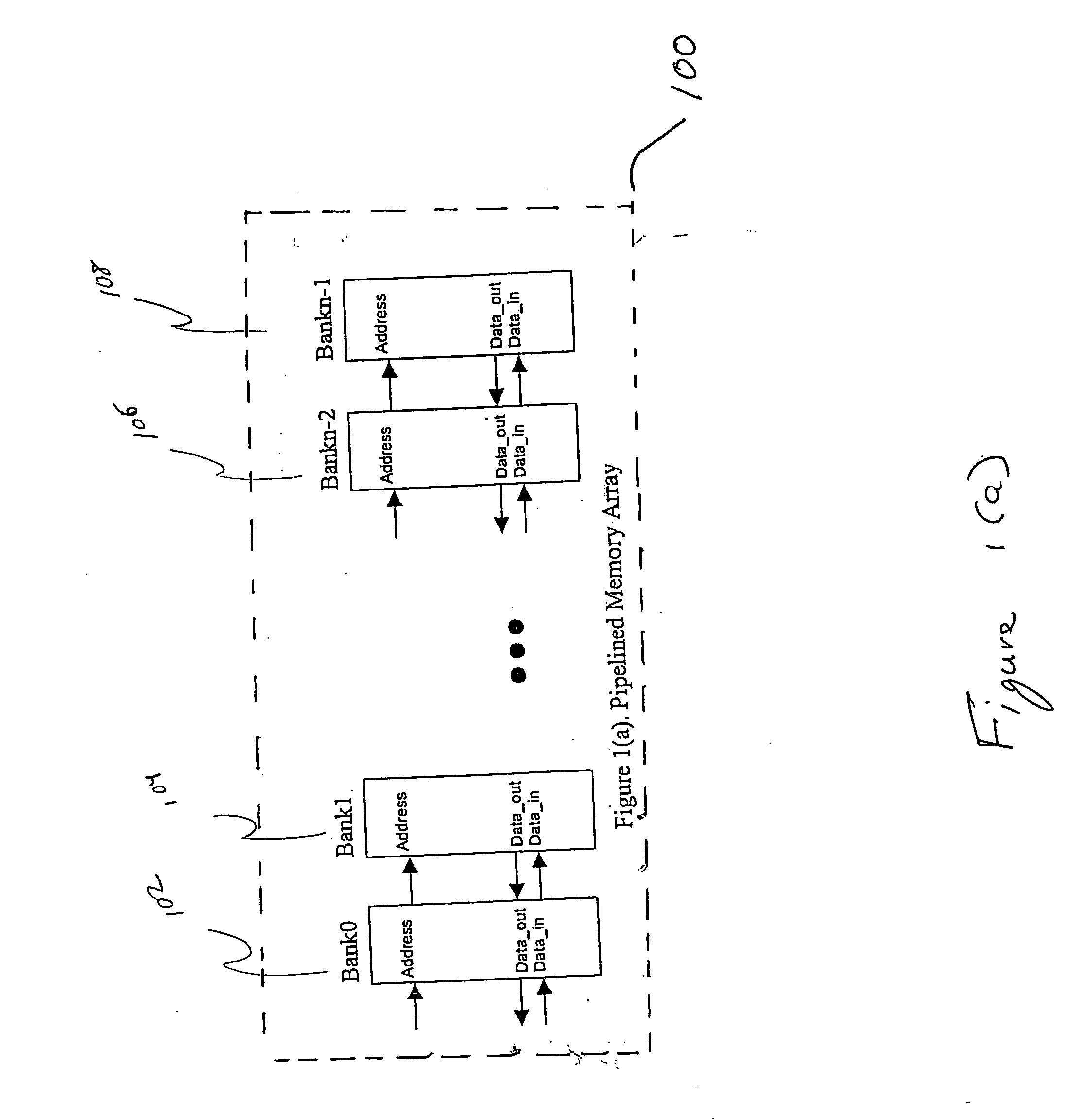

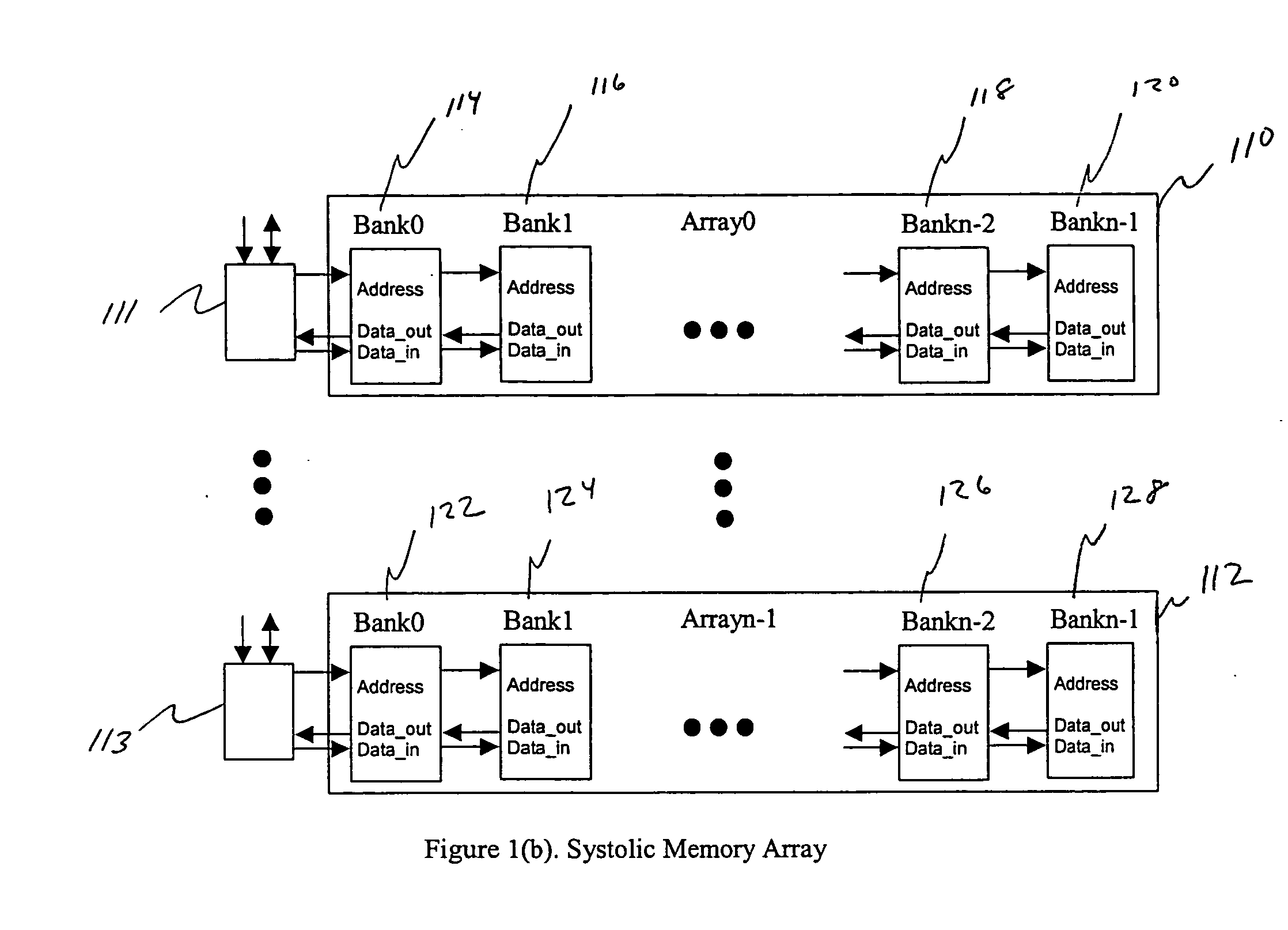

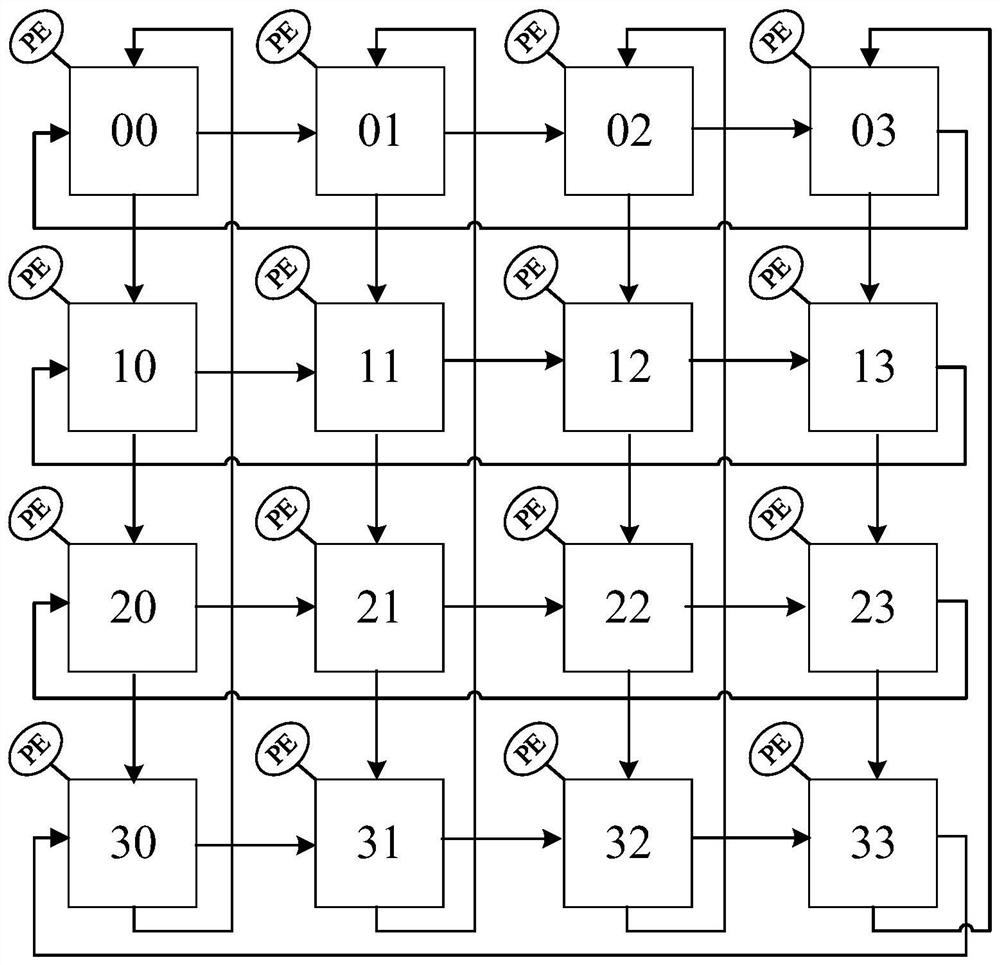

Systolic memory arrays

InactiveUS7246215B2Memory architecture accessing/allocationDigital storageArray data structureParallel computing

A short latency and high bandwidth memory includes a systolic memory that is sub-divided into a plurality of memory arrays, including banks and pipelines that access these banks. Shorter latency and faster performance is achieved with this memory, because each bank is smaller in size and is accessed more rapidly. A high throughput rate is accomplished because of the pipelining. Memory is accessed at the pipeline frequency with the proposed read and write mechanism. Design complexity is reduced because each bank within the memory is the same and repeated. The memory array size is re-configured and organized to fit within desired size and area parameters.

Owner:INTEL CORP

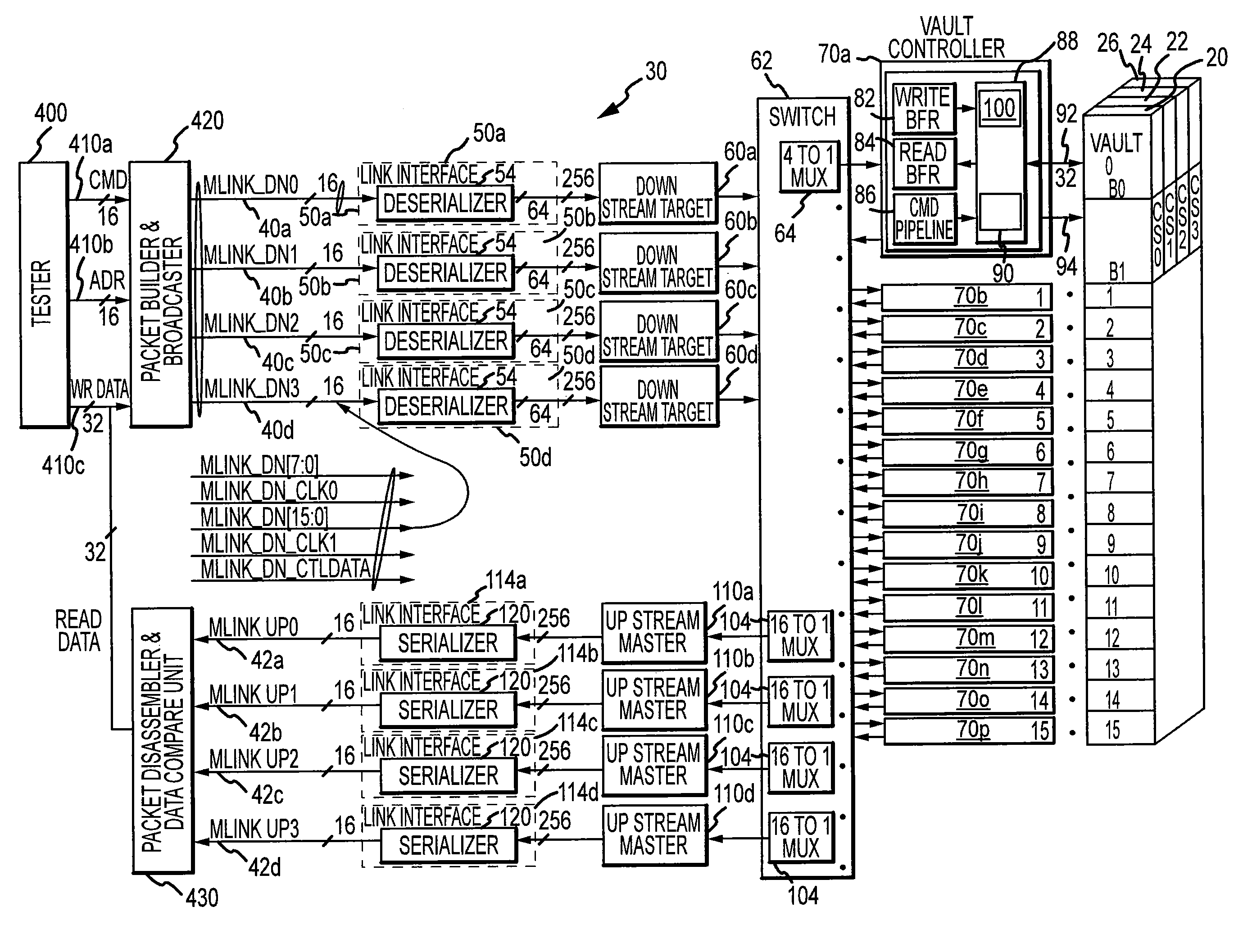

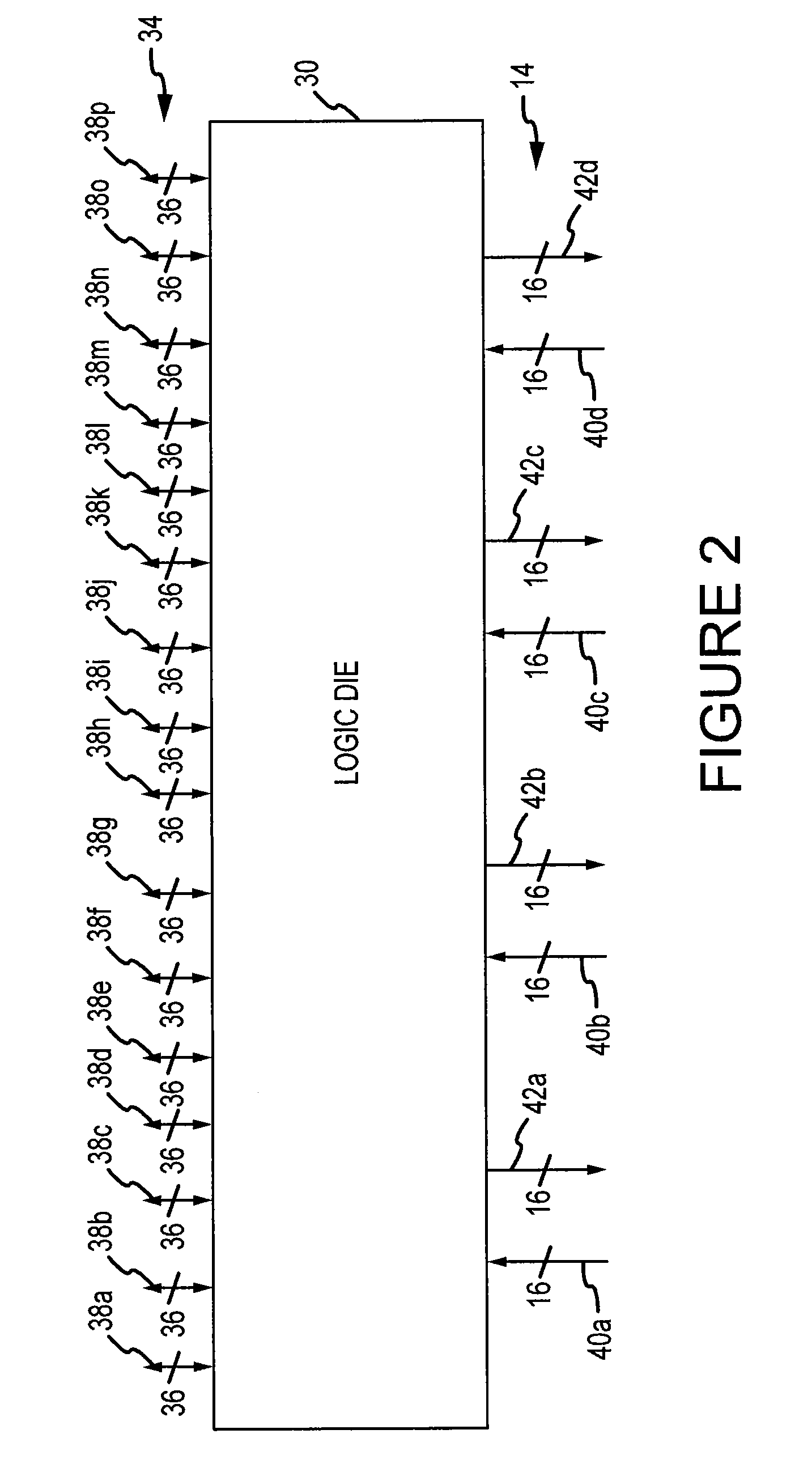

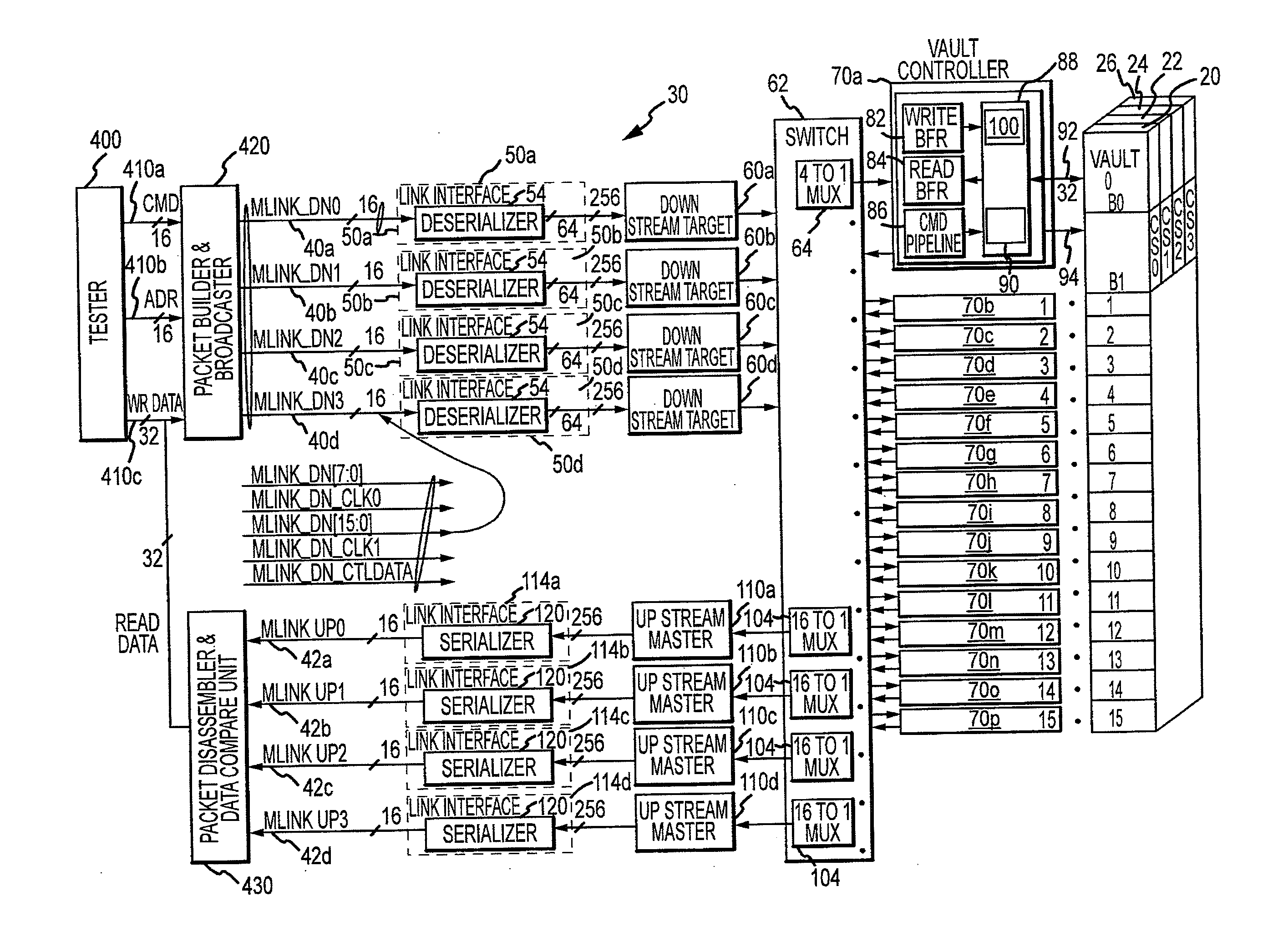

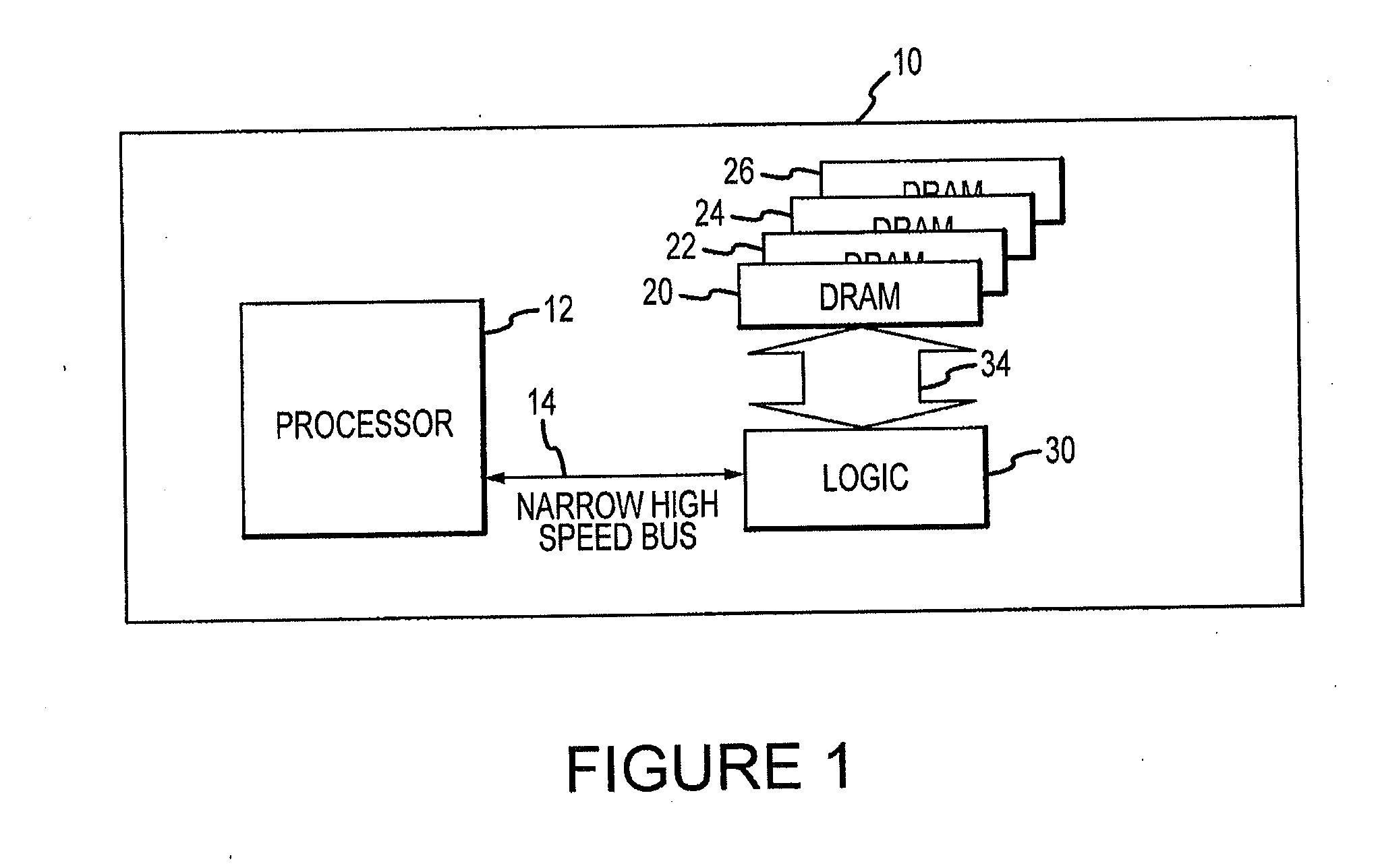

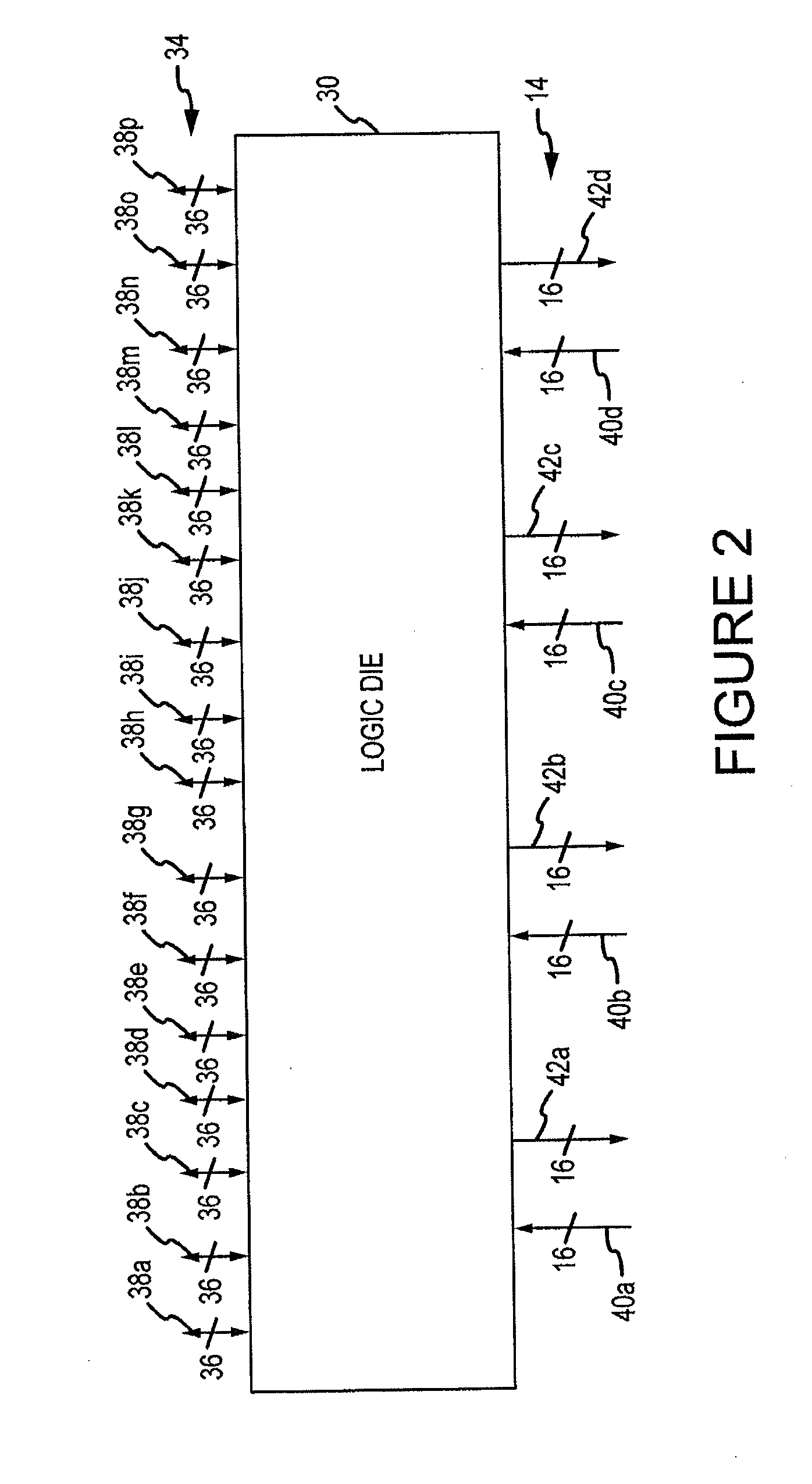

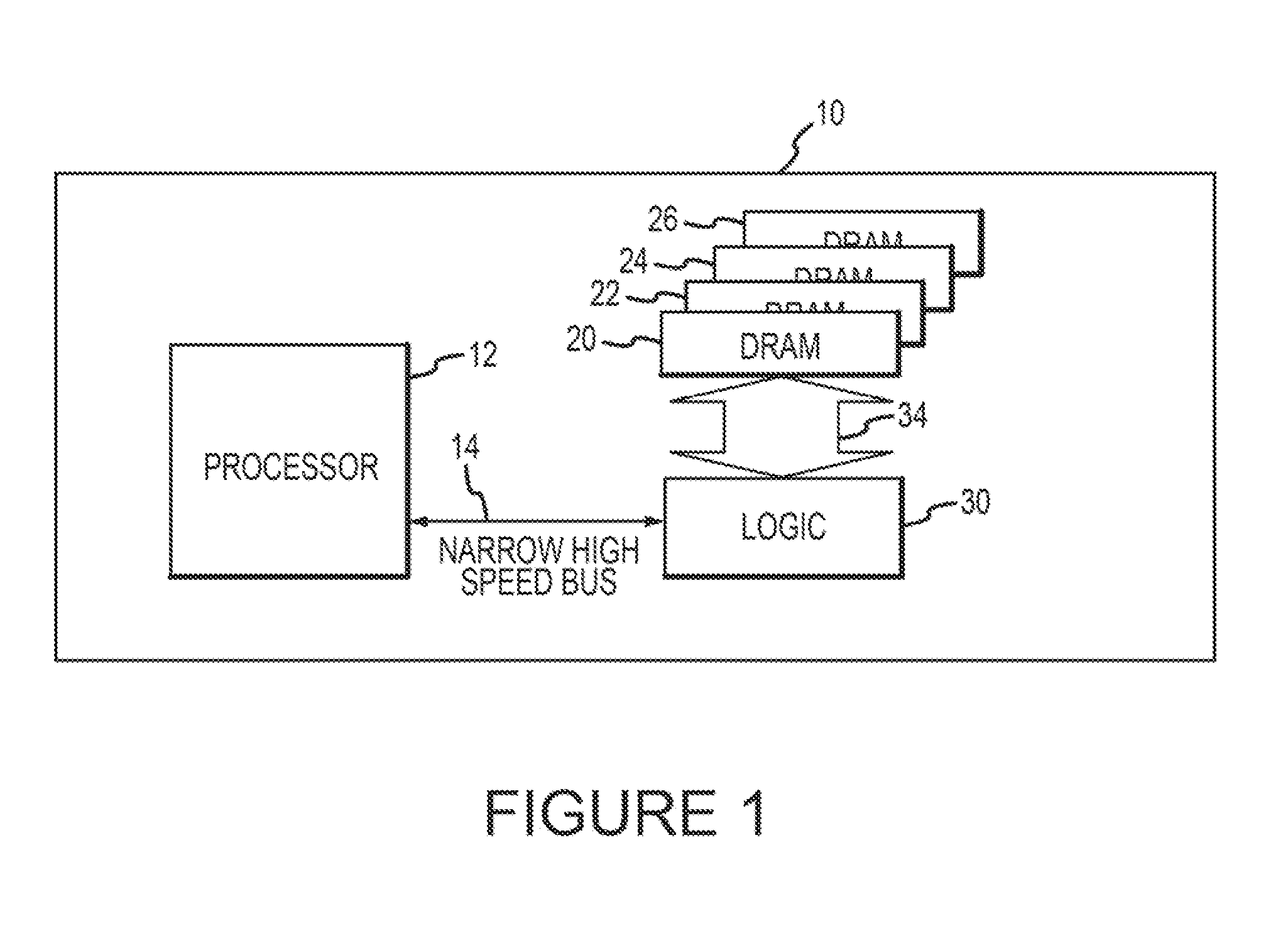

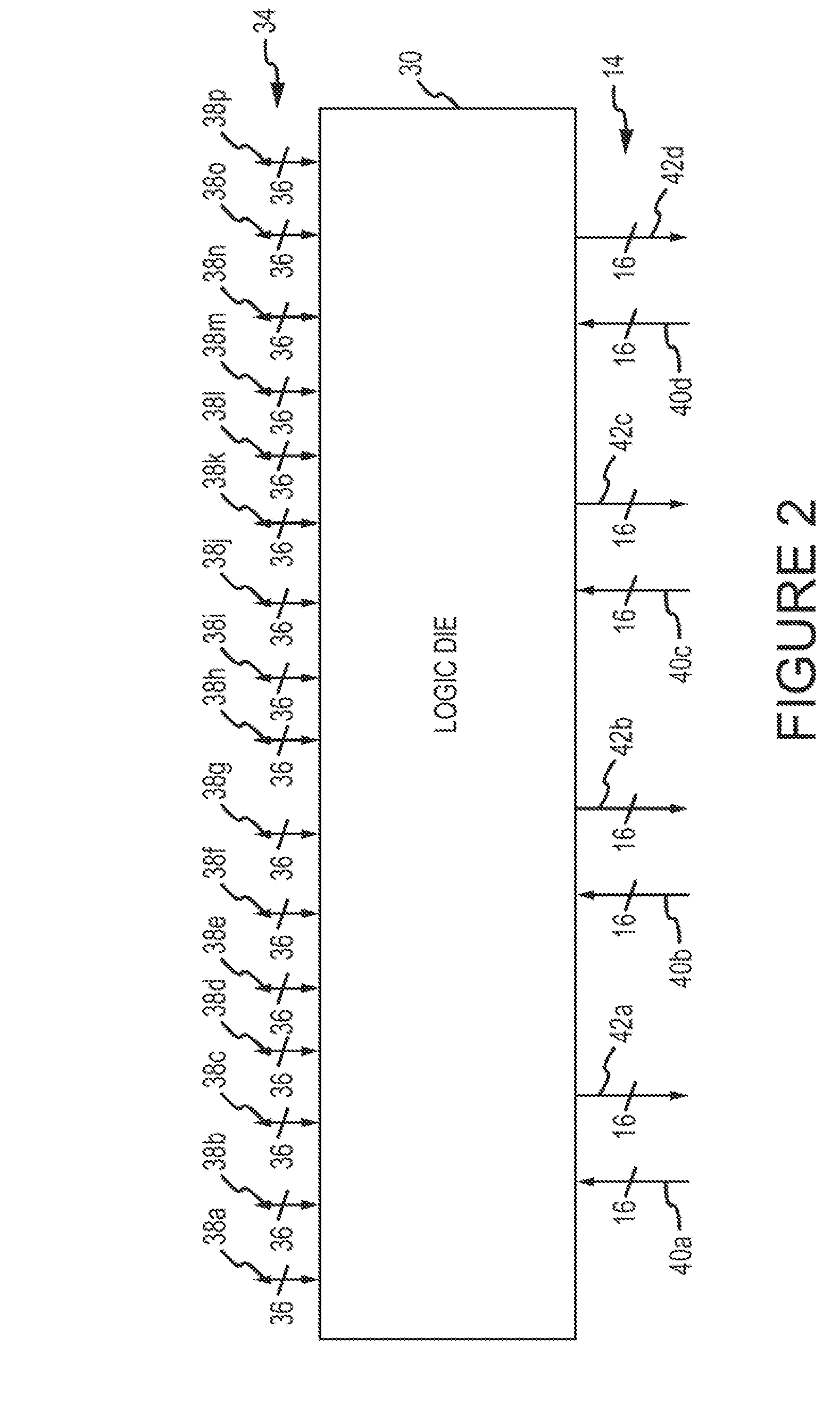

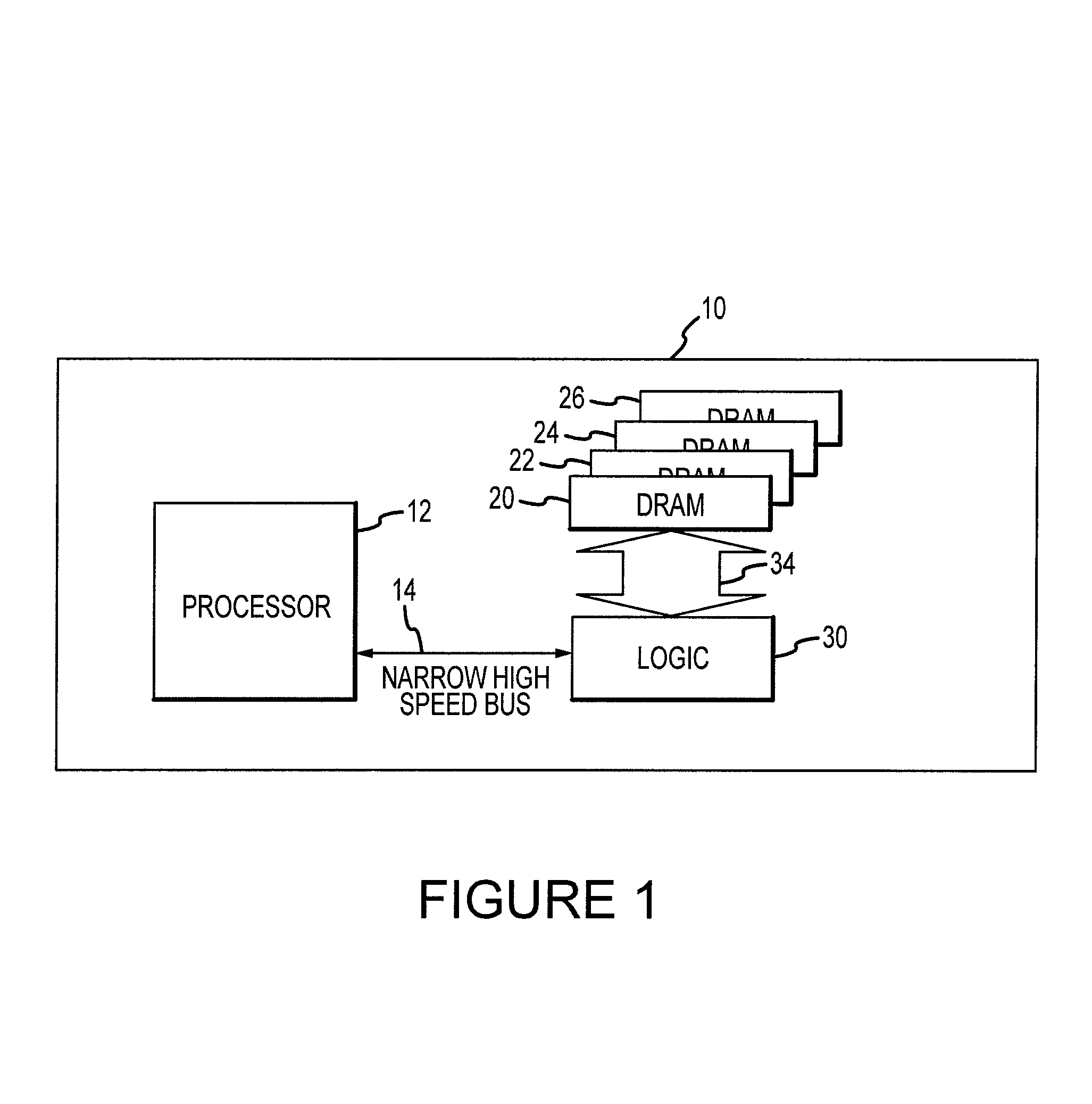

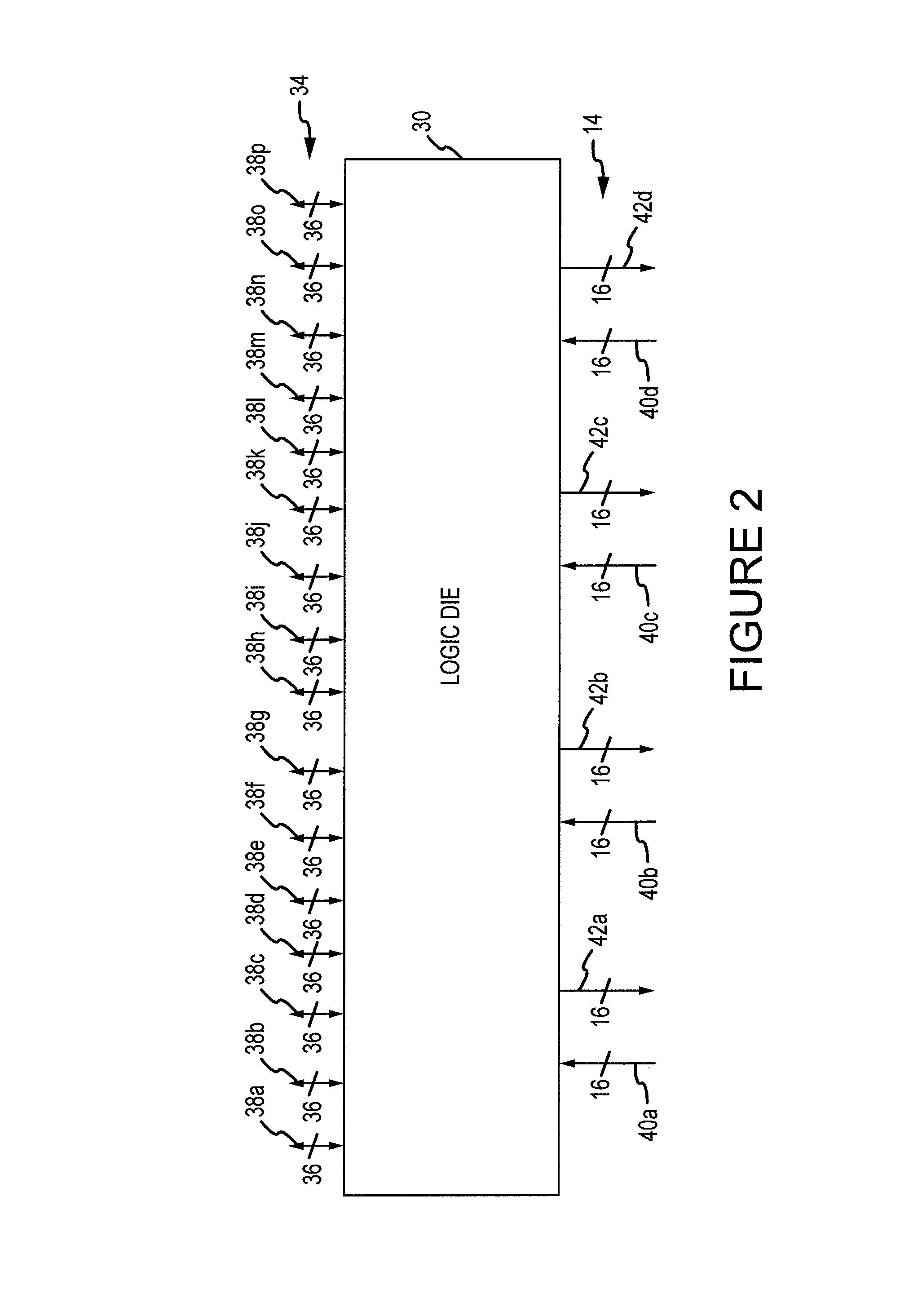

Method and apparatus for testing high capacity/high bandwidth memory devices

A plurality of stacked memory device die and a logic circuit are connected to each other through a plurality of conductors. The stacked memory device die are arranged in a plurality of vaults. The logic circuit die serves as a memory interface device to a memory access device, such as a processor. The logic circuit die includes a plurality of link interfaces and downstream targets for transmitting received data to the vaults. The logic circuit die includes a packet builder and broadcaster configured to receive command, address and data signals over separate interfaces from a conventional tester, format the signals into a packet and broadcast the signals to a plurality of vaults.

Owner:MICRON TECH INC

Method and apparatus for testing high capacity/high bandwidth memory devices

ActiveUS20110271158A1Detecting faulty computer hardwareStatic storageElectrical conductorMemory interface

A plurality of stacked memory device die and a logic circuit are connected to each other through a plurality of conductors. The stacked memory device die are arranged in a plurality of vaults. The logic circuit die serves as a memory interface device to a memory access device, such as a processor. The logic circuit die includes a plurality of link interfaces and downstream targets for transmitting received data to the vaults. The logic circuit die includes a packet builder and broadcaster configured to receive command, address and data signals over separate interfaces from a conventional tester, format the signals into a packet and broadcast the signals to a plurality of vaults.

Owner:MICRON TECH INC

Systolic memory arrays

InactiveUS20050114618A1Memory architecture accessing/allocationDigital storageArray data structureParallel computing

A short latency and high bandwidth memory includes a systolic memory that is sub-divided into a plurality of memory arrays, including banks and pipelines that access these banks. Shorter latency and faster performance is achieved with this memory, because each bank is smaller in size and is accessed more rapidly. A high throughput rate is accomplished because of the pipelining. Memory is accessed at the pipeline frequency with the proposed read and write mechanism. Design complexity is reduced because each bank within the memory is the same and repeated. The memory array size is re-configured and organized to fit within desired size and area parameters.

Owner:INTEL CORP

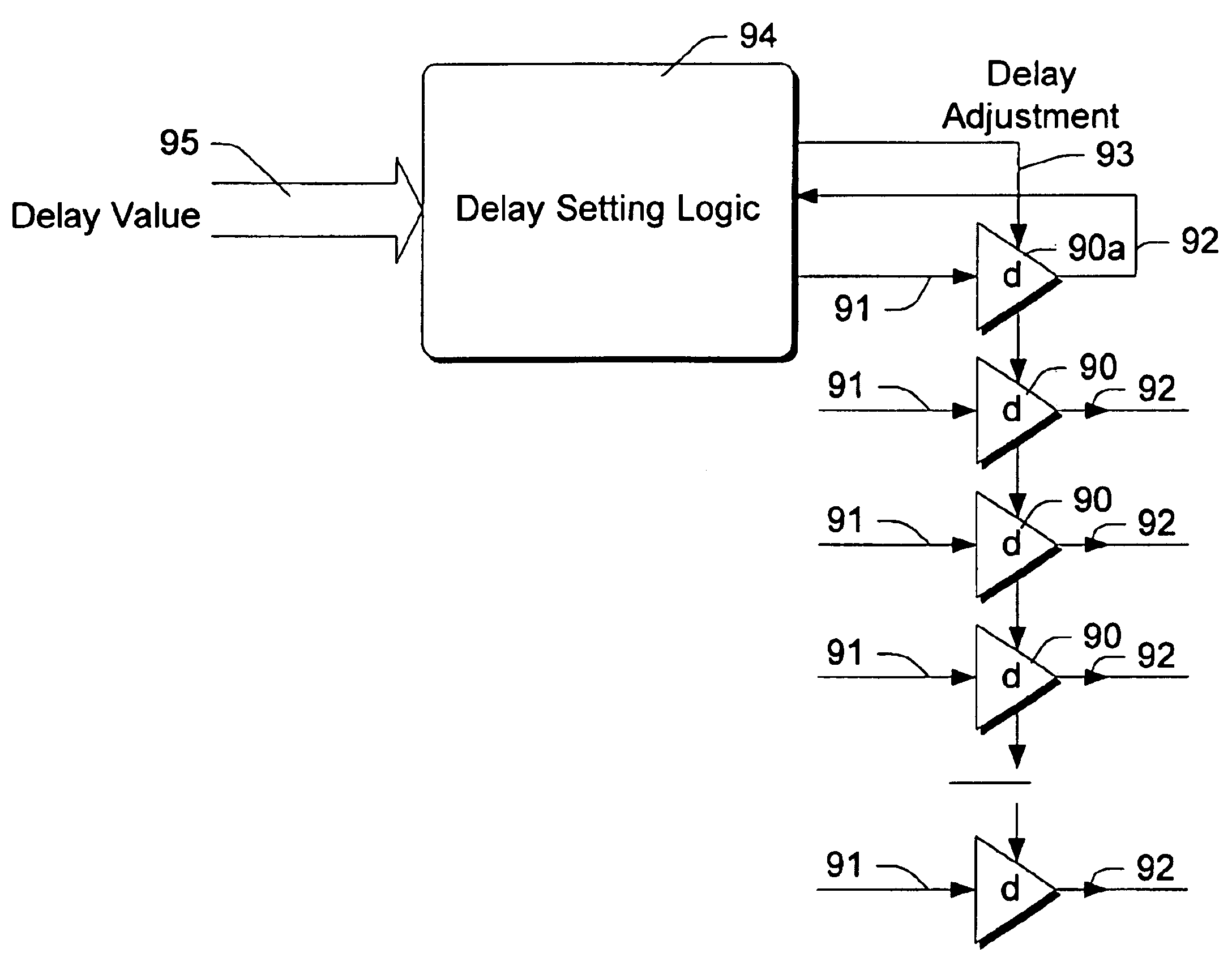

Asynchronous, high-bandwidth memory component using calibrated timing elements

Disclosed herein are embodiments of an asynchronous memory device that use internal delay elements to enable memory access pipelining. In one embodiment, the delay elements are responsive to an input load control signal, and are calibrated with reference to periodically received timing pulses. Different numbers of the delay elements are configured to produce different asynchronous delays and to strobe sequential pipeline elements of the memory device.

Owner:RAMBUS INC

High bandwidth, efficient graphics hardware architecture

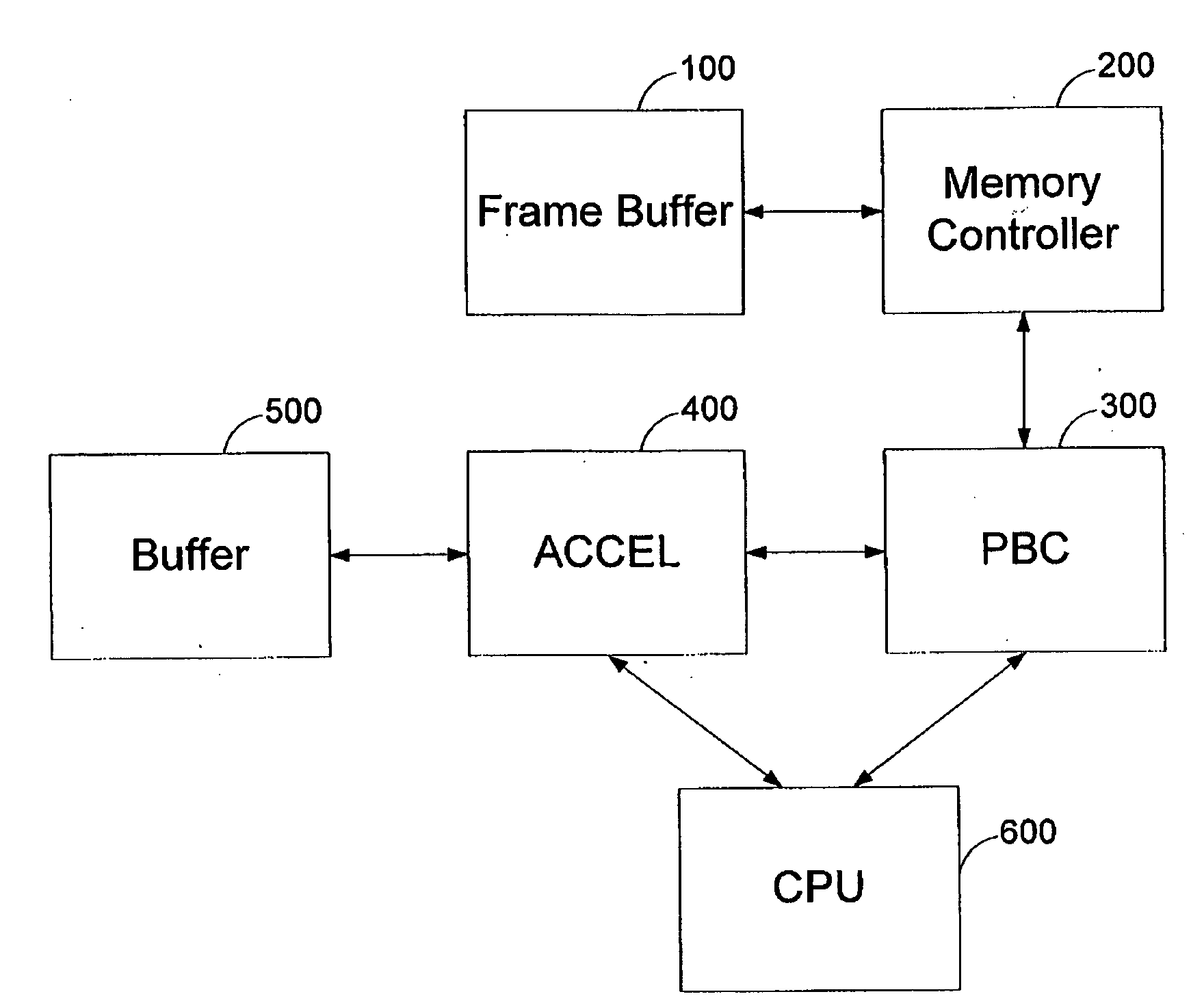

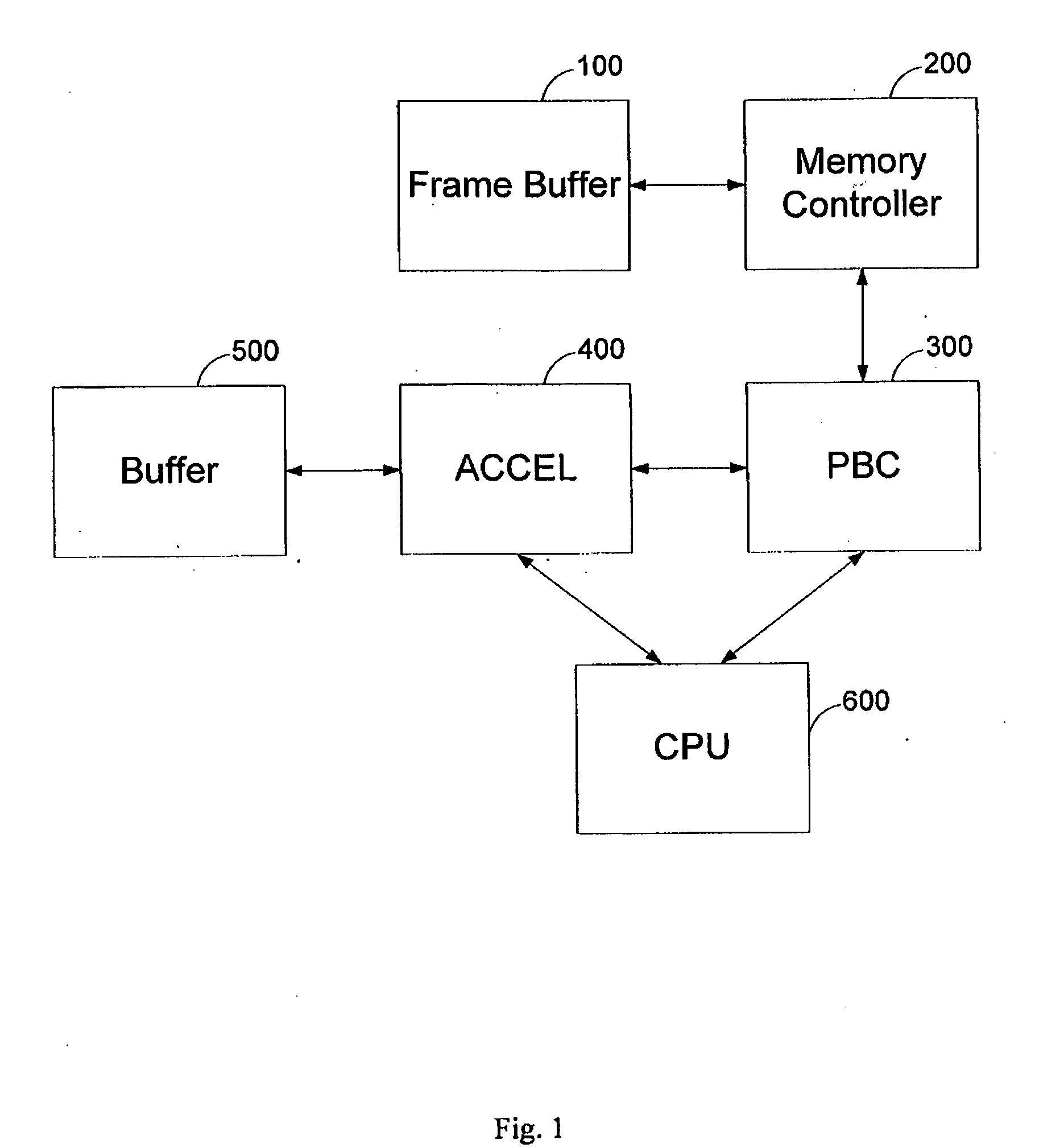

The present invention relates to a system according to claim 1, where the pixel buffer cache comprises at least one row descriptor for tracking and monitoring the activities of read and write requests of a particular tile. A system for providing a high bandwidth memory access to a graphics processor comprising: (a) a frame buffer for storing at least one frame, where said frame is stored in a tiled manner; (b) a memory controller for controlling said frame buffer; (c) a pixel buffer cache for storing multiple sections of at least one memory row of said frame buffer, and for processing requests to access pixels of said frame buffer; (d) a graphics accelerator having an interface to said pixel buffer cache for processing a group of related pixels; and (e) a CPU for processing graphic commands and controlling said graphics accelerator and said pixel buffer cache.

Owner:DIGITALPTICS CORP INT

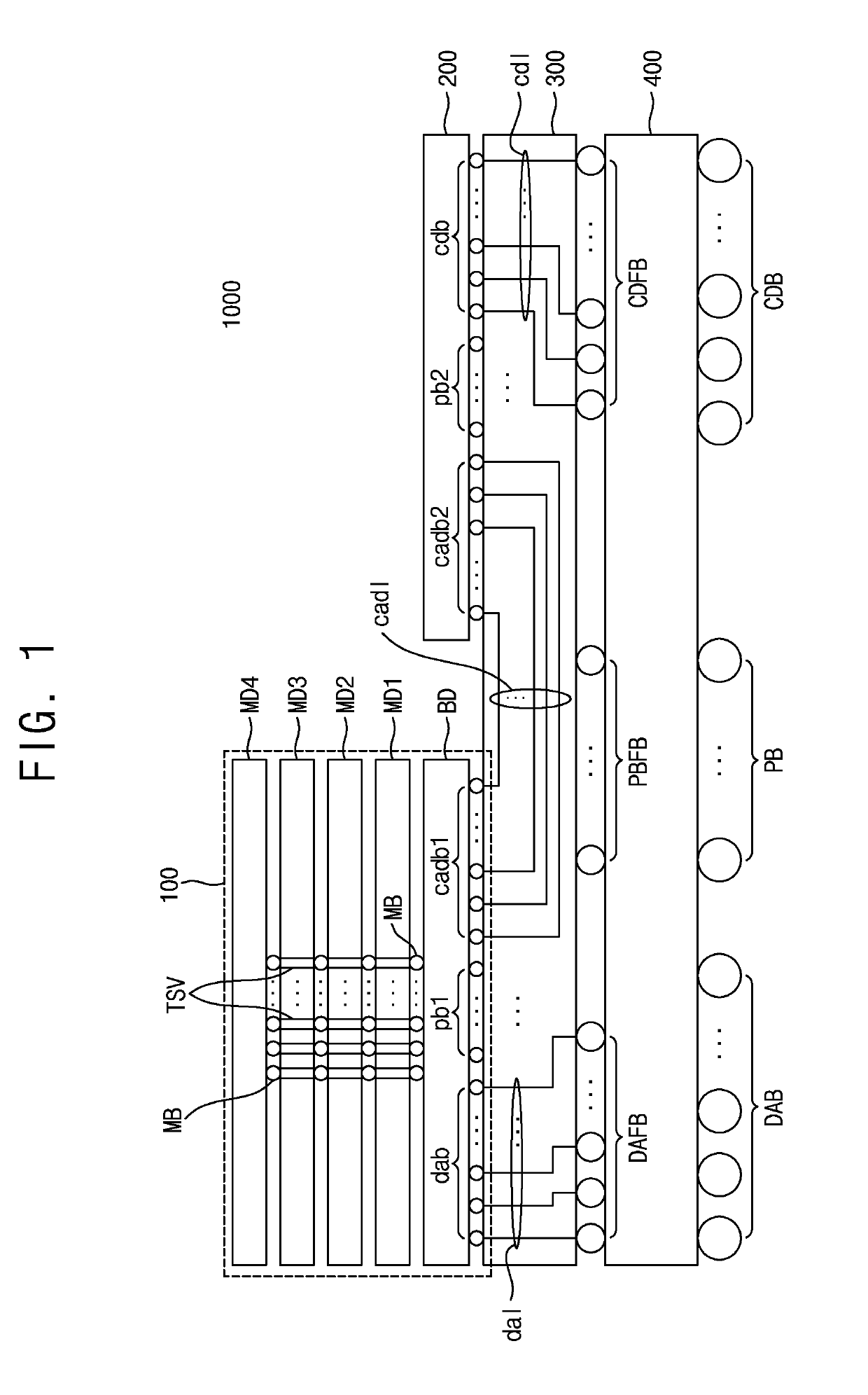

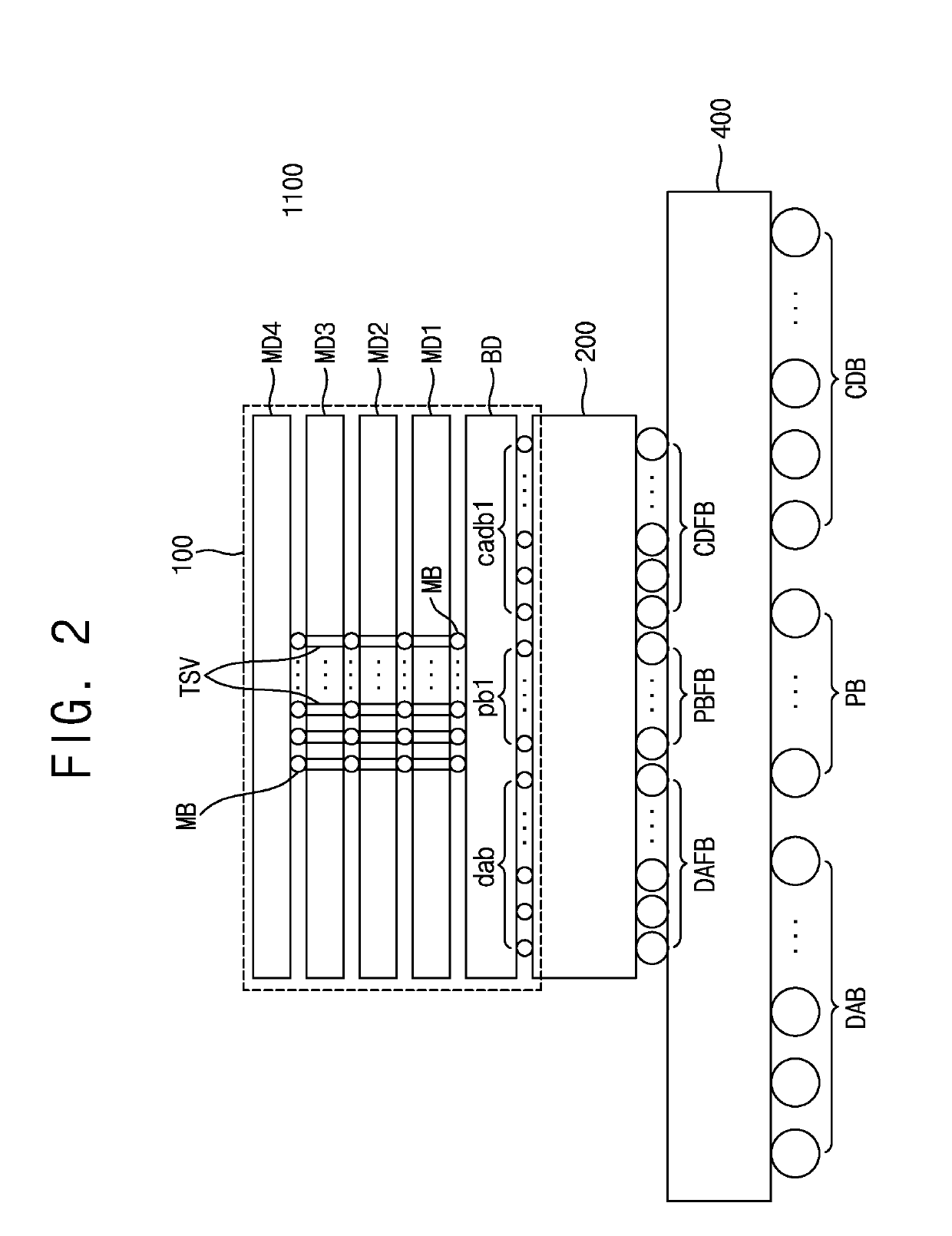

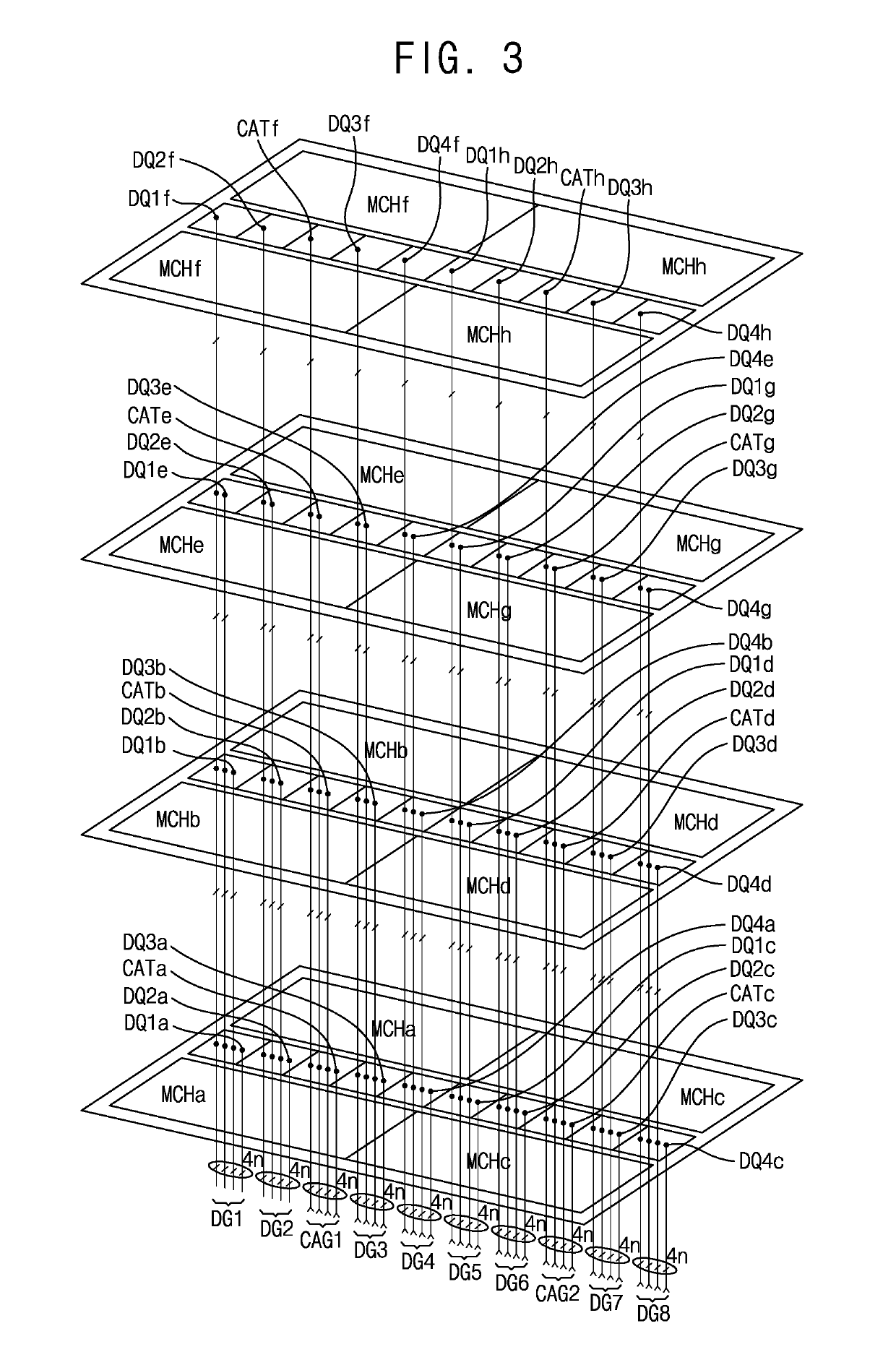

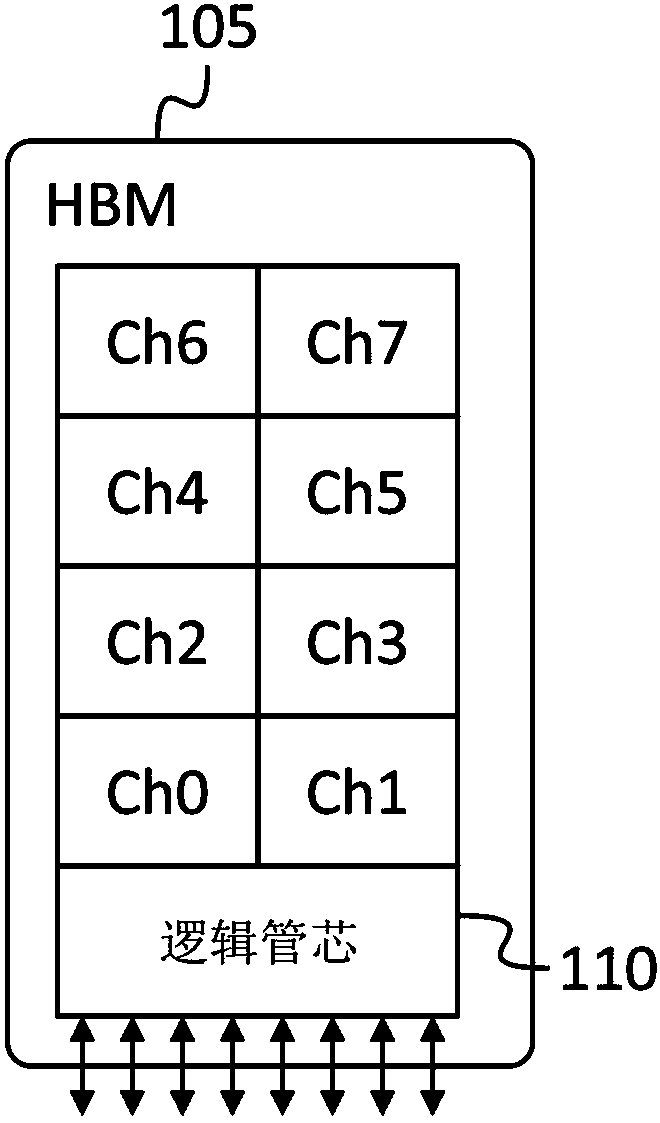

High bandwidth memory device and system device having the same

ActiveUS20190278511A1Input/output to record carriersMemory adressing/allocation/relocationChannel dataElectricity

According to some embodiments, a high bandwidth memory device includes a base die and a plurality of memory dies stacked on the base die and electrically connected to the base die through a plurality of through substrate vias. The base die includes a plurality of first input buffers configured to receive channel clock signals, channel command / addresses, and channel data from a plurality of first bumps connected to the outside of the base die, a plurality of second input buffers configured to receive test clock signals, test command / addresses, and test data from a plurality of second bumps connected to the outside of the base die, a monitoring unit, a plurality of first output buffers connected to the monitoring unit and configured to output monitored data from the monitoring unit to the plurality of second bumps, and a plurality of paths from the plurality of first input buffers to the monitoring unit. The plurality of second bumps are connected to receive test clock signals, test command / addresses, and test data from the outside of the base die during a first operation mode, and to receive monitored data from the plurality of first output buffers during a second operation mode.

Owner:SAMSUNG ELECTRONICS CO LTD

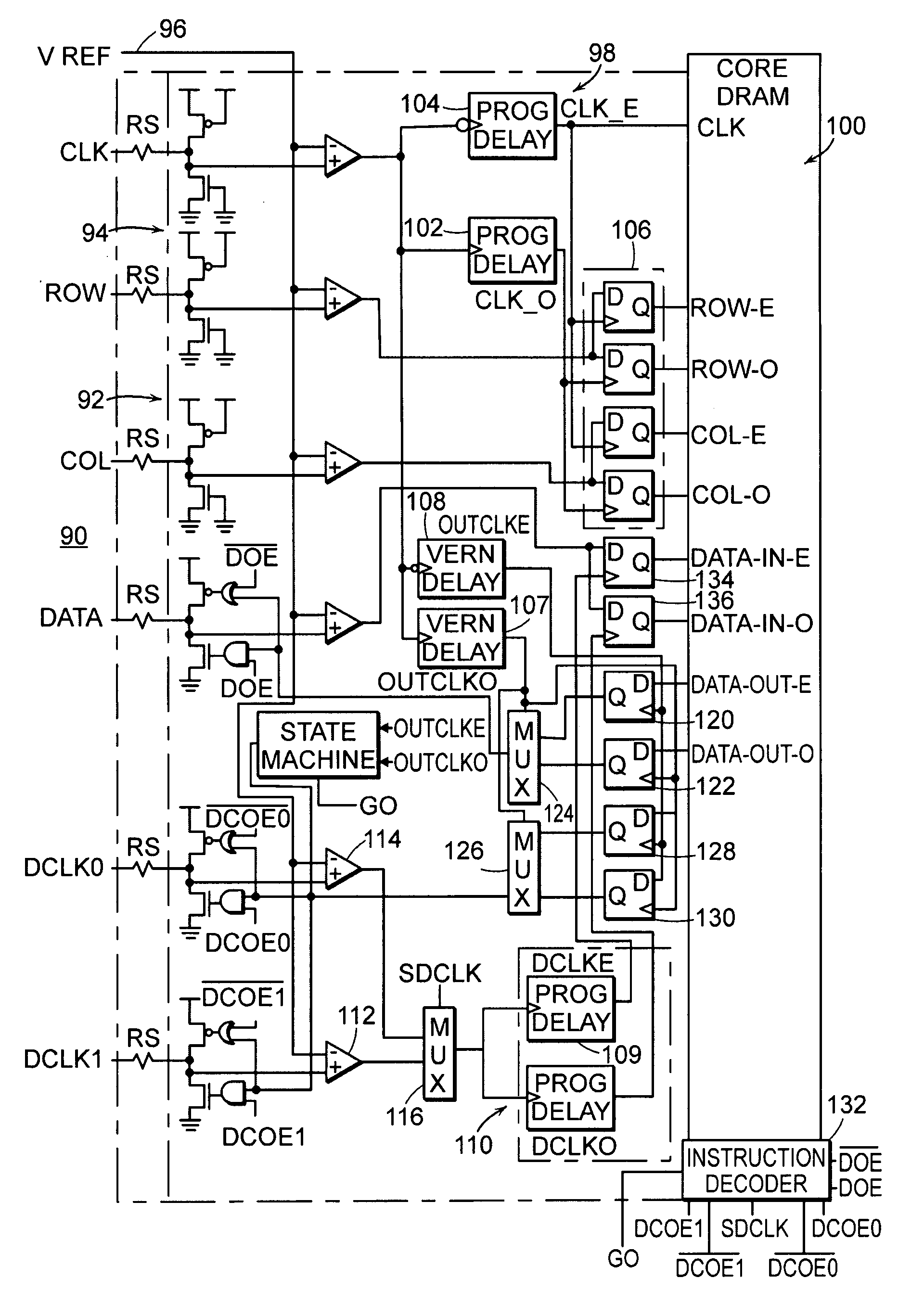

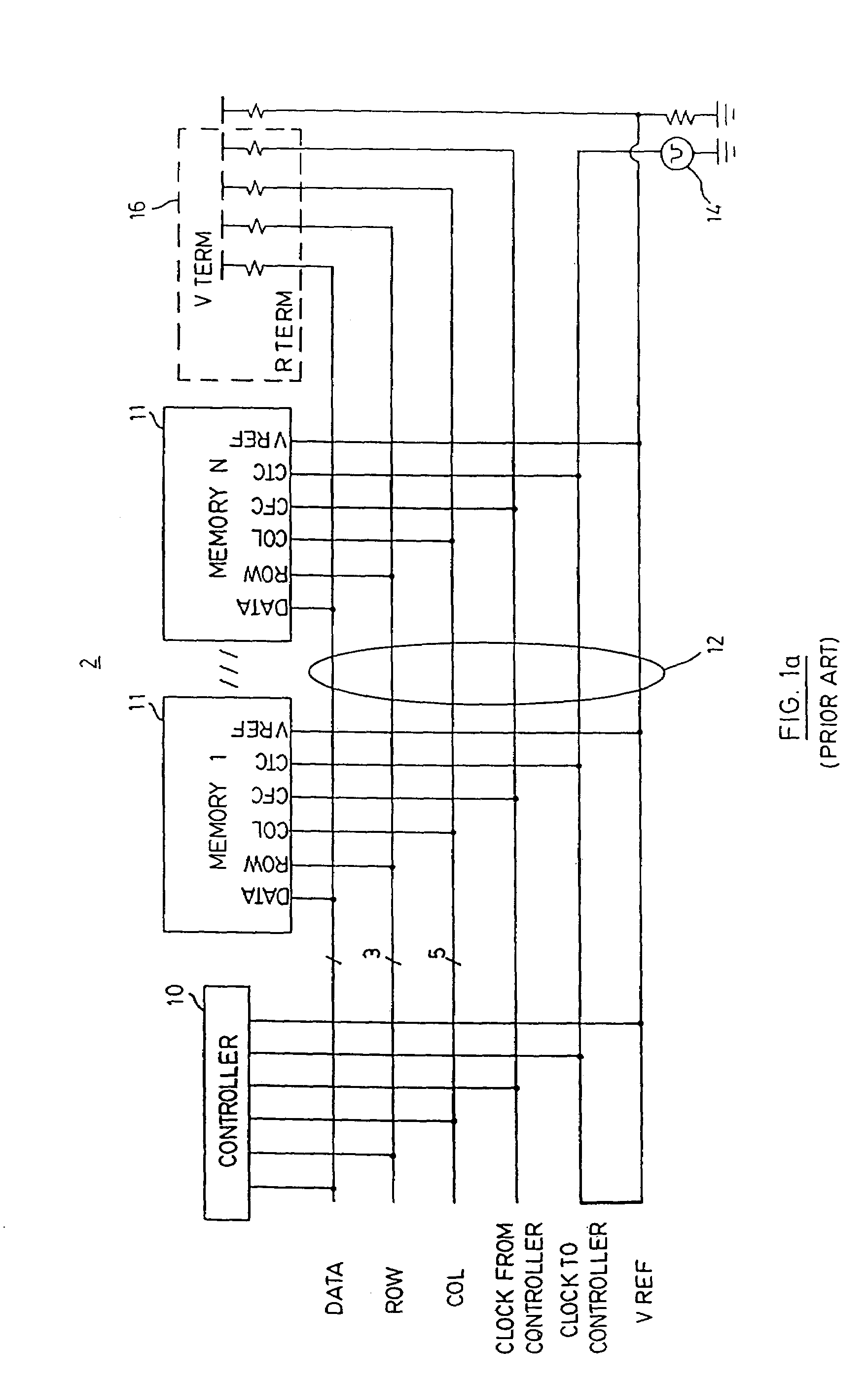

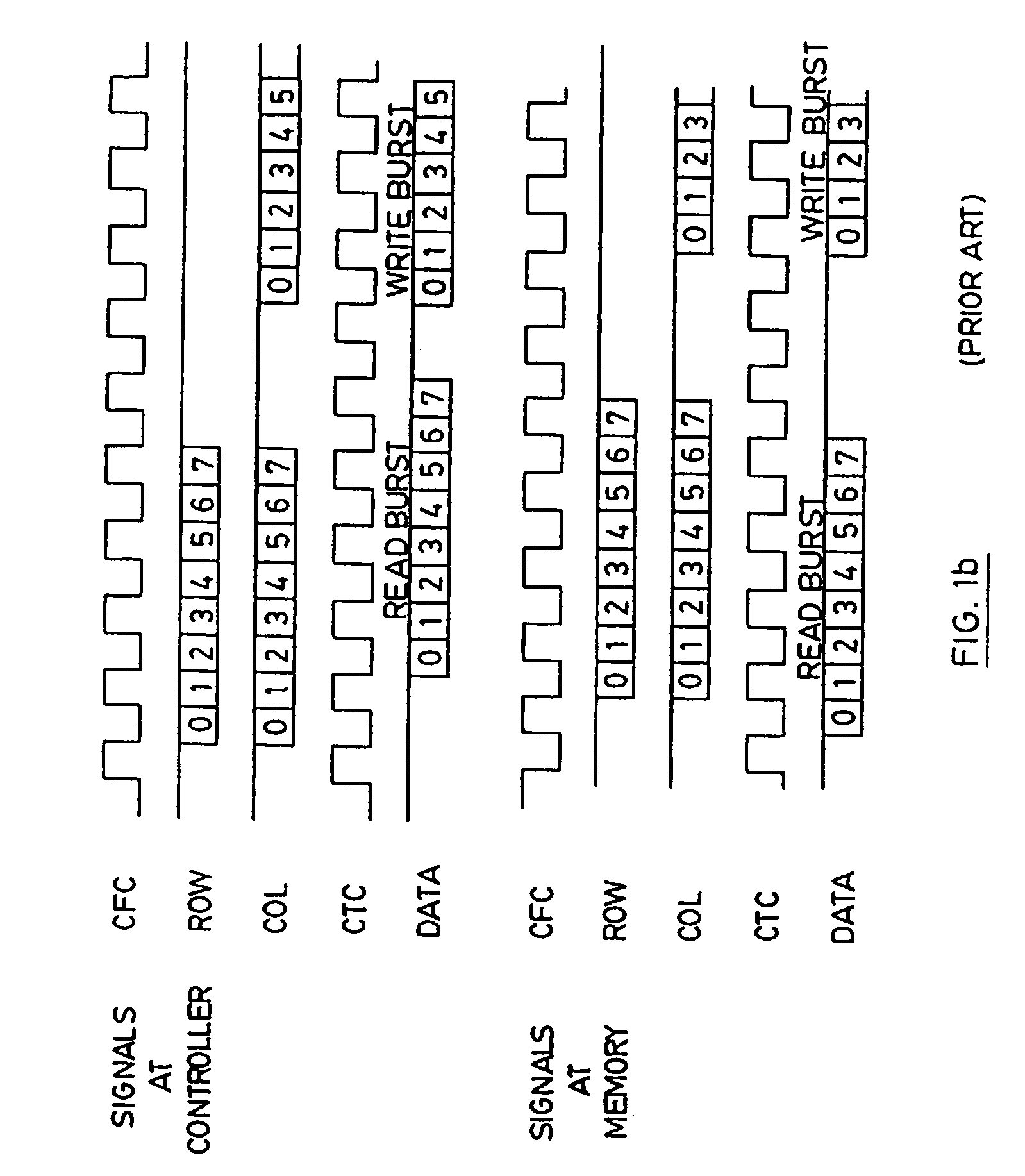

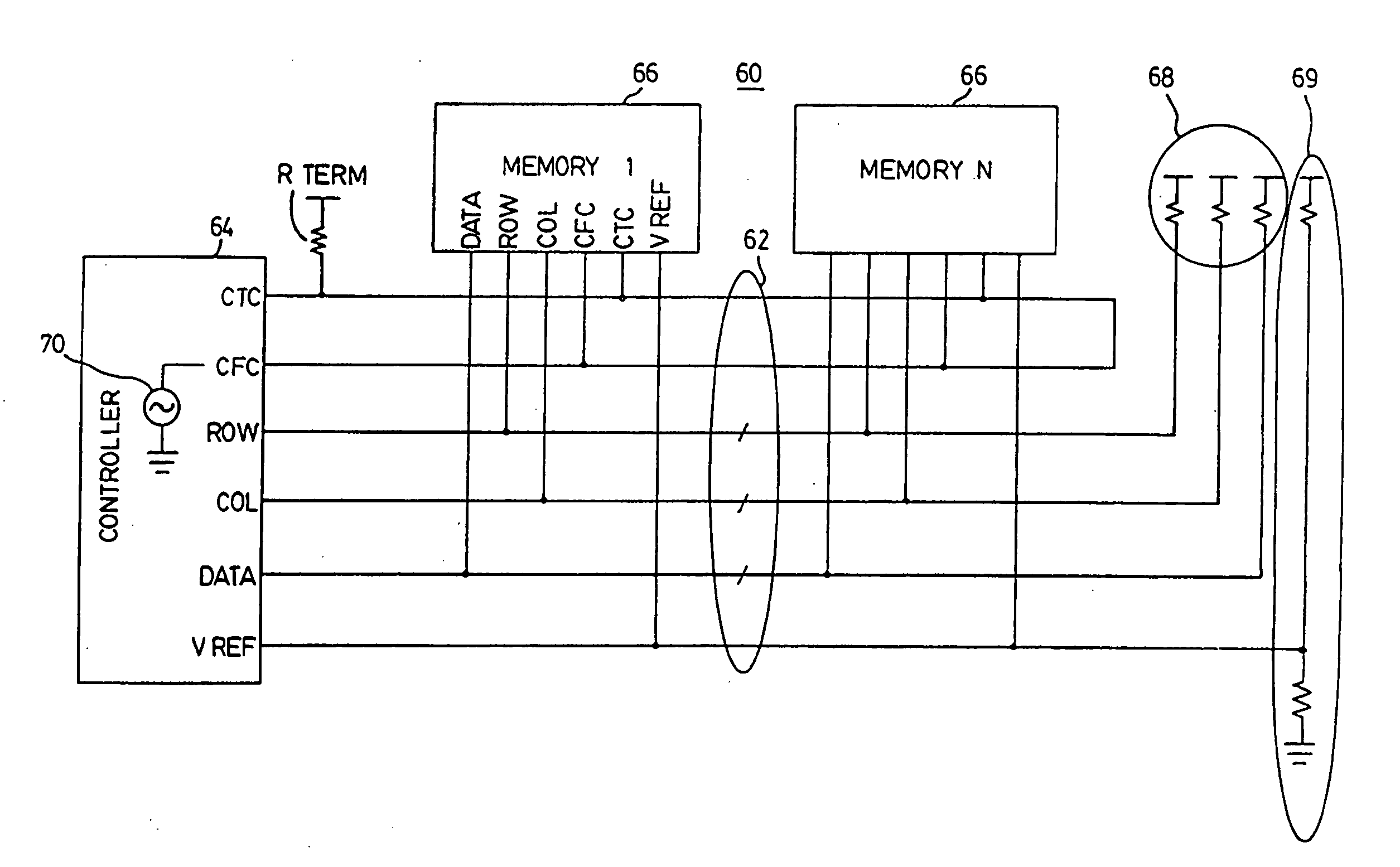

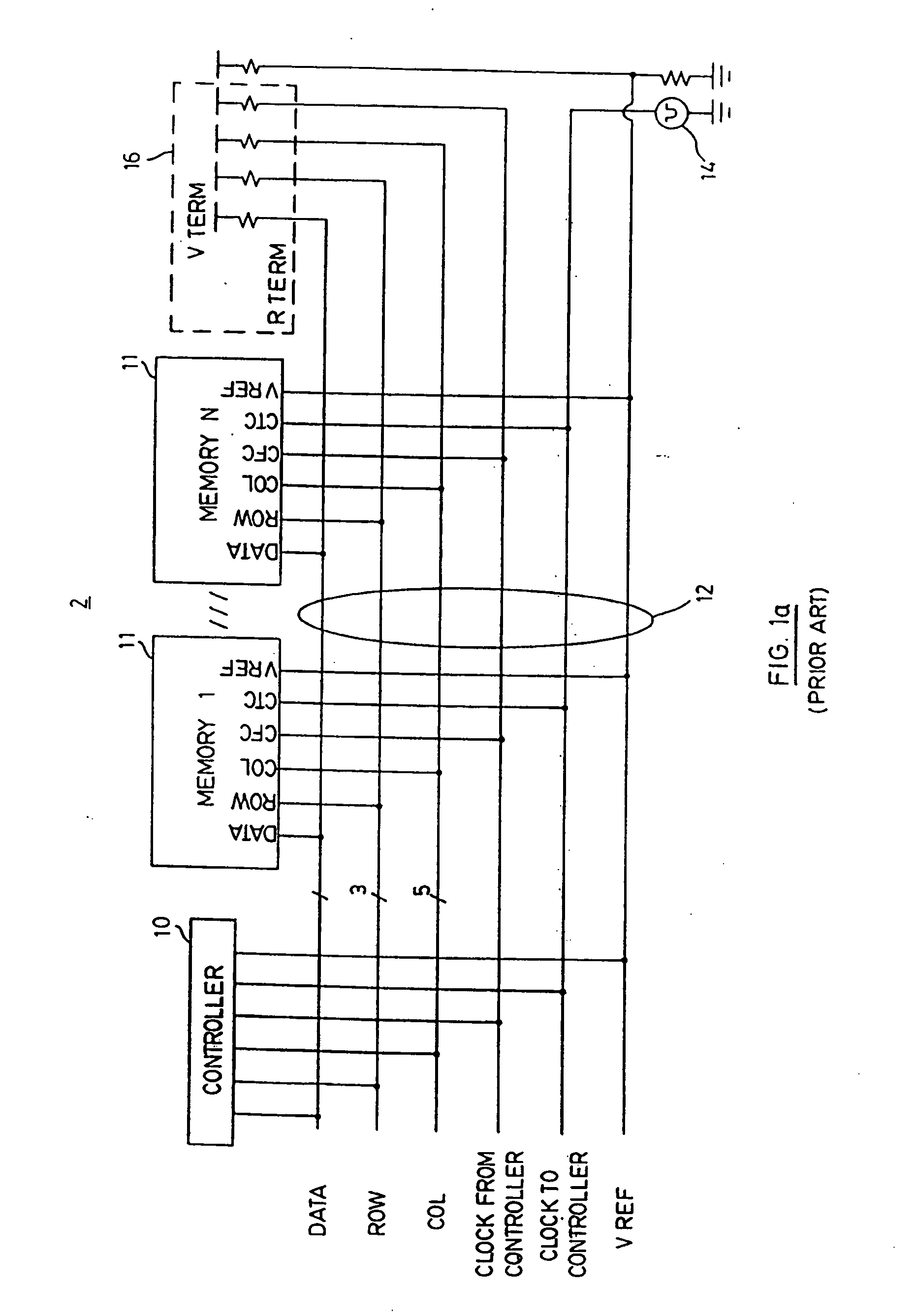

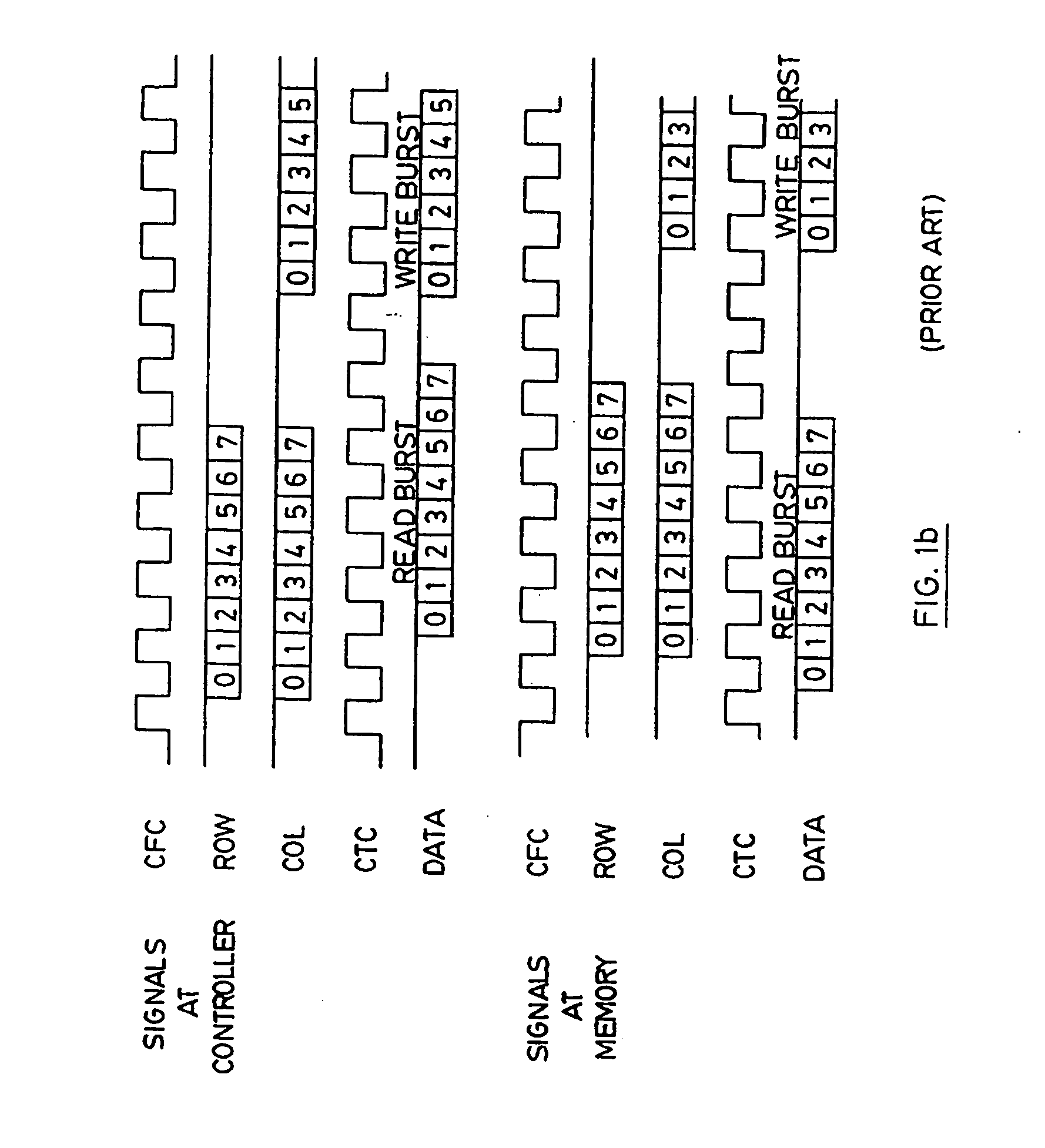

High bandwidth memory interface

InactiveUS20050081012A1Increase speedMaintaining error free data transmissionEnergy efficient ICTDigital storageComputer hardwareHigh bandwidth

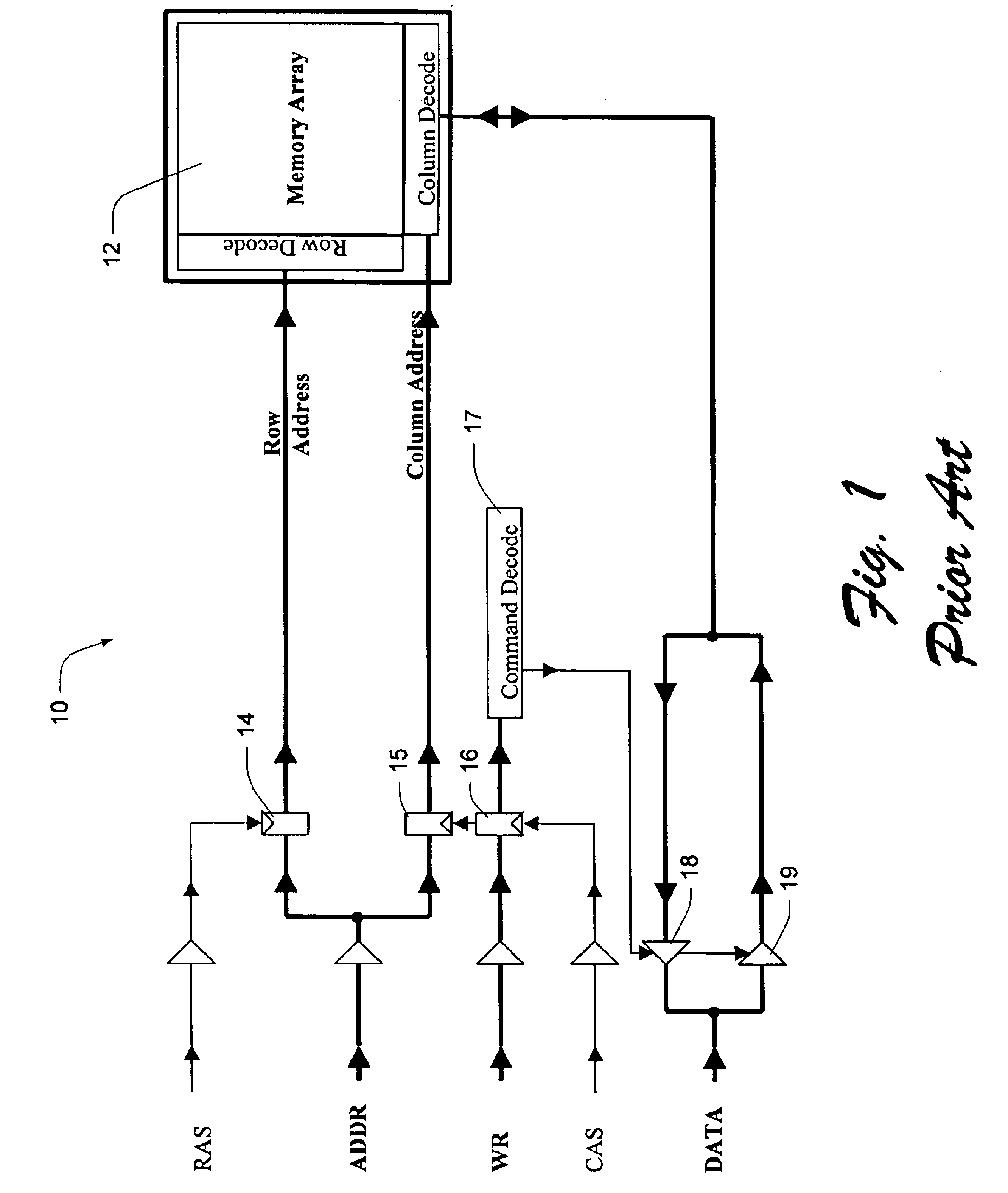

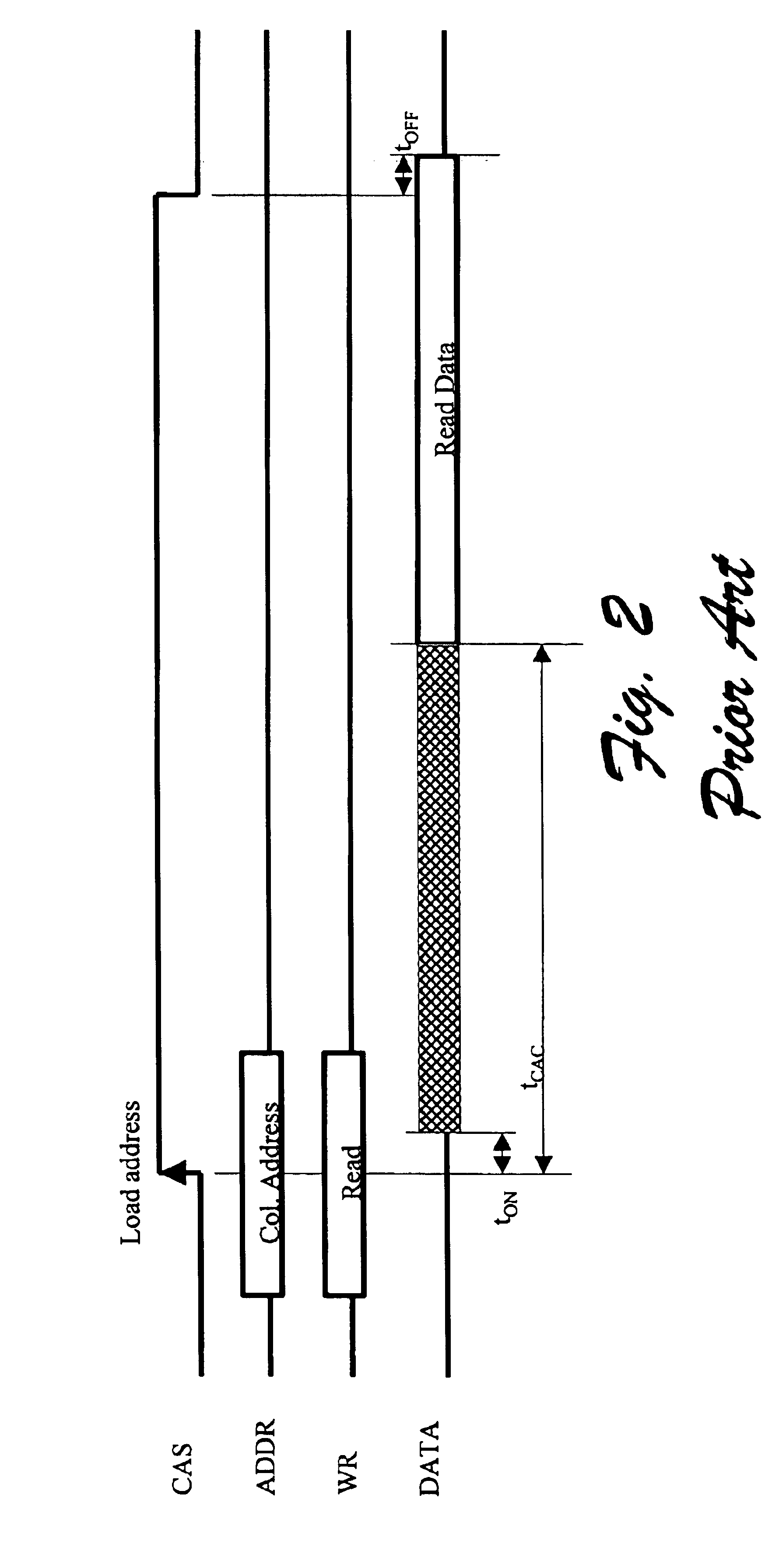

This invention describes an improved high bandwidth chip-to-chip interface for memory devices, which is capable of operating at higher speeds, while maintaining error free data transmission, consuming lower power, and supporting more load. Accordingly, the invention provides a memory subsystem comprising at least two semiconductor devices; a main bus containing a plurality of bus lines for carrying substantially all data and command information needed by the devices, the semiconductor devices including at least one memory device connected in parallel to the bus; the bus lines including respective row command lines and column command lines; a clock generator for coupling to a clock line, the devices including clock inputs for coupling to the clock line; and the devices including programmable delay elements coupled to the clock inputs to delay the clock edges for setting an input data sampling time of the memory device.

Owner:CONVERSANT INTPROP MANAGEMENT INC

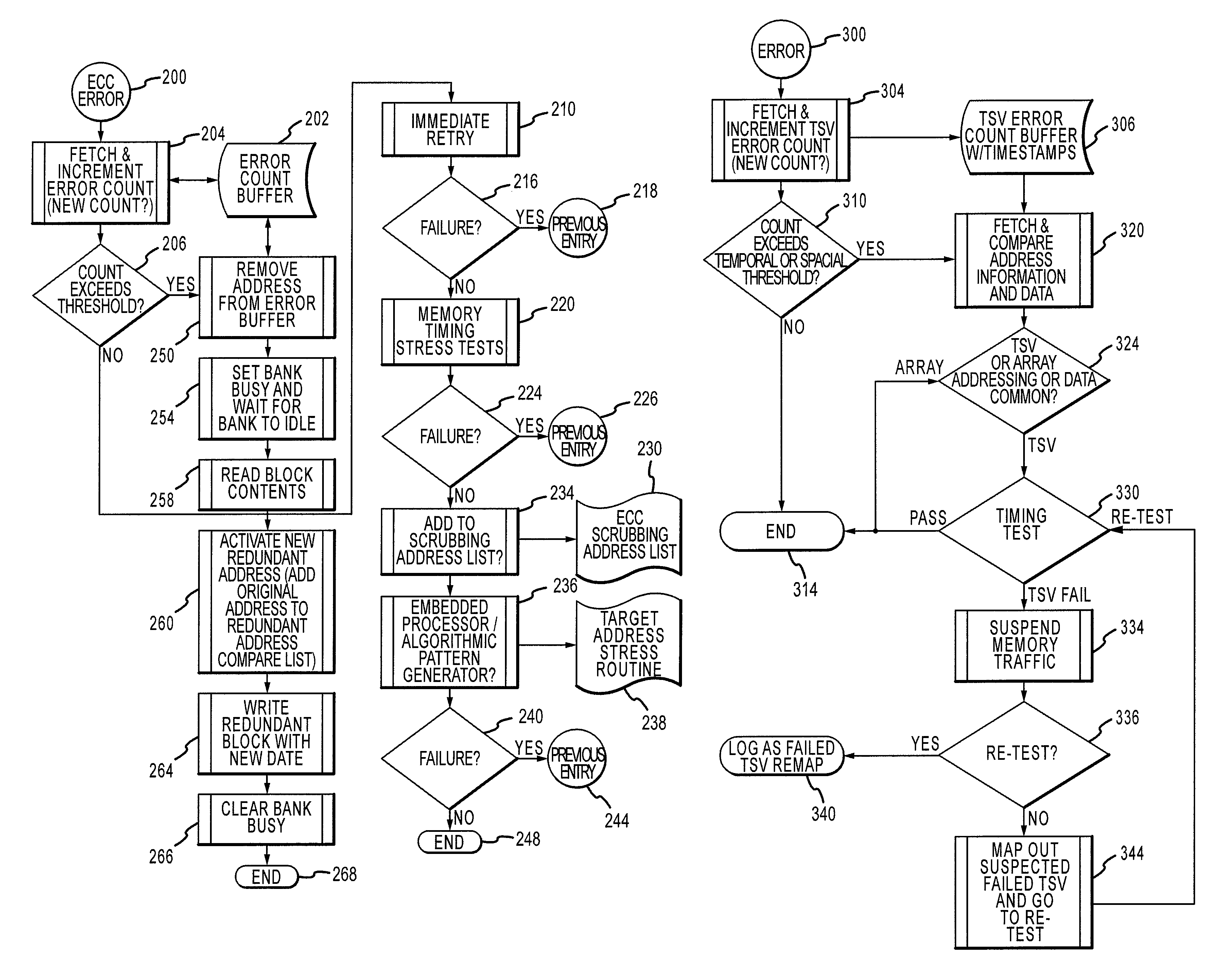

Method and apparatus for repairing high capacity/high bandwidth memory devices

Memory systems, systems and methods are disclosed that may include a plurality of stacked memory device dice and a logic die connected to each other by through silicon vias. One such logic die includes an error code generator that generates error checking codes corresponding to write data. The error checking codes are stored in the memory device dice and are subsequently compared to error checking codes generated from data subsequently read from the memory device dice. In the event the codes do not match, an error signal can be generated. The logic die may contain a controller that records the address from which the data was read. The controller or memory access device may redirect accesses to the memory device dice at the recorded addresses. The controller can also examine addresses or data resulting in the error signals being generated to identify faults in the through silicon vias.

Owner:MICRON TECH INC

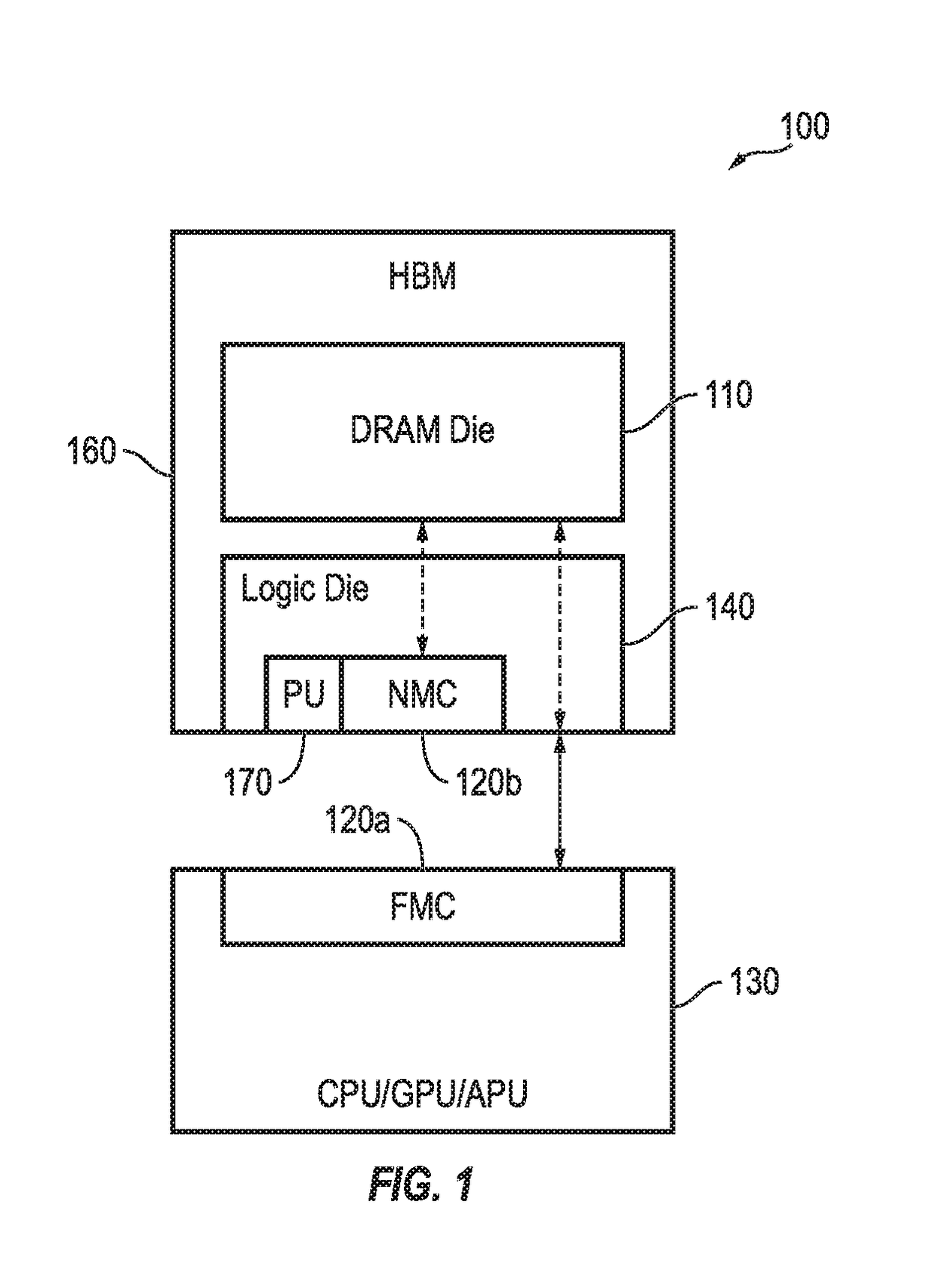

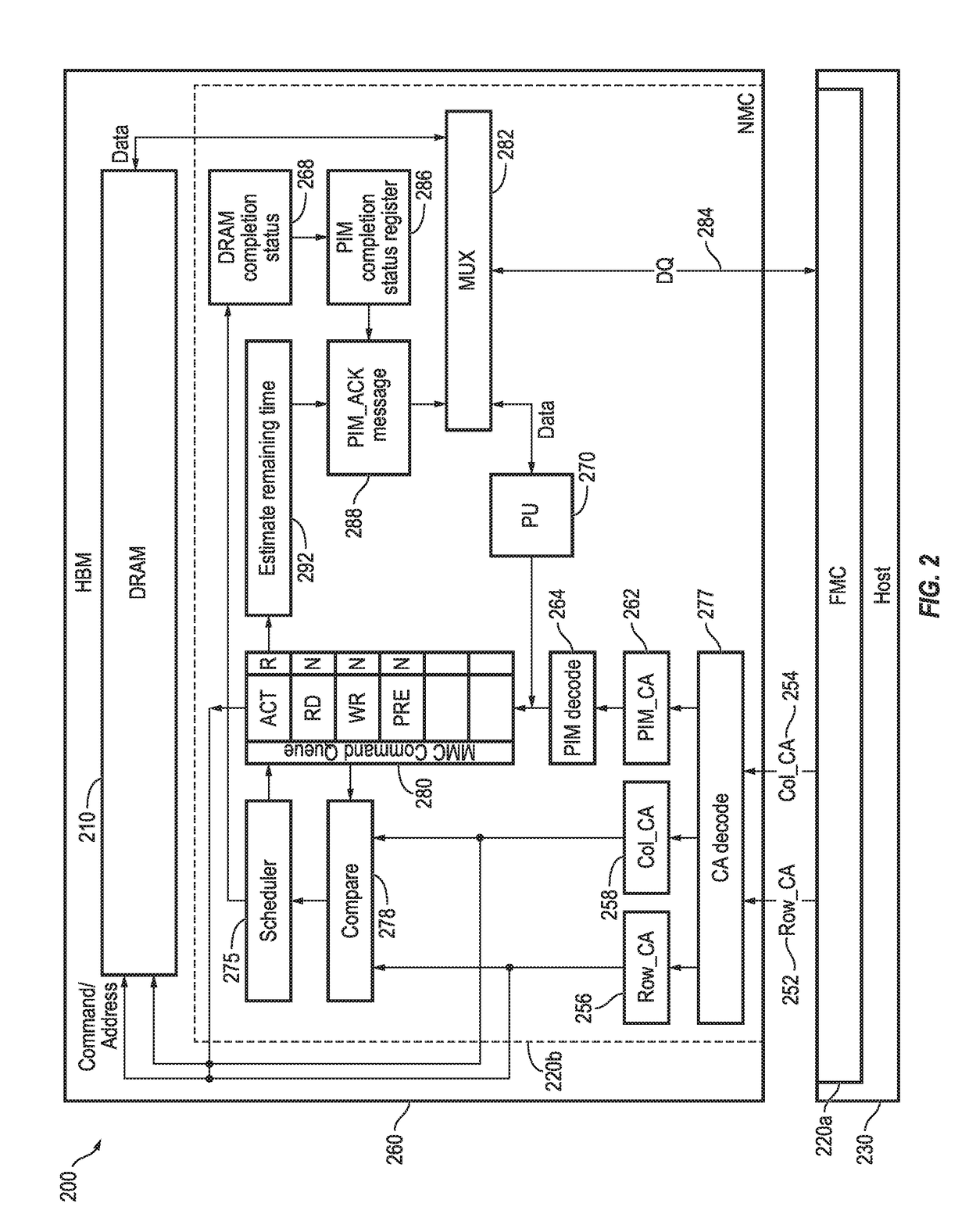

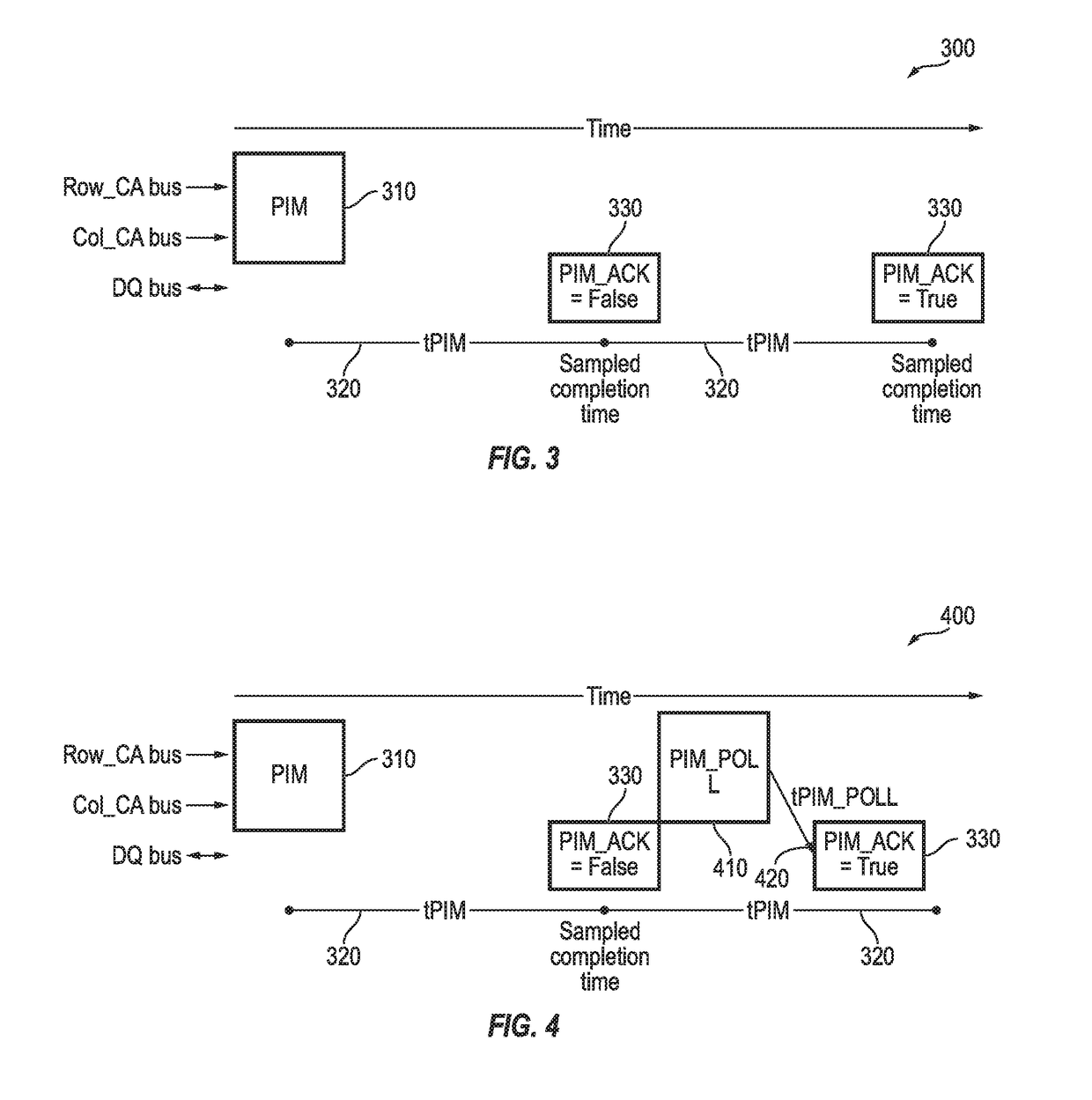

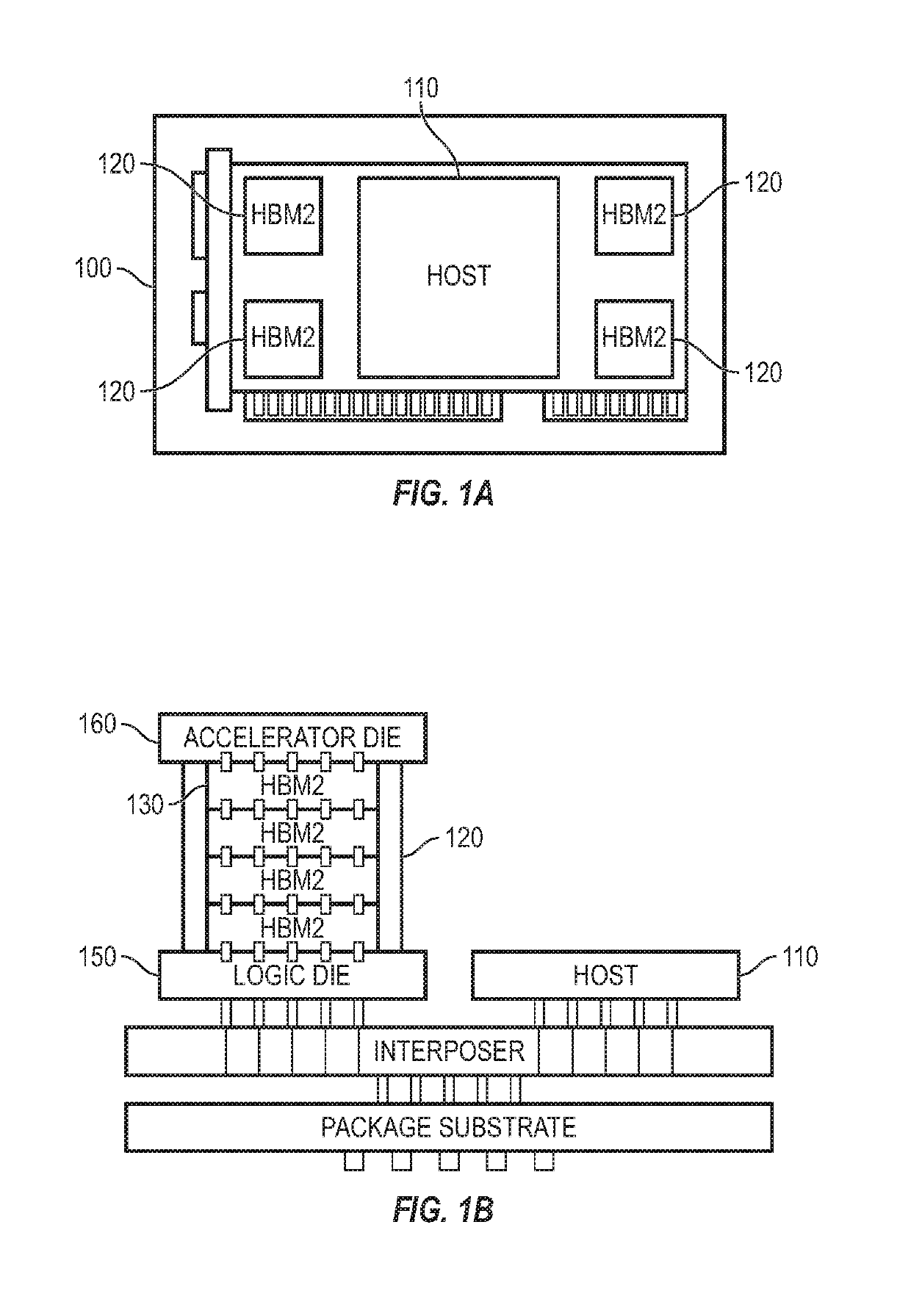

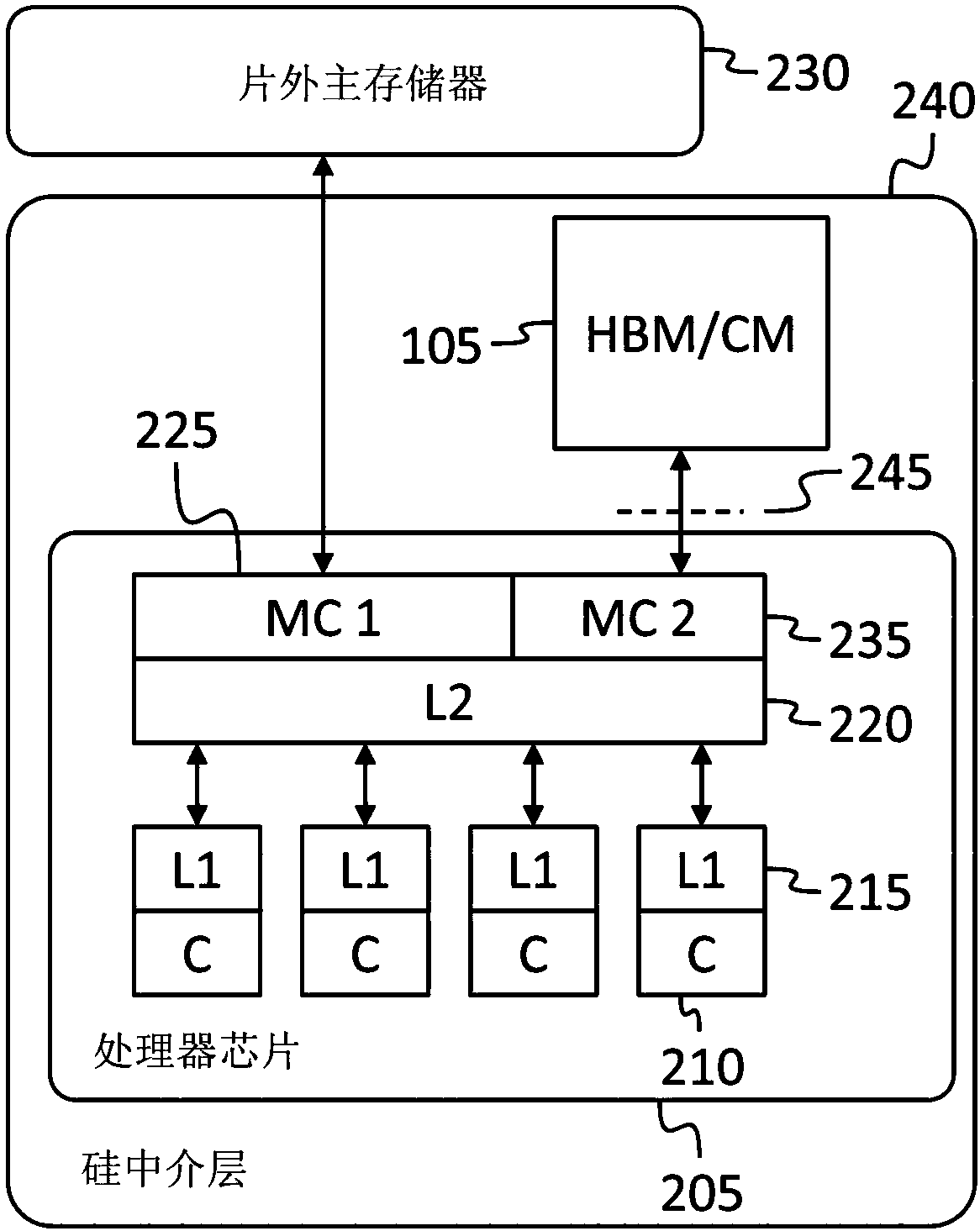

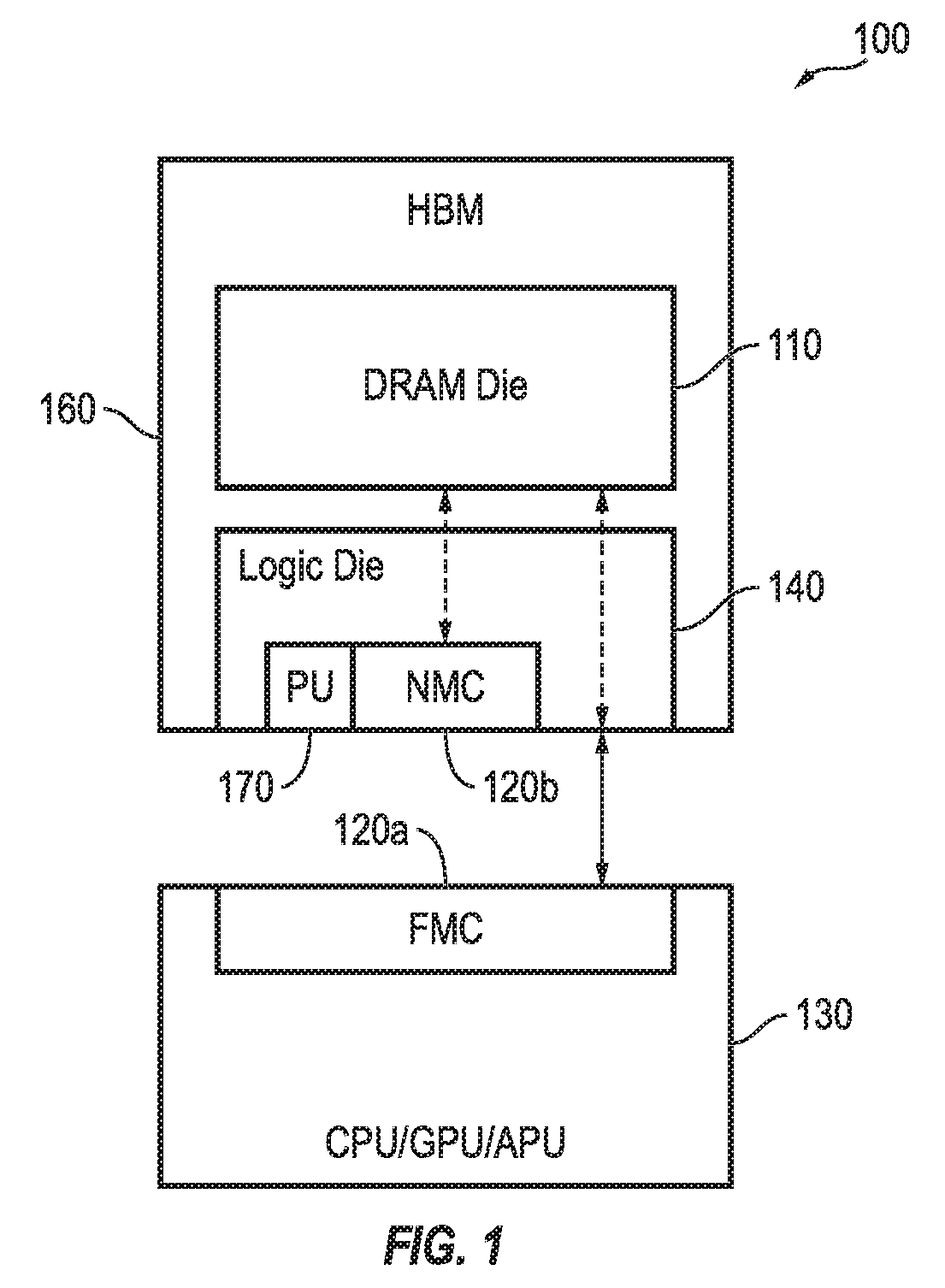

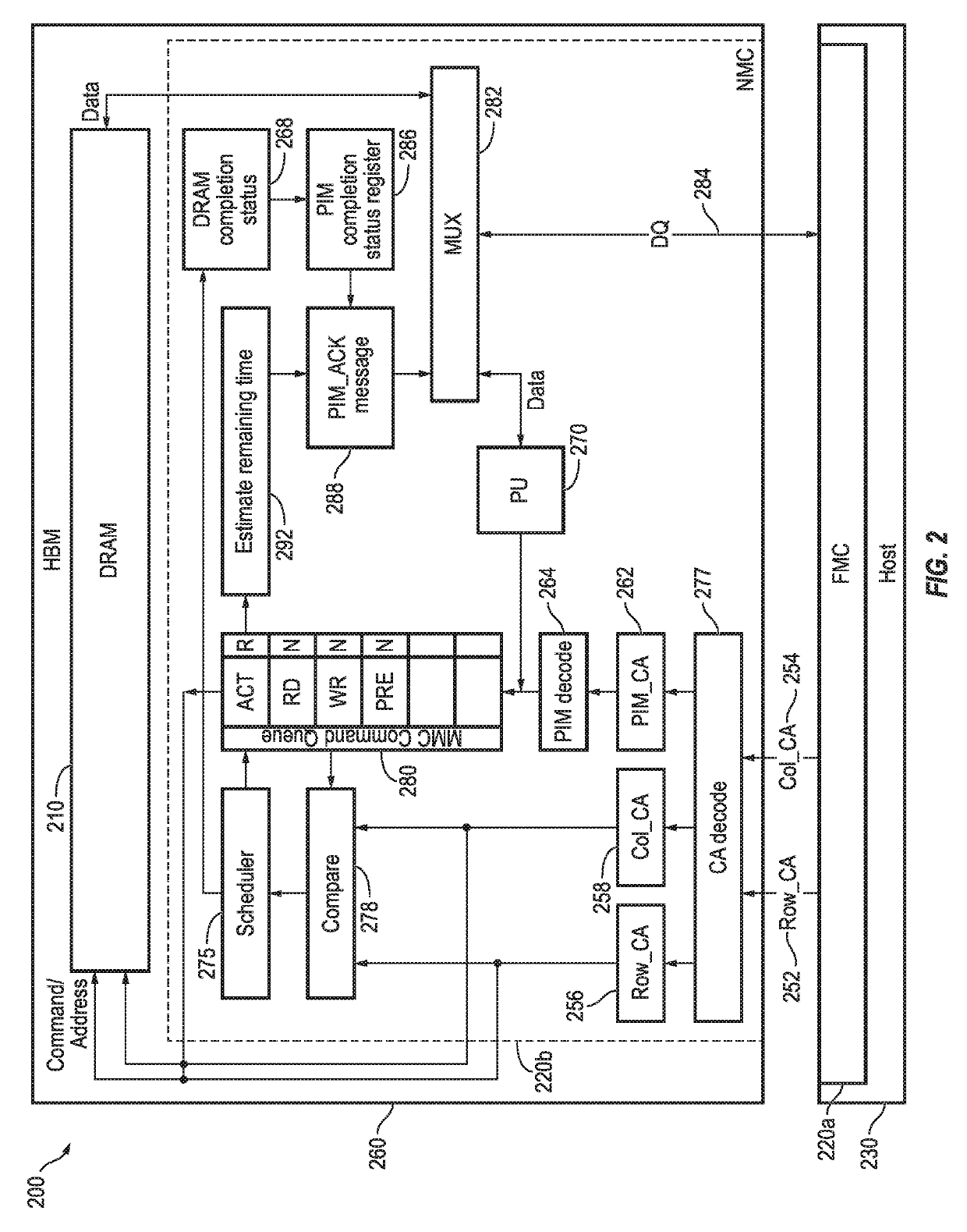

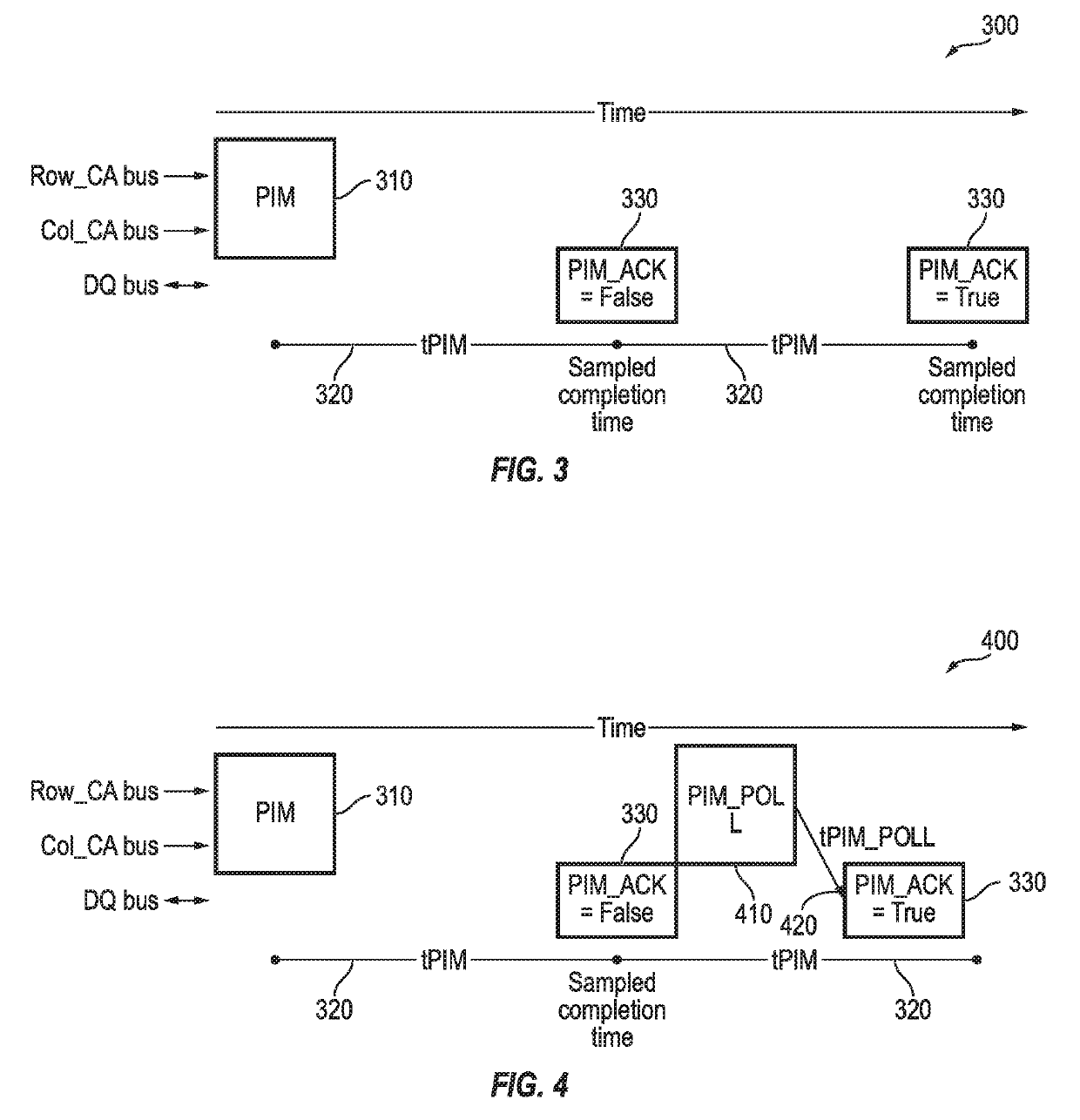

Coordinated near-far memory controller for process-in-hbm

ActiveUS20190034097A1Improve performanceMemory architecture accessing/allocationInput/output to record carriersTerm memoryMemory controller

A method of coordinating memory commands in a high-bandwidth memory HBM+ system, the method including sending a host memory controller command from a host memory controller to a memory, receiving the host memory controller command at a coordinating memory controller, forwarding the host memory controller command from the coordinating memory controller to the memory, and scheduling, by the coordinating memory controller, a coordinating memory controller command based on the host memory controller command.

Owner:SAMSUNG ELECTRONICS CO LTD

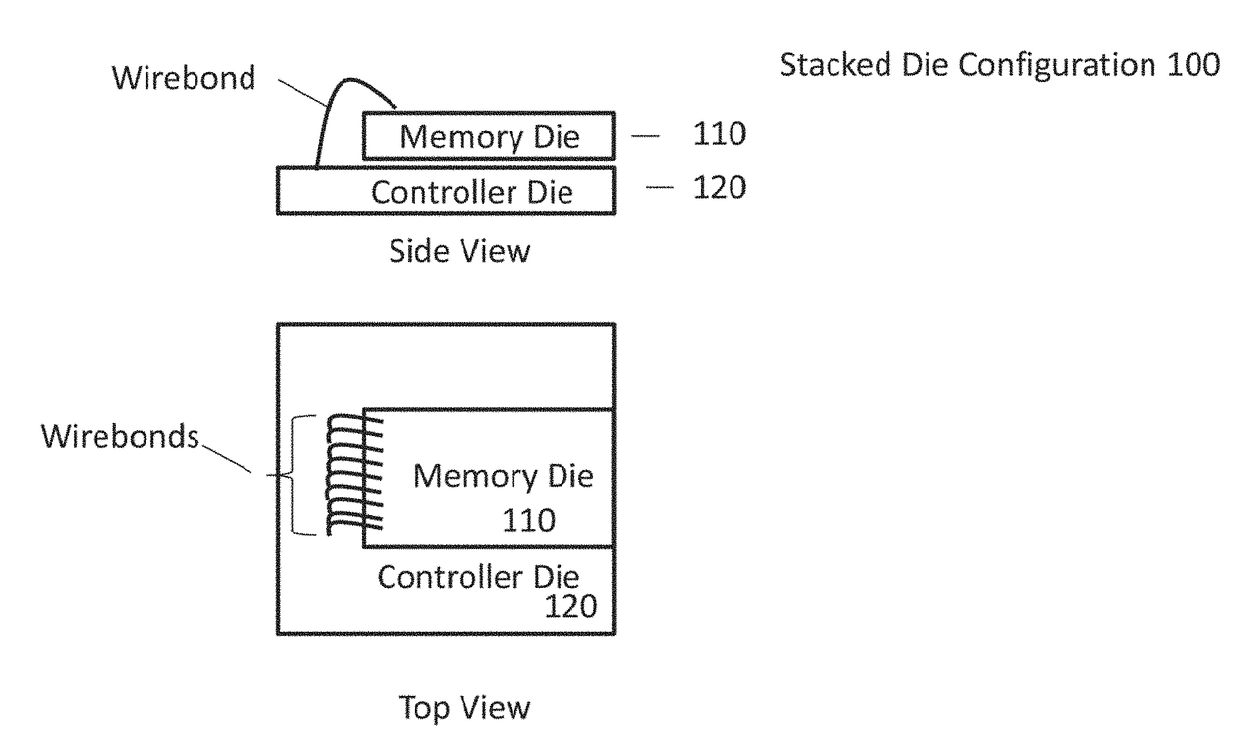

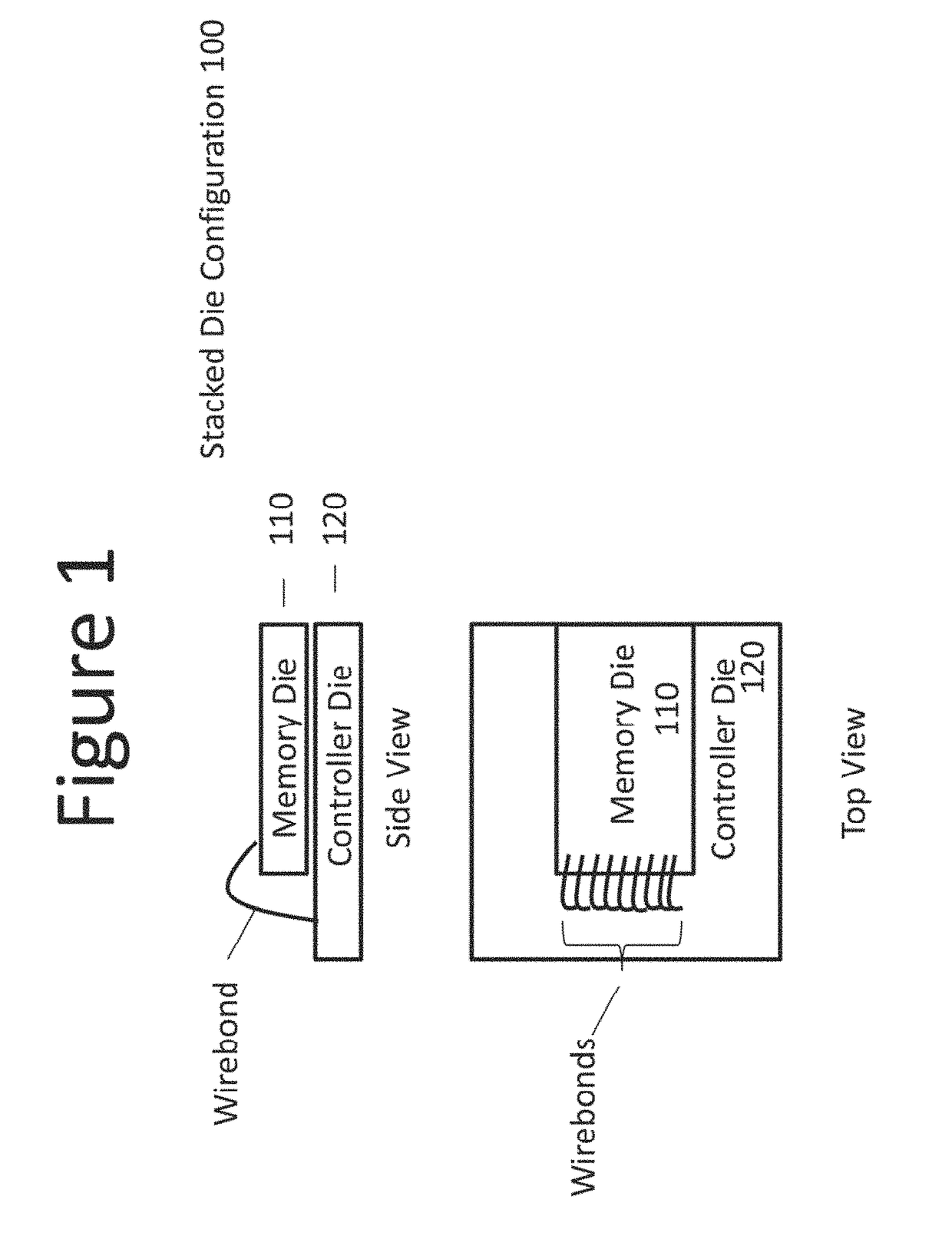

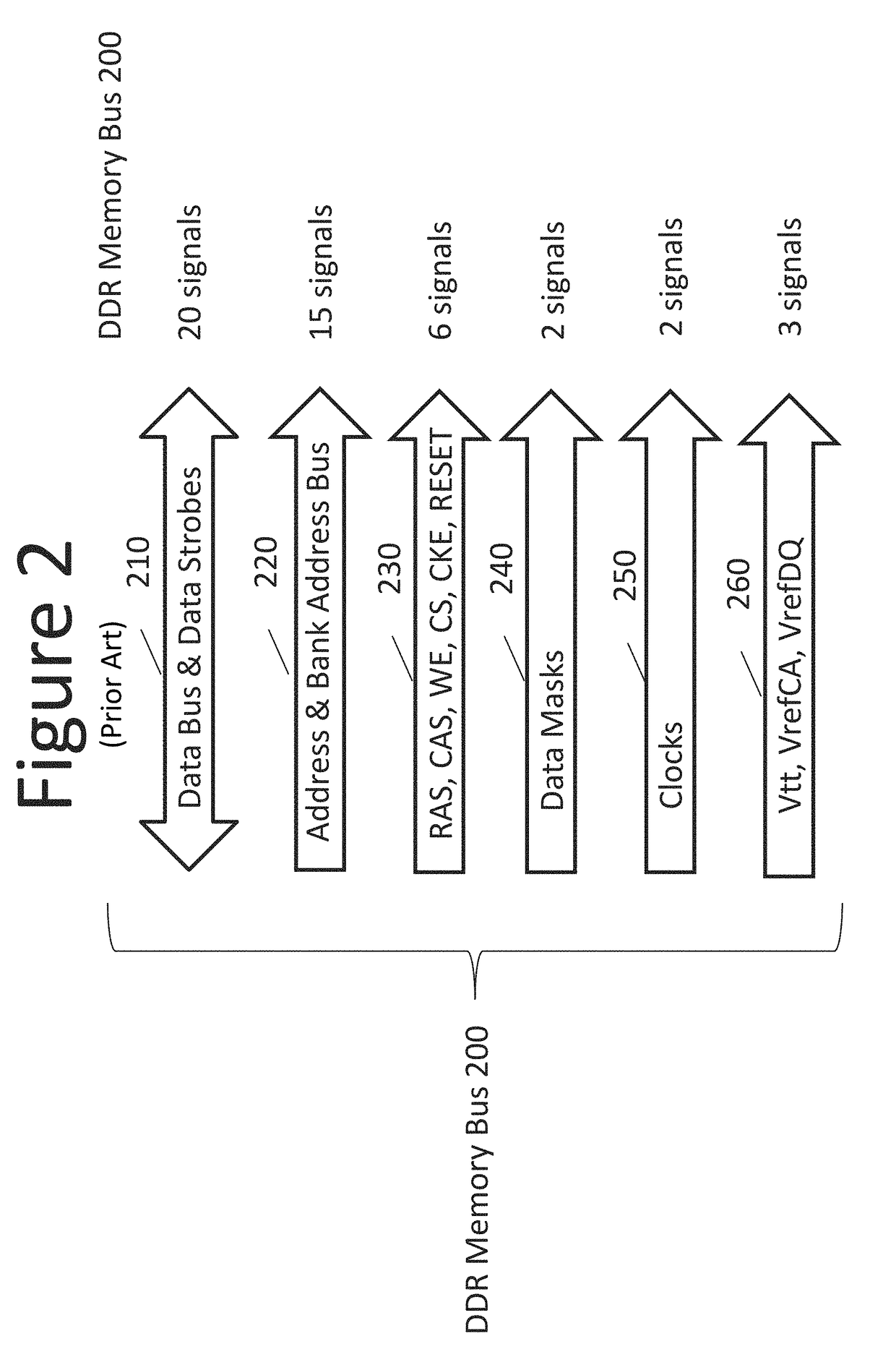

Low-pincount high-bandwidth memory and memory bus

ActiveUS20170364469A1Operation controlReduce in quantityDigital storageElectric digital data processingMemory interfaceMegabyte

A memory subsystem is provided, including a memory controller integrated circuit (IC), a memory bus and a memory IC, all which use fewer signals than common DDR type memory of the same peak bandwidth. Using no more than 22 switching signals, the subsystem can transfer data over 3000 Megabytes / second across the bus interconnecting the ICs. Signal count reduction is attained by time-multiplexing address / control commands onto at least some of the same signals used for data transfer. A single bus signal is used to initiate bus operation, and once in operation the single signal can transfer addressing and control information to the memory IC concurrent with data transfer via a serial protocol based on 16 bit samples of this single bus signal. Bus bandwidth can be scaled by adding additional data and data strobe IO signals. These additional data bus signals might be used only for data and data mask transport. The physical layout of one version of the memory IC dispatches switching signal terminals adjacent to one short edge of the memory die to minimize the die area overhead for controller IC memory interface circuitry when used in a stacked die multi-chip package with said memory controller IC. The memory IC interface signal placement and signal count minimize signal length and circuitry for the memory bus signals.

Owner:ETRON TECH AMERICA INC

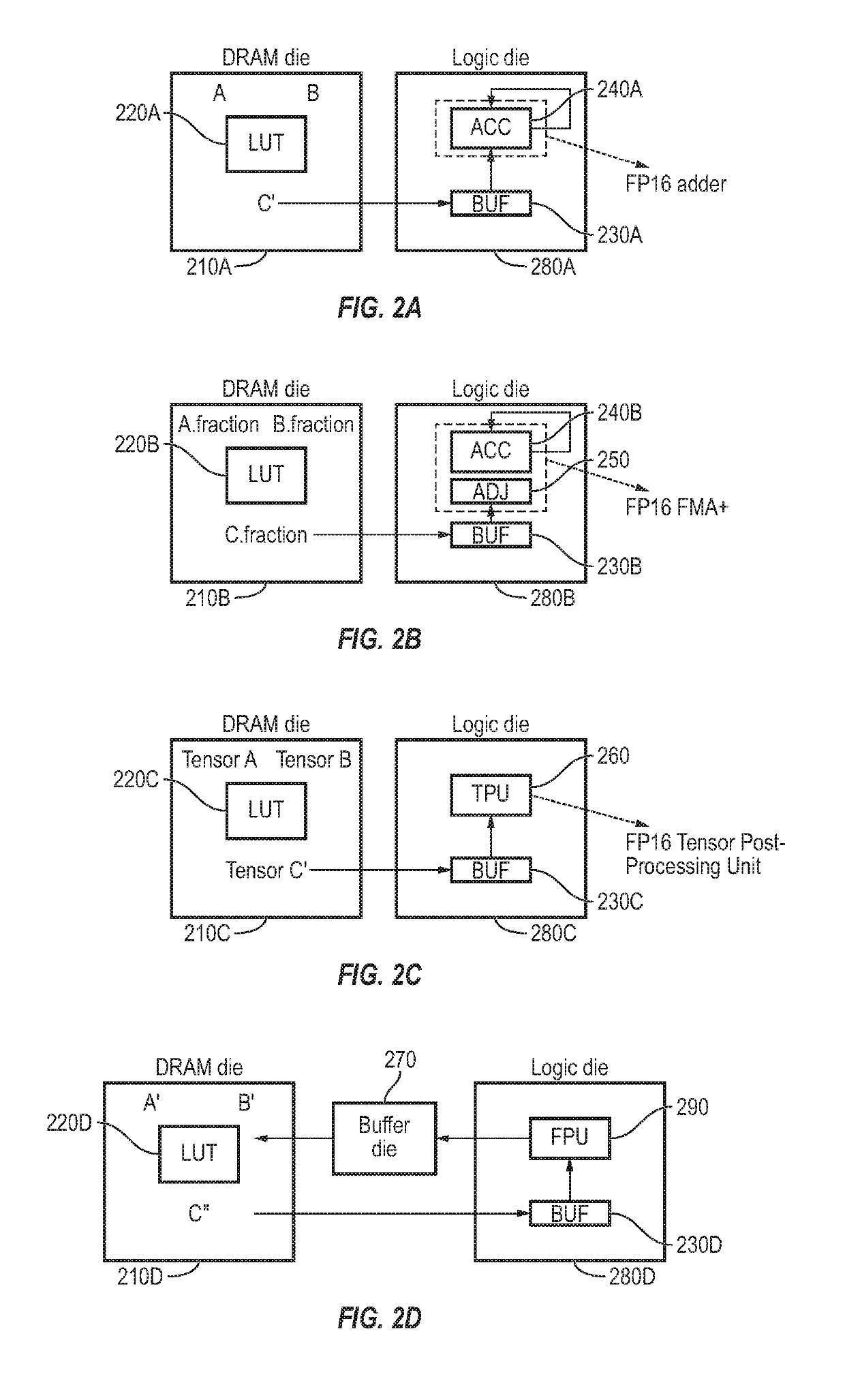

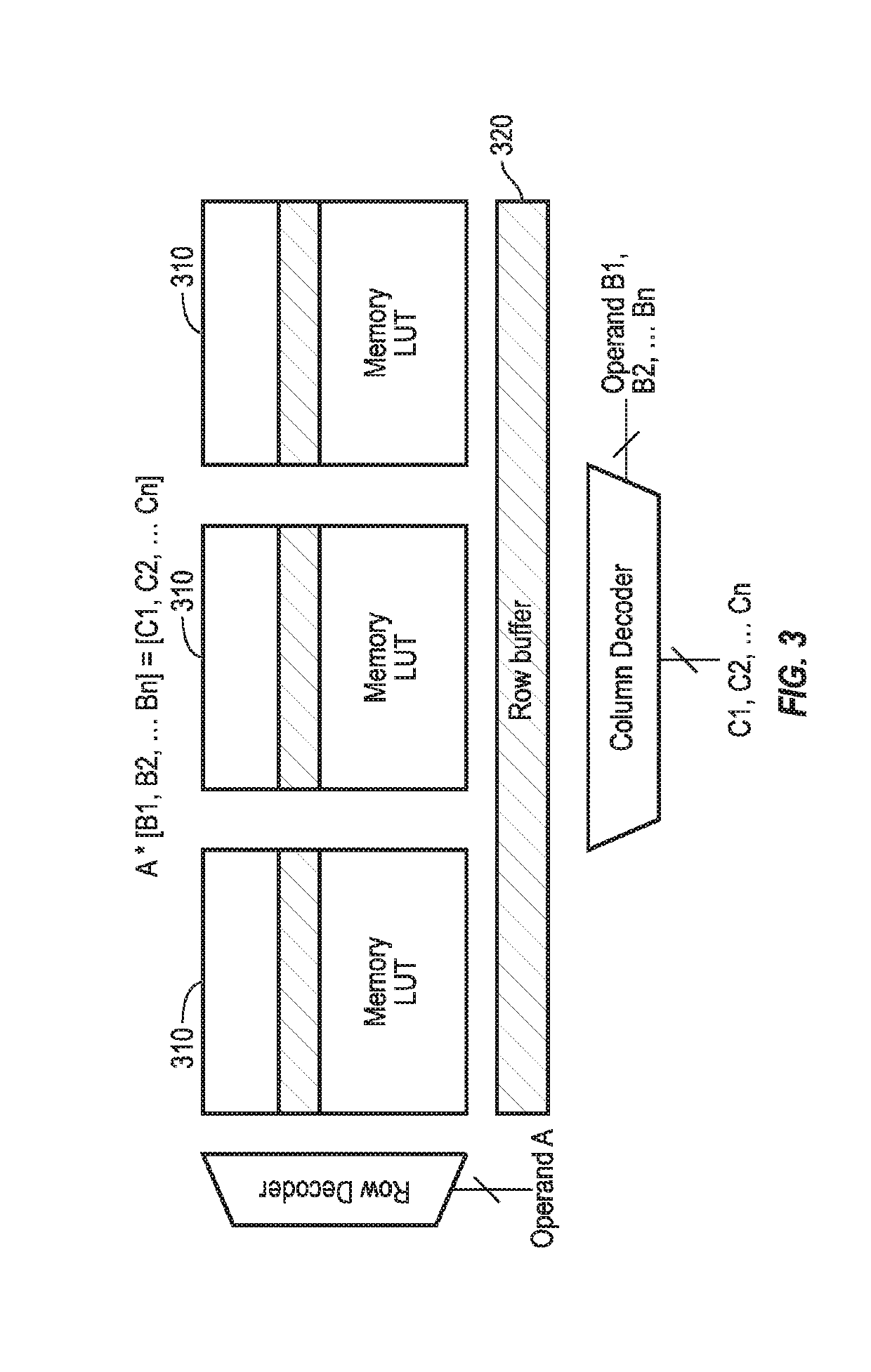

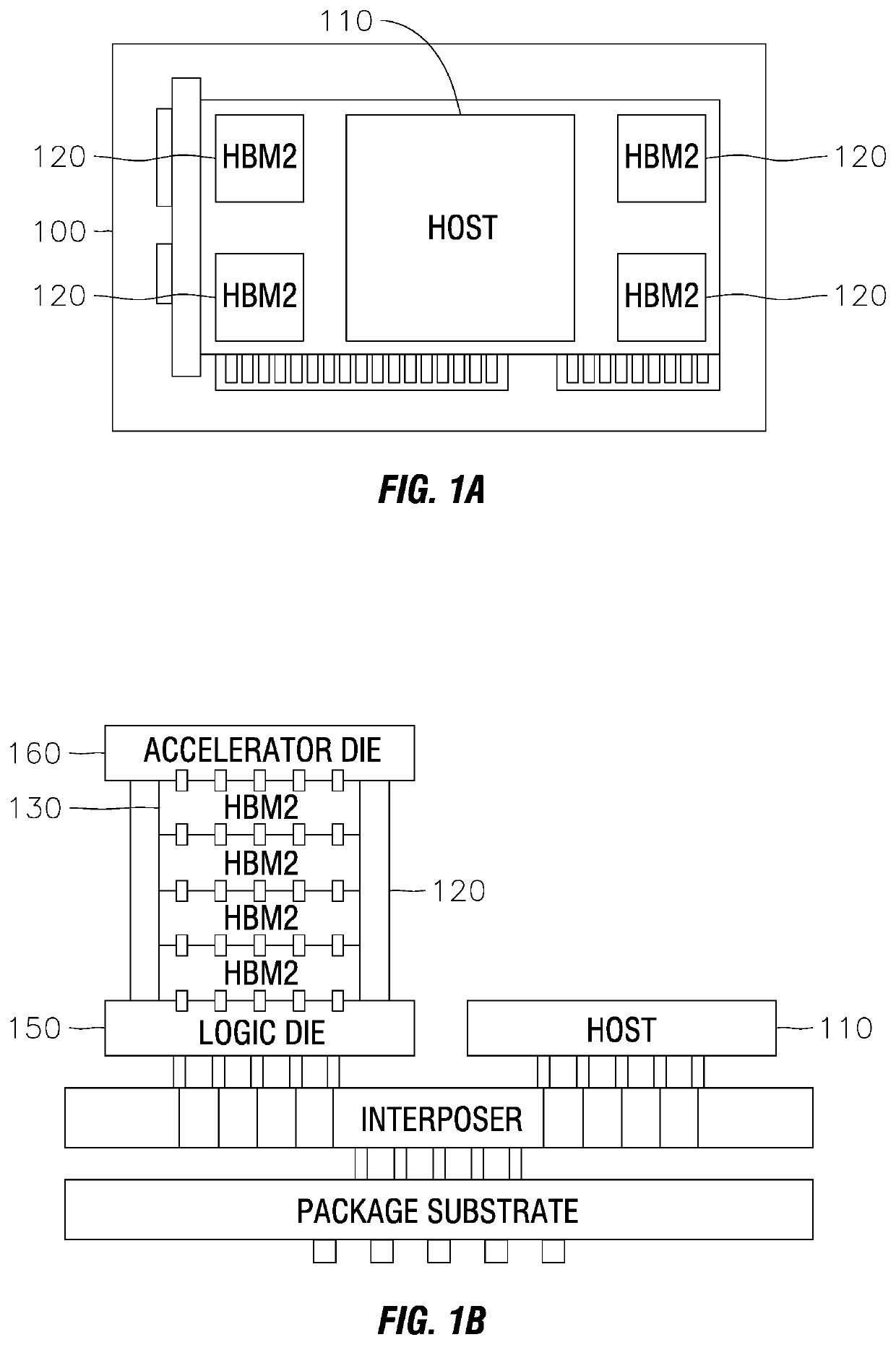

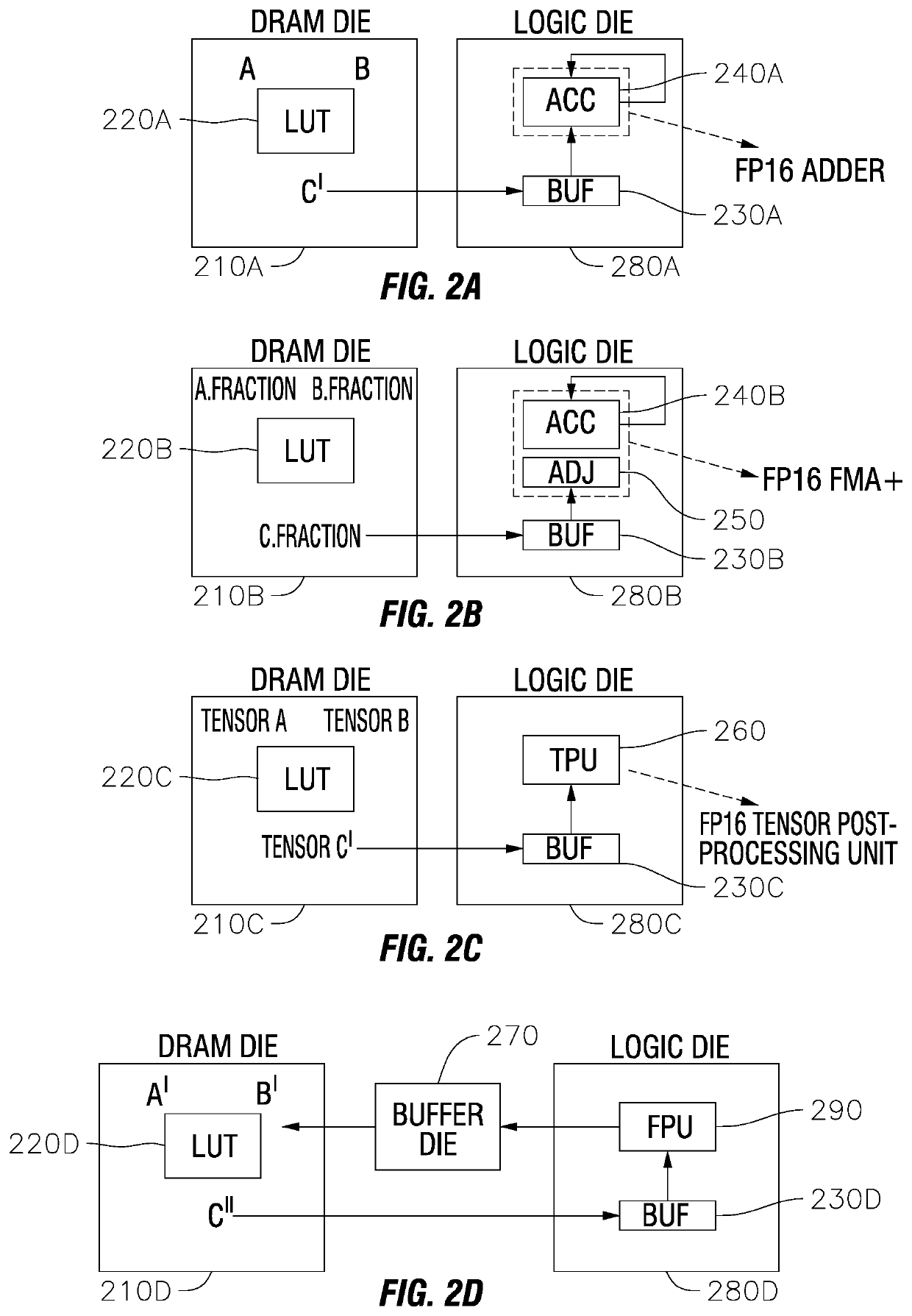

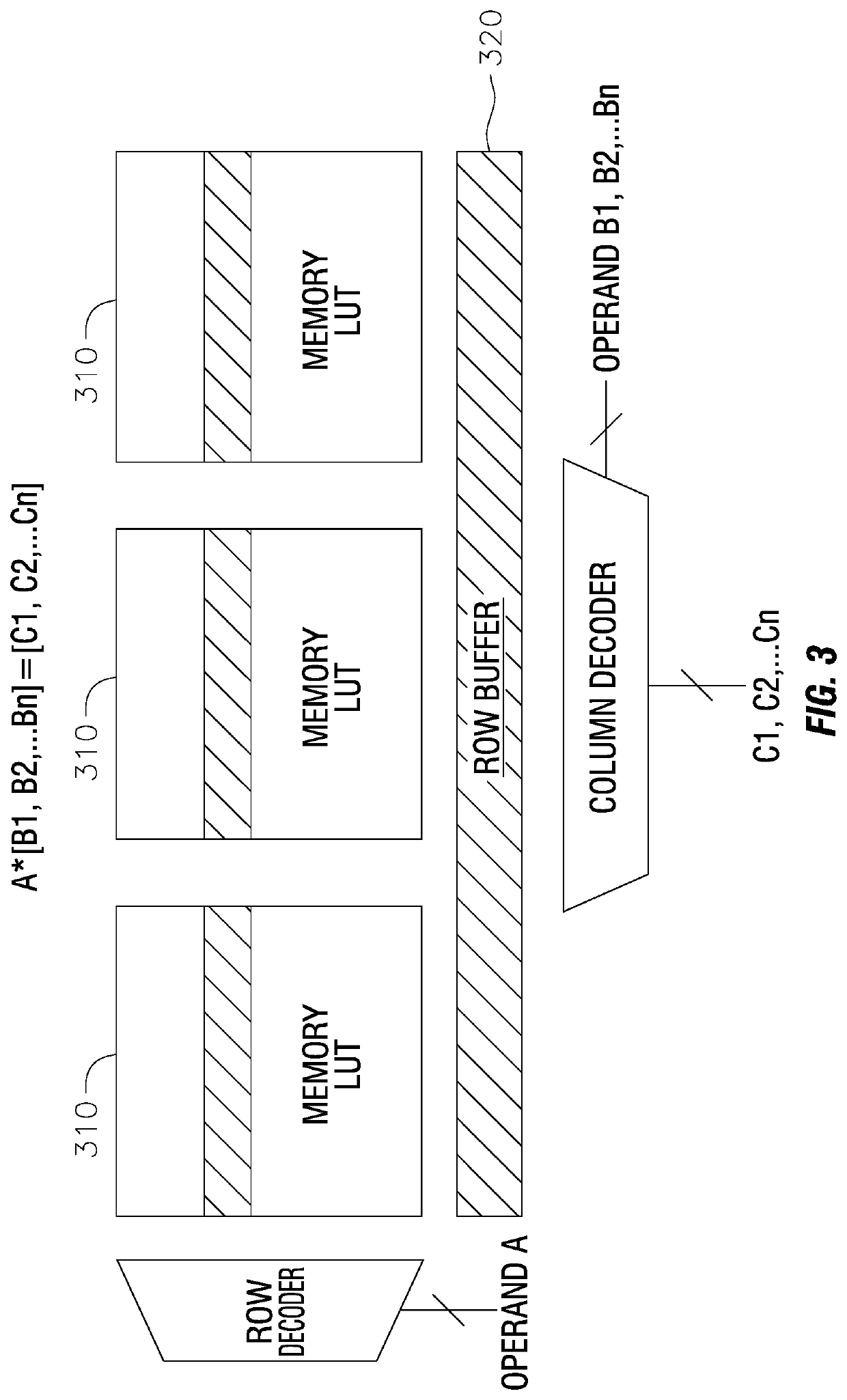

Memory lookup computing mechanisms

ActiveUS20190196953A1Memory architecture accessing/allocationDigital data processing detailsParallel computingFloating point

According to some example embodiments of the present disclosure, in a method for a memory lookup mechanism in a high-bandwidth memory system, the method includes: using a memory die to conduct a multiplication operation using a lookup table (LUT) methodology by accessing a LUT, which includes floating point operation results, stored on the memory die; sending, by the memory die, a result of the multiplication operation to a logic die including a processor and a buffer; and conducting, by the logic die, a matrix multiplication operation using computation units.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and apparatus for repairing high capacity/high bandwidth memory devices

Memory systems, systems and methods are described that may include a plurality of stacked memory device dice and a logic die connected to each other by through silicon vias. One such logic die includes an error code generator that generates error checking codes corresponding to write data. The error checking codes are stored in the memory device dice and are subsequently compared to error checking codes generated from data subsequently read from the memory device dice. In the event the codes do not match, an error signal can be generated. The logic die may contain a controller that records the address from which the data was read. The controller or memory access device may redirect accesses to the memory device dice at the recorded addresses. The controller can also examine addresses or data resulting in the error signals being generated to identify faults in the through silicon vias.

Owner:MICRON TECH INC

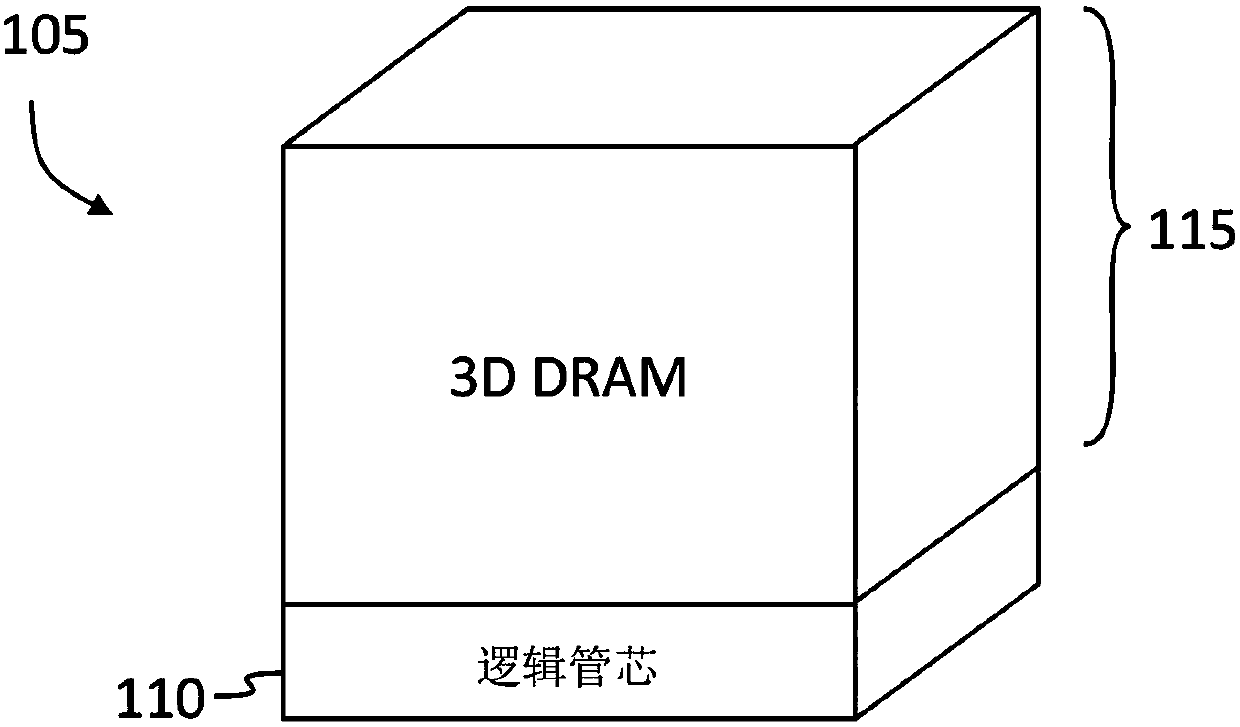

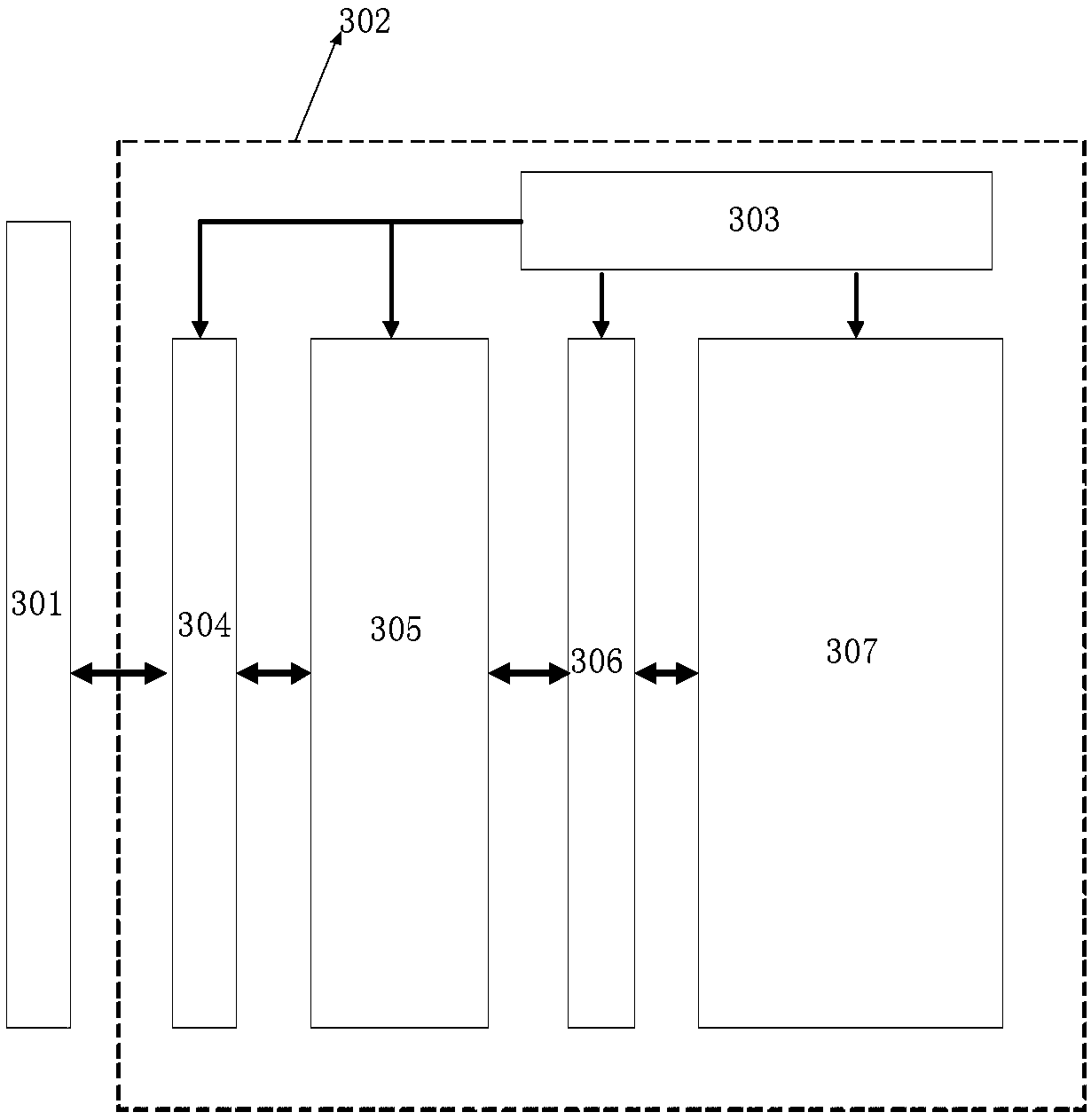

Hbm with in-memory cache manager

ActiveCN107656878AMemory architecture accessing/allocationStatic storageComputer architecturePhysical address

A system and method for using high bandwidth memory as cache memory. A high bandwidth memory may include a logic die, and, stacked on the logic die, a plurality of dynamic read-only memory dies. The logic die may include a cache manager, that may interface to external systems through an external interface conforming to the JESD235A standard, and that may include an address translator, a command translator, and a tag comparator. The address translator may translate each physical address received through the external interface into a tag value, a tag address in the stack of memory dies, and a data address in the stack of memory dies. The tag comparator may determine whether a cache hit or cache miss has occurred, according to whether the tag value generated by the address translator matchesthe tag value stored at the tag address.

Owner:SAMSUNG ELECTRONICS CO LTD

Computing mechanisms using lookup tables stored on memory

ActiveUS10628295B2Memory architecture accessing/allocationDigital data processing detailsComputer architectureHigh bandwidth

According to some example embodiments of the present disclosure, in a method for a memory lookup mechanism in a high-bandwidth memory system, the method includes: using a memory die to conduct a multiplication operation using a lookup table (LUT) methodology by accessing a LUT, which includes floating point operation results, stored on the memory die; sending, by the memory die, a result of the multiplication operation to a logic die including a processor and a buffer; and conducting, by the logic die, a matrix multiplication operation using computation units.

Owner:SAMSUNG ELECTRONICS CO LTD

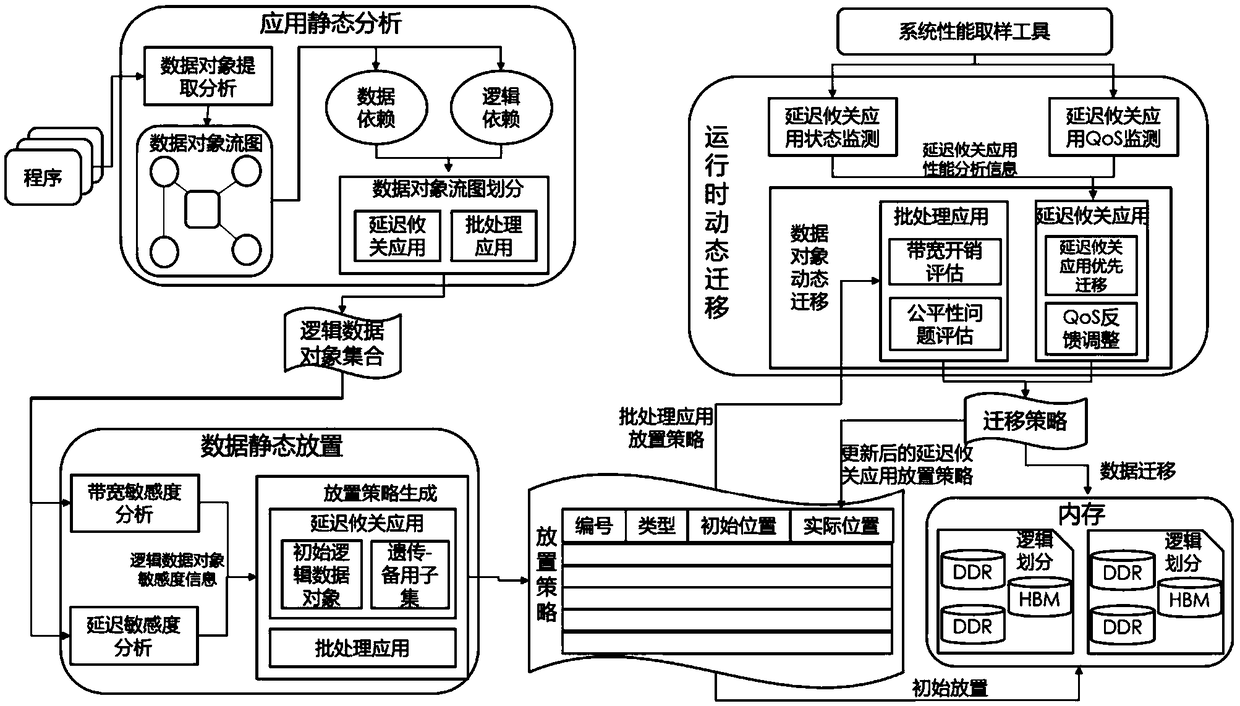

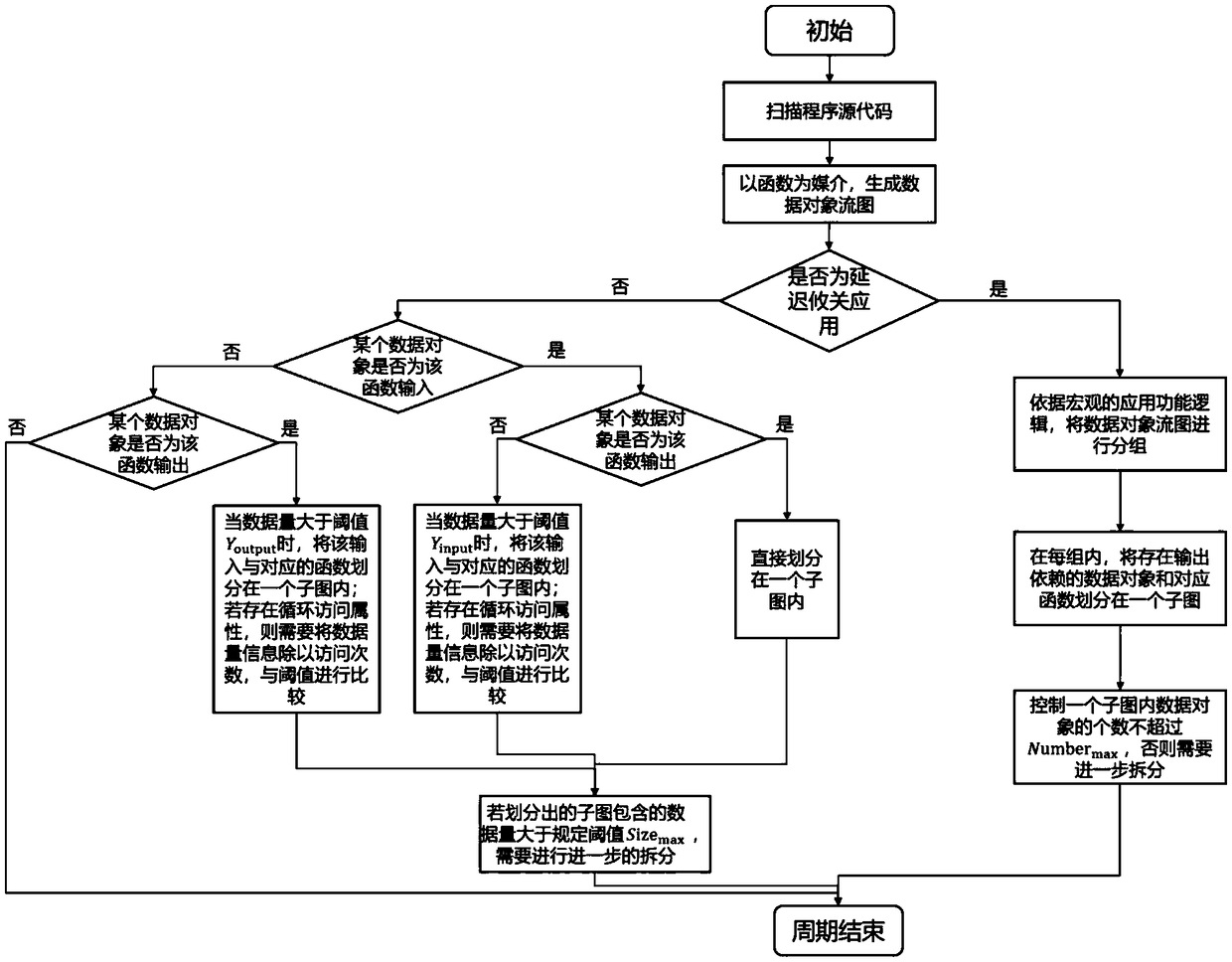

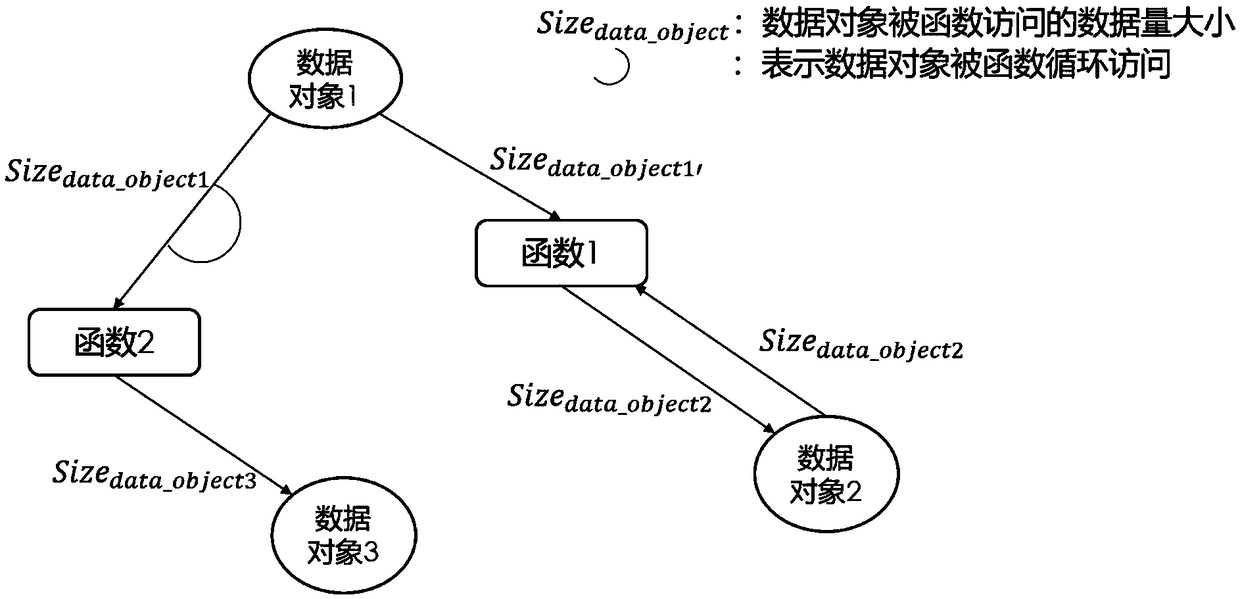

A data placement and migration method for mixed deployment scenarios of heterogeneous memory and multi-type applications

ActiveCN109388486ASatisfy Latency Critical ApplicationsMeet needsInput/output to record carriersProgram initiation/switchingDynamic monitoringHeterogeneous network

A data placement and migration method for mixed deployment scenarios of heterogeneous memory and multi-type applications is composed of an application static analysis method, a data static placement method and a runtime dynamic migration mechanism. The method is applicable to heterogeneous memory systems consisting of high bandwidth memory and double rate memory and mixed deployment scenarios of asingle delay-dependent application and multiple batch applications, from two angles of static data placement tool and dynamic migration runtime system, the application is divided into logical data object sets by using the dependencies between data objects. Based on the different characteristics of the two memories, different data placement strategies are developed for different types of logical data objects, and dynamic monitoring and real-time migration are carried out during the running process of the application, so as to make full use of various memory resources of heterogeneous memory systems and meet the performance requirements of delay-dependent applications and batch applications respectively.

Owner:BEIHANG UNIV

Asynchronous, high-bandwidth memory component using calibrated timing elements

Disclosed herein are embodiments of an asynchronous memory device that use internal delay elements to enable memory access pipelining. In one embodiment, the delay elements are responsive to an input load control signal, and are calibrated with reference to periodically received timing pulses. Different numbers of the delay elements are configured to produce different asynchronous delays and to strobe sequential pipeline elements of the memory device.

Owner:RAMBUS INC

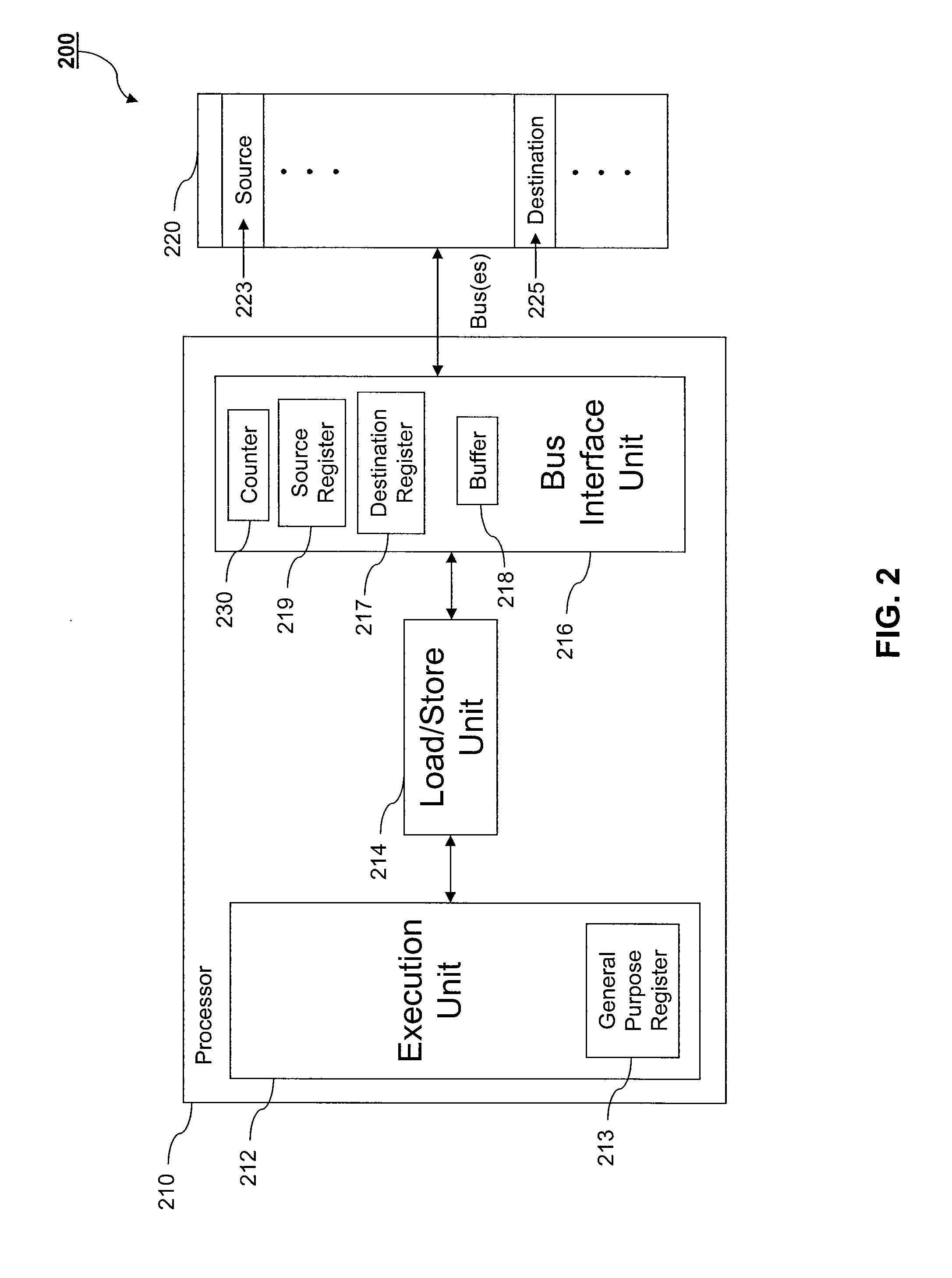

System and Method for Improving Memory Transfer

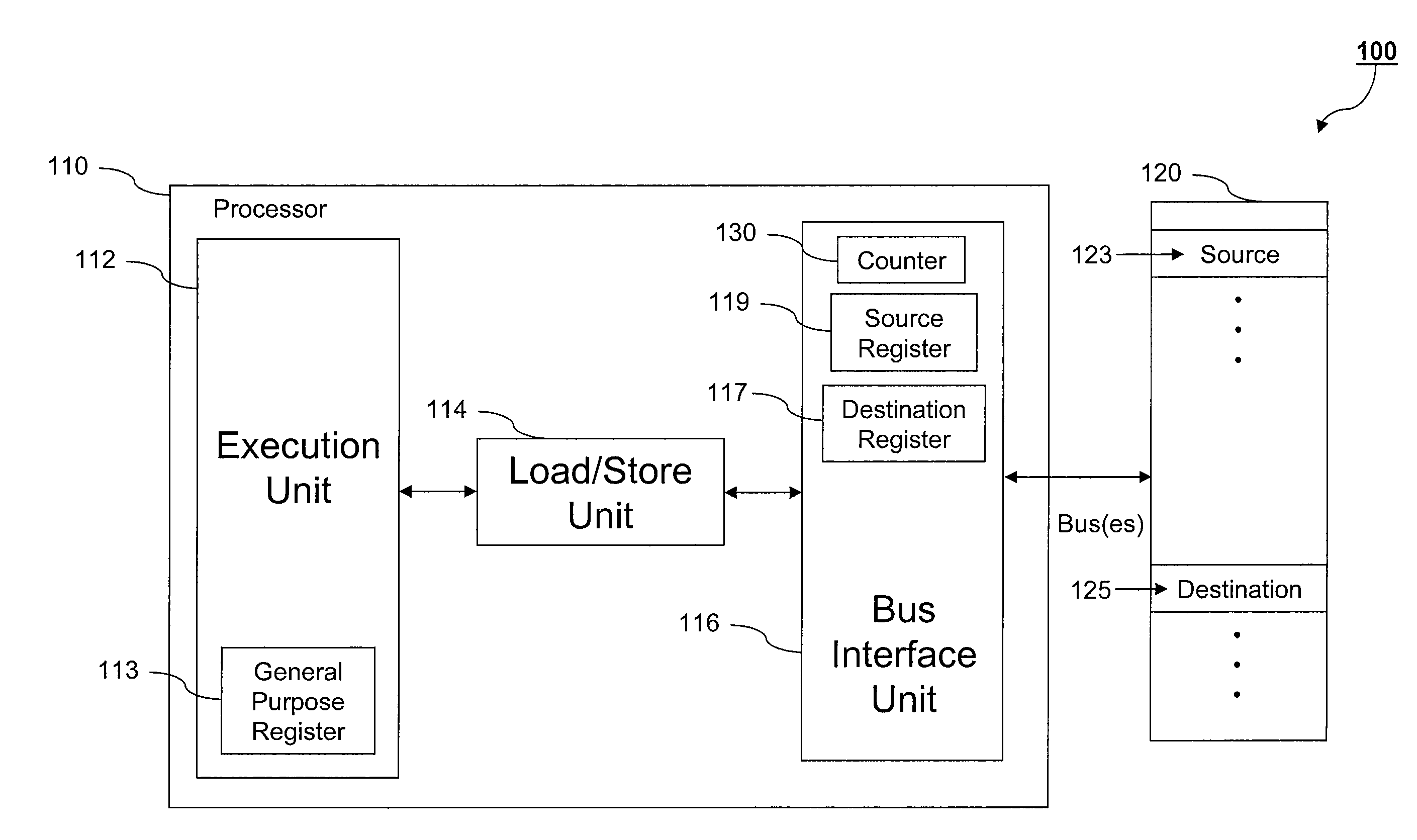

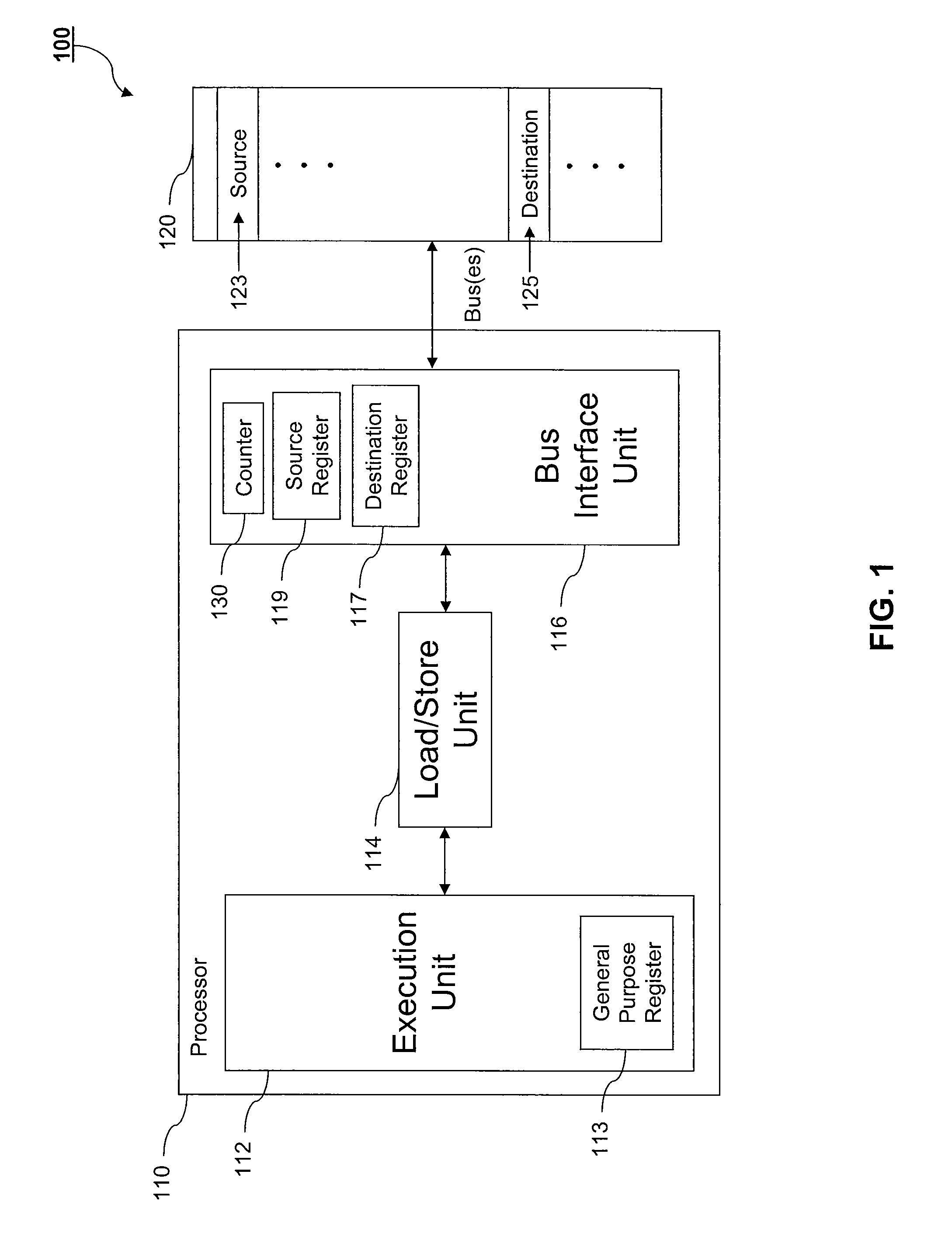

ActiveUS20100199054A1High bandwidthMemory architecture accessing/allocationMemory loss protectionMemory addressHigh bandwidth

System and method for performing a high-bandwidth memory copy. Memory transfer instructions allow for copying of data from a first memory location to a second memory location without the use of load and store word instructions thereby achieving a high-bandwidth copy. In one embodiment, the method includes the steps of (1) decoding a destination address from a first memory transfer instruction, (2) storing the destination address in a register in the bus interface unit, (3) decoding a source address from a second memory transfer instruction, and (4) copying the contents of a memory location specified by the source memory address to a memory location specified by the contents of the register. Other methods and a microprocessor system are also presented.

Owner:ARM FINANCE OVERSEAS LTD

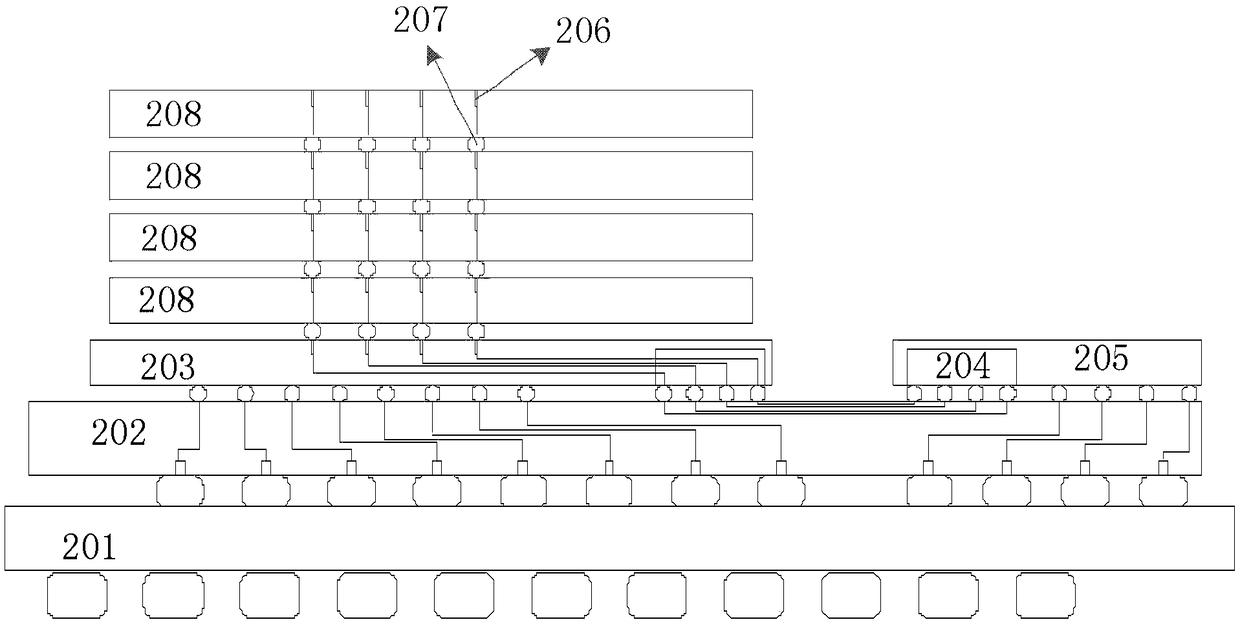

High-bandwidth memory-based neural network calculation apparatus and method

ActiveCN108241484AFast data exchangeIO time shortenedDigital data processing detailsPhysical realisationNerve networkTransverse plane

The invention provides a high-bandwidth memory-based neural network calculation apparatus and method. The neural network calculation apparatus comprises at least one high-bandwidth memory and a neuralnetwork accelerator, wherein each high-bandwidth memory comprises multiple memories accumulated in a stacked manner; and the neural network accelerator is electrically connected with the high-bandwidth memory, performs data exchange with the high-bandwidth memory, and executes neural network calculation. According to the apparatus and the method, the storage bandwidth can be greatly increased; the high-bandwidth memory serves as a memory of the neural network calculation apparatus, so that the data exchange of input data and calculation parameters can be performed between a buffer and the memory more quickly, and the IO time is greatly shortened; the high-bandwidth memory adopts a stacked structure and does not occupy a transverse plane space, so that the area of the neural network calculation apparatus can be greatly reduced and can be reduced to about 5% of the area in the prior art; and the power consumption of the neural network calculation apparatus is reduced.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

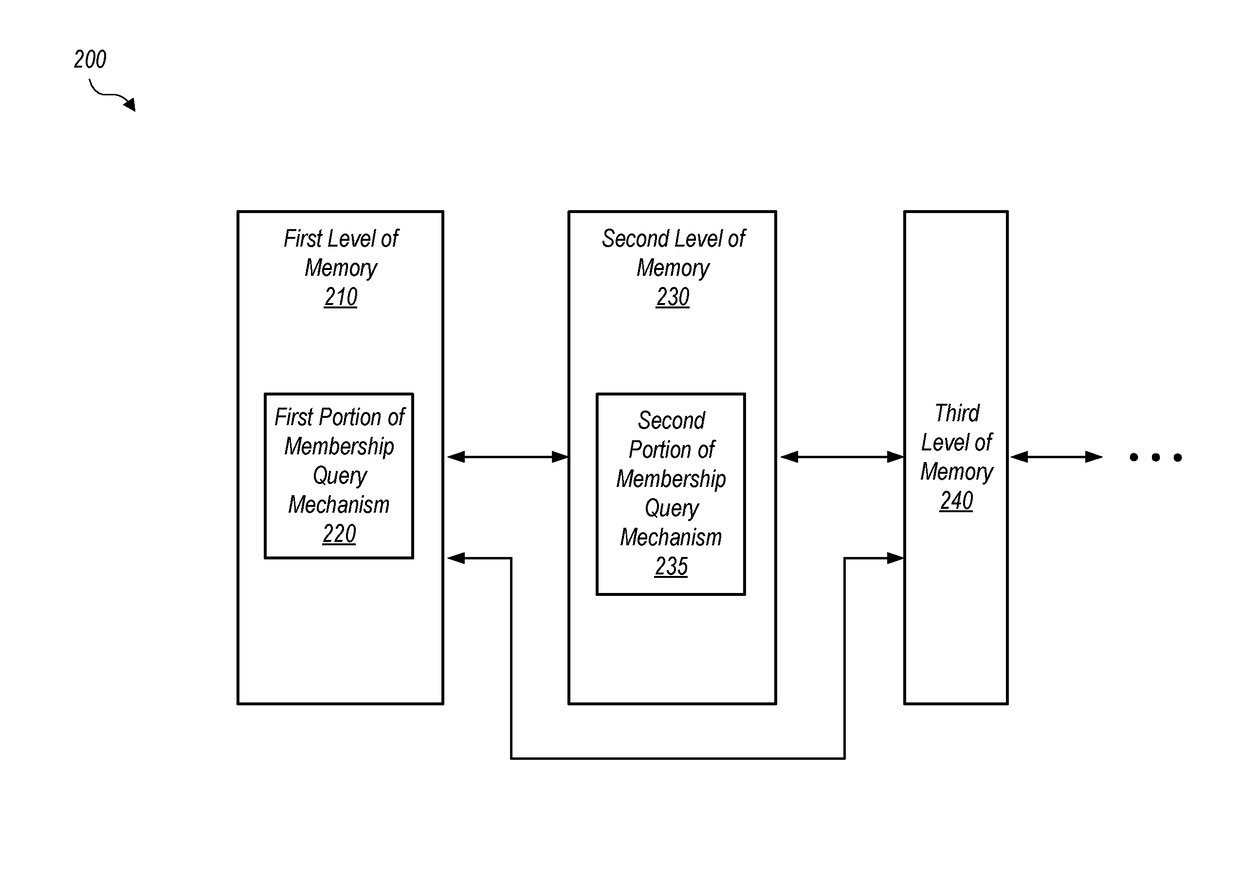

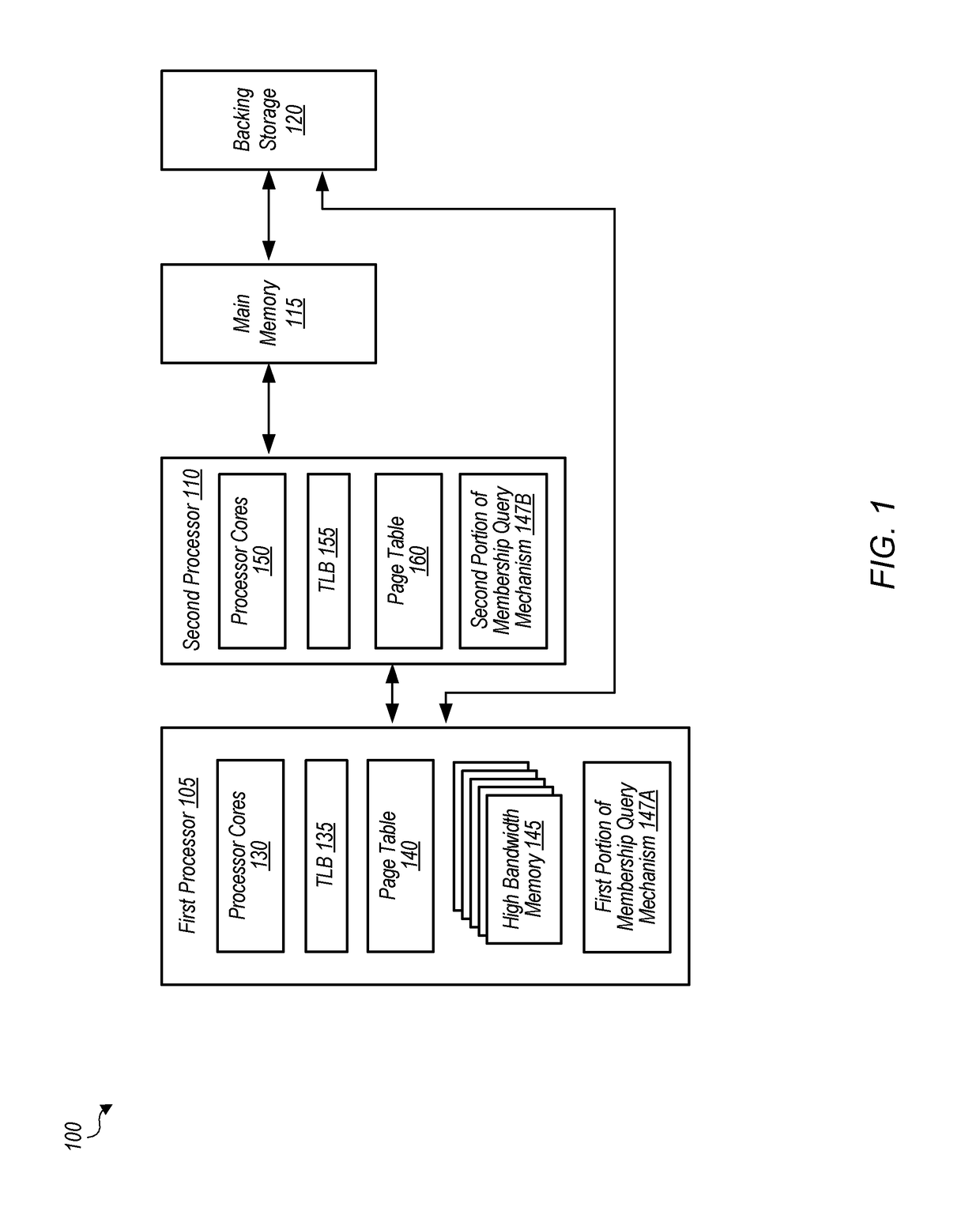

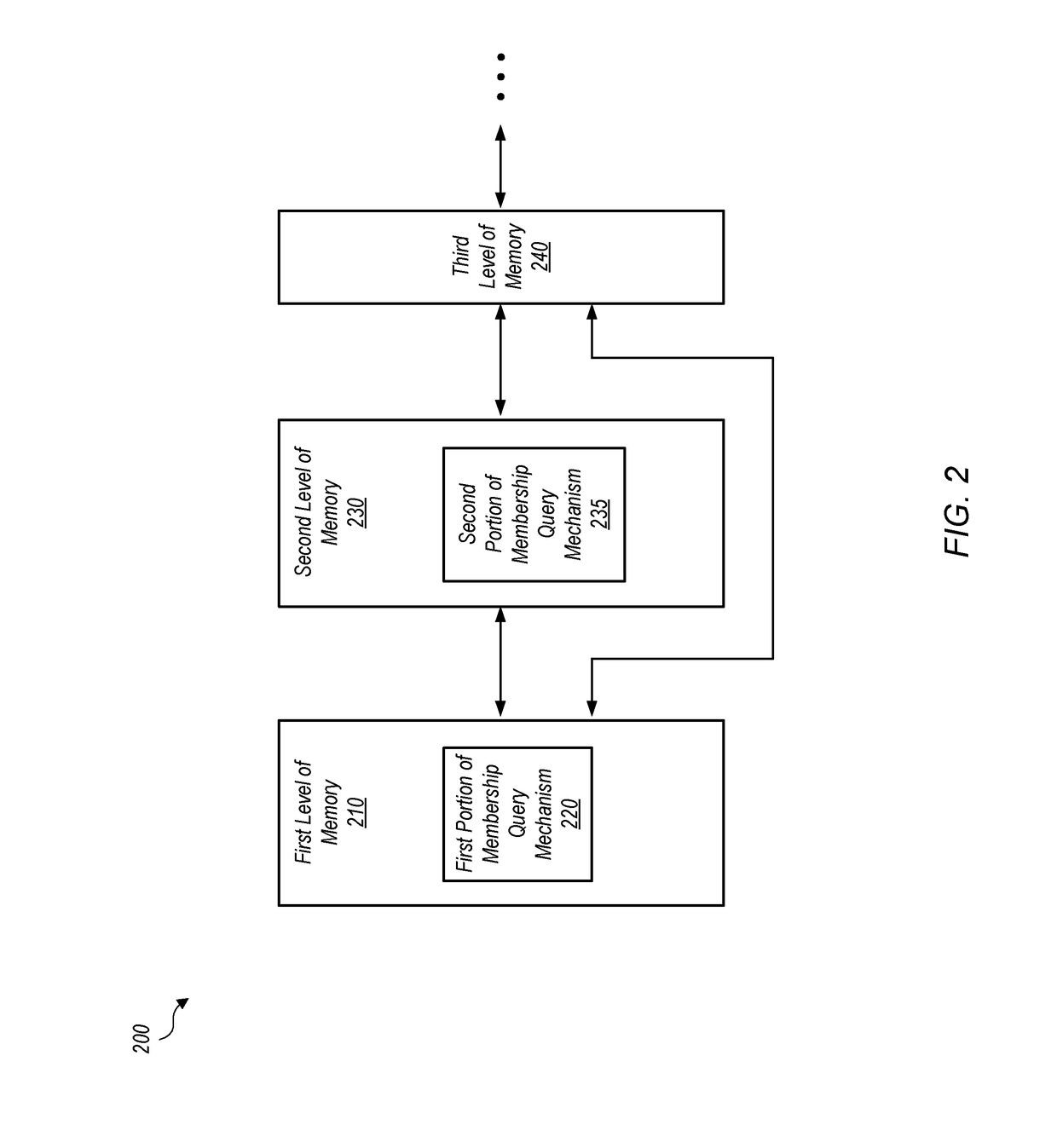

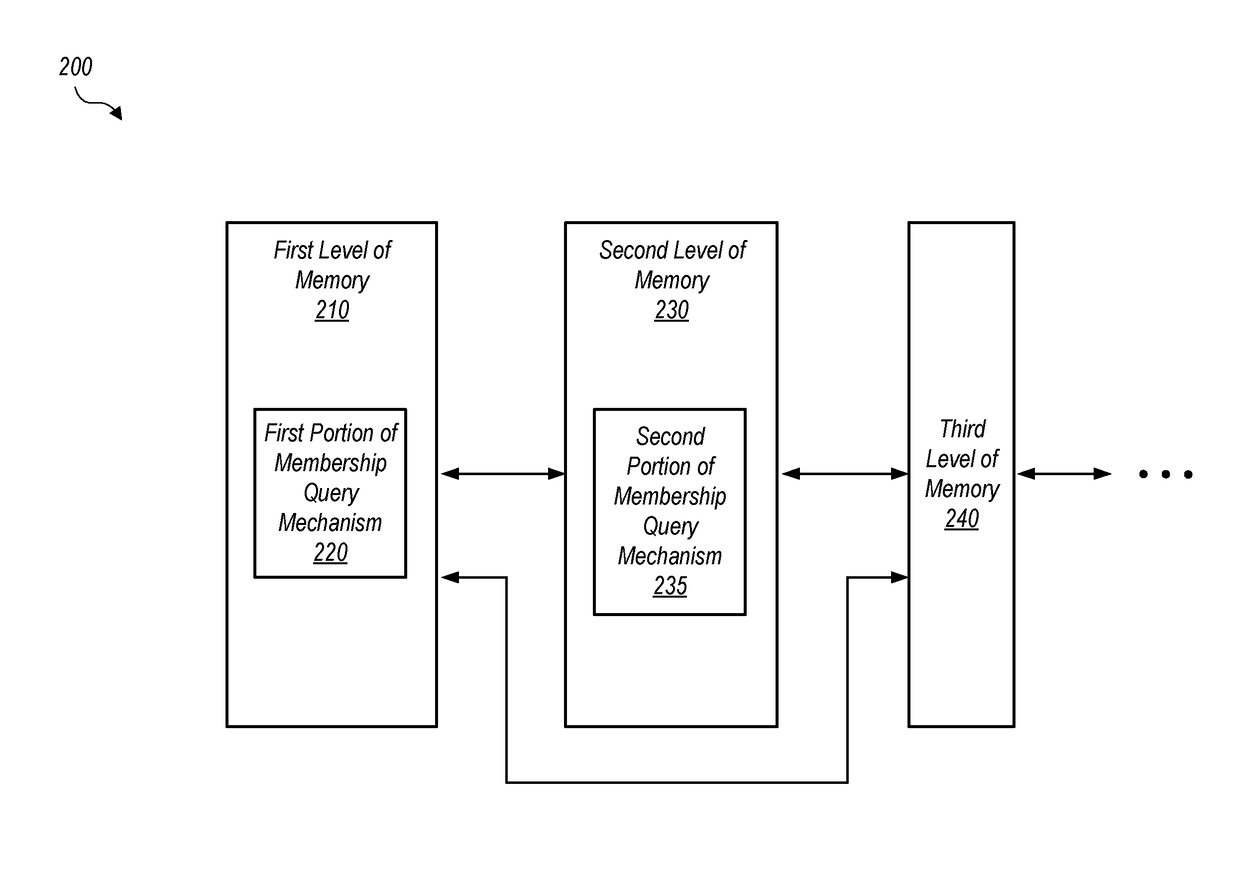

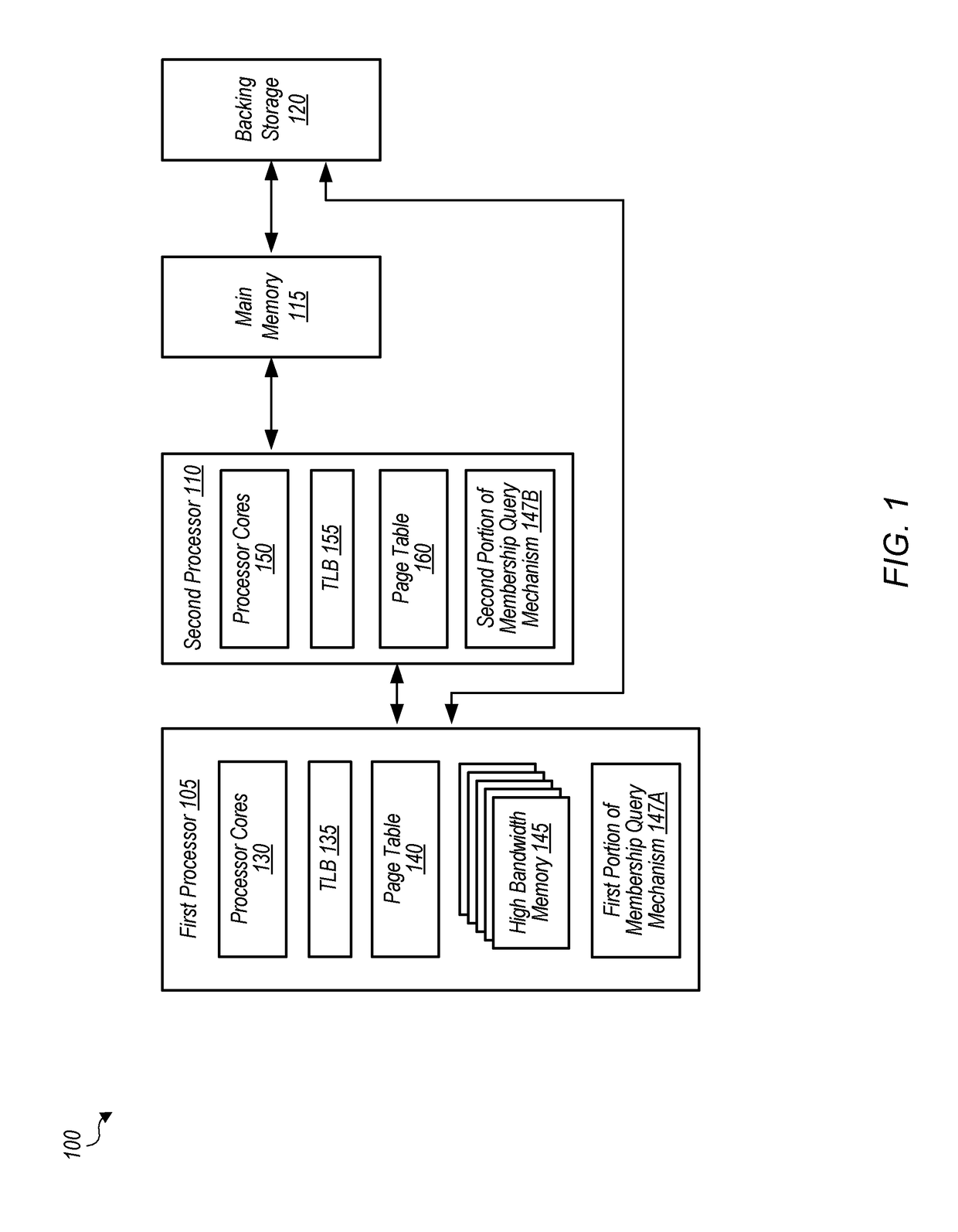

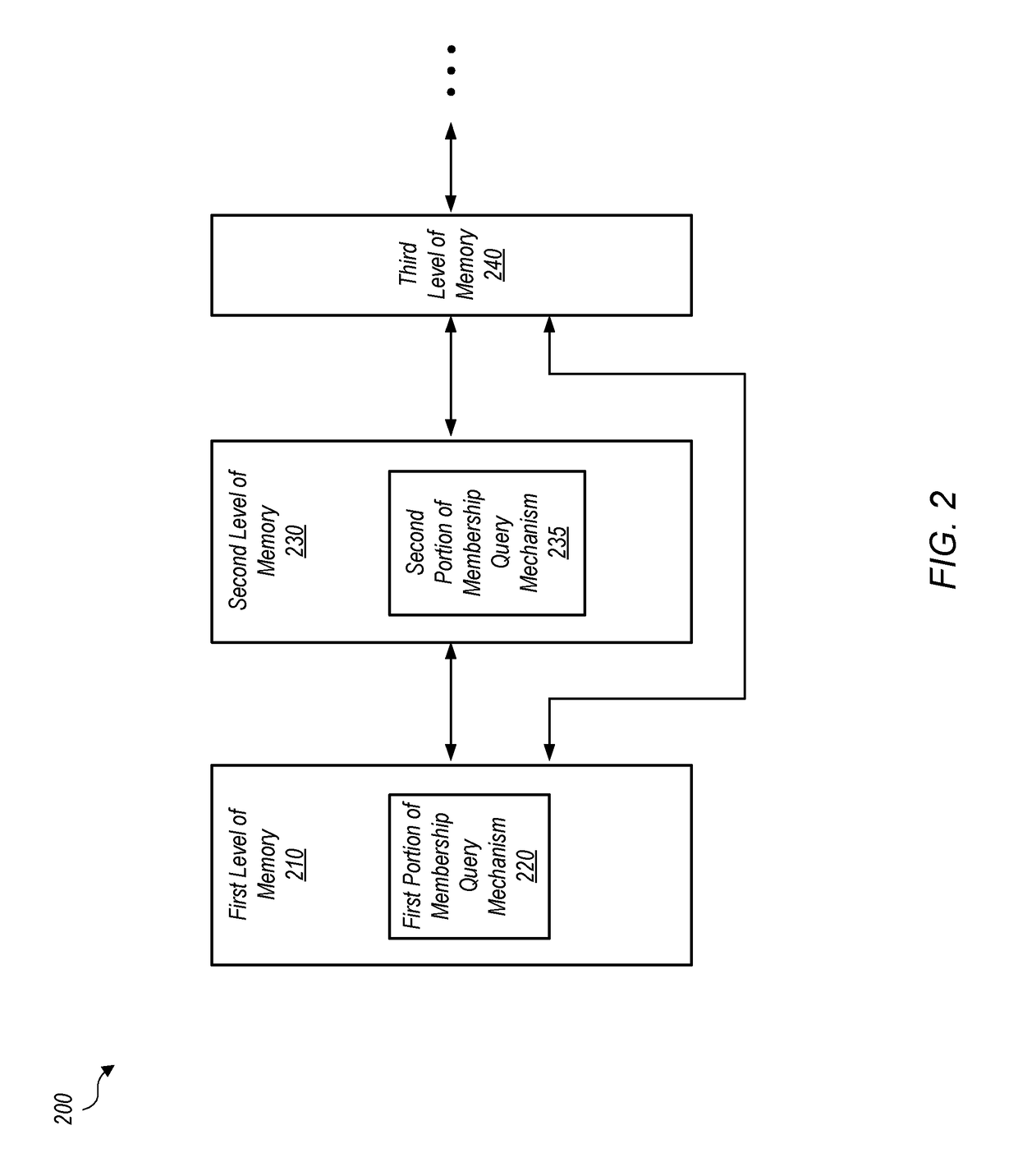

Page migration acceleration using a two-level bloom filter on high bandwidth memory systems

ActiveUS20180081585A1Memory architecture accessing/allocationDigital data information retrievalMemory hierarchyMultilevel memory

Systems, apparatuses, and methods for accelerating page migration using a two-level bloom filter are disclosed. In one embodiment, a system includes a GPU and a CPU and a multi-level memory hierarchy. When a memory request misses in a first memory, the GPU is configured to check a first level of a two-level bloom filter to determine if a page targeted by the memory request is located in a second memory. If the first level of the two-level bloom filter indicates that the page is not in the second memory, then the GPU generates a page fault and sends the memory request to a third memory. If the first level of the two-level bloom filter indicates that the page is in the second memory, then the GPU sends the memory request to the CPU.

Owner:ADVANCED MICRO DEVICES INC

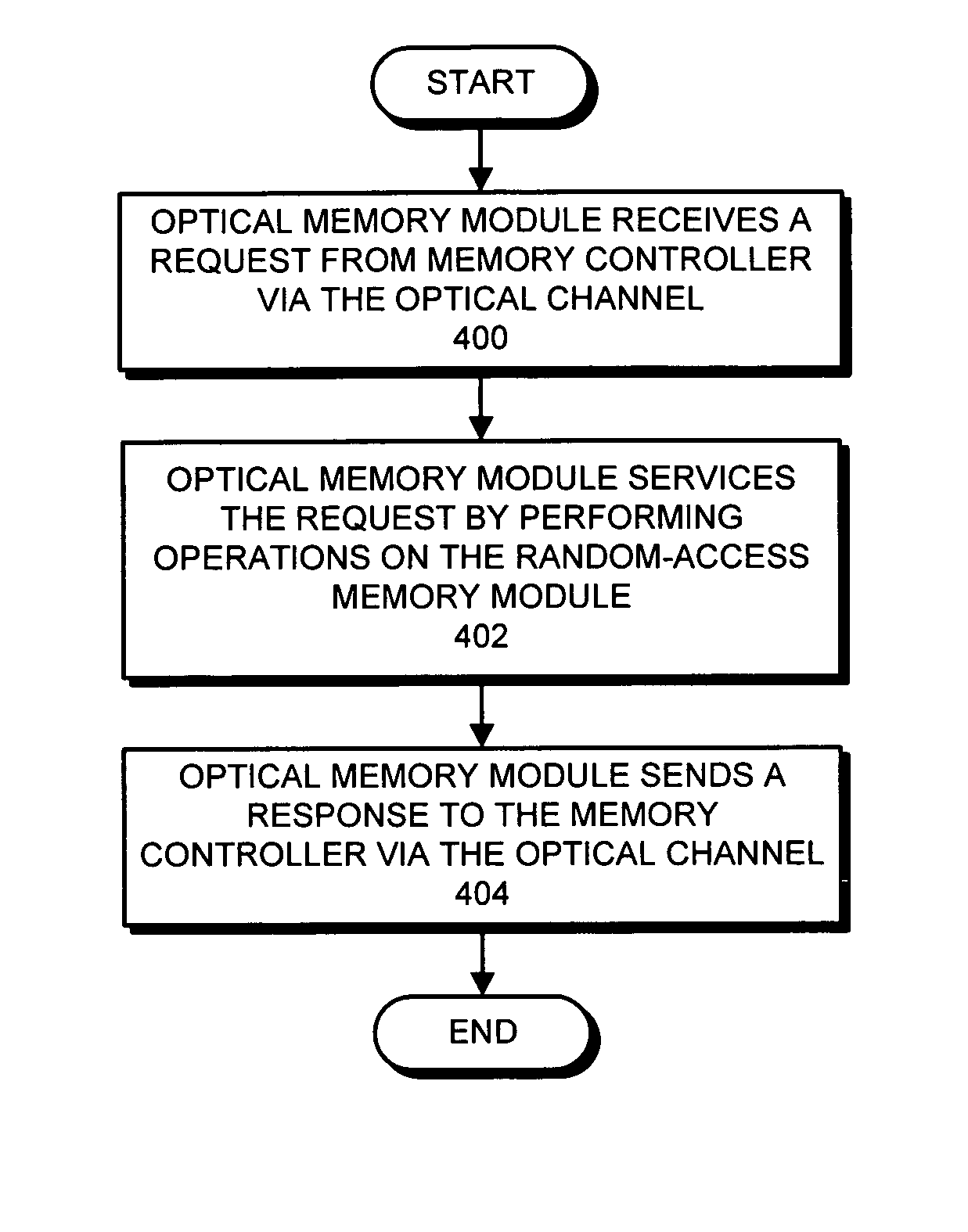

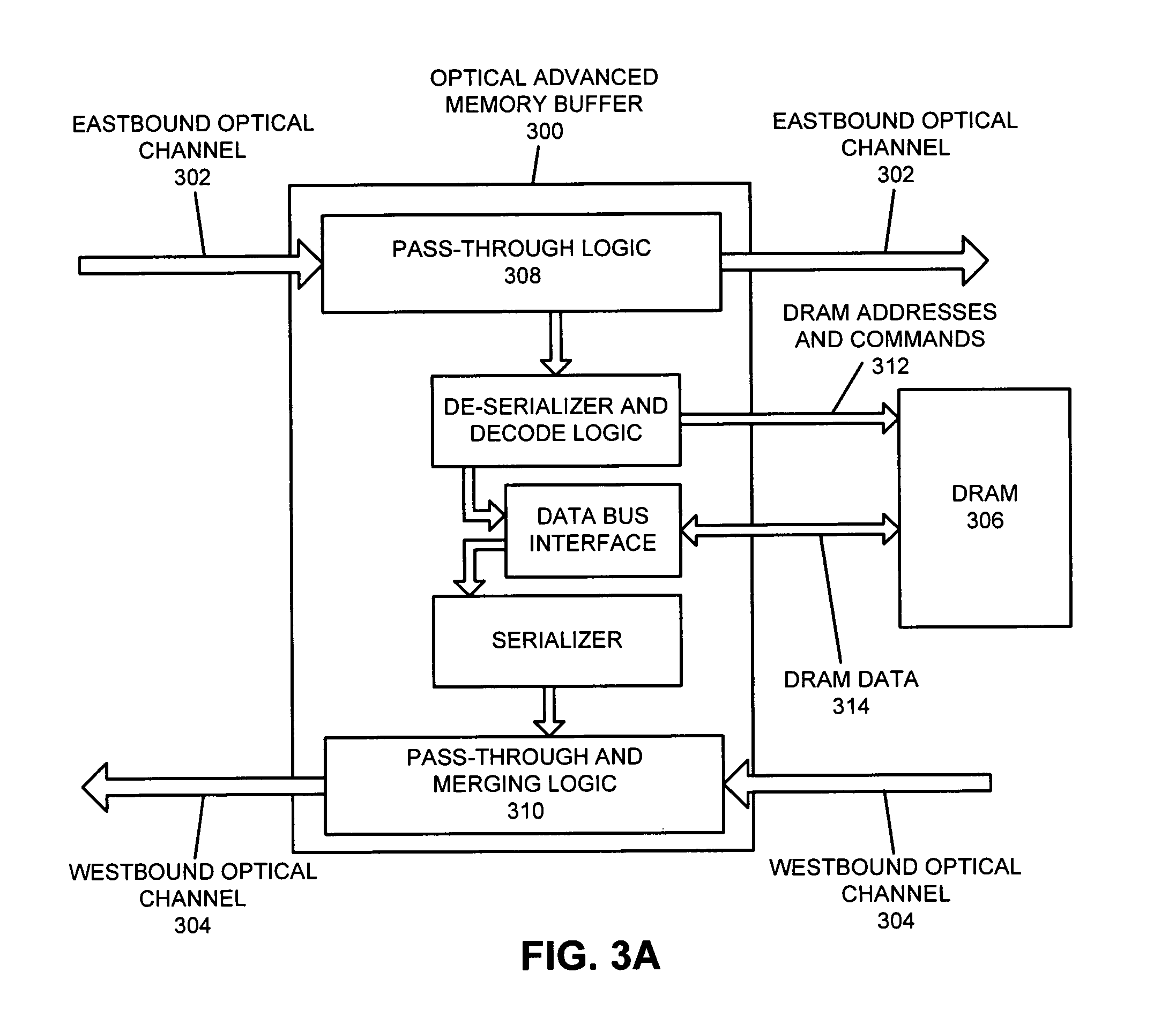

Memory module with optical interconnect that enables scalable high-bandwidth memory access

ActiveUS7970990B2Facilitates scalableIncrease speedDigital storageMemory systemsRandom access memoryMemory controller

One embodiment of the present invention provides a system that facilitates scalable high-bandwidth memory access using a memory module with optical interconnect. This system includes an optical channel, a memory buffer, and a random-access memory module. The memory buffer is configured to receive a request from a memory controller via the optical channel. The memory buffer handles the received request by performing operations on the random-access memory module and then sending a response to the memory controller via the optical channel. Hence, the memory module with optical interconnect provides a high-speed serial link to the random-access memory module without consuming a large number of pins per channel on the memory controller.

Owner:ORACLE INT CORP

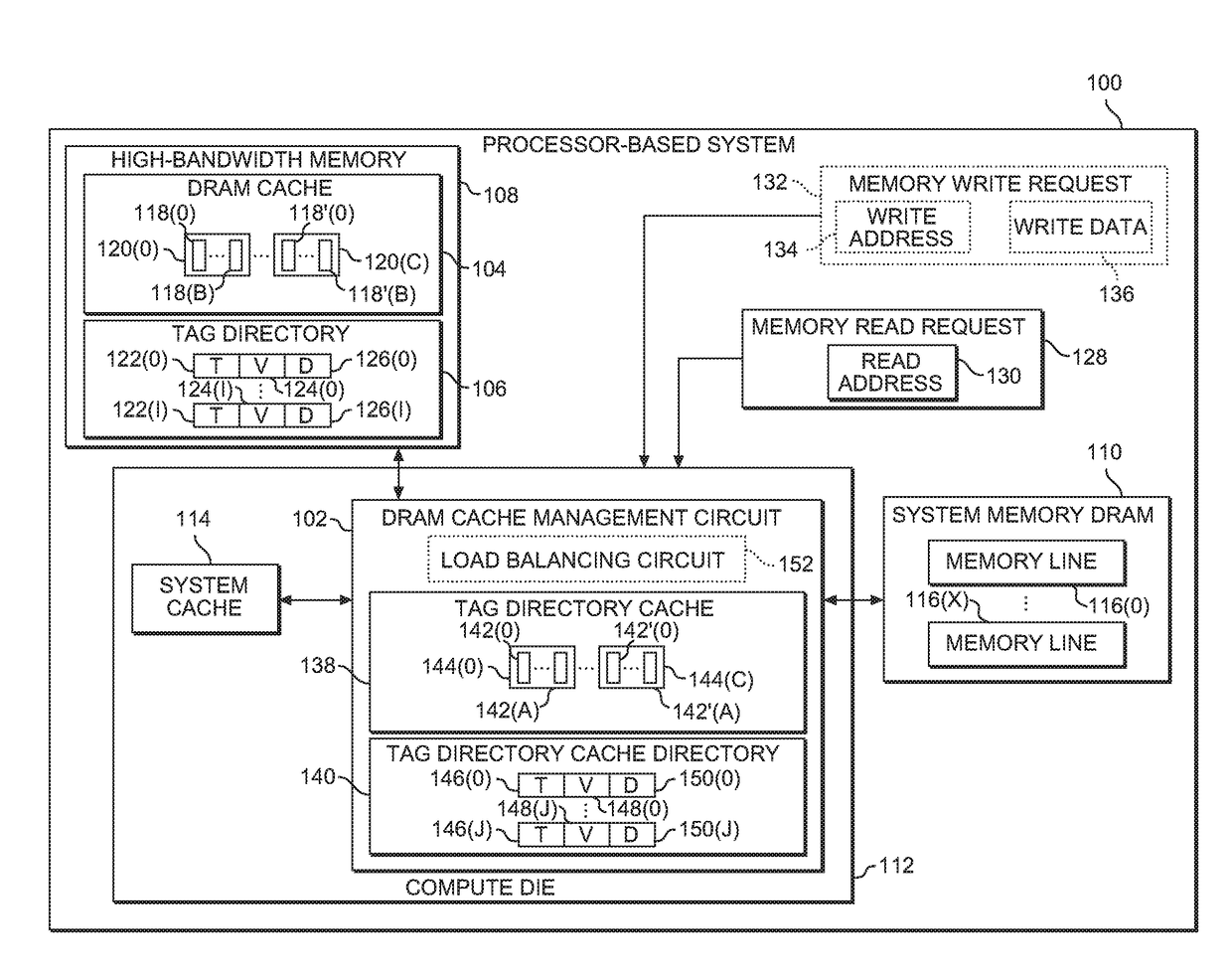

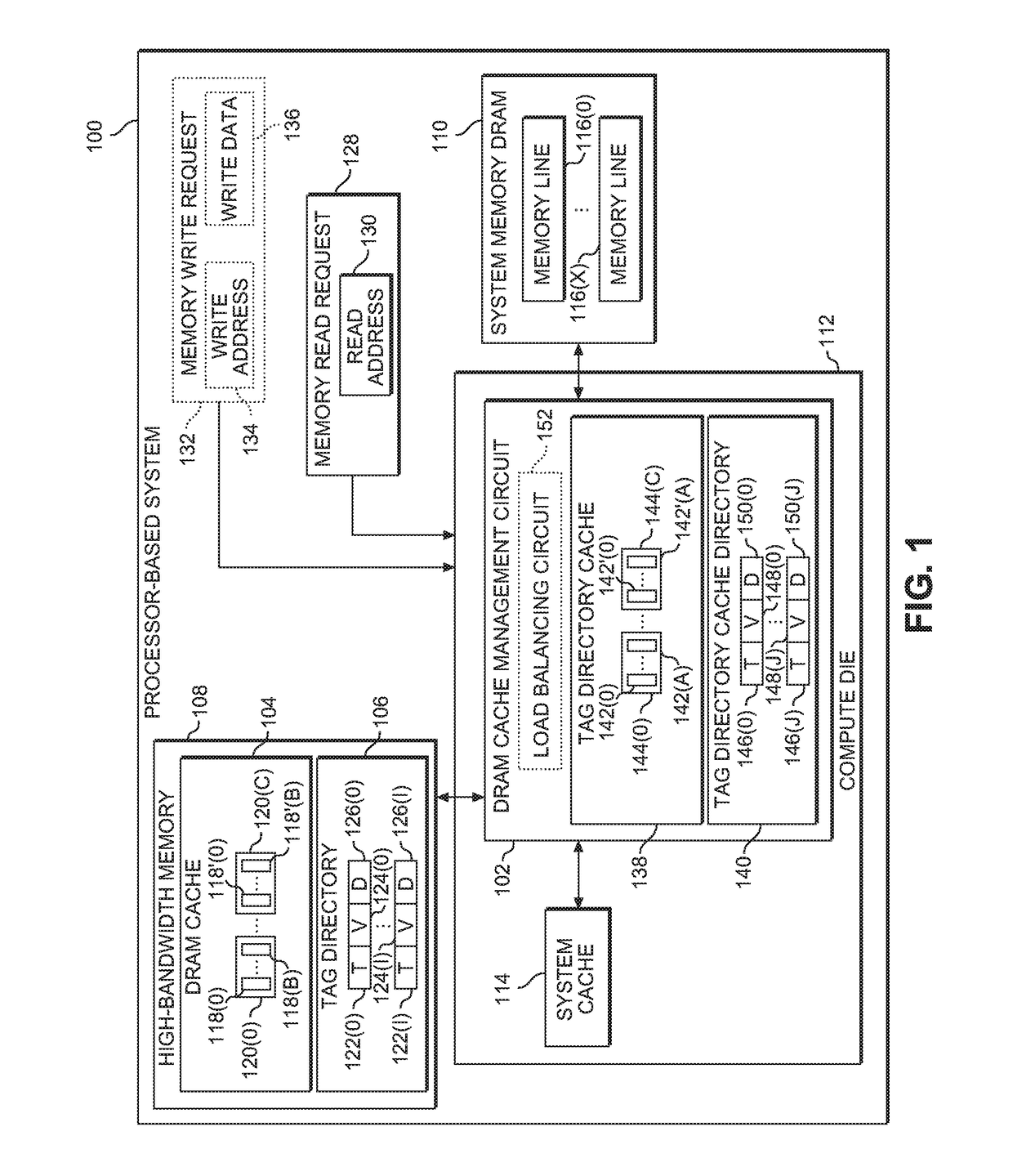

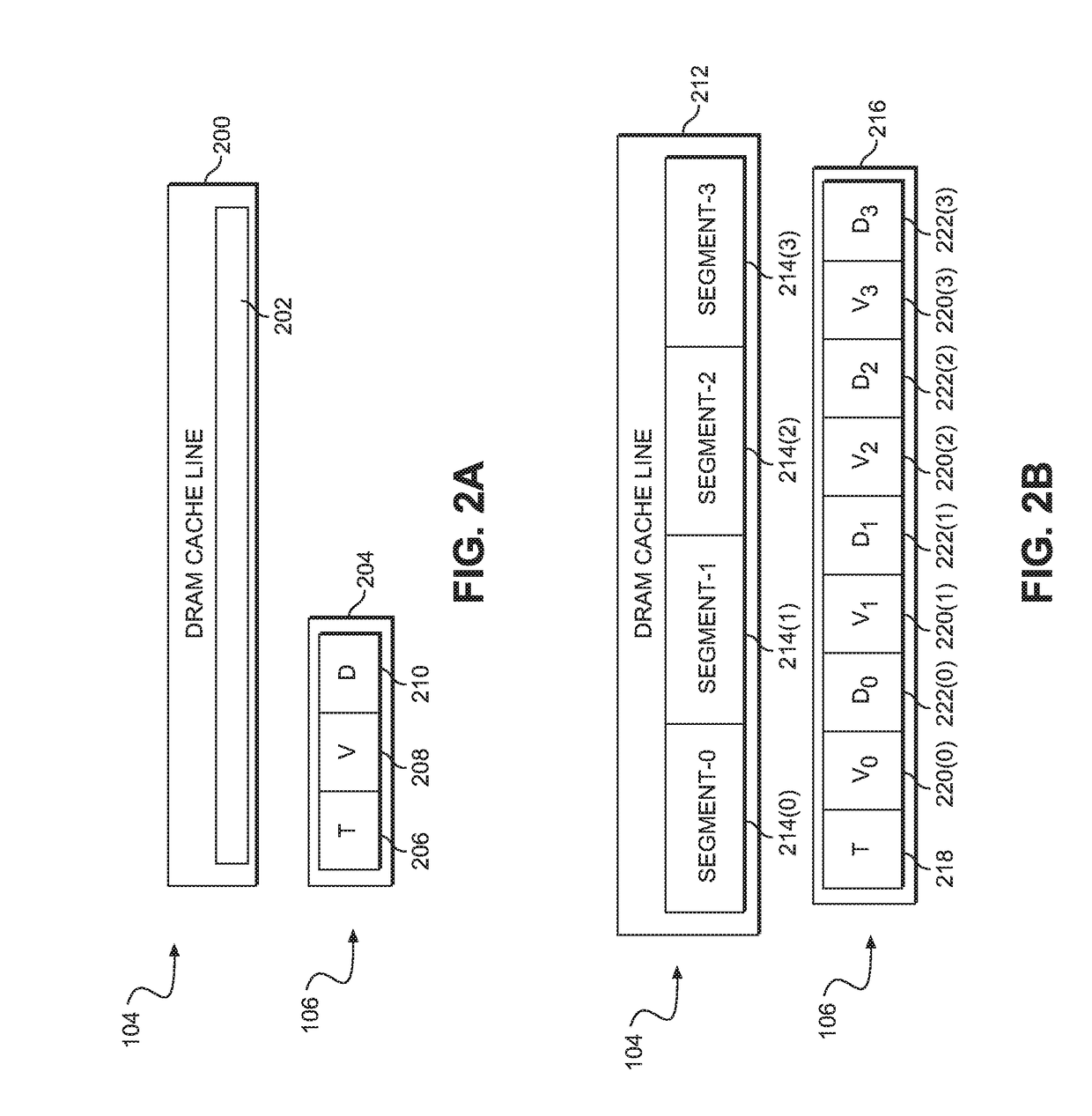

Providing scalable dynamic random access memory (DRAM) cache management using tag directory caches

InactiveUS20170212840A1Minimize latency penaltyMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory addressParallel computing

Providing scalable dynamic random access memory (DRAM) cache management using tag directory caches is provided. In one aspect, a DRAM cache management circuit is provided to manage access to a DRAM cache in a high-bandwidth memory. The DRAM cache management circuit comprises a tag directory cache and a tag directory cache directory. The tag directory cache stores tags of frequently accessed cache lines in the DRAM cache, while the tag directory cache directory stores tags for the tag directory cache. The DRAM cache management circuit uses the tag directory cache and the tag directory cache directory to determine whether data associated with a memory address is cached in the DRAM cache of the high-bandwidth memory. Based on the tag directory cache and the tag directory cache directory, the DRAM cache management circuit may determine whether a memory operation can be performed using the DRAM cache and / or a system memory DRAM.

Owner:QUALCOMM INC

Coordinated near-far memory controller for process-in-HBM

ActiveUS10437482B2Improve performanceMemory architecture accessing/allocationInput/output to record carriersTerm memoryMemory controller

A method of coordinating memory commands in a high-bandwidth memory HBM+ system, the method including sending a host memory controller command from a host memory controller to a memory, receiving the host memory controller command at a coordinating memory controller, forwarding the host memory controller command from the coordinating memory controller to the memory, and scheduling, by the coordinating memory controller, a coordinating memory controller command based on the host memory controller command.

Owner:SAMSUNG ELECTRONICS CO LTD

Page migration acceleration using a two-level bloom filter on high bandwidth memory systems

ActiveUS10067709B2Memory architecture accessing/allocationDigital data information retrievalMemory hierarchyMultilevel memory

Owner:ADVANCED MICRO DEVICES INC

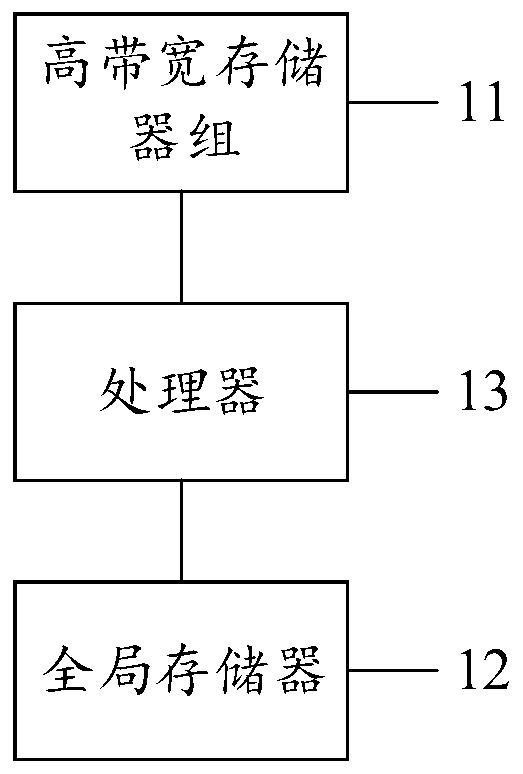

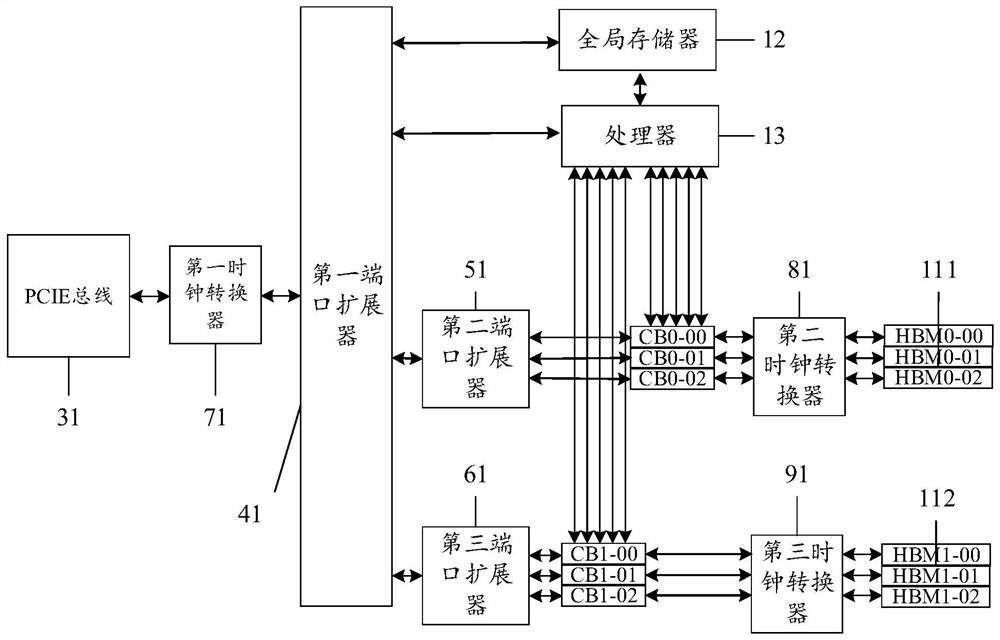

Model training device, method and system and computer readable storage medium

ActiveCN111723907AImprove training efficiencyReduce the number of reads and writesNeural architecturesPhysical realisationHigh bandwidthAlgorithm

The invention discloses a model training device, method and system and a computer readable storage medium, and the device comprises: a high-bandwidth storage group which is used for storing to-be-trained data; a global memory which is used for storing model parameters of the target model; a processor which is connected with the high-bandwidth memory bank and the global memory and is used for operating the to-be-trained data and the initial model parameters stored in the global memory based on a training algorithm of the target model to obtain target model parameters and storing the target model parameters into the global memory, wherein the processor is established based on the network-on-chip. In the present application, the storage capacity of the high-bandwidth memory is large, so thatmore to-be-trained data can be stored, and the read-write frequency of the high-bandwidth memory is reduced; the processor is built based on the network-on-chip, so that the processor can transmit corresponding data in the processor by means of the network-on-chip, the read-write frequency of the data to be transmitted is reduced, and compared with the prior art, the training efficiency of the model can be improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com