Patents

Literature

36results about How to "Reduce data transfer overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

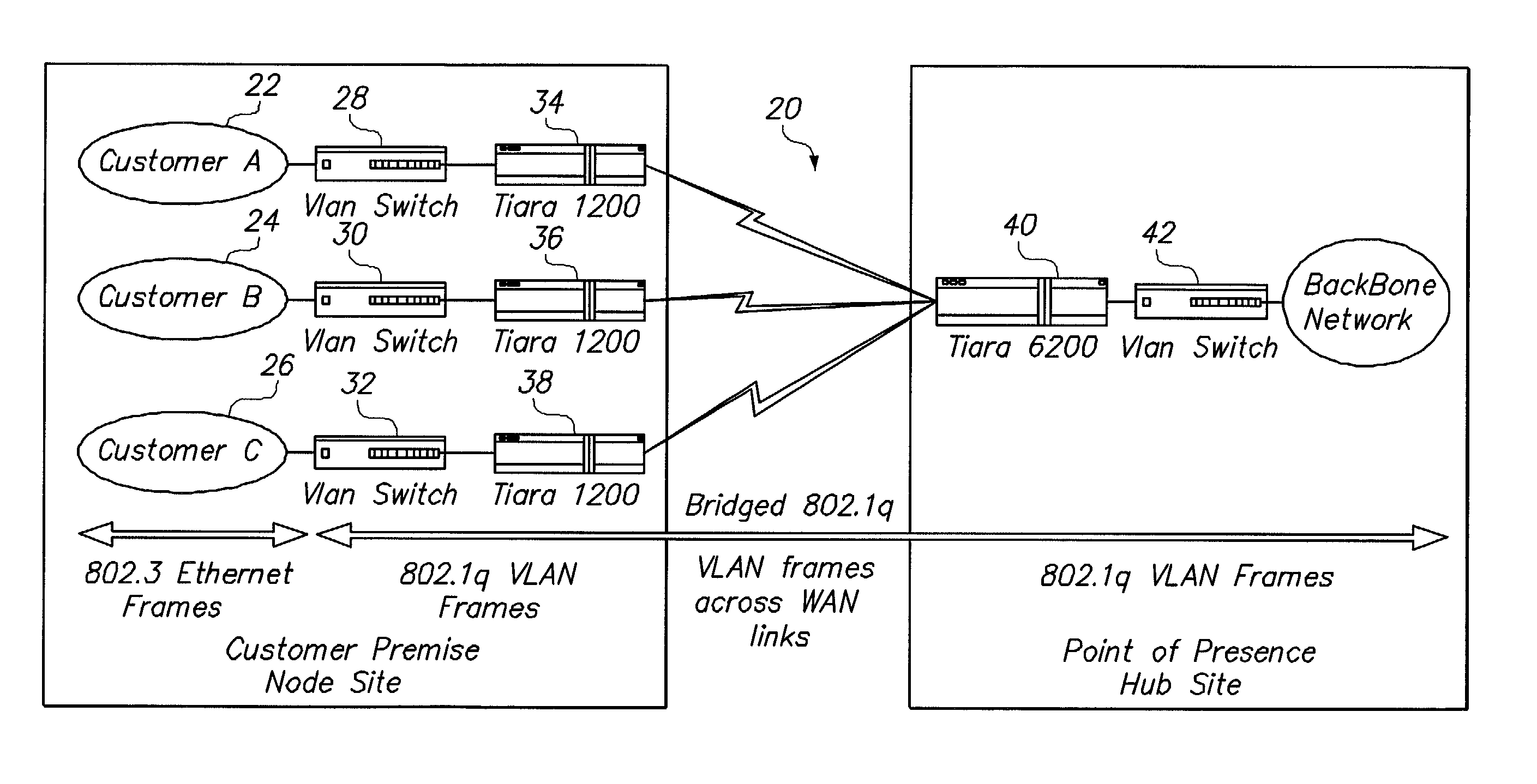

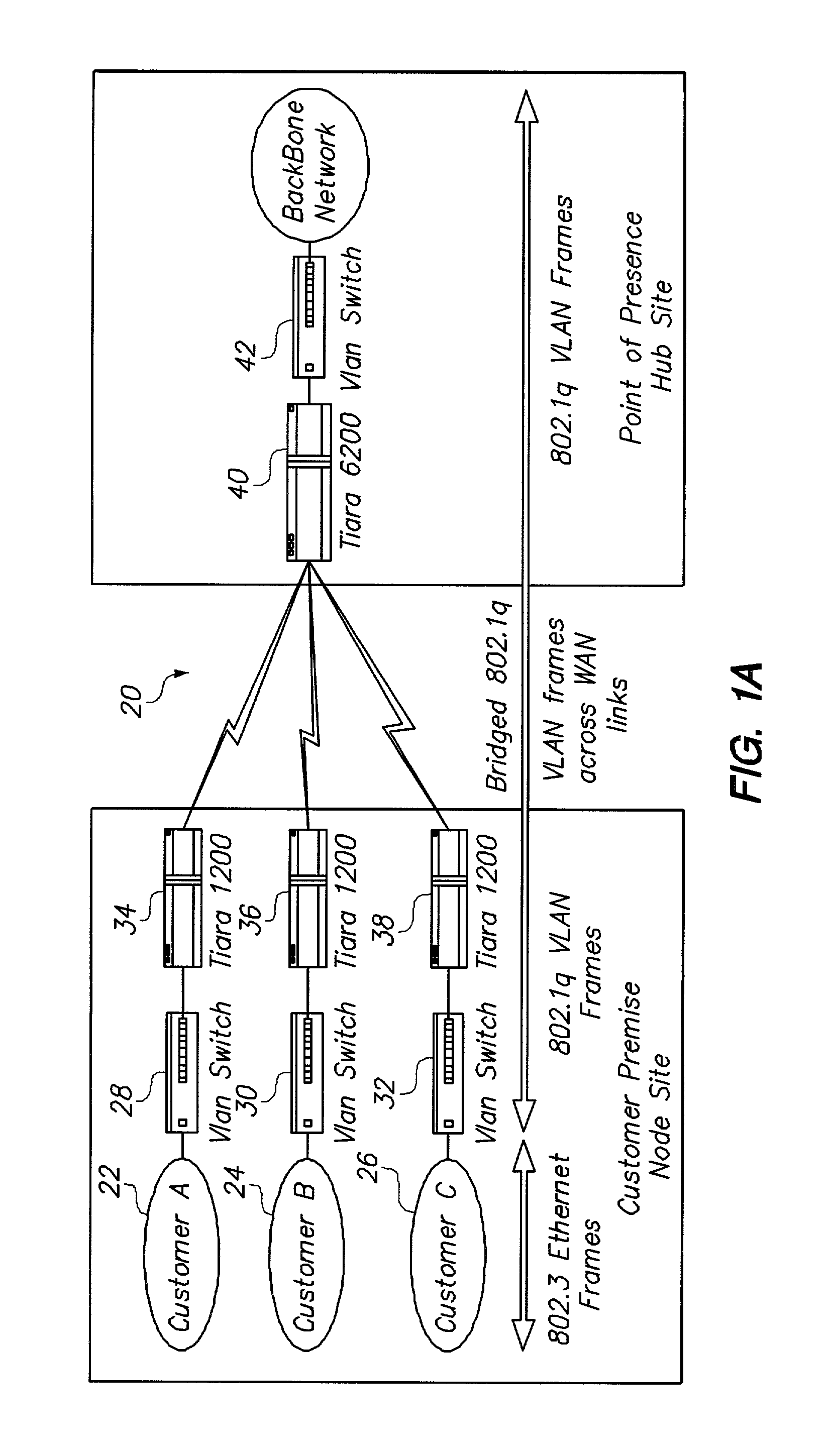

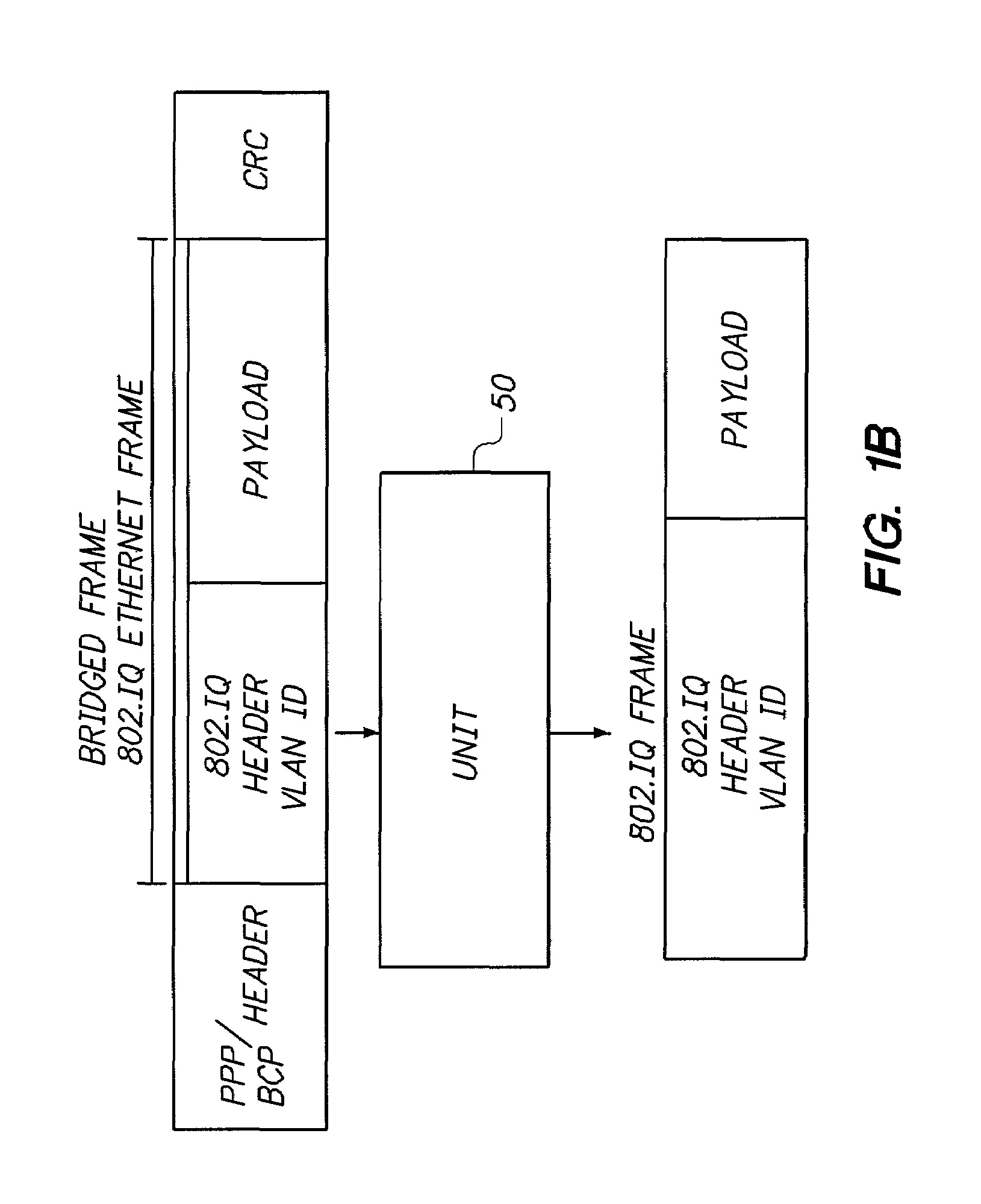

System and method for connecting geographically distributed virtual local area networks

ActiveUS7088714B2Reduced flexibilityAdds to overhead of data transferTime-division multiplexCircuit switching systemsVirtual LANDistributed computing

Owner:AVAYA INC +1

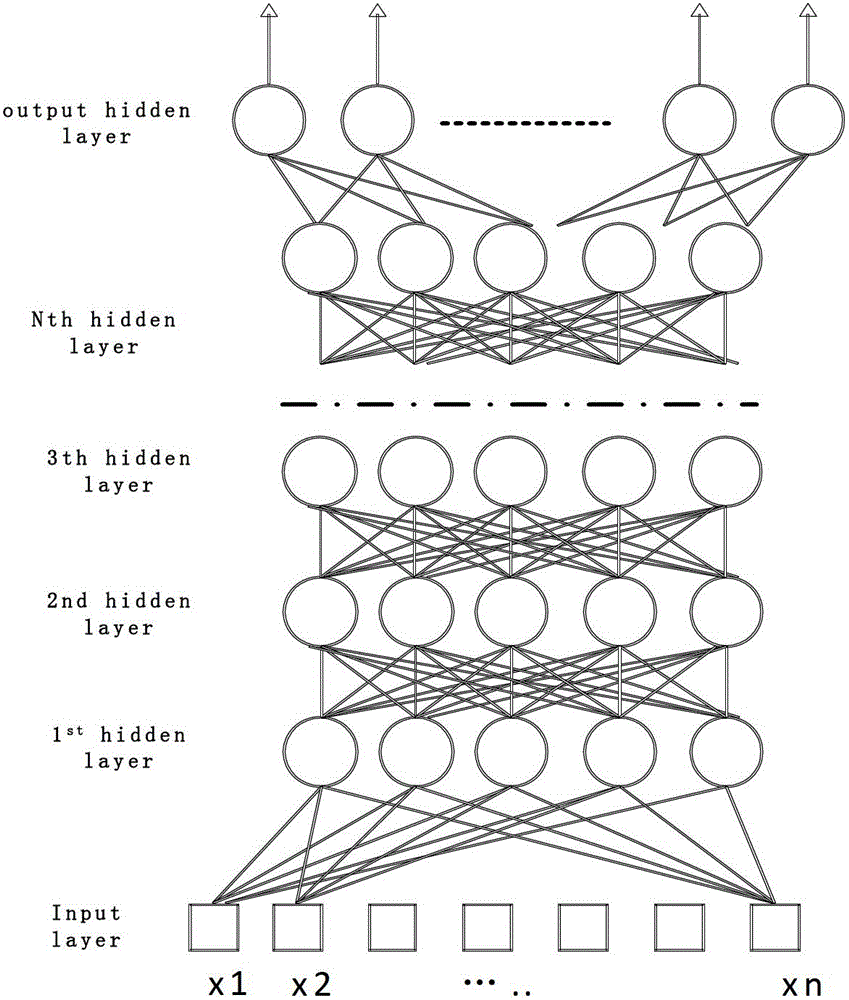

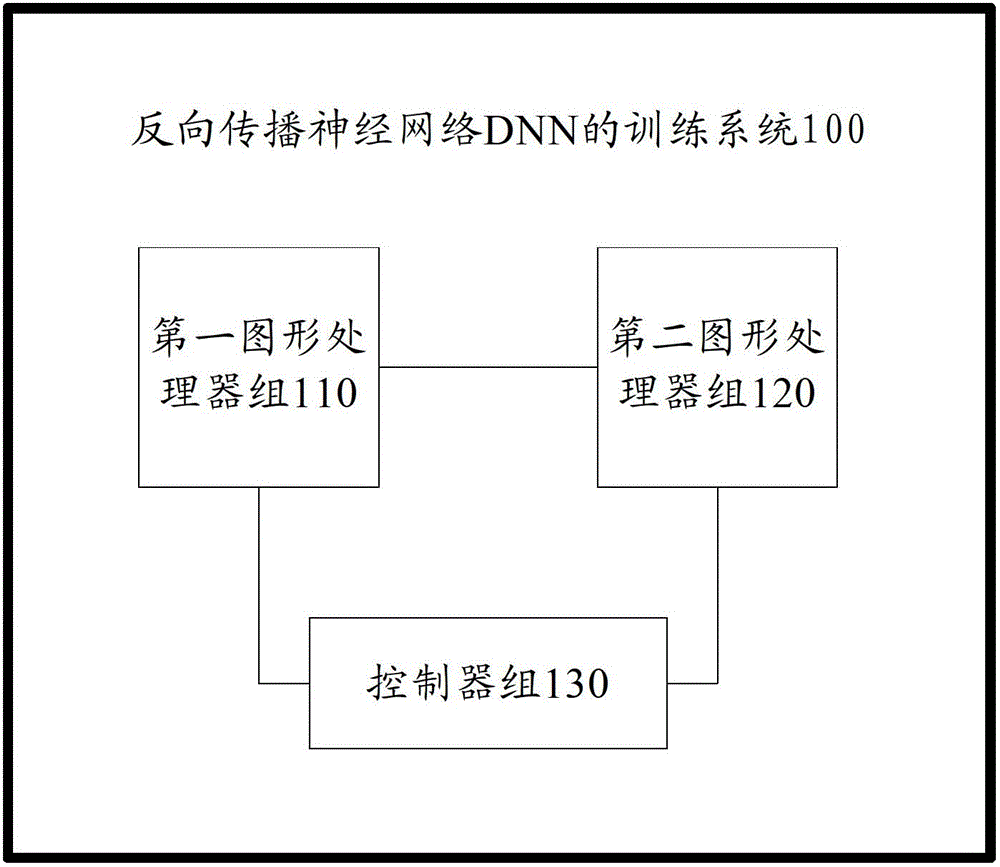

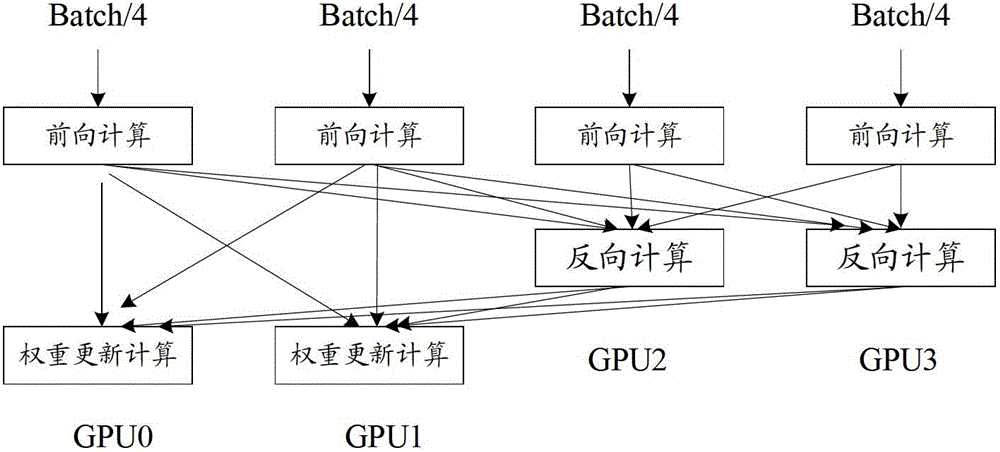

Training system of back propagation neural network DNN (Deep Neural Network)

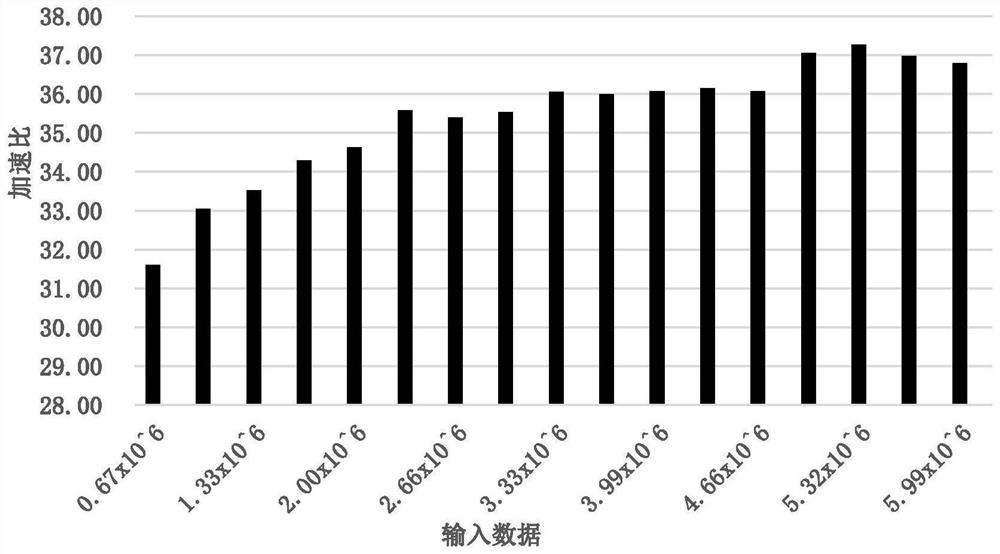

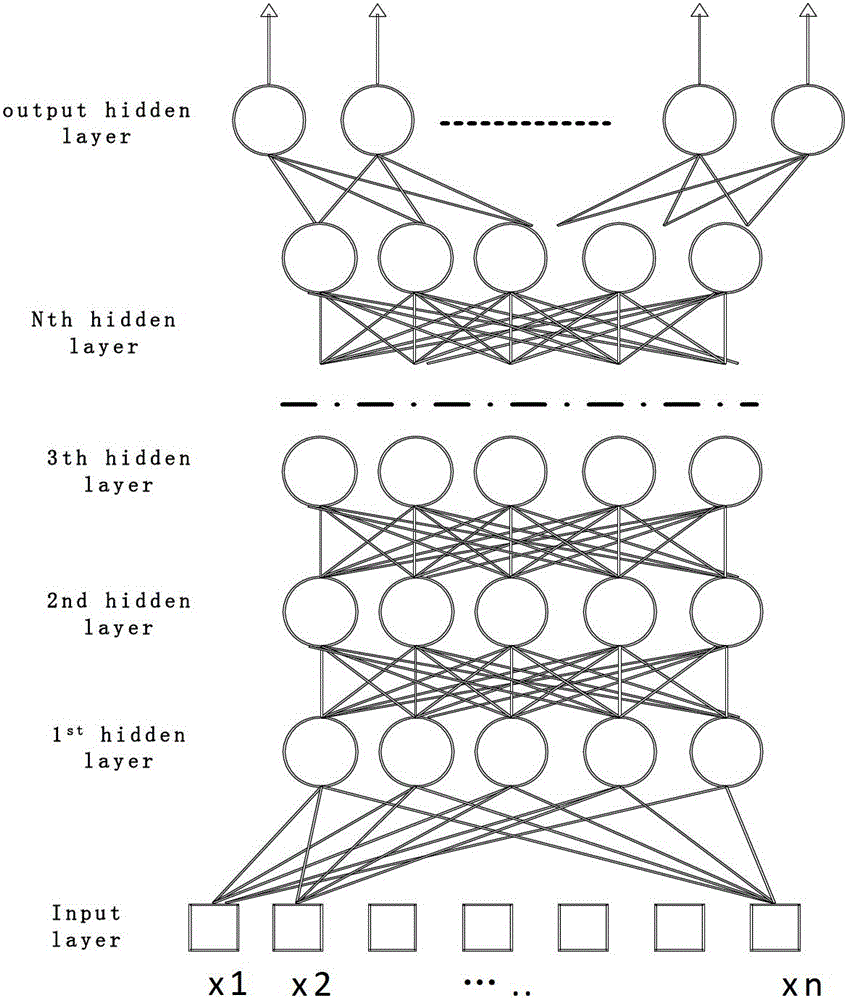

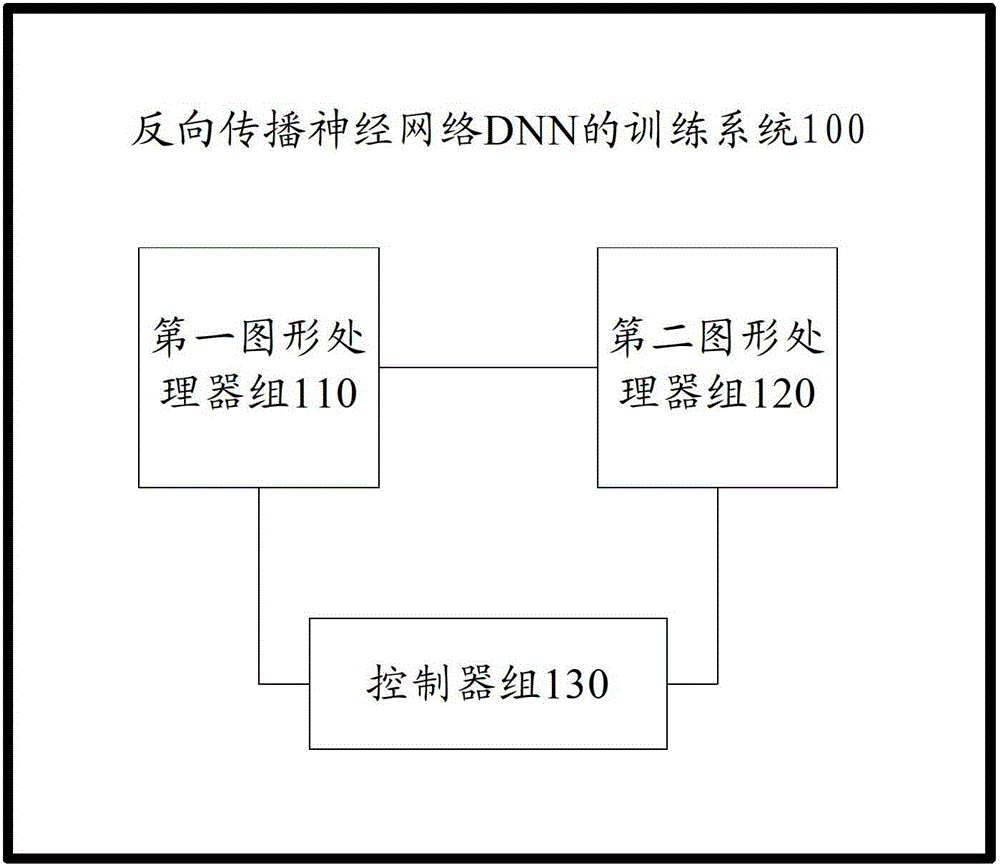

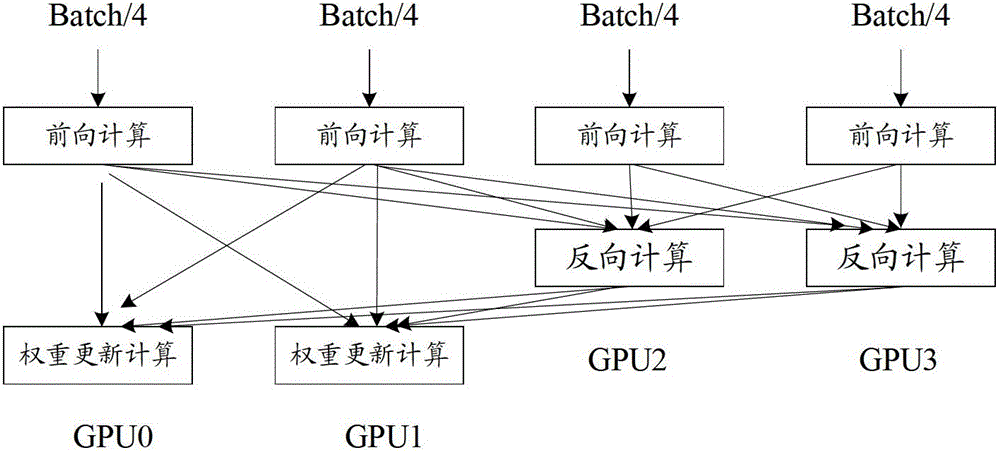

ActiveCN103150596AReduce transmission overheadIncrease training speedNeural learning methodsGraphicsSimulation

The invention provides a training system of back propagation neural network DNN (Deep Neural Network). The training system of the back propagation neural network DNN comprises a first graphics processor assembly, a second graphics processor assembly and a controller assembly, wherein the first graphics processor assembly is used for performing DNN forward calculation and weight update calculation; the second graphics processor assembly is used for perform DNN forward calculation and DNN back calculation; the controller assembly is used for controlling the first graphics processor assembly and the second graphics processor assembly to perform Nth-layer DNN forward calculation respectively according to respective input data, and after the completion of the forward calculation, controlling the first graphics processor assembly to perform the weight update calculation and controlling the second graphics processor assembly to perform the DNN back calculation; and N is a positive integer. The training system provided by the invention has the advantages of high training speed and low data transmission cost, so that the training speed of the back propagation neural network DNN is promoted.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

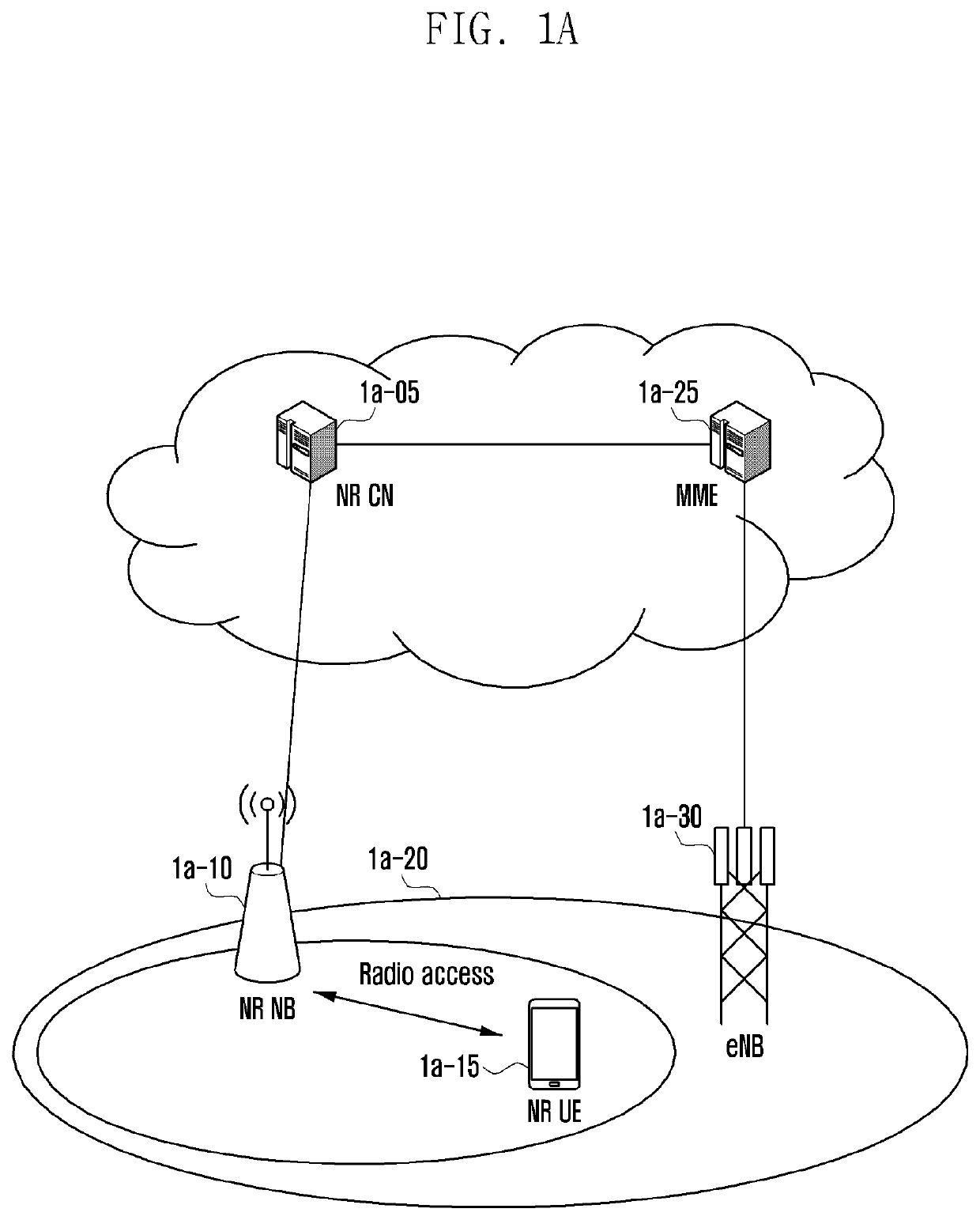

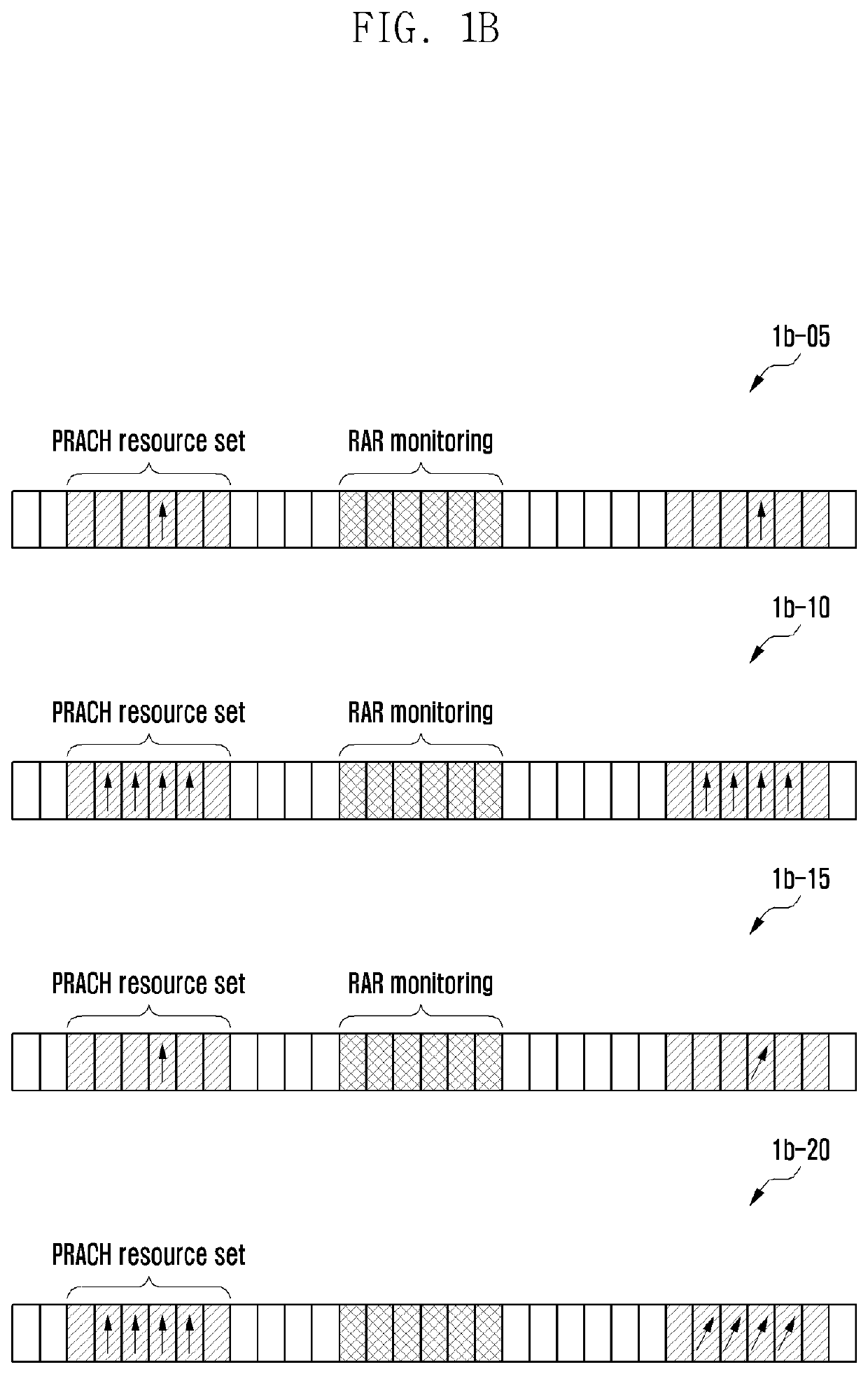

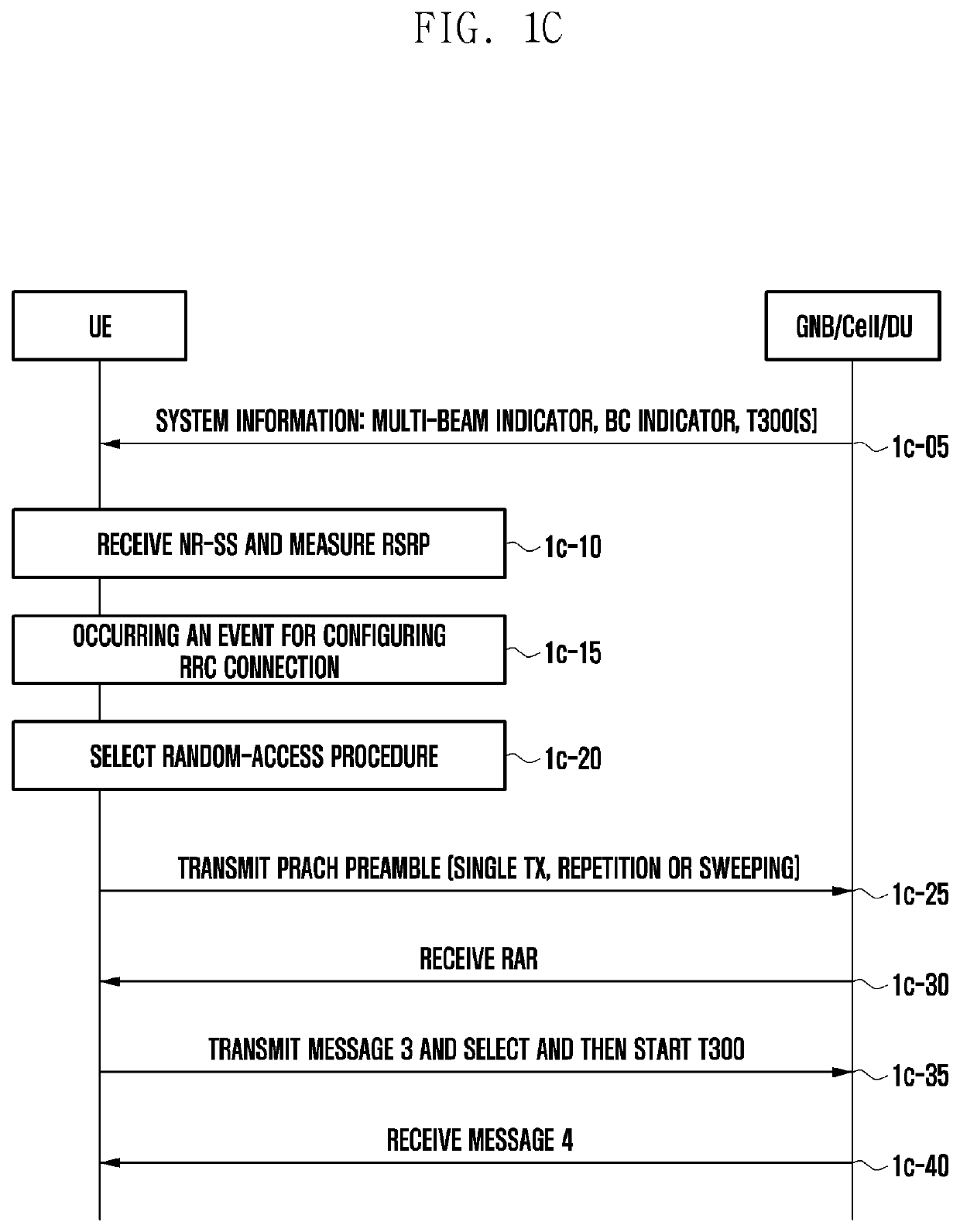

Method and device for efficient communication in next generation mobile communication system

ActiveUS20200229111A1Reduce data transfer overheadEasy to usePower managementNetwork traffic/resource managementData transmission5G

The present disclosure relates to a communication technique for convergence of a 5G communication system for supporting a higher data transmission rate beyond a 4G system with an IoT technology, and a system therefor. The present disclosure may be applied to an intelligent service (for example, smart home, smart building, smart city, smart car or connected car, health care, digital education, retail business, security and safety-related service, etc.) on the basis of a 5G communication technology and an IoT-related technology. The present disclosure relates to a method of a terminal, the method comprising the steps of: determining a path loss reference beam on the basis of whether information indicating the path loss reference beam is received; obtaining a path loss on the basis of the path loss reference beam; obtaining a power headroom (PH) on the basis of the path loss; and transmitting a power headroom report (PHR) including the PH.

Owner:SAMSUNG ELECTRONICS CO LTD

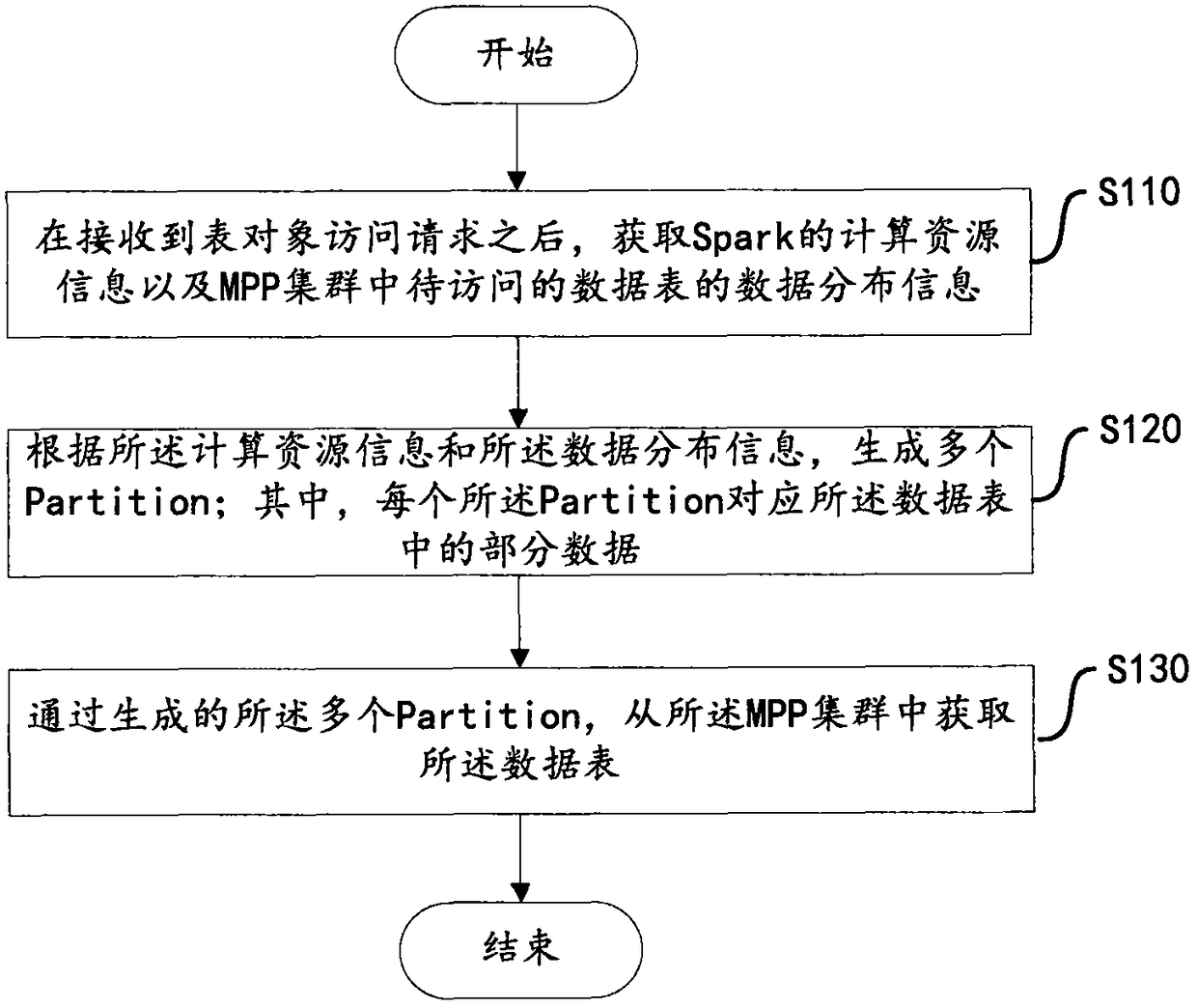

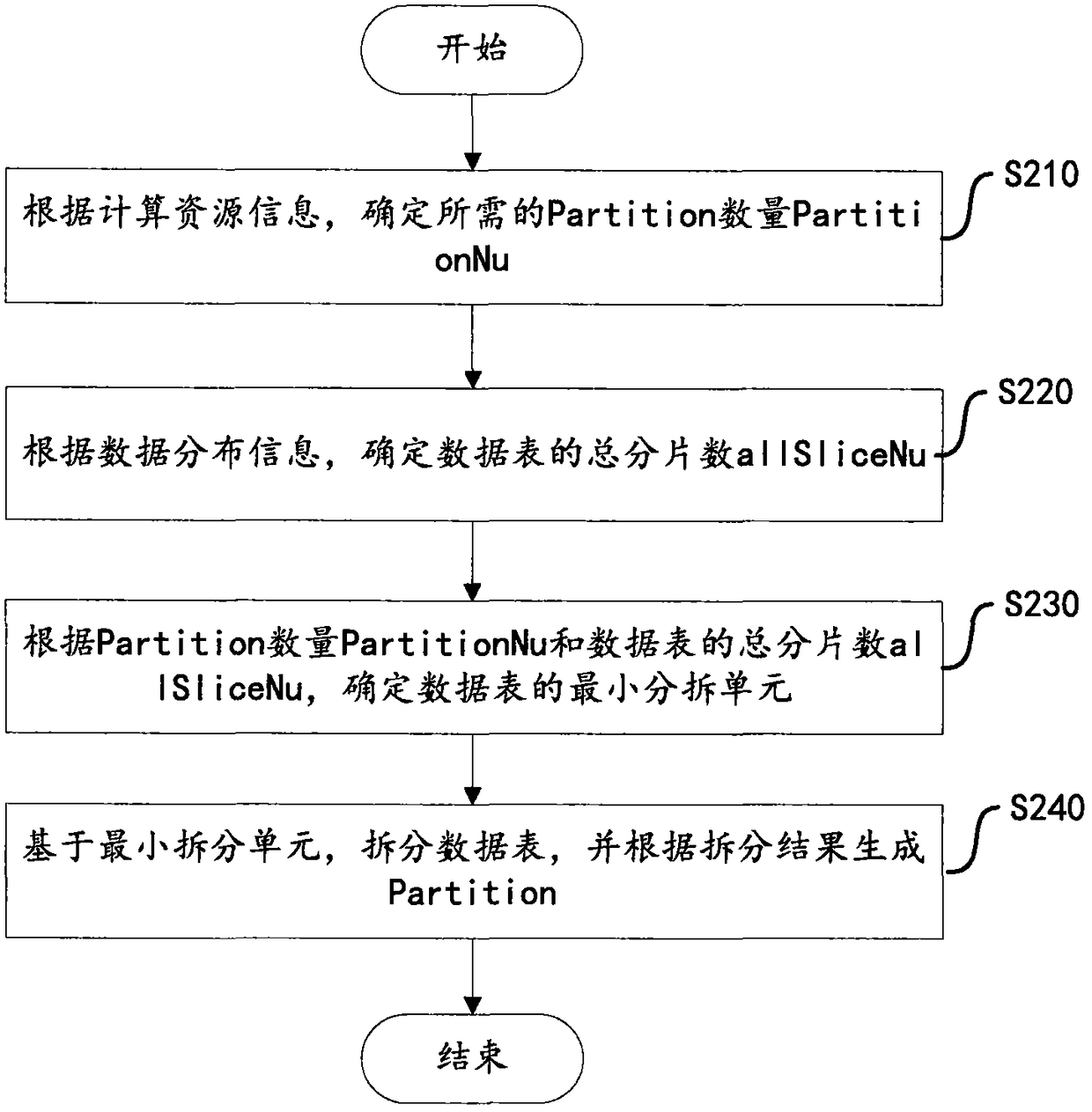

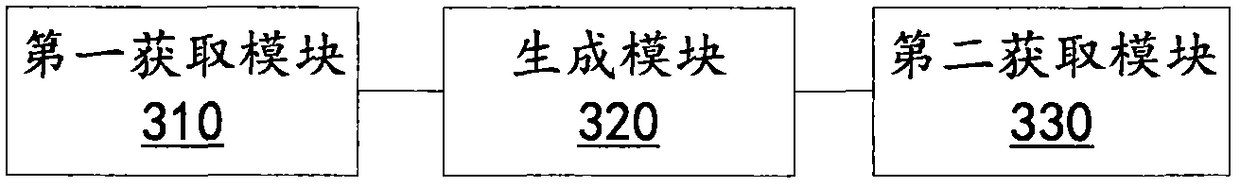

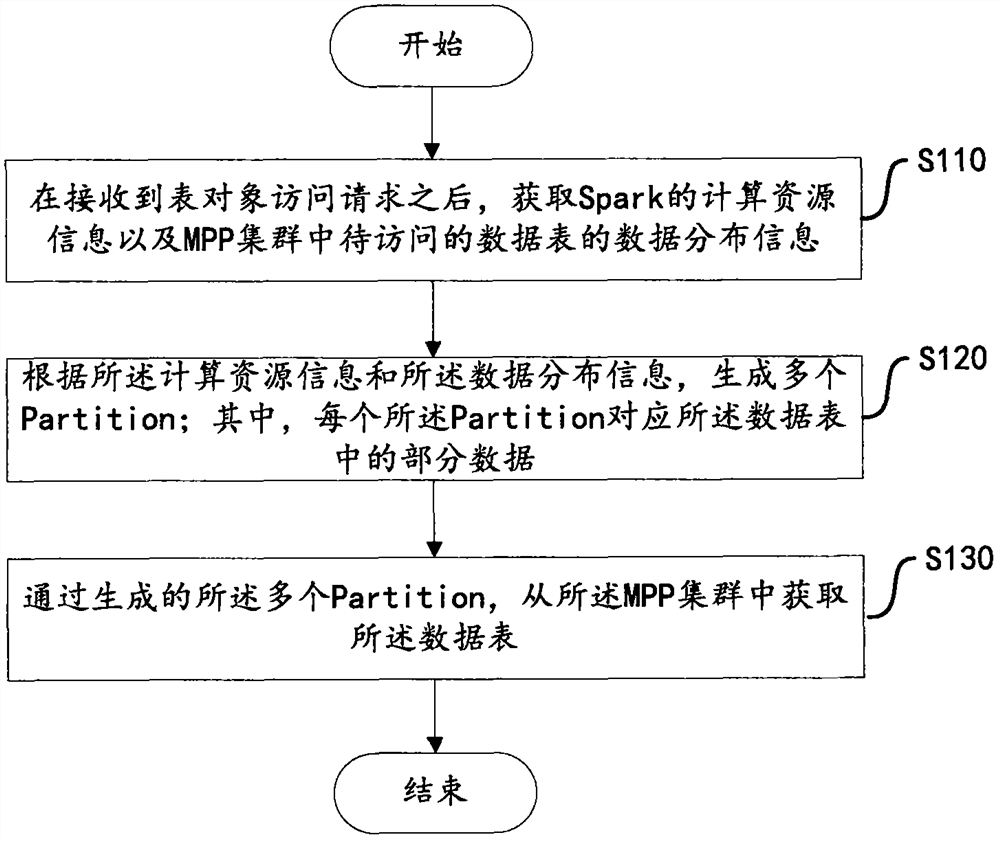

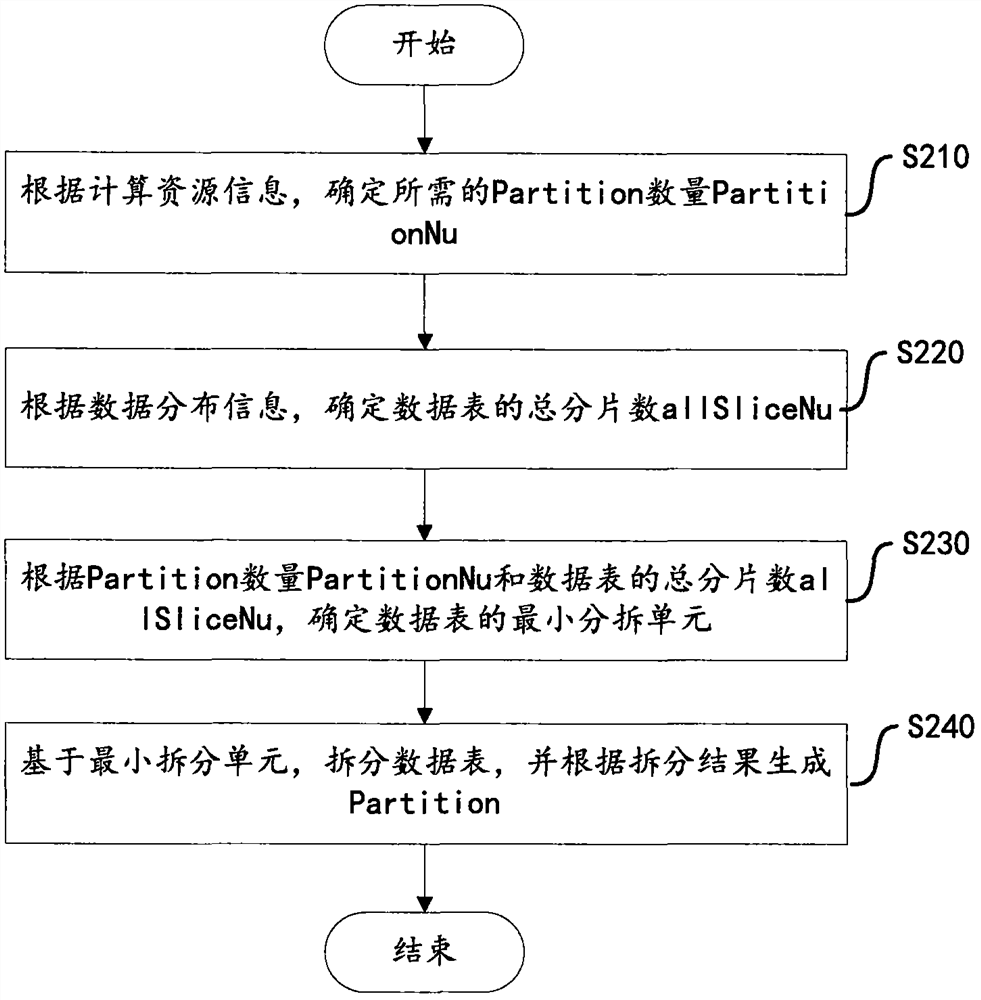

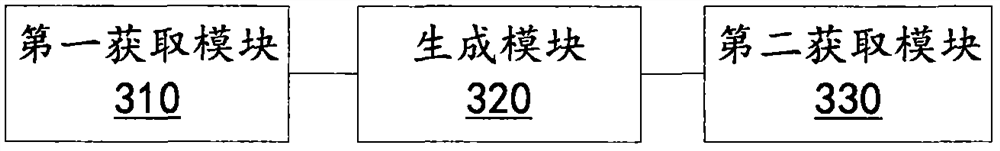

Data acquisition method and device based on Spark computing framework

ActiveCN108536808AImprove import performanceLower latencySpecial data processing applicationsData setResource information

The invention discloses a data acquisition method and device based on a Spark computing framework. The method includes the steps of receiving a table object access request, and acquiring computing resource information of Spark and data distribution information of a data table to be accessed in an MPP cluster; generating a plurality of Partitions according to the computing resource information andthe data distribution information, wherein each Partition corresponds to part of the data in the data table; obtaining the data table from the MPP cluster by generating the Partitions. The method fully utilizes the data storage characteristics of the MPP cluster, and quickly acquires a data set directly from a storage node of the MPP through the multiple Partitions. Further, in the case that computing resources are sufficient, the data table of the storage node may be further split to achieve the purpose of improving parallelism and improving data import performance. According to the data distribution condition of the MPP cluster, data can be preferentially obtained from local storage, data transmission overhead is reduced, network bandwidth is saved, network delay is reduced, and computing performance is improved.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT +1

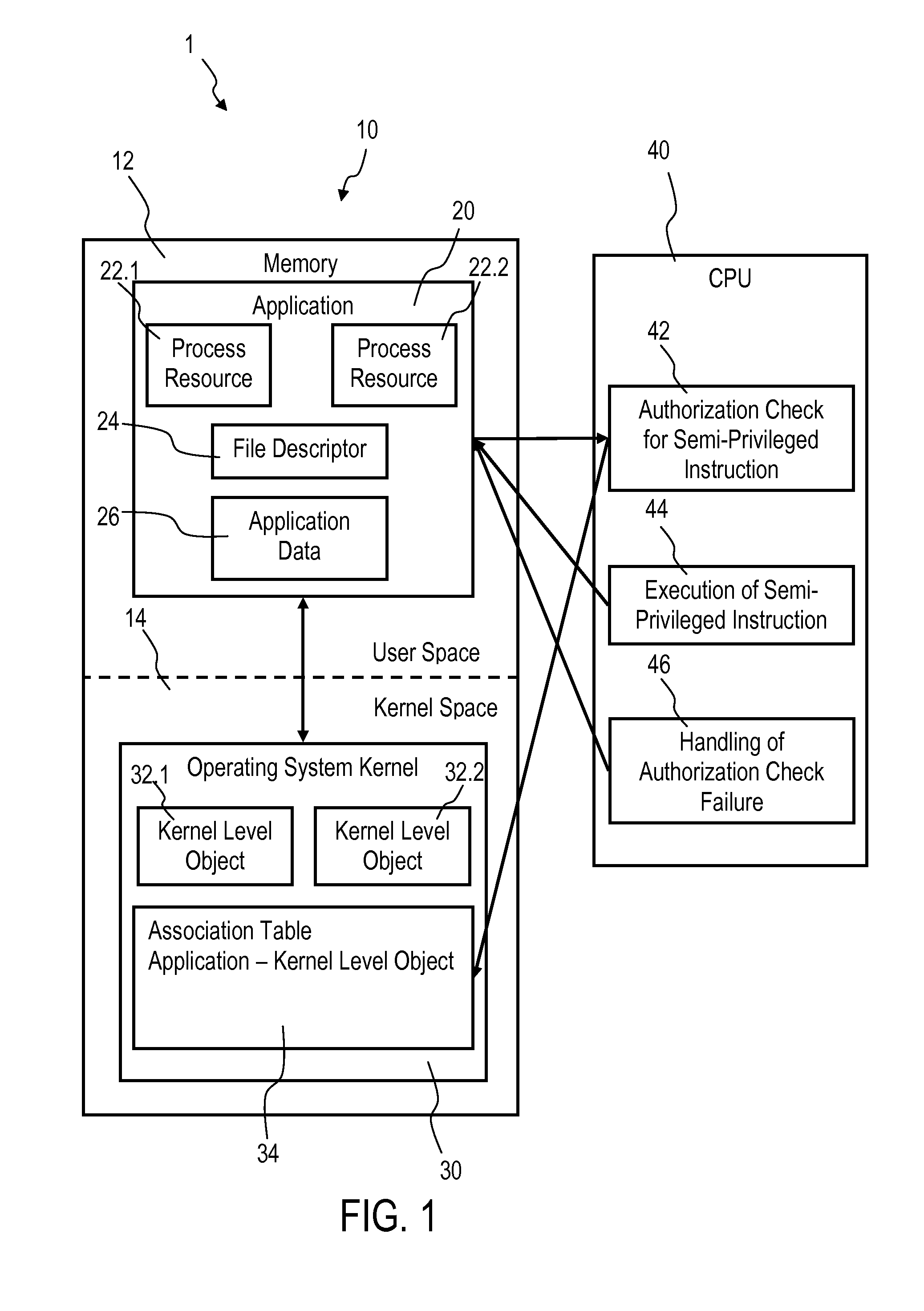

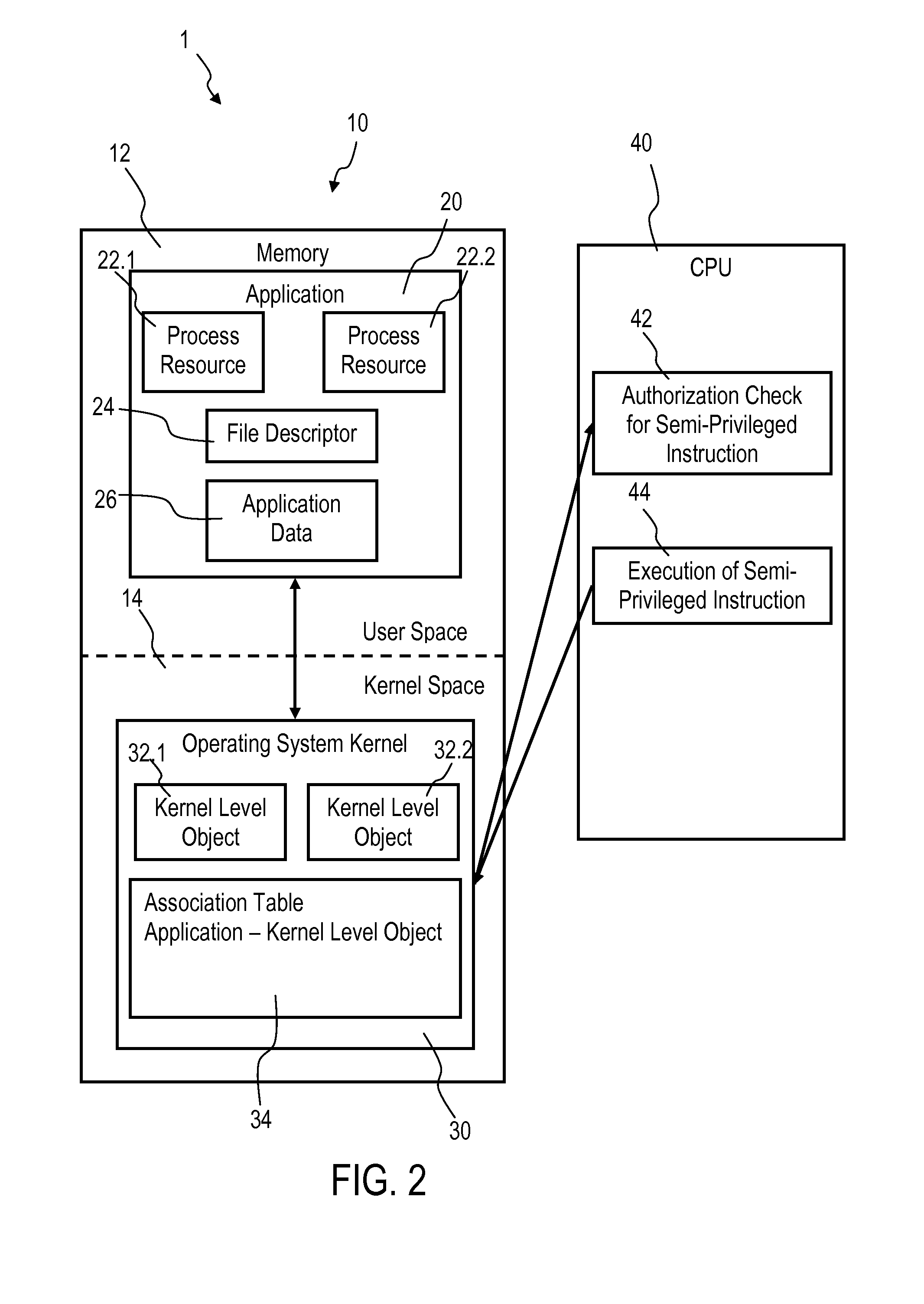

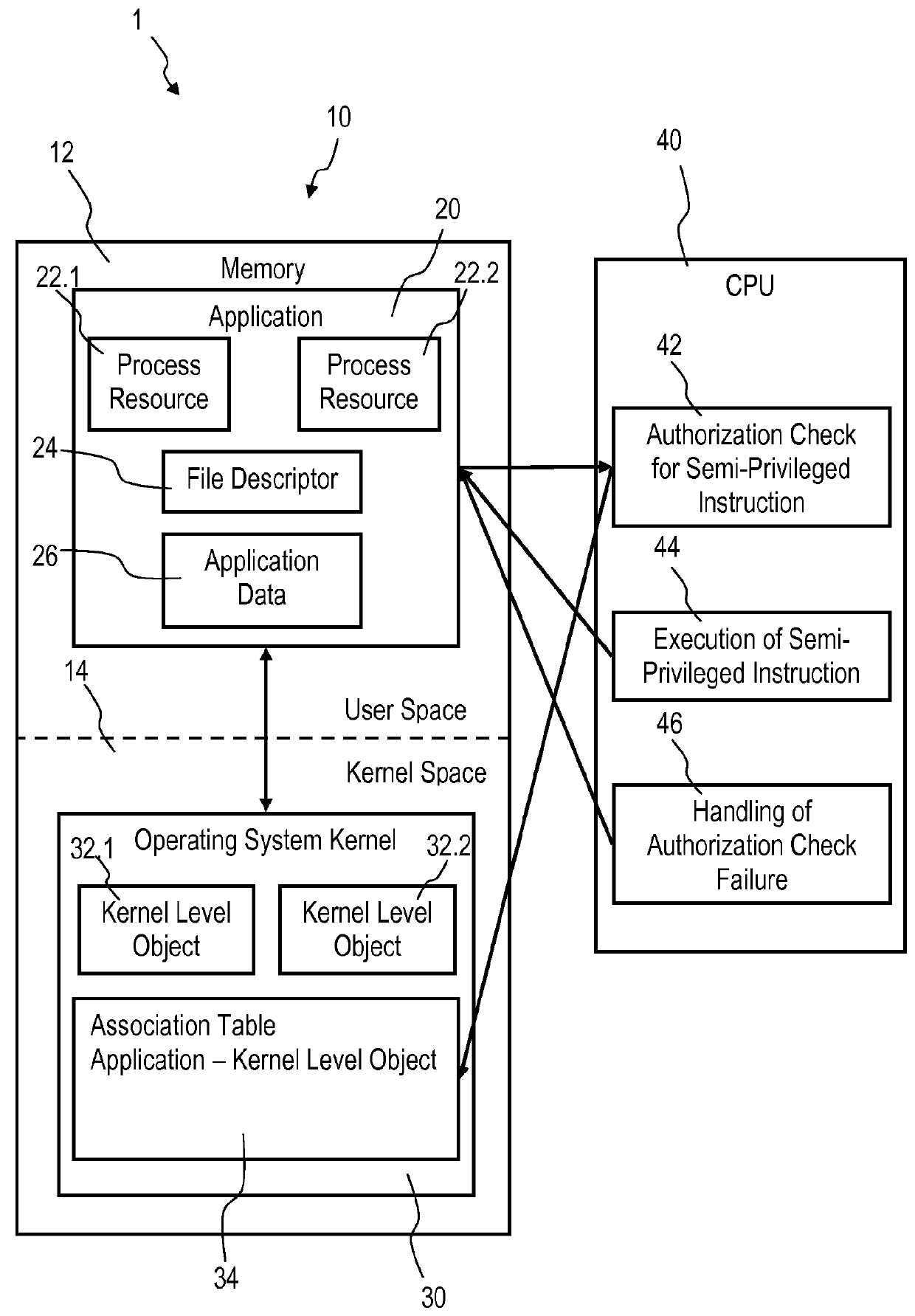

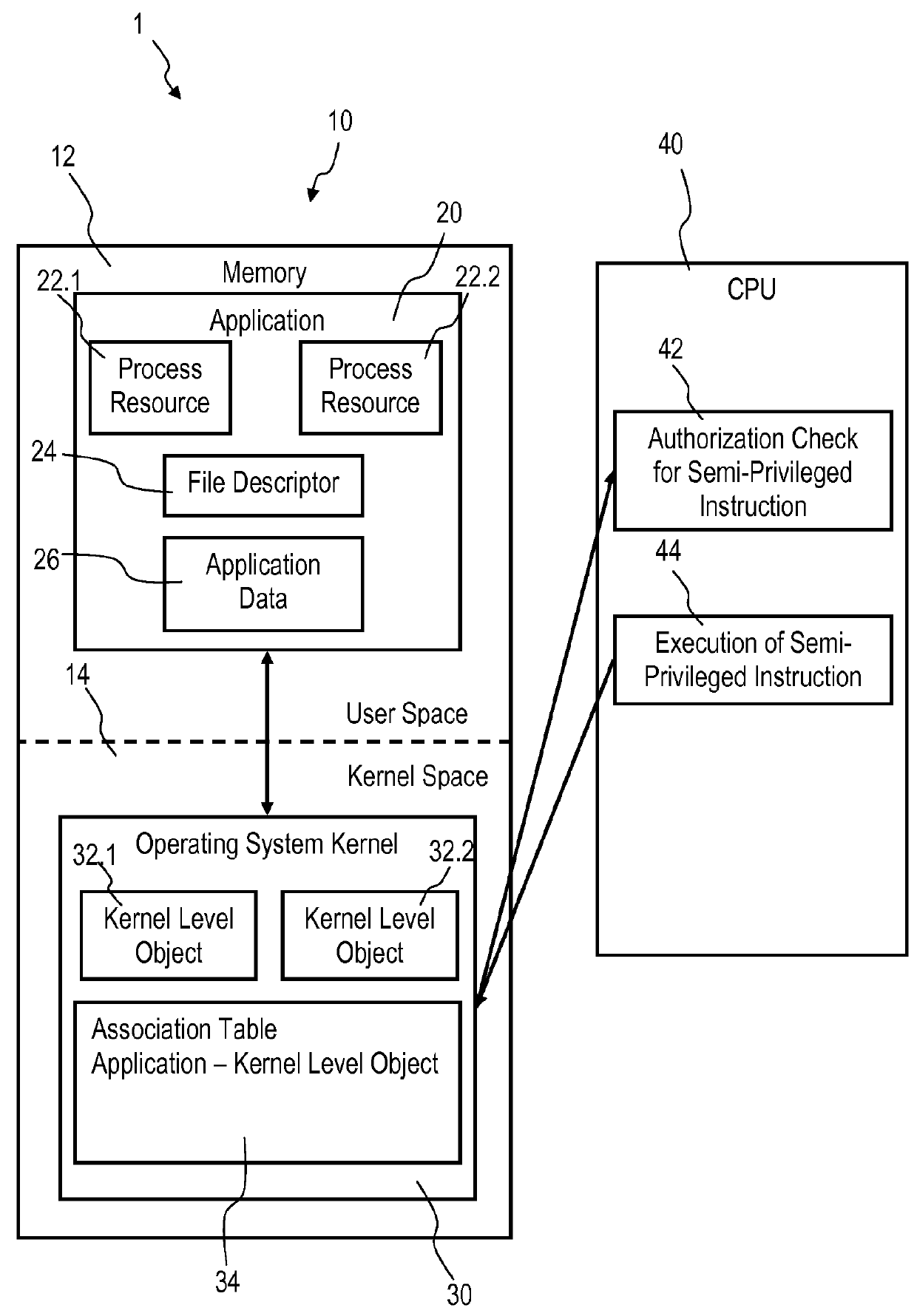

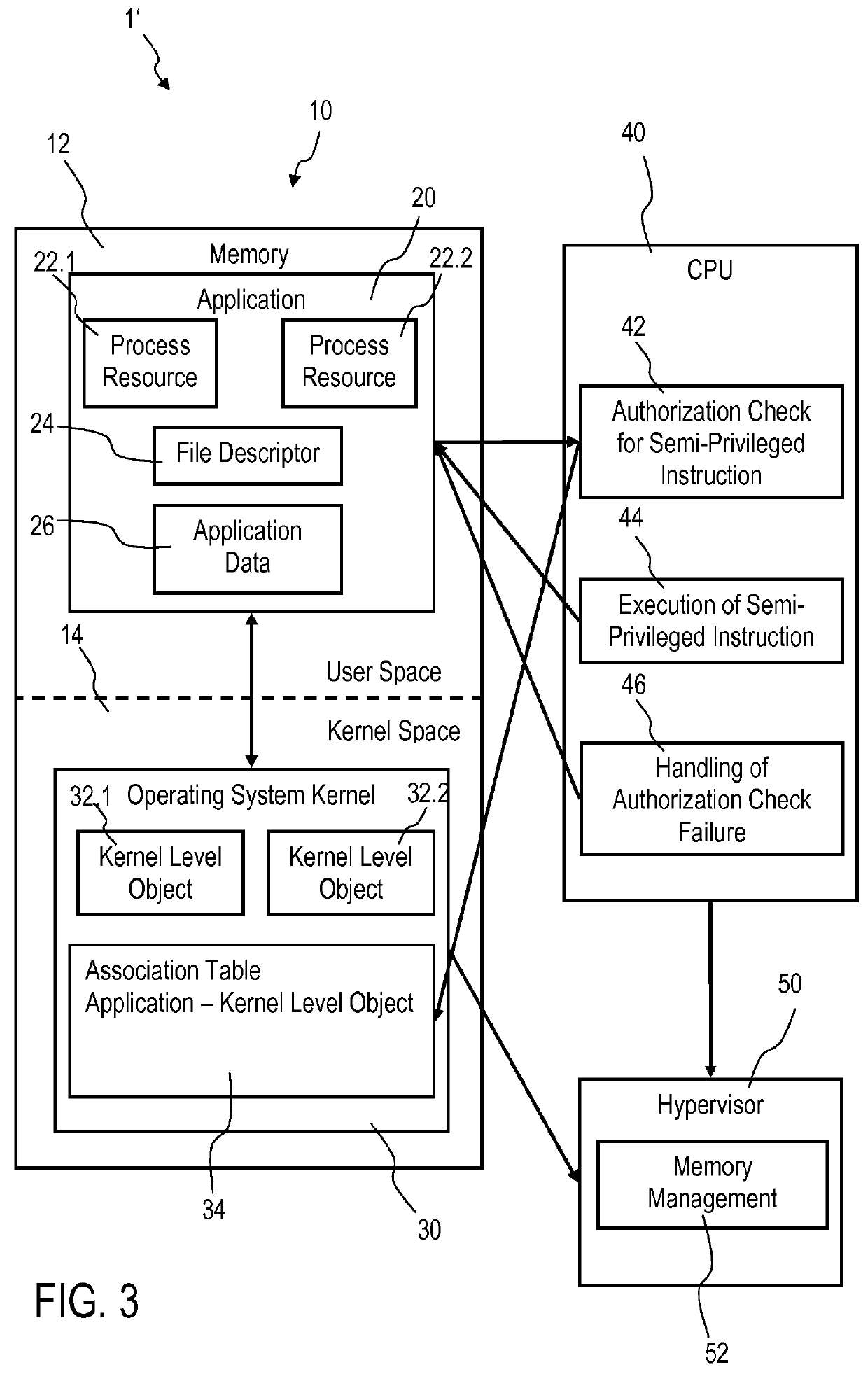

Accessing privileged objects in a server environment

InactiveUS20140123238A1Speed up network communicationReduce data transfer overheadSpecific access rightsDigital data processing detailsAuthorizationEngineering

Accessing privileged objects in a server environment. A privileged object is associated with an application comprising at least one process resource and a corresponding semi-privileged instruction. The association is filed in an entity of an operating system kernel. A central processing unit (CPU) performs an authorization check if the semi-privileged instruction is issued and attempts to access the privileged object. The CPU executes the semi-privileged instruction and grants access to the privileged object if the operating system kernel has issued the semi-privileged instruction; or accesses the entity if a process resource of the application has issued the semi-privileged instruction to determine authorization of the process resource to access the privileged object. Upon positive authorization the CPU executes the semi-privileged instruction and grants access to the privileged object, and upon authorization failure denies execution of the semi-privileged instruction and performs a corresponding authorization check failure handling.

Owner:IBM CORP

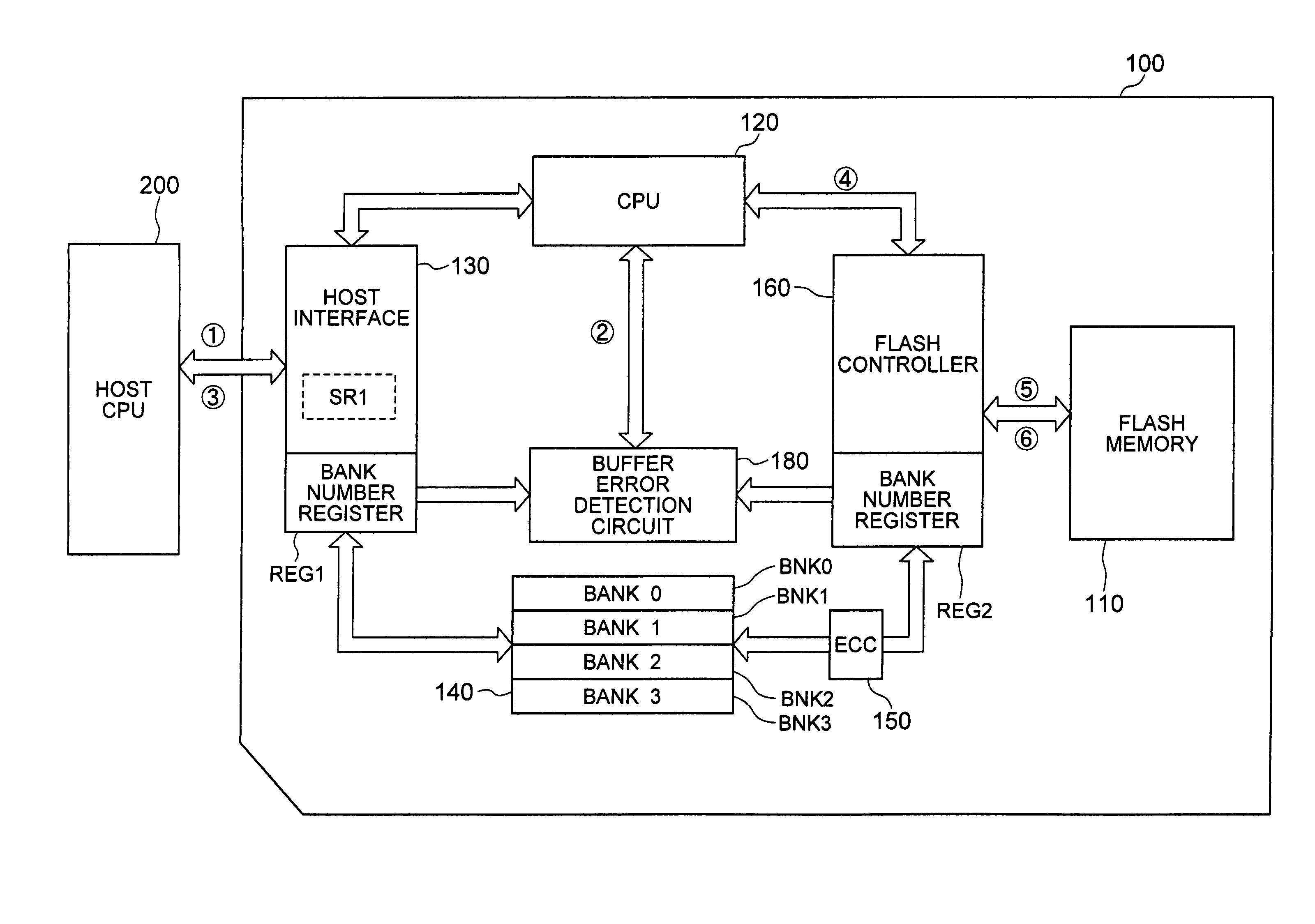

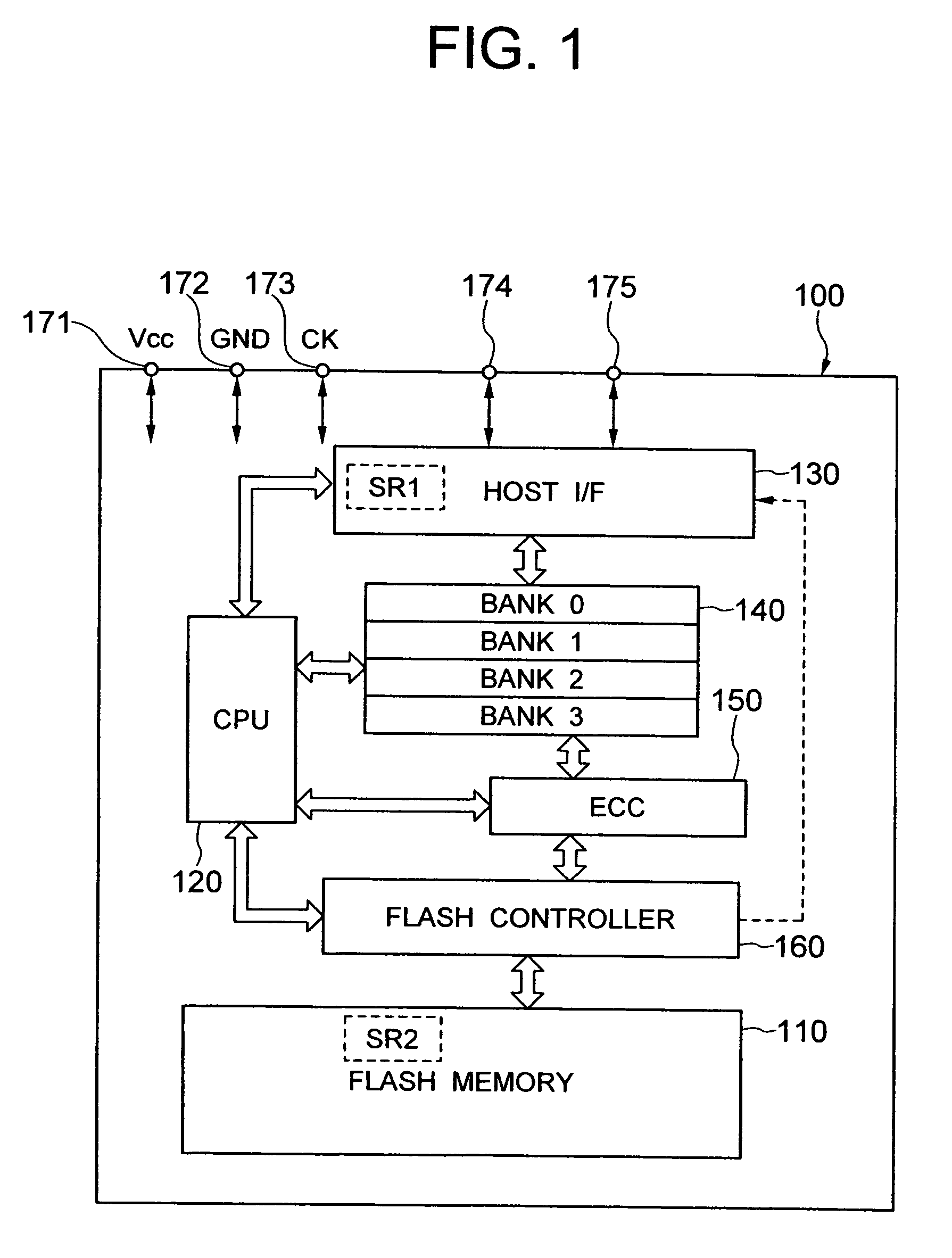

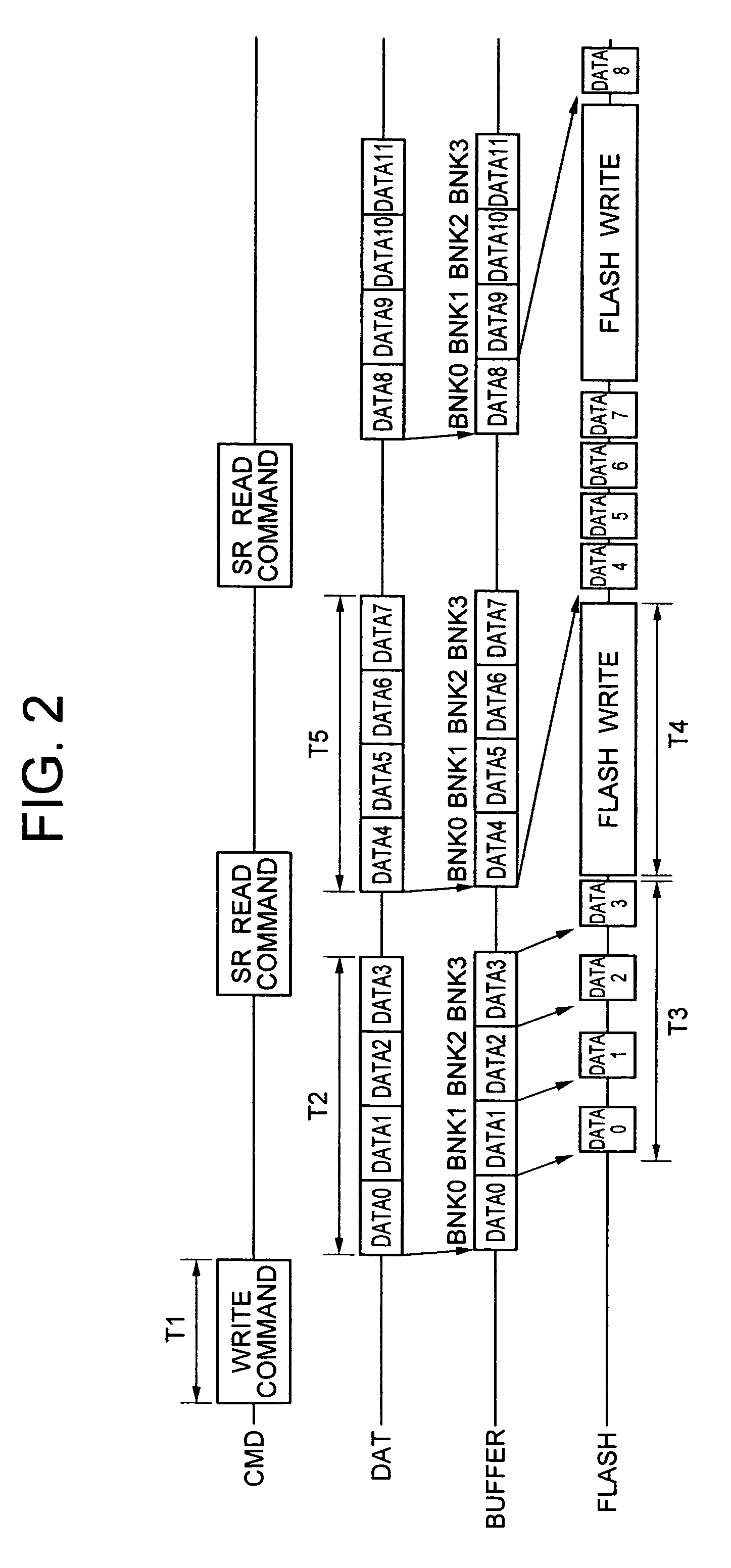

Non-volatile memory device and data storing method

InactiveUS7116578B2Reduces write-data transfer overheadReduce data write timeMemory adressing/allocation/relocationRead-only memoriesData storingData store

In a card storage device containing a non-volatile memory and a buffer memory, the buffer memory includes a plurality of banks. Data is transferred sequentially from a host CPU to the banks of the buffer memory, data is transferred to the non-volatile memory from a bank that becomes full, a write operation is started when one unit of data to be written into the non-volatile memory at a time has been transferred and, without waiting for the data to be written, the next write data is transferred from the host CPU to a bank from which write data has been transferred.

Owner:RENESAS ELECTRONICS CORP

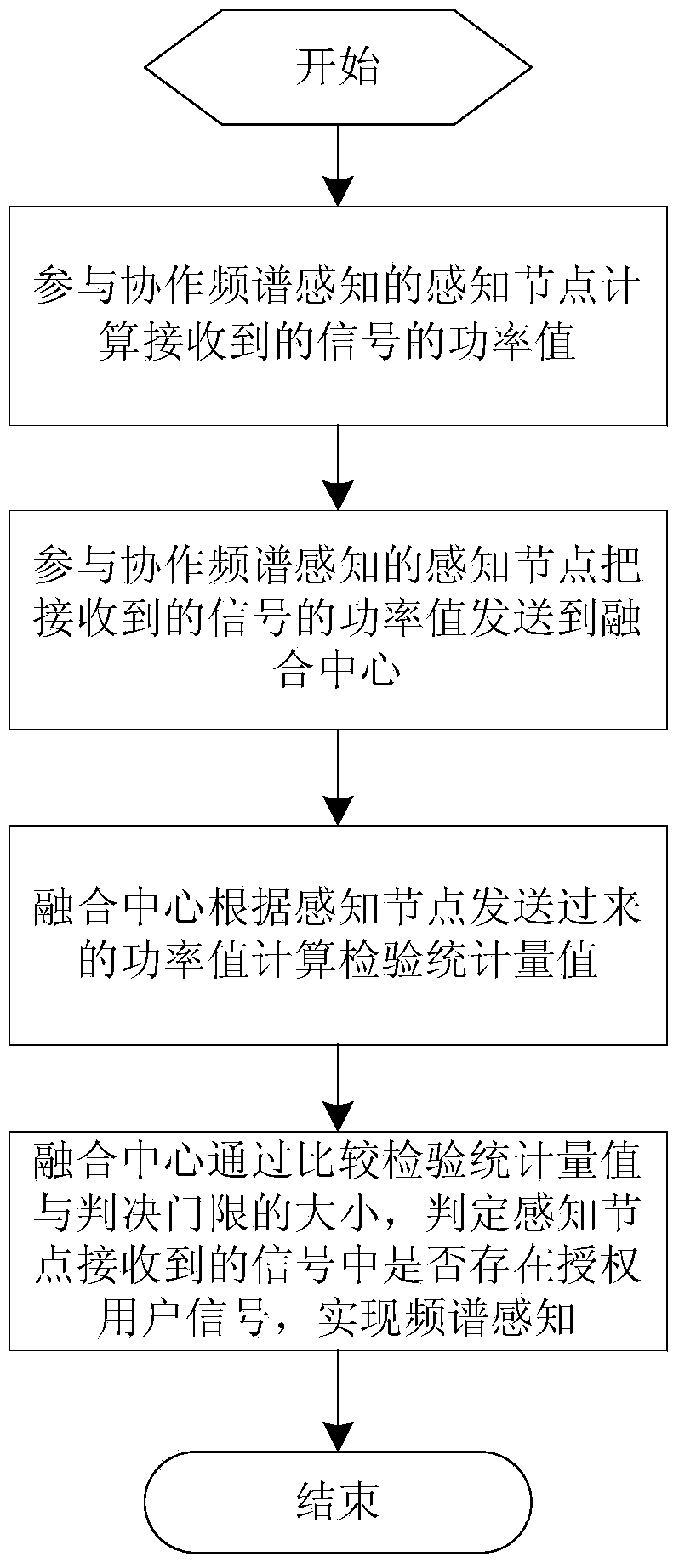

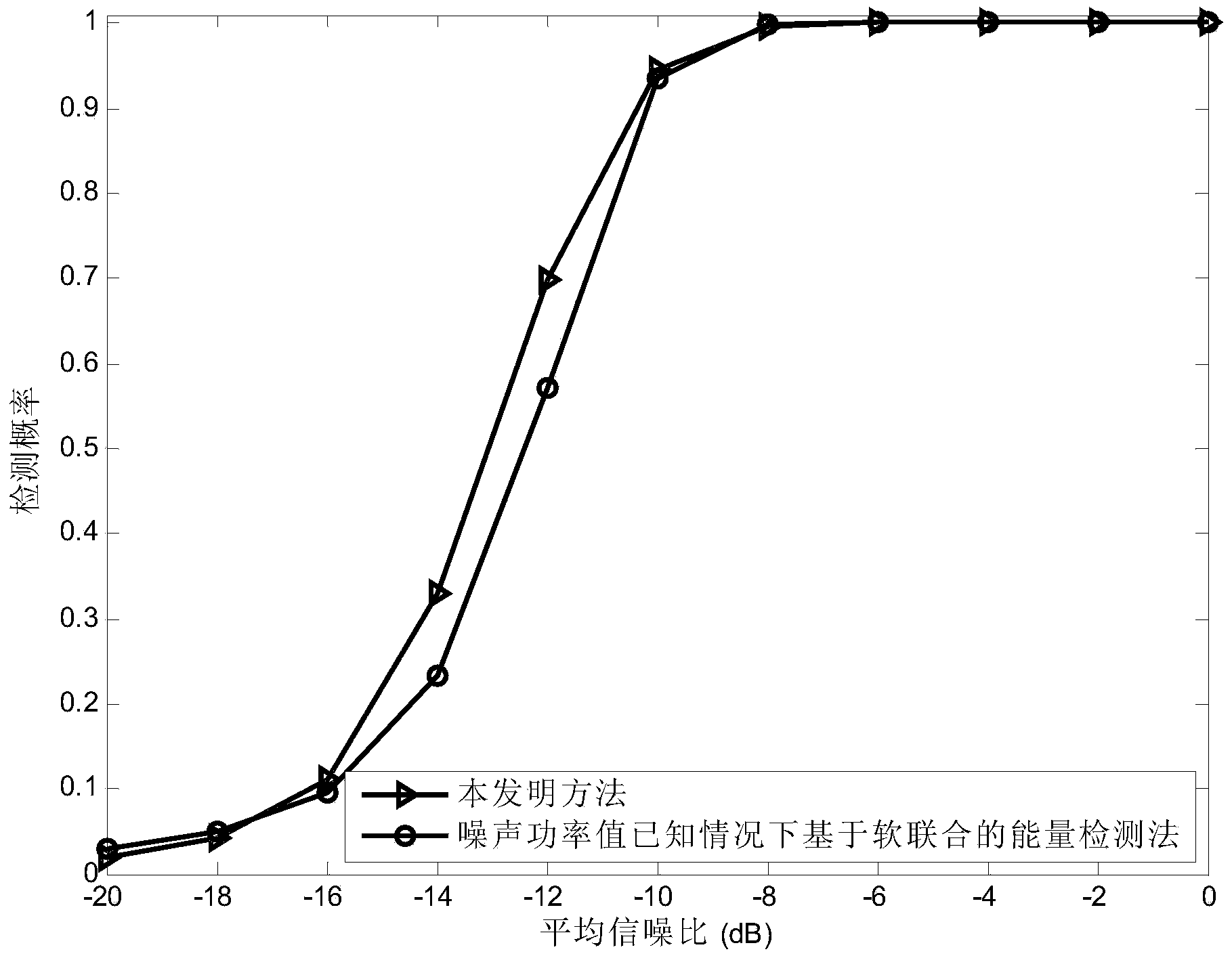

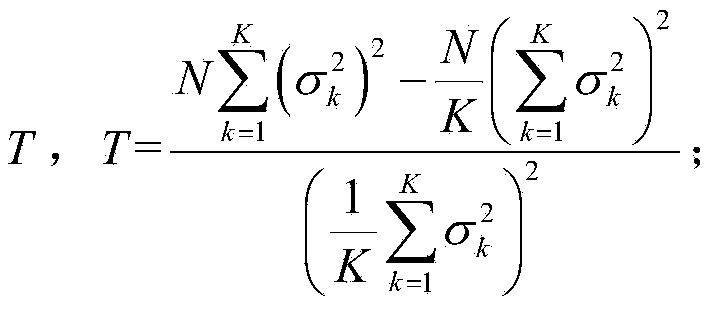

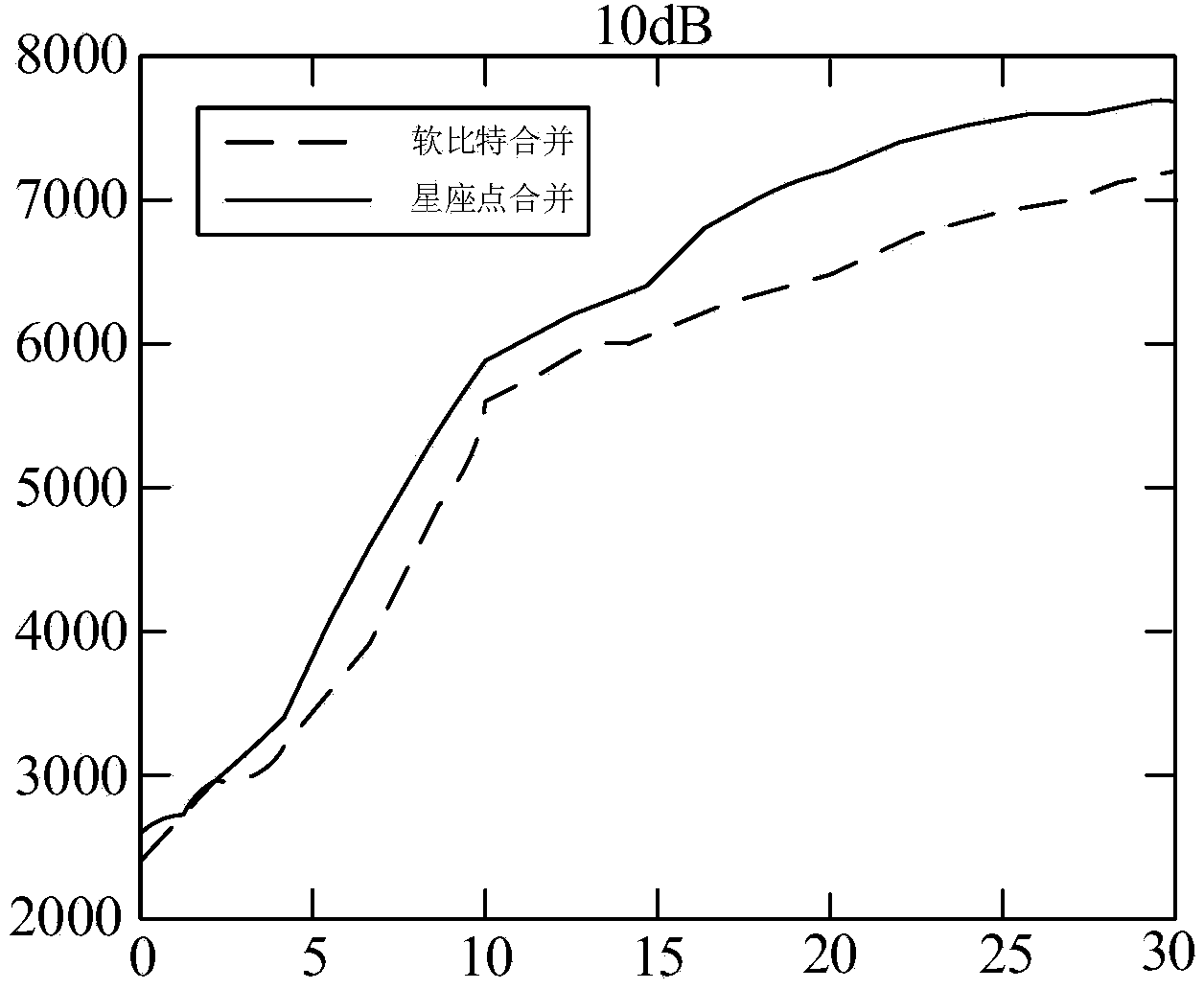

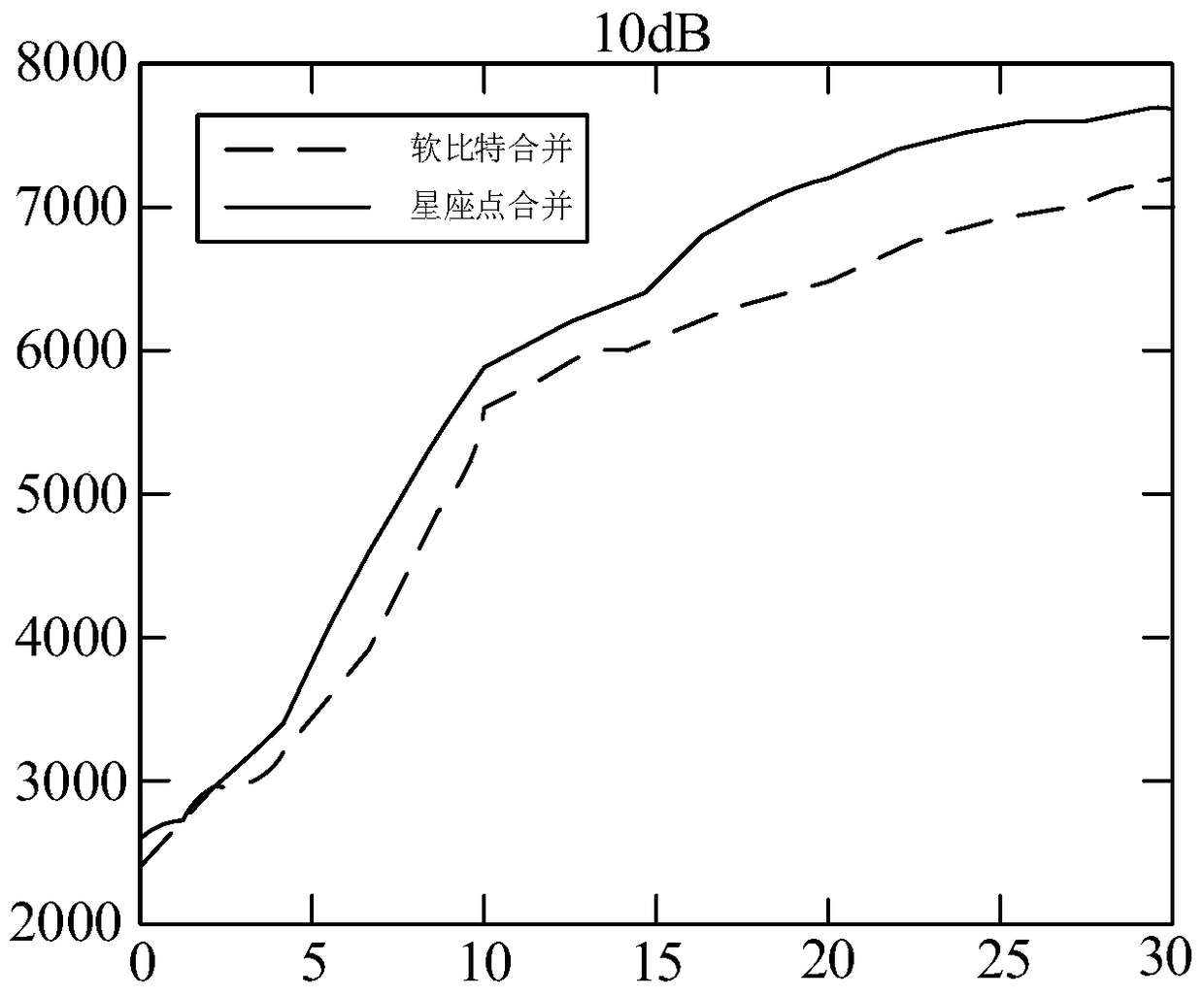

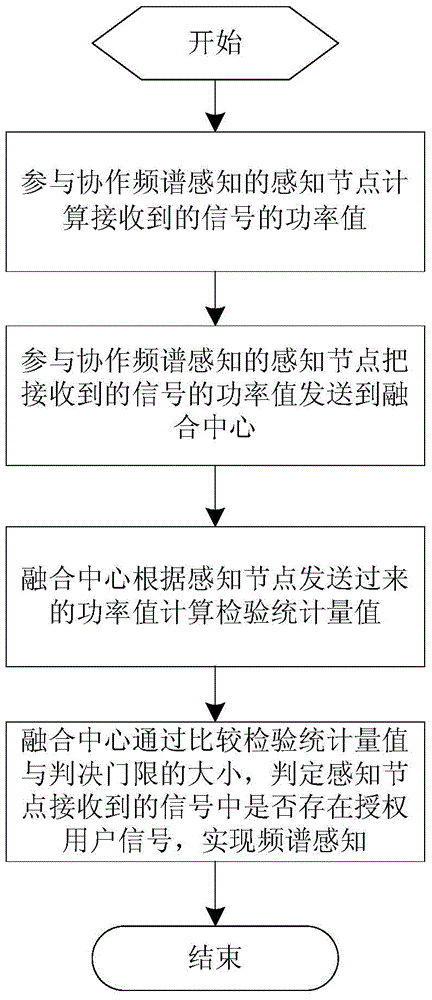

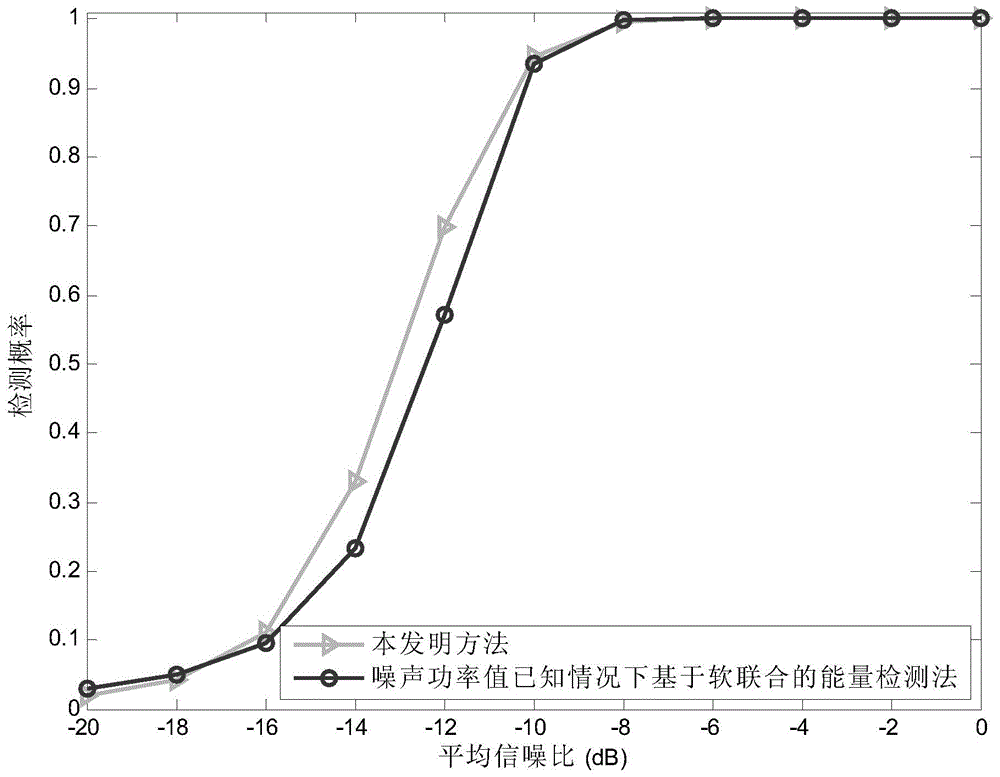

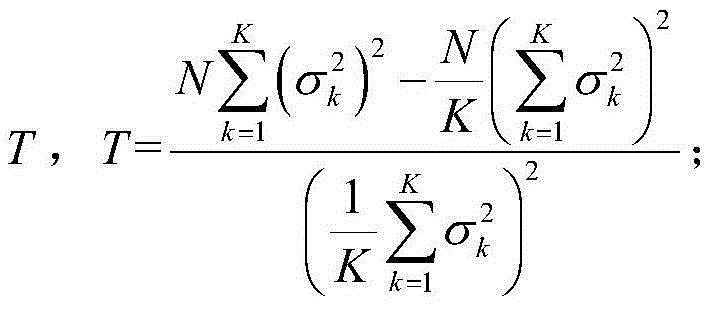

Cooperative spectrum sensing method utilizing space diversity

InactiveCN103888201AReduce data transfer overheadUnaffected by Noise UncertaintySpatial transmit diversityTransmission monitoringFusion centerFrequency spectrum

The invention discloses a cooperative spectrum sensing method utilizing space diversity. The method comprises the steps that sensing nodes participating in cooperative spectrum sensing calculate power magnitudes of received signals, the sensing nodes participating in cooperative spectrum sensing send the power magnitudes of the received signals to a fusion center, the fusion center calculates an inspection statistics magnitude according to the power magnitudes of the signals received by the sensing nodes participating in cooperative spectrum sensing, and finally, the fusion center judges whether an authorized user signal exists in the signals received by the sensing nodes to achieve spectrum sensing by comparing the inspection statistics magnitude with a judgment threshold. The method has the advantages that the noise power magnitude does not need to be known, and the performance is close to that of the energy inspection method based on soft combination with the noise power magnitude known.

Owner:NINGBO UNIV

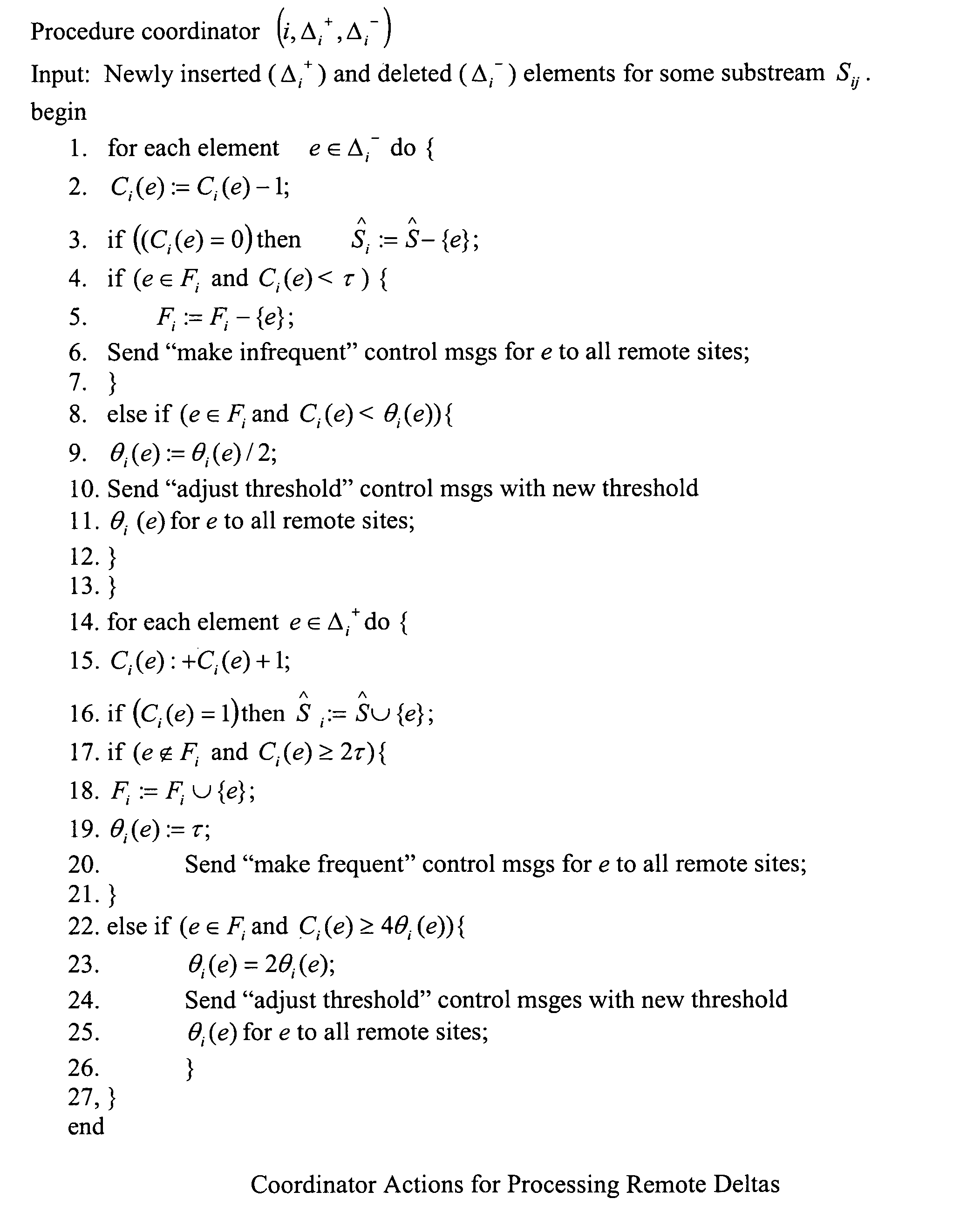

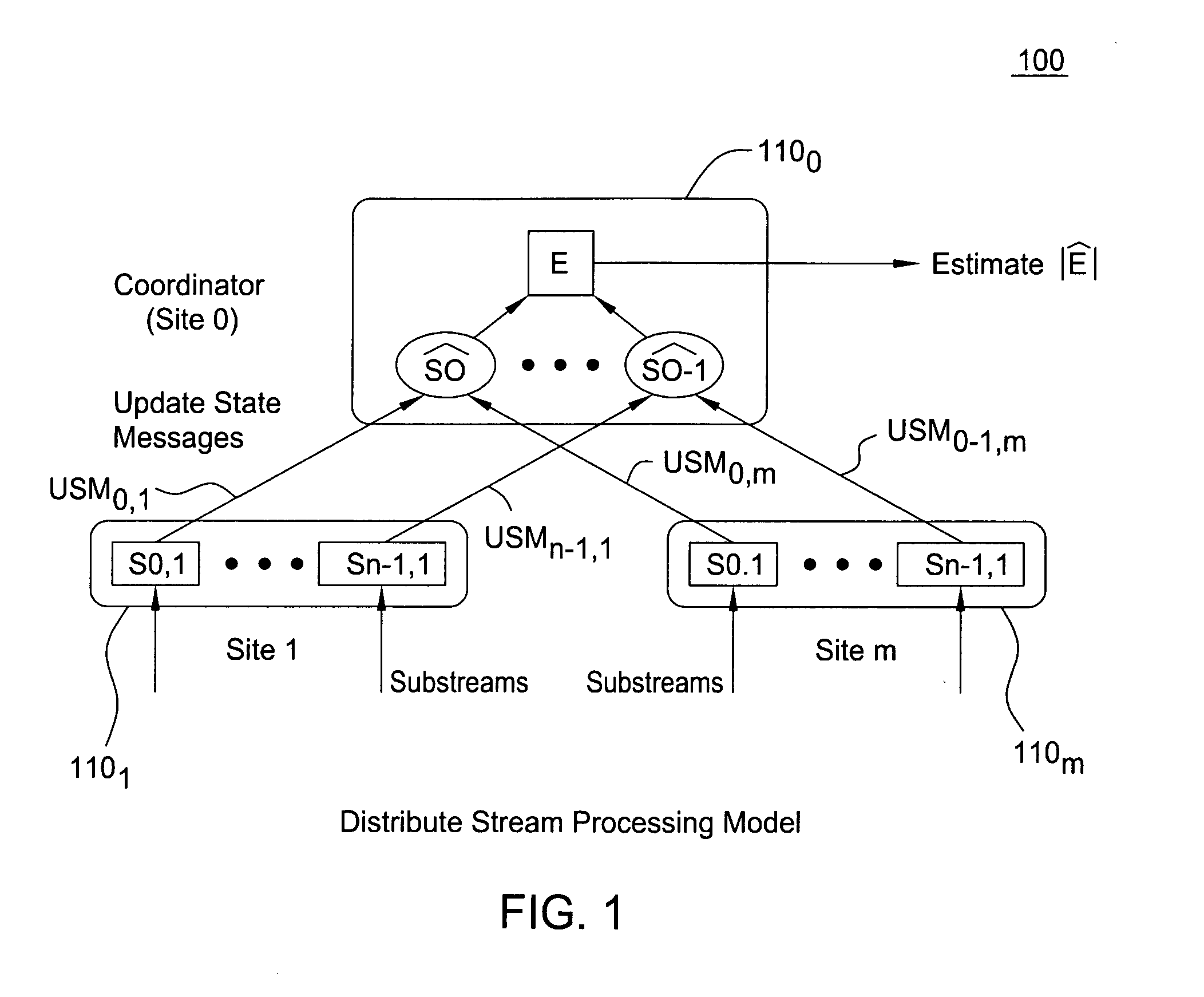

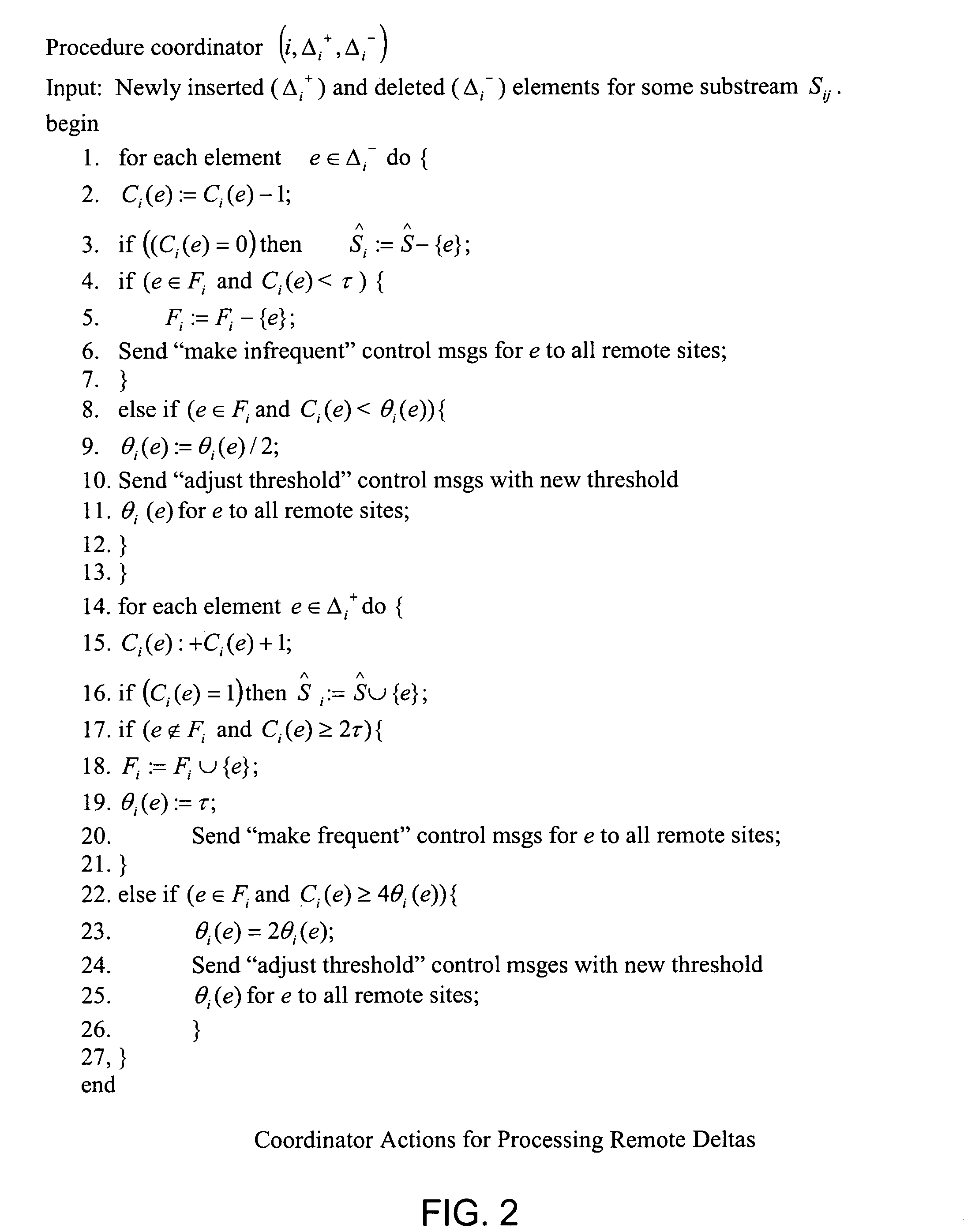

Distributed set-expression cardinality estimation

InactiveUS20060149744A1Reduce data communication costsReduced stateTransmissionSpecial data processing applicationsSemanticsData mining

A method and system for answering set-expression cardinality queries while lowering data communication costs by utilizing a coordinator site to provide global knowledge of the distribution of certain frequently occurring stream elements to significantly reduce the transmission of element state information to the central site and, optionally, capturing the semantics of the input set expression in a Boolean logic formula and using models of the formula to determine whether an element state change at a remote site can affect the set expression result.

Owner:ALCATEL-LUCENT USA INC +1

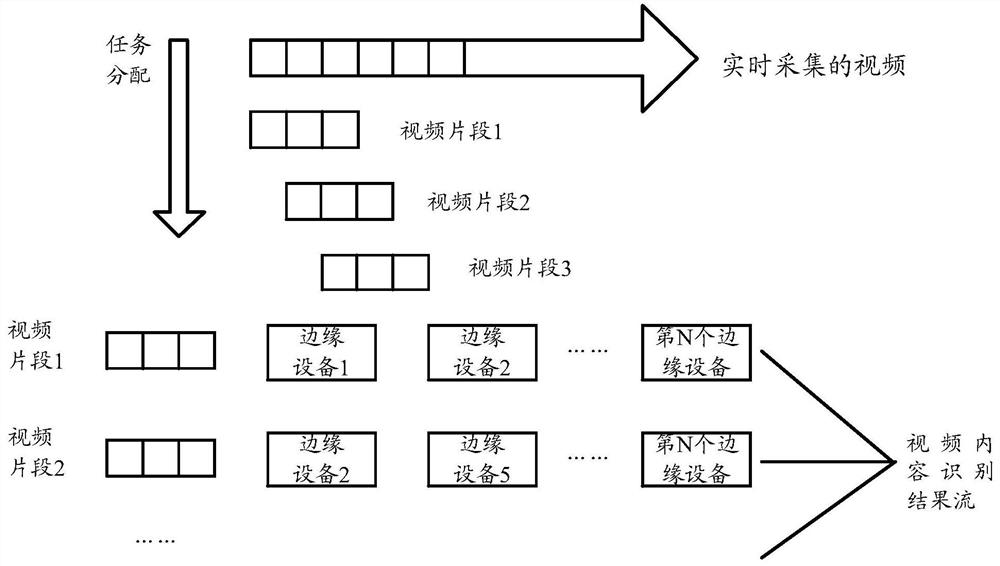

Video content identification method, device, medium and system

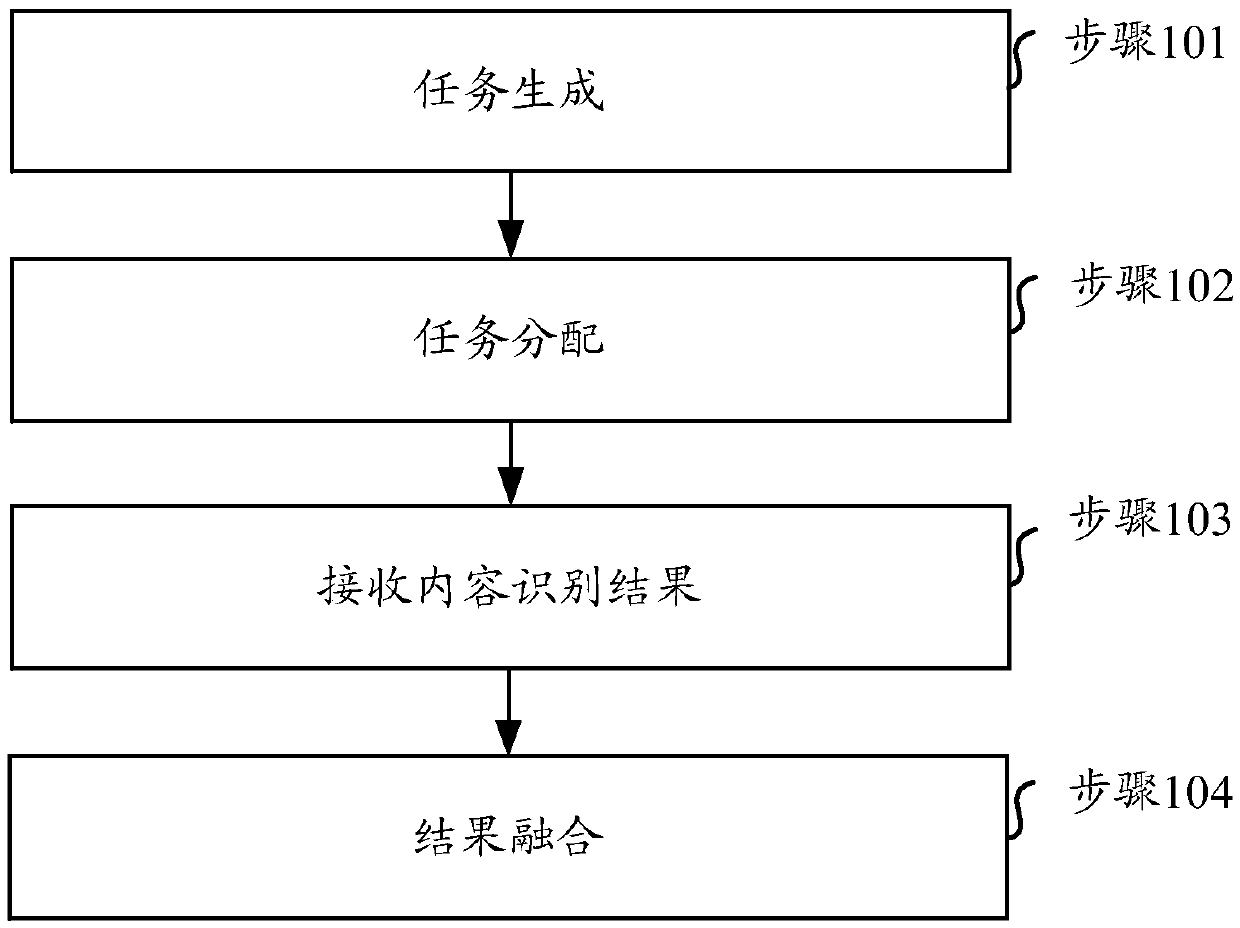

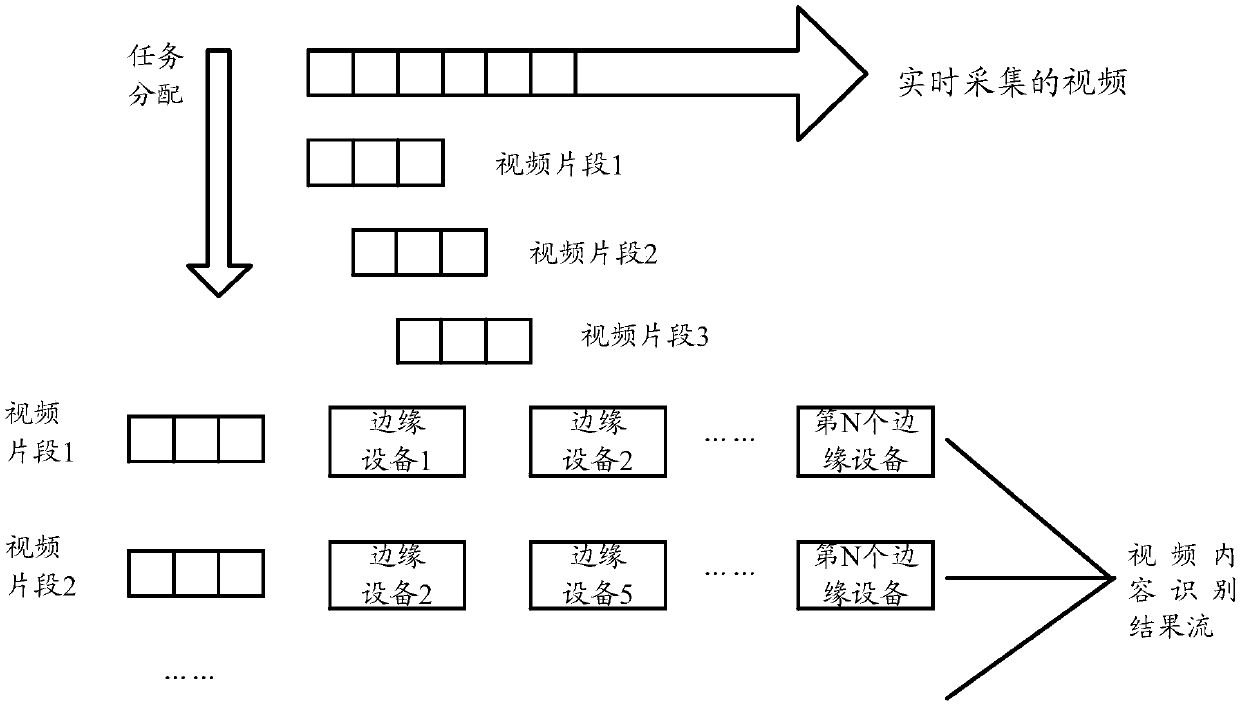

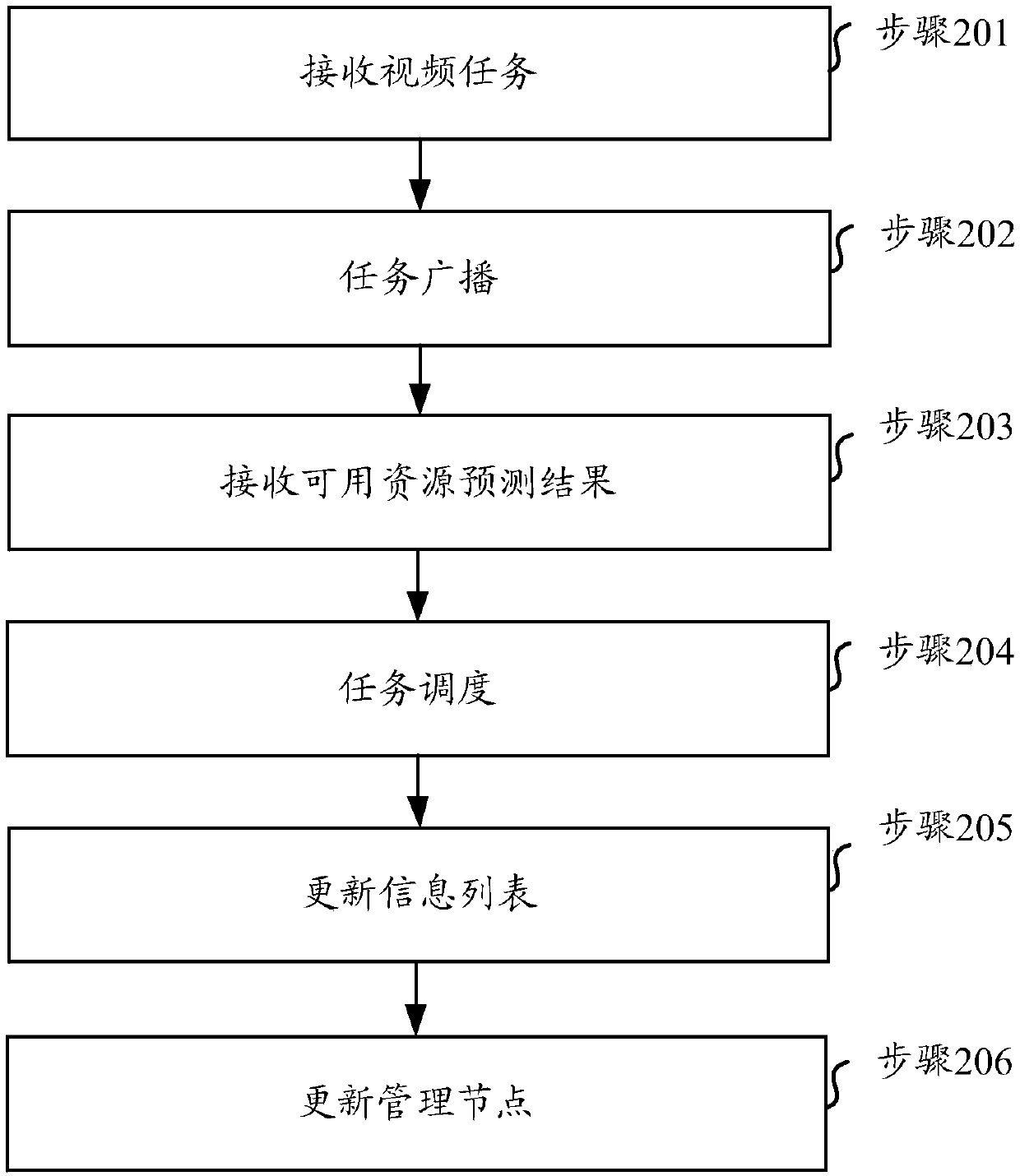

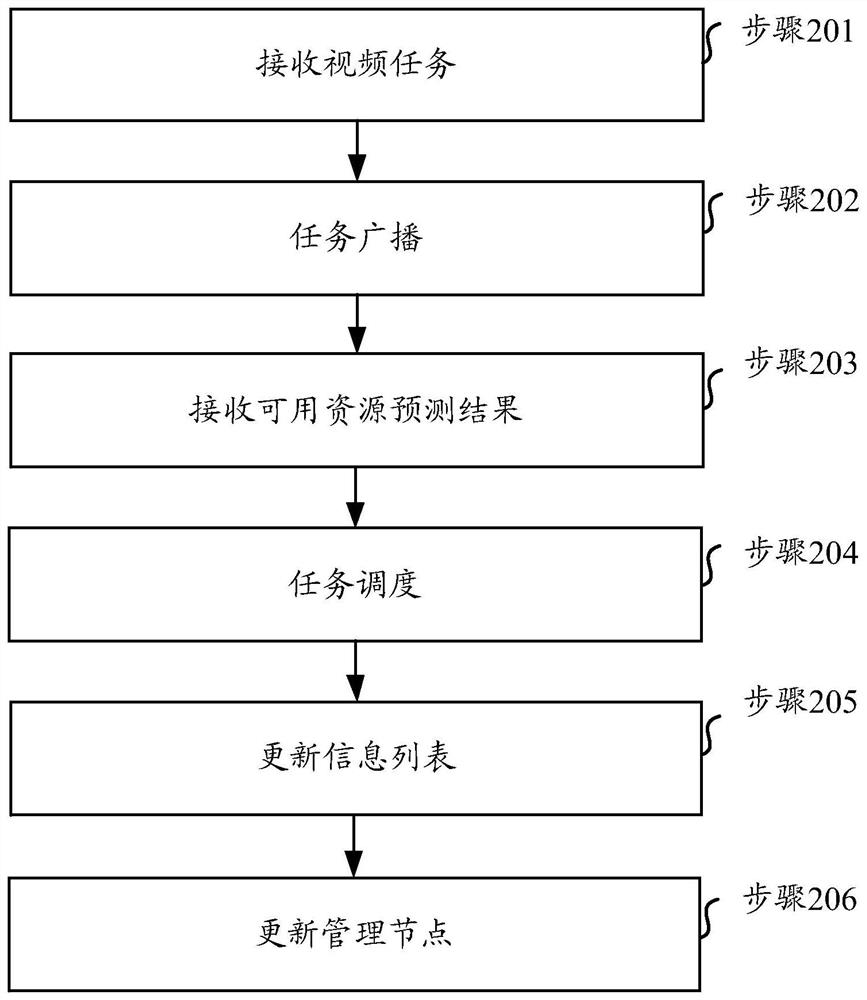

ActiveCN110557679AIntegrity guaranteedGuaranteed accuracyTelevision system detailsColor television detailsOccupancy rateEdge computing

The invention relates to the technical field of video processing, in particular to a video content identification method, equipment, a medium and a system. Aiming at the problem of high resource occupancy rate or low identification accuracy of a video identification end during automatic real-time identification of video contents in the existing security monitoring system, the embodiment of the invention provides a scheme for performing real-time video processing on an edge side and cooperatively finishing real-time video identification based on redundant resources and computing power of edge equipment. According to the scheme, the existing edge equipments (such as a set top box and an intelligent home gateway) are utilized to form an edge computing network, and real-time processing of videos is completed on the edge side through a series of methods such as allocation and scheduling of video tasks and prediction of available resources.

Owner:CHINA MOBILE COMM LTD RES INST +1

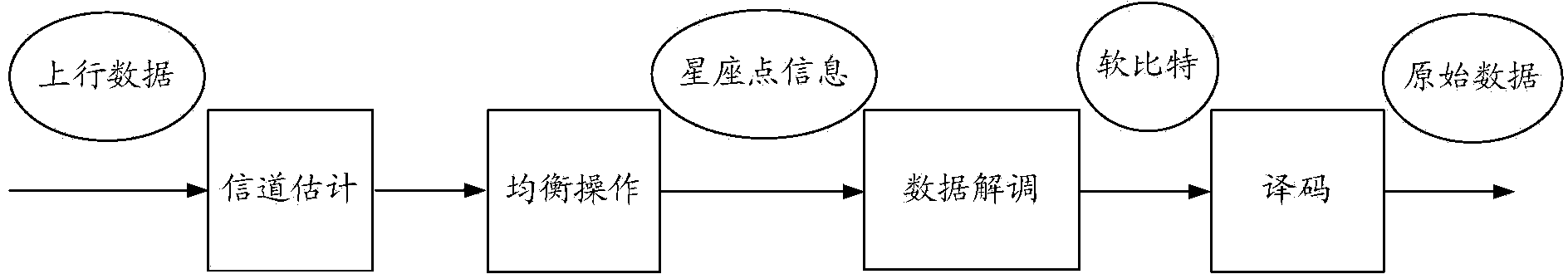

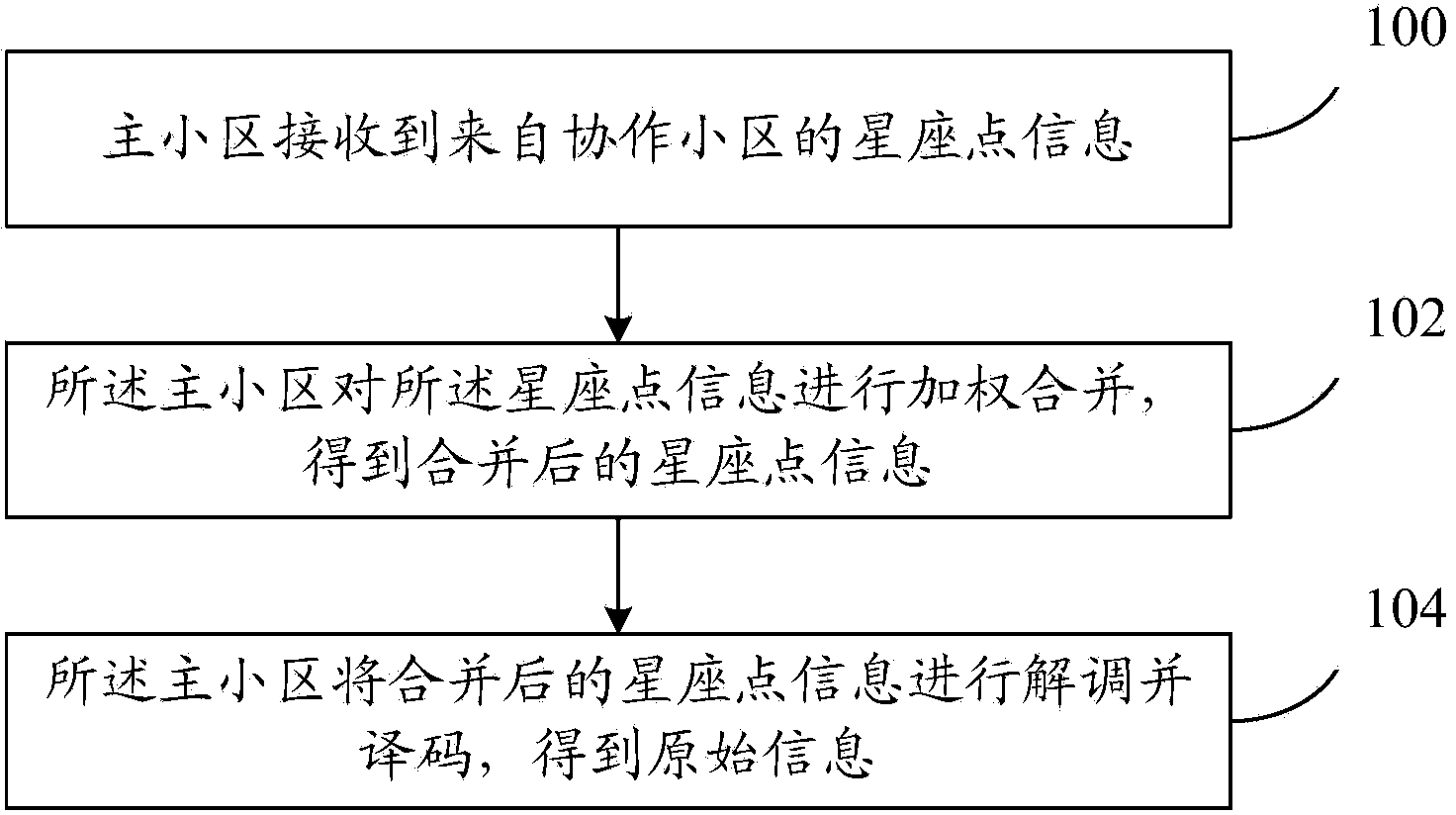

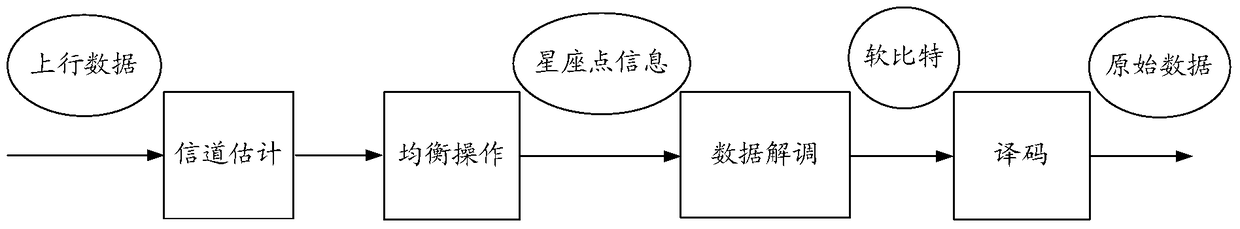

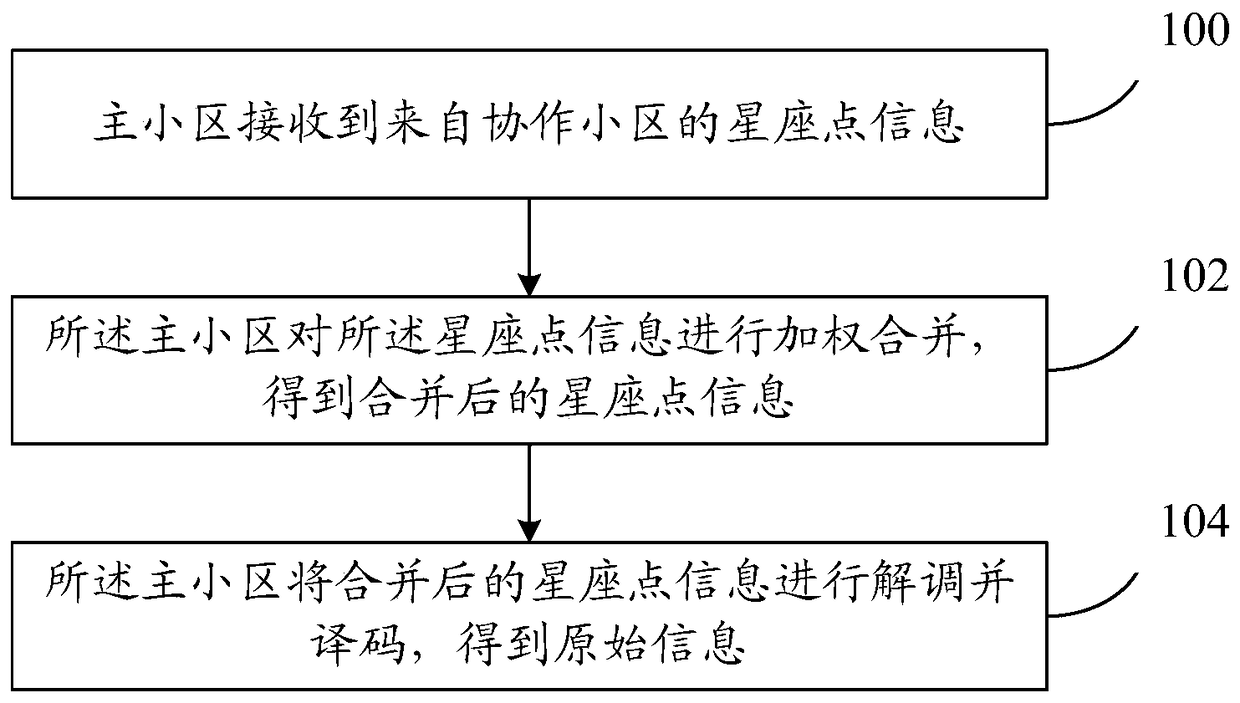

Data decoding method and equipment with uplink coordinated multiple points

ActiveCN104283644AReduce data transfer overheadAvoid gain reduction issuesSite diversityModulated-carrier systemsData transmissionConstellation

The invention provides data decoding method and equipment with uplink coordinated multiple points. The data decoding method comprises the following steps: a main district receives constellation point information from a cooperation district, wherein the data quantity of the constellation point information is smaller than that of uplink data received by the cooperation district; the main district carries out weighted combination on the constellation point information to obtain combined constellation point information; and the main district carries out demodulation and decoding on the combined constellation point information to obtain original information. With the adoption of the technical scheme of the invention, the data transmission cost between the main district and the cooperation district is reduced, the problem that the gain is lowered caused by decoding soft bits after being combined is solved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

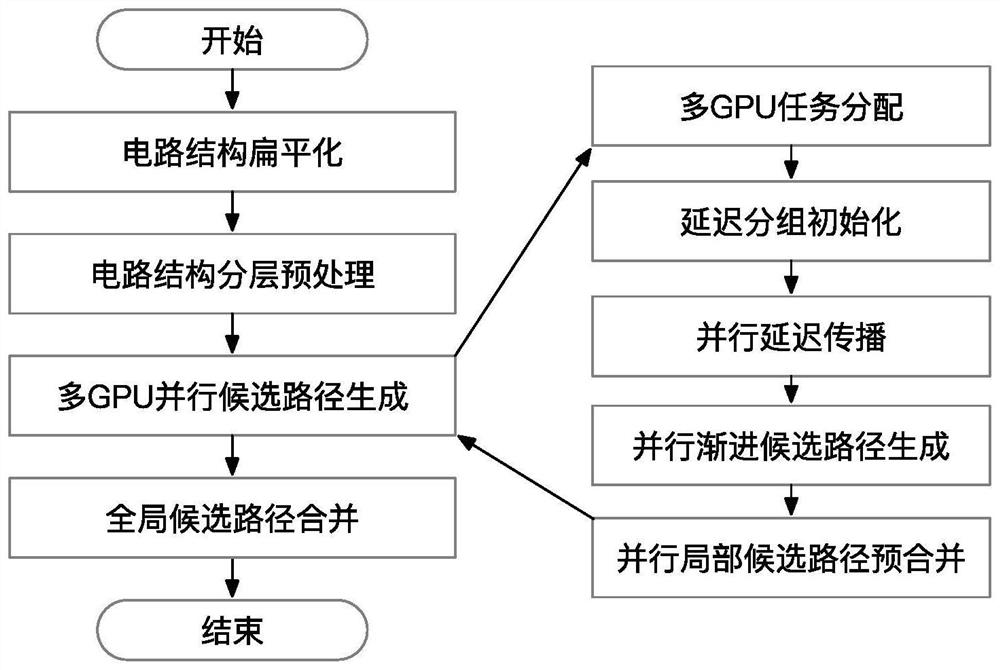

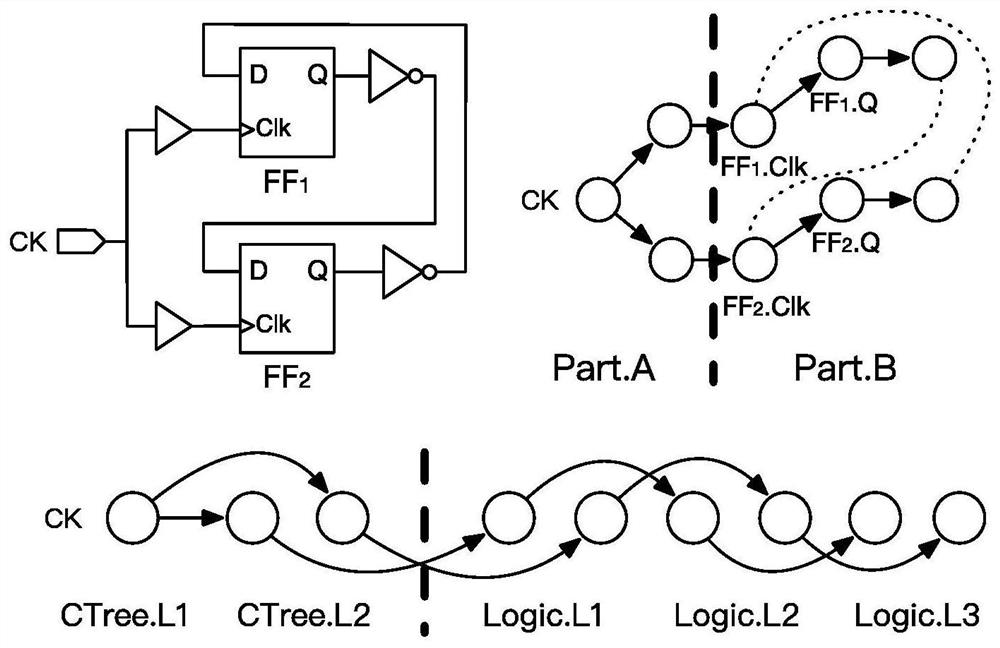

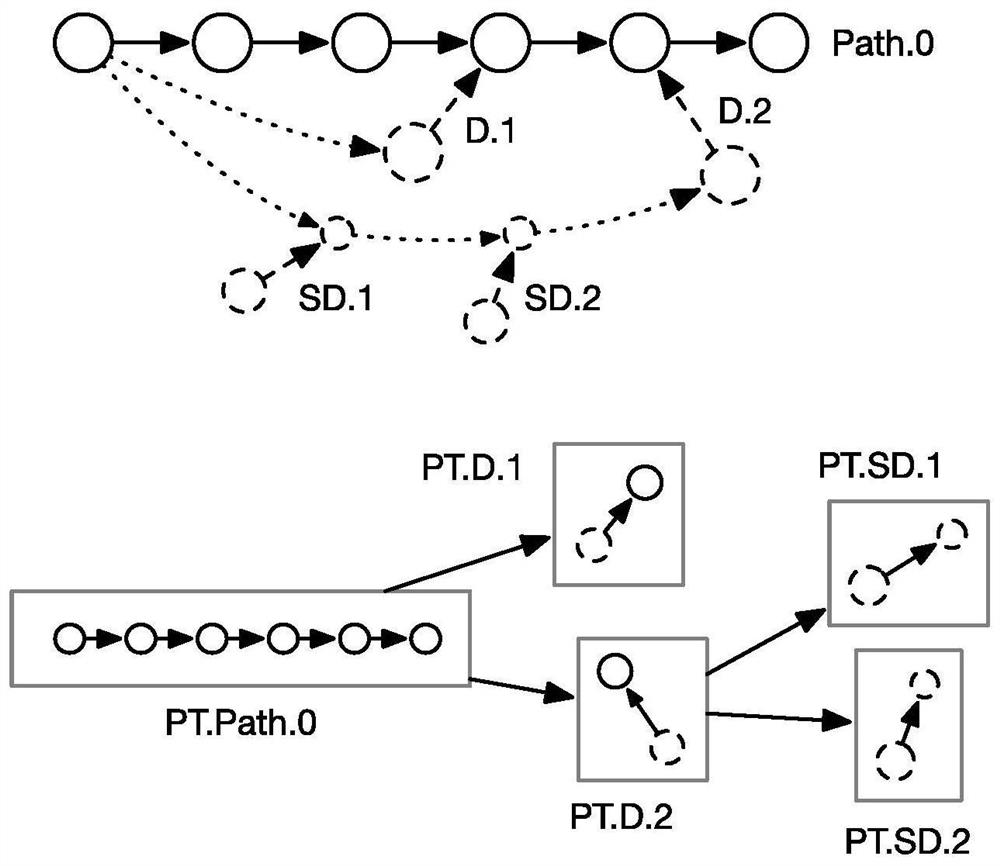

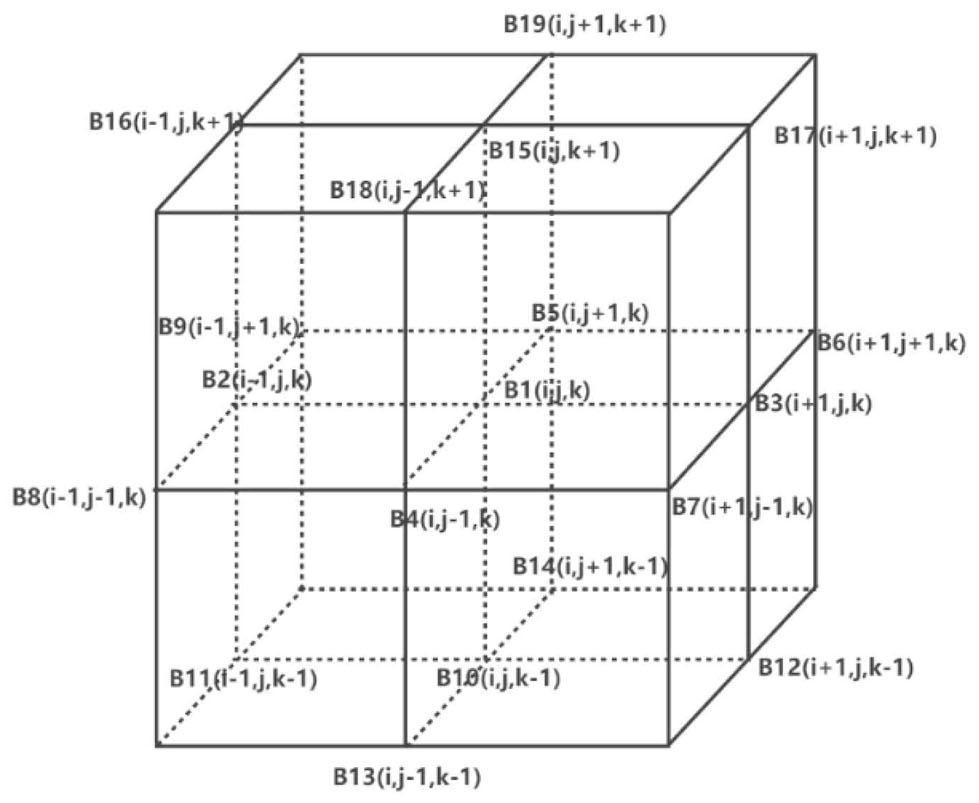

Integrated circuit non-pessimistic path analysis method for GPU accelerated calculation

PendingCN113836846AHigh degree of parallelismReduce data transfer overheadComputer aided designSpecial data processing applicationsIntegrated circuitEngineering

The invention discloses an integrated circuit non-pessimistic path analysis method for GPU accelerated calculation. The method comprises the steps of circuit structure flattening, circuit structure layering preprocessing, multi-GPU parallel candidate path generation and global candidate path merging. The multi-GPU parallel candidate path generation comprises the steps of multi-GPU task allocation, delay grouping initialization, parallel delay propagation, parallel progressive candidate path generation and parallel local candidate path pre-merging. According to the invention, by introducing equivalent transformation of an algorithm and a data structure, dense calculation in non-pessimistic time sequence analysis is completed in parallel on a plurality of GPUs, and data and control scheduling work among the GPUs is completed by using a CPU. Through cooperation of a single CPU-multi-GPU heterogeneous calculation model, compared with an original CPU algorithm, the performance can be improved by dozens of times, the calculation cost of non-pessimistic path analysis is greatly reduced, and the invention can be popularized and applied to the technical field of chip design automation.

Owner:PEKING UNIV

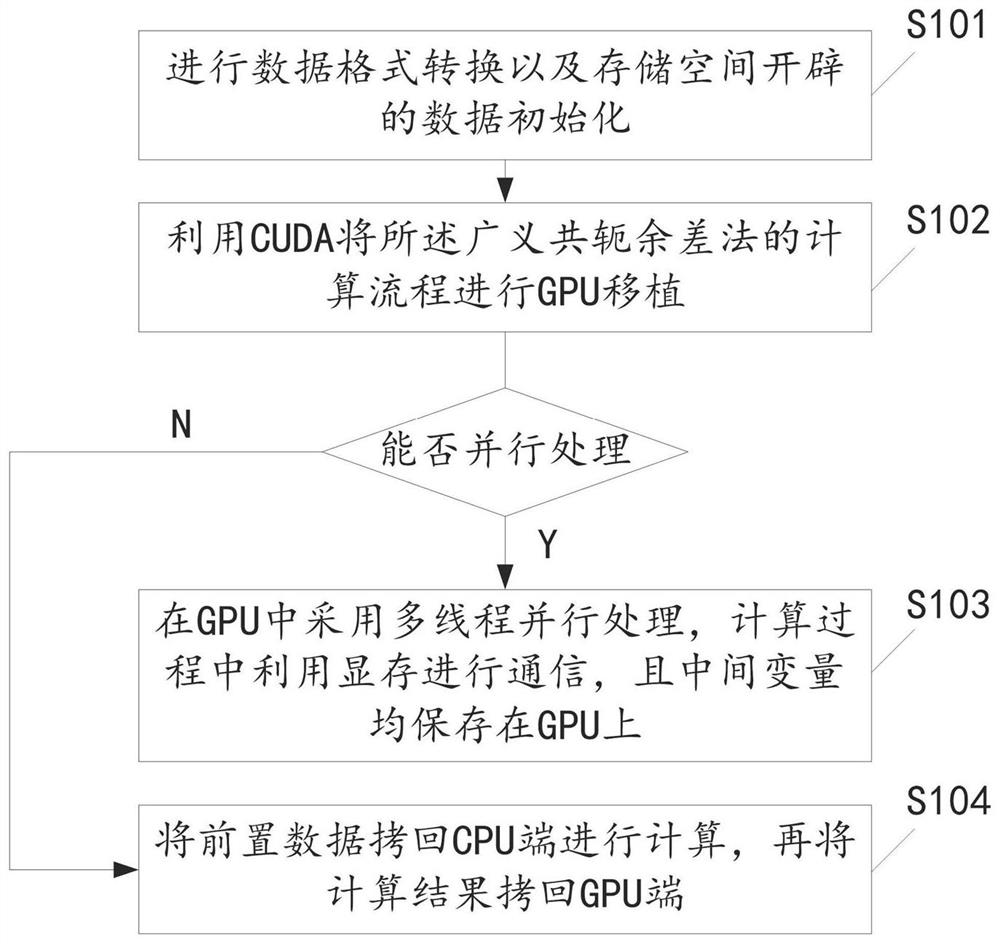

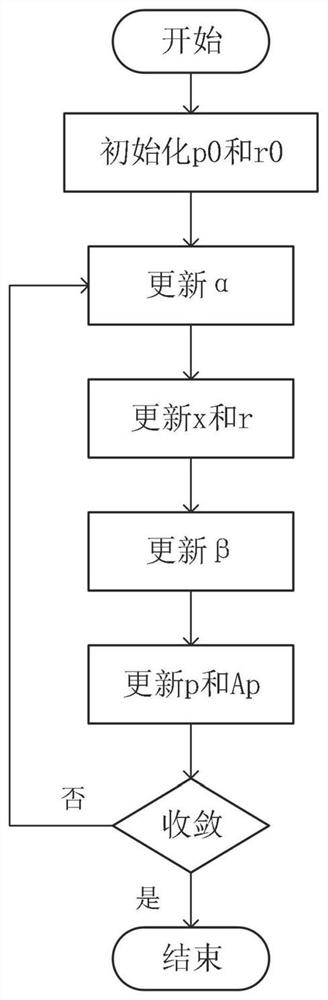

GPU-based GRAPES system optimization method and system, medium and equipment

PendingCN112486671AReduce data transfer overheadFrequent data transferResource allocationVideo memoryComputer architecture

The invention relates to a GPU-based GRAPES system optimization method and system, a medium and equipment. The method comprises the steps of performing data format conversion and data initialization of storage space development; conducting GPU transplantation on the calculation process of the generalized conjugate residual error method through CUDA, parallel processing can be conducted in the calculation process, adopting multi-thread parallel processing in a GPU, communication by a video memory in the calculation process, and saving intermediate variables in the GPU; for the data which cannotbe processed in parallel in the calculation process, copying the preposed data back to the CPU end for calculation, and copying the calculation result back to the GPU end. According to the embodimentof the invention, GPU transplantation is carried out on the calculation process, parallel processing can be carried out, multi-thread parallel processing is adopted in the GPU, a video memory is usedfor communication in the calculation process, and intermediate variables are all stored on the GPU, so that frequent data transmission with a CPU end is avoided, the data transmission overhead between the GPU and the CPU is reduced, and the overall performance of a program is improved.

Owner:QINGHAI UNIVERSITY

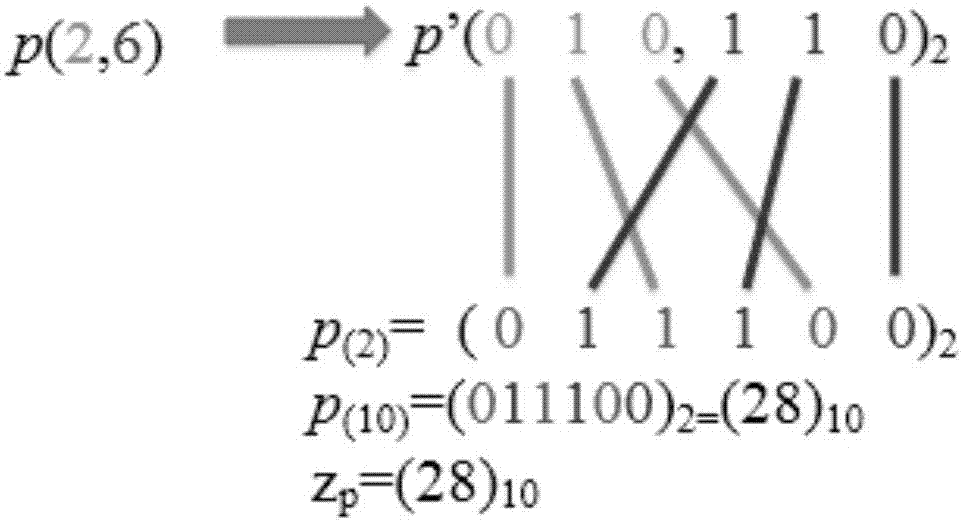

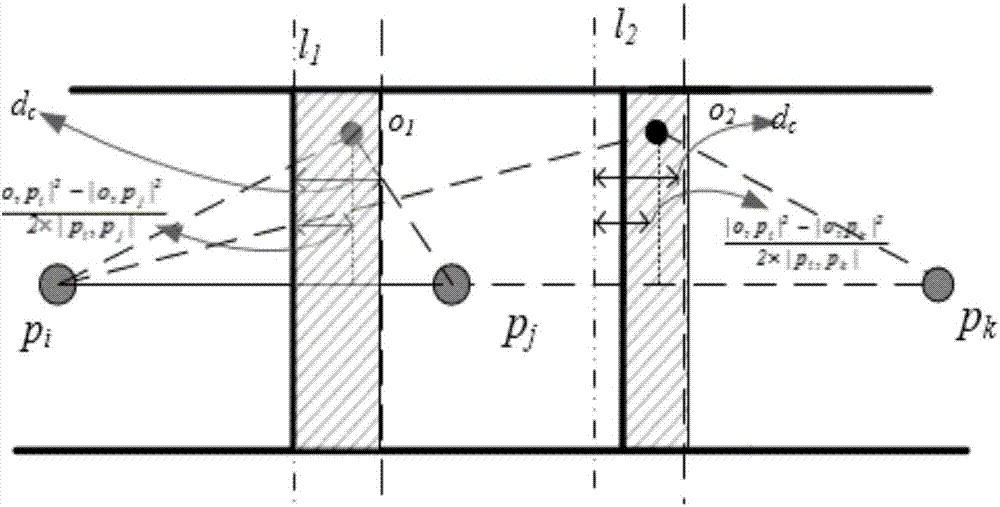

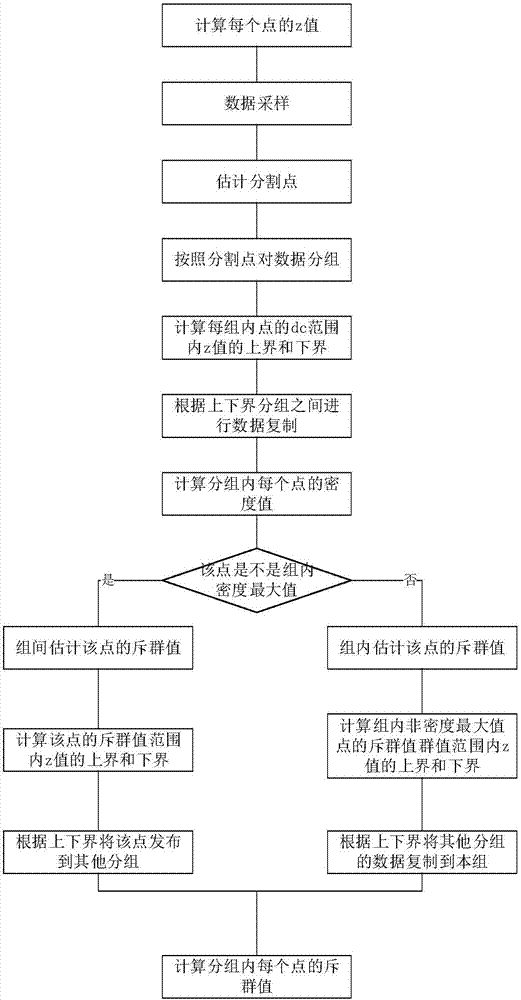

Distributed density peak value clustering algorithm based on z value

InactiveCN107516106AImprove execution efficiencyReduce distanceRelational databasesCharacter and pattern recognitionCluster algorithmData set

A distributed density peak value clustering algorithm based on a z value is disclosed. The algorithm comprises the following steps of (1) preparing a data set; (2) constructing software and hardware environments; (3) preprocessing data; (4) sampling the data set; (5) determining a cut-off distance parameter value of a density value calculating formula in a density clustering algorithm based on the z value and selecting a subgroup quantile; (6) according to the size of the z value, sending points in the data set to different groups; (7) calculating a density value in the distributed density peak value clustering algorithm based on the z value; (8) calculating a global outlier in the distributed density peak value clustering algorithm based on the z value; and (9) under a Hadoop environment, using the density peak value clustering algorithm based on the z value to carry out large data clustering. A z value characteristic is used, a filtering strategy is adopted during data interaction among subgroups, a lot of ineffective distance calculating and data transmission cost are reduced, and execution efficiency of the algorithm is effectively increased.

Owner:SHENYANG POLYTECHNIC UNIV

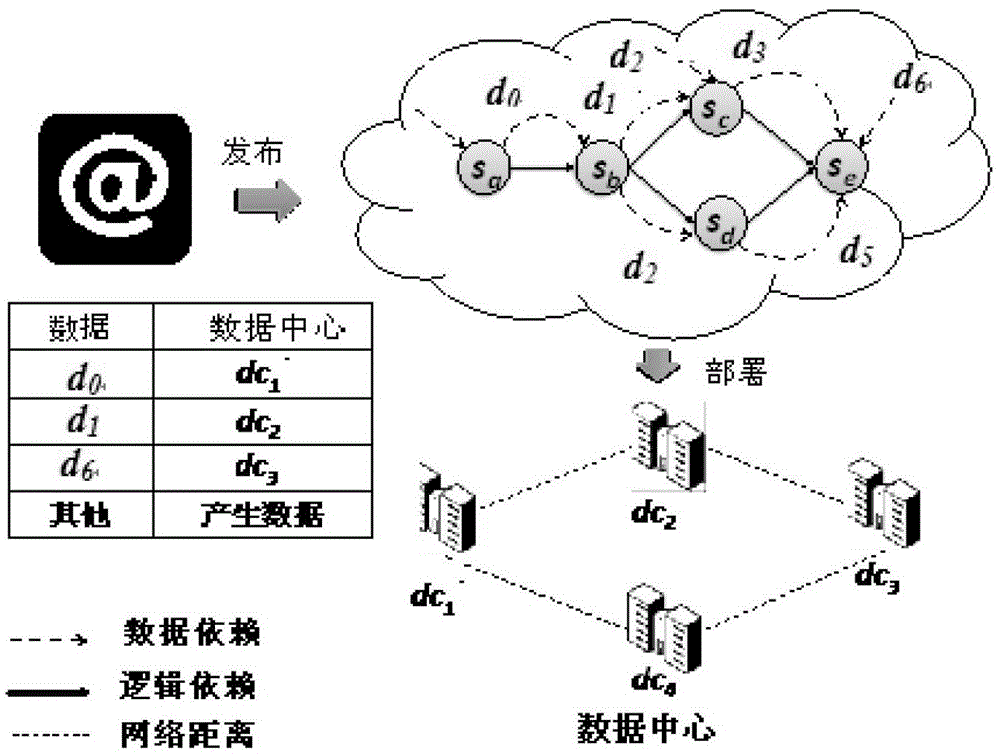

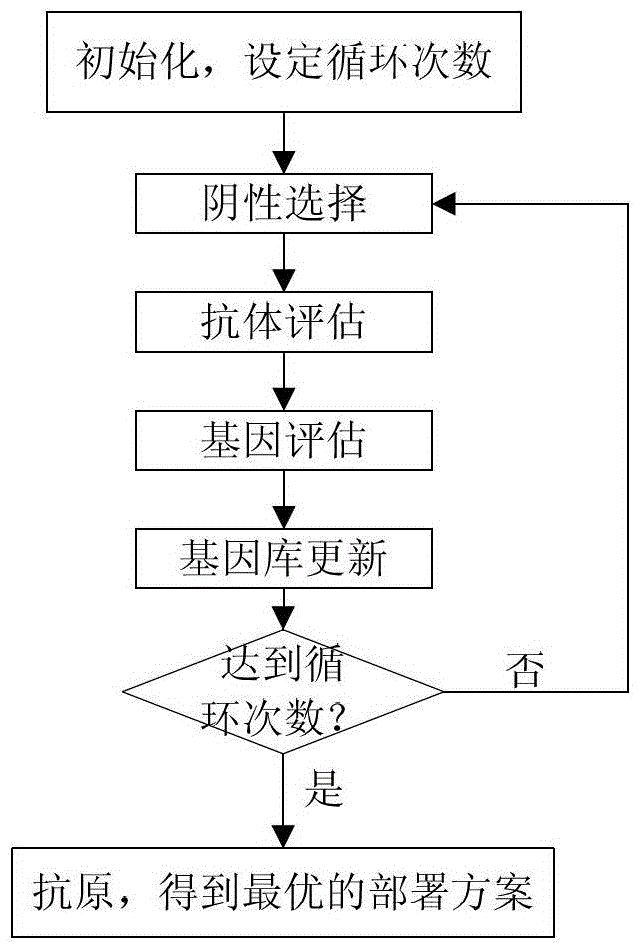

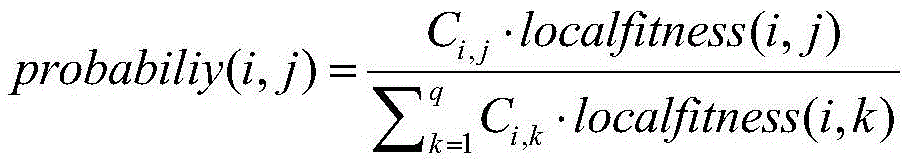

Component service deployment method for data-intensive service collaboration system

ActiveCN103413040BReduce data transfer overheadTransmissionSpecial data processing applicationsAntigenNegative selection algorithm

The invention discloses a method for deploying component services for a data-intensive service cooperation system. By the method, the component services are optimally deployed by the aid of a multi-object optimization algorithm on the basis of negative selection. The method includes mapping the component service deployment on the basis of data-intensive service cooperation into a negative selection algorithm, mapping a single deployment scheme of each single component service into a gene, mapping a deployment scheme of all the component services as an antibody and creating all possible genes to form a gene library; sequentially iteratively matching antibodies on the basis of a negative selection process and circularly iteratively matching the antibodies repeatedly to finally obtain antigens, and using the deployment schemes corresponding to the certain antibodies as the optimal deployment schemes. The method has the advantages that in each iteration procedure, a certain quantity of antibodies are generated by means of gene recombination at first, antibody groups are formed, then low-quality antibodies in the antibody groups are eliminated by means of negative selection, and accordingly search space is reduced; a gene warehouse is updated according to an iteration result after each iteration procedure is completed, so that optimal antibodies can be assuredly generated by means of gene recombination in each follow-up iteration procedure.

Owner:ZHEJIANG UNIV

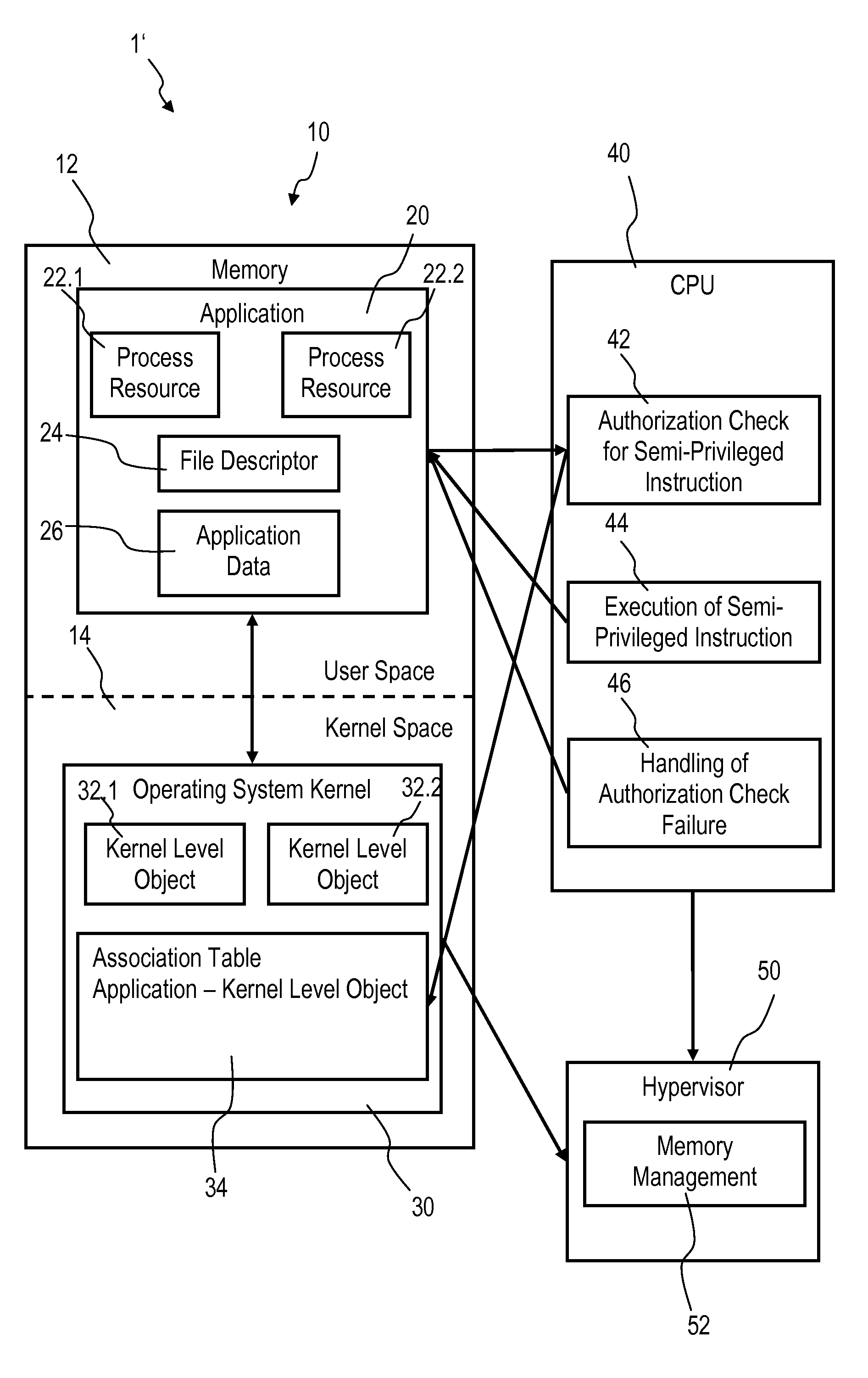

Accessing privileged objects in a server environment

InactiveUS9230110B2Network communicationReduce data transfer overheadSpecific access rightsDigital data processing detailsApplication softwareAuthorization

Accessing privileged objects in a server environment. A privileged object is associated with an application comprising at least one process resource and a corresponding semi-privileged instruction. The association is filed in an entity of an operating system kernel. A central processing unit (CPU) performs an authorization check if the semi-privileged instruction is issued and attempts to access the privileged object. The CPU executes the semi-privileged instruction and grants access to the privileged object if the operating system kernel has issued the semi-privileged instruction; or accesses the entity if a process resource of the application has issued the semi-privileged instruction to determine authorization of the process resource to access the privileged object. Upon positive authorization the CPU executes the semi-privileged instruction and grants access to the privileged object, and upon authorization failure denies execution of the semi-privileged instruction and performs a corresponding authorization check failure handling.

Owner:IBM CORP

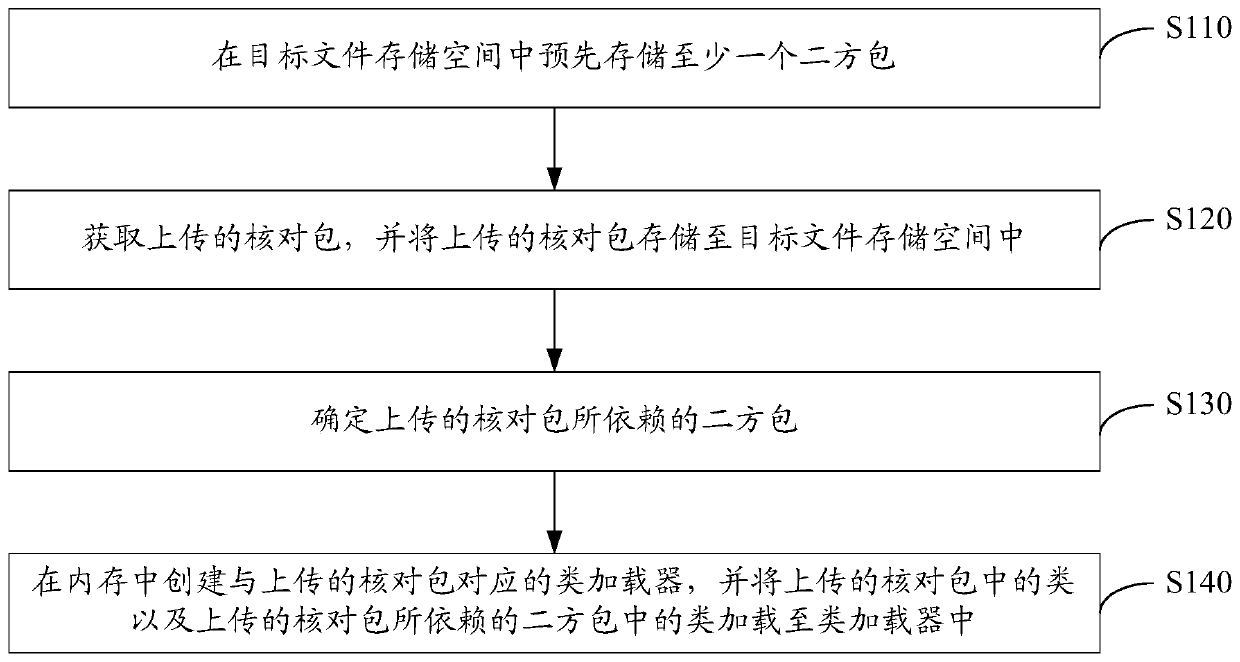

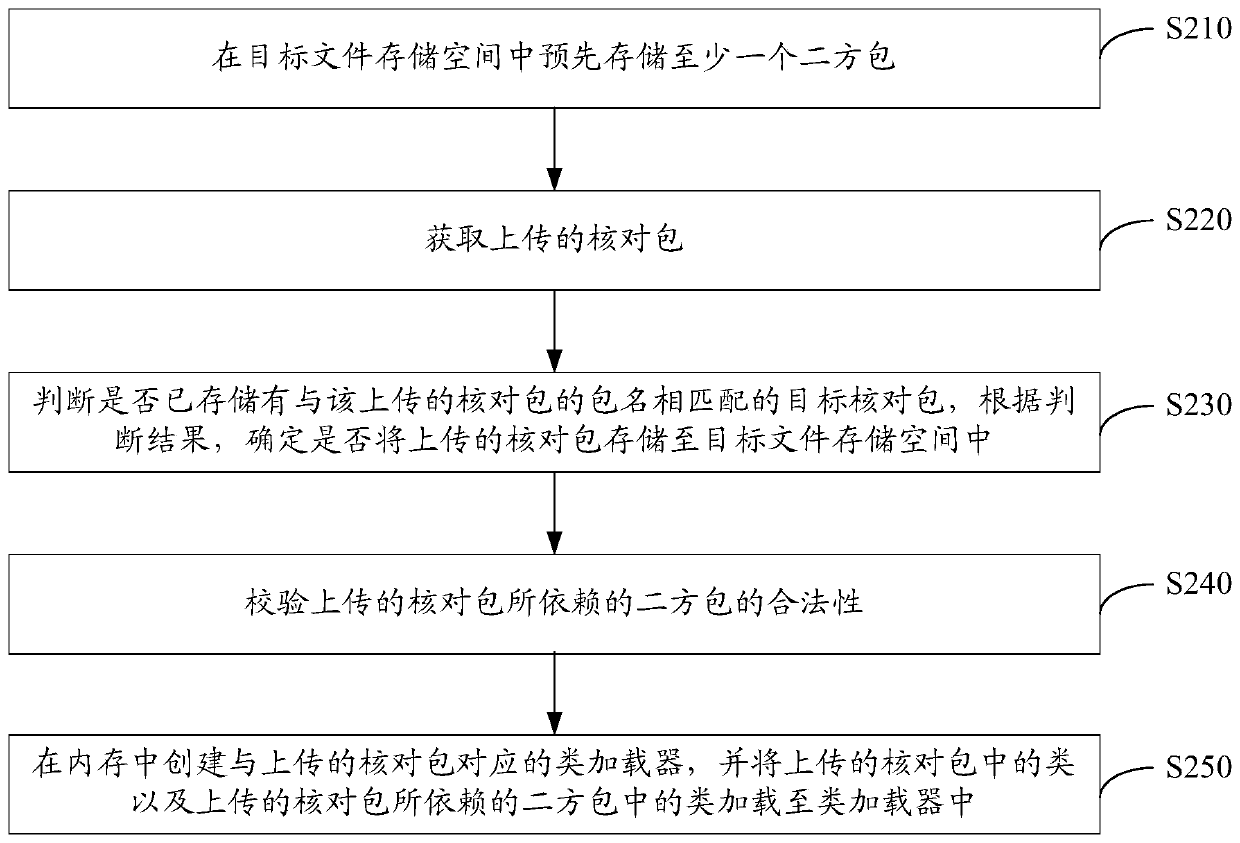

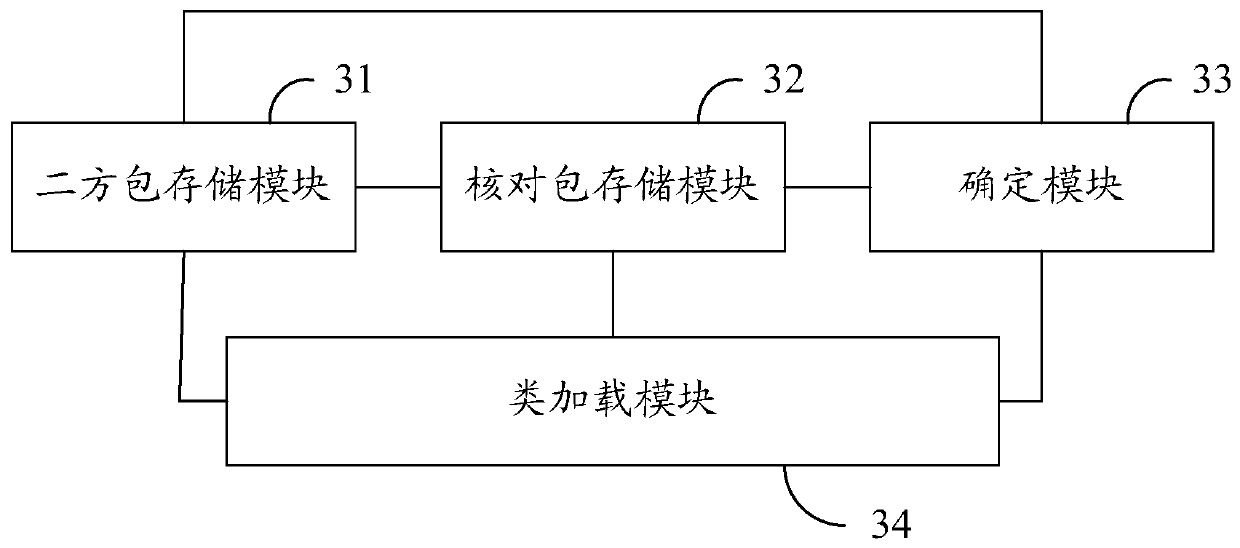

Method and device for checking code package deployment

ActiveCN110286913BGuaranteed uptimeReduce storage overheadVersion controlSoftware deploymentStorage managementTerm memory

The invention discloses a check code packet deployment method and device. The method comprises the following steps of pre-storing at least one secondary package in the target file storage space; obtaining an uploaded check packet, and storing the uploaded check packet in the target file storage space; determining a secondary package on which the uploaded check packet depends; and creating a class loader corresponding to the uploaded check packet in the memory, and loading the class in the uploaded check packet and the class in the secondary package on which the uploaded check packet depends into the class loader. According to the scheme, the check packet in the check code packet is decoupled from the secondary package, and the secondary package is stored and managed in a centralized manner, so that the storage overhead and the data transmission overhead can be greatly reduced, and the centralized management of the secondary package in the check code packet is facilitated; and moreover, by loading the classes of the check package and the relied secondary package into the same class loader, the efficient operation of the check code package is ensured.

Owner:KOUBEI SHANGHAI INFORMATION TECH CO LTD

A data processing method, system, device and readable storage medium

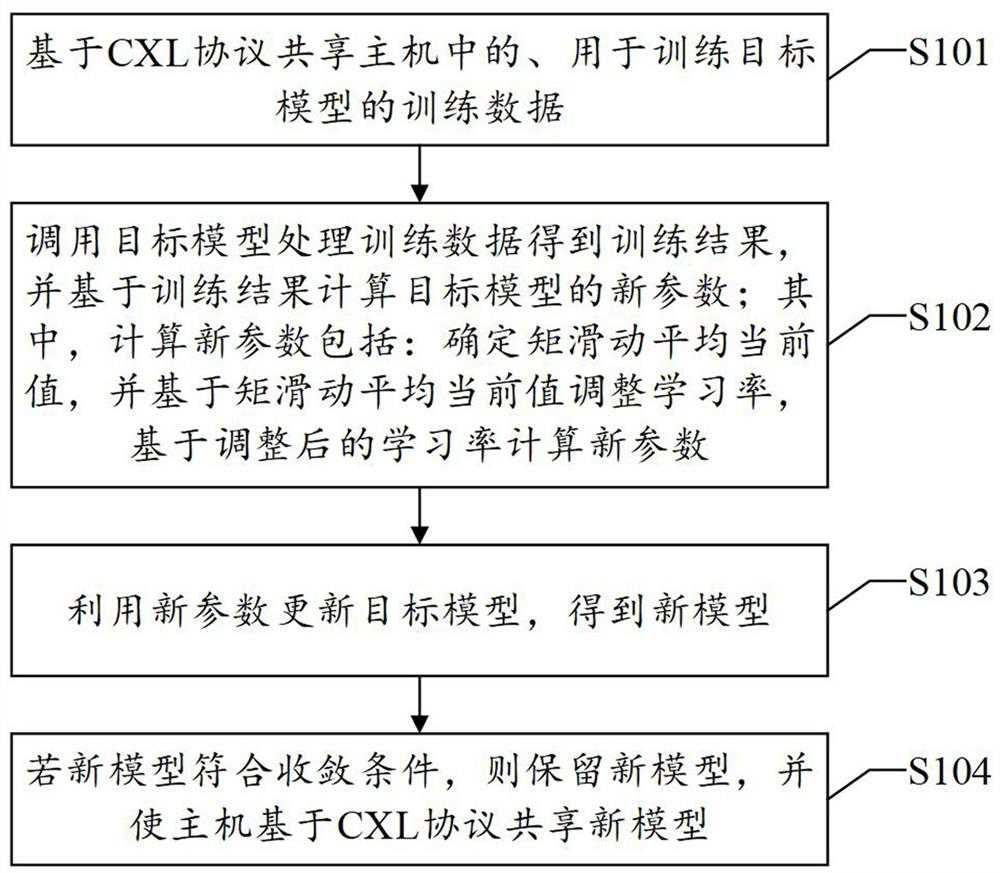

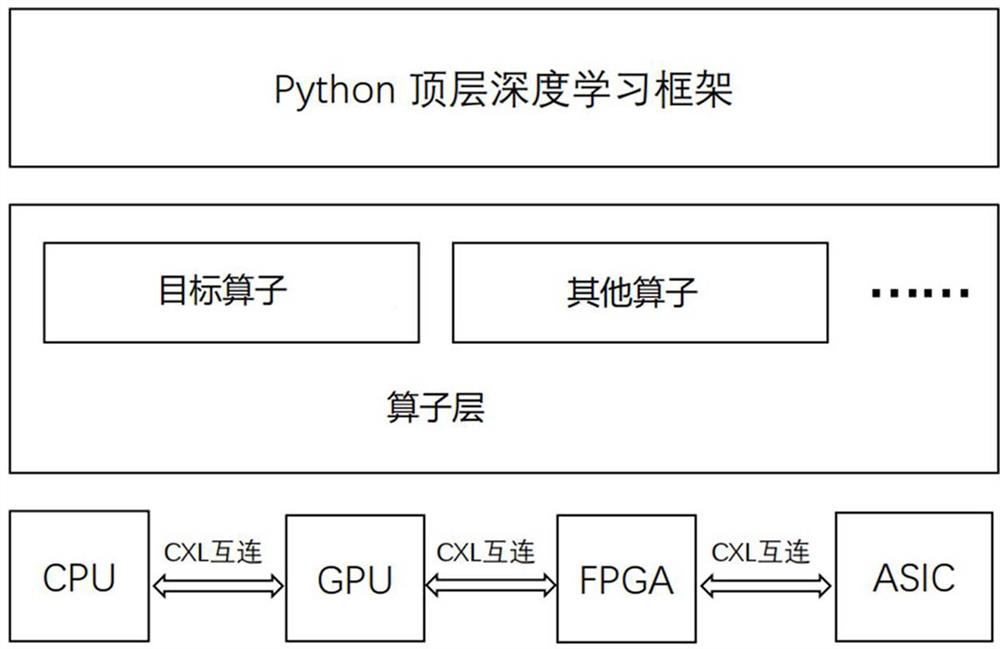

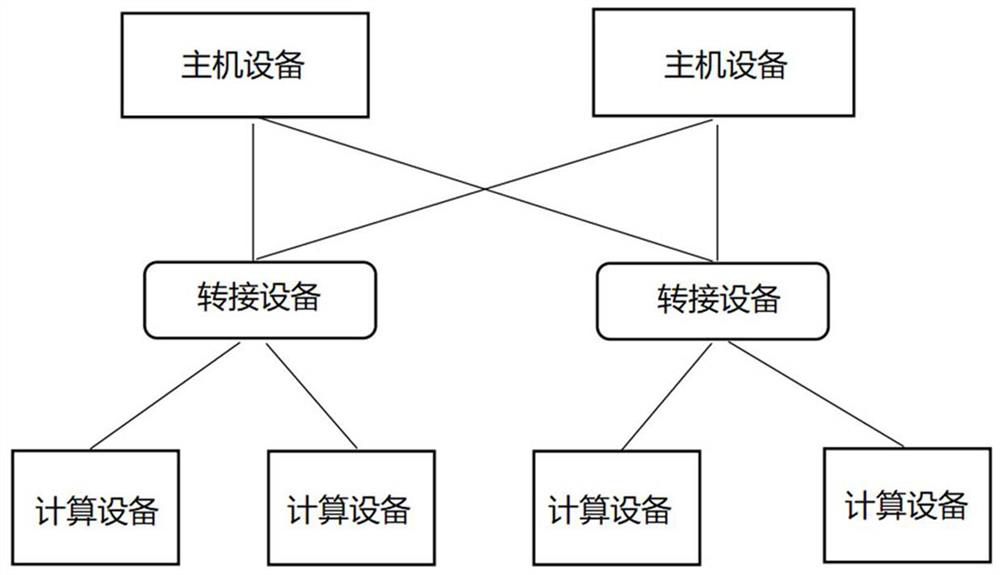

ActiveCN114461568BGuaranteed accuracyImprove training efficiencyInterprogram communicationMachine learningData processing systemComputer architecture

The present application discloses a data processing method, system, device and readable storage medium in the field of computer technology. This application connects the host and the hardware computing platform through the CXL protocol, so the host and the hardware computing platform can share each other's memory, IO and cache, so the training data does not need to be transmitted through storage media such as host memory, GPU cache, GPU memory, etc. The training data in the host memory is directly read by the hardware computing platform, thereby reducing the data transmission overhead. At the same time, the hardware computing platform can adjust the learning rate based on the current value of the moment sliding average and then calculate the new parameters of the model, so as to stabilize the model parameters, ensure the model accuracy, and improve the training efficiency. It can be seen that this scheme can reduce the data transmission overhead between the host and the hardware module and improve the model training efficiency. Correspondingly, a data processing system, device and readable storage medium provided by the present application also have the above technical effects.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

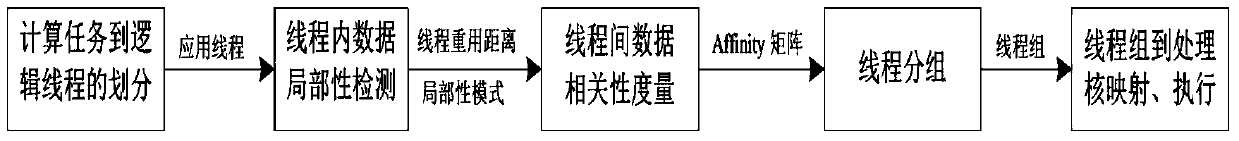

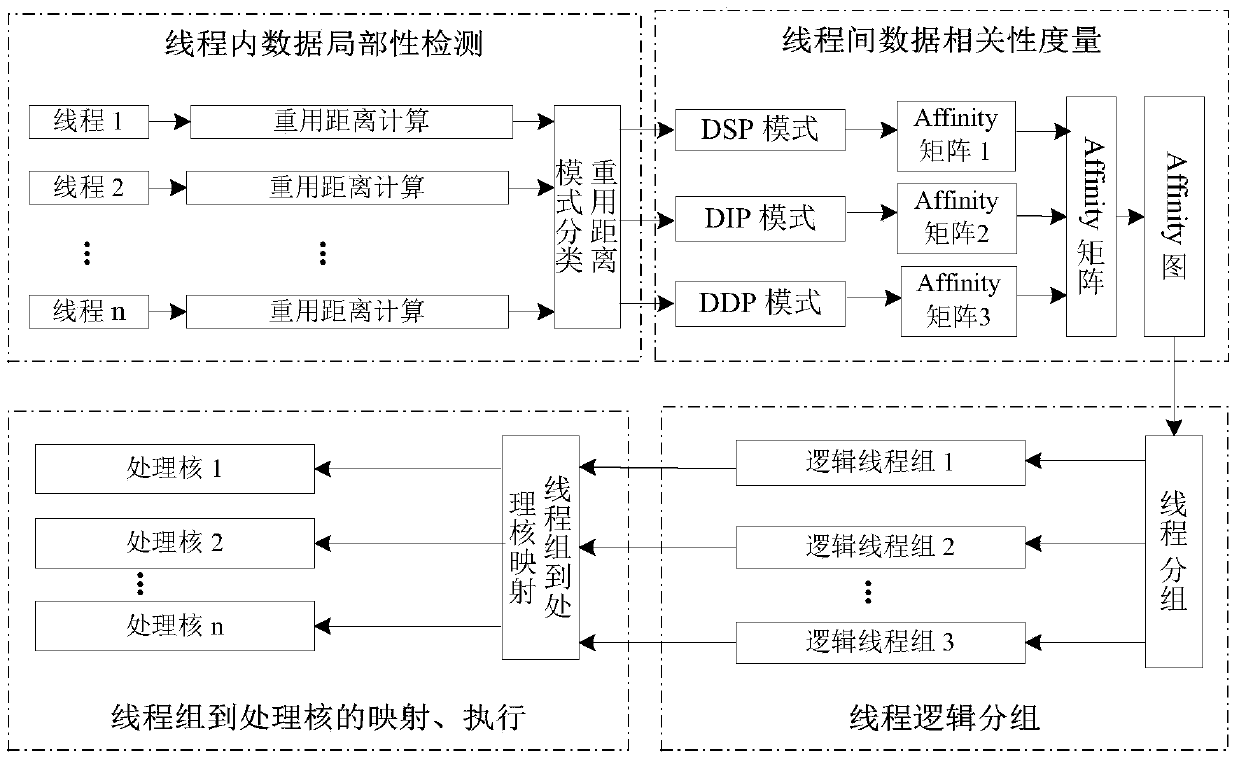

A data-dependent thread grouping mapping method for many-core systems

ActiveCN105808358BImprove computing powerReduce shared storage access conflictsResource allocationHardware threadEffective solution

Owner:XI AN JIAOTONG UNIV

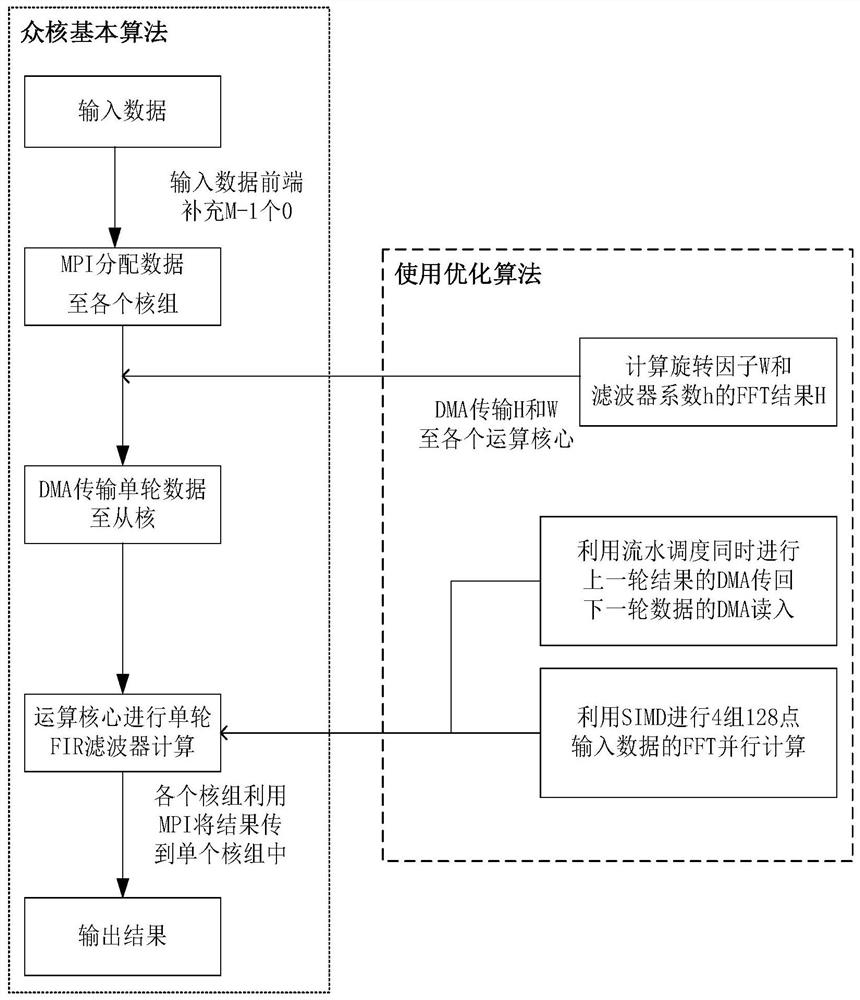

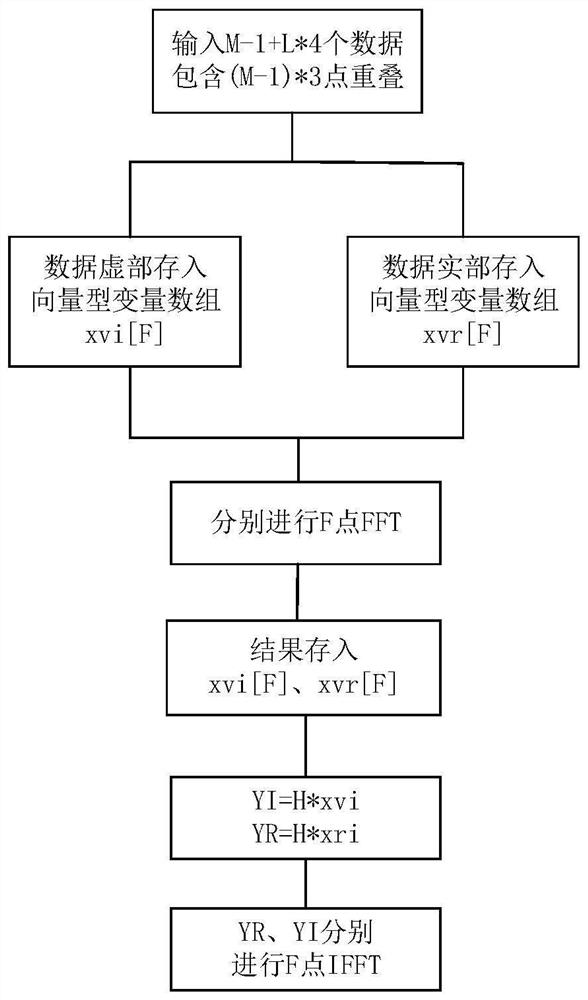

FIR (Finite Impulse Response) filter high-performance implementation method based on domestic many-core processor

PendingCN114237716AImprove parallelismImprove processing parallelismDigital technique networkConcurrent instruction executionFinite impulse responseMessage delivery

The invention provides an FIR filter high-performance implementation method based on a domestic many-core processor, and the method comprises the steps: carrying out the analog-to-digital conversion of an analog signal based on a domestic many-core processor platform, obtaining input data, enabling a control core to distribute the input data to four core groups through a message passing interface, supplementing M-1 zero values at the front end of the input data, then calculating an FFT result H of a twiddle factor W and a filter coefficient h [M], and transmitting the twiddle factor W and the calculation result H to each operation core by using direct storage access; and then direct storage access is carried out to transmit single-round data to each operation core and carry out single-round FIR filtering calculation, and operation results of each round are connected in sequence in the control core to obtain a final result. Compared with the method for directly calculating the FIR filter algorithm by utilizing a single core of a domestic processor, the method for optimizing the FIR filter algorithm has the advantages that the core parallelism is improved, and the parallelization of data processing is realized, so that the algorithm speed is improved.

Owner:ZHEJIANG UNIV

A training system for backpropagation neural network dnn

ActiveCN103150596BFast trainingReduce data transfer overheadNeural learning methodsGraphicsAlgorithm

The invention provides a training system of back propagation neural network DNN (Deep Neural Network). The training system of the back propagation neural network DNN comprises a first graphics processor assembly, a second graphics processor assembly and a controller assembly, wherein the first graphics processor assembly is used for performing DNN forward calculation and weight update calculation; the second graphics processor assembly is used for perform DNN forward calculation and DNN back calculation; the controller assembly is used for controlling the first graphics processor assembly and the second graphics processor assembly to perform Nth-layer DNN forward calculation respectively according to respective input data, and after the completion of the forward calculation, controlling the first graphics processor assembly to perform the weight update calculation and controlling the second graphics processor assembly to perform the DNN back calculation; and N is a positive integer. The training system provided by the invention has the advantages of high training speed and low data transmission cost, so that the training speed of the back propagation neural network DNN is promoted.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

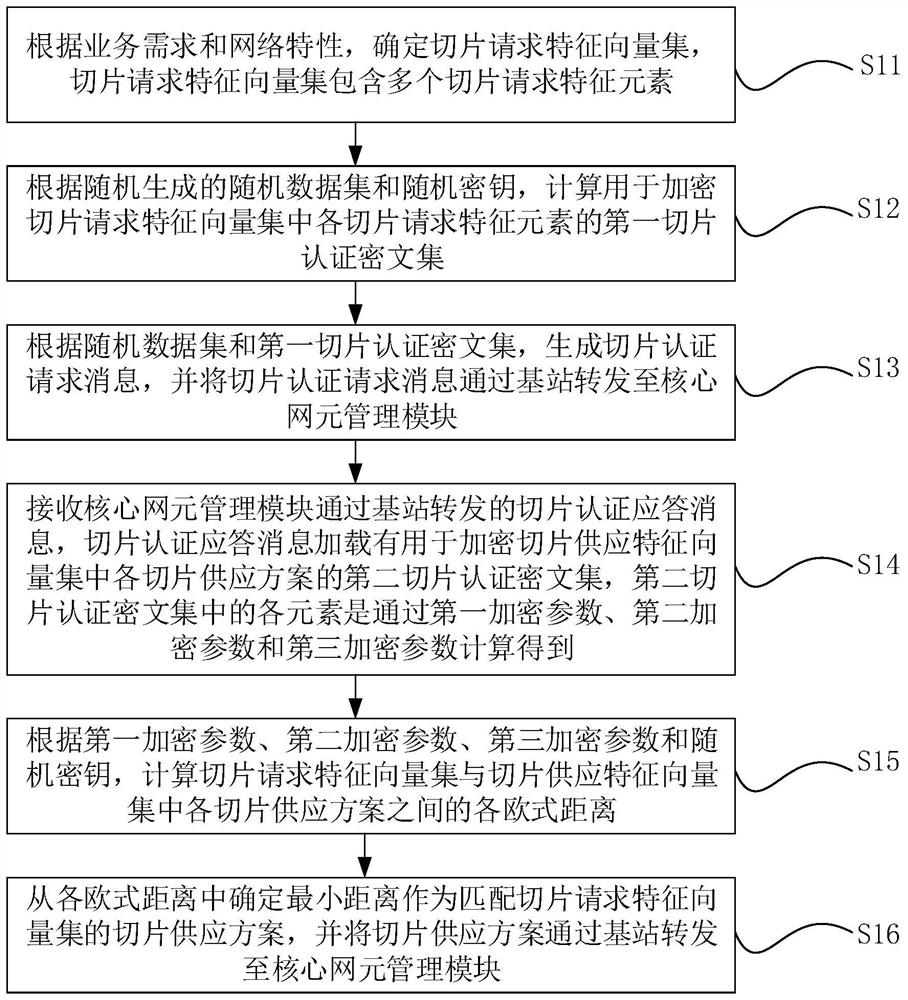

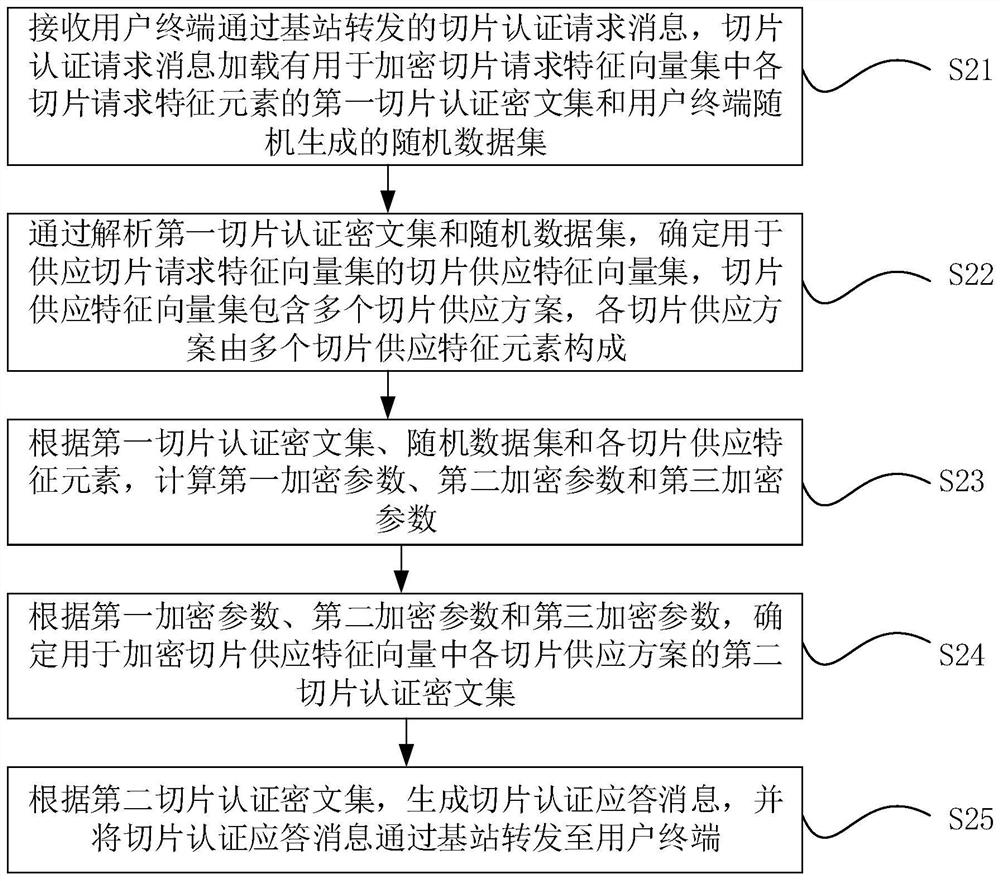

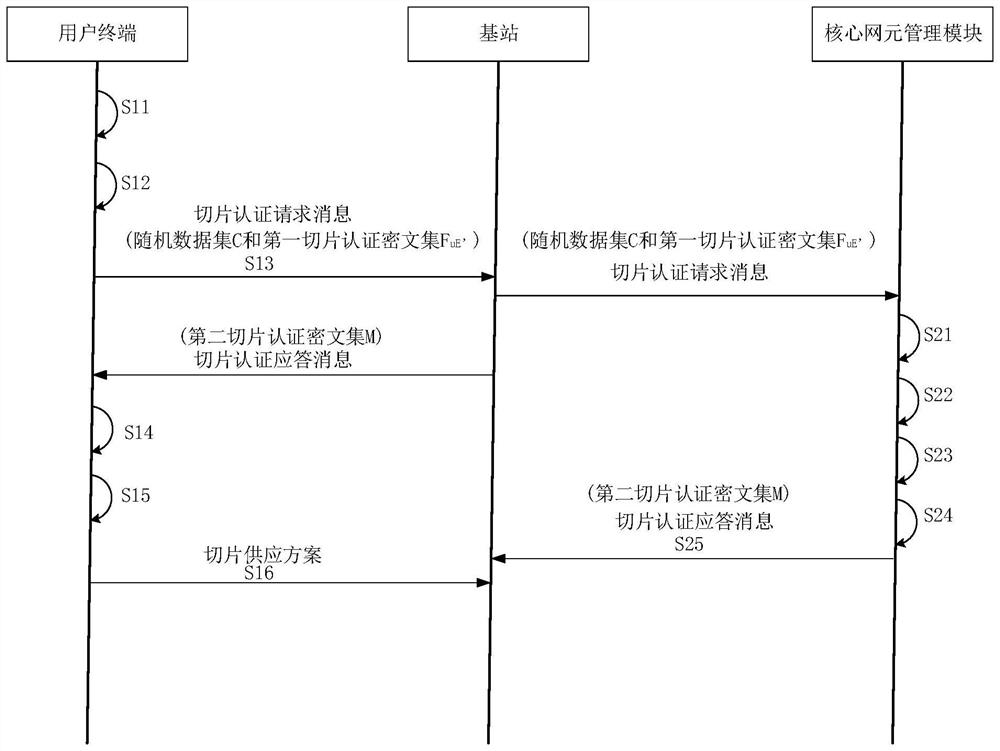

Electric power 5G network slice authentication message matching method and device based on edge calculation

ActiveCN114745151AAvoid illegal acquisitionEnsure safetyKey distribution for secure communicationInformation technology support systemComputer hardwareEngineering

The invention discloses an electric power 5G network slice authentication message matching method and device based on edge computing, and the method comprises the steps: carrying out the data interaction between user equipment (UE) and a core network element management module AMF, calculating the Euclidean distance between a slice request feature vector set and each slice supply scheme feature vector provided by the core network element management module AMF through the UE, and carrying out the Euclidean distance between the slice request feature vector set and each slice supply scheme feature vector provided by the core network element management module AMF; and the nearest slice supply scheme is selected as the slice selection of the user. In a slice selection matching process, a random number set C and a random key r are used for hiding feature information of a slice request scheme and a slice supply scheme, so that a third-party attacker is prevented from illegally obtaining slice information, and the security of the feature information of the slice scheme is ensured. Moreover, the deployment scheme is simple, a PKI system is not needed, and through simple arithmetical operation, reduction of data calculation and data transmission overhead is facilitated, transmission delay is reduced, and calculation and communication efficiency is improved.

Owner:GLOBAL ENERGY INTERCONNECTION RES INST CO LTD +3

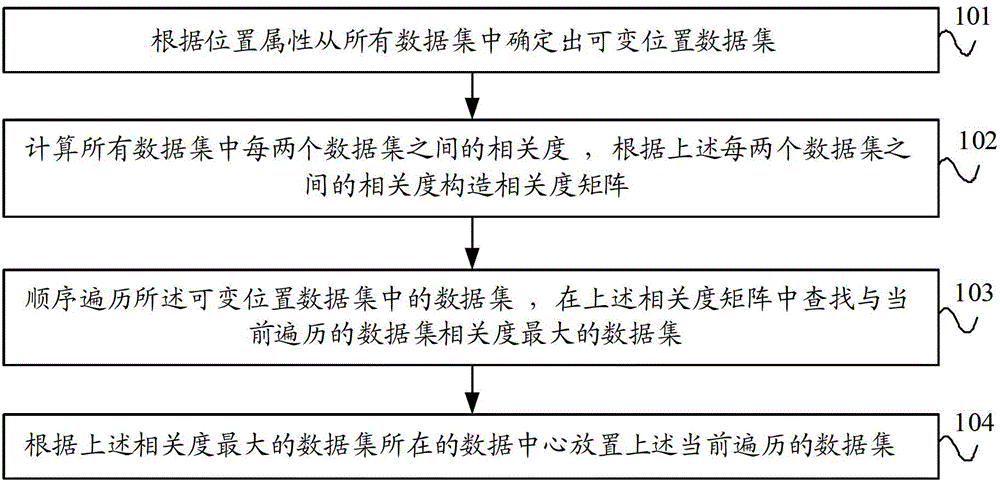

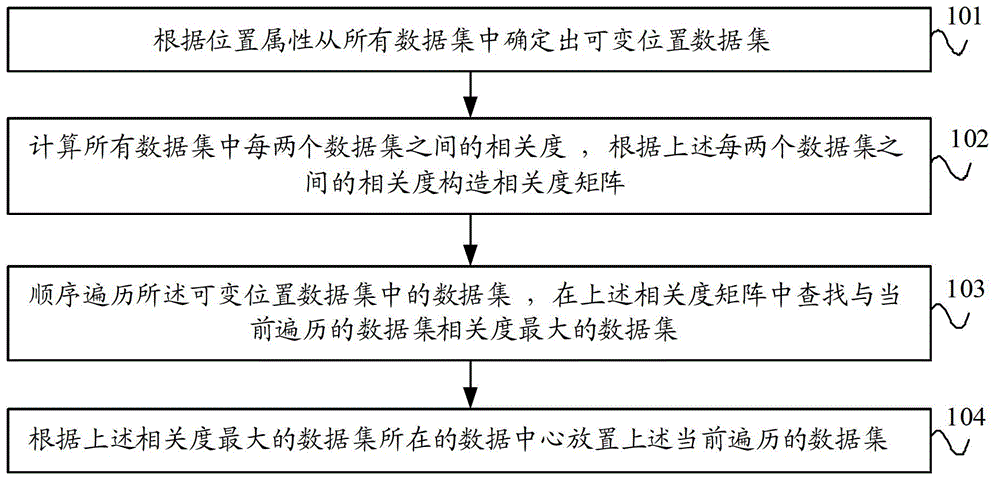

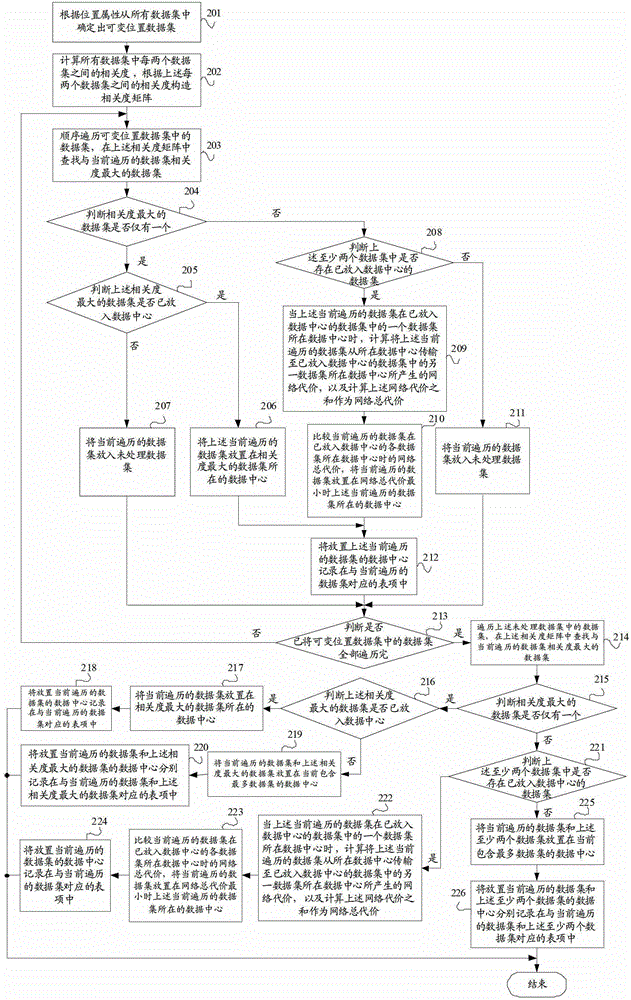

Data configuration method, data configuration device and server

ActiveCN102945245AReduce data transfer overheadImprove efficiencySpecial data processing applicationsData setData center

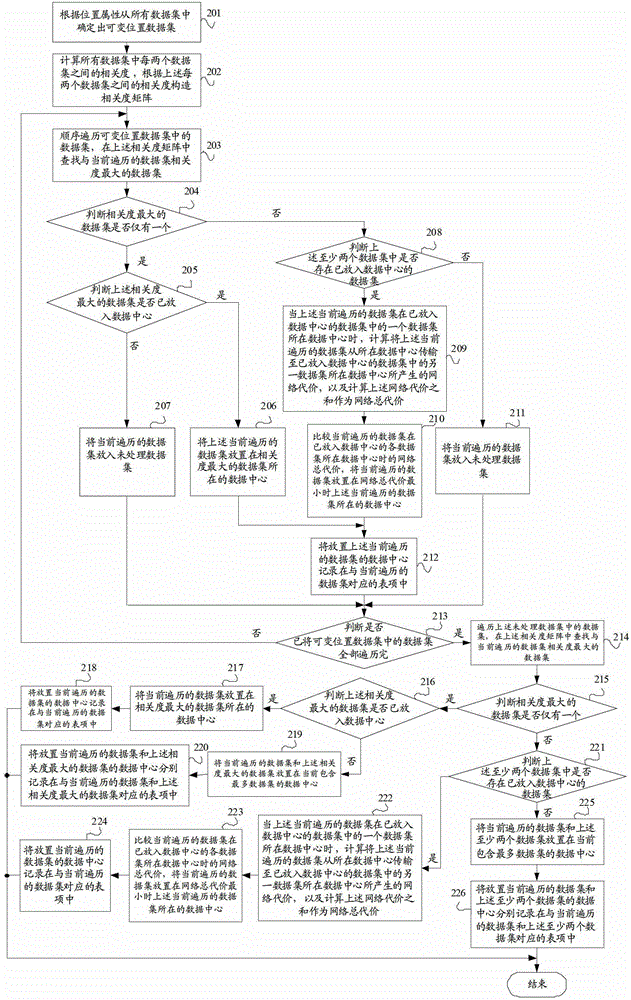

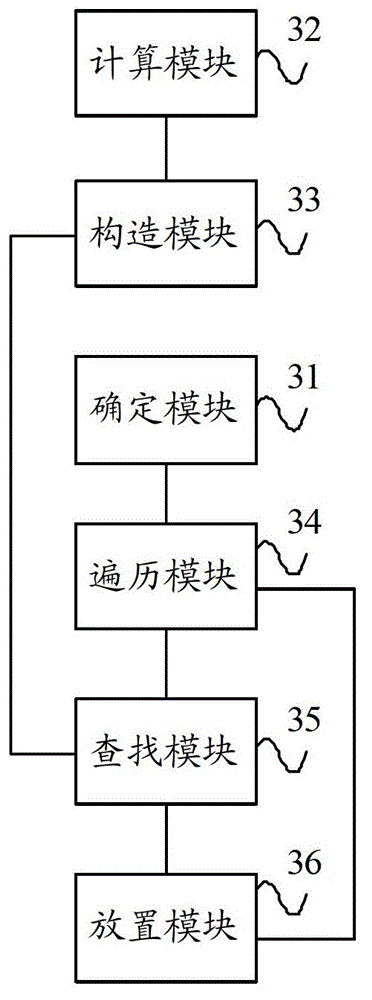

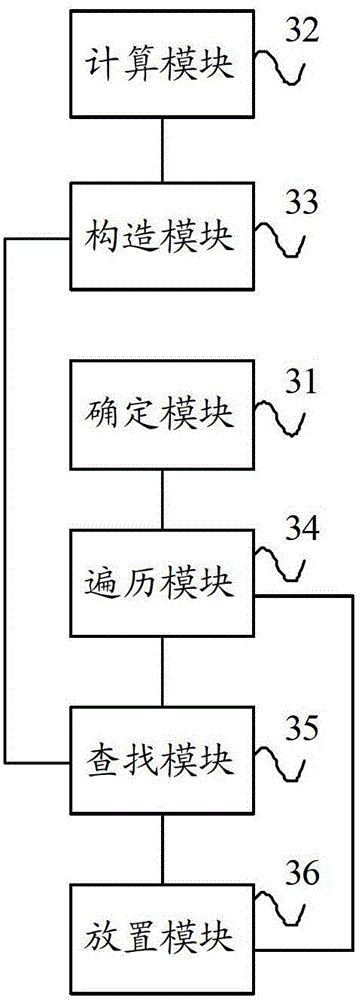

The invention provides a data configuration method, a data configuration device and a server. The data configuration method comprises the following steps: according to a position attribute, determining variable position data sets from all data sets; calculating the relevance of each two data sets in all the data sets and constructing a relevance matrix according to the relevance of each two data sets; sequentially traversing data sets in the variable position data sets and searching the data set with the highest relevance with the currently transversed data set from the relevance matrix; and according to a data center of the data set with the highest relevance, placing the currently transversed data set. The invention can implement the effect of placing the data set at the data center according to the relevance between the data sets so as to reduce the cost of data transmission across the data center and improve the integral efficiency of a system.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

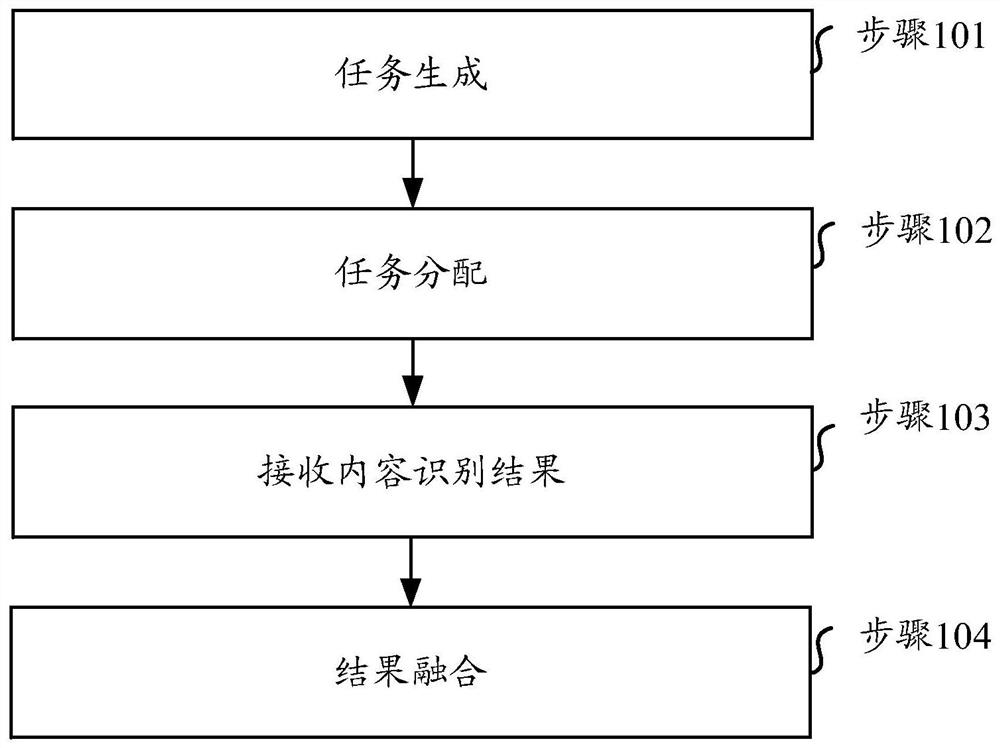

A video content recognition method, device, medium and system

ActiveCN110557679BIntegrity guaranteedGuaranteed accuracyTelevision system detailsColor television detailsEdge computingVideo processing

The present invention relates to the technical field of video processing, in particular to a method, device, medium and system for identifying video content. Aiming at the problem that in the existing security monitoring system, when the video content is automatically recognized in real time, the resource occupancy rate of the video recognition terminal is high or the recognition accuracy is low, the embodiment of the present invention provides a real-time video processing on the edge side, based on the edge device Redundant resources and computing power to cooperate to complete the real-time video recognition scheme. This solution uses existing edge devices (such as set-top boxes, smart home gateways, etc.) to form an edge computing network, and completes real-time video processing on the edge side through a series of methods such as video task allocation, scheduling, and prediction of available resources.

Owner:CHINA MOBILE COMM LTD RES INST +1

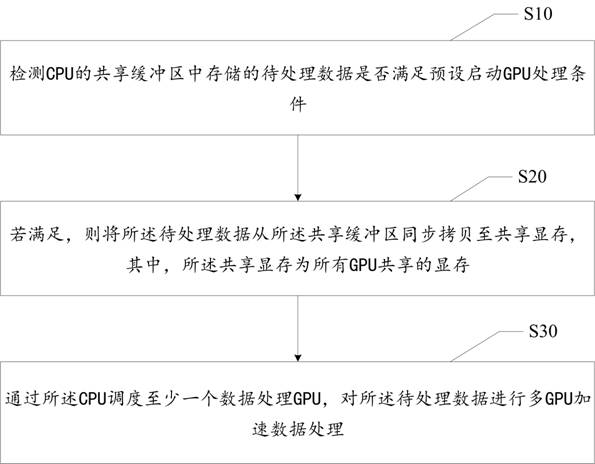

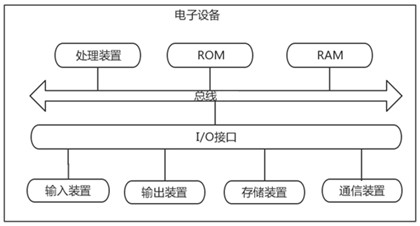

Data processing optimization method, device, electronic device and readable storage medium

ActiveCN114116238BReduce data transfer overheadImprove data processing efficiencyResource allocationVideo memoryComputer architecture

The application discloses a data processing optimization method, device, electronic equipment, and readable storage medium, which are applied in the field of computer technology. The data processing optimization method includes: detecting whether the data to be processed stored in the shared buffer of the CPU satisfies the preset startup GPU processing condition; if satisfied, the data to be processed is synchronously copied from the shared buffer to the shared video memory, wherein the shared video memory is a video memory shared by all GPUs; at least one data processing GPU is scheduled by the CPU, Perform multi-GPU accelerated data processing on the data to be processed. The present application solves the technical problem of low efficiency when the CPU performs data processing in the prior art.

Owner:SHENZHEN MICROPROFIT ELECTRONICS

A data decoding method and device for uplink coordinated multi-point

ActiveCN104283644BReduce data transfer overheadAvoid gain reduction issuesSite diversityModulated-carrier systemsDecoding methodsMultiple point

Owner:DATANG MOBILE COMM EQUIP CO LTD

Data configuration method, data configuration device and server

ActiveCN102945245BReduce data transfer overheadImprove efficiencySpecial data processing applicationsData setData center

The invention provides a data configuration method, a data configuration device and a server. The data configuration method comprises the following steps: according to a position attribute, determining variable position data sets from all data sets; calculating the relevance of each two data sets in all the data sets and constructing a relevance matrix according to the relevance of each two data sets; sequentially traversing data sets in the variable position data sets and searching the data set with the highest relevance with the currently transversed data set from the relevance matrix; and according to a data center of the data set with the highest relevance, placing the currently transversed data set. The invention can implement the effect of placing the data set at the data center according to the relevance between the data sets so as to reduce the cost of data transmission across the data center and improve the integral efficiency of a system.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

A Data Management Method Based on Data Access Characteristics of Rendering Application

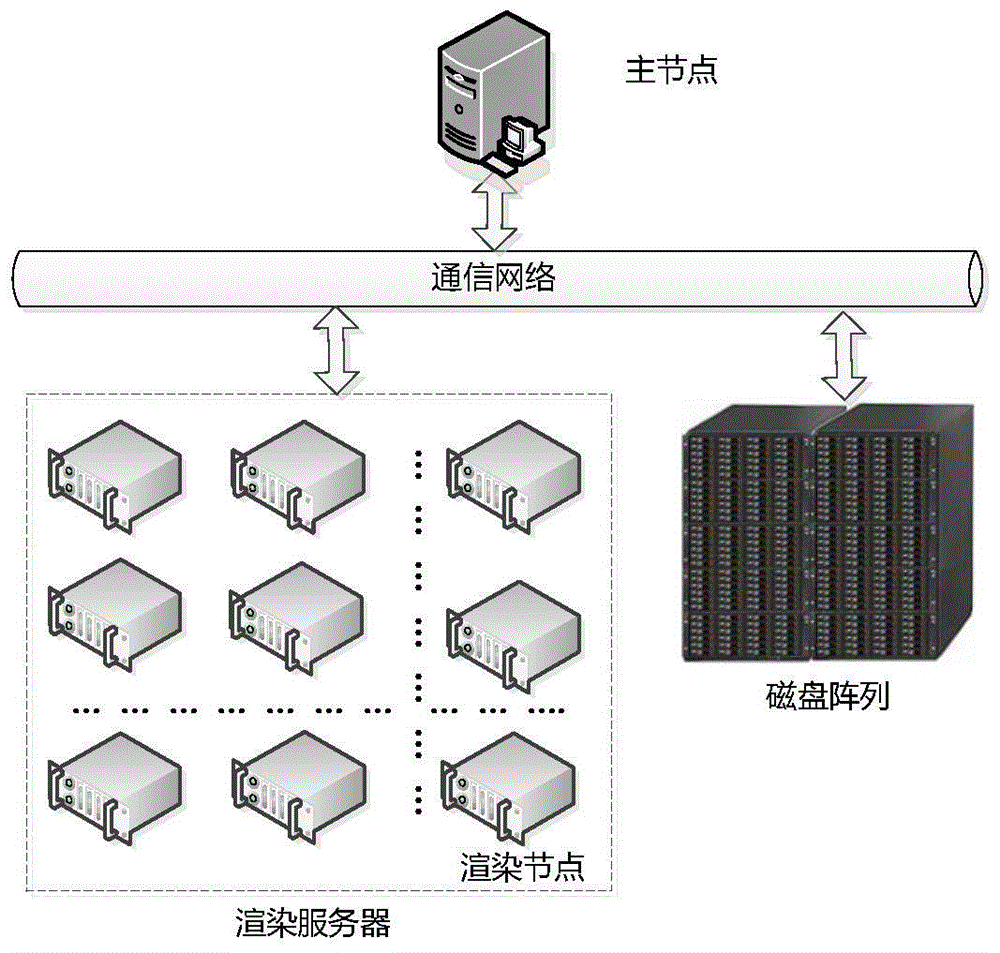

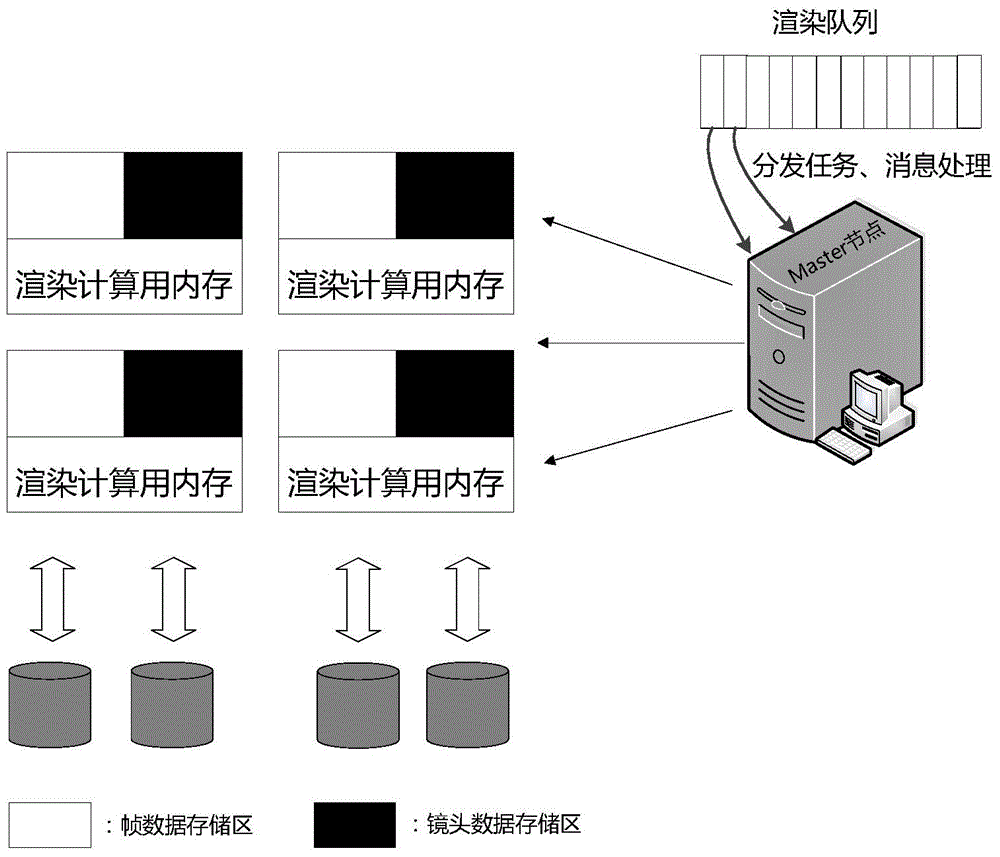

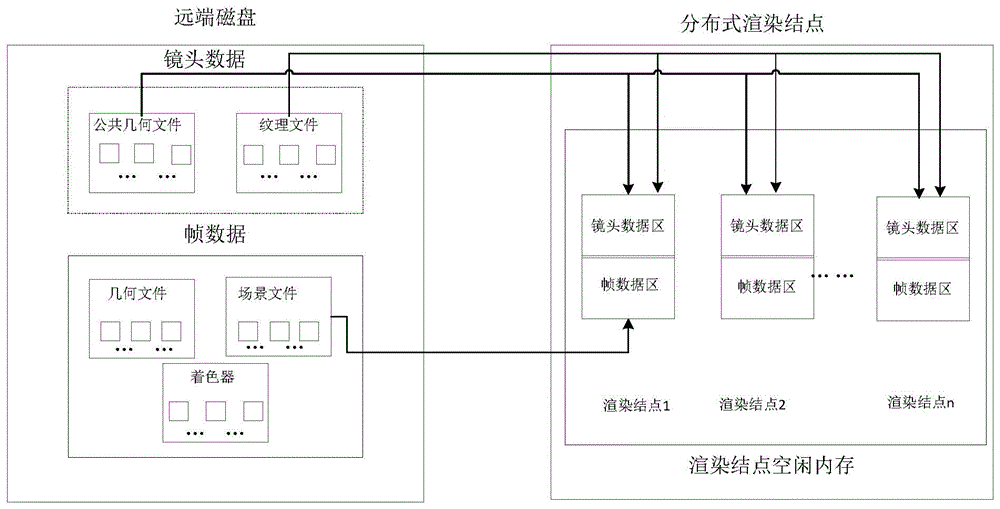

ActiveCN104239224BReduce readReduce data transfer overheadInput/output to record carriersMemory adressing/allocation/relocationData accessData management

The invention discloses a data management method based on rendering application data access characteristics. Different from a disk array storage mode used by the existing rendering application, the technical scheme adopts different storage designs for different data categories by summarizing the access data characteristics of the rendering application. Under the situation that the rendering application is enabled to operate normally, the memory of a rendering node is effectively used, and the data access time of the traditional rendering storage is reduced. Besides, data pre-access is taken into consideration aiming at the characteristics of frame data, and data required by rendering can be stored in almost all of the memory in the rendering operation process, so that the utilization rate of memory is improved. Meanwhile, the data management method is convenient to use as the design in the invention is flexible and feasible, the flexibility is embodied on memory allocation, and the available memory space is allocated for each node according to the size of a scene file; the feasibility is embodied in the aspect that the technical scheme in the invention is still available when the number of nodes is increased or reduced in the distributed environment.

Owner:HUAZHONG UNIV OF SCI & TECH

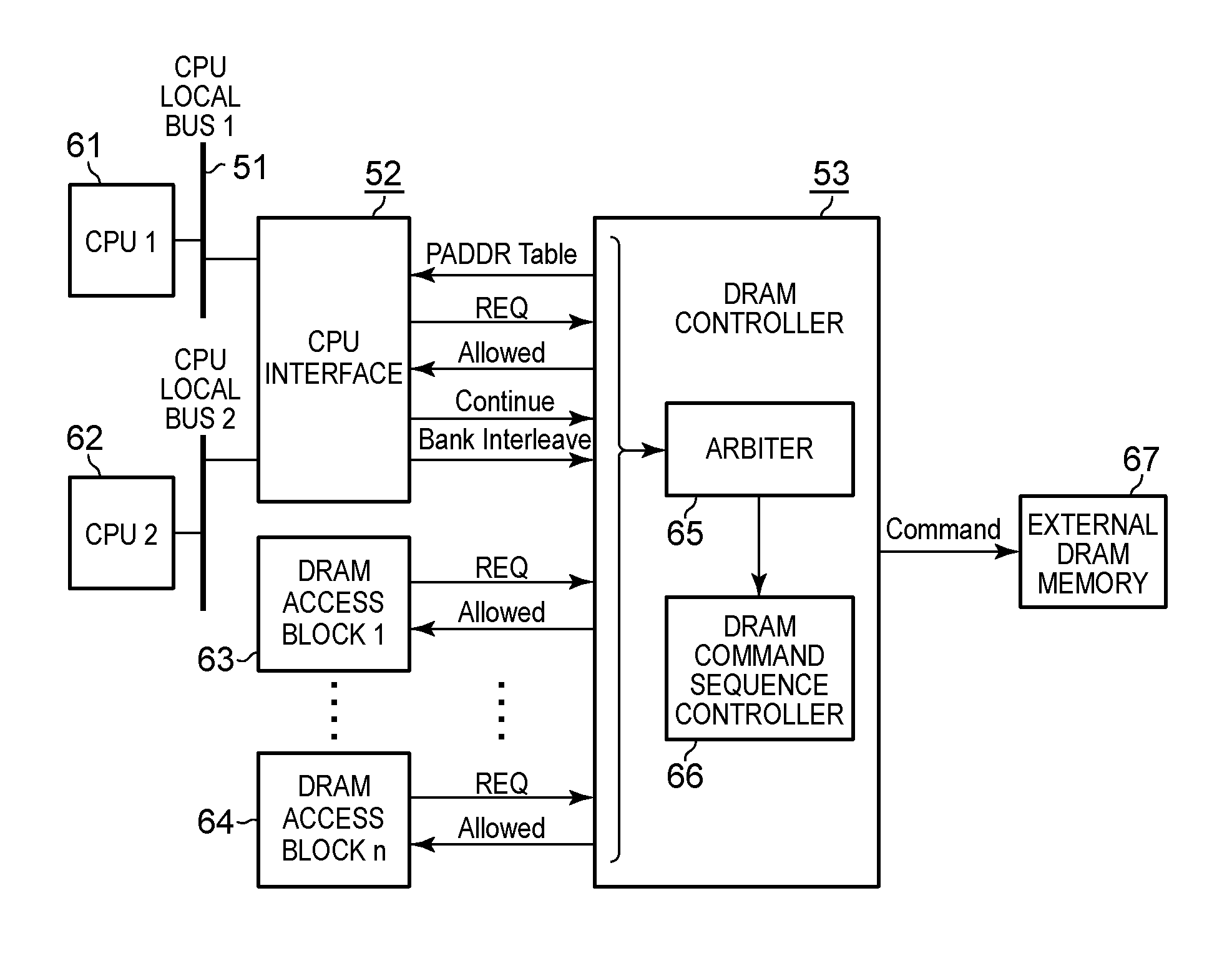

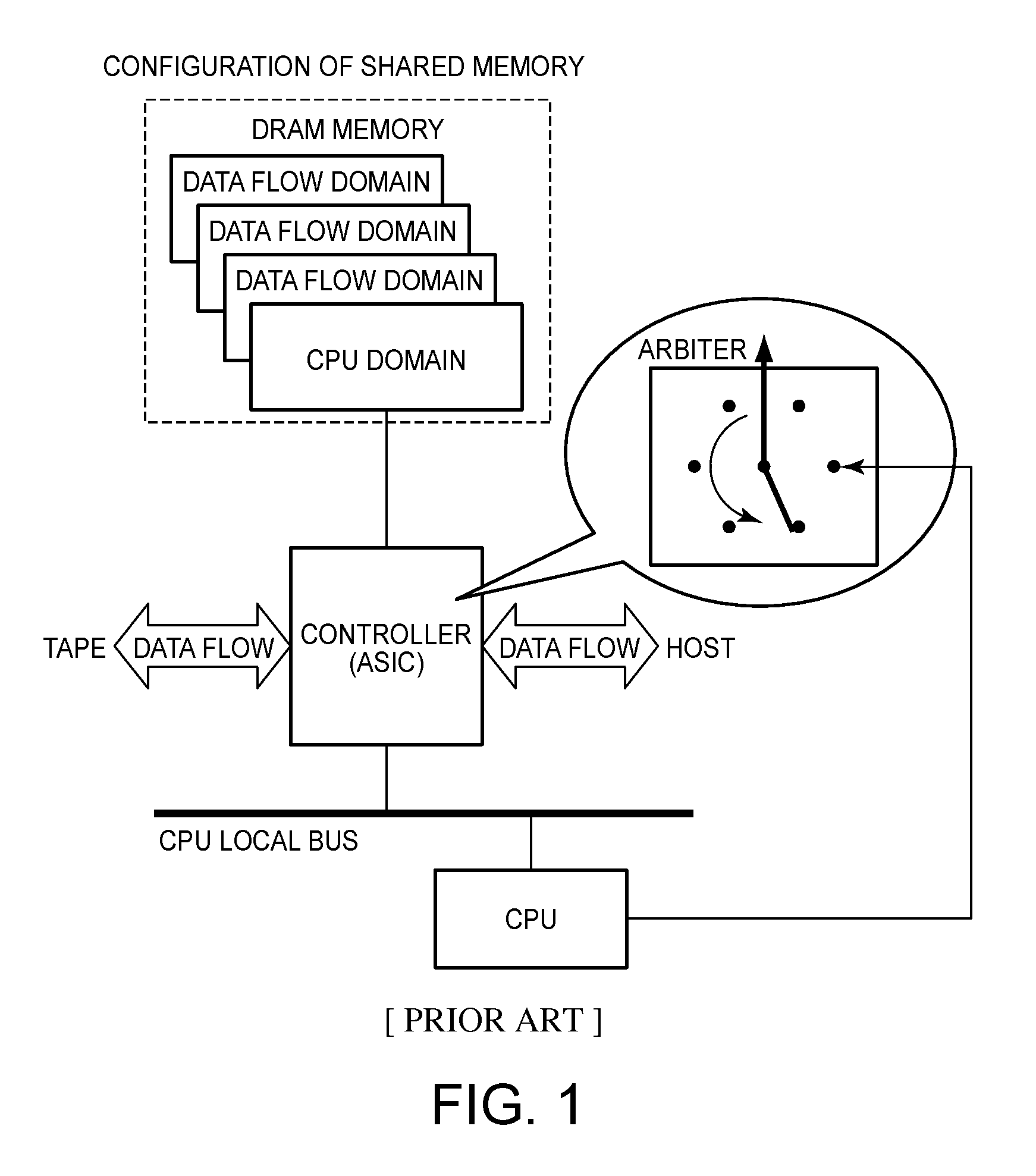

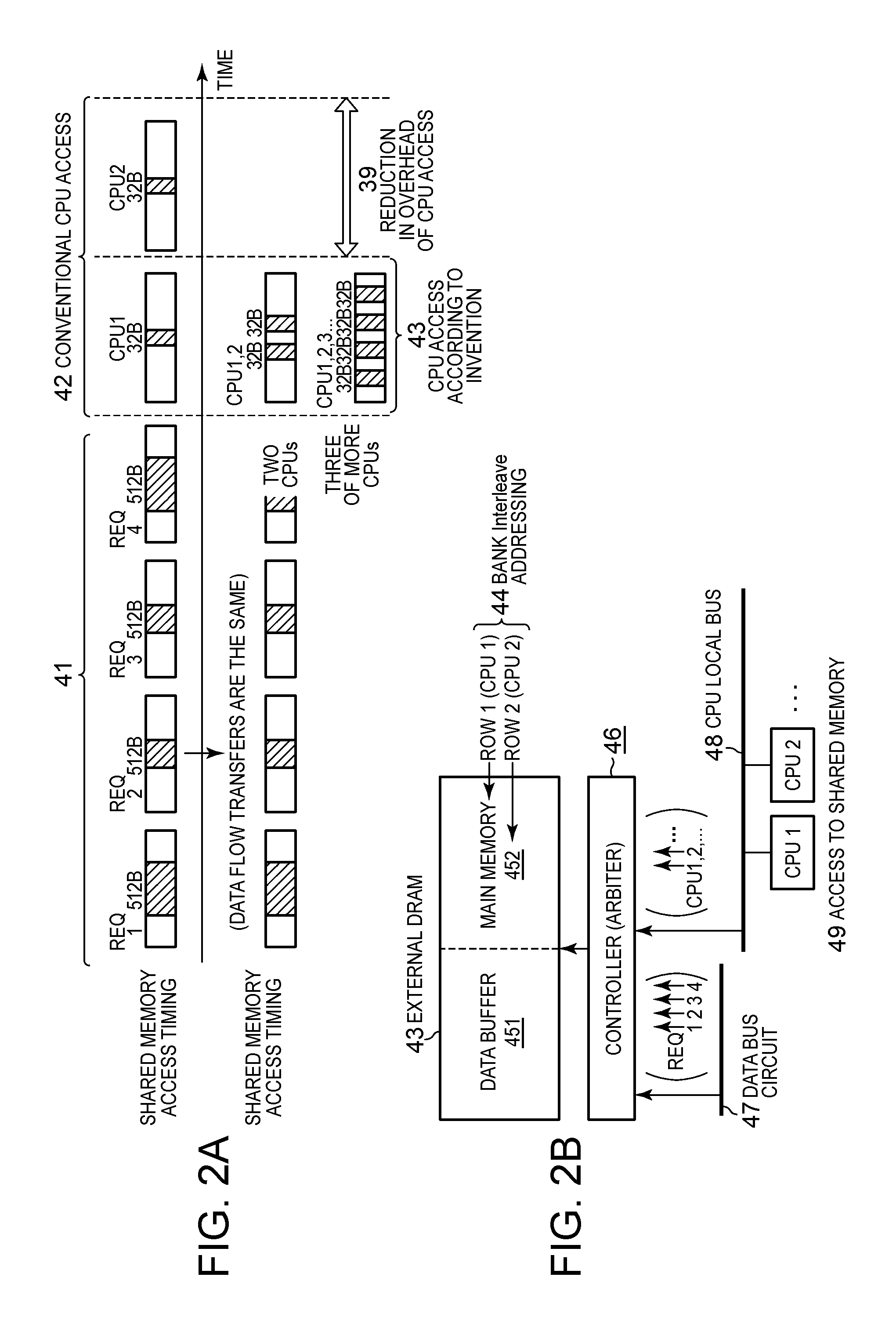

Holding by a memory controller multiple central processing unit memory access requests, and performing the multiple central processing unit memory requests in one transfer cycle

ActiveUS9268721B2Reduce data transfer overheadMinimize delayDigital storageInput/output processes for data processingTransfer modeMemory controller

The present invention includes a plurality of CPUs using memory as main memory, another function block using memory as a buffer, a CPU interface which controls access transfer from the plurality of CPUs to memory, and a DRAM controller for performing arbitration of the access transfer to the memory. Therein, the CPU interface causes access requests from the plurality of CPUs to wait, and receives and stores the address, data transfer mode and data size of each access, notifies the DRAM controller of the access requests, and then, upon receiving grant signals for the access requests, sends information to the DRAM controller according to the grant signals, whereupon the DRAM controller receives the grant signals, and on the basis of the access arbitration, specifies CPUs for which transfers have been granted so as to send the grant signals to the CPU interface.

Owner:INT BUSINESS MASCH CORP

A Cooperative Spectrum Sensing Method Using Spatial Diversity

InactiveCN103888201BReduce data transfer overheadUnaffected by Noise UncertaintySpatial transmit diversityTransmission monitoringFusion centerFrequency spectrum

The invention discloses a cooperative spectrum sensing method utilizing space diversity. The method comprises the steps that sensing nodes participating in cooperative spectrum sensing calculate power magnitudes of received signals, the sensing nodes participating in cooperative spectrum sensing send the power magnitudes of the received signals to a fusion center, the fusion center calculates an inspection statistics magnitude according to the power magnitudes of the signals received by the sensing nodes participating in cooperative spectrum sensing, and finally, the fusion center judges whether an authorized user signal exists in the signals received by the sensing nodes to achieve spectrum sensing by comparing the inspection statistics magnitude with a judgment threshold. The method has the advantages that the noise power magnitude does not need to be known, and the performance is close to that of the energy inspection method based on soft combination with the noise power magnitude known.

Owner:NINGBO UNIV

A data acquisition method and device based on spark computing framework

ActiveCN108536808BImprove import performanceLower latencyDatabase management systemsDatabase indexingDatasheetData set

The invention discloses a data acquisition method and device based on a Spark computing framework. The method includes: after receiving the table object access request, obtaining the computing resource information of Spark and the data distribution information of the data table to be accessed in the MPP cluster; generating multiple Partitions according to the computing resource information and data distribution information; wherein, each A Partition corresponds to part of the data in the data table; through the generated multiple Partitions, the data table is obtained from the MPP cluster. The present invention makes full use of the data storage characteristics of the MPP cluster, and directly and quickly acquires data sets from the storage nodes of the MPP through multiple Partitions. Furthermore, in the case of sufficient computing resources, the data table of the storage node can be further split to achieve the purpose of increasing parallelism and improving data import performance. According to the data distribution of the MPP cluster, data can be obtained from local storage first, reducing data transmission overhead, saving network bandwidth, reducing network delay, and improving computing performance.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com