Patents

Literature

176 results about "Motion intensity" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

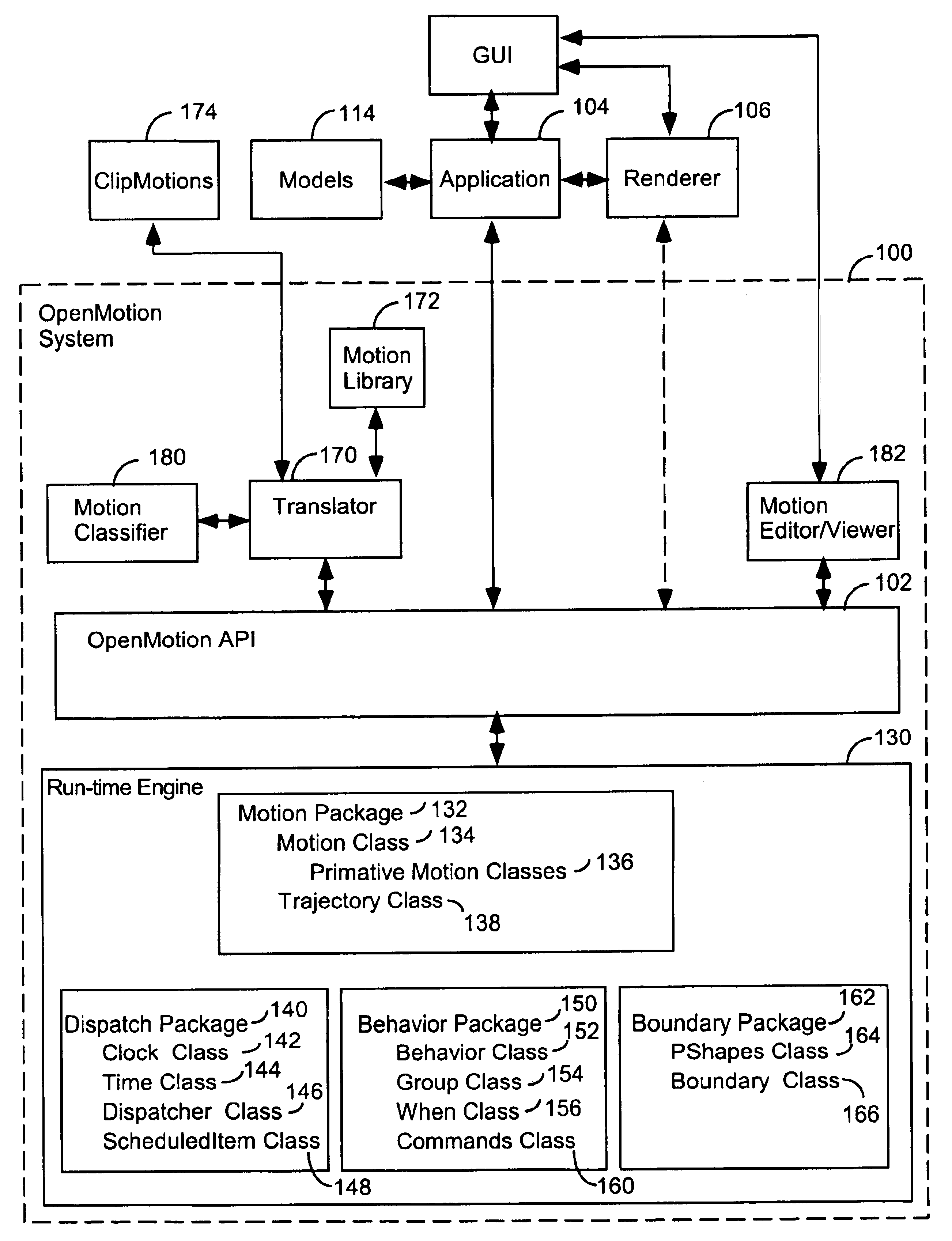

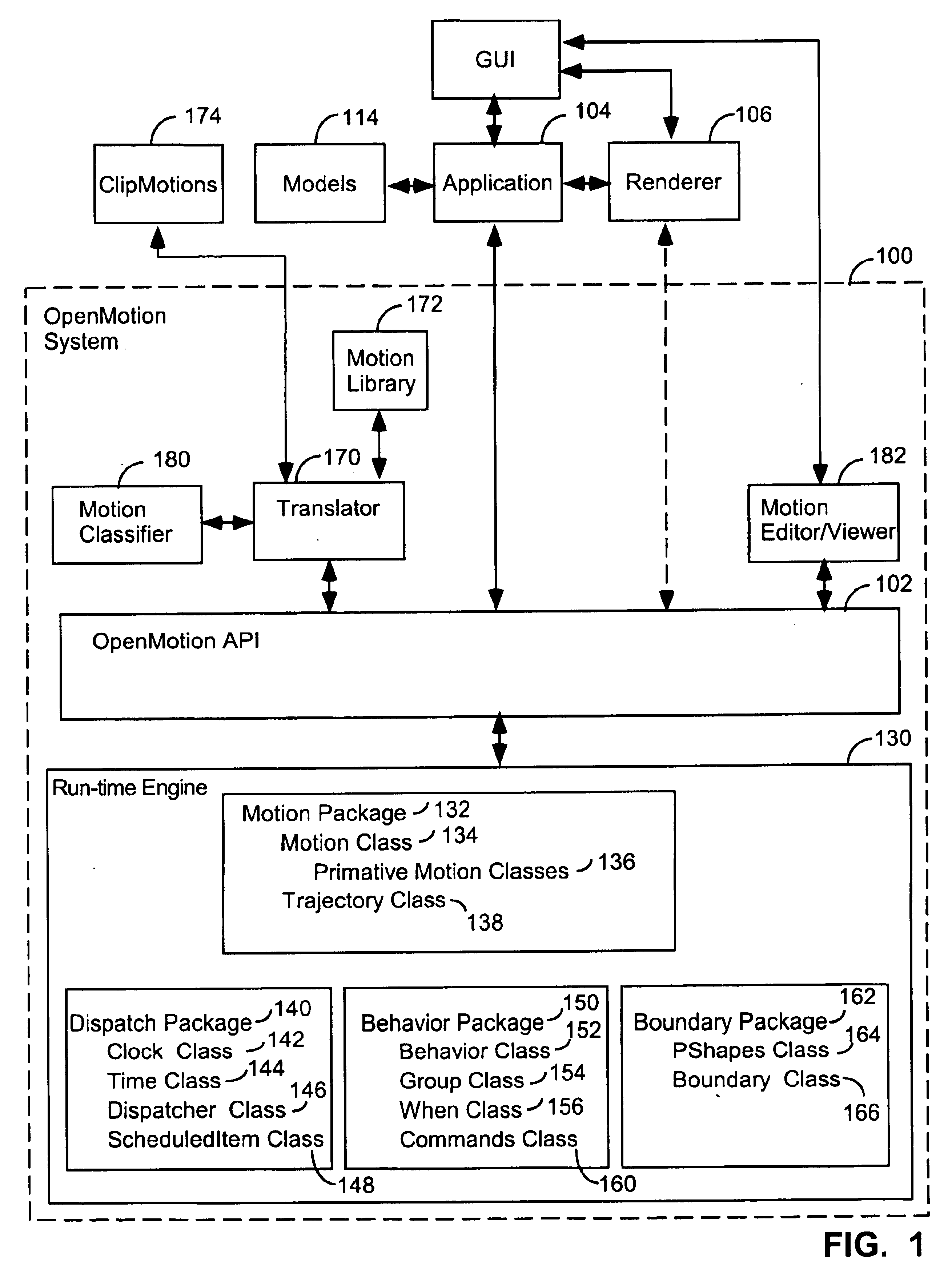

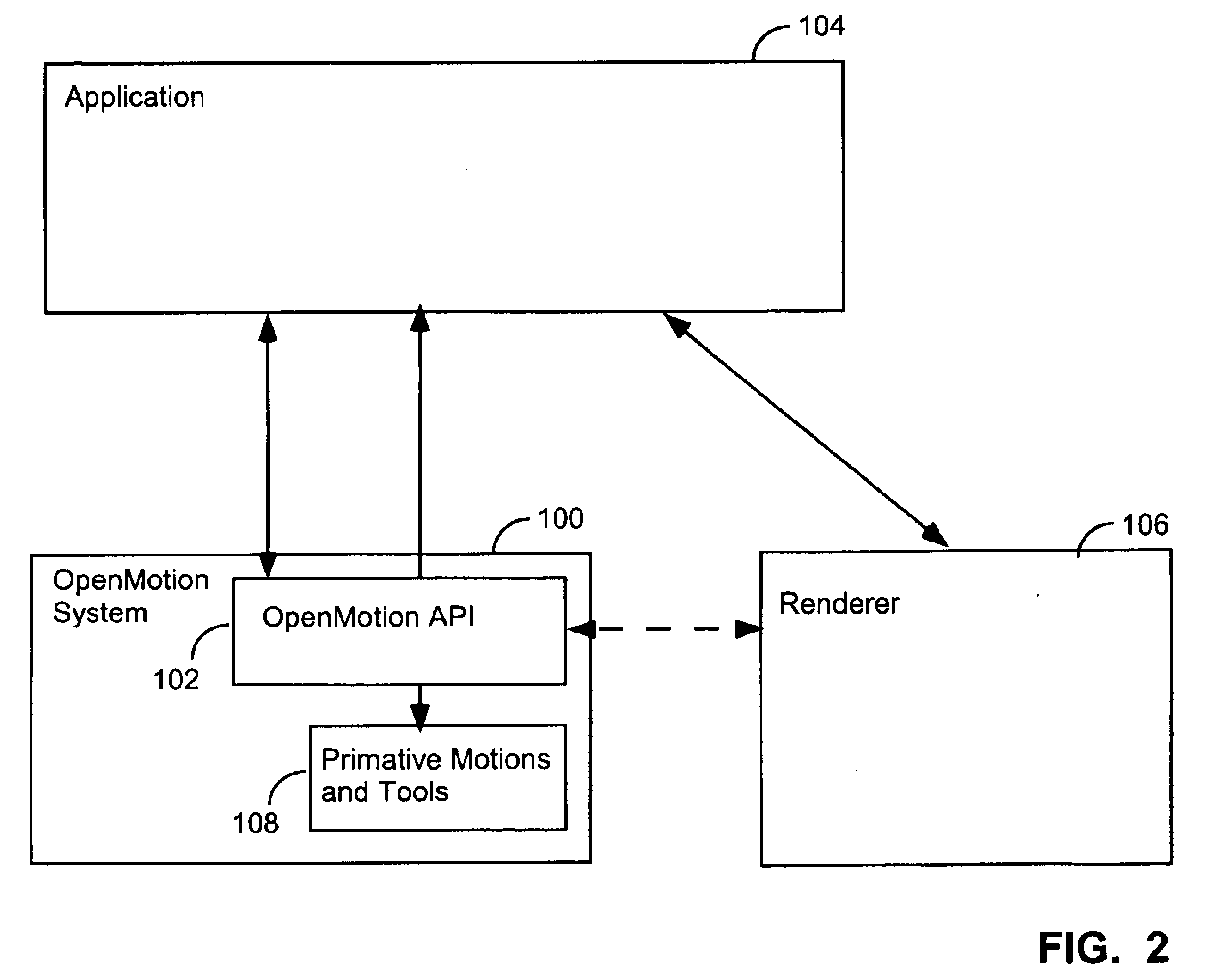

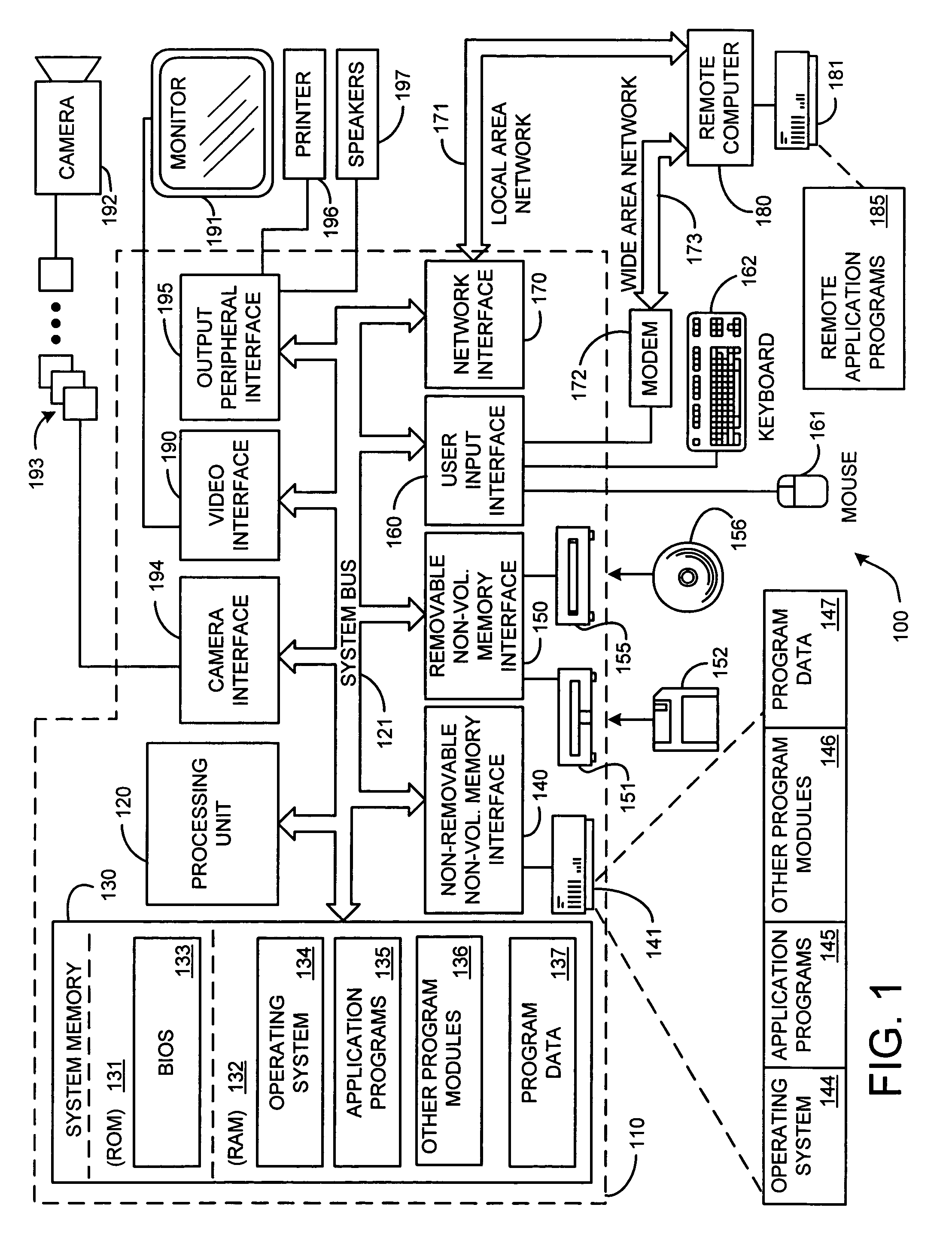

Apparatuses, methods, computer programming, and propagated signals for modeling motion in computer applications

InactiveUS6714201B1Easy to buildThe process is simple and fastComputer controlSimulator controlGraphicsTime function

A hierarchical 3D graphics model can be viewed as a hierarchical graph of its nodes and their associated motions, with the mathematical type of motions graphically indicated. A user can click on a displayed motion to edit it. A motion API provides one or more of the following features: (1) spatial predicate functions; (2) functions for scaling motion intensity; (3) classes for shake, spin, and swing motions; (4) motion classes with a GUI interfaced for defining their duty cycle; (5) functions for defining and computing 0through 2<nd >order derivatives of 3D position and orientation as function of time; (6) behaviors that constrain motions by boundaries in which the constraint is a reflection, clamp, andor onto constraint, or in which the boundary is a composite boundary. Motions can be defined by successive calls to an API and then be saved in a file.

Owner:3D OPEN MOTION

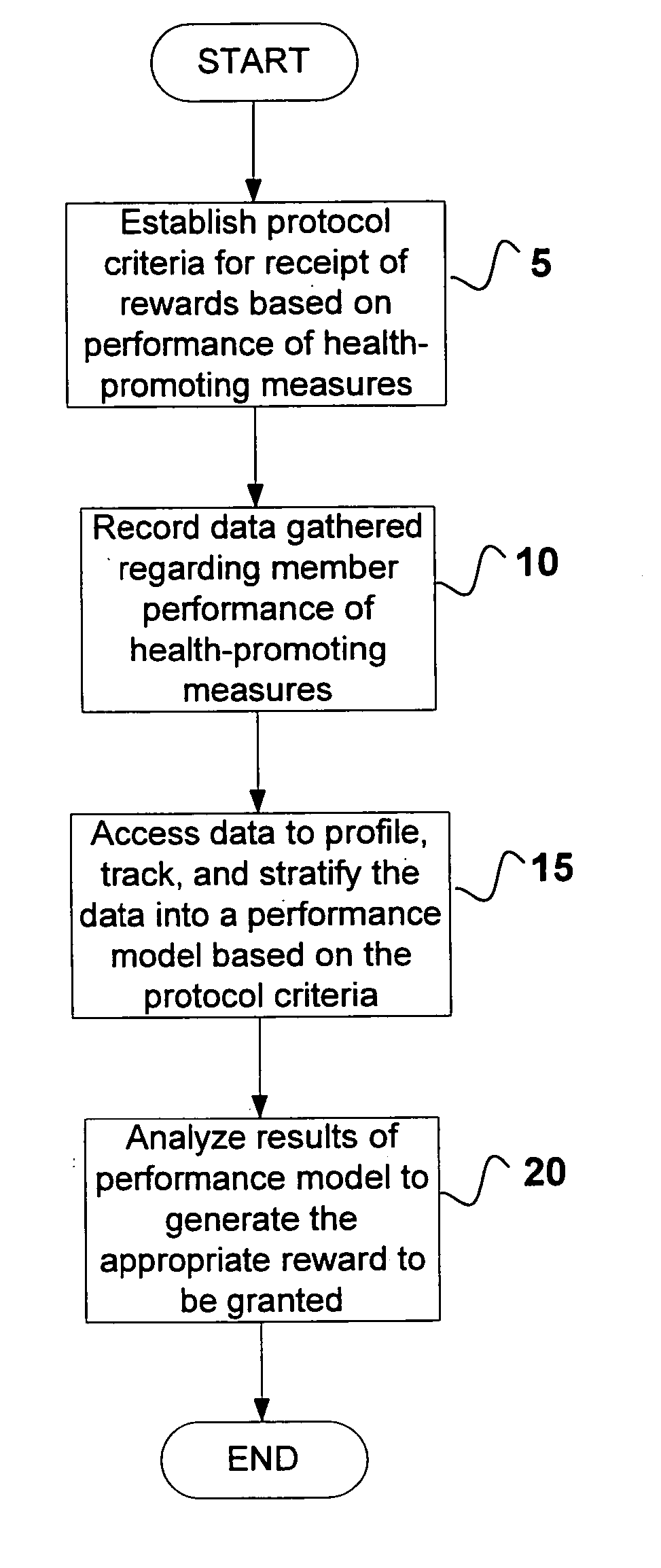

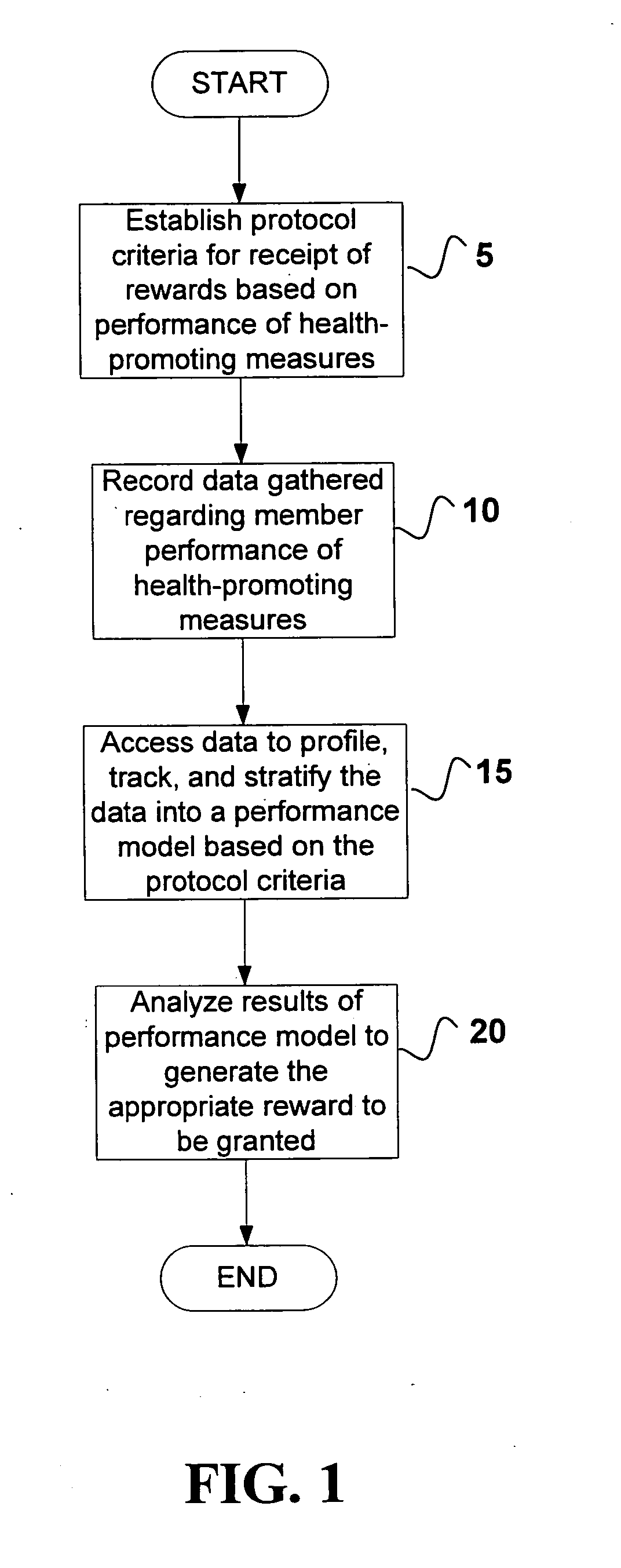

System and method for evaluating insurance member activity and pricing insurance products

InactiveUS20050102172A1Reduced premiumFinanceSpecial data processing applicationsHealth related informationProduct base

The present invention relates to systems and methods for evaluating and establishing pricing of insurance products based on insured member compliance to health-promoting measures. Member participation in health-promoting measures are monitored and used as a basis for establishing incentives (i.e., reduction in insurance premiums) for said member. In one embodiment, exercise / activity monitors are worn by members to verify their identity and to record insurance member compliance in performing health-promoting measures (i.e., heart rate, type of exercise, exercise intensity and duration). All health-related information, including physical examination results and recorded participation in health-promoting measures, are used to determine appropriate incentives (i.e., subsidize membership fees for health club) to be rewarded to said member.

Owner:SIRMANS JAMES R JR

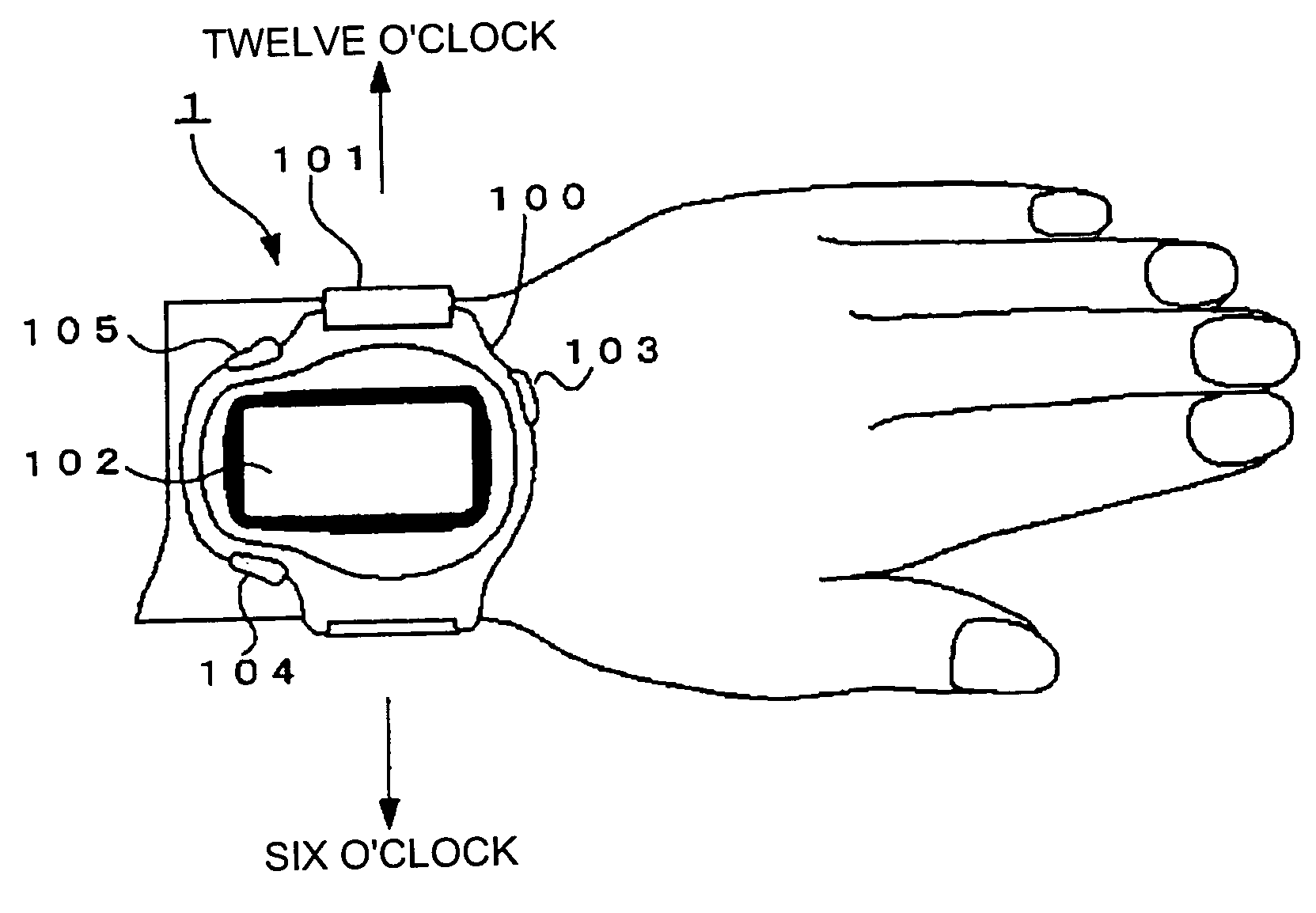

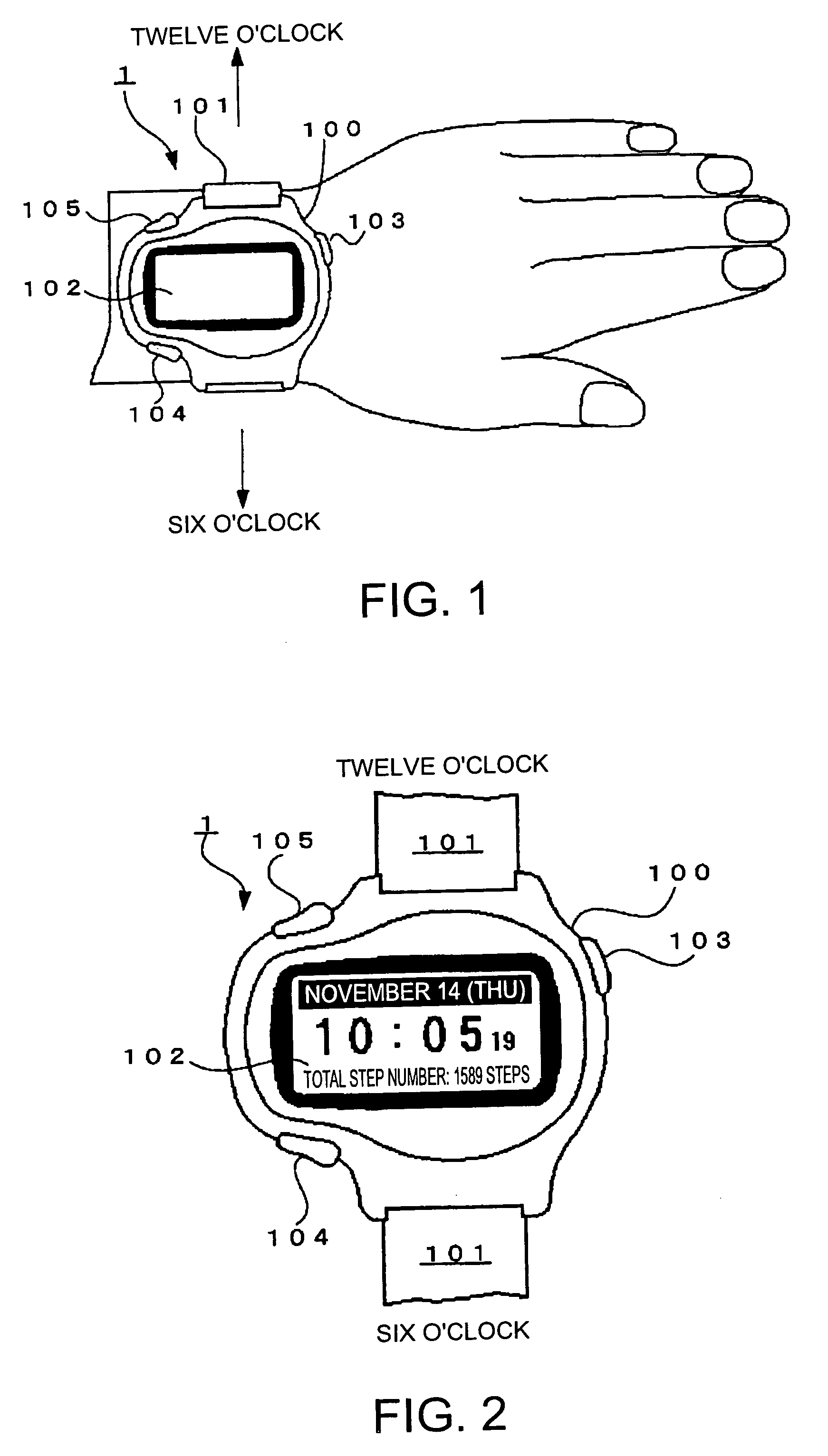

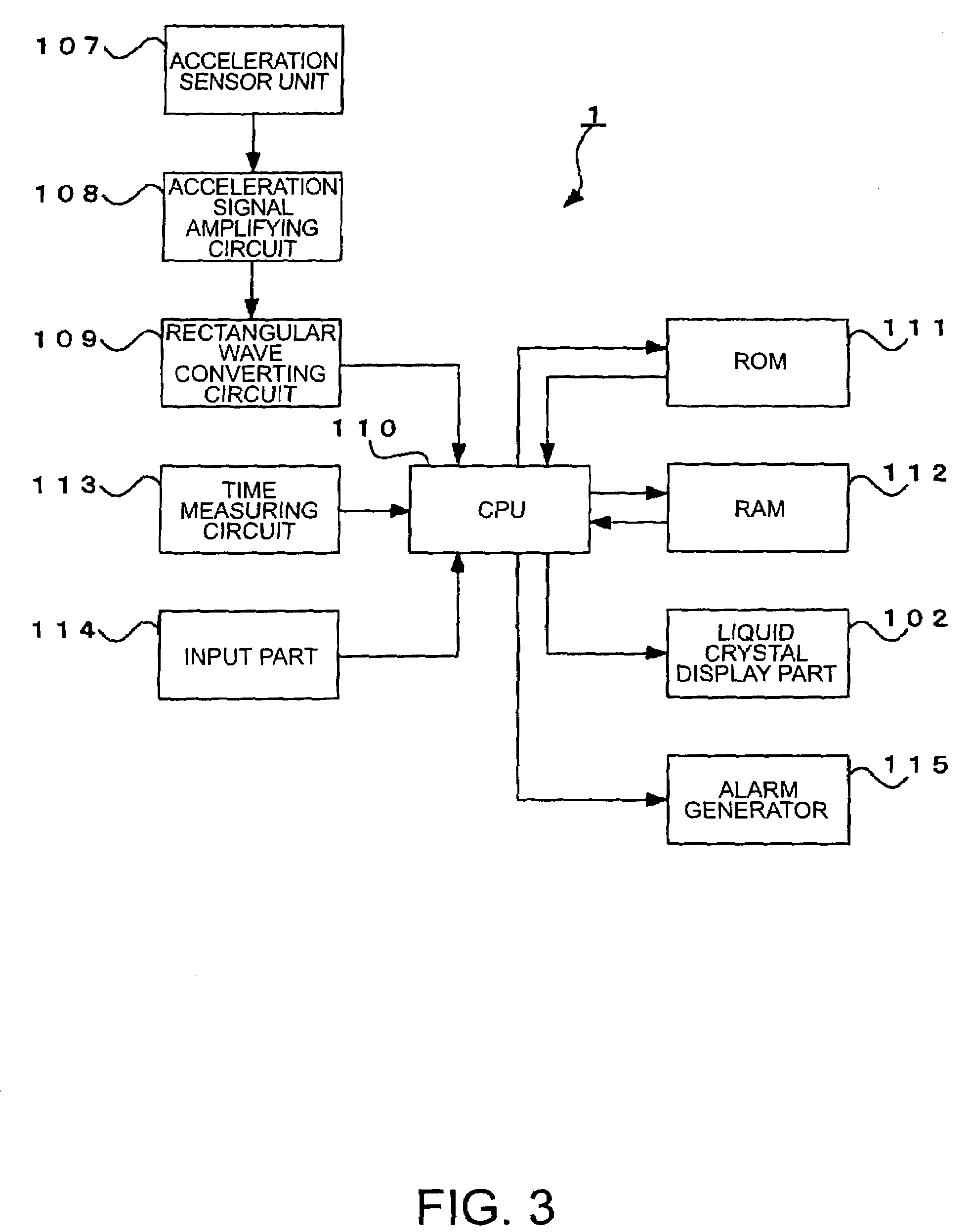

Body motion detector

ActiveUS7034694B2Increase exerciseAppropriate intensityAnti-theft devicesSurgeryMotion detectorEngineering

The invention provides a body motion detector that allows a user to check whether he / she makes motion with appropriate motion intensity for every motion thereby to obtain an excellent exercise effect while exercising such as walking and running. While a user makes motion, a CPU determines whether the user makes appropriate motion by the amplitude, the period, and the detection frequency of an acceleration signal inputted from an acceleration sensor unit, and when it is determined that the user makes appropriate motion, operates an alarm generator thereby to notify the user that he / she makes motion with appropriate motion intensity.

Owner:SEIKO EPSON CORP

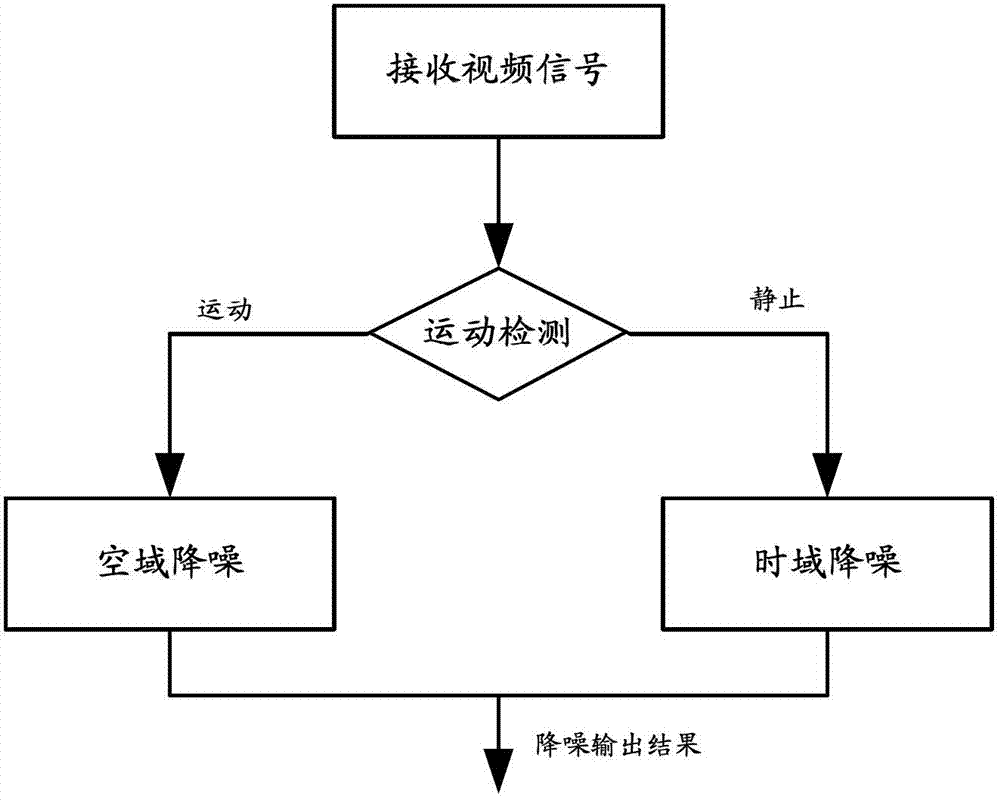

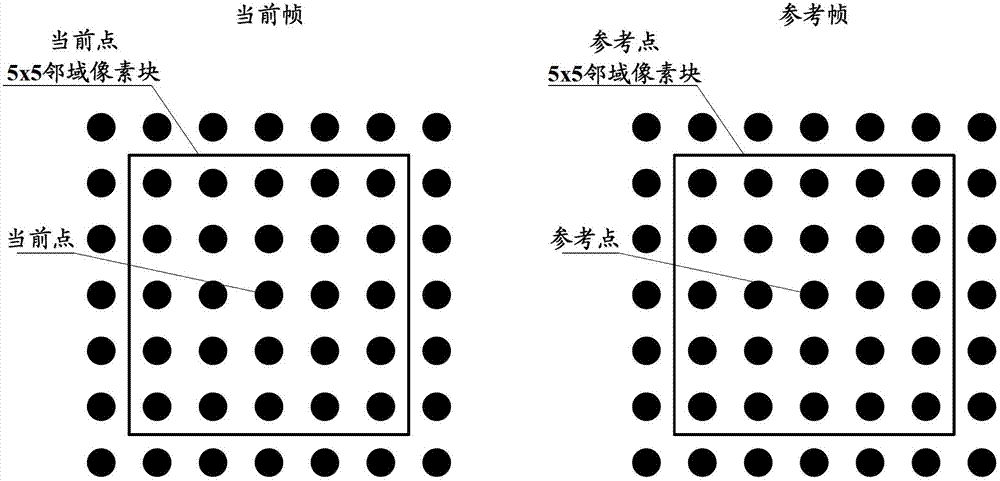

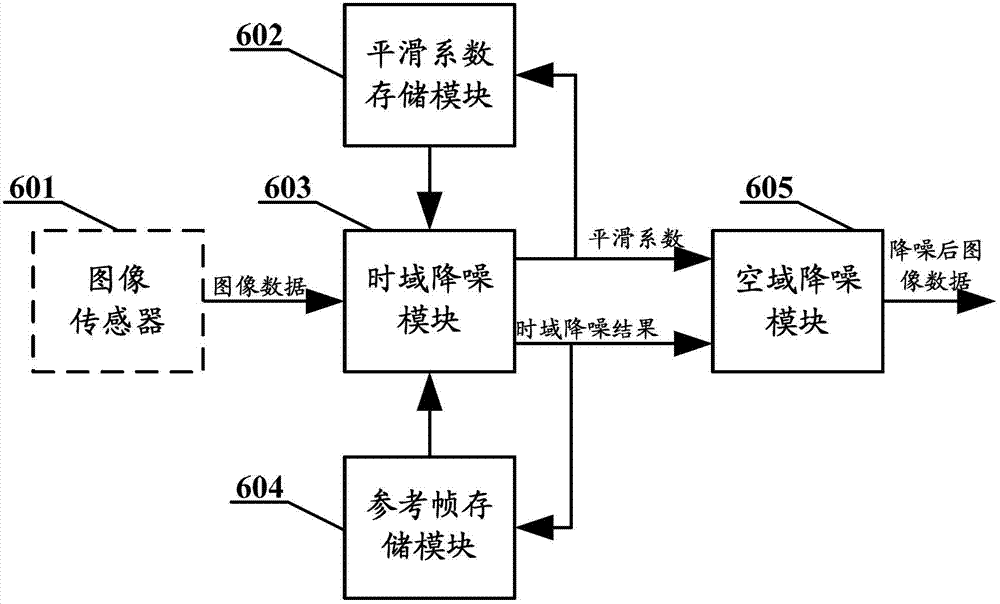

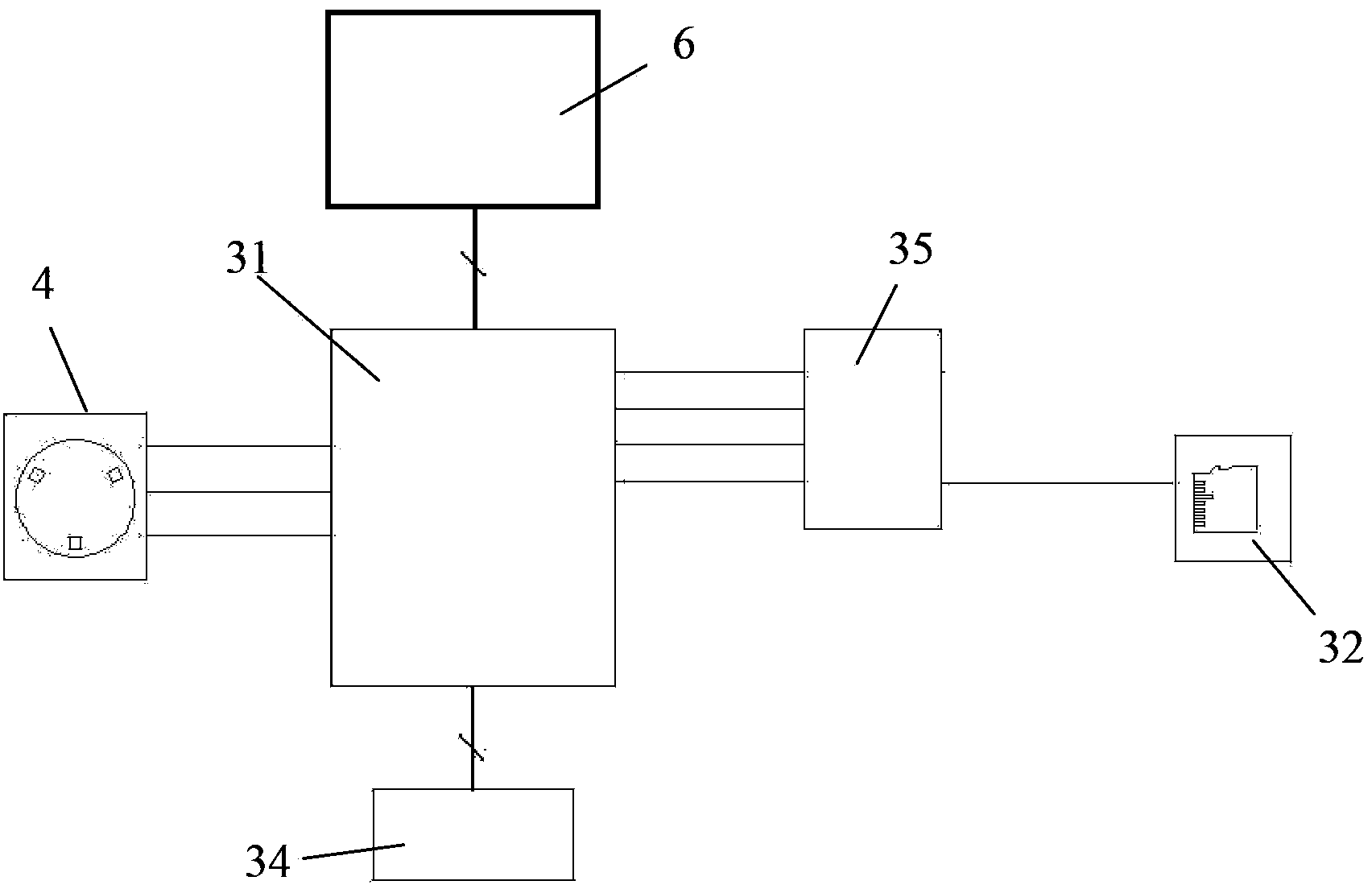

Time-space domain hybrid video noise reduction device and method

ActiveCN102769722ASimple structureEasy to applyTelevision system detailsColor television detailsPattern recognitionTime domain

The invention relates to a time-space domain hybrid video noise reduction device and a time-space domain hybrid video noise reduction method, and belongs to the technical field of video image processing. The device comprises a time domain noise reduction module, a smoothing coefficient storage module, a reference frame storage module and a space domain noise reduction module. The method comprises the following steps that: the time domain noise reduction module calculates noise variance, the motion intensity of a current point and a weighting coefficient of the current point according to the current point and a reference point; the time domain noise reduction module calculates and stores a smoothing coefficient of the current point and a time domain filtering result of a current frame respectively; and the space domain noise reduction module performs space domain filtering to obtain noise-reduced image data. Therefore, the combined application of time domain noise reduction and space domain noise reduction is realized, a good noise reduction effect can be achieved, system overhead required by the noise reduction can be effectively reduced, and system cost is lowered. The noise reduction device is simple in structure; and the noise reduction method can be conveniently and widely applied.

Owner:SHANGHAI FULLHAN MICROELECTRONICS

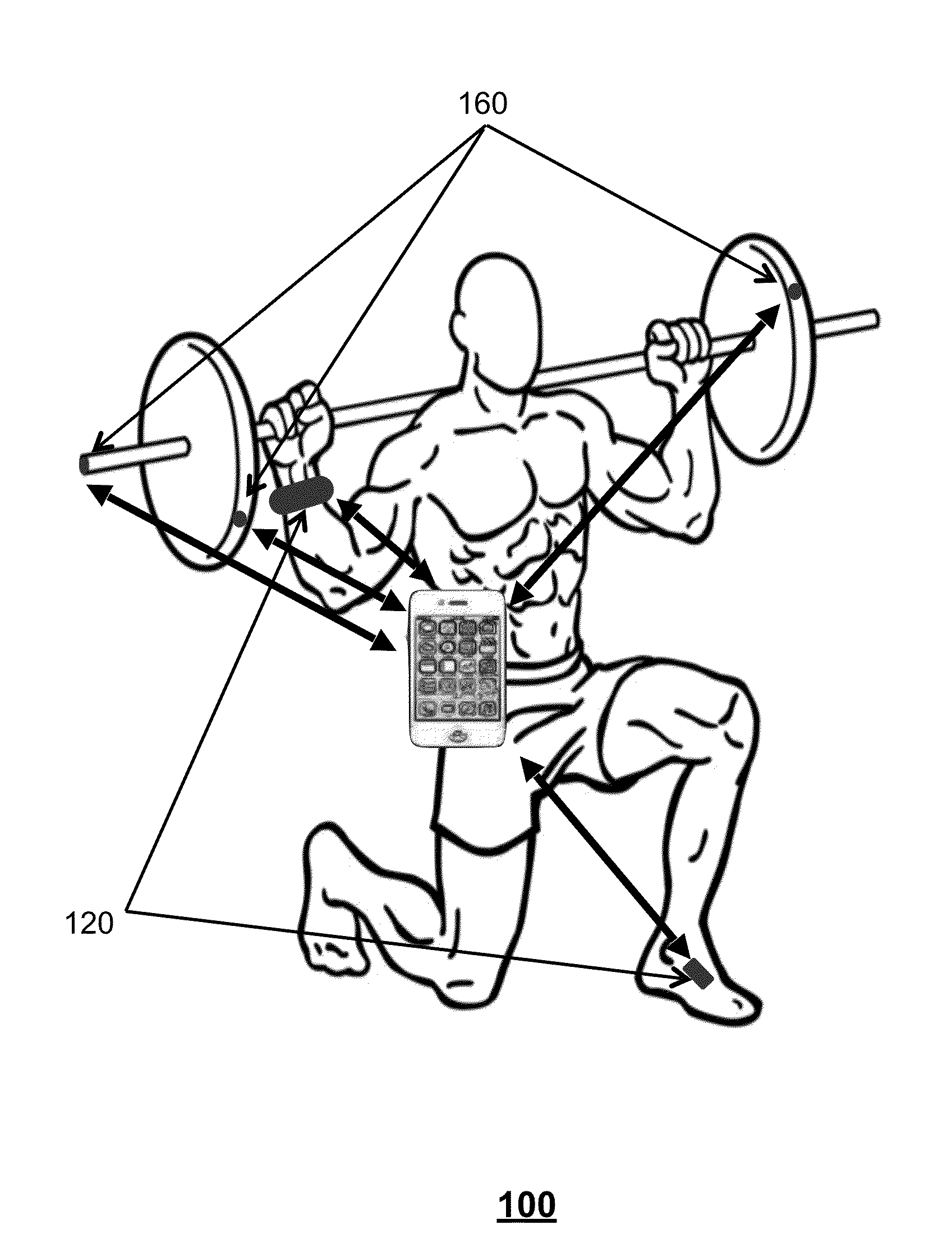

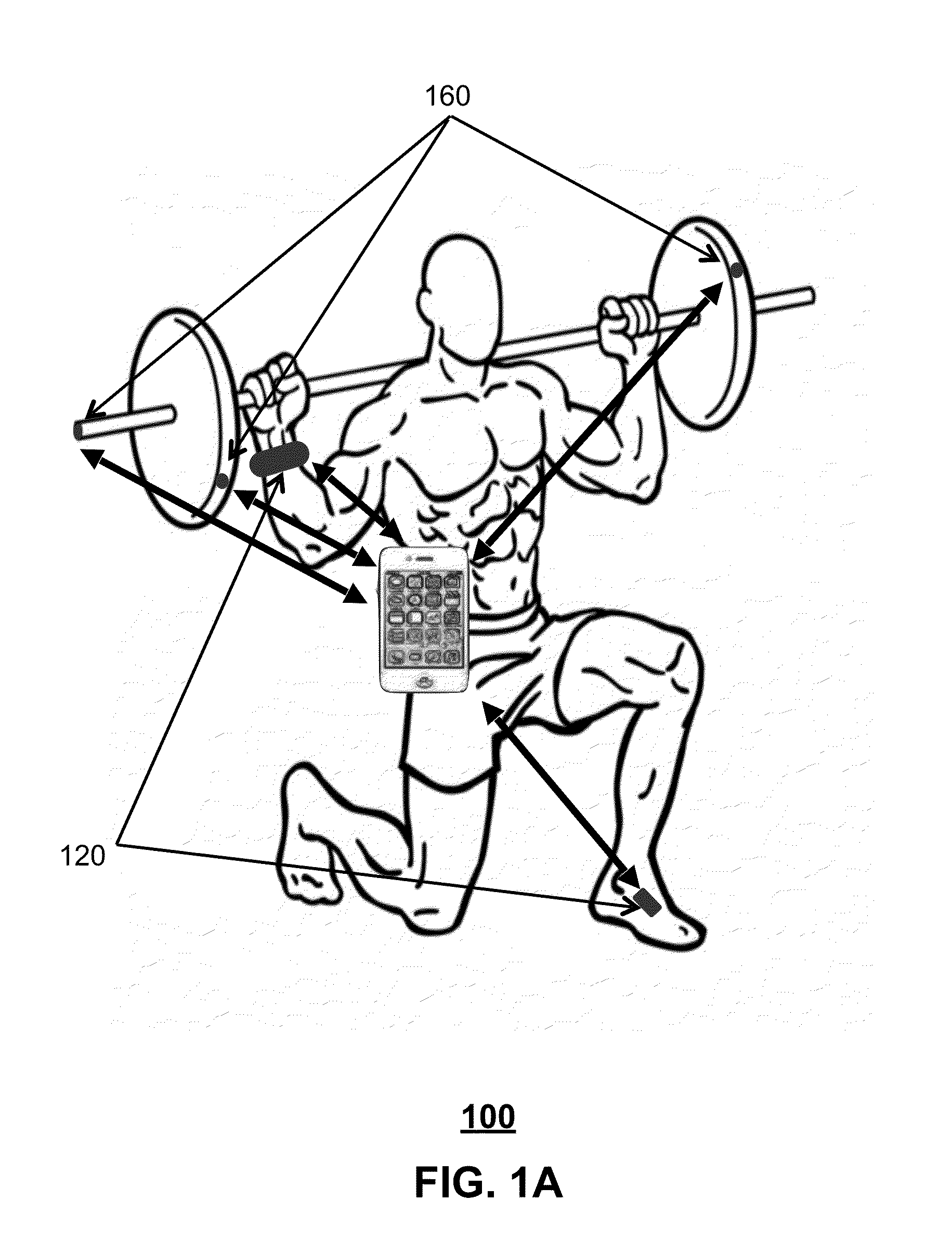

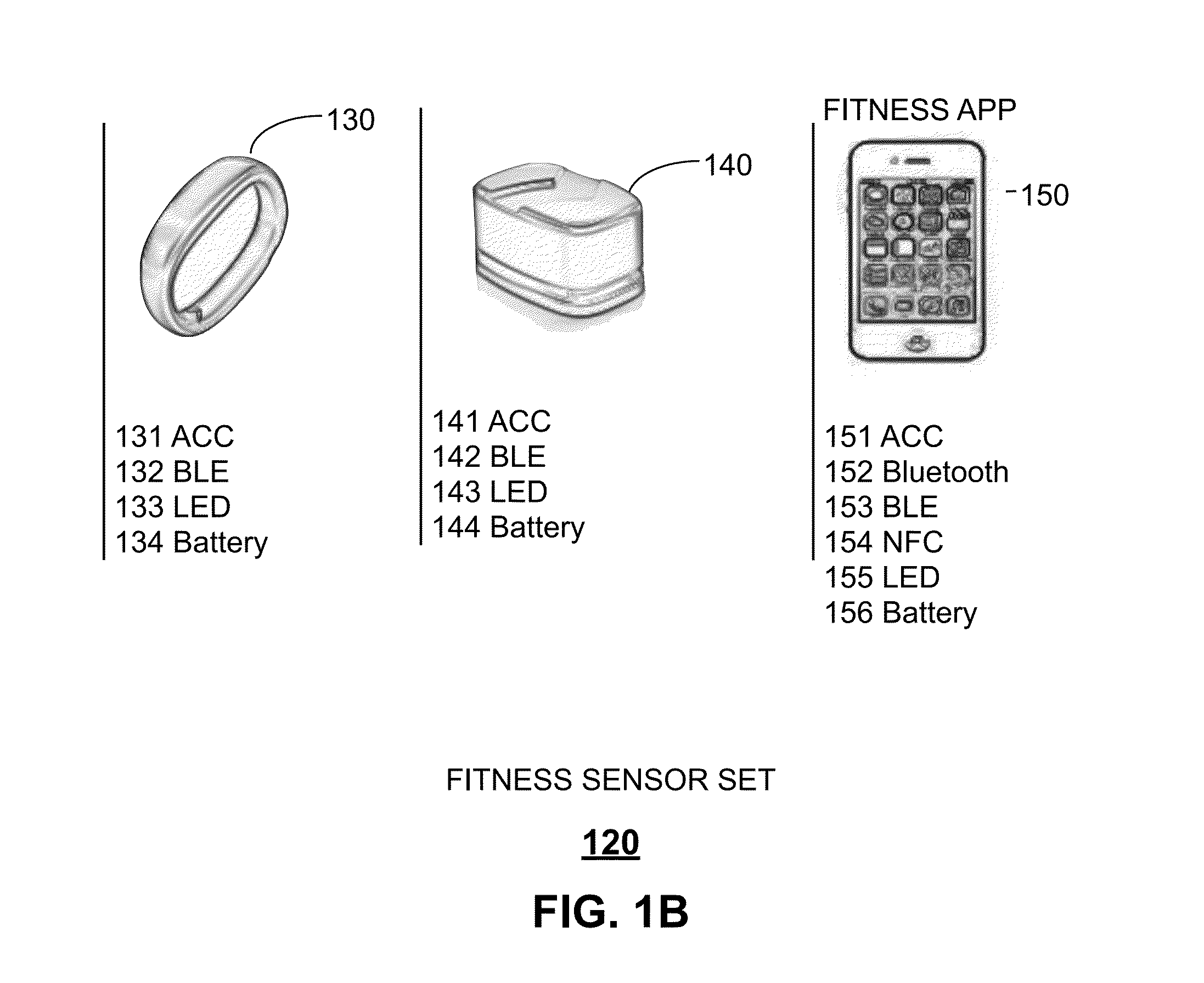

Method and System for Identification of Concurrently Moving Bodies and Objects

Herein provided is a method and system for wearable data collection in sports strength training and fitness exercise. The system includes an admin sensor set including sensors that couple to fitness equipment and a fitness sensor set which includes a wrist band sensor. A fitness app communicates with the sensors via Bluetooth Low Energy and Near Field Communication and detect concurrent movement based on proximity, user parameters and fitness object parameters. A fitness training report is generated that identifies the fitness objects used in a fitness exercise detected. Other embodiments are disclosed.

Owner:FLOMIO

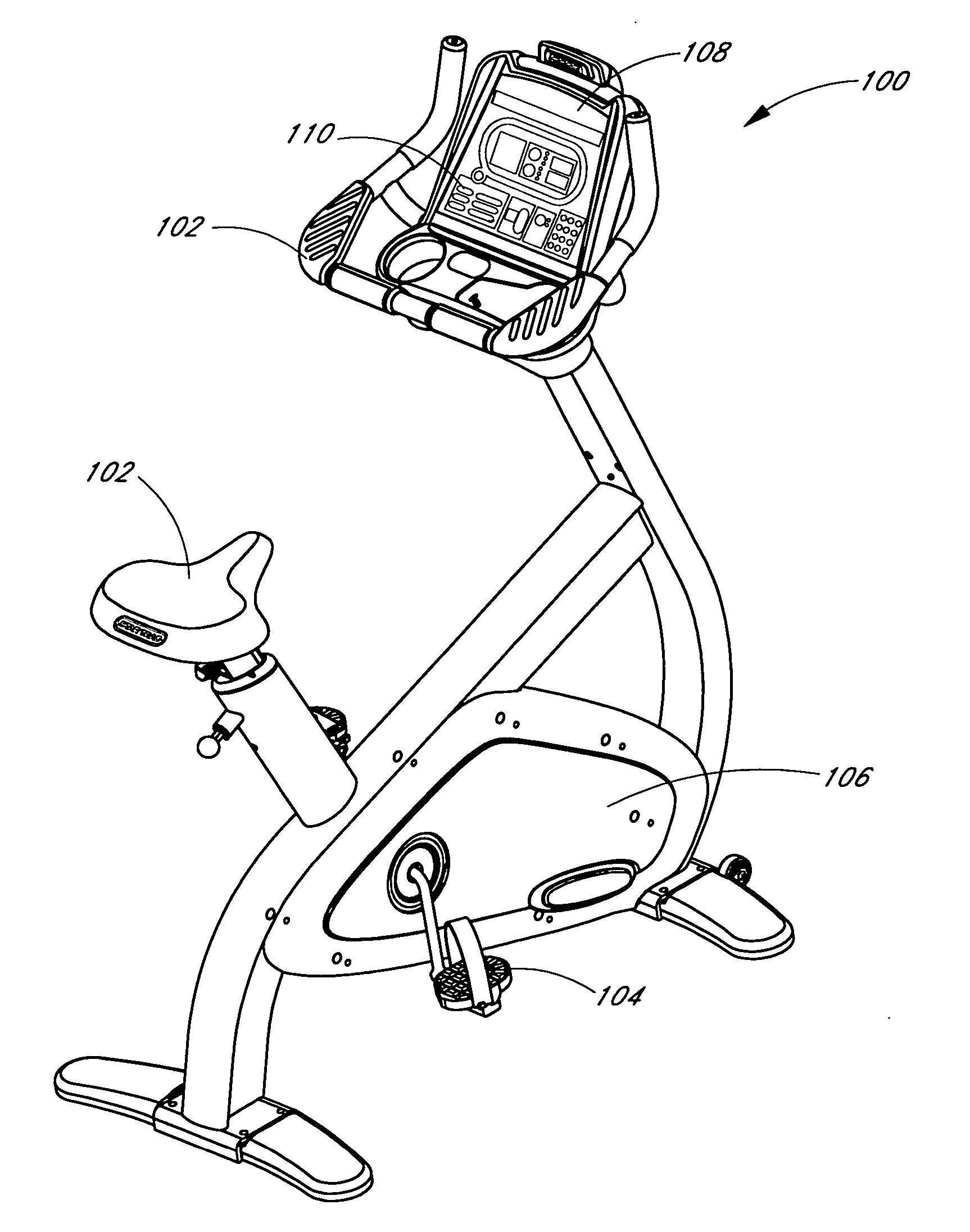

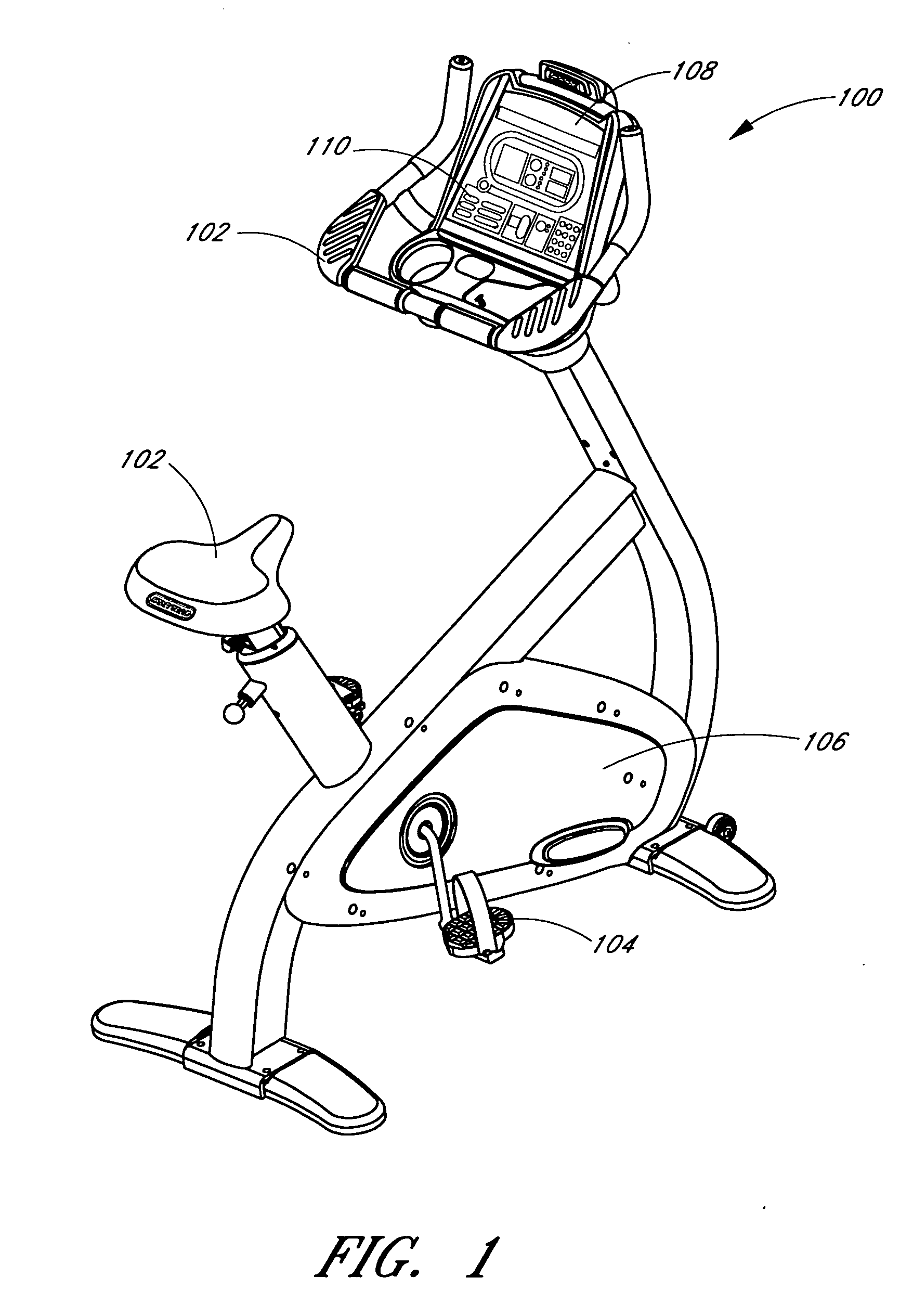

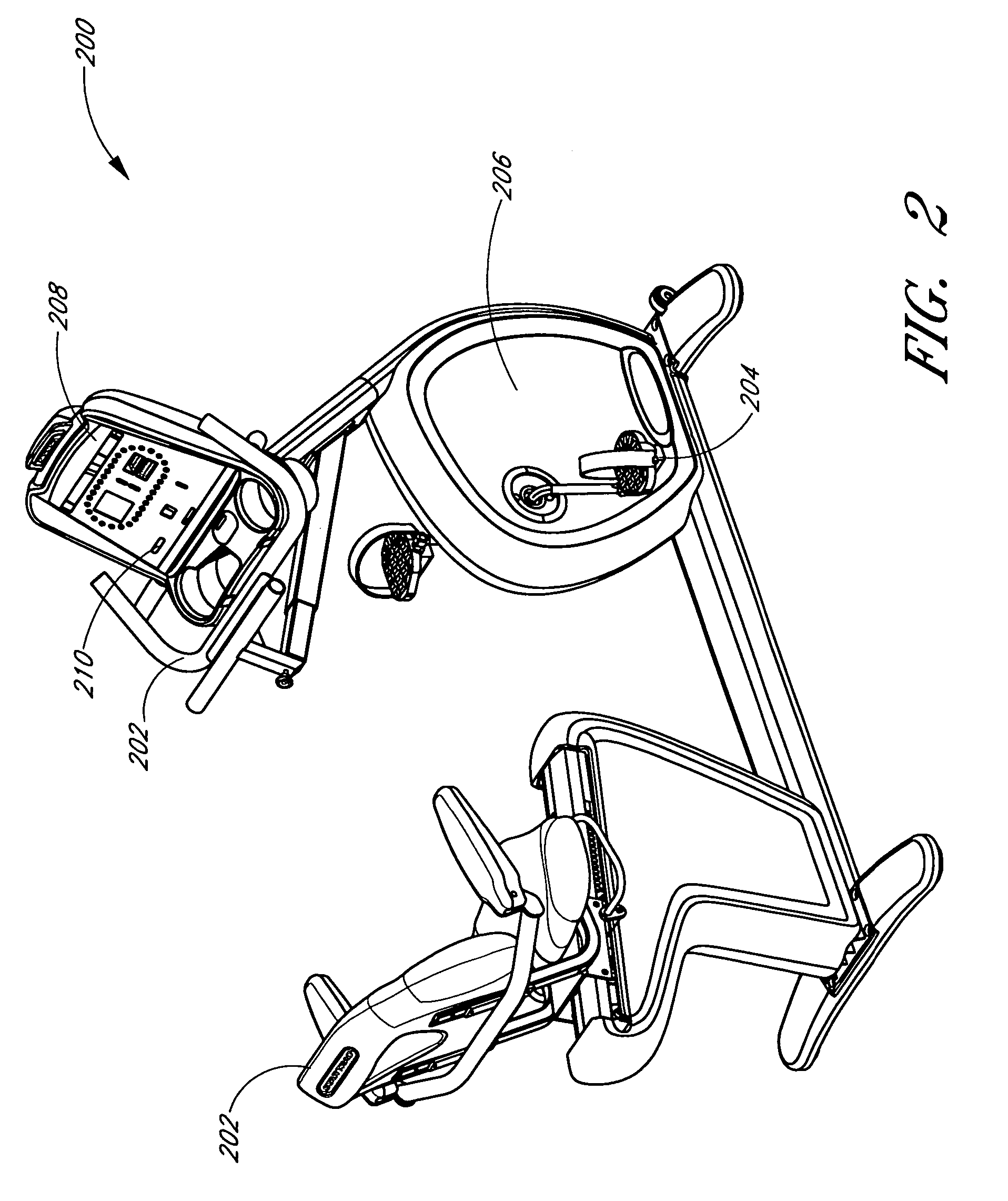

Load variance system and method for exercise machine

InactiveUS20060046905A1Direct controlTherapy exerciseMuscle exercising devicesElectrical resistance and conductanceControl system

An exercise machine that varies a resistive load based on sensed changes in intensity of exercise. In one example, an electronic control system of a stationary bicycle adjusts a flywheel resistive load based on changes in the user's pedal cadence. During the exercise routine, subsequent increases or decreases in the pedal cadence cause, respectively, increases or decreases in the flywheel resistive load. In addition, the control system may execute the exercise routine after actuation of a single input key. In another embodiment, the user may simply start to exercise. The electronic control system may calculate a default flywheel resistive load based on initialization parameters, such as demographic data and / or exercise preferences.

Owner:CORE INDS MICHIGAN

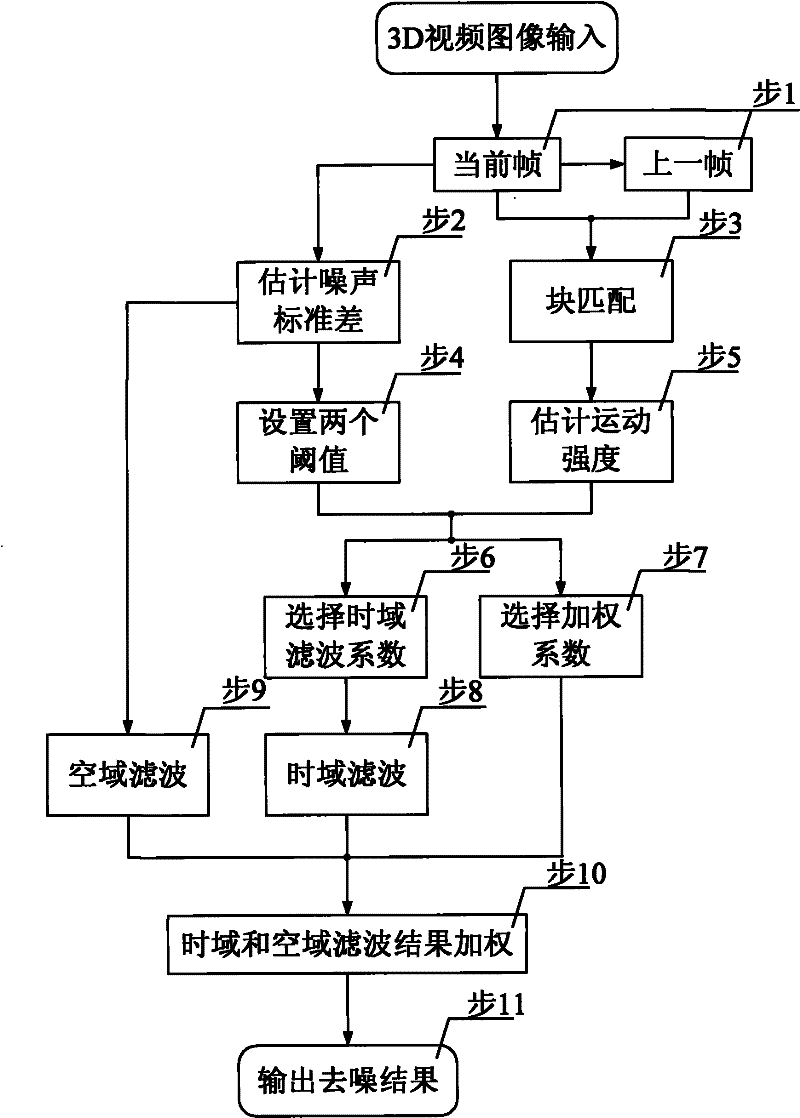

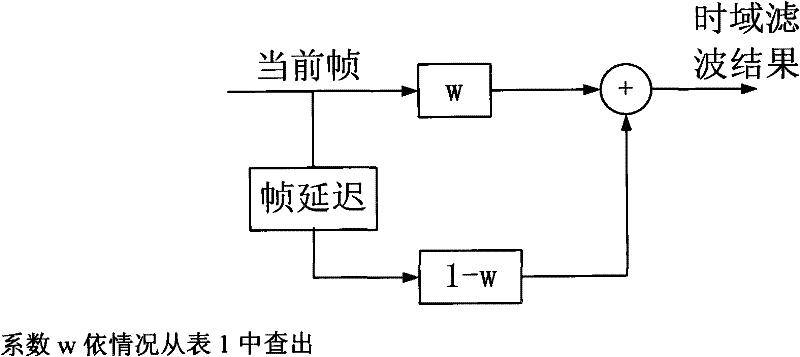

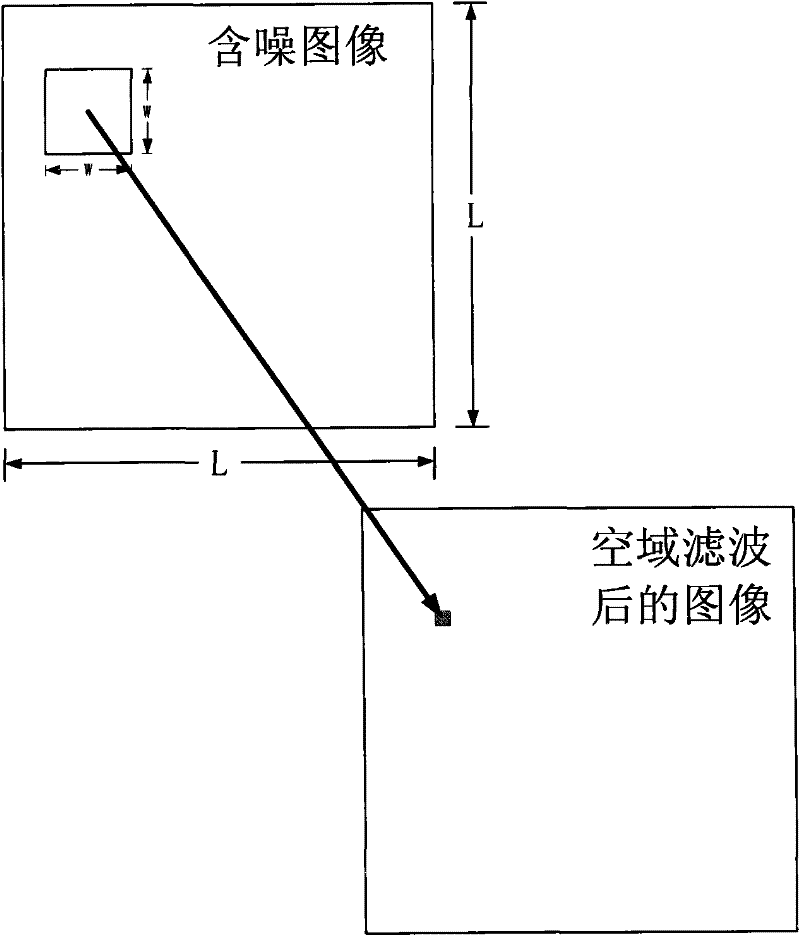

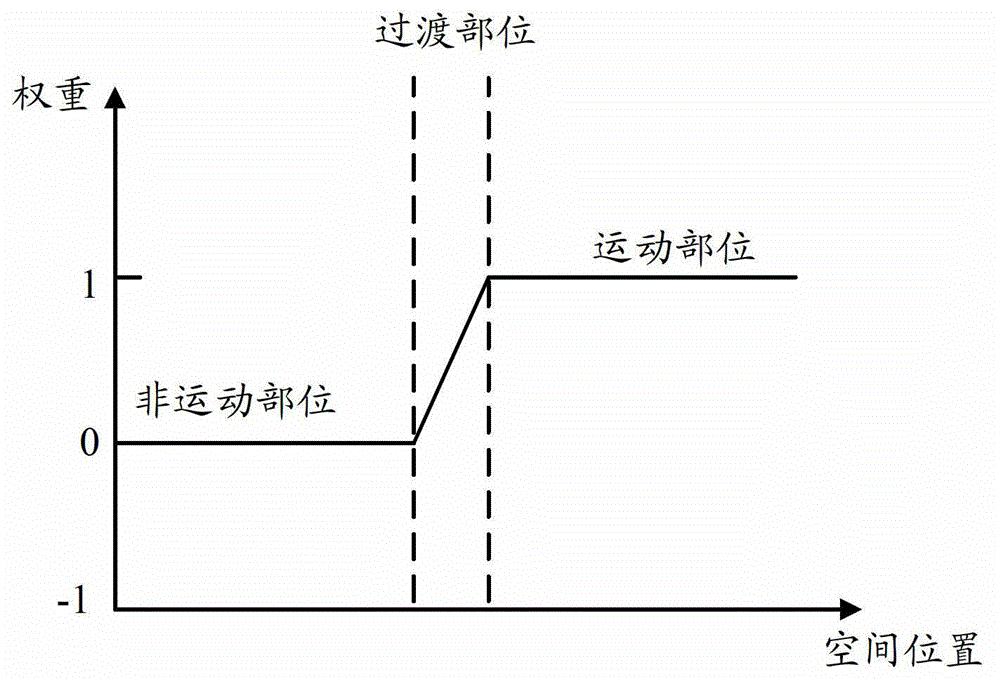

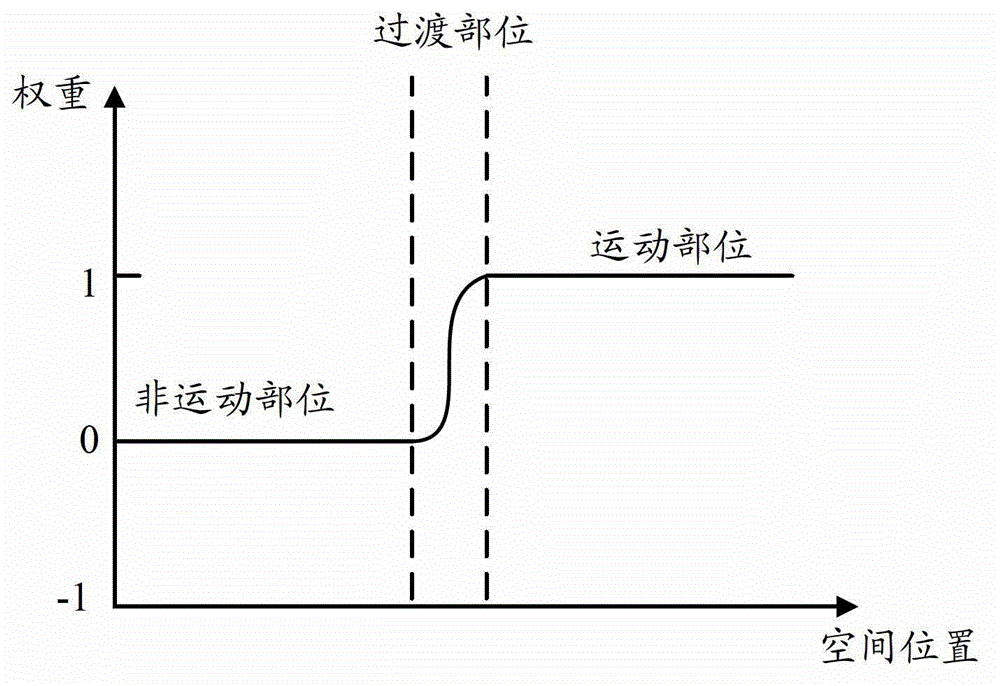

Self-adaptive real-time denoising scheme for 3D digital video image

InactiveCN102238316AImprove comfortReduce the amount of calculationTelevision system detailsColor television detailsDigital videoTime domain

The invention provides a self-adaptive real-time denoising scheme for a 3D digital video image, which is used for filtering and denoising a video image in a space domain two-dimensional mode and a time domain one-dimensional mode. The method comprises the following steps of: detecting the motion intensity of the video image, estimating a noise standard difference of the image and setting two different threshold value limits according to an estimation result of the noise standard difference; and adjusting parameters in time domain filtering in a self-adaptive mode according to a relation between the motion intensity and the limits and weighting a time domain filtering result and a space domain filtering result according to the relation in a self-adaptive mode to obtain a denoised output result. In the scheme, the computational load and memory space are small, the 3D digital video image can be denoised effectively in real time, borders and details of the image are protected, and the watching comfort of the 3D digital video image is enhanced greatly.

Owner:BEIJING KEDI COMM TECH

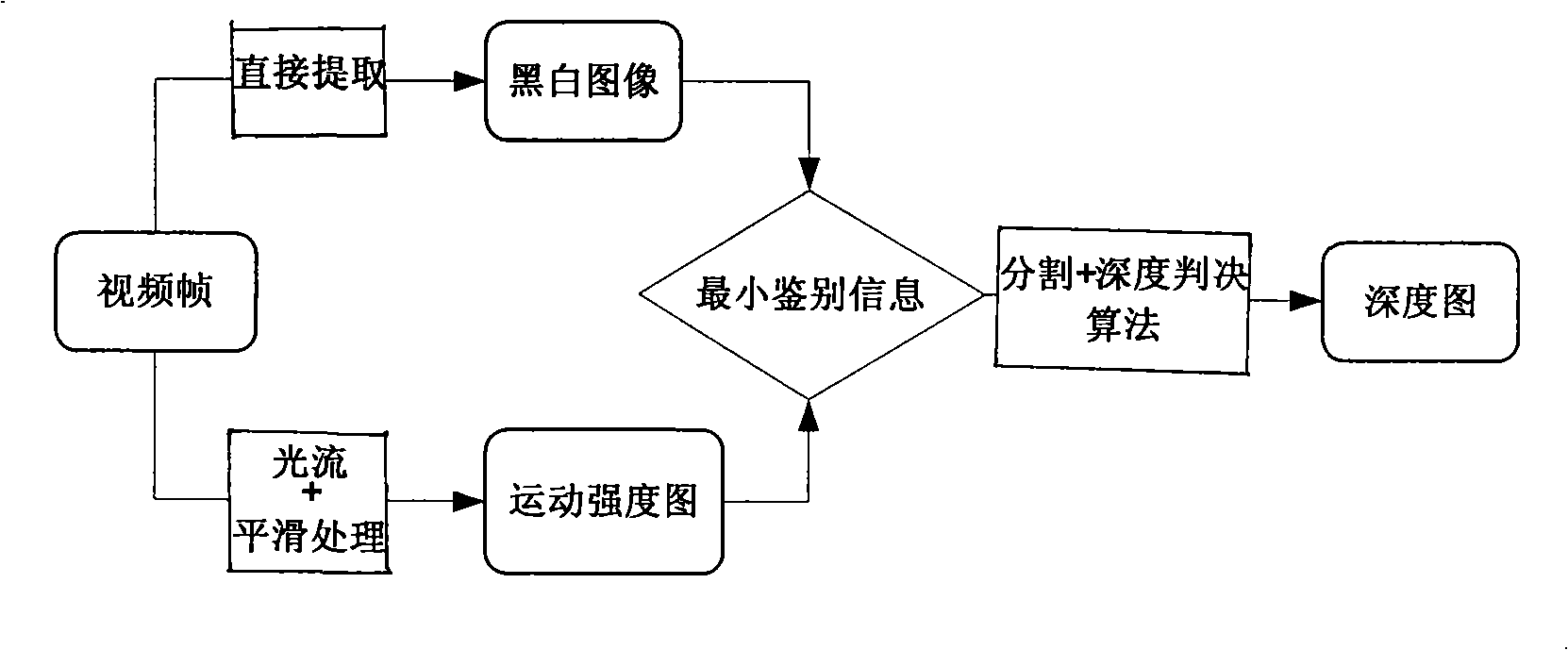

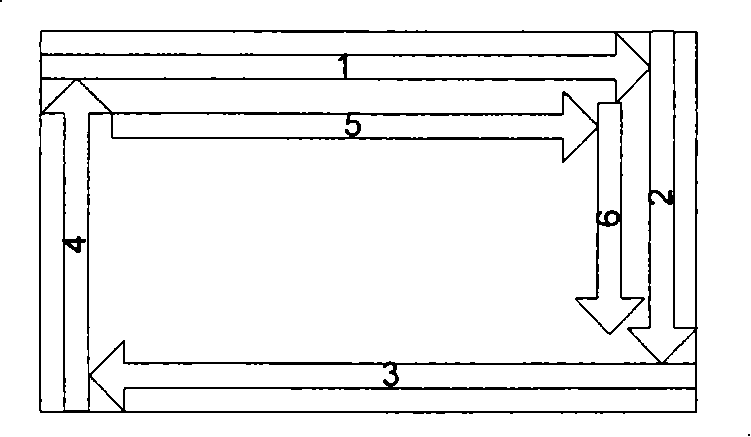

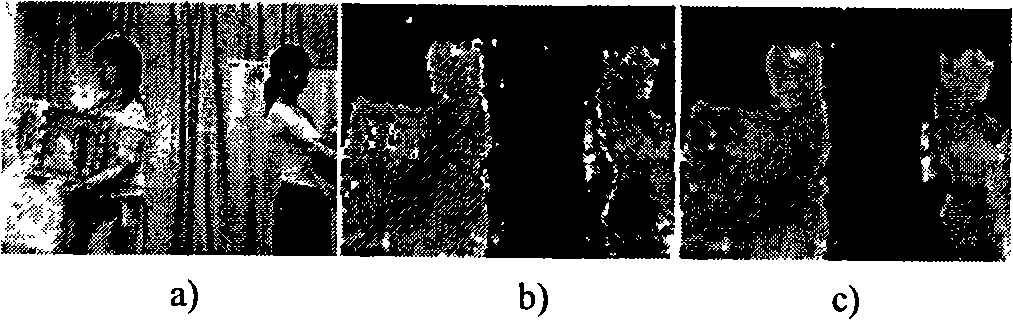

Depth sequence generation method of technology for converting plane video into stereo video

ActiveCN101271578AIn line with the real deep relationshipSegmentation results are accurate and reliableImage analysisSteroscopic systemsTime informationStereoscopic video

The invention relates to a depth sequence generation method in a technique of converting a plane video to a stereo video, belonging to the technical field of a computer multimedia, in particular to the technique of converting the normal plane video to the stereo video. The method includes: based on an optical flow algorithm, a two-dimensional motion of a pixel of a frame in an original two-dimensional video sequence is picked up to obtain an image of a motion intensity of the frame; by utilizing a minimum discrimination information principle, the coloring information of the image of each frame in the original two-dimensional video sequence is mixed with the image of the motion intensity to obtain a distinguishing image of a motion color used for cutting a video image; the image is cut according to the luminance of the distinguishing image of the motion color; and a different depth value is given to each cut area to get a depth map of the image of each frame; the depth maps of the image of all frames constitute a depth sequence. The depth sequence generation method has the advantages that the space and time information of the video sequence are used jointly, and the judgment of the cutting and the depth are accurate and reliable.

Owner:TSINGHUA UNIV

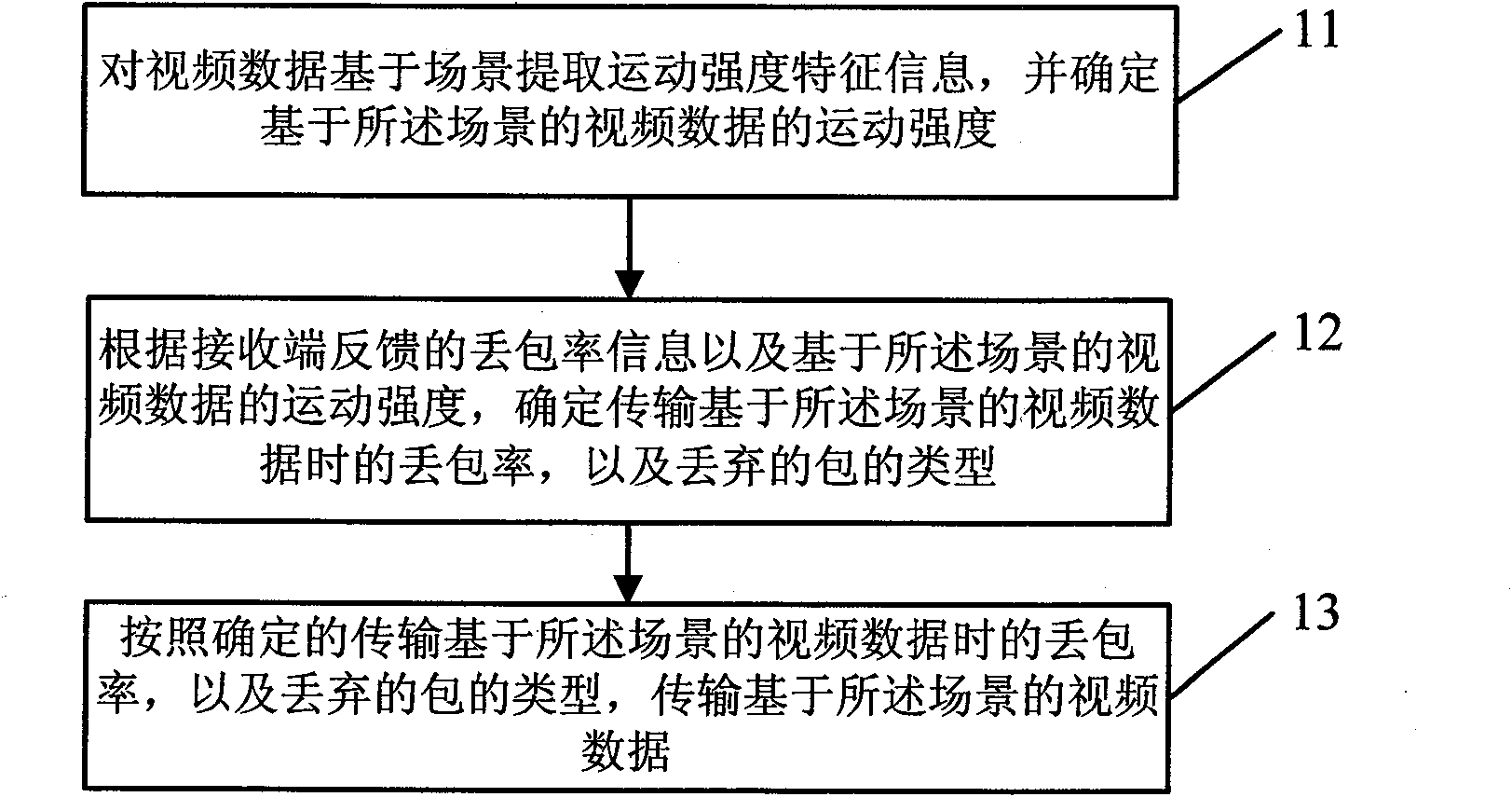

Method, device and system for video transmission

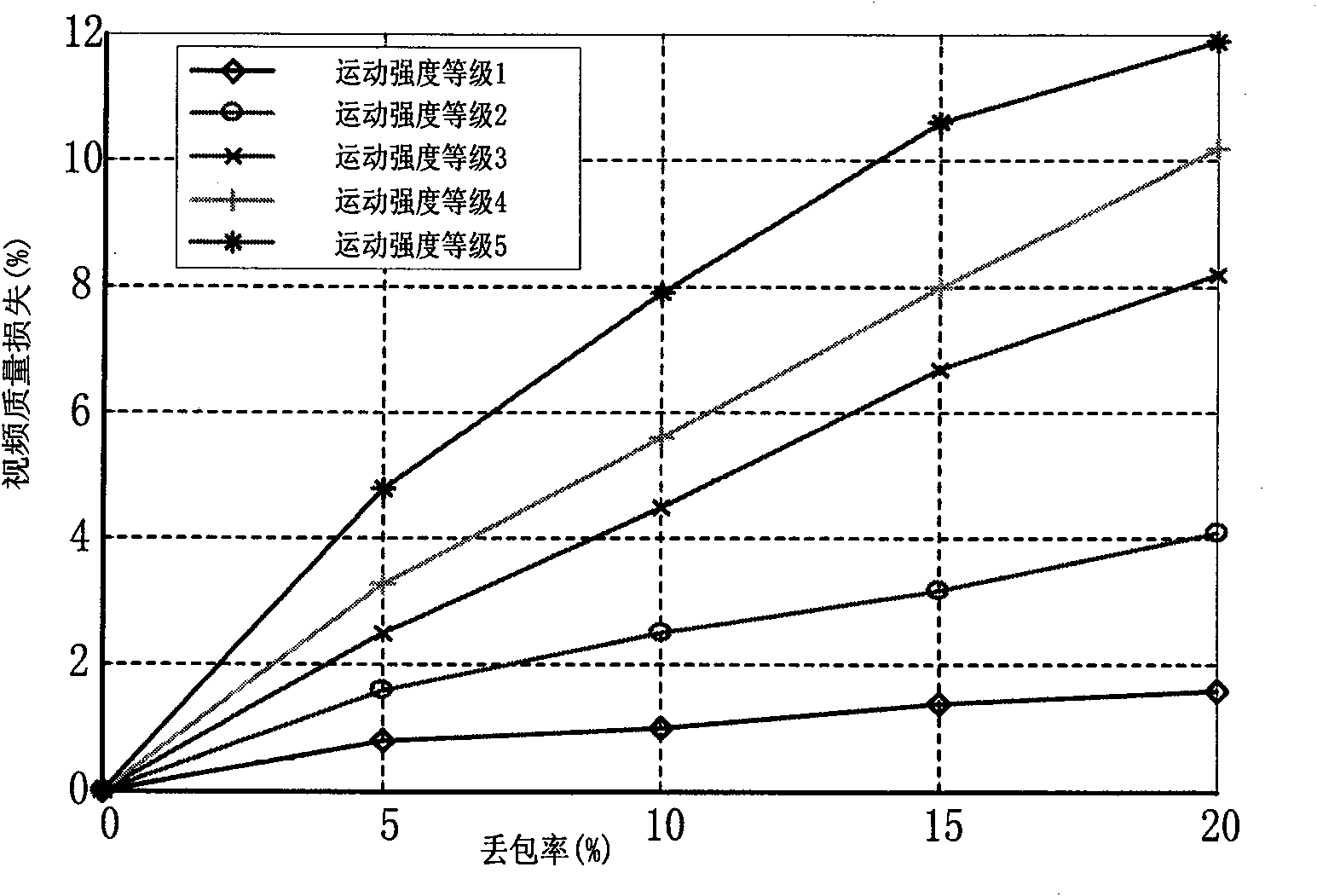

InactiveCN101656888AControl congestionCongestion effectiveTelevision systemsDigital video signal modificationData ingestionComputation complexity

The invention provides a method, a device and a system for video transmission. The method comprises the following steps of: extracting scene-based movement intensity characteristic information from video data, and determining the scene-based movement intensity of the video data; according to packet loss rate information fed back by a receiving end and the scene-based movement intensity of the video data, determining the packet loss rate and the type of the lost packet when the scene-based video data are transmitted; and according to the determined packet loss rate and type of the lost packet when the scene-based video data are transmitted, transmitting the scene-based video data. The method, the device and the system can effectively lower computing complexity, control the network congestion more effectively, avoid aimless packet loss caused when a large amount of data are transmitted under a condition of network congestion, control the quality of each video stream even if a plurality of paths of video streams are transmitted at the same time, and further improve the video playing quality at the same time while realizing the effective control of the network congestion.

Owner:HUAWEI TECH CO LTD +1

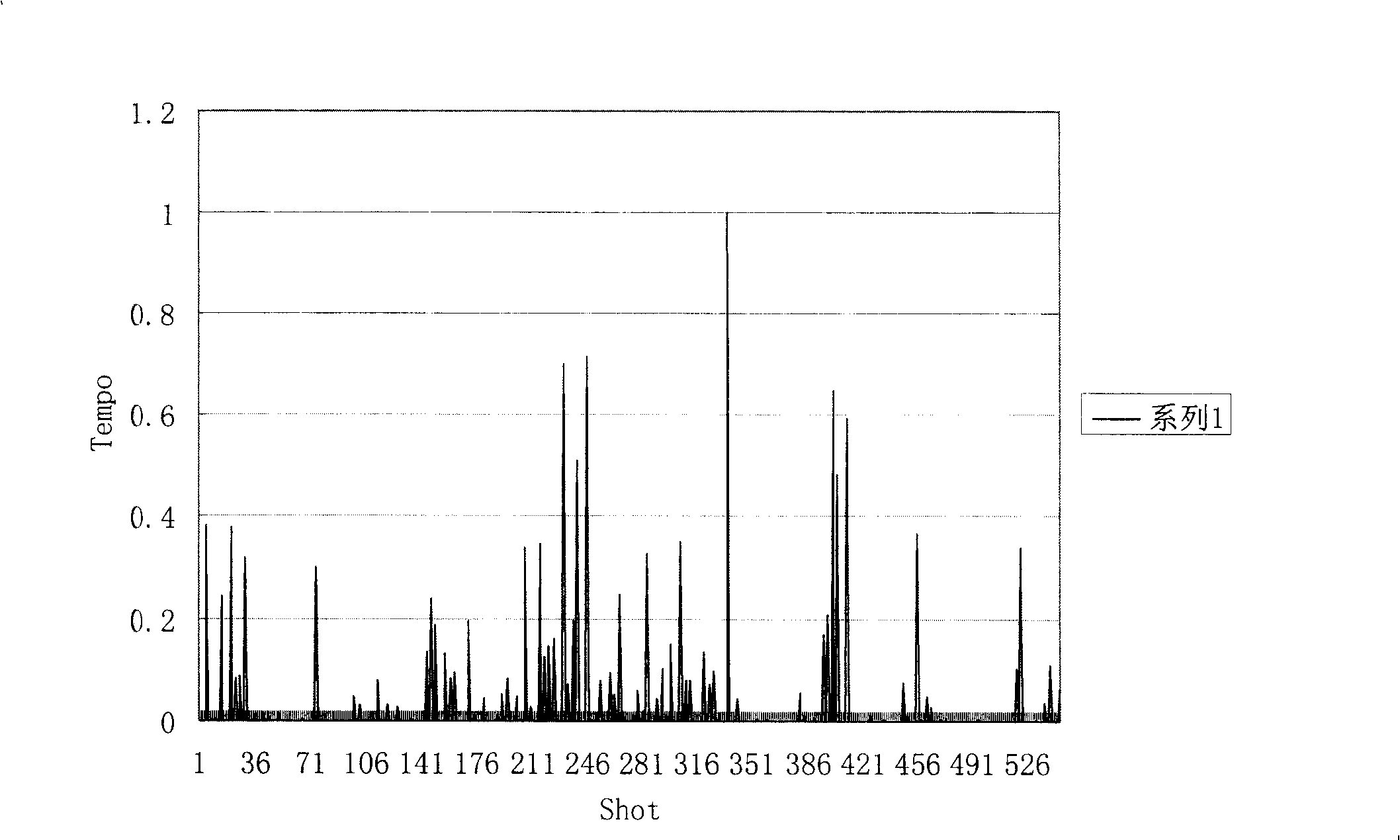

Movie action scene detection method based on story line development model analysis

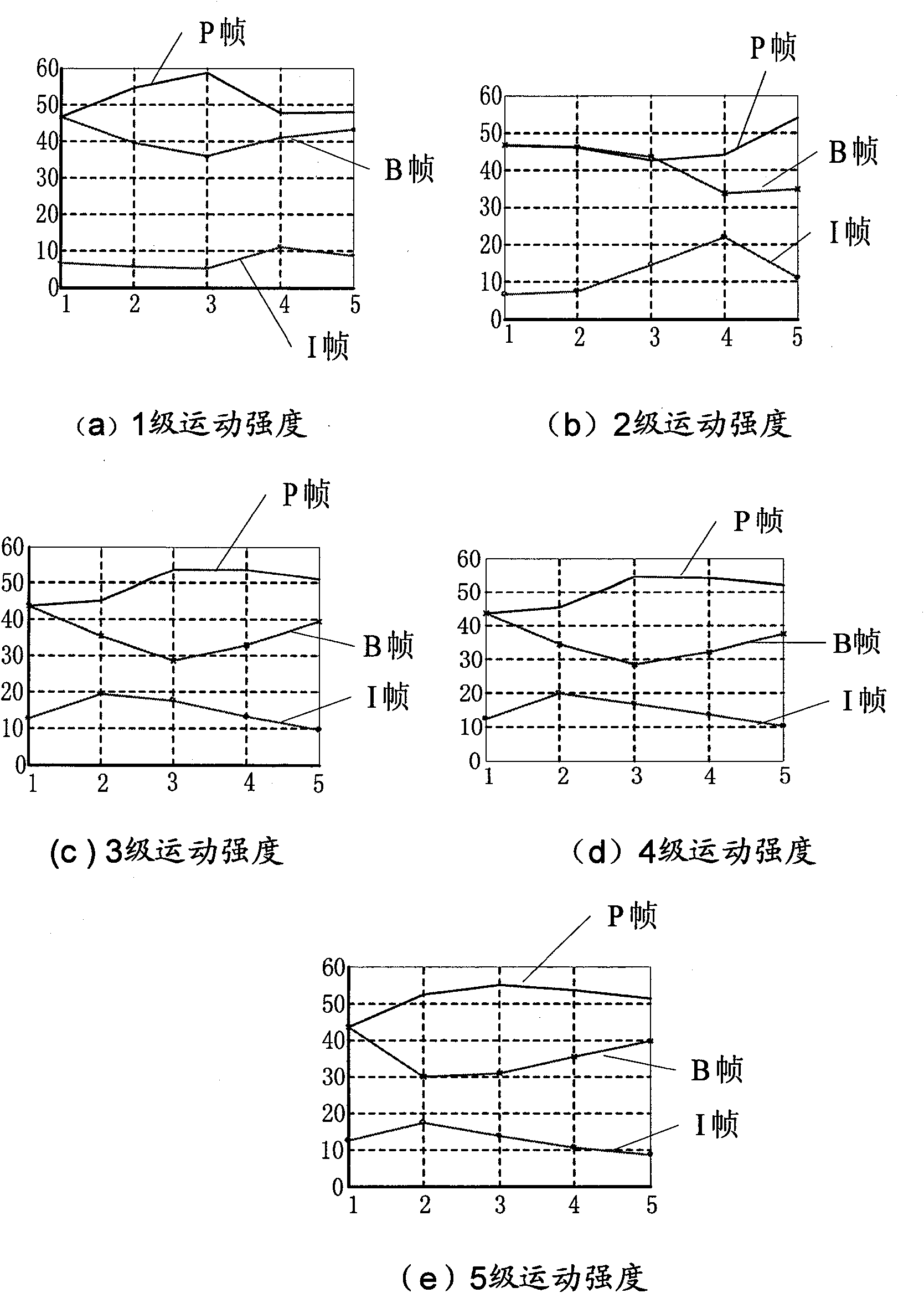

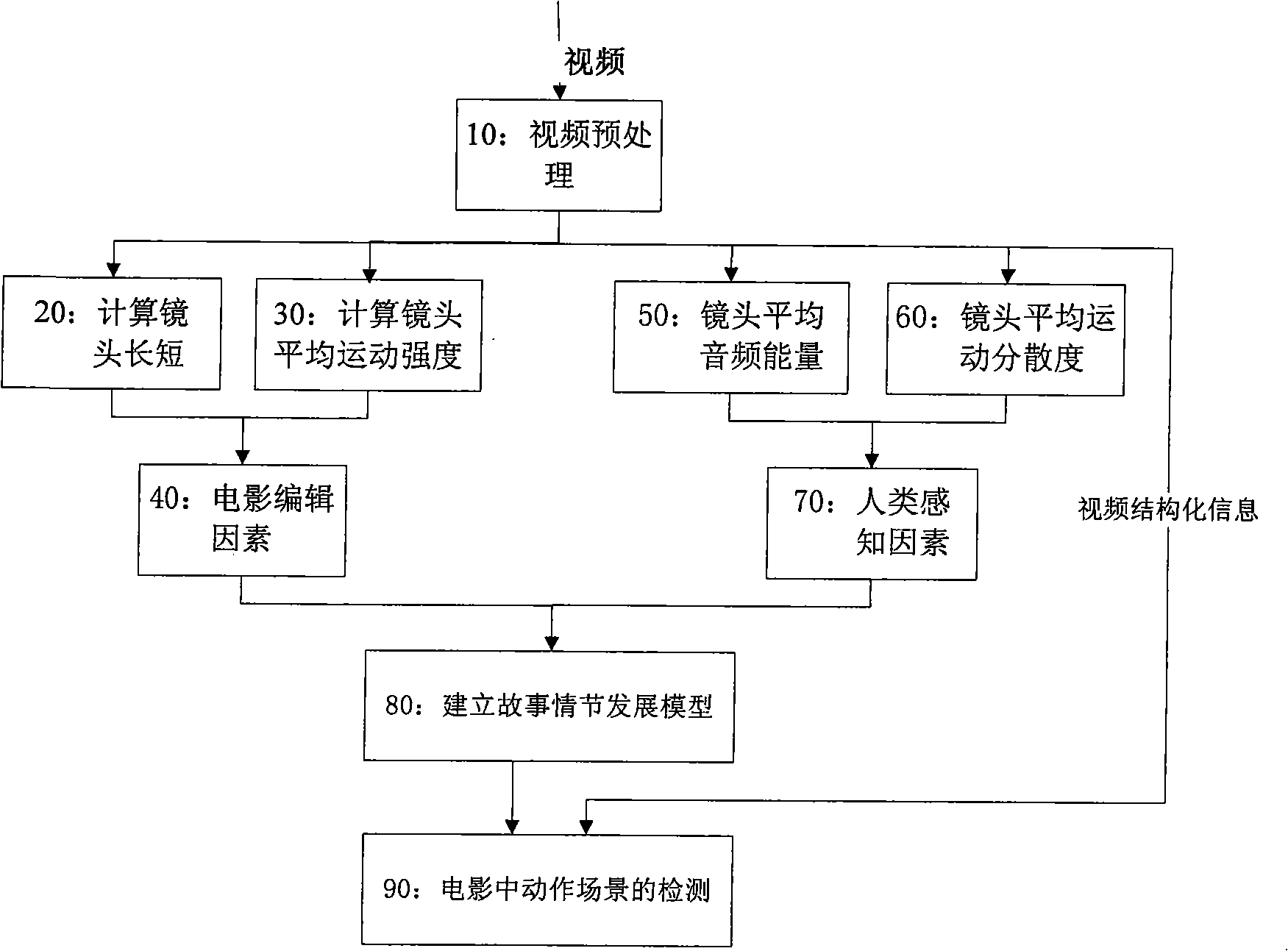

InactiveCN101316362AAccurate detectionTelevision system detailsColor television detailsDispersityViewpoints

The invention discloses a movie action scene testing method based on analysis of a story line development model, which comprises the steps that: a video is carried out with the operation of pretreatment; the scene length of each scene is calculated; the average action intensity of the scenes is calculated; a movie editing factor is calculated by utilizing the scene length and the average action intensity of the scenes; the short-term audio energy of each audio frame and the average audio energy of the scenes are calculated; the average action dispersity of the scenes is calculated; a human perception factor is calculated by utilizing the average audio energy of the scenes and the average action dispersity of the scenes; according to the movie editing factor and the human perception factor, the story line development model is established and a story line development flow graph is generated according to the time order; action scenes in a movie are tested according to the story line development model. The testing method has the advantages that the story line development model is established by taking two viewpoints of the movie editing method and human perception into account to consider visual and auditory factors so as to simulate story line development and change, thereby realizing the accurate test of the action scenes in the movie.

Owner:欢瑞世纪(东阳)影视传媒有限公司

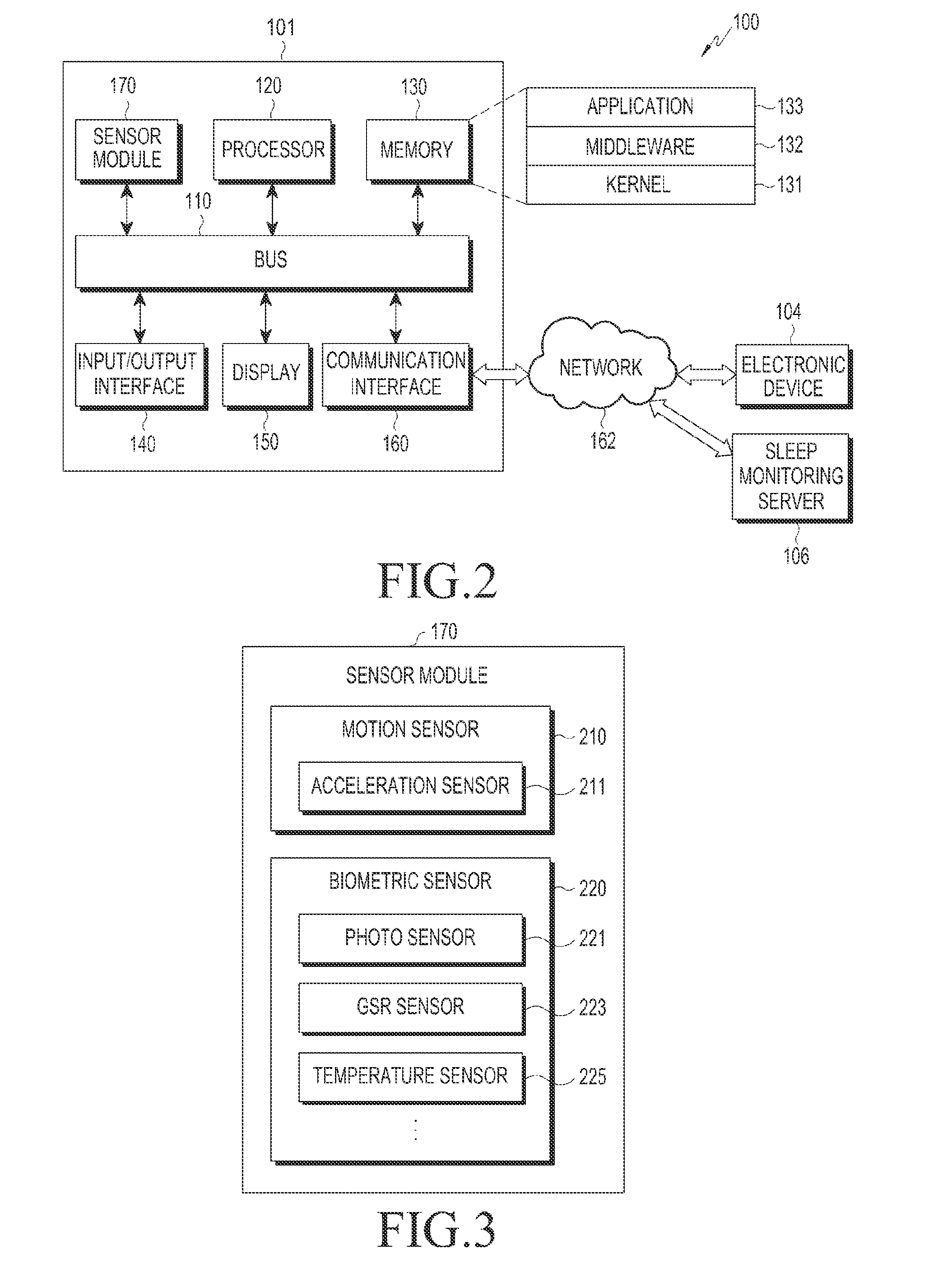

Electronic device and sleep monitoring method in electronic device

InactiveUS20160058366A1Reduce power consumptionDiagnostics using lightPerson identificationSimulationMotion intensity

Wearable electronic devices, systems, and methods for monitoring sleep are described. In one method, motion sensor values of the current motion of the electronic device are acquired and the change in motion intensity of an electronic device is calculated by comparing motion sensor values over two or more time periods. If the change in motion intensity fits a predetermined pattern, it is determined whether the electronic device is currently being worn. If it is determined that the electronic device is currently being worn, sleep monitoring is performed.

Owner:SAMSUNG ELECTRONICS CO LTD

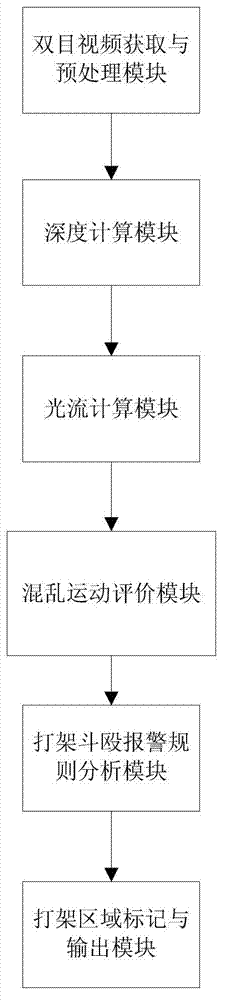

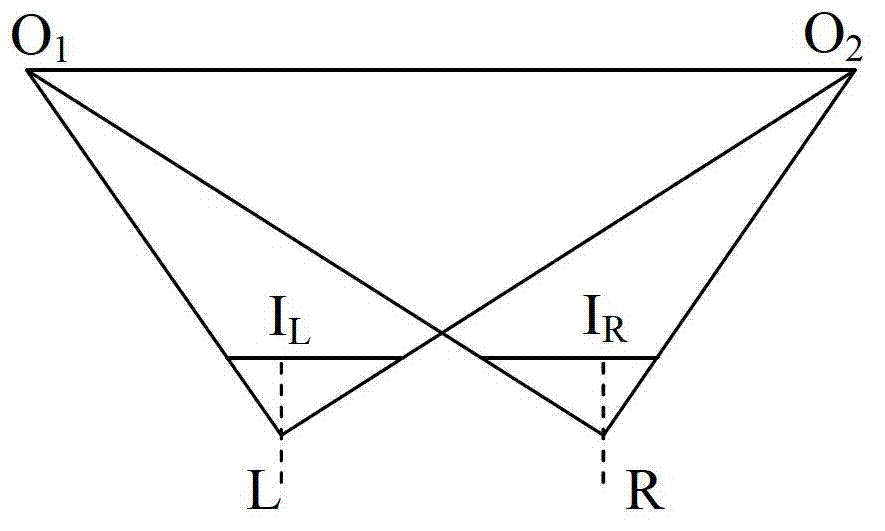

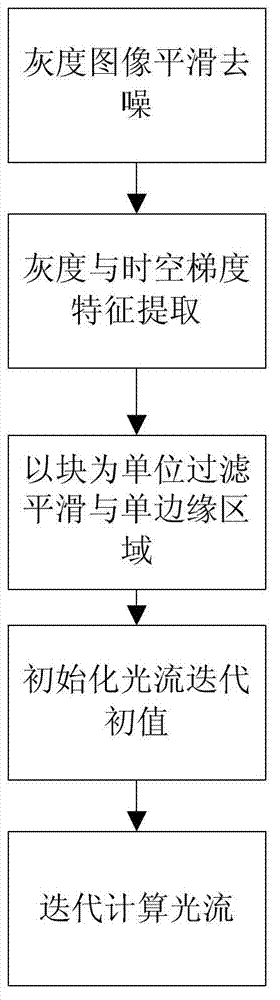

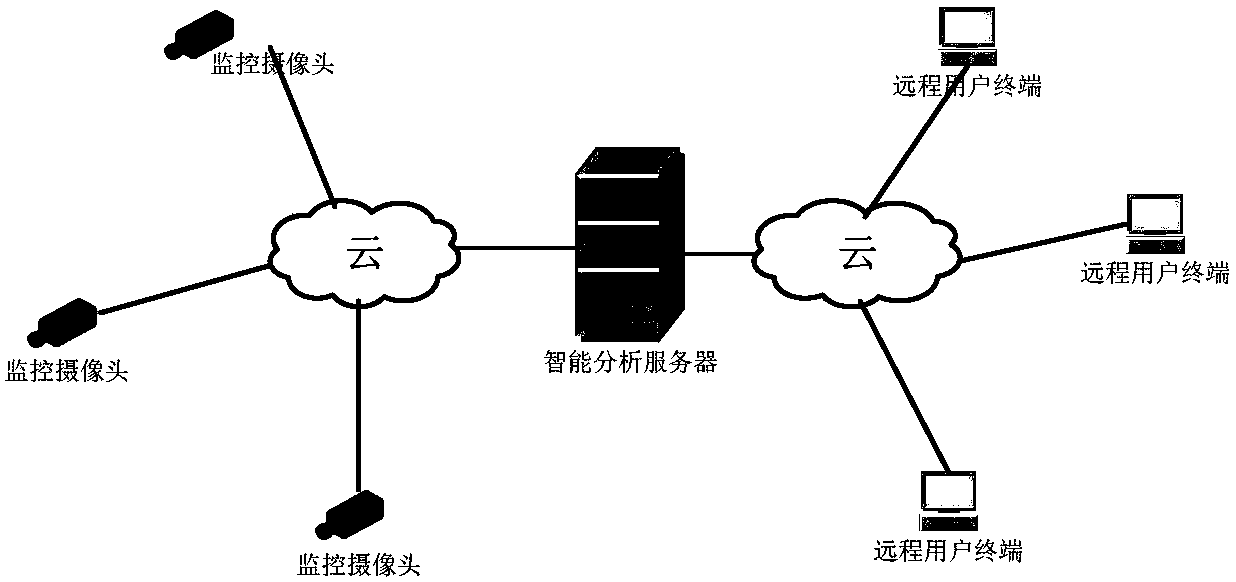

Fighting detecting method based on stereoscopic vision motion field analysis

InactiveCN102880444AOvercome the effects of adverse conditionsJudgment of severity is reasonableData comparisonHuman bodyMotion vector

The invention relates to a fighting detecting method based on stereoscopic vision motion field analysis. The method is as follows: an object human body is extracted according to the motion vector field and the depth map of the object; by taking the motion vector field as the main characteristic, the entropy of a cumulative histogram within a certain time is calculated out based on a block statistics cumulative motion vector direction histogram; a motion intensity degree evaluation strategy is planned according to the depth information of the object by calculating the average motion vector intensity in the block; and finally, the disorder and intensity degree evaluation scores are combined to obtain the probability of fighting behavior, through the time and space accumulation, when the probability of the fighting behavior is continuously greater than a threshold value, then the fighting behavior is confirmed. The fighting detecting method can filter the motion vector calculation errors caused by scene illumination variation and object blocking under the monitoring environment. The intensity degree judgment is more reasonable according to the scene depth information, and the robust detection result is obtained after the analysis of the time and space distribution by establishing the fighting probability model.

Owner:ZHEJIANG ICARE VISION TECH

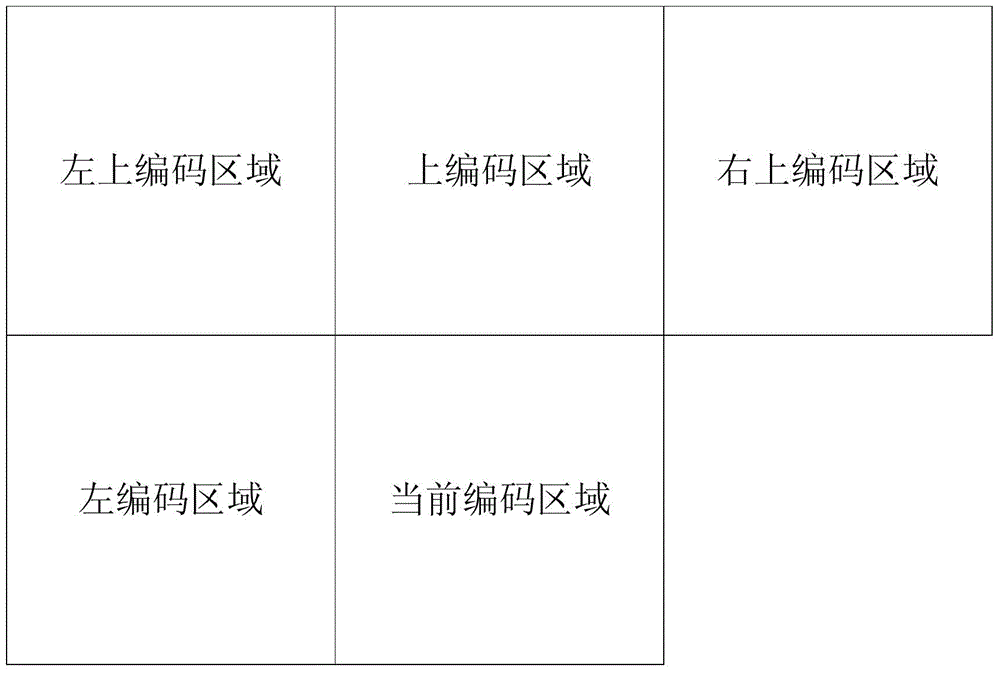

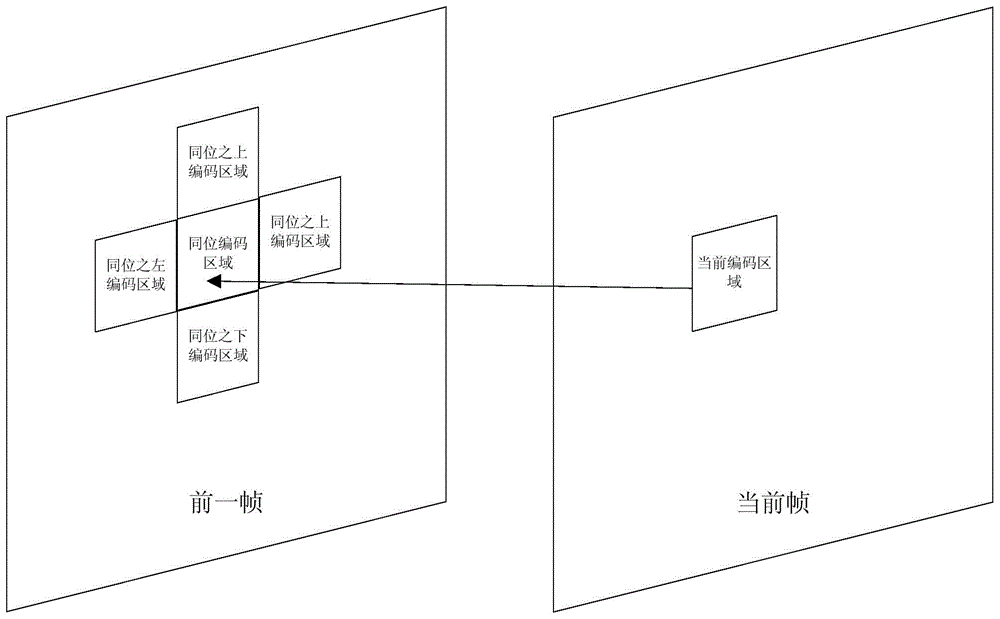

High efficiency video coding sensing rate-distortion optimization method based on structural similarity

ActiveCN103607590AImprove perceived visual qualityDoes not add much computational complexityDigital video signal modificationTime correlationComputer architecture

Provided is a high efficiency video coding sensing rate-distortion optimization method based on structural similarity. The high efficiency video coding sensing rate-distortion optimization method based on the structural similarity comprises the following steps that (1) calculation of image distortion is carried out by using the structural similarity as the evaluation criterion of distortion before the coding end of a high efficiency video coder carries out mode judgment, and the calculation of the image distortion is used for replacing calculation of a coded image distortion value in the process of rate-distortion judgment carried out by the coding end of the high efficiency video coder; (2) Lagrangian multipliers in the process of high efficiency video coding rate-distortion judgment calculation are corrected according to the motion intensity consistency between every two adjacent zones on a space domain and a time domain of a coded image, and rate-distortion optimization calculation of a current coding region is carried out. According to the high efficiency video coding sensing rate-distortion optimization method based on the structural similarity, due to the facts that the structural similarity serves as the evaluation criterion of image distortion, spatial correlation and time correlation of a former frame and a later frame in the process of interframe coding are used for deducing the motion intensity consistency of the current coding region, and accordingly, parameters used in the process of the rate-distortion judgment are corrected, sensing visual quality of the coded image is greatly improved under the condition that the calculation complexity is not greatly improved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

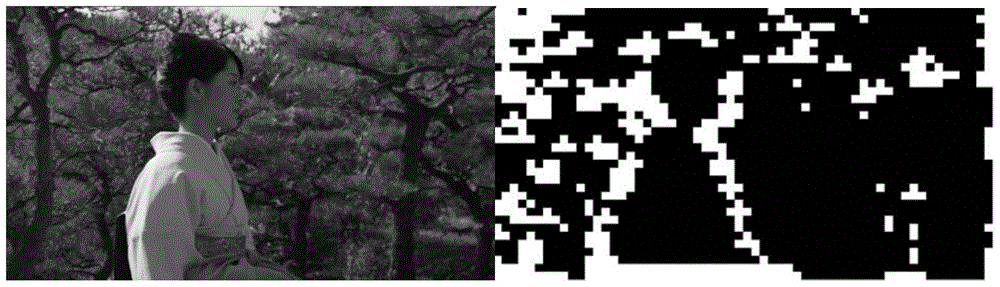

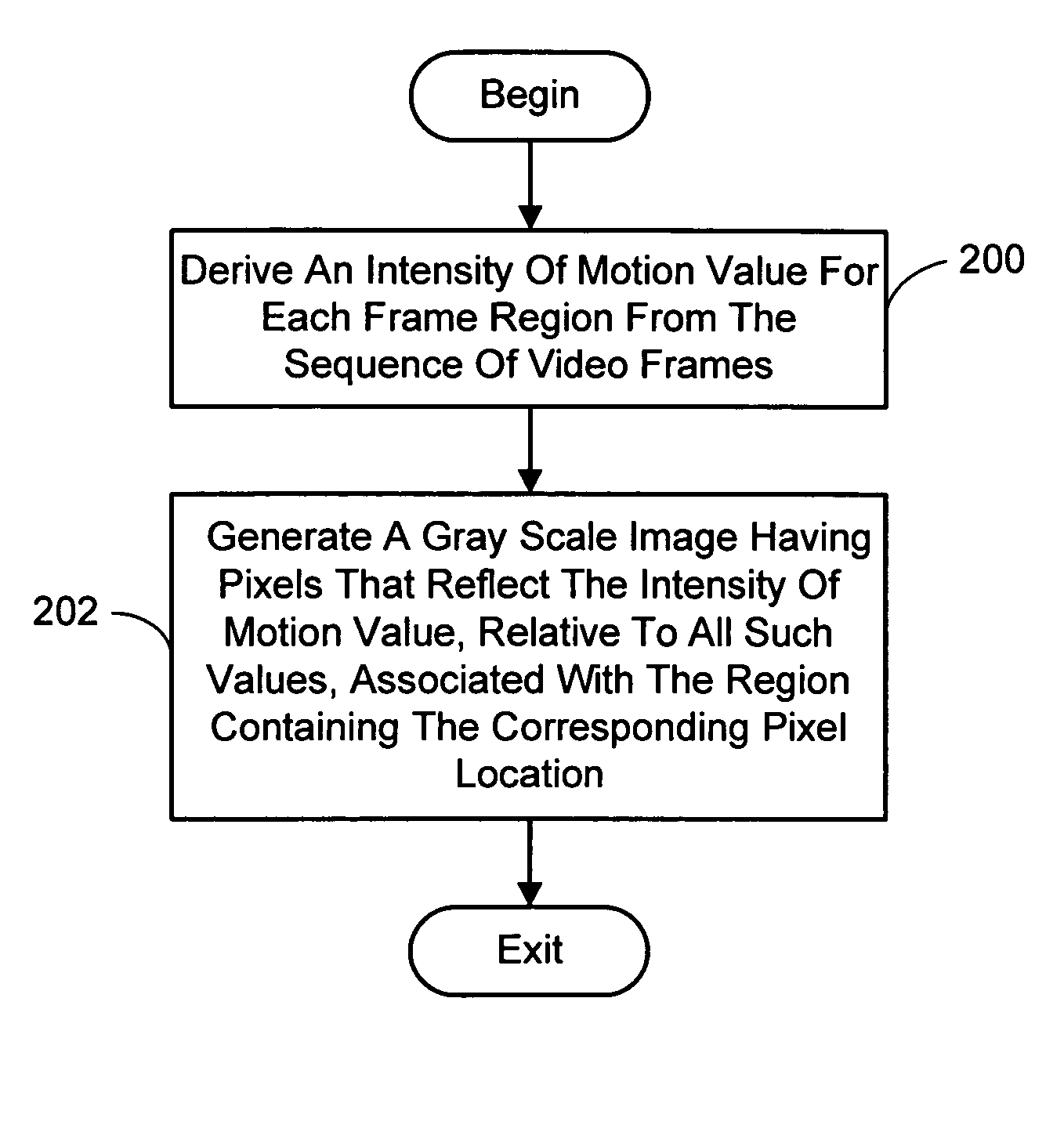

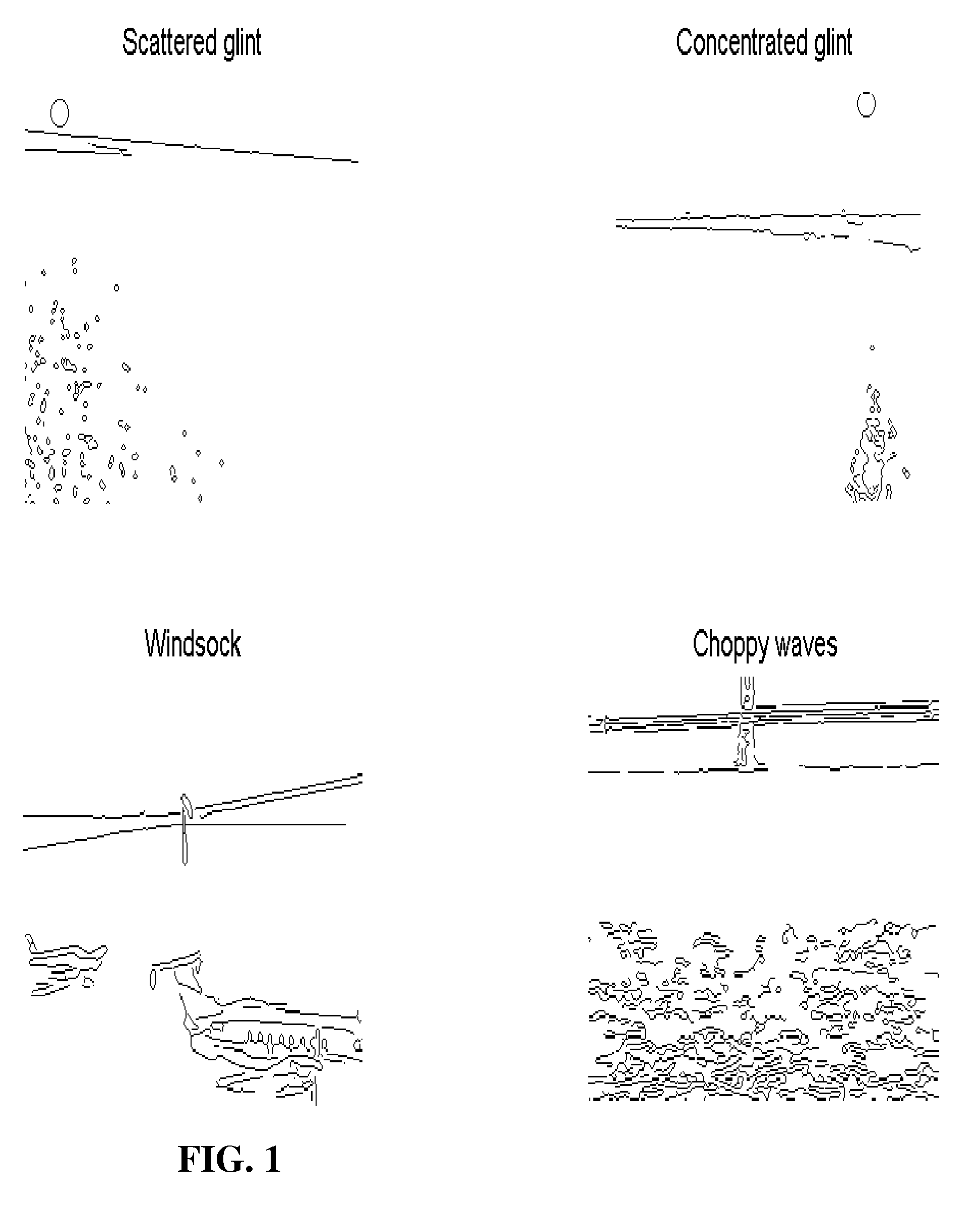

Content-based characterization of video frame sequences

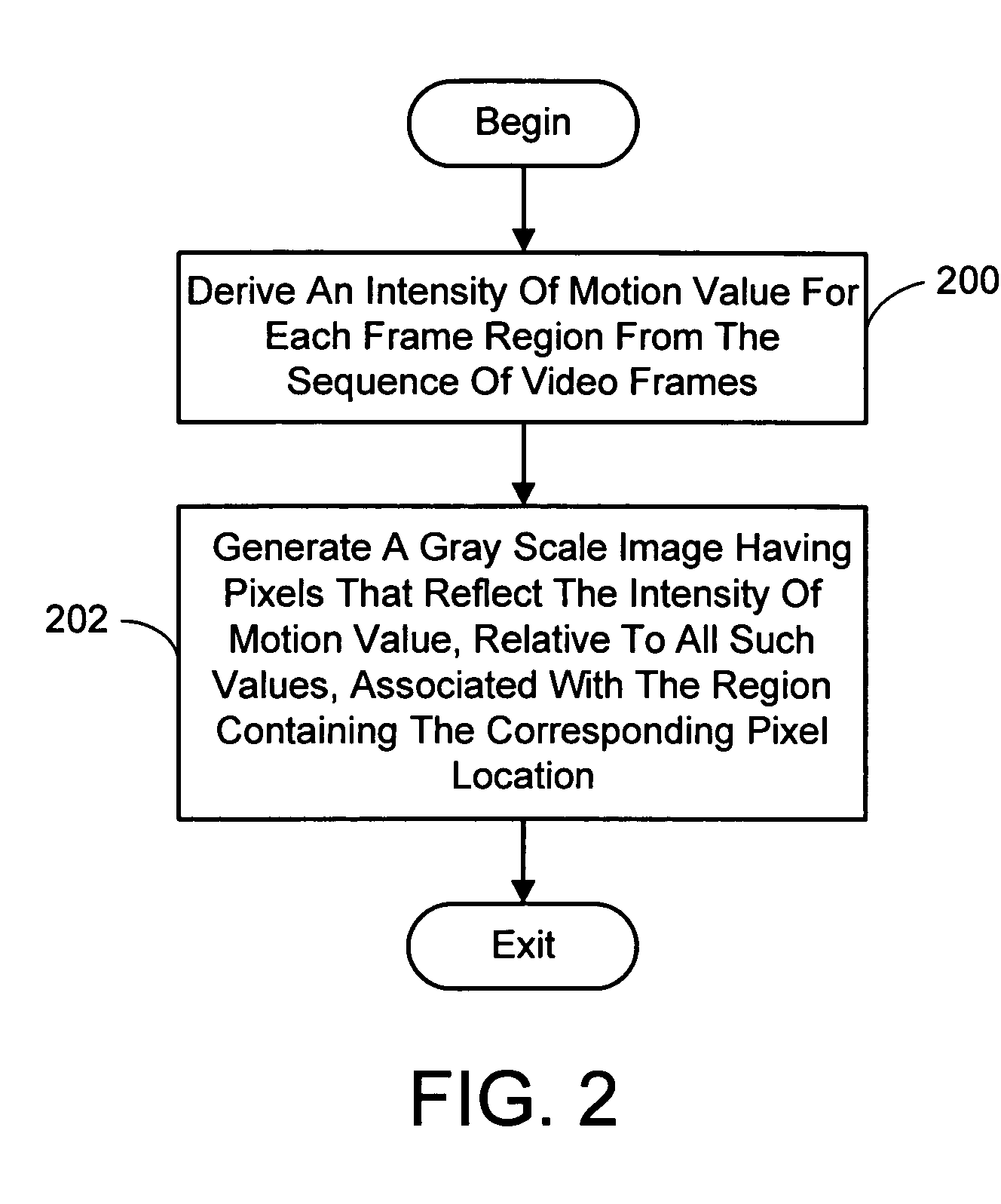

InactiveUS20050147170A1Eliminate high frequency noiseTelevision system detailsImage analysisObject basedFrame sequence

A system and process for video characterization that facilitates video classification and retrieval, as well as motion detection, applications. This involves characterizing a video sequence with a gray scale image having pixel levels that reflect the intensity of motion associated with a corresponding region in the sequence of video frames. The intensity of motion is defined using any of three characterizing processes. Namely, a perceived motion energy spectrum (PMES) characterizing process that represents object-based motion intensity over the sequence of frames, a spatio-temporal entropy (STE) characterizing process that represents the intensity of motion based on color variation at each pixel location, a motion vector angle entropy (MVAE) characterizing process which represents the intensity of motion based on the variation of motion vector angles.

Owner:MICROSOFT TECH LICENSING LLC

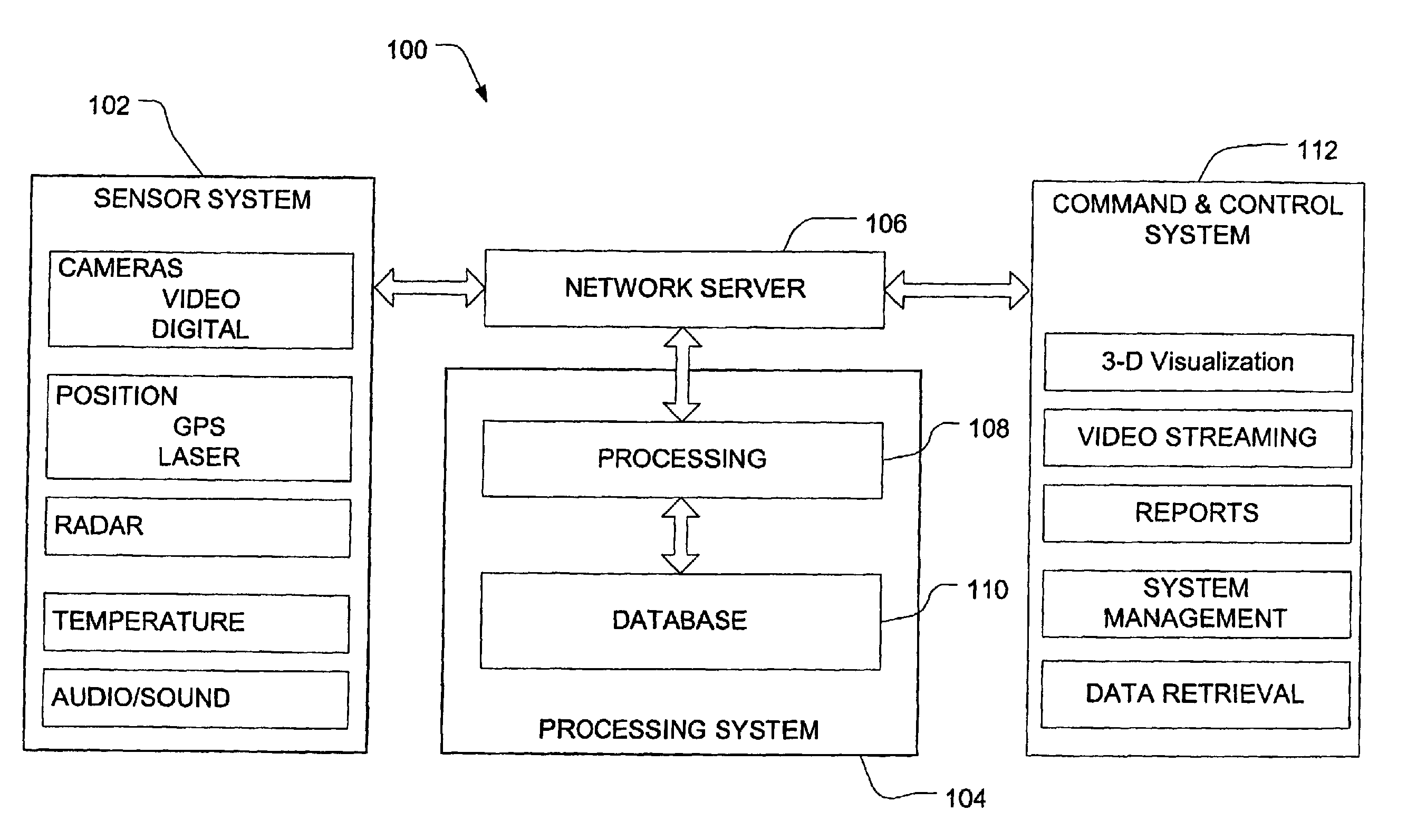

Device, system and method for automated detection of orientation and/or location of a person

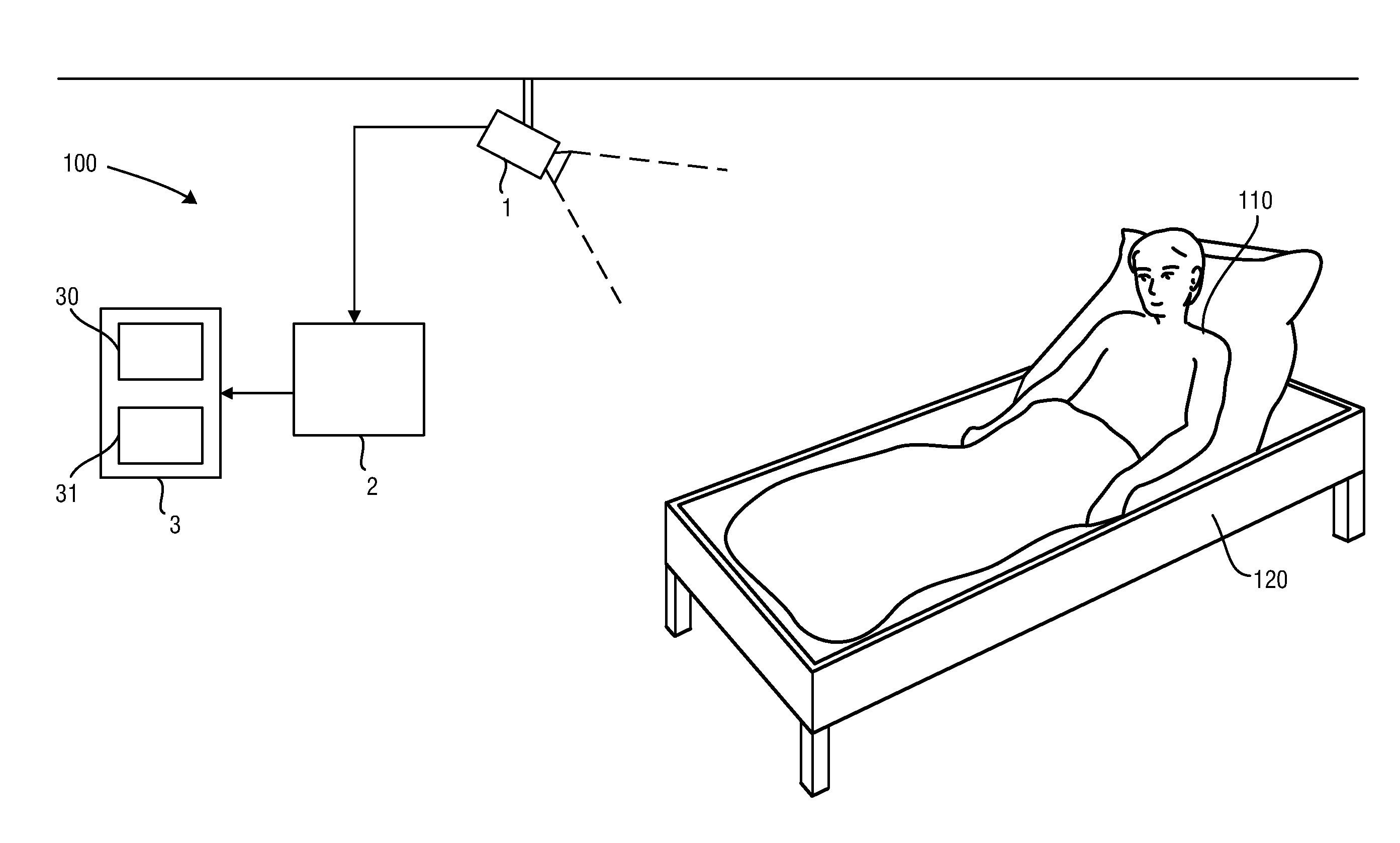

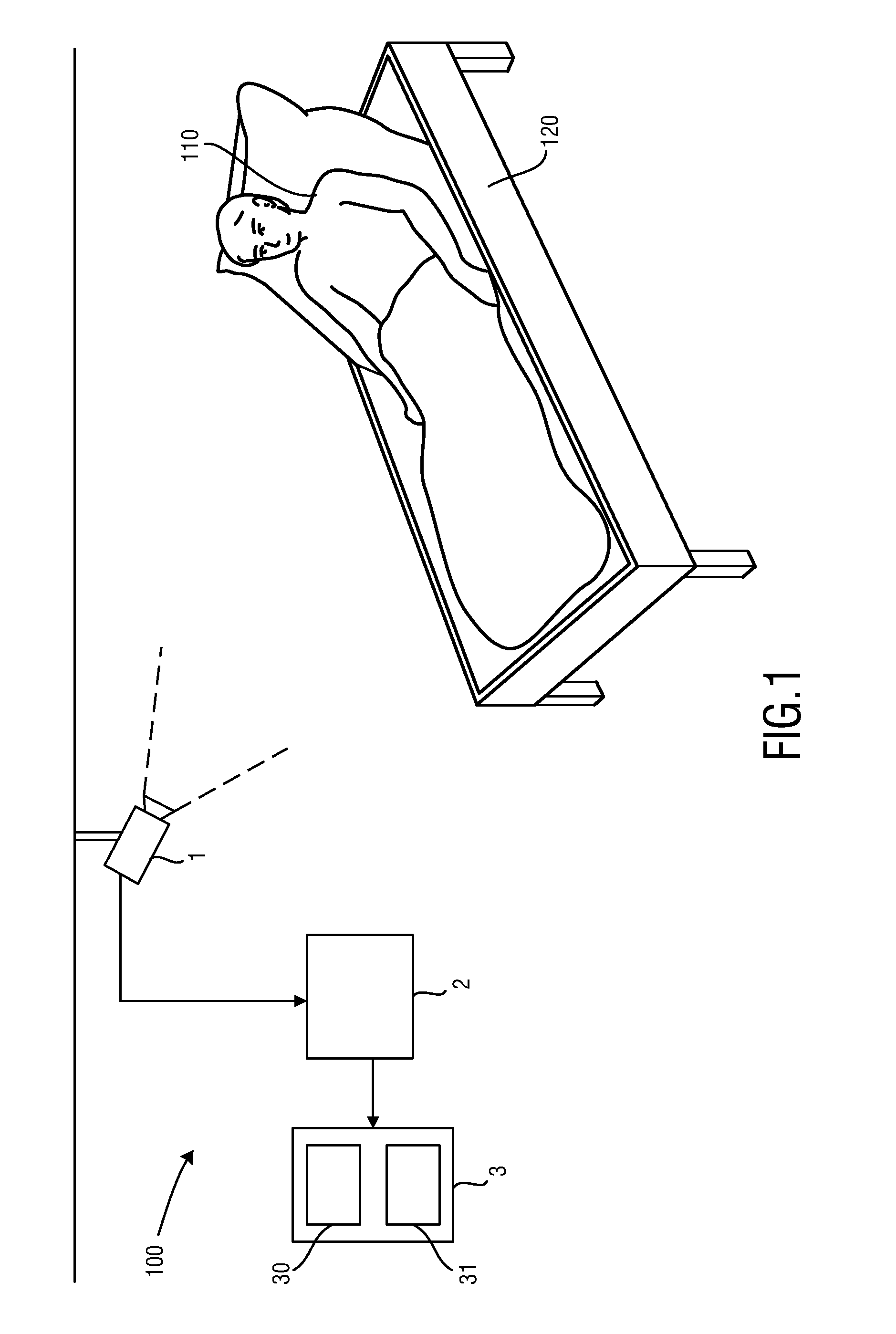

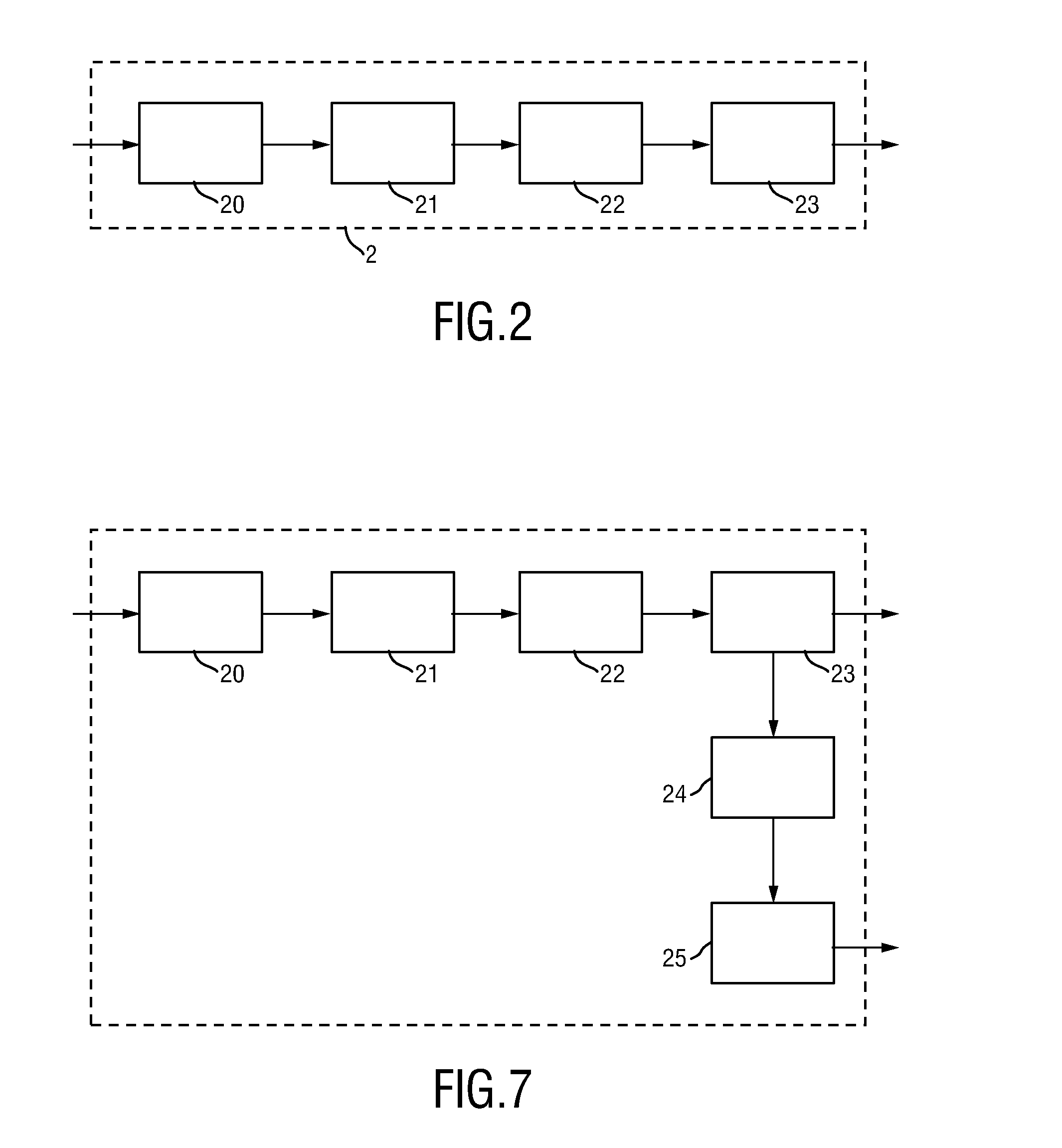

ActiveUS20160125620A1Robust automated detectionRobust detectionImage enhancementMedical imagingMotion detectorTemporal change

The present invention relates to a device, system and method for automated detection of orientation and / or location of a person. To increase the robustness and accuracy, the proposed device comprises an image data interface (20) for obtaining image data of a person (110), said image data comprising a sequence of image frames over time, a motion detector (21) for detecting motion within said image data, a motion intensity detector (22) for identifying motion hotspots representing image areas showing frequently occurring motion, and a person detector (23) for detecting the orientation and / or location of at least part of the person (110) based on the identified motion hotspots.

Owner:KONINKLJIJKE PHILIPS NV

Portable electronic device and computer software product

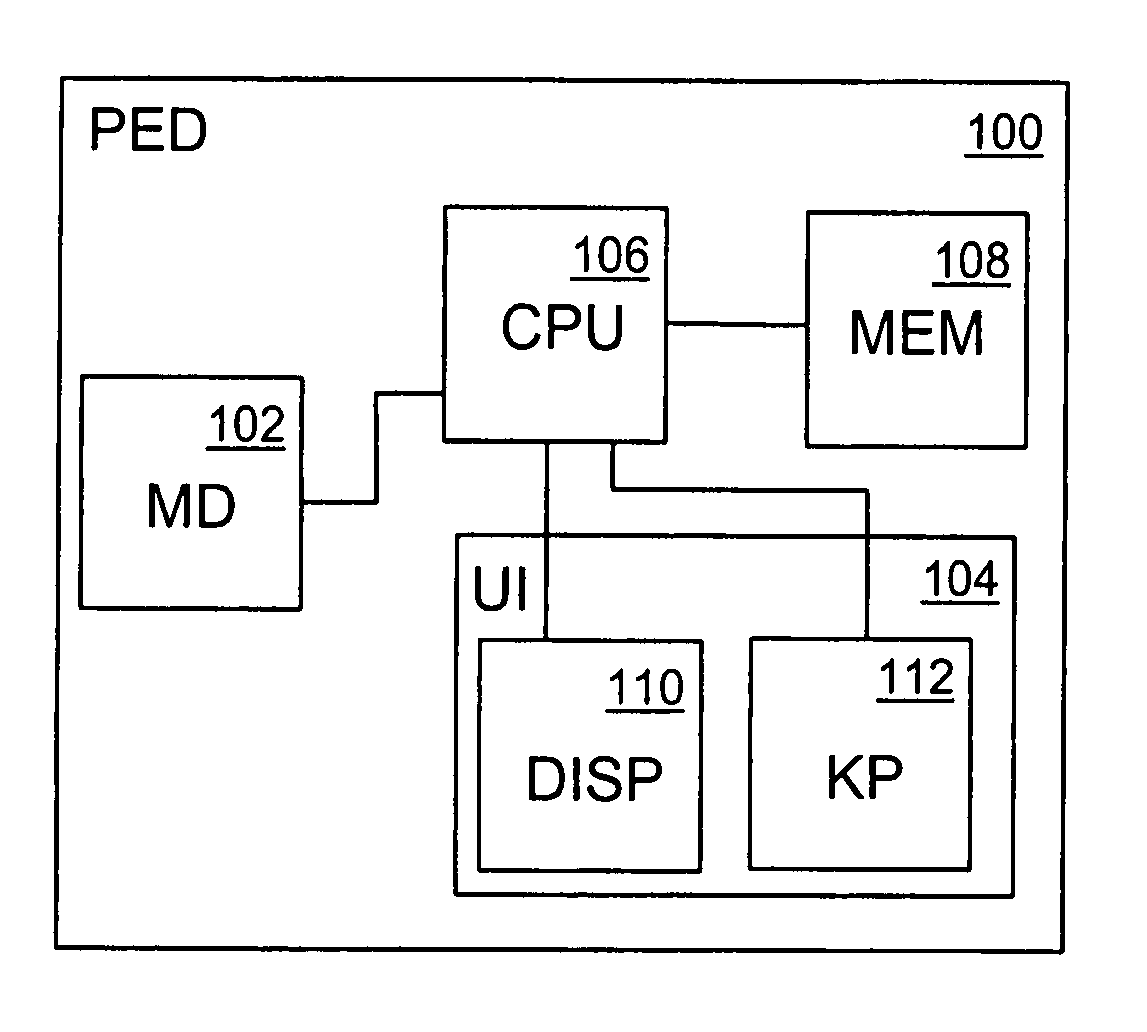

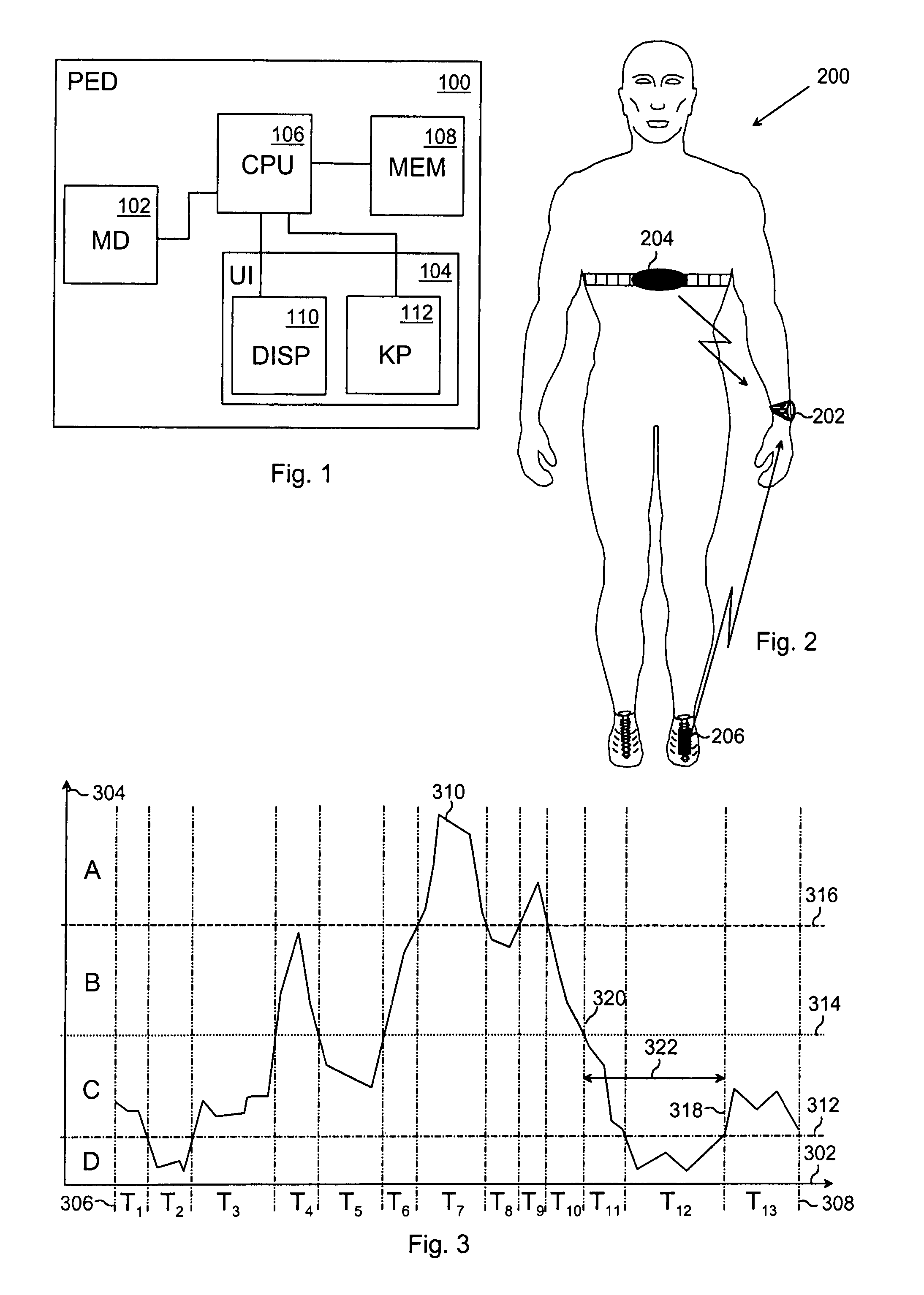

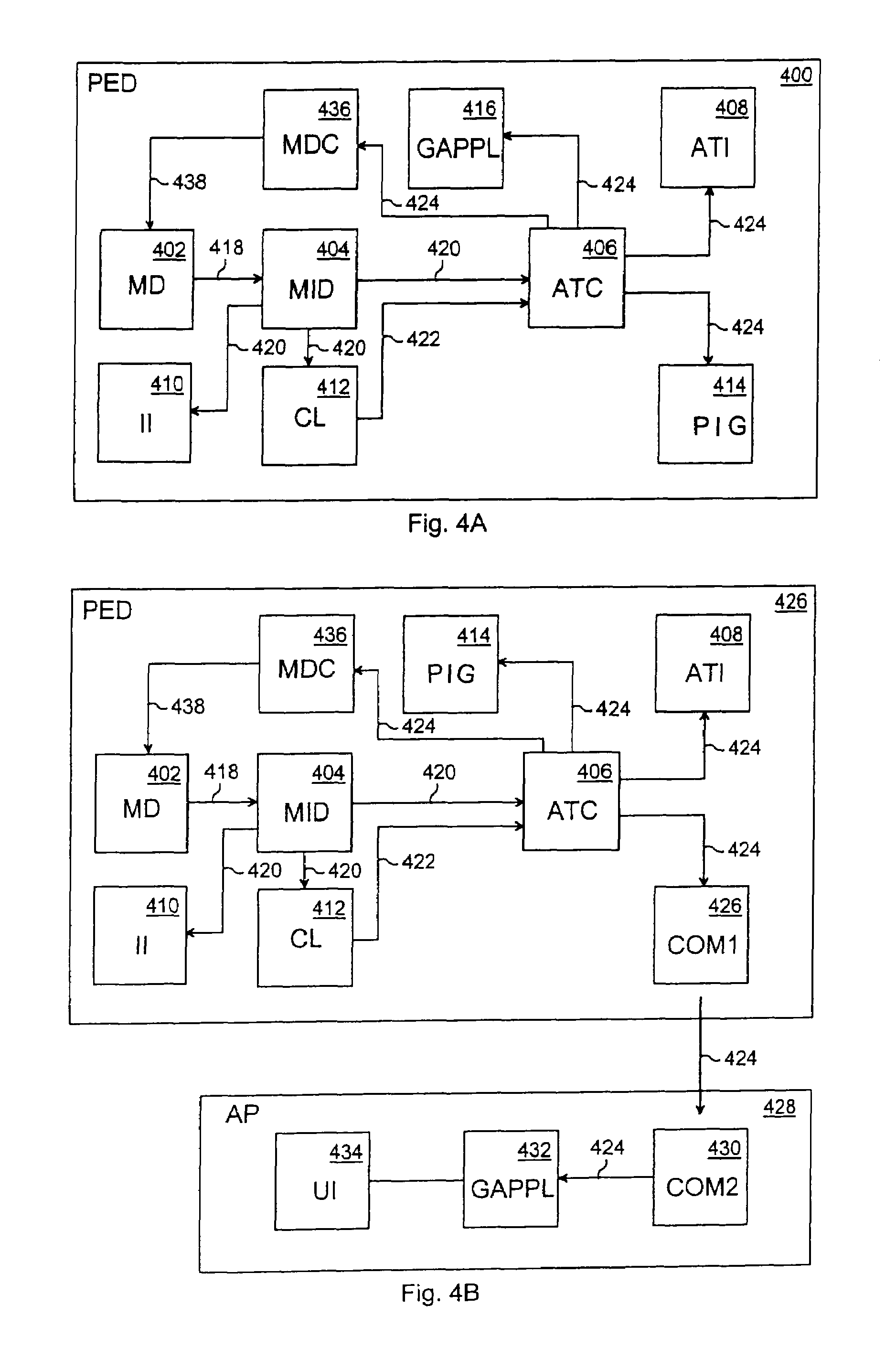

The invention relates to a portable electronic device and computer software product. The portable electronic device comprises a motion detector for generating motion data characterizing the local movement of the portable electronic device, a motion intensity determiner for determining a instantaneous motion intensity value of the user of the portable electronic device from the motion data, and an active time counter for determining an active time accumulation that sums up the time periods, during which the instantaneous motion intensity value meets predefined activity criteria.

Owner:POLAR ELECTRO

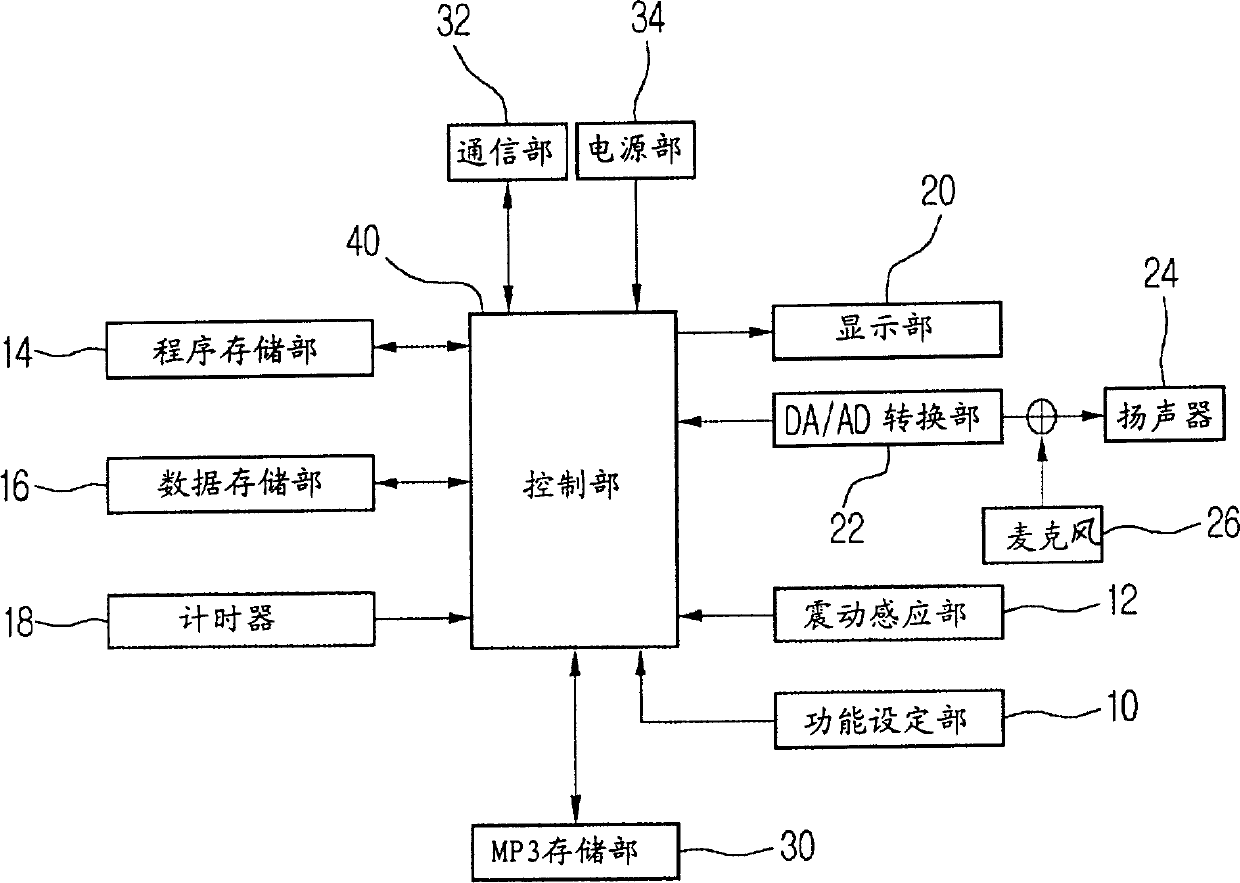

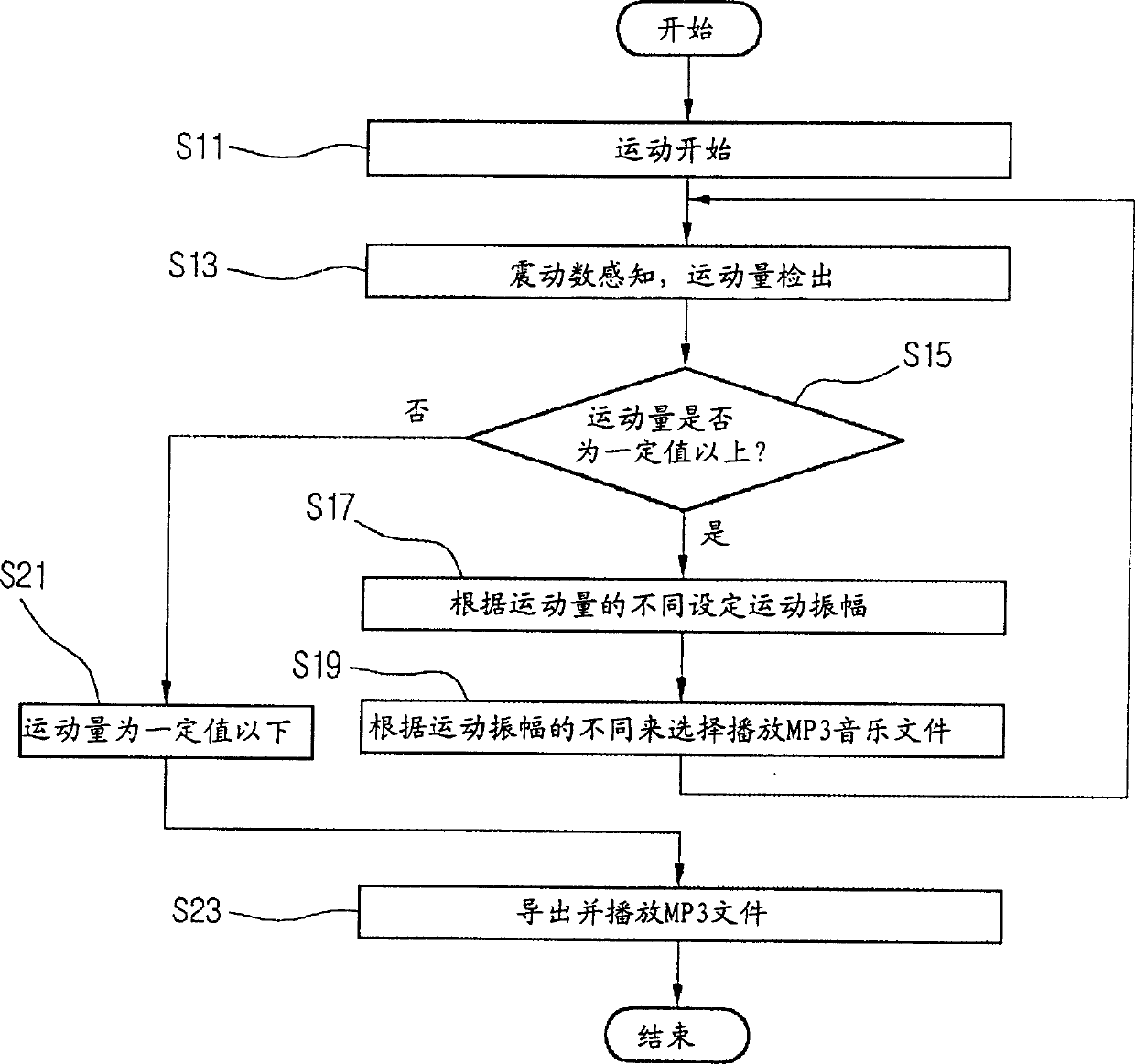

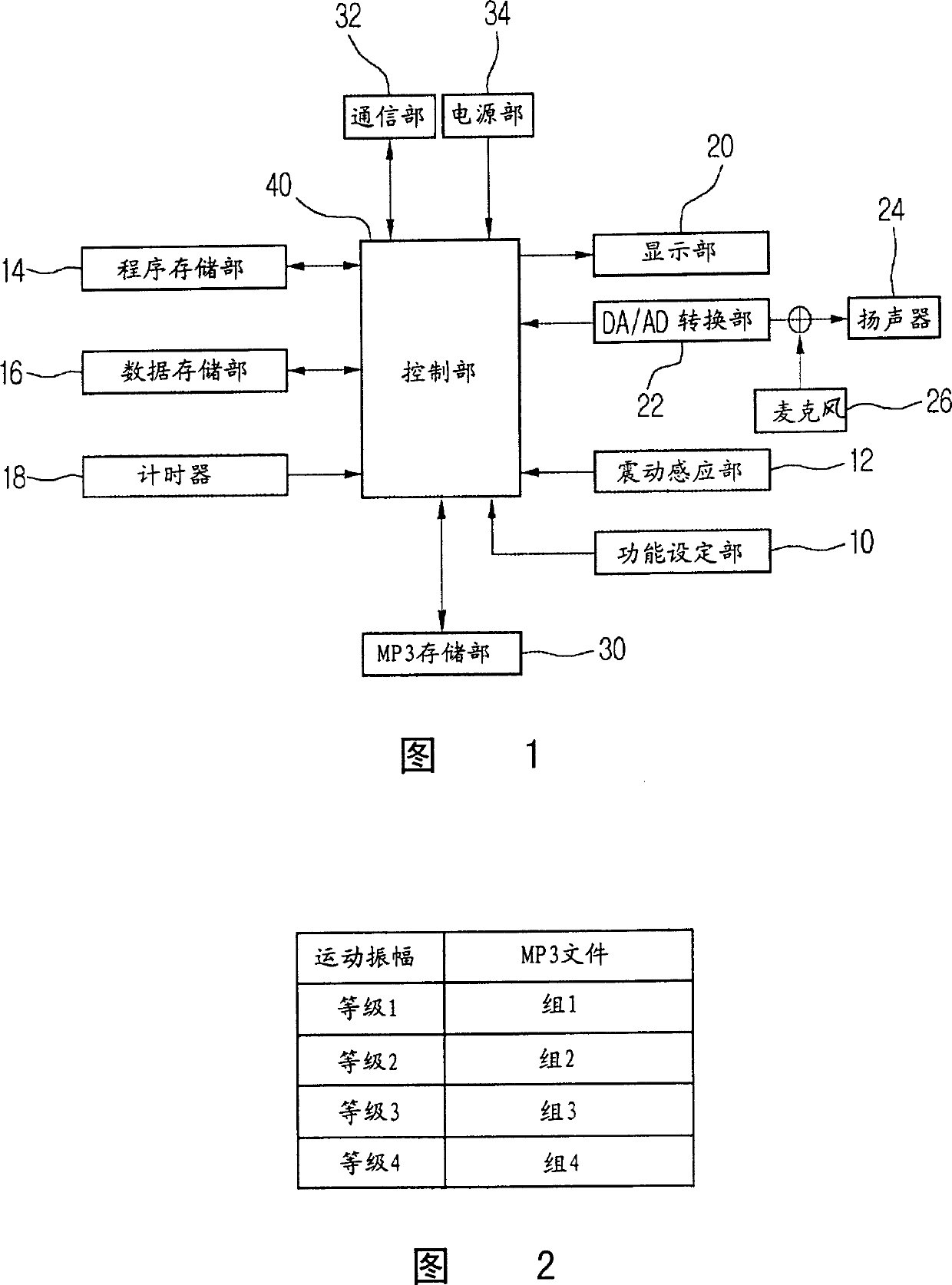

Sport assistance device capable of playing music

InactiveCN1728276AImprove athletic abilityDigital storageDiagnostic recording/measuringLoudspeakerTimer

A motion auxiliary device being able to play music consists of function setting unit, vibration sensing unit for sensing motion intensity , program storing unit for calculating and storing motion amplitude per unit time in order to play relevant music coordinating to said amplitude, data storing unit for storing calculated out motion amplitude , timer, displaying unit for displaying motion intensity per unit time and MP3 file information, A / D converting unit for converting music file to be analog signal for outputting through loudspeaker, microphone, MP3 storing unit, communication unit, power unit and control unit.

Owner:LG ELECTRONIC (HUIZHOU) CO LTD

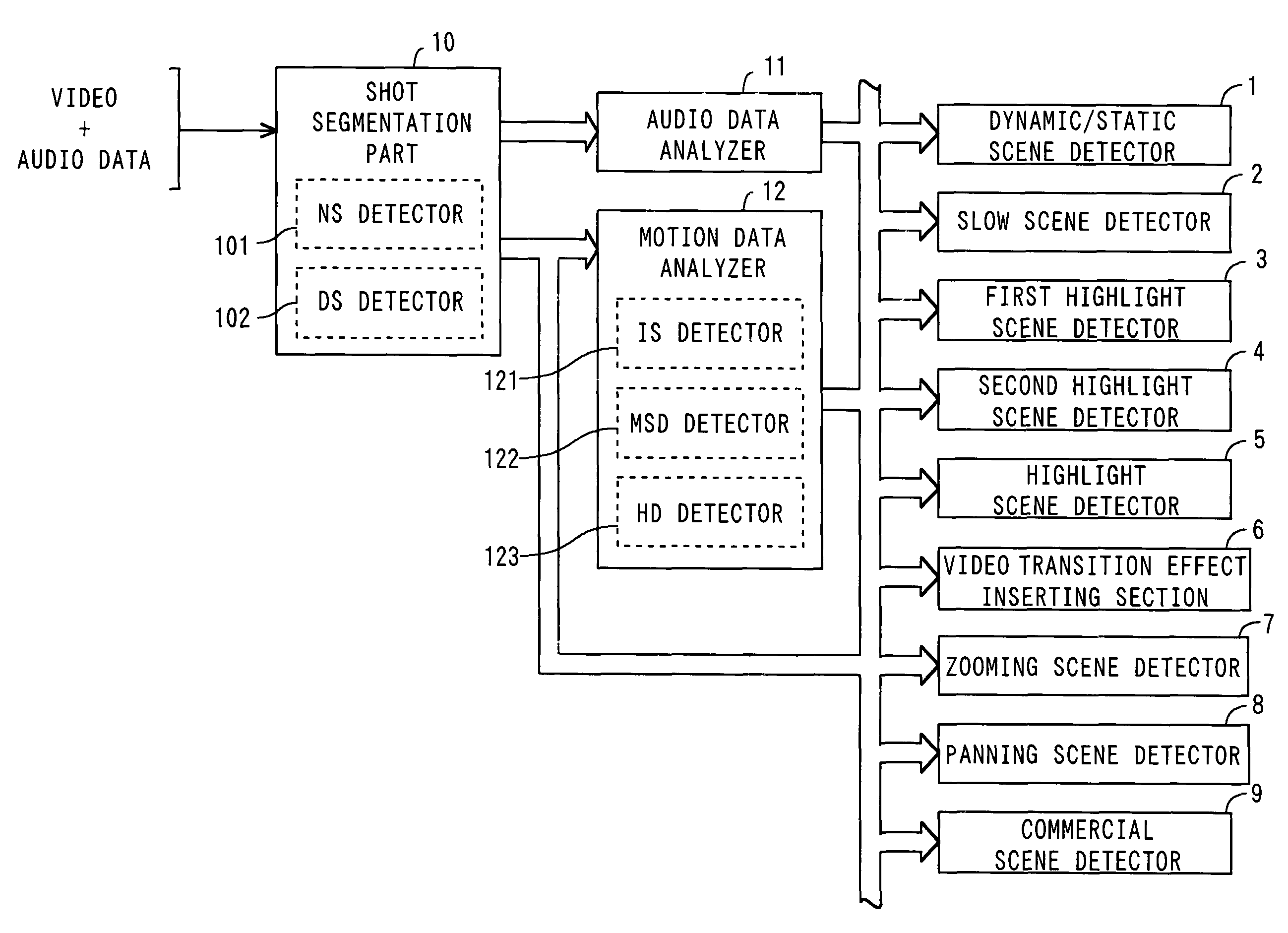

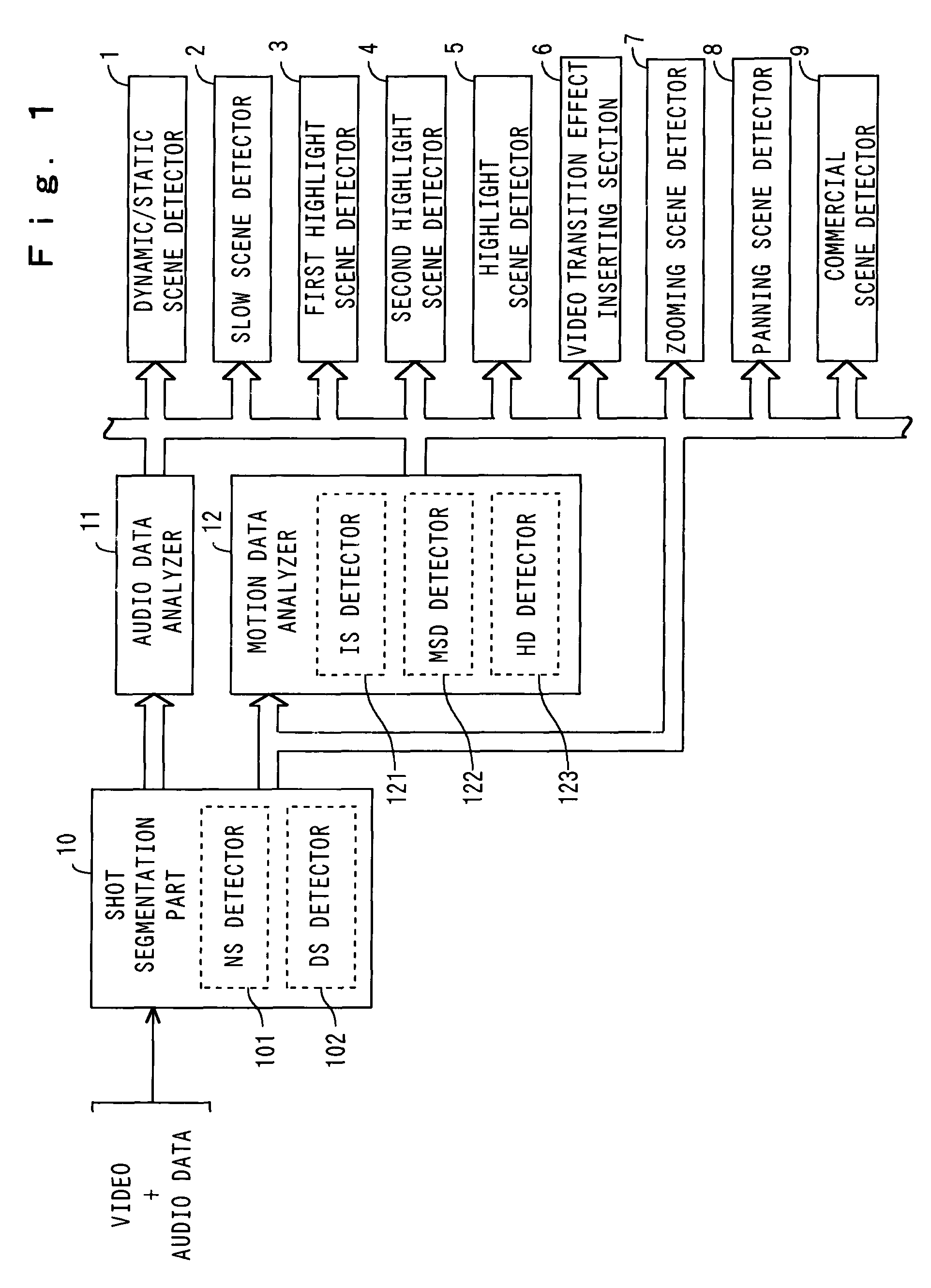

Scene classification apparatus of video

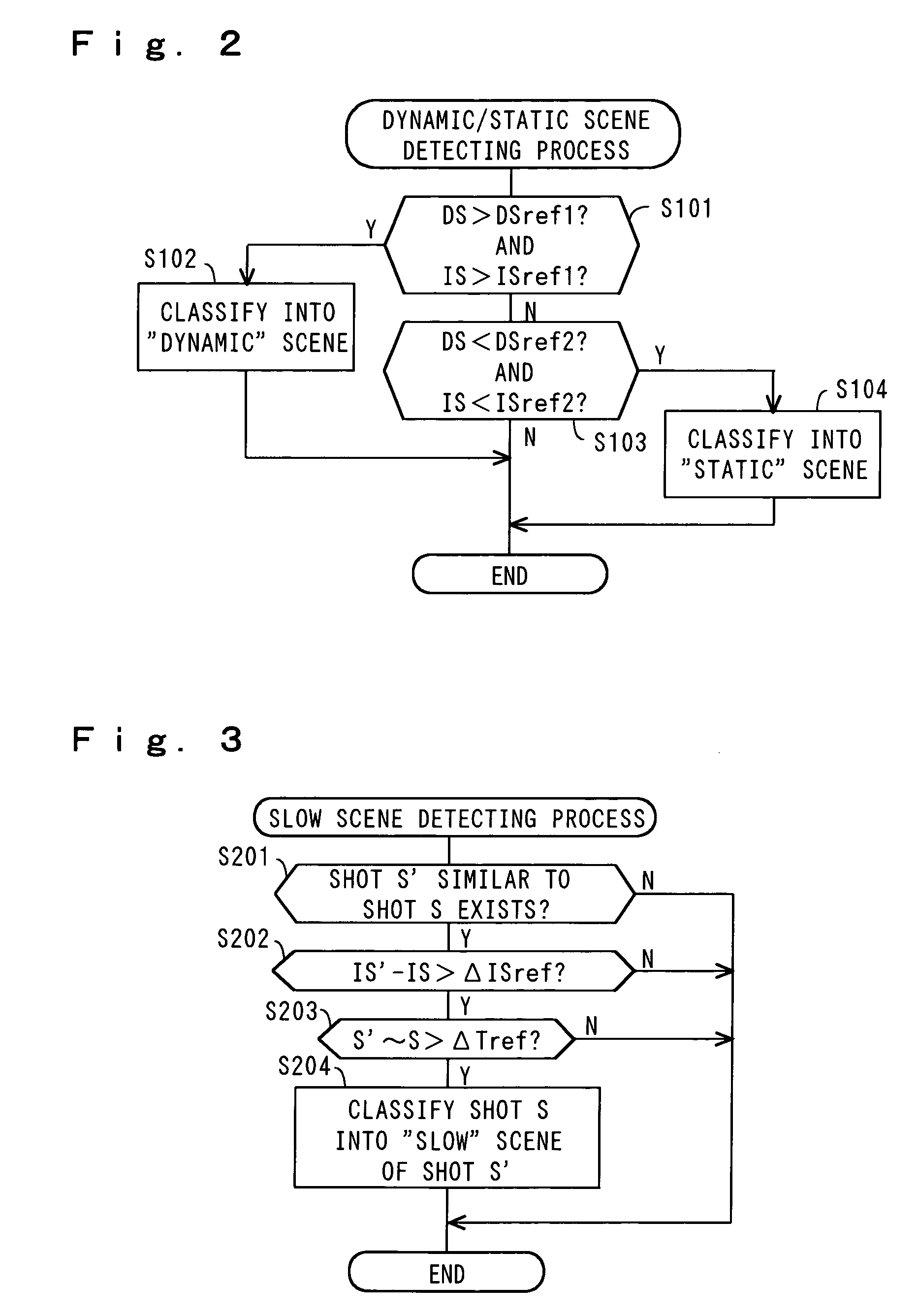

InactiveUS8264616B2Low costImprove accuracyTelevision system detailsColor signal processing circuitsMotion vectorMotion intensity

The present invention provides a scene classification apparatus for classifying uncompressed or compressed video into various types of scenes at low cost and with high accuracy using characteristics of a video and audio characteristics accompanied by the video. When video are compressed data, their motion intensity, spatial distribution of motion and histogram of motion direction are detected by using values of motion vectors of predictive coding images existing in respective shots, and the respective shots of the video are classified into a dynamic scene, a static scene, a slow scene, a highlight scene, a zooming scene, a panning scene, a commercial scene and the like based on the motion intensity, the spatial distribution of motion, the histogram of motion direction and shot density.

Owner:KDDI R&D LAB INC

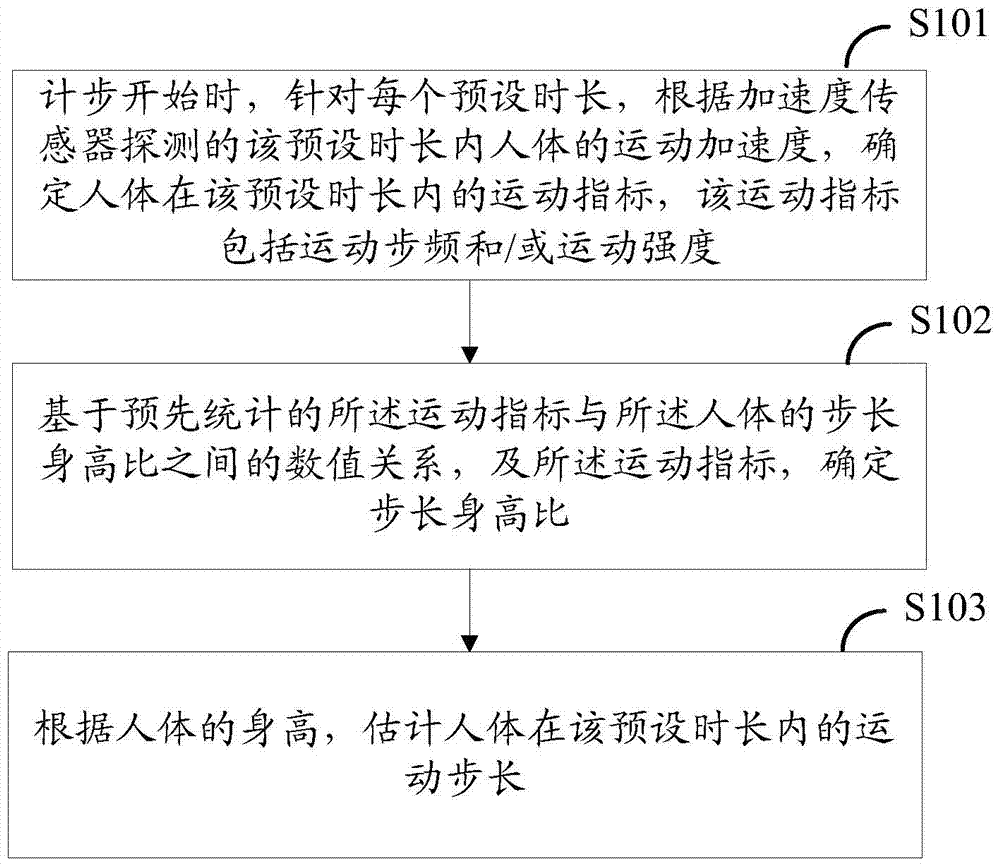

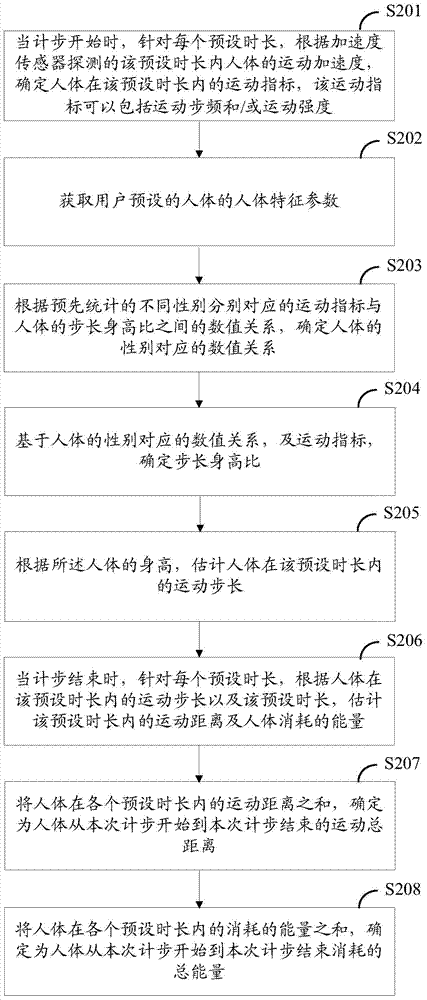

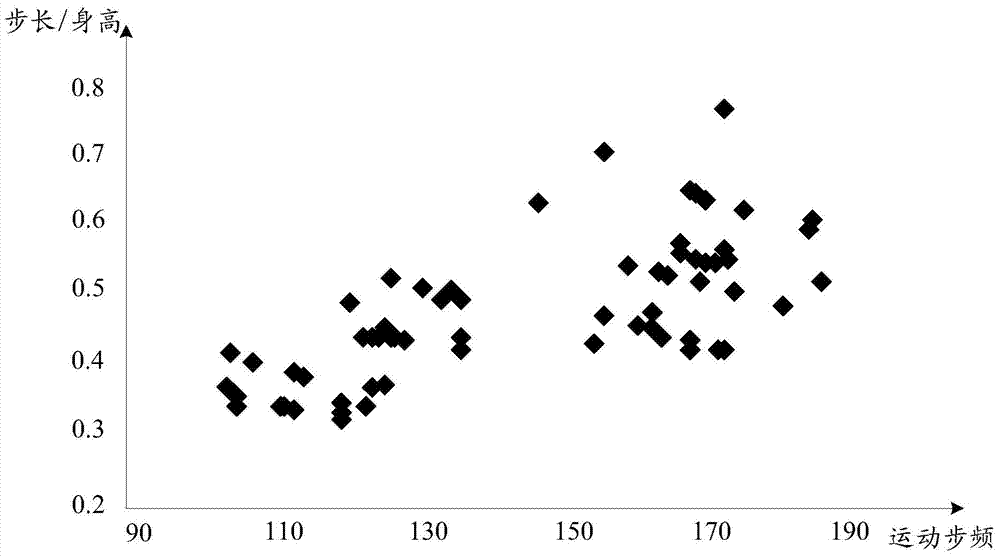

Step length estimation method, pedometer and step counting system

InactiveCN104729524AAccurately calculate exercise distanceDistance measurementHuman bodyEstimation methods

Embodiments of the invention provide a step length estimation method, a pedometer and a step counting system. According to the present invention, when step counting is started, based on each preset duration, according to the human body motion acceleration detected by an acceleration sensor within the preset duration, the motion indexes of the human body within the preset duration are determined, wherein the motion indexes comprise motion step frequency and / or motion intensity; based on the numerical relationship between the pre-statistical motion indexes and the human body step length / height ratio, and the motion indexes, the step length / height ratio is determined; and according to the human body height, the motion step length within the preset duration is estimated; and with the step length estimation method, the pedometer and the step counting system, the problem that the calculation is not accurate when the pedometer uses the step length output by the user to calculate the motion distance is solved in the prior art. The present invention relates to the technical field of computers.

Owner:CHINA MOBILE COMM GRP CO LTD

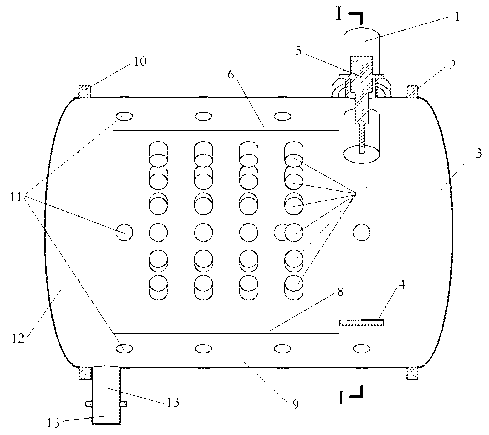

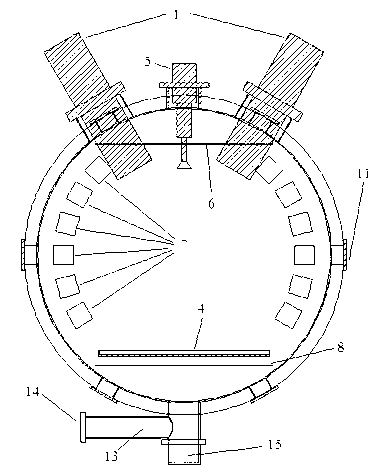

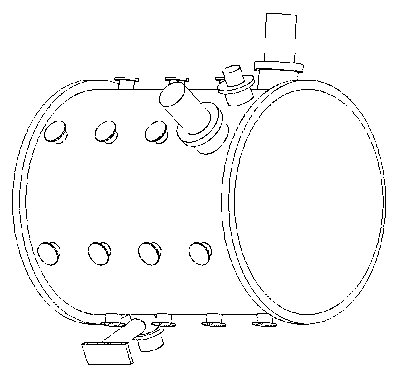

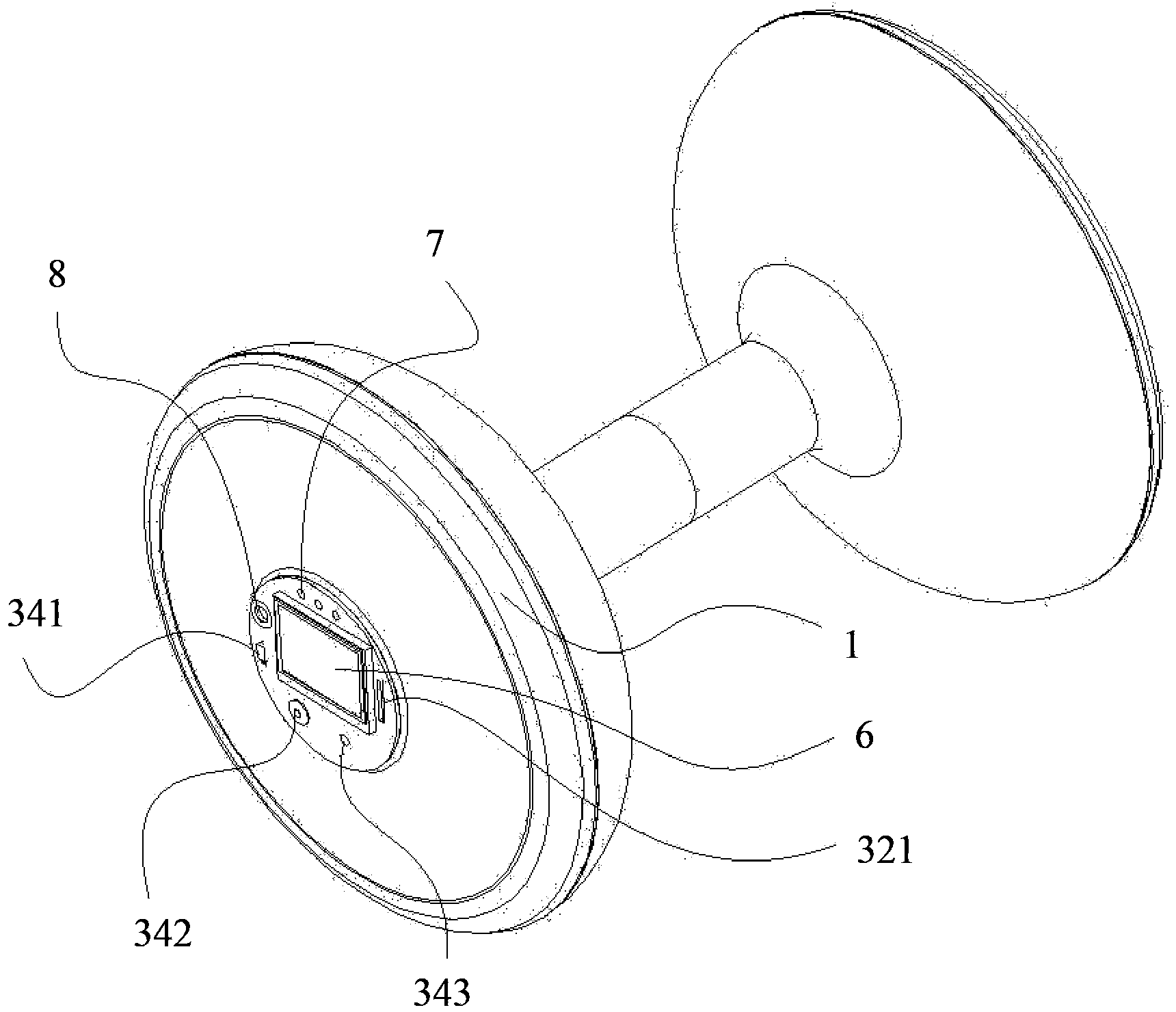

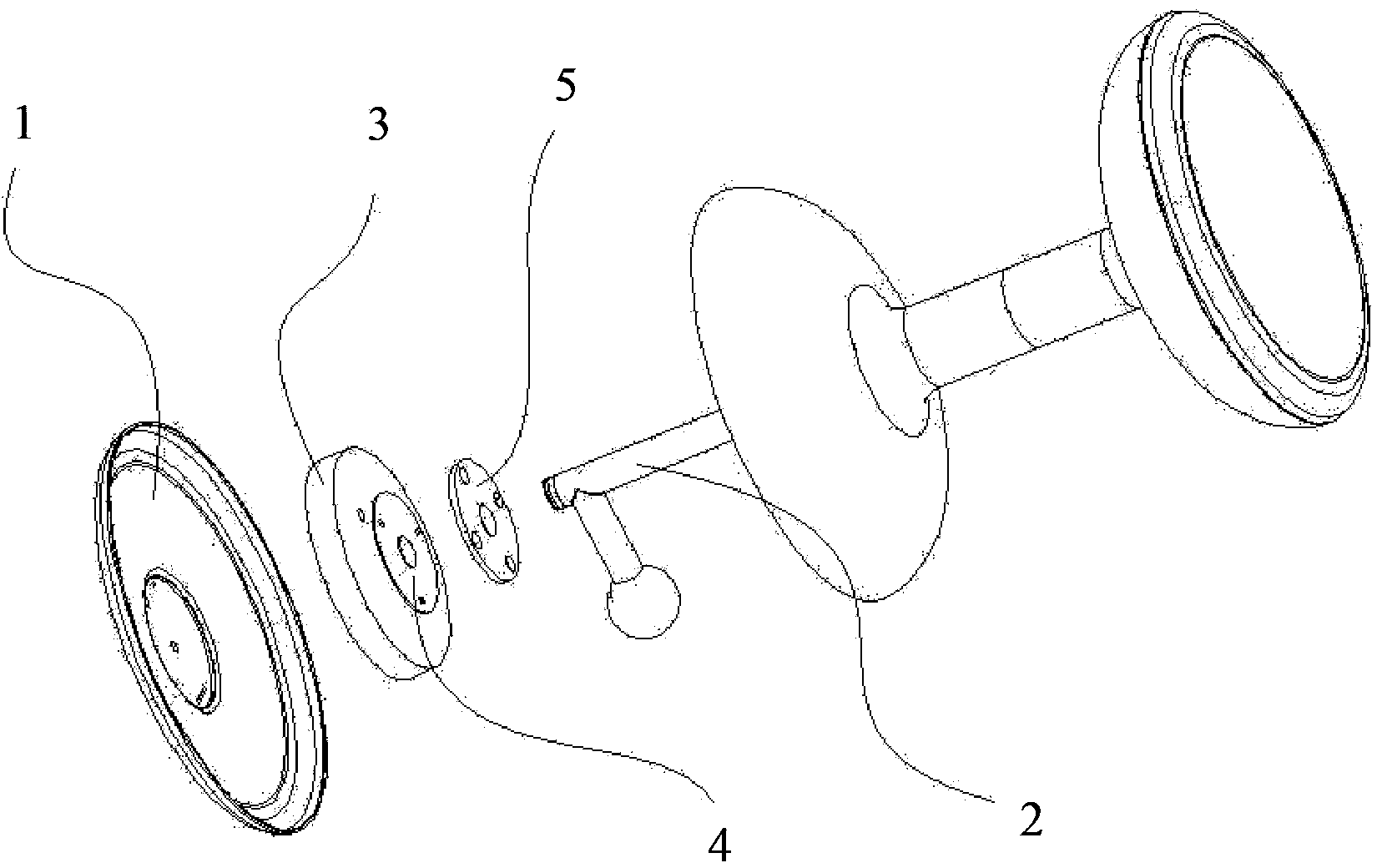

Moon surface dust environment simulating method and simulating device

ActiveCN103318428ASolve the problem that the actual lunar surface dust environment cannot be effectively simulatedEasy to implementCosmonautic condition simulationsControlling environmentMotion intensity

The invention discloses a moon surface dust environment simulating method and a simulating device. The moon surface dust environment simulating method includes a first step of vacuumizing the atmosphere in a cavity through vacuum equipment and enabling the vacuum degree to approximate the moon surface environment, a second step of enabling dust samples to be placed in a sample groove in the cavity, adjusting temperatures of the dust samples within the range of minus 190-150 DEG C, and using a microporous film to seal the dust samples in the sample groove, a third step of carrying out ultraviolet irradiation on the dust samples through deep ultraviolet spectrum, and enabling the dust samples to be electrified through external photoelectric effect, and a fourth step of enabling the dust samples to float through electric field attraction, creating the dust environment, controlling environmental temperatures by means of a quartz lamp array, and controlling dust density and motion intensity through adjustment of ray intensity and electric field intensity so as to achieve the purpose of simulating the cosmic dust environment. The moon surface dust environment simulating method and the simulating device solve the problem that the actual moon surface dust environment can not be effectively simulated in the prior art. The moon surface dust environment simulating method is easy to implement, and the simulating device is simple in structure and good in using effect.

Owner:INST OF GEOCHEM CHINESE ACADEMY OF SCI

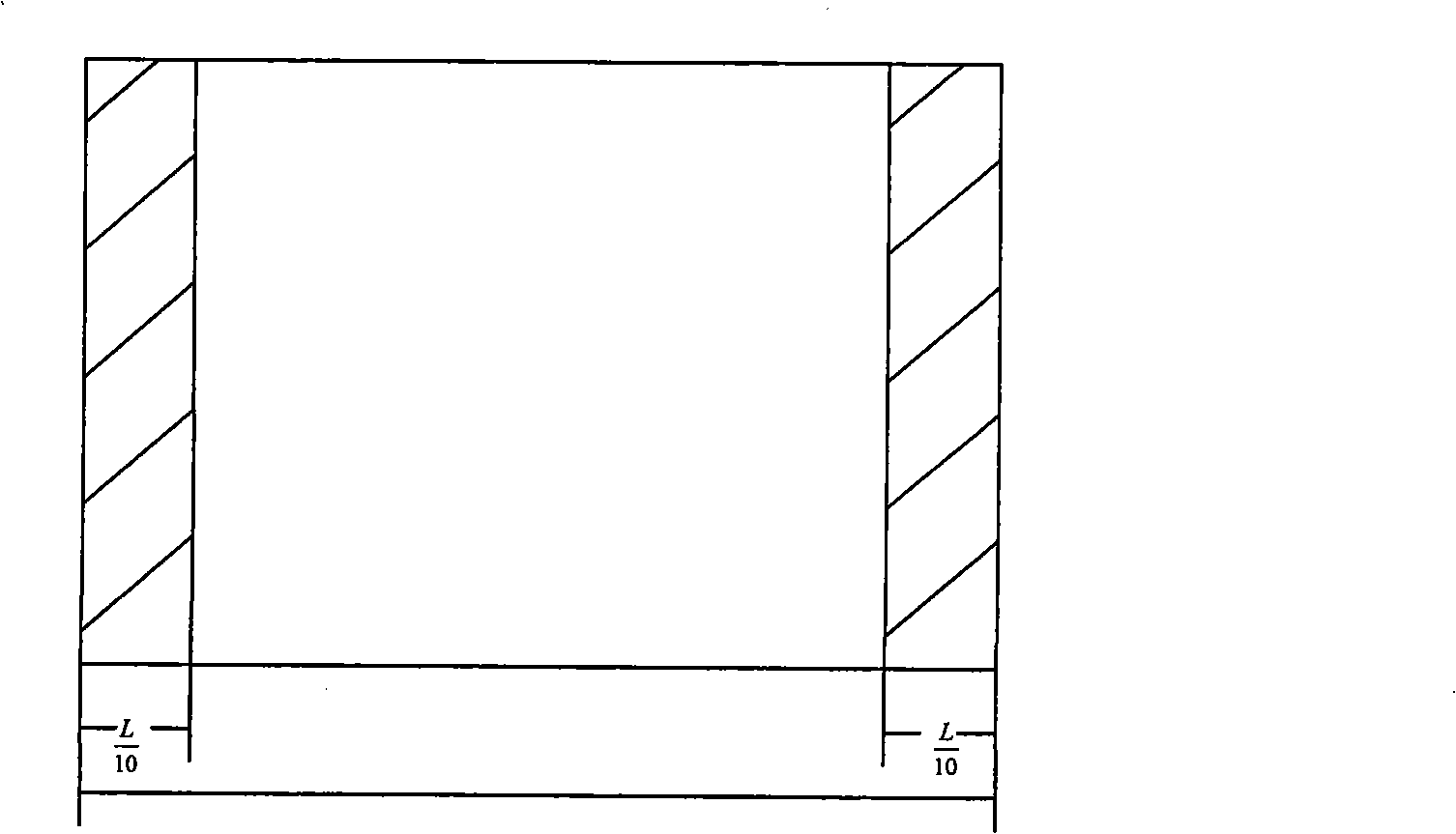

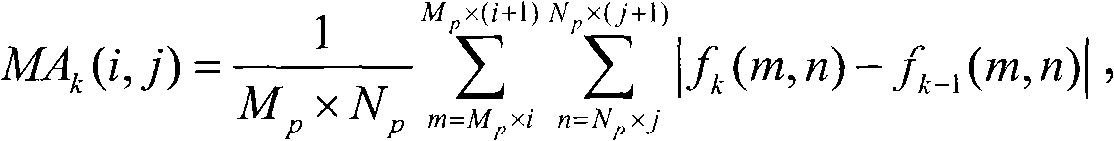

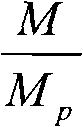

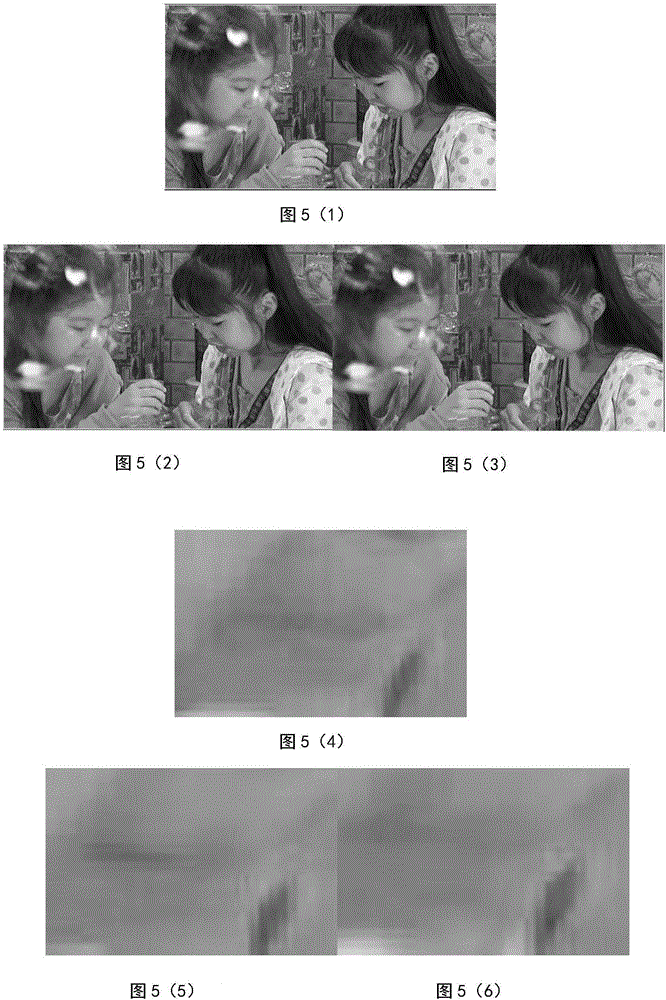

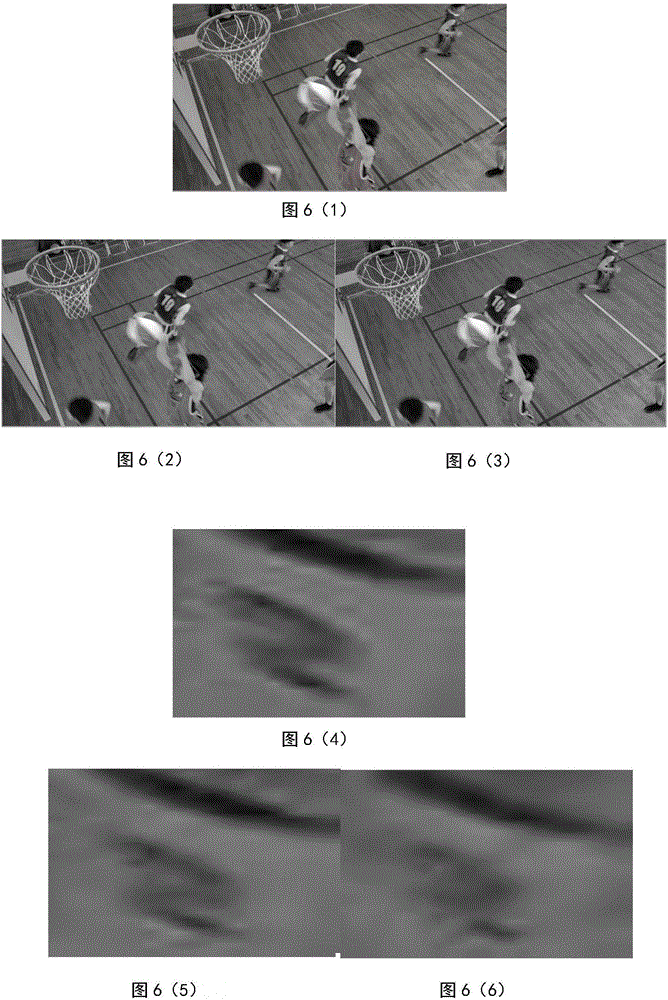

Selecting method of keyframe for video quality evaluation

InactiveCN102572502AReduce operational complexityAccurately reflectTelevision with combined individual color signalTelevision systemsMotion intensityVideo quality

The invention relates to a selecting method of a keyframe for video quality evaluation, which includes: first describing motion intensity degree of each image block in each frame of video images in mean absolute deviation (MAD) method, then weighting the motion intensity degree of each image block by adopting weight factors based on human eye vision sense interest, calculating total motion intensity degree of each frame of images, and determining the keyframe according to a set judging condition. The selecting method overcomes the defects in the prior art, remarkably reduces calculating complexity of evaluating a video frame by frame, and can be applied to actual video quality evaluation. Human eye vision sense interest is further considered and used as the weighting factors to calculate the motion intensity degree of the images so that the selected keyframe can reflect actual video quality accurately and is in accordance with subjective appreciation experience. In addition, the selecting method is simple in operation steps, convenient and easy to integrate and has good popularization value in video quality evaluation application.

Owner:北京东方文骏软件科技有限责任公司 +1

Code rate control method fused with visual perception characteristic

ActiveCN106303530AImprove encoding performanceImprove subjective qualityDigital video signal modificationSubjective qualityMotion intensity

The invention discloses a code rate control method fused with a visual perception characteristic. Code rate distribution of an LCU layer is guided according to influence of brightness and exercise intensity to human visual perception, and furthermore, in order to improve rate-distortion performance, a Lagrange multiplier lambda and a quantization parameter QP are corrected on the basis of the visual perception characteristic. According to the technical scheme of the invention, compared with an international coding standard HEVC (HM15.0), the code rate control method fused with the visual perception characteristic has the advantages that code rate control accuracy is not changed basically, subjective quality of a video is improved, rate-distortion performance can be better, bit resources are saved, contradiction between the quality of a video coding reconstructed image and coding resources is relieved, and coding performance of HEVC is improved.

Owner:BEIJING UNIV OF TECH

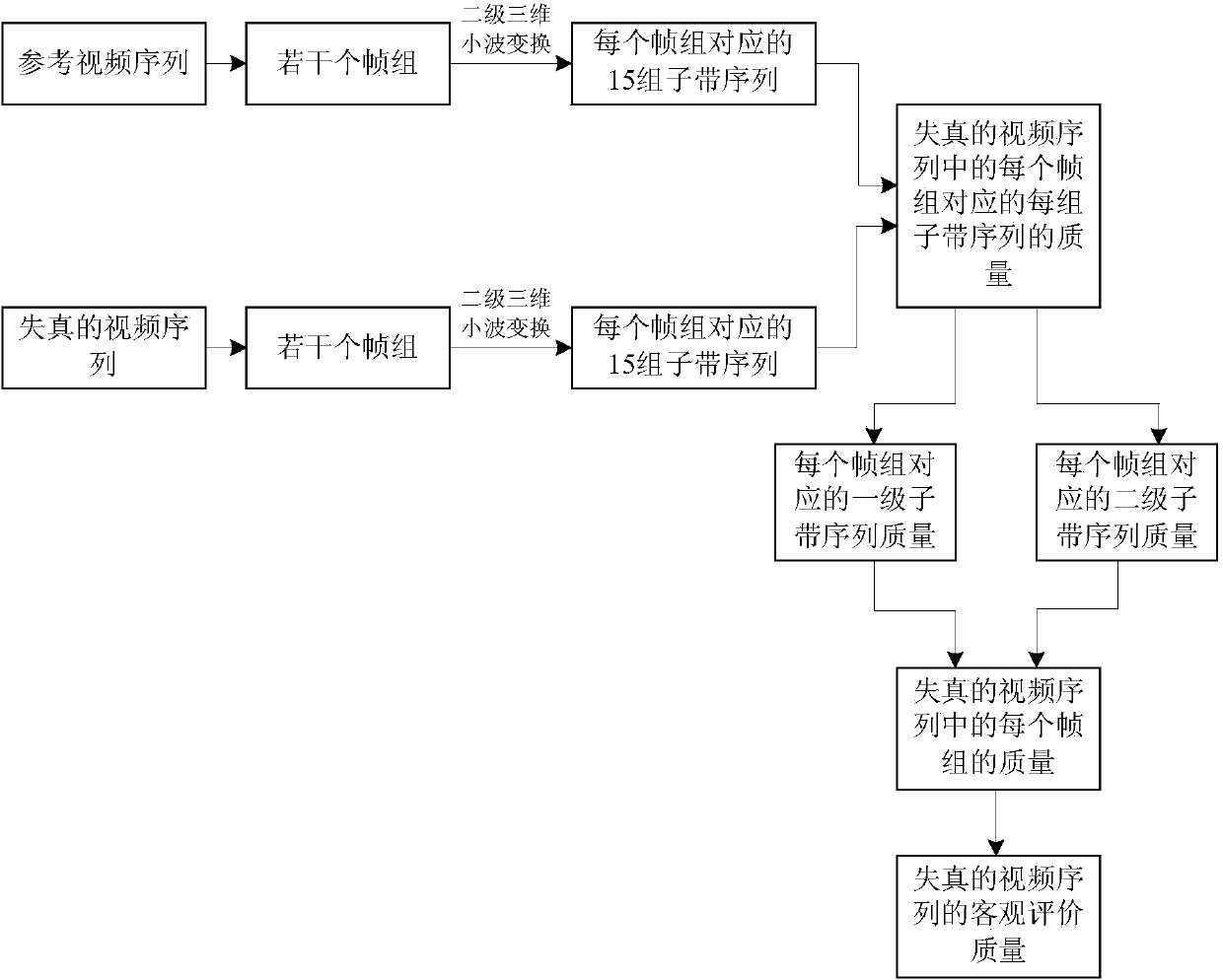

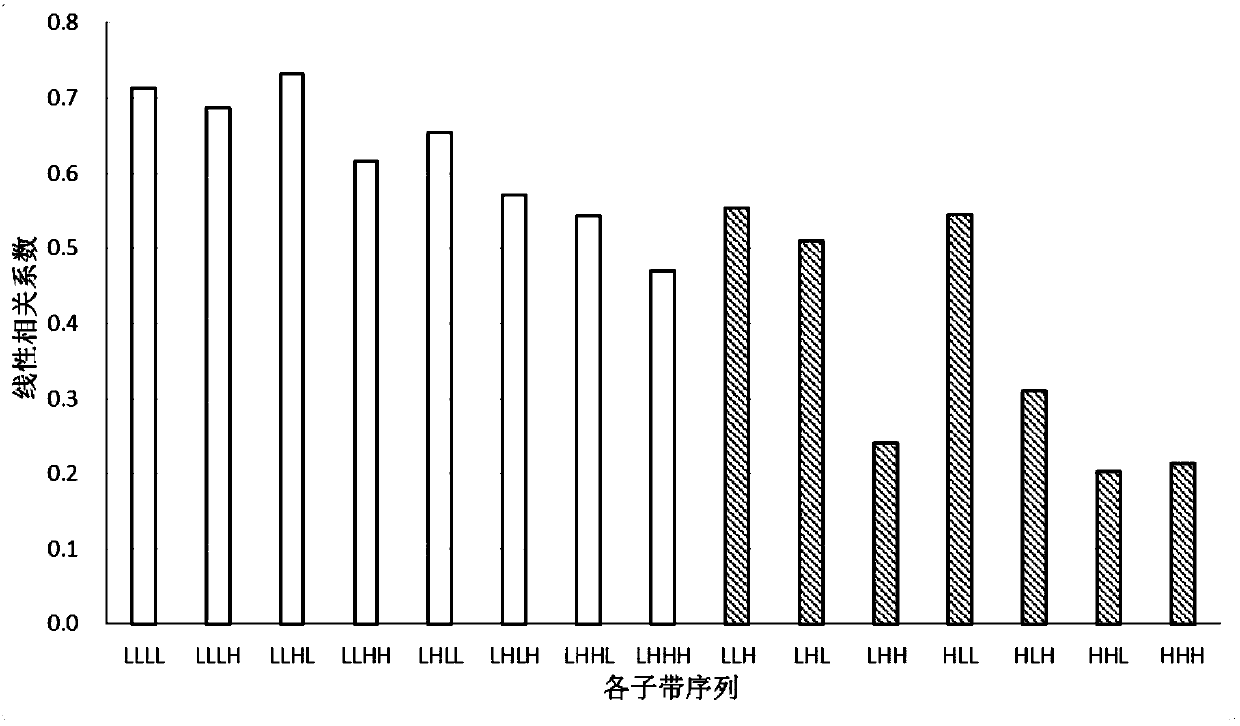

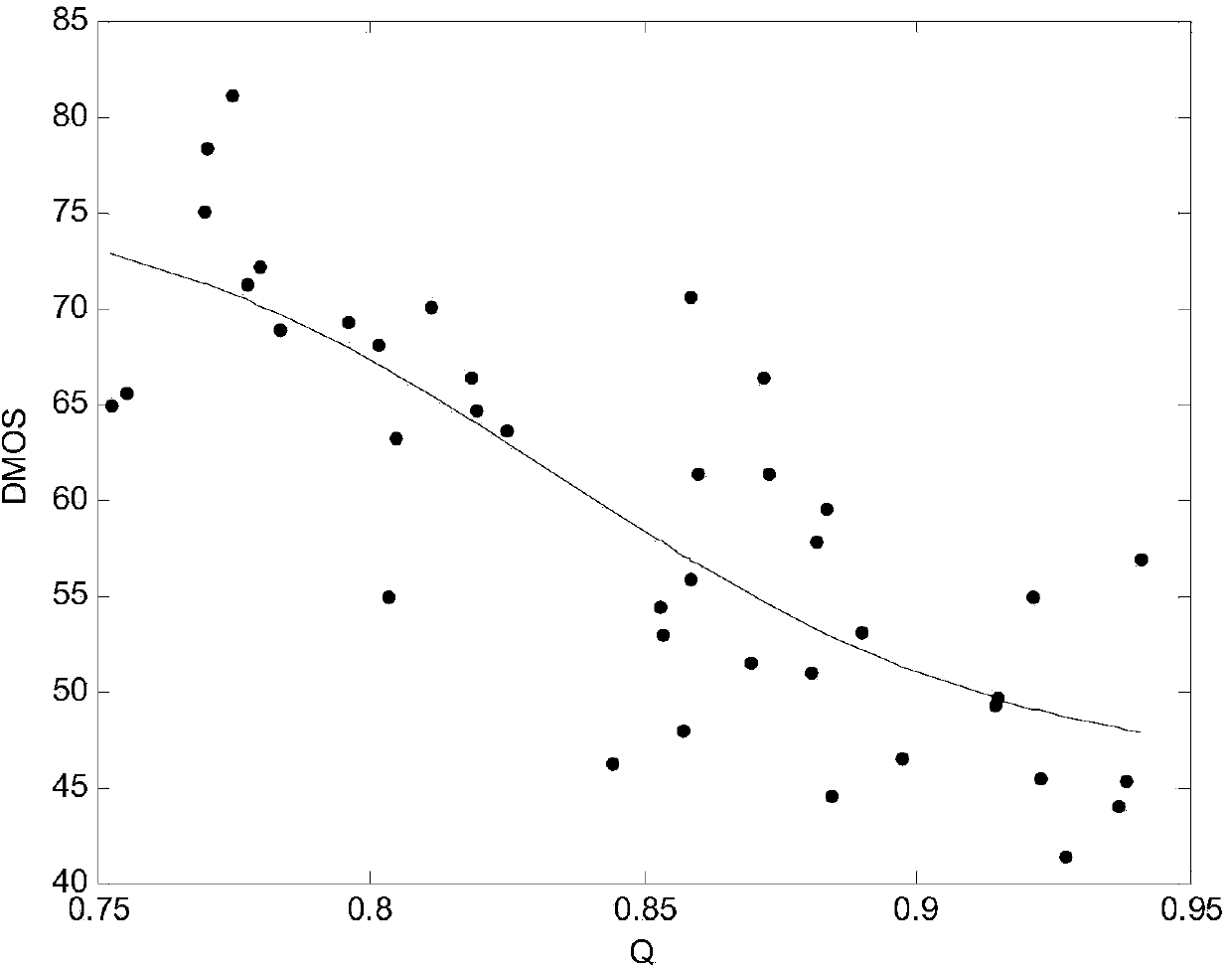

Video quality evaluation method based on three-dimensional wavelet transform

ActiveCN104202594ATroubleshoot difficult descriptionsImprove accuracyImage enhancementImage analysisObjective qualityDecomposition

The invention discloses a video quality evaluation method based on three-dimensional wavelet transform. The three-dimensional wavelet transform is applied to video quality evaluation, secondary three-dimensional wavelet transform is performed on each frame group in a video, and description of time domain information in the frame groups is finished through decomposition of a video sequence on a timeline, so that the problem of difficulty in describing the time domain information of the video is solved at a certain degree, the objective quality evaluation accuracy of the video is effectively increased, and the correlation between an objective evaluation result and human eye subjective perception quality is effectively improved; and specific to time domain correlation existing among the frame groups, the quality of each frame group is weighted through motion intensity and brightness characteristic, so that the method disclosed by the invention can well comfort to human eye visual characteristics.

Owner:陕西时青网络科技有限公司

Digital inertia dumbbell capable of measuring motion parameters

InactiveCN103394178ANumber of real-time measurementsMeasure time in real timeDumb-bellsDumbbellComputer module

Provided is a digital inertia dumbbell capable of measuring motion parameters. A circuit board is fixedly arranged inside a shield, a Hall code disc is connected to the circuit board, the Hall code disc is provided with a Hall component, the axial lead of the Hall code disc coincides with the axial lead of an eccentric rotary shaft, a magnet rotary disc which is adjacent with the Hall code disc in parallel is arranged inside the shield, the magnet rotary disc is fixedly arranged at one end of the eccentric rotary shaft and is coaxial with the eccentric rotary shaft, a permanent magnet is arranged on the magnet rotary disc, a micro-processor is arranged on the circuit board and is connected with the Hall component on the Hall code disc, the micro-processor is connected with a display screen and a data storage module, and the display screen is fixedly arranged in the shield. The digital inertia dumbbell capable of measuring the motion parameters can measure the times, time, speed and interrupt times of rotation of the eccentric rotary shaft in the inertia dumbbell in real time, the motion parameters are displayed through the display screen in real time or stored through the data storage module, and accordingly the motion intensity, coordination condition and motion progress can be reflected accurately.

Owner:SHANGHAI UNIV OF SPORT

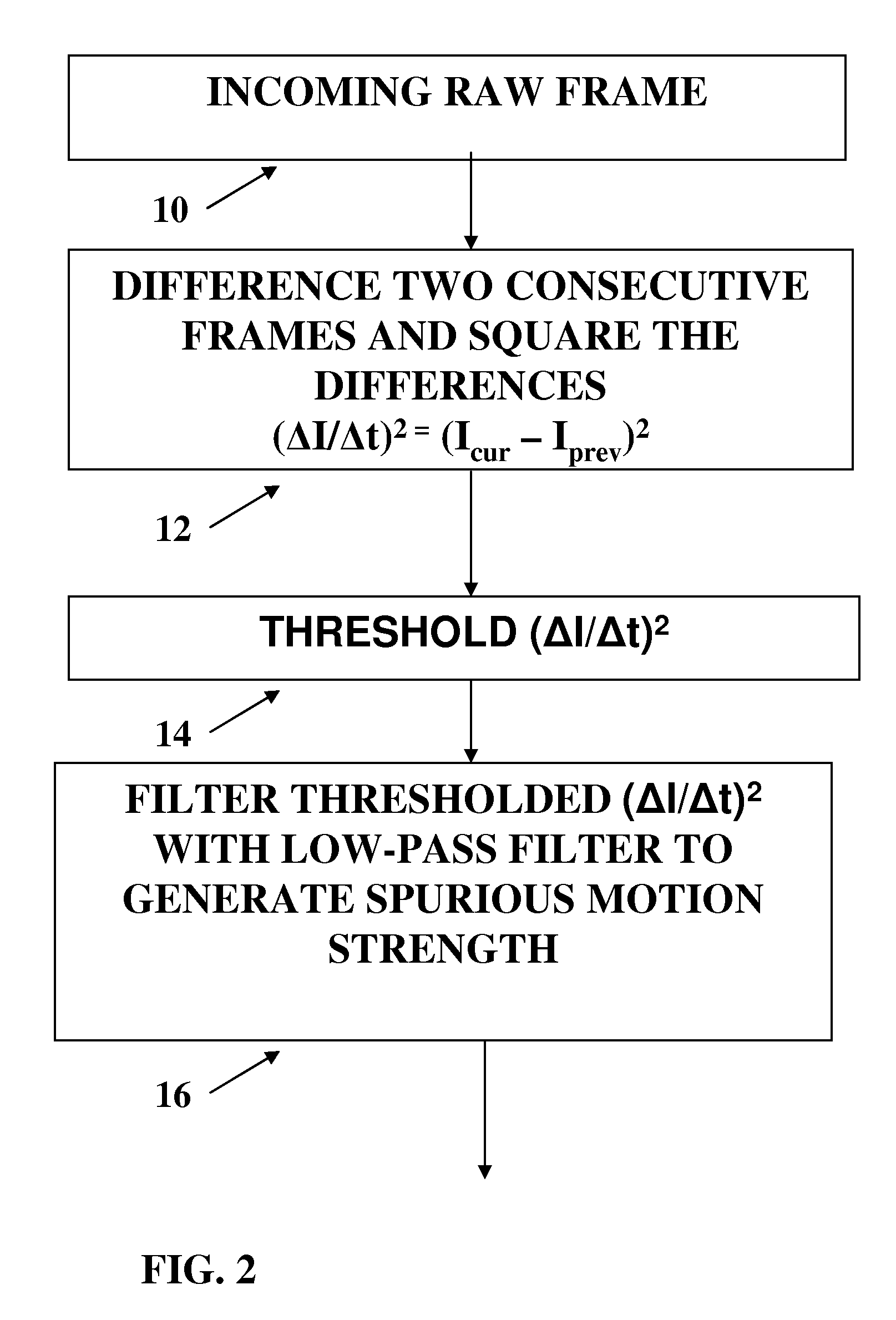

Spurious Motion Filter

InactiveUS20070223596A1Easy to detectTelevision system detailsImage enhancementMotion intensityVideo image

A filter for filtering out spurious motion from a sequence of video images, for use in video image signal processing to identify objects in motion in the sequence of video images. Spurious motion is chaotic, repetitive, jittering portions of an image that constitute noise and interfere with motion detection in video signals. The filter keeps track of the location and the strengths of spurious motion, applies appropriate low pass filtering strengths according to the spurious motion strengths in real-time. Regular pixels without spurious motion will pass through the filter unaltered, while pixels with spurious motion will be “smoothed” to avoid being detected as noise.

Owner:SIEMENS SWITZERLAND

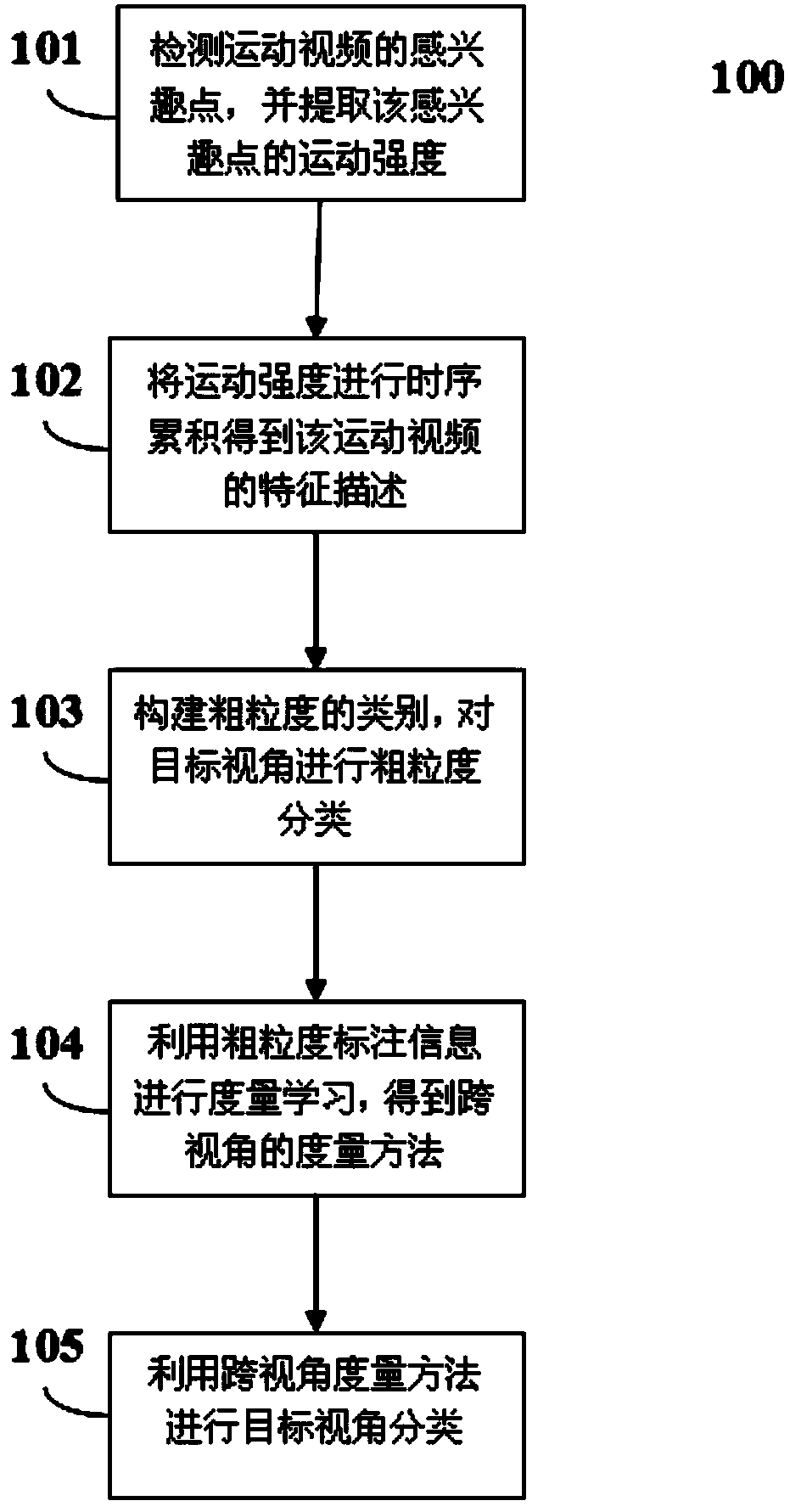

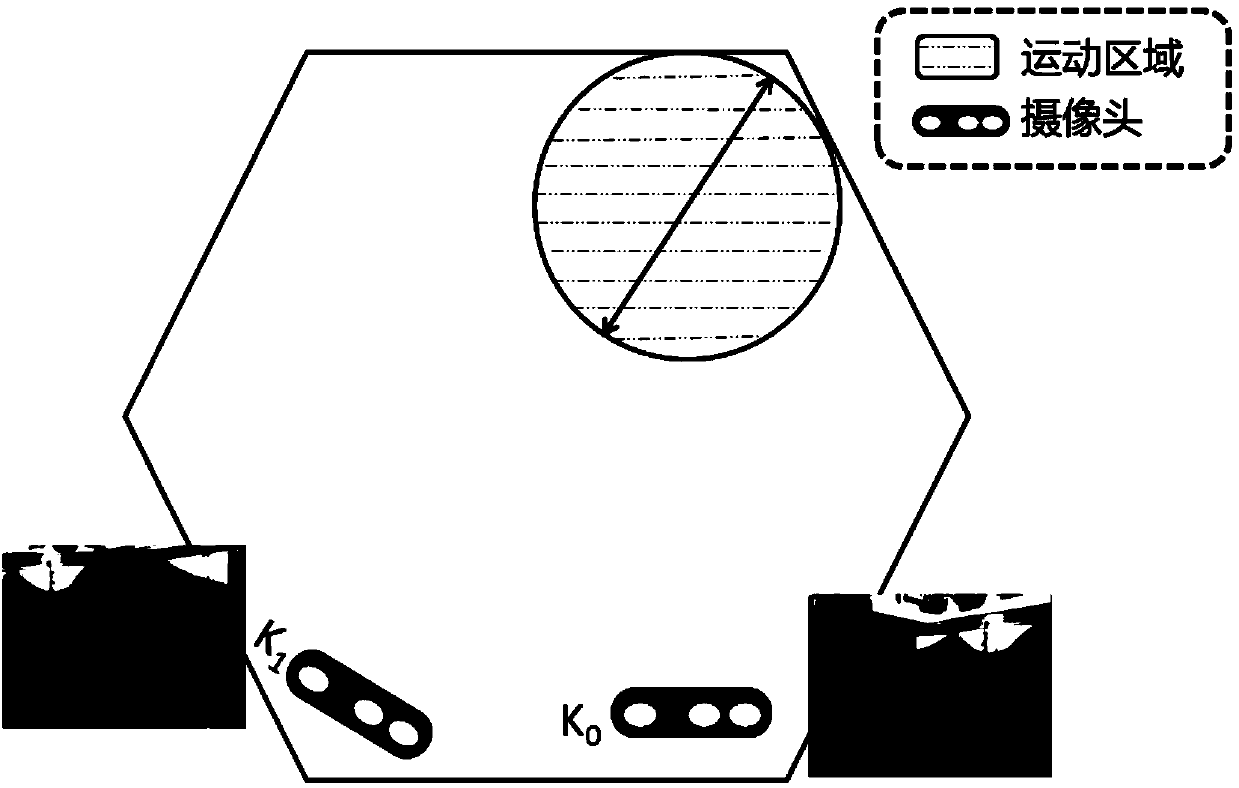

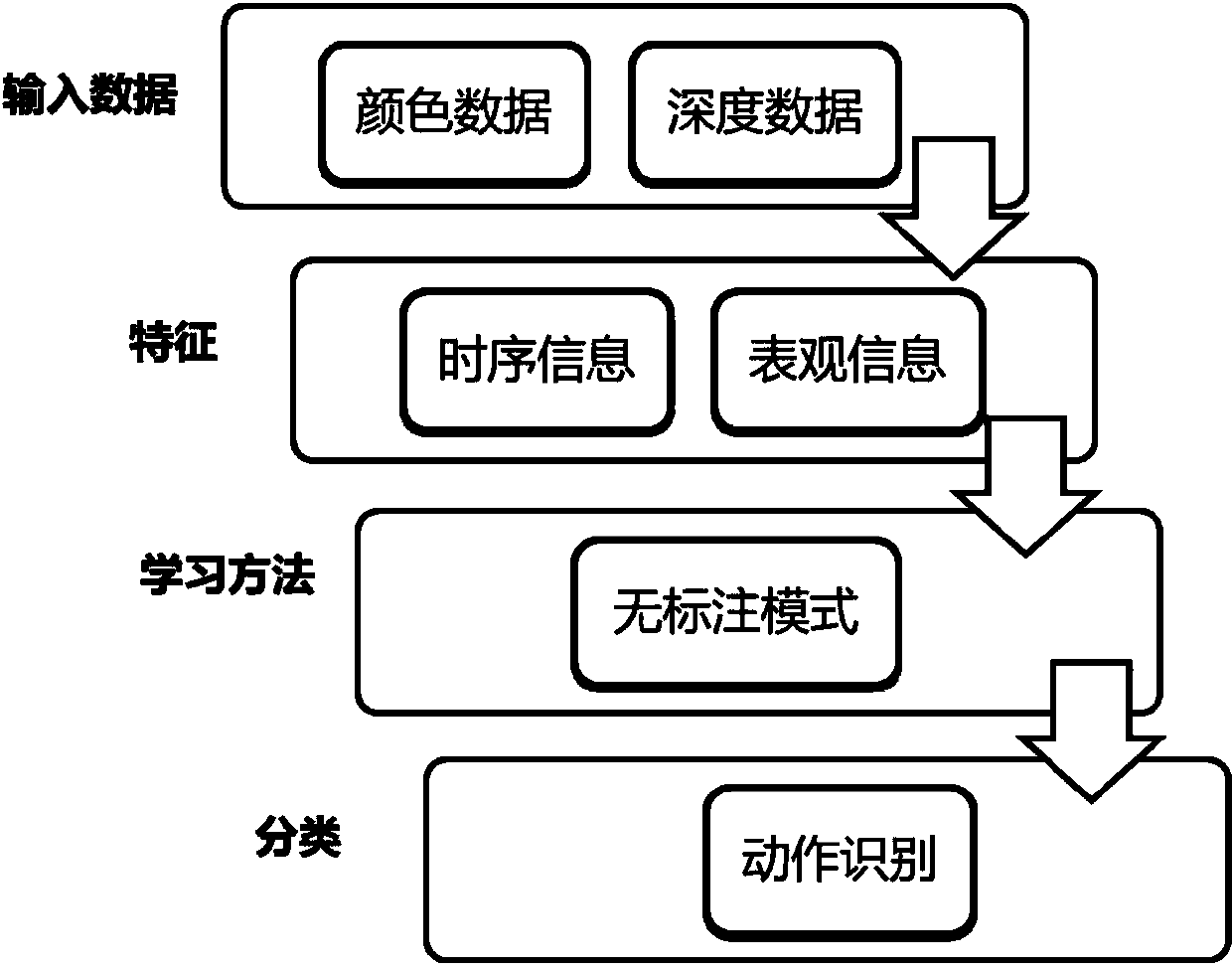

Cross-view-angle action identification method and system based on time sequence information

ActiveCN104200218AResolve differencesAchieving cross-view motion recognitionCharacter and pattern recognitionMotion intensityStudy methods

The invention discloses a cross-view-angle action identification method and system based on time sequence information and relates to the technical field of mode identification. The method includes detecting interest points of videos and extracting motion intensity of the interest points, wherein the videos include a source view angle video and a target view angle video; conducting time sequence accumulation on the motion intensity according to the time sequence information of the videos to obtain motion characteristic description of the videos; conducting coarseness labeling on the target view angle video according to the motion characteristic description and source coarseness labeling information of the source view angle video to obtain target coarseness labeling information; conducting measurement learning on the source view angle video and the target view angle video through a measurement learning method according to the source coarseness labeling information and the target coarseness labeling information to obtain a cross-view-angle measurement method; conducting action classification on action in the target view angle video through the cross-view-angle measurement method to finish cross-view-angle action recognition.

Owner:中科海微(北京)科技有限公司

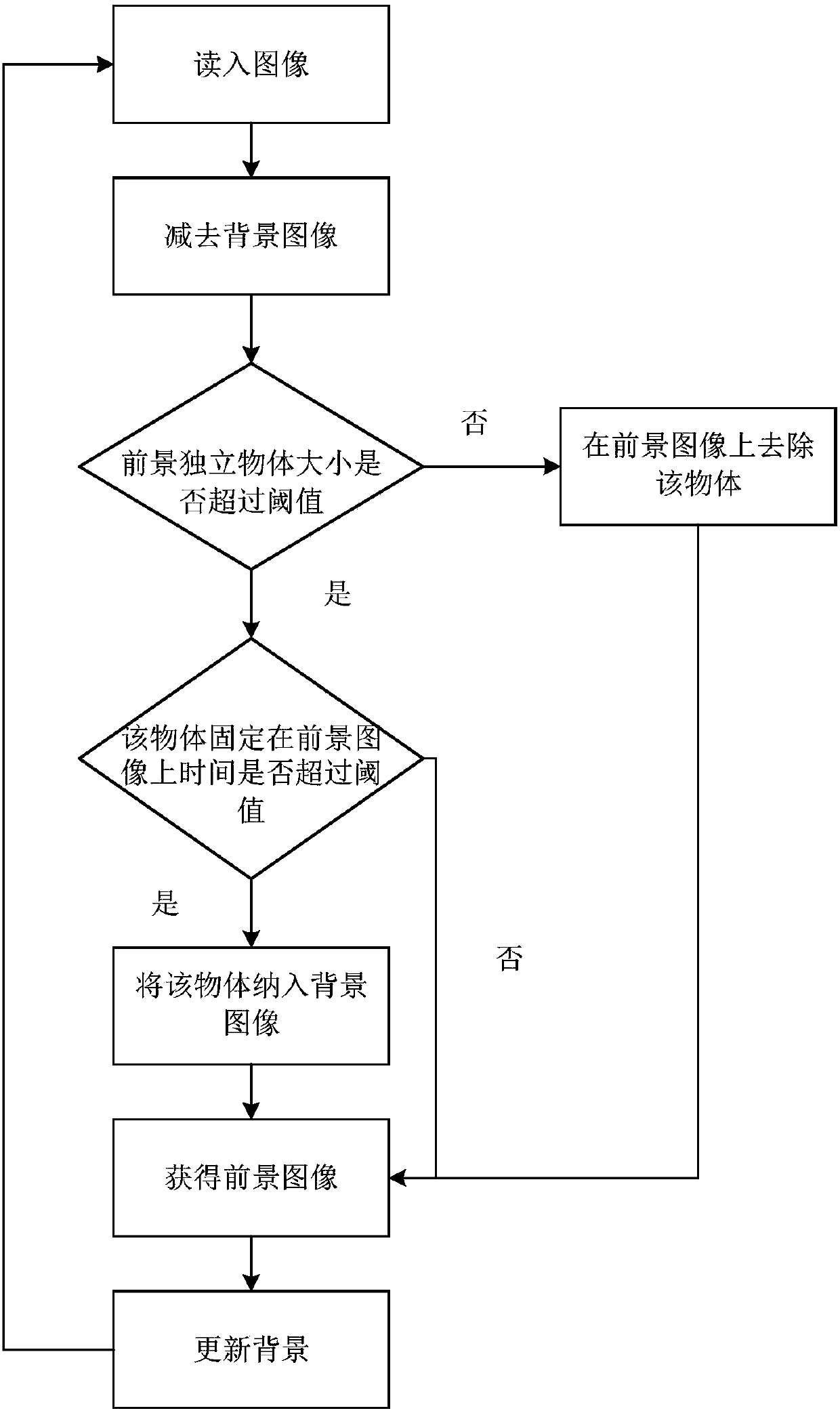

Violent behavior monitoring method

InactiveCN107330414AAccurate detectionTimely detectionCharacter and pattern recognitionPattern recognitionMotion intensity

The invention discloses a violent behavior monitoring method comprising the steps that the image of a current video frame is acquired, and the binary foreground image of the image of the current video frame is extracted; the binary foreground image of the image of the current video frame is compared with a violent behavior template so as to obtain the similarity R; the global motion intensity E is calculated according to the image of the current video frame and the image of the previous video frame; fusion calculation of a violent behavior index is performed according to the similarity R and the global motion intensity E; and if the violent behavior index is greater than the preset threshold, the violent behavior occurs and the system transmits an alarm signal. The violent crime situation can be more accurately and timely discovered especially in the closed places so that the victims of the violent crime can be more timely rescued.

Owner:ZHENGZHOU UNIVERSITY OF LIGHT INDUSTRY

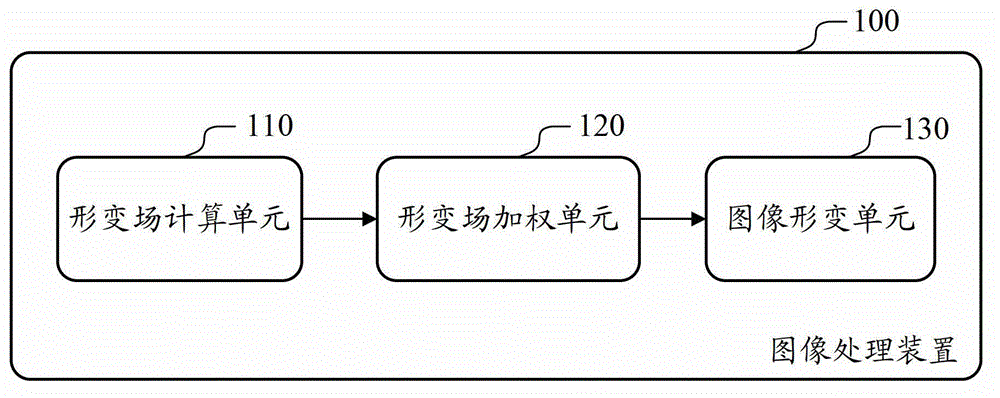

Image processing device, image processing method and medical image equipment

ActiveCN104036452AReduce image noiseImage enhancementImage analysisImaging processingReference image

The invention relates to an image processing device, an image processing method and a piece of medical image equipment. The image processing device comprises a deformation field calculation unit, a deformation field weighting unit and an image deformation unit. The deformation field calculation unit can calculate a deformation field of a second image of an object relative to a reference image based on non-rigid registration with a first image of the object as the reference image. The deformation field weighting unit can weight the deformation field according to the motion intensities of parts of the object. The image deformation unit can deform the second image by use of the weighted deformation field to obtain a third image.

Owner:TOSHIBA MEDICAL SYST CORP

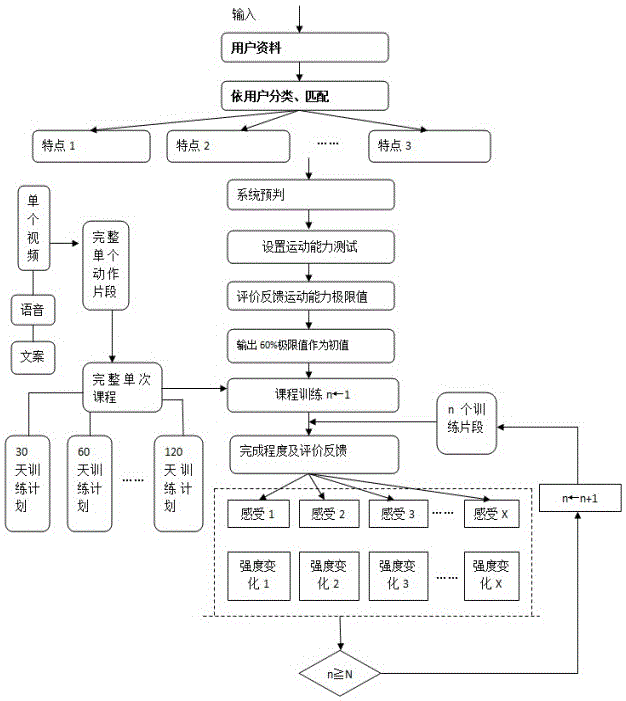

Service platform for guiding exercise based on evaluation feedback

InactiveCN106845938AImprove the effect of sports trainingAdjust in timeOffice automationTraining planExercise testings

The invention discloses a service platform for guiding exercise based on evaluation feedback. The service platform comprises a user classifying management module, an information release system, a motion testing system, an evaluation feedback system, an action management system module and a course management system module. The evaluation feedback system carries out classification through user subjective feeling evaluation, different kinds of feedback of the systems are obtained through different kinds of feeling evaluation, and the exercise intensity of next training is pushed according to the feedback. Targeted and scientific training courses can be provided for users according to the self conditions of the users and the exercise testing result, the training plan can be adjusted in time according to the training evaluation and feeling of the users in combination with the testing condition of training in the training process of the courses, the training intensity is arranged more scientifically, and therefore the exercise training effect of the users is improved.

Owner:江苏康兮运动健康研究院有限公司

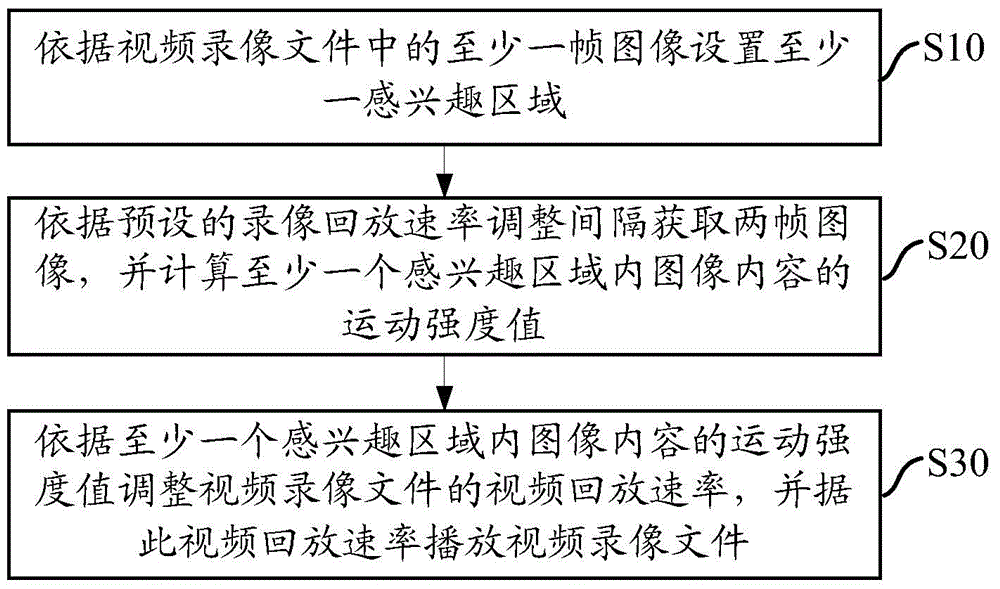

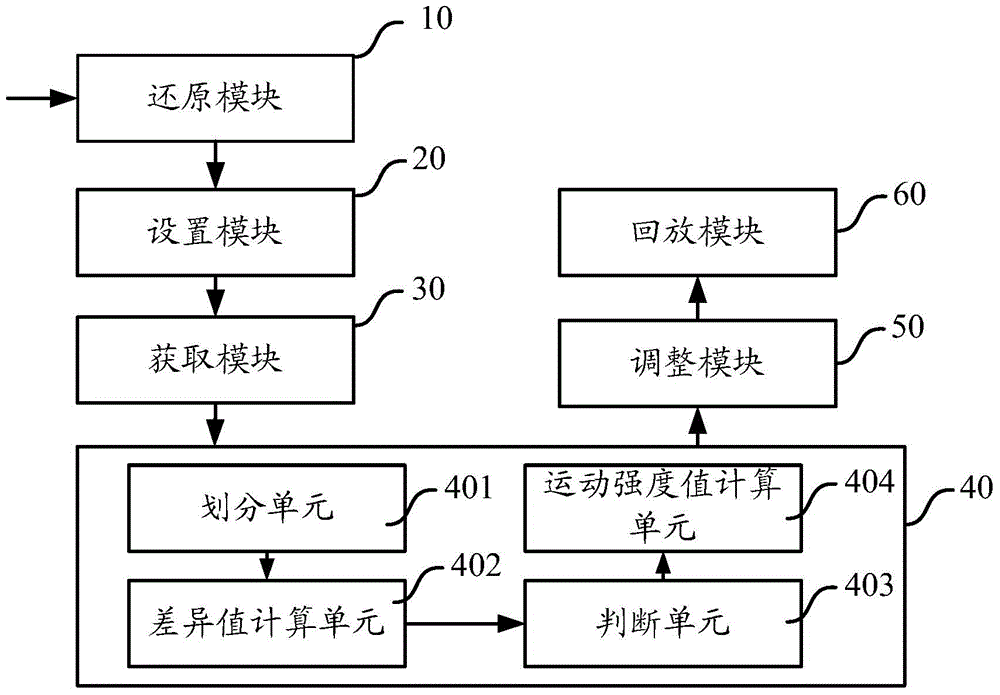

Video playback method and video playback device

ActiveCN105245817APlayback high efficiencyImprove work efficiencyTelevision system detailsColor television detailsVideo retrievalComputer graphics (images)

The invention discloses a video playback method and a video playback device. The device comprises the steps of setting at least one region of interest according to at least one frame image in a video recording file; adjusting the interval according to a preset video playback rate so as to acquire to frame images, and calculating a motion intensity value of the image content of the at least one region of interest; adjusting the video playback rate of the video recording file according to the motion intensity value of the image content in the at least one region of interest, and playing the video recording file according to the video playback rate. According to the invention, in the process of playing the video recording file, participation of an encoder terminal is not required, high-efficiency video playback can be realized while ensuring users to acquire enough interested information as for any path of video code stream, and the work efficiency of video retrieval is improved.

Owner:SHENZHEN ZTE NETVIEW TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com