Patents

Literature

33results about How to "Implement parallel execution" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

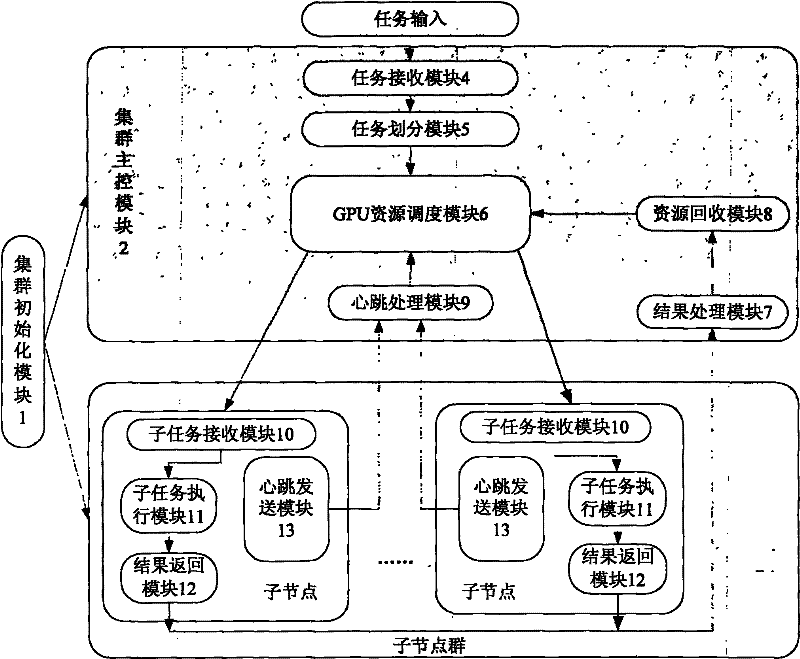

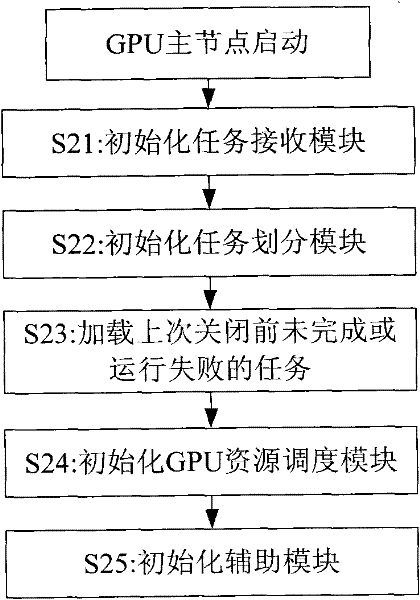

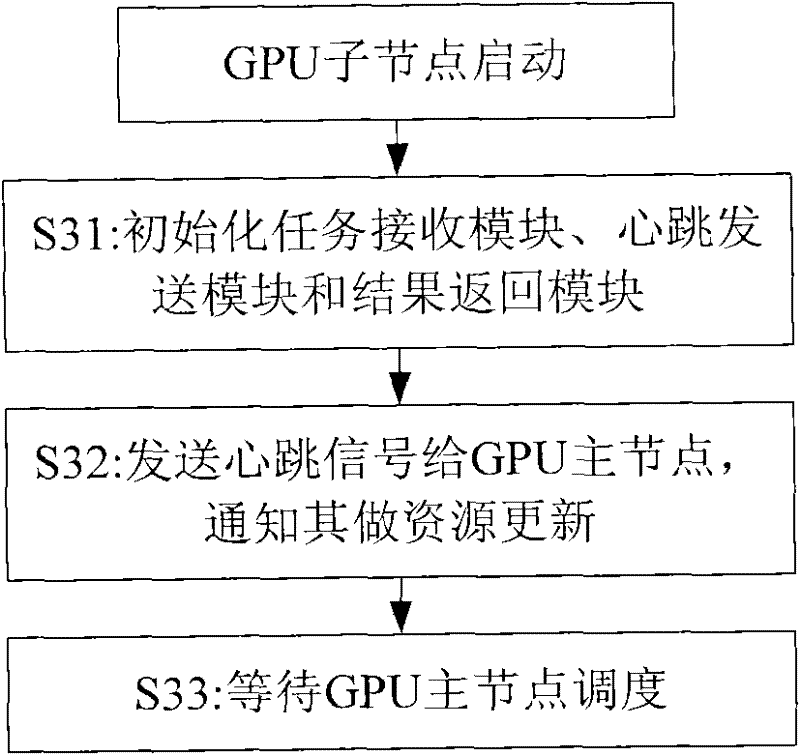

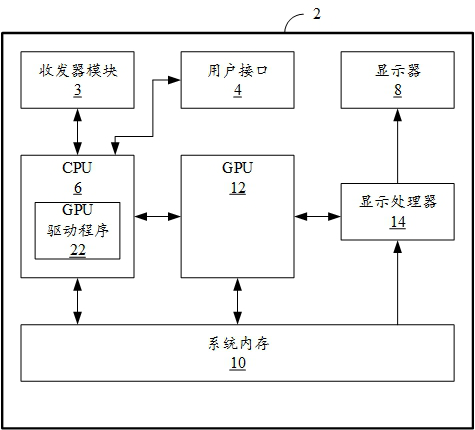

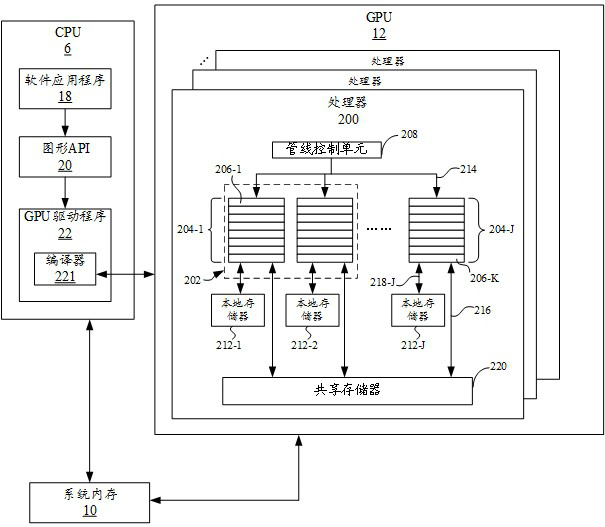

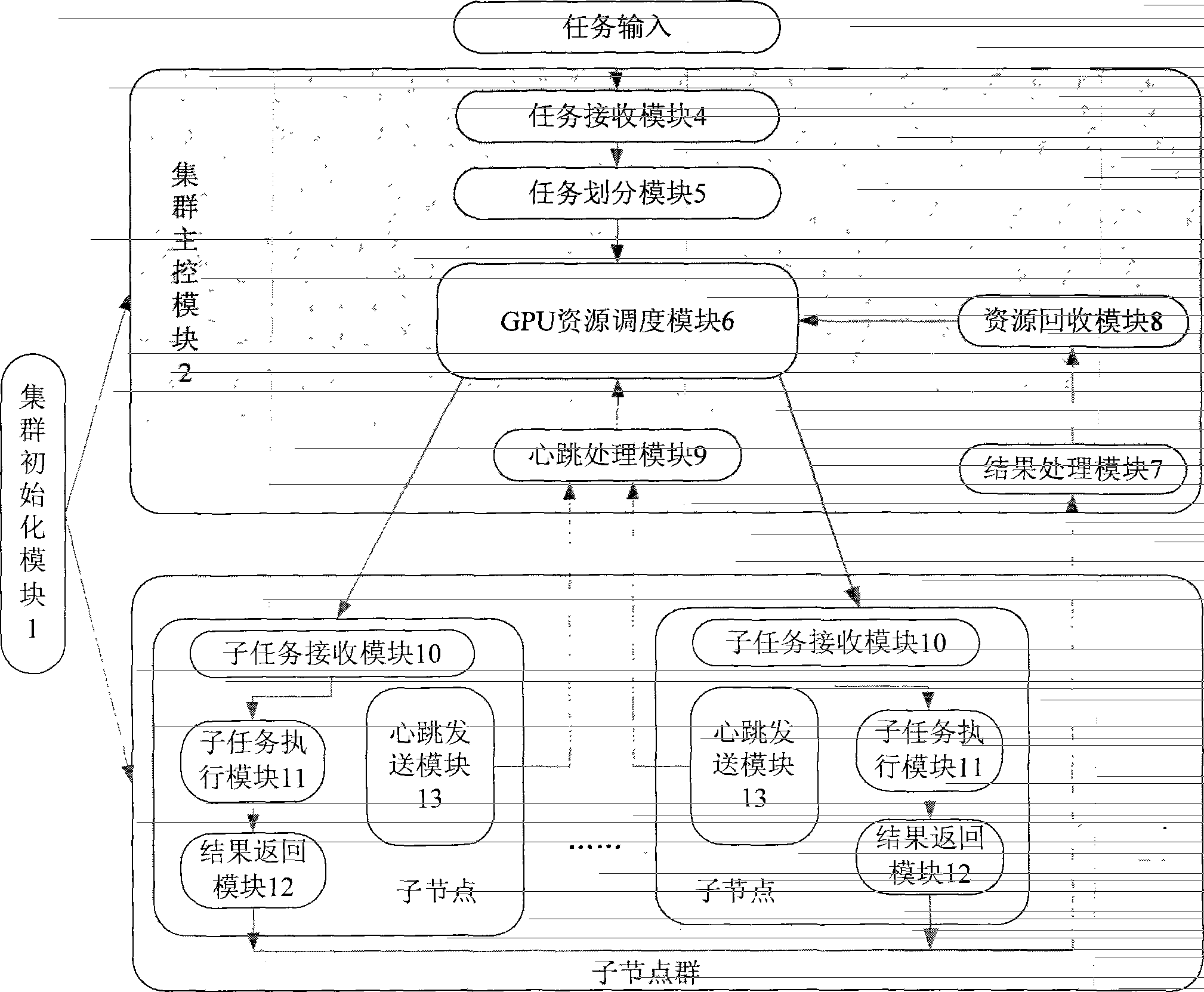

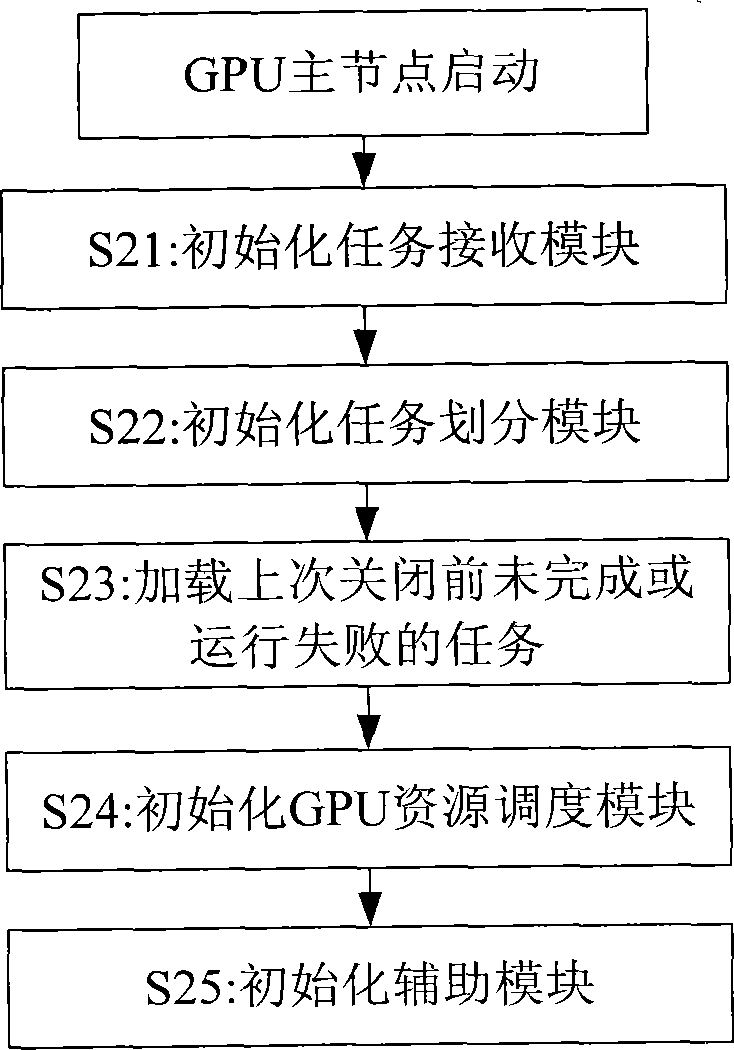

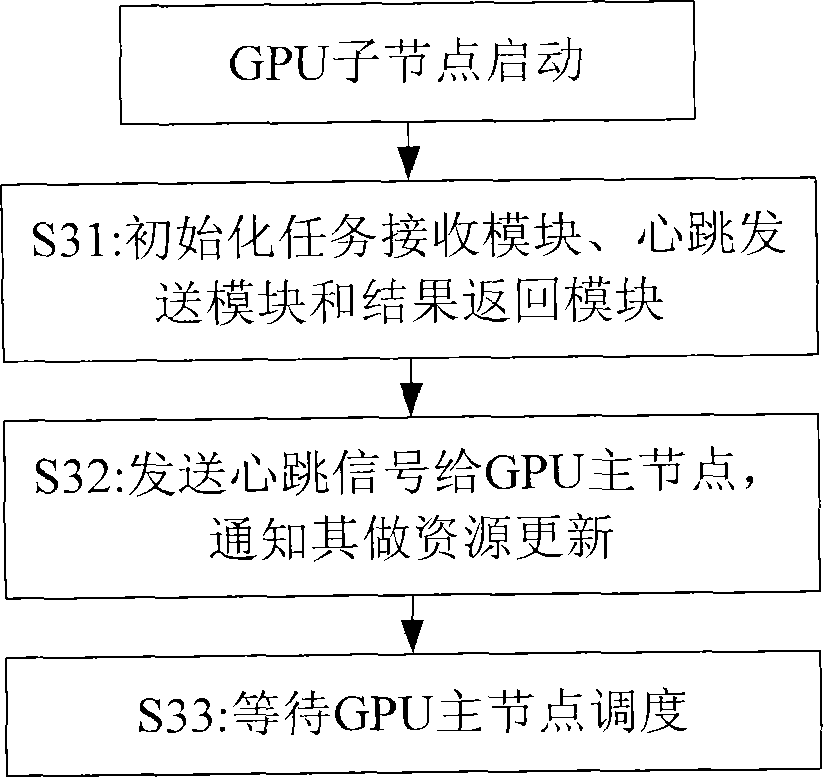

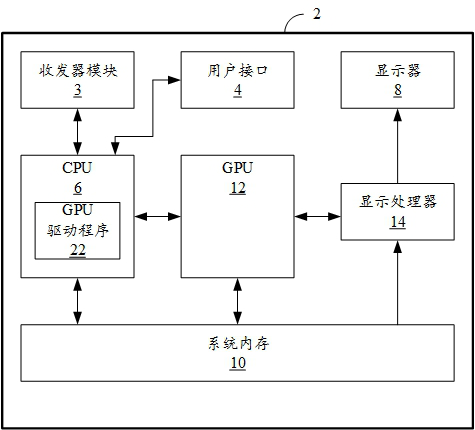

Cluster GPU (graphic processing unit) resource scheduling system and method

The invention provides a cluster GPU resource scheduling system. The system comprises a cluster initialization module, a GPU master node, and a plurality of GPU child nodes, wherein the cluster initialization module is used for initializing the GPU master node and the plurality of GPU child nodes; the GPU master node is used for receiving a task inputted by a user, dividing the task into a plurality of sub-tasks, and allocating the plurality of sub-tasks to the plurality of GPU child nodes by scheduling the plurality of GPU child nodes; and the GPU child nodes are used for executing the sub-tasks and returning the task execution result to the GPU master node. The cluster GPU resource scheduling system and method provided by the invention can fully utilize the GPU resources so as to execute a plurality of computation tasks in parallel. In addition, the method can also achieve plug and play function of each child node GPU in the cluster.

Owner:XIAMEN MEIYA PICO INFORMATION

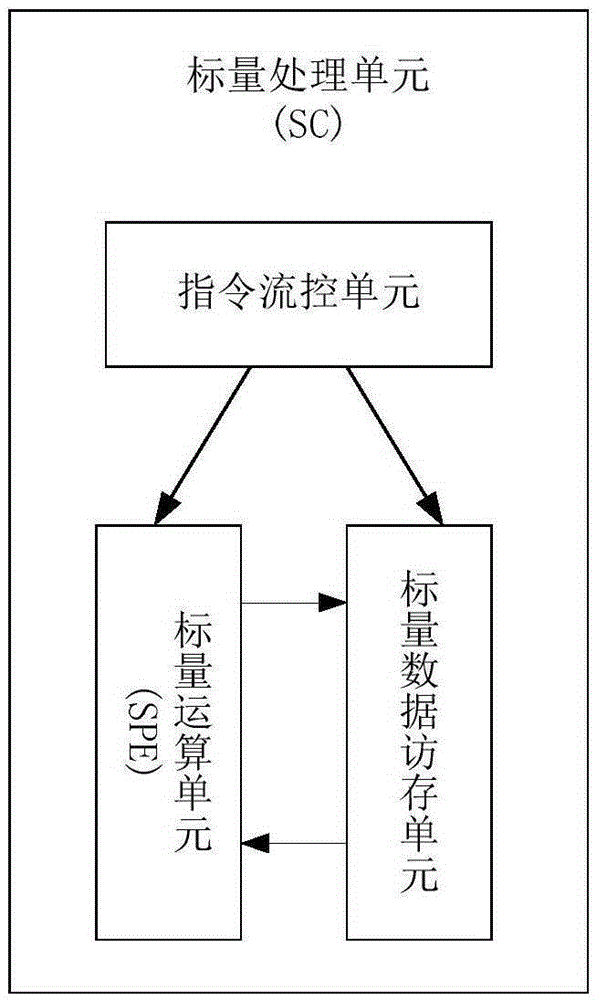

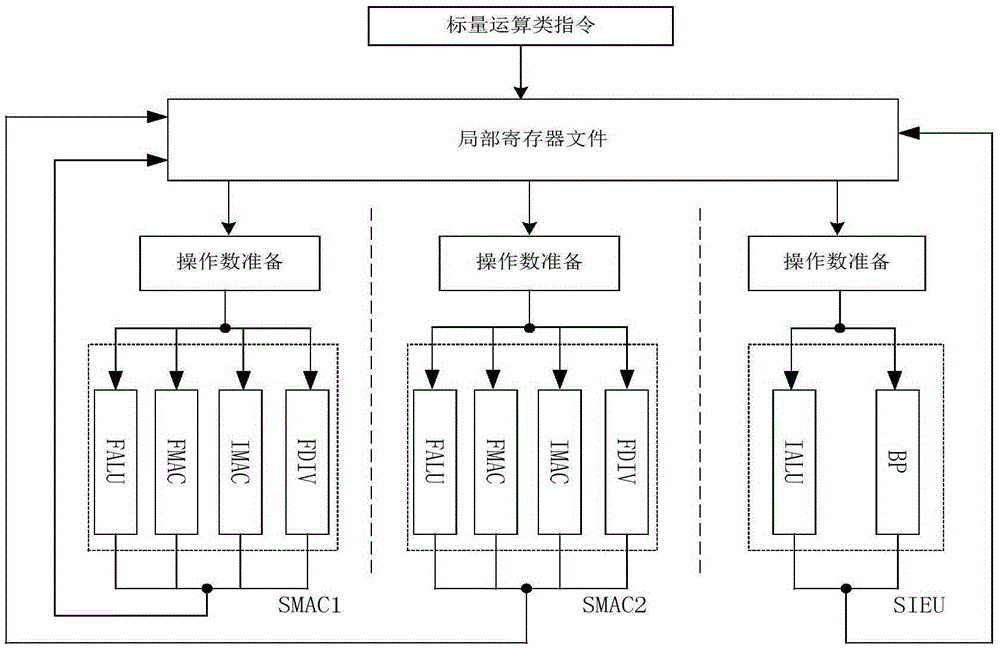

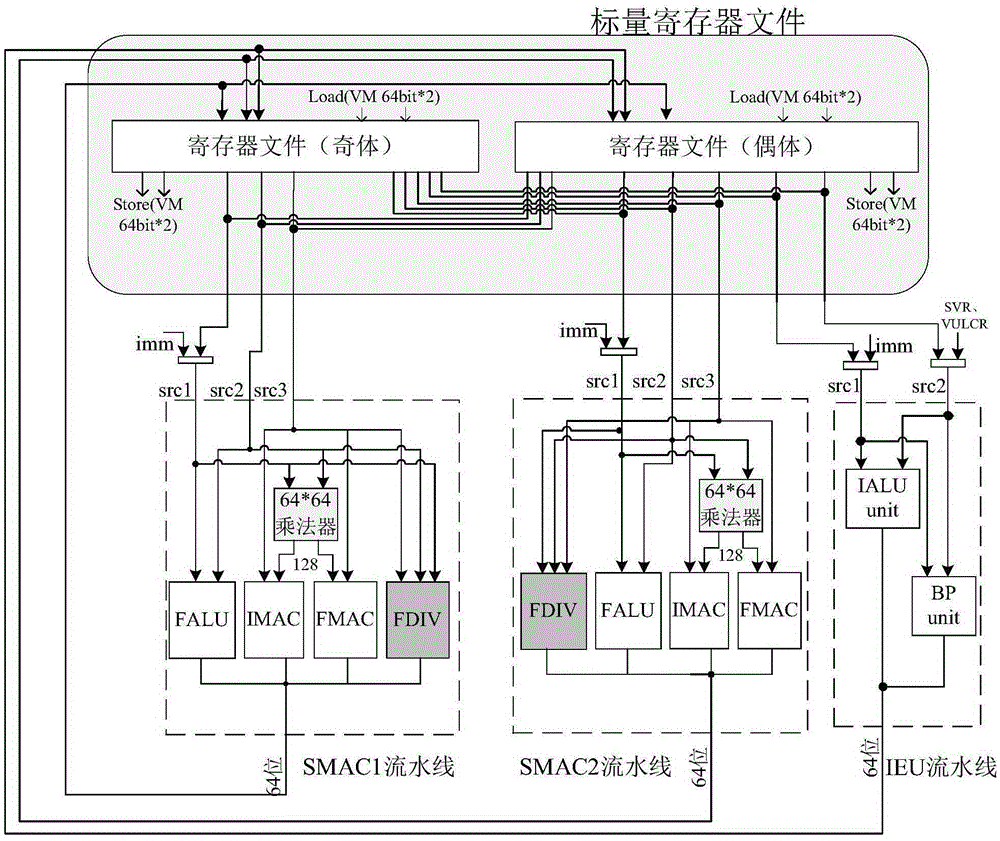

Scalar operation unit structure supporting floating-point division method in GPDSP

InactiveCN105335127AFewer instruction execution cyclesLower latencyComputation using non-contact making devicesConcurrent instruction executionParallel computingFloating point

The invention discloses a scalar operation unit structure supporting a floating-point division method in a GPDSP. The scalar operation unit structure comprises a first component SMAC1, a second component SMAC2 and a third component SIEU, which are used as scalar calculation components and are used for supporting the scalar basic calculation; each scalar calculation component corresponds to one scalar instruction in a VLIW execute packet. The scalar operation unit structure has the advantages that the instruction execution periods are few; the delay is small; the structure is simple; the feasibility is high, and the like.

Owner:NAT UNIV OF DEFENSE TECH

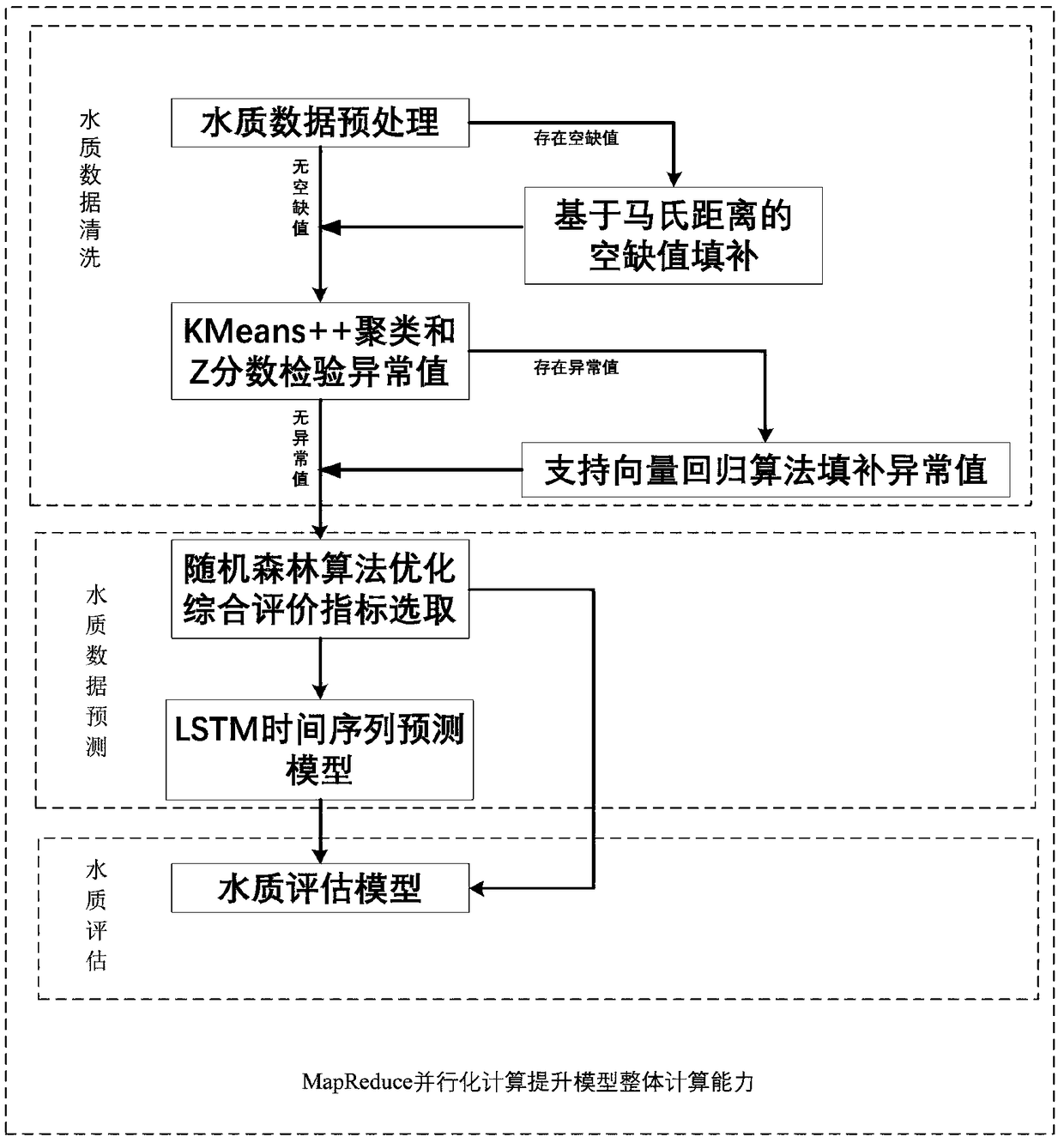

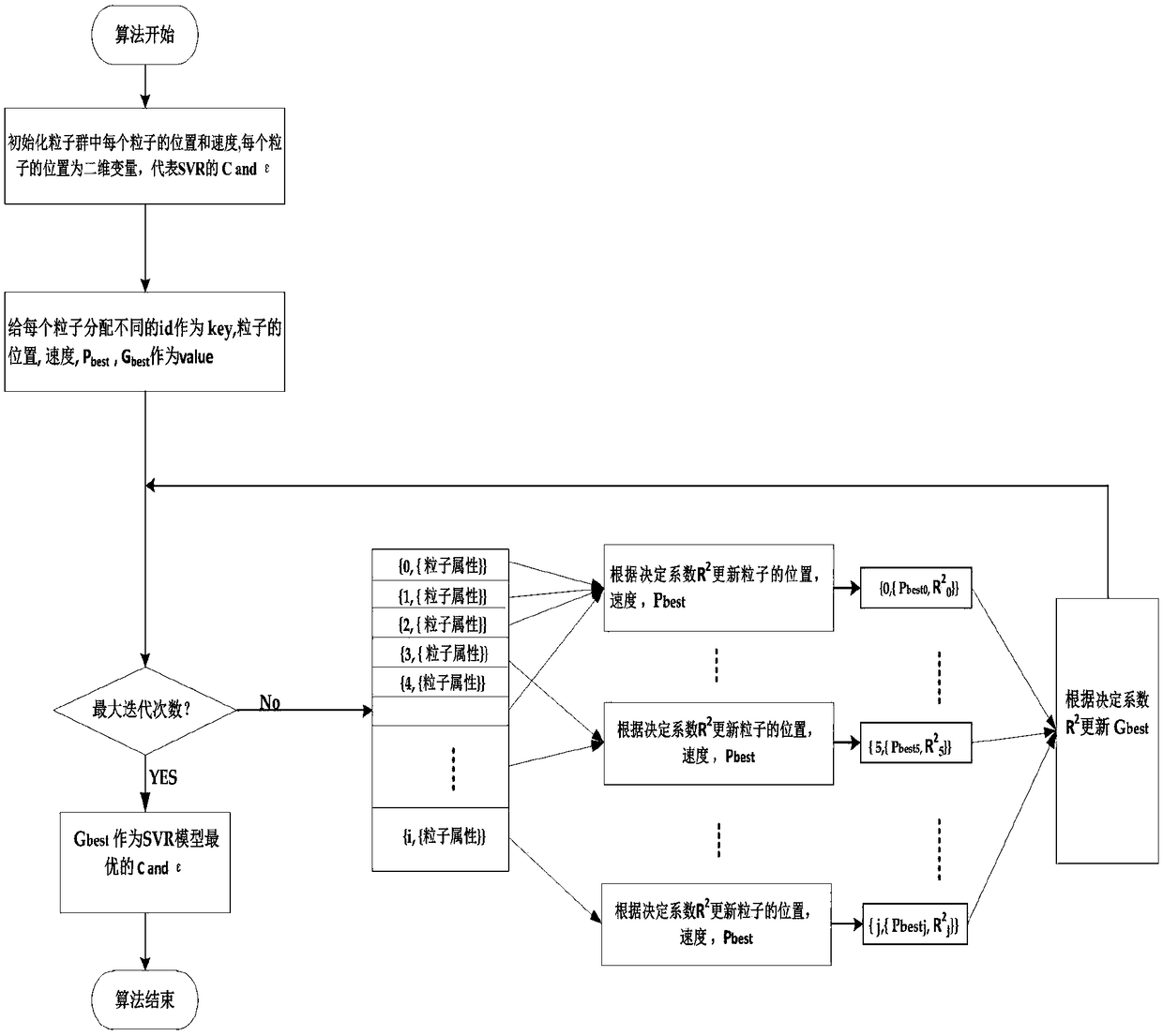

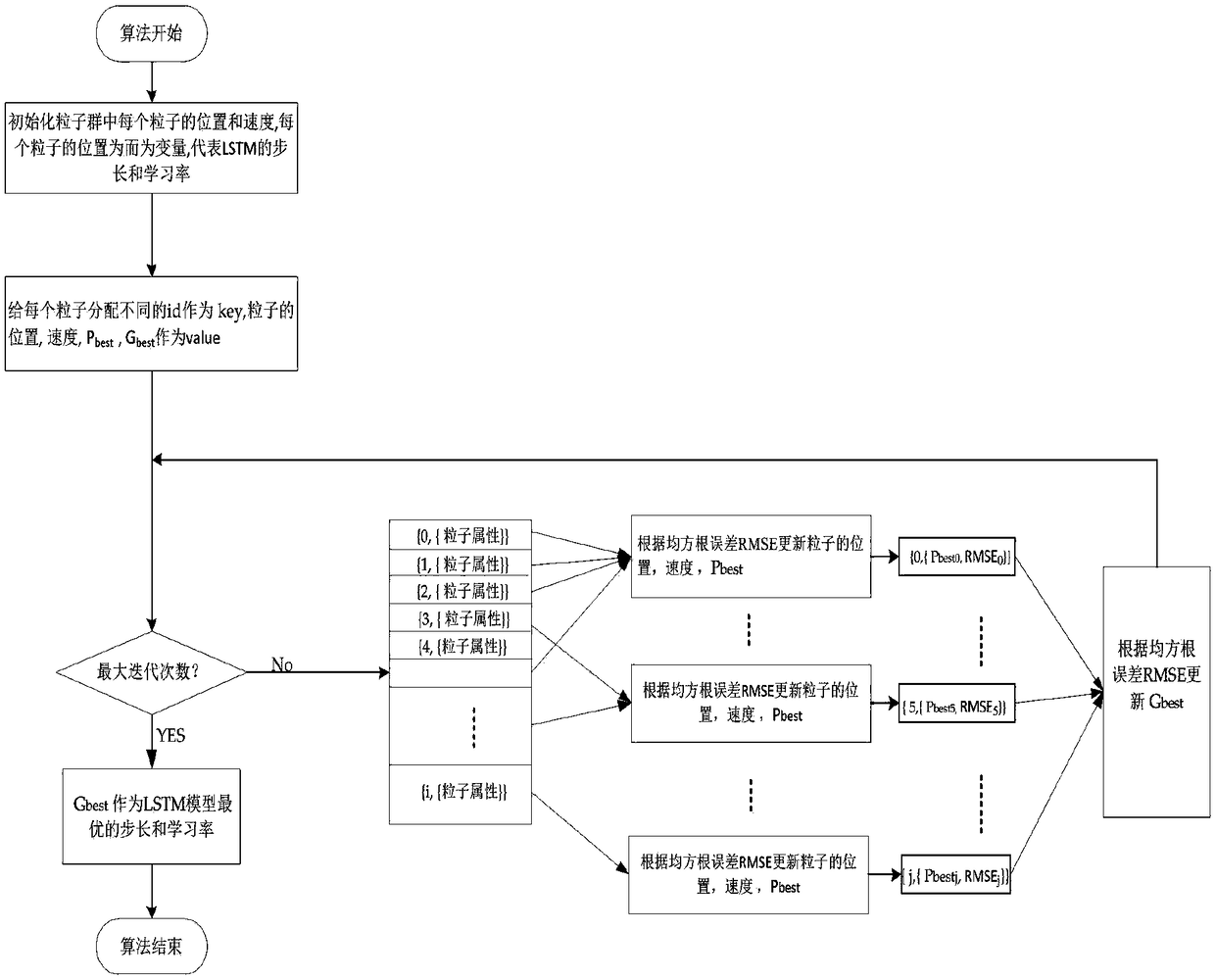

A method for construct a prediction and assessment model of time series surface water quality big data

ActiveCN109472321AEfficient and accurate fillingImprove predictive performanceGeneral water supply conservationCharacter and pattern recognitionCluster algorithmFeature extraction

The invention discloses a method for constructing a prediction and evaluation model of time series type surface water quality big data, which firstly clears the numerical value obviously contrary to common sense, then finds out the time point nearest to the Markov distance according to all the data at the time point where the vacancy value exists, and uses the data at the time point to fill the vacancy value. Then the outliers in the water quality data are detected by using the improved KMeans + + clustering algorithm and Z-fraction detection algorithm, and the outliers are filled by support vector regression. Then stochastic forest algorithm is used to extract the important characteristics of water quality indicators, and the indicators with high importance are selected to evaluate the overall state of water quality. Then the LSTM model is used to predict the time series of the whole state of water quality. Finally, the MapReduce program of Hadoop is used to realize the parallel execution of the program, which improves the execution efficiency of each algorithm, completes the final prediction and evaluation model construction, and improves the efficiency, integrity and accuracy ofwater quality big data analysis.

Owner:BEIJING UNIV OF TECH

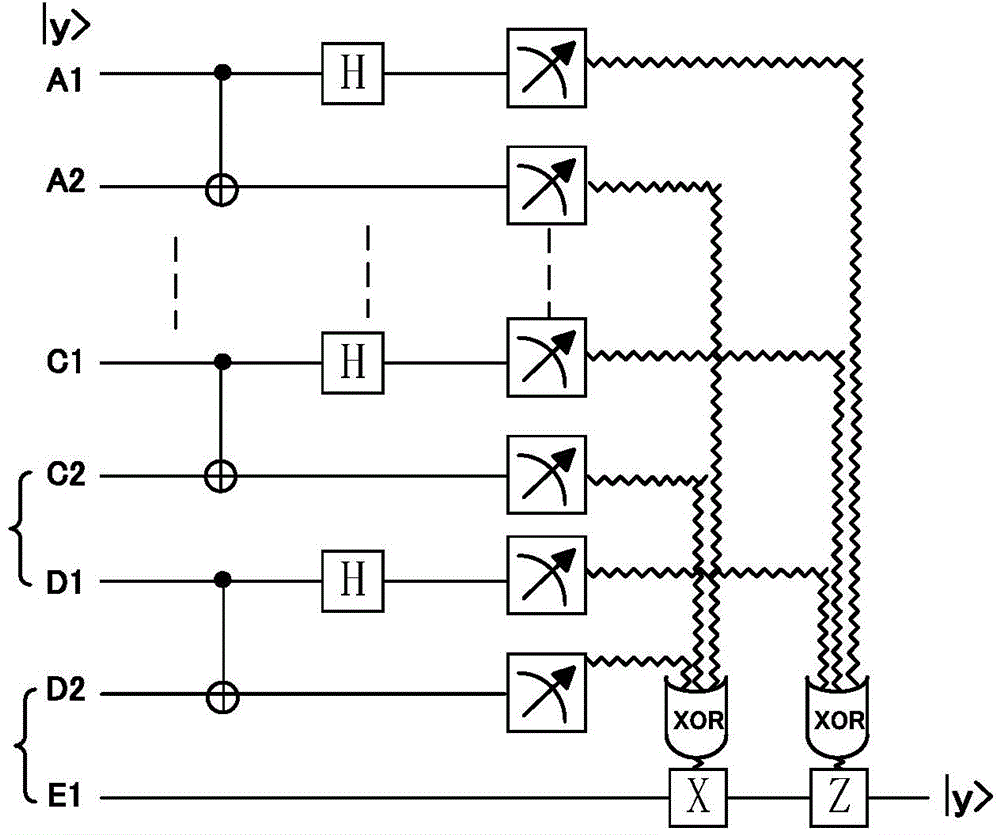

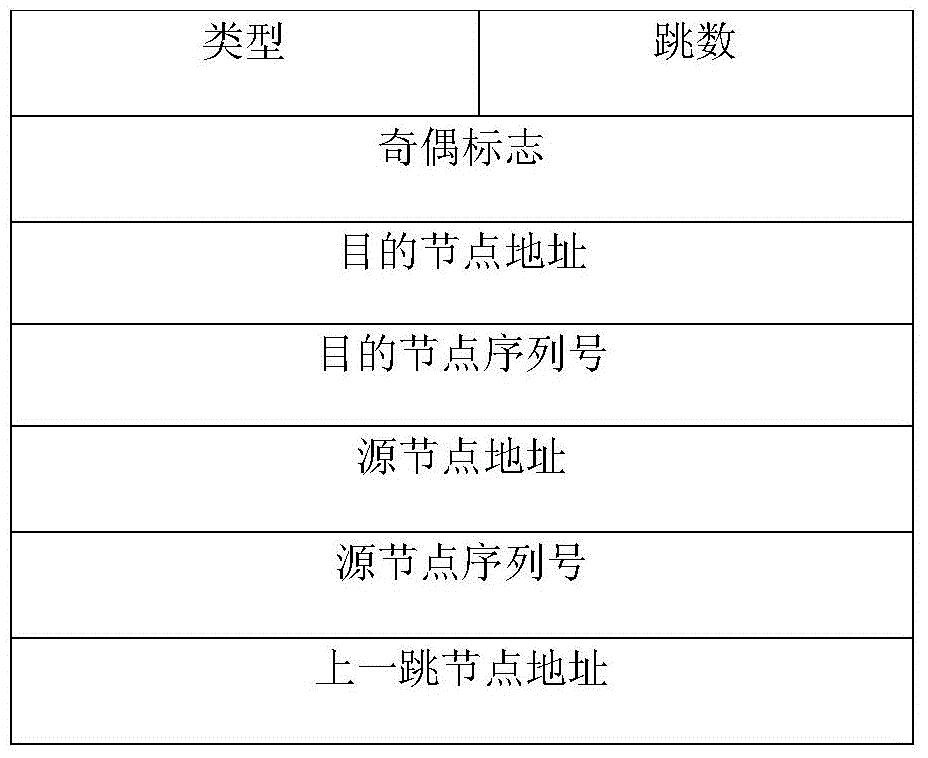

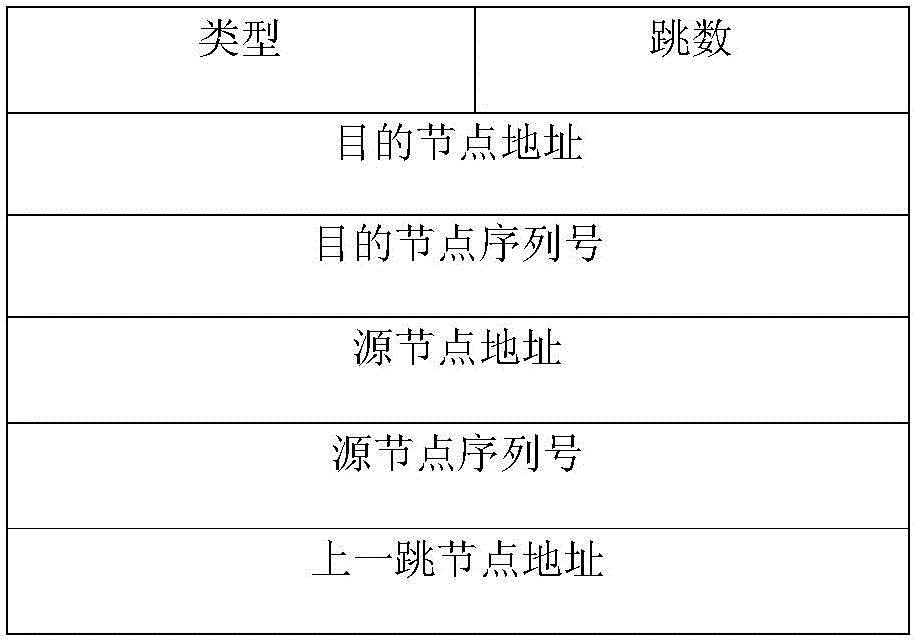

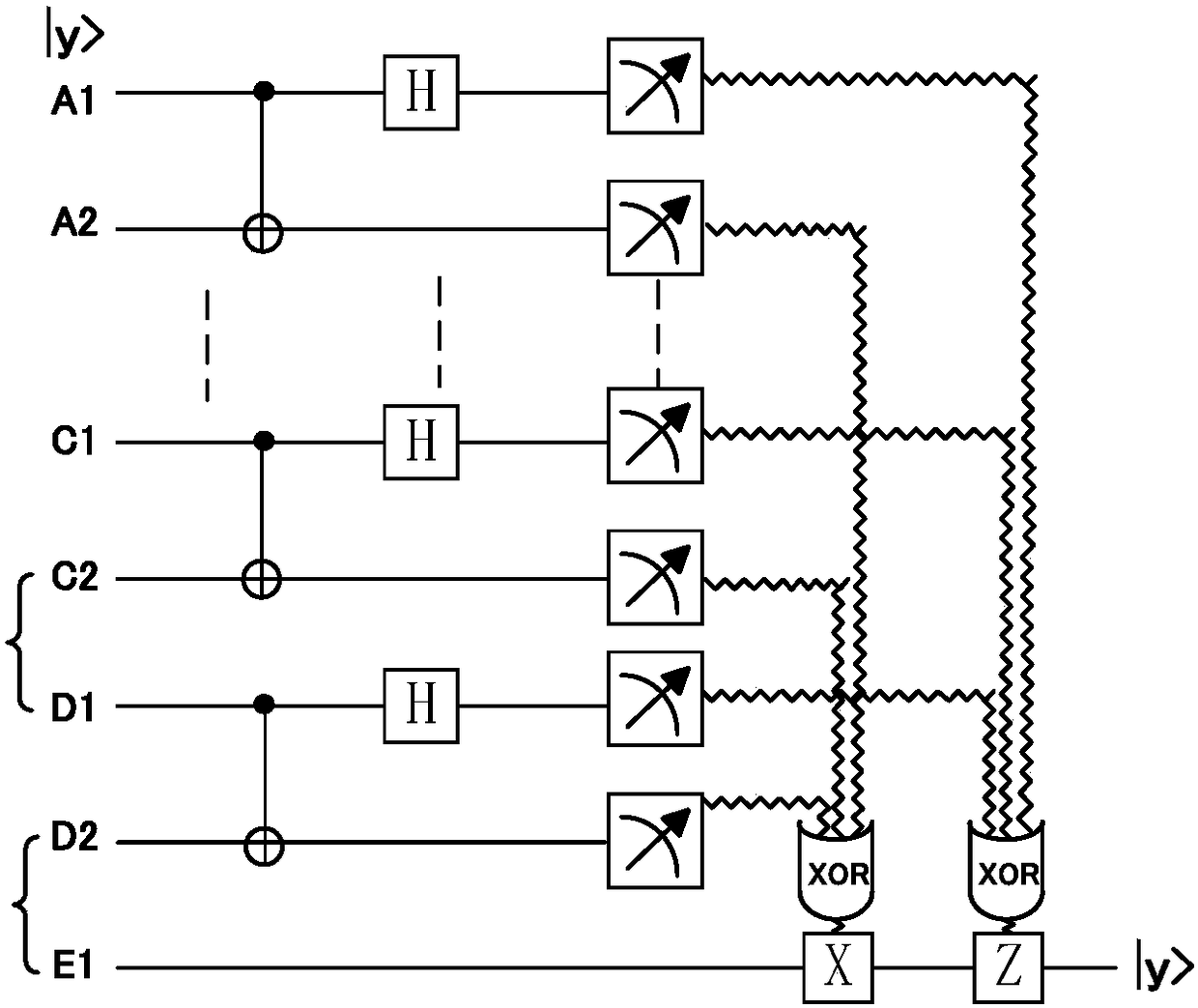

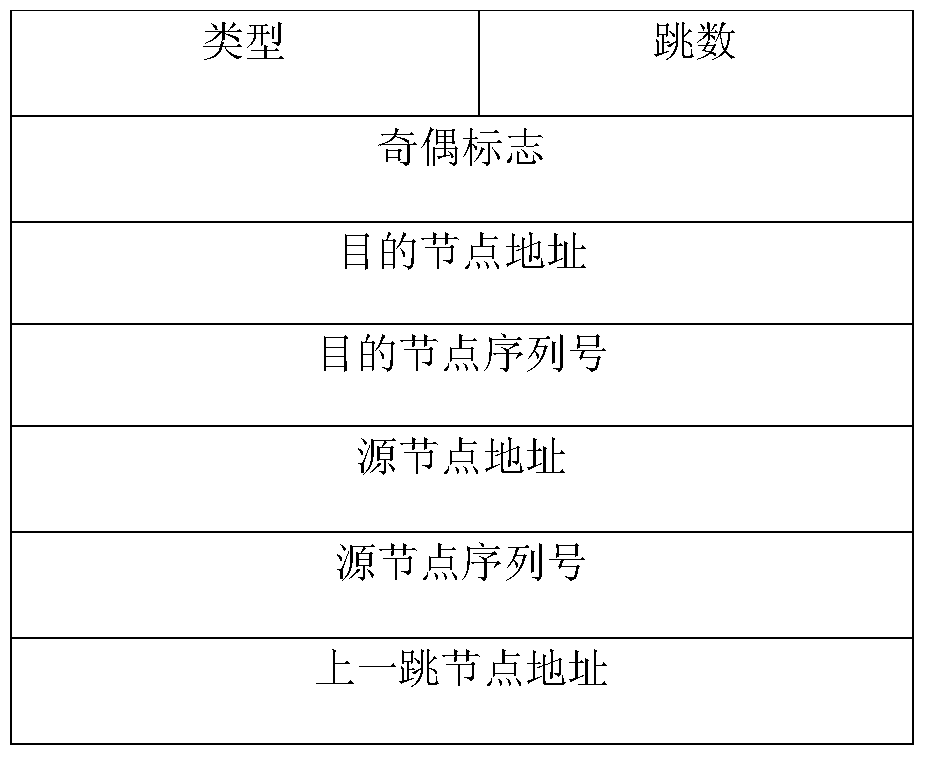

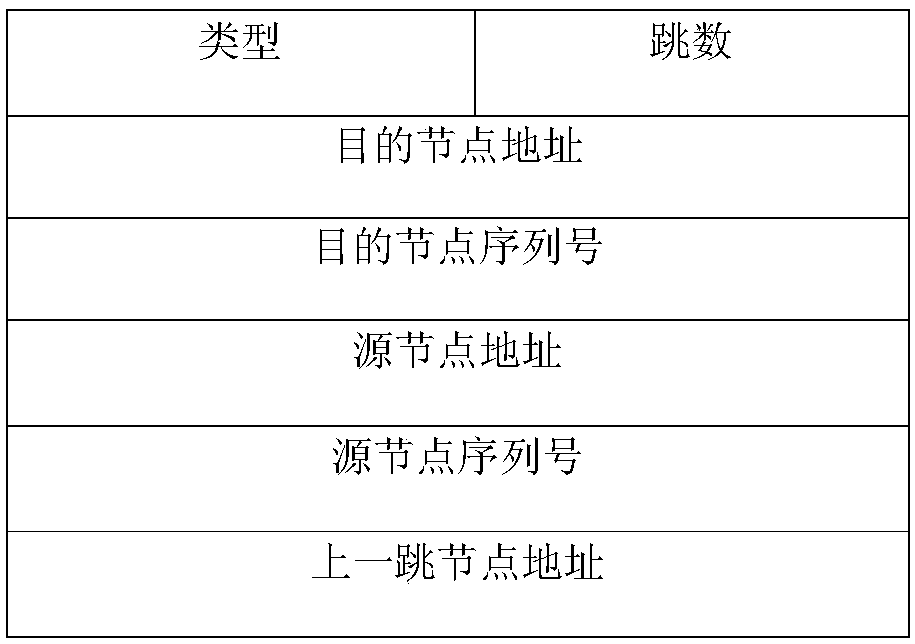

Method for routing part entangled quantum pair bridging communication network

ActiveCN104883304AImplement parallel executionLarge capacityData switching networksQuantumDistributed computing

Owner:SOUTHEAST UNIV

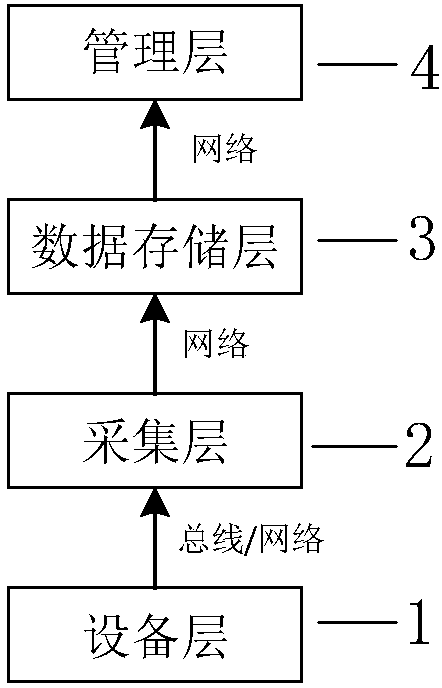

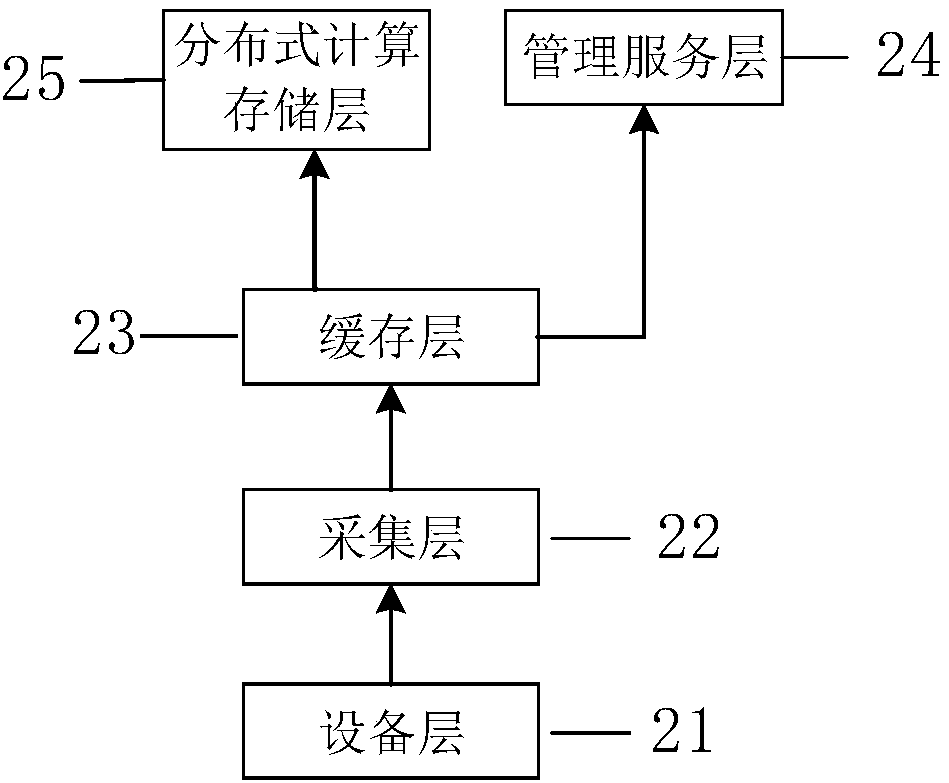

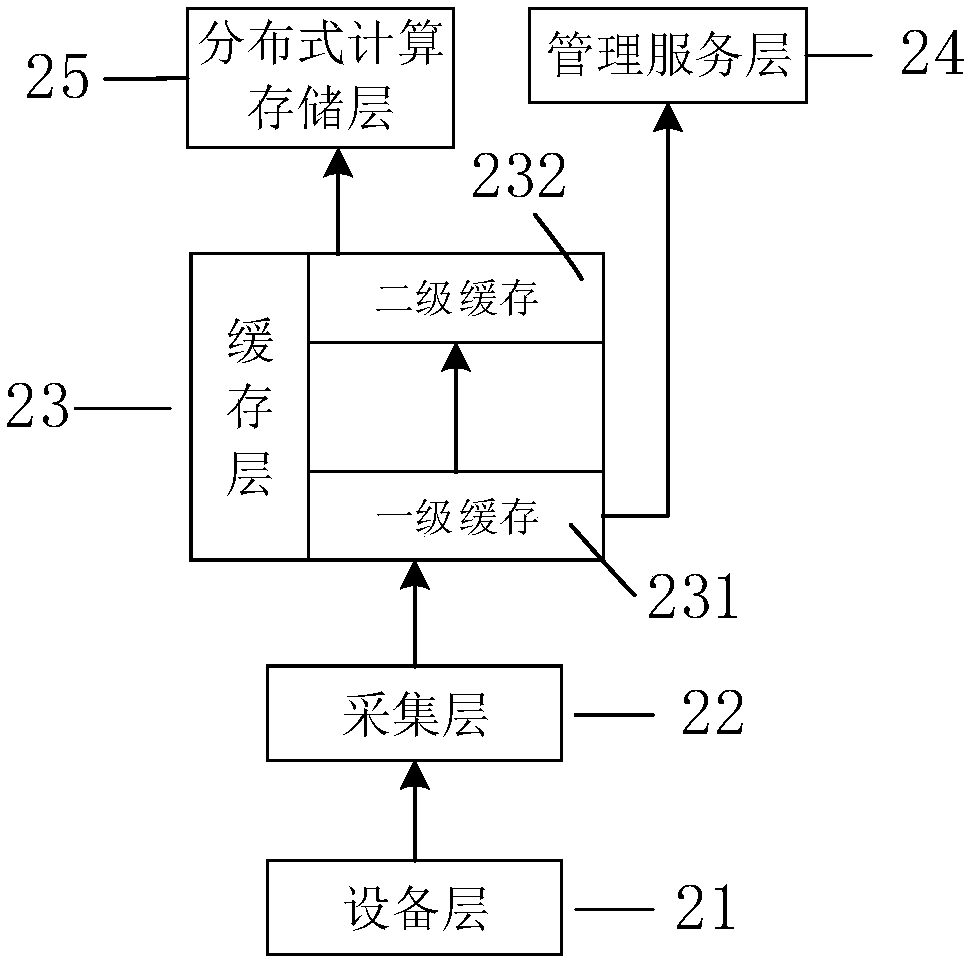

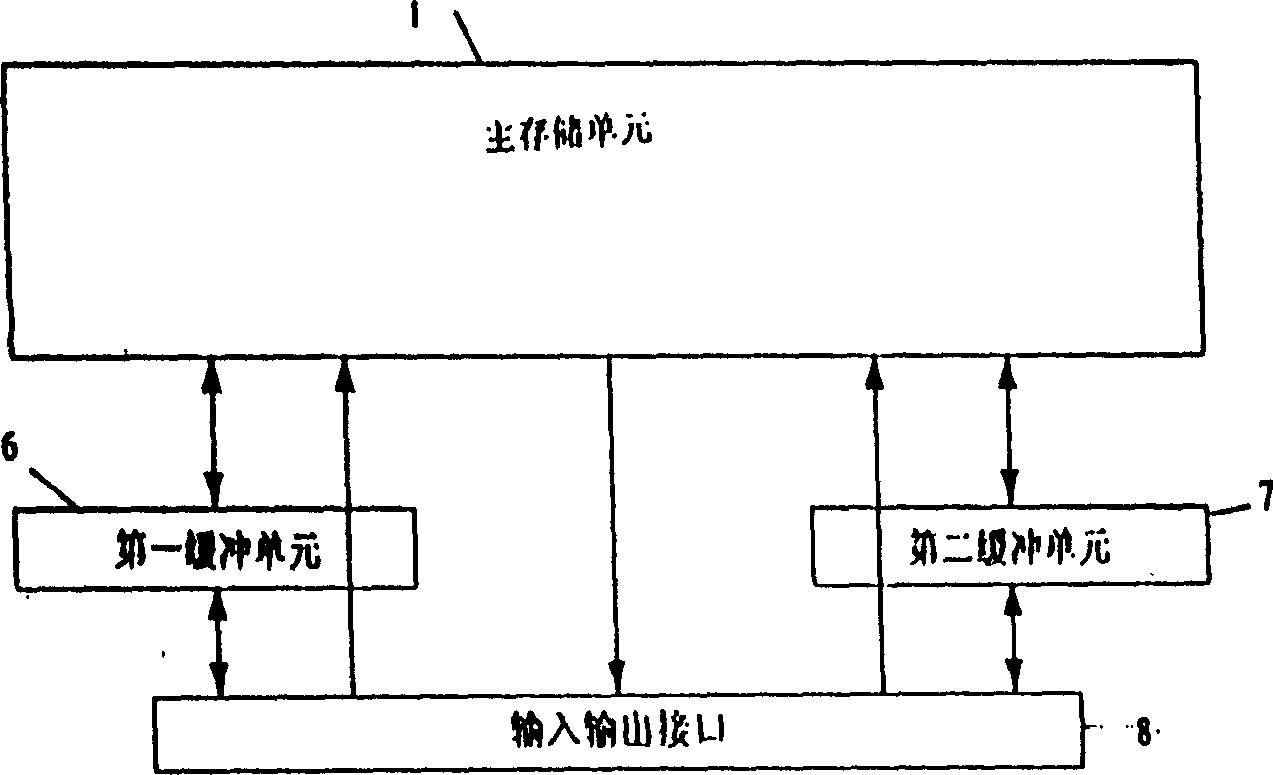

Buffer layer used in industrial big data aggregation, aggregation system and method

InactiveCN108804347AReduce network congestionAvoid disk I/O operationsMemory systemsData aggregatorData acquisition

The invention discloses a buffer layer used in a data aggregation system based on a distributed computing technology. The buffer layer includes a first level cache for storing data collected by an acquisition layer; and a second level cache communicatively connected with the first level cache and used for pulling data, reaching a set threshold value, in the first level cache. The invention also provides a data aggregation system and a data aggregation method based on the distributed computing technology. The invention sets two-level buffer layers between an acquisition layer and a distributedcomputing storage layer, and integrates a distributed computing framework at a writing end of the distributed computing storage layer to realize the parallel pulling and writing of data, which is a fundamental scheme for guiding the construction of a large data center of an intelligent factory. The invention solves the technical problems that the data acquisition of the numerical control equipmentor the system in the prior art is still in a discrete state and cannot form an organic whole and the efficiency problem of the acquisition / storage speed is low.

Owner:HUAZHONG UNIV OF SCI & TECH

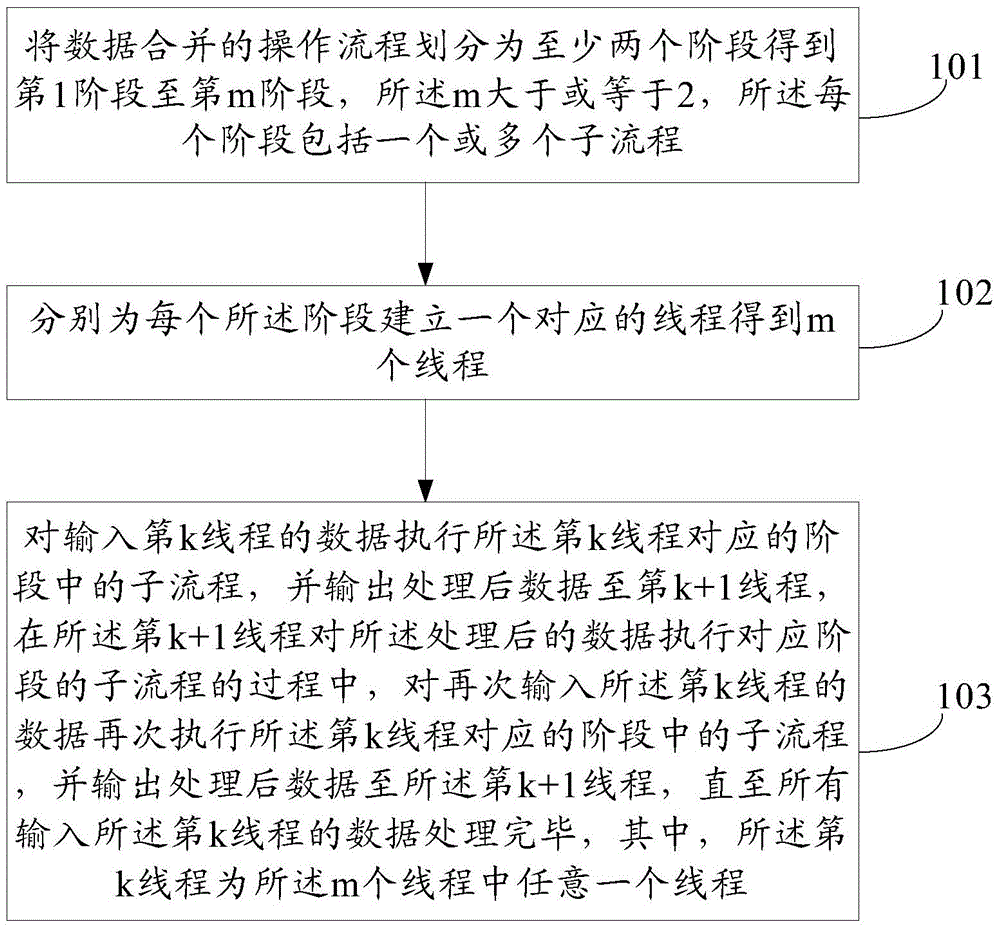

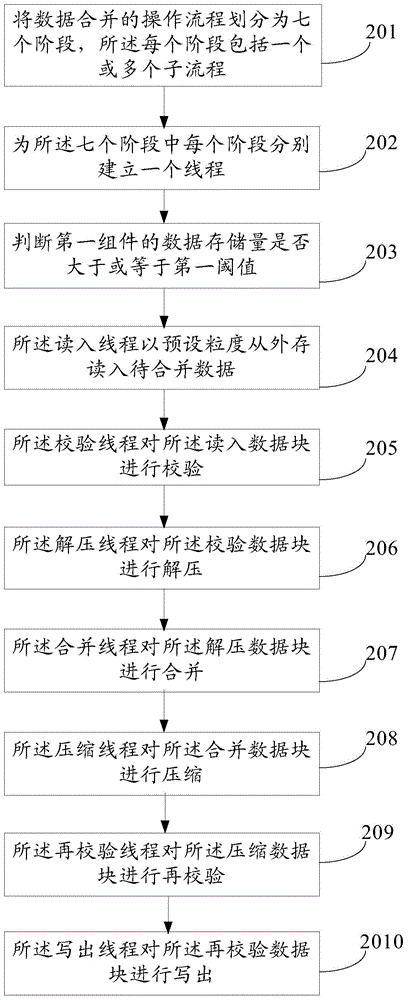

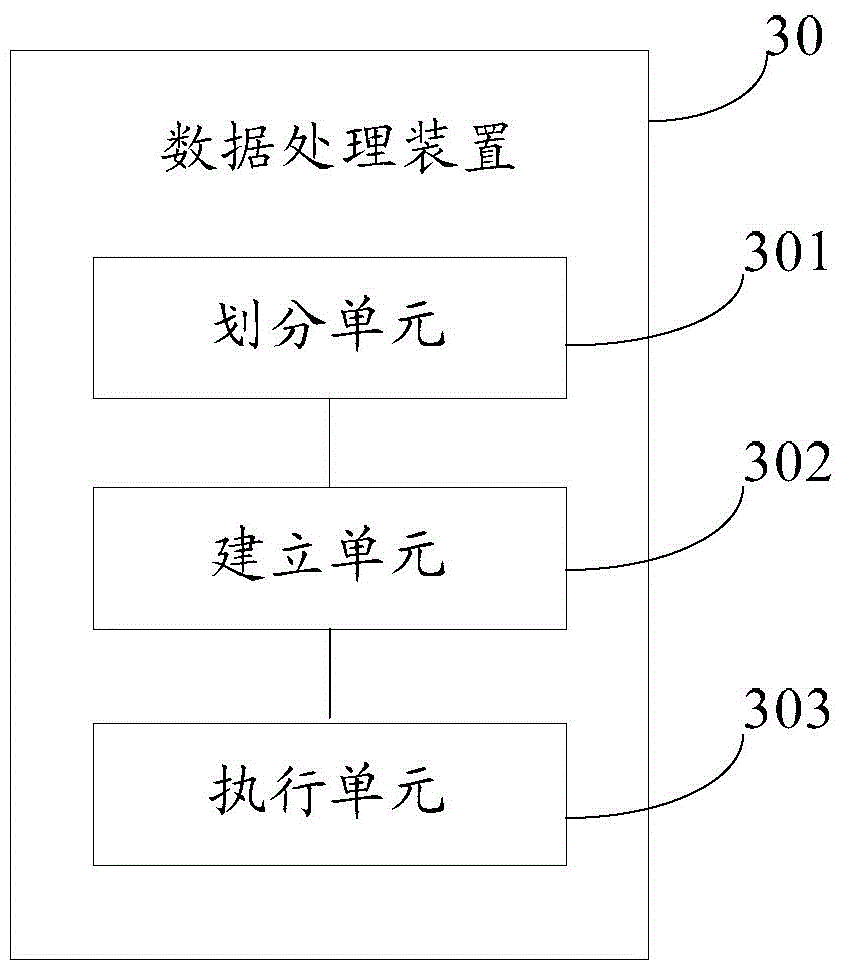

Data processing method and device

InactiveCN104424326AShorten the timeImplement parallel executionDigital data information retrievalSpecial data processing applicationsParallel computingData input

Provided are a data processing method and device, which relate to the field of computers, and can decrease the length time of the operation flow of merging data and reduce the probability and time of the occurrence of the stop-and-wait phenomena. The method comprises: dividing an operation flow of merging data into at least two phases to obtain 1st to mth phases, where m is greater than or equal to 2, and each phase comprises one or more sub-flows; respectively establishing a corresponding thread for each phase to obtain m threads; and executing a sub-flow in a phase corresponding to a kth thread on data input to the kth thread and outputting processed data to a k+1th thread, and in the process of the k+1th thread executing the sub-flow of the corresponding phase on the processed data, executing the sub-flow in the phase corresponding to the kth thread again on the data input to the kth thread again and outputting the processed data to the k+1th thread, until the processing of all of the data input to the kth thread is completed. The data processing method and device are applied to data processing.

Owner:HUAWEI TECH CO LTD +1

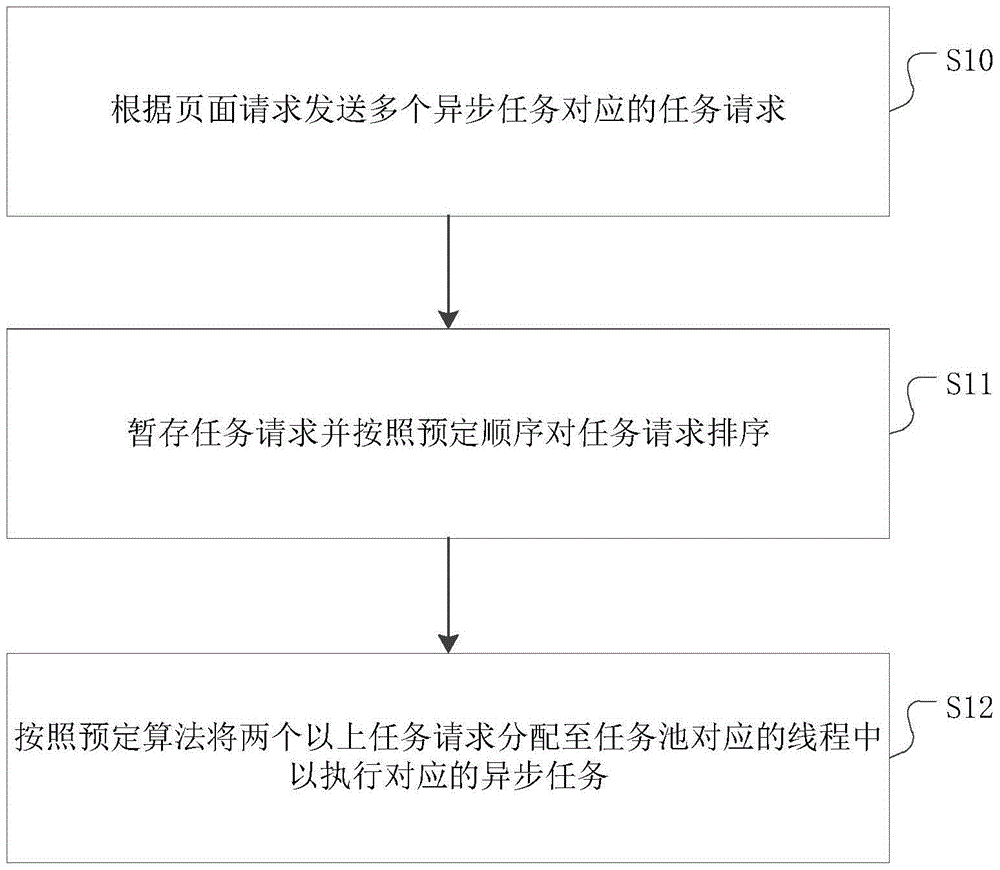

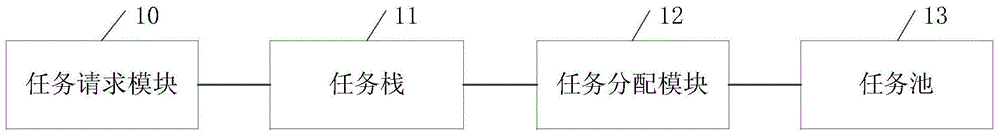

Method and device for controlling execution of asynchronous tasks

InactiveCN105740065AReduced execution timeImprove execution efficiencyProgram initiation/switchingOperating systemRequest distribution

The invention discloses a method for controlling the execution of asynchronous tasks, comprising: sending a plurality of task requests corresponding to the asynchronous tasks according to a page request; temporarily storing the task requests and sorting the task requests according to a predetermined order; More than two task requests are assigned to threads corresponding to the task pool to execute corresponding asynchronous tasks; wherein, there are at least two threads in the task pool. The method can realize the parallel execution of a plurality of asynchronous tasks, reduce the execution time of the tasks, and improve the execution efficiency. In addition, the invention also discloses a device for controlling the execution of asynchronous tasks.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

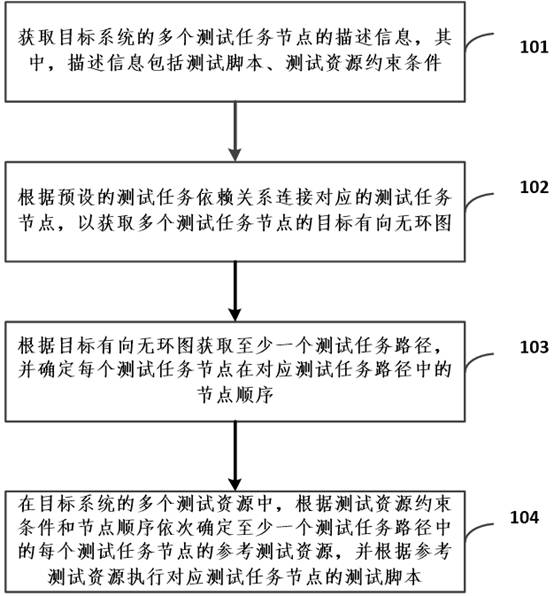

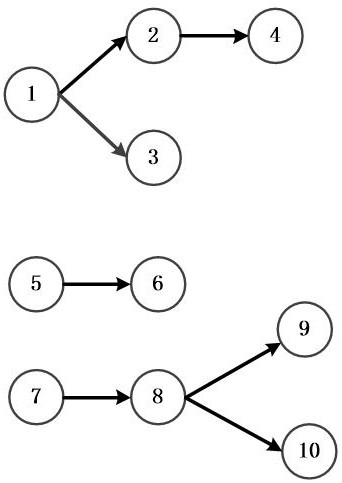

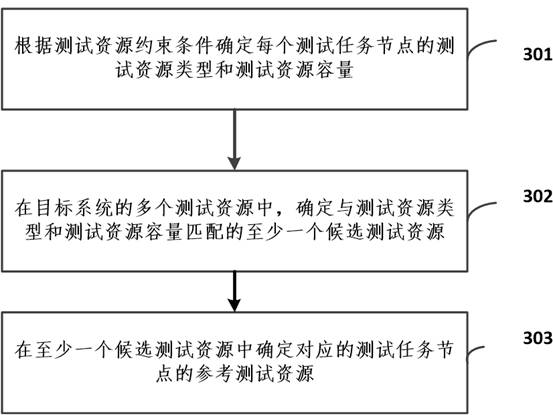

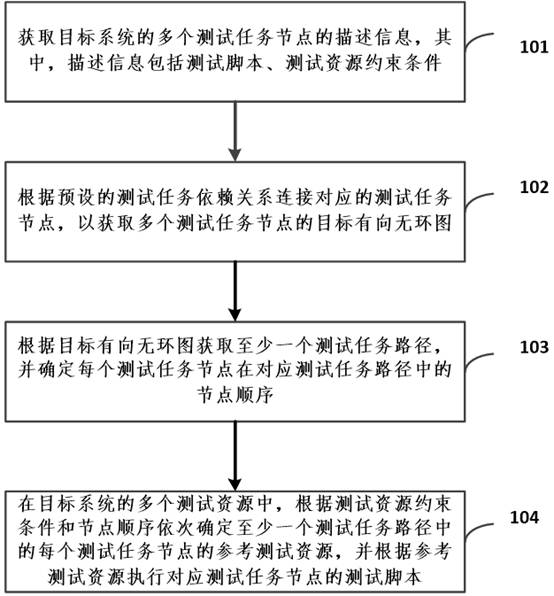

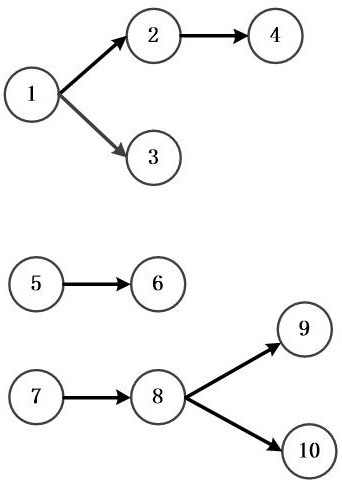

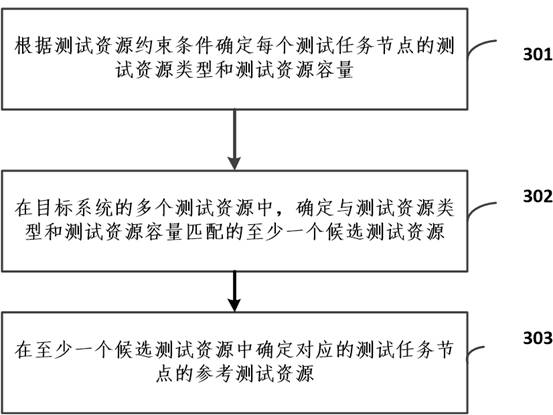

Complex resource constraint-oriented test task scheduling method

ActiveCN113282402AImplement parallel executionIncrease profitProgram initiation/switchingFaulty hardware testing methodsTask dependencyReal-time computing

The invention relates to a complex resource constraint-oriented test task scheduling method, and relates to the field of automated testing, and the method comprises the following steps: connecting test task nodes of a target system according to a preset test task dependency relationship to obtain a target directed acyclic graph of a plurality of test task nodes; obtaining at least one test task path according to the target directed acyclic graph, and determining a node sequence of each test task node in the corresponding test task path; sequentially determining a reference test resource of each test task node in at least one test task path according to the test resource constraint condition and the node sequence, and executing a corresponding test script according to the reference test resource. Therefore, the test task is executed based on the target directed acyclic graph, parallel execution of the test task can be achieved, the test efficiency and the utilization rate of the test resources are improved, the test resources are matched based on the test resource constraint conditions, and the selection reliability and efficiency of the test resources are improved.

Owner:航天中认软件测评科技(北京)有限责任公司

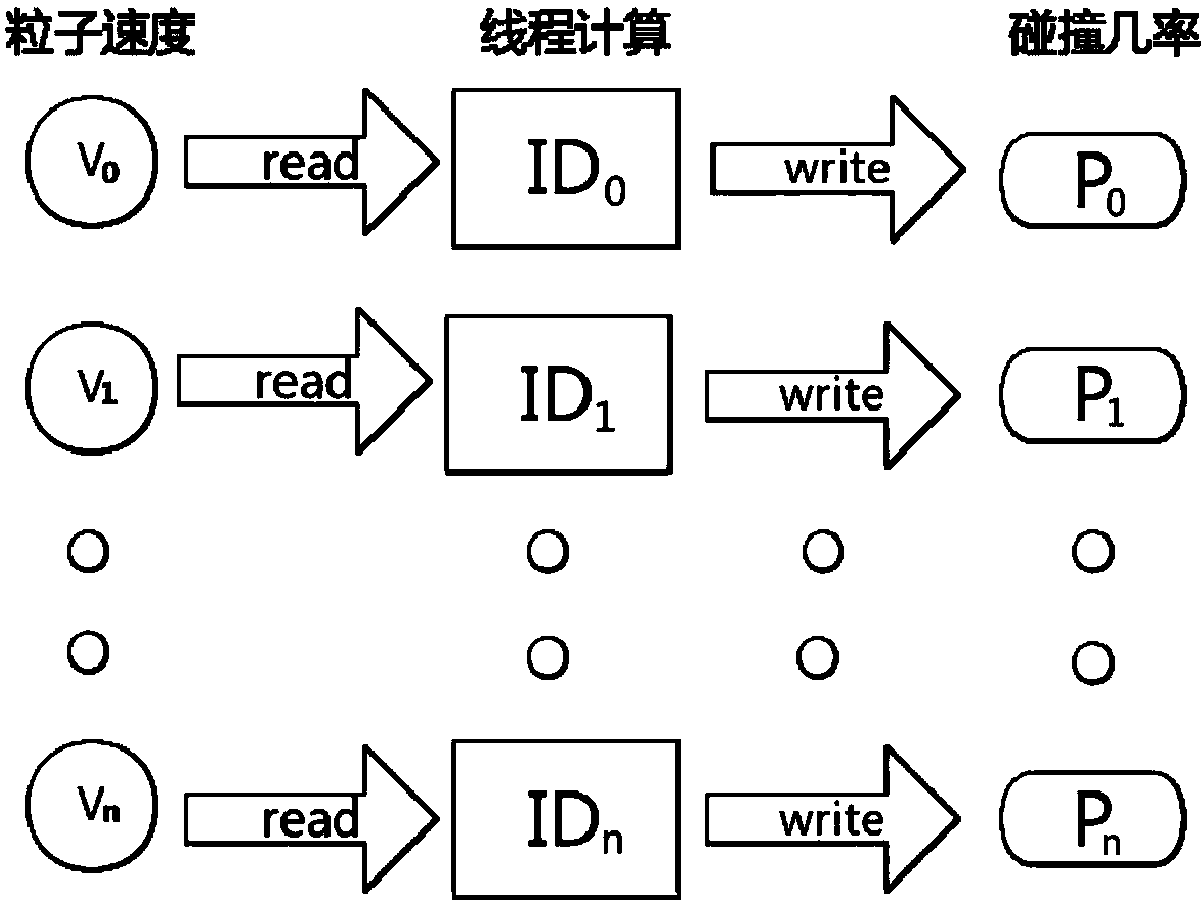

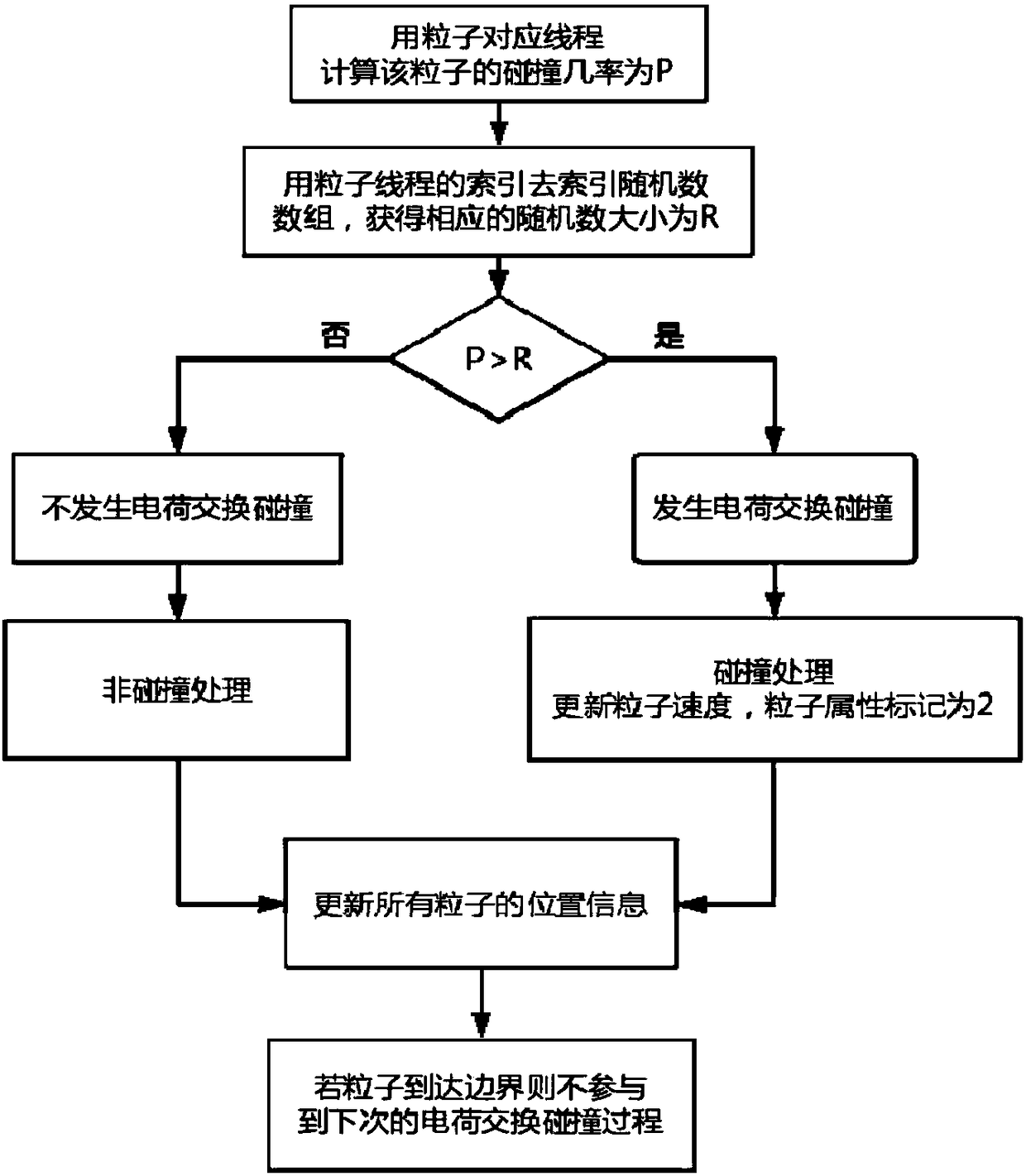

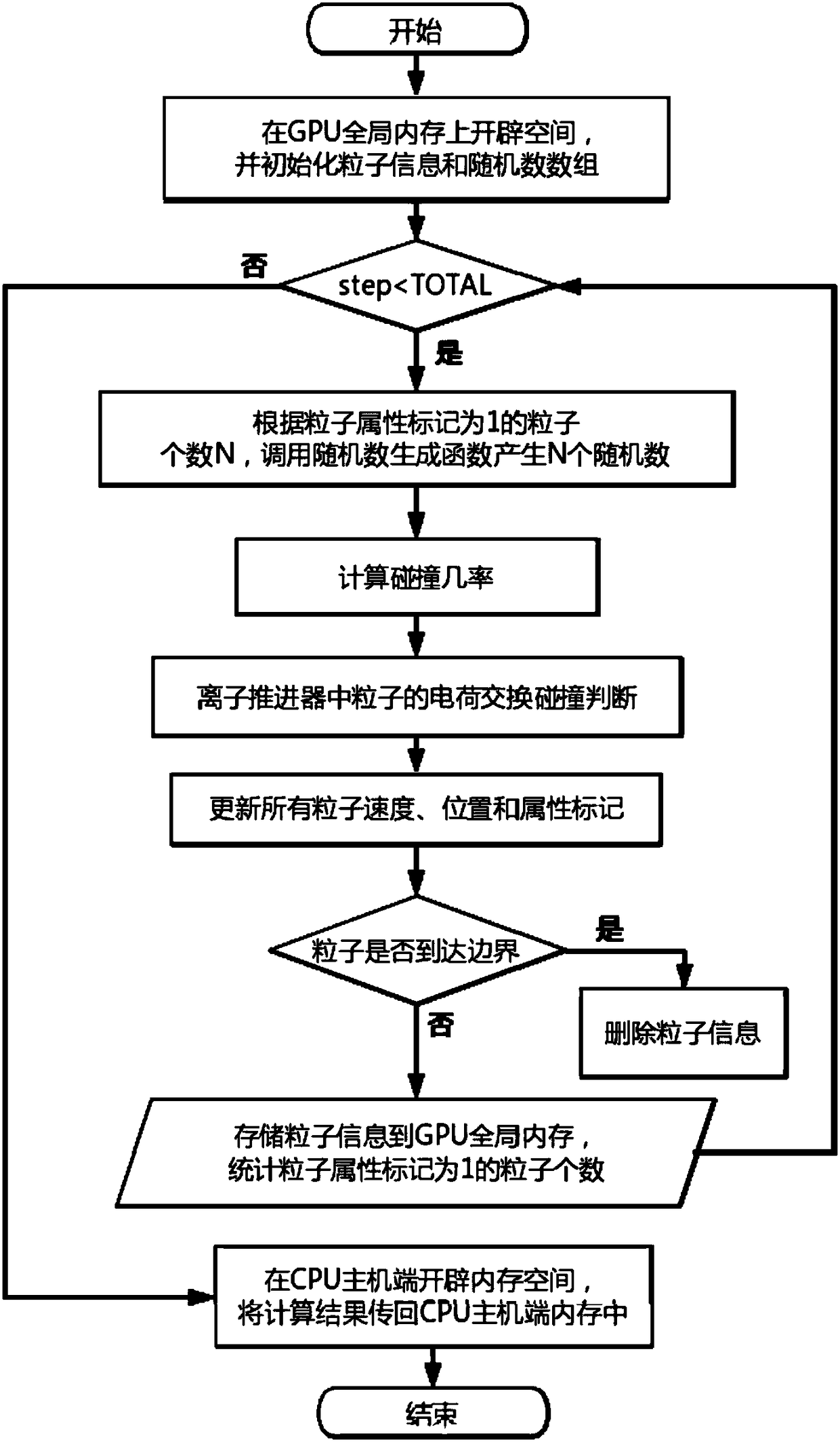

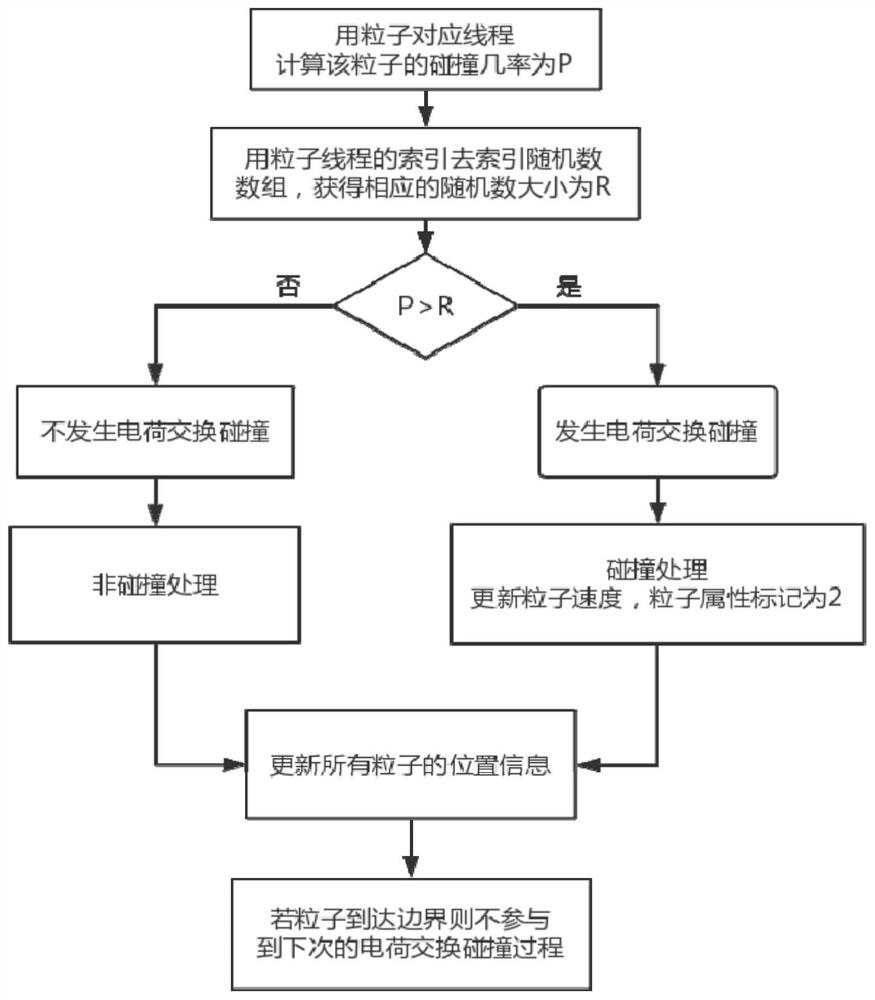

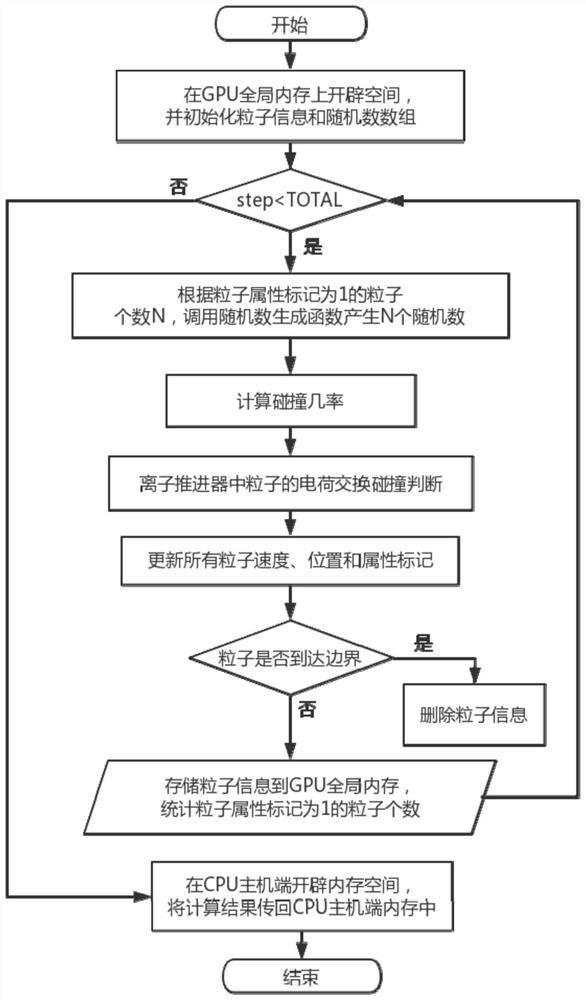

Charge exchange collision MCC method used for ion thruster numerical simulation

ActiveCN108549763AImprove computing efficiencyReduce computing timeGeometric CADSpecial data processing applicationsSingle instruction, multiple threadsCharge exchange

The invention relates to the field of ion thruster numerical simulation, in particular to a charge exchange collision MCC method used for ion thruster numerical simulation. By utilizing a physical characteristic of charge exchange collision of an ion thruster, and in combination with a single-instruction multi-thread characteristic of a graphic processing unit (GPU) in the field of computers, parallel execution of single instruction multiple data of a charge exchange collision MCC algorithm for the ion thruster is realized, so that the calculation efficiency is greatly improved and the calculation time is shortened.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

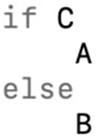

Conditional branch instruction fusion method and device and computer storage medium

ActiveCN111930428AImplement parallel executionAvoid wastingInstruction analysisConcurrent instruction executionProgramming languageCoding block

The embodiment of the invention discloses a conditional branch instruction fusion method and device and a computer storage medium. The method comprises the following steps: in a compiling stage, respectively generating corresponding code blocks from branch statements in a conditional branch statement sequence in response to detecting that the conditional branch statement sequence appears in a program; fusing the code blocks according to a set instruction fusion strategy to obtain a fusion instruction, and storing the fusion instruction in an instruction memory, wherein the branch statement corresponds to a judgment result corresponding to a conditional judgment statement in the conditional branch statement sequence; in an execution stage, decoding a fusion instruction read from the instruction memory to obtain a code block contained in the fusion instruction; scheduling code blocks obtained by decoding to corresponding execution units in parallel according to an execution result obtained by executing the conditional judgment statement; and executing the scheduled code block through the execution unit.

Owner:烟台芯瞳半导体科技有限公司

Routing method for partially entangled quantum pairs bridging communication networks

ActiveCN104883304BImplement parallel executionLarge capacityData switching networksDistributed computingQuantum

The invention relates to a method for routing a part entangled quantum pair bridging communication network. The method comprises the following steps: launching routing request information 1 by an original node; answering the routing request information by a destination node; launching routing request information 2 by the original node; launching routing request information 3 by a path node; answering the routing request information of each node by the destination node; and establishing routing. The routing metric of each node is updated on time by the node, wherein the routing metric is calculated through a special formula, and the node selects and determines routing according to the routing metric.

Owner:SOUTHEAST UNIV

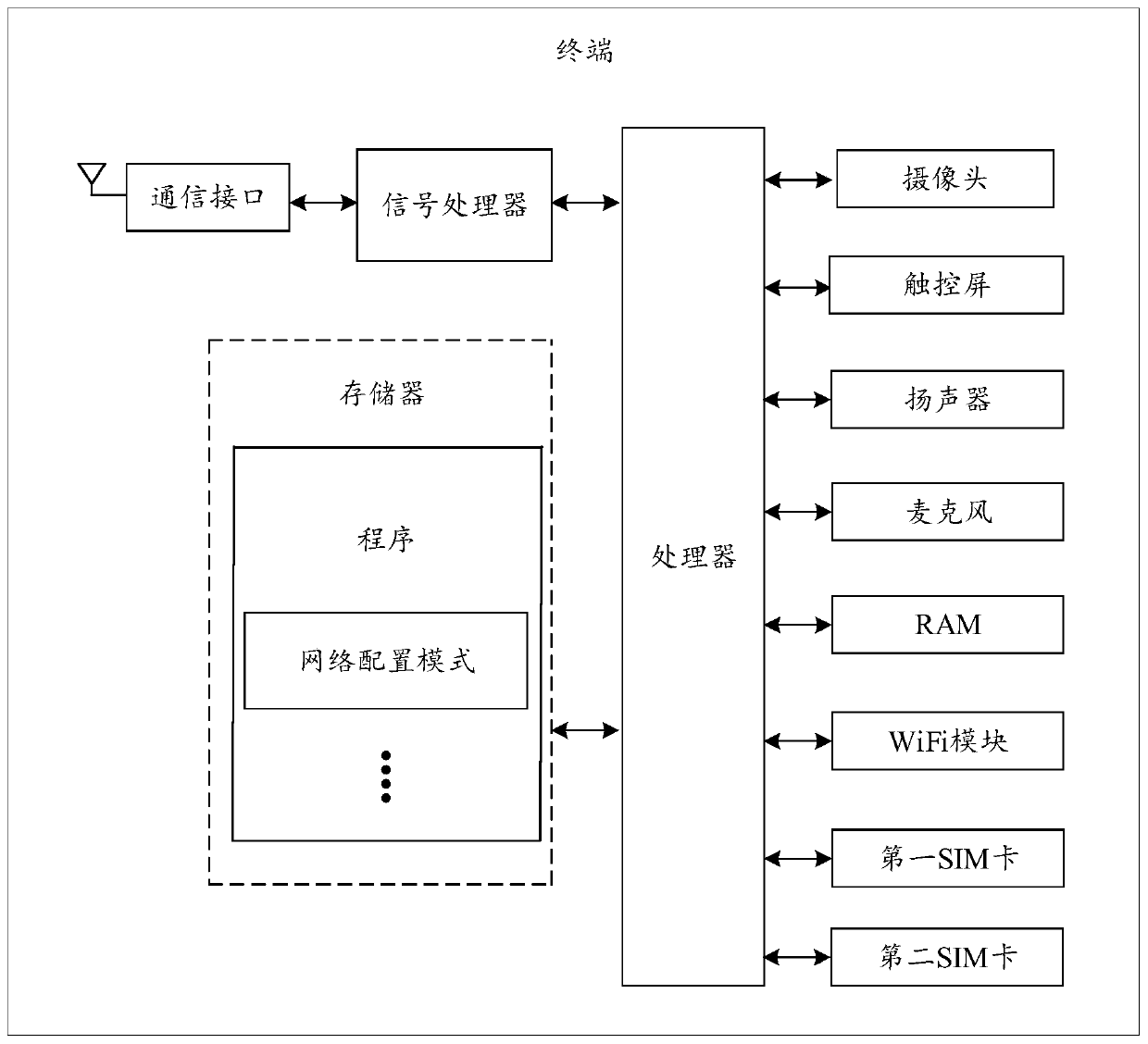

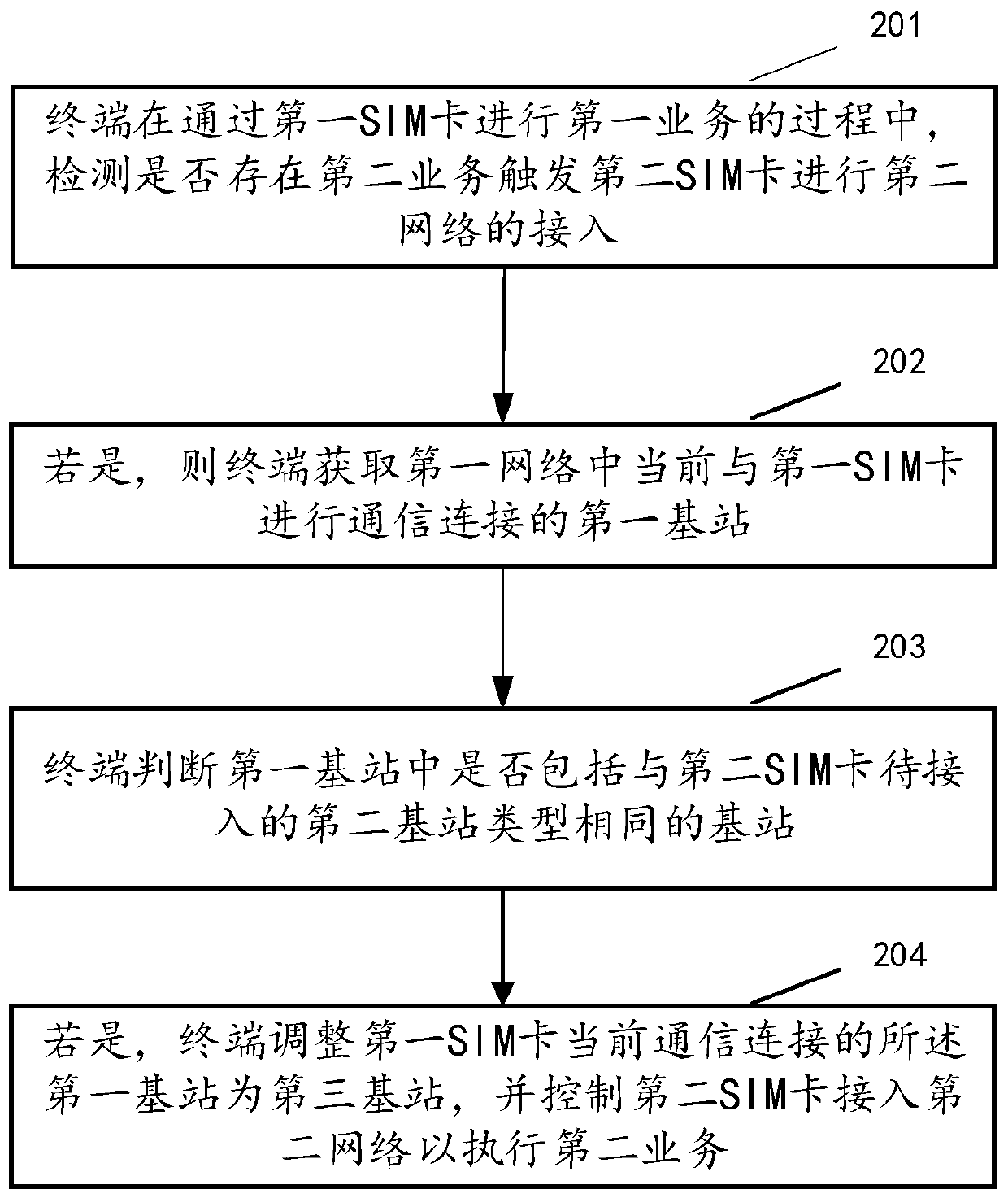

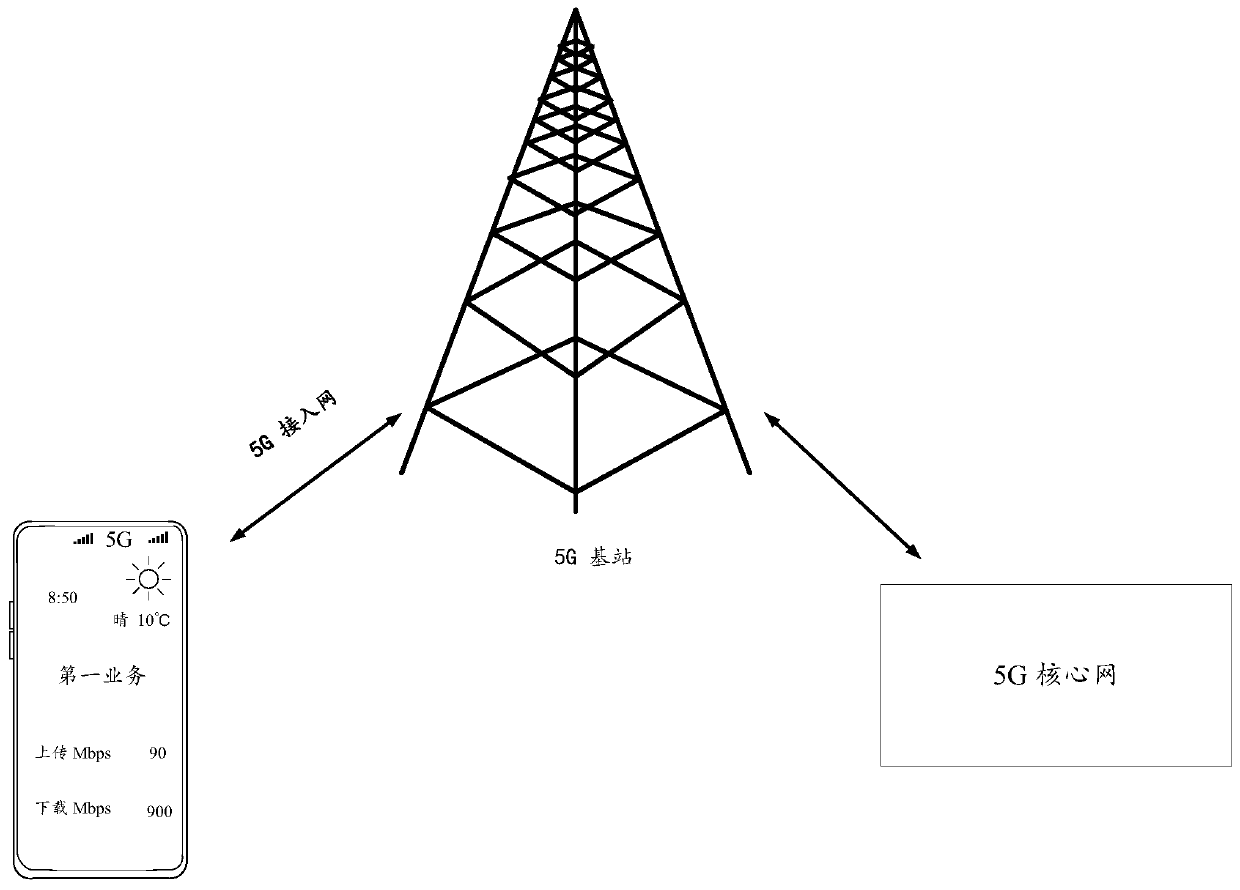

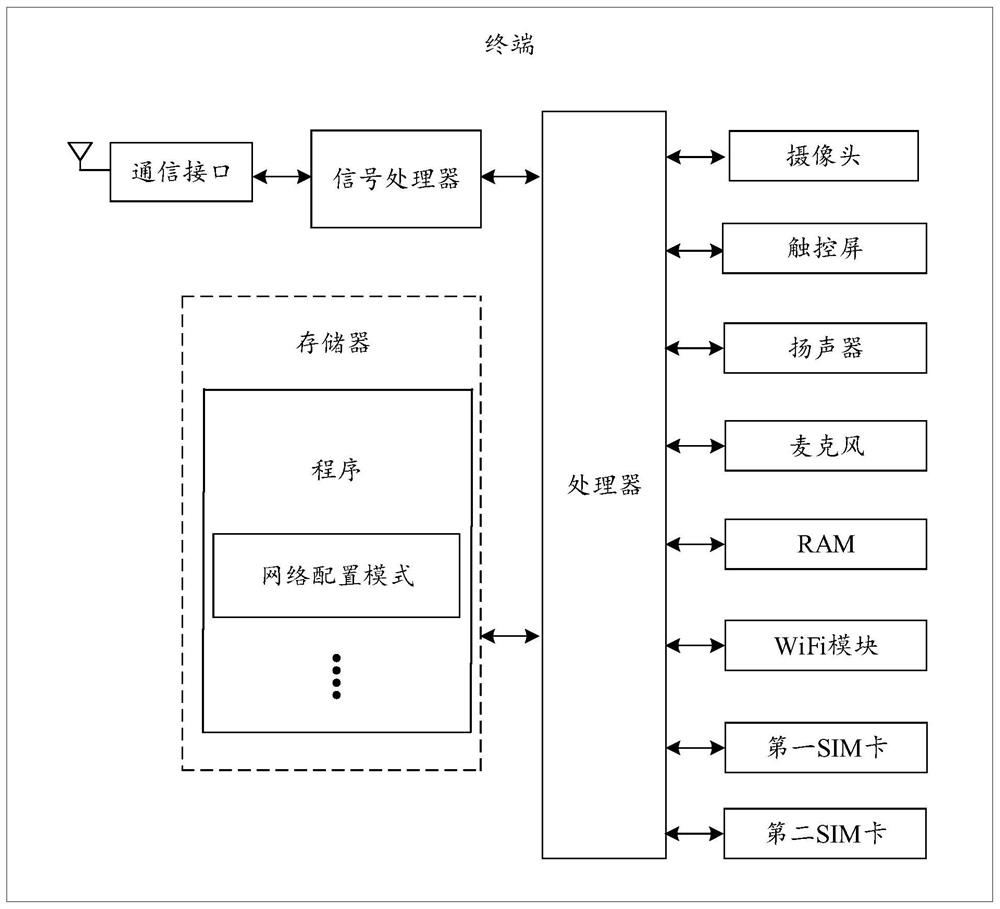

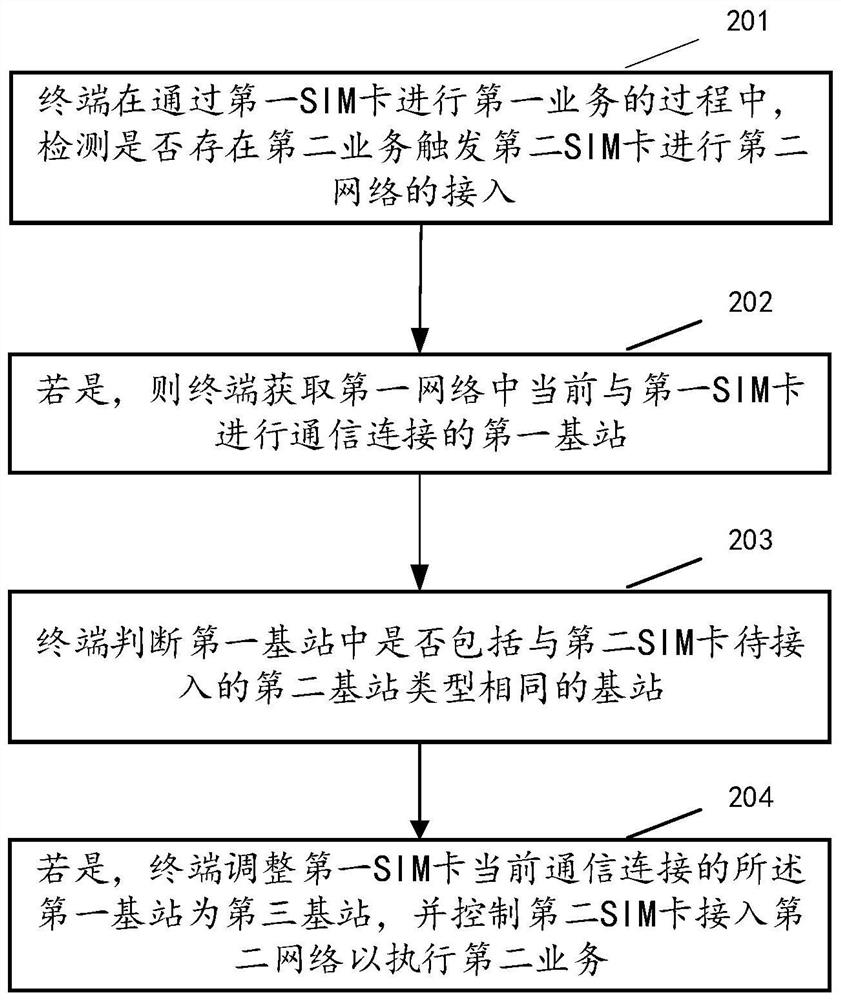

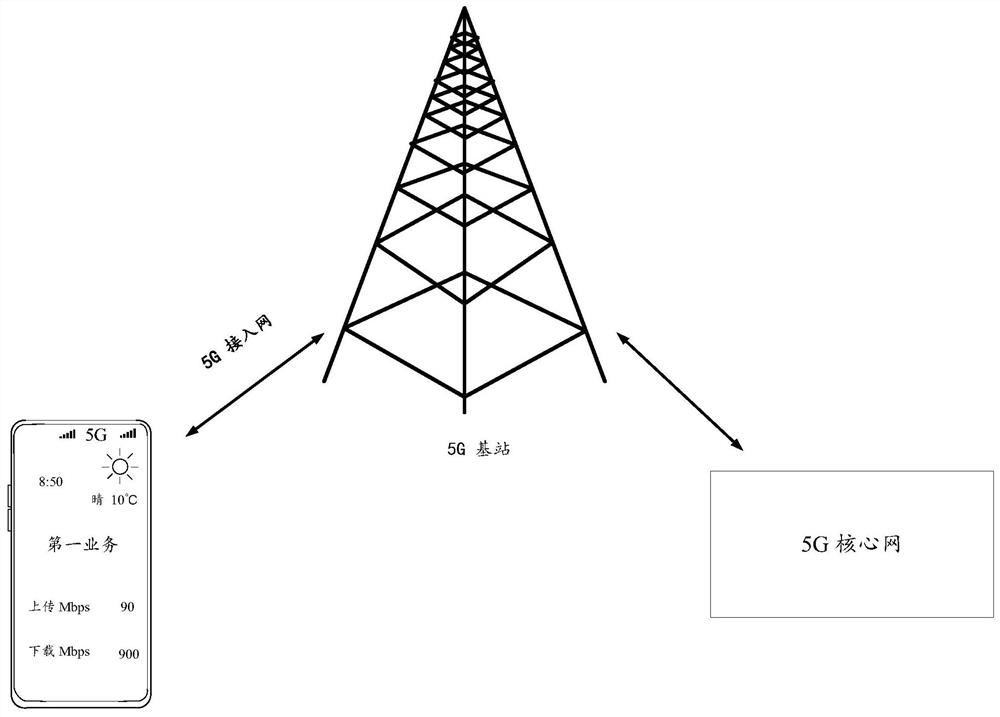

Network configuration method and related device

ActiveCN110856162AImprove data processing efficiencyAvoid confictAssess restrictionNetwork data managementEmbedded systemBase station

The embodiment of the invention discloses a network configuration method and a related device, and the method comprises the steps: detecting whether there is a second service triggering a second SIM card to carry out the access of a second network or not in a process that a terminal carries out the first service through the first SIM card; if yes, obtaining a first base station in communication connection with the first SIM card currently in the first network; judging whether the first base station comprises a base station with the same type as a second base station to which the second SIM card is to be accessed; if yes, the first base station in current communication connection with the first SIM card is adjusted to be a third base station, the second SIM card is controlled to have accessto the second network to execute the second service, and the third base station does not comprise the base station of the same type as the second base station. By implementing the embodiment of the invention, the terminal can realize a function of executing services in parallel by two SIM cards, and the data processing efficiency of the terminal is improved.

Owner:OPPO CHONGQING INTELLIGENT TECH CO LTD

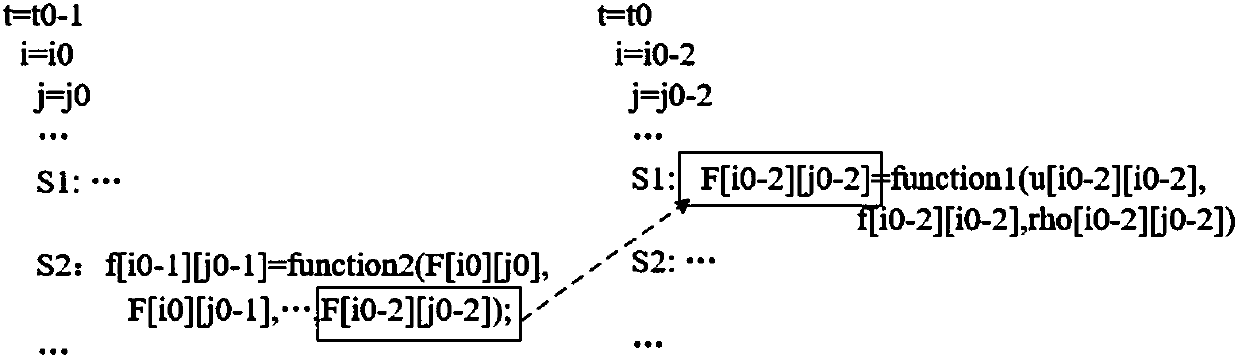

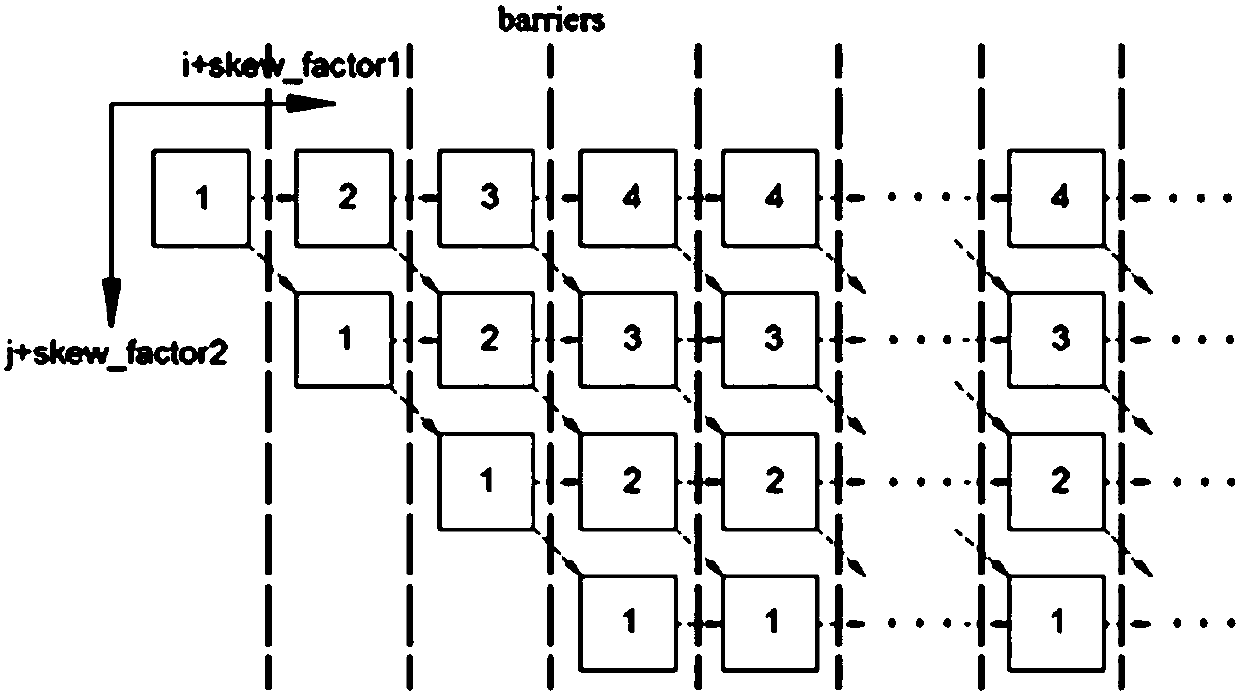

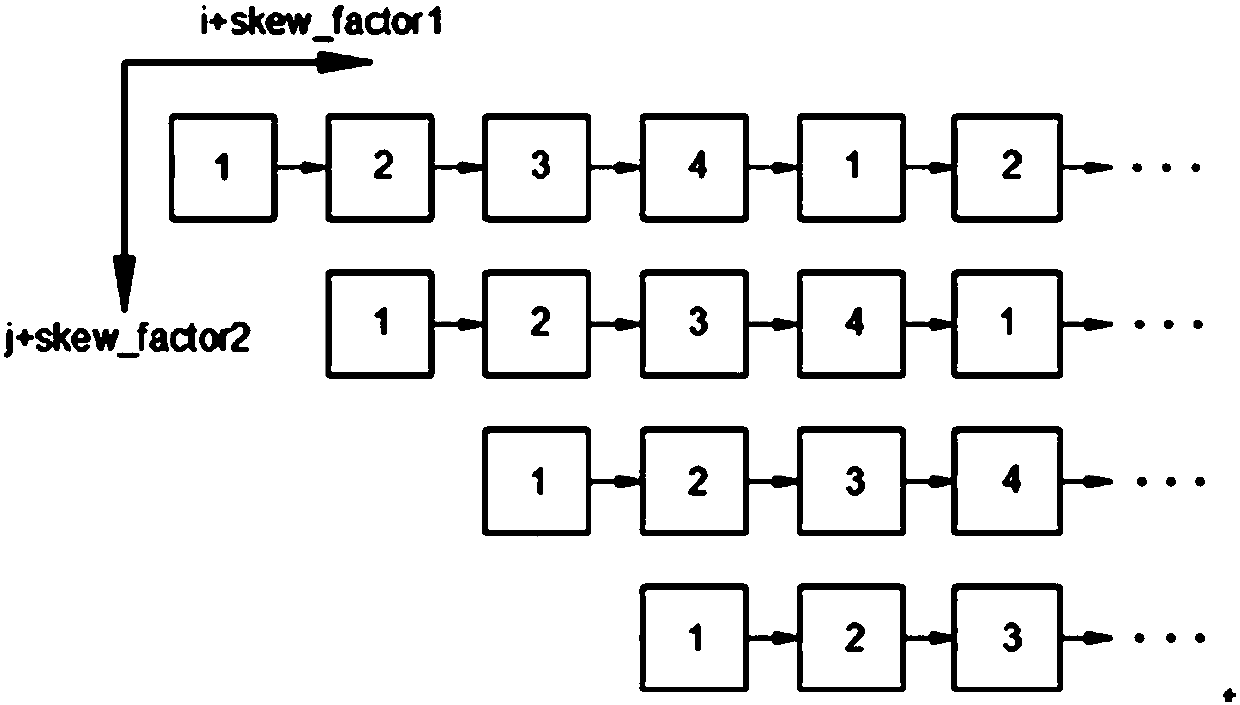

Lattice Boltzmann method parallel acceleration method by using time locality

ActiveCN108038304AImplement parallel executionIncreased temporal localityDesign optimisation/simulationSpecial data processing applicationsWavefrontParallel computing

The invention provides a lattice Boltzmann method parallel acceleration method by using time locality. The method comprises the steps of 1) merging DOALL loops of three spatial dimensions within a single-time iterative step into a DOACROSS loop; 2) performing cycle deflection on the merged DOACROSS loop, eliminating negative dependencies related to the time dimensions, and forming a DOACROSS loopwhich is merged with the time dimensions; 3) using a loop tiling technique to perform loop tiling on the DOACROSS loop which is merged with the time dimensions to form multiple blocks at the block size of a*a*t; 4) achieving parallel wave fronts for the blocks. The method can significantly improve the calculation speed of the lattice Boltzmann method.

Owner:XI AN JIAOTONG UNIV

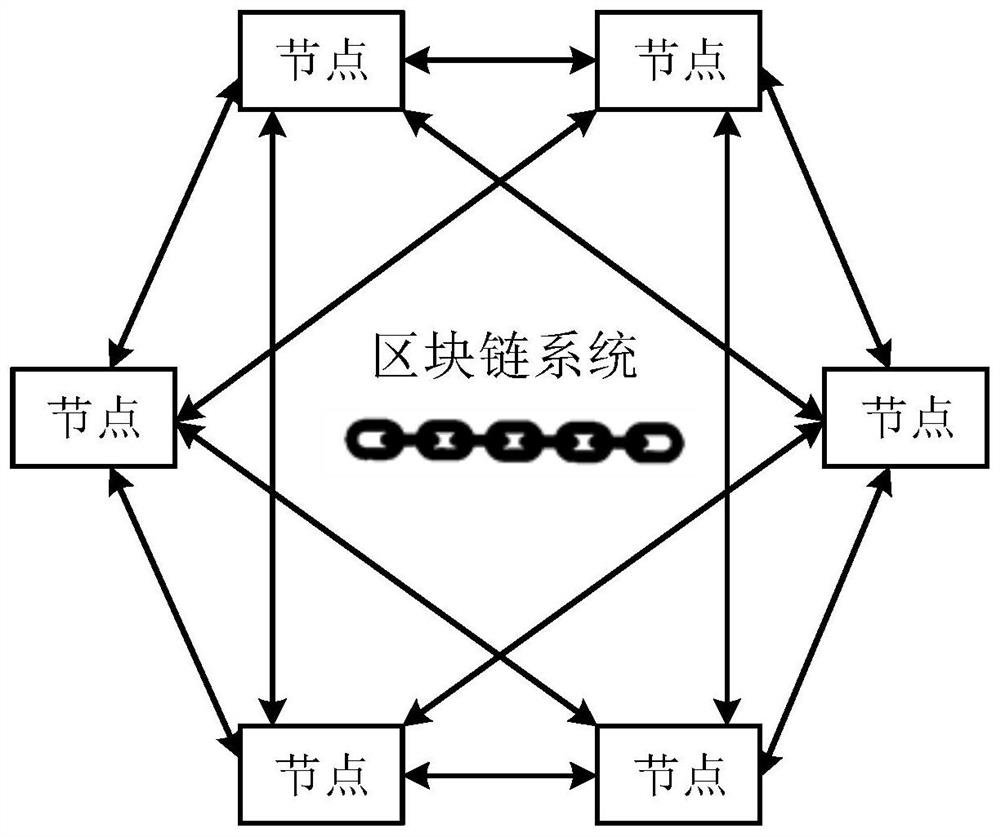

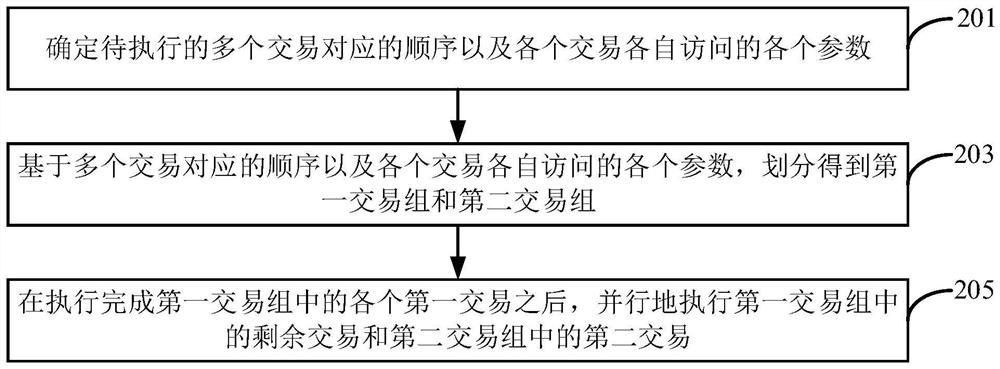

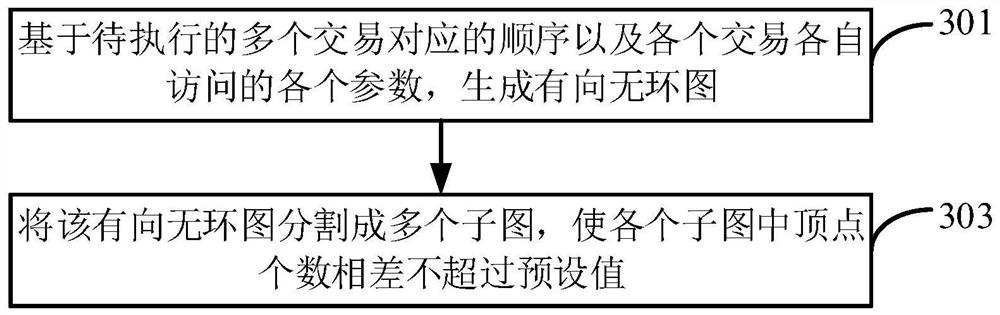

Method and device for executing transactions in block chain system

PendingCN113656507AImplement parallel executionFinanceDatabase distribution/replicationParallel computingFinancial transaction

The invention provides a method and device for executing transactions in a block chain system. The method is executed by a node of a block chain, and the method comprises the following steps: determining a sequence corresponding to a plurality of transactions to be executed and each parameter accessed by each transaction; based on the sequence and each parameter accessed by each transaction, dividing the transactions to obtain a first transaction group and a second transaction group, wherein the second transaction group comprises at least one second transaction, and a random second transaction has a read-write conflict with at least one first transaction in the first transaction group, and the sequence of the second transaction is after the first transaction having the read-write conflict with the second transaction; and after the execution of each first transaction in the first transaction group is completed, executing the remaining transactions in the first transaction group and the second transaction in the second transaction group in parallel.

Owner:ALIPAY (HANGZHOU) INFORMATION TECH CO LTD +2

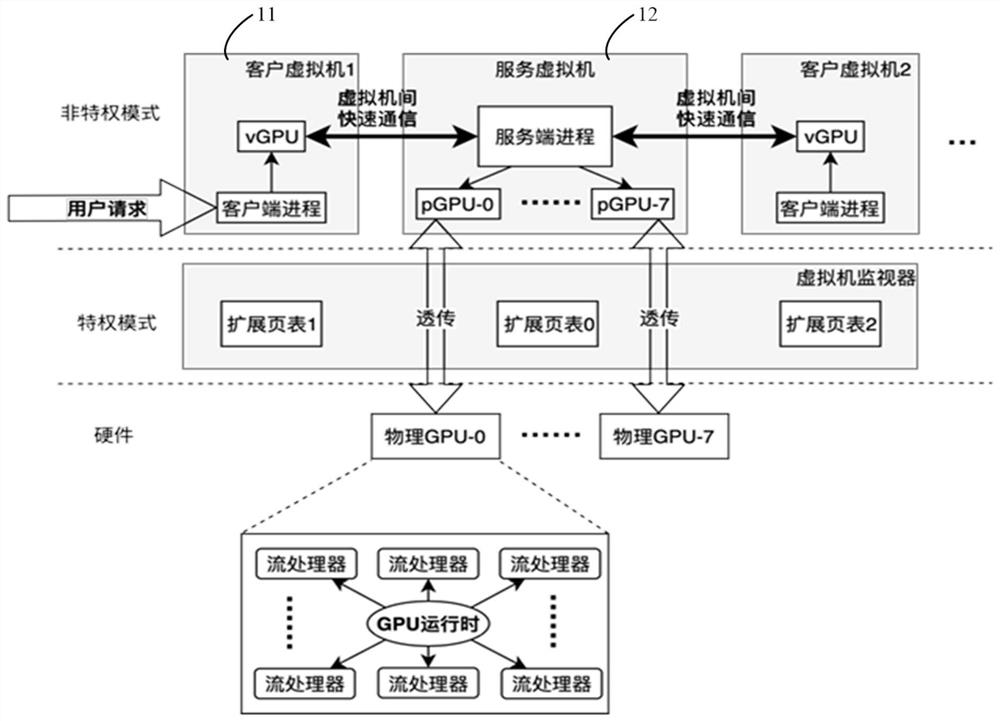

A cluster GPU resource scheduling system and method

ActiveCN102541640BFast parallel processingUnplug at willResource allocationComputer architectureEngineering

The invention provides a cluster GPU resource scheduling system. The system comprises a cluster initialization module, a GPU master node, and a plurality of GPU child nodes, wherein the cluster initialization module is used for initializing the GPU master node and the plurality of GPU child nodes; the GPU master node is used for receiving a task inputted by a user, dividing the task into a plurality of sub-tasks, and allocating the plurality of sub-tasks to the plurality of GPU child nodes by scheduling the plurality of GPU child nodes; and the GPU child nodes are used for executing the sub-tasks and returning the task execution result to the GPU master node. The cluster GPU resource scheduling system and method provided by the invention can fully utilize the GPU resources so as to execute a plurality of computation tasks in parallel. In addition, the method can also achieve plug and play function of each child node GPU in the cluster.

Owner:XIAMEN MEIYA PICO INFORMATION

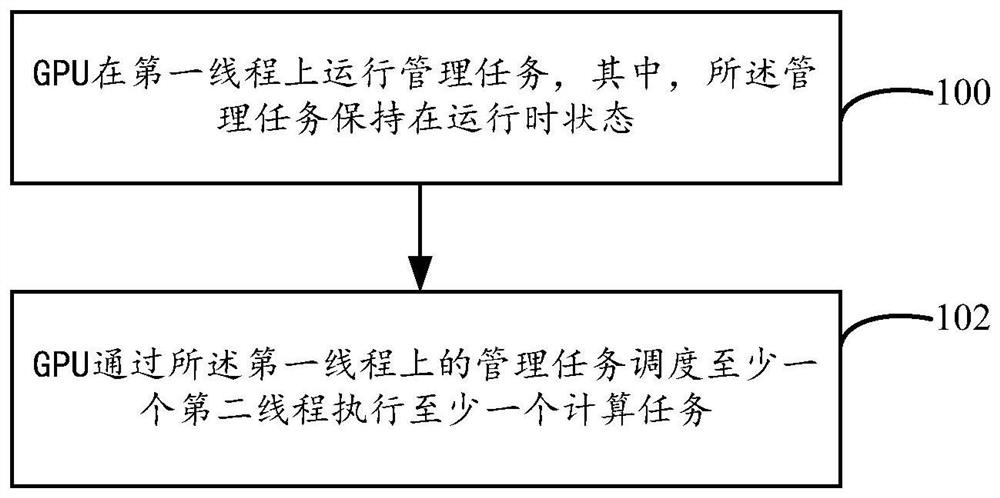

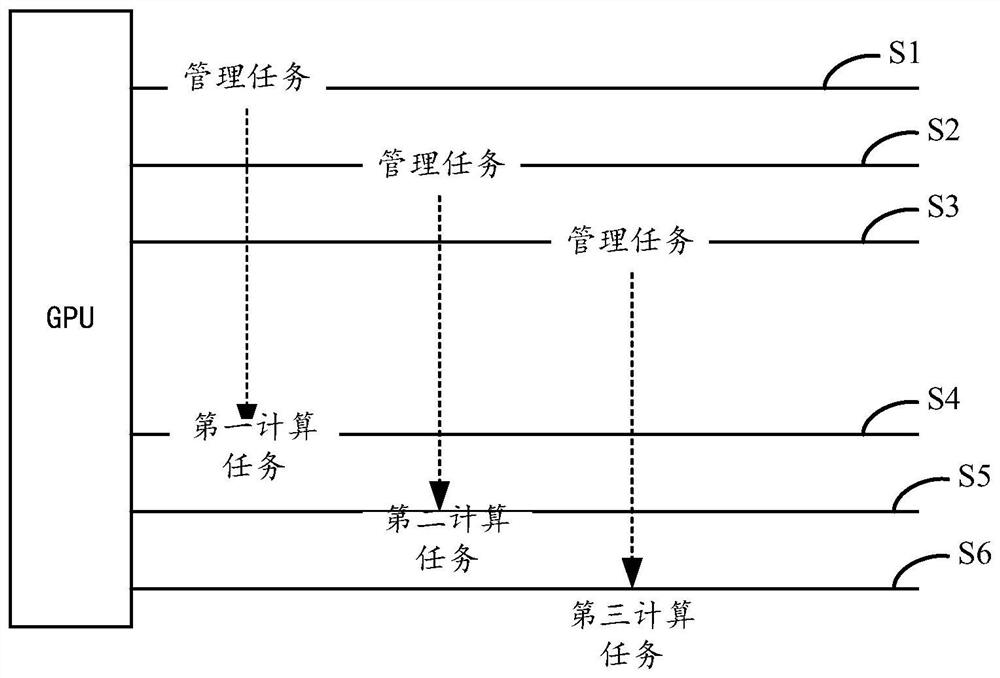

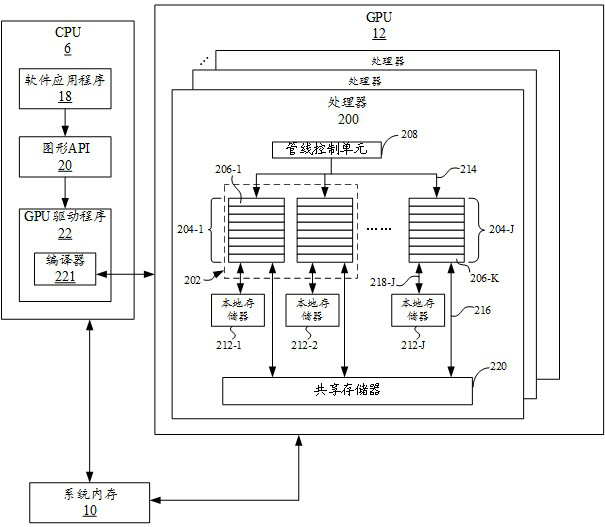

Task processing method, device and system

PendingCN111722915AReduce task switching overheadIncrease profitProgram initiation/switchingResource allocationEngineeringGraphics

The embodiment of the invention provides a task processing method, device and system. The method can comprise the steps that a graphics processing unit GPU operates a management task on a first thread, and the management task is kept in a runtime state; and the GPU schedules at least one second thread to execute at least one computing task through the management task on the first thread.

Owner:SHANGHAI SENSETIME INTELLIGENT TECH CO LTD

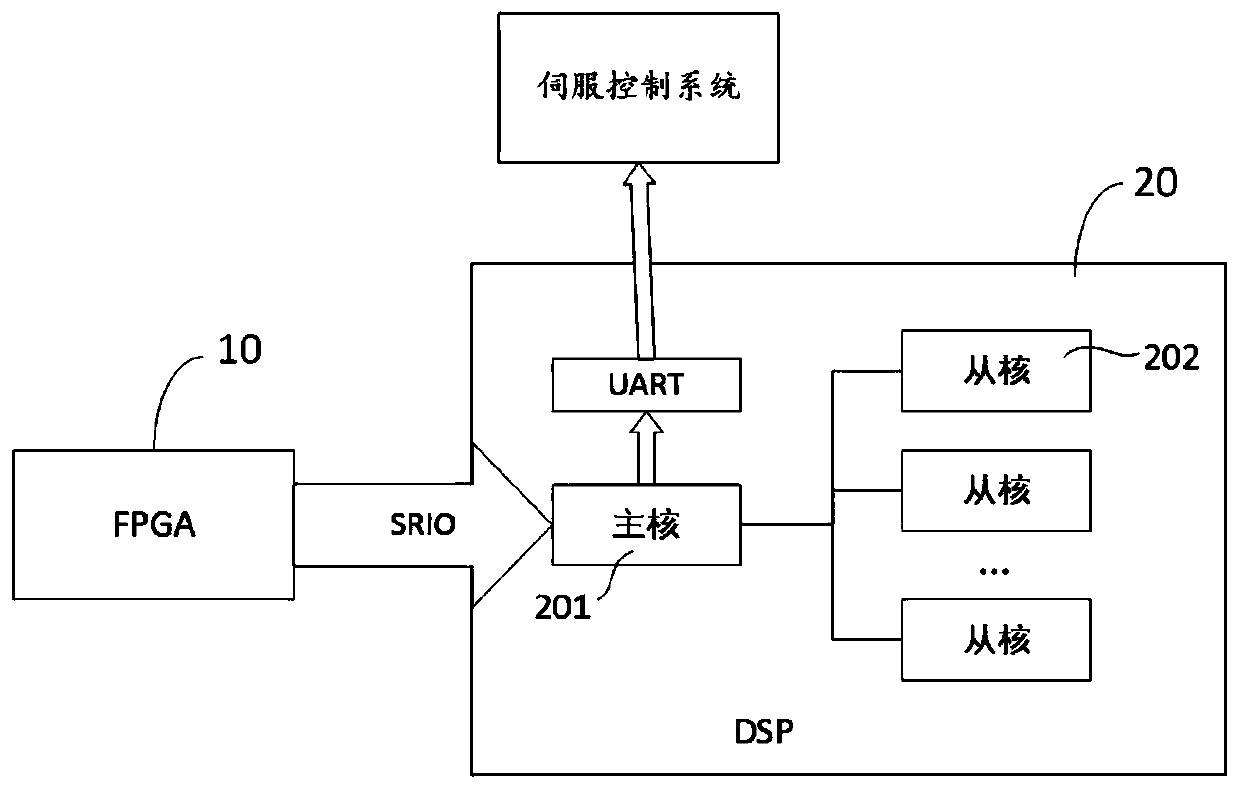

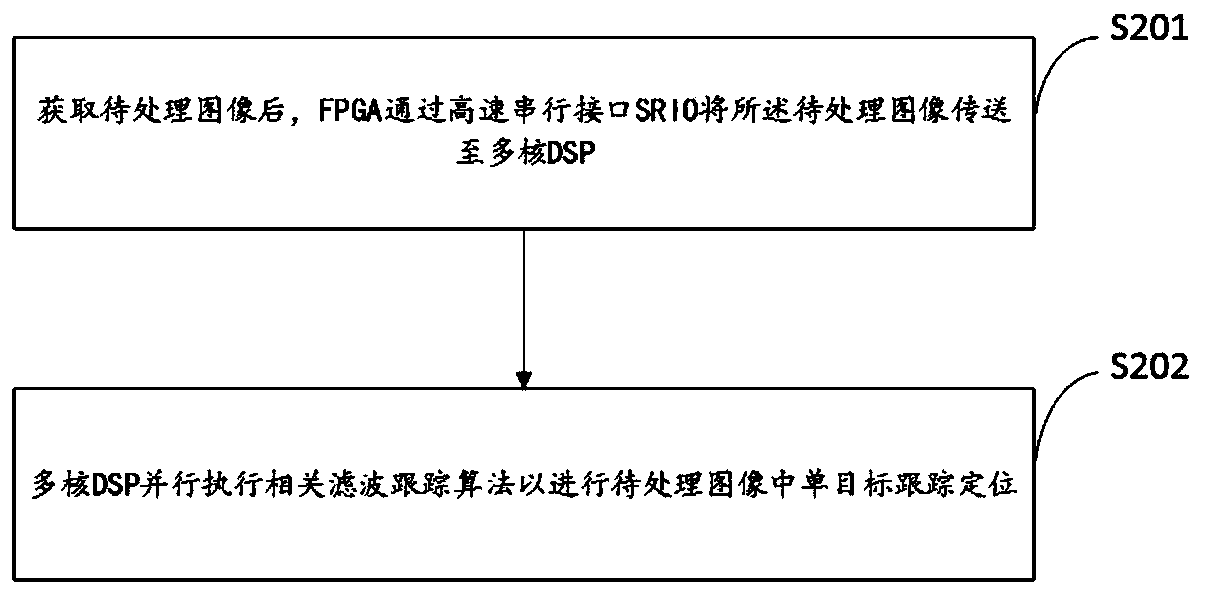

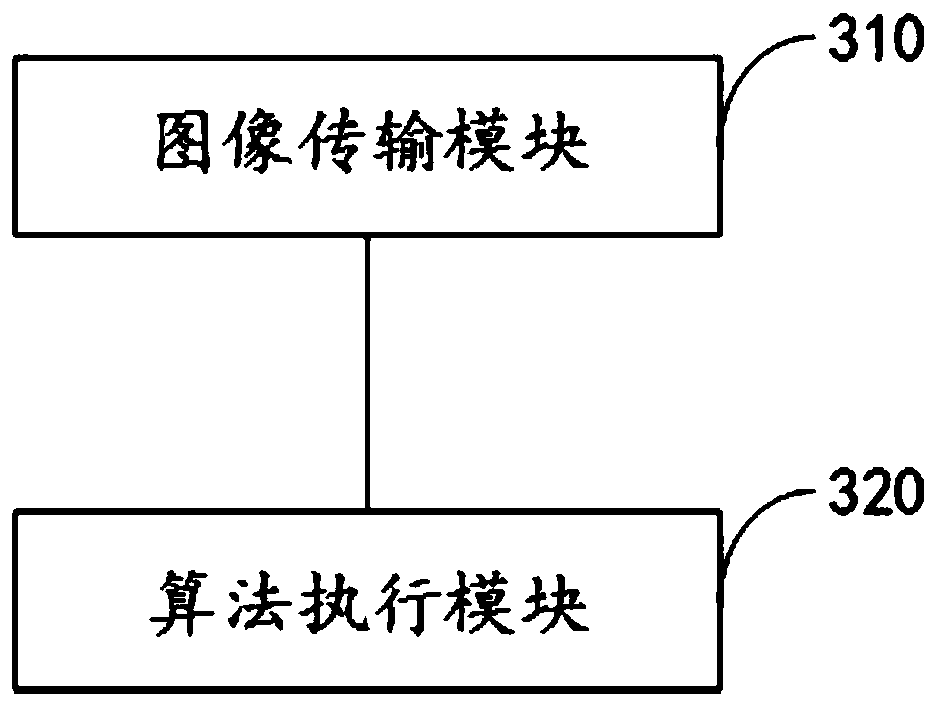

Coring correlation filtering target tracking method and system

PendingCN111221770AImprove processing efficiencyGuaranteed real-timeMultiple digital computer combinationsElectric digital data processingComputer graphics (images)Correlation filter

The invention provides a coring correlation filtering target tracking method and a system, and the method comprises the steps that an FPGA transmits a to-be-processed image to a multi-core DSP througha high-speed serial interface SRIO after the to-be-processed image is obtained; and the multi-core DSP executes a correlation filtering tracking algorithm in parallel to track and position a single target in the to-be-processed image. By means of the method, the problem that a front-end embedded platform is low in processing speed when processing high-resolution and large-scale image data is solved, the image data processing speed can be effectively increased, and the real-time performance and robustness of target tracking are guaranteed.

Owner:NO 717 INST CHINA MARINE HEAVY IND GRP

Network configuration method and related device

ActiveCN110856162BImprove data processing efficiencyAvoid confictAssess restrictionNetwork data managementEmbedded systemBase station

Owner:OPPO CHONGQING INTELLIGENT TECH CO LTD

A Test Task Scheduling Method Oriented to Complex Resource Constraints

ActiveCN113282402BImplement parallel executionIncrease profitProgram initiation/switchingFaulty hardware testing methodsTest efficiencyTest script

The disclosure relates to a test task scheduling method oriented to complex resource constraints, and relates to the field of automated testing, wherein the method includes: connecting test task nodes of the target system according to preset test task dependencies to obtain multiple test task nodes According to the target directed acyclic graph, obtain at least one test task path according to the target directed acyclic graph, and determine the node sequence of each test task node in the corresponding test task path; according to the test resource constraints and node sequence, determine at least The reference test resource of each test task node in a test task path, and execute the corresponding test script according to the reference test resource. Therefore, the execution of test tasks based on the target directed acyclic graph can realize parallel execution of test tasks, improve test efficiency and utilization of test resources, and match test resources based on test resource constraints, improving Test resource selection reliability and efficiency.

Owner:航天中认软件测评科技(北京)有限责任公司

Conditional branch instruction fusion method, device and computer storage medium

ActiveCN111930428BImplement parallel executionAvoid wastingInstruction analysisConcurrent instruction executionProgramming languageCoding block

The embodiment of the invention discloses a conditional branch instruction fusion method and device and a computer storage medium. The method comprises the following steps: in a compiling stage, respectively generating corresponding code blocks from branch statements in a conditional branch statement sequence in response to detecting that the conditional branch statement sequence appears in a program; fusing the code blocks according to a set instruction fusion strategy to obtain a fusion instruction, and storing the fusion instruction in an instruction memory, wherein the branch statement corresponds to a judgment result corresponding to a conditional judgment statement in the conditional branch statement sequence; in an execution stage, decoding a fusion instruction read from the instruction memory to obtain a code block contained in the fusion instruction; scheduling code blocks obtained by decoding to corresponding execution units in parallel according to an execution result obtained by executing the conditional judgment statement; and executing the scheduled code block through the execution unit.

Owner:西安芯云半导体技术有限公司

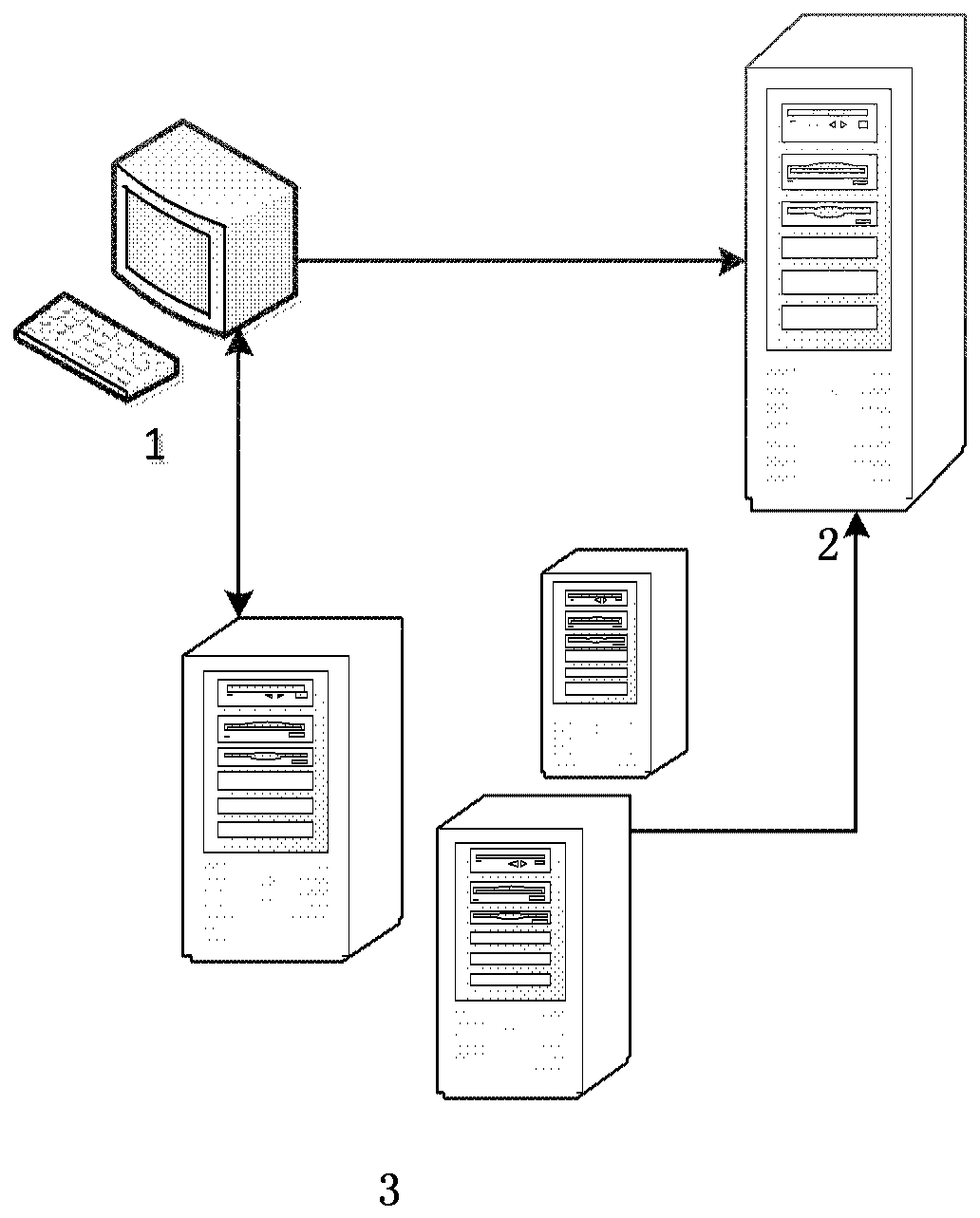

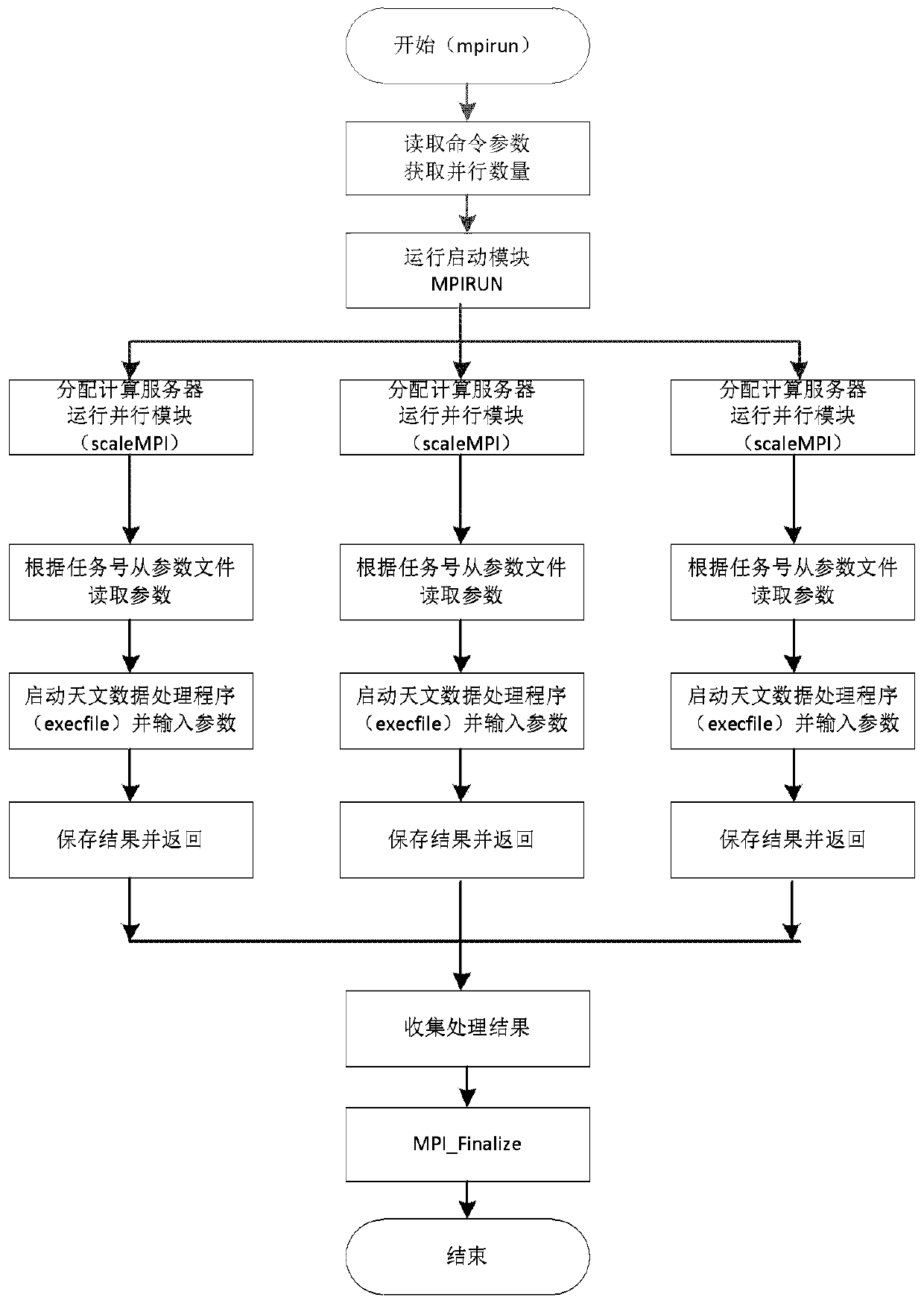

Astronomical data parallel processing device and method

ActiveCN110543361AImprove processing efficiencyCapable of parallel computingResource allocationEnergy efficient computingData fileComputer module

The invention discloses an astronomical data parallel processing device and method. The astronomical data parallel processing device comprises a computing cluster, wherein the computing cluster comprises a management server, a storage server and a plurality of computing servers; the storage server is used for storing a plurality of astronomical data files, parameter files and astronomical data processing instructions; and the astronomical data processing instructions are executed by the plurality of computing servers at the same time. The astronomical data parallel processing method comprisesthe steps that the management server operates a starting module; the starting module allocates the parallel modules to a plurality of computing servers for operation and allocates a task number to theastronomical data processing program; the parallel module starts an astronomical data processing program, extracts parameters from the parameter file in the storage server according to the task number and inputs the parameters into the astronomical data processing program; and the astronomical data processing program processes the astronomical data files in the storage server according to the parameters and stores results in the storage server.

Owner:NAT ASTRONOMICAL OBSERVATORIES CHINESE ACAD OF SCI

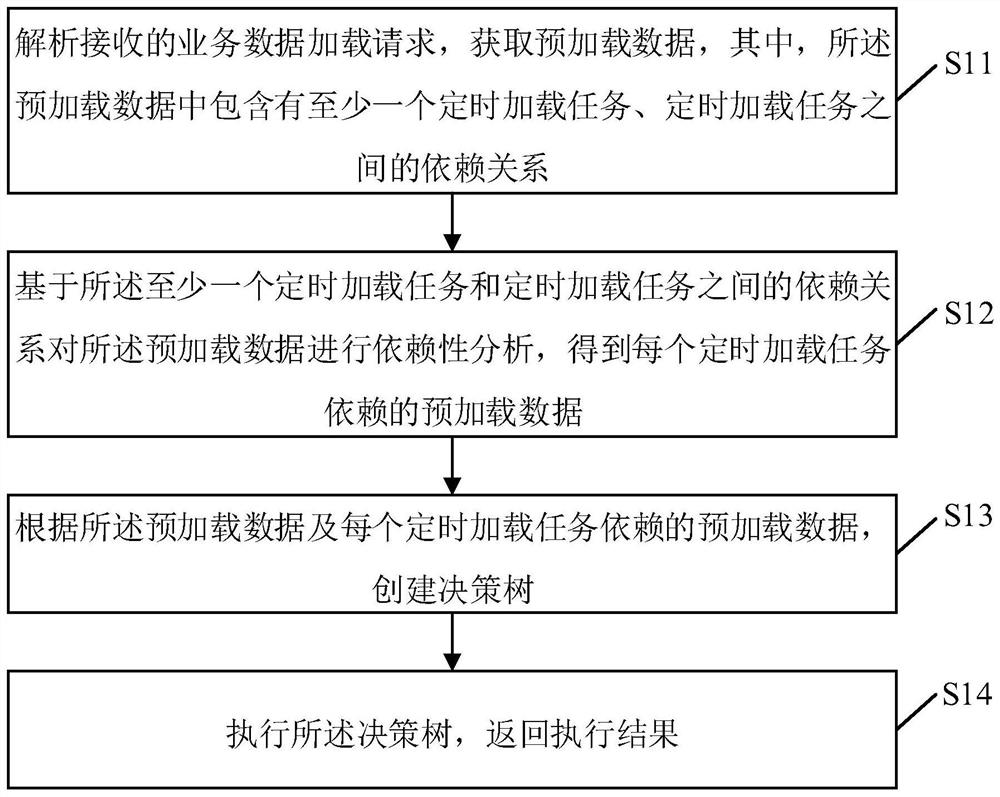

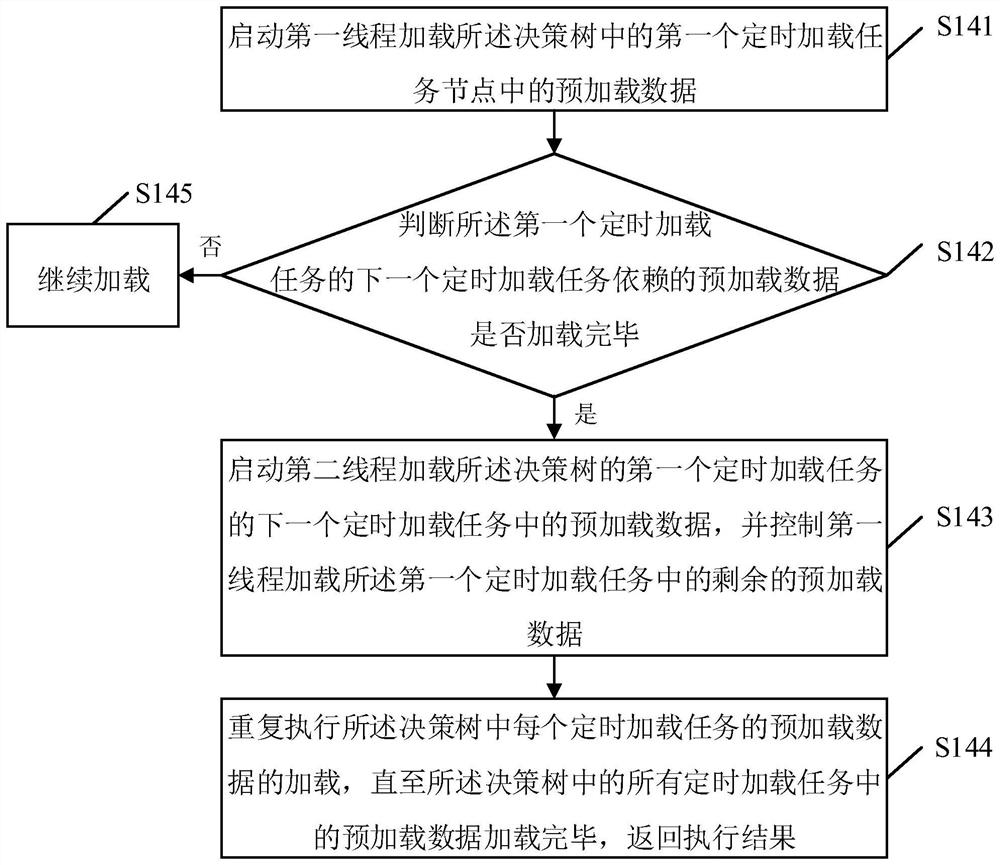

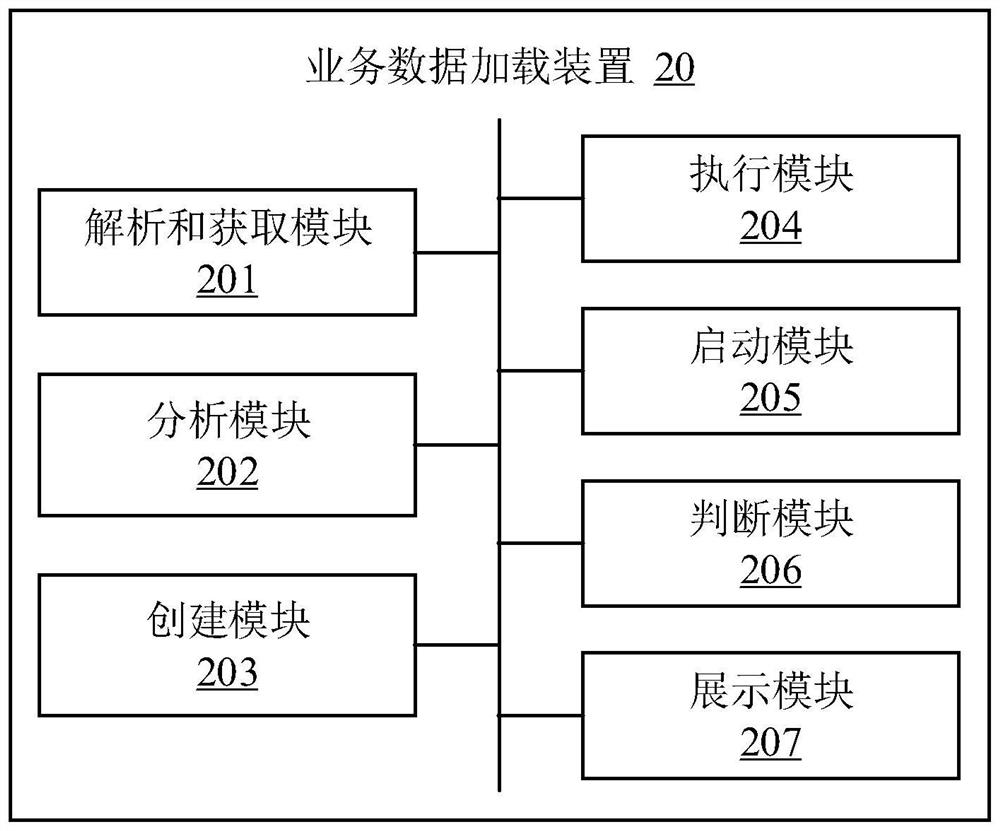

Business data loading method and device, electronic equipment and storage medium

PendingCN114840325AImprove loading efficiencyImprove accuracyDigital data information retrievalProgram initiation/switchingBusiness dataDecision tree

The invention relates to the technical field of big data, and provides a business data loading method and device, electronic equipment and a storage medium, and the method comprises the following steps: carrying out dependency analysis on preloaded data; creating a decision tree according to the preloading data and the preloading data on which each timed loading task depends; when it is detected that loading of the preloaded data depended on by the next timed loading task of the first timed loading task is completed, a second thread is started to load the preloaded data in the next timed loading task of the first timed loading task of the decision tree, controlling the first thread to load the residual preloading data in the first timed loading task; and the decision tree is loaded. According to the method and the device, when the loading of the preloading data on which the next timed loading task of the first timed loading task depends is finished, the second thread is started to load the next timed loading task of the first timed loading task, so that the data loading efficiency is improved.

Owner:CHINA PING AN PROPERTY INSURANCE CO LTD

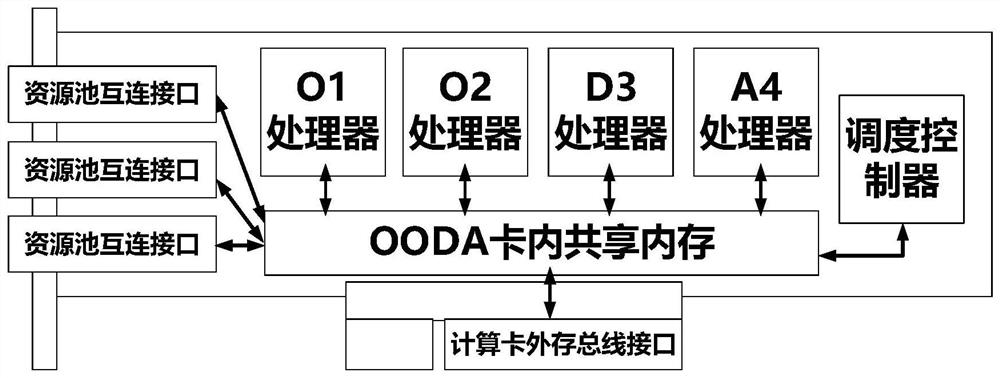

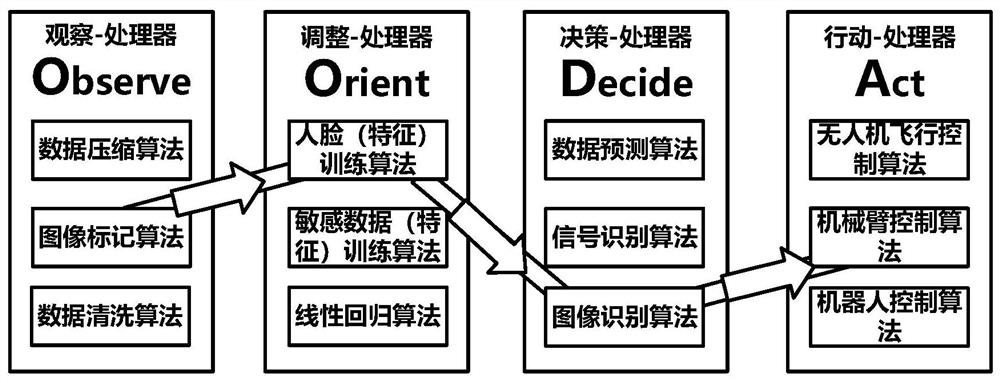

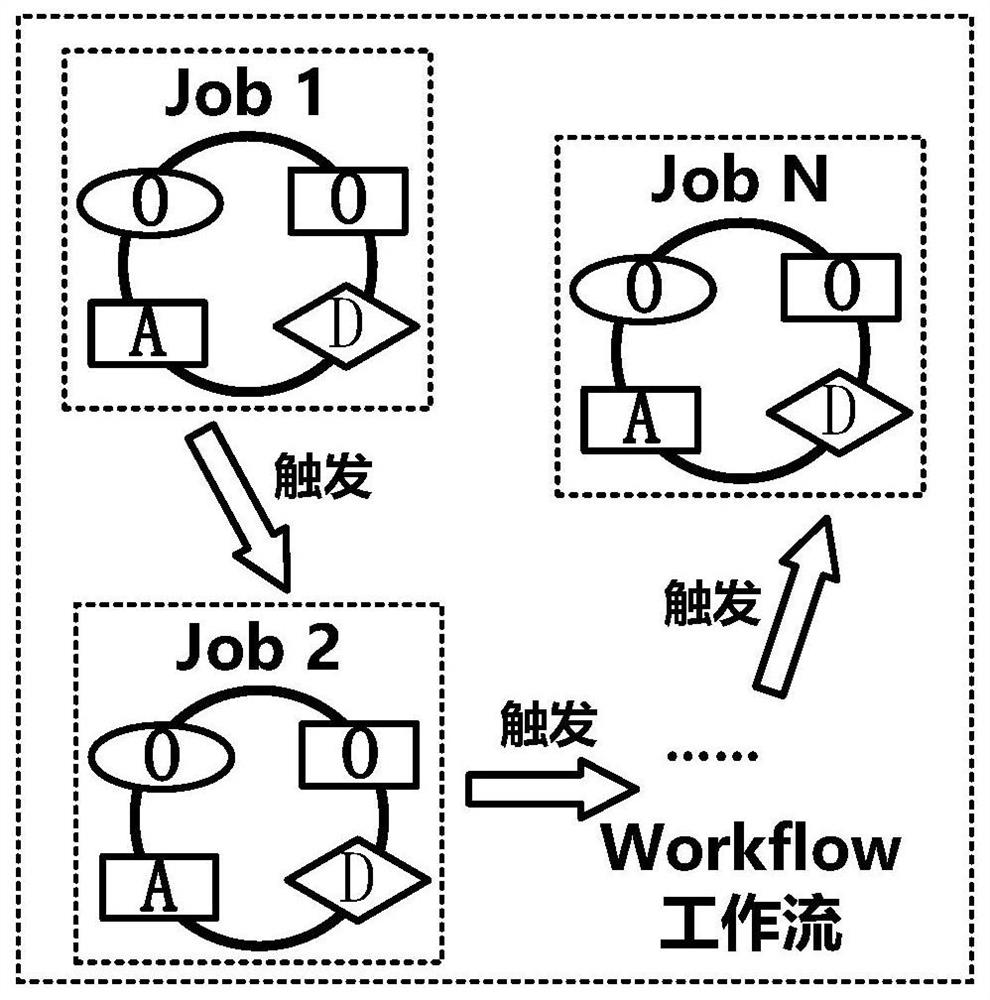

Computing board card with OODA multiprocessor

ActiveCN111813453AImprove processing efficiencyImplement parallel executionResource allocationInterprogram communicationMulti processorParallel computing

The invention discloses a computing board card with an OODA multiprocessor. The computing board card comprises four processors with different computing functions of the OODA, and the four processors allocate different workflow jobs through a scheduling controller; and when one streaming job is processed, sequentially and circularly calling the four processors according to a preset calculation sequence, and executing the streaming job. When the four processors process the OODA workflow loads, four kinds of calculation algorithms including observation, adjustment, scenario and action are executed respectively, the multiple OODA workloads occupy different processors in sequence according to the characteristic that the OODA workloads occupy different processors in sequence, and the execution efficiency of a load assembly line is improved.

Owner:中科院计算所西部高等技术研究院

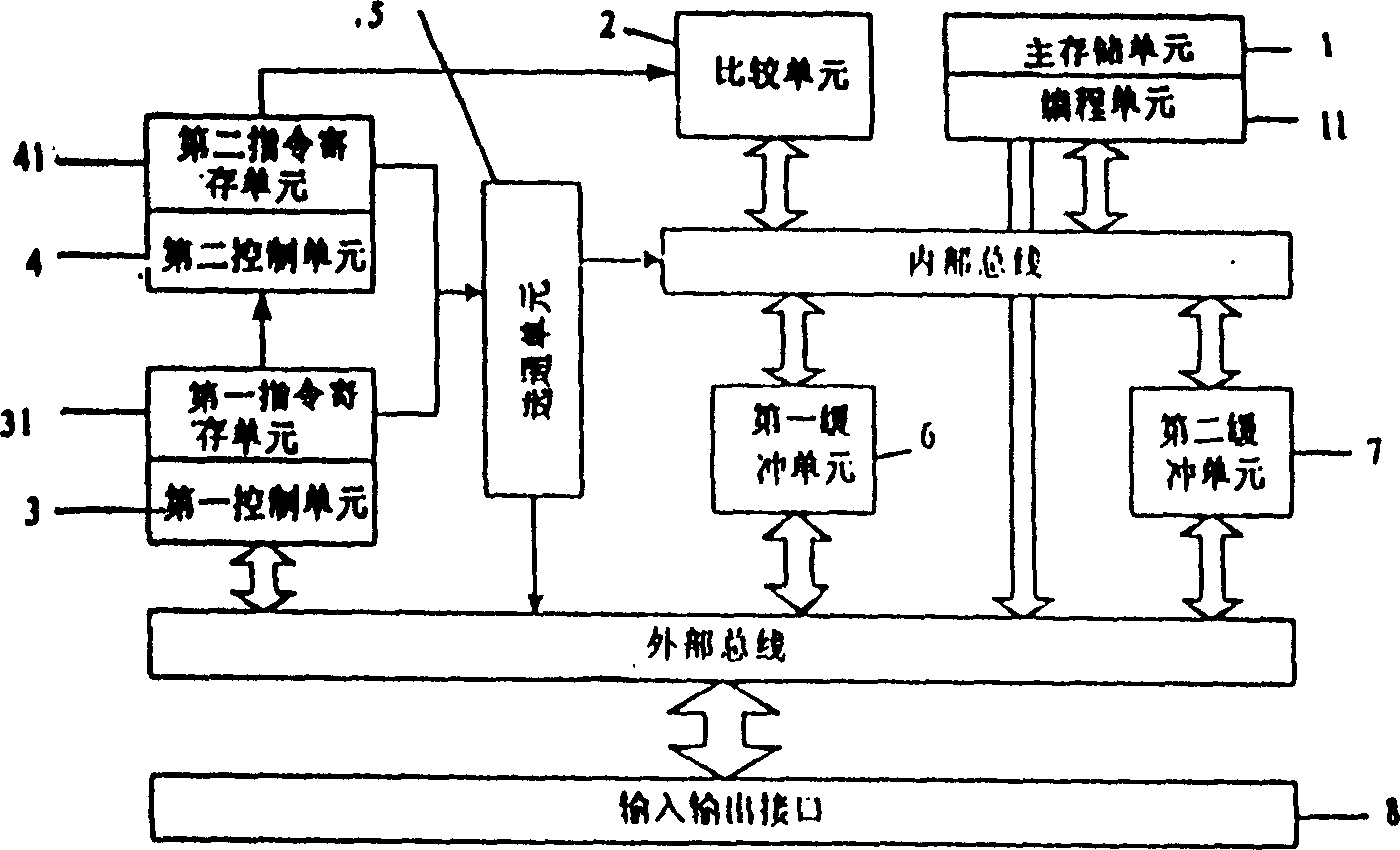

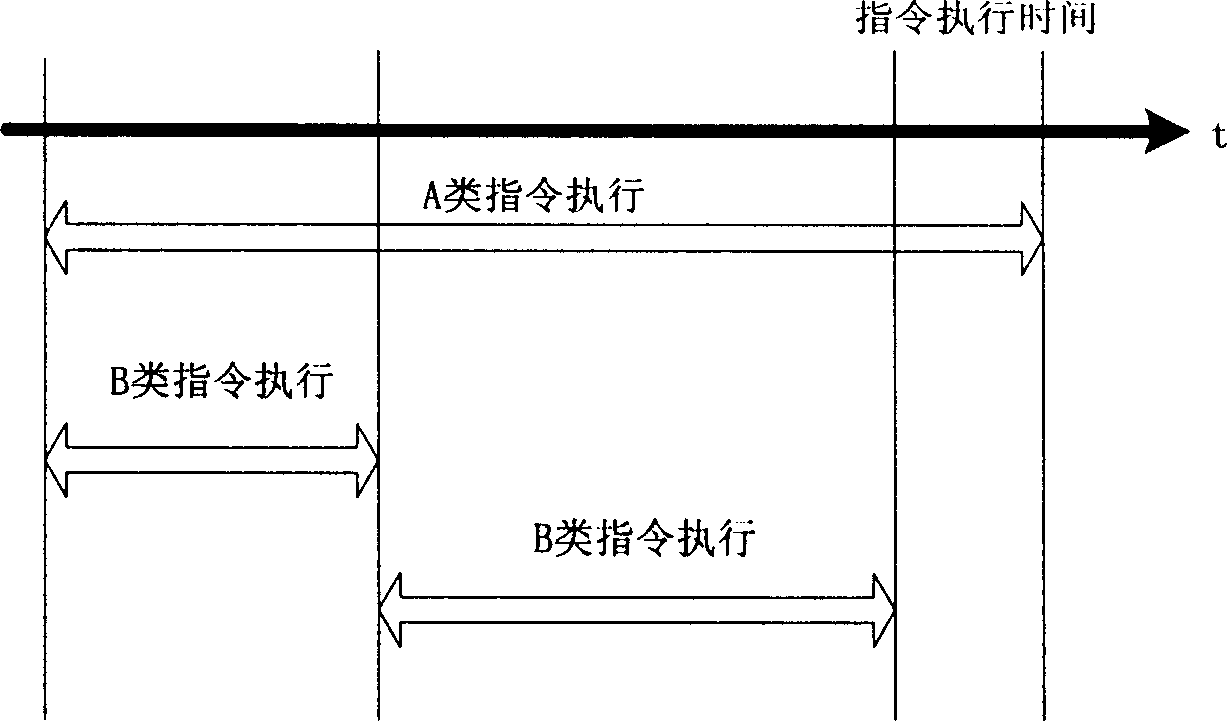

IC storage card

InactiveCN1521691ALow costHigh densityRecord carriers used with machinesControl signalControl system

An IC storing card wherein the main storage unit and the programming unit employ flash memory units, two static state random memory units, and a serial input / output interfaces, when a command is inputted, the device command is temporarily stored in a command registering unit, the type A command is sent to the second control unit for execution, the type B command is sent to the first control unit for execution, in the execution term, the control signal produced by the control unit flows to the two static random storage units through data of the selector control system bus, thus preventing errors for data communication between the system resources when two commands are operated simultaneously.

Owner:上海华园微电子技术有限公司

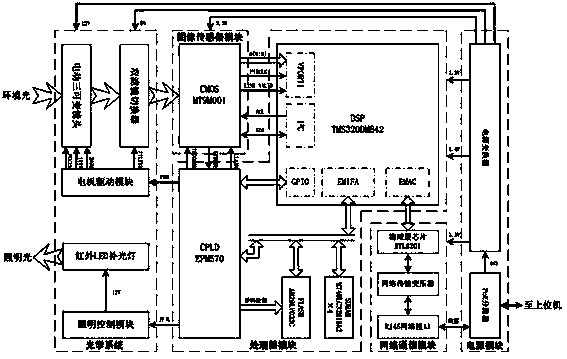

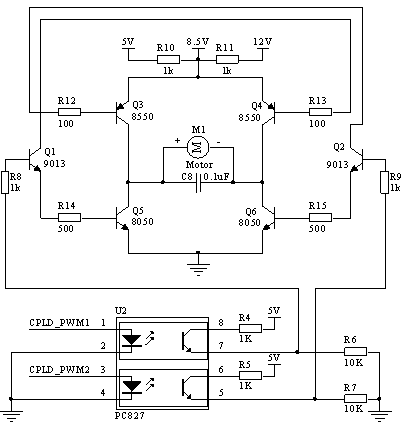

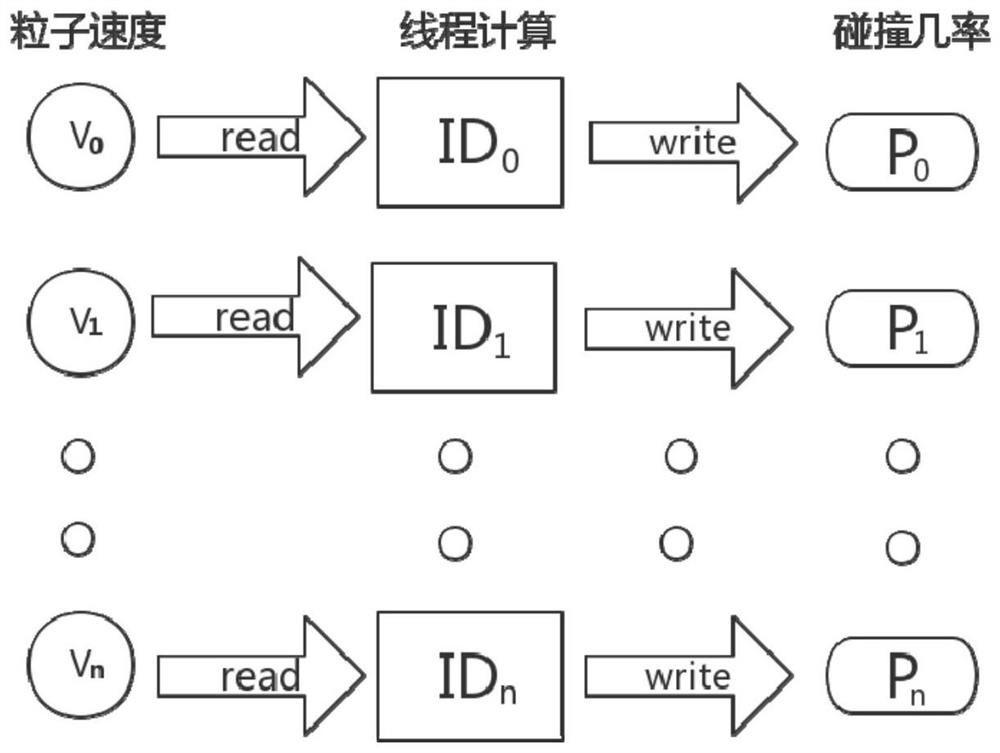

Large-scale particle image velocimeter based on near-infrared smart camera

ActiveCN102914667BIncrease Brightness ContrastImprove accuracyTelevision system detailsColor television detailsAnti jammingNetwork communication

The invention discloses a large-scale particle image velocimeter based on a near-infrared smart camera, which belongs to the technical field of non-contact open channel current surveying. The instrument consists of a processor module, an image sensor module, an optical system, a network communication module and a power module, wherein the processor module takes a 32-bit fixed point DSP (digital signal processor) chip as a core to replace a PC (personal computer) to finish image collection and processing as well as data transmission; the image sensor module adopts a monochrome CMOS (complementary metal oxide semiconductor) image sensor of a digital interface; and the optical system consists of a double-filter switcher, an electric three-variable lens, an infrared LED fill light, a motor driving module and an illumination control module and is used for realizing the remote control of lower optical image under a near-infrared waveband or full-spectrum waveband. The instrument adopts an Ethernet transmission image, flow field data, a state information and control command conforming to an IEEE (institute of electrical and electronic engineers) 802.3 protocol, and the instrument and data transmission can share one cable by supplying power in a PoE mode. The large-scale particle image velocimeter has the characteristics of strong anti-jamming capability, high temporal-spatial resolution and flexible and efficient system.

Owner:HOHAI UNIV

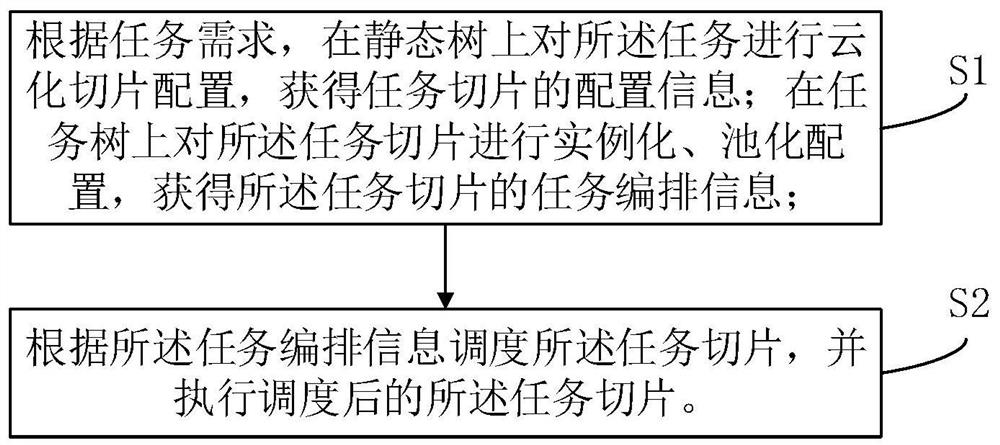

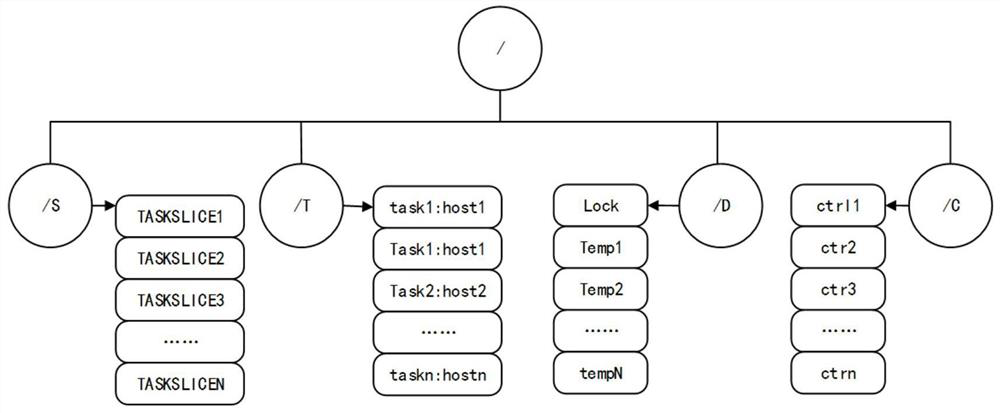

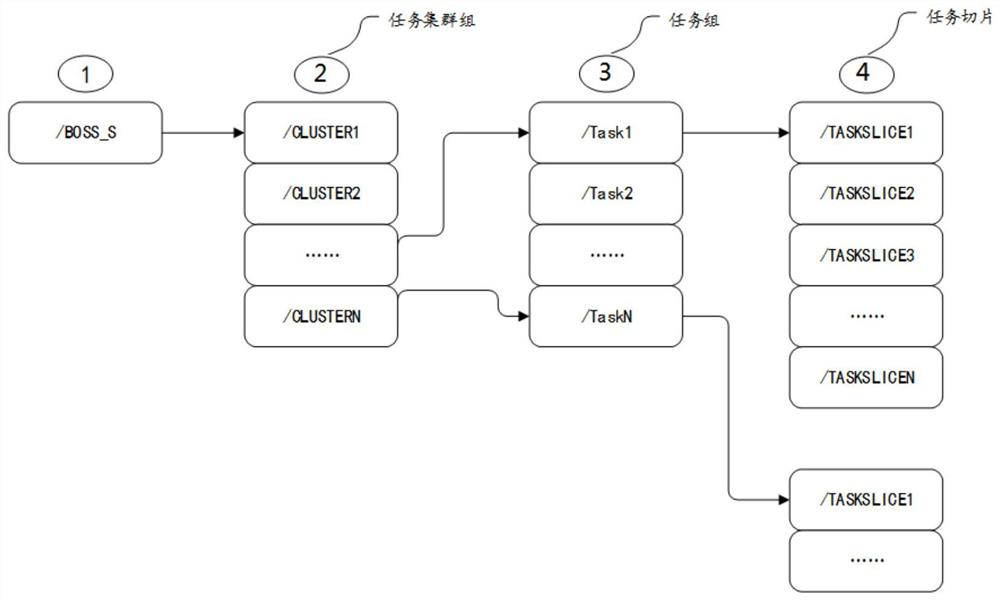

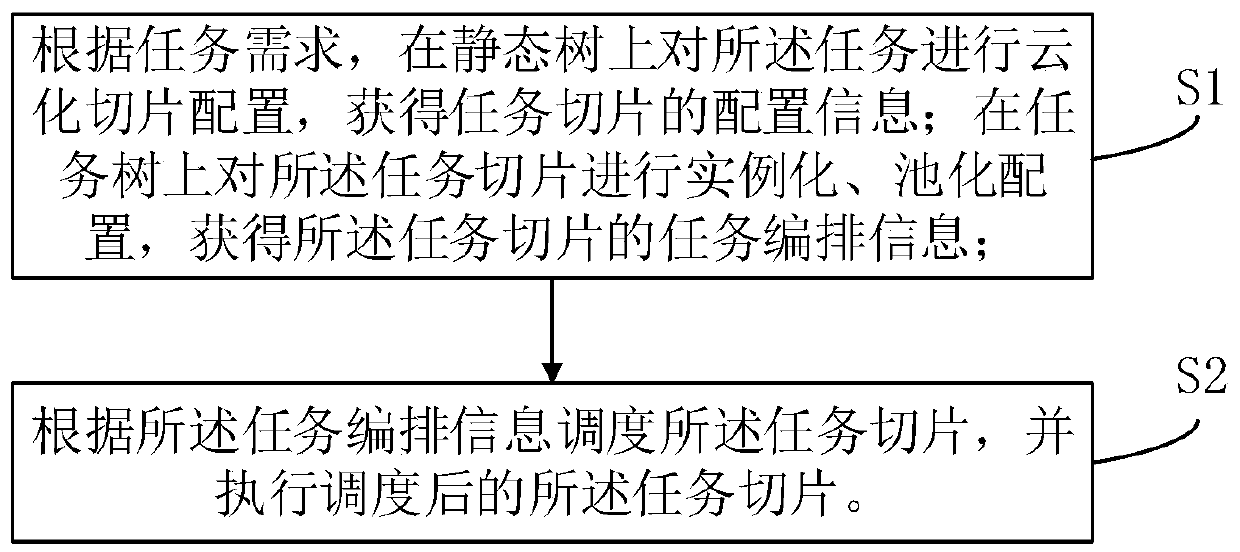

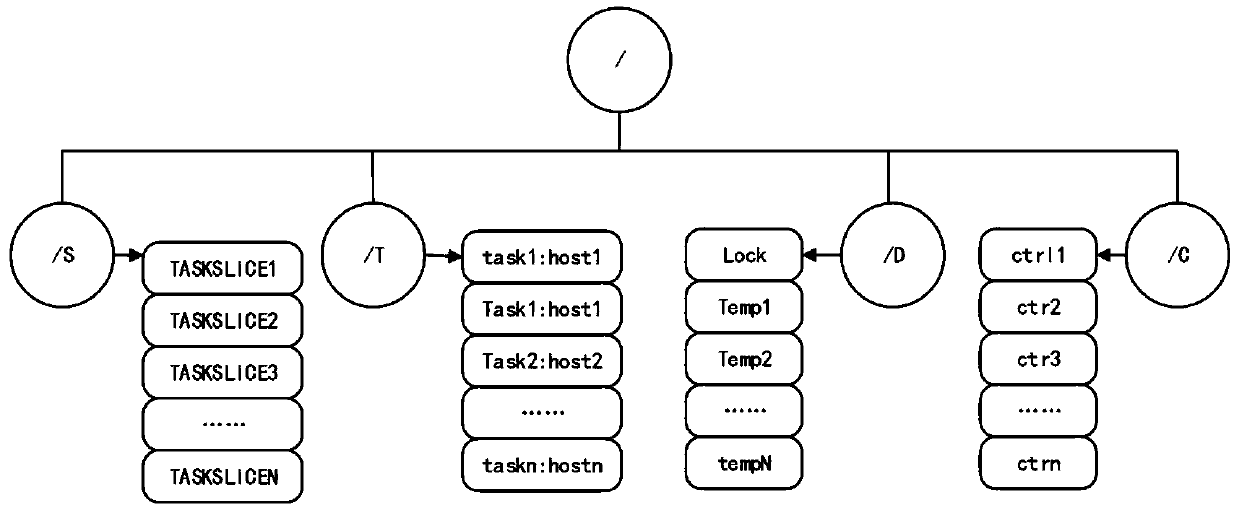

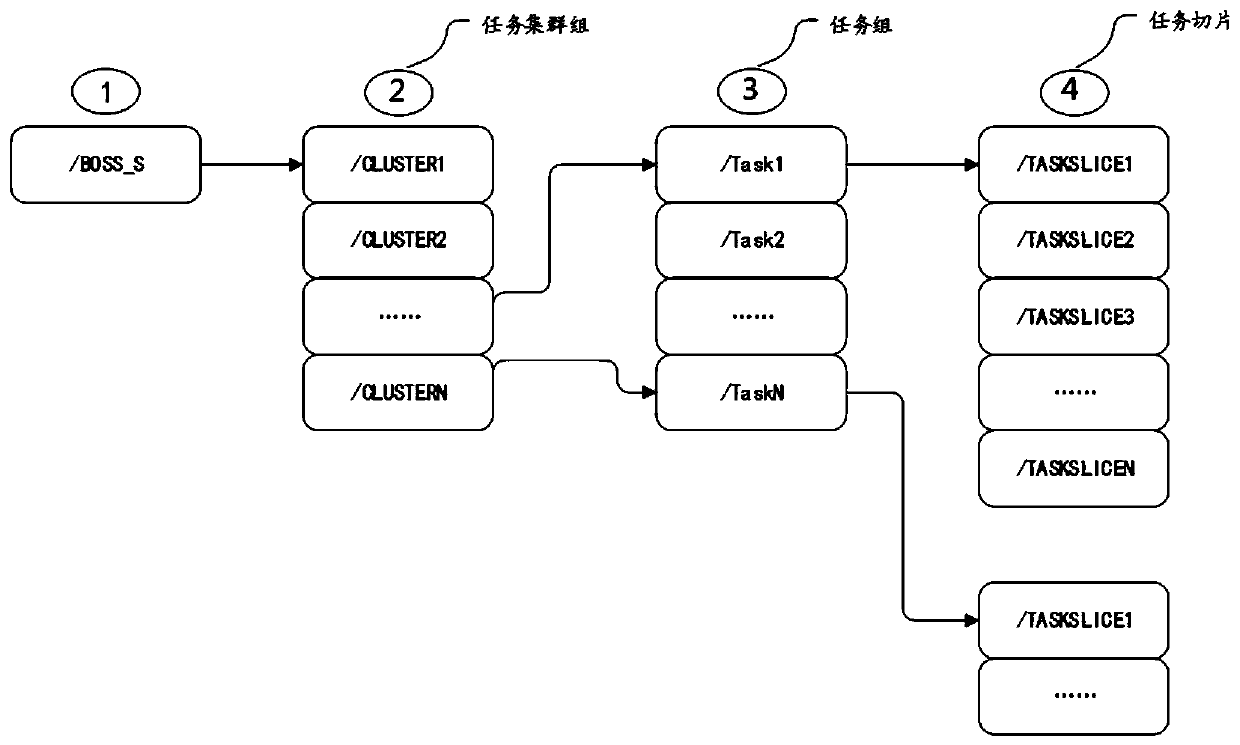

A method and system for implementing cloud task orchestration and scheduling based on zookeeper

ActiveCN110928668BOrchestration implementationImplement schedulingProgram initiation/switchingParallel computingUsability

The invention discloses a method for realizing cloudization task arrangement and scheduling based on ZooKeeper. The method comprises: performing cloudization slice configuration on the task on a static tree according to the task requirement, and obtaining configuration information of the task slice; Slices are instantiated, pooled and configured to obtain task layout information of task slices; record the running status of task slices on the dynamic tree; record the execution status of task slices on the control tree; schedule task slices according to task layout information, and execute scheduling The final task slicing realizes the arrangement, scheduling and execution of tasks, and does not require manual intervention. The arrangement and scheduling of tasks can be realized through the cloud task arrangement and scheduling client, which improves the usability and robustness of batch tasks .

Owner:北京思特奇信息技术股份有限公司

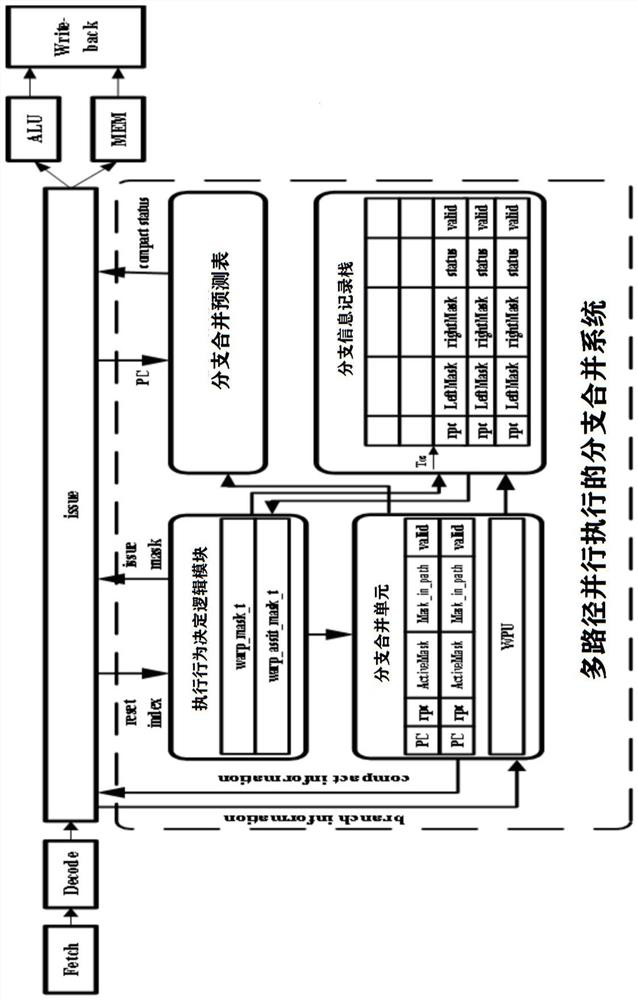

Multi-path parallel execution branch merging system and branch merging method

PendingCN113778529AImplement parallel executionConcurrent instruction executionParallel computingBranch

The invention relates to a multi-path parallel execution branch merging system and a branch merging method. The system comprises an execution behavior decision logic module, a branch merging prediction table, a branch merging unit and a branch information recording stack, the execution behavior decision logic module is connected with the branch merging unit and the branch information recording stack, and the branch merging unit is connected with the branch merging prediction table and the branch information recording stack. The branch information record stack is connected with the execution behavior decision logic module, and the branch merging prediction table is used for predicting whether all warps currently executing the branch separation instruction need to be merged or not; the branch merging unit completes the merging of warps under different branch paths when a branch instruction is executed once; the branch information recording stack records execution information of the warp under different branch paths at the same time; and the execution behavior decision logic module records the warp which can be scheduled and executed in parallel under the main branch path and other branch paths at the same time, and controls the issue module to complete the warp parallel scheduling. According to the invention, parallel execution of the warp under a plurality of branch paths is effectively realized.

Owner:XIDIAN UNIV

Method and system for realizing cloud task arrangement and scheduling based on ZooKeeper

ActiveCN110928668AImprove ease of use and robustnessIntegrity guaranteedProgram initiation/switchingClientUsability

The invention discloses a method for realizing cloud task arrangement and scheduling based on ZooKeeper. The method comprises the steps: carrying out cloud slice configuration on a task on a static tree according to a task demand, and obtaining the configuration information of a task slice; performing instantiation and pooling configuration on the task slices on the task tree to obtain task arrangement information of the task slices; recording the running state of the task slice on the dynamic tree; recording an execution state of the task slice on the control tree; scheduling the task slicesaccording to the task arrangement and scheduling information, and executing the scheduled task slices, so that the arrangement, scheduling and execution of the tasks are realized while manual intervention is not needed, and the arrangement and scheduling of the tasks can be realized through the cloud task arrangement and scheduling client, and the usability and robustness of batch tasks are improved.

Owner:北京思特奇信息技术股份有限公司

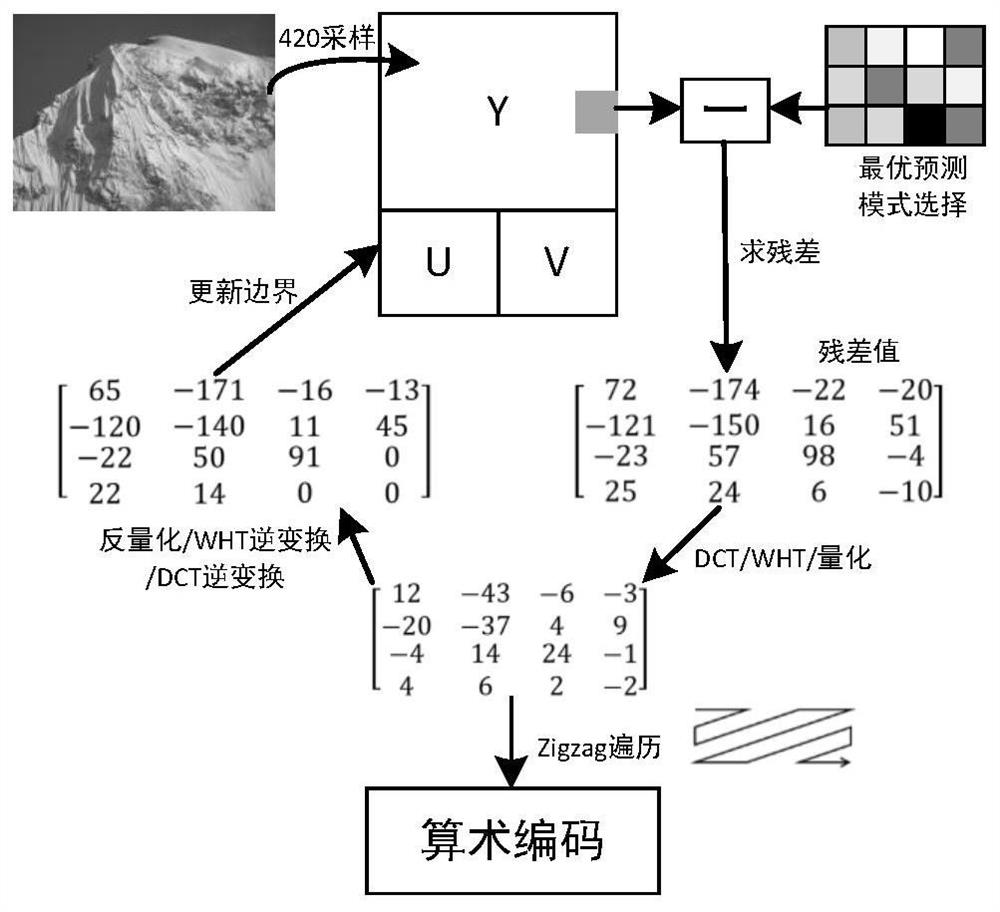

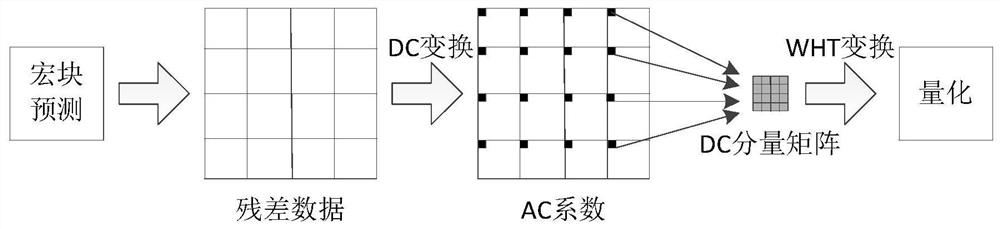

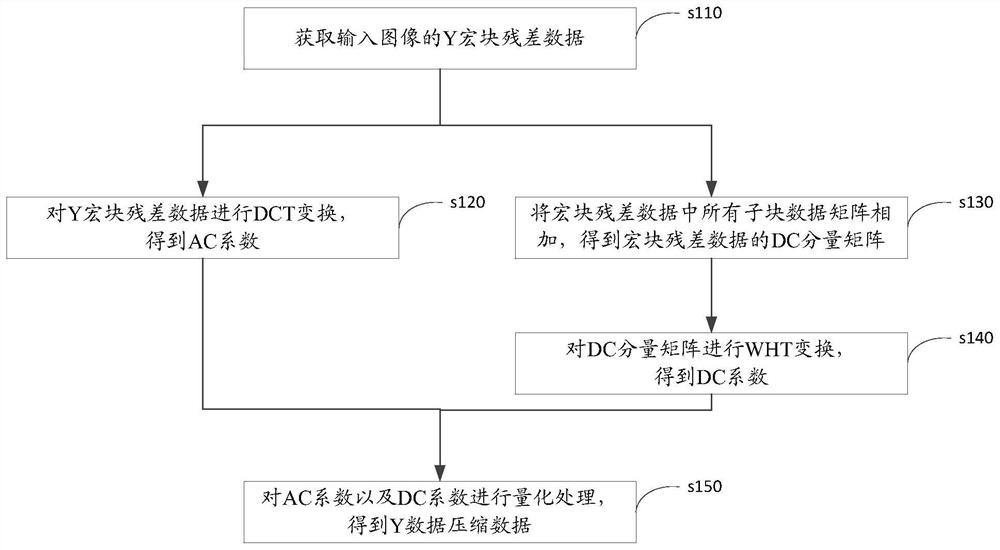

yData compression processing method, device, equipment and webp compression system

ActiveCN108900842BShorten the timeCancel the serial linkage relationshipDigital video signal modificationImaging processingImage manipulation

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

A Charge Exchange Collision MCC Method for Numerical Simulation of Ion Thrusters

ActiveCN108549763BImplement parallel executionImprove computing efficiencyGeometric CADDesign optimisation/simulationGraphicsPERQ

The invention relates to the field of ion thruster numerical simulation, in particular to a charge exchange collision MCC method for ion thruster numerical simulation. The present invention utilizes the physical characteristics of the charge exchange collision of the ion thruster in combination with the characteristics of single instruction multi-threading of the graphics processing unit (GPU) in the computer field to realize the parallel execution of the single instruction multiple data of the charge exchange collision MCC algorithm of the ion thruster, greatly greatly The calculation efficiency is improved and the calculation time is saved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com