Patents

Literature

83results about How to "Reduce start-up delay" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

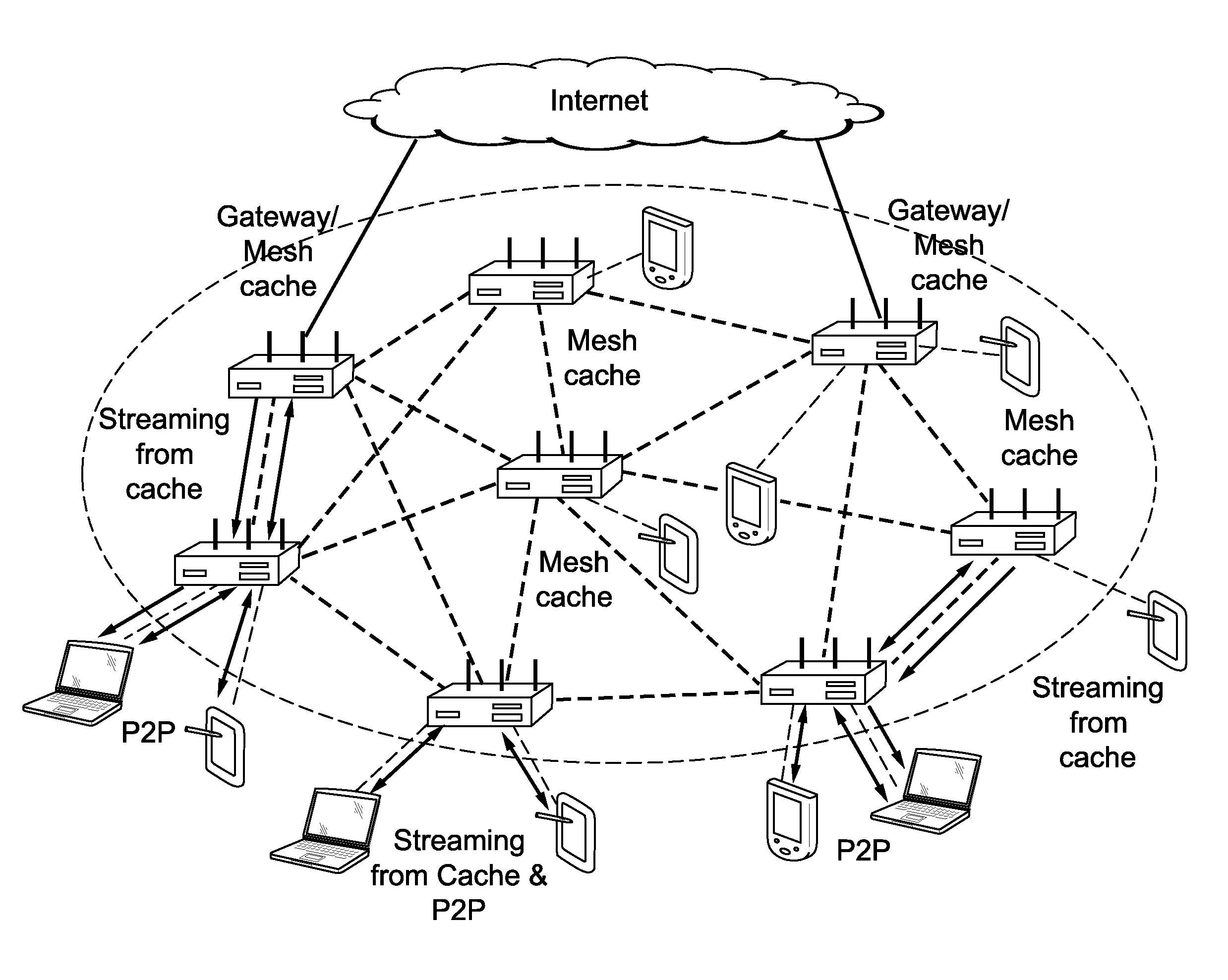

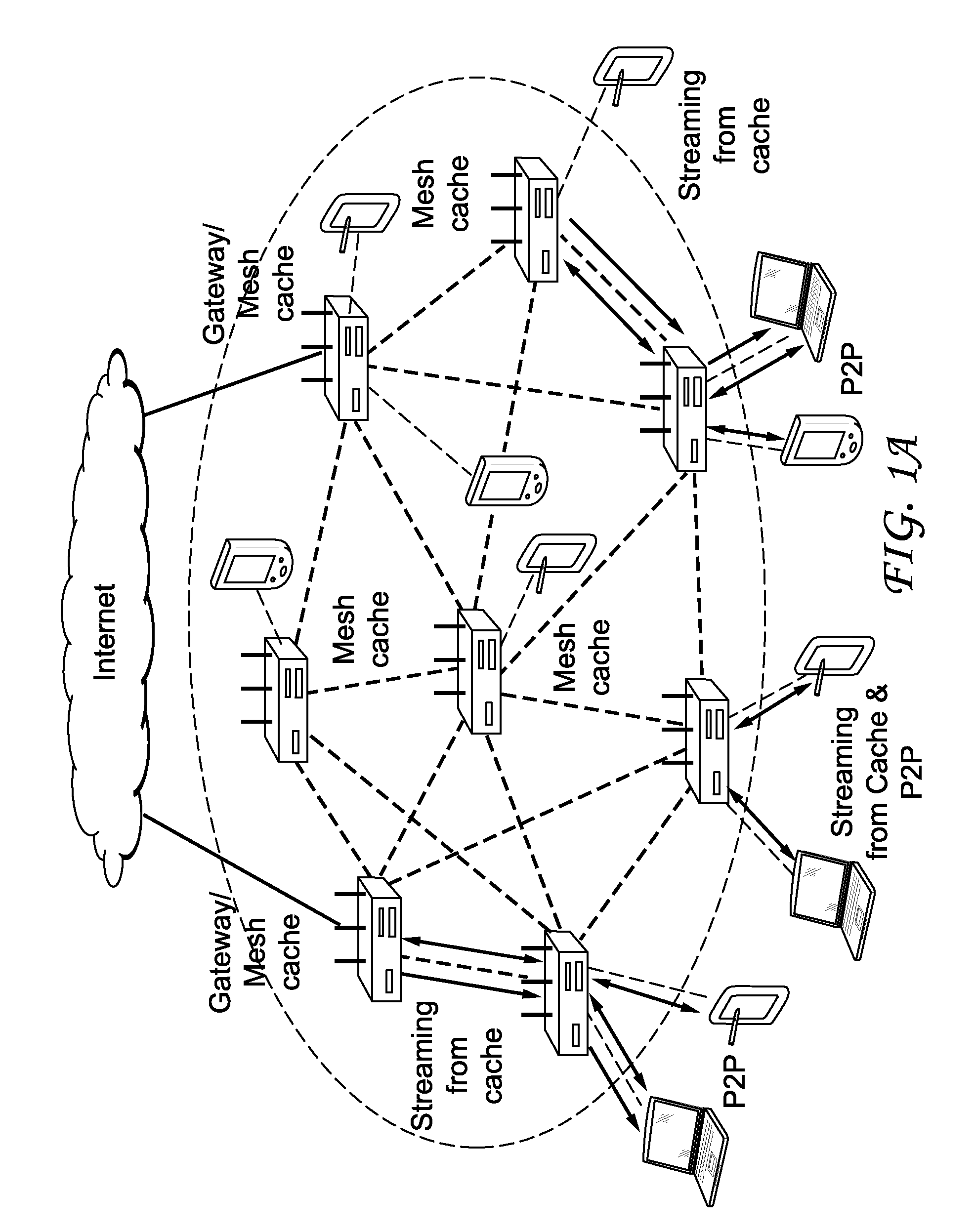

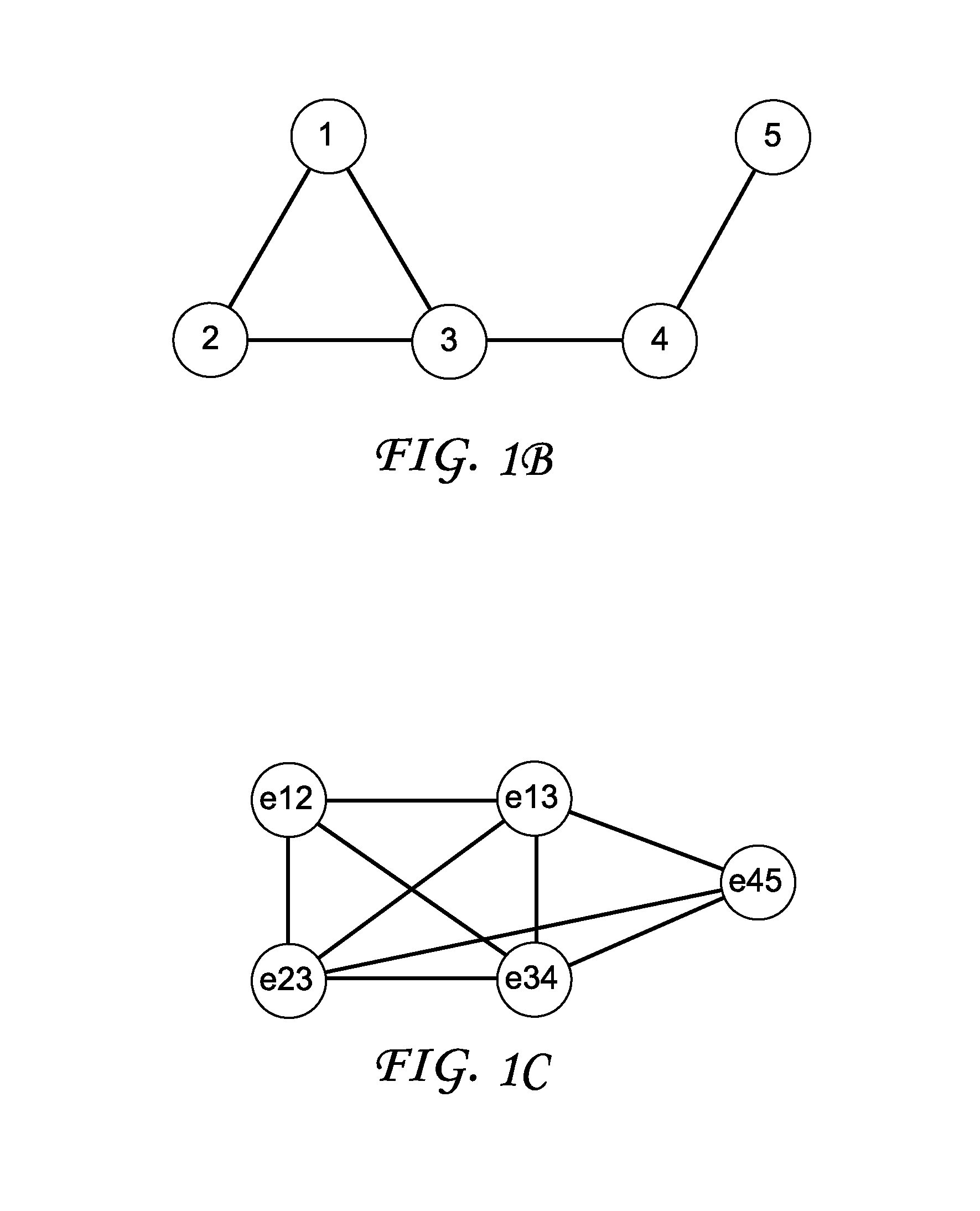

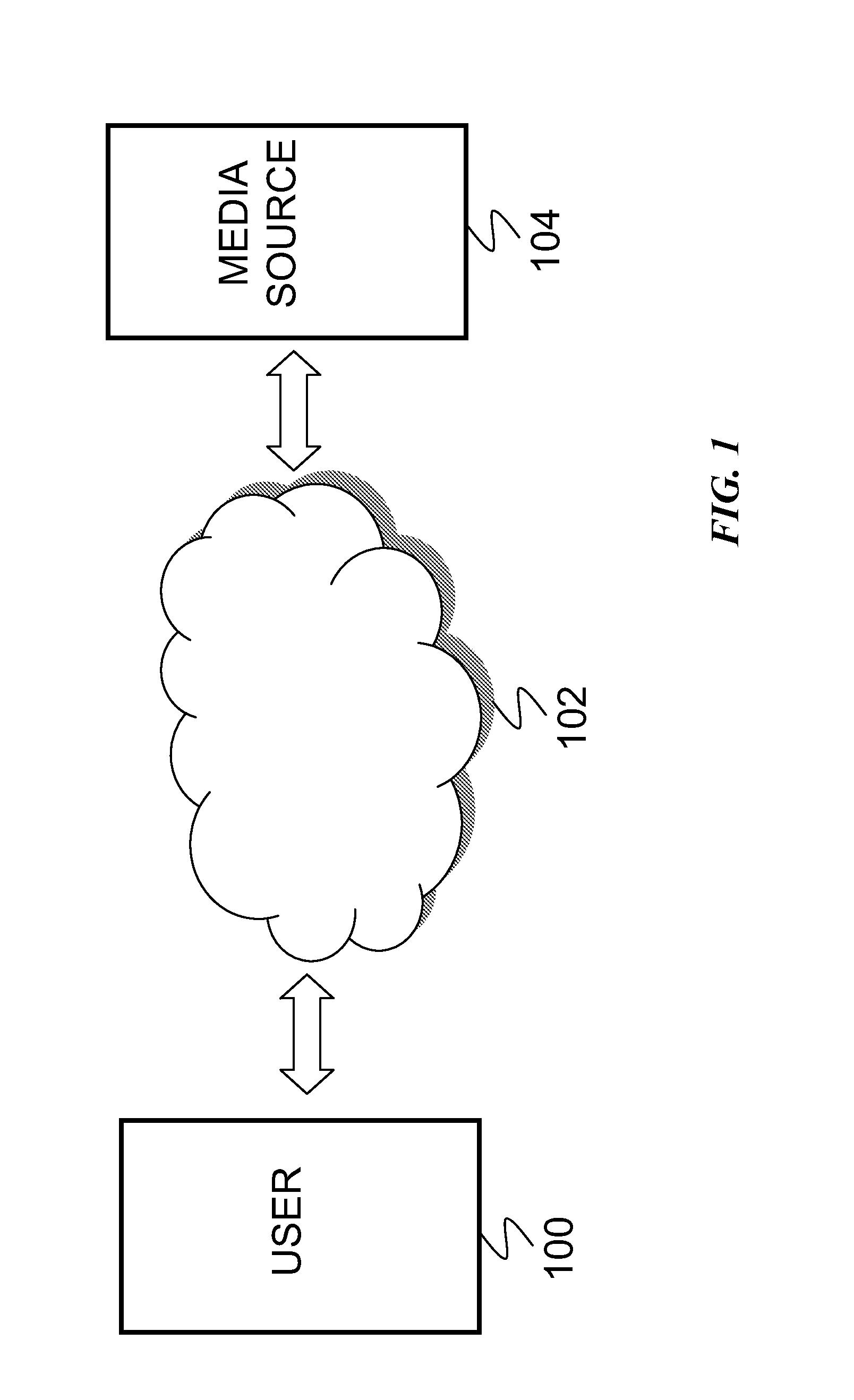

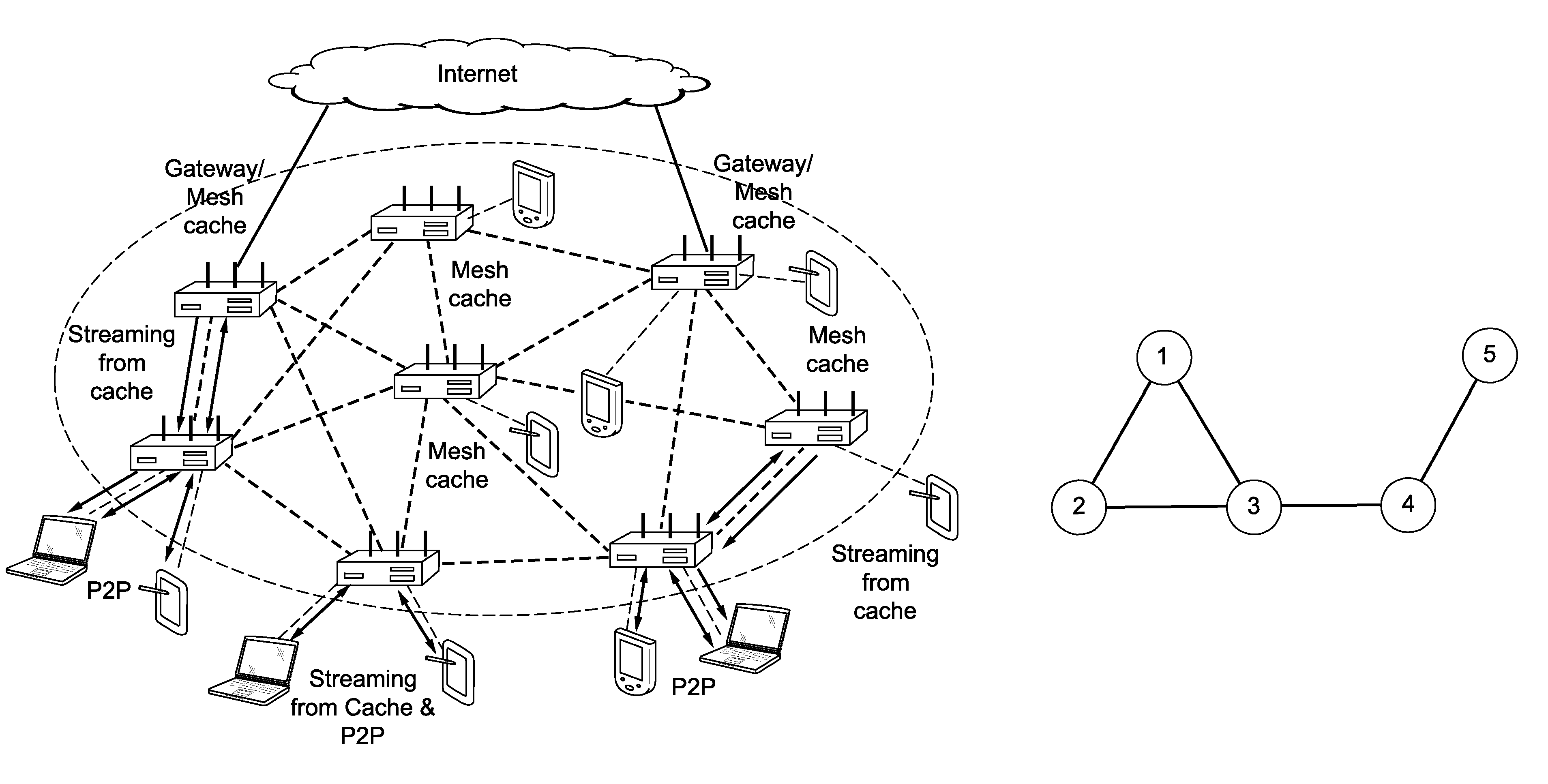

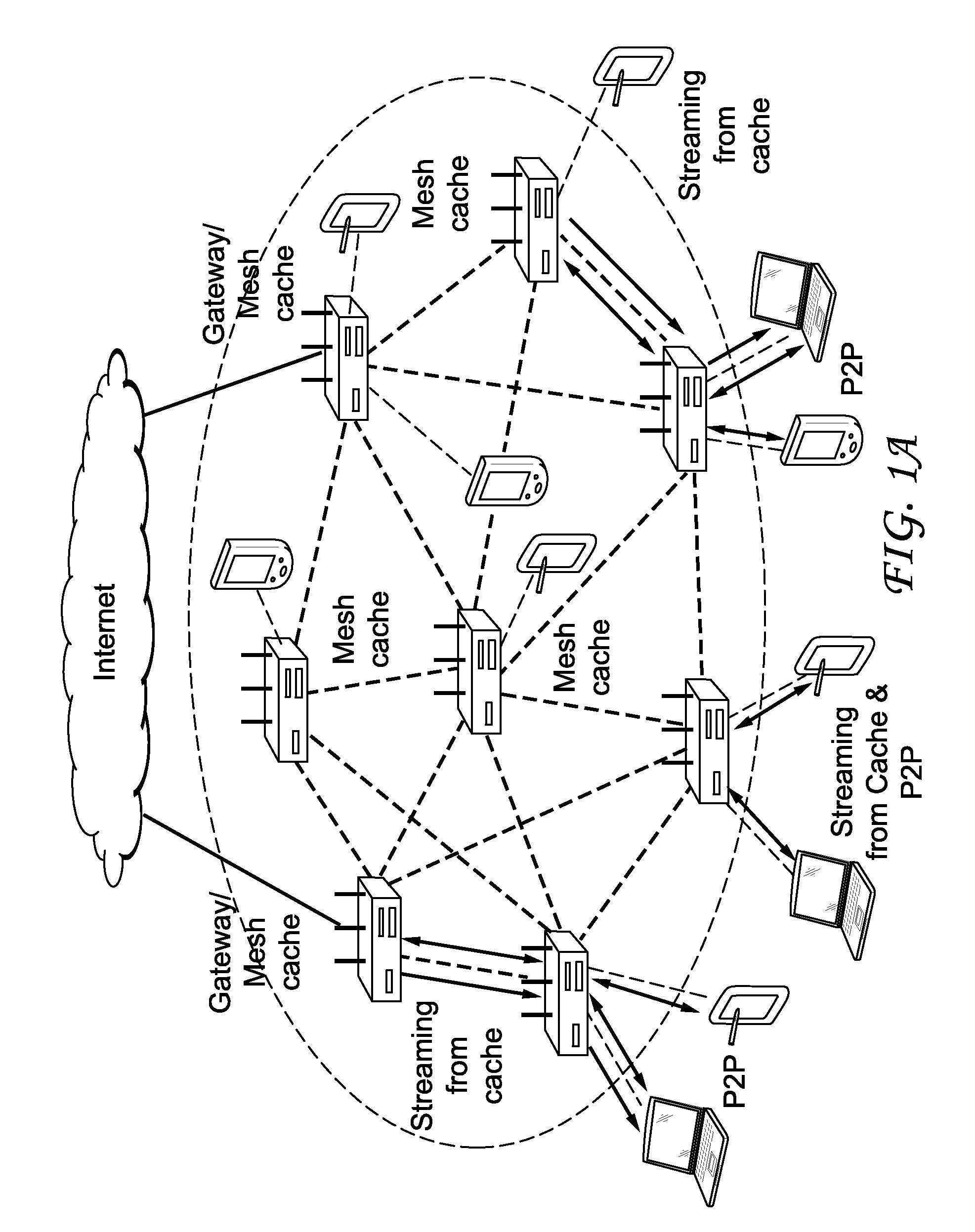

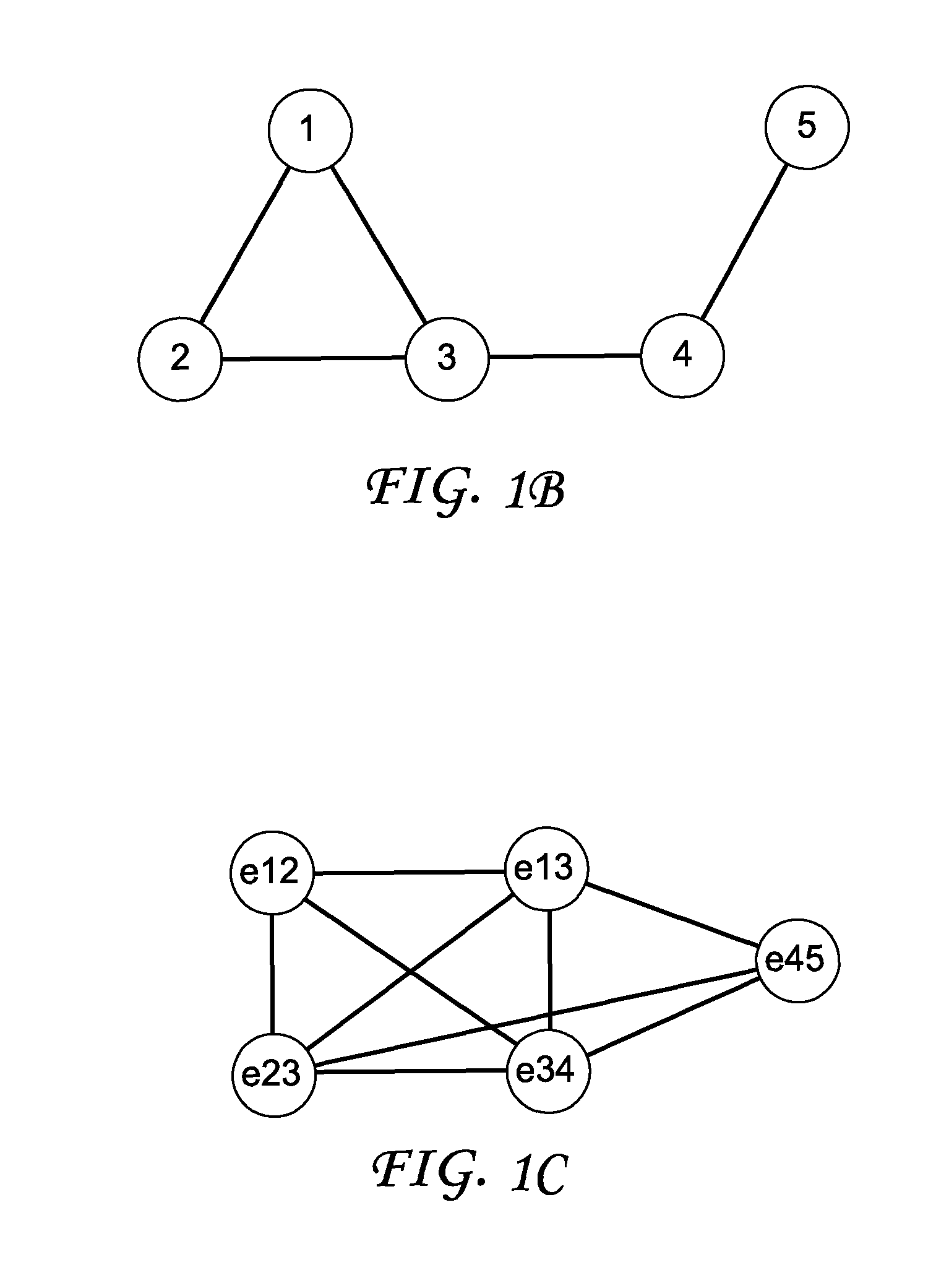

Unified cache and peer-to-peer method and apparatus for streaming media in wireless mesh networks

InactiveUS20110225311A1Reduce throughputQuality improvementBroadcast transmission systemsData switching by path configurationQuality of serviceRouting table

A method and apparatus are described including receiving a route request message to establish a streaming route, determining a cost of a reverse route and traffic load introduced by the requested streaming route, discarding the route request message if one of wireless interference constraints for the requested streaming route cannot be satisfied and quality of service requirements for the requested streaming route cannot be satisfied, pre-admitting the route request message if wireless interference constraints for the requested streaming route can be satisfied and if quality of service requirements for the requested streaming route can be satisfied, adding a routing table entry responsive to the pre-admission, admitting the requested streaming route, updating the routing table and transmitting a route reply message to an originator if requested content is cached, updating the route request message and forwarding the updated route request message if the requested content is not cached, receiving a route reply message and deleting the pre-admitted routing table entry if a time has expired.

Owner:MAGNOLIA LICENSING LLC

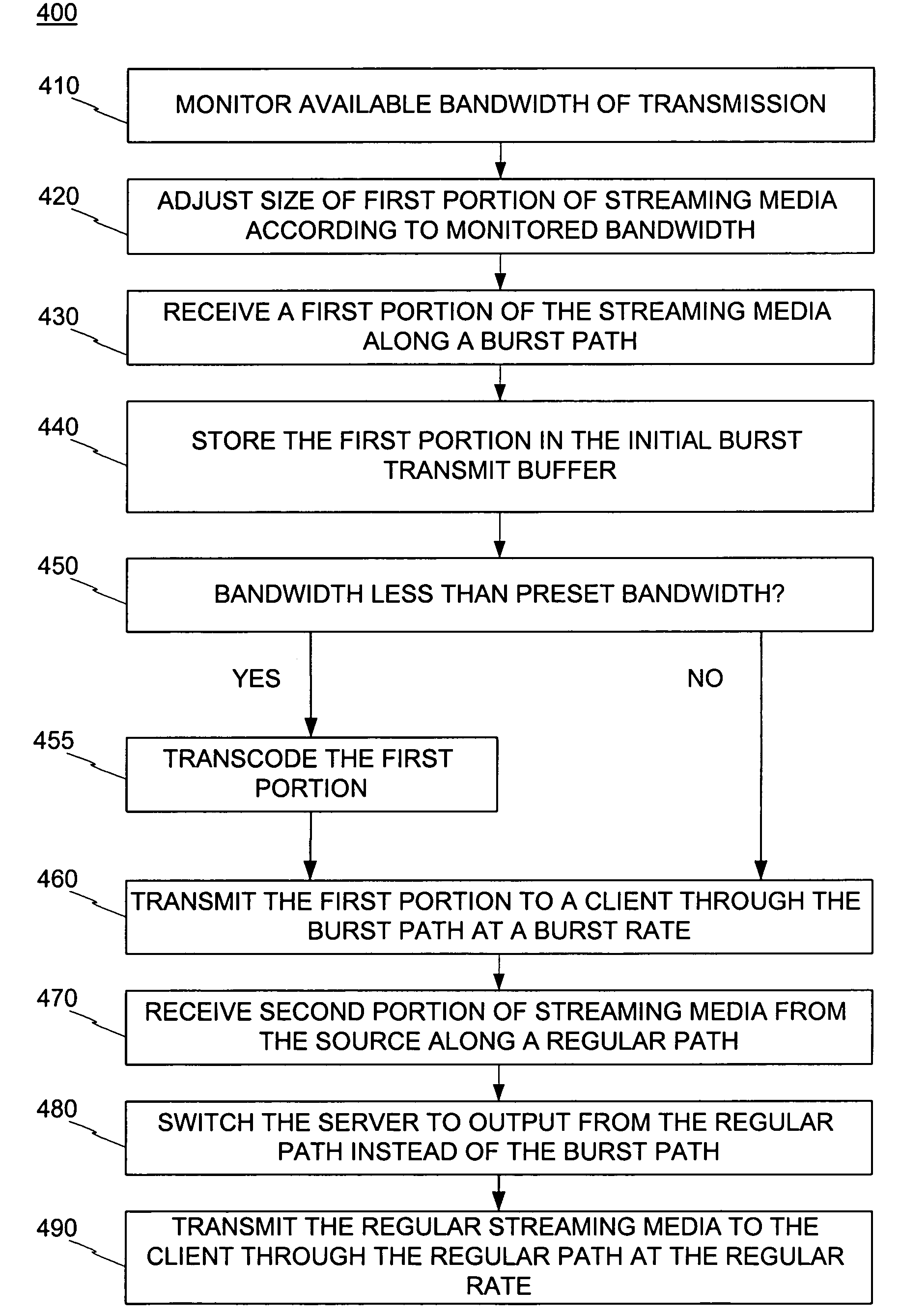

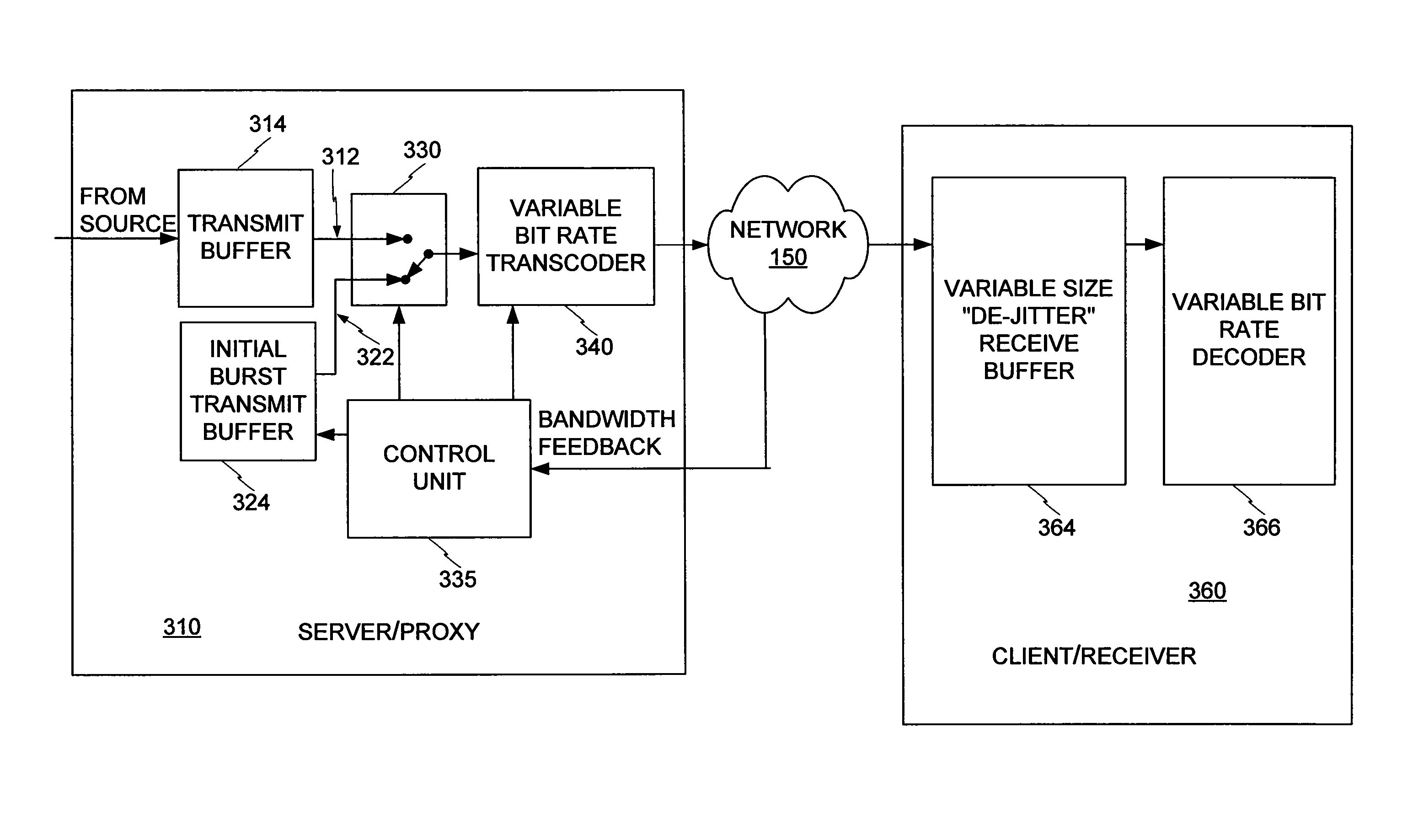

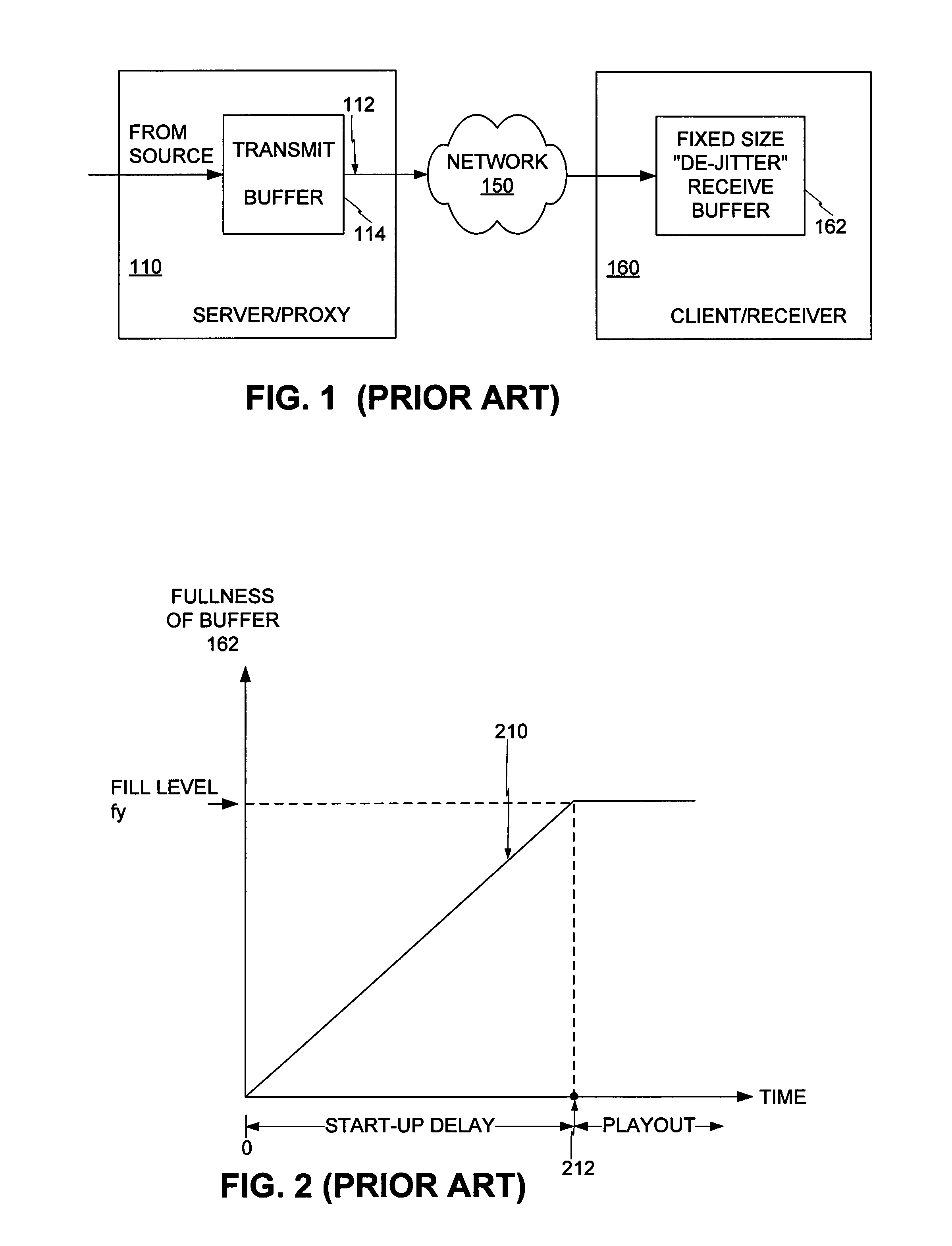

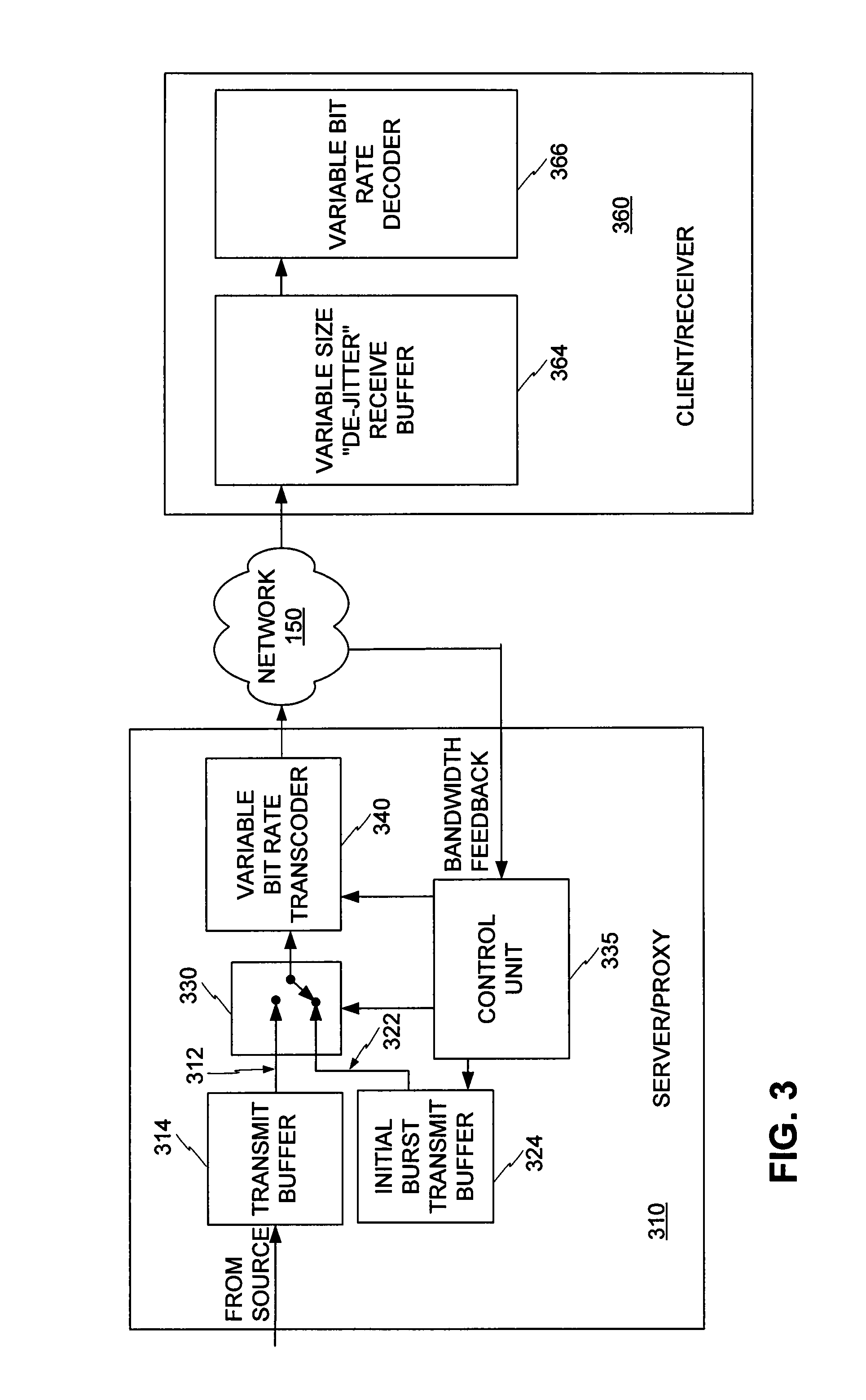

Devices and methods for minimizing start up delay in transmission of streaming media

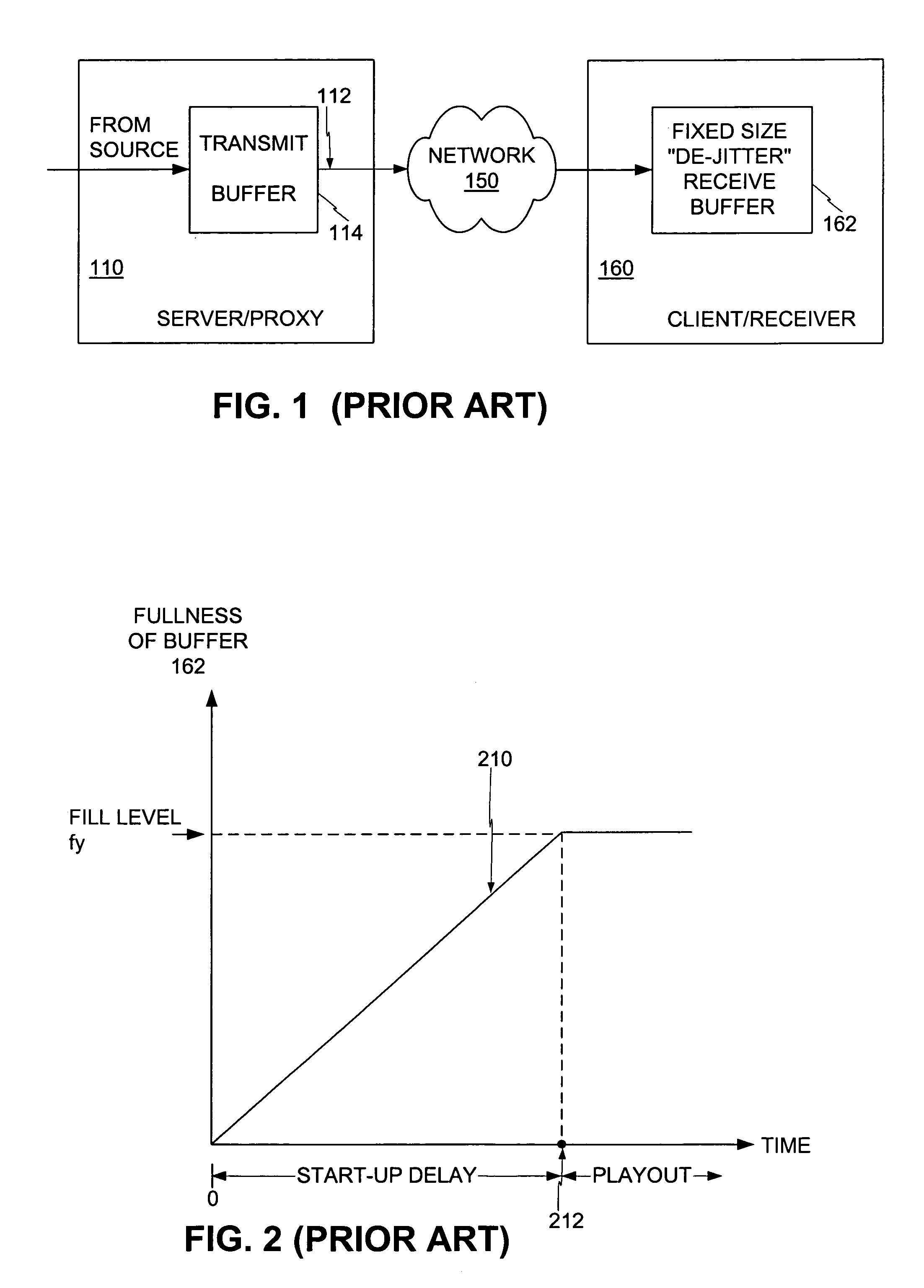

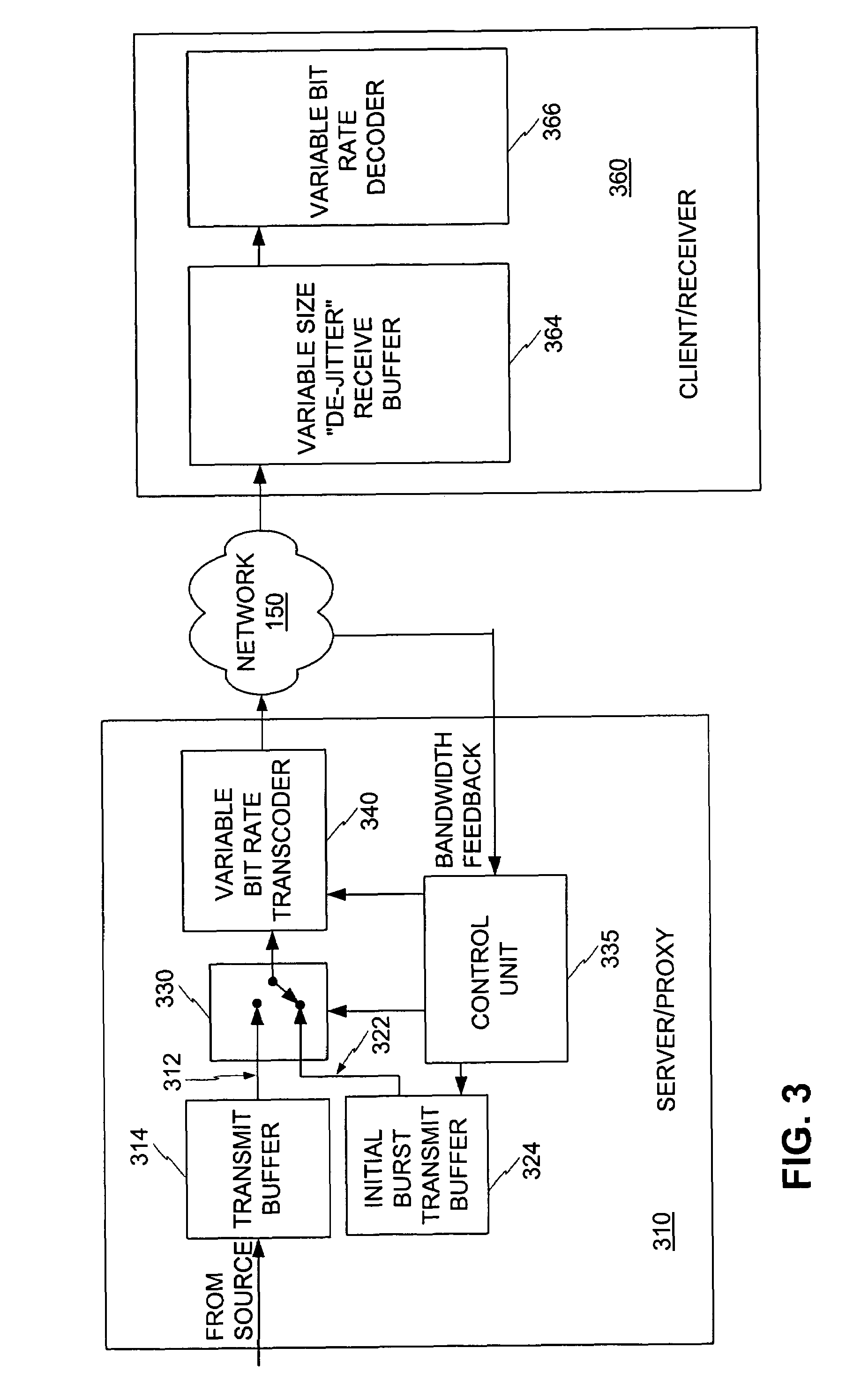

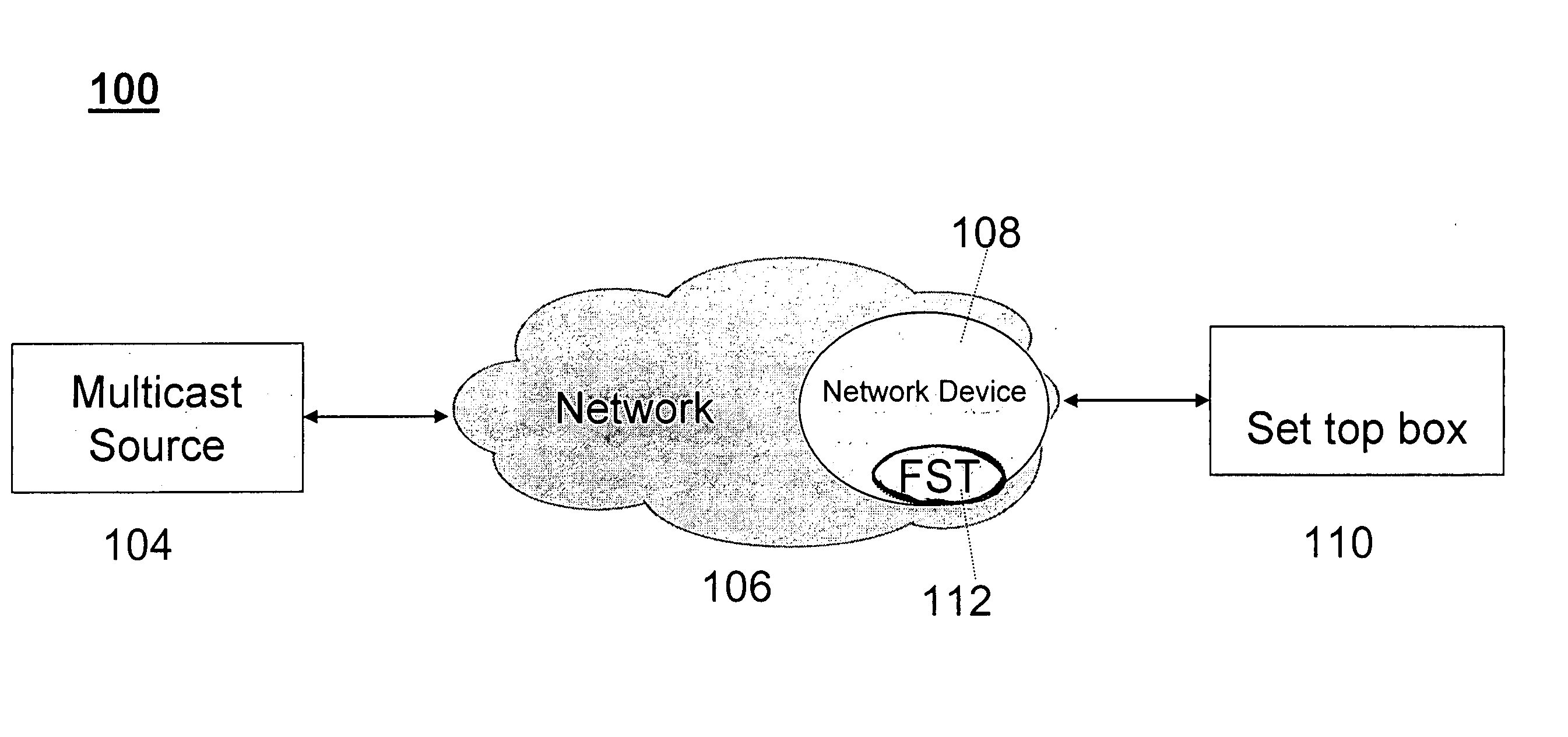

InactiveUS7373413B1Reduce start-up delayImprove transmittanceError preventionFrequency-division multiplex detailsData bufferFixed frame

Devices and methods are provided for minimizing the startup delay of streaming media transmitted through networks. A server maintains a portion of the media stream stored in an initial burst transmit buffer. At startup, the stored portion is transmitted at a rate higher than the fixed frame rate, exploiting the full available bandwidth. The initial burst transmission fills up the de-jitter receive buffer at the receiving end faster, thereby shortening the startup delay. Then transmission is switched to the regular rate, from the regular buffer. A variable bit rate transcoder is optionally used for the data of the initial transmission. The transcoder diminishes the size of these frames, so they can be transmitted faster. This shortens the start up delay even more. A receiver has a buffer with a fill level started at a value lower than a final value. This triggers the beginning of play out faster, further shortening the delay time. The fill level of the de-jitter receive buffer is then gradually increased to a desired final value.

Owner:CISCO TECH INC

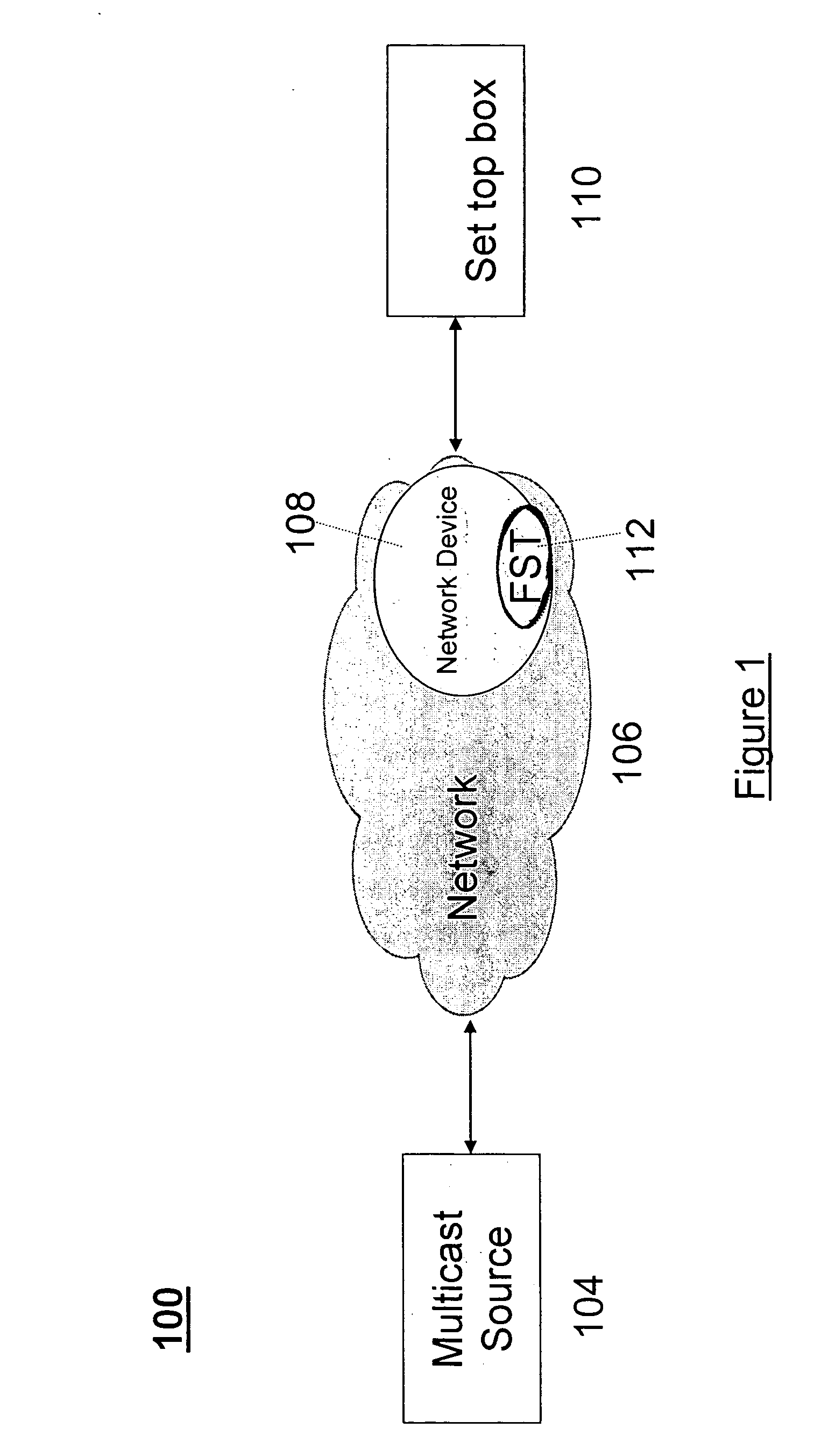

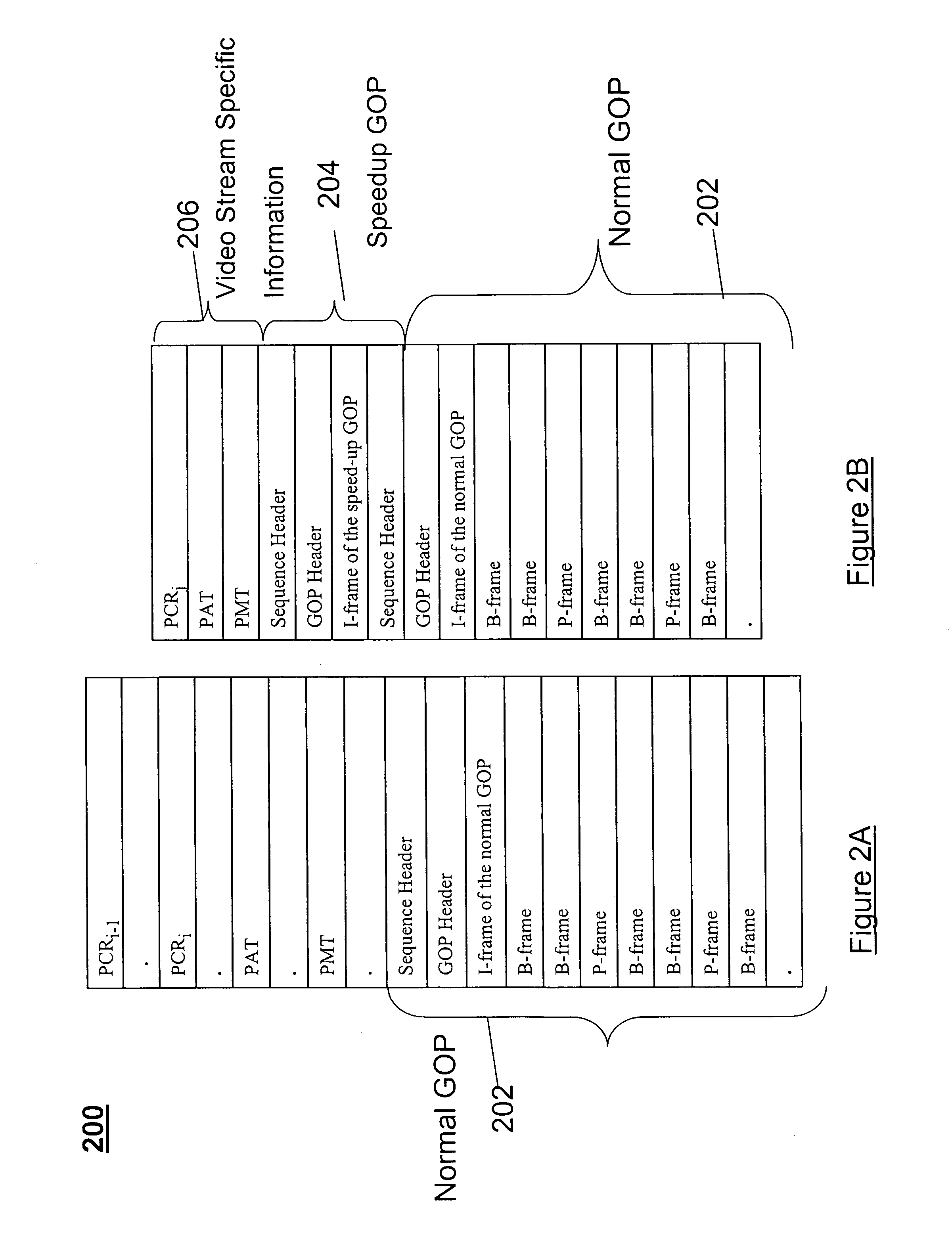

Method for reducing channel change startup delays for multicast digital video streams

ActiveUS20070214490A1Reduce start-up delayPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningDigital videoVideo transmission

Methods and systems for reducing channel change startup delays for multicast digital video streams are described. Packets of a multicast digital video transport stream having a plurality of normal Group of Pictures are received. Further, a channel change request is received and a speed-up Group of Pictures is inserted in the stream in response to the channel change request. In one embodiment, video stream specific information is also inserted in the stream. The packets are processed and transmitted.

Owner:SYNAMEDIA LTD

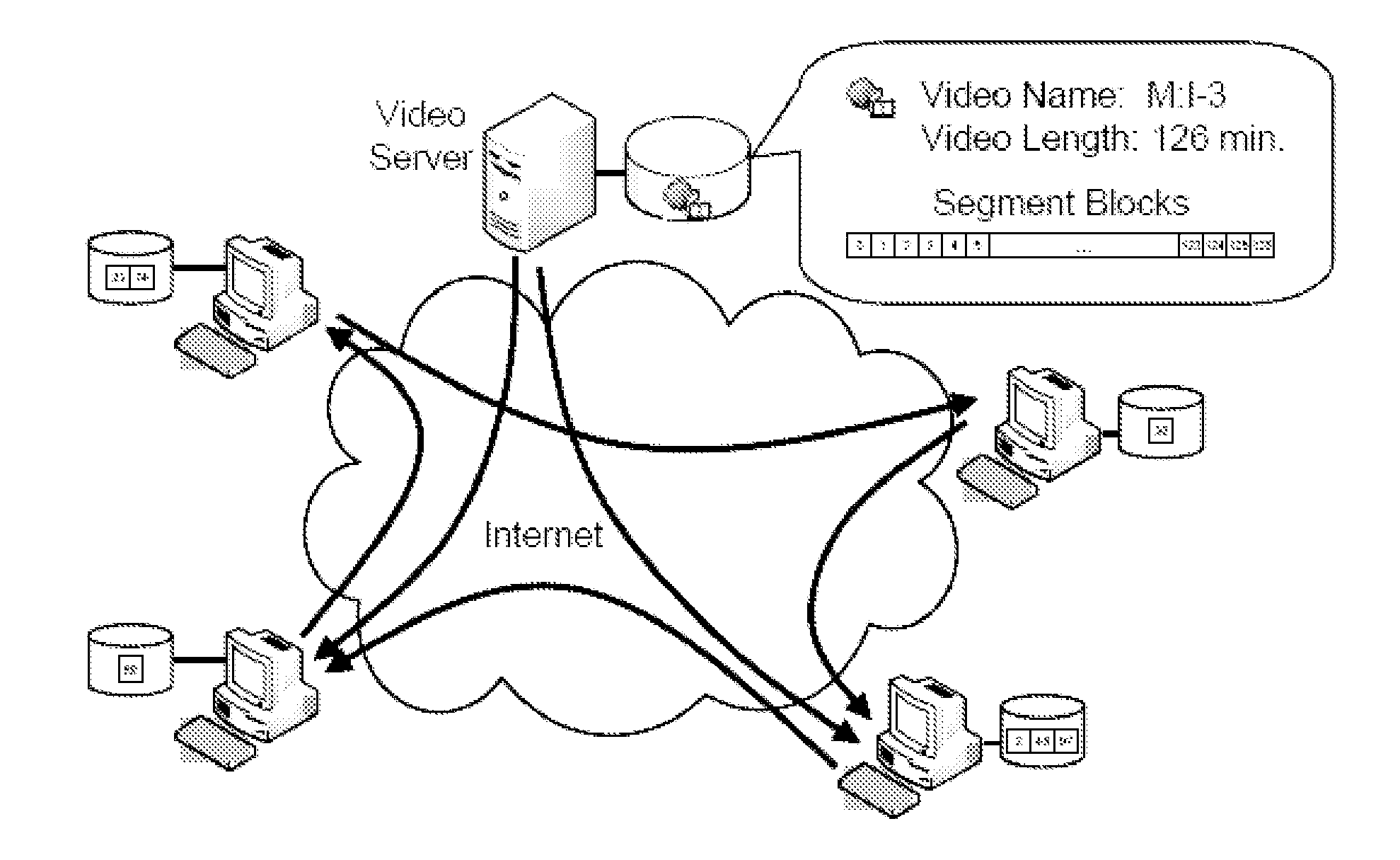

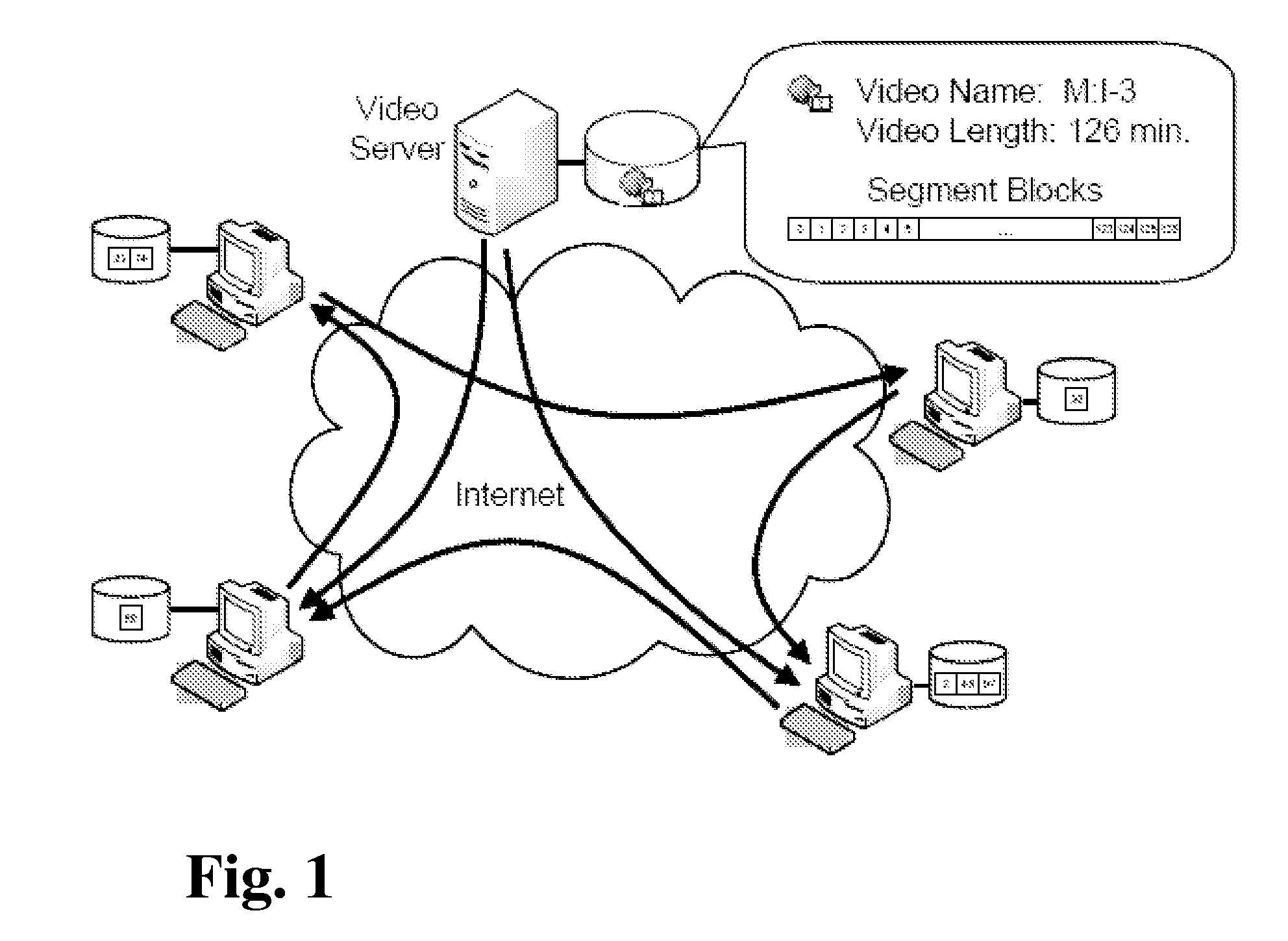

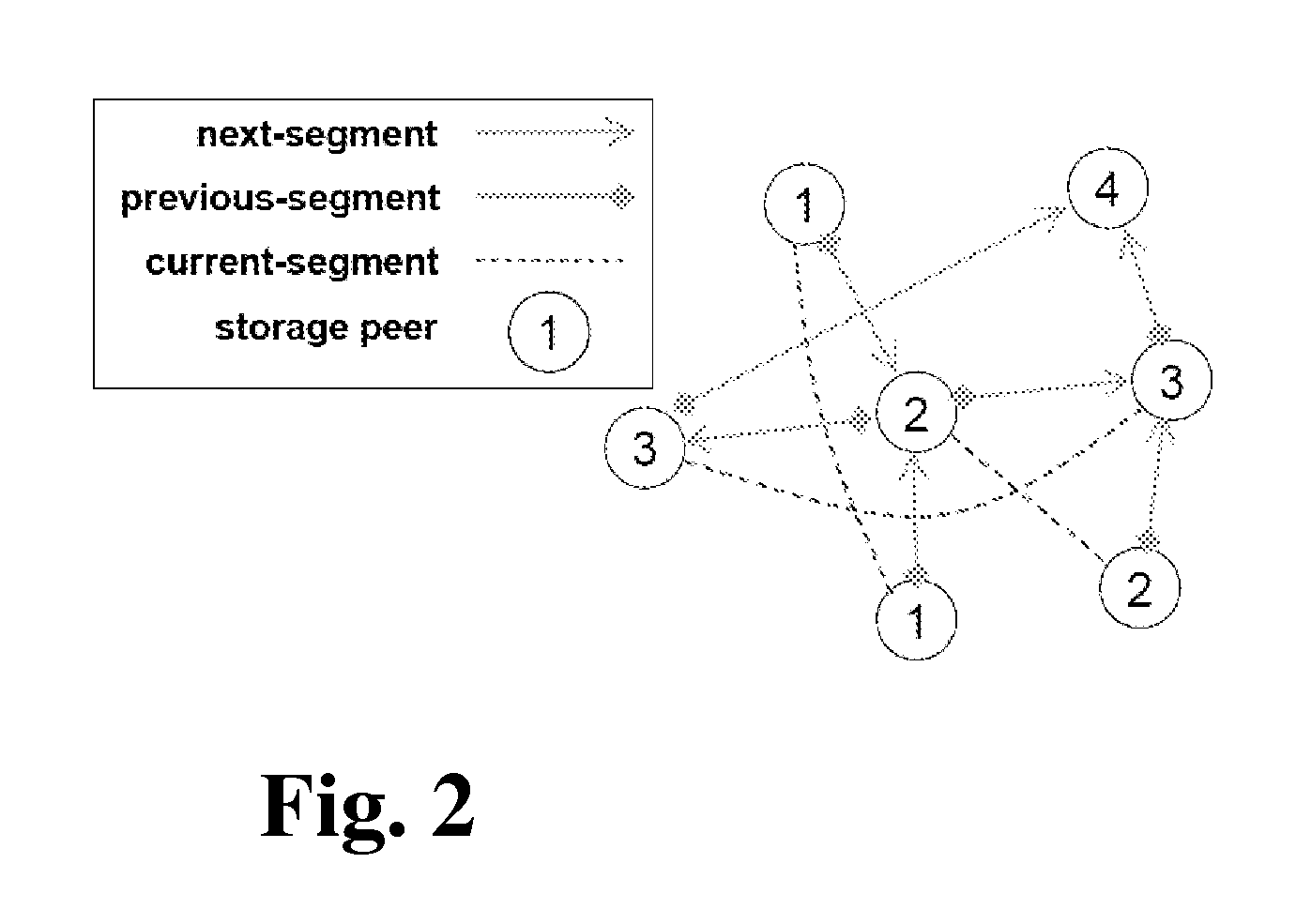

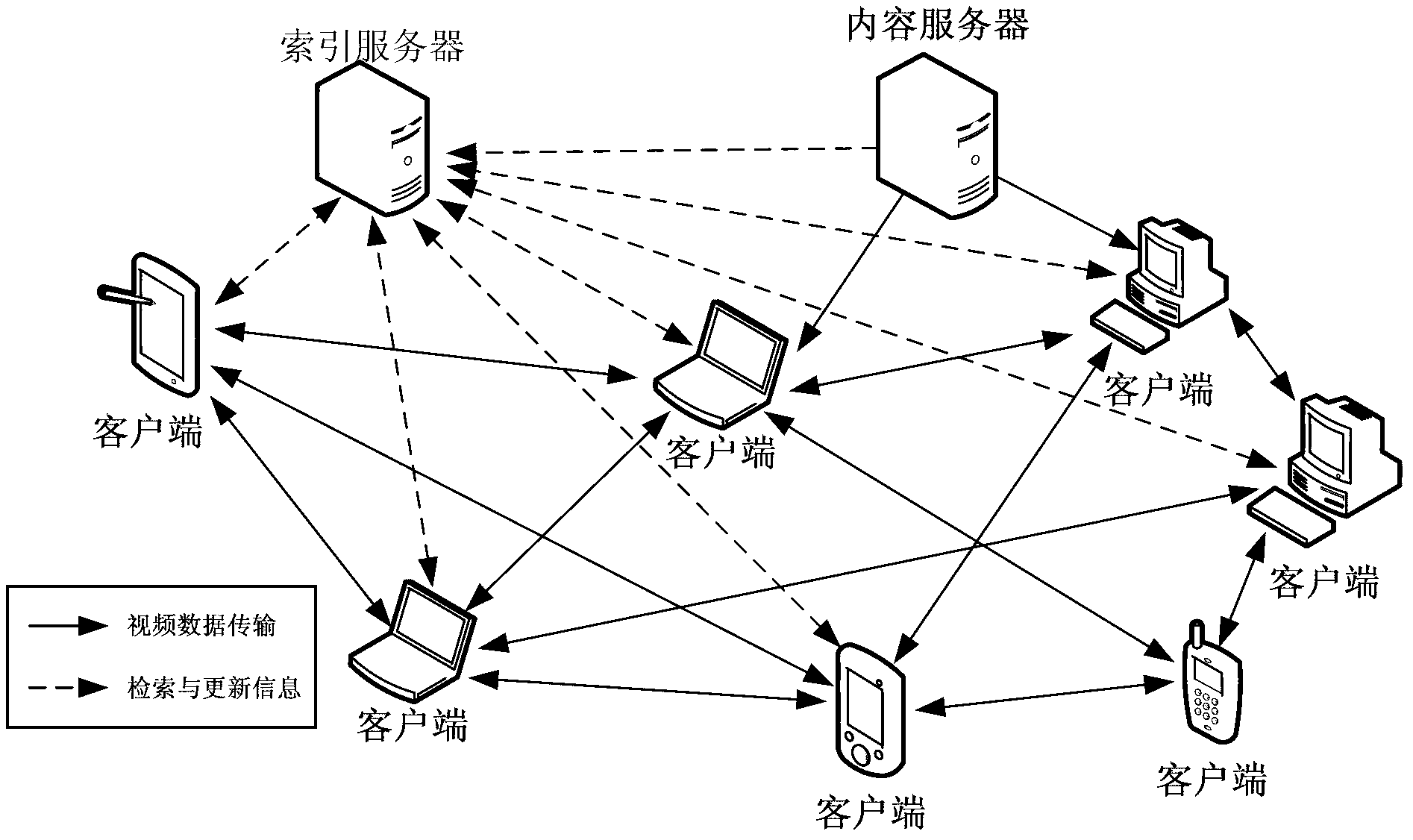

Distributed storage to support user interactivity in peer-to-peer video streaming

InactiveUS7925781B1Long startup delayReduce start-up delayMultiple digital computer combinationsTransmissionPeer-to-peerVideo streaming

A method of video distribution within a peer-to-peer network. Once the video has initially been downloaded from a server, various fragments of it reside on various peers of the network. At the location of each fragment, the peer which stores it preferably also carries pointers to preceding-fragment, following-fragment, and same-fragment locations. This provides a list-driven capability so that, once a user has attached to any fragment of the program, the user can then transition according to the previous-fragment and following-fragment pointers to play, fast-forward, or rewind the program.

Owner:THE HONG KONG UNIV OF SCI & TECH

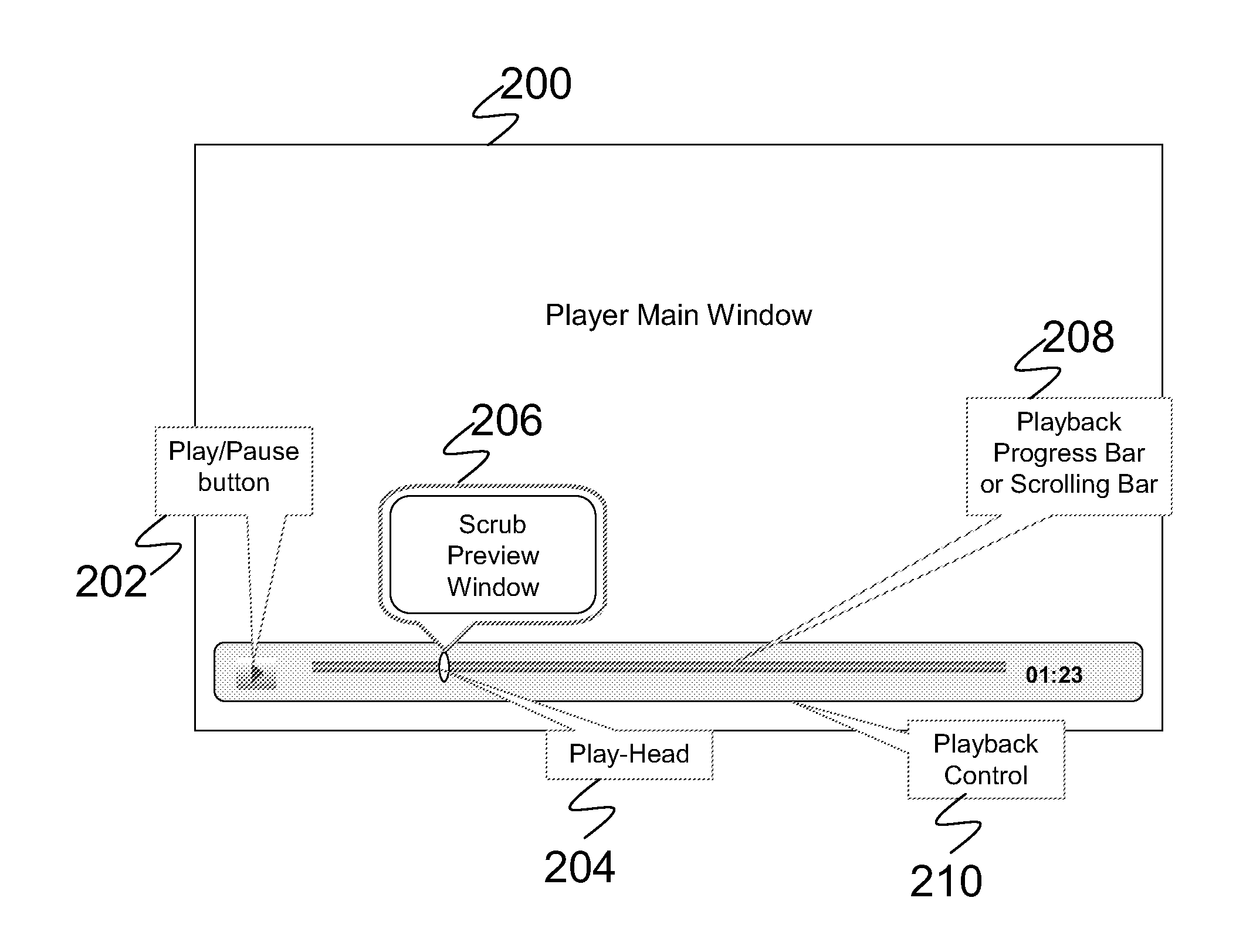

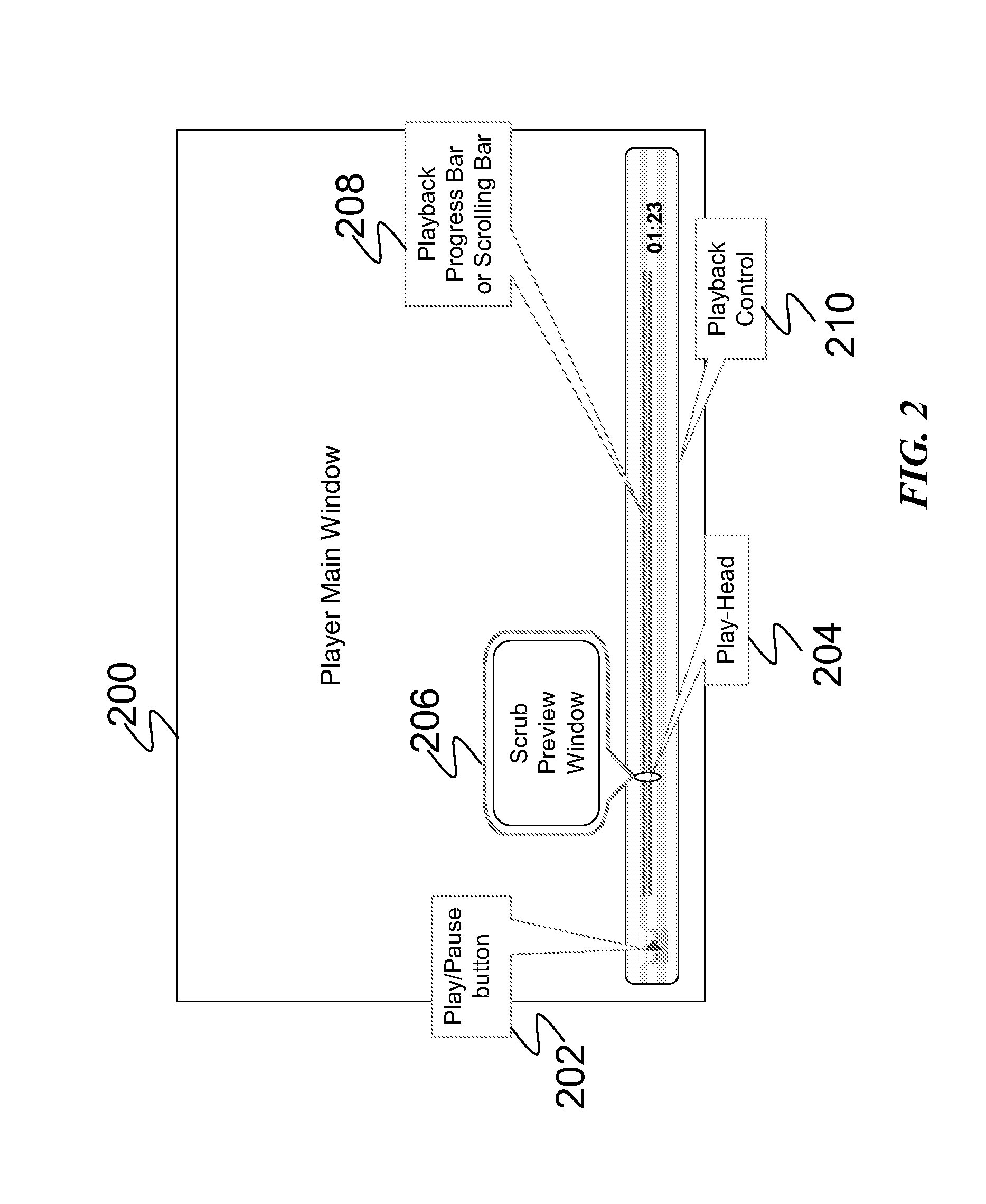

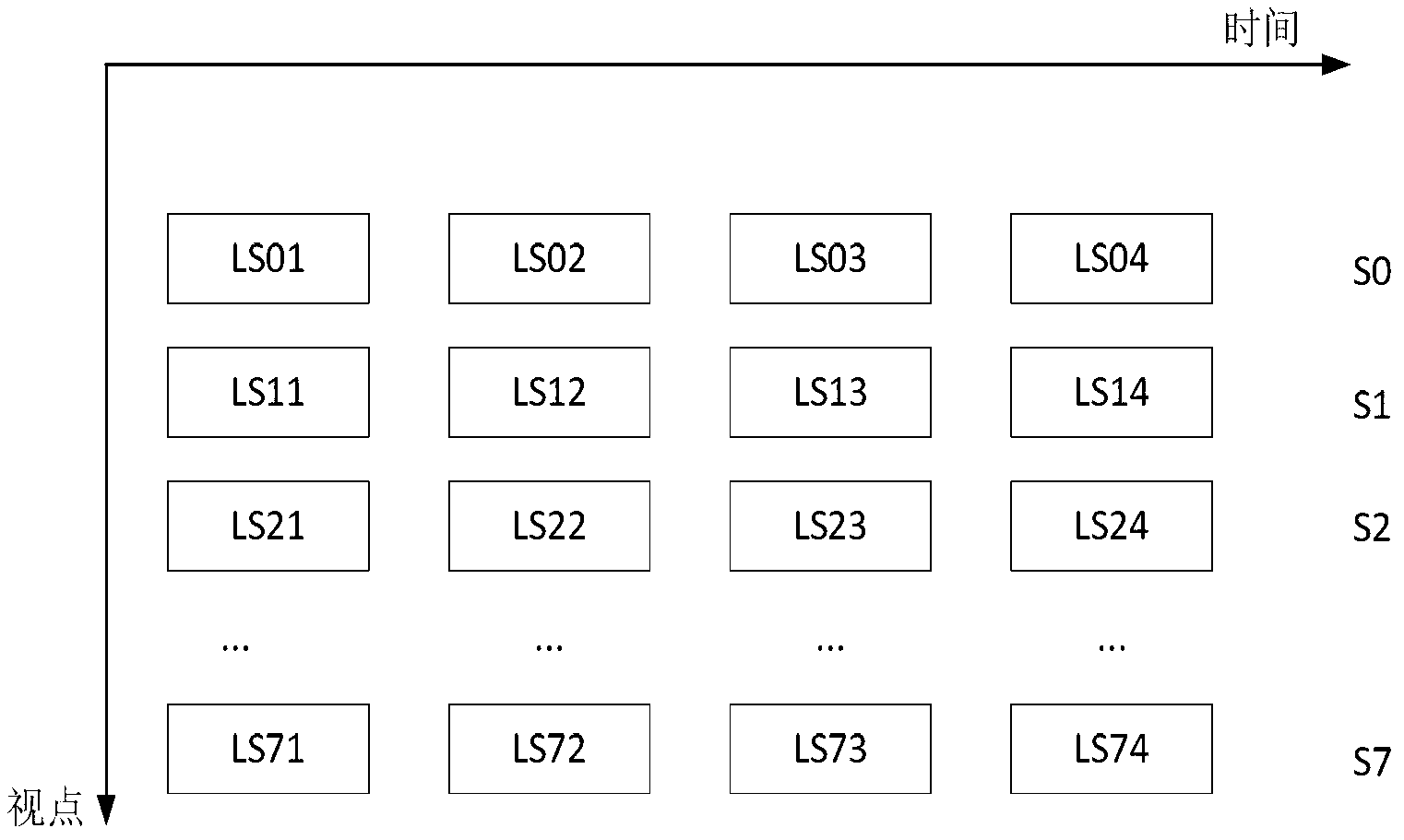

System and Method for Online Media Preview

InactiveUS20110191679A1Reduce start-up delayReduce playback jitterInput/output for user-computer interactionSelective content distributionImage resolutionLow resolution

An embodiment of a system and method for online media preview extracts a plurality of preview frames from a media file. The preview frames are saved in a layered data structure. In addition, the preview frames may be scaled to a lower resolution so that the preview file formed by the preview frames is reduced in size. After receiving a preview request, a delivery scheduling scheme delivers the preview frames at selected time points to minimize startup delay and playback jitter.

Owner:FUTUREWEI TECH INC

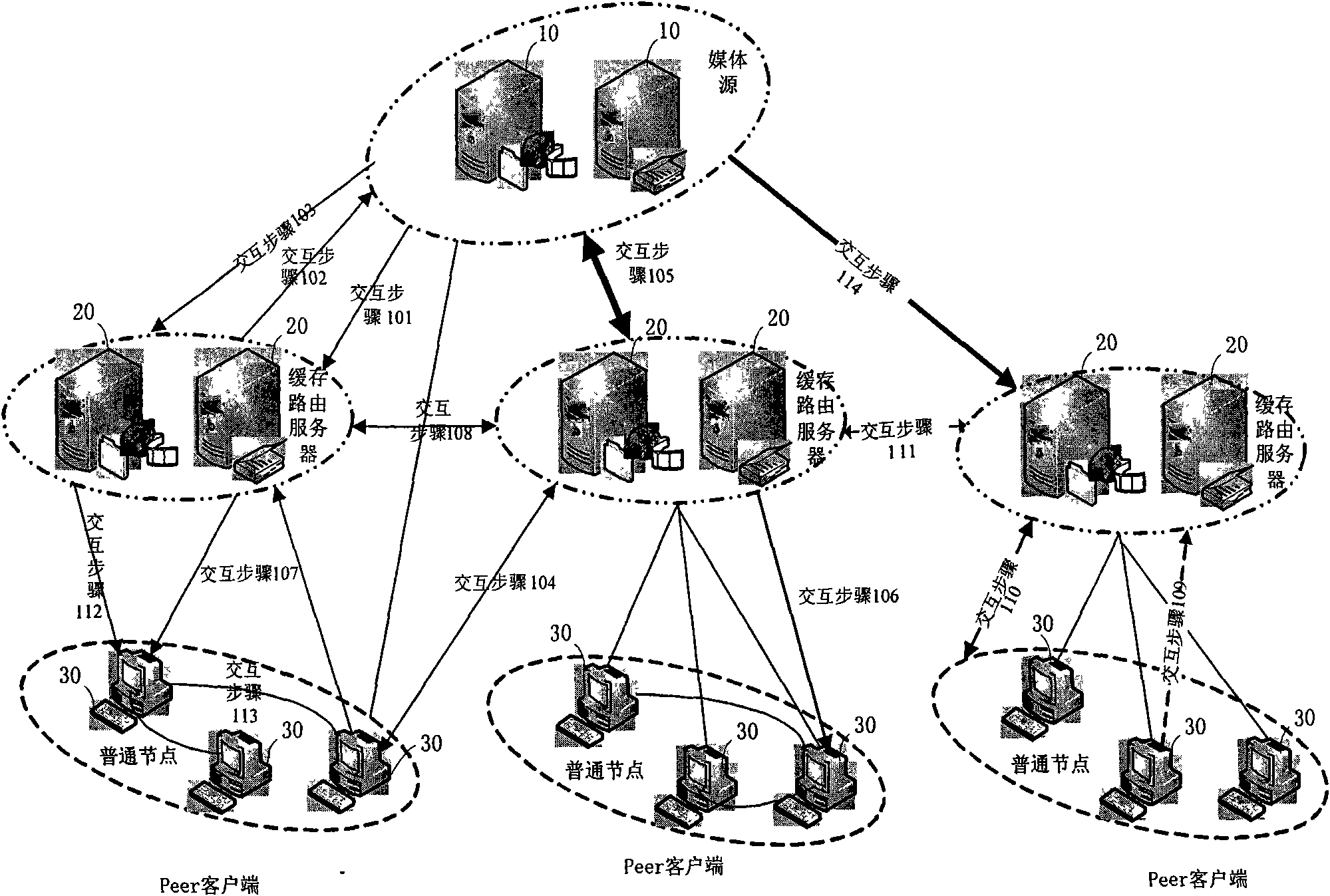

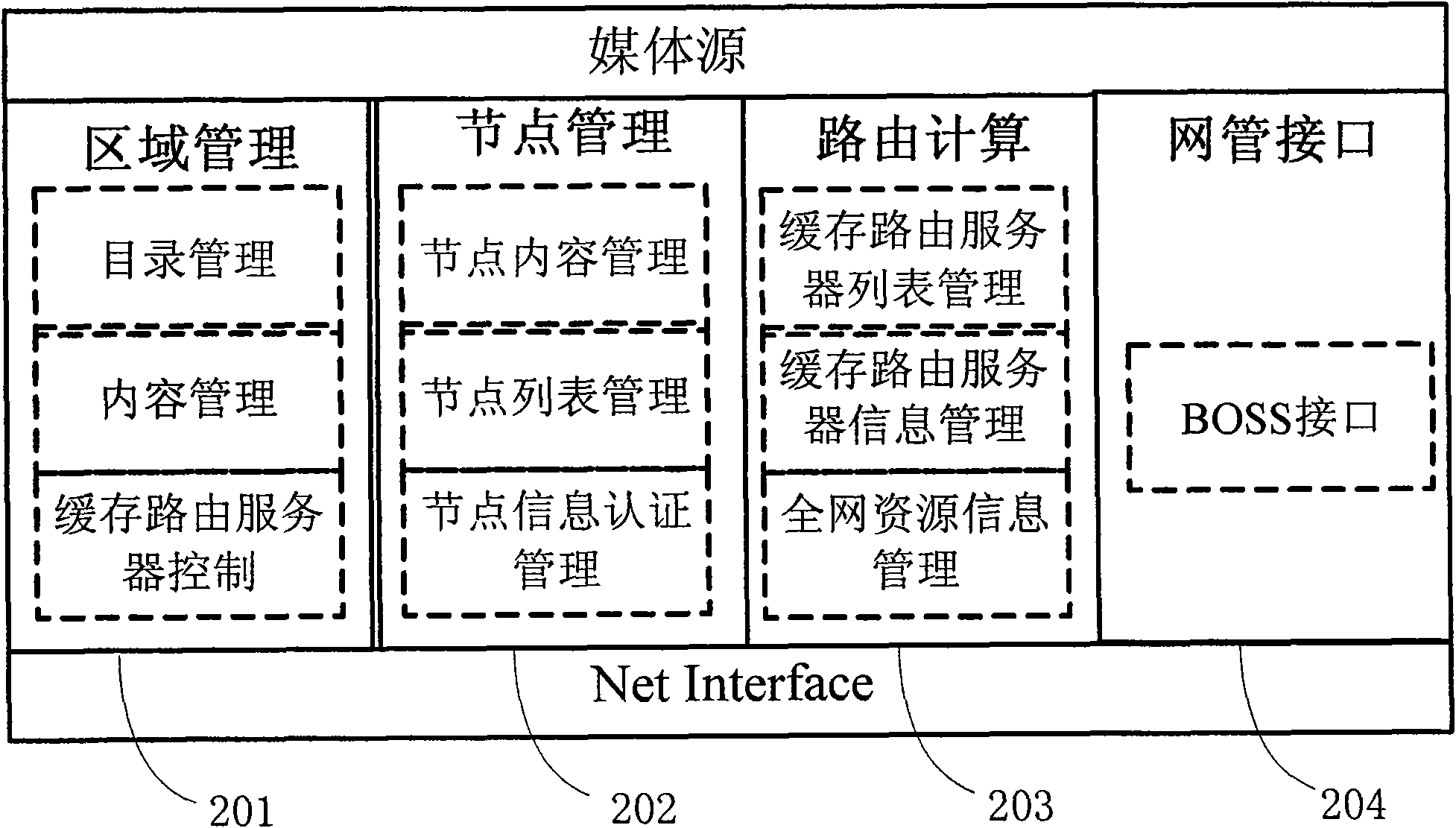

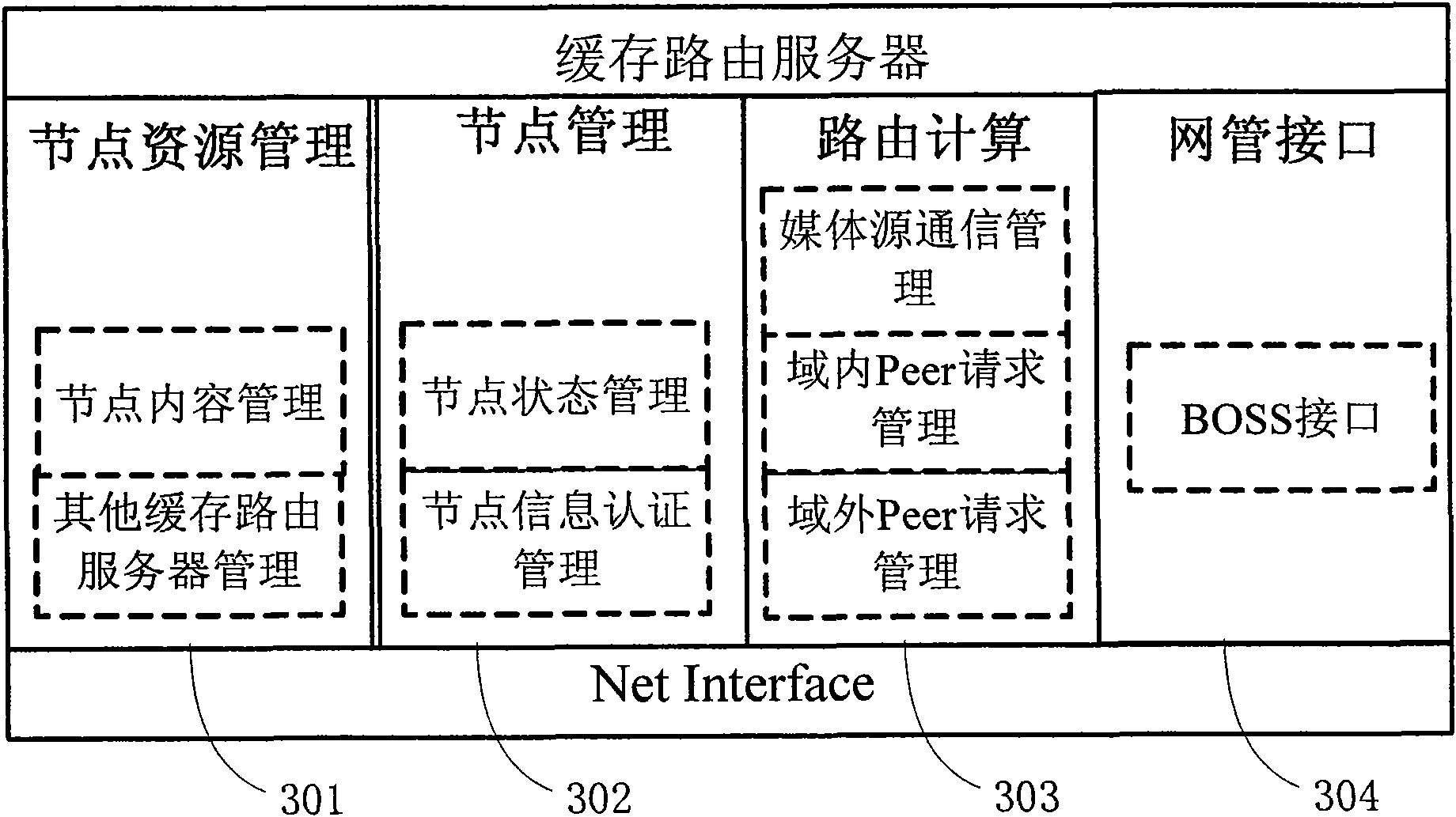

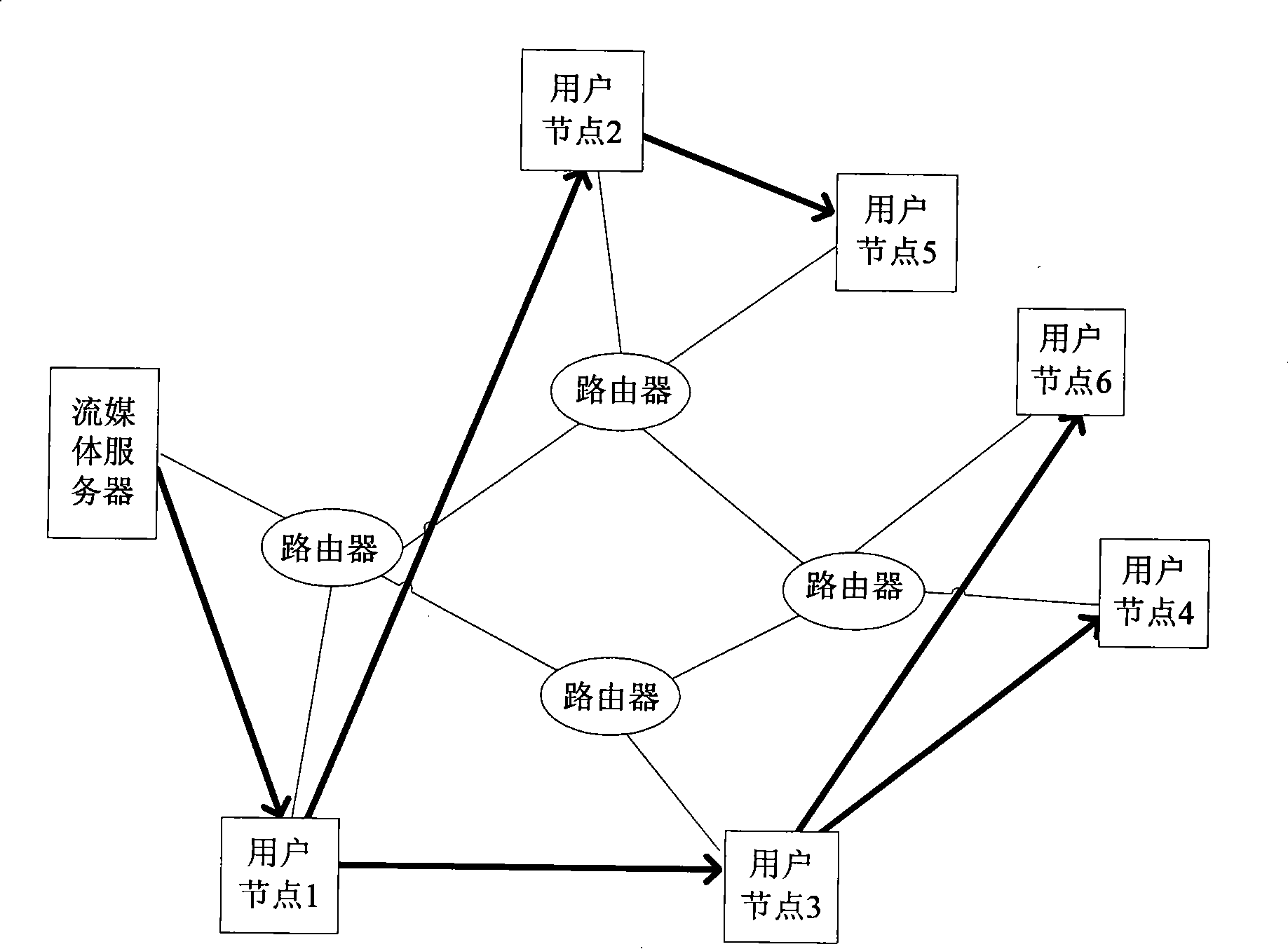

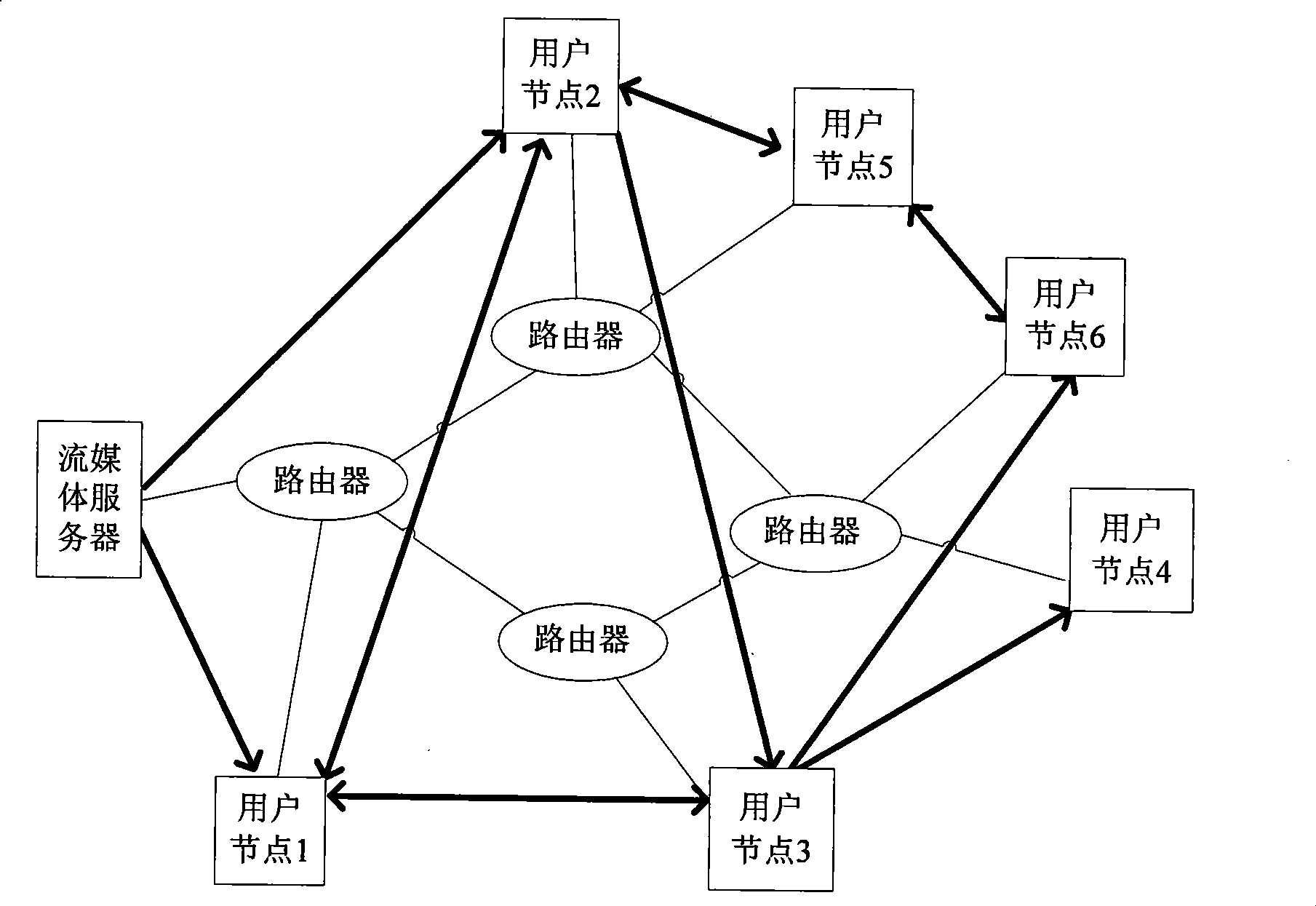

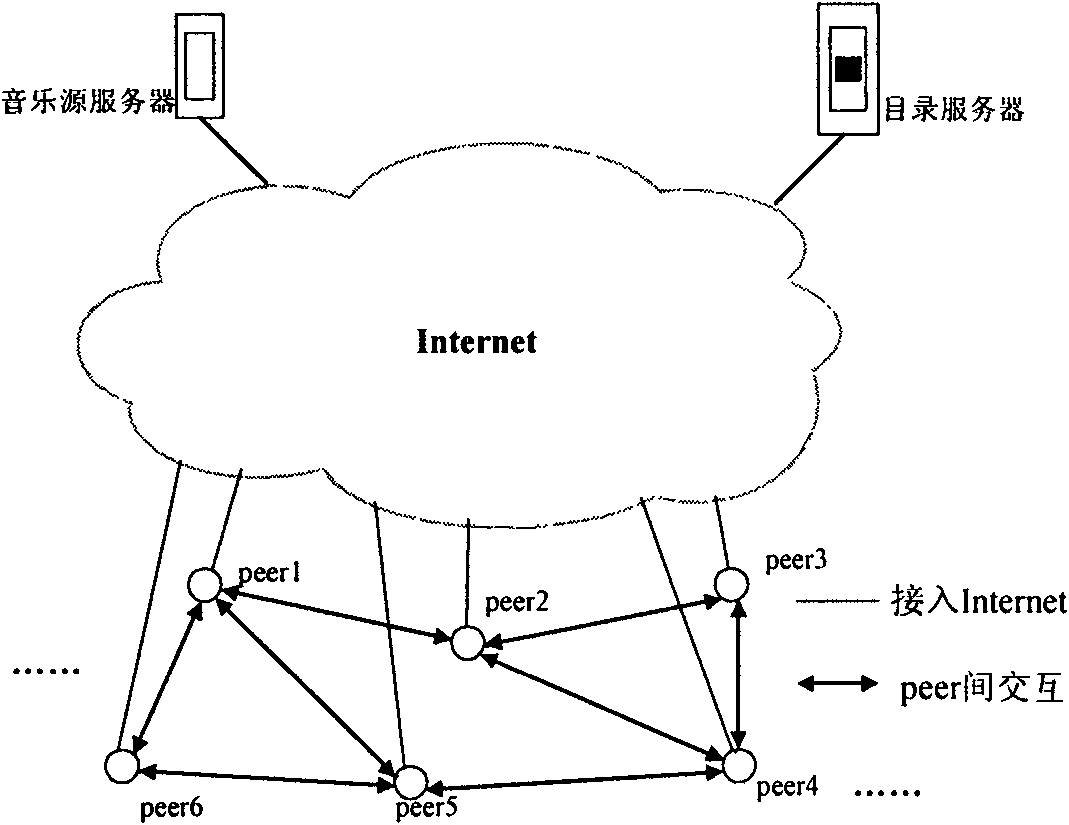

Method and system for shortening time delay in peer-to-peer network streaming media live broadcast system

ActiveCN101938508AReduce start-up delayReduce consumption and loadSpecial service provision for substationSelective content distributionRoute serverClient-side

The invention discloses a method and a system for shortening time delay in a peer-to-peer network streaming media live broadcast system. The system is provided with a media source node and a cache routing server, wherein the media source node is used for the media resource management of the whole system; the cache routing server is used for media resource management, routing calculation and peer client end management in a local domain; the cache routing server is used for selecting a proper peer client end from other managed peer client ends and establishing streaming media data interaction with a request peer client end; when needed media streaming data does not exist in the peer client end managed by the cache routing server, the cache routing server is used for requesting needed streaming media data information from other cache routing servers and transmitting the needed streaming media data information to the request peer client end; and when the cache routing server is failed forobtaining the needed streaming media data from other cache routing servers, the cache routing server is used for obtaining the needed data from the media source node and transmitting the needed data to the request peer client end.

Owner:CHINA TELECOM CORP LTD

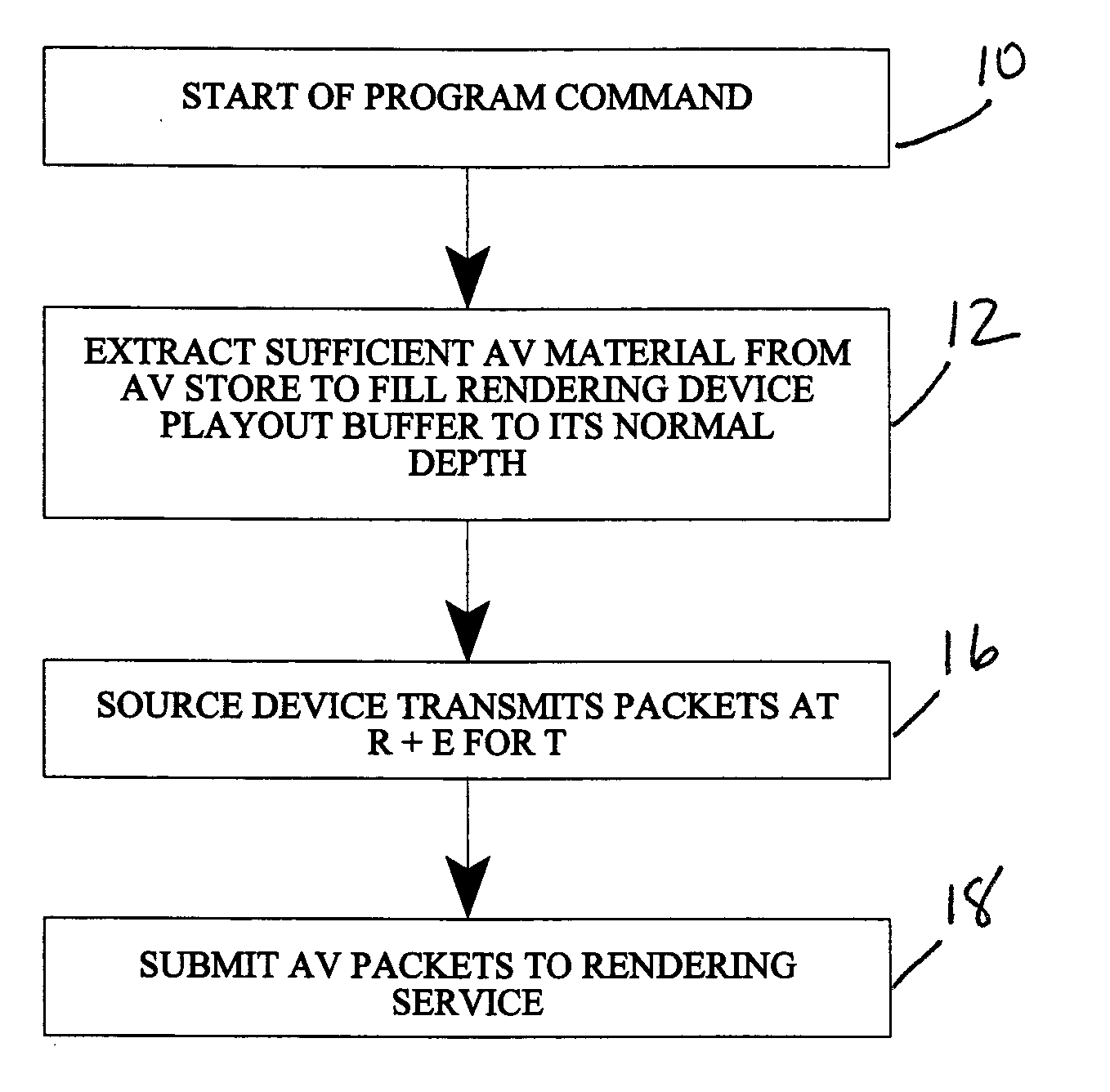

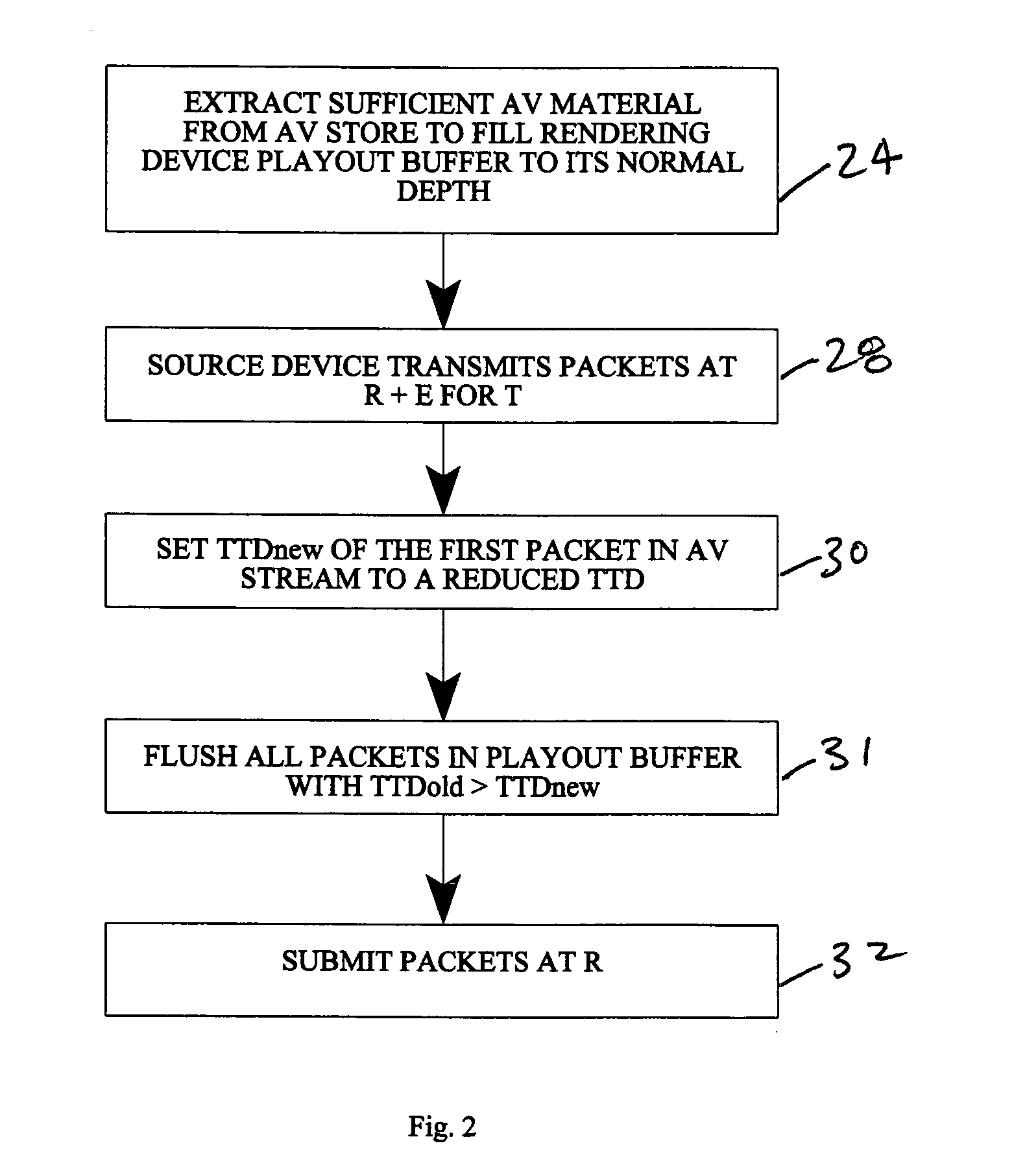

Playout buffer management to minimize startup delay

InactiveUS20050198681A1Low error recovery capabilityReduced maximum delivery delayAnalogue secracy/subscription systemsTwo-way working systemsData shippingReal-time computing

A method of playout buffer management includes delivering AV data to a destination device at a rate greater than the rate at which the destination device consumes data; rendering of AV data upon receipt of a first AV data packet while simultaneously filling a playout buffer; and flushing the playout buffer upon receipt of a command taken from the group of commands consisting of change of AV program, receipt of a new AV stream following a fast forward or fast reverse command, a pause command and a stop command.

Owner:SHARP LAB OF AMERICA INC

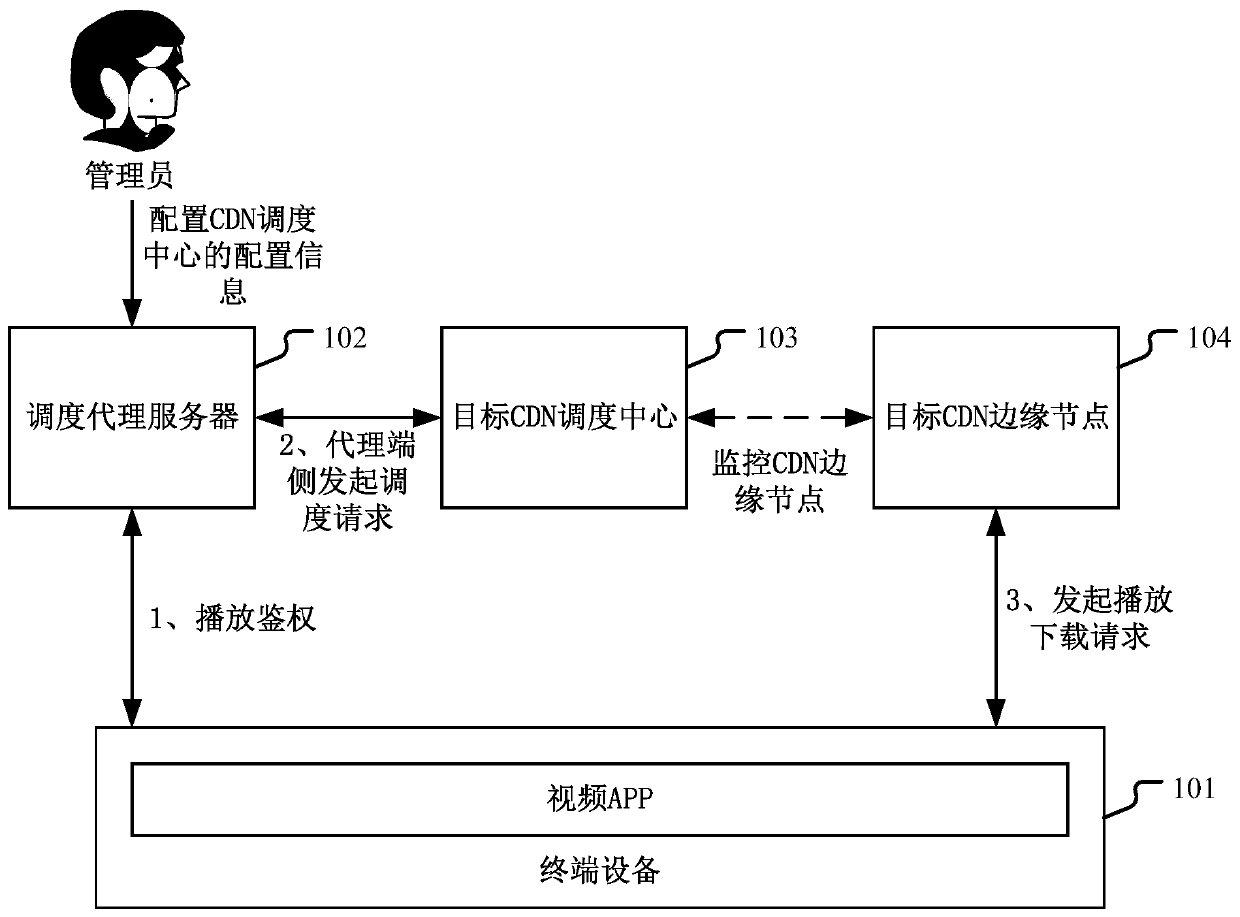

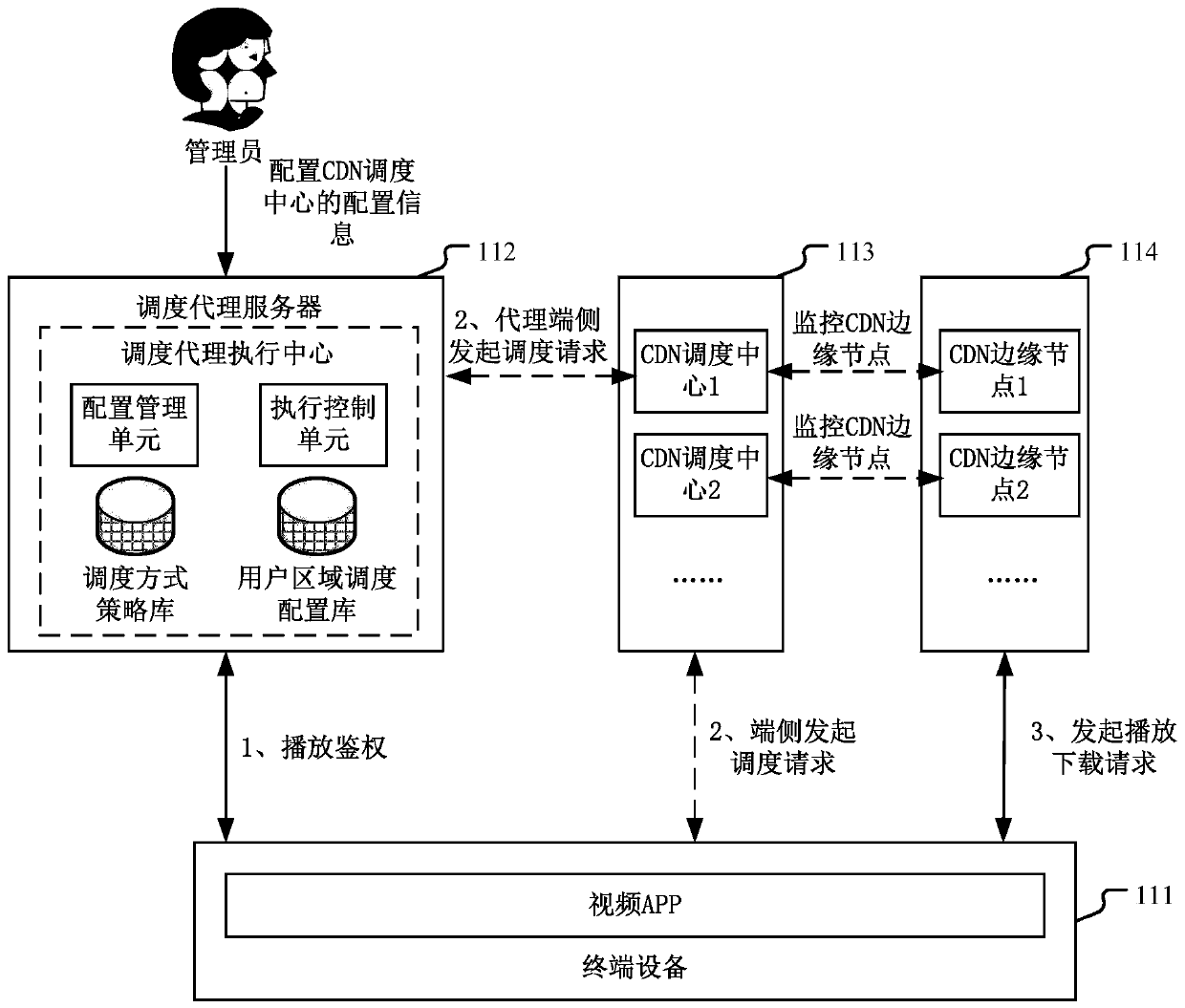

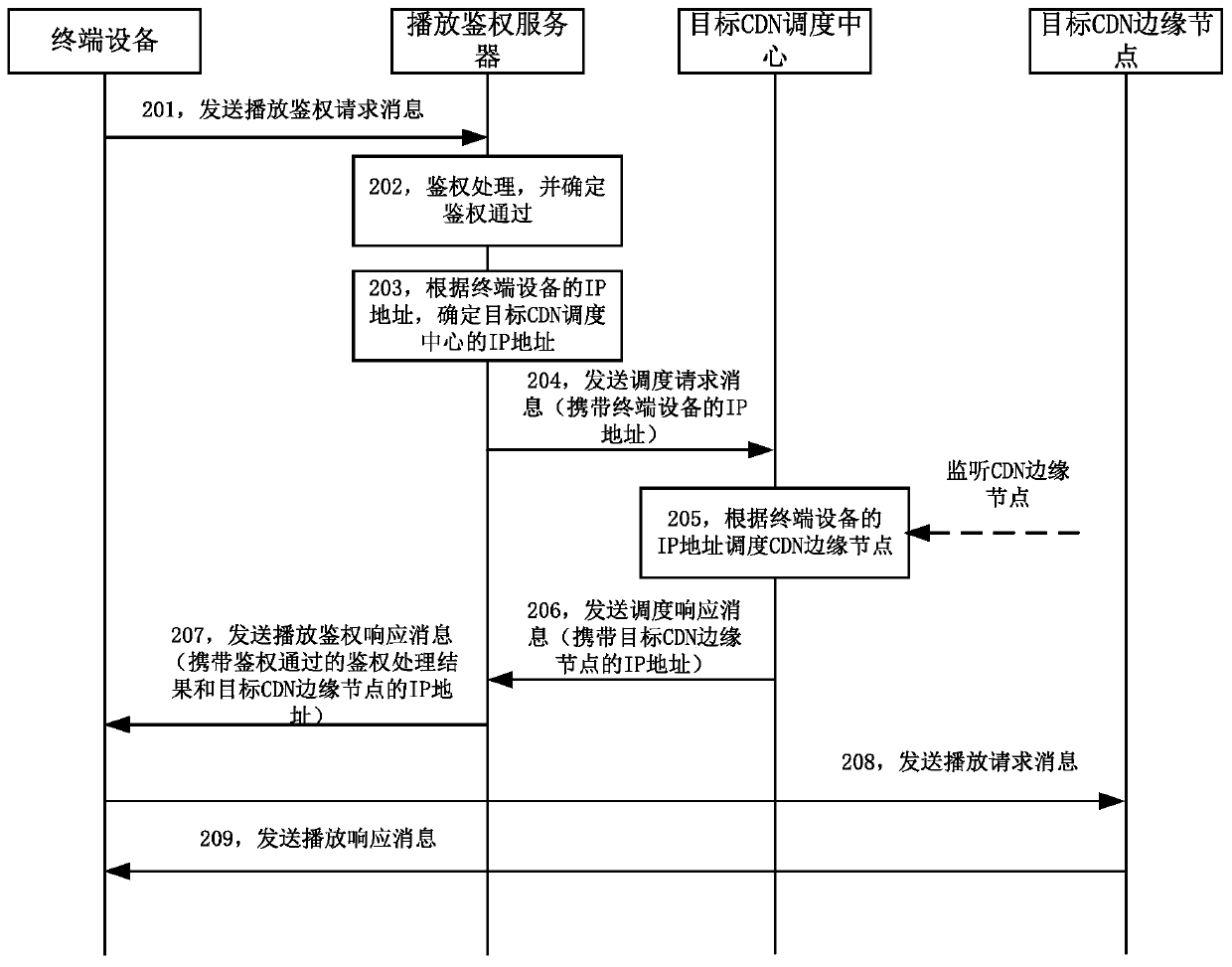

A method and equipment for scheduling CDN edge nodes

ActiveCN109831511AGuaranteed correctnessImprove acquisition success rateTransmissionIp addressTerminal equipment

The embodiment of the invention provides a method and equipment for scheduling CDN edge nodes. The method comprises the steps that enabling a scheduling proxy server receive a first request message from terminal equipment, the first request message comprises a first IP address, and the IP address is the IP address of the terminal equipment; determining a target CDN scheduling center correspondingto the first IP address according to the first IP address and the configuration information; when the target CDN scheduling center supports the set agent scheduling, the target CDN scheduling center schedules the set agent; sending a second request message to the target CDN scheduling center, the second request message comprising a first IP address for the target CDN scheduling center to determinea second IP address according to the first IP address, and the IP address being an IP address of a target CDN edge node corresponding to the first IP address; receiving a second IP address sent by the target CDN scheduling center; and sending a request response message to the terminal device, the request response message carrying the second IP address for the terminal device to acquire the distribution content from the target CDN edge node according to the second IP address, thereby improving the content acquisition success rate.

Owner:PETAL CLOUD TECH CO LTD

Unified cache and peer-to-peer method and apparatus for streaming media in wireless mesh networks

InactiveUS8447875B2Reduce throughputQuality improvementBroadcast transmission systemsData switching by path configurationQuality of serviceRouting table

A method and apparatus are described including receiving a route request message to establish a streaming route, determining a cost of a reverse route and traffic load introduced by the requested streaming route, discarding the route request message if one of wireless interference constraints for the requested streaming route cannot be satisfied and quality of service requirements for the requested streaming route cannot be satisfied, pre-admitting the route request message if wireless interference constraints for the requested streaming route can be satisfied and if quality of service requirements for the requested streaming route can be satisfied, adding a routing table entry responsive to the pre-admission, admitting the requested streaming route, updating the routing table and transmitting a route reply message to an originator if requested content is cached, updating the route request message and forwarding the updated route request message if the requested content is not cached, receiving a route reply message and deleting the pre-admitted routing table entry if a time has expired.

Owner:MAGNOLIA LICENSING LLC

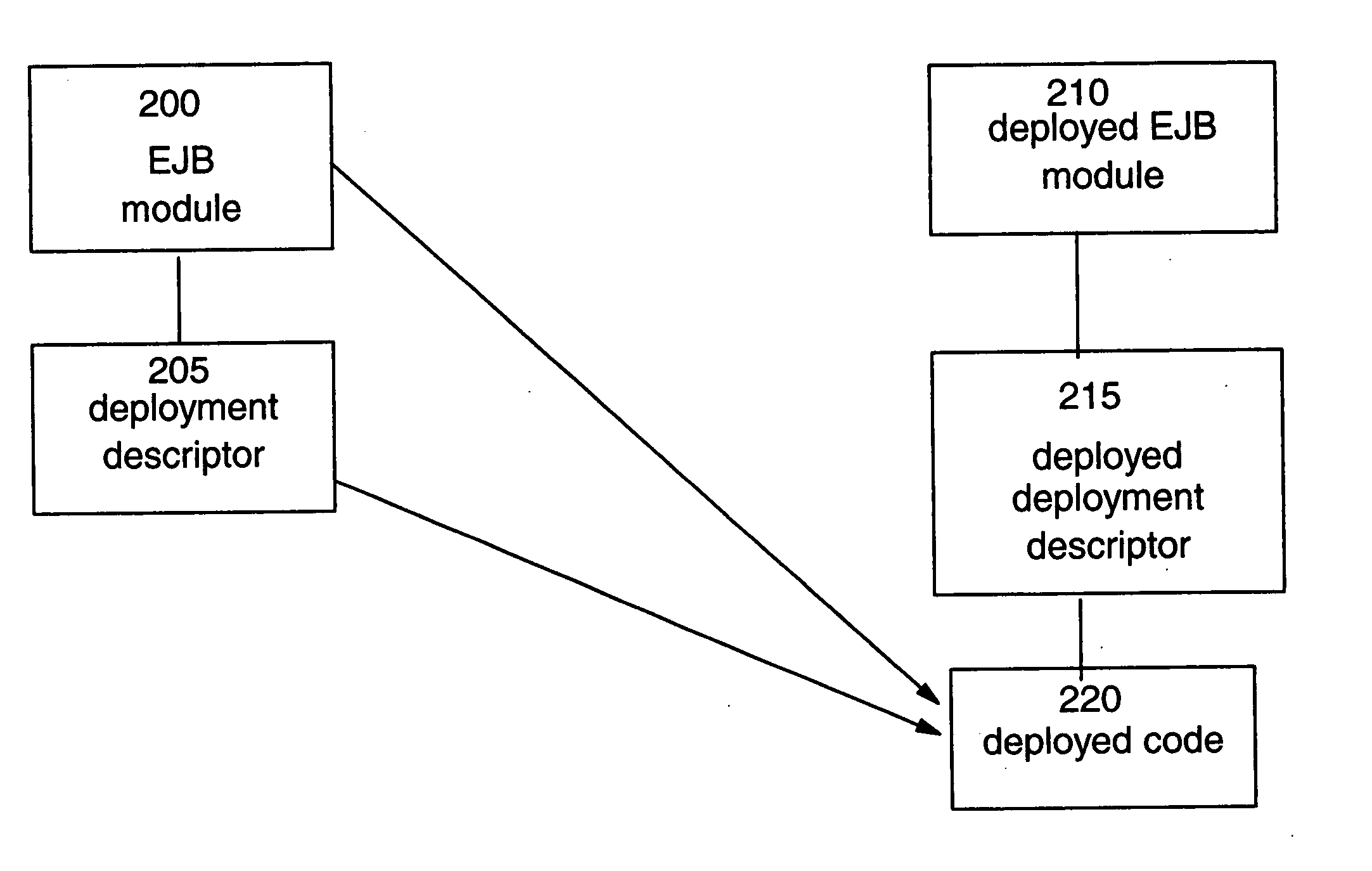

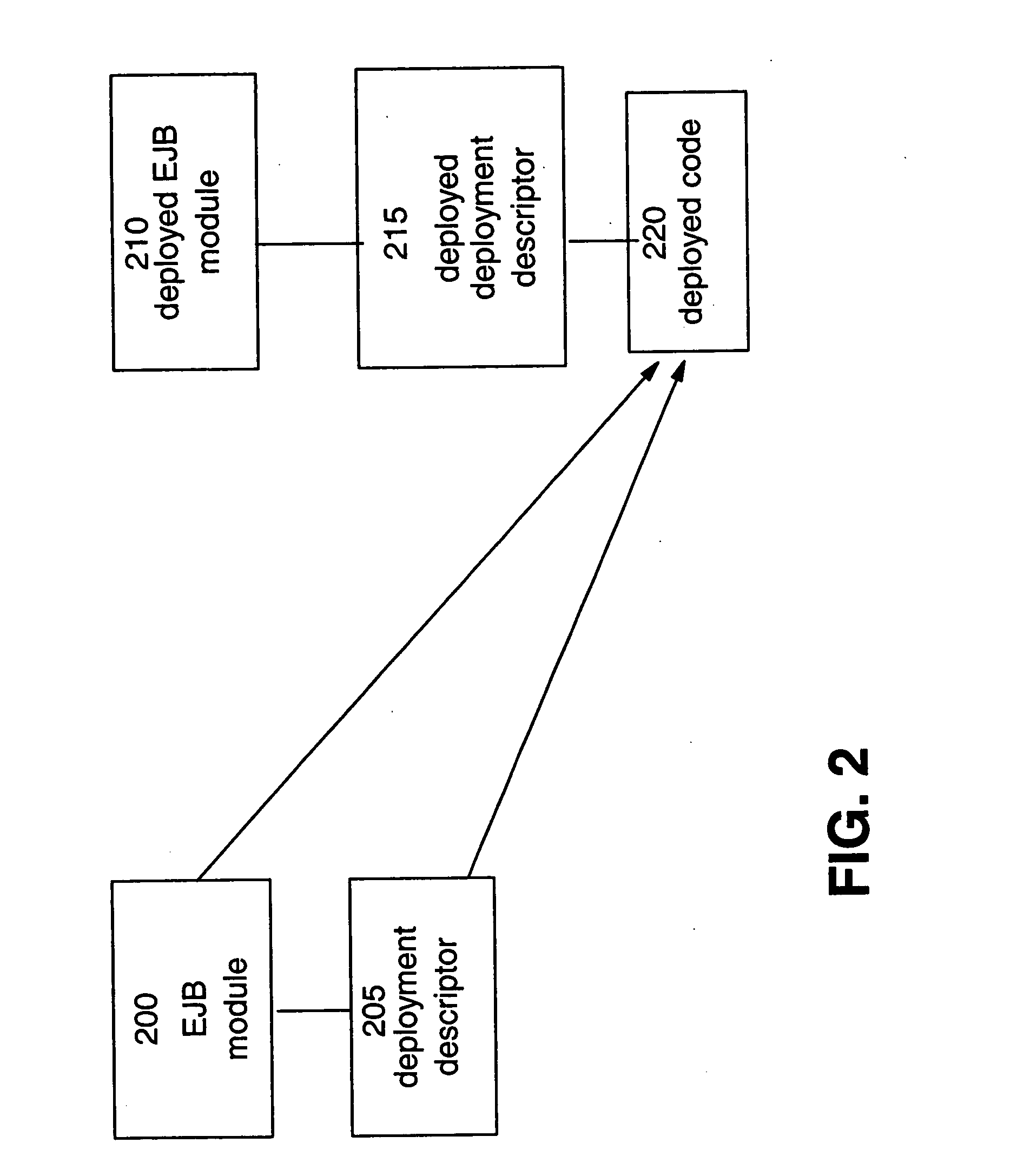

On-demand software module deployment

InactiveUS20050108702A1Reduce memory consumptionReduce start-up delayDigital computer detailsProgram loading/initiatingInformation repositoryLoad time

A method, system, program product and signal bearing medium embodiments of the present invention provide for deploying software modules for software application use in a computer system thereby reducing load time as well as memory requirements. Deployment of a plurality of software modules and associated deployment descriptors into a software module depository and creation of a deployment information repository from the associated deployment descriptors occurs. A name service is initialized with information from the deployment information repository and a requested software module identifier is then mapped to a respective enabler. Having mapped the requested software module to an enabler, the respective software module is enabled for the software application use. On-demand deployment in this manner saves start-up time as well as initial and ongoing memory allocation.

Owner:KYNDRYL INC

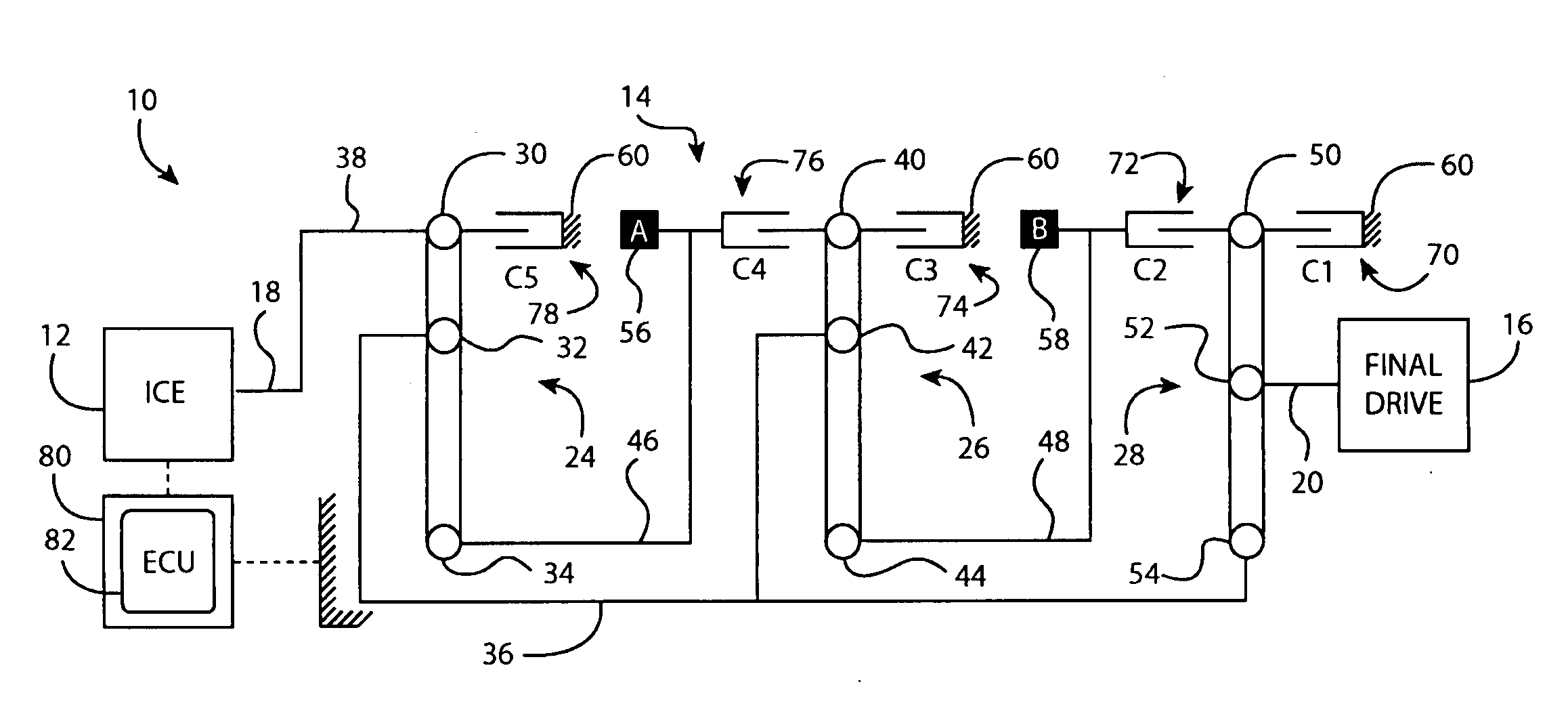

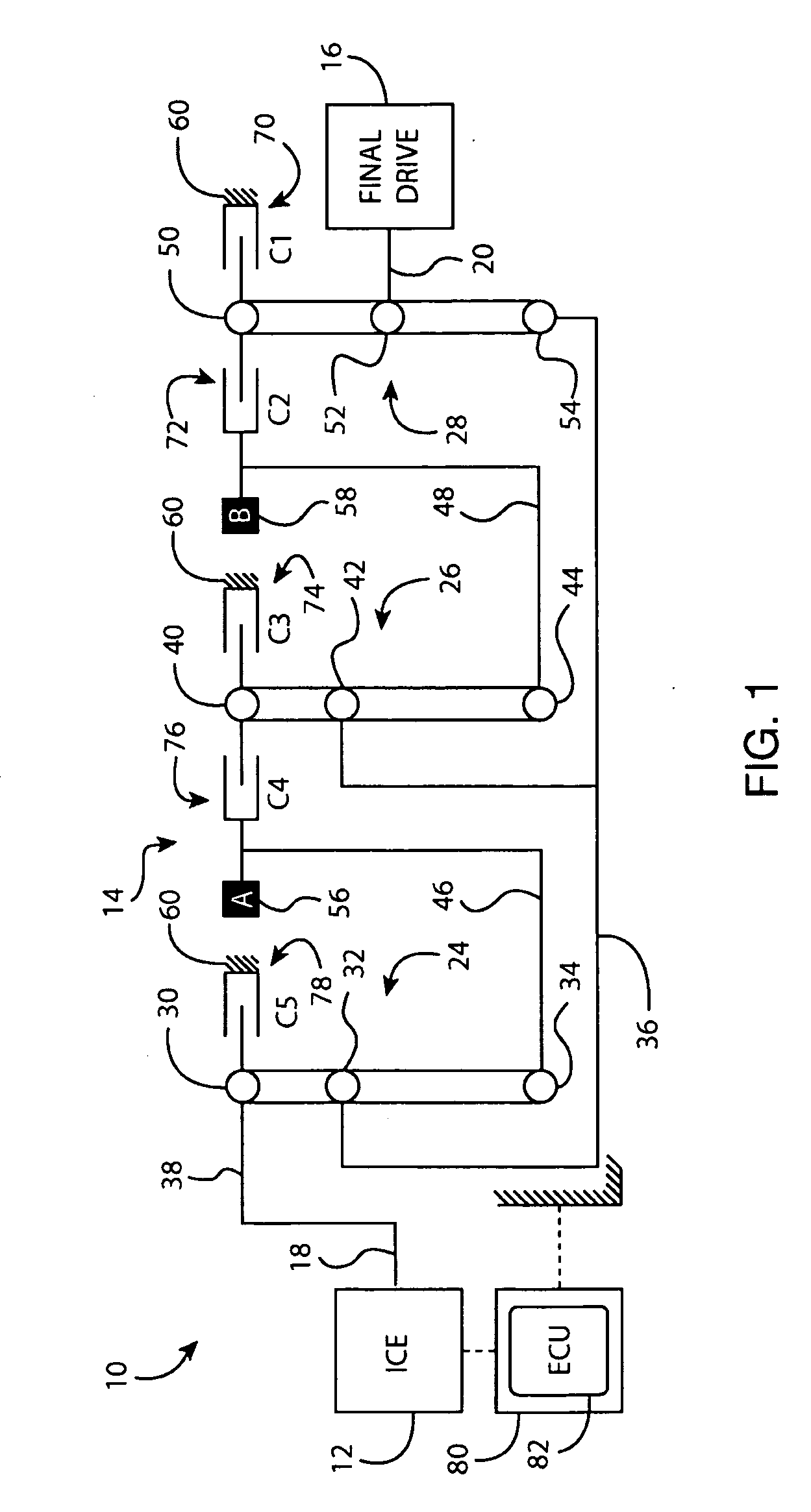

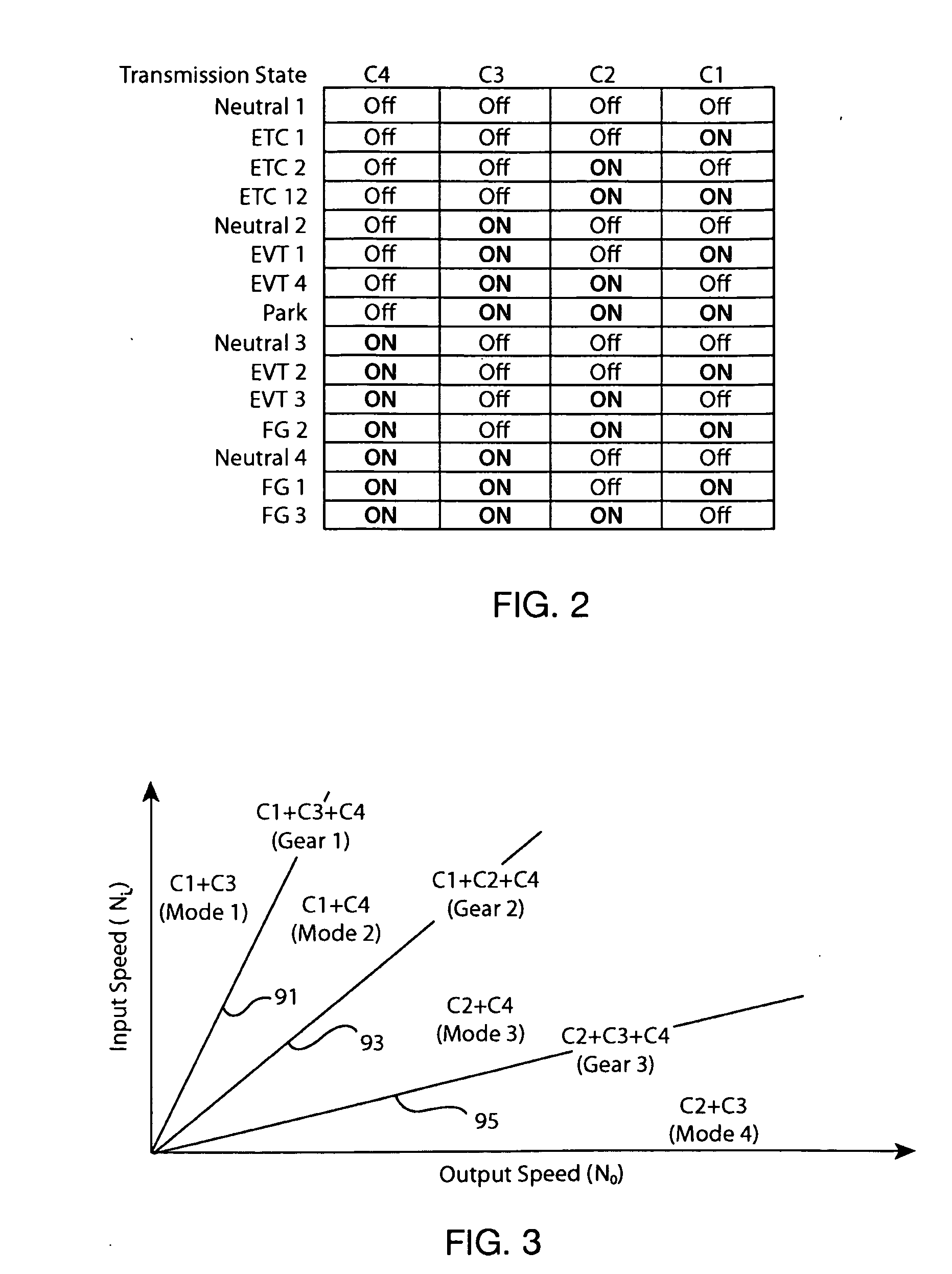

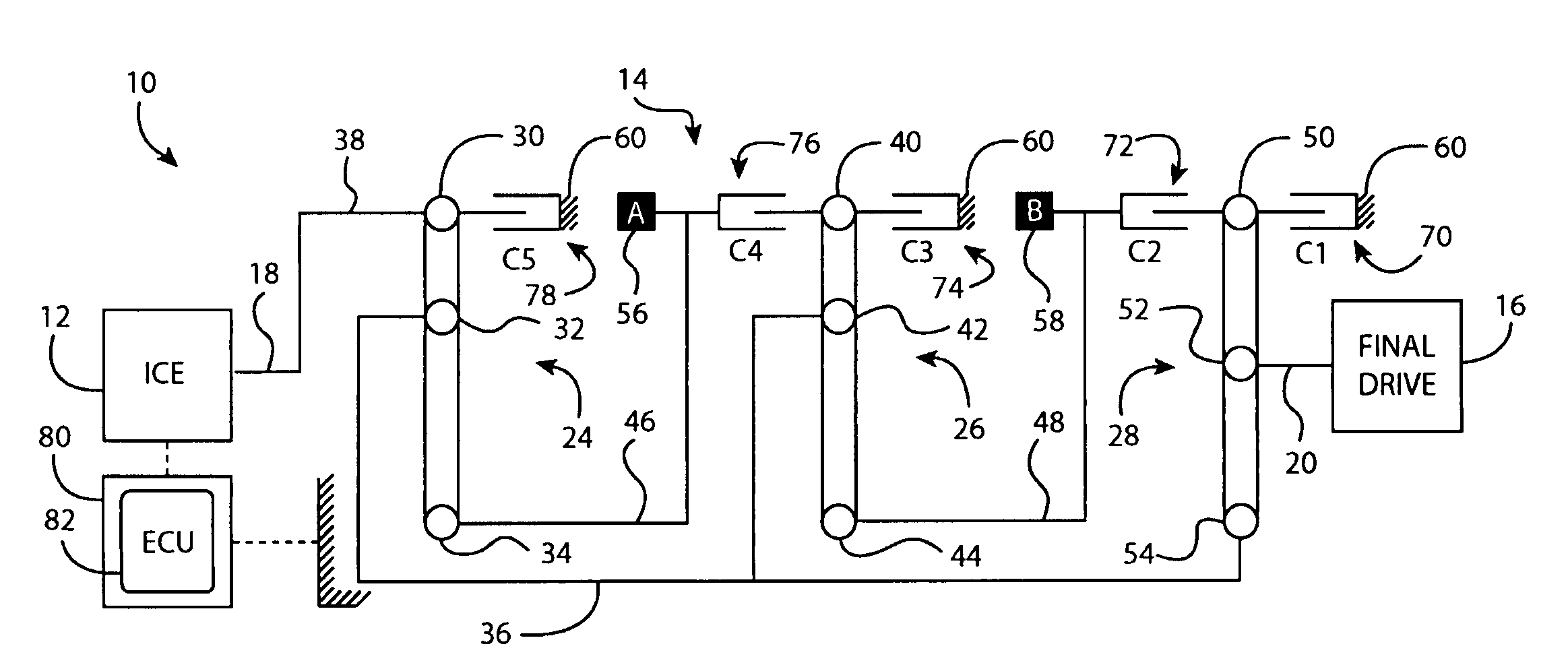

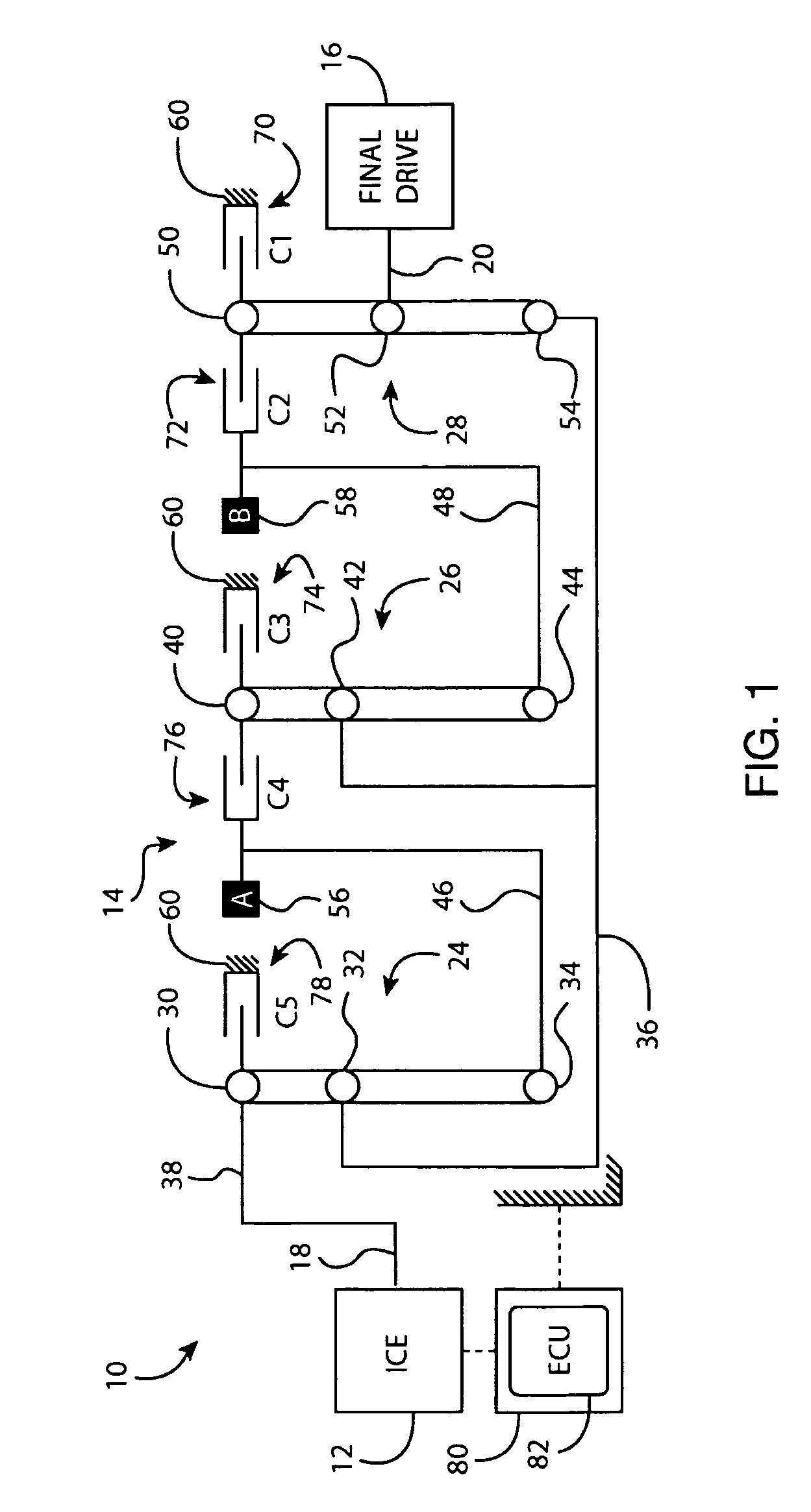

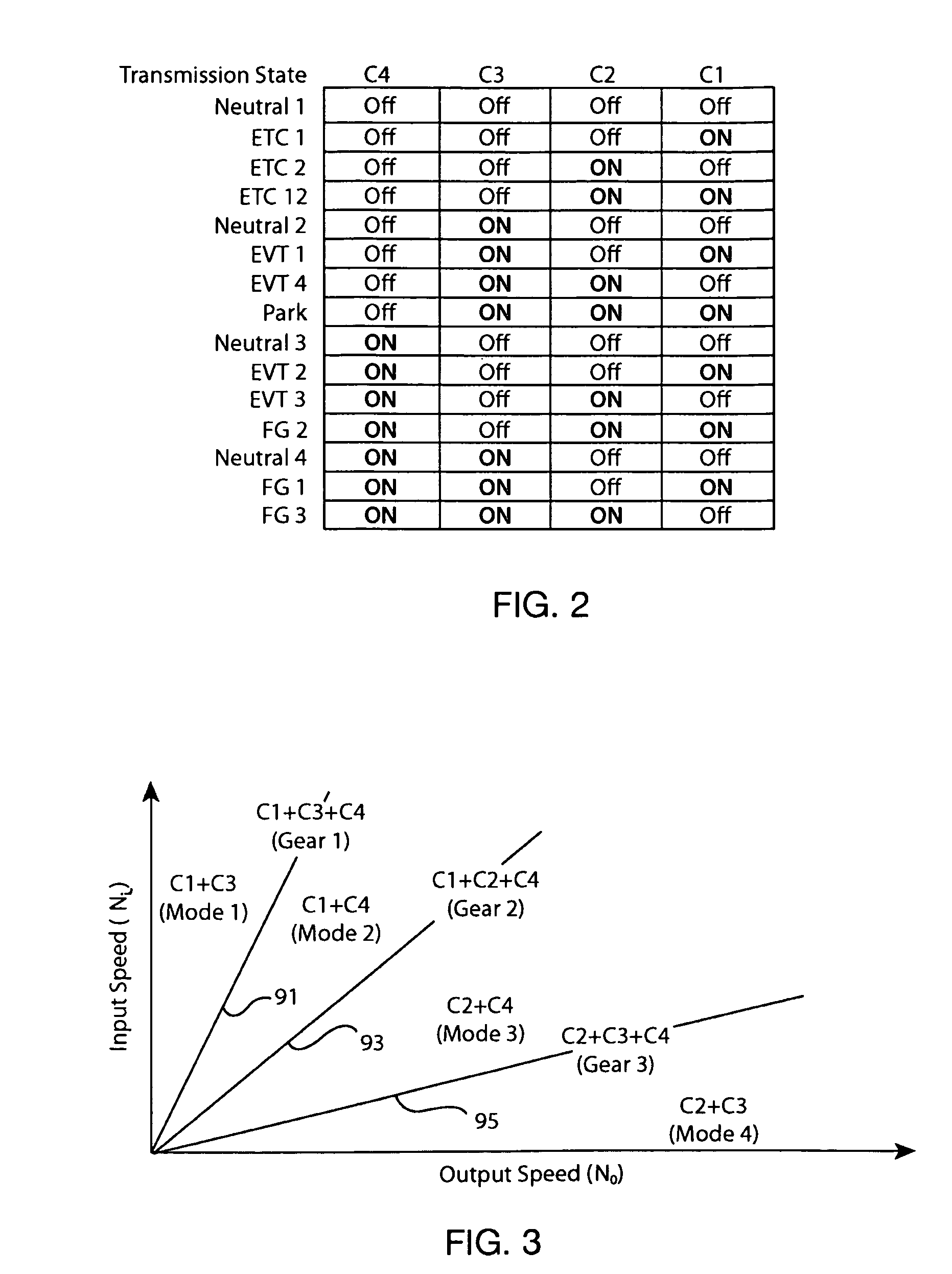

Multi-mode hybrid transmission and shift control method for a multi-mode hybrid transmission

InactiveUS20100227735A1Minimize timeReduce engine start delayHybrid vehiclesDigital data processing detailsClutchVariator

A multi-mode, electrically variable, hybrid transmission and improved shift control methods for controlling the same are provided herein. The hybrid transmission configuration and shift control methodology presented herein allow for shifting between different EVT modes when the engine is off, while maintaining propulsion capability and minimum time delay for engine autostart. The shift control maneuver is able to maintain zero engine speed while producing continuous output torque throughout the shift by eliminating transition through a fixed gear mode or neutral state. Optional oncoming clutch pre-fill strategies and mid-point abort logic minimize the time to complete shift, and reduce the engine start delay if an intermittent autostart operation is initiated.

Owner:GM GLOBAL TECH OPERATIONS LLC

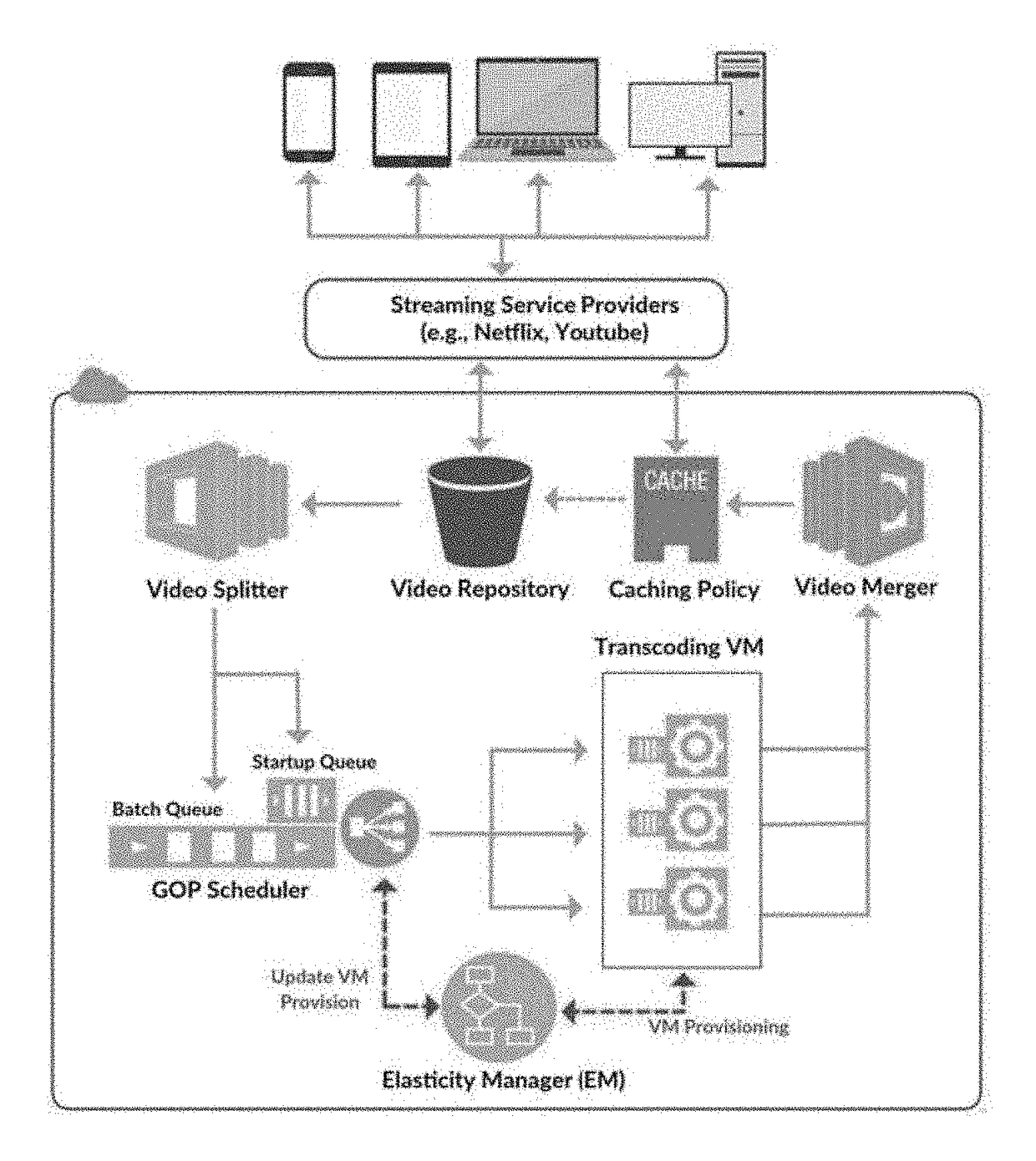

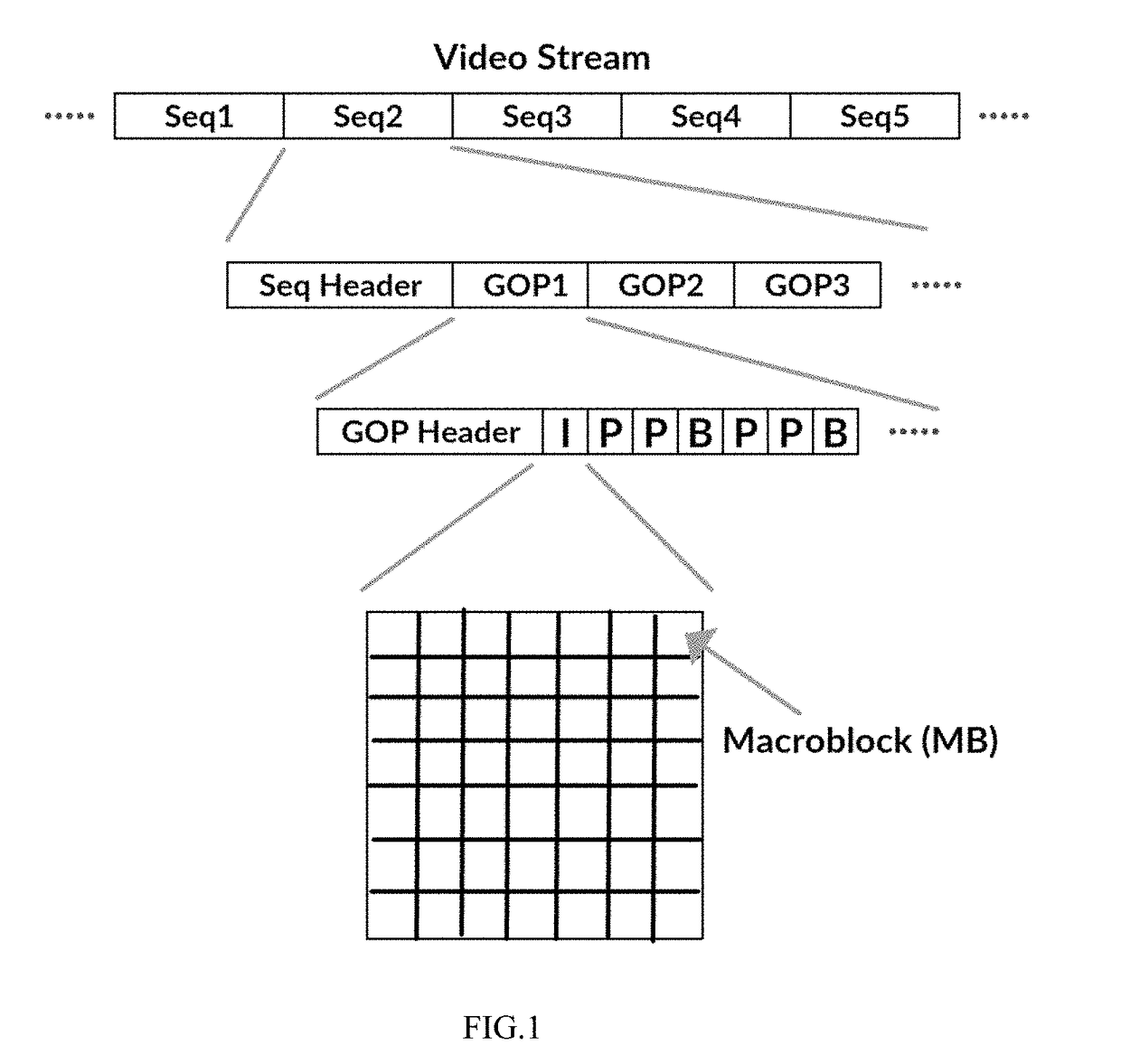

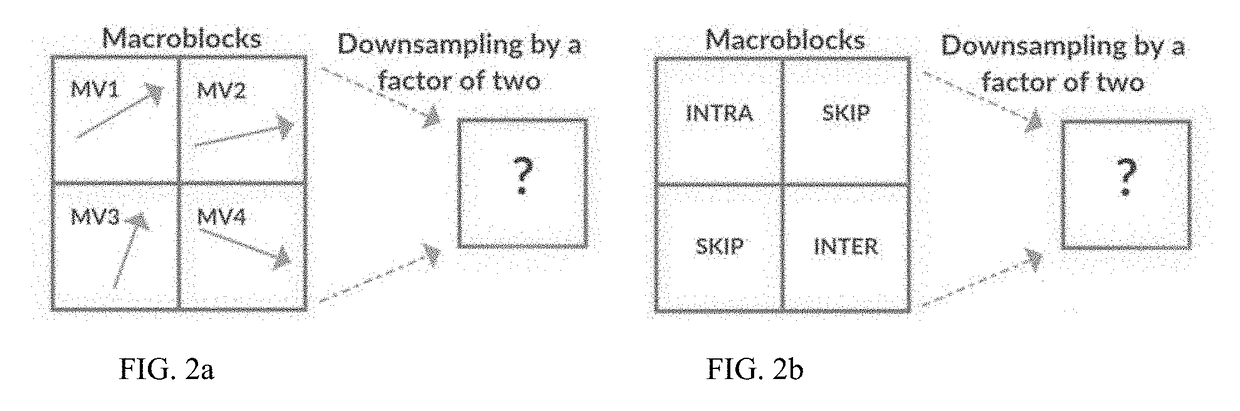

Architecture and method for high performance on demand video transcoding

ActiveUS20180131979A1Decrease deadline miss rateReduce start-up delayResource allocationDigital video signal modificationCloud baseService provision

Video streams, either in form of on-demand streaming or live streaming, usually have to be transcoded based on the characteristics of clients' devices. Transcoding is a computationally expensive and time-consuming operation; therefore, streaming service providers currently store numerous transcoded versions of the same video to serve different types of client devices. Due to the expense of maintaining and upgrading storage and computing infrastructures, many streaming service providers recently are becoming reliant on cloud services. However, the challenge in utilizing cloud services for video transcoding is how to deploy cloud resources in a cost-efficient manner without any major impact on the quality of video streams. To address this challenge, in this paper, the Cloud-based Video Streaming Service (CVSS) architecture is disclosed to transcode video streams in an on-demand manner. The architecture provides a platform for streaming service providers to utilize cloud resources in a cost-efficient manner and with respect to the Quality of Service (QoS) demands of video streams. In particular, the architecture includes a QoS-aware scheduling method to efficiently map video streams to cloud resources, and a cost-aware dynamic (i.e., elastic) resource provisioning policy that adapts the resource acquisition with respect to the video streaming QoS demands. Simulation results based on realistic cloud traces and with various workload conditions, demonstrate that the CVSS architecture can satisfy video streaming QoS demands and reduces the incurred cost of stream providers up to 70%.

Owner:UNIVERSITY OF LOUISIANA AT LAFAYETTE

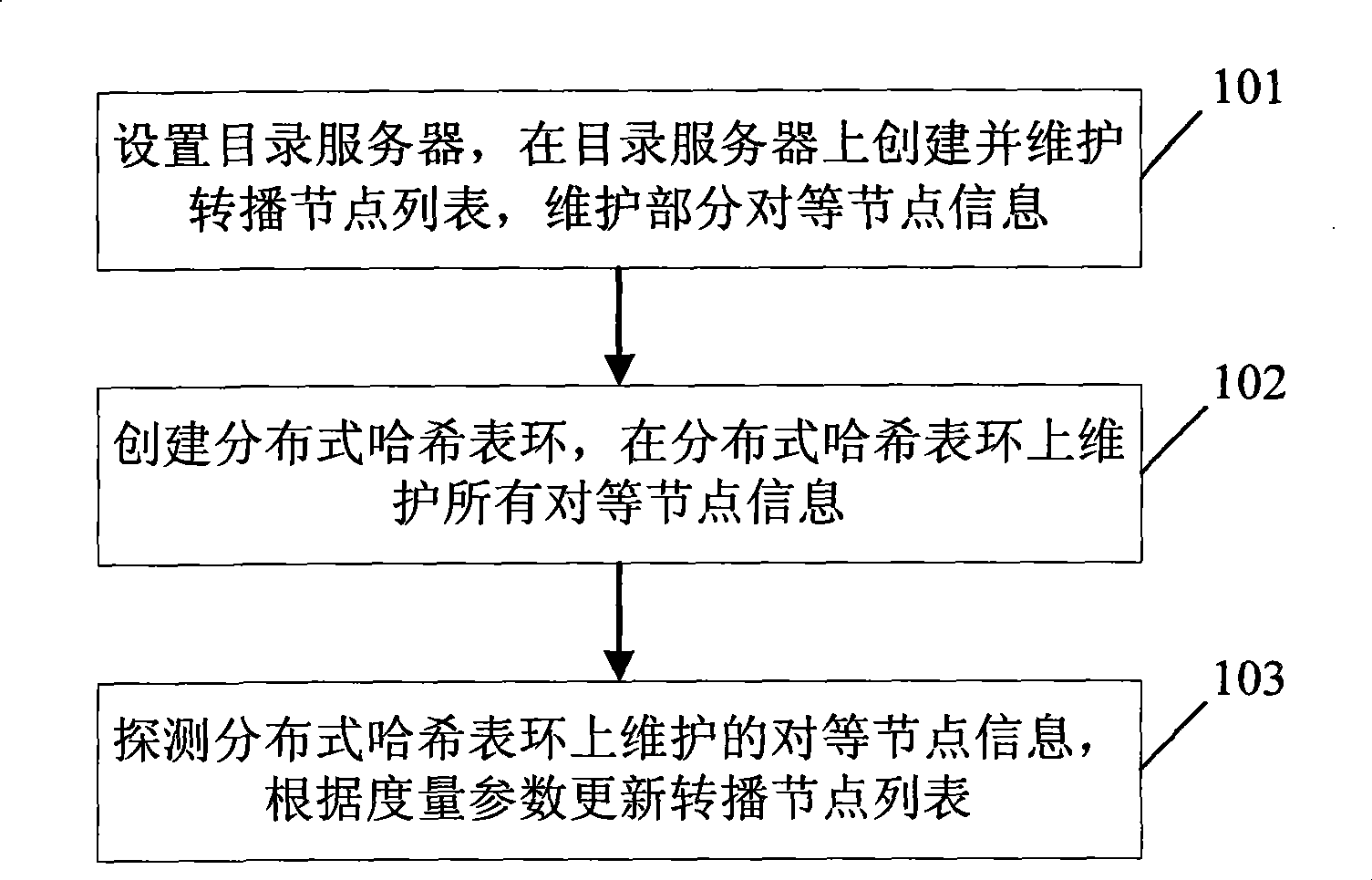

Superposition network and building method thereof

InactiveCN101378325AIncrease memory overheadImprove scalabilitySpecial service provision for substationBroadcast channelsRelationship - Father

The invention discloses an overlay network and a construction method thereof, and a peer node discovery method and user nodes, which pertain to the network communication field, wherein, the construction method comprises the steps that: a rebroadcast node list is created for each live broadcast channel, a distributed hash table ring is created for each live broadcast channel. The discovery method comprises the steps that: peer node information corresponding to the channel is searched in the rebroadcast node list; performance detection is carried out to optimal nodes and the optimal peer nodes are taken as the candidate father nodes; the minimum amount of optimal peer nodes meeting the system performance requirements are taken as the father nodes, transmission requests for different block data are respectively initiated to the father nodes. The overlay network comprises a list server, a streaming server and user nodes. The user node comprises a peer node searching module, a candidate father node storage module and a father node selection module. By adopting the technical proposal, the invention has better transmission performance in the start-up initial stage, is convenient for being expanded and can raise the peer node discovery speed.

Owner:HUAWEI TECH CO LTD

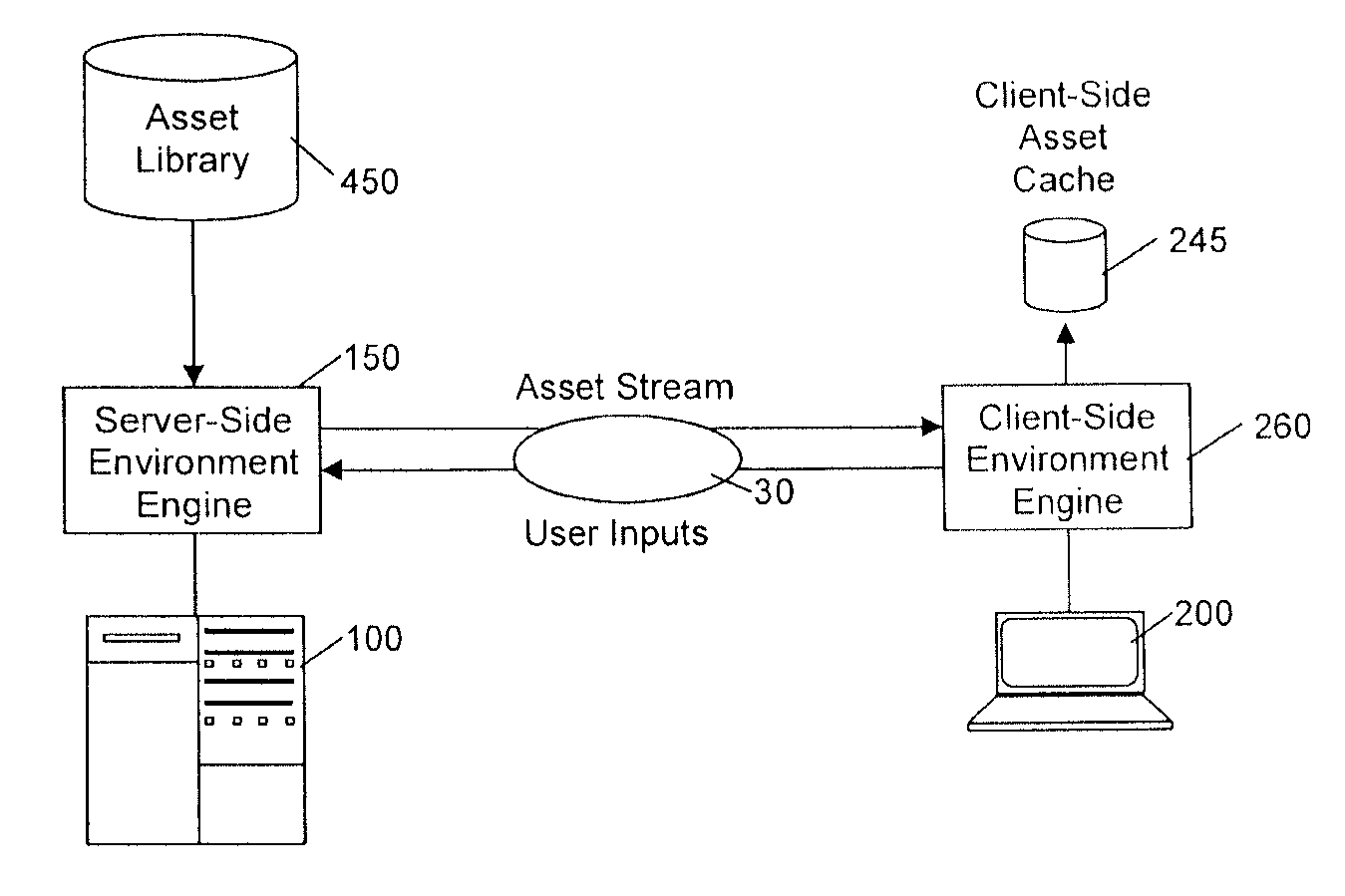

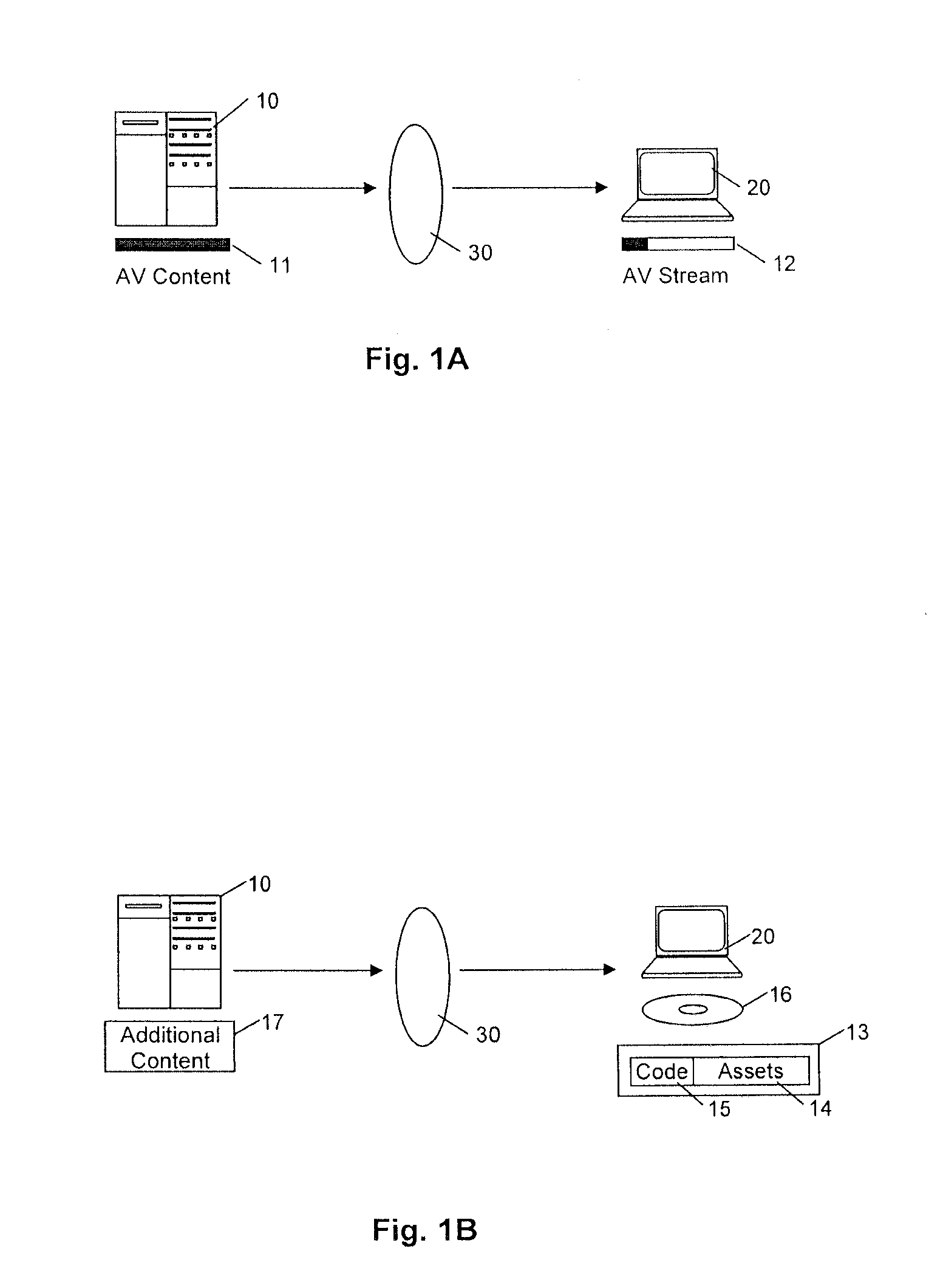

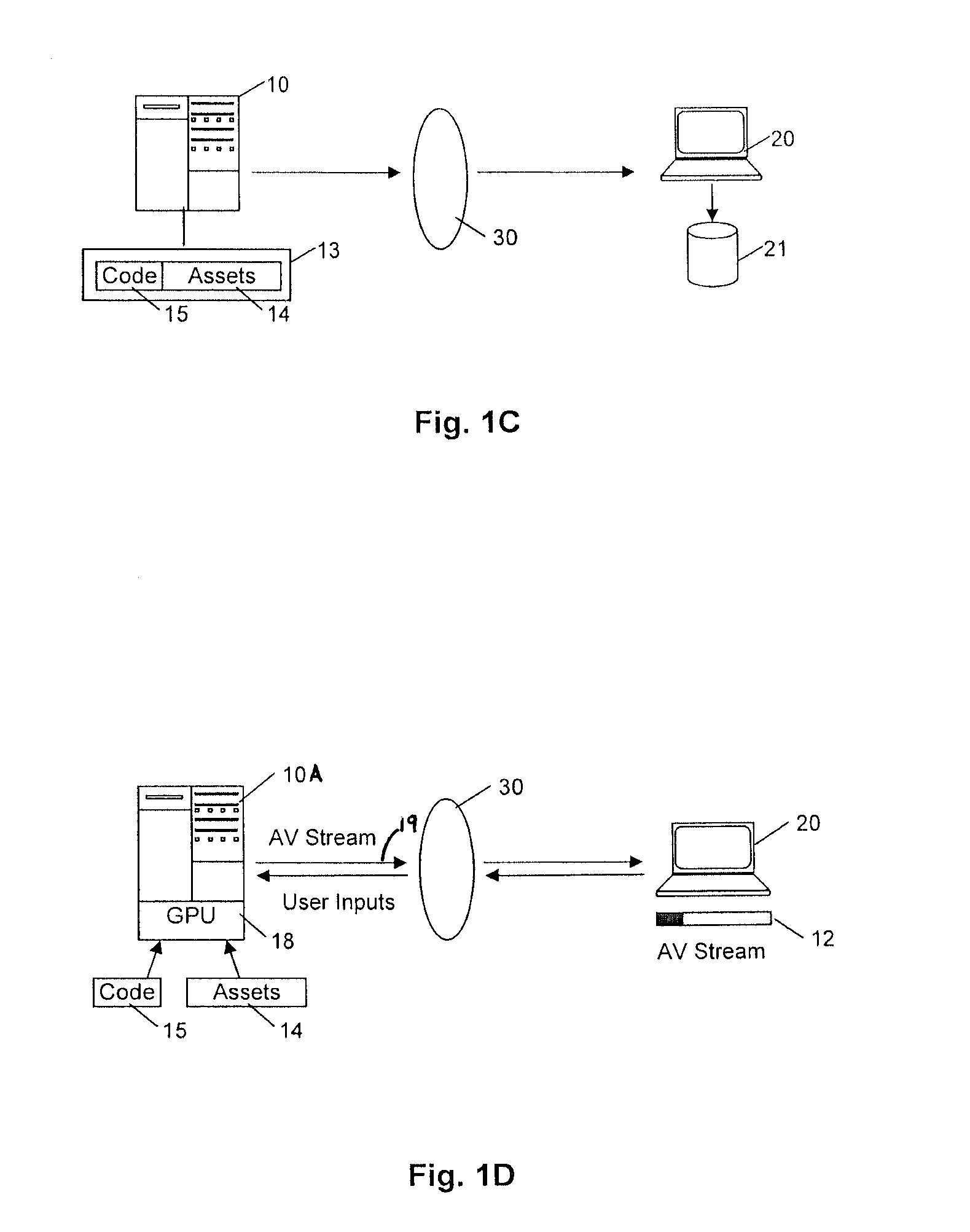

Hybrid Client-Server Graphical Content Delivery Method and Apparatus

A hybrid client-server multimedia content delivery system is provided for delivering graphical information across a network from a server to a client device. An initial set of object data is provided sufficient for the client device to begin representing the virtual environment, followed by one or more subsequent items of the object data dynamically while the client device represents the virtual environment on the visual display device. The server maintains shadow rendering information identifying the items of object data which are currently in use at the client device. Delivery of subsequent object data to the client device is ordered and prioritised with reference to the shadow rendering information.

Owner:TANGENTIX

Devices and methods for minimizing start up delay in transmission of streaming media

InactiveUS20080222302A1Reduce start-up delayImprove transmittanceError preventionTransmission systemsClient-sideStart up

A method for a client to receive streaming media over a network includes receiving data having the streaming media encoded therein and storing the received data in a de-jitter buffer thereby increasing a fullness of the de-jitter buffer. The method further includes, when the fullness reaches a fill level, initiating play out of the stored data from the de-jitter buffer, and changing the fill level while playing out the stored data.

Owner:CISCO TECH INC

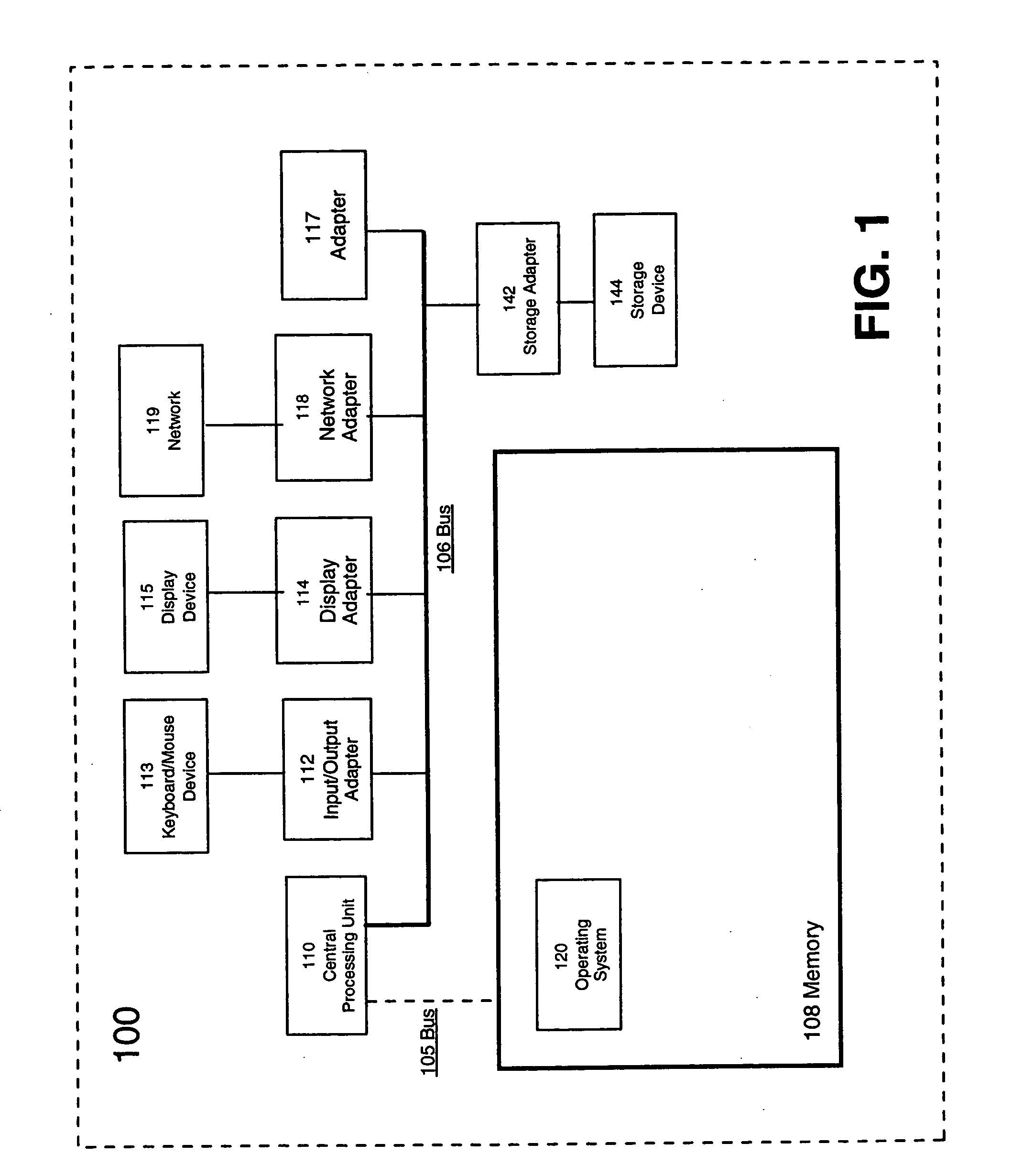

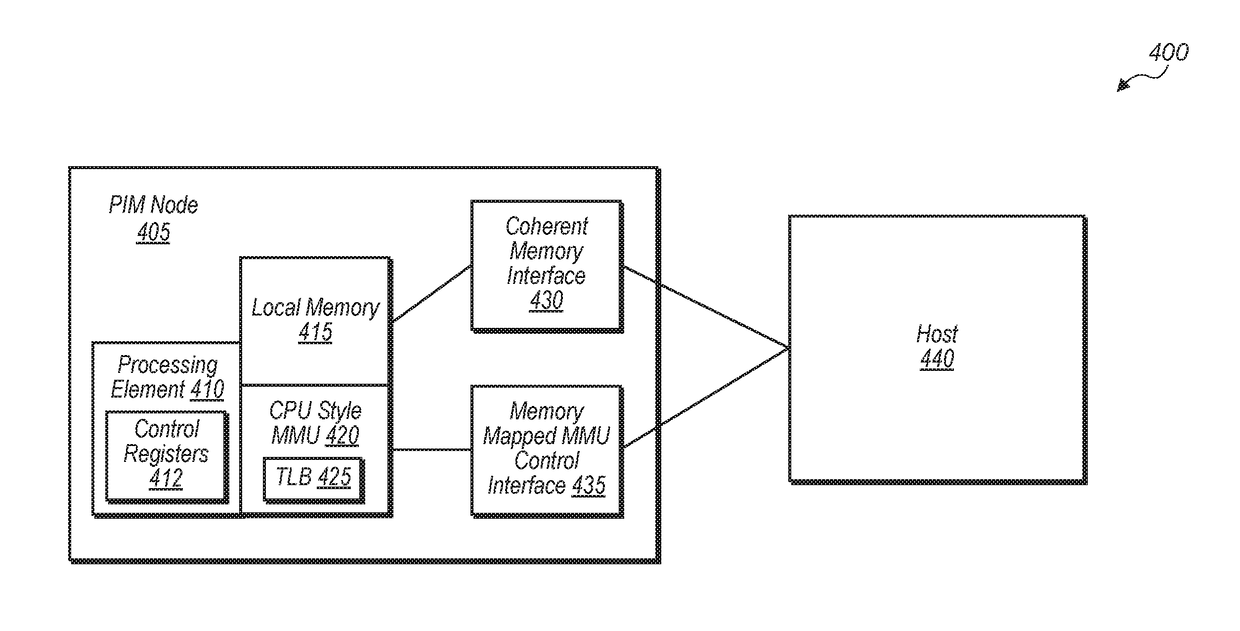

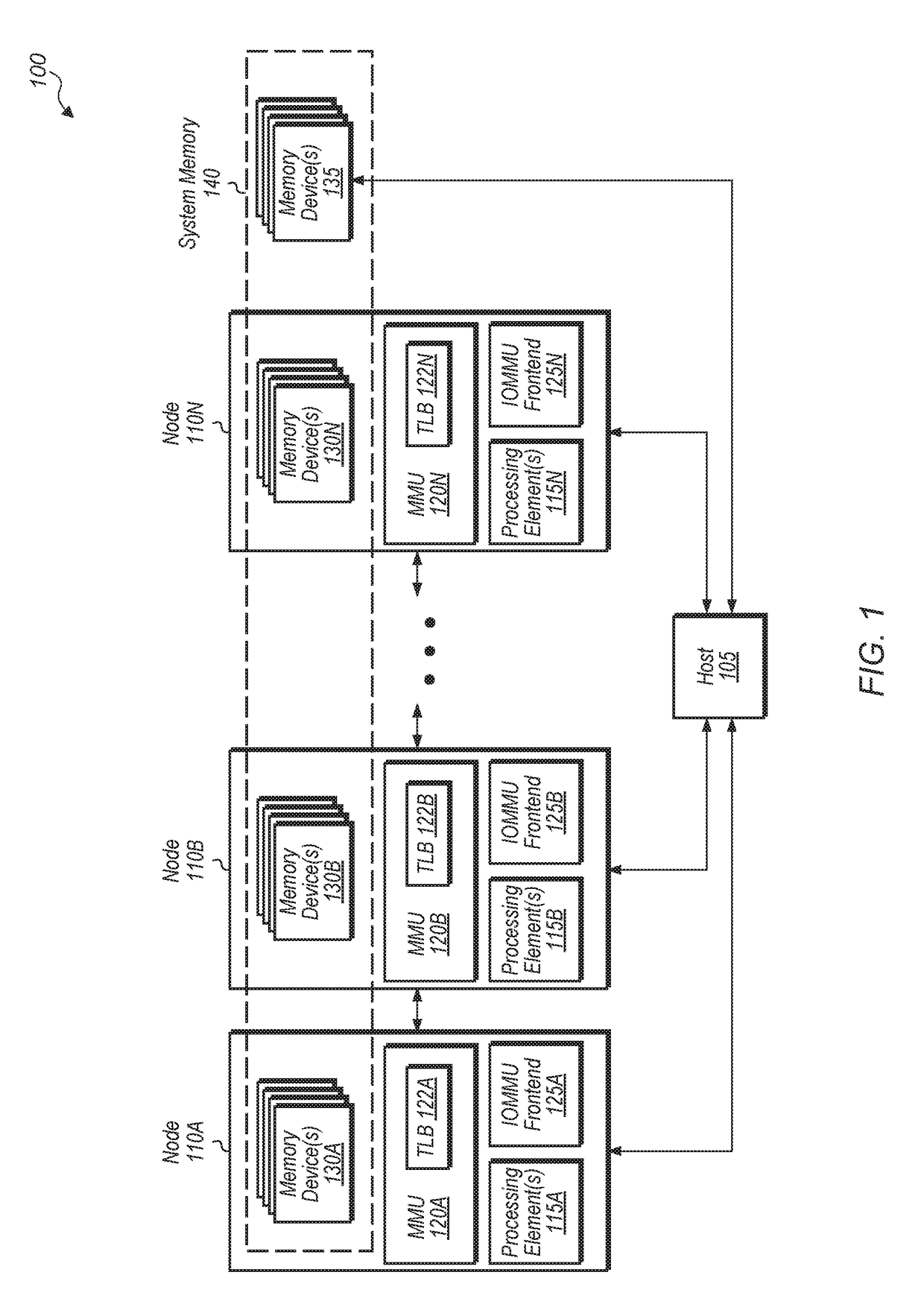

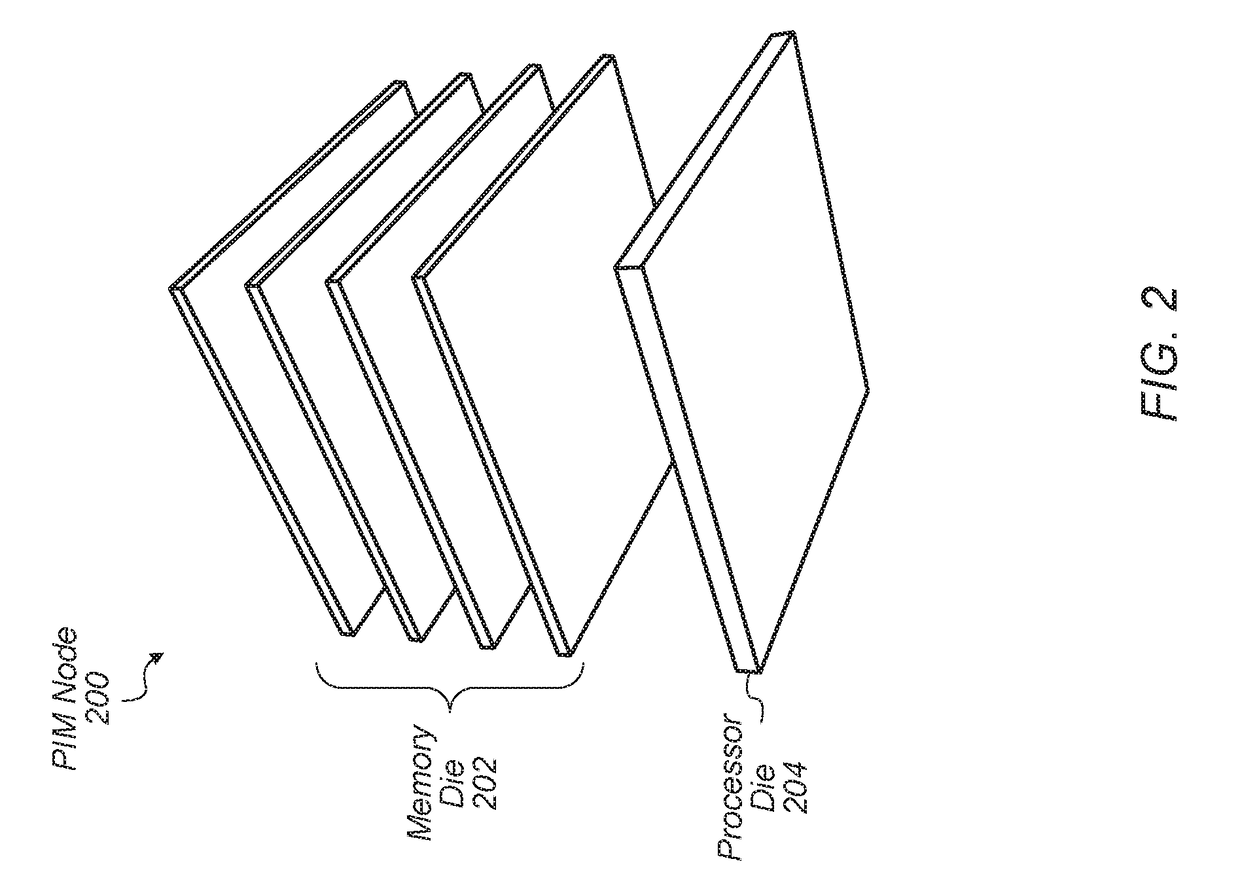

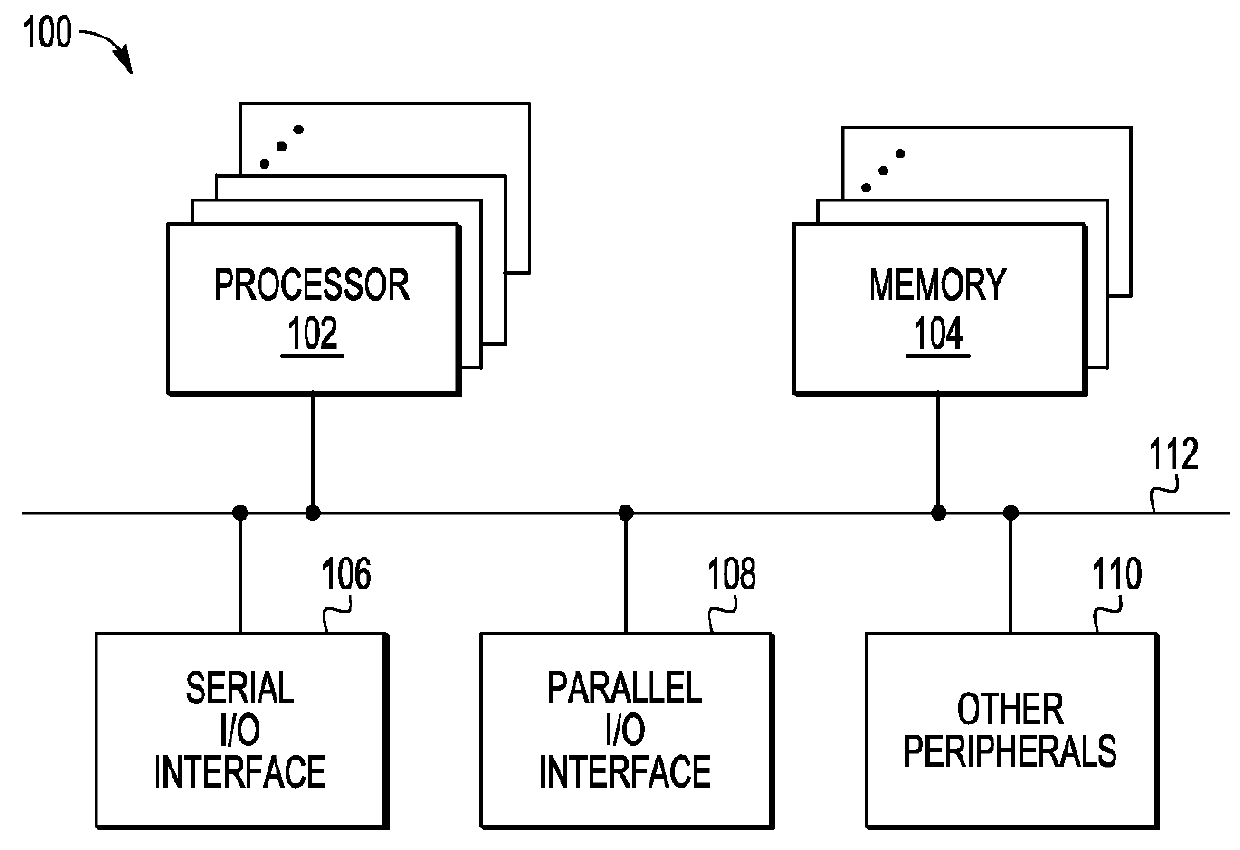

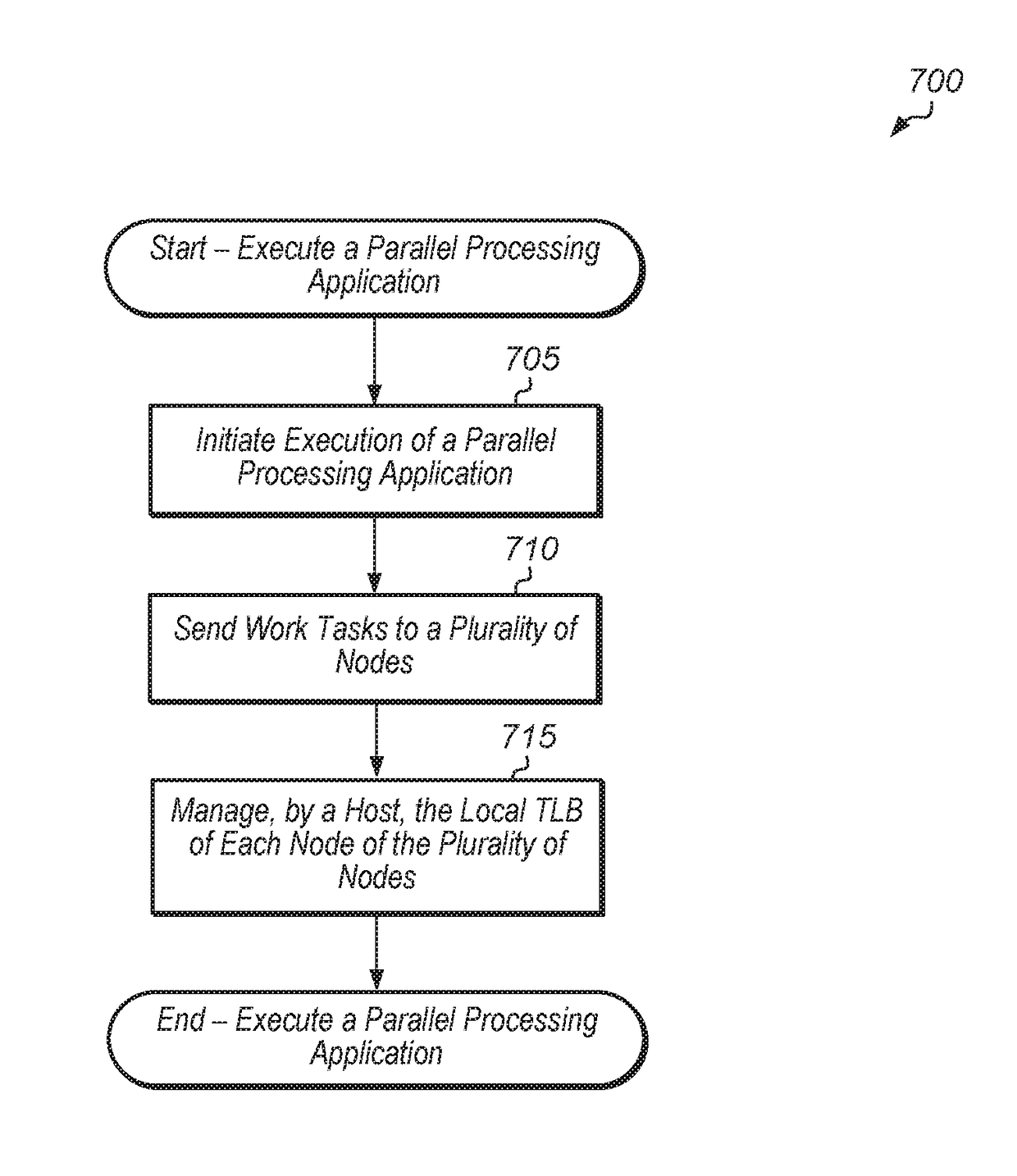

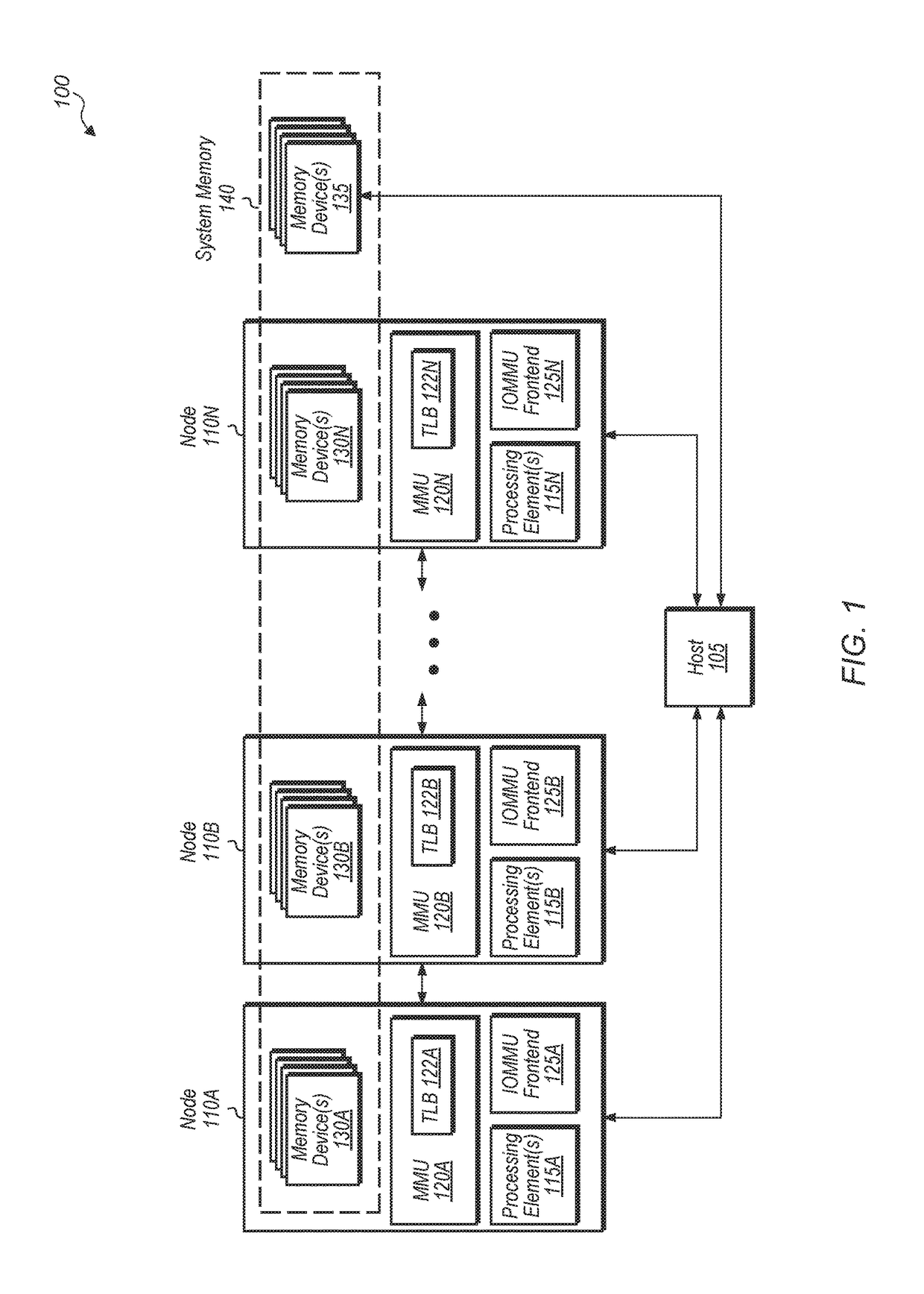

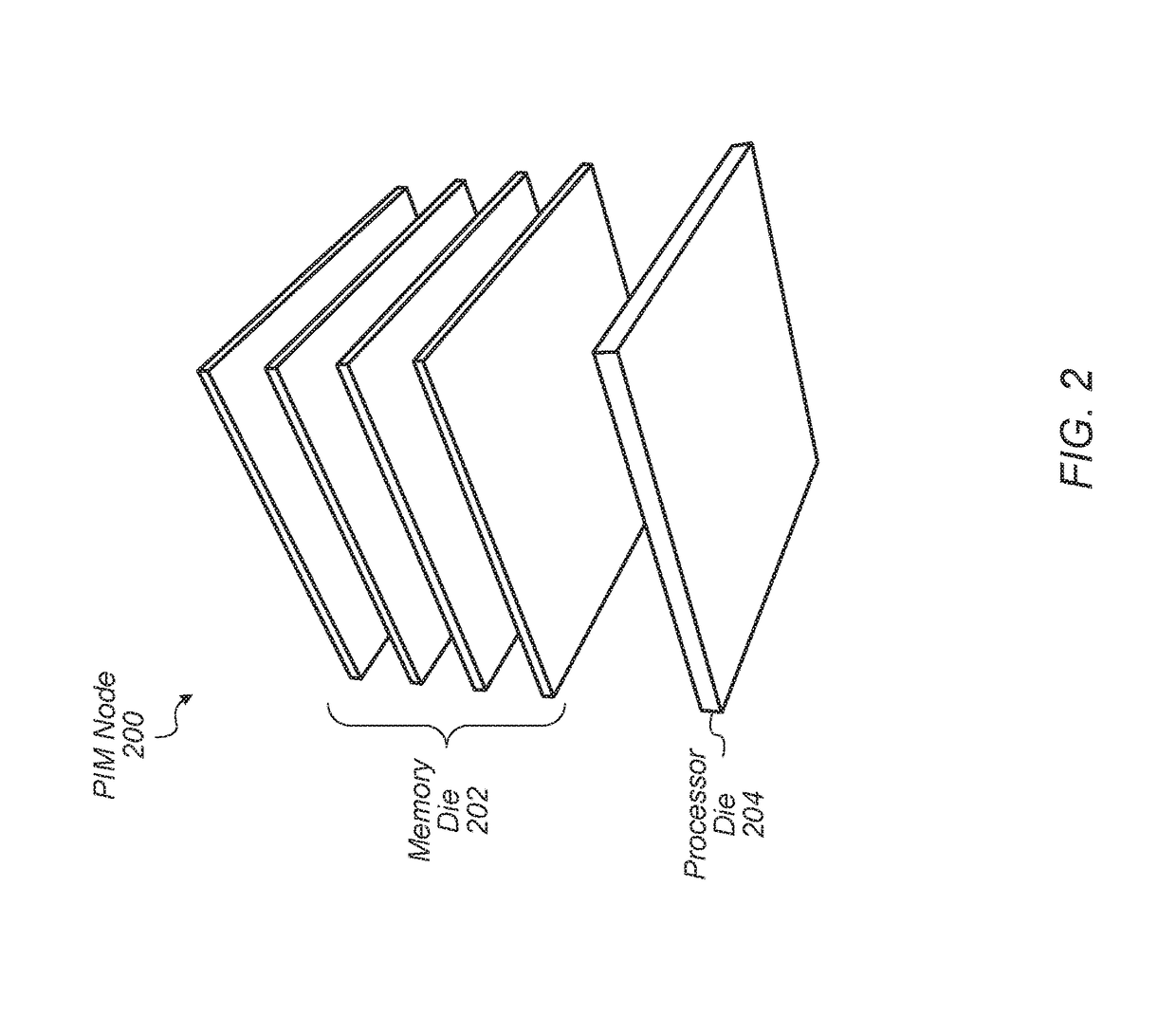

Centrally managed unified shared virtual address space

ActiveUS20170177498A1Reduce processing loadReduce start-up delayMemory architecture accessing/allocationMemory adressing/allocation/relocationWork taskProcessor register

Systems, apparatuses, and methods for managing a unified shared virtual address space. A host may execute system software and manage a plurality of nodes coupled to the host. The host may send work tasks to the nodes, and for each node, the host may externally manage the node's view of the system's virtual address space. Each node may have a central processing unit (CPU) style memory management unit (MMU) with an internal translation lookaside buffer (TLB). In one embodiment, the host may be coupled to a given node via an input / output memory management unit (IOMMU) interface, where the IOMMU frontend interface shares the TLB with the given node's MMU. In another embodiment, the host may control the given node's view of virtual address space via memory-mapped control registers.

Owner:ADVANCED MICRO DEVICES INC

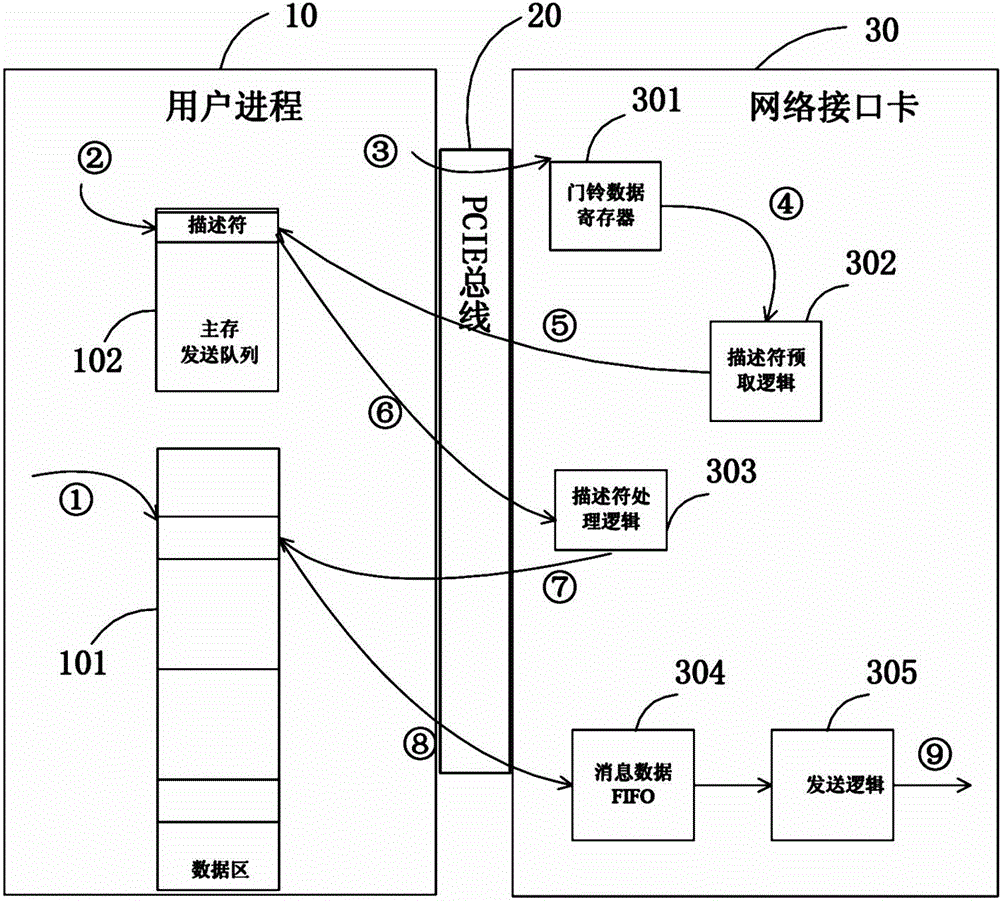

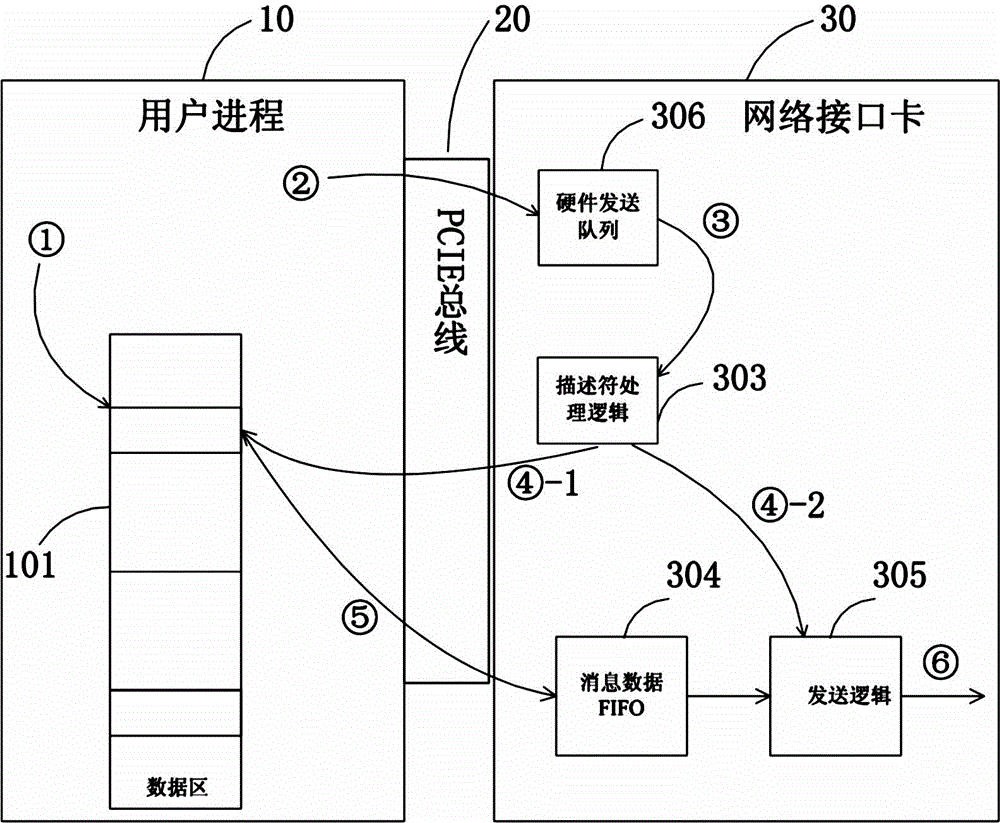

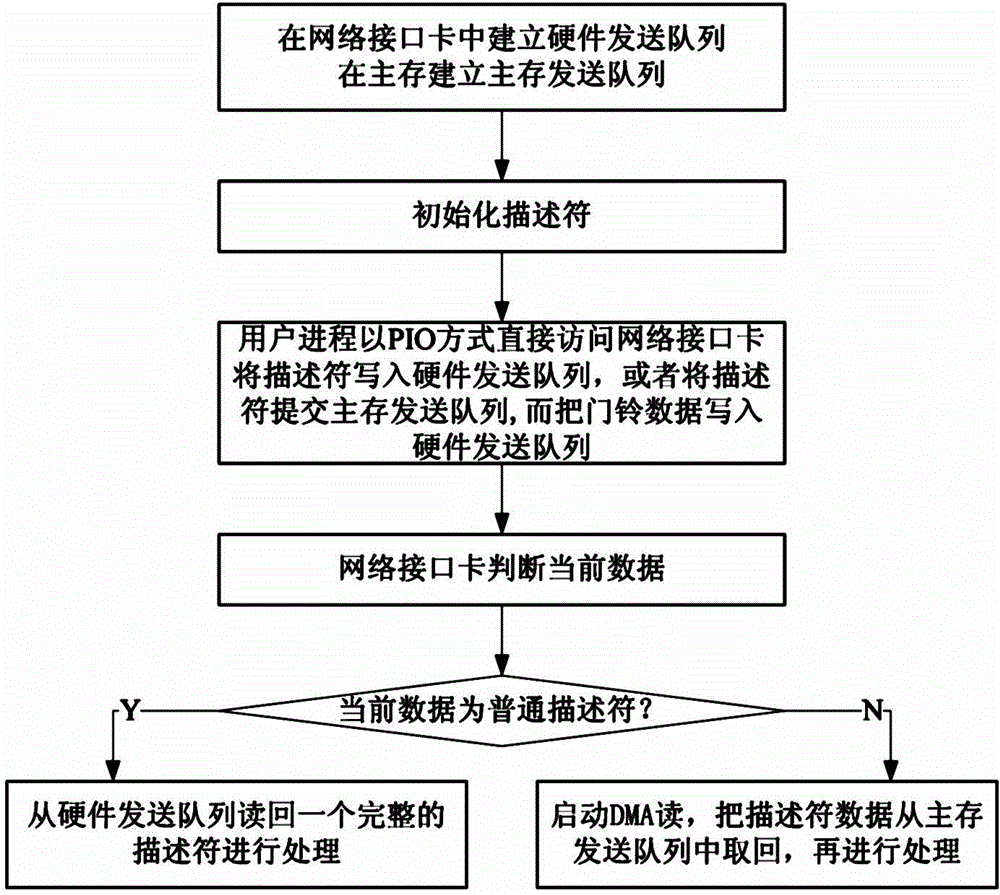

Submission method of descriptor of network interface card (NIC) based on mixing of PIO (process input output) and DMA (direct memory access)

ActiveCN103150278AReduce startup delayImprove submission efficiency and data communication efficiencyData switching networksElectric digital data processingDirect memory accessInput/output

The invention discloses a submission method of a descriptor of an NIC based on mixing of PIO and DMA. The implementation steps are as follows: 1) a hardware transmit queue is built in an NIC, and a main store transmit queue is built in a main store; 2) a descriptor is initialized; and 3) a consumer process accesses the NIC directly to write the descriptor into the hardware transmit queue directly in a PIO manner, or the descriptor is submitted to the main transmit queue, while door-bell data is written into the hardware transmit queue; when the NIC processes the data of the hardware transmit queue sequentially, current data type is judged, if the current data is the descriptor, and read-back processing is performed from the hardware transmit queue according to the value of a length field; and if the current data is the door-bell data, the current data is retaken and then processed from the main store transmit queue, and a process that the NIC implements the DMA to read a next descriptor and a process of processing the current descriptor are overlapped. The submission method has the advantages that the message start delay is small, the capacity of the transmit queue is large, and data are processed simply and efficiently.

Owner:NAT UNIV OF DEFENSE TECH

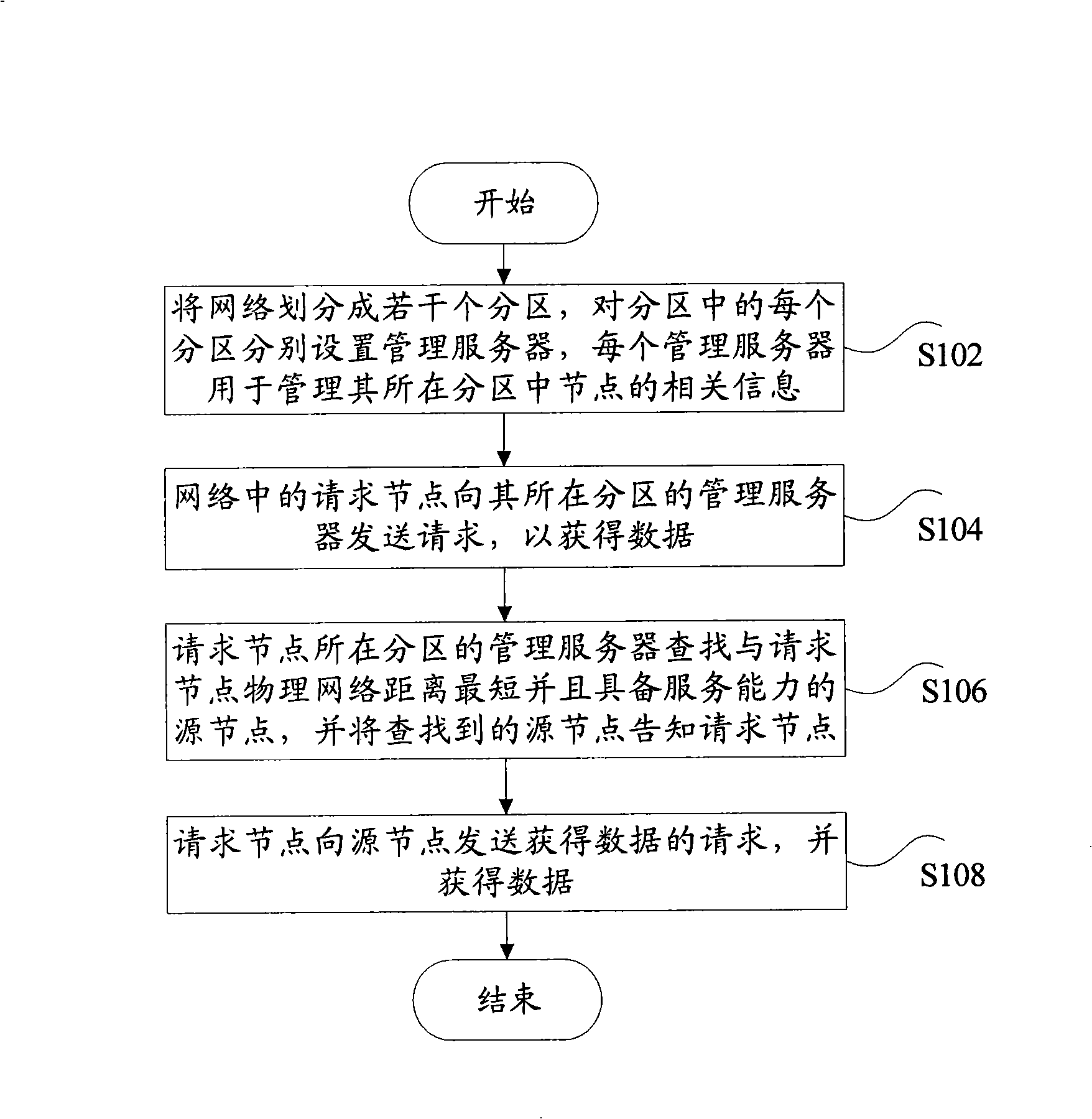

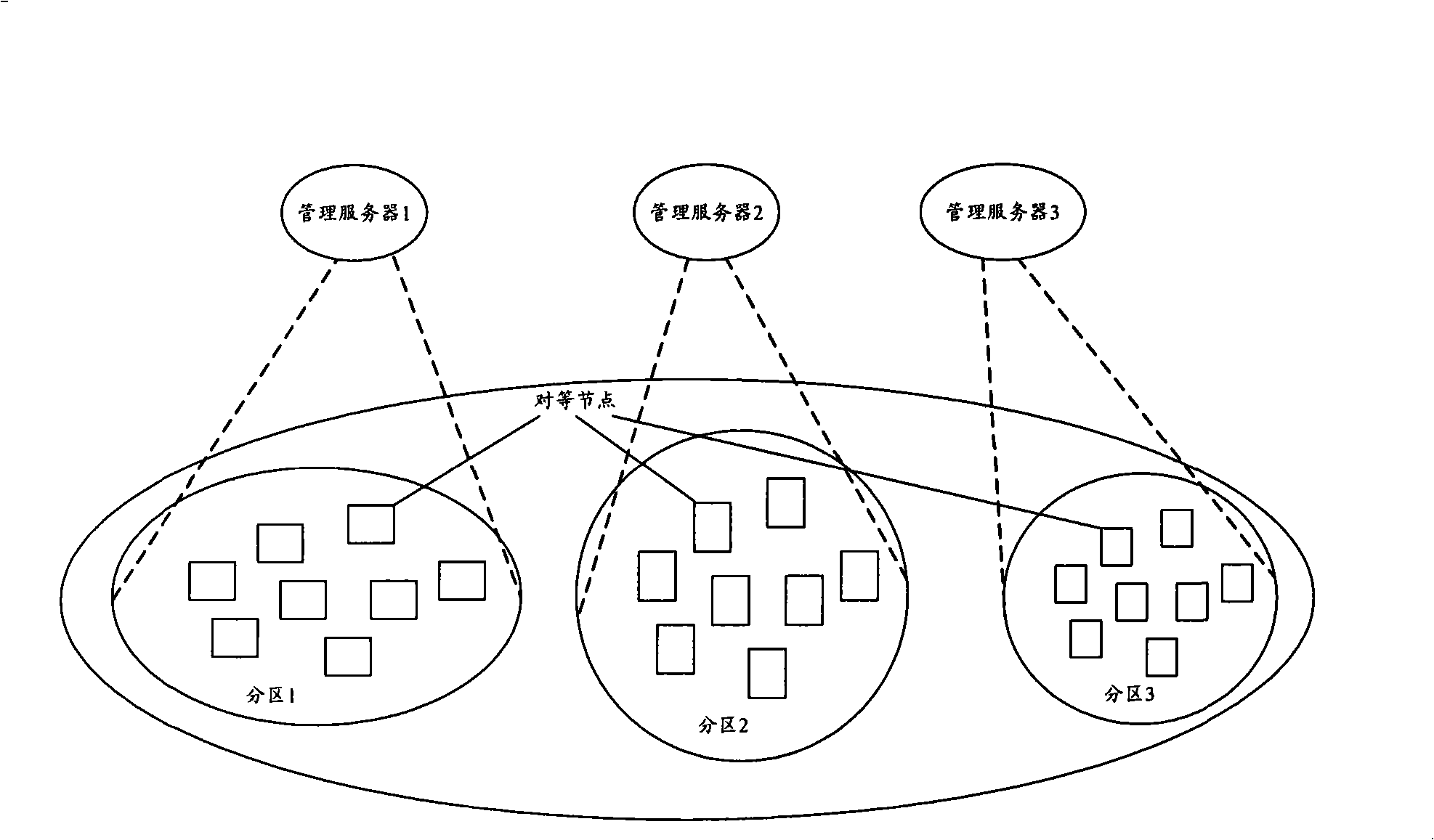

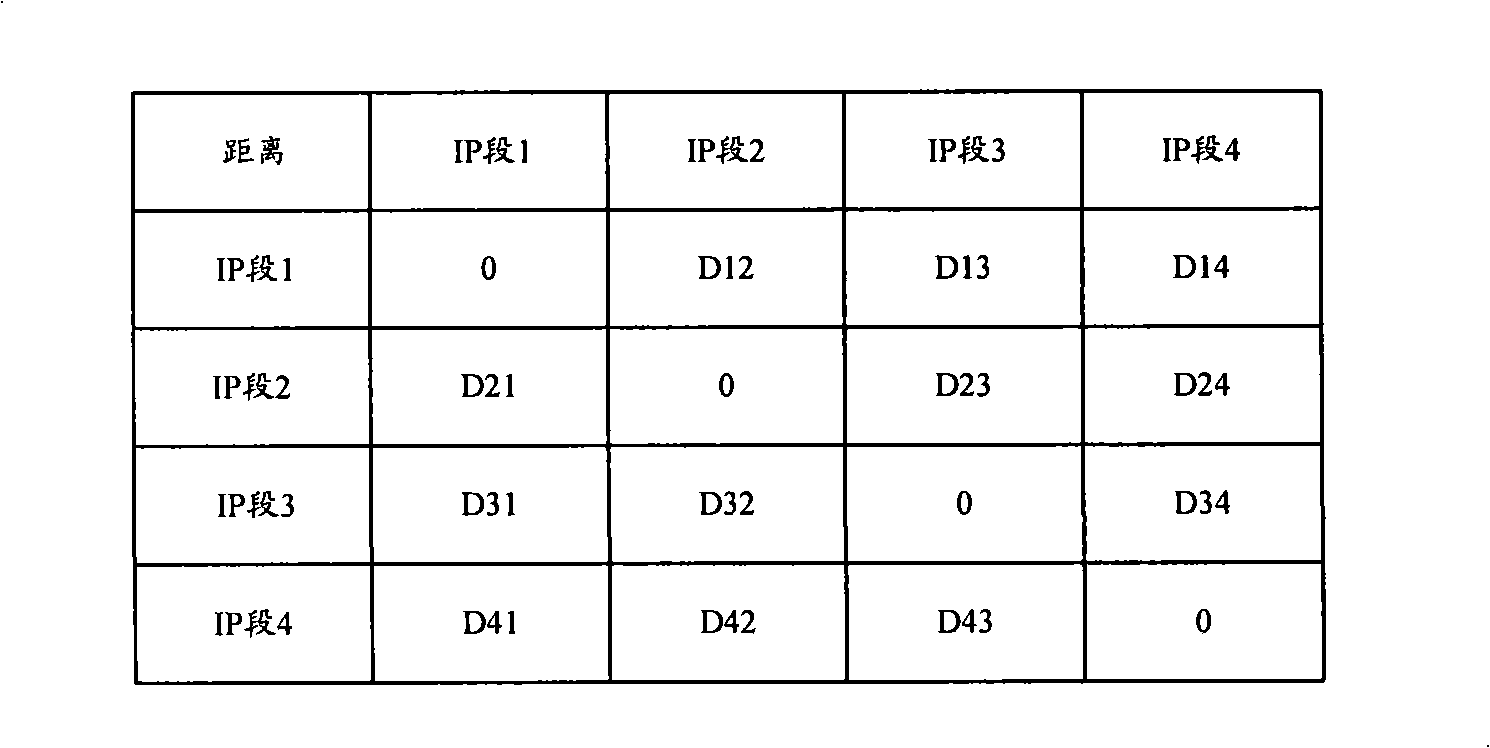

Source node selection method

InactiveCN101345628AReduce start-up delayReduce in quantityData switching networksTraffic volumeTraffic capacity

The invention discloses a source node selecting method, comprising: the step S102, dividing the network into a plurality of partitions, respectively arranging a management server for managing related information in the partitions for each partition of the plurality of partitions; the step S104, transmitting request to the management server in the partitions by the request node in the network, to obtain data; the step S106, finding the source node with service ability and the shortest distance with the physical network of the request node by the management server of the partitions, and informing the found source node to the request node; and the step S108, transmitting the obtained request to the source node by the request node, and obtaining the data. According to the invention, starting delay is shortened, the number of the detecting information is reduced as much as possible, and cross-regional flow is reduced, thereby advancing utilance of the network.

Owner:韦德宗

Multi-mode hybrid transmission and shift control method for a multi-mode hybrid transmission

InactiveUS8287427B2Capability minimumMinimum delayHybrid vehiclesDigital data processing detailsMinimum timeEngineering

A multi-mode, electrically variable, hybrid transmission and improved shift control methods for controlling the same are provided herein. The hybrid transmission configuration and shift control methodology presented herein allow for shifting between different EVT modes when the engine is off, while maintaining propulsion capability and minimum time delay for engine autostart. The shift control maneuver is able to maintain zero engine speed while producing continuous output torque throughout the shift by eliminating transition through a fixed gear mode or neutral state. Optional oncoming clutch pre-fill strategies and mid-point abort logic minimize the time to complete shift, and reduce the engine start delay if an intermittent autostart operation is initiated.

Owner:GM GLOBAL TECH OPERATIONS LLC

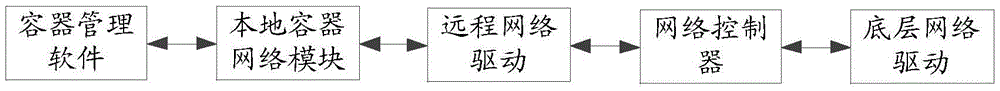

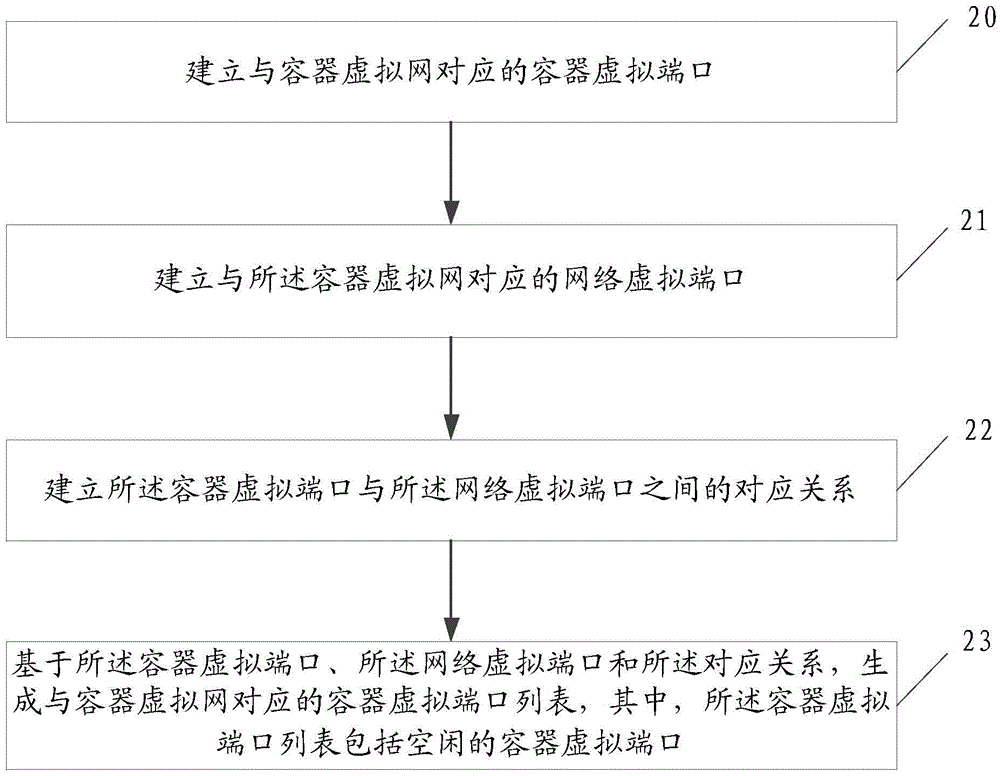

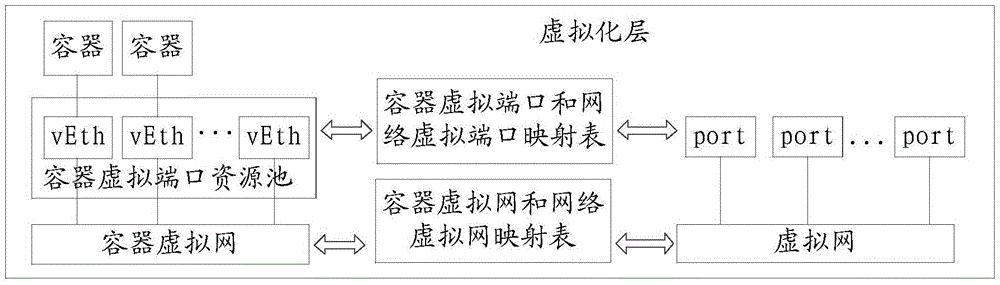

Resource pool management method, container creation method and electronic equipment

ActiveCN105630607AFast startupAvoid operating proceduresResource allocationResource poolElectric equipment

The invention discloses a resource pool management method, a container creation method and electronic equipment. The resource pool management method comprises the following steps: establishing a container virtual port corresponding to a container virtual network; establishing a network virtual port corresponding to the container virtual network; establishing a corresponding relationship between the container virtual port and the network virtual port; and generating a container virtual port list corresponding to the container virtual network on the basis of the container virtual port, the network virtual port and the corresponding relationship, wherein the container virtual port list comprises an unoccupied container virtual port.

Owner:LENOVO (BEIJING) LTD

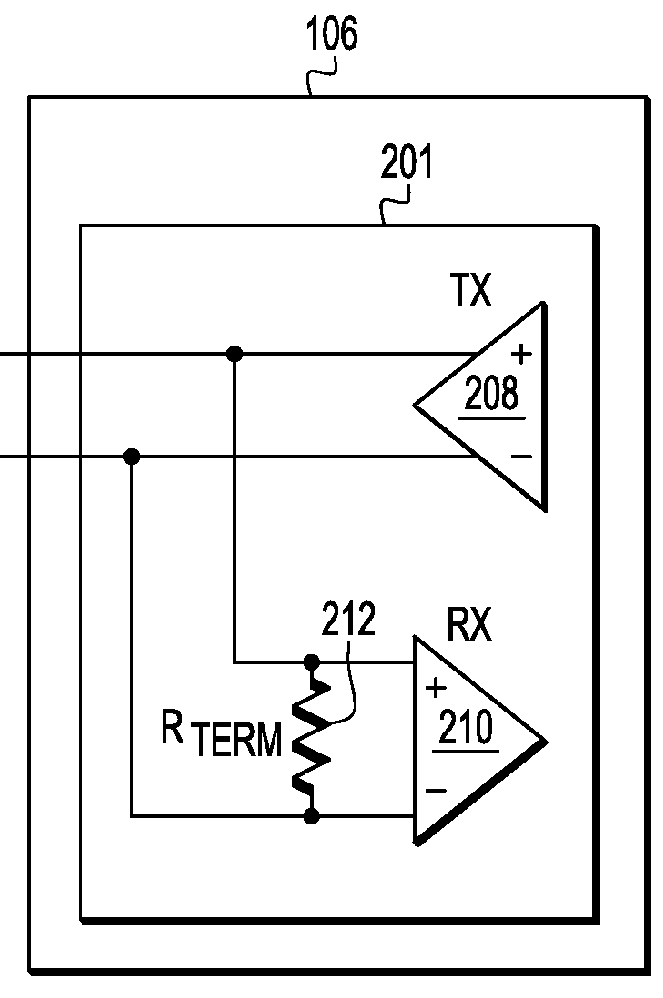

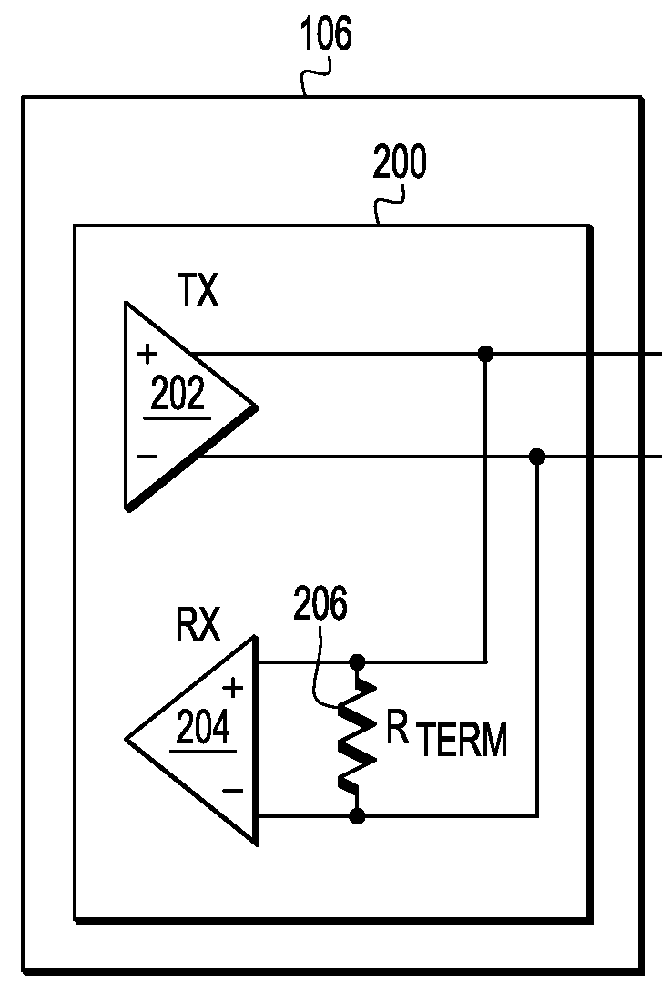

LVDS with idle state

ActiveUS9362915B1Reduce start-up delayShorten the timeElectric pulse generatorBaseband systemsPower flowDifferential signaling

A low voltage differential signaling generating circuit, which comprises a current source a pair of output nodes for providing a differential signal by virtue of a voltage difference therebetween, first and second differential switch circuitries and a bypass circuitry. The first differential switch circuitry selectively connects the current source to the first output node based on a control signal to cause a current flow from the first output node to the second one. The second differential switch circuitry selectively connects the current source to the second output node based on the control signal to cause a current flow from the second output node to the first one. The bypass circuitry is arranged in parallel to the first and second differential switch circuitries and is selectively switched based on an idle mode signal to prevent a current between the output nodes.

Owner:NXP USA INC

Centrally managed unified shared virtual address space

ActiveUS9892058B2Reduce processing loadReduce start-up delayMemory architecture accessing/allocationEnergy efficient computingWork taskProcessor register

Systems, apparatuses, and methods for managing a unified shared virtual address space. A host may execute system software and manage a plurality of nodes coupled to the host. The host may send work tasks to the nodes, and for each node, the host may externally manage the node's view of the system's virtual address space. Each node may have a central processing unit (CPU) style memory management unit (MMU) with an internal translation lookaside buffer (TLB). In one embodiment, the host may be coupled to a given node via an input / output memory management unit (IOMMU) interface, where the IOMMU frontend interface shares the TLB with the given node's MMU. In another embodiment, the host may control the given node's view of virtual address space via memory-mapped control registers.

Owner:ADVANCED MICRO DEVICES INC

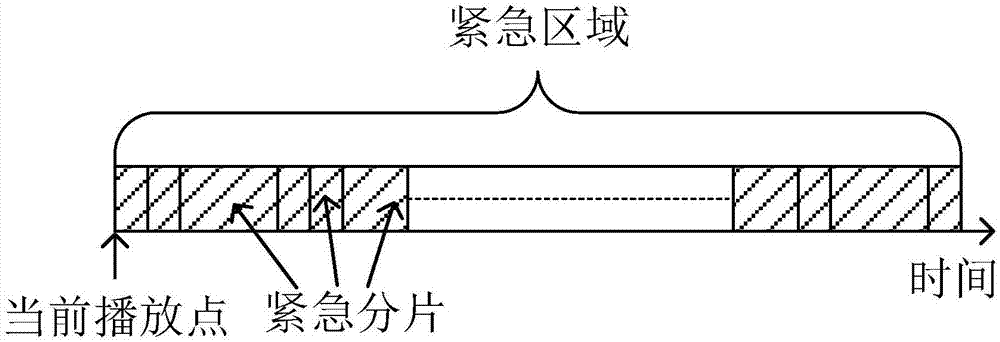

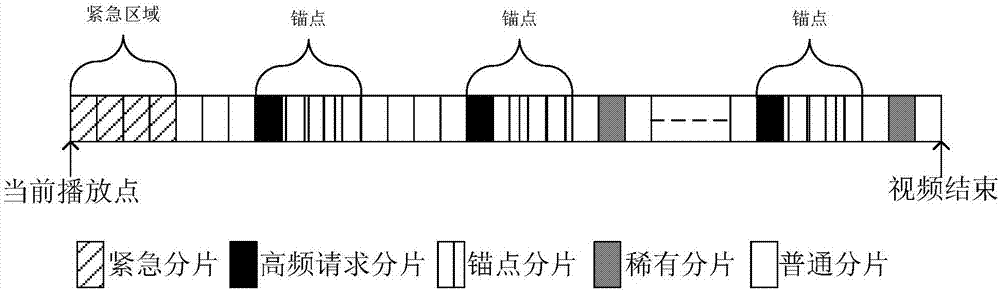

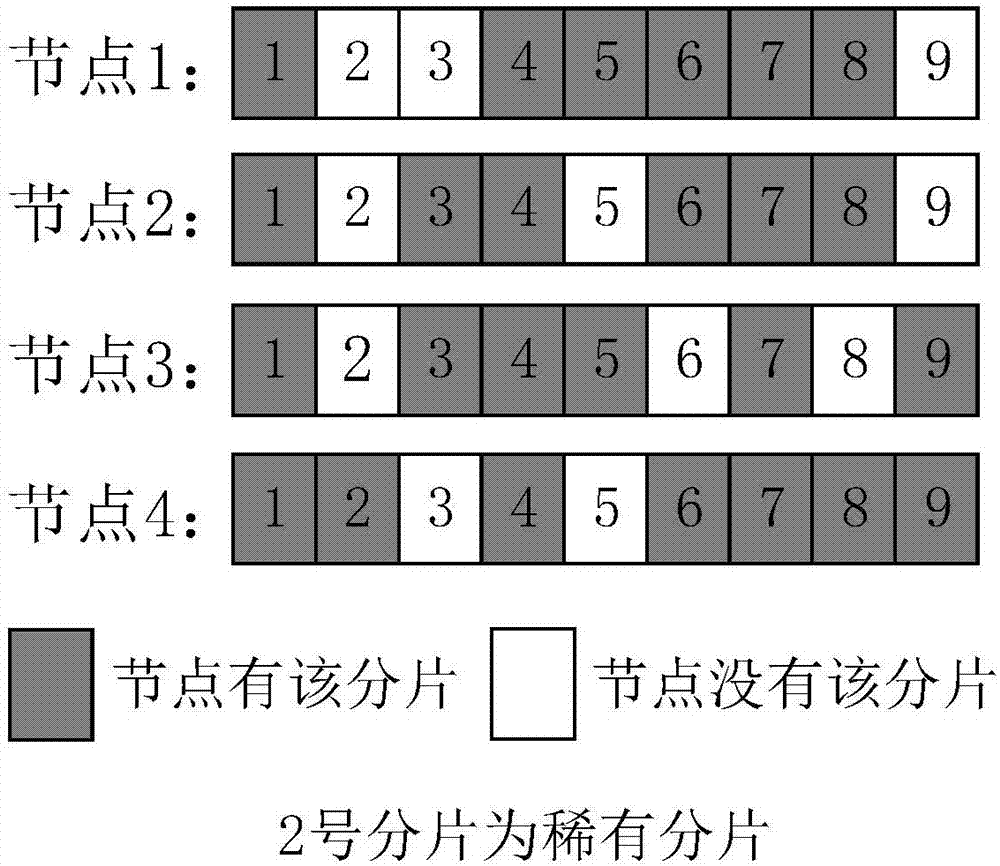

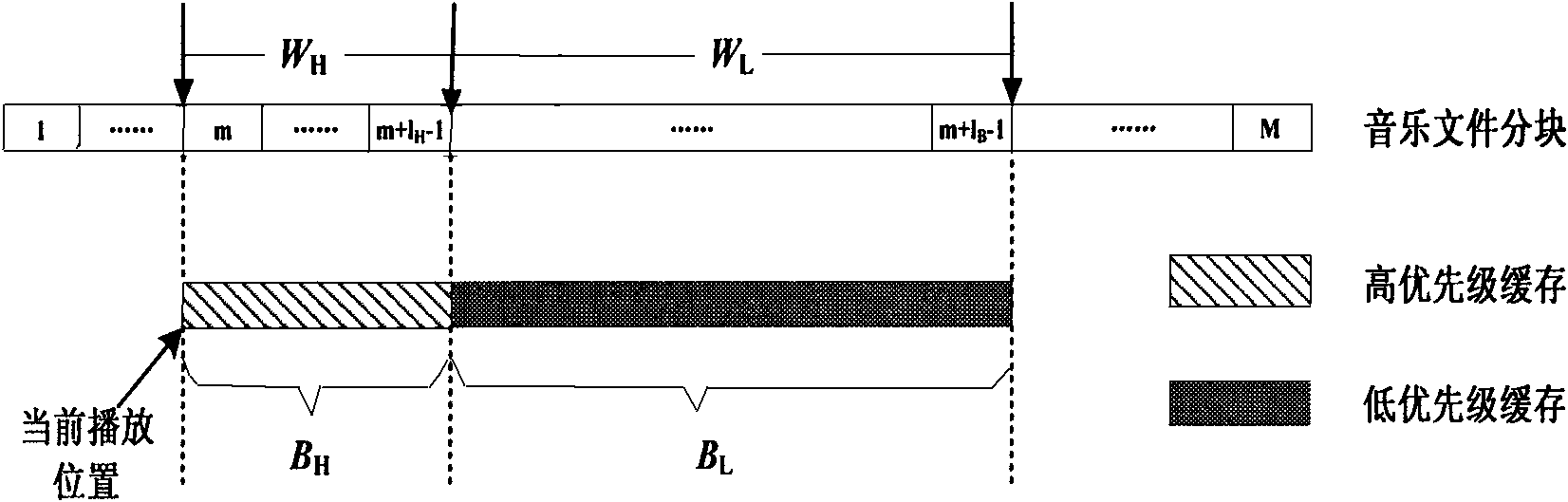

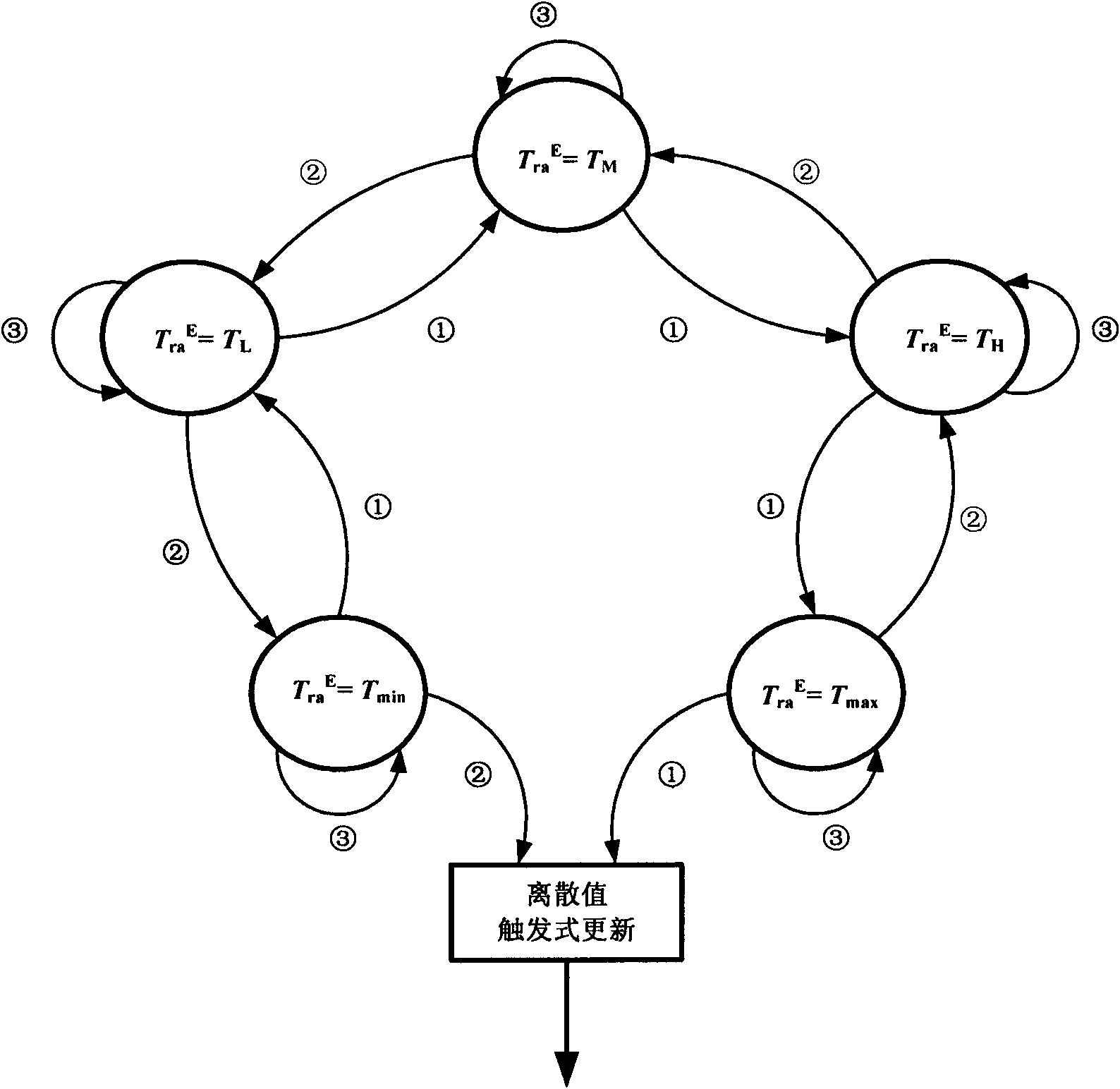

P2P data scheduling method based on feature priorities

ActiveCN107396207AGuaranteed fluencyAvoid CatonSelective content distributionTheoretical computer scienceData scheduling

The invention discloses a P2P data scheduling method based on feature priorities, through which the problem of the traditional fragment scheduling strategy that only some types of fragments are given attention while other types of fragments are ignored is solved. The method is characterized in that the fragments are divided into four types, i.e., emergent fragments, anchor fragments, rare fragments and ordinary fragments, according to fragment features; anchors are set according to request frequencies of the video fragments, instead of in a manner that a video is simply equally divided in the traditional anchor strategy; and downloading priorities of all the types of fragments are set from high to low, and downloading of the fragments with a lower priority is started upon completion of downloading of the fragments with a higher priority, until all the four types of fragments are downloaded. The method adopting the fragment scheduling scheme has the advantages that good user operation experience is provided while high video playing smoothness is ensured, and the number of copies of the rare fragments in a system is increased.

Owner:NANJING NANYOU INST OF INFORMATION TECHNOVATION CO LTD

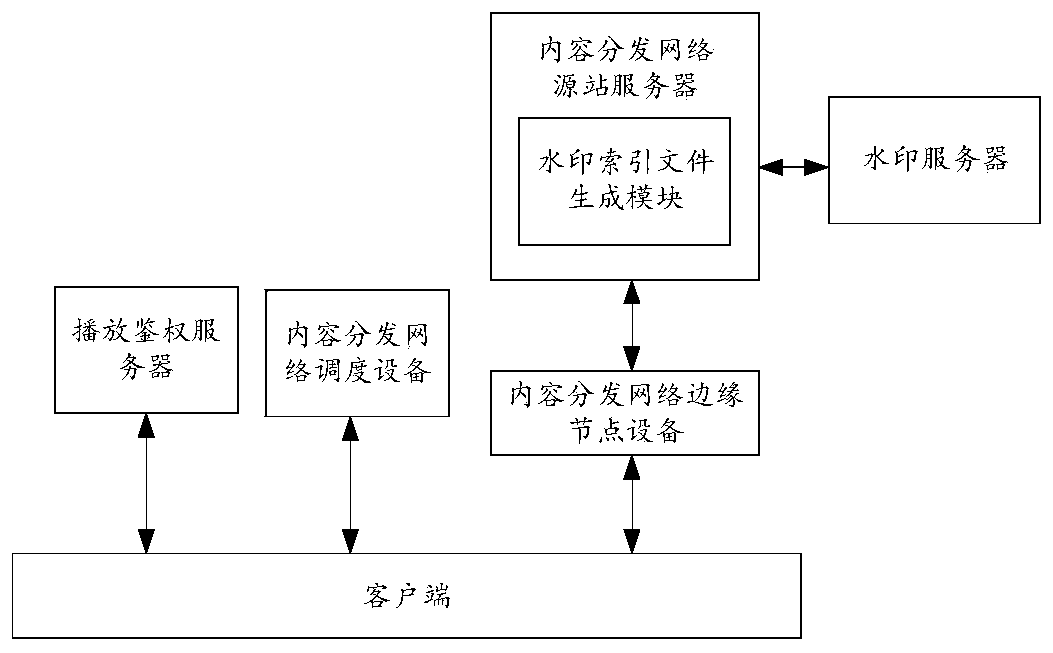

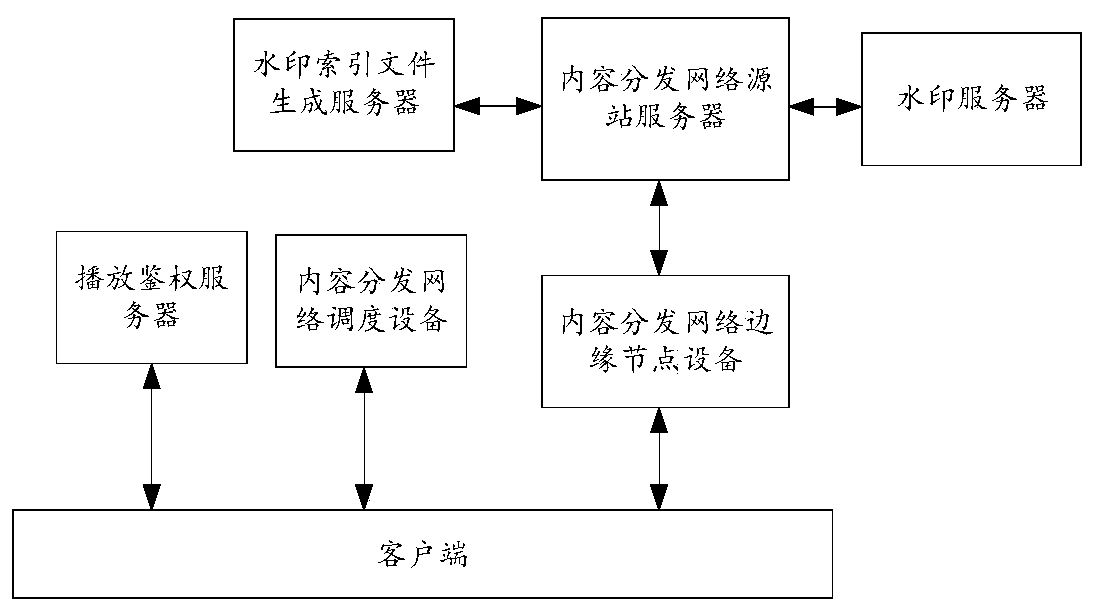

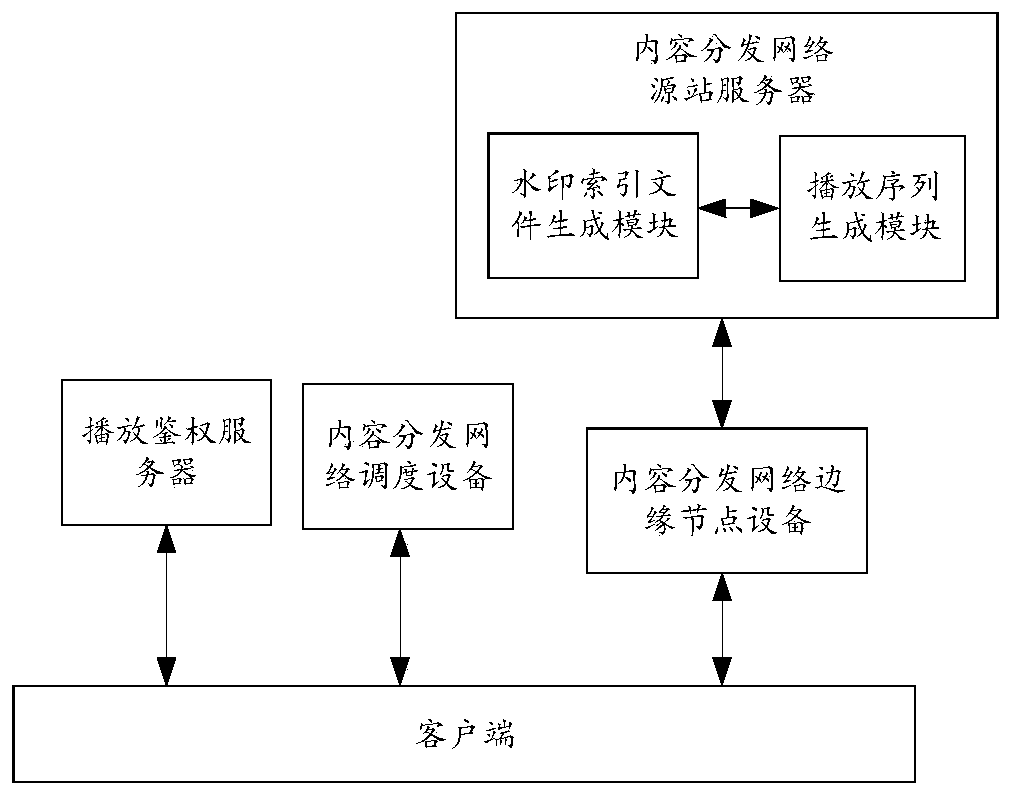

Video playing method and related equipment

ActiveCN110072122AReduce data volumeReduce start-up delaySelective content distributionStart timeTime delays

The embodiment of the invention discloses a video playing method and related equipment, which can reduce playing starting time delay of a video under the condition of playing the video with a watermark. The method comprises: after a CDN source station server receiving a first request sent by a client through CDN edge node equipment, a play starting index file being generated in real time, the playstarting index file comprising a download address corresponding to a play starting playing sequence, the play starting playing sequence being a video clip at the beginning of the video or a sequencecorresponding to the video clip after the video skips the title; and then, the CDN source station server sending the playing starting index file to the client through the CDN edge node equipment. Dueto the fact that the playing starting index file contains a small amount of data, the generation time and the transmission time are short, the client side can obtain the playing starting index file ina short time, video content is downloaded according to the downloading address corresponding to the playing starting playing sequence for playing starting, and the purpose of reducing the video playing starting time delay is achieved.

Owner:PETAL CLOUD TECH CO LTD

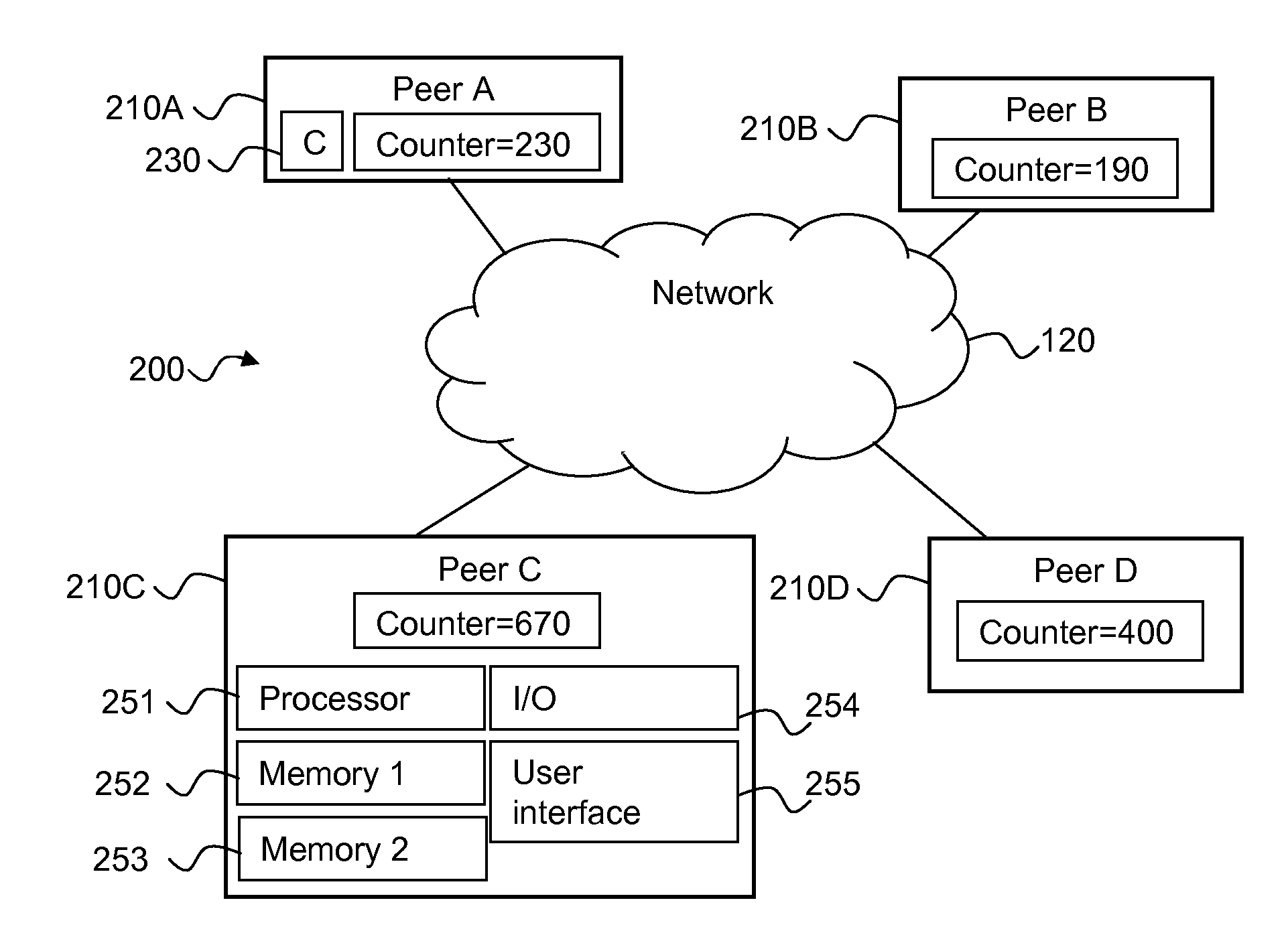

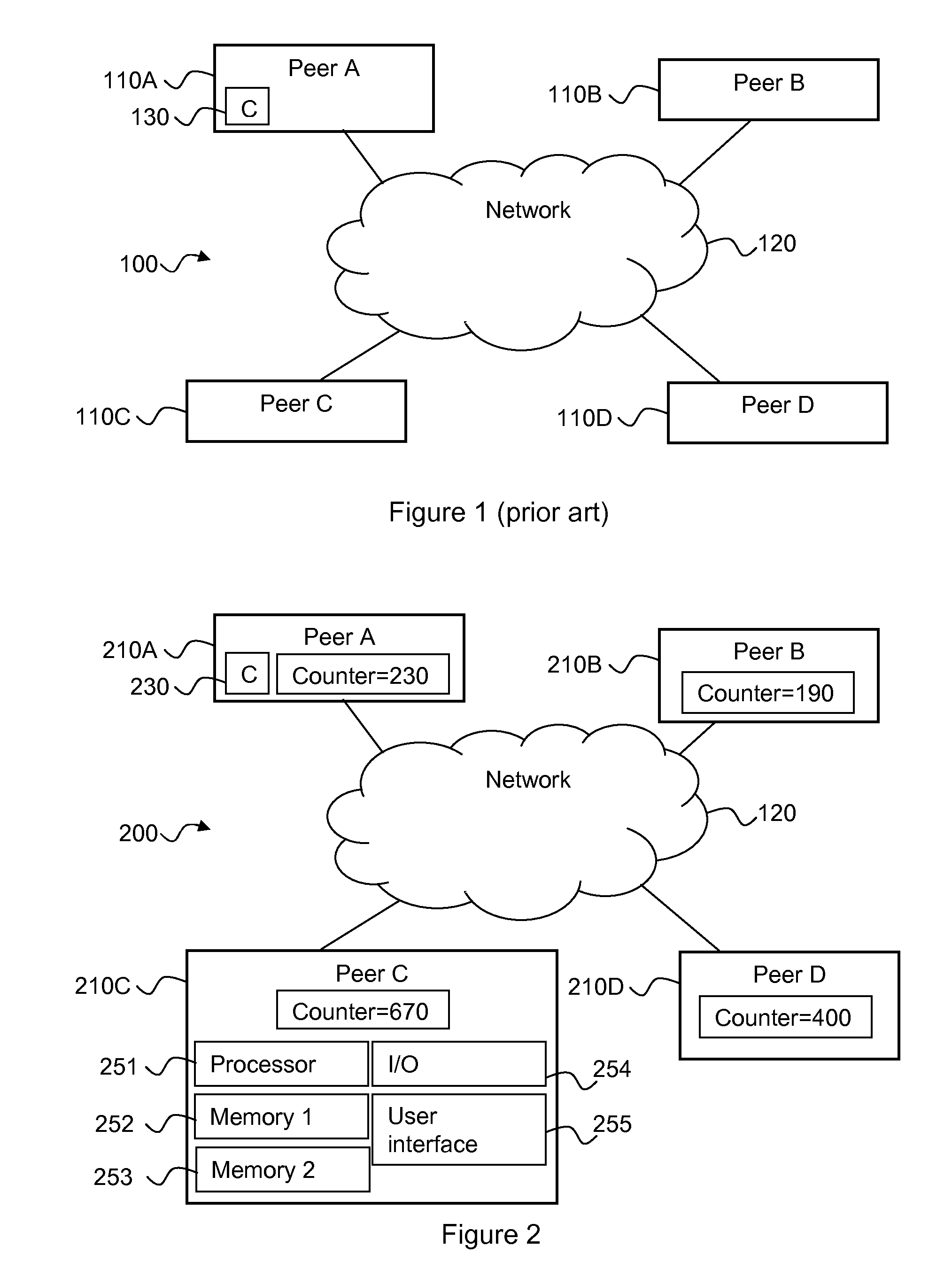

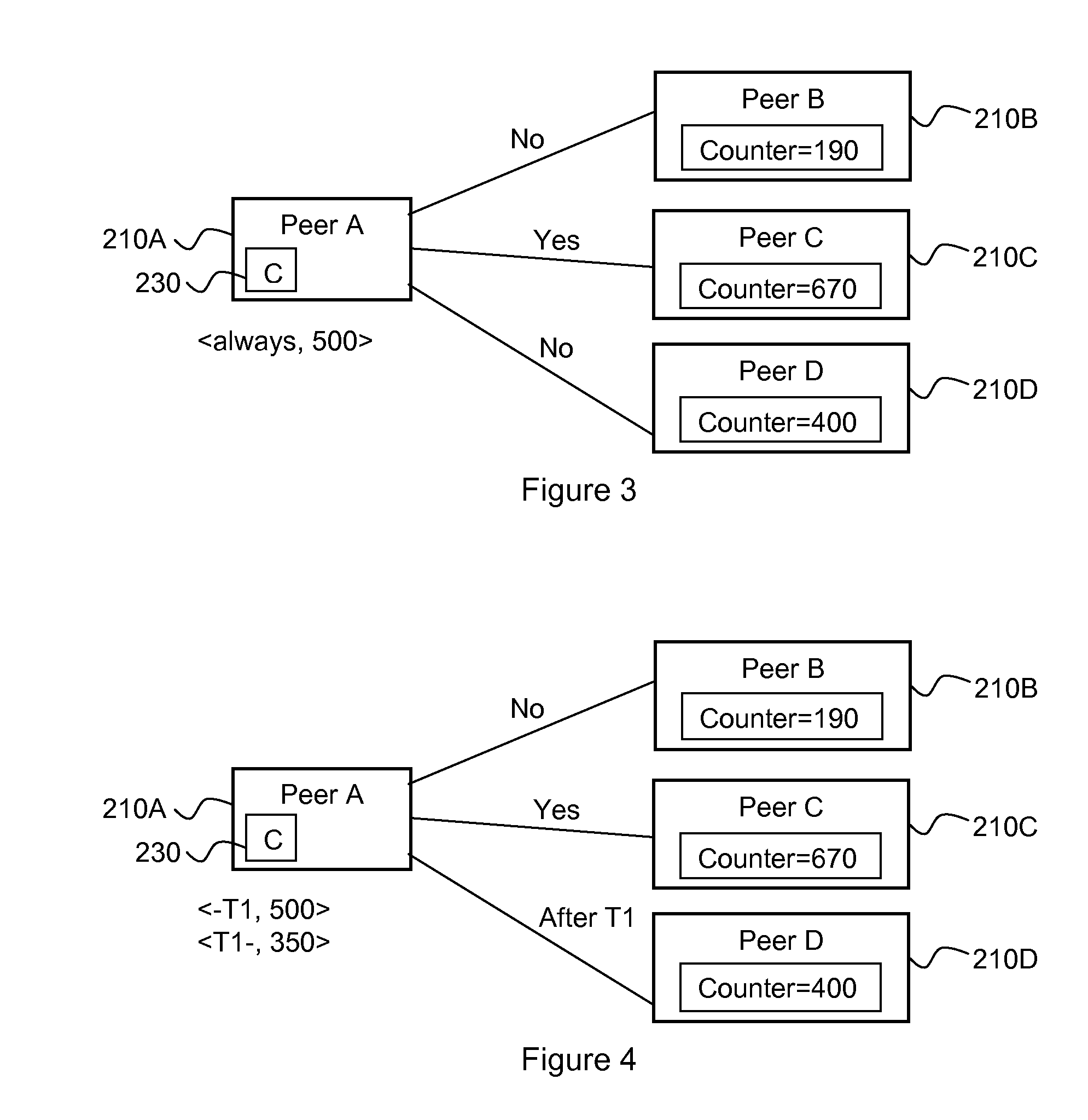

System, sharing node,server, and method for content distribution

InactiveUS20110093561A1Increase opportunitiesEffect bandwidthDigital data processing detailsMultiple digital computer combinationsContent distributionDistributed computing

A system for distribution of a content item in a network, particularly a peer-to-peer network. A requesting node sends a request for the content item. An access requirement value for the content item is compared to a counter value for the requesting node to determine if the requesting node may download the content item from a sharing node. The counter value is advantageously linked to the requesting node's habit of sharing content items. The access requirement value, which preferably is not only linked to the size of the content item, is modified for at least one content item in the network, either following a time rule or when the content is downloaded. In this way it can be ensured that initial downloaders are likely to share the content item and that the content then gets more accessible to other nodes. Also provided are a sharing node, a server and a method.

Owner:THOMSON LICENSING SA

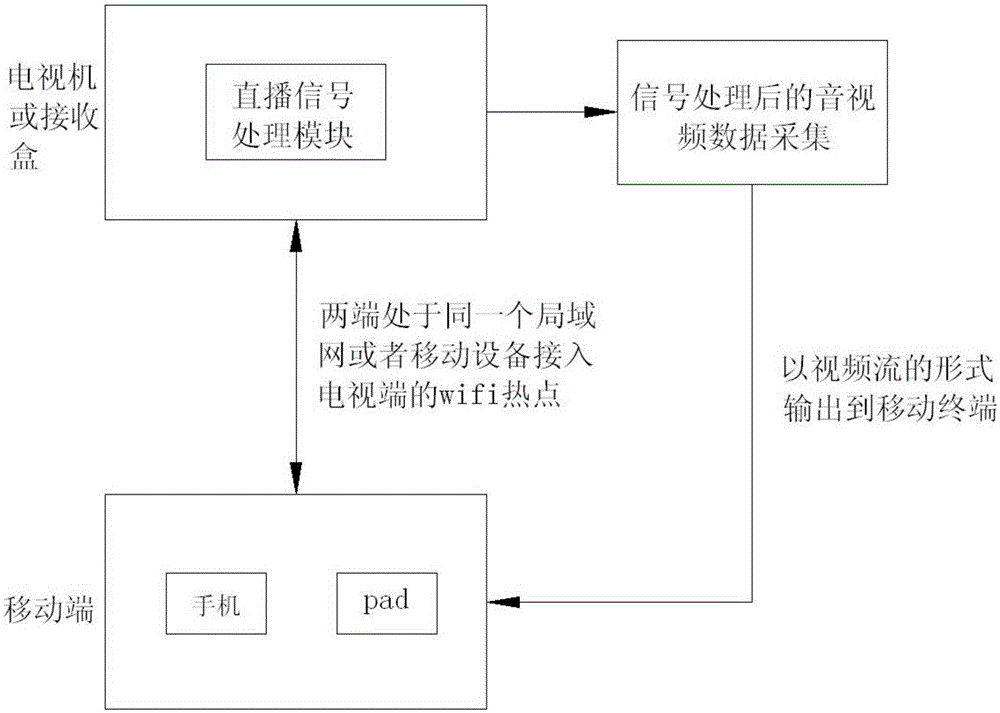

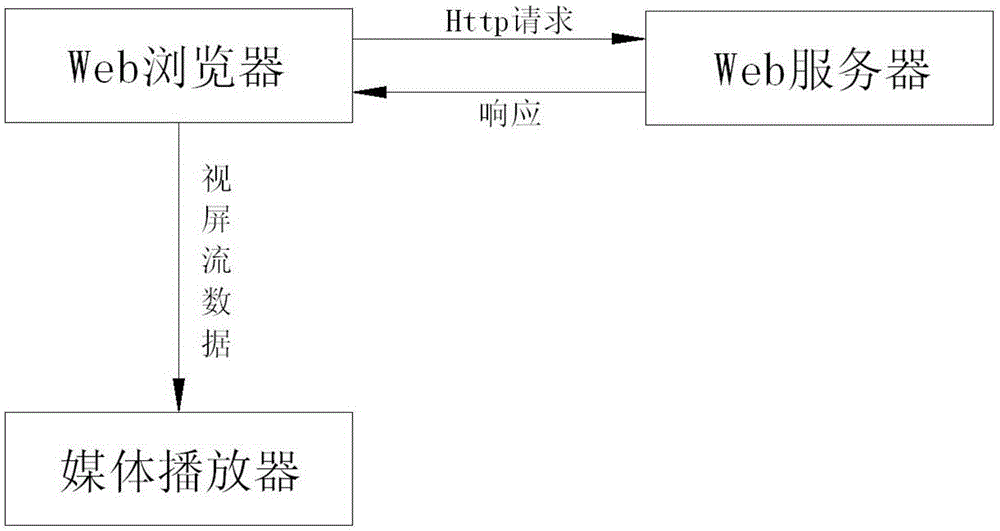

Content distribution method based on live broadcast signal

InactiveCN105307008AReduced start-up delayReduced system cache capacity requirementsSelective content distributionContent distributionLocal area network

The invention belongs to the field of signal transmission, and particularly relates to a novel content distribution method based on a live broadcast signal. The content distribution method comprises the following steps: a. after receiving the live broadcast signal, a TV set (or a receiving box) collects a corresponding analog or digital signal through a live broadcast signal processing module; b. a local server edits collection audio and video data to a video stream form; c. a mobile terminal and the TV set (or the receiving box) are kept in the same local area network or a wifi hotspot of the TV set (or the receiving box); d. the local server distributes video stream data to the mobile terminal through wireless or the local area network; and e. the mobile terminal obtains the video stream data through http, a media player on the mobile terminal decodes the video stream data, and plays the video stream data at least. The content distribution method provided by the invention has the advantages of distributing the live broadcast signal and taking the viewing demands of multiple users in a family into account.

Owner:CHUNGHSIN TECH GRP

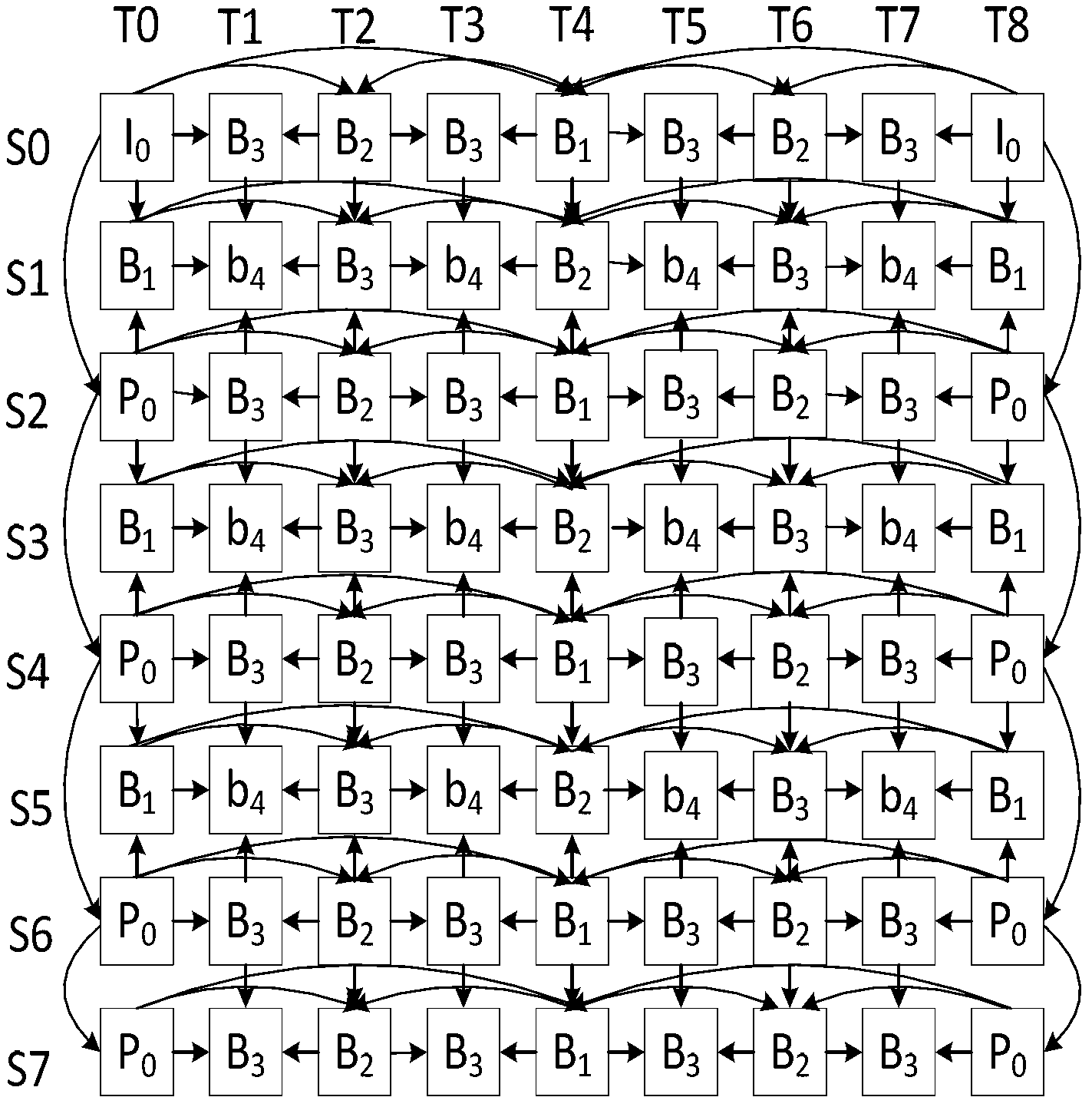

Video-frequency band scheduling and transmission method used for peer to peer (P2P) three-dimensional streaming medium system

InactiveCN103260090AImprove playback efficiencyAchieve conversionSelective content distributionSteroscopic systemsDistributed computingLower priority

The invention discloses a video-frequency band scheduling and transmission method used for a peer to peer (P2P) three-dimensional streaming medium system, and belongs to the field of P2P three-dimensional streaming media. The scheduling method comprises the steps of successively arranging priority levels of following existing relevance video-frequency bands based on a video point time and a play time from a high priority level to a low priority level, wherein a current video-frequency band belongs to the video point time and the play time, and the following existing relevance video-frequency bands comprise a video-frequency band of a next even video point with the same play time, a video-frequency band of a next play time with the same video point, and a video-frequency band of an upper odd video point and a lower odd video point at the same play time if the video point which the current video-frequency band belongs to is an even video point, and the priority of the upper odd video point is higher than that of the lower odd video point; if the relevance video-frequency bands have already been in a video-frequency band scheduling sequence, skipping the relevance video-frequency bands; then, sequencing the relevance video-frequency bands according to the priority levels from high to low, and sequentially arranging the relevance video-frequency bands in the video-frequency band scheduling sequence. Based on the scheduling method, the video-frequency band transmission method is provided. According to the video-frequency band scheduling and transmission method used for the P2P three-dimensional streaming media system, play efficiency of videos can be improved, and system throughput of the P2P three-dimensional streaming medium system can be improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

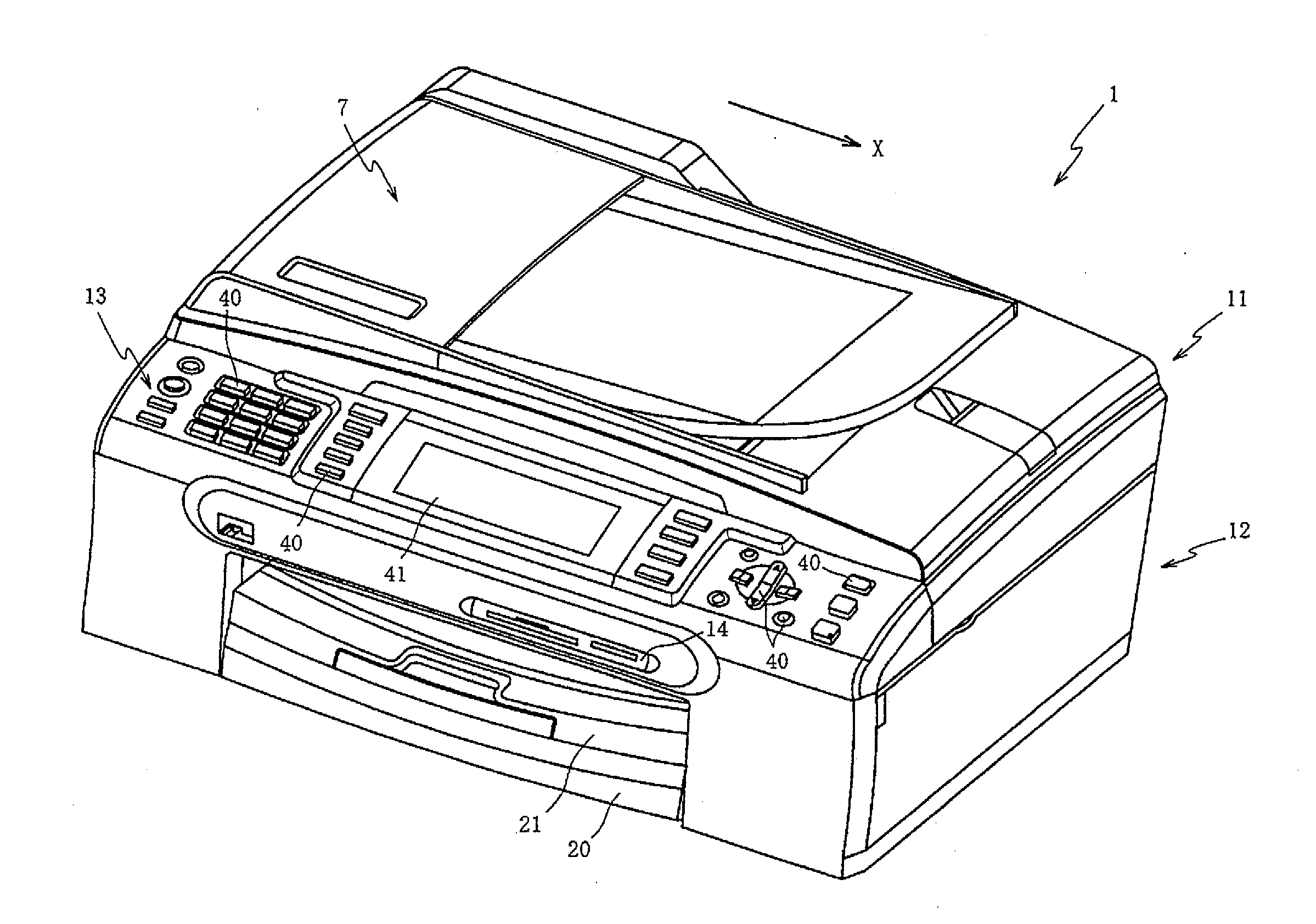

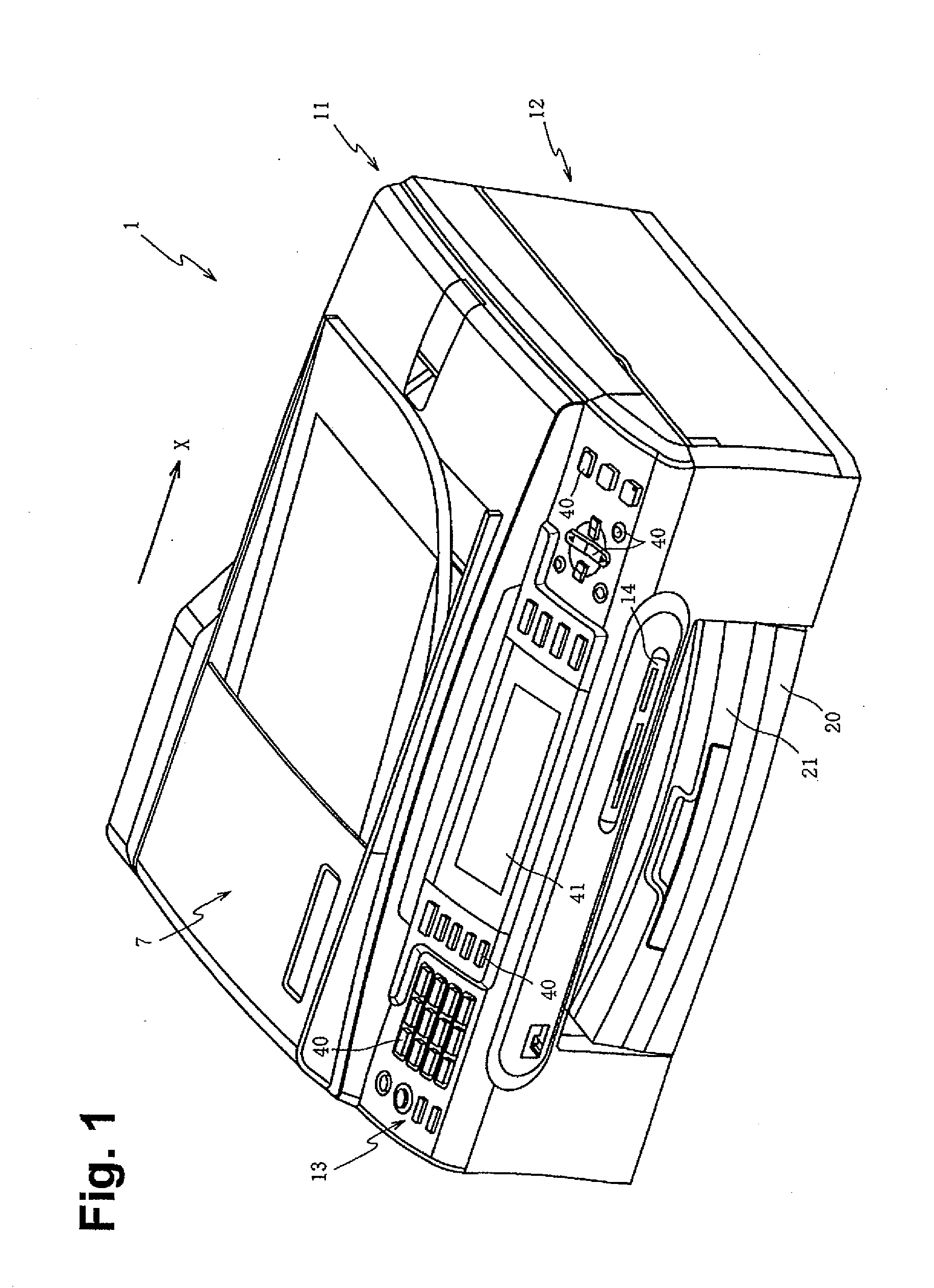

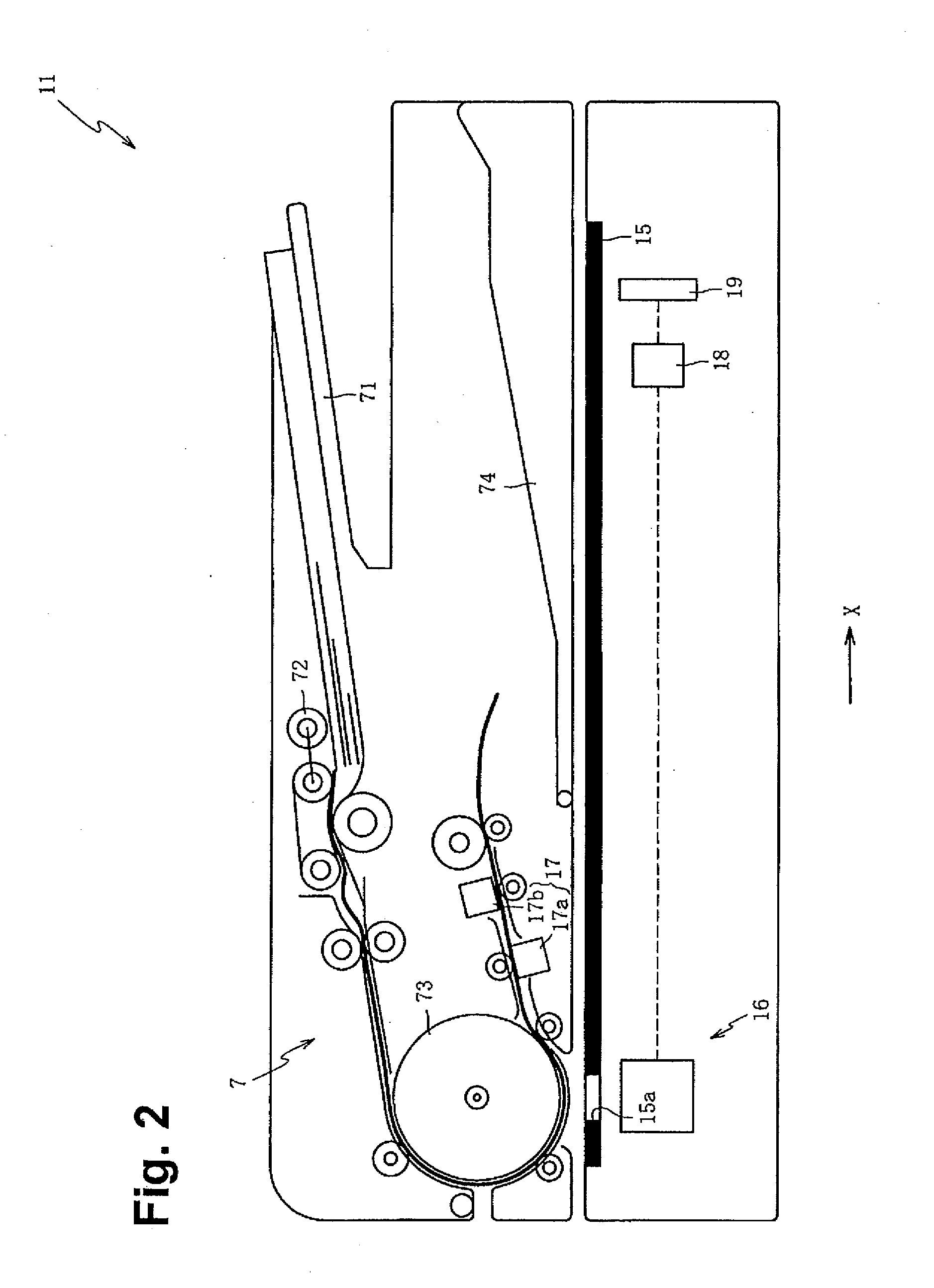

Image forming apparatus and image reading apparatus

ActiveUS20100220345A1Reduce delaysReduce start-up delayDigital computer detailsPictoral communicationImage formationPaper document

Owner:BROTHER KOGYO KK

Compensation method of P2P music on-demand system

The invention belongs to the technical field of P2P network streaming media, in particular relating to a compensation method of a P2P music on-demand system. The music on-demand compensation mechanism provided in the invention comprises the following steps: compensation is started, and compensation is performed aiming at the condition that music data file playing can not be immediately started; compensation is performed during the playing, and the compensation is performed aiming at the condition that the music playing is started. By adopting the technical scheme in the invention, the playing starting time delay of the music can be reduced, the music playing continuity can be ensured, and the expansibility of the system can be improved, therefore, the waiting time of the user can be shortened, and the user experience can be improved.

Owner:HUAZHONG UNIV OF SCI & TECH

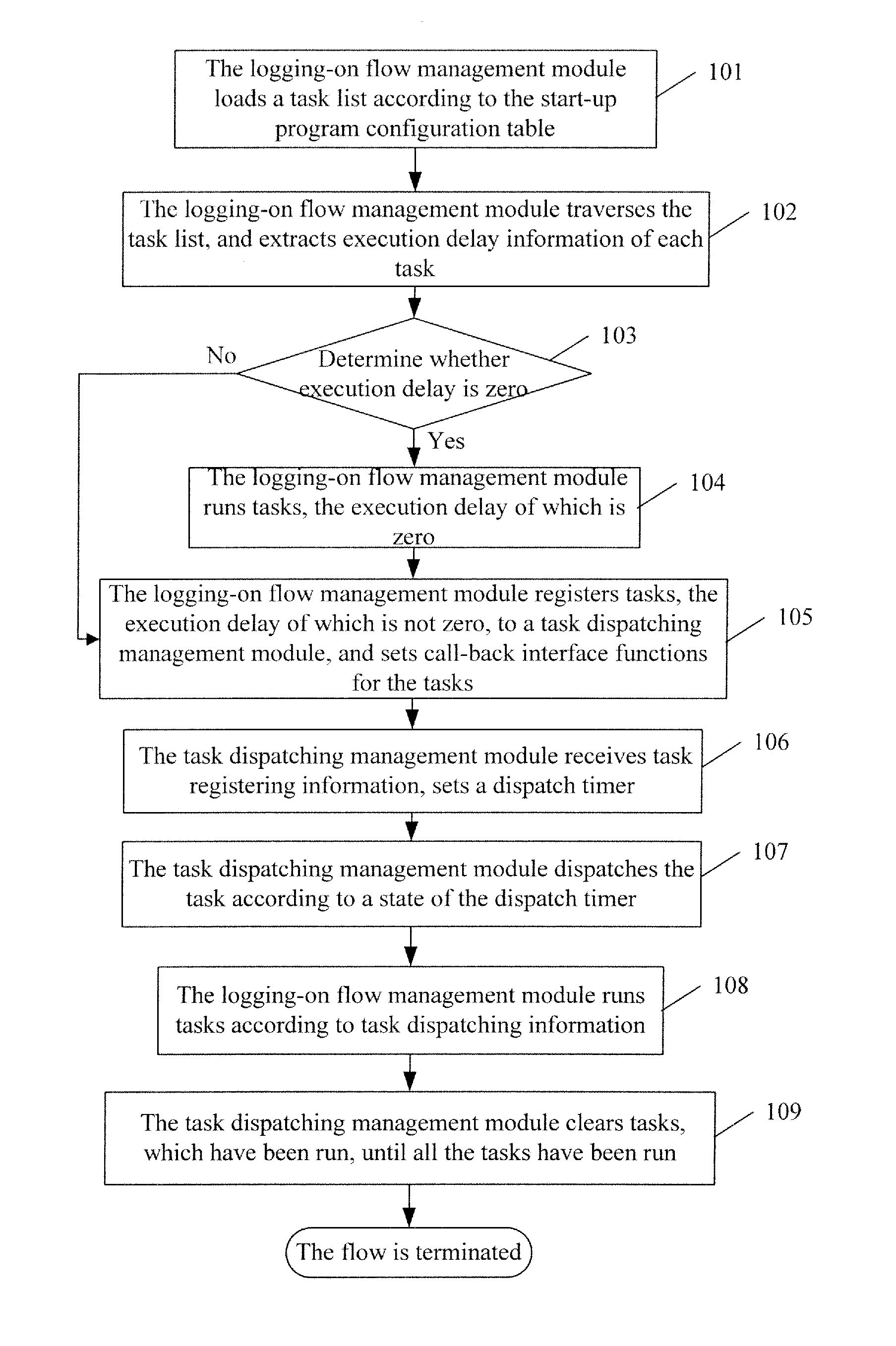

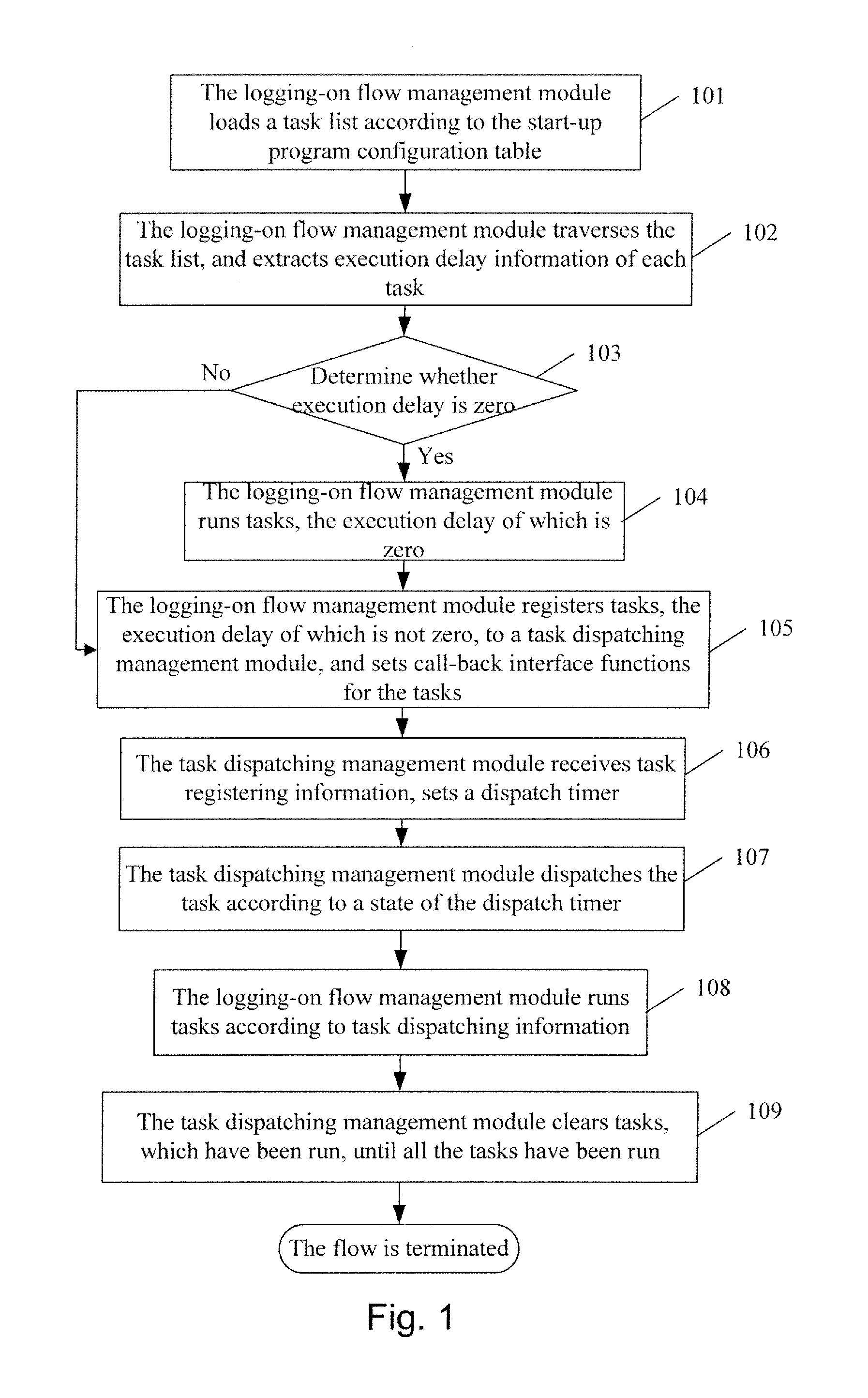

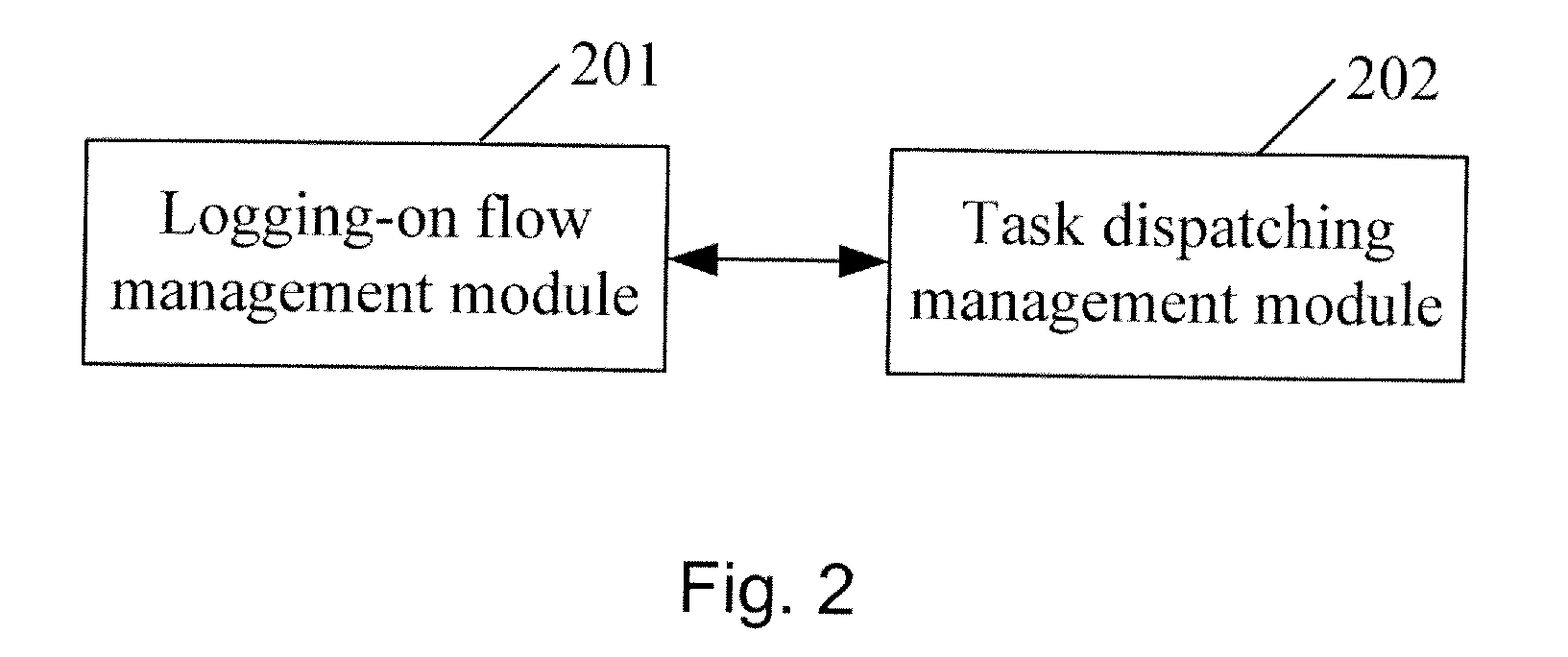

Instant messenger and method for dispatching task with instant messenger

ActiveUS20100269114A1Reduce start-up delayMultiprogramming arrangementsData switching networksMessage passingFlow management

Embodiments of the present invention provide an Instant Messenger (IM) and a method for dispatching tasks by the IM. The method includes: presetting task information in a start-up program configuration table, and dispatching, by the IM, tasks in batches according to the task information in the start-up program configuration table. Preferably, the task information includes the execution delay information and priority information of the tasks. The IM includes a logging-on flow management module and a task dispatching management module. The logging-on flow management module is adapted to store the start-up program configuration table, which is configured with the task information. The task dispatching management module is adapted to dispatch the tasks in batches according to the task information in the start-up program configuration table. With embodiments of the invention, the start-up delay of the IM may be reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com