Patents

Literature

98 results about "3D interaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

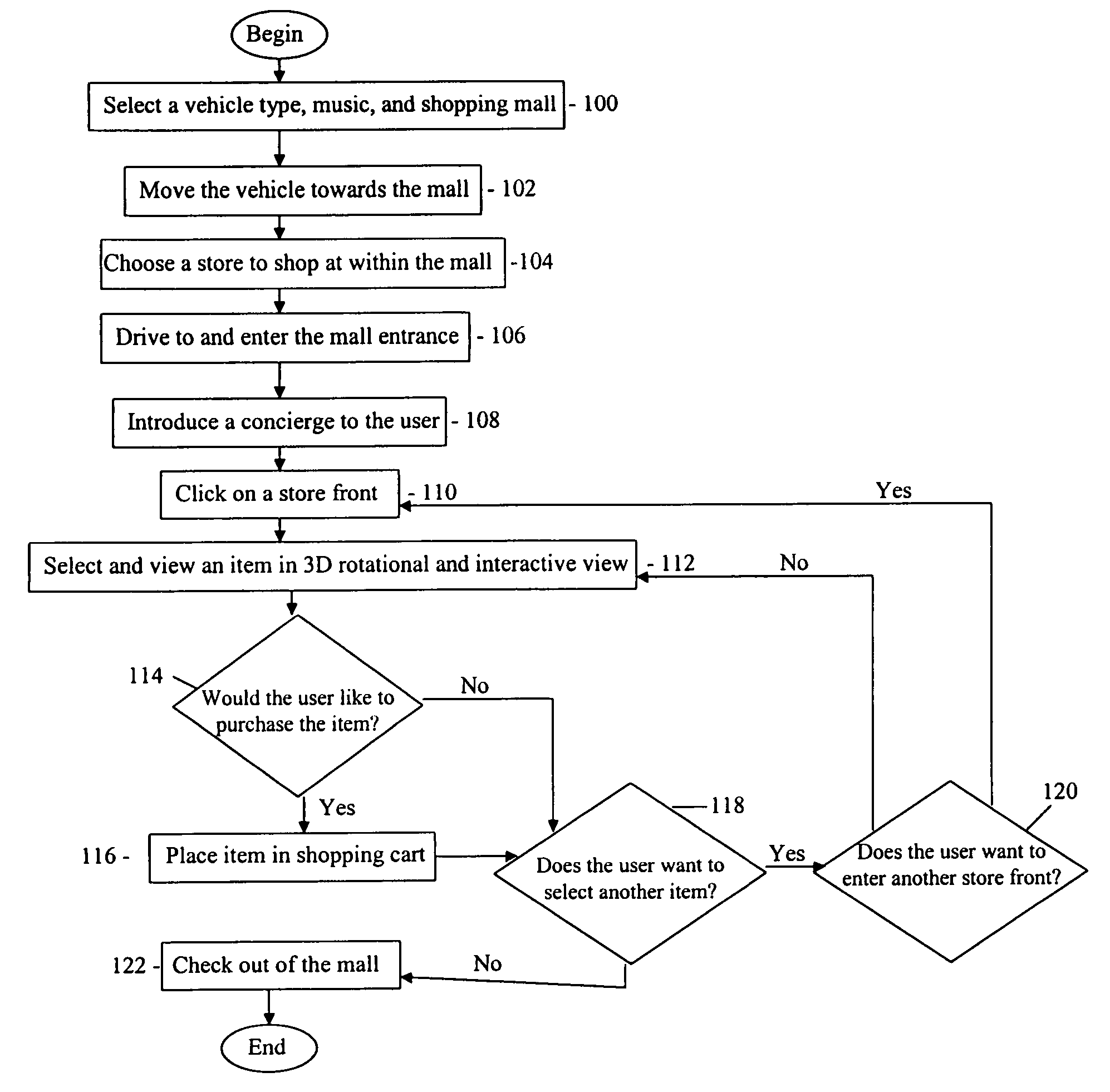

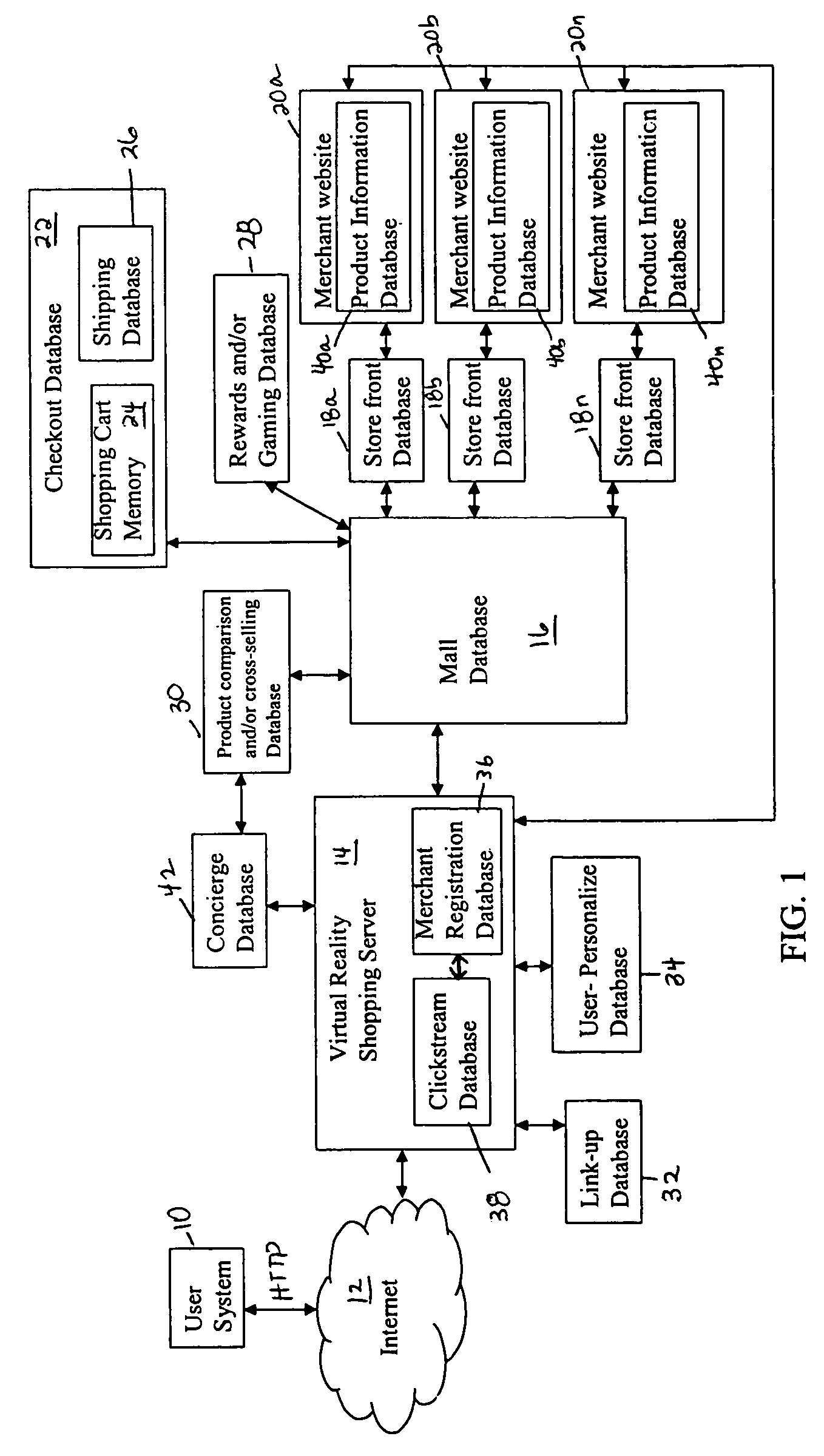

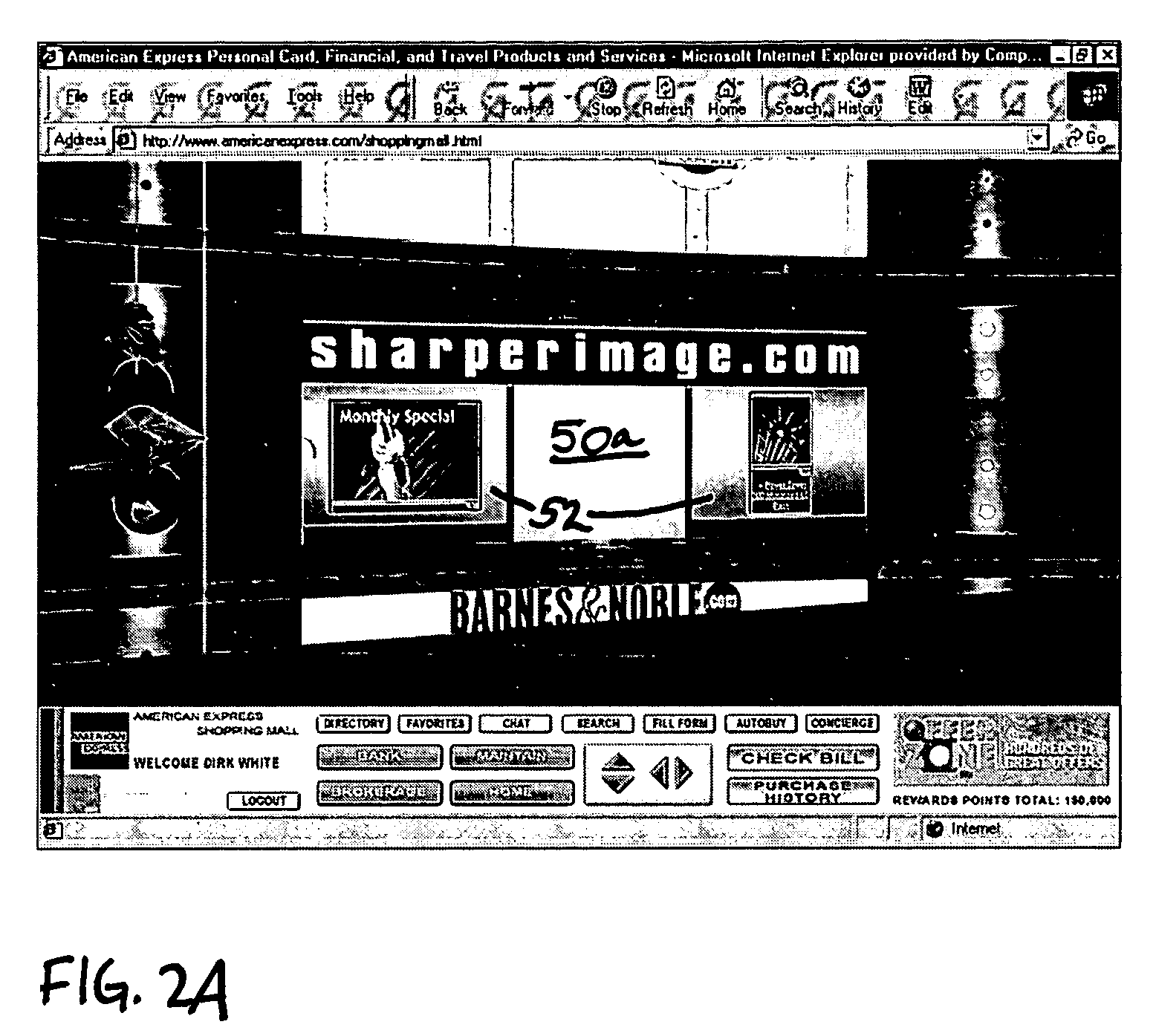

Method and apparatus for a user to shop online in a three dimensional virtual reality setting

A solution is provided for a method for a user to shop online in a three dimensional (3D) virtual reality (VR) setting by receiving a request at a shopping server to view a shopping location, having at least one store, and displaying the shopping location to the user's computer in a 3D interactive simulation view via a web browser to emulate a real-life shopping experience for the user. The server then obtains a request to enter into one of the stores and displays the store website to the user in the same web browser. The store website has one or more enhanced VR features. The server then receives a request to view at least one product and the product is presented in a 3D interactive simulation view to emulate a real-life viewing of the product.

Owner:LIBERTY PEAK VENTURES LLC

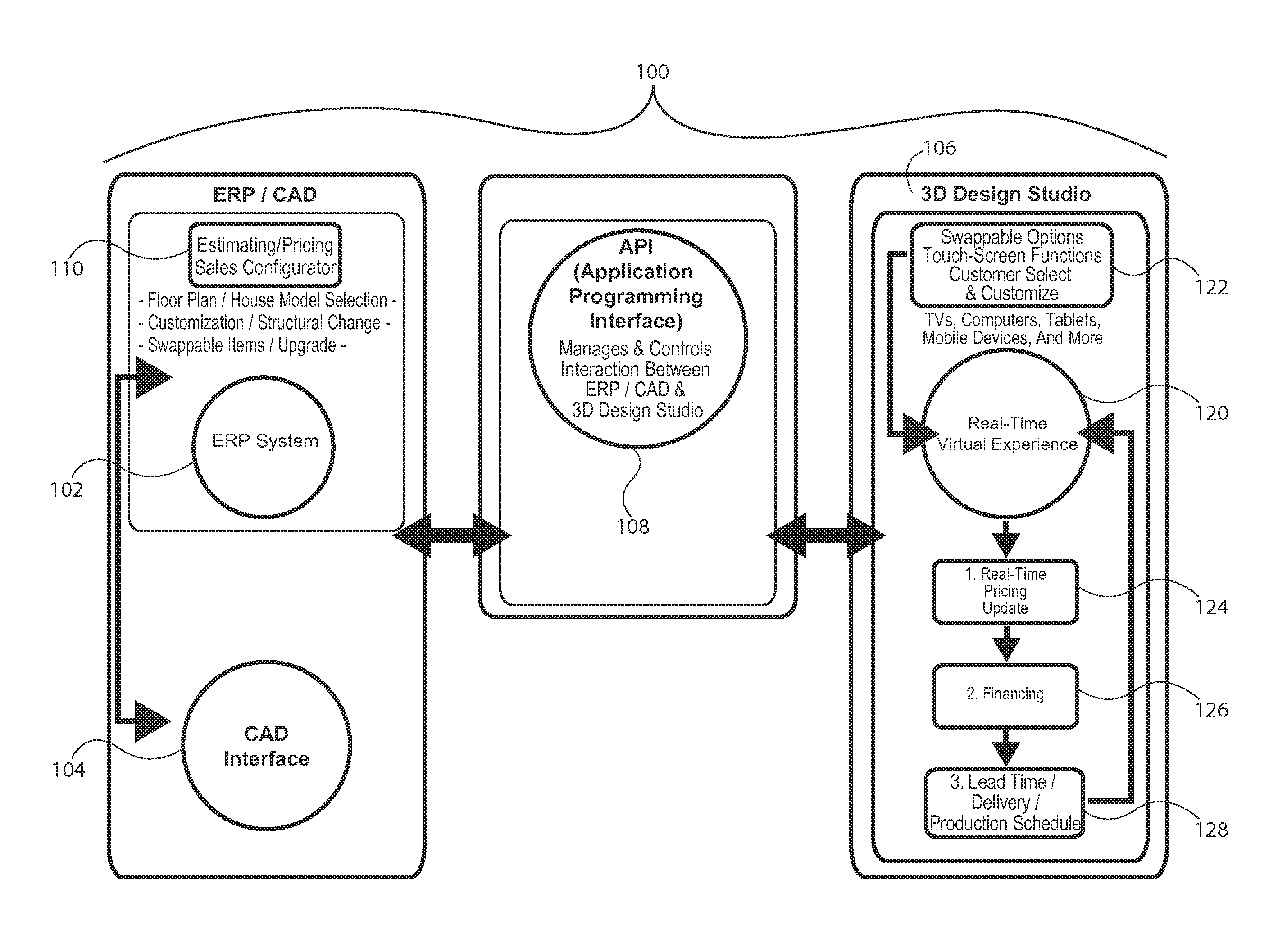

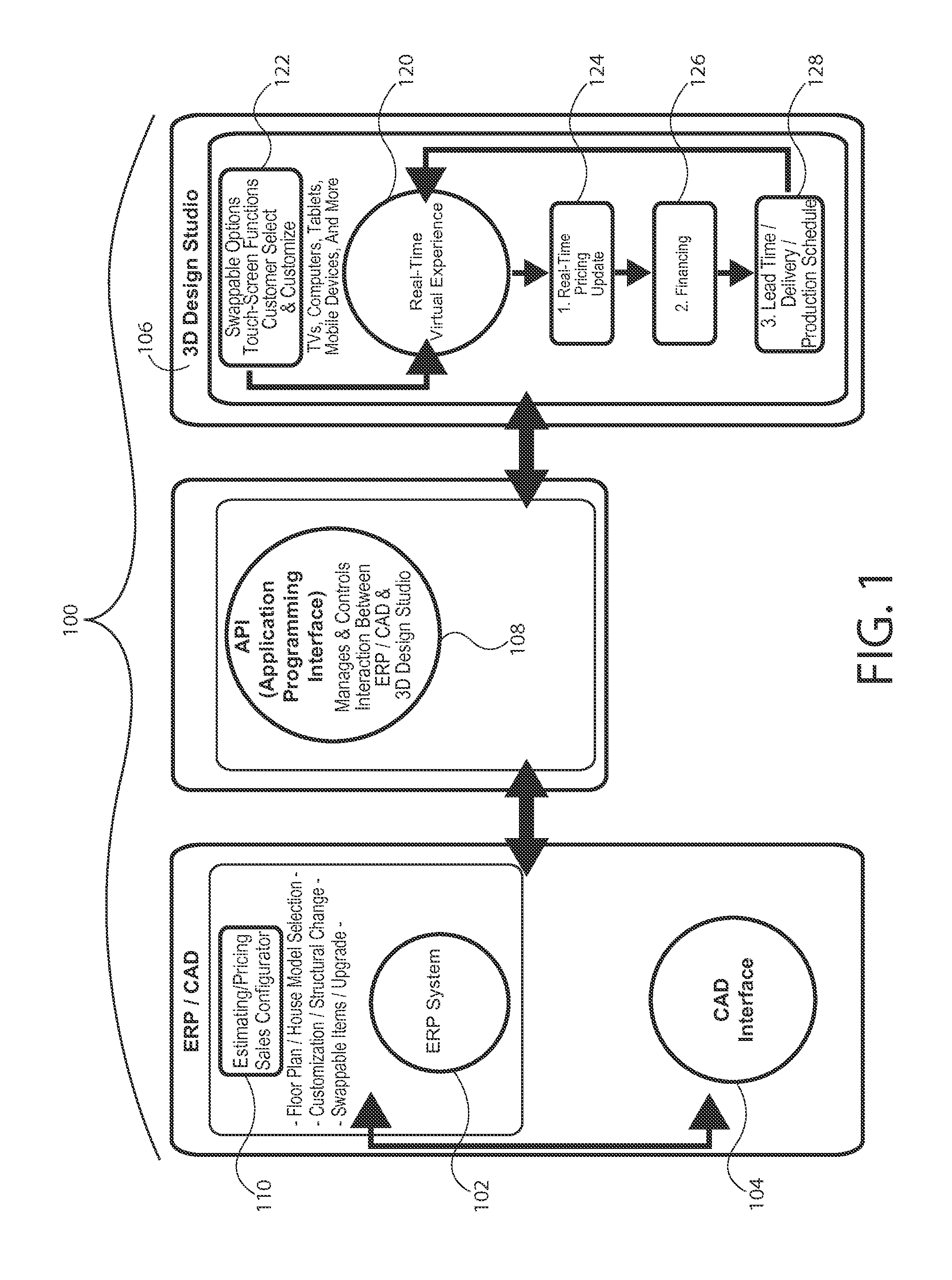

3D Interactive Construction Estimating System

InactiveUS20150324940A1Easy to customizeGeometric CADBuying/selling/leasing transactionsGraphics3d design

A 3D interactive construction estimating system is provided that includes a computerized interactive ERP / 3D design and estimating system for building construction projects and services. The computerized 3D interactive construction estimating system is real-time, visual, and transparent to both the customer and the manufacturer, and allows the customer to design and customize a home or other building with real-time integrated 3D virtual tour, pricing, scheduling, ordering, and financing options. The system includes an ERP system, a CAD system, and a graphical front end that provides the user with a virtual design experience.

Owner:MODULAR NORTH AMERICA

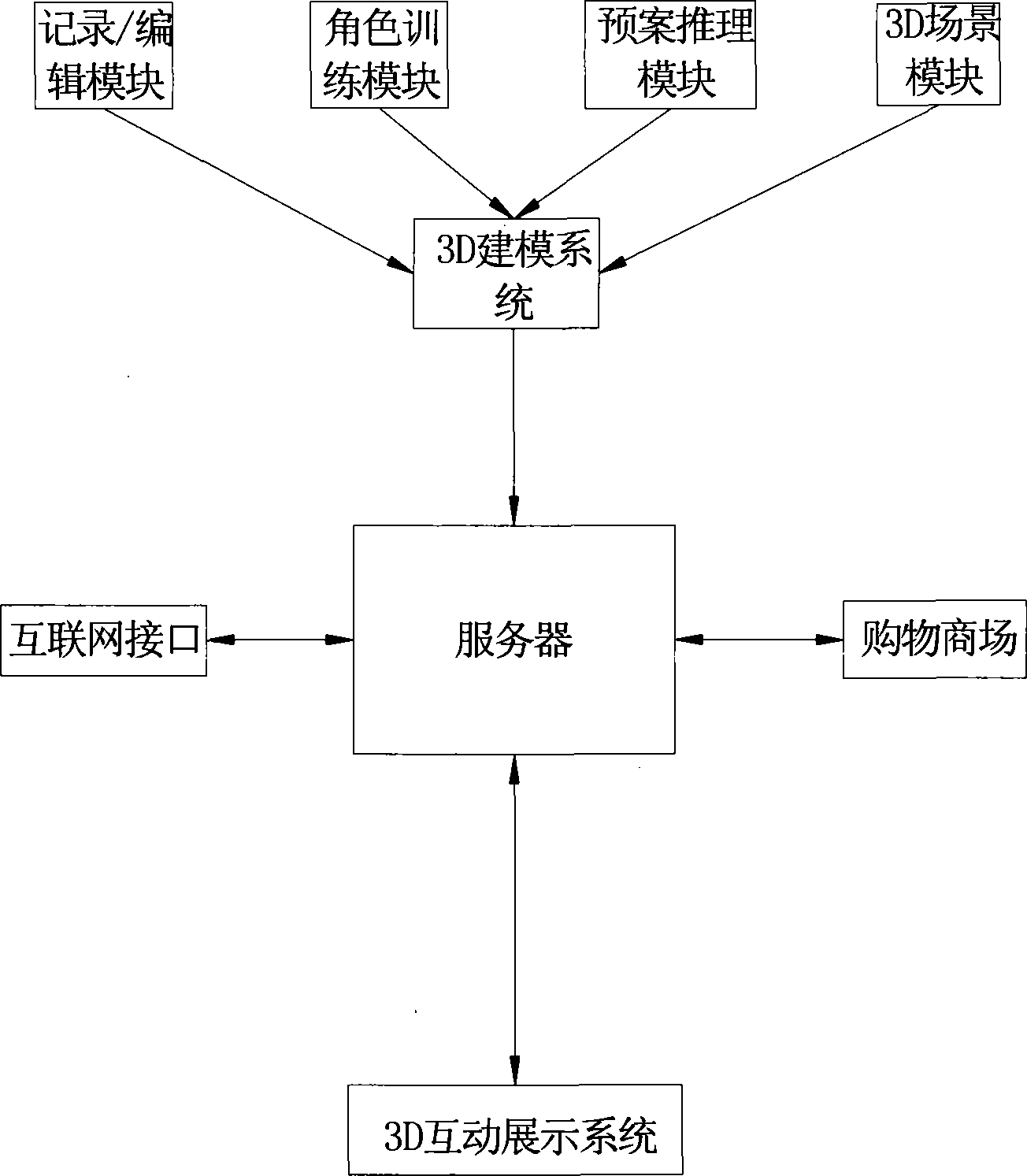

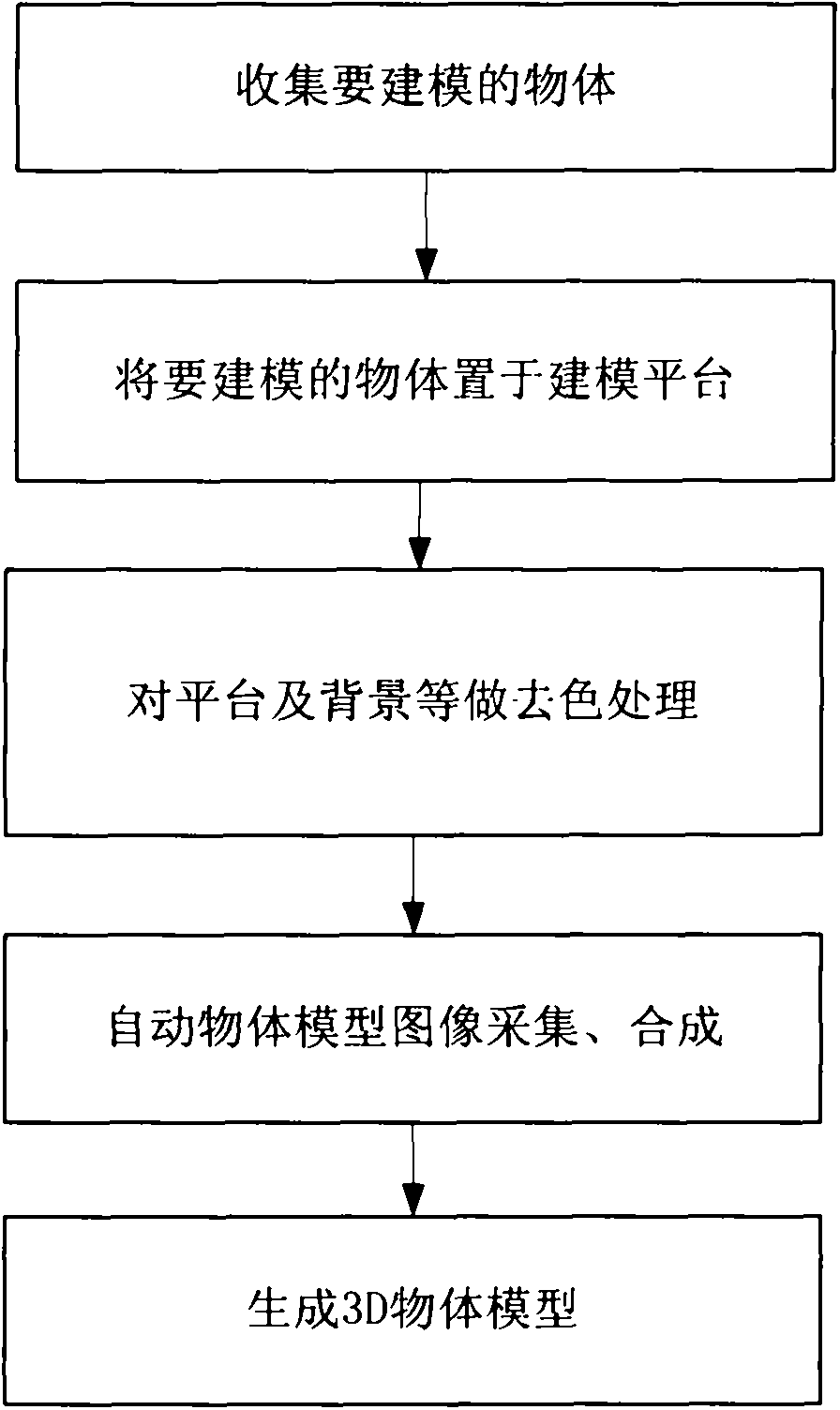

Virtual reality e-commerce platform system and application method thereof

The invention discloses a virtual reality e-commerce platform system and an application method thereof, and the system enables a user to feel that the user is on the scene. The virtual reality e-commerce platform system comprises a shopping mall, commodity information, a server, the Internet, a database, an operation system, a 3D modeling system, a 3D interaction display system and a shopping terminal. The 3D modeling system comprises a three-dimensional scene module, a plan reasoning module, a character training module and a recording / editing module. Compared with the prior art, the virtual reality e-commerce platform system has the advantages that wearable commodities can be tried on by a shopper in online shopping; the three-dimensional effect of selected commodities can be watched by 360 degrees; various commodities or environments can be quickly made to three-dimensional pictures; the shopper virtually enters various three-dimensional malls and three-dimensional shops for shopping in a roaming mode through the Internet, the shopper can feel that the shopper is on the scene, and shopping interest and experience are enhanced; based on a real mall, online-offline linkage shopping (the e-commerce model) is formed.

Owner:贵州宝森科技有限公司

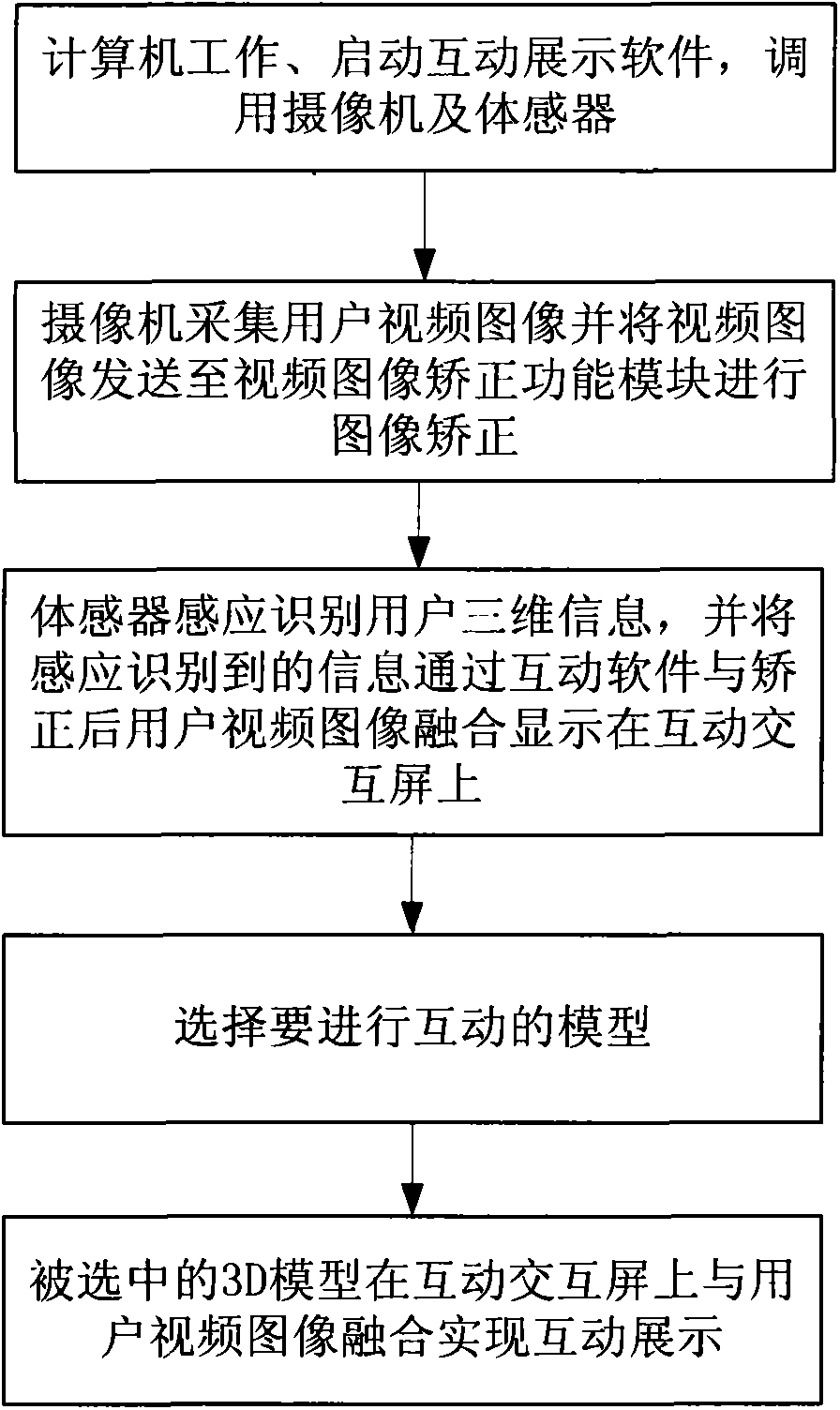

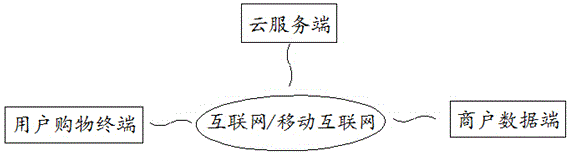

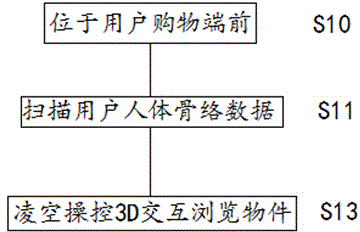

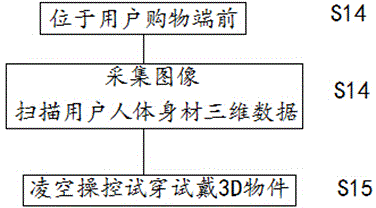

Multi-terminal 3D somatosensory shopping method and system based on internet and mobile internet

InactiveCN104616190AImprove the sense of real experienceAdd funBuying/selling/leasing transactionsVirtual spaceInteractive video

The invention relates to a multi-terminal 3D somatosensory shopping method and system based on the internet and the mobile internet. According to the method and the system, various objects can be browsed or tried by a user by performing volley operation 3D interaction and are purchased directly after the user is satisfied with an experience. Multi-screen combined experience shopping is realized; a somatosensory technology and a video image processing technology are combined, so that 3D object models or 3D scenes of various commodities and a human body interact in a three-dimensional virtual space; an interaction video image is directly displayed on an interactive display screen. A customer can browse or try different virtual objects by performing the volley operation 3D interaction at any time and any place; the customer and the commodities are interacted in the three-dimensional virtual space, so that real shopping experience feeling of the user is improved, the shopping delight is enhanced, and the commodity return and exchange rate in online shopping is reduced.

Owner:GUANGZHOU NEWTEMPO TECH

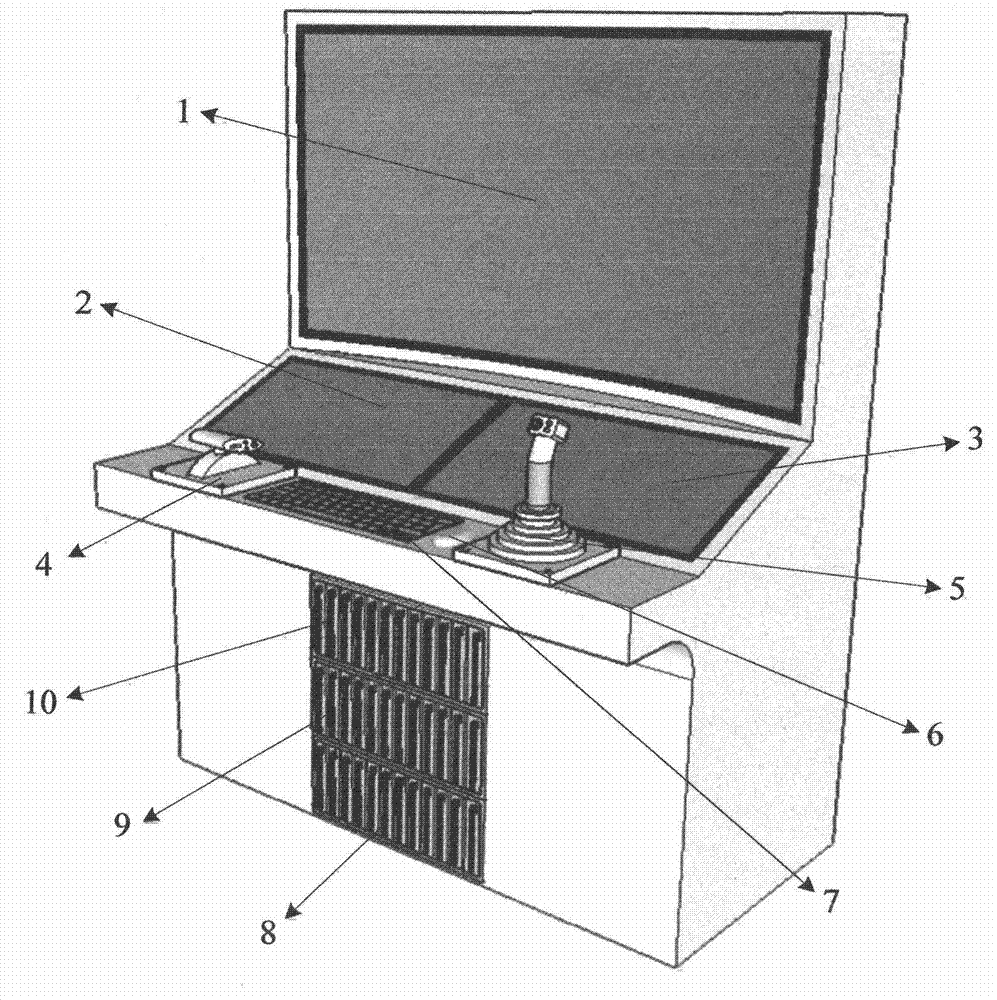

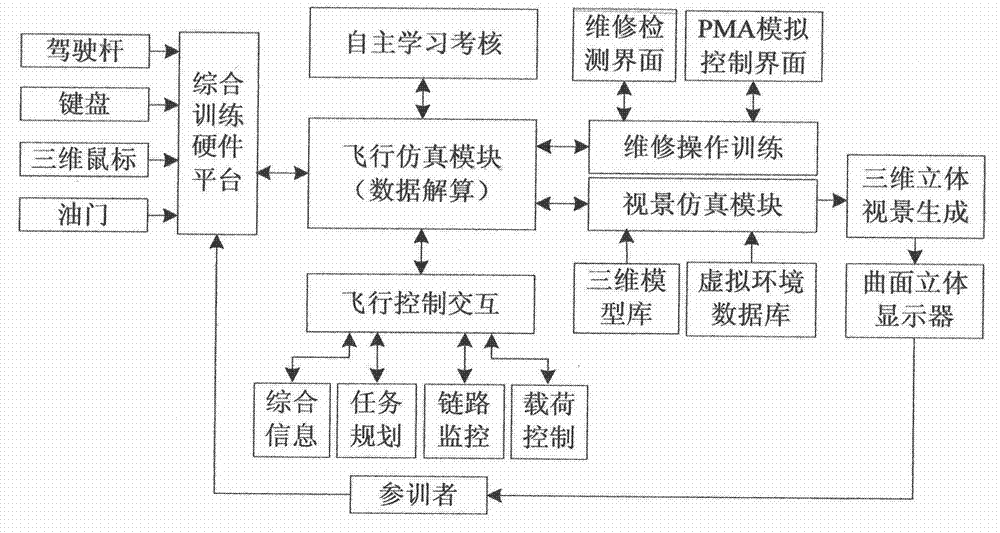

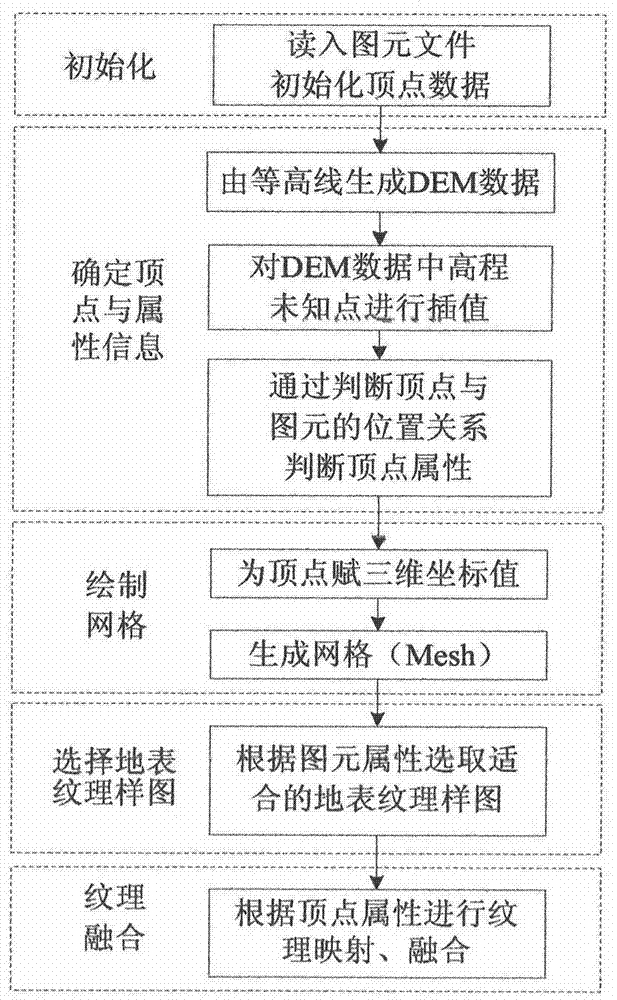

Unmanned aerial vehicle three-dimensional display control comprehensive-training system

InactiveCN104765280AReduce the cost of trainingAlleviate the small number of installationsSimulator controlVehicle position/course/altitude controlThree-dimensional spaceStructure and function

The invention discloses an unmanned aerial vehicle three-dimensional display control comprehensive-training system and relates to the technical field of training simulation technologies. The unmanned aerial vehicle three-dimensional display control comprehensive-training system comprises a flight simulation module, a visual simulation module, a flight control interaction module, a maintenance operation training module and an autonomic learning examination module, adopts a driving type three-dimensional curved-surface displayer to establish a display platform, adopts a 3D interaction technology and a three-dimensional driving technology to achieve driving and three-dimensional display of three-dimensional images, achieves highly-simulated man-machine interaction through interaction hardware, establishes a multiple-sensory-simulation ground control environment and adopts a flight control training working mode, a maintenance operation training working mode and an autonomic learning examination working mode. By means of the system, an unmanned aerial vehicle operator can master an unmanned aerial vehicle control method, an operation process and a special situation disposal method, accurately senses the state and environment situation of the three-dimensional space of an aerial vehicle, and a maintainer can be quickly familiar with the principle, structure and functions of the aerial vehicle and master a guarantee process and a maintenance method.

Owner:JINLIN MEDICAL COLLEGE

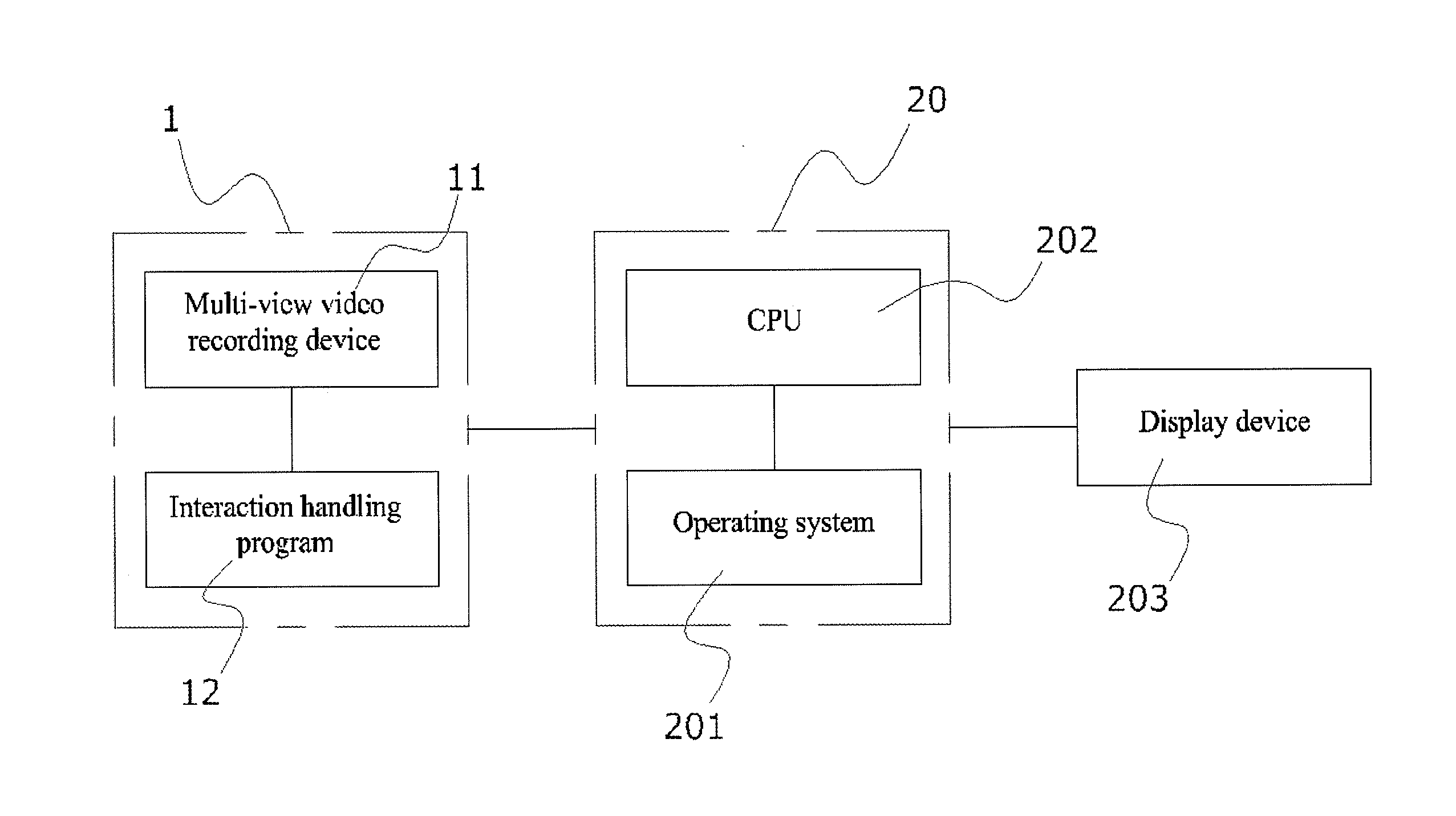

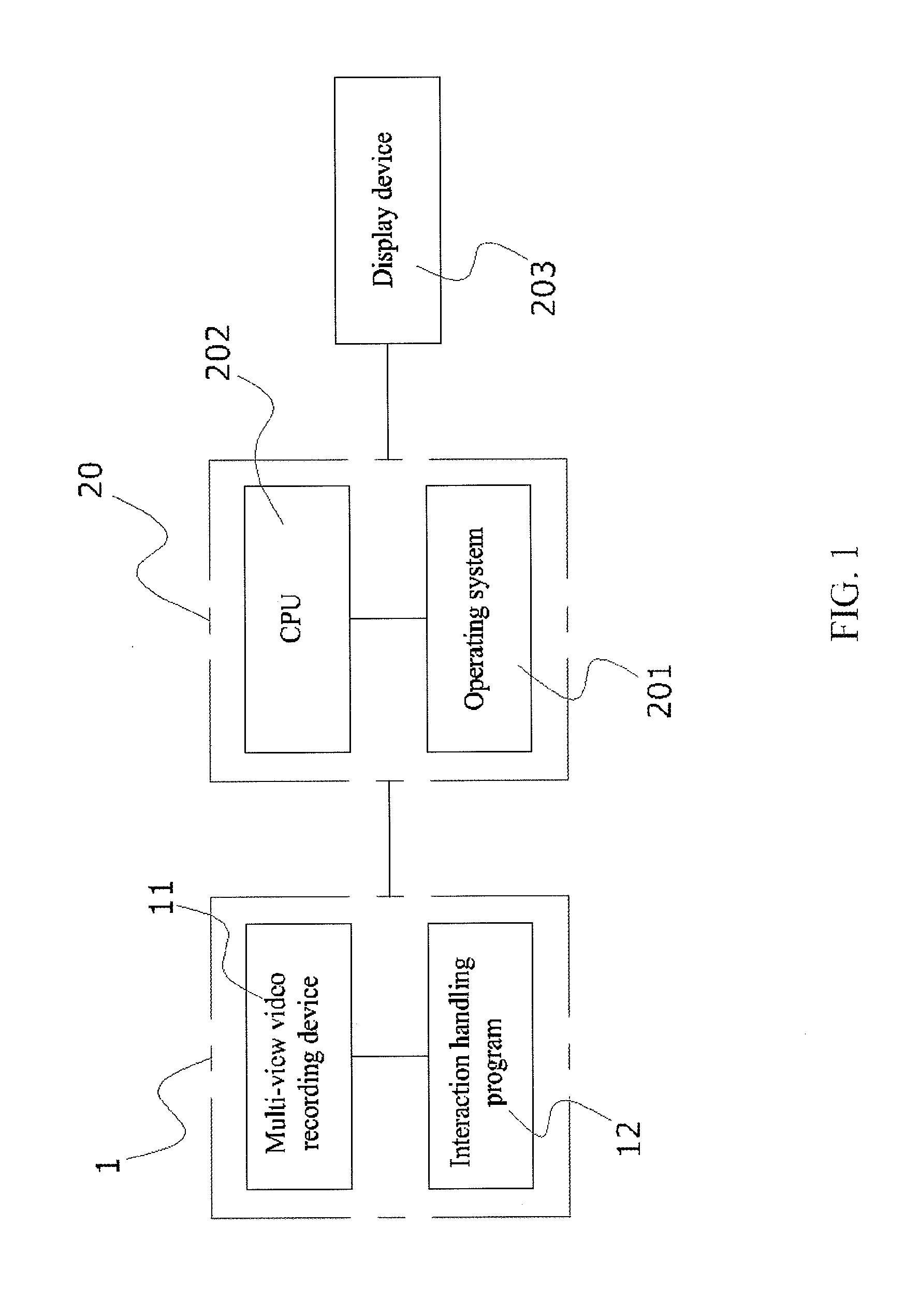

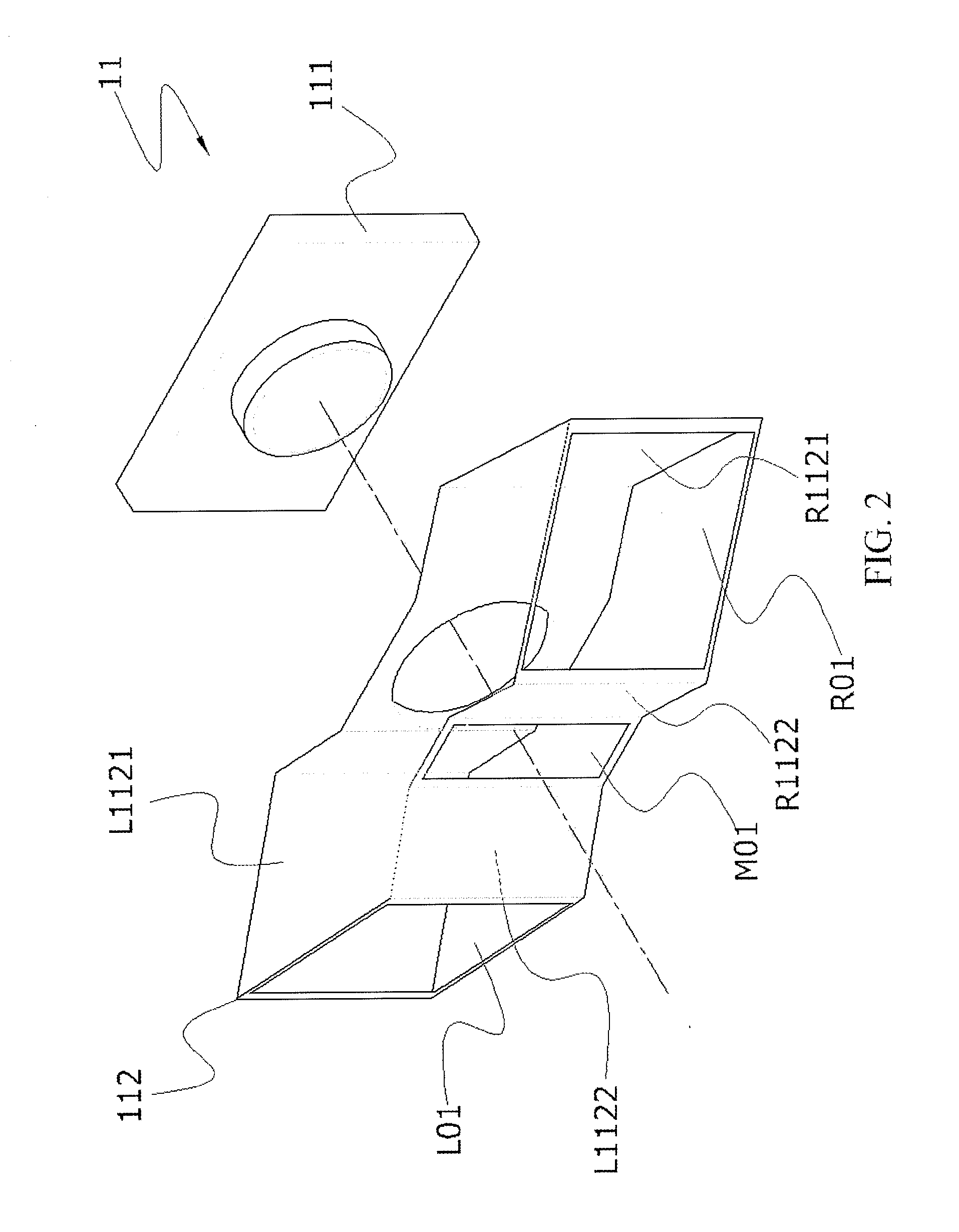

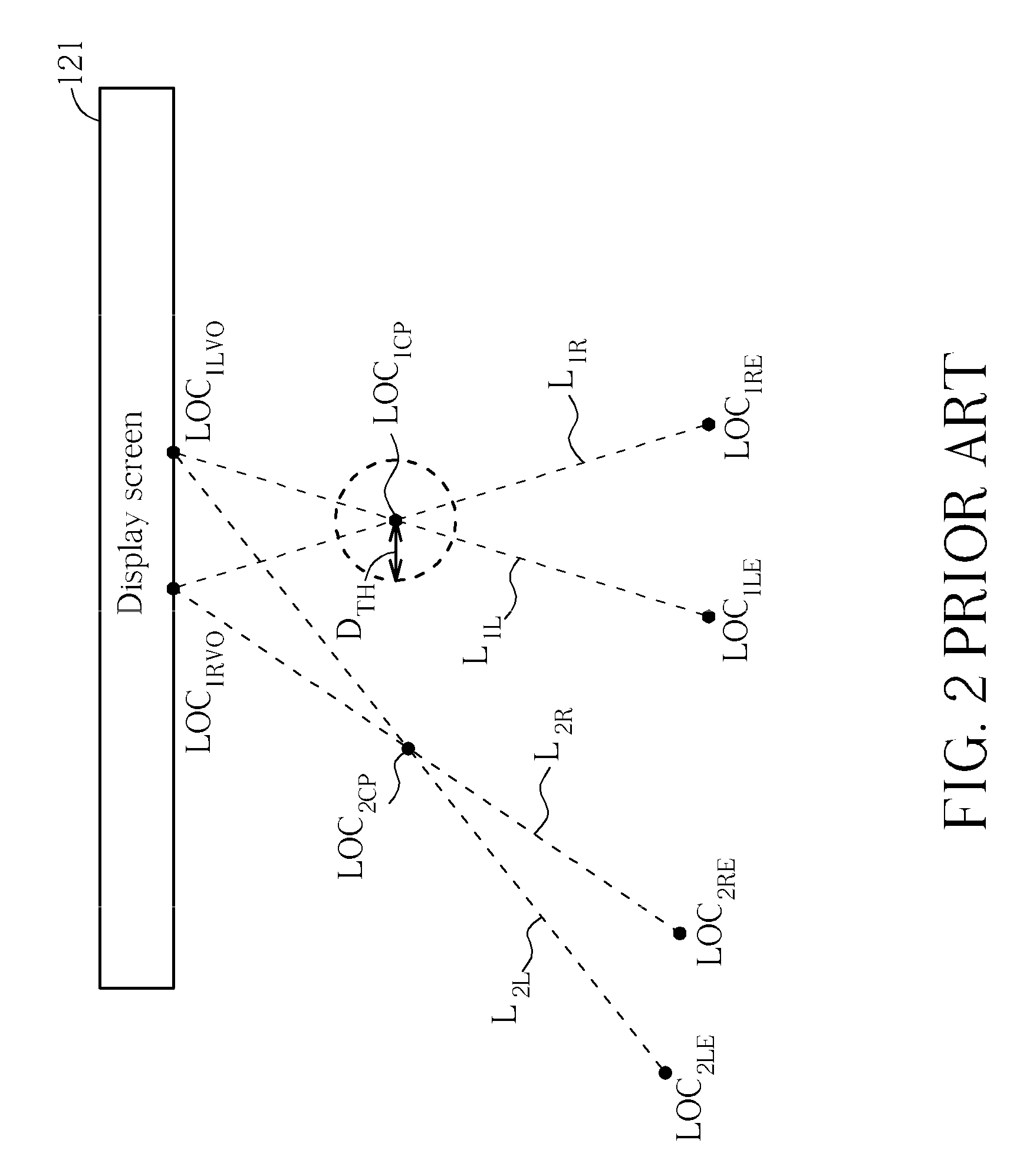

Three-dimensional human-computer interaction system that supports mouse operations through the motion of a finger and an operation method thereof

ActiveUS20130069876A1Cathode-ray tube indicatorsInput/output processes for data processingInteraction systemsVideo record

A three-dimensional (3D) human-computer interaction system that supports mouse operations through the motion of a finger and an operation method thereof are provided. In the provided system and method, a multi-view video recording device captures an image of a finger of an operator, and has an information connection with an electronic information device through an interaction handling program. After the interaction handling program is executed, a CPU of the electronic information device performs operations such as computation, synthesis, image presentation, gesture tracking, and command recognition on the captured image to interpret a motion of the finger of the user. Accordingly, the user may perform operations on an operation interface of the electronic information device by using the finger in a 3D space in a noncontact mode. The present invention is particularly applicable to application software with 3D space operations as it can perform 3D interaction with the application software.

Owner:AMCHAELVISUAL TECH LLC

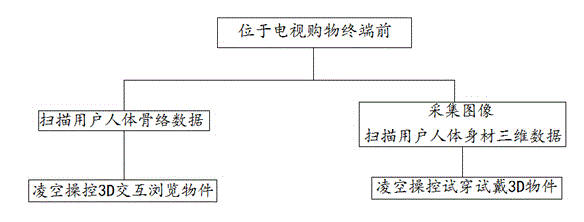

Television terminal-based 3D somatosensory shopping system and method

InactiveCN104618819AImprove the sense of real experienceAdd funInput/output for user-computer interactionBuying/selling/leasing transactionsInteractive videoVirtual space

The invention relates to a television terminal-based 3D somatosensory shopping system and method. The system comprises a television terminal; a user browses or tries different virtual objects by performing volley operation 3D interaction, and purchases the objects directly after being satisfied with an experience. The objects are 3D object models of various commodities which are pre-stored in a storage device. According to the system and the method, shopping is experienced by adopting the television terminal, a somatosensory technology and a video image processing technology are combined, so that 3D object models or 3D scenes of the various commodities and a human body interact in a three-dimensional virtual space; an interaction video image is directly displayed on an interactive display screen. Family members can browse or try different virtual objects by performing the volley operation 3D interaction at any time and any place; the system and the method are suitable for the old and the young in a family to experience real shopping; the shopping crowd is expanded; the shopping delight is enhanced; the commodity return and exchange rate in online shopping is reduced.

Owner:GUANGZHOU NEWTEMPO TECH

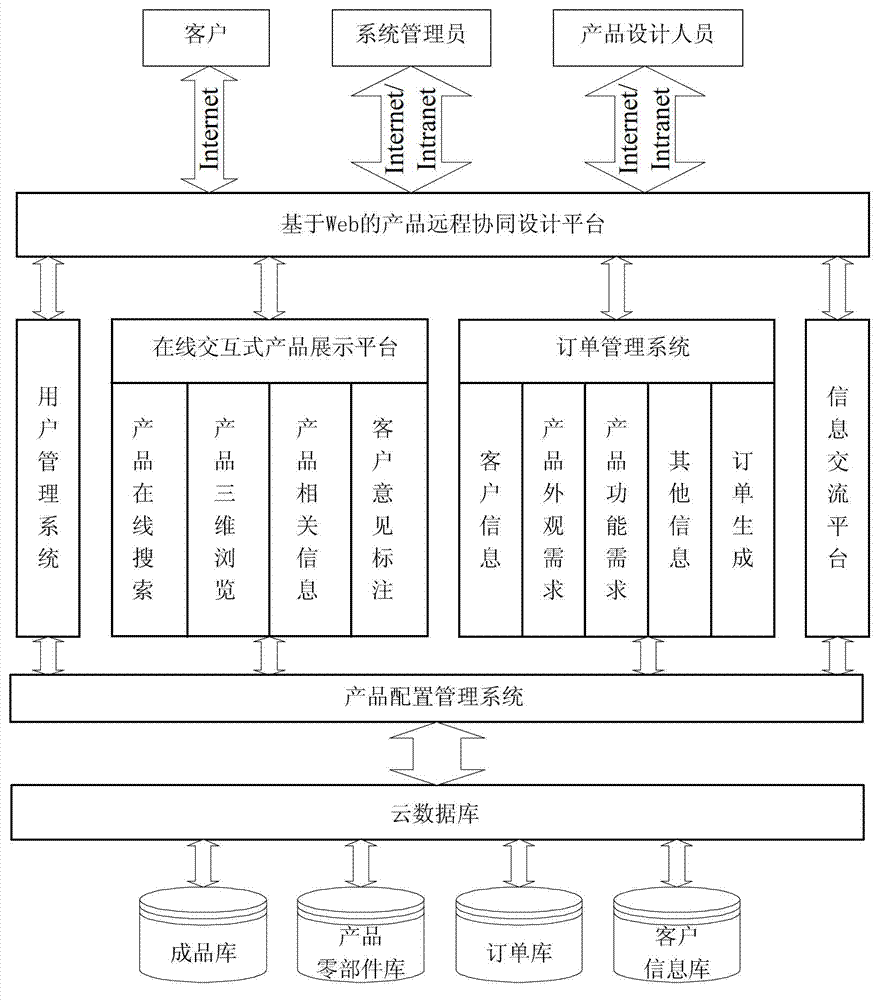

Interactive product configuration platform

InactiveCN103093045AImprove design and development efficiencyGuarantee data securityResourcesSpecial data processing applicationsBill of materials3D interaction

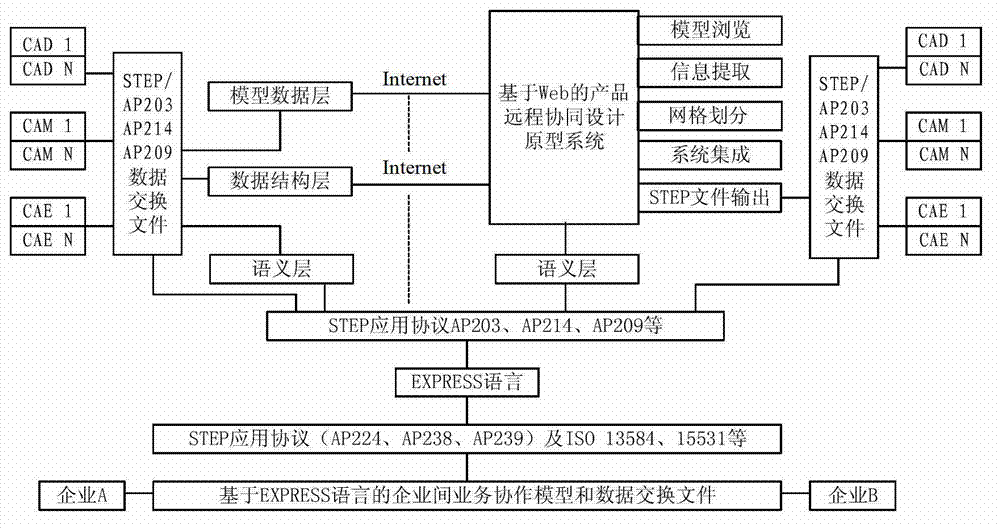

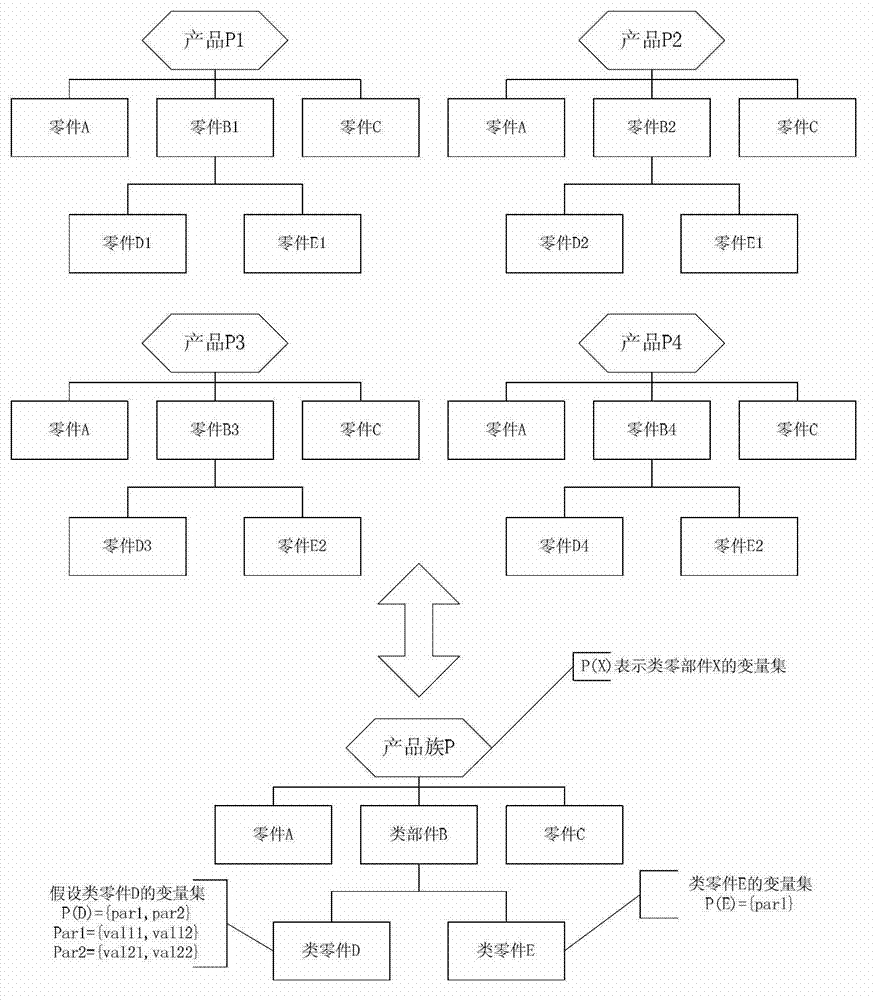

An interactive product configuration platform comprises a two-dimensional (2D) or three-dimensional (3D) information interface module, a product case base module, a parts base module, a product and parts management module, an optimal solver module, an embedded 3D product holographic information interaction module and a parameter drive modeling module. The 2d or 3D information interface module is relevant to a general bill of material (GBOM), and used for obtaining of user demands and generating of a bill of material (BOM). The product case base module and the parts base module have online self-learning ability. The product and parts management module is based on cloud computing. The optimal solver module is relevant to product structures and a case base, and a quadratic parabola fuzzy set case-based reasoning method is adopted for the optimal solver module which is used for matching and solving of an optimal case which is in the case base and most similar to a customized product. The embedded 3D product holographic information interactive module is relevant to the case base, and used for previewing of holographic cases of optimal products by a user and interactive information modifying. The parameter drive modeling module is relevant to the optimal cases, and used for establishing of a 3D model of the customized product driven by automatic parametric characteristics according to the user demands and interactive information. The interactive product configuration platform achieves 3D interaction, and improves product development and design efficiency.

Owner:ZHEJIANG UNIV OF TECH

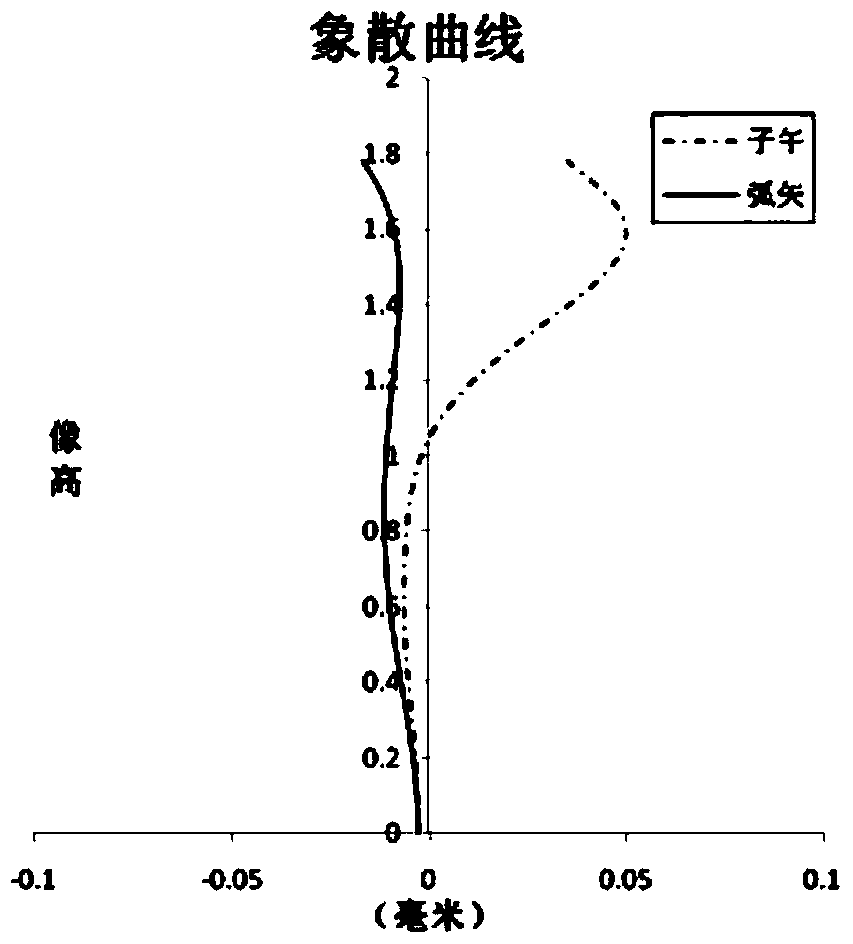

3D interaction-type projection lens

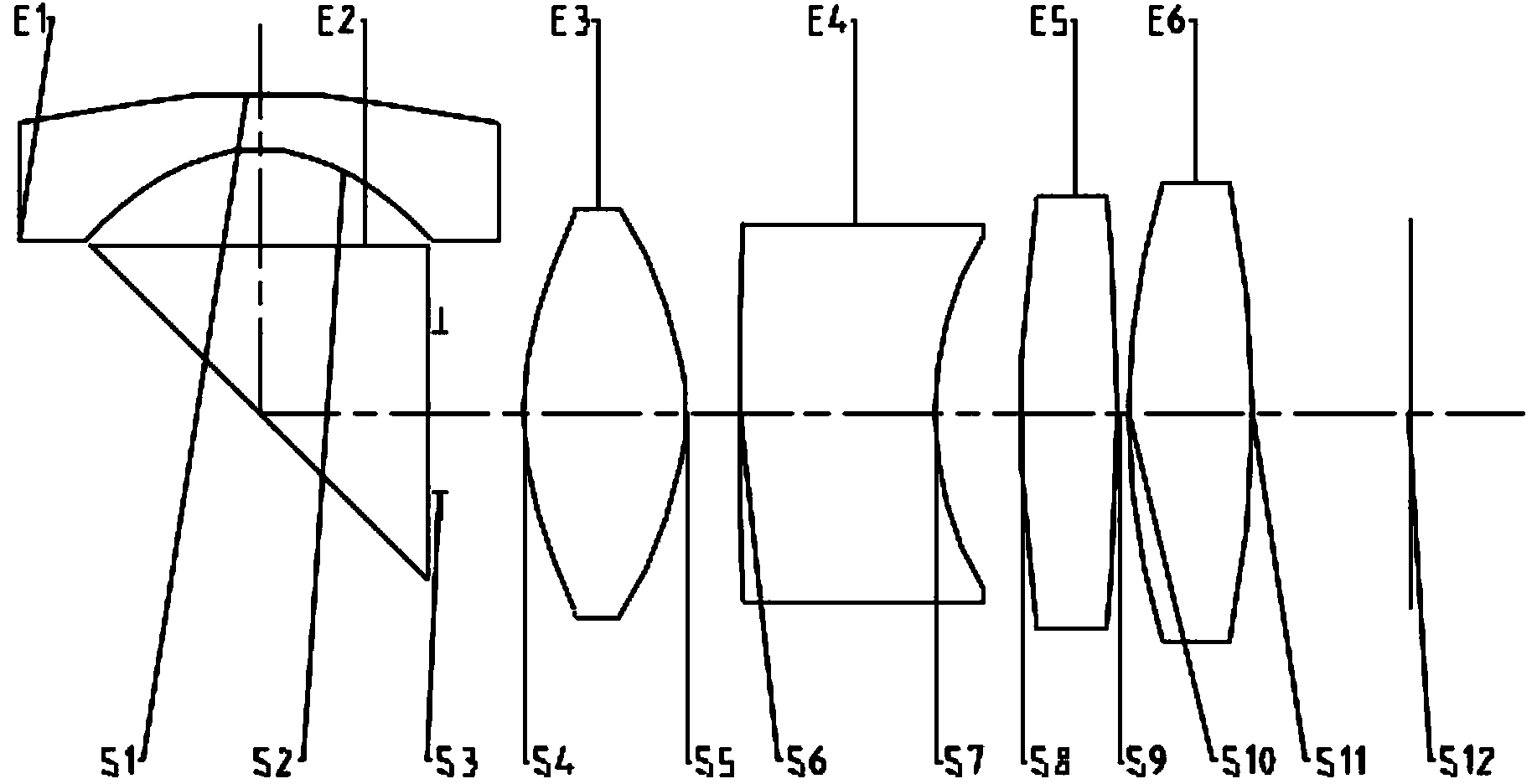

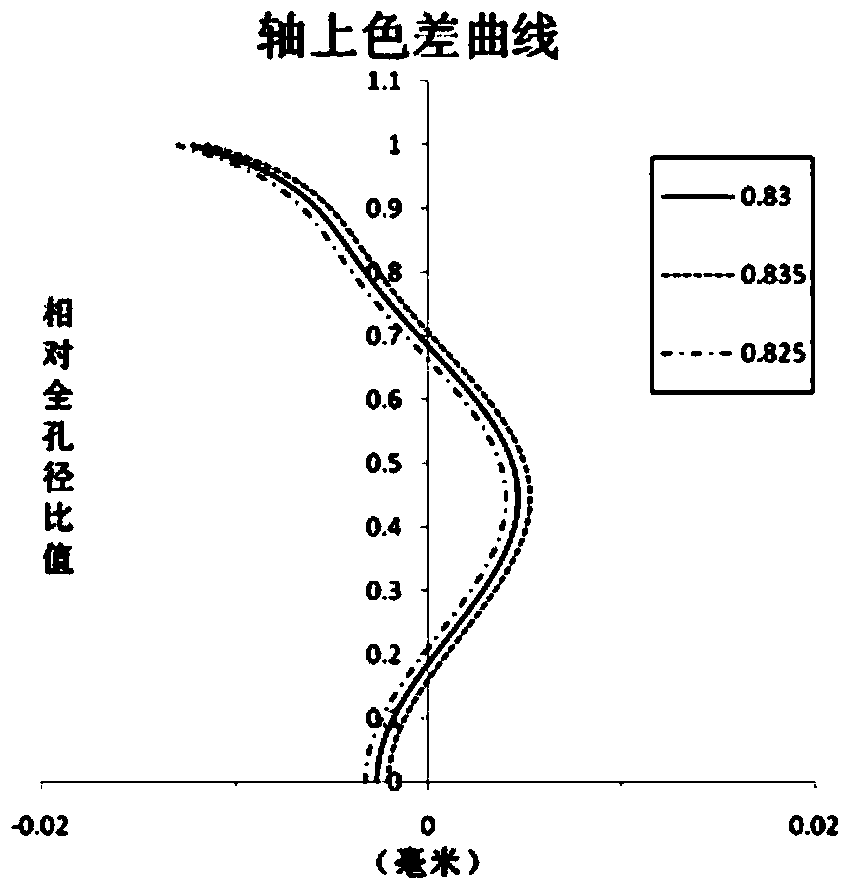

The invention provides a projection lens. The projection lens comprises a first lens set, a second lens set, a third lens set and a fourth lens set from an imaging side to an image source side in sequence, wherein the first lens set comprises a first lens with negative focal power and a reflection optical face enabling a light path to bend; the second lens set comprises a second lens with positive focal power, and the faces, facing the imaging side, of the second lens and the image source side, of the second lens are convex faces; the third lens set comprises a third lens with negative focal power; the fourth lens set has positive focal power and comprises one or more lenses with focal power, and the face, closest to the imaging side, of the fourth lens set is a convex face. The lens meets the following relation of ImgH / D>0.55, wherein ImgH is one half the diameter of an image source, and D is the vertical distance between the face, facing the imaging side, of the first lens and the center position of the reflection optical face. According to the projection lens, because the four lens sets are adopted, the size of a lens system can be effectively reduced, it can be guaranteed that the lens has a large resolution ratio under the large-view-angle condition, and the large-view-field-angle, small-distortion and high-resolution-ratio technical effects are achieved.

Owner:ZHEJIANG SUNNY OPTICAL CO LTD

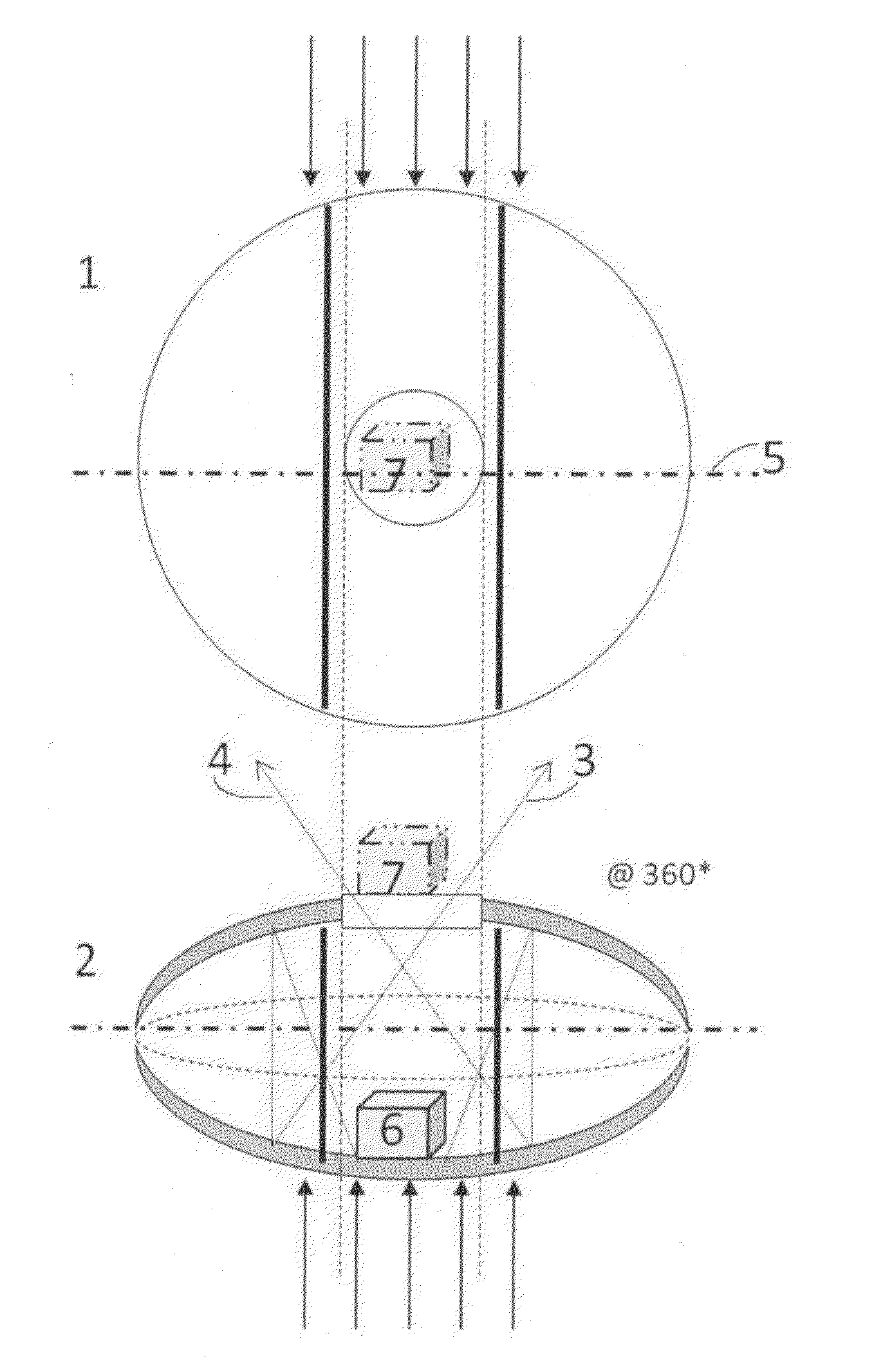

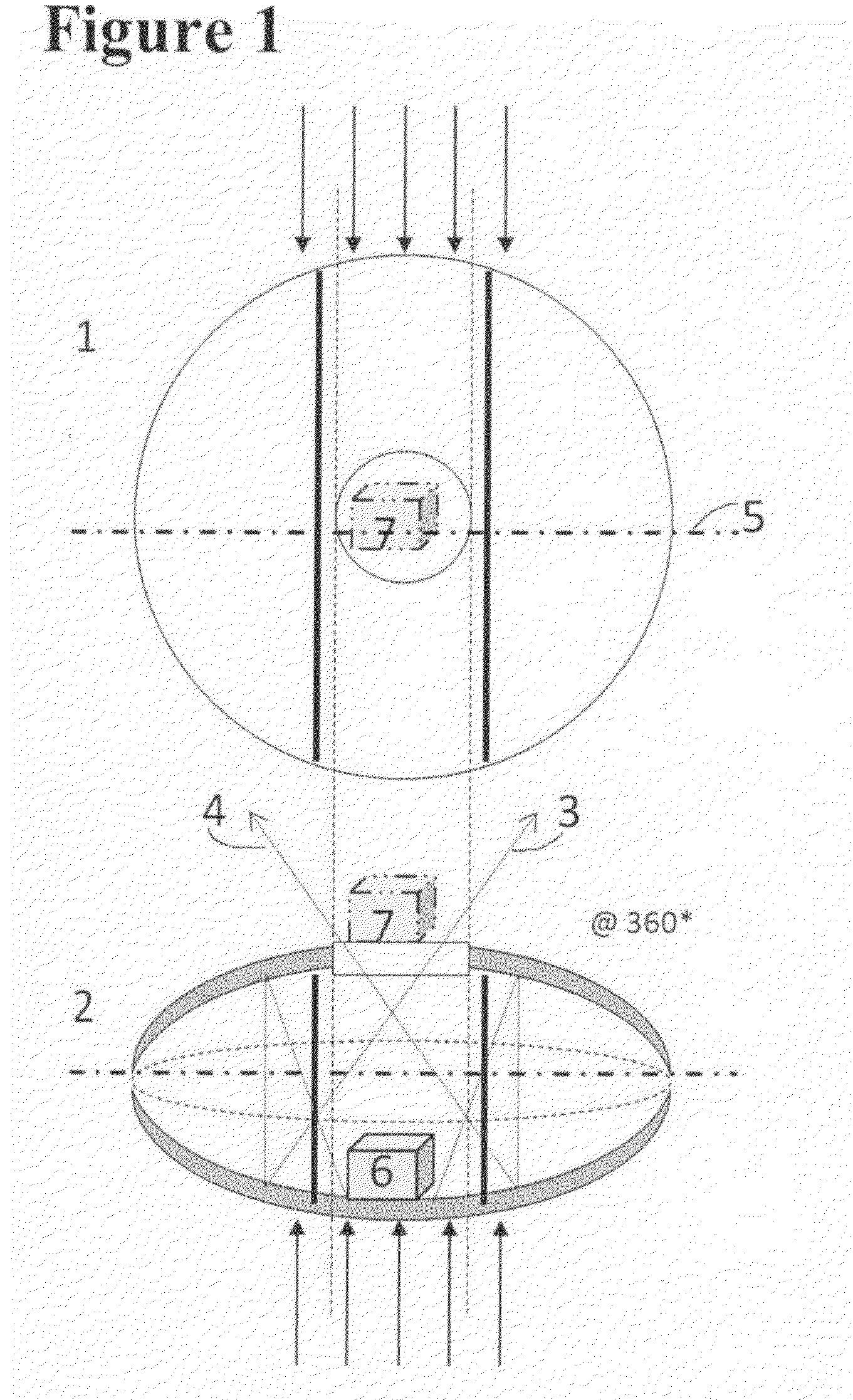

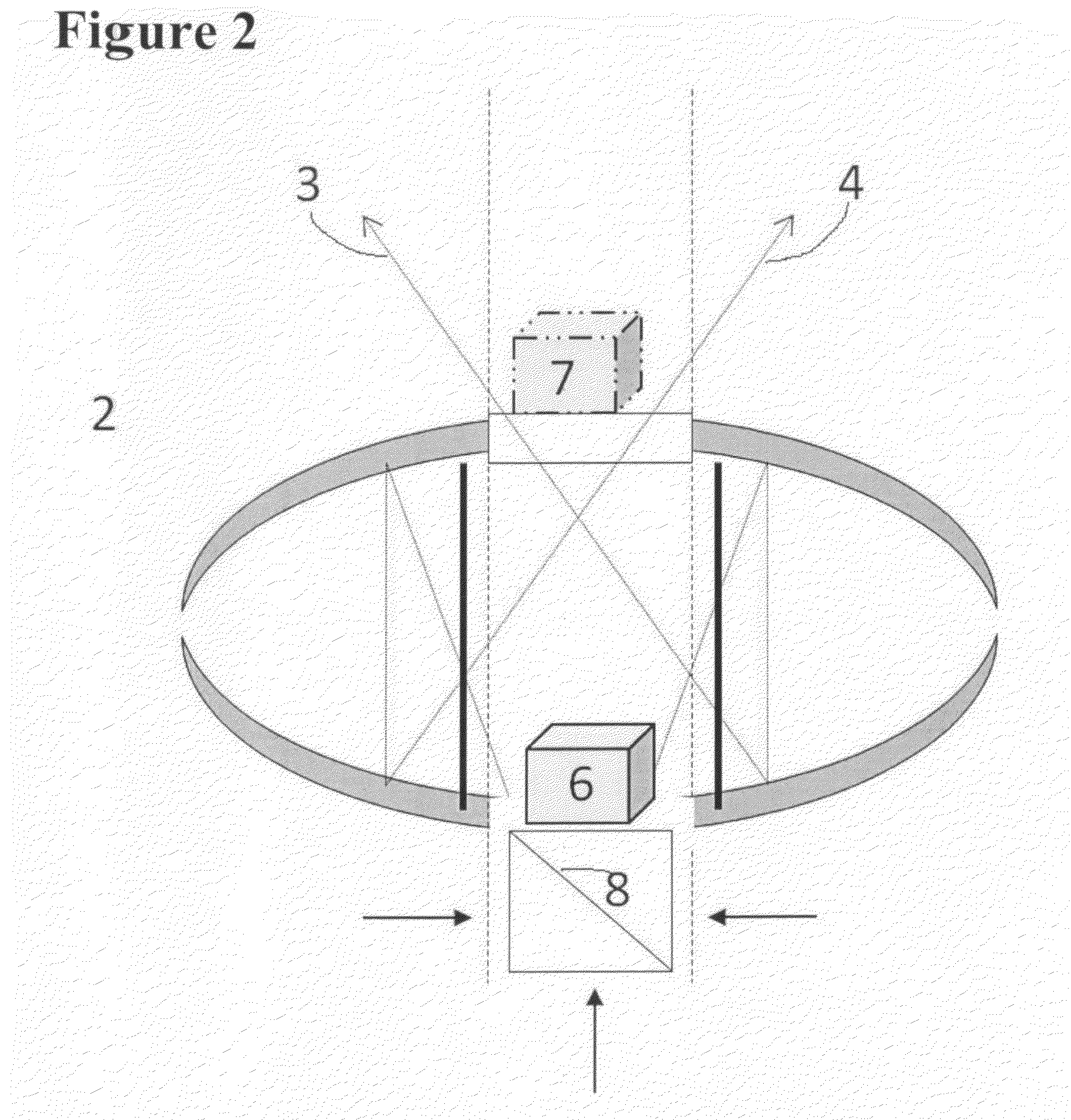

Sensor-monitored, 3d, interactive holographic freespace control unit (HFCU) and associated components

InactiveUS20140253432A1Effectively process inputEfficient outputInput/output for user-computer interactionCathode-ray tube indicatorsAs DirectedDisplay device

A sensor / camera-monitored, virtual image or holographic-type display device is provided and called a SENSOR-MONITORED, 3D, INTERACTIVE HOLOGRAPHIC FREESPACE CONTROL UNIT (HFCU). This invention allows user interactions with the virtual-images or holograms without contacting any physical surface; it is achieved via the use of a visually bounded freespace both with and without holographic-type assistance. The 3D Holographic-type Freespace Control Unit (HFCU) and the Gesture-Controlled 3D Interface Freespace (GCIF) are implemented to produce external and internal commands. The built hardware of the present invention include concave and convex mirror slices at the size, curvature(s), repetition, and locations so as to create the desired holograms / virtual images, optical real-object generation pieces (projectors, digital screens, other mediums as desired, etc.) placed to create the associated virtual images / holograms, sensor(s) for monitoring holographic-type spaces and reporting data, and the computer and software pieces / code used to analyze the collected data and execute further commands as directed

Owner:FERGUSON HOLLY TINA

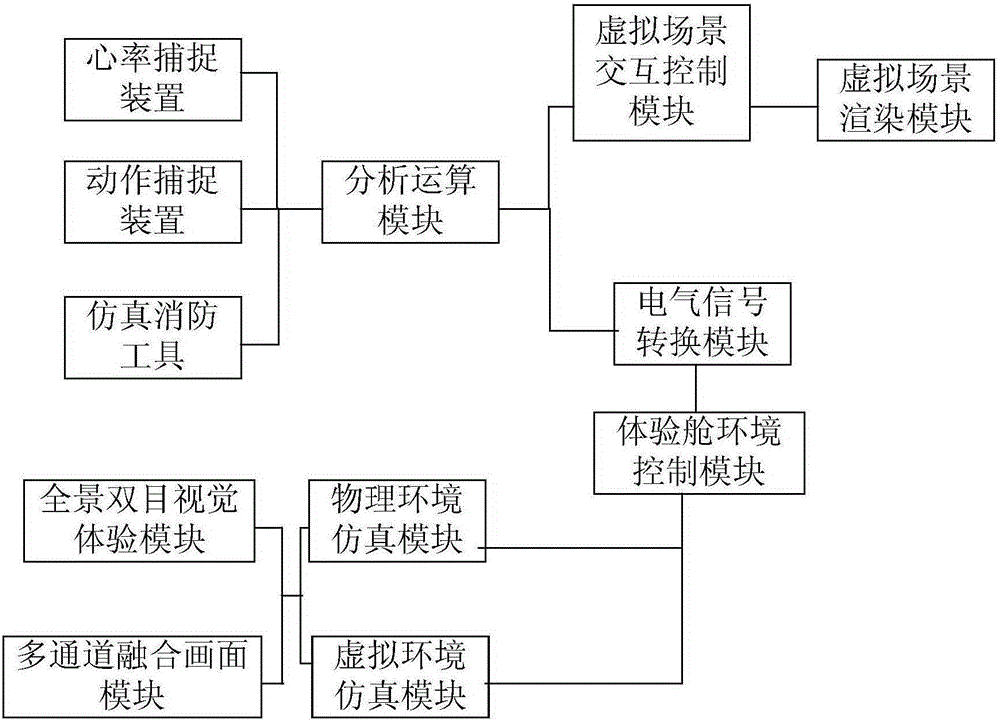

Virtual reality system for experiencing fire-fighting scene

ActiveCN106601060AImprove emergency performanceIncrease brightnessCosmonautic condition simulationsSimulatorsVirtual trainingComputer compatibility

The invention discloses a virtual reality system for experiencing a fire-fighting scene. The virtual reality system comprises a fire-fighting virtual training interaction module, a fire-fighting training scene virtual electric control module and a practice training cabin, wherein the fire-fighting virtual training interaction module utilizes a 3D interaction technique to establish an entity high-precision model in high compatibility; the fire-fighting training scene virtual electric control module utilizes the combination of an environment control module and a digital-to-analogue conversion device to seamlessly connect the reaction behaviors of trainees in the virtual fire scene with the virtual equipment and scene objects in the fire scene; a special annular visual angle high-reflection developing carrier with a 5M diameter is used as an outer layer of the practice training cabin; and a 360-degree multi-channel curved fusing projection system which is composed of five sets of high-luminance image projection devices is arranged above the developing carrier. The virtual reality system does not consume the true fire-fighting equipment in the maneuver, does not pollute the environment and can guarantee the daily fire-fighting maneuver and skilled rescue skills of a fire-fighting commander.

Owner:SHANGHAI FIRE RES INST OF THE MIN OF PUBLIC SECURITY +1

3D interactive menu

ActiveUS20130275918A1Preserving the adjacency relationshipsInput/output processes for data processingClose-up3D interaction

A method for navigating an interactive menu including a first number of selectable items in a virtual environment. The method includes: providing a grid on a delimited portion of a plane in the virtual environment, the grid including locations equal to the first number; providing plural navigation directions within the grid and arranging each menu item on a respective location of the grid according to an item-grid arrangement, adjacencies along the navigation directions among grid items determine corresponding adjacency relationships; determining adjacency relationships along the navigation directions among items located on borders of the delimited portions; shooting a first item in close-up, enabling its selection; receiving a navigation command identifying a navigation direction along which a second selectable item is located, and altering the item-grid arrangement to relocate the items in different grid locations based on the received navigation command to shoot the second item in close-up.

Owner:TELECOM ITALIA SPA

A 3D interaction display system based on augmented reality

InactiveCN104820497AInput/output for user-computer interactionGraph reading3D interactionVirtual space

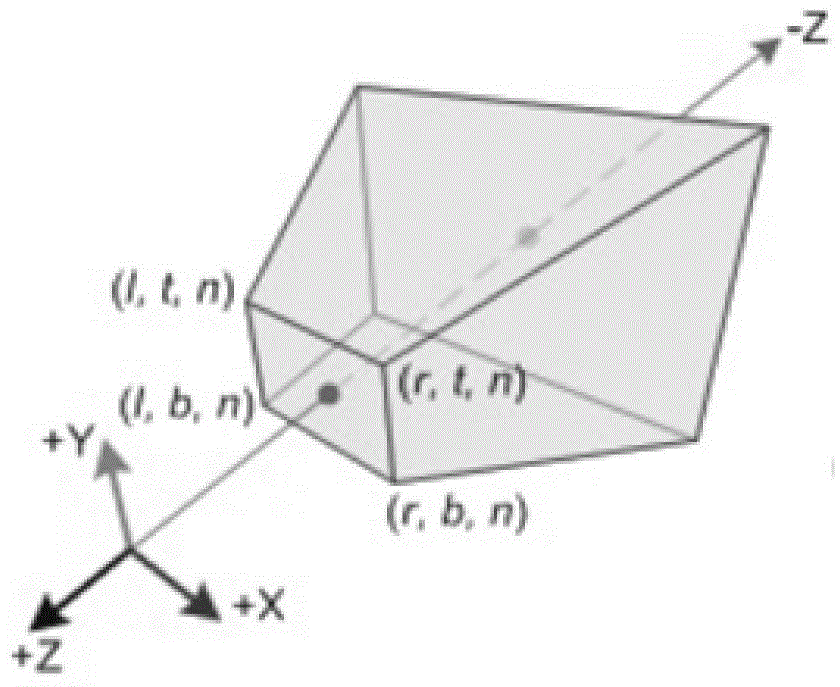

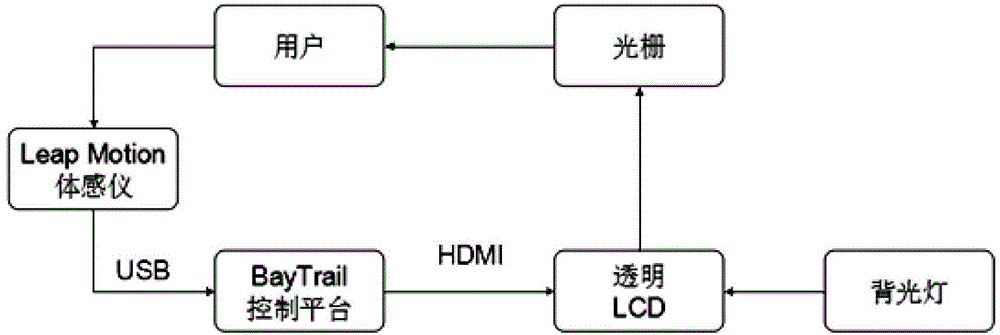

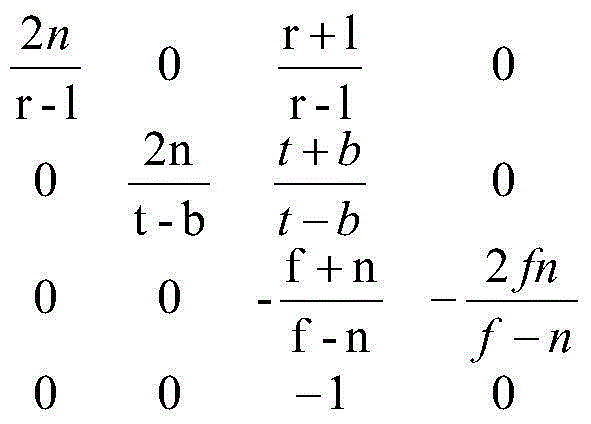

The invention relates a 3D interaction display system based on augmented reality. The 3D interaction display system based on augmented reality comprises a 3D stereo display part and a human-machine interaction part, wherein, the 3D stereo display part comprises a stereo camera for acquiring a scene, and an image processing device for executing pixel even / odd column processing on left and right pictures of the acquired scene and rendering them onto a transparent displayer; a grating on the transparent displayer projects the even / odd column processed images into left and right eyes of a user respectively; the human-machine interaction part comprises a human body induction controller for obtaining information on a location, speed and a posture of a finger and providing a location tracking algorithm; and the 3D stereo display part and the human-machine interaction part realize uniformity of virtual space and real space. The 3D interaction display system based on augmented reality can provide the user with the most natural and stereo human-machine interaction mode, which is similar to operation of a real object.

Owner:DONGHUA UNIV

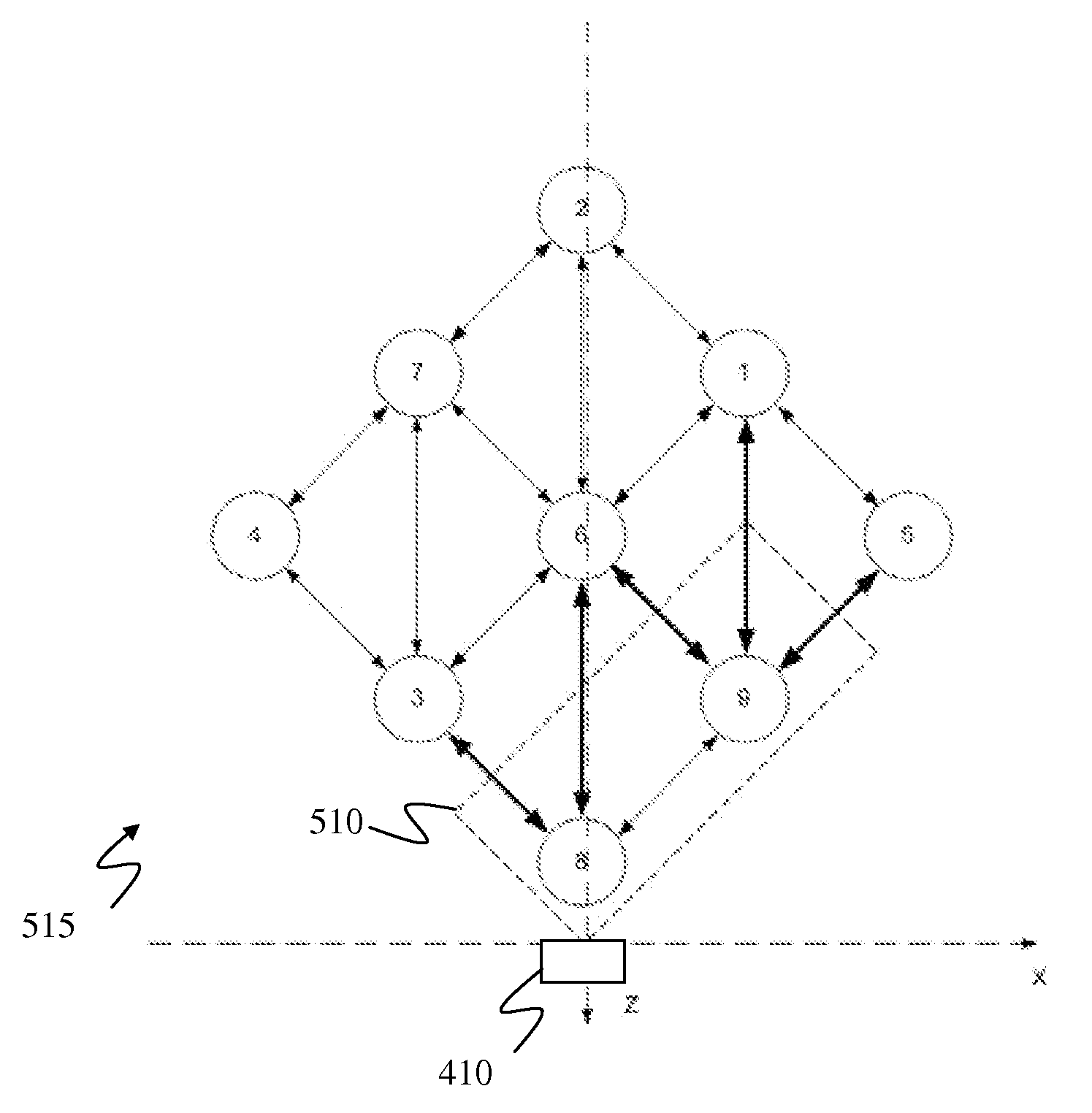

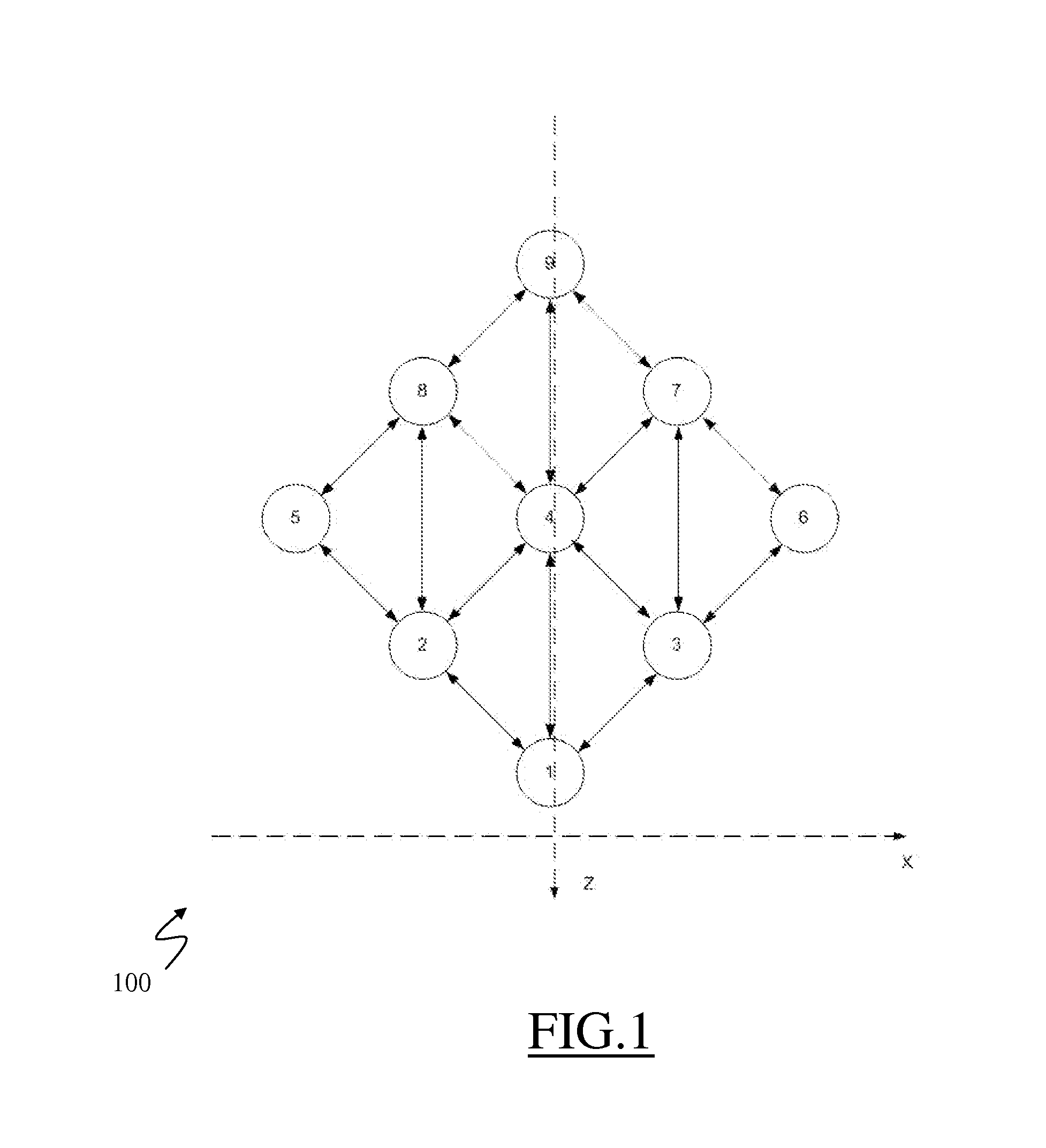

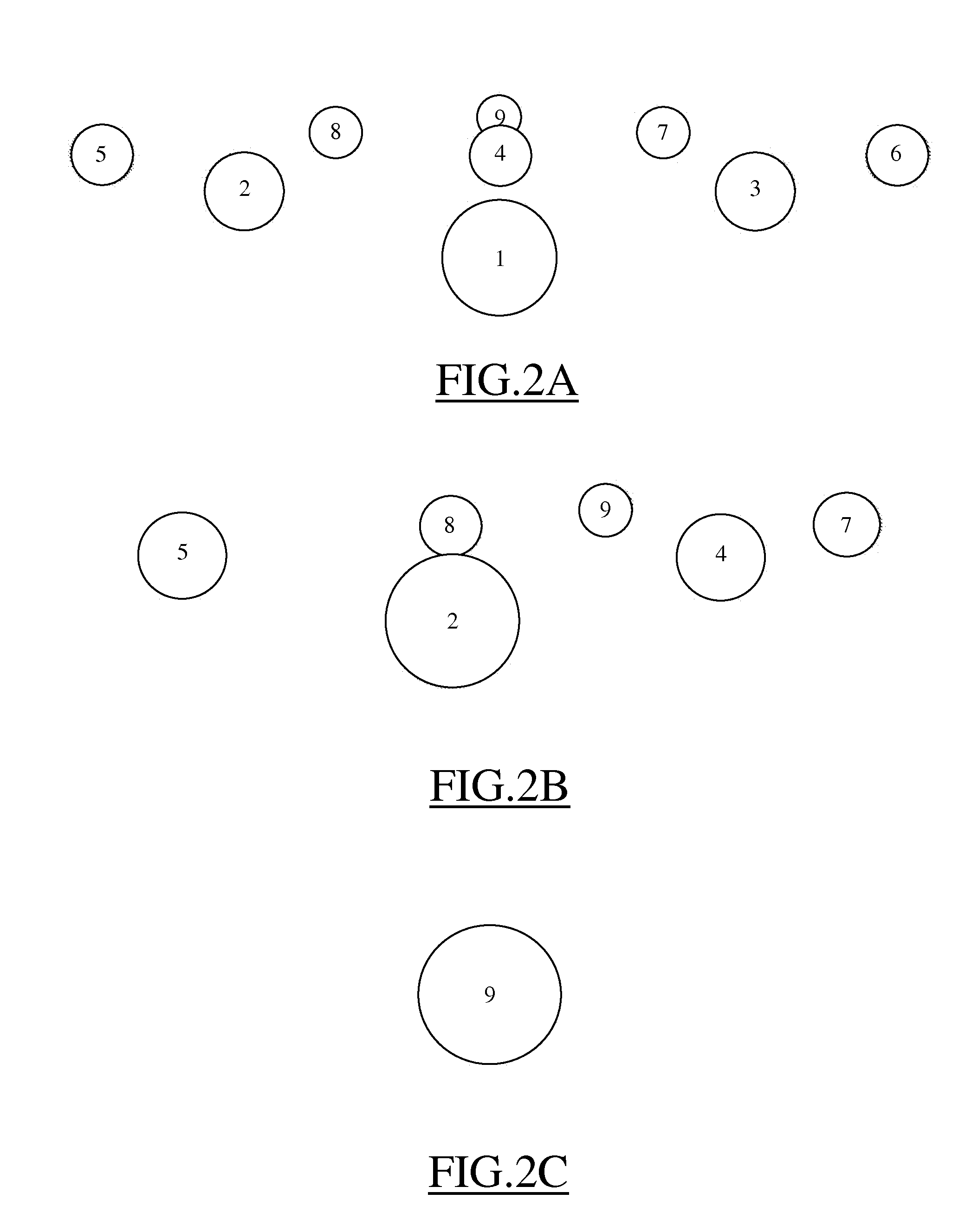

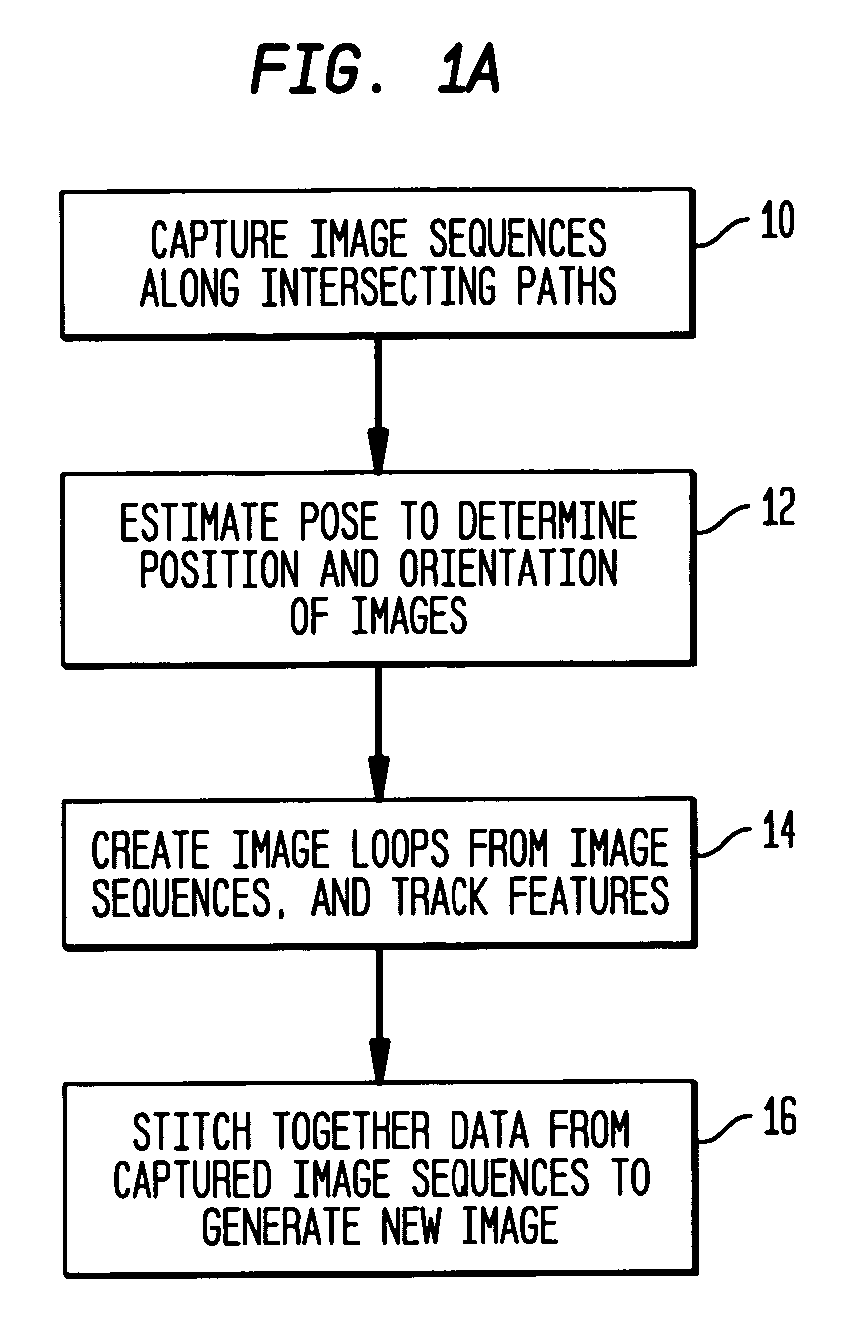

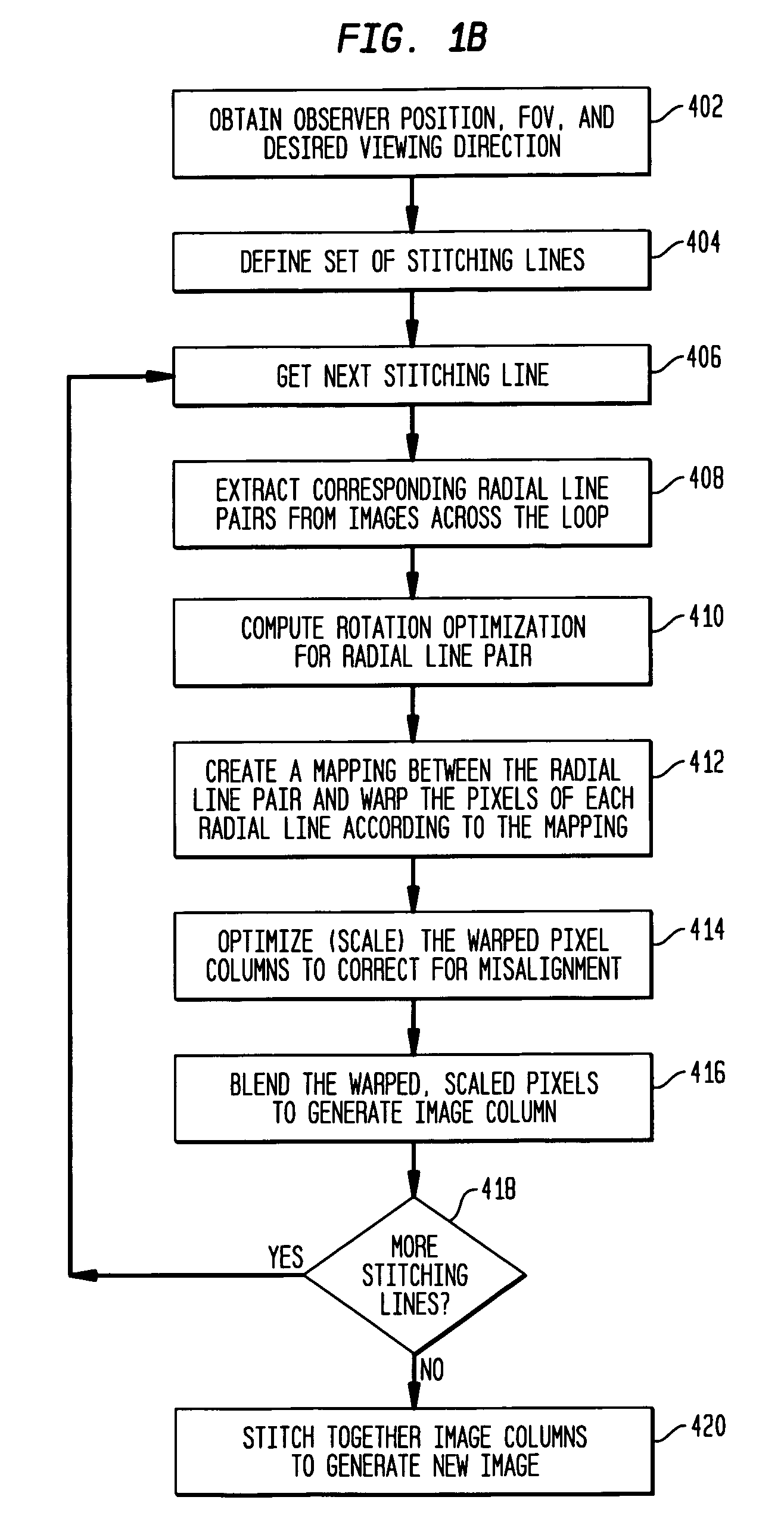

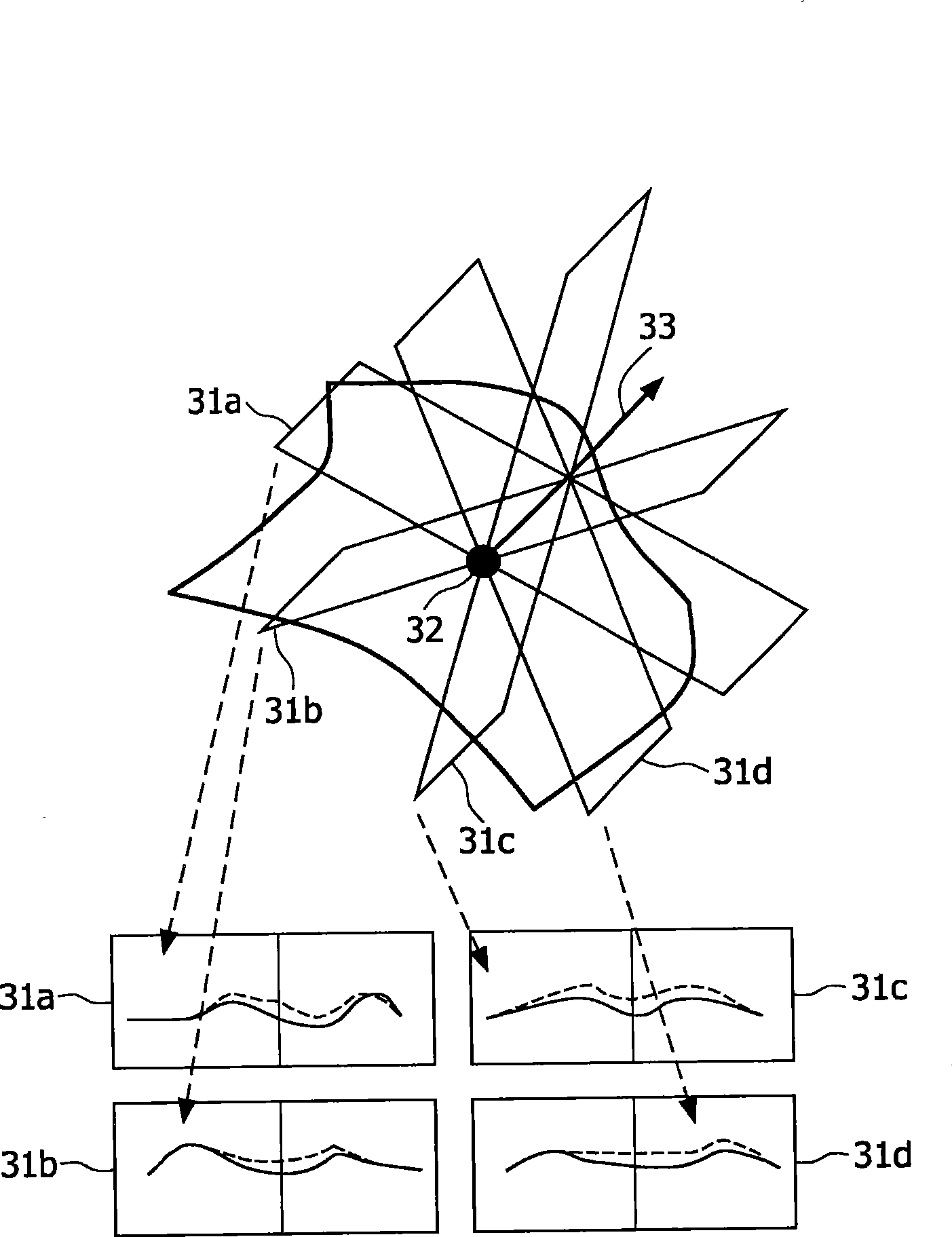

Method and system for reconstructing 3D interactive walkthroughs of real-world environments

InactiveUS7027049B2Quick snapFreedom of movementCharacter and pattern recognition3D-image renderingImage extractionViewpoints

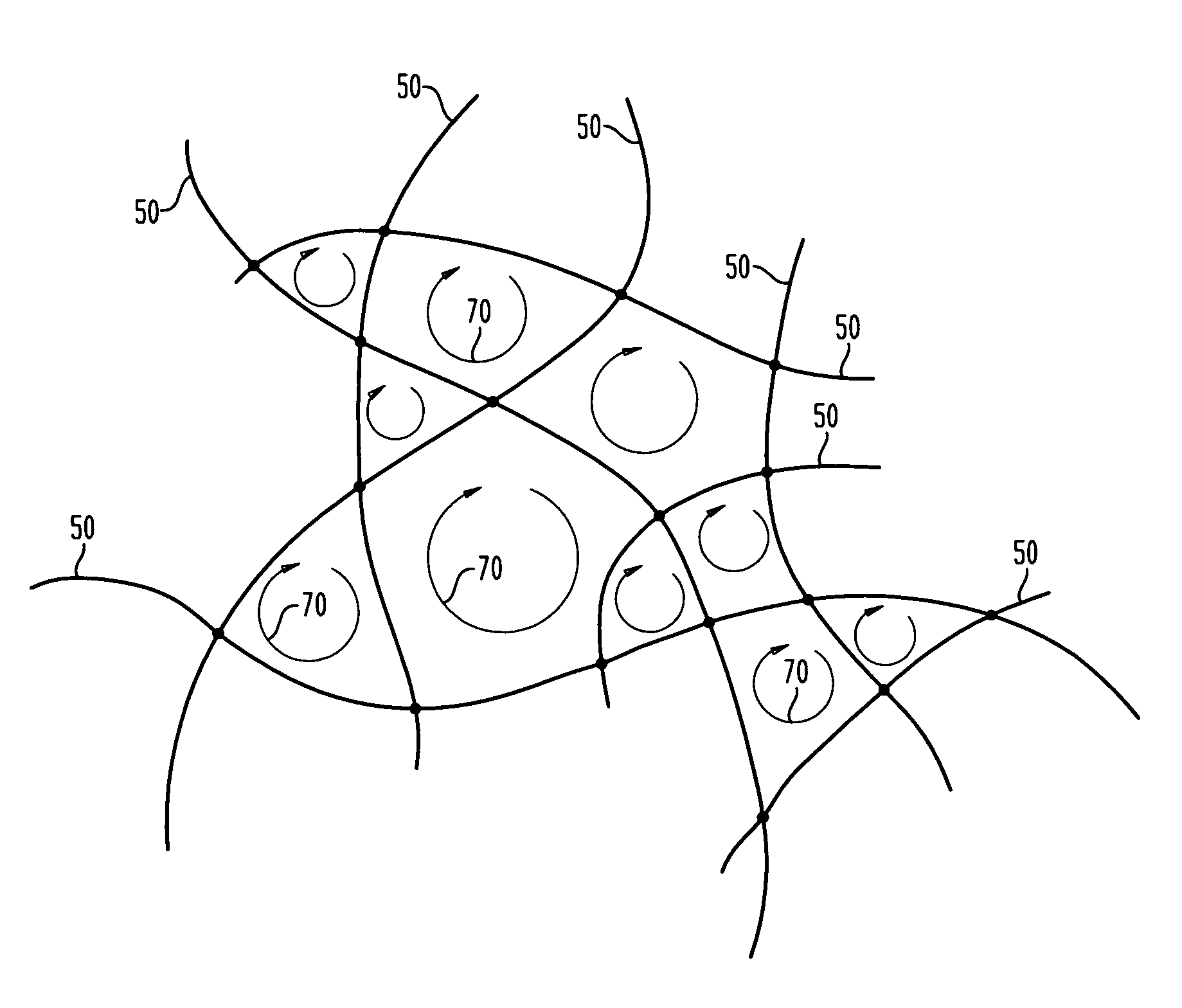

An omnidirectional video camera captures images of the environment while moving along several intersecting paths forming an irregular grid. These paths define the boundaries of a set of image loops within the environment. For arbitrary viewpoints within each image loop, a 4D plenoptic function may be reconstructed from the group of images captured at the loop boundary. For an observer viewpoint, a strip of pixels is extracted from an image in the loop in front of the observer and paired with a strip of pixels extracted from another image on the opposite side of the image loop. A new image is generated for an observer viewpoint by warping pairs of such strips of pixels according to the 4D plenoptic function, blending each pair, and then stitching the resulting strips of pixels together.

Owner:LUCENT TECH INC

Interactive experience system for museum

InactiveCN107957782AInterpretation conceptVivid connotationInput/output for user-computer interactionElectrical apparatusElectricityRemote control

The invention relates to the technical field of interaction of a museum, in particular to an interactive experience system for a museum. The system is used for an experience region of the museum and separately comprises a sound-light-electricity effect system, a multimedia touch system, a voice synchronous lamplight control system, a multimedia remote control system, an interactive projection system, a near-field interactive pushing system, a holographic imaging system and a 3D interaction and virtual reality system which are connected with a master control system of the museum in a controlledmanner. A multimedia technology is introduced in exhibition, the cultural connotation of exhibits is extended, interaction between the interactive experience system and a visitor is improved, and different side surfaces of an exhibit are shown by multiple layers; and three-dimensional images of the exhibits and interactive game designed according to associated contents of the exhibits are pushedto visitors, and thus, the visitors can know the exhibits comprehensively, and can learn related knowledge in interactive games which teach through lively activities interestingly.

Owner:广东互动电子有限公司

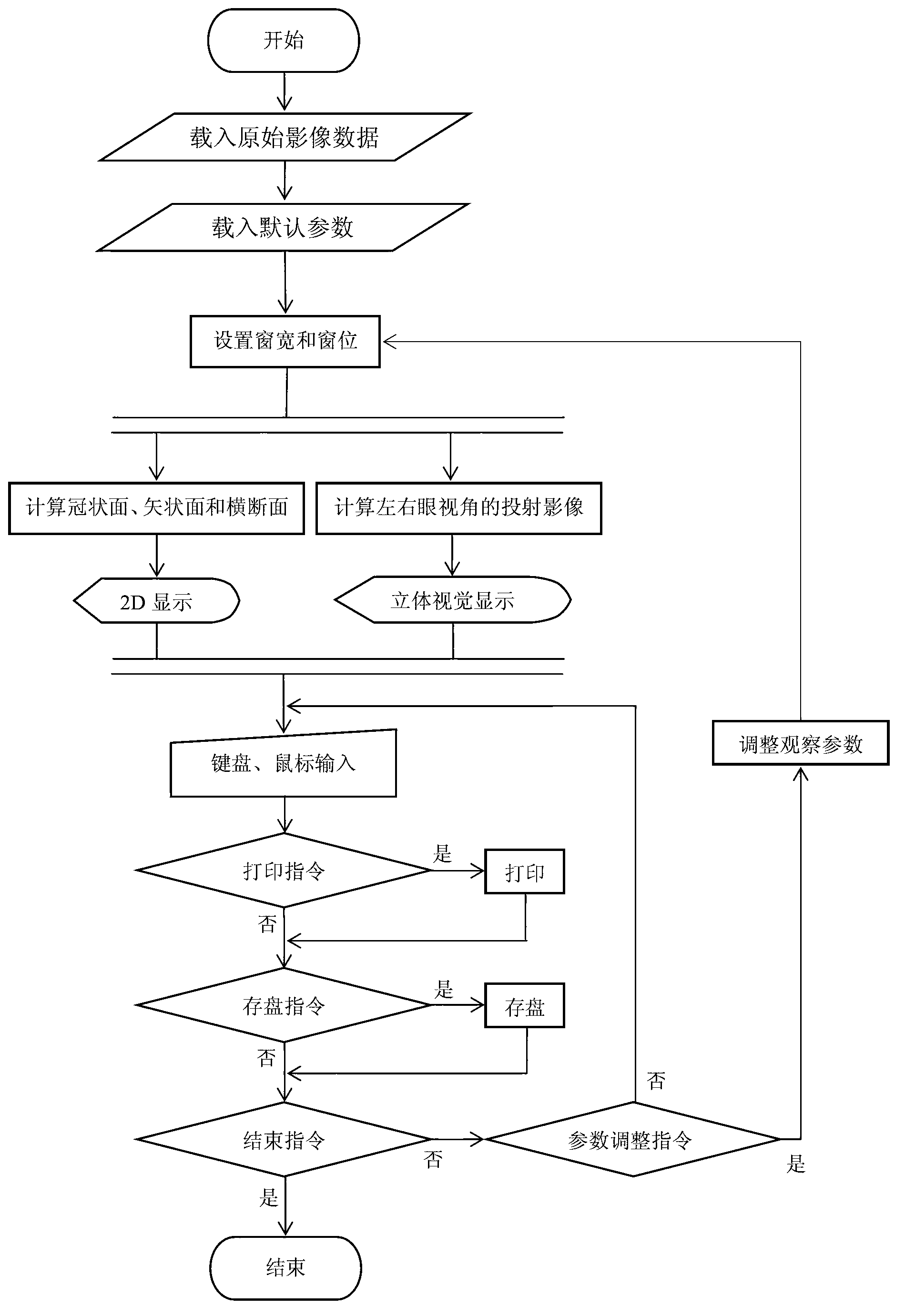

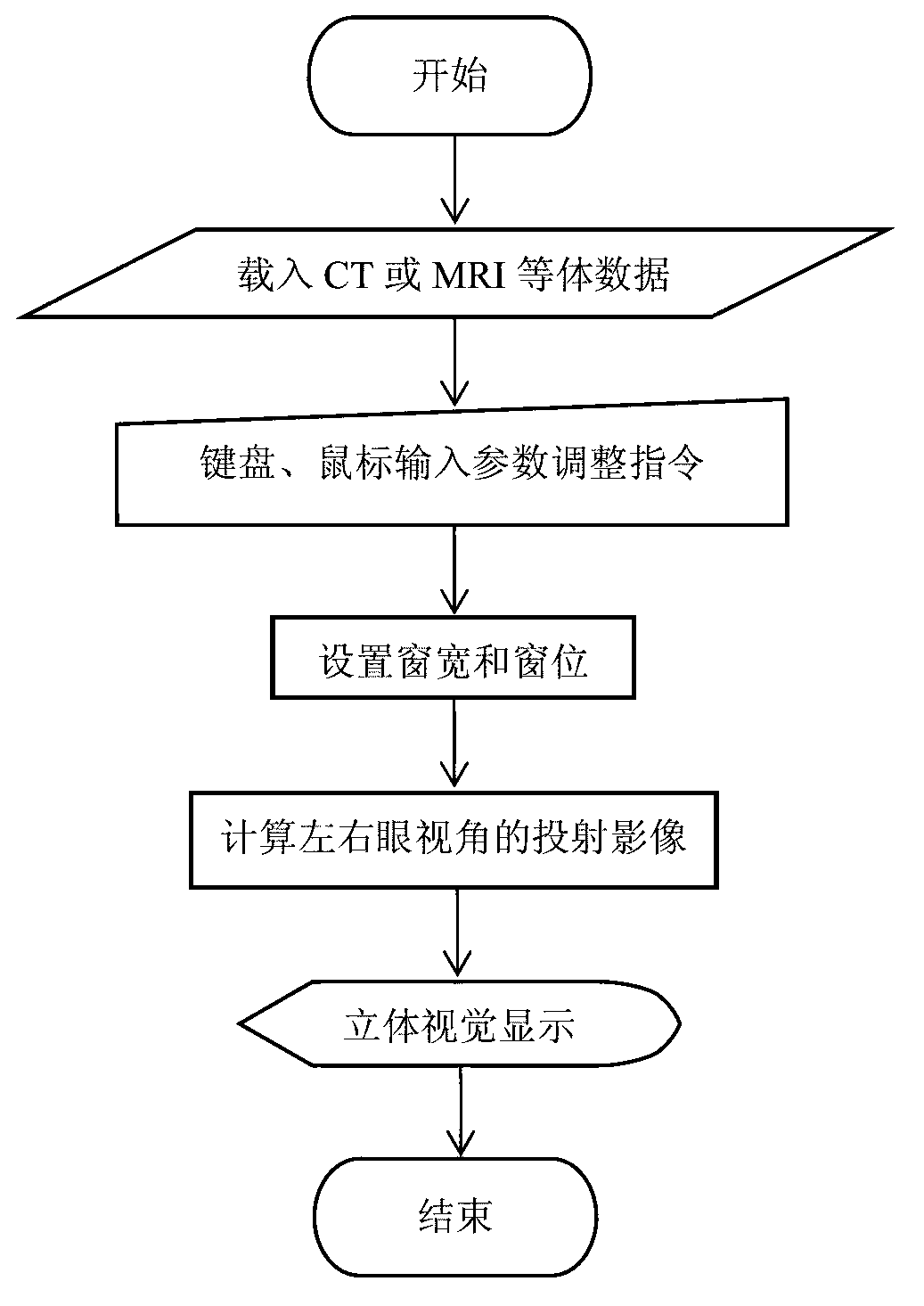

Medical image workstation with stereo visual display

ActiveCN102982233AStrong spatial realismStrong three-dimensional senseSteroscopic systemsSpecial data processing applicationsSoftware systemDepth of field

The invention discloses a medical image workstation with stereo visual display. The medical image workstation comprises a computer mainframe, a three dimensional (3D) display screen, an input device and a software system. The software system comprises a software function module, a stereo visual display module, a 3D interaction module and a 2D display and stereo visual display linkage module. The software function module, the stereo visual display module, the 3D interaction module and the 2D display and stereo visual display linkage module are possessed by an ordinary medical image workstation. The stereo visual display module is used for achieving the stereo visual display with depth information. The 3D interaction module is used for receiving operating commands which is provided with the depth information and inputted by a user from the input device, and synchronously displaying operating results in real time on the 3D display screen. The 2D display and stereo visual display linkage module is used for achieving linkage of a 2D display screen and the 3D display screen or achieving conversion between the 2D display and the 3D stereo visual display. The medical image workstation with the stereo visual display can overcome the defect that an existing medical image workstation is poor in linkage of stereo visual display, 3D interaction, and 2D display and stereo visual display, so that observation effects and work efficiency of doctors can be improved.

Owner:湖北得康科技有限公司

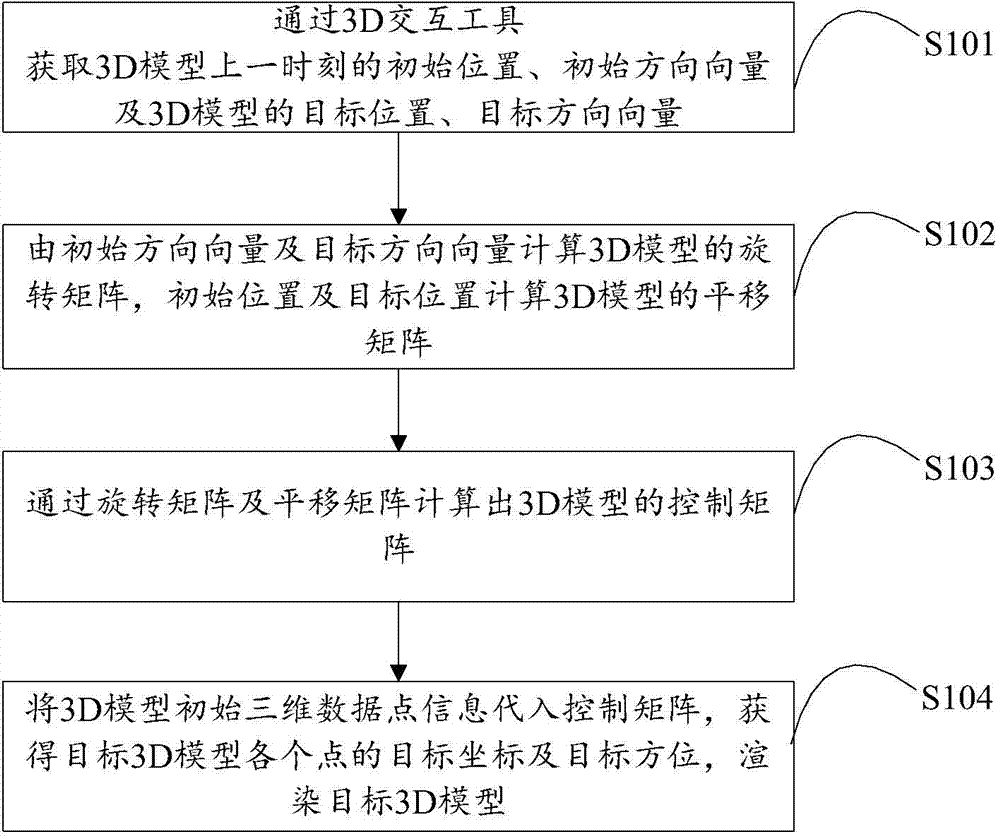

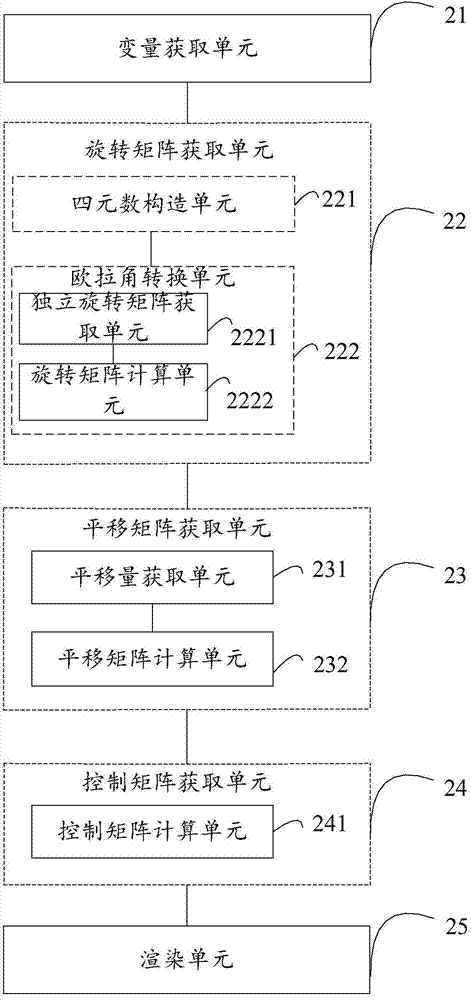

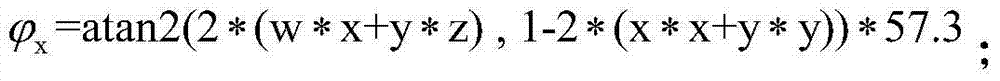

Method and device of rendering 3D (three dimensional) model in any orientation

InactiveCN103810746AGood conditionImprove the speed of determining the orientation of 3D models in real time3D-image renderingImaging processingComputer graphics (images)

The invention is applicable to the field of image processing, and provides with a method and a device of rendering a 3D (three dimensional) model in any orientation. The method comprises the steps of obtaining the primary position and the primary direction vector of the last moment of the 3D model and the target position and the target direction vector of the 3D model through a 3D interactive tool; calculating the rotary matrix of the 3D matrix through the primary direction vector and the target direction vector, and calculating the translation matrix of the 3D model through the primary position and the target position; calculating the control matrix of the 3D model through the rotary matrix and the translation matrix; substituting the primary 3D data point information of the 3D model into the control matrix to obtain the target coordinates and target directions of all points of the 3D model, and rendering the target 3D model. Any 3D model which accords with a control requirement can be rendered, so that the method is applicable to the real-time rendering of any 3D model, the speed of determining the position of the 3D model can be improved in real time, and the state of controlling the 3D model can be effectively improved.

Owner:TCL CORPORATION

Remote interaction control system and method for physical information space

InactiveCN104656893ALow costStrong sense of immersion in operationInput/output for user-computer interactionCharacter and pattern recognitionInteraction control3D interaction

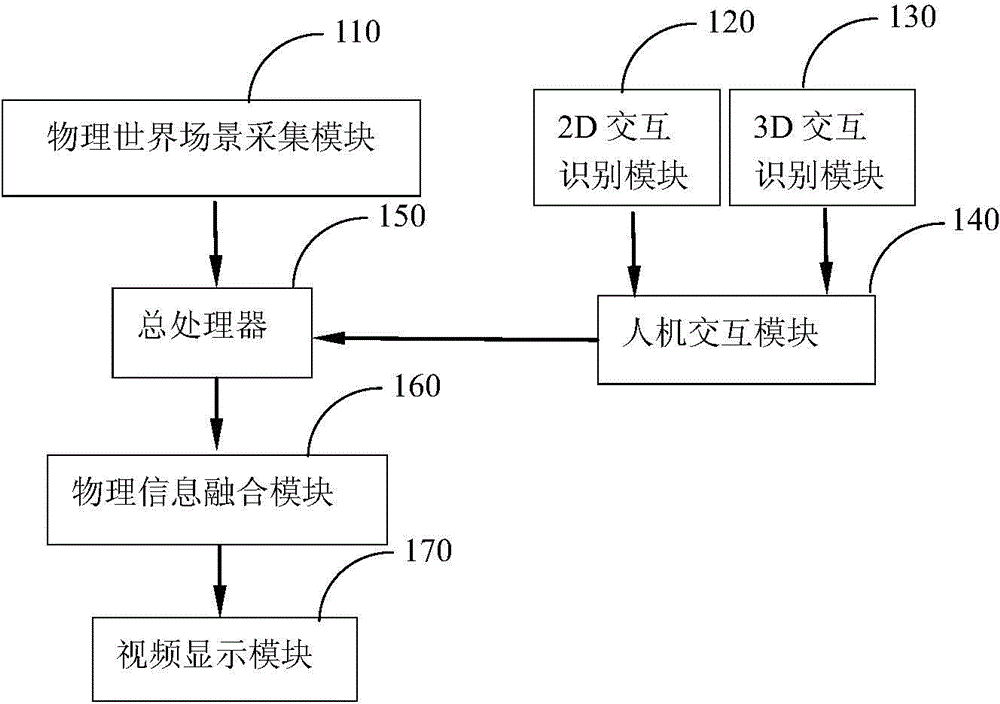

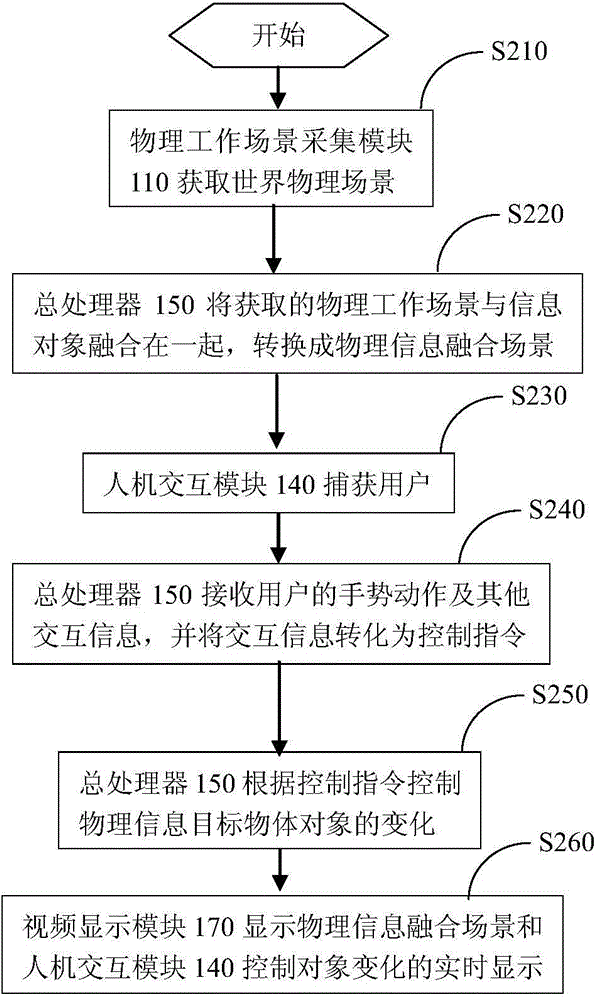

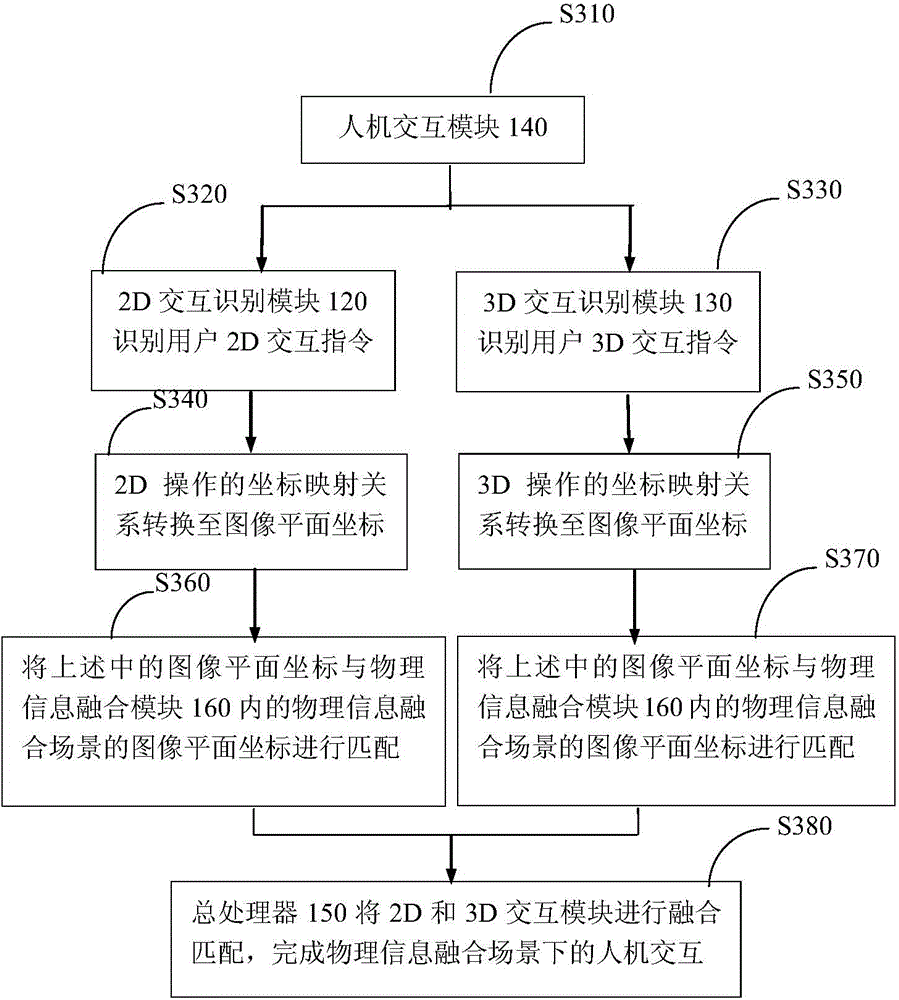

The invention relates to a remote interaction control system and a remote interaction control method for a physical information space. The system comprises a 3D (3-Dimensional) interaction identification module, a 2D (2-Dimensional) interaction identification module, a physical world scene acquisition module, a master processor, a physical information fusion module and a video display module. By the method, a combined 2D and 3D interaction manner can be adopted for remotely finishing real-time human-computer interaction control on a physical information object in a physical information fusion scene. Compared with other methods, the method is low in cost, high in operation immersion and real-time and efficient in operation, and has broad application prospect in human-computer interaction in a future physical information fusion scene.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Interactive editing and stitching method and device for garment cut pieces

ActiveCN107958488AReal-time simulationImprove design efficiencyDesign optimisation/simulationSpecial data processing applicationsInteractive editing3D interaction

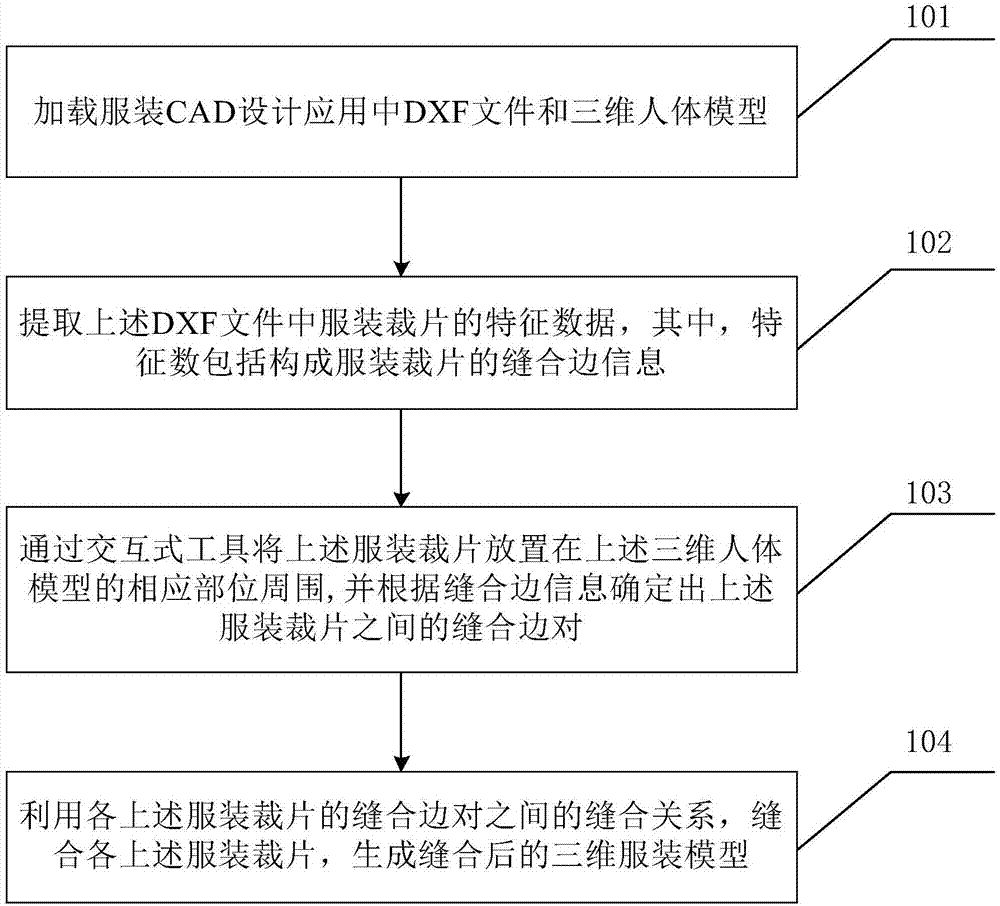

The invention relates to the field of garment design, discloses a 3D interactive editing and stitching method for garment CAD cut pieces, and aims to solve the problem that in the garment design, according to an emulation effect of a designed garment on a human body model, the garment needs to be repeatedly modified and redesigned. The method comprises the steps of: loading a DXF file in a garmentCAD design application and a three-dimensional human body model; extracting feature data of the garment cut pieces in the DXF file, wherein the feature data comprises information of stitching edges for forming the garment cut pieces; by an interactive tool, placing the garment cut pieces around corresponding positions of the three-dimensional human body model, and according to the information ofthe stitching edges, determining stitching edge pairs between the garment cut pieces, wherein an interactive operation is a movement operation of the garment cut pieces, which is carried out by utilizing the interactive tool; and by utilizing a stitching relationship between the stitching edge pairs of each garment cut piece, stitching each garment cut piece to generate a stitched three-dimensional garment model. Synchronous execution of garment design and emulation is implemented, and the designed garment is emulated in real time, so that garment design efficiency is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

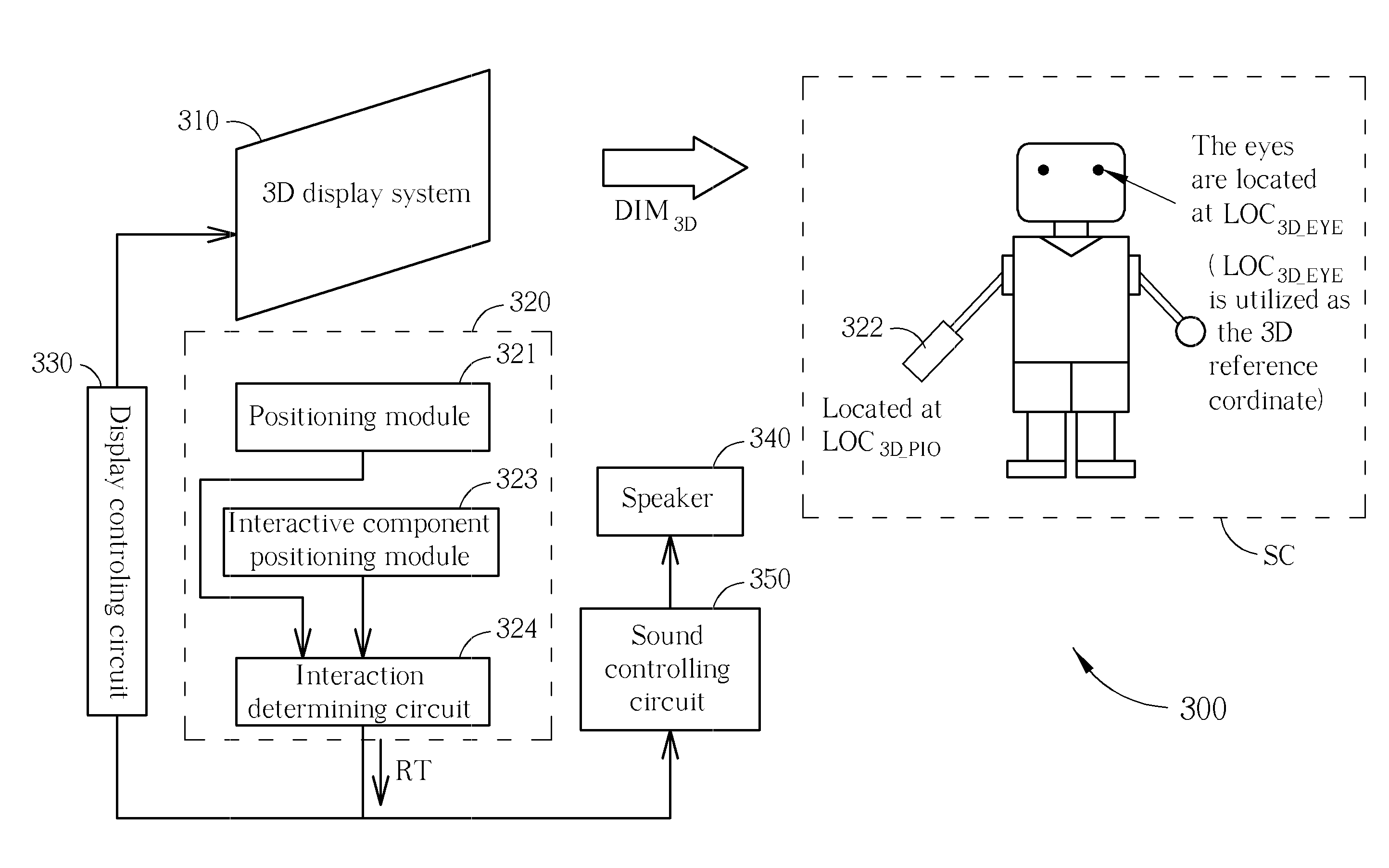

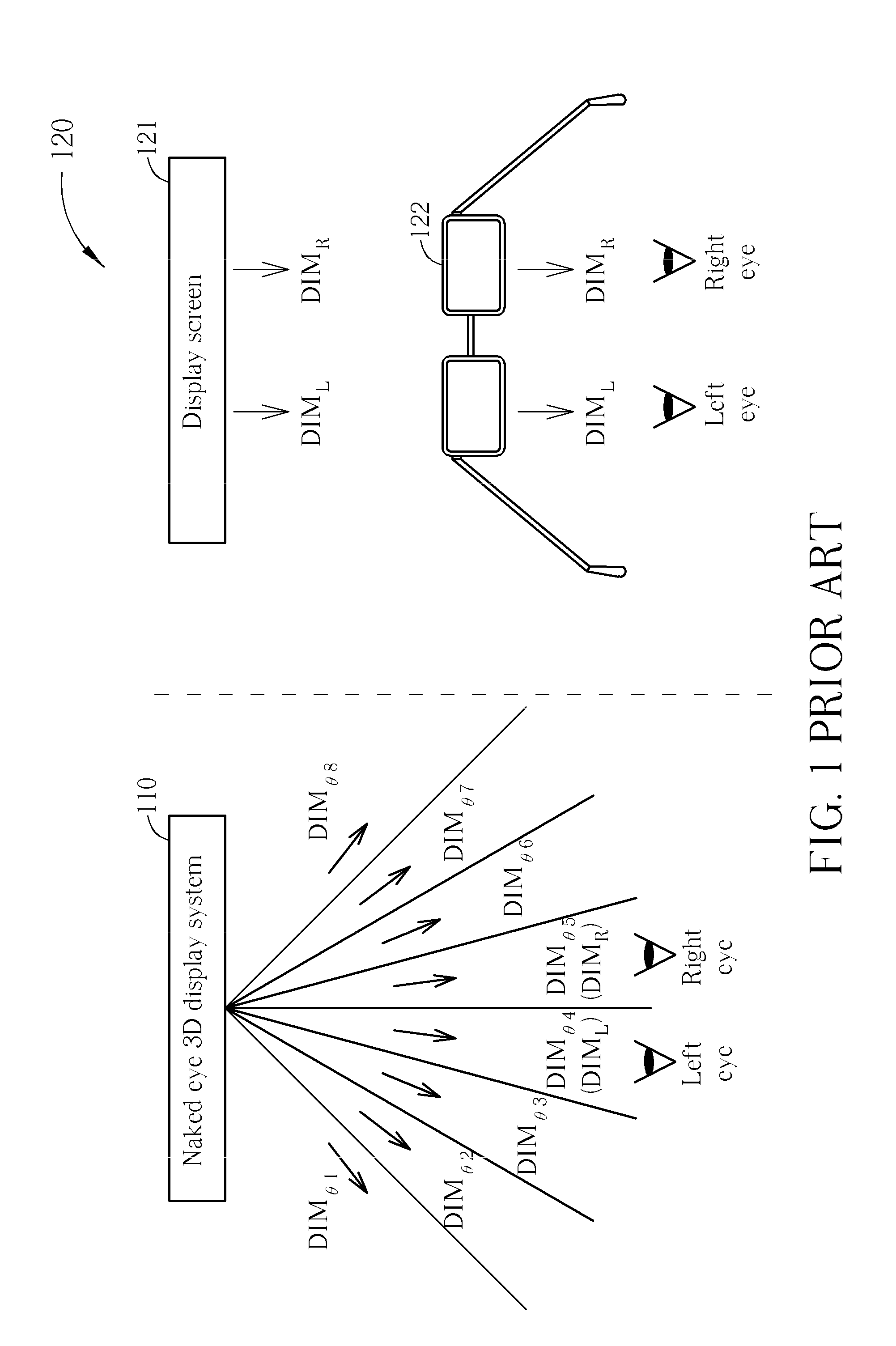

Interactive module applied in 3D interactive system and method

InactiveUS20110187638A1Input/output for user-computer interactionCathode-ray tube indicatorsComputer module3D interaction

An interactive module applied in a 3D interactive system calibrates a location of an interactive component or calibrates a location and an interactive condition of a virtual object in a 3D image, according to a location of a user. In this way, even the location of the user changes so that the location of the virtual object seen by the user changes as well, the 3D interactive system still can correctly decide an interactive result according to the corrected location of the interactive component, or according to the corrected location and corrected interactive condition of the virtual object.

Owner:PIXART IMAGING INC

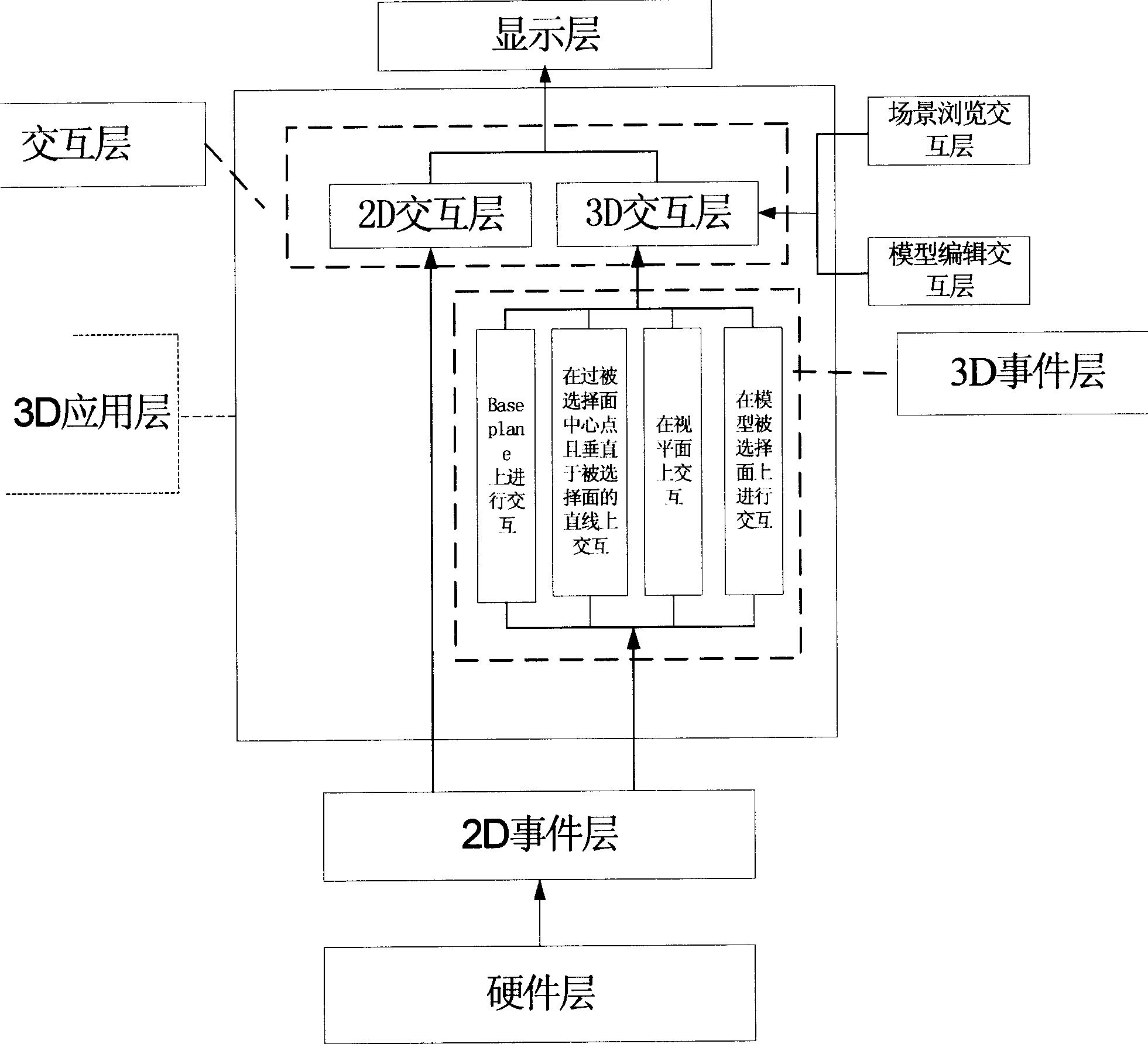

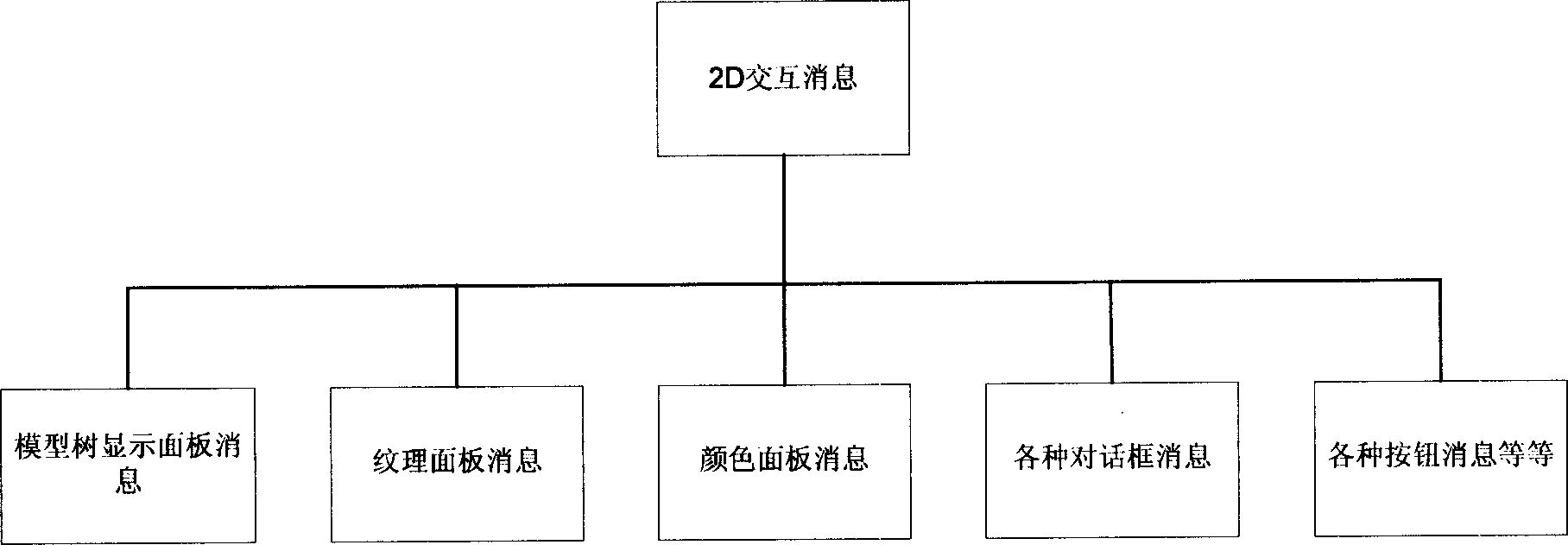

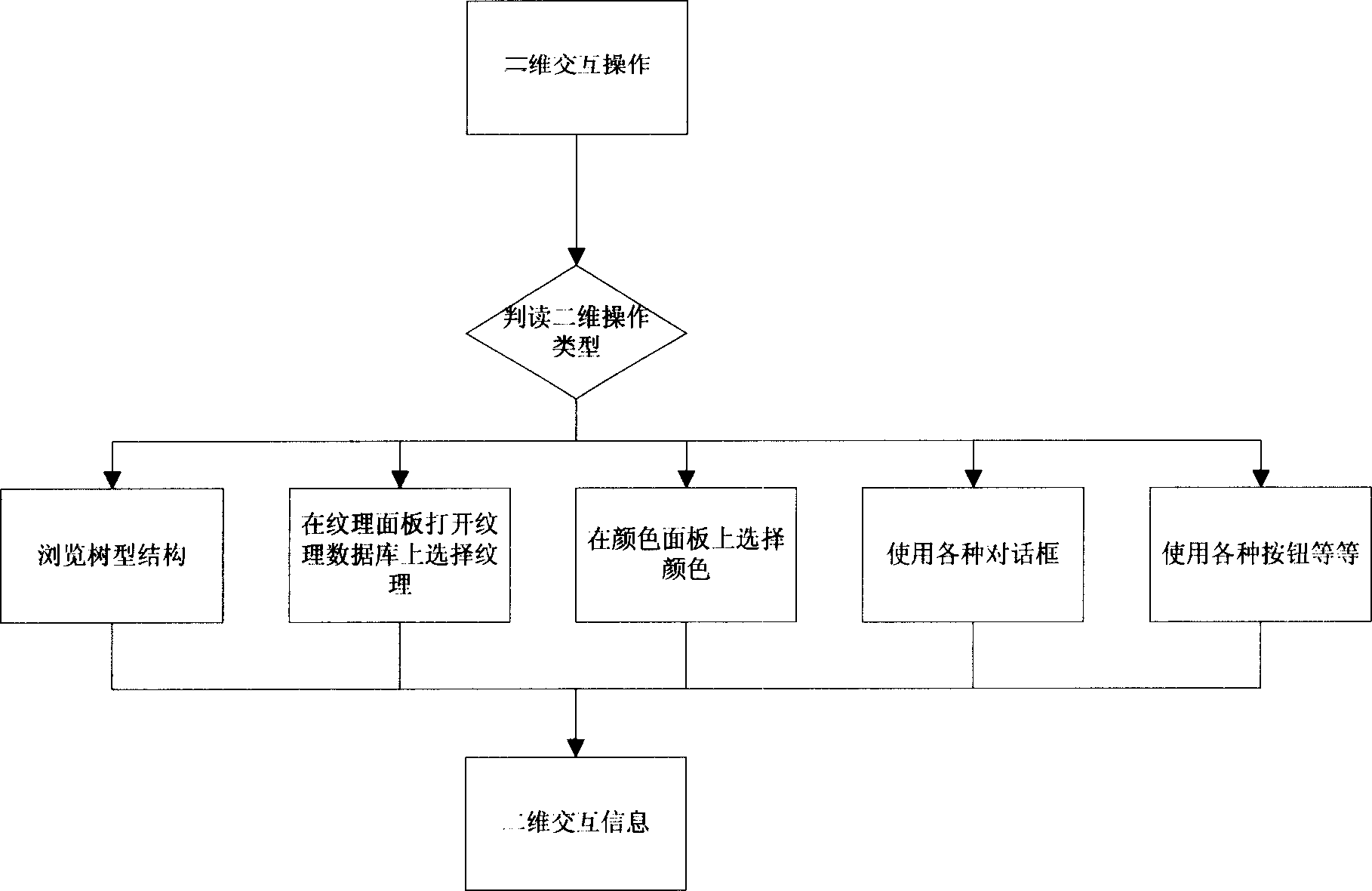

Human machine interactive frame, faced to three dimensional model construction

InactiveCN1753030AOverall clarityEasy to manageModifying/creating image using manual inputGraphicsHuman–machine interface

The invention is a 3D modeling-oriented man-machine interactive frame, comprising hardware layer, 2D event layer, 3D event layer, interactive layer and display layer from the bottom up; the hardware layer is composed of interactive devices; the 2D event layer is used to catch the interaction from the operator and convert it into 2D interactive information; the 3D event layer selectively converts the 2D interactive information into 3D interactive information; the interactive layer is divided into 2D interactive layer and 3D interactive layer, where the former receives the 2D interactive information to produce corresponding 2D interactive task and the latter receives the 3D interactive information to produce corresponding 3D interactive task; the display layer completes the interactive operation according to the 2D and 3D interactive tasks and feeds the effect to the user. The invention support the standard Open Flight-format model database files, providing various functions of browsing, model building, model modifying, etc, and the whole system has a user-friendly visual graphic interface, able to make high efficiency 3D model edition and has low price.

Owner:BEIHANG UNIV

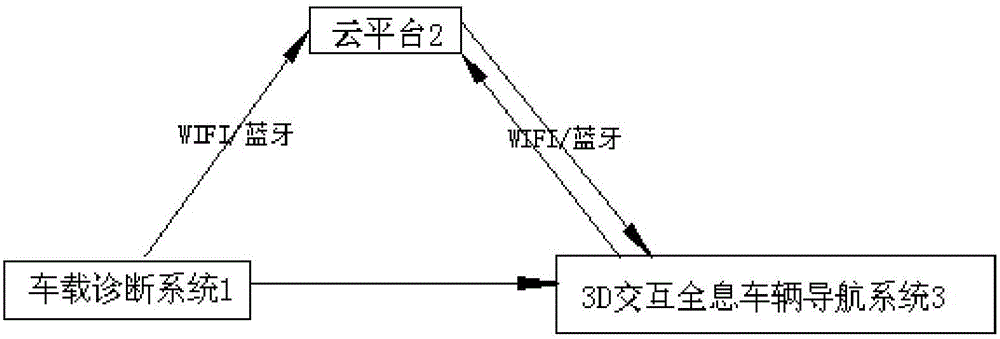

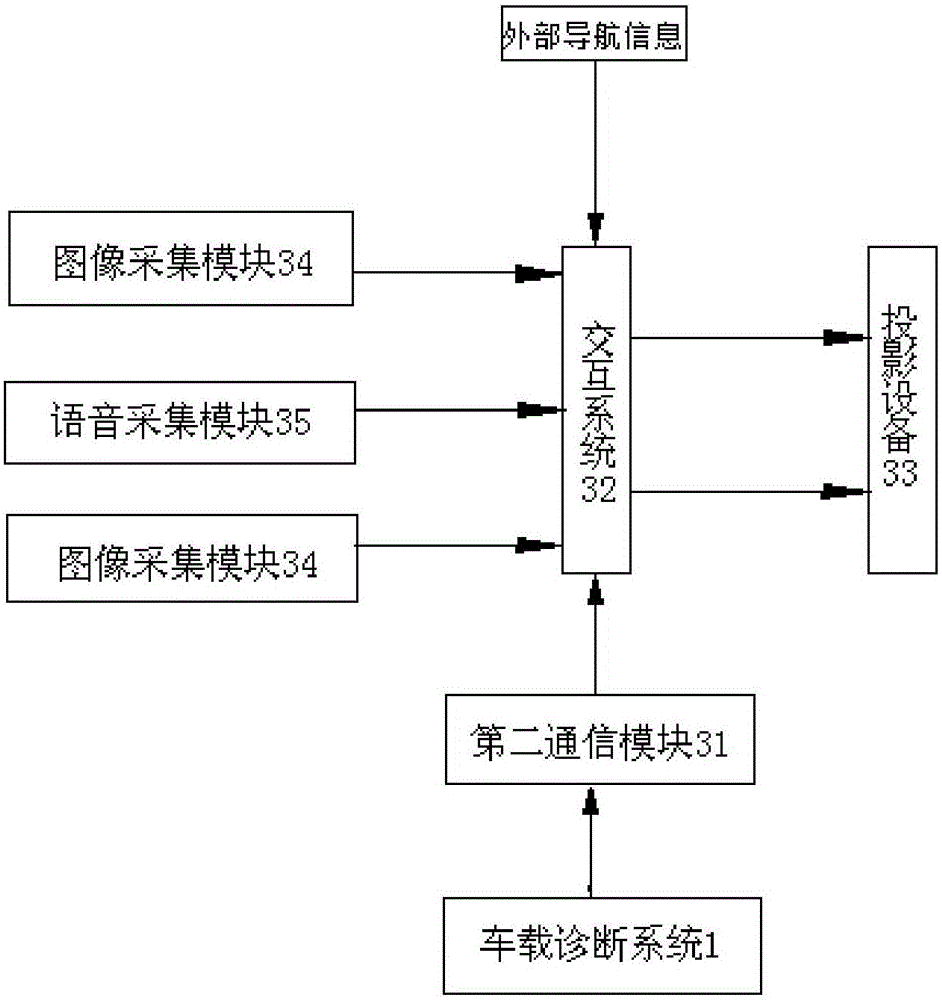

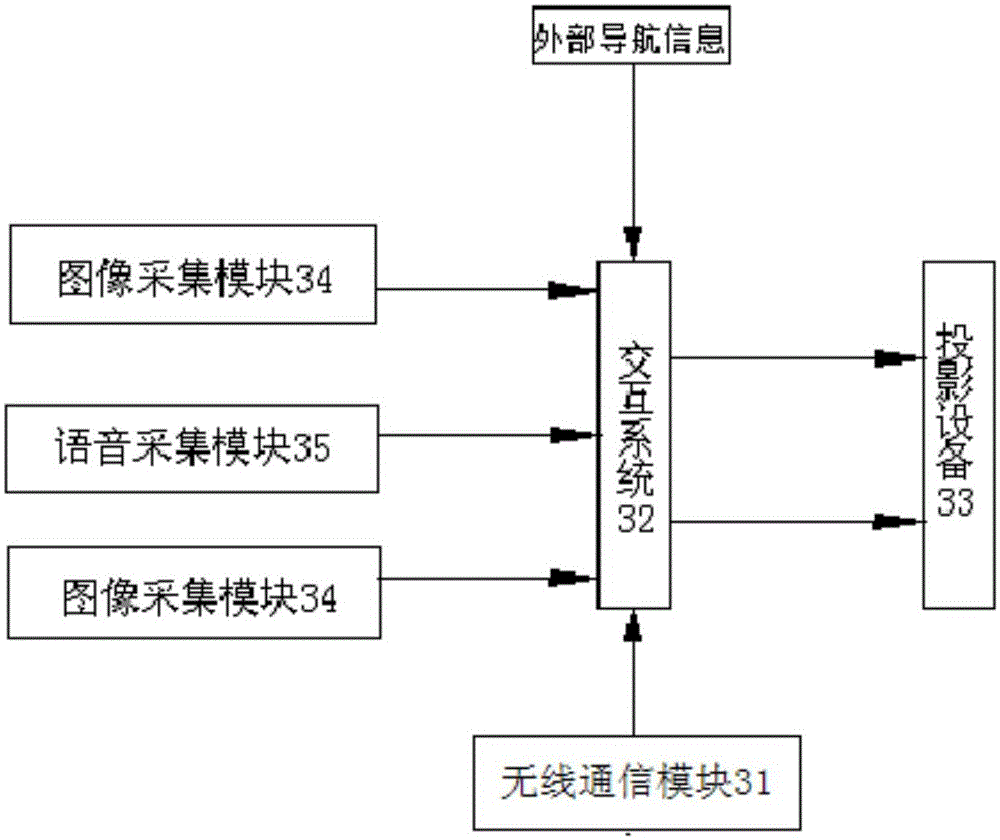

3D (three dimensional) hologram Internet of Vehicles interactive display terminal

ActiveCN105136151ARealize integrated interactionAchieve acquisitionInstruments for road network navigationDriver/operator3d image

The invention provides a 3D (three dimensional) hologram Internet of Vehicles interactive display terminal, which comprises a vehicle-mounted diagnostic system, a cloud platform and a 3D interactive hologram in-vehicle navigation system, wherein the vehicle-mounted diagnostic system is installed in a vehicle, and is used for collecting vehicle state data and acquiring vehicle diagnostic data; the cloud platform is connected with a first wireless communication module, and is used for receiving and storing the vehicle state data and the vehicle diagnostic data; the 3D interactive hologram in-vehicle navigation system is connected with the vehicle-mounted diagnostic system and the cloud platform and is positioned in the vehicle. The 3D interactive hologram in-vehicle navigation system comprises a second wireless communication module, an interactive system, projection equipment, a plurality of image collecting modules and voice collecting modules. By adopting the 3D hologram Internet of Vehicles interactive display terminal, vehicle and map data interface can be projected into the space of the vehicle in a 3D image mode, so that a driver does not need to move eyesight away from a road and can observe the data interface in real time, and the driving safety is improved.

Owner:王占奎

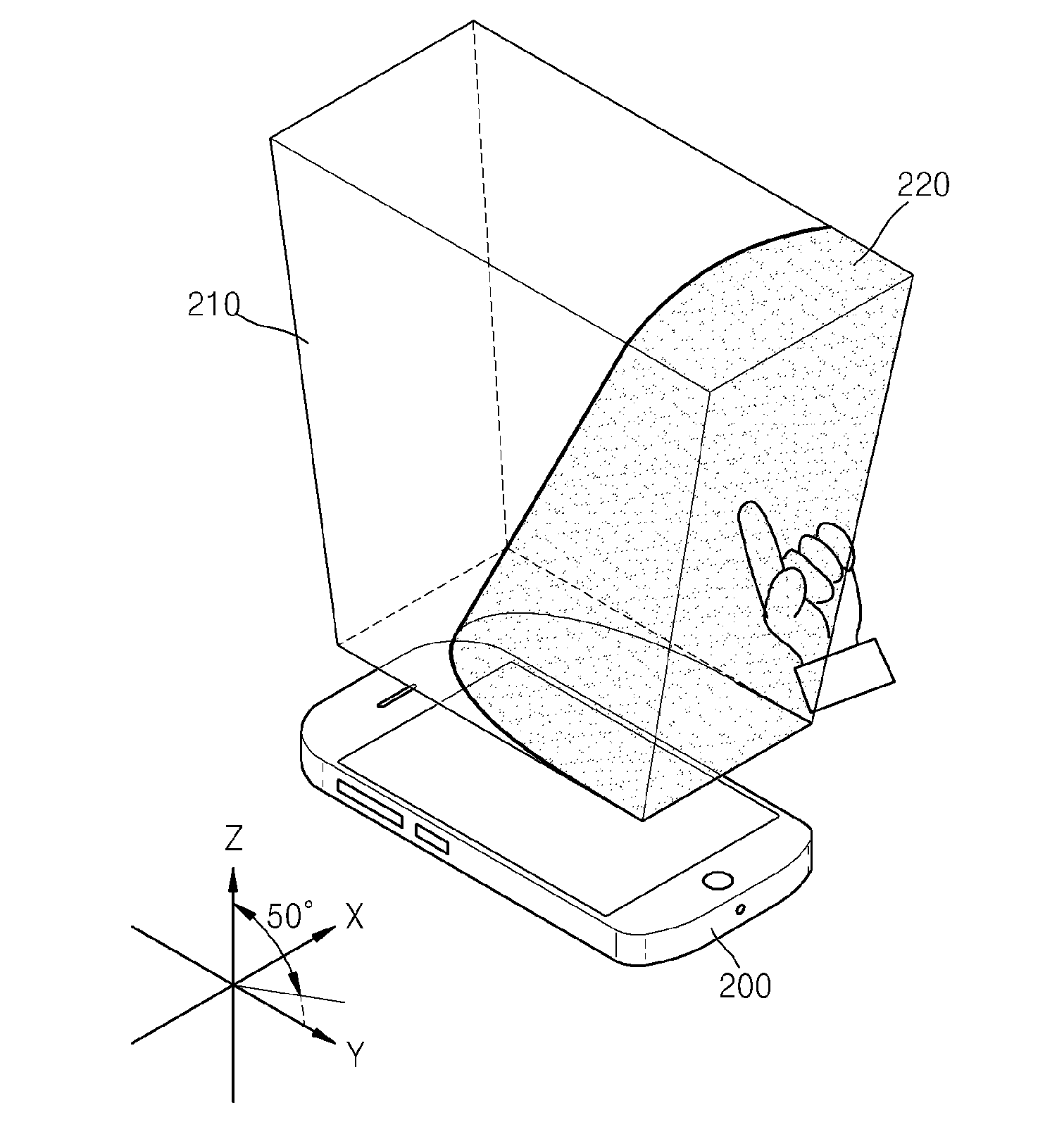

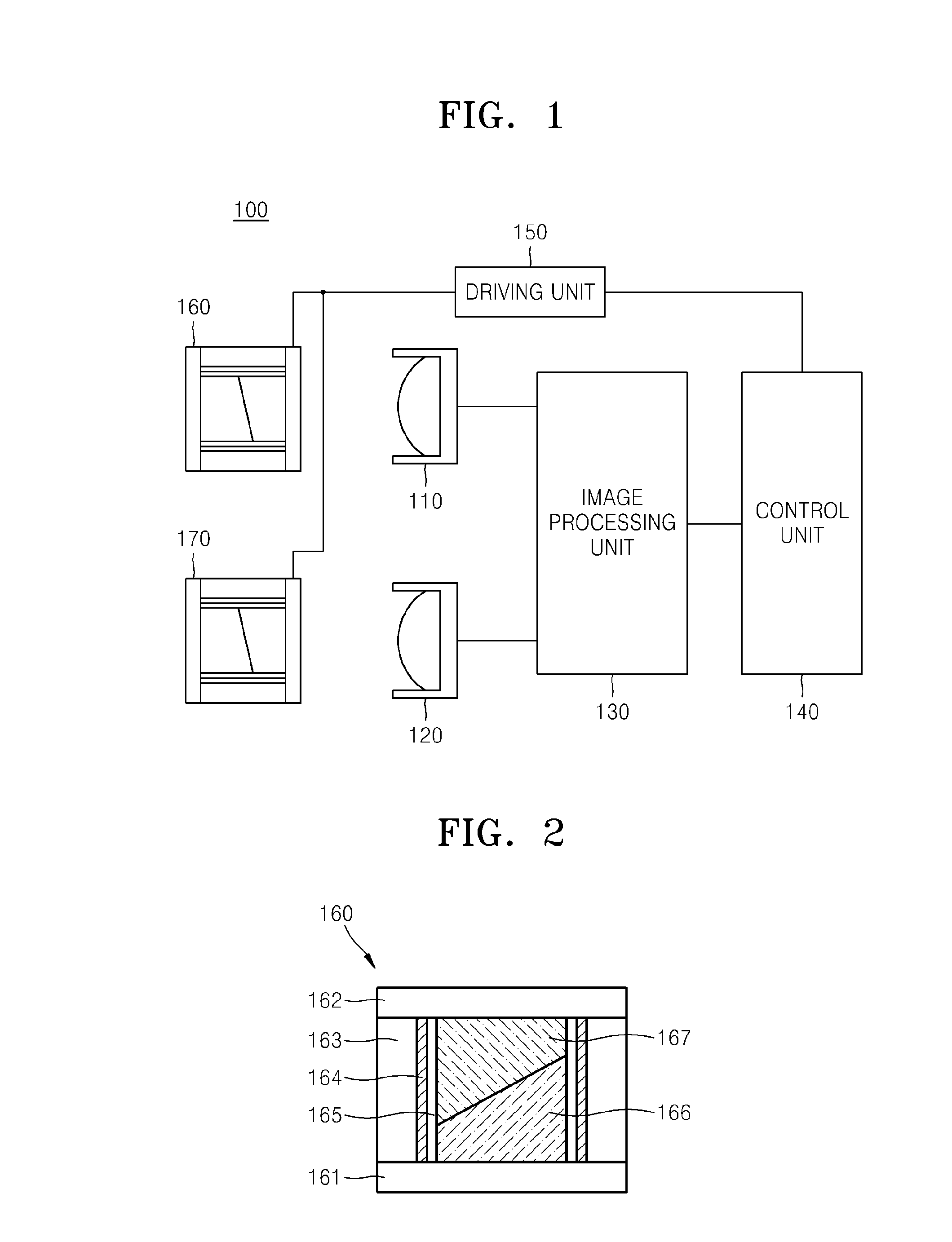

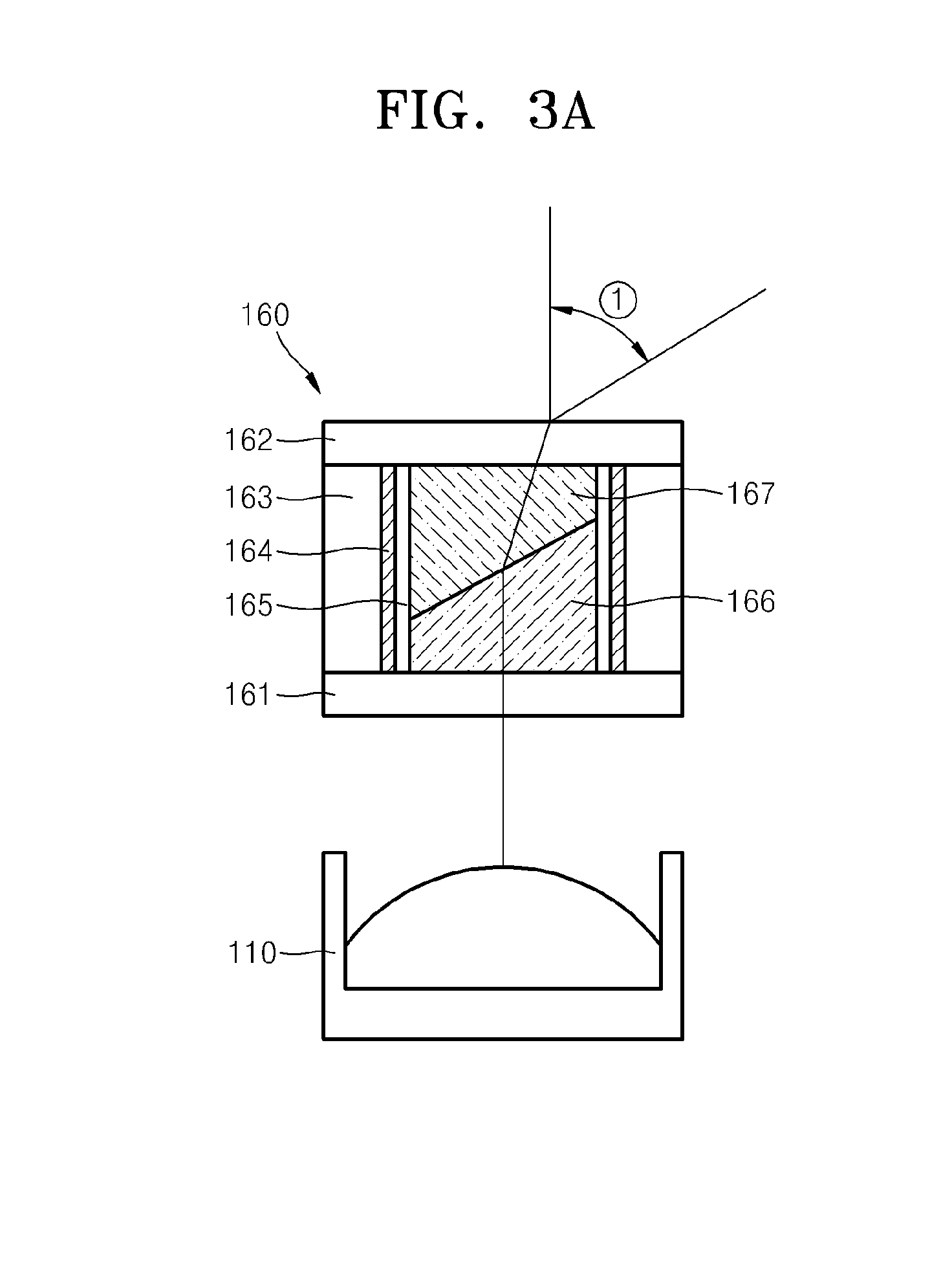

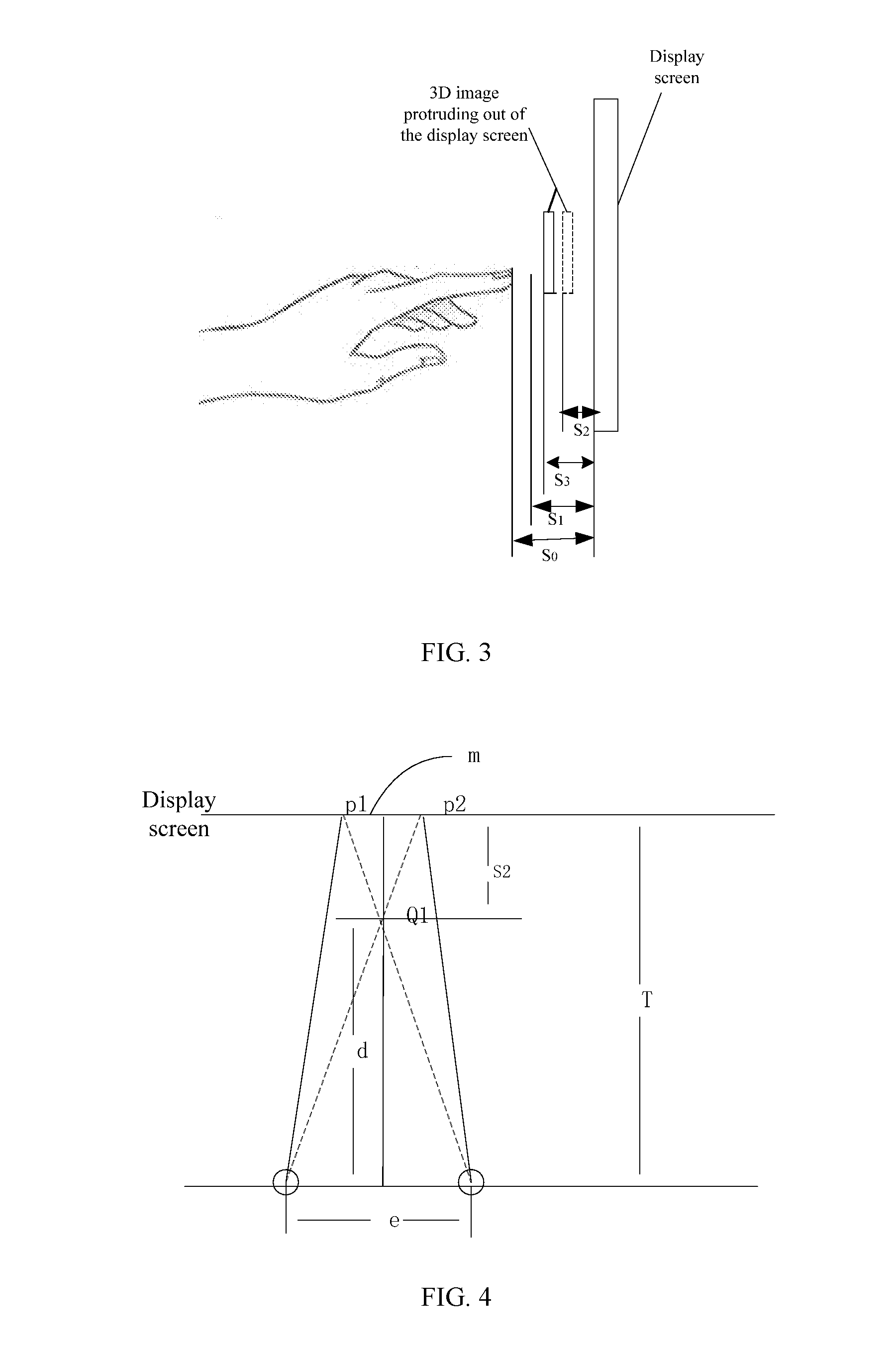

3D interaction apparatus, display device including the same, and method of driving the same

ActiveUS20150102997A1Input/output for user-computer interactionCathode-ray tube indicators3D interactionInteraction device

Provided are a three-dimensional (3D) interaction apparatus capable of recognizing a user's motions in a 3D space for performing a 3D interaction function, a display device including the 3D interaction apparatus, and a method of driving the 3D interaction apparatus. The 3D interaction apparatus includes a depth camera which obtains a depth image including depth information of a distance between an object and the depth camera; an active optical device disposed in front of the depth camera and configured to adjust a propagation path of light by refracting incident light so as to adjust a field of view of the depth camera; and a driving unit which controls operation of the active optical device.

Owner:SAMSUNG ELECTRONICS CO LTD

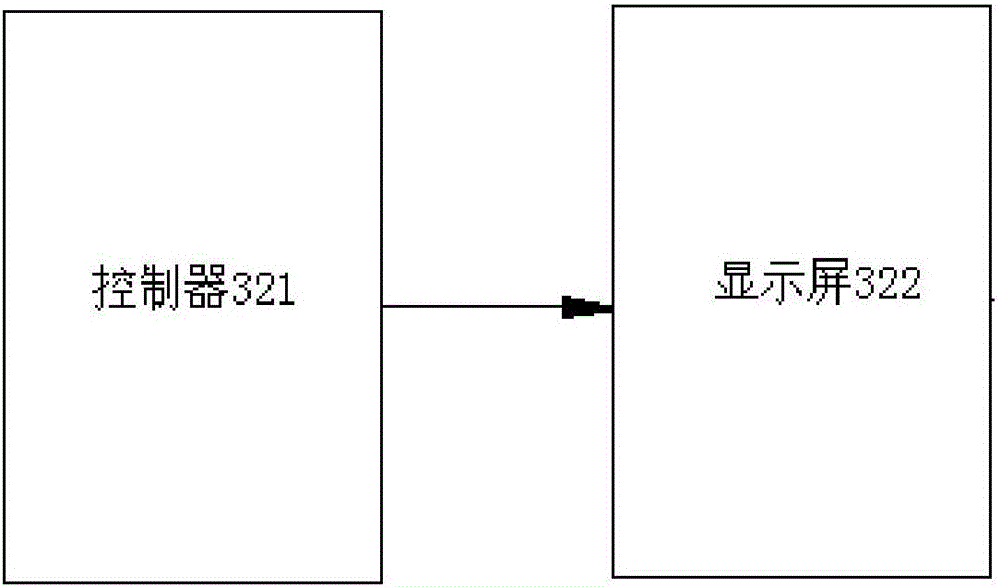

Three-dimensional interactive holographic in-car navigation system

ActiveCN105091887ADoes not affect normal drivingRapid positioningInstruments for road network navigationDriver/operatorOn board

The invention provides a three-dimensional interactive holographic in-car navigation system which is connected with an on-board diagnosis system and a cloud platform of a car and located in the car. The three-dimensional interactive holographic in-car navigation system comprises a wireless communication module, an interactive system, projection equipment, multiple image acquisition modules and an audio acquisition module, wherein the interactive system is connected with the wireless communication module, the projection equipment, the image acquisition modules and the audio acquisition module. According to the three-dimensional interactive holographic in-car navigation system, car and map data interfaces can be projected on a windscreen of the car in the mode of a three-dimensional image, a driver can observe the data interfaces without needing to remove the sight from a road, and therefore the driving safety is improved.

Owner:王占奎

A method, apparatus, system and computer-readable medium for interactive shape manipulation

A method and an apparatus for interactively manipulating a shape of an object, comprises selecting an object to be manipulated and rendering the object in dependence of a manipulation type. The method provides a smart object adapted interaction, manipulation and visualization scheme in contrast to previous display driven schemes. The method allows efficient shape manipulation by restricting the degrees of freedom for the manipulation to the meaningful ones for a given object or object part, thus allowing to reduce e.g. a 3D interaction to a 2D interaction.

Owner:KONINK PHILIPS ELECTRONICS NV

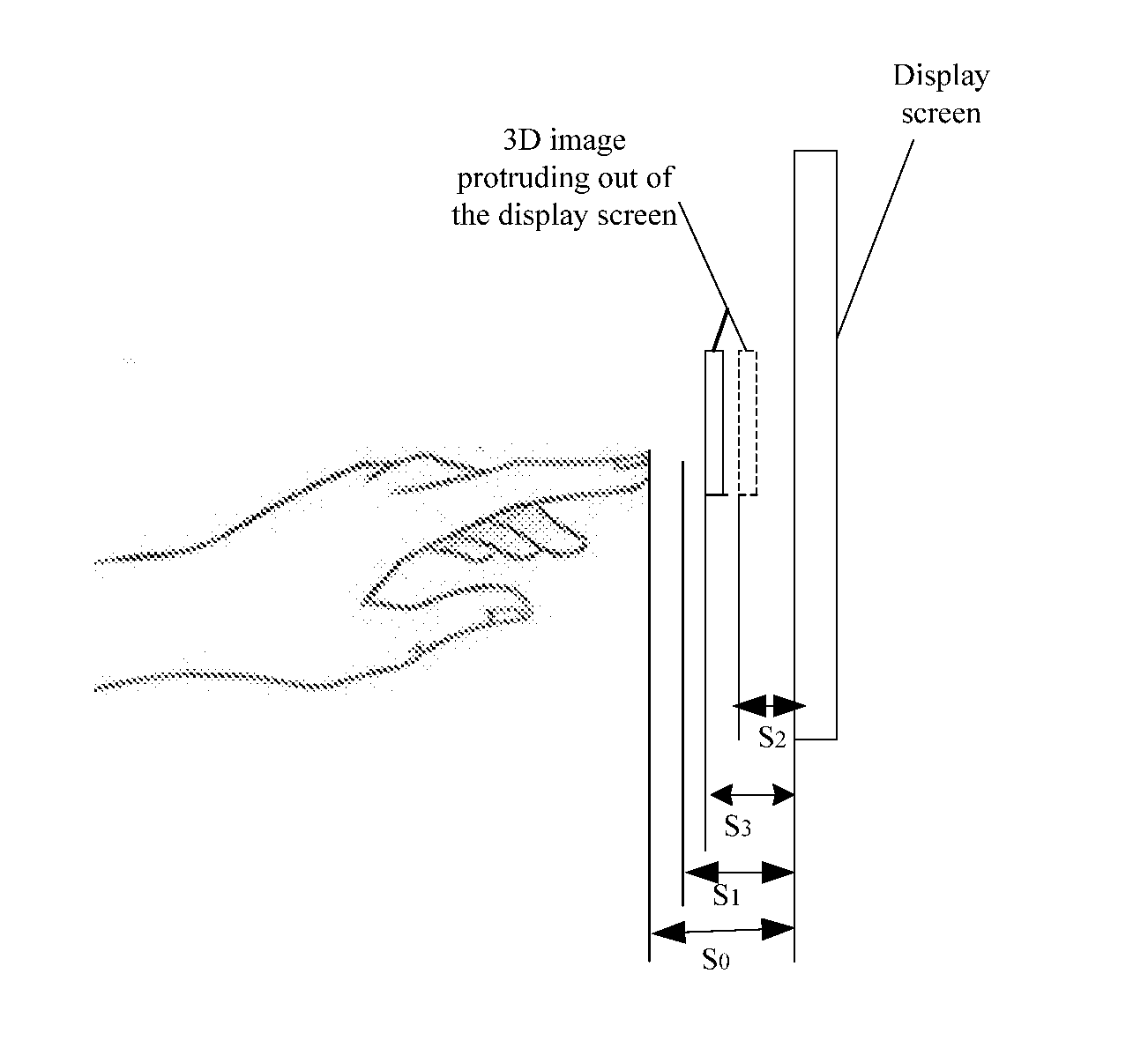

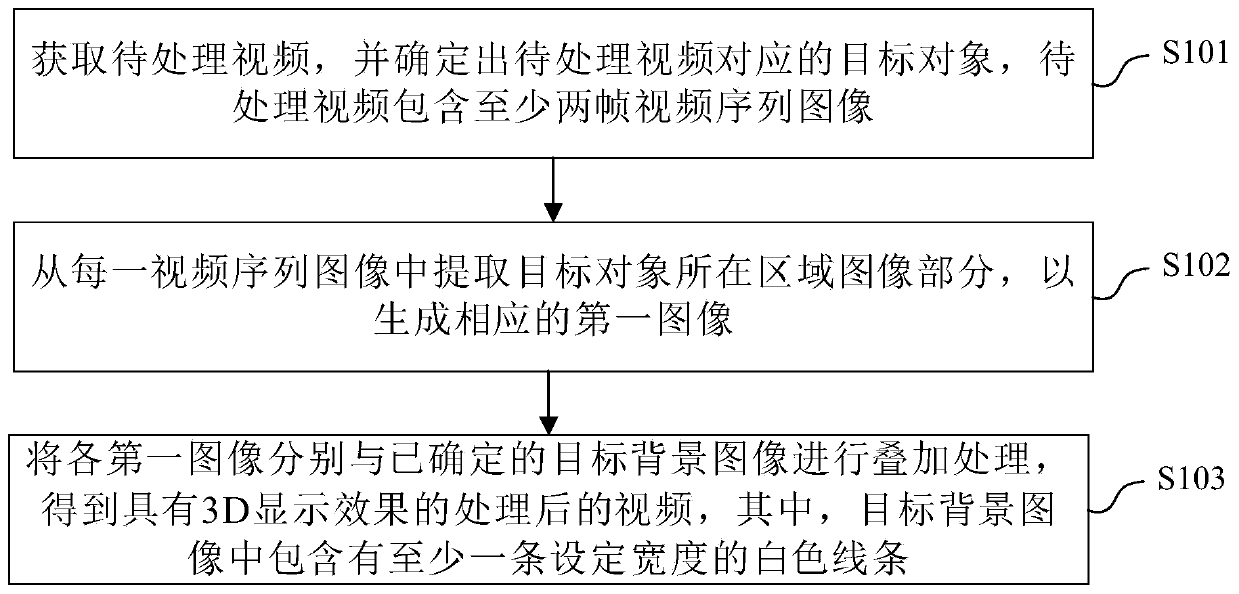

3D interaction method and display device

ActiveUS20160219270A1Improve viewing experienceSteroscopic systemsInput/output processes for data processingParallax3d image

The present disclosure provides a 3D interaction method. The 3D display device detects a distance between an operating element and a display screen and checks whether the distance is less than a maximum distance of a 3D image pointed by the operating element protruding out of the display screen. When the distance is less than the maximum protruding distance of the 3D image pointed by the operating element, the 3D display device acquires a viewing distance between a viewer and the display screen. Based on the acquired viewing distance and the distance between the operating element and the display screen, the 3D display device adjusts the parallax of the 3D image pointed by the operating element to cause an actual distance of the 3D image pointed by the operating element protruding out of the display screen equal to the distance between the operating element and the display screen.

Owner:SUPERD TECH CO LTD

VR (virtual reality) equipment based VR interactive simulation practical training system

PendingCN108961893AImprove convenienceQuality improvementInput/output for user-computer interactionCosmonautic condition simulationsComputer moduleDisplay device

A VR equipment based VR interactive simulation practical training system comprises VR interaction equipment, a PC for constructing a simulation practical training module, and a host body for data conversion and transmission. The VR interaction equipment comprises a head display device and a sensor module. A practical training method of the system comprises the steps that 1) the PC is combined witha 3D interaction function in the VR technology to construct virtual practical training modules, and a practitioner selects the corresponding virtual practical training module which needs simulated practical training; 2) the practitioner completes wearing of the VR interaction equipment, and the PC starts the corresponding virtual practical training module; and 3) the practitioner obtains positionand condition in a virtual application scene via the head display device, and completes the corresponding operation via the sensor module according to a corresponding practical training operation. Different virtual practical training modules are constructed to improve the convenience and quality of simulated teaching, and the system is suitable for use of teaching exercise and simulation practical training.

Owner:浙江引力波网络科技有限公司

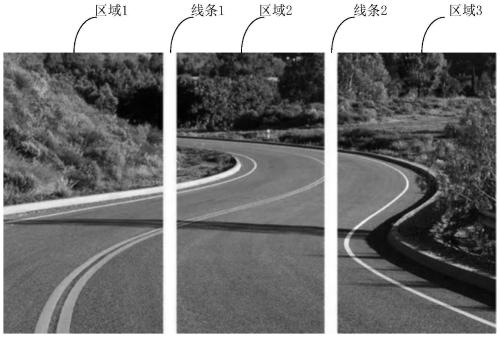

Video processing method and device, electronic equipment and computer readable storage medium

ActiveCN111246196AEasy to handleLow costSteroscopic systemsComputer graphics (images)Video processing

The disclosure provides a video processing method and device, electronic equipment and a computer readable storage medium. The method comprises the steps: obtaining a to-be-processed video, determining a target object corresponding to the to-be-processed video, and enabling the to-be-processed video to comprise at least two frames of video sequence images; extracting an image part of an area wherethe target object is located from each video sequence image to generate a corresponding first image; and performing superposition processing on each first image and a determined target background image to obtain a processed video with a 3D display effect. The target background image includes at least one white line with a set width. According to the scheme, when the processed video formed by theplurality of superposed images is played, a viewer can generate a sense of depth of field, a naked eye 3D display effect is generated; the processing process of the video to be processed is simple, the implementation cost is low, and the popularization of naked eye 3D interaction is facilitated.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

Immersed naked-eye 3D interactive method and system based on simulation teaching training room

InactiveCN107783306AAvoid inauthenticAvoid the disadvantage of not being able to interact with naked eyes in 3DInput/output for user-computer interactionDiffusing elementsThird partyInteraction control

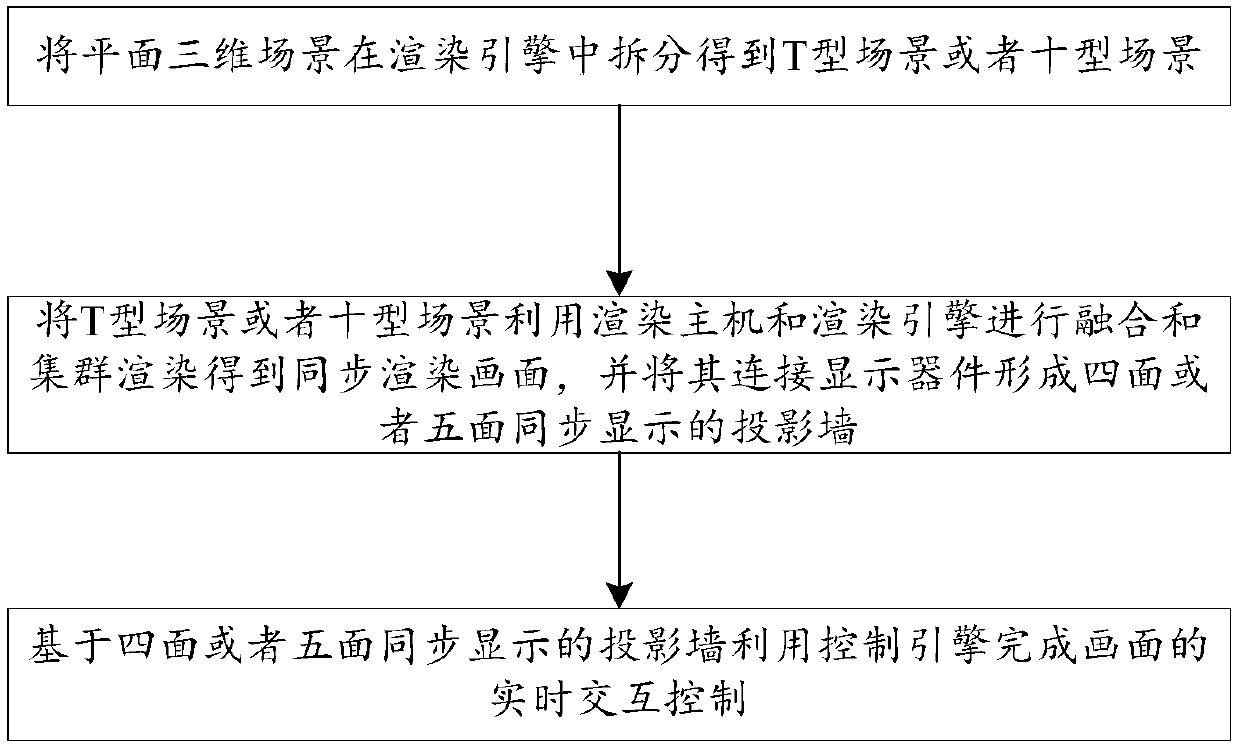

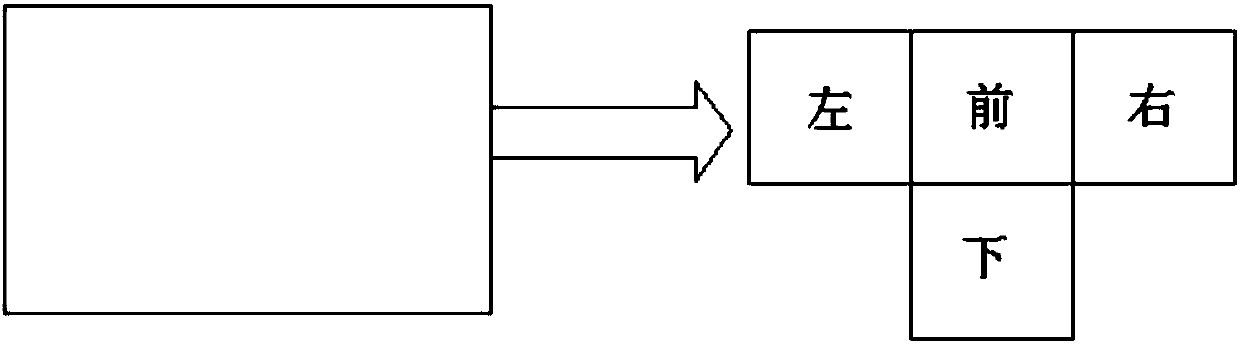

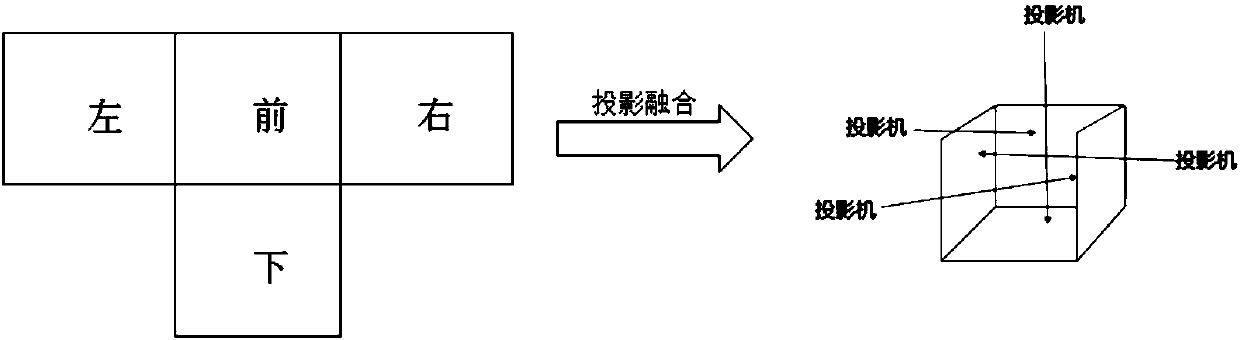

The invention discloses an immersed naked-eye 3D interactive method and system based on a simulation teaching training room and relates to the field of virtual simulation teaching training rooms. Themethod comprises the steps as follows: 1) splitting a planar 3D scene in a rendering engine to obtain a T-type scene or cross-type scene; 2) performing fusion and cluster rendering on the T-type sceneor cross-type scene by use of a rendering host and the rendering engine to obtain a synchronous rendering picture, and connecting the synchronous rendering picture with a display device to form a four-side or five side synchronous display projection wall; 3) completing real-time interaction control of the picture by use of a control engine on the basis of the step 2. The system comprises the rendering host, the rendering engine, the display device, a router and the control engine. The problem of poor training effect due to the fact that the third party watch virtual pictures by the aid of auxiliary equipment through picture synthesis and cannot perform immersed interaction with the virtual picture with naked eyes in the existing simulation teaching training field is solved, and immersed naked-eye 3D interaction effect is realized.

Owner:河南新汉普影视技术有限公司

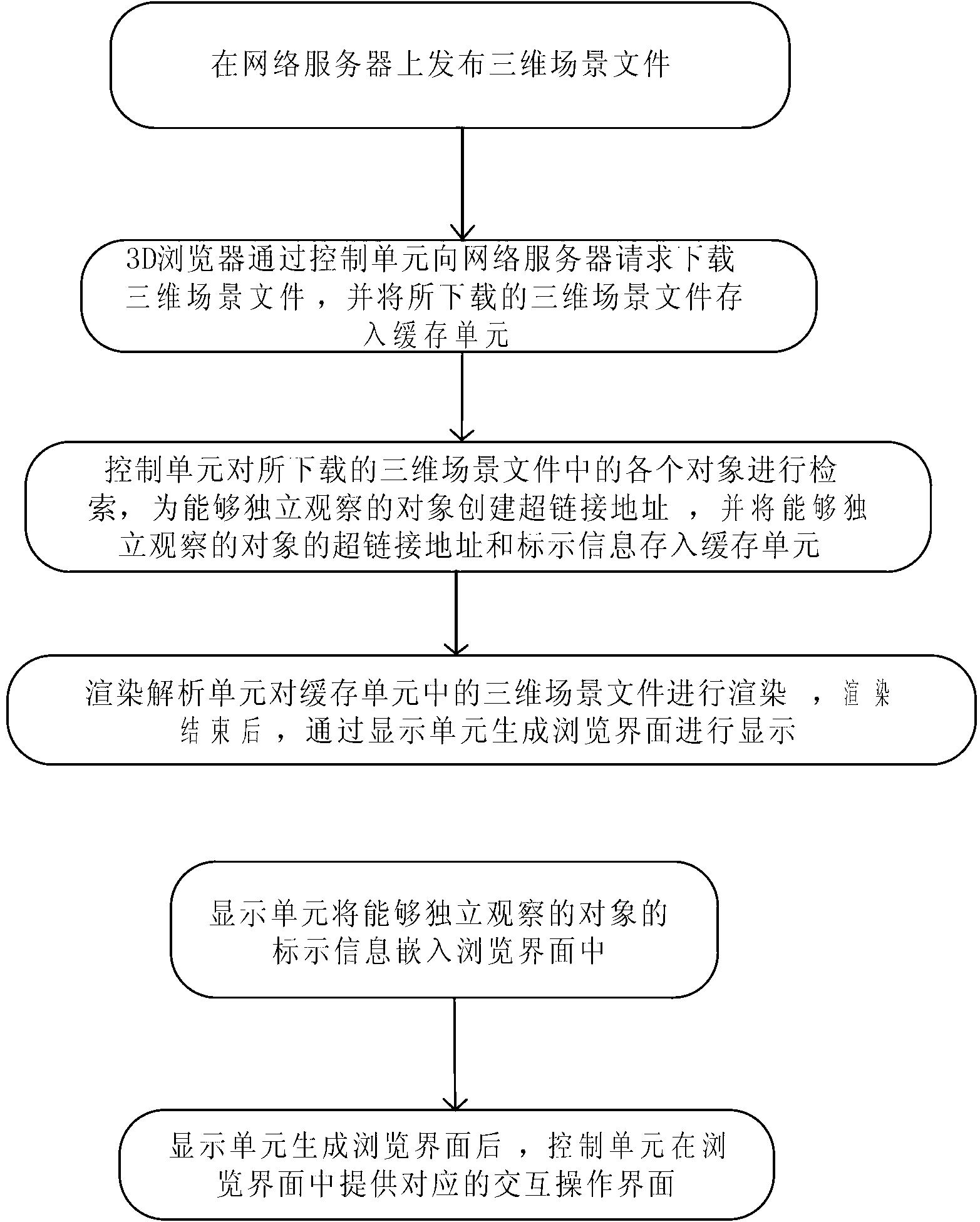

Mobile phone 3D (3-dimensional) browser system based on three-dimensional panoramic hyperlink browse and application method

InactiveCN103226596ATo achieve mutual integrationGood effectTransmissionSpecial data processing applicationsHyperlink3D interaction

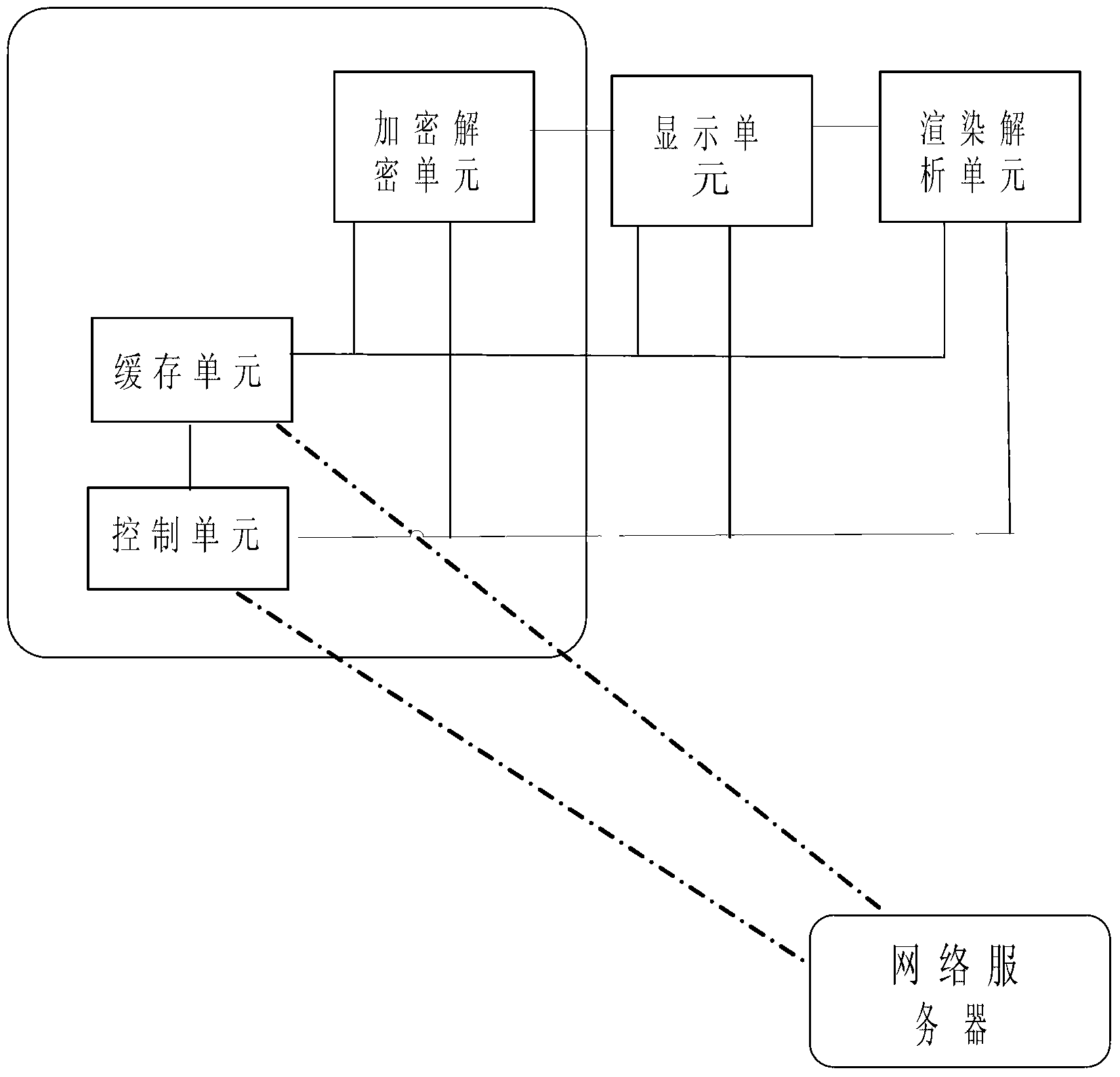

The invention provides a mobile phone 3D browser system based on a three-dimensional panoramic hyperlink browse and an application method. The system comprises a control unit, a network server, a cache unit, an encryption and decryption unit, a display unit and a render resolution cell; through the matching operation of the whole system, a 3D interactive browse function of a user terminal of a mobile phone is realized. The mobile phone 3D browser system based on the three-dimensional panoramic hyperlink browse and the application method can efficiently change the current situation that current mobile terminals, such as the mobile phone, have main functions of treatment of static diagrams, games and entertainment, fills the technical vacancy of dimensional 3D browsers suitable for mobile phones, realizes the mutual fusion of a panoramagram and an independent object better, achieves an excellent effect, facilitates smooth progress of corresponding market and technical activities, and plays an active role in promoting the technology of the whole industry.

Owner:广东百泰科技有限公司 +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com