Patents

Literature

33 results about "Overhead (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, overhead is any combination of excess or indirect computation time, memory, bandwidth, or other resources that are required to perform a specific task. It is a special case of engineering overhead. Overhead can be a deciding factor in software design, with regard to structure, error correction, and feature inclusion. Examples of computing overhead may be found in functional programming, data transfer, and data structures.

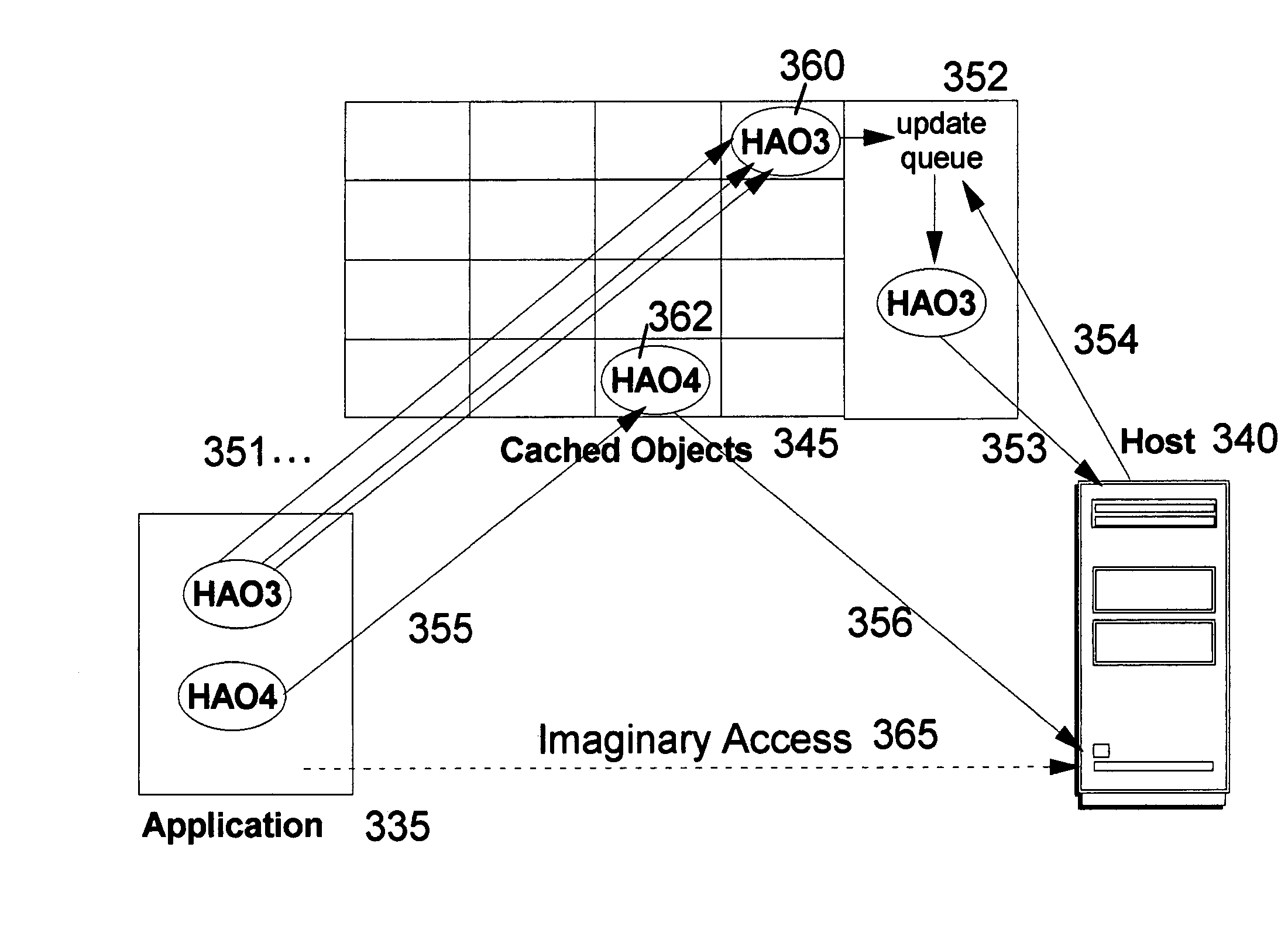

Object caching and update queuing technique to improve performance and resource utilization

InactiveUS7130964B2Improve performanceIncrease resourcesData processing applicationsDigital data information retrievalOverhead (computing)Engineering

The present invention provides a method, system, and computer program product for caching objects to improve performance and resource utilization of software applications which interact with a back-end data source, such as a legacy host application and / or legacy host data store or database. Read-only requests for information are satisfied from the cache, avoiding the overhead of a network round-trip and the computing overhead of repeating an interaction with the back-end data source. Refreshes of cached objects and update requests to objects may be queued for delayed processing (for example, at a time when the system is lightly loaded), thereby improving system resource utilization. A sequence of actions that may be required to initiate, and interact with, the refresh and update processes is also preferably stored in the cached objects. This technique is applicant-independent, and may therefore be used for objects having an arbitrary format.

Owner:INT BUSINESS MASCH CORP

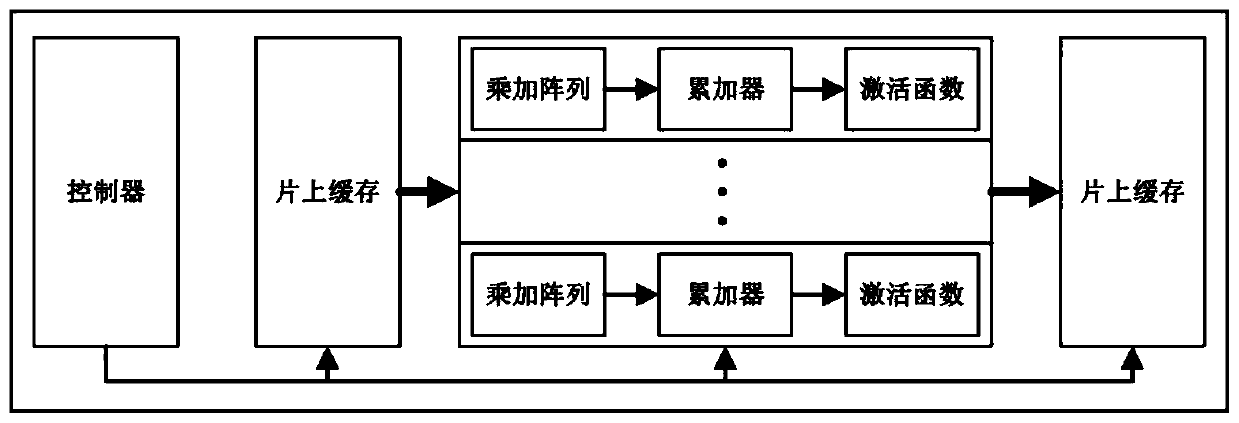

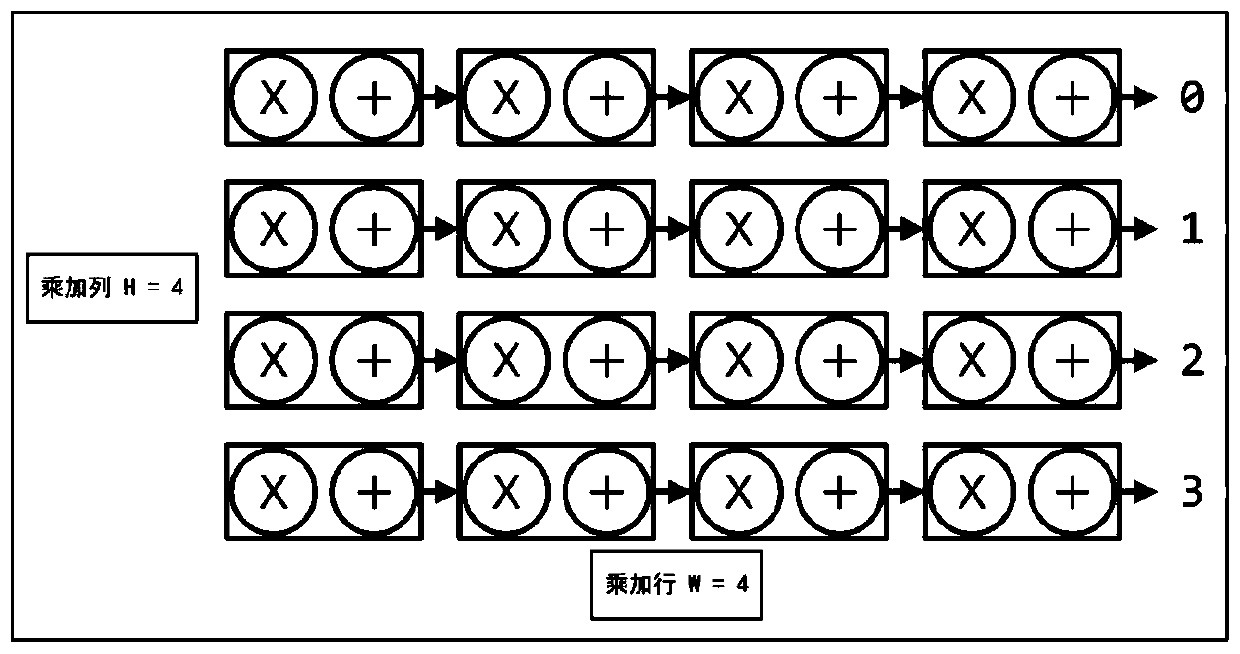

Scale-extensible convolutional neural network acceleration system and method

ActiveCN111242289AAchieve accelerationReduce data transferNeural architecturesEnergy efficient computingHardware modulesOverhead (computing)

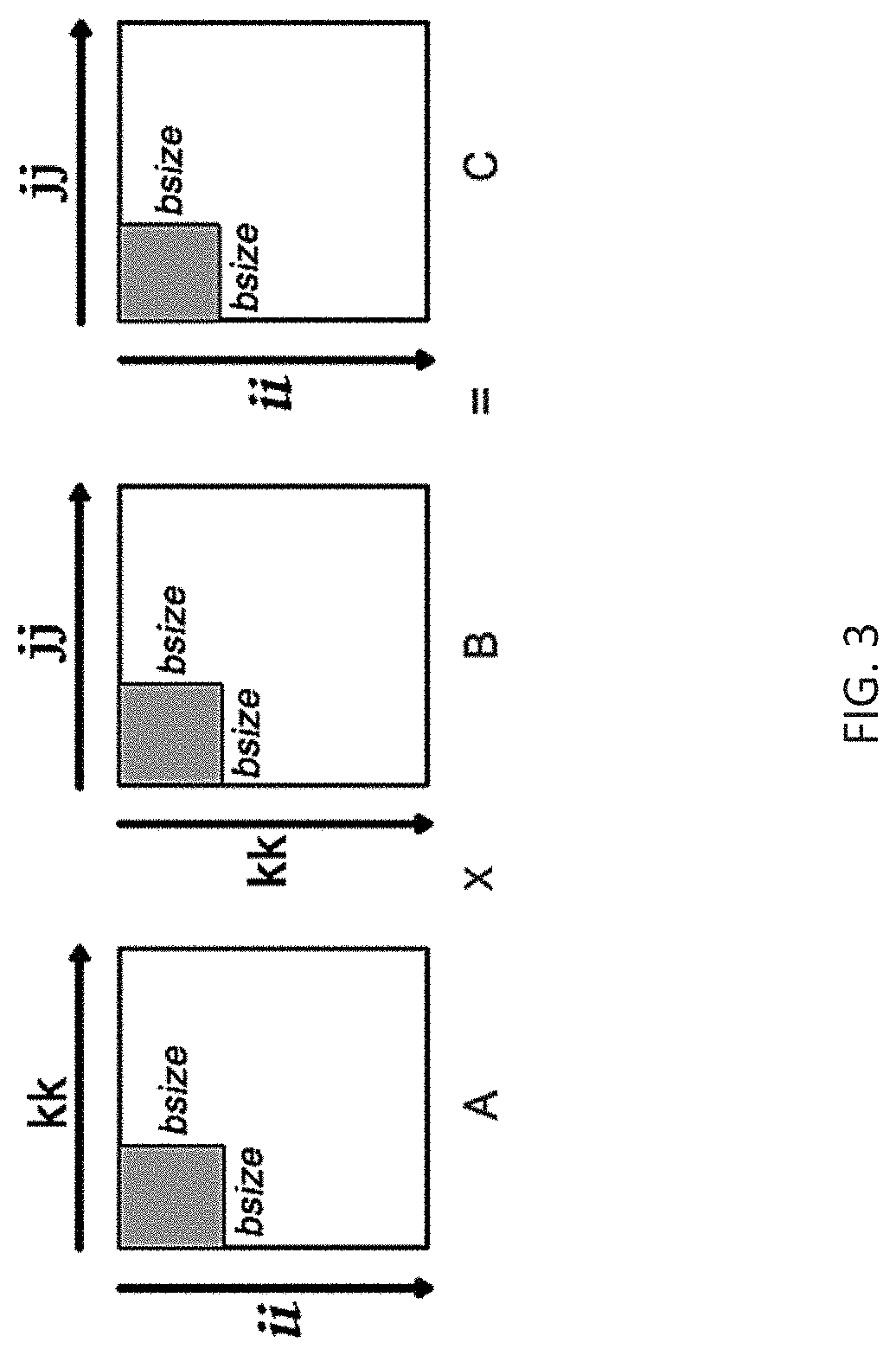

A scale-extensible convolutional neural network acceleration system, and the system comprises a processor and at least one convolutional acceleration core, wherein each convolutional acceleration coremainly comprises a computing array, a controller and an on-chip cache; when the scale is extended, only the number of the convolutional acceleration cores needs to be increased, programs running on the processor need to be modified, and other hardware modules do not need to be changed. Namely, a plurality of convolution acceleration kernels can be added to improve the scale and computing performance of the system. The invention further provides a method based on the scale-extensible convolutional neural network acceleration system, extra overhead caused by scale extension can be reduced to agreat extent, and therefore the scale-extensible convolutional neural network acceleration system can be deployed on different hardware platforms. Meanwhile, the software and hardware cooperation modeis good in universality, and different convolutional neural networks can be supported. Compared with other circuits, the method has universality and expandability.

Owner:TSINGHUA UNIV

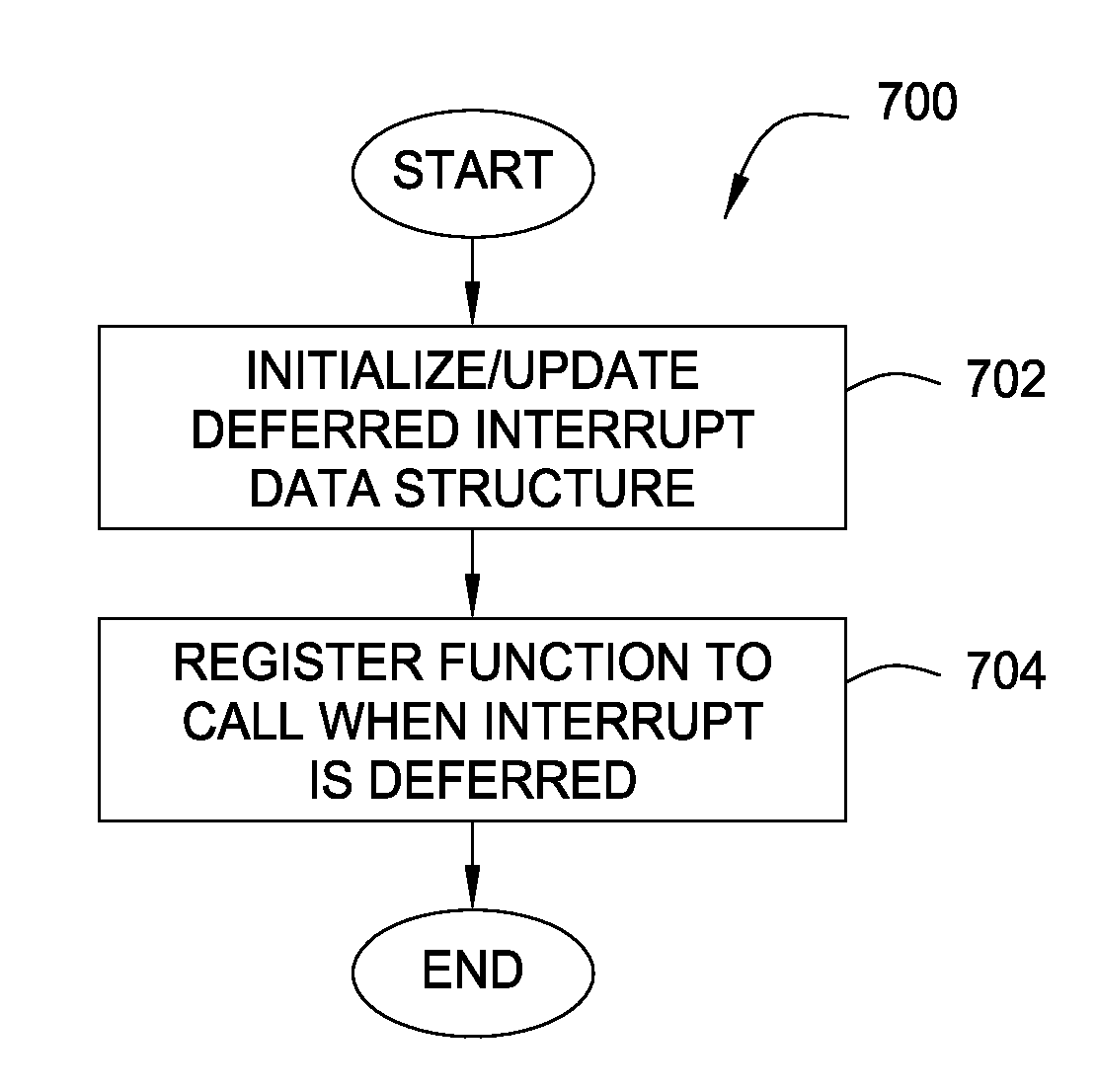

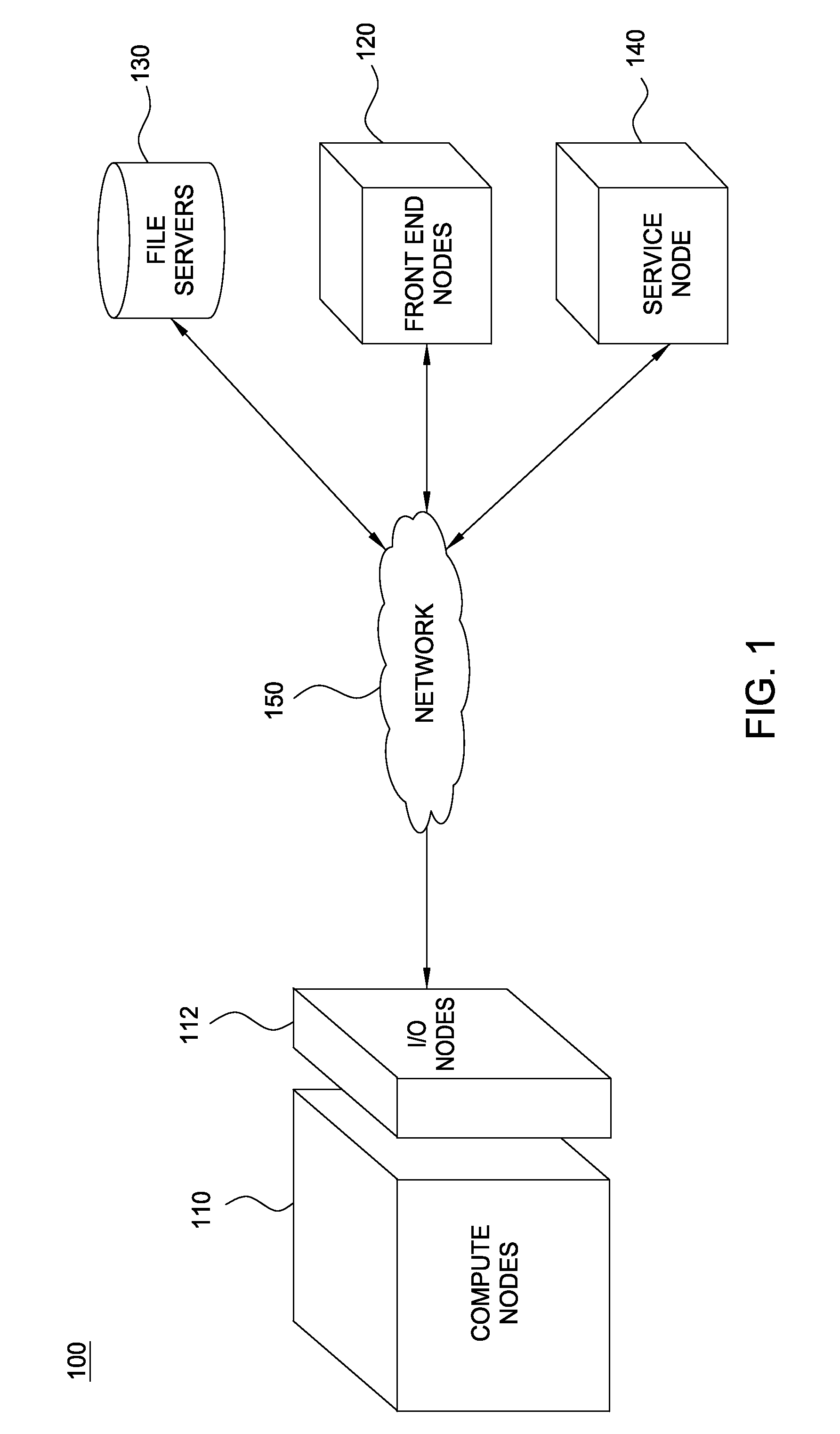

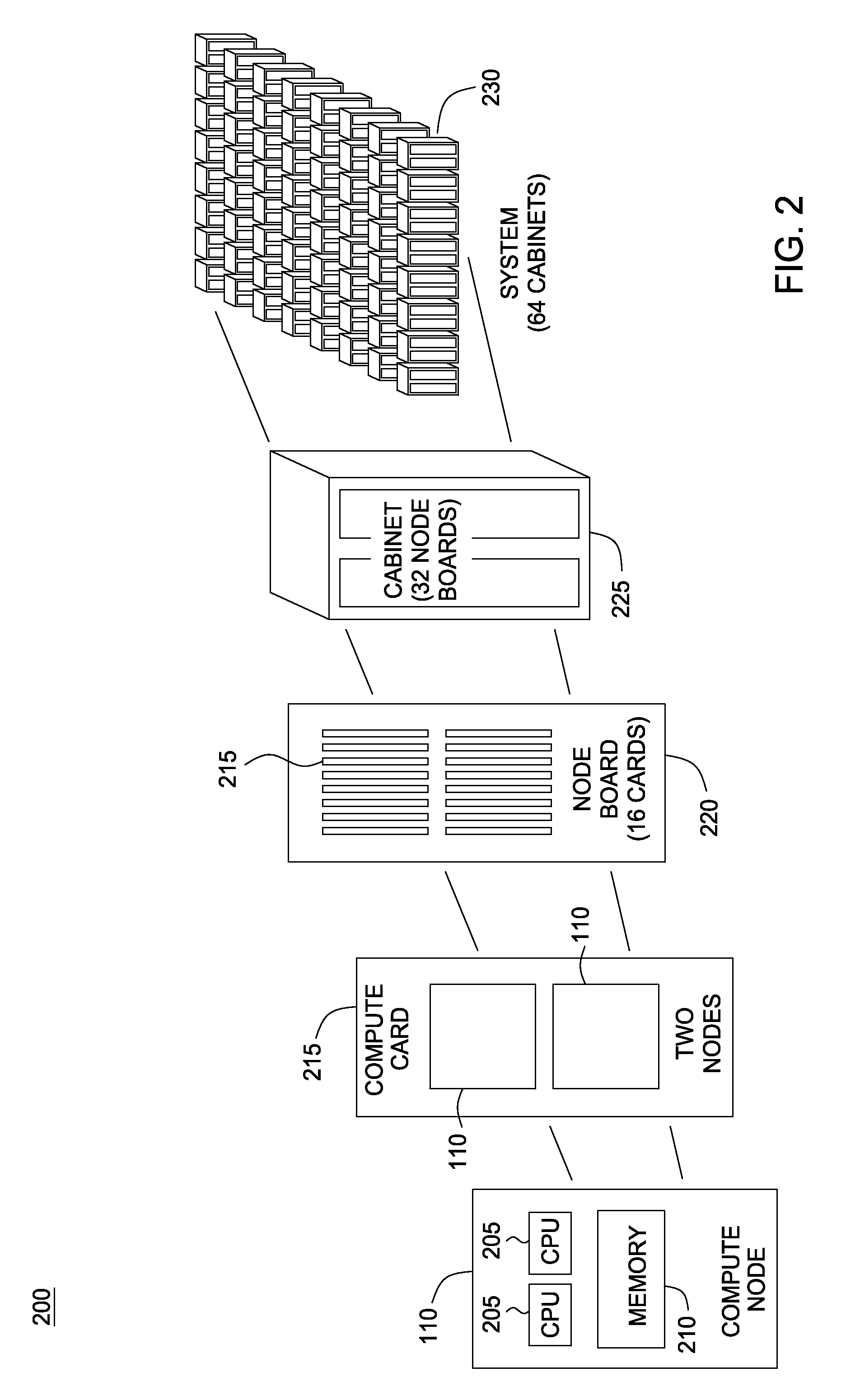

Efficient deferred interrupt handling in a parallel computing environment

InactiveUS20080059676A1Efficient deferred interrupt handlingFast interrupt disablingElectric digital data processingSpatial functionCritical section

Embodiments of the present invention provide techniques for protecting critical sections of code being executed in a lightweight kernel environment suited for use on a compute node of a parallel computing system. These techniques avoid the overhead associated with a full kernel mode implementation of a network layer, while also allowing network interrupts to be processed without corrupting shared memory state. In one embodiment, a fast user-space function sets a flag in memory indicating that interrupts should not progress and also provides a mechanism to defer processing of the interrupt.

Owner:IBM CORP

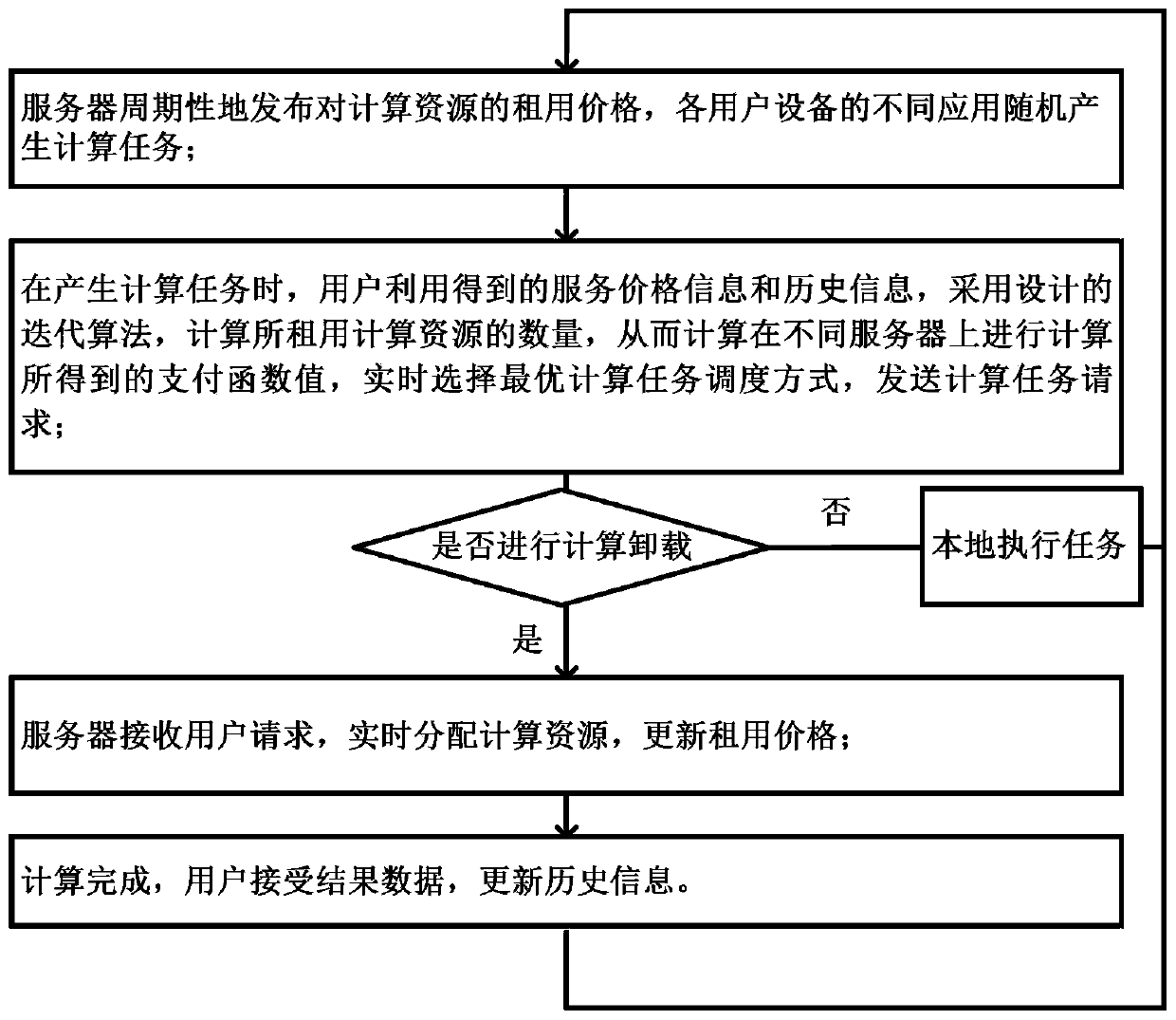

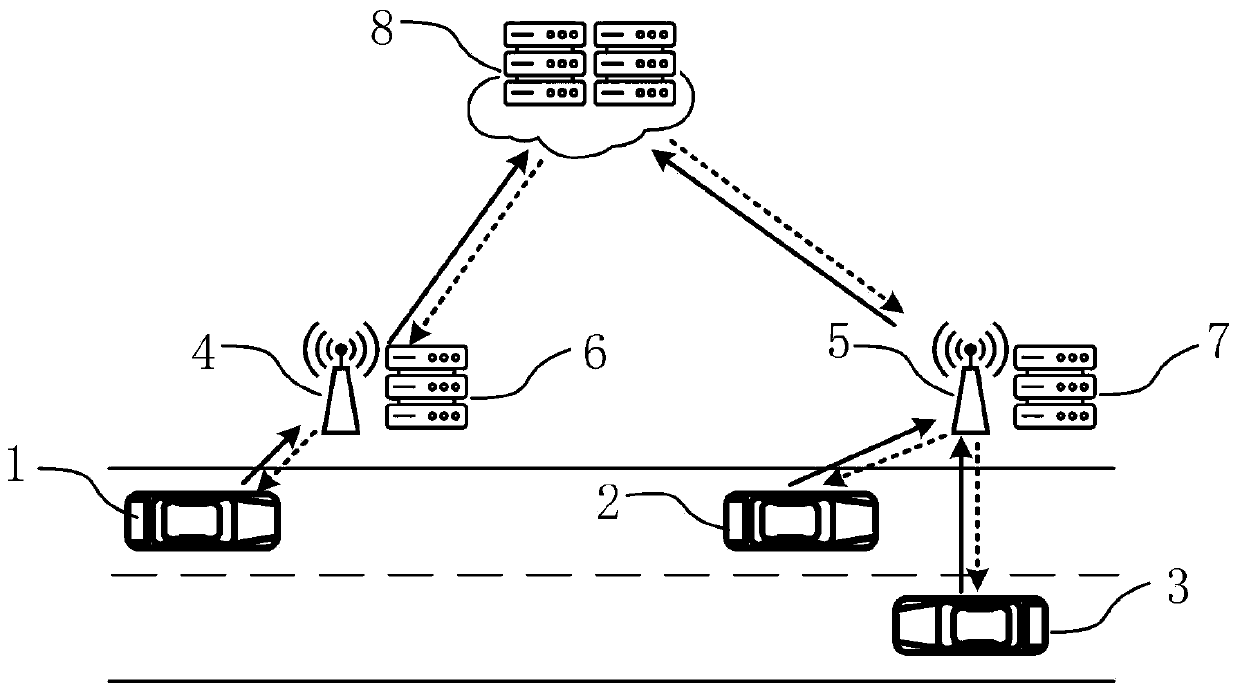

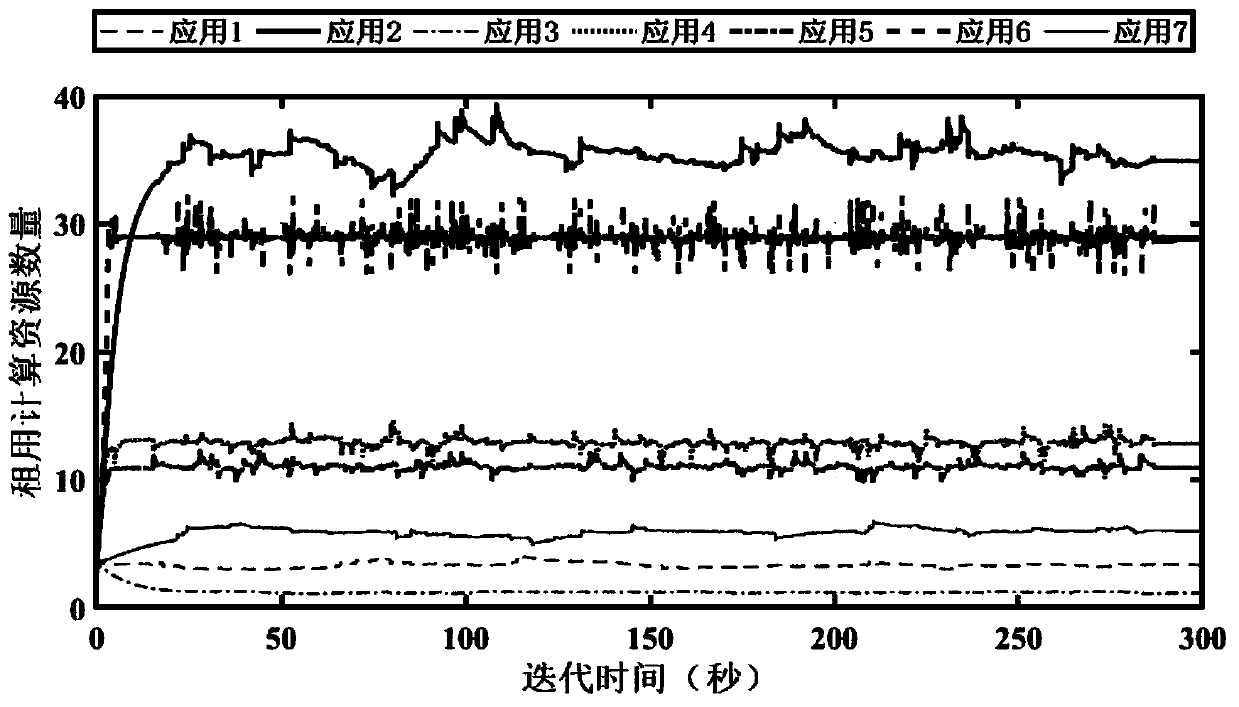

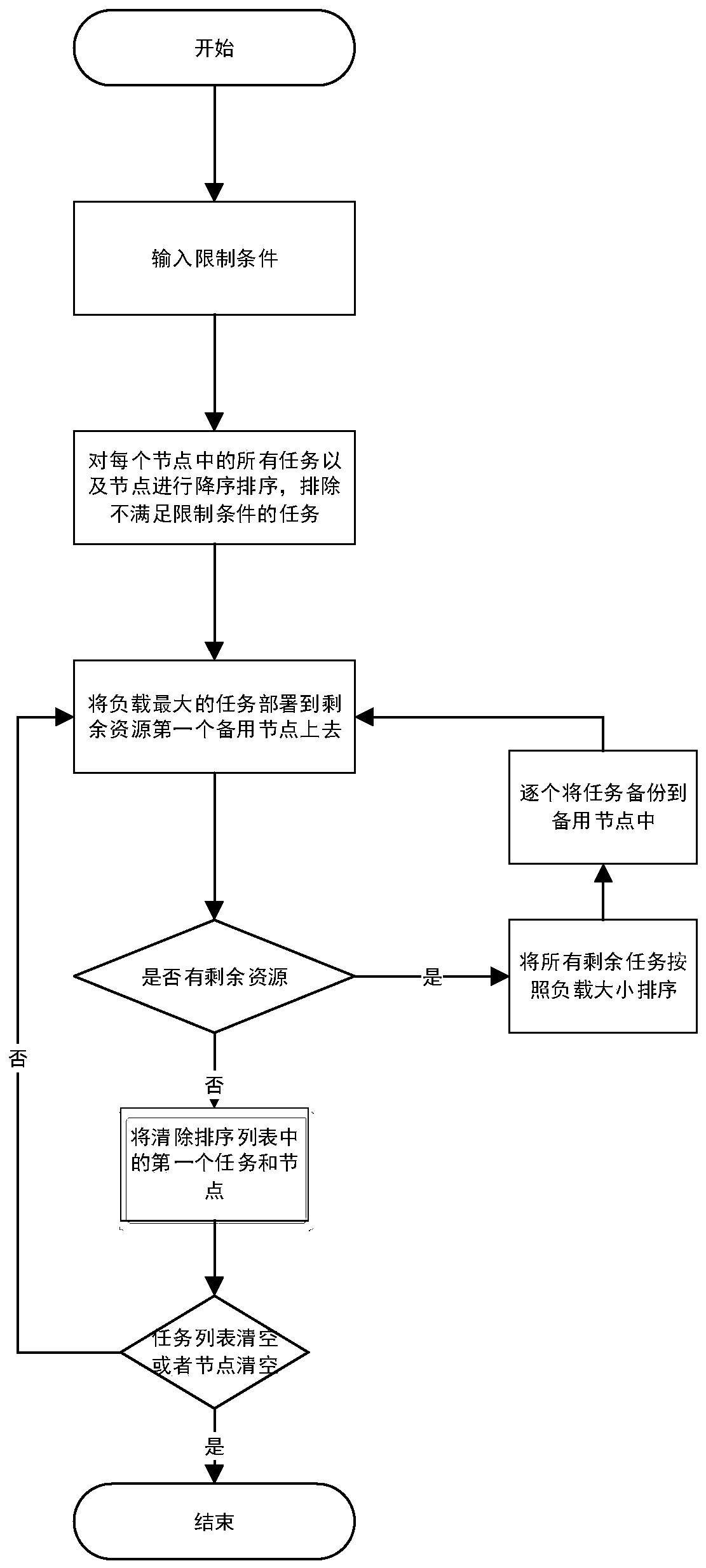

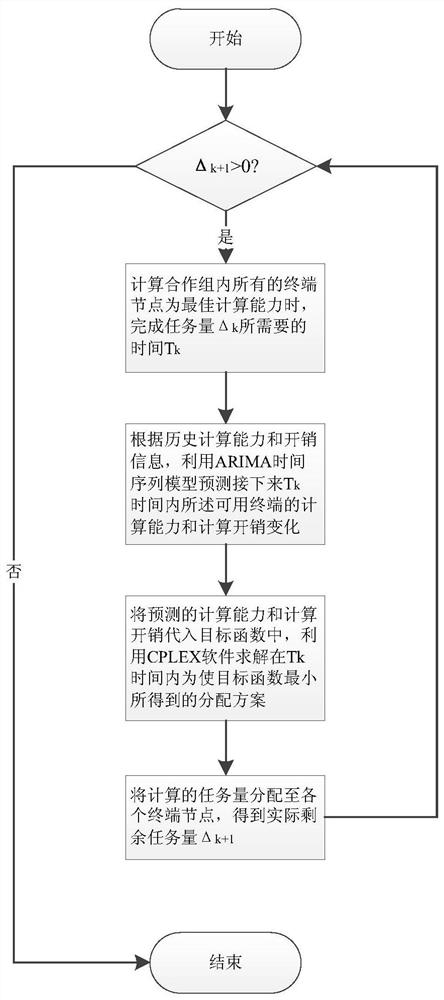

Online computing task unloading scheduling method for edge computing environment

ActiveCN111400001AReduce overheadReduce time complexityMarket predictionsProgram initiation/switchingUser deviceOverhead (computing)

Owner:TSINGHUA UNIV

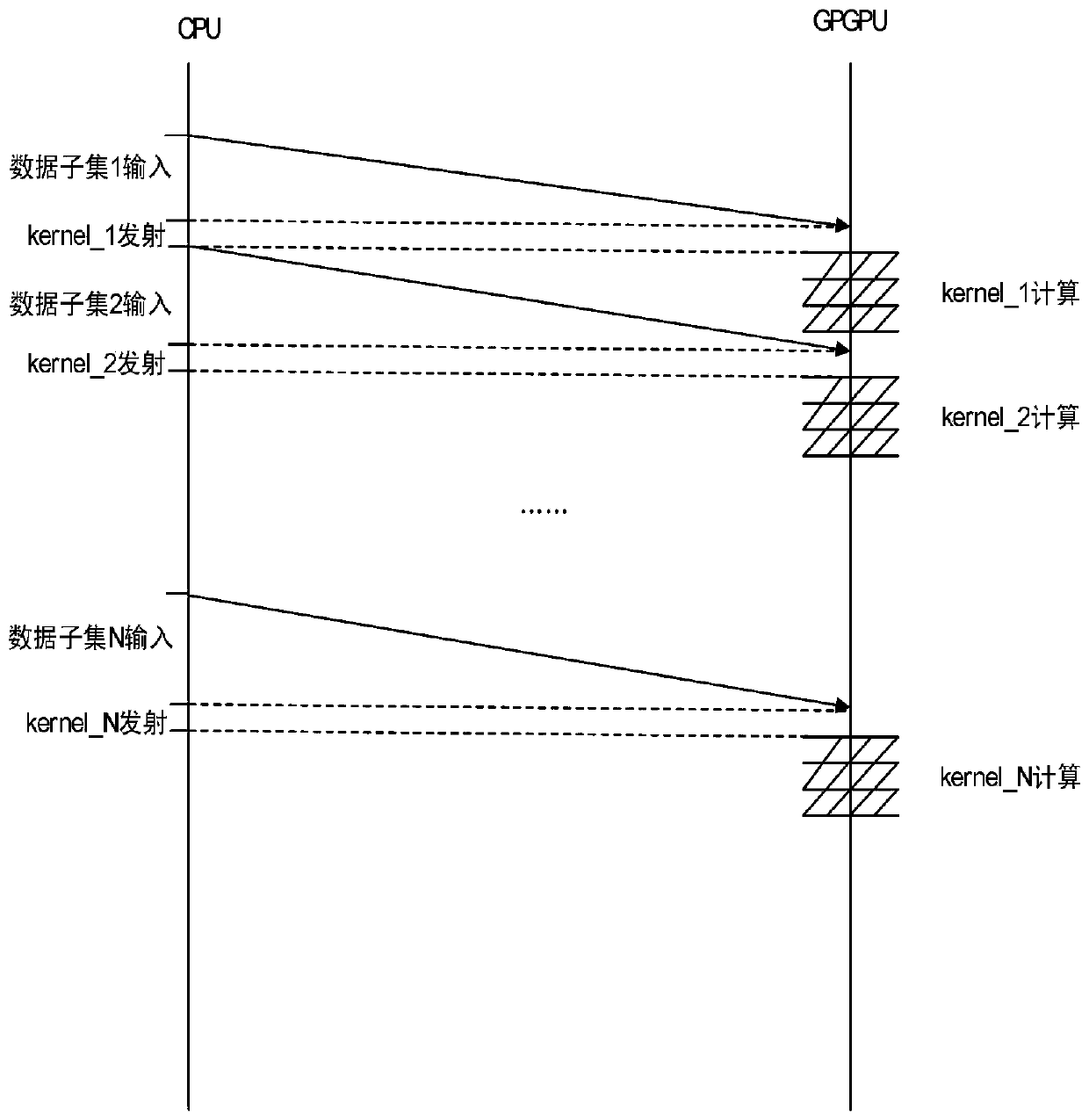

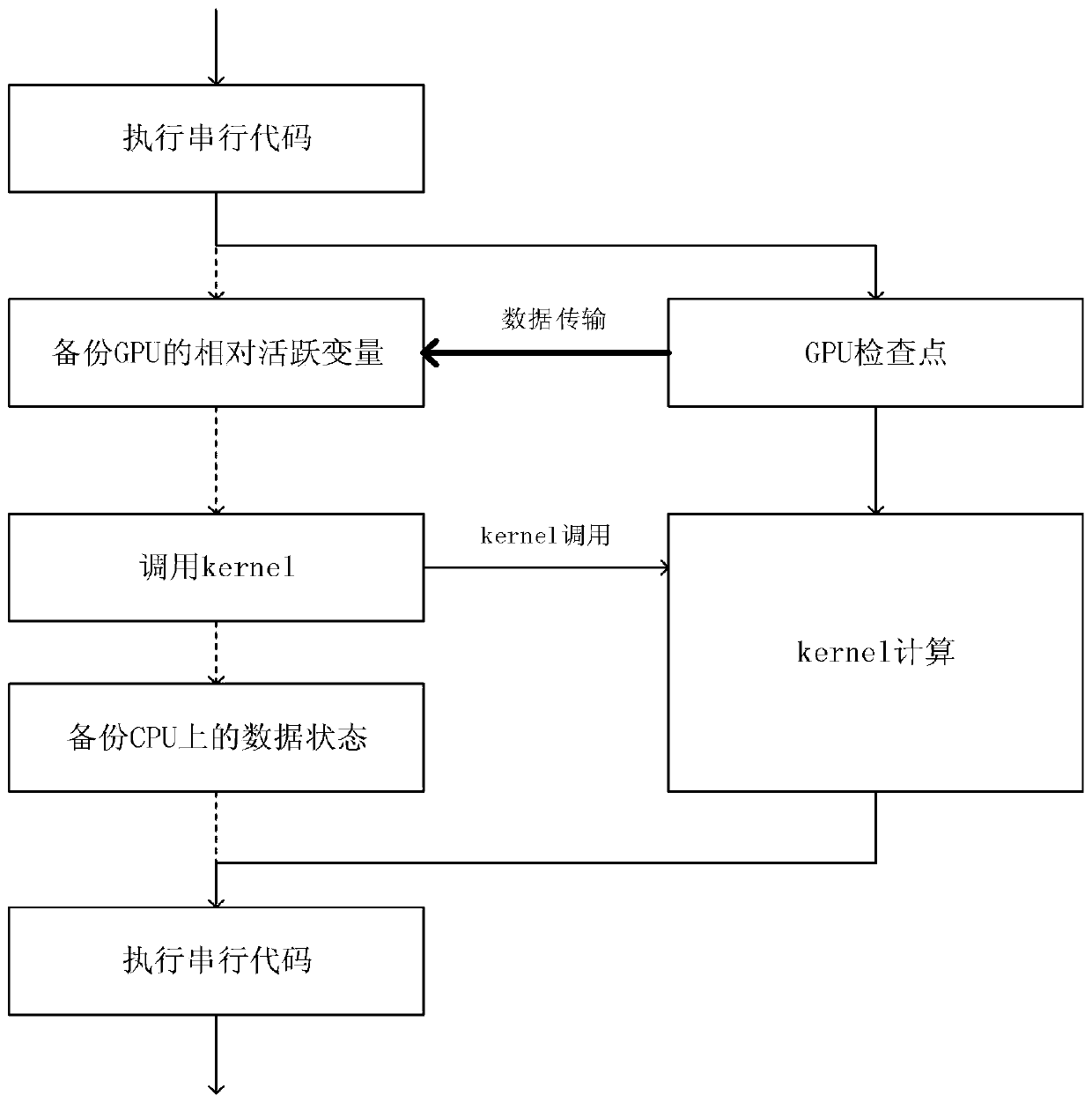

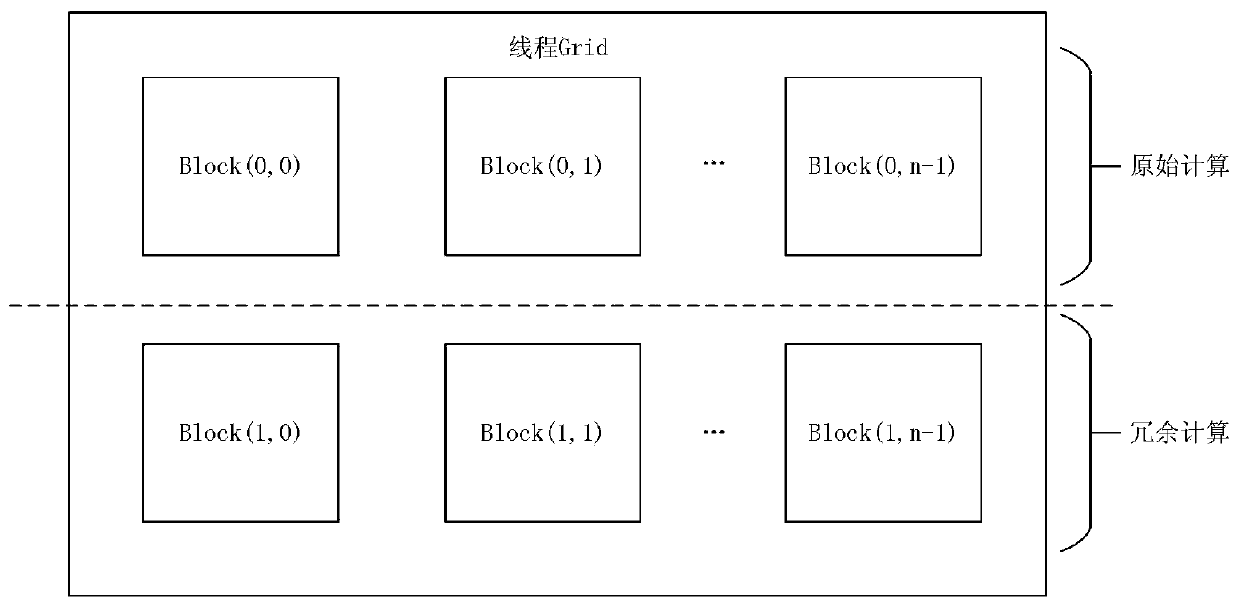

Fine-grained low-overhead fault-tolerant system for GPGPU

ActiveCN110083488AAchieve overlapImprove performanceEnergy efficient computingRedundant operation error correctionCheck pointTime delays

The invention provides a fine-grained low-overhead fault-tolerant system for a GPGPU. The fine-grained low-overhead fault-tolerant system comprises a task division module, a check point backup module,a redundancy execution and error detection module and an error repair module. The fault-tolerant processing of the instantaneous fault of the GPU computing component can be realized, and the problemsof large fault-tolerant granularity, high error repair cost, poor fault-tolerant system performance and the like in the traditional software fault-tolerant method of the GPU can be solved. The beneficial effects of the invention are as follows: thread tasks can be divided; the calculation scale of the kernel is reduced, only the relative active variables need to be backed up during check point backup, the space-time expenditure caused by storage is reduced, only part of objects related to errors need to be recalculated during error repair, the fault-tolerant cost caused by recalculation is reduced, and the asynchronous mechanism of the CPU-GPU heterogeneous system is fully utilized to hide the time delay caused by data transmission and improve the performance of the system.

Owner:HARBIN INST OF TECH

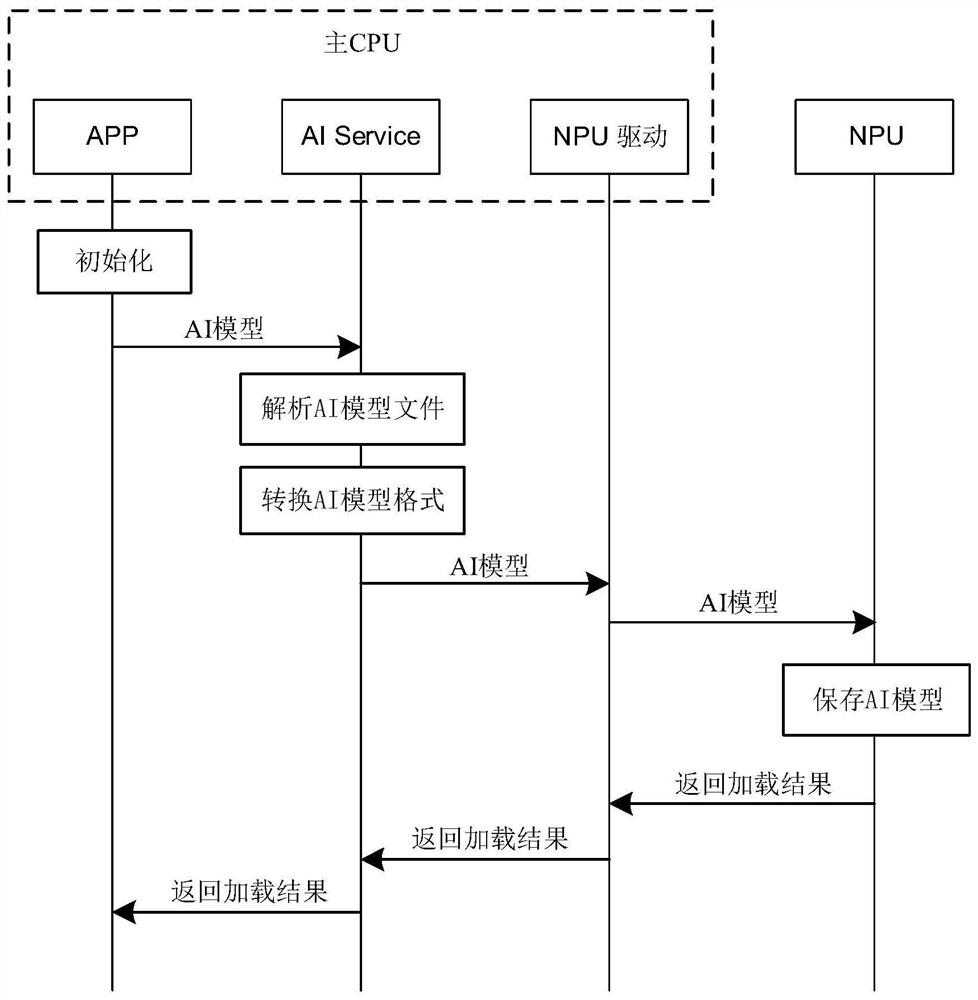

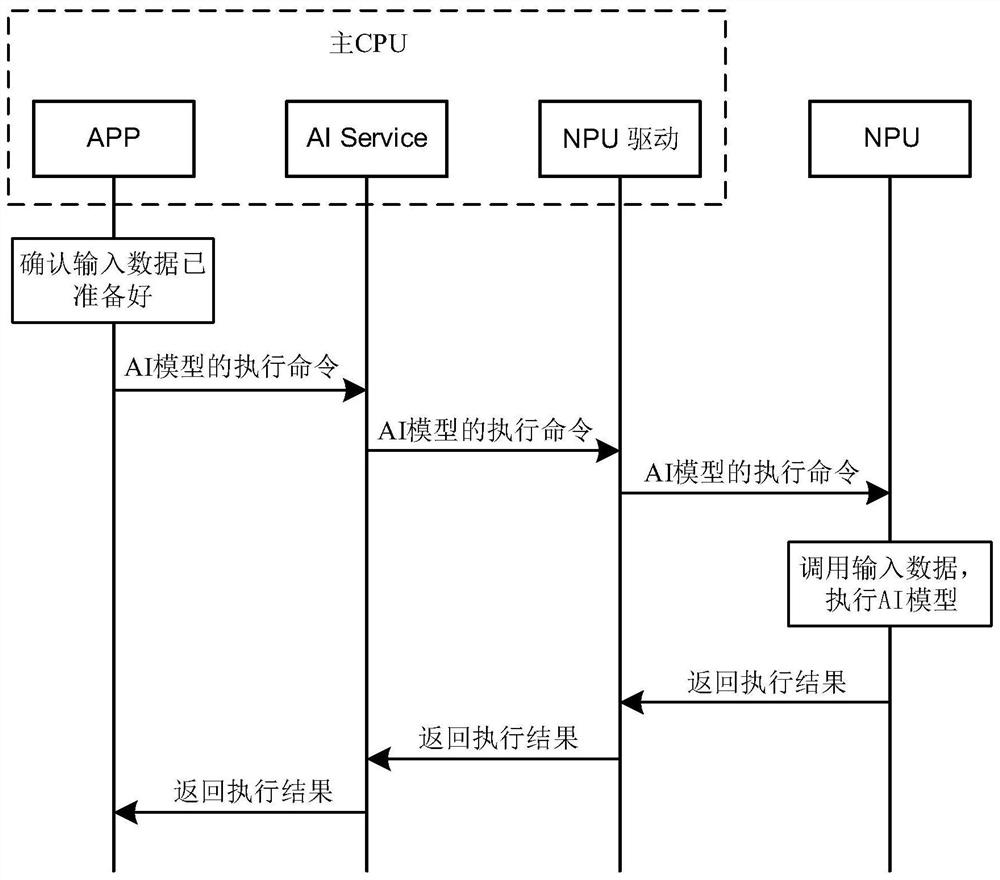

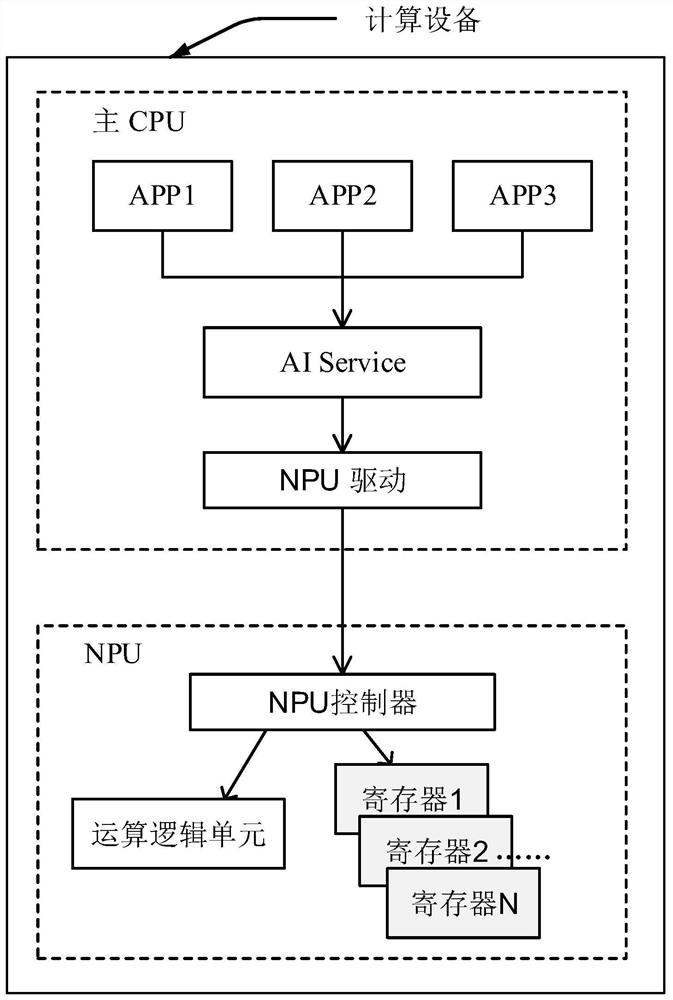

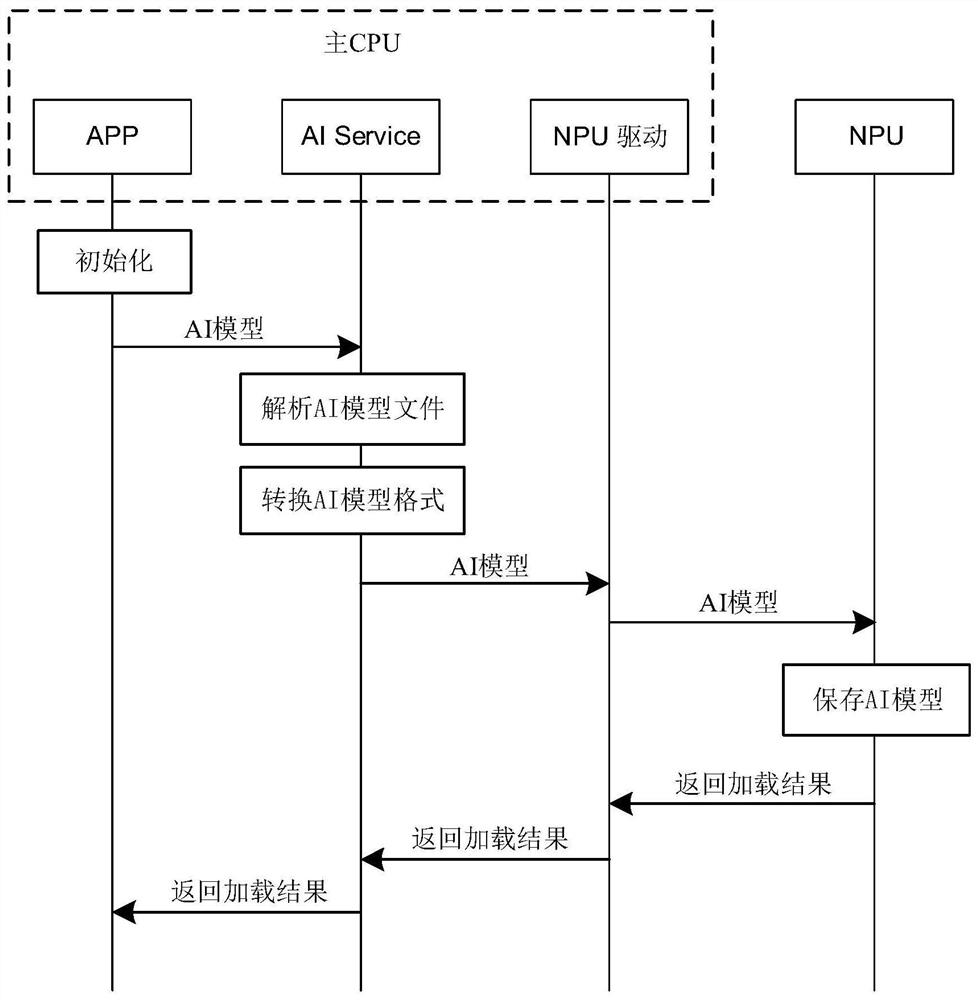

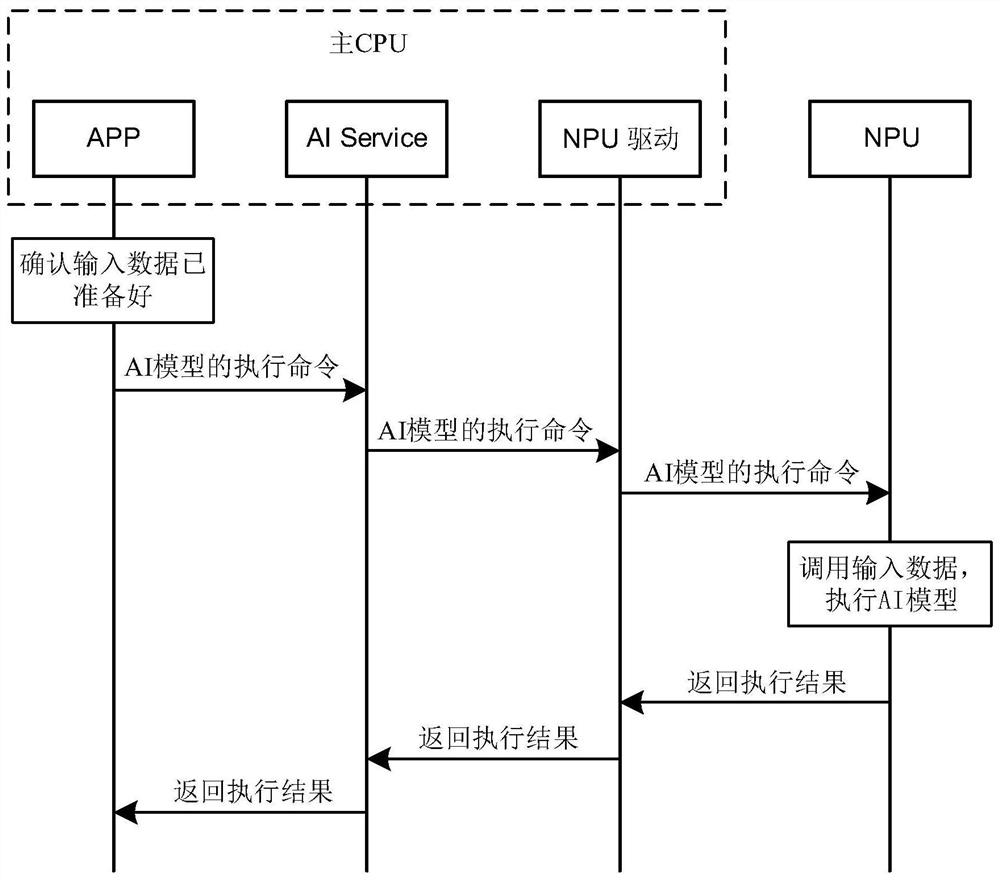

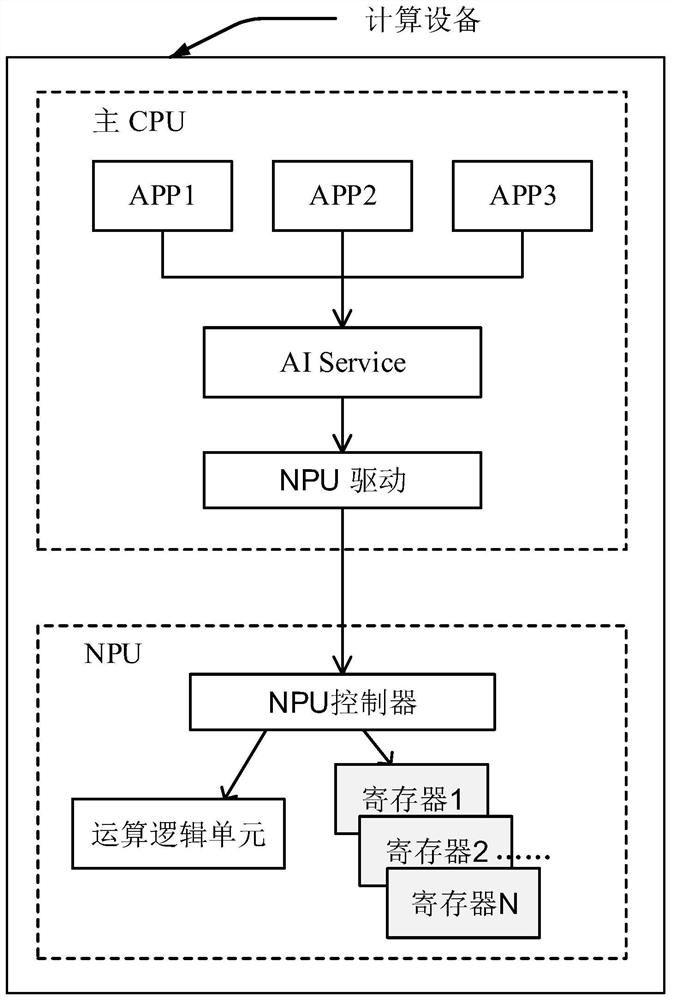

Data interaction method of main CPU and NPU and computing equipment

The embodiment of the invention discloses a main CPU and NPU data interaction method and computing equipment, which can be applied to the field of artificial intelligence, the method is applied to thecomputing equipment, the computing equipment comprises a main CPU and an NPU, a target APP runs on the main CPU, the main CPU comprises an AI Service and an NPU driver, and the NPU comprises an NPU controller, an arithmetic logic unit and N registers. And the NPU allocates a register to the AI model, the main CPU maps a physical address into a virtual address of a virtual memory on the target APPafter receiving the physical address of the register sent by the NPU, and the main CPU can actually read / write the corresponding register on the NPU directly through the target APP, which is equivalent to that a direct connection path exists between the target APP and the NPU. When the main CPU issues the execution command of the AI model to the NPU through the target APP to the whole process ofobtaining the execution result of the AI model, the AI Service and the NPU drive are bypassed by the calculation pass, only the register reading / writing overhead exists, and the real-time performanceof AI model reasoning is improved.

Owner:HUAWEI TECH CO LTD

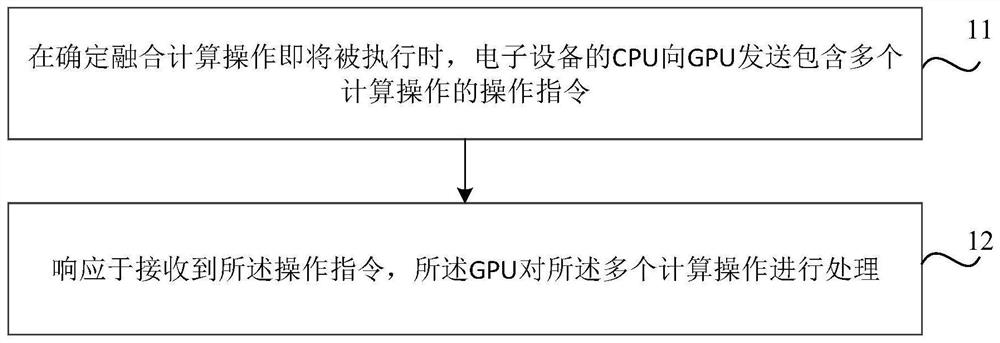

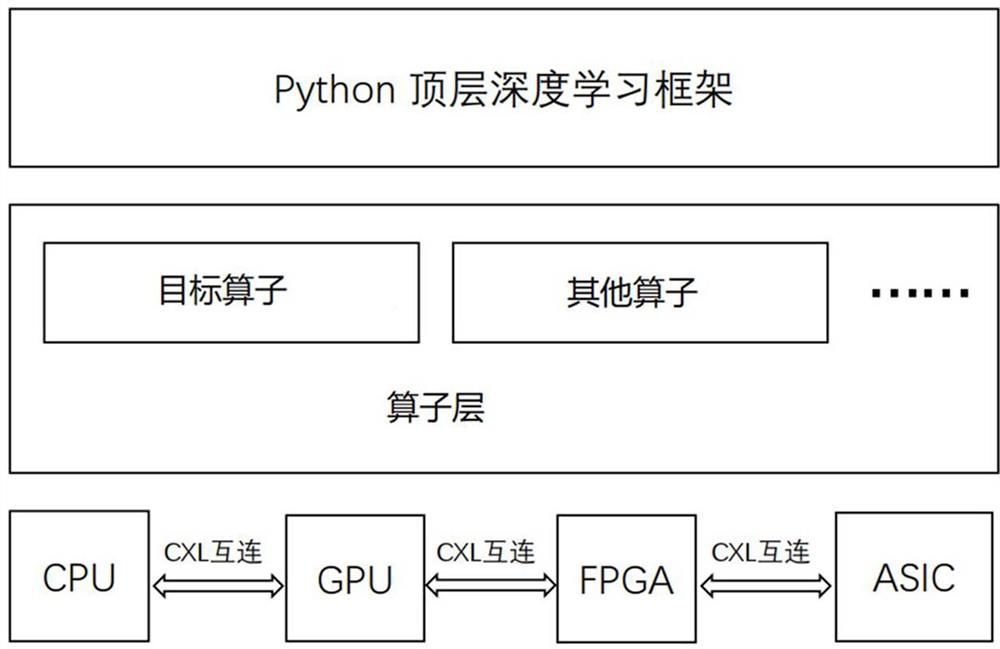

Processing method and device for language model, text generation method and device and medium

PendingCN112818663AImprove computing efficiencyReduce scheduling overheadNatural language data processingVideo memoryComputer architecture

The embodiment of the invention relates to a processing method and device for a language model, a text generation method and device and a medium. The language model is deployed in the electronic equipment, and a plurality of calculation operations between calculation of a target type in calculation of the same feature layer of the language model are combined into one fusion calculation operation, and the processing method for the language model comprises the following steps: when it is determined that the fusion calculation operation is about to be executed, the CPU of the electronic equipment sends an operation instruction containing the plurality of calculation operations to a GPU (Graphics Processing Unit); in response to receiving the operation instruction, the GPU processes the plurality of computing operations. Therefore, the scheduling overhead between the CPU and the GPU and the repeated read-write overhead of the GPU on the video memory in the processing process of the language model can be effectively reduced, so that the calculation efficiency of the GPU can be effectively improved, the calculation efficiency of the language model is further improved, and the delay of text processing based on the language model is effectively reduced.

Owner:BEIJING YOUZHUJU NETWORK TECH CO LTD

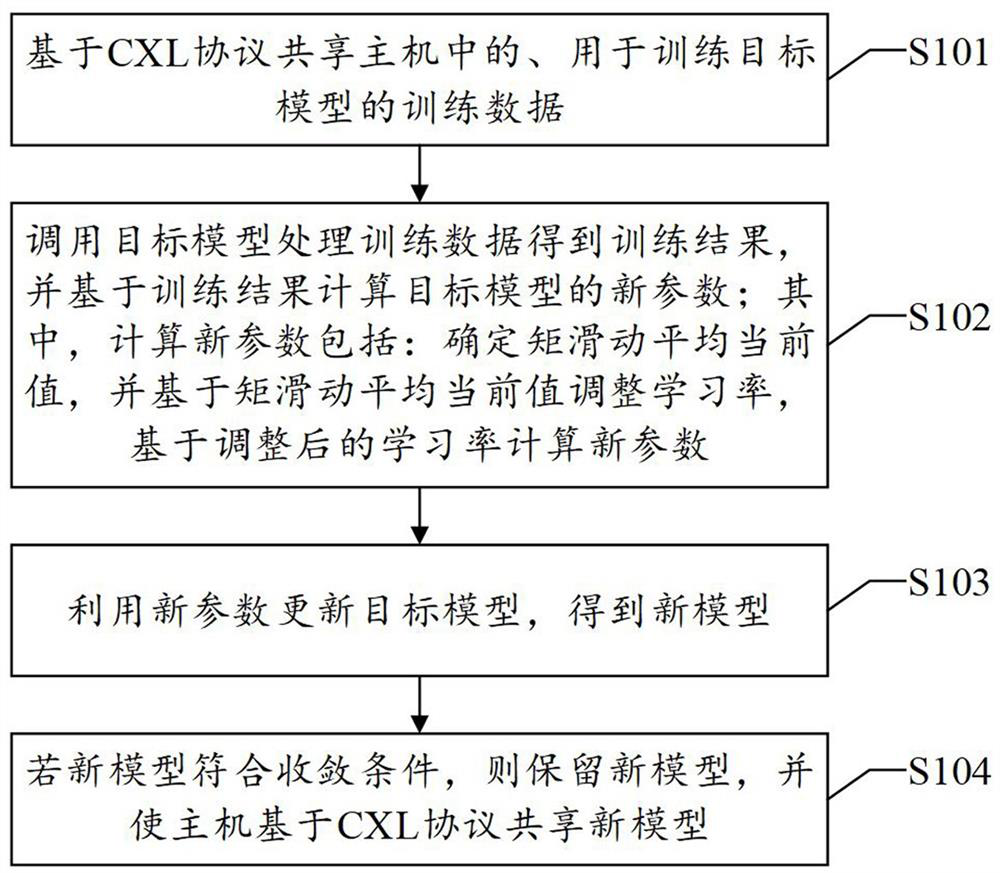

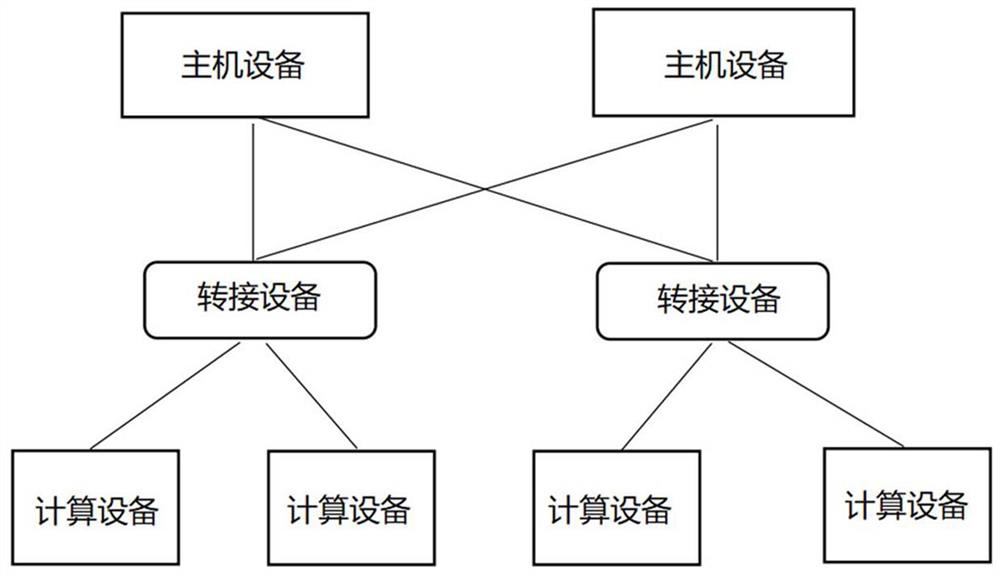

Data processing method, system and device and readable storage medium

ActiveCN114461568AGuaranteed accuracyImprove training efficiencyInterprogram communicationMachine learningMoving averageComputer architecture

The invention discloses a data processing method, system and device and a readable storage medium in the technical field of computers. According to the method, the host and the hardware computing platform are connected through the CXL protocol, so that the host and the hardware computing platform can share the memory, IO and cache of each other, training data does not need to be transmitted through storage media such as a host memory, a GPU cache and a GPU memory, and the hardware computing platform directly reads the training data in the host memory; therefore, the data transmission overhead is reduced. Meanwhile, the hardware computing platform can adjust the learning rate based on the moment moving average current value and then compute new parameters of the model, so that the model parameters can be stabilized, the model precision is guaranteed, and the training efficiency is improved. According to the scheme, the data transmission overhead between the host and the hardware module can be reduced, and the model training efficiency is improved. Correspondingly, the invention also provides a data processing system and device and a readable storage medium, which also have the above technical effects.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

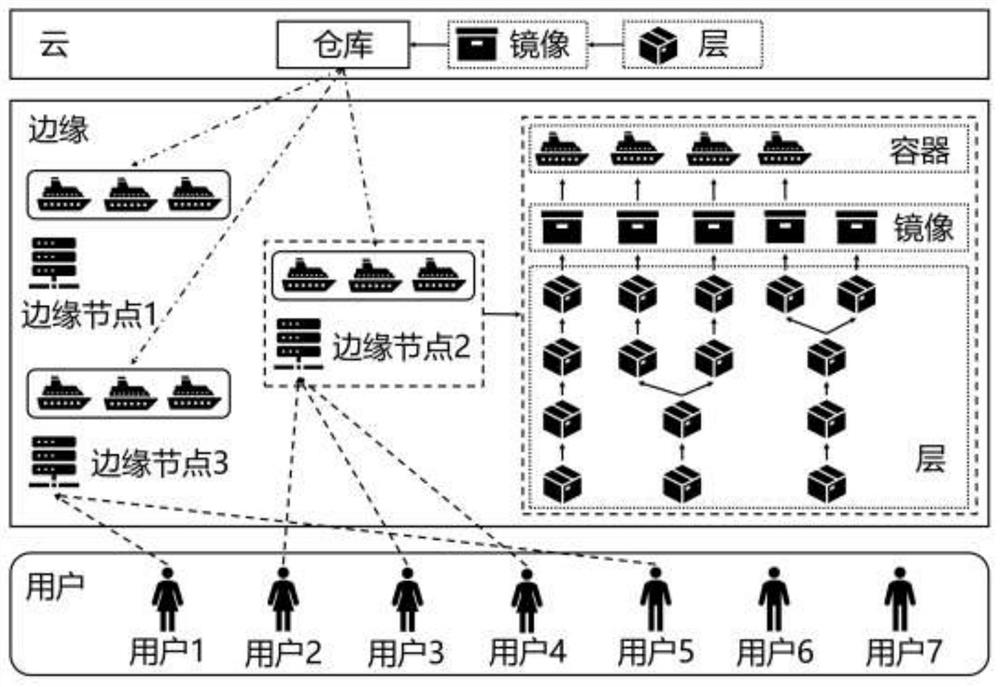

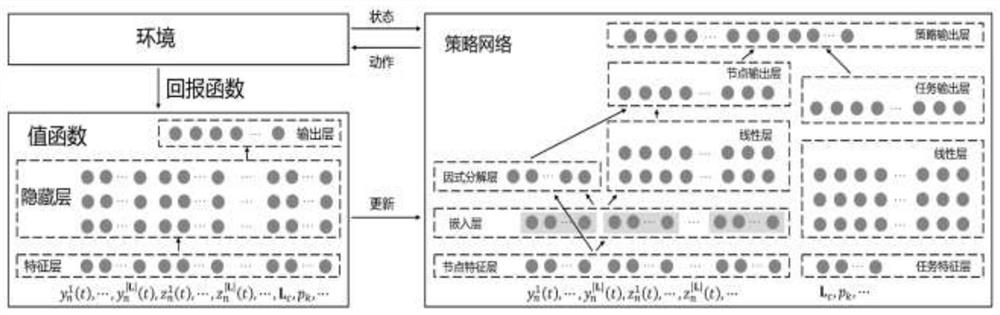

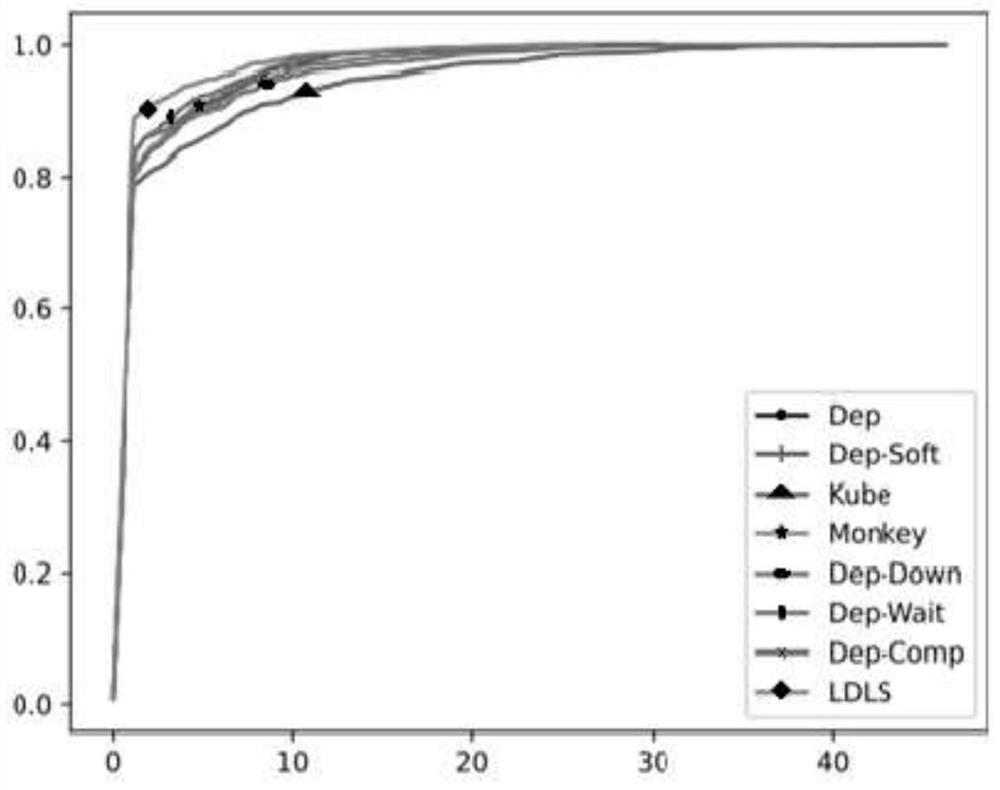

Online learning type scheduling method based on container layer dependency relationship in edge computing

The invention provides an online learning type scheduling method based on a container layer dependency relationship in edge computing. The invention relates to edge computing and a deep reinforcement learning method of resource scheduling and machine learning in a distributed system. According to the technical scheme, firstly, modeling is conducted on edge calculation based on the level of a container layer; the task completion time of the user in the edge calculation is considered, and the task completion time comprises the downloading time of a container required by the user task and the running time of the user task. On the basis, an algorithm based on factorization is provided, the dependency relationship of a container layer in edge computing is extracted, and high-dimensional and low-dimensional sparse dependency features in the dependency relationship are extracted. And finally, on the basis of the extracted dependency relationship and task and node resource characteristics, a learning type task scheduling algorithm based on strategy gradient is designed, and thus verifying the whole process through real data. According to the method provided by the invention, resources in the edge computing can be better planned, and the total overhead of tasks of users in an edge computing system and the overhead required for downloading container mirror image files during container running in the edge computing are reduced.

Owner:北京师范大学珠海校区

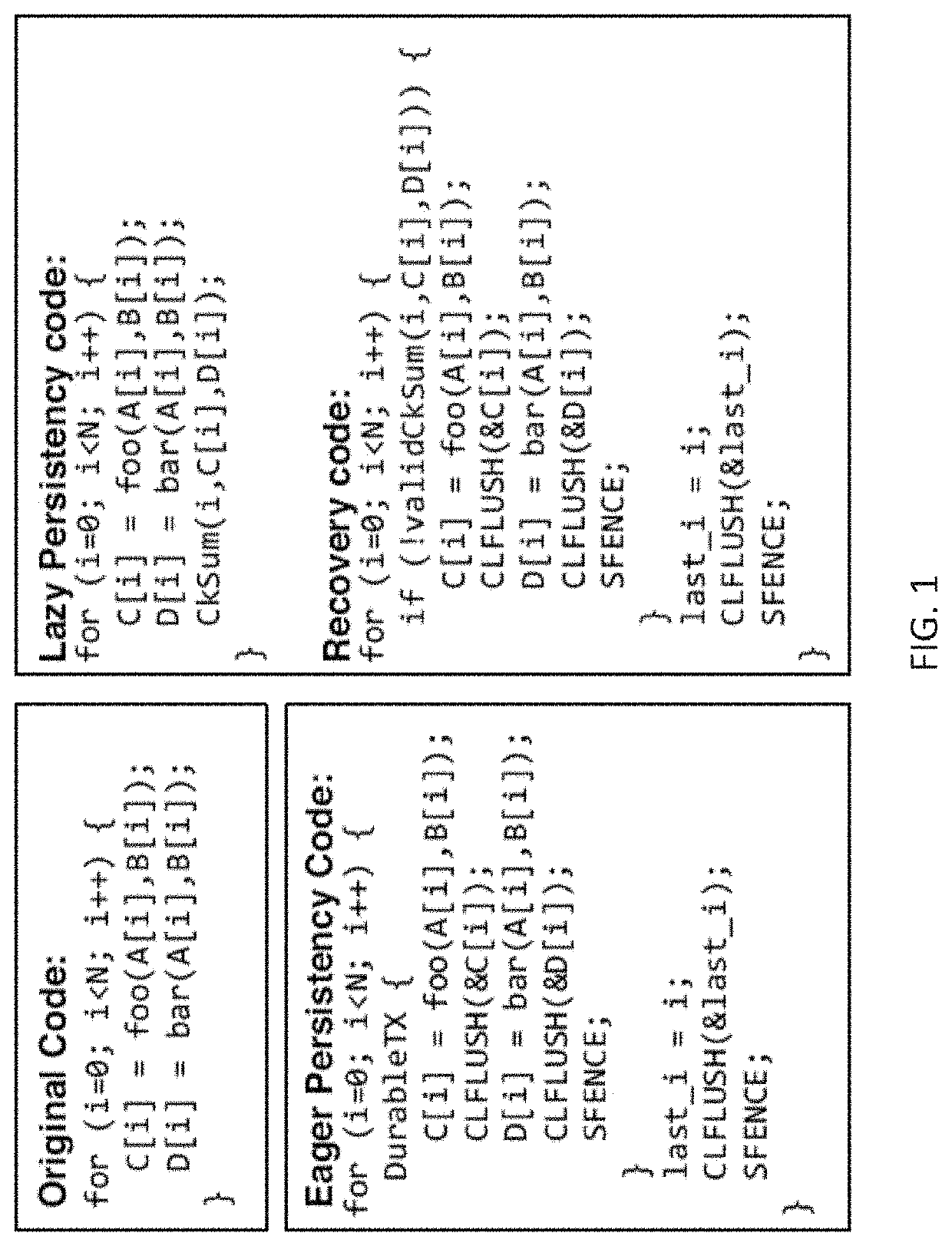

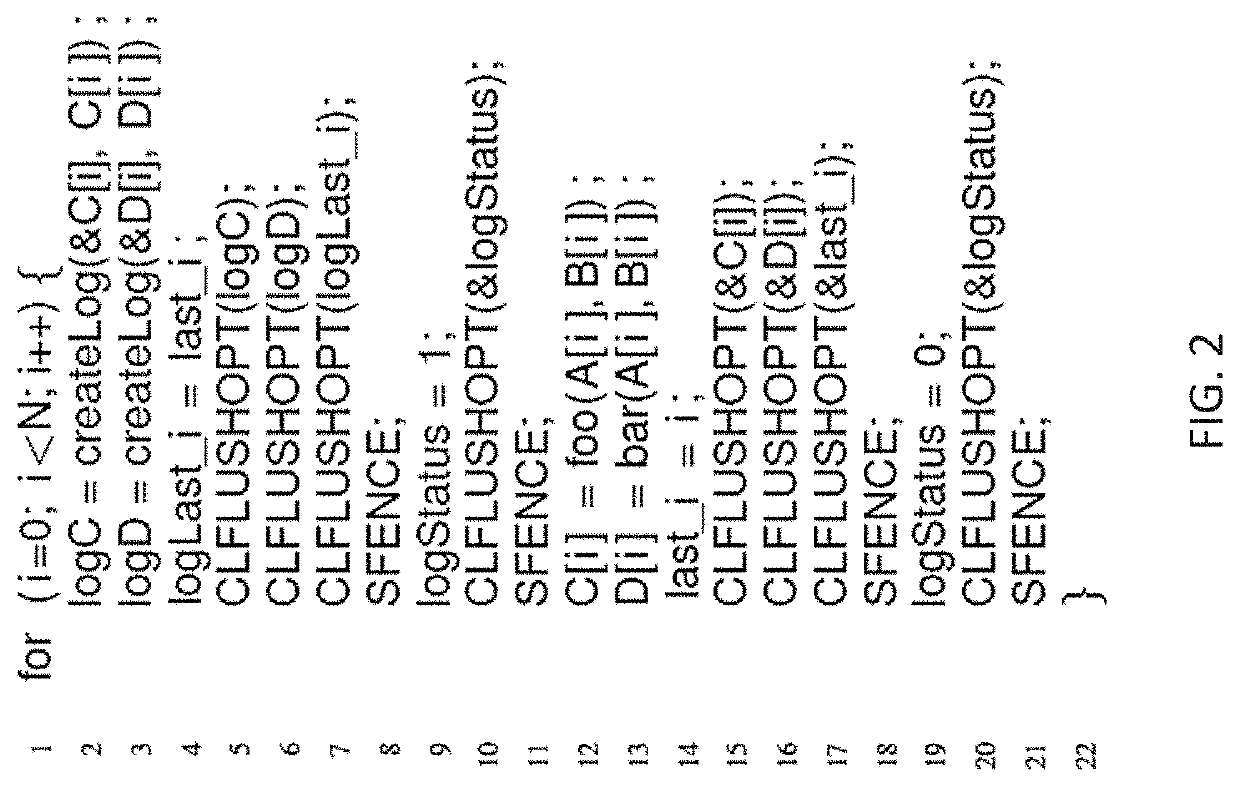

Methods of crash recovery for data stored in non-volatile main memory

ActiveUS20200081802A1Reduce computing costReduce overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationWrite amplificationSoftware bug

Owner:UNIV OF CENT FLORIDA RES FOUND INC

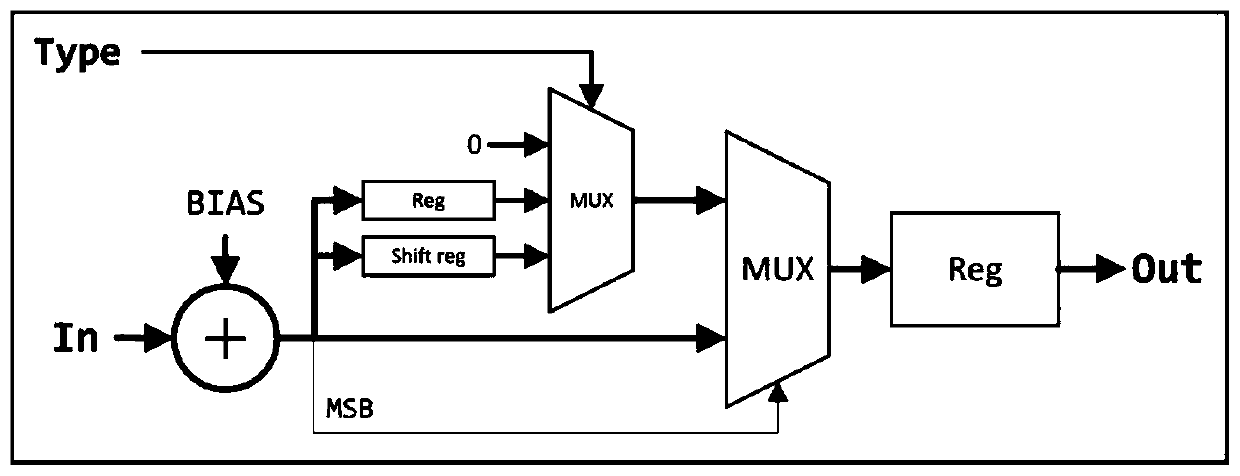

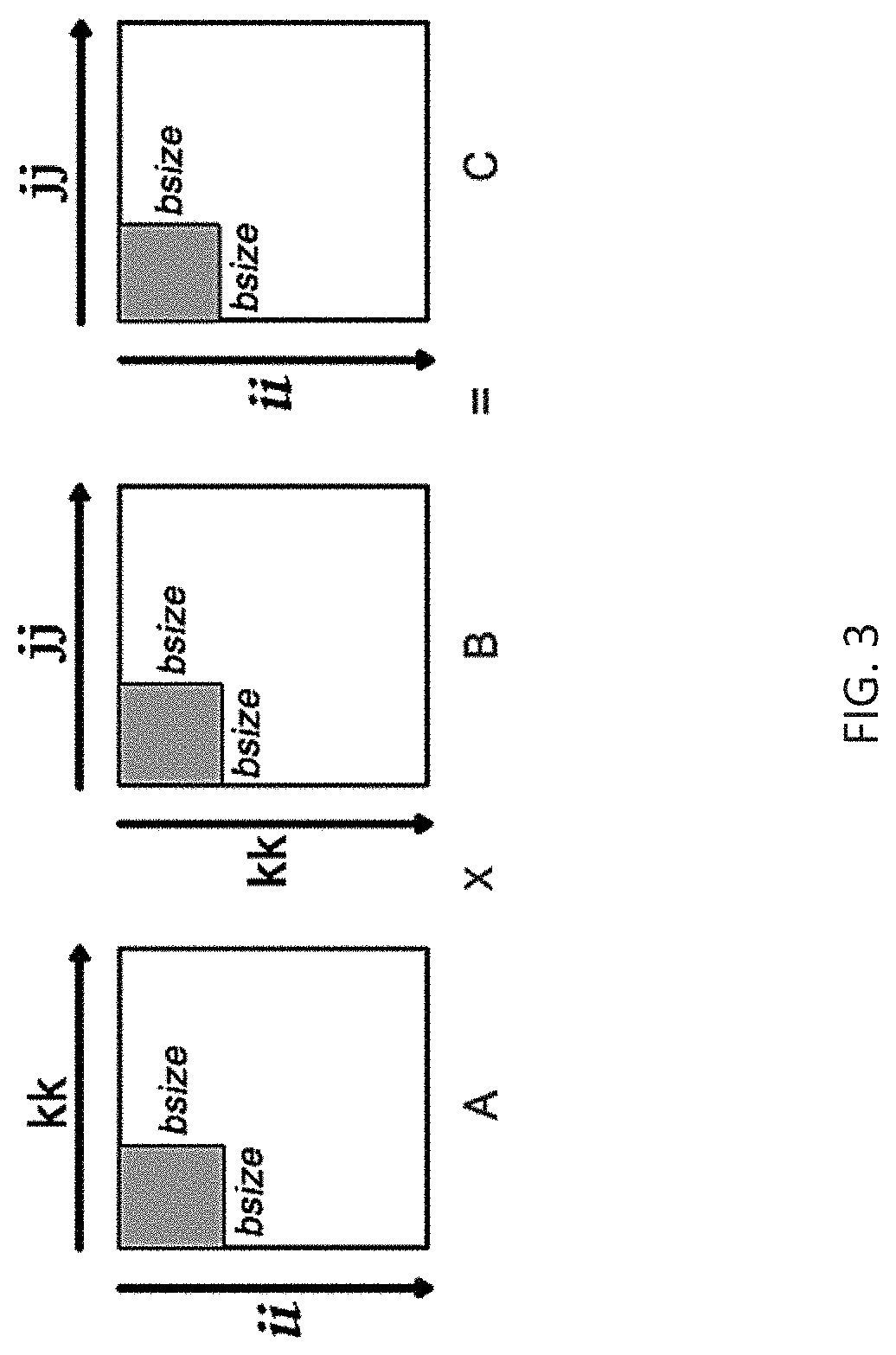

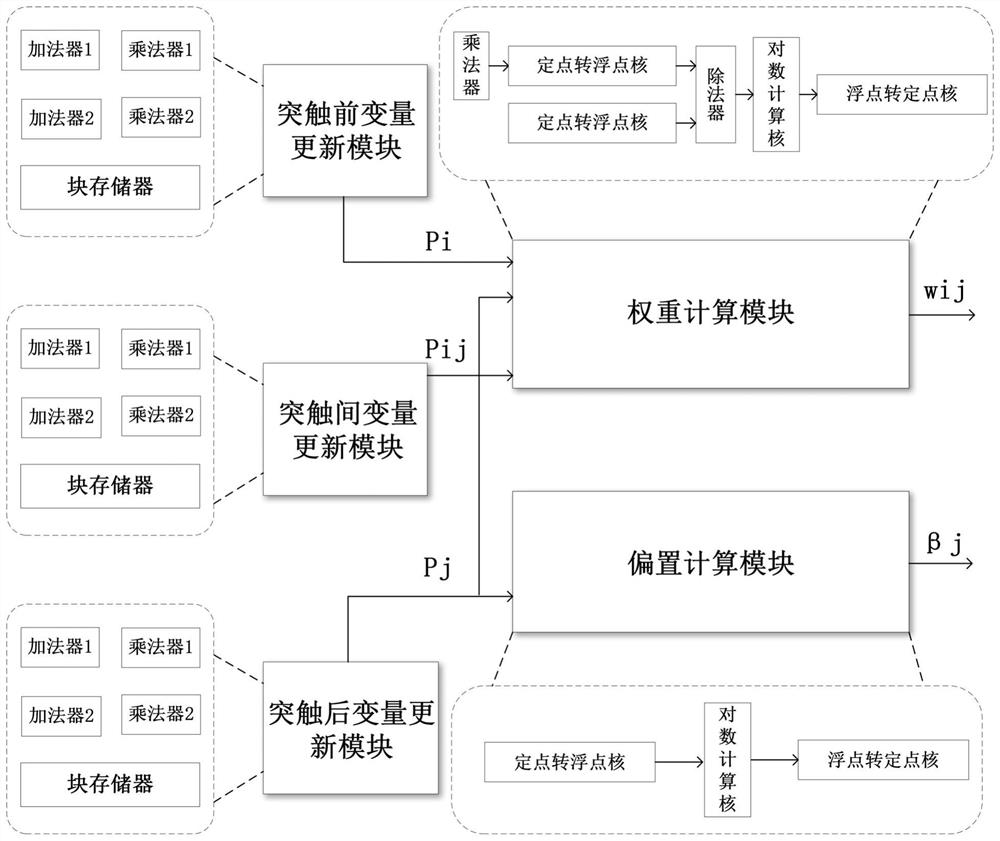

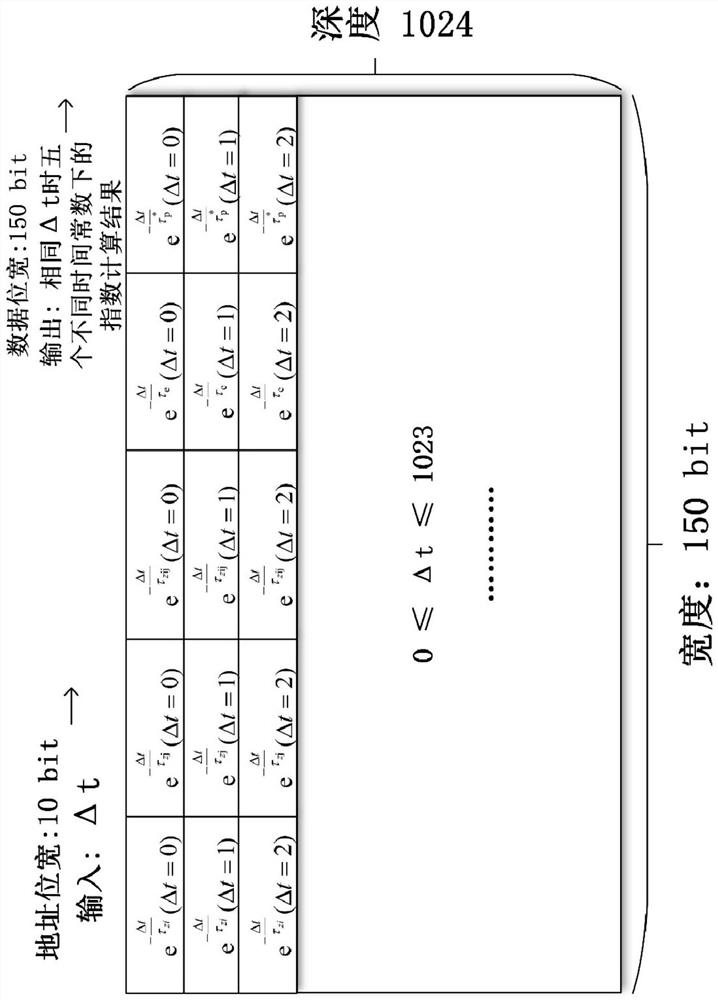

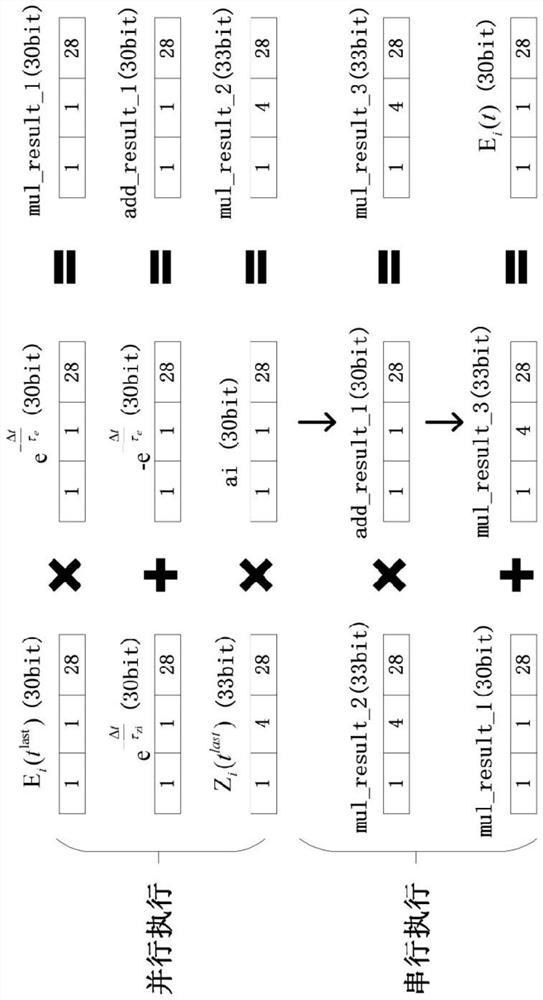

FPGA-based design method for improving the speed of BCPNN

PendingCN111882050AReduce overheadIncrease weightNeural architecturesPhysical realisationMultiplexingBinary multiplier

The invention discloses an FPGA-based design method for improving the speed of a BCPNN, and relates to the technical field of artificial intelligence, and the method comprises the steps: updating variables, weights and offsets of synaptic states in the BCPNN on hardware through modular design; and achieving exponential operation on the FPGA through the lookup table. through a parallel algorithm, the speed of the weight and bias updating process of the synaptic state in the BCPNN is increased; through module multiplexing of the adder and the multiplier, the resource overhead is reduced under the condition of keeping the same computing performance. The method provided by the invention not only has higher calculation performance, but also has higher calculation accuracy, and can effectively improve the weight and offset updating speed of the BCPNN.

Owner:FUDAN UNIV +1

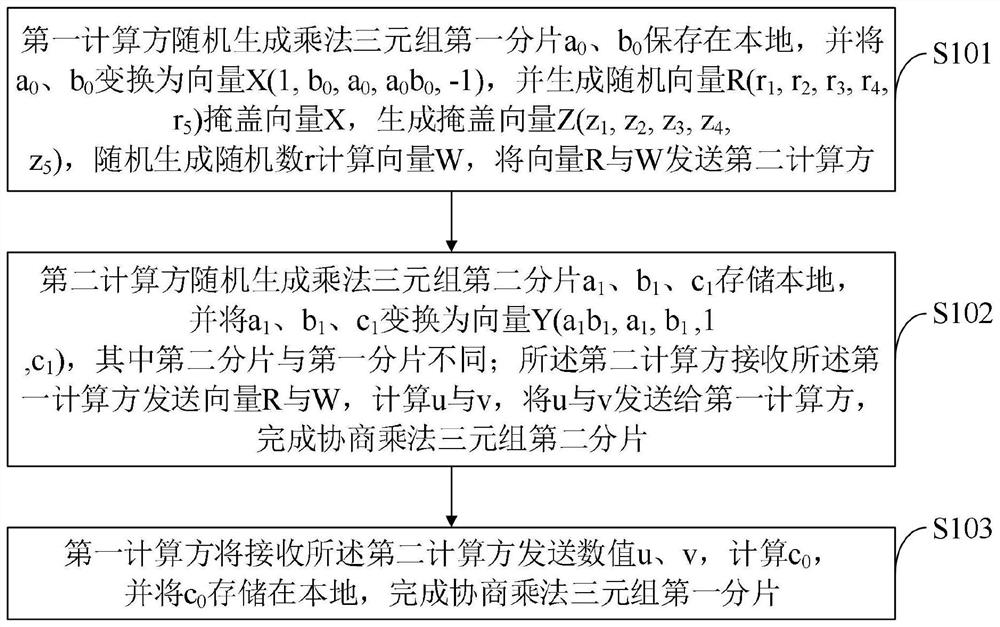

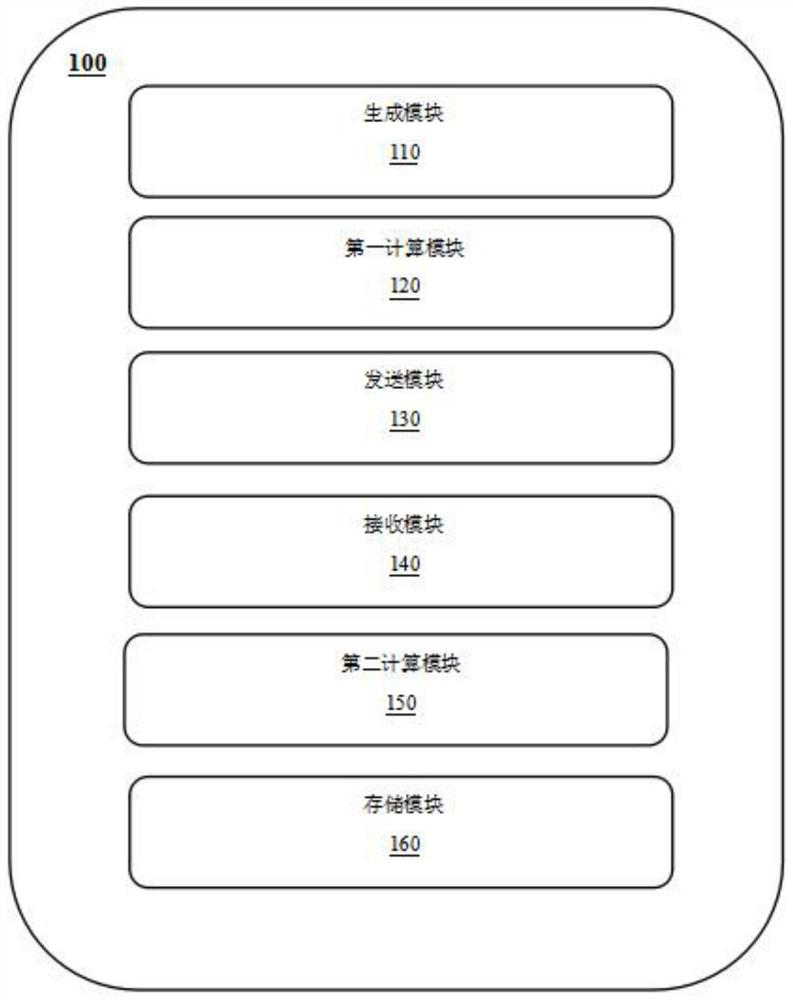

Method and system for improving security multi-party computing efficiency and storage medium

ActiveCN112953700AImprove computing efficiencyPrivacy protectionSecuring communicationComputer networkOverhead (computing)

The invention belongs to the technical field of computers, and discloses a method and a system for improving security multi-party computing efficiency and a storage medium, the method for improving security multi-party computing efficiency is used for a first computing party and a second computing party: the first computing party randomly generates a first fragment of a multiplication triple and generates a random value to cover the fragment; the first computing party sends the generated random value and the masked value to a second computing party; the second computing party receives a random value and a covering value sent by the first computing party, and generates a multiplication triple second fragment; u and v are obtained through calculation with the second fragment, and the u and the v are sent to the first computing party; and the first computing party receives the numerical values u and v sent by the second computing party, calculates c0, and stores the c0 locally. According to the method, the computing overhead and the communication overhead are greatly reduced, and expansibility is achieved; and a strict cryptography theory is used as a support, and it can be guaranteed that privacy is not leaked under the condition that computing parties are not mutually collocated.

Owner:XIDIAN UNIV +1

Method for eliminating cold start of server-free computing container

PendingCN114528068AAvoid repeated executionEliminate overheadEnergy efficient computingSoftware simulation/interpretation/emulationFile systemOverhead (computing)

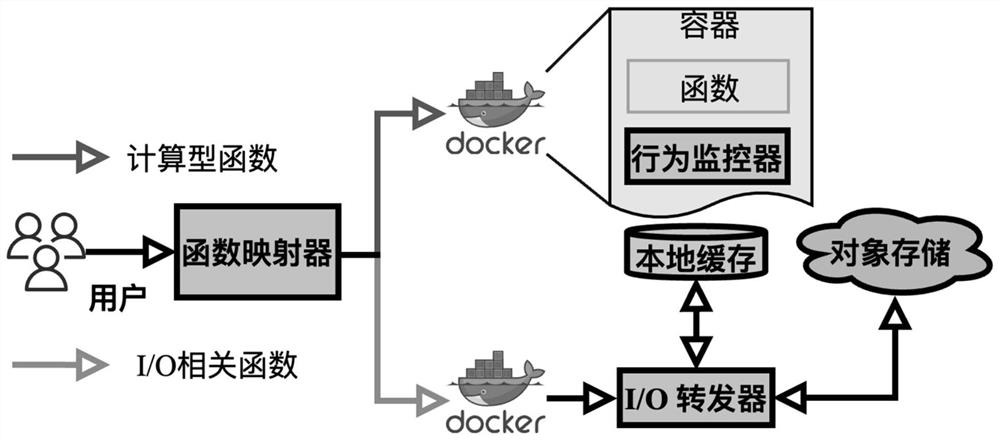

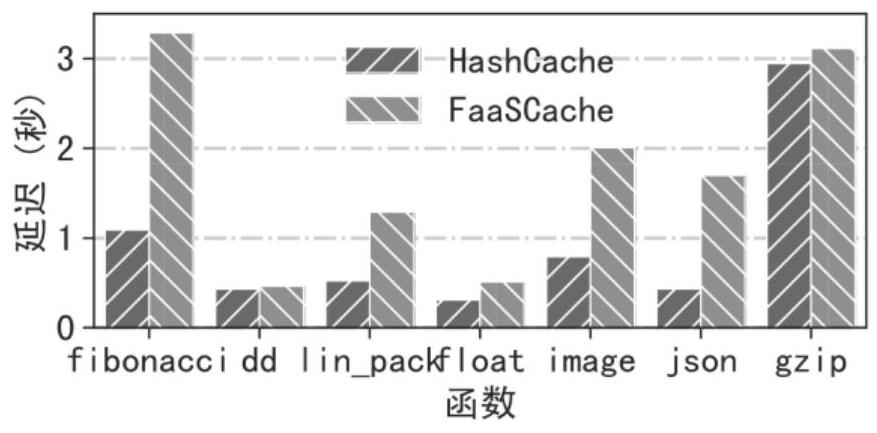

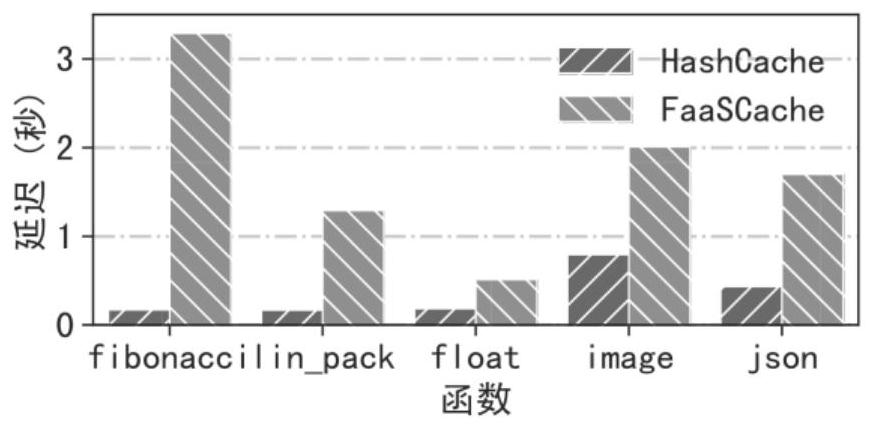

The invention discloses a method for eliminating cold start of a server-free computing container, and aims to eliminate cold start of the container by bypassing repeated computation in a process of calling a function by a server-free platform; and (2) external files are prevented from being requested by functions, and calling delay during function execution is reduced. According to the method, a real-time monitoring mechanism based on container runtime is designed, functions are divided into three types according to monitoring information, namely a calculation type function, an I / O type function and an environment related function, and for the calculation type function, execution of the functions is bypassed by caching and directly returning a calculation result; and for the I / O type function, external files required by the function are maintained in the local file system, so that the delay overhead caused by accessing an external network by the function is reduced. The method eliminates container cold start and reduces end-to-end delay of function call. In addition, due to the fact that function execution and container starting can be bypassed, the calculation result of the calculation type function can be directly returned, and physical resources needed for processing the function request are further reduced.

Owner:JINAN UNIVERSITY

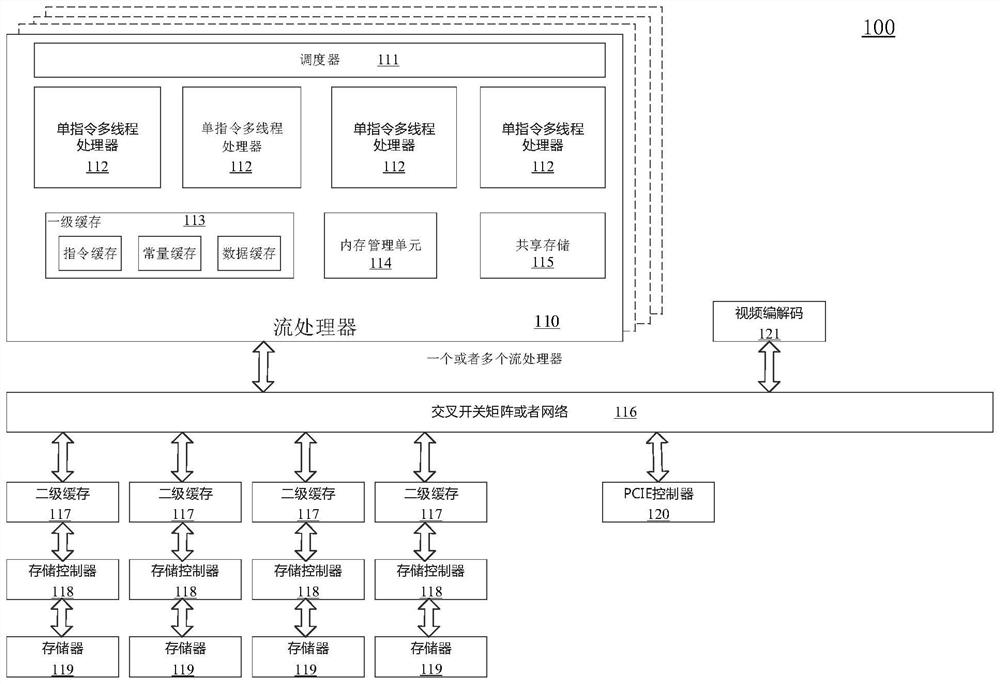

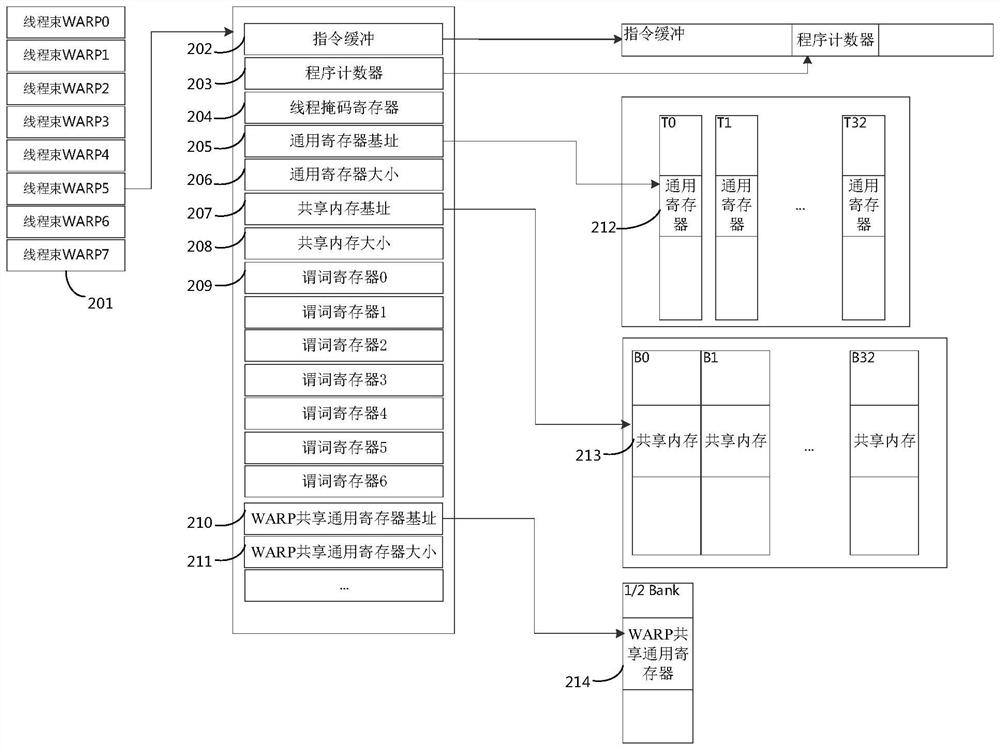

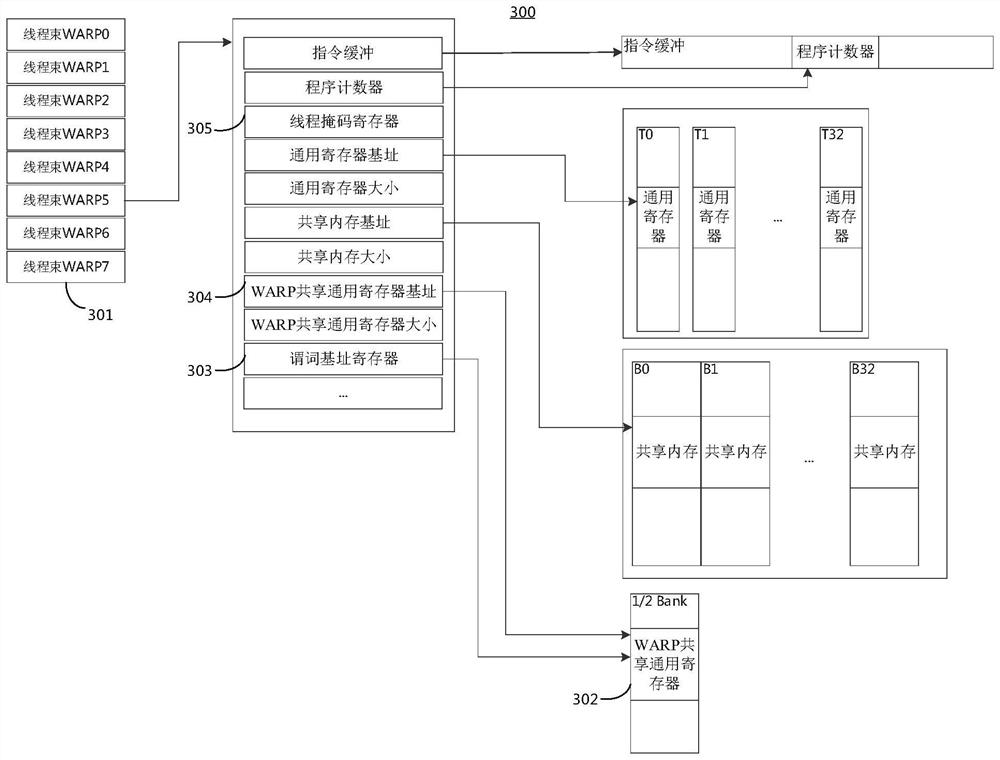

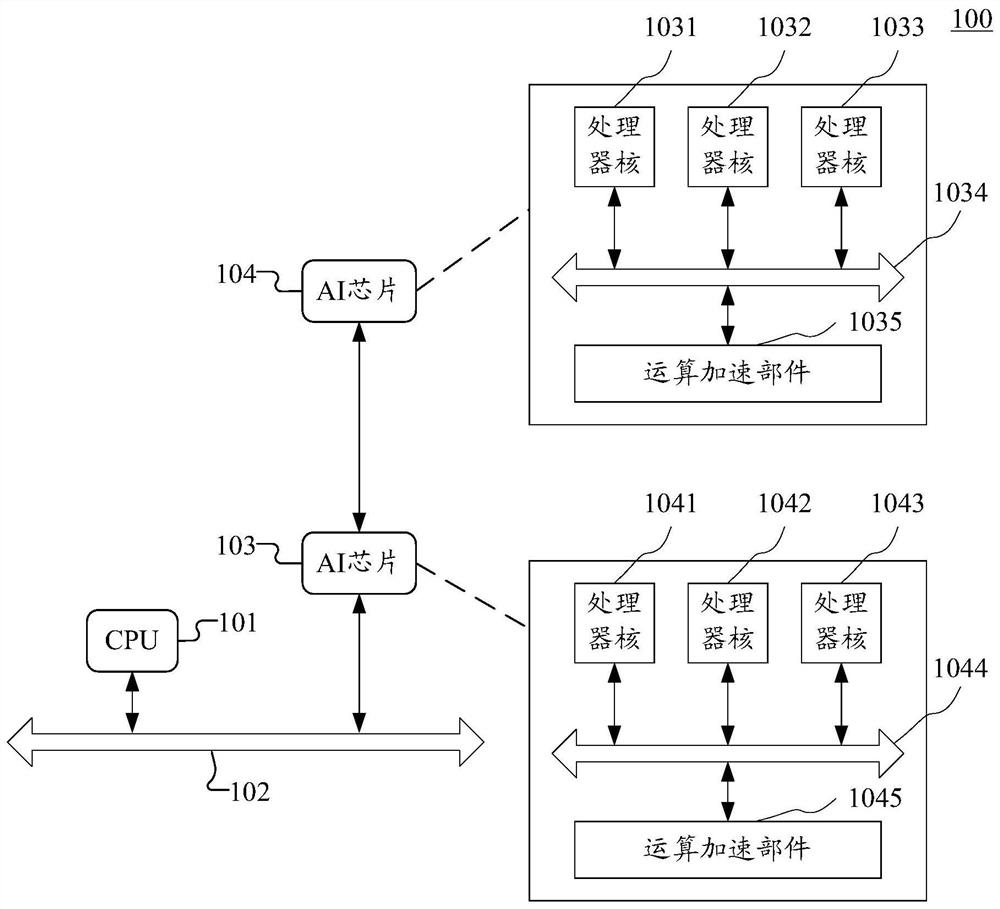

Processor device, instruction execution method thereof and computing equipment

ActiveCN114489791ARealize dynamic expansionTake advantage ofRegister arrangementsConcurrent instruction executionGeneral purposeComputer architecture

The embodiment of the invention discloses a processor device, an instruction execution method thereof and computing equipment. The apparatus comprises one or more single-instruction multi-thread processing units, and the single-instruction multi-thread processing units comprise one or more thread bundles used for executing instructions; a shared register group including a plurality of general purpose registers shared among the thread bundles; a predicate base address register which is arranged corresponding to each thread bundle and is used for indicating a base address of a group of general purpose registers which are used as predicate registers of each thread bundle in the shared register group; wherein each thread bundle performs asserted execution on instructions based on predicate values in the set of general purpose registers used as predicate registers for each thread bundle. According to the embodiment of the invention, the inherent special predicate register of each thread bundle in the original processor architecture can be canceled, the dynamic expansion of the predicate register resource of each thread bundle is realized, the full utilization of processor resources is realized, the overhead of switching instructions is reduced, and the instruction processing performance is improved.

Owner:METAX INTEGRATED CIRCUITS (SHANGHAI) CO LTD

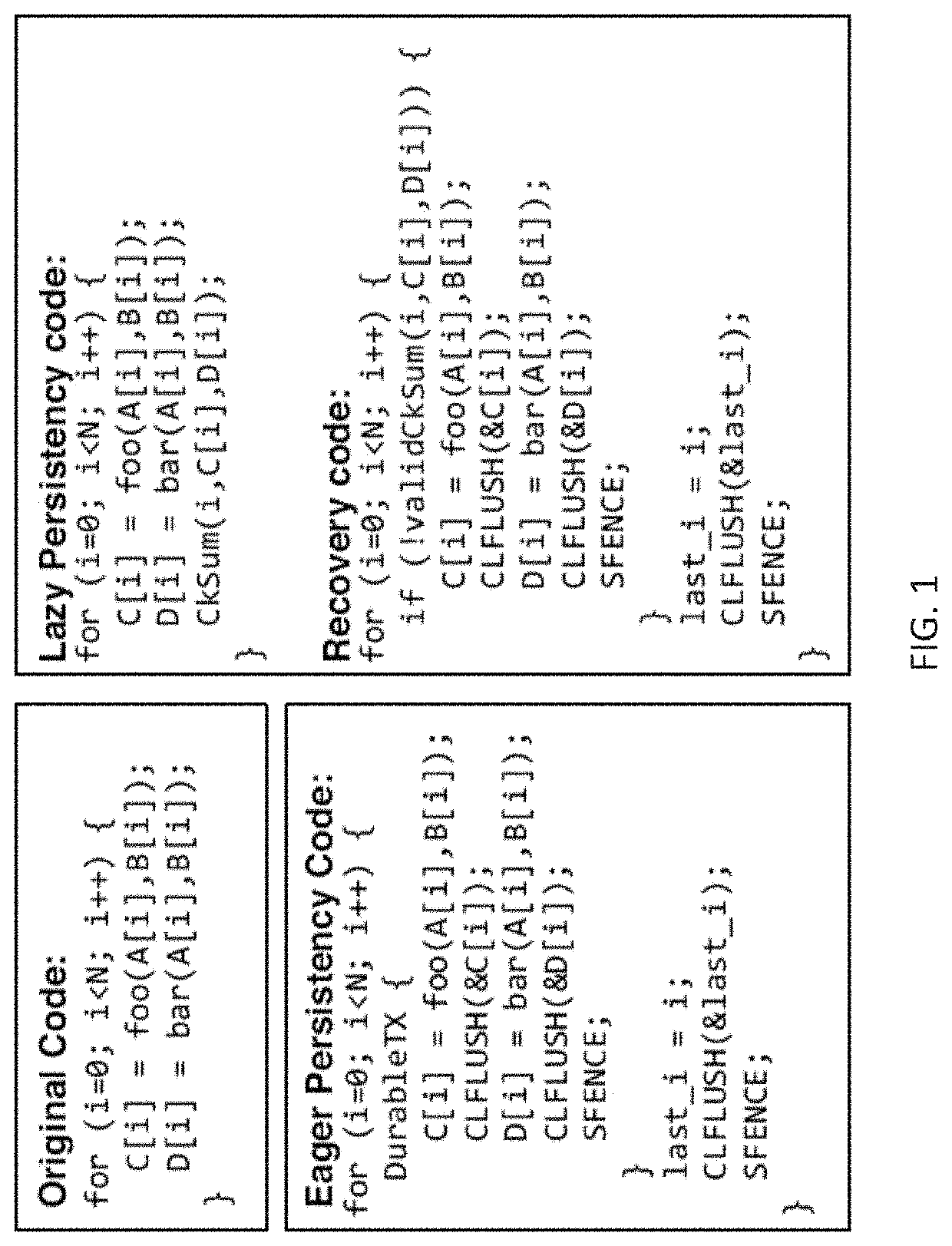

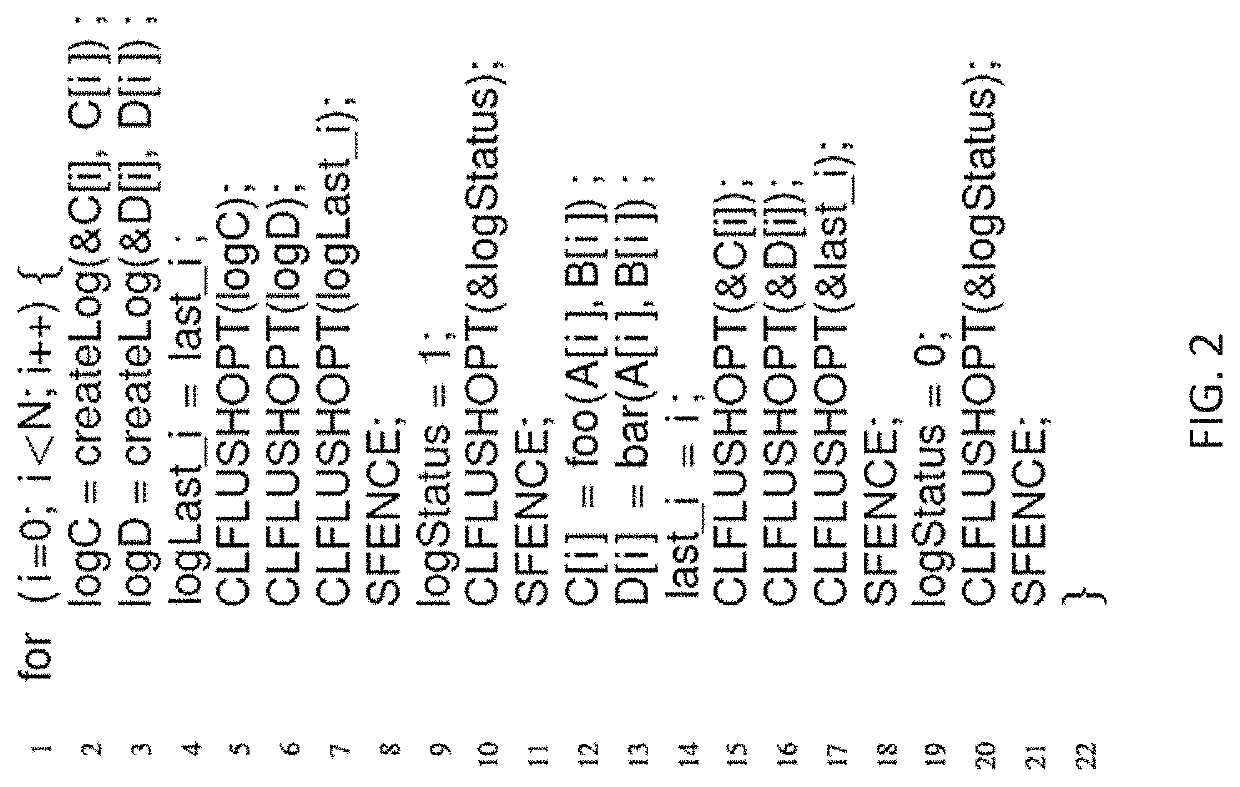

Methods of crash recovery for data stored in non-volatile main memory

ActiveUS11281545B2Reduce overheadShorten the timeMemory architecture accessing/allocationMemory adressing/allocation/relocationWrite amplificationSoftware bug

Lazy Persistency (LP), a software persistency method that allows caches to slowly send dirty blocks to the non-volatile main memory (NVMM) through natural evictions. With LP, there are no additional writes to NVMM, no decrease in write endurance, and no performance degradation from cache line flushes and barriers. Persistency failures are discovered using software error detection (checksum), and the system recovers from them by recomputing inconsistent results. LP was evaluated and compared to the state-of-the-art Eager Persistency technique from prior work. Compared to Eager Persistency, LP reduces the execution time and write amplification overheads from 9% and 21% to only 1% and 3%, respectively.

Owner:UNIV OF CENT FLORIDA RES FOUND INC

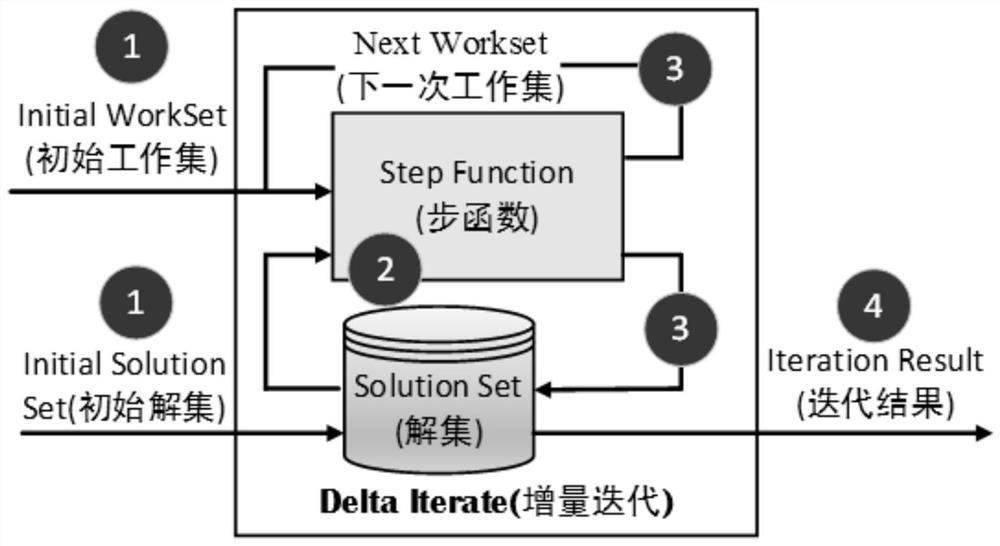

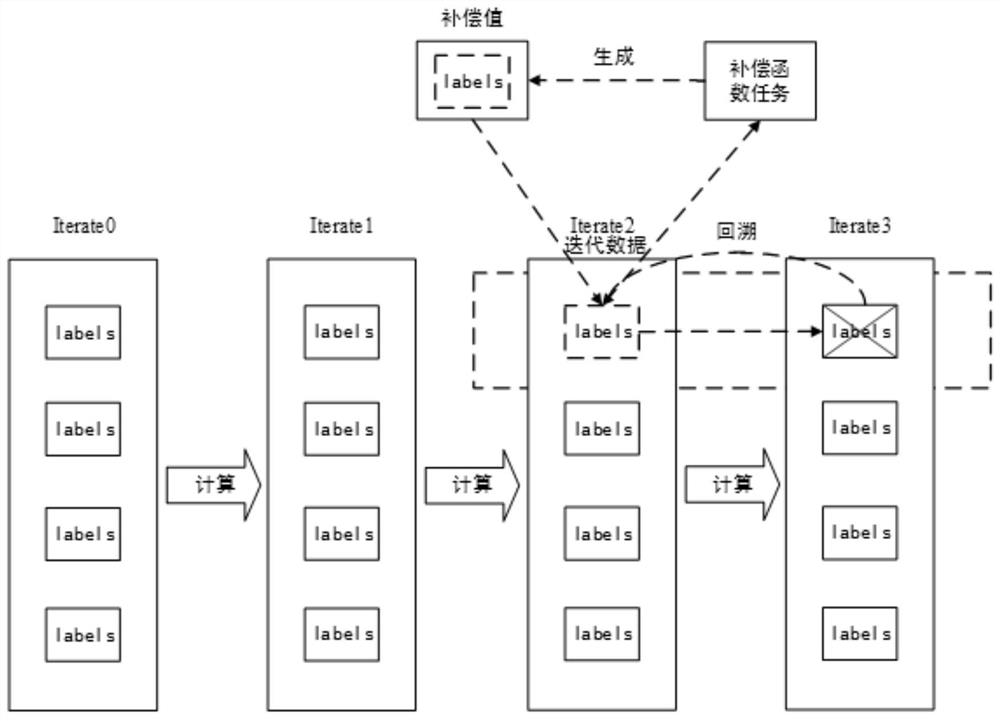

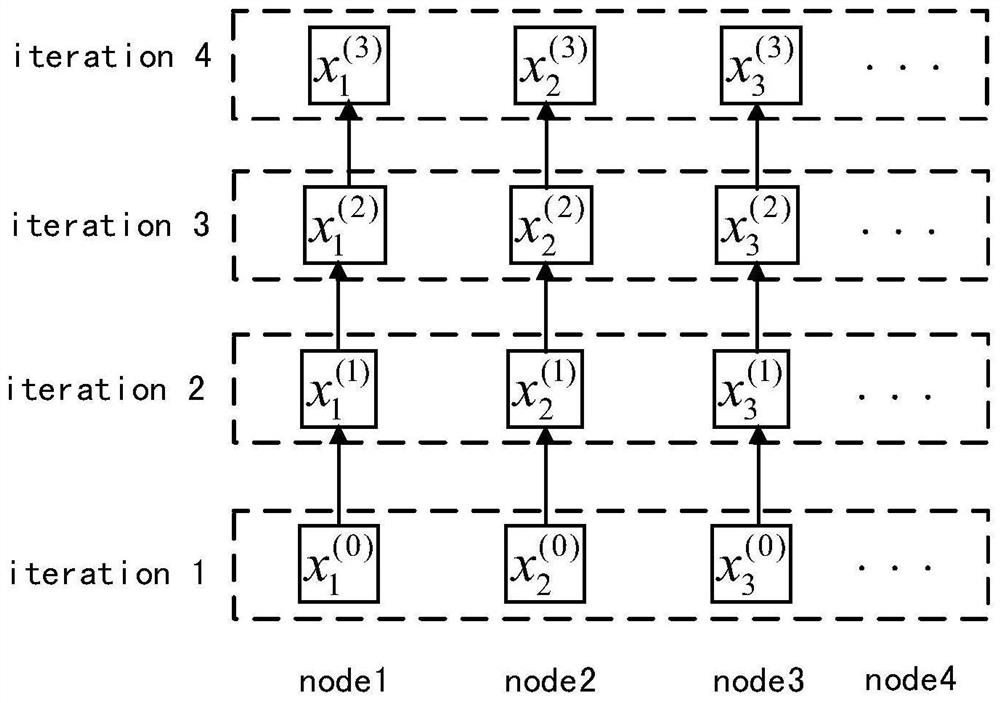

An Iterator Based on Optimistic Fault Tolerance

ActiveCN110795265BImprove processing efficiencyBest trouble-free performanceNon-redundant fault processingExecution paradigmsFailure rateParallel computing

The invention discloses an iterator based on an optimistic fault-tolerant method, which belongs to the technical field of distributed iterative computing in a big data environment. The iterator includes an incremental iterator and a batch iterator, and comprehensively considers iteration tasks of different sizes and different faults. For the iterative calculation task of rate, a compensation function is introduced, and the system uses this function to re-initialize the lost partitions. When a failure occurs, the system pauses the current iteration, ignores the failed tasks, and redistributes the lost computations to newly acquired nodes, calling the compensation function on the partition to restore a consistent state and resume execution. For situations where the fault frequency is low, the calculation delay is greatly reduced and the iterative processing efficiency is improved. For situations with high failure frequency, this iterator can ensure that the iteration processing efficiency is not lower than that of the iterator before optimization. This optimistic fault-tolerant iterator does not need to add additional operations to the task, effectively reducing the fault-tolerant overhead.

Owner:NORTHEASTERN UNIV LIAONING +1

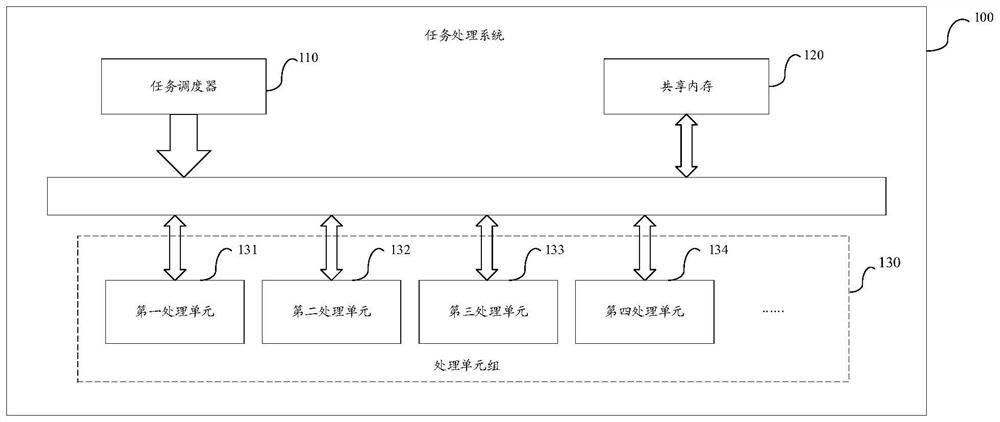

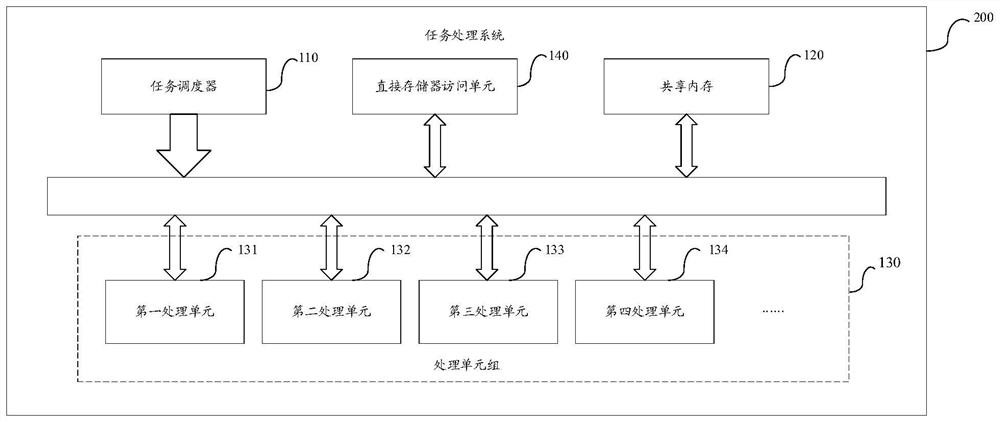

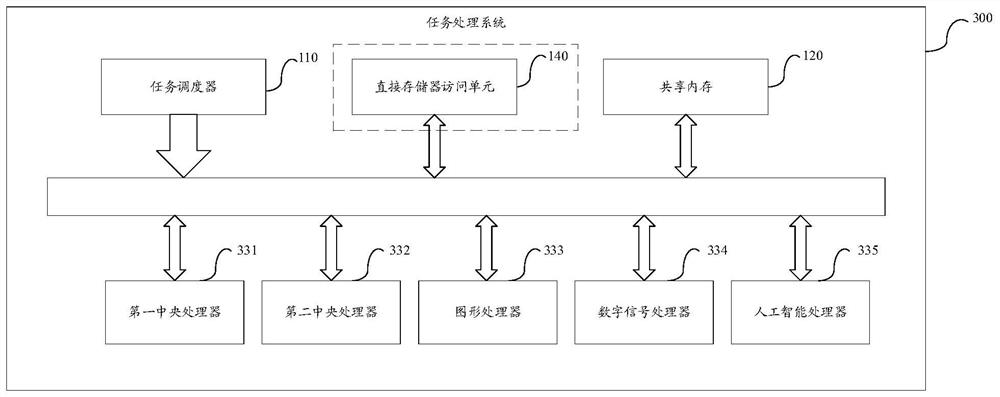

Task processing method and device for preventing side channel attacks

PendingCN112199675AGuaranteed independenceReduce resource overheadPlatform integrity maintainancePERQComputer network

The invention provides a task processing method and device for preventing side channel attacks based on a heterogeneous computing system and a computer readable storage medium. The method comprises the steps of performing task division on a to-be-executed first task to obtain at least two first sub-tasks, determining a random probability number, and dynamically adjusting the at least two first sub-tasks according to the random probability number to obtain at least two to-be-executed first sub-tasks, and allocating the at least two first sub-tasks to be executed to at least one processing unitmatched with the task characteristics, and respectively executing the at least two to-be-executed first sub-tasks by the at least one processing unit corresponding to the at least two to-be-executed first sub-tasks. Through adoption of the method and the device, the side channel attack can be effectively resisted, the resource overhead is reduced, and the information security is improved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

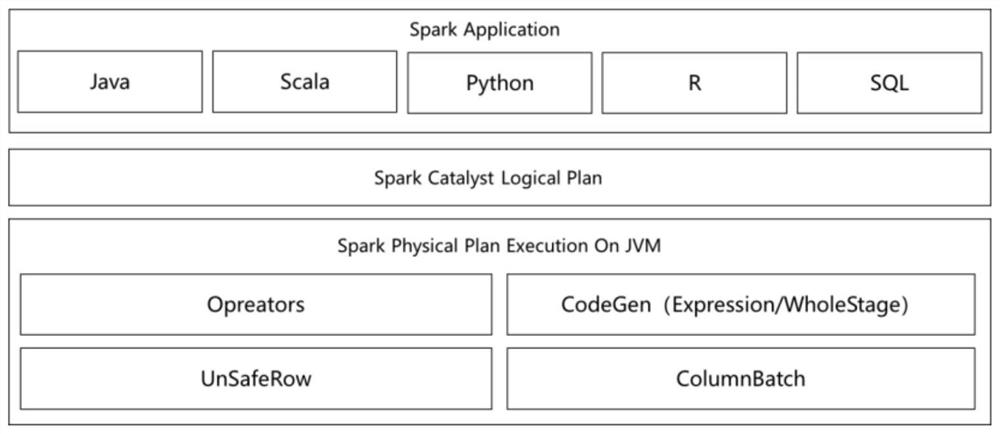

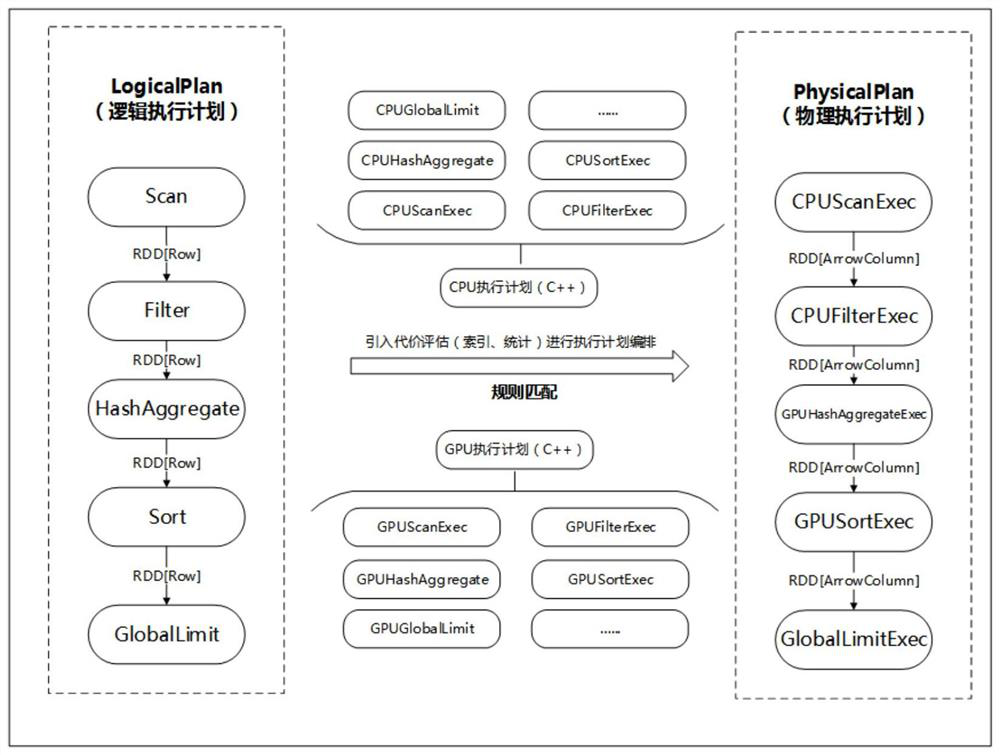

Column calculation optimization method based on Spark SQL

PendingCN114138811AReduce overheadAvoid overheadDigital data information retrievalProgram initiation/switchingExecution planTheoretical computer science

The invention discloses a Spark SQL (Structured Query Language)-based column calculation optimization method, which comprises the following steps of: S1, unified memory management: establishing an Arrow-based unified data management mechanism, and accessing and calculating file data by various plug-ins after the file data is loaded into a memory Arrow structure from a disk; s2, heterogeneous computing resources are scheduled in a unified mode, an optimizer and a plug-in are expanded in a Spark SQL, and a heterogeneous resource scheduling mechanism based on data features is achieved; and S3, performing rule matching on the logic execution plan of the Spark SQL, and generating a physical execution plan of CPU and GPU mixed arrangement based on a unified memory structure Arrow. According to the method, the memory space can be compressed in the format of the Arrow column, the GC overhead of JVM memory calculation is avoided, the calculation efficiency is improved, the Spark SQL operators are mixed and arranged into the optimal execution plan according to the cost optimization method, and the overall calculation time consumption is reduced.

Owner:西安烽火软件科技有限公司

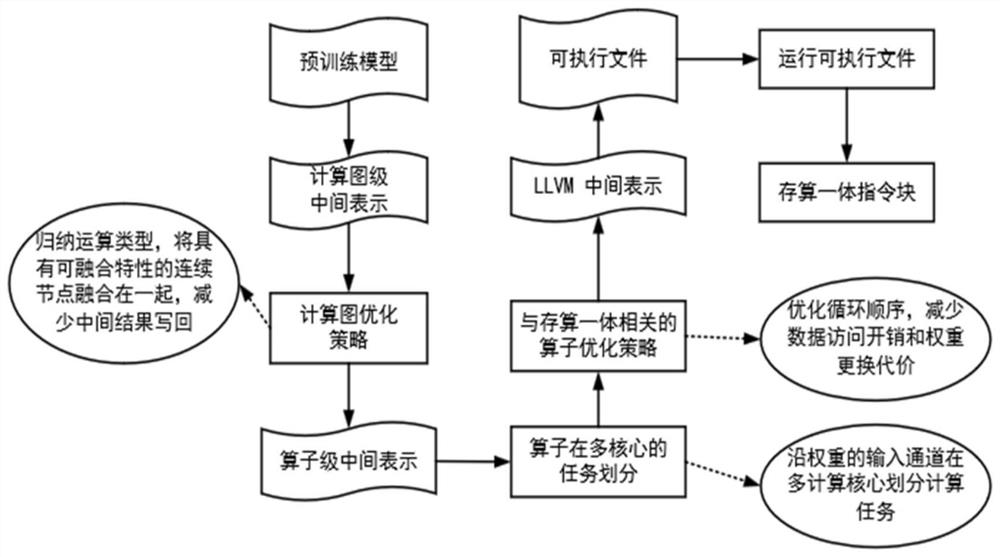

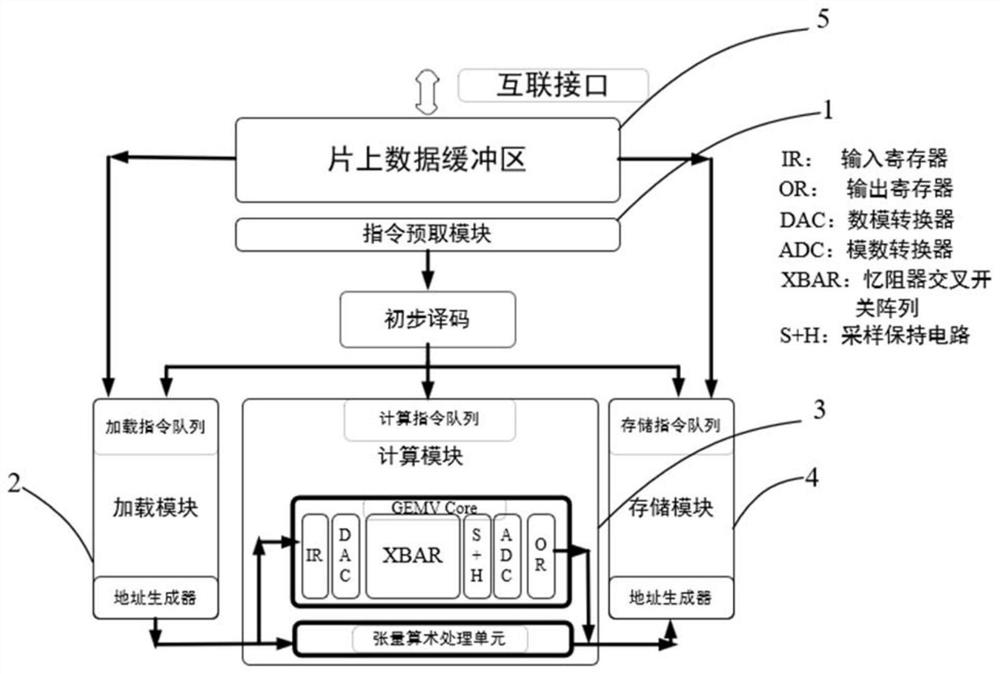

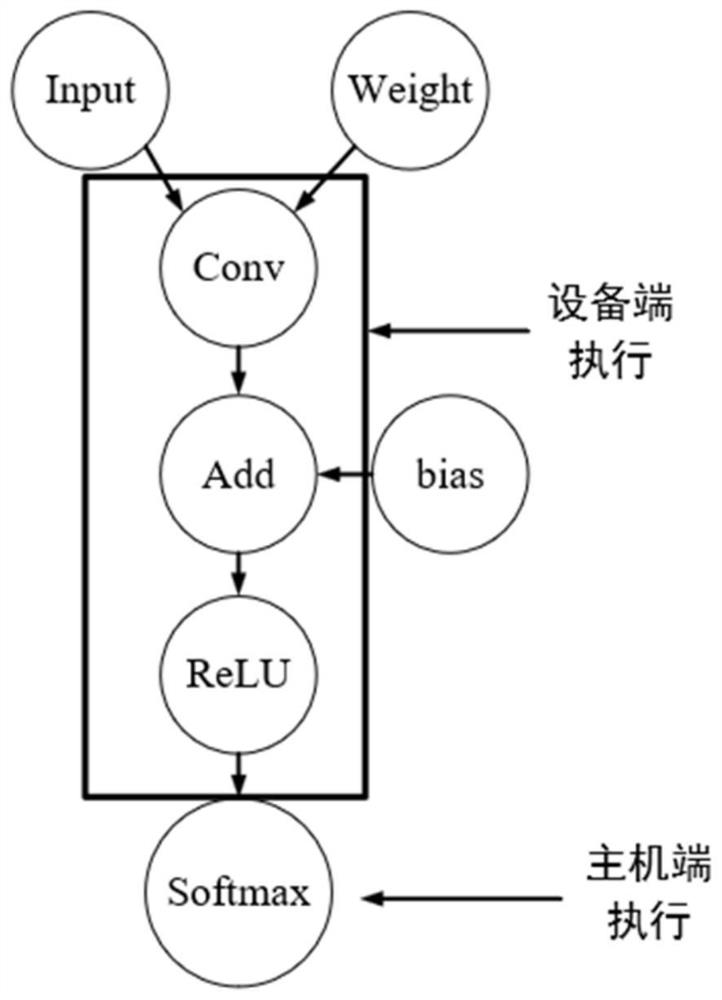

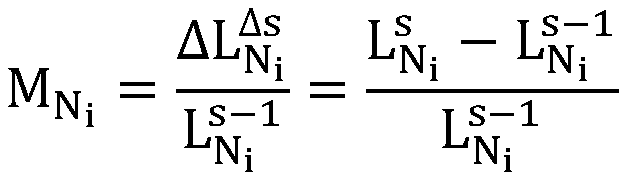

A Neural Network Compilation Method for Storage and Computing Integrated Platform

ActiveCN112465108BReduce overheadReduce the number of timesNeural architecturesPhysical realisationOverhead (computing)Term memory

Owner:SHANGHAI JIAOTONG UNIV

A data interaction method and computing device between a main CPU and an NPU

The embodiment of the present application discloses a data interaction method between a main CPU and an NPU and a computing device, which can be applied in the field of artificial intelligence. The method is applied to a computing device. The computing device includes a main CPU and an NPU, and the main CPU runs a target APP. The main CPU includes an AI Service and an NPU driver, and the NPU includes an NPU controller, an operation logic unit, and N registers. The method includes: after the main CPU loads the AI model to the NPU, the NPU allocates a register for the AI model, and the main CPU receives After the physical address of the register sent by the NPU, the physical address will be mapped to the virtual address of the virtual memory on the target APP. The main CPU can actually directly read / write the corresponding register on the NPU through the target APP, which is equivalent to the relationship between the target APP and the NPU. There is a direct connection. When the main CPU sends the execution command of the AI model to the NPU through the target APP to obtain the execution result of the AI model, the calculation path bypasses the AI Service and the NPU driver, and only the overhead of register read / write improves the reasoning of the AI model. real-time.

Owner:HUAWEI TECH CO LTD

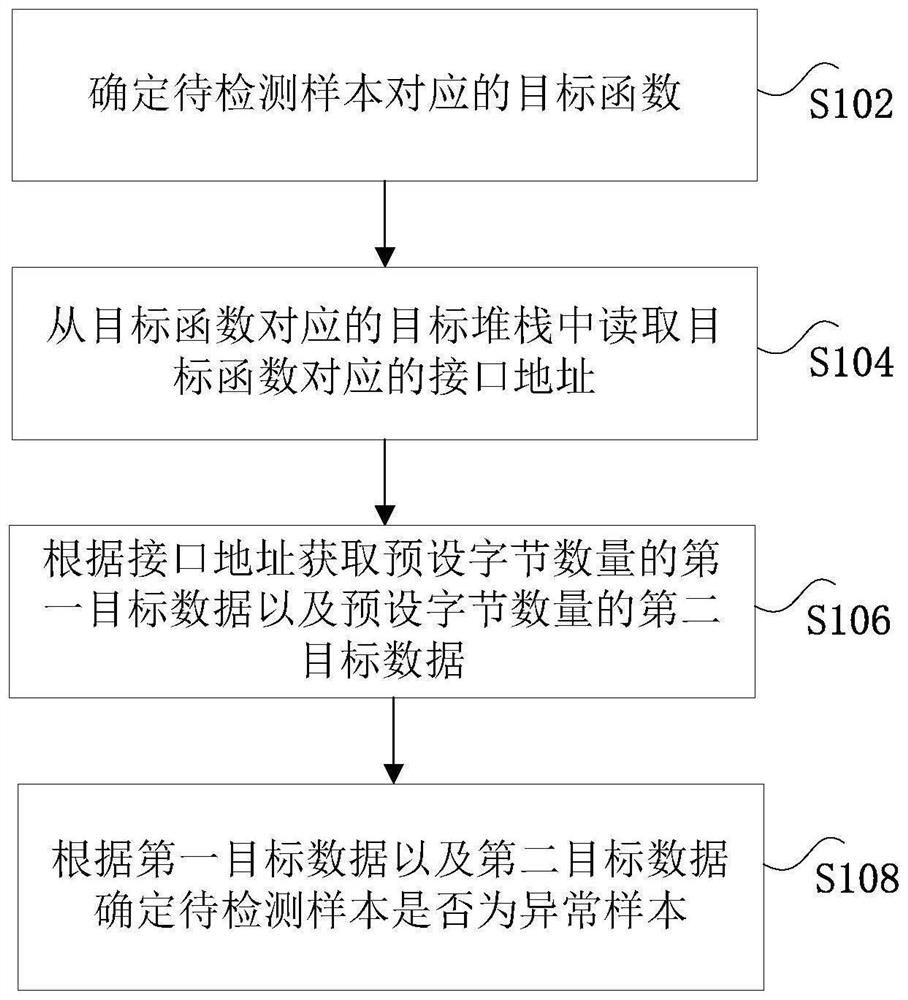

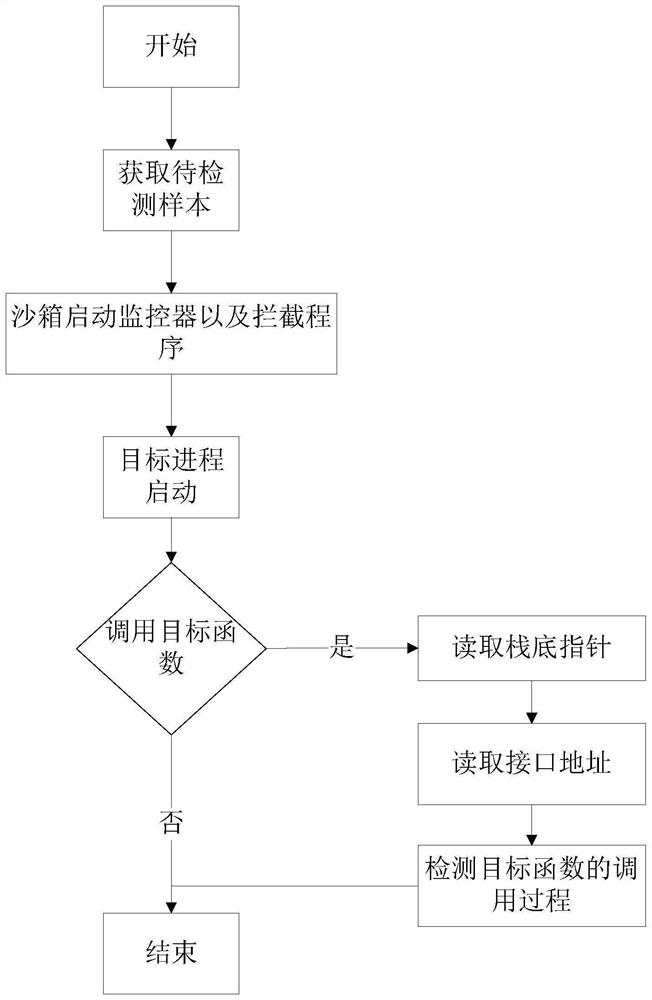

Sample detection method and device and computer readable storage medium

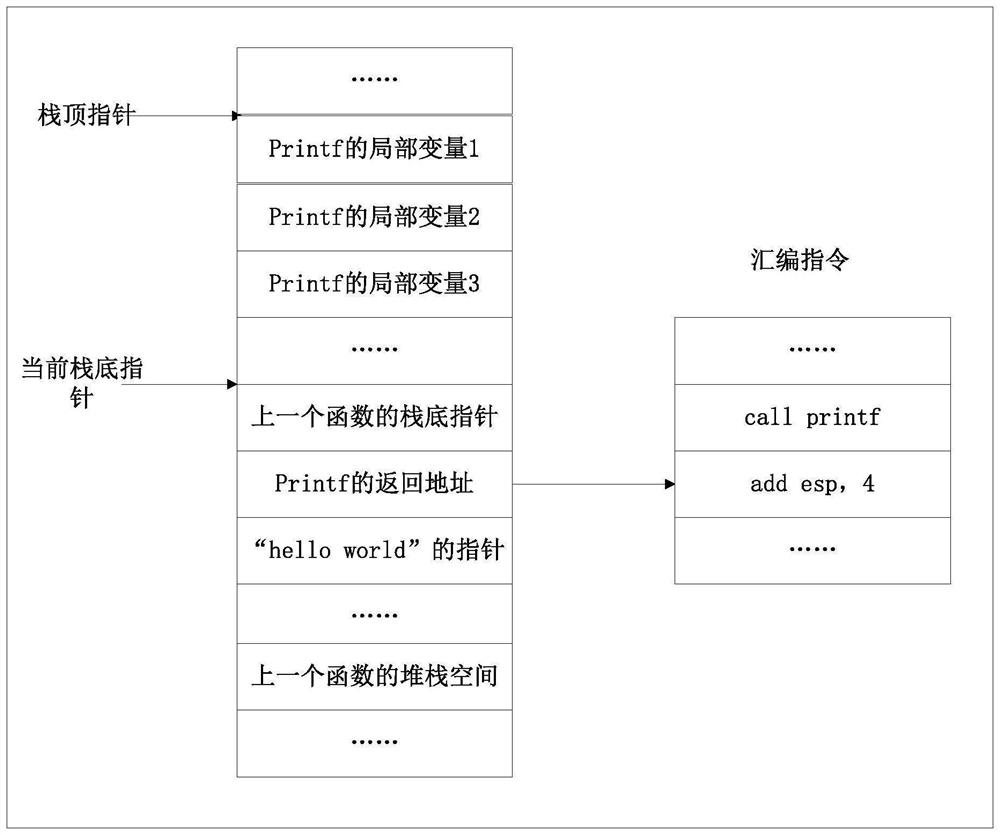

PendingCN114329440AImprove securityReduce overheadPlatform integrity maintainanceOperational systemSoftware engineering

The invention discloses a sample detection method and device and a computer readable storage medium. The method comprises the steps that a target function corresponding to a to-be-detected sample is determined, and the target function represents a section of code executed when an operating system processes the to-be-detected sample; reading an interface address corresponding to the target function from a target stack corresponding to the target function; first target data with a preset byte number and second target data with a preset byte number are obtained according to the interface address, the first target data are located before the data corresponding to the interface address, and the second target data are located after the data corresponding to the interface address; and determining whether the to-be-detected sample is an abnormal sample according to the first target data and the second target data. The technical problem that in the prior art, when the to-be-detected sample is detected, the computing resource overhead is large is solved.

Owner:HILLSTONE NETWORKS CO LTD

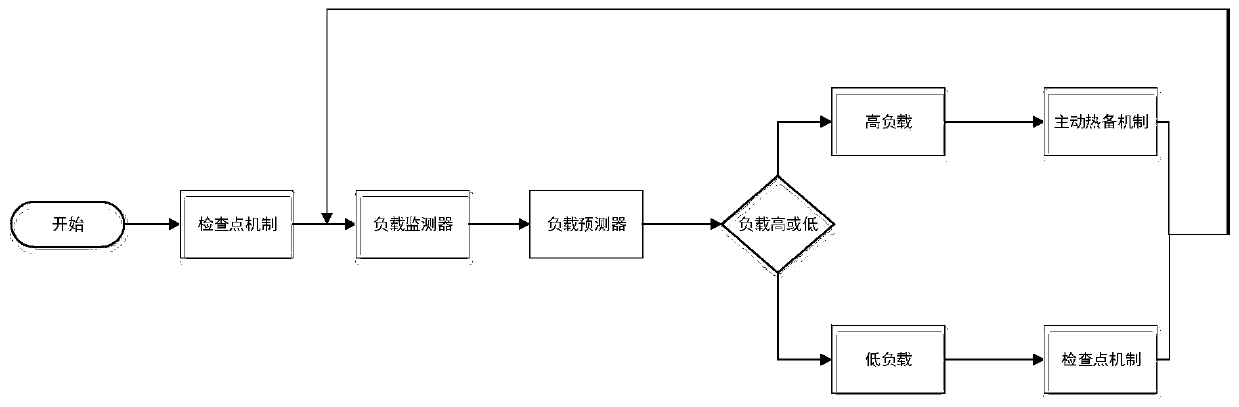

A Fault Tolerance Method for Distributed Stream Processing System in Multiple Application Scenarios

ActiveCN110190991BSolve the problem of high failure recovery delayReduce fault tolerance overheadData switching networksOverhead (computing)Engineering

The invention discloses a fault-tolerant method of a distributed stream processing system in a multi-application scene, and belongs to the field of distributed stream processing. According to the invention, historical valid data analysis of all nodes and real-time load data monitoring are carried out; an active backup mechanism or a check point mechanism is effectively adopted according to different load states of the nodes, so that the problem of high fault recovery delay of the computing nodes in a flow processing system is effectively solved, the fault-tolerant overhead is reduced, the recovery delay is greatly shortened, and the reliability of the system is improved. Hot standby task selection and standby node selection are carried out through load sensing in a multi-application scene;the backup node is reasonably selected for running replica tasks of part of tasks, idle resources on the idle nodes are used for hot standby for the tasks on the busy nodes, and therefore the recovery time delay of the busy nodes when faults happen is remarkably shortened, and meanwhile the resource utilization rate and reliability of a fault-tolerant mechanism of the distributed flow processingsystem are improved.

Owner:HUAZHONG UNIV OF SCI & TECH

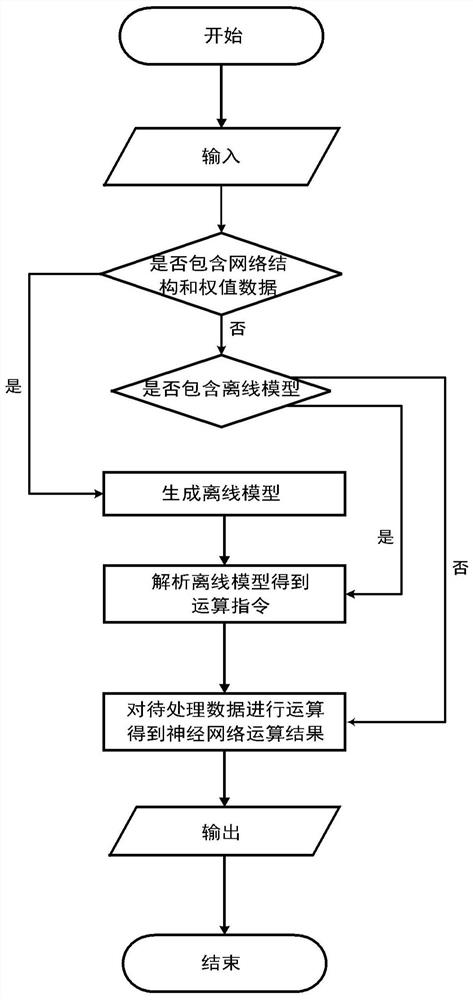

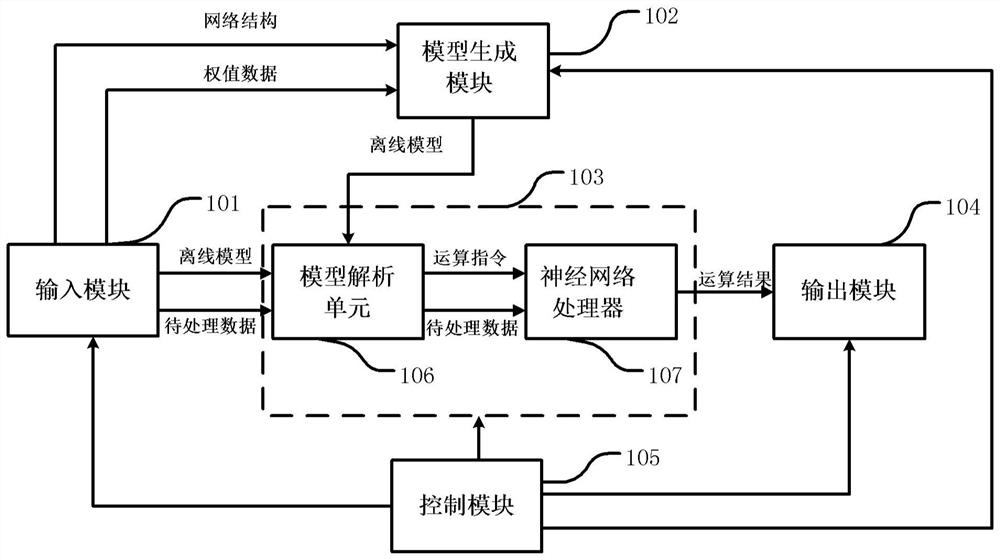

A computing method and device

ActiveCN108734288BAvoid overheadEfficient functional reconstructionModel driven codeNeural architecturesSoftware architectureSoftware engineering

An operation method and device, the operation device includes an input module for inputting data; a model generation module for constructing a model according to the input data; a neural network operation module for generating and caching operation instructions based on the model, and Perform operations on the data to be processed to obtain operation results; the output module is used to output operation results. The device and method disclosed in the present disclosure can avoid the extra overhead caused by running the entire software architecture in the traditional method.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

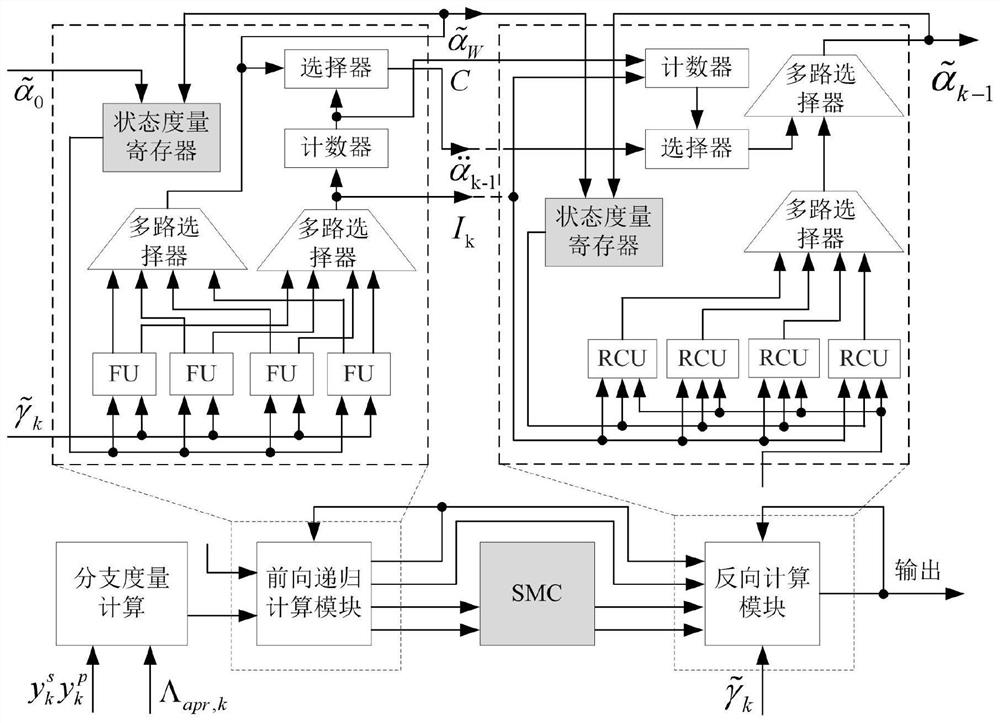

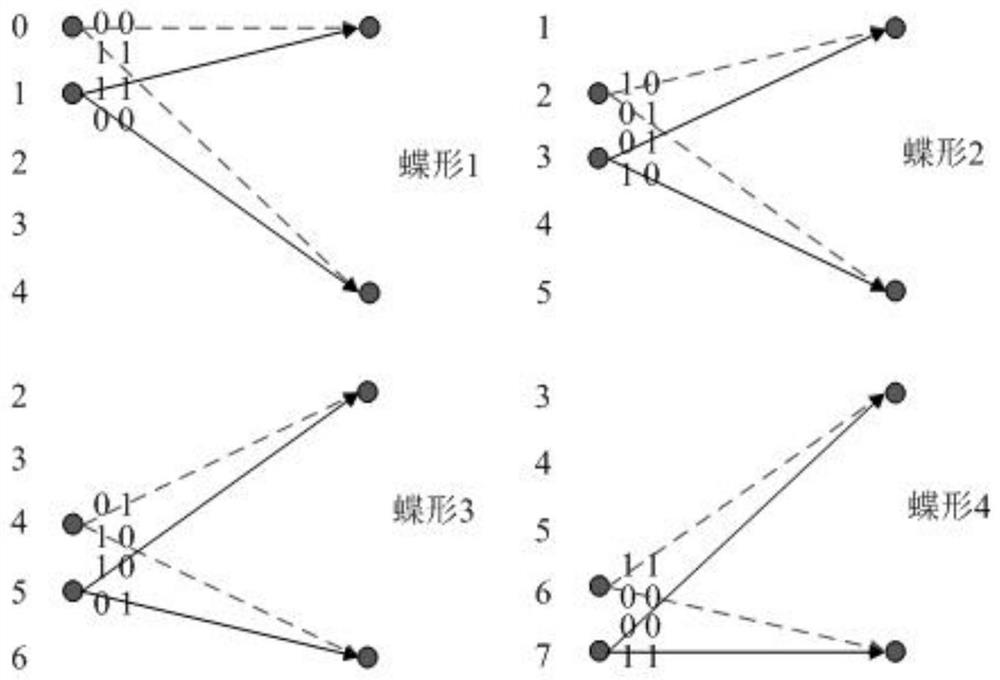

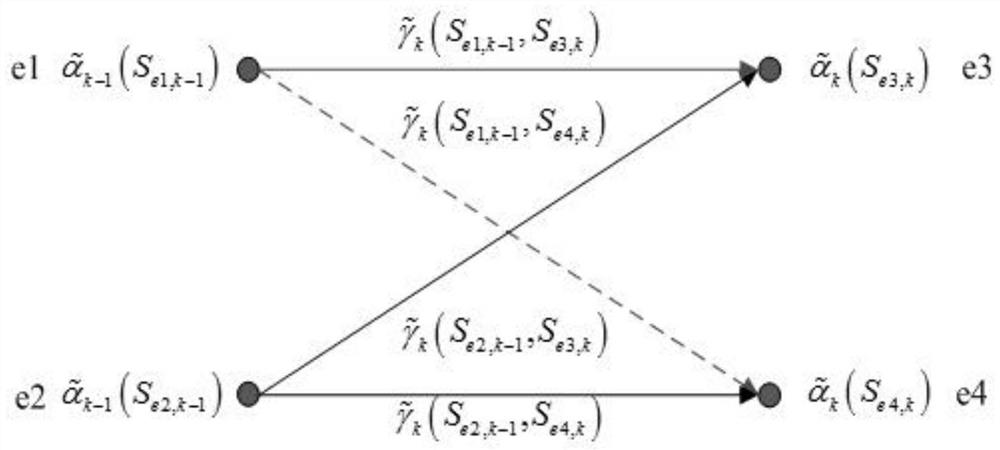

A turbo code decoder and processing method based on reverse butterfly calculation

ActiveCN111181575BReduce capacityGuaranteed accuracyCode conversionError correction/detection by combining multiple code structuresParallel computingOverhead (computing)

Owner:SOUTHWEST UNIV

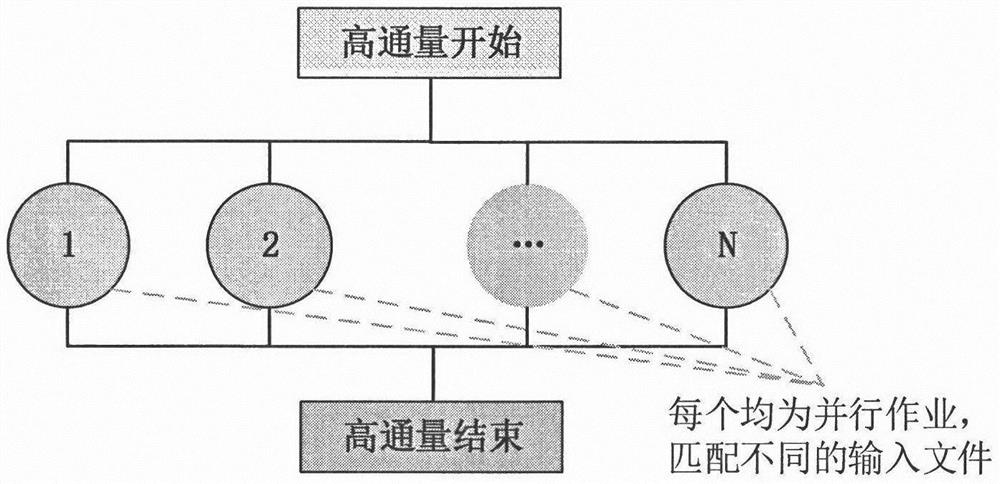

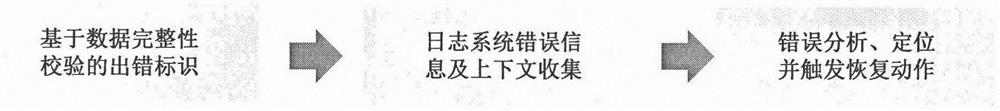

Lightweight high-throughput calculation mode and fault tolerance method thereof

PendingCN113742125AReduce administrative overheadReduce interaction frequencyFault responseConcurrent computingRelational model

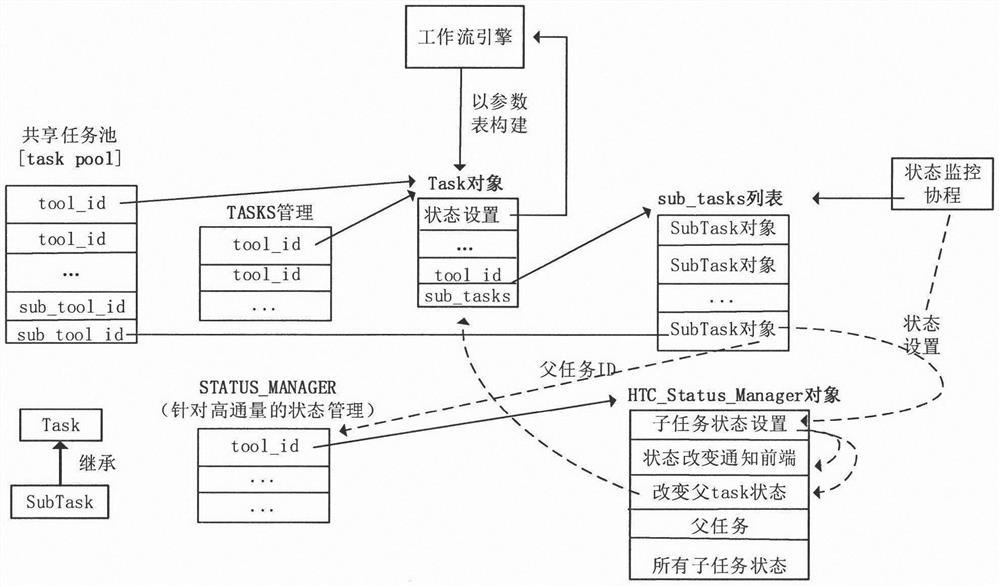

The invention discloses a lightweight high-throughput calculation mode and a fault tolerance method thereof, and particularly relates to the technical field of computer application, and the method comprises the following steps: 1, providing a runtime high-throughput calculation mode; 2, providing an error discrimination method based on a threshold value; and 3, designing a high-flux calculation fault-tolerant technical framework which can achieve efficient fault tolerance of thousands of flux calculation. A father-child task relation model is designed, and the management overhead of super-large-scale concurrent computing tasks is reduced; According to the method, a runtime high-throughput task model is provided for thousands of throughput concurrent calculation modes, a father-child task relation model is designed in a dynamic task expansion mode, and the management overhead of super-large-scale concurrent calculation tasks is reduced; and through the task packaging technology of the job array, the interaction frequency with the supercomputing scheduling system is reduced, and the overhead of job delivery and job state monitoring is further reduced.

Owner:COMP APPL RES INST CHINA ACAD OF ENG PHYSICS

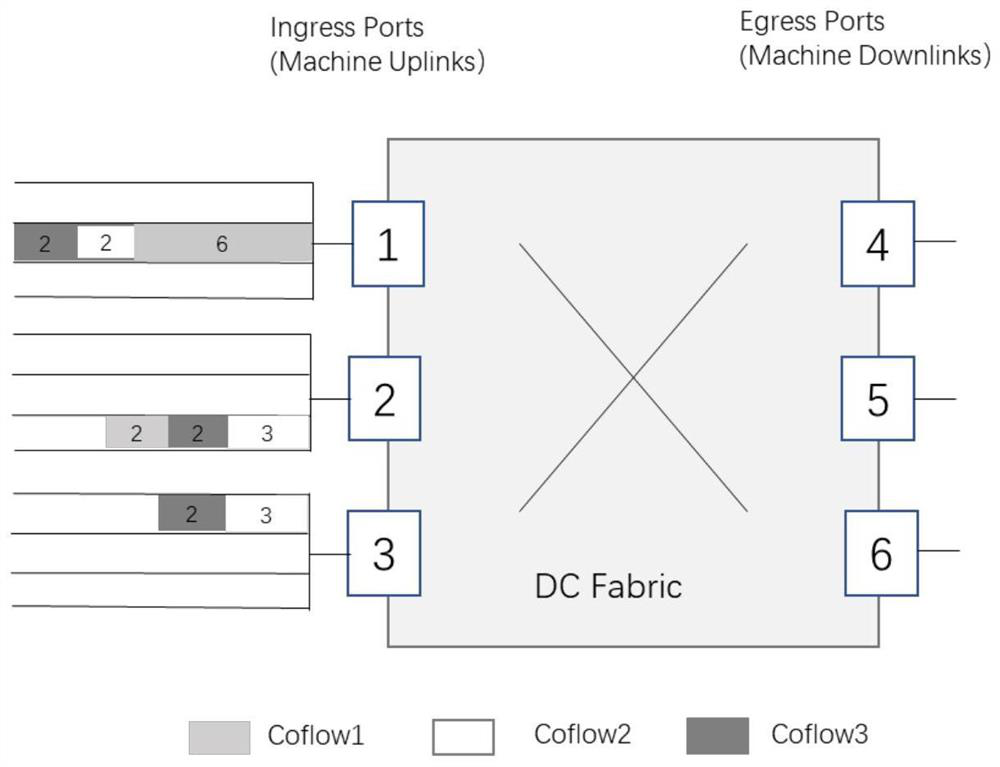

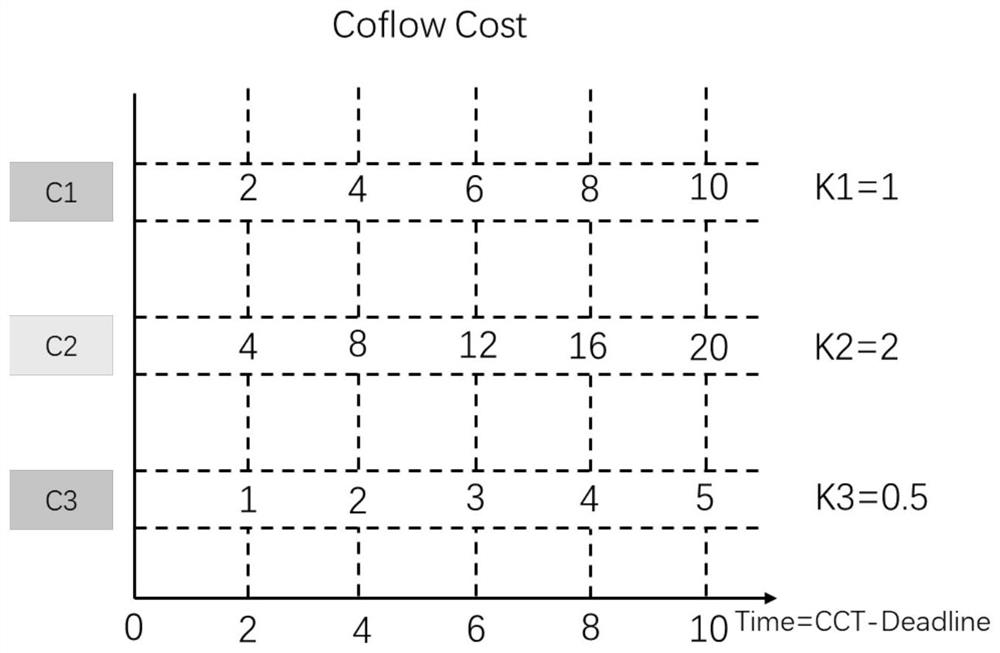

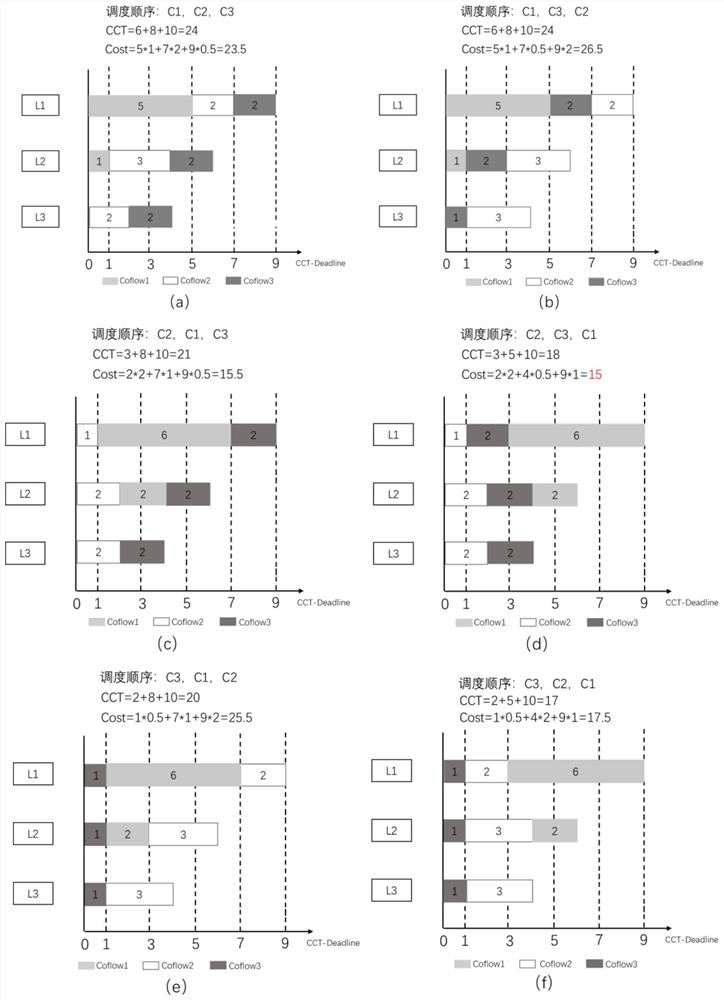

Coflow Scheduling Method Based on Deadline to Minimize System Overhead

InactiveCN110048966BEfficient schedulingMinimize overheadData switching networksConcurrent computationData center

Owner:TIANJIN UNIV

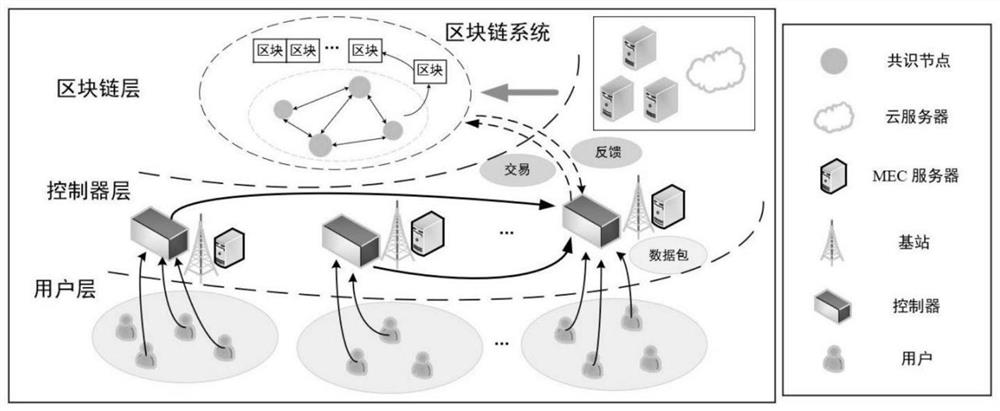

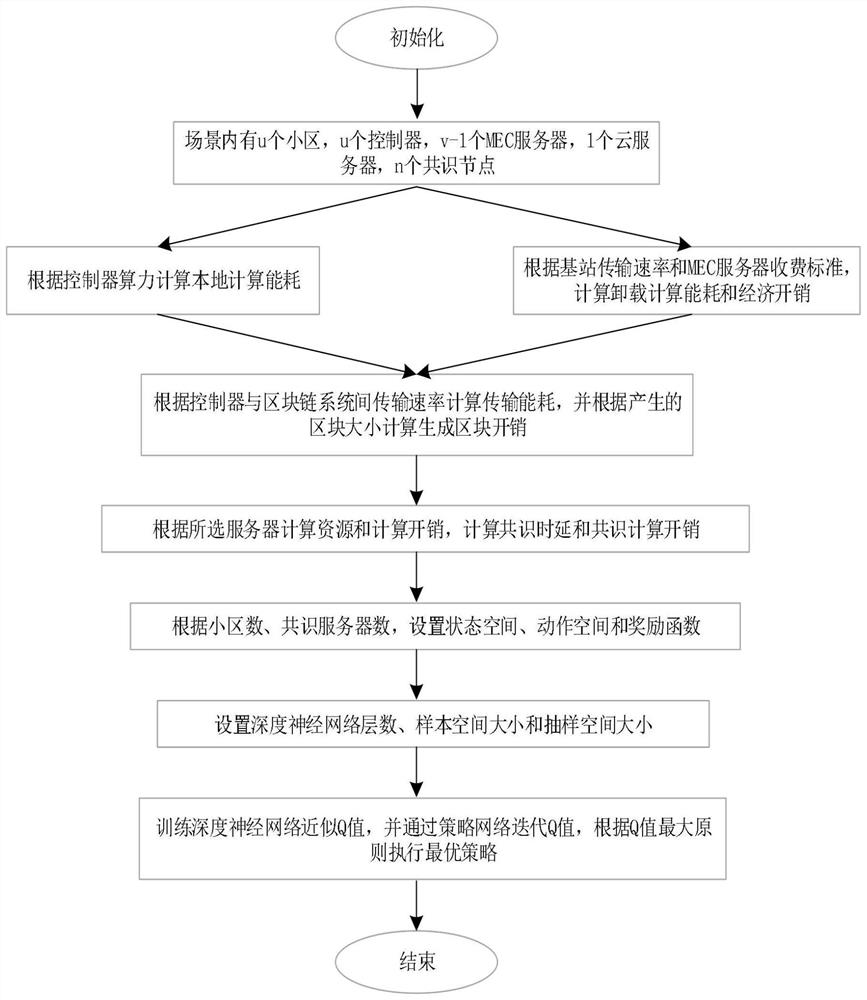

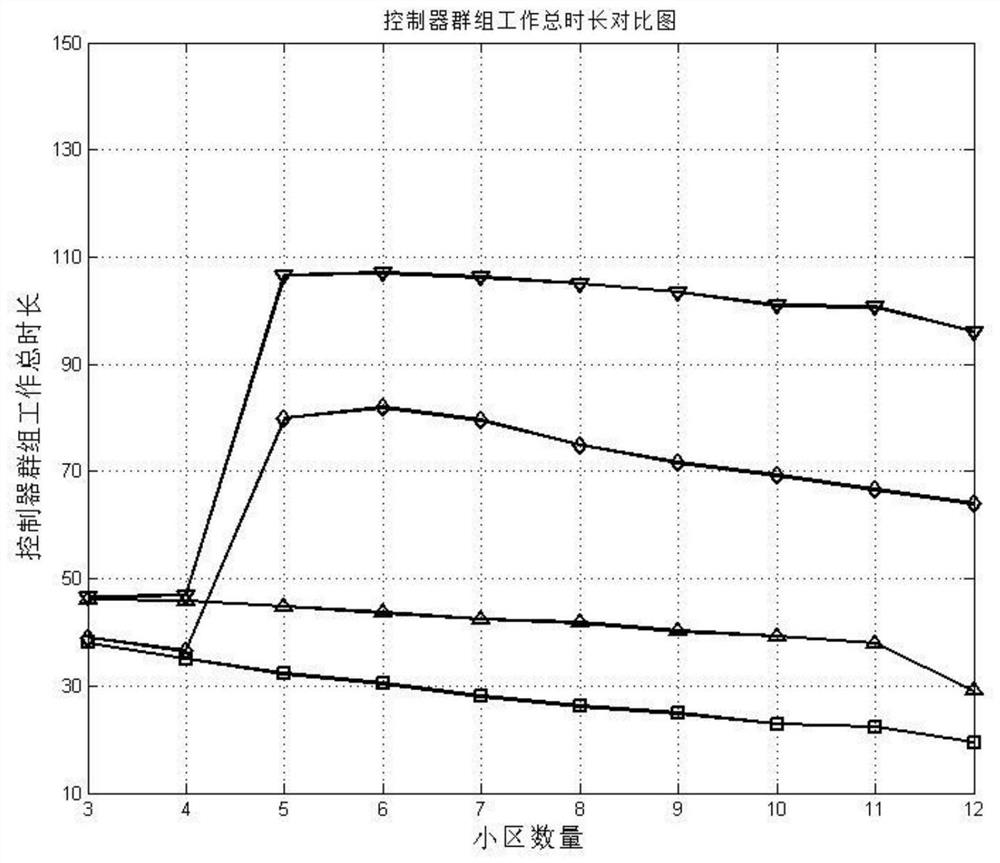

Decision-making method for optimal allocation of resources based on deep reinforcement learning and blockchain consensus

The invention discloses a resource optimization allocation decision method based on deep reinforcement learning and block chain consensus. By constructing a computing task model and a server state model, the energy consumption and economic overhead of local computing and offloading computing of the main controller are calculated, and the block chain The computational economic overhead generated by the consensus process can guide the adjustment of controller selection, offloading decision, block size and server selection through training deep neural network and policy network, and complete the optimal resource allocation in the scene. The invention overcomes the problems of industrial Internet data security, high energy consumption of equipment due to processing calculation tasks, short working cycle, and high overall economic cost of the system. Simulation experiments show that the industrial Internet resource optimization allocation decision-making method based on deep reinforcement learning and blockchain consensus proposed by the present invention has certain advantages in saving controller energy consumption, system economic overhead and prolonging the total working time of controller groups.

Owner:BEIJING UNIV OF TECH

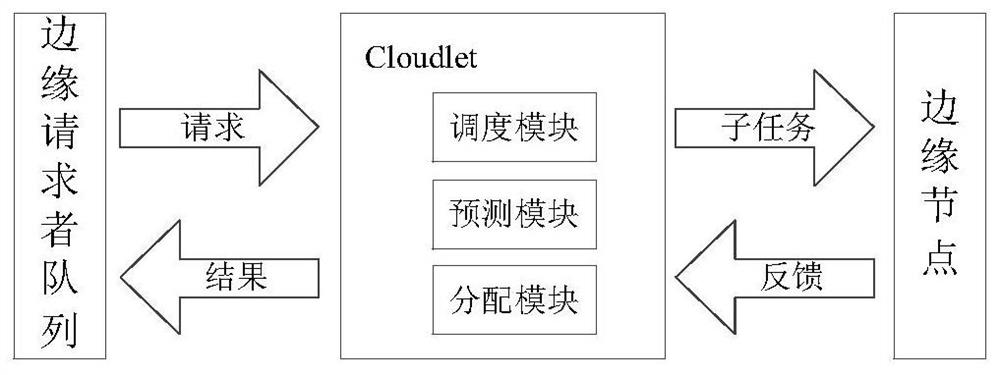

A multi-round task allocation method, edge computing system and storage medium thereof

ActiveCN109947551BResolution timeHigh costProgram initiation/switchingResource allocationEdge computingDistribution method

The invention discloses a multi-round task allocation method, an edge computing system and its storage medium, including task sending, and decomposing a single task into multiple sub-tasks; on the basis of multi-round allocation, system overhead and task completion are considered time, provide a joint optimization model for multi-stage optimization, and obtain the actual size of assigned tasks for each stage and each round of each terminal; assign the assigned tasks to each terminal node round by round, and repeat multiple stages until all tasks are completed. The invention provides a video analysis task scheduling strategy suitable for a highly dynamic mobile terminal cooperation environment, which can effectively learn and obtain the change rule of terminal node computing capabilities, and at the same time minimize the task completion time and system overhead, which is used to solve the traditional The problem of long task completion time and high cost in the model.

Owner:CENT SOUTH UNIV

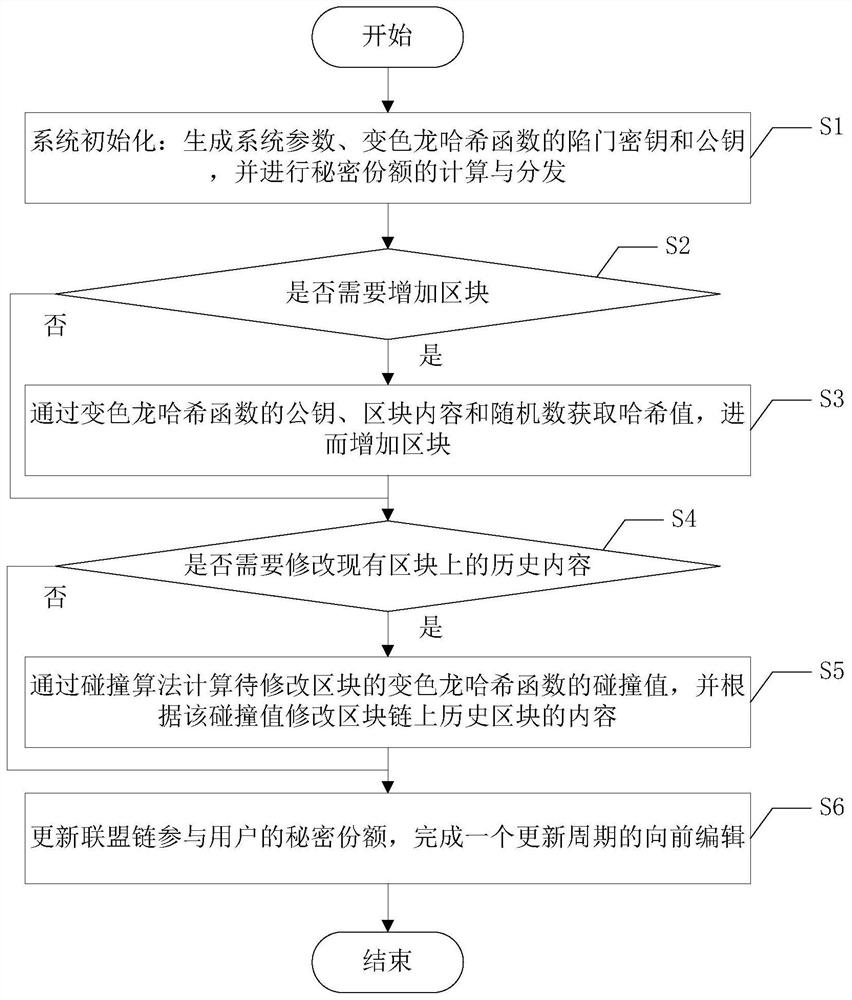

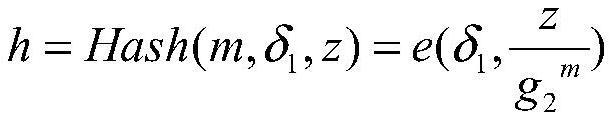

A forward-safe editable blockchain construction method suitable for consortium chains

ActiveCN111526009BProtection from being leakedMultiple interactionsKey distribution for secure communicationEncryption apparatus with shift registers/memoriesSecret shareAlgorithm

The present invention discloses a forward secure editable block chain construction method suitable for consortium chains, which includes the following steps: S1, system initialization; S2, judging whether blocks need to be added, if so, enter step S3, otherwise enter step S4; S3, increase the block by obtaining the hash value; S4, judge whether the historical content on the existing block needs to be modified, if so, go to step S5, otherwise go to step S6; S5, pass the chameleon of the block to be modified The collision value of the Greek function modifies the content of the historical block on the block chain; S6, updates the secret share of the participating users of the alliance chain, and completes the forward editing of an update cycle. The present invention uses secret sharing technology to store trapdoor keys in a distributed manner. Compared with secure multi-party computing protocols, the structure of calculation collisions in the present invention not only protects trapdoor keys and secret shares from being leaked, but also has less interaction times and lower computational overhead.

Owner:SOUTHWEST JIAOTONG UNIV

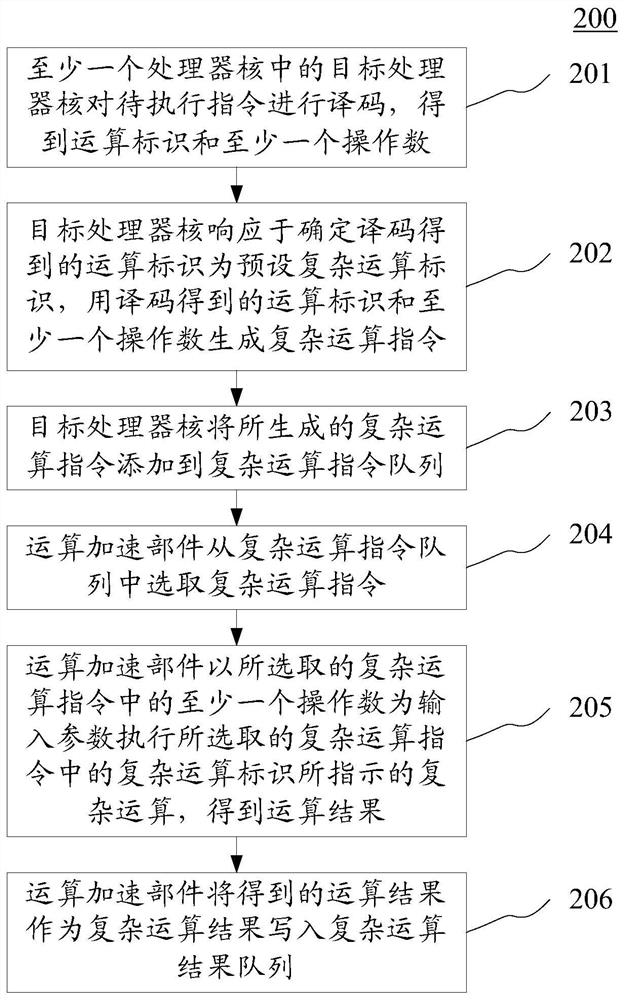

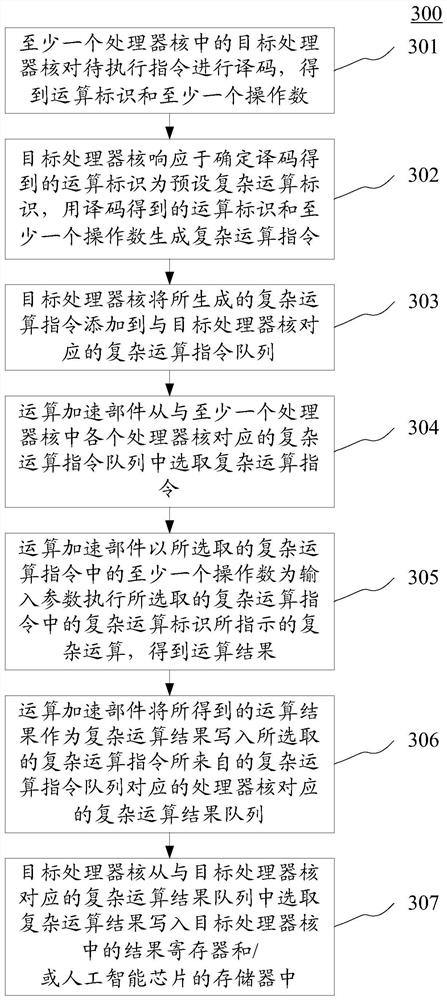

Calculation method applied to artificial intelligence chip and artificial intelligence chip

ActiveCN110825436BImprove abilitiesImprove efficiencyInstruction analysisRuntime instruction translationOverhead (computing)Engineering

The embodiment of the present application discloses a calculation method applied to an artificial intelligence chip and an artificial intelligence chip. A specific implementation manner of the method includes: the target processor core responds to determining that the operation identifier obtained by decoding the instruction to be executed is a preset complex operation identifier, using the decoded operation identifier and at least one operand to generate a complex operation instruction, and Add the generated complex operation instruction to the complex operation instruction queue; the operation acceleration component selects the complex operation instruction from the complex operation instruction queue, and executes the selected complex operation with at least one operand in the selected complex operation instruction as an input parameter The complex operation indicated by the complex operation identifier in the instruction obtains the operation result, and writes the obtained operation result into the complex operation result queue as the complex operation result. This embodiment reduces the area overhead and power consumption overhead of the artificial intelligence chip.

Owner:KUNLUNXIN TECH BEIJING CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com