Patents

Literature

40results about How to "Reduce request latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

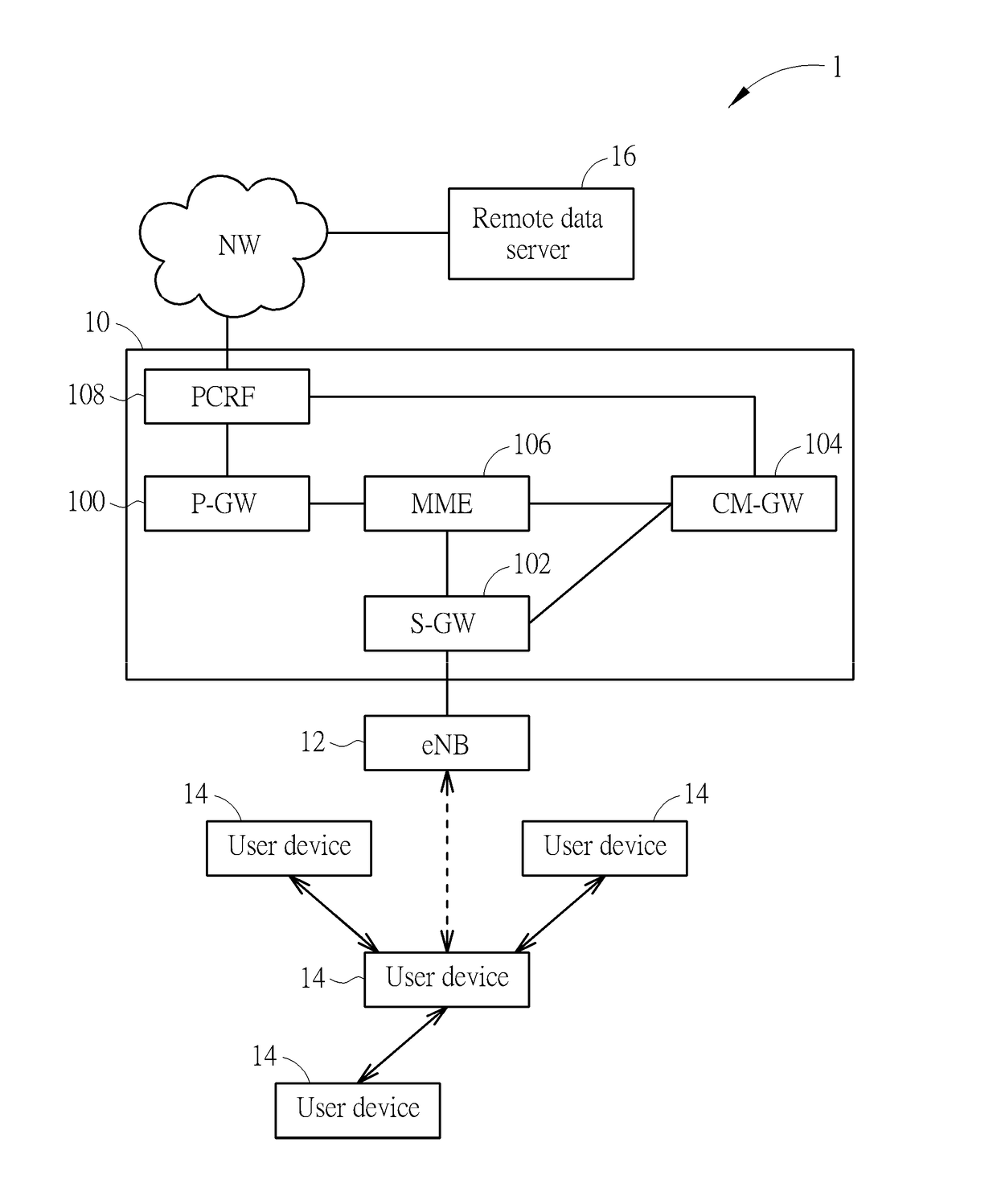

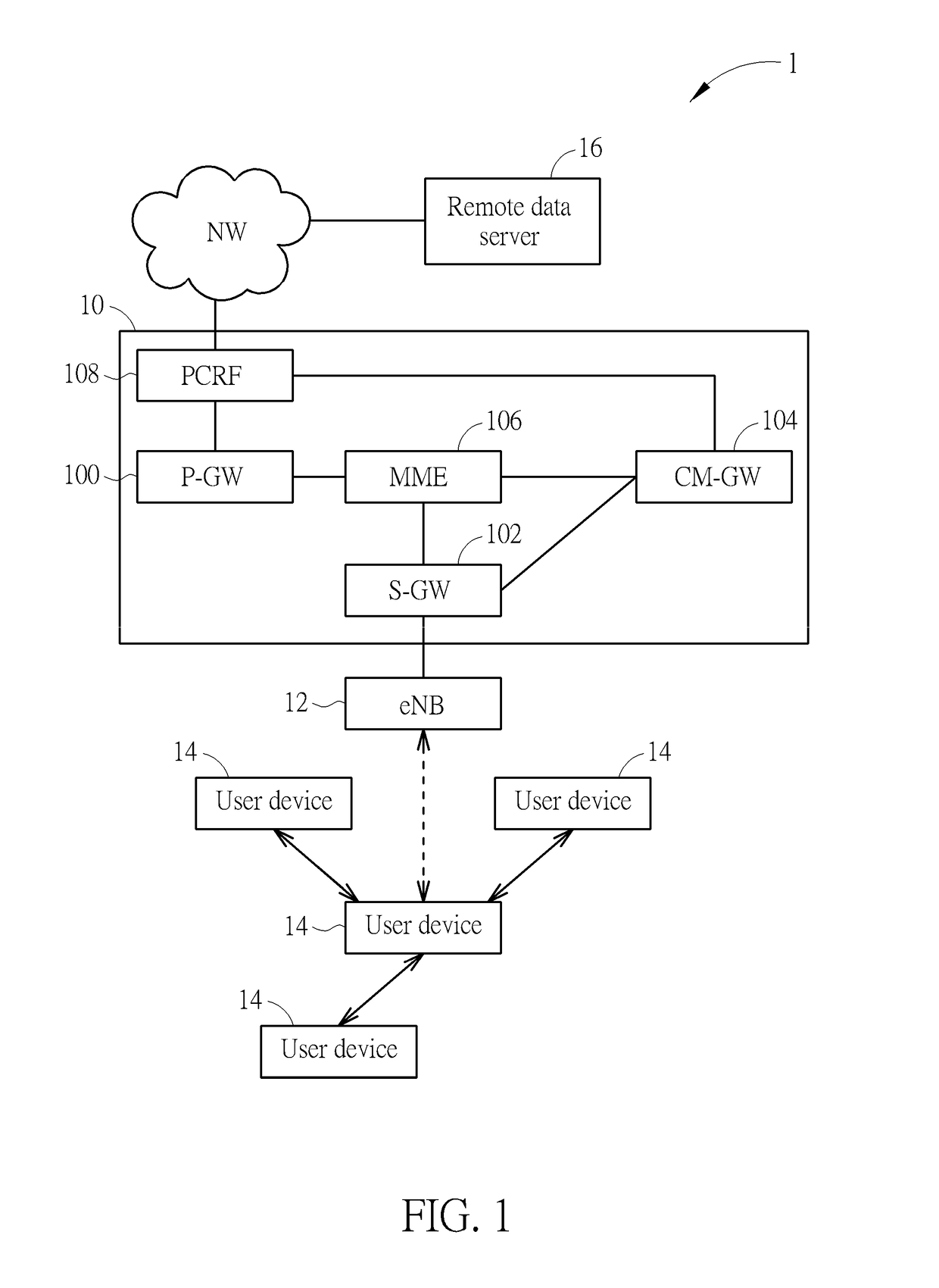

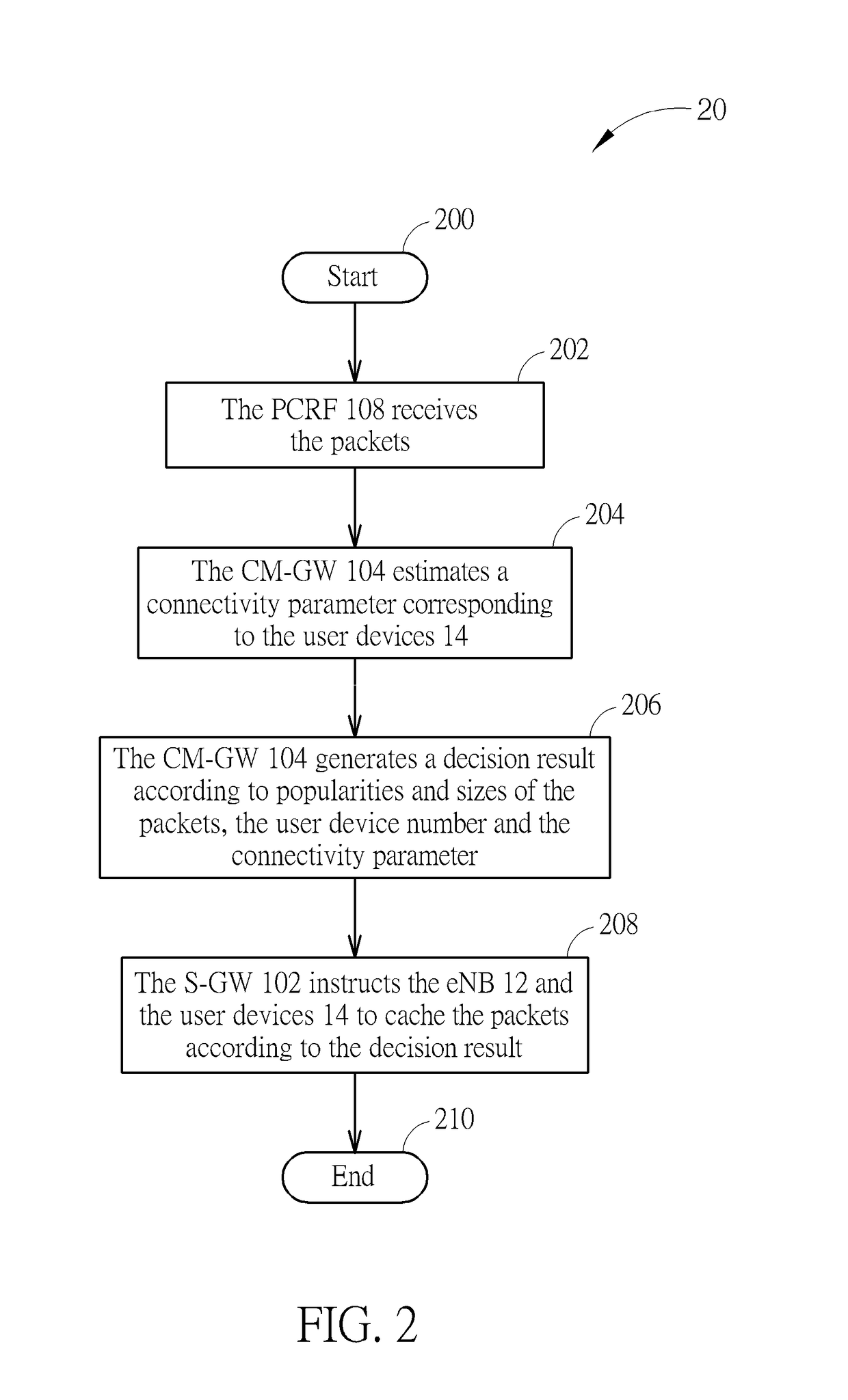

Method and Wireless Communication System for Processing Data

InactiveUS20170214761A1Reduce request latencyNetwork traffic/resource managementTransmissionCommunications systemCache management

A method is to process packets in a wireless communication system including an evolved core network, a plurality of user devices, and an evolved node B (eNB), the evolved core network including a policy and charging rules function (PCRF), a serving gateway, a caching management gateway and a mobile management entity (MME). The method includes receiving, via the PCRF, the packets; obtaining, via the MME, an user device number; estimating, via the caching management gateway, a connectivity parameter corresponding to the user devices; generating, via the caching management gateway, a decision result according to popularities and sizes of the packets, the user device number and the connectivity parameter; and instructing, via the serving gateway, the eNB and the user devices to cache the packets according to the decision result.

Owner:NAT TAIWAN UNIV +1

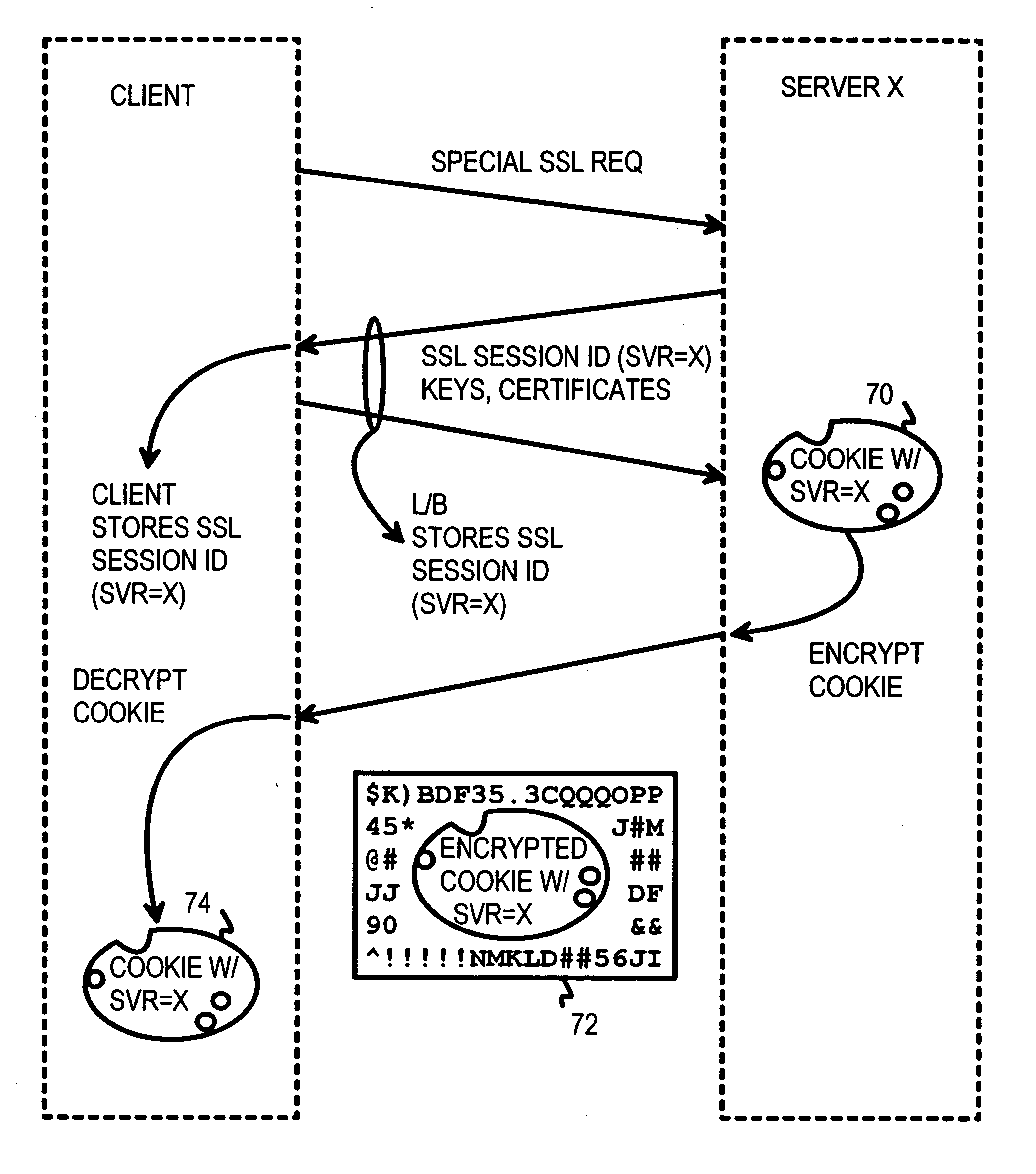

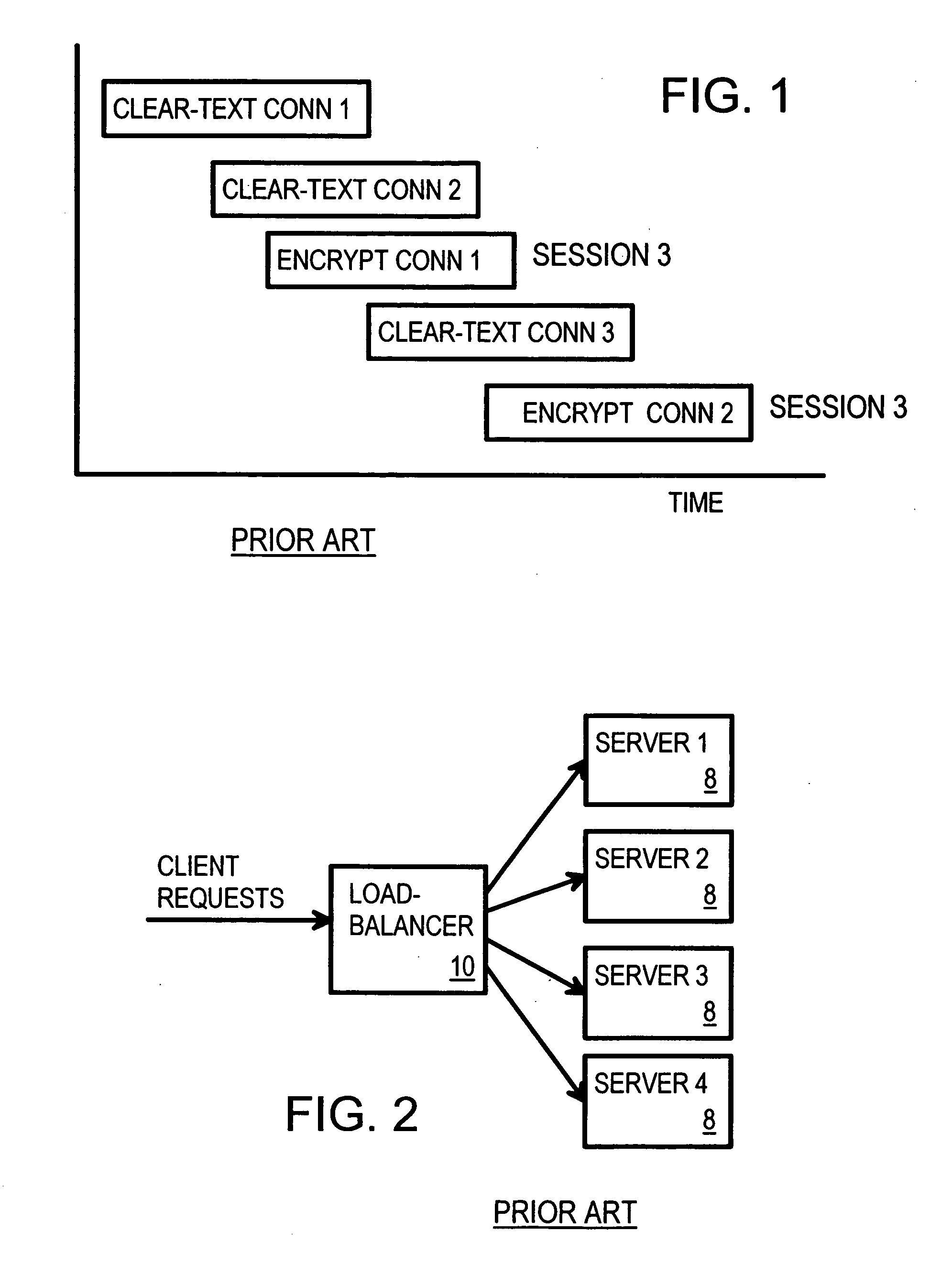

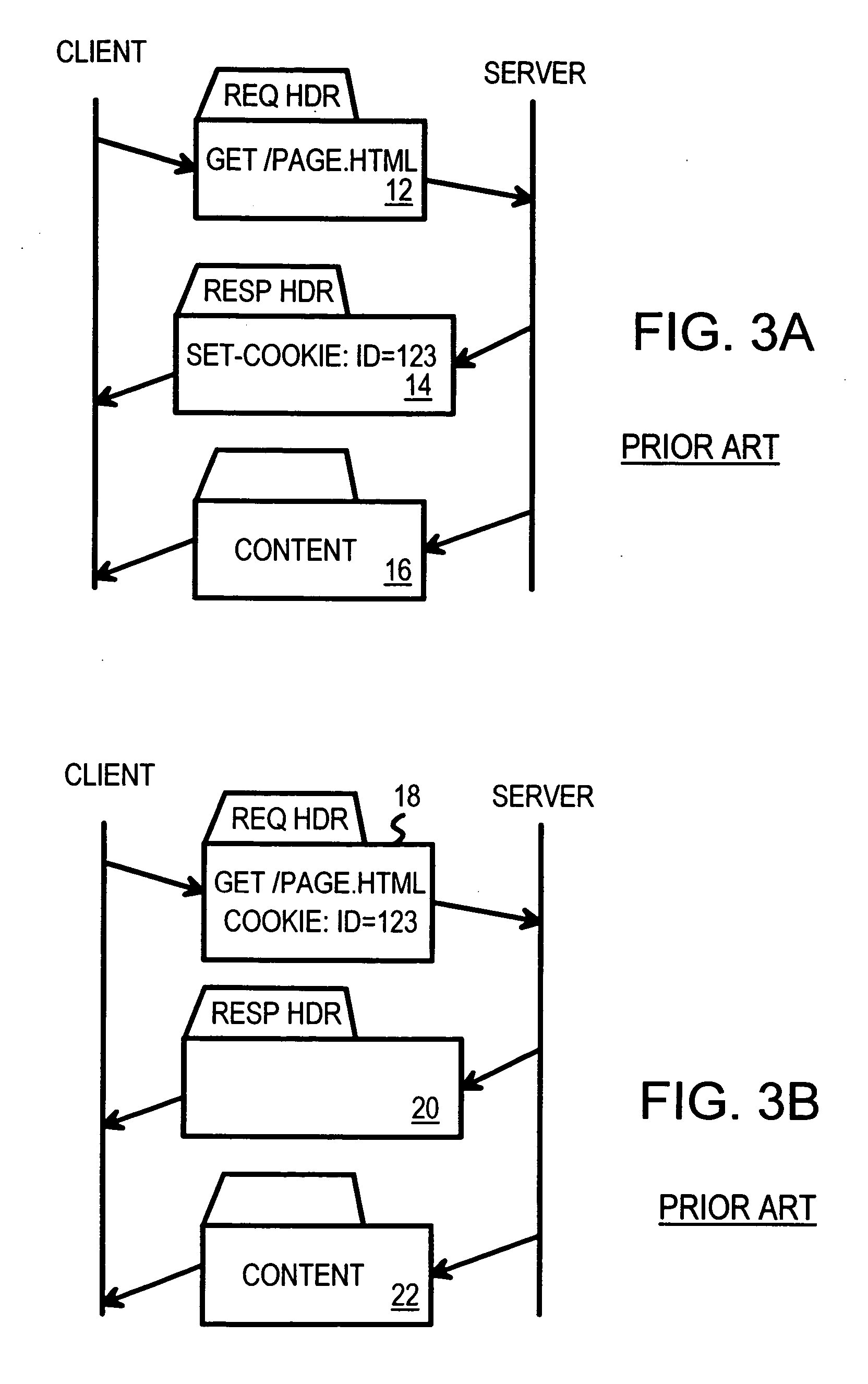

Atomic session-start operation combining clear-text and encrypted sessions to provide ID visibility to middleware such as load-balancers

InactiveUS20050010754A1Reducing local network trafficSimplifying web-site architectureDigital computer detailsMultiprogramming arrangementsPlaintextLoad Shedding

A load-balancer assigns incoming requests to servers at a server farm. An atomic operation assigns both un-encrypted clear-text requests and encrypted requests from a client to the same server at the server farm. An encrypted session is started early by the atomic operation, before encryption is required. The atomic operation is initiated by a special, automatically loaded component on a web page. This component is referenced by code requiring that an encrypted session be used to retrieve the component. Keys and certificates are exchanged between a server and the client to establish the encrypted session. The server generates a secure-sockets-layer (SSL) session ID for the encrypted session. The server also generates a server-assignment cookie that identifies the server at the server farm. The server-assignment cookie is encrypted and sent to the client along with the SSL session ID. The Client decrypts the server-assignment cookie and stores it along with the SSL session ID. The load-balancer stores the SSL session ID along with a server assignment that identifies the server that generated the SSL session ID. When other encrypted requests are generated by the client to the server farm, they include the SSL session ID. The load-balancer uses the SSL session ID to send the requests to the assigned server. When the client sends a non-encrypted clear-text request to the server farm, it includes the decrypted server-assignment cookie. The load balancer parses the clear-text request to find the server-assignment cookie. The load-balancer then sends the request to the assigned server.

Owner:HANGER SOLUTIONS LLC +1

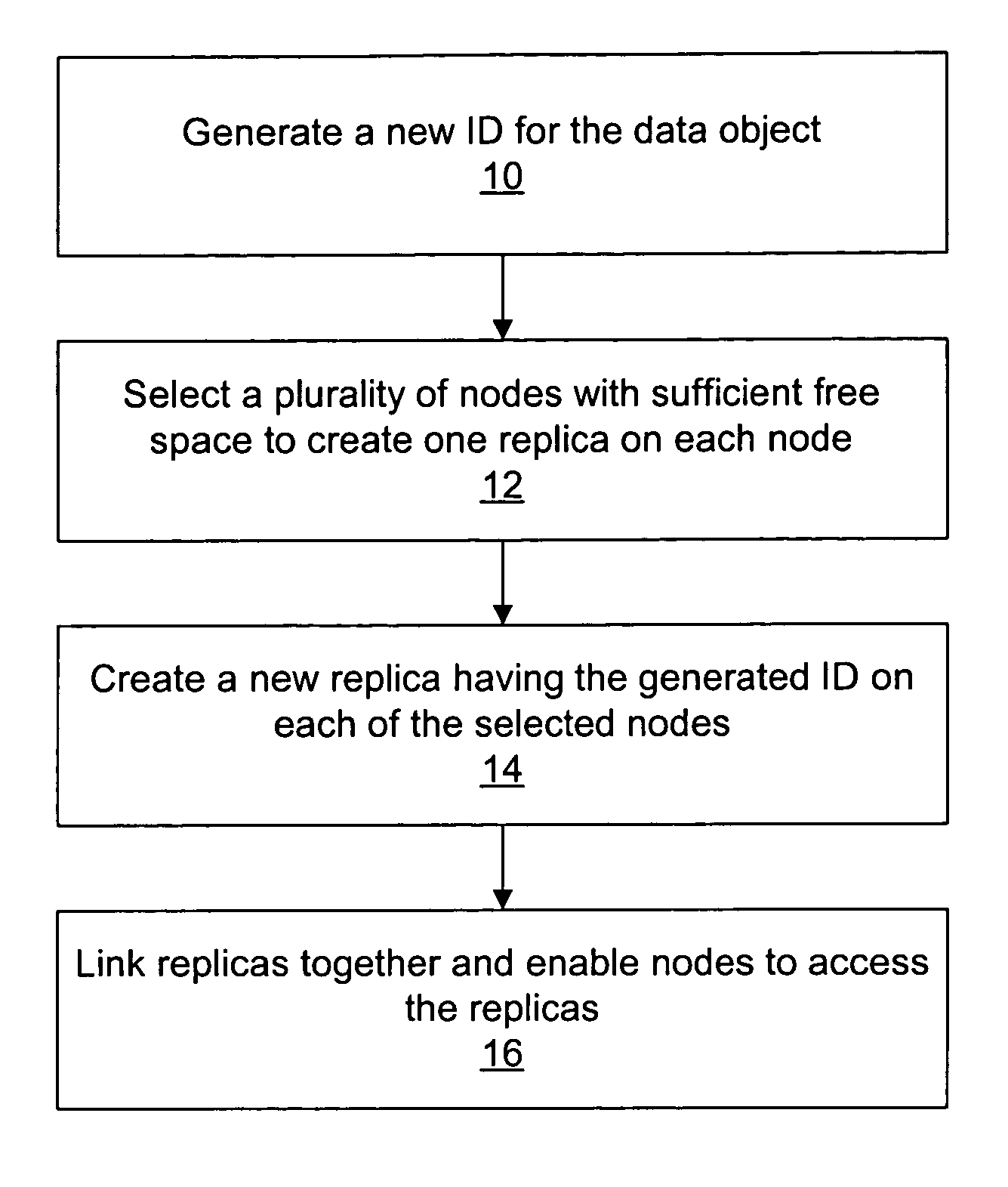

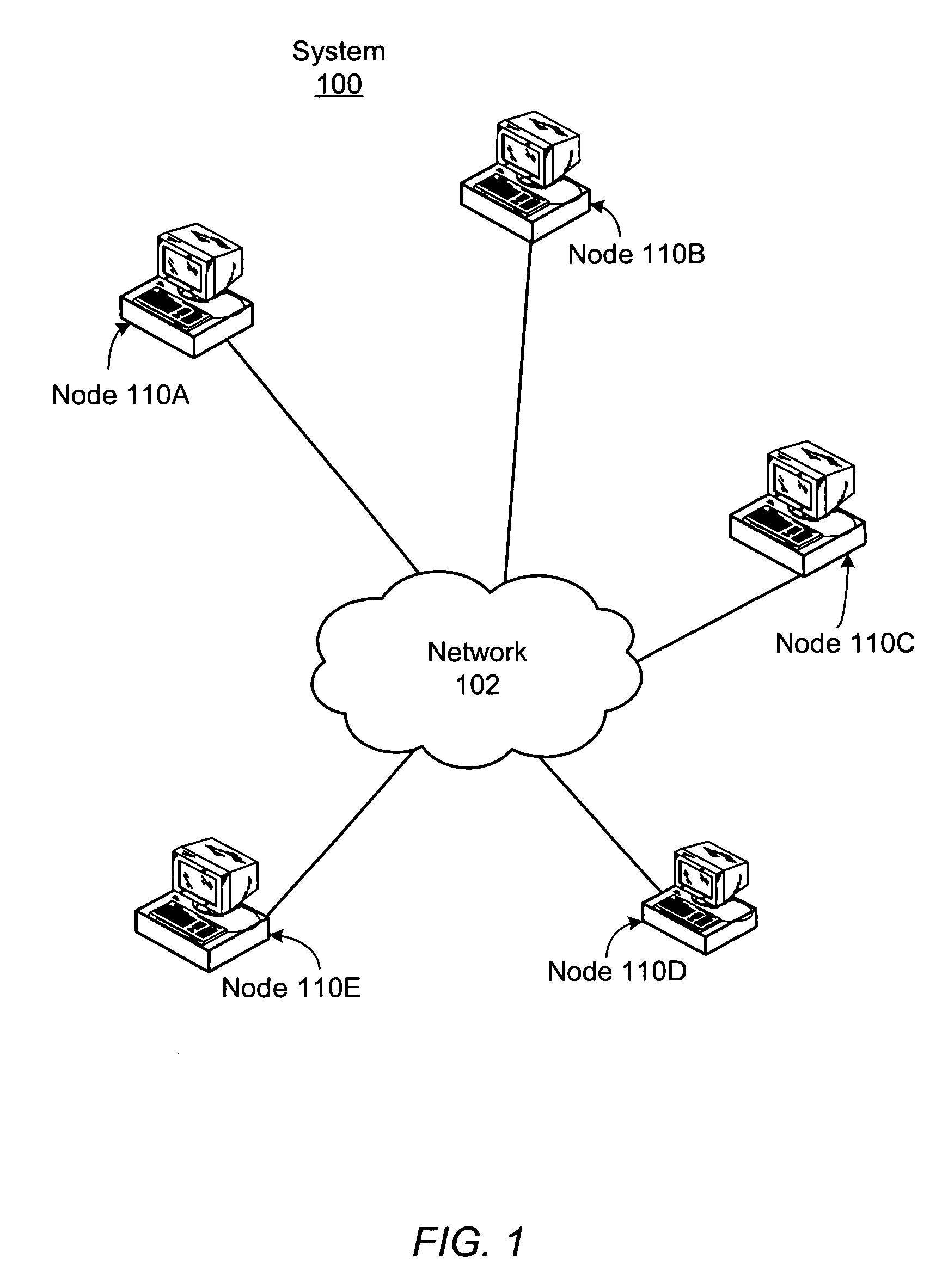

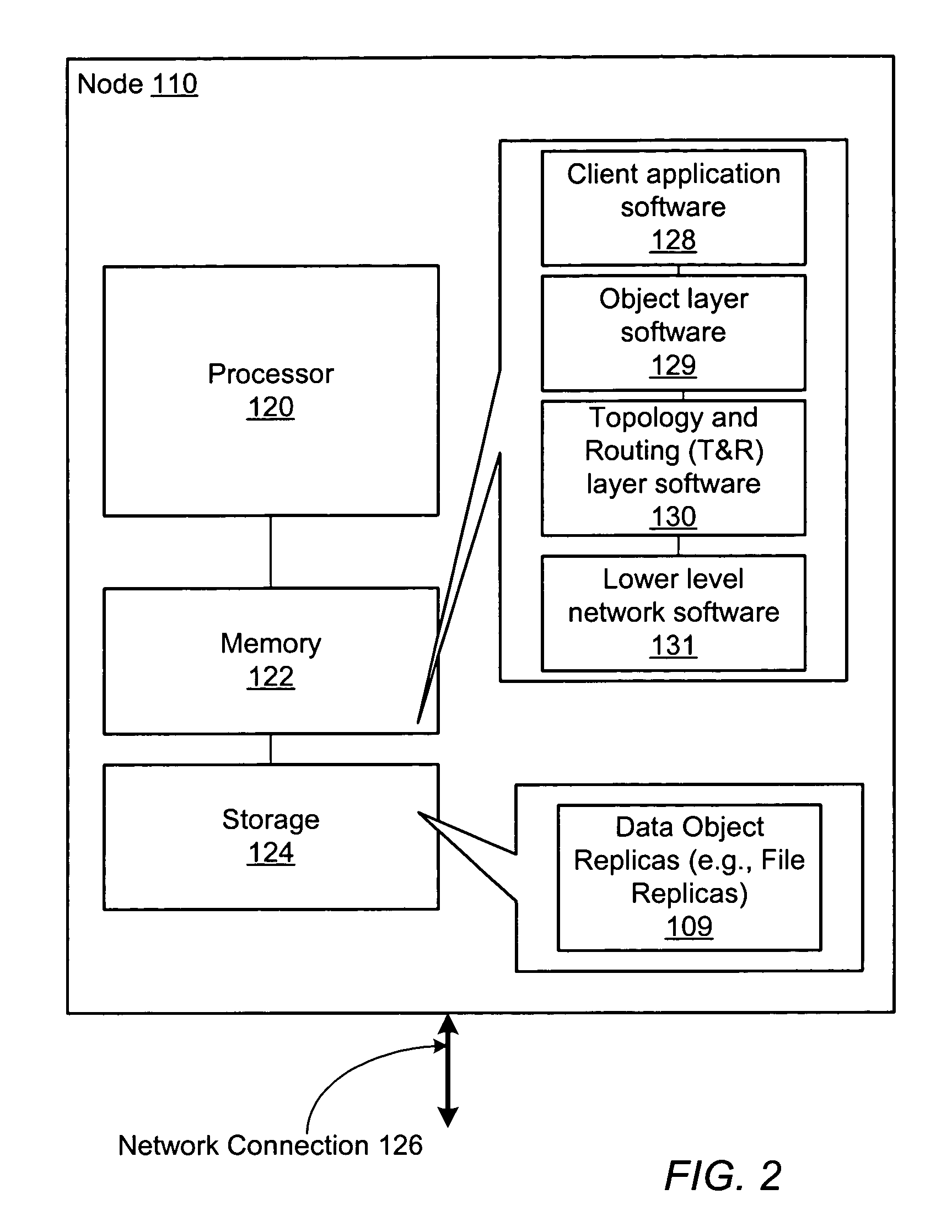

System pre-allocating data object replicas for a distributed file sharing system

ActiveUS7433928B1Reduce request latencyDigital computer detailsTransmissionOperating systemWaiting time

A system and method for pre-allocating replicas for a distributed file sharing system. Creating a new file may involve creating a plurality of replicas for the file on a plurality of nodes. In one embodiment nodes in the system may pre-allocate sets of file replicas, where the pre-allocated replicas can be used to satisfy requests to create new files. Pre-allocating the file replicas may decrease the latency of file creation requests by enabling the requests to be satisfied without performing replica allocation in response to the requests.

Owner:SYMANTEC OPERATING CORP

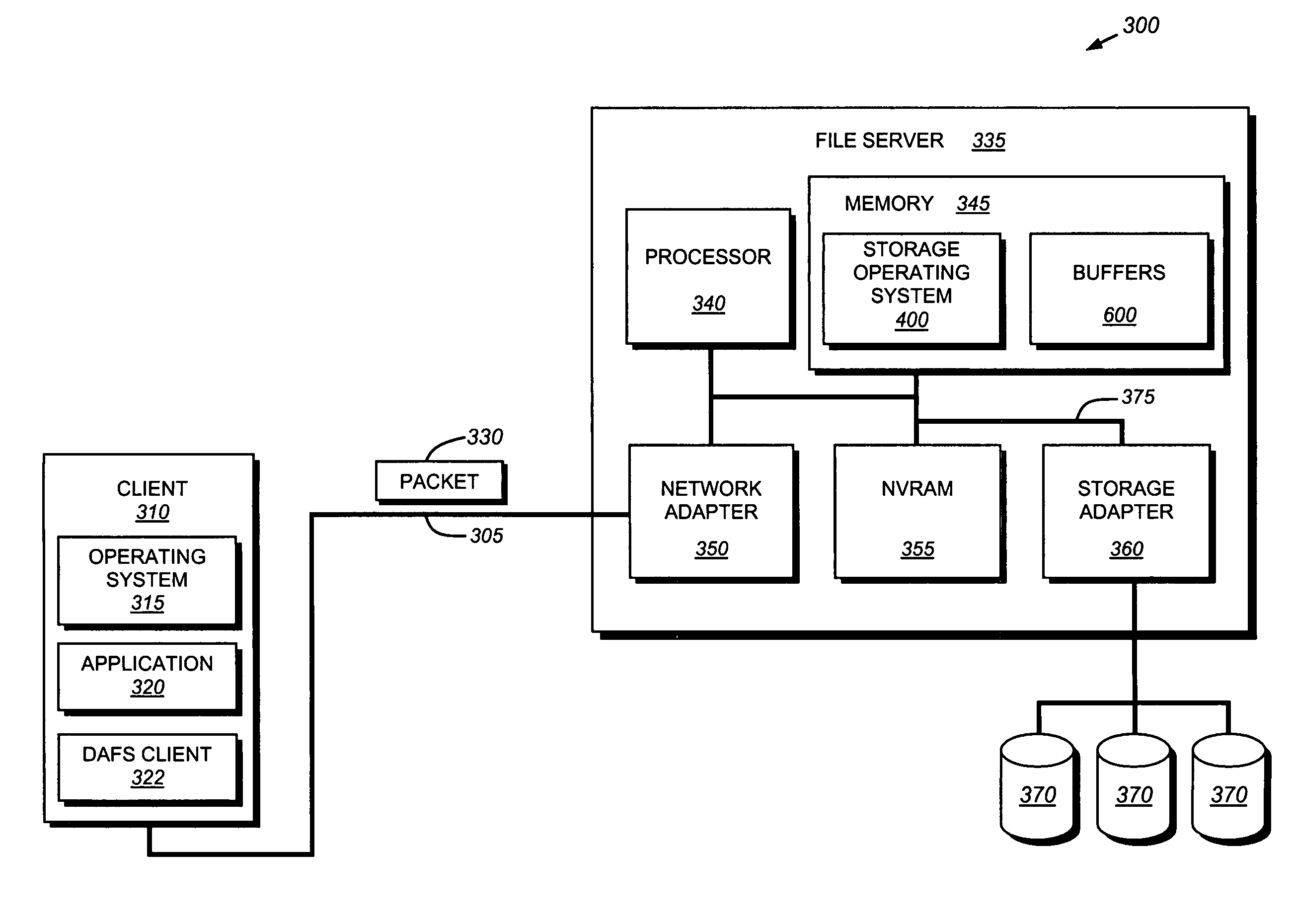

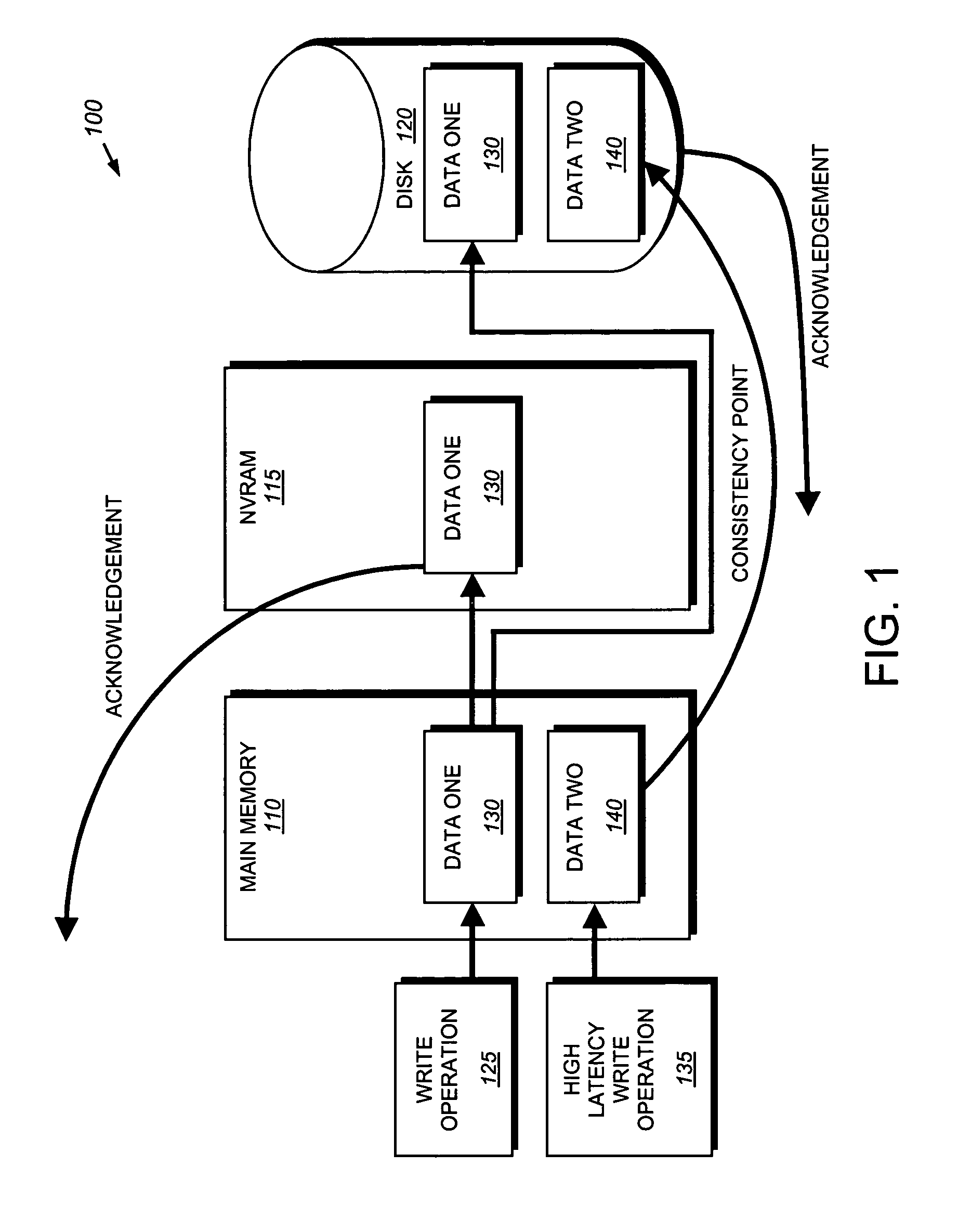

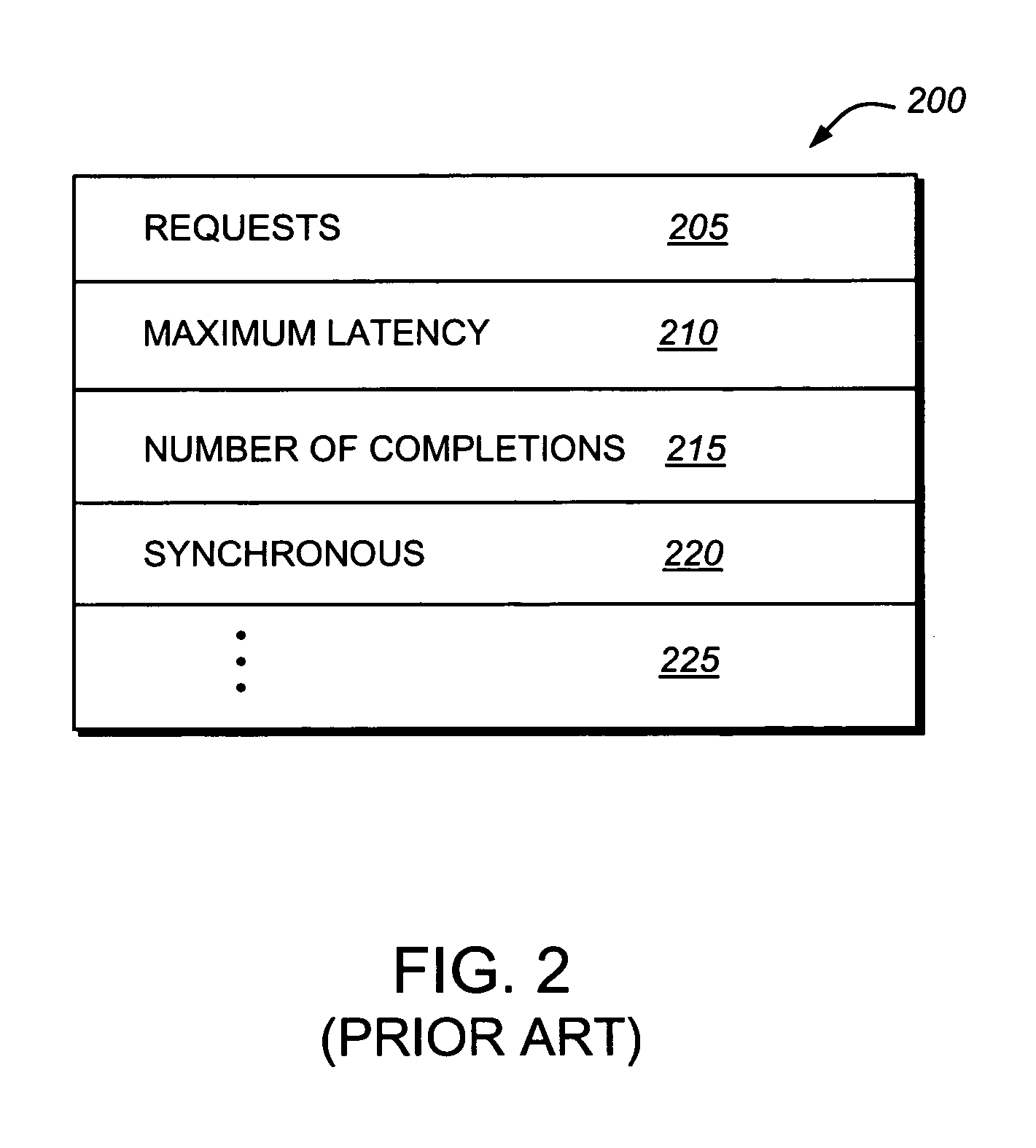

System and method for reprioritizing high-latency input/output operations

ActiveUS7783787B1Reduce request latencyIncrease and decrease latencyData processing applicationsMultiprogramming arrangementsFile systemWaiting time

A mechanism for reprioritizing high-latency input / output operations in a file system is provided. The mechanism expands a file access protocol, such as the direct access file system protocol, by including a hurry up command that adjusts the latency of a given input / output operation. The hurry up command can be employed in the Direct Access File System.

Owner:NETWORK APPLIANCE INC

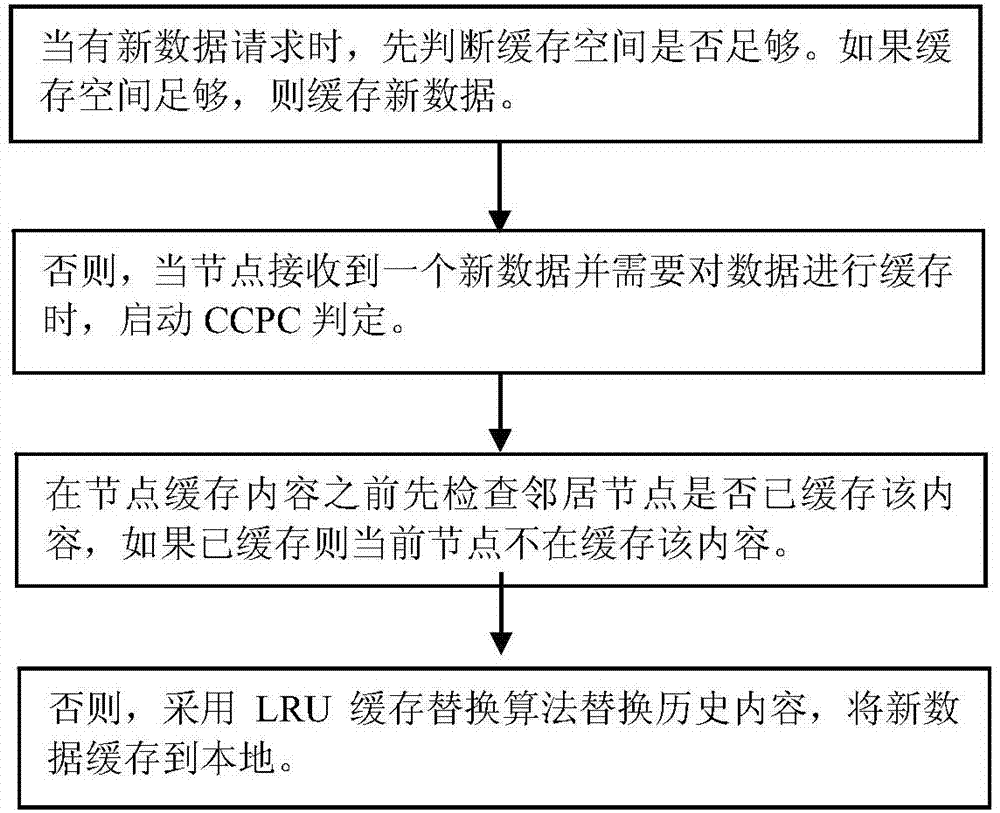

Neighbor cooperation cache replacement method in content center network

InactiveCN103905538AReduce redundancyIncrease varietyTransmissionParallel computingReplacement method

The invention relates to a neighbor cooperation cache replacement method in a content center network. The method is characterized by comprising the steps that step 1, when a node i has a new data einew request, if the cache space of the node i is full is judged; if the cache space of the node i is not full, the step 3 is executed directly; if the cache space of the node i is full, the step 2 is executed, and judging of cooperation cache replacement is carried out; step 2, if the new data einew is cached in a neighbor node of the node i is checked, and if the new data einew is cached, the new data einew is no longer cached in the node i; if the new data einew is not cached, the step 3 is executed; step 3, the new data einew is cached in the local through LRU cache replacement algorithm.

Owner:HARBIN ENG UNIV

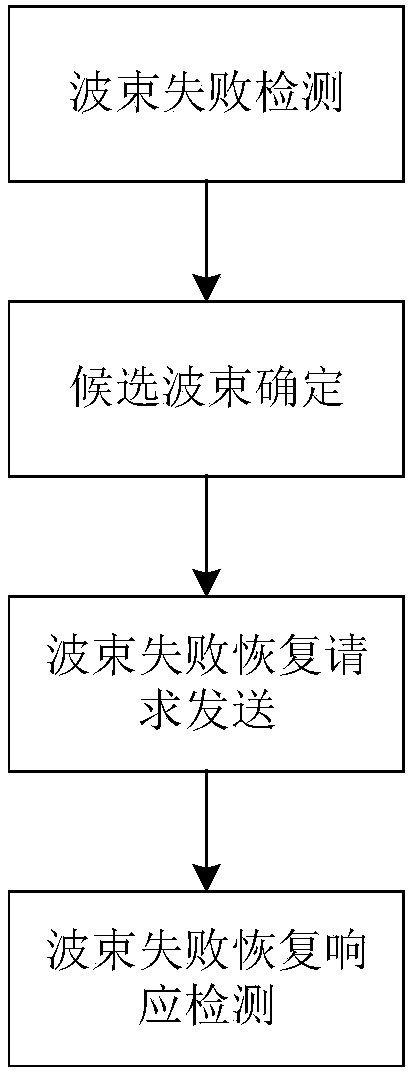

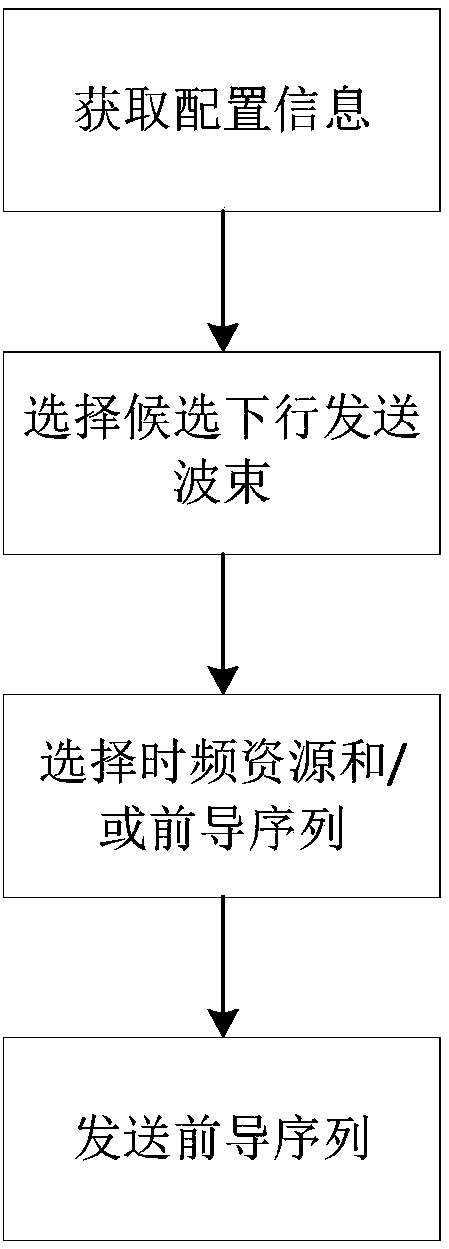

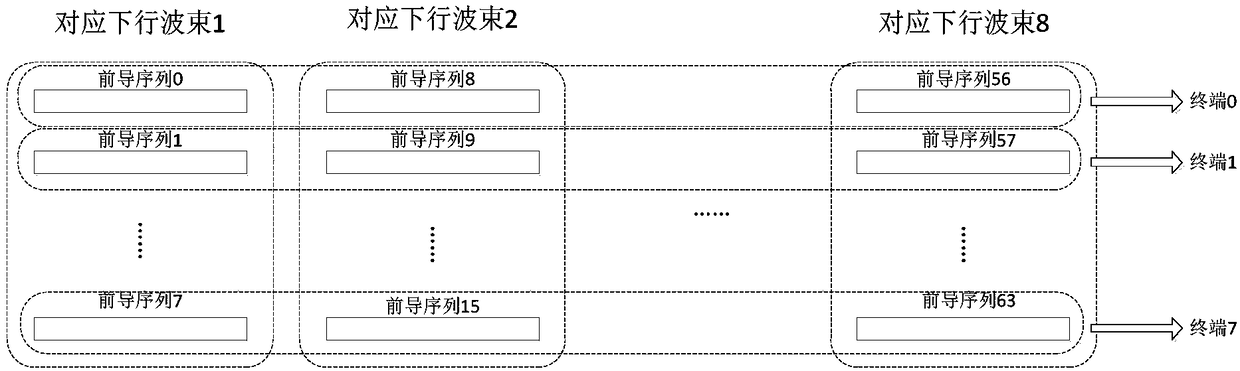

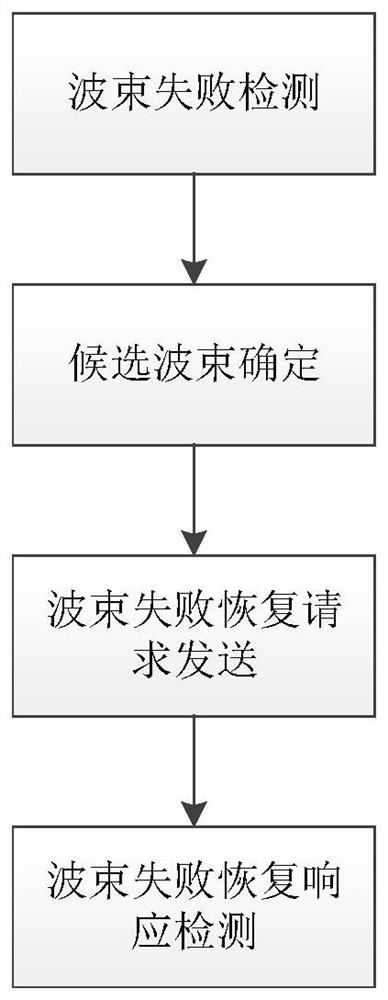

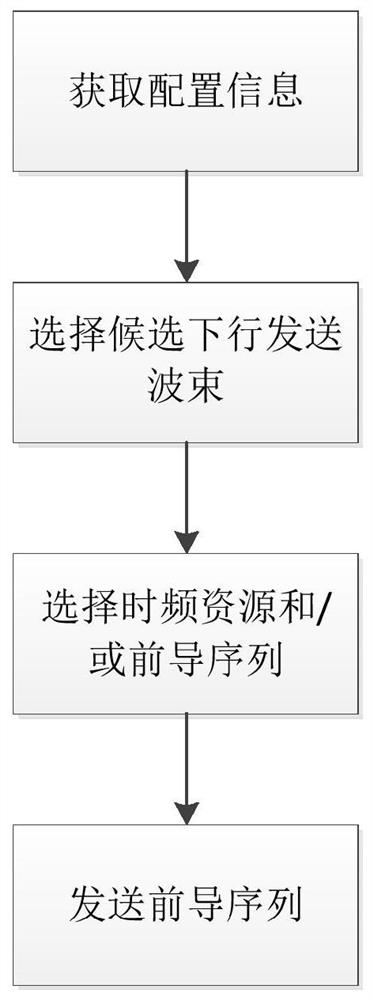

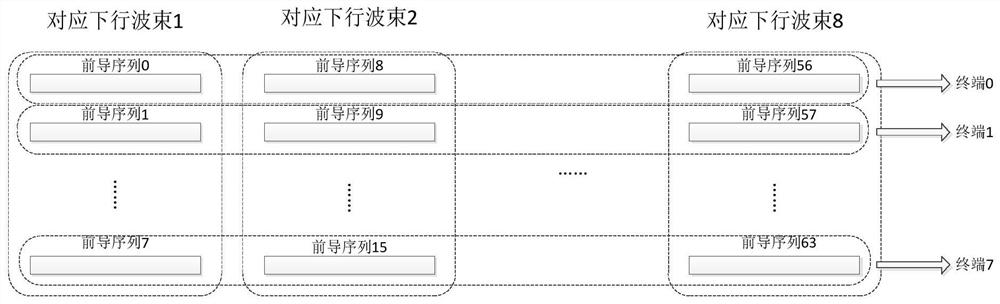

Random access method, user equipment and base station equipment

ActiveCN109121223AReduce signaling overheadLow latencyPilot signal allocationWireless communicationAccess timeEngineering

The invention discloses a beam failure recovery request method, which comprises the steps of obtaining channel time-frequency resource allocation information and preamble sequence configuration information for transmission of a beam failure recovery request; selecting candidate downlink transmission beams according to a measurement result; selecting a channel time-frequency resource and / or preamble sequence according to a corresponding relationship between the downlink transmission beams and channel time-frequency resources and / or preamble sequences, the time-frequency resource allocation information and the preamble sequence configuration information; and transmitting the preamble sequence on the channel time-frequency resource. Compared with the prior art, optimization is performed on the resource allocation and process in allusion to the beam failure recovery request, the signaling overhead can be effectively reduced, and the access time delay is reduced.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

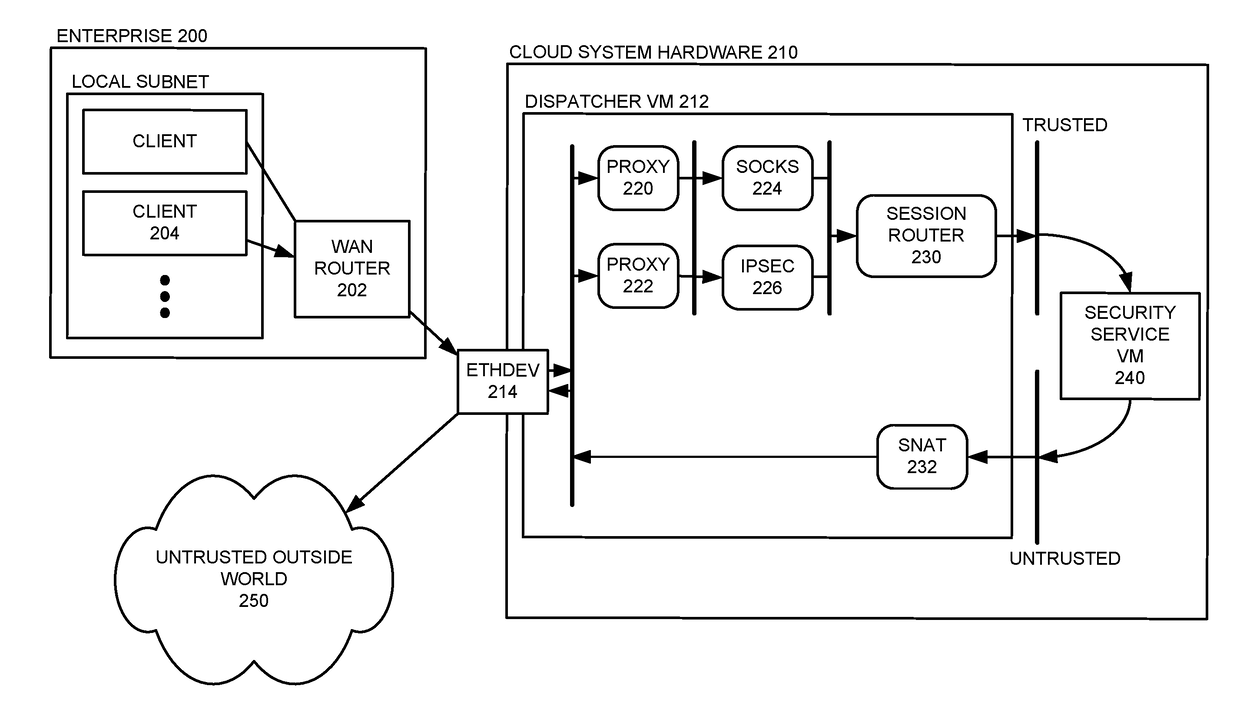

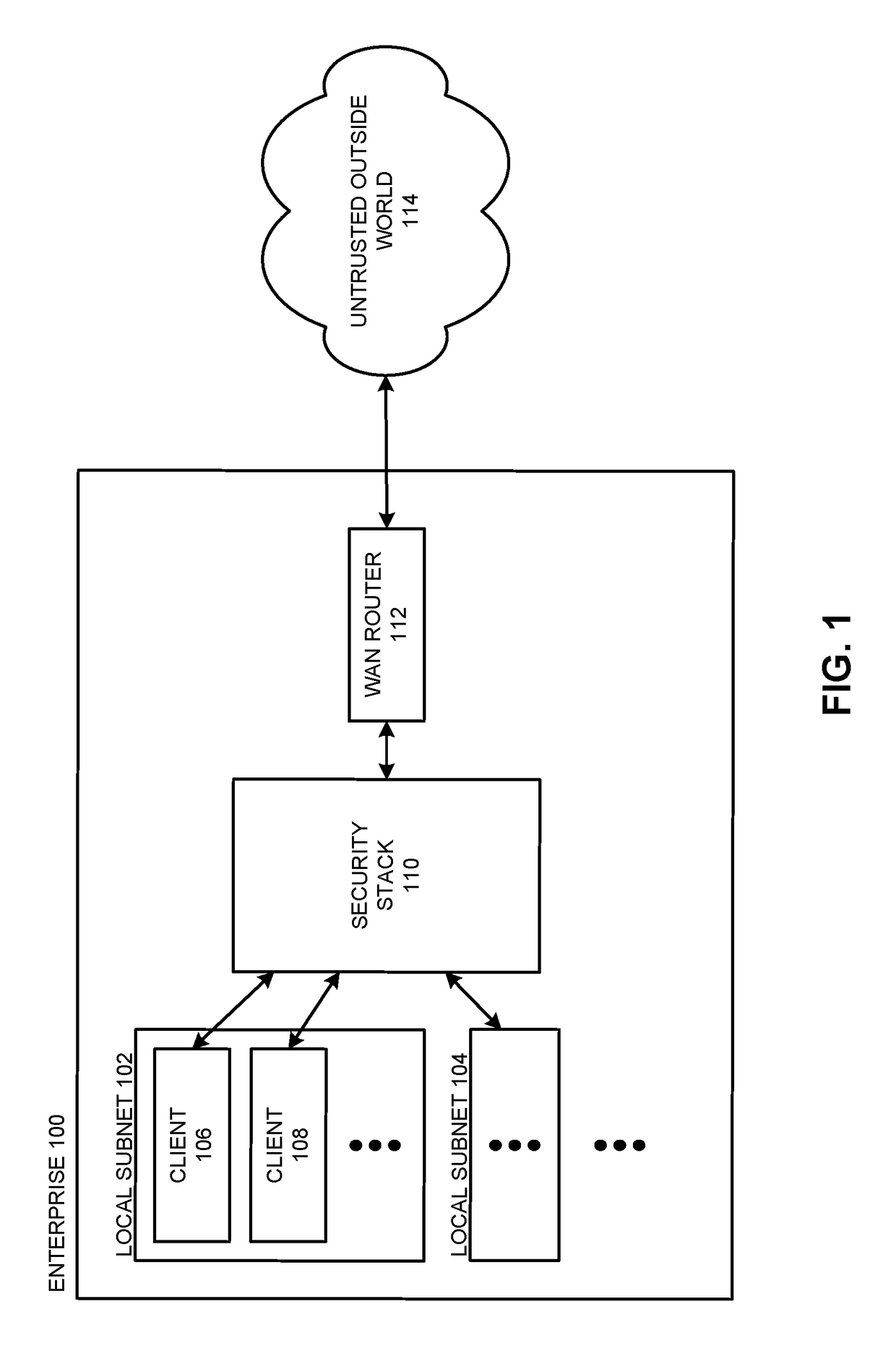

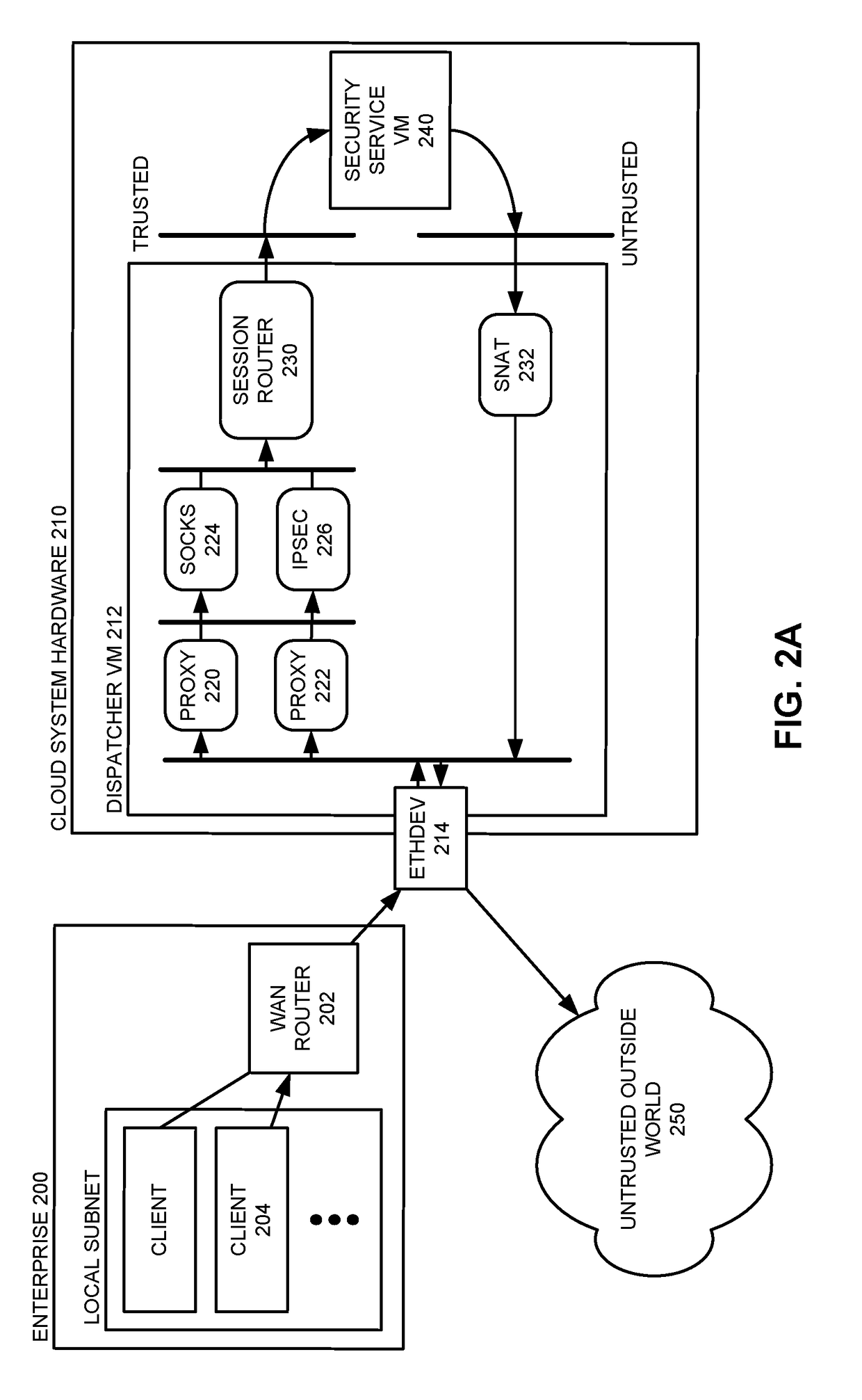

Optimizing Data Transfer Costs for Cloud-Based Security Services

The disclosed embodiments disclose techniques for optimizing data transfer costs for cloud-based security services. During operation, an intermediary computing device receives a network request from a client located in a remote enterprise location that is sending the network request to a distinct, untrusted remote site (e.g., a site separate from the distinct locations of the remote enterprise, the cloud data center, and the intermediary computing device). The intermediary computing device caches a set of data associated with the network request while forwarding the set of data to the cloud-based security service for analysis. Upon receiving a confirmation from the cloud-based security service that the set of data has been analyzed and is permitted to be transmitted to the specified destination, the intermediary computing device forwards the cached set of data to the specified destination.

Owner:NUBEVA INC

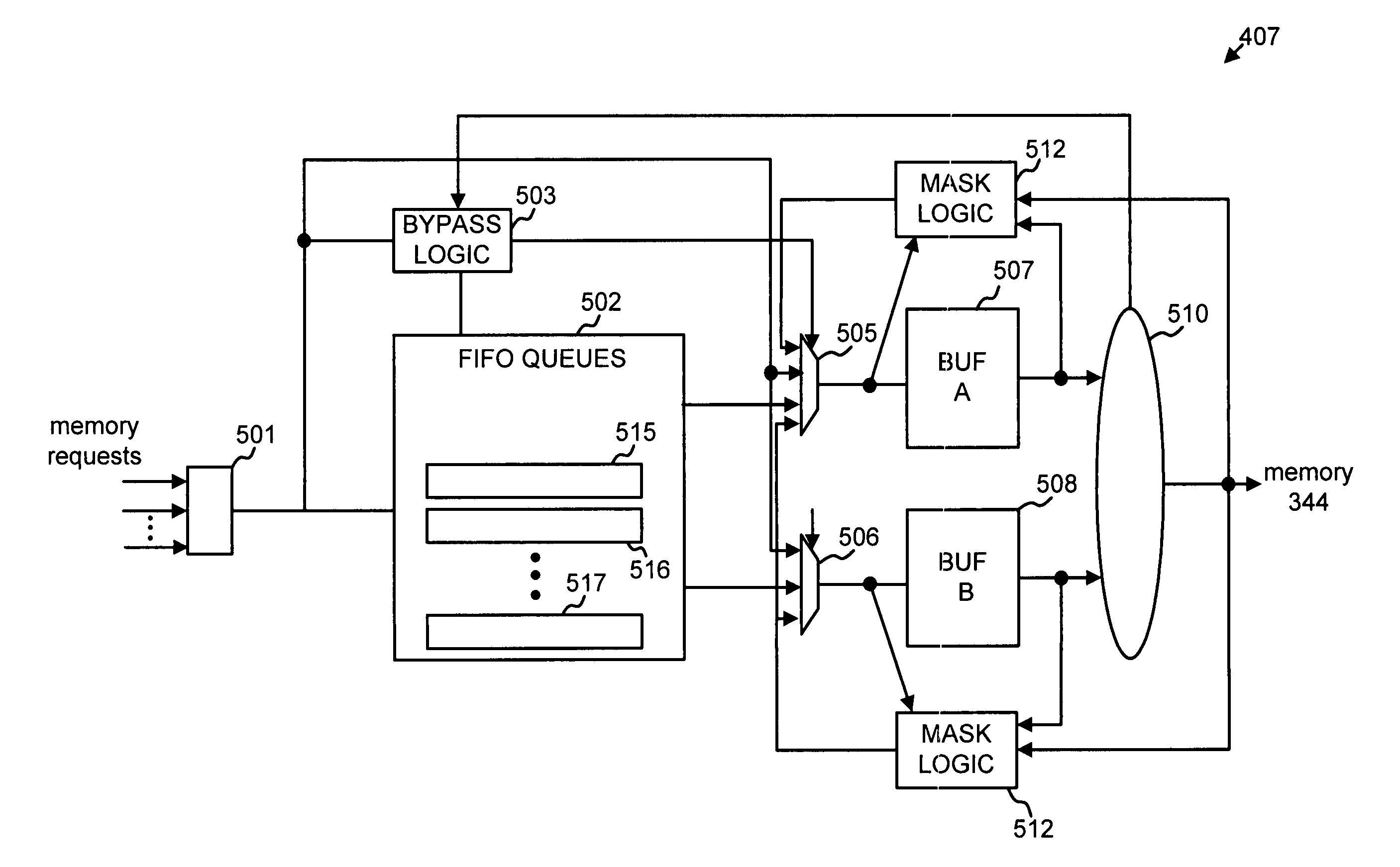

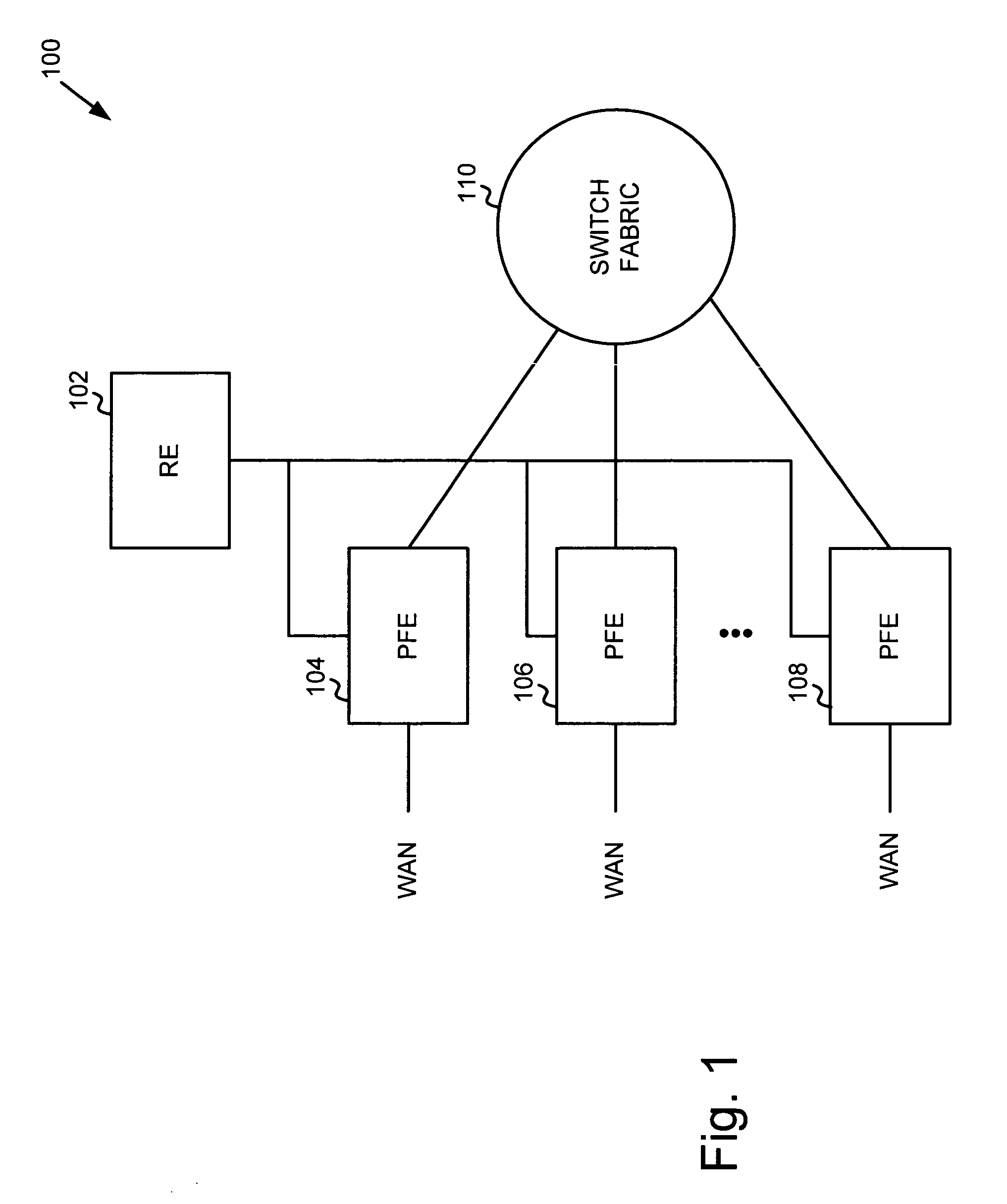

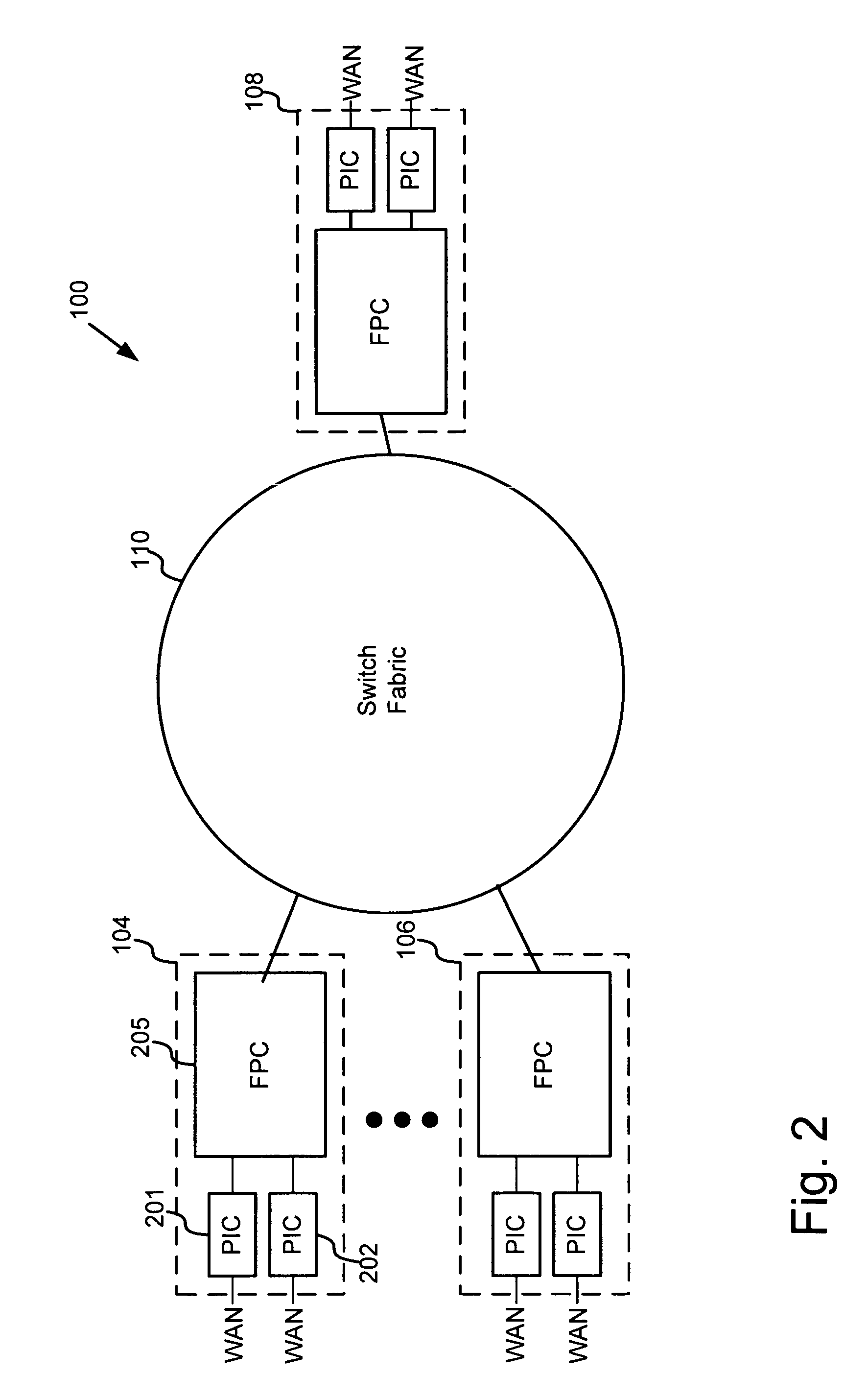

Low latency request dispatcher

InactiveUS7814283B1Delay minimizationReduce request latencyMemory systemsData conversionMultiplexerLatency (engineering)

A first-in-first-out (FIFO) queue optimized to reduce latency in dequeuing data items from the FIFO. In one implementation, a FIFO queue additionally includes buffers connected to the output of the FIFO queue and bypass logic. The buffers act as the final stages of the FIFO queue. The bypass logic causes input data items to bypass the FIFO and to go straight to the buffers when the buffers are able to receive data items and the FIFO queue is empty. In a second implementation, arbitration logic is coupled to the queue. The arbitration logic controls a multiplexer to output a predetermined number of data items from a number of final stages of the queue. In this second implementation, the arbitration logic gives higher priority to data items in later stages of the queue.

Owner:JUMIPER NETWORKS INC

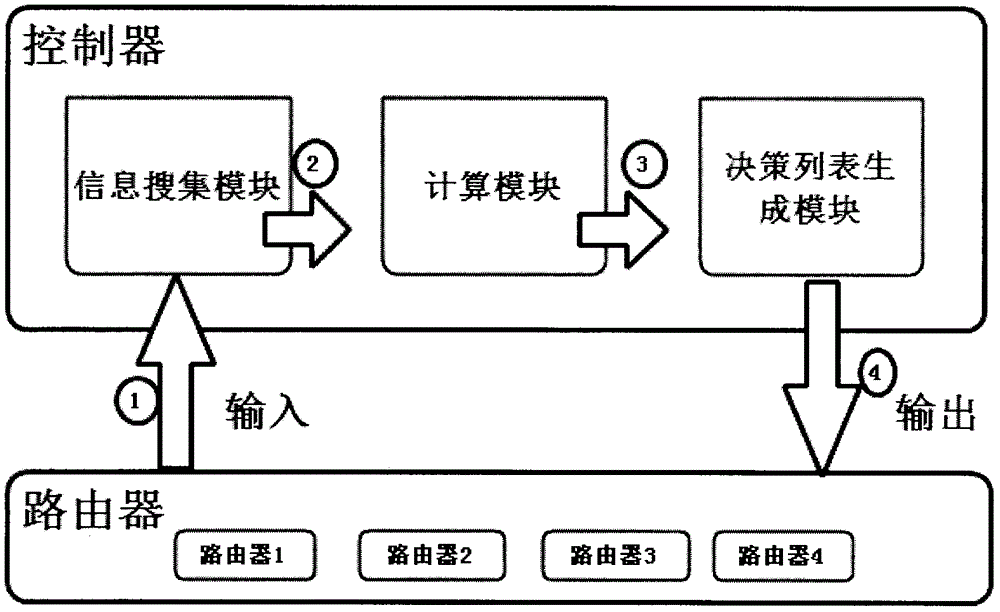

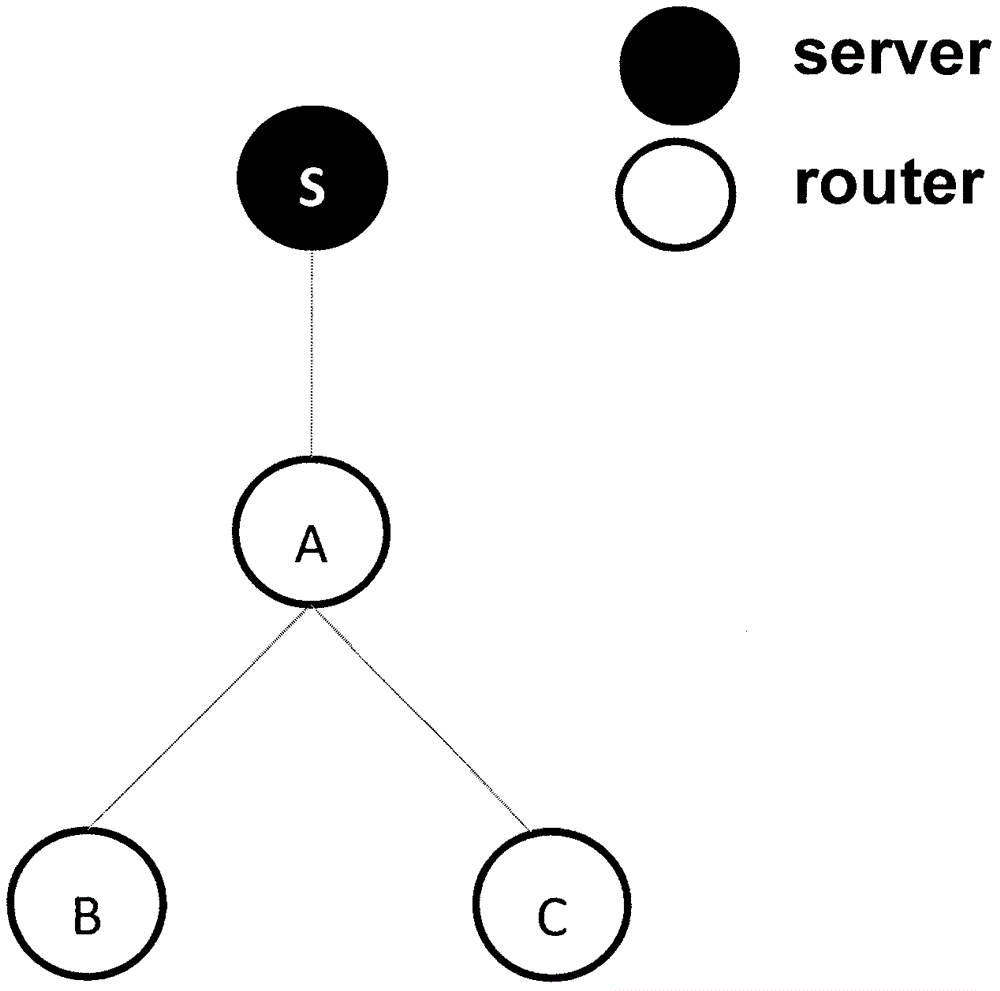

Centralized caching decision strategy in content-centric networking

ActiveCN105681438AImprove performanceIncrease profitTransmissionDecision strategyInformation-centric networking

Content-centric networking is one solution of an information-centric networking thought. The invention discloses a centralized caching decision strategy in the content-centric networking. Under the architecture of the content-centric networking, researches of basic functions, such as data naming, content forwarding and intranet caching of information-centric networking, are realized by utilizing the existing framework adopting a SDN; the centralized caching decision strategy is provided; a centralized controller is placed in the content-centric networking; the controller generates one caching list for each node by collecting all node request information, and deterministically guides each node to cache contents; therefore, the transmission flow in the network is minimized; and the user request delay is reduced.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

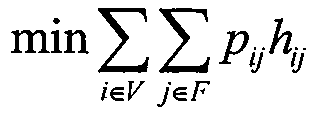

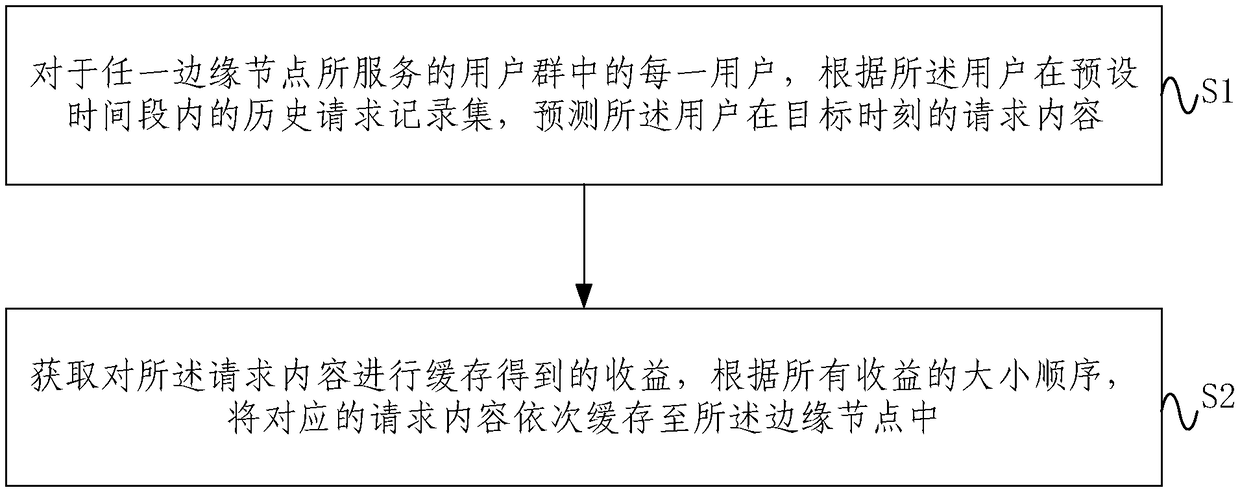

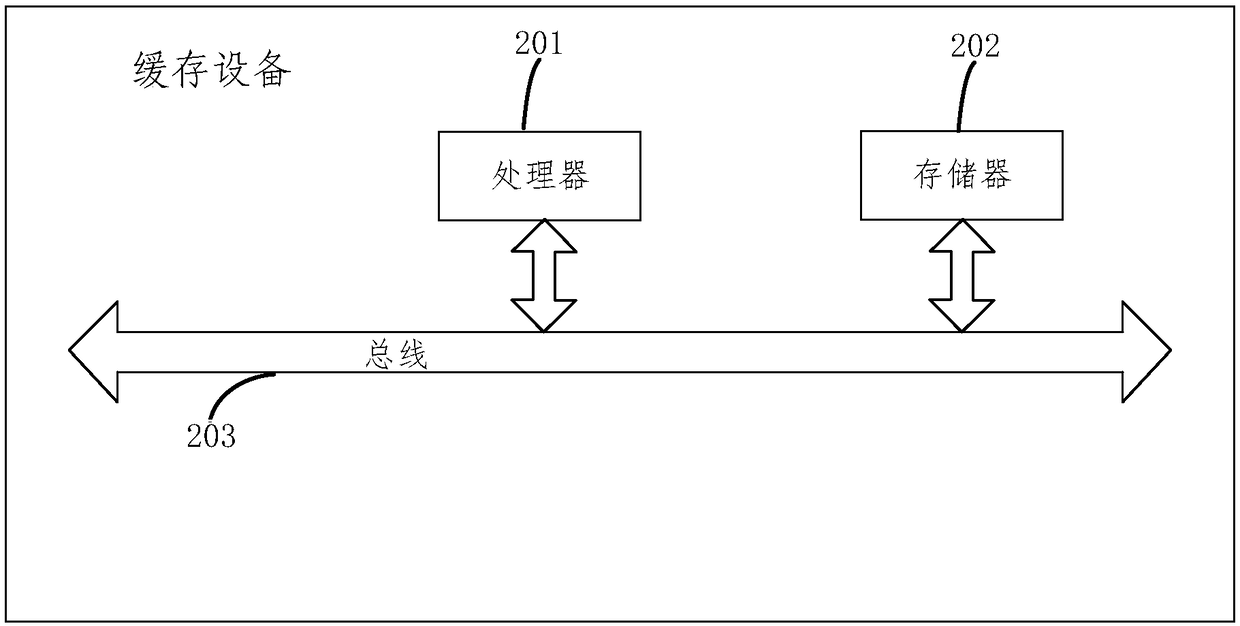

Caching method and system

The embodiment of the invention provides a caching method and system. The method includes: for each user in a user group served by any edge node, a request content from a user at a target time is predicted according to a historical request record set of the user within a preset period of time; incomes obtained by caching the request content are obtained and the corresponding request contents are cached to the edge node successively according to the incoming sequence. According to the method and system, with the historical request record from the user, the user request behavior characteristicscan be explored fully and the request content from the user at a certain time in future can be predicted accurately. Moreover, the income obtained by caching each request content is calculated and thecorresponding request content is cached based on the income sequence, so that the cache space of the edge node is utilized efficiently and the request delay is reduced.

Owner:BEIJING UNIV OF POSTS & TELECOMM

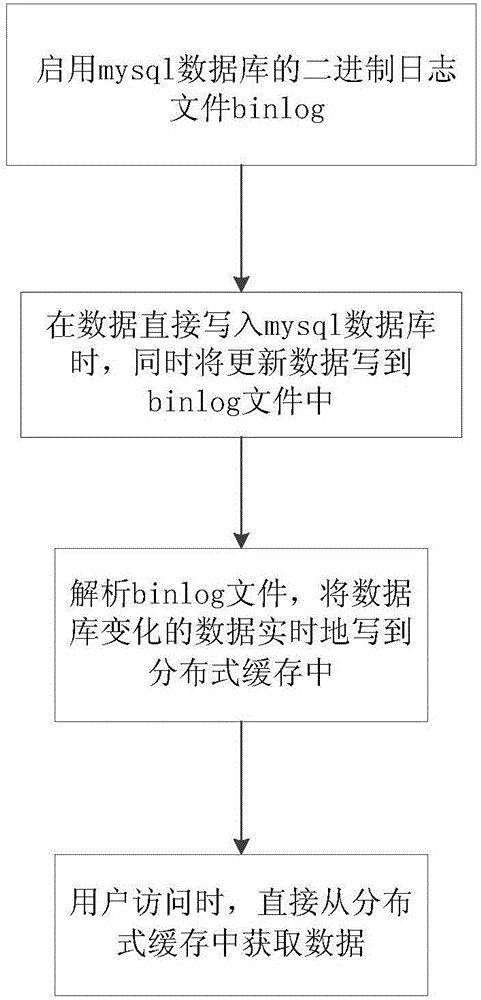

Distributed cache method and system based on mysql binlog

InactiveCN106484869AGuaranteed timelinessImprove experienceDatabase management systemsSpecial data processing applicationsDistributed cacheOperating system

The invention discloses a distributed cache method based on mysql binlog. The method guarantees promptness of distributed cache data, reduces pressure of a mysql database, lowers a request delay and improves user experience. The method includes: (1) starting using a binary log file binlog of the mysql database; (2) when data is directly written in the mysql database, writing updated data in the binlog file synchronously; (3) parsing the binlog file, and writing changing data of the database in real time in distributed cache; and (4) when a user accesses, directly acquiring the data from the distributed cache. The invention also discloses a distributed cache system based on mysql binlog.

Owner:BEIJING GEO POLYMERIZATION TECH

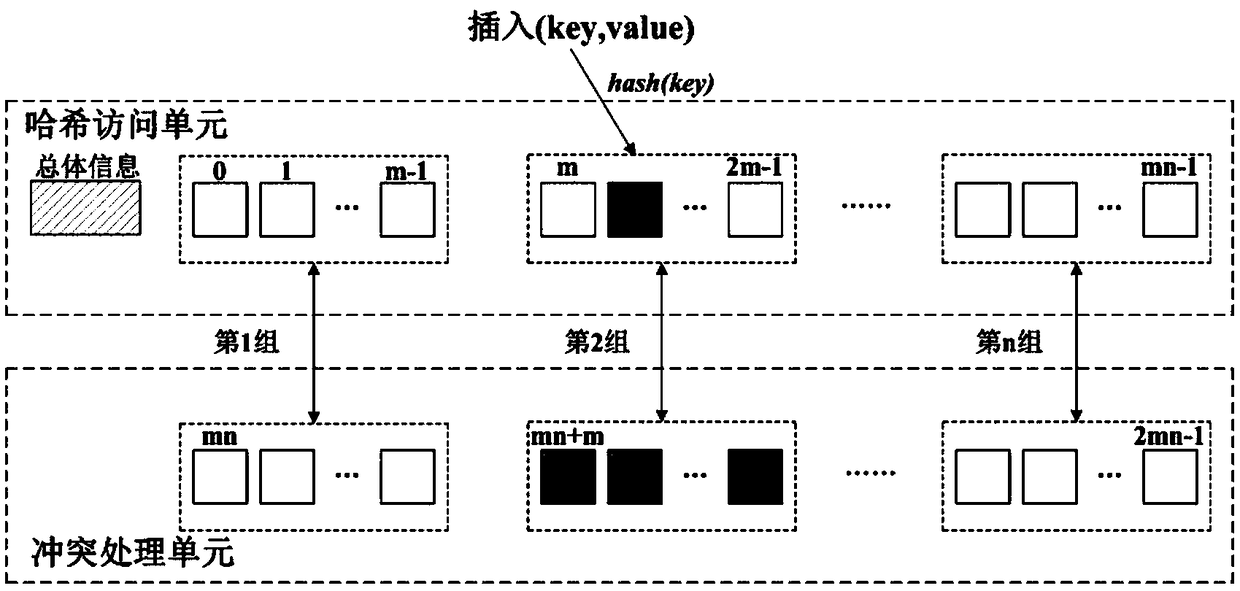

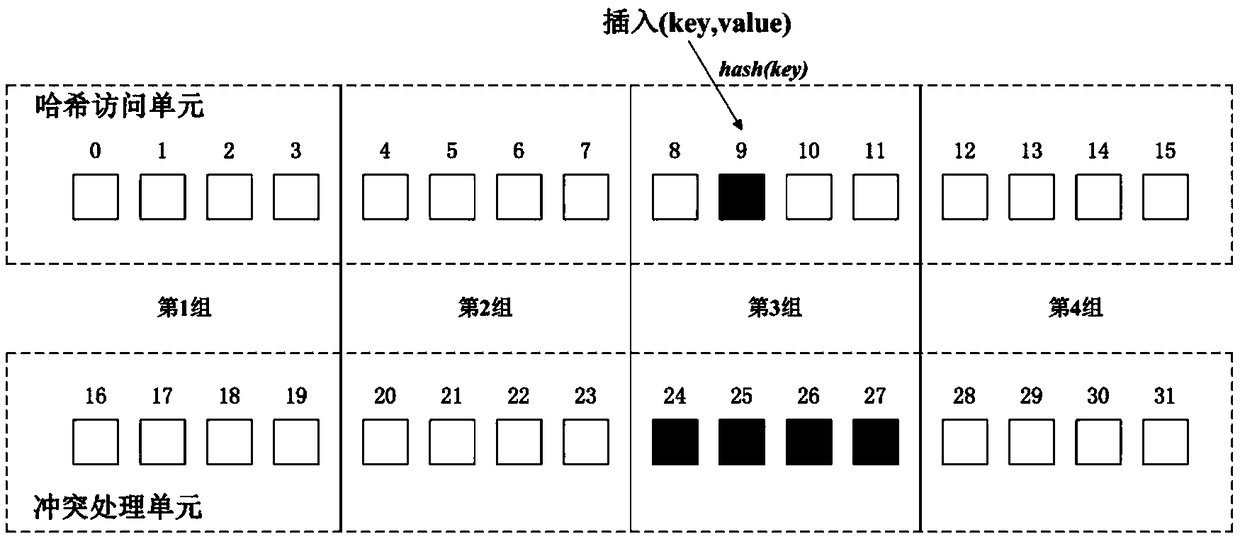

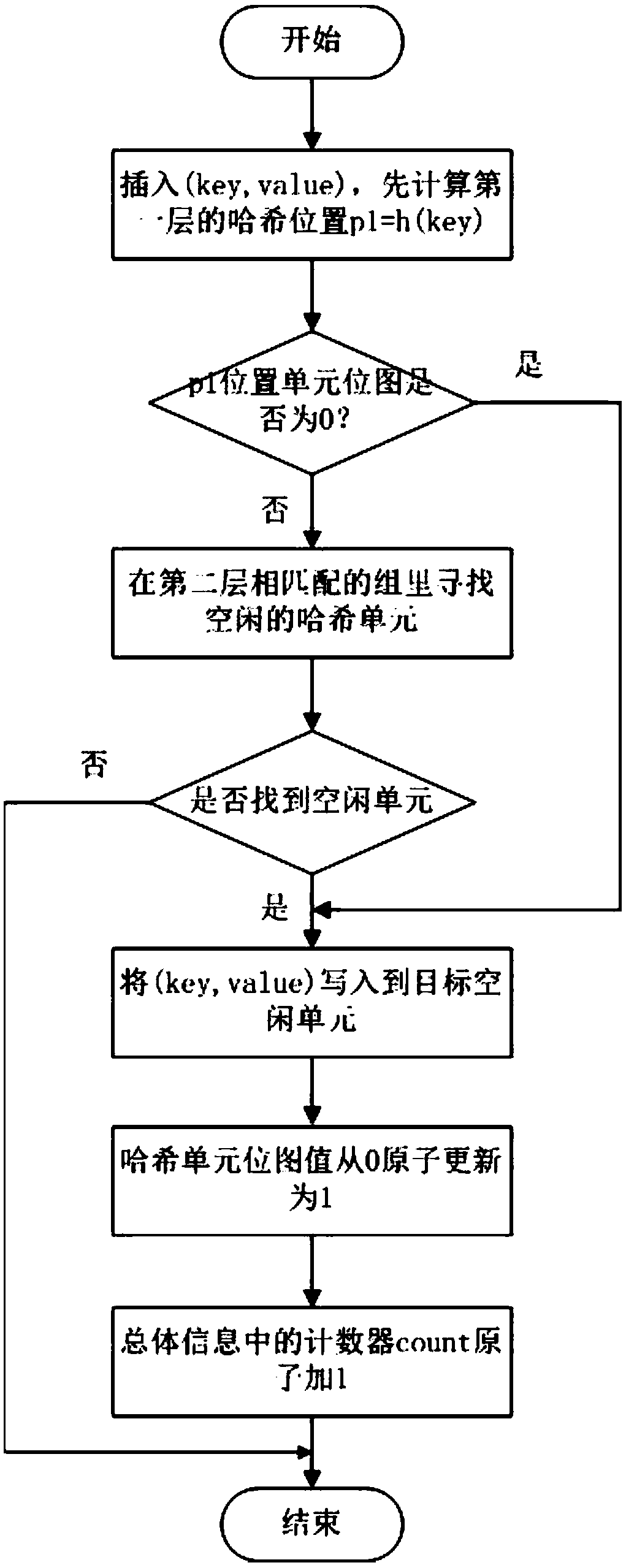

A method and a system for constructing a consistent hash table based on a non-volatile memory

ActiveCN109165321AEnsure consistencyReduce request latencyOther databases indexingRedundant operation error correctionTheoretical computer scienceByte

The invention discloses a method and a system for constructing a consistency hash table based on a non-volatile memory. The method of the invention constructs a consistent hash table, and divides allhash units in the hash table into two layers. The first layer is a hash function accessible unit, the second layer is a hash function inaccessible unit, and the second layer is used for collision processing when a hash conflict occurs in the first layer. The hash cells of each layer are divided into an equal number of n groups, and the groups of the first layer and the second layer with the same number are matched. When the i-th group of the first layer has hash collision, the i-th group of the second layer handles the collision, and the hash table guarantees the consistency of the data by using the 8-byte atomic write technique. The hash table can be restored to a consistent state after a system crash. The invention also realizes a hash table construction system based on non-volatile memory. The hash table constructed by the technical scheme of the invention can reduce the consistency overhead and has low request delay.

Owner:HUAZHONG UNIV OF SCI & TECH

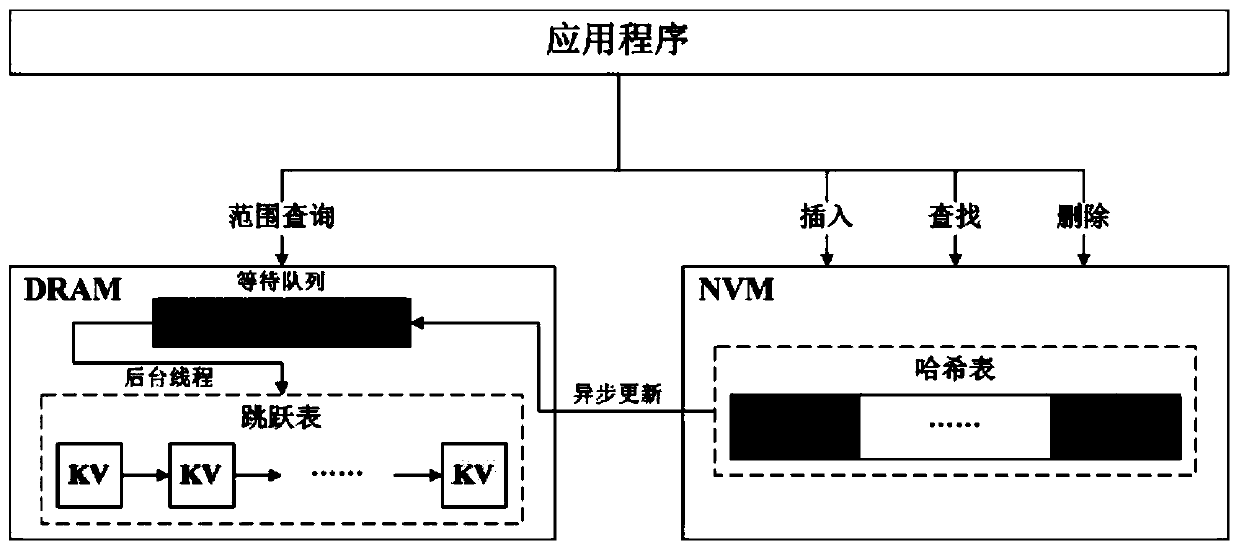

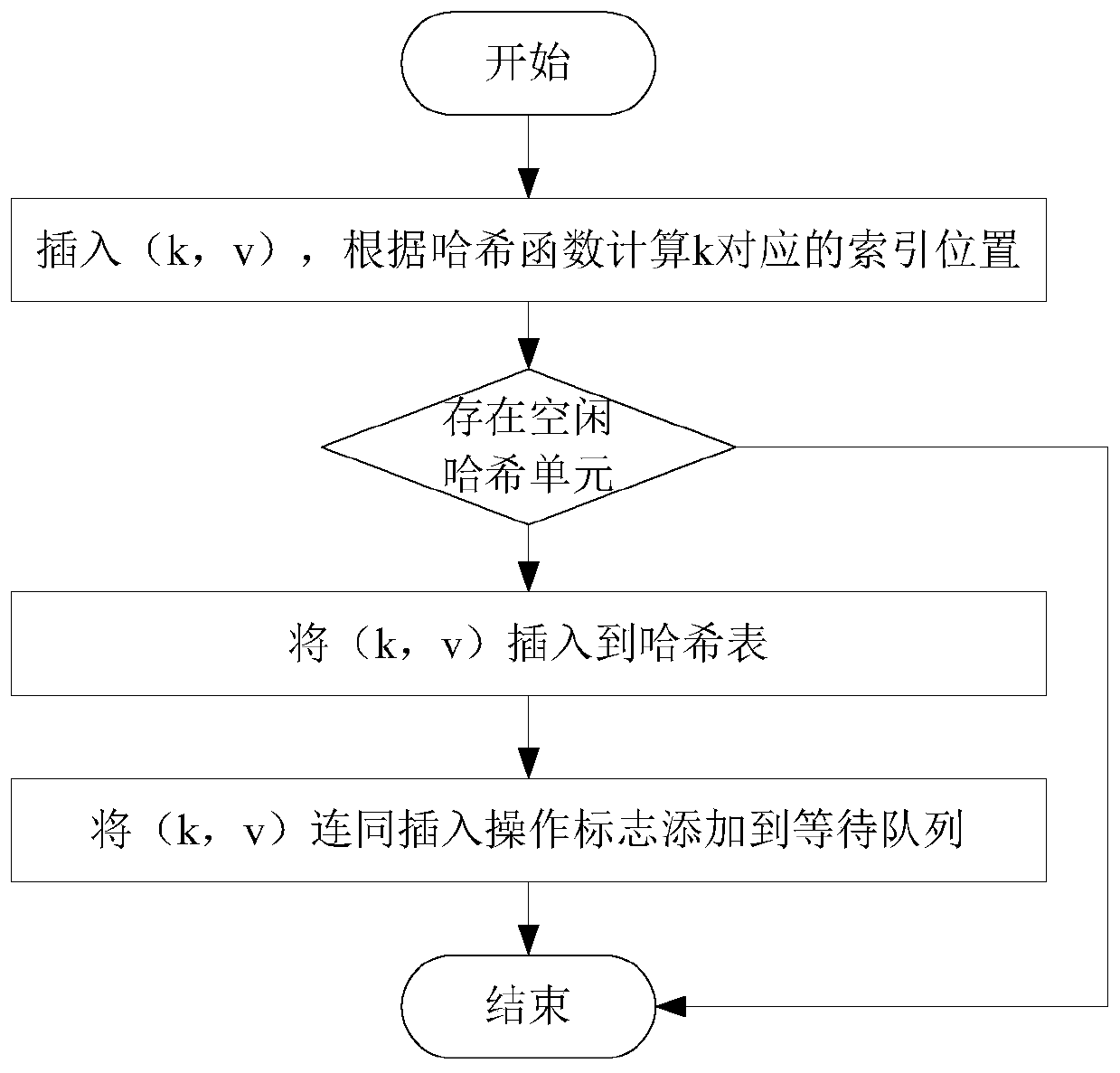

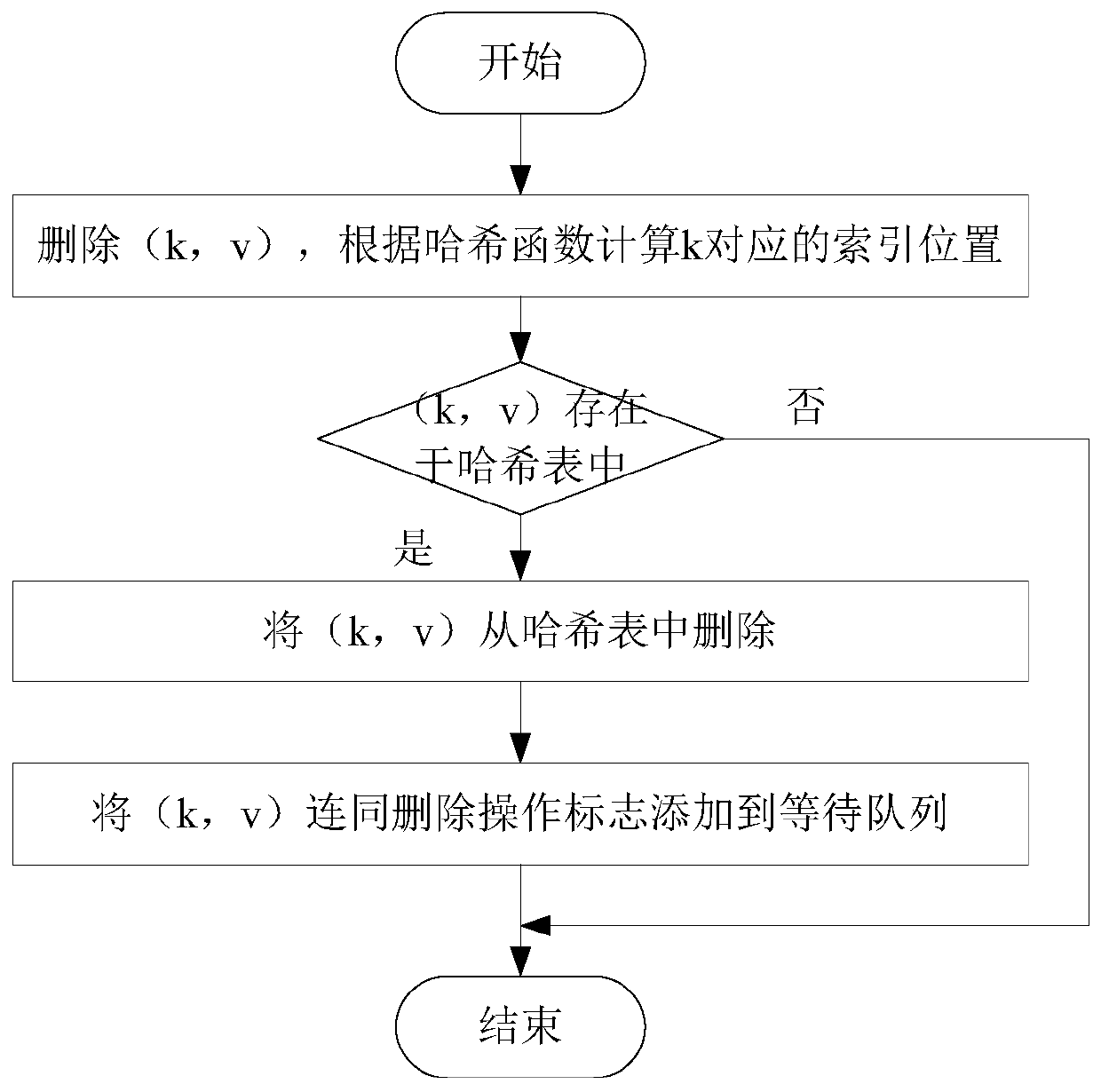

Hybrid index-based hybrid memory performance optimization method and system

InactiveCN110413612AImprove performanceFulfill the operation requestSpecial data processing applicationsDatabase indexingSkip listTheoretical computer science

The invention discloses a hybrid index-based hybrid memory performance optimization method and system, which belongs to the field of computer data storage, and comprises the following steps of: establishing a hash table in an NVM (Non-Volatile Memory) of a hybrid memory in advance, and establishing a skip table and a waiting queue in a DRAM (Dynamic Random Access Memory); for each operation request, if the operation request is an insertion operation, inserting the operation request into a hash table according to a key value of a key value pair to be inserted, and adding the key value pair andan insertion operation mark into a waiting queue; if the operation request is deletion operation, deleting the key value pair from the hash table according to the key value of the key value pair to bedeleted, and adding the key value pair and a deletion operation mark into a waiting queue; if the operation request is the single-point query operation, obtaining the key value pair from the hash table according to the key value of the key value pair to be queried; and if the operation request is a range query operation, synchronizing all the key value pairs in the waiting queue into the skip list, and obtaining all the key value pairs in the query range from the skip list. According to the invention, various operation requests including range query can be efficiently realized.

Owner:HUAZHONG UNIV OF SCI & TECH

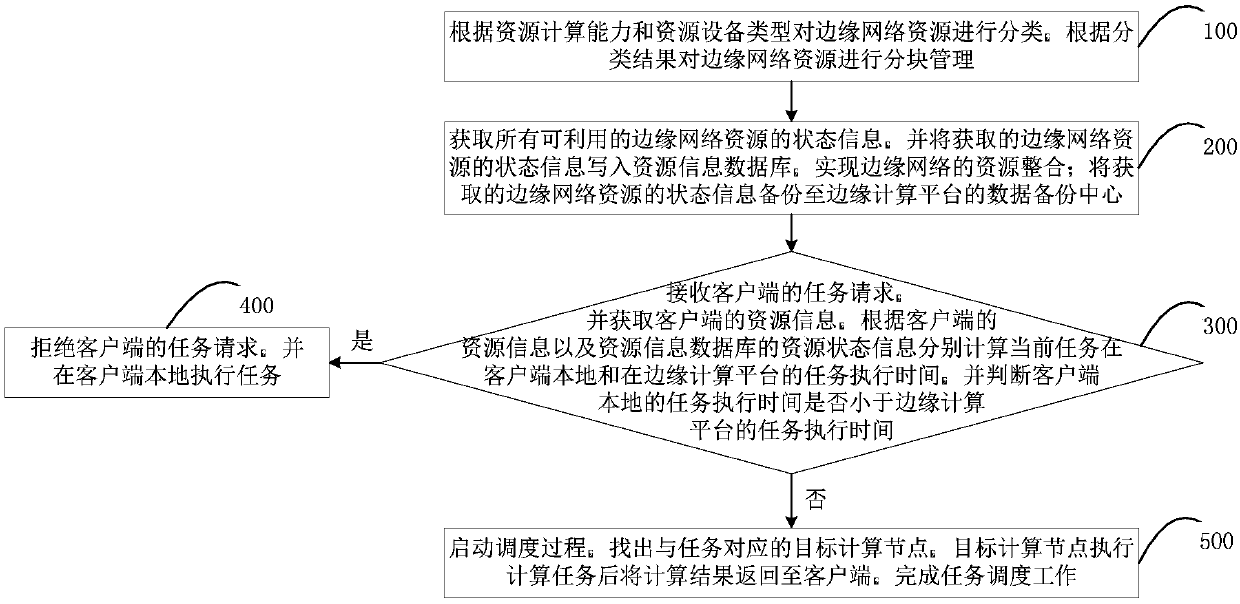

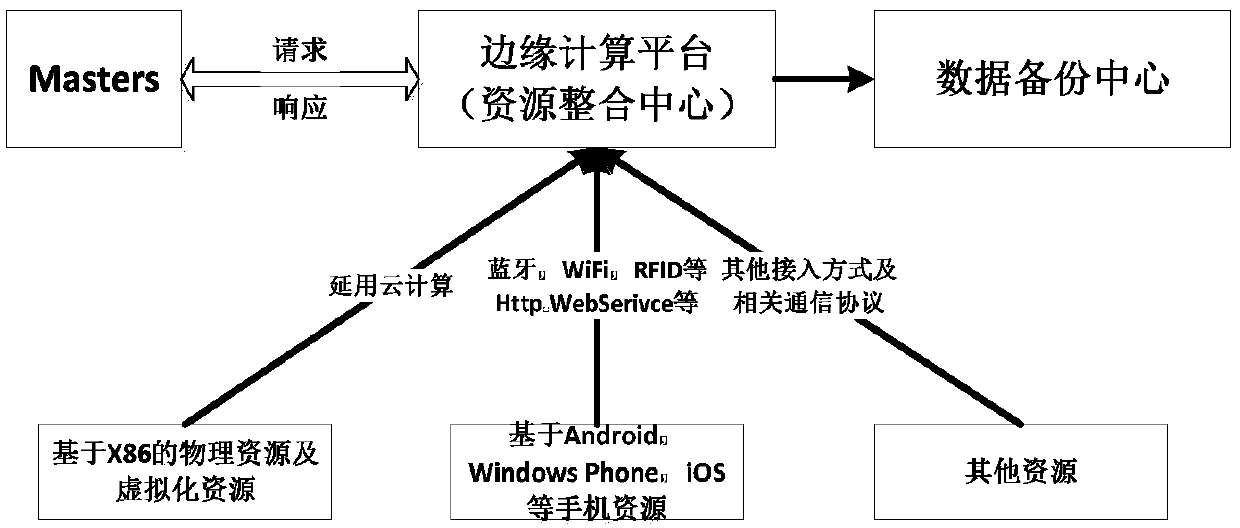

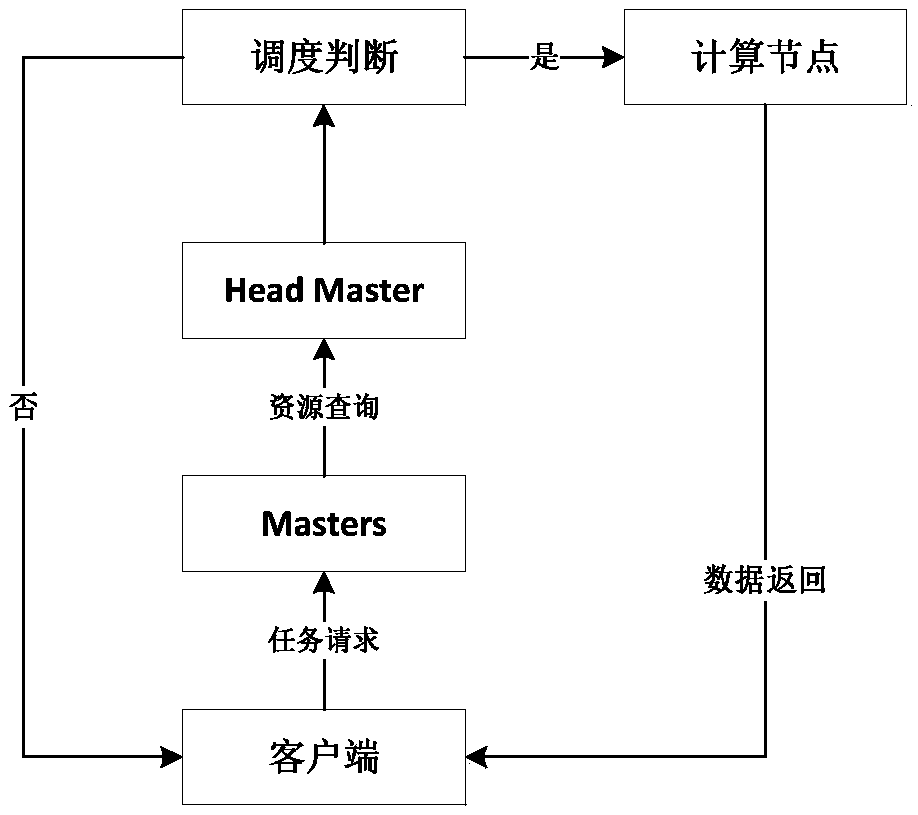

Resource management method and system based on edge computing and electronic equipment

ActiveCN110688213AIncrease profitReduce wasteProgram initiation/switchingResource allocationOperating systemData bank

The invention relates to a resource management method and system based on edge computing and electronic equipment. The method comprises the following steps: step a, acquiring state information of allavailable edge network resources, and writing the acquired state information of the edge network resources into a resource information database; b, receiving a task request of a client, respectively calculating the task execution time of the current task in the local client and the task execution time of the current task in the edge computing platform according to the related information of the current task and the resource state information of the resource information database, and if the task execution time of the local client is not less than the task execution time of the edge computing platform, executing the step c; and c, matching a target computing node corresponding to the current task through the edge computing platform, executing the current task through the target computing node, and returning an execution result to the client. Resource waste can be effectively reduced, the resource utilization rate is increased, and the task execution time is shortened.

Owner:SHENZHEN INST OF ADVANCED TECH

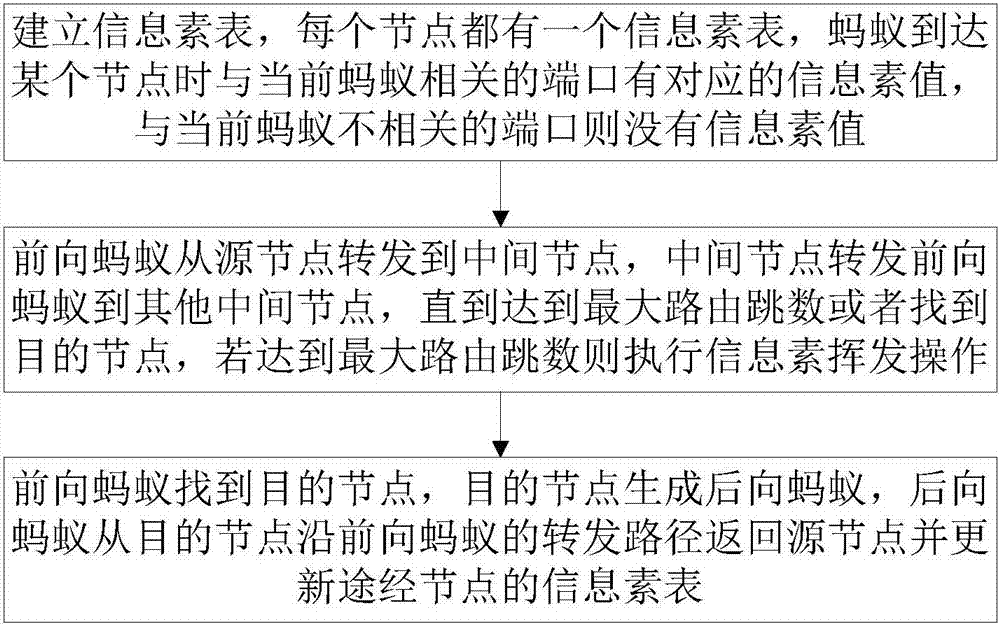

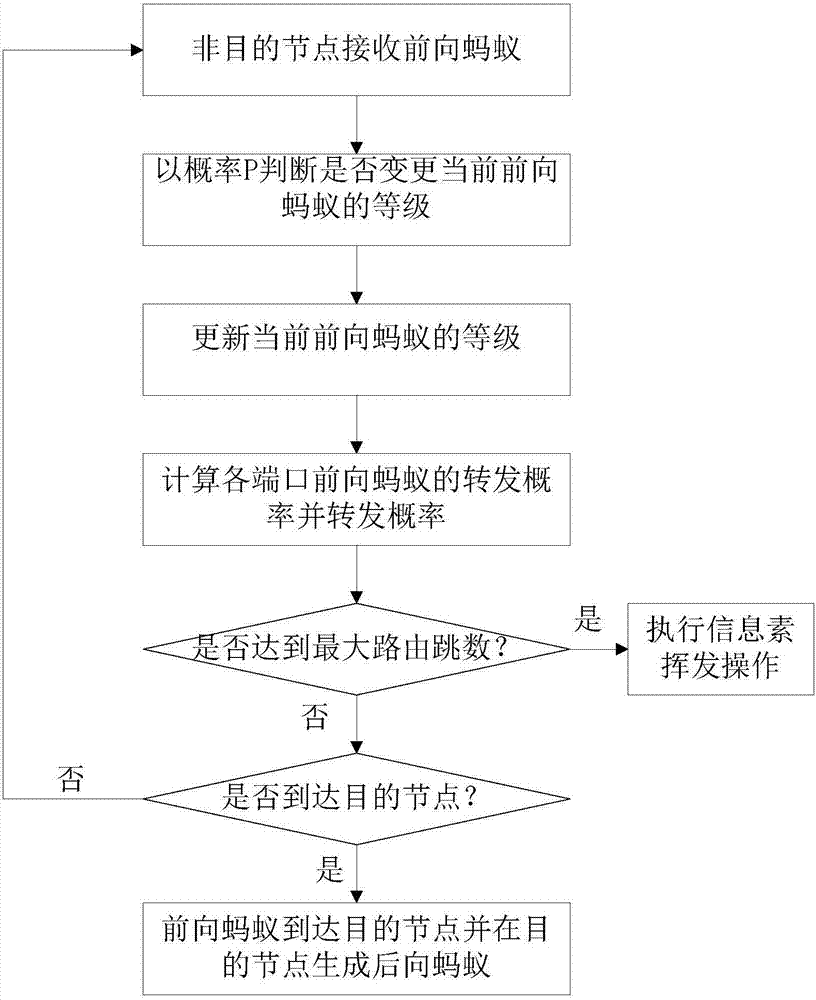

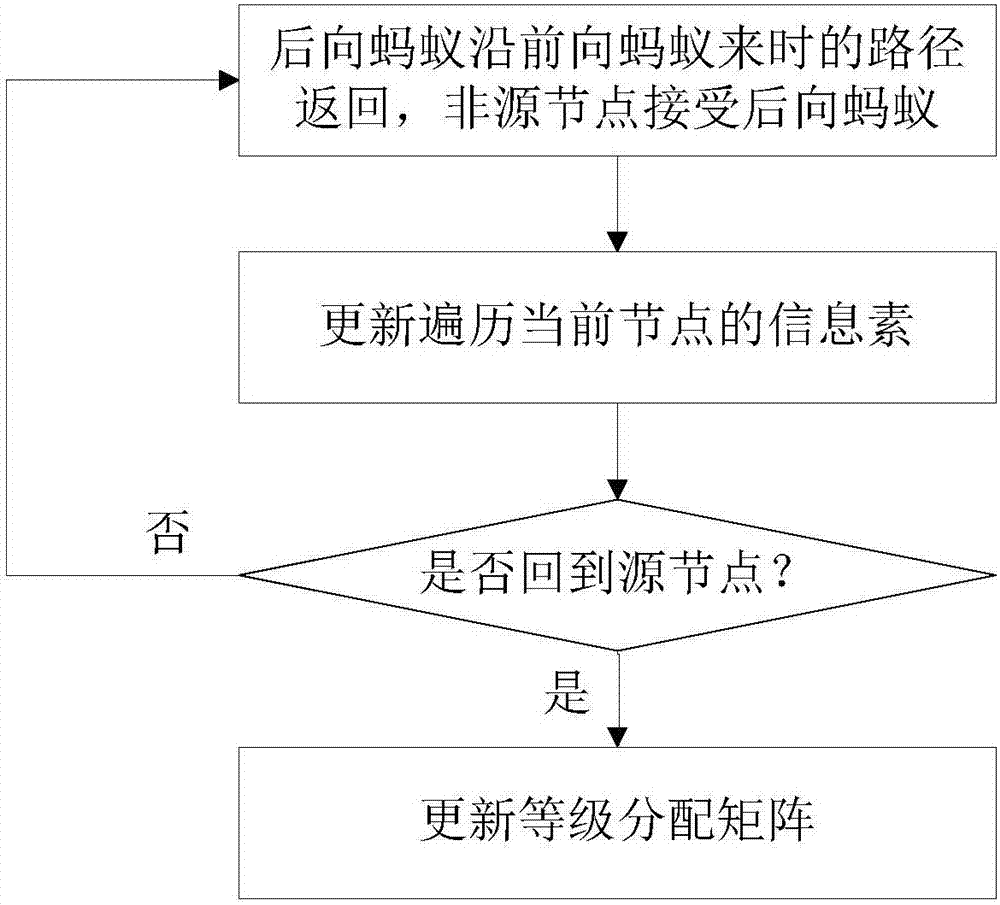

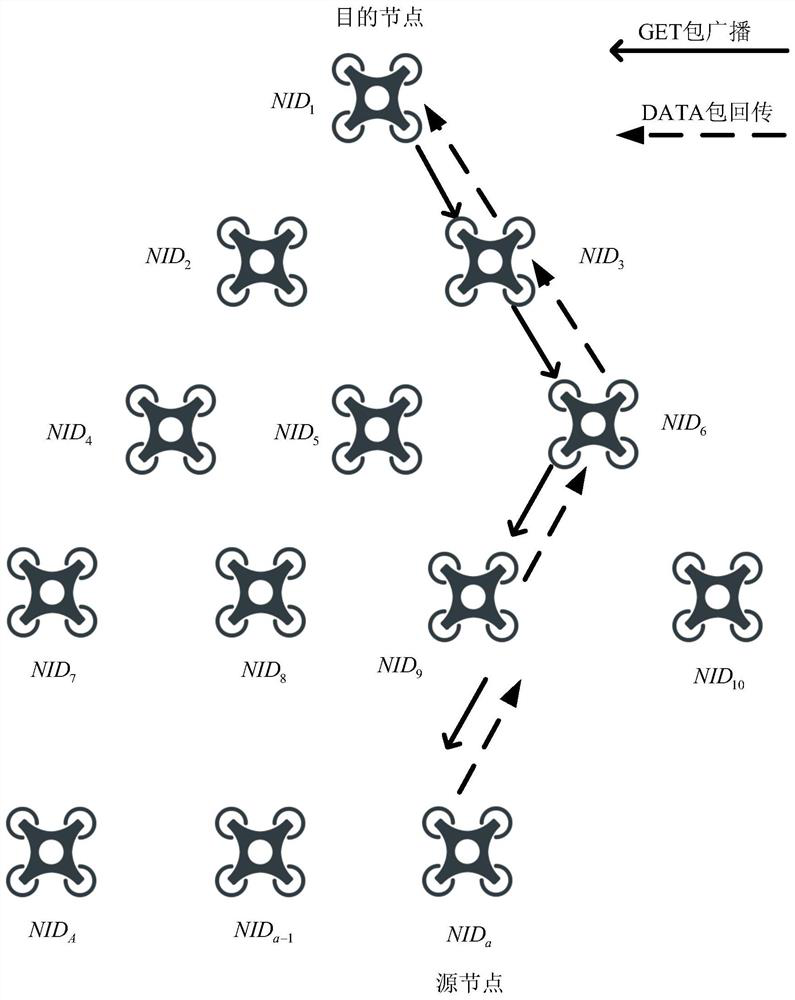

Non-hybrid ant colony routing method in content centric networking

ActiveCN107888502AReduce request latencyImprove performanceData switching networksNetwork overheadContent based networking

The invention relates to the technical field of communication, and particularly relates to a non-hybrid ant colony routing method in content centric networking. The method comprises the steps of building pheromone tables, wherein each node stores a pheromone table, when a previous ant arrives at a certain node, a port related to a current ant has a corresponding pheromone value, and a port not related to the current ant has no pheromone value; forwarding the previous ant from a source node to an intermediate node, and forwarding the previous ant to other intermediate node by the intermediate node till highest routing hops are achieved or a destination node is found; if the highest routing hops are achieved, executing a pheromone volatile operation; and if the previous ant rinds the destination node, generating a next ant by the destination node, and allowing the next ant to return from the destination node along the forwarding path of the previous ant to the source node and update pheromones of the node passed by. The method keeps the network overhead cost low and prevents premature and stagnation effectively.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

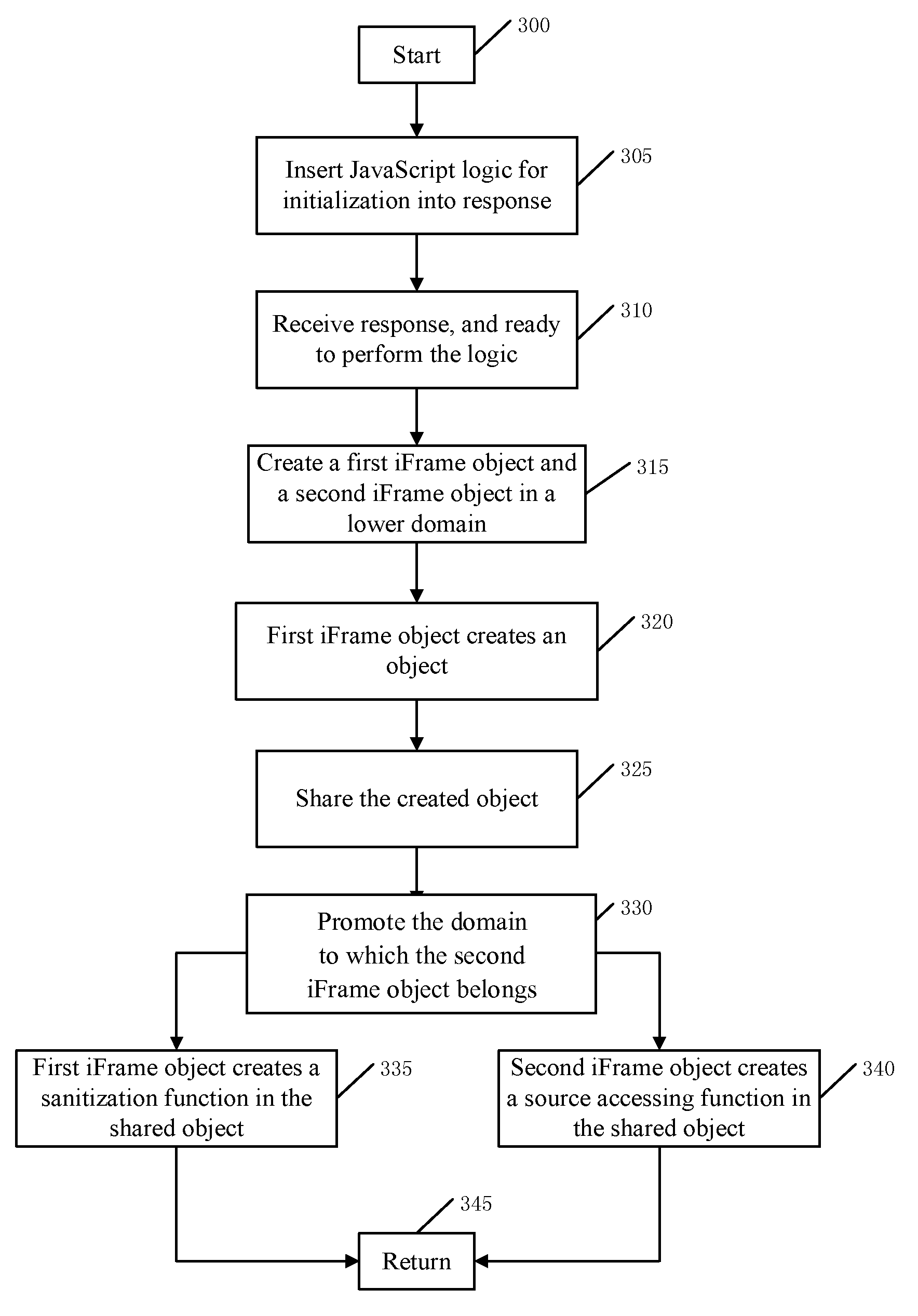

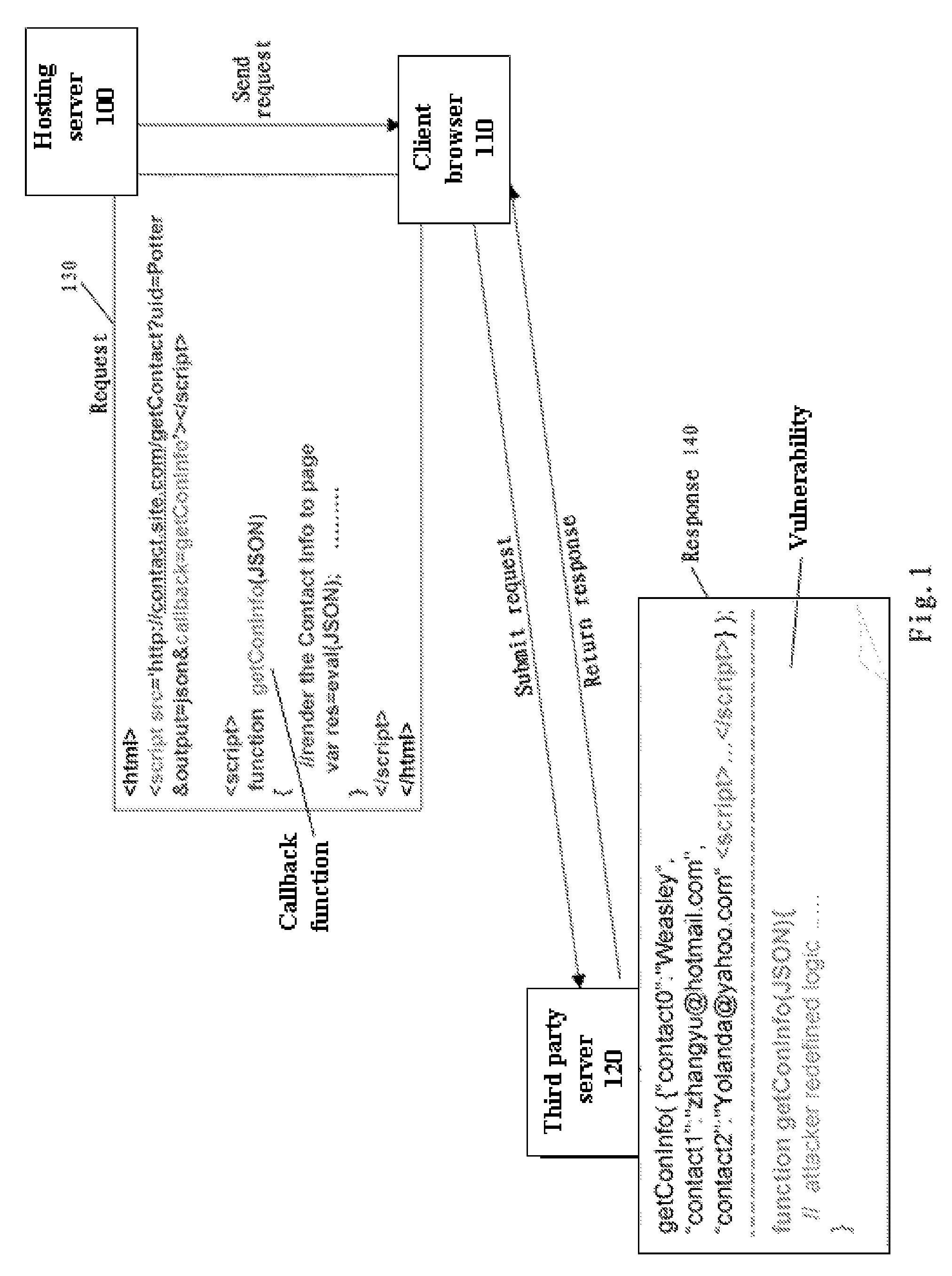

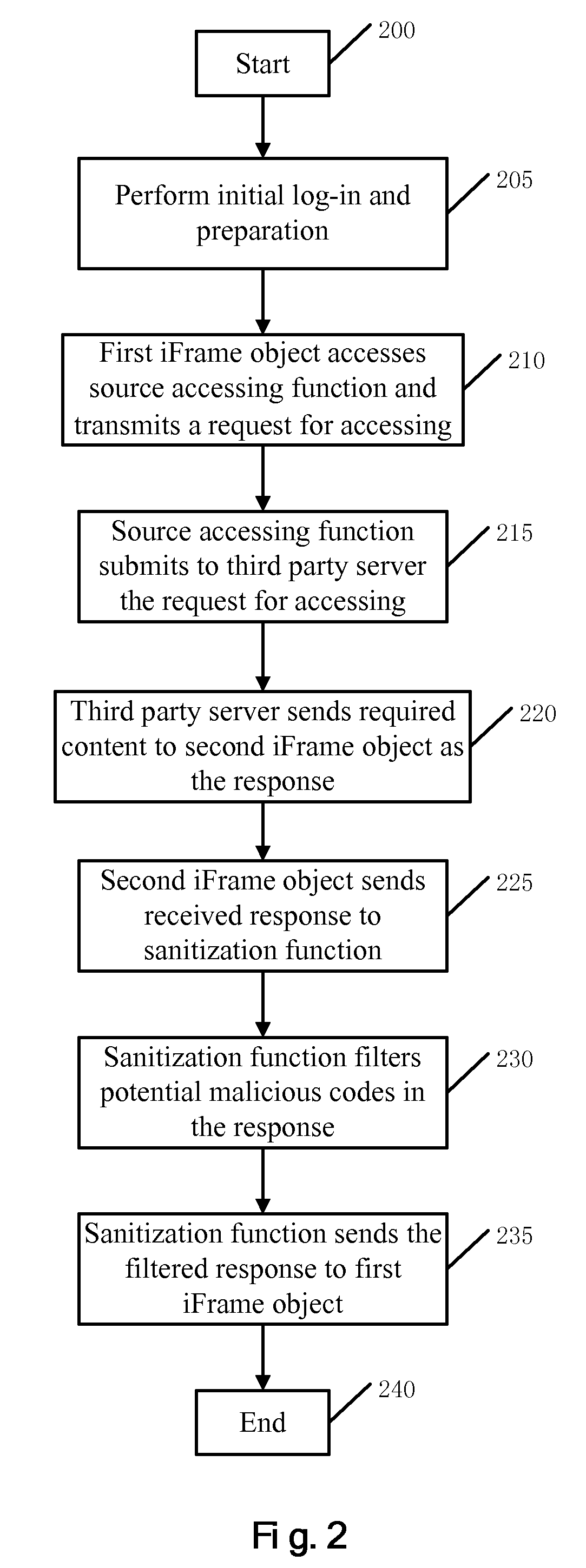

Method and system for providing runtime vulnerability defense for cross domain interactions

InactiveUS8341239B2Improve efficiencyImprove vulnerabilityMemory loss protectionError detection/correctionThird partyWeb application

A runtime vulnerability defense method, system, and computer readable article of manufacture tangibly embodying computer readable instructions for executing the method for cross domain interactions for a Web application. The method includes: creating a first and second iFrame object by the Web application which belong to a lower domain; creating an object O by the first iFrame object; sharing the created object O by the second iFrame object; promoting the domain of the second iFrame object to an upper domain; creating in the shared object O a source accessing function for submitting to a third party server a request to access the content of the third party server; and creating in the shared object O a sanitization function for sanitizing the response received from the server.

Owner:INT BUSINESS MASCH CORP

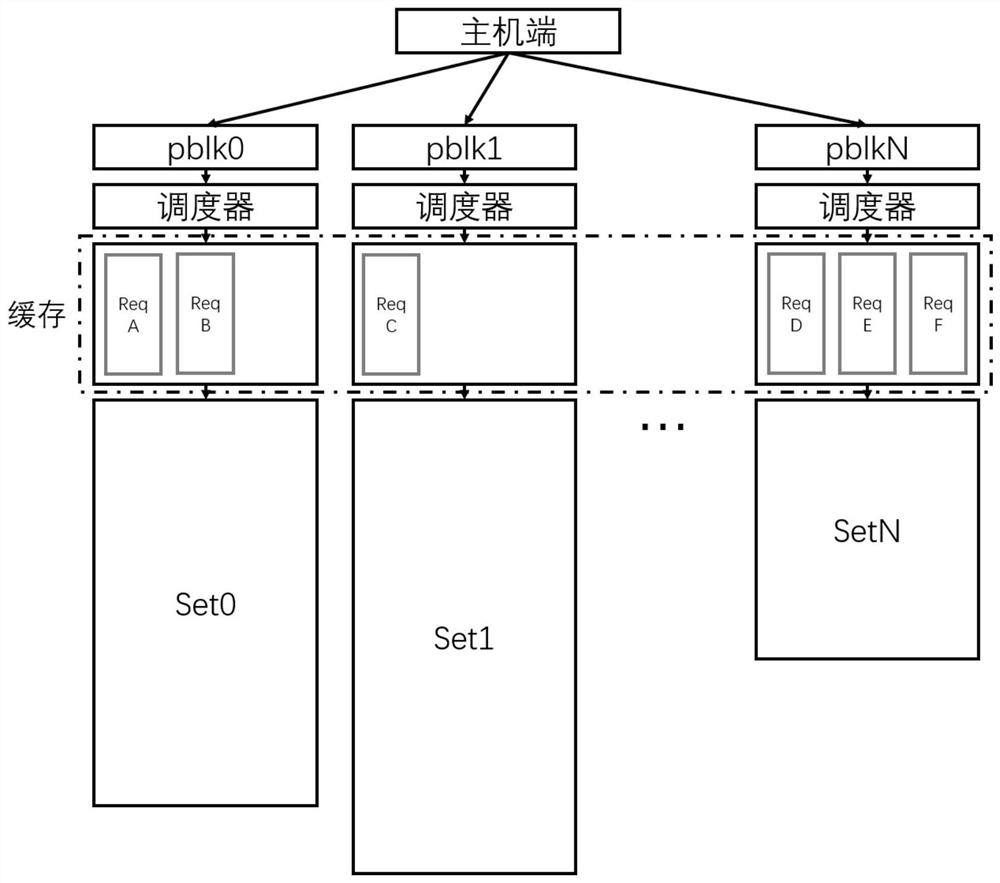

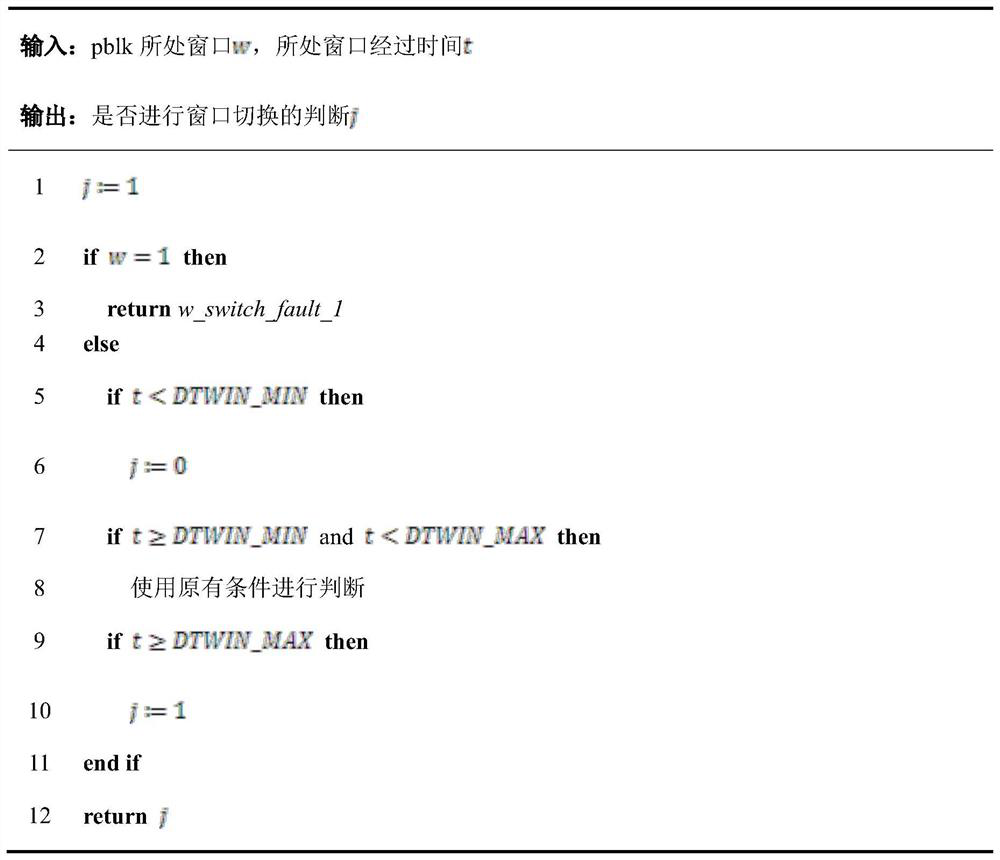

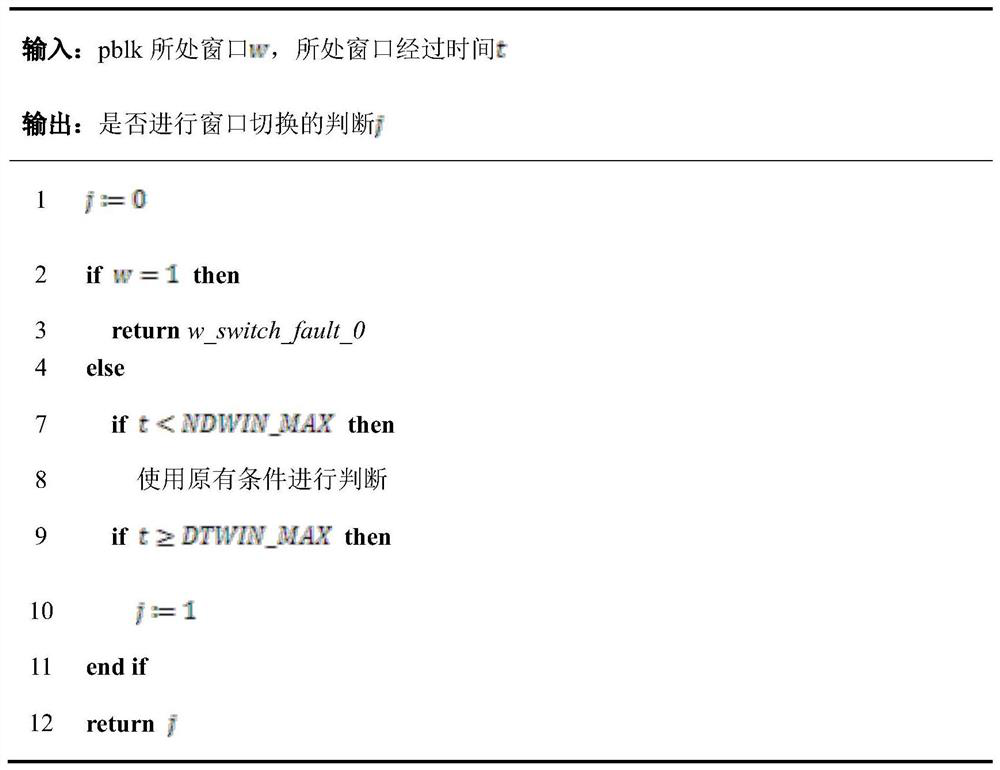

IO deterministic optimization strategy method for NVMe

ActiveCN112559381AExtend your lifeReduce Tail LatencyMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache management

An NVMe-oriented IO deterministic optimization strategy method can reduce performance jitter and the like of I / O requests by performing optimization based on sets and window division, thereby improving garbage collection efficiency and further prolonging the service life of a solid state disk, and is characterized by comprising the following steps: step A, realizing mutual independence of different sets by performing set division on NVMe storage units, therefore, reading, writing and garbage collection operations can be performed in parallel among different sets; step B, proposing a new I / O request scheduling algorithm for the NVMe after set division, and avoiding conflict between garbage collection and I / O request access; and step C, designing a new cache management algorithm to perceivethe garbage collection operation of the NVMe set, avoiding the possibility of conflict between the garbage collection operation and the I / O request access to the greatest extent, and reducing the performance jitter of the I / O request.

Owner:BEIHANG UNIV

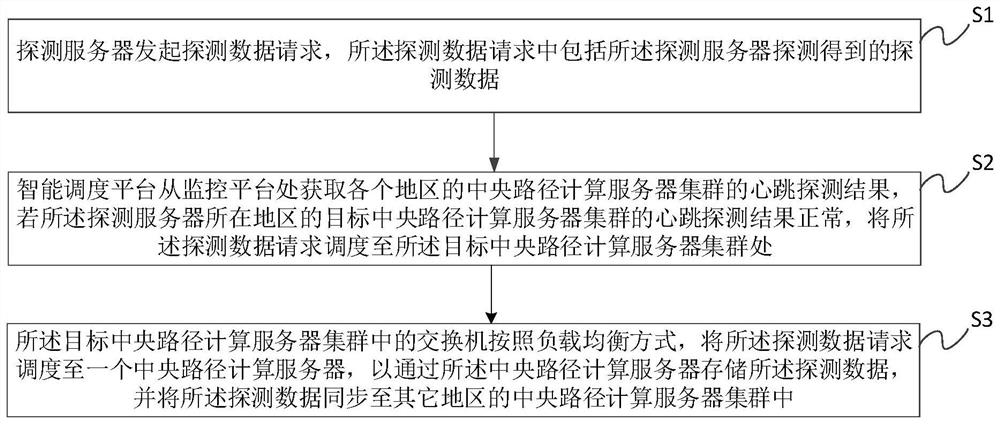

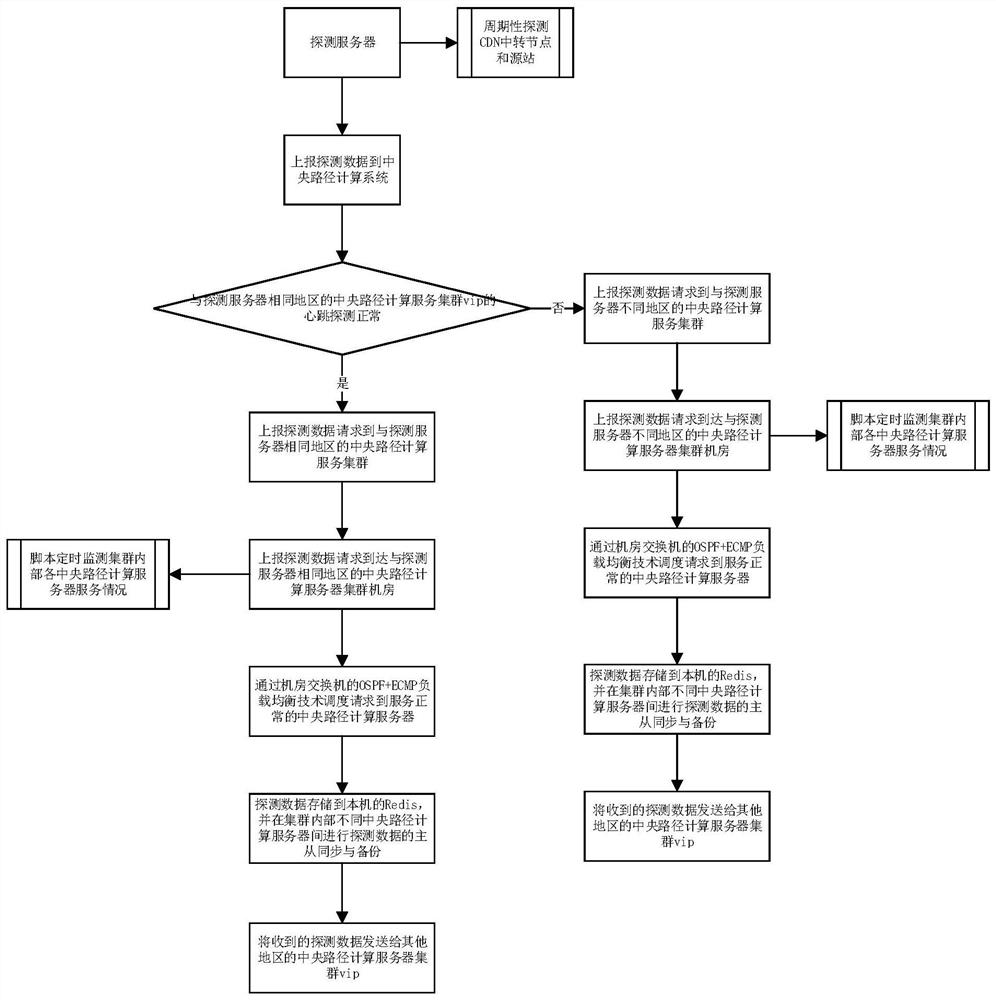

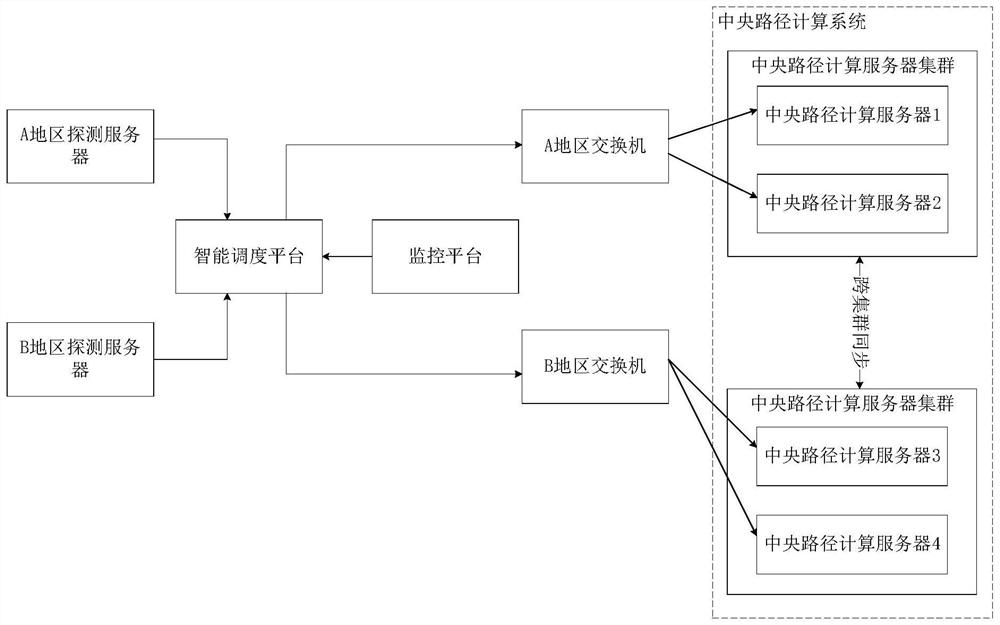

Intelligent scheduling distributed path calculation method and system

ActiveCN114500340AIncrease success rateReduce request latencyTransmissionData synchronizationPathPing

The invention discloses an intelligent scheduling distributed path calculation method and system, and the method comprises the steps: a detection server initiates a detection data request; the intelligent scheduling platform obtains the heartbeat detection result of the central path calculation server cluster of each region from the monitoring platform, and if the heartbeat detection result of the target central path calculation server cluster of the region where the detection server is located is normal, the target central path calculation server cluster of the region where the detection server is located is abnormal; scheduling the detection data request to the target central path computing server cluster; and a switch in the target central path computing server cluster schedules the detection data request to a central path computing server according to a load balancing mode, so that the detection data is stored through the central path computing server. And synchronizing the detection data to a central path computing server cluster in other regions. According to the technical scheme provided by the invention, the processing efficiency of the detection request can be improved.

Owner:CHINA TELECOM CLOUD TECH CO LTD

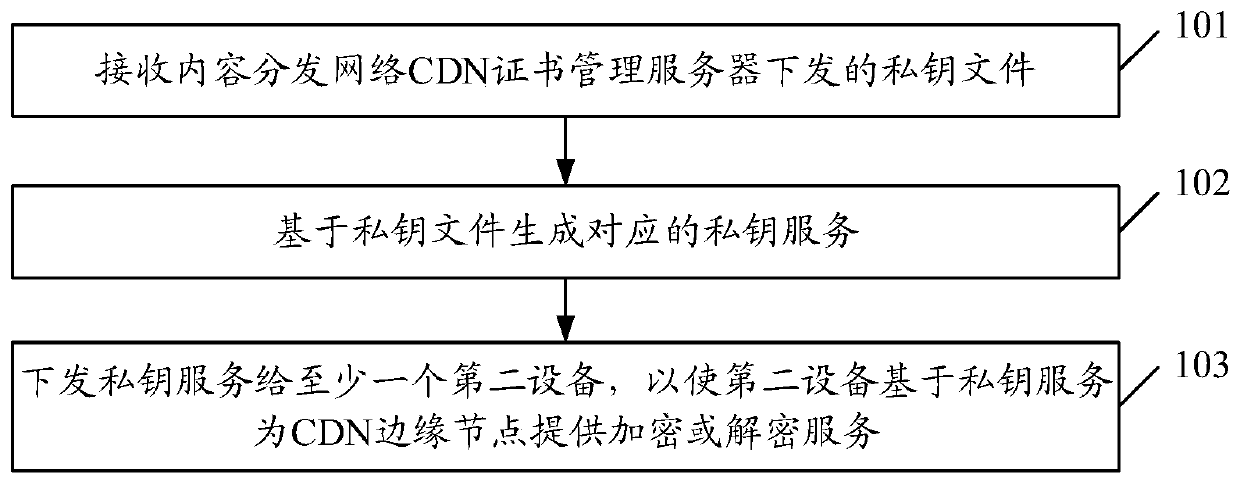

Information processing method and device and storage medium

ActiveCN111404668AEnsure safetyReduce request latencyKey distribution for secure communicationInformation processingEdge node

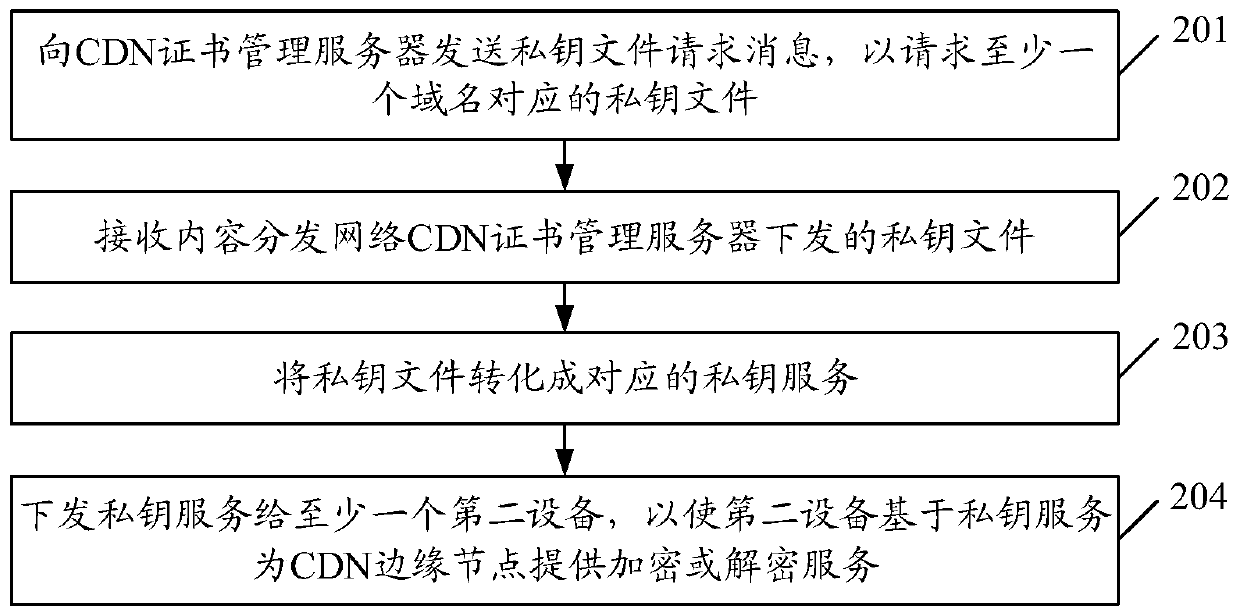

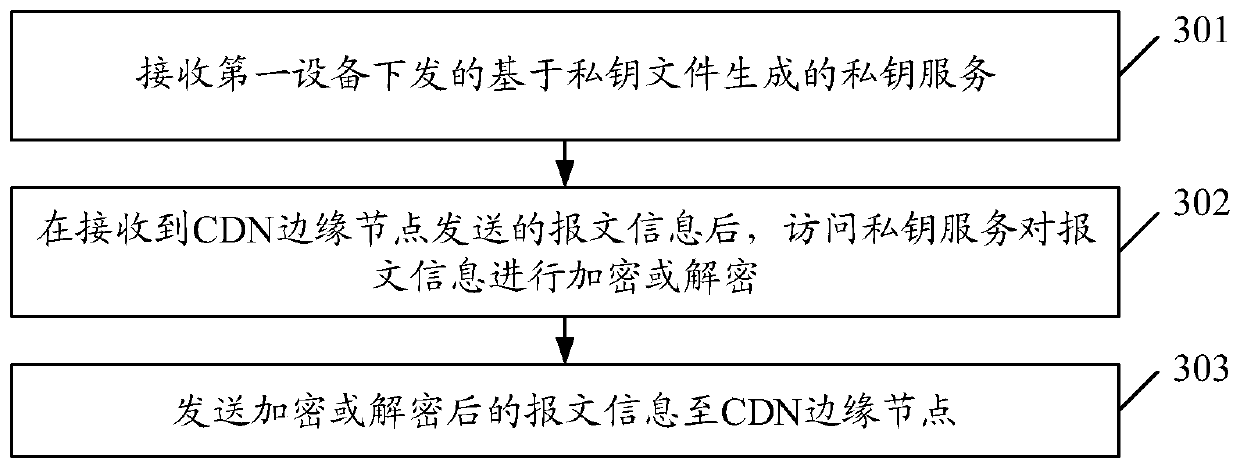

The embodiment of the invention discloses an information processing method and device and a storage medium. The method comprises: receiving a private key file issued by a CDN certificate management server d; generating a corresponding private key service based on the private key file; and issuing the private key service to at least one second device, so that the second device provides an encryption or decryption service for a CDN edge node based on the private key service.

Owner:CHINA MOBILE COMM LTD RES INST +1

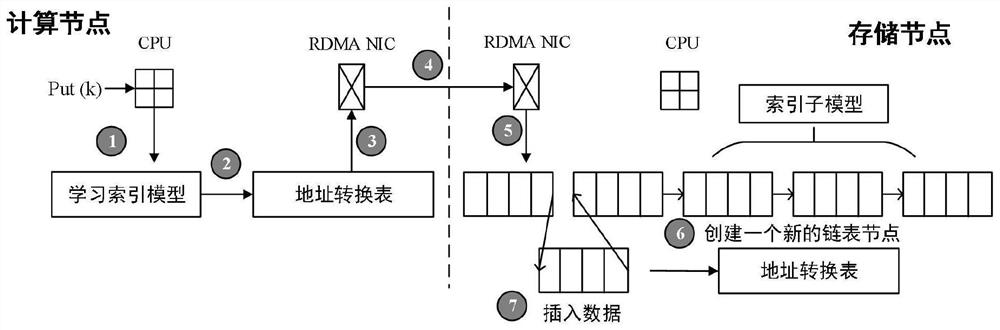

Construction method and application of distributed learning index model

PendingCN113779154ANo loss of accuracyDoes not reduce overheadDatabase updatingDatabase distribution/replicationAlgorithmTheoretical computer science

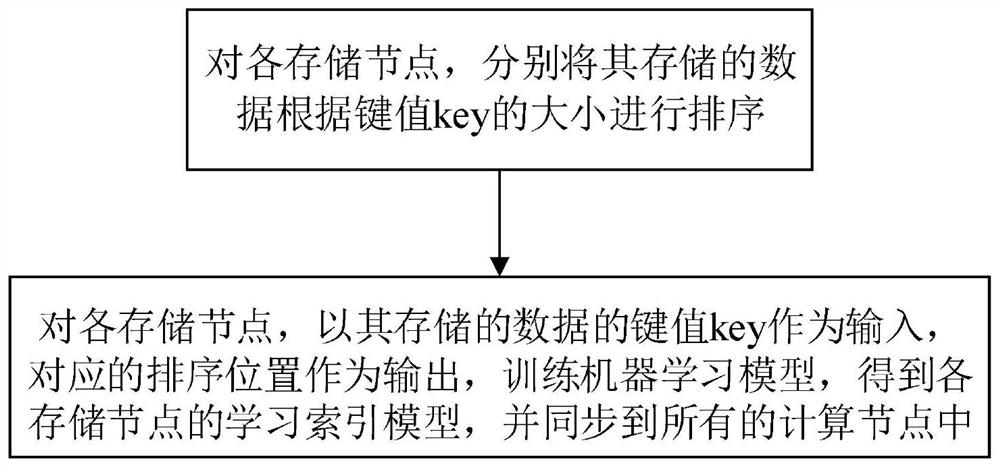

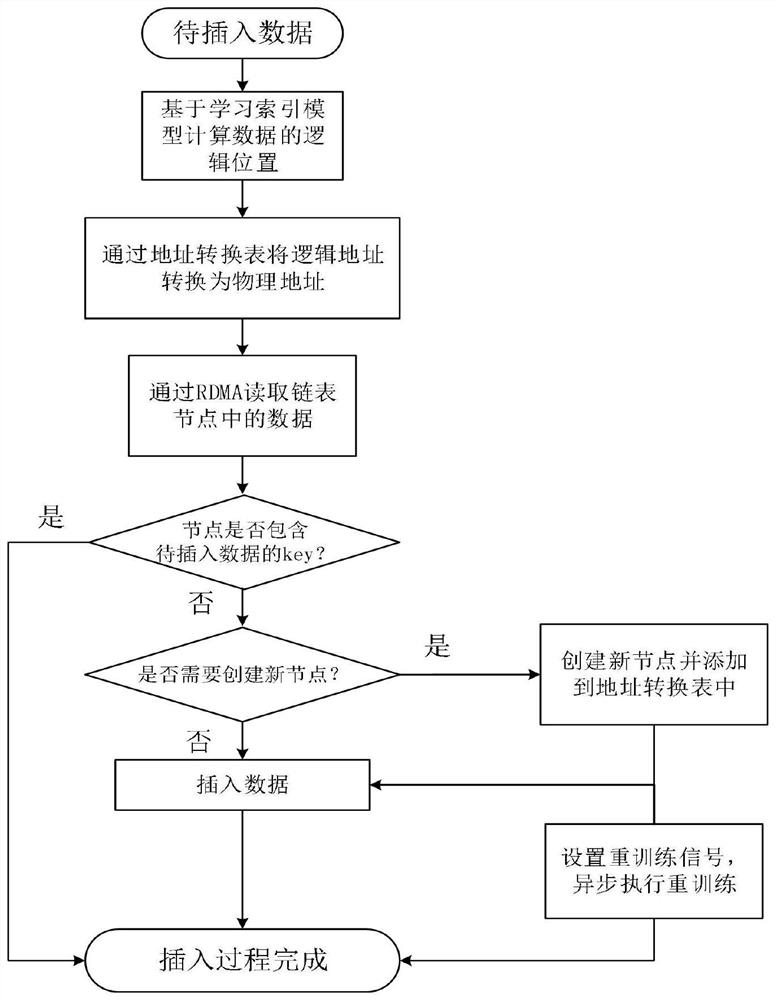

The invention discloses a construction method and application of a distributed learning index model, and belongs to the technical field of computer distributed storage. The construction method comprises the following steps: for each storage node, sorting data stored in the storage node according to the size of key values, taking the key values of the stored data as input, taking corresponding sorting positions as output, training a machine learning model to obtain a learning index model of each storage node, and synchronizing the learning index model to all calculation nodes; the calculation node directly modifies data in the storage node through RDMA operation, and a CPU of the storage node does not need to participate in work; meanwhile, the calculation node asynchronously retrains the old model and synchronizes the new model into the storage node; the operation of modifying the data and the model is executed in the calculation node in the distributed system, so that the CPU overhead of the storage node is greatly reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

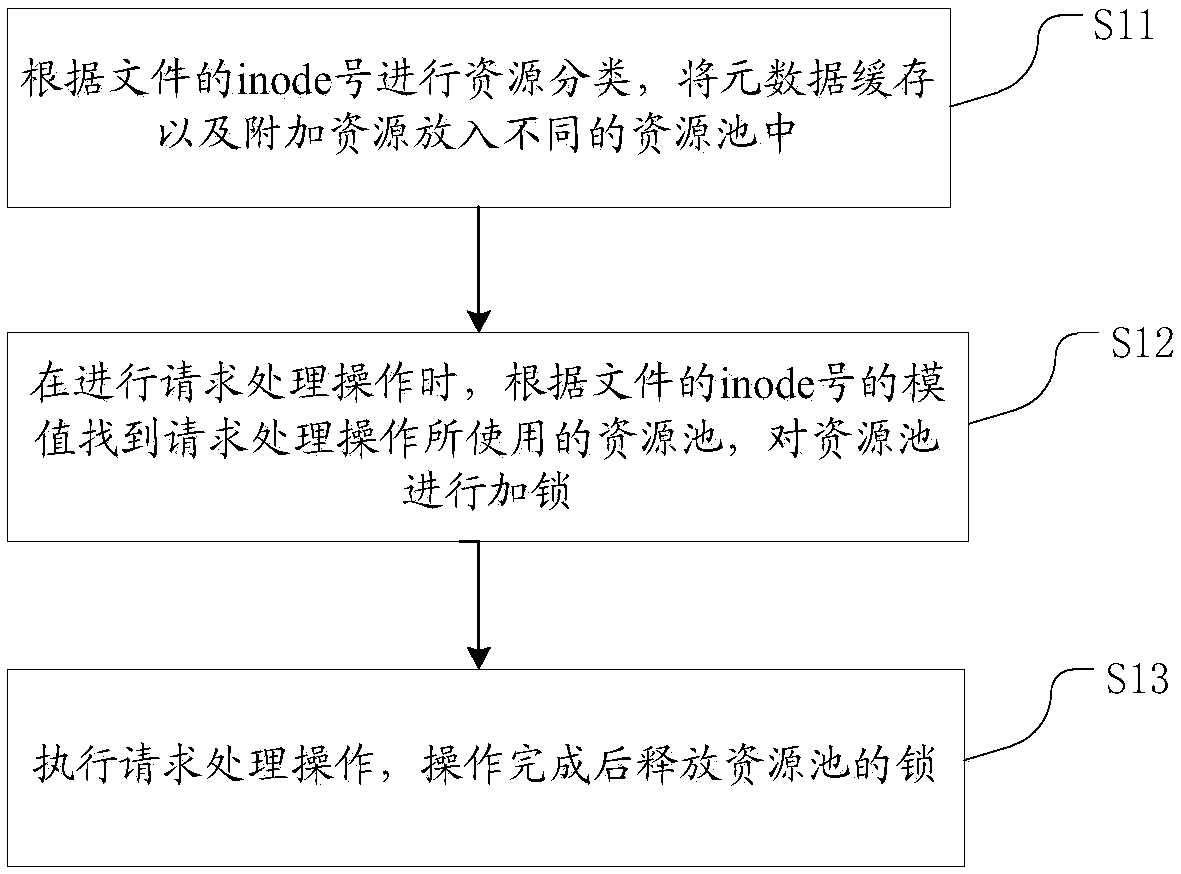

Client lock splitting method based on distributed file system

InactiveCN107633090AImprove concurrent processing performanceImprove concurrencyTransmissionSpecial data processing applicationsResource poolDistributed File System

The invention discloses a client lock splitting method based on a distributed file system. The method comprises the steps that resource classification is performed according to an inode number of a file, and metadata caches and additional resources are placed in different resource pools; when request processing operation is performed, the resource pool used in the request processing operation is found according to a module value of the inode number of the file, and the resource pool is locked; and the request processing operation is executed, and the lock of the resource pool is released afterthe operation is completed. Through the method, request response delay is shortened on the basis of improving client IOPS.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

Random access method, user equipment, and base station equipment

ActiveCN109121223BReduce overheadLower latencyPilot signal allocationWireless communicationResource assignmentUser equipment

The invention discloses a beam failure recovery request method, which includes: acquiring channel time-frequency resource configuration information and preamble sequence configuration information used for beam failure recovery request transmission; selecting candidate downlink transmission beams according to measurement results; and the corresponding relationship between channel time-frequency resources and / or preamble sequences, as well as time-frequency resource configuration information and preamble sequence configuration information, select channel time-frequency resources and / or preamble sequences; and send preamble sequences on channel time-frequency resources. Compared with the prior art, the present invention optimizes the resource allocation and process for the beam failure recovery request, and can effectively reduce signaling overhead and access delay.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

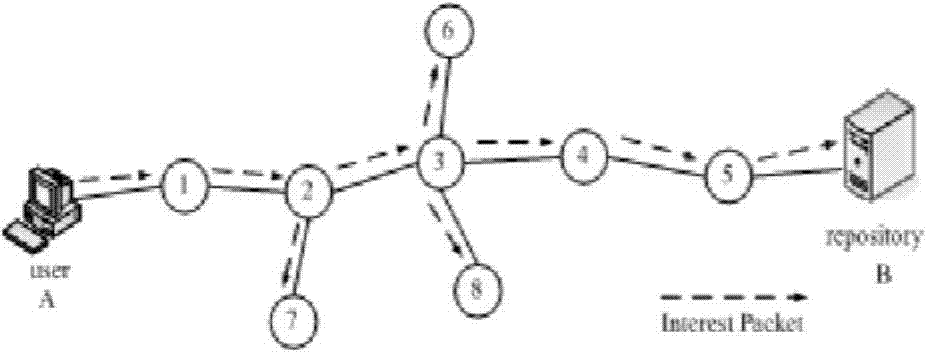

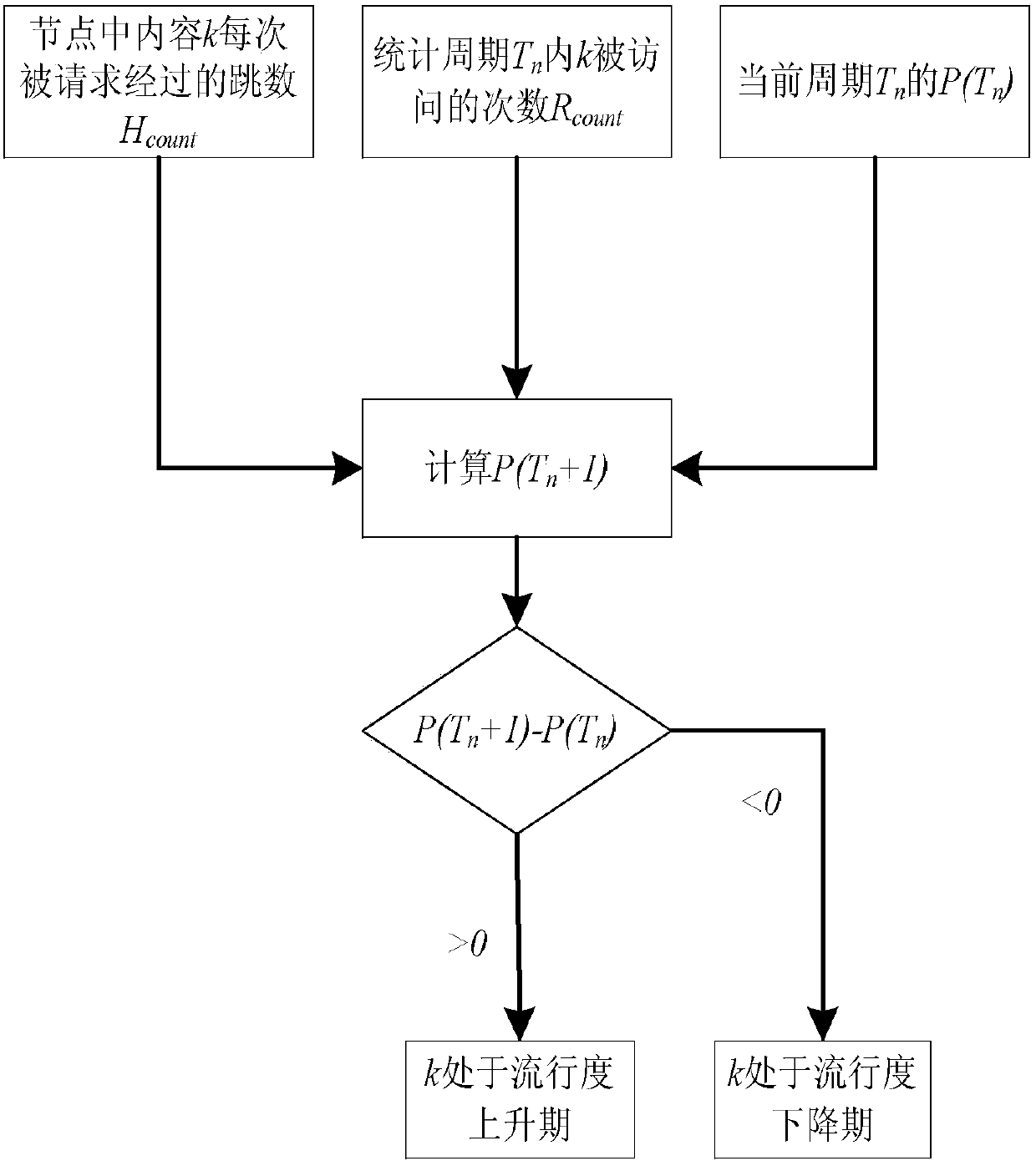

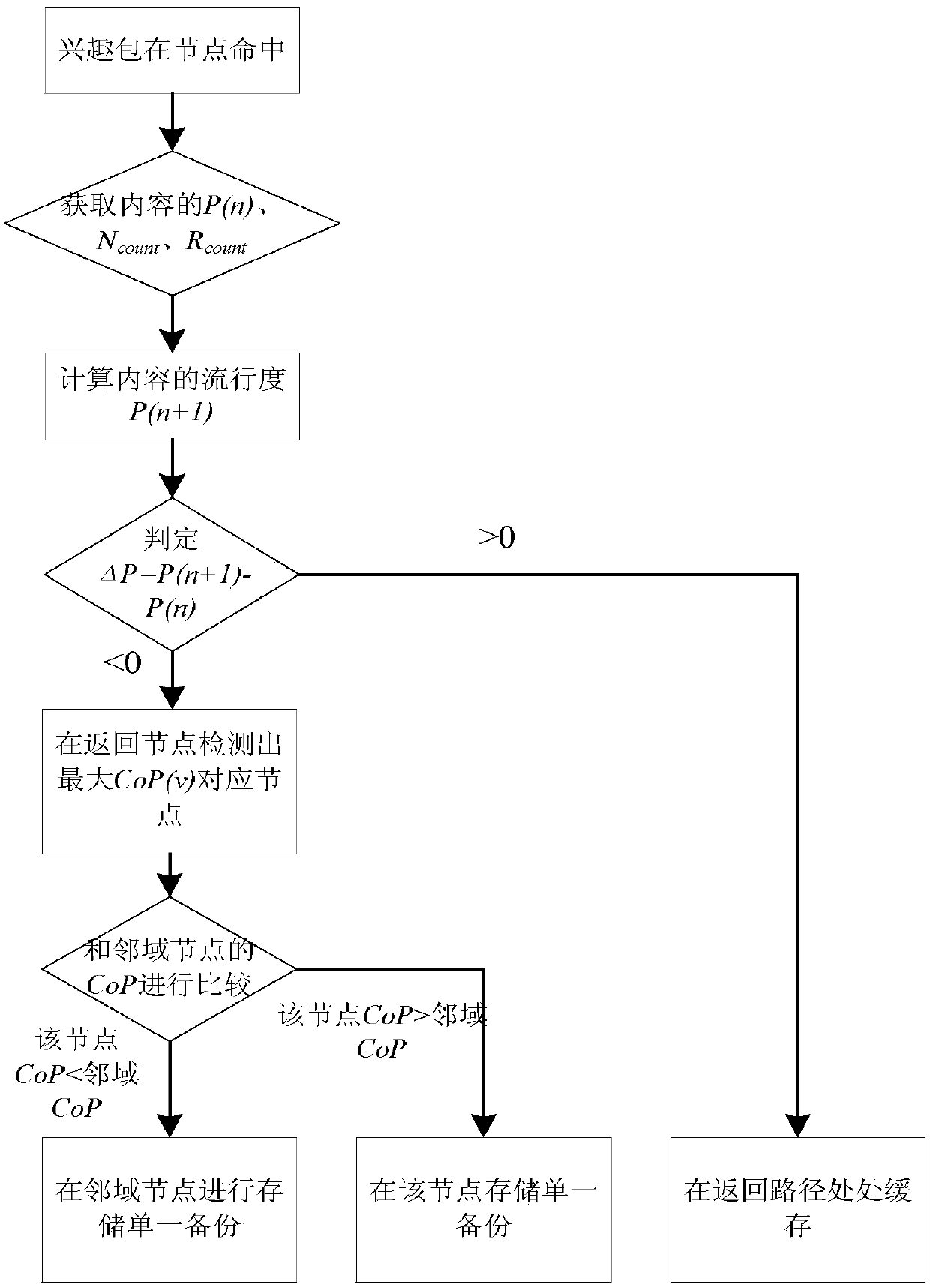

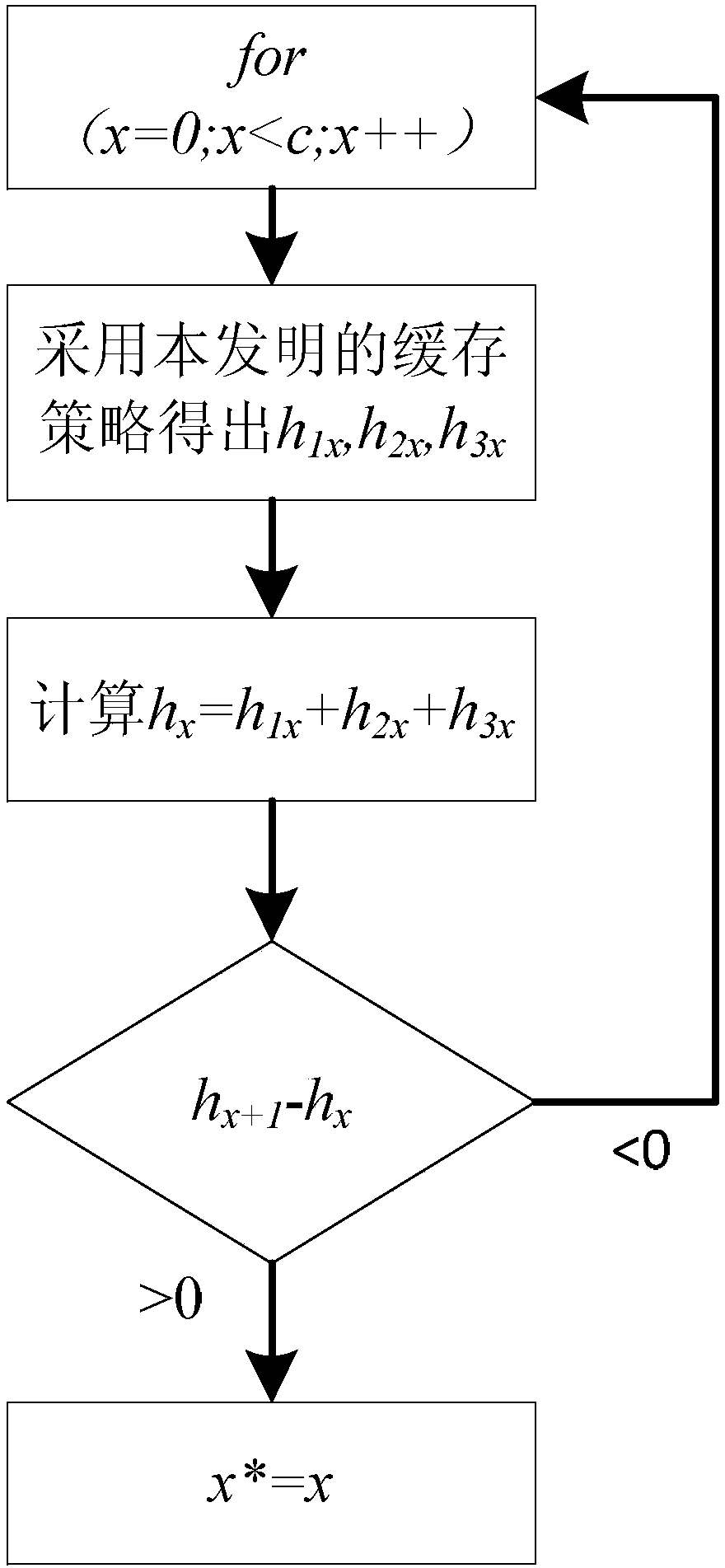

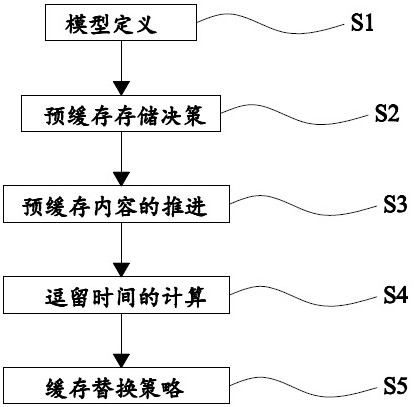

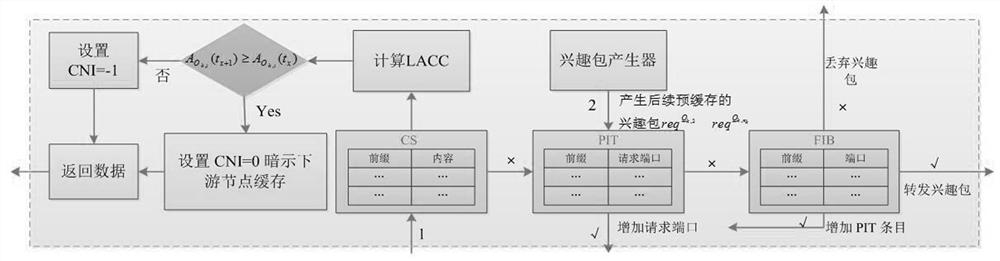

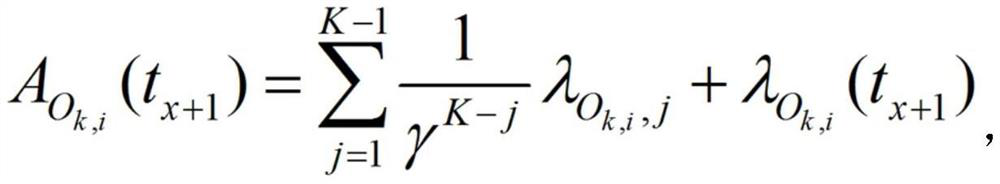

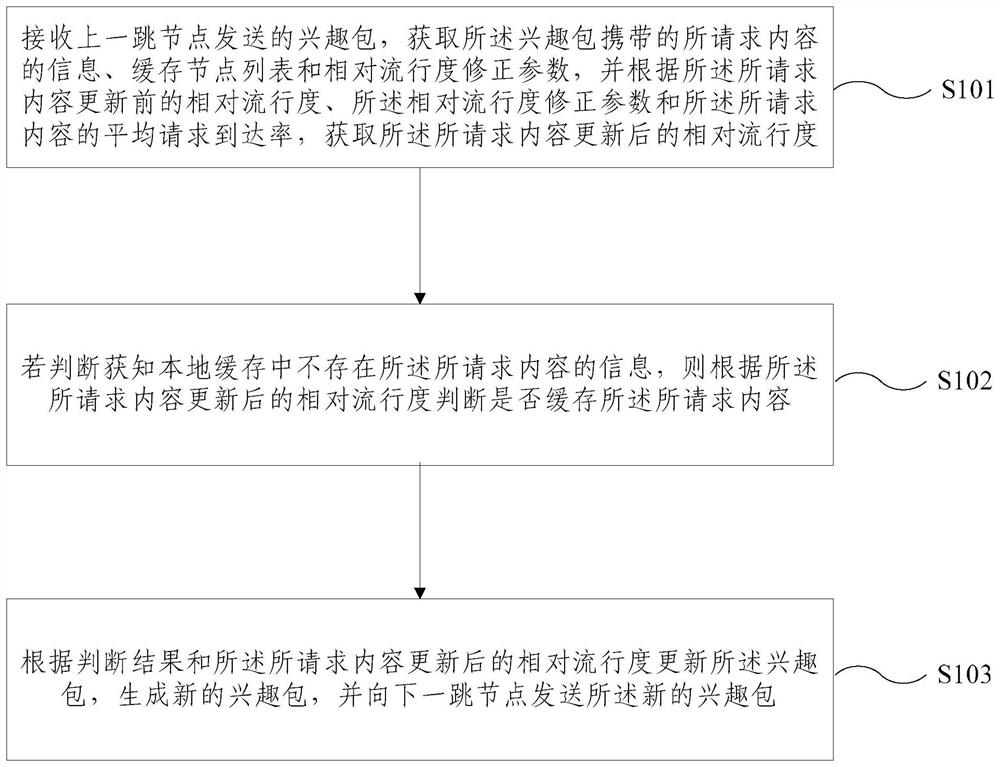

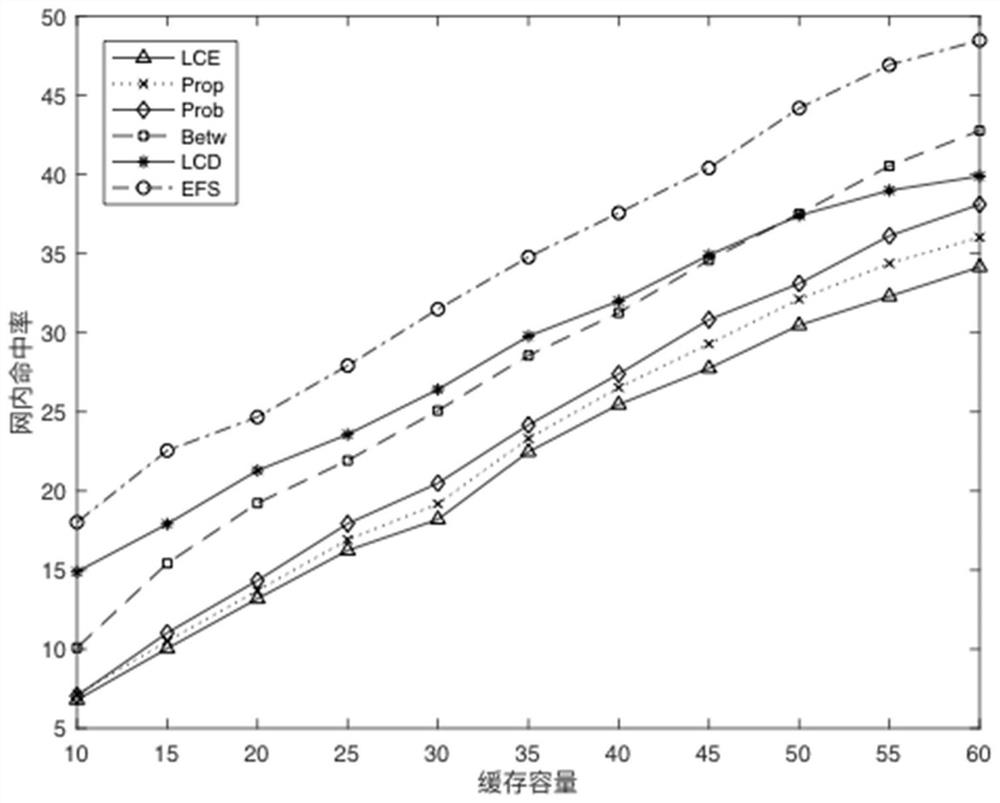

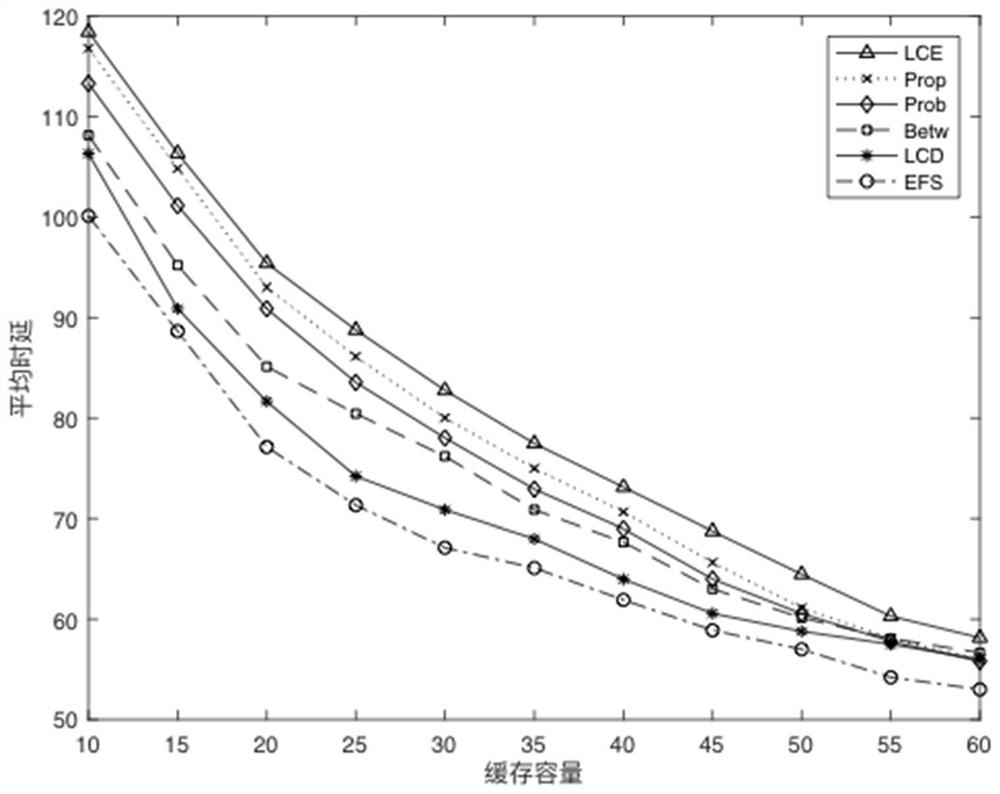

A Collaborative Caching Method Based on Popularity Prediction in Named Data Networks

The present invention requests protection of a collaborative caching method based on popularity prediction in a named data network. When storing content in a named data network (Named Data Networking, NDN), multiple caching strategies along the path or caching strategies at important nodes are used to cause This ensures high redundancy of node data in the network and low utilization of cache space. This paper adopts the method of "partial collaborative caching". First, after predicting the future popularity of the content, each node allocates an optimal proportion of cache space as a local cache space to store highly popular content. The remaining part of each node stores content with relatively low popularity through neighborhood cooperation. The optimal space division ratio is calculated by considering the number of hops that the Interest packet hits the node in the network and the server-side request hits. Compared with the traditional cache strategy, this method increases the utilization rate of the cache space in the network, reduces the cache redundancy in the network, improves the cache hit rate of the nodes in the network, and improves the performance of the entire network.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

An icn network pre-caching method based on request content relevance

The present invention provides an ICN network pre-caching method based on the relevance of request content. In the present invention, by analyzing the connection characteristics between user request content blocks in the ICN network, and utilizing the correlation between user request content blocks, a method is designed. A pre-caching strategy that cooperates between nodes. In view of the fact that the existing caching strategy does not fully consider the characteristics of the relationship between the user's multiple requests, this strategy introduces a pre-cache method, which reduces the user's request delay to a certain extent. At the same time, according to the popularity of the requested content, Gradually place popular content on the edge of the network.

Owner:HENAN UNIV OF SCI & TECH

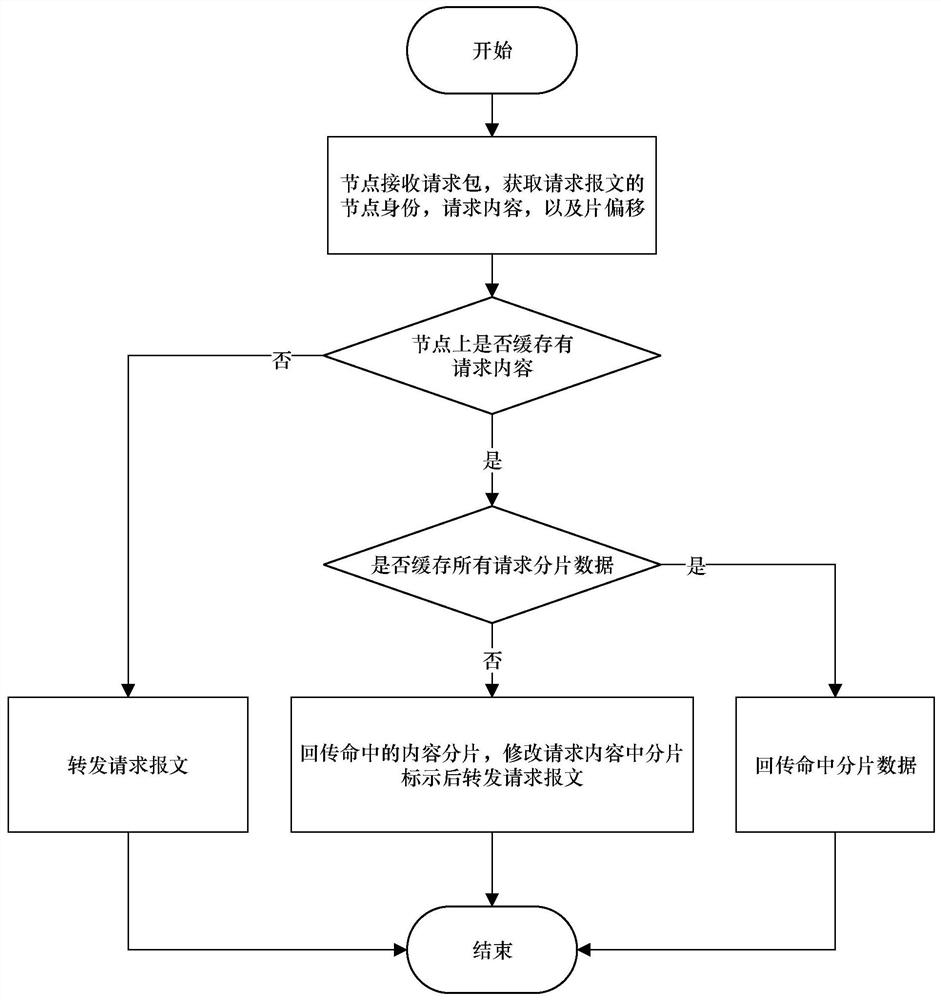

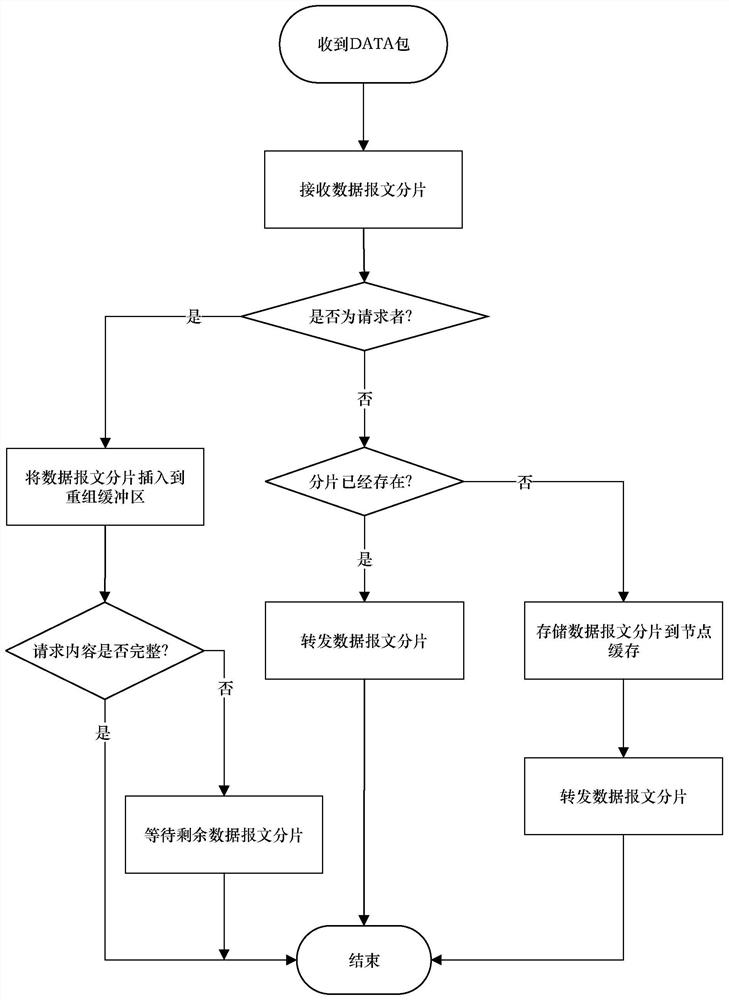

Content request method based on fragment cache in unmanned aerial vehicle ad hoc network

PendingCN114040357AReduce overheadImprove transmission efficiencyParticular environment based servicesNetwork topologiesPathPingUncrewed vehicle

The invention discloses a content request method based on fragment cache in an unmanned aerial vehicle ad hoc network. On the premise that each node has the caching capability, the request content is fragmented by using the fragment offset mark in the request message, so that the number of the request messages is reduced, and the data message is acquired from the neighbor node to the greatest extent; therefore, the total delay of data message acquisition is reduced, and the load of a return path is reduced. The method comprises the steps that a destination node sets a slice offset mark in a generated request message; a relay node checks own node cache according to the content in the request message and the slice offset mark, passes back the hit cache slice and modifies the slice offset mark; and finally, the data message corresponding to the chip offset mark is transmitted back to the destination node. In the return process, the relay node can store the cache chips which are not cached. According to the method, a plurality of data messages are obtained by a single request message, so the request delay is reduced, and the data return load is reduced.

Owner:BEIHANG UNIV

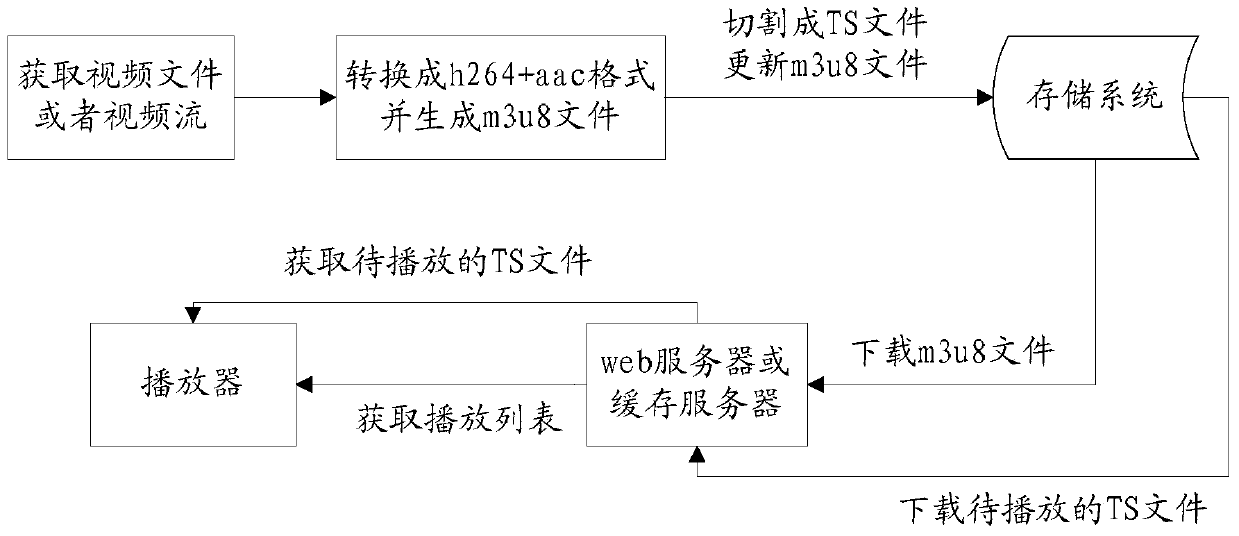

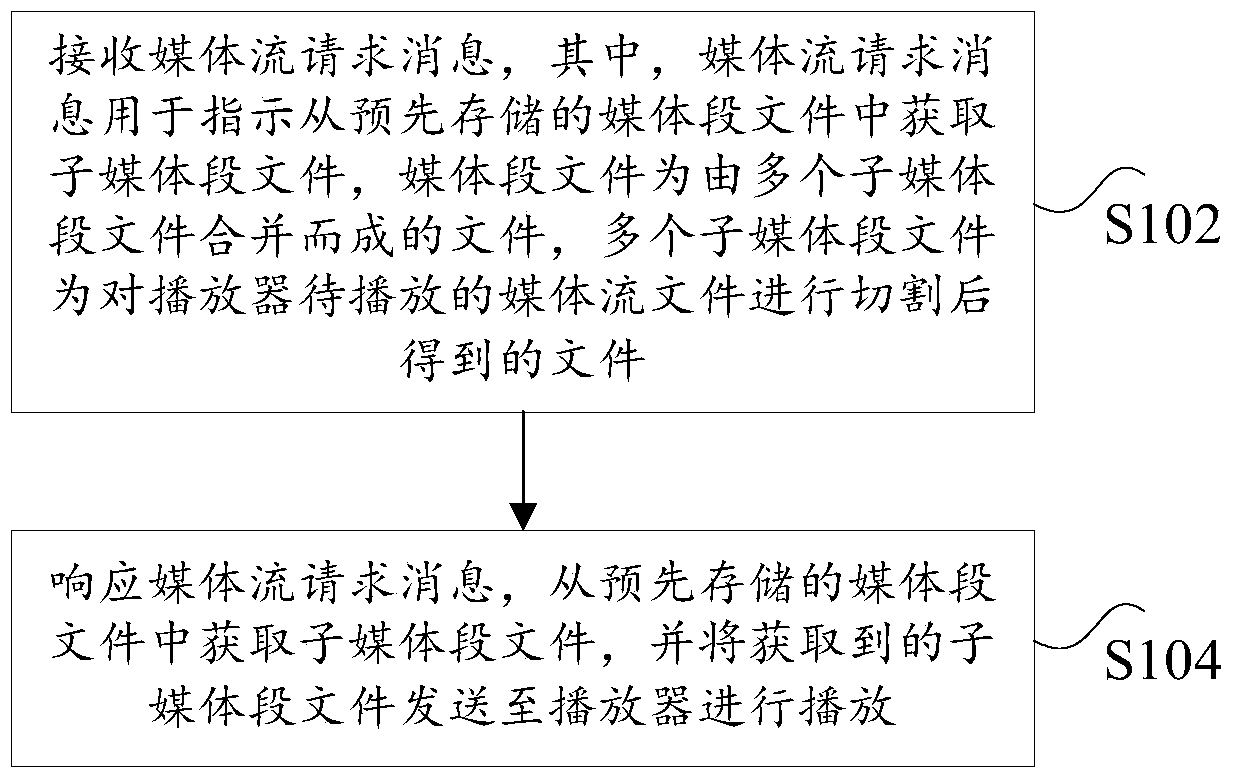

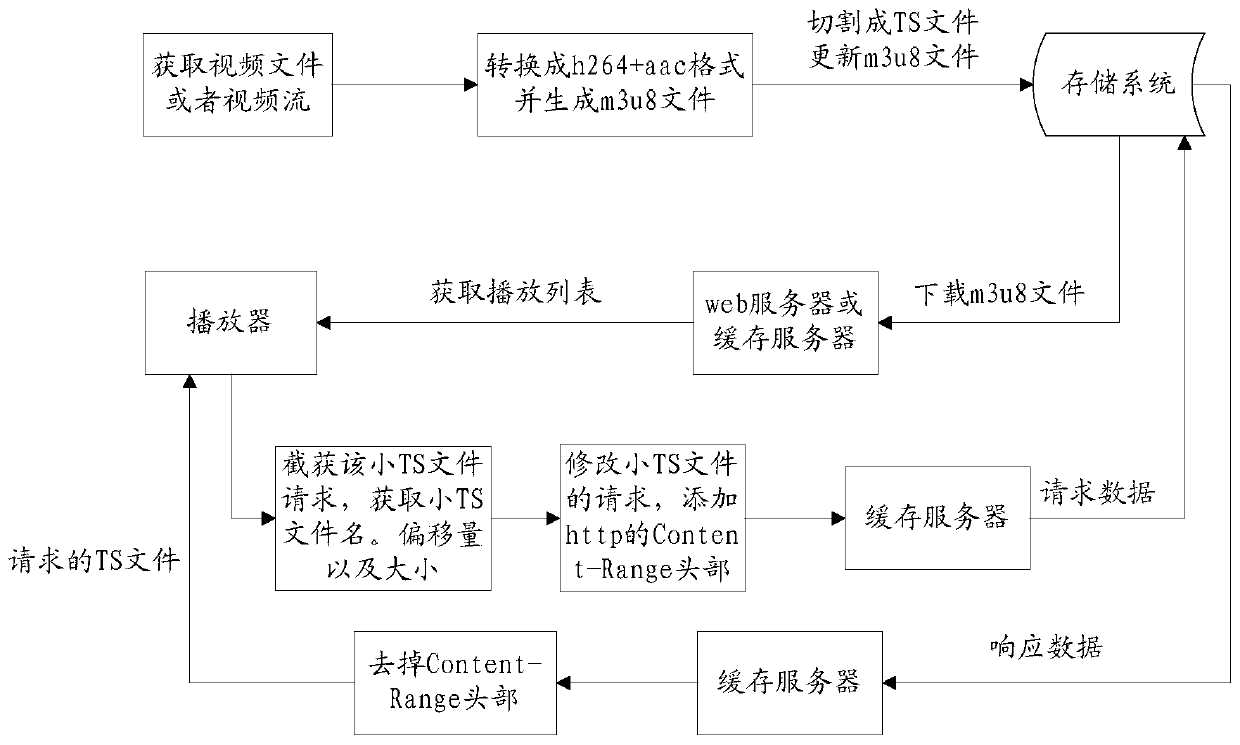

Method and device for processing media stream files

ActiveCN105828096BAvoid the problem of access efficiencyImprove access efficiencyTransmissionSelective content distributionComputer hardwareComputer network

The invention discloses a media stream file processing method and device, wherein the method comprises following steps of receiving a media stream request message, wherein the media stream request message is used for indicating that sub-media segment files are obtained from a preset media segment file, wherein the media segment file is the file formed by combining multiple sub-media segment files, the multiple sub-media segment files are the files obtained by cutting a to-be-played media stream file of a player; obtaining the sub-media segment files from the preset media segment file in response to the media stream request message; and sending the obtained sub-media segment files to the player for playing. According to the method and the device, the technical problems that in the related technique, the efficiency of accessing small files is reduced due to cutting the media stream file into a mass of small files, and the delay of requesting for big files is high due to cutting the media stream file into the big files are solved.

Owner:CHINANETCENT TECH

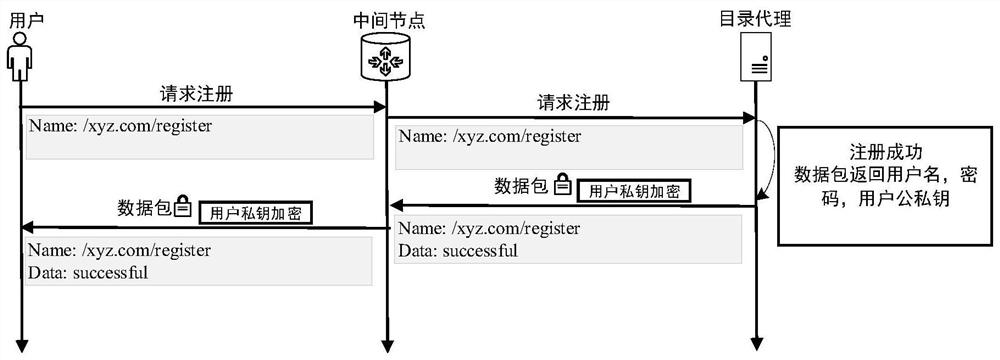

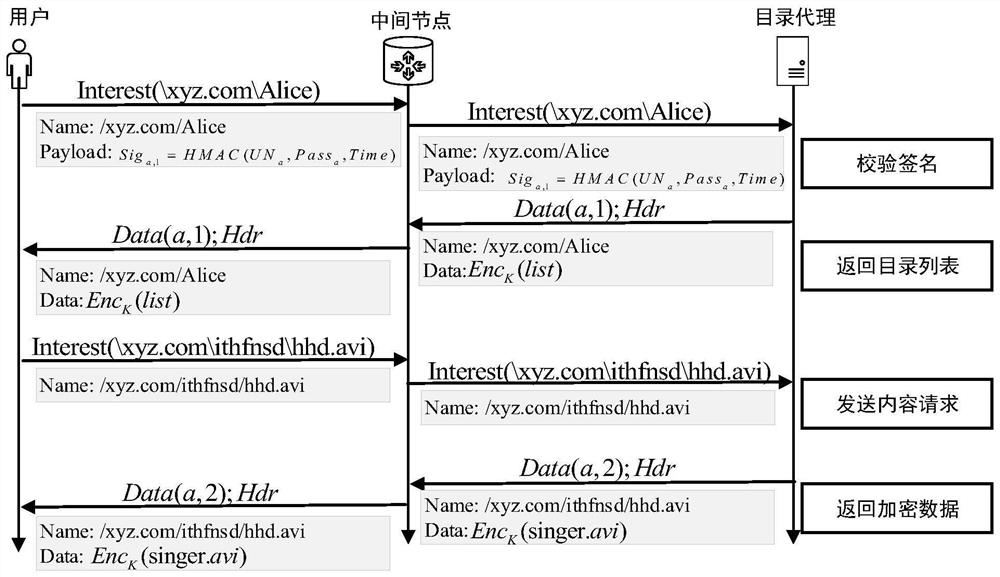

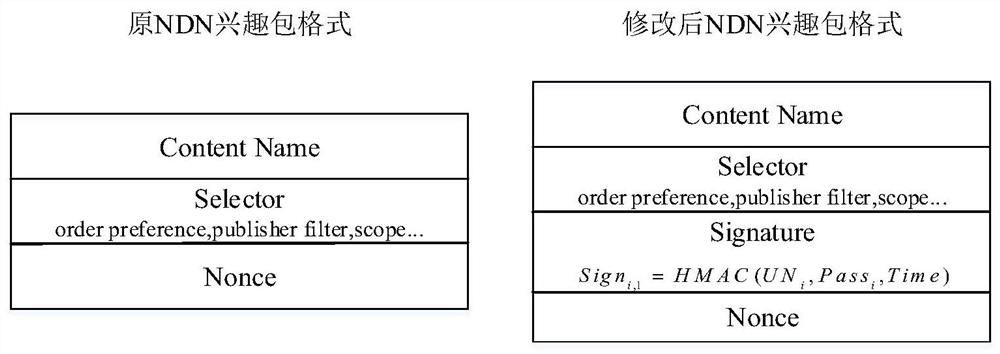

A Named Data Network Anti-Name Filtering Method Based on Directory Proxy

The invention discloses a name data network anti-name filtering method based on a directory agent. The directory agent provides regularly updated directory files with one-to-one correspondence between the real name and the fake name. Masquerade name correspondence, send requests to the directory agent with the masquerade name; the directory agent translates the masquerade name, learns the real name requested by the user, retrieves the requested content file for the user, and then sends the content file to the user through broadcast encryption. The proposal of the present invention can be applied to the scene where there are hijacked routers in the network, effectively avoiding the name filtering attack of the hijacked routers in the network, and at the same time not only ensuring that the interest packet has good routable, but also ensuring the cache in the network utilization rate.

Owner:JIANGSU UNIV

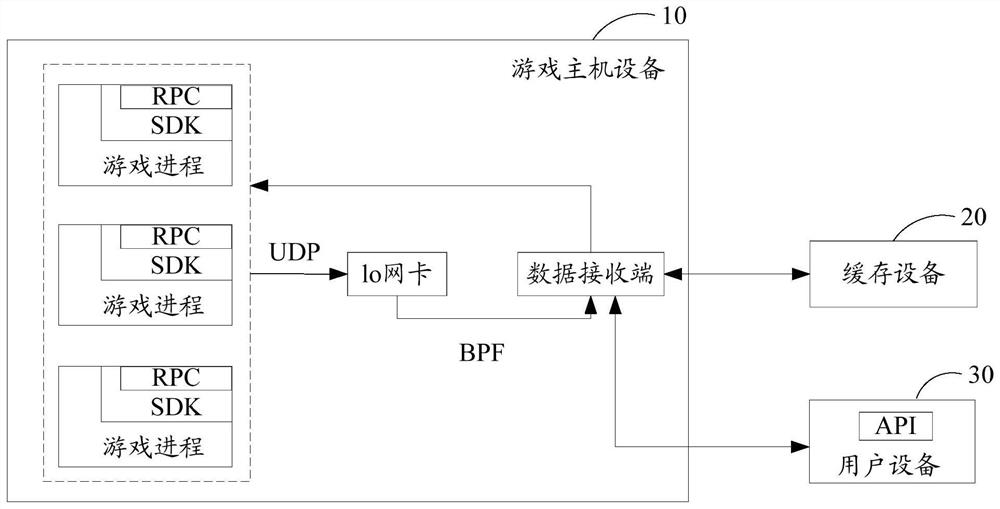

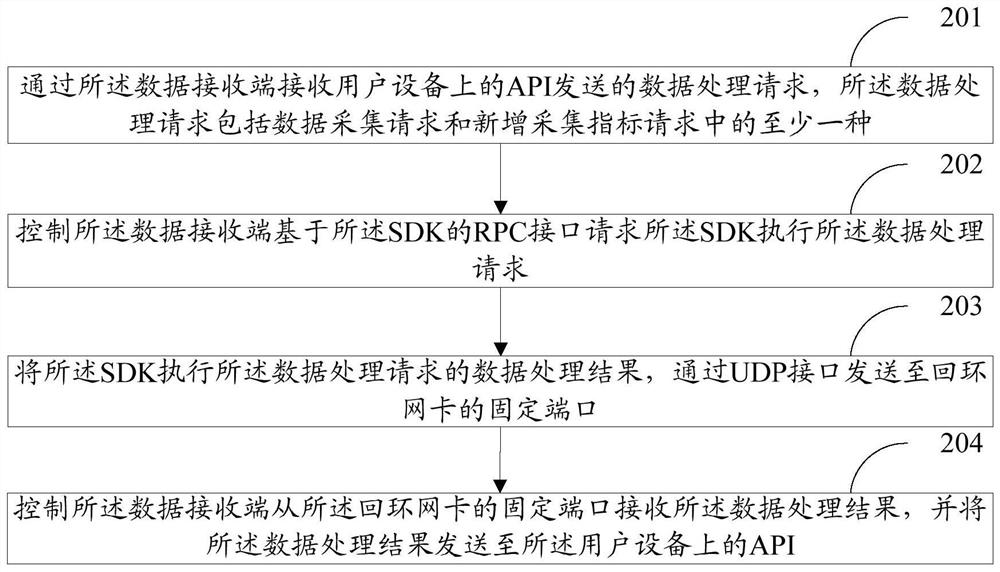

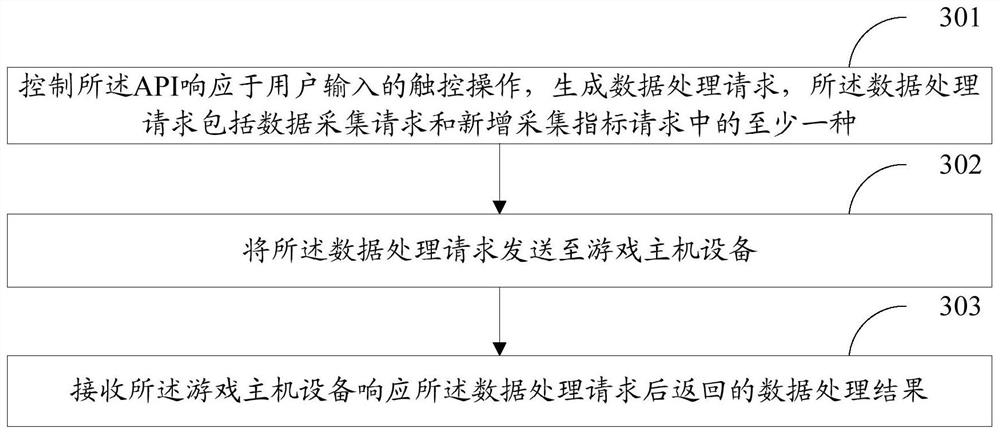

Data processing method, storage medium and electronic equipment

PendingCN112891928AImprove data collection efficiencyReduce Data Acquisition OverheadInterprogram communicationVideo gamesSoftware engineeringControl data

The embodiment of the invention discloses a data processing method, a storage medium and electronic equipment. The method comprises the following steps of: receiving a data processing request sent by an API (Application Program Interface) on user equipment through a data receiving end of game host equipment, the data processing request comprising at least one of a data acquisition request and a newly added acquisition index request; controlling the data receiving end to request an SDK to execute the data processing request based on a RPC interface of the SDK; sending a data processing result of the data processing request executed by the SDK to a fixed port of a loop-back network card through a UDP interface; and then controlling the data receiving end to receive the data processing result from the fixed port of the loop-back network card, and sending the data processing result to the API on the user equipment. According to the embodiment of the invention, the data acquisition overhead of the game service can be reduced, the operation of the game process is not blocked, the request delay of players is reduced, and the data acquisition efficiency of the game is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

Caching method and device for internet of things

Owner:BEIJING UNIV OF POSTS & TELECOMM +2

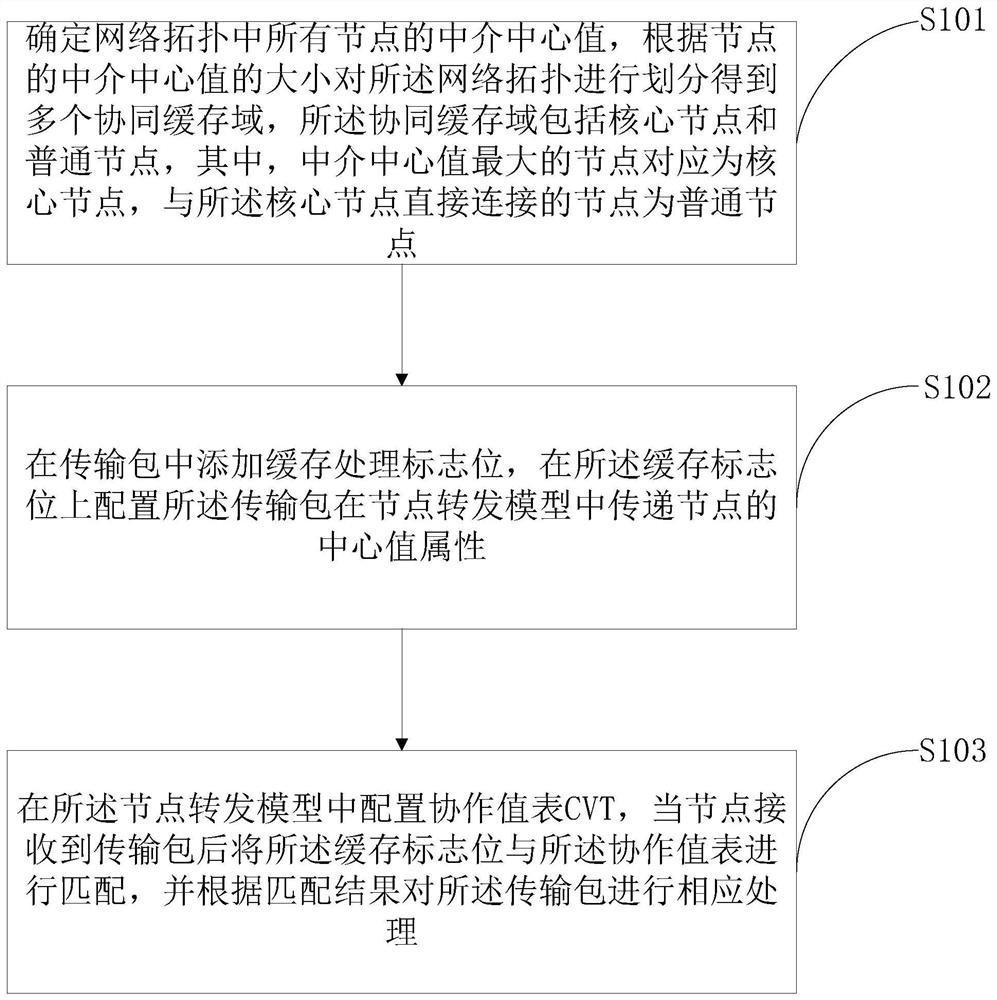

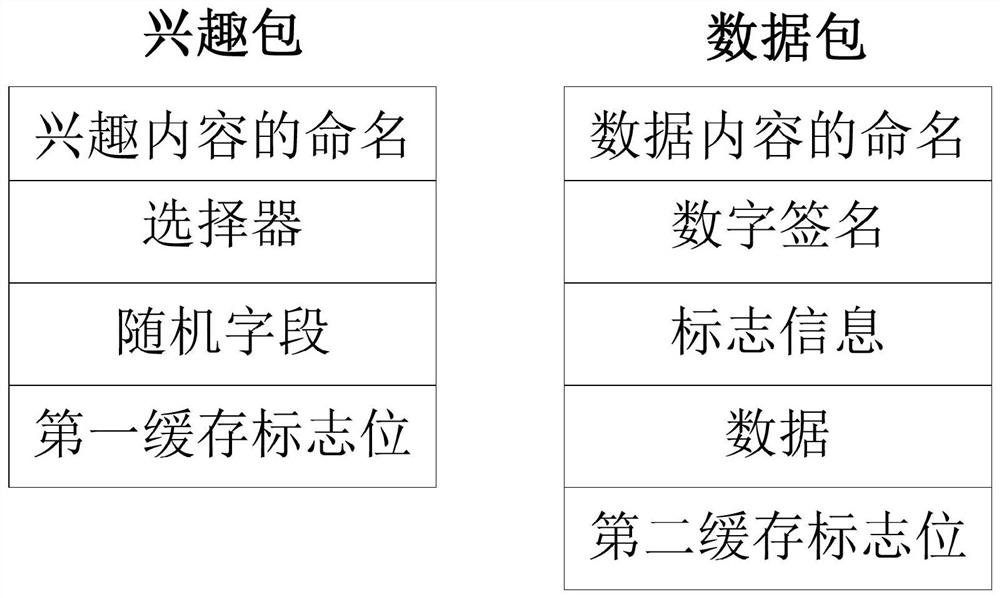

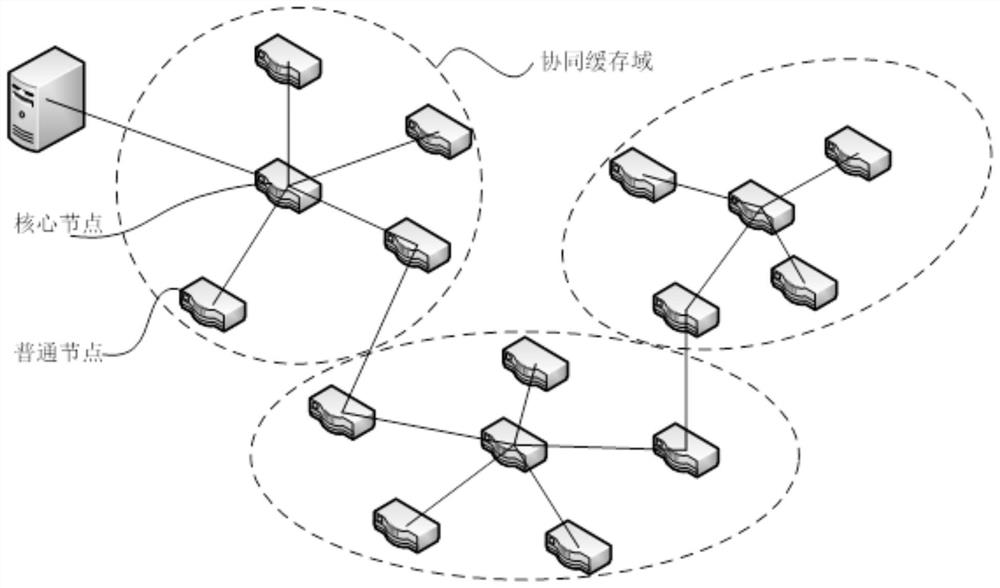

A content-centric network-based domain collaborative caching method and device

The content-centric network-based domain collaborative caching method and device provided by the present invention caches data in a certain collaborative area by adopting a collaborative caching method, fully utilizes the cache space of non-path nodes in the collaborative domain, and at the same time, the collaborative area only caches One copy reduces data redundancy, makes full use of hardware resources in the network topology, and adopts a reasonable cooperative caching strategy to achieve the purpose of improving the cache hit rate, reducing the number of routing hops, and reducing the request delay. Reduce network congestion while reducing pressure, and provide better data services for network users in CCN.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com