Patents

Literature

148results about How to "Reduce the number of reads" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Accessing and updating a configuration database from distributed physical locations within a process control system

InactiveUS7127460B2Reduce the number of readsIncrease speedData processing applicationsProgram loading/initiatingCache accessControl system

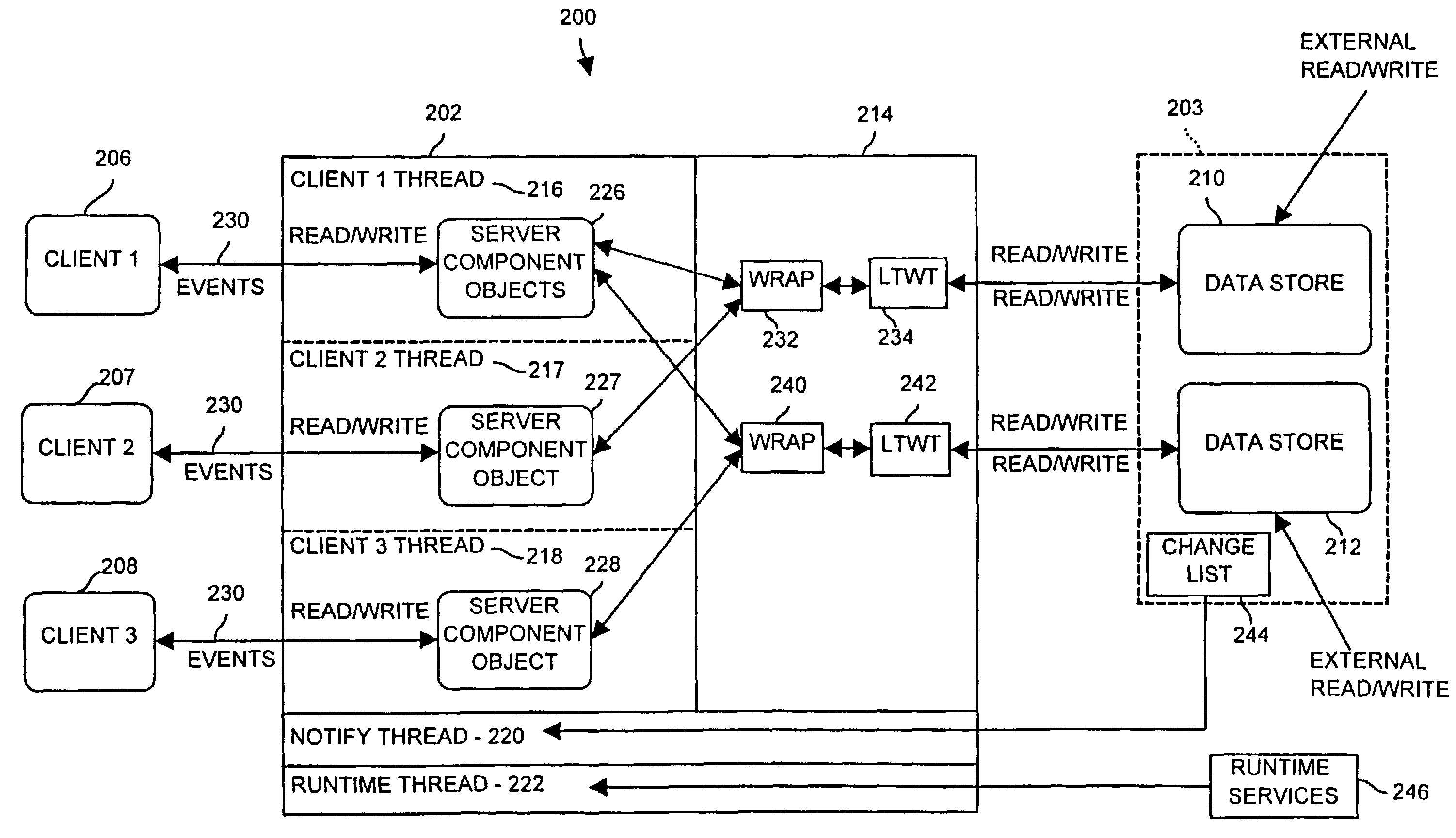

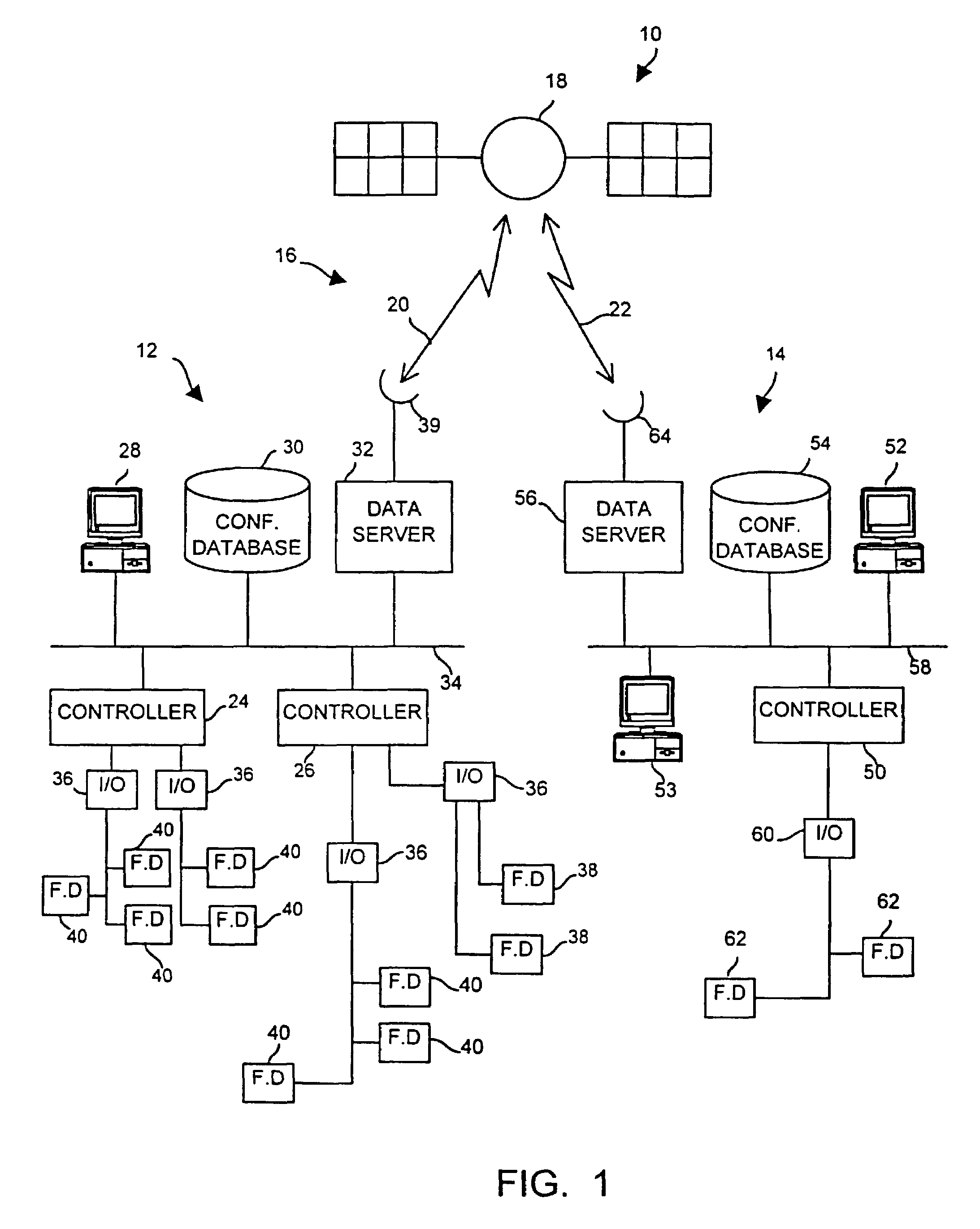

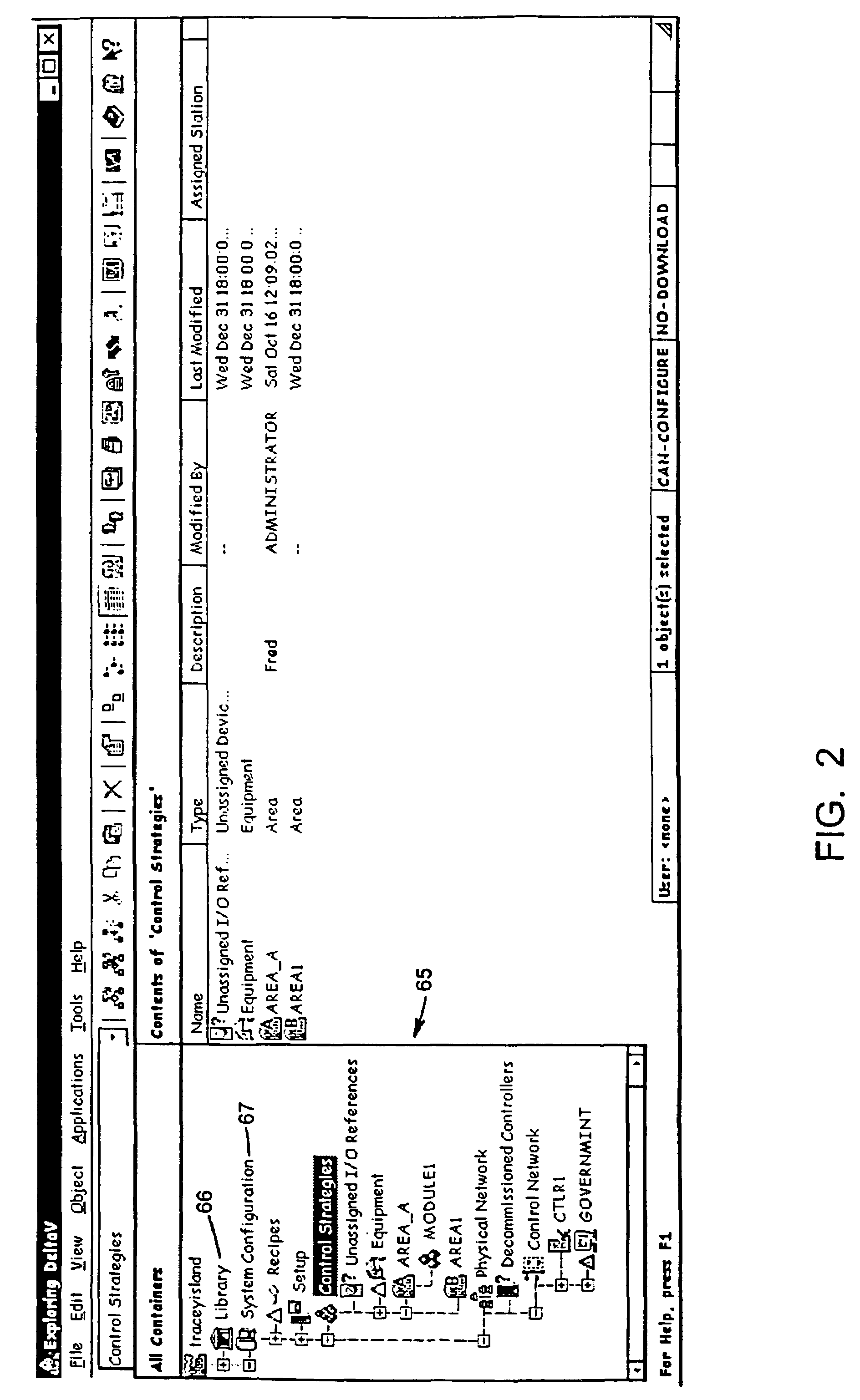

A configuration database includes multiple databases distributed at a plurality of physical locations within a process control system. Each of the databases may store a subset of the configuration data and this subset of configuration data may be accessed by users at any of the sites within the process control system. A database server having a shared cache accesses a database in a manner that enables multiple subscribers to read configuration data from the database with only a minimal number of reads to the database. To prevent the configuration data being viewed by subscribers within the process control system from becoming stale, the database server automatically detects changes to an item within the configuration database and sends notifications of changes made to the item to each of the subscribers of that item so that a user always views the state of the configuration as it actually exists within the configuration database.

Owner:FISHER-ROSEMOUNT SYST INC

Method to reduce I/O for hierarchical data partitioning methods

InactiveUS6055539AGenerate efficientlyShort training timeData processing applicationsDigital data information retrievalRecordsetMulti processor

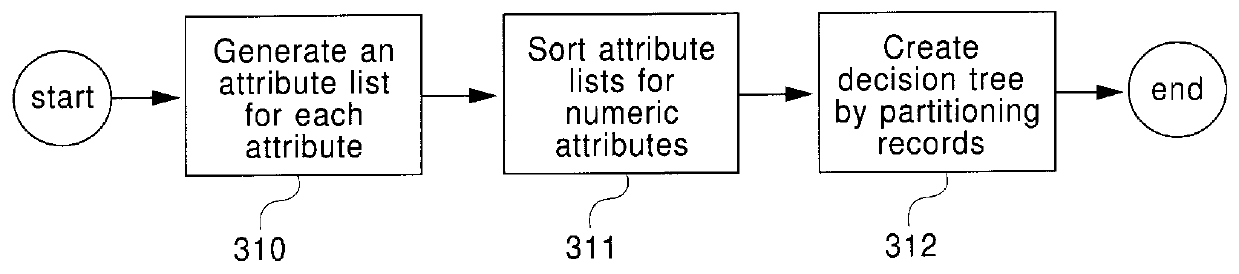

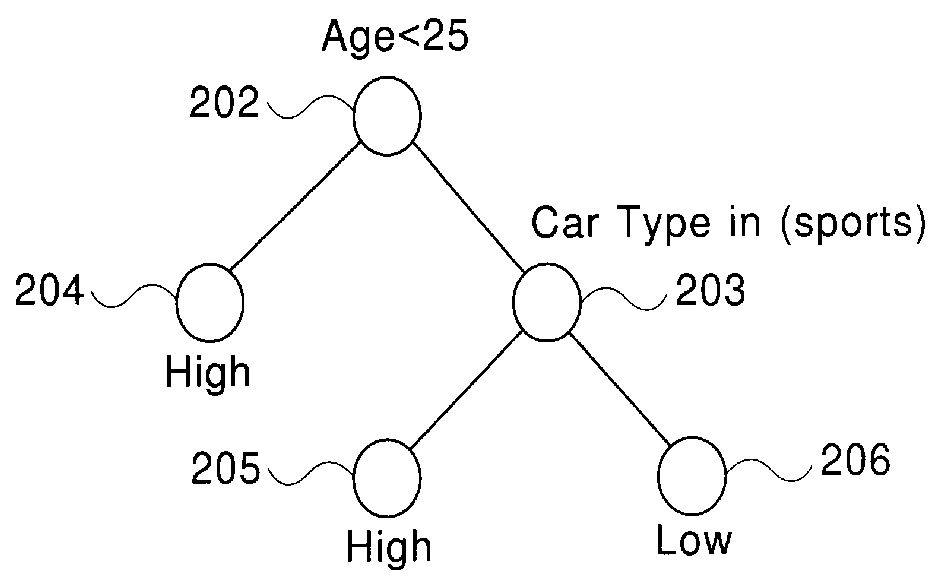

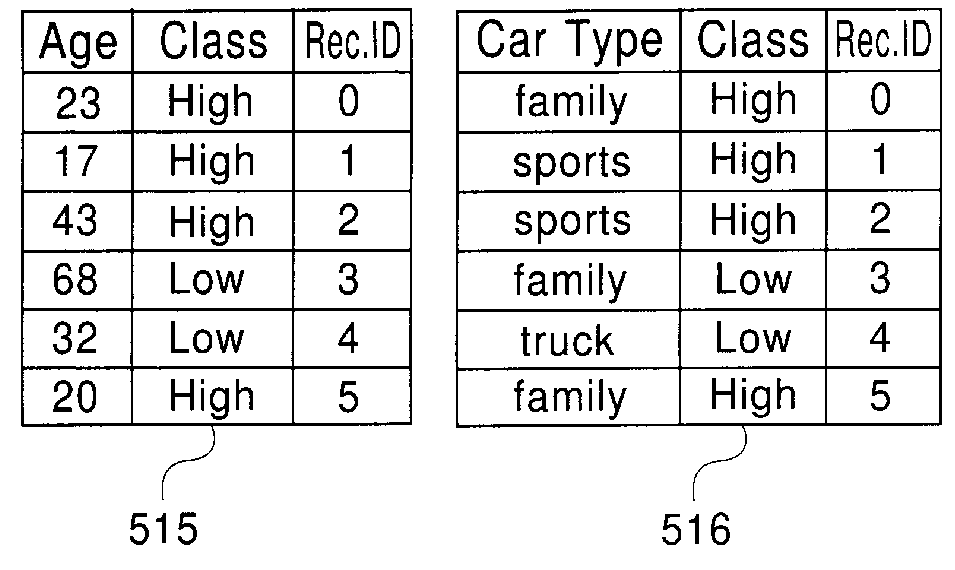

A method and system for generating a decision-tree classifier from a training set of records, independent of the system memory size. The method includes the steps of: generating an attribute list for each attribute of the records, sorting the attribute lists for numeric attributes, and generating a decision tree by repeatedly partitioning the records using the attribute lists. For each node, split points are evaluated to determine the best split test for partitioning the records at the node. Preferably, a gini index and class histograms are used in determining the best splits. The gini index indicates how well a split point separates the records while the class histograms reflect the class distribution of the records at the node. Also, a hash table is built as the attribute list of the split attribute is divided among the child nodes, which is then used for splitting the remaining attribute lists of the node. The method reduces I / O read time by combining the read for partitioning the records at a node with the read required for determining the best split test for the child nodes. Further, it requires writes of the records only at one out of n levels of the decision tree where n> / =2. Finally, a novel data layout on disk minimizes disk seek time. The I / O optimizations work in a general environment for hierarchical data partitioning. They also work in a multi-processor environment. After the generation of the decision tree, any prior art pruning methods may be used for pruning the tree.

Owner:IBM CORP

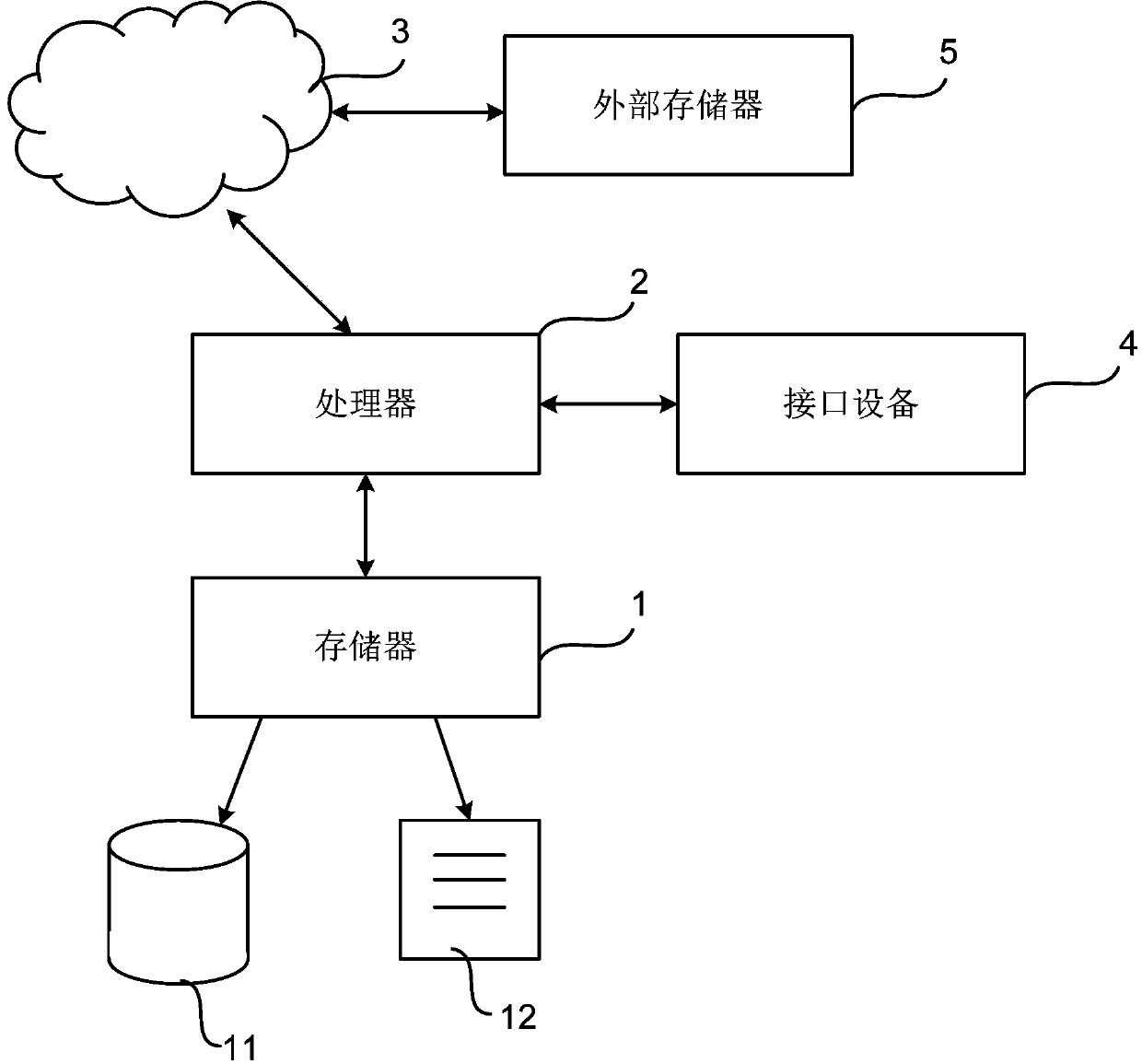

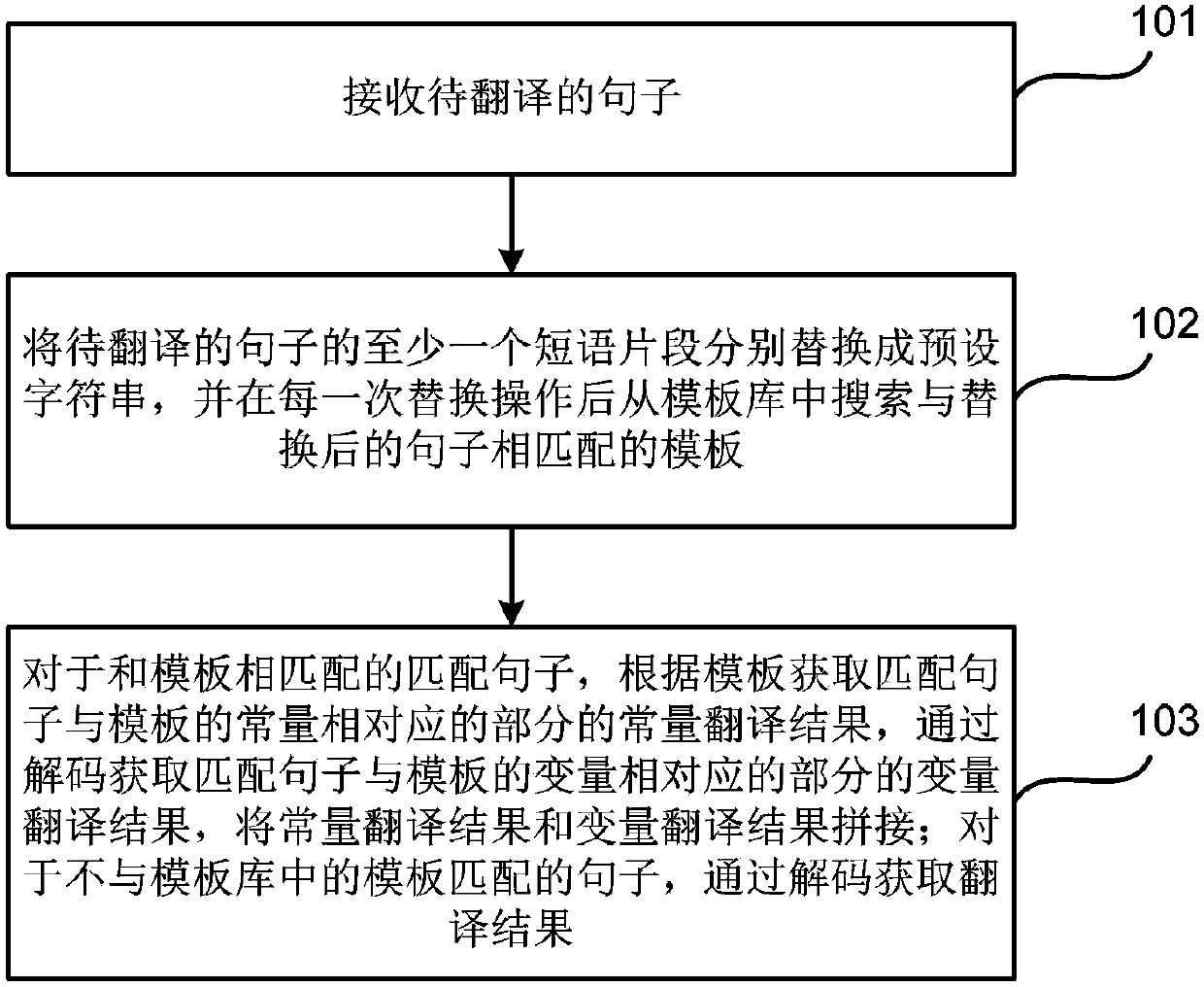

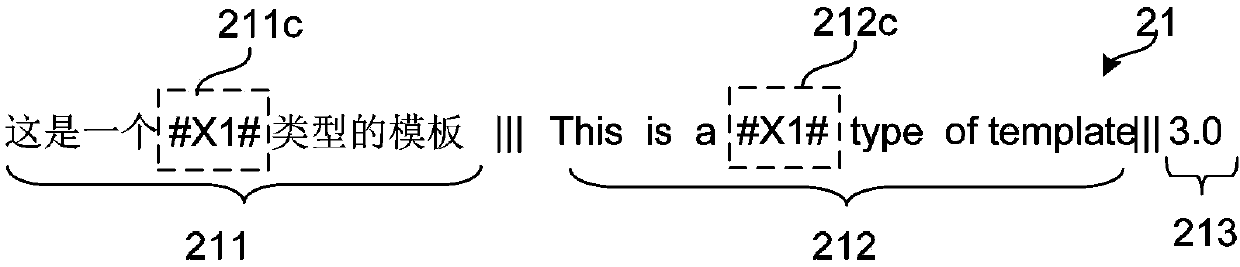

Machine translation method and device

InactiveCN103631772AThe translation is accurateReduce the number of readsSpecial data processing applicationsEngineeringMachine translation

The invention discloses a machine translation method and device. The method comprises the steps that a sentence to be translated is received; at least one phrase fragment in the sentence to be translated is replaced by a preset character string, and a template which is matched with the sentence after replacing is searched from a template library after replacing operation every time; for the sentence matched with the template, a constant translation result of the part, corresponding to the constant of the template, of the matched sentence is acquired according to the template, a variable translation result of the part, corresponding to the variable of the template, of the matched sentence is acquired by decoding, and the constant translation result and the variable translation result are spliced; for the sentence which is not matched with the template in the template library, the translation result is acquired by decoding. According to the machine translation method and device, calculation can be reduced, and translation quality is improved.

Owner:ALIBABA GRP HLDG LTD

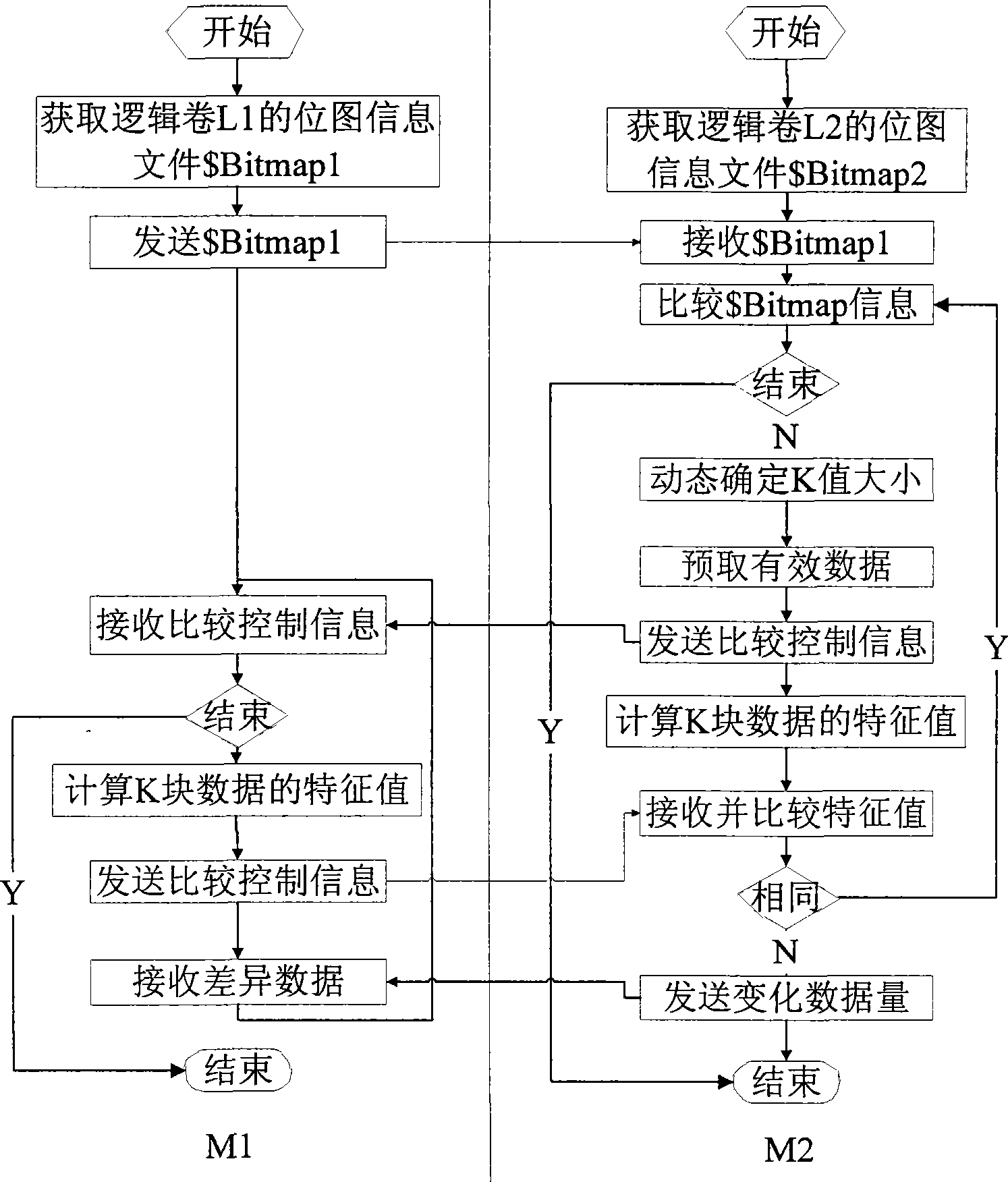

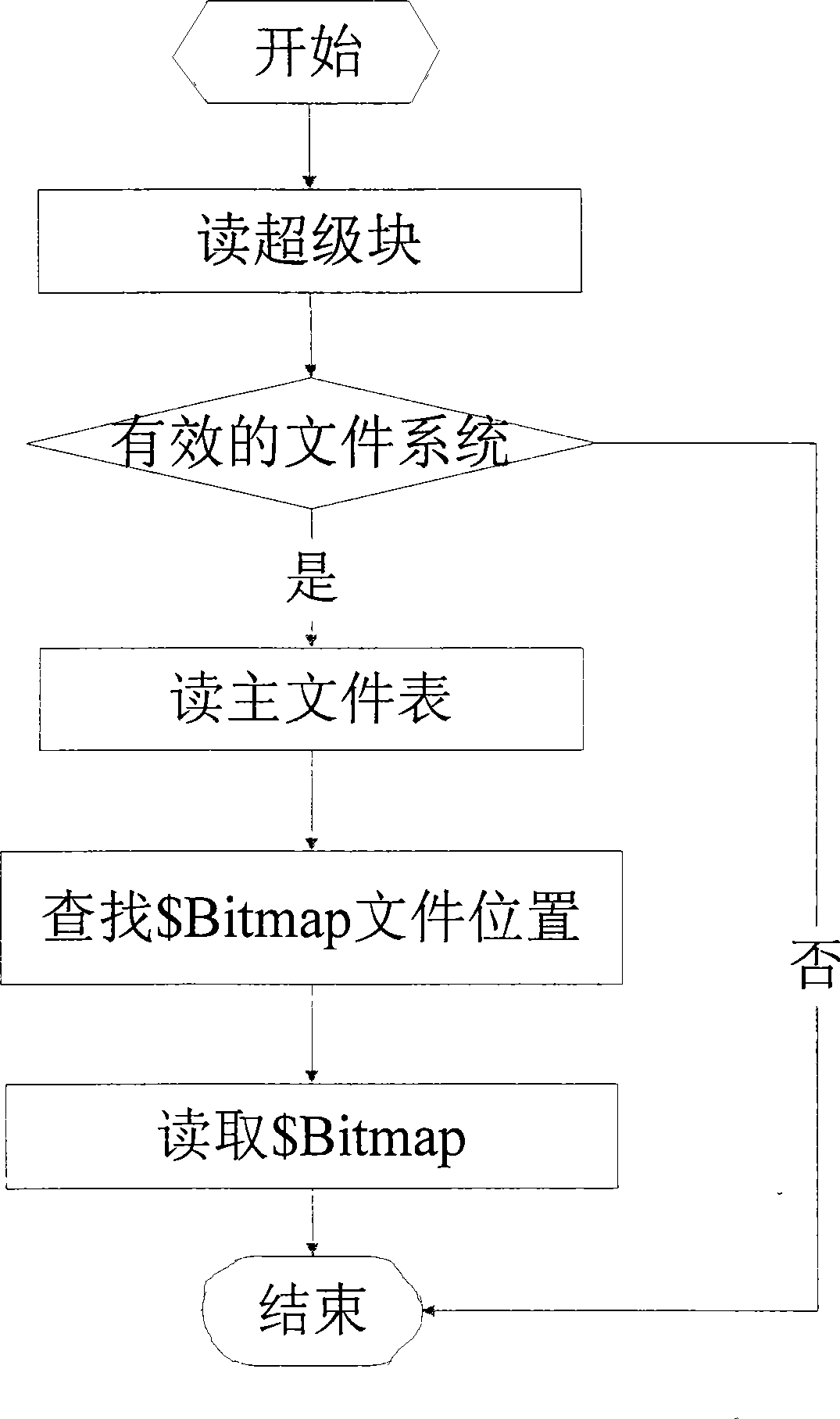

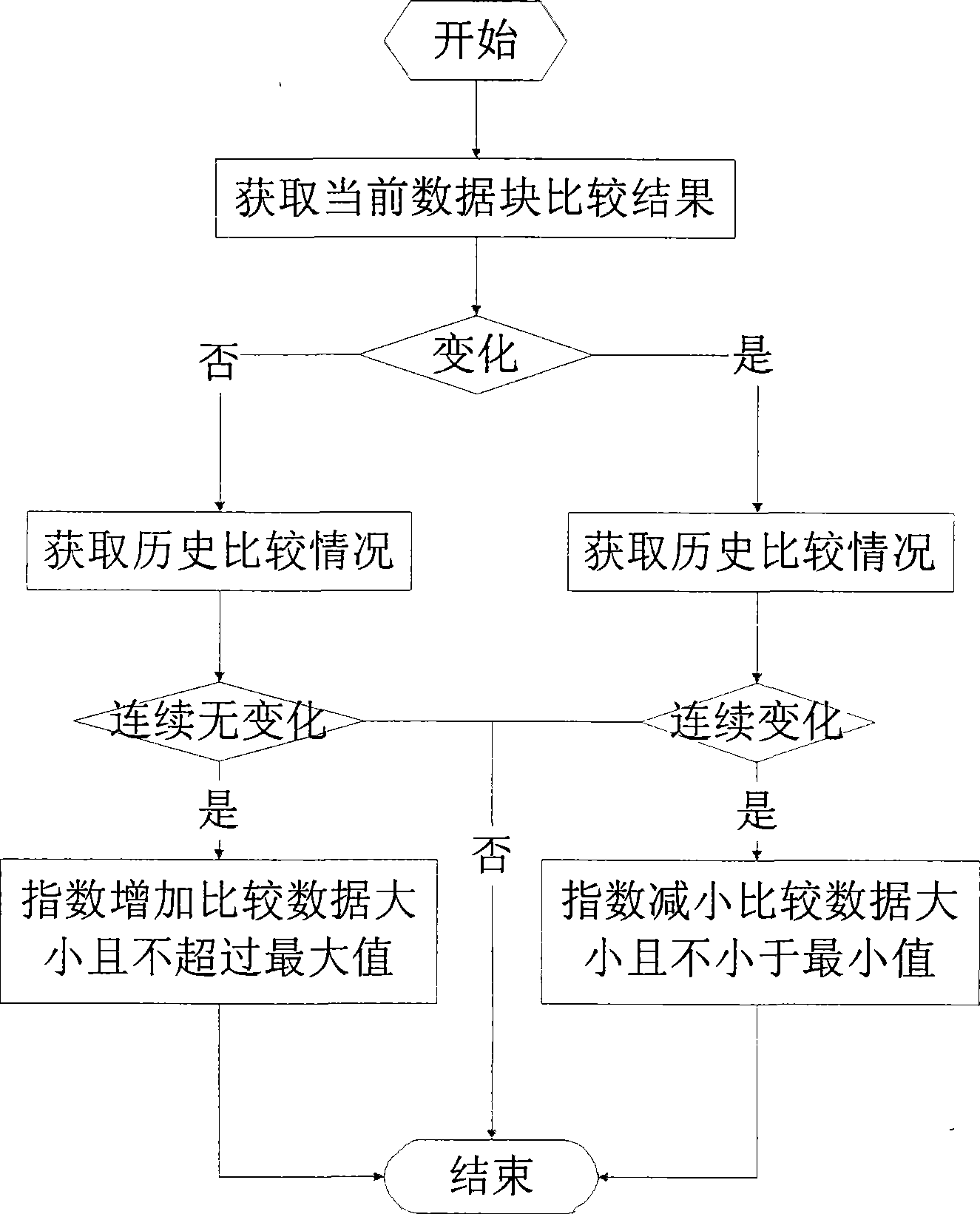

Logical volume rapid synchronization method based on data differentia

InactiveCN101387976AReduce the scope of the comparisonReduce data volumeRedundant operation error correctionSpecial data processing applicationsFile synchronizationData synchronization

The invention relates to a rapid synchronization method of logical volumes based on data difference, which belongs to the technical field of computer data storage. The method comprises steps of finding out used data blocks on each logical volumes according to file system information of source volumes and destination volumes, comparing corresponding data block pairs on the source logical volumes and the destination logical volumes, then computing two eigenvalues of a data block pair, synchronizing the data block pair if the two eigenvalues are varied, or not synchronizing if not. The invention only compares the used data blocks in case of data synchronization, thereby obviously reducing the range of data comparison, and simultaneously only the changed data blocks are synchronized during synchronization, thereby effectively reducing the quantity of synchronized data.

Owner:TSINGHUA UNIV +1

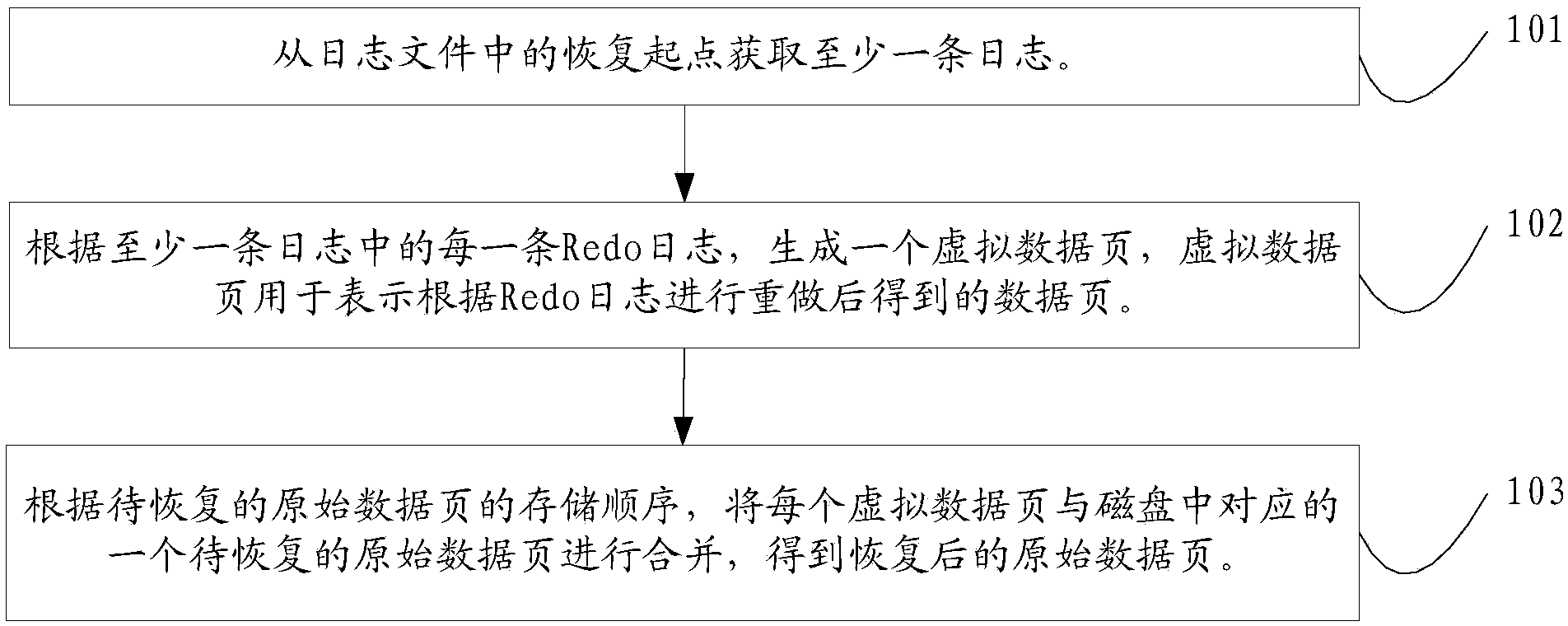

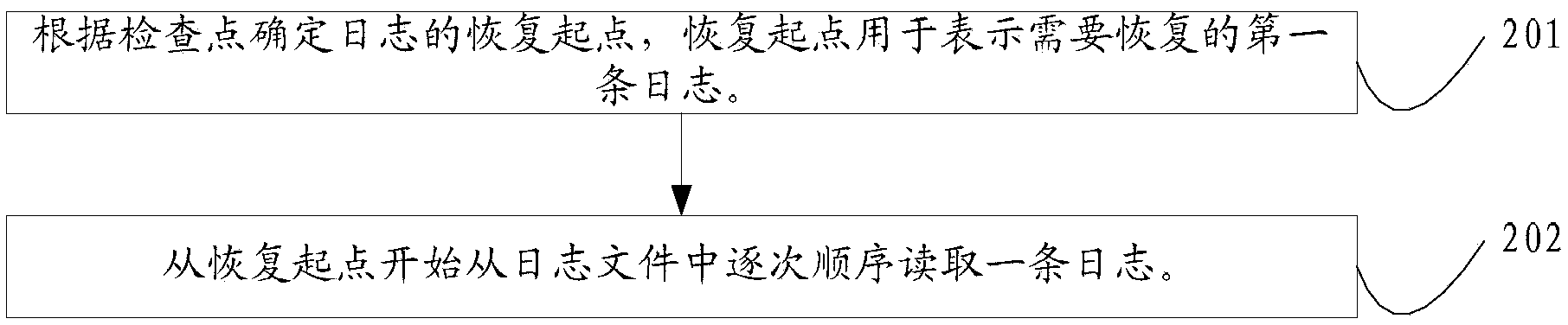

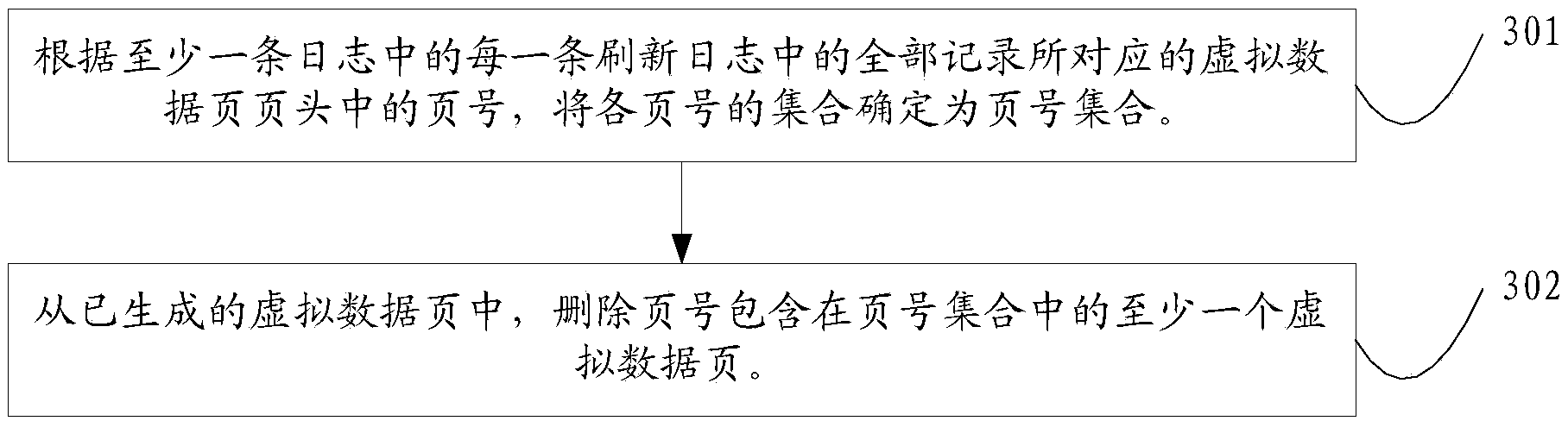

Data recovering method and device

ActiveCN103412803AReduce the number of readsAvoid read operationsSpecial data processing applicationsRedundant operation error correctionMagnetic disksData file

The invention discloses a data recovering method and device, which can solve the problem that the performance of a database is low as a data file is frequently read from a magnetic disk in a database recovering process, and the problem that a database recovering speed is slow as continuous logs correspond to discontinuous data pages. The method comprises the following steps: obtaining at least one log from a recovering starting point in a log file; generating a virtual data page according to each Redo log in the at least one log, wherein the virtual data page is used for representing a data page obtained by redoing according to the Redo logs; and combining each virtual data page with a corresponding original data page to be recovered in the magnetic disk according to a storage sequence of the original data page to be recovered to obtain the recovered original data page. The data recovering method and device disclosed by the invention are mainly applied to the database recovering process.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

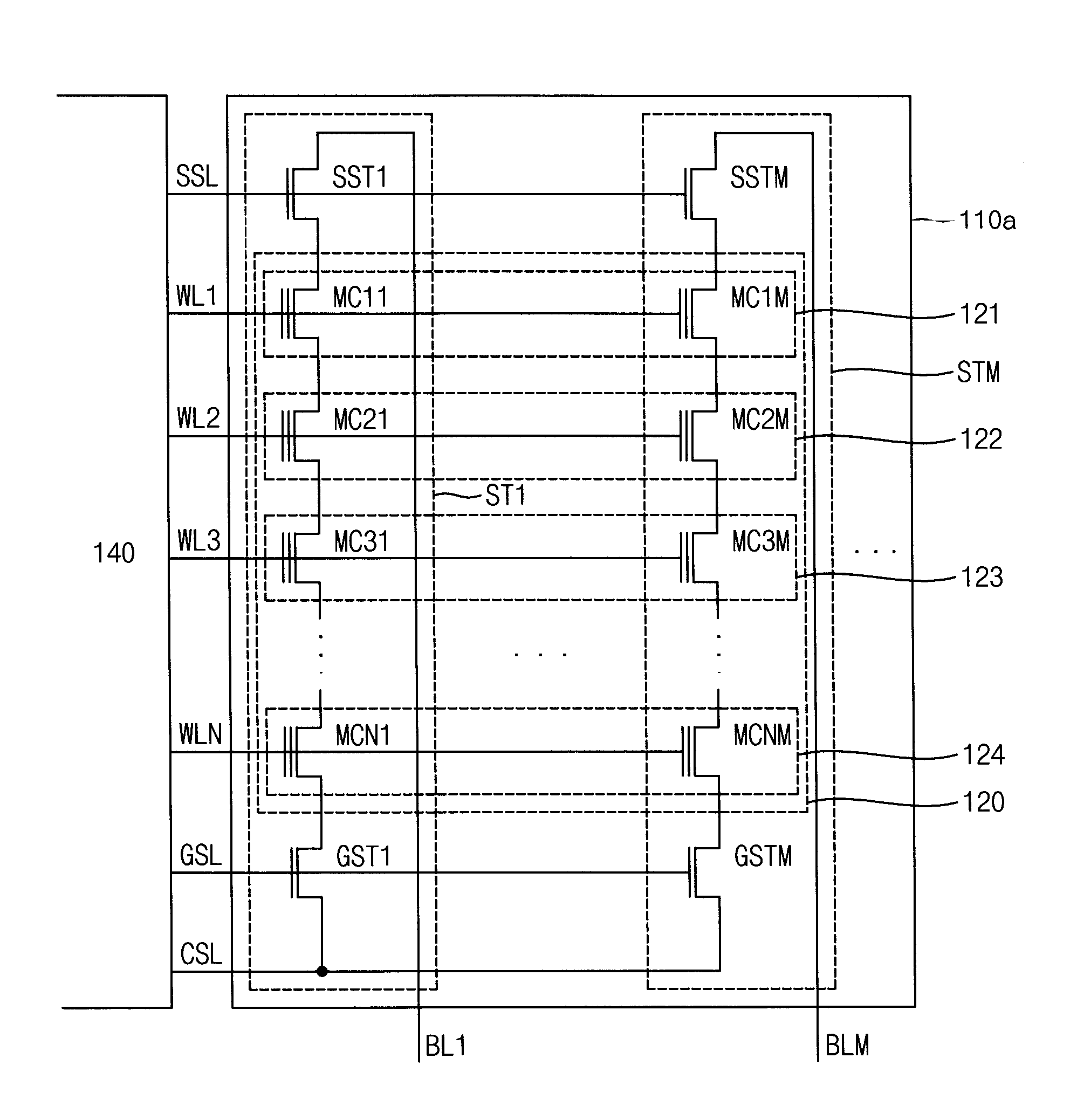

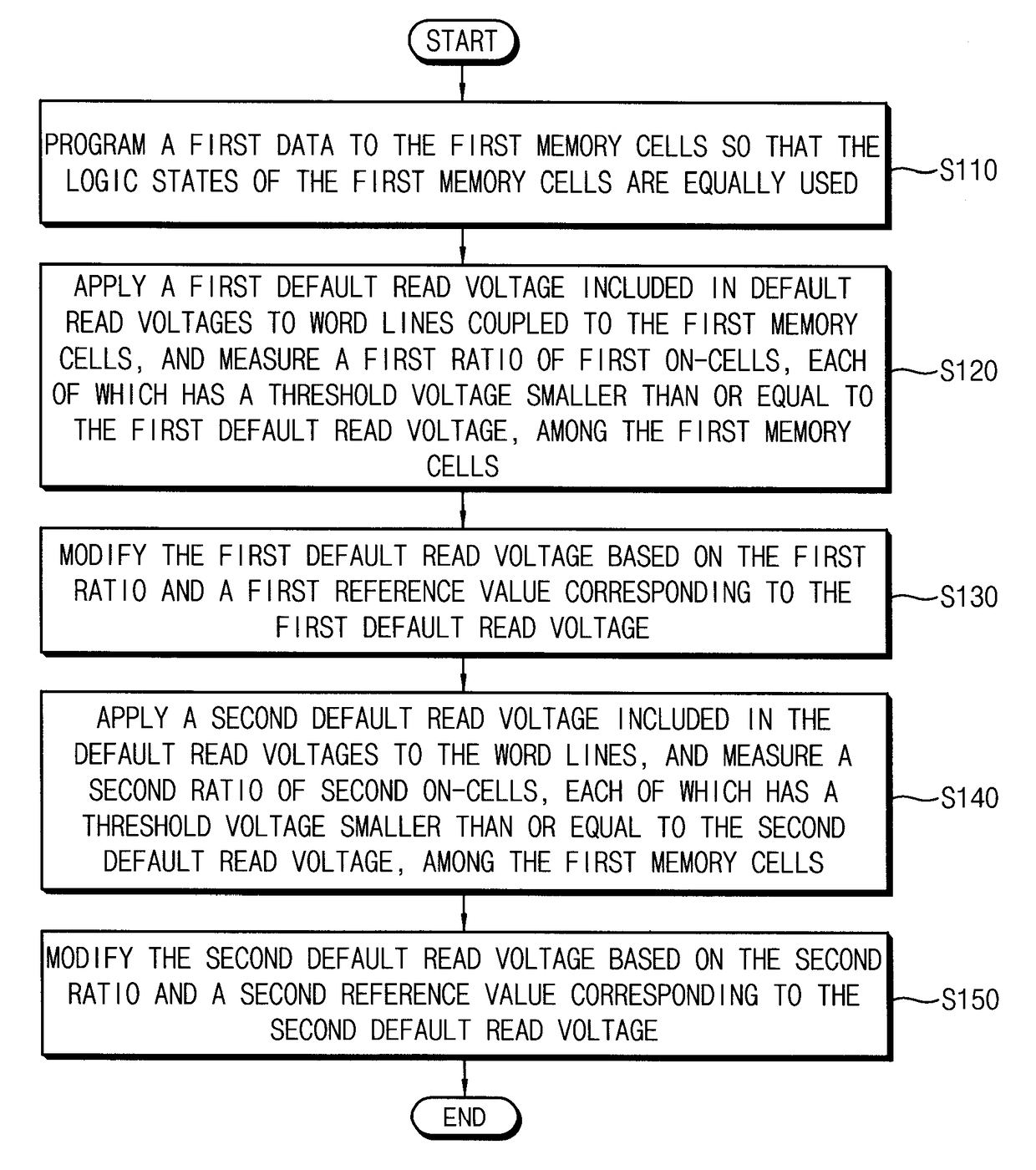

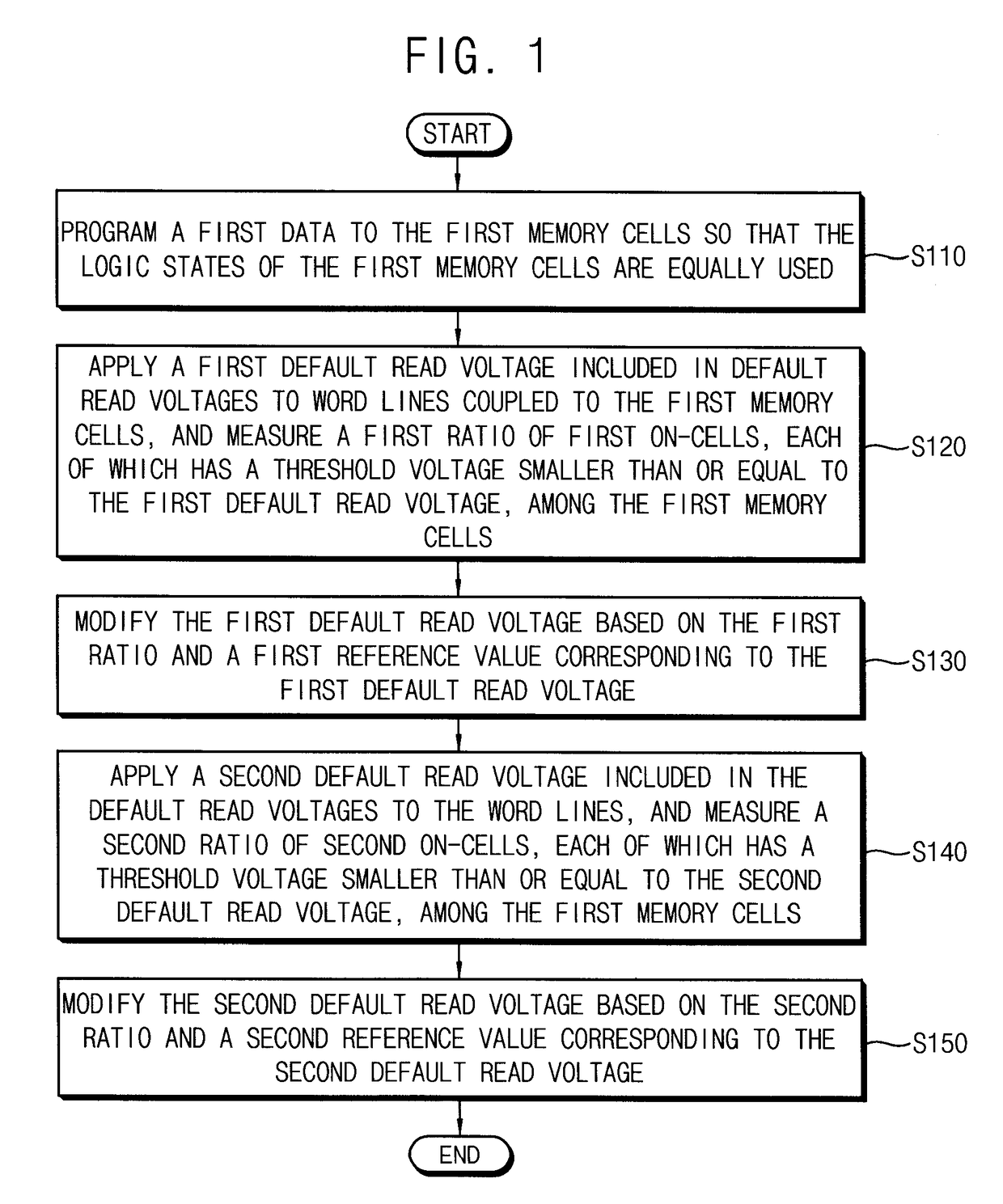

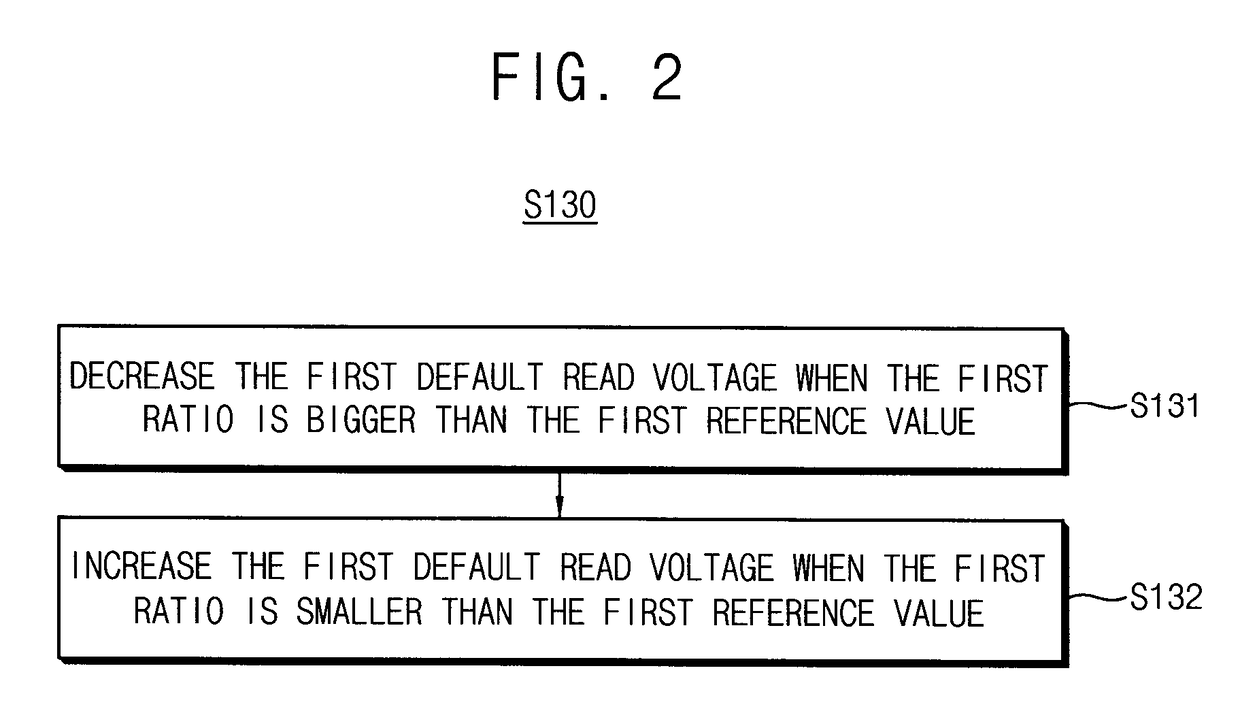

Method of determining default read voltage of non-volatile memory device and method of reading data of non-volatile memory device

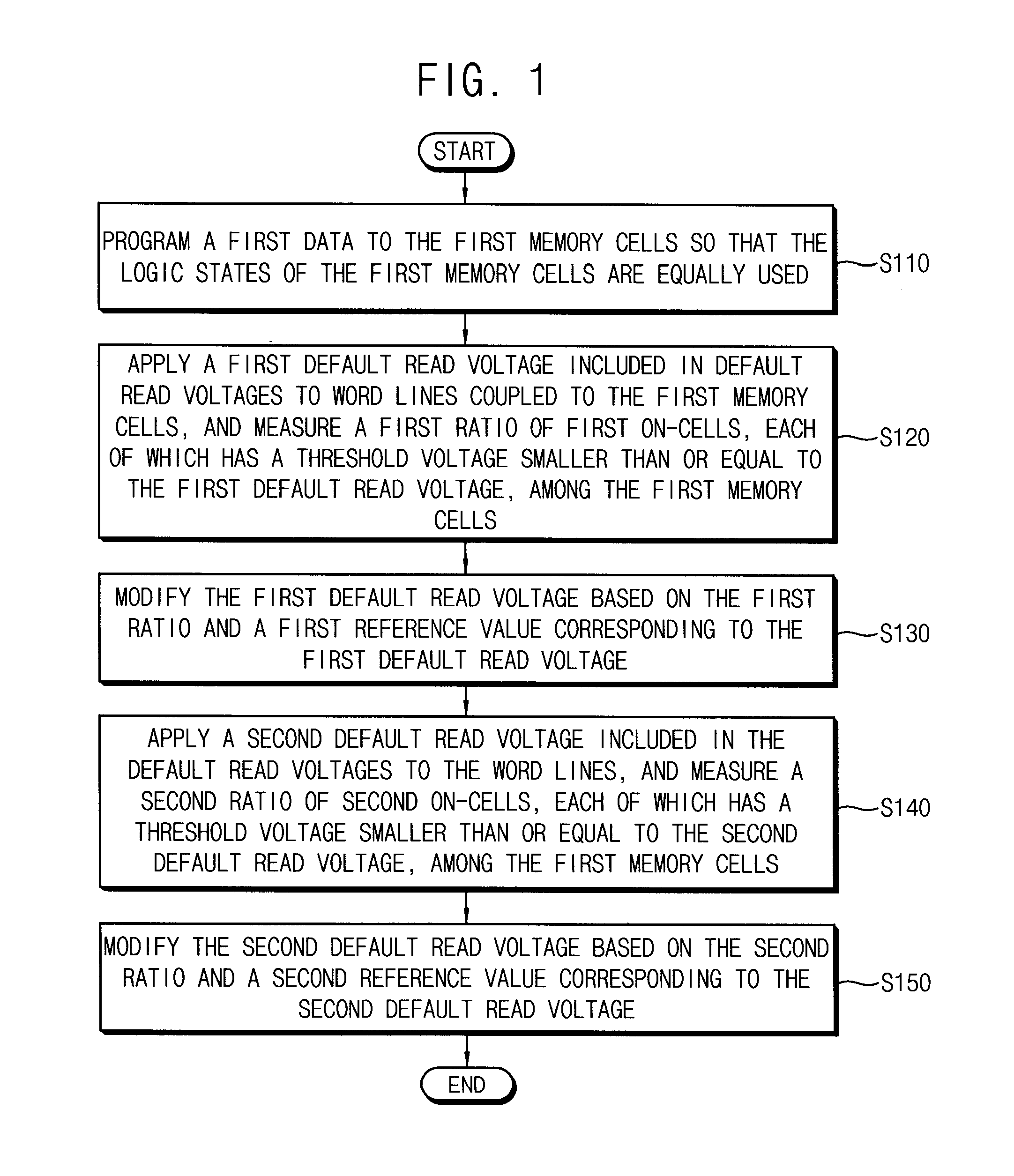

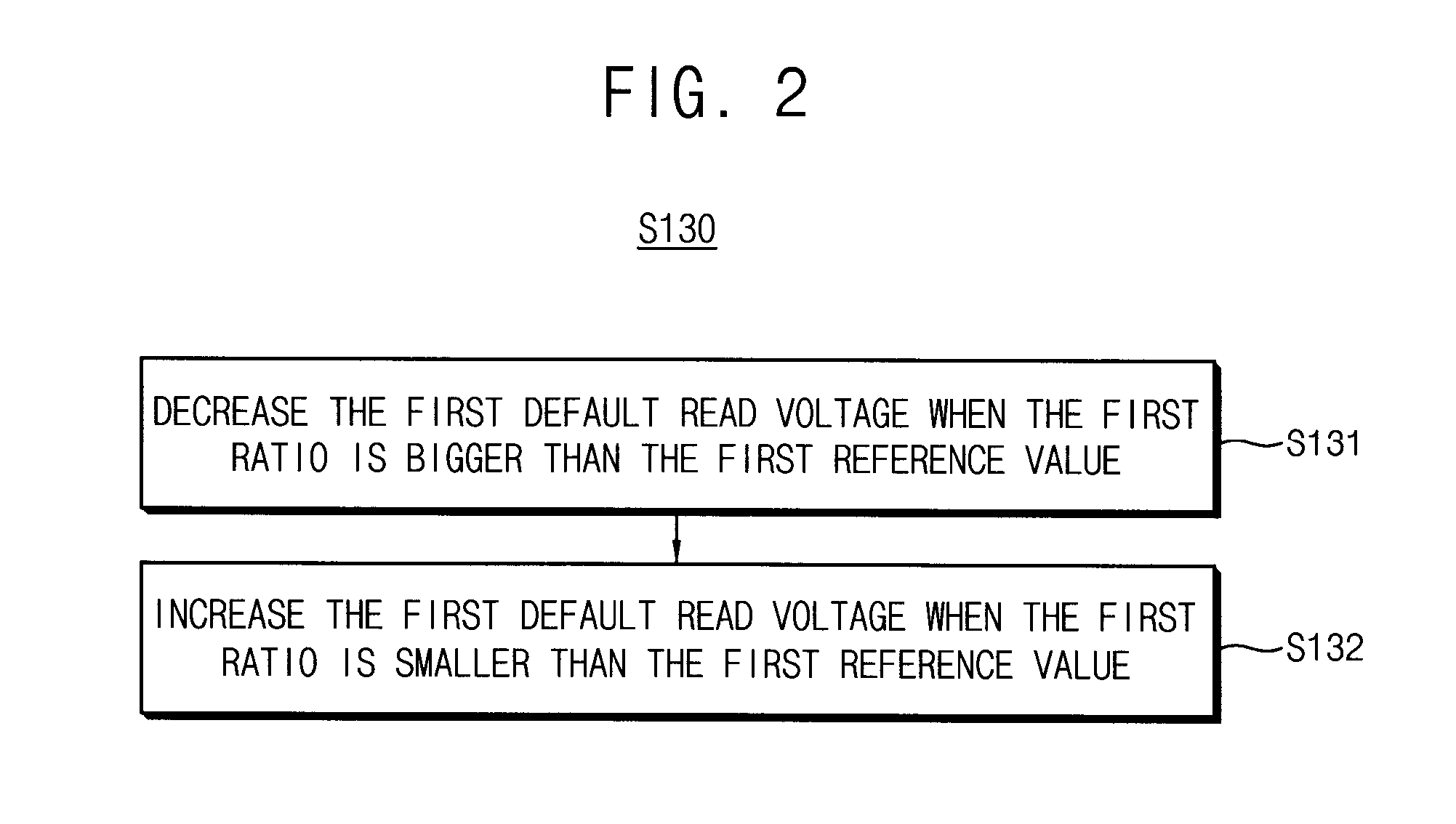

ActiveUS20160042797A1Error minimizationReduce the number of readsRead-only memoriesDigital storageLogic stateComputer science

A method of determining a default read voltage of a non-volatile memory device which includes a plurality of first memory cells, each of which stores a plurality of data bits as one of a plurality of threshold voltages corresponding to a plurality of logic states, includes programming a first data to the first memory cells so that the logic states of the first memory cells are balanced or equally used. The method includes applying a first default read voltage included in default read voltages to word lines coupled to the first memory cells, and measuring a first ratio of first on-cells, each of which has a threshold voltage smaller than or equal to the first default read voltage, among the first memory cells, and modifying the first default read voltage based on the first ratio and a first reference value corresponding to the first default read voltage.

Owner:SAMSUNG ELECTRONICS CO LTD

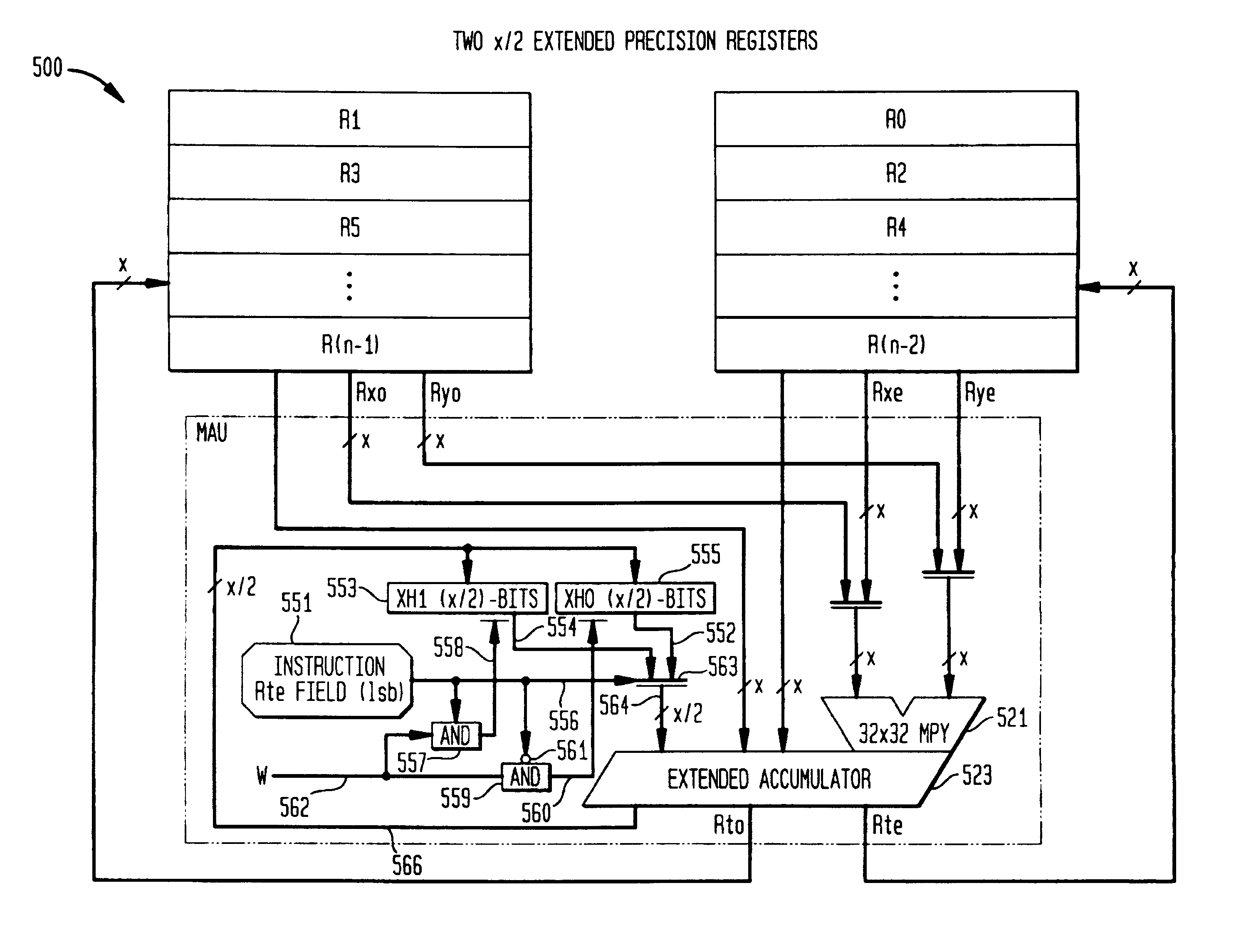

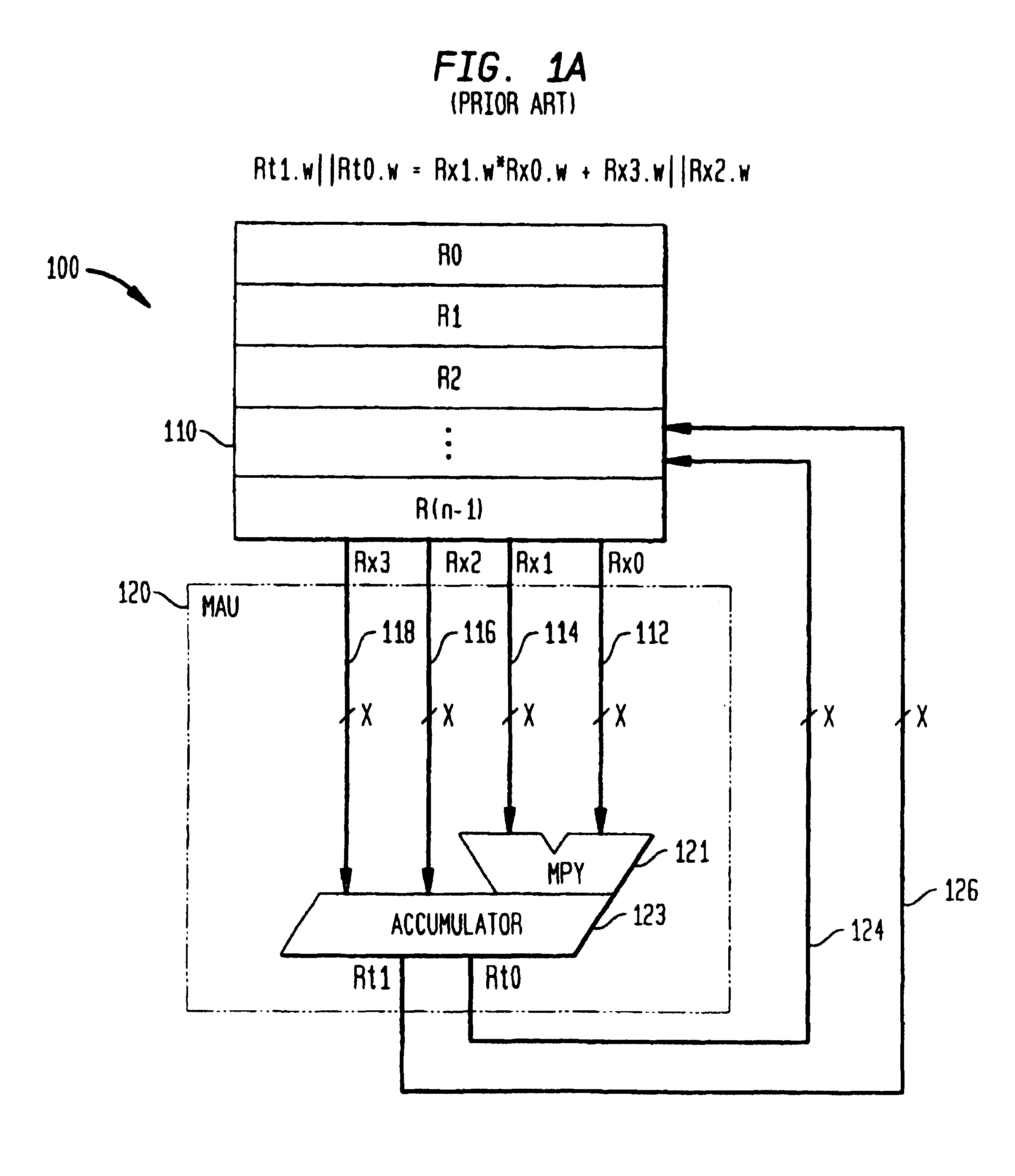

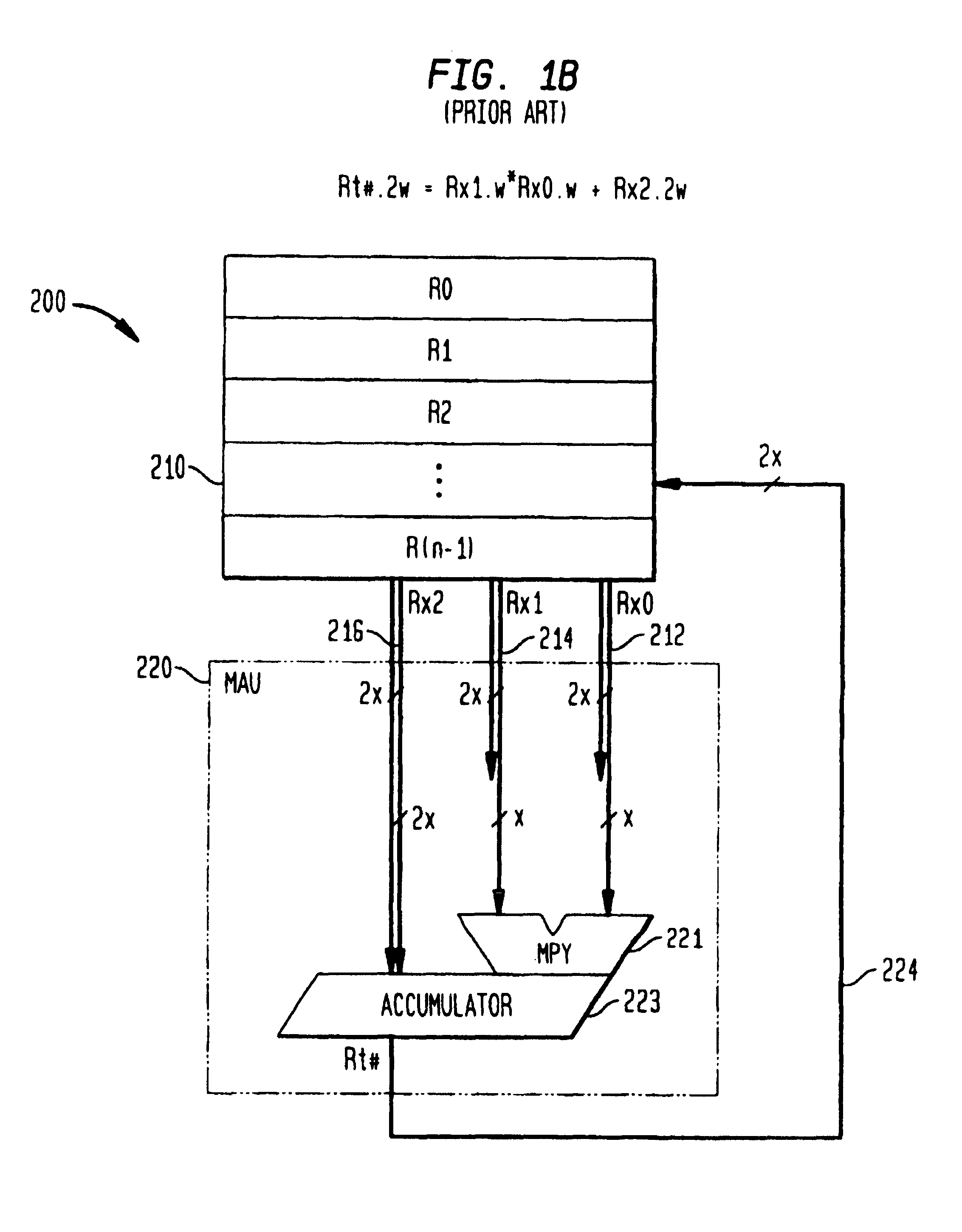

Methods and apparatus for dynamic instruction controlled reconfigurable register file with extended precision

InactiveUSRE40883E1Extended precision data widthSmall sizeRegister arrangementsInstruction analysisProcessor registerMultiply–accumulate operation

A reconfigurable register file integrated in an instruction set architecture capable of extended precision operations, and also capable of parallel operation on lower precision data is described. A register file is composed of two separate files with each half containing half as many registers as the original. The halves are designated even or odd by virtue of the register addresses which they contain. Single width and double width operands are optimally supported without increasing the register file size and without increasing the number of register file ports. Separate extended registers are also employed to provide extended precision for operations such as multiply-accumulate operations.

Owner:ALTERA CORP

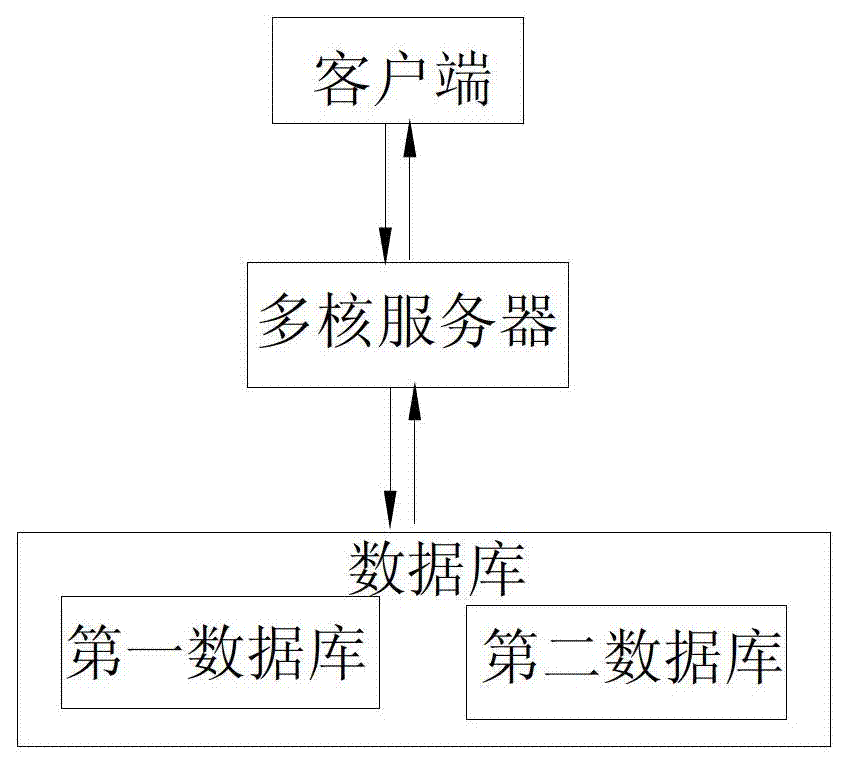

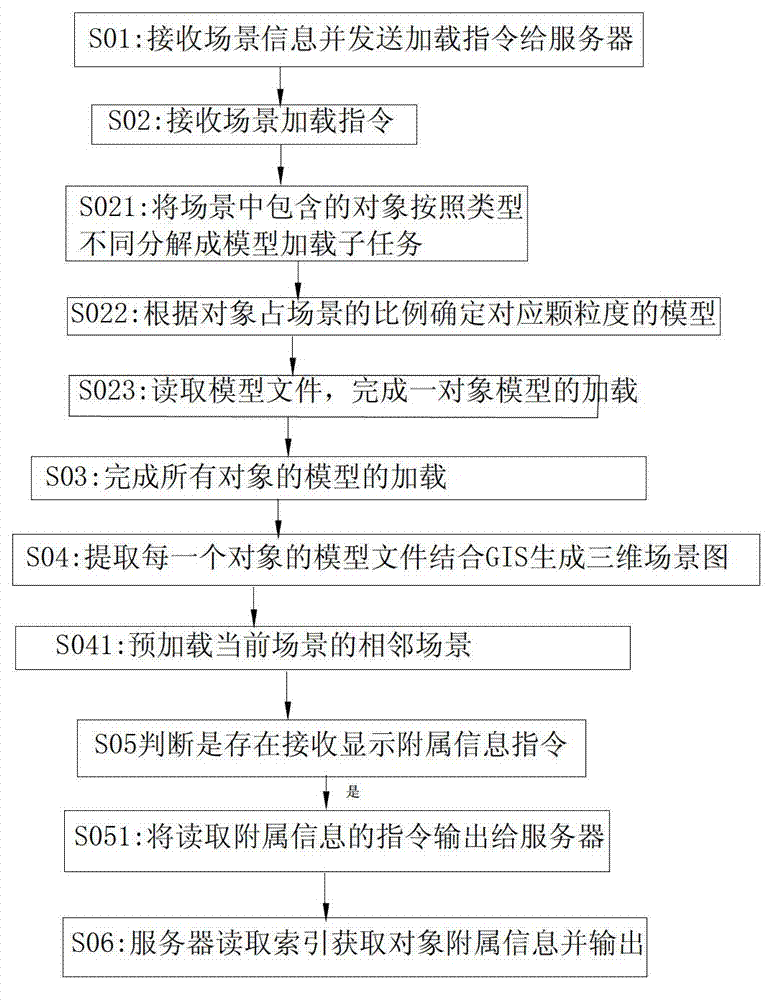

Three-dimensional GIS (Geographic Information System) technology based power grid visualization system and method

ActiveCN102831307AAvoid repeated readingReduce the number of readsComputer controlSimulator controlDatabaseGeographic information system

The invention relates to a three-dimensional GIS (Geographic Information System) technology based power grid visualization system and method. The three-dimensional GIS technology based power grid visualization method comprises the steps of: dividing models to be uploaded into different model upload sub-tasks based on different types of objects included in a scene to be uploaded, and calling model documents of the multiple sub-tasks in a parallel manner through a multi-thread way to improve an uploading speed; and meanwhile, on the basis of dividing the uploading tasks into different model uploading sub-tasks based on the type of the uploaded objects, only reading the model document of the objects of each type for once, wherein the repeated use of each model document is not limited to the uploading task of one client; and different clients can multiplex the read model documents. Therefore, the three-dimensional GIS (Geographic Information System) technology based power grid visualization system and method, disclosed by the invention, have the advantages of giving a full consideration to the characteristics of limited type and relative consistent specification of power devices, avoiding repeated reading of the model documents of the same type, obviously improving the uploading efficiency, guaranteeing rapid uploading and guaranteeing real-time browsing of the clients.

Owner:STATE GRID SHANDONG ELECTRIC POWER

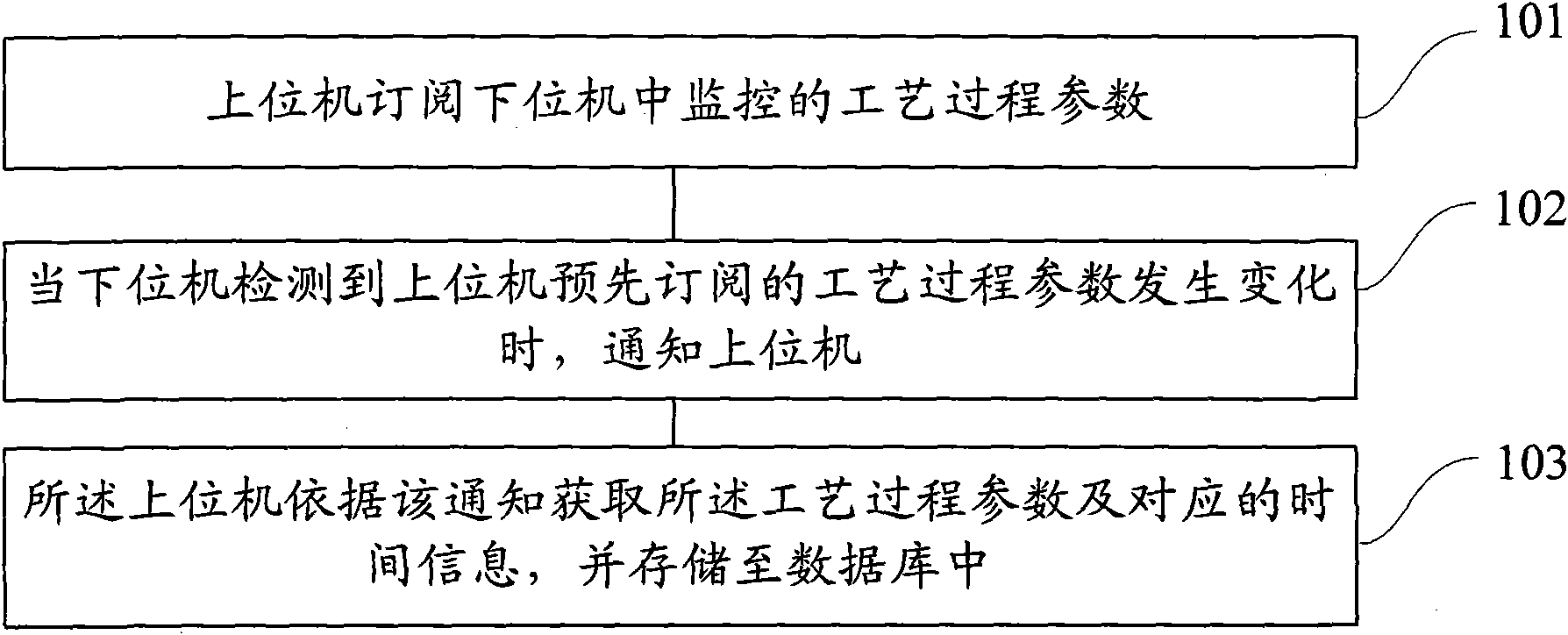

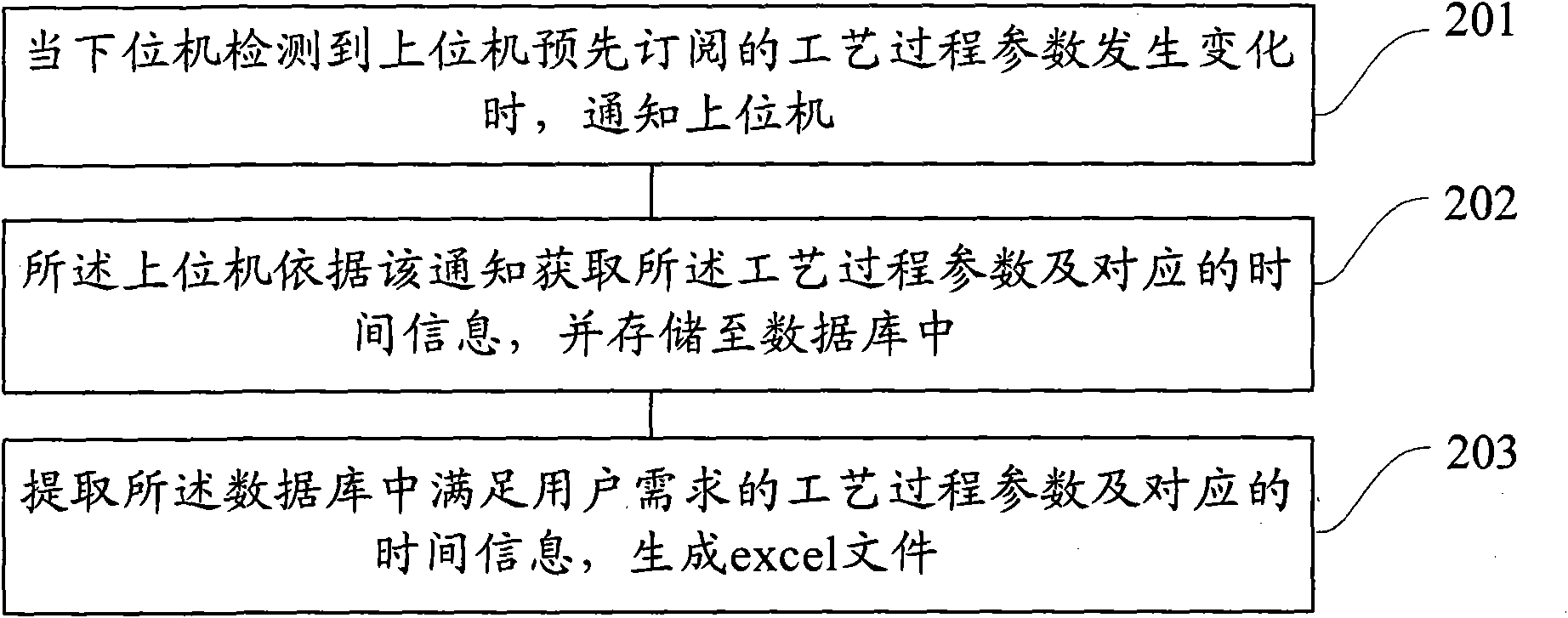

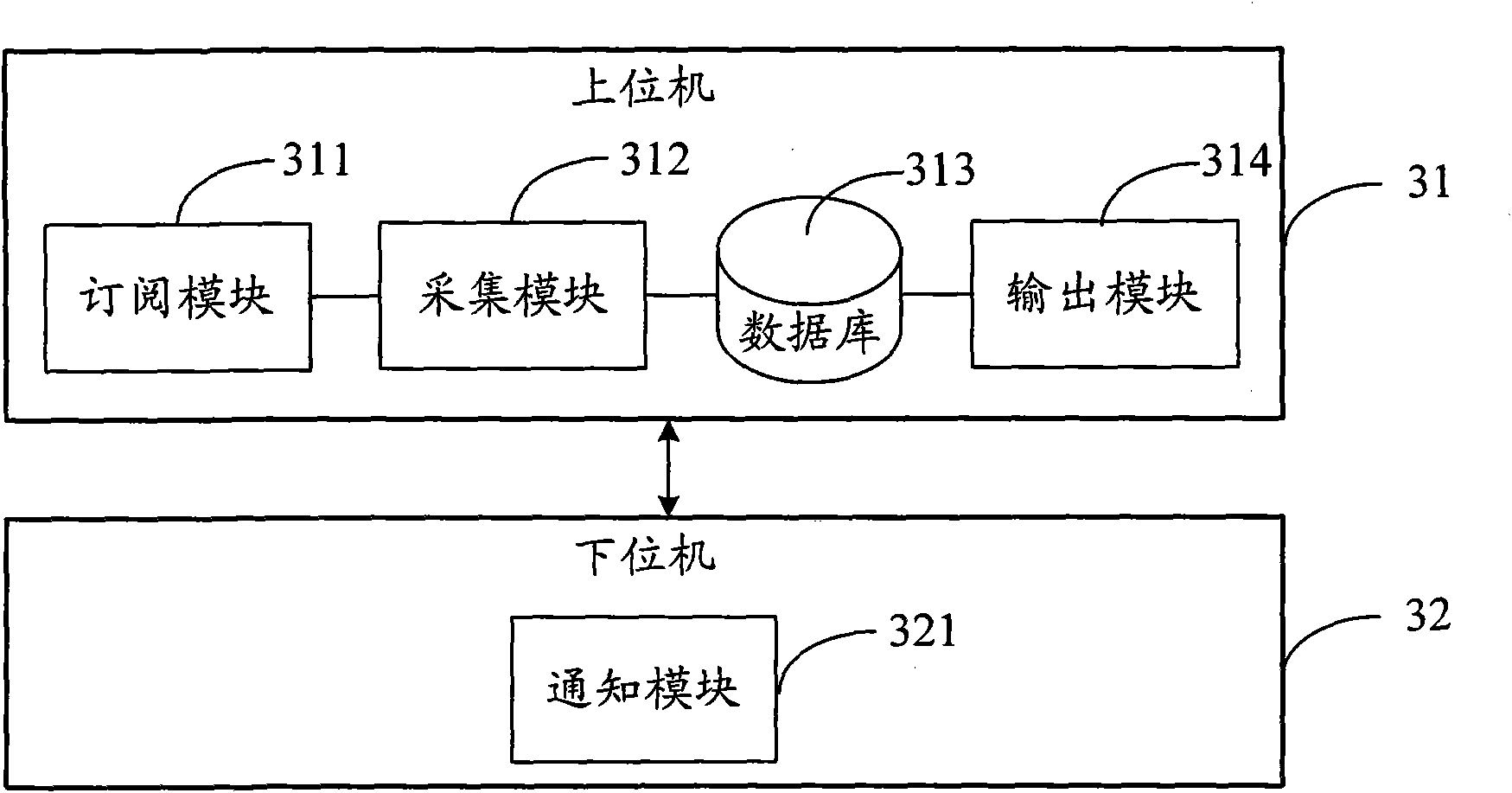

Acquisition method and system of parameters of technique process

ActiveCN101673100AImprove performanceReduce the number of readsComputer controlSimulator controlTime informationTemporal change

The invention discloses an acquisition method of parameters of a technique process, which is used for acquiring the change condition of the parameters of the technique process with the time; the acquisition method comprises the following steps: when a lower computer detects that the parameters of the technique process pre-subscibed by an upper computer are changed, the upper machine is notified; and the upper computer acquires the technique process parameters and corresponding time information according to the notification, and stores the technique process parameters and corresponding time information in a database. The invention can reduce the acquisition of garbage data, guarantee the validity of the acquired data, and can effectively save system resources.

Owner:BEIJING NAURA MICROELECTRONICS EQUIP CO LTD

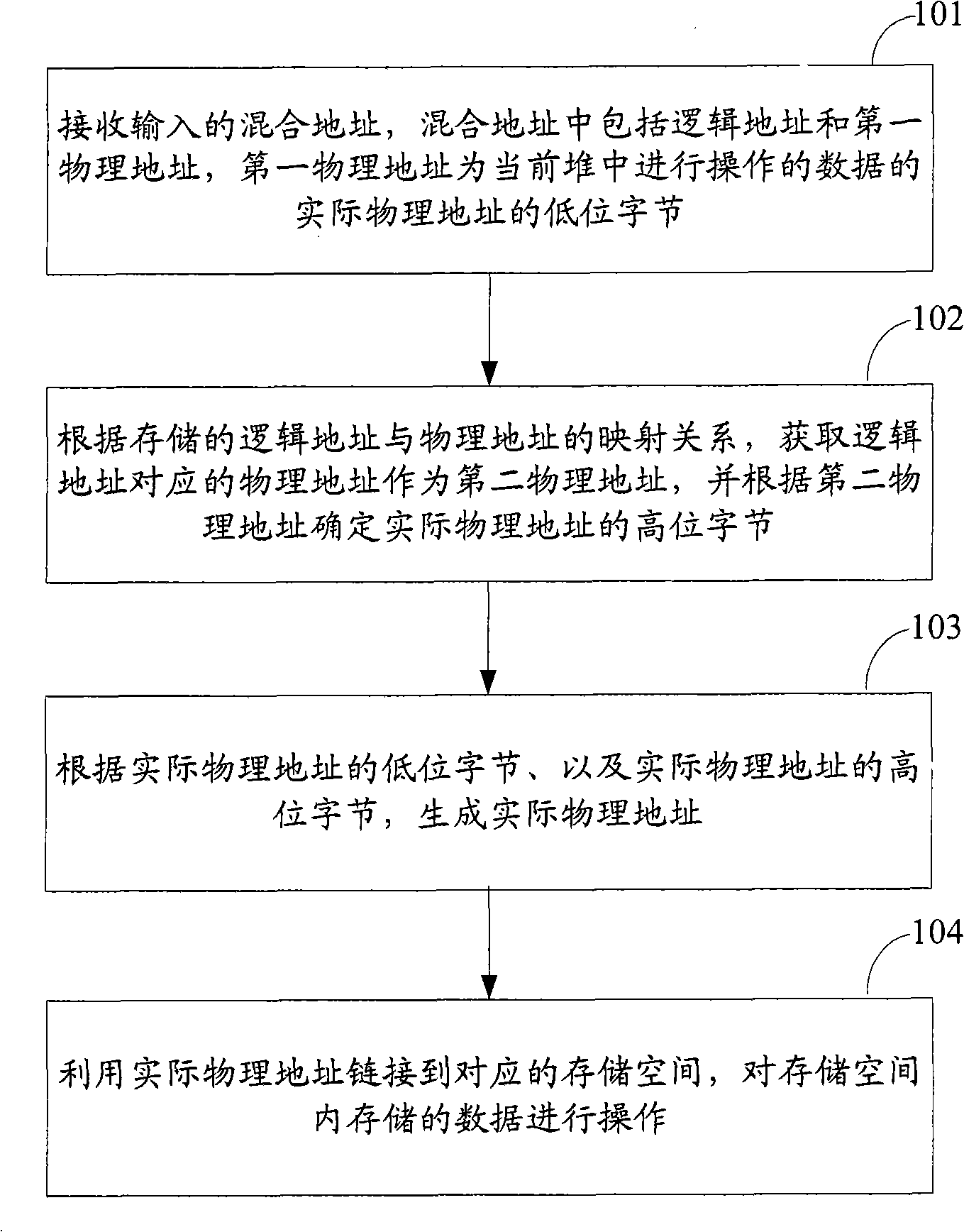

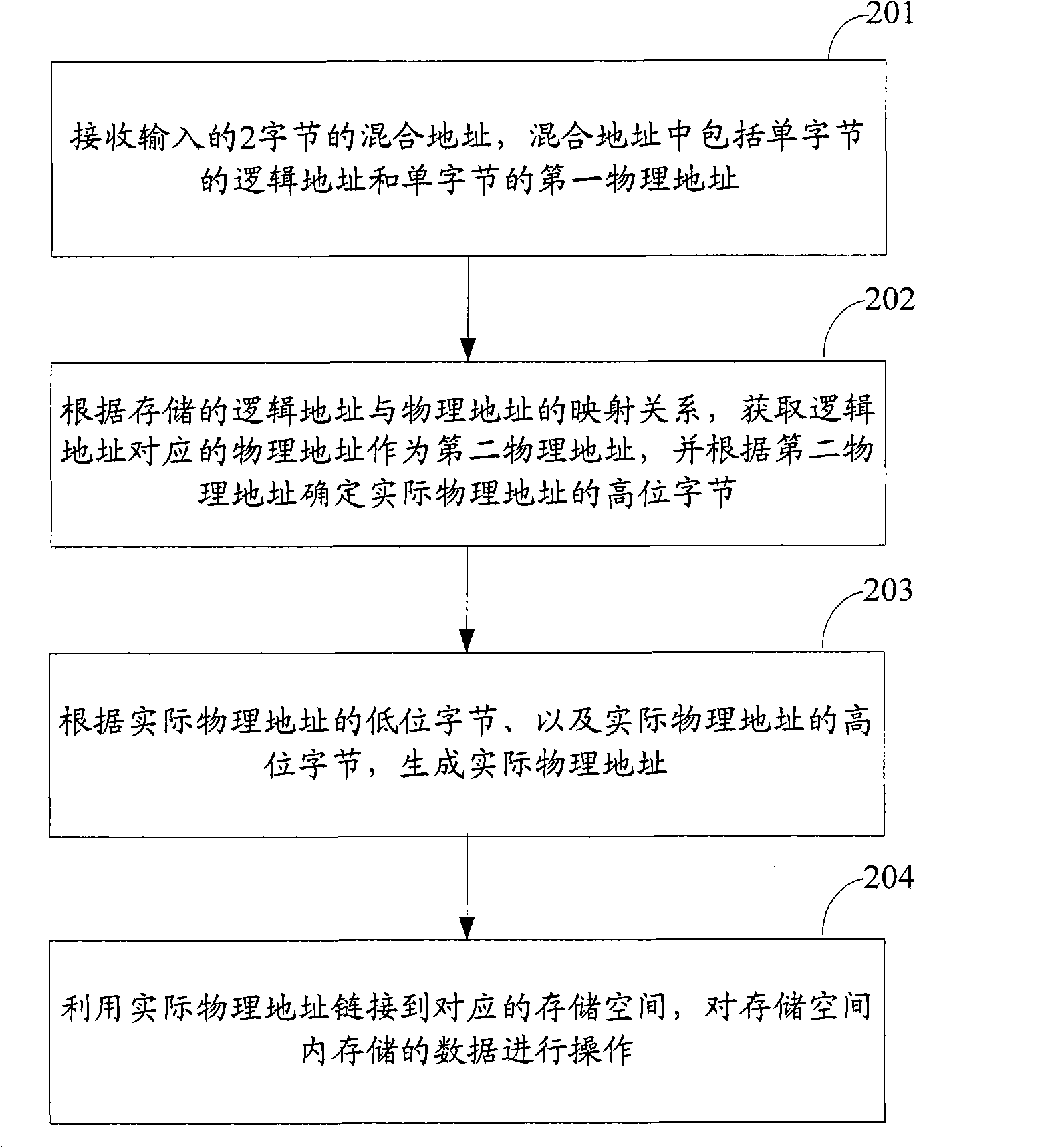

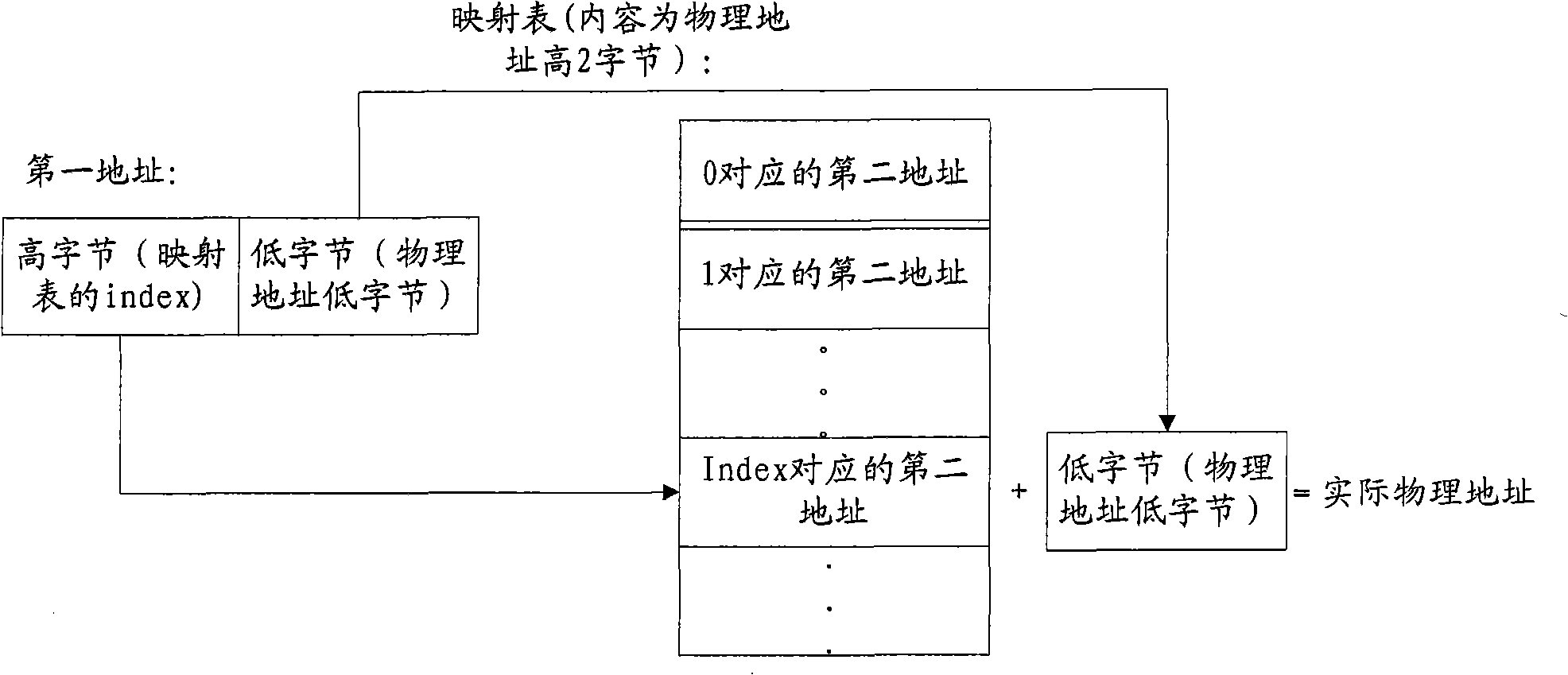

Data operating method and device, and addressing method and device

InactiveCN101840373AShorten the lengthReduce the number of readsMemory adressing/allocation/relocationData operationsData storing

The invention discloses a data operating method, which operates data stored in each memory heap in the following steps: receiving a hybrid address, wherein the hybrid address comprises a logic address and a first physical address, and the first physical address is used as the low order byte of the actual physical address of the data operated in the current heap; according to the mapping relation between the stored logic address and physical address, acquiring the physical address corresponding to the logic address as a second physical address, and determining the high order byte of the actual physical address according to the second physical address; according to the low order byte of the actual physical address and the high order byte of the actual physical address, generating the actual physical address; and linking to the corresponding memory space by using the actual physical address, and operating the data stored in the memory space. The invention also discloses a data operating device, an addressing method and an addressing device. The invention can solve the problem of high time consumption in the prior art since data searching and operation need to read the EEPROM for times.

Owner:BEIJING WATCH DATA SYST

Techniques for reducing read voltage threshold calibration in non-volatile memory

ActiveUS10453537B1Reduce the number of readsReduce in quantityRead-only memoriesDigital storageShort durationVoltage

A non-volatile memory includes a plurality of cells each individually capable of storing multiple bits of data including bits of multiple physical pages including at least a first page and a second page. A controller of the non-volatile memory determines a first calibration interval for a first read voltage threshold defining a bit value in the first page and a different second calibration interval for a second read voltage threshold defining a bit value in the second page. The second calibration interval has a shorter duration than the first calibration interval. The controller calibrates the first and second read voltage thresholds for the plurality of memory cells in the non-volatile memory based on the determined first and second calibration intervals.

Owner:IBM CORP

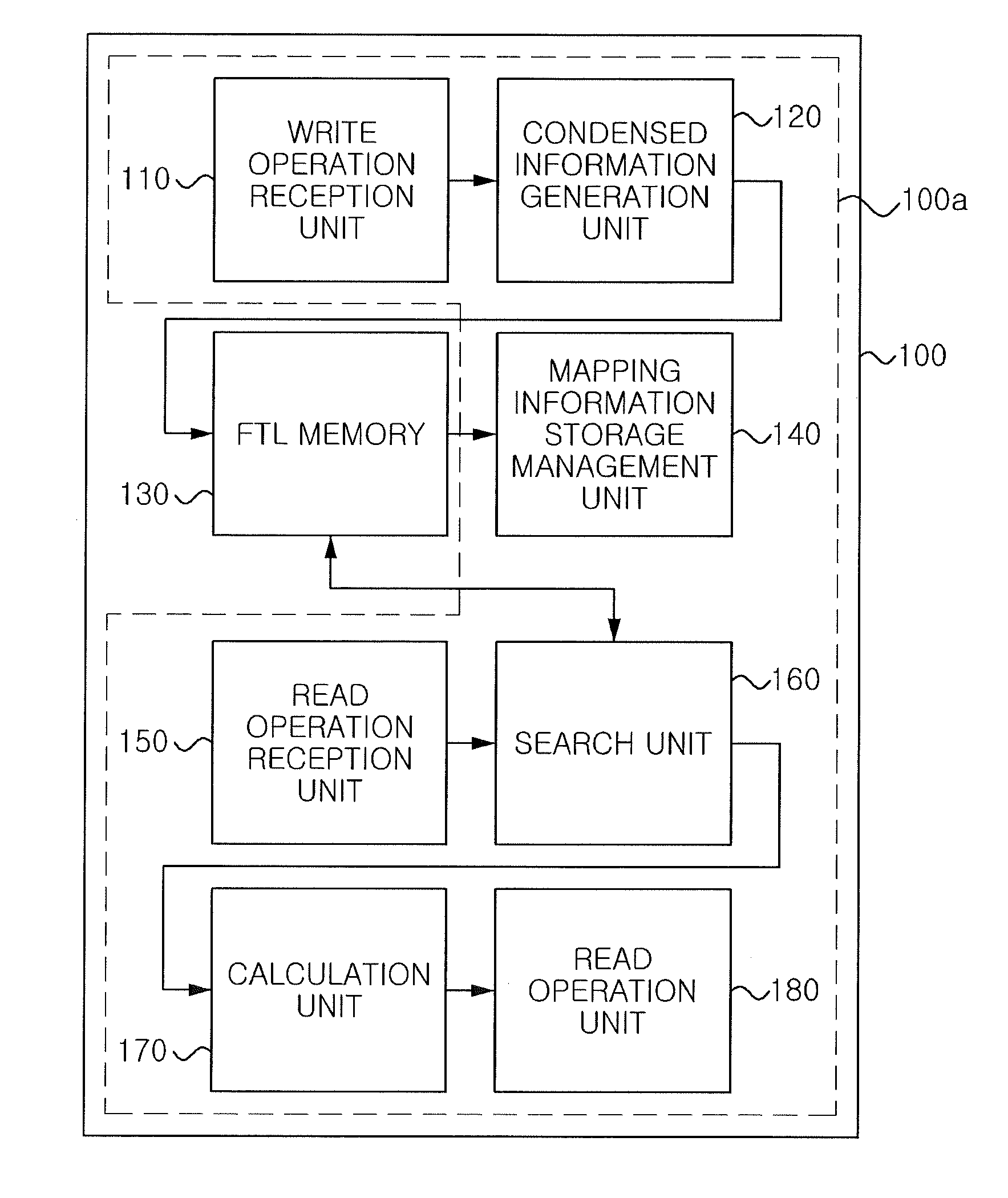

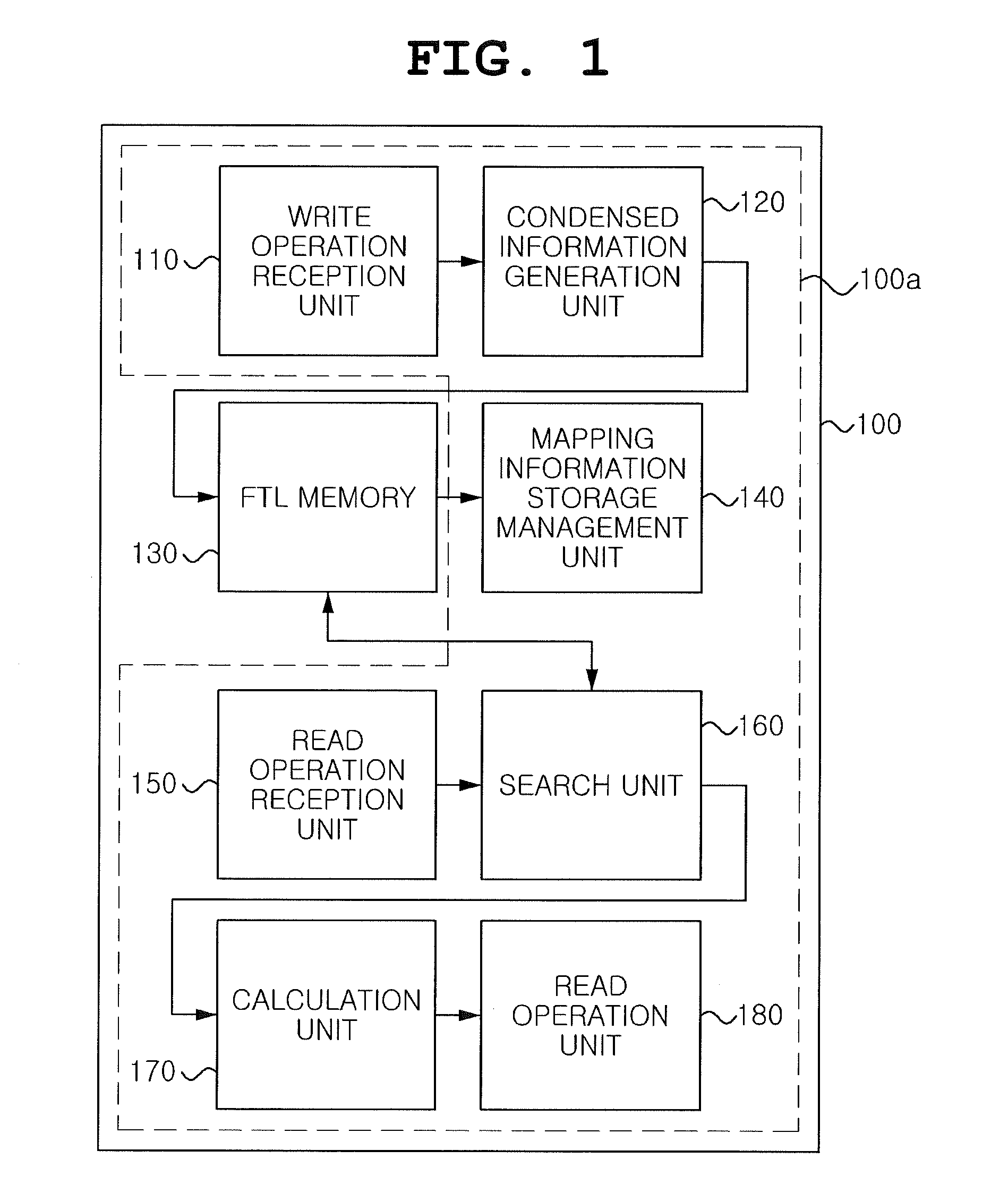

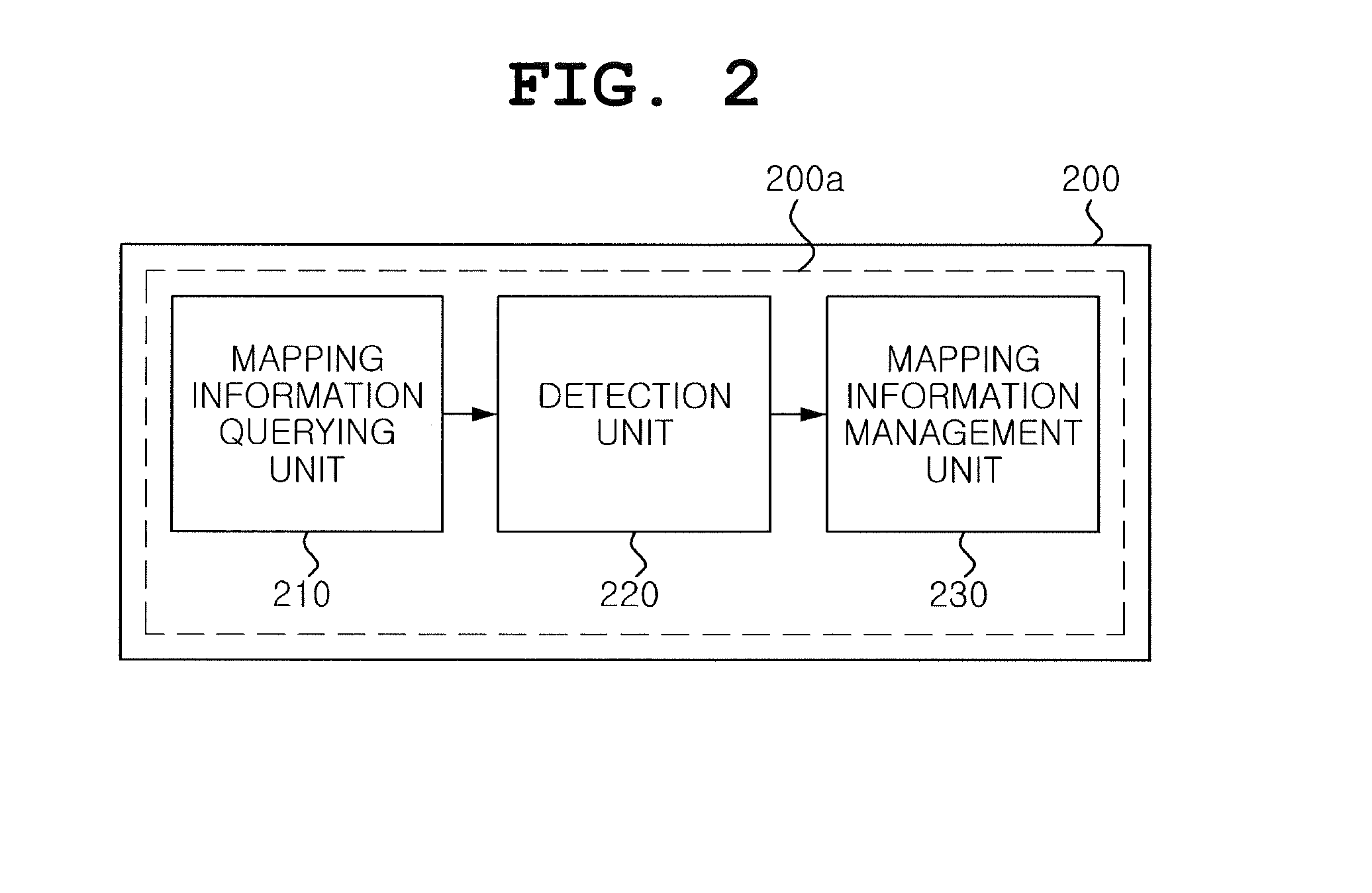

Method for mapping page address based on flash memory and system therefor

ActiveUS20160048448A1Reduce in quantitySmall sizeMemory architecture accessing/allocationMemory adressing/allocation/relocationData informationFile system

The present invention relates to a method for a page-level address mapping based on flash memory and a system thereof. A method for a page-level address mapping based on a flash memory according to an embodiment of the present invention includes the steps of: receiving a write operation from a file system; generating condensed mapping information using a size of data information of the write operation and a start logical address of sequentially allocated logical addresses of the write operation; and storing the condensed mapping information as a first mapping table in a memory of a flash translation.

Owner:AJOU UNIV IND ACADEMIC COOP FOUND

Adaptive read voltage threshold calibration in non-volatile memory

ActiveUS20200066353A1Reduce in quantityReduce the number of readsInput/output to record carriersRead-only memoriesControl theorySelf adaptive

A non-volatile memory includes a plurality of physical pages each assigned to one of a plurality of page groups. A controller of the non-volatile memory performs a first calibration read of a sample physical page of a page group of the non-volatile memory. The controller determines if an error metric observed for the first calibration read of the sample physical page satisfies a calibration threshold. The controller calibrates read voltage thresholds of the page group utilizing a first calibration technique based on a determination that the error metric satisfies the calibration threshold and calibrates read voltage thresholds of the page group utilizing a different second calibration technique based on a determination that the error metric does not satisfy the calibration threshold.

Owner:IBM CORP

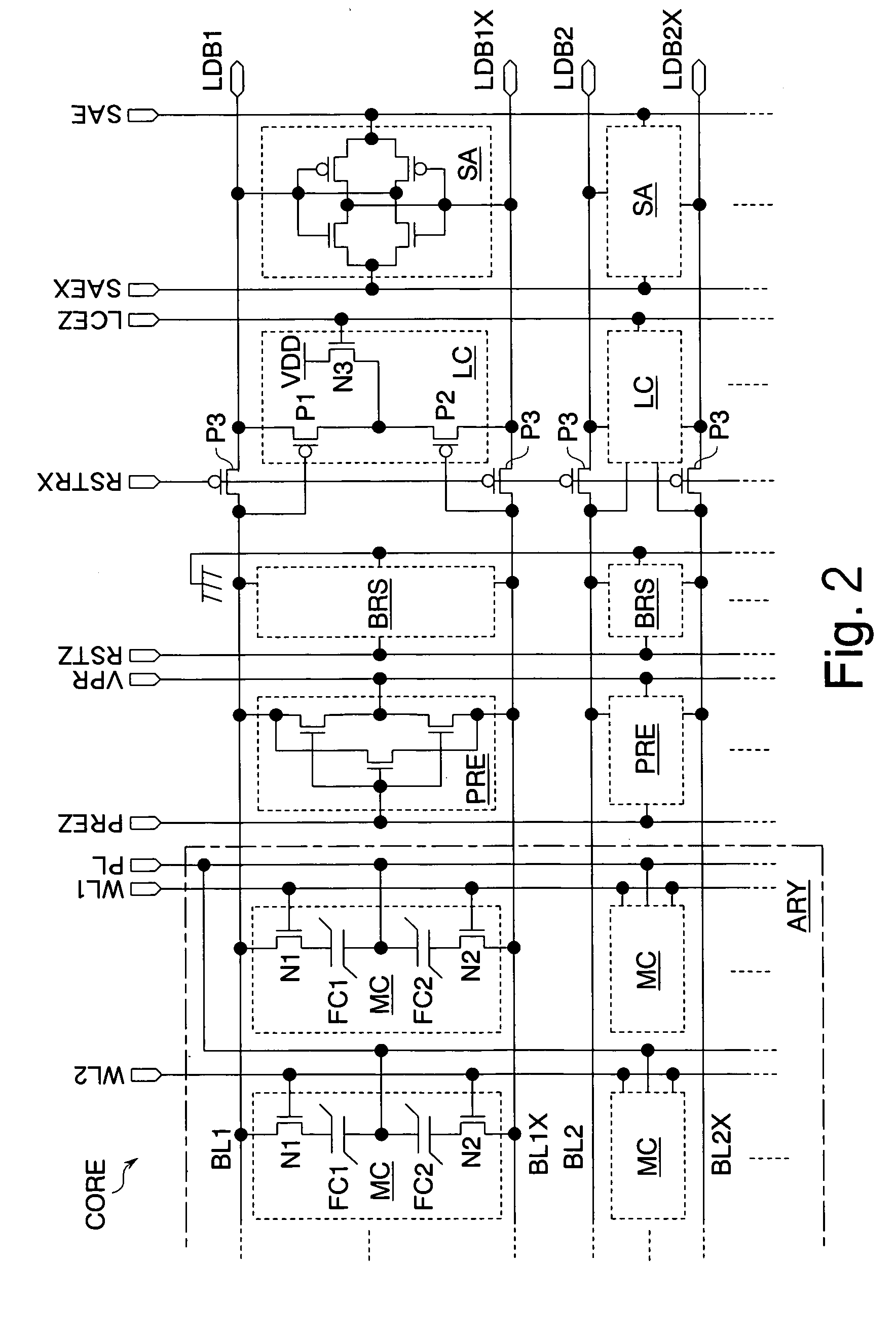

Ferroelectric memory

ActiveUS20060215438A1Reduce the number of readsReduce power consumptionDigital storageBit lineCapacitance

In order to charge a pair of ferroelectric capacitors that constitute a memory cell, a voltage setting circuit sets a voltage difference between both ends of the ferroelectric capacitors to be lower than a coercive voltage in a read operation. A differential sense amplifier amplifies a voltage difference between bit lines generated in accordance with a difference in charging amounts of the ferroelectric capacitors. A voltage difference between both ends of the ferroelectric capacitor being charged is lower than the coercive voltage; accordingly, a polarization vector of the ferroelectric capacitor is inhibited from reversing. As a result, the ferroelectric material can be inhibited from deteriorating owing to the read operation, thereby eliminating the restriction on the number of times of read operations in the ferroelectric memory.

Owner:FUJITSU SEMICON LTD

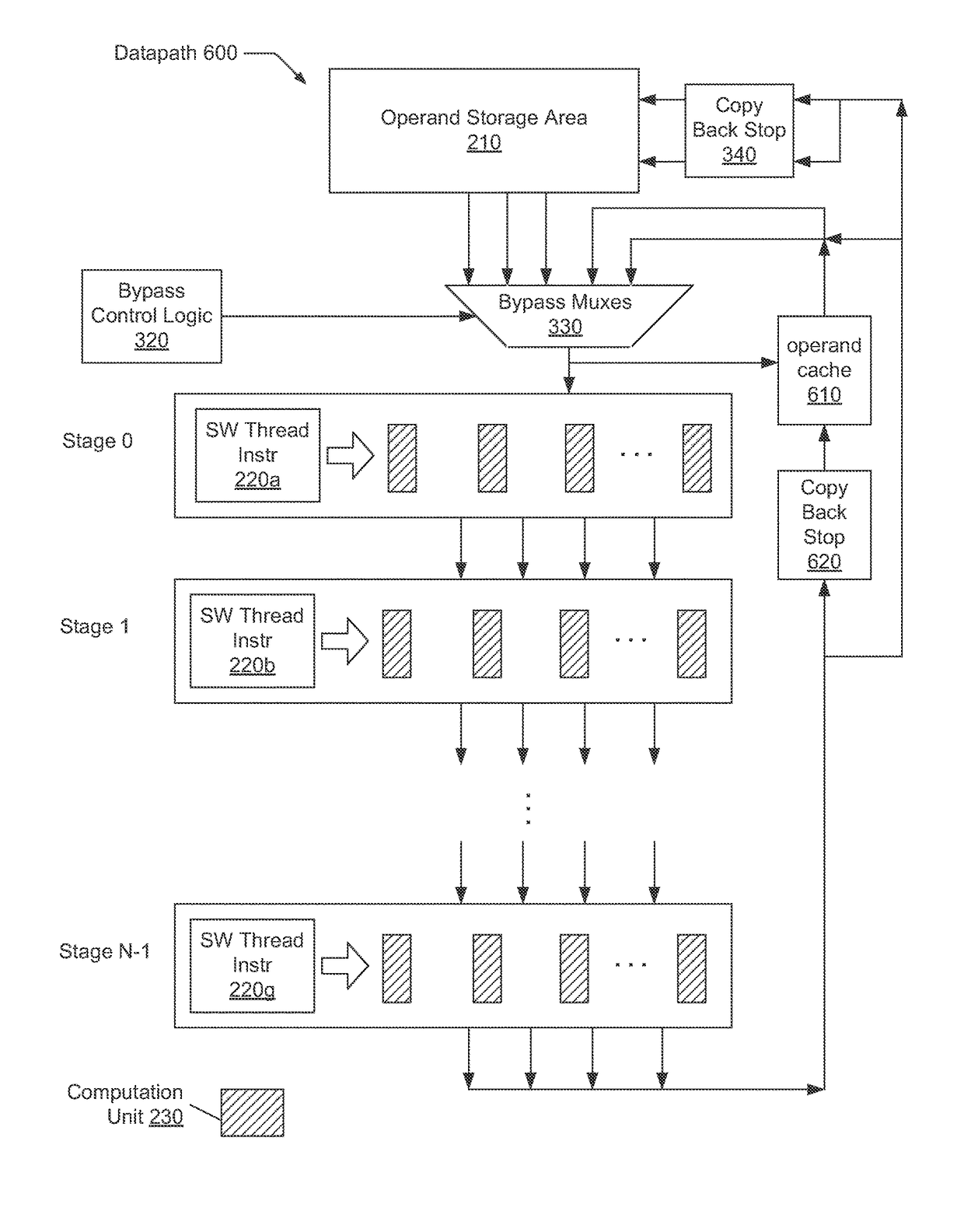

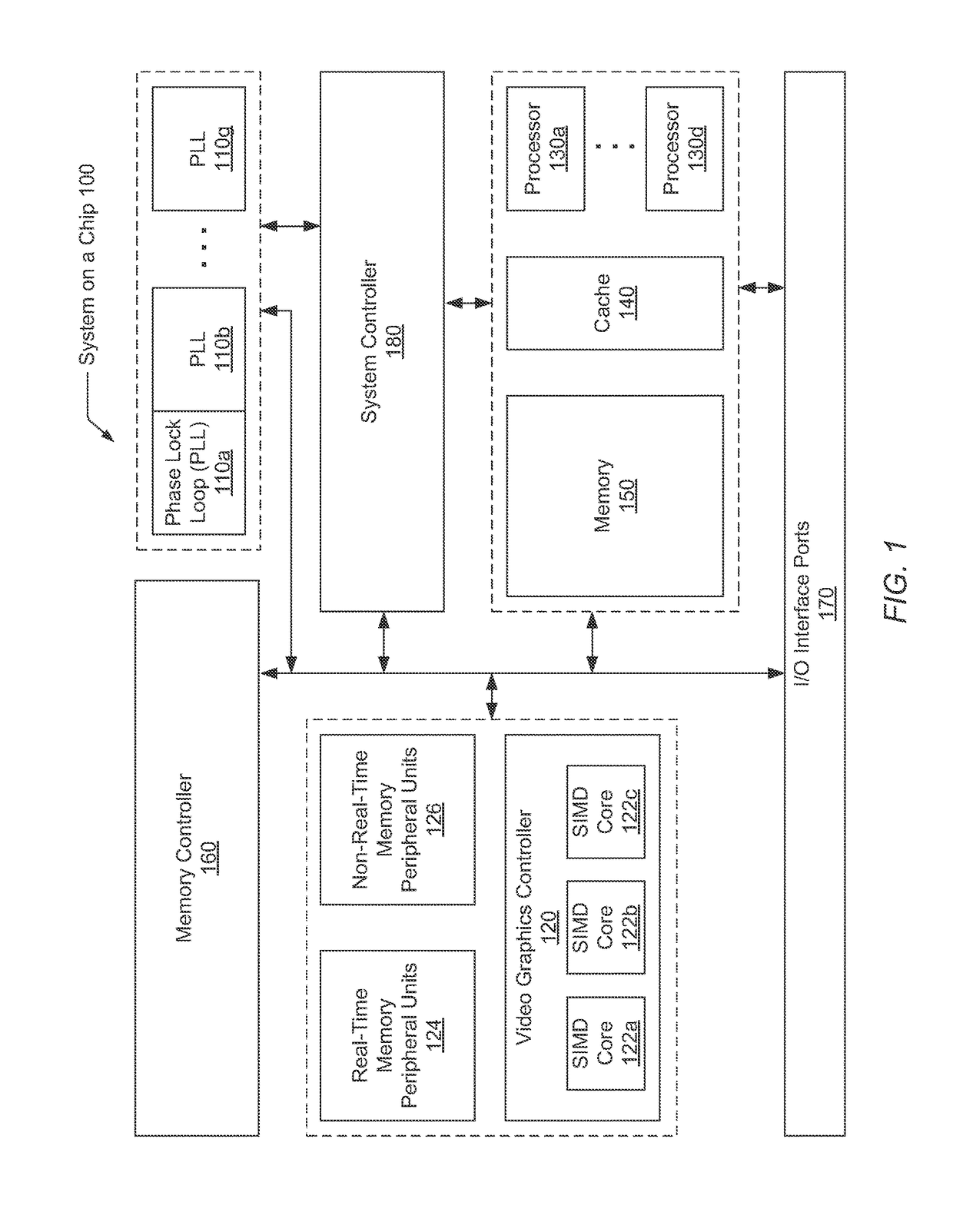

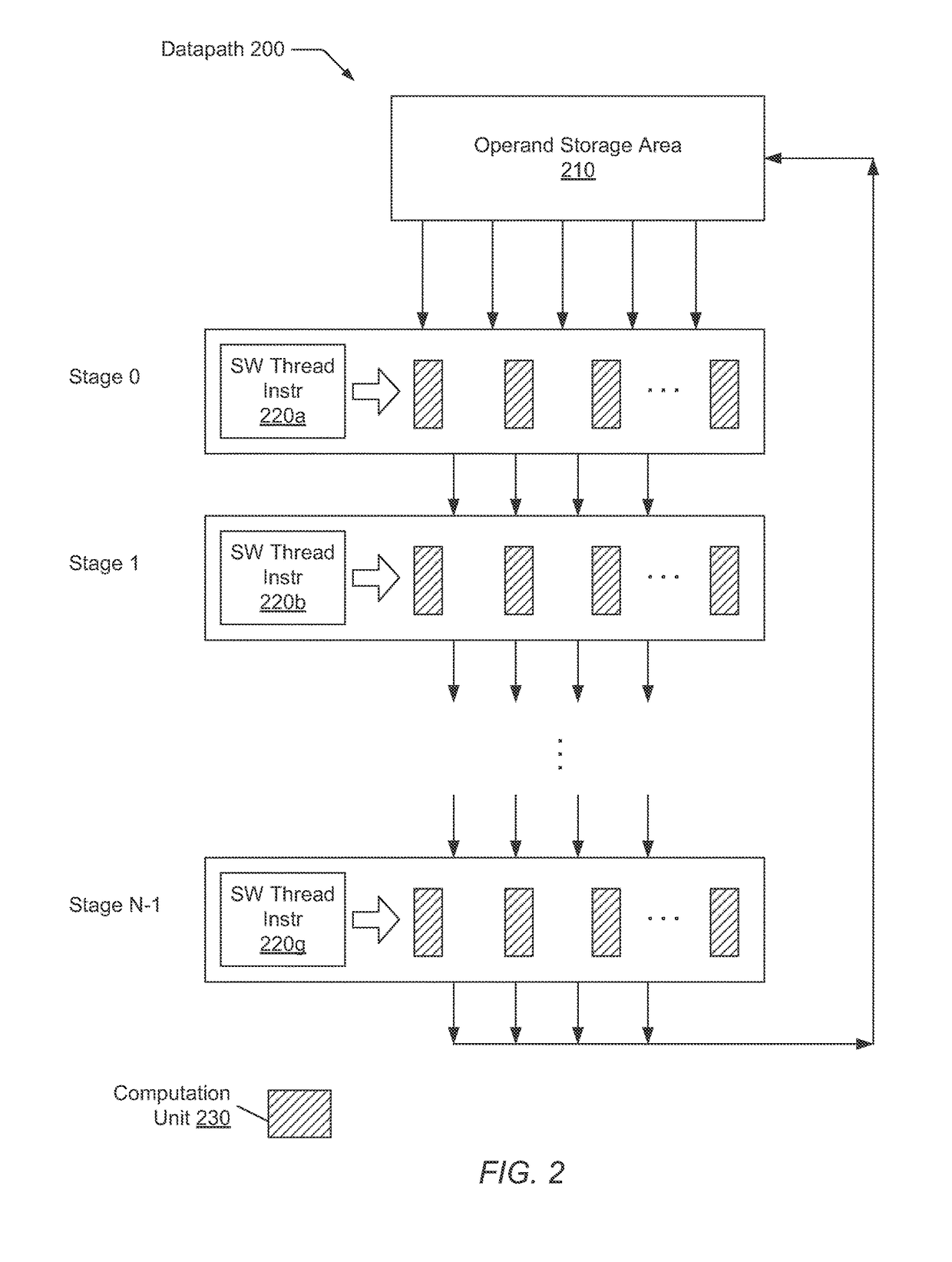

Result bypass cache

ActiveUS9600288B1Efficient accessExtend battery lifeConcurrent instruction executionData operationsProcessor register

A system and method for efficiently accessing operands in a datapath. An apparatus includes a data operand register file and an execution pipeline with multiple stages. In addition, the apparatus includes a result bypass cache configured to store data results conveyed by at least the final stage of the execution pipeline stage. Control logic is included which is configured to determine whether source operands for an instruction entering the pipeline are available in the last stage of the pipeline or in the result bypass cache. If the source operands are available in the last stage of the pipeline or the result bypass cache, they may be obtained from one of those locations rather than reading from the register file. If the source operands are not available from the last stage or the result bypass cache, then they may be obtained from the data operand register file.

Owner:APPLE INC

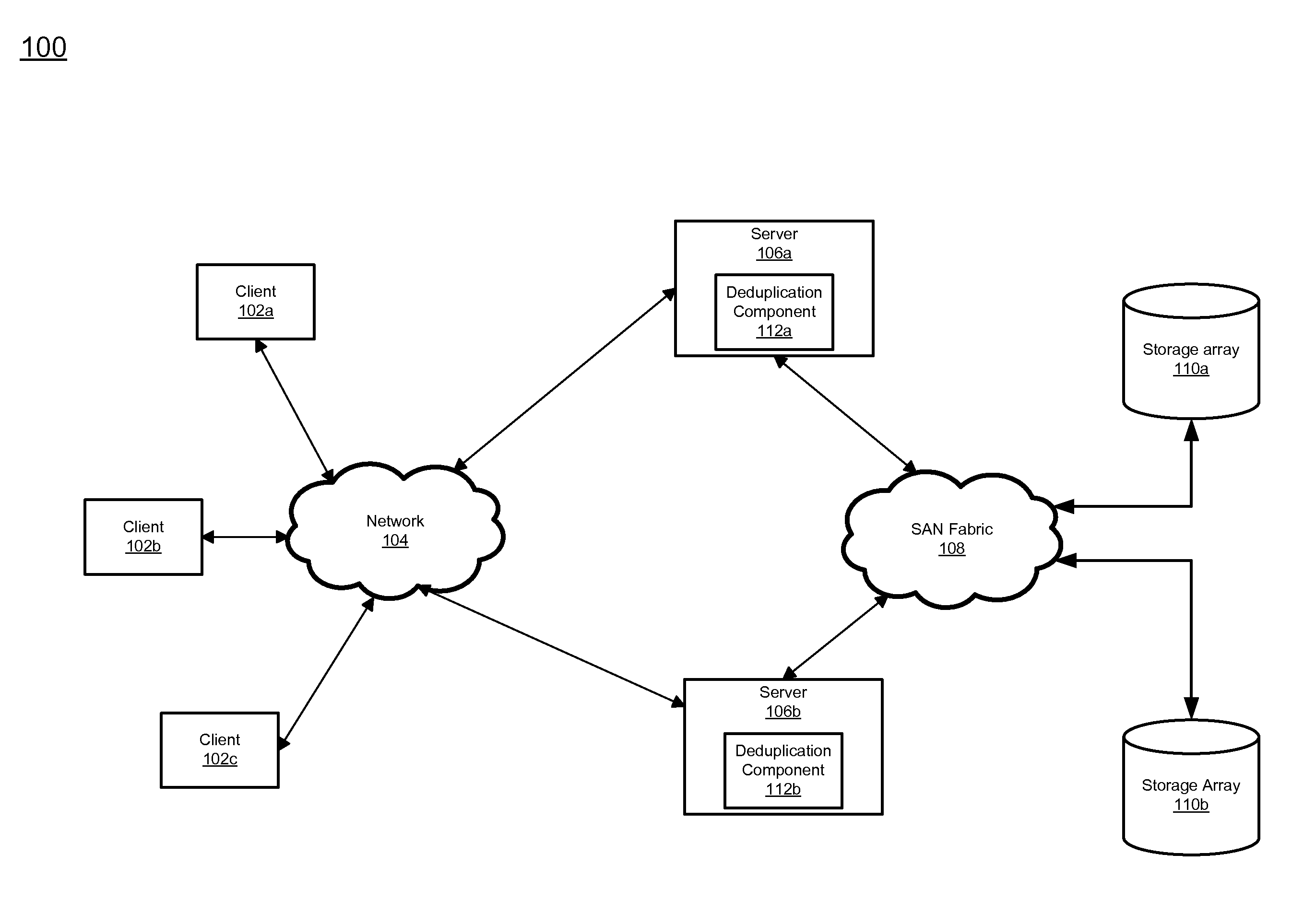

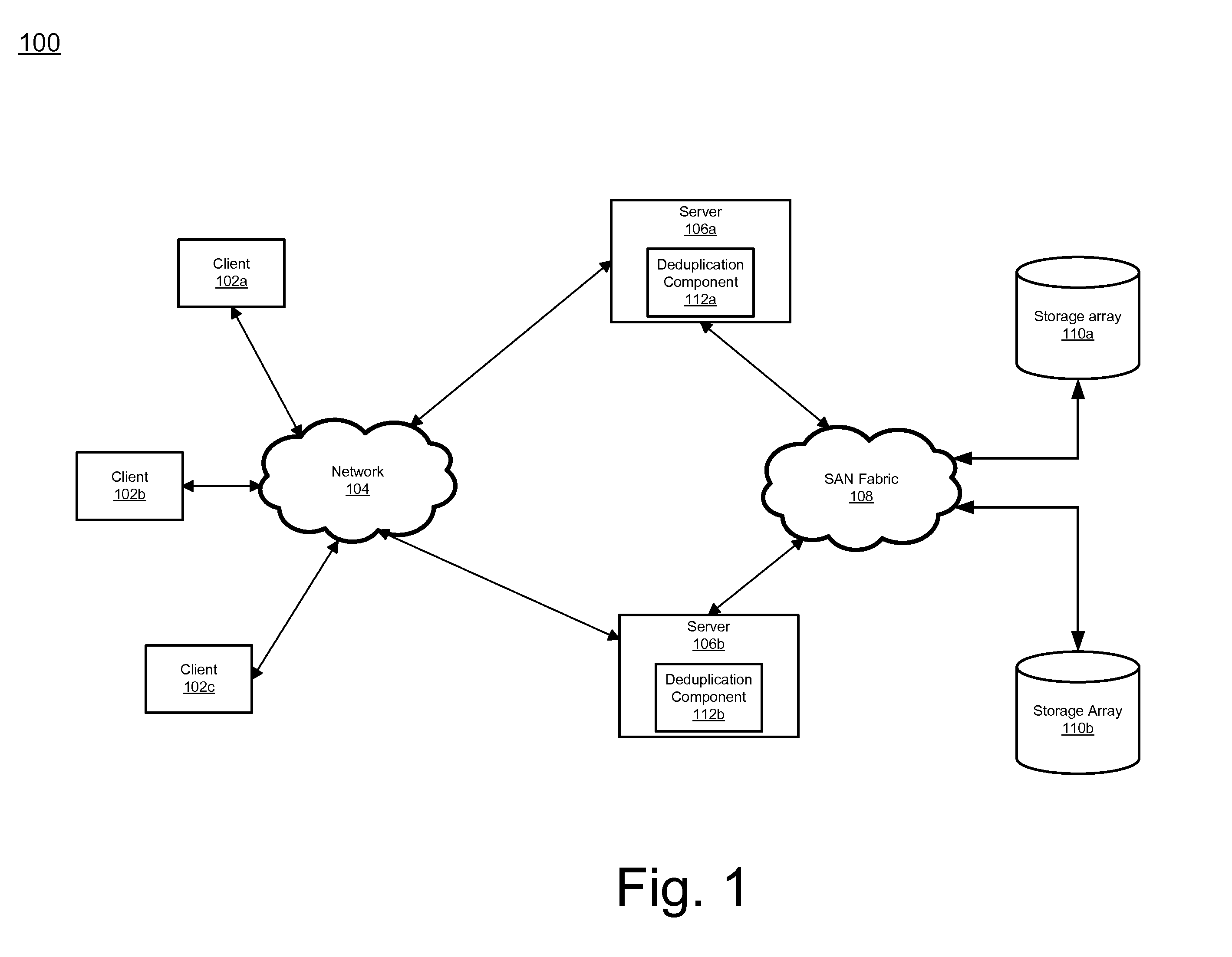

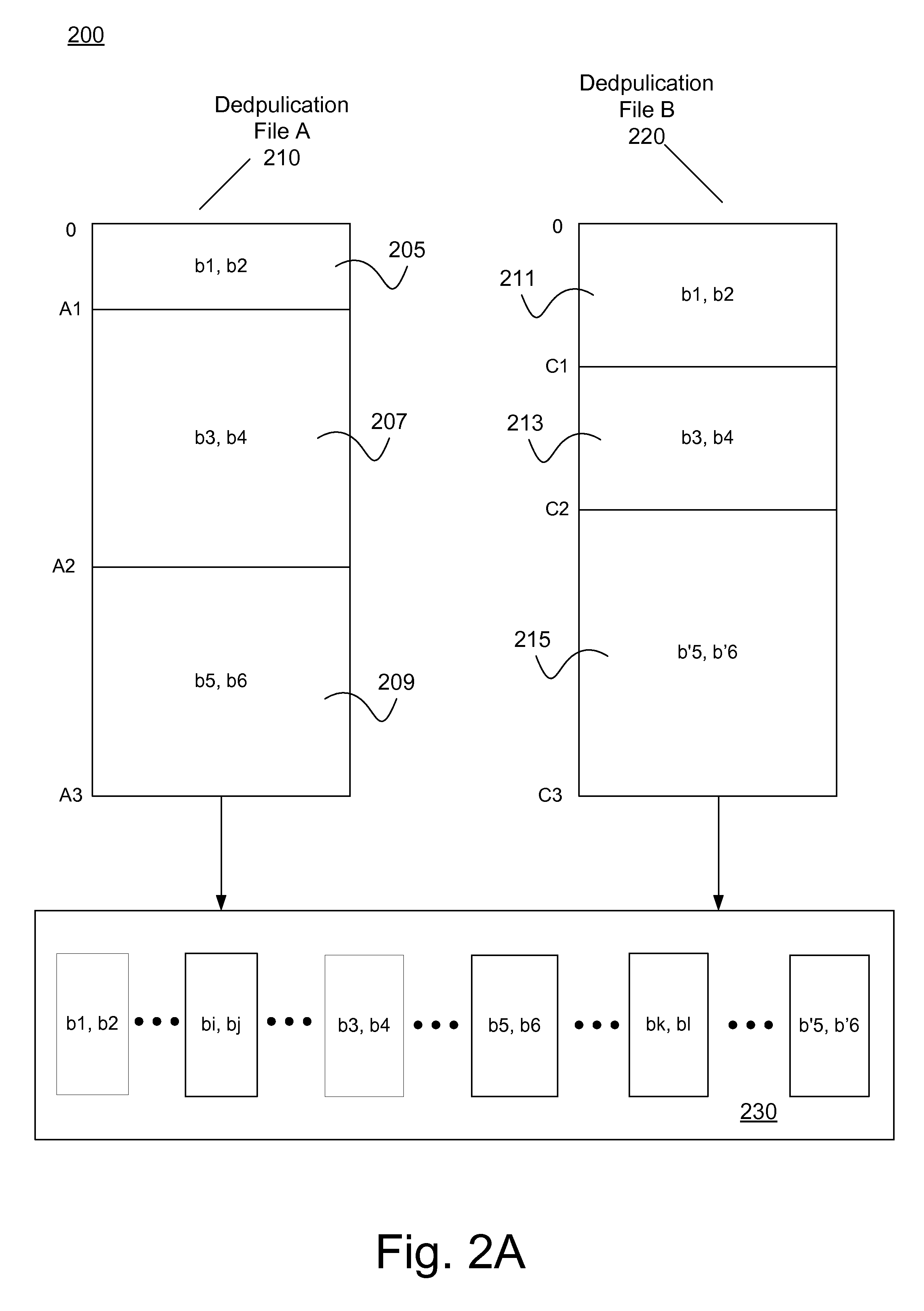

Method and system for providing deduplication information to applications

ActiveUS20110225211A1Efficient processingEfficient data processingDigital data information retrievalDigital data processing detailsApplication softwareData deduplication

A method of maintaining and providing information relating to file deduplication. A first portion of a first file and a second portion of a second file that contain a first content are identified. A first header associated with the first portion is created. The first header identifies the first portion and the second portion containing the first content. The first header is appended to a storage location of the first content of the first portion to form a first data structure for the first file. The first data structure is stored. The first data structure is provided to an application requesting the first file so that duplicate data processing can be avoided by the application. The first data structure is updated when the first file or the second file are altered. A similar process may occur to generate a data structure for the second file.

Owner:VERITAS TECH

Method of determining default read voltage of non-volatile memory device and method of reading data of non-volatile memory device

ActiveUS9666292B2Error minimizationReduce the number of readsRead-only memoriesCode conversionLogic stateComputer science

A method of determining a default read voltage of a non-volatile memory device which includes a plurality of first memory cells, each of which stores a plurality of data bits as one of a plurality of threshold voltages corresponding to a plurality of logic states, includes programming a first data to the first memory cells so that the logic states of the first memory cells are balanced or equally used. The method includes applying a first default read voltage included in default read voltages to word lines coupled to the first memory cells, and measuring a first ratio of first on-cells, each of which has a threshold voltage smaller than or equal to the first default read voltage, among the first memory cells, and modifying the first default read voltage based on the first ratio and a first reference value corresponding to the first default read voltage.

Owner:SAMSUNG ELECTRONICS CO LTD

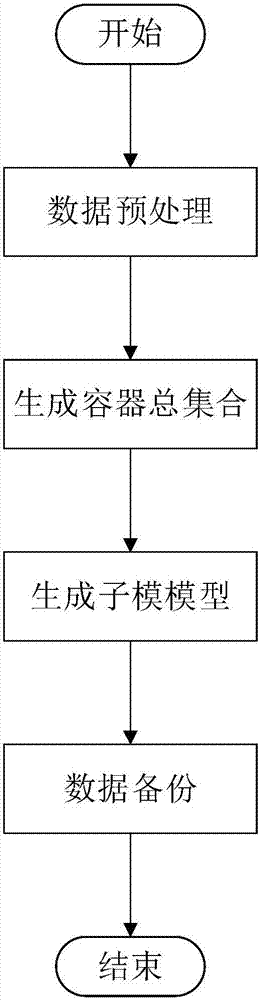

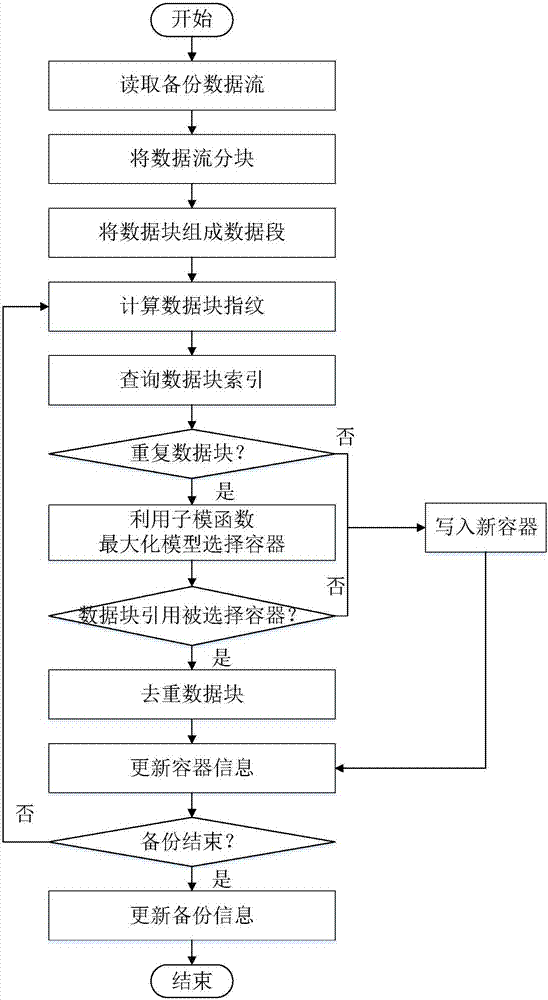

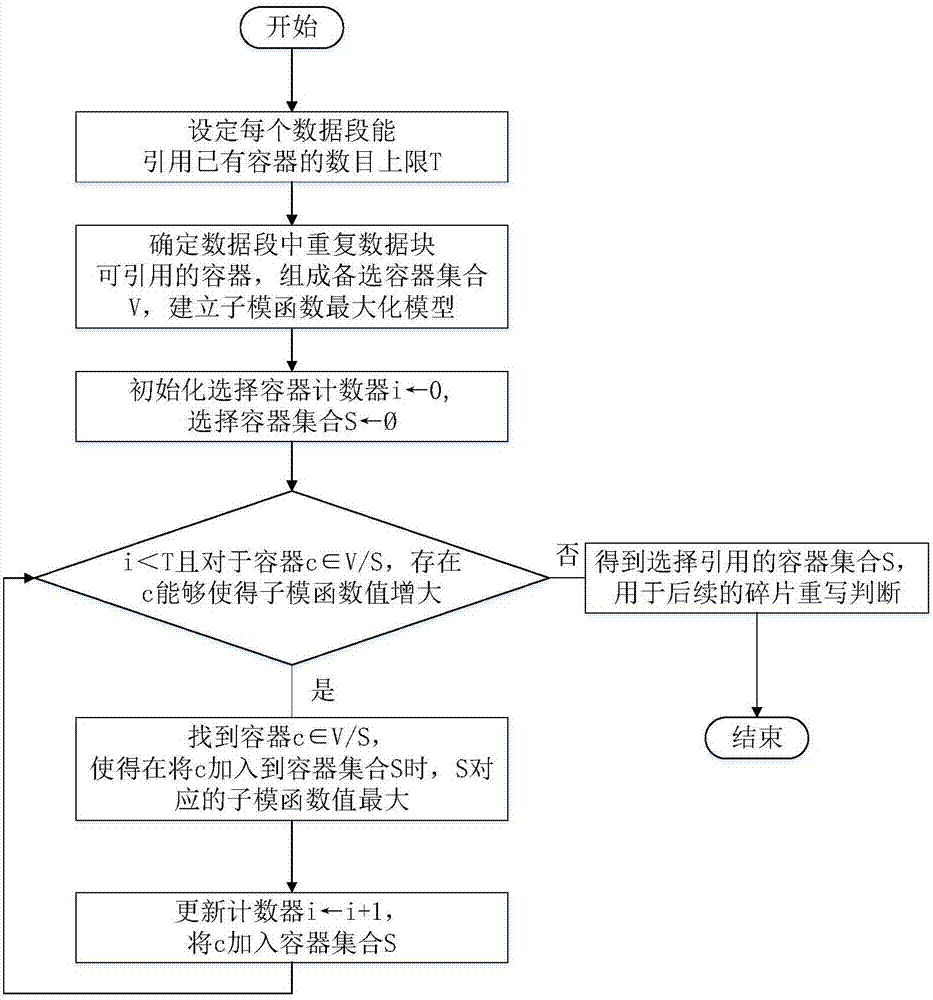

Data backup method and system based on submodule model

ActiveCN107015888AIncrease data deduplication rateReduced number of containersRedundant operation error correctionFingerprintComputer hardware

The invention discloses a data backup method and system based on a submodule model, and belongs to the technical field of computer storage. The method comprises the steps that partitioning is conducted on data flow, and different data segments are formed; a fingerprint of each data block of the corresponding data segment is calculated, fingerprint indexing is inquired, and container information quoted by repeating data blocks is obtained; a submodule function maximization model is established for selecting containers containing more quotable and non-redundant data blocks; the data blocks quoting the containers are subjected to duplication eliminating, and fragment data blocks quoting other containers are rewritten. The invention further provides the data backup system based on the submodule model. According to the technical scheme, more duplicating data blocks can be removed, consumption of redundant and non-quoting data blocks on bandwidth recovery is reduced, and the recovery performance is improved while the high duplication eliminating rate is guaranteed.

Owner:HUAZHONG UNIV OF SCI & TECH

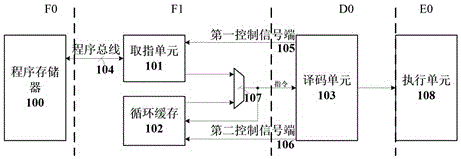

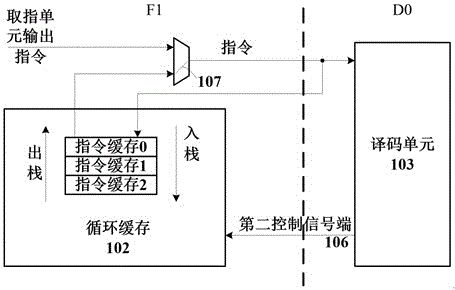

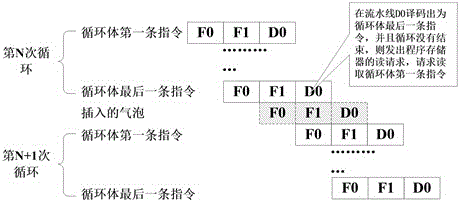

Hardware circular processing method and system of processor

ActiveCN106775591AReduce accessReduce power consumptionMachine execution arrangementsComputer hardwareWaiting period

The invention provides a hardware circular processing method and system of a processor. Circular buffering is increased in an original fetch unit; first N commands in a corresponding circular body are directly output to a subsequent decoding unit through the circular buffering, and a condition that an extra waiting period caused by the fact that the reading of data in a program memory is delayed during each skipping to a first command of the circular body from a final command of the circular body in a circular processing process is eliminated, so that zero-delaying skipping of hardware circulation is realized. The method provided by the invention has a simple design, and only one hardware circular buffering and a corresponding selection module need to be increased in an original system to realize the zero-delaying skipping of the hardware circulation. Furthermore, the access of the fetch unit on the program memory can also be reduced by utilizing the method so that the power consumption of the processor is reduced.

Owner:JIANGSU HONGYUN TECH

Filtering method of blocking-removing filter suitable for HEVC standard

ActiveCN103491372AReduce the number of readsEasy to handleDigital video signal modificationDigital videoHigh definition

The invention belongs to the technical field of high-definition digital video compression encoding and decoding and particularly relates to a filtering method of a block-removing filter suitable for the HEVC standard. Each image is set to comprise a luminance component Y which corresponds to two chrominance components Cb and Cr. Processing is performed in a block-removing filter module based on one quarter-LCU. Pixel blocks of the quarter-LCU needing to be processed are set to be provided with vertical edges and horizontal edges. The filtering sequence of the filtering method is that filtering is performed on the vertical edges and then on the horizontal edges; the vertical edges are sequentially filtered from left to right, and the pixel blocks on the two sides of each vertical edge are sequentially filtered from top to bottom; the horizontal edges are sequentially filtered from top to bottom, and the pixel blocks on the two sides of each horizontal edge are sequentially filtered from left to right. According to the filtering method of the block-removing filter suitable for the HEVC standard, the number of times of reading of an external storage device is effectively reduced, the processing time is shortened, the processing capability of a chip is improved, and therefore real-time encoding of a high-definition video is efficiently achieved.

Owner:FUDAN UNIV

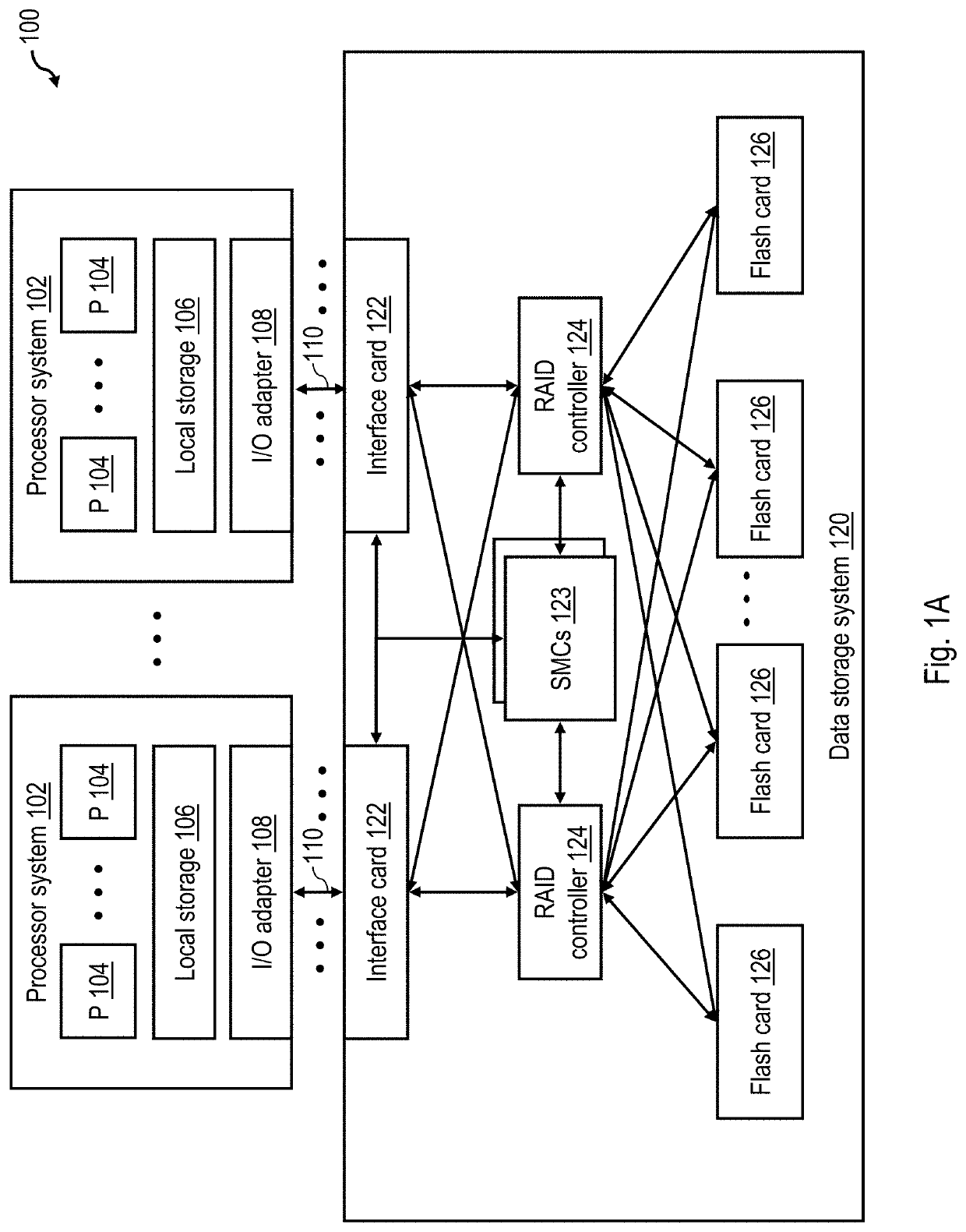

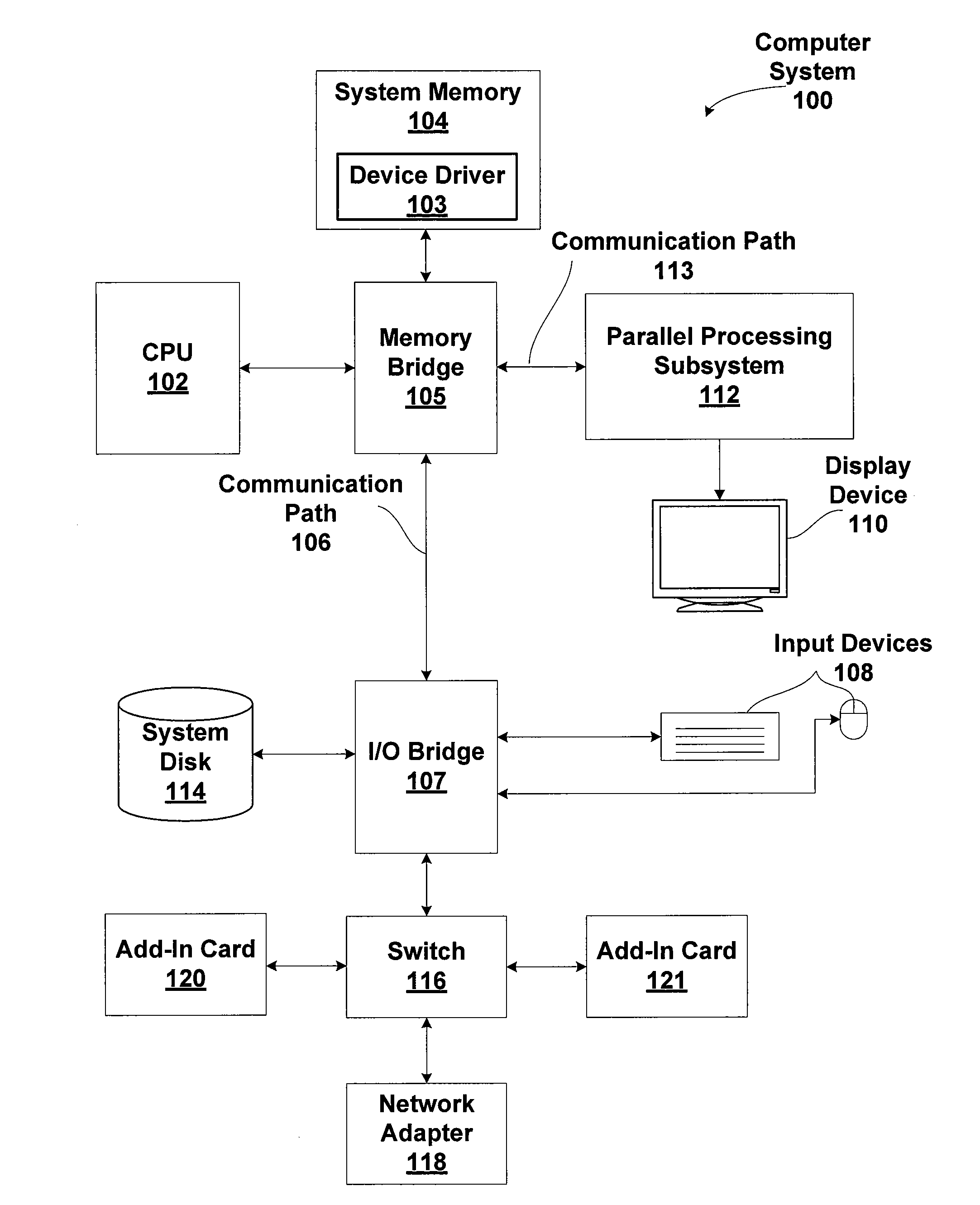

Managing conflicts on shared L2 bus

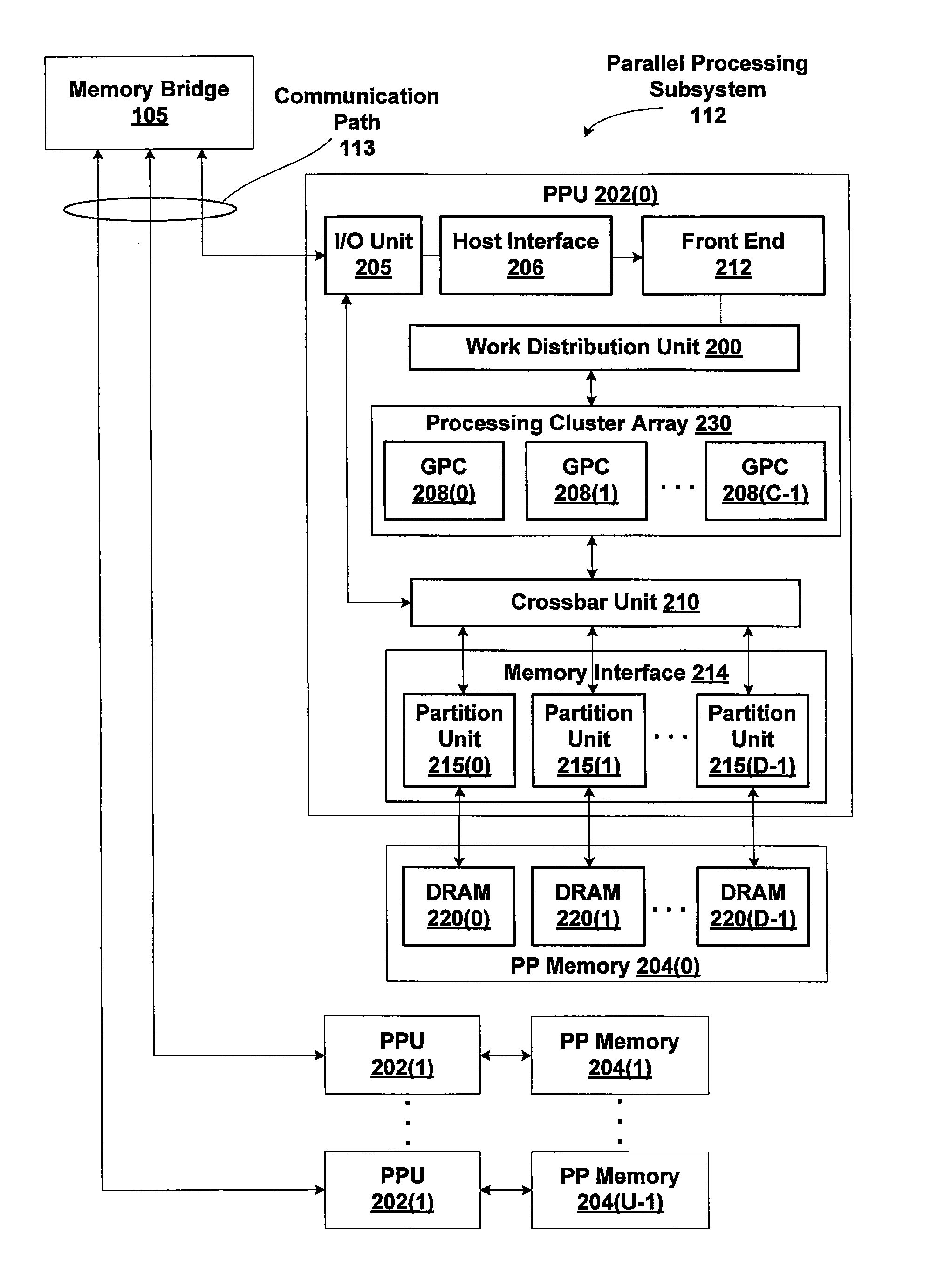

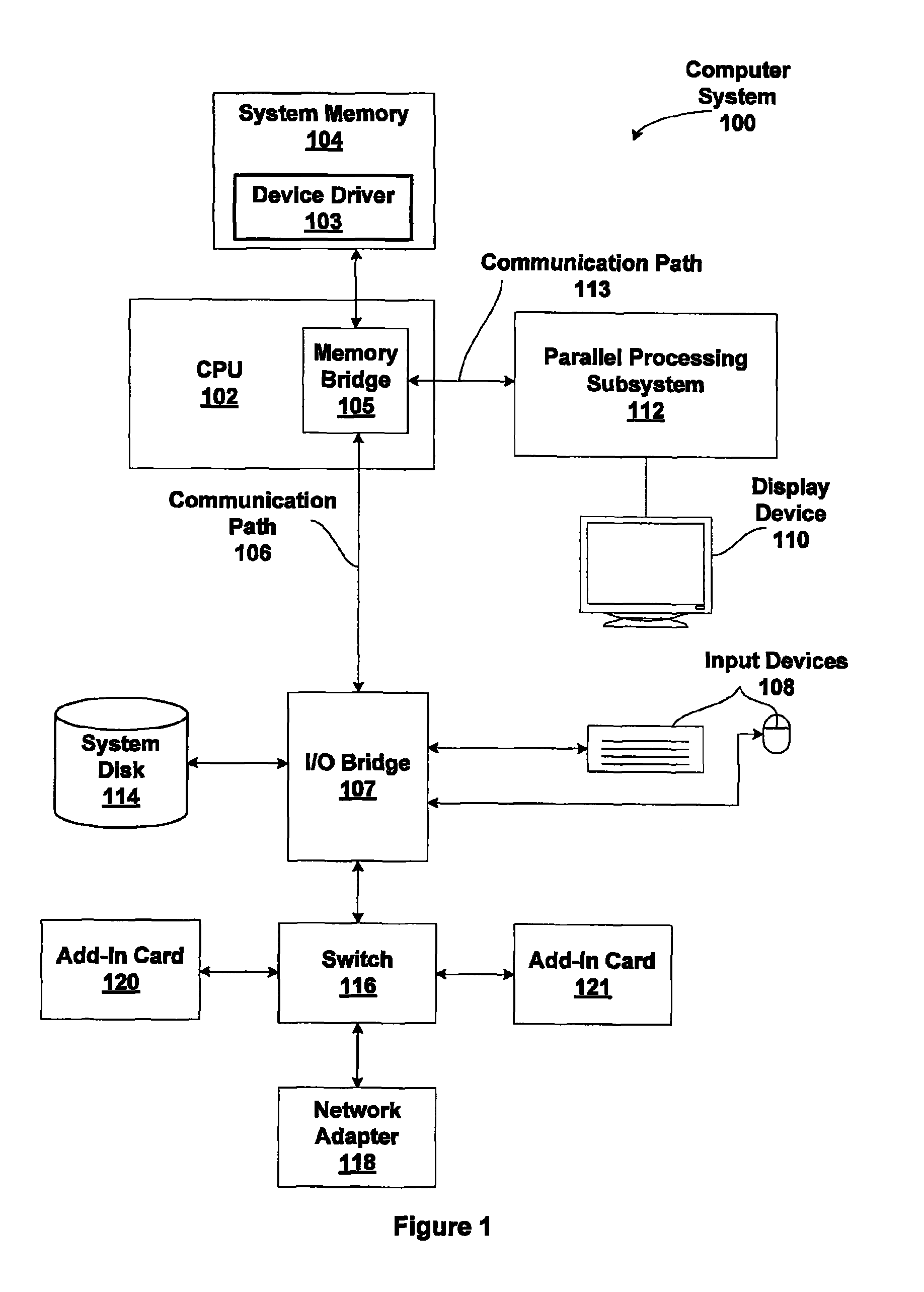

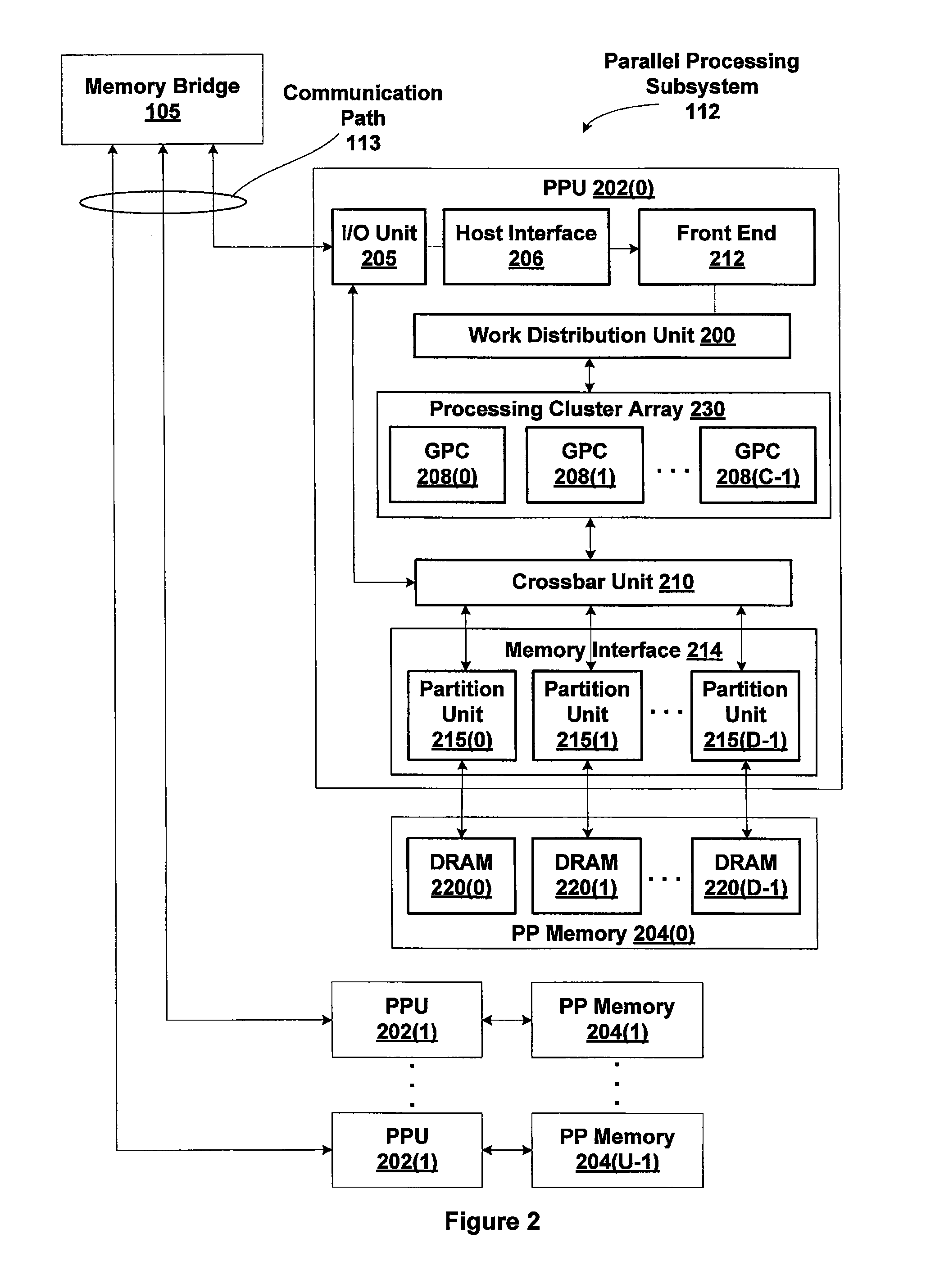

One embodiment of the present invention sets forth a mechanism to schedule read data transmissions and write data transmissions to / from a cache to frame buffer logic on the L2 bus. When processing a read or a write command, a scheduling arbiter examines a bus schedule to determine that a read-read conflict, a read-write conflict or a write-read exists, and allocates an available memory space in a read buffer to store the read data causing the conflict until the read return data transmission can be scheduled. In the case of a write command, the scheduling arbiter then transmits a write request to a request buffer. When processing a write request, the request arbiter examines the request buffers to determine whether a write-write conflict. If so, then the request arbiter allocates a memory space in a request buffer to store the write request until the write data transmission can be scheduled.

Owner:NVIDIA CORP

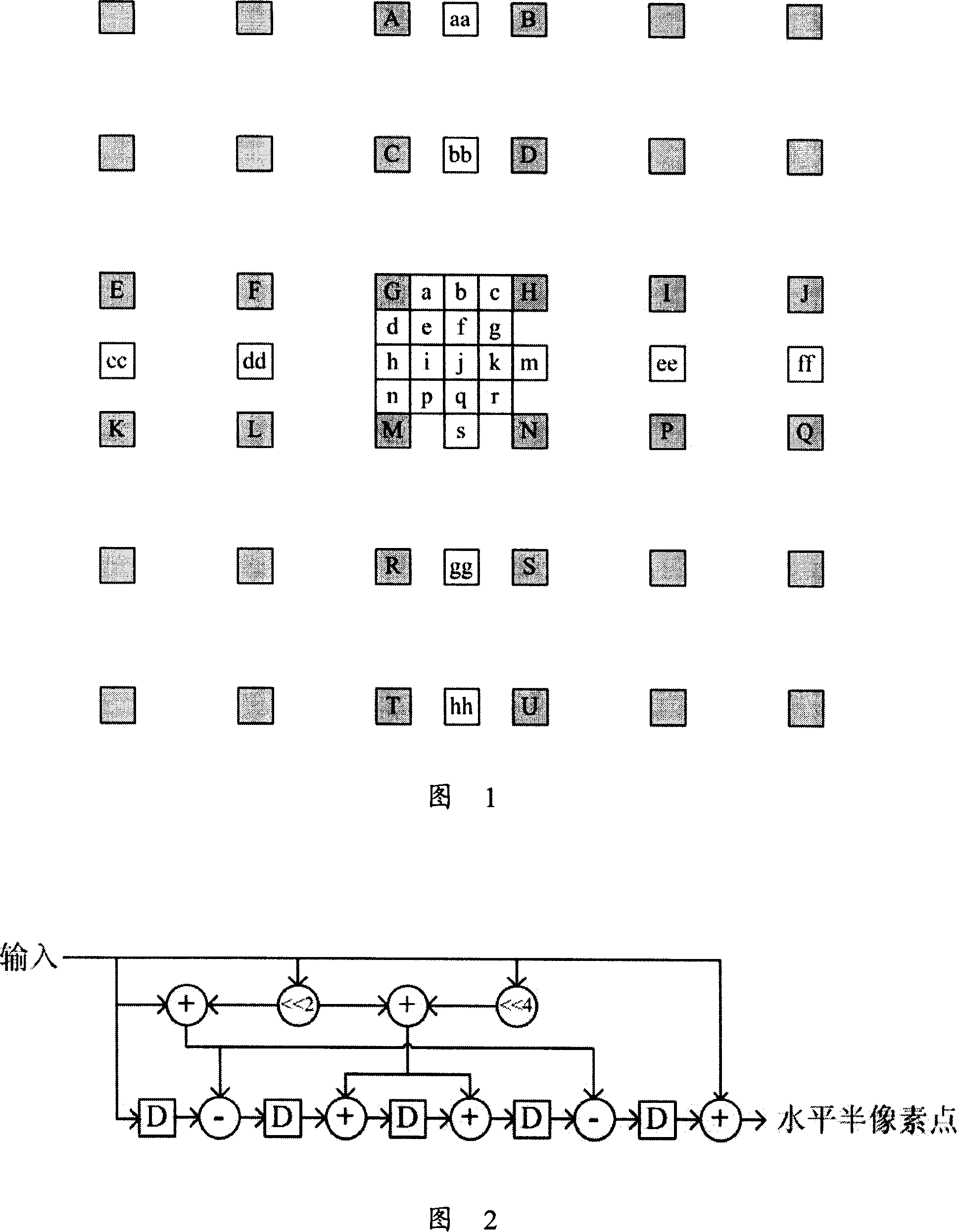

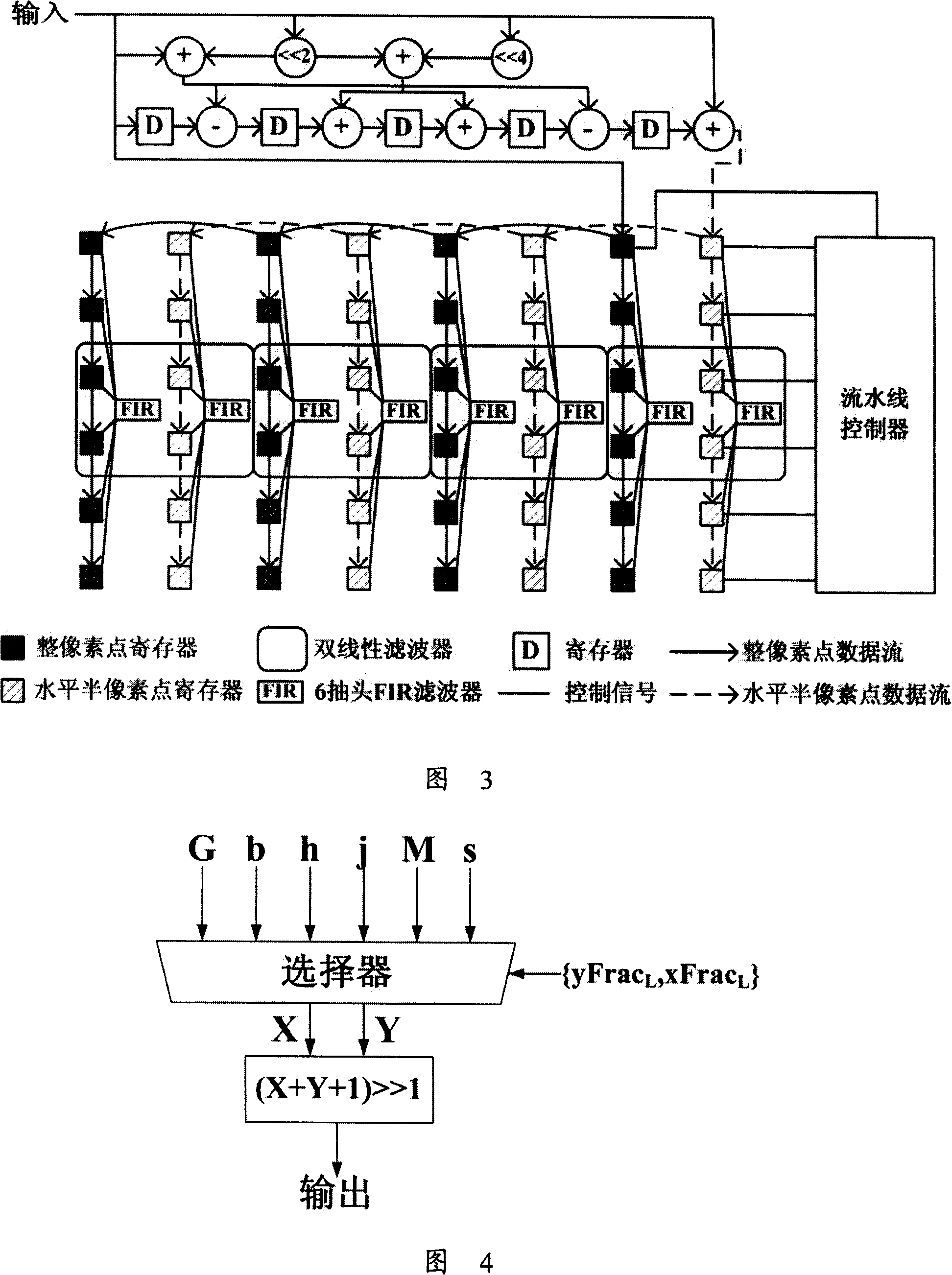

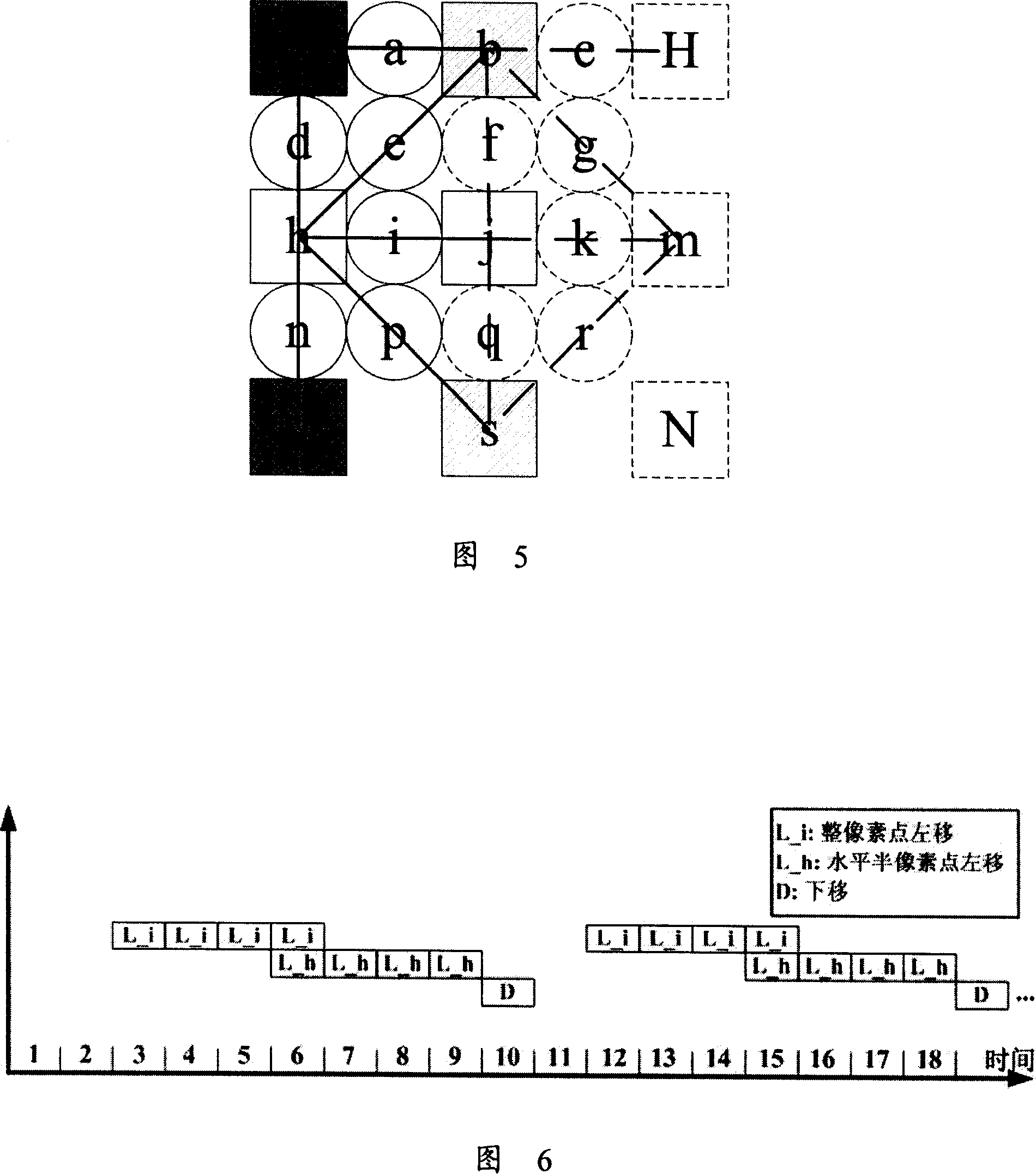

Serial input-parallel output video image brightness interpolating method and device

InactiveCN101141559AReduce complexityReduce bandwidthTelevision system detailsColor television detailsProcessor registerMotion vector

The present invention discloses an image brightness interpolation method of H.264 / AVC based on serially input and paralleling output video and the device. A full pixel point in each line serially inputs a Wiener filter to produce a medium value of a level half pixel point, at the same time, the full pixel point is selected by utilizing the motion vector, thereby, the register array is reduced to 6 is multiplied by 8, and the filtering algorithm of the 1 / 4 pixel is optimized. Compared with the original paralleling input and paralleling output interpolation device, a 6 tap FIR filter in at least 3 horizontal directions are saved. Compared with the original paralleling input and serially output interpolation device, the register array is reduced from 6 is multiplied by 9 to 6 is multiplied by 8, and the complexity of the production chain controller is decreased. Compared with the original serially input and serially output interpolation device, and the bandwidth and the computation period are saved.

Owner:ZHEJIANG UNIV

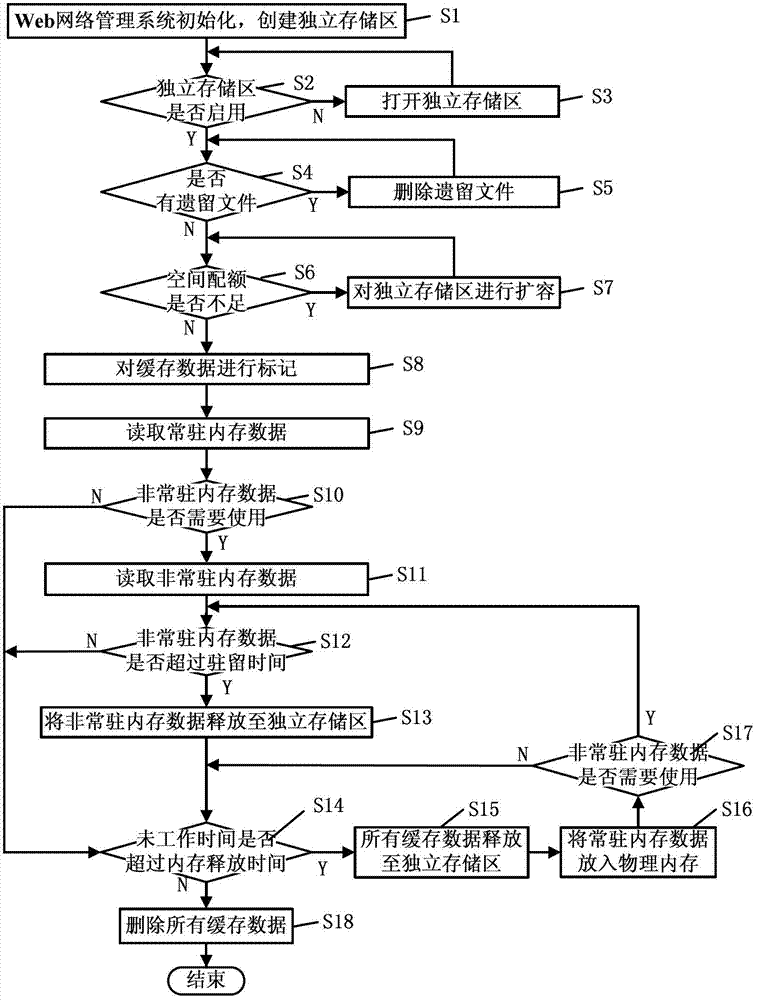

Data caching method and system in Web network management client based on rich internet application (RIA)

The invention discloses a data caching method and system in a Web network management client based on a RIA and relates to the data caching field in network management systems. The data caching method comprises the steps of establishing an independent storage area; marking areas for arranging cache data in a physical memory as a resident memory data area and a non-resident memory data area; placing resident memory data and non-resident memory data in the resident memory data area and the non-resident memory data area respectively; releasing the resident memory data and non-resident memory data in the independent storage area regularly when the data are not used by users, and placing the resident memory data and non-resident memory data in the physical memory when the data are reused by users; and deleting all cache data after being used by users. According to the data caching method and system, the cache data can be placed in the physical memory and the independent storage area respectively according to requirements of users, less physical memory is occupied, the reading frequency of discs is reduced, and performances of the Web network management system are improved.

Owner:FENGHUO COMM SCI & TECH CO LTD

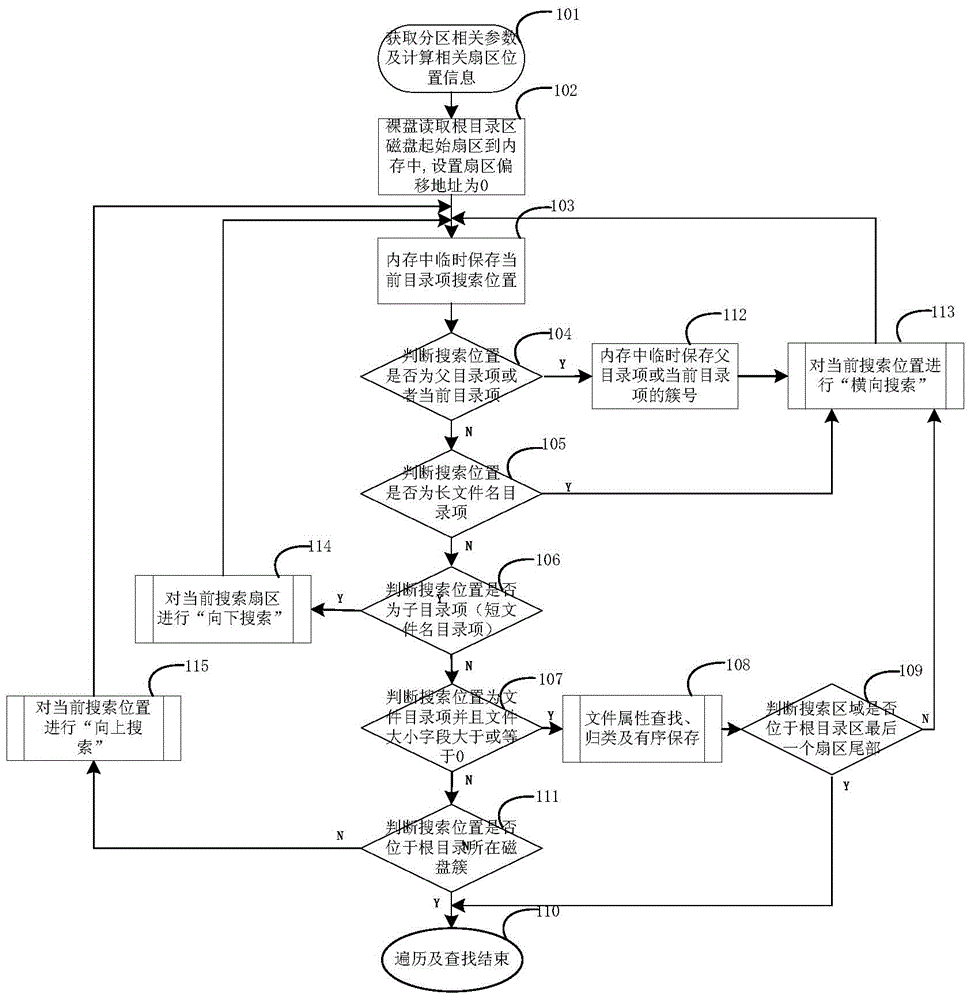

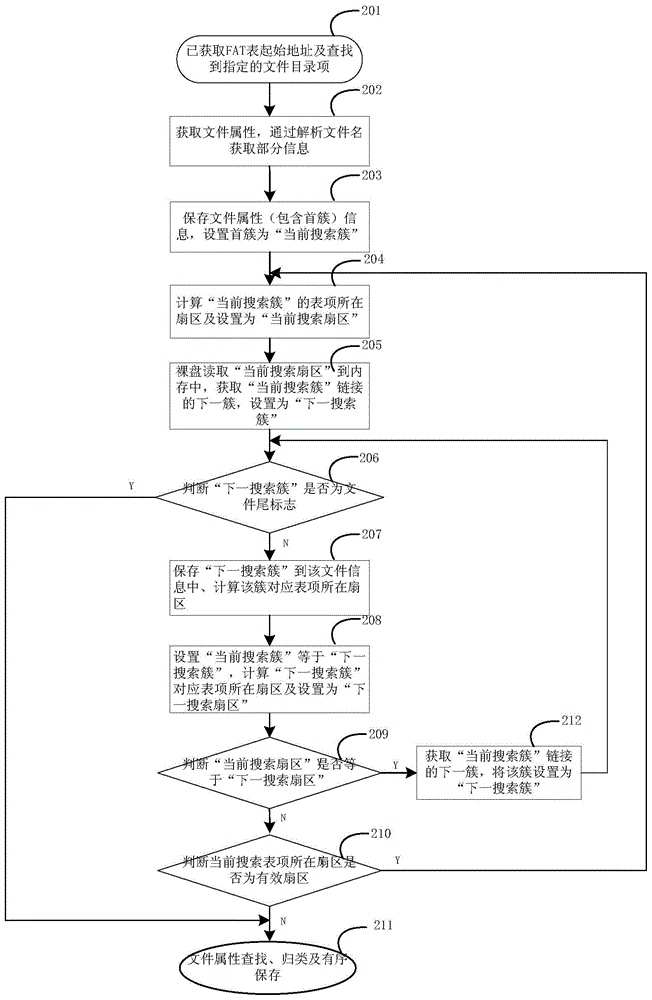

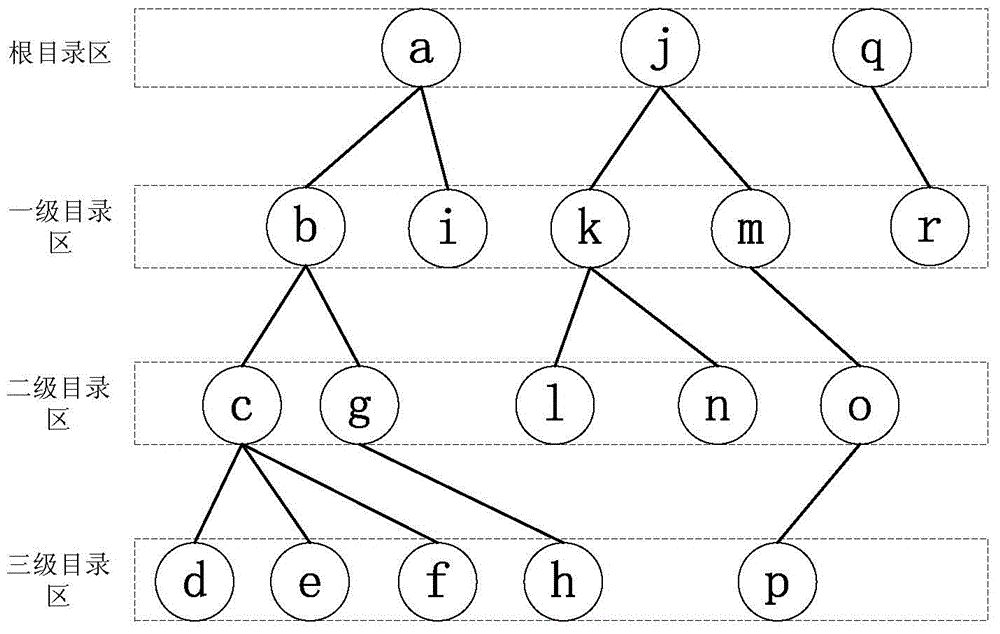

Fast FAT32 disk partition traversal and file searching method

ActiveCN105045528AReduce the number of readsImprove traversalInput/output to record carriersSpecial data processing applicationsByteComputer science

The invention discloses a fast FAT32 disk partition traversal and file searching method and relates to an FAT32 file system and a magnetic disk data search and recovery technology. The method of the invention comprises the steps as follows: raw-disk-reading disk sector data of a root directory and subdirectories to internal storage, and performing directory entry search to all levels of directories: gradually adding 32 bytes in the same directory to perform horizontal search, and performing downward search to the searched subdirectory entry at a start position of the subdirectory, and returning to a search position stored in a parent directory to search by performing upward search after finishing the search of the current subdirectory, and performing traversing to finish the search of each directory entry sub-tree of the root directory in turn, and performing file attribute search, classification and ordered storage to a file directory entry. The method of the invention improves traversal and search speed, and improves overall performance for subsequent file search, read or download. The method of the invention is especially suitable for FAT32 file system partition traversal and file search in an embedded system.

Owner:武汉烽火众智智慧之星科技有限公司

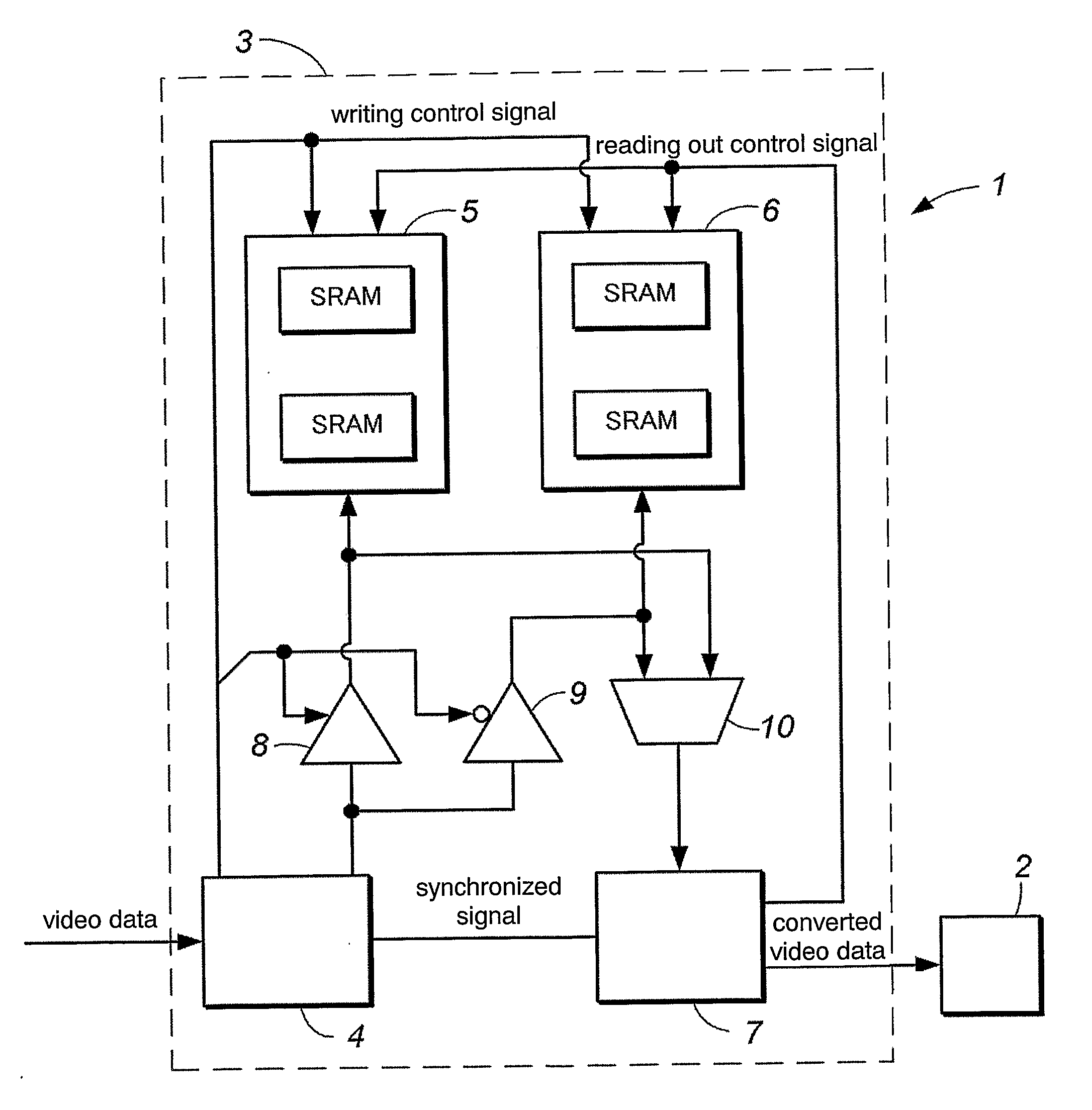

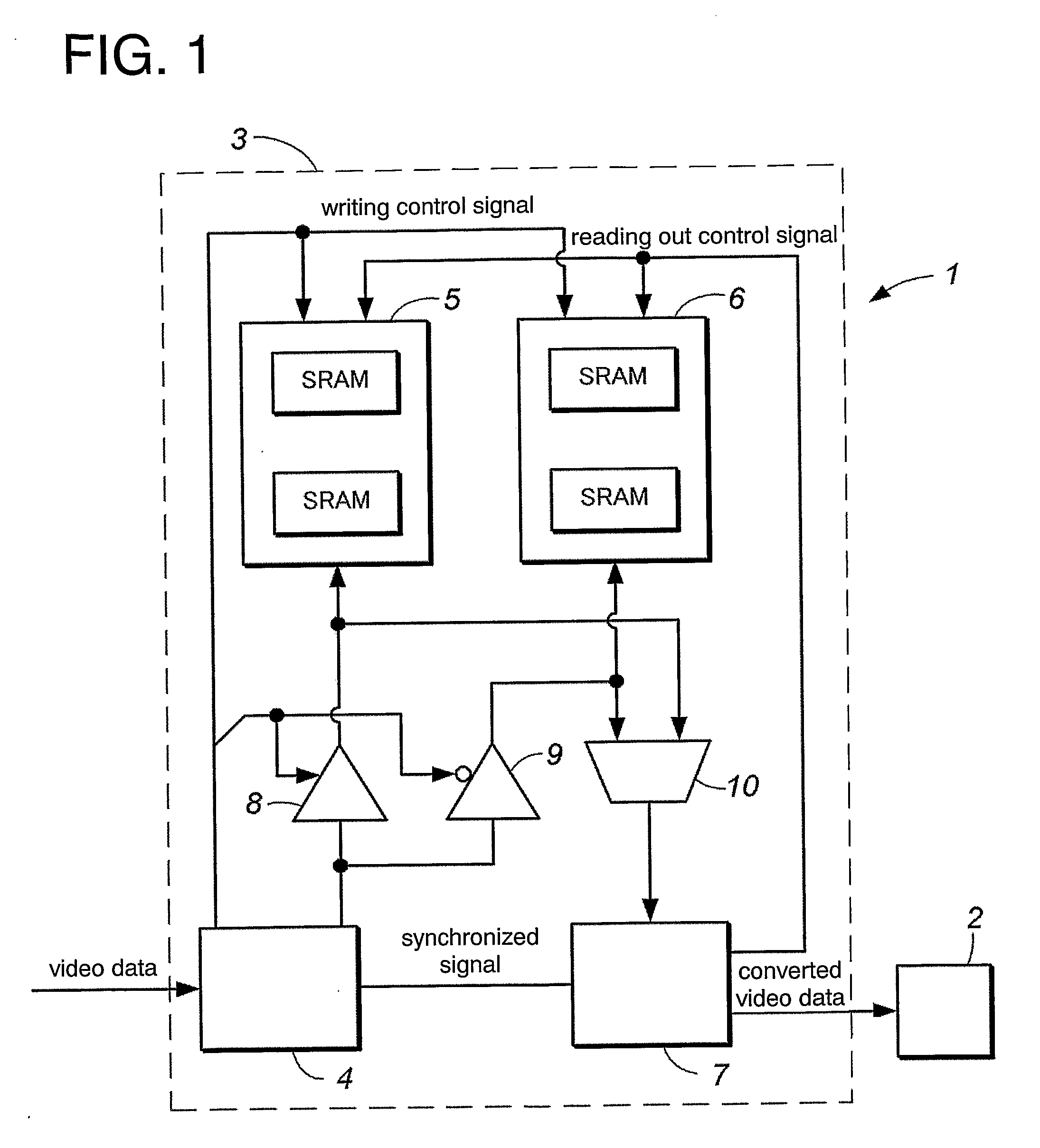

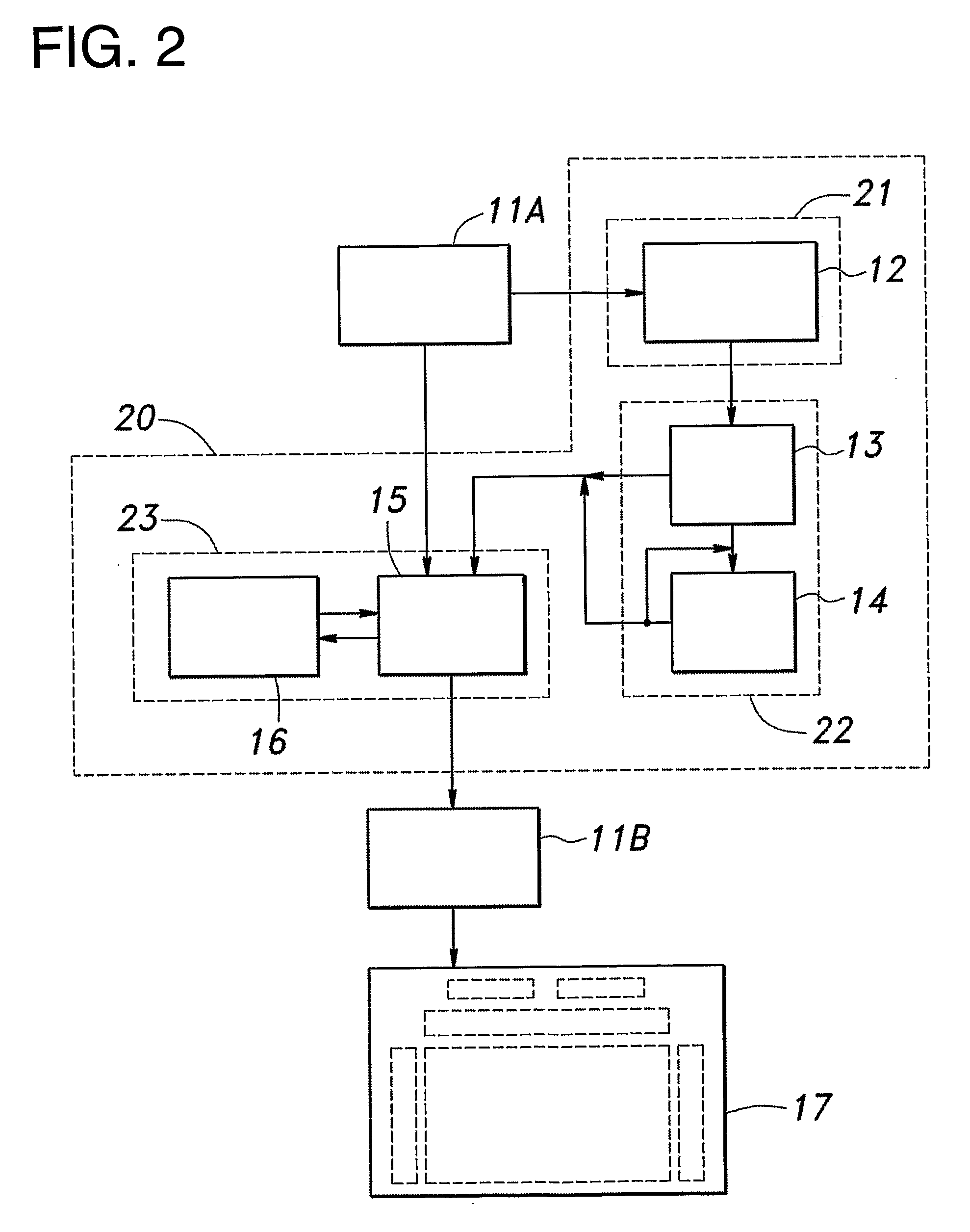

Display device and electronic device using the same

ActiveUS20090015604A1Access speed is lowReduce in quantityCathode-ray tube indicatorsInput/output processes for data processingVideo storageVideo memory

According to the invention, a compact and inexpensive with low power consumption memory and low access speed can be used for a panel controller and a deterioration compensation circuit of a display device. In a display device of a digital gray scale method, a plurality of pixels of a display panel are divided into first to n-th pixel regions (n is 2 or more) and a format converter portion of a panel controller converts the format of only video data corresponding to one of first to n-th pixel regions and writes the data to one of first and second video memories in each frame period. A display control portion reads out video data that is converted in format and corresponds to one of first to n-th pixel regions in which video data is written to the other of the first and second video memories in the preceding frame period, and transmits the data to the display panel.

Owner:SEMICON ENERGY LAB CO LTD

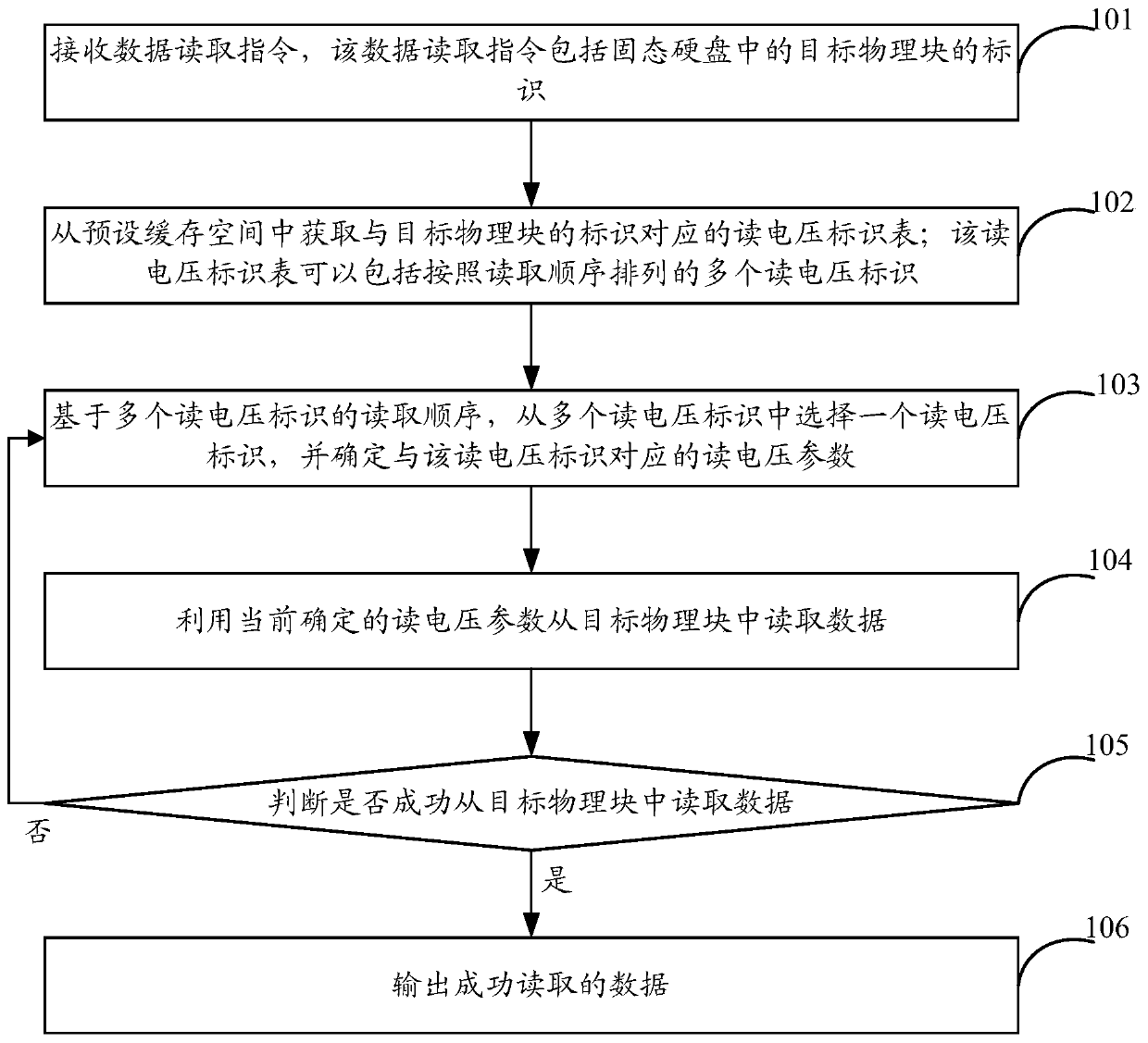

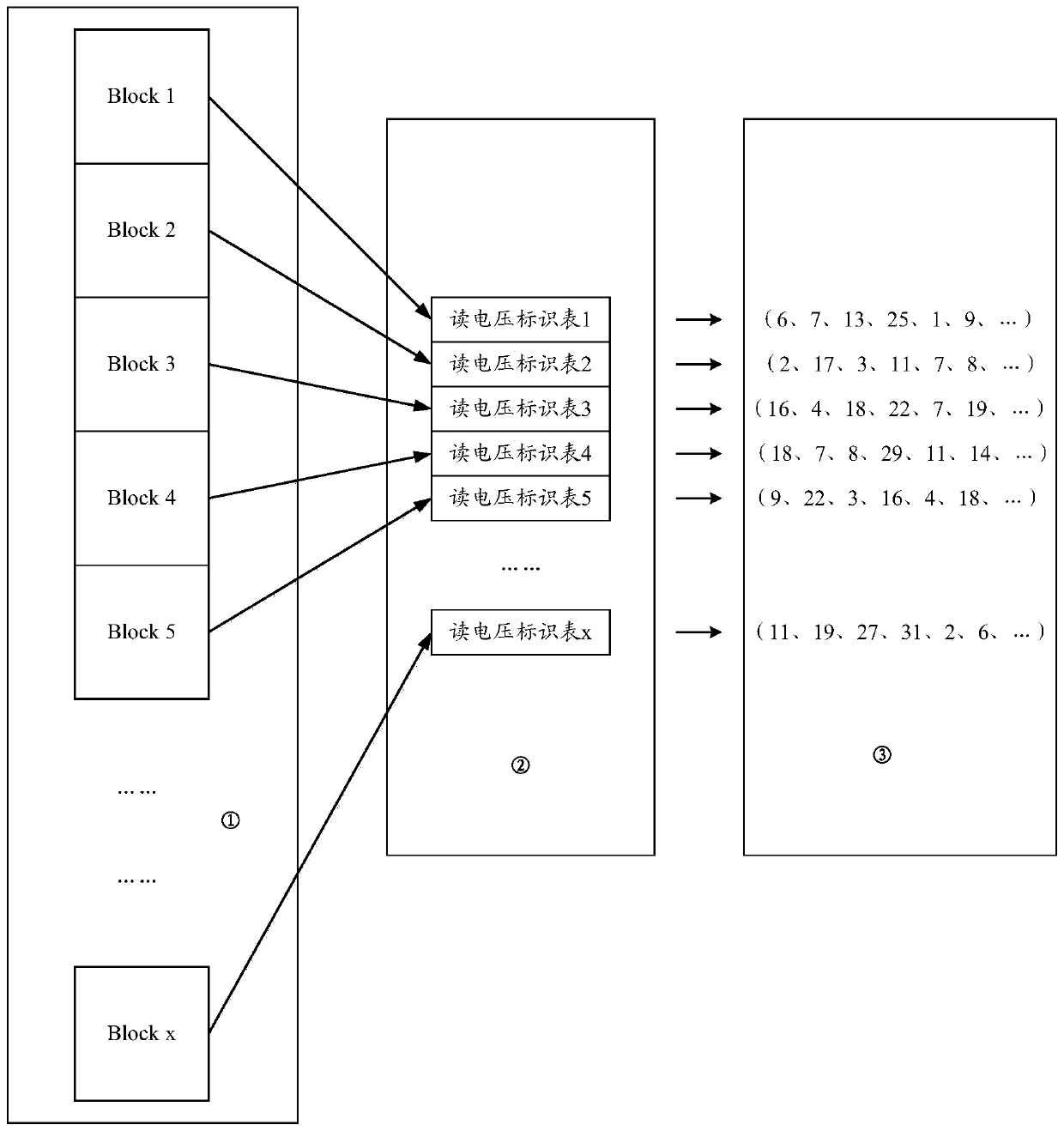

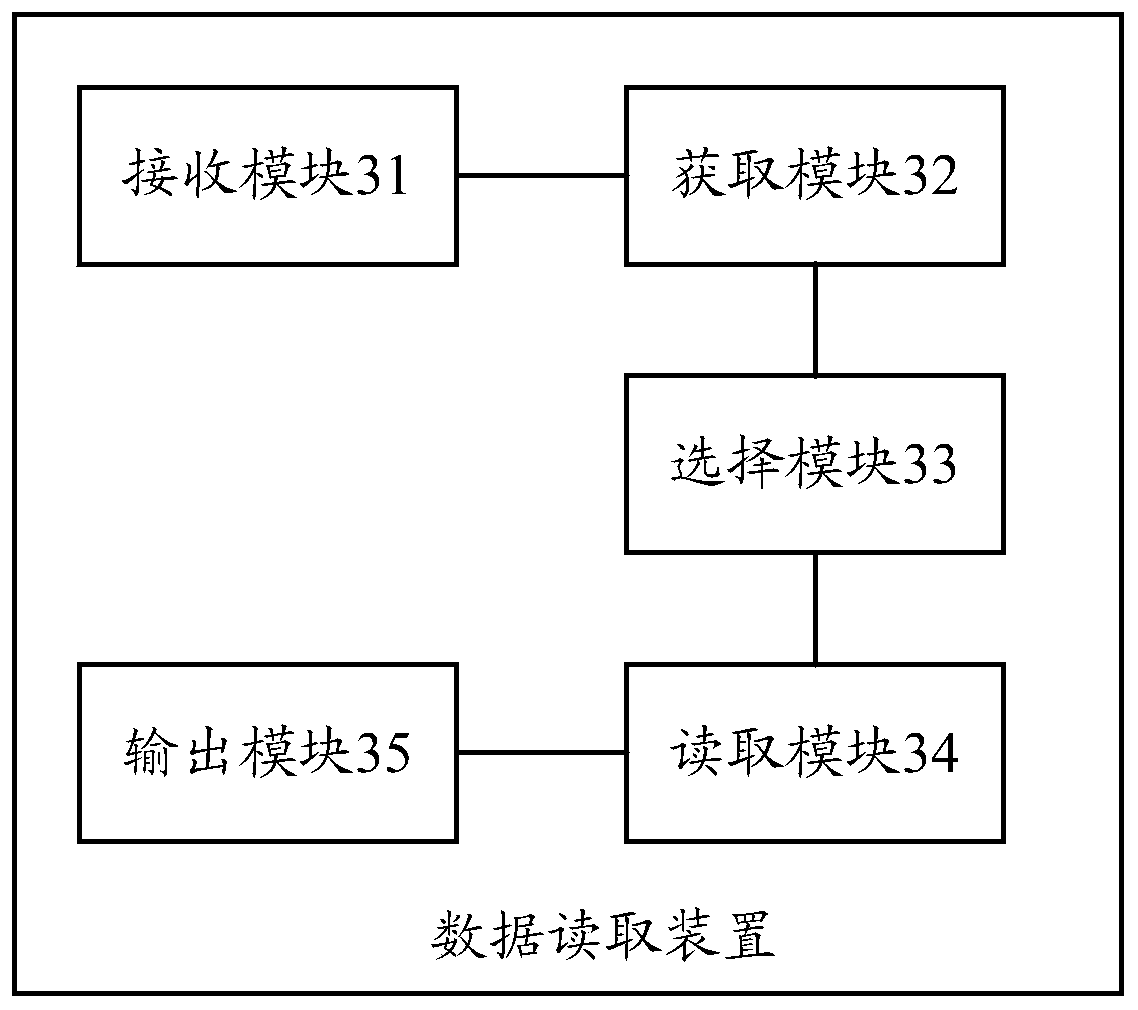

Data reading method and device

InactiveCN110007861AExtended service lifeGood effectInput/output to record carriersRedundant data error correctionComputer architectureVoltage

The invention provides a data reading method and device, and the method comprises the steps: receiving a data reading instruction which comprises an identifier of a target physical block; obtaining aread voltage identification table corresponding to the identification of the target physical block from a preset cache space; wherein the reading voltage identification table comprises a plurality ofreading voltage identifications arranged according to a reading sequence; based on the reading sequence of the plurality of reading voltage identifiers, selecting one reading voltage identifier from the plurality of reading voltage identifiers, and determining a reading voltage parameter corresponding to the reading voltage identifier; reading data from the target physical block by using the voltage reading parameter; if the data reading fails, returning to execute a process of selecting one reading voltage identifier from the plurality of reading voltage identifiers based on a reading sequence of the plurality of reading voltage identifiers; and if the data is successfully read, outputting the successfully read data. According to the technical scheme, the reading efficiency can be improved, the reading performance is improved, and the reading speed is increased.

Owner:NEW H3C TECH CO LTD

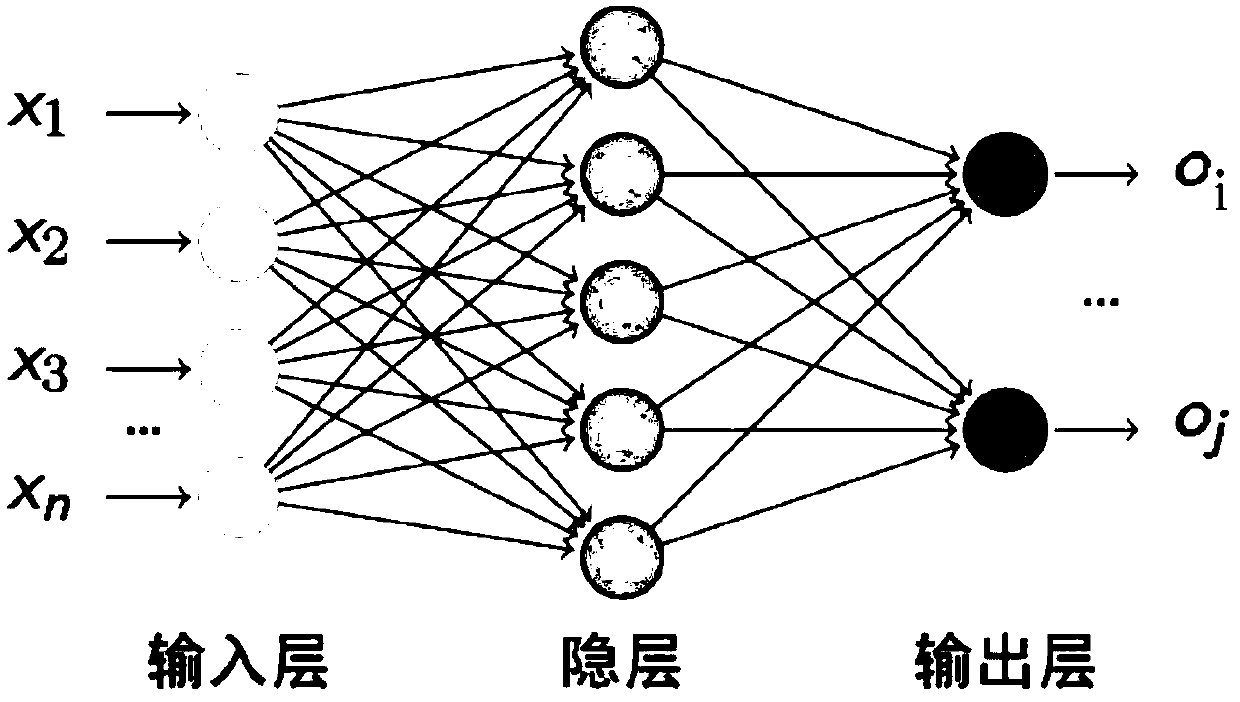

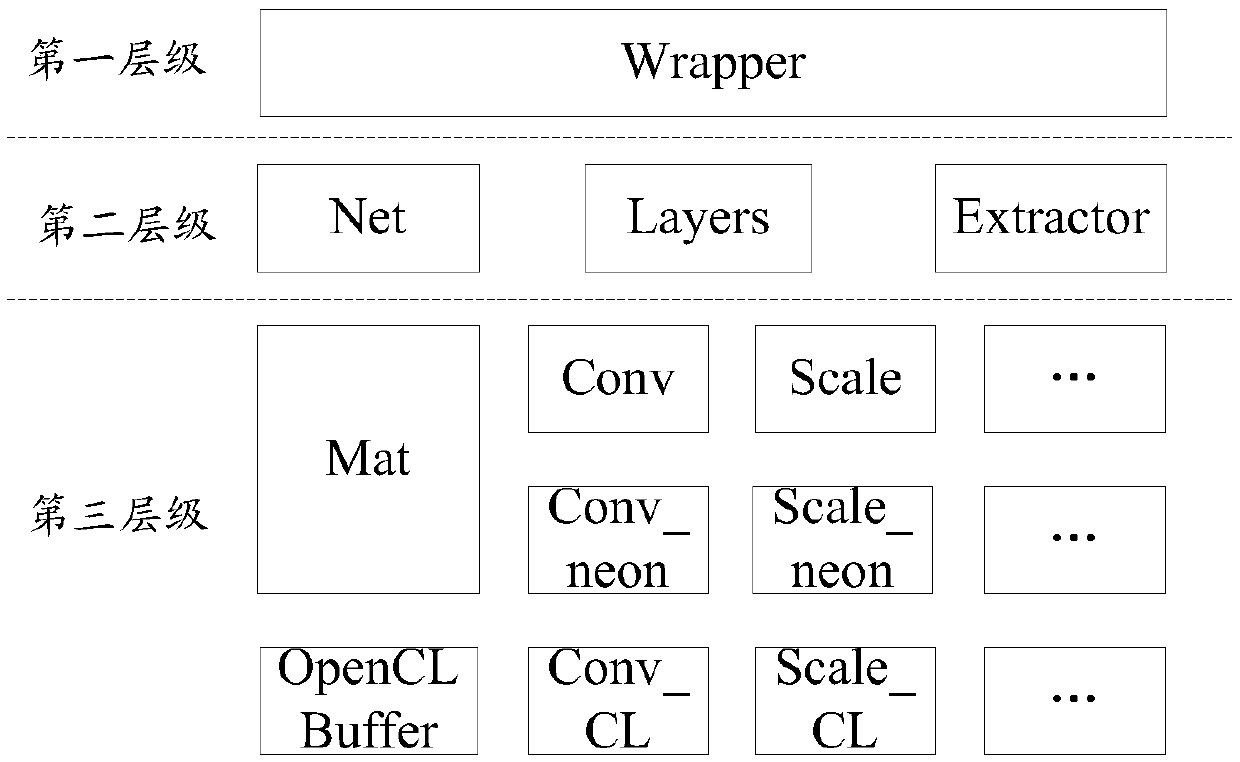

Acceleration method and device of data operation, terminal and readable storage medium

ActiveCN108681773AReduce the number of data readsGood parallel computing capabilityProcessor architectures/configurationPhysical realisationStorage cellData operations

Owner:TENCENT TECH (SHENZHEN) CO LTD

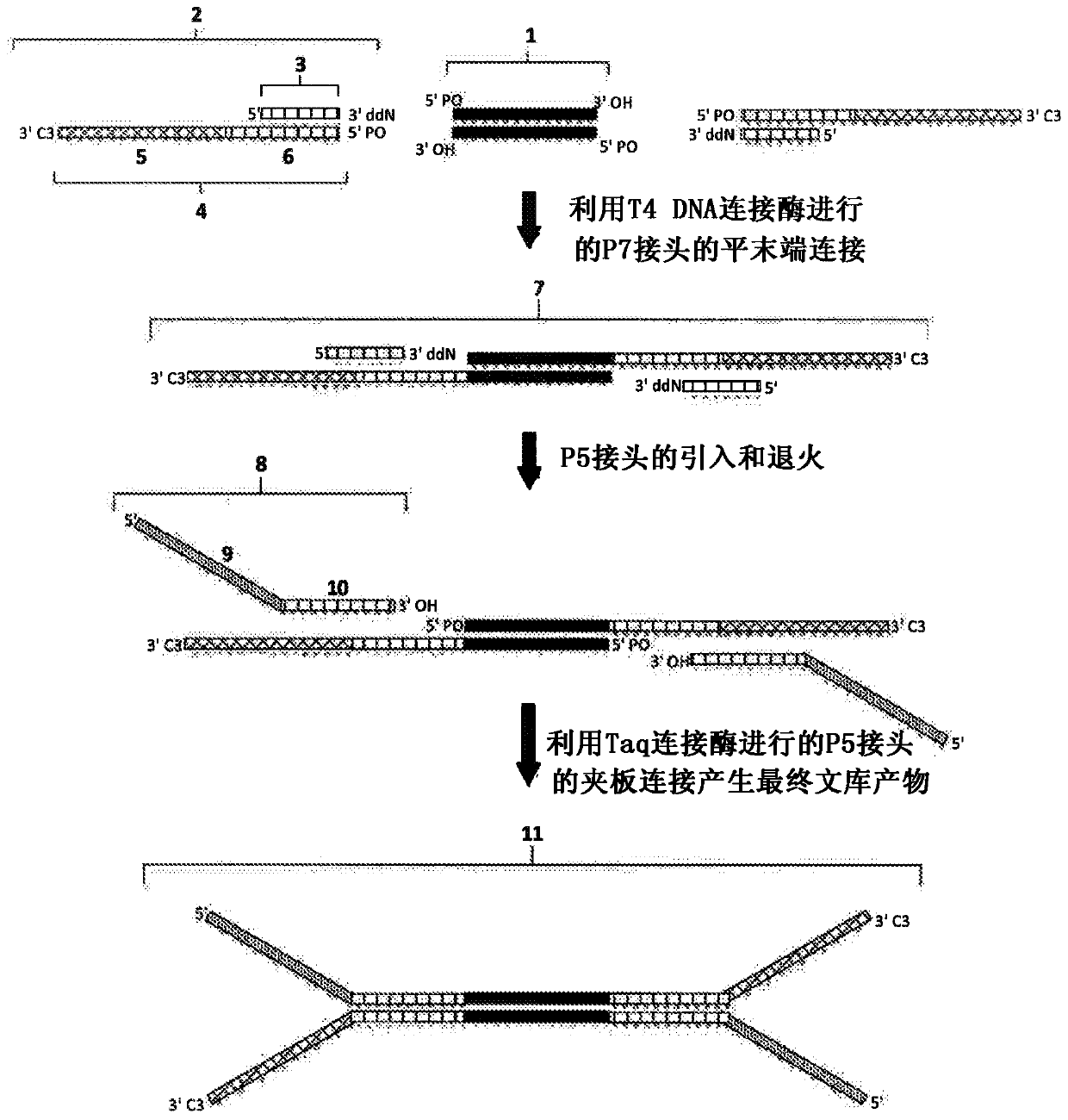

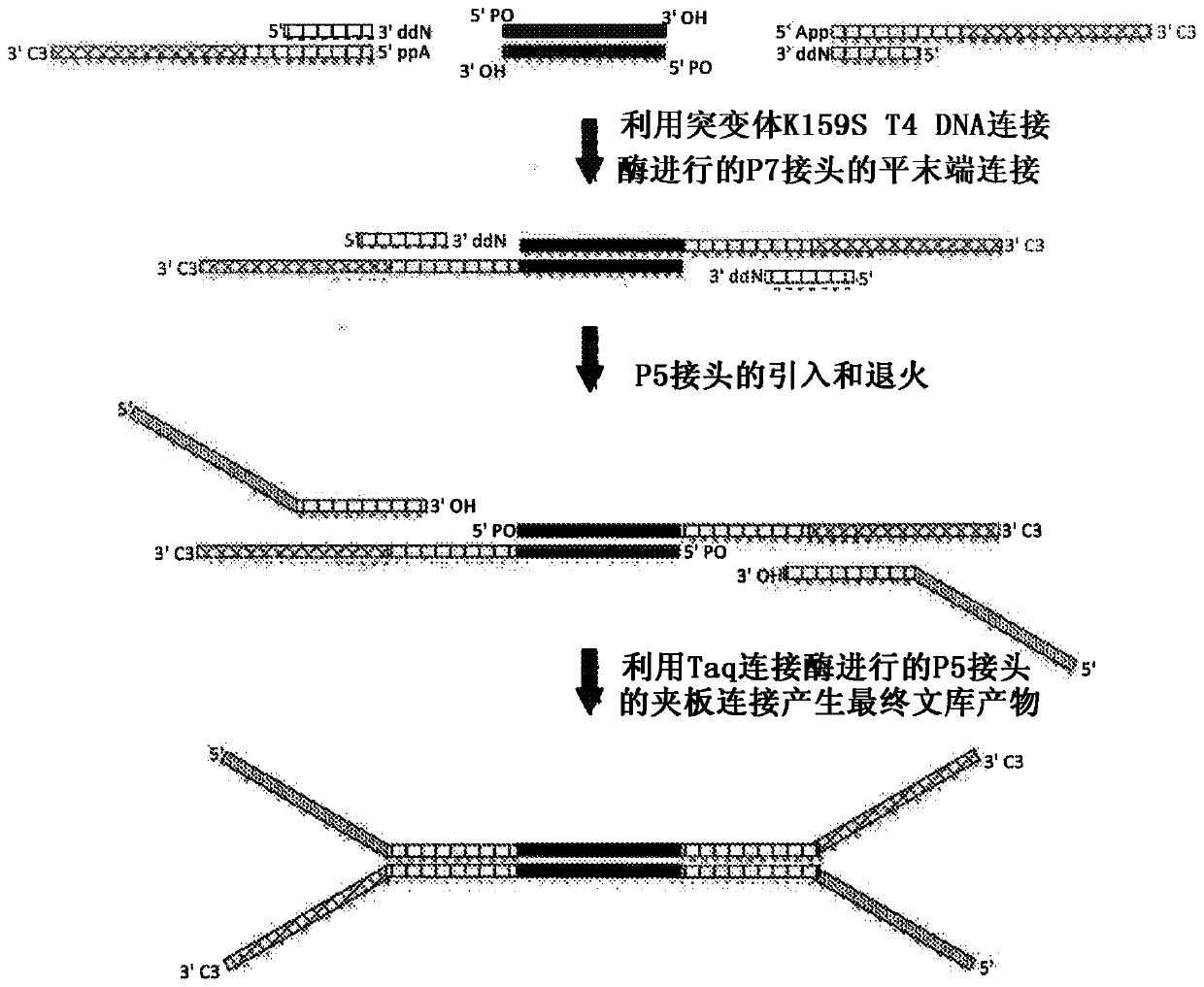

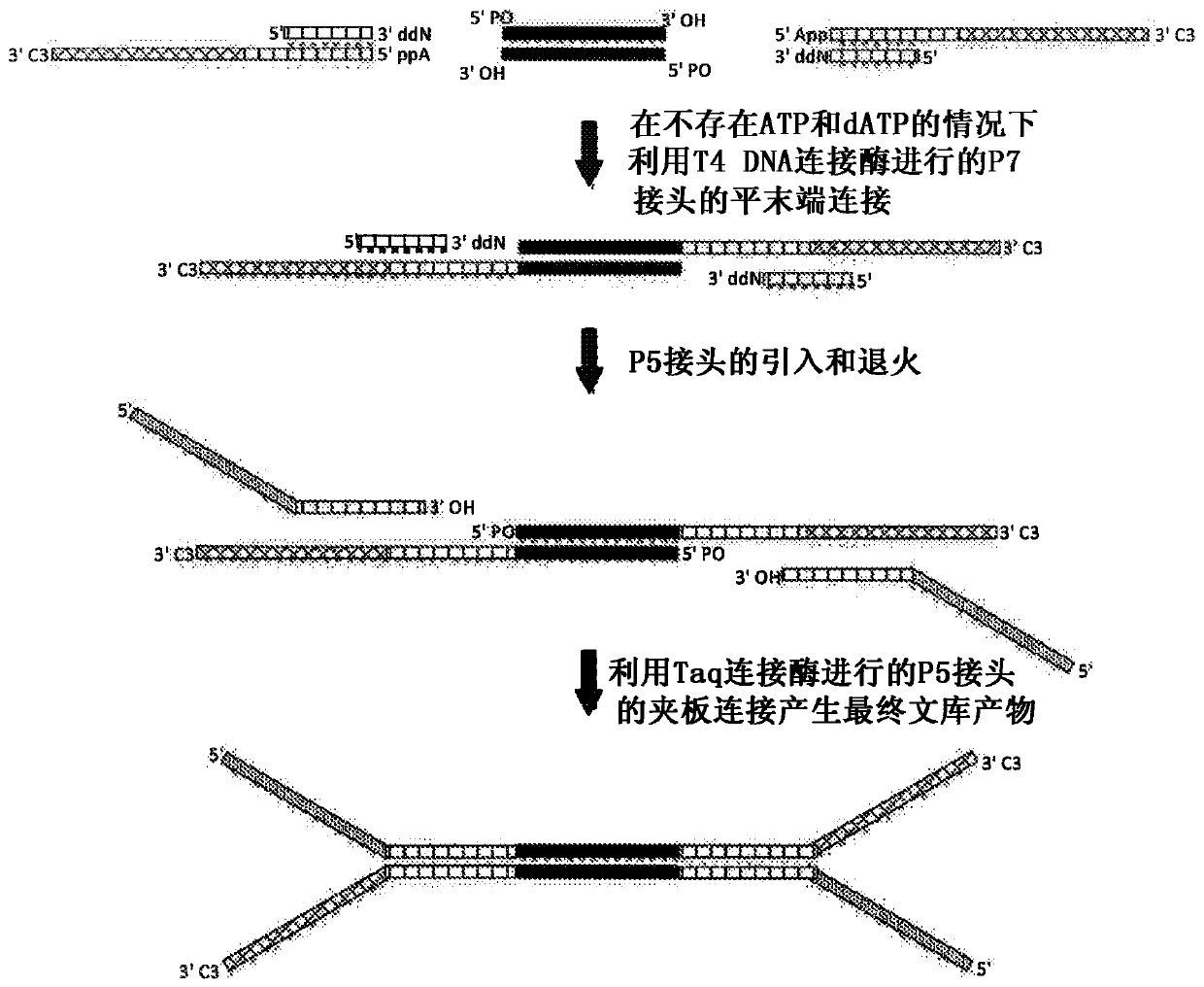

Construction of next generation sequencing (NGS) libraries using competitive strand displacement

ActiveCN110248675AEfficient recyclingIncrease complexity/coveragePeptide/protein ingredientsNucleotide librariesGenomicsWhole genome sequencing

The invention pertains to construction of next-generation DN A sequencing (NGS) libraries for whole genome sequencing, targeted resequencing, sequencing-based screening assays, rnetagenomics, or any other application requiring sample preparation for NGS.

Owner:INTEGRATED DNA TECHNOLOGIES

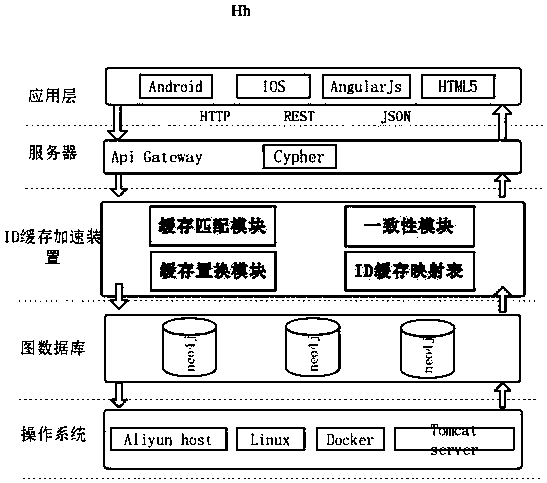

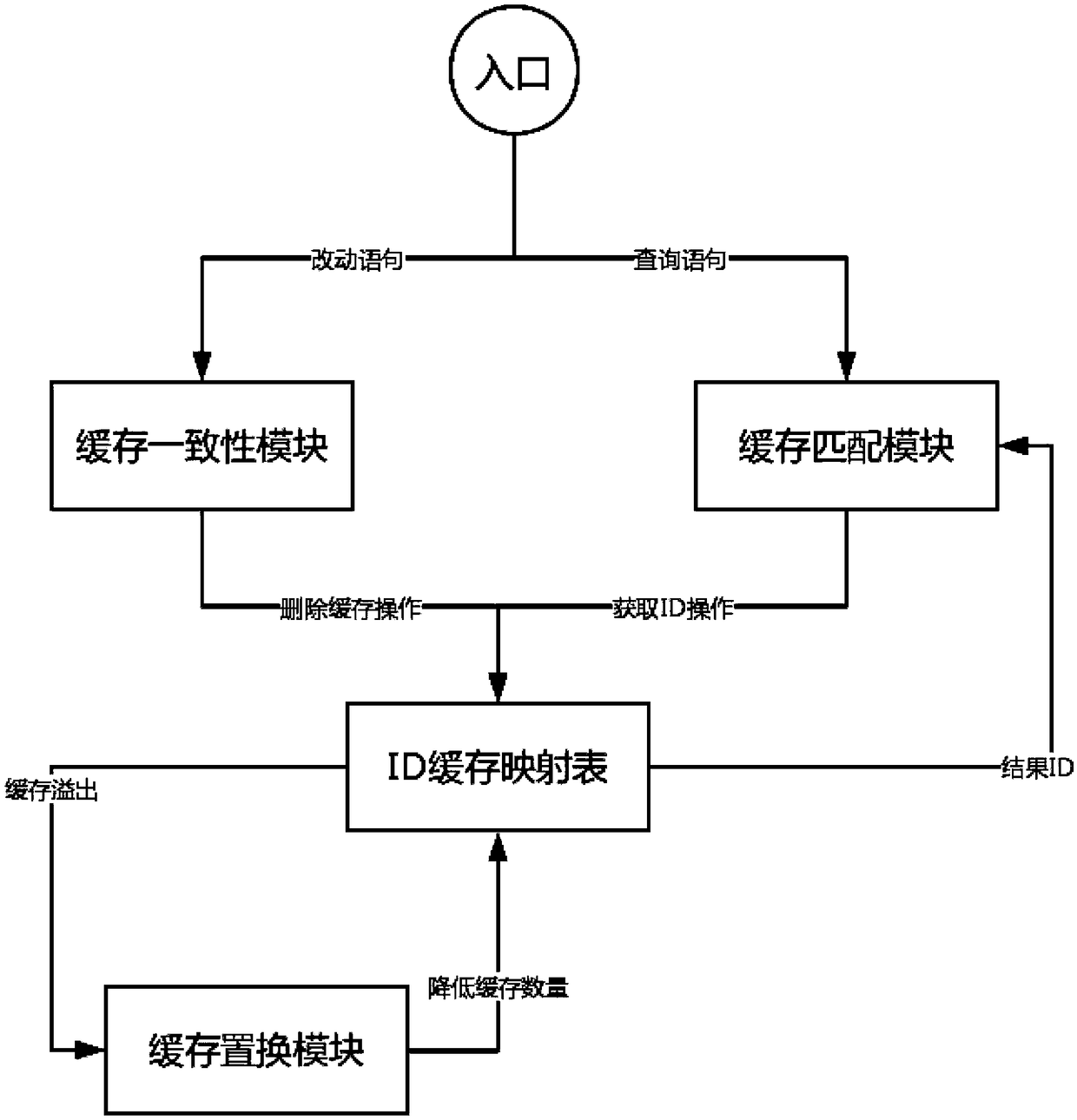

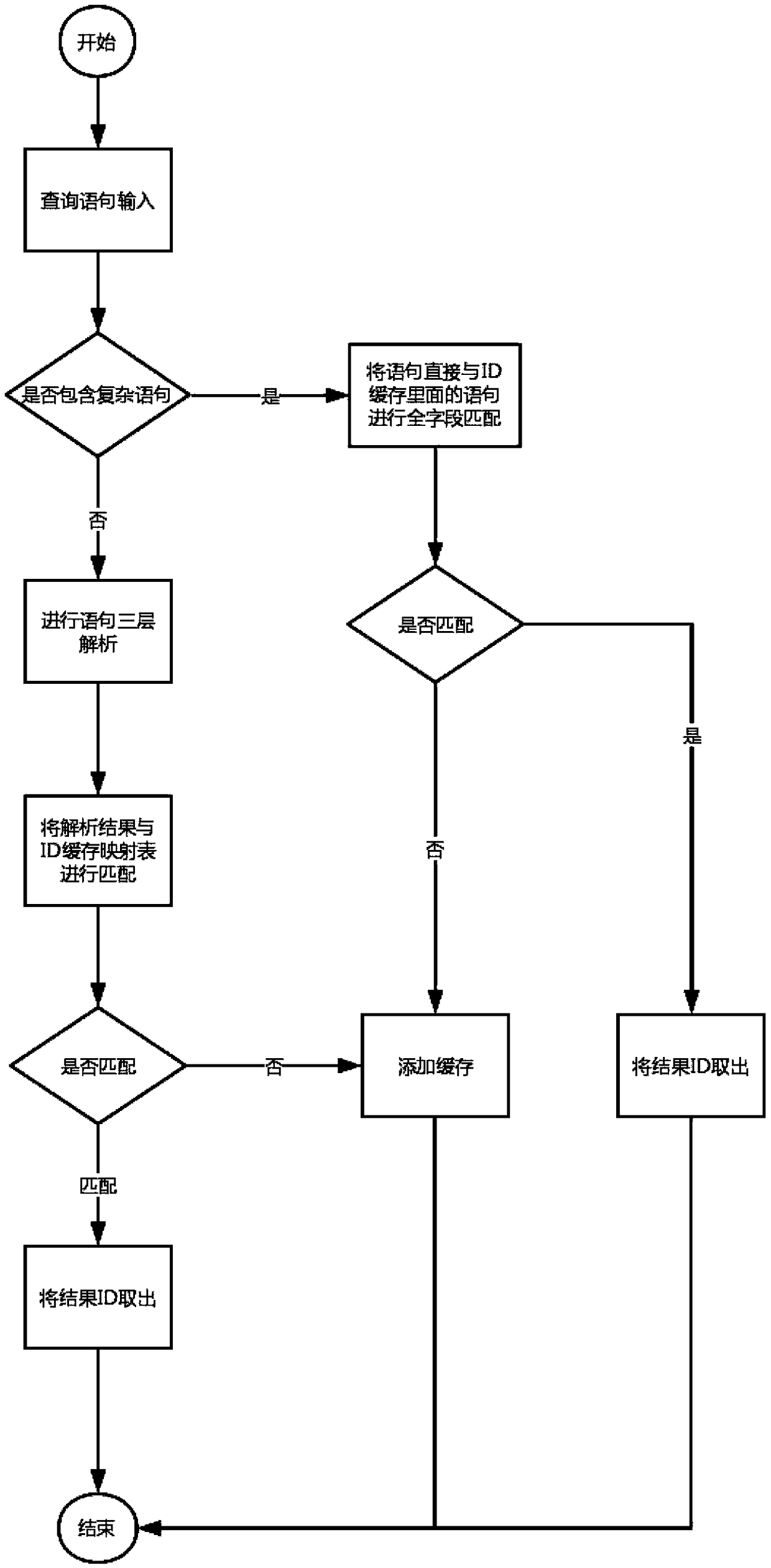

A graph database accelerating device and method based on an ID caching technology

InactiveCN109446358AReduce the number of readsImprove query speedStill image data indexingStill image data browsing/visualisationClient-sideData mining

The present invention discloses the core of a graph database ID caching technology, the dynamic caching is applied to the graph databases with millions or more of data, and the ID caching technology caches IDs in memory, although the graph database will still be queried. However, compared with the original query process of traversing millions of data, the speed of query is much faster, and the amount of query is very small, so that the query speed is also greatly improved, and the data dynamic, the data changes in the database and the cache do not conflict, greatly reducing the probability ofdirty data. The device is divided into the following parts of a special cache structure based on the structural characteristics of graph database, different matching strategies for simple query and complex query, updating cache to achieve consistency when the database is changed, and replacement strategy when the number of caches is too large.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

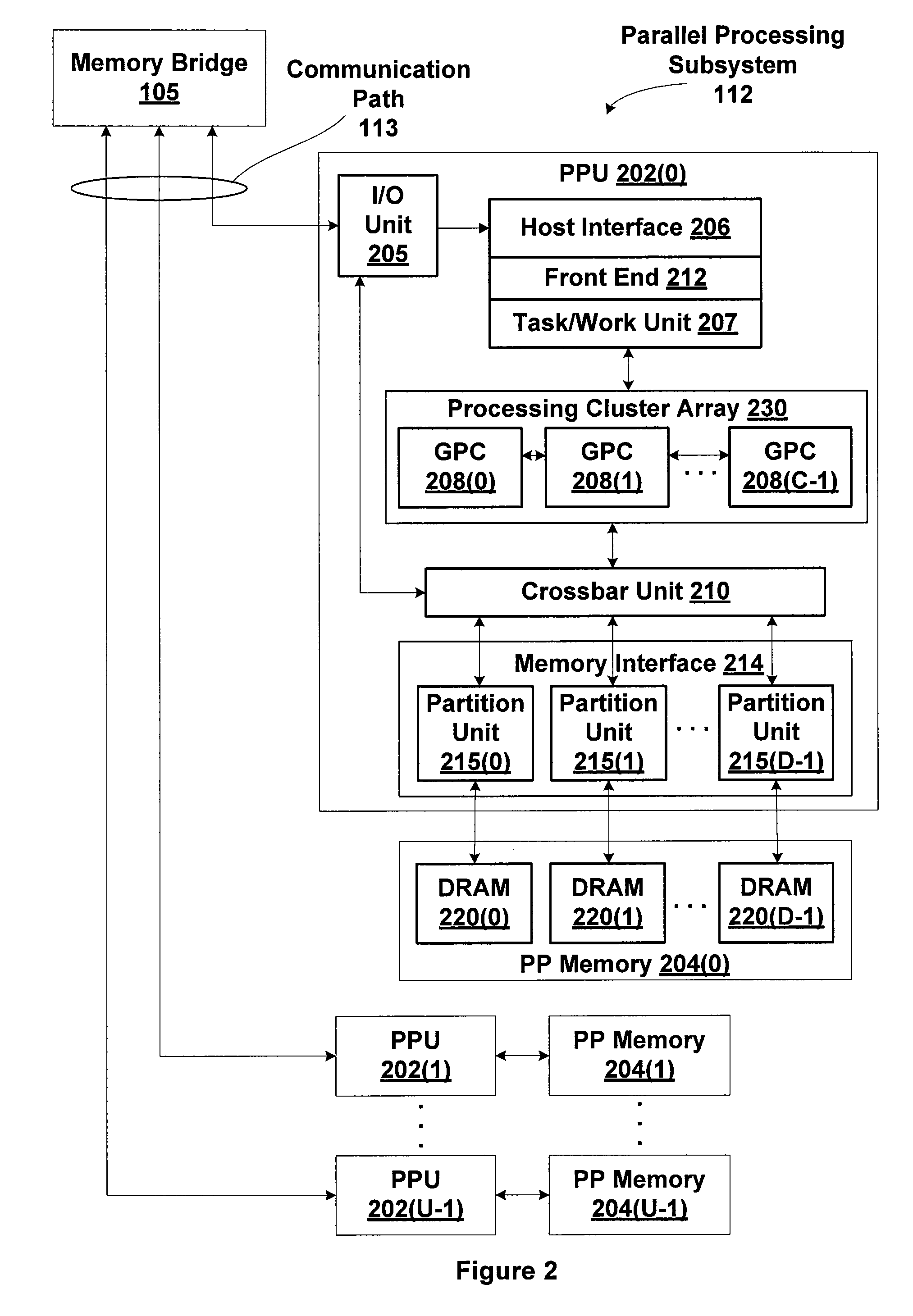

Techniques for storing ecc checkbits in a level two cache

ActiveUS20140143635A1Reduce the number of readsMoreStatic storageRedundant data error correctionData storingError correcting

A partition unit that includes a cache for storing both data and error-correcting code (ECC) checkbits associated with the data is disclosed. When a read command corresponding to particular data stored in a memory unit results in a cache miss, the partition unit transmits a read request to the memory unit to fetch the data and store the data in the cache. The partition unit checks the cache to determine if ECC checkbits associated with the data are stored in the cache and, if the ECC checkbits are not in the cache, the partition unit transmits a read request to the memory unit to fetch the ECC checkbits and store the ECC checkbits in the cache. The ECC checkbits and the data may then be compared to determine the reliability of the data using an error-correcting scheme such as SEC-DED (i.e., single error-correcting, double error-detecting).

Owner:NVIDIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com