Patents

Literature

74 results about "Binary control" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cybernetic 3D music visualizer

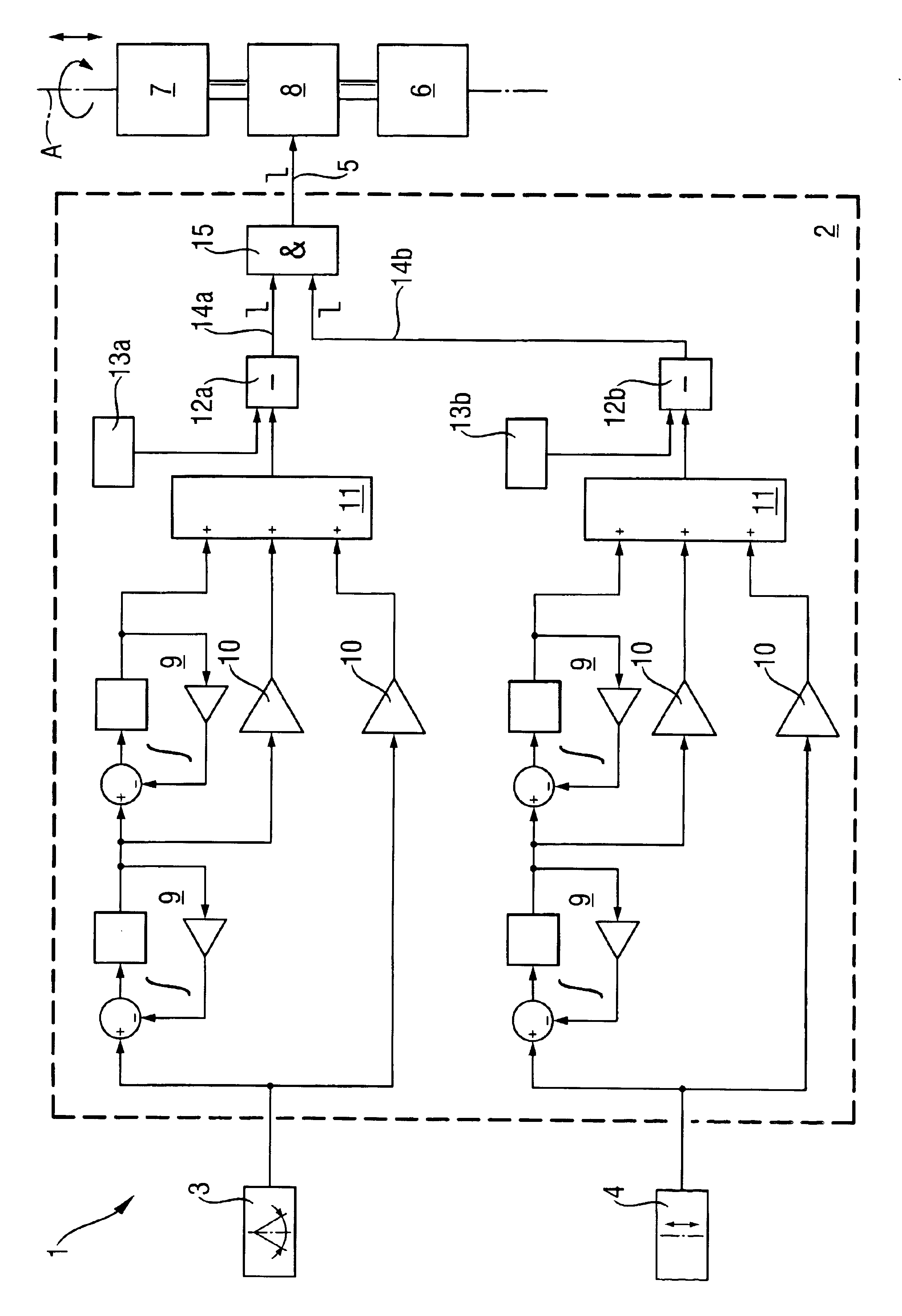

InactiveUS20060181537A1Control inputImprove the level ofElectrophonic musical instrumentsAnimationFrequency spectrumInput control

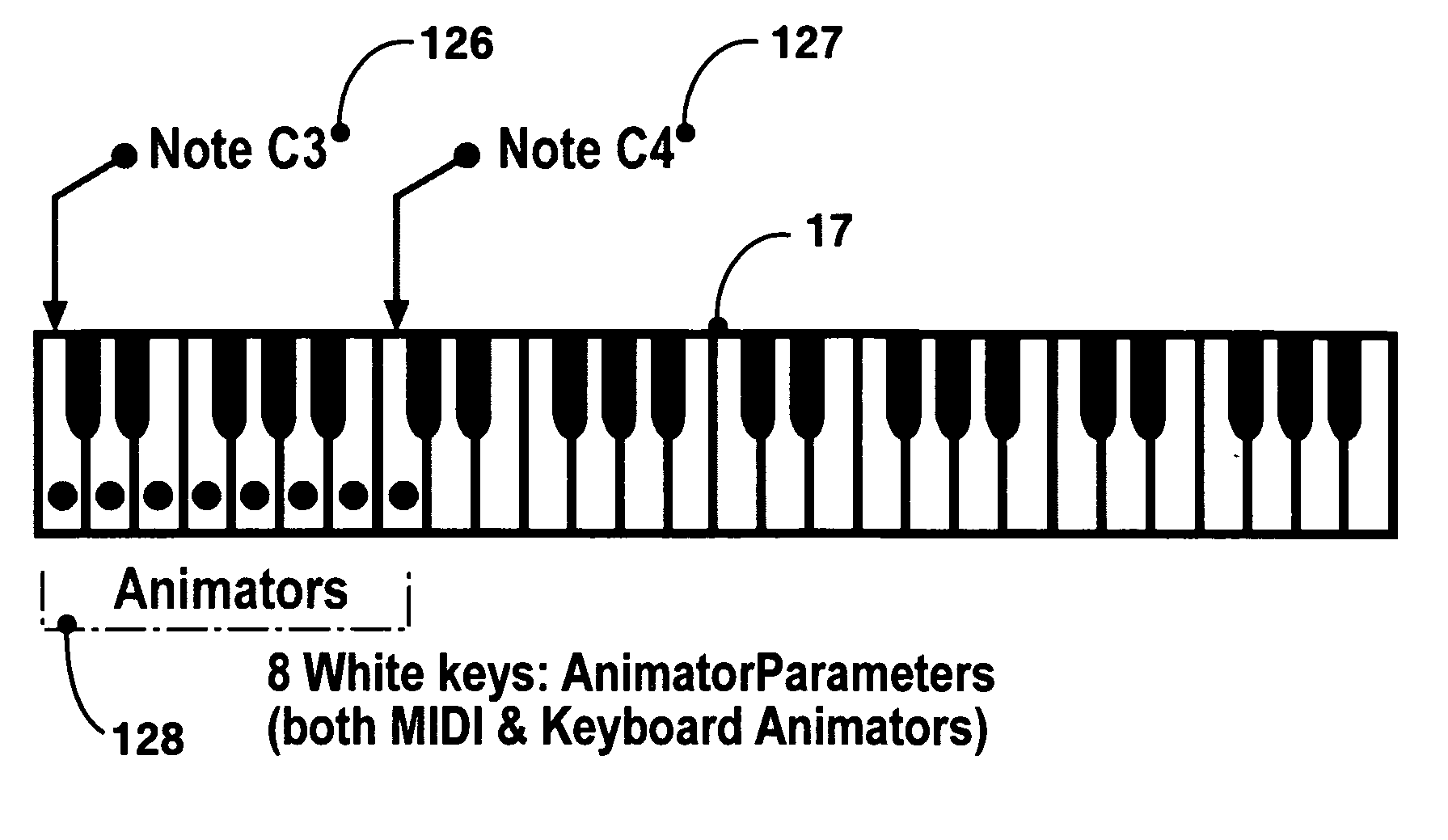

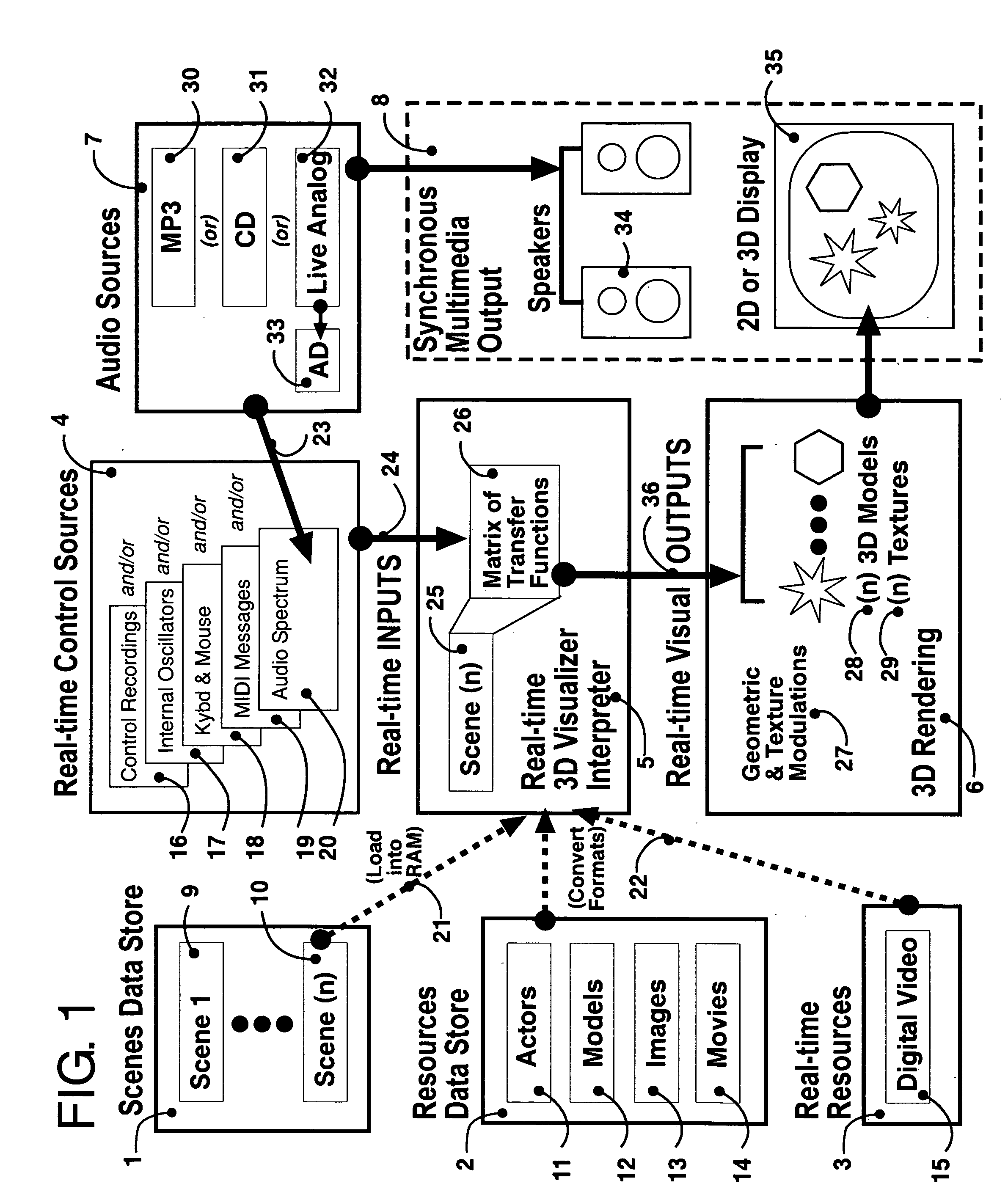

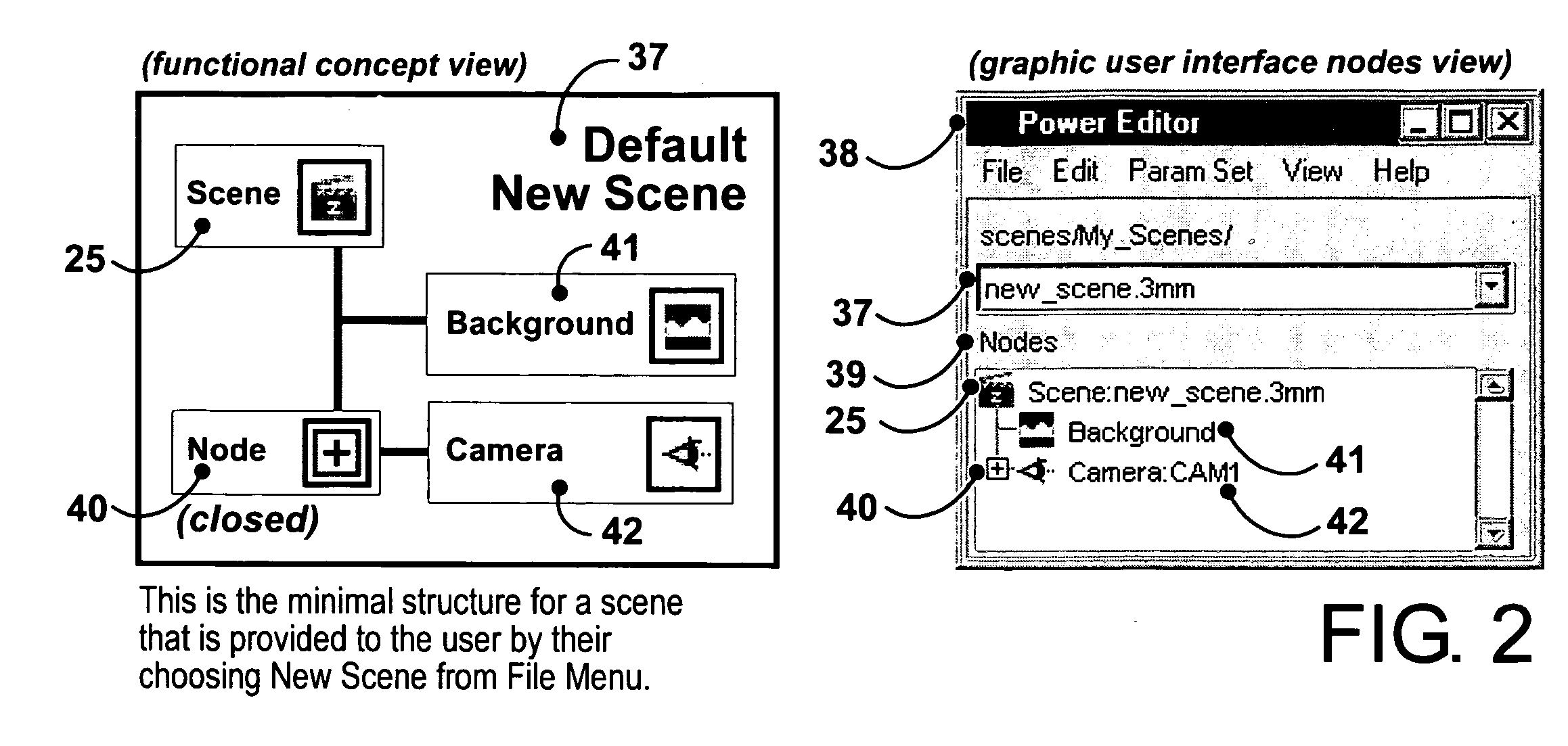

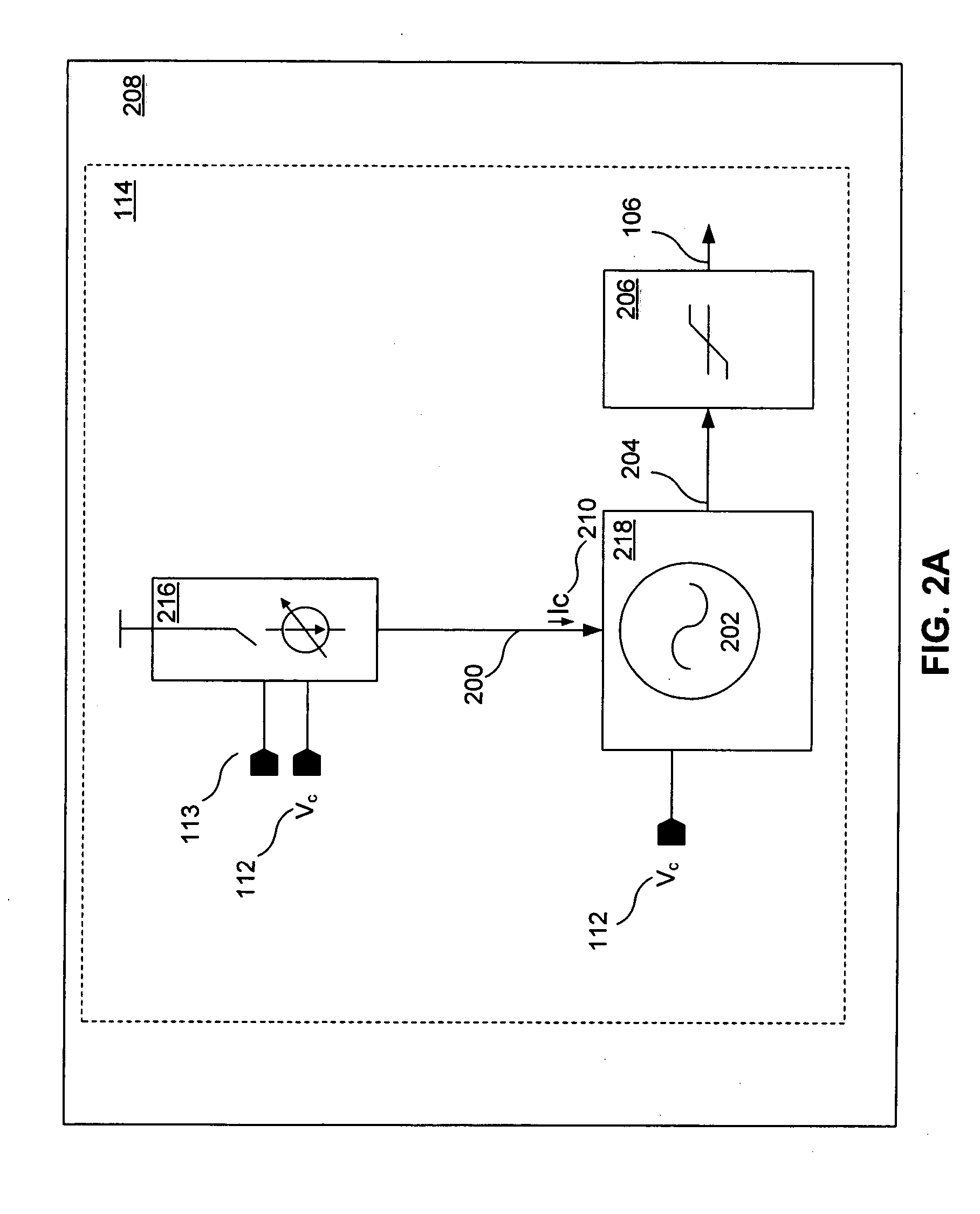

3D music visualization process employing a novel method of real-time reconfigurable control of 3D geometry and texture, employing blended control combinations of software oscillators, computer keyboard and mouse, audio spectrum, control recordings and MIDI protocol. The method includes a programmable visual attack, decay, sustain and release (V-ADSR) transfer function applicable to all degrees of freedom of 3D output parameters, enhancing even binary control inputs with continuous and aesthetic spatio-temporal symmetries of behavior. A “Scene Nodes Graph” for authoring content acts as a hierarchical, object-oriented graphical interpreter for defining 3D models and their textures, as well as flexibly defining how the control source blend(s) are connected or “Routed” to those objects. An “Auto-Builder” simplifies Scene construction by auto-inserting and auto-routing Scene Objects. The Scene Nodes Graph also includes means for real-time modification of the control scheme structure itself, and supports direct real-time keyboard / mouse adjustment to all parameters of all input control sources and all output objects. Dynamic control schemes are also supported such as control sources modifying the Routing and parameters of other control sources. Auto-scene-creator feature allows automatic scene creation by exploiting the maximum threshold of visualizer set of variables to create a nearly infinite set of scenes. A Realtime-Network-Updater feature allows multiple local and / or remote users to simultaneously co-create scenes in real-time and effect the changes in a networked community environment where in universal variables are interactively updated in real-time thus enabling scene co-creation in a global environment. In terms of the human subjective perception, the method creates, enhances and amplifies multiple forms of both passive and interactive synesthesia. The method utilizes transfer functions providing multiple forms of applied symmetry in the control feedback process yielding an increased level of perceived visual harmony and beauty. The method enables a substantially increased number of both passive and human-interactive interpenetrating control / feedback processes that may be simultaneously employed within the same audio-visual perceptual space, while maintaining distinct recognition of each, and reducing the threshold of human ergonomic effort required to distinguish them even when so coexistent. Taken together, these novel features of the invention can be employed (by means of considered Scene content construction) to realize an increased density of “orthogonal features” in cybernetic multimedia content. This furthermore increases the maximum number of human players who can simultaneously participate in shared interactive music visualization content while each still retaining relatively clear perception of their own control / feedback parameters.

Owner:VASAN SRINI +2

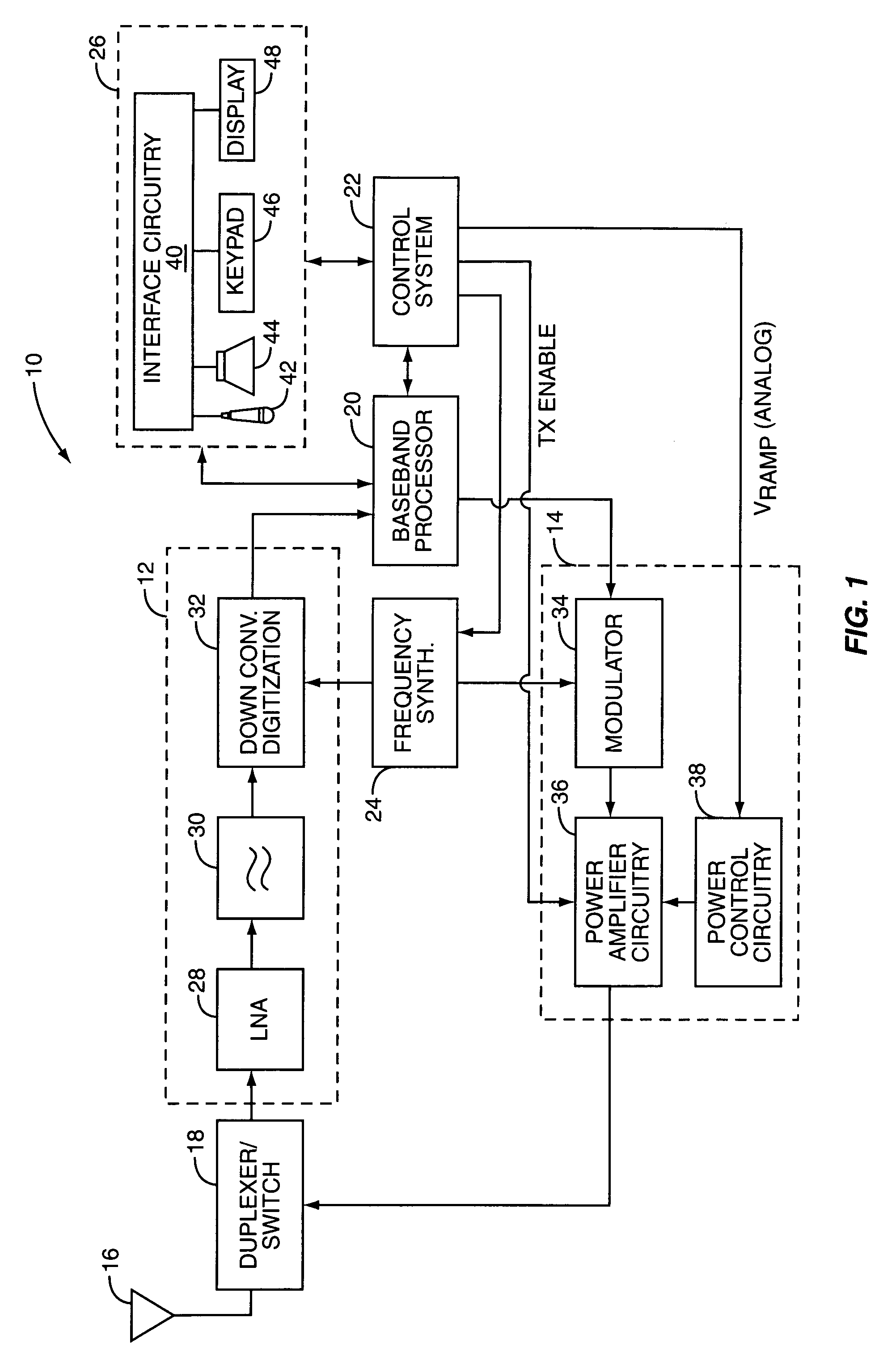

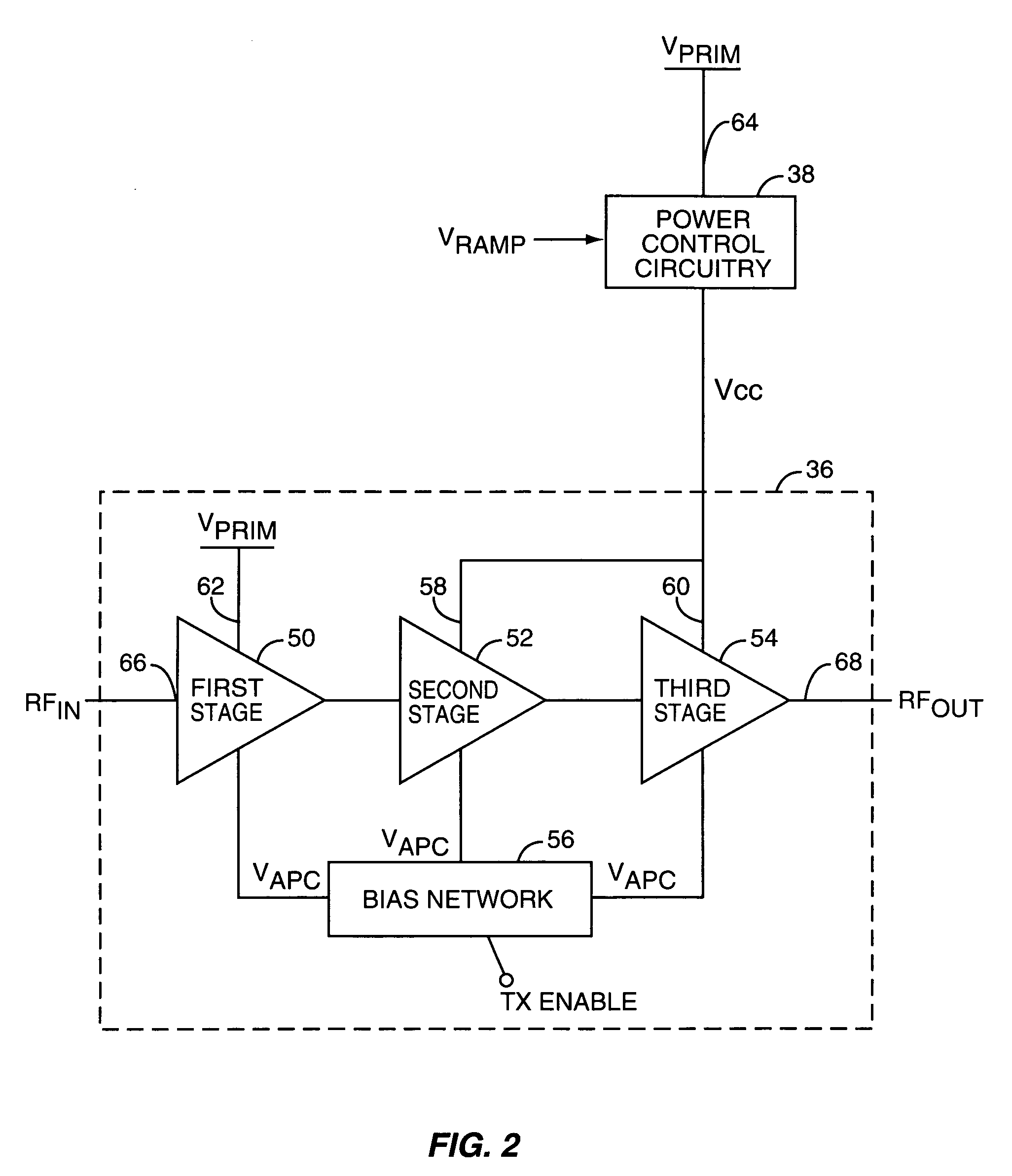

Reconfigurable power control for a mobile terminal

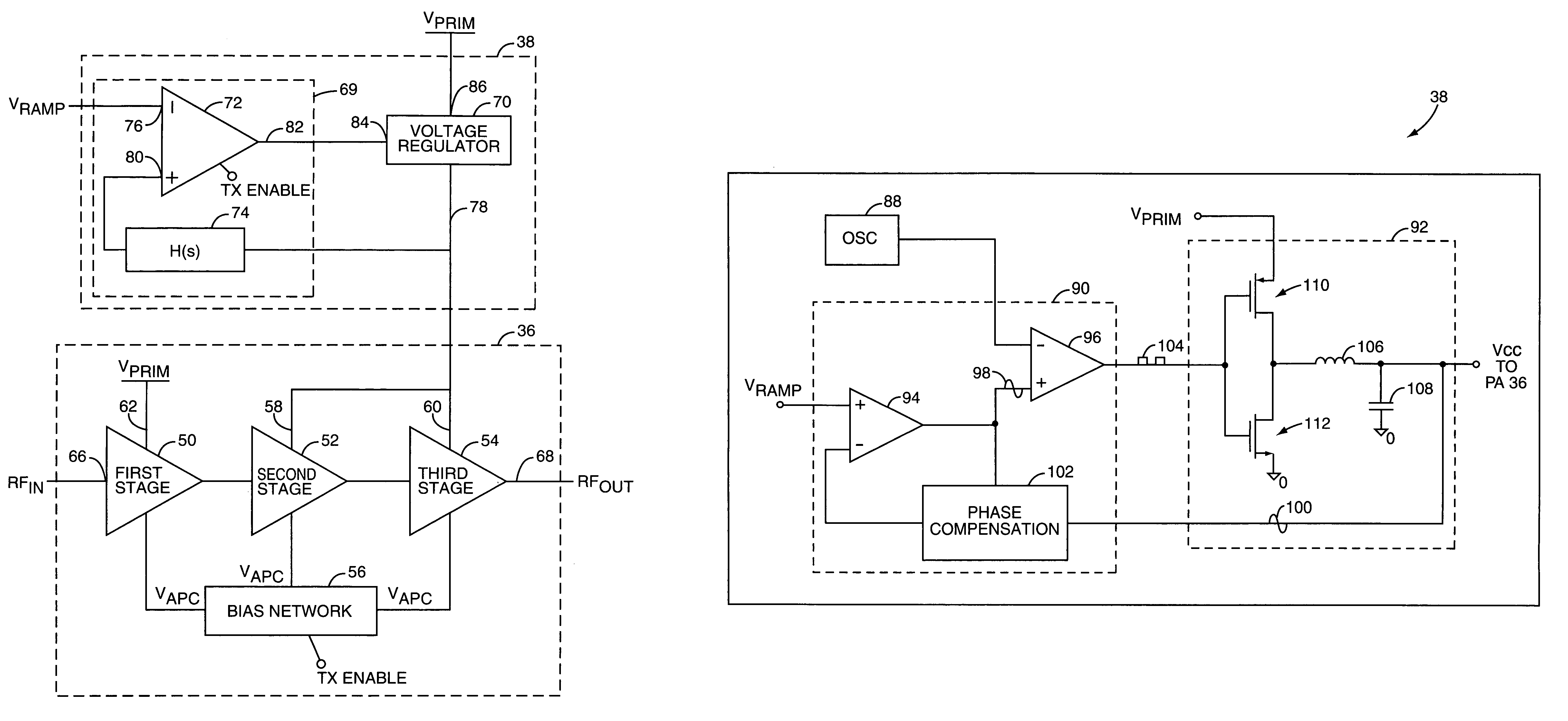

Power control circuitry that is configurable as either a Low Dropout (LDO) voltage regulator or a switching DC—DC converter for controlling a variable supply voltage provided to a power amplifier of a mobile terminal is provided. The power control circuitry includes an output stage including first and second output transistors, an analog control system, and a digital control system. When in LDO voltage regulator mode, the analog control system provides an analog control signal to the first transistor based on an adjustable power control signal and a feedback signal indicative of the variable supply voltage, and the digital control system operates to disable the second output transistor. When in DC—DC converter mode, an output stage of the analog control system is disabled, and the digital control system provides binary control signals to the first and second transistors based on the adjustable power control signal and the feedback signal.

Owner:QORVO US INC

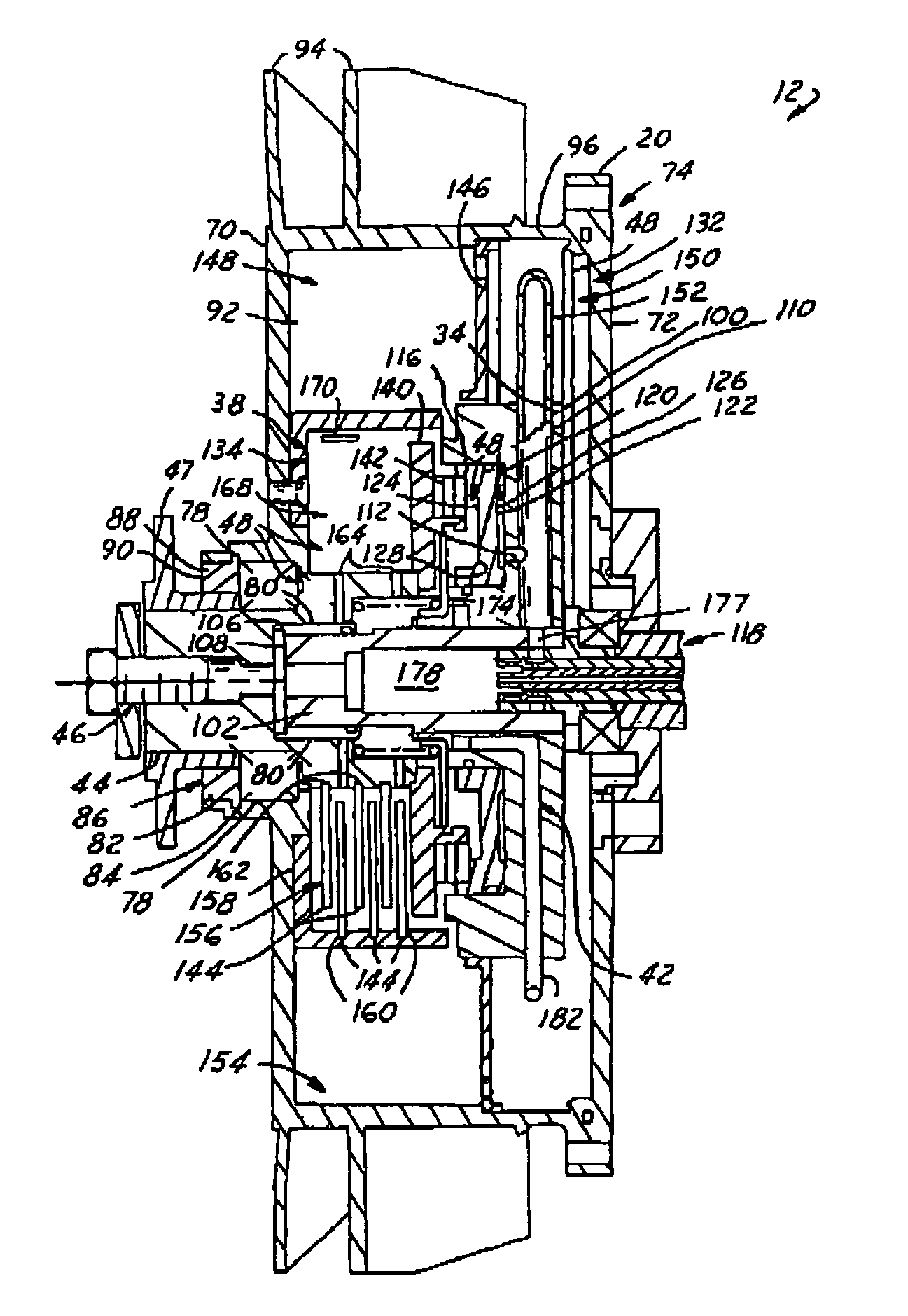

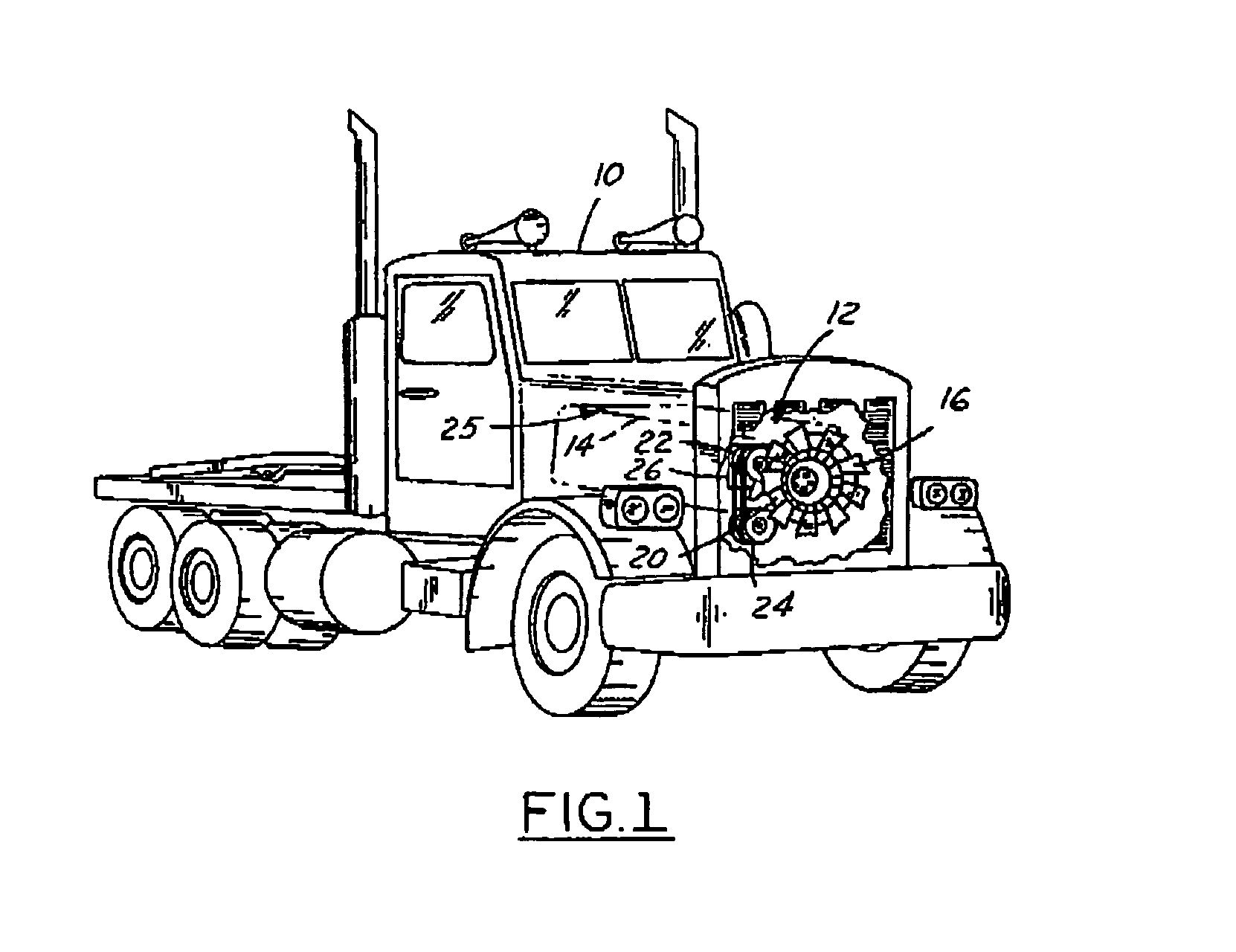

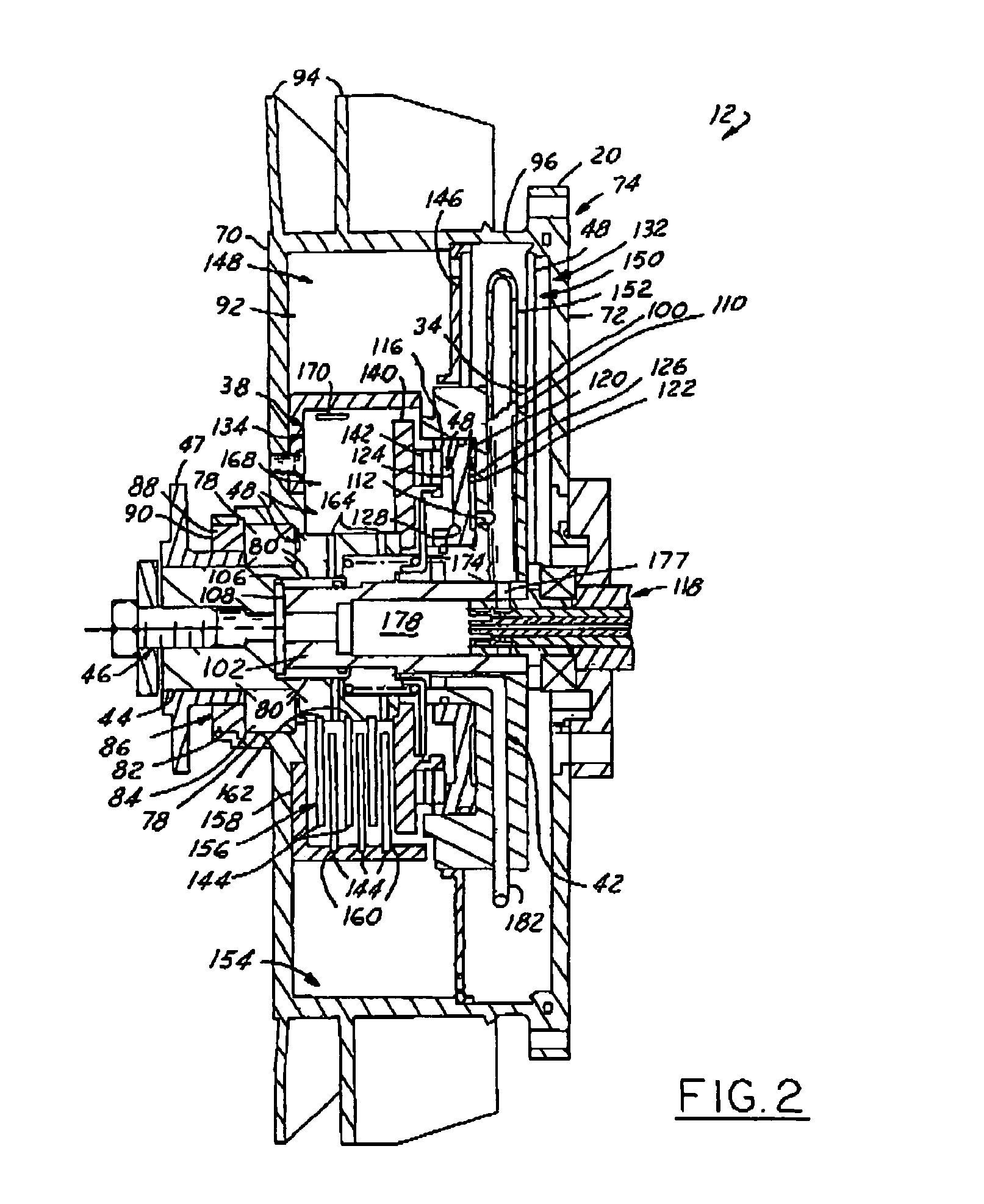

Hydraulic fan drive system employing binary control strategy

InactiveUS7047911B2High pressureCoolant flow controlFluid actuated clutchesRotation velocityHydraulic fluid

A hydraulically controlled fan drive system for controlling the cooling of an engine and having a method of engagement includes a housing assembly containing a hydraulic fluid and an engaging circuit. The engaging circuit includes a pitot tube coupled within the housing assembly that receives at least a portion of the hydraulic fluid as the housing assembly rotates to drive a clutch pack (and coupled fan) via static pressure. A fluid controller having binary control adjusts the static pressure within the pitot tube at a given rotational speed, thereby controlling the engagement of the clutch pack to a fully engaged drive (utilizing friction type engagement), a fully disengaged drive, and at least two partially engaged clutch positions (i.e. partially engaged utilizing a wet viscous type clutch engagement). To control static pressure release, the fluid controller may utilize a dual spool system valving arrangement or a parallel fixed orifice binary control.

Owner:BORGWARNER INC

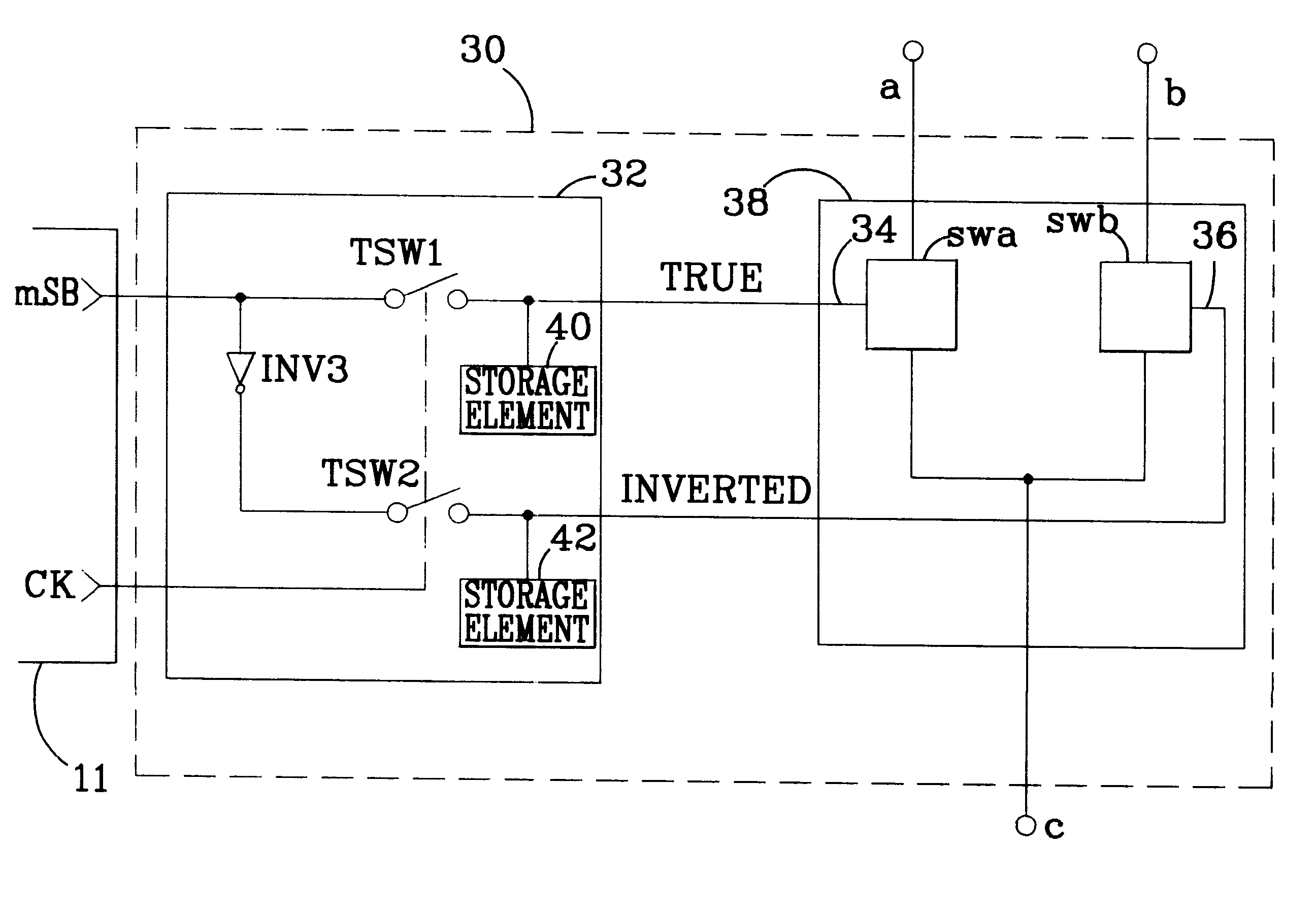

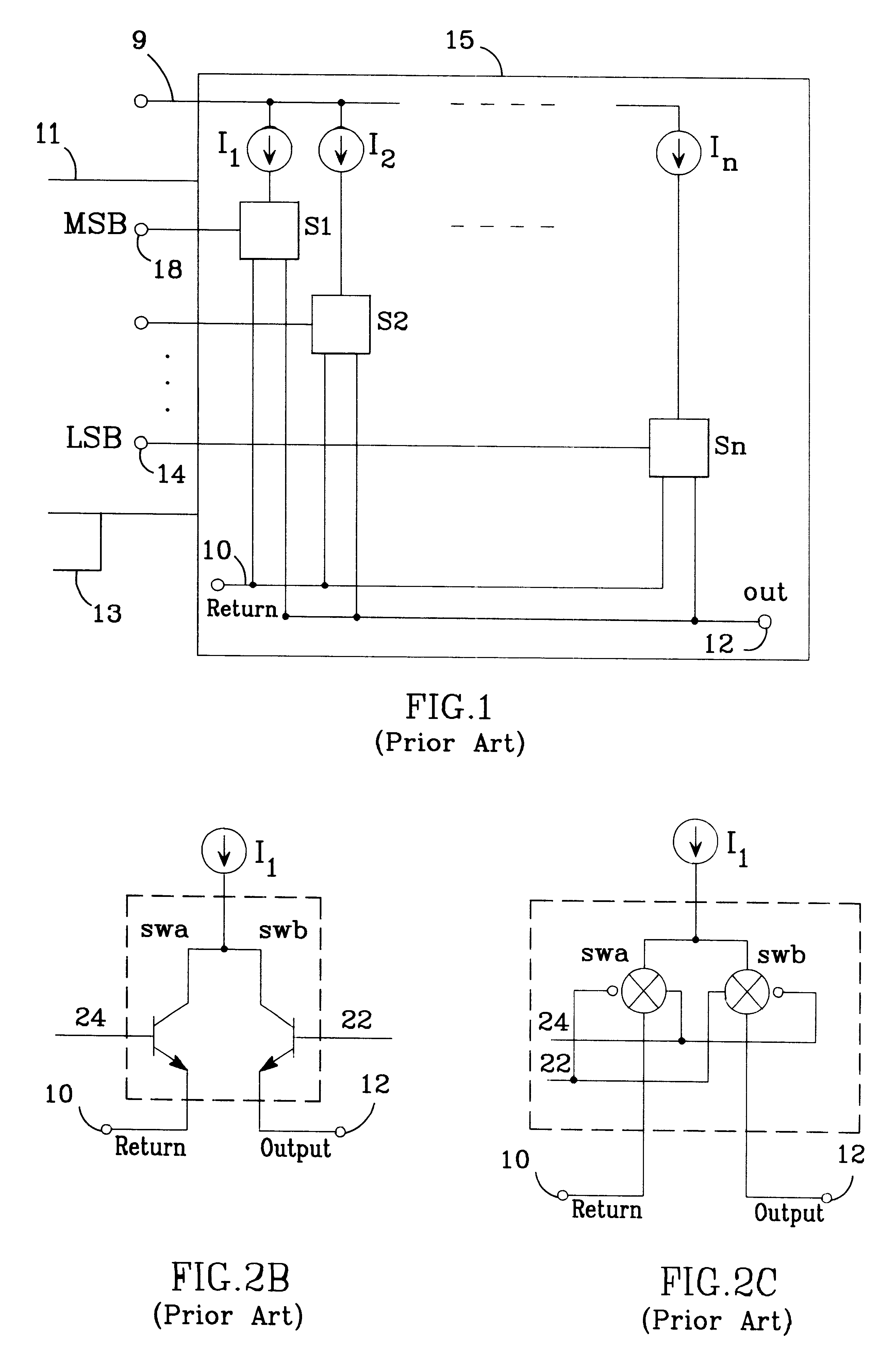

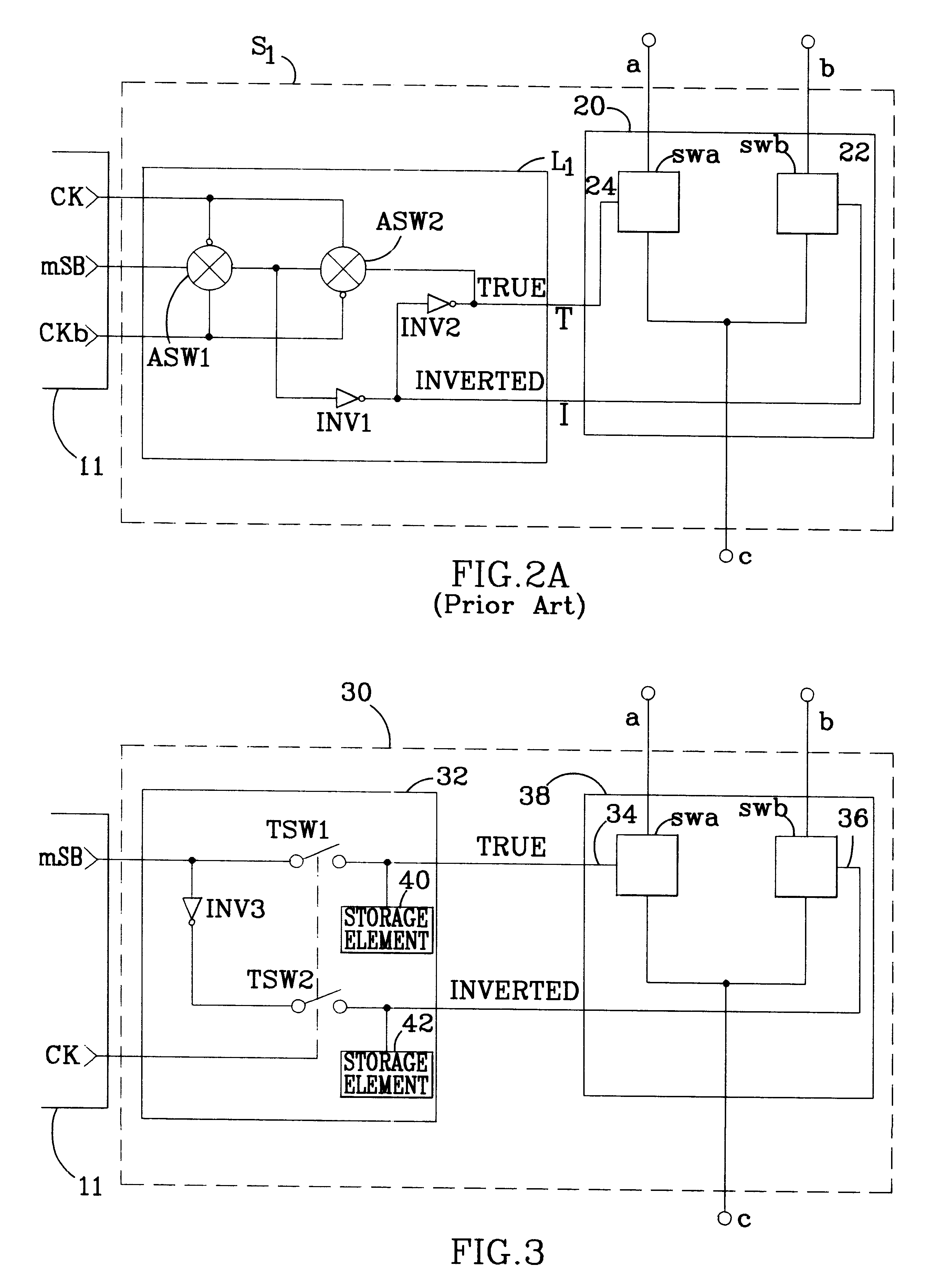

Skewless differential switch and DAC employing the same

InactiveUSRE37619E1Minimizes complexity of switchReduces spurious switchingTransistorElectric signal transmission systemsControl signalDigital-to-analog converter

A differential switch accepts a binary control signal and its complement (which may be skewed with respect to the control signal) and latches both signals simultaneously. The latched output signals drive the control terminals of a differential switch pair which connects one of two terminals to a third terminal, depending upon the state of the control terminals. The differential switch may optionally include an inverter which complements the binary control signal, thus eliminating the need for external inversion of the control signal. The switch is particularly applicable for use in a digital to analog converter.

Owner:ANALOG DEVICES INC

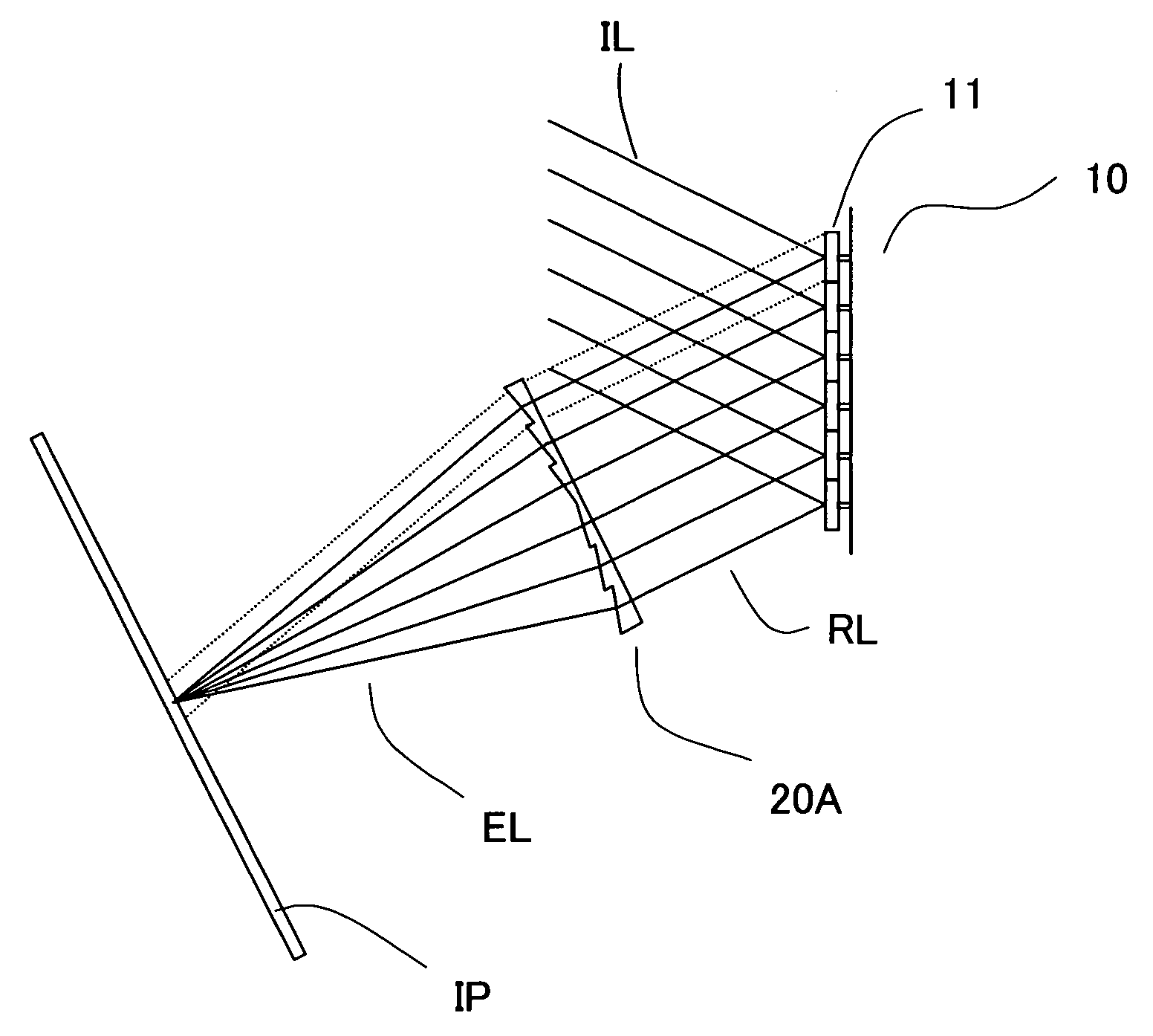

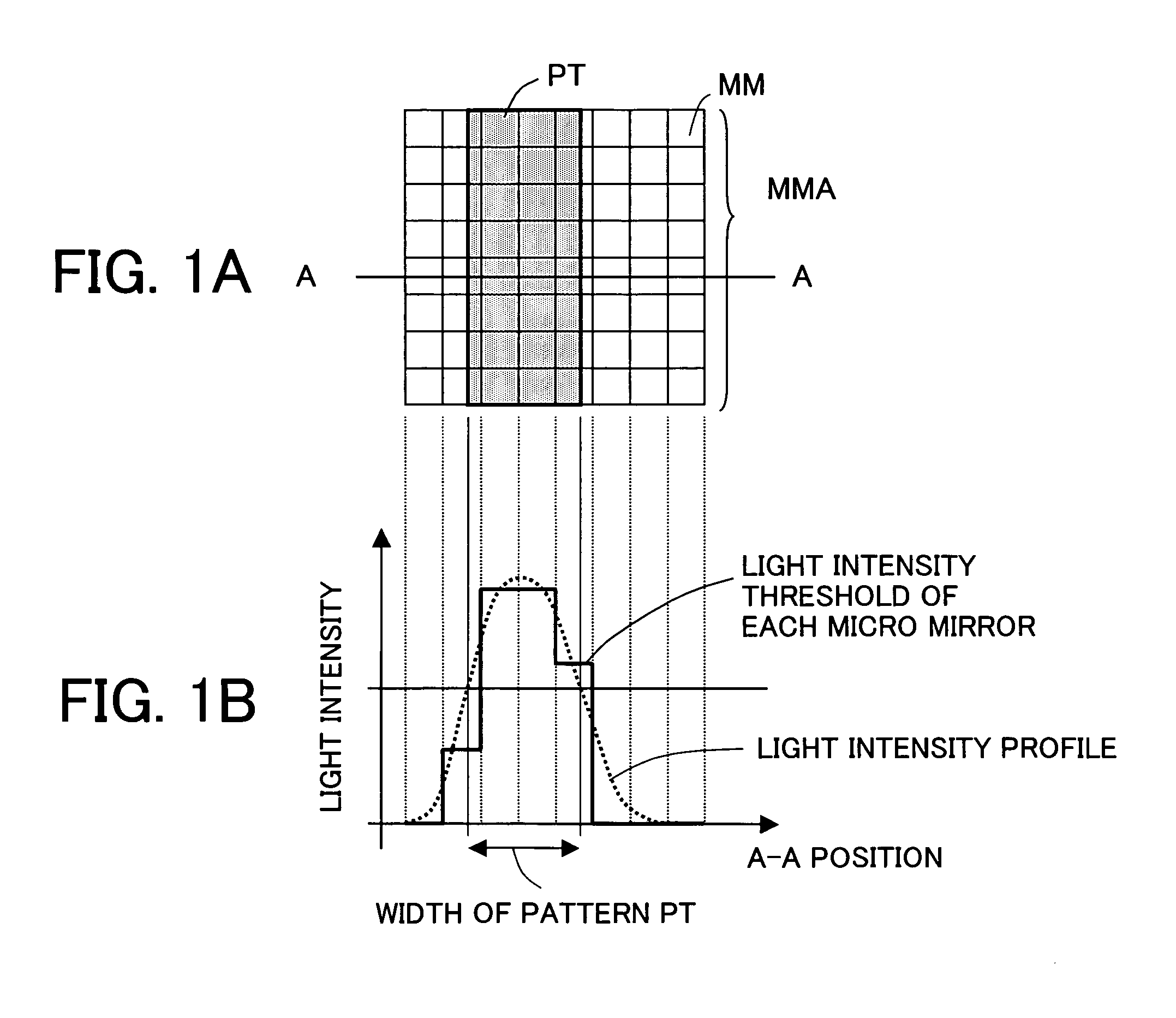

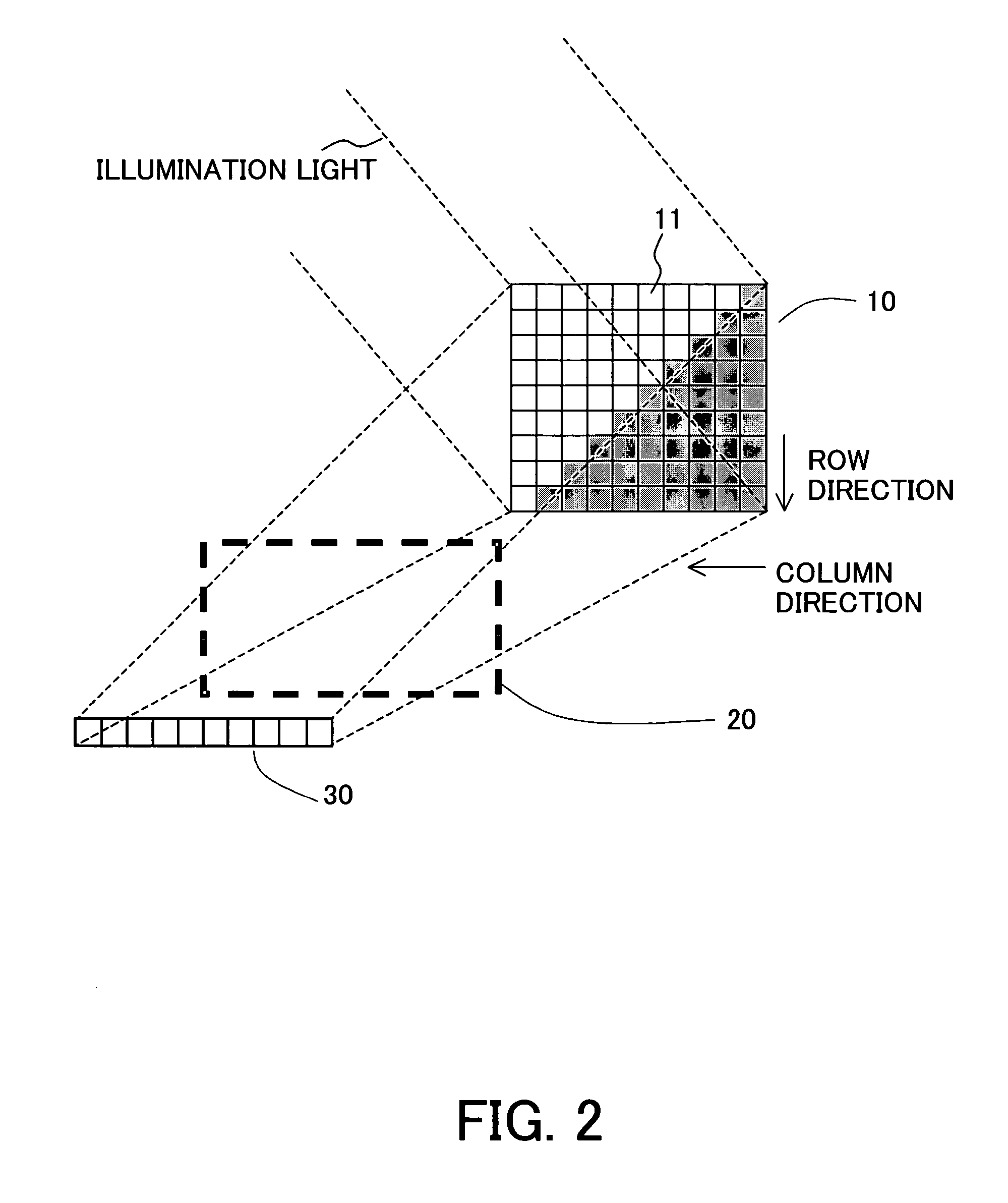

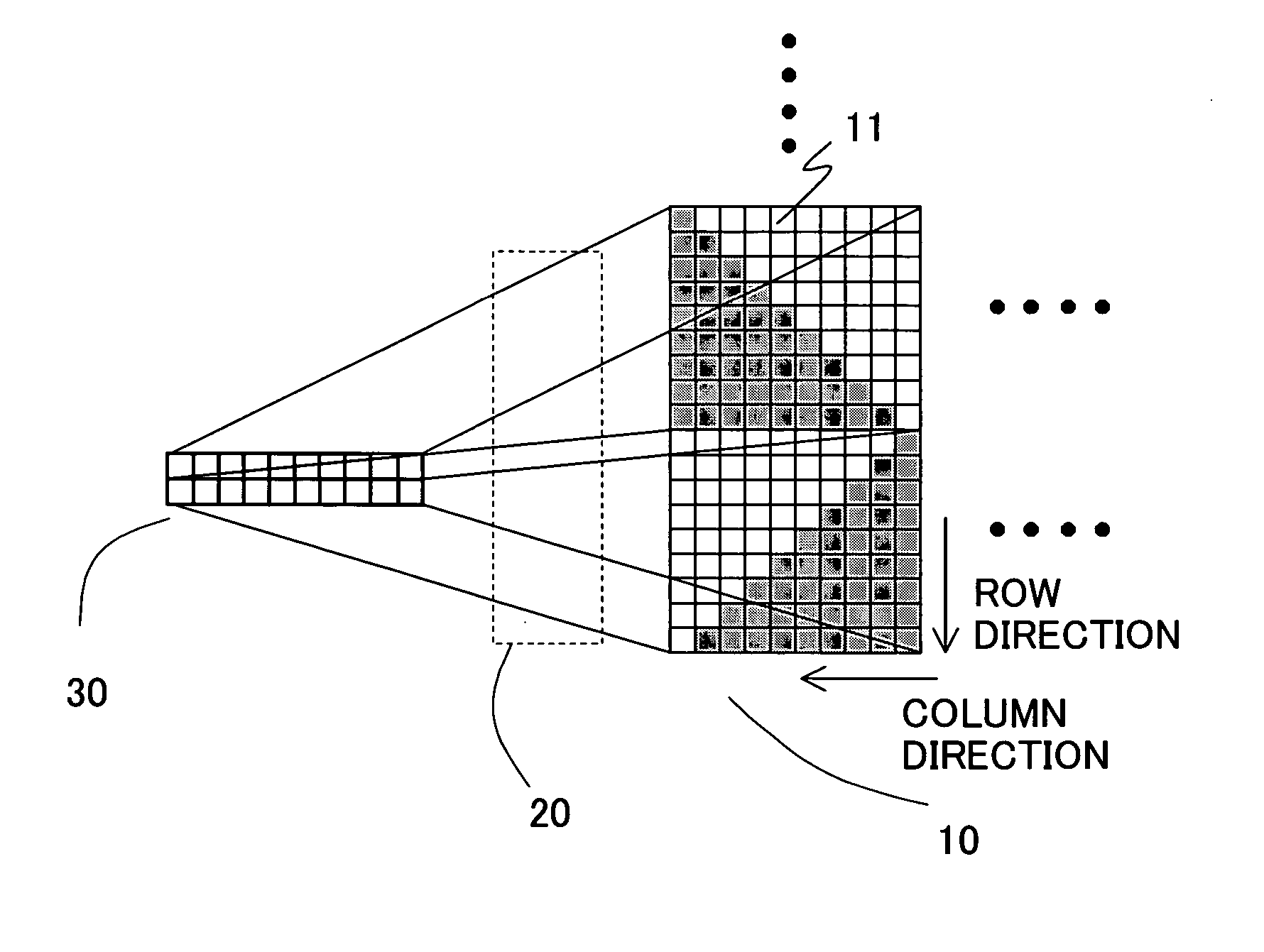

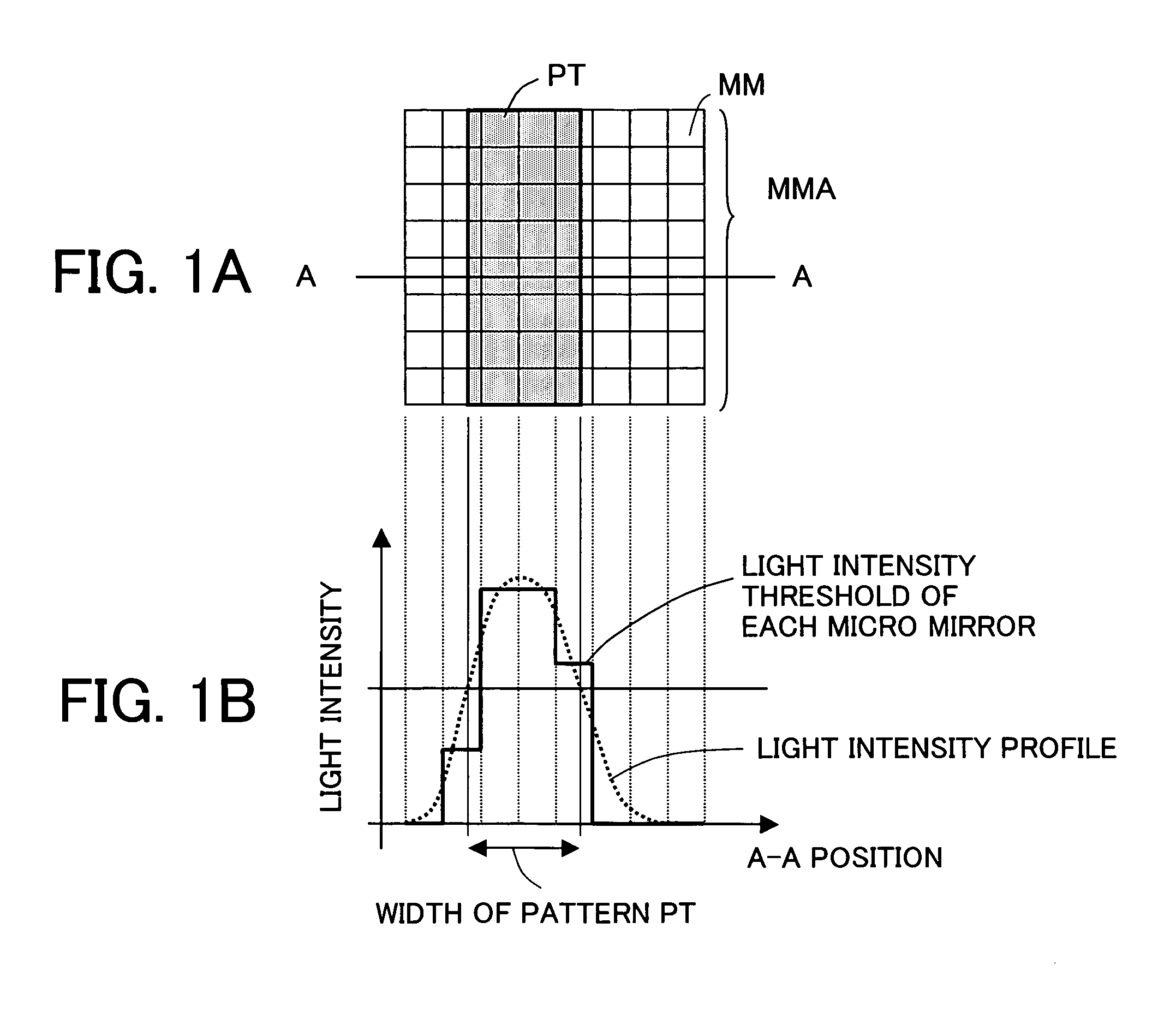

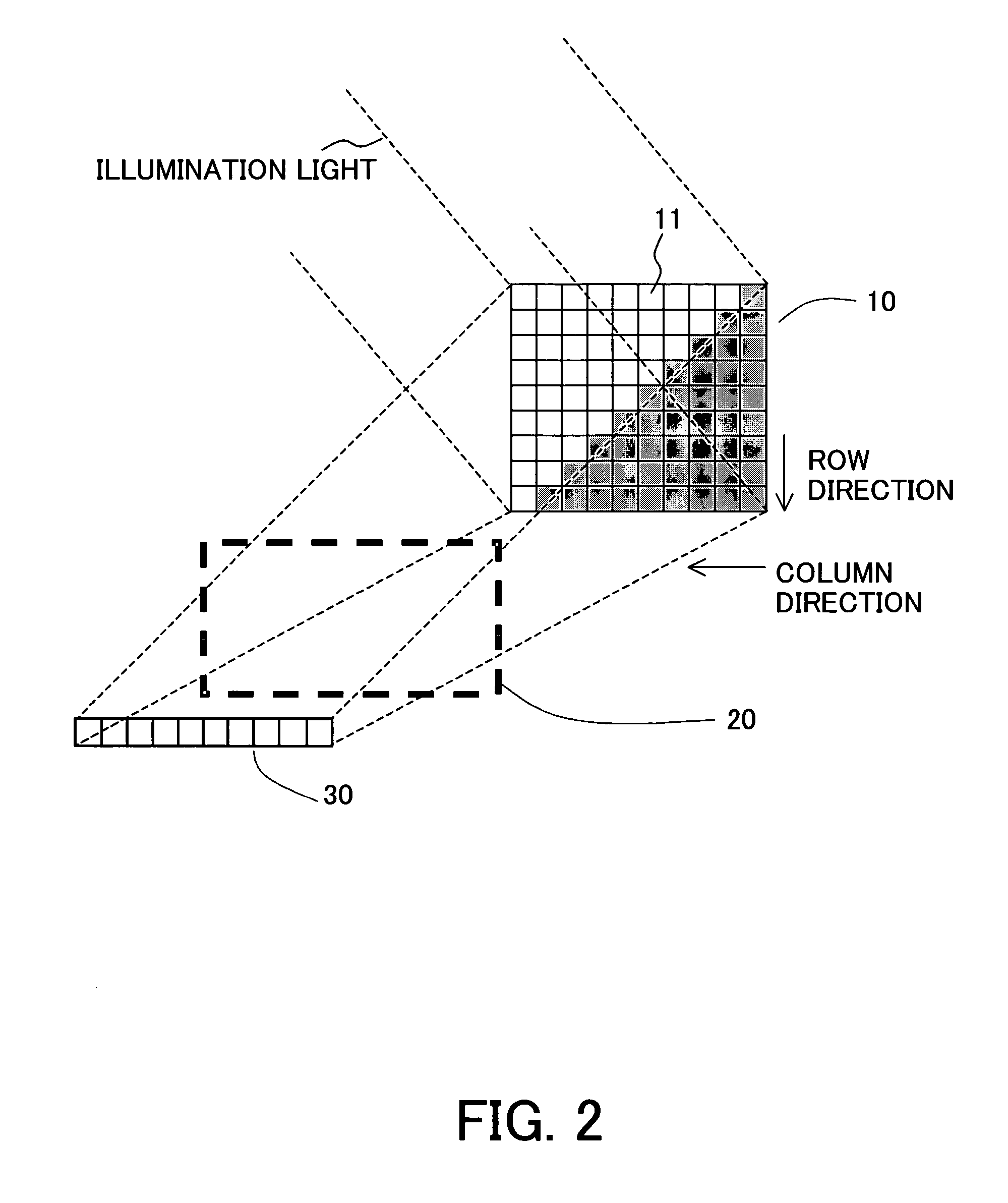

Exposure apparatus and device manufacturing method

InactiveUS20060087635A1Facilitate gray scaleSimple structurePhotomechanical apparatusPhotographic printingBinary controlSpatial modulation

An exposure apparatus includes a projection optical system for projecting a multigradation pattern onto an object, a spatial modulation element that includes plural, two-dimensionally arranged pixels, and forms an optical image by binary control over each pixel, and a superposing optical system for forming the multigradation pattern by superposing the optical images for each row and / or for each column.

Owner:CANON KK

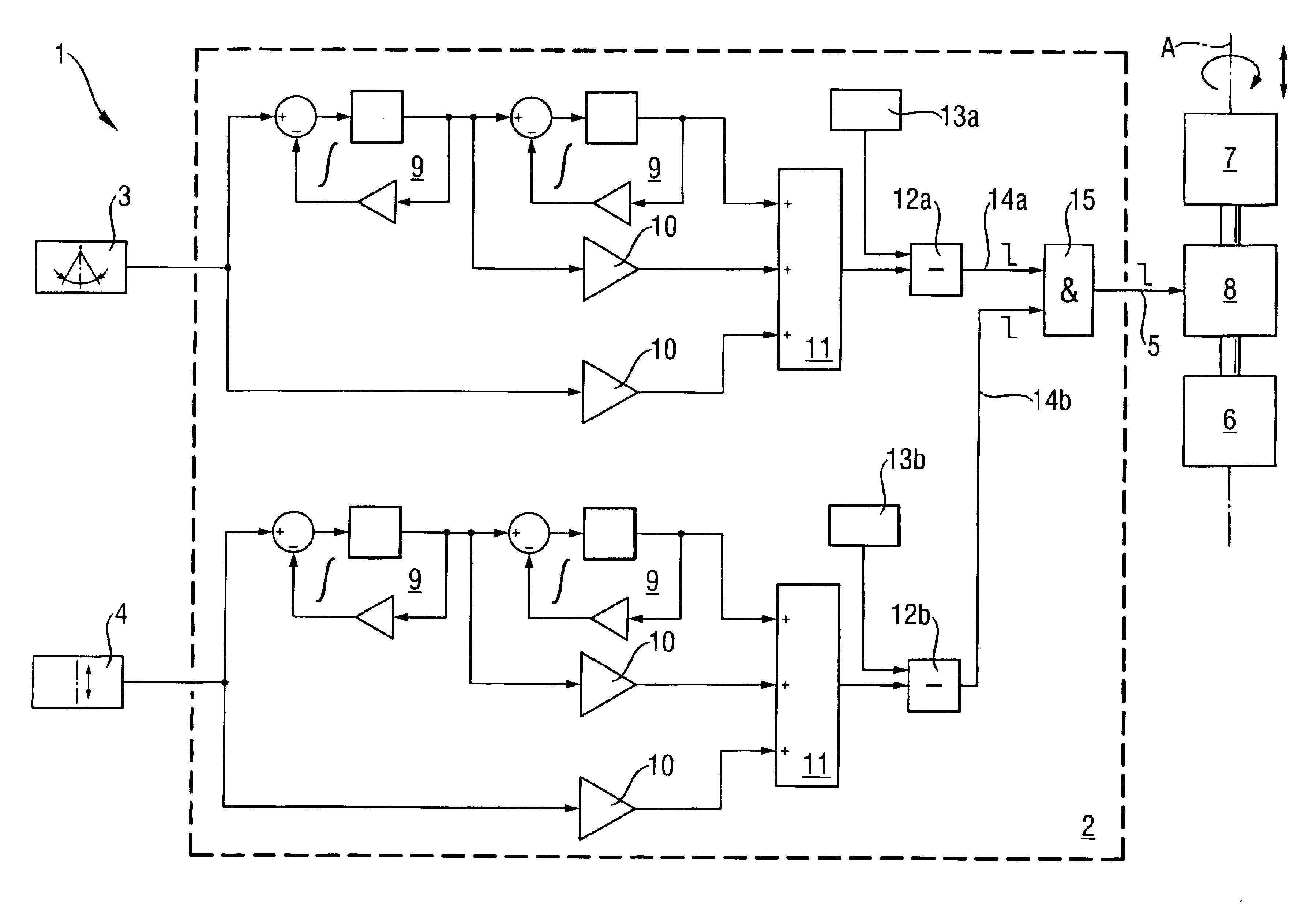

Safety module for a multifunctional handheld tool

A multifunction handheld tool machine having an at least partially axially percussive tool receptacle (7), a safety coupling (8) disposed in the power line between the tool receptacle and an electrical motor (6), a hand-guided housing and an ATC safety module (1) with a rotational sensor (3) sensitive to an angular displacement. The ATC safety module (1) is connected to an axial displacement sensor (4) and, in the event of exceeding an axial limit value (13b), the binary control signal (5) is suppressed by the axial displacement.

Owner:HILTI AG

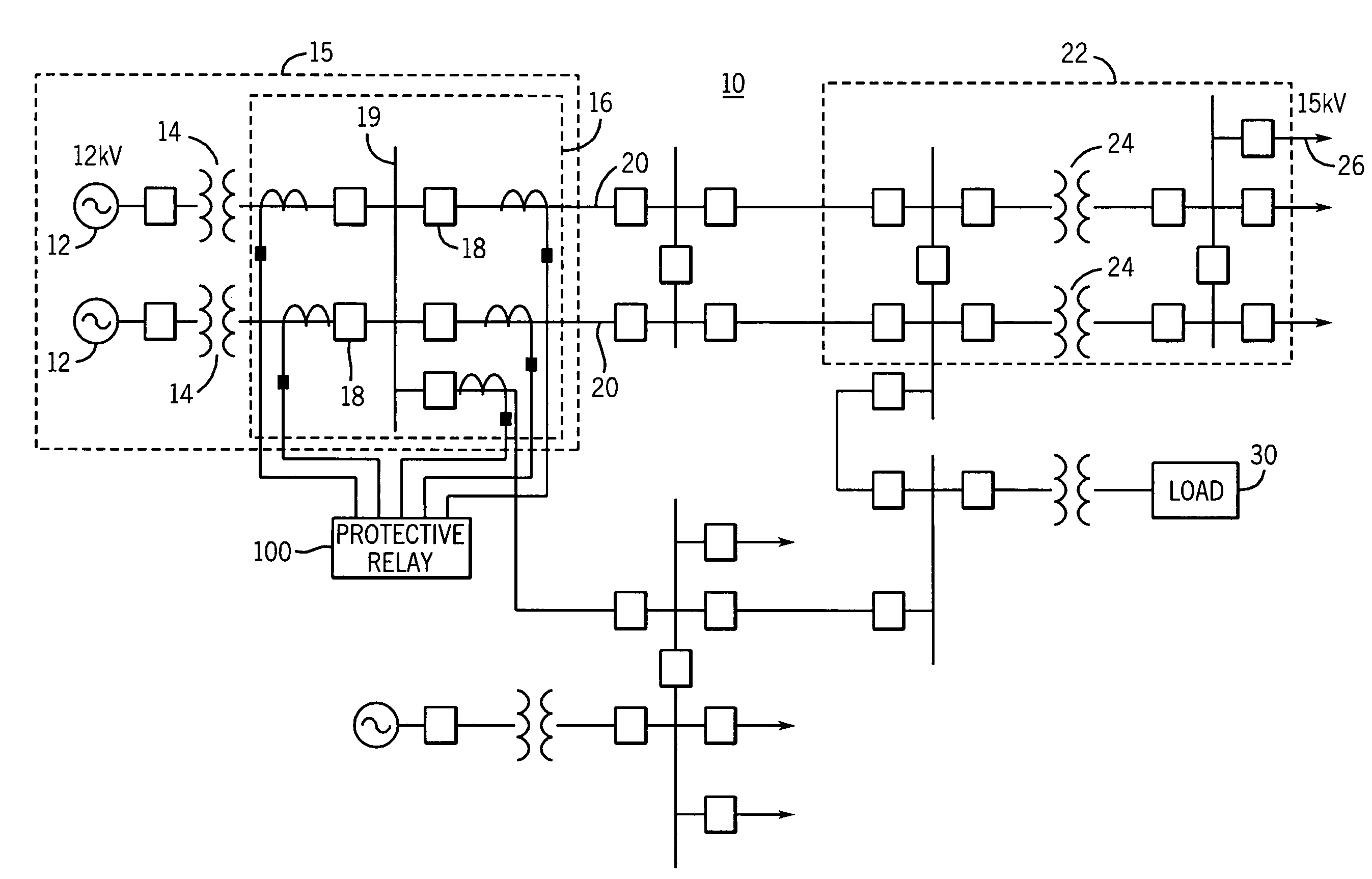

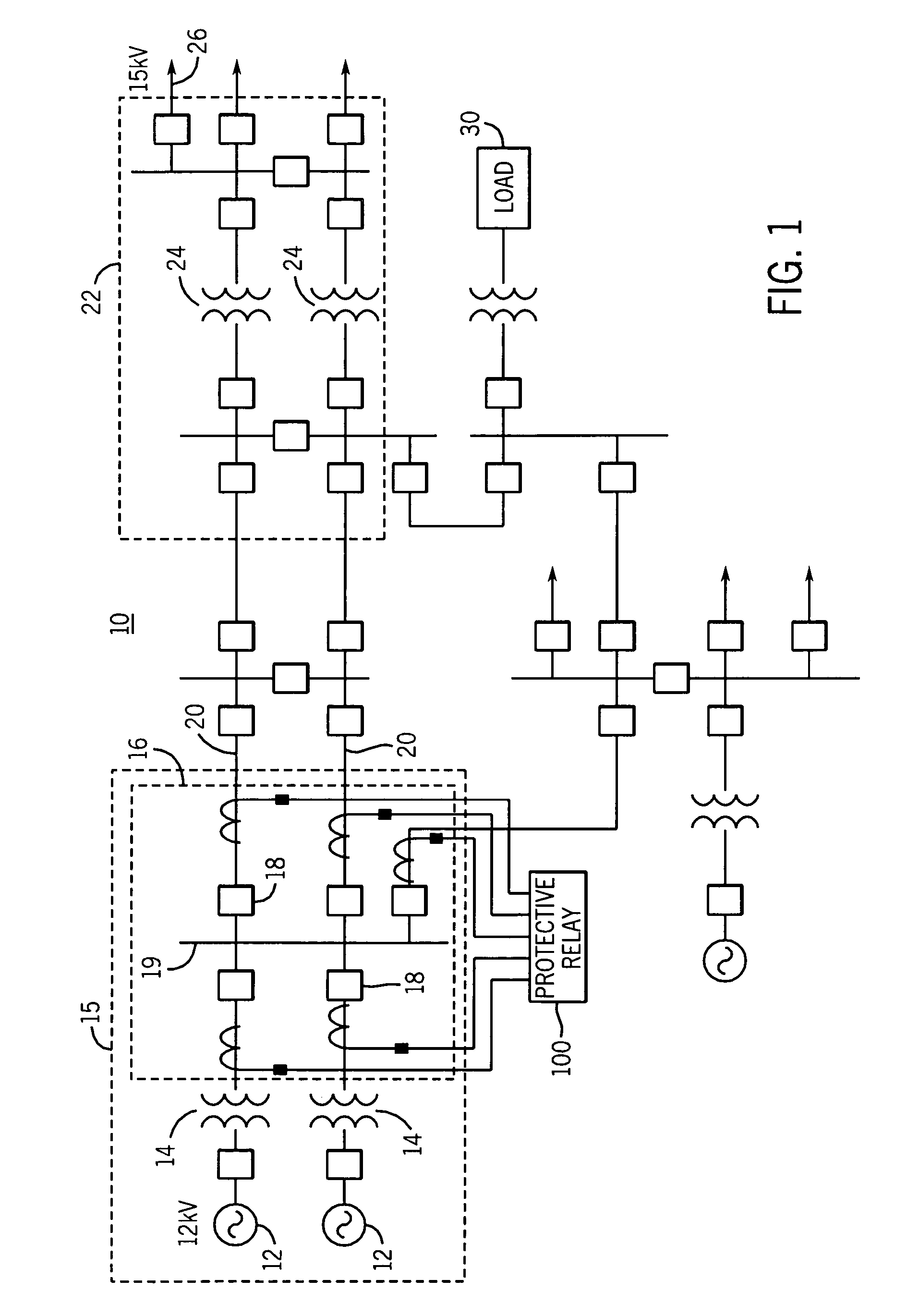

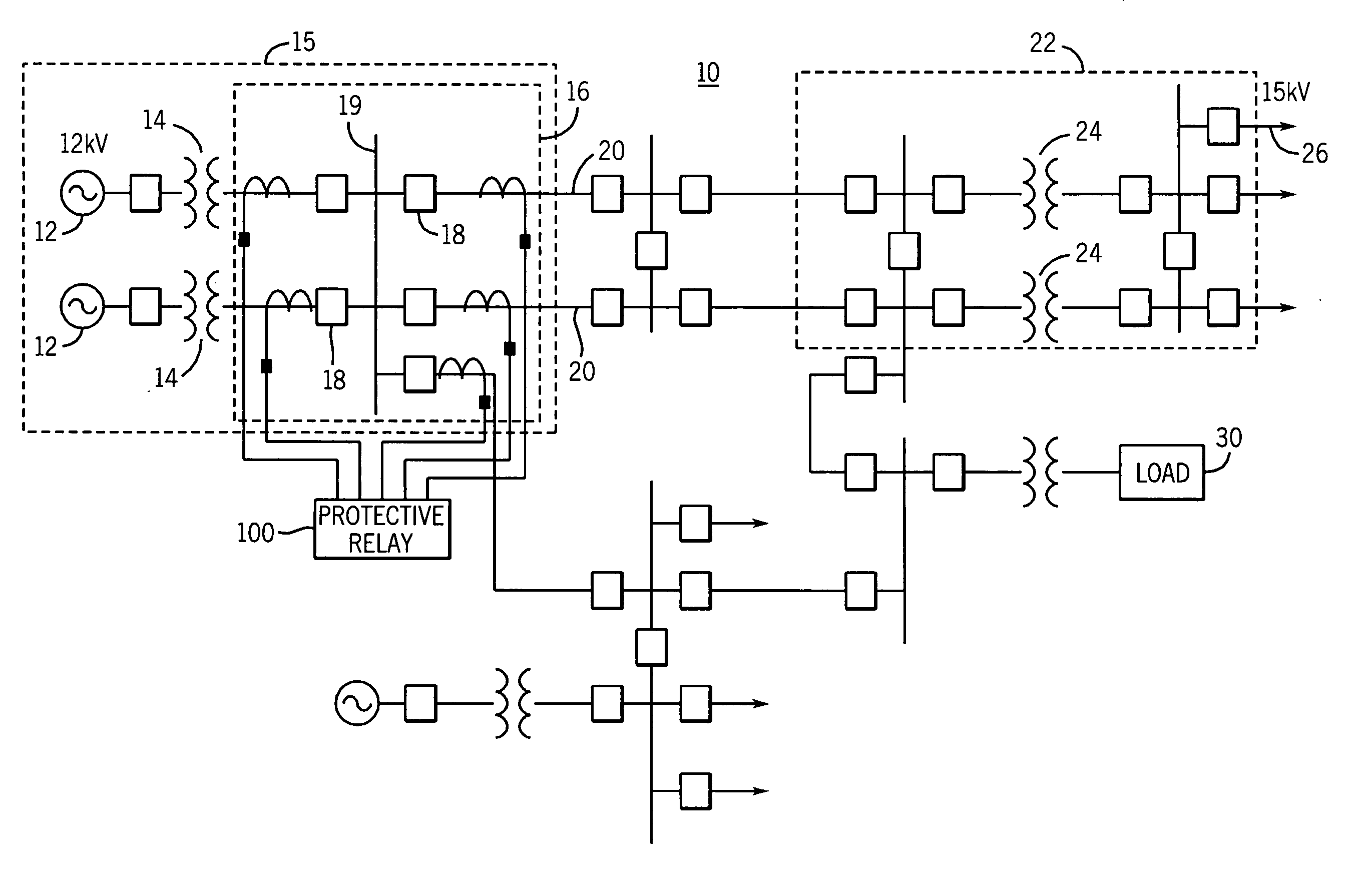

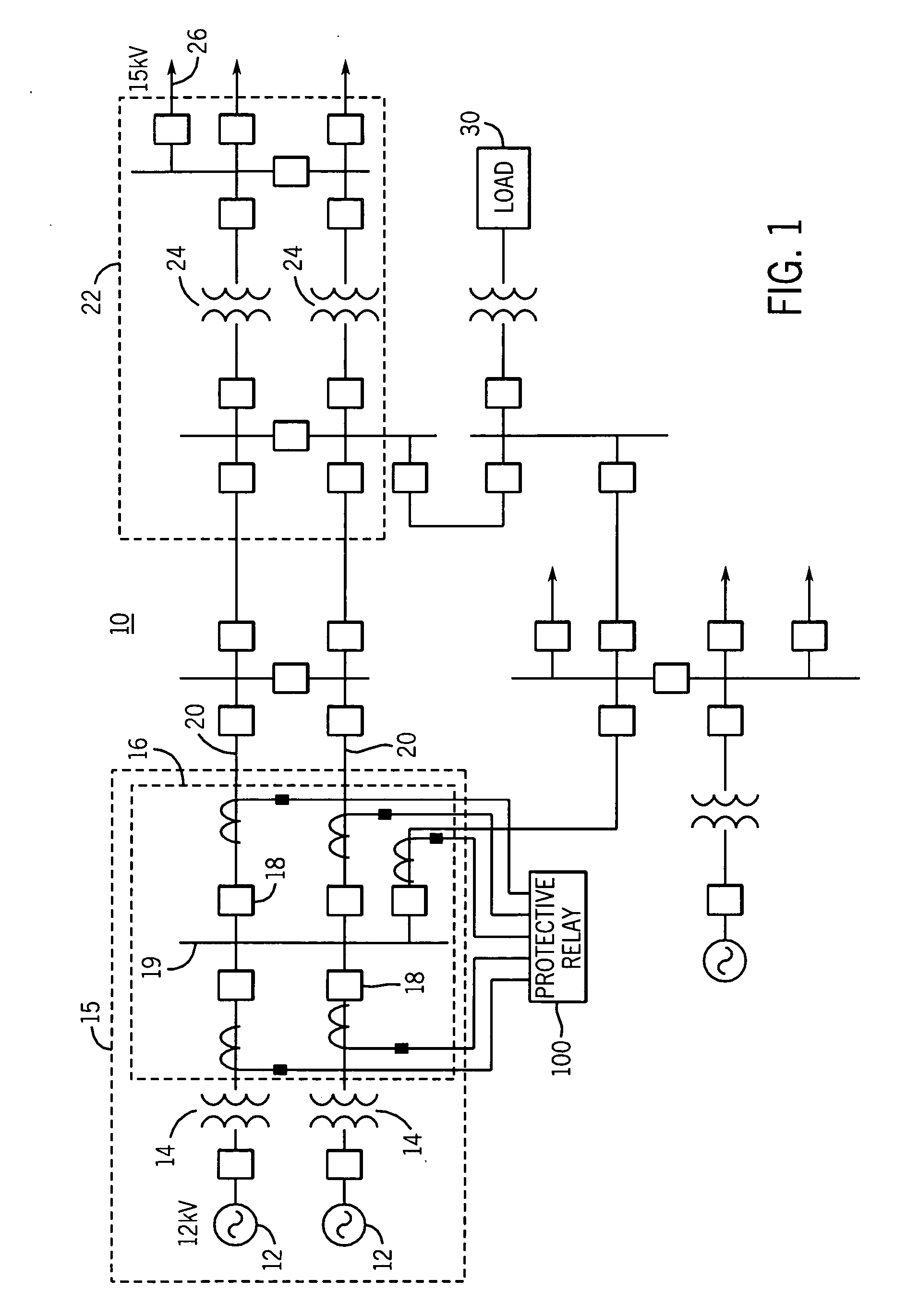

Apparatus and method for identifying a loss of a current transformer signal in a power system

ActiveUS7345863B2Circuit-breaking switches for excess currentsEmergency protection detectionControl signalElectric power system

Owner:SCHWEITZER ENGINEERING LABORATORIES

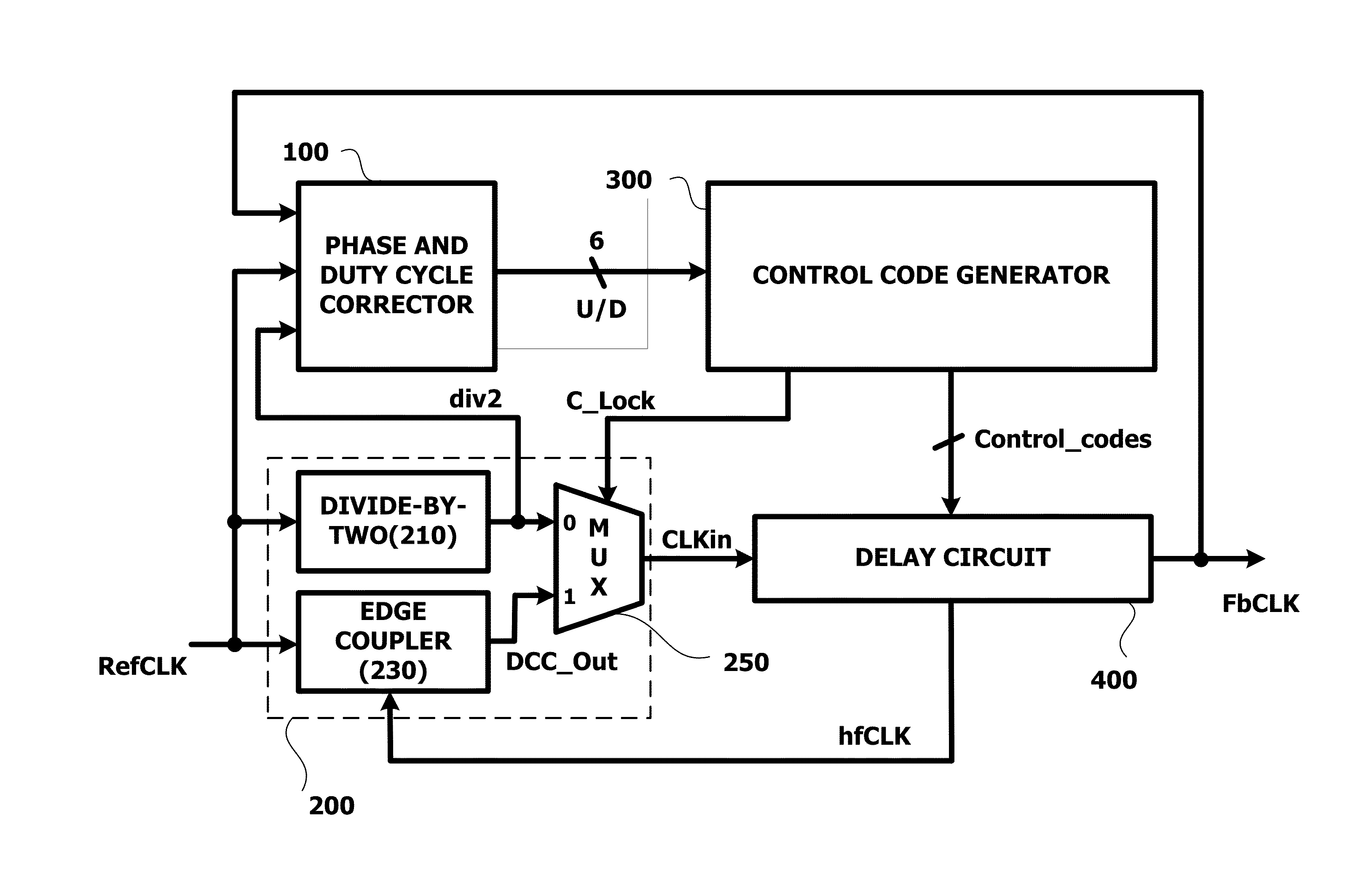

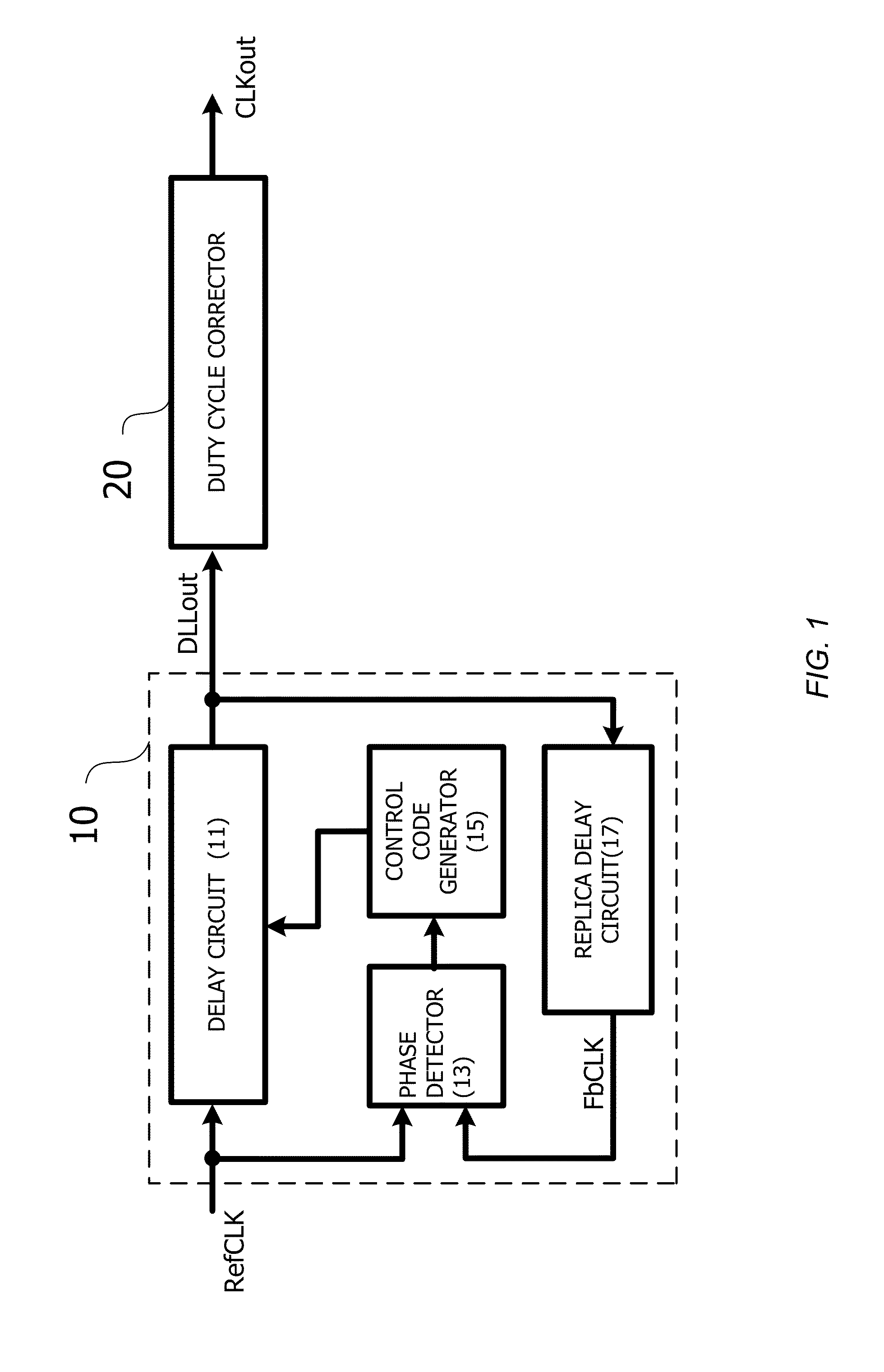

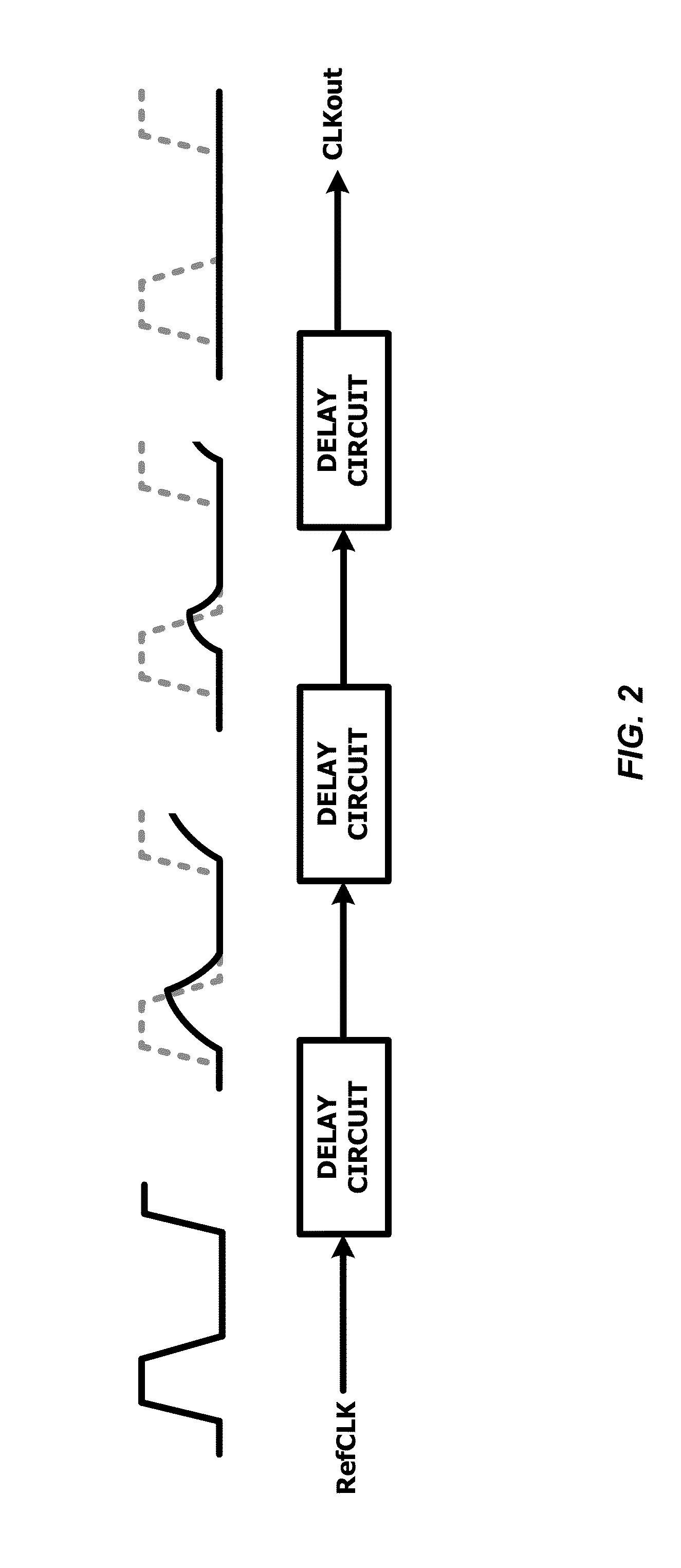

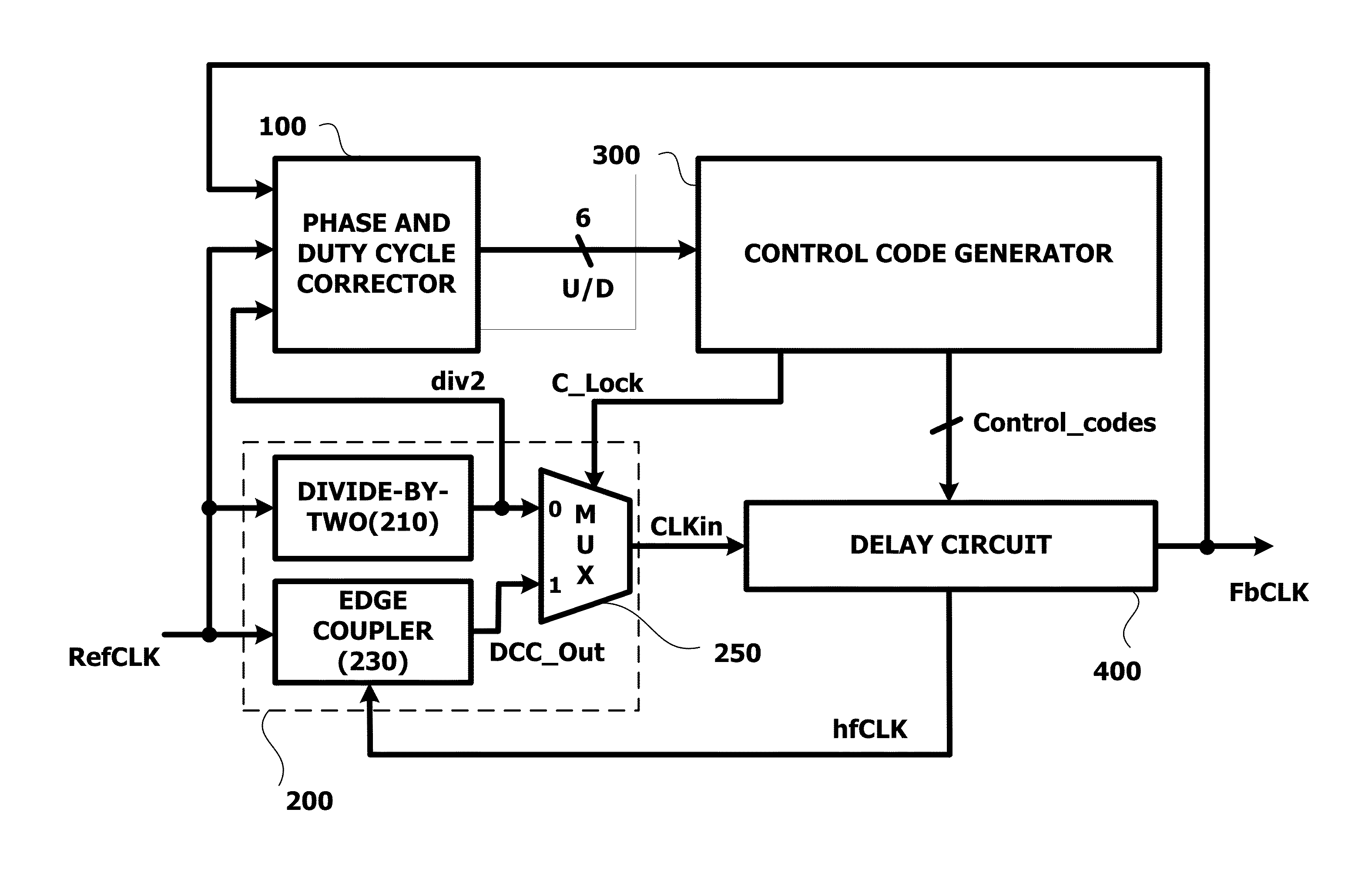

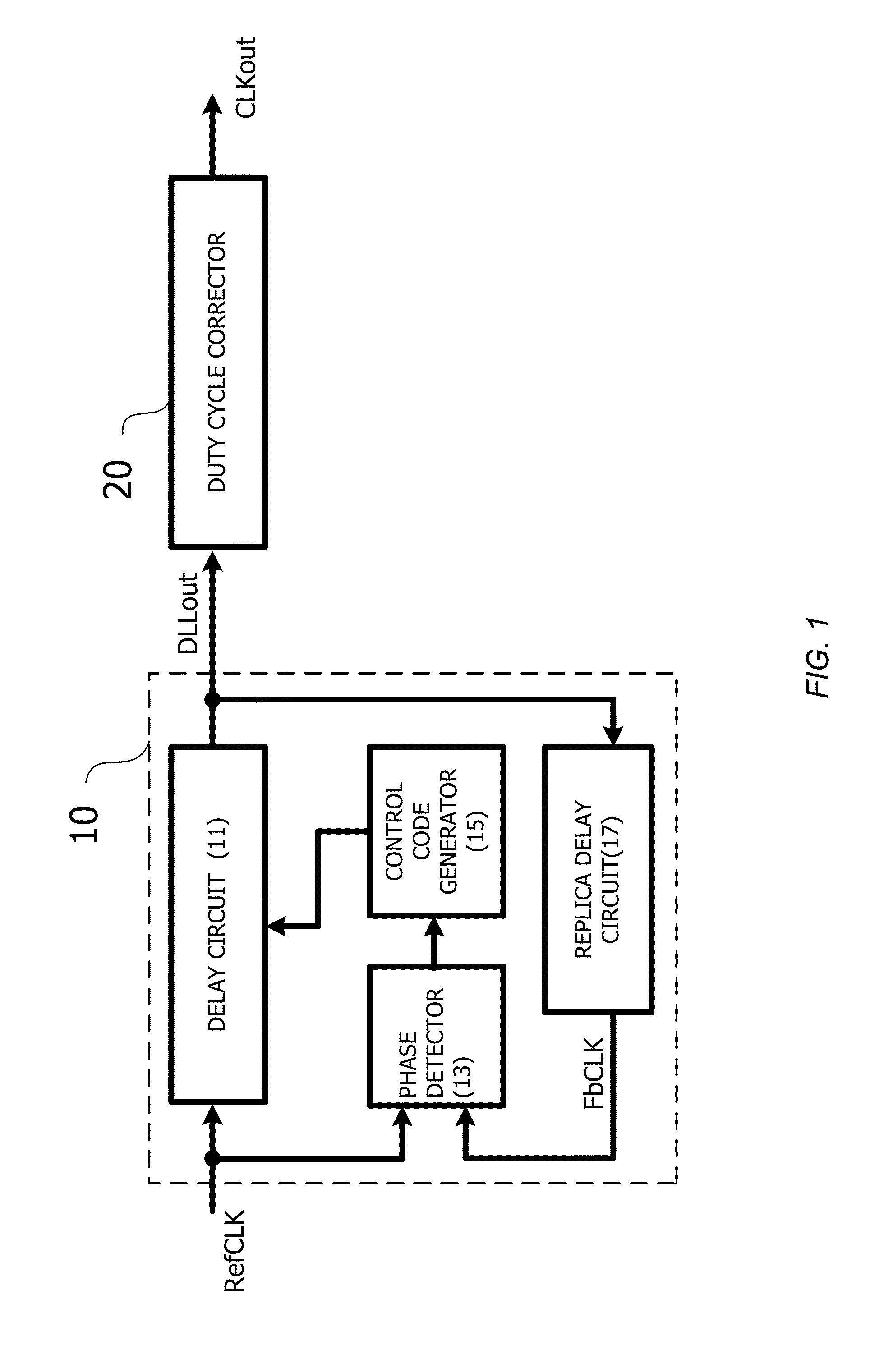

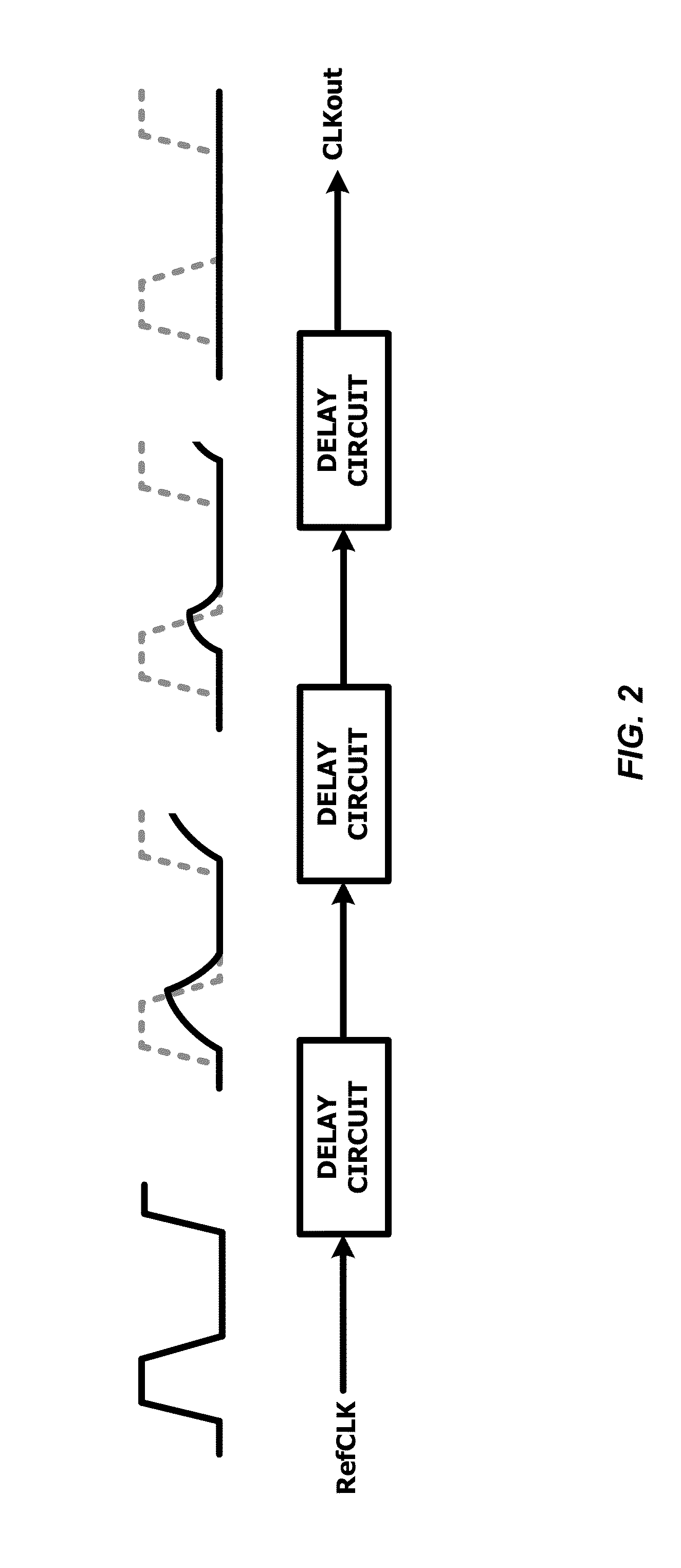

Delay locked loop with a loop-embedded duty cycle corrector

ActiveUS20140002155A1Reduce areaReduce consumptionPulse automatic controlContinuous to patterned pulse manipulationDelay-locked loopEngineering

A delayed locked loop (DLL) adjusts a duty cycle of an input clock signal and outputs an output clock signal. The DLL includes a phase and duty cycle detector configured to detect a phase and duty cycle of the input clock signal, a duty cycle corrector configured to correct the duty cycle, a control code generator configured to detect coarse lock of the DLL and generate a binary control code corresponding to the detection result, and a delay circuit configured to delay an output signal of the duty cycle corrector by a predetermined time according to the binary control code, tune the duty cycle thereof, and mix the phase thereof, wherein the phase and duty cycle detector, the duty cycle corrector, the control code generator, and the delay circuit form a feedback loop.

Owner:SK HYNIX INC +1

Exposure apparatus and device manufacturing method

InactiveUS7180575B2Easy to scaleSimple structurePhotomechanical apparatusPhotographic printingOptic systemBinary control

An exposure apparatus includes a projection optical system for projecting a multigradation pattern onto an object, a spatial modulation element that includes plural, two-dimensionally arranged pixels, and forms an optical image by binary control over each pixel, and a superposing optical system for forming the multigradation pattern by superposing the optical images for each row and / or for each column.

Owner:CANON KK

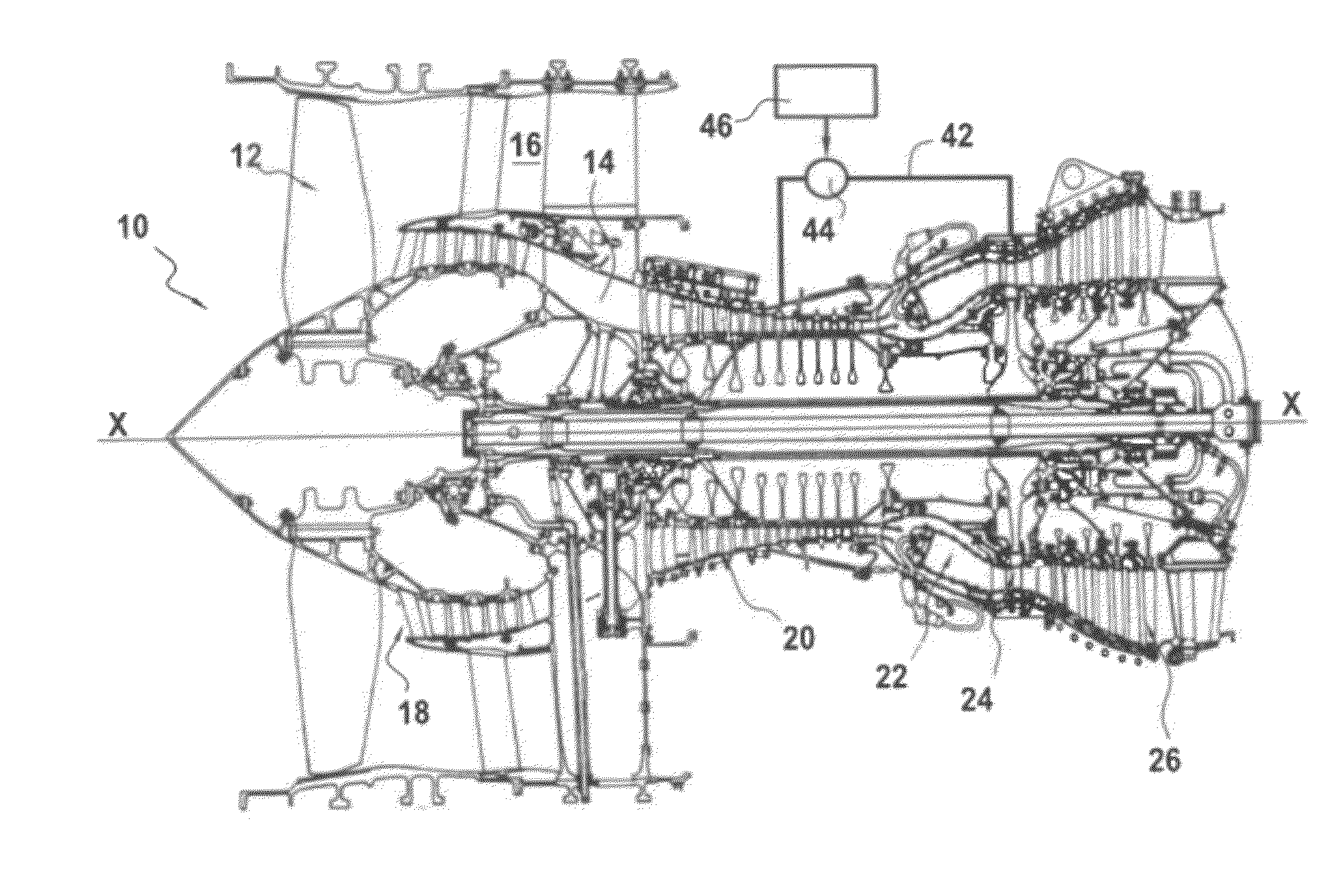

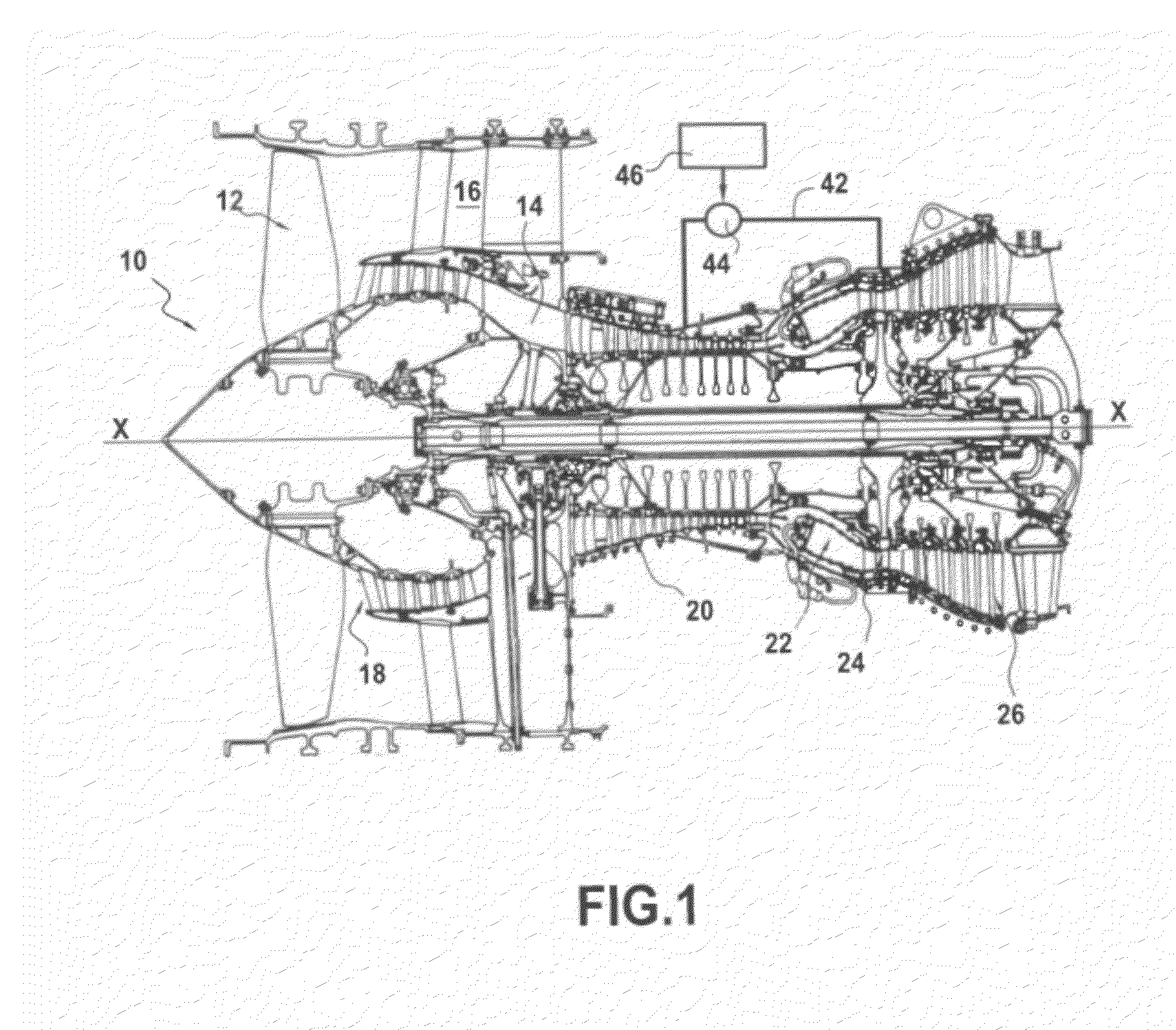

Control unit and a method for controlling blade tip clearance

ActiveUS20120201651A1Mitigate such drawbackEasy to useLeakage preventionPump controlControl signalComputer module

A method and system for controlling clearance between the tips of blades of a turbine rotor in a gas turbine airplane engine and a turbine ring of a casing surrounding the blades. A valve is controlled to act on a flow rate and / or a temperature of air directed against the casing. The valve is of the on / off type for switching between an open state and a closed state in a determined reaction time, the valve control module including a corrector for determining a first binary control signal that can take a first value corresponding to an open state and a second value corresponding to a closed state of the valve. The corrector includes a time-delay module for determining a binary control signal, with the duration between two successive changes of state in the control signal being not less than the reaction time of the valve.

Owner:SN DETUDE & DE CONSTR DE MOTEURS DAVIATION S N E C M A

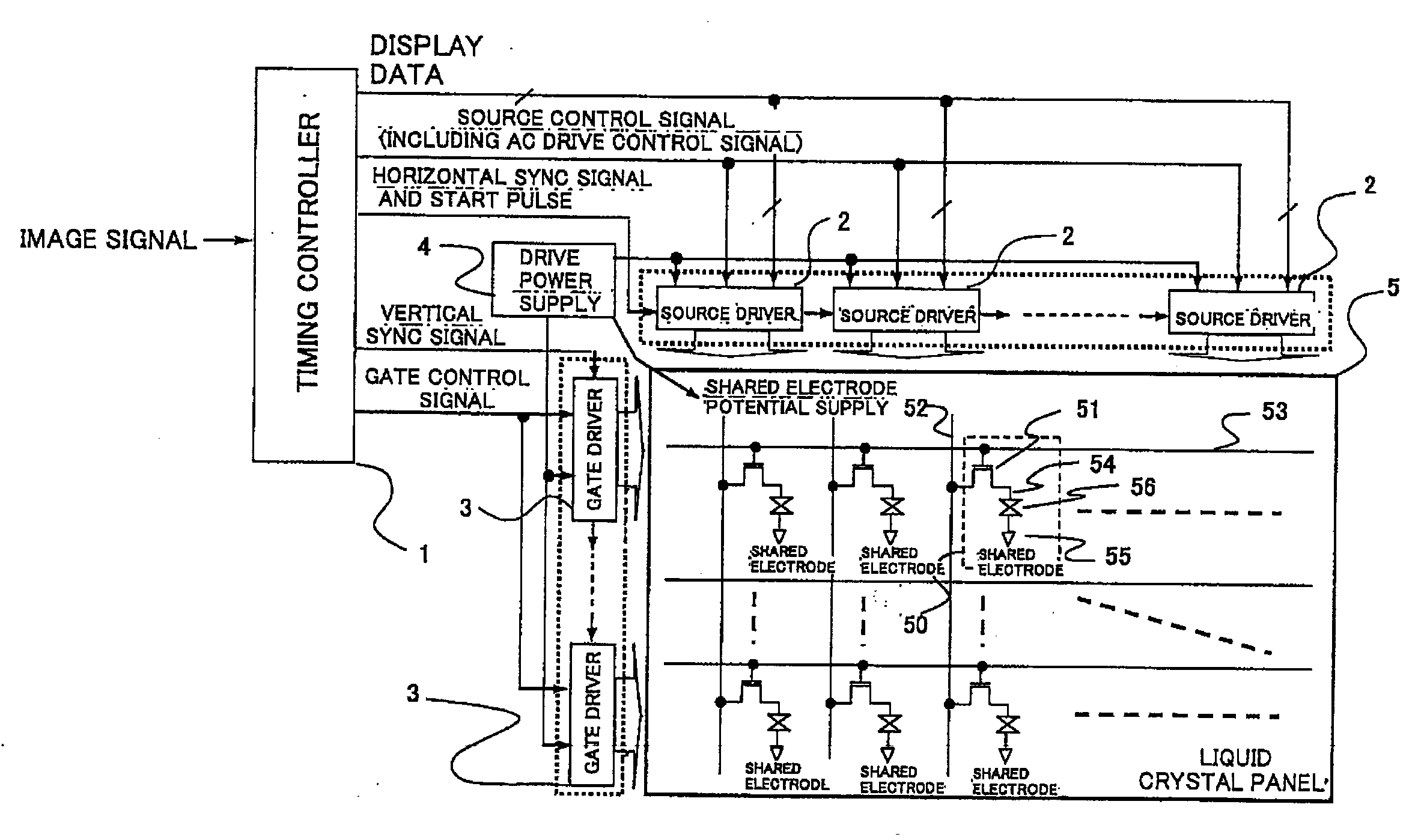

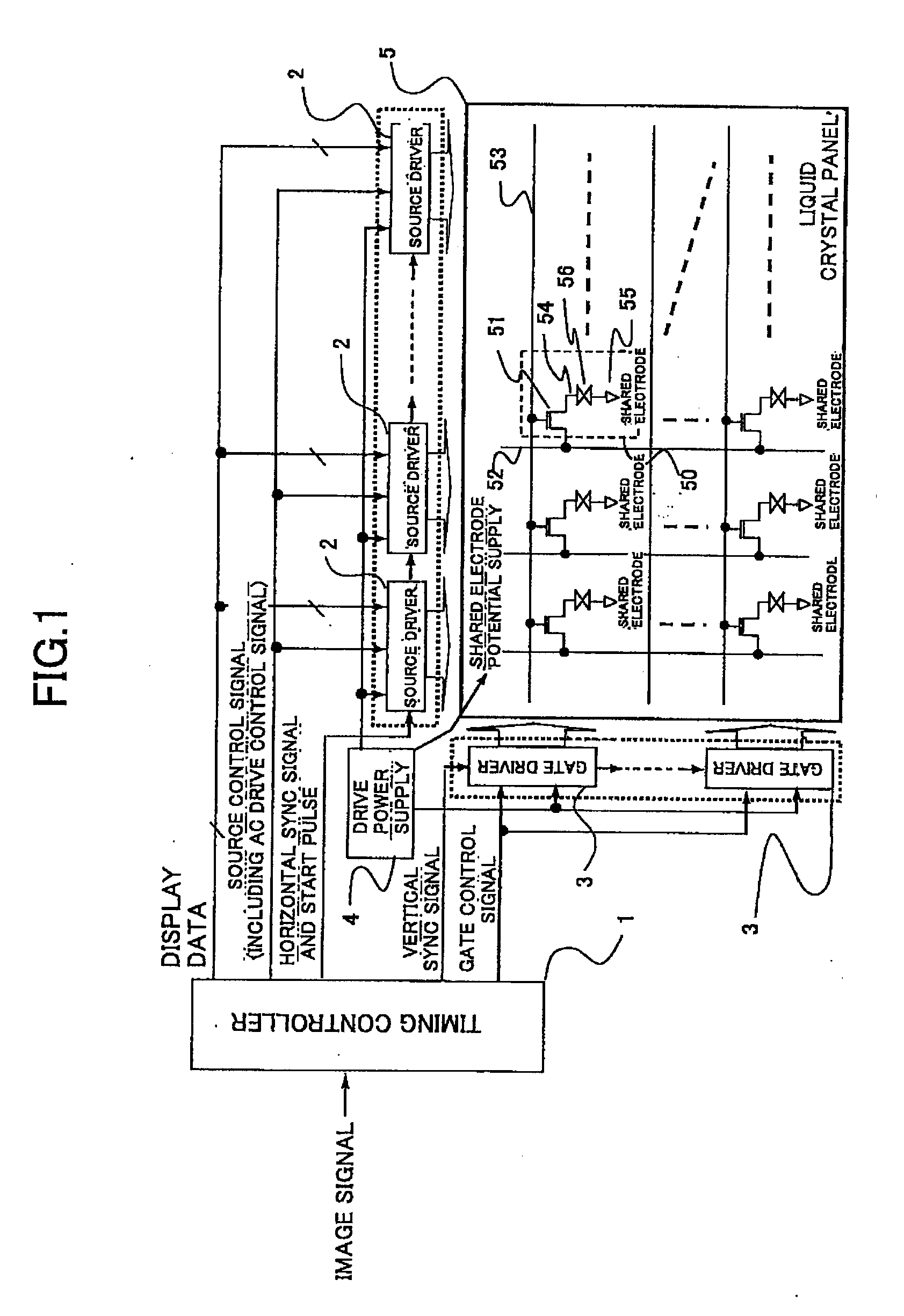

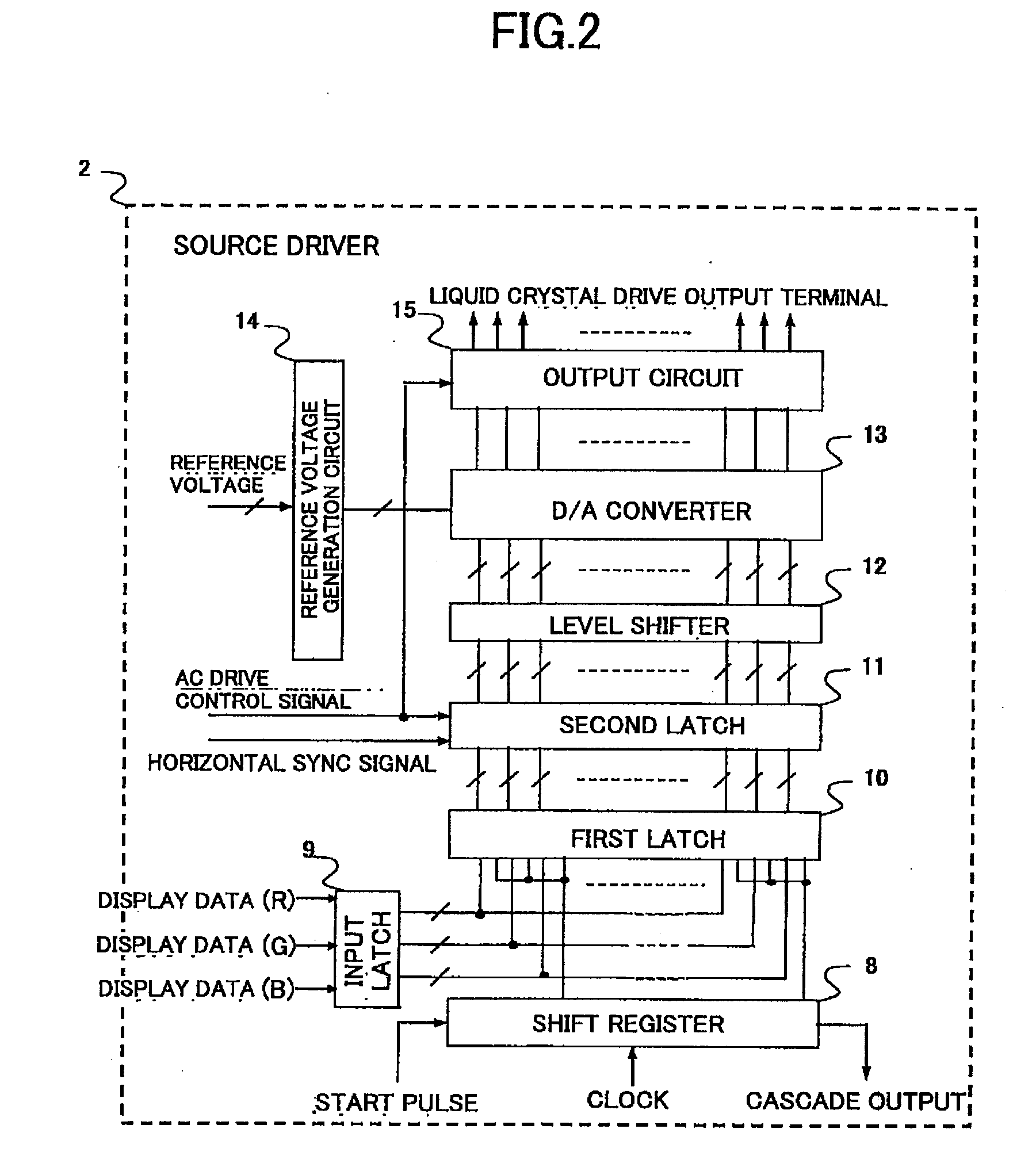

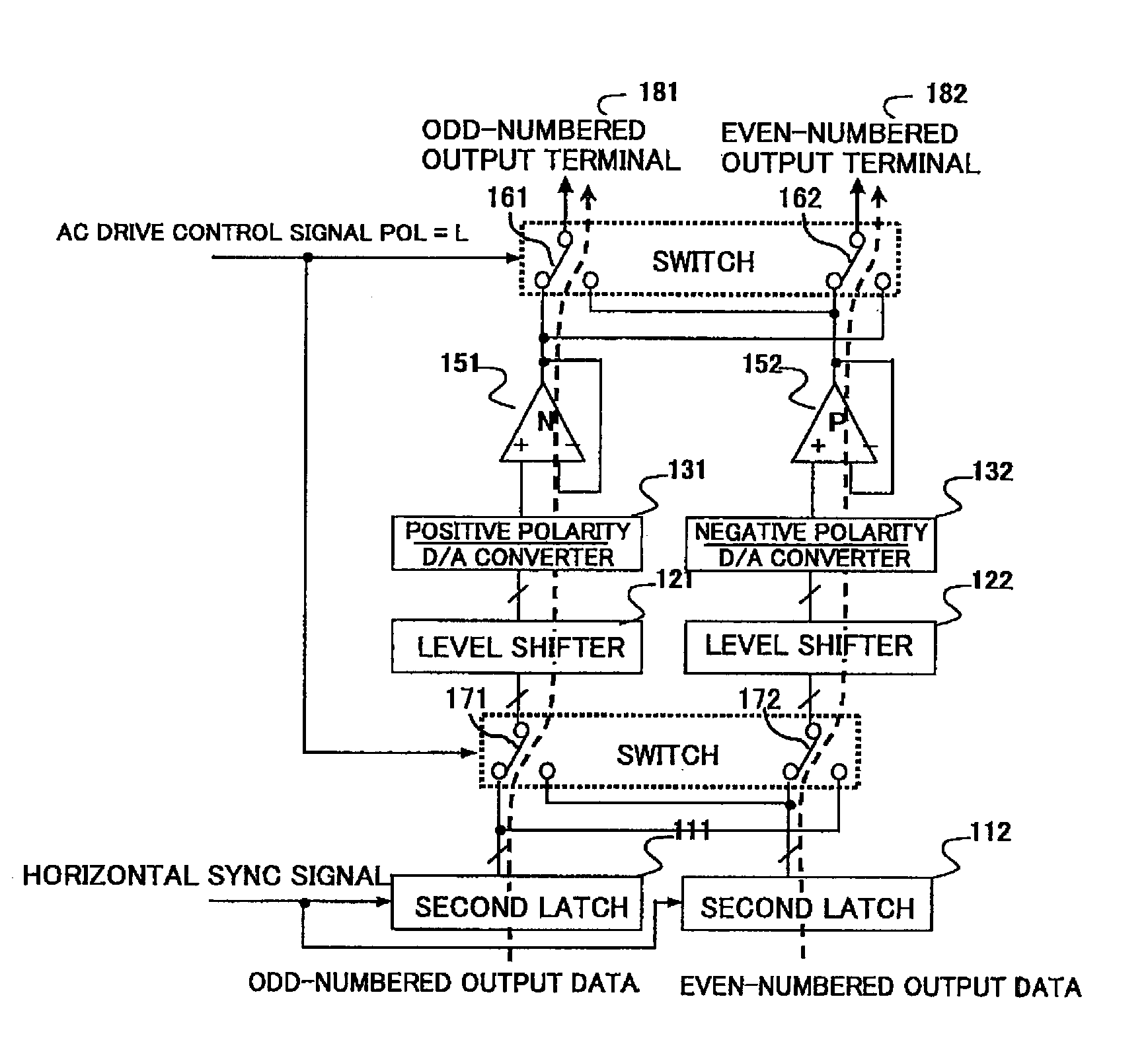

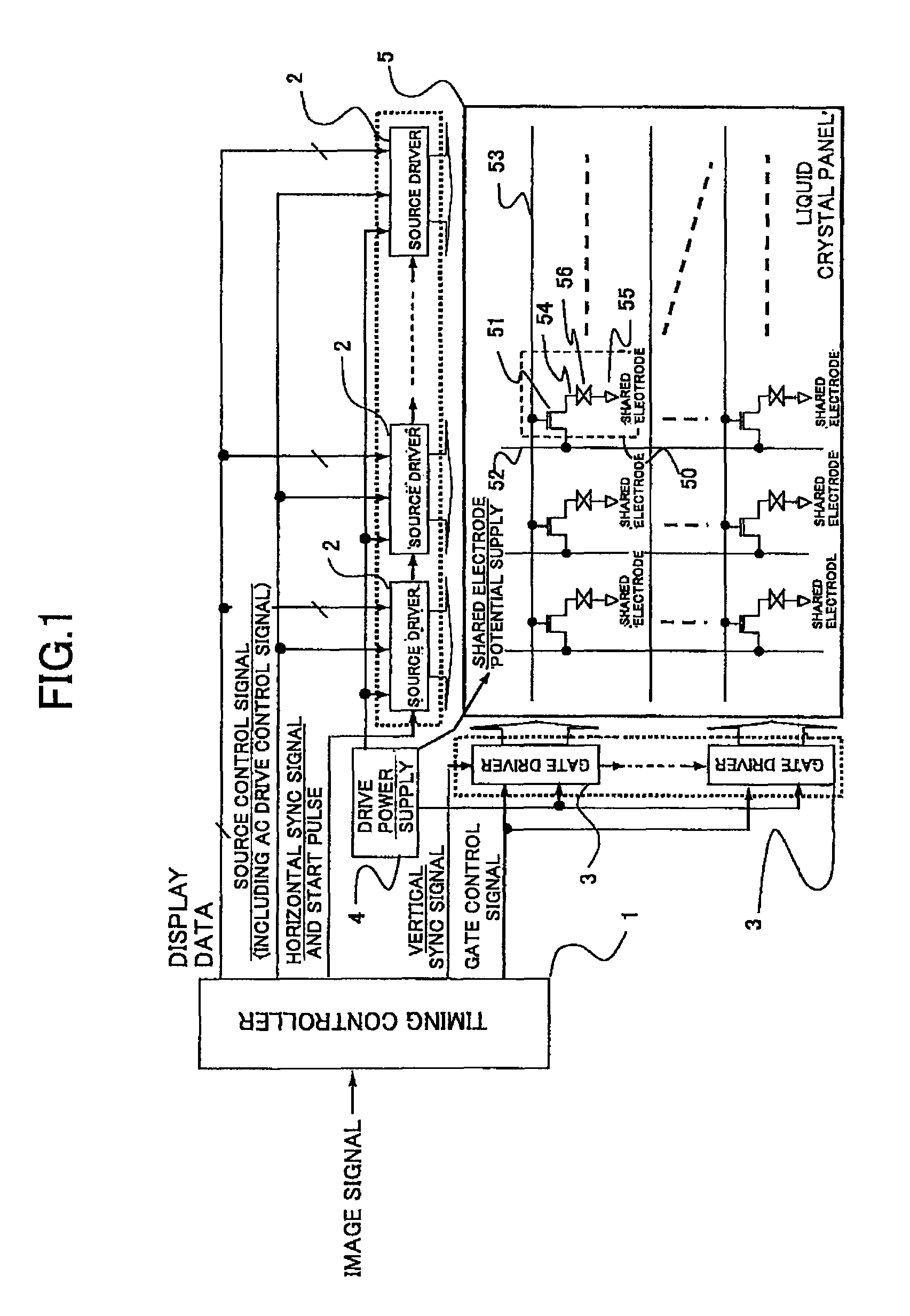

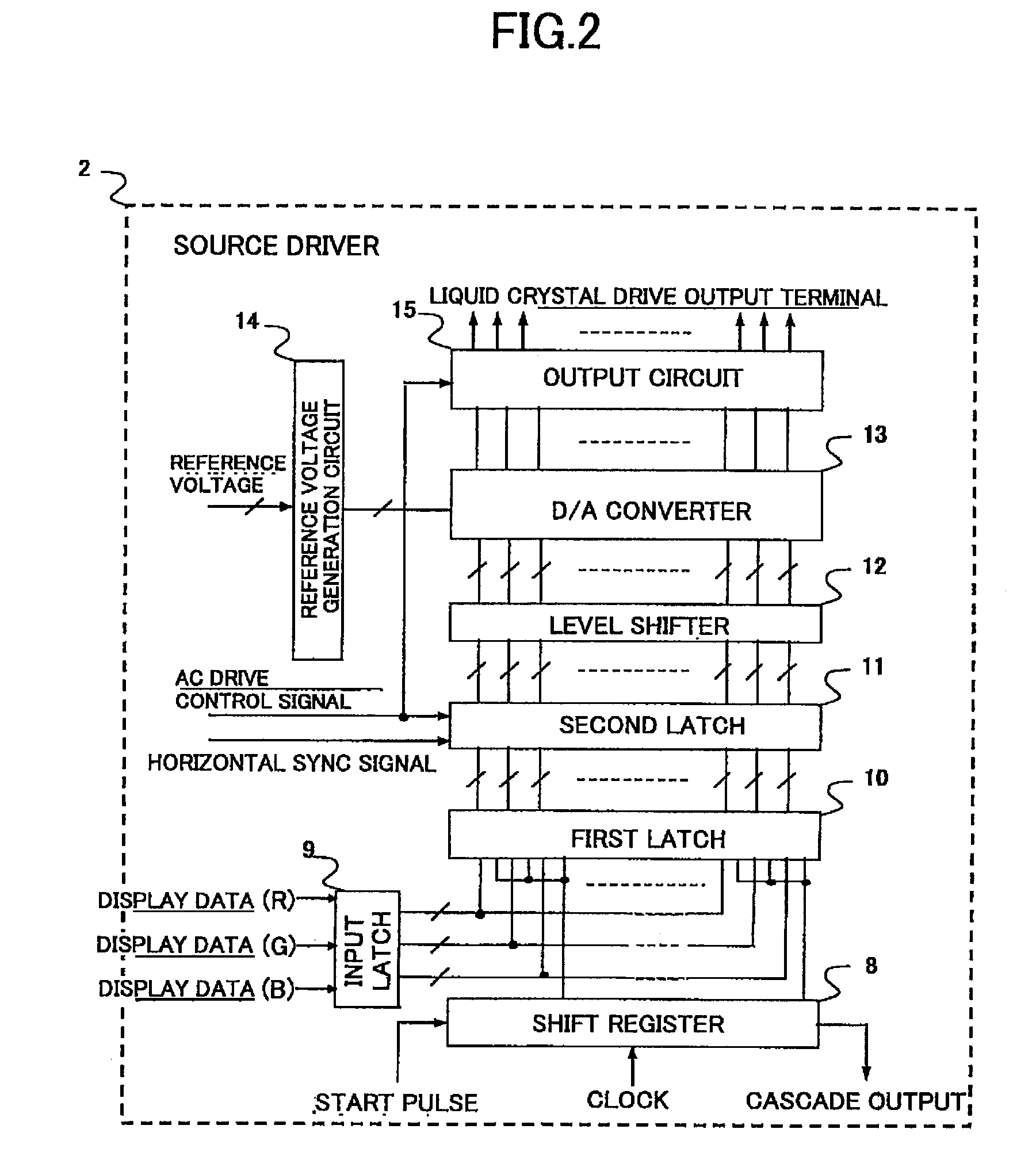

Source driver for display panel and drive control method

ActiveUS20090295777A1Improve display qualityCathode-ray tube indicatorsInput/output processes for data processingControl signalEngineering

A source driver and drive control method therefor that makes it possible to cancel offset voltages and to obtain good display quality even when a vertical synchronization signal is not fed to the source driver. A source driver is provided for receiving from a timing controller a horizontal synchronization signal of an image signal, and a binary control signal of which a value varies in two values in synchronization with the horizontal synchronization signal and in which start values of adjacent frames of the image signal are different, excluding a vertical synchronization signal of the image signal, to apply a drive voltage to a plurality of source signal lines of a display panel. In the source driver, the vertical cycle of the image signal is analyzed on the basis of the binary control signal; a pseudo vertical synchronization signal is generated on the basis of the vertical cycle; and a cancel operation of an offset voltage component of the drive voltage is perform on the basis of the pseudo vertical synchronization signal.

Owner:LAPIS SEMICON CO LTD

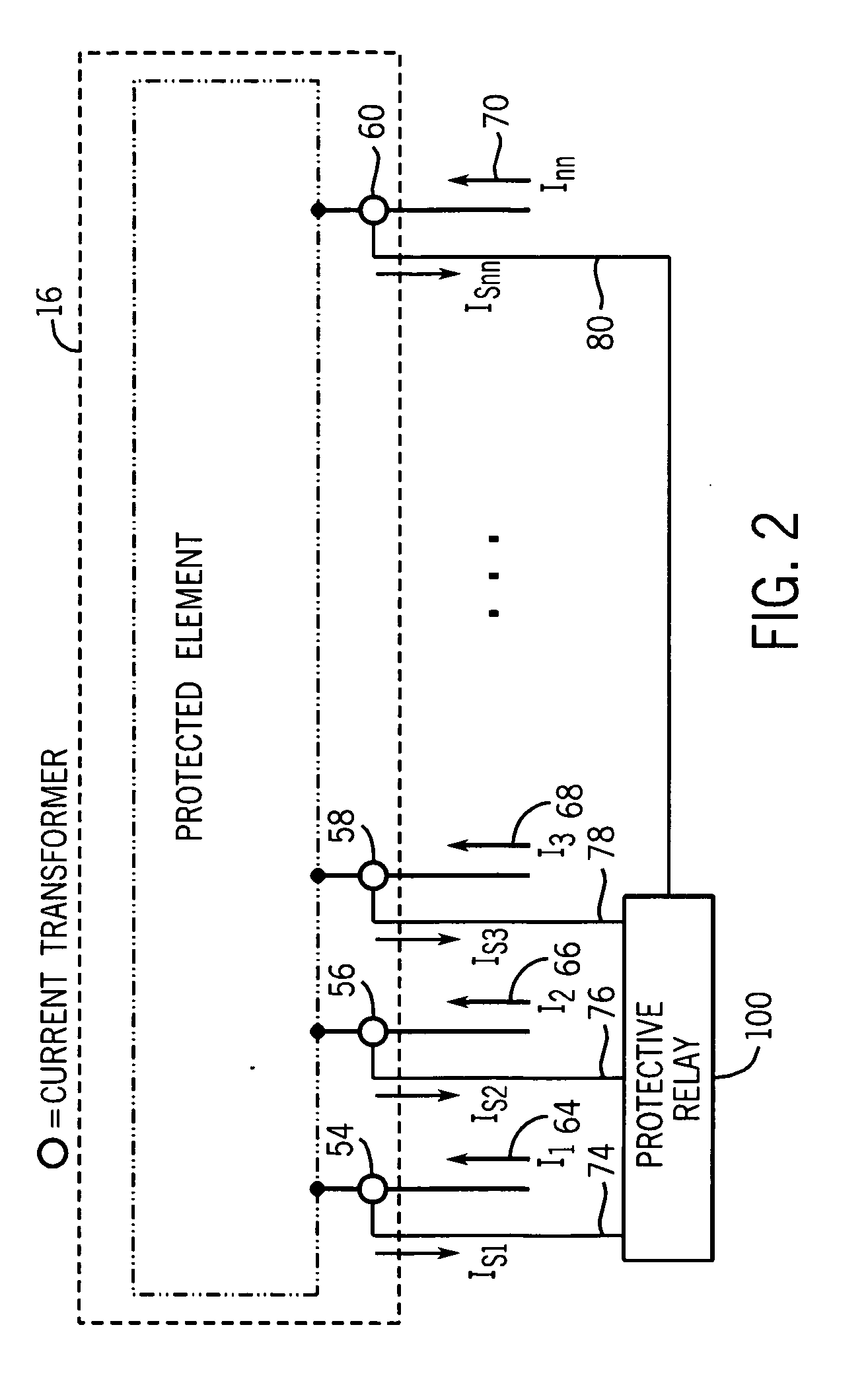

Apparatus and method for identifying a loss of a current transformer signal in a power system

ActiveUS20070014062A1Circuit-breaking switches for excess currentsEmergency protection detectionElectric power systemControl signal

Provided is an apparatus and method for identifying a specific lost current transformer (CT) signal of number of CT signals provided by a corresponding number of CTs coupling a protective device to at least one protection zone. The method includes selectively providing pairs of first and second binary control signals corresponding to each of the CT signals in response to comparisons of RMS current changes of respective CT signals. A third binary control signal associated with a protection zone is provided in response to receipt of the pairs of first and second binary control signals. A first value for only one of the first binary control signals, a second value for all of the second binary control signals and the first value for the third binary control signal for a predetermined time indicates the loss of the CT signal corresponding to the first binary control signal having the first value.

Owner:SCHWEITZER ENGINEERING LABORATORIES

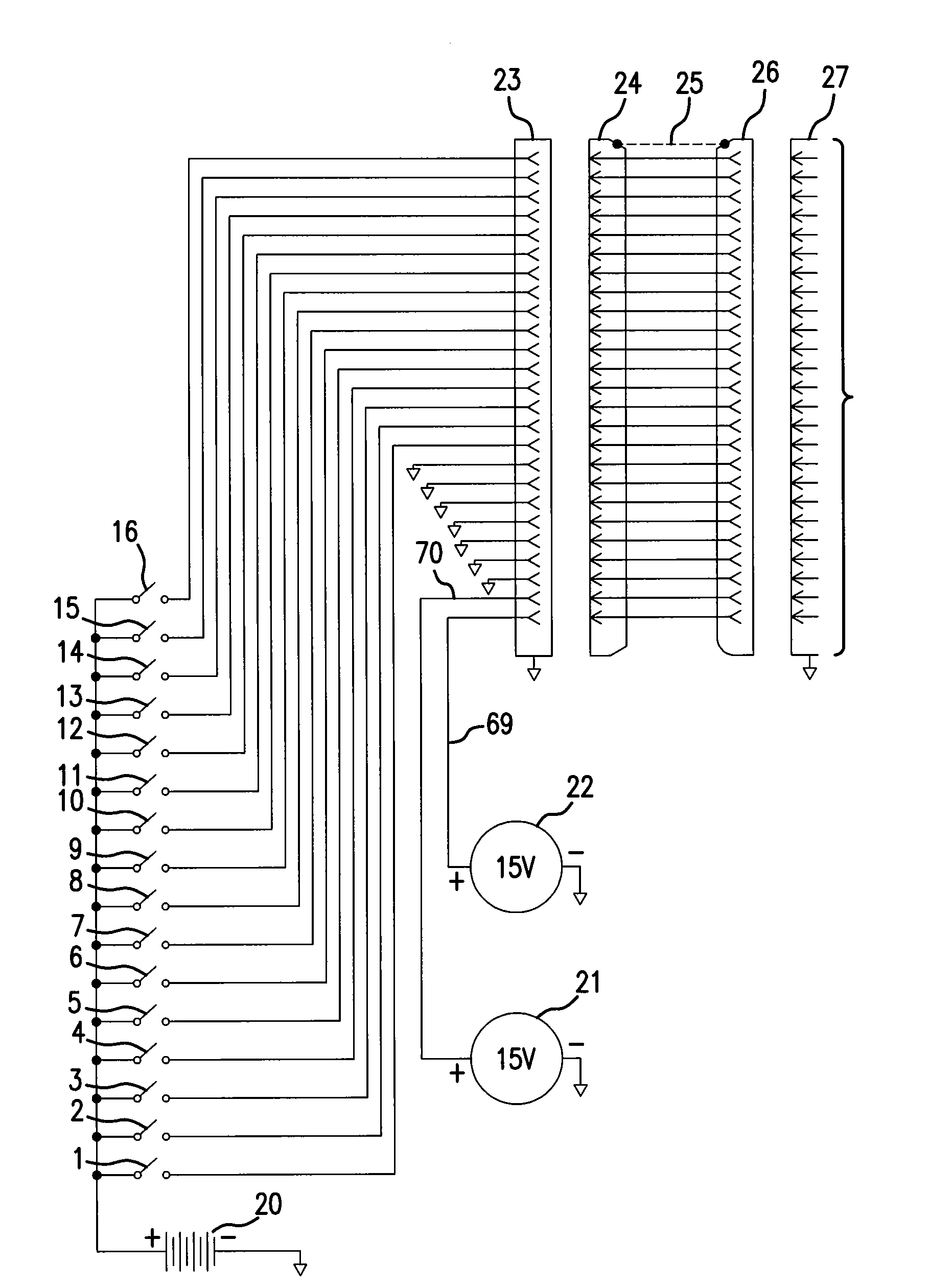

Compact remote tuned antenna

InactiveUS20090231223A1Easy to handleImprove the level ofAntennas earthing switches associationLoop antennas with variable reactanceElectricityRemote control

A compact electrically long antenna including a manual or computer remote controlled tuning system using switched electrical length capable of operation at high RF power levels. An electromechanical relay or other switch device provides remote control (by a parallel binary bit pattern over great distance) of radiating structures formed of series connected absolute binary sequence electrical length radiating elements in a main circuit loop having a total electrical length. These radiating structures are formed from individual elements, and sets of individual elements, insulated and isolated from each other. The binary controlled switch devices may unshort (connect) and short out (bypass) binary length elements in the main loop circuit. The electrical length of the main loop circuit can be set to a desired length, from a maximum total length of all binary length elements in series to a minimum length where all binary elements are shorted out and effectively bypassed.

Owner:LARONDA MICHAEL

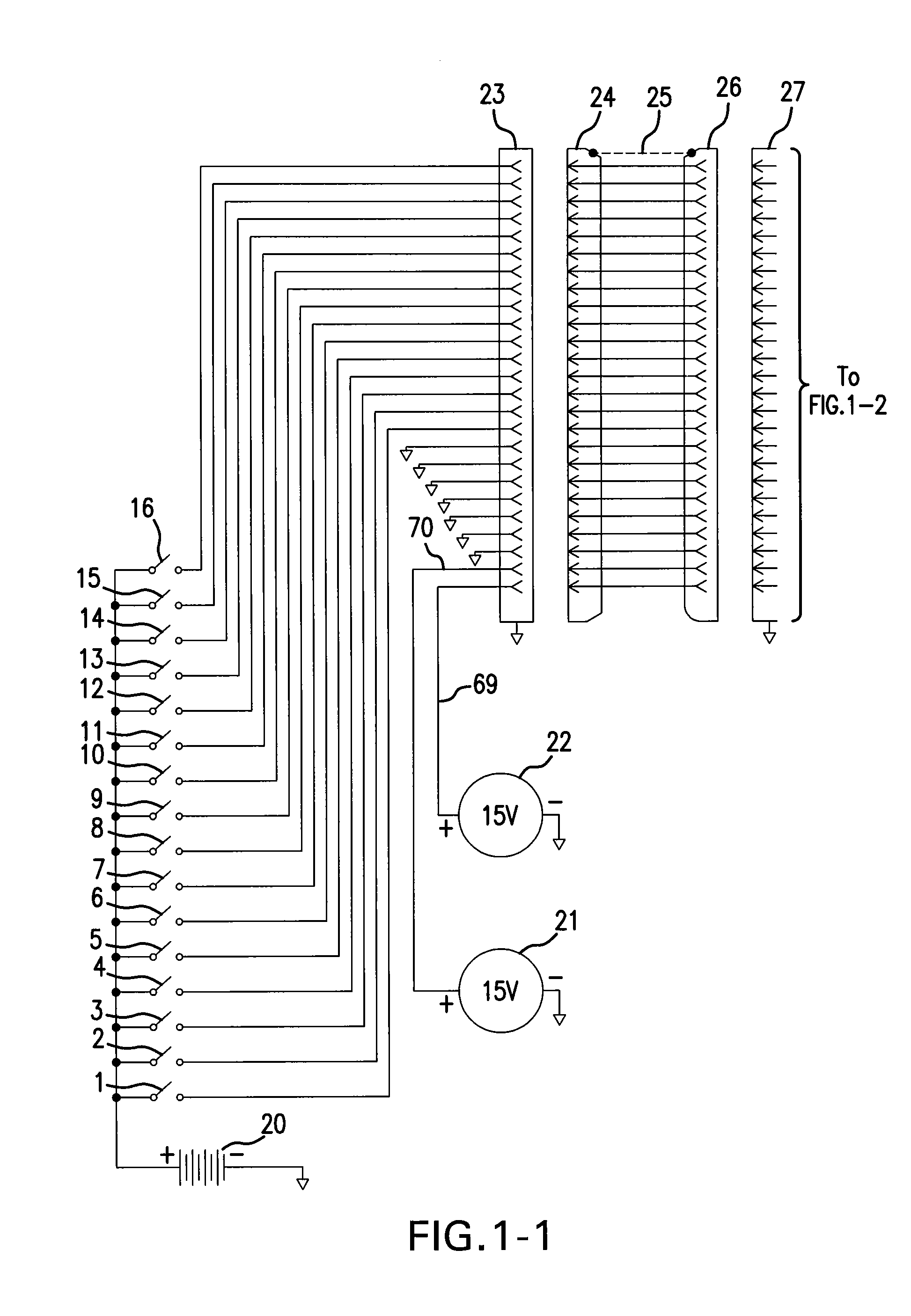

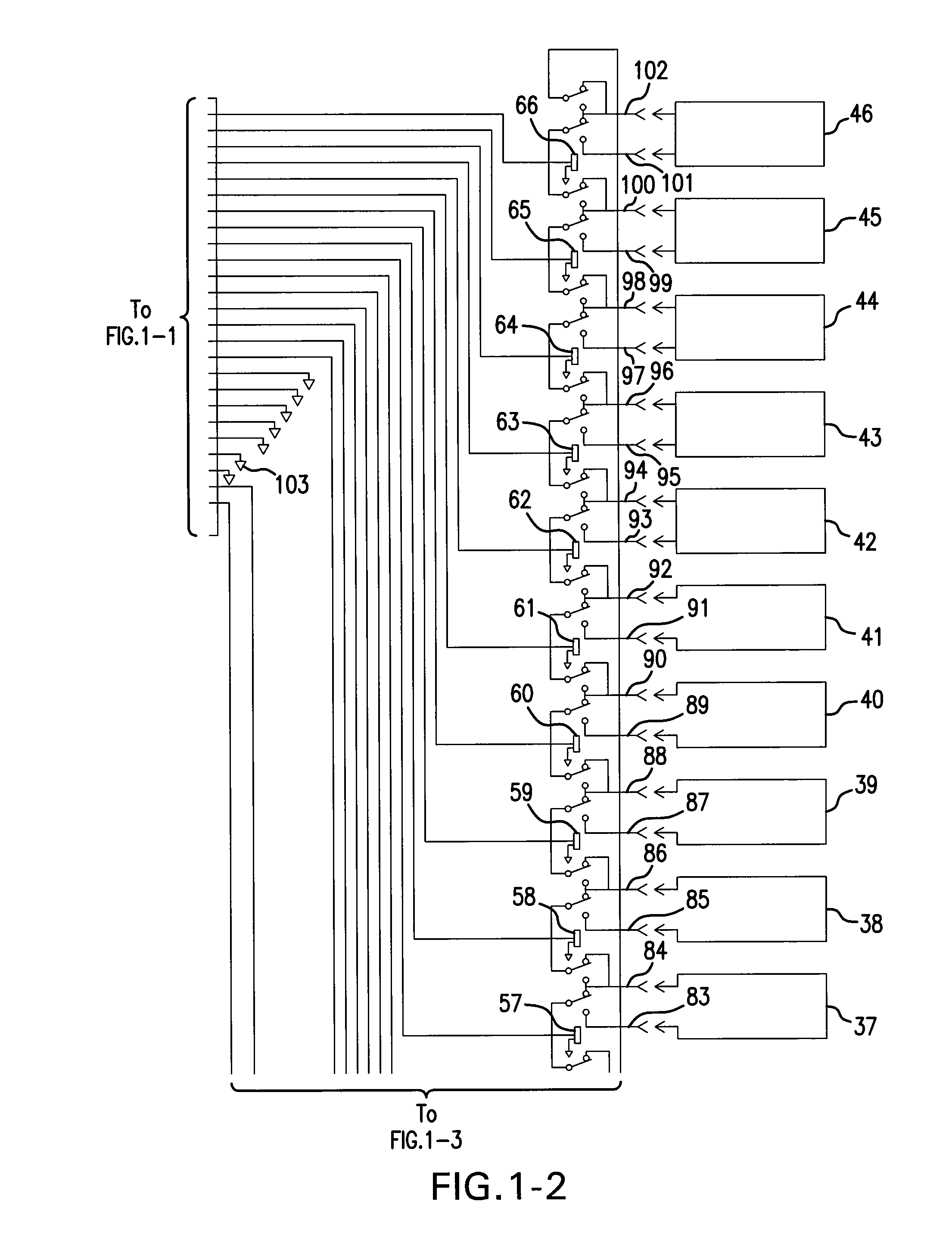

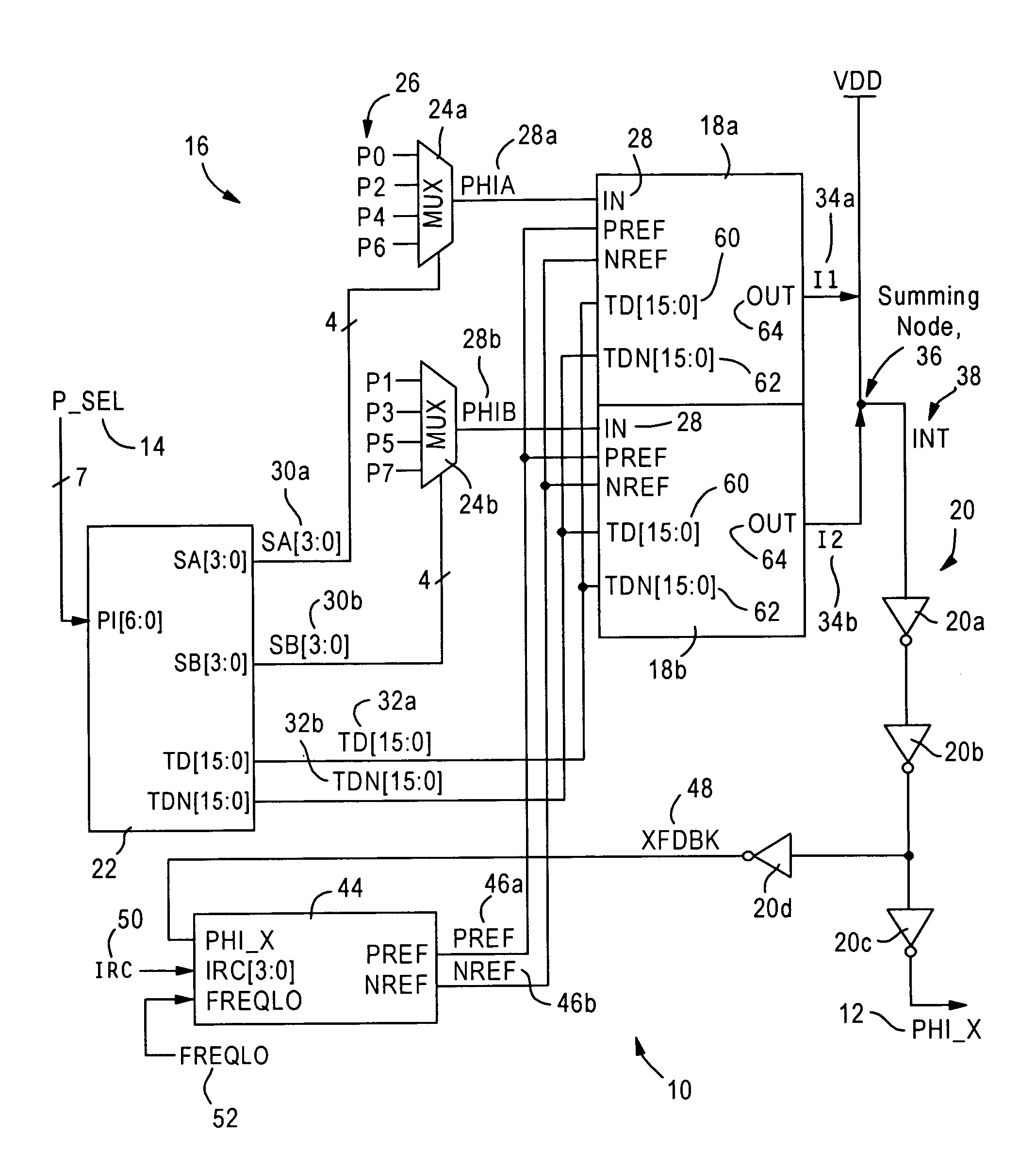

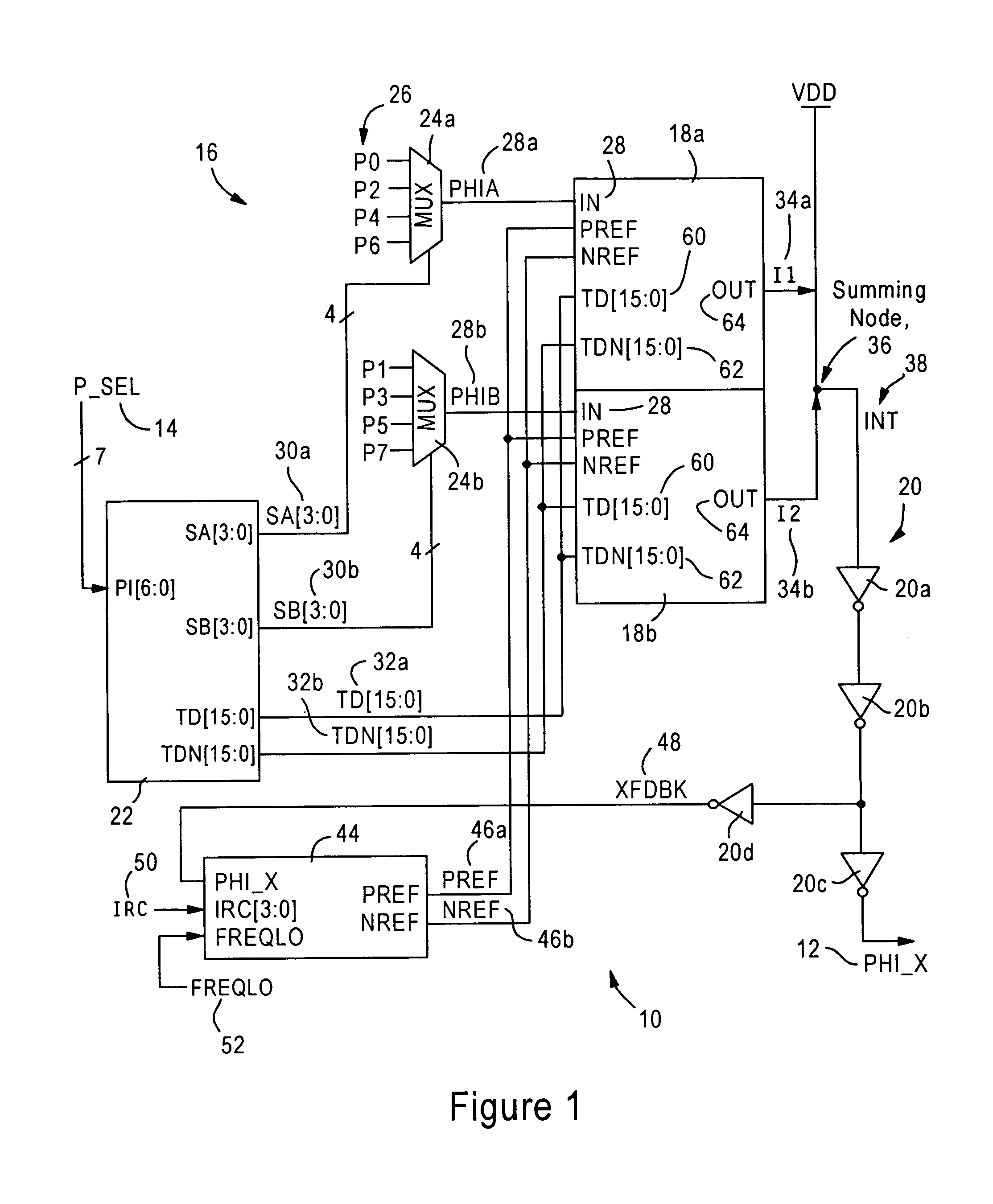

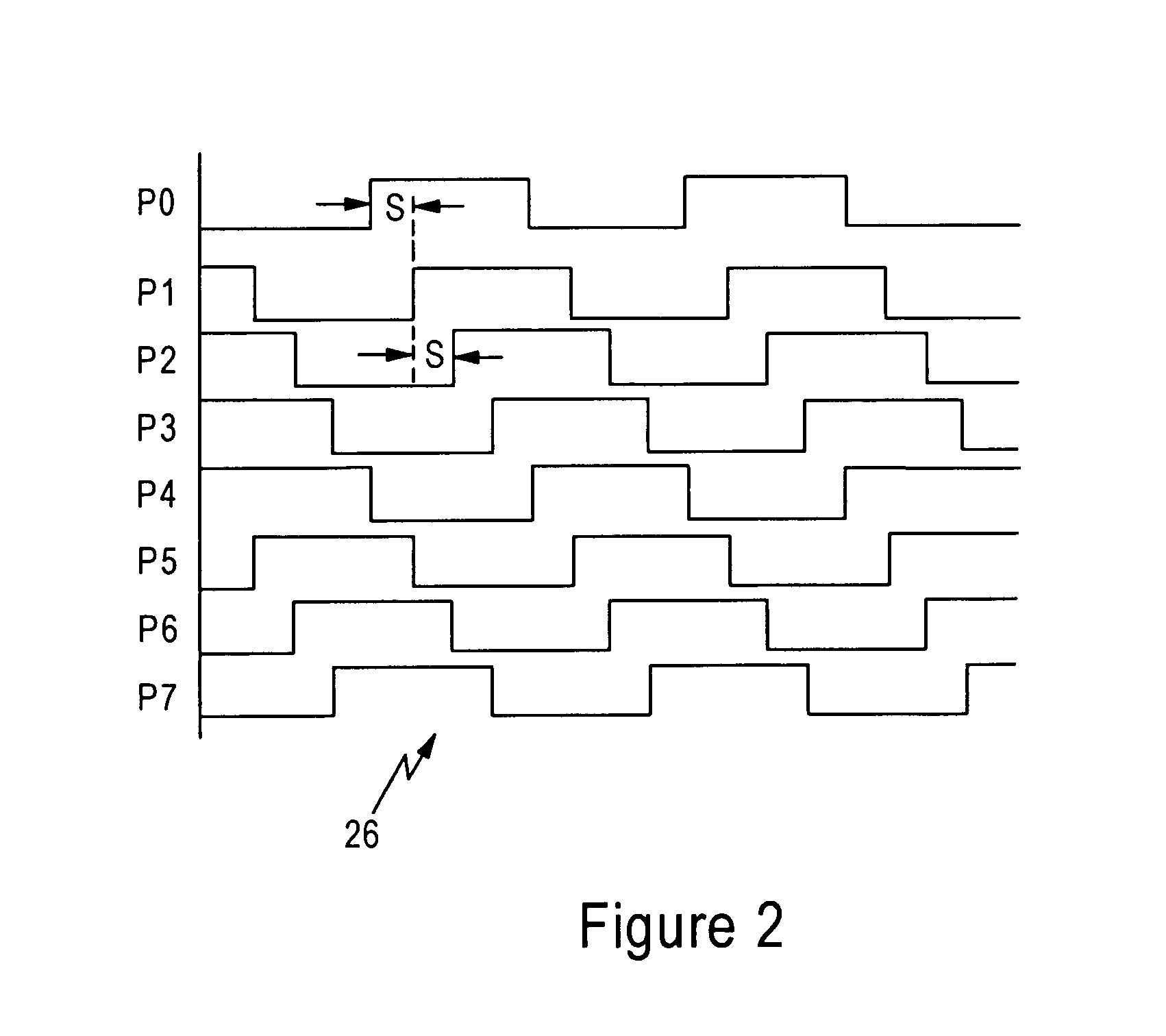

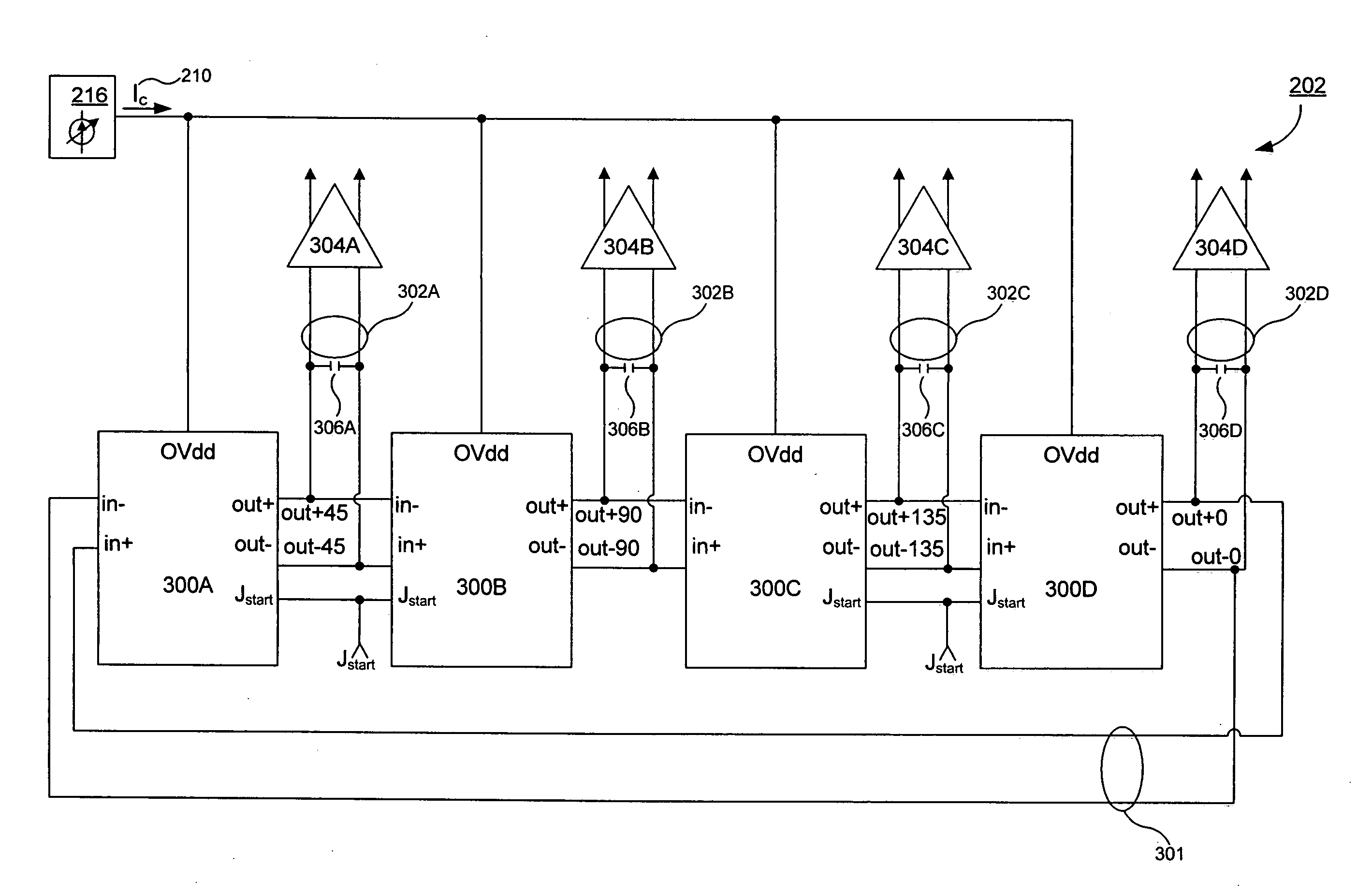

Binary controlled phase selector with output duty cycle correction

ActiveUS7613266B1Pulse automatic controlSynchronisation signal speed/phase controlAudio power amplifierBinary control

A phase selection circuit having a selection circuit, binary weighted current sources, and an amplifier circuit. The phase selection circuit is configured for selecting adjacent phase signals from a number of equally-spaced phases of a clock signal, based on a phase selection value. The selection circuit outputs the adjacent phase signals to respective first and second binary weighted current sources, along with a digital interpolation value. The first current source outputs a contribution current onto a summing node based on the first adjacent phase signal and the digital interpolation control value, and the second current source outputs a second contribution current to the summing node based on the second adjacent phase signal and an inverse of the digital interpolation control value, resulting in an interpolated signal. An amplifier circuit outputs the interpolated signal as a phase-interpolated clock signal according to the phase selection value.

Owner:GLOBALFOUNDRIES US INC

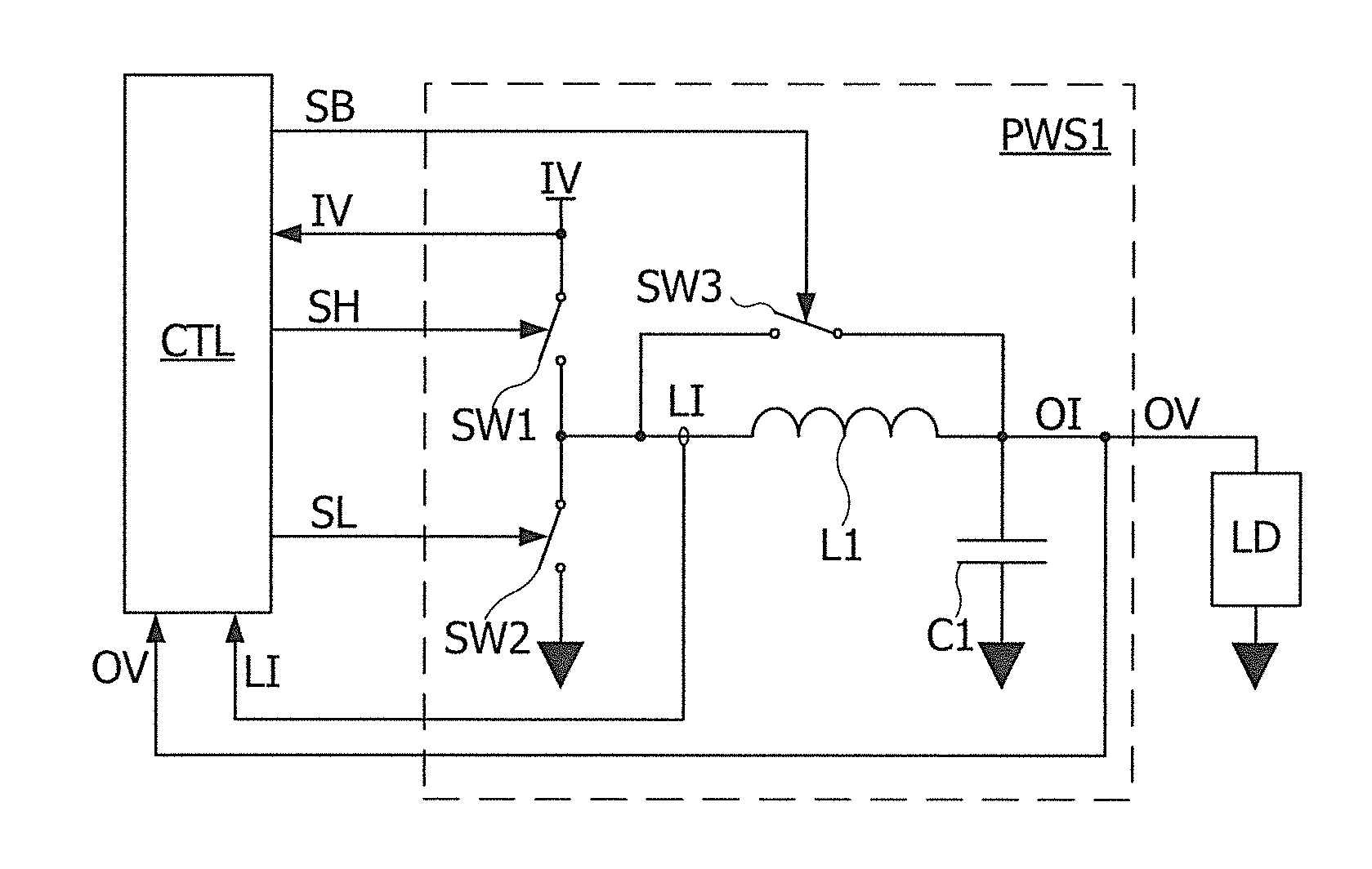

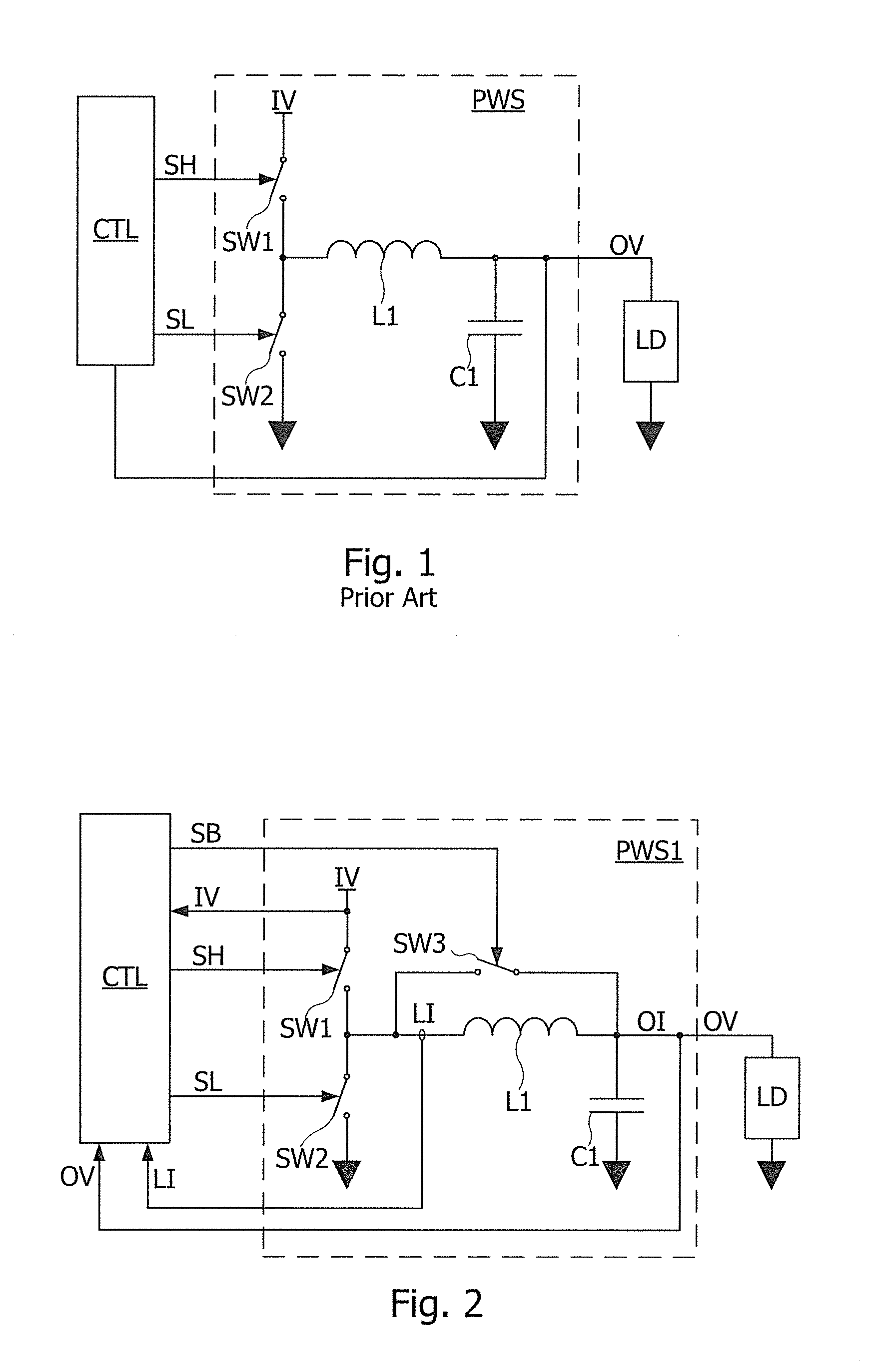

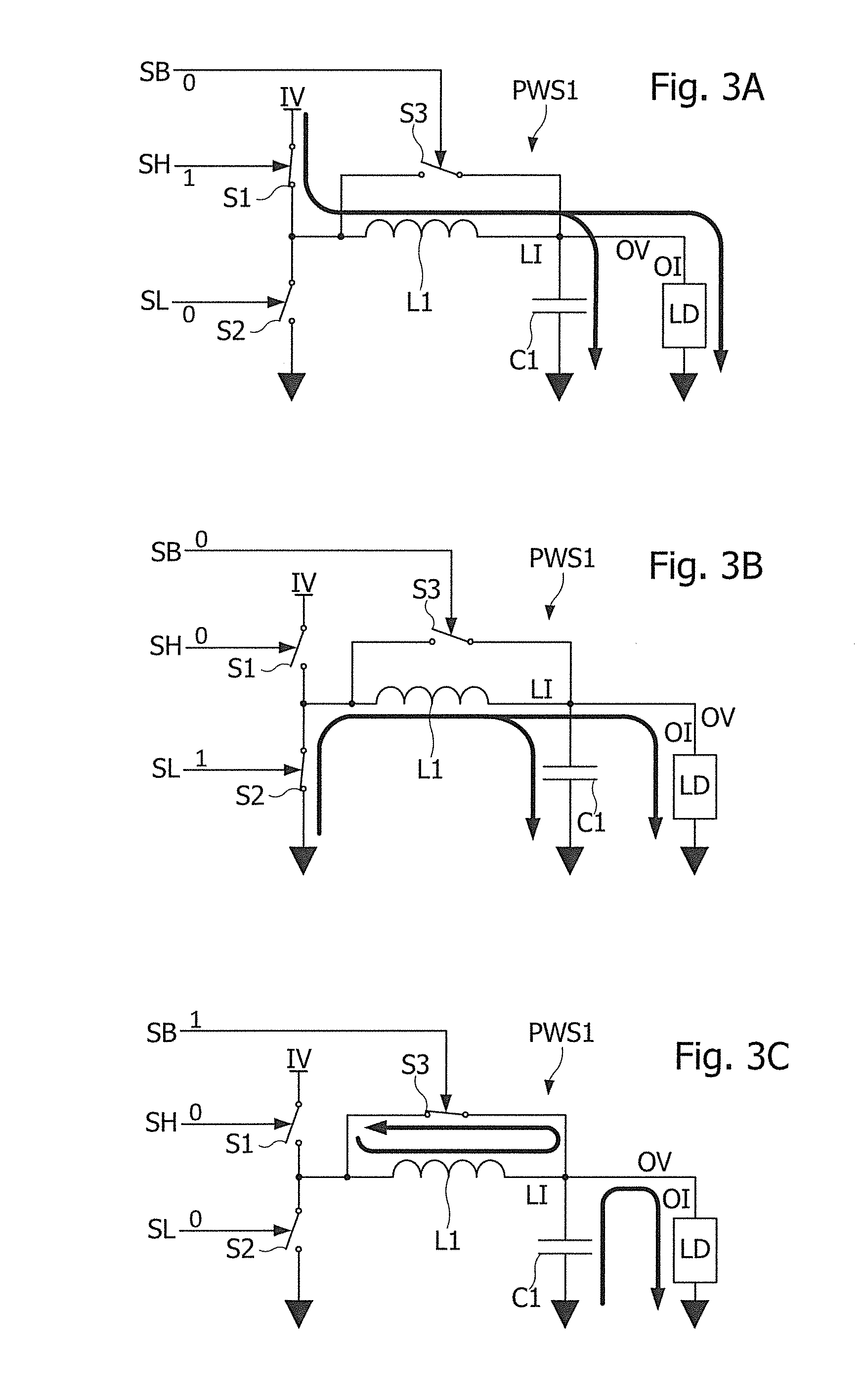

Switched power stage and a method for controlling the latter

ActiveUS20160049860A1Reduce the differenceFully comprehendedEfficient power electronics conversionDc-dc conversionControl signalLow voltage

The disclosure relates to a method of generating an output voltage, comprising: generating a regulated output voltage from a high voltage source; providing an inductor having a first terminal and a second terminal linked to a low voltage source by a capacitor, the second inductor terminal supplying the output voltage to a load; connecting the first inductor terminal exclusively either to the high voltage source or to the low voltage source or to the second inductor terminal, as a function of command signals to reduce a difference between the output voltage and a reference voltage lower than a high voltage supplied by the high voltage source; and generating a square binary control signal having a duty cycle substantially adjusted to the ratio of the output voltage to the high voltage; the first inductor terminal being connected to the high voltage source or to the low voltage source as a function of a binary state of the control signal.

Owner:ENDURA IP HLDG LTD

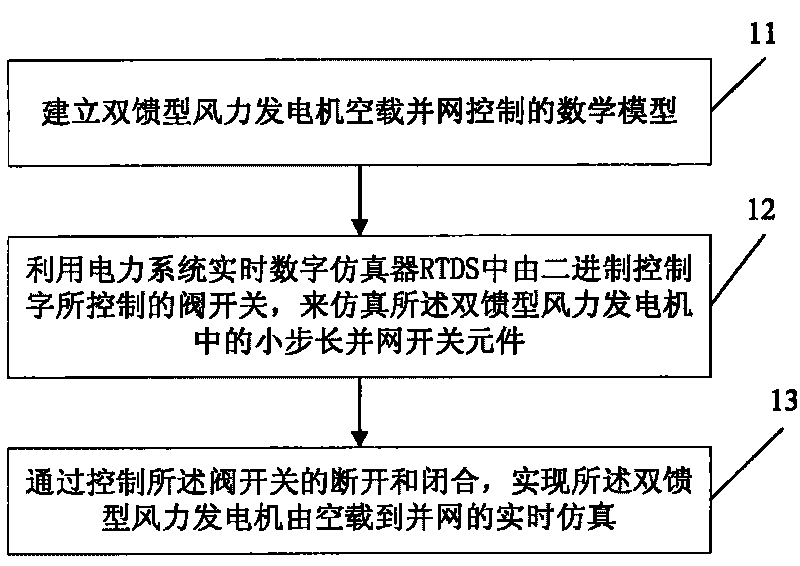

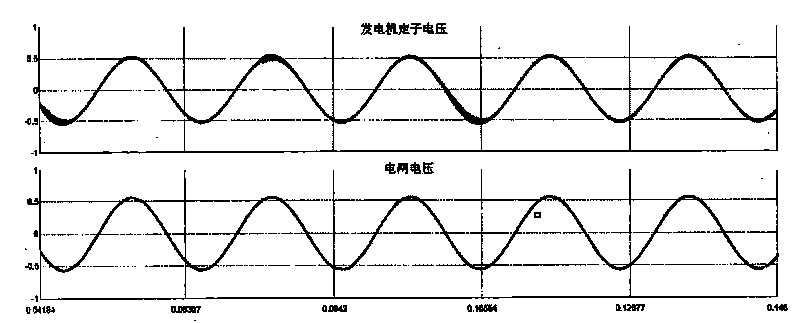

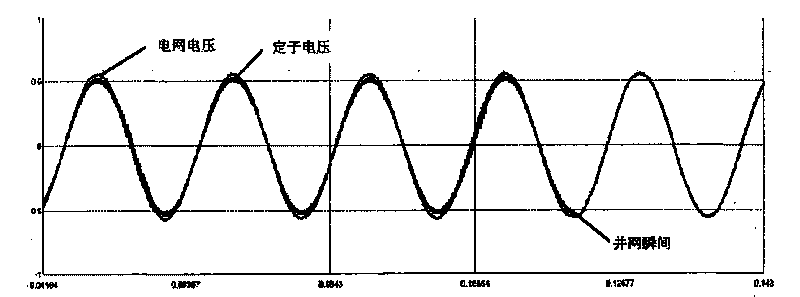

No-load cutting-in modeling and experimental method of double-fed type wind-driven generator

ActiveCN101719678AReal and accurate simulation environmentImprove verification accuracySingle network parallel feeding arrangementsSpecial data processing applicationsWind drivenReal-time simulation

The embodiment of the invention provides a no-load cutting-in modeling and experimental method of a double-fed type wind-driven generator. The modeling method comprises the following concrete steps: firstly, establishing a mathematical model for no-load cutting-in control of the double-fed type wind-driven generator; simulating a small-step cutting-in switch element in the double-fed type wind-driven generator by utilizing a valve switch which is controlled by binary control words in a real-time digital simulator (RTDS) of an electric power system; and realizing the simulation control of the double-fed type wind-driven generator from the no-load state to the cutting-in state by controlling the switching-on and the switching-off of the valve switch. The technical scheme of the invention can be implemented to realize the real-time simulation process of the double-fed type wind-driven generator from the no-load state to the cutting-in state, and the simulation model is used for carrying out the no-load cutting-in experiment on a variable-flow controller system of a practical double-fed type wind-driven generator, thereby providing real and accurate simulation environment and improving the verifying accuracy.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING)

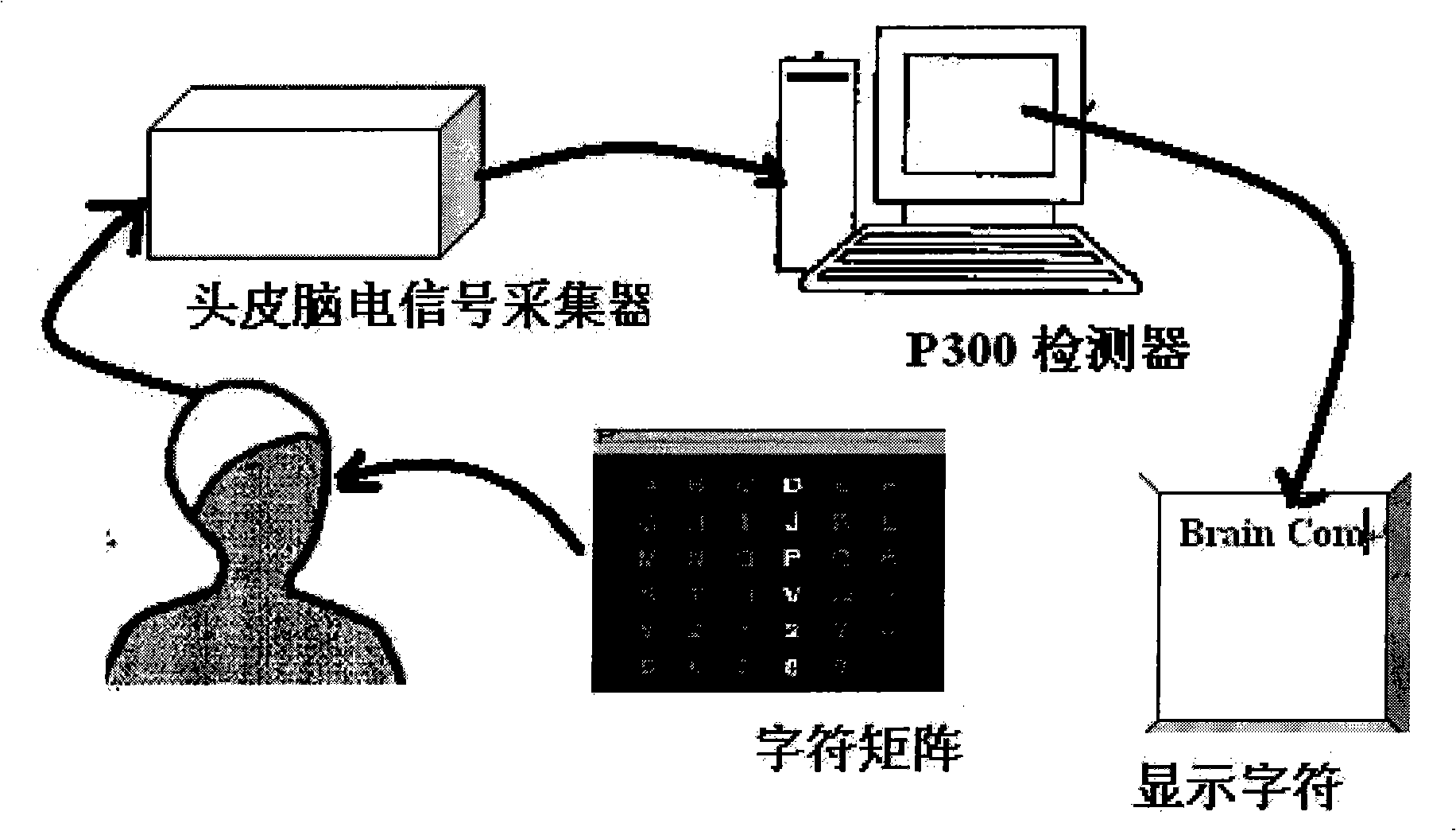

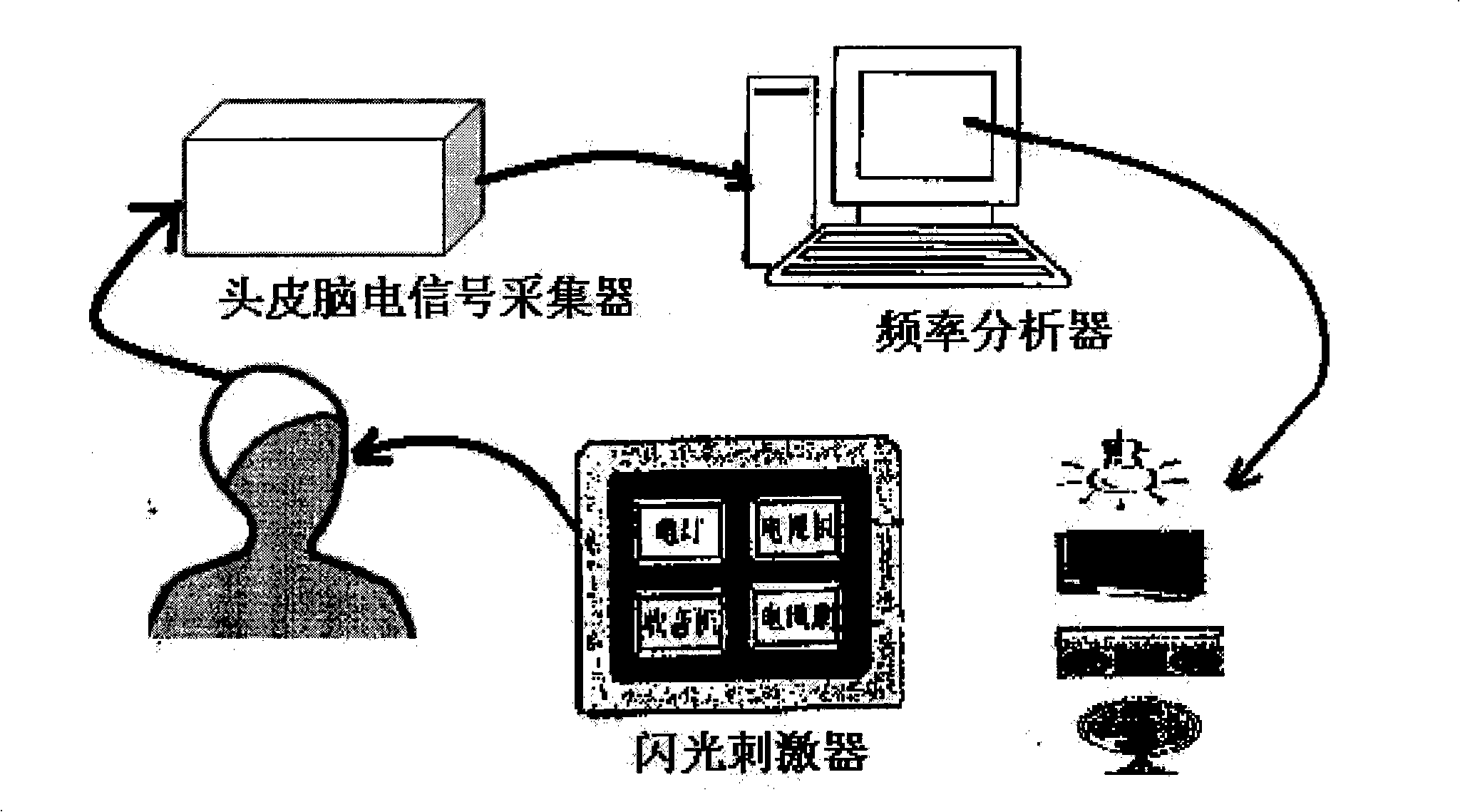

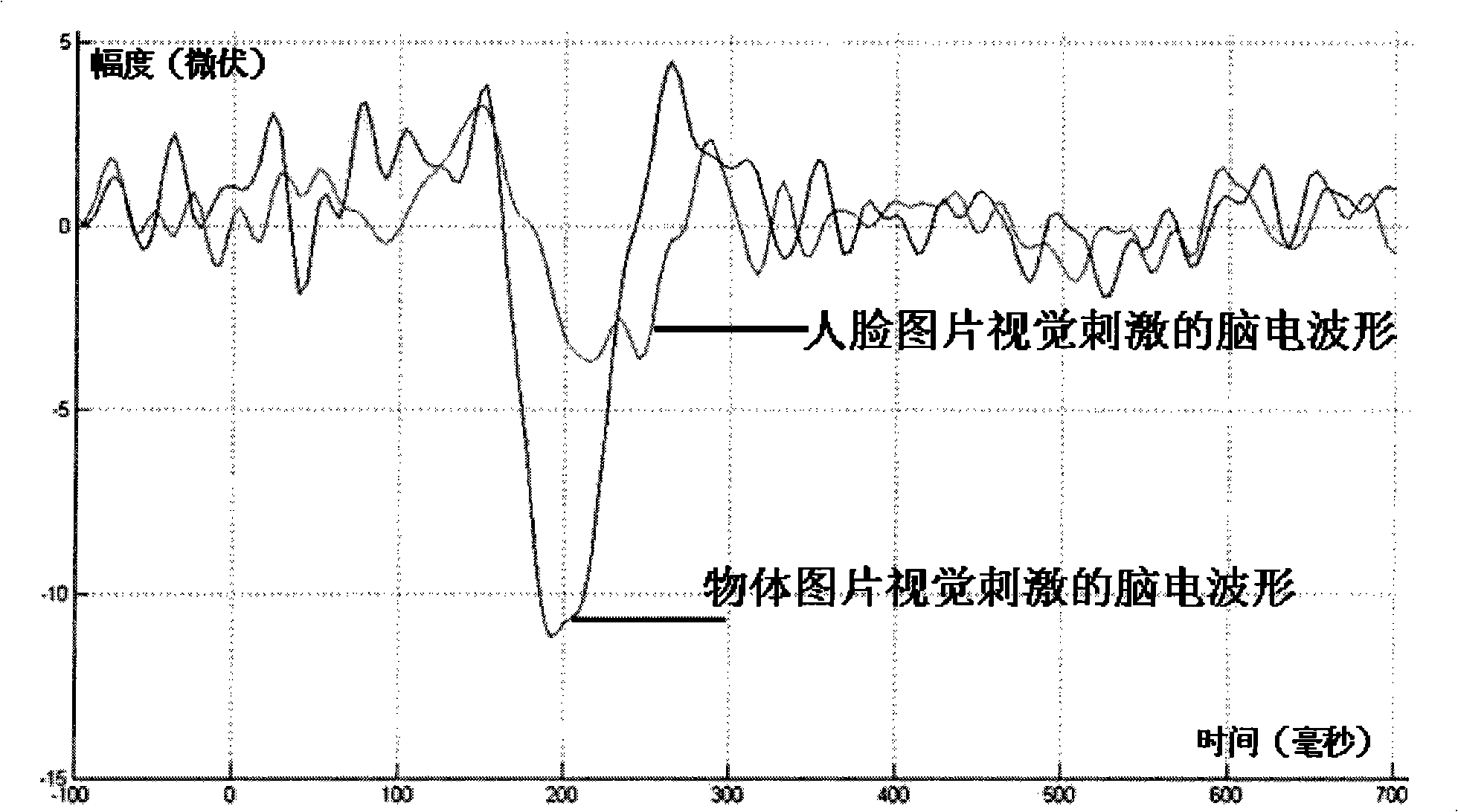

Switching control method based on brain electric activity human face recognition specific wave

InactiveCN101339413AImprove usabilityEasy to identifyInput/output for user-computer interactionCharacter and pattern recognitionControl signalPeripheral neuron

The present invention discloses a switch control method based on human face identification specificity waves in brain waves. The method comprises the following steps: a picture exciting module presents human face and object pictures synchronously at a certain interval, and if a user wants to open a device, the user should observe the human face picture, or else observe the object picture; a brain wave signal acquiring module records brain wave signals of the user scalp and performs amplification and digital-to-analog conversion; a signal detection module detects that whether specificity waves generated by the exiting of the human face picture exist in the brain wave signals or not; a switch control module transforms the detection result into binary control signals to control the switch, and if obvious specificity wave component exist in the brain waves, the device is opened, or else the device is closed. In the present invention, a direct control channel between a human brain and an external switch device is established through acquiring and analyzing human face identification specificity wave components in the brain waves without depending on normal information transmitting channels such as peripheral nerves, muscles and the like for providing a novel method of controlling external environment for persons having normal thinking abilities but suffering from dyskinesia.

Owner:BEIJING NORMAL UNIVERSITY

Source driver for display panel and drive control method

ActiveUS8519931B2Improve display qualityCathode-ray tube indicatorsInput/output processes for data processingImage signalComputer science

A source driver and drive control method that cancel offset voltages and enable quality display when a vertical synchronization signal is not fed to the source driver. A source driver receives a horizontal synchronization signal of an image signal, and a binary control signal which varies in two values in synchronization with the horizontal synchronization signal and in which start values of adjacent frames of the image signal are different, excluding a vertical synchronization signal of the image signal, to apply a drive voltage to source signal lines of a display panel. In the source driver, the vertical cycle of the image signal is analyzed based on the binary control signal; a pseudo vertical synchronization signal is generated based on the vertical cycle; and a cancel operation of an offset voltage component of the drive voltage is performed based on the pseudo vertical synchronization signal.

Owner:LAPIS SEMICON CO LTD

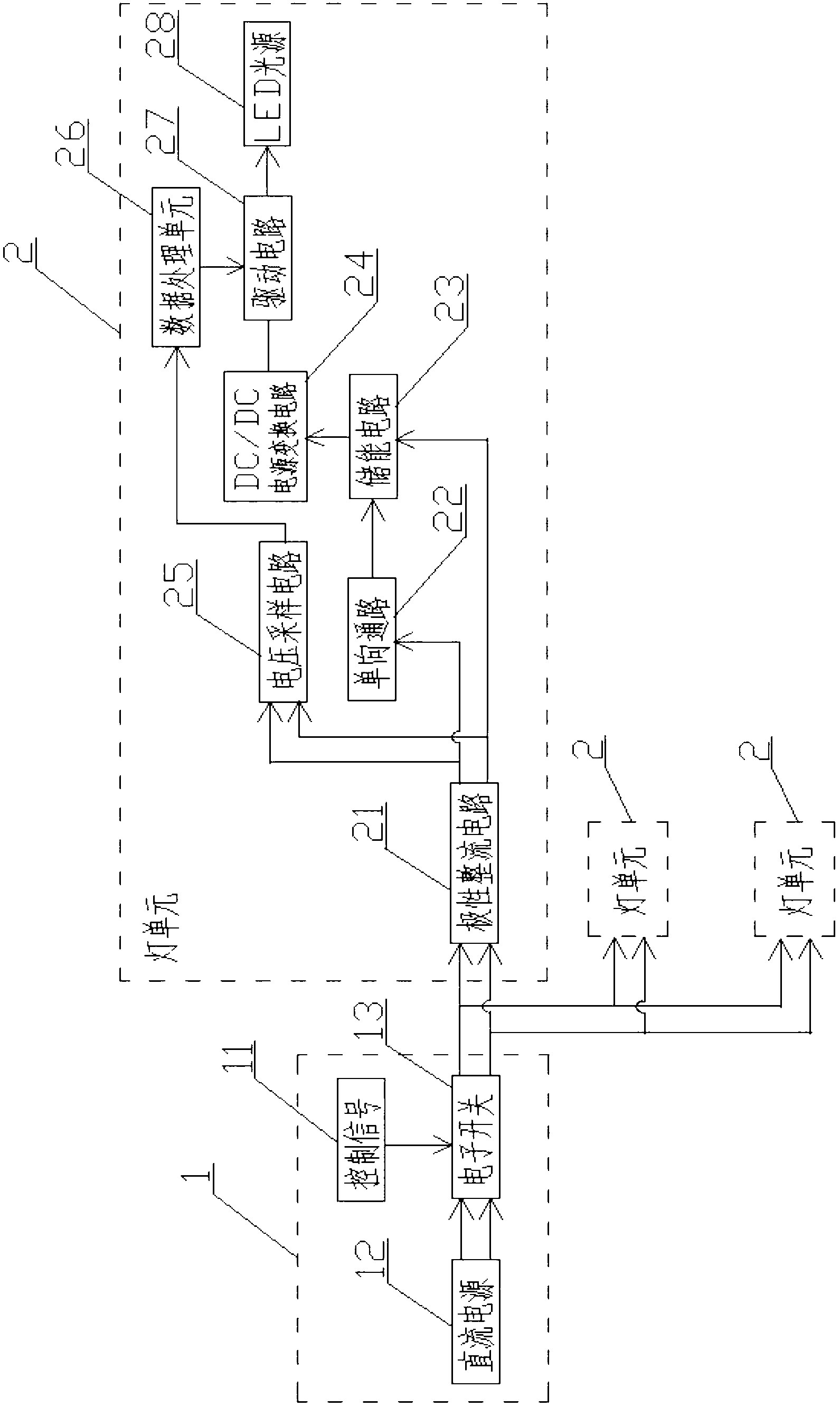

Bi-wire lamp control system based on energy storage method

InactiveCN103220861AWith energy storage functionWill not affect power supplyElectric light circuit arrangementControl systemEffect light

The invention belongs to the technical field of landscape lighting, and relates to a bi-wire lamp control system based on an energy storage method. The bi-wire lamp control system based on the energy storage method comprises a controllable power supply circuit and a plurality of lamp units, each lamp circuit receives a binary control command from the controllable power supply circuit in a broadcast mode, therefore, a light-emitting diode (LED) light source can change working condition according to the binary control command, only two wires are used for transmitting energy and transmitting control information to each lamp unit at the same time, and a tank circuit supplies enough electric energy to the LED light source when voltage is absent temporarily. The bi-wire lamp control system based on the energy storage method has an energy storage function, even a power line is interrupted, the power supply of an LED lamp is not influenced, information can be obtained from the power line, and then the color of each lamp can be controlled to be changed. Further, wiring is simple, cost is reduced greatly, and accordingly majority of defects of an LED lighting system are overcome. In addition, the bi-wire lamp control system based on the energy storage method has good application prospects in the technical field of landscape lighting.

Owner:杭州奔云科技有限公司

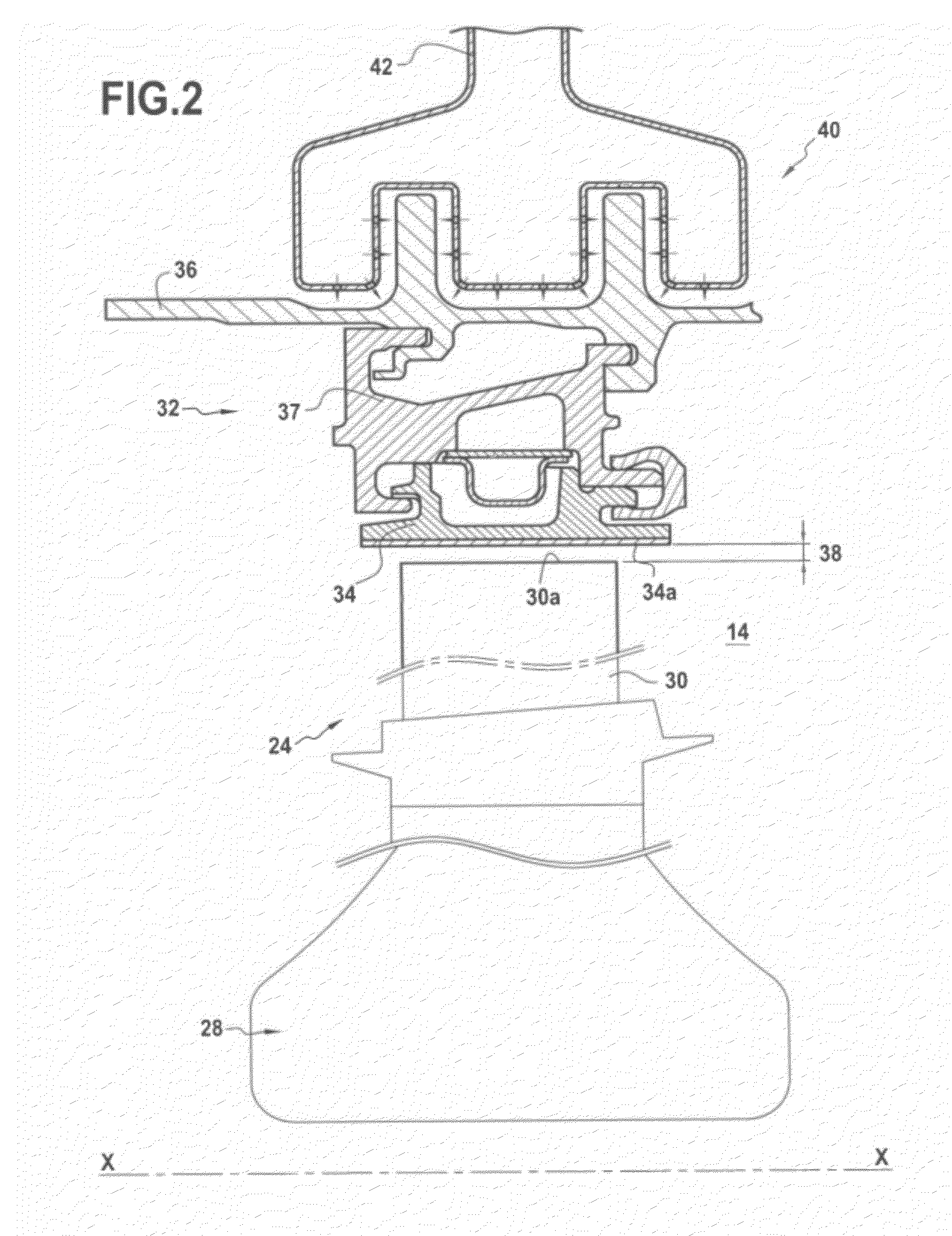

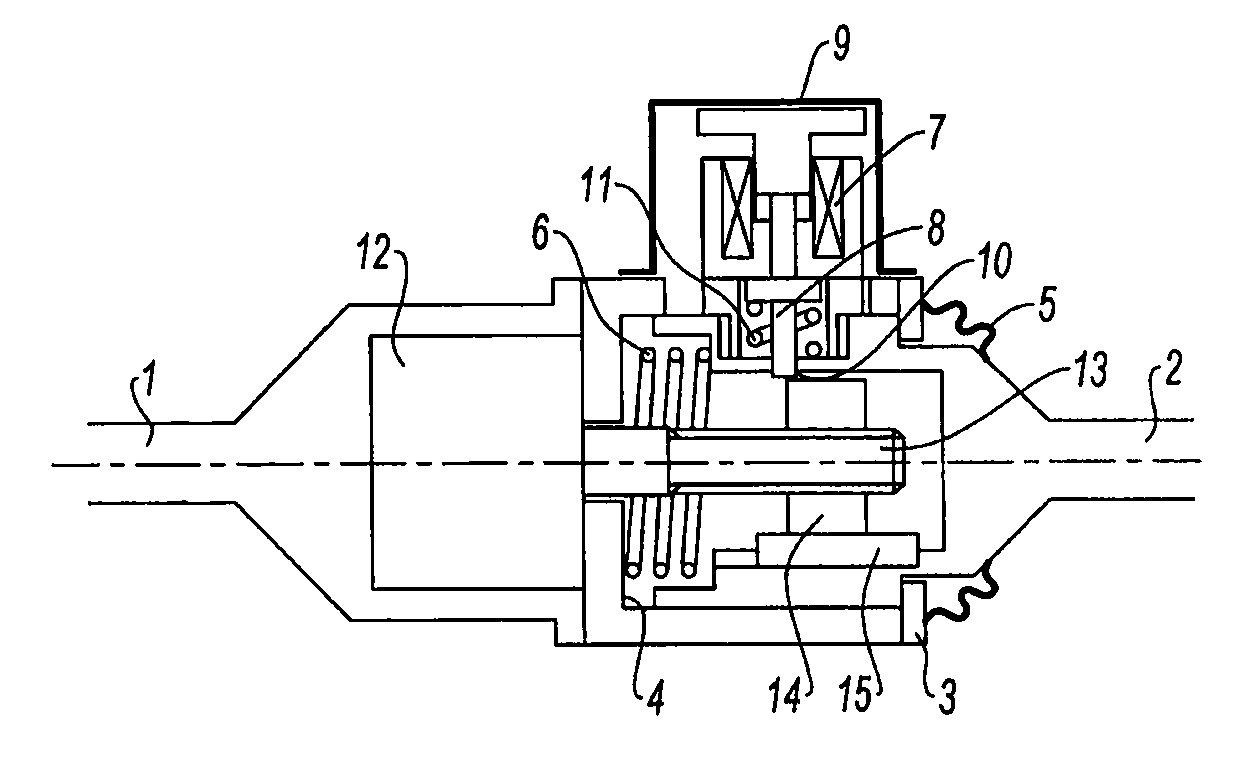

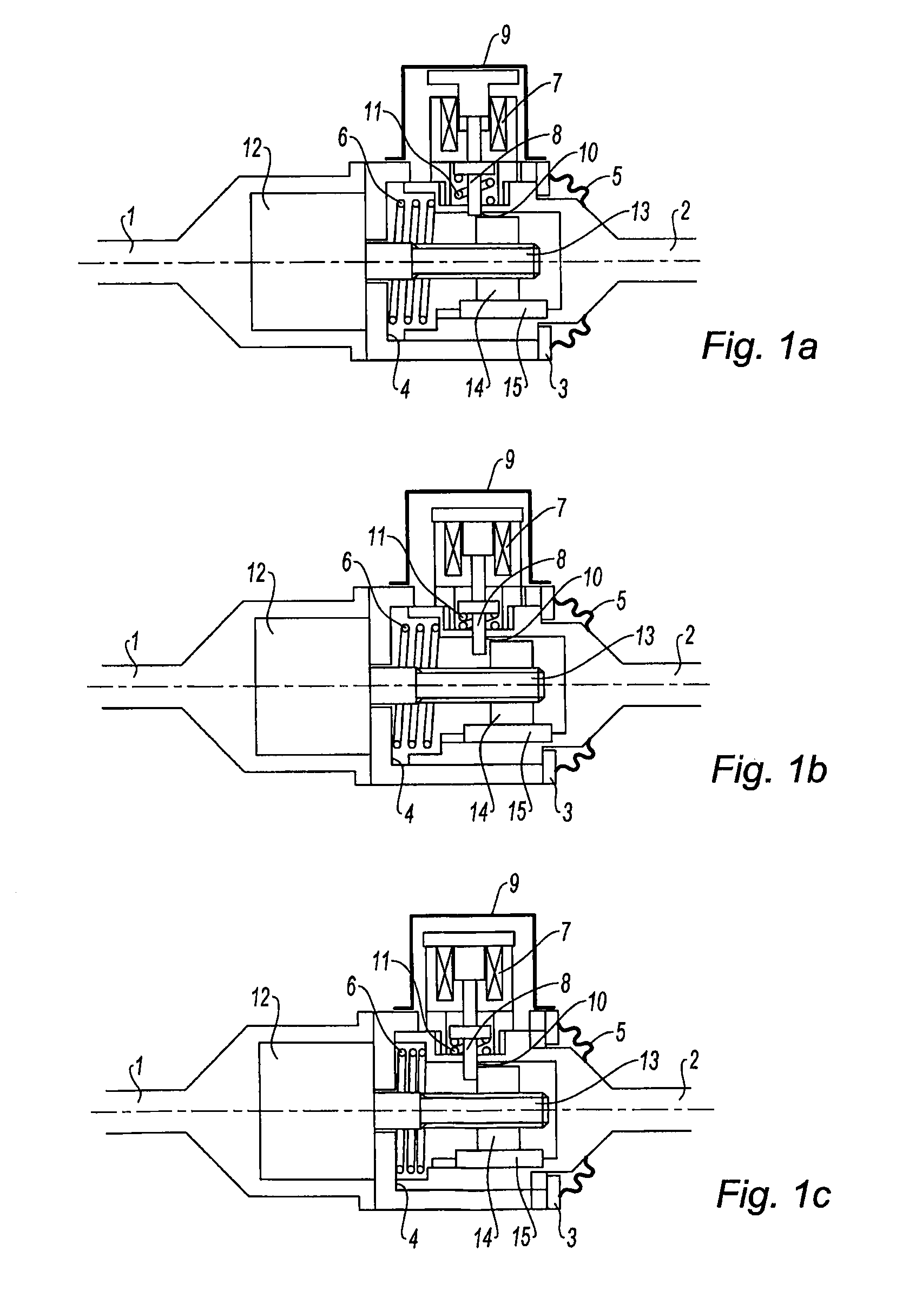

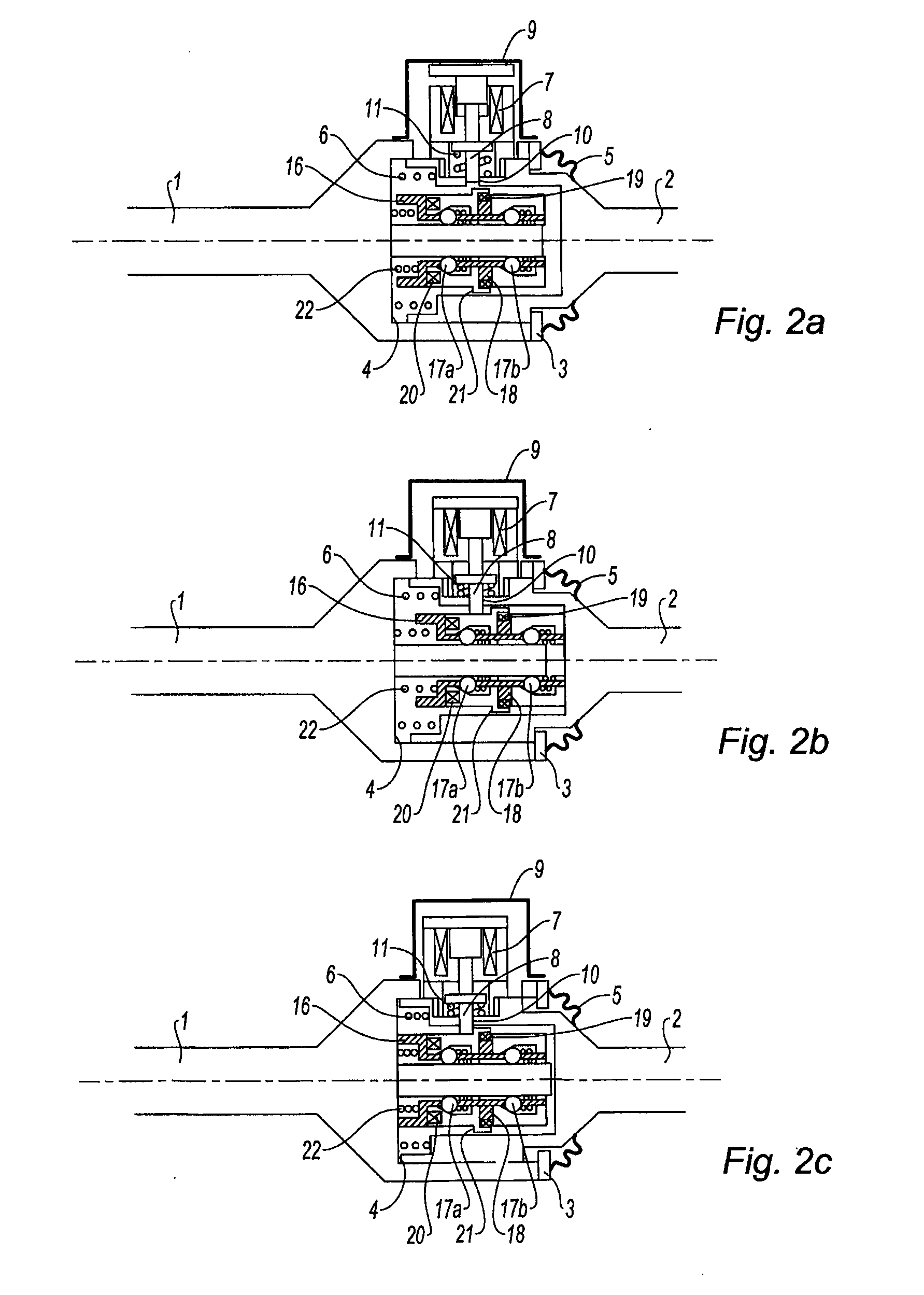

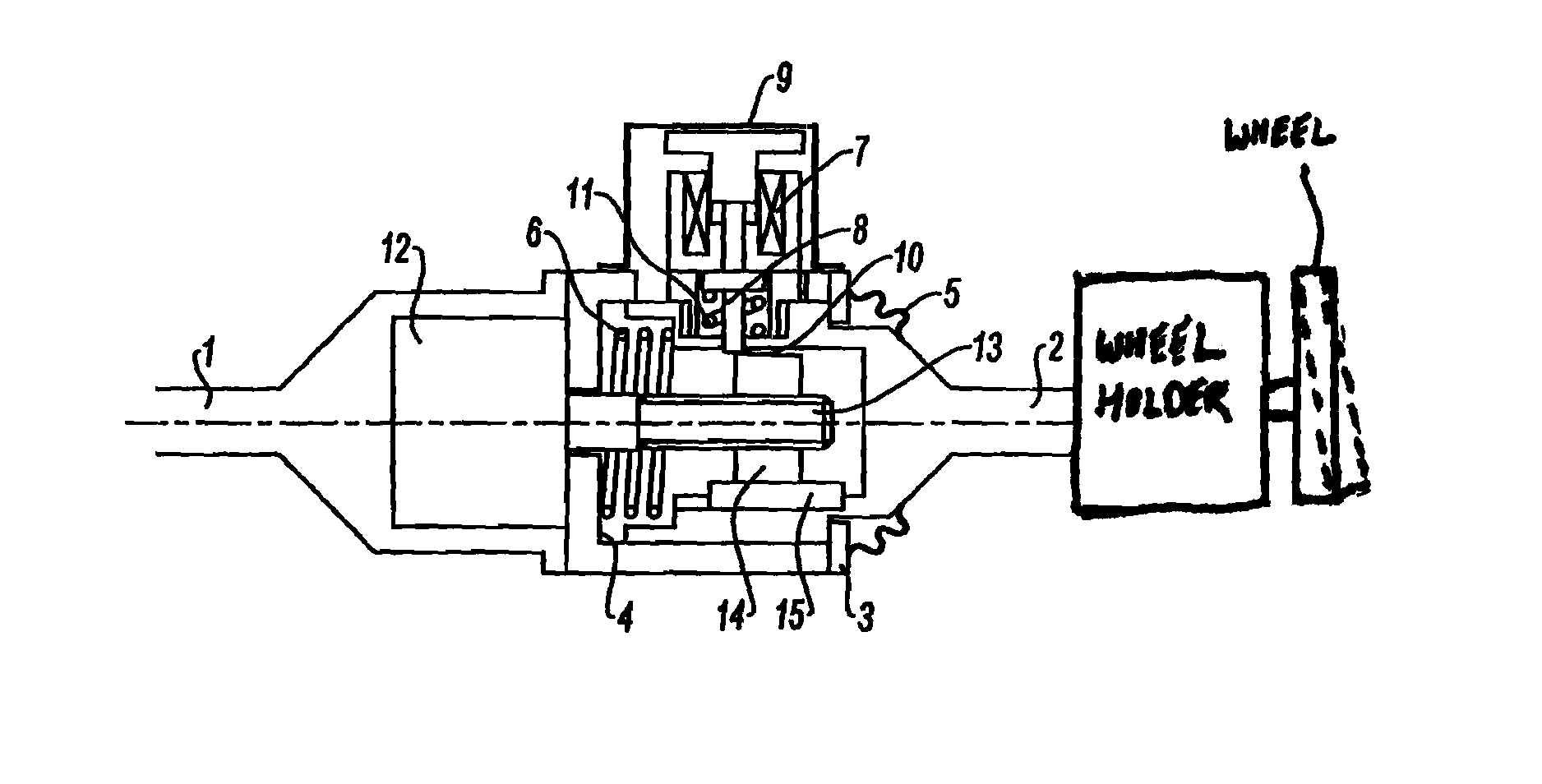

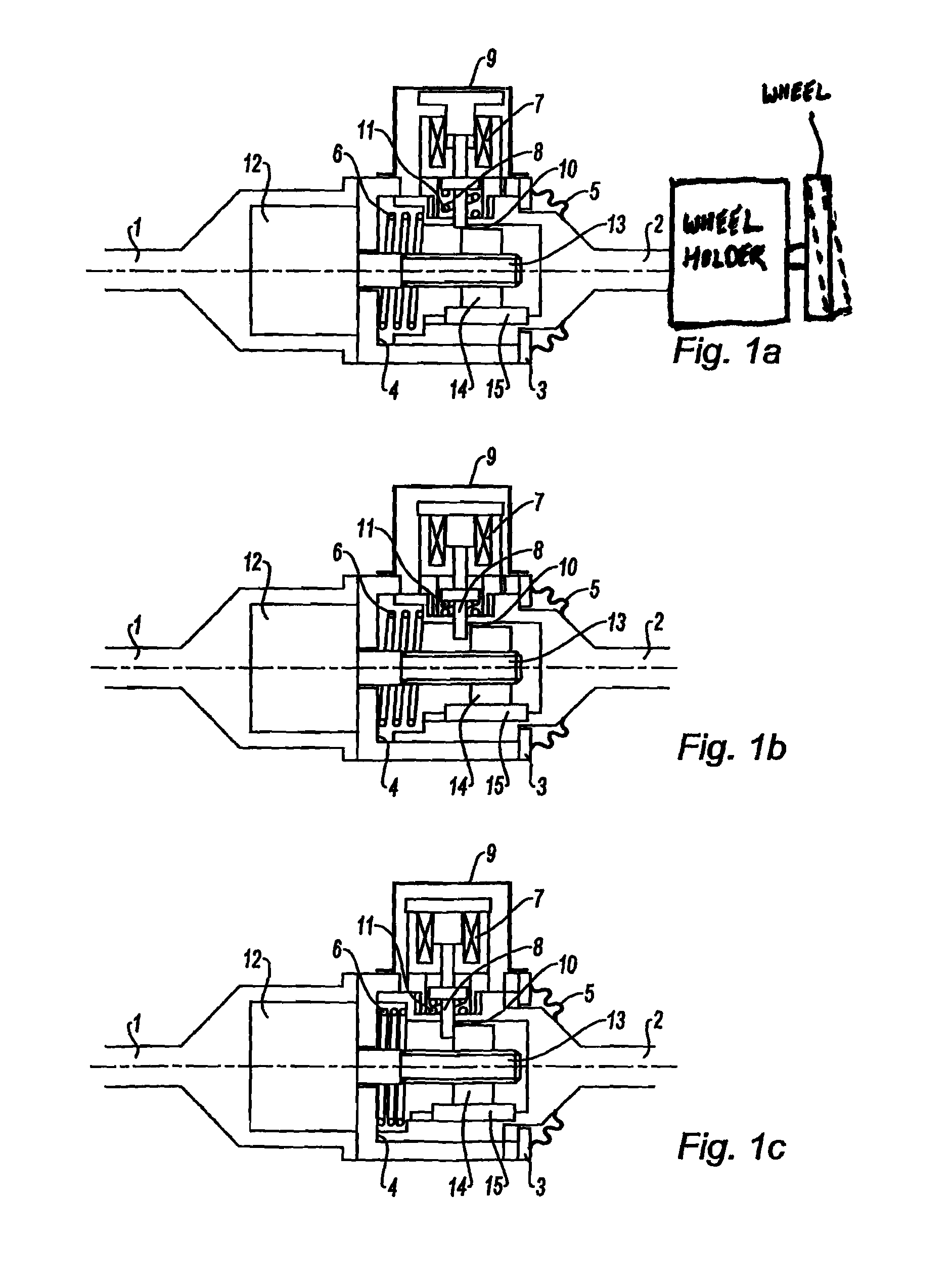

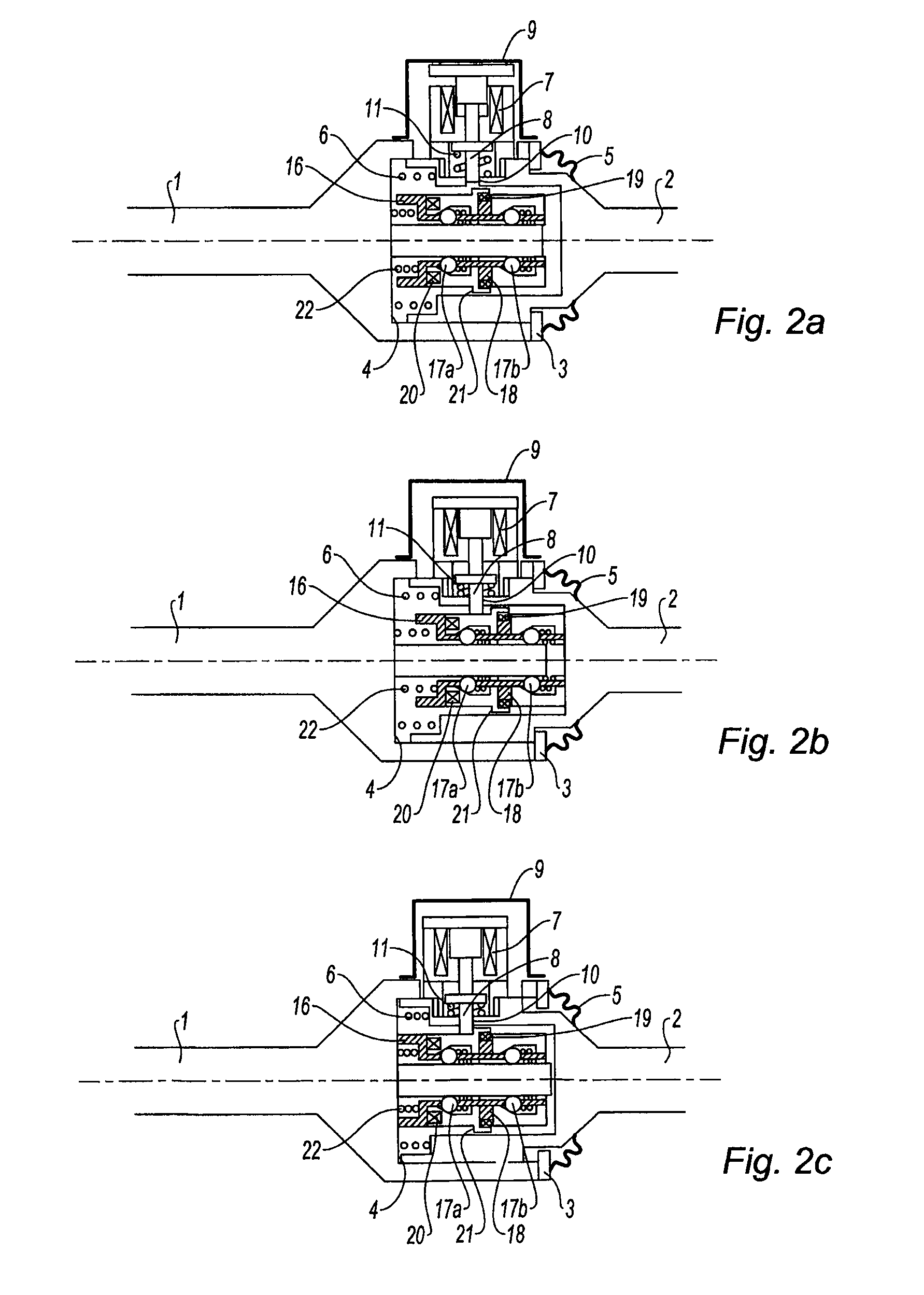

Motor Vehicle Wheel Mounting Comprising A Binary Actuator For Adjusting The Angular Position of The Plane of A Wheel

InactiveUS20100156057A1High energyImprove securitySteering partsResilient suspensionsStable stateActuator

A motor vehicle wheel set-up via a wheel holder is provided, which wheel holder is associated with the vehicle via at least one pivot in such a way that the plane of the wheel can exhibit first and second angular running positions. The set-up further has a binary actuator that has a fixed component and a moving component capable of translational movement with respect to the fixed component, moving component being secured to the wheel holder and the actuator comprising a device for the binary control of the moving member placing it in one of two stable states each allowing the wheel holder to be moved into an angular position corresponding to one angular position of the wheel plane.

Owner:MICHELIN & CO CIE GEN DES ESTAB MICHELIN +1

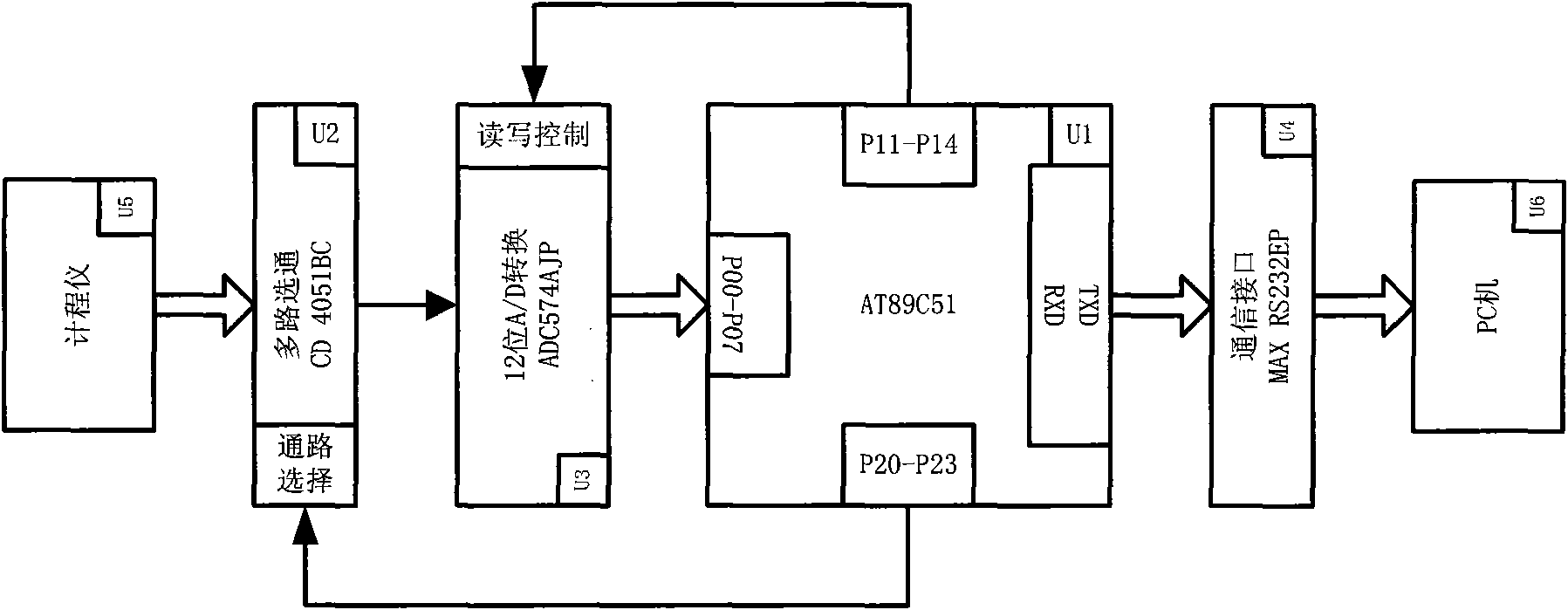

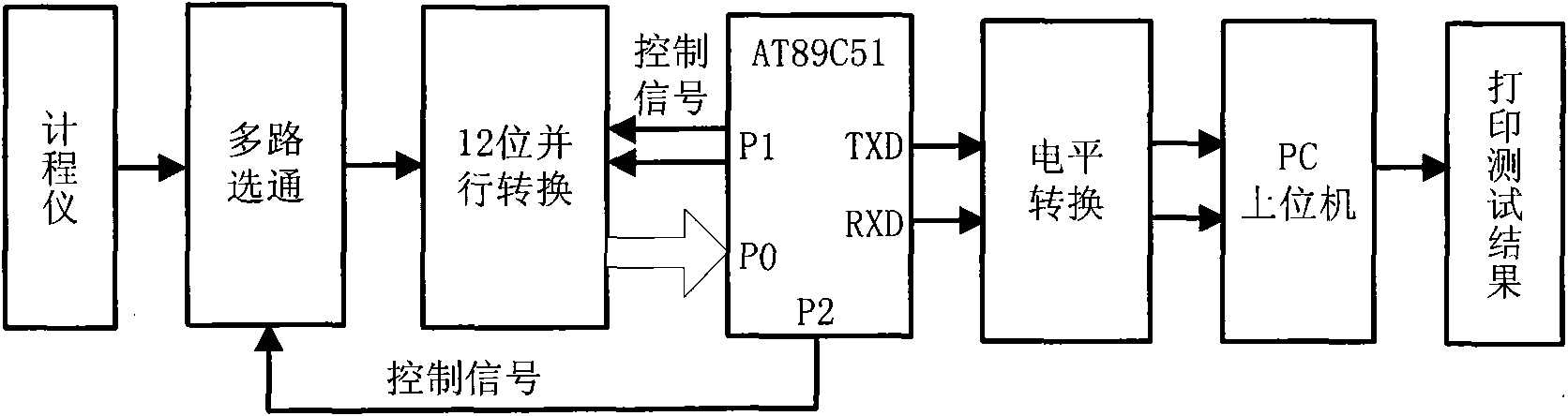

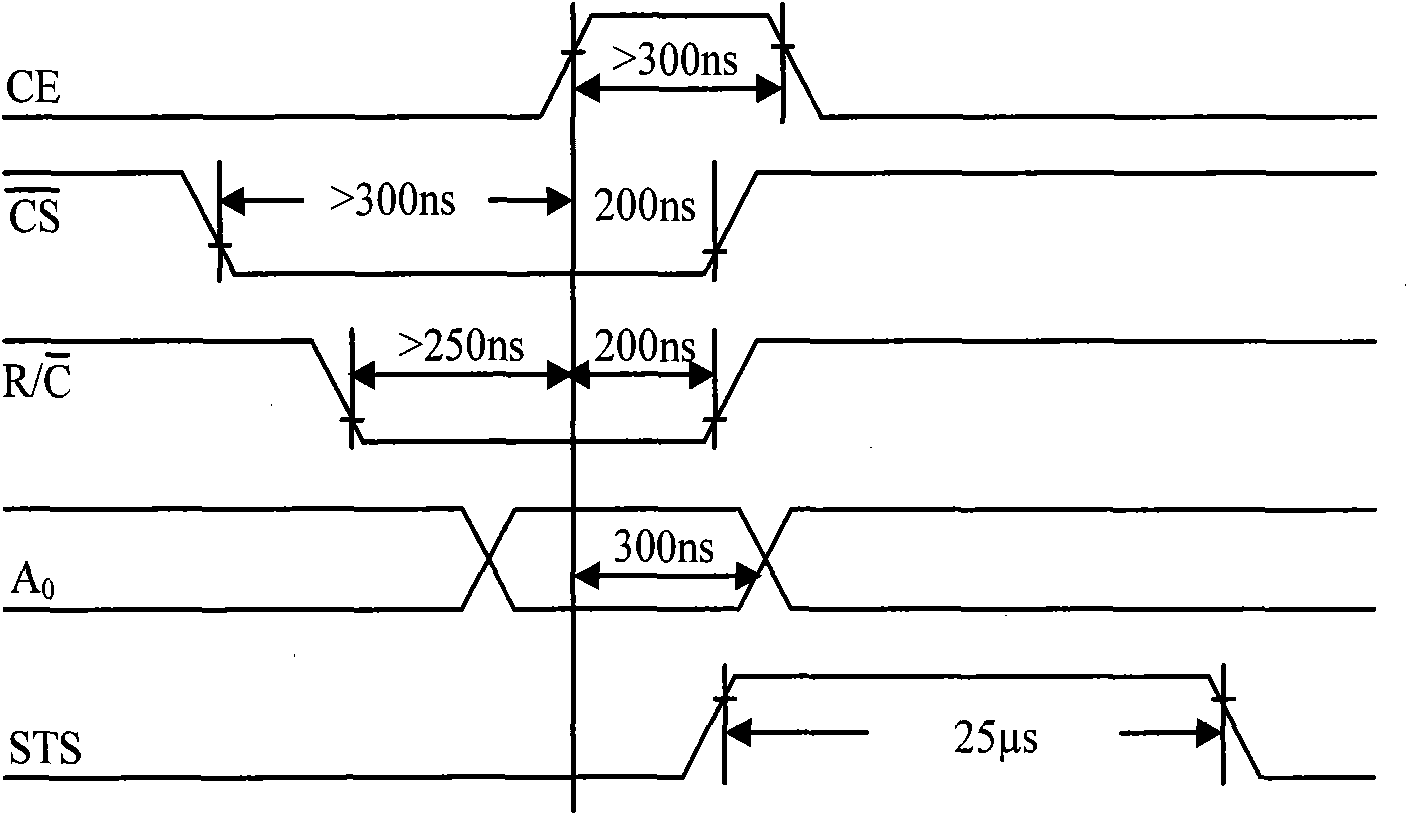

Fault detecting device of ship-used log

InactiveCN101576393AFunctionalShorten the timeMeasurement devicesCommunication interfacePersonal computer

The invention provides a fault detecting device of a ship-used log, which comprises a data processor (U1), a multiplex strobe (U2), a data collecting unit (U3), a communication interface unit (U4), a log (U5), a personal computer (PC) (U6). The input end of the multiplex strobe (U2) is connected with the log (U5). The data processor (U1) controls the three binary normal control input ends and enable ends of the multiplex strobe (U2) and determines which way is strobed. The output end of the multiplex strobe (U2) is connected with the input end of the data collecting unit (U3) to carry out the A / D conversion of the signals of the strobed way. The data processor (U1) judges the conversion status by inquiring and finishes the reading and writing operations. The data transmission is realized between the data processor (U1) and the PC (U6) by the communication interface unit (U4). Then the PC (U6) can detect the faults of all cards of the log (U5). The device can be arranged on any ship and can carry out the high-efficient and reliable fault diagnosis and fault locating at any time without the help of professional personnel.

Owner:HARBIN ENG UNIV

Reconfigurable logic gates using chaotic dynamics

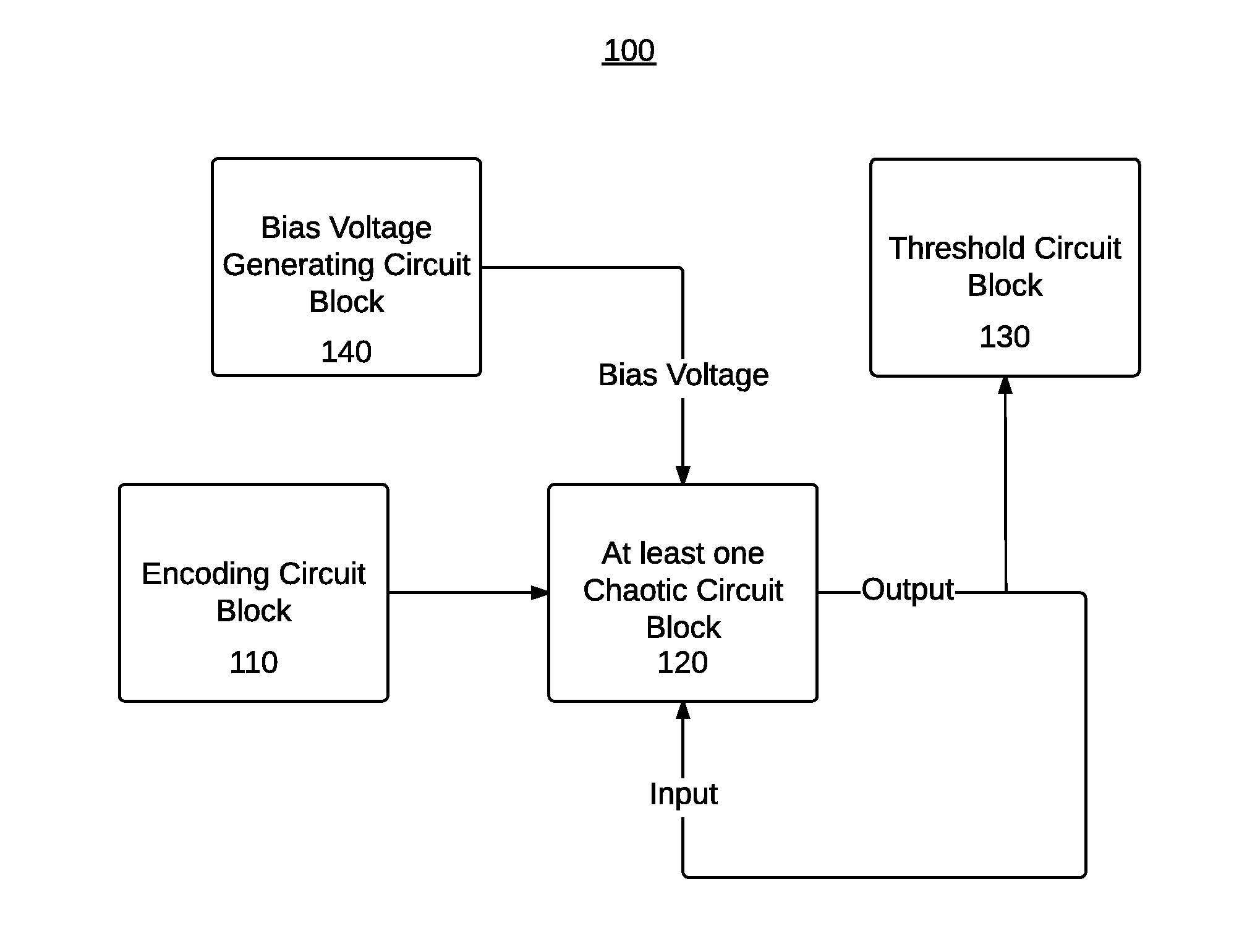

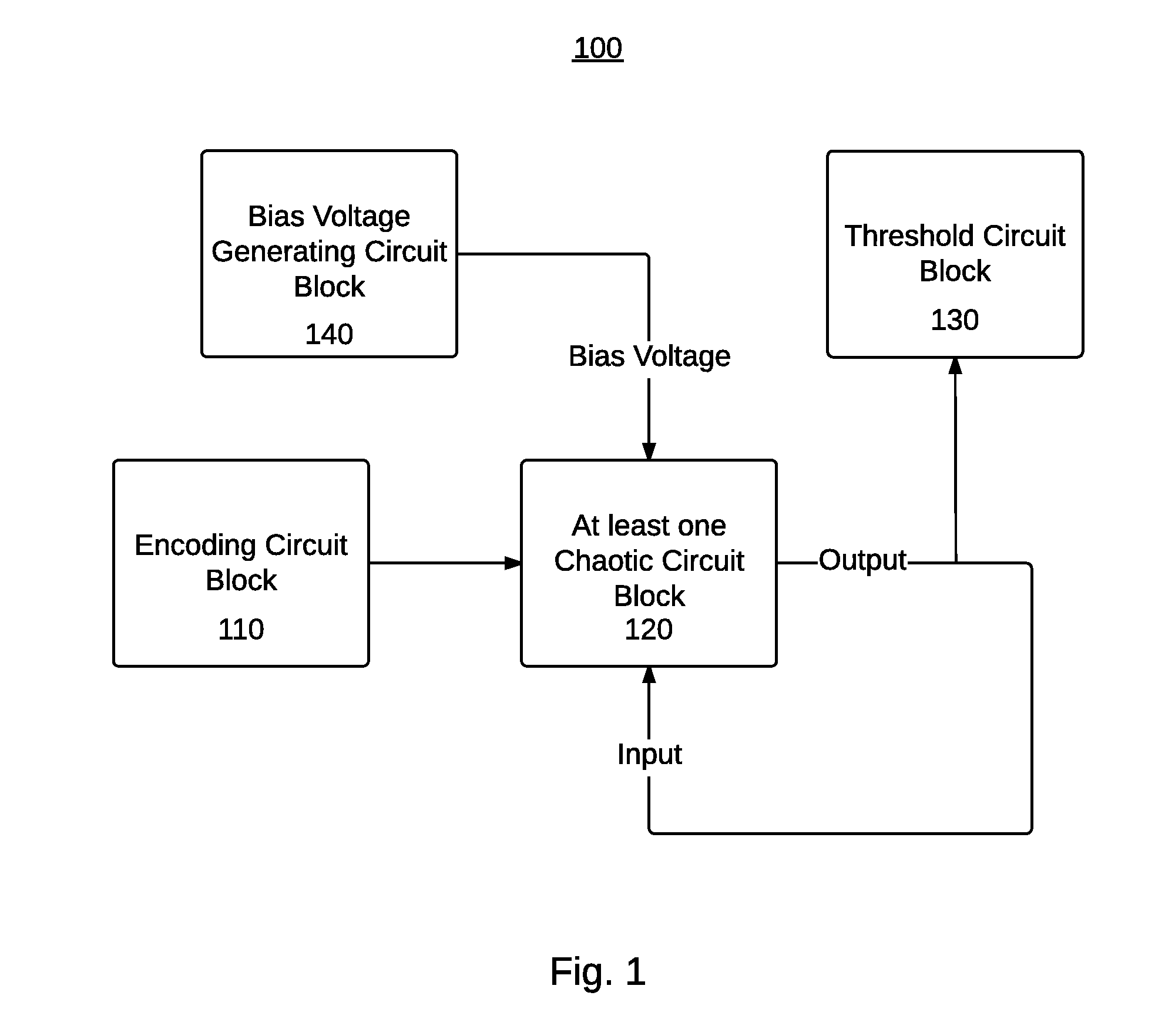

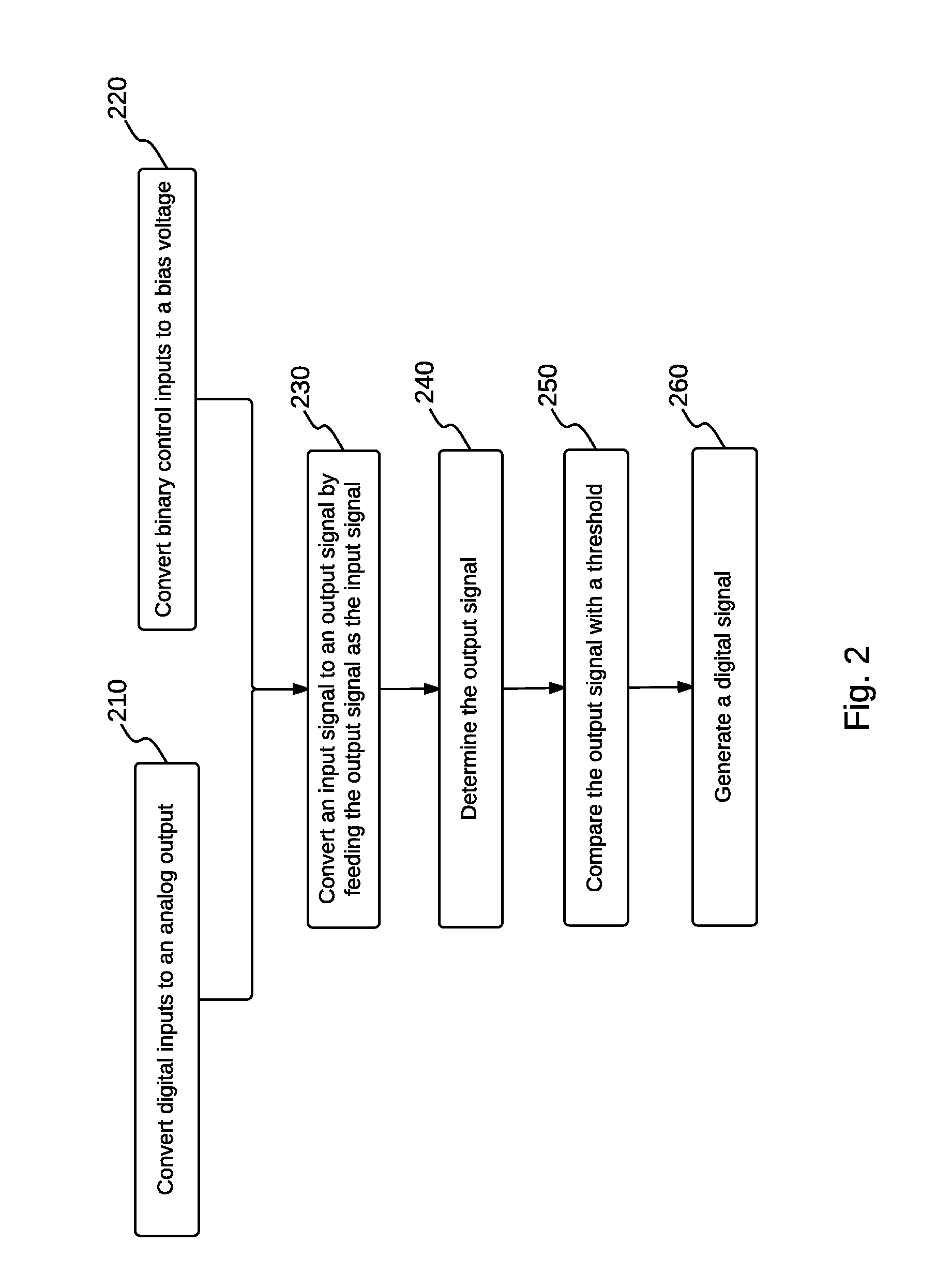

InactiveUS20160087634A1Electric signal transmission systemsAnalogue-digital convertersChaos computingDigital input

The present invention provides apparatuses and methods for chaos computing. For example, a chaos-based logic block comprises an encoding circuit block, at least one chaotic circuit block, a bias voltage generating circuit block, and a threshold circuit block. The encoding circuit block converts a plurality of digital inputs to an analog output. The plurality of digital inputs may comprise at least one data input and at least one control input. At least one chaotic circuit block is configured to iterate converting an input signal to an output signal by feeding the output signal to at least one chaotic circuit as the input signal at each iteration. The bias voltage generating circuit block converts a plurality of binary control inputs to a bias voltage. The threshold circuit block compares the output signal with a predetermined threshold, thereby generating a digital signal.

Owner:UNIV OF HAWAII

Motor vehicle wheel mounting comprising a binary actuator for adjusting the angular position of the plane of a wheel

InactiveUS8419022B2High energyImprove securitySteering partsResilient suspensionsStable stateActuator

A motor vehicle wheel set-up via a wheel holder is provided, which wheel holder is associated with the vehicle via at least one pivot in such a way that the plane of the wheel can exhibit first and second angular running positions. The set-up further has a binary actuator that has a fixed component and a moving component capable of translational movement with respect to the fixed component, moving component being secured to the wheel holder and the actuator comprising a device for the binary control of the moving member placing it in one of two stable states each allowing the wheel holder to be moved into an angular position corresponding to one angular position of the wheel plane.

Owner:MICHELIN & CO CIE GEN DES ESTAB MICHELIN +1

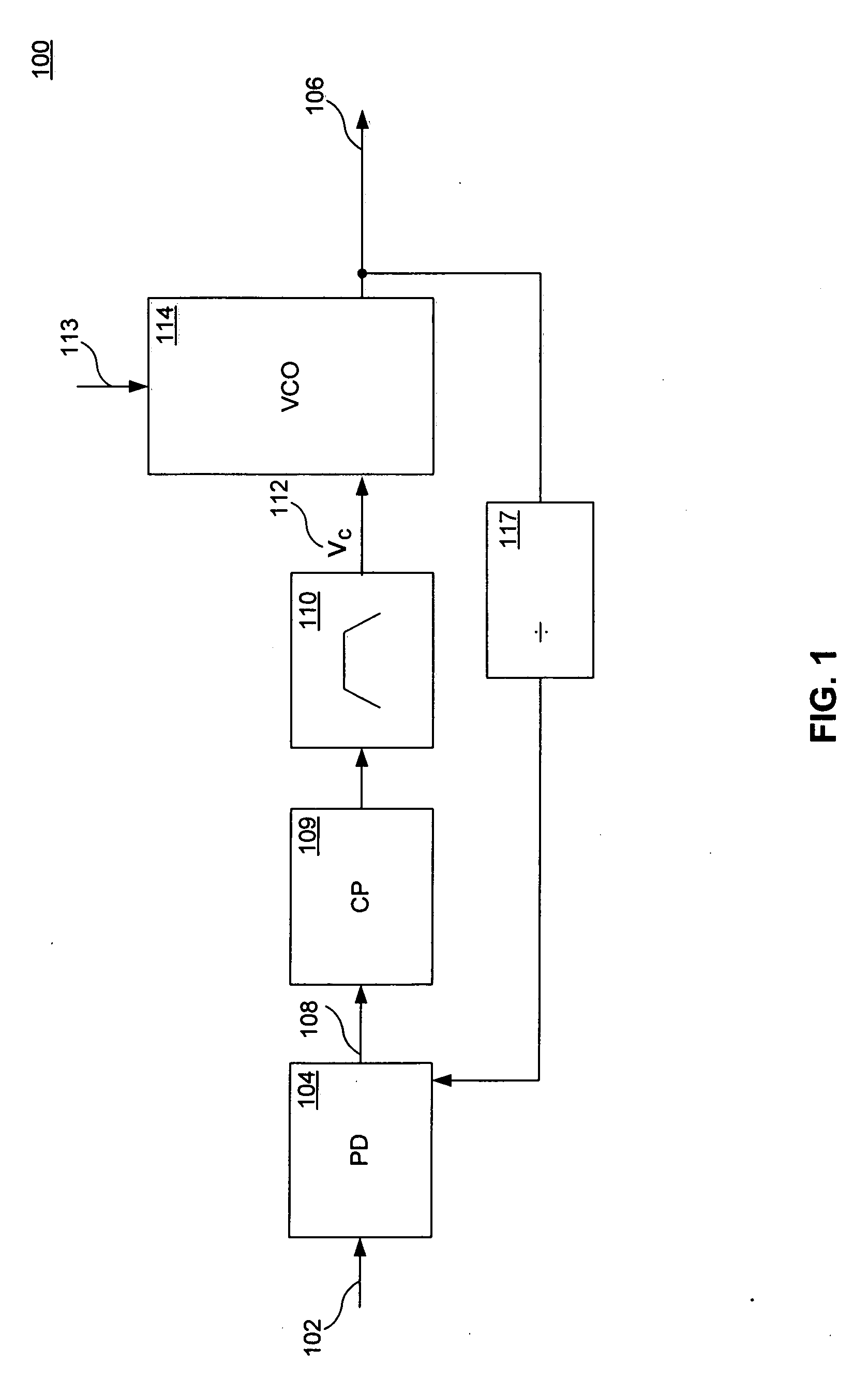

Voltage controlled oscillator with variable control sensitivity

InactiveUS20070152761A1High frequencyLess sensitive to noisePulse automatic controlCurrent cellCurrent source

An embodiment of the invention provides an apparatus and method for varying a voltage controlled oscillator (VCO) sensitivity. A VCO has an oscillator portion coupled to a variable current supply. The variable current supply has one or more enabled variable current cells. The enable variable current cell input provides a control to change the VCO sensitivity. In an example, the oscillator portion has a ring oscillator. In an example, the variable current supply has at least two variable current cells that supply the control current. A binary control signal alters a quantity of variable current cells that supply the control current. Each successive variable current cell has an output current substantially equal to twice that of a prior variable current cell.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Passive keyless entry device

InactiveUS20060215028A1Prevent transfer efficiencySimple configurationAnti-theft devicesColor television detailsTransceiverIn vehicle

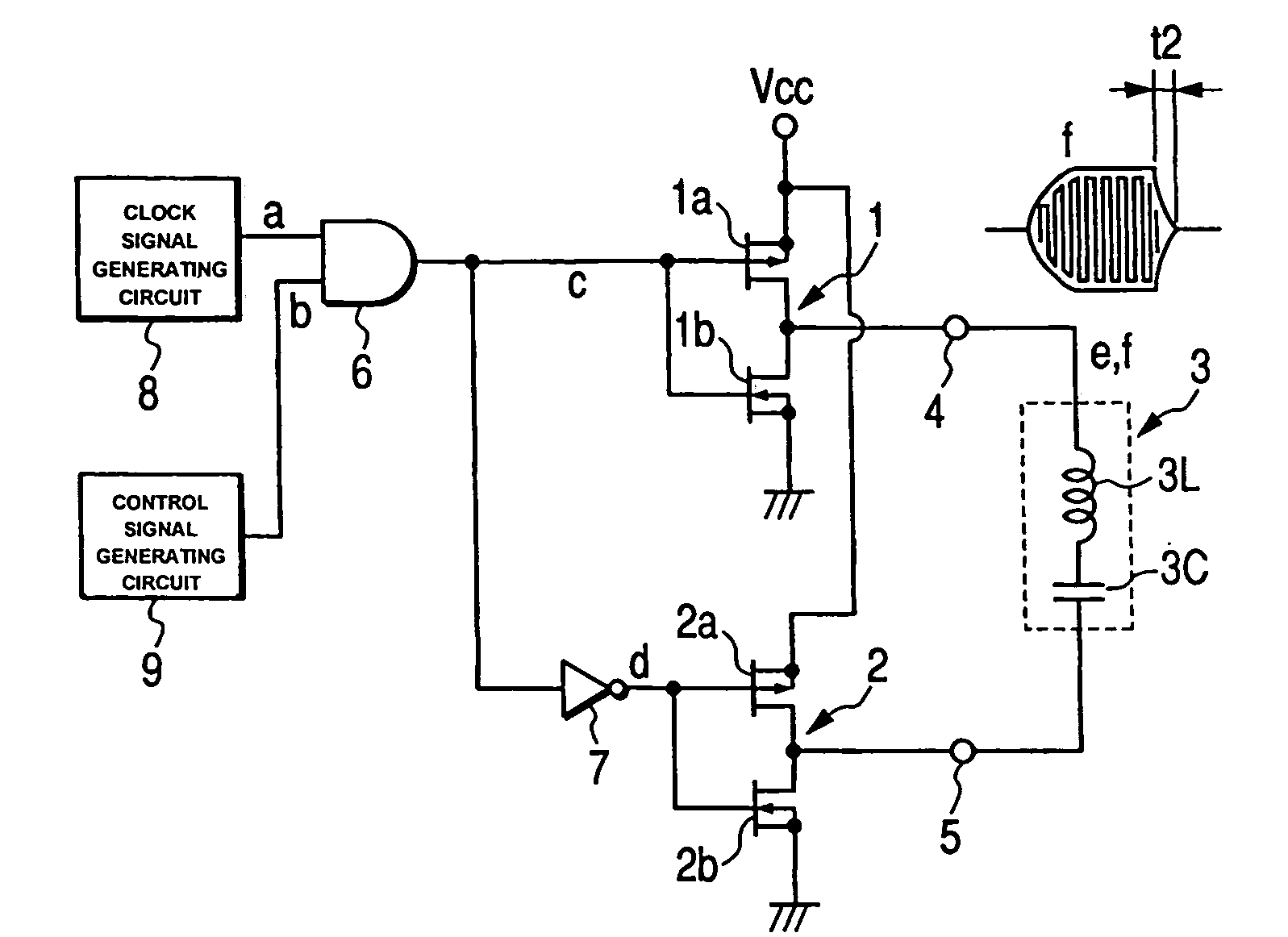

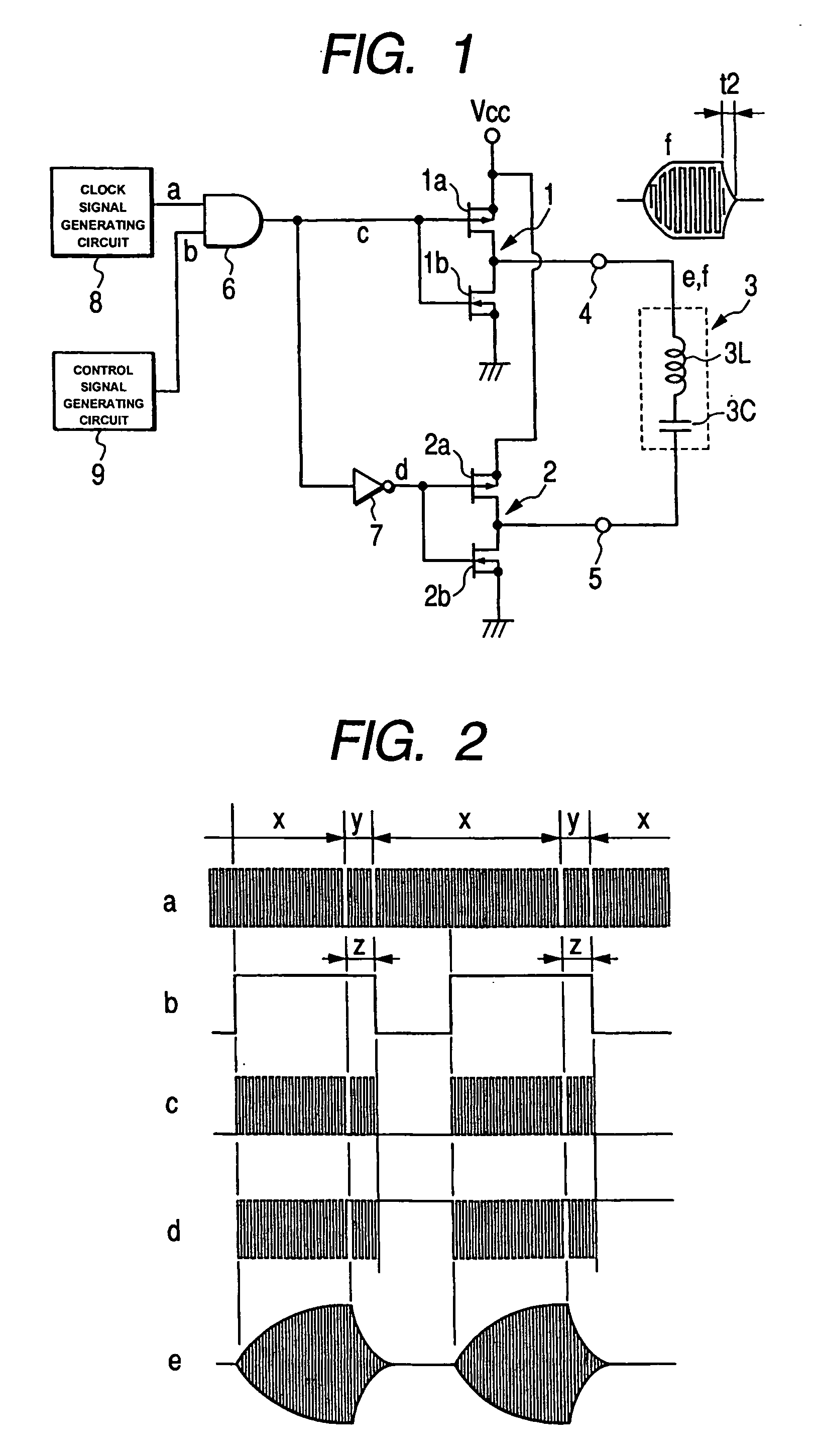

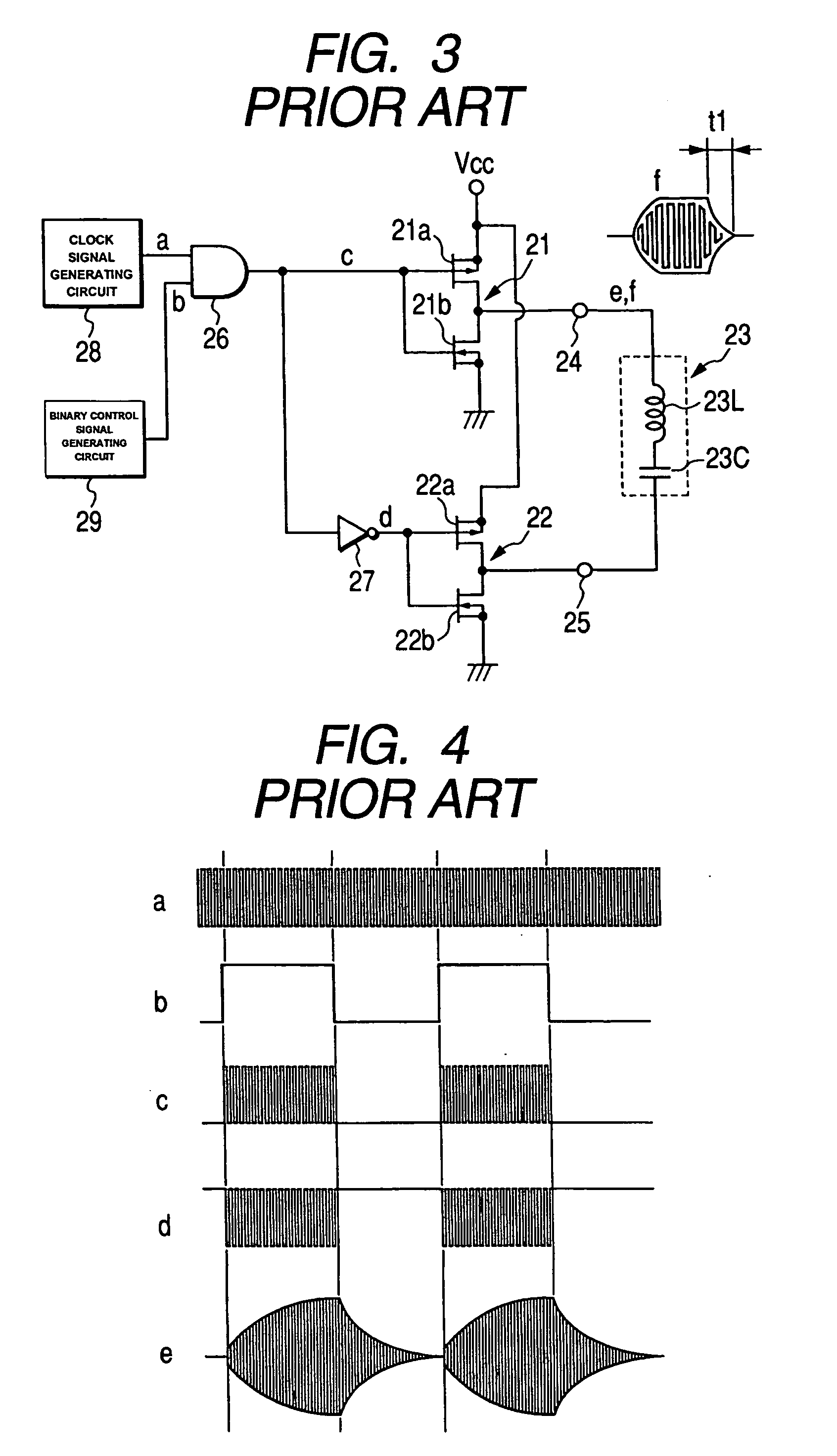

A passive keyless entry device includes an in-vehicle transceiver and a portable transceiver. The in-vehicle transceiver has a clock signal generating circuit, a control signal generating signal that outputs a binary control signal having positive and negative values, a modulation circuit that forms a pulse modulation signal by modulating the clock signals by the control signal, and a transmission circuit that has half-bridge circuits, a low-frequency antenna being connected to output ends of the half-bridge circuits. The control signal changes from positive to negative value slightly later than the usual time. During a period from the usual time to the slightly later time, the clock signals modulated by the control signal are inverted clock signals that are shifted by half wavelength with respect to the usual clock signals.

Owner:ALPS ALPINE CO LTD

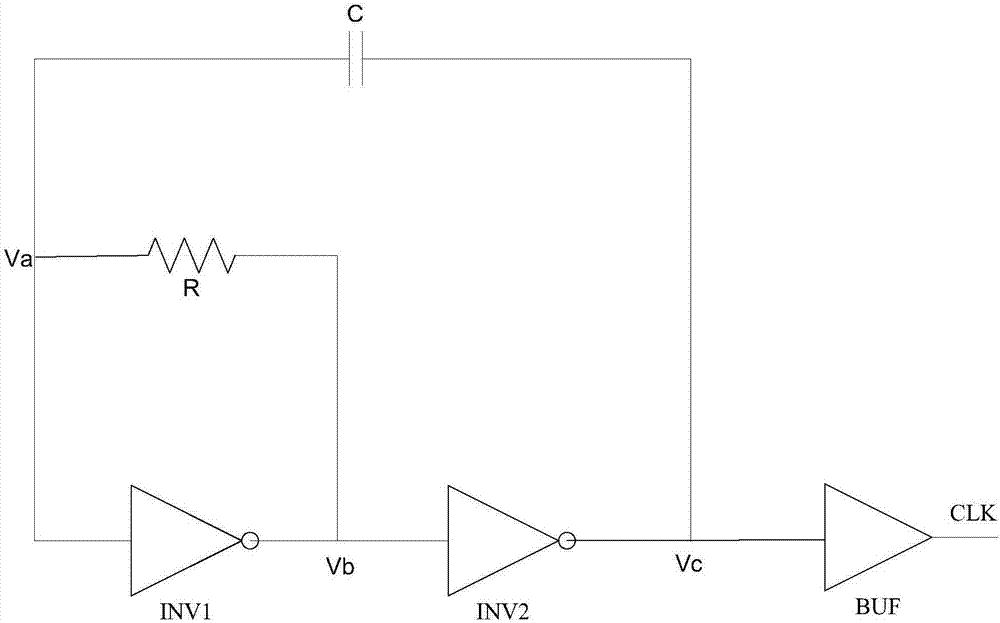

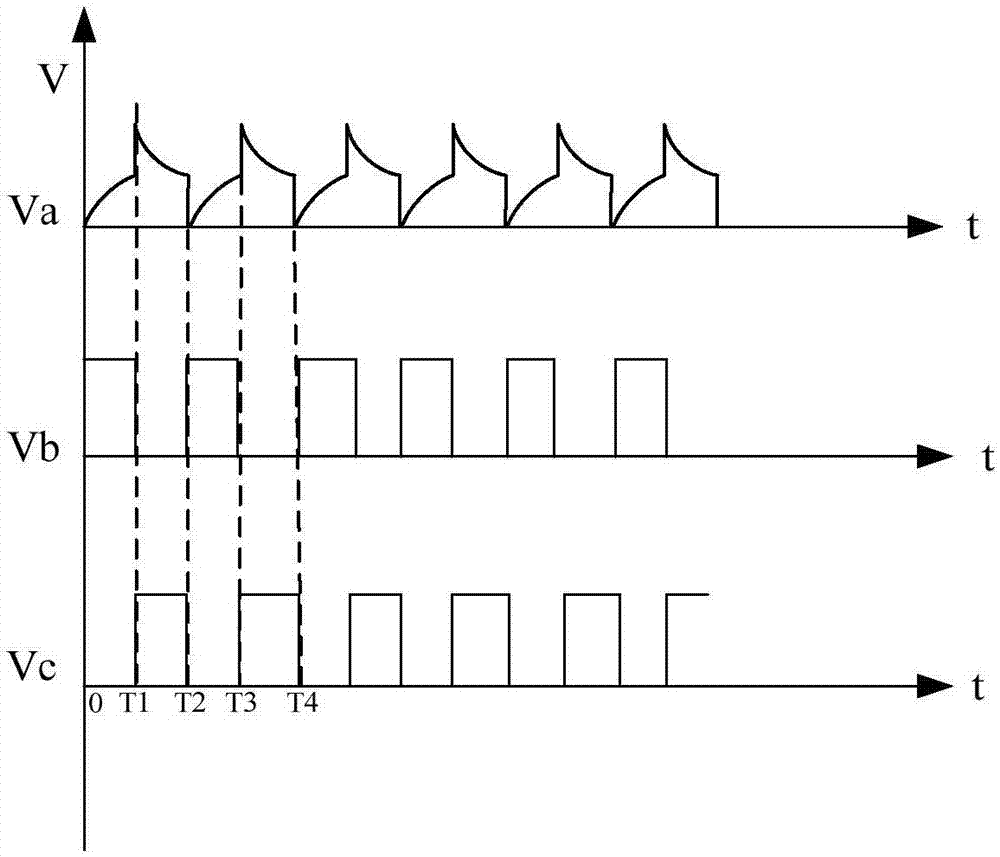

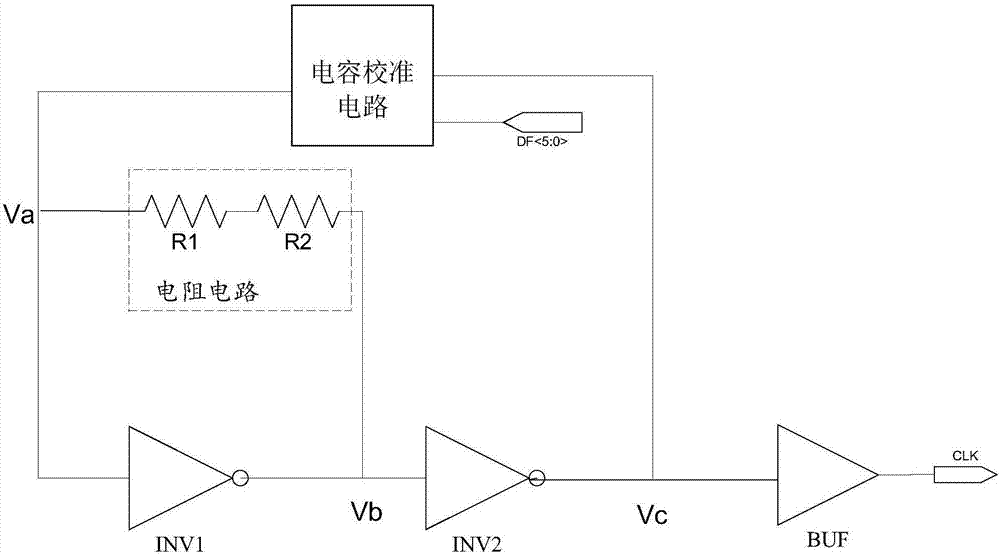

RC oscillator

ActiveCN107134979AImprove stabilityEliminate introduced biasOscillations generatorsCapacitanceClock rate

The invention discloses an RC oscillator, comprising a first phase inverter, a second phase inverter, a buffer and a resistor circuit. The output end of the first phase inverter is connected with the input end of the second phase inverter. The output end of the second phase inverter is connected with the input end of the buffer. The resistor circuit is connected between the input end and the output end of the first phase inverter. The RC oscillator also comprises a decoder, a basic capacitor and 2N-1 capacitor calibration units. Two ends of the basic capacitor are connected with the input end of the first phase inverter and the output end of the second phase inverter. Each capacitor calibration unit is connected with the input end of the first phase inverter and the output end of the second phase inverter. External N-bit binary control words are input into the control end of the decoder. Two output ends of the decoder output two groups of 2N-1-bit binary control words, and the two groups of 2N-1-bit binary control words are input into the capacitor calibration units. The RC oscillator is accurate in capacitance value, the capacitance value is not changed along with the temperature, a voltage and a technology, a resistance value is not changed along with the temperature, and an output clock frequency is precisely locked nearby a design frequency.

Owner:IPGOAL MICROELECTRONICS (SICHUAN) CO LTD

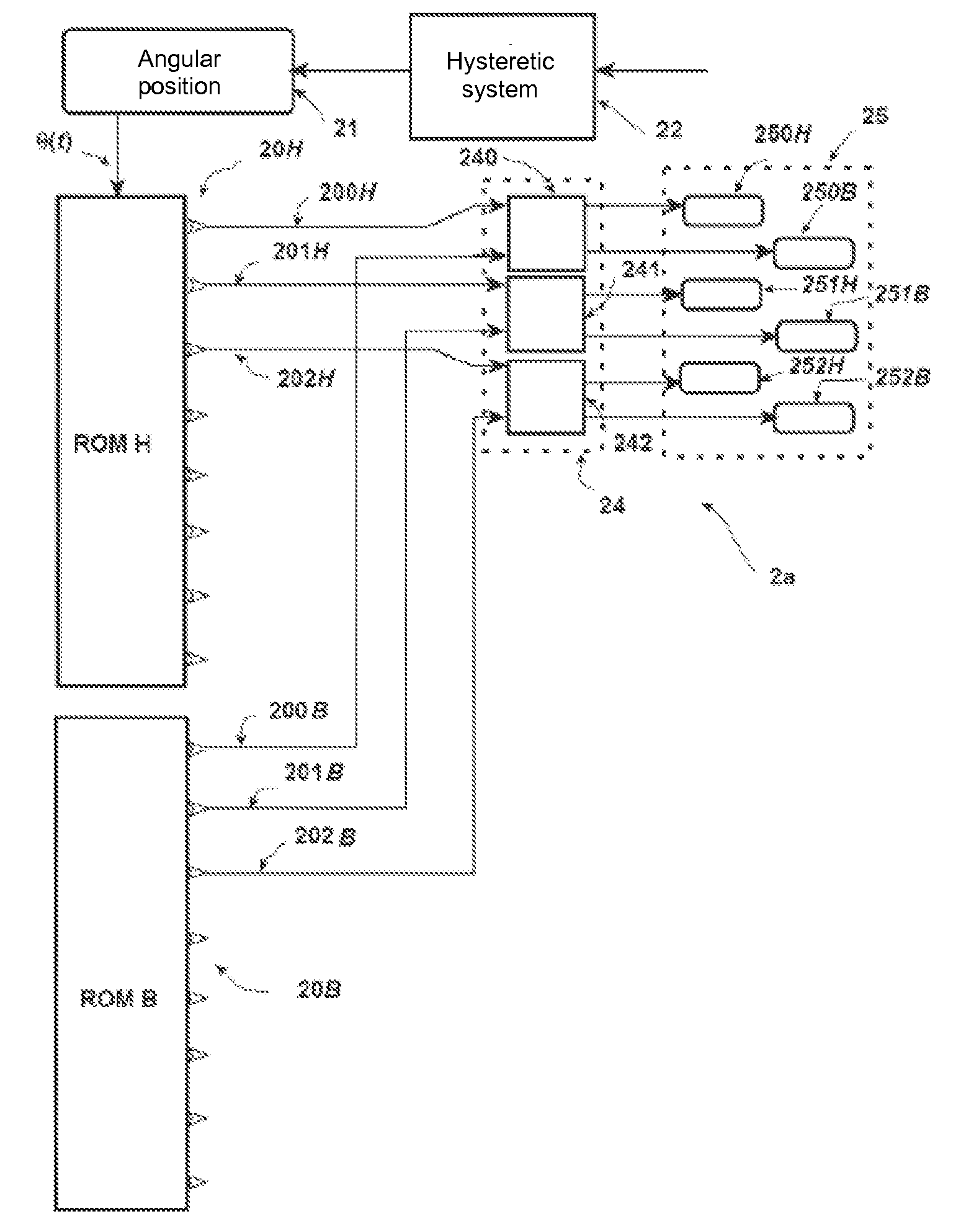

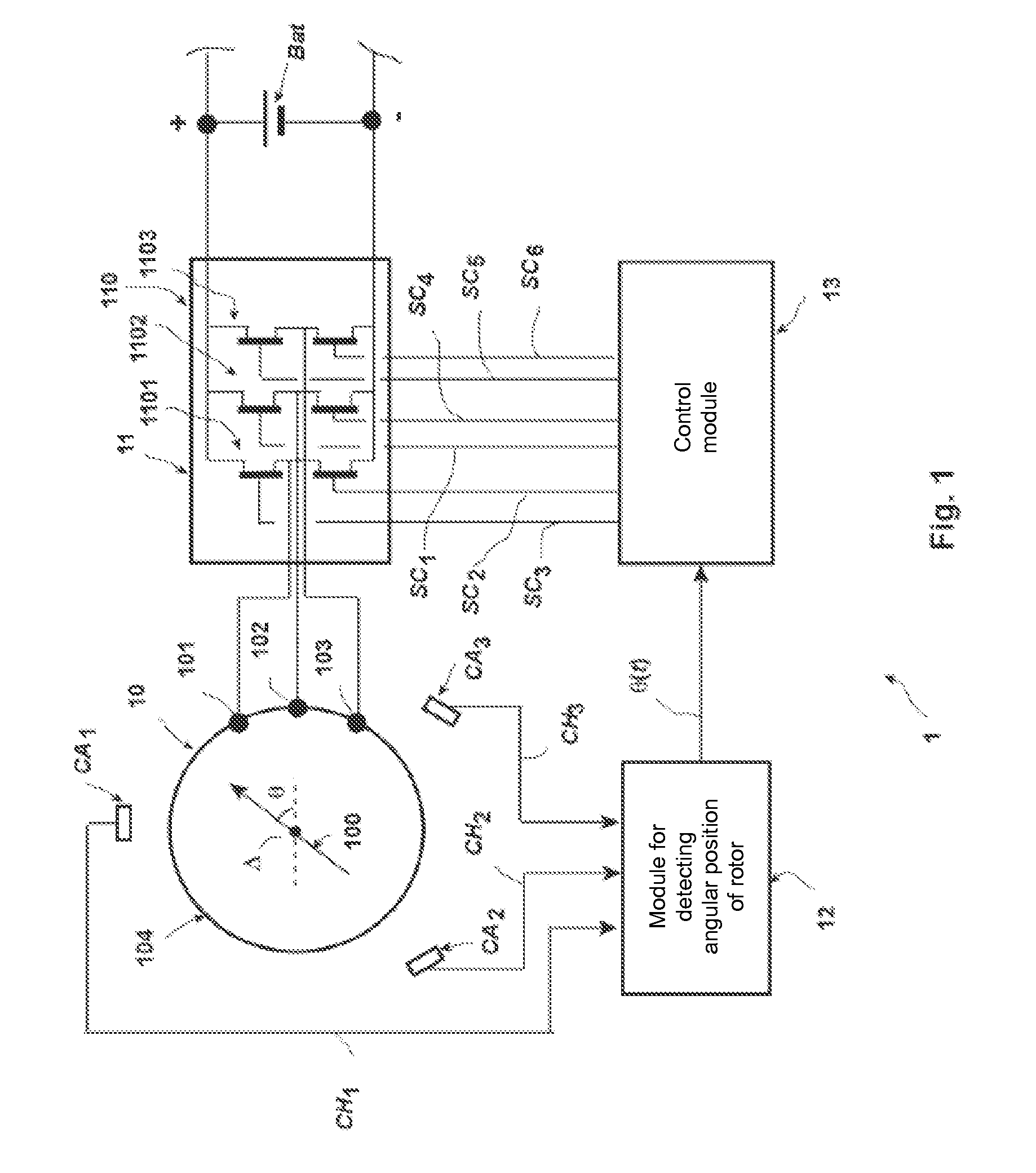

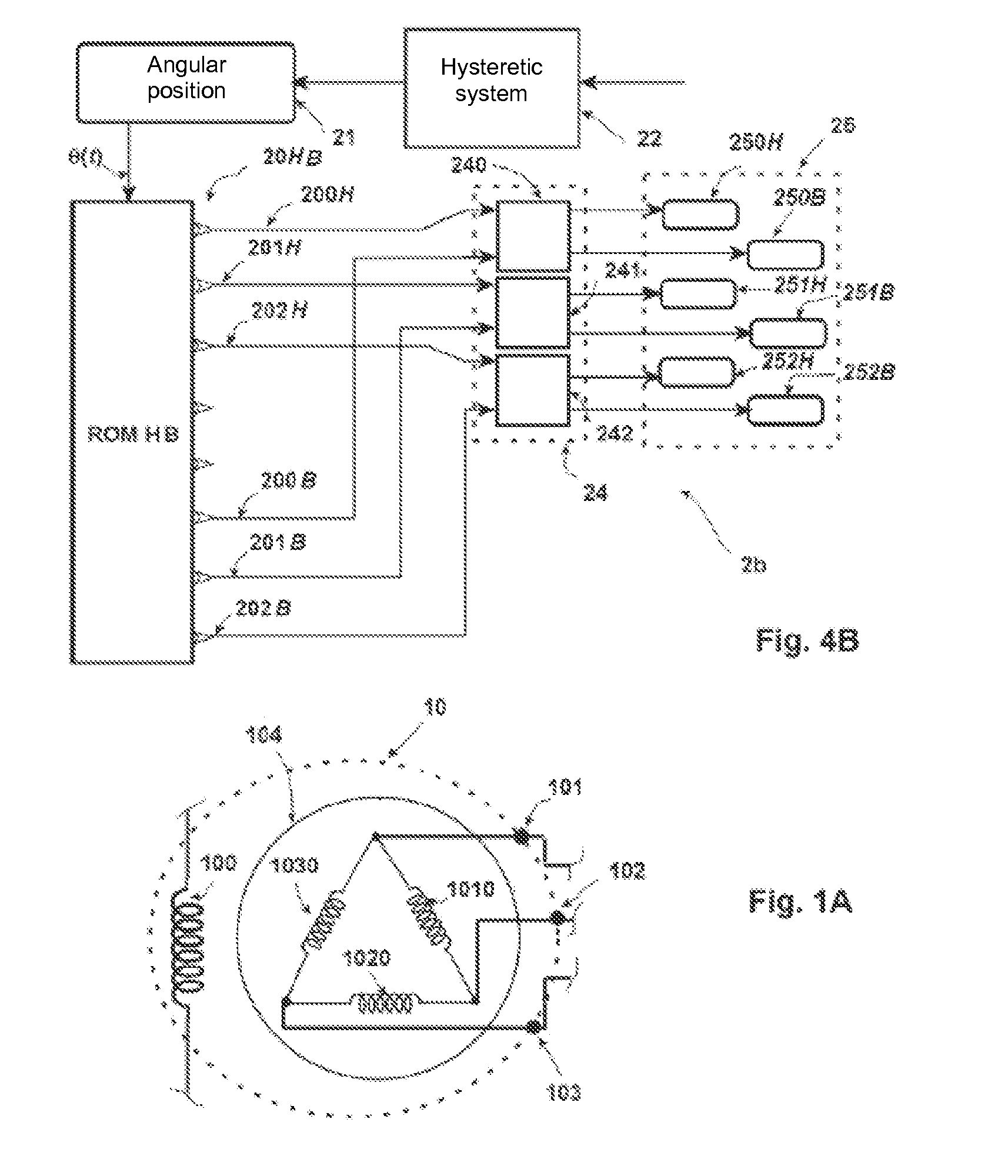

Device for controlling a polyphase synchronous rotary electrical machine and polyphase synchronous rotary electrical machine containing such a device

ActiveUS20090302791A1Good flexibilityEasy to changeMotor/generator/converter stoppersSynchronous motors startersDc currentPower switching

A control device (2′″) for an AC-DC current converter associated with a polyphase synchronous rotary electrical machine. The AC-DC current converter contains, for each phase, a branch of two power switches in series, known as high and low (25). The control device (2′″) contains means of generating a signal (θ(t)) representing the angular position of the rotor. The control device contains one or more digital tables (20H, 20B) addressed by the signal of the angular position of the rotor (θ(t)) and delivering at their outputs binary control signals (200H-202H, 200B-202B), each controlling one branch of power switches (25).

Owner:VALEO EQUIP ELECTRIC MOTEUR

Delay locked loop with a loop-embedded duty cycle corrector

ActiveUS8803577B2Reduce areaReduce consumptionPulse automatic controlContinuous to patterned pulse manipulationDelay-locked loopEngineering

A delayed locked loop (DLL) adjusts a duty cycle of an input clock signal and outputs an output clock signal. The DLL includes a phase and duty cycle detector configured to detect a phase and duty cycle of the input clock signal, a duty cycle corrector configured to correct the duty cycle, a control code generator configured to detect coarse lock of the DLL and generate a binary control code corresponding to the detection result, and a delay circuit configured to delay an output signal of the duty cycle corrector by a predetermined time according to the binary control code, tune the duty cycle thereof, and mix the phase thereof, wherein the phase and duty cycle detector, the duty cycle corrector, the control code generator, and the delay circuit form a feedback loop.

Owner:SK HYNIX INC +1

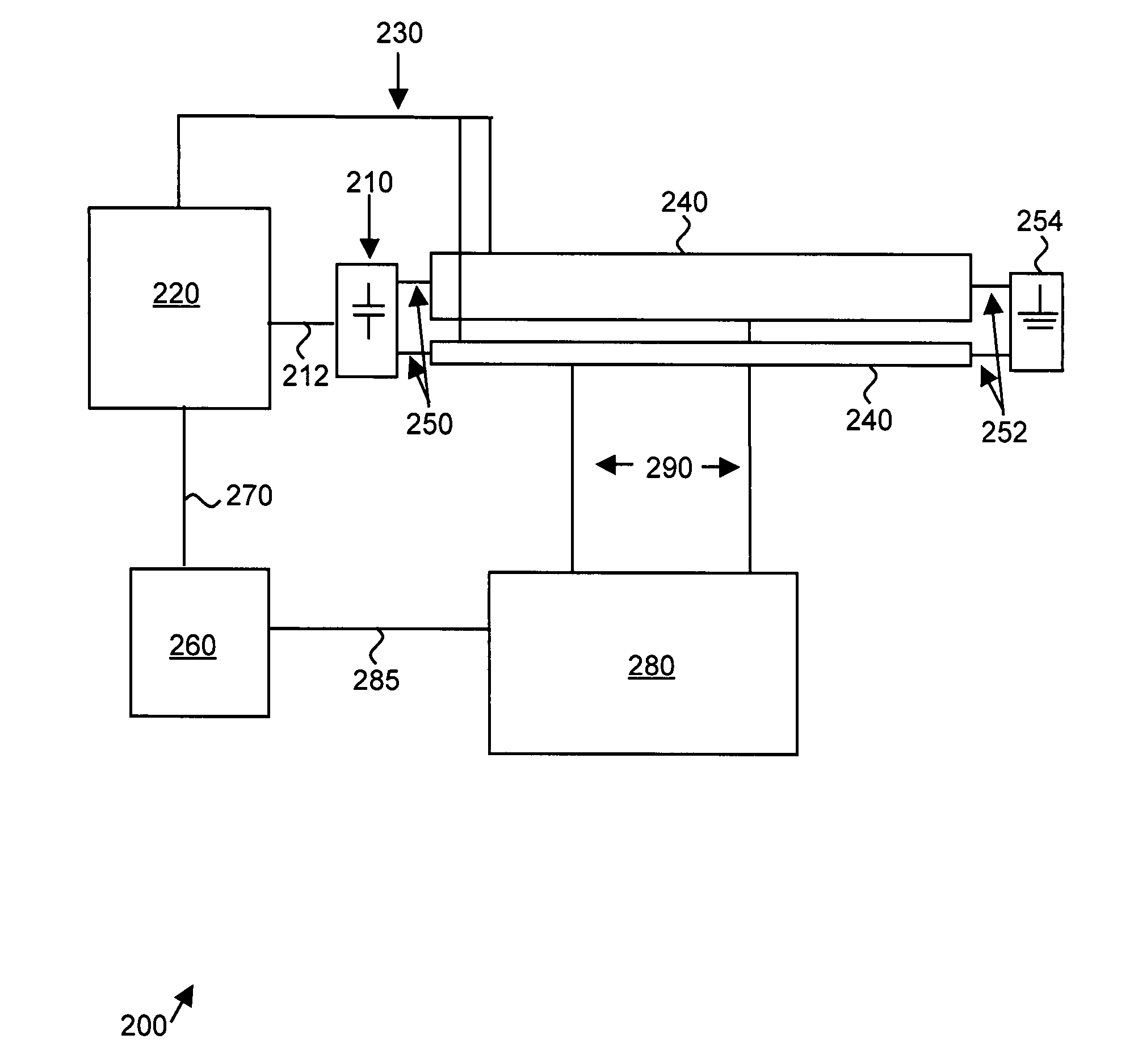

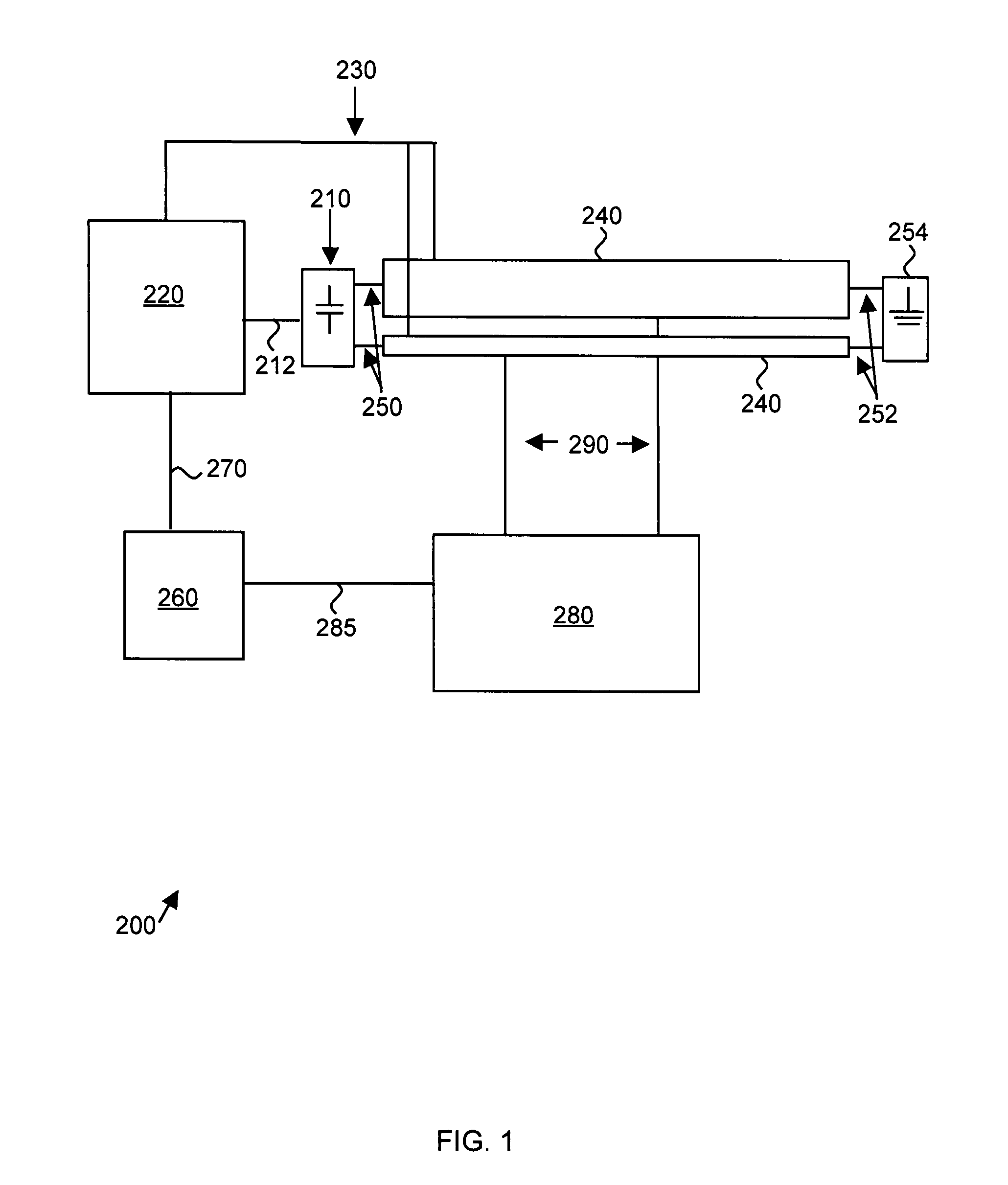

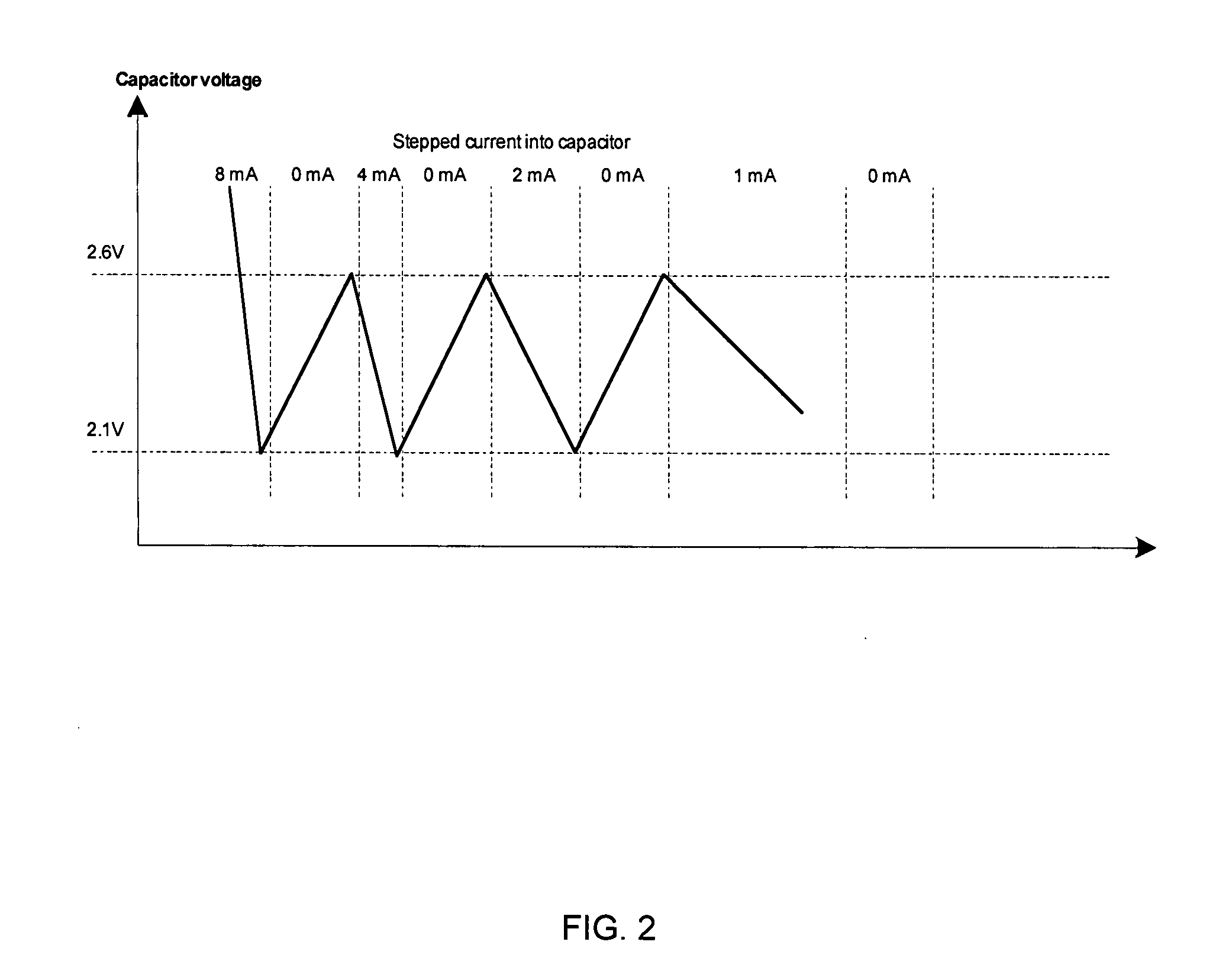

Circuit for charging a capacitor with a power source

InactiveUS20080079399A1Electrical storage systemBatteries circuit arrangementsElectricityCharge current

The present invention provides a circuit and method for charging a capacitor with a power source. The circuit includes a capacitor, a power source, and at least two binary-controlled current sources. Each of these current sources is energized by the power source and provides either no current or a predetermined current to the capacitor. Importantly, the two predetermined currents are distinct. The circuit also includes a voltage comparator, which senses changes in the voltage of the power source. In addition, the circuit includes a logic device, which is electrically connected to the current sources and to the voltage comparator. The logic device selects one of the current sources to charge the capacitor based on information obtained by the voltage comparator, i.e. changes in voltage of the power source. Thus, this circuit allows a selectable charging current to be provided to the capacitor based on the voltage of the power source.

Owner:LV SENSOR

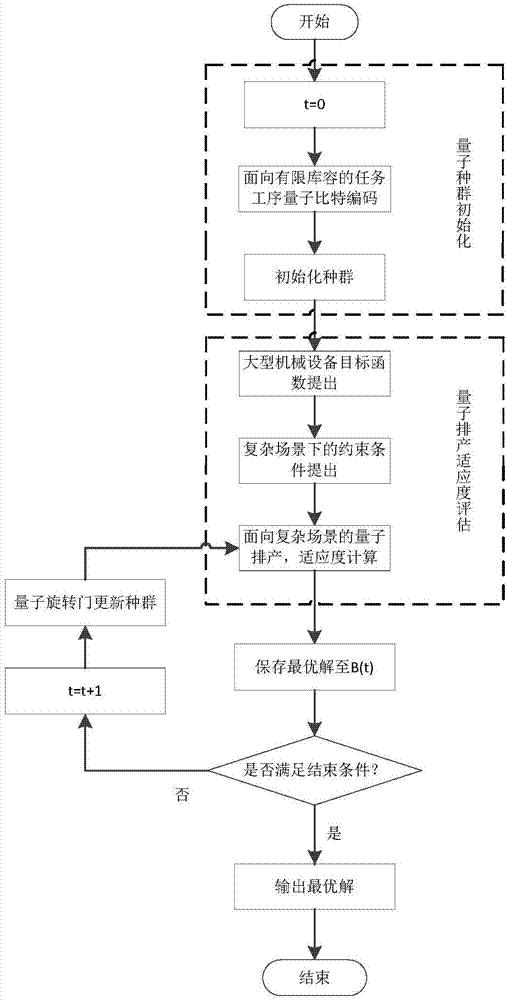

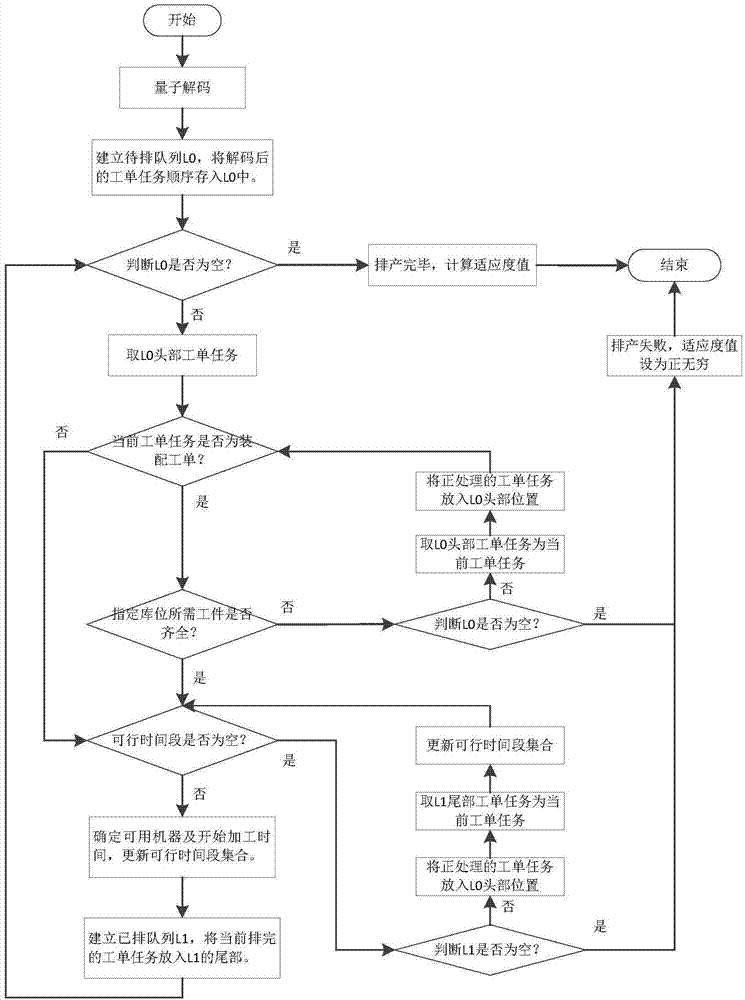

Complex scene scheduling method and system based on quantum evolutionary algorithm

ActiveCN107330808AProduction Scheduling RealizationFast operationData processing applicationsArtificial lifeQuantum evolutionary algorithmCompletion time

The invention discloses a complex scene scheduling method and system based on a quantum evolutionary algorithm. The method comprises steps of quantum population initialization; quantum scheduling adaptability assessment: setting a quantum objective function, and solving the quantum objective function in consideration of the constraint conditions of the manufacturing environment of large mechanical equipment, enabling the quantum population to generate a binary control variable of the objective function, scheduling corresponding to the order of work orders according to the generated binary control variable, calculating the completion time of the entire large mechanical equipment work order as a quantum adaptability value in accordance with scheduled order; comparing fitness values, determining an optimal solution through the minimum fitness value, saving the optimal solution, determining whether an end condition is reached, and updating the quantum population with a quantum revolving door if not; returning to the quantum scheduling adaptability assessment step to recalculate the quantum fitness value and continuing searching optimal solution. The method and system searches the optimal solution in balanced state of local optimization and global optimization and achieve large machinery and equipment scheduling.

Owner:山东万腾电子科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com