Patents

Literature

161 results about "Central projection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

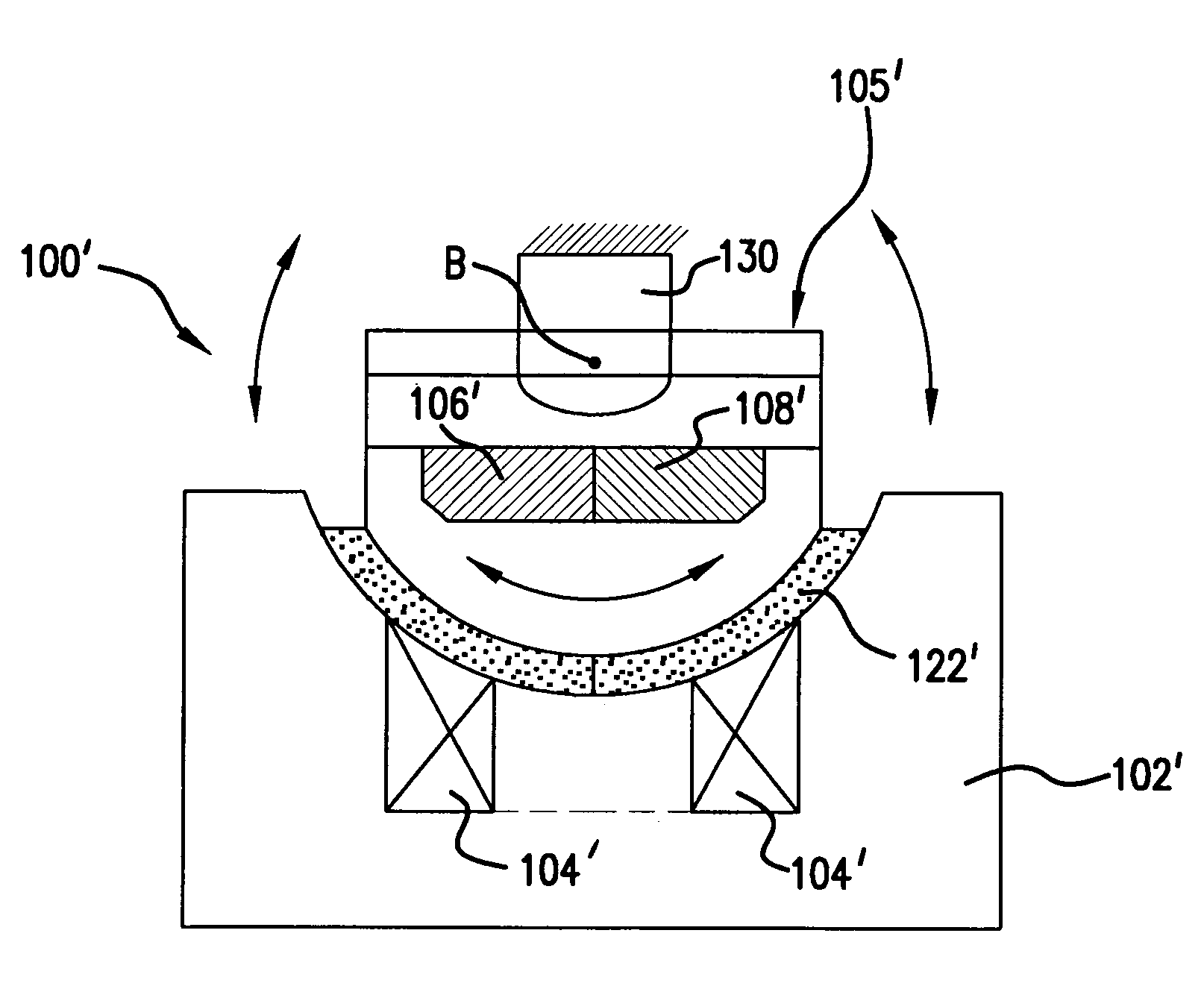

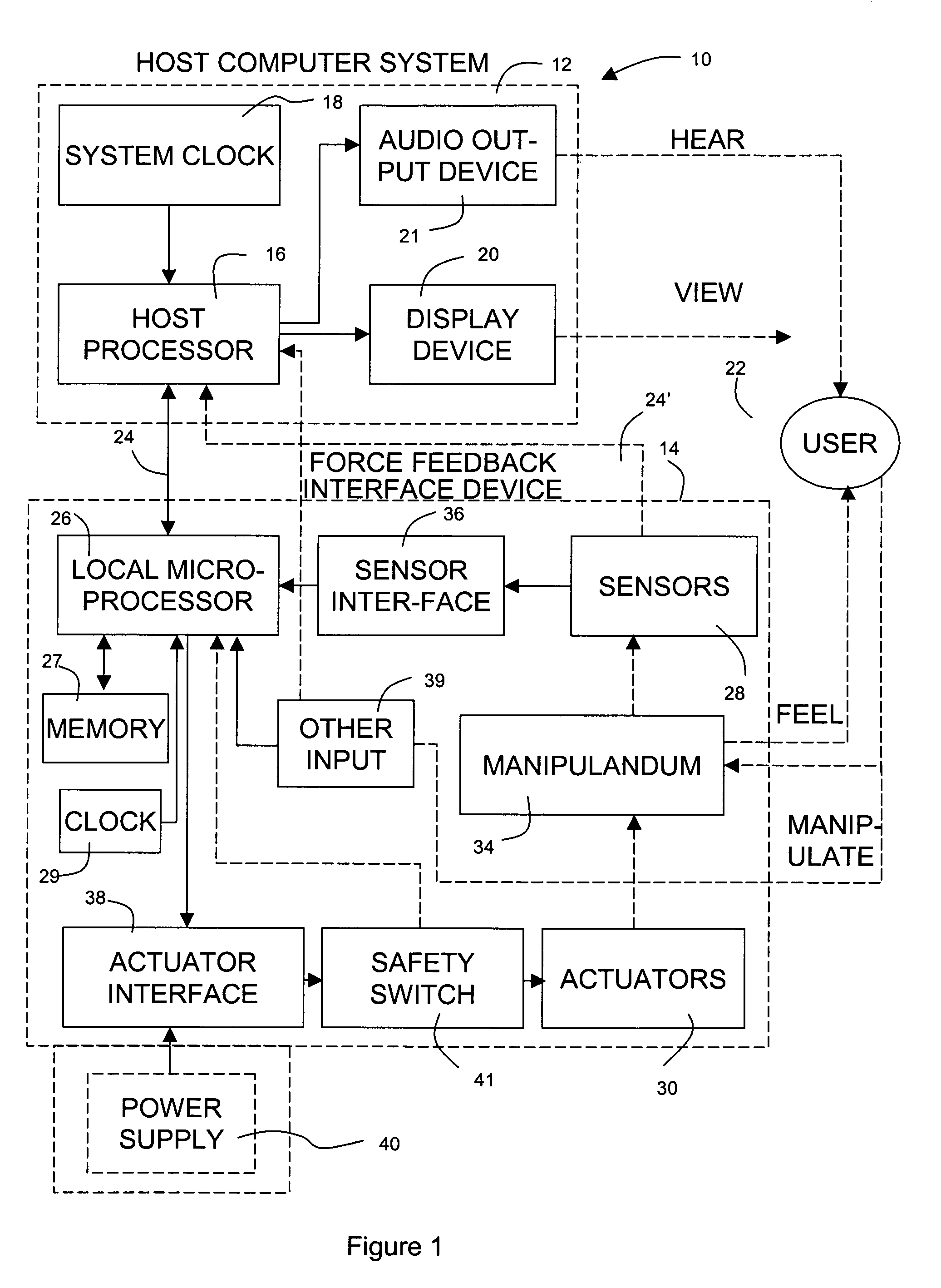

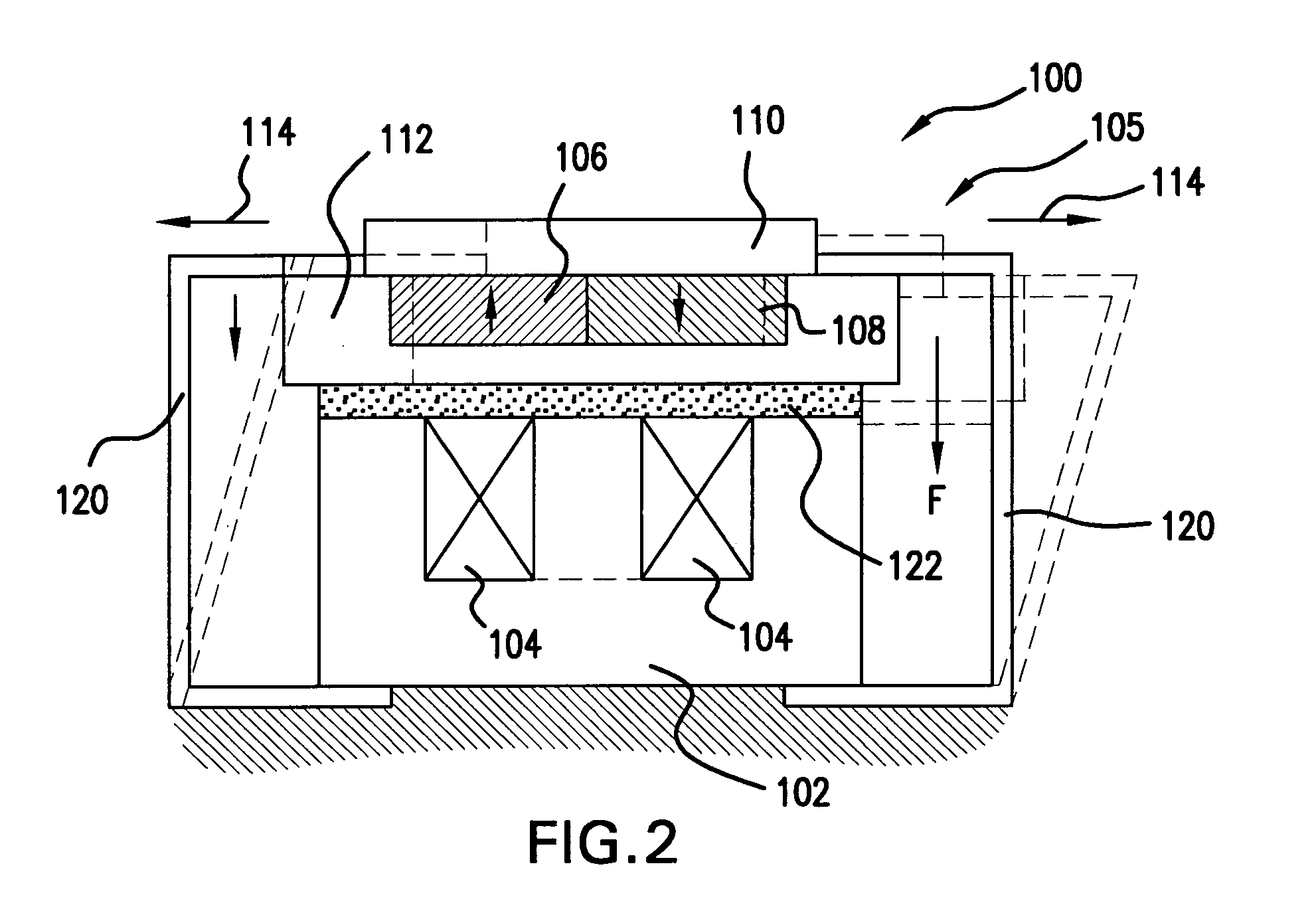

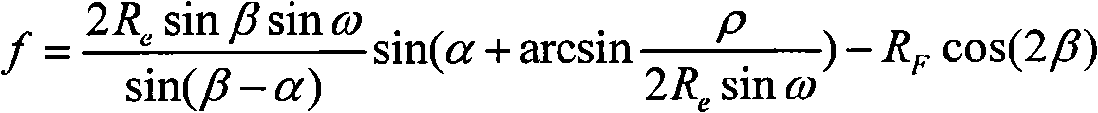

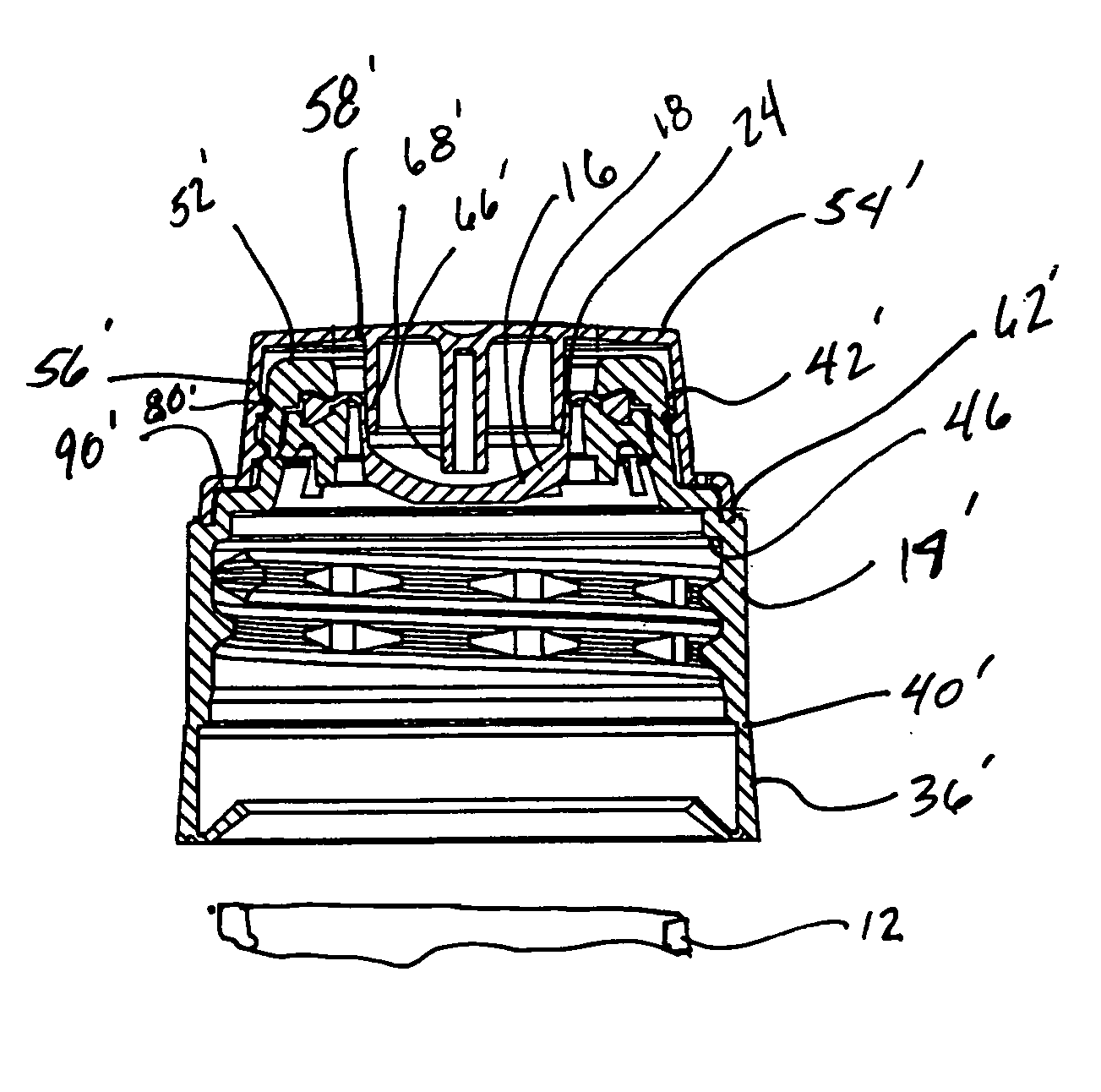

Moving magnet actuator for providing haptic feedback

InactiveUS6982696B1High magnitudeHigh bandwidthInput/output for user-computer interactionManual control with multiple controlled membersCentral projectionSpring force

A moving magnet actuator for providing haptic feedback. The actuator includes a grounded core member, a coil is wrapped around a central projection of the core member, and a magnet head positioned so as to provide a gap between the core member and the magnet head. The magnet head is moved in a degree of freedom based on an electromagnetic force caused by a current flowed through the coil. An elastic material, such as foam, is positioned in the gap between the magnet head and the core member, where the elastic material is compressed and sheared when the magnet head moves and substantially prevents movement of the magnet head past a range limit that is based on the compressibility and shear factor of the material. Flexible members can also be provided between the magnet head and the ground member, where the flexible members flex to allow the magnet head to move, provide a centering spring force to the magnet head, and limit the motion of the magnet head.

Owner:IMMERSION CORPORATION

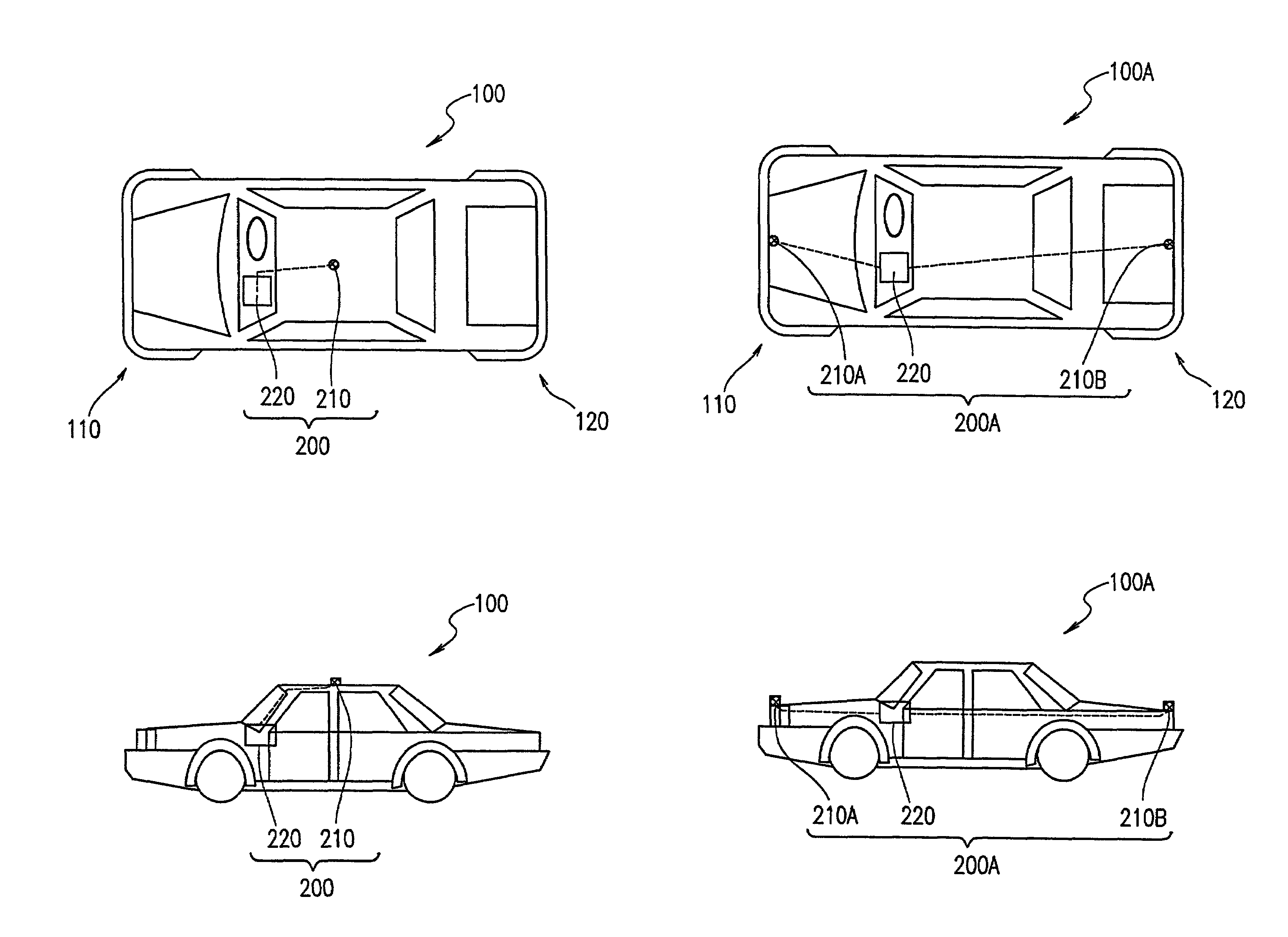

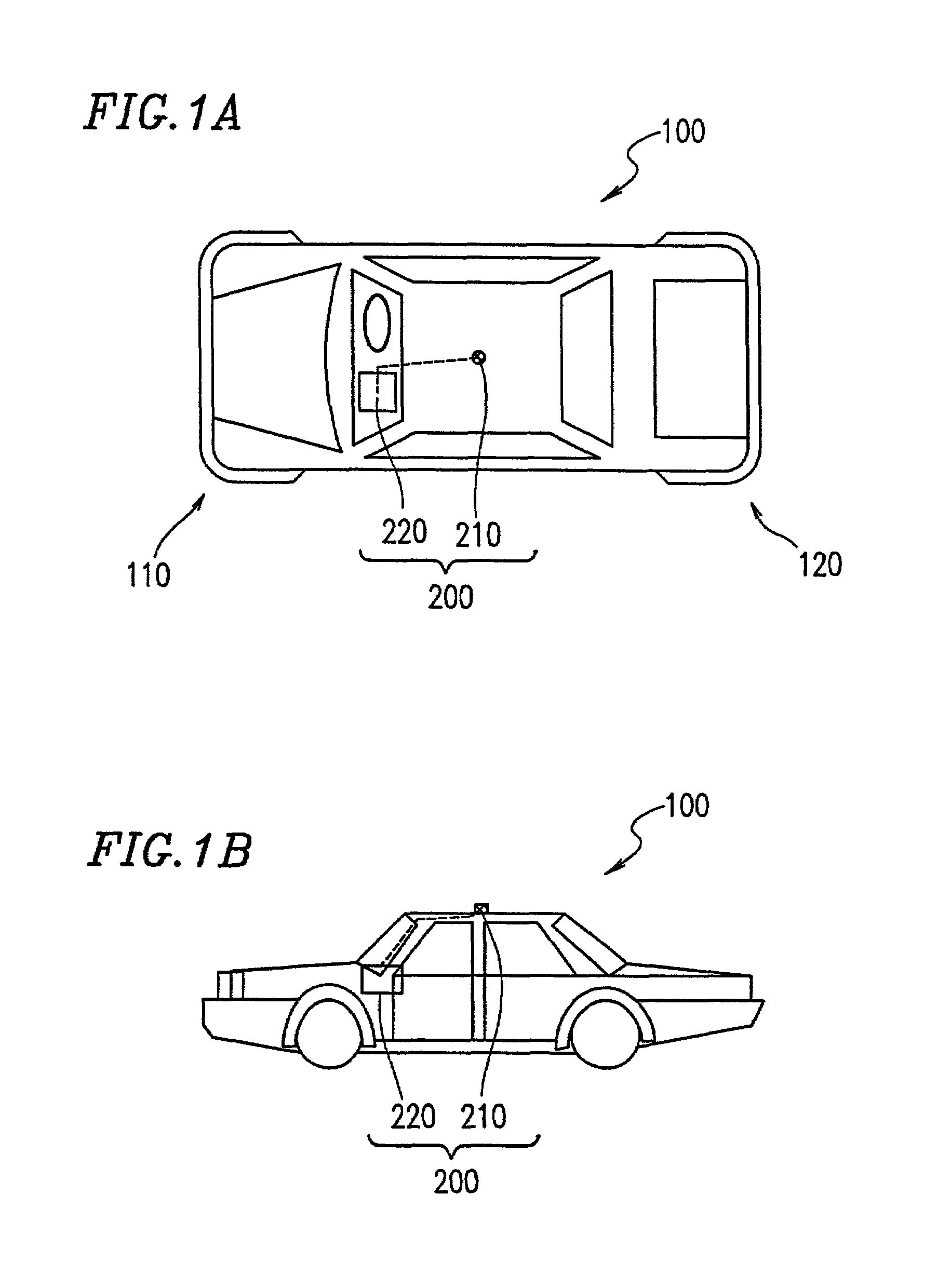

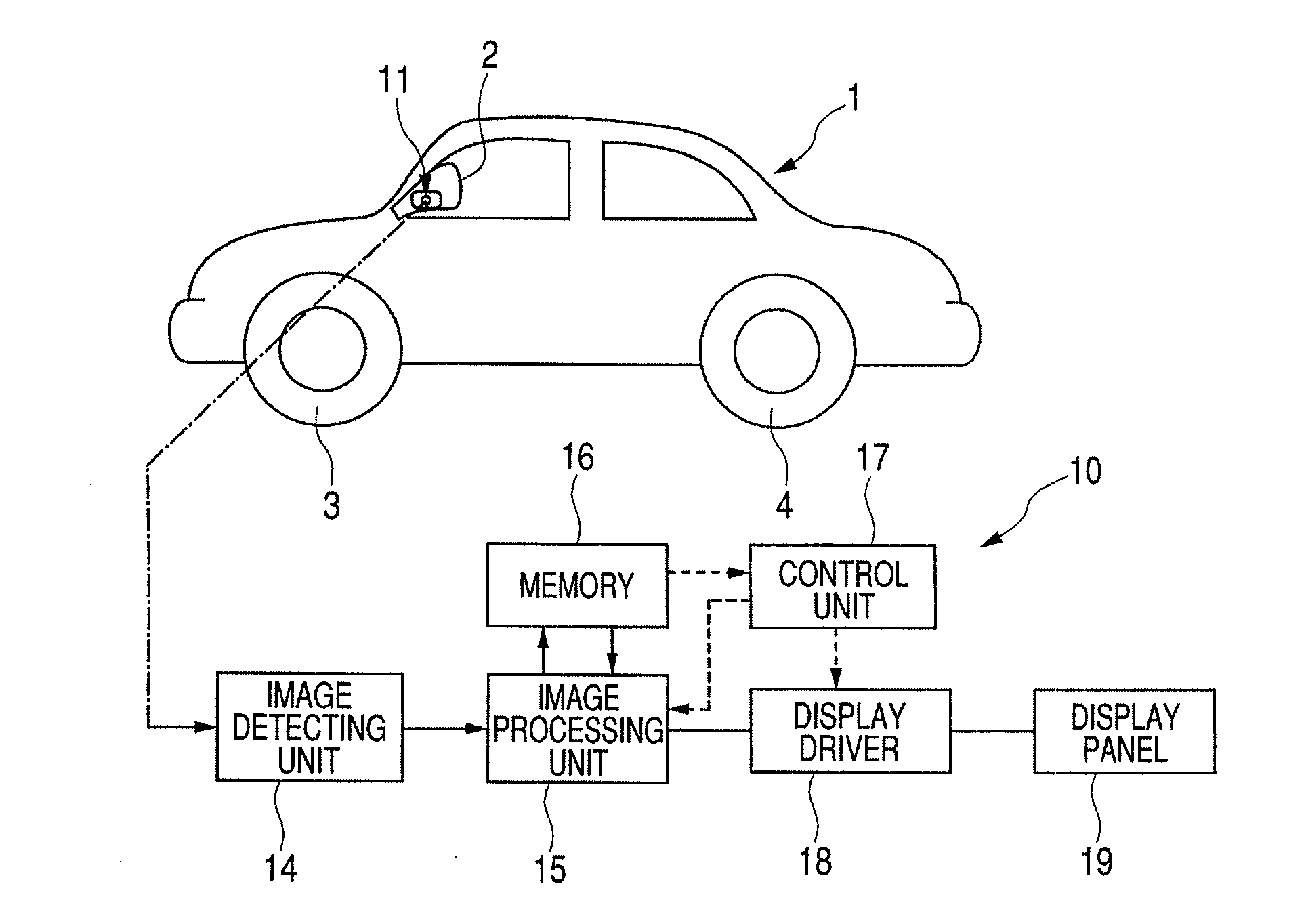

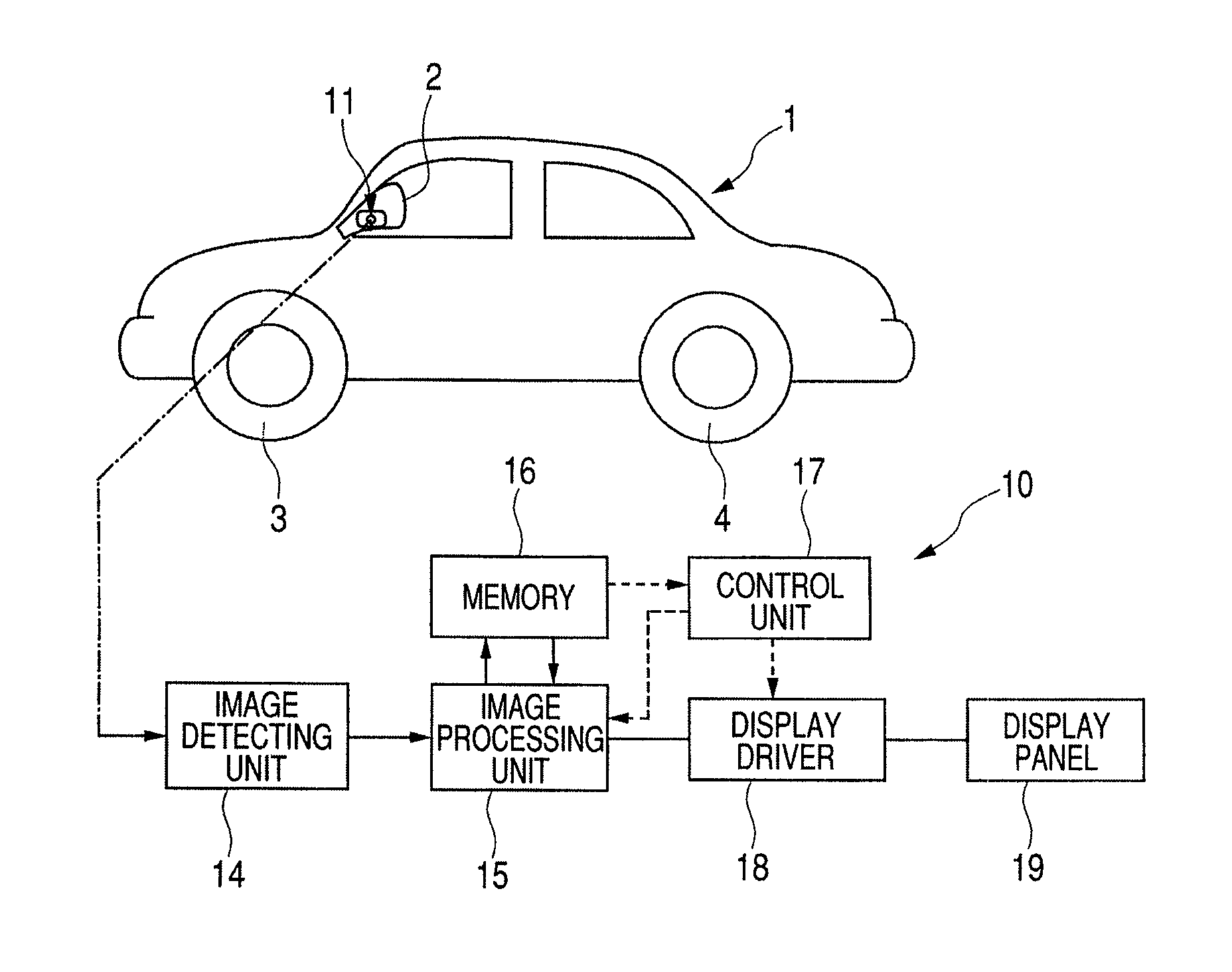

Surround surveillance apparatus for mobile body

InactiveUS7295229B2Easy to checkReduce ambient temperaturePedestrian/occupant safety arrangementAnti-collision systemsCentral projectionImaging lens

A surround surveillance system mounted on a mobile body for surveying surroundings around the mobile body includes an omniazimuth visual system, the omniazimuth visual system includes at least one omniazimuth visual sensor including an optical system capable of obtaining an image with an omniazimuth view field area therearound and capable of central projection transformation of the image into an optical image, and an imaging section including an imaging lens for converting the optical image obtained by the optical system into image data, an image processor for transforming the image data into at least one of panoramic image data and perspective image data, a display section for displaying one of a panoramic image corresponding to the panoramic image data and a perspective image corresponding to the perspective image data and a display control section for controlling the display section.

Owner:SHARP KK

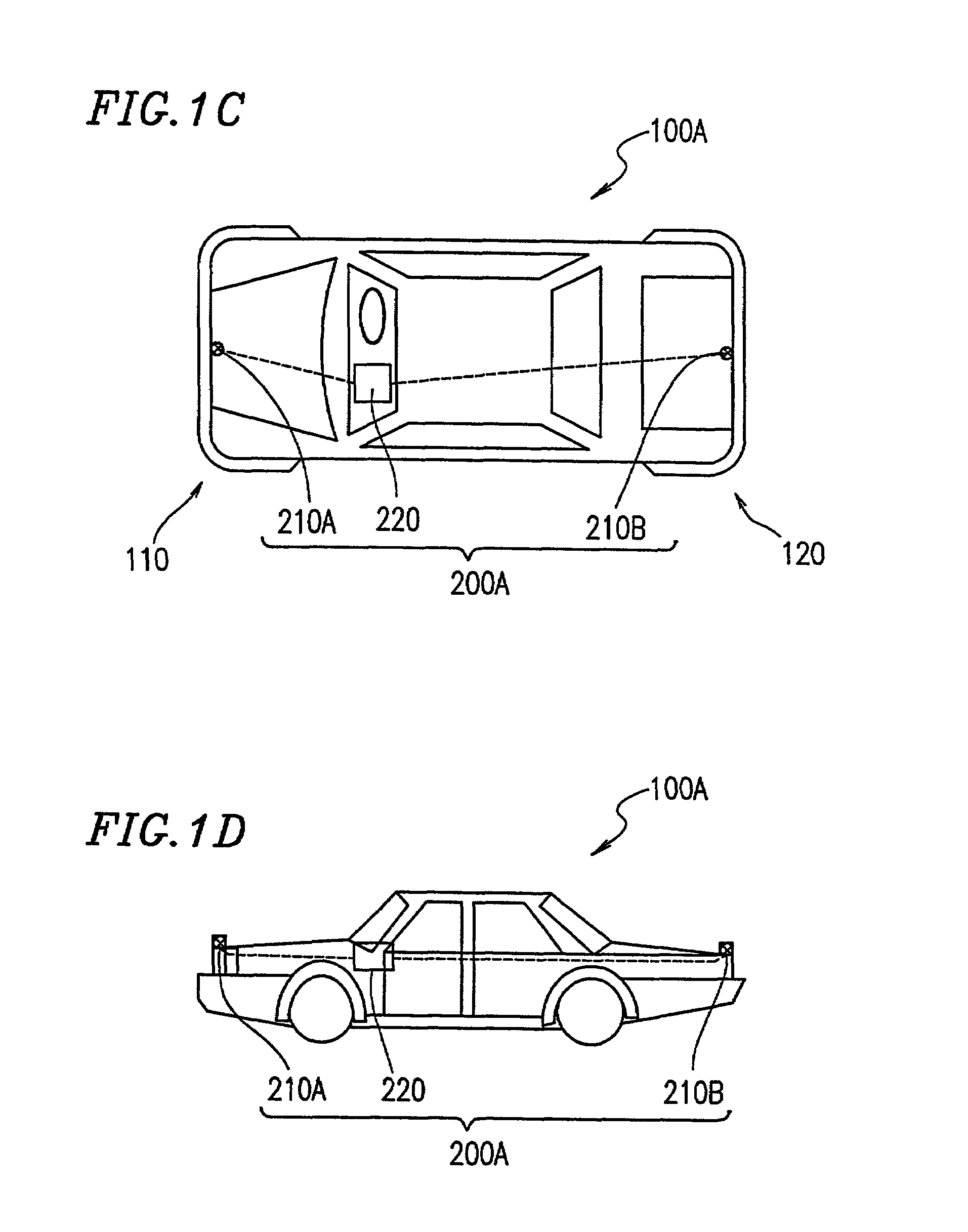

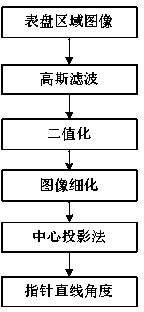

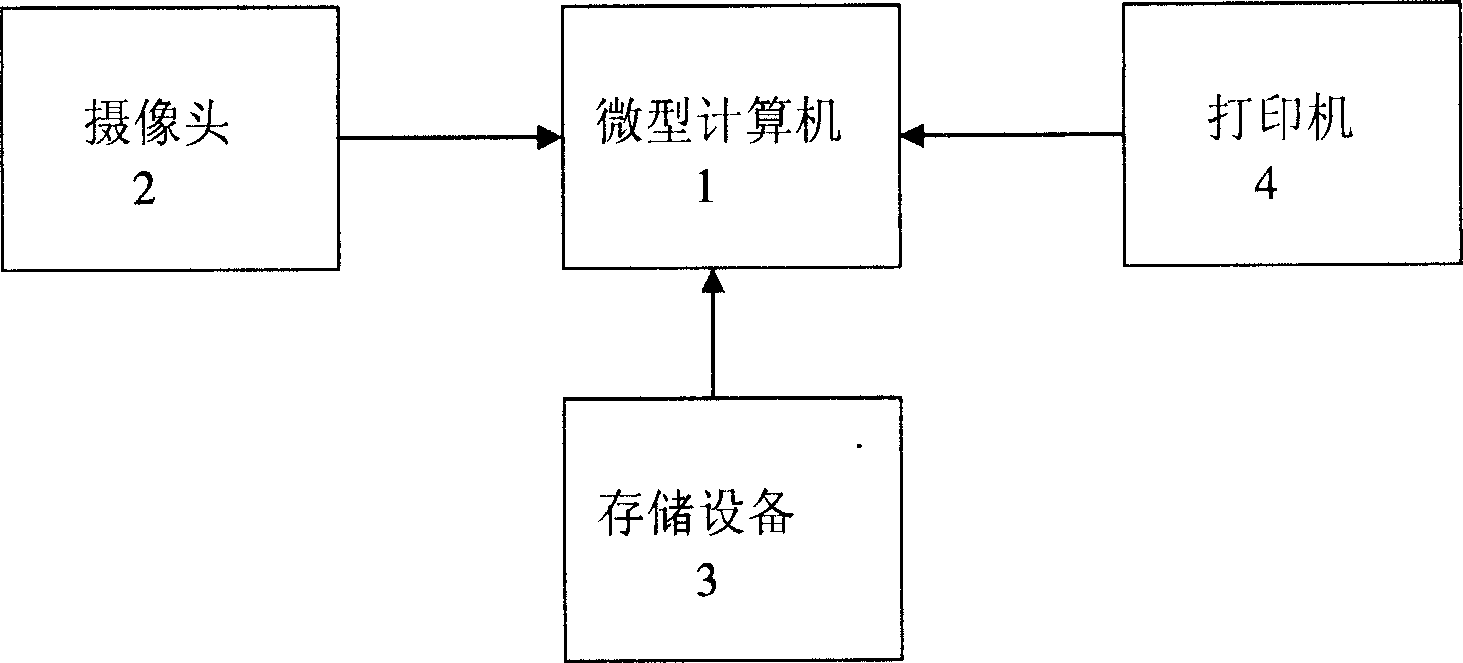

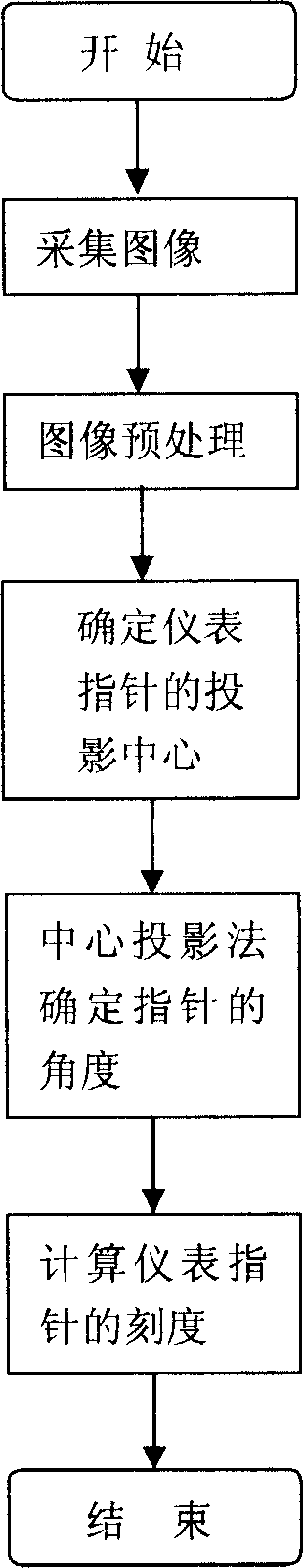

Image processing method for automatic pointer-type instrument reading recognition

ActiveCN104392206ASmall amount of calculationHigh positioning accuracyCharacter and pattern recognitionTemplate matchingImaging processing

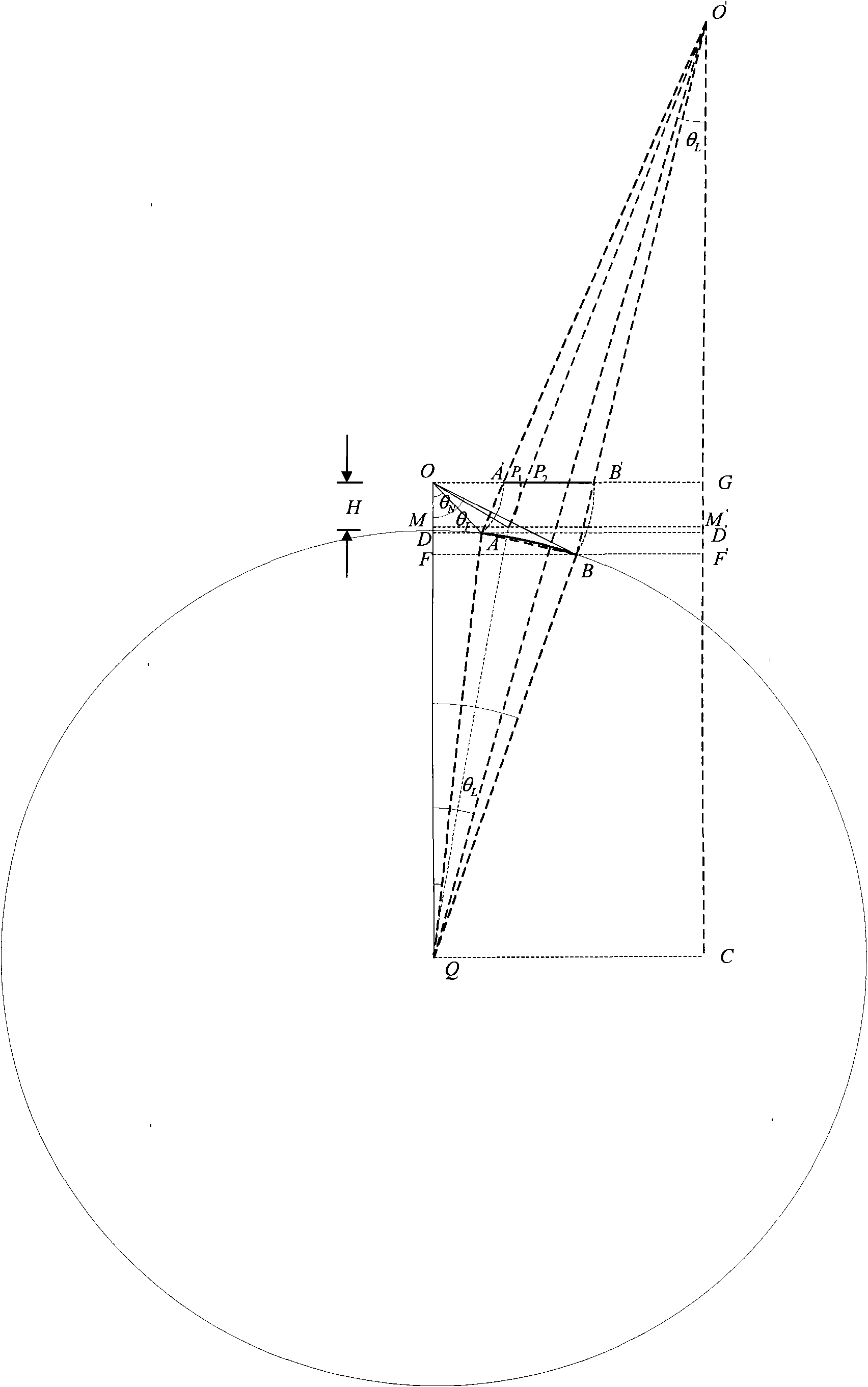

The invention discloses an image processing method for automatic pointer-type instrument reading recognition. The method comprises the following steps: (1) Hough circle detection is carried out on the image, a weighted average method is used for positioning the circle center and the radius of a dial, and a dial region square image is extracted; (2) the image is pre-treated, and a binary thinning image of the instrument pointer is extracted; (3) a central projection method is used for determining a pointer angle; (4) a zero graduation line and full graduation line position templates are extracted, and a range starting point and ending point positions are calibrated; (5) by using template matching, zero graduation line and full graduation line angles are obtained; and (6) according to the pointer angle, the zero graduation line angle and the full graduation line angle, the pointer reading is obtained through calculation. Thus, the problem that the instrument dial position on the acquired image is not fixed as the relative position between a camera and the pointer-type instrument is not fixed can be solved, subjective errors as the reading of the instrument is read manually can be eliminated, the efficiency and the precision can be improved, safety of people is ensured, the application range is wide, and robustness is strong.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

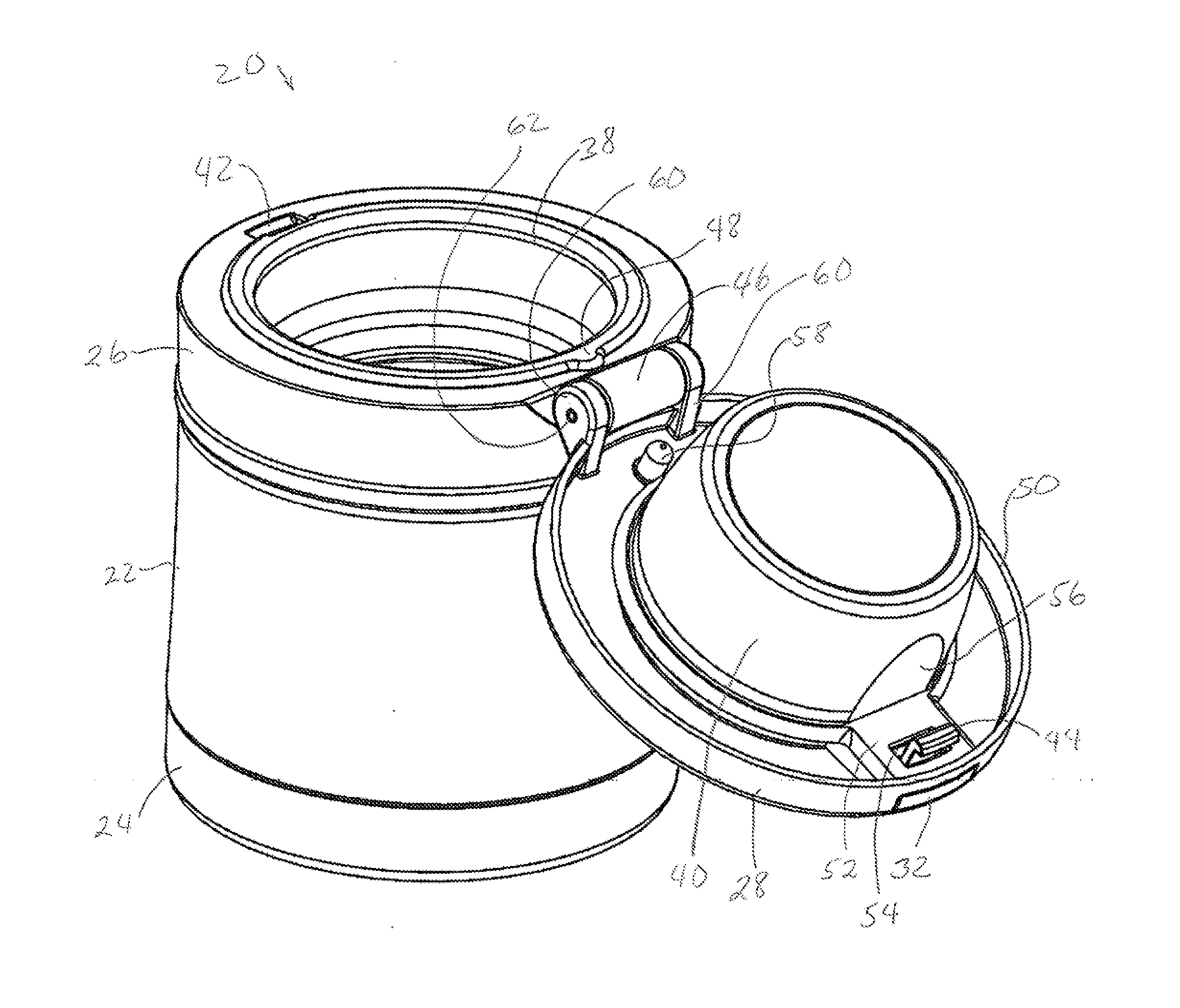

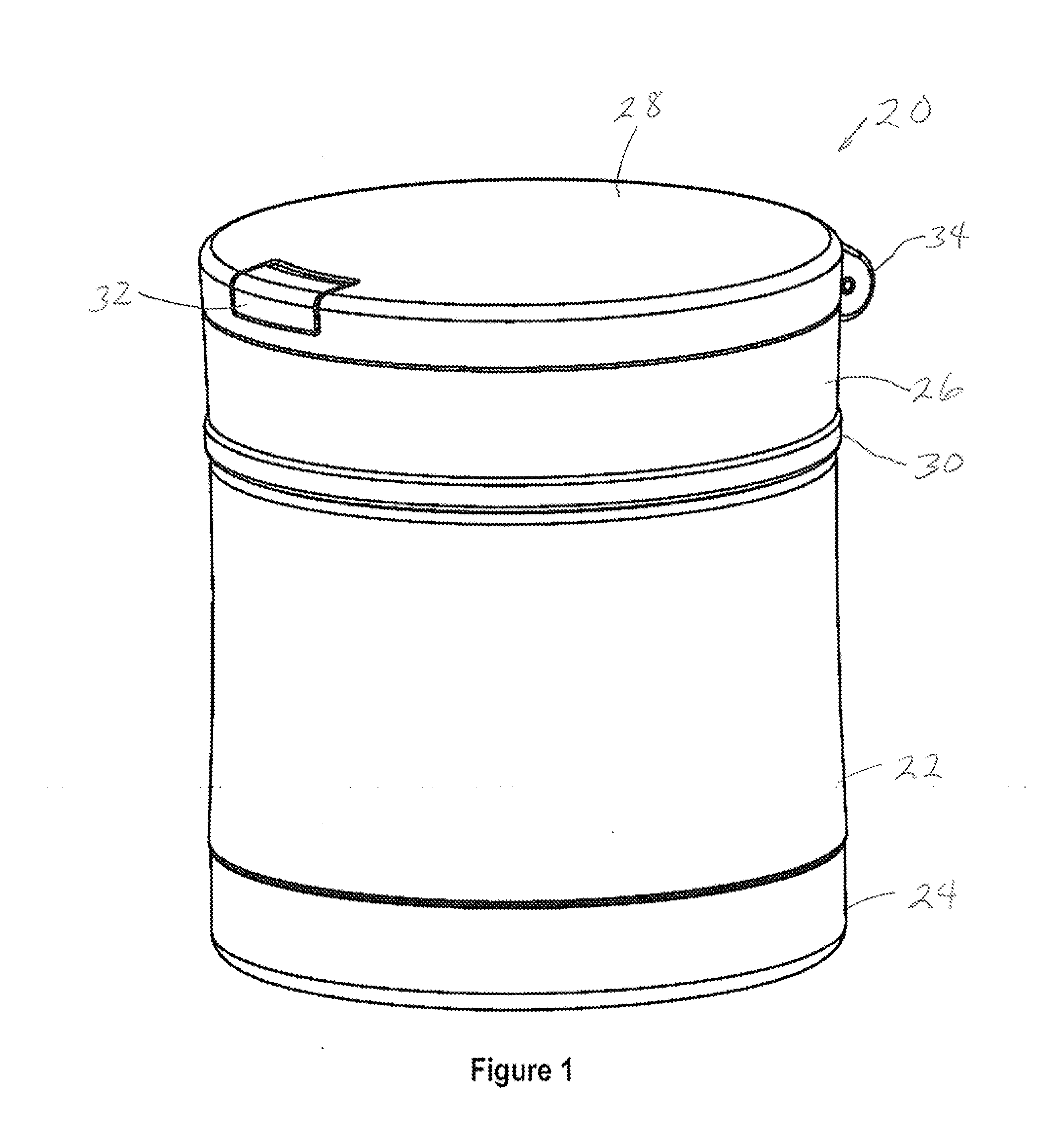

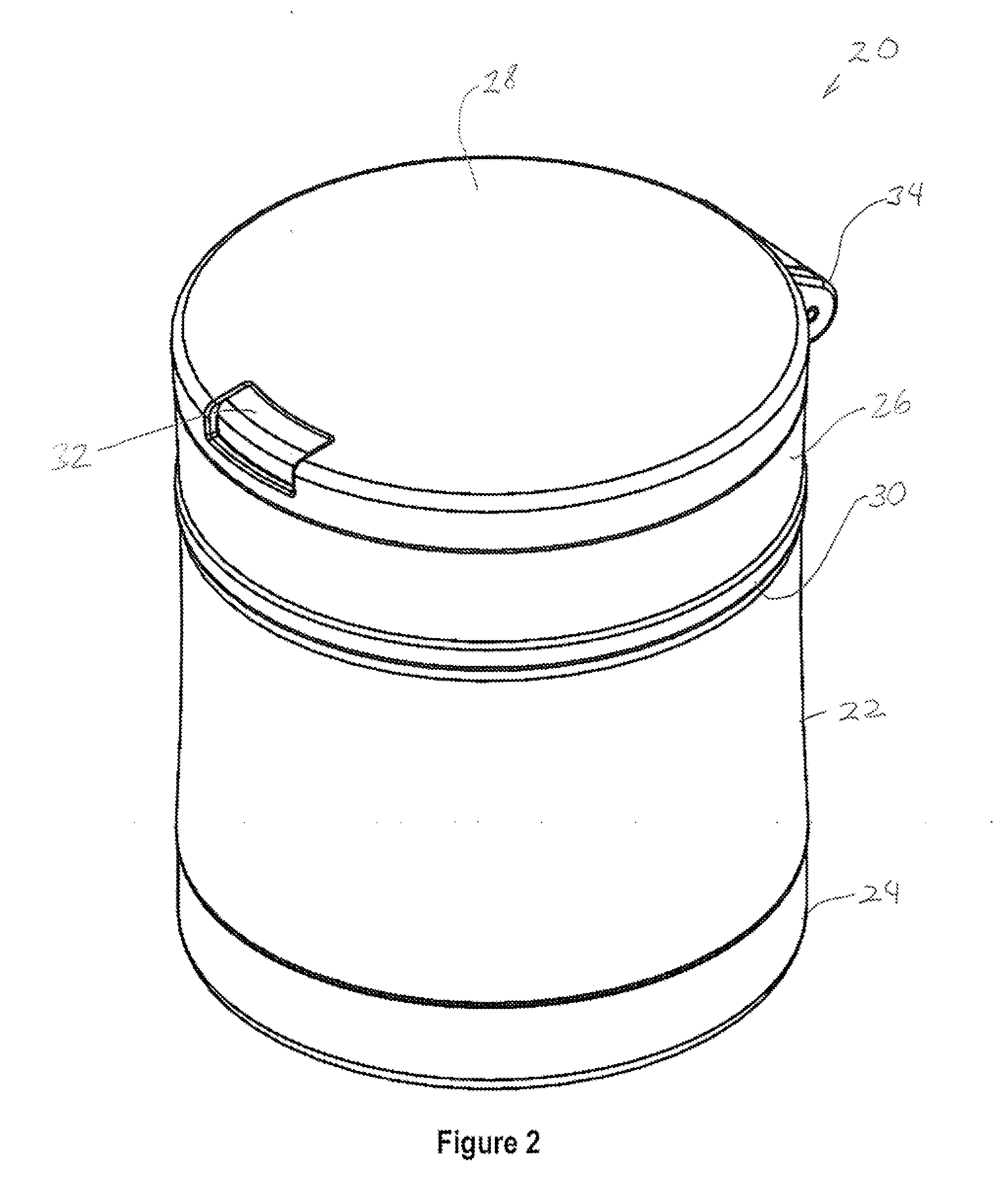

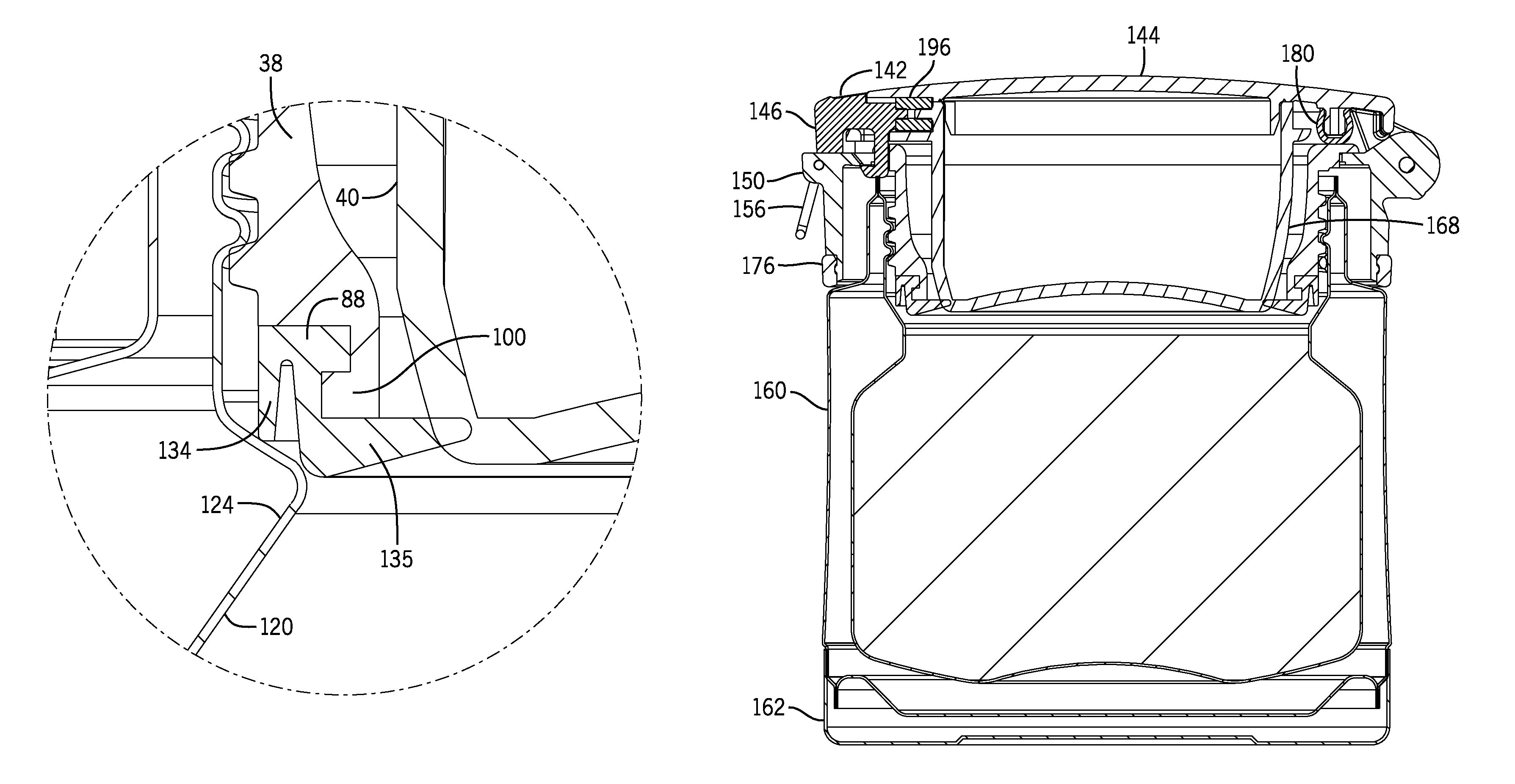

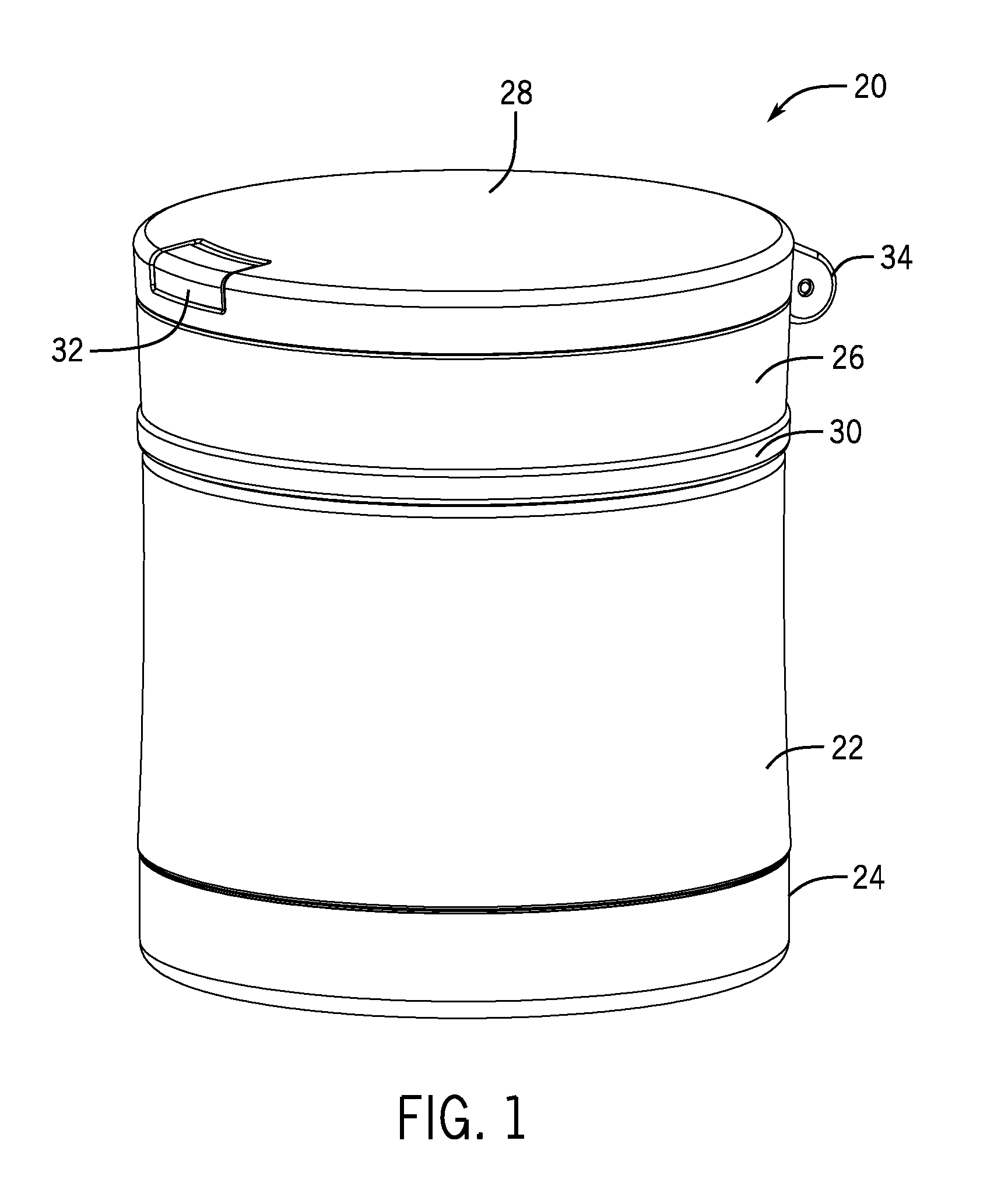

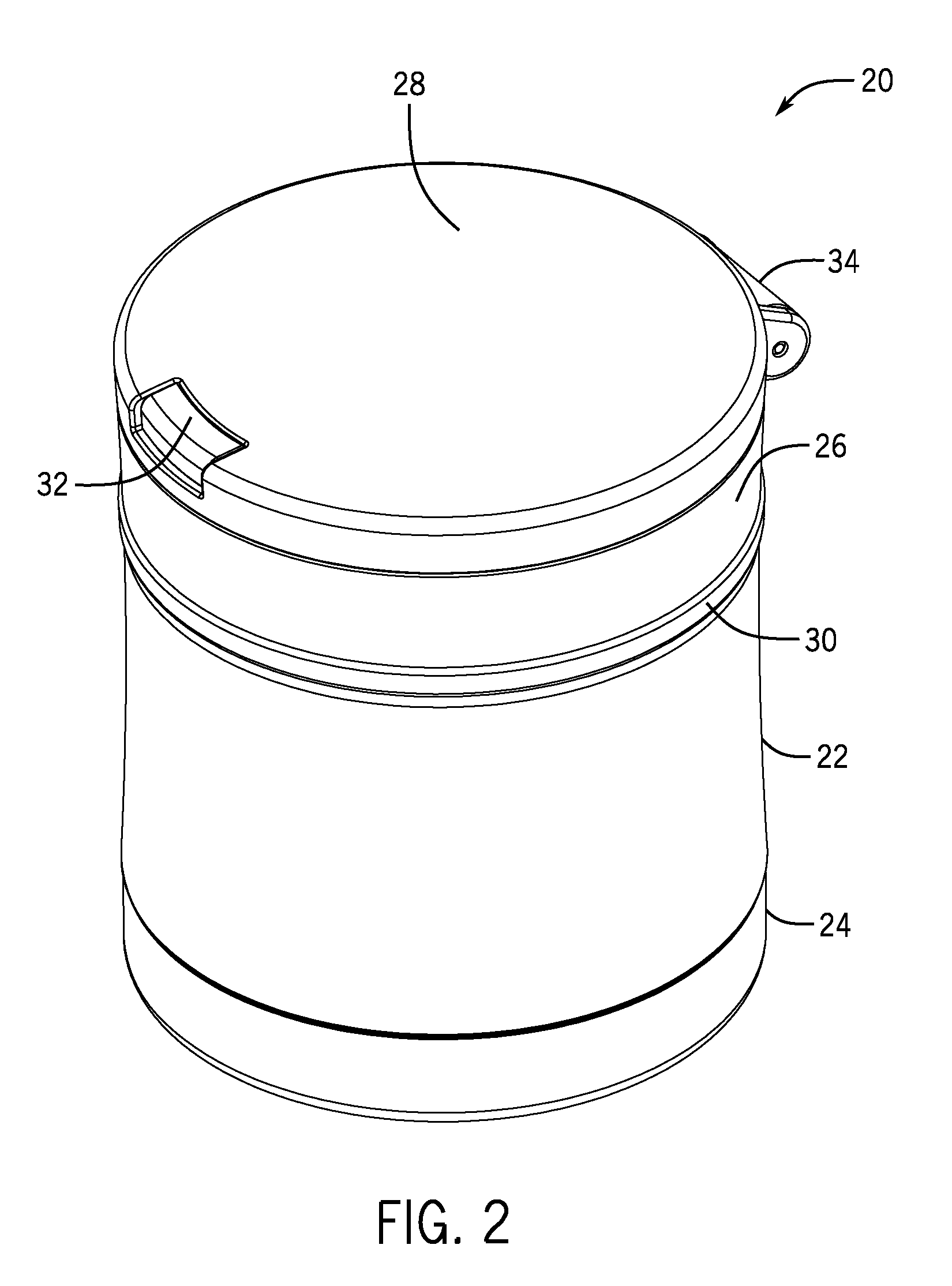

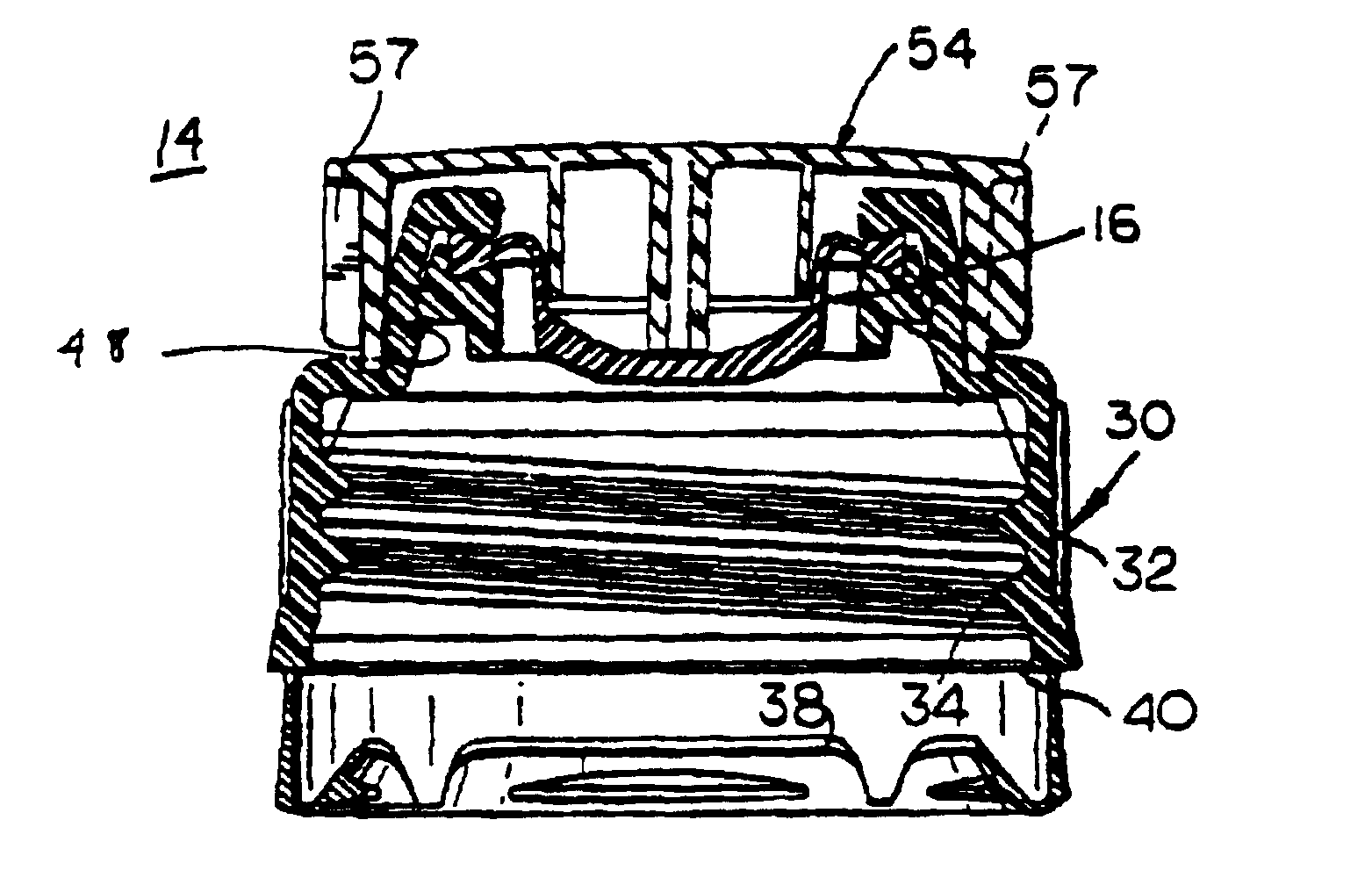

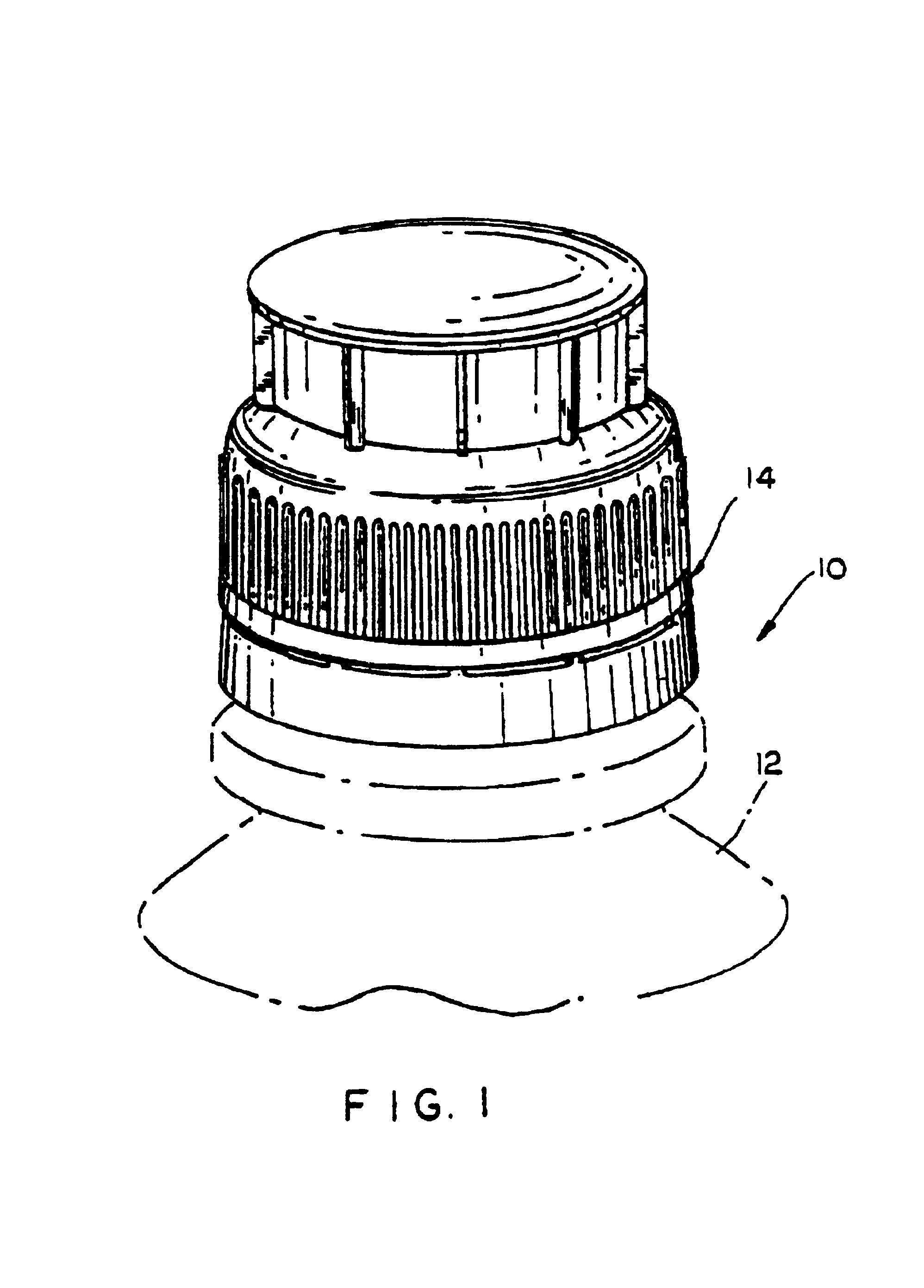

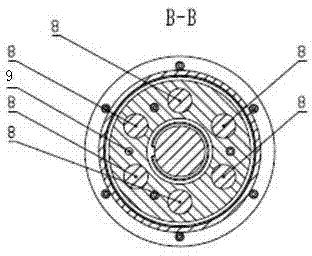

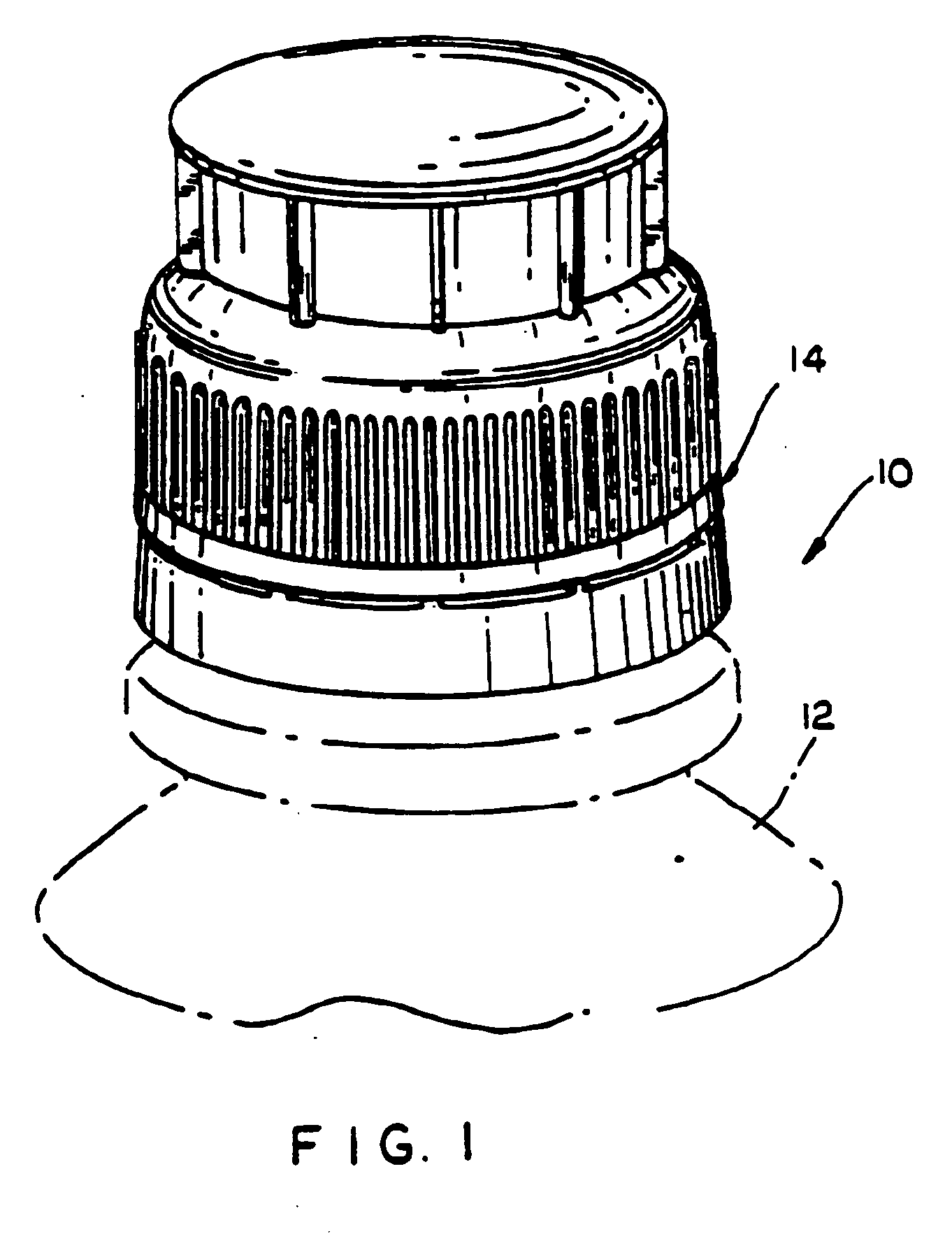

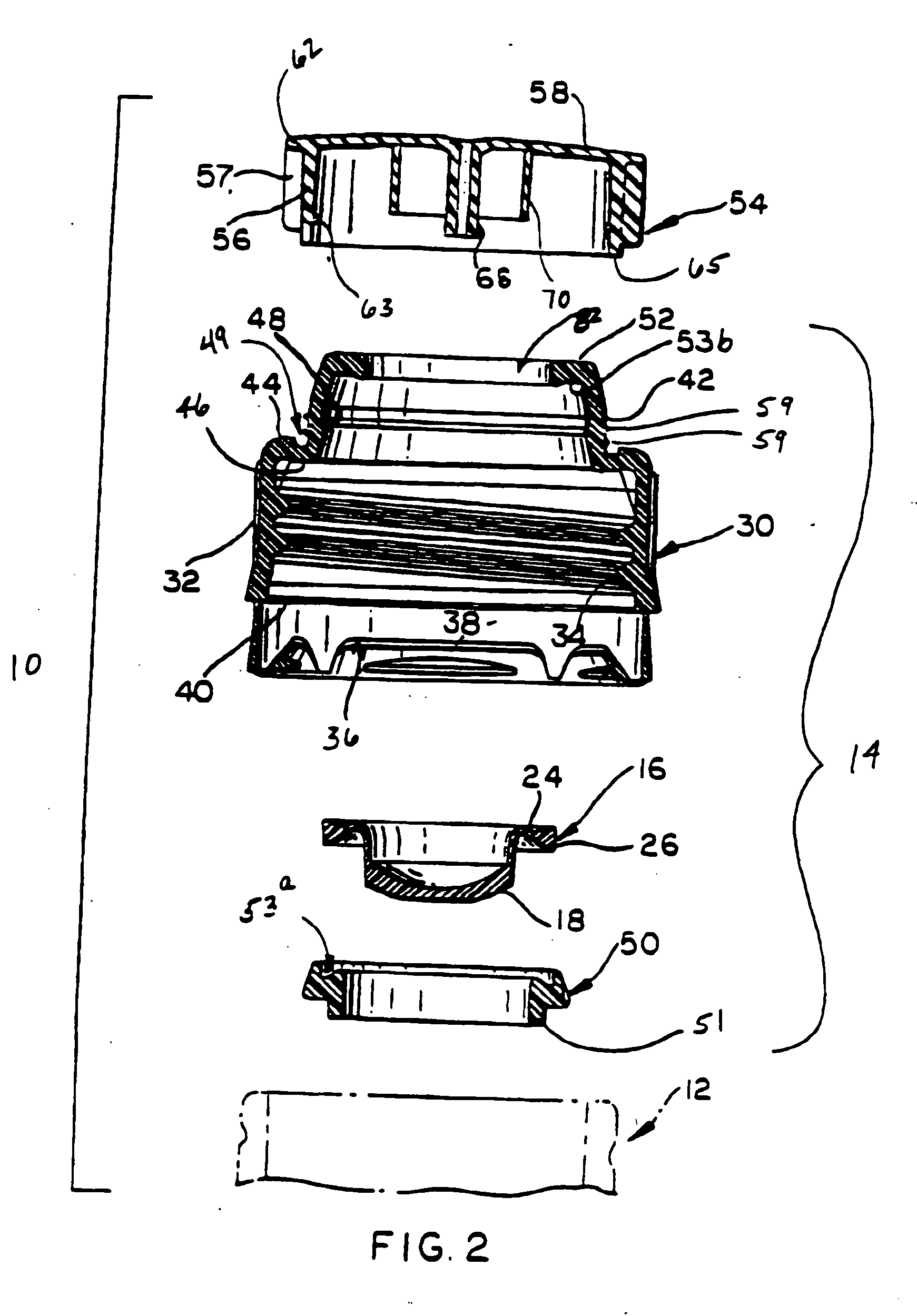

Food storage container with quick access lid

ActiveUS20130248531A1Sufficient spring forceEasy to closeOpening closed containersTravelling sacksThroatCentral projection

A food jar has an insulated body with a wide-mouth opening for receiving food. A collar is affixed to the opening of the food jar by affixing a throat member to the collar and threading the throat into the wide-mouth body. The throat member includes a gasket at the lower end to seal the throat to the body. A lid is affixed to the collar by a hinge. The lid includes a central projection that extends into sealing contact with the gasket on the throat member to seal the lid to the throat member. A button is mounted in a channel in the lid and biased by a spring to move into an engaging position to engage the collar at an opening in the collar to secure the lid to the collar. A wire loop is provided to engage the button in an embodiment.

Owner:THERMOS LLC

Food storage container with quick access lid

ActiveUS9211040B2Easy to closeEasy to openOpening closed containersTravelling sacksWide mouthCentral projection

A food jar has an insulated body with a wide-mouth opening for receiving food. A collar is affixed to the opening of the food jar by affixing a throat member to the collar and threading the throat into the wide-mouth body. The throat member includes a gasket at the lower end to seal the throat to the body. A lid is affixed to the collar by a hinge. The lid includes a central projection that extends into sealing contact with the gasket on the throat member to seal the lid to the throat member. A button is mounted in a channel in the lid and biased by a spring to move into an engaging position to engage the collar at an opening in the collar to secure the lid to the collar. A wire loop is provided to engage the button in an embodiment.

Owner:THERMOS LLC

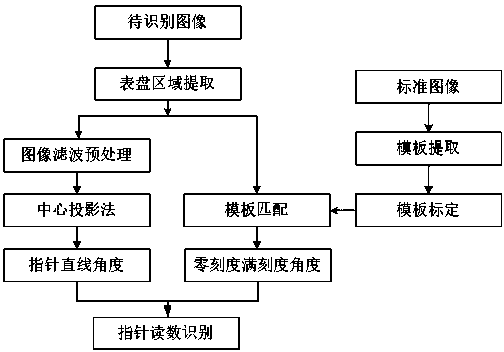

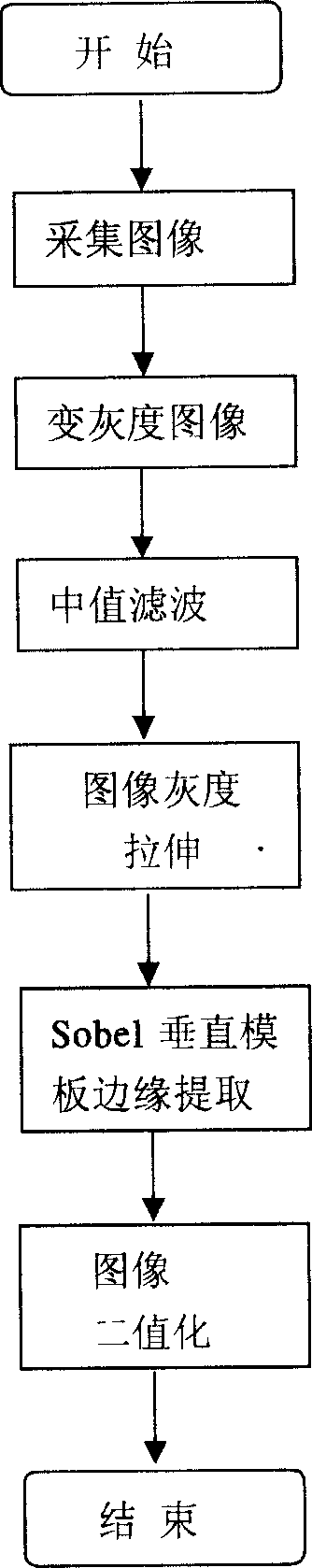

Instrument pointer automatic detection identification method and automatic reading method

InactiveCN1693852AAvoid human errorReduce labor intensityElectrical measurementsPattern recognitionCentral projection

The invention discloses a gange pointer automatic measuring identification method and reading method that includes gange image collection, pre-process of the gange image, taking pointer origin detecting using Hough conversion, detecting and identifying the gange pointer location by calculating the central projection point of the gange; then reading the value on the base of detecting and identification pointer location. The invention could automatically detecting and identifying the gange pointer and can conquer the human factor error and visual error when checking the gange by human eye. It has the advantage of high speed calculating, high accuracy identifying, low effect of human factor and convenience to take automatic information process.

Owner:SOUTH CHINA UNIV OF TECH

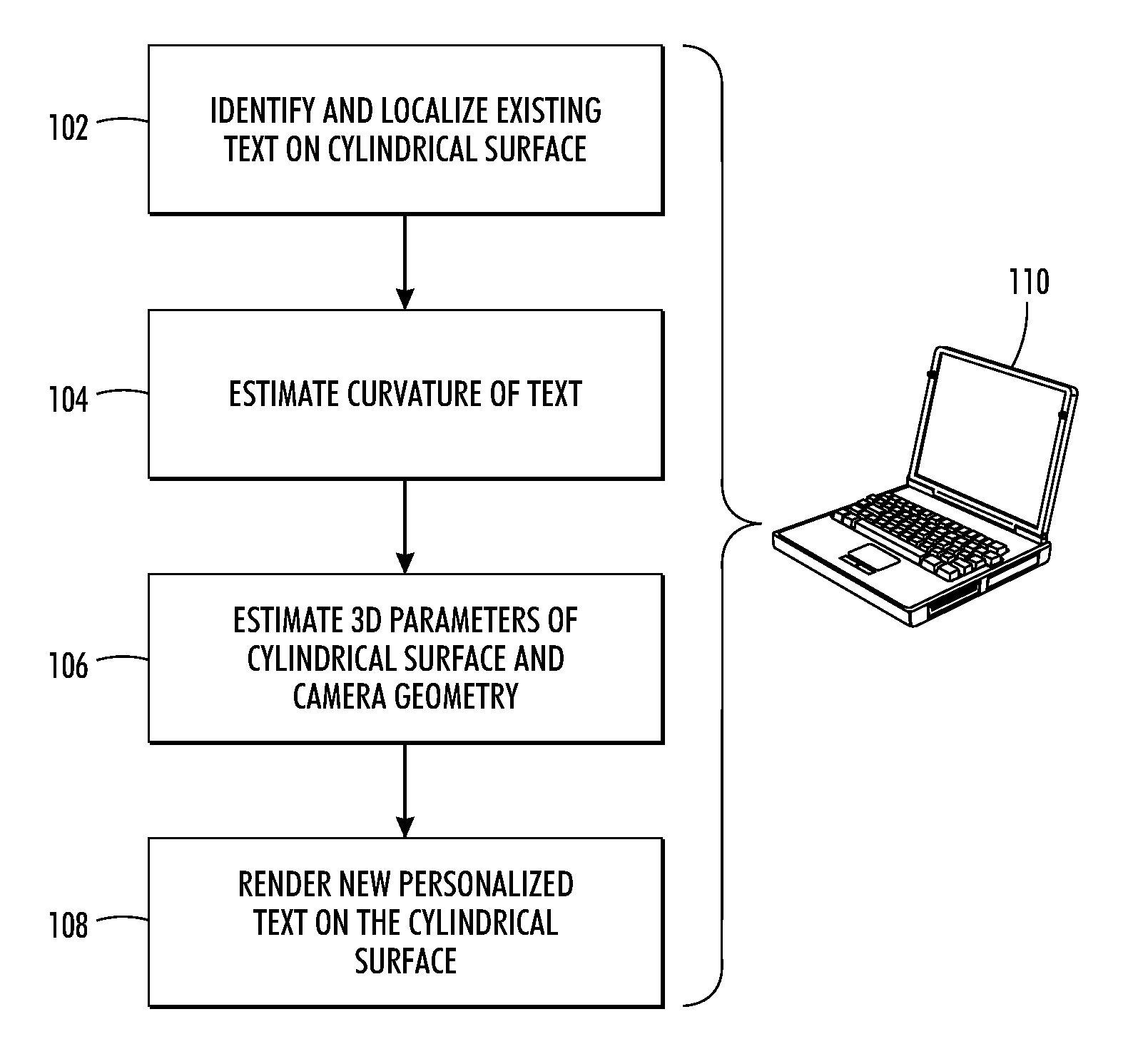

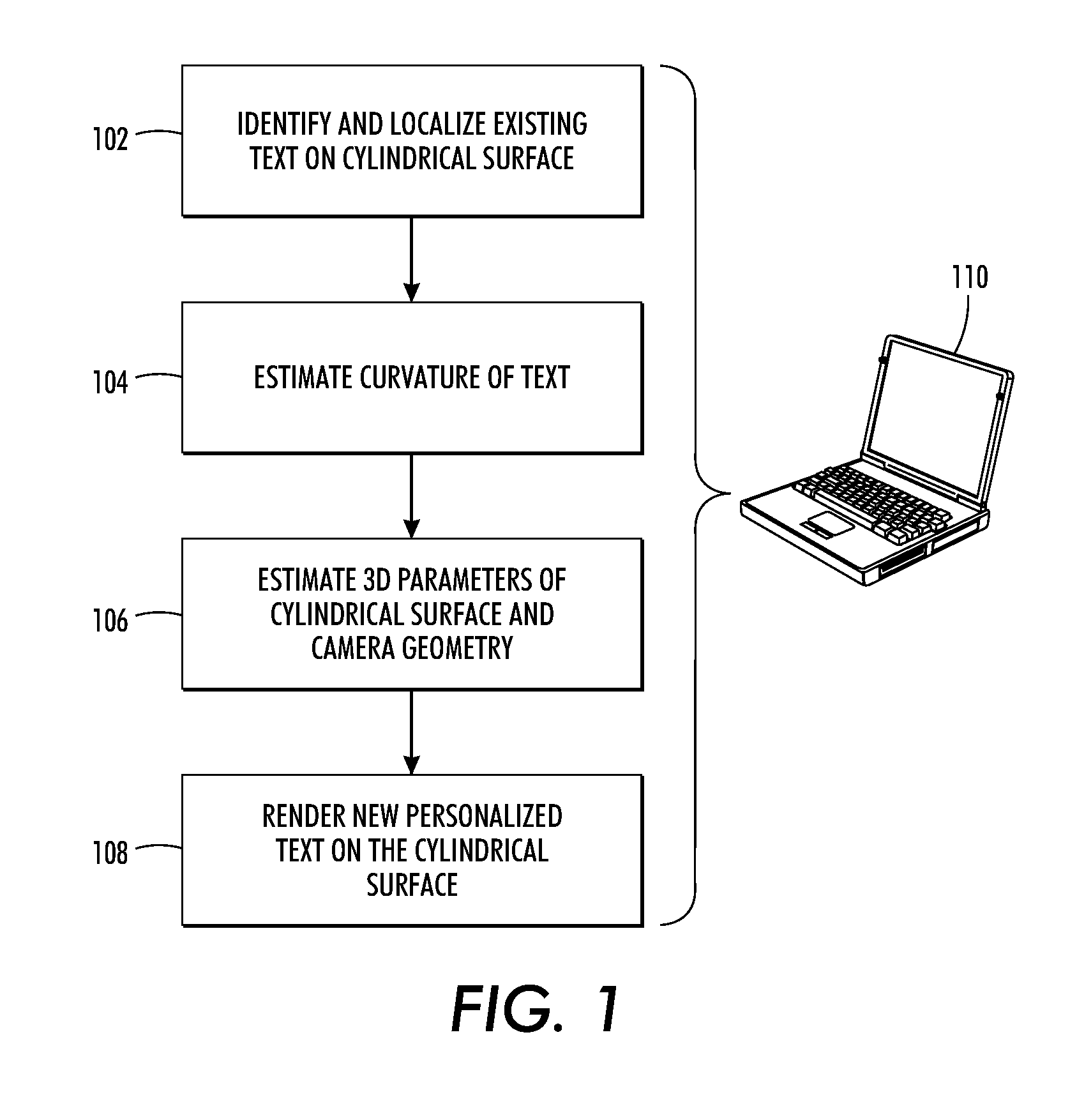

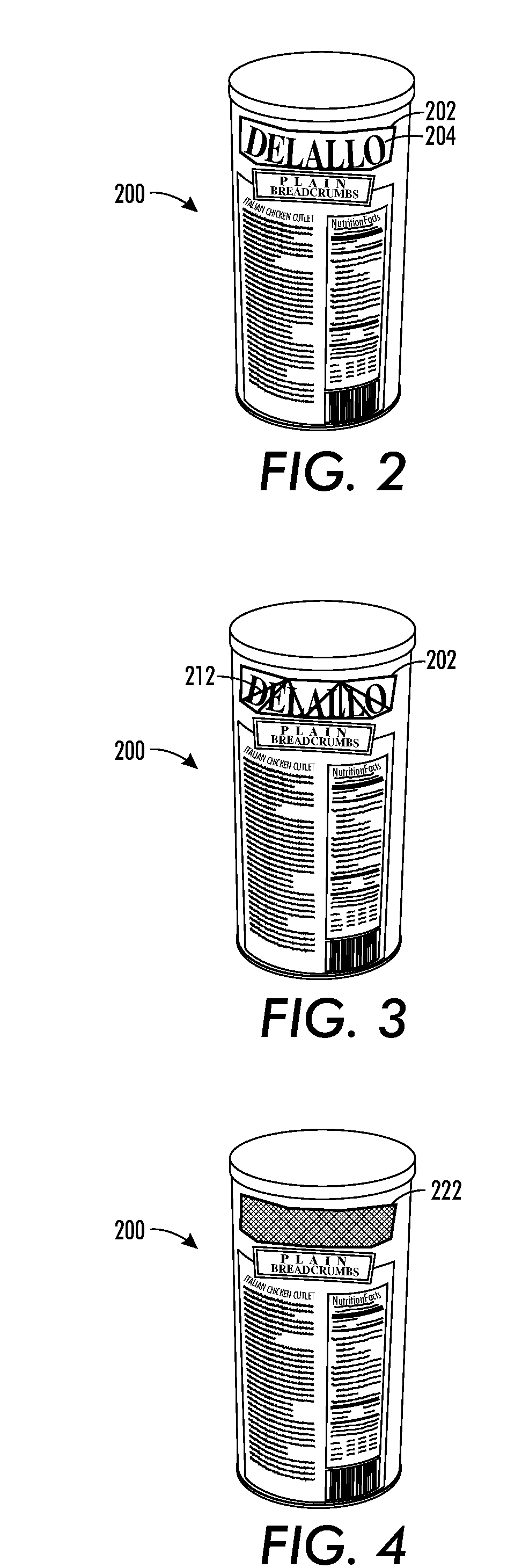

Rendering personalized text on curved image surfaces

InactiveUS20120146991A1Drawing from basic elementsCharacter and pattern recognitionPinhole camera modelPersonalization

As set forth herein, a computer-implemented method facilitates replacing text on cylindrical or curved surfaces in images. For instance, the user is first asked to perform a multi-click selection of a polygon to bound the text. A triangulation scheme is carried out to identify the pixels. Segmentation and erasing algorithms are then applied. The ellipses are estimated accurately through constrained least squares fitting. A 3D framework for rendering the text, including the central projection pinhole camera model and specification of the cylindrical object, is generated. These parameters are jointly estimated from the fitted ellipses as well as the two vertical edges of the cylinder. The personalized text is wrapped around the cylinder and subsequently rendered.

Owner:XEROX CORP

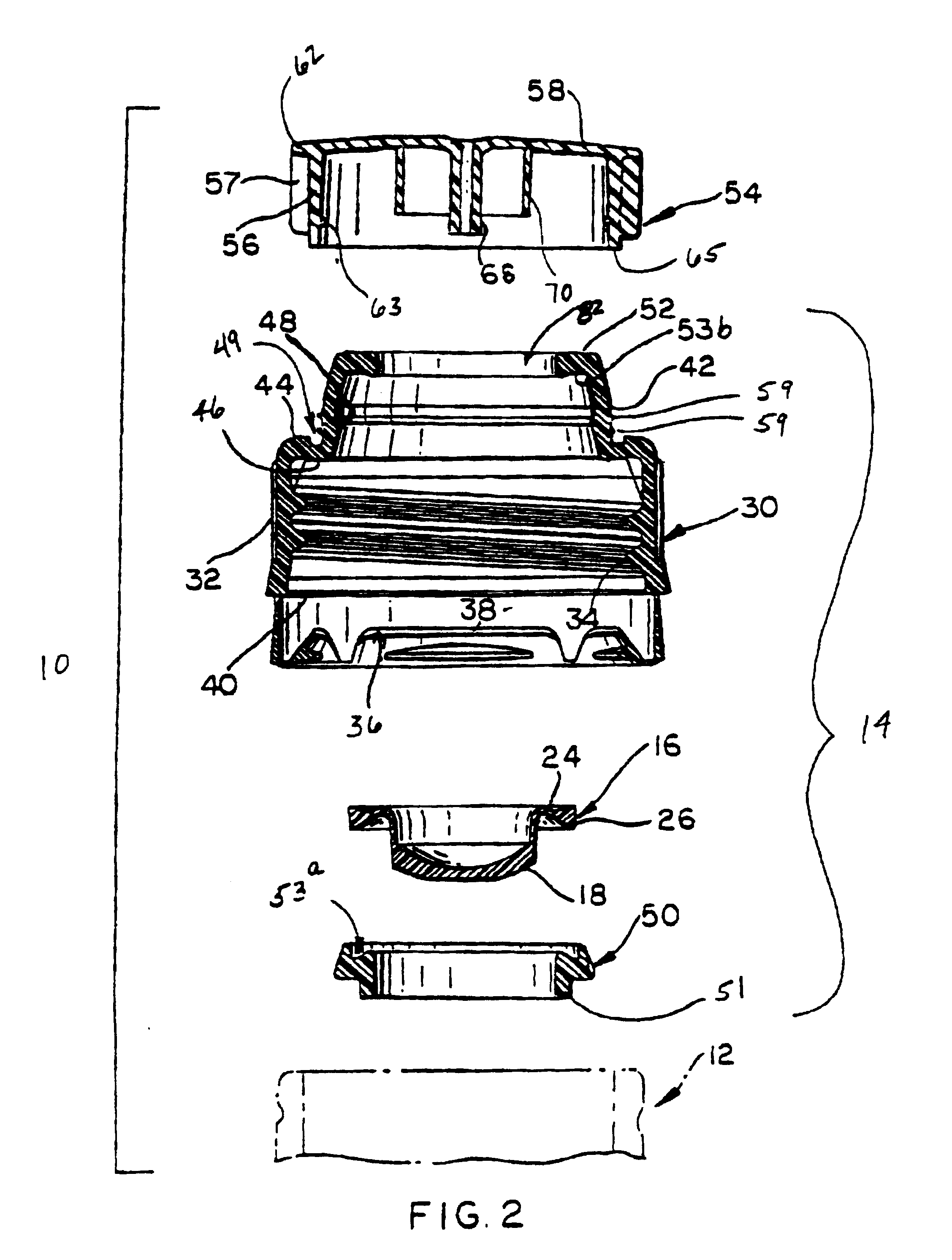

Cover for dispensing closure with pressure actuated valve

InactiveUS6910607B2Prevent openingRestrict movementClosuresLiquid flow controllersCentral projectionEngineering

A cover for a valved dispensing closure prevents the valve from opening while the cover is disposed on closure. The cover's central projection prevents flaps formed in the valve from opening, and the cover's outer projection prevents the flexible valve head from moving or inverting. The cover may also have projections that project inwardly from its skirt. The cover projections interact with mating projections formed on an exterior of the closure, and a lower end of the skirt may be disposed into an annular groove in the closure, to enhance a seal between the closure and the cover.

Owner:OBRIST CLOSURES SWITZERLAND GMBH

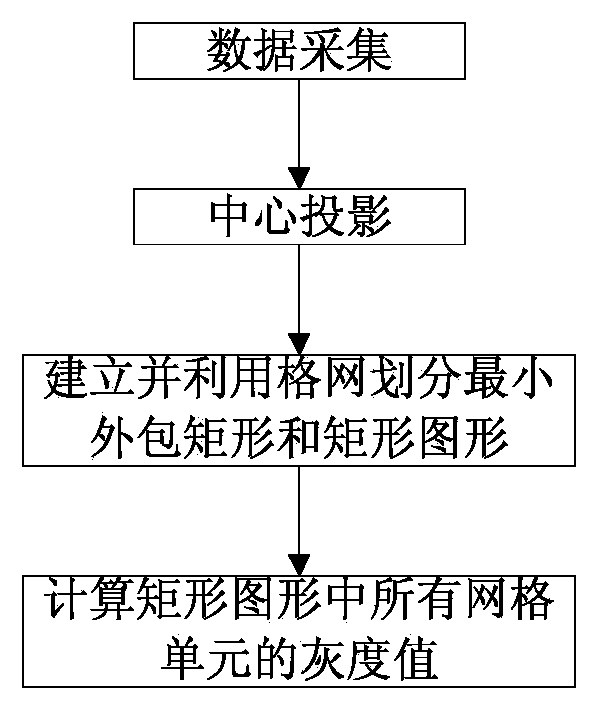

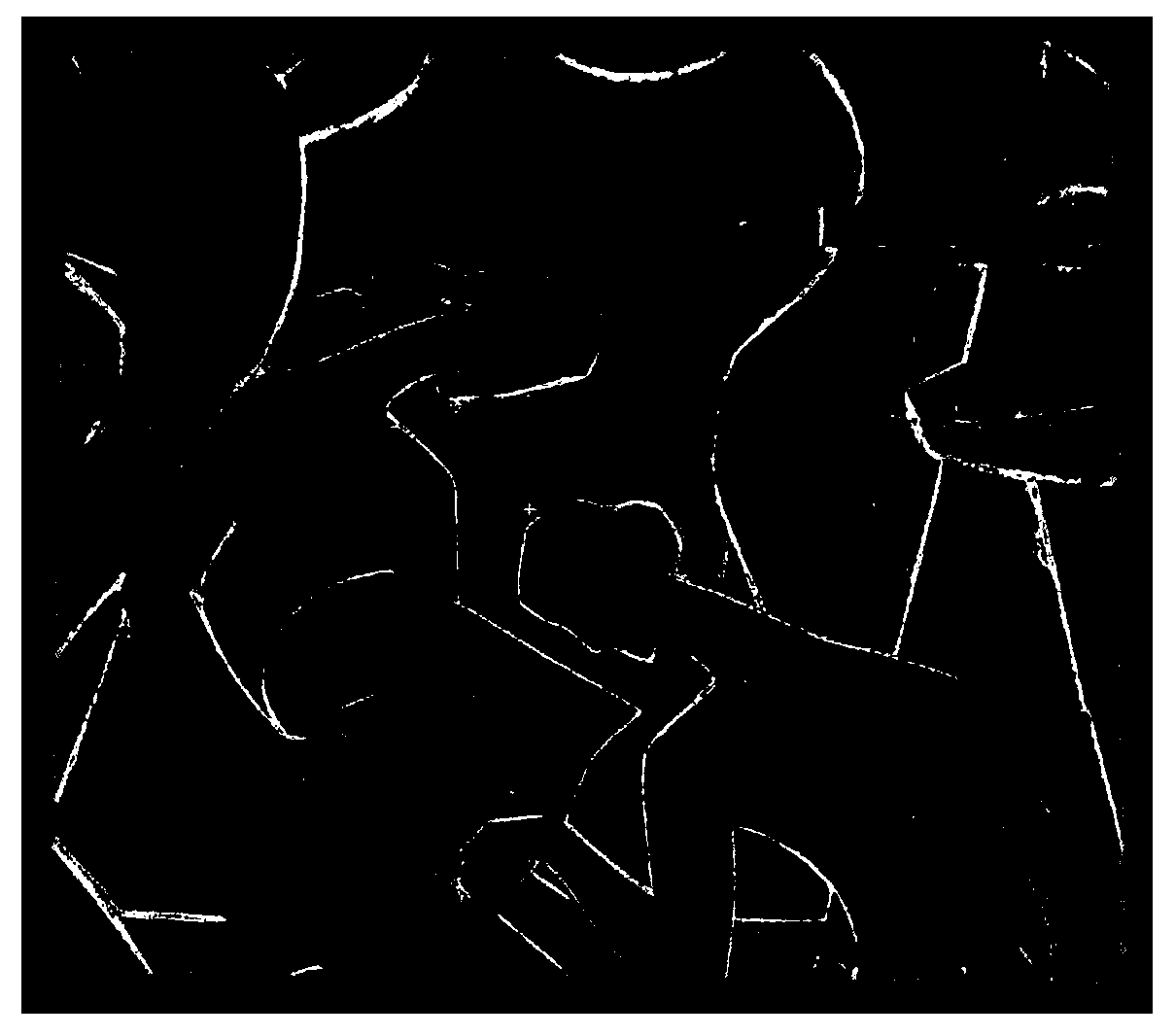

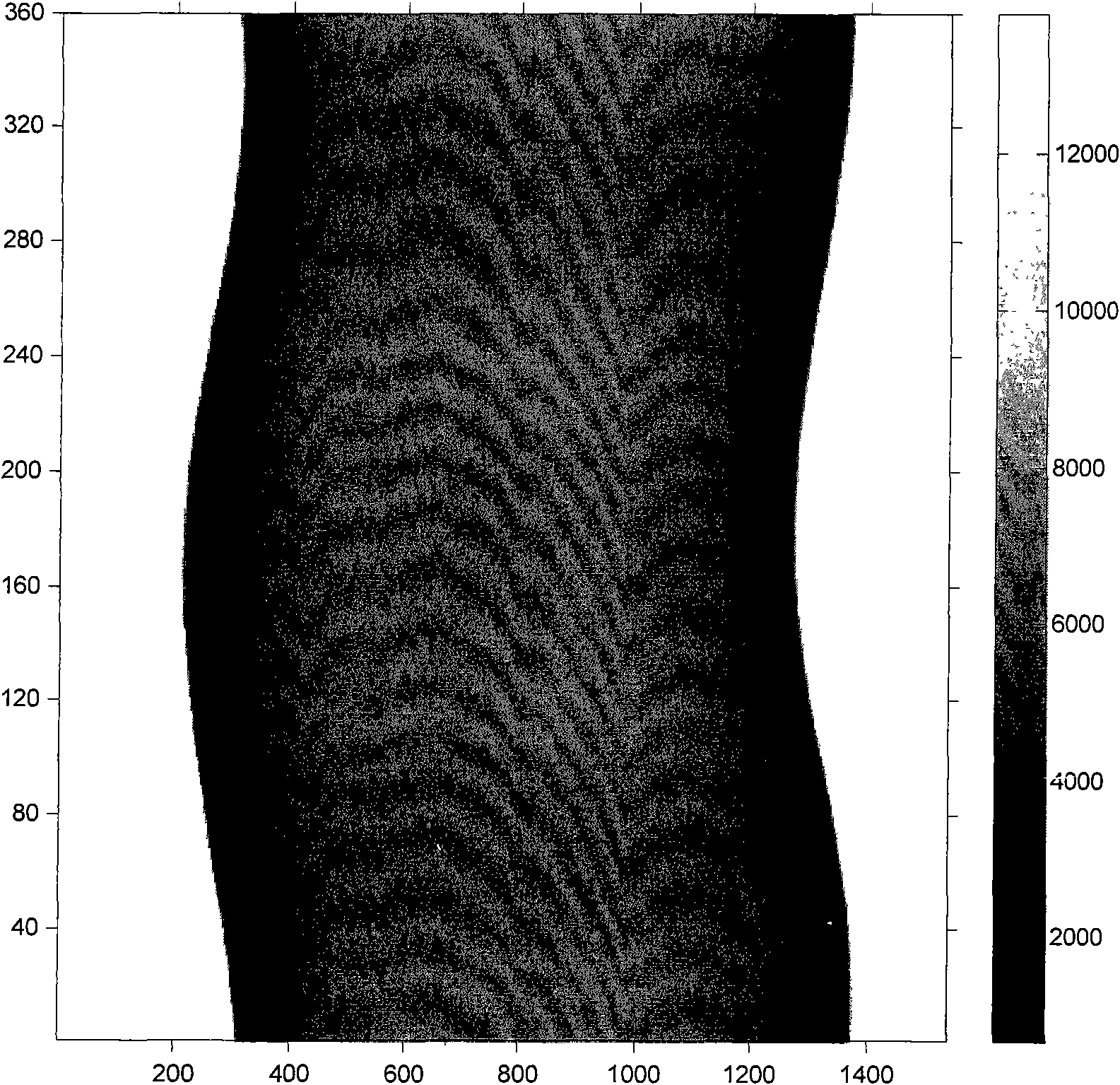

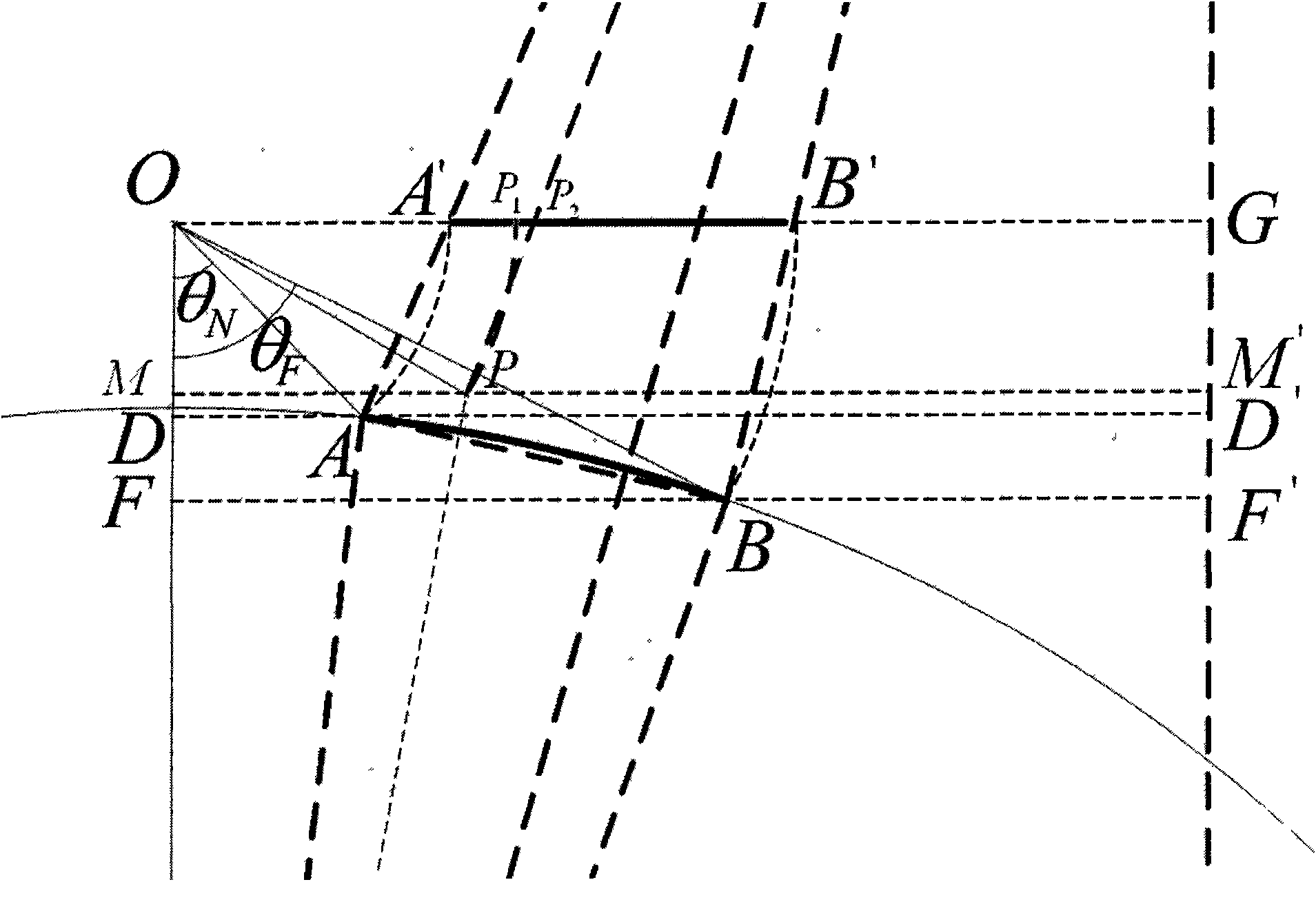

Ground laser radar reflection intensity image generation method based on central projection

ActiveCN104007444AProjection approachSolve matching problemsElectromagnetic wave reradiationSpecial data processing applicationsGraphicsPoint cloud

The invention discloses a ground laser radar reflection intensity image generation method based on central projection. The ground laser radar reflection intensity image generation method based on central projection comprises the following steps that firstly, a ground laser radar is used for obtaining the laser point cloud of a detected object; secondly, the laser source of the ground laser radar serves as a projection center, a central point projection radial is determined through the projection center and the center of the laser point cloud, a plane which is perpendicular to the central point projection radial and passes through the central point serves as a projection plane, the laser point cloud is projected in the projection plane to obtain corresponding projection points; thirdly, a minimum wrapping rectangle and a rectangular graph of all the projection points are built and are divided through grids with the same intervals, and any grid unit of the rectangular graph has corresponding grid units in the minimum wrapping rectangle. For any grid unit of the rectangular graph, the reflection intensity value of the nearest projection point in the minimum wrapping rectangle is converted into a gray value, and the gray value is given to the grid unit.

Owner:BEIJING UNIVERSITY OF CIVIL ENGINEERING AND ARCHITECTURE

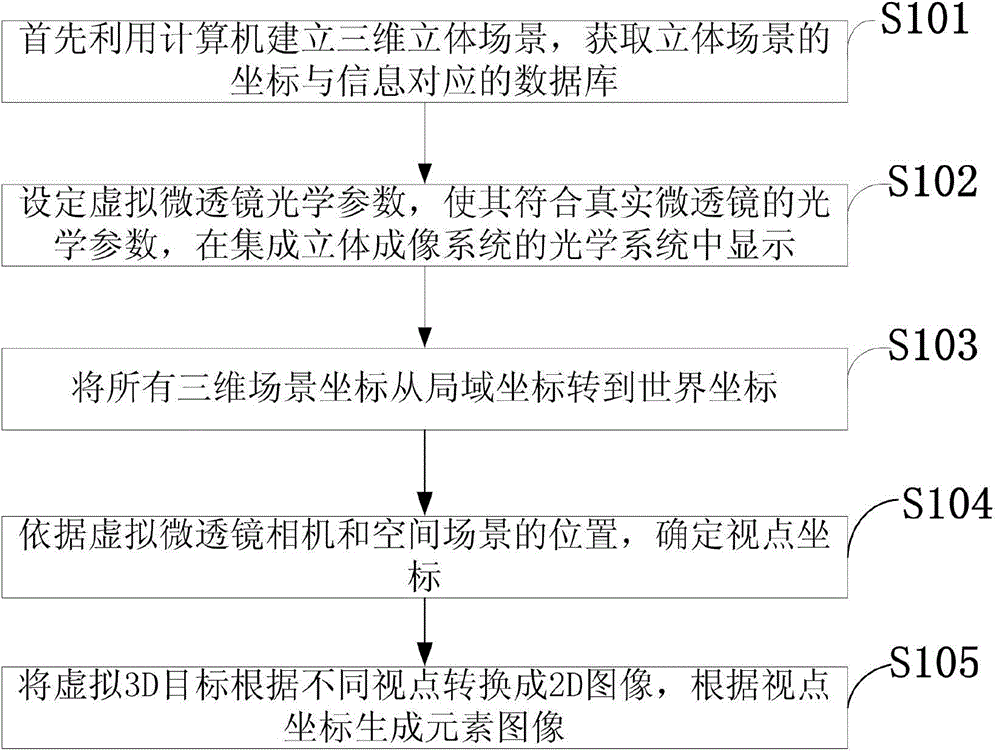

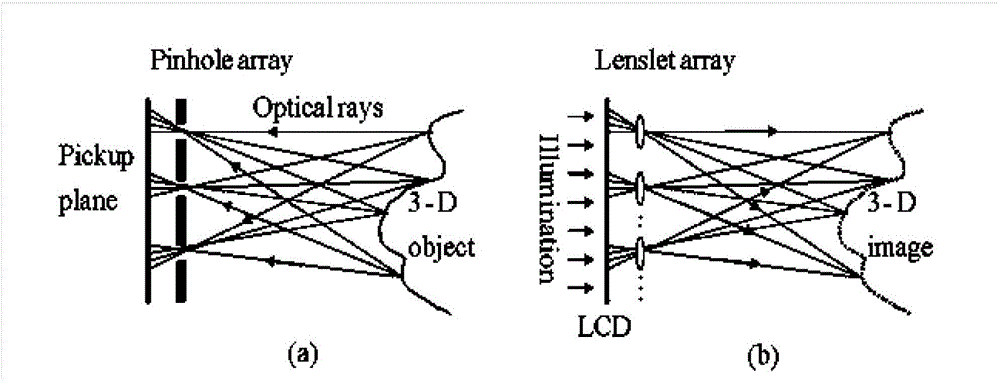

Method for generating integrated three-dimensional imaging element images on basis of central projection

The invention discloses a method for generating integrated three-dimensional imaging element images on the basis of central projection. Firstly, a three-dimensional scene is established by using a computer, and a database corresponding to coordinates and information of the three-dimensional scene is obtained; virtual micro lens optical parameters are set to accord with optical parameters of a true micro lens, and are displayed in an optical system of an integrated three-dimensional imaging system; all the coordinates of the three-dimensional scene are converted into world coordinates from local coordinates; the coordinates of viewpoints are determined according to the position of a virtual micro lens camera and the position of a space scene; a virtual 3D target is converted into 2D images according to different viewpoints, and the element images are generated according to the coordinates of the viewpoints. According to the method for generating the integrated three-dimensional imaging element images on the basis of the central projection, the technology of geometric projection and mapping is adopted, virtual micro lens array element images are formed through the computer, the obtained element images have the advantages of being wider in view angle range, higher in resolution and larger in field depth, interference among element image arrays obtained on the basis of micro lens arrays is eliminated, and clear integrated three-dimensional images are reconfigured.

Owner:CHANGCHUN UNIV OF SCI & TECH

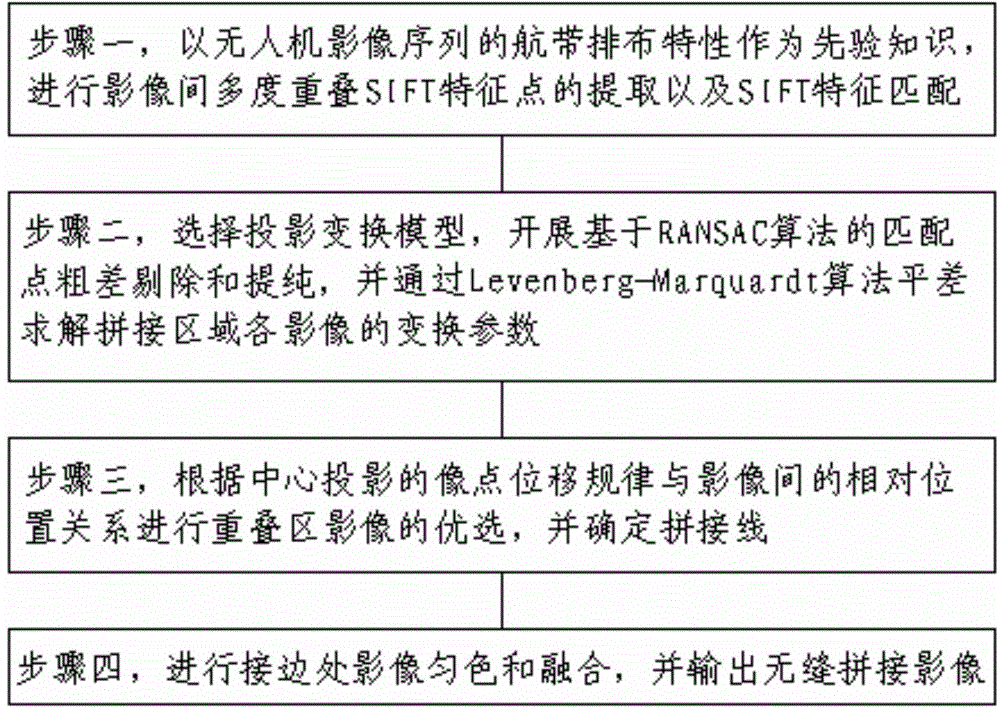

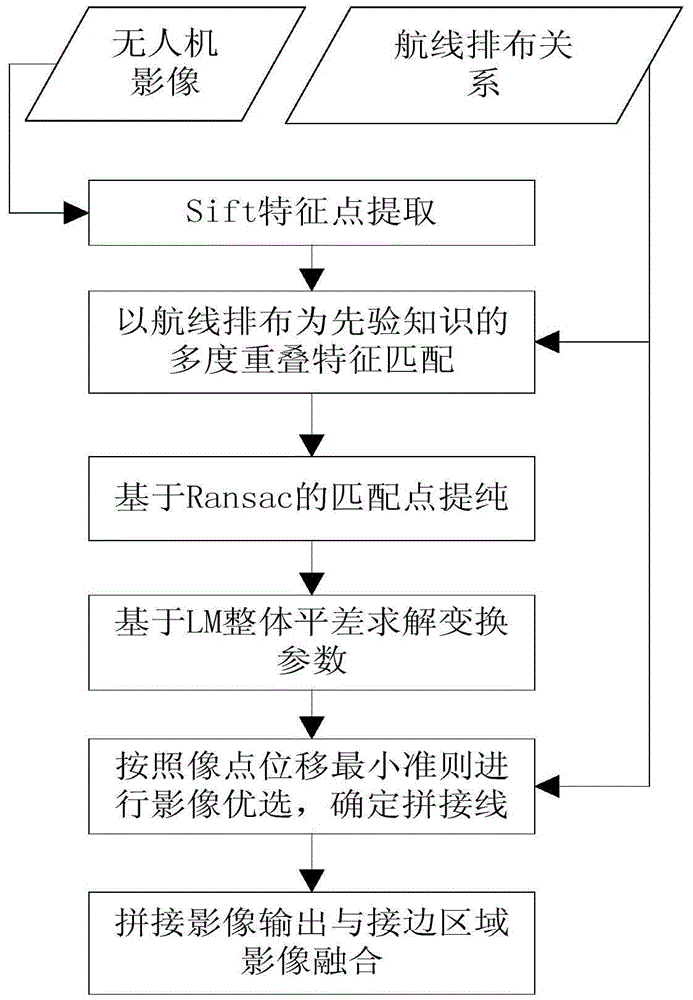

Large-area complex-terrain-region unmanned plane sequence image rapid seamless splicing method

InactiveCN104156968AAvoid blind matchingNarrow searchImage analysisLevenberg–Marquardt algorithmTerrain

Provided is a large-area complex-terrain-region unmanned plane sequence image rapid seamless splicing method which comprises the following steps: to begin with, with air strip arrangement features of unmanned plane image sequence being prior knowledge, carrying out inter-image multiple-overlap SIFT feature point extraction and matching; then, carrying out matching point gross error removing and purifying based on random sample consensus algorithm, and solving transformation parameters of each image in spliced regions in an adjustment manner through an Levenberg-Marquardt algorithm; next, carrying out overlapped region image optimized selection according to the relative position relationship between central projection image point displacement rules and the images, and determining splicing lines; and finally, carrying out image uniform-coloring and fusion at the edge-connection places, and outputting spliced images, thereby realizing mass unmanned plane image seamless splicing. The seamless splicing method helps to improve the extraction efficiency of the SIFT feature points, guarantee the geometric accuracy of the spliced images, and eliminate the tiny color difference at the two sides of the image splicing line, and thus the spliced images with natural color transition and good natural object and landform continuity are obtained.

Owner:SHANDONG LINYI TOBACCO

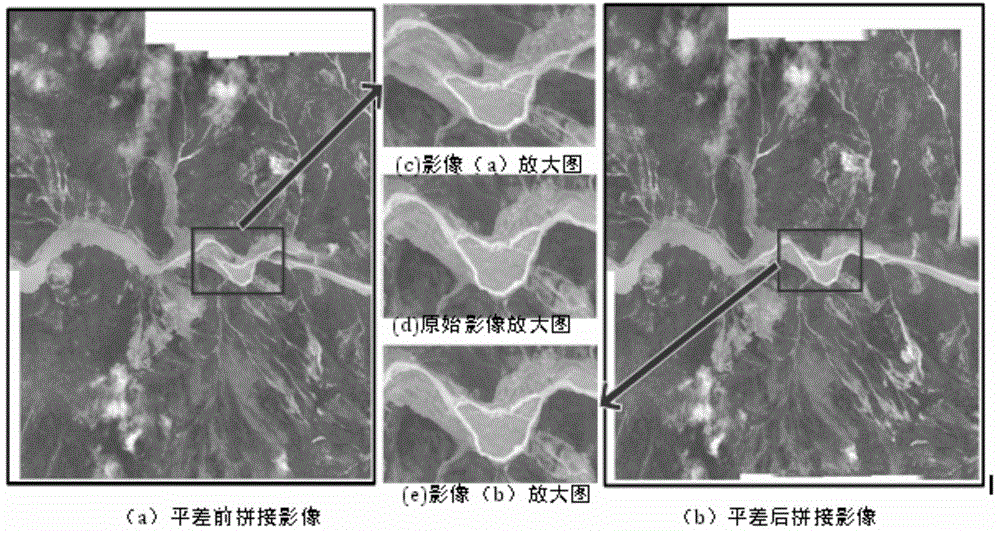

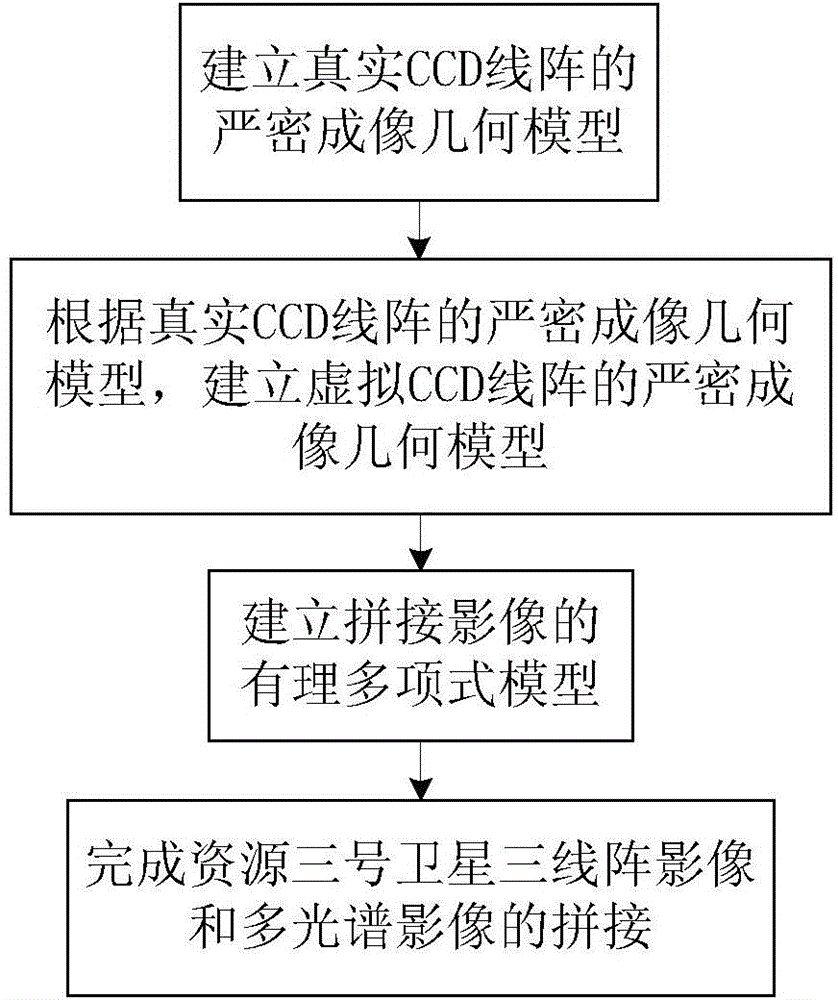

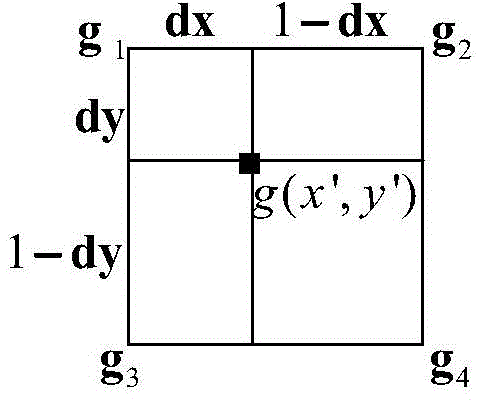

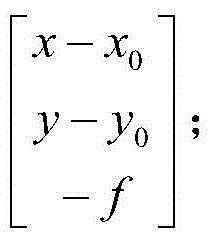

Method for splicing ZY3 satellite three-line-scanner image and multispectral image

ActiveCN103914808AFacilitate subsequent geometry processingEasy to handle geometryGeometric image transformationImaging processingImaging modalities

The invention relates to a method for splicing a ZY3 satellite three-line-scanner image and a multispectral image. The method includes the following steps of building a precise imaging geometrical model of a real CCD linear array, building a precise imaging geometrical model of a virtual CCD linear array according to the precise imaging geometrical model of the real CCD linear array, building a rational polynomial model of the spliced images, and finishing splicing of the ZY3 satellite three-line-scanner image and the multispectral image. By the inner view field splicing scheme based on the virtual CCD linear array, a distortionless CCD array is built on a focal plane, and reimaging is carried out on a plurality of original CCD imaging images according to a line central projection imaging method, so that line central projection seamless splicing of the CCD imaging images is achieved. The method can be widely applied to image processing of the ZY3 satellite.

Owner:SATELLITE SURVEYING & MAPPING APPL CENTSASMAC NAT ADMINISTATION OF SURVEYING MAPPING & GEOINFORMATION OF CHINANASG

Navigation multiple spectrum scanner geometric approximate correction method under non gesture information condition

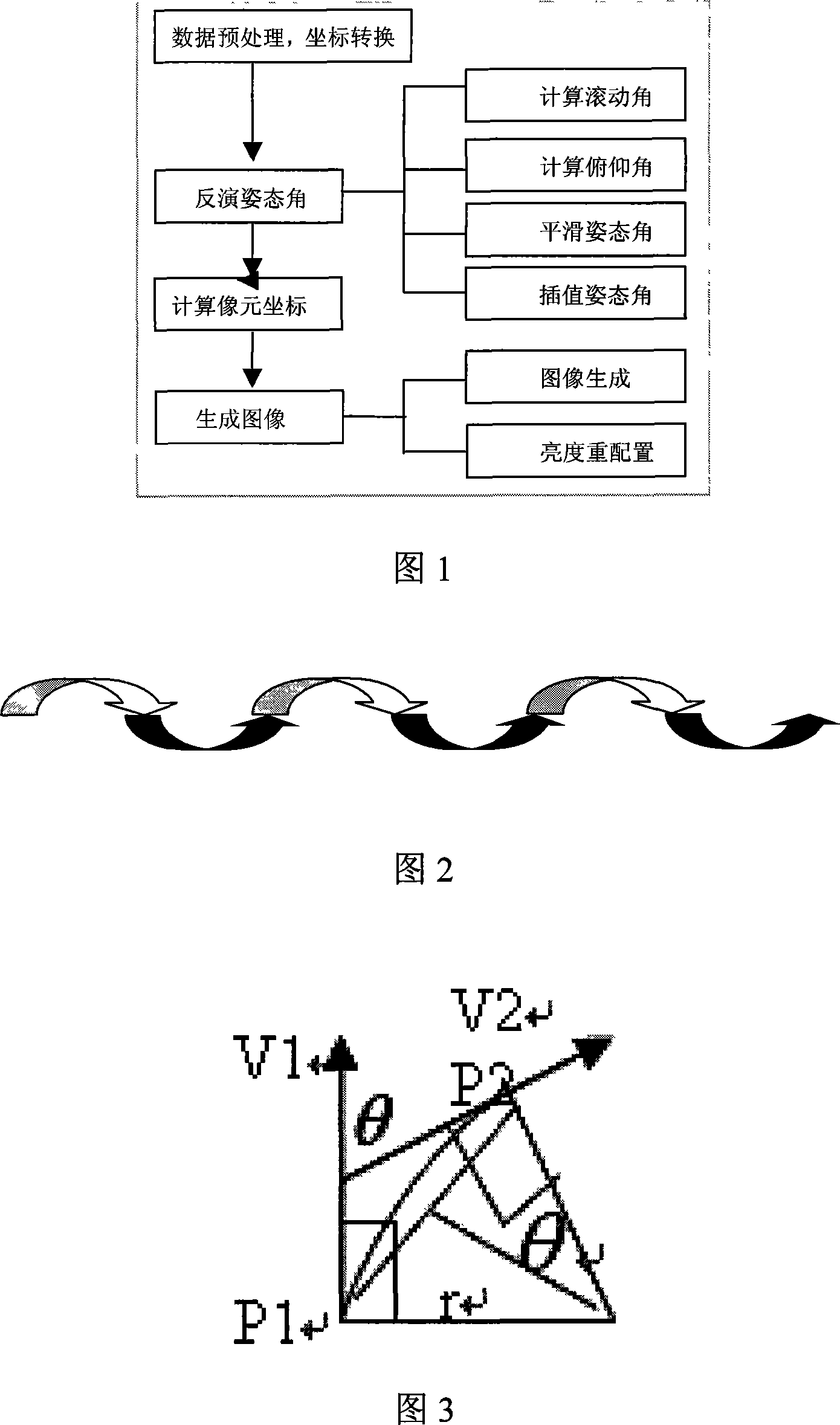

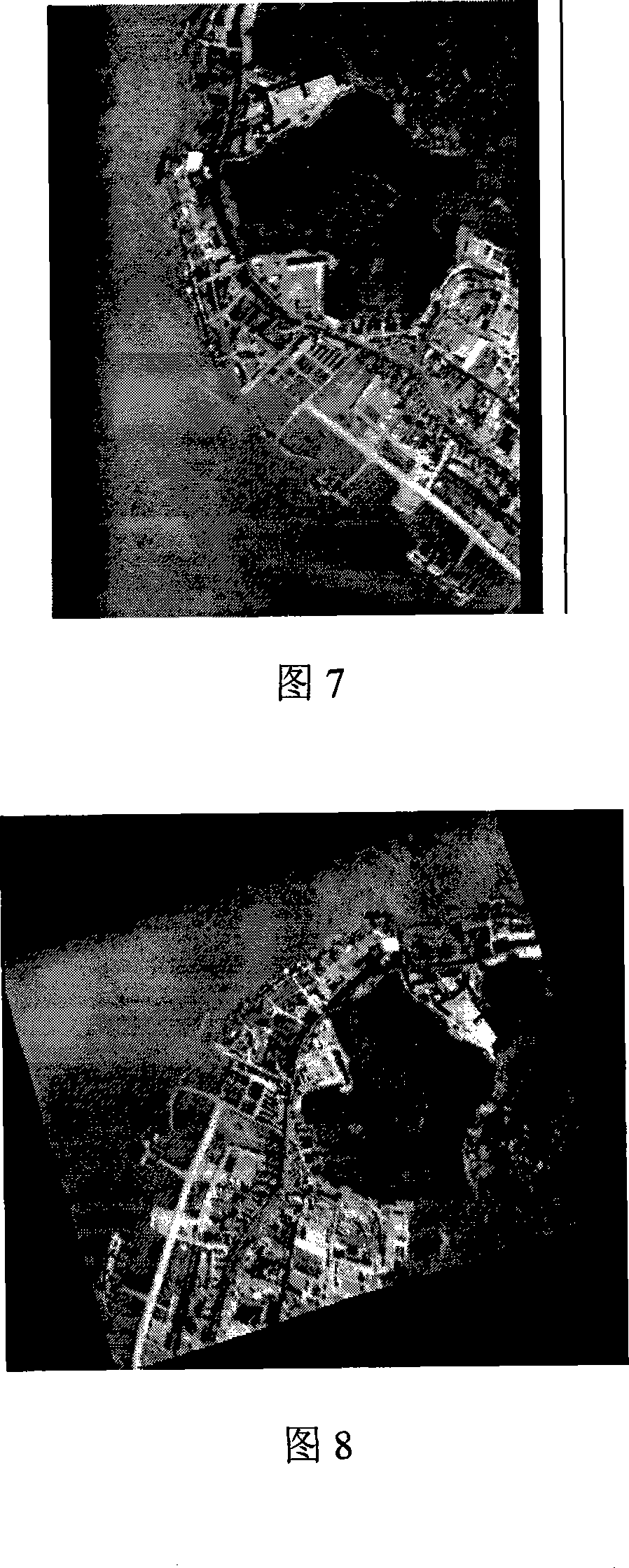

InactiveCN101114022AImprove geographic accuracyFlexible access toUsing optical meansElectromagnetic wave reradiationAviationVertical plane

The invention discloses an avigation multi-spectral scanner geometric rough correction method under the conditions of no gesture information, which comprises the steps that: 1) the multi-spectral scanner data is carried out the coordinate conversion, the measured value in a WGS-84 ground coordinate system is converted to the value in a Gaussian plane Cartesian coordinate; 2) according to a ideal flight model, an one second mode is used for simulating a rolling angle; 3) a curve is fitted according to the height data in the vertical plane, then the flight pitching angle in each updated data point is calculated according to the cutting direction; 4) the central projection constitutive equation is used for obtaining the point coordinates; 5)according to the flight height, the scanning angel and the instantaneous scanning angel, the other points coordinates in the same scanning row are obtained by the scanning mode; 6) a direct method is used for producing a rough correction image. The invention improves the geographical accuracy of the avigation remote sensing, and is an innovation of the remote sensing technology under the conditions of no gesture information and improves the time effectiveness, so the avigation remote sensing technology can be better applied to the production and livelihood of the national economy.

Owner:SECOND INST OF OCEANOGRAPHY MNR

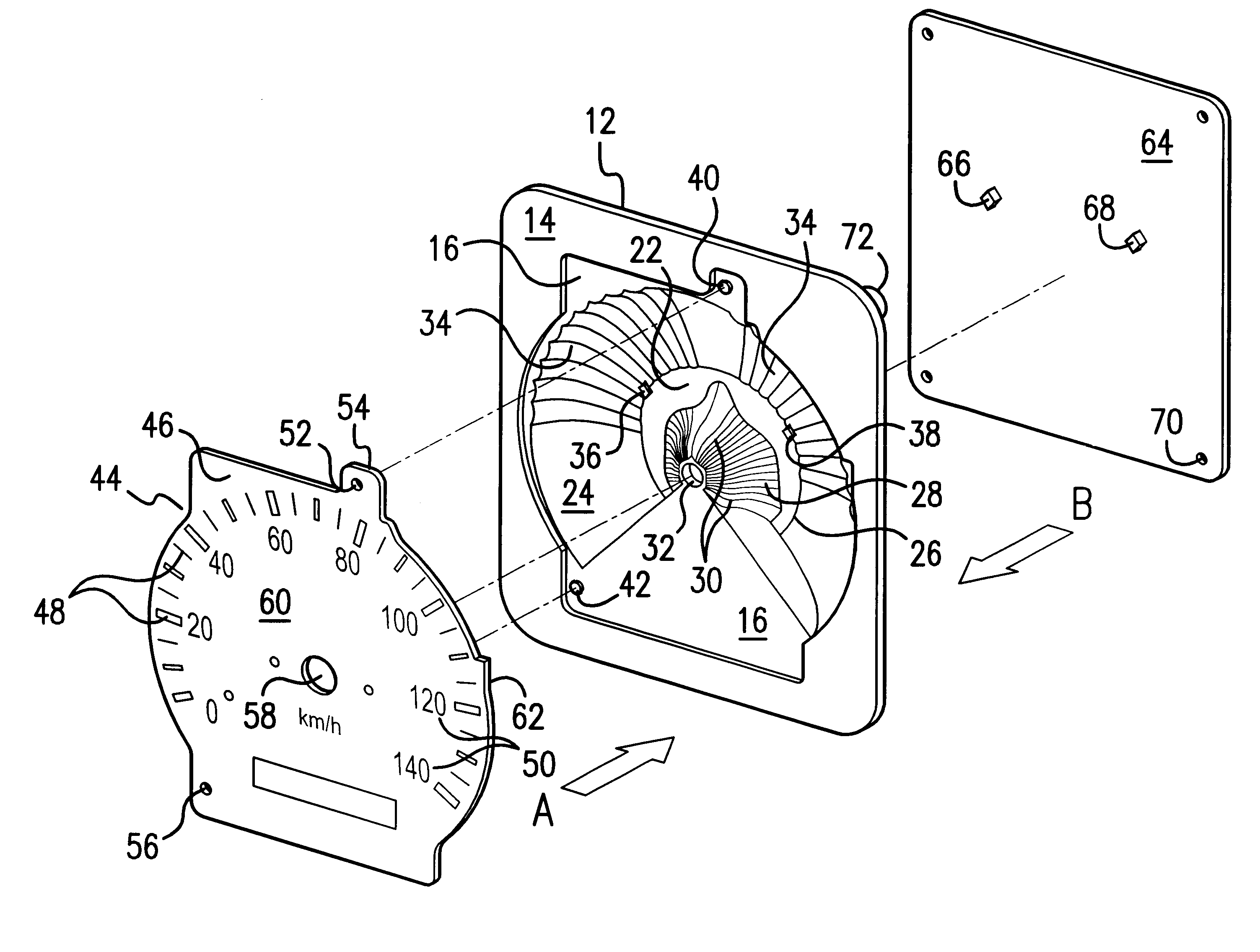

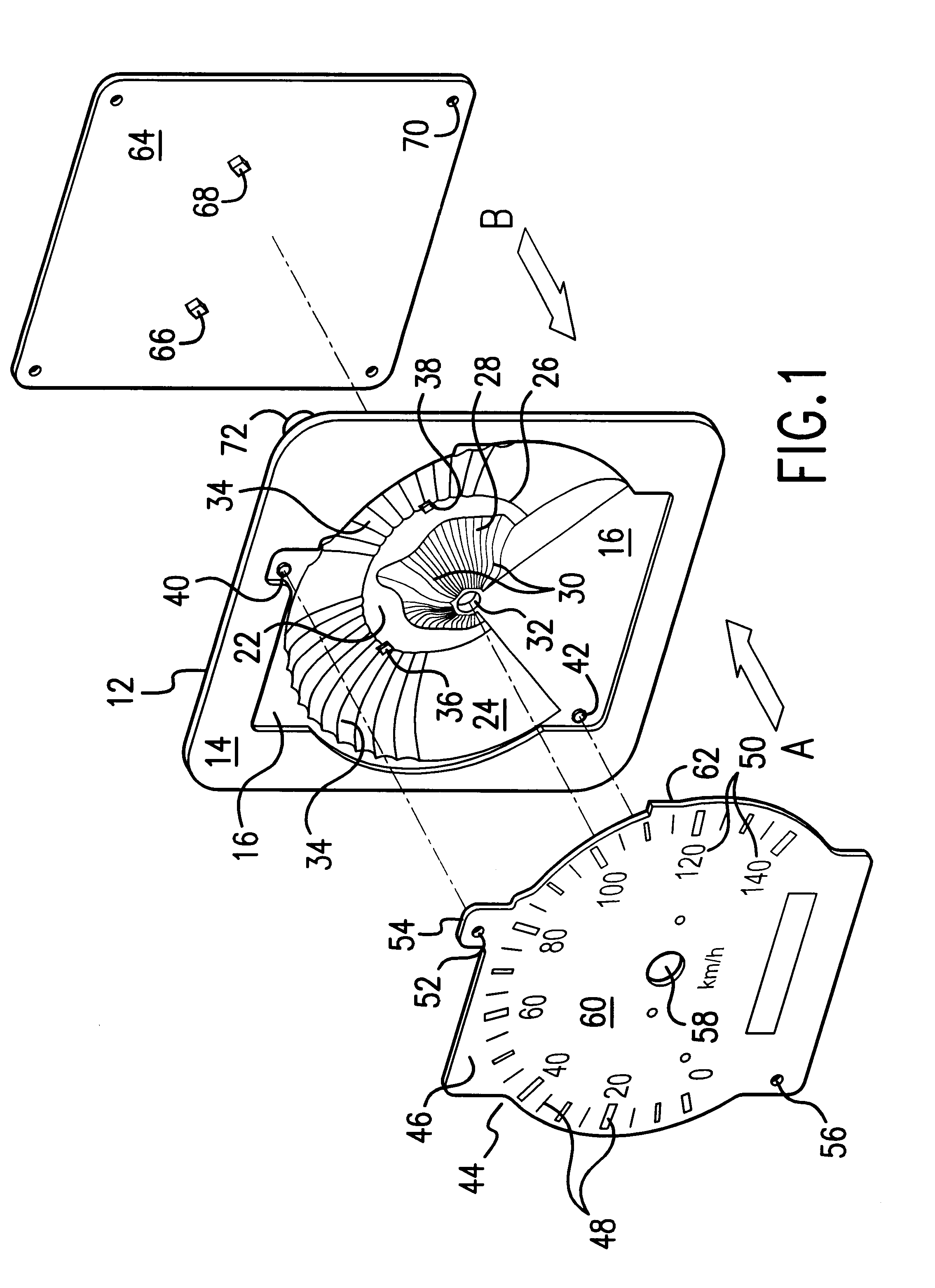

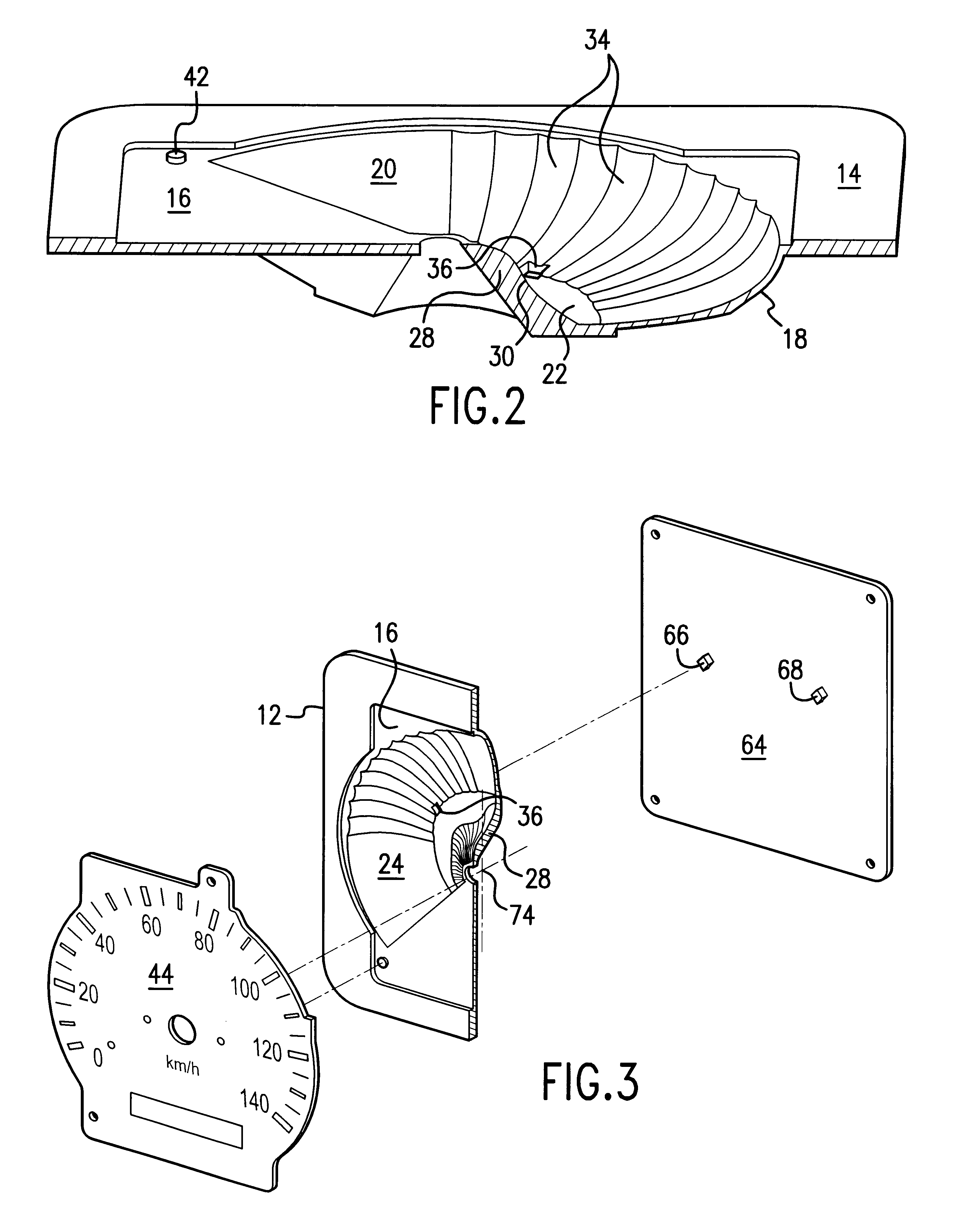

Instrument cluster reflector

InactiveUS6508562B1Inexpensively and efficiently backlitFew partsMeasurement apparatus componentsDashboard lighting devicesFluteCentral projection

A reflector for backlighting an indicating instrument has two groups of flutes arranged for evenly distributing light and reducing bright spots. A plate has a recessed portion for receiving the indicating instrument and a semi-bowl-shaped compartment extending away from the plate and opening to the indicating instrument. The compartment has a base with a central projection formed by parabolic flutes and a semi-circular wall connecting the base with the recessed portion of the plate. The semi-circular wall has an inner surface facing the parabolic flutes. The inner surface has flutes extending in a radial direction from the base. Two apertures are positioned in the compartment across a boundary between the base and semi-circular wall. The apertures each receive a light emitting diode mounted on a printed circuit board when the board is assembled on the reflector. The parabolic flutes distribute the light from the light emitting diodes to evenly backlight the indicating instrument. The radial flutes diffuse the light to prevent hot or bright spots from developing under the indicating instrument. The light is further scattered by a chrome coating applied on a surface of the indicating instrument facing the compartment.

Owner:YAZAKI NORTH AMERICA

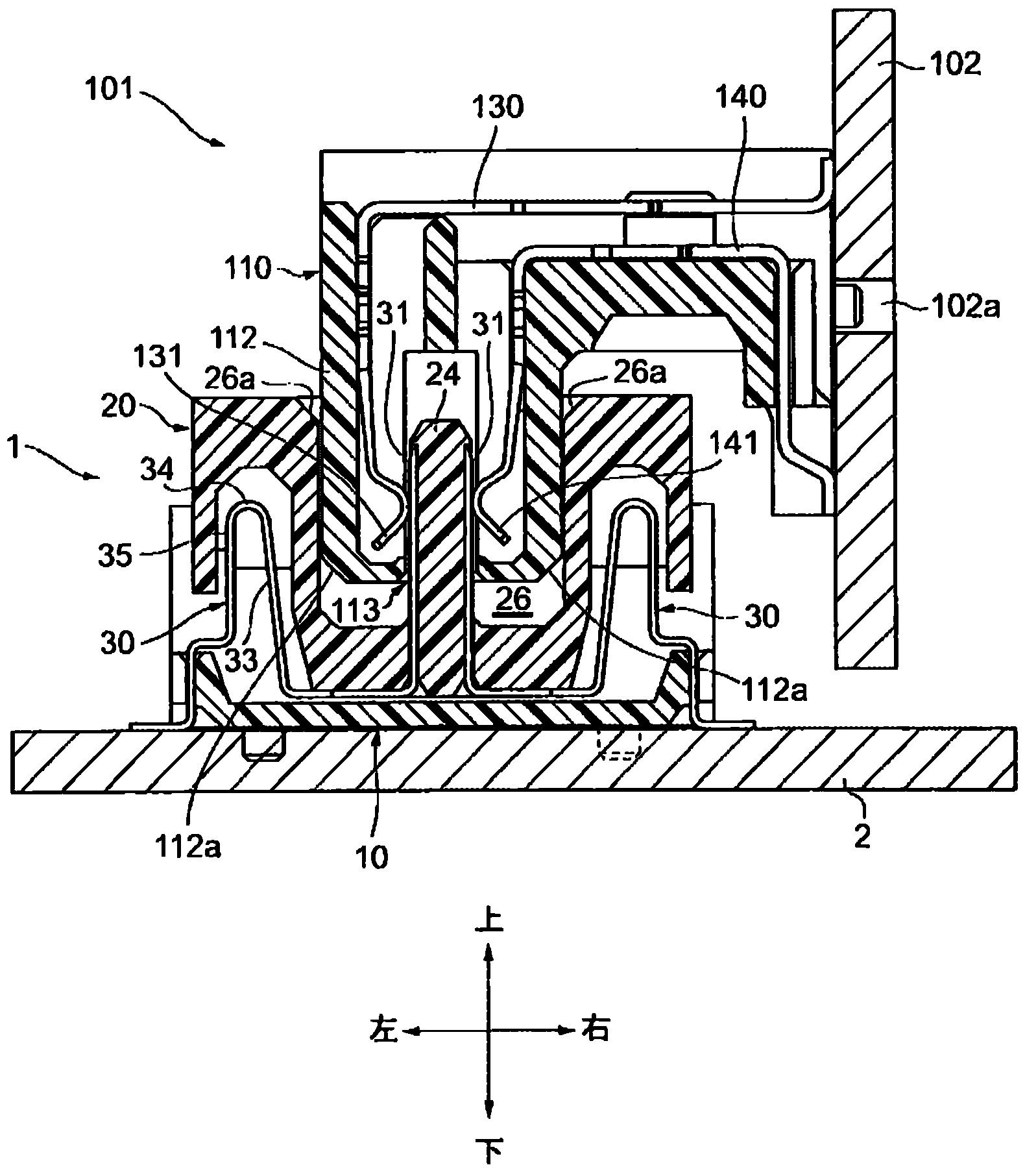

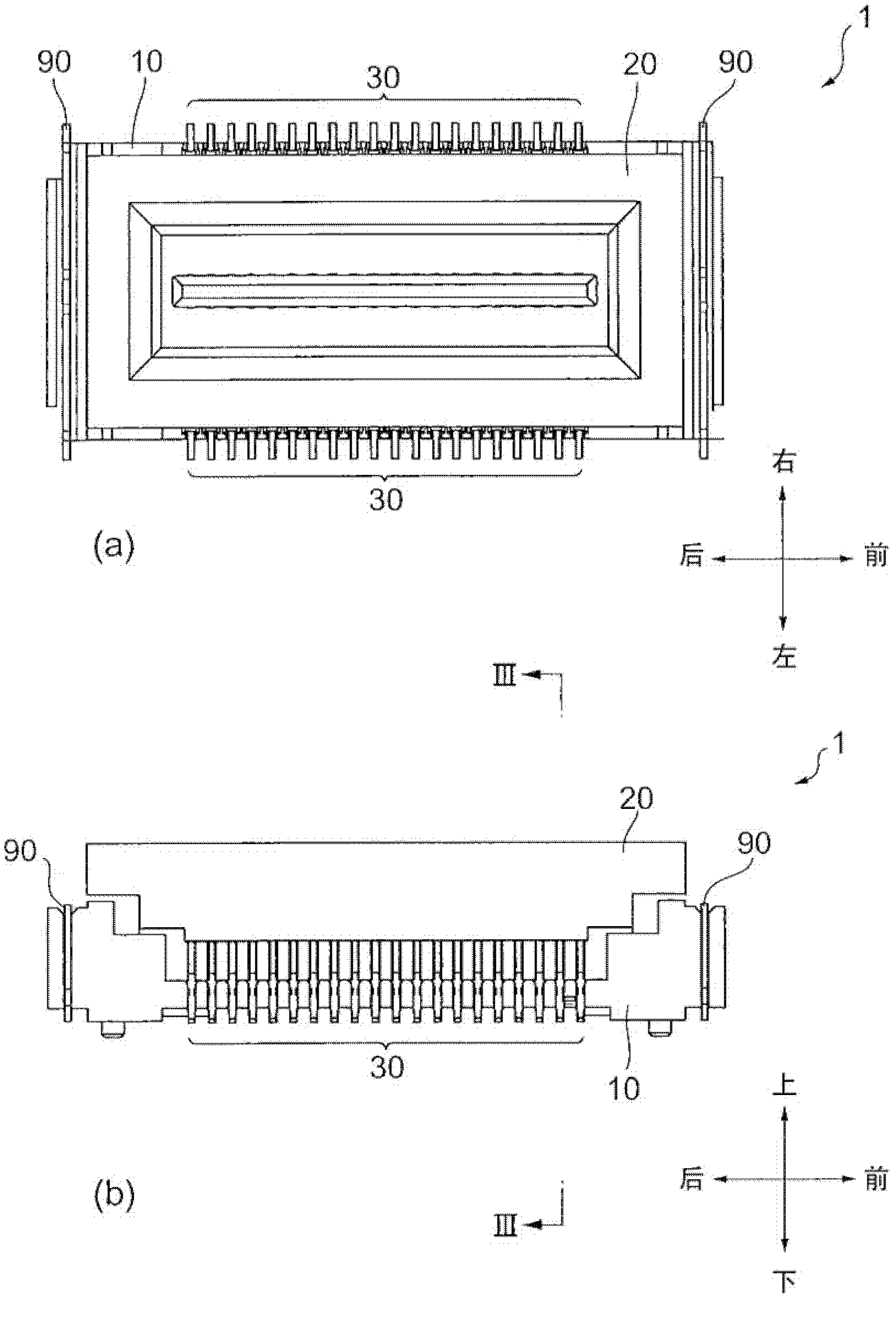

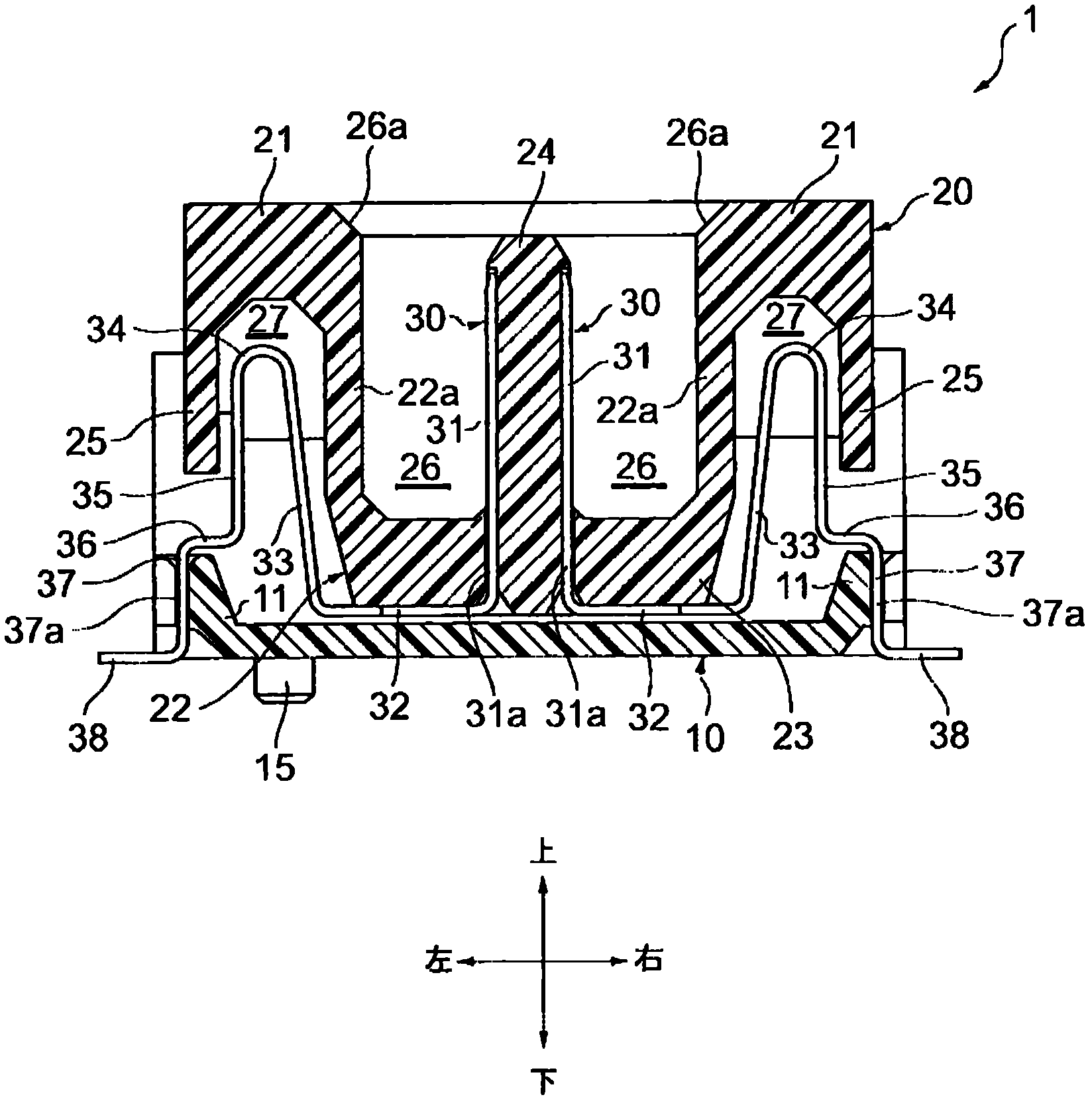

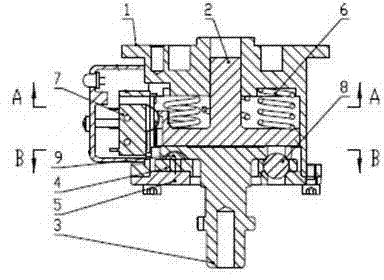

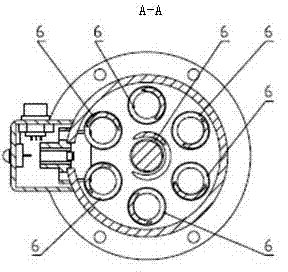

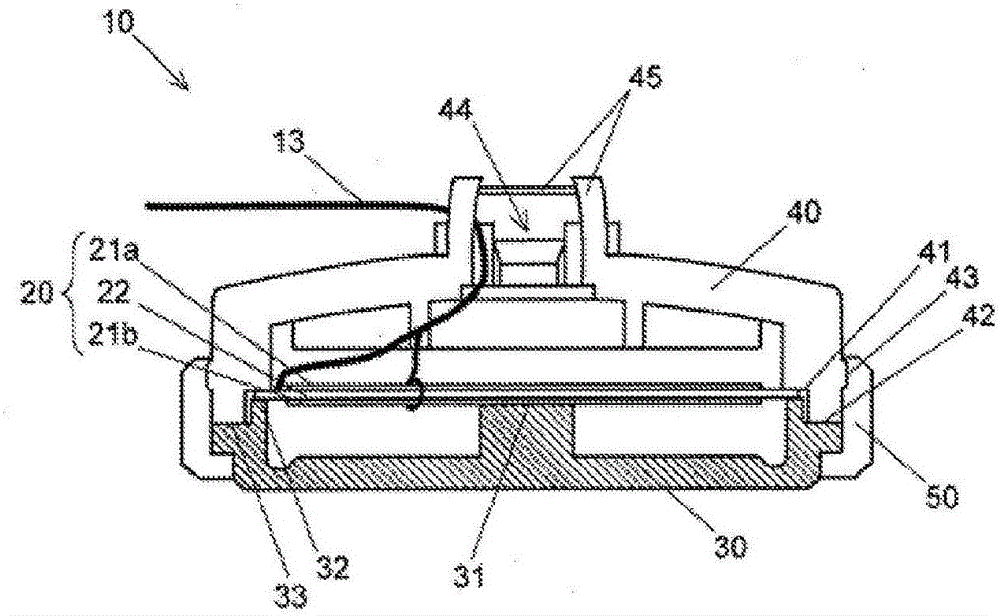

Floating type connector

ActiveCN102623841AEasy to installAvoid plastic deformationCoupling contact membersCentral projectionEngineering

Provided is a floating type connector, comprising a movable substrate (20) having an accepting recess (26), a fixed substrate (10) loading the movable substrate (20) in a free-moving manner, and a connecting member (30), wherein the connecting member (30) comprises a lead portion (38) exposed from the lower surface of the fixed substrate (10), a contact portion (31) located inside the accepting recess (26), and an intermediate portion for connecting the lead portion (38) and the contact portion (31), with the intermediate portion having a curving elastic portion capable of being elastically deformed; the movable substrate (20) comprises a base (21), a central projection portion (22), and an outboard limiting wall (25); the curving elastic portion elastically deforms inside an accepting space (27) of the connecting member (30), thereby making the movable substrate (20) freely move relative to the fixed substrate (10); and the curving elastic portion abuts against the central projection portion (22) / outboard limiting wall (25), thus to prevent the movable substrate (20) from moving beyond the allowed range.

Owner:KEZ

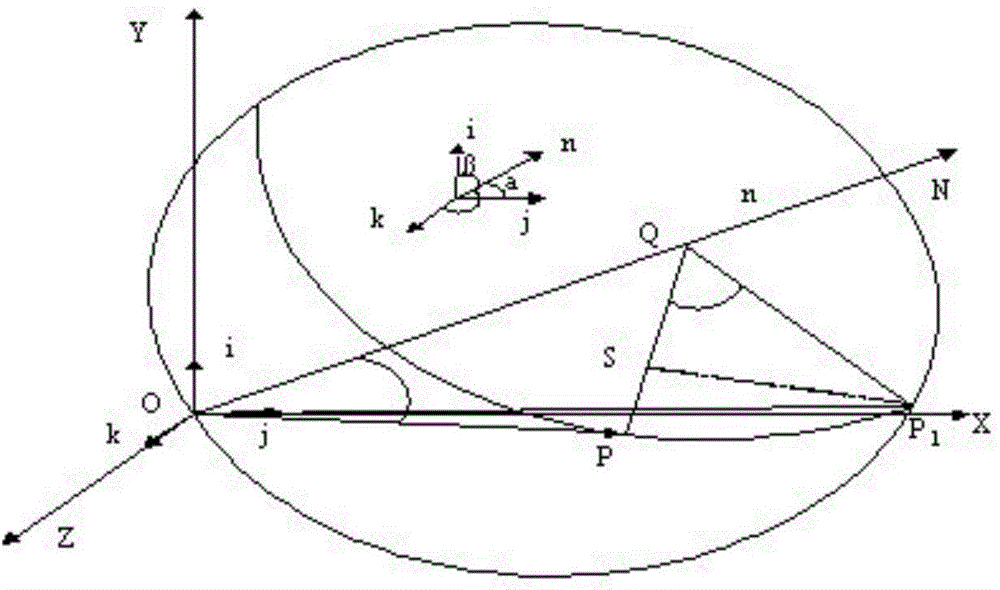

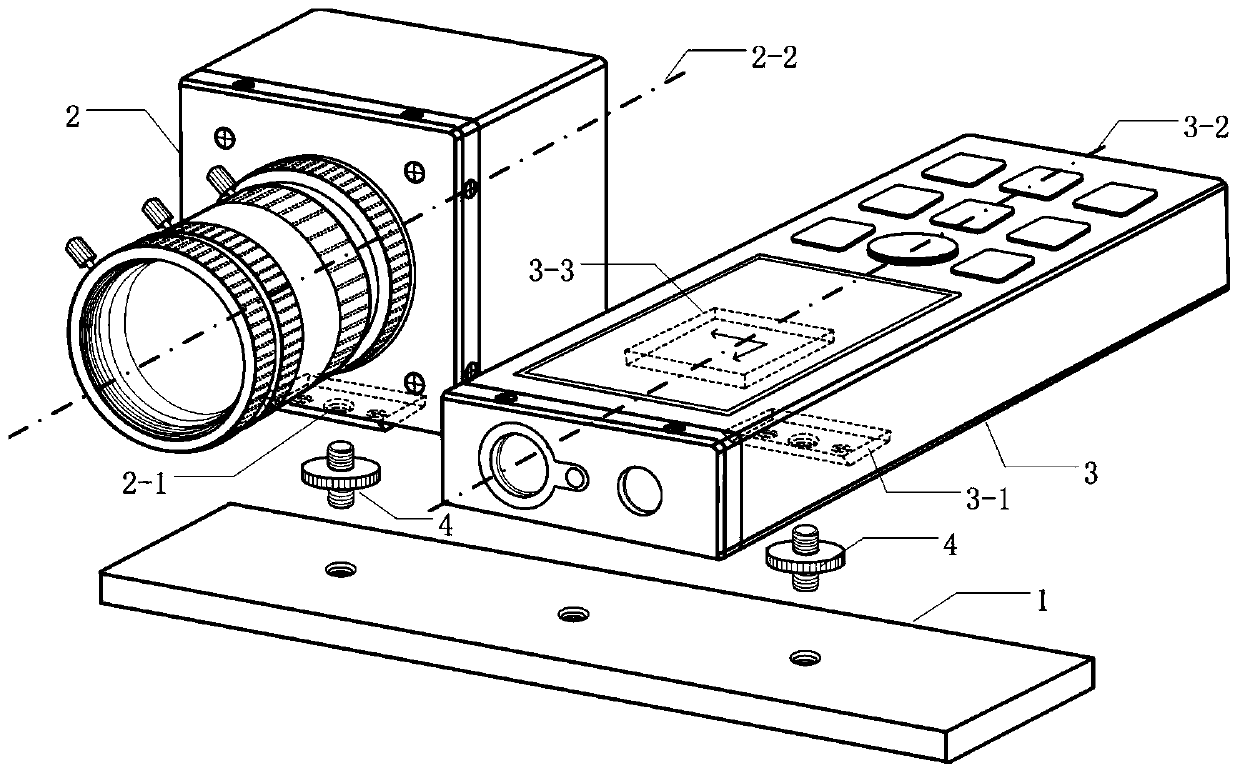

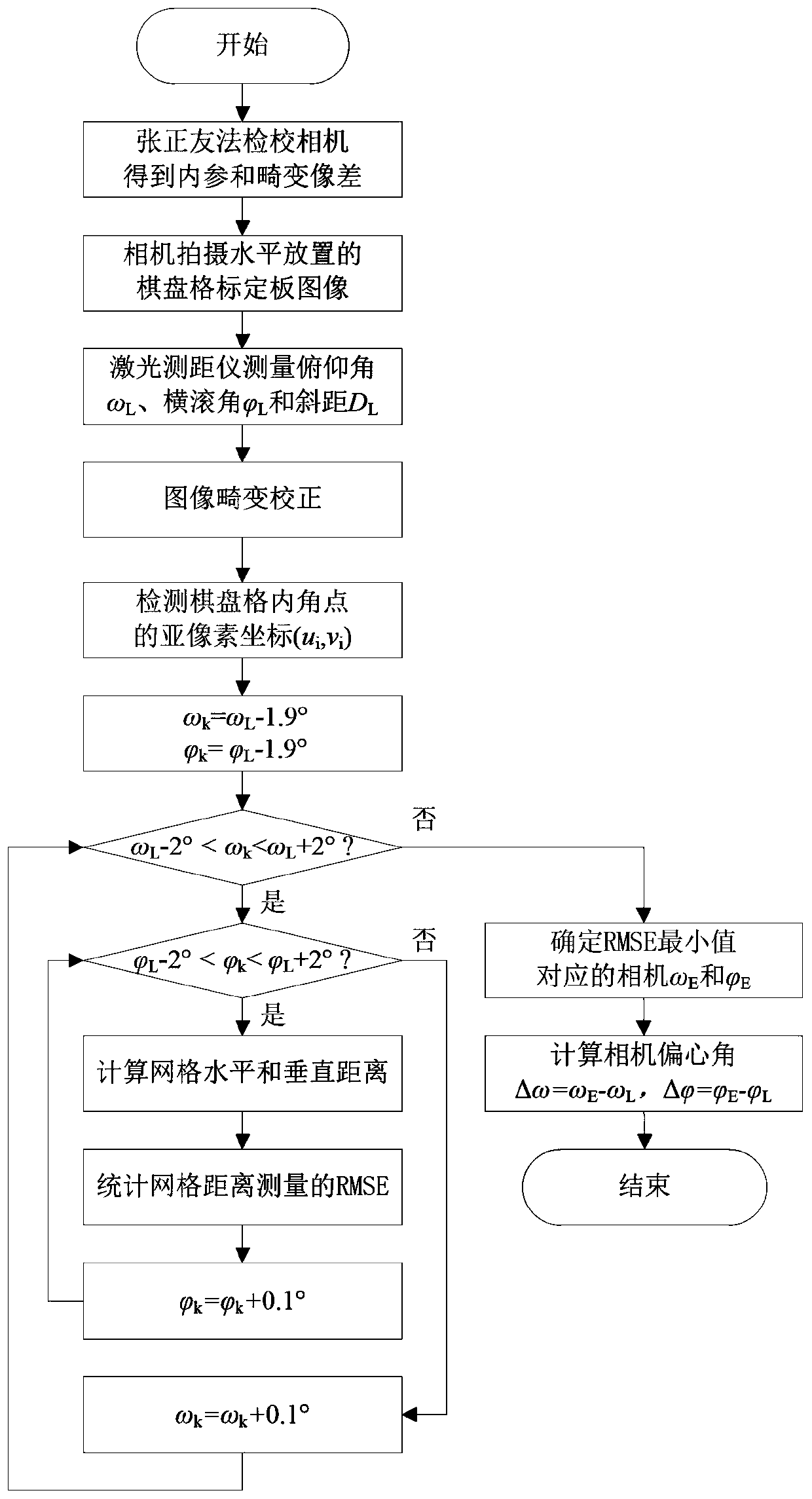

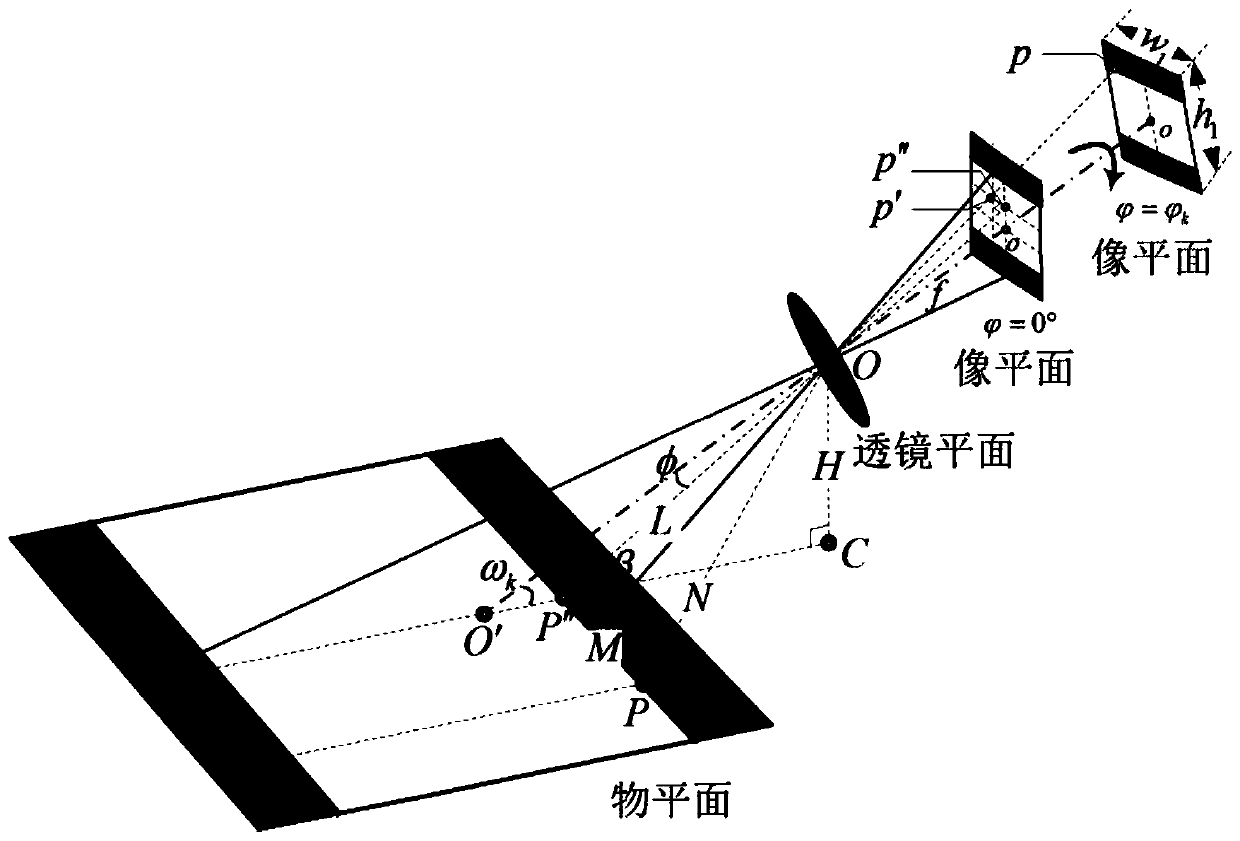

Monocular vision plane distance measuring method free of photo-control

ActiveCN110057295AReduce hardware costsReduce operational complexityUsing optical meansNonlinear distortionObject point

The invention discloses a monocular vision plane distance measuring method free of photo-control. The method comprises the following steps of a measurement system integration stage: fixedly connectinga camera and a laser range finder to form a direct oriented vision measurement system; a measurement system calibration stage: performing integrated calibration on the measurement system to determinecamera internal parameters, distortion aberrations and an eccentric angle between the camera and the laser range finder, and correcting the nonlinear distortion, measured elevation and attitude of the camera; and a plane distance measurement stage: establishing an "image-distance" transformation relationship between the image plane and the object plane based on a central projection imaging principle of the collinearity of the image point, optic center and object point of the camera in an oblique angle of view, and finally realizing the monocular vision plane distance measurement free of photo-control. The method provided by the invention has low hardware cost and operational complexity, greatly reduces the workload in the field by completing the measuring point layout in a few minutes with no need to arrange photo-control points on the plane to be tested, and has universality without depending on the prior knowledge in the scene.

Owner:HOHAI UNIV

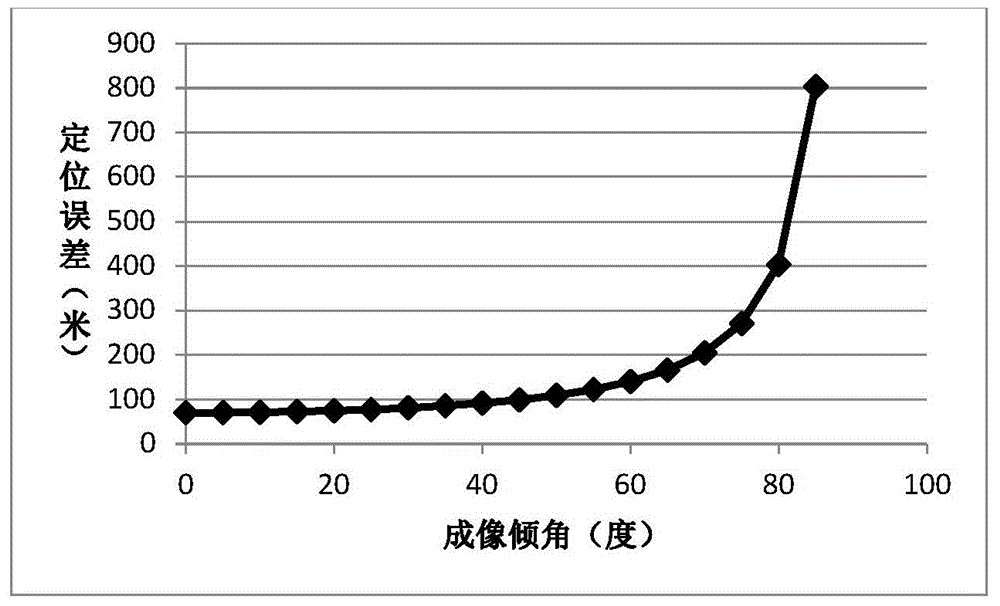

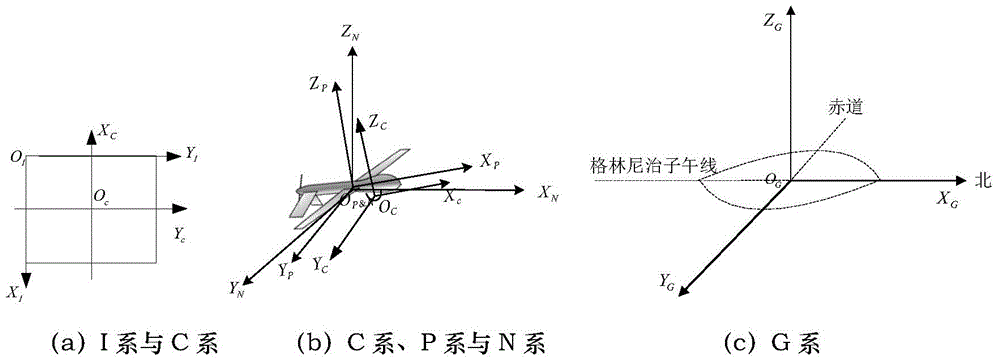

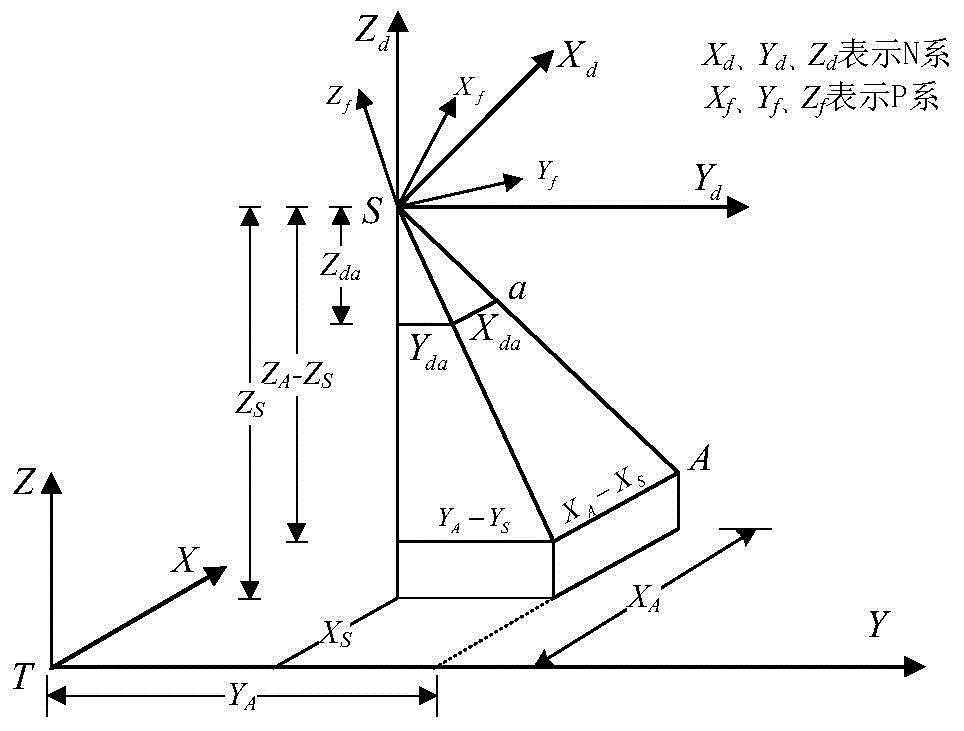

Unmanned aerial vehicle visible light and infrared image target positioning method under large squint angle

ActiveCN105004354AImprove target positioning accuracySmall amount of calculationPicture interpretationCentral projectionVisible spectrum

The invention discloses an unmanned aerial vehicle visible light and infrared image target positioning method under a large squint angle. The method includes the steps that firstly, target positioning is performed based on visible light and infrared images of an imaging model; secondly, target positioning error characteristic extraction and representation are performed under various-factor influences; thirdly, target positioning error prediction and compensation are performed at variable heights and the large squint angle. Under the condition of the large squint angle, the unmanned aerial vehicle target positioning precision can be effectively improved. The target positioning and error compensation method is small in calculation amount and can meet the real-time requirement of airborne computation. The method is suitable for target positioning application of images, conforming to the central projection imaging model, such as the visible light images and the infrared images.

Owner:BEIHANG UNIV

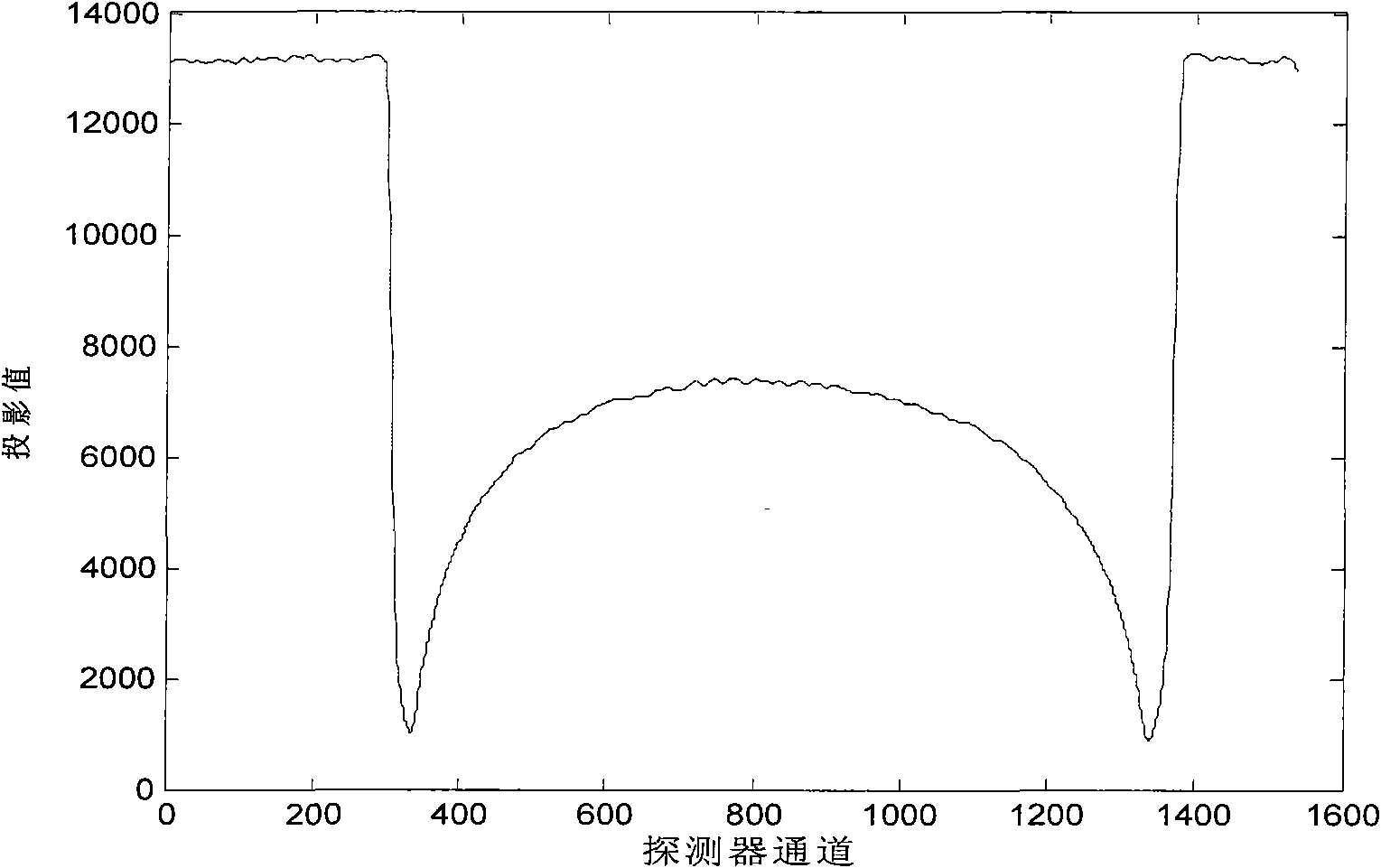

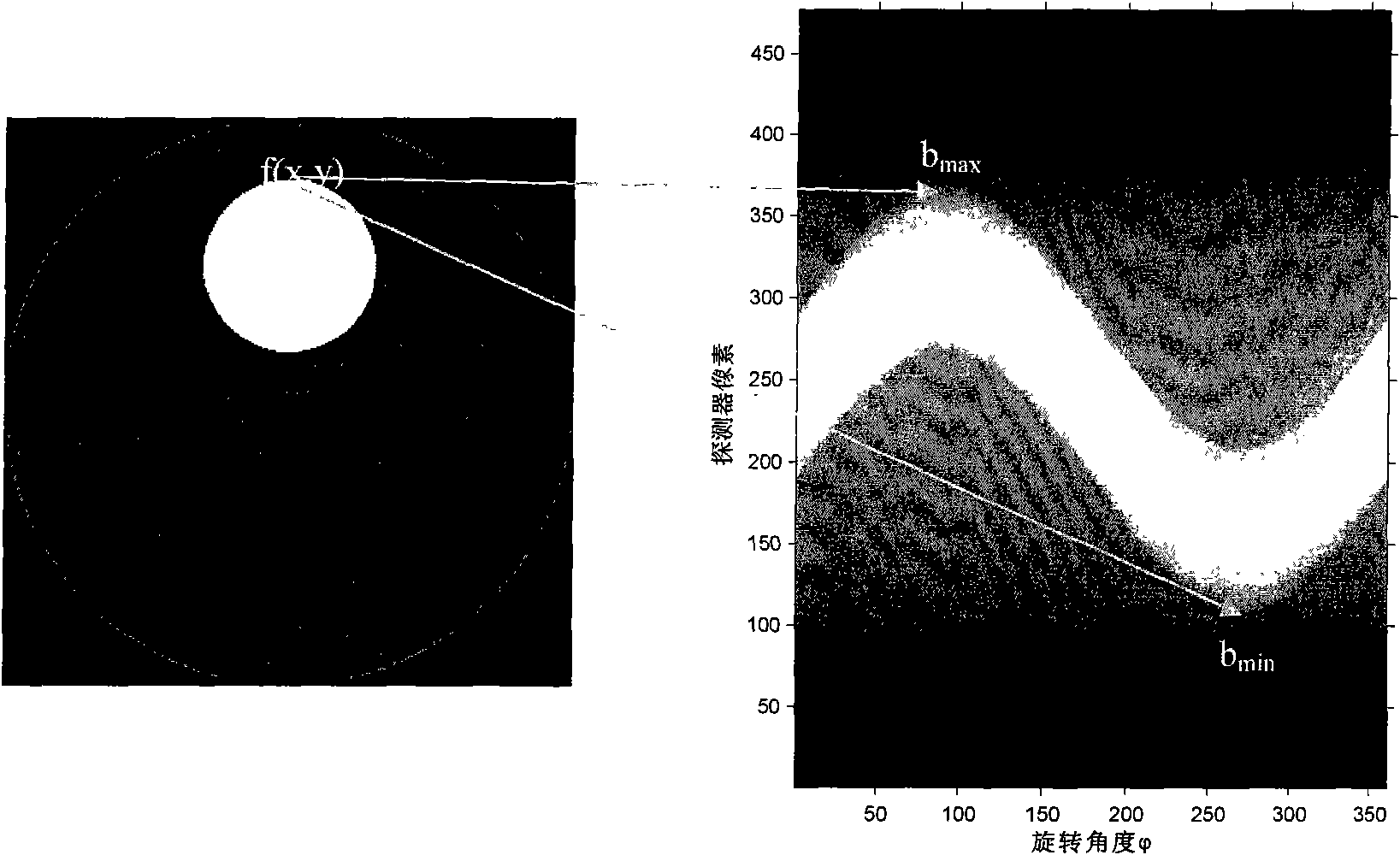

Automatic calibration method for CT projection center

InactiveCN101584587AExtend working lifeReduced measurement timeComputerised tomographsTomographyCentral projectionDetector array

The invention provides an automatic calibration method for a CT projection center, which comprises the following steps of: 1) using CT to perform all-round scanning on a measured object to obtain original projection data of the measured object in various detector units of a detector array under different angles; 2) performing data processing on the original projection data to find the positions of projection points of the edges of the measured object in the detector array; and 3) calculating the CT projection center according to the positions of the projection points of the edges of the measured object in the detector array, and calibrating the original projection data to obtain final two-dimensional tomography data. The method can calibrate the central projection position of the CT without adopting a special die body and moving the measured objects; and various calibration steps of the method can be established into a reconstruction pre-processing program to automatically run so as to save measurement time, and prolong the effective service life of diographic sources and detectors.

Owner:INST OF PROCESS ENG CHINESE ACAD OF SCI

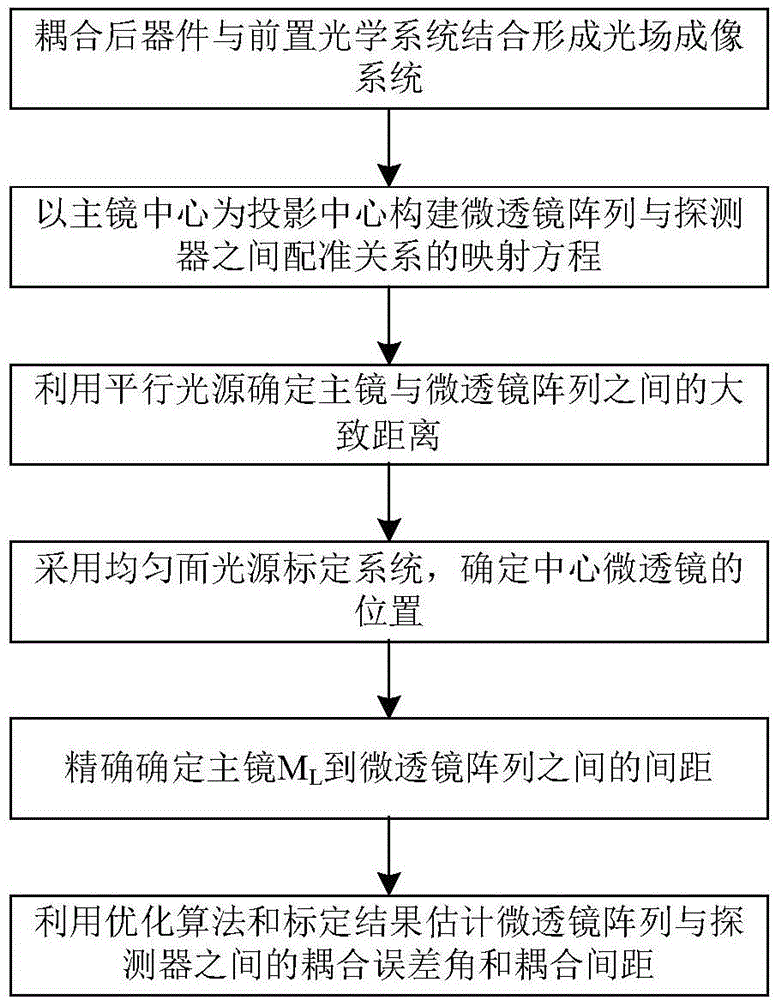

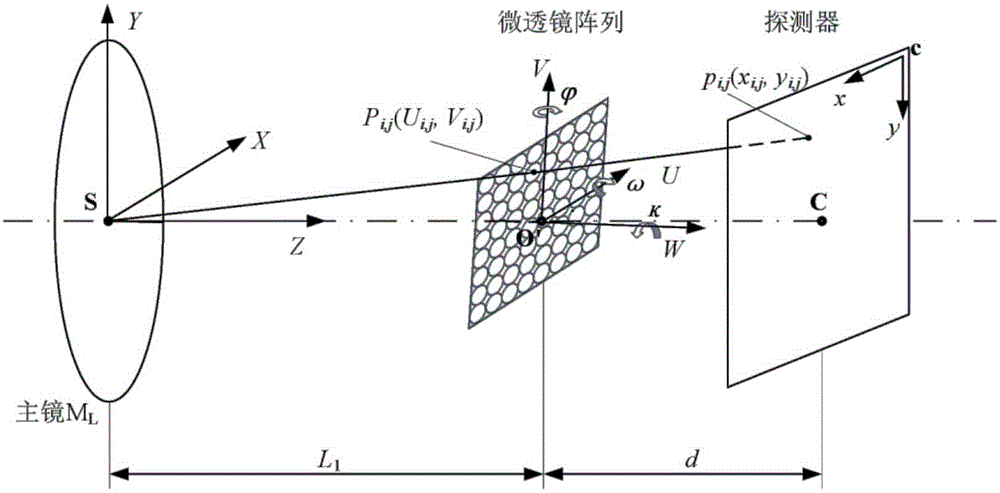

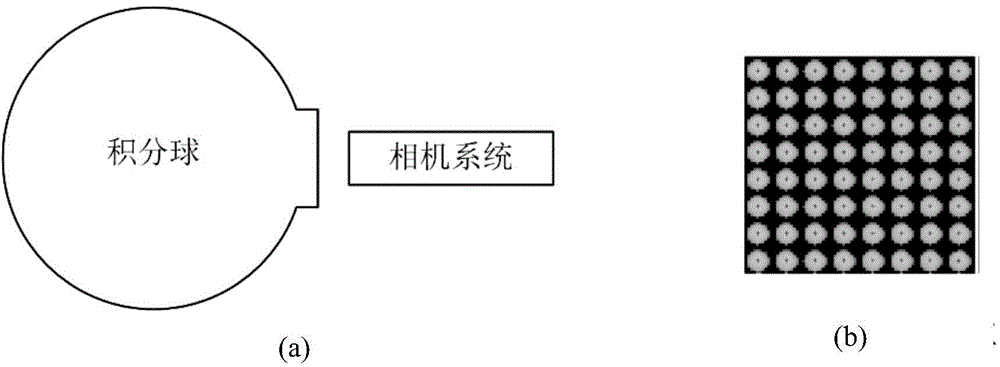

Calibration method of coupling position relationship between micro lens array and detector

ActiveCN104613871AAchieve precise calibrationEasy to operateUsing optical meansDevice formCentral projection

The invention discloses a calibration method of a coupling position relationship between a micro lens array and a detector. The calibration method of the coupling position relationship between the micro lens array and the detector includes: combining a device formed by coupling the micro lens array and the detector with a front optical system into a light field imaging system; building a mapping equation between the center of micro lenses and a point pi,j on a plane of the detector based on a central projection principle; using a parallel light source to confirm a rough distance between a main lens and the micro lens array; using a uniform area light source to calibrate the light field imaging system, and confirming an actual coordinate of the point pi,j on the plane of the detector; confirming accurate value of L1; using an optimization algorithm to estimate coupling error angles phi, omega, k and d between the micro lens array and the detector. The calibration method of the coupling position relationship between the micro lens array and the detector only needs to roughly confirm a position relationship between the micro lens array and the front optical system in the light field imaging system, cam achieve calibration of parameters of a distance, a rotation angle and the like between the micro lens array and the detector, is simple to use, and facilitates actual operation.

Owner:BEIHANG UNIV

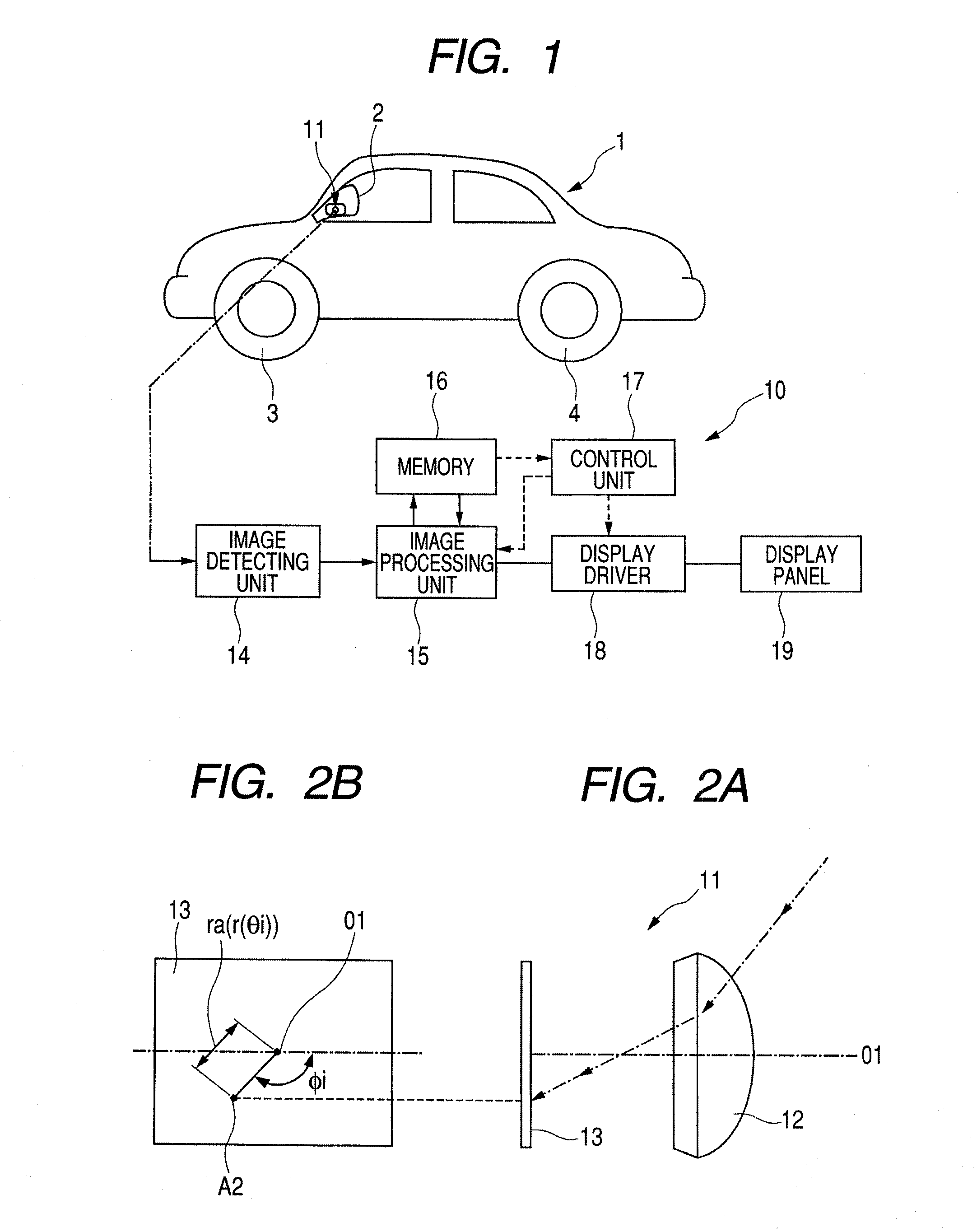

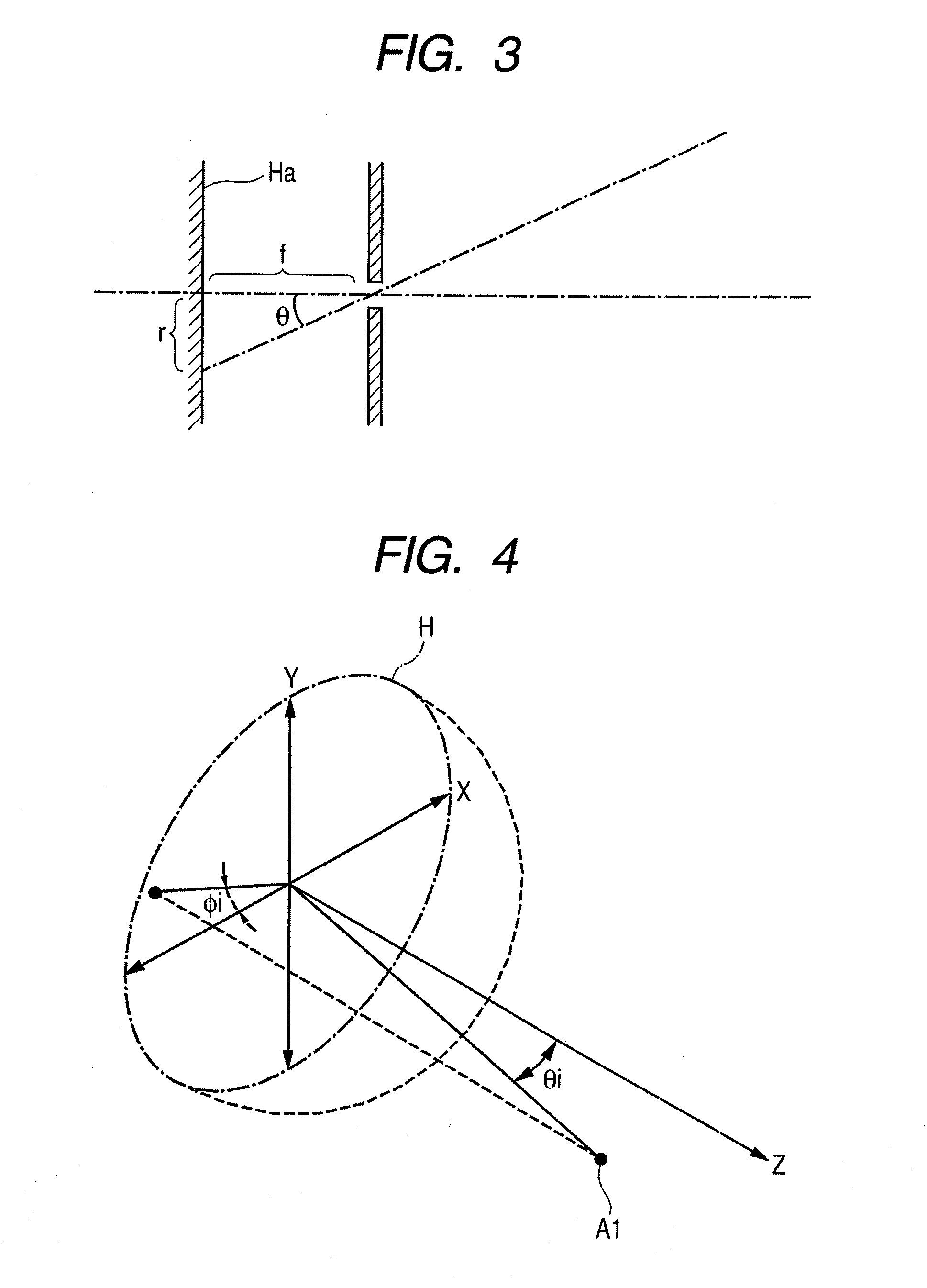

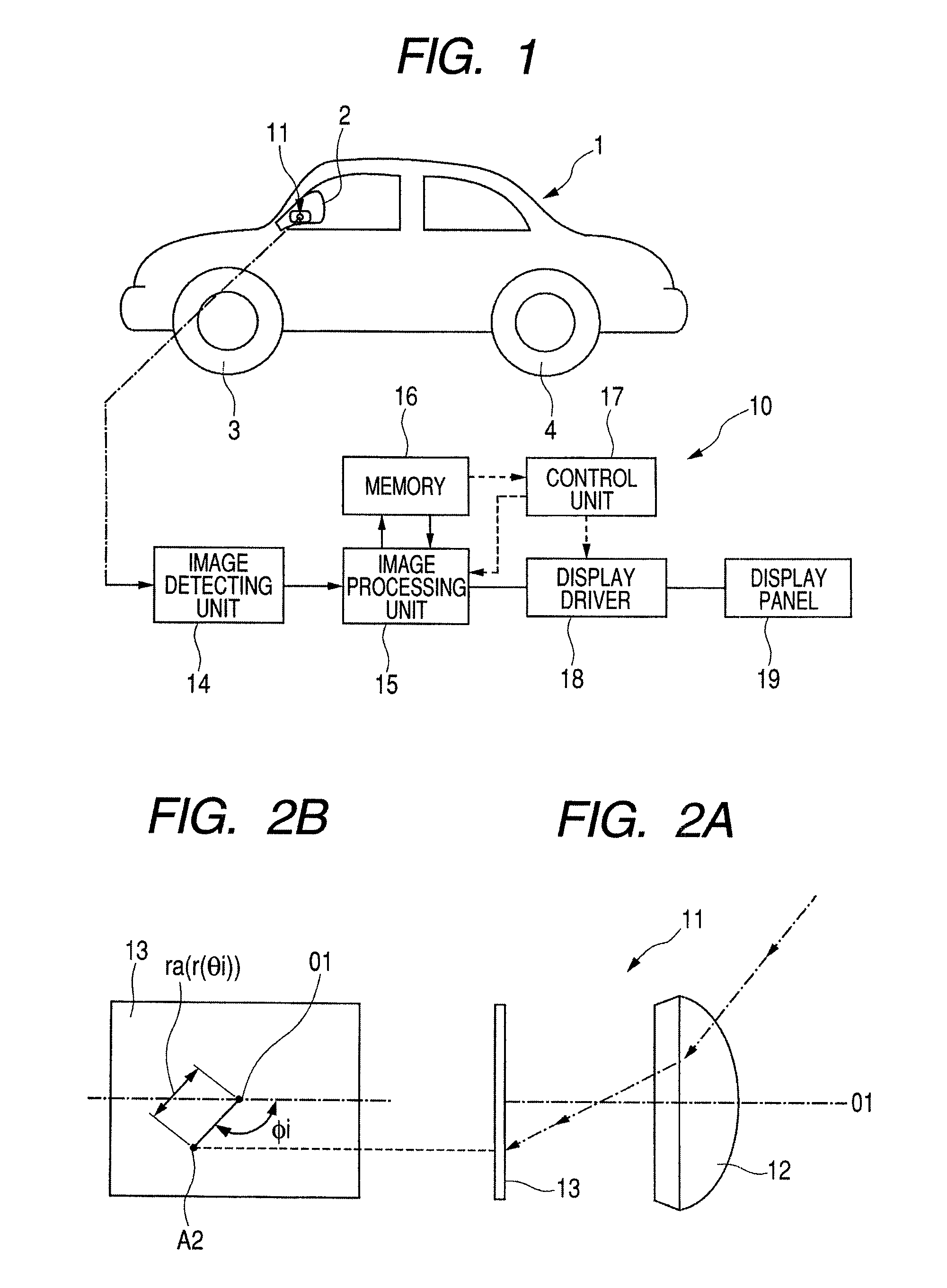

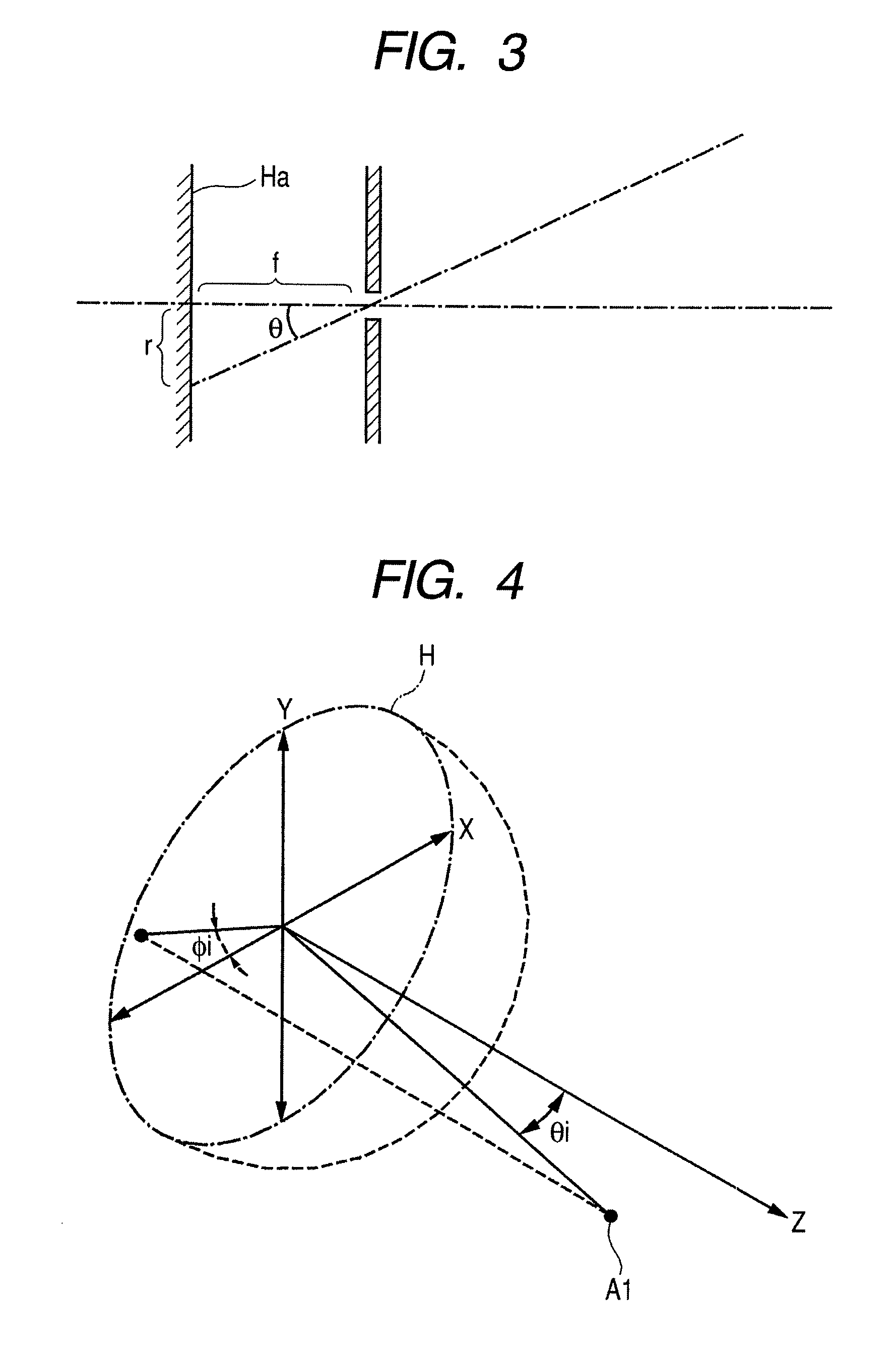

Imaging apparatus

ActiveUS20090128686A1Enhance captured imageSmall distortionTelevision system detailsGeometric image transformationVisibilityImaging processing

Disclosed is an imaging apparatus capable of enlarging a portion of an image captured by an imaging device of a fisheye camera and displaying the enlarged image on a display screen with little distortion. The imaging apparatus enlarges a section, which is a portion of an image captured by a fisheye camera, and displays the section on a display screen such that the enlargement ratios of points in the section are different from each other. An image processing method uses a change function that changes the enlargement ratio of a field angle depending on the place is used, and converts a portion of the captured image into a central projection image to generate a display image. The use of the change function makes it possible to generate a display image having high visibility.

Owner:ALPS ALPINE CO LTD

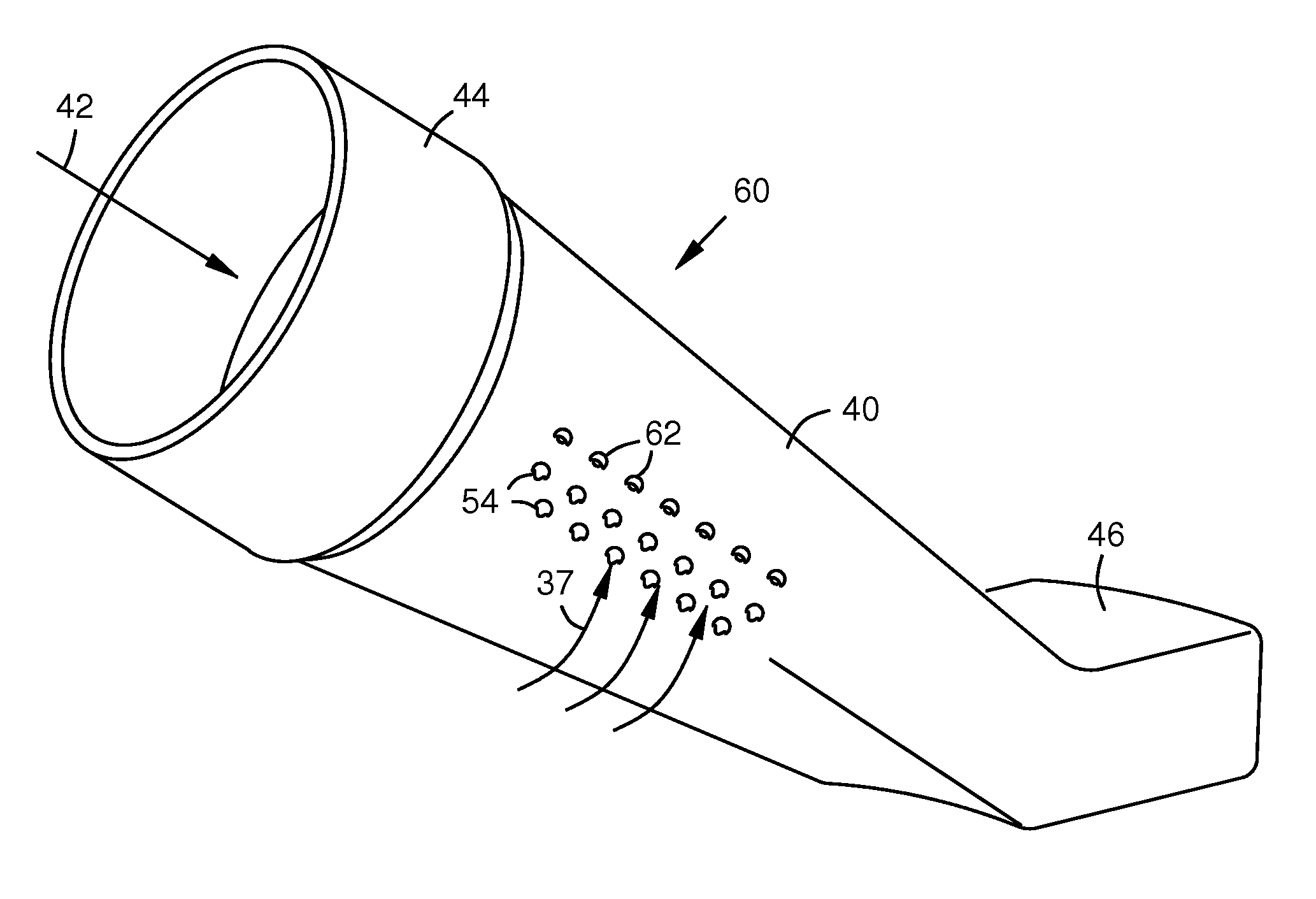

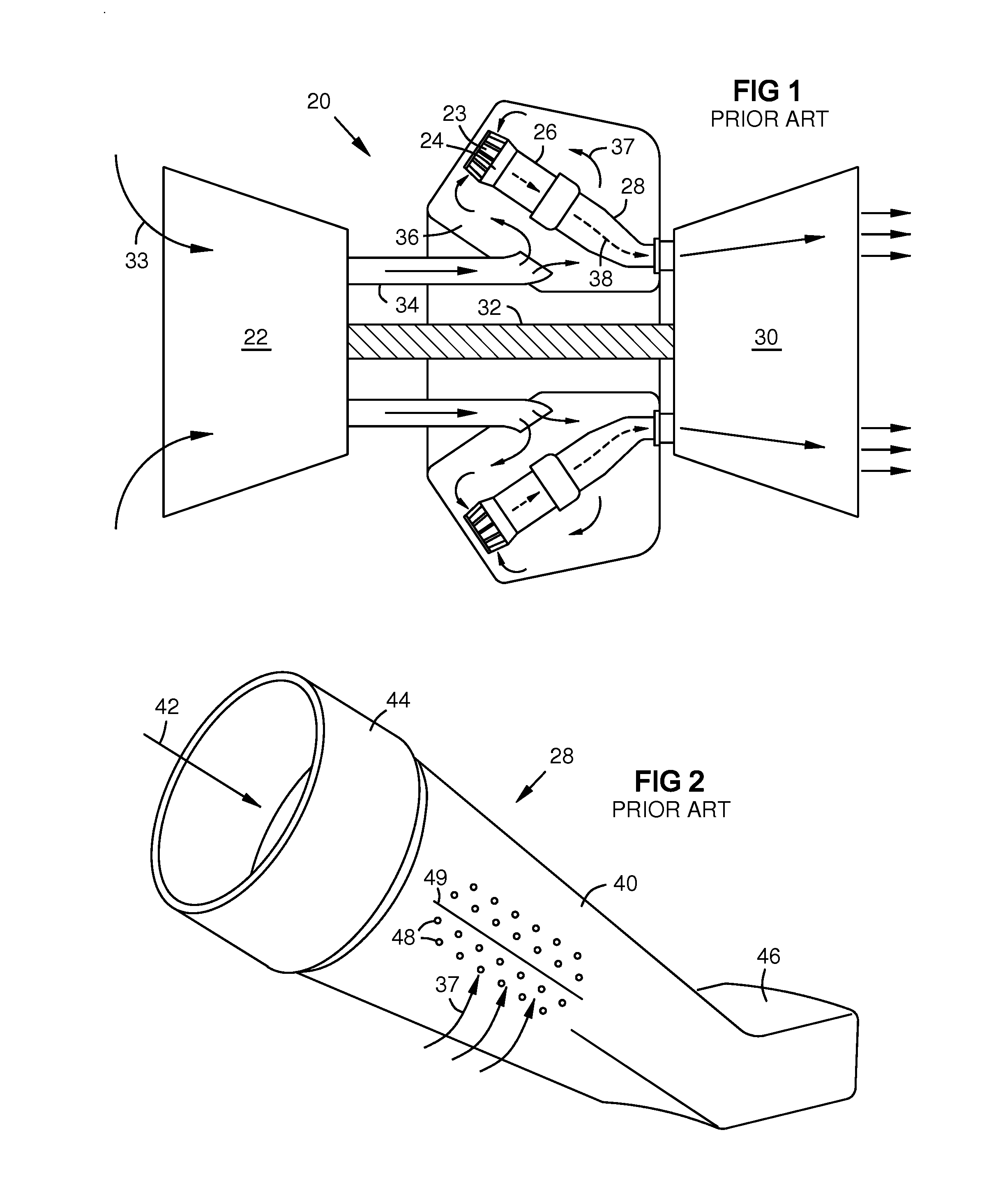

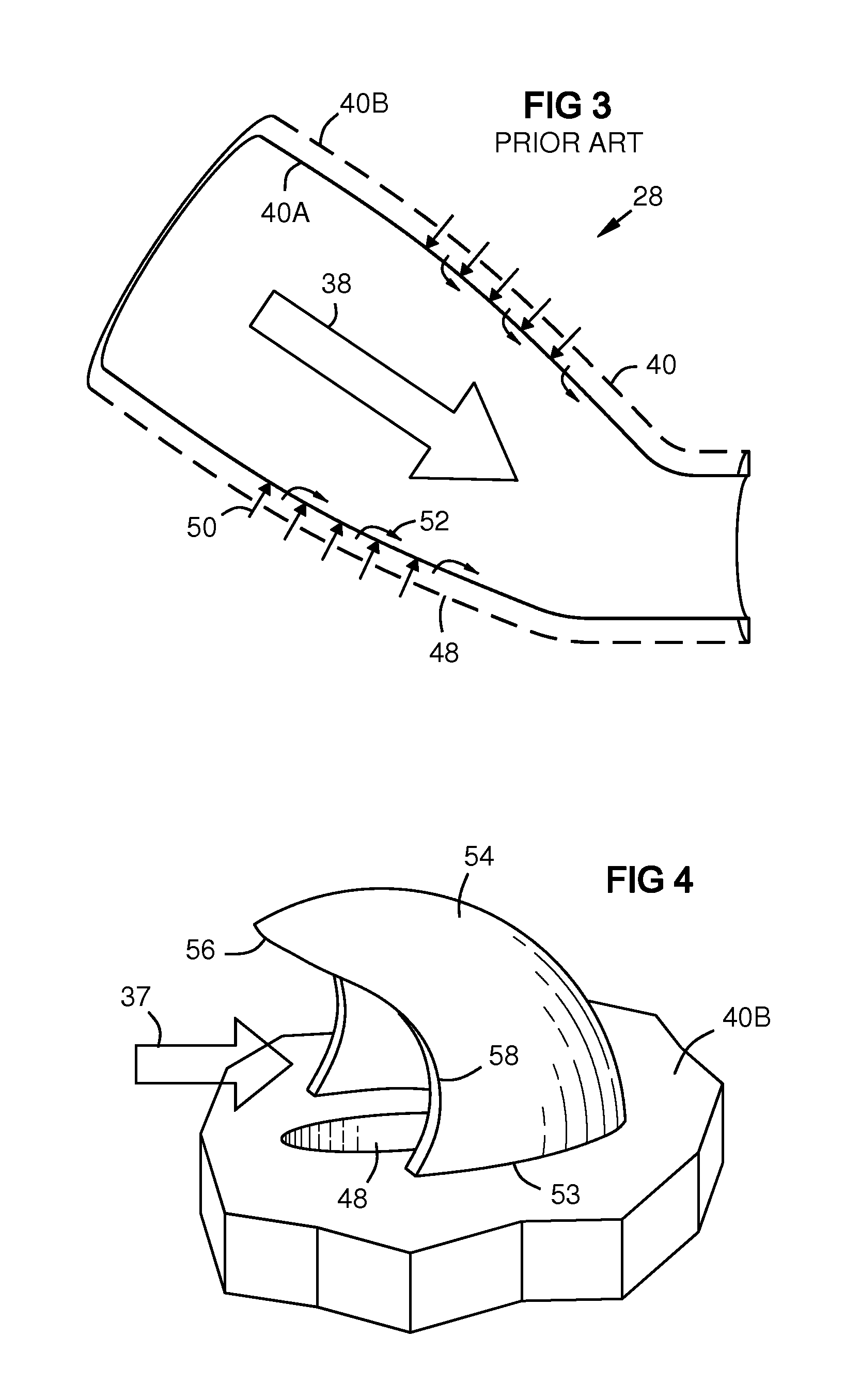

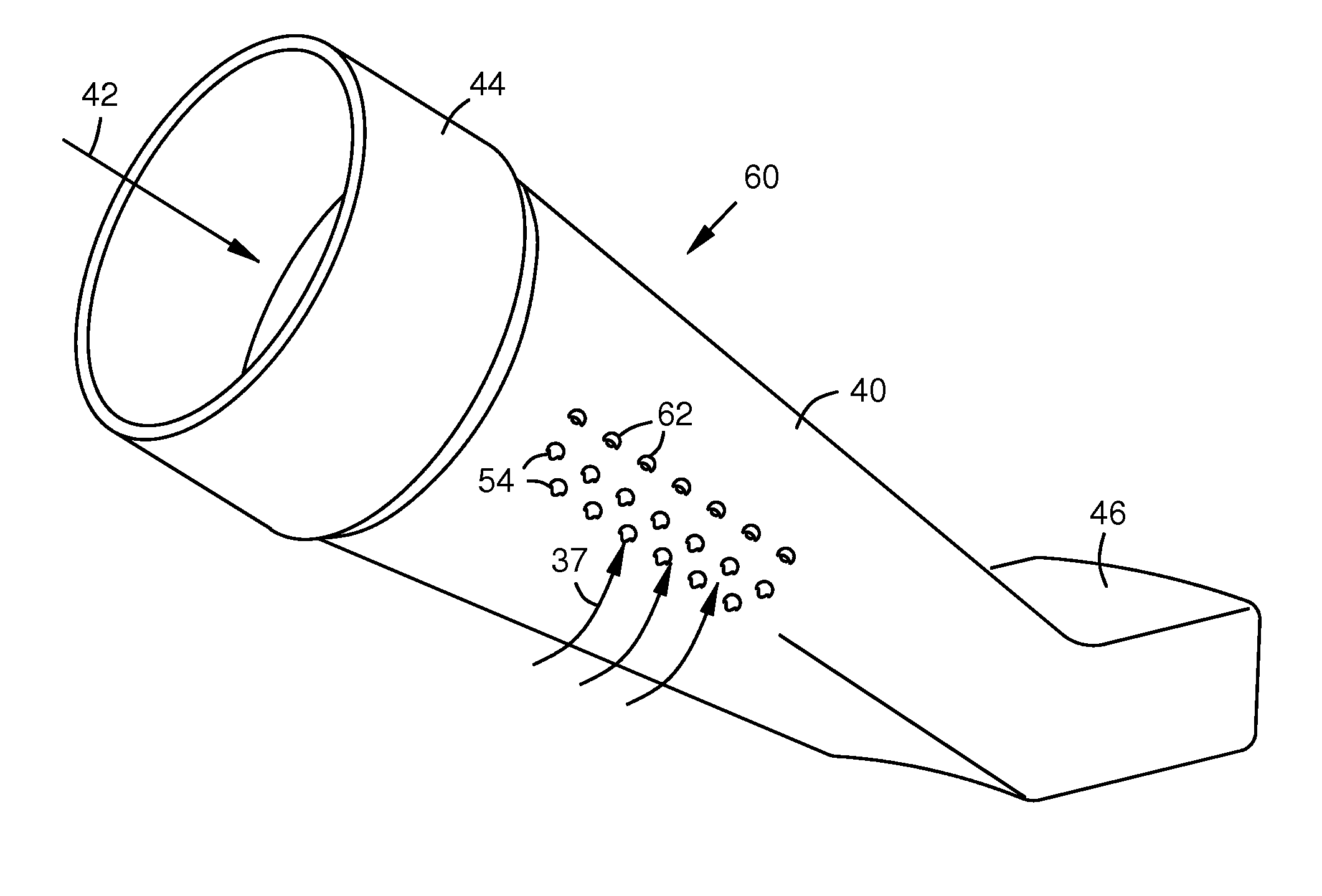

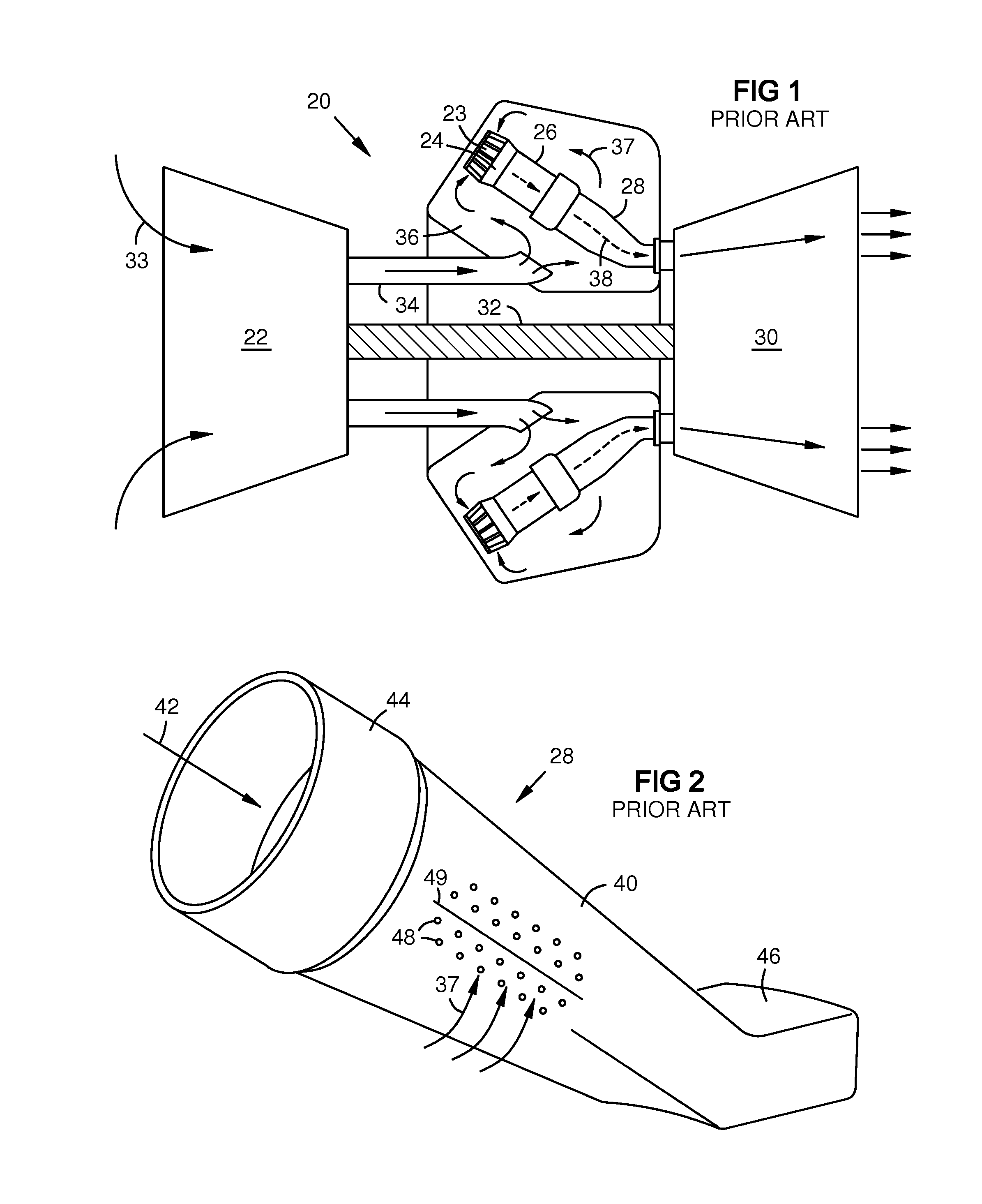

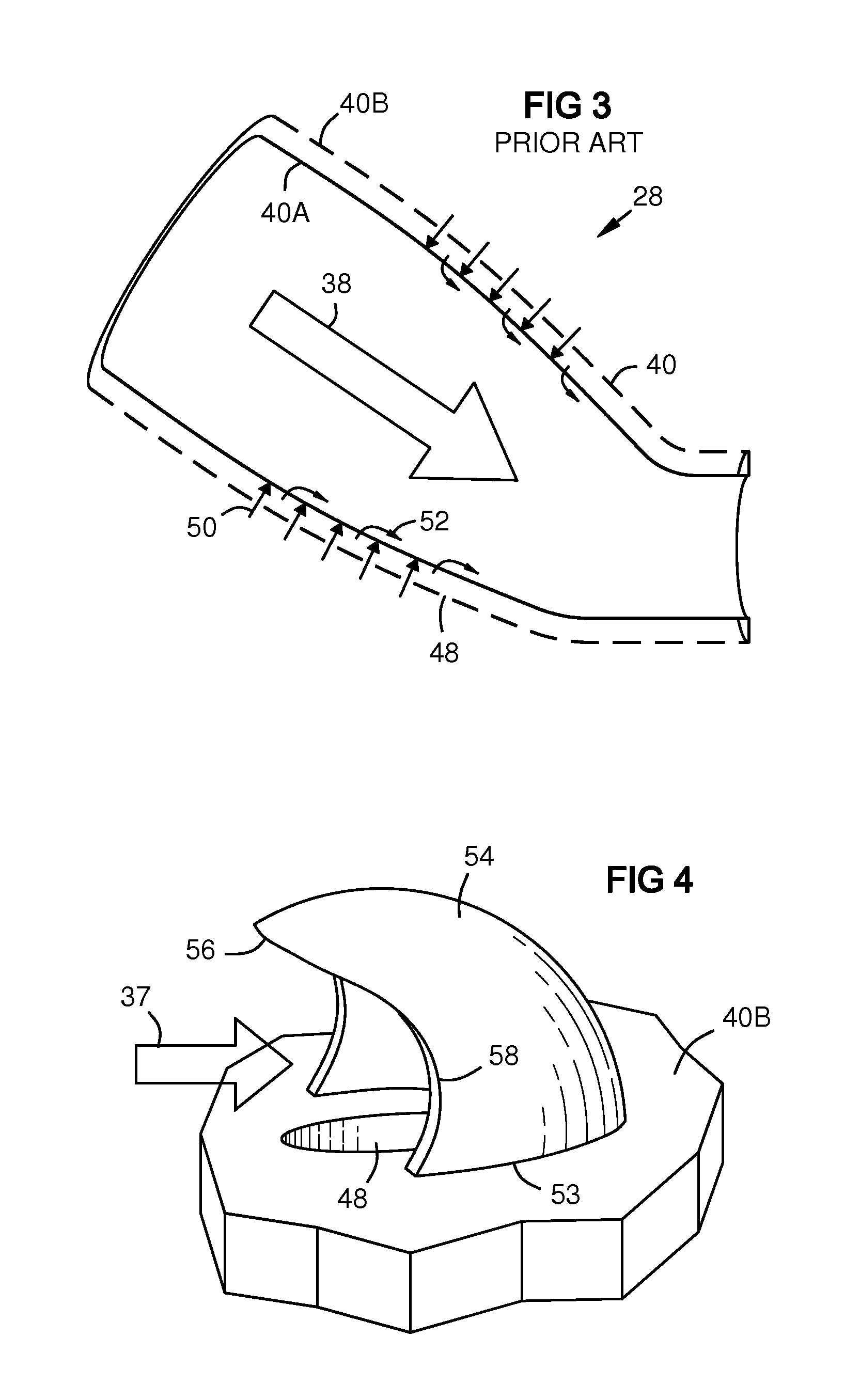

Turbine combustion system cooling scoop

A scoop (54) over a coolant inlet hole (48) in an outer wall (40B) of a double-walled tubular structure (40A, 40B) of a gas turbine engine component (26, 28). The scoop redirects a coolant flow (37) into the hole. The leading edge (56, 58) of the scoop has a central projection (56) or tongue that overhangs the coolant inlet hole, and a curved undercut (58) on each side of the tongue between the tongue and a generally C-shaped or generally U-shaped attachment base (53) of the scoop. A partial scoop (62) may be cooperatively positioned with the scoop (54).

Owner:SIEMENS ENERGY INC

Anti-collision device of welding robot

InactiveCN103537830AAvoid damageAccurate returnWelding/cutting auxillary devicesAuxillary welding devicesCentral projectionEngineering

The invention relates to an anti-collision device of a welding robot. The anti-collision device comprises a base connected to the executing end of the robot and a connecting shaft connected with the top of a welding gun, and the center of the base is provided with a through hole. A disc-shape spring fixing plate with a central projection is arranged between the base and the connecting shaft, the lower end face fits the upper end face of the connecting shaft, the projection extends into the through hole of the base, and a plurality of pressed springs are mounted on the projection and the circumferential plate face; one side of the spring fixing plate is provided with a supporting point contacting with a sensor which is mounted on the same side and which is connected with a robot controller. The anti-collision device is reasonable in design, simple in structure and convenient to mount, damages on the welding gun and the robot can be avoided effectively when the welding robot collides, the welding gun can return to the position accurately when the robot moves backwards, troubles of re-calibration or re-programming are avoided, security and stability of equipment operation are improved, and operation cost is reduced.

Owner:NANJING PANDA ELECTRONICS +1

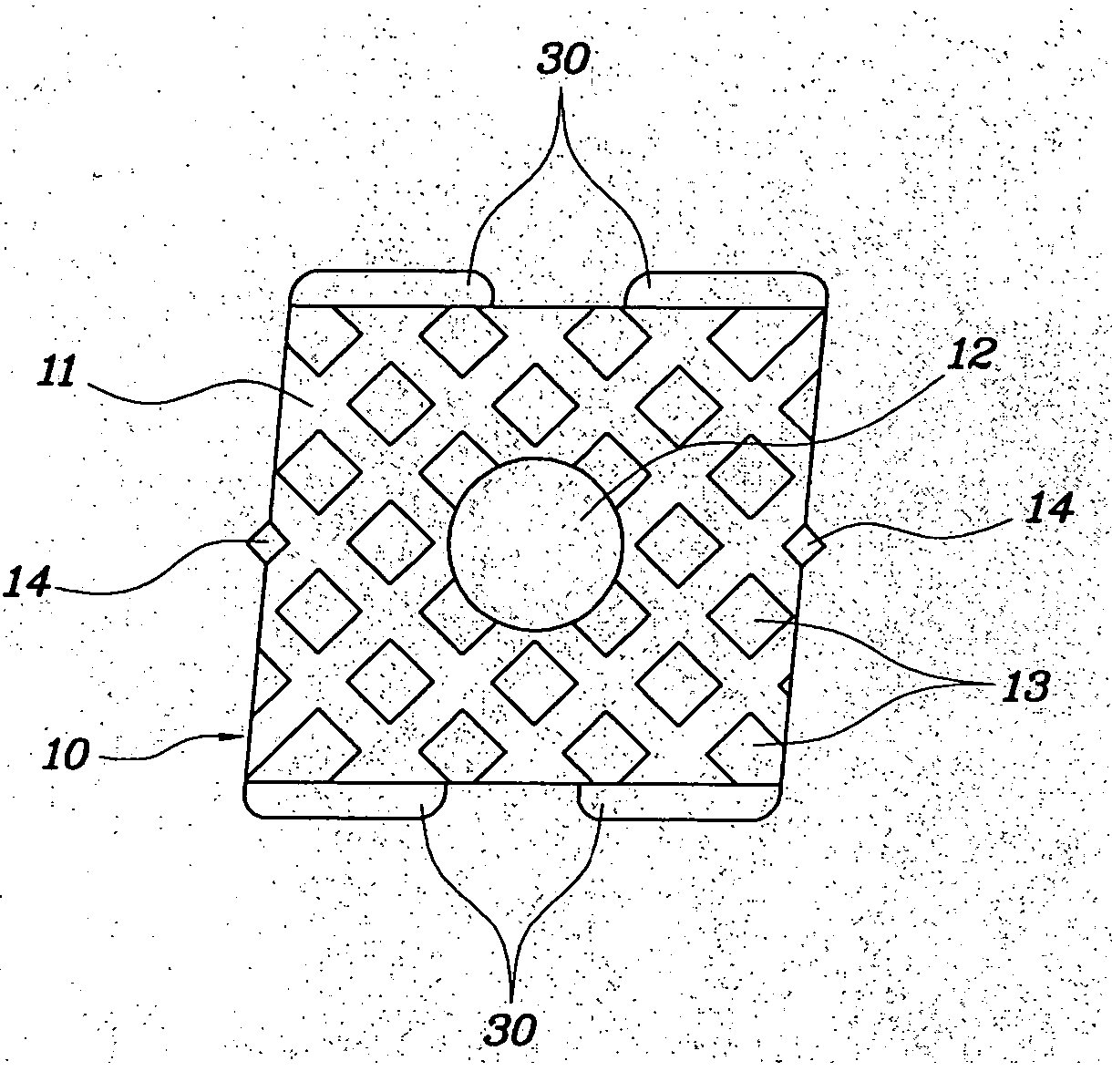

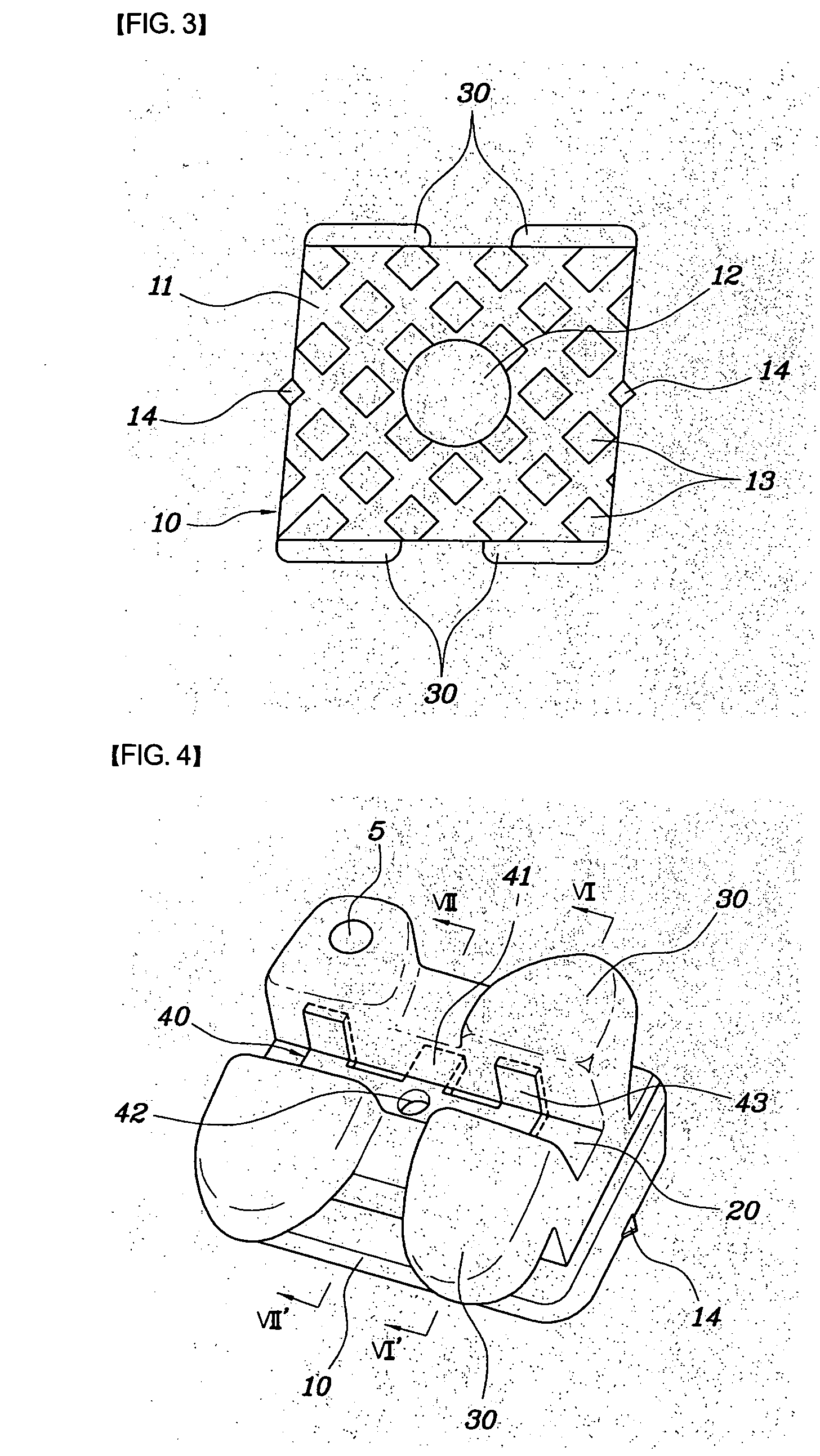

Orthodontic bracket base and bracket using the base

ActiveUS20060263736A1Inhibition releaseImprove adhesionBracketsDental toolsAdhesiveCentral projection

Disclosed is an orthodontic bracket which has excellent adhesion to a tooth surface and can be easily separated from the tooth surface after orthodontic treatment is finished. The base of the orthodontic bracket comprises an adhesion surface brought into dose contact with a tooth surface; a central projection located at a center portion of the adhesion surface; and a plurality of surrounding projections arranged at regular intervals around the central projection and made of a material which allows force of adhesion to the tooth surface of an adhesive to be greater at the surrounding projections than at the central projection.

Owner:MOON SEUNG SOO

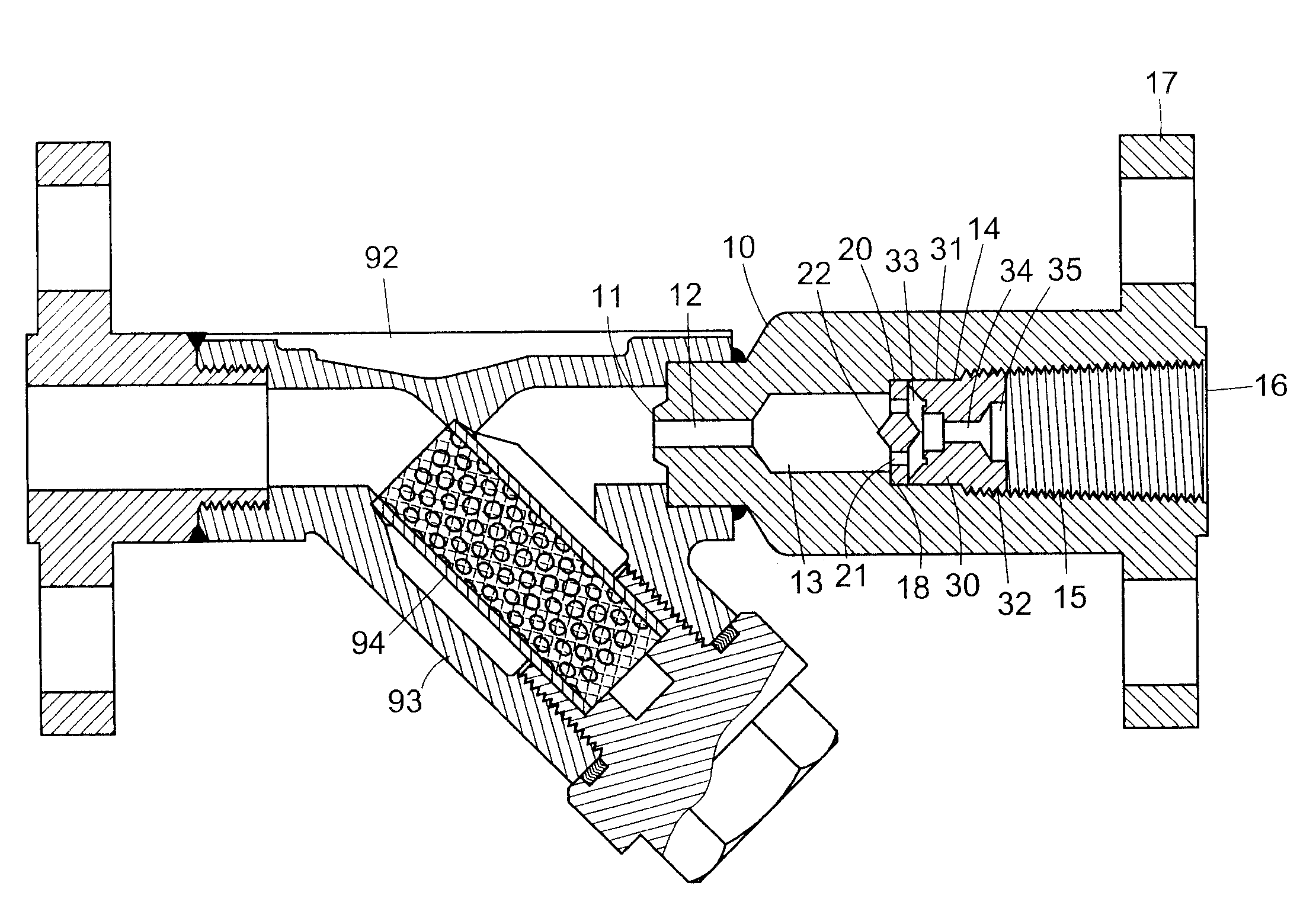

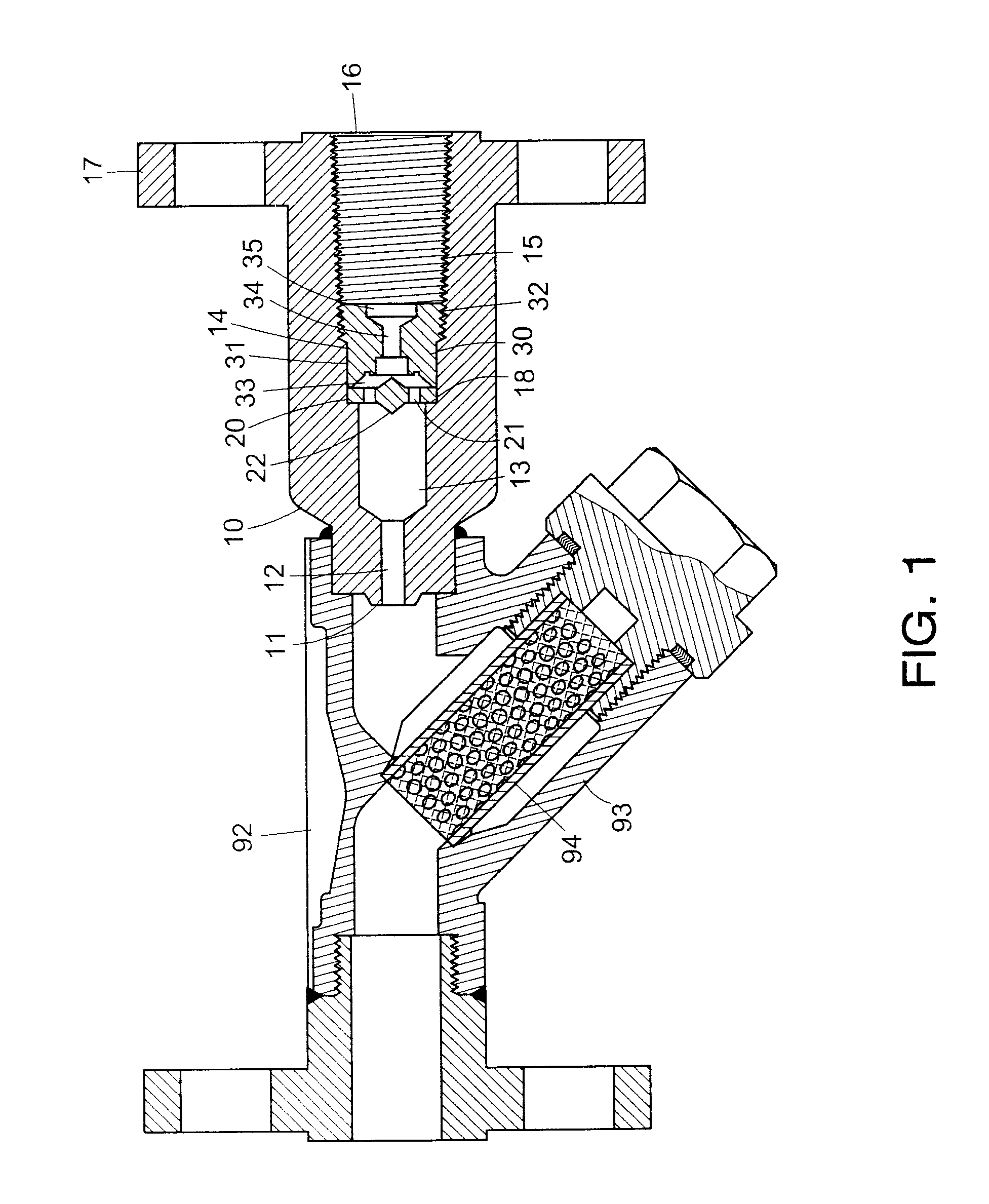

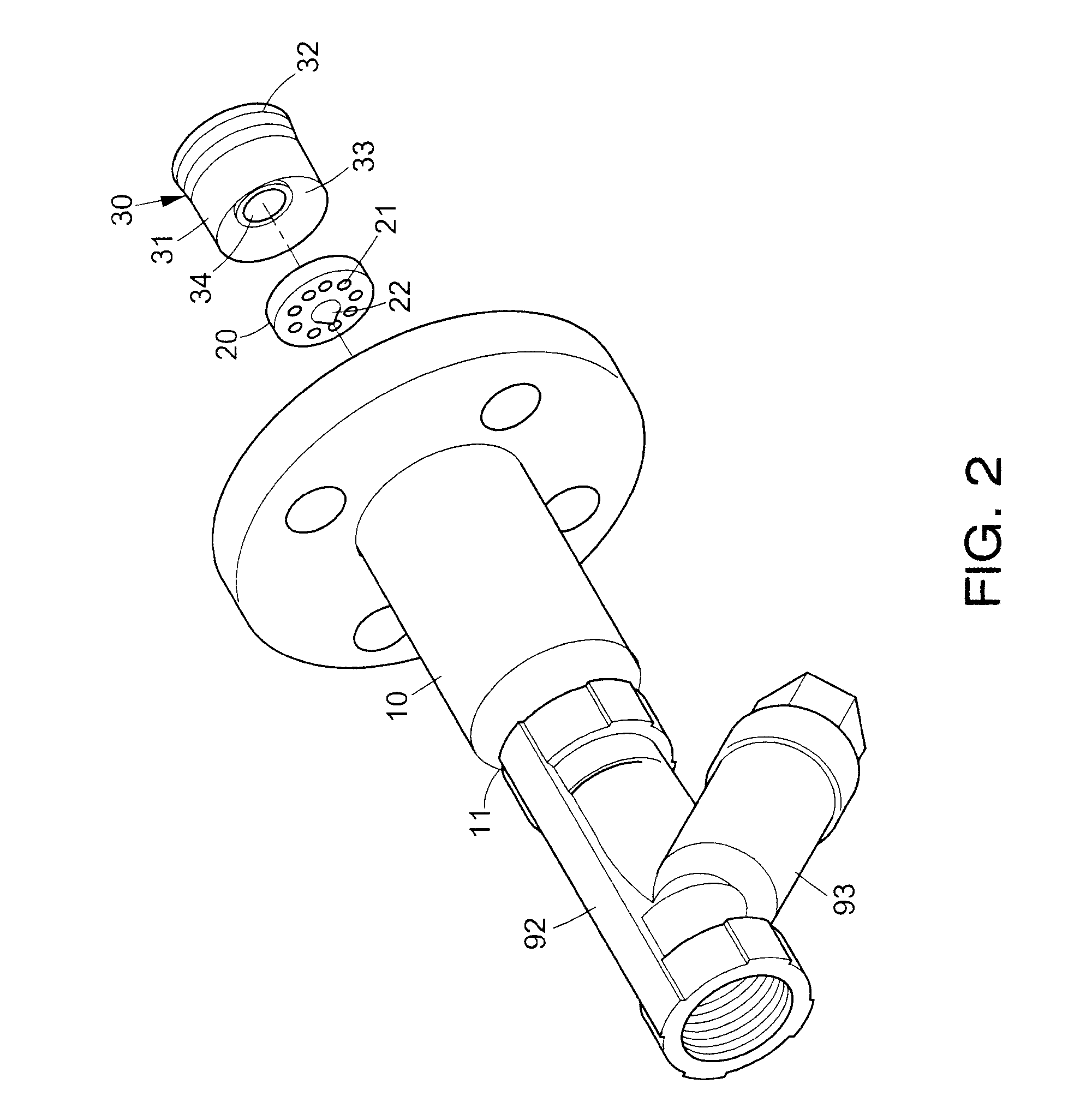

Steam trap with capillary action based blocking arrangement

A steam trap is provided to remove condensed water from a steam pipe having an end to which the strap is connected. In one embodiment, the trap includes a hollow body having an inlet adapted to secure to the pipe end and an outlet, the body comprising a staged internal space; a blocking member secured in the space and comprising a central projection on either surface and a plurality of apertures around the projections; and an abutment member in the rear of the blocking member. Condensate is adapted to pass the apertures while the steam is substantially blocked by the apertures due to capillary action.

Owner:CHIANG YING CHUAN

Turbine combustion system cooling scoop

A scoop (54) over a coolant inlet hole (48) in an outer wall (40B) of a double-walled tubular structure (40A, 40B) of a gas turbine engine component (26, 28). The scoop redirects a coolant flow (37) into the hole. The leading edge (56, 58) of the scoop has a central projection (56) or tongue that overhangs the coolant inlet hole, and a curved undercut (58) on each side of the tongue between the tongue and a generally C-shaped or generally U-shaped attachment base (53) of the scoop. A partial scoop (62) may be cooperatively positioned with the scoop (54).

Owner:SIEMENS ENERGY INC

Strict collinearity equation model of satellite-borne SAR image

InactiveCN101571593ASatisfy the ground positioningRadio wave reradiation/reflectionCentral projectionComputer vision

The invention discloses a strict collinearity equation model of a satellite-borne SAR image. In the model, the derivation is carried out aiming at an earth ellipsoid model, a virtual projection center is built at each orientation of the SAR image, and a computing method of external parameter and equivalent focal length of a virtual projection center sensor and a revision method from a slant-distance image to a central projection image are derived. The method solves the following problems: (1), an approximate collinearity equation model is not strict; and (2), a ground control point is required to carry out image geocoding when the external parameter of the sensor is unknown.

Owner:BEIHANG UNIV

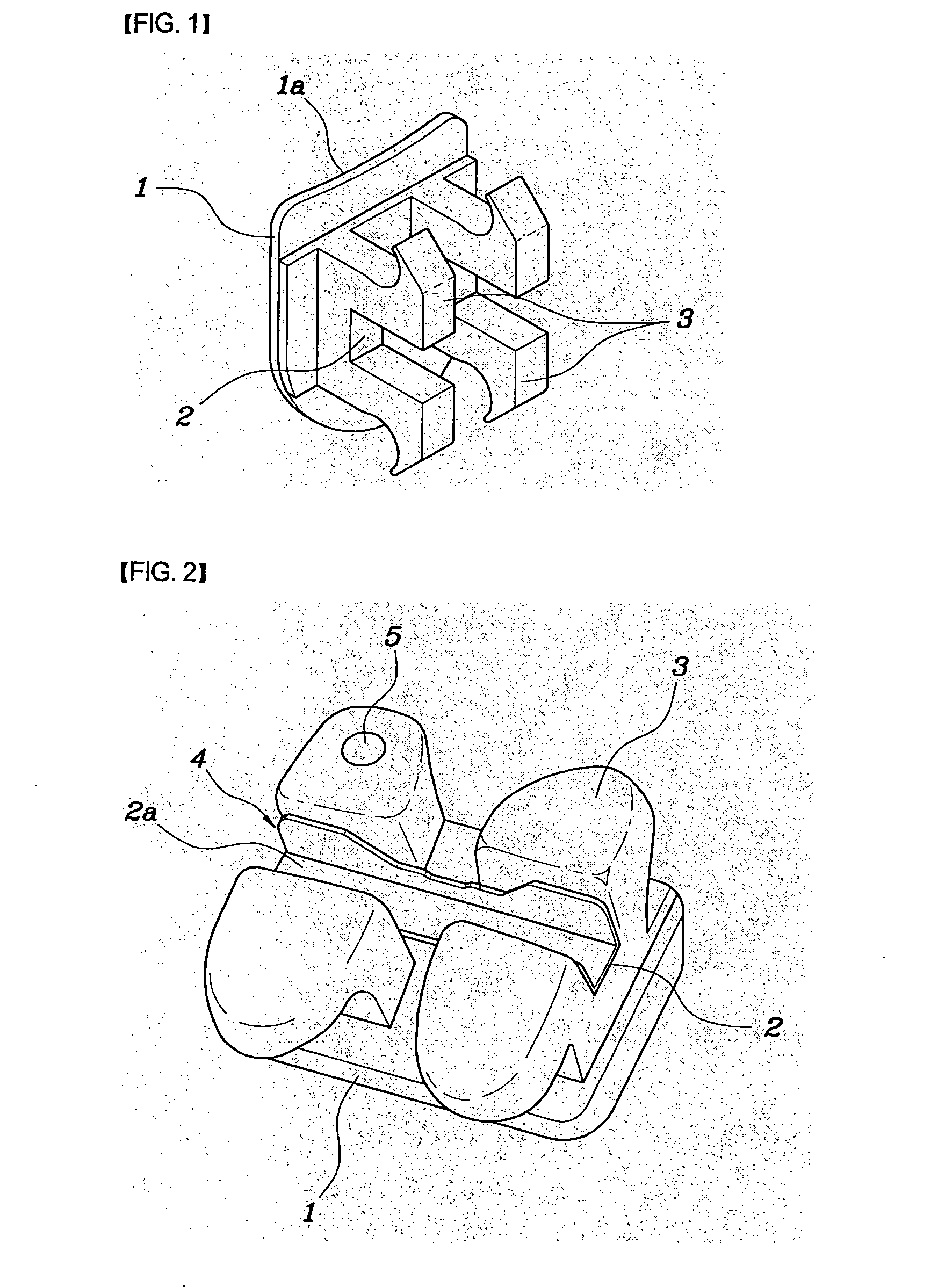

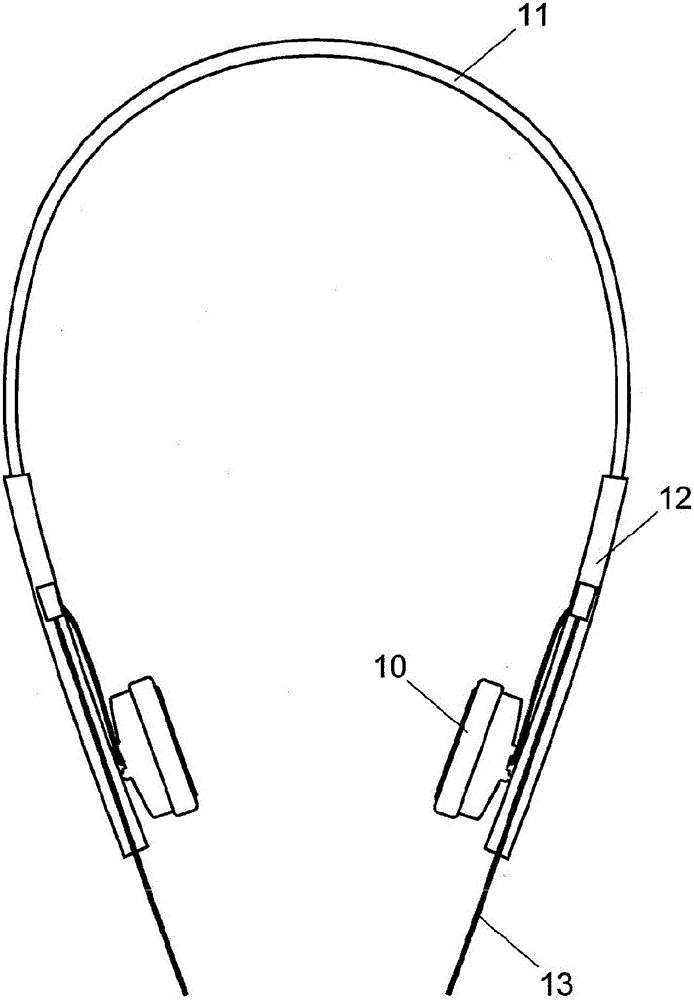

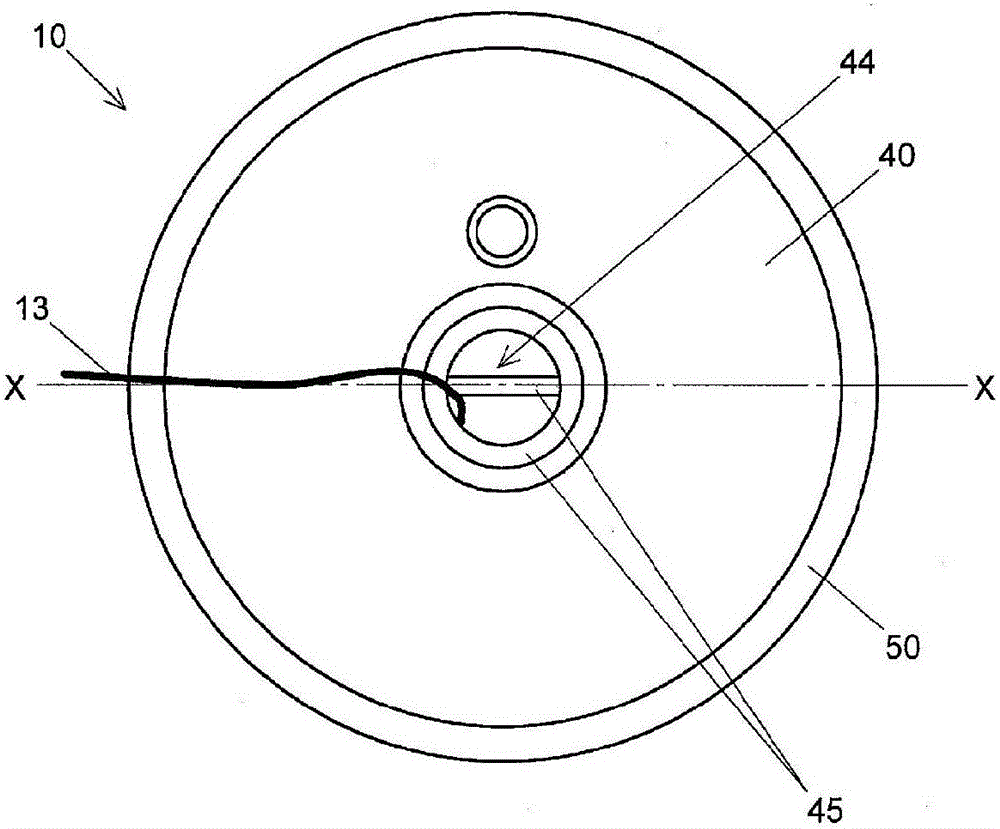

Bone-conduction speaker

ActiveCN105745941AHeadphones for stereophonic communicationBone conduction transducer hearing devicesElectricityCentral projection

The bone-conduction speaker includes a plate-shaped piezoelectric vibration element (20) which has its circumferential portion fixed to a circumferential edge projection (32) of a vibrator (30) on one side, while its center is in contact with a central projection (31) of the vibrator (30). The other side of the element (20) is covered with a resin-made back case (40). The central projection (31) is in contact with the center of the piezoelectric vibration element (20), where the vibration generated by a conversion of sound signals has the largest amplitude. Through this contact, the vibrator (30) can efficiently pick up vibrations. Since no vibration damper is used, the vibration is conducted efficiently and with no absorption. The resin-made back case (40) absorbs air vibration generated in a space between the piezoelectric vibration element (20) and the back case (40), whereby the leakage of sound to the outside is suppressed and the acoustic feedback is reduced.

Owner:唐迎

Imaging apparatus

ActiveUS8134608B2Easy to captureReducing the other portionsTelevision system detailsGeometric image transformationVisibilityImaging processing

Disclosed is an imaging apparatus capable of enlarging a portion of an image captured by an imaging device of a fisheye camera and displaying the enlarged image on a display screen with little distortion. The imaging apparatus enlarges a section, which is a portion of an image captured by a fisheye camera, and displays the section on a display screen such that the enlargement ratios of points in the section are different from each other. An image processing method uses a change function that changes the enlargement ratio of a field angle depending on the place is used, and converts a portion of the captured image into a central projection image to generate a display image. The use of the change function makes it possible to generate a display image having high visibility.

Owner:ALPS ALPINE CO LTD

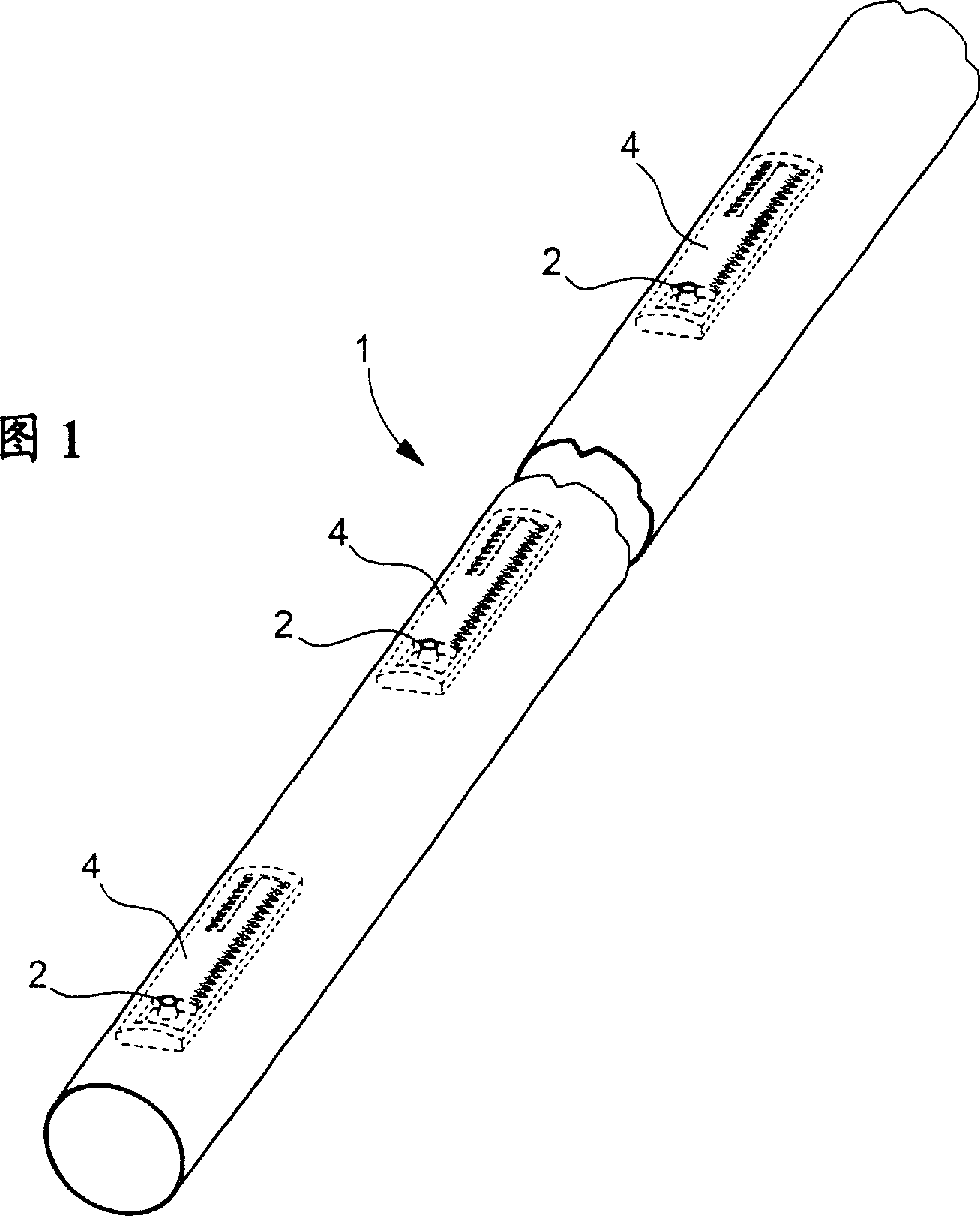

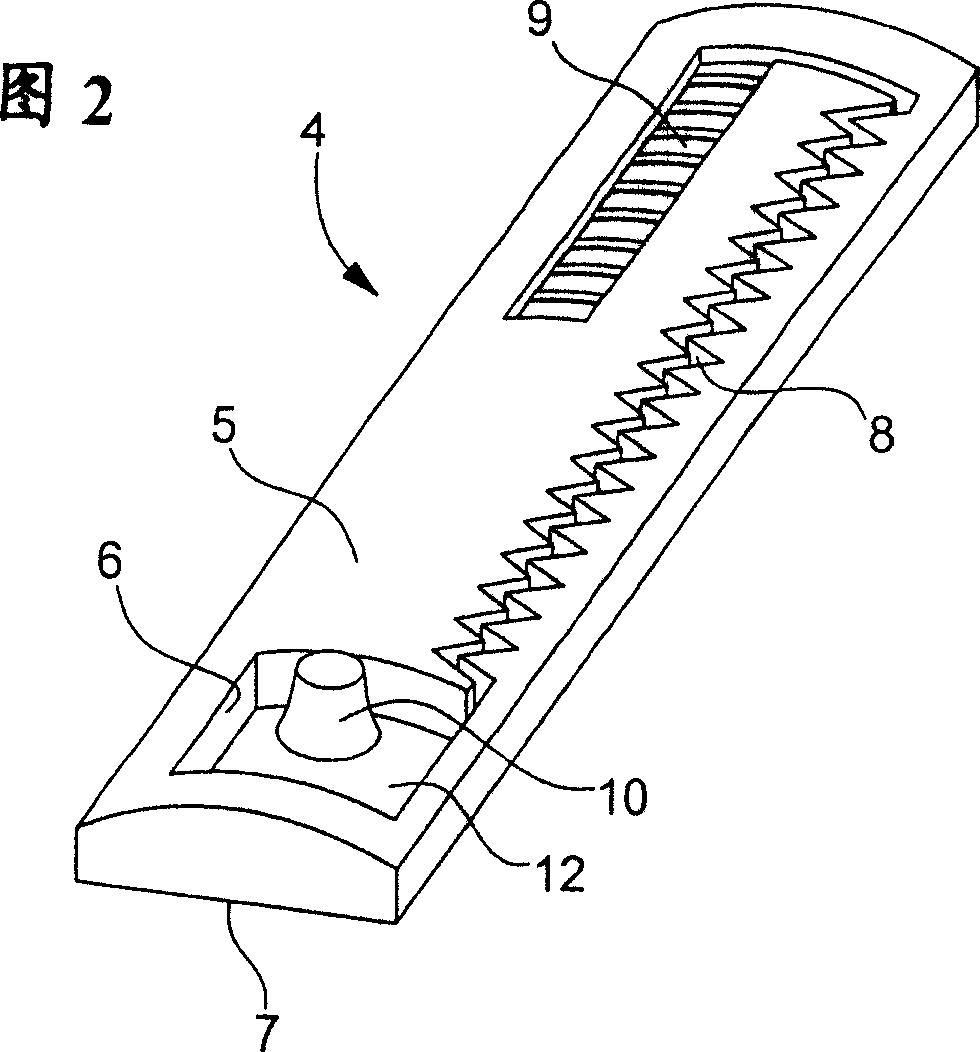

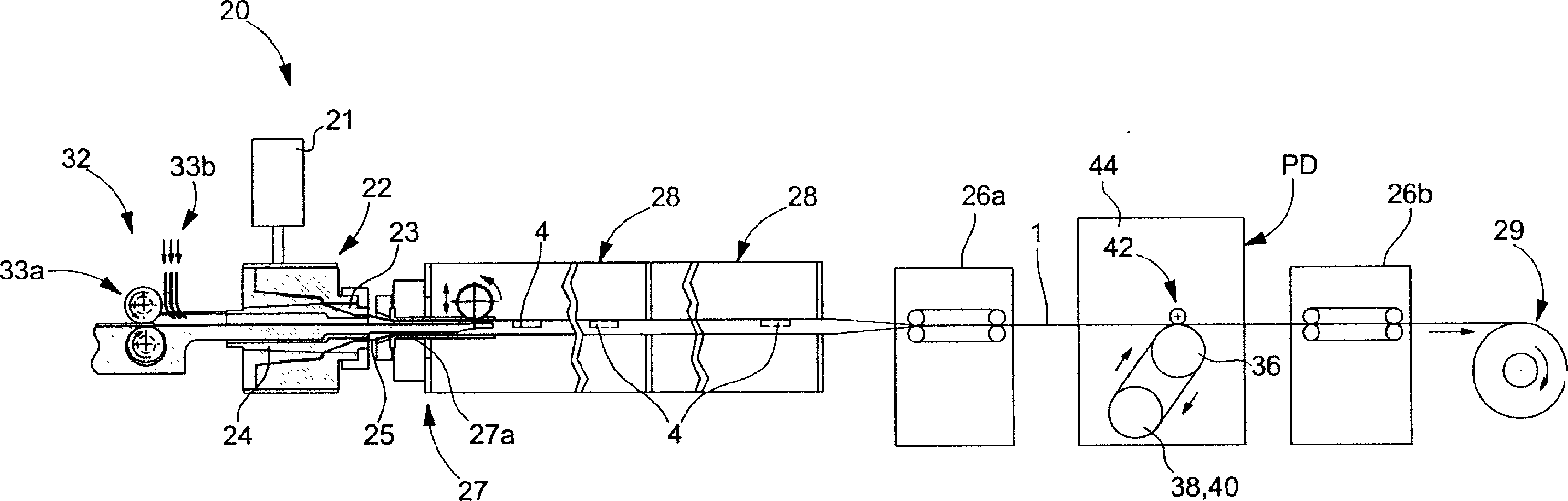

Method of fabrication of drip irrigation conduits

InactiveCN1640236AIncrease production speedClimate change adaptationWatering devicesCentral projectionDrip irrigation

The droplet former (4), designed to be located at intervals to the inside of an irrigation pipe to control the flow of water through its outlets, consists of a plastic bar (5) with a water collection chamber (10) fed by a passage (9) and a labyrinth channel (8). The bar is attached to the inner surface of the irigation pipe, especially by welding, so that the collection chamber is aligned with a pipe outlet. The collection chamber has a central projection that lifts the wall of the irrigation pipe from the inside so that it can be trimmed to make an outlet with its edges in contact with the projection to form a valve. - An INDEPENDENT CLAIM is included for a method of manufacturing a water droplet former.

Owner:托马斯机械股份有限公司

Cover for dispensing closure with pressure actuated valve

InactiveUS20050269373A1Prevent openingRestrict movementClosuresDischarging meansCentral projectionEngineering

A cover for a valved dispensing closure prevents the valve from opening while the cover is disposed on closure. The cover's central projection prevents flaps formed in the valve from opening, and the cover's outer projection prevents the flexible valve head from moving or inverting. The cover may also have projections that project inwardly from its skirt. The cover projections interact with mating projections formed on an exterior of the closure, and a lower end of the skirt may be disposed into an annular groove in the closure, to enhance a seal between the closure and the cover. The cover may include a snap ring attached to other portions of the cover by a breakable connection to provide tamper evidence.

Owner:OBRIST CLOSURES SWITZERLAND GMBH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com