Patents

Literature

319 results about "Street scene" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

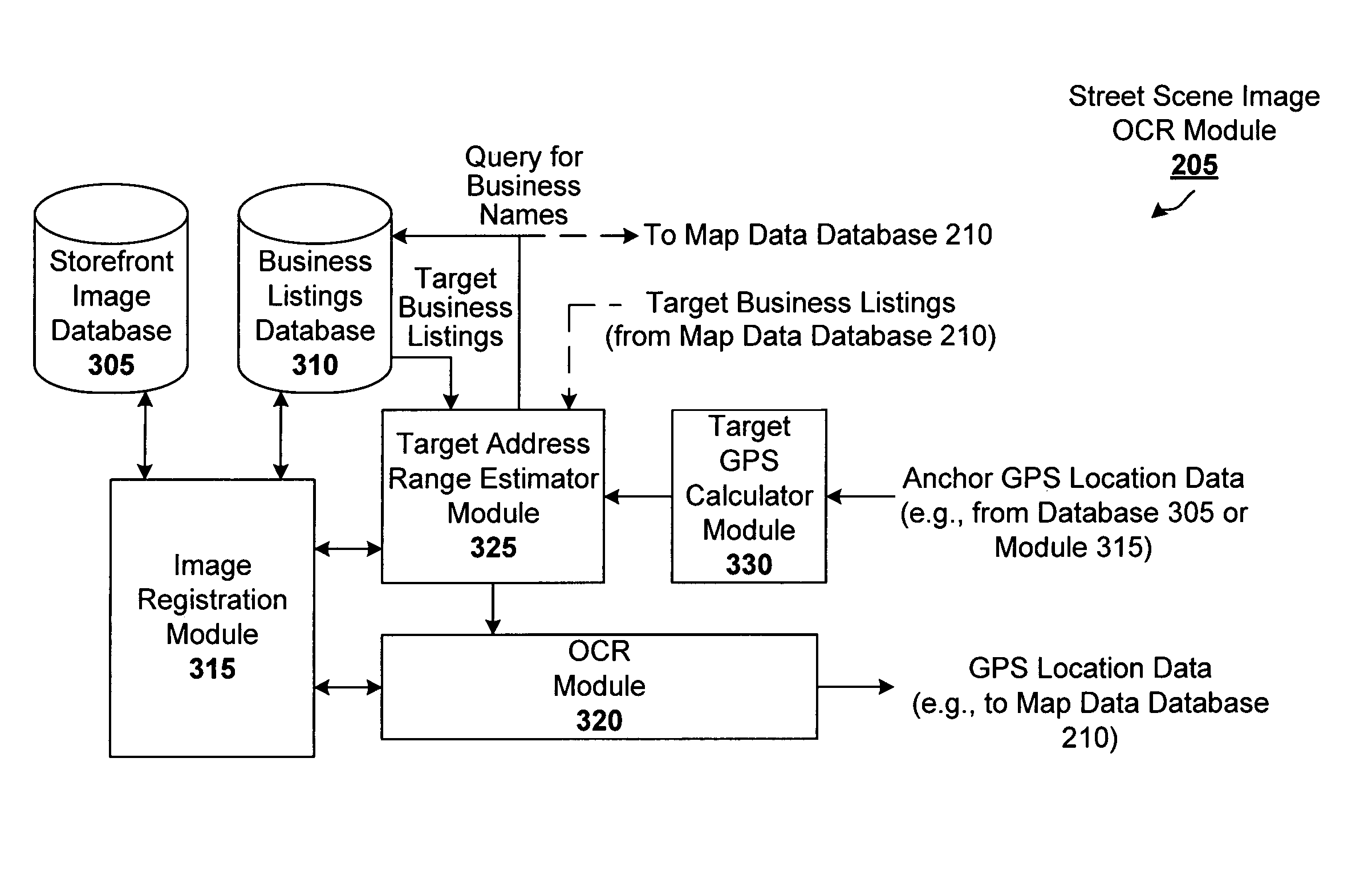

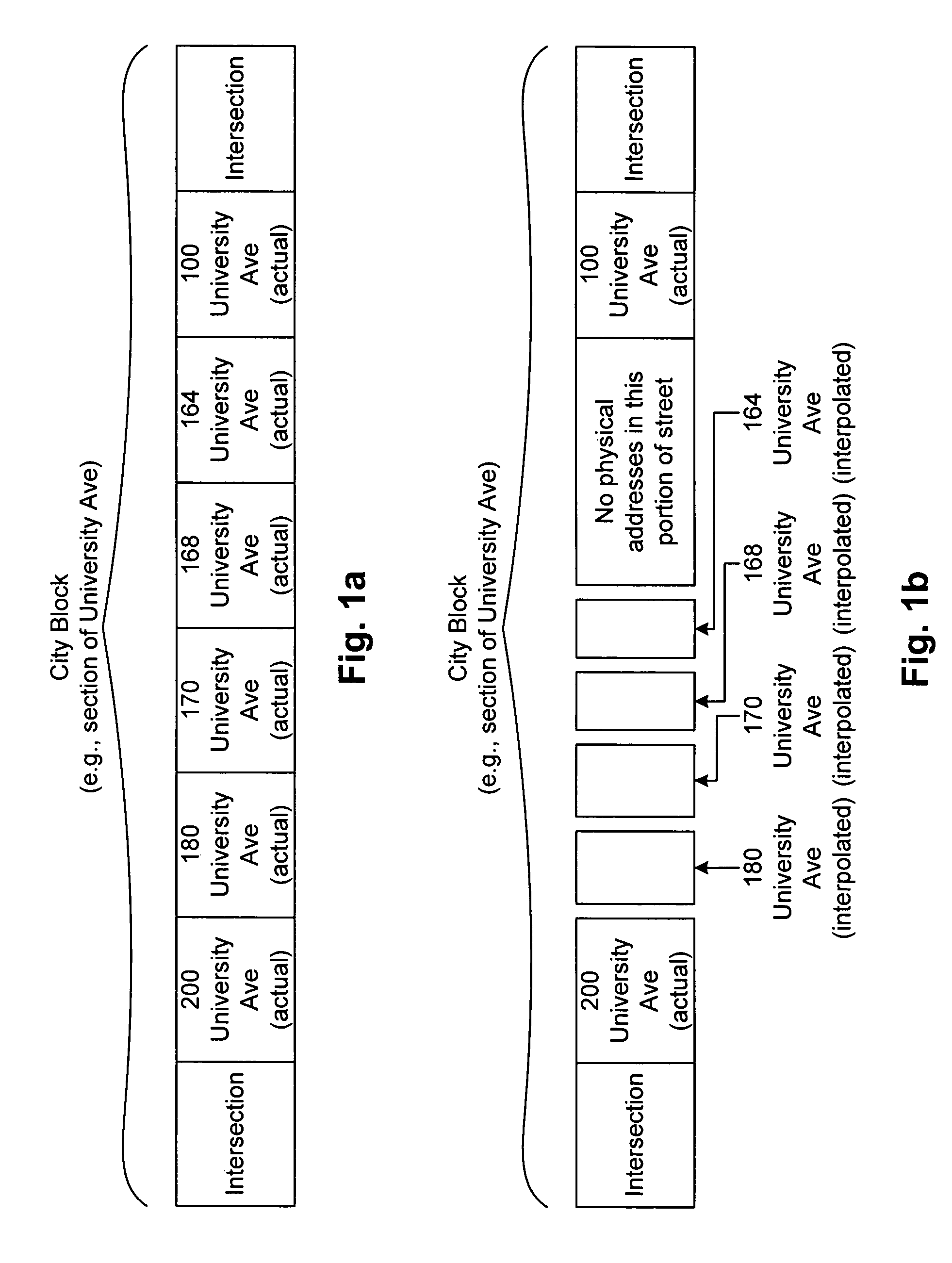

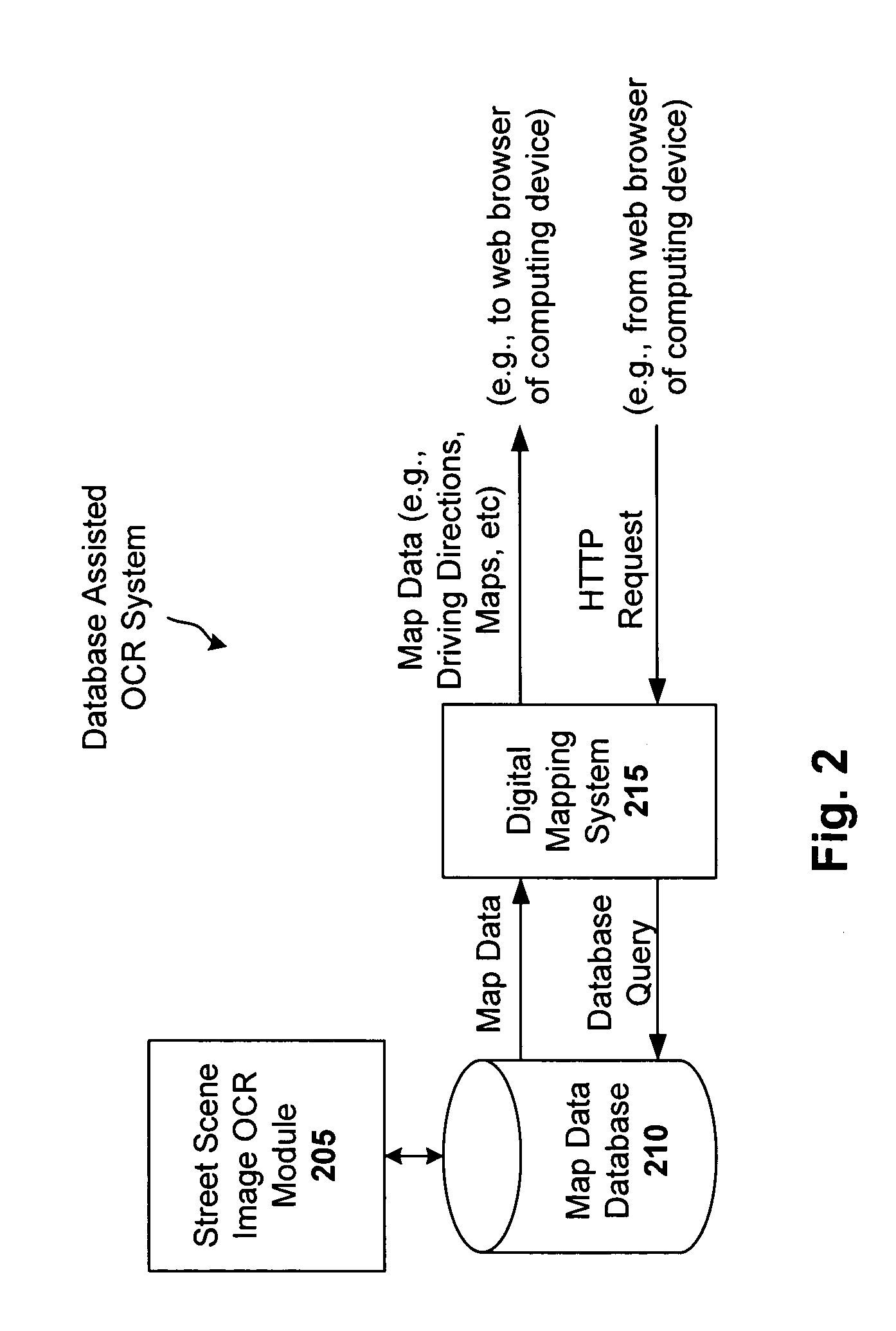

Database assisted OCR for street scenes and other images

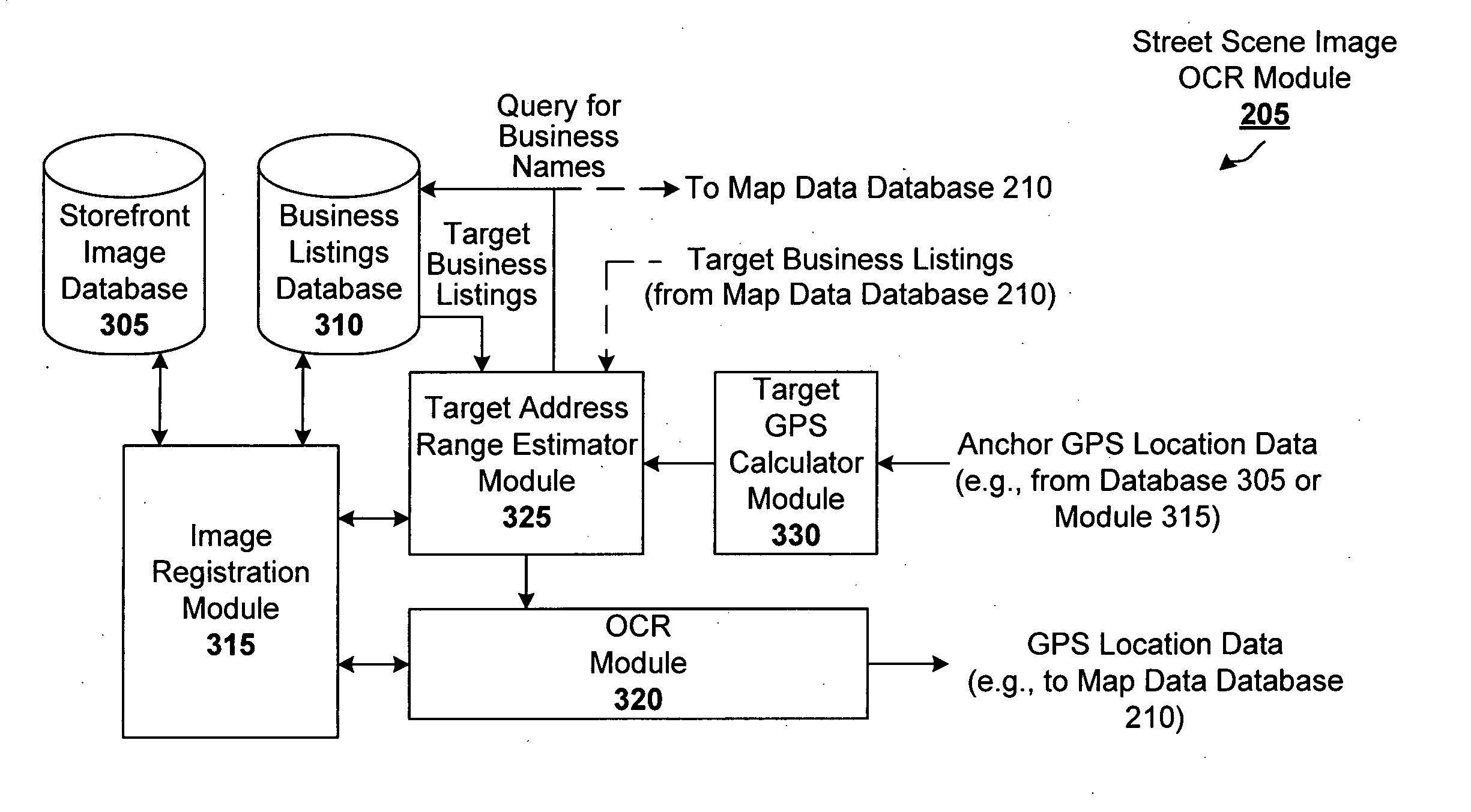

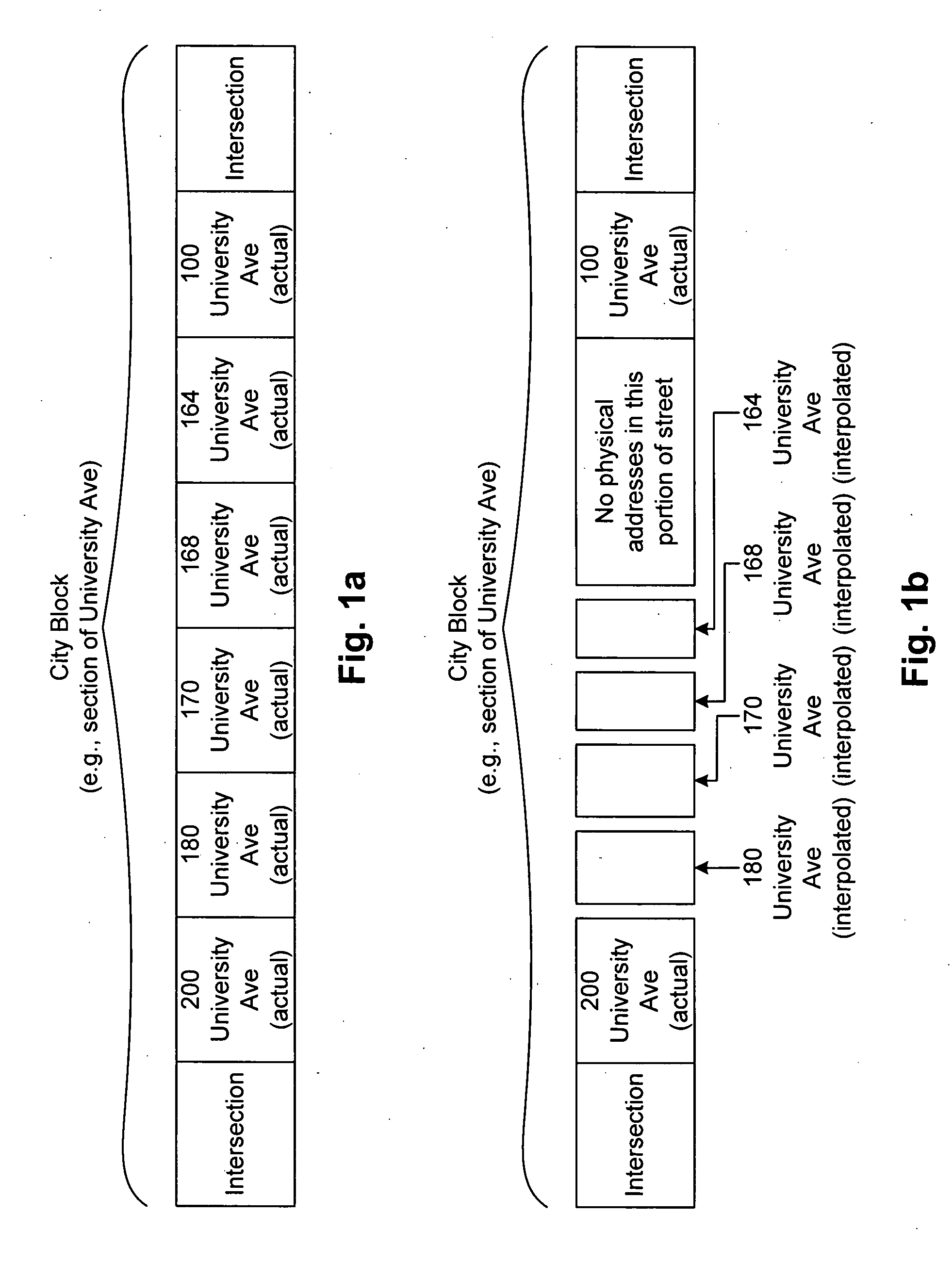

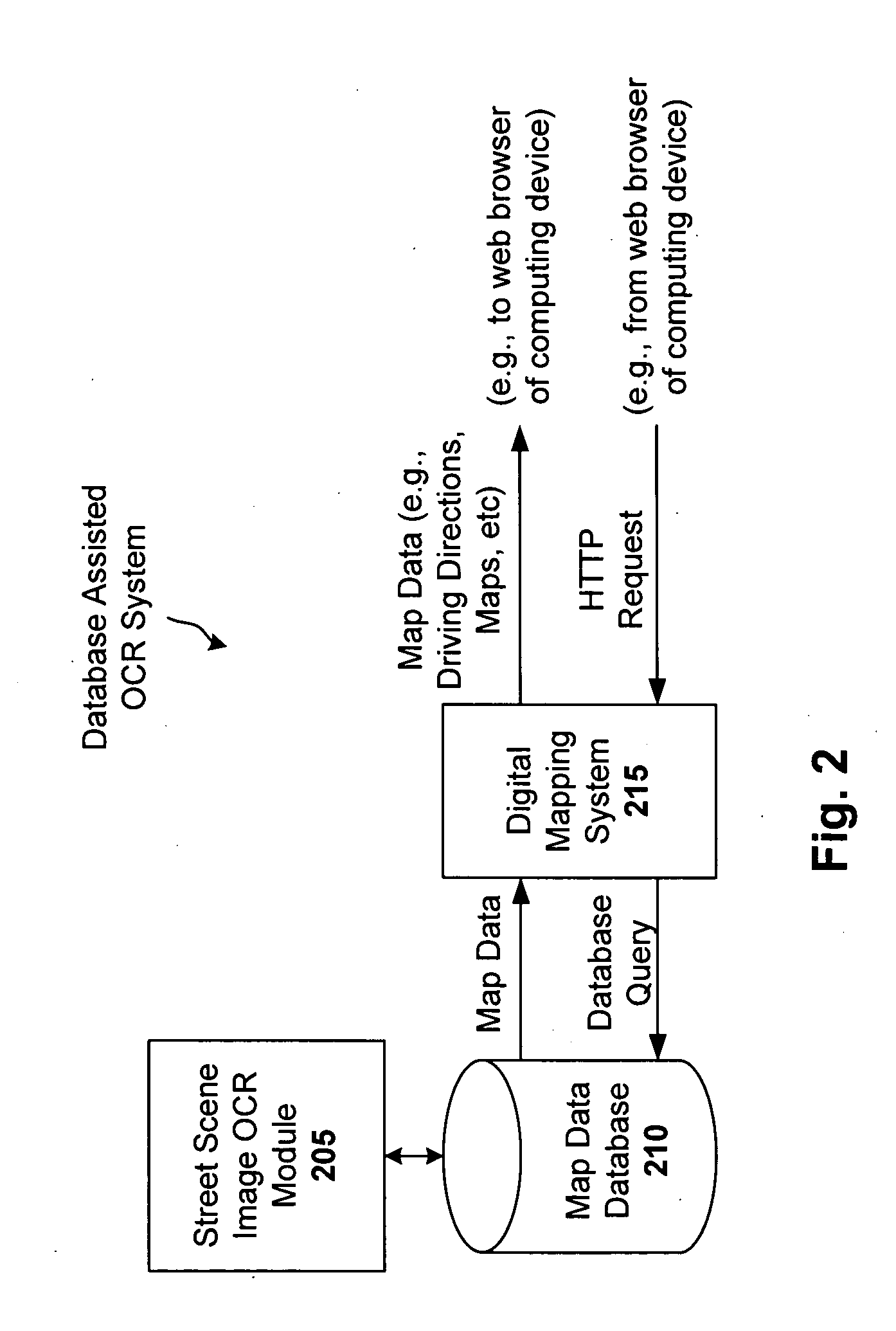

Optical character recognition (OCR) for images such as a street scene image is generally a difficult problem because of the variety of fonts, styles, colors, sizes, orientations, occlusions and partial occlusions that can be observed in the textual content of such scenes. However, a database query can provide useful information that can assist the OCR process. For instance, a query to a digital mapping database can provide information such as one or more businesses in a vicinity, the street name, and a range of possible addresses. In accordance with an embodiment of the present invention, this mapping information is used as prior information or constraints for an OCR engine that is interpreting the corresponding street scene image, resulting in much greater accuracy of the digital map data provided to the user.

Owner:GOOGLE LLC

Processing method and device for adding interest point information of map to street scene images

ActiveCN103971589ATime-consuming and laborious to solveEasy to updateMaps/plans/chartsSpecial data processing applicationsStreet scene

The invention relates to a processing method and device for adding interest point information of a map to street scene images. The interest point information comprises position information of interest points. The method includes the steps that information of all interest points located within a shooting position preset range are found and extracted in the map according to shooting positions of the street scene images; according to the position information of the interest point information, the interest points are projected at the positions corresponding to the street scene images, and marks capable of being identified by a computer are formed; corresponding interest point information labels are added to all marks capable of being identified by the computer of the street scene images, and the interest point information labels comprise interest point information of corresponding interest points. The problems that interest point information is manually labeled and time and labor are wasted in the prior art are solved, work efficiency is improved, and information provided by the street scene images is richer.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

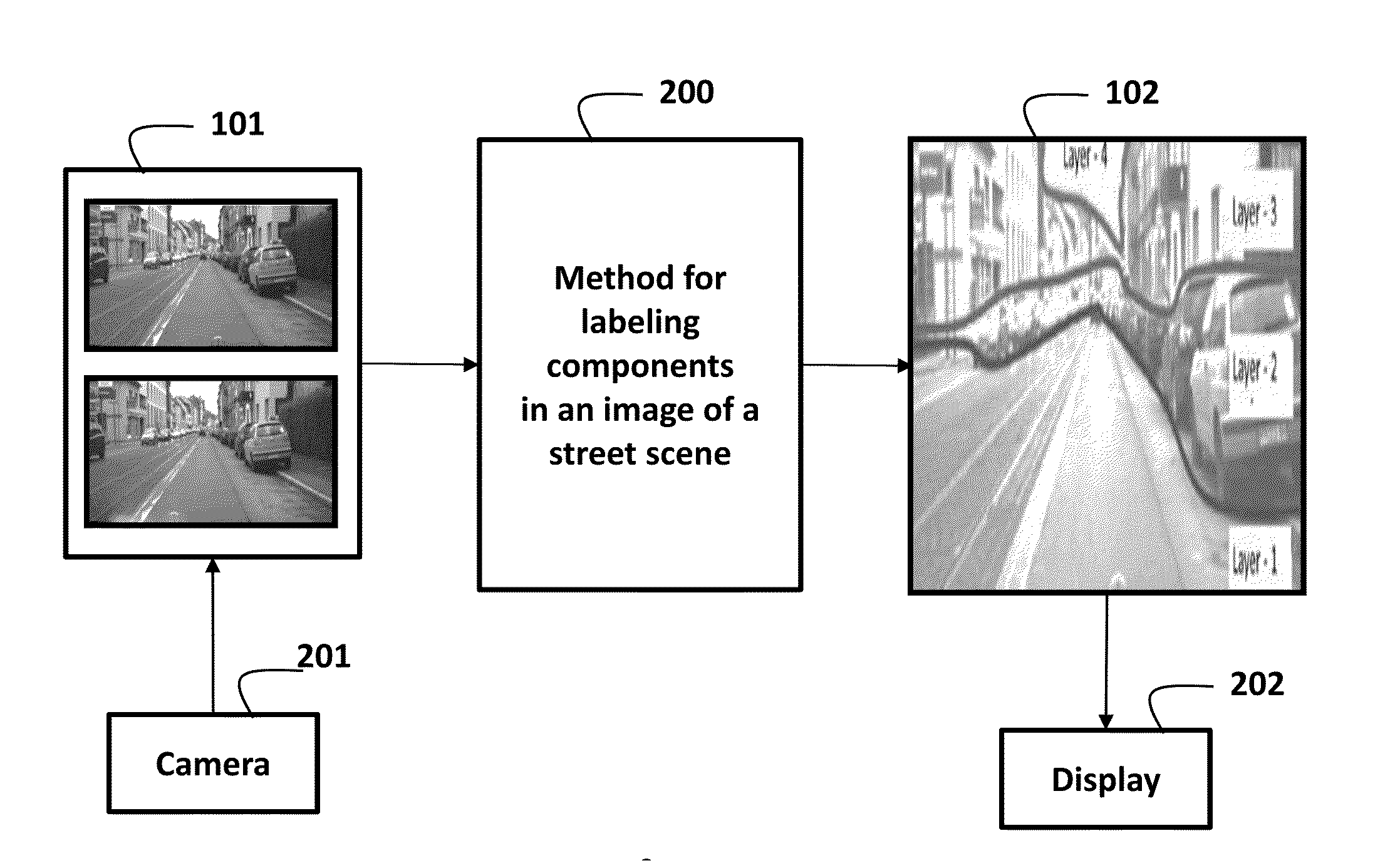

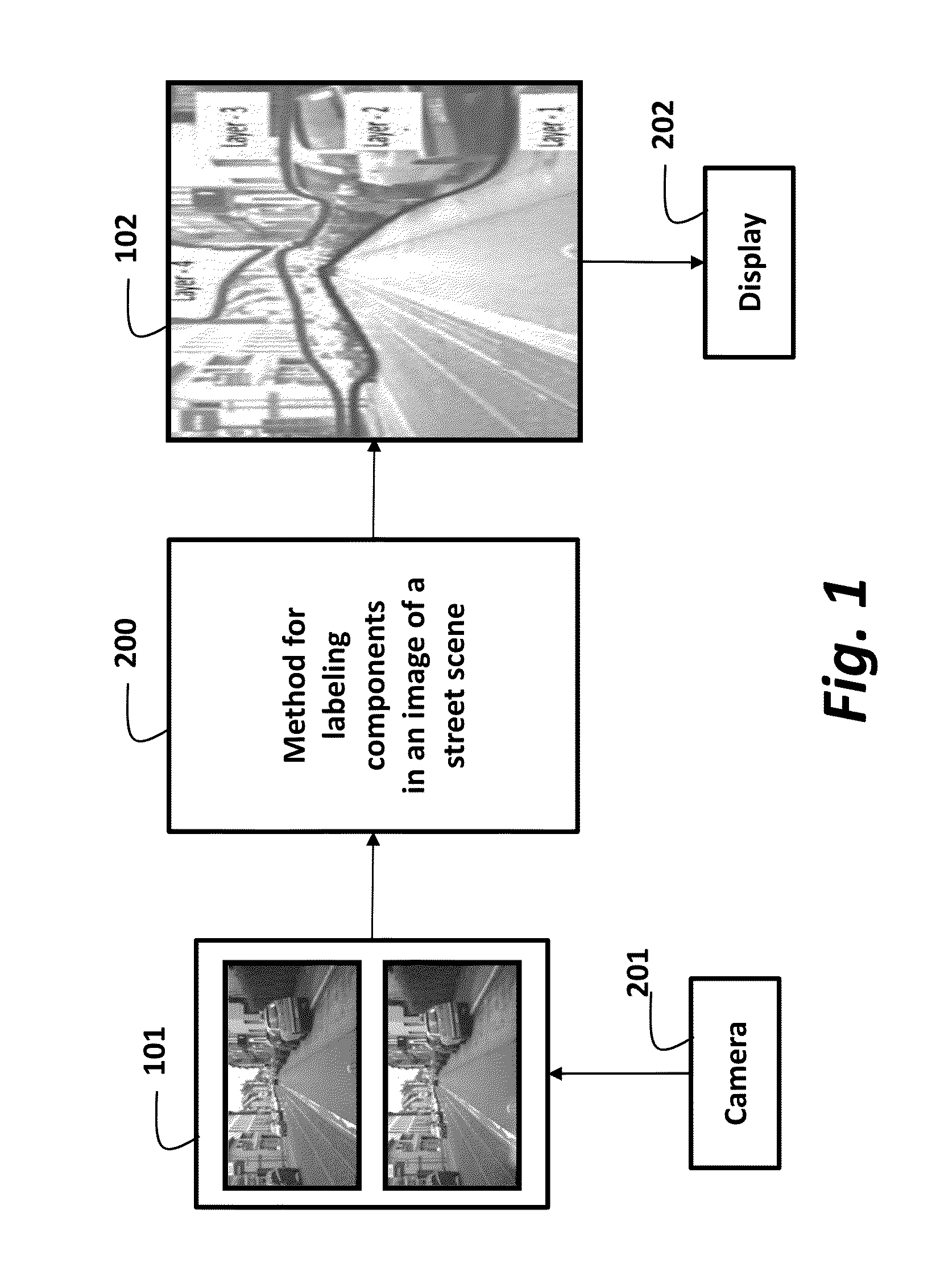

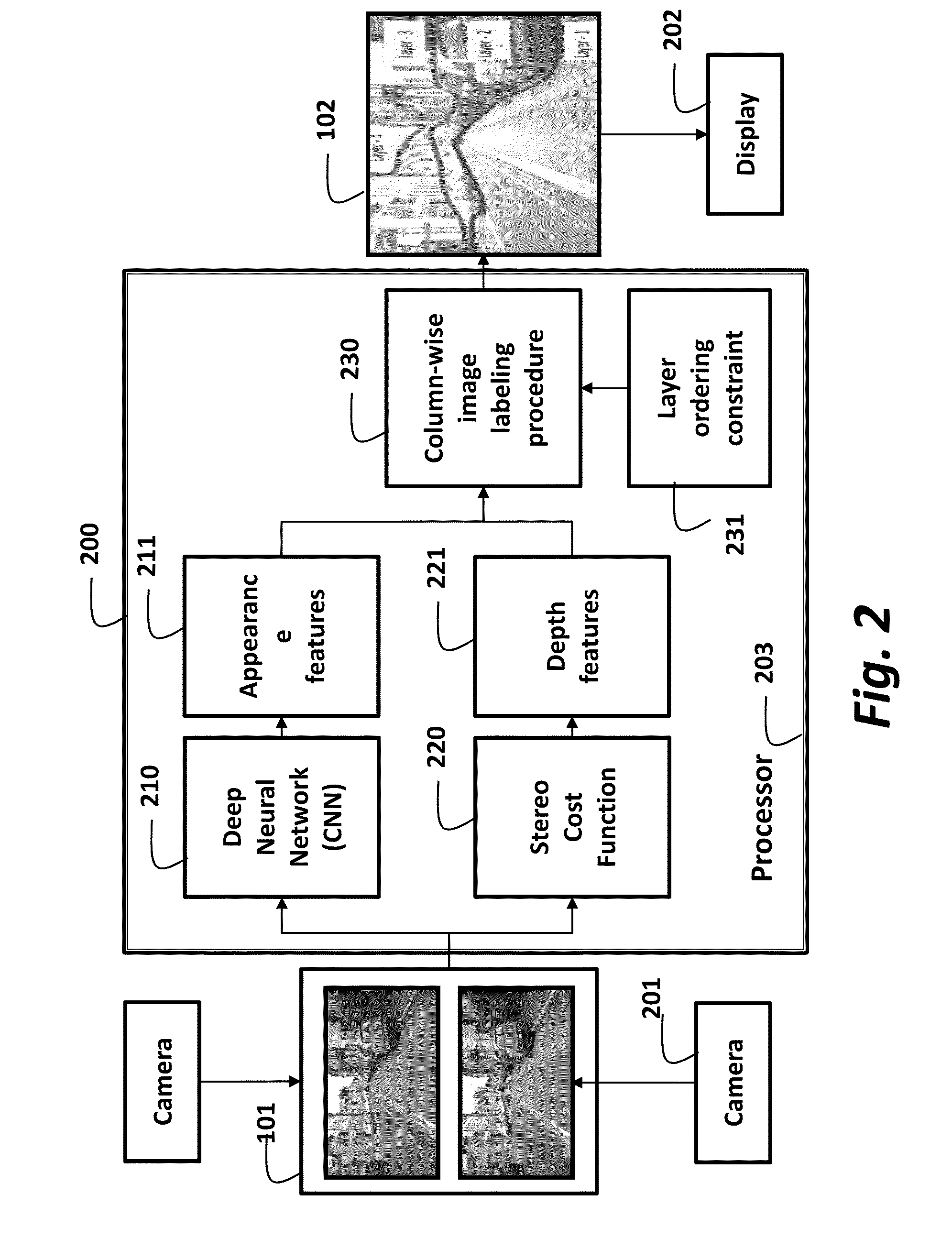

Method for Labeling Images of Street Scenes

A method labels an image of a street view by first extracting, for each pixel, an appearance feature for inferring a semantic label, a depth feature for inferring a depth label. Then, a column-wise labeling procedure is applied to the features to jointly determine the semantic label and the depth label for each pixel using the appearance feature and the depth feature, wherein the column-wise labeling procedure is according to a model of the street view, and wherein each column of pixels in the images includes at most four layers.

Owner:MITSUBISHI ELECTRIC RES LAB INC

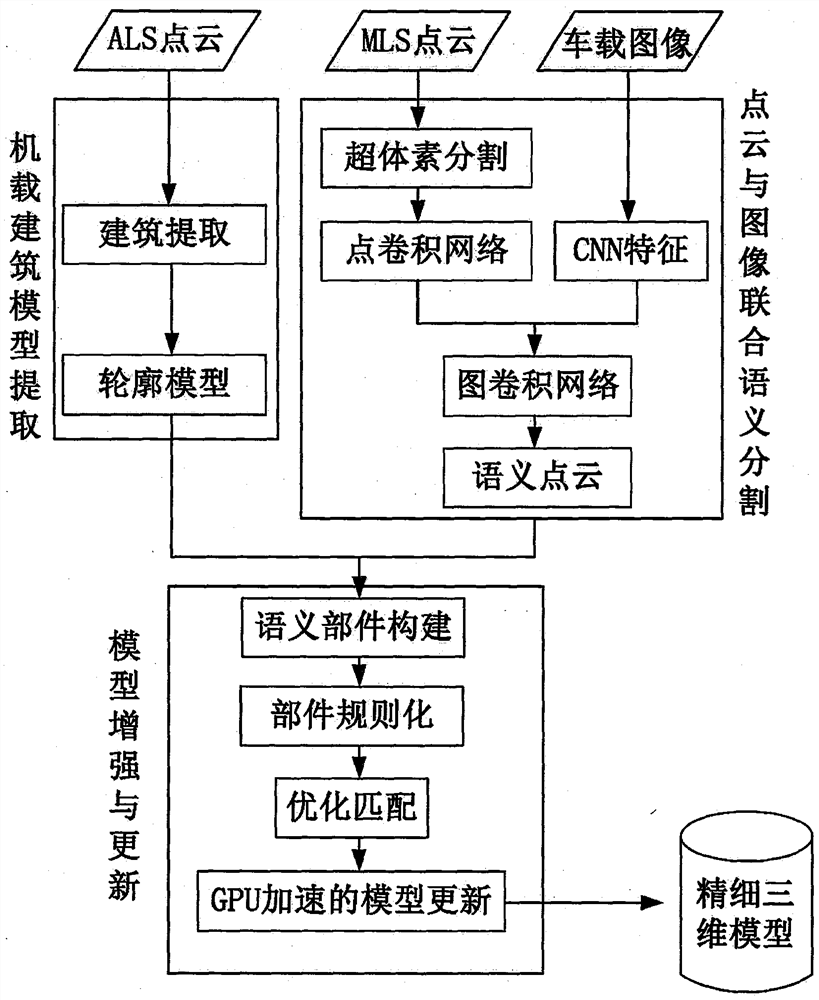

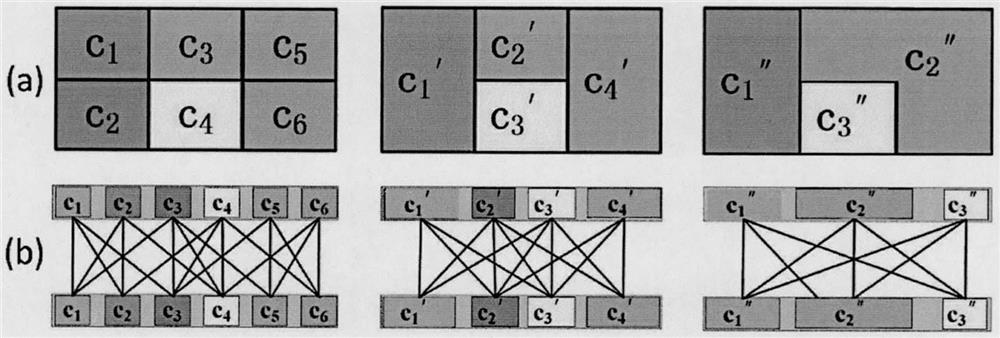

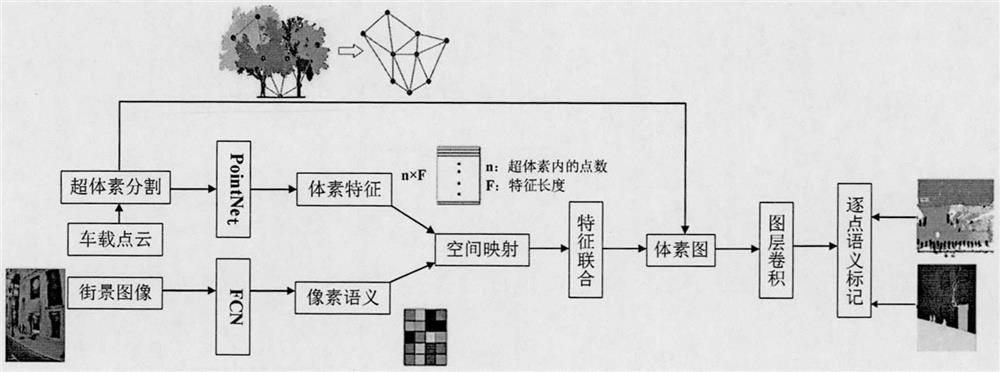

Three-dimensional building fine geometric reconstruction method integrating airborne and vehicle-mounted three-dimensional laser point clouds and streetscape images

PendingCN111815776AGuaranteed geometric accuracyEfficient extractionInternal combustion piston engines3D modellingPoint cloudModel reconstruction

The invention provides a three-dimensional building fine geometric reconstruction method integrating airborne and vehicle-mounted three-dimensional laser point clouds and streetscape images. The method comprises the following steps: (1) a quick modeling method based on airborne laser data; (2) combining the semantic segmentation framework of the vehicle-mounted point cloud and the image; and (3) amodel automatic enhancement algorithm fusing multi-source data. According to the method, airborne laser point cloud, vehicle-mounted laser point cloud and streetscape images are taken as research objects, model reconstruction, model enhancement and updating are taken as targets, joint processing of point cloud and image data of different platforms is realized, and the fusion potential of variousdata is fully mined. The final research result will perfect the fusion and fine modeling framework of vehicle-mounted-airborne data, promote the development of the point cloud data semantic segmentation technology, and serve the emerging application fields of unmanned driving and the like.

Owner:山东水利技师学院

Database assisted OCR for street scenes and other images

Optical character recognition (OCR) for images such as a street scene image is generally a difficult problem because of the variety of fonts, styles, colors, sizes, orientations, occlusions and partial occlusions that can be observed in the textual content of such scenes. However, a database query can provide useful information that can assist the OCR process. For instance, a query to a digital mapping database can provide information such as one or more businesses in a vicinity, the street name, and a range of possible addresses. In accordance with an embodiment of the present invention, this mapping information is used as prior information or constraints for an OCR engine that is interpreting the corresponding street scene image, resulting in much greater accuracy of the digital map data provided to the user.

Owner:GOOGLE LLC

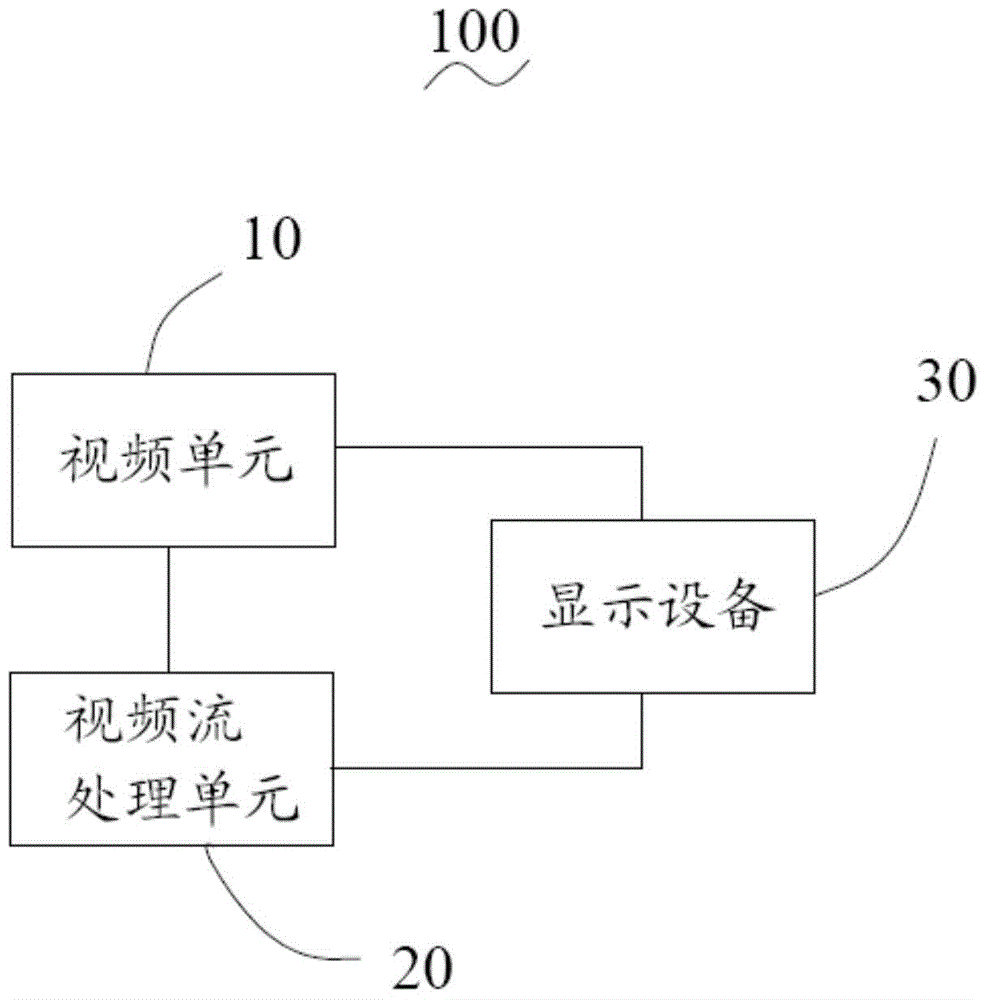

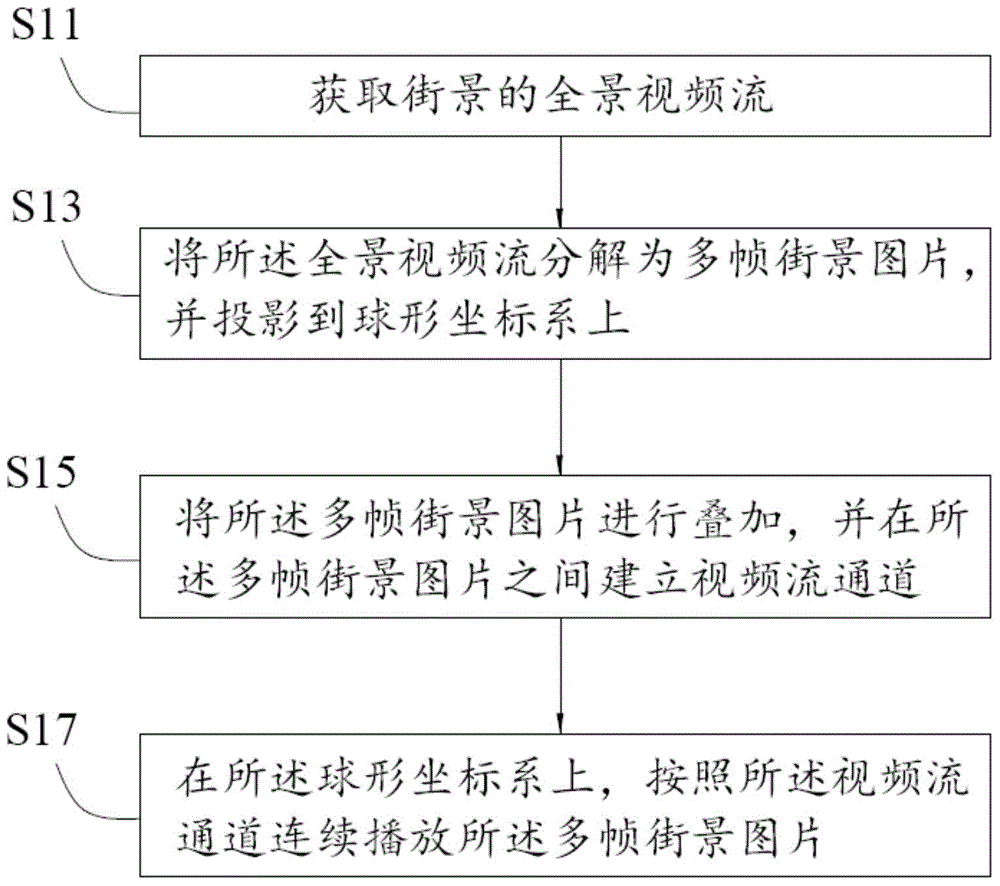

Stereo street scene video projection method and system

ActiveCN103607568ARich relevant informationTelevision system detailsPhotogrammetry/videogrammetryComputer graphics (images)Multiple frame

The invention provides a stereo street scene video projection method. The method comprises the following steps: S11, obtaining a panorama video stream of a street scene; S13, decomposing the panorama video stream to multiple frames of street scene images and projecting the images to a spherical coordinate system; S15, superposing the multiple frames of street scene images, and establishing video stream channels among the multiple frames of street scene images; and S17, on the spherical coordinate system, continuously playing the multiple frames of street scene images according to the video stream channels. The invention further provides a stereo street scene video projection system. By using the stereo street scene video projection method and system, real-time images can be obtained simply by use of a camera without specially photographing panorama photographs of specific places, latest data can be monitored, updated and obtained without regular plotting, the panorama photographs are replaced by videos obtained through the camera, and the containing information is quite rich.

Owner:SHENZHEN INST OF ADVANCED TECH

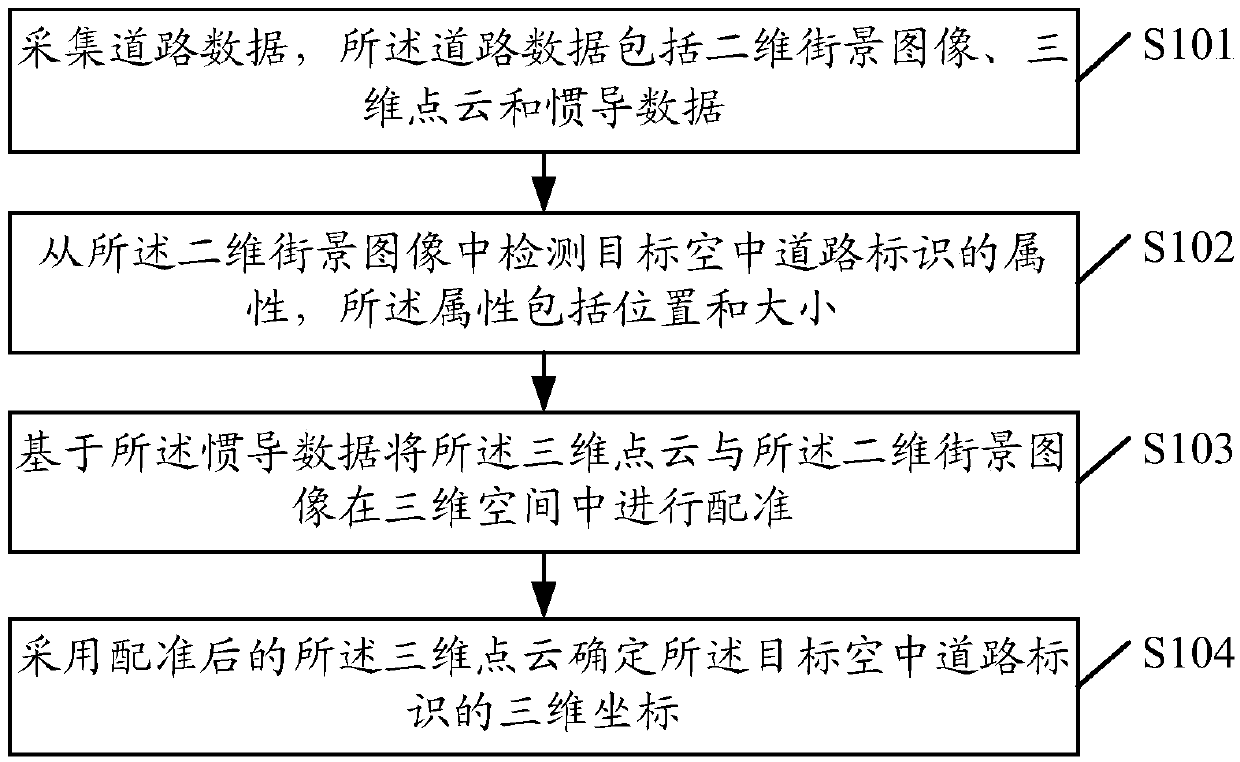

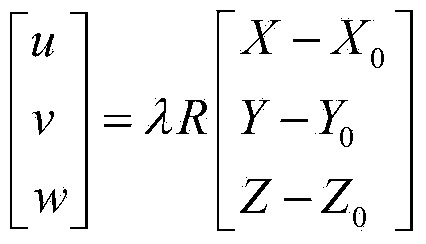

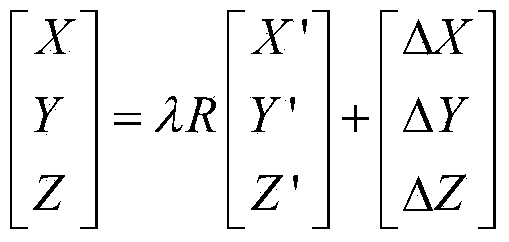

Data processing method, data processing device and terminal

ActiveCN105512646AImprove accuracyImprove practicalityCharacter and pattern recognitionPoint cloudThree-dimensional space

The embodiment of the invention provides a data processing method, a data processing device and a terminal. The data processing method comprises the following steps: collecting road data, wherein the road data comprise a two-dimensional street scene image, three-dimensional point cloud and inertial data; detecting the attributes of a target air road mark from the two-dimensional street scene image, wherein the attributes comprise positions and sizes; registering the three-dimensional point cloud and the two-dimensional street scene image in a three-dimensional space based on the inertial data; determining three-dimensional coordinates of the target air road mark by adopting the registered three-dimensional point cloud. The data processing method provided by the invention has the advantages that the air road mark can be detected from the two-dimensional street scene image, the precision of a detecting result is increased, and the practicability of data processing is increased.

Owner:TENCENT TECH (SHENZHEN) CO LTD

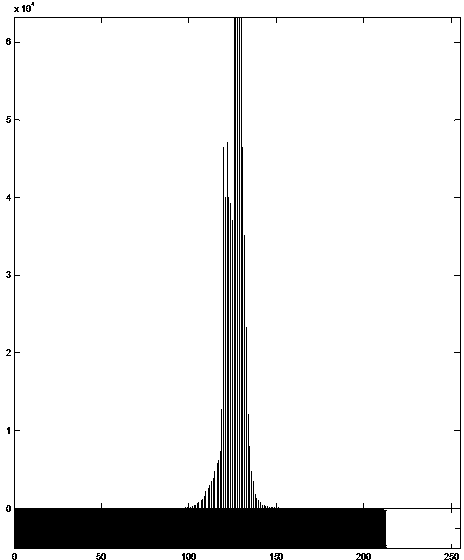

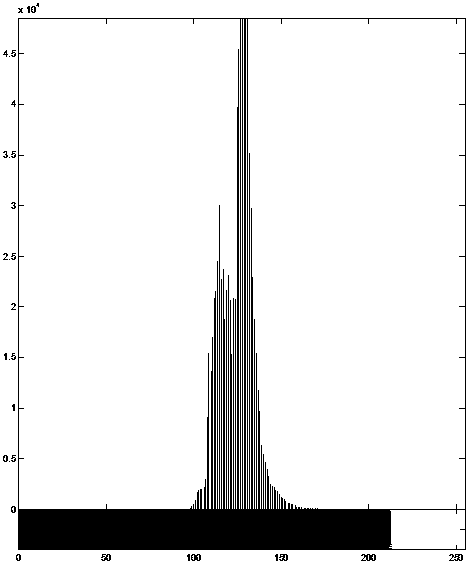

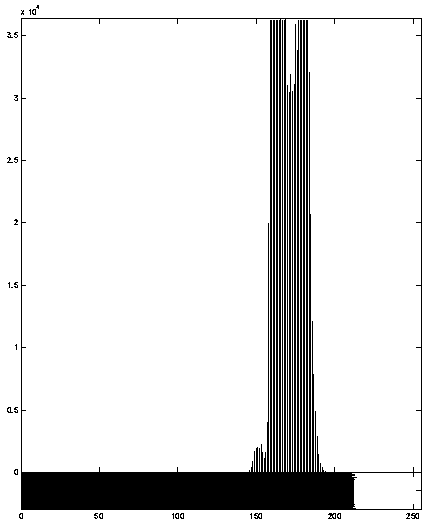

Method for detecting color cast of video image based on Lab chrominance space

InactiveCN103402117ATrue reflection of color castColor signal processing circuitsTelevision systemsComputer graphics (images)Gradation

The invention relates to a method for detecting the color cast of a video image based on Lab chrominance space. The method comprises the following steps: a) converting RGB (Red Green Blue) color space into the Lab chrominance space; b) calculating color cast factors. The invention provides a new color cast detecting factor, which is successively used for detecting the color cast of the image. First, the image is converted from the original RGB color space into the Lab chrominance space; then according to the distinction of the spatial gray level histogram distribution of a chrominance and b chrominance between a normal image without color cast and an abnormal image with color cast, the mean value of the spatial gray level histogram distribution of the a chrominance and the b chrominance are respectively calculated; then according to the statistical distribution between the mean value and the mid value of histograms, the color factors are calculated. A lot of experiments of real street view image databases prove that the new color cast detecting factor disclosed by the invention can really reflect the degree of color cast of images so as to complete color cast detection. The method has the advantages of high detection speed and accurate precision.

Owner:湖南乐泊科技有限公司

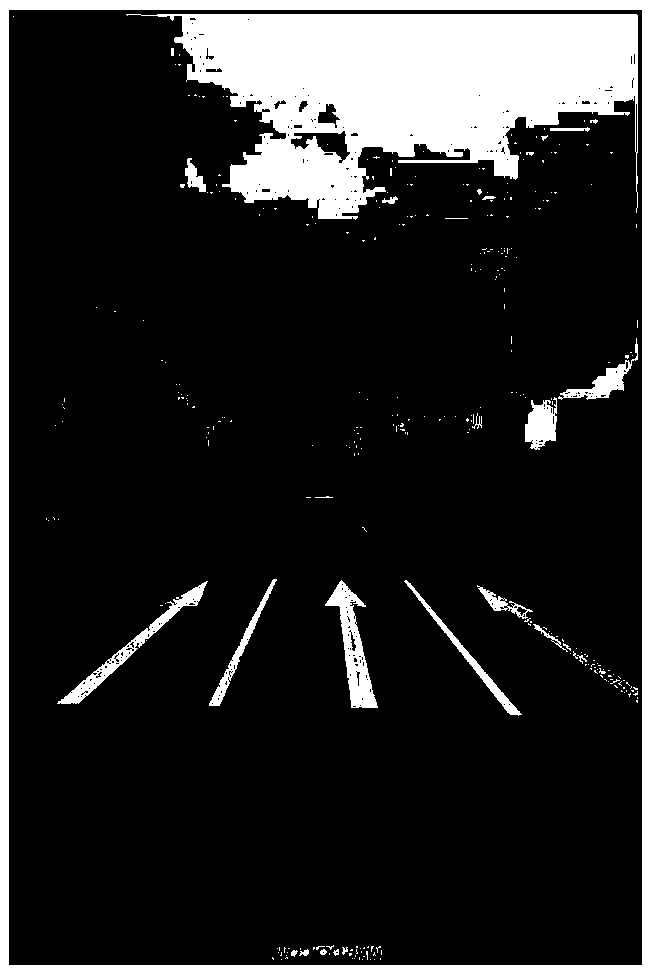

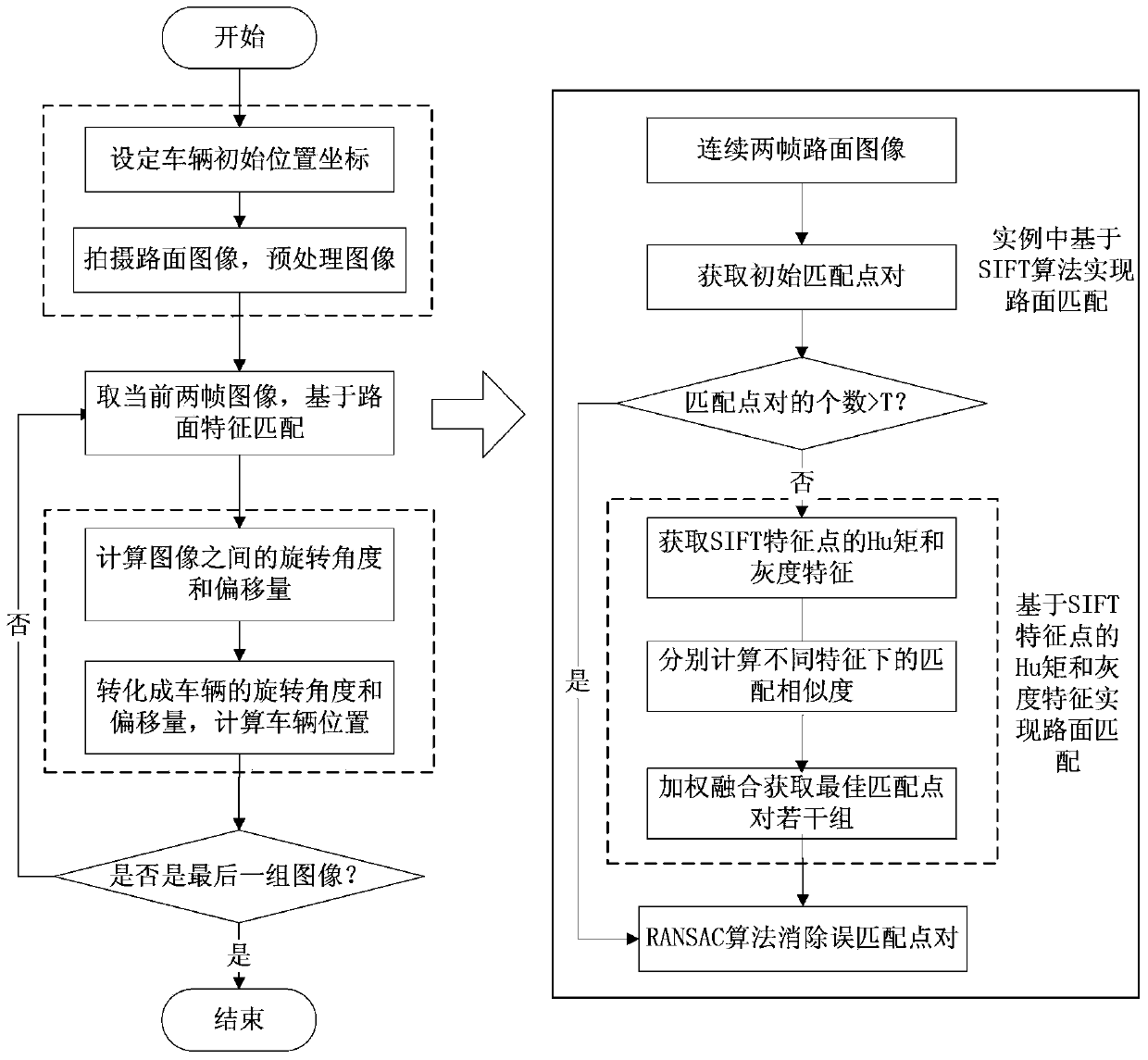

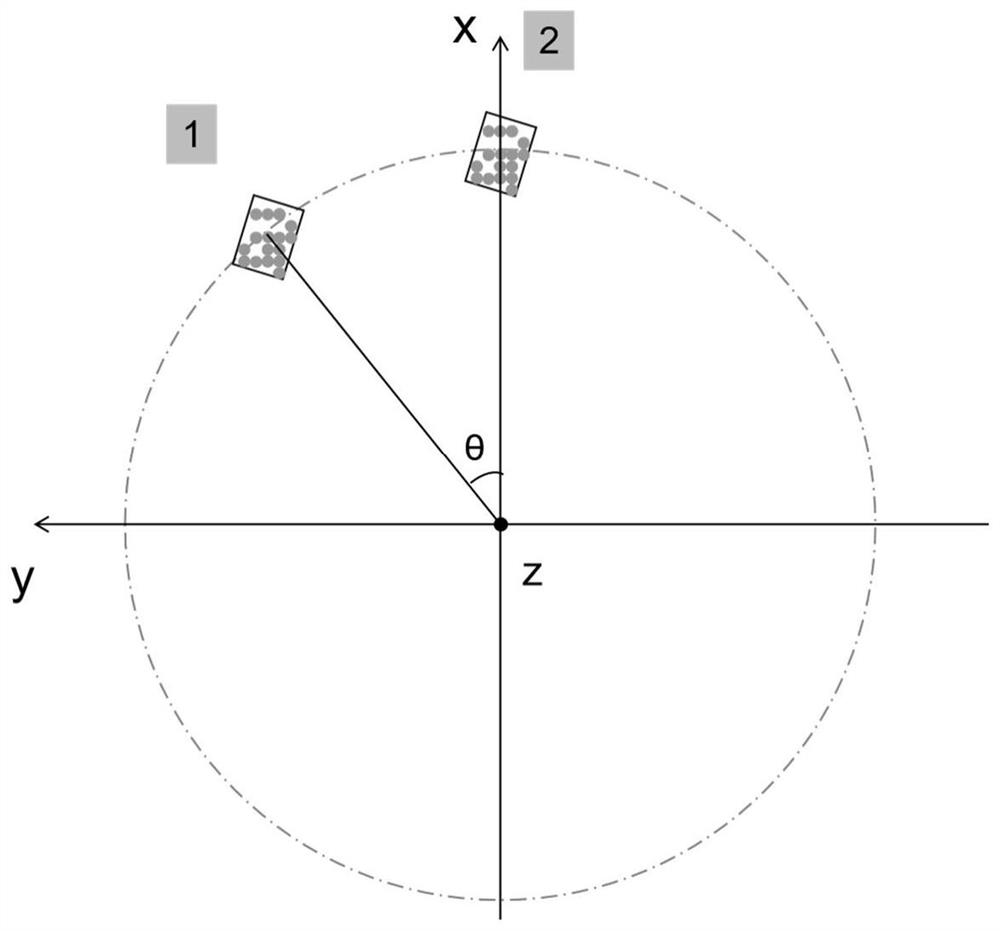

Vehicle locating method based on matching of road surface image characteristics

ActiveCN103473774AReduce preparatory workGuaranteed continuityImage analysisComputer graphics (images)Road surface

The invention discloses a vehicle locating method based on matching of road surface image characteristics. The vehicle locating method based on the matching of the road surface image characteristics comprises the steps of determining geographical coordinates of an initial position of a vehicle, shooting road surface images in real time in the traveling process of the vehicle, carrying out dogging processing on two shot current continuous frames of the road surface images in sequence, carrying out matching on the two continuous frames of the road surface images processed through the dogging in real time to obtain matching dot pairs of the two continuous frames of the road surface images, carrying out vehicle location on the vehicle according to the obtained matching dot pairs, judging whether the current two frames of the images are the last two frames or not, ending the process if the current two frames of the images are the last two frames, and otherwise repeating the steps. According to the vehicle locating method based on the matching of the road surface image characteristics, the road surface images only need to be collected in real time in the traveling process of the vehicle, and matching is carried out on the two continuous frames of the road surface images so that automatic vehicle location can be achieved; the method is not prone to interference, high in location precision and capable of saving time and labor due to the fact that the time-consuming and labor-consuming link of collecting all-dimensional street scenes in advance in an existing locating method is saved.

Owner:CHANGAN UNIV

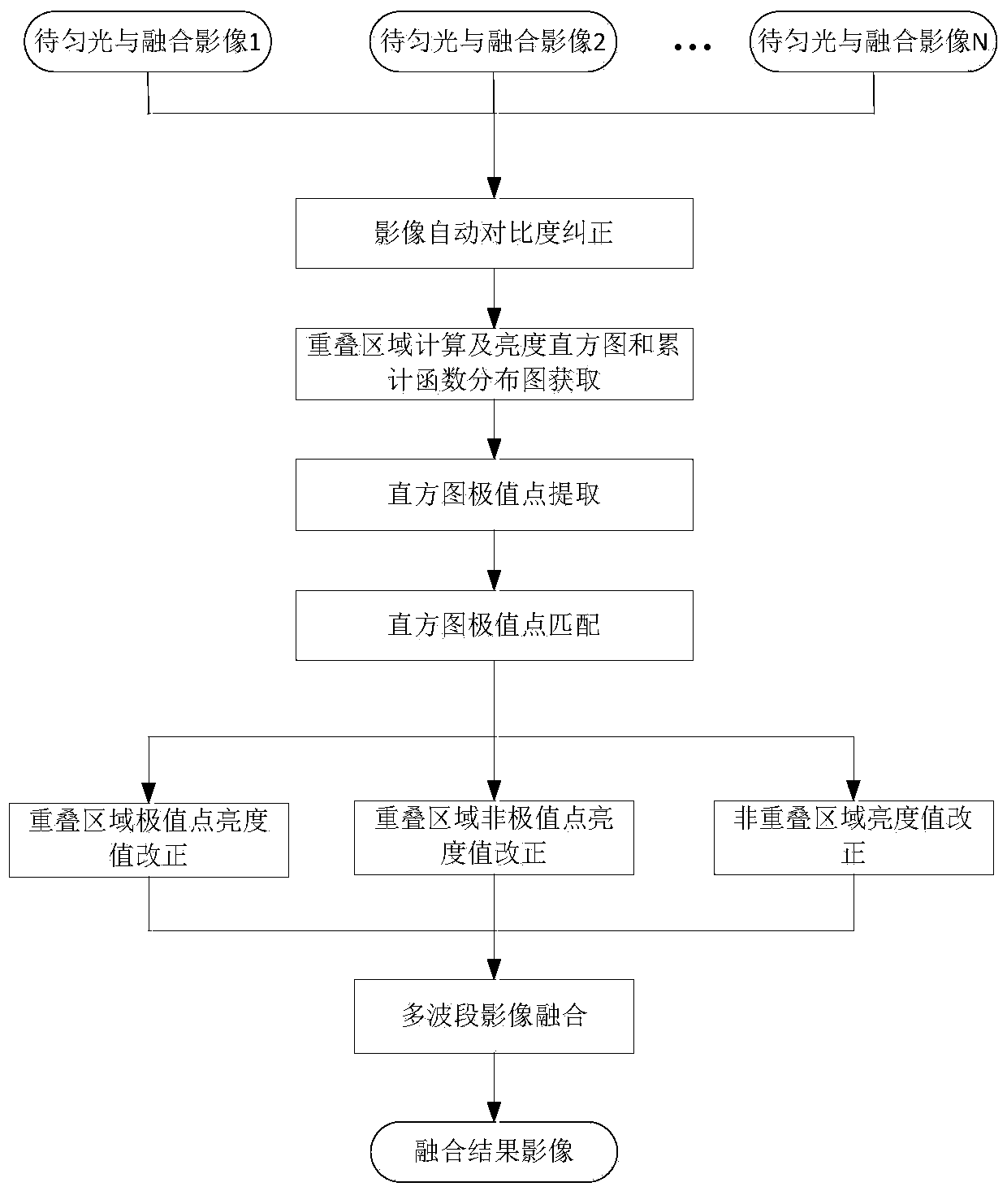

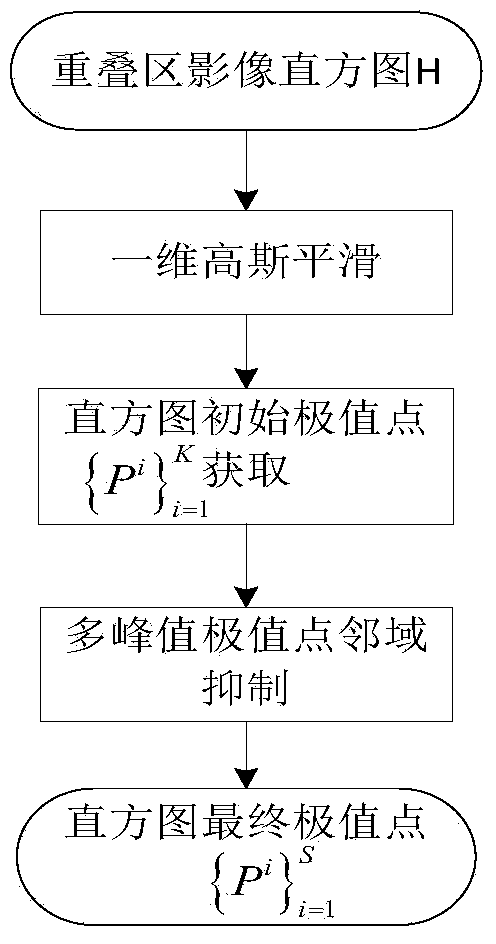

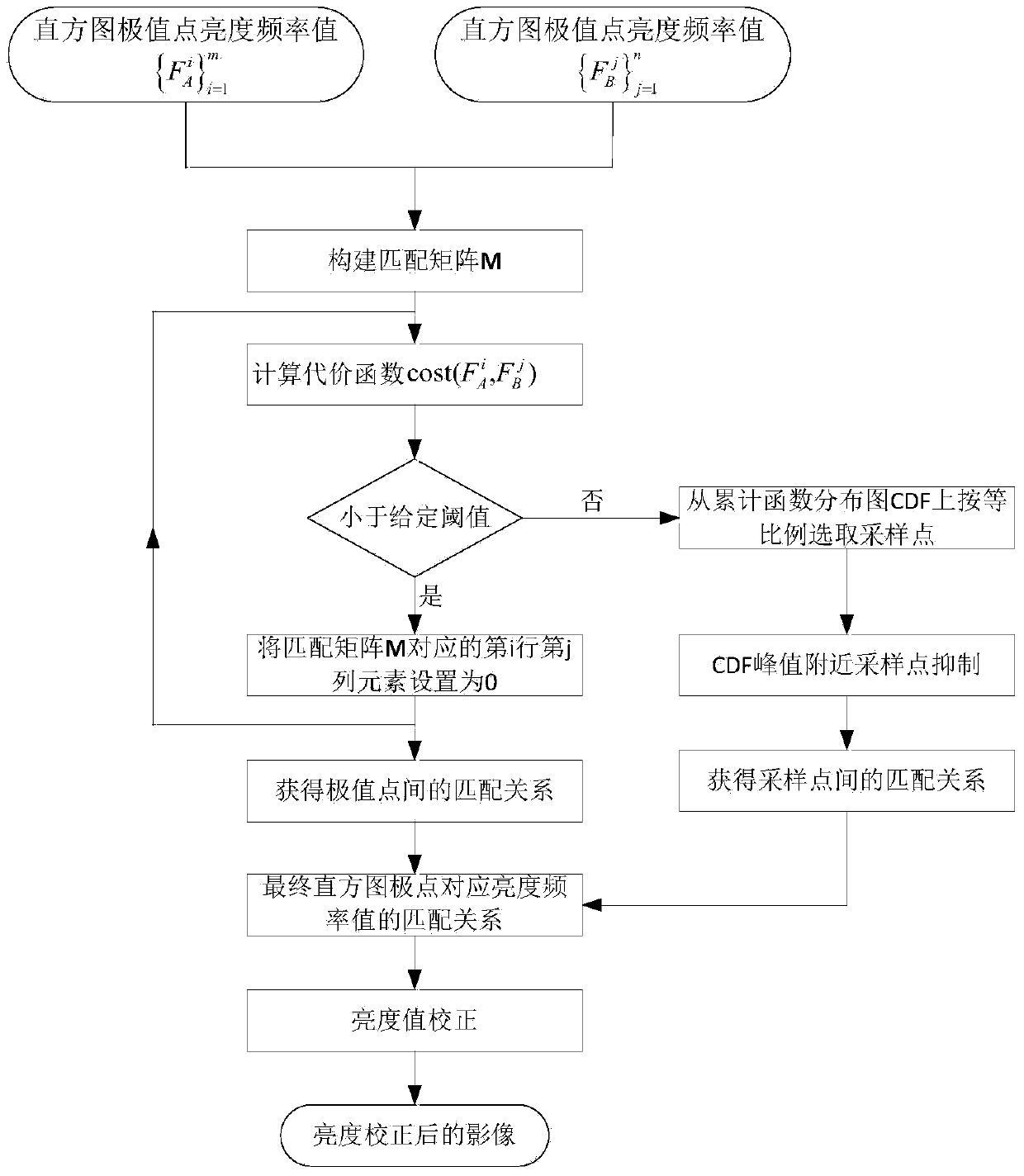

Image inking and fusing method and system based on histogram feature point registration

ActiveCN104182949AImprove color uniformityEasy to integrateImage enhancementImage analysisPoint registrationHue

The invention discloses an image inking and fusing method and system based on histogram feature point registration. The method comprises the following steps: according to image brightness histogram characteristics, extracting histogram extremum points, and adopting one-dimensional Gaussian to smoothly inhibit the local noise points of a brightness histogram; meanwhile, constructing matching relationship among the histogram extremum points in a way that a matching cost function is used to eliminate a matching matrix M; and taking the matched histogram extremum points as histogram characteristic points. The histogram characteristic points are utilized, and image brightness is corrected by adopting the corresponding brightness values of the histogram characteristic points of an image overlapping region. The image which exhibits a big visual angle and big close-range chromatic aberration can be subjected to hue difference processing, hue differences of a plurality of image overlapping regions and non-overlapping regions can be automatically eliminated, and effects of local zone hue balance of the image and the integral hue balance of a panorama image of spliced streetscape can be achieved.

Owner:SHENZHEN JIMUYIDA TECH CO LTD

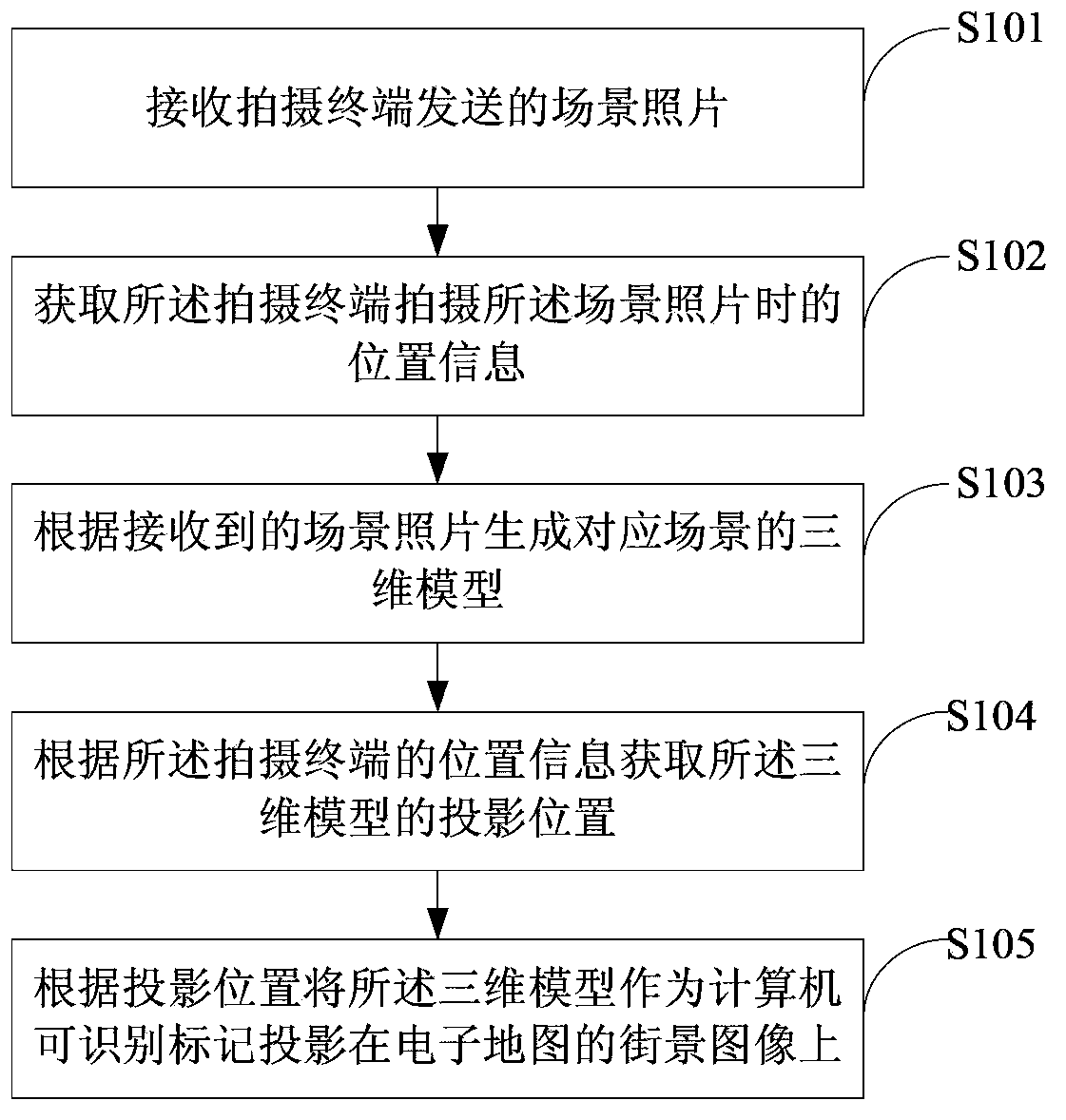

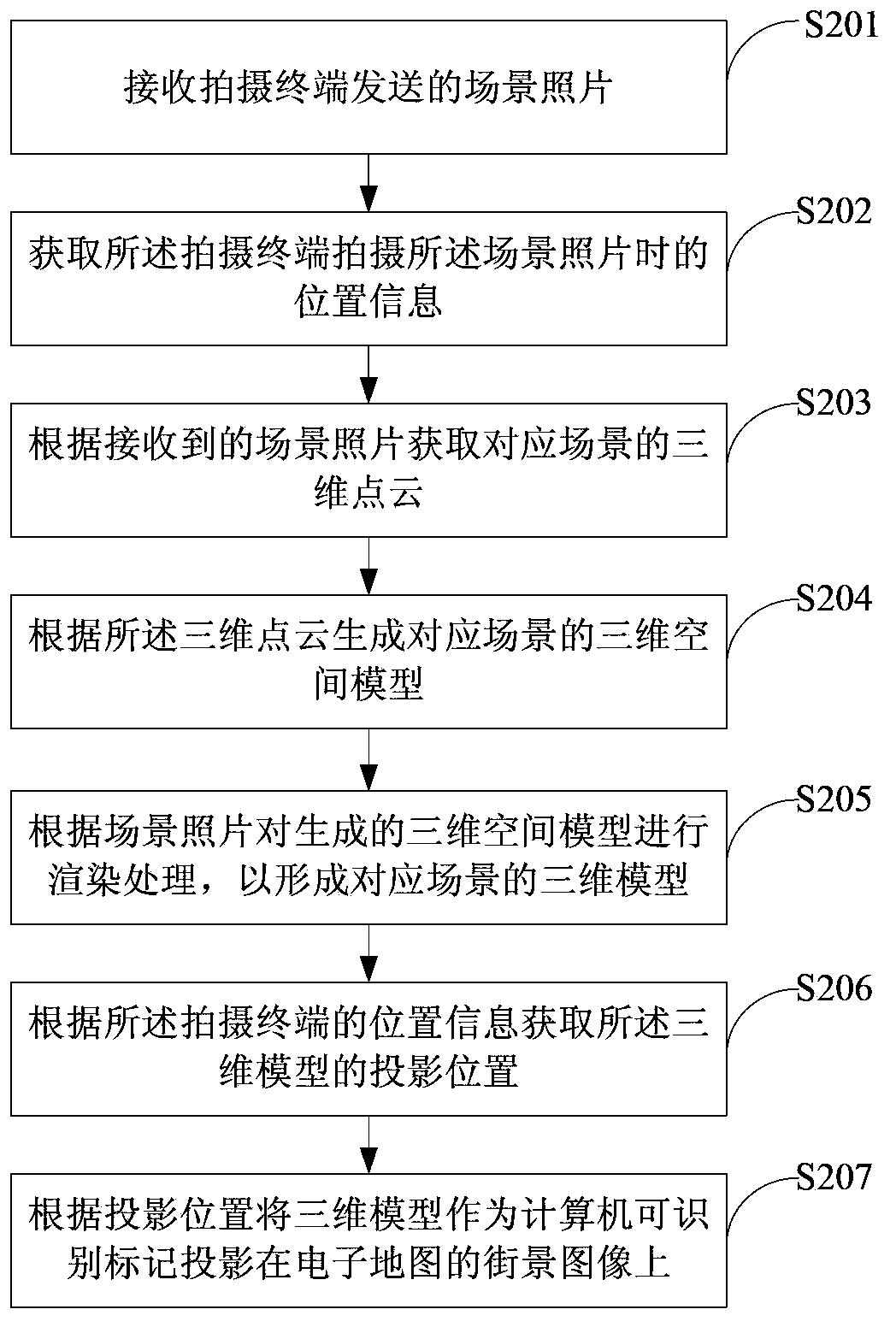

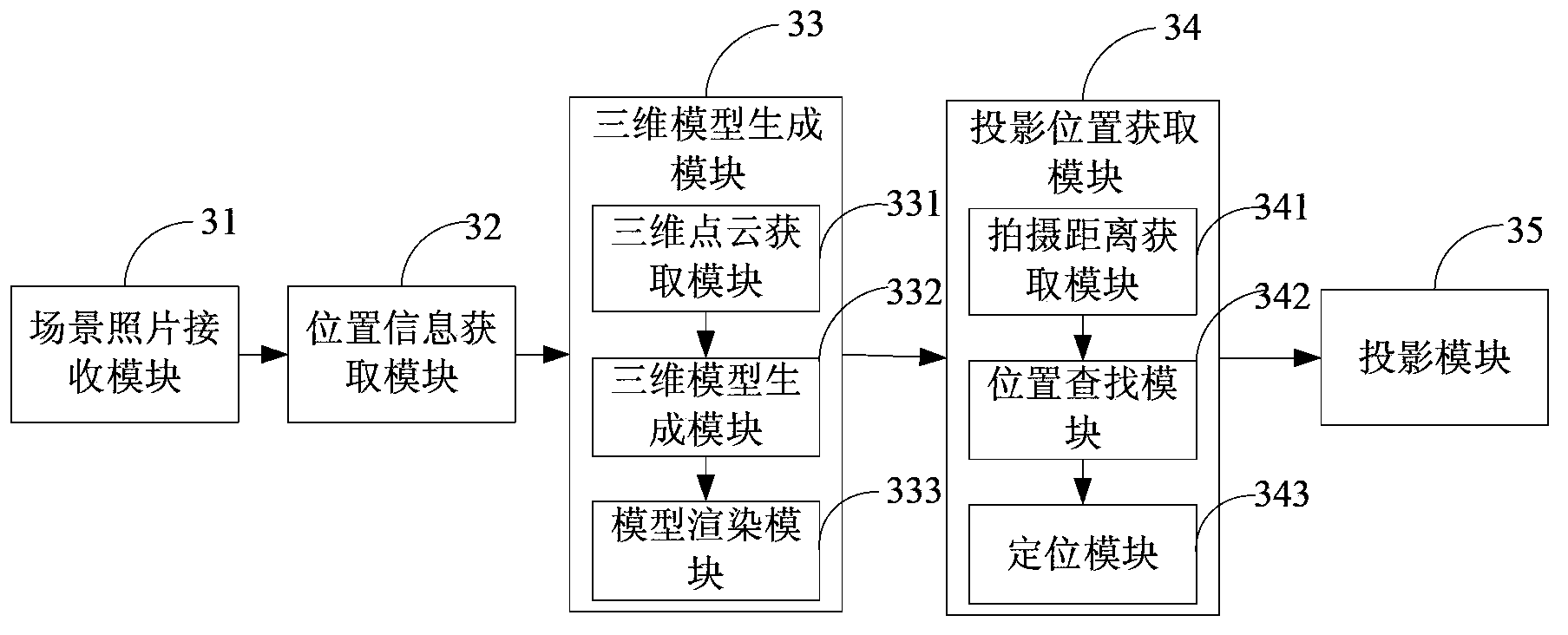

Street view generation method and server

ActiveCN104050177ASave resourcesImprove operational efficiencyGeographical information databasesSpecial data processing applicationsComputer terminalComputer science

The invention discloses a street view generation method and a server. The method comprises the following steps: receiving a scene photograph sent by a shooting terminal, and acquiring the position information when the scene photograph is shot by the shooting terminal; generating a three-dimensional model corresponding to the scene according to the received scene photograph; acquiring the projection position of the three-dimensional model according to the position information of the shooting terminal; projecting the three-dimensional model onto a street scene picture of an electronic map as a computer identification marking according to the projection position. The street scene picture in the electronic map can be updated in real time according to a scene photograph uploaded by a user, so that the efficiency is high, the update efficiency is high, the resources of the server are greatly saved, and the operating efficiency of the server is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

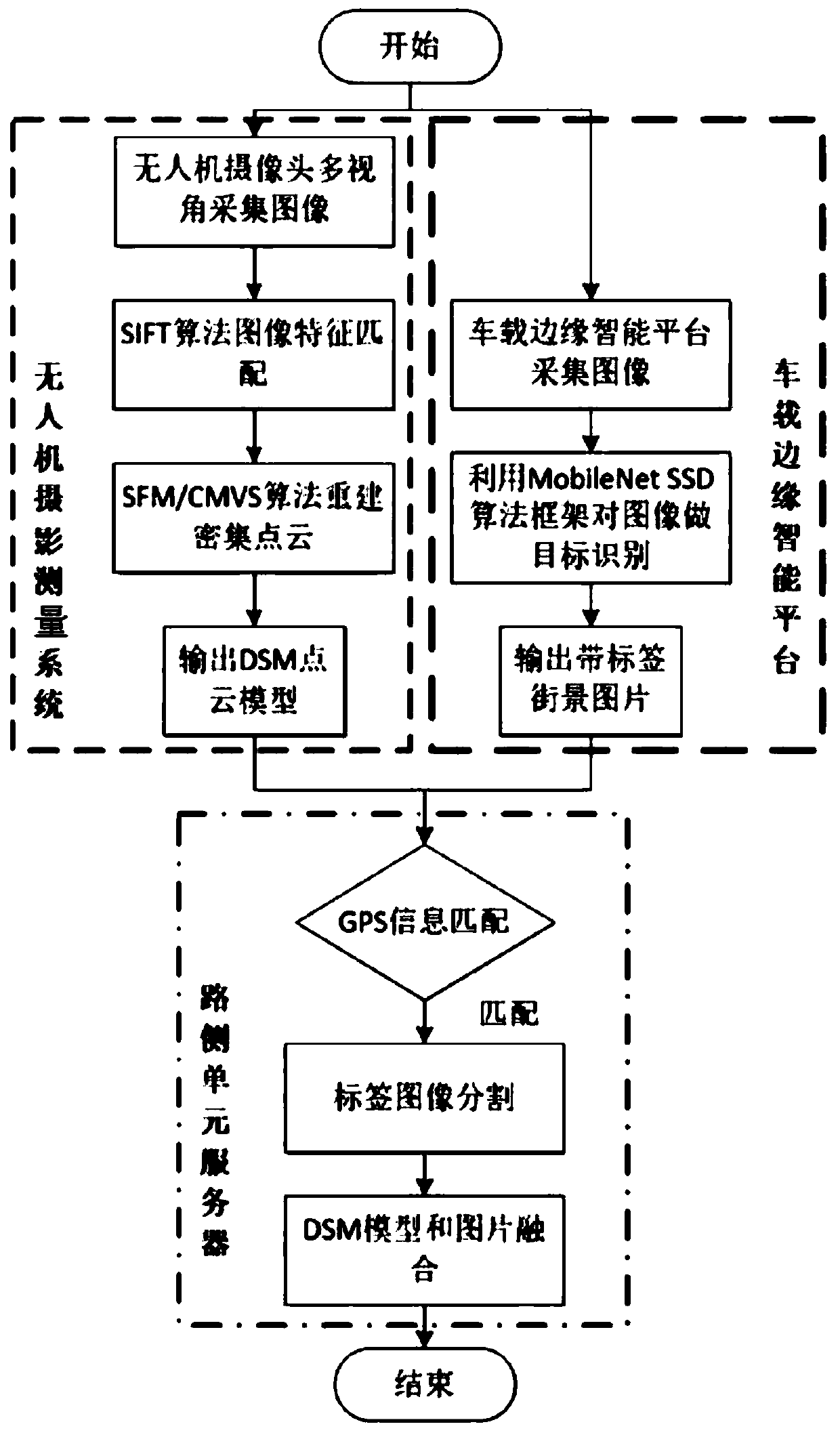

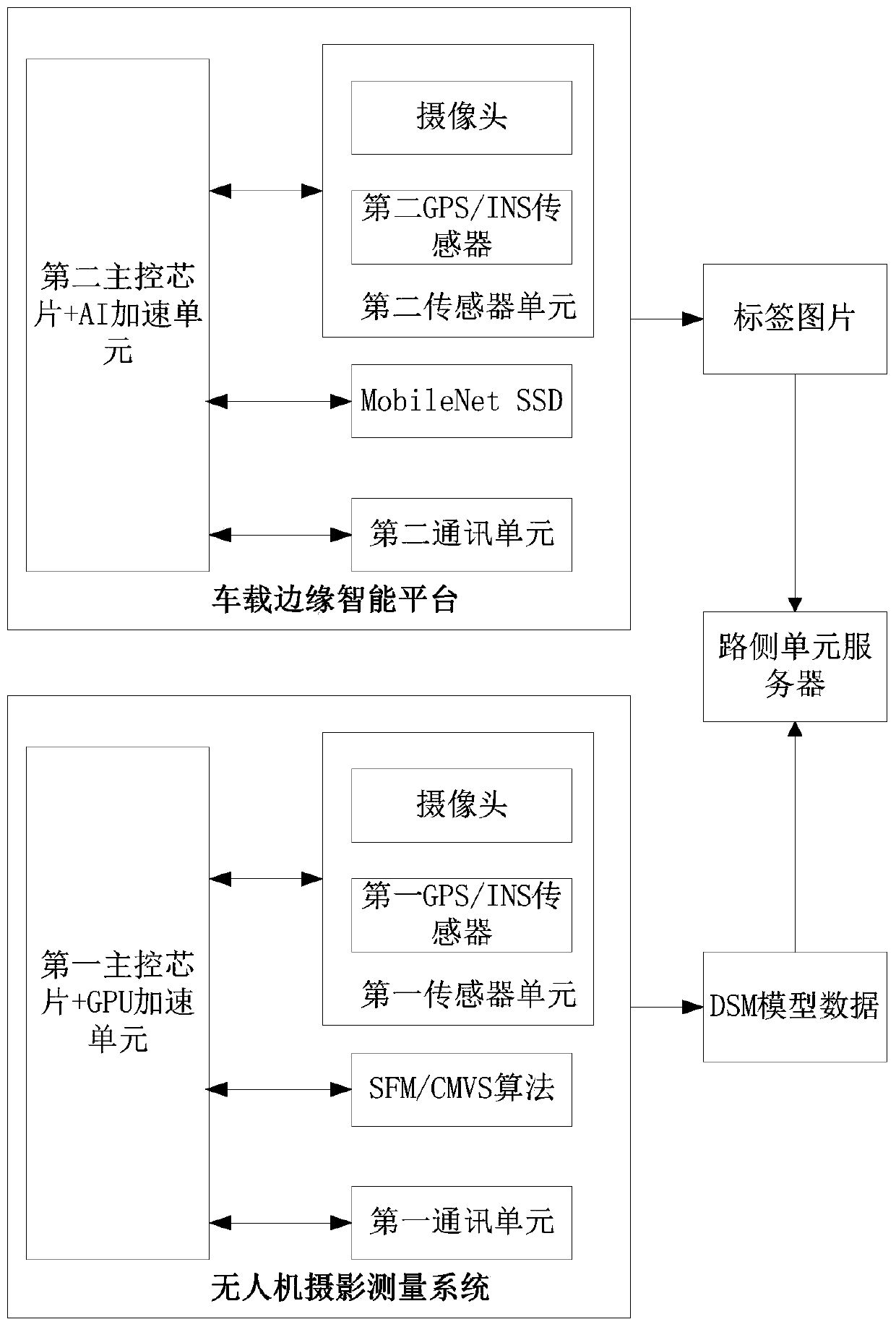

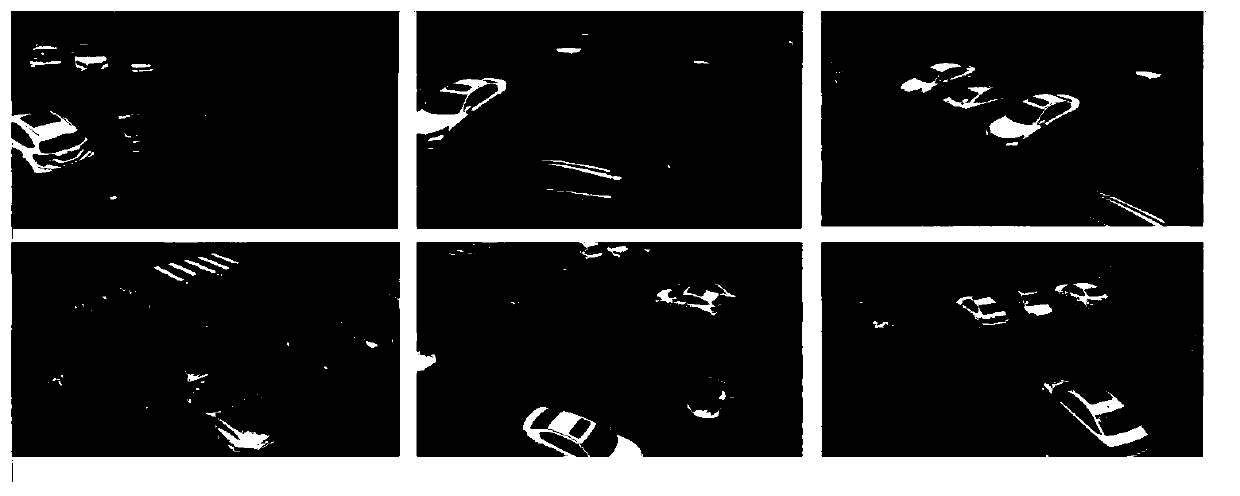

Urban streetscape 3D reconstruction method and system based on unmanned aerial vehicle and edge vehicle cooperation

PendingCN110648389AOvercoming shortcomings in detail reconstructionEasy to detectImage enhancementImage analysisSimulationData acquisition

The invention provides an urban streetscape 3D reconstruction method based on unmanned aerial vehicle and edge vehicle cooperation. The functions of rapid and high-precision reconstruction, urban maprecognition and rapid updating iteration in large-scale urban streetscape 3D modeling are achieved. A distributed multi-node data acquisition network is formed in a mode that multiple vehicles at theedge end acquire streetscape pictures of a dense city area and transmit the streetscape pictures to a drive test unit server, so that multi-view shooting and large-range acquisition of road streetscape picture information are realized, the acquisition efficiency is improved, and the acquisition cost is reduced.

Owner:GUANGDONG UNIV OF TECH

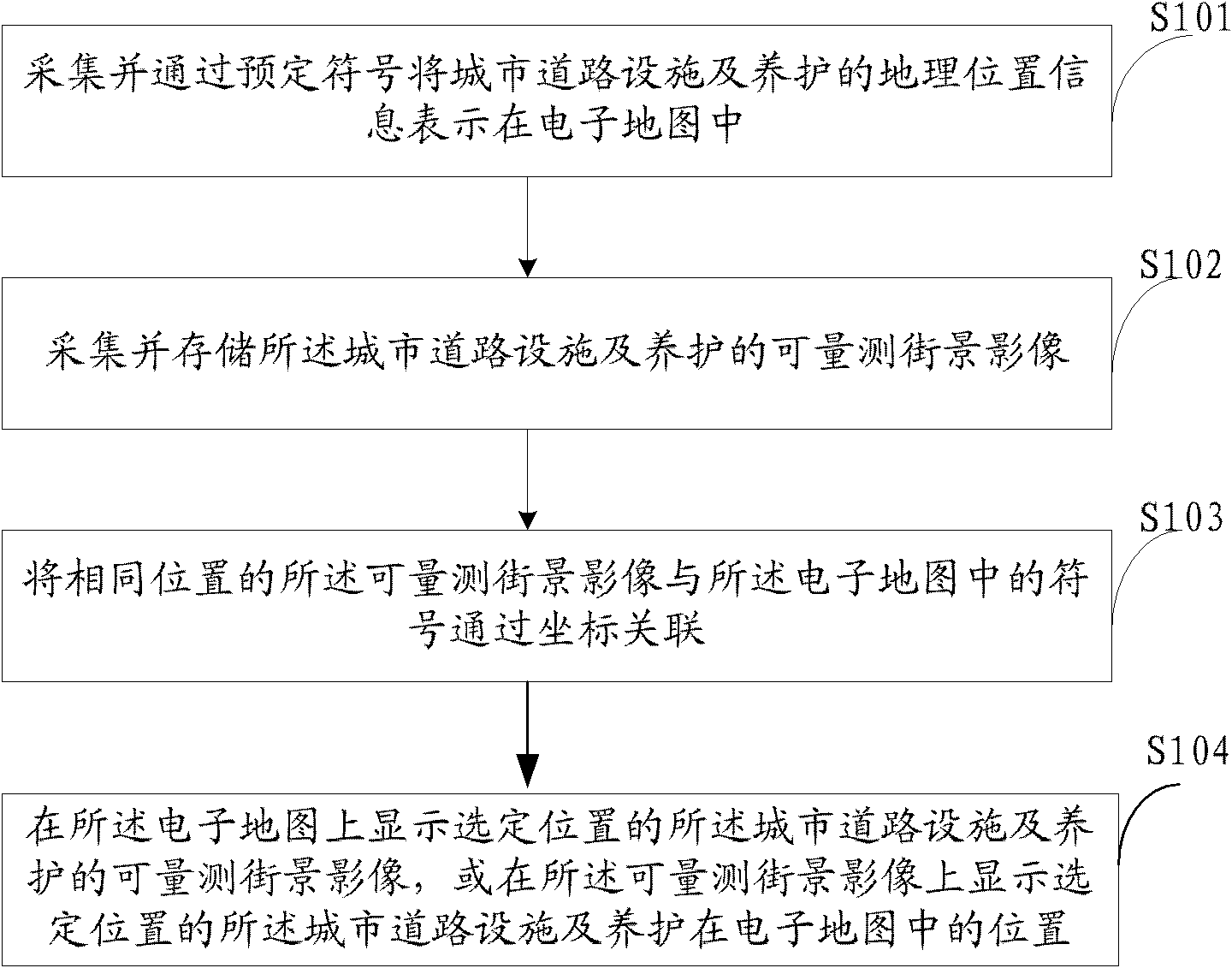

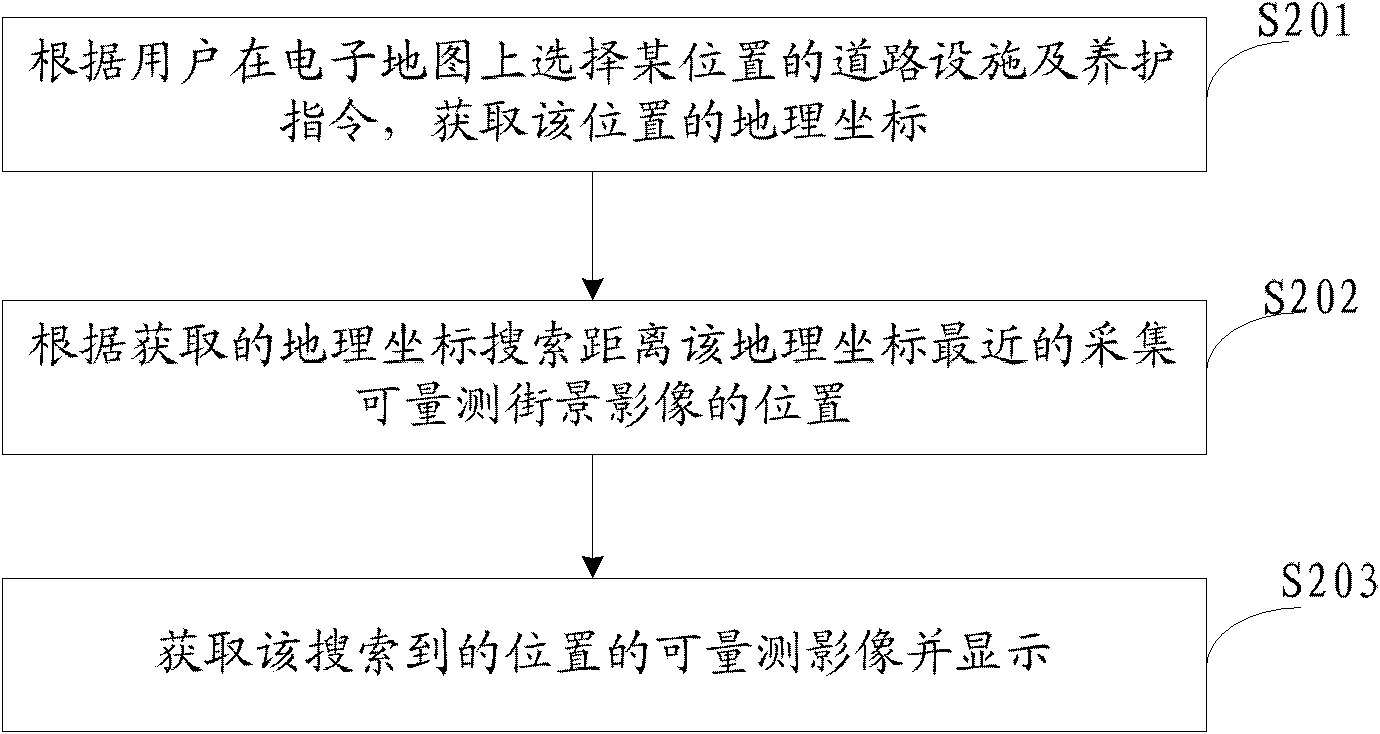

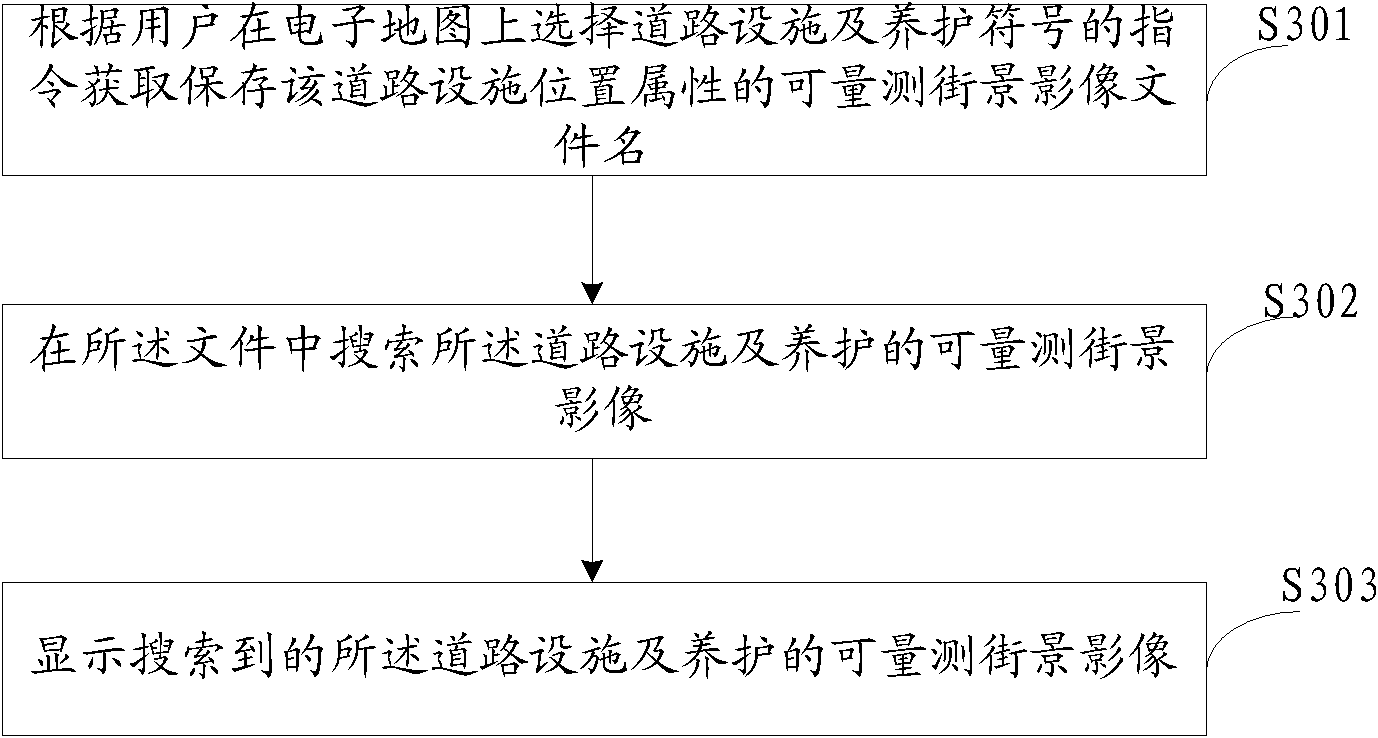

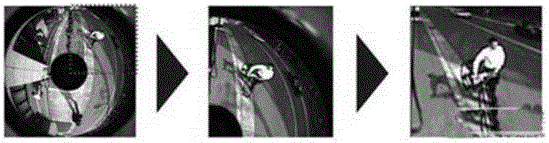

Road equipment and maintenance supervising method

The invention discloses an urban road equipment and maintenance supervising method comprising the following steps of: collecting and showing the geographic position information of urban road equipment and maintenance in an electronic map through predetermined signs; collecting and storing a measurable streetscape image of the urban road equipment and maintenance; relating the measurable streetscape image with the sign of the electronic map, with the same position, through a coordinate; displaying the measurable streetscape image of the selected-position urban road equipment and maintenance on the electronic map or displaying the position of the selected-position urban road equipment and maintenance in the electronic map on the measurable streetscape image. By relating the electronic map with the measurable streetscape image and displaying the measurable streetscape image of the urban road equipment and maintenance on the electronic map, the method disclosed by the invention realizes visual and measurable fine management on various relevant data of relevant management content of the road equipment and maintenance by utilizing the measurable streetscape image.

Owner:BEIJING UNIVERSITY OF CIVIL ENGINEERING AND ARCHITECTURE

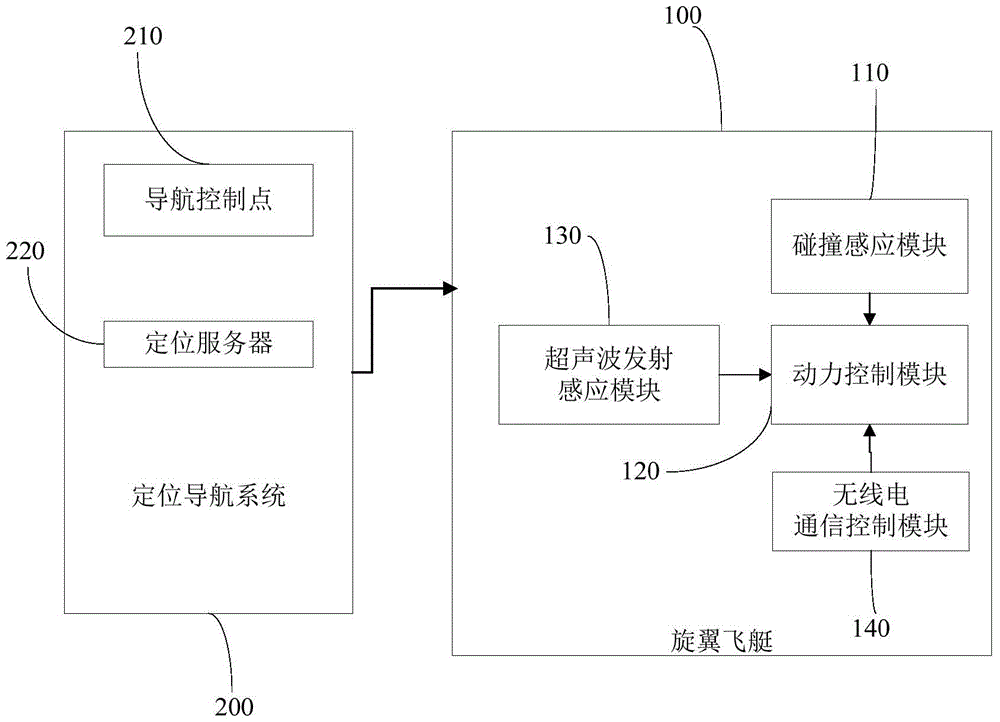

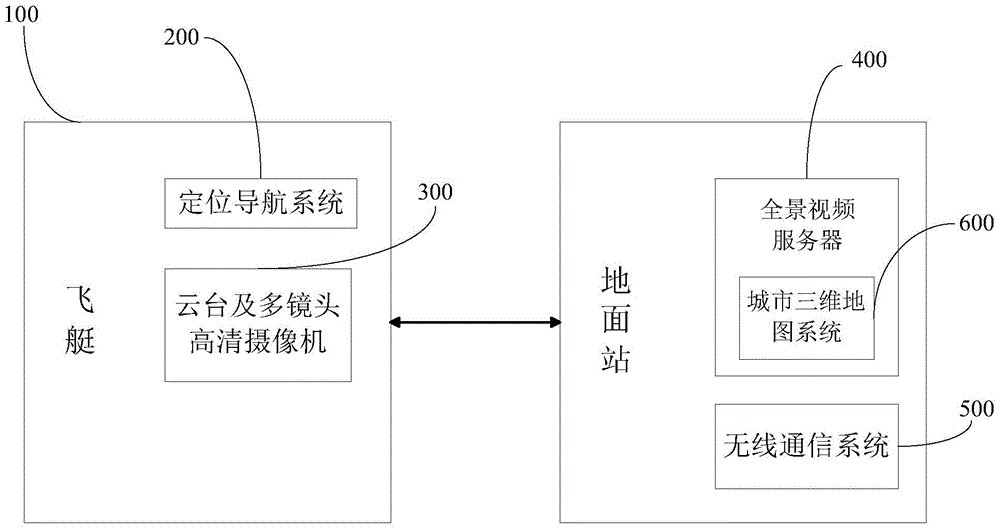

Aerial stereoscopic video streetscape system based on a navigation airship and an implement method

ActiveCN105700547ASolve the problem of collecting updatesChange limitPicture taking arrangementsClosed circuit television systemsStereoscopic videoAerial video

Provided are an aerial stereoscopic video streetscape system based on a navigation airship and an implement method. The system acquires aerial video streetscape by a viewing mode combining automatic flying and manual flying of a navigation airship, and solves a difficulty in streetscape data acquisition and update at high efficiency and low cost. A rotor wing airship captures aerial panoramic videos, and divides layers according to a flying height and by using latitude and longitude as coordinates, thereby forming the aerial video streetscape of a city. The system has wider view and higher height, displays the stereoscopic space of the city in an omnibearing way, effectively prevents a case that current ground streetscape is restricted by lack of roads, obstruction of buildings, ground fluctuation, and blind angles, may cover any area of the city in the air, replaces static streetscape with dynamic video streetscape, and may display the real space really and dynamically in a large data bulk.

Owner:SHENZHEN INST OF ADVANCED TECH

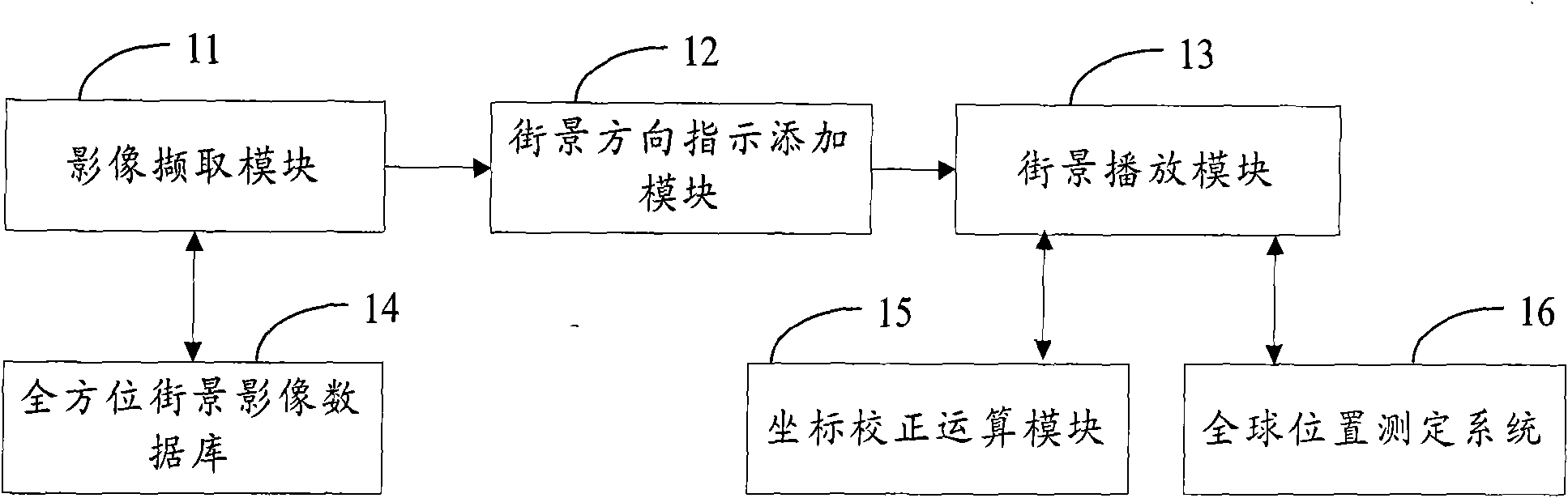

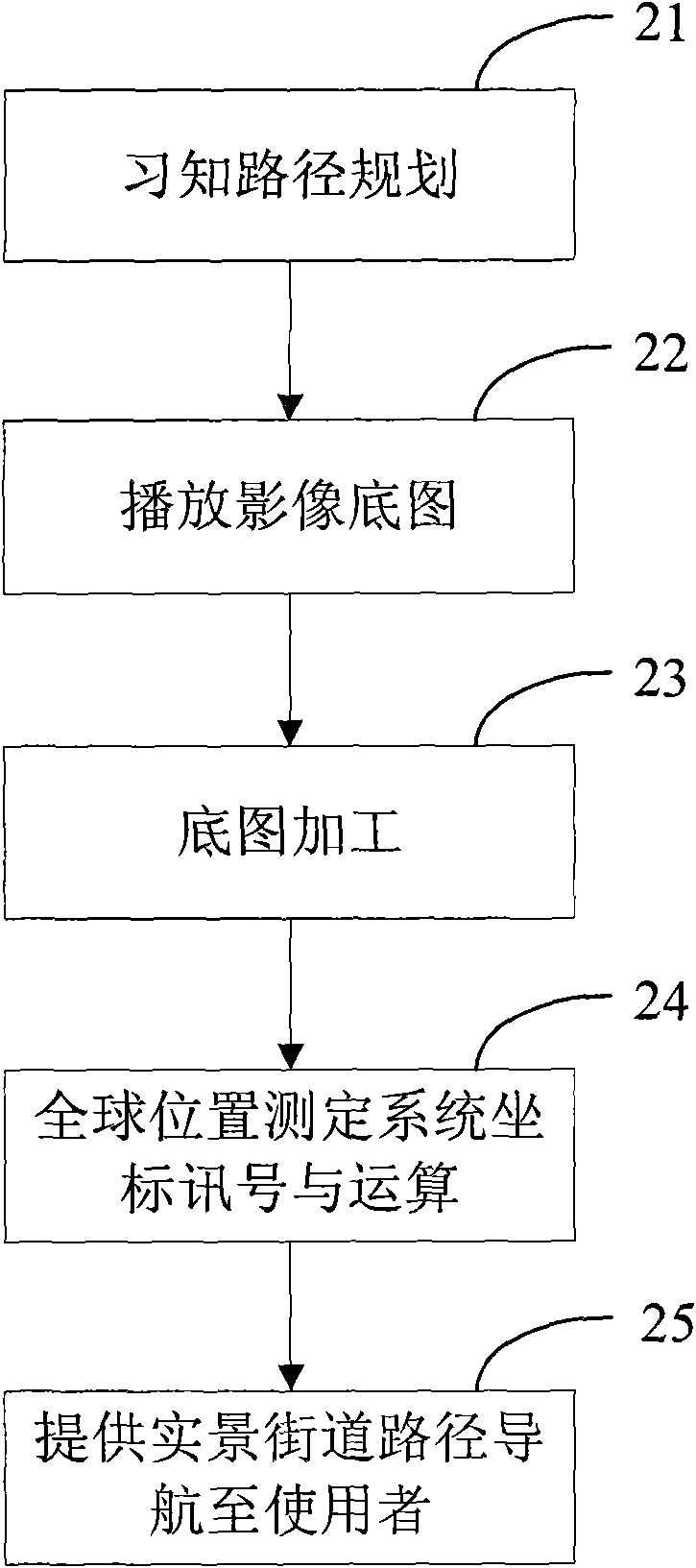

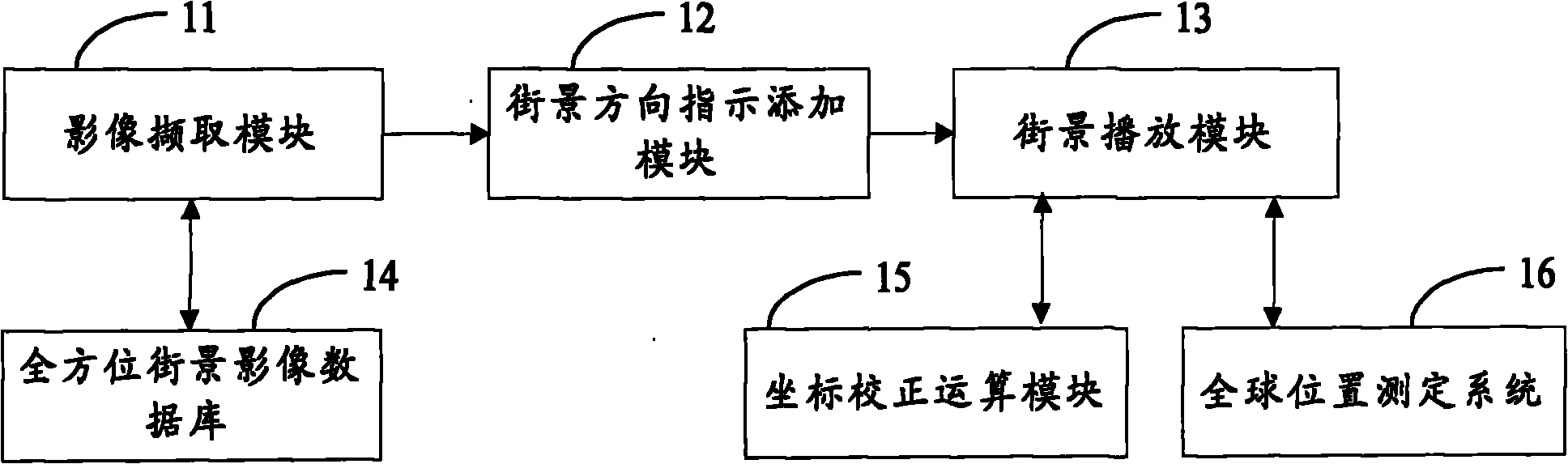

Street view dynamic navigation system and method thereof

InactiveCN102012233AEasy to useIntuitive method of navigationInstruments for road network navigationPosition fixationLive actionNavigation system

The invention provides a street view dynamic navigation system and a method thereof. The method comprises the steps of: establishing a whole block street view image database, capturing the image streaming of all coordinate strings of a planned path along the way from the whole block street view image database by using an image capturing module, carrying out direction indication processing on a manuscript image captured by the image capturing module by using a street view direction indication addition module, and finally providing a real-time street view image streaming film processed by the street view direction indication addition module by using a street view playing module through localization coordinate signals of a global positioning system (GPS), which are received in real time, and combining with a coordinate calibration operation. In the invention, by using the street view image streaming technology, a whole-journey live-action navigation effect is achieved, road images seen by a user within a vision angle are played, and the user can be guided to arrive at a destination from a start location step by step.

Owner:CHUNGHWA TELECOM CO LTD

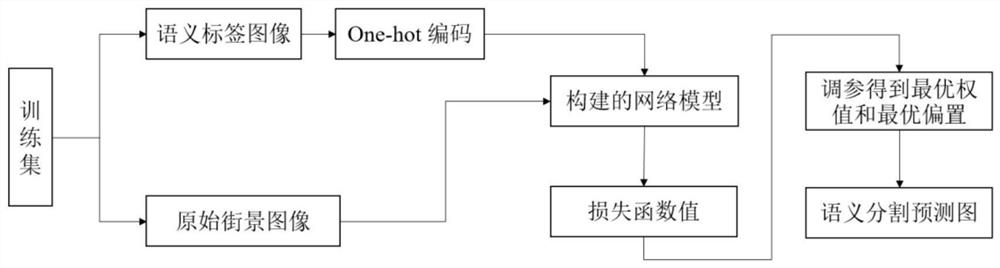

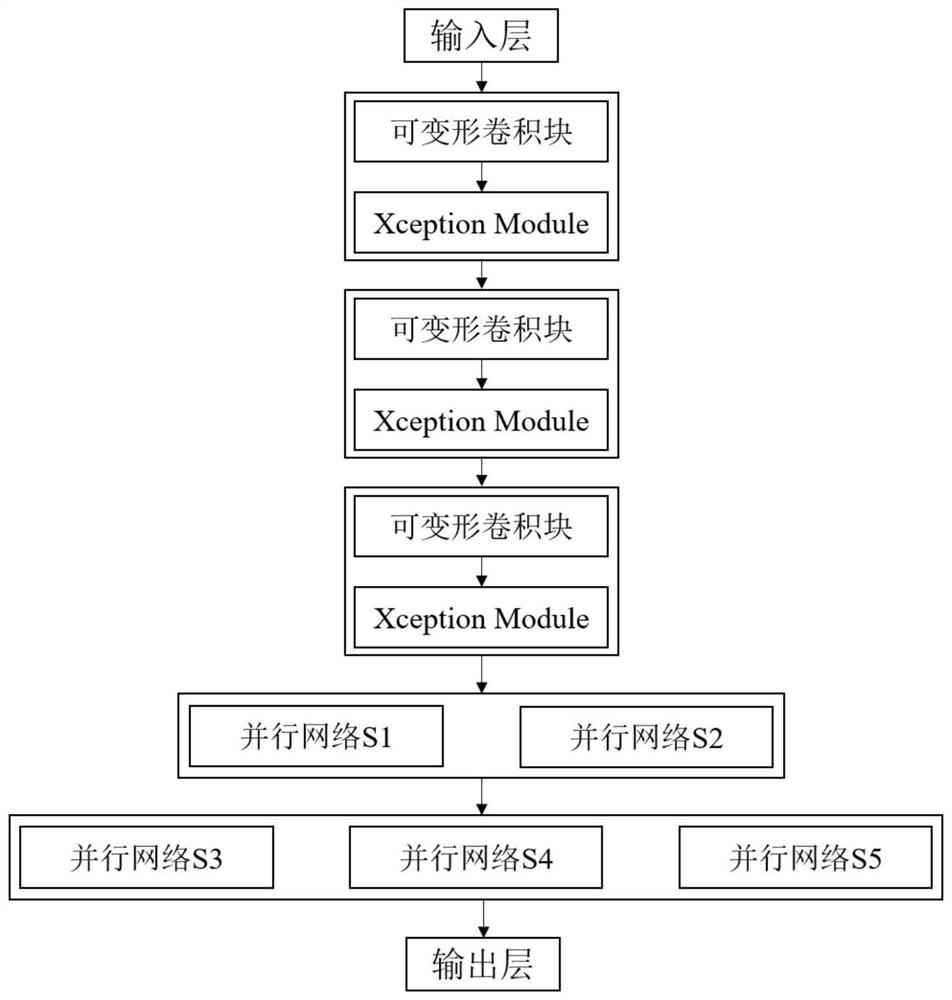

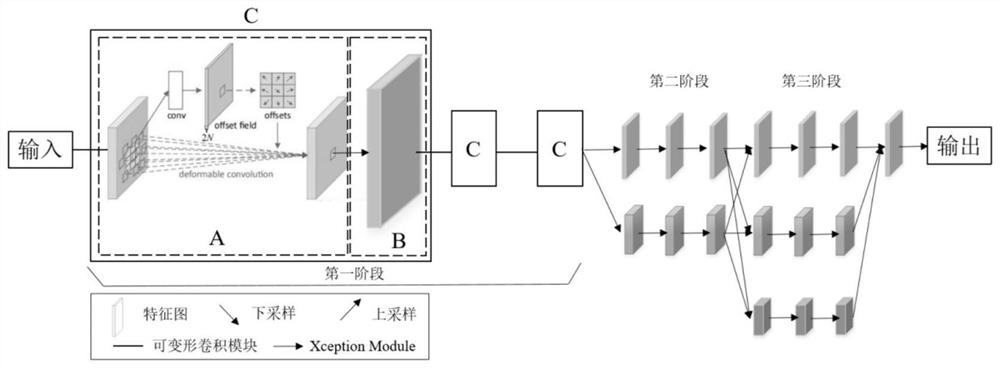

Deformable convolution fusion enhanced streetscape image semantic segmentation method

ActiveCN112396607AFew parametersRich semantic featuresImage enhancementImage analysisPattern recognitionImaging processing

The invention discloses a deformable convolution fusion enhanced streetscape image semantic segmentation method, which comprises a training stage and a test stage, and comprises the following steps of: constructing a streetscape image semantic segmentation deep neural network model to ensure that the network model obtains more small target feature information while a streetscape image large targetobject is segmented; therefore, the problems of small-scale target loss and discontinuous segmentation during streetscape image semantic segmentation are solved, the image segmentation effect is improved, the overall robustness of the model is better, and the streetscape image processing precision is higher.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY +1

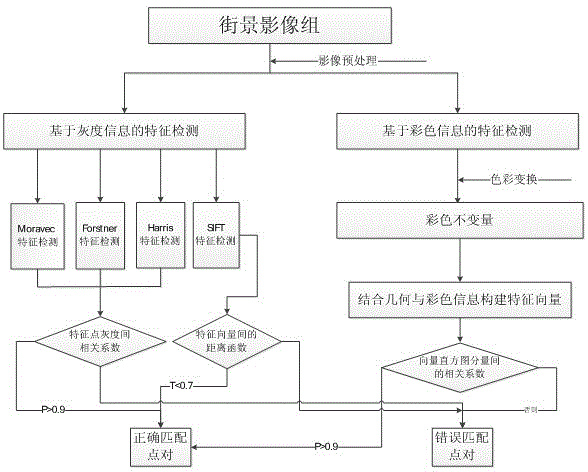

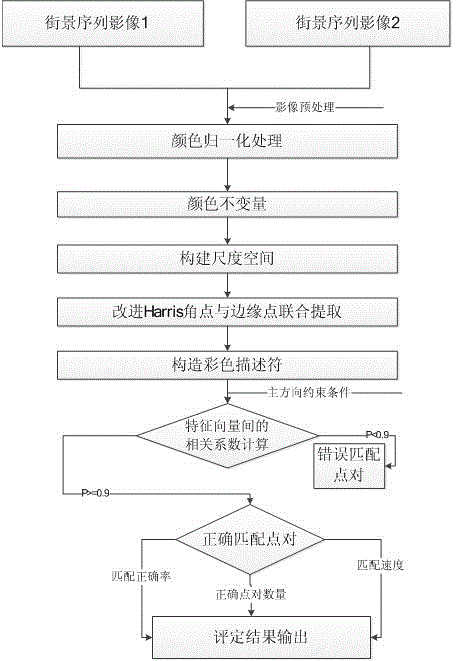

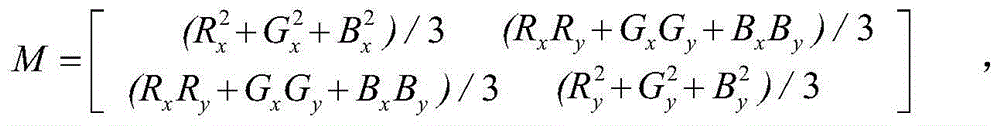

Streetscape image feature detecting and matching method based on same color scale

InactiveCN103606170AAccurate feature positioningHigh precisionImage analysisFeature vectorColor Scale

The invention provides an automatic streetscape image detecting and matching method based on the same color scale. The automatic streetscape image detecting and matching method comprises the following steps that a color invariant is used as an input image; all channels of the color of the input image are comprehensively processed based on a scale space theory by adopting an improved Harris detection algorithm, and feature points are extracted; furthermore, color features of the feature points are added to construct a description vector to obtain a stable feature descriptor; feature vector calculation is performed by adopting a relevant coefficient as a similarity measurement function; a main direction restriction is added into a search strategy, so that image matching is implemented. The automatic streetscape image detecting and matching method disclosed by the invention has the advantages of accuracy in feature positioning, high precision, high timeliness, high data processing speed and the like and is suitable for real-time detecting and matching of streetscape images.

Owner:WUHAN UNIV

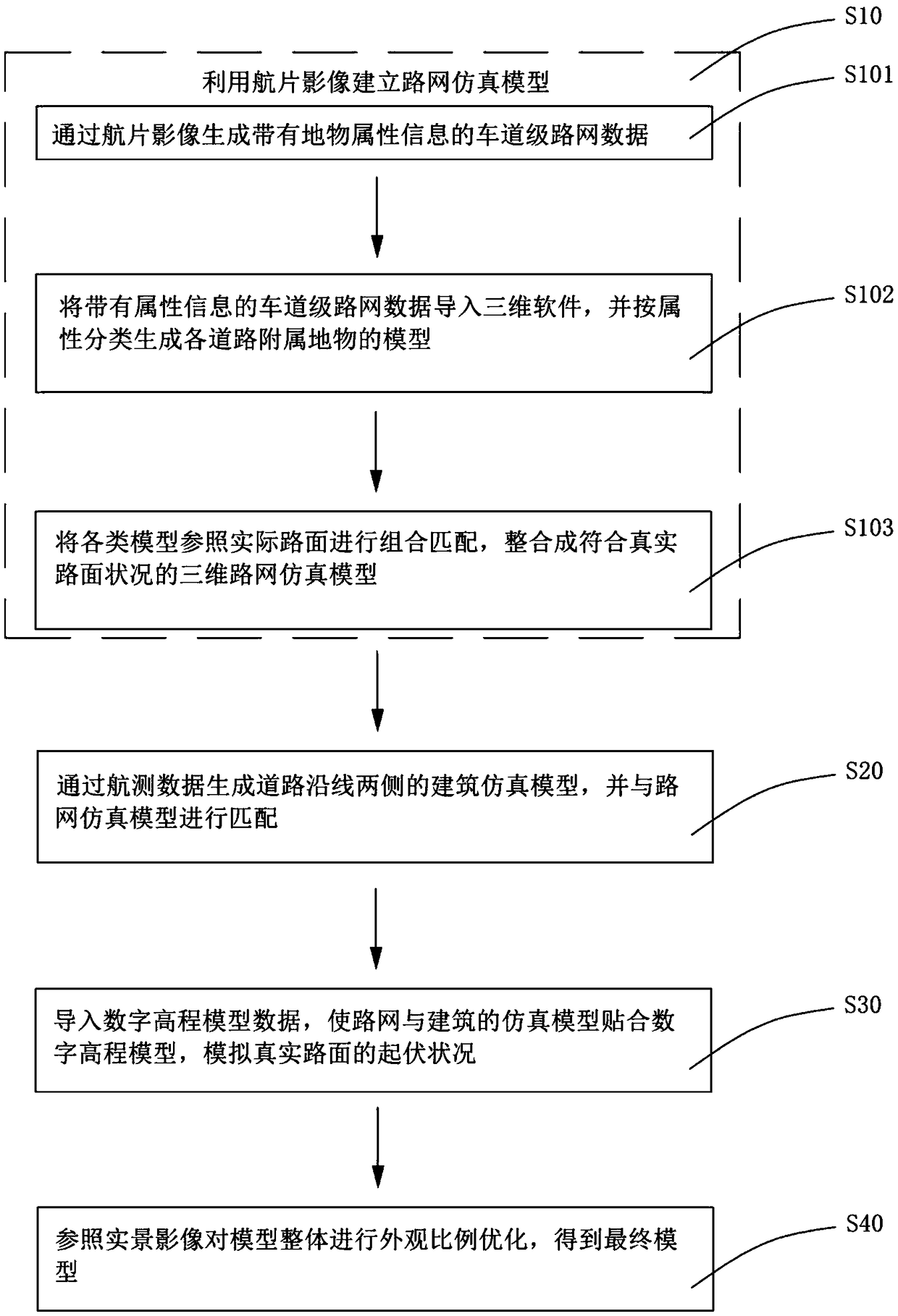

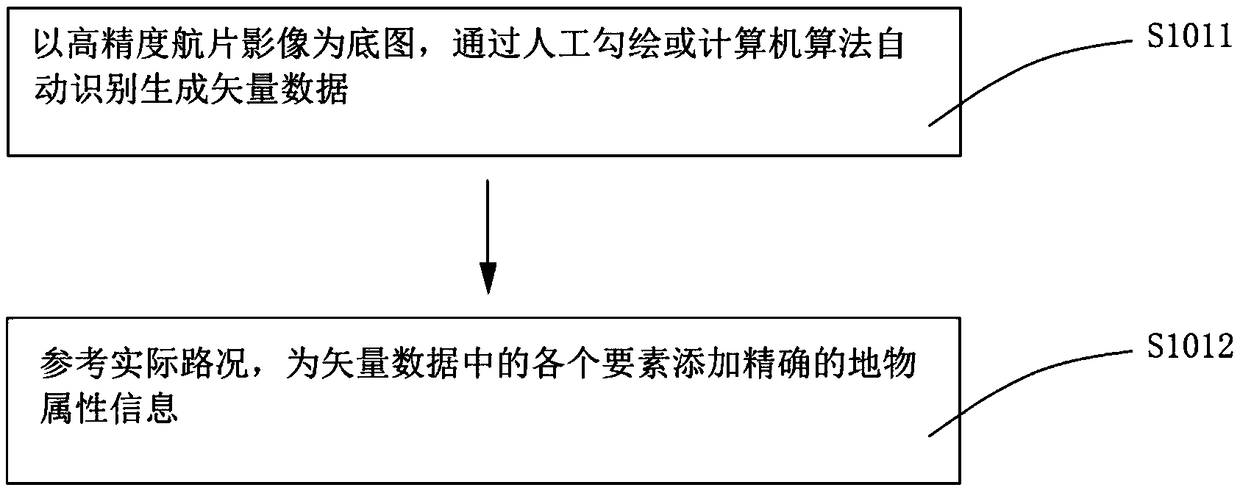

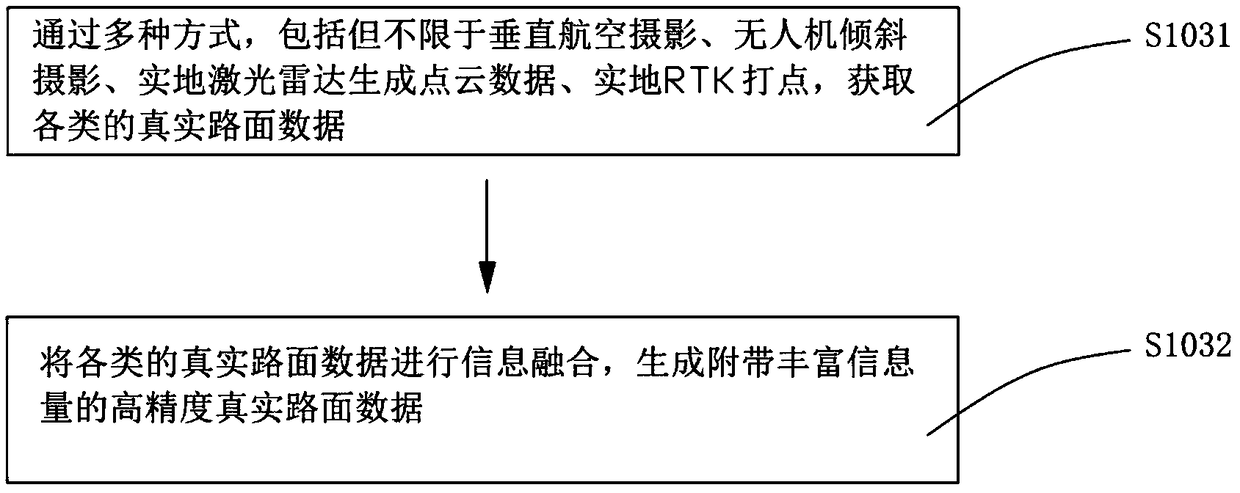

A road and building simulation modeling method based on real road surface data

ActiveCN108986207AHigh degree of simulationMultiple pavement detailsGeometric CADInternal combustion piston enginesBuilding simulationRoad networks

The invention provides a road and building simulation modeling method based on real road surface data, the method comprising the following steps: S10, establishing a road network simulation model by using aerial photograph images; S20, generating building simulation models along the road by aerial survey data, and matching the building simulation models with the road network simulation models; S30, importing the digital elevation model data, so that the simulation model of the road network and the building conforms to the digital elevation model, and simulating the undulation condition of thereal pavement; S40, optimizing the overall appearance ratio of the model with reference to the real image to obtain the final model. According to the idea that the model attribute information groupware is modeled and matched with the space, by means of multi-source data fusion, the road model and the building model along the road are combined and matched with reference to the actual pavement, so that the model can show more details of the pavement, can restore the shape of the real street scene, undulation and other details, and can be better used in driving perspective simulation and other application scenes.

Owner:广东星舆科技有限公司

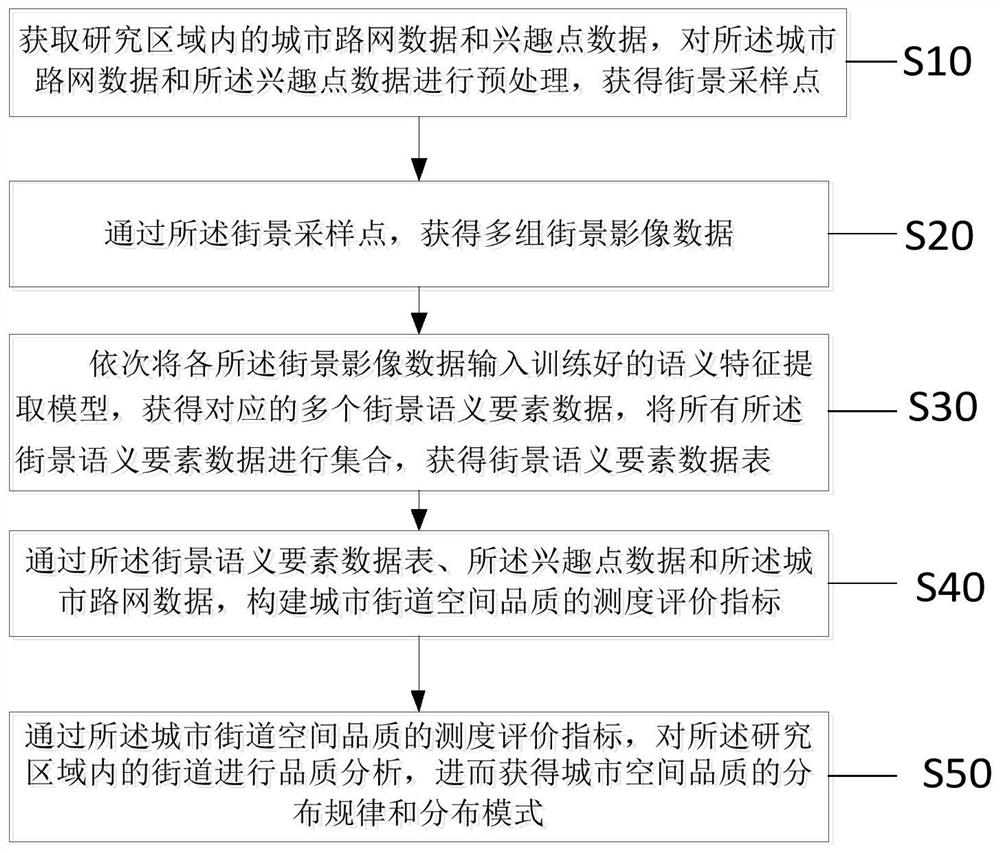

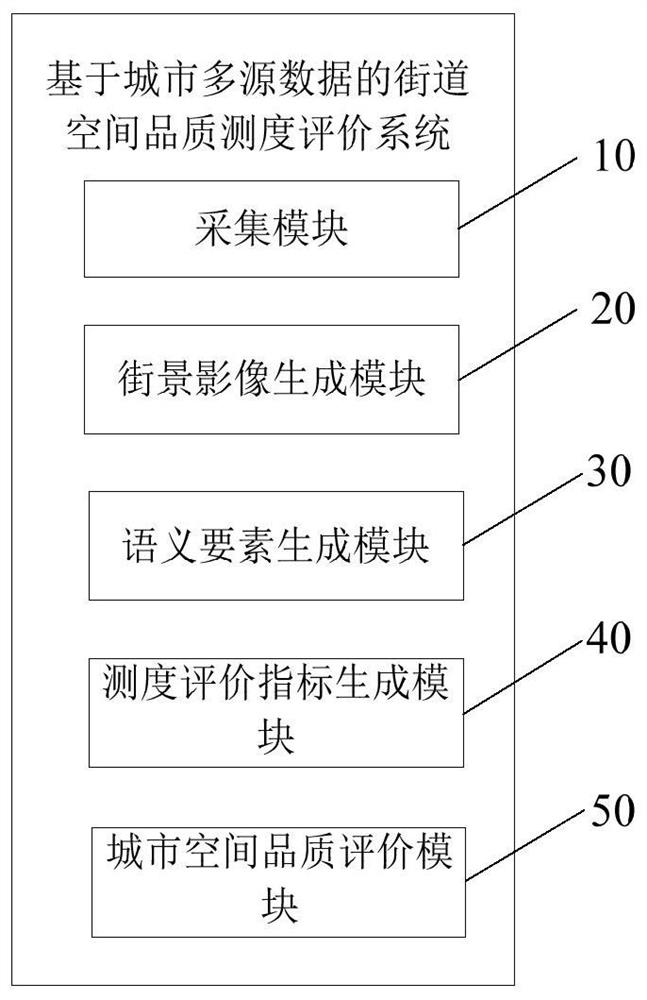

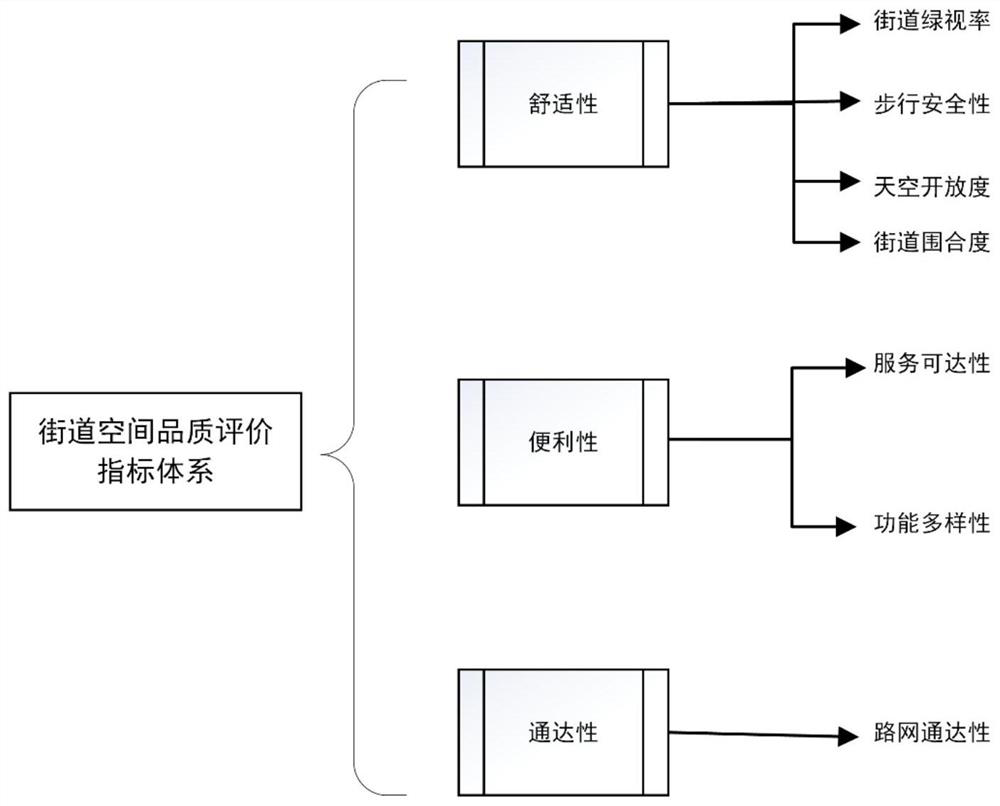

Urban multi-source data-based street space quality measurement evaluation method and system

PendingCN112418674AImprove accuracyHigh precisionCharacter and pattern recognitionResourcesMulti source dataStreet scene

The invention relates to the field of urban planning, and provides an urban multi-source data-based street space quality measurement evaluation method, which comprises the following steps of: obtaining urban road network data and interest point data in a research area, and preprocessing the urban road network data and the interest point data to obtain street view sampling points; obtaining a plurality of groups of streetscape image data through streetscape sampling points; inputting each piece of streetscape image data into the trained semantic feature extraction model to obtain a streetscapesemantic element data table; constructing a measure evaluation index of the urban street space quality through a street view semantic element data table, the interest point data and the urban road network data; and obtaining the distribution rule and the distribution mode of the urban space quality through the measure evaluation index of the urban street space quality. According to the invention,the street quality is researched on the microcosmic scale, the research range is widened, research is carried out on the perspective of the urban macroscopic level, and the accuracy of street space quality measurement can be remarkably improved.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

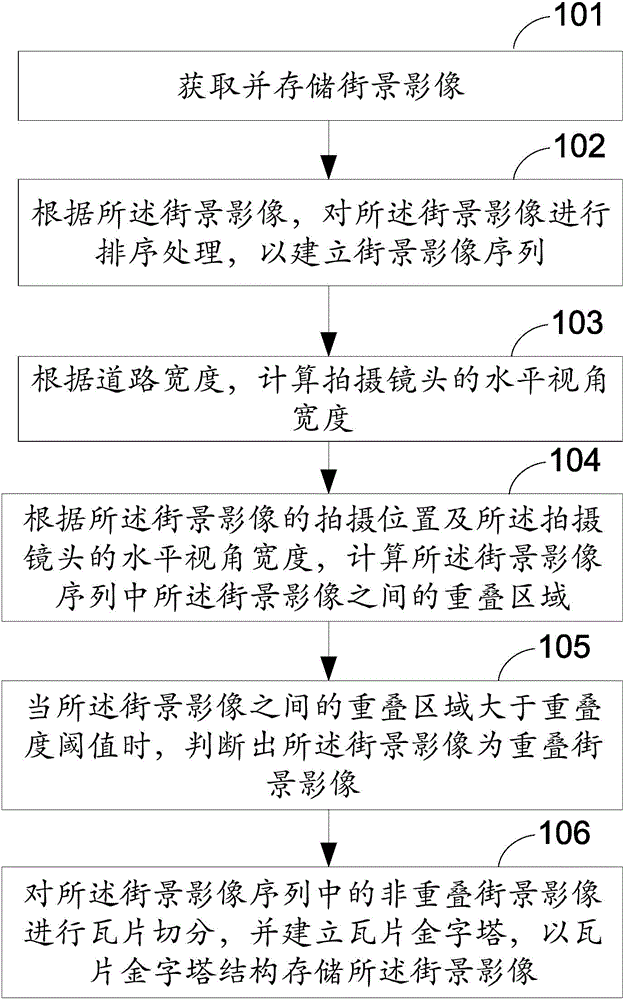

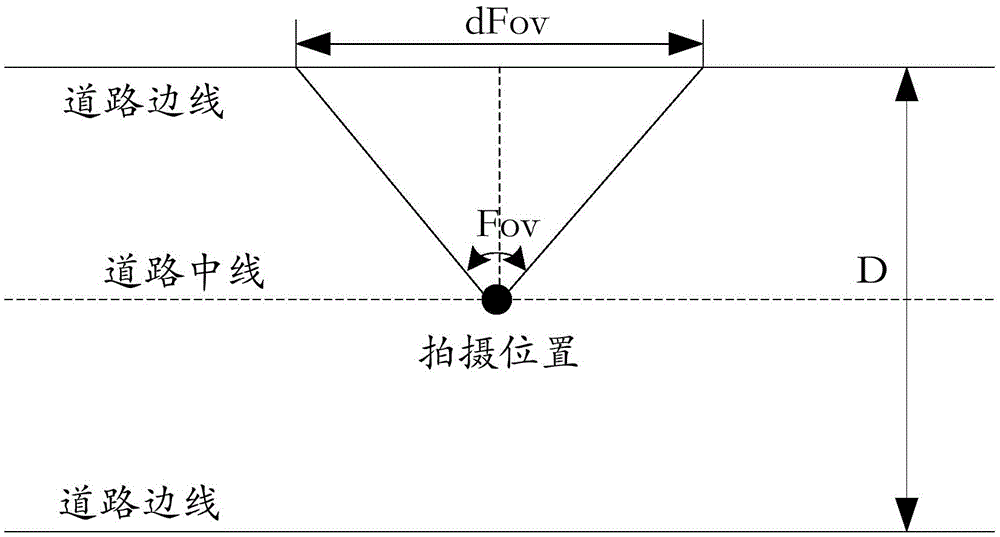

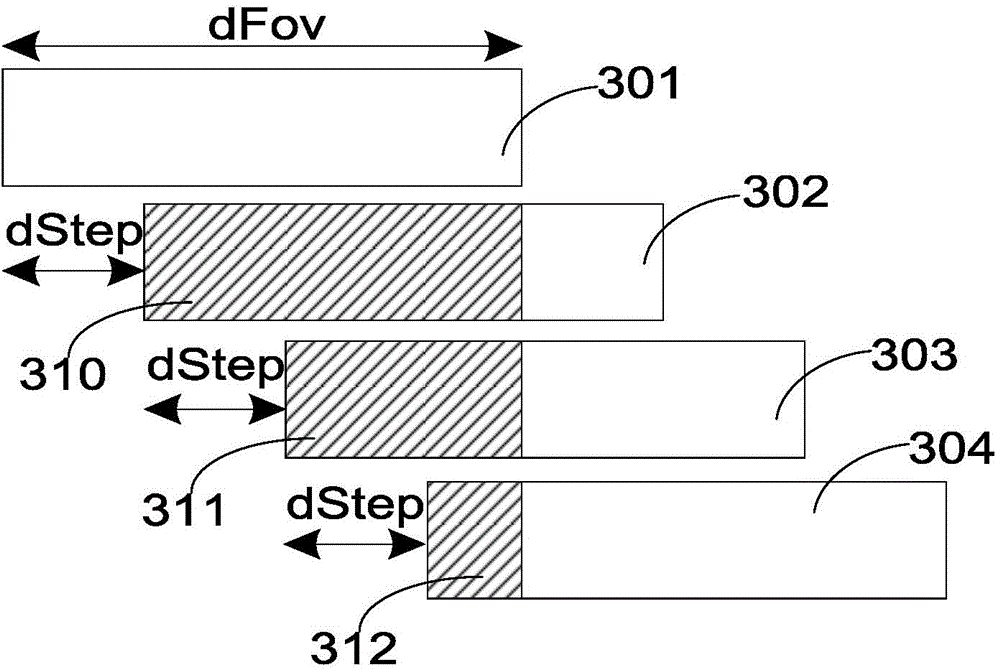

Street view image storage method and device based on mixed tile pyramids

ActiveCN103914521AReduce overlapEfficient browsingStill image data retrievalGeographical information databasesCamera lensSurveyor

The invention relates to the technical field of surveying and mapping, and discloses a street view image storage method and device based on mixed tile pyramids. The method includes acquiring and storing street view images; sorting the street view images according to the street view images to establish street view image sequences; calculating the horizontal angle width of a shooting camera according to the width of a street, and calculating overlapping regions of the street view images in the street view image sequences according to shooting positions of the street view images and the horizontal angle width of the shooting camera; when the overlapping regions of the street view images are larger than an overlap threshold, judging the street view images to be overlapped street view images, performing tile dividing on non-overlapped street view images in the street view image sequences, and establishing mixed tile pyramids to store the street view images. Overlapping degree of the street view images is reduced effectively, data redundancy is removed, efficient browsing of images is realized, and accurate measurement can be considered during quick browsing.

Owner:BEIJING UNIV OF CIVIL ENG & ARCHITECTURE

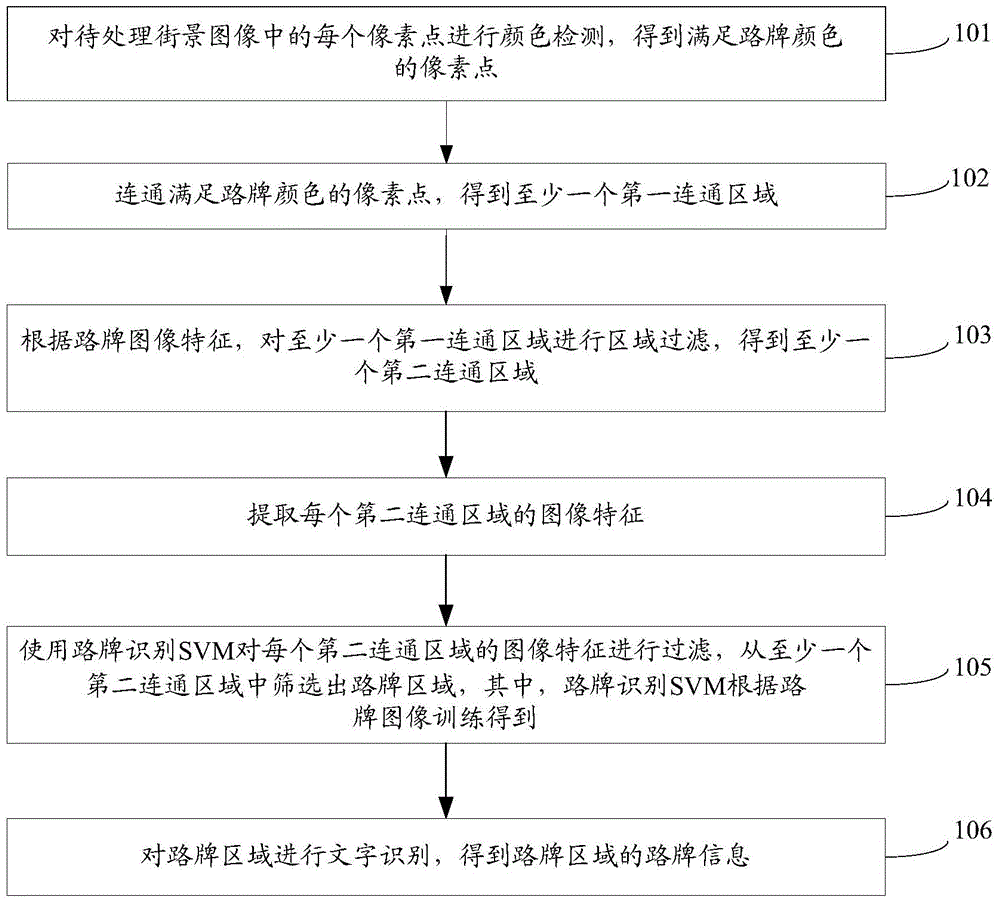

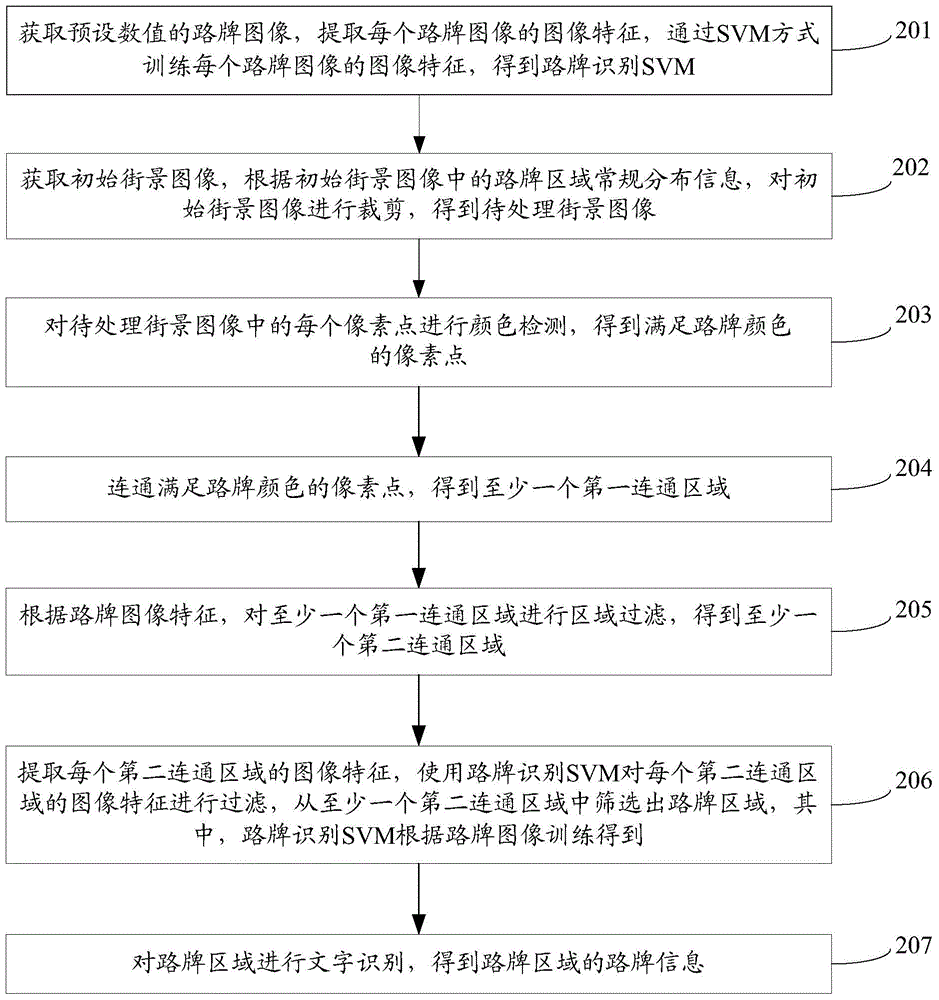

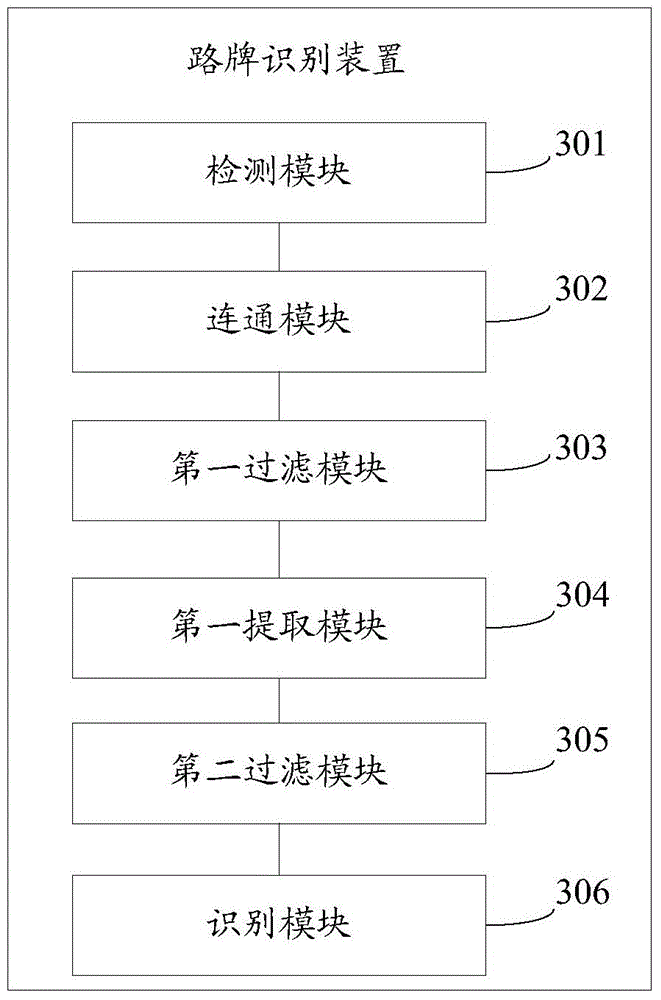

Guide board recognizing method and device

ActiveCN104463105AReduce identification costsImprove recognition efficiencyImage analysisCharacter and pattern recognitionSupport vector machineFiltration

The invention discloses a guide board recognizing method and device and belongs to the technical field of computers. The guide board recognizing method comprises the steps that color detection is conducted on each pixel point in a street scene image to be processed, so that the pixel points corresponding to the color of a guide board are obtained; the pixel points corresponding to the color of the guide board are connected, so that at least one first connection area is obtained; area filtration is conducted on the at least one first connection area according to the image characteristics of the guide board, so that at least one second connection area is obtained; the image characteristic of each second connection area is extracted; the image characteristics of the second connection areas are filtered by means of a guide board reorganization support vector machine, and a guide board area is screened out from the at least one second connection area, wherein the guide board reorganization support vector machine is obtained through guide board image training; character recognition is conducted on the guide board area, so that guide board information of the guide board area is obtained. According to the guide board recognizing method and device, due to the fact that manual intervention is not needed in the guide board reorganization process, guide board reorganization is reduced, and guide board reorganization efficiency is improved.

Owner:TSINGHUA UNIV +1

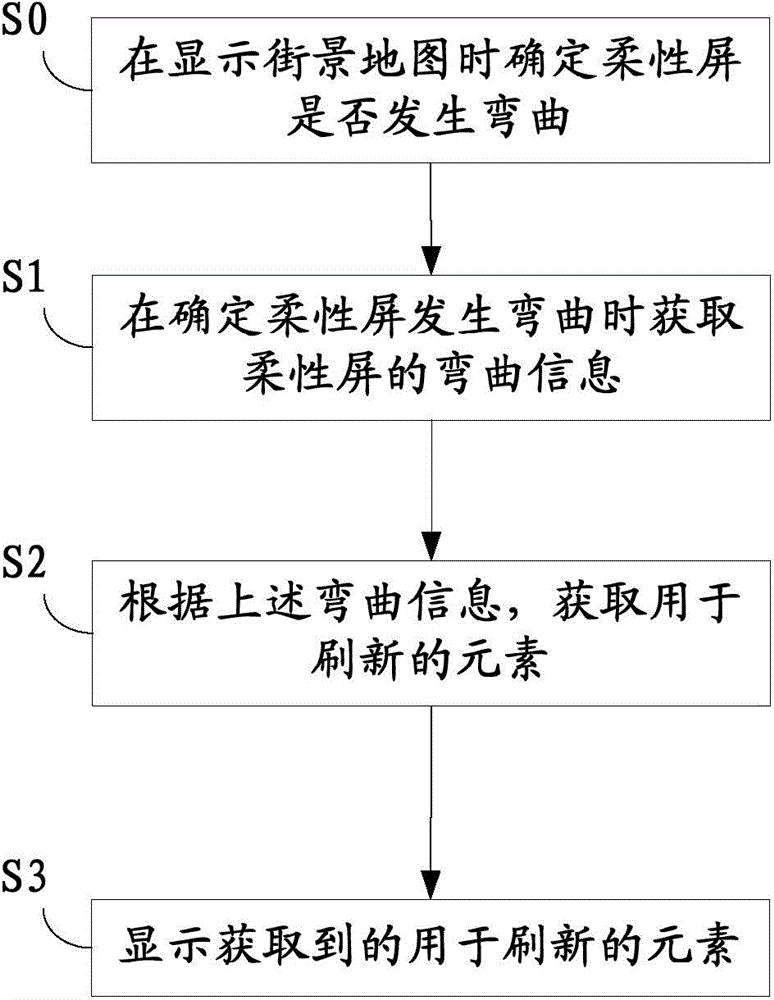

Data processing method and mobile device

ActiveCN104375713AImprove experienceInput/output processes for data processingMobile deviceComputer science

An embodiment of the invention discloses a data processing method and a mobile device and aims to solve the problem that the experience of traditional click and slide on the mobile terminal with a flexible screen is poor. The method includes: determining whether or not the flexible screen bends when the flexible screen displays a street view map; if yes, acquiring bending information of the flexible screen; according to the bending information including bending direction and bending degree, acquiring elements for refreshing, at least including street view pictures and POIs (points of interest); displaying the acquired elements for refreshing. When a user applies an external force to the flexible screen, the bending information of the flexible screen can be acquired, and the elements for refreshing can be acquired according to the bending information of the flexible screen. Therefore, the bendability of the flexible screen allows human-machine interaction. Further, by the use of the data processing method, the problem that the experience of traditional click and slide on the mobile terminal with the flexible screen is solved.

Owner:HUAWEI DEVICE CO LTD

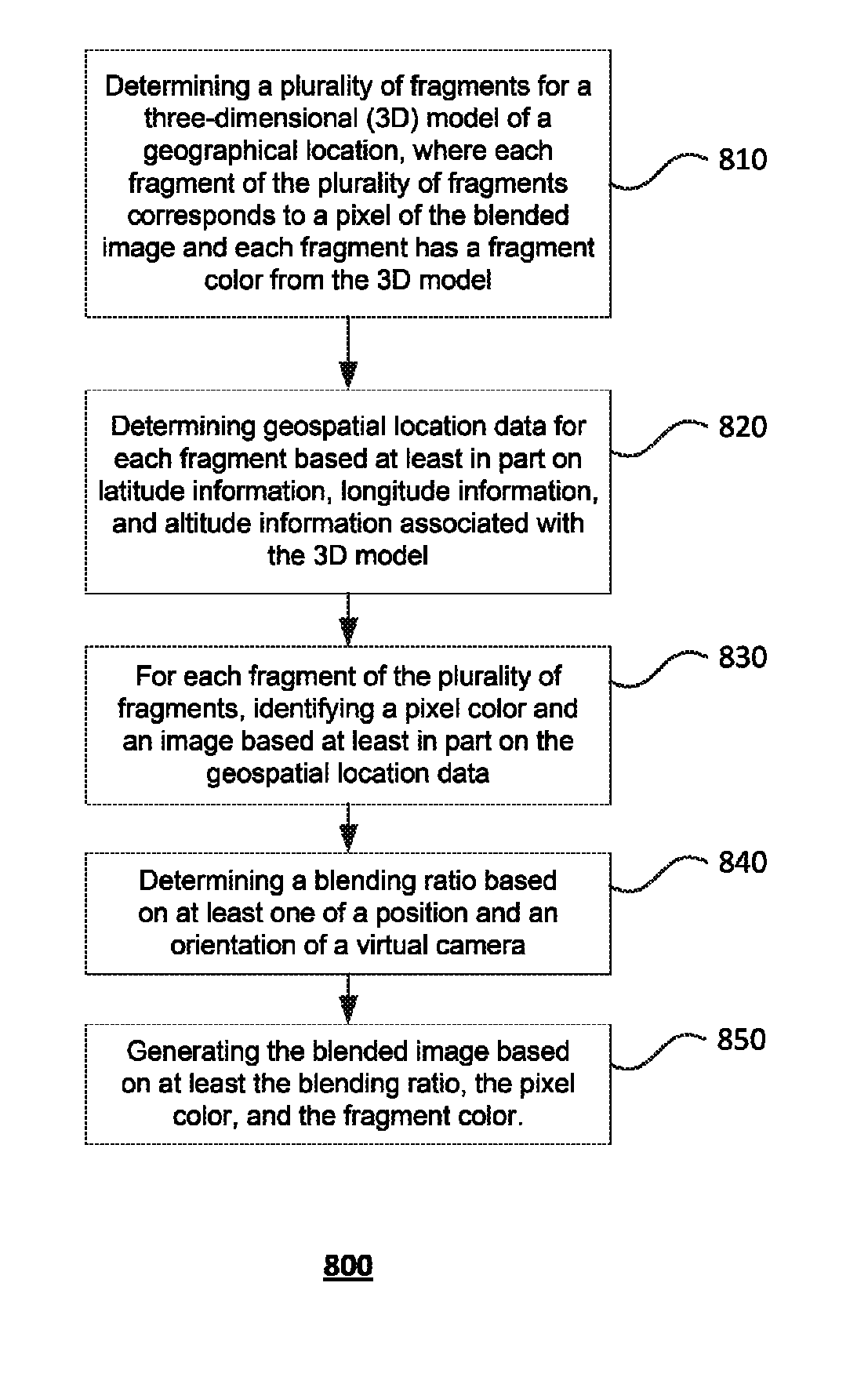

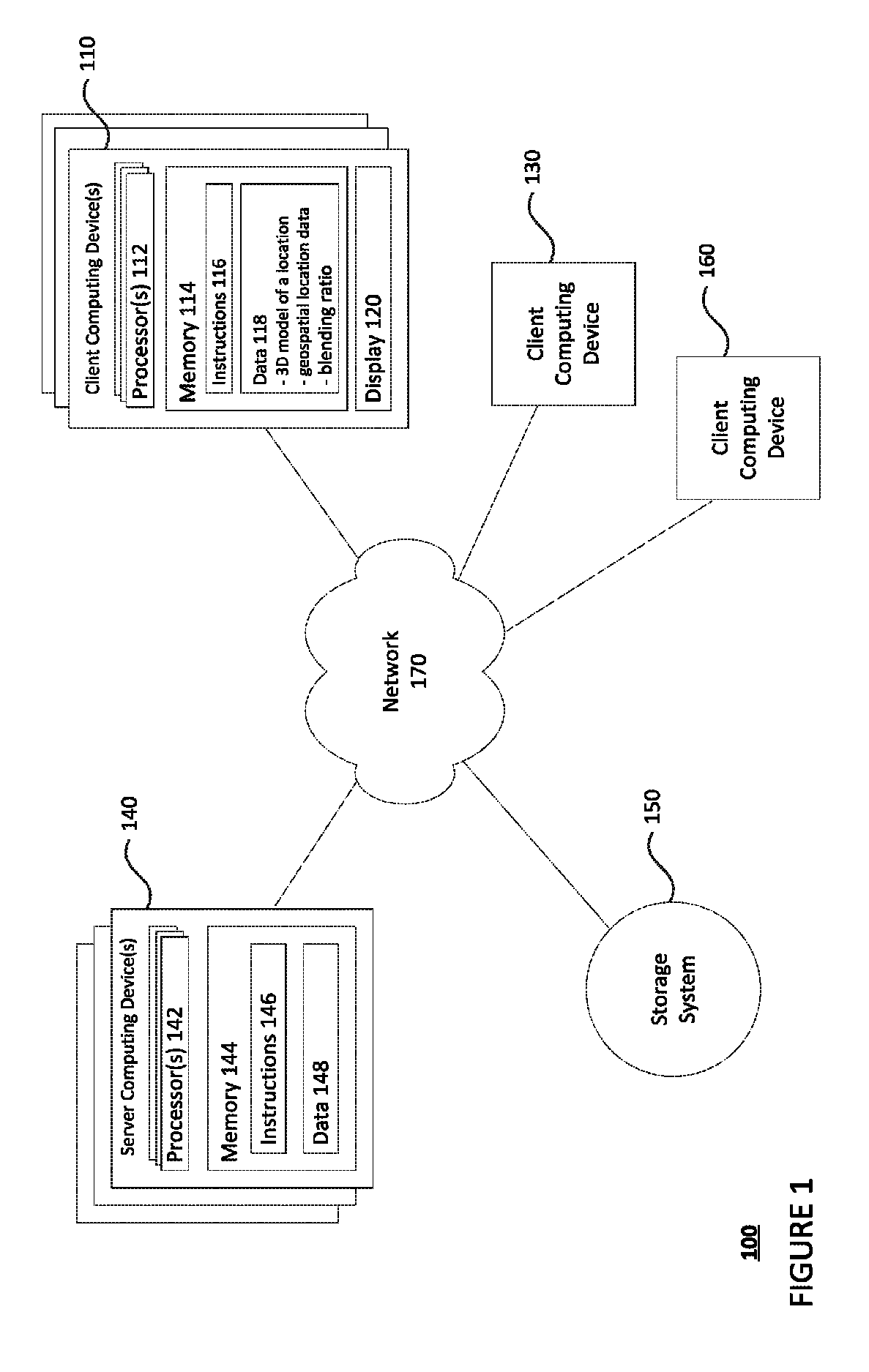

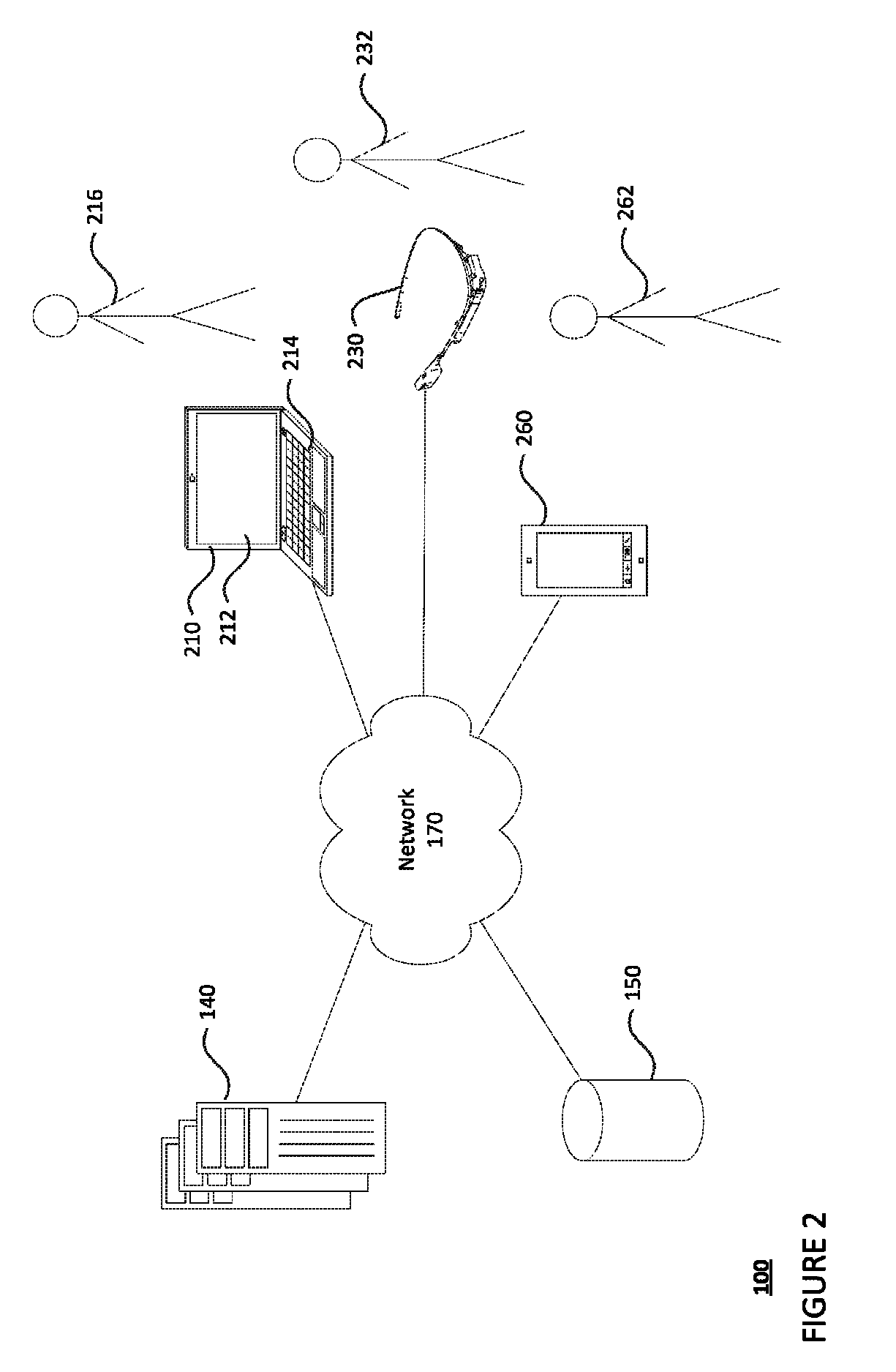

Blending between street view and earth view

In one aspect, computing device(s) may determine a plurality of fragments for a three-dimensional (3D) model of a geographical location. Each fragment of the plurality of fragments may correspond to a pixel of a blended image and each fragment has a fragment color from the 3D model. The one or more computing devices may determine geospatial location data for each fragment based at least in part on latitude information, longitude information, and altitude information associated with the 3D model. For each fragment of the plurality of fragments, the one or more computing devices may identify a pixel color and an image based at least in part on the geospatial location data, determine a blending ratio based on at least one of a position and an orientation of a virtual camera, and generate the blended image based on at least the blending ratio, the pixel color, and the fragment color.

Owner:GOOGLE LLC

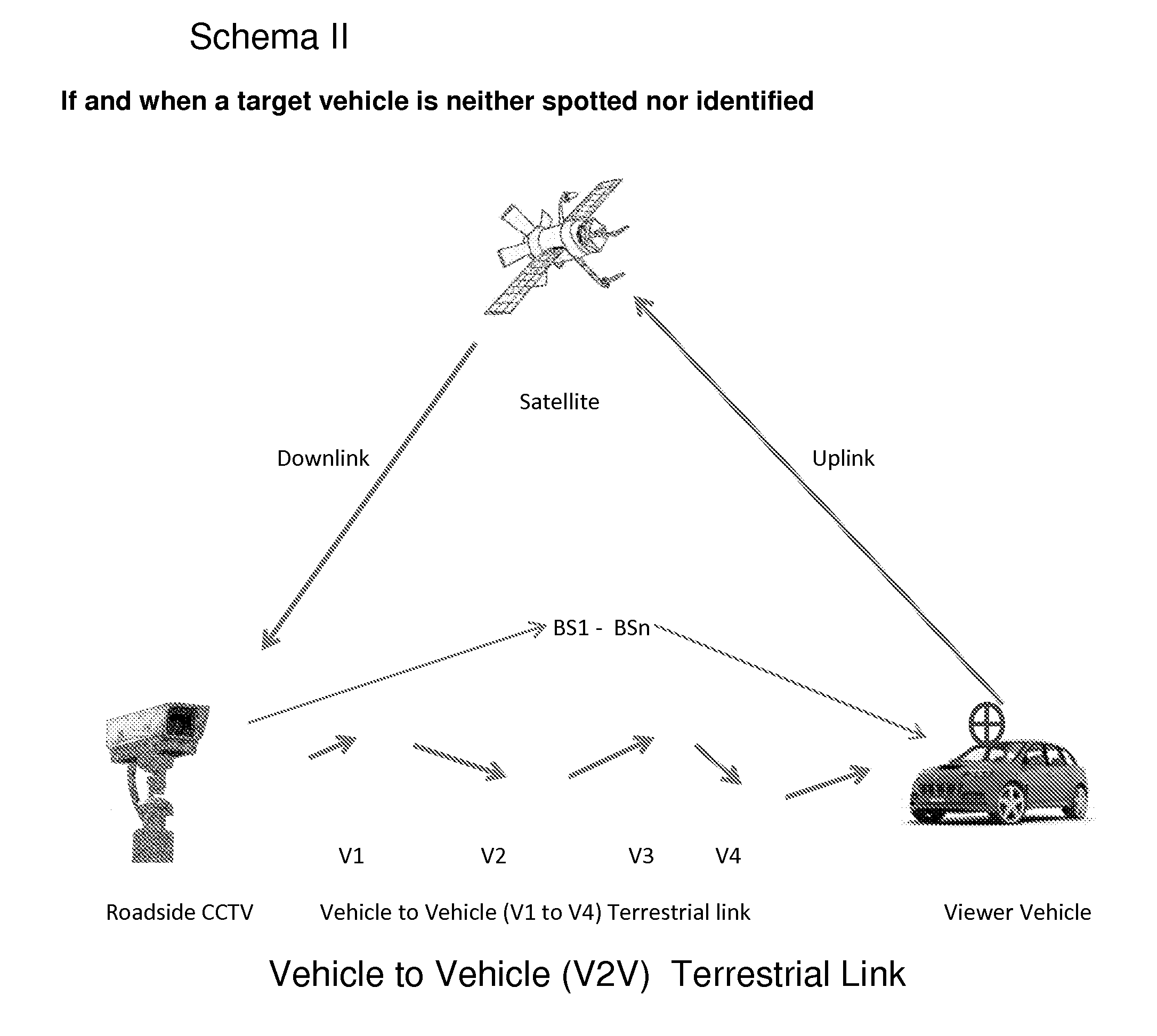

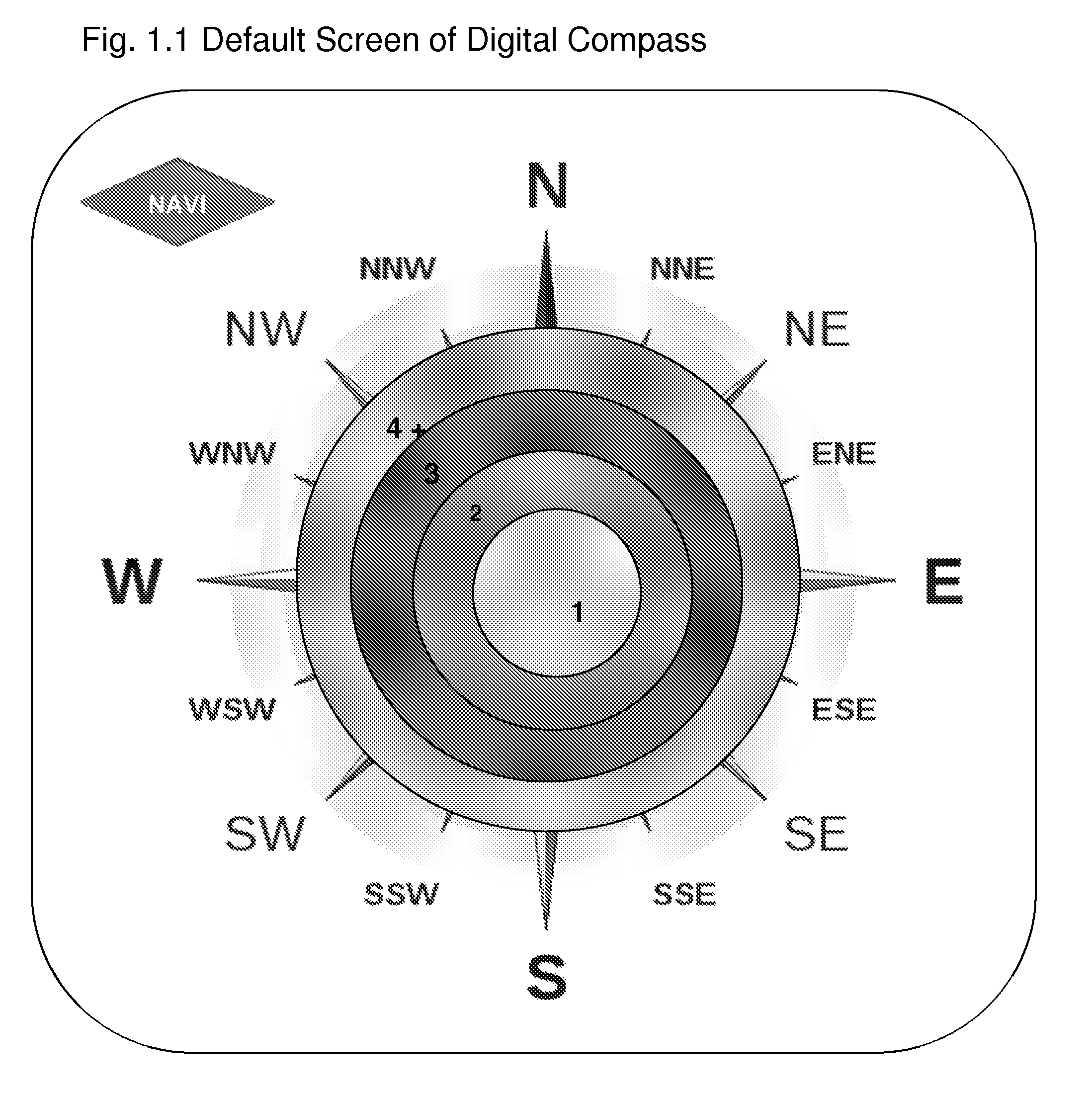

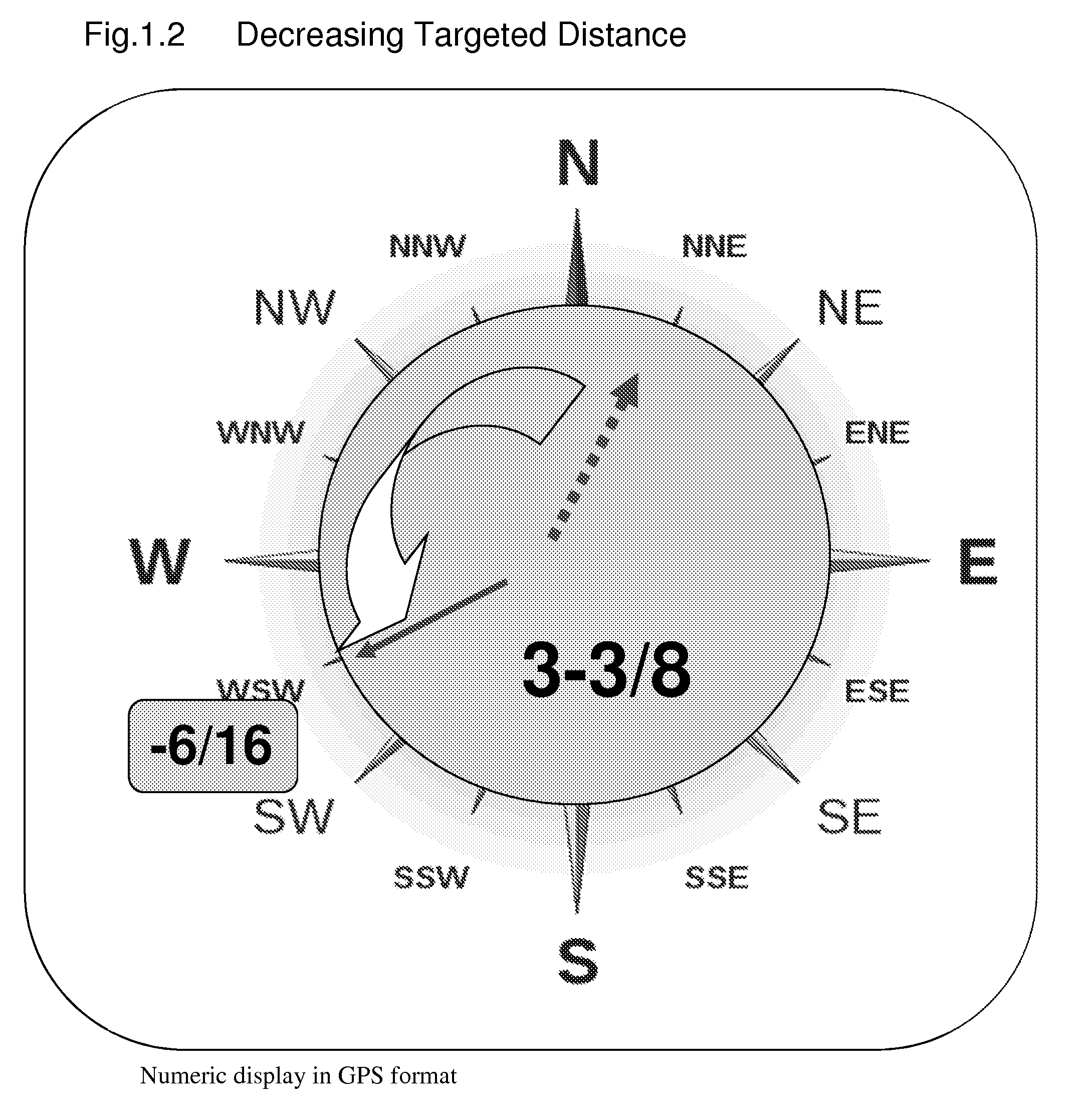

Real-Time Remote-Viewing Digital Compass

ActiveUS20110122244A1Encouraging utilizationOut of balanceGHz frequency transmissionColor television detailsTablet computerTransceiver

Vehicle-mounted video cameras, if and when wirelessly connected via a hybrid transceiver of satellite and terrestrial links and either through a randomly-formed vehicle-to-vehicle network, or via mobile web links, may enable motorists behind the wheel to remotely see either on a navigator screen, or on a screen of mobile equipment, inclusive of handsets and tablet PCs, any real-time video images of traffic and / or street scenes, far beyond physical limits of human eyesight. In pursuing the said peer-to-peer advantages, the real-time street views targeted in any directions can be picked at the discretion of motorists, by transmitting the location-based inquiry to the targeted on-vehicle cameras, by tapping on an in-vehicle touchscreen or a mobile device screen and also by activating voice commands, if necessary. The viewable range and directions are only affected or limited by the signal strength based on the density of moving vehicles in between and the availability of interconnected roadside stationary surveillance fixtures as well as the availability of target vehicles equipped with video cameras.

Owner:ERGONOTECH

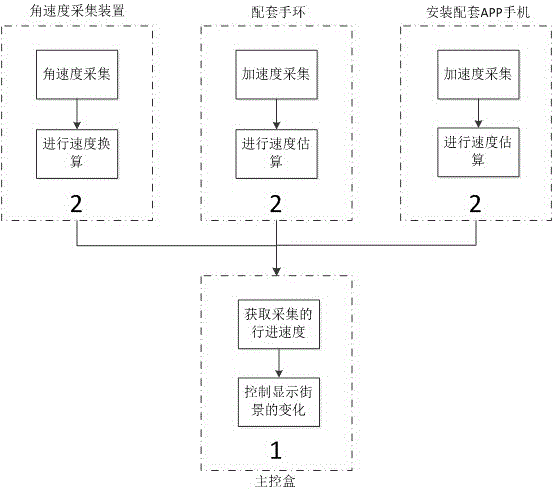

Virtual reality technology-based scenery viewing system

InactiveCN104998376AReduce volumeEasy loading and unloadingMovement coordination devicesCardiovascular exercising devicesGyroscopeAngular velocity

The invention relates to a virtual reality technology-based scenery viewing system. According to the system, scenery viewing equipment is realized through using an Android intelligent terminal, and a streetscape API provided by map service providers such as Tencent and Baidu is used in combination, and conveyor belt equipment such as a running machine is also used in combination, and therefore, a user can enjoy sceneries along a selected route when walking and running on the conveyor belt equipment. The system is composed of a touch control display screen and a corresponding speed collecting device; and an angular velocity wireless sensor device assembled on a conveying crawler belt can be selected as the speed collecting device to acquire precise instant speed, or equipment with an acceleration gyroscope such as a mobile phone and a smart band, which is worn on the user, can be adopted as the speed collecting device to perform calculation, so that relatively accurate estimated velocity can be obtained. According to the system of the invention, streetscape maps and the conveyor belt device are used in combination, virtual-reality scenery viewing experience can be provided. The system is advantageous in simple assembly. The system can be fast deployed on the conveyor belt device such as the running machine so as to be used.

Owner:FUZHOU UNIV

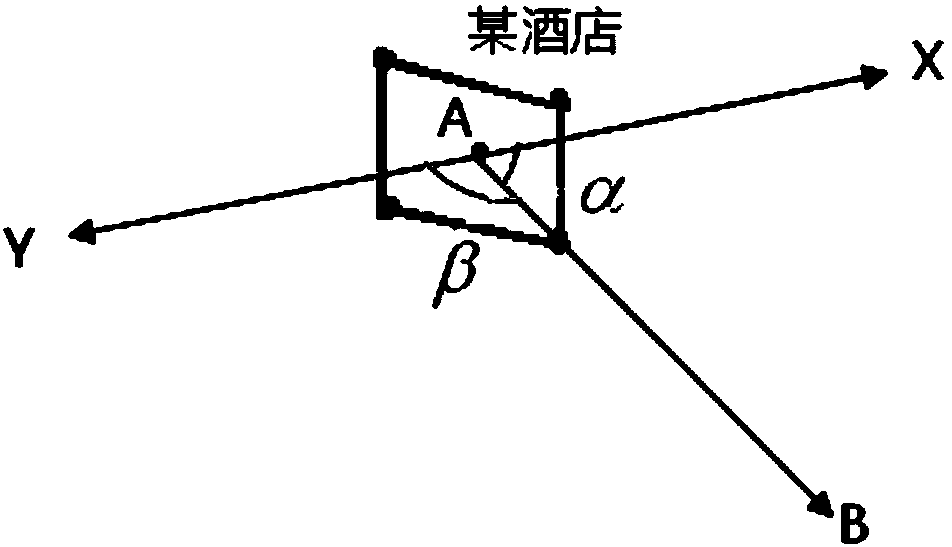

Space vector POI extracting method based on vehicle-mounted space information collection

InactiveCN104063499AImprove accuracyImprove experienceGeographical information databasesSpecial data processing applicationsCloud pointStreet scene

The invention relates to a space vector POI extracting method based on vehicle-mounted space information collection. The space vector POI extracting method based on vehicle-mounted space information collection comprises the following steps that street scene data and laser cloud points on the two sides of an urban road are acquired and matched; positions of POI targets are selected in a clicked mode; space vector POIs are acquired; the POIs with space vector information are acquired and include horizontal coordinates, elevation information and orientation information. The space vector POI extracting method based on vehicle-mounted space information collection has the advantages that real three-dimensional POI information is provided for a user, and the accuracy of POI information displaying or skipping is improved.

Owner:SUPER SCENE BEIJING INFORMATION TECH

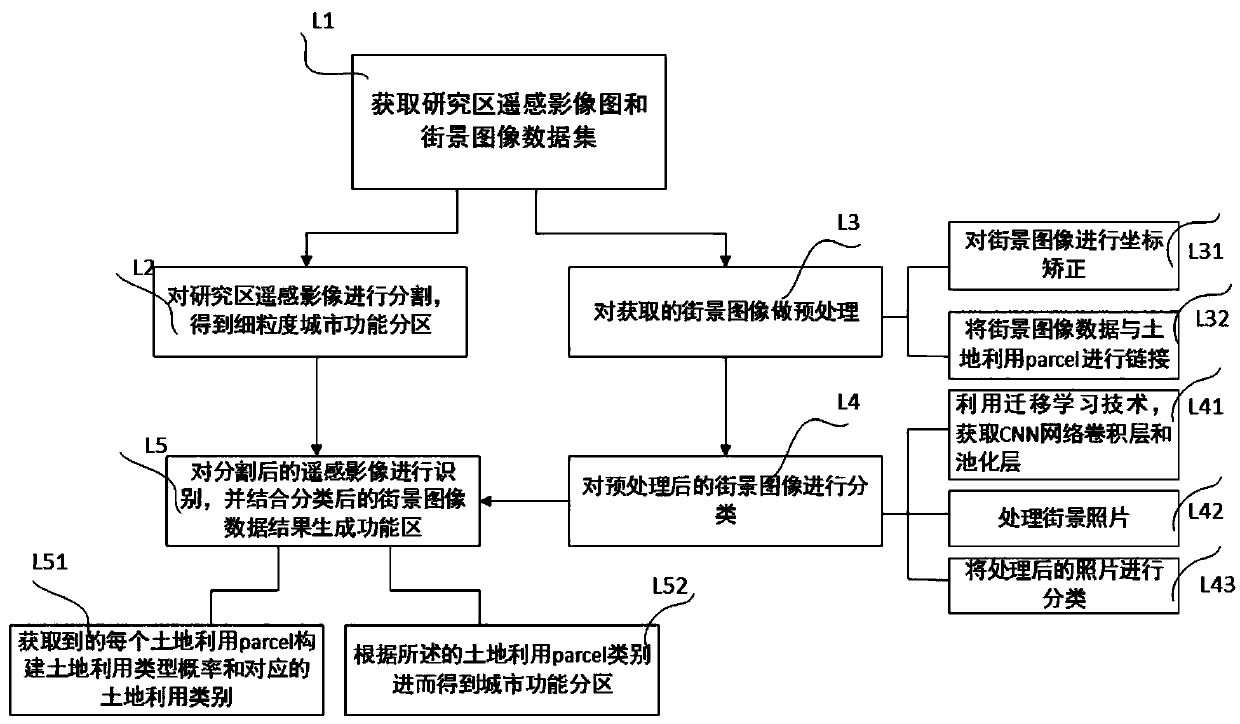

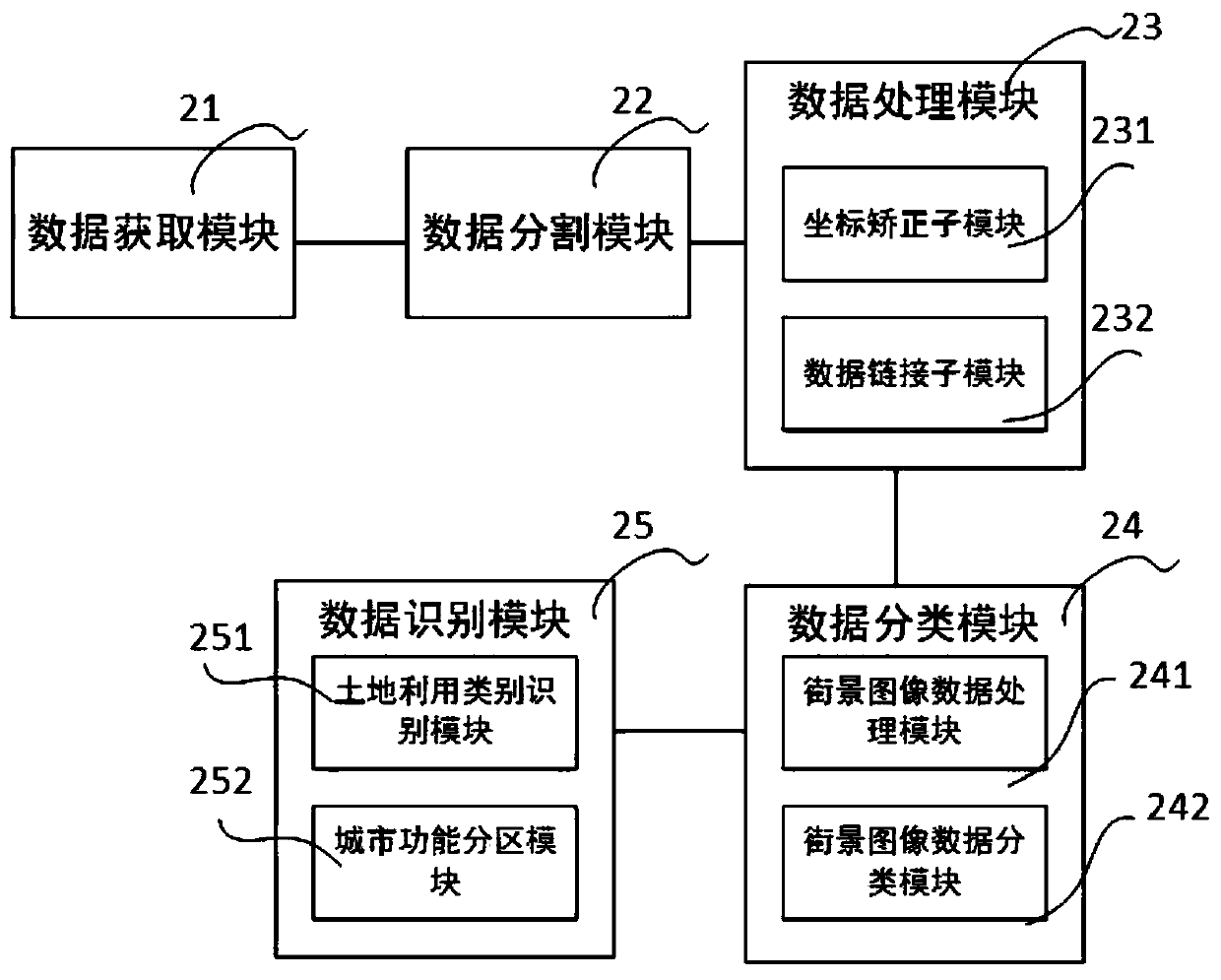

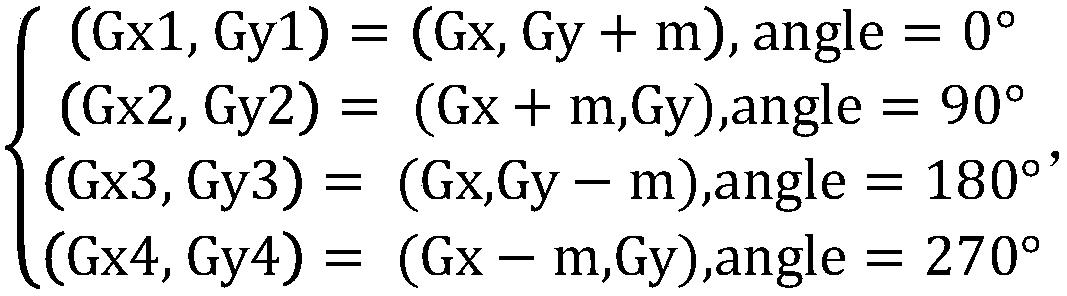

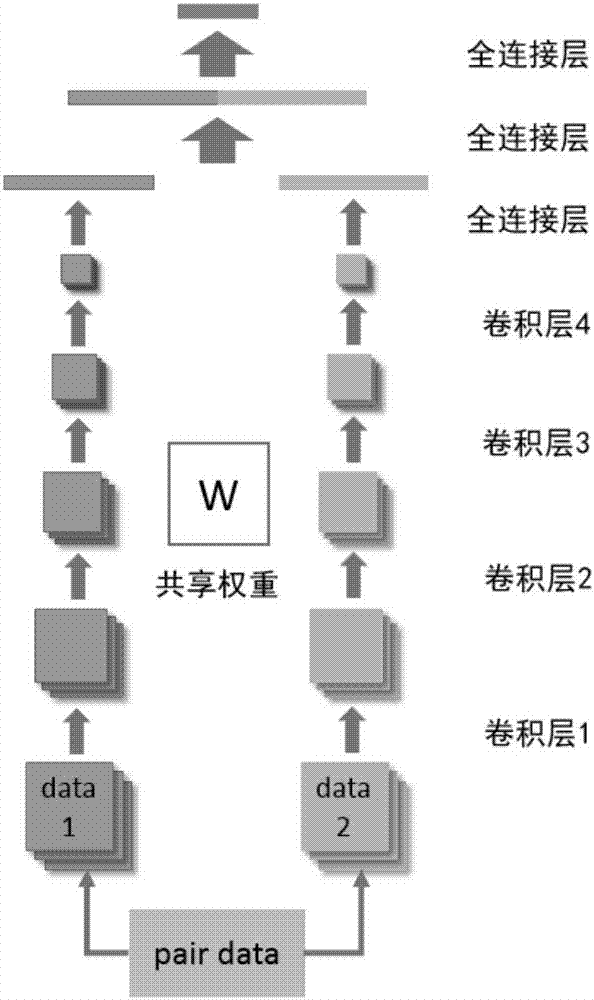

Automatic city function partitioning method and system based on streetscape data and transfer learning

InactiveCN109657602AFaster and more accurate classificationFast training convergenceImage enhancementImage analysisImage segmentationUrban area

The invention provides an automatic city function partitioning method and system based on streetscape data and transfer learning. According to the method and the system, urban functional areas are automatically identified based on streetscape photos, a deep learning technology and an image segmentation technology, and an urban functional partition map is automatically obtained by using streetscapephoto scene semantic information in combination with classified urban area file data obtained by the image segmentation technology. The method can be used for automatically and accurately identifyingfine-grained urban functional areas, and brings opportunities to urban planning and urban environment development.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

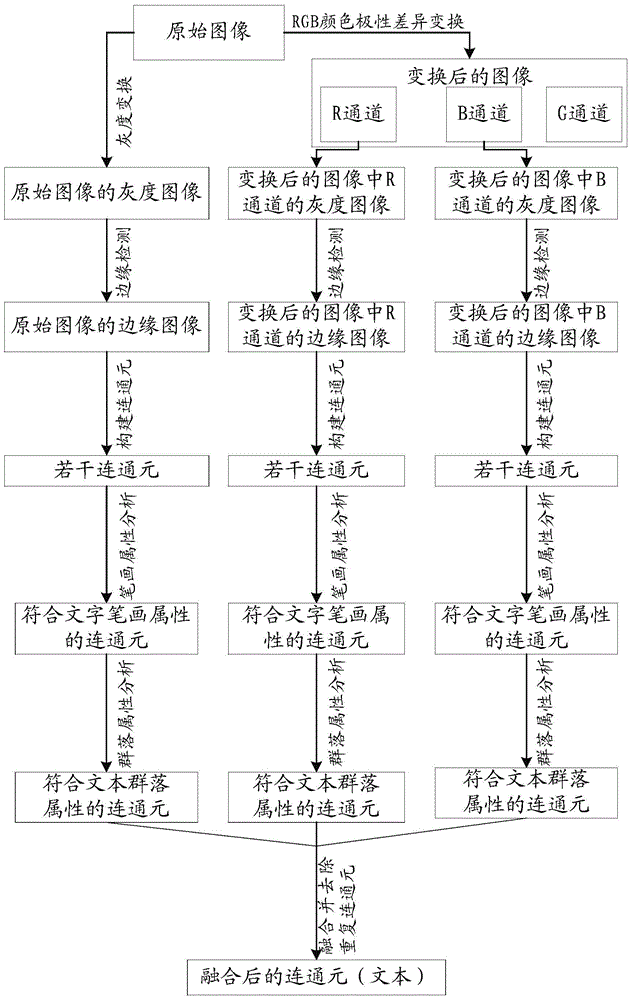

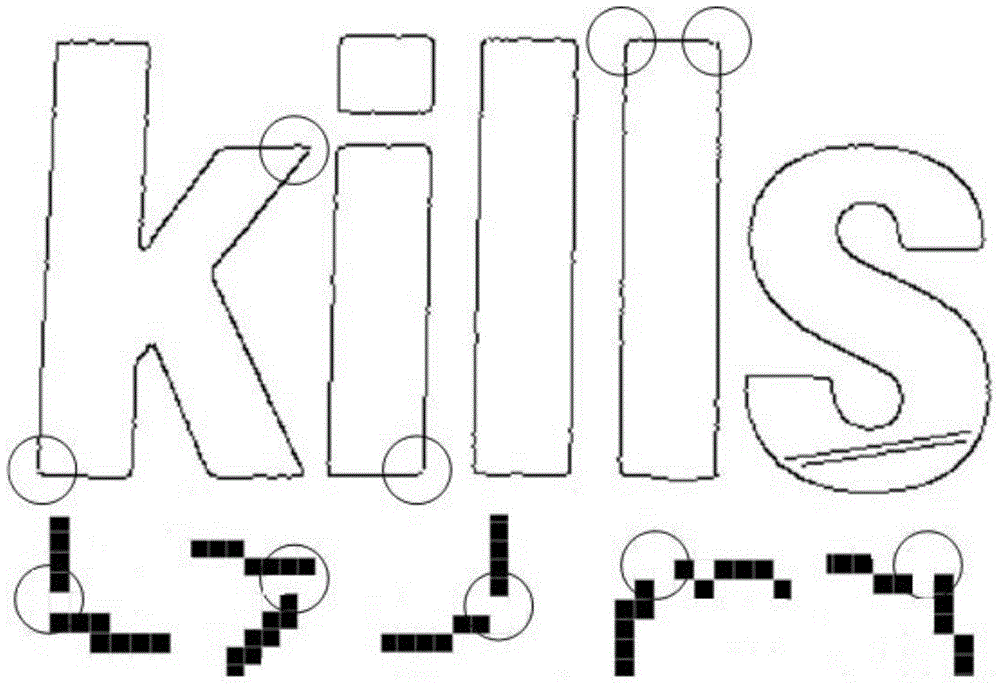

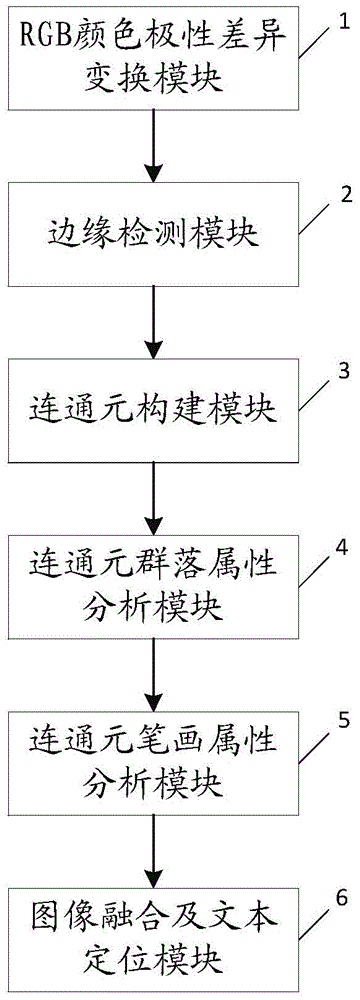

Text positioning method and system based on visual structure attribute

ActiveCN104463138AImprove recognition rateImprove robustnessCharacter and pattern recognitionVideo retrievalPattern recognition

The invention belongs to the technical field of image recognition, and particularly relates to a text positioning method and system based on the visual structure attribute. Based on the visual attribute of a text, by means of color polarity difference transformation and edge neighborhood tail end bonding, abundant closed edges are detected so that abundant candidate connection elements can be obtained, then character stroke attributive character and text colony attributive character screening is conducted, the connection elements belonging to characters are extracted from the candidate connection elements, and then the final text is positioned through multi-channel blending and repeated connection element removal. The method is high in robustness and can be adapted to the situation that multiple word language categories are mixed, or various font styles exist, or arrangement directions are random, or background interference exists and other situations, the positioned text can be directly provided for OCR software for recognition, and OCR software recognition rate can be increased. The text positioning method and system based on the visual structure attribute can be applied to image video retrieval, junk information blocking, vision assisted navigation, street view positioning, industrial equipment automation and other fields.

Owner:SHENZHEN UNIV

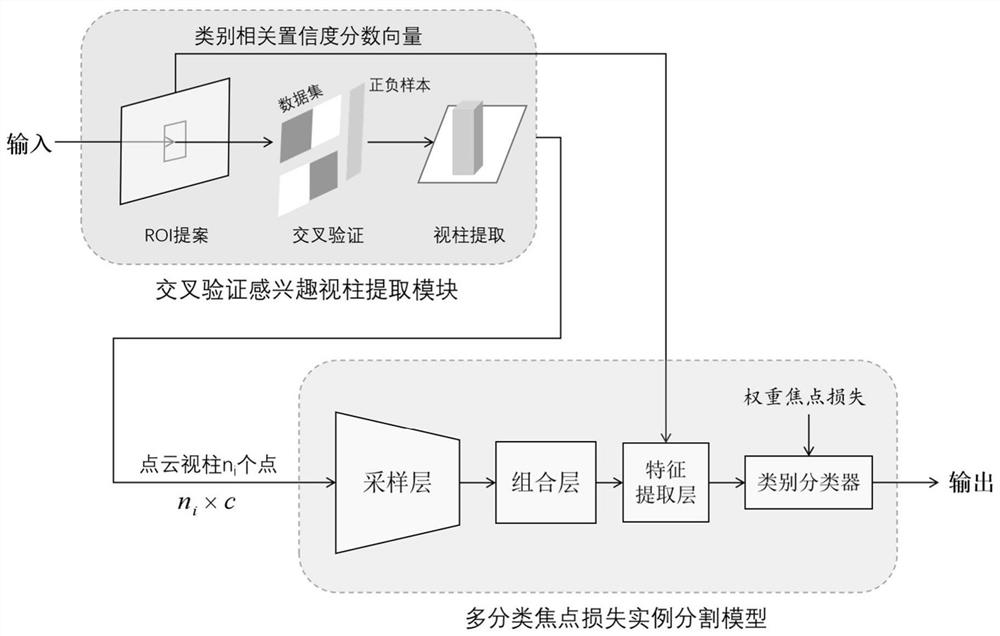

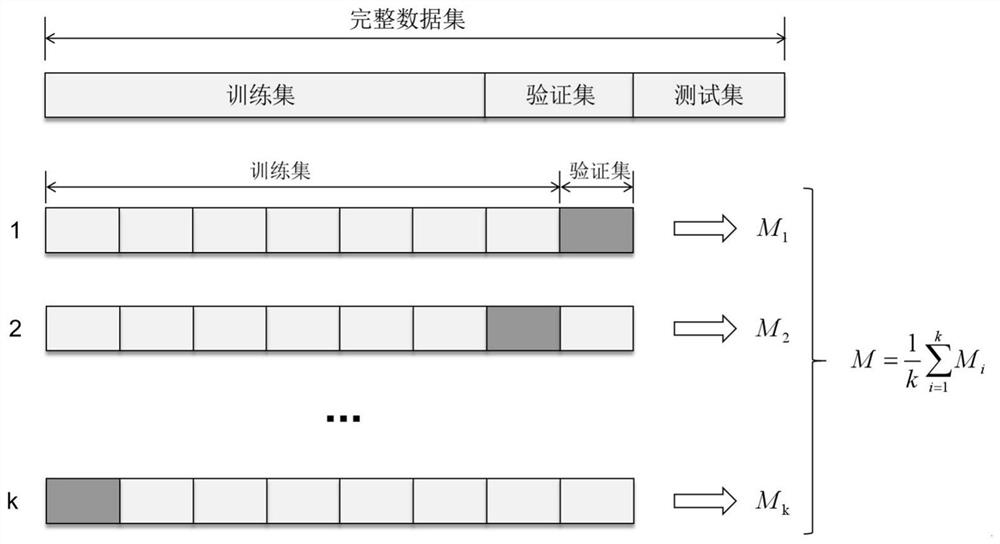

Three-dimensional point cloud data instance segmentation method and system in automatic driving scene

PendingCN111968133AImprove generalization abilityImprove concentrationImage enhancementImage analysisPoint cloudData set

The invention provides a three-dimensional point cloud data instance segmentation method and system in an automatic driving scene. The method comprises steps of carrying out the preliminary recognition and division of an outdoor street scene through the spatial position information of a target object, and forming a point cloud visual column of an interested region; visual column point clouds containing objects and negative sample visual column background point clouds distributed in the same way are extracted from the point cloud visual columns of the region of interest to form a visual columnpoint cloud data set; and extracting high-dimensional semantic feature information of an object contained in each visual column point cloud in the visual column point cloud data set, and meanwhile, introducing a multi-classification focus loss function with a weight to obtain a category to which each point cloud in the visual column belongs, thereby realizing instance segmentation of the point cloud data. According to the three-dimensional point cloud data instance segmentation method in the automatic driving scene, target detail feature expression can be effectively enhanced so that the prediction capability of point cloud difficult samples can be enhanced and the performance of point cloud instance segmentation in the automatic driving scene can be enhanced.

Owner:SHANGHAI JIAO TONG UNIV

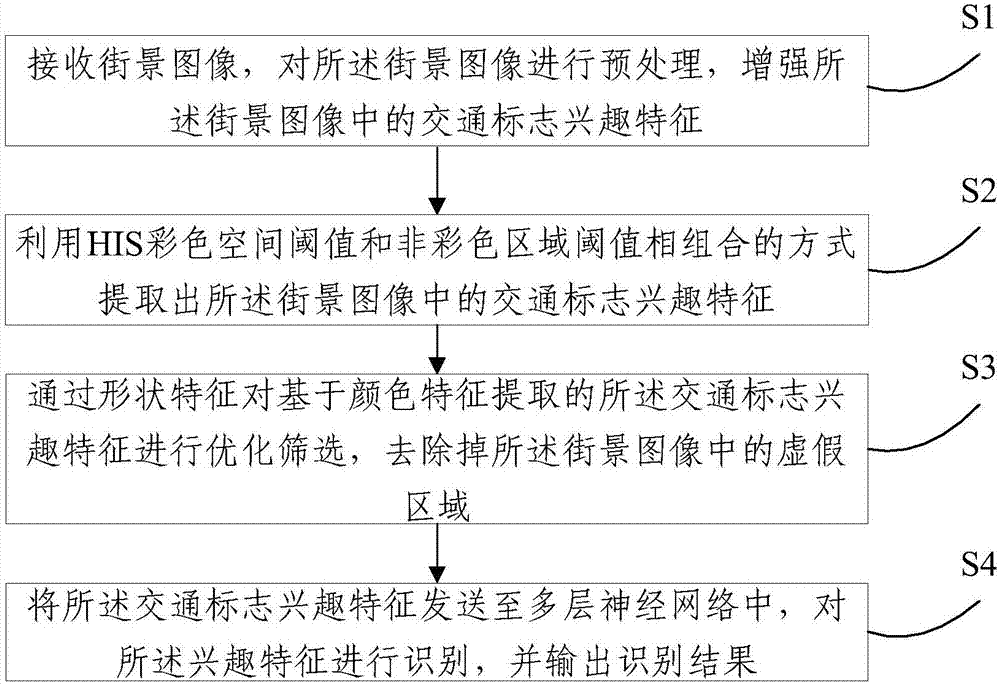

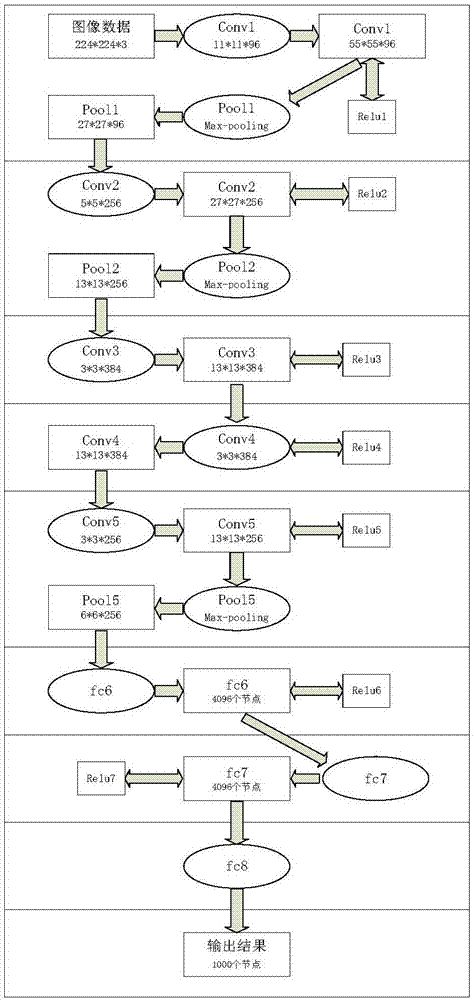

Method and system for identifying traffic signs in street image

InactiveCN107346413AImprove recognition rateReduce the impact of recognitionImage enhancementImage analysisTraffic sign recognitionStreet scene

The invention provides a method and a system for identifying traffic signs in a street scene image. The method includes the following steps: receiving a street scene image, preprocessing the street scene image, and enhancing the traffic sign features of interest in the street scene image; extracting the traffic sign features of interest in the street scene image by combining a HIS color space threshold and a non-color region threshold; using shape features to optimize and screen the traffic sign features of interest extracted based on color features, and removing false regions in the street scene image; and sending the traffic sign features of interest to a multilayer neural network, identifying the features of interest, and outputting an identification result. According to the invention, the street scene image is processed, the traffic sign features of interest are extracted, and traffic signs are identified using a trained multilayer convolutional neural network. The identification rate is improved, and the influence of the factors of the street scene image on identification is reduced.

Owner:BEIJING UNIV OF CIVIL ENG & ARCHITECTURE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com