Patents

Literature

67results about How to "Reduce computing delay" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Vehicle networking communication optimization algorithm based on edge computing and Actor-Critic algorithm

ActiveCN109068391ASolve the problem of huge access volumeImprove utilization efficiencyParticular environment based servicesVehicle wireless communication serviceNon orthogonalReal-time computing

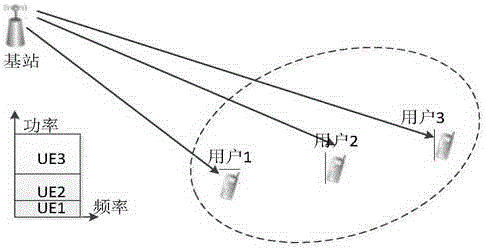

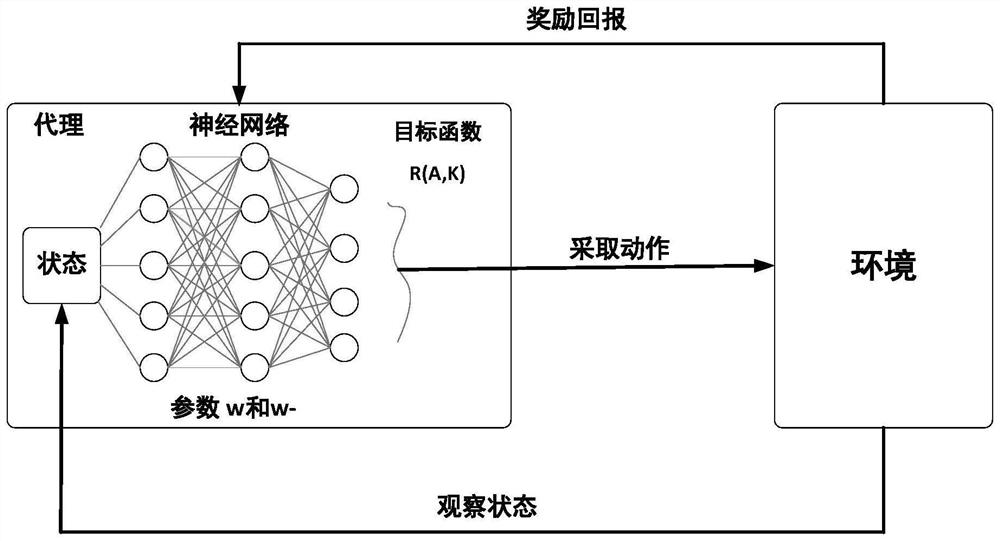

The invention relates to a method based on edge computing and an Actor-Critic algorithm. The specific steps are as follows: in the vehicle networking communication system, the user sequence is {1, 2,..., k,..., K}, and there are K users; the subchannel sequence is {1, 2,..., n,..., N} with N subchannels; fog access node sequence {1, 2,..., m,... M}, total M access nodes, computing power sequence of incoming nodes {1, 2,... Cm,..., cM}; task sequence {1, 2,... tk,..., tK} uploaded by user, total tK tasks; a non-orthogonal multiple access (NOMA) mode is adopted to connect the user to the vehiclenetworking communication system. The task uploaded by the user carries out edge calculation and returns the calculation result to the user; taking advantage of actor-Critic algorithm to optimize theresource allocation method and get the best resource allocation method. The invention combines the non-orthogonal multiple access, the edge calculation and the reinforcement learning, effectively solves the problem of huge access quantity existing in the vehicle networking, simultaneously reduces the time delay in the whole communication process, obtains the best resource distribution mode under different environments, and improves the energy utilization efficiency.

Owner:QINGDAO ACADEMY OF INTELLIGENT IND

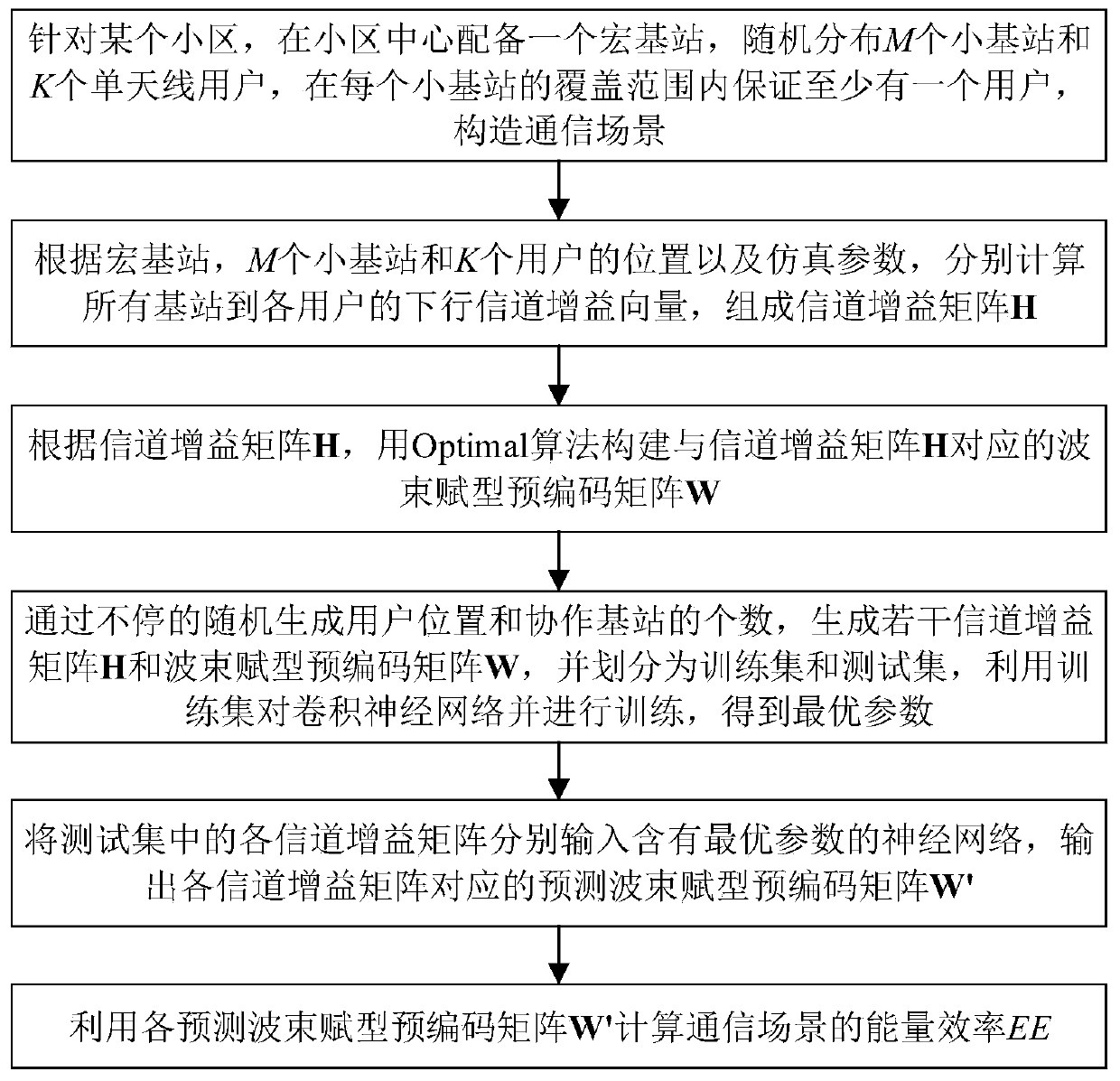

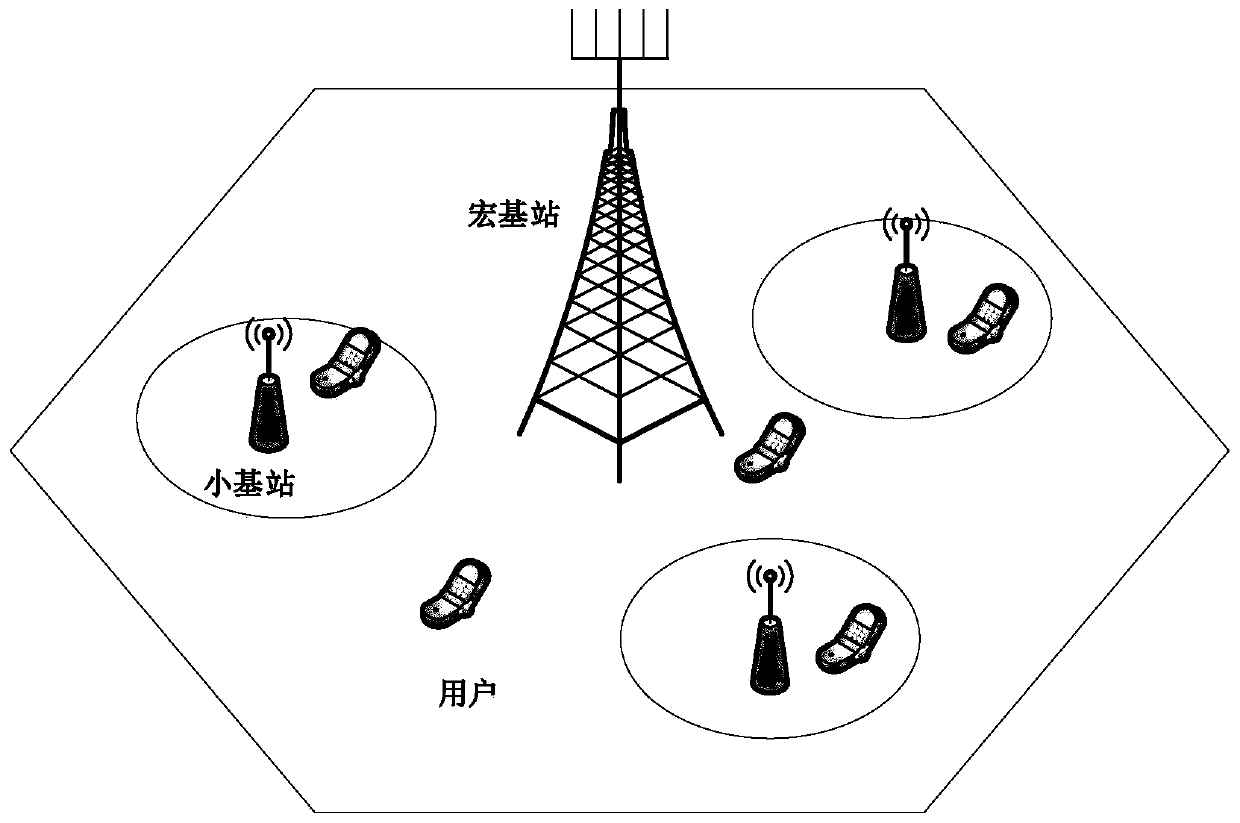

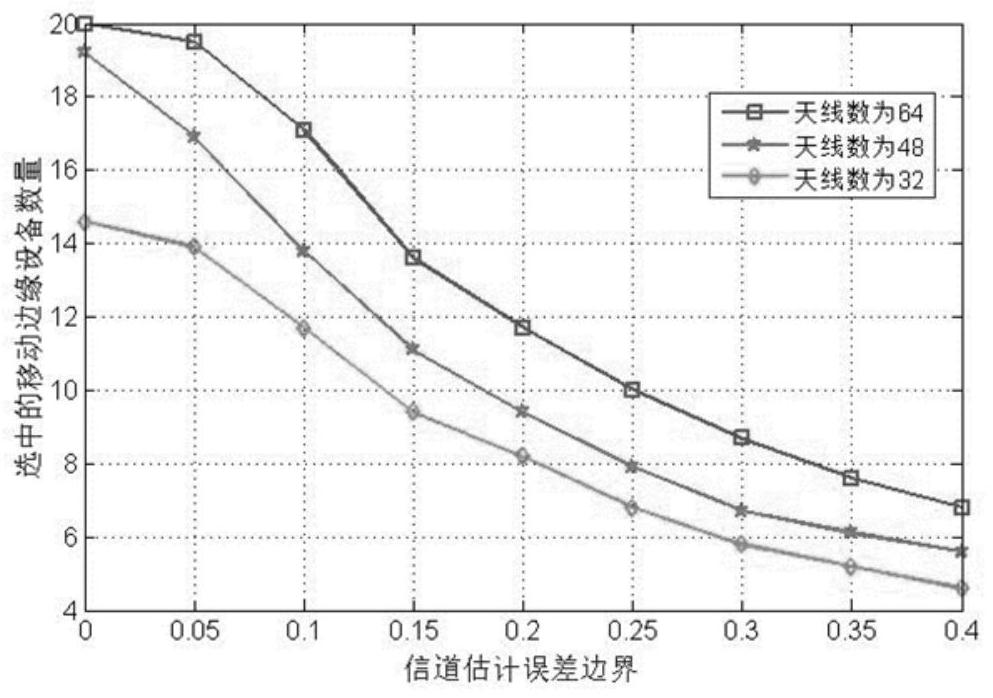

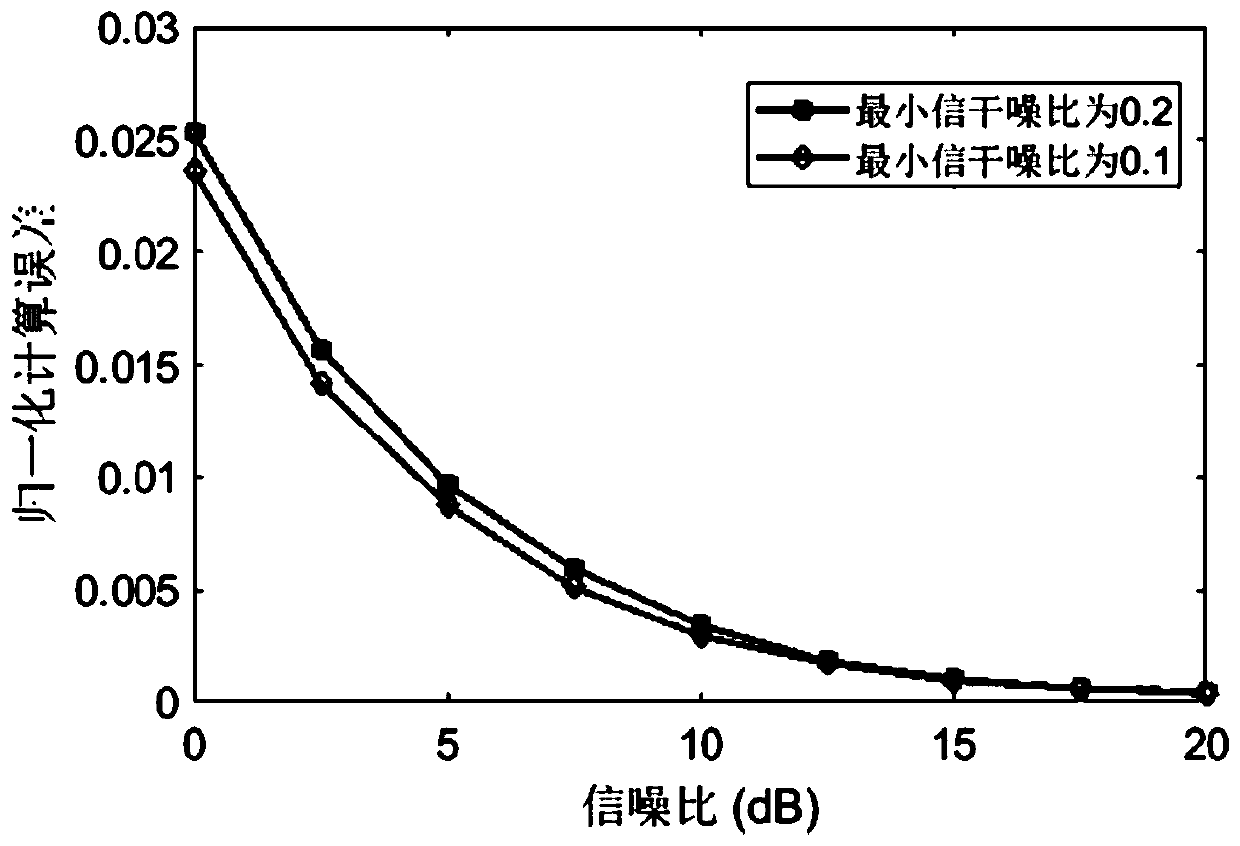

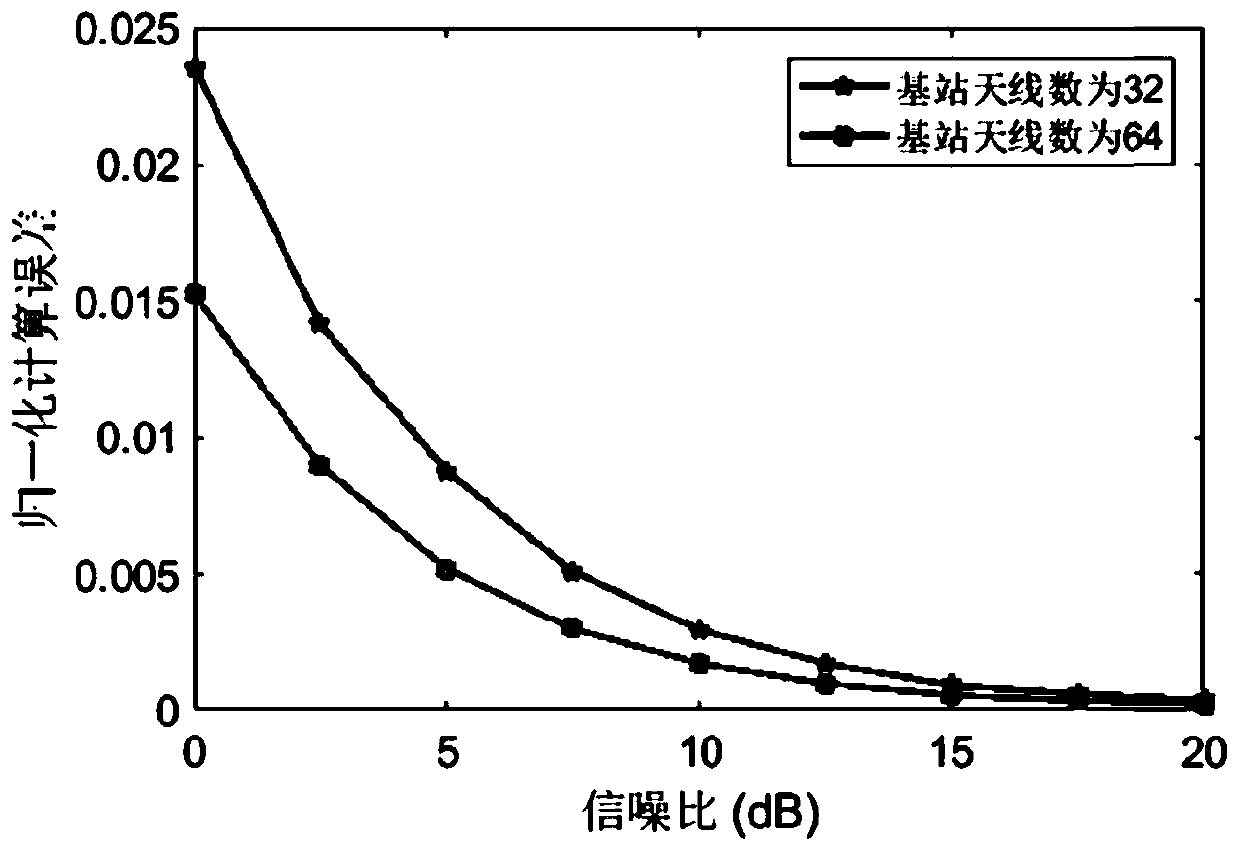

Cooperative beamforming method of large-scale MIMO system

ActiveCN111181612AImprove energy efficiencyImprove real-time performanceSpatial transmit diversityHigh level techniquesMacro base stationsTransmitted power

The invention discloses a cooperative beamforming method for a large-scale MIMO system, and belongs to the field of wireless communication. The method comprises the steps: constructing a downlink large-scale MIMO heterogeneous network among a macro base station, a small base station and a user; considering the circuit power consumption of the base station, researching a system energy efficiency optimization problem satisfying QoS and antenna transmitting power constraints, and fully mining characteristics of a neural network; through the satisfactory performance of deep learning in regressiveproblems, fitting an optimal beamforming solution by extracting channel gain matrix characteristics; performing offline training by utilizing the neural network, and transferring a part of calculationto an offline part. Only a small amount of linear and nonlinear calculation is needed when the trained neural network is applied to solve, and the time delay is greatly reduced while the energy efficiency of the system is improved.

Owner:INNER MONGOLIA UNIVERSITY

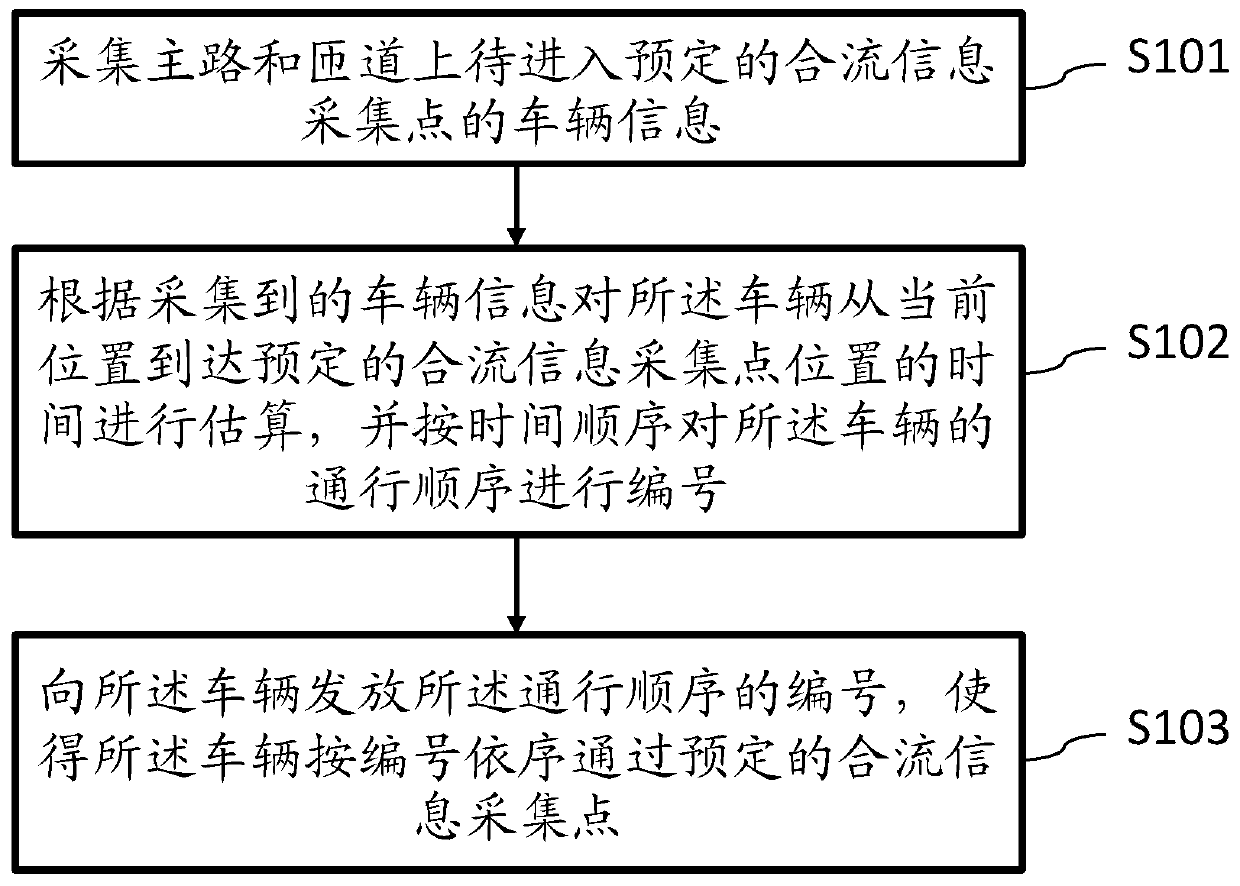

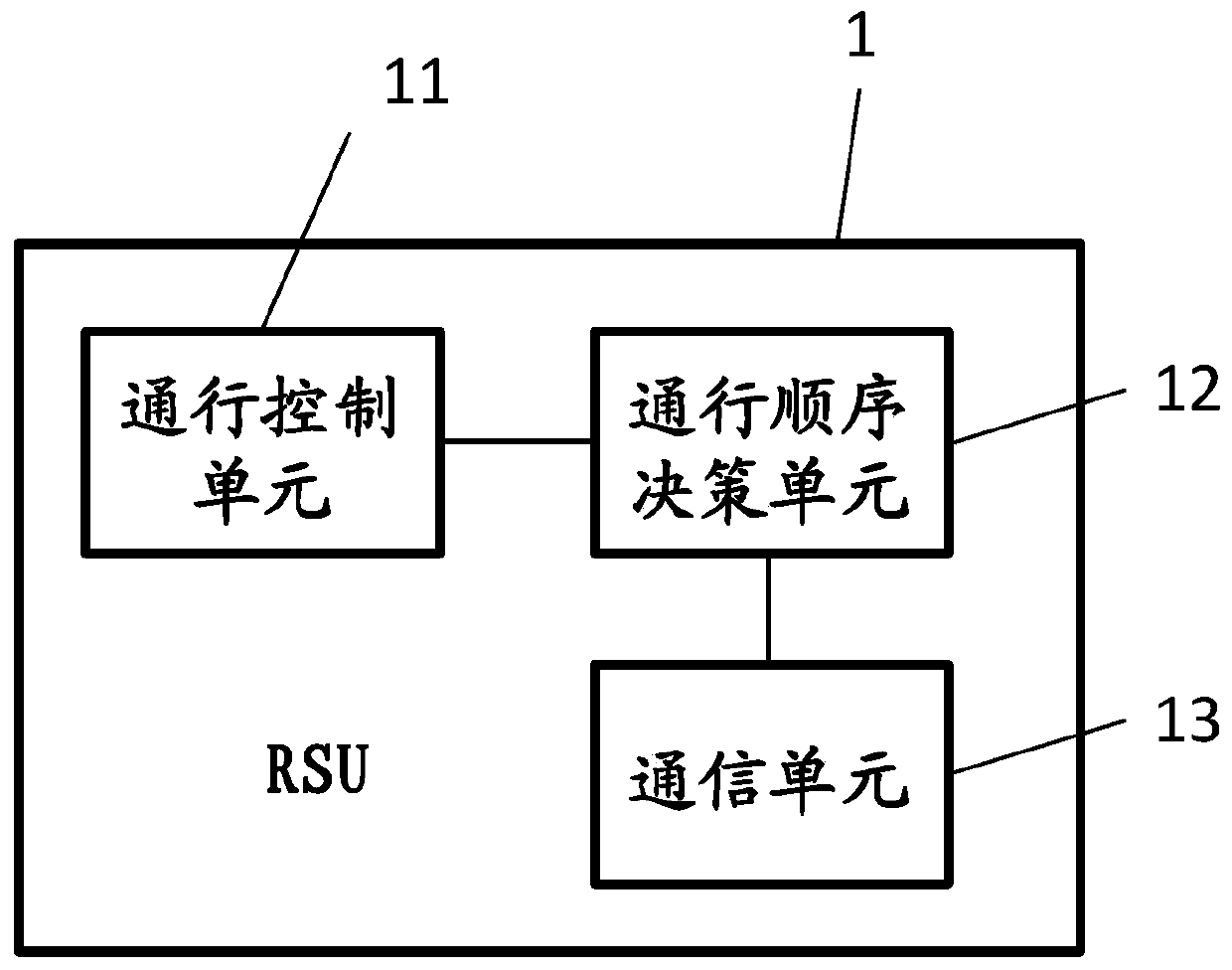

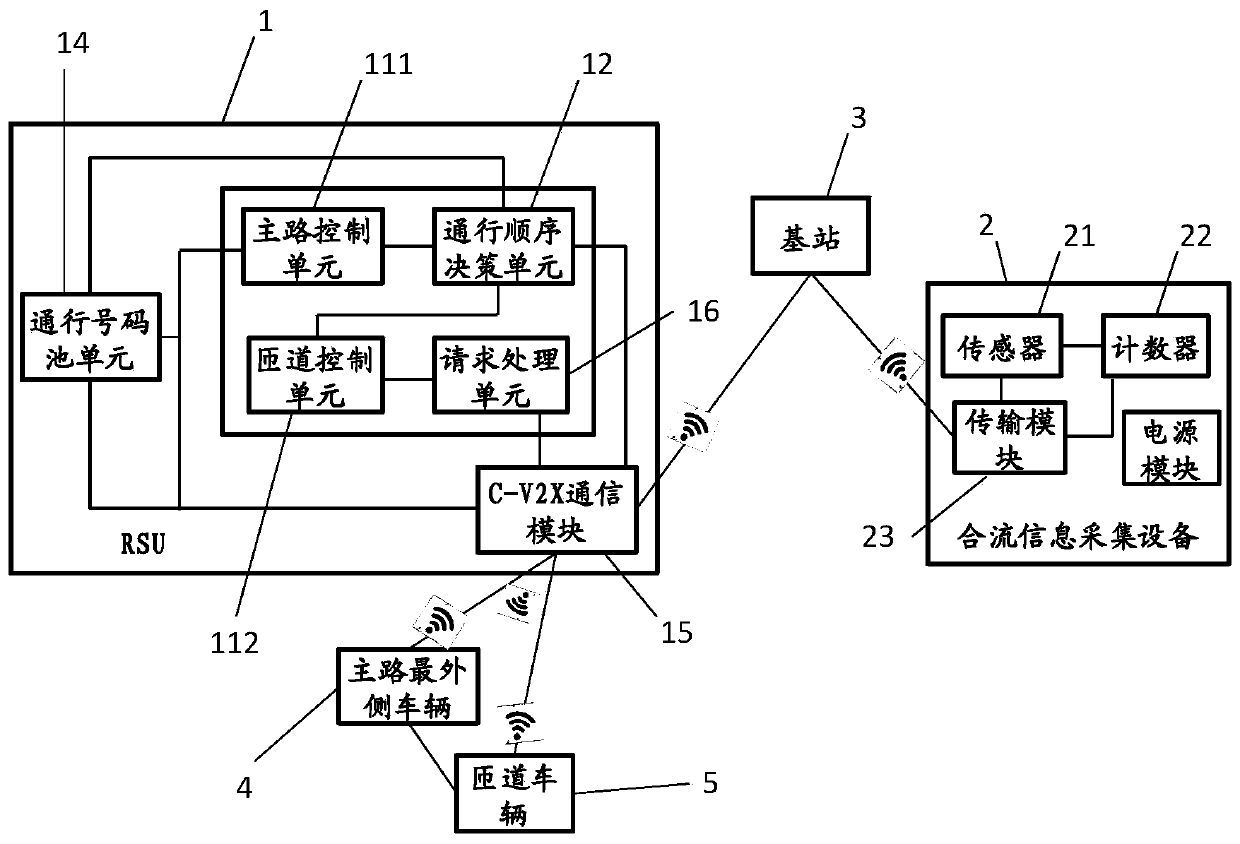

On-ramp vehicle merging management method and system

InactiveCN110853378AEasy to identifySimplified import processing methodControlling traffic signalsTransport engineeringOperations research

The invention provides an on-ramp vehicle merging management method and a system. The method includes the following steps: information of vehicles to enter a predetermined merging information collection point on a main road and a ramp is collected; the time of a vehicle from the current position to the predetermined merging information collection point is estimated according to the collected vehicle information, and the traffic order of the vehicle is numbered according to a time sequence; the traffic sequence number of the vehicle is issued so that the vehicle passes through the predeterminedmerging information collection point in sequence. The on-ramp vehicle merging management method and the system of the invention provide a more simplified mergence processing method for lane merging management; it is conducive to shortening the calculation delay; the division of responsibilities between roadside equipment and vehicle ends can be clearly defined; and it is easier to identify the accident responsibility party.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

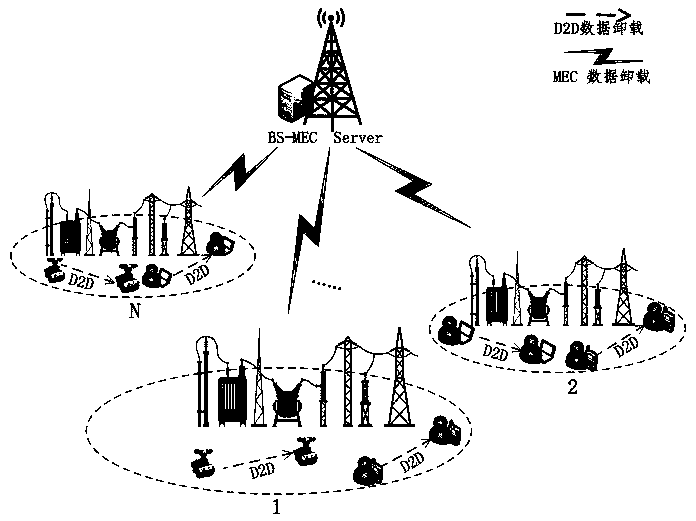

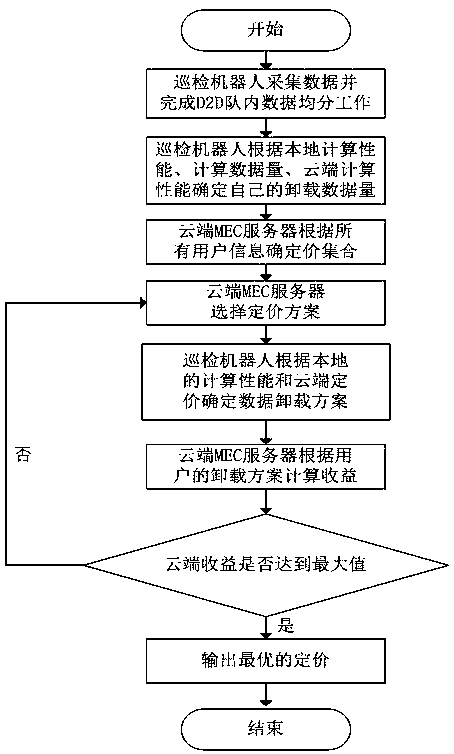

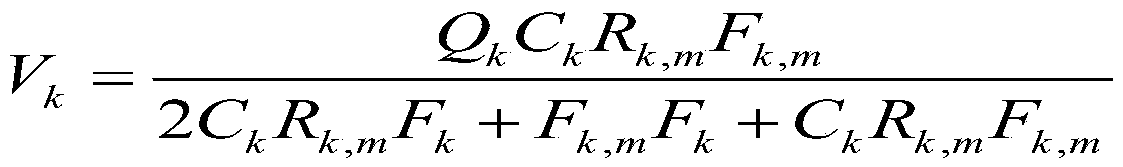

MEC pricing unloading method based on D2D communication in electric power internet of things

ActiveCN111107153AReduce computing delayIncrease incomeTransmissionOperations researchIndustrial engineering

The invention relates to an MEC pricing unloading method based on D2D communication in the electric power Internet of Things, and belongs to the technical field of mobile communication. According to the technical scheme, in-queue task uniform distribution work is completed through a D2D data communication technology; each pair of paired equipment determines the data unloading amount of the pairedequipment; determining a pricing set, and sorting the pricing set; the MEC server broadcasts one pricing scheme in the pricing set according to the sequence; the inspection equipment determines an unloading scheme of the inspection equipment and uploads the unloading scheme to the MEC server; the cloud server determines the income according to the calculation scheme of the inspection equipment, and judges whether a pricing scheme needs to be updated or not according to an income maximization principle; and the cloud server iteratively outputs an optimal pricing scheme. According to the method,the average calculation time delay of the inspection equipment can be effectively reduced, the MEC server can generate part of benefits, and limited calculation resources of the MEC server are considered, so that the proposed scheme better conforms to the actual scene.

Owner:STATE GRID JIBEI ELECTRIC POWER CO LTD TANGSHAN POWER SUPPLY CO +1

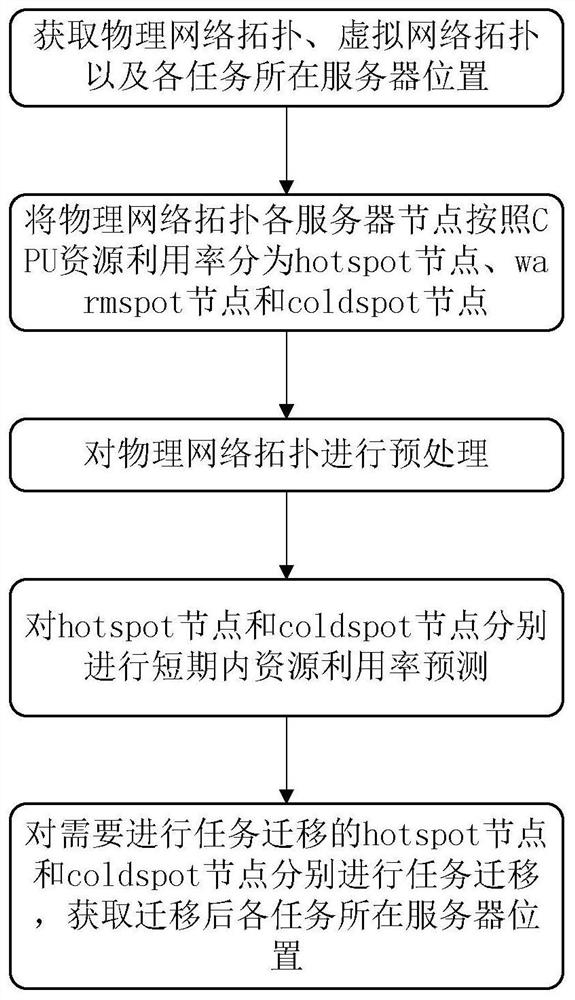

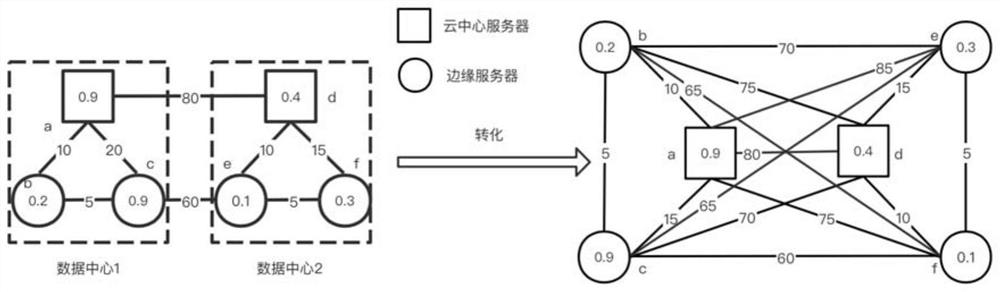

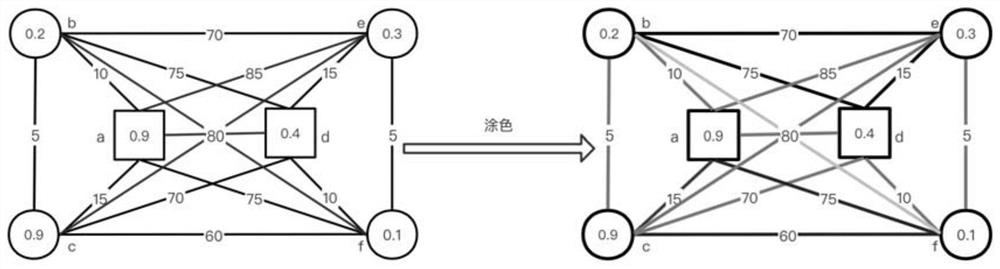

Task migration method in edge computing platform

ActiveCN112087509AImprove selection speedReduce overheadData switching networksComputing centerEdge server

The invention discloses a task migration method in an edge computing platform, belongs to the technical field of edge computing, and is used for reducing energy consumption expenditure, communicationexpenditure, migration expenditure and comprehensive expenditure of the energy consumption expenditure, the communication expenditure and the migration expenditure during task migration in the edge computing platform. The method comprises the steps of dividing each server node of a physical network topology into a hotspot node, a warmspot node and a coldspot node according to the CPU resource utilization rate; preprocessing the physical network topology; respectively carrying out short-term resource utilization rate prediction on the hotspot node and the coldspot node; and respectively carrying out task migration on the hotspot node and the coldspot node which need to be subjected to task migration, and obtaining the position of the server where each migrated task is located. The method isused for eliminating servers exceeding the upper limit of the resource utilization rate and lower than the lower limit of the resource utilization rate in the edge computing platform, effectively reducing the energy consumption overhead, the computing time delay and the data transmission pressure of the system, fully utilizing the storage and computing capabilities of the edge servers and relieving the pressure of a cloud computing center.

Owner:HARBIN INST OF TECH

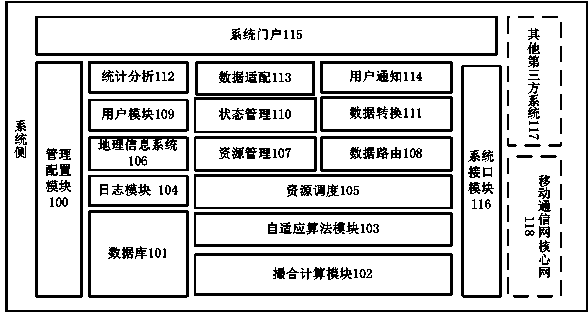

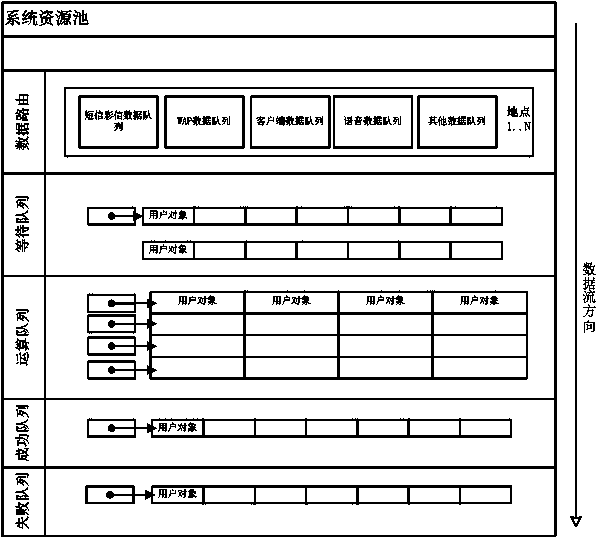

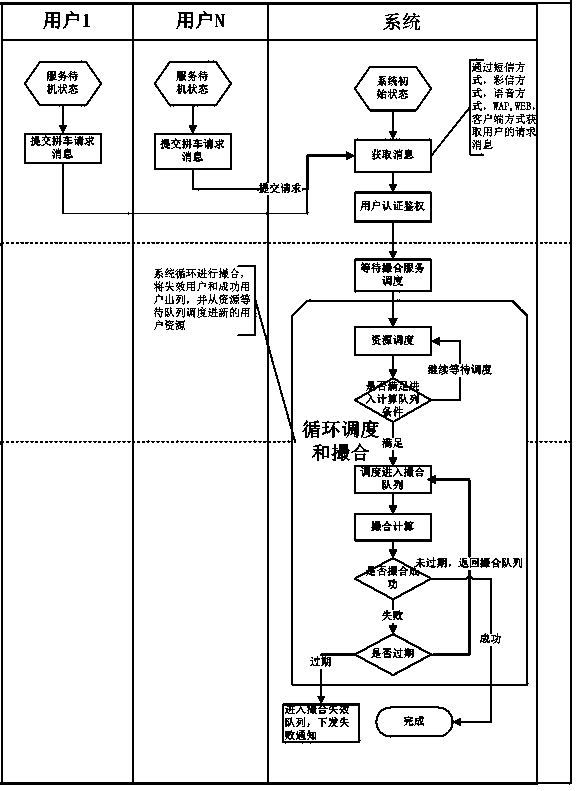

Real-time matching method

InactiveCN103544671AFulfil requirementsImprove friendly experienceData processing applicationsArray data structureCar sharing

The invention discloses a real-time matching method. The real-time matching method is implemented by a system resource management function module, a state management and maintenance function module, a matching computation function module and the like and includes enabling a system to build data models of user objects; storing the modeled user objects in a data structure of a memory; scheduling and shifting the objects and changing states of the objects by a scheduling algorithm; performing scanning and matching computation on the user objects in matching queues according to a matching computation algorithm and matching parameters; updating queue states of the user objects and counting various matching dynamic parameters of the user objects after the user objects are subjected to matching computation; performing the matching algorithm and dynamically adjusting the parameters to adjust integral user resource scheduling for a next computation matching cycle. The data structure contains the queues, lists, arrays and the like. The dynamic parameters include success rates, available system resources and the like. The real-time matching method has the advantage that a core resource scheduling and matching algorithm can be provided for the real-time computation car-sharing application system by a real-time computation matching car-sharing algorithm.

Owner:SHANGHAI BOXUN INFORMATION TECH

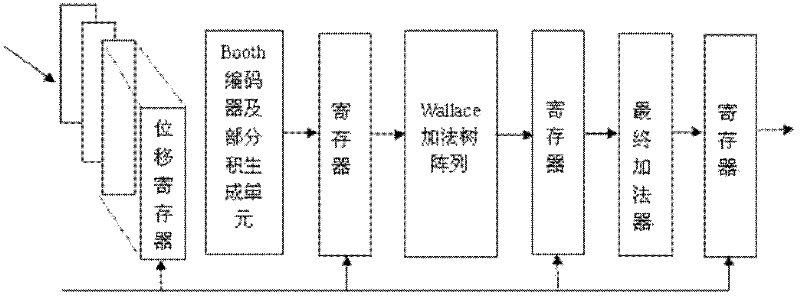

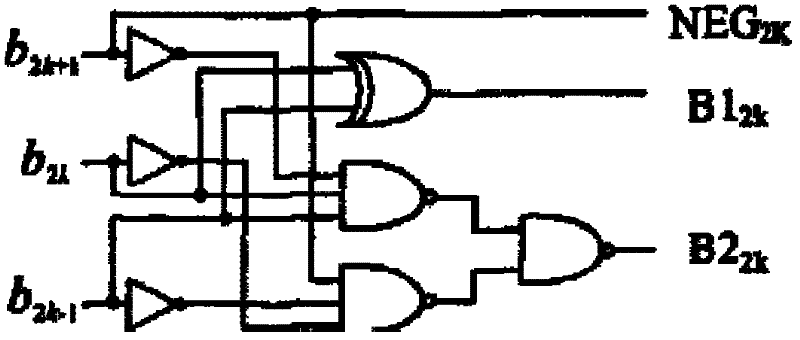

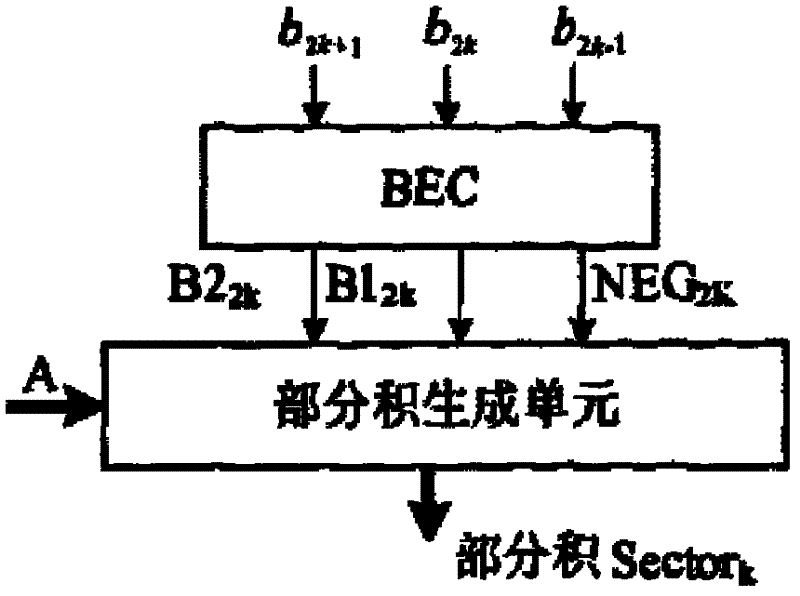

FPGA (field-programmable gate array)-based high-speed FIR (finite impulse response) digital filter

InactiveCN102355232AEasy to adjustApplications for different occasionsDigital technique networkData compressionBinary multiplier

An FPGA-based high-speed FIR digital filter is characterized in that an improved Booth coding mode and a partial product adder array module are used as the first stage of the pipeline design; a Wallace adder tree for compressing and adding 2M data is used as the second stage of the pipeline design; and a final adder is used as the third stage of the pipeline design. The order number and coefficient of the filter can be adjusted conveniently by reasonably partitioning a high-speed multiplier and combining the Wallace adder tree array through the pipeline technology, so that the FPGA-based high-speed FIR digital filter is applicable to different occasions and the operation speed is improved greatly.

Owner:BEIHANG UNIV

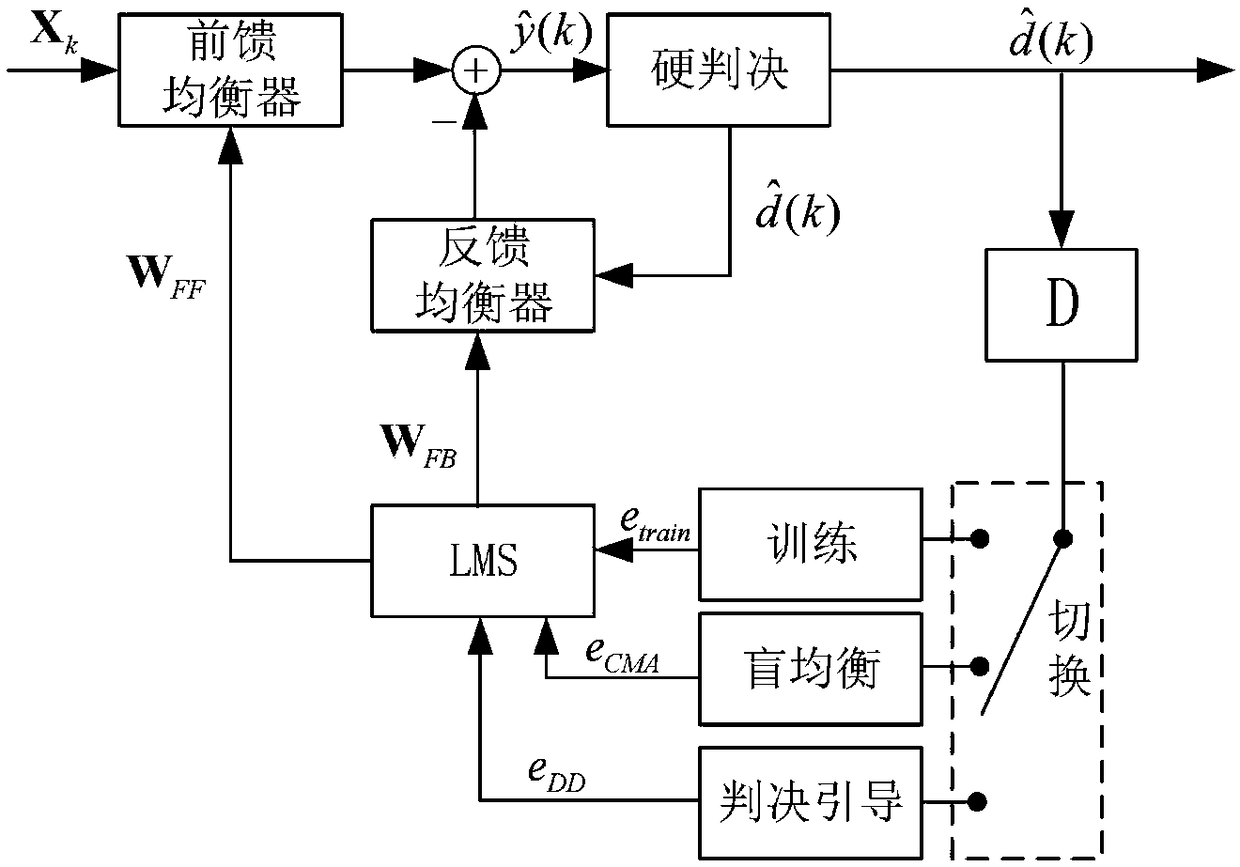

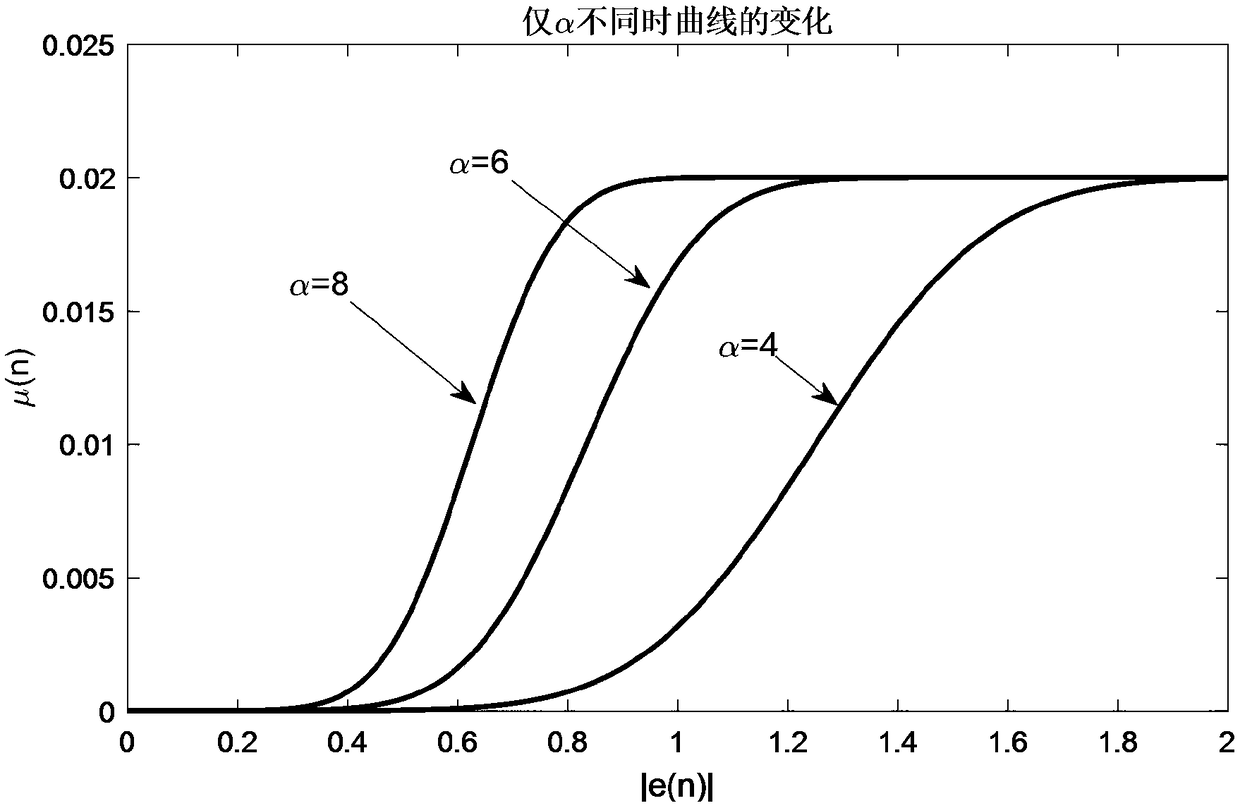

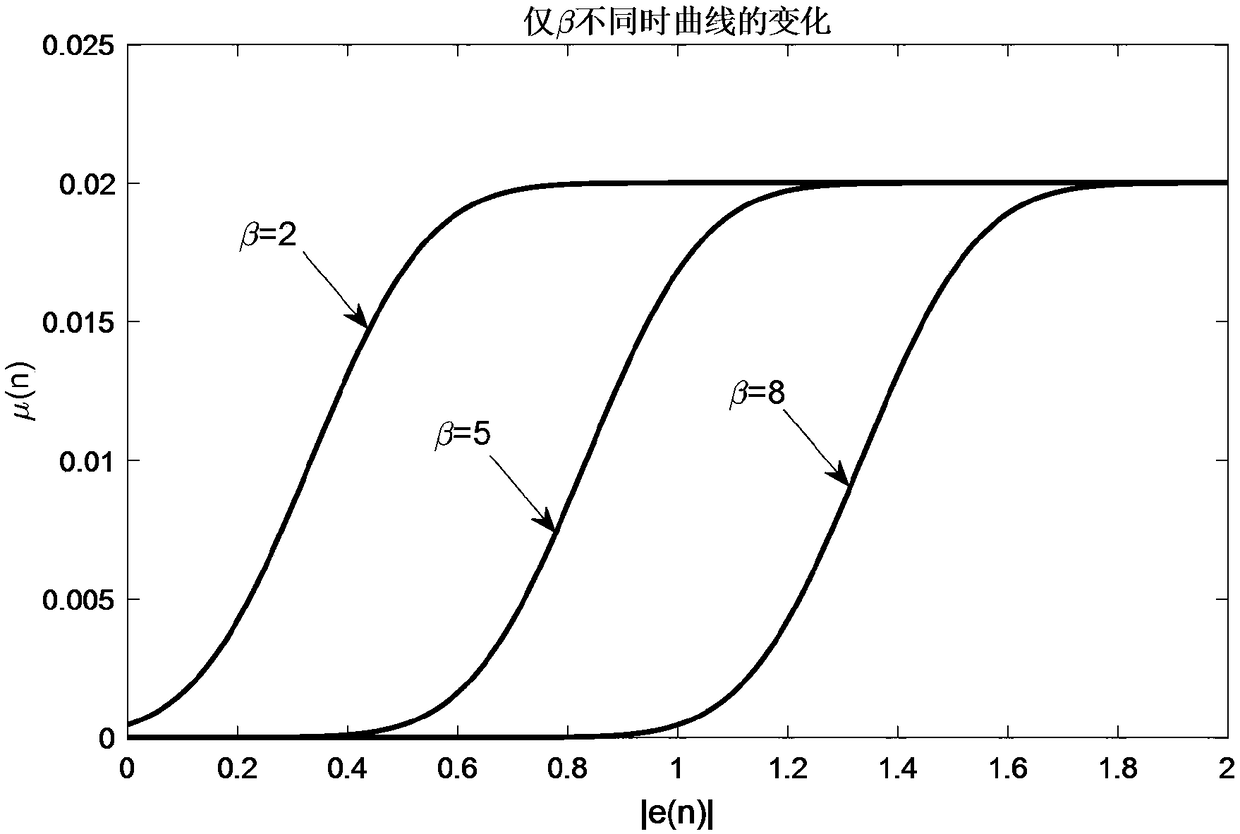

LMS algorithm processing time-delay sensitive based delayed decision feedback equalization method and system

ActiveCN108712354AFast convergenceSmall steady state errorTransmitter/receiver shaping networksAlgorithmEqualization

The invention belongs to the field of wireless communication, and discloses an LMS algorithm processing time-delay sensitive based delayed decision feedback equalization method and system. The systemapplies a variable step LMS algorithm based decision feedback equalizer to the receiving processing of a disturbed signal. As a wireless communication channel exists a time-varying property, the convergence speed of LMS needs to be further accelerated to make the equalizer track channel changes better and in real time. The variable step LMS algorithm adopts a larger iterative step to accelerate the convergence speed during an initial working phase, and adopts a smaller iterative step to reduce steady-state error during a convergence phase, so that an original signal can be effectively recovered. The method and system have advantages of fast in convergence speed, less in steady-state error, little in processing time-delay and easy for realization.

Owner:XIDIAN UNIV

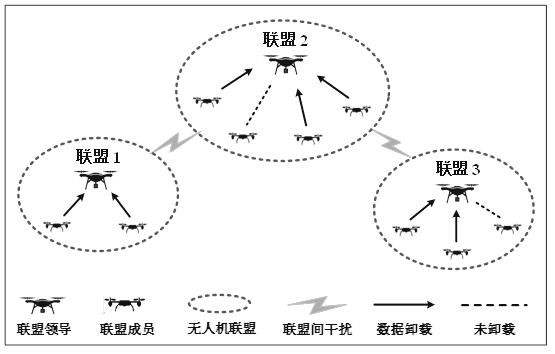

Unmanned aerial vehicle alliance network unloading model and decision calculation method

ActiveCN111988792AReduce computing delayGuaranteed validityNetwork traffic/resource managementDesign optimisation/simulationInformation transmissionNeighbor relation

The invention discloses an unmanned aerial vehicle alliance network unloading model and a decision calculation method, and belongs to the technical field of wireless communication. An unmanned aerialvehicle alliance network for executing an emergency task is established; each alliance comprises an alliance leader and a plurality of alliance members; and the unmanned aerial vehicle members collectinformation, calculate and process the data, select a data unloading proportion and an information transmission channel, send the unloading data to the unmanned aerial vehicle leader, allocate calculation resources according to a first-coming first-serving service mode when the unmanned aerial vehicle leader receives the unloading data of the plurality of members, and return a result to the alliance members. The unmanned aerial vehicle leader obtains decision information through information interaction, and selects a plurality of unmanned aerial vehicle members with non-neighbor relations toupdate the unloading strategy. The product is complete in model, clear in physical significance and reasonable and effective in design algorithm, and can be well applied to unmanned aerial vehicle network scenes.

Owner:ARMY ENG UNIV OF PLA

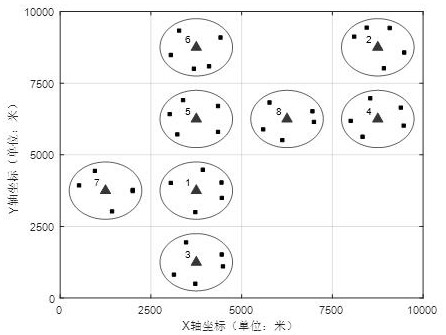

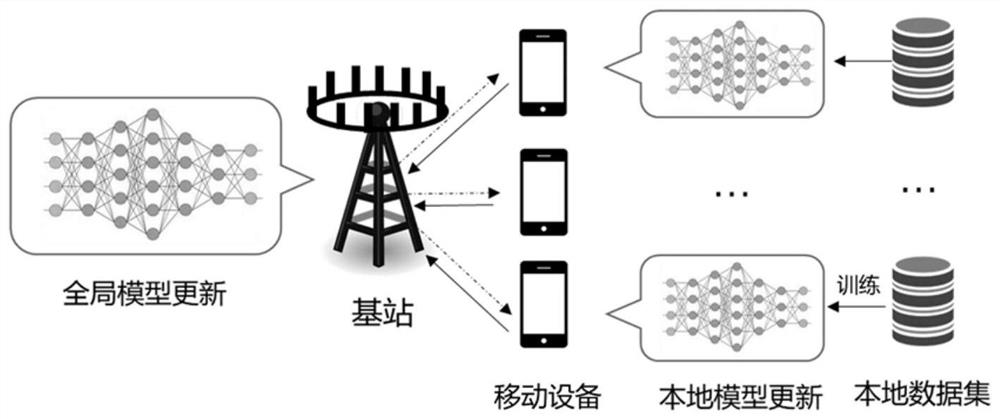

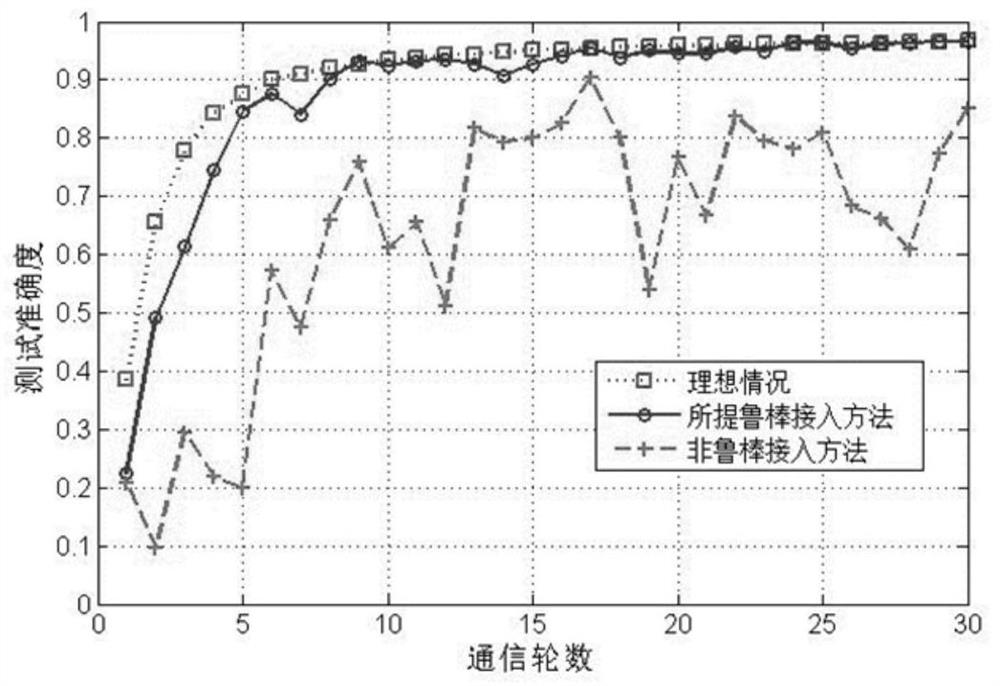

Large-scale access method for edge intelligent network

ActiveCN112911608AImprove spectral efficiencyReduce computing delayChannel estimationNeural architecturesData setBeamforming

The invention discloses a large-scale access method for an edge intelligent network. A cell deploys a multi-antenna base station as an edge server, and a large number of mobile devices are accessed to a wireless network through the base station for federated learning. In each round of learning, a base station firstly selects part of equipment, and a transmitting beam broadcast global model is designed through a downlink. After each selected device recovers the global model through the receiver, a new local model is trained based on the local data set, and then the new local model is sent out after beam forming. The superposition characteristic of a wireless channel is utilized, through a receiver, a base station can directly obtain an aggregation model which is calculated in the air, and then the weighted average of the aggregation model is calculated to serve as an updated global model. The invention provides an effective large-scale access method for the edge intelligent network.

Owner:ZHEJIANG UNIV

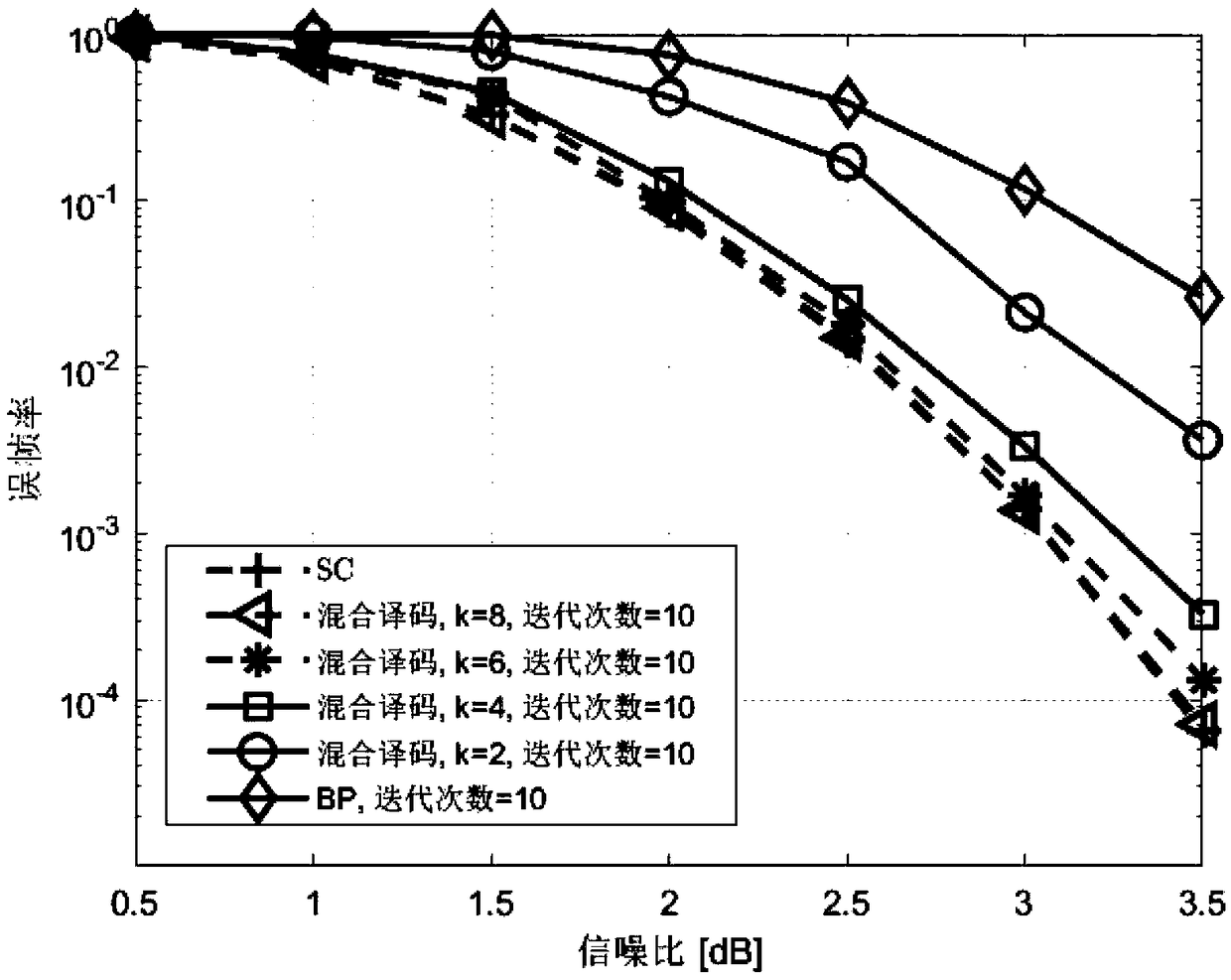

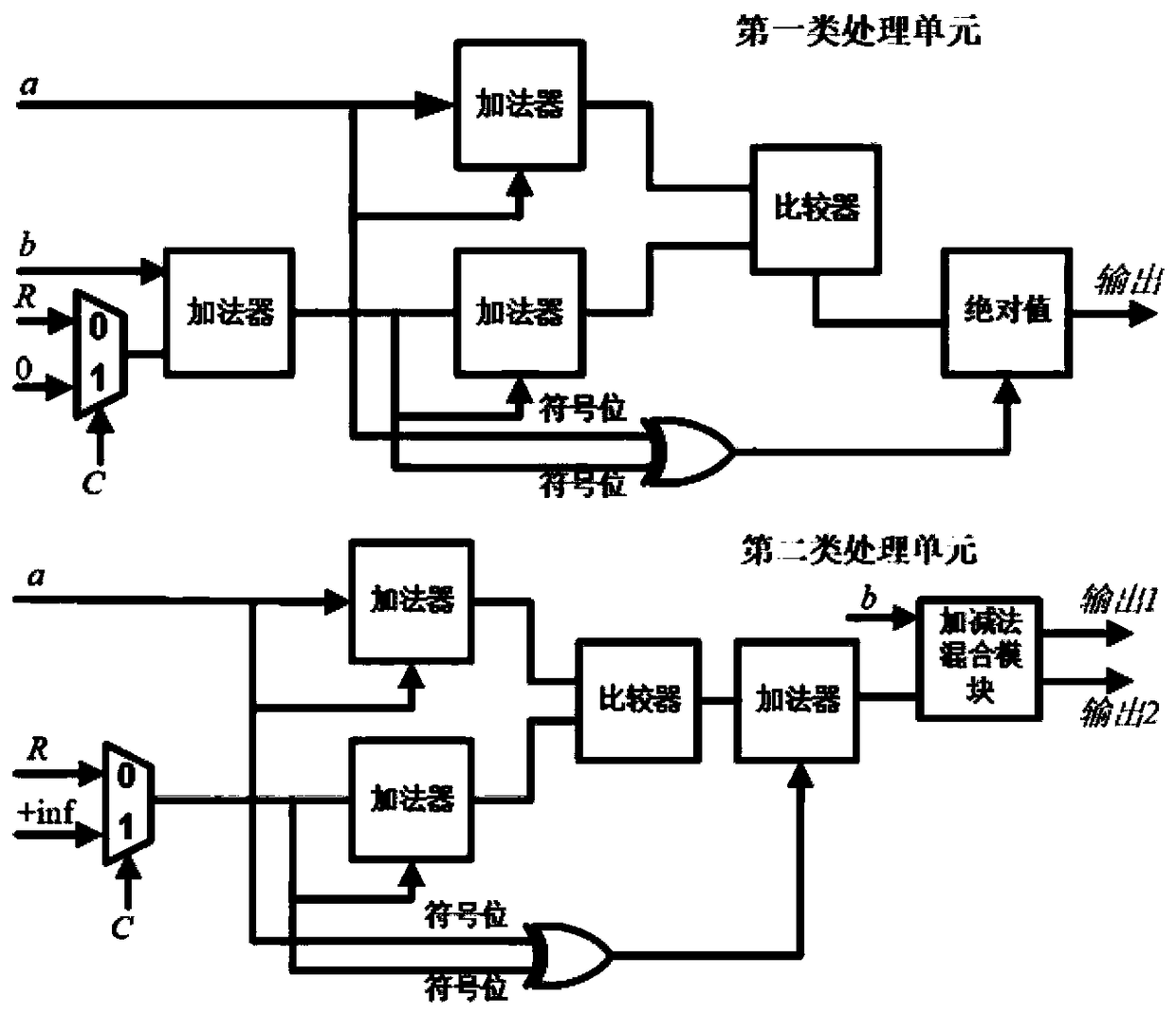

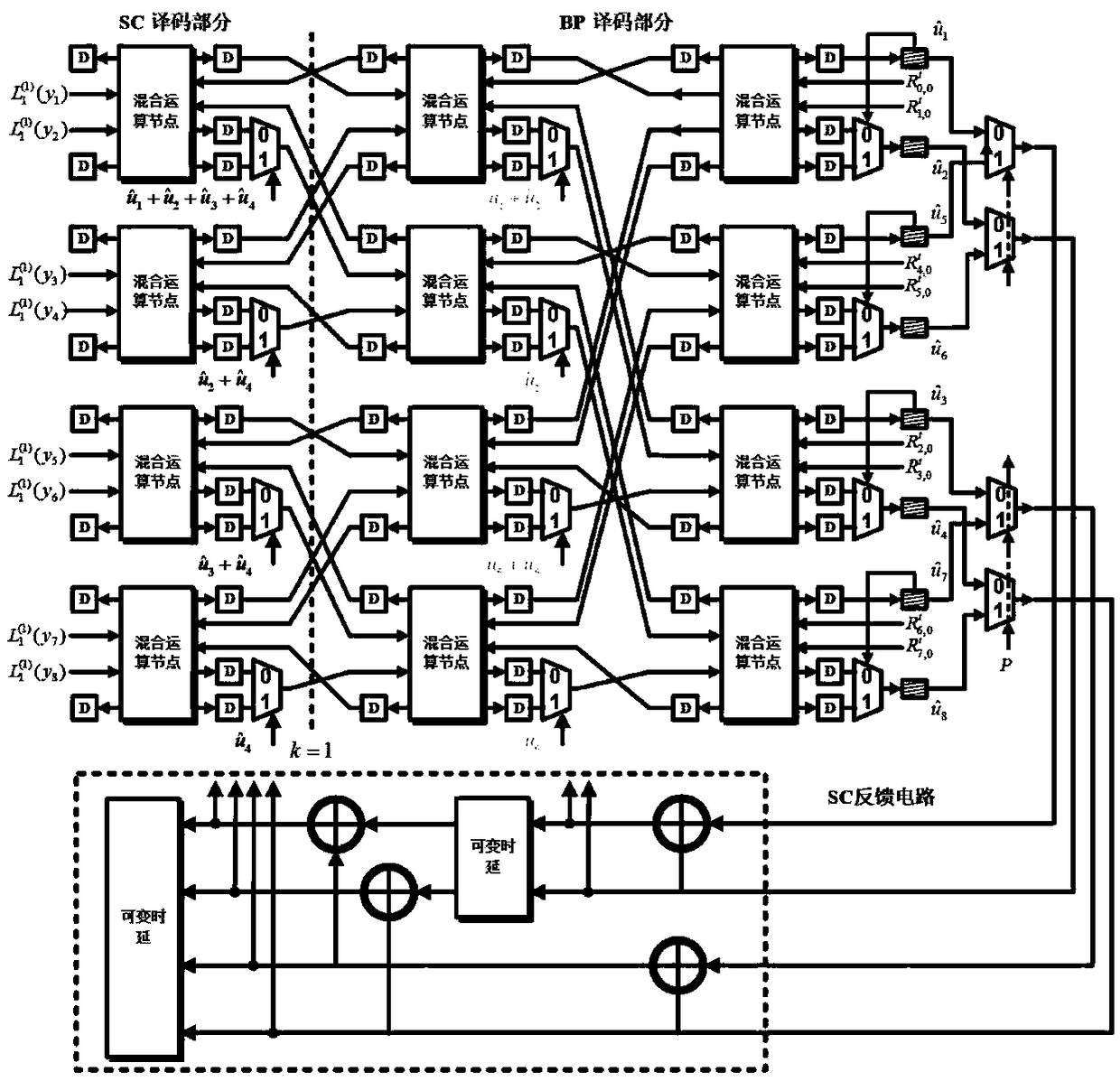

SC-BP hybrid decoding method for polar code and adjustable hardware architecture thereof

ActiveCN109495116AImprove sexual functionReduce computing delayCode conversionError correction/detection using linear codesDecompositionComputer engineering

The invention discloses an SC-BP hybrid decoding method for a polar code and an adjustable hardware architecture thereof, comprising the following steps of: processing the channel log-likelihood ratioof an input through a k-order SC decoder to obtain the input log-likelihood ratio of a BP decoder; iterating the input to obtain an output of the BP decoder, wherein the k is a decomposition factor of a hybrid decoder; encoding and returning the output to the SC decoder as a returned value of the SC decoder; and performing the next decoding operation according to the returned value by the SC decoder. The SC-BP hybrid decoding method for a polar code integrates the SC decoder algorithm and the BP decoder algorithm into an SC-BP hybrid decoding unit and adds the pre-computing technology, so that under the effects of an SC encoding feedback architecture and an hybrid decoding systolic architecture, the time delay and the performance of the decoding can be rounded between the effects of the SC and the BP algorithms, the decoder can adapt to multiple communication requirements, and the market application prospect is excellent.

Owner:SOUTHEAST UNIV

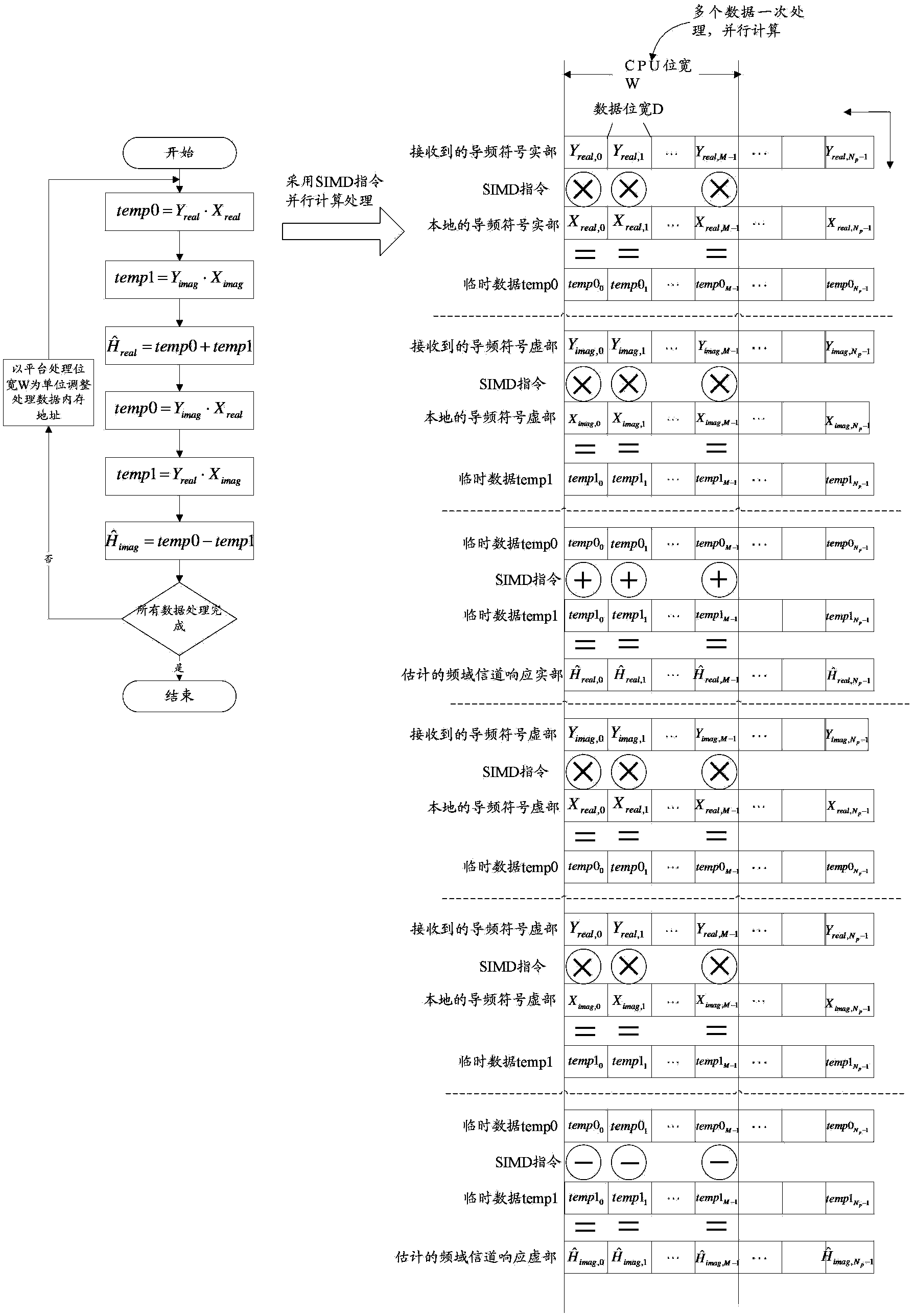

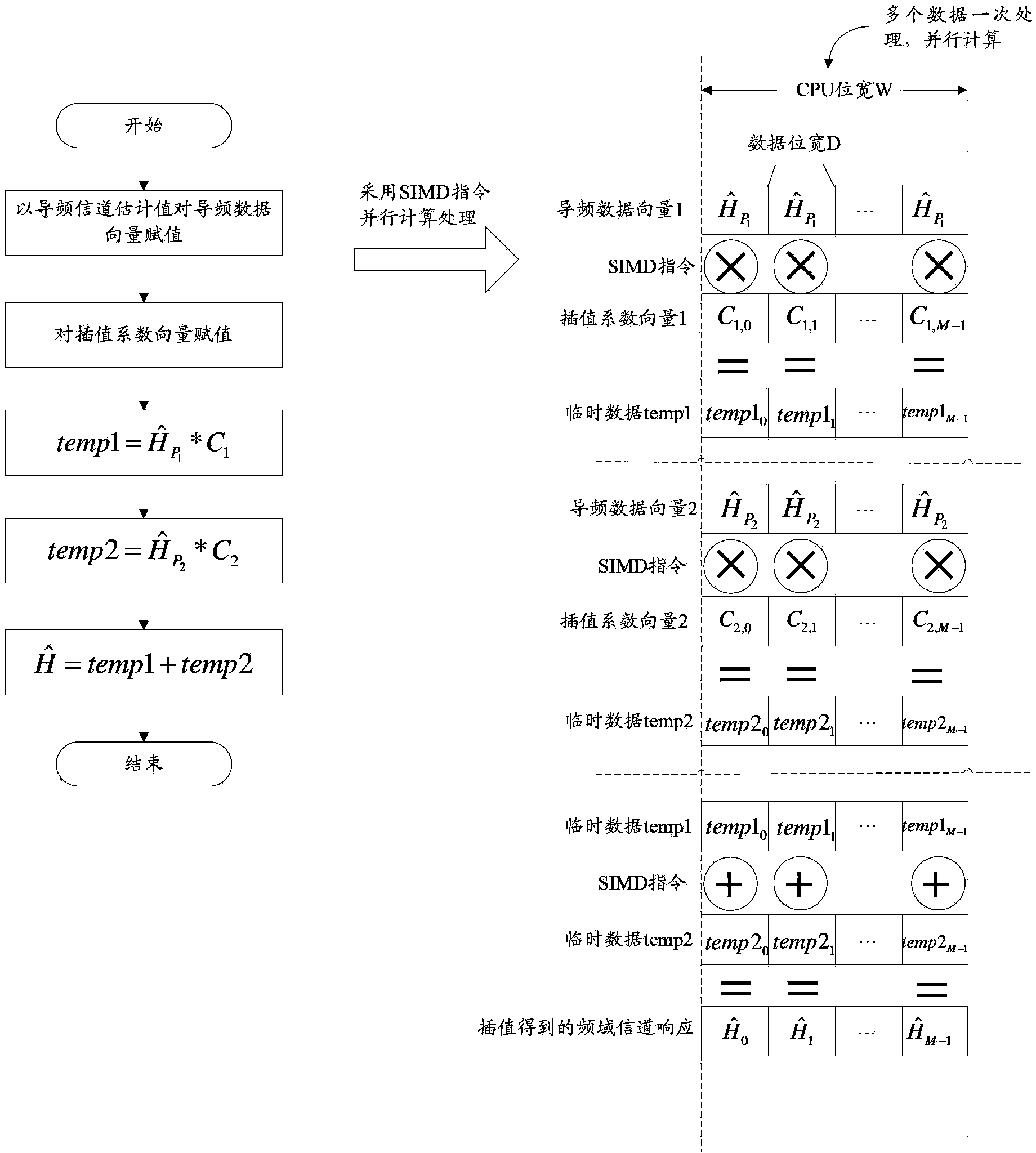

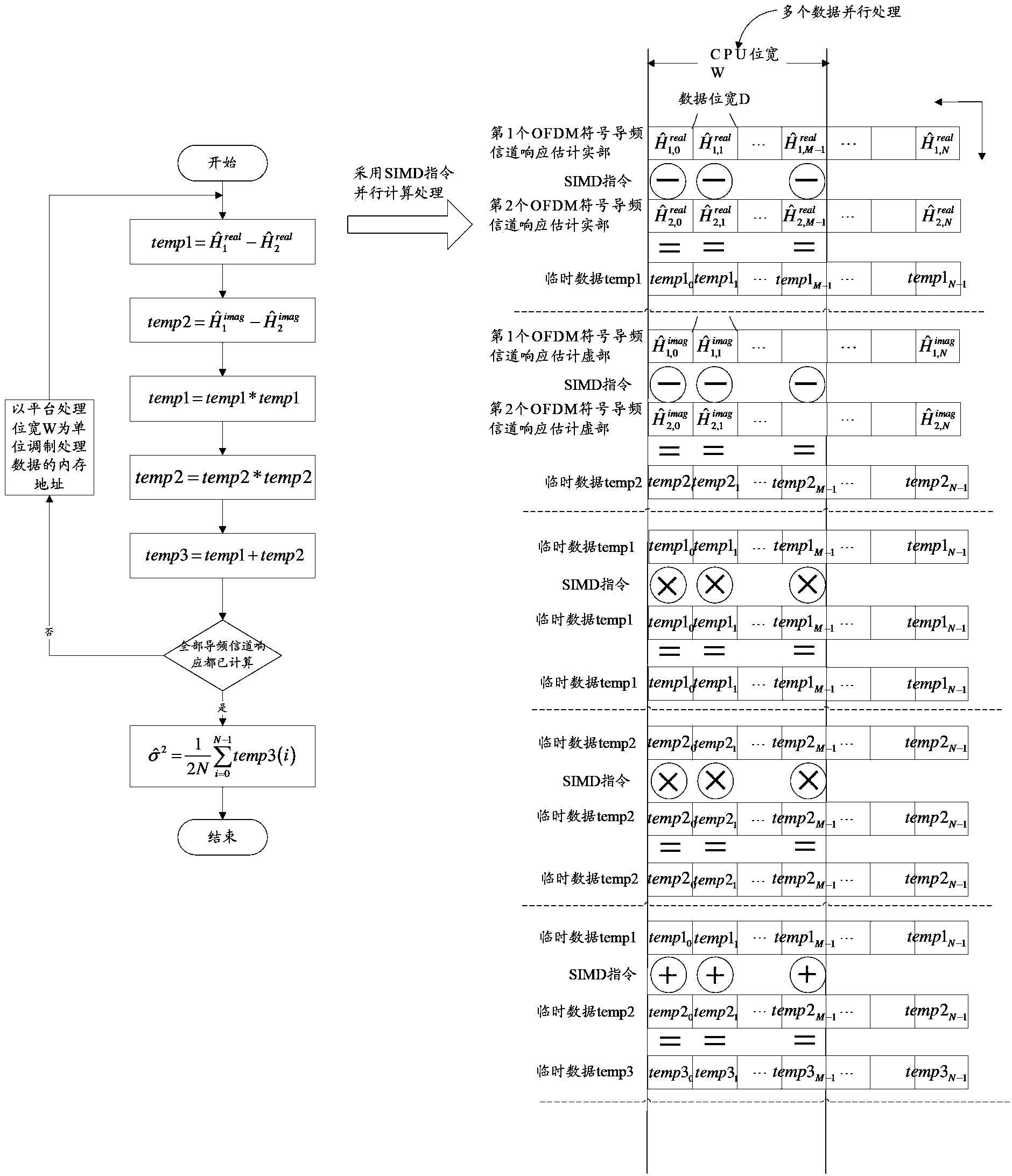

Channel estimation and frequency domain equalization method and device and general-purpose processor

InactiveCN103916351AMeet real-time requirementsReduce computing delayMulti-frequency code systemsTransmitter/receiver shaping networksGeneral purposeEngineering

The invention discloses a channel estimation and frequency domain equalization method. The method comprises the steps that multiple pilot symbols of received OFDM symbols are utilized to carry out channel estimation by using SIMD instructions, and the SIMD instructions are utilized to calculate channel estimated values of non-pilot symbol positions of the OFDM symbols respectively according to the channel estimation values of multiple pilot symbol positions of the OFDM symbols. The invention discloses a channel estimation and frequency domain equalization device. According to the technical scheme, the OFDM signals are processed based on a general-purpose multi-core processor, parallel optimization is carried out on related algorithms based on an SIMD instruction collection, calculation time delay is made to be reduced to be inversely proportional to parallel times, processing time delay is reduced greatly, and the instantaneity requirement of signal processing at a receiving end is met.

Owner:CHINA MOBILE COMM GRP CO LTD

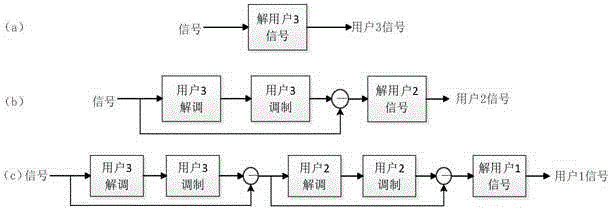

High-performance successive interference cancellation receiver based on non-orthogonal multi-access system

ActiveCN106685449AReduce error rateImprove correctness and reliabilityTransmissionMulti accessEngineering

The invention relates to the mobile communication technology field, and especially relates to a high-performance successive interference cancellation receiver based on a non-orthogonal multi-access system. A selector switch unit, a user decoding unit and a user coding unit are sequentially added between a user demodulation unit and a user modulation unit of a former N-1 level cancellation apparatus, and a result of the user coding unit is output to the user modulation unit. The selector switch is connected with the user decoding unit and the user modulation unit, and a user demodulation unit result received by the selector switch is sent to the user decoding unit or the user modulation unit. The selector switch unit, the user decoding unit and the user coding unit are additionally adopted on the basis of a conventional successive interference cancellation receiver, error rates of signals of each level can be reduced through error correction capabilities of decoding and coding, and signal correctness and reliability can be improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

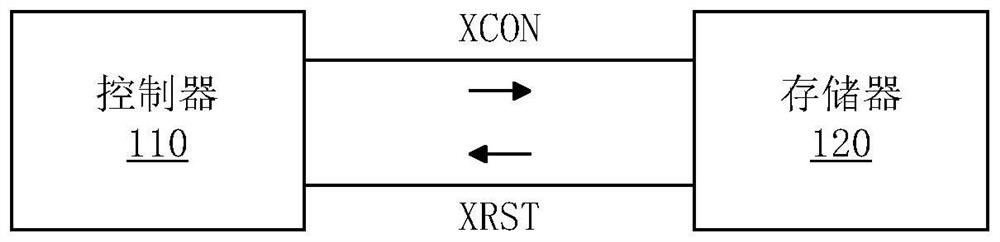

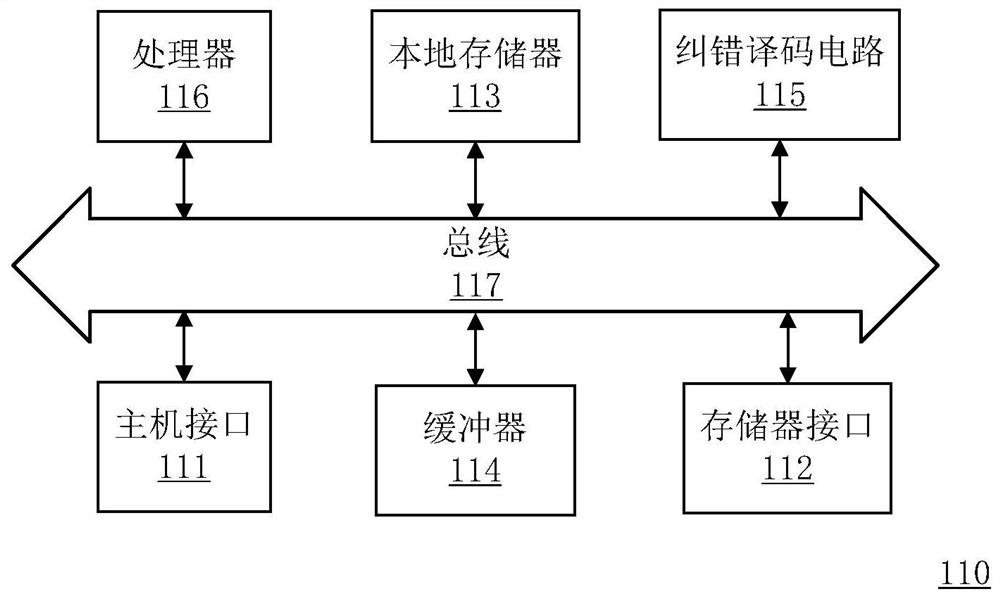

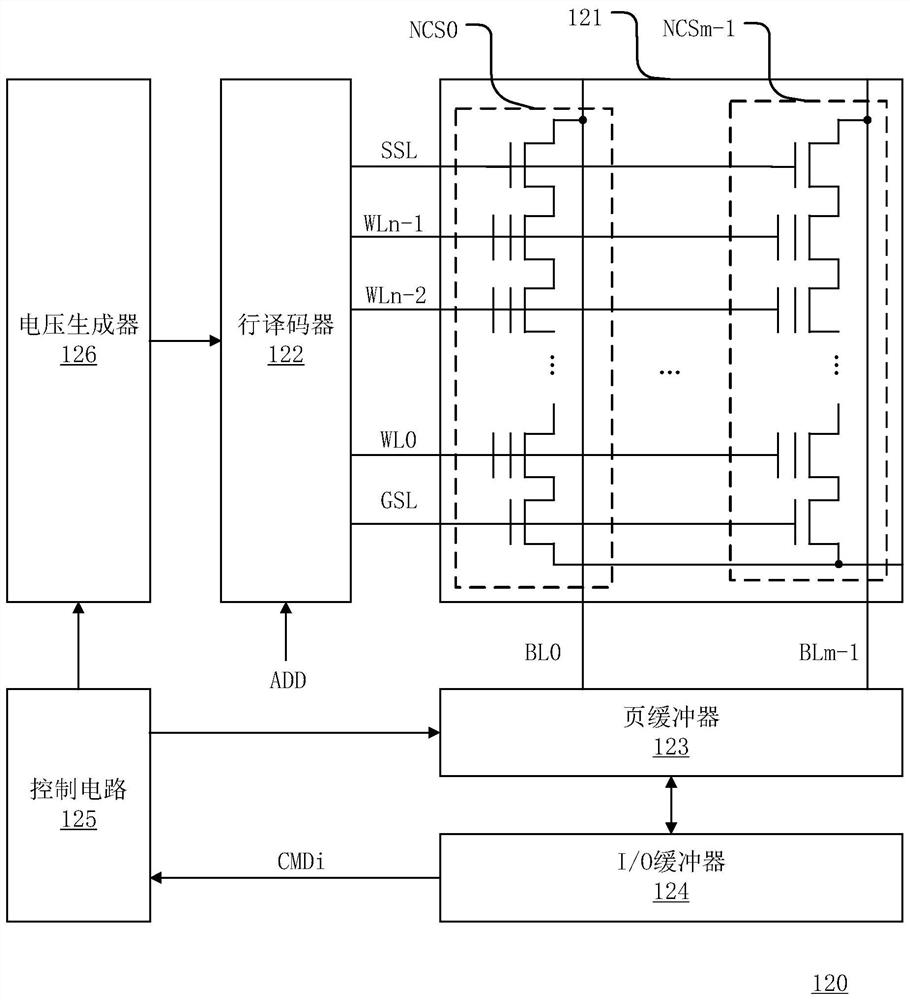

Read voltage optimization method of memory cell, controller of 3D memory and operation method of controller

ActiveCN113223593AReduce latencyImprove reliabilityRead-only memoriesRedundant data error correctionMemory cellControl theory

The invention discloses a reading voltage optimization method of a memory cell. The memory cell is selected from a plurality of memory cells corresponding to a selected physical page. The read voltage optimization method comprises the following steps: counting the number of memory cells with a first threshold voltage to obtain a first number; counting the number of the memory cells with the second threshold voltage to obtain a second number; setting an optimal reading voltage based on the first number, the second number and the adjustment parameter, wherein different adjusting parameters are set according to different data storage time, read interference, cross temperature and programming / erasing times. According to the reading voltage optimization method of the memory cell, the controller of the 3D memory and the operation method of the controller, when the optimal reading voltage is set, only the limited number of times of reading is needed, the time delay for determining the optimal reading voltage is effectively reduced, and the adjustment parameters are set based on parameters such as reading interference and programming / erasing times, and the accuracy and reliability of products are improved.

Owner:MAXIO TECH HANGZHOU LTD

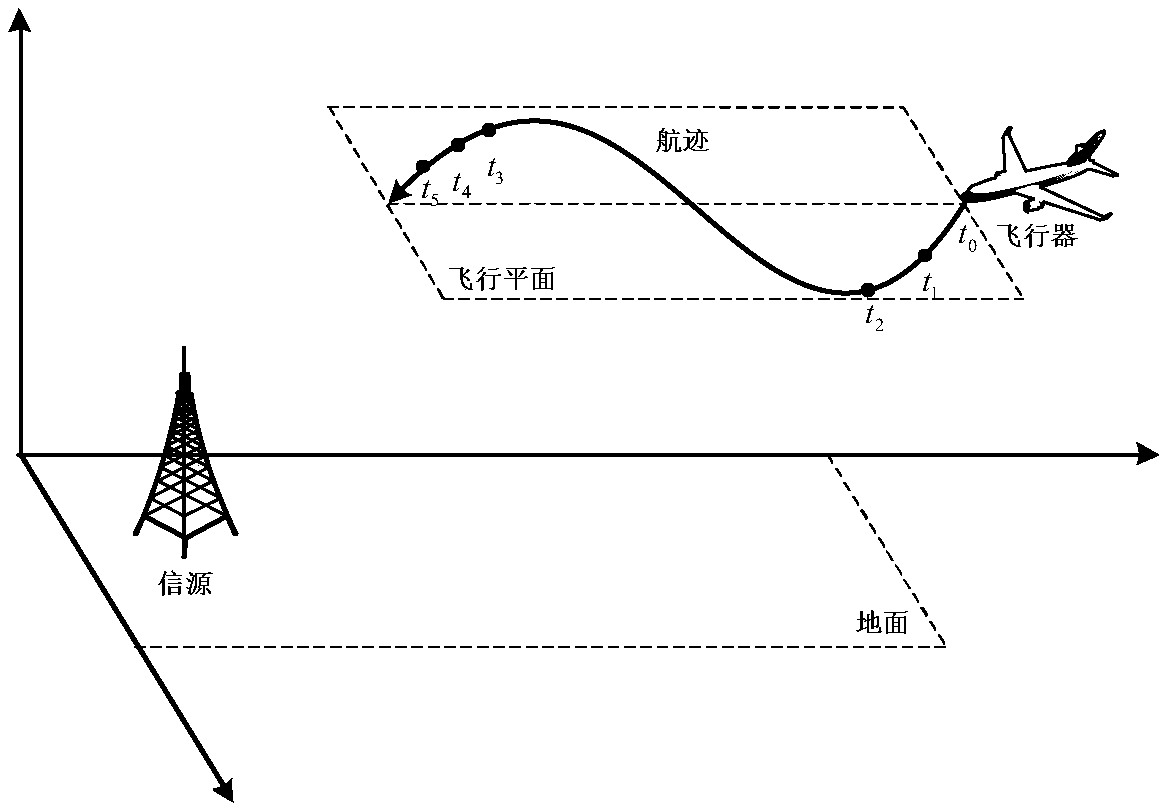

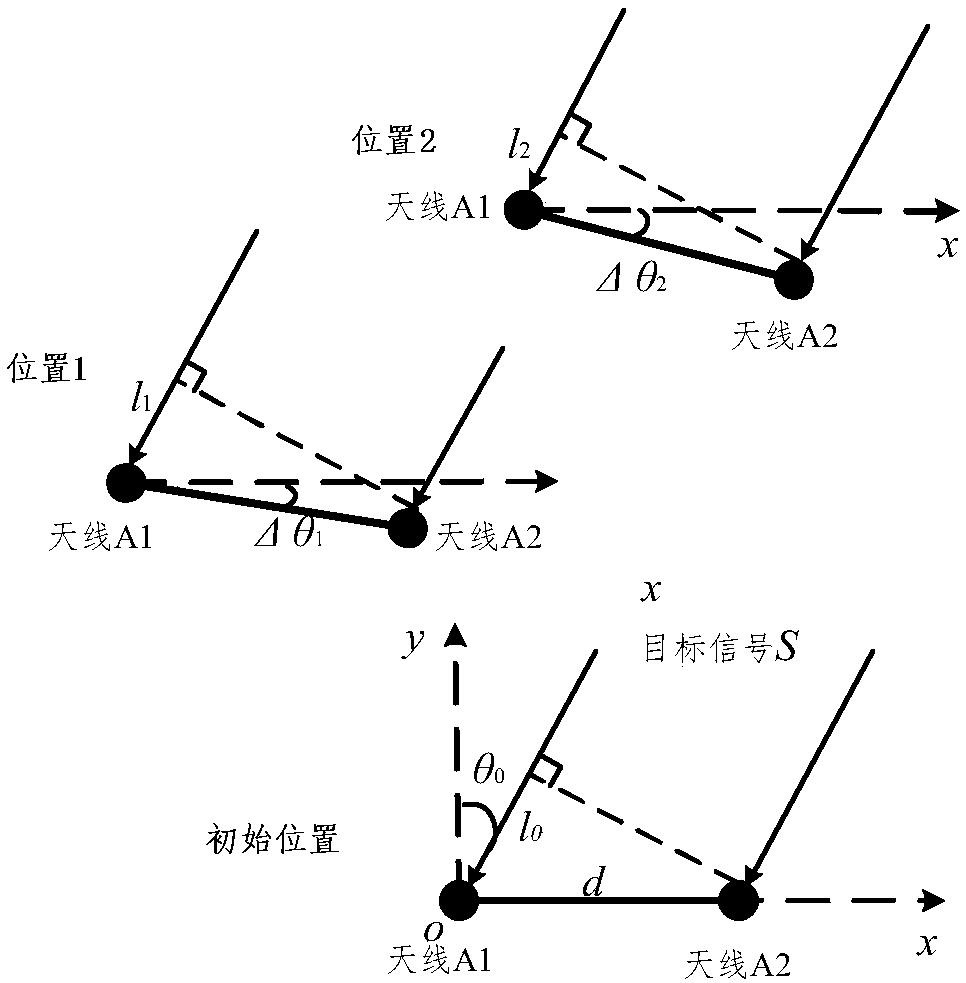

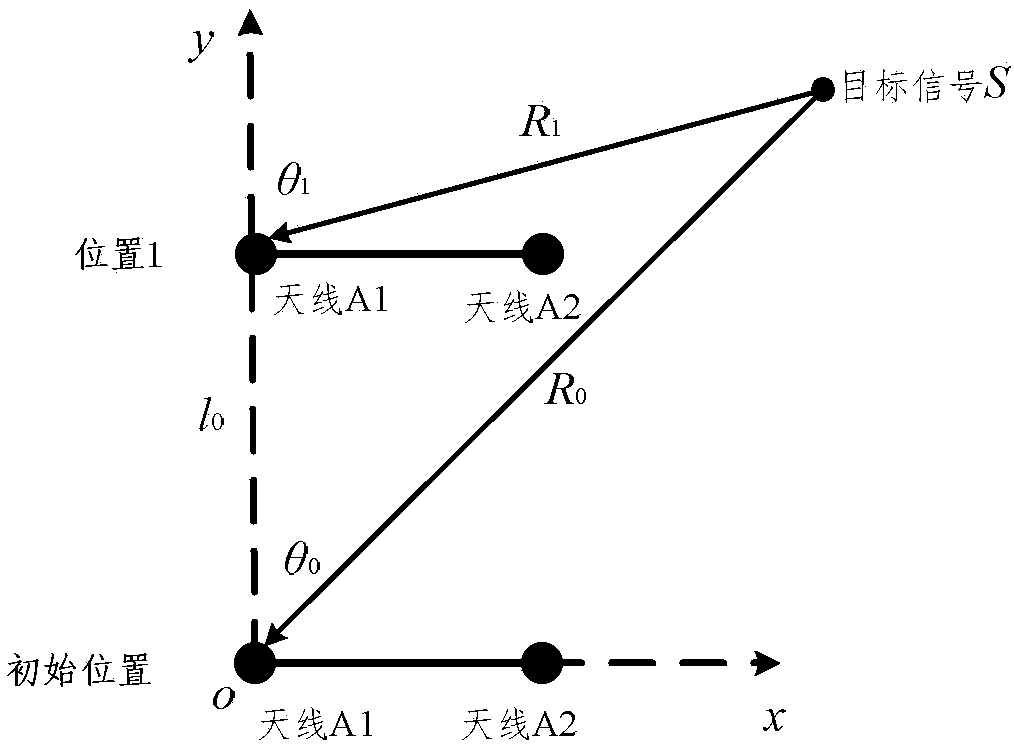

Aircraft positioning system and method based on single station

ActiveCN108680941ARealize signal locationRapid positioningSatellite radio beaconingSingle stationAzimuth

The invention discloses an aircraft positioning system and method based on a single station, wherein the positioning system comprises an aircraft flight control module, a phase measuring module, an inertial gyroscope, a satellite navigation positioning module and a processing module. The phase measuring module comprises two antennas at different positions of the aircraft. The aircraft flight control module is configured to control the aircraft to fly along a nonlinear line. The processing module includes a target signal azimuth angle calculation unit and a target signal distance calculation unit. The target signal distance calculation unit calculates the distance from the aircraft to a target signal based on the target signal azimuth angle acquired by the aircraft and the aircraft positionmeasured by the satellite navigation positioning module. The system and method do not need to consider the Doppler frequency offset effect of a received signal caused by the motion of the aircraft, can realize high-precision signal source positioning in combination with existing hardware devices of the aircraft under the premise of only a single short baseline, and has a great application value.

Owner:SOUTHEAST UNIV

Large-scale access method integrating calculation and communication

ActiveCN110380762AImprove spectral efficiencyReduce computing delaySpatial transmit diversitySuperposition codingAccess method

The invention discloses a large-scale access method integrating calculation and communication. A multi-antenna base station is arranged in the center of a cell, and a large number of mobile terminalsare accessed to a wireless network through the base station. The mobile terminal performs beam forming on the communication signal and the calculation signal to be transmitted respectively, and then transmits the communication signal and the calculation signal after superposition coding. On one hand, the base station can directly receive the summation function obtained through air calculation by utilizing the superposition characteristic of the wireless channel, and then recover the target function through calculating the receiver. And on the other hand, the base station decodes the communication signal of each mobile terminal through the communication receiver. The invention provides an effective calculation and communication fusion large-scale access method for the Internet of Things with a large-scale mobile terminal.

Owner:ZHEJIANG UNIV

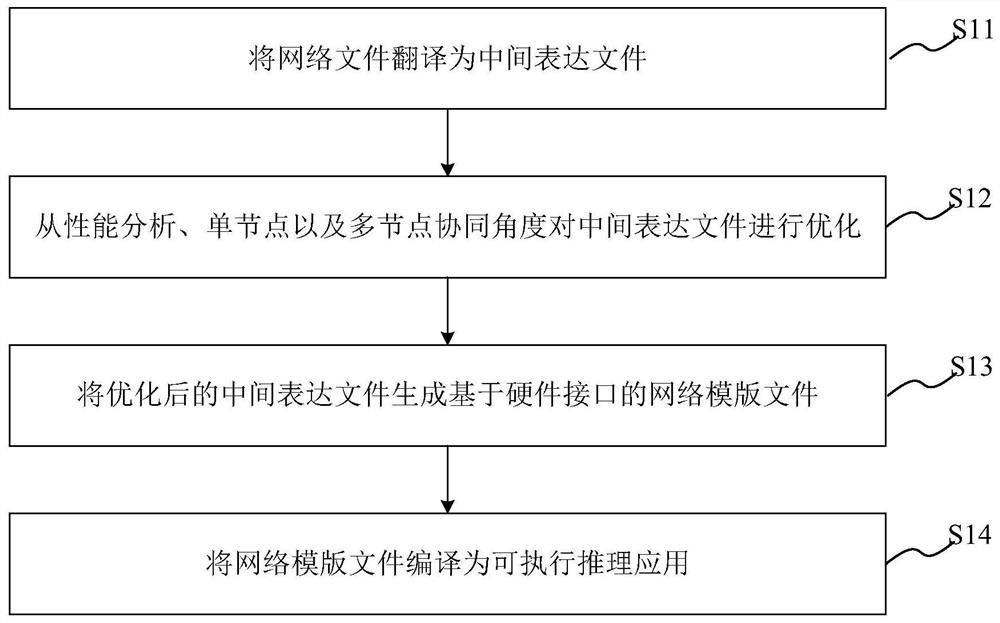

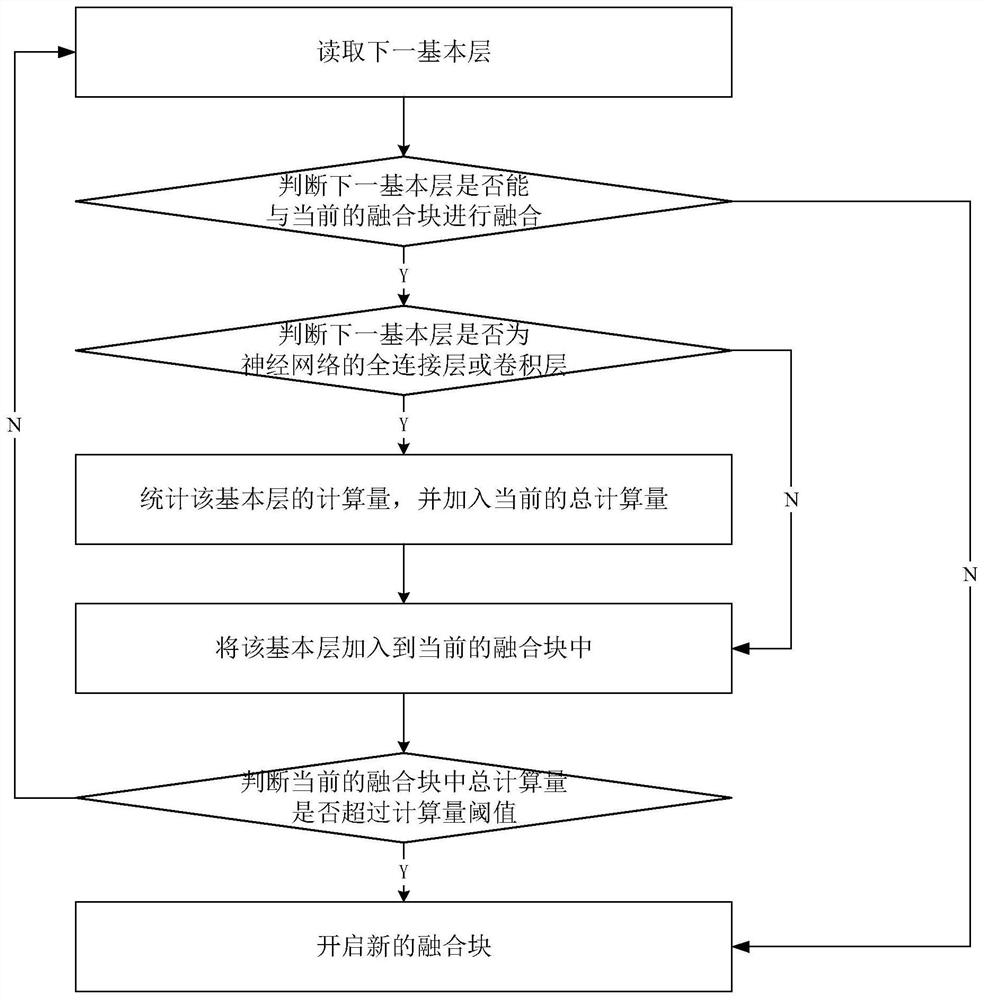

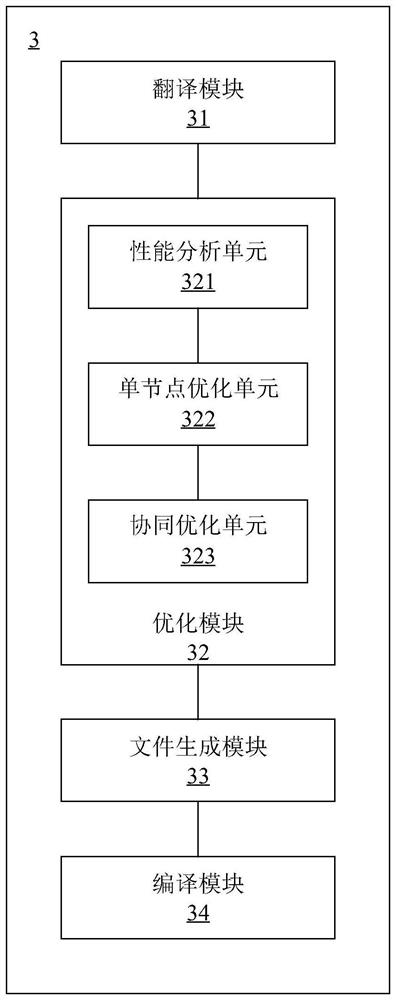

Neural network compiling method and system, computer storage medium and compiling equipment

ActiveCN112529175AEasy to debug by yourselfParameters are easy to adjustNeural architecturesNeural learning methodsNetwork outputDatabase

The invention provides a neural network compiling method and system, a computer storage medium and compiling equipment. The neural network compiling method comprises the following steps: translating anetwork file into an intermediate expression file; optimizing the intermediate expression file from the perspective of performance analysis and single-node and multi-node cooperation; generating a network template file based on a hardware interface from the optimized intermediate expression file; and compiling the network template file into an executable reasoning application. The invention aimsto design and achieve a compiling tool chain framework capable of automatically adjusting parameters and generating codes according to software and hardware information, intermediate representation and a corresponding optimization algorithm, so that when the compiling tool chain framework is used for calculating on a target chip and a network output result is not changed, a higher calculation rateand a smaller calculation time delay can be obtained in a shorter optimization time. A user can conveniently debug and adjust parameters by himself / herself.

Owner:SHANGHAI JIAO TONG UNIV

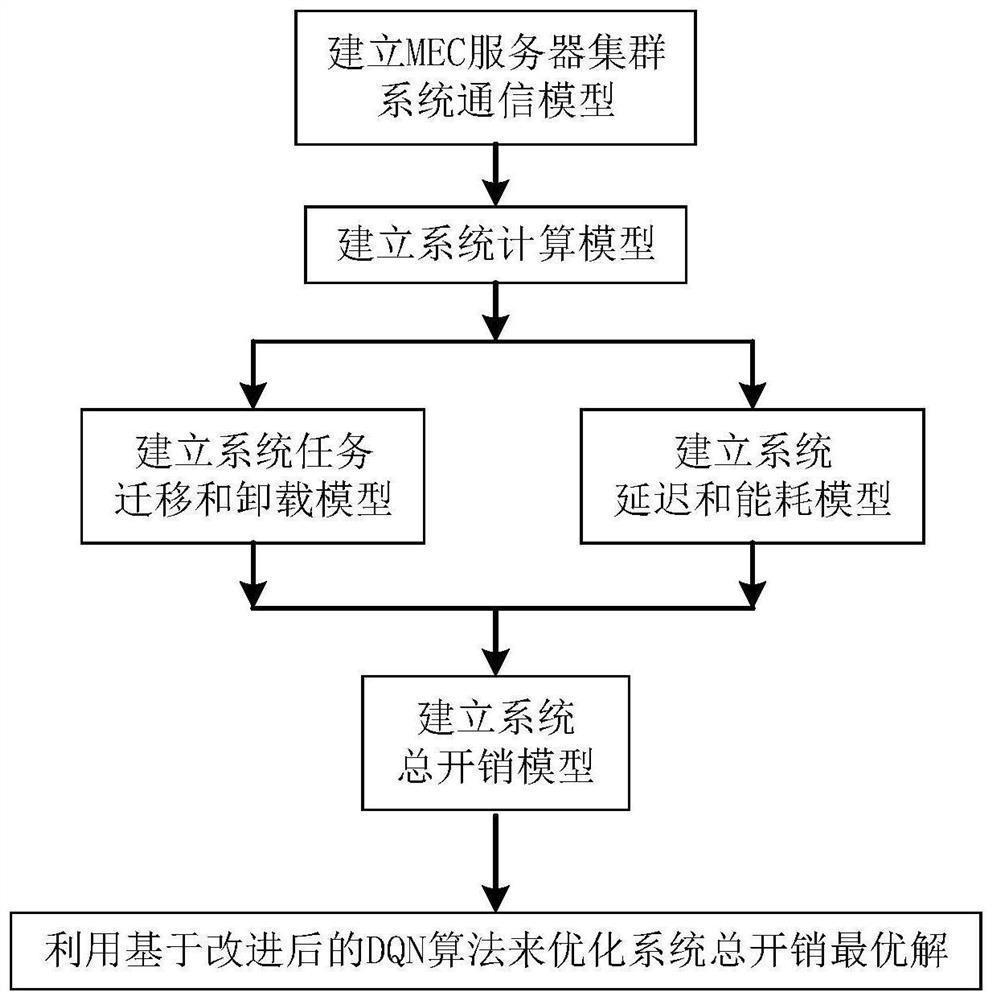

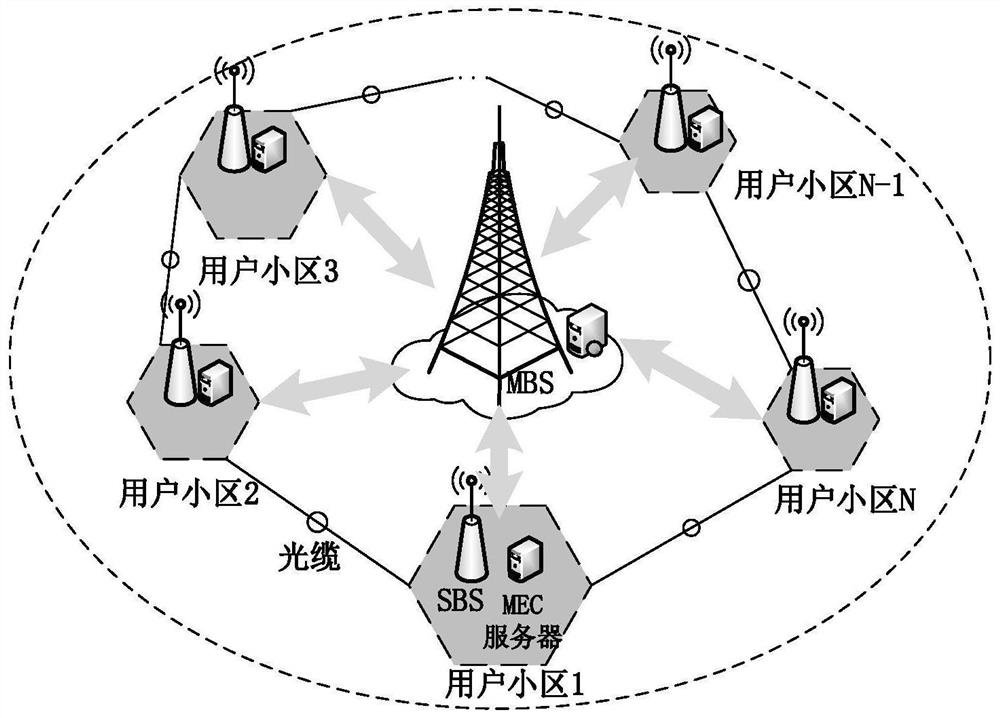

Implementation method of intelligent collaboration strategy of MEC server cluster

PendingCN114567895AReduce computing delayReduce energy consumptionTransmissionNeural learning methodsMacro base stationsEdge computing

The invention belongs to the technical field of mobile communication and computers, and particularly relates to an implementation method of an intelligent collaboration strategy of an MEC server cluster. The method comprises the following steps: in a mobile edge network comprising N small base stations and a macro base station, configuring an MEC server for each small base station, and establishing a system communication model of an MEC server cluster; respectively establishing a delay and energy consumption model of the MEC server cluster according to congestion delay generated during task migration and calculation delay generated during task migration, and obtaining a system total overhead model; and minimizing the total overhead of all MEC server clusters, namely the total overhead of the system, and optimizing the total overhead of the system by using a deep reinforcement learning DQN algorithm to output an optimal solution. According to the method, the new intelligent collaborative model is constructed on the MEC server cluster, the optimal task migration decision of the MEC cluster is obtained by using the deep reinforcement learning algorithm, and the method can be suitable for various types of edge computing service scenes.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

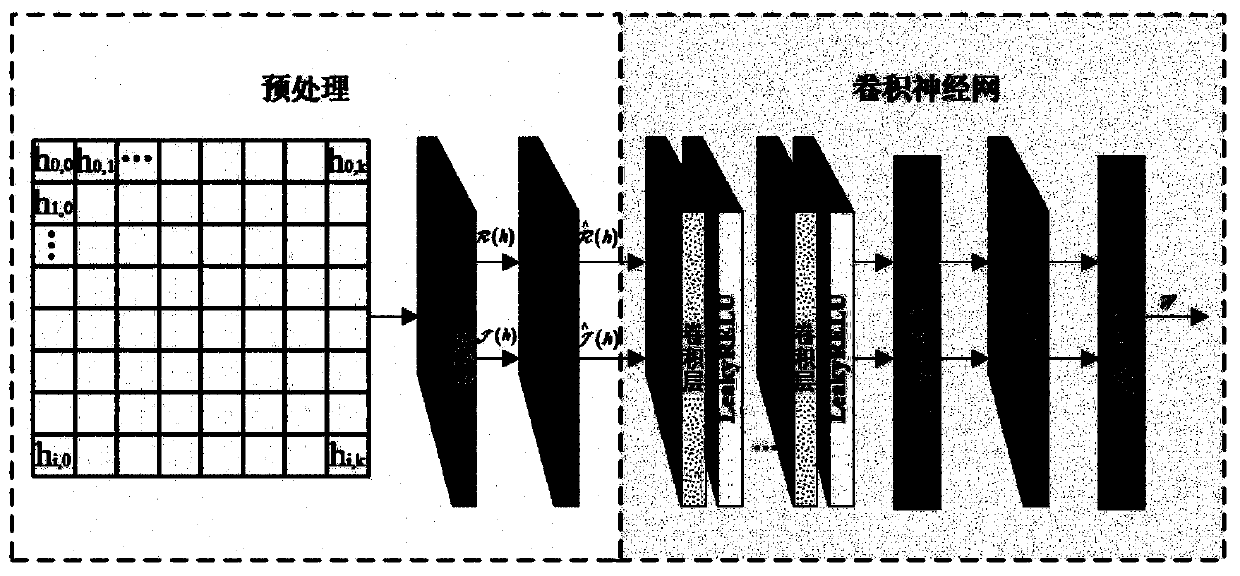

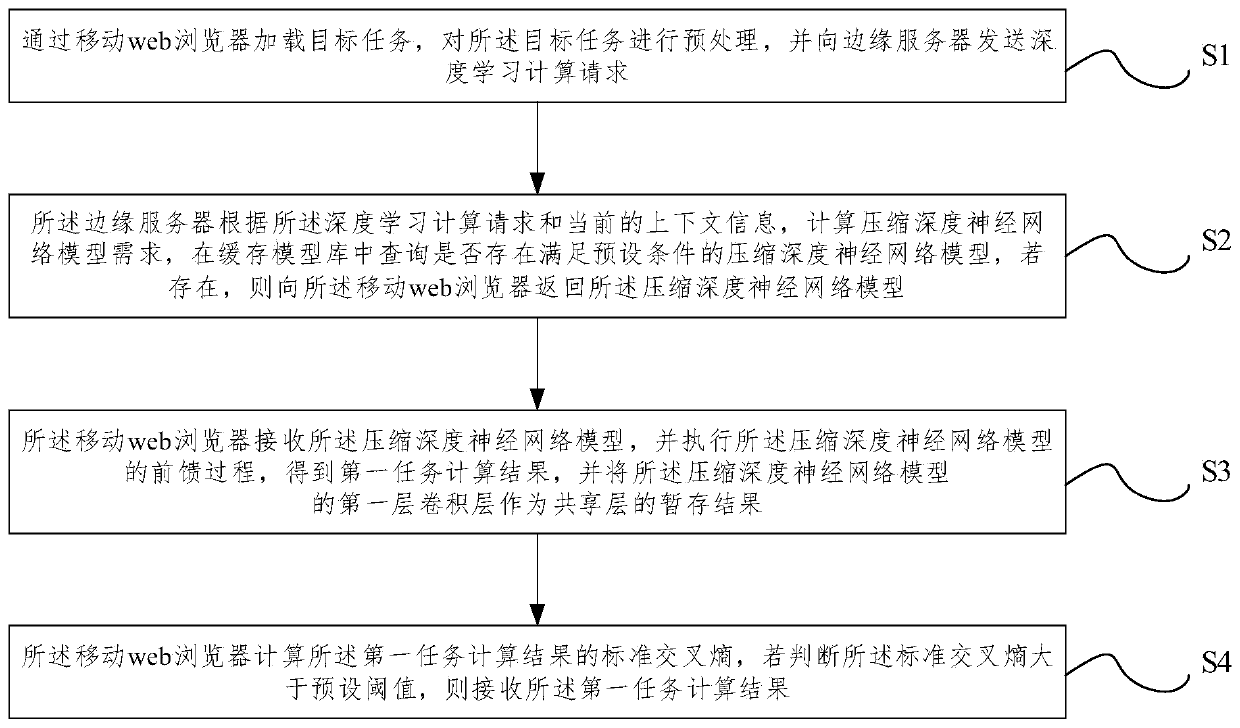

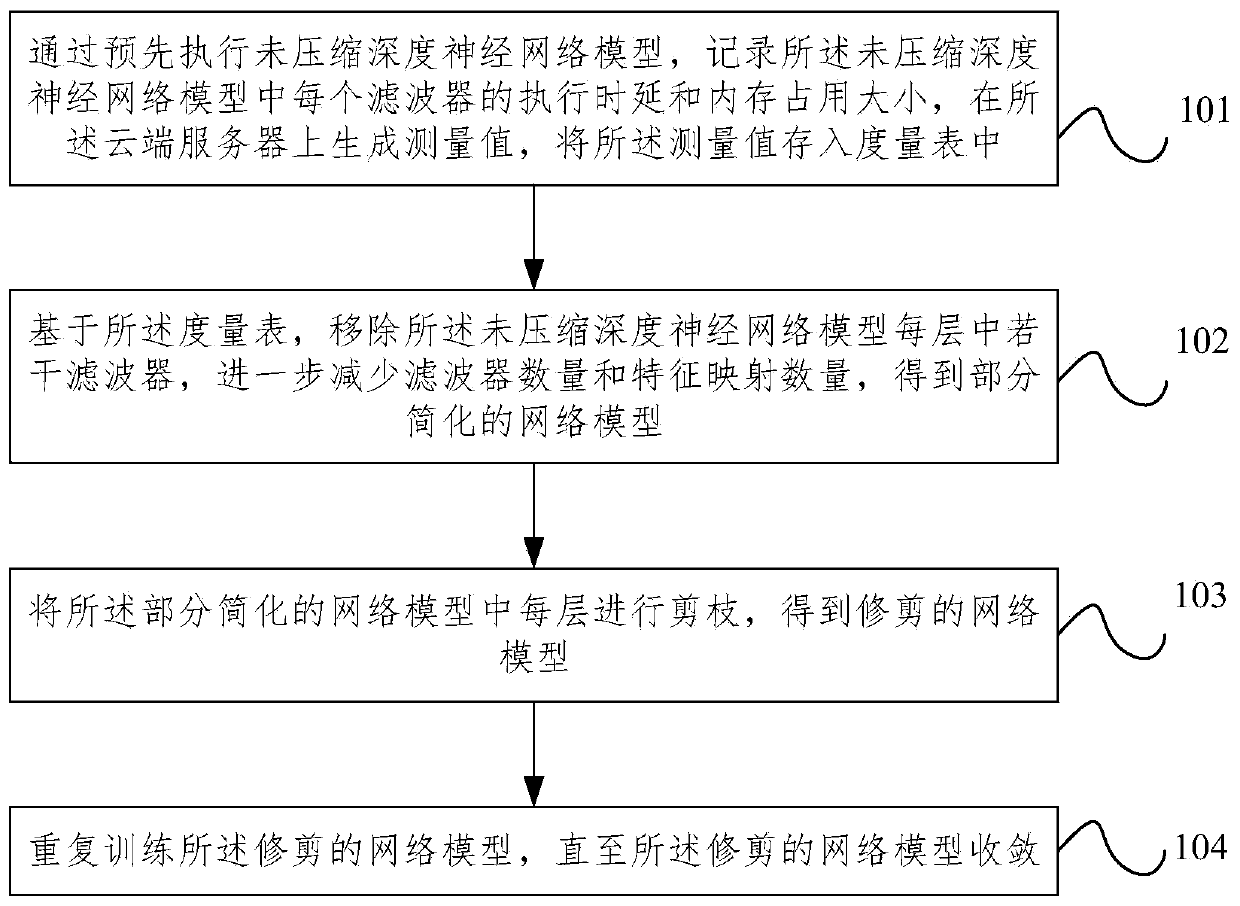

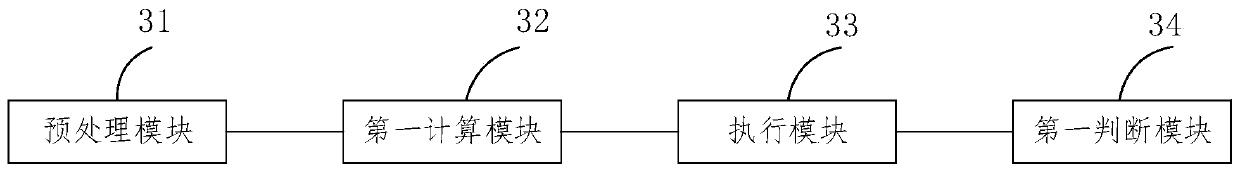

Mobile web deep learning cooperation method and system

ActiveCN110795235AGuaranteed accuracyAccelerated Feedforward InferenceResource allocationNeural architecturesEdge serverEngineering

The embodiment of the invention provides a mobile web deep learning cooperation method and system. The method comprises the steps of loading a target task through a mobile web browser, preprocessing the target task, and sending a deep learning calculation request to an edge server; the edge server calculating a compressed deep neural network model demand according to the deep learning calculationrequest and the current context information, and returning a satisfied compressed deep neural network model to the mobile web browser; the mobile web browser receiving and executing a feedforward process of compressing the deep neural network to obtain a first task calculation result, and taking the first convolutional layer as a temporary storage result of the shared layer; the mobile web browsercalculating the standard cross entropy of the first task calculation result, and if it is judged that the standard cross entropy is larger than a preset threshold value, receiving the first task calculation result. According to the mobile Web deep learning cooperation provided by the embodiment of the invention, the transmission and feedforward calculation time delay of the model is effectively reduced.

Owner:BEIJING UNIV OF POSTS & TELECOMM

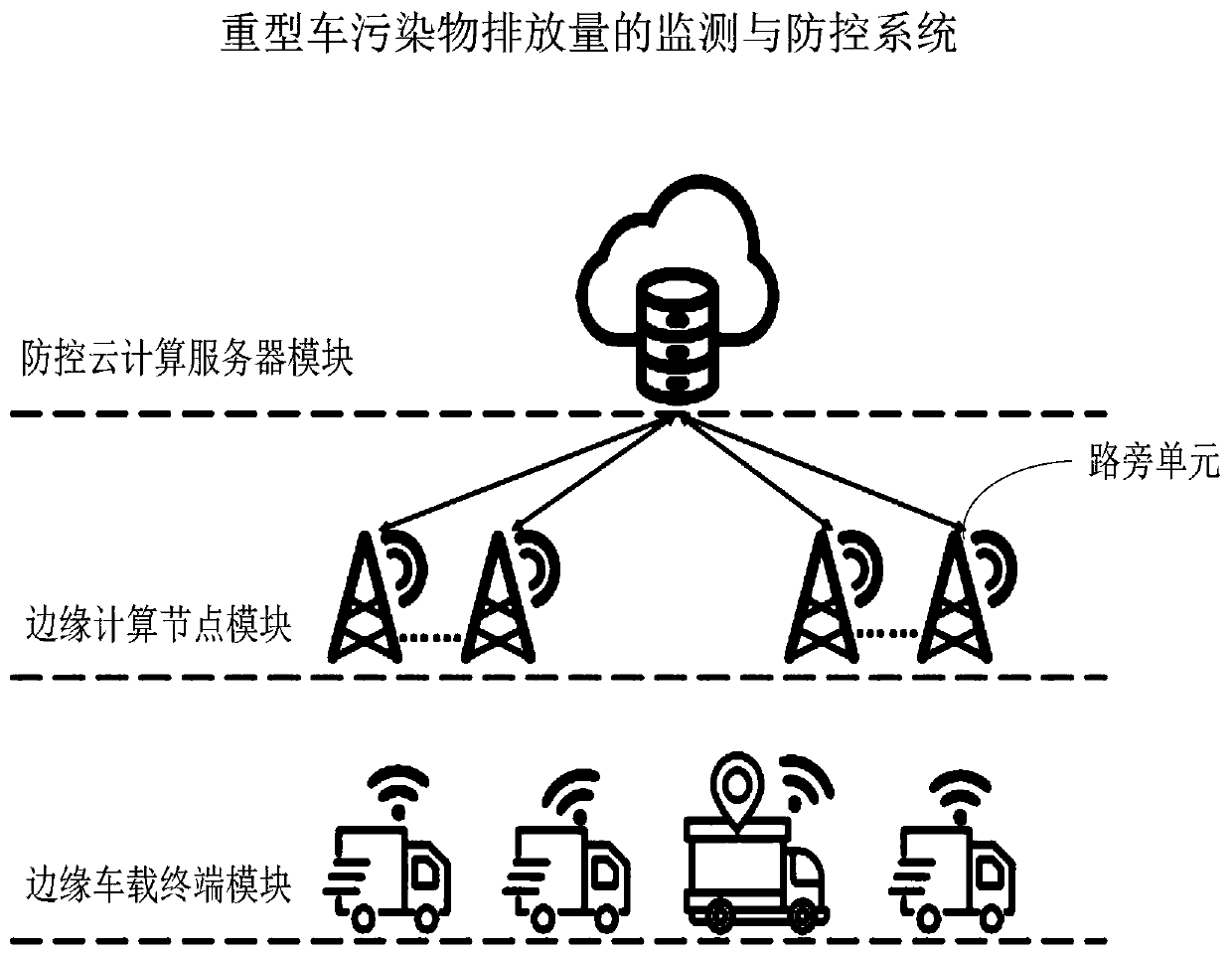

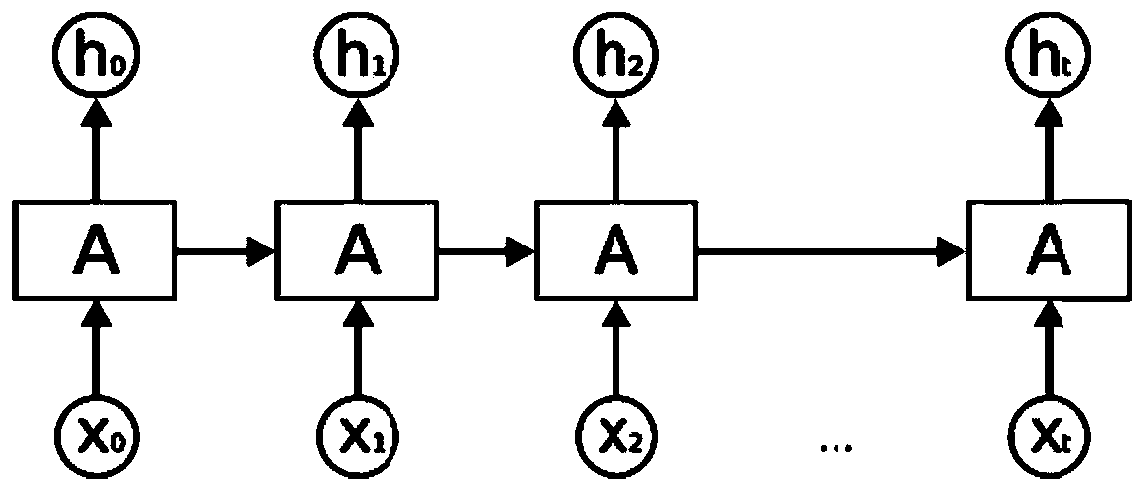

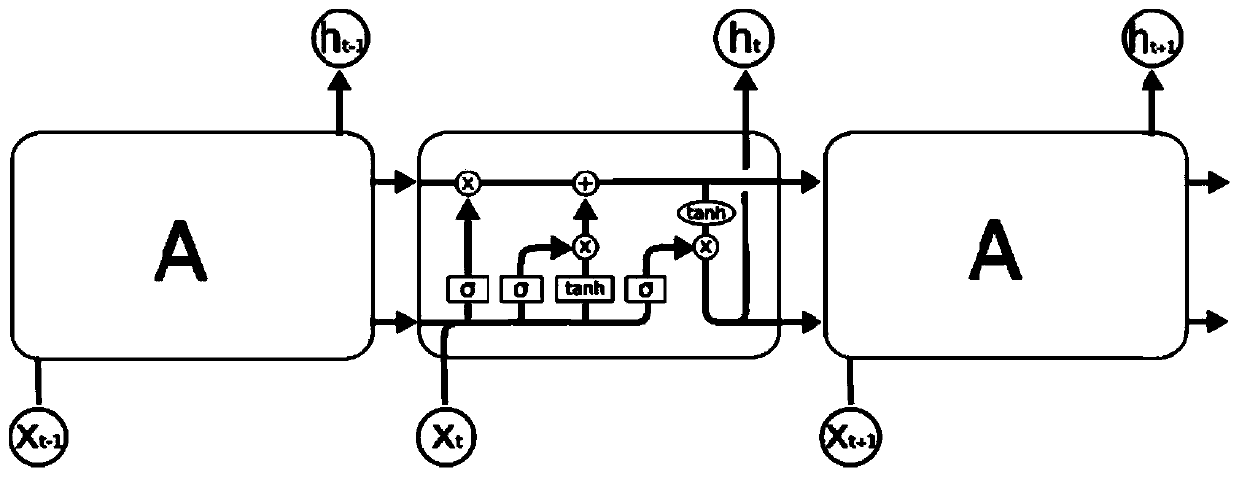

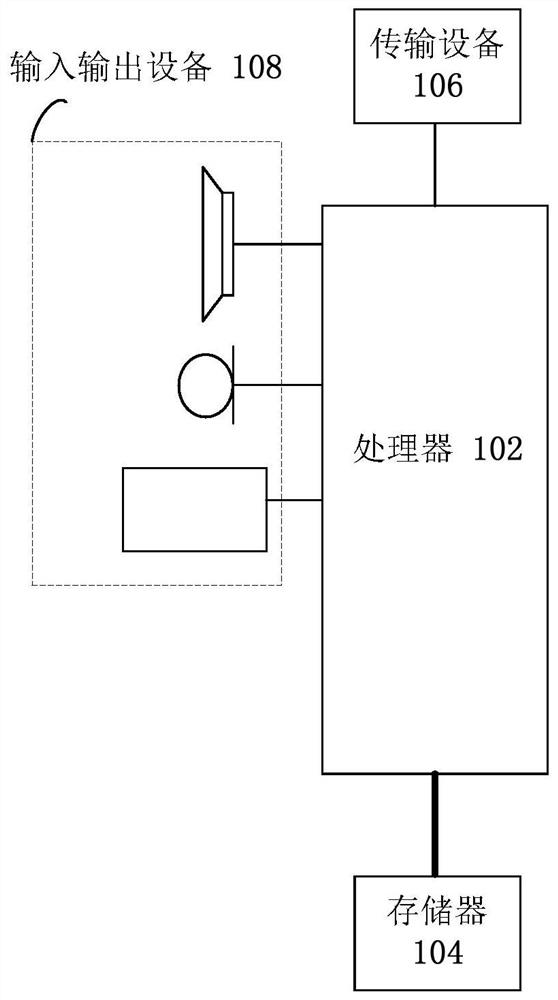

Pollutant discharge monitoring, preventing and controlling system, method and device and medium

PendingCN111340280AAvoid wastingReduce computing delayForecastingNeural architecturesEdge computingControl system

The invention discloses a heavy-duty vehicle pollutant discharge monitoring, prevention and control system, method and device and a storage medium. The system comprises an edge vehicle-mounted terminal module, an edge computing node module and a prevention and control cloud computing server module, the prevention and control cloud computing server module is used for training a heavy-duty vehicle pollutant discharge prediction model and sending the trained prediction model to each roadside unit in the edge computing node module, so that each roadside unit in the edge computing node module can independently complete the prediction task of the pollutant discharge amount of the heavy vehicle, the computing time delay can be effectively reduced, and the system operation efficiency is improved;and the pollutant emission monitoring accuracy is considered, and meanwhile, the pollutant emission of the heavy vehicle can be monitored, prevented and controlled only by using the data of the vehicle-mounted OBD equipment installed on the heavy vehicle, so that the manpower and material resource cost in the implementation process is reduced, the resource waste is avoided, and the working efficiency is improved. The method is widely applied to the field of heavy-duty vehicle pollution monitoring, prevention and control.

Owner:宜通世纪物联网研究院(广州)有限公司

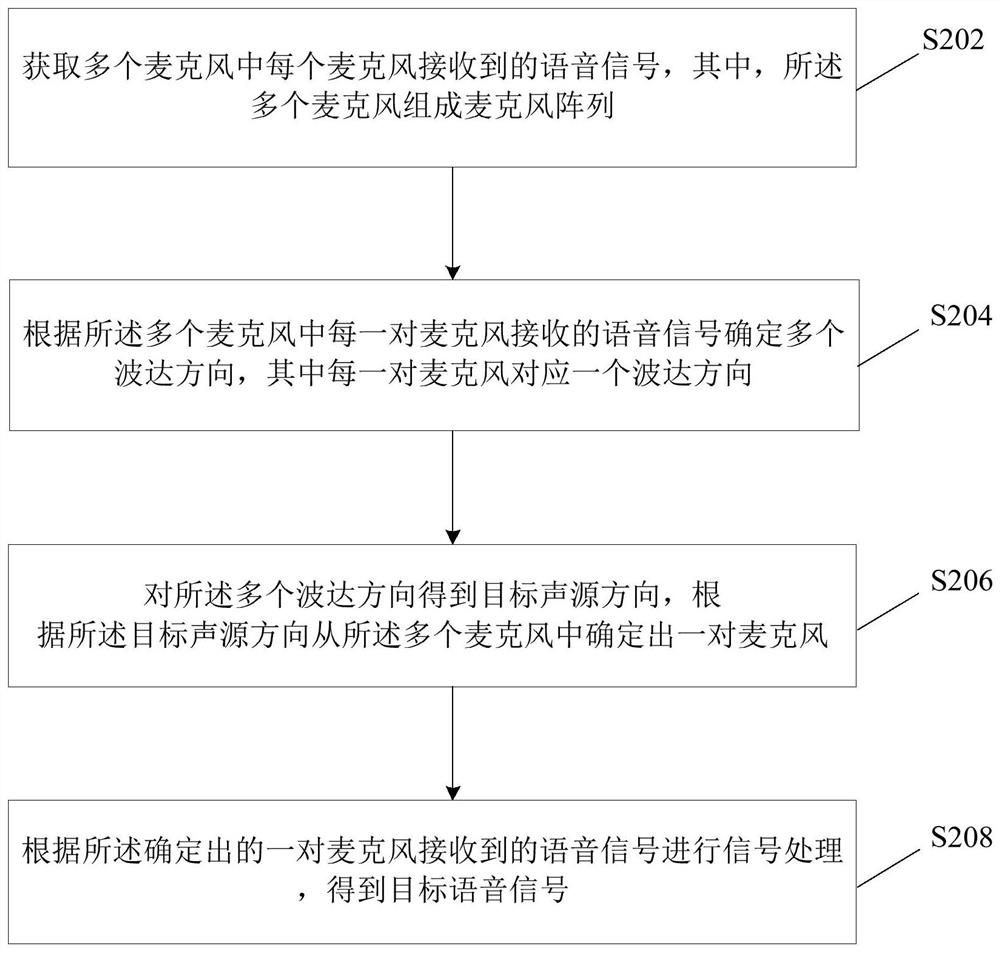

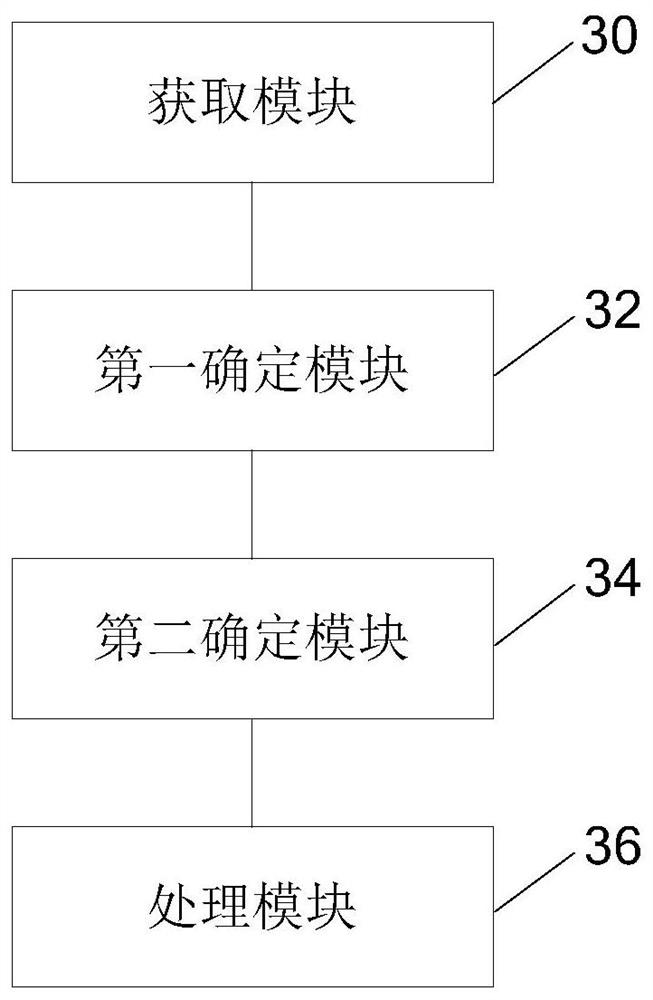

Signal processing method and device, storage medium and electronic device

ActiveCN111856402AHigh complexityReduce difficultyDirection finders using ultrasonic/sonic/infrasonic wavesPosition fixationComputation complexityEngineering

The invention provides a signal processing method and device, a storage medium and an electronic device. The method comprises the steps: obtaining a voice signal received by each microphone in a plurality of microphones, wherein the plurality of microphones form a microphone array; determining a plurality of directions of arrival according to the voice signals received by each pair of microphonesin the plurality of microphones, wherein each pair of microphones corresponds to one direction of arrival; determining a pair of microphones from the plurality of microphones according to the plurality of directions of arrival; and performing signal processing on the voice signals received by the determined pair of microphones to obtain a target voice signal. According to the invention, the problems of high operation and hardware complexity when multiple microphones perform voice signal processing are solved, so that the hardware difficulty and complexity are reduced, the calculation complexity is reduced, and a relatively low calculation time delay effect can be obtained.

Owner:HAIER YOUJIA INTELLIGENT TECH BEIJING CO LTD

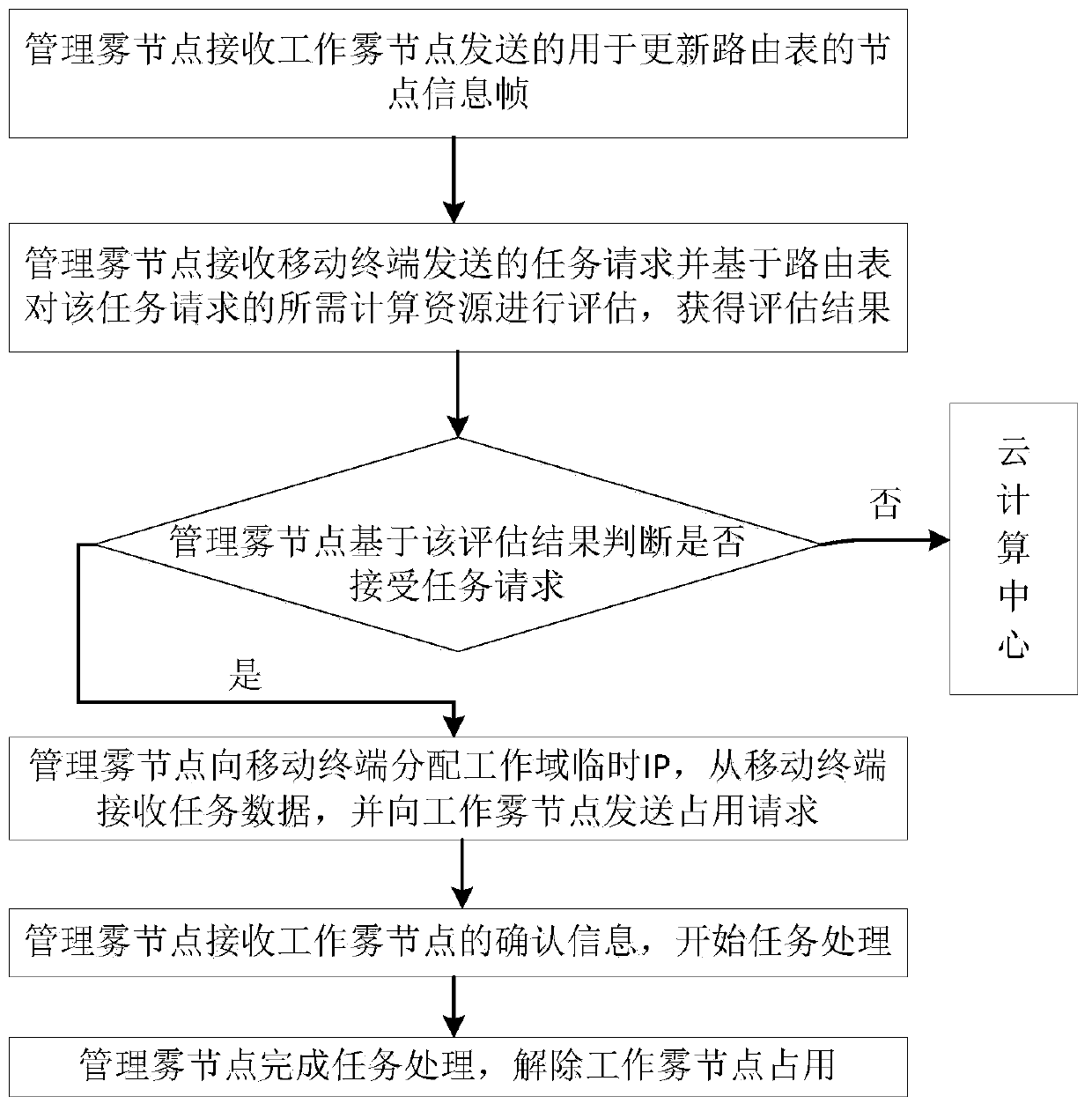

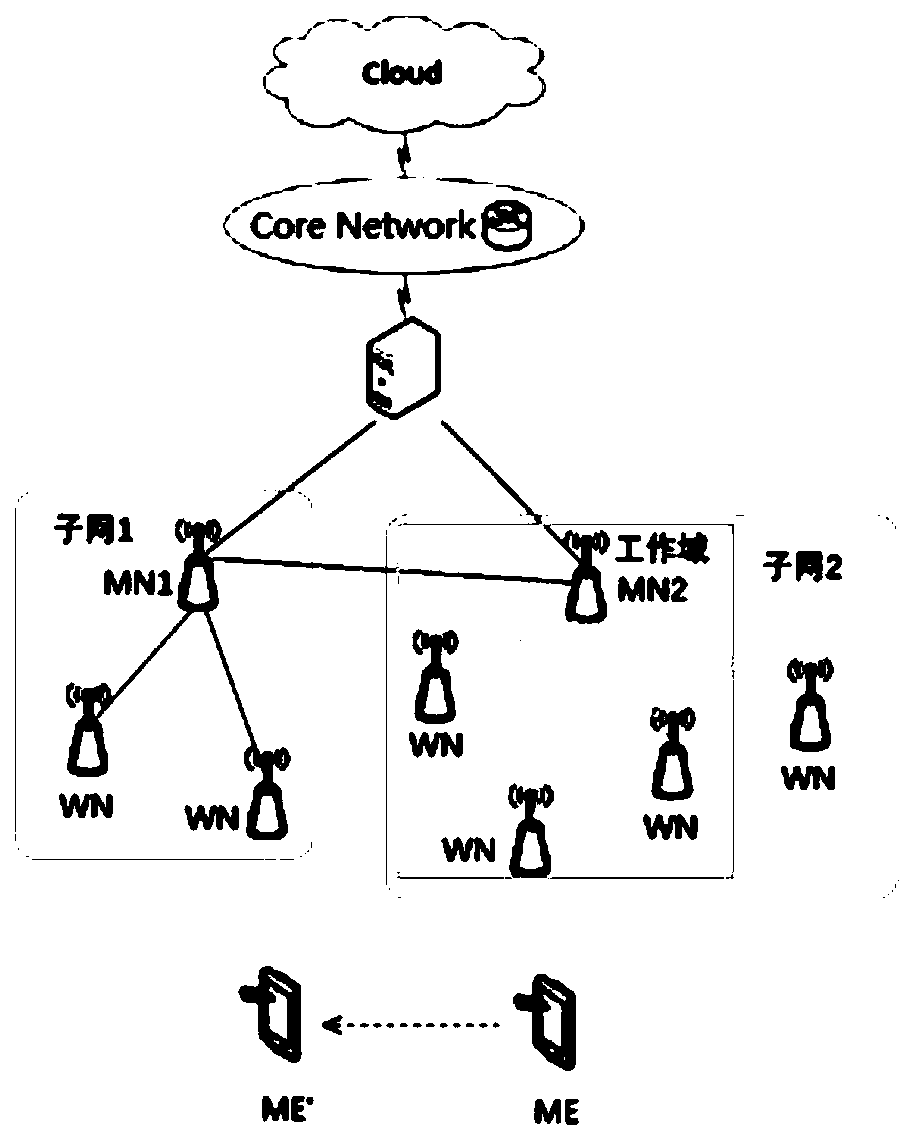

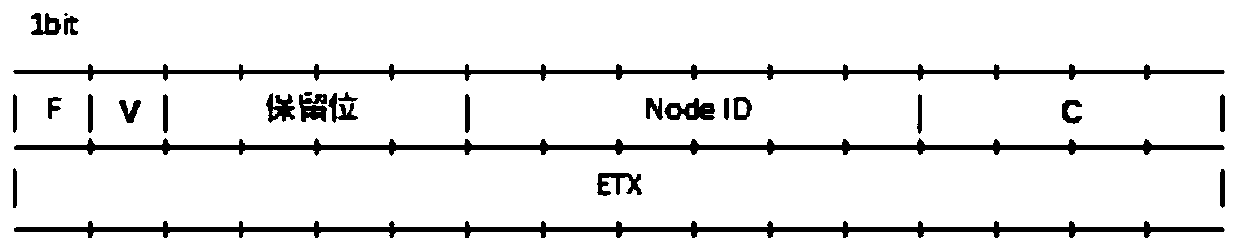

Fog node self-organizing cooperation method based on mobile IP

ActiveCN111263303AAdapt to workload changes in a timely mannerReduce computing delayNetwork traffic/resource managementNetwork topologiesEngineeringWorkload

The invention provides a fog node self-organizing cooperation method based on a mobile IP (Internet Protocol). The method comprises the steps that a working fog node sends a node information frame toa management fog node at the beginning or the end of work or every other certain period to update a routing table; the management fog node performs task distribution decision according to task conditions, and constructs a work domain by combining the plurality of work fog nodes according to needs after receiving the tasks; the management fog node monitors the position of a mobile terminal after constructing the working domain, and after leaving the subnet, the mobile terminal requests the management fog node newly entering the subnet to transfer an address and registers to a local agent; and after the task is finished, the local agent relieves the occupation of the working fog node, releases the working domain and recovers the IP allocated to the mobile terminal. According to the method provided by the invention, nodes participating in fog computing can form a distributed computing platform in real time to timely adapt to the change of a workload, so that the computing time delay is reduced; a fog node working domain group with a dynamically variable topology is realized; and extensible computing resources are provided for the terminal.

Owner:BEIJING JIAOTONG UNIV

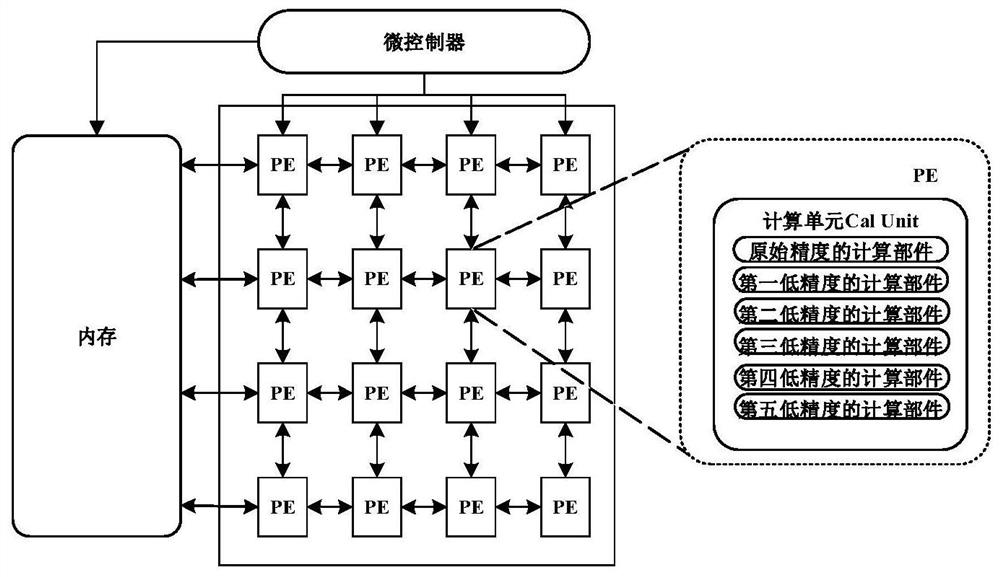

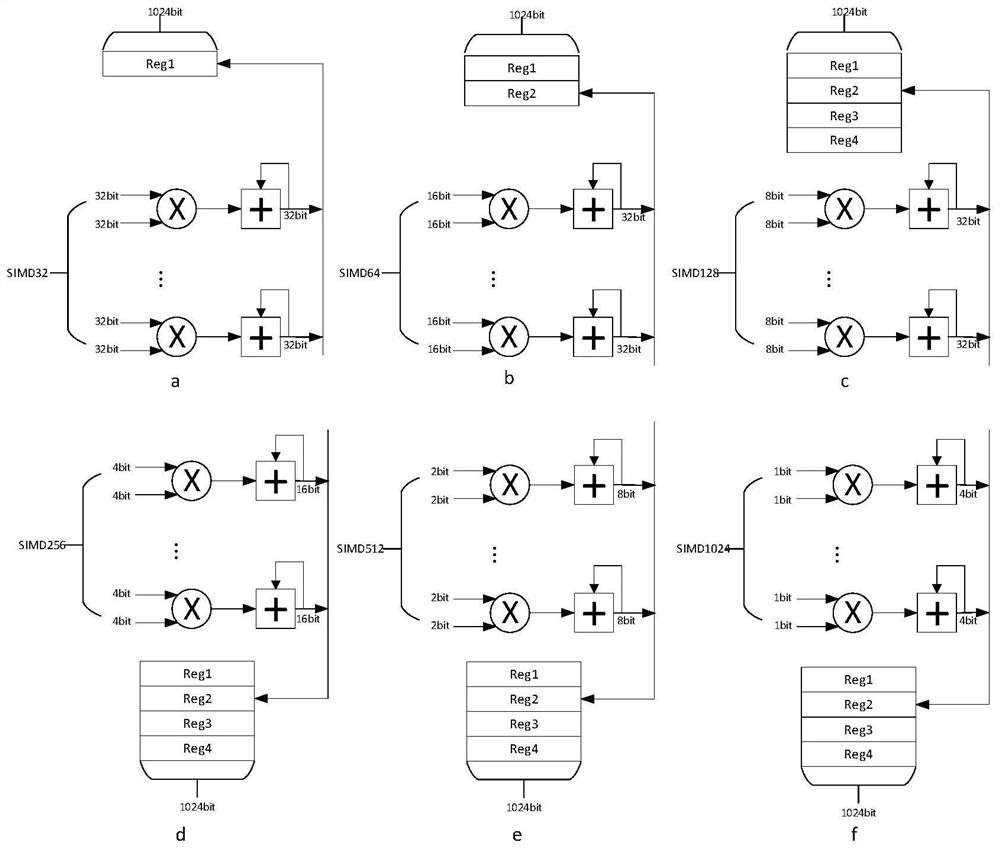

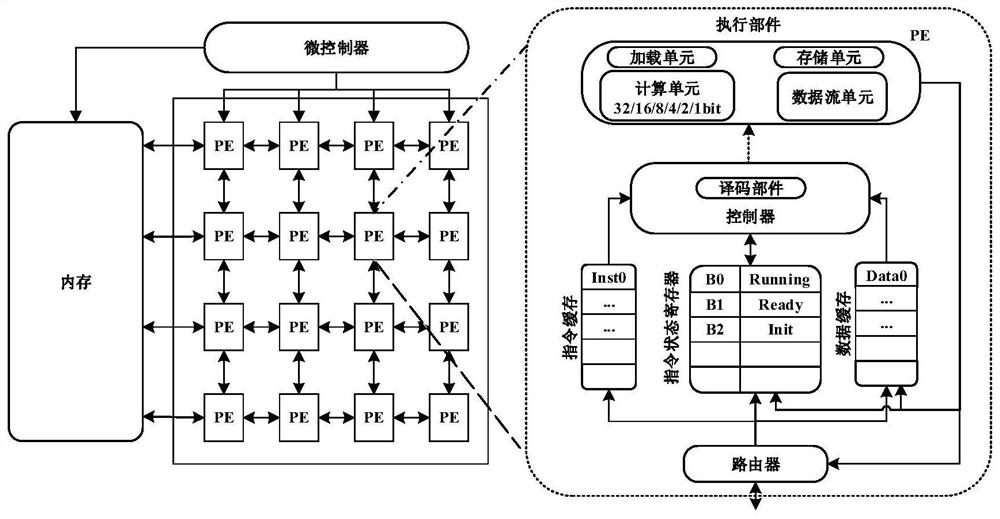

Multi-precision neural network computing device and method based on data stream architecture

ActiveCN113298245AAvoid data overflowReduce computing delayNeural architecturesPhysical realisationEngineeringConvolution

The embodiment of the invention provides a multi-precision neural network computing device based on a data stream architecture, which comprises a microcontroller and a PE array connected with the microcontroller, and each PE of the PE array is configured with a plurality of low-precision computing components with original precision and precision lower than the original precision. The computing component with lower precision is provided with more parallel multiply accumulators to make full use of the on-chip network bandwidth, and each low-precision computing component in each PE is provided with sufficient registers to avoid data overflow; and the microcontroller is configured to respond to an acceleration request for a specific convolutional neural network, control a calculation component with original precision or low precision matched with the precision of the specific convolutional neural network in the PE array to execute operation in corresponding convolution operation and store an intermediate calculation result to a corresponding register. Therefore, the convolutional neural networks with different precisions can be accelerated, the calculation time delay and energy consumption are reduced, and the user experience is improved.

Owner:INST OF COMPUTING TECHNOLOGY - CHINESE ACAD OF SCI

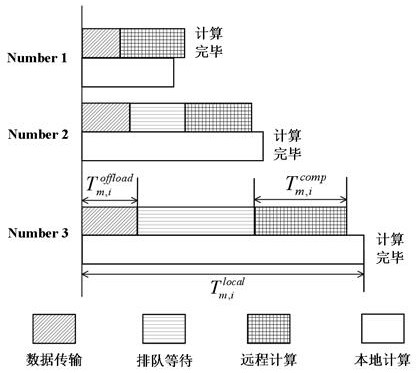

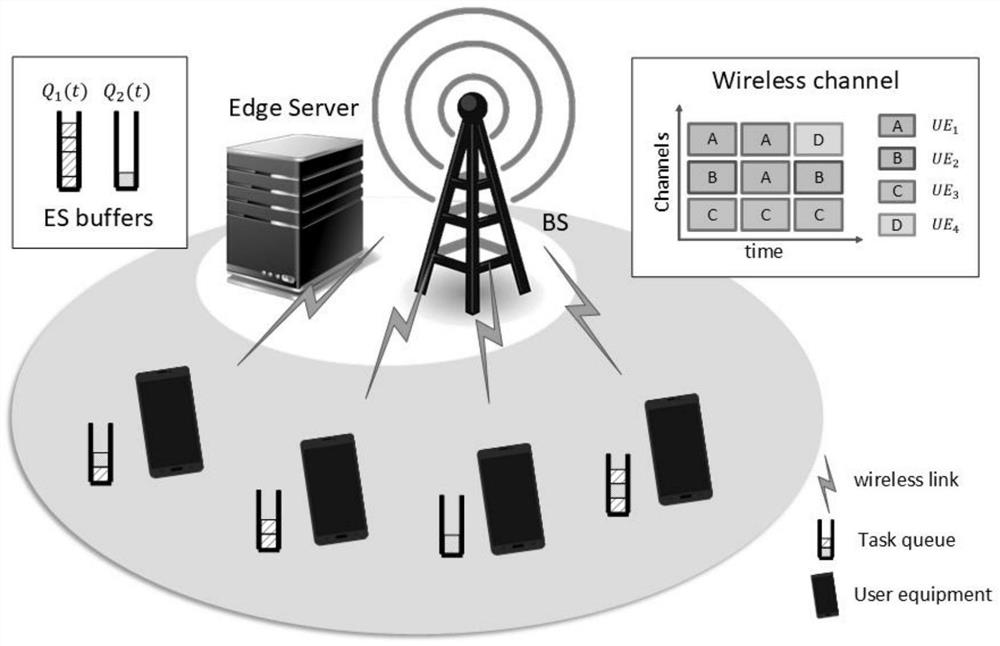

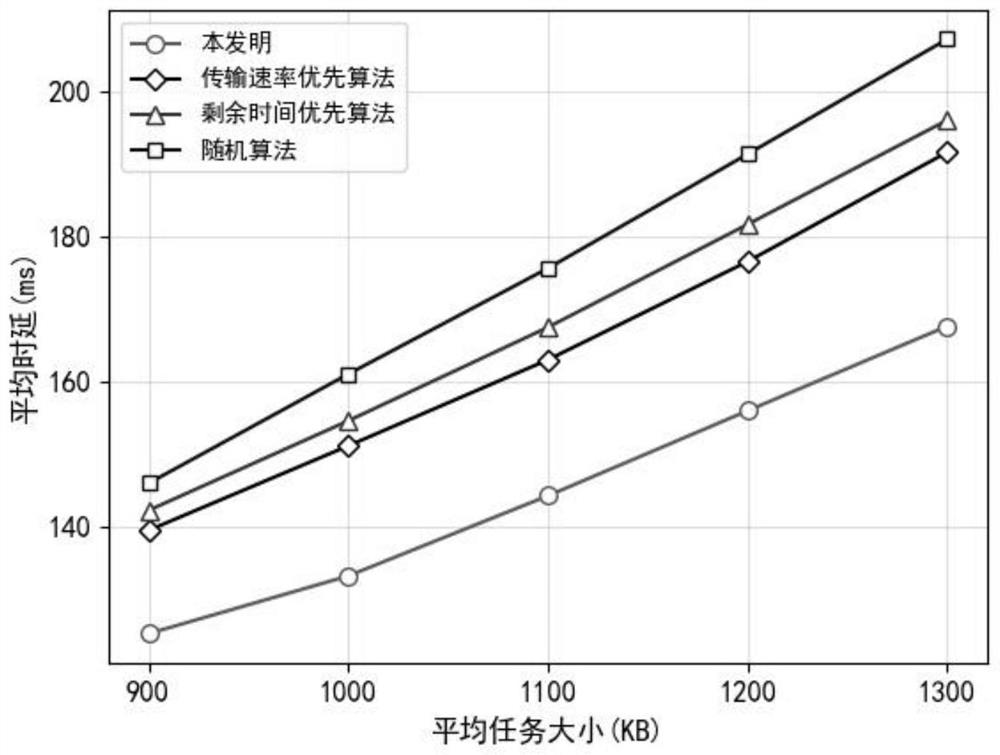

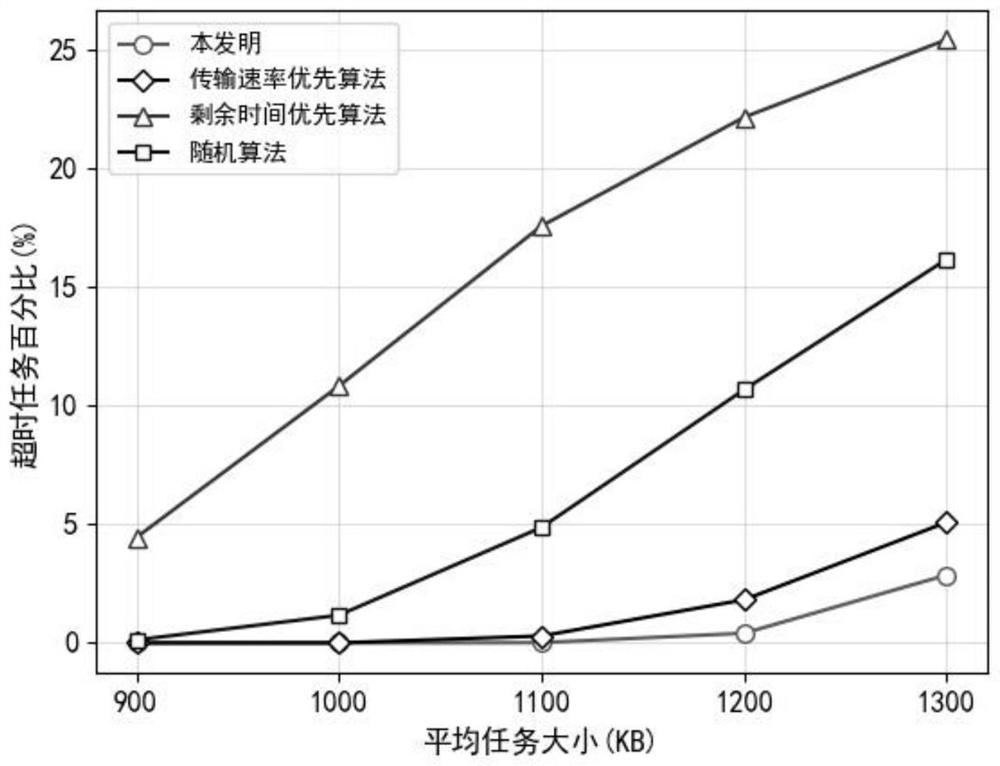

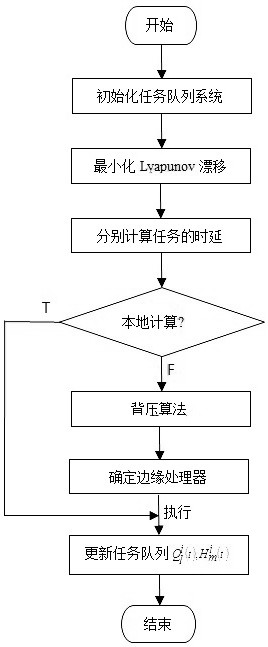

Task queue aware edge computing real-time channel allocation and task unloading method

PendingCN114375058AReduce computing delayRaise priorityNetwork traffic/resource managementTransmissionLyapunov optimizationEdge computing

An edge computing real-time channel allocation and task unloading method based on task queue perception is characterized in that a base station channel allocation and user task unloading problem based on task queue perception is converted into a single time slot optimization model according to a Lyapunov optimization framework, users are divided and combined according to a game theory, and a cooperative game is carried out; and forming a combination set with stable convergence, and finally obtaining a channel allocation and task unloading strategy of each time slot.

Owner:SHANGHAI UNIV

Edge cloud computing unloading method for mobile medical health management

PendingCN112527409AReduce computing delayImprove QoSResource allocationInterprogram communicationReal-time computingQuality of service

The invention discloses an edge cloud computing unloading method for mobile medical health management, relates to the technical field of mobile medical and edge computing. The method is characterizedby combining a computing task with a queue on each local device for a large amount of data generated by mobile medical health management by utilizing random optimization, reducing queue backlog by minimizing Lyapunov drift, optimizing the calculation delay of the task, and further determining an unloading decision of the task by considering the calculation delay of the task and the task backlog situation of queues on the local devices and the edge processor, thereby reducing the calculation delay. The method has the beneficial effects of making up the inherent defects of the mobile terminal, realizing quick execution of tasks and improving the service quality of users.

Owner:HENAN UNIV OF SCI & TECH

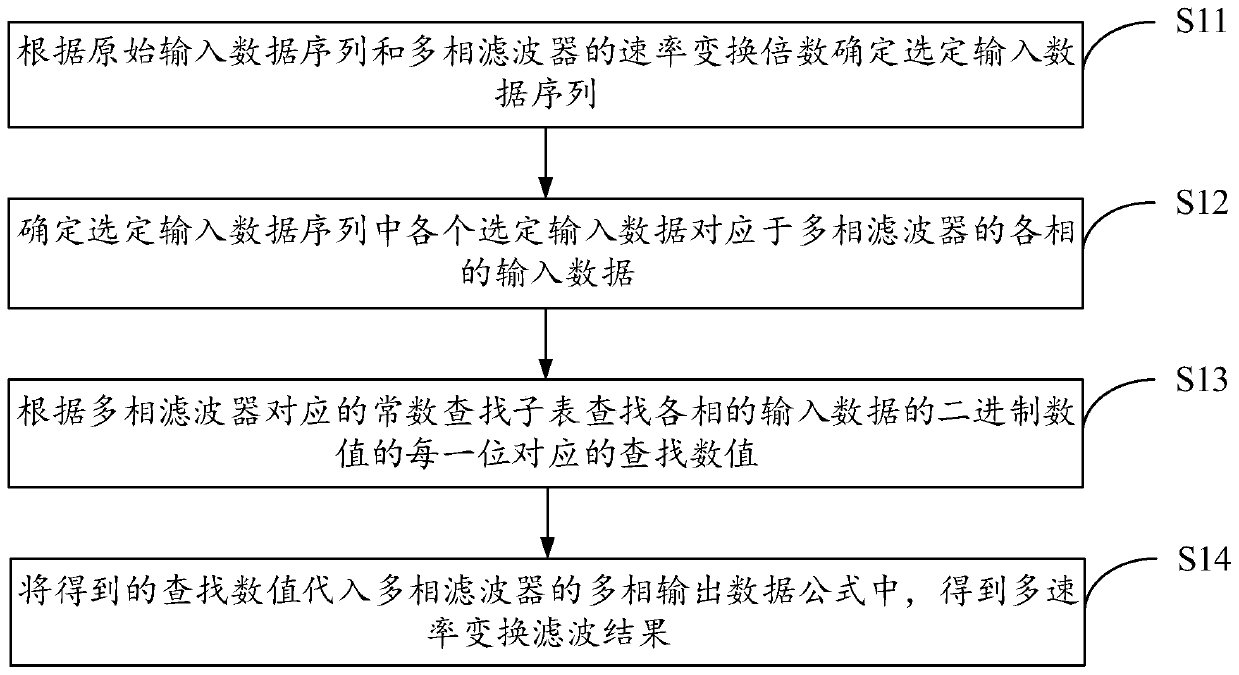

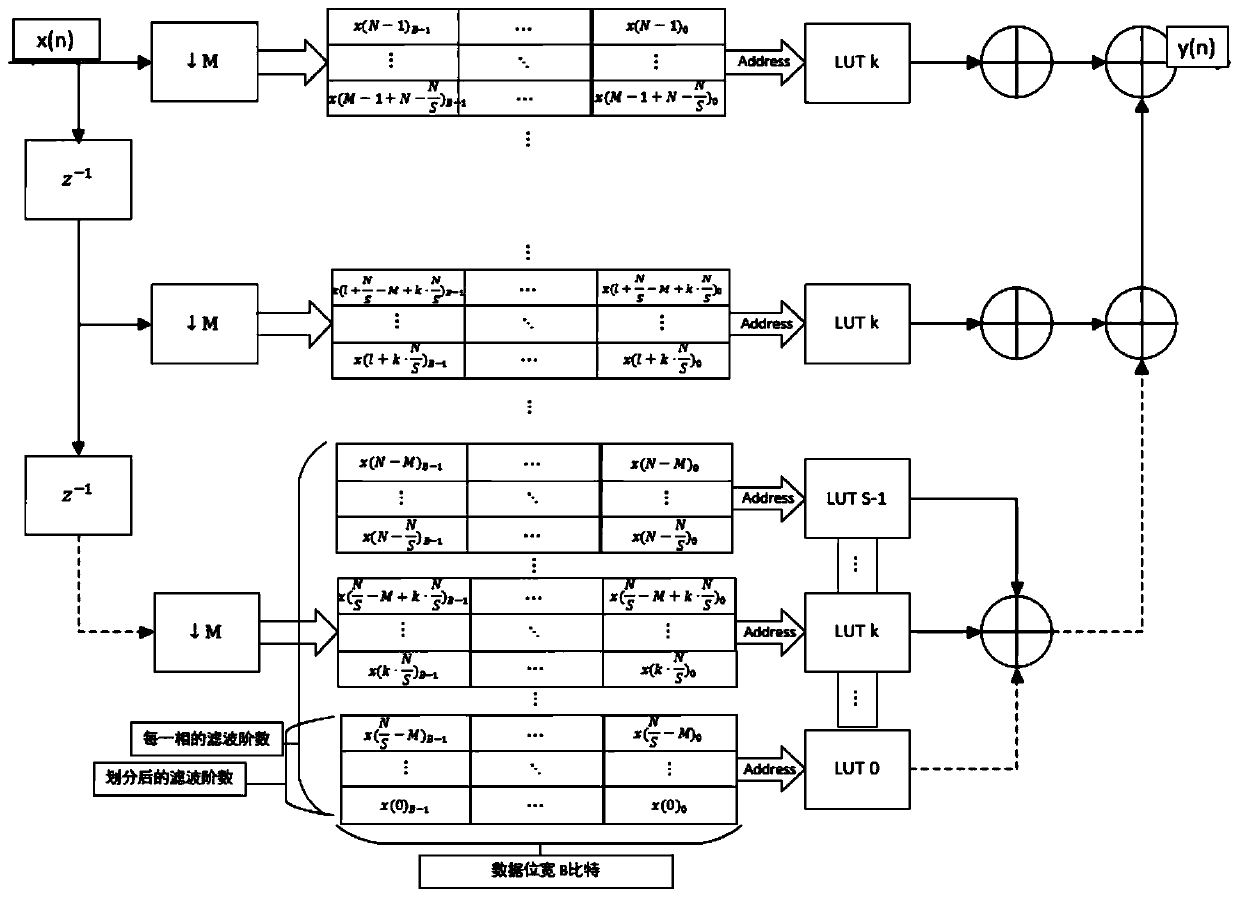

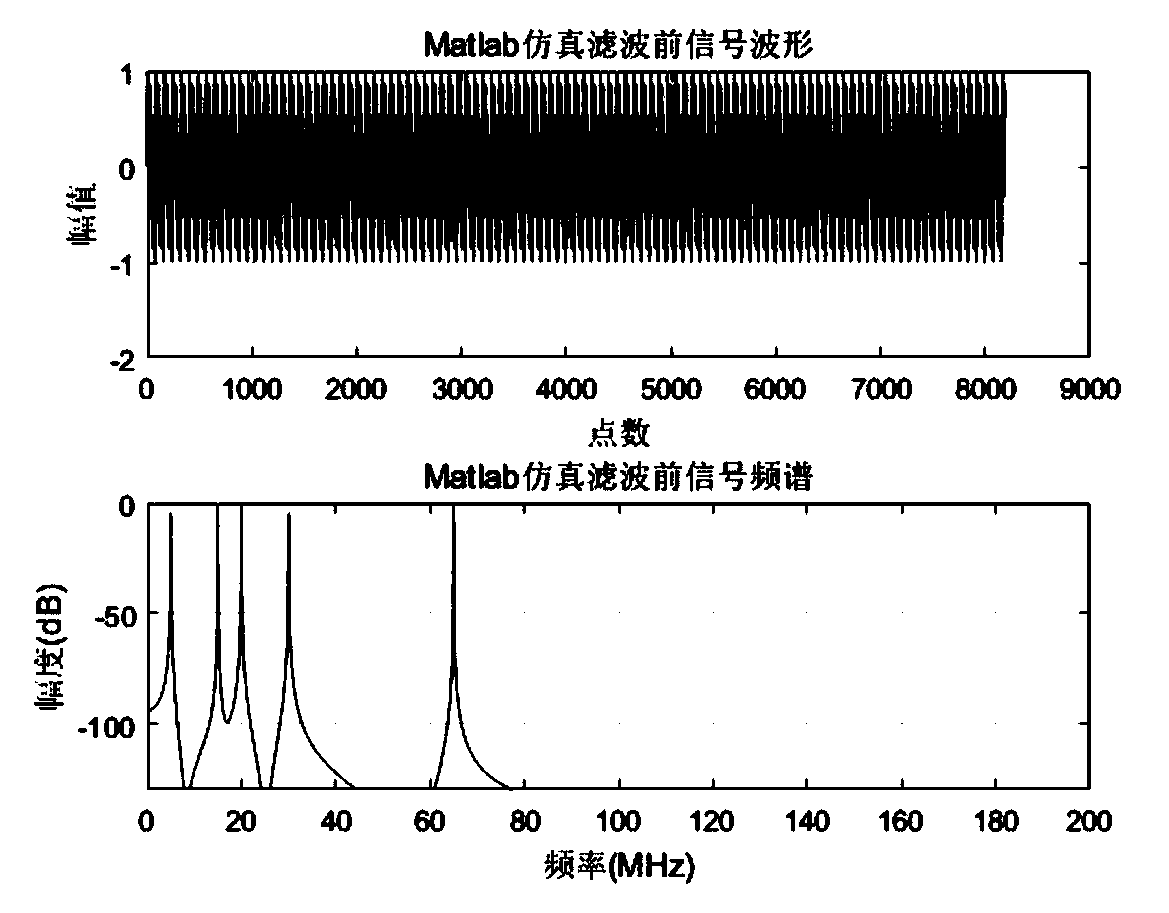

Multi-rate conversion filtering method and device

InactiveCN110233606AReduce resource overheadReduce computing delayDigital technique networkData miningMulti phase

The invention discloses a multi-rate conversion filtering method and device. The method comprises the following steps: determining a selected input data sequence according to an original input data sequence and a rate conversion multiple of a multi-phase filter; determining input data of each phase, corresponding to the multi-phase filter, of each selected input data in the selected input data sequence; searching a search value corresponding to each bit of the binary value of the input data of each phase according to a constant search sub-table corresponding to the multi-phase filter; and substituting the obtained lookup value into a multiphase output data formula of the multiphase filter to obtain a multirate conversion filtering result. Compared with the prior art, a multiplier is not needed, so that the resource cost of the editable device can be greatly saved. Moreover, due to the fact that a table lookup method is used, one part of multiplication and addition operation is completed in advance, only a result needs to be called, and the calculation delay can be further reduced.

Owner:BEIJING XINWANG RUIJIE NETWORK TECH CO LTD

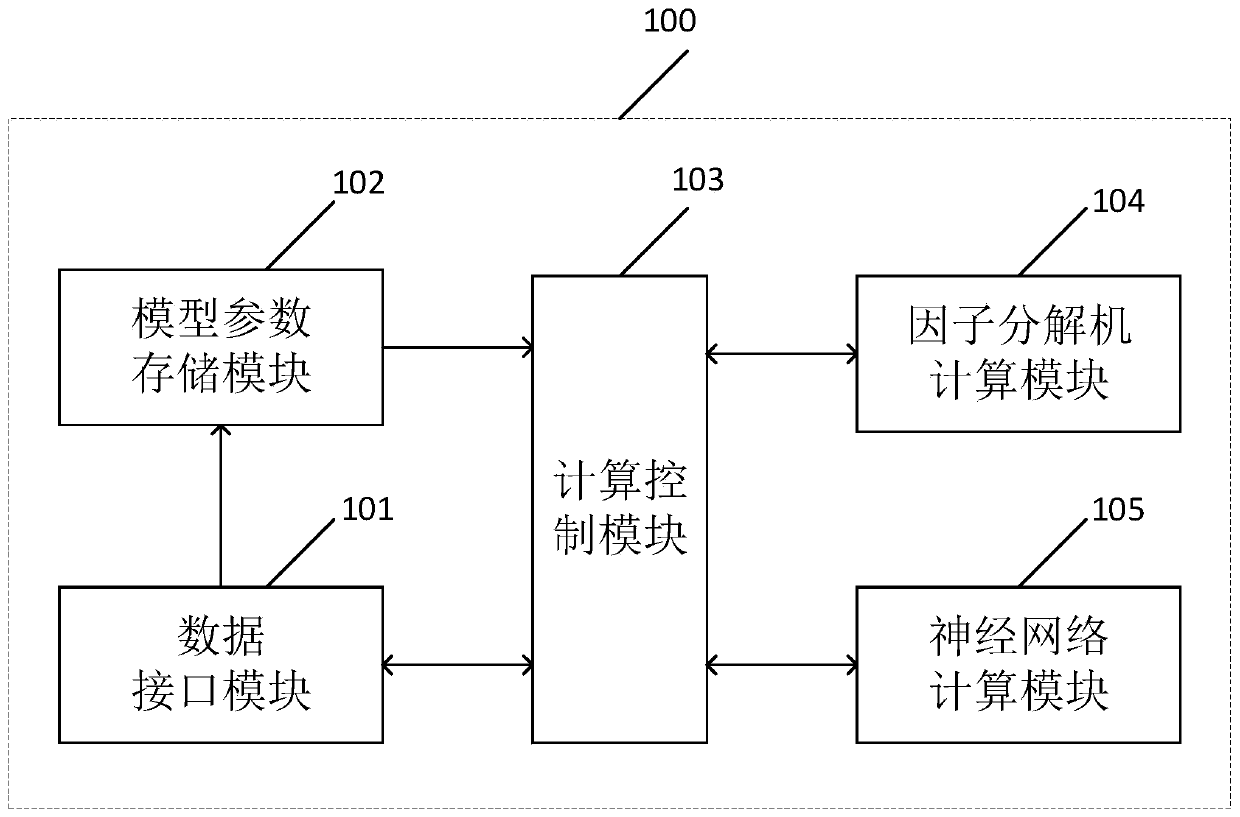

Click rate estimation system and method based on field-programmable gate array

ActiveCN110399979AReduce computing delayCalculation speedPhysical realisationNeural learning methodsModel parametersStorage model

The invention provides a click rate estimation system and method based on a field-programmable gate array. The click rate estimation system based on the field-programmable gate array comprises a datainterface module, a model parameter storage module, a calculation control module, a factorization machine calculation module and a neural network calculation module, wherein the data interface moduleis used for acquiring model parameters, acquiring to-be-estimated data and outputting a click rate estimation result; the model parameter storage module is used for storing model parameters; the calculation control module is used for obtaining to-be-estimated data from the data interface module and obtaining model parameters from the model parameter storage module; the factorization machine calculation module is used for calculating a factorization machine expression of the to-be-estimated data; the neural network calculation module is used for calculating a neural network forward propagationresult of the to-be-estimated data; and the calculation control module is also used for obtaining a click rate estimation result. According to the technical scheme of the embodiment of the invention,the calculation delay of click rate estimation is reduced, and the calculation speed is improved.

Owner:SHENZHEN UNIV

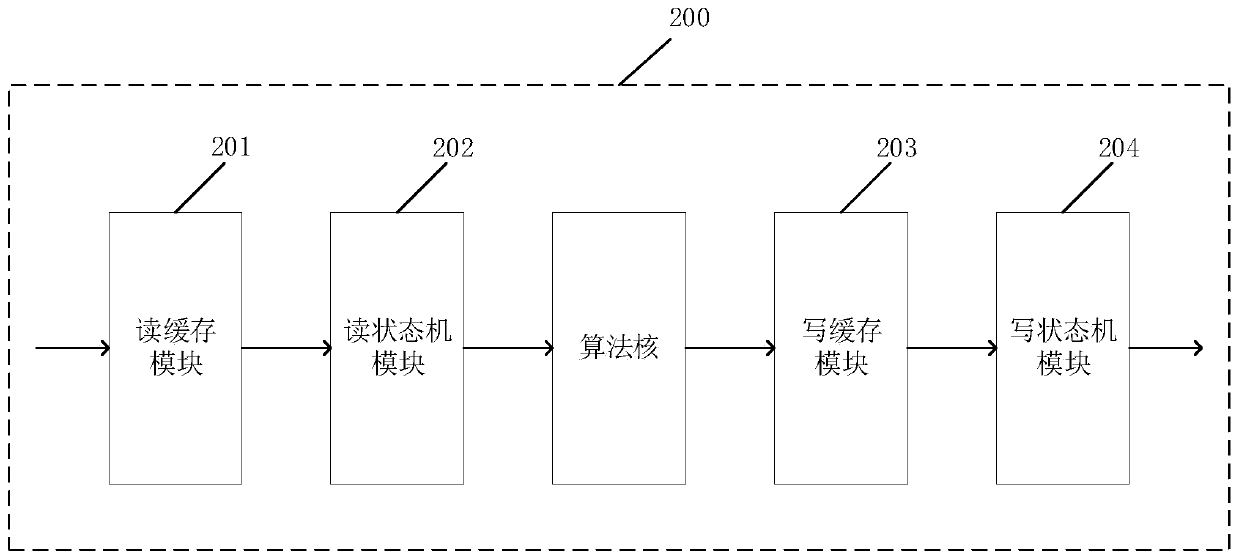

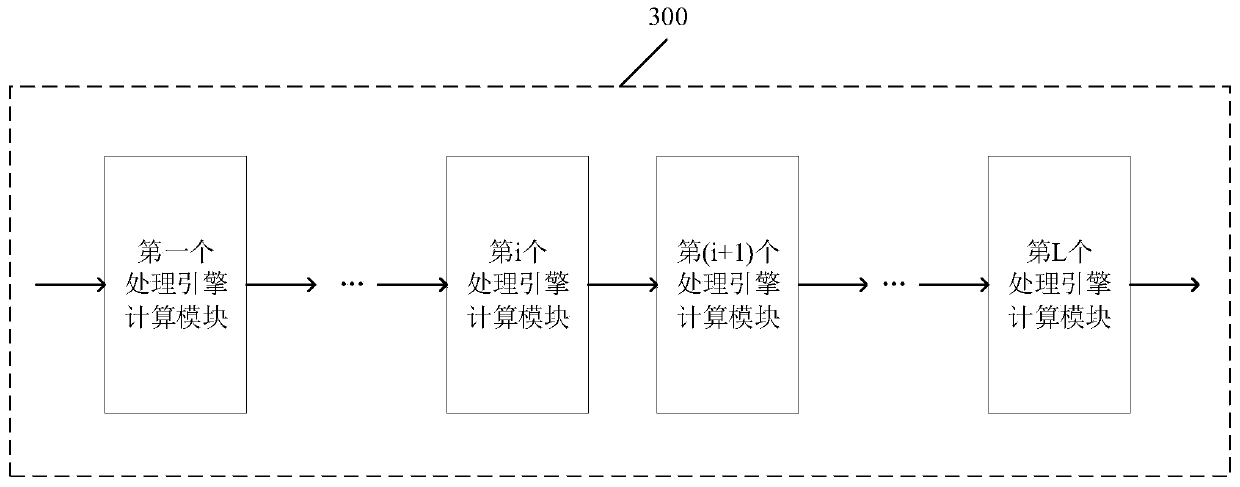

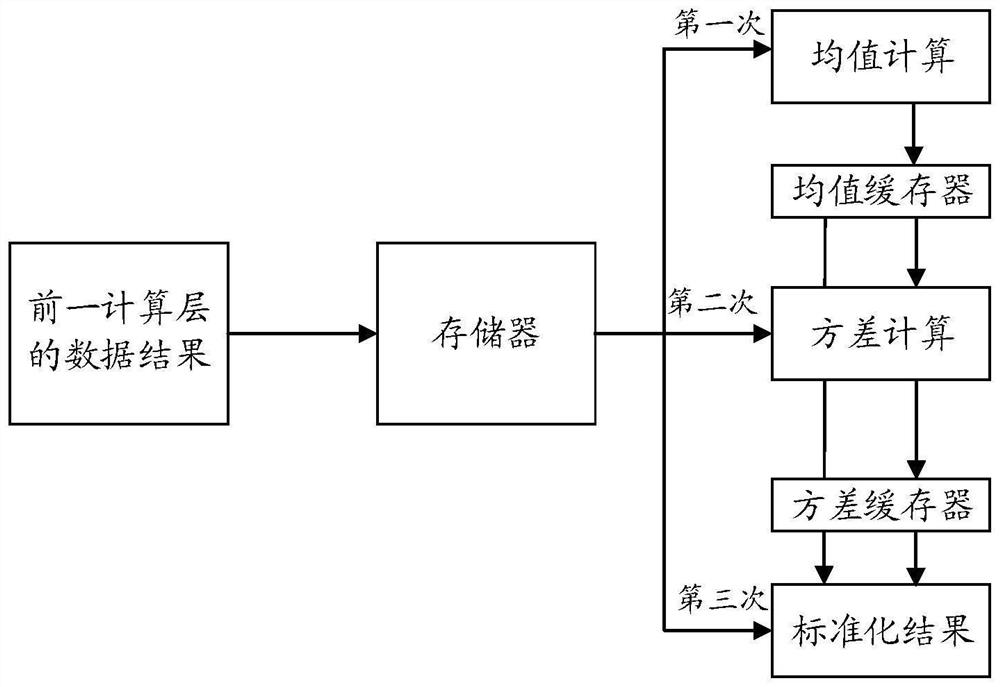

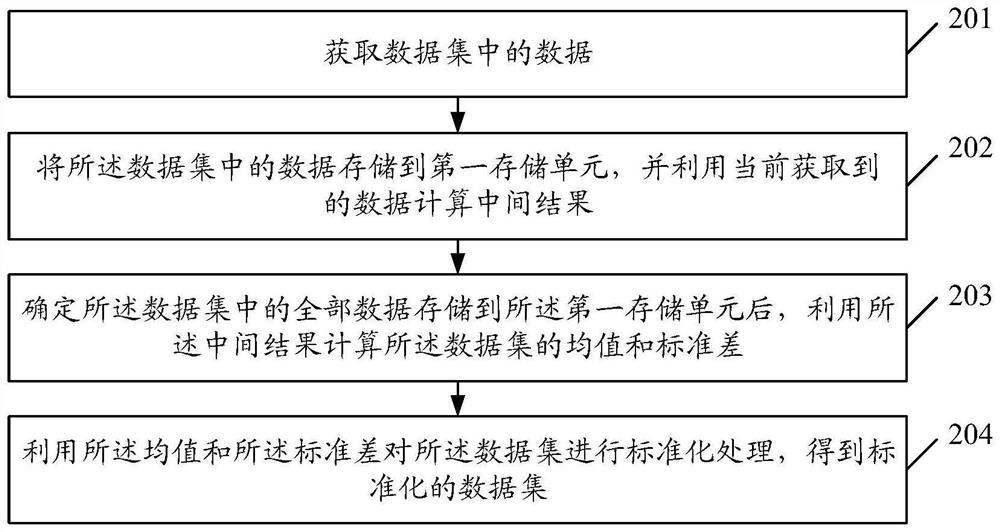

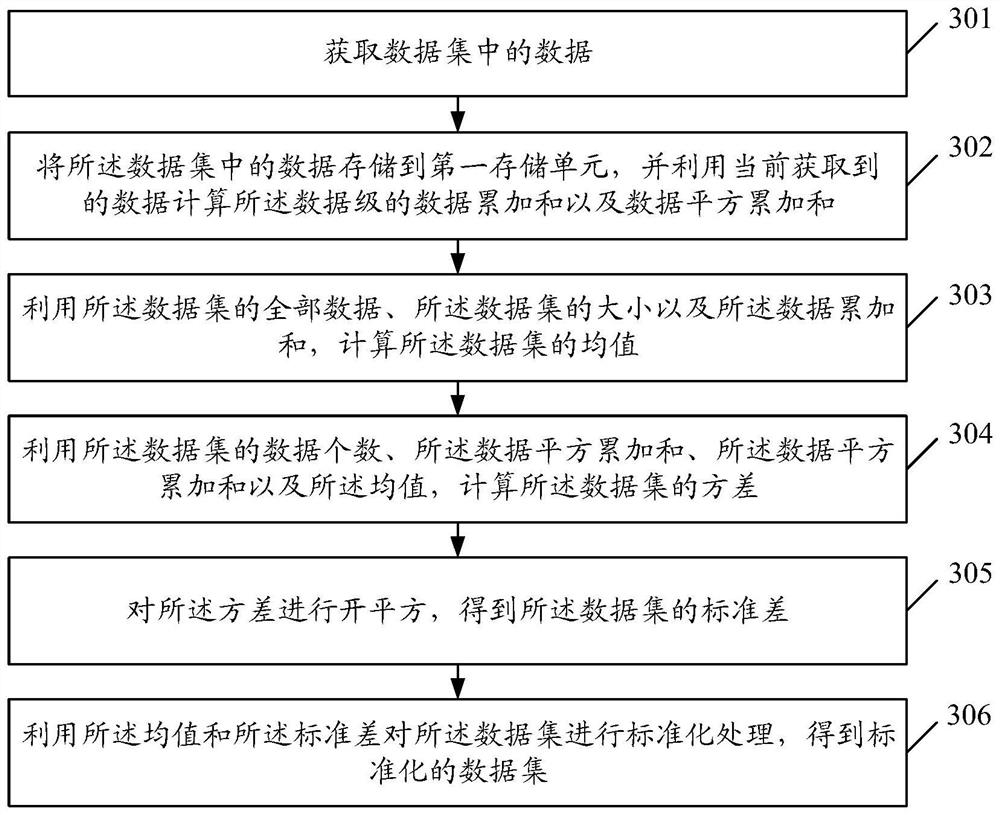

Data standardization processing method and device, electronic equipment and storage medium

PendingCN114356235AReduce computing delayReduce computing power consumptionDatabase updatingInput/output to record carriersData setTime delays

Embodiments of the invention disclose a data standardization processing method and apparatus, an electronic device and a storage medium. The method comprises the steps of obtaining data in a data set; storing the data in the data set into a first storage unit, and calculating an intermediate result by using the currently obtained data; after determining that all data in the data set is stored in the first storage unit, calculating a mean value and a standard deviation of the data set by using the intermediate result; and performing standardization processing on the data set by using the mean value and the standard deviation to obtain a standardized data set. Therefore, after the data in the data set is obtained, the intermediate result participating in the calculation of the mean value and the standard deviation is updated online while the data is stored, and the mean value and the standard deviation of the data set can be calculated according to the intermediate result when the data transmission is completed, so that the process of reading the data from the first storage unit at least once can be omitted, the calculation time delay is shortened, and the calculation efficiency is improved. And calculation power consumption is reduced.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

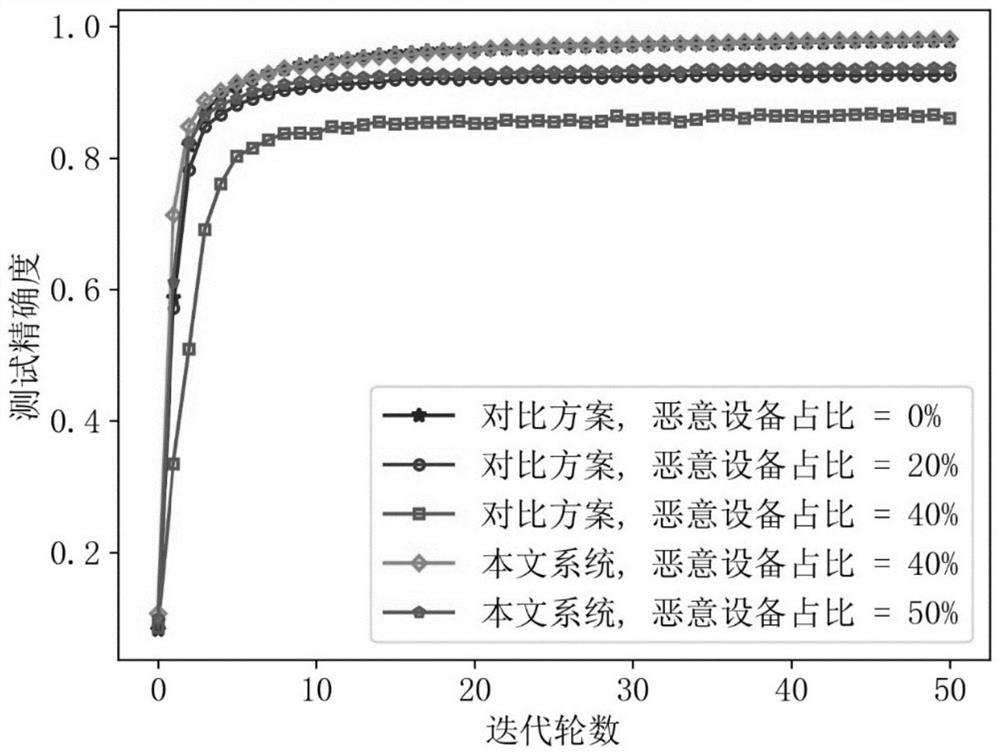

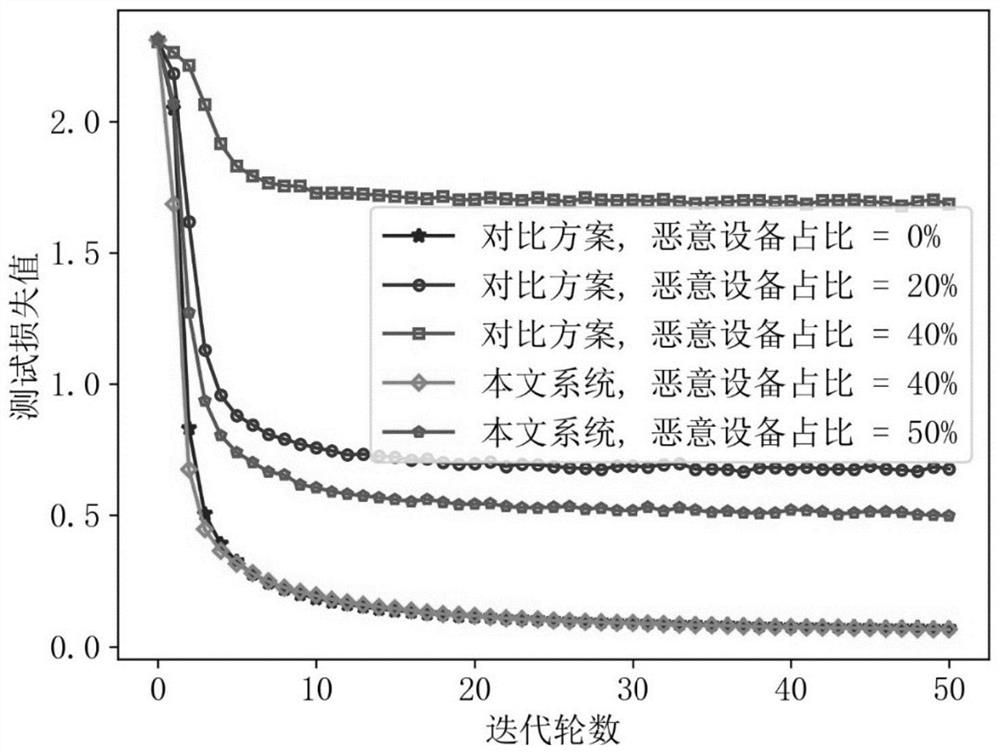

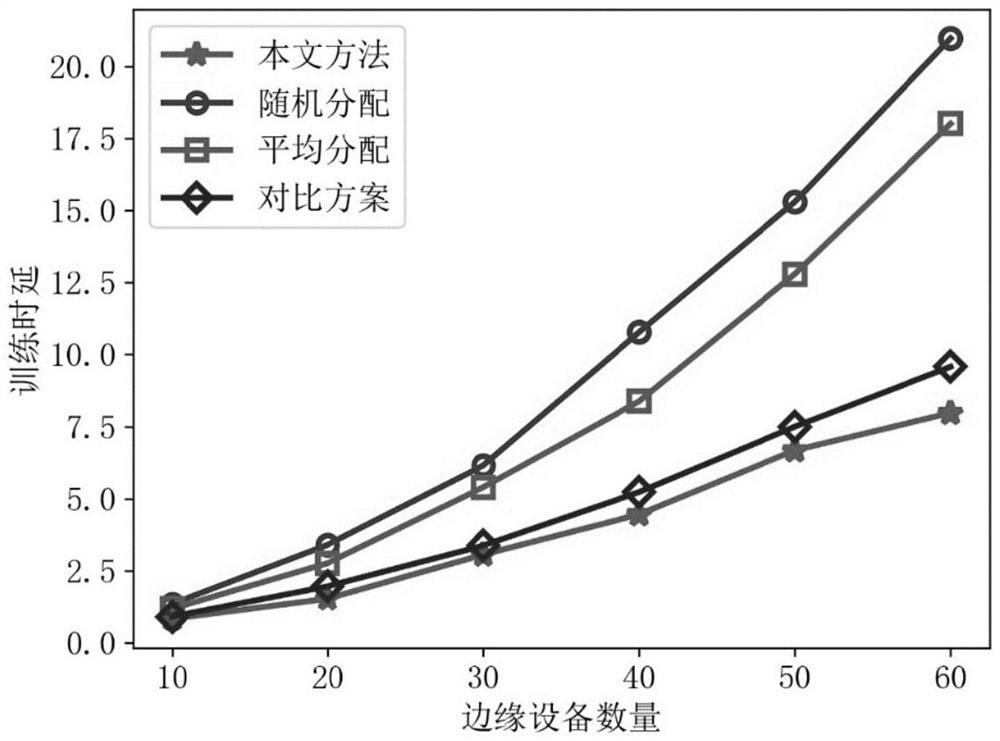

Design method of security edge federated learning system based on block chain and optimization algorithm thereof

PendingCN114422354AAchieve robustnessRealize intelligent distributionMathematical modelsUser identity/authority verificationFederated learningAttack

The invention provides a design method of a security edge federated learning system based on a block chain, and the method employs a consensus mechanism based on a practical Byzantine fault-tolerant method and a robust global model aggregation algorithm to resist the attack of a malicious edge device and a malicious edge server on federated learning training. The safe and credible edge federated learning system is realized, and the convergence performance of edge federated learning model training is effectively improved. In order to further improve the training speed of the block chain-based secure edge federated learning system and reduce the time delay of system model training and consensus, the invention also provides a resource optimization algorithm based on deep reinforcement learning, so that the rapid and efficient allocation of wireless bandwidth and sending power in a dynamic wireless channel environment is realized, and the resource optimization efficiency is improved. The resource utilization maximization of the secure edge federated learning system is improved, and then the training time delay minimization of the block chain-based secure edge federated learning system is realized.

Owner:SHANGHAI TECH UNIV

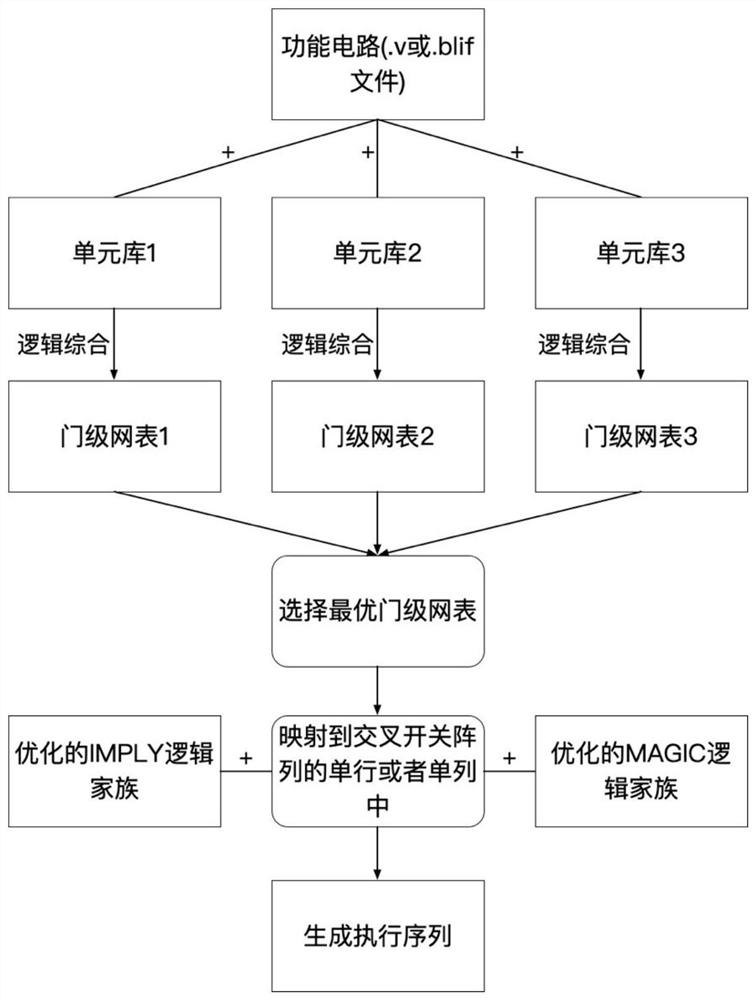

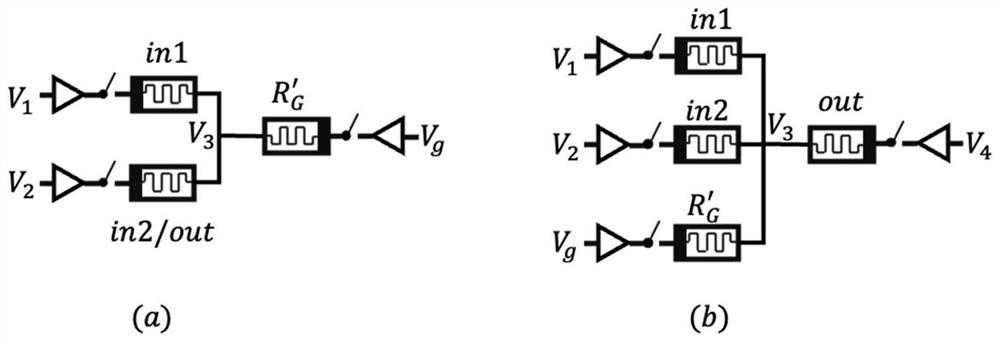

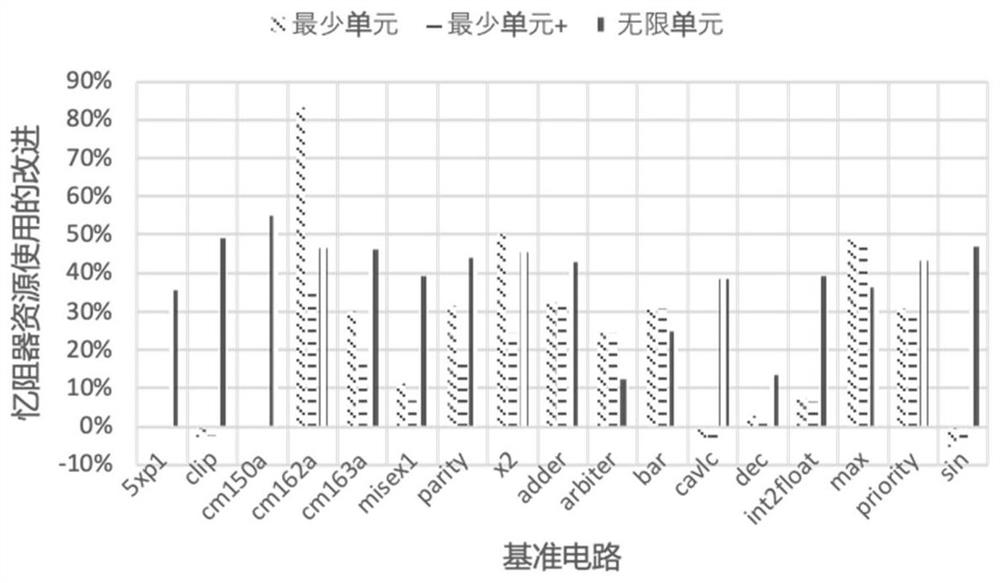

Method for improving memory logic calculation efficiency based on memristor

PendingCN113553793AReduce computing delayImprove efficiencyConstraint-based CADSpecial data processing applicationsLogic familyTime delays

The invention discloses a method for improving memory logic calculation efficiency based on a memristor, which combines an IMPLY logic family and an MAGIC logic family, and reduces time delay and hardware resource overhead of in-memory calculation by simultaneously utilizing the advantages of the two logic families. The method comprises the following steps: firstly, replacing an external resistor required by an IMPLY logic family gate and optimizing the design of an MAGIC logic family gate by using a memristor unit in a memristor crossbar switch array; secondly, analyzing the compatibility of the improved IMPLY logic family gate and the improved MAGIC logic family gate in the same memristor crossbar switch array; and finally, providing an optimization mapping method combining two logic families. The invention is easy to operate and high in practicability, and the efficiency of logic calculation in the memristor memory can be improved.

Owner:NANJING UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com