Patents

Literature

212 results about "Program optimization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, program optimization or software optimization is the process of modifying a software system to make some aspect of it work more efficiently or use fewer resources. In general, a computer program may be optimized so that it executes more rapidly, or to make it capable of operating with less memory storage or other resources, or draw less power.

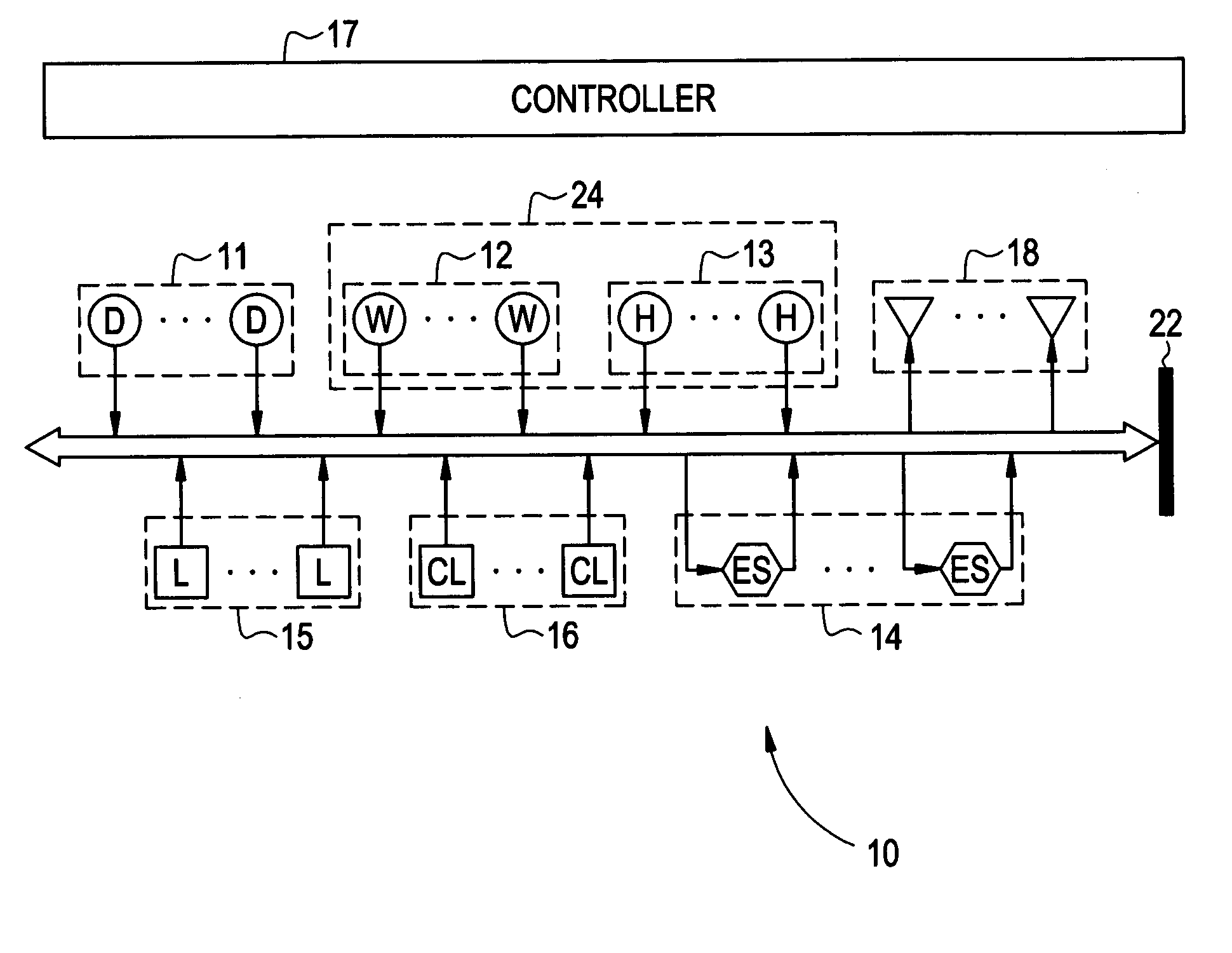

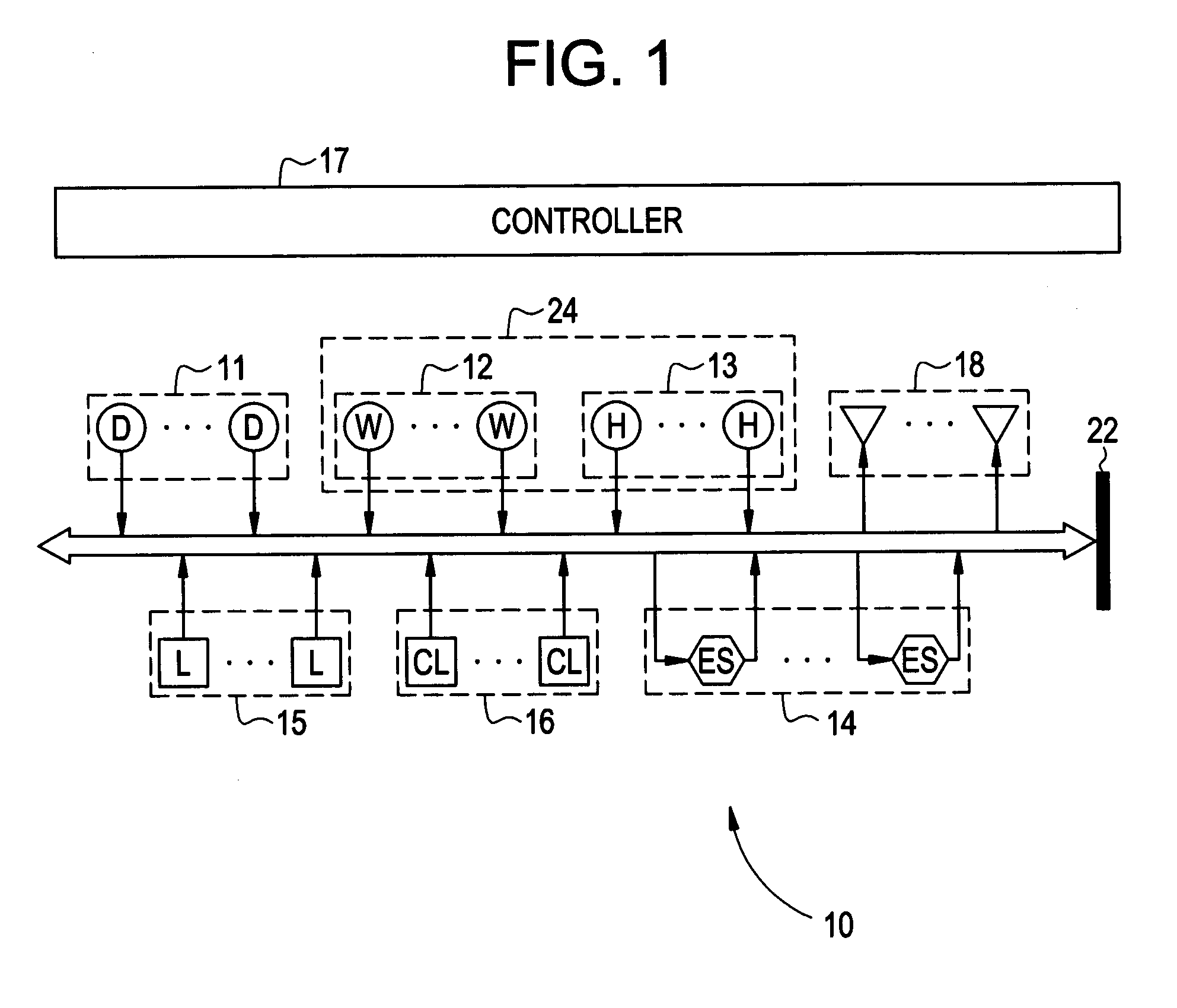

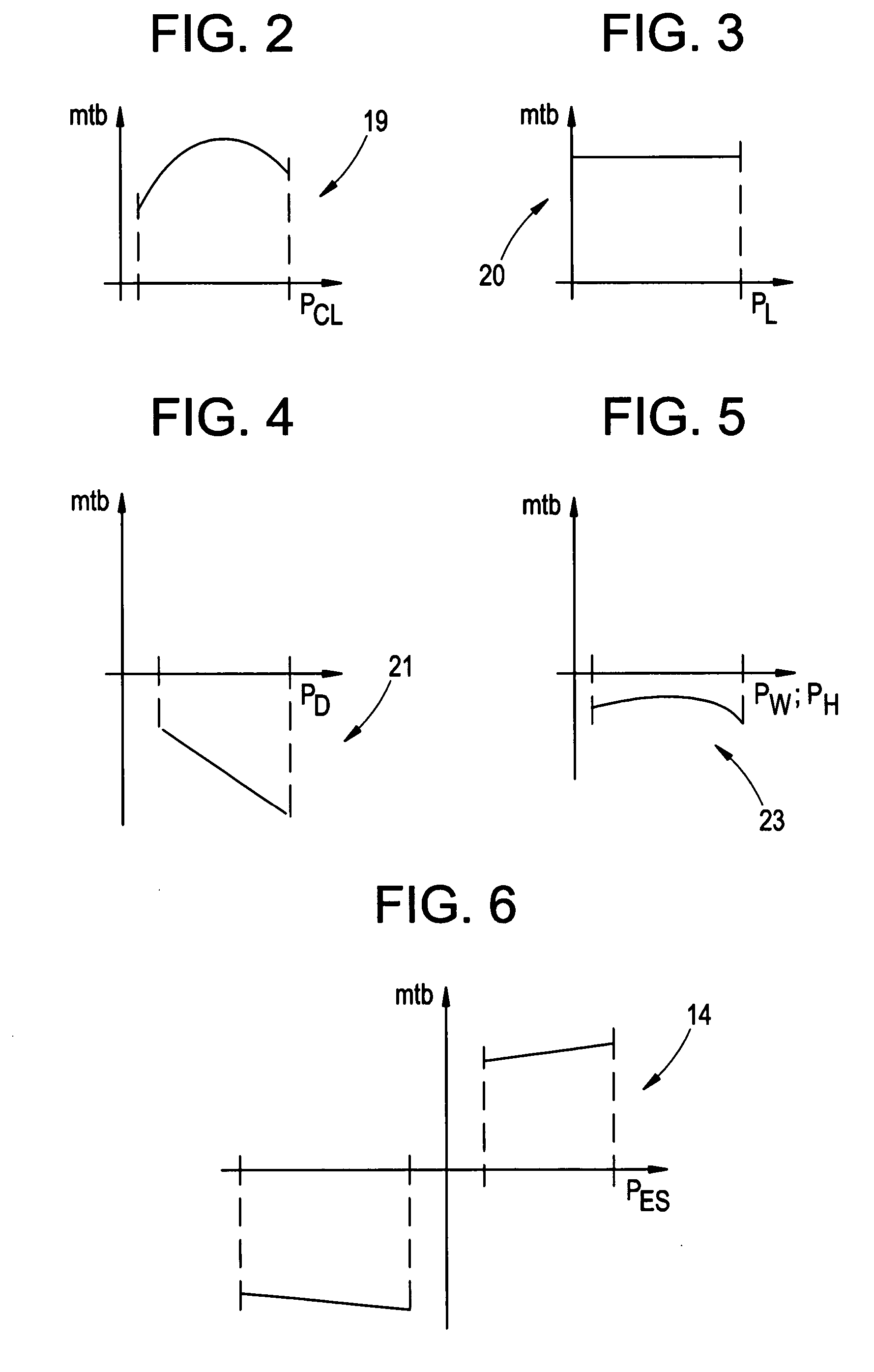

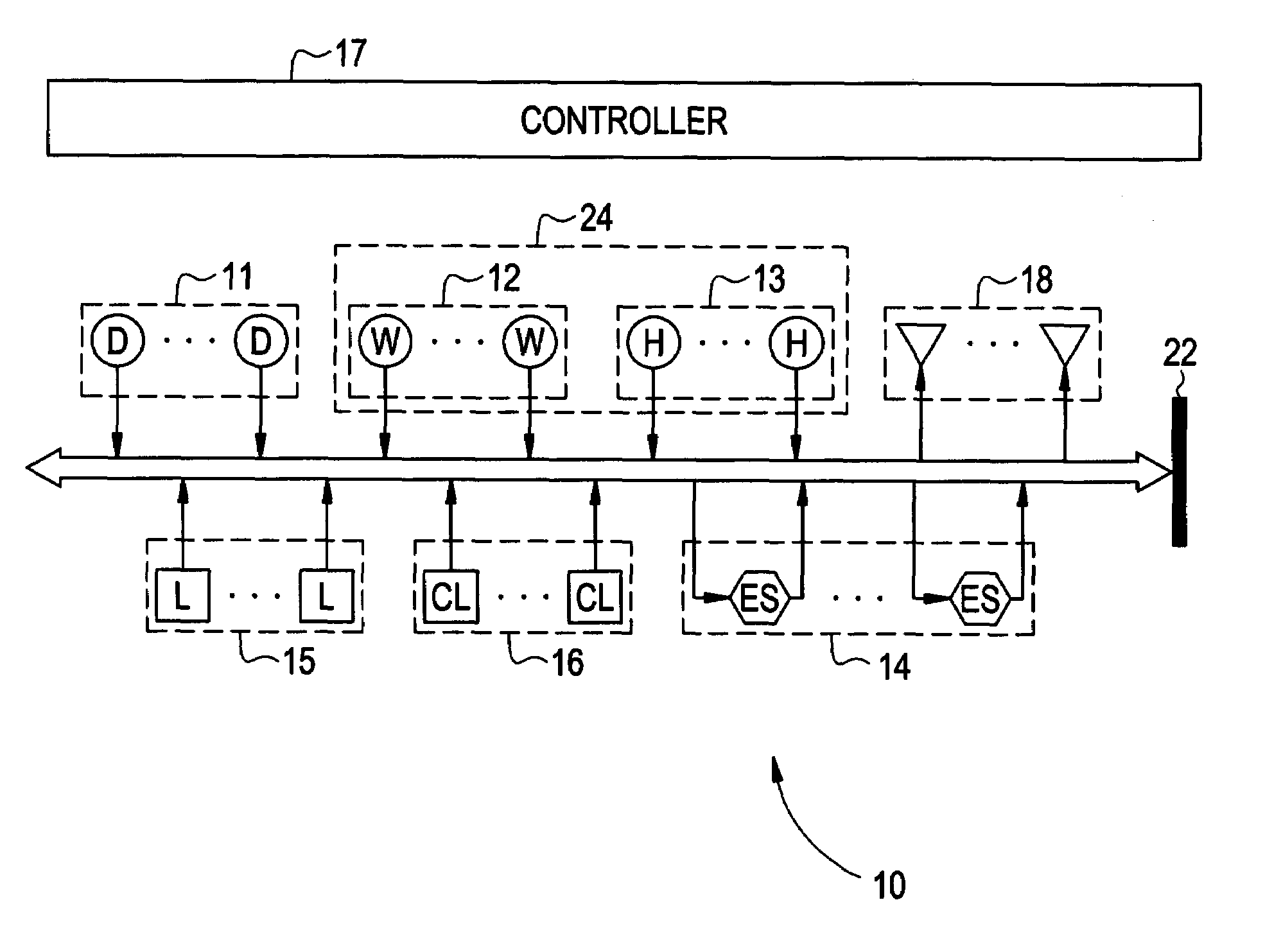

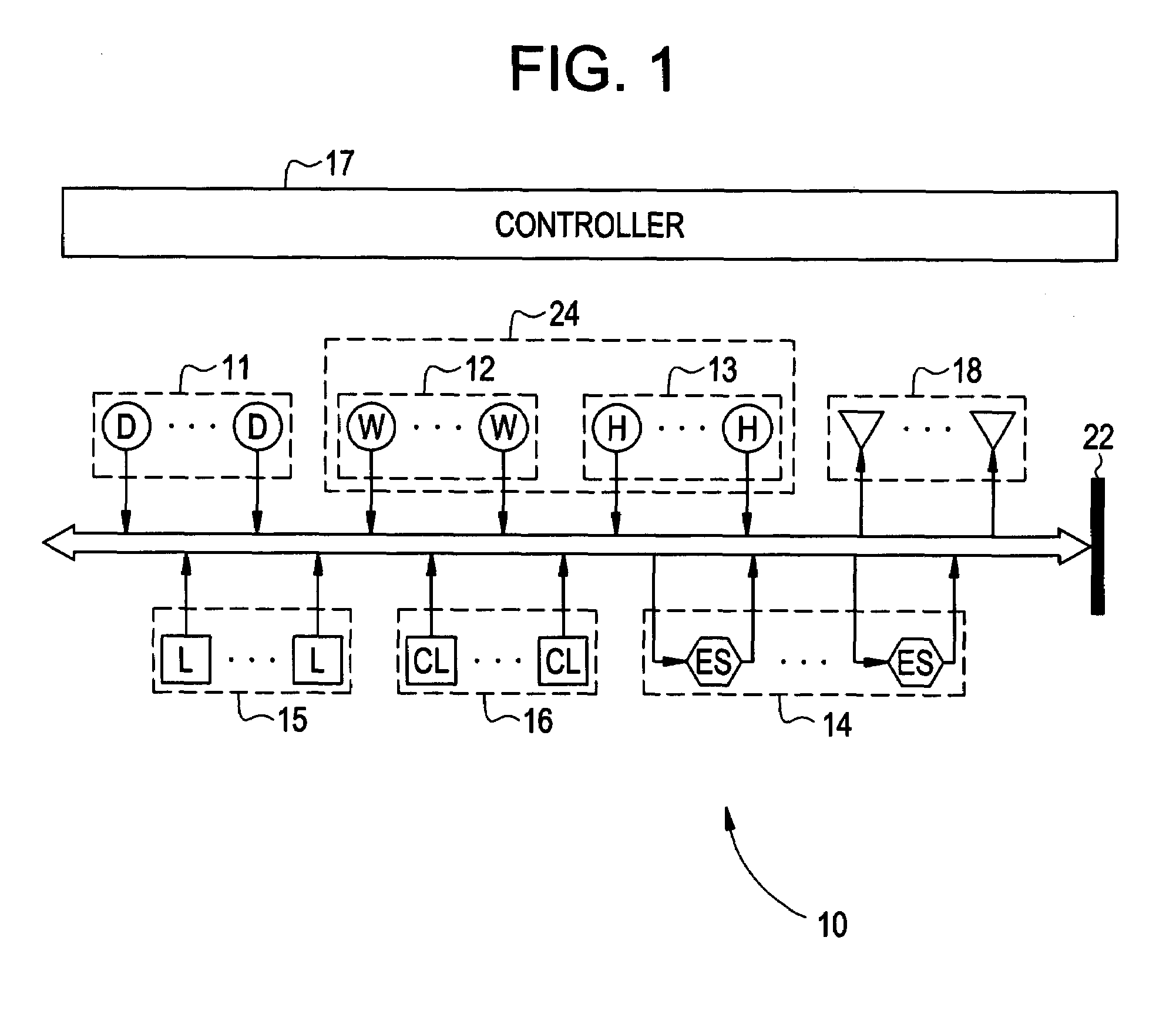

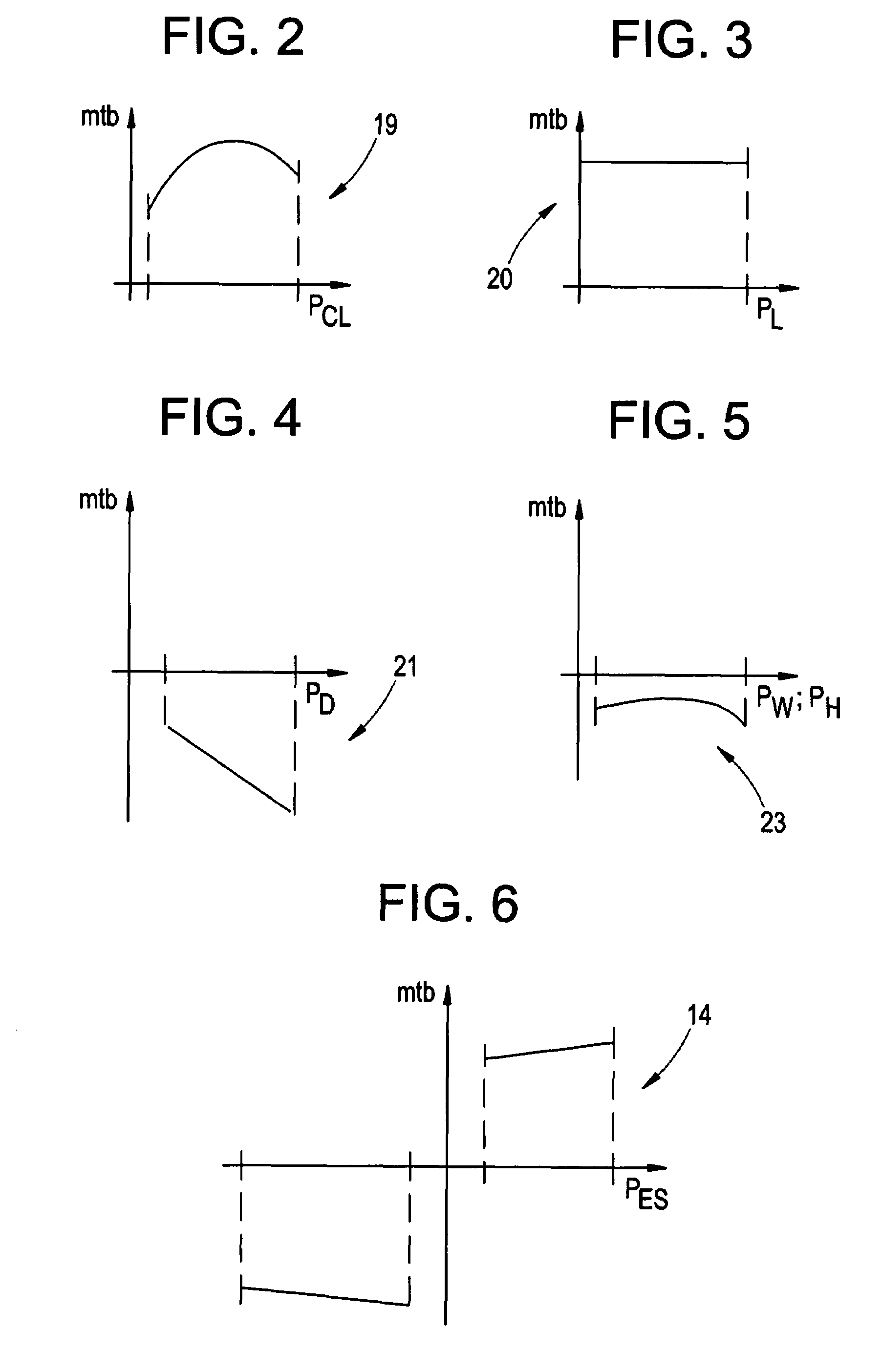

Multi-tier benefit optimization for operating the power systems including renewable and traditional generation, energy storage, and controllable loads

ActiveUS20070100503A1Maximize the benefitsSampled-variable control systemsMechanical power/torque controlElectric power systemPower grid

A plurality of power generating assets are connected to a power grid. The grid may, if desired, be a local grid or a utility grid. The power grid is connected to a plurality of loads. The loads may, if desired, be controllable and non-controllable. Distribution of the power to the loads is via a controller that has a program stored therein that optimizes the controllable loads and the power generating assets. The optimization process is via multi-tier benefit construct.

Owner:GENERAL ELECTRIC CO

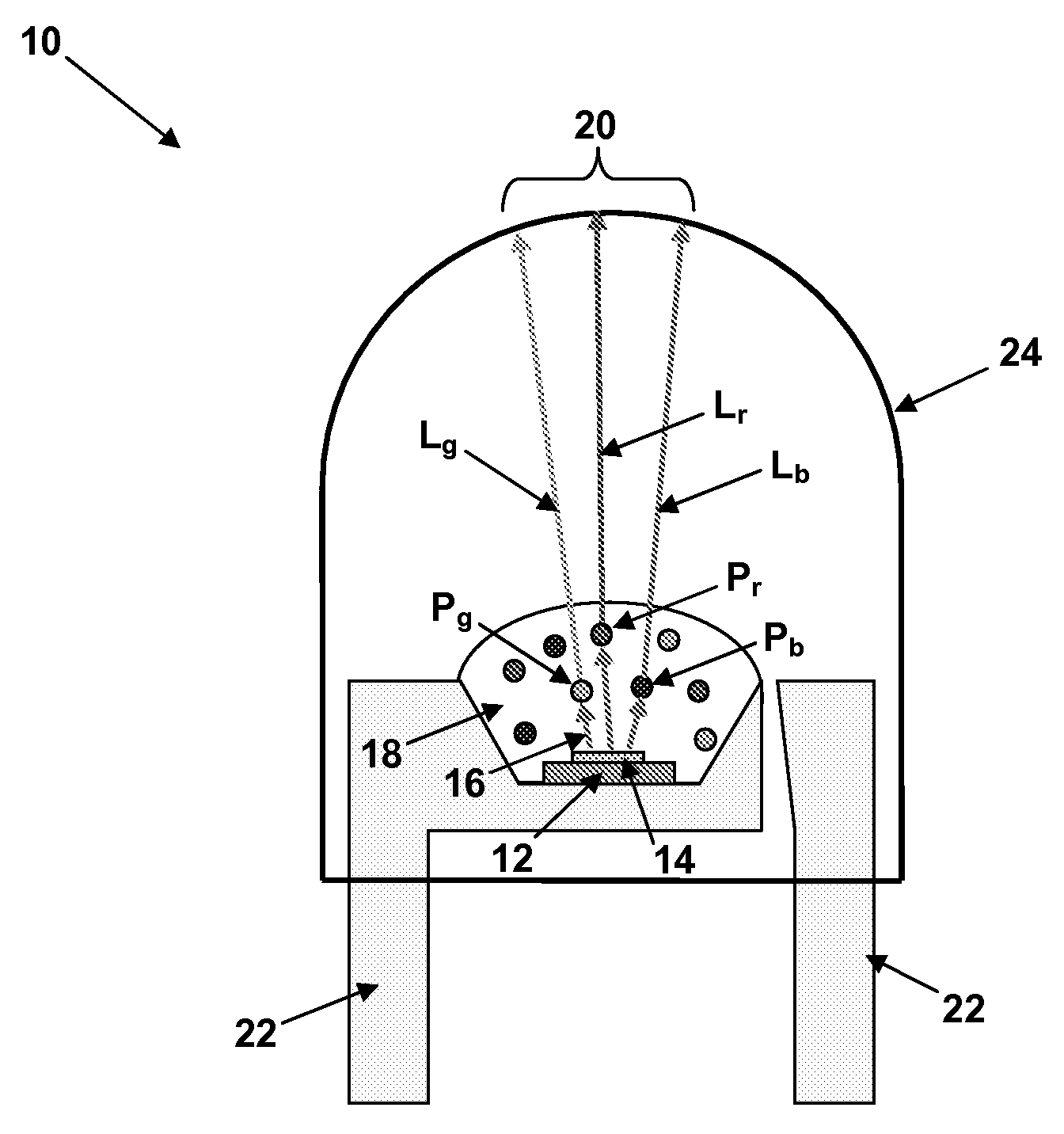

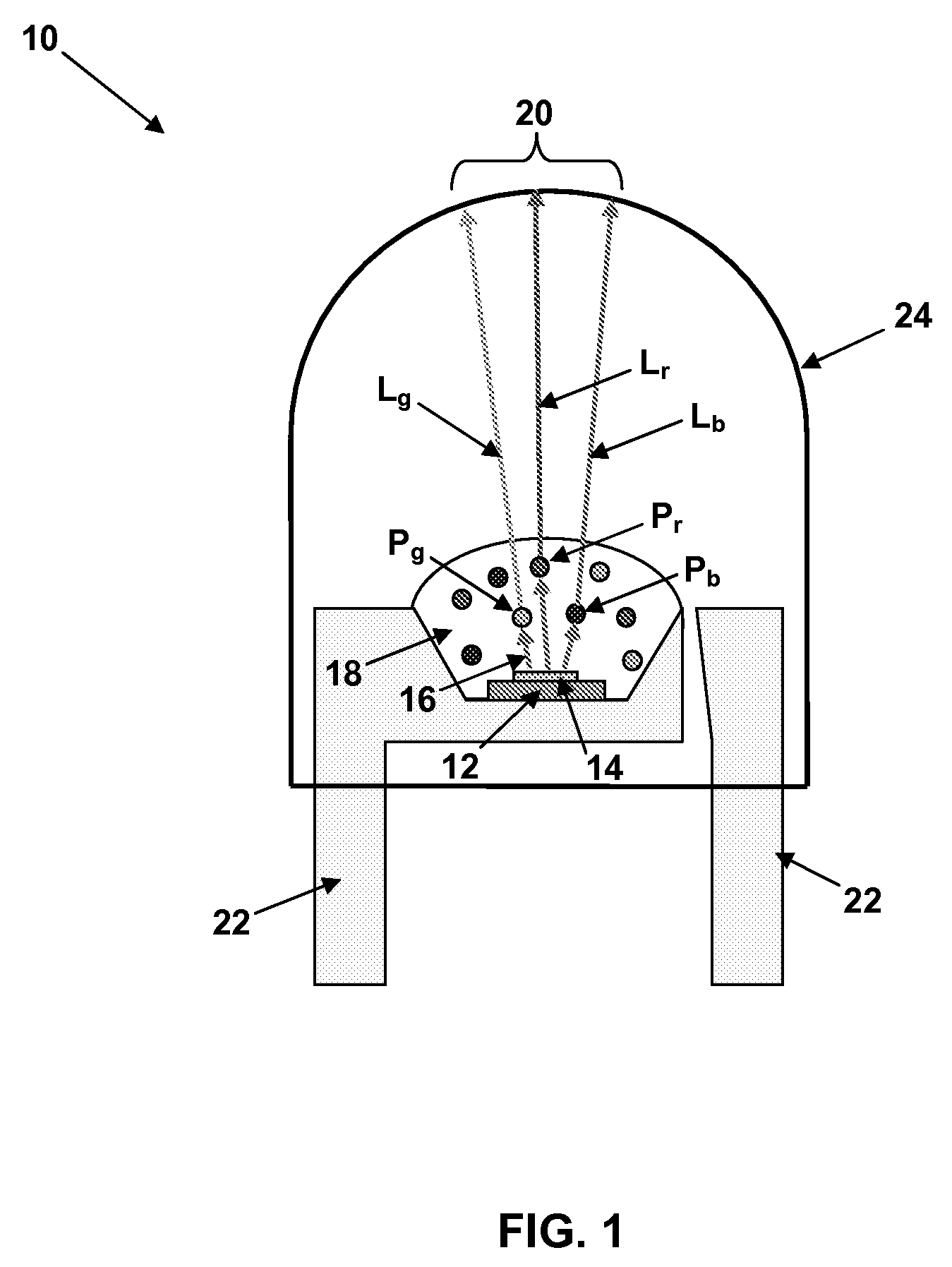

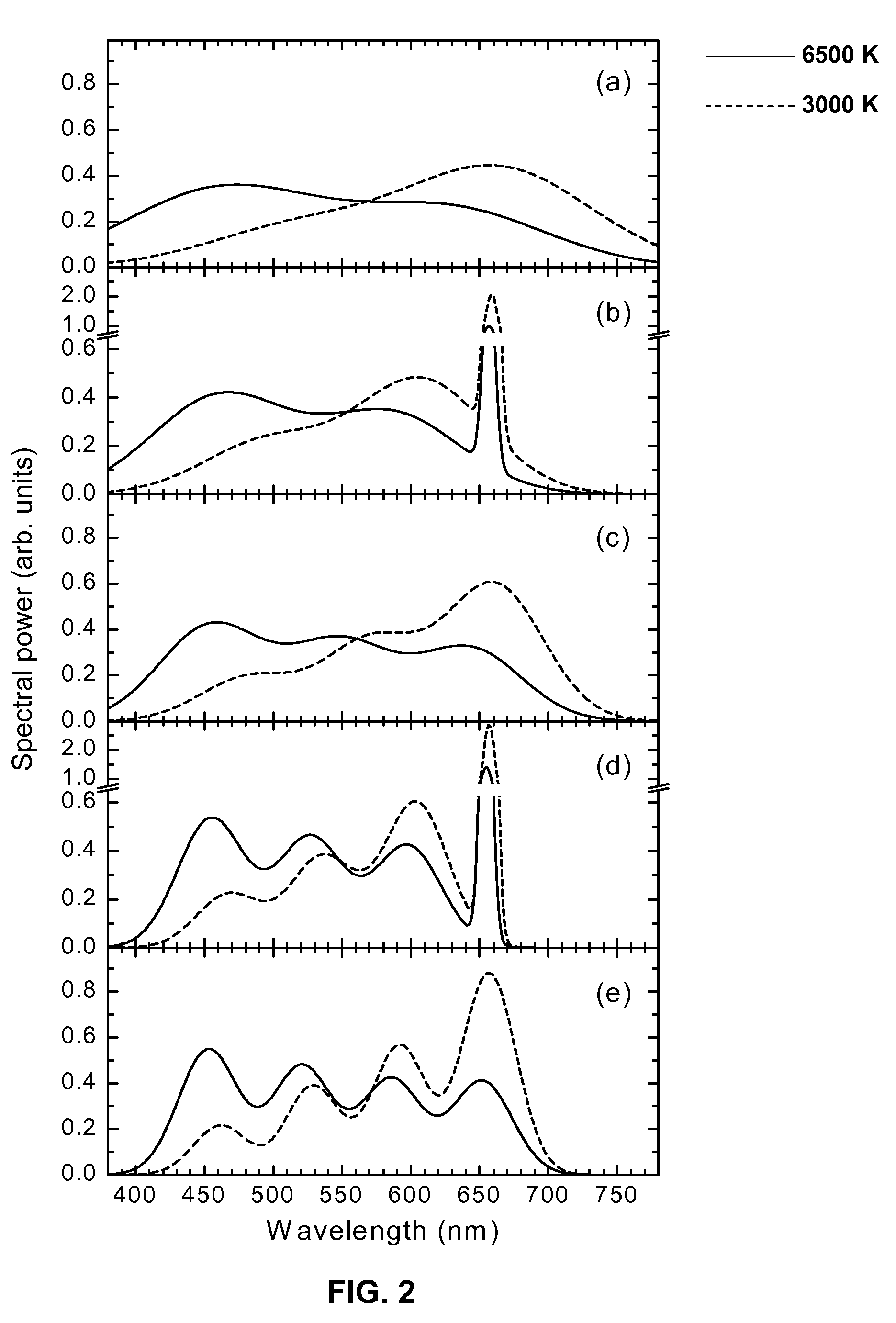

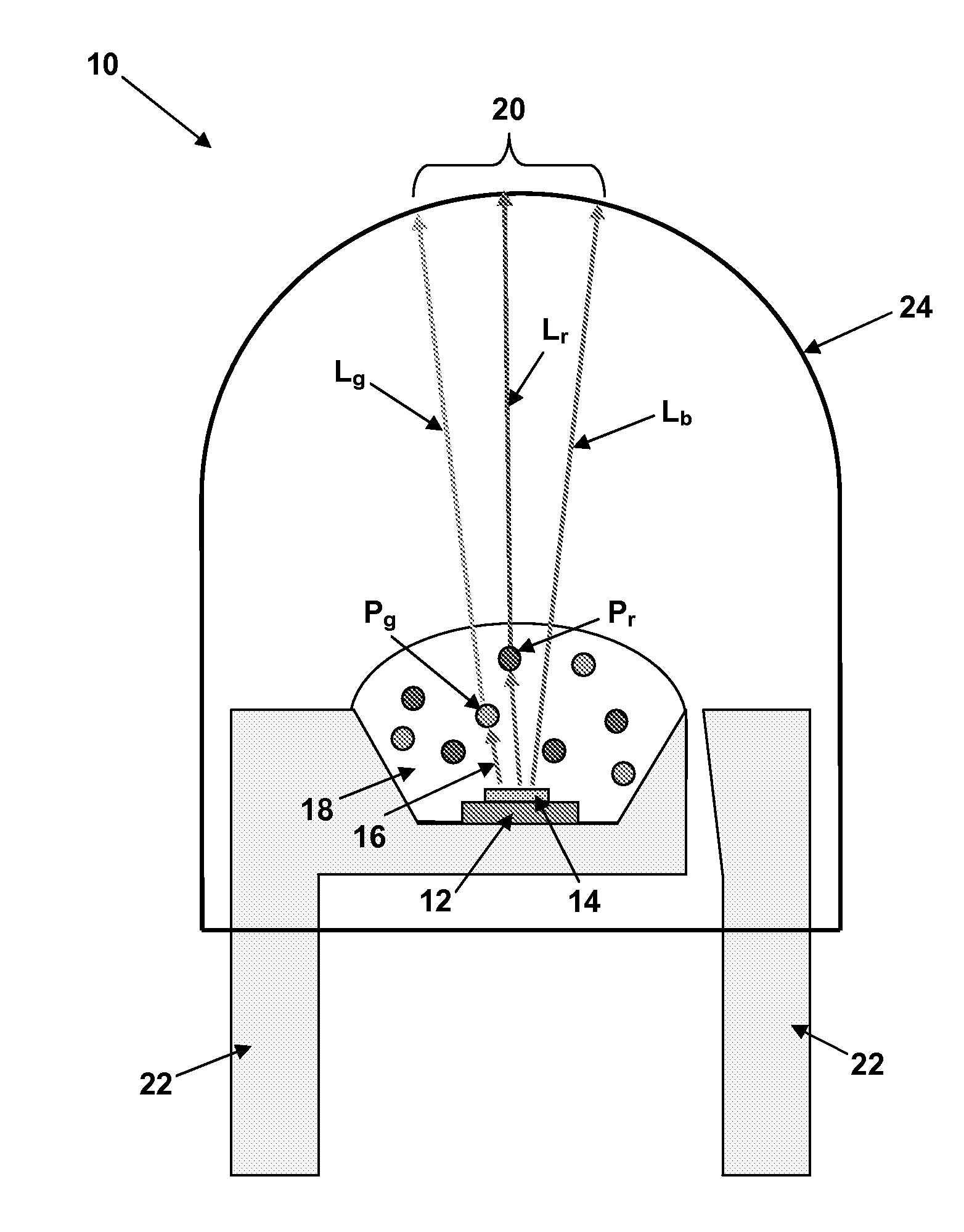

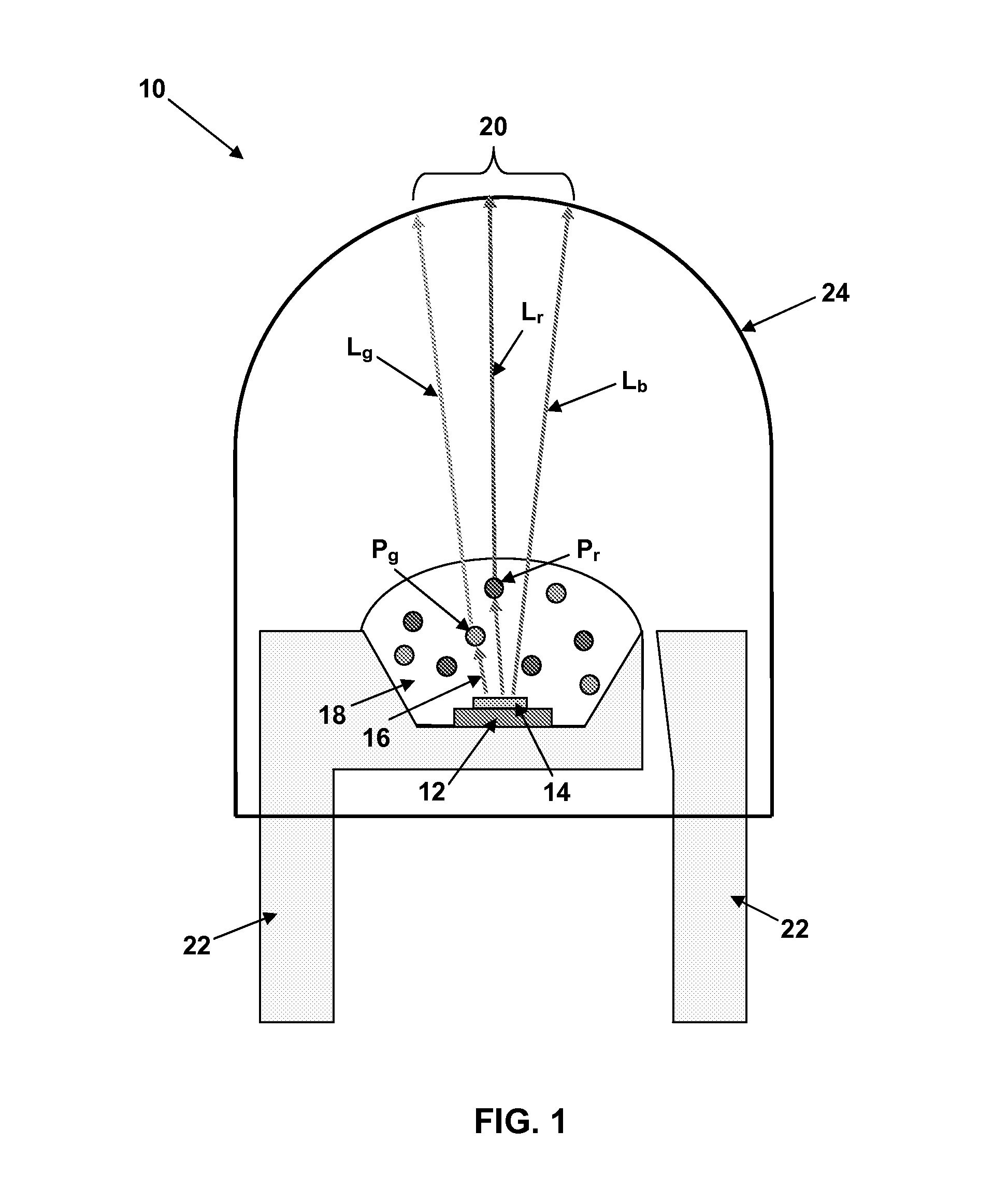

Solid-state lamps with complete conversion in phosphors for rendering an enhanced number of colors

InactiveUS20090231832A1Quality improvementSpectral modifiersSemiconductor devicesDaylightLight-emitting diode

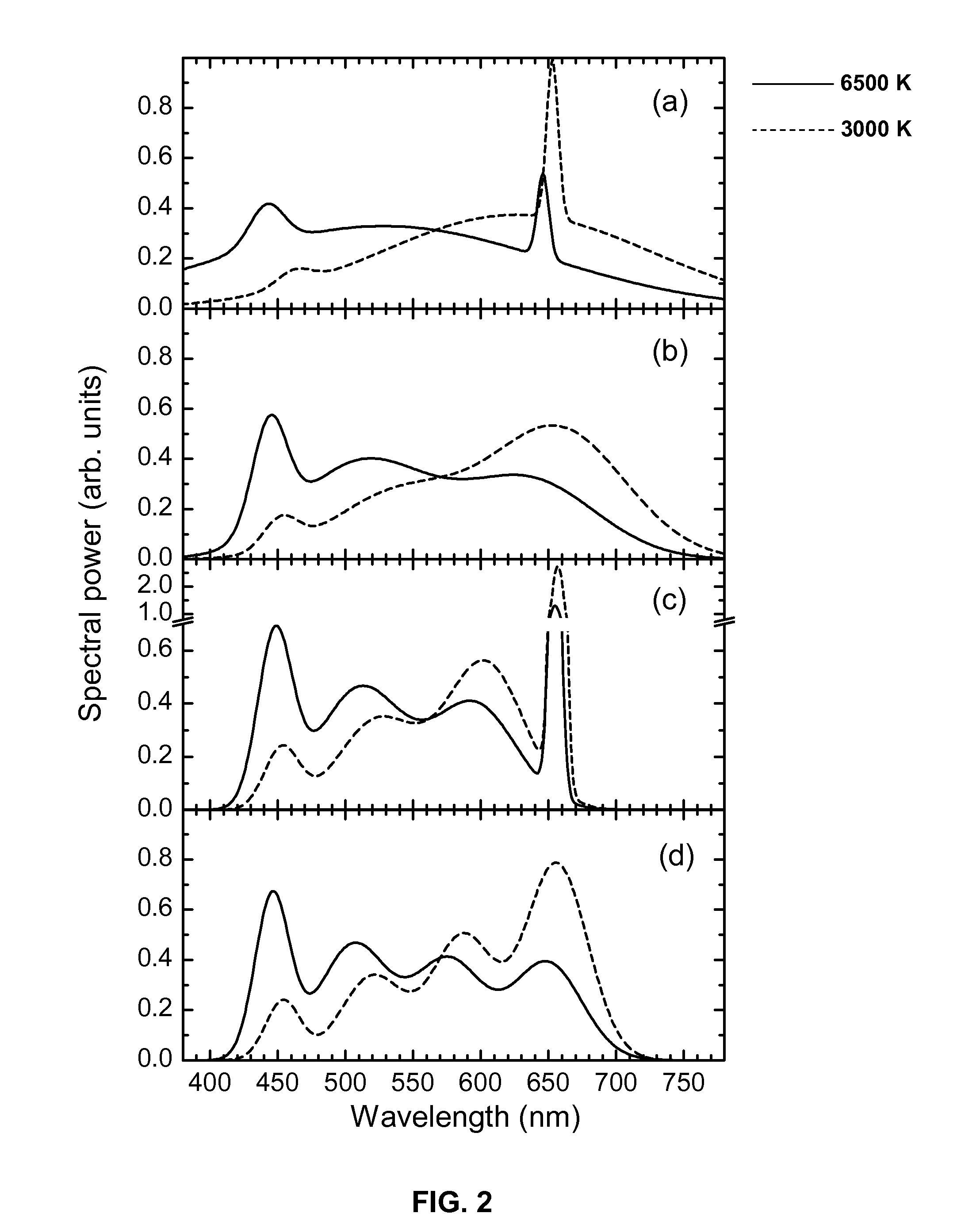

The invention relates to phosphor-conversion (PC) sources of white light, which are composed of at least two groups of emitters, such as ultraviolet (UV) light-emitting diodes (LEDs) and wide-band (WB) or narrow-band (NB) phosphors that completely absorb and convert the flux generated by the LEDs to other wavelengths, and to improving the color quality of the white light emitted by such light sources. In particular, embodiments of the present invention describe new 2-4 component combinations of peak wavelengths and bandwidths for white PC LEDs with complete conversion. These combinations are used to provide spectral power distributions that enable lighting with a considerable portion of a high number of spectrophotometrically calibrated colors rendered almost indistinguishably from a blackbody radiator or daylight illuminant, and which differ from distributions optimized using standard color-rendering assessment procedures based on a small number of test color samples.

Owner:SENSOR ELECTRONICS TECH

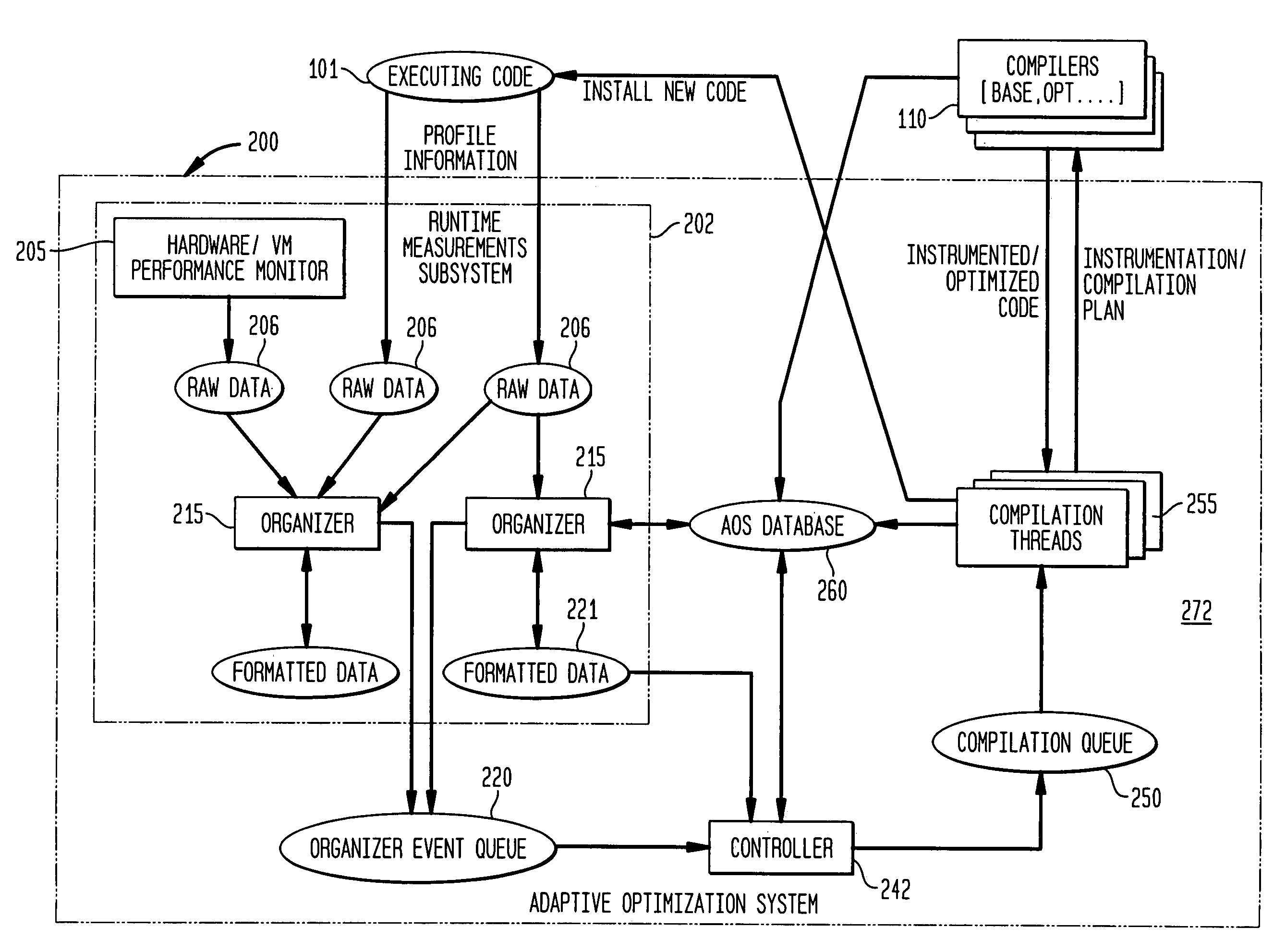

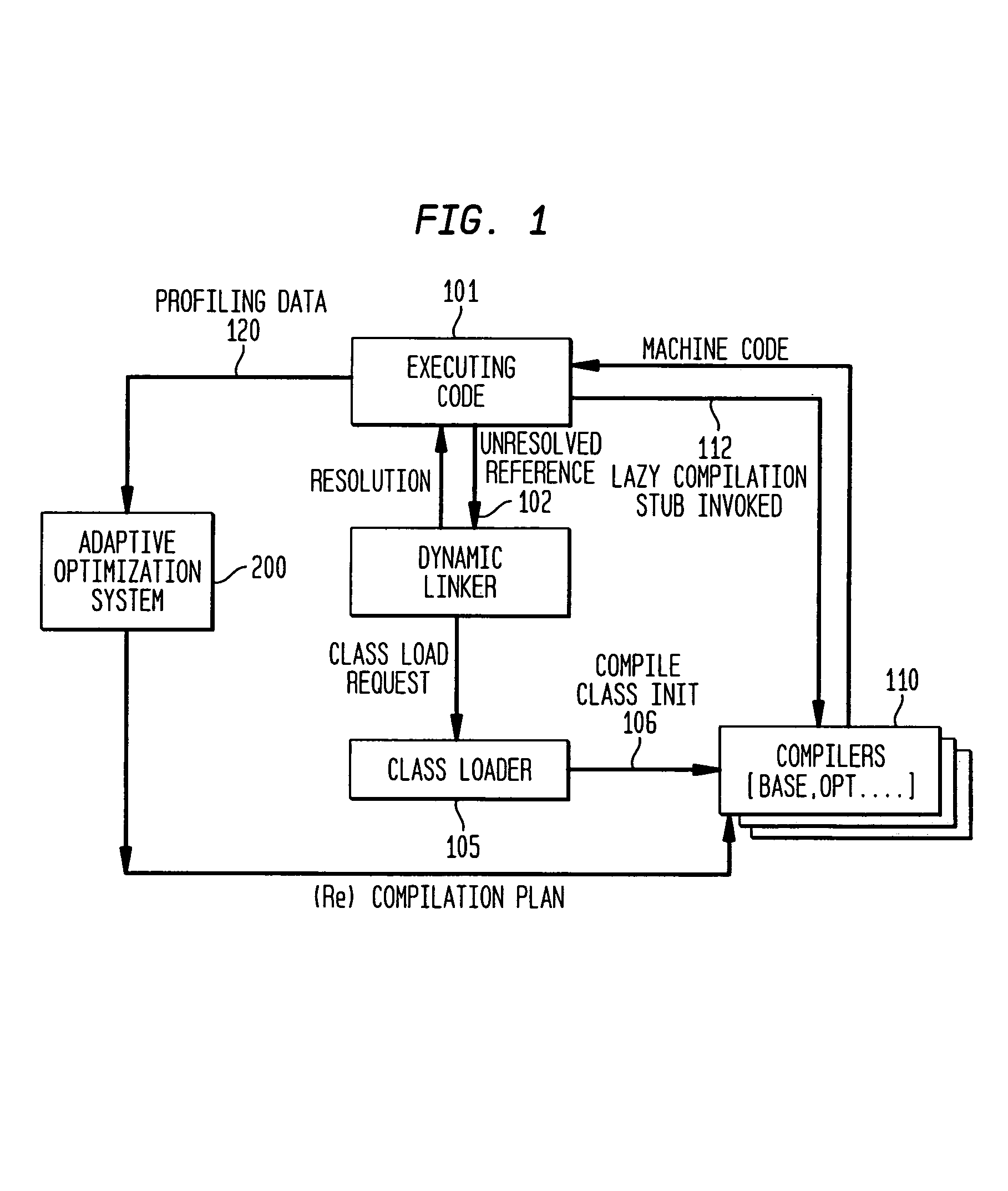

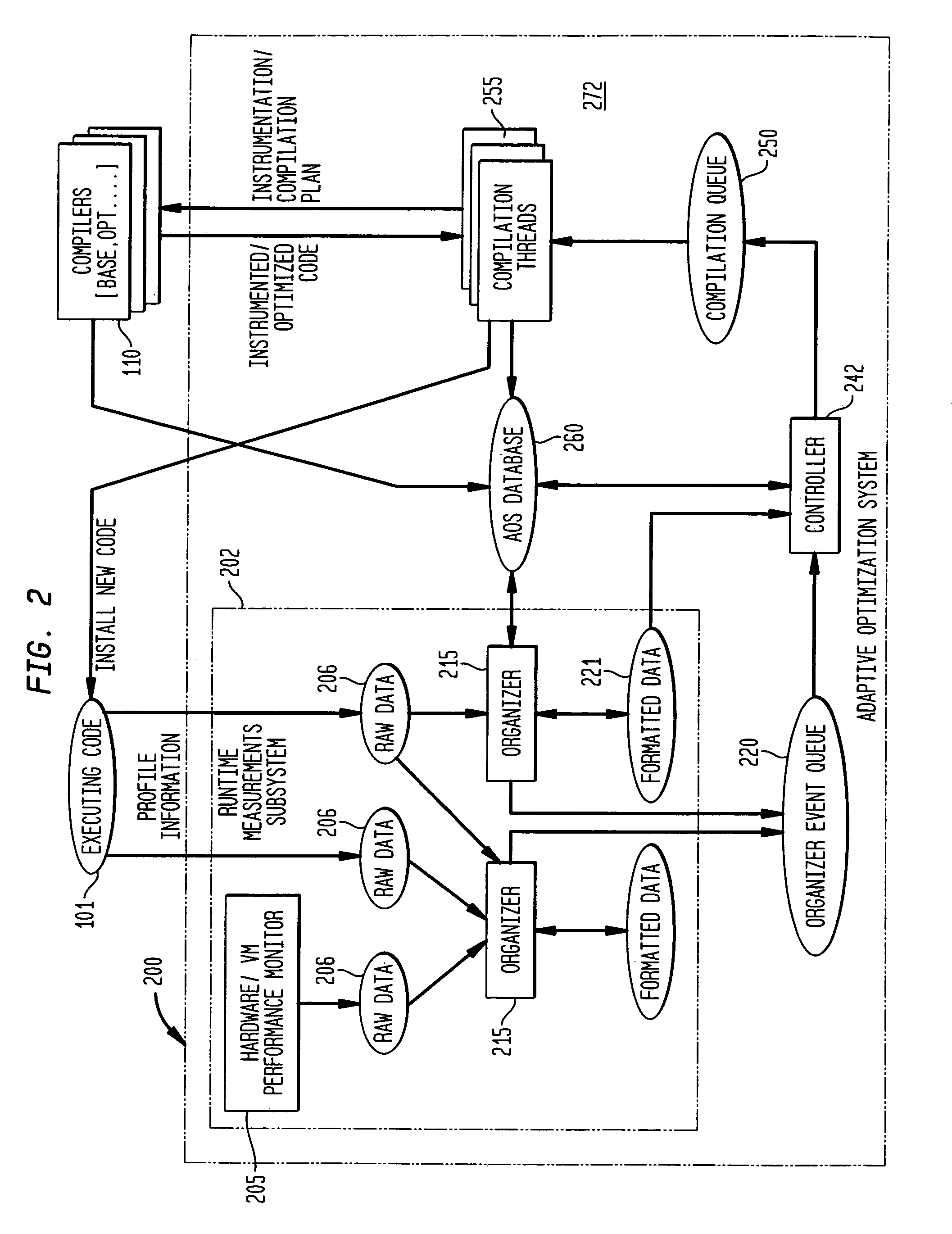

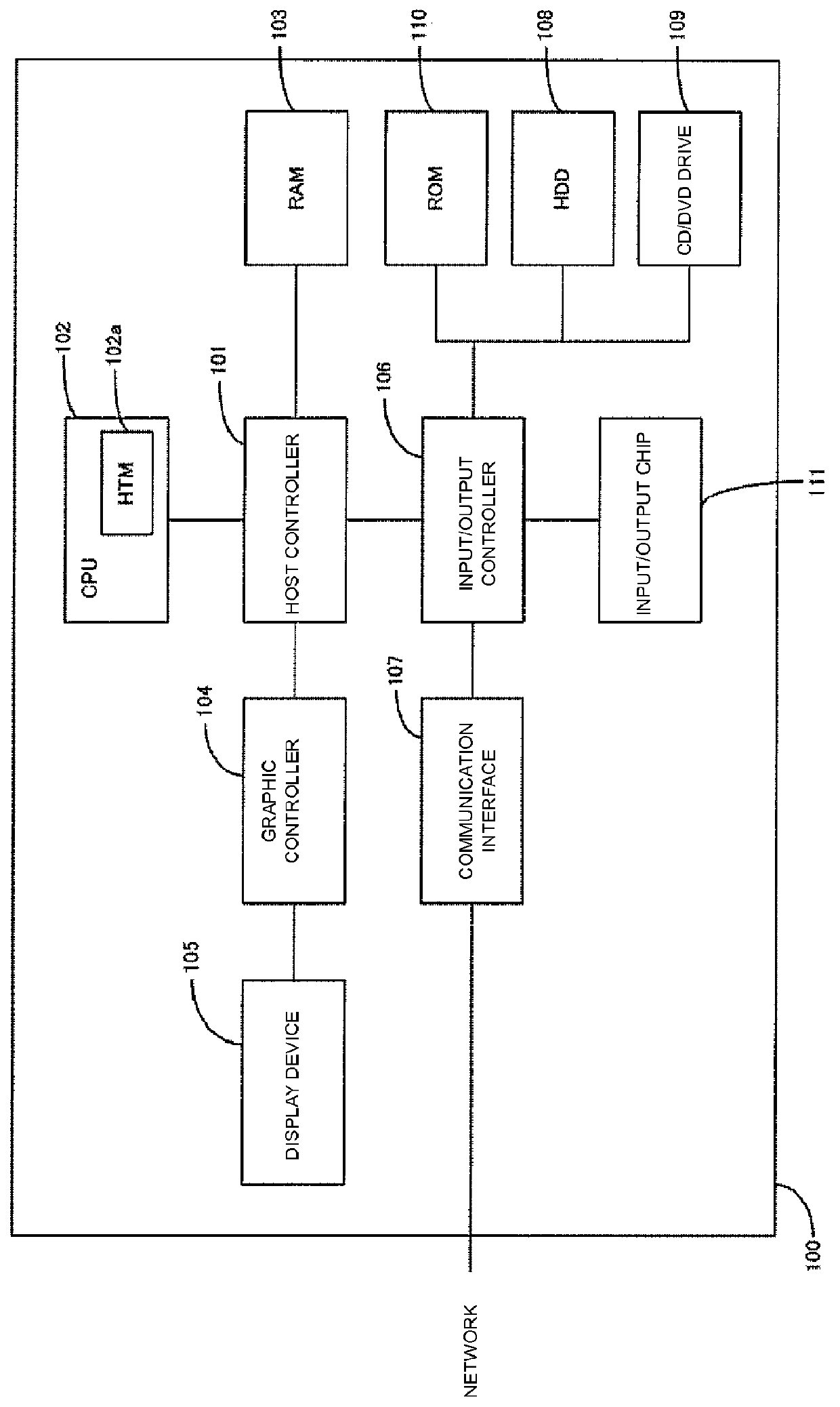

System and method for adaptively optimizing program execution by sampling at selected program points

InactiveUS6971091B1Improve performanceSoftware engineeringSoftware simulation/interpretation/emulationProgram planningParallel computing

A sampling-based system and method for adaptively optimizing a computer program executing in an execution environment that comprises one or more compiler devices for providing various levels of program optimization. The system comprises a runtime measurements sub-system for monitoring execution of the computer program to be optimized, the monitoring including obtaining raw profile data samples and characterizing the raw profile data; a controller device for receiving the characterized raw profile data from the runtime measurements sub-system and analyzing the data for determining whether a level of program optimization for the executing program is to be performed by a compiler device, the controller generating a compilation plan in accordance with a determined level of optimization; and, a recompilation sub-system for receiving a compilation plan from the controller and invoking a compiler device for performing the level of program optimization of the executing program in accordance with the compilation plan.

Owner:IBM CORP

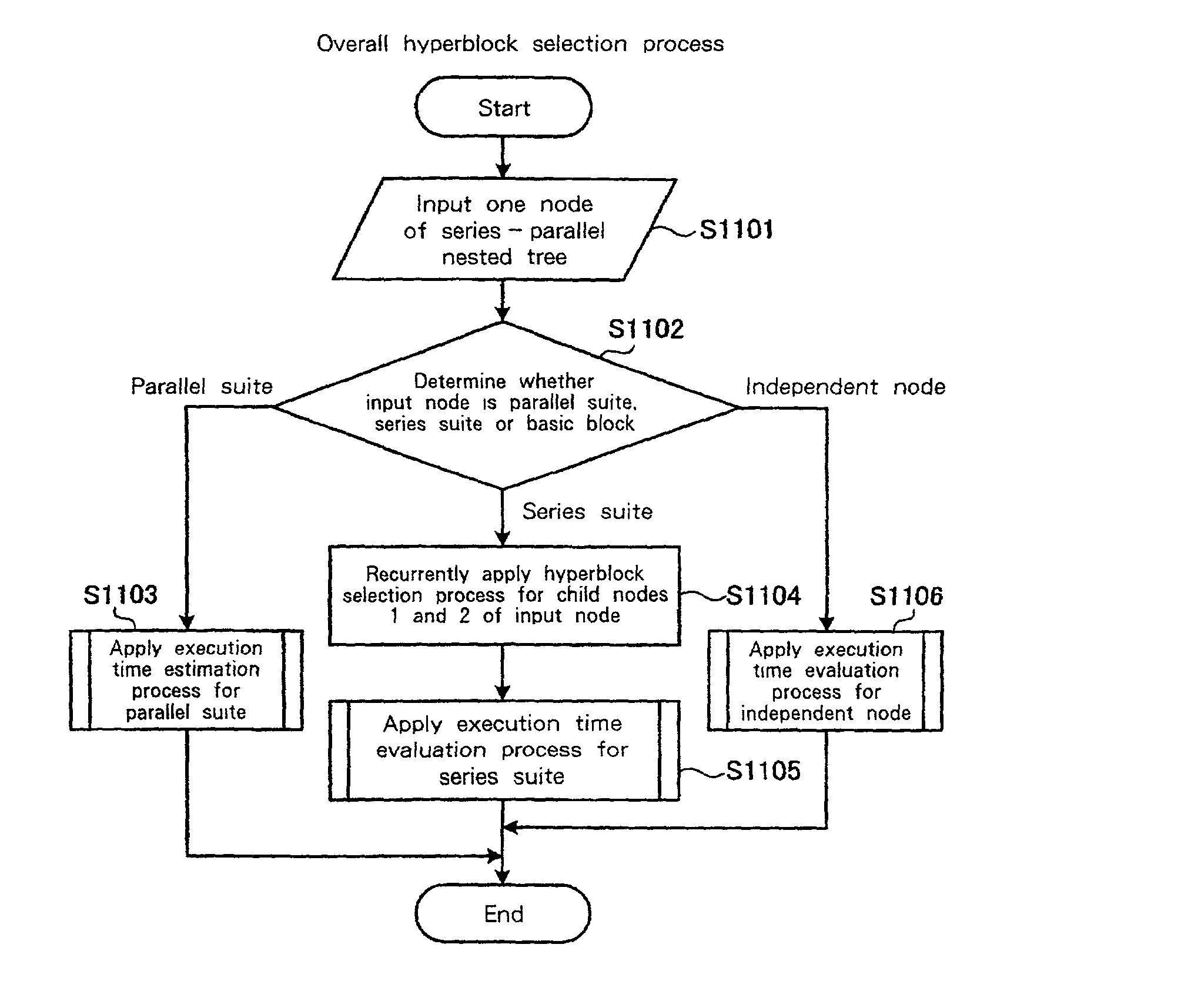

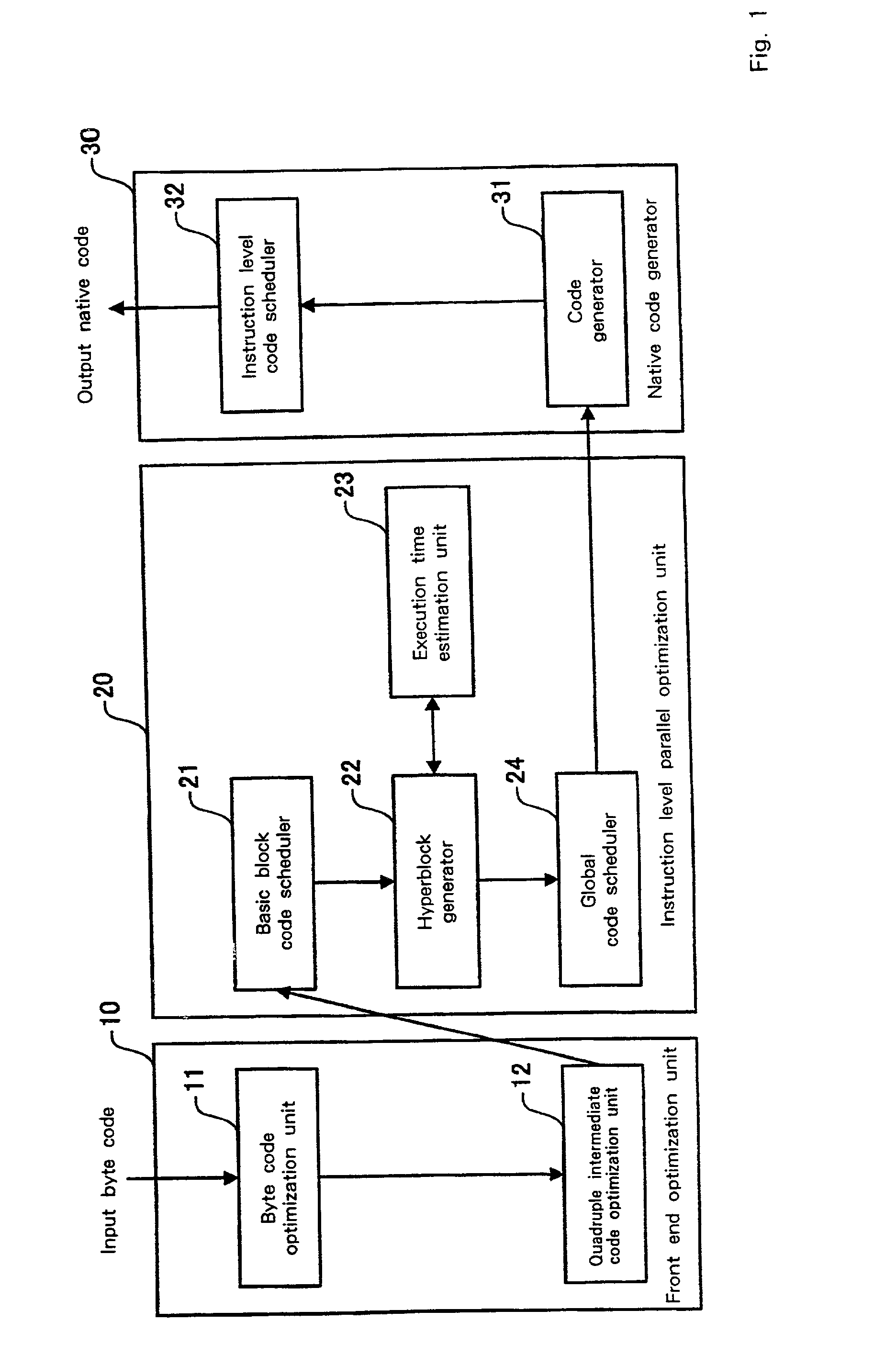

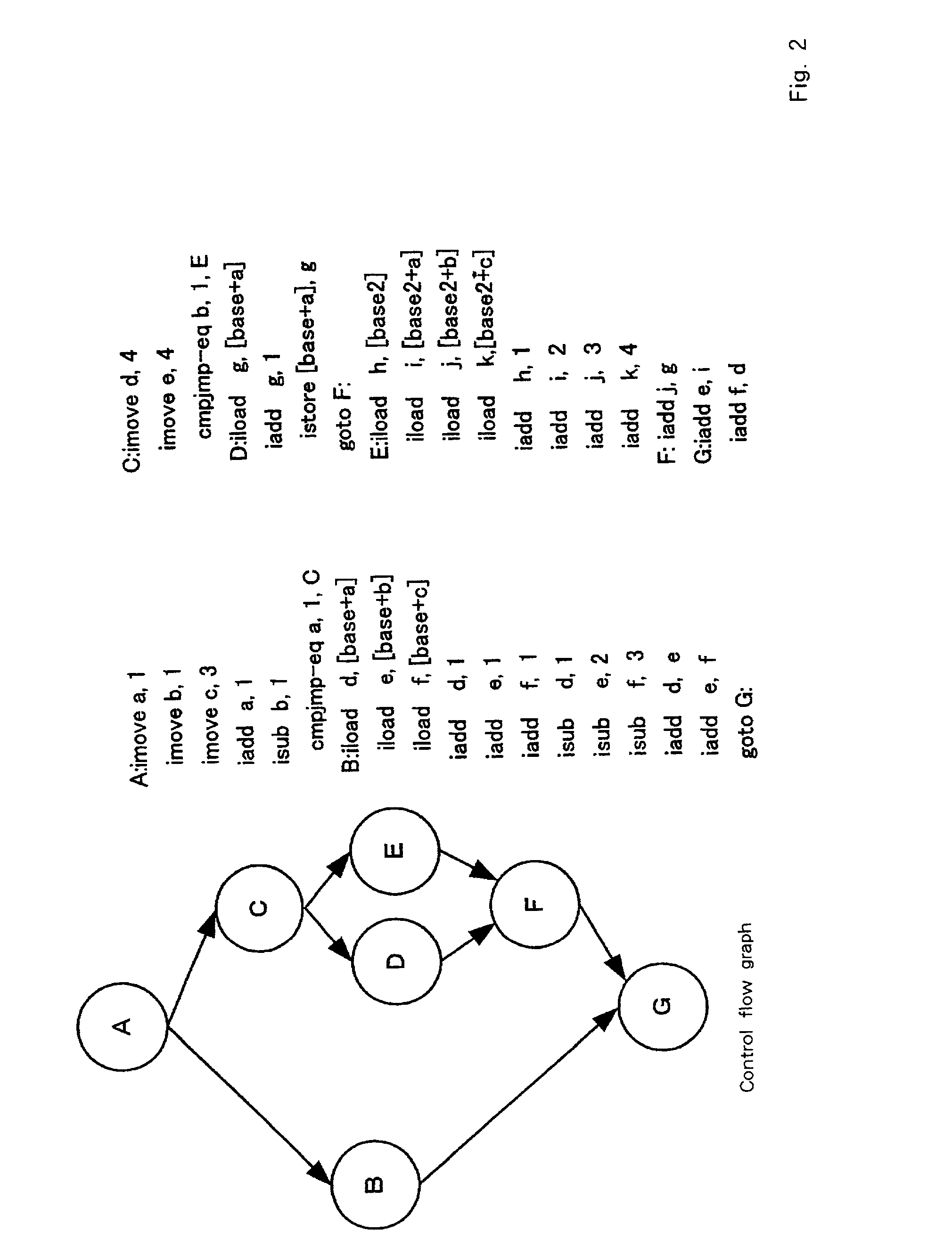

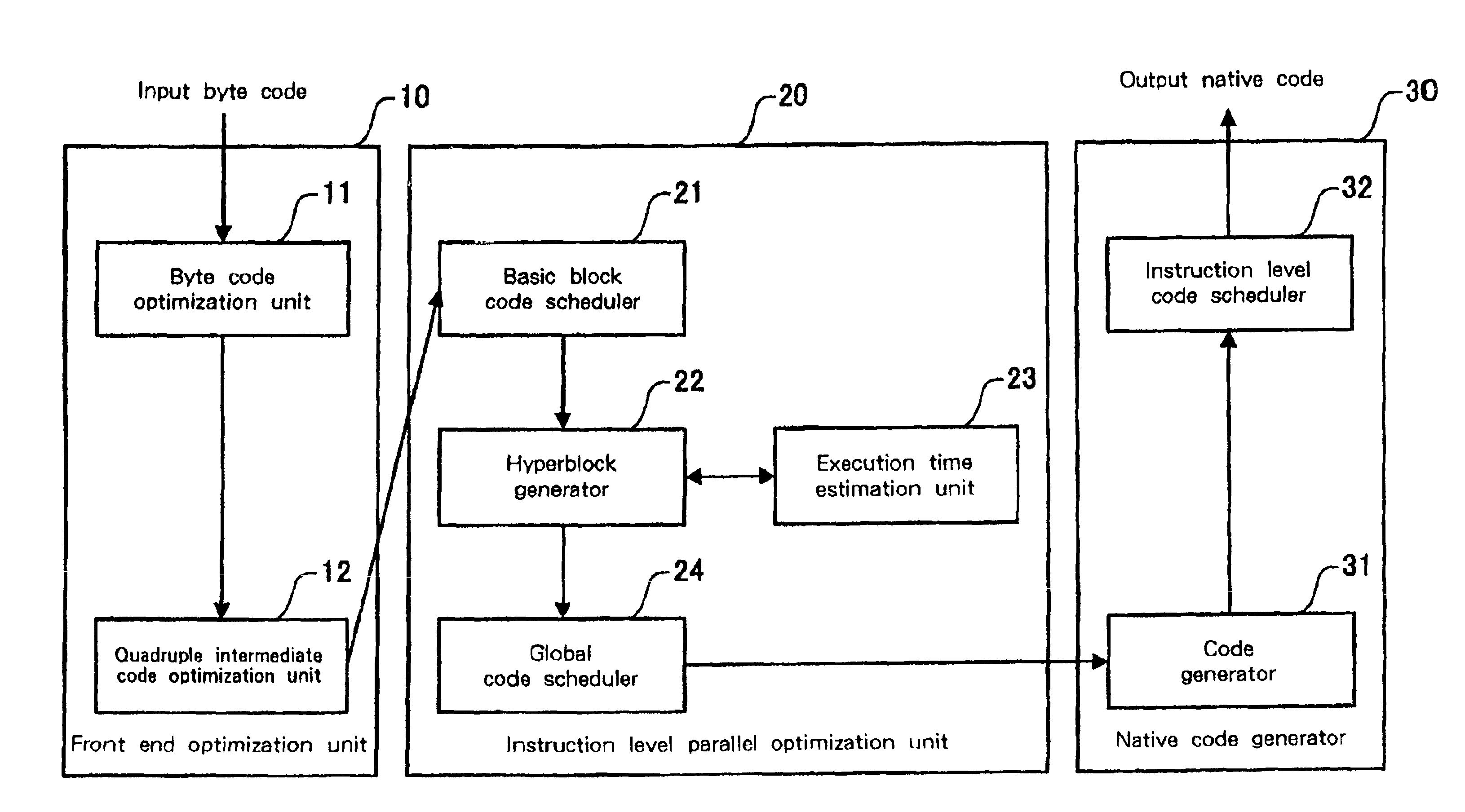

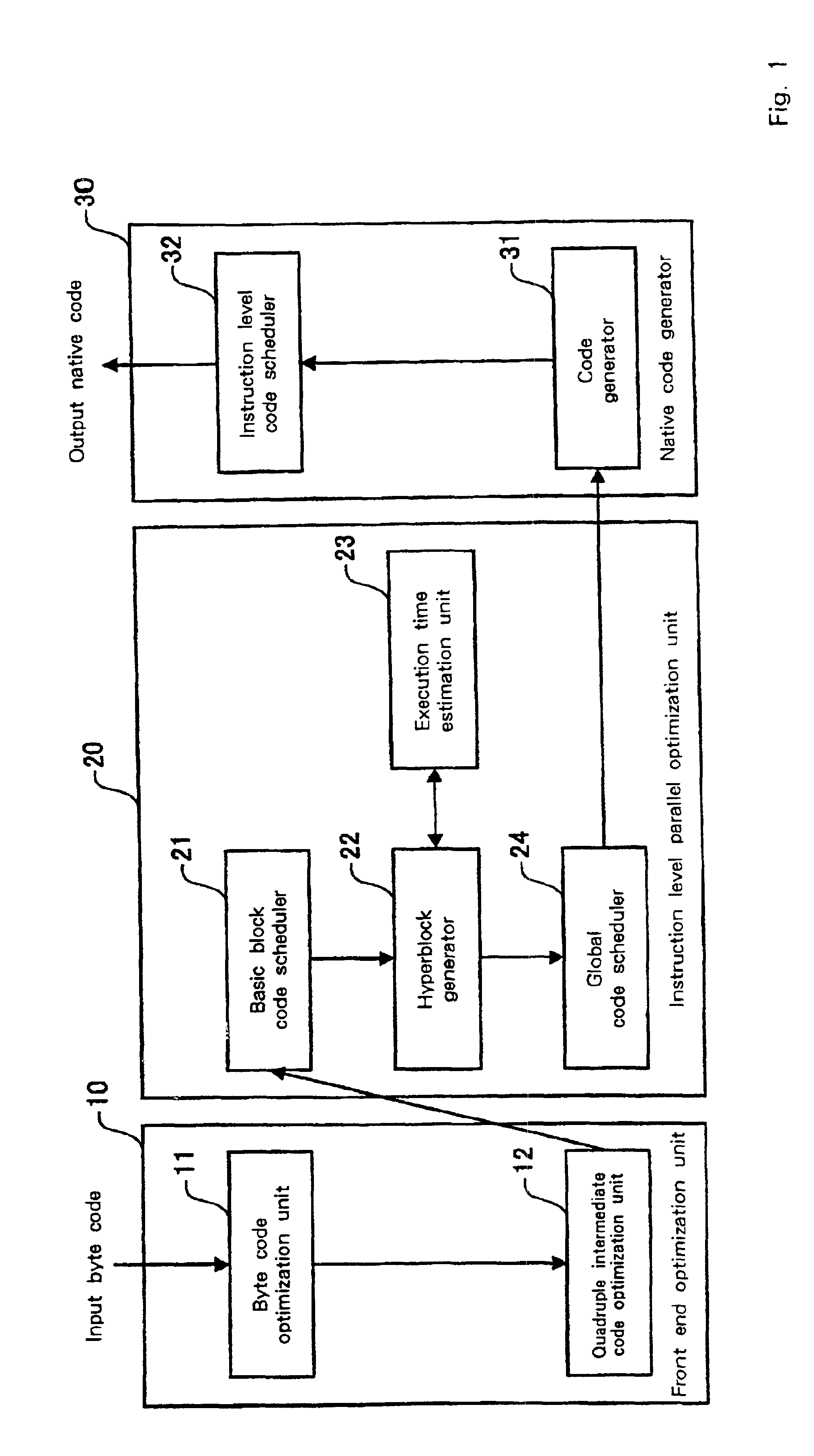

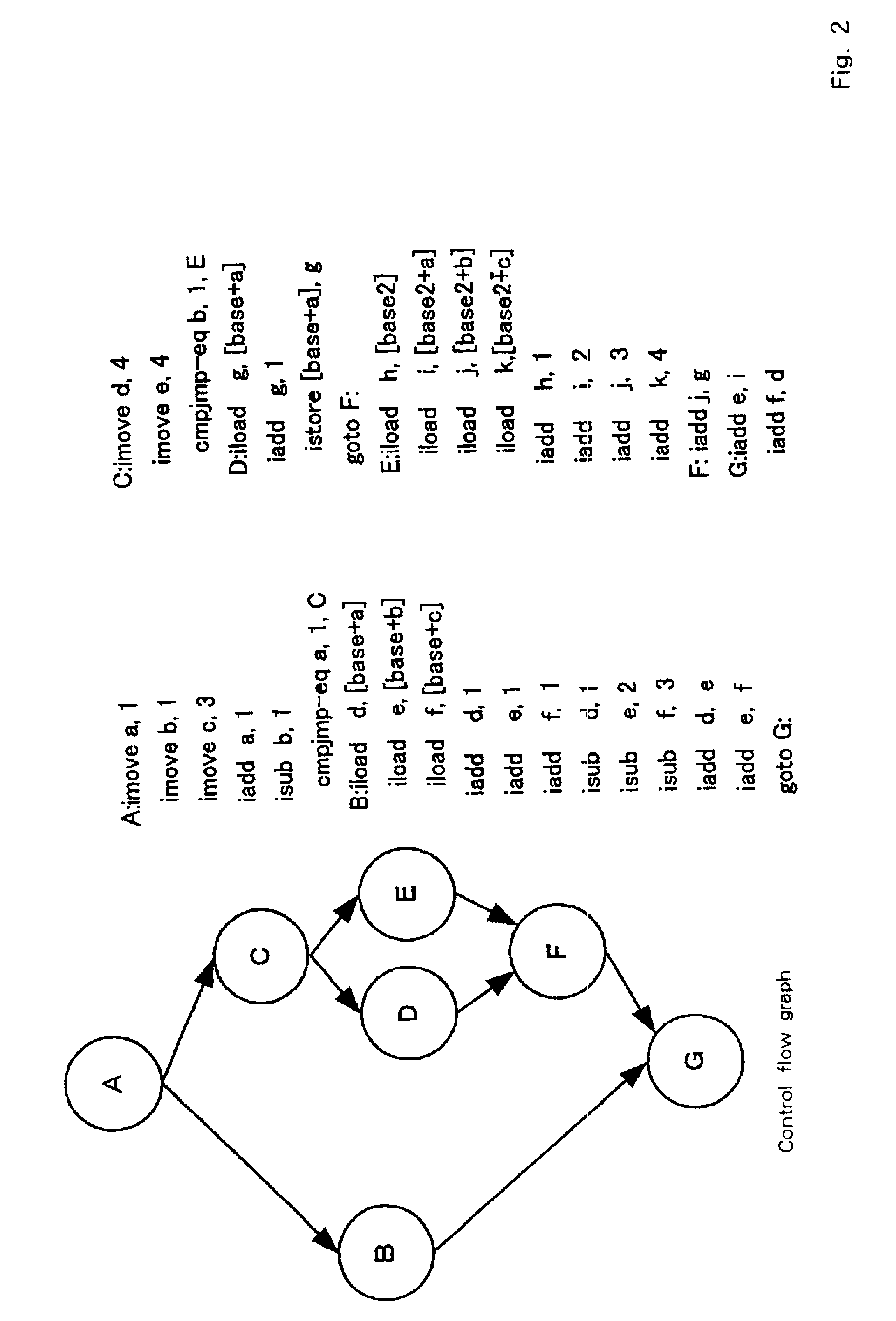

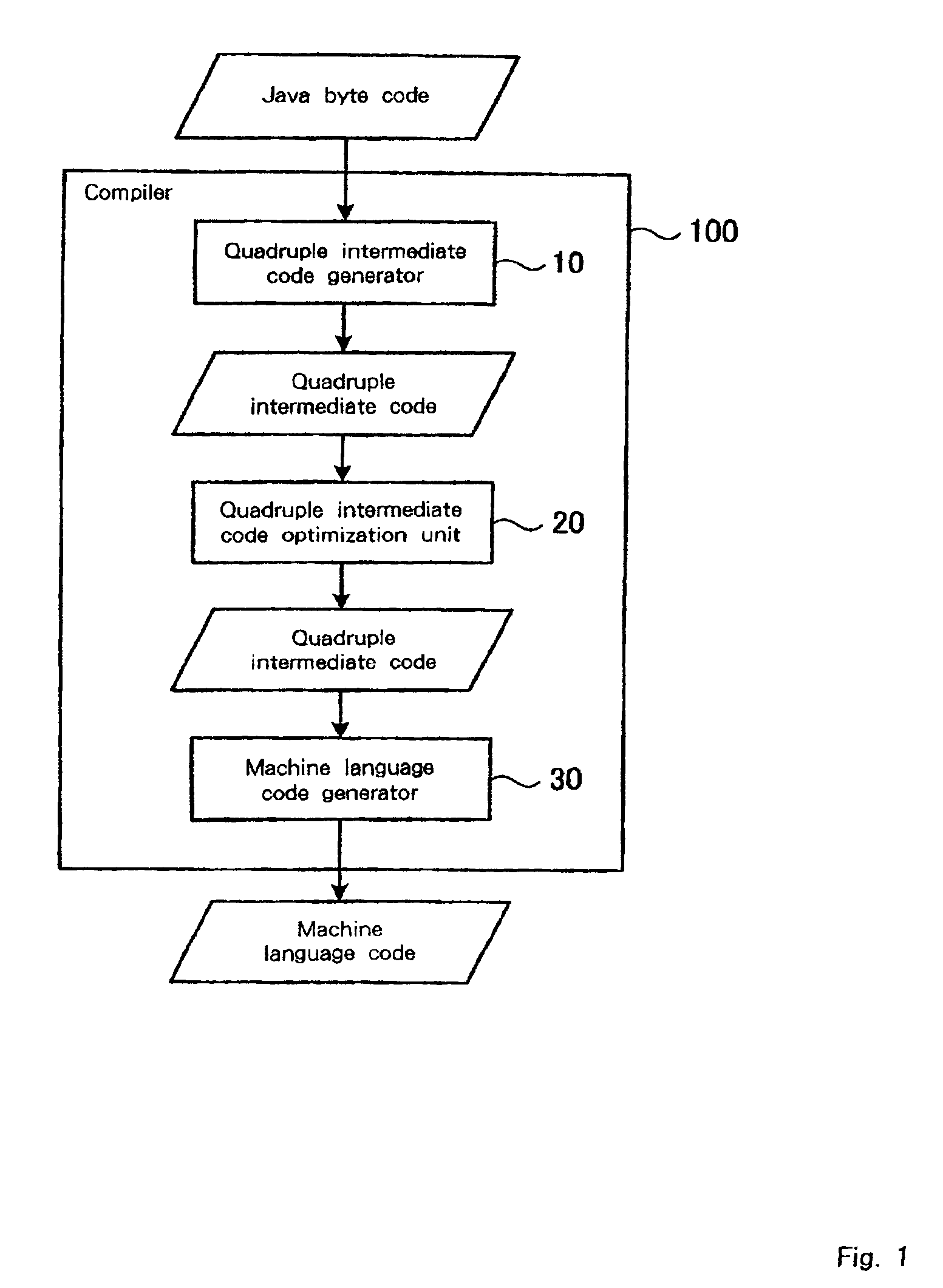

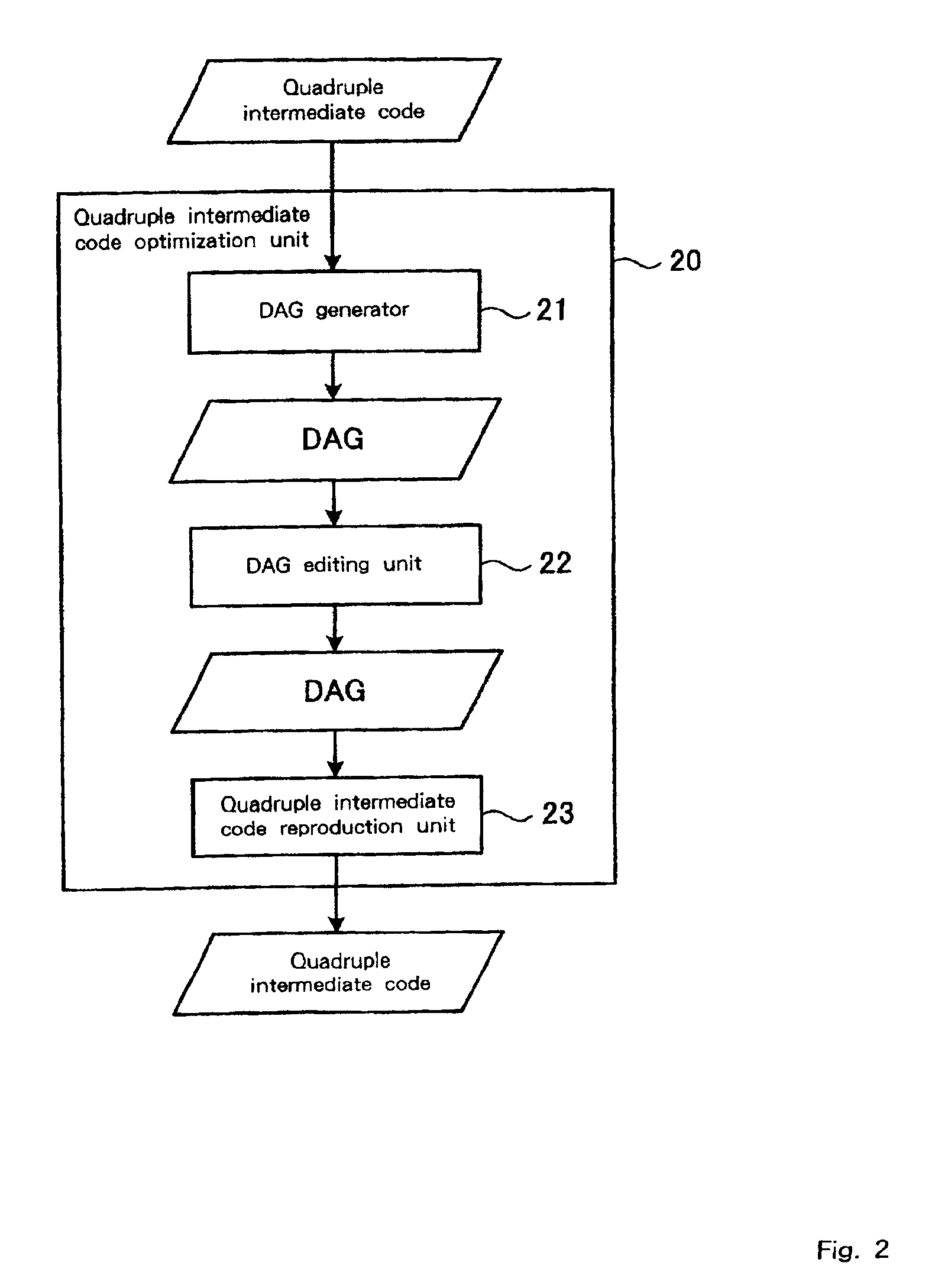

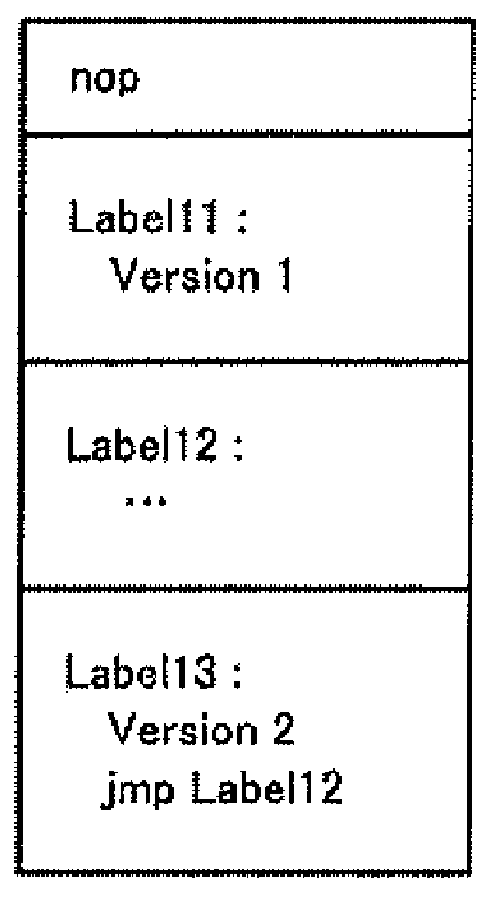

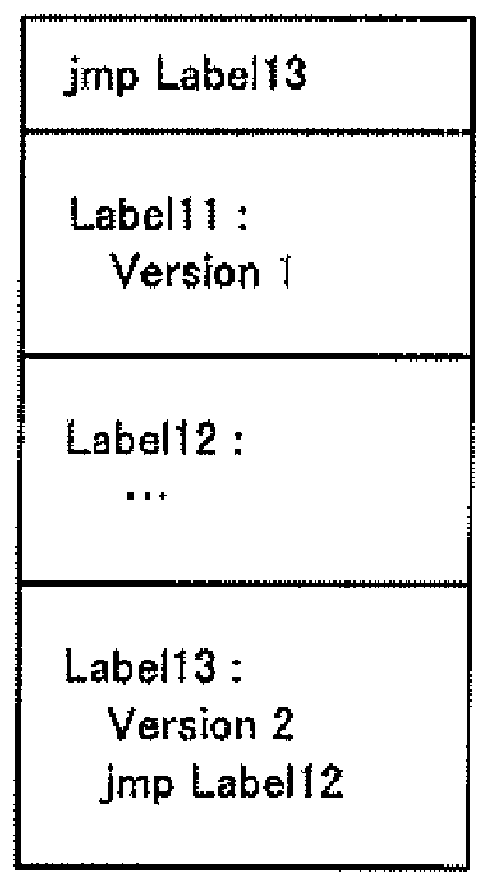

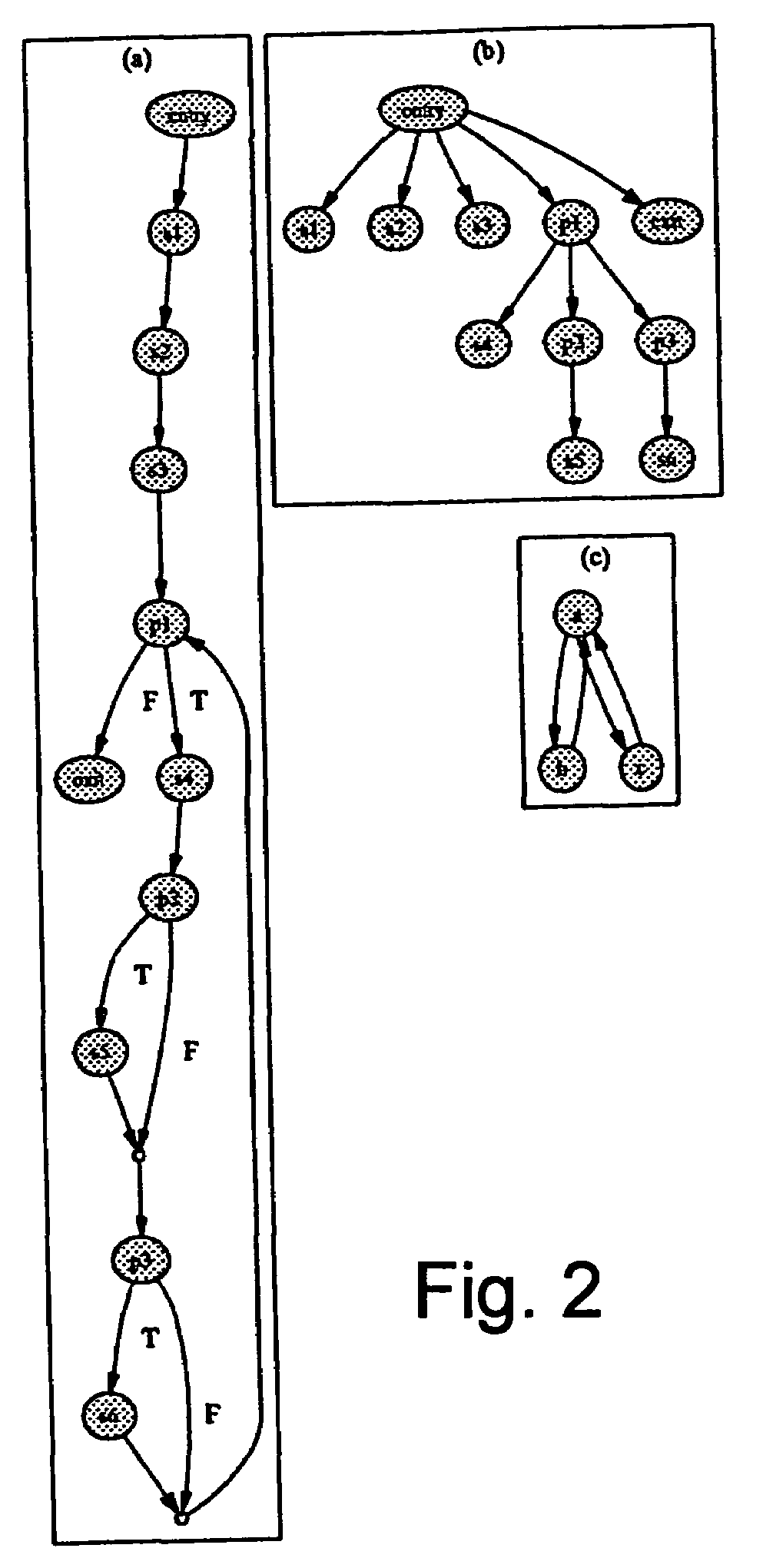

Program optimization method, and compiler using the same

InactiveUS20020095666A1Short processing timeEfficient executionSoftware engineeringDigital computer detailsSource codeBasic block

An optimization method and apparatus for converting source code for a program written in a programming language into machine language and for optimizing the program includes employing a basic block as a unit to estimate an execution time for the program to be processed, generating a nested tree that represents the connections of the basic blocks using a nesting structure, when a conditional branch is accompanied by a node in the nested tree, employing the execution time estimated by using the basic blocks as units to obtain an execution time at the node of the program when a conditional branching portion of a program is directly executed and when the conditional branching portion is executed in parallel, and defining the node as a parallel execution area group when the execution time required for the parallel execution is shorter or dividing multiple child nodes of the nodes into multiple parallel execution areas when the execution time for the conditional branching portion is shorter.

Owner:IBM CORP

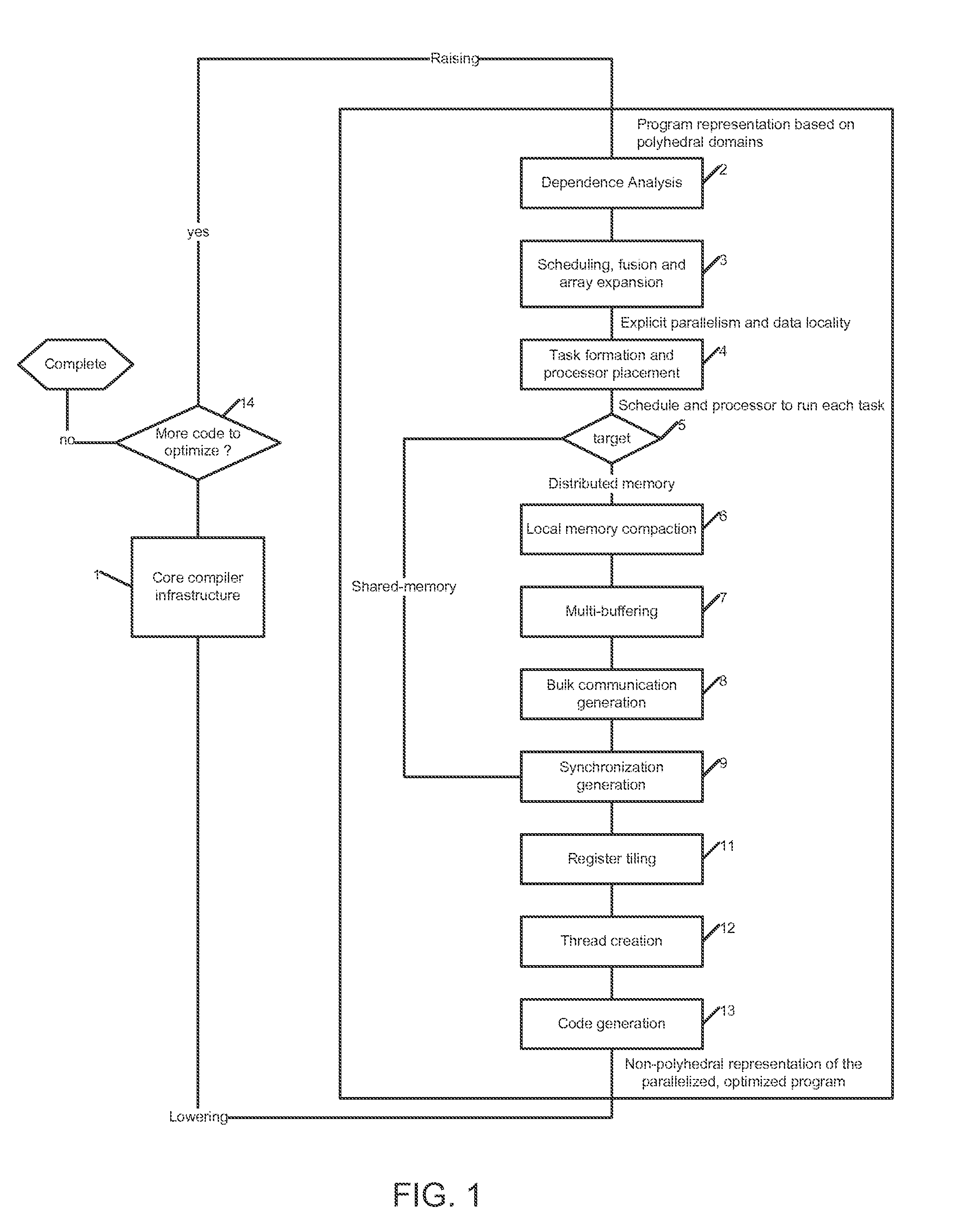

System, methods and apparatus for program optimization for multi-threaded processor architectures

ActiveUS20100218196A1Low costEasy to implementSoftware engineeringMultiprogramming arrangementsParallel computingExecution unit

Owner:QUALCOMM TECHNOLOGIES INC

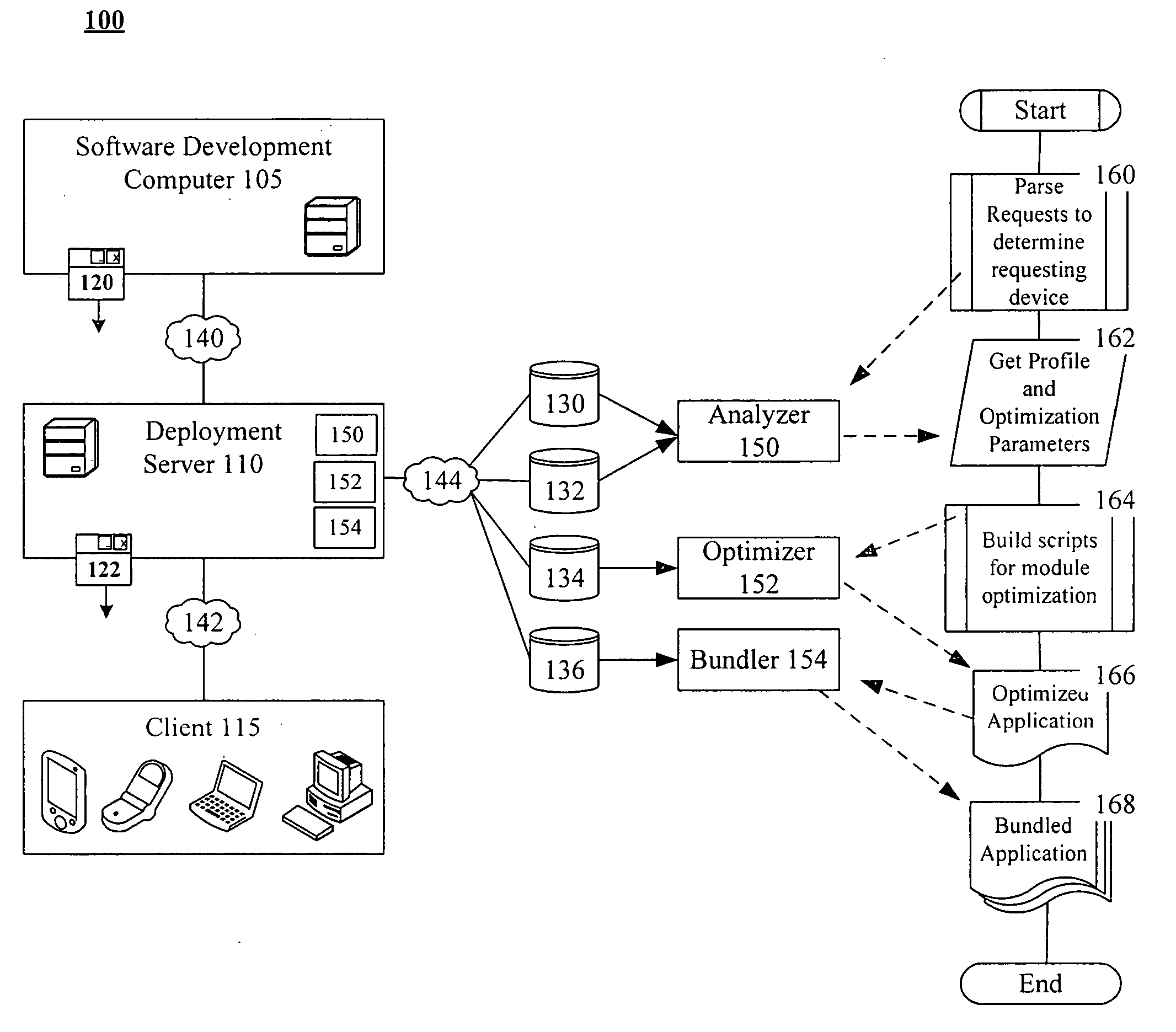

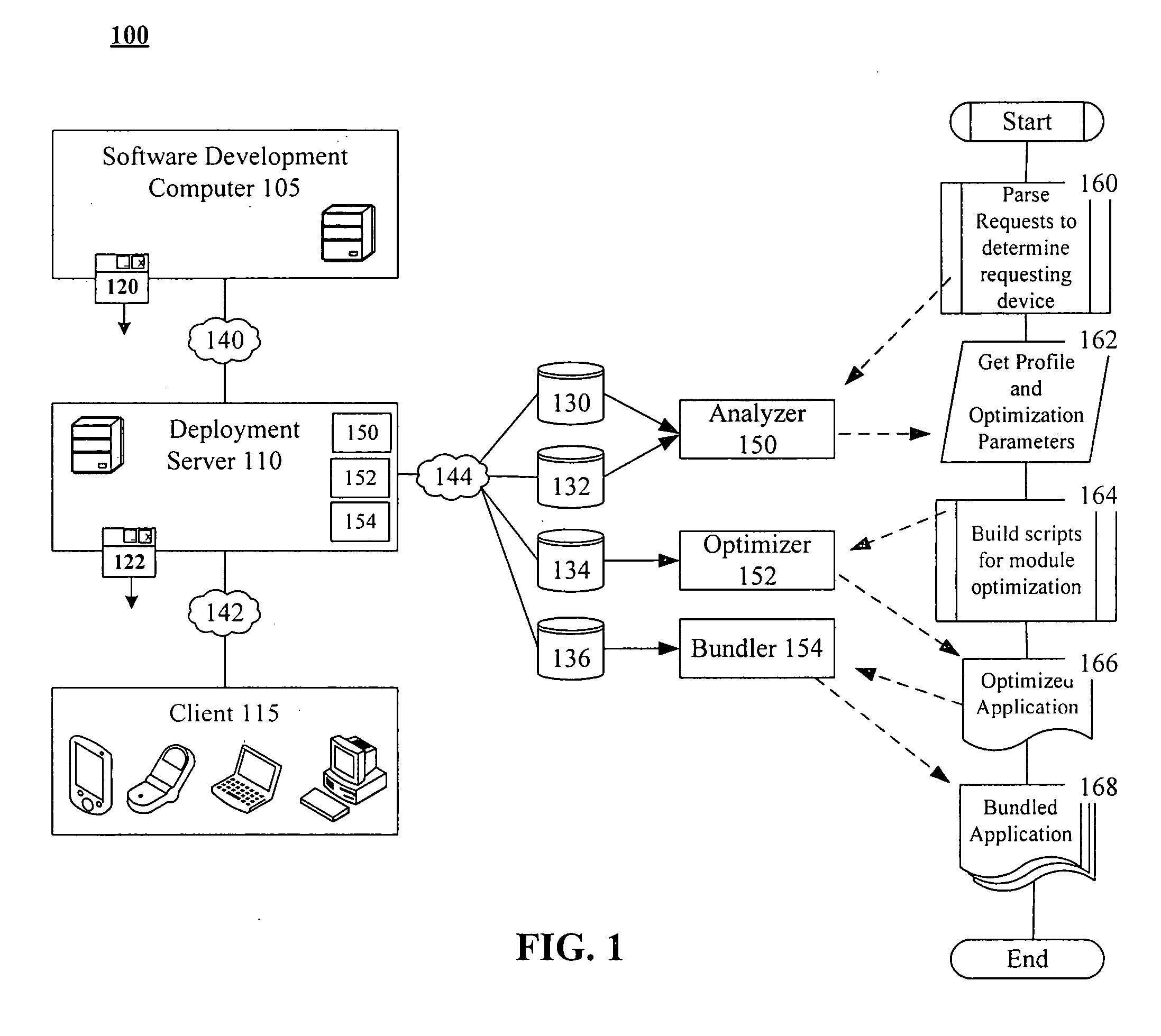

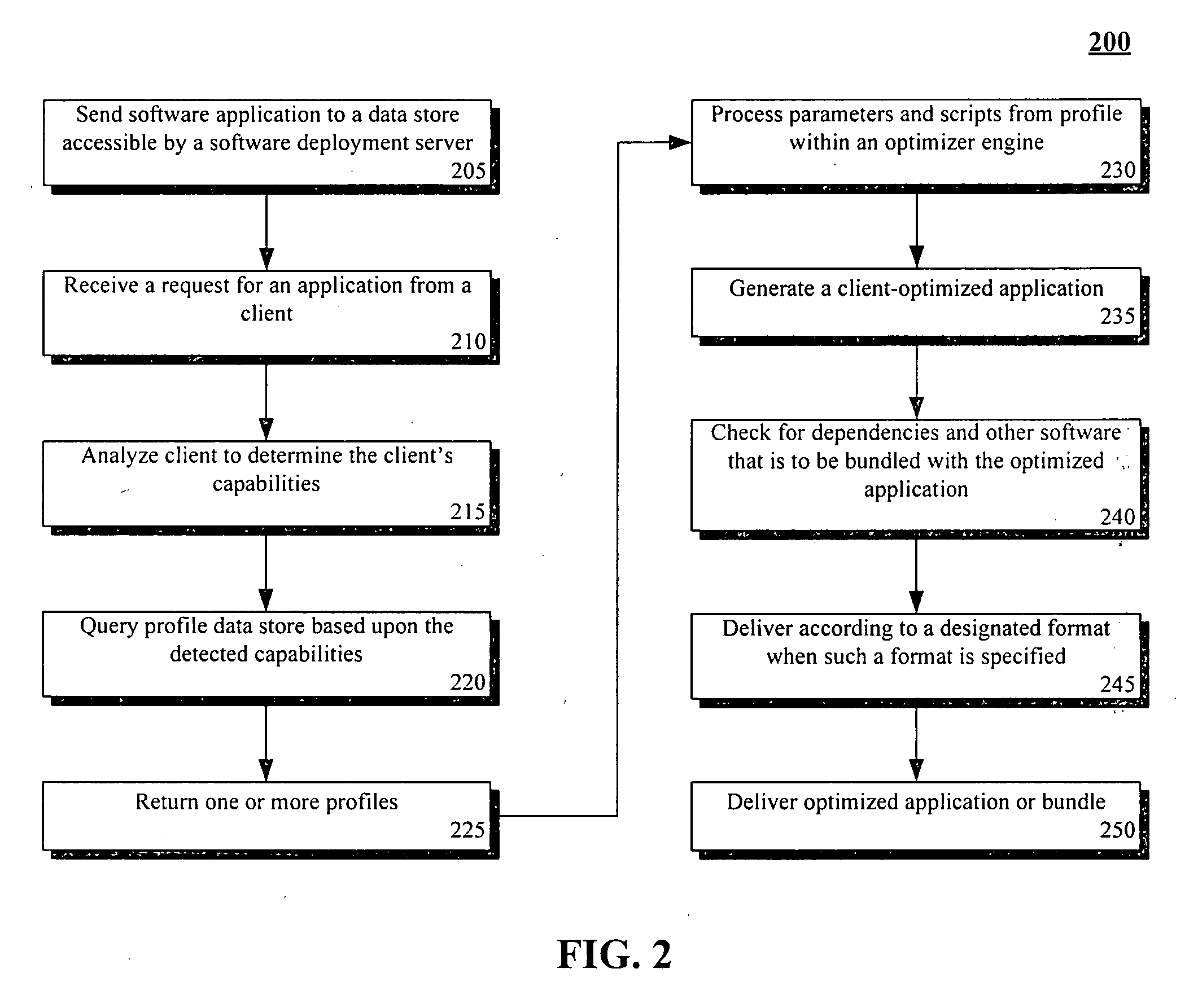

Runtime optimizing applications for a target system from within a deployment server

A deployment server can include a profile data store, a generic application data store, and an optimizer. The profile data store can contain a plurality of attributes for devices and associate different optimization parameters or optimization routines to each of the stored attributes. The generic application data store can contain at least one generic application written in a device independent fashion. The optimizer can receive application requests from an assortment of different requesting devices and can dynamically generate device-specific applications responsive to received requests. For each requesting device, the optimizer can determine attributes of a requesting device, utilize the profile data store to identify optimization parameters or optimization routines for the requesting device, and generate a device-specific application based upon data from the profile data store and based upon a generic application retrieved from the generic application data store.

Owner:IBM CORP

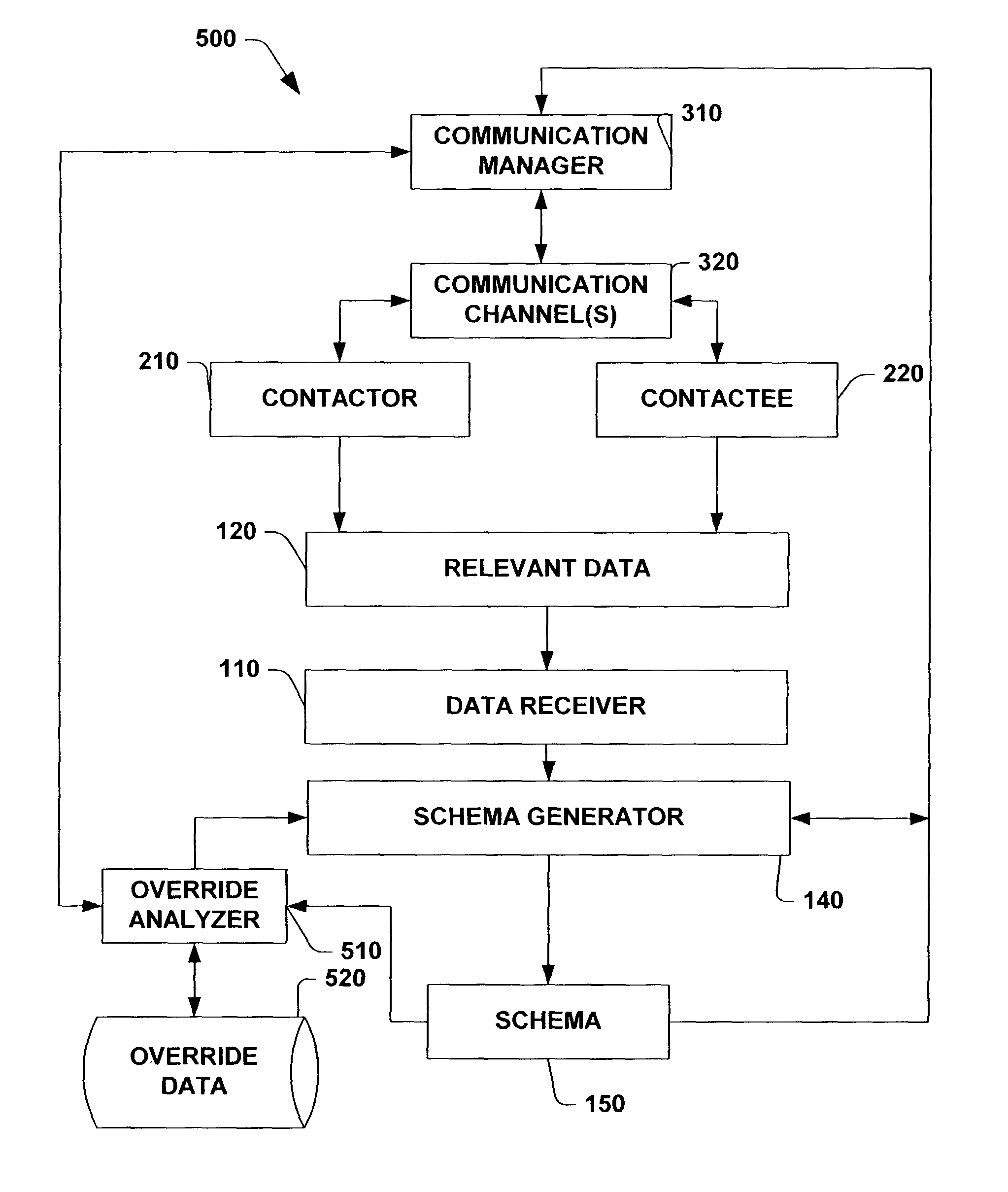

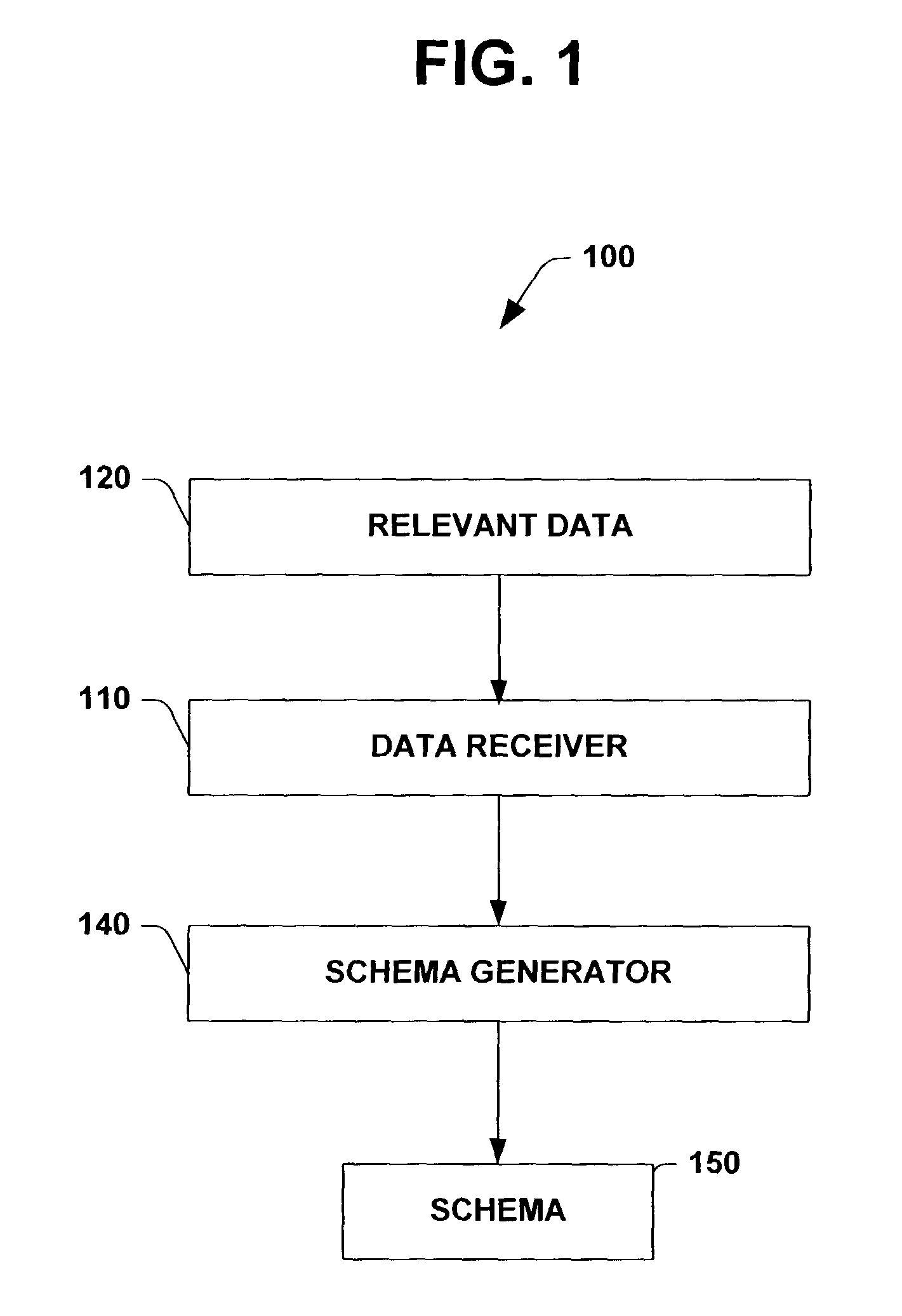

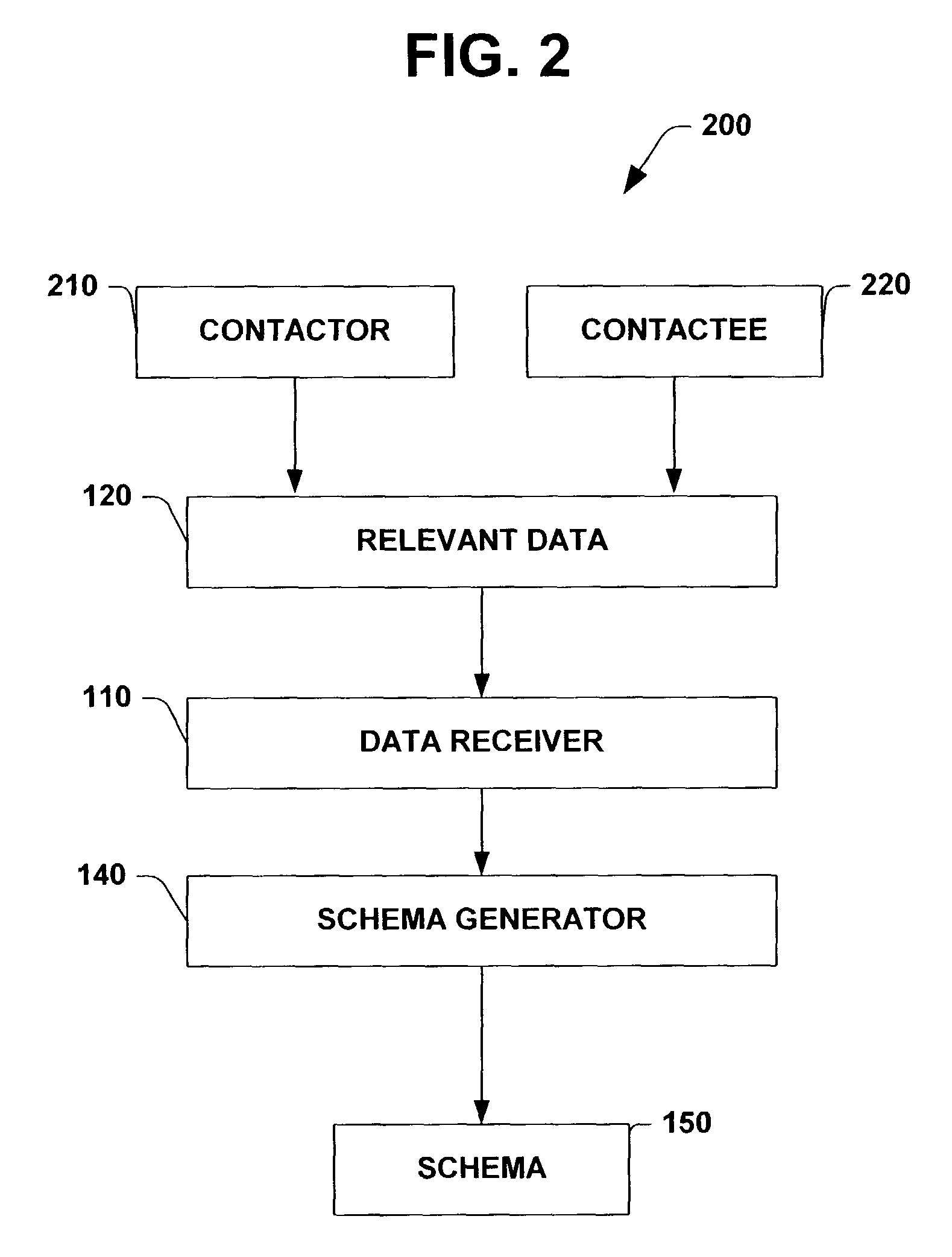

Metadata schema for interpersonal communications management systems

InactiveUS7870240B1Maximize expected utilityImprove utilizationDigital computer detailsResourcesCommunications managementComputer science

A system and method for generating, managing and accessing a schema that facilitates maximizing utility of a managed communication is provided. The system provides a computer-based system for creating, accessing and / or managing a schema employed in utility-optimizing communication management. The system includes computer components for receiving communication related data and storing such communication related data, inferences concerning such data, probabilities and / or probability distributions associated with such data in an extensible, portable, data schema. The schema can facilitate making utility optimizing communication management decisions.

Owner:MICROSOFT TECH LICENSING LLC

Program optimization method, and compiler using the same

InactiveUS6817013B2Short processing timeEfficient executionSoftware engineeringDigital computer detailsBasic blockSource code

An optimization method and apparatus for converting source code for a program written in a programming language into machine language. The program includes a basic block as a unit to estimate an execution time for the program to be processed, generating a nested tree that represents the connections of the basic blocks using a nesting structure, when a conditional branch is accompanied by a node in the nested tree, employing the execution time estimated by using the basic blocks as units to obtain an execution time at the node of the program when a conditional branching portion of a program is directly executed and when the conditional branching portion is executed in parallel, and defining the node as a parallel execution area group when the execution time required for the parallel execution is shorter or dividing multiple child nodes of the nodes into multiple parallel execution areas.

Owner:INT BUSINESS MASCH CORP

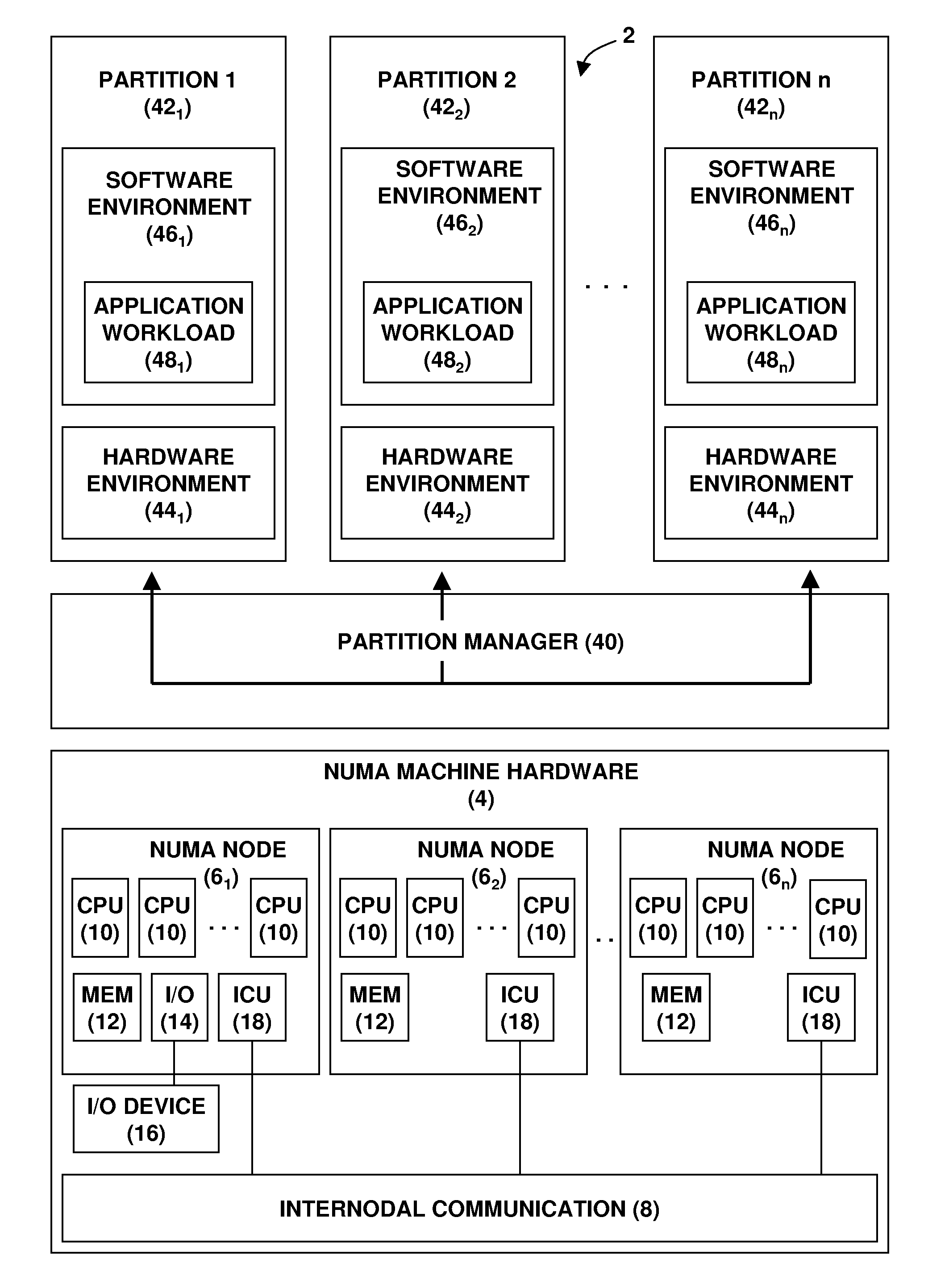

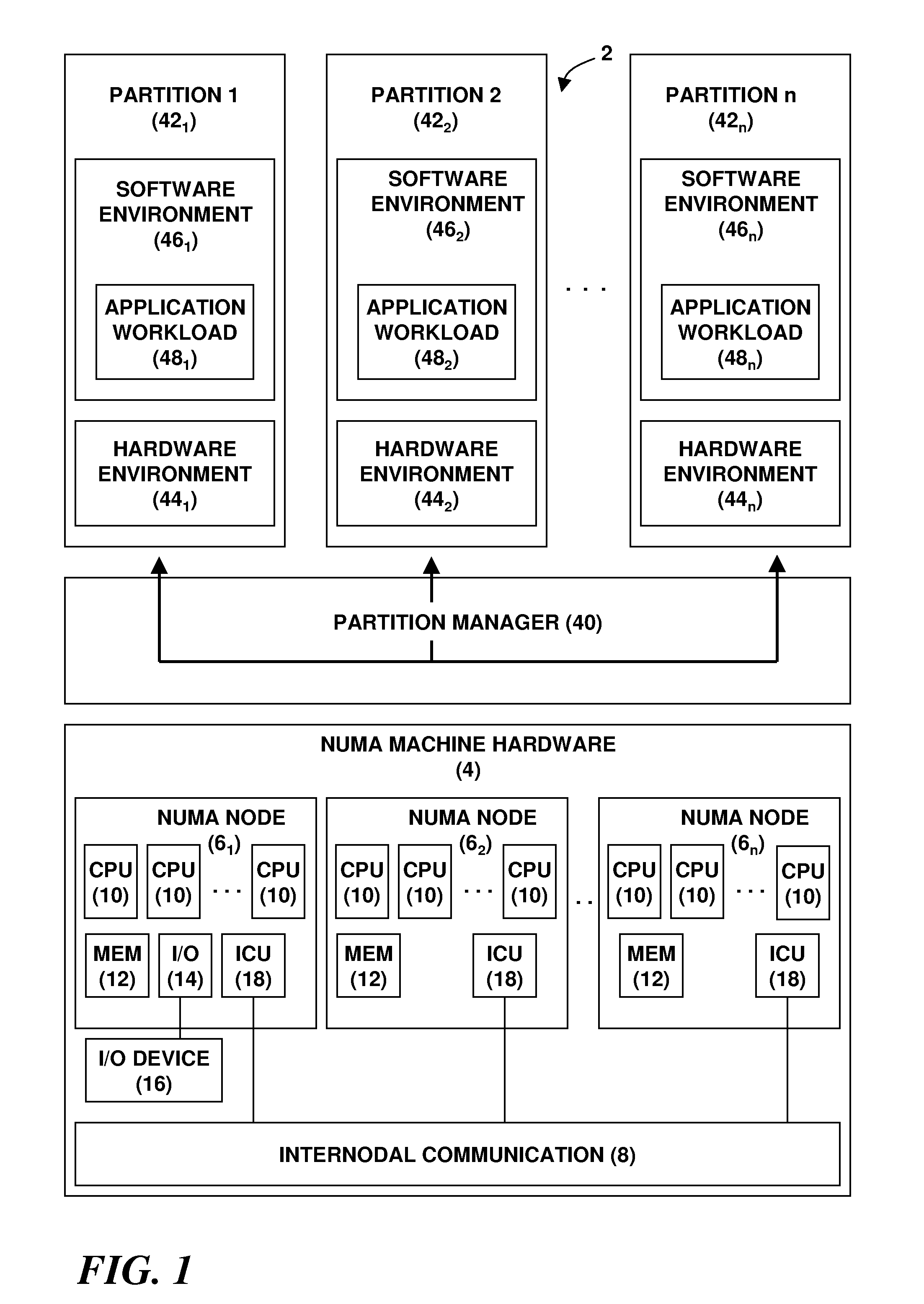

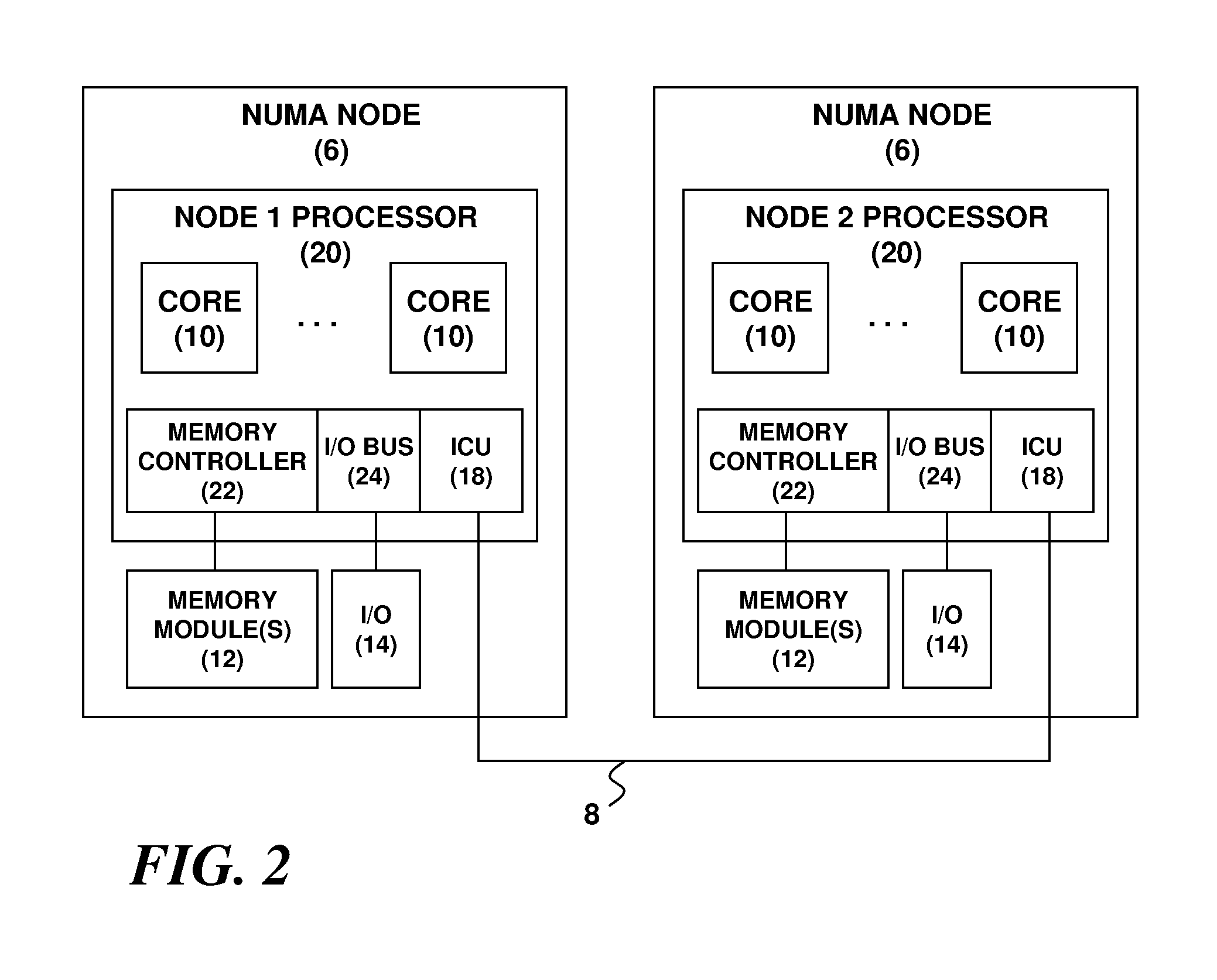

Dynamic Logical Partition Management For NUMA Machines And Clusters

ActiveUS20100217949A1Memory adressing/allocation/relocationProgram controlTelecommunications linkParallel computing

A partitioned NUMA machine is managed to dynamically transform its partition layout state based on NUMA considerations. The NUMA machine includes two or more NUMA nodes that are operatively interconnected by one or more internodal communication links. Each node includes one or more CPUs and associated memory circuitry. Two or more logical partitions each comprise at a CPU and memory circuit allocation on at least one NUMA node. Each partition respectively runs at least one associated data processing application. The partitions are dynamically managed at runtime to transform the distributed data processing machine from a first partition layout state to a second partition layout state that is optimized for the data processing applications according to whether a given partition will most efficiently execute within a single NUMA node or by spanning across a node boundary. The optimization is based on access latency and bandwidth in the NUMA machine.

Owner:DAEDALUS BLUE LLC

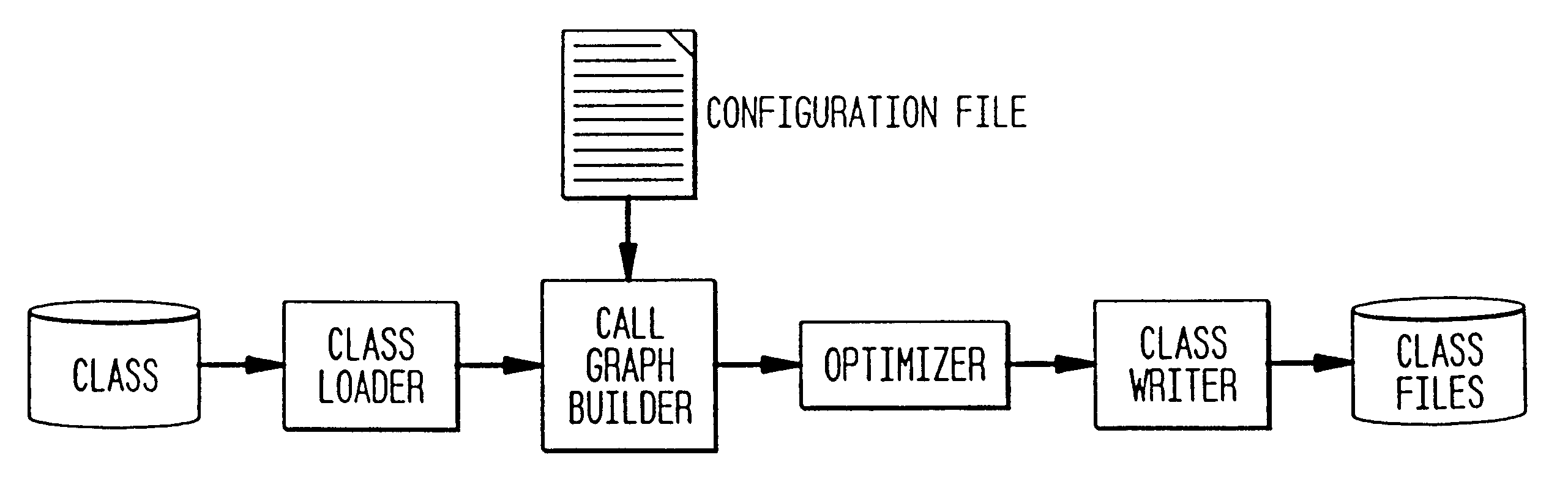

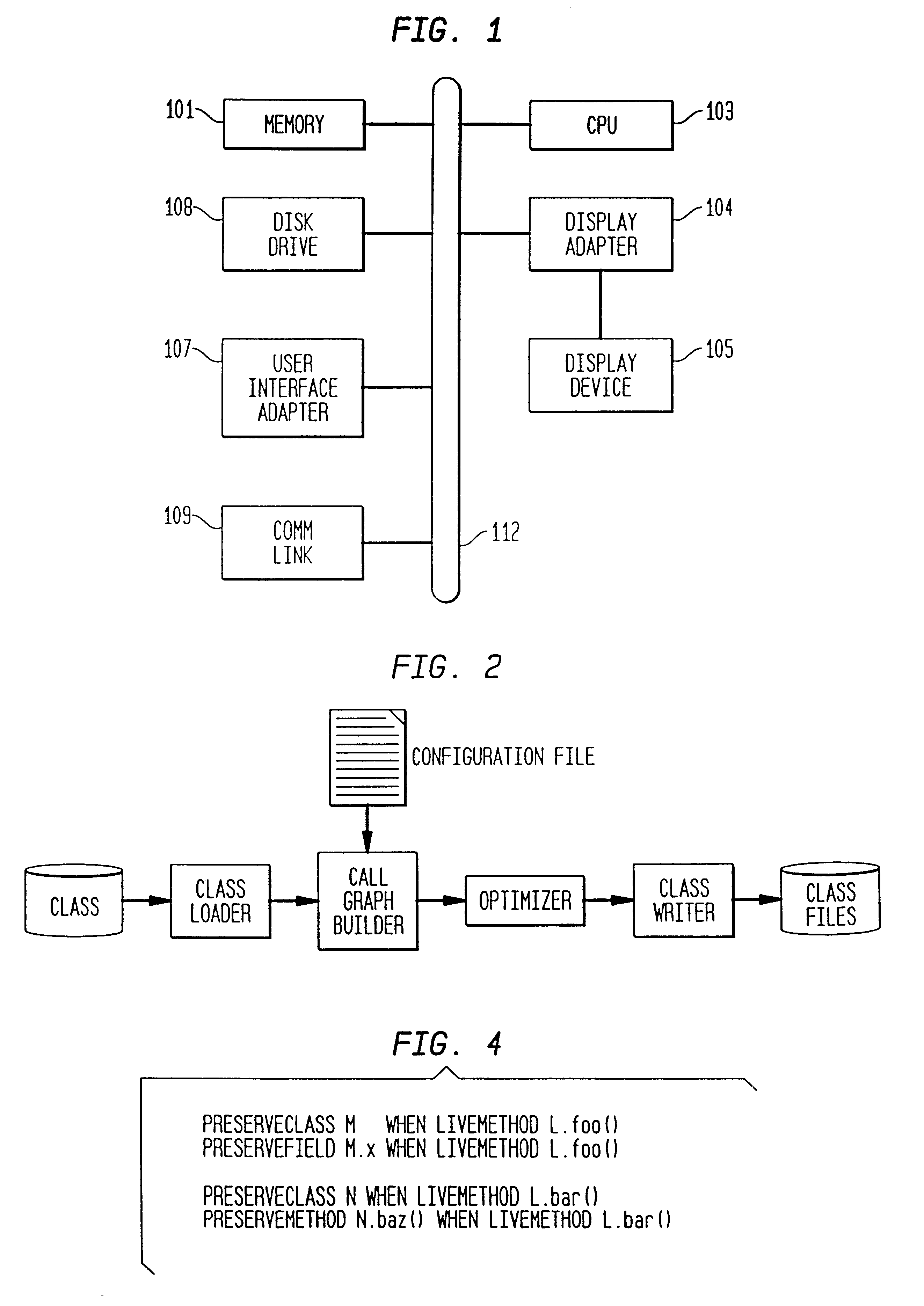

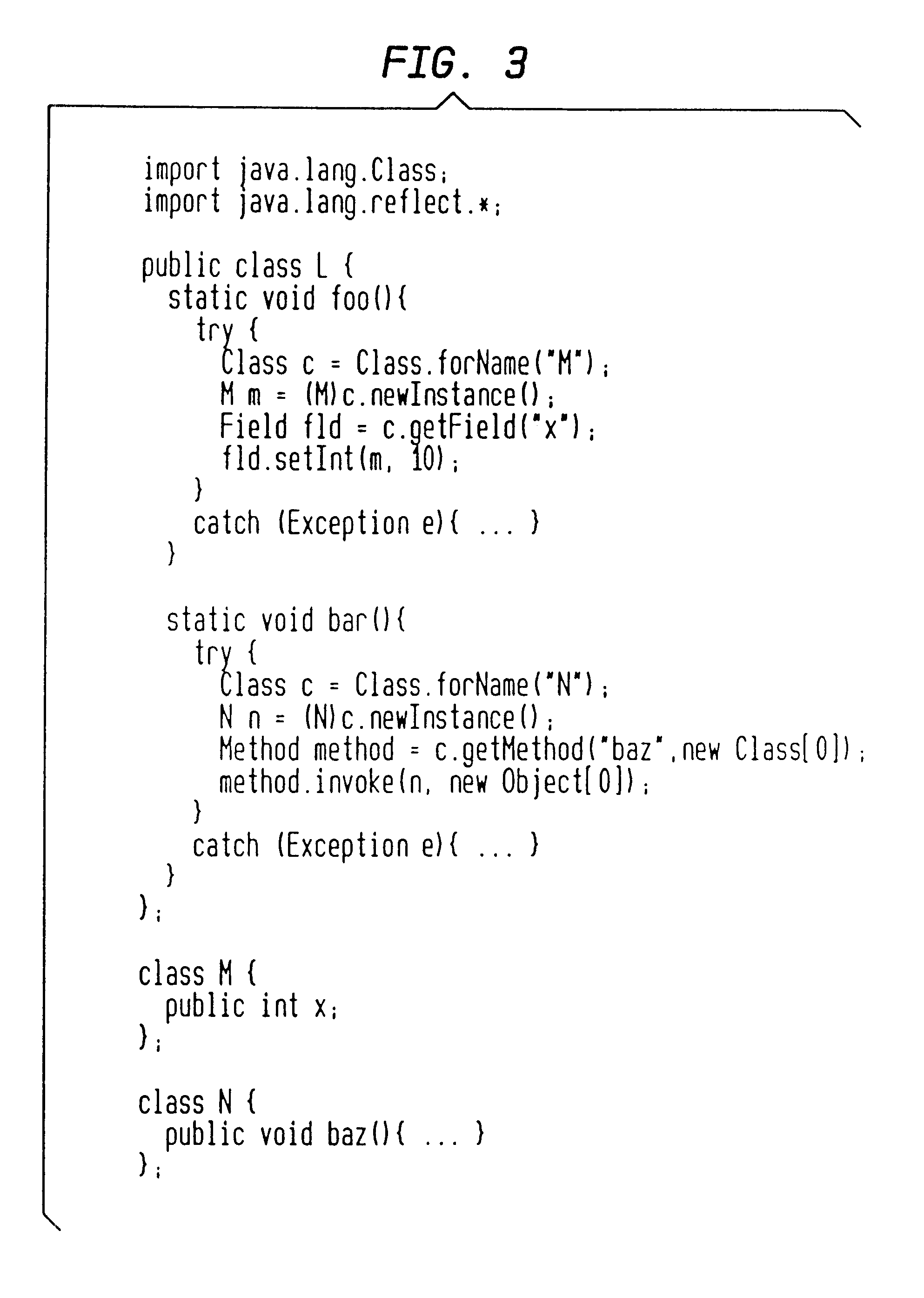

Method for accurately extracting library-based object-oriented applications

InactiveUS6546551B1Software engineeringProgram loading/initiatingTheoretical computer scienceApplication software

The present invention is capable of accurately extracting multiple applications with respect to a class library. The invention relies on a configuration file for an application program and / or library, which describes how program components in the program / library should be preserved under specified conditions. The invention may be used in application extraction tools, and in tools that aim at enhancing performance using whole-program optimizations. The invention may be used as an optimization to reduce application size by eliminating unreachable methods. In the alternative, the invention may be used as a basis for optimizations that reduce execution time (e.g., by means of call devirtualization), and as a basis for tools for program understanding and debugging.

Owner:IBM CORP

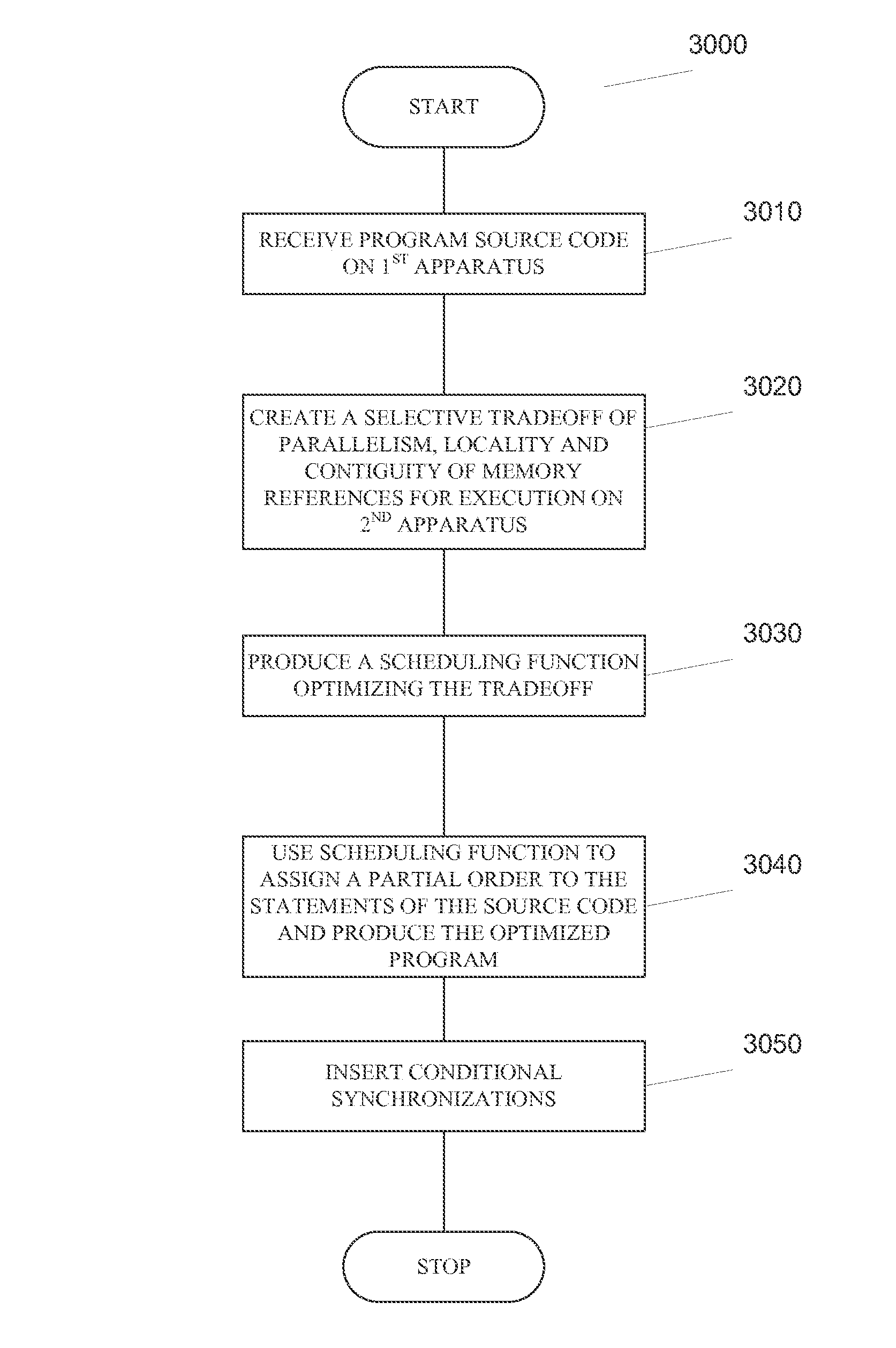

System, methods and apparatus for program optimization for multi-threaded processor architectures

ActiveUS8930926B2Low costEasy to implementSoftware engineeringMultiprogramming arrangementsParallel computingExecution unit

Methods, apparatus and computer software product for source code optimization are provided. In an exemplary embodiment, a first custom computing apparatus is used to optimize the execution of source code on a second computing apparatus. In this embodiment, the first custom computing apparatus contains a memory, a storage medium and at least one processor with at least one multi-stage execution unit. The second computing apparatus contains at least two multi-stage execution units that allow for parallel execution of tasks. The first custom computing apparatus optimizes the code for parallelism, locality of operations and contiguity of memory accesses on the second computing apparatus. This Abstract is provided for the sole purpose of complying with the Abstract requirement rules. This Abstract is submitted with the explicit understanding that it will not be used to interpret or to limit the scope or the meaning of the claims.

Owner:QUALCOMM INC

Multi-tier benefit optimization for operating the power systems including renewable and traditional generation, energy storage, and controllable loads

ActiveUS7315769B2Maximize the benefitsMechanical power/torque controlSampled-variable control systemsElectric power systemPower grid

A plurality of power generating assets are connected to a power grid. The grid may, if desired, be a local grid or a utility grid. The power grid is connected to a plurality of loads. The loads may, if desired, be controllable and non-controllable. Distribution of the power to the loads is via a controller that has a program stored therein that optimizes the controllable loads and the power generating assets. The optimization process is via multi-tier benefit construct.

Owner:GENERAL ELECTRIC CO

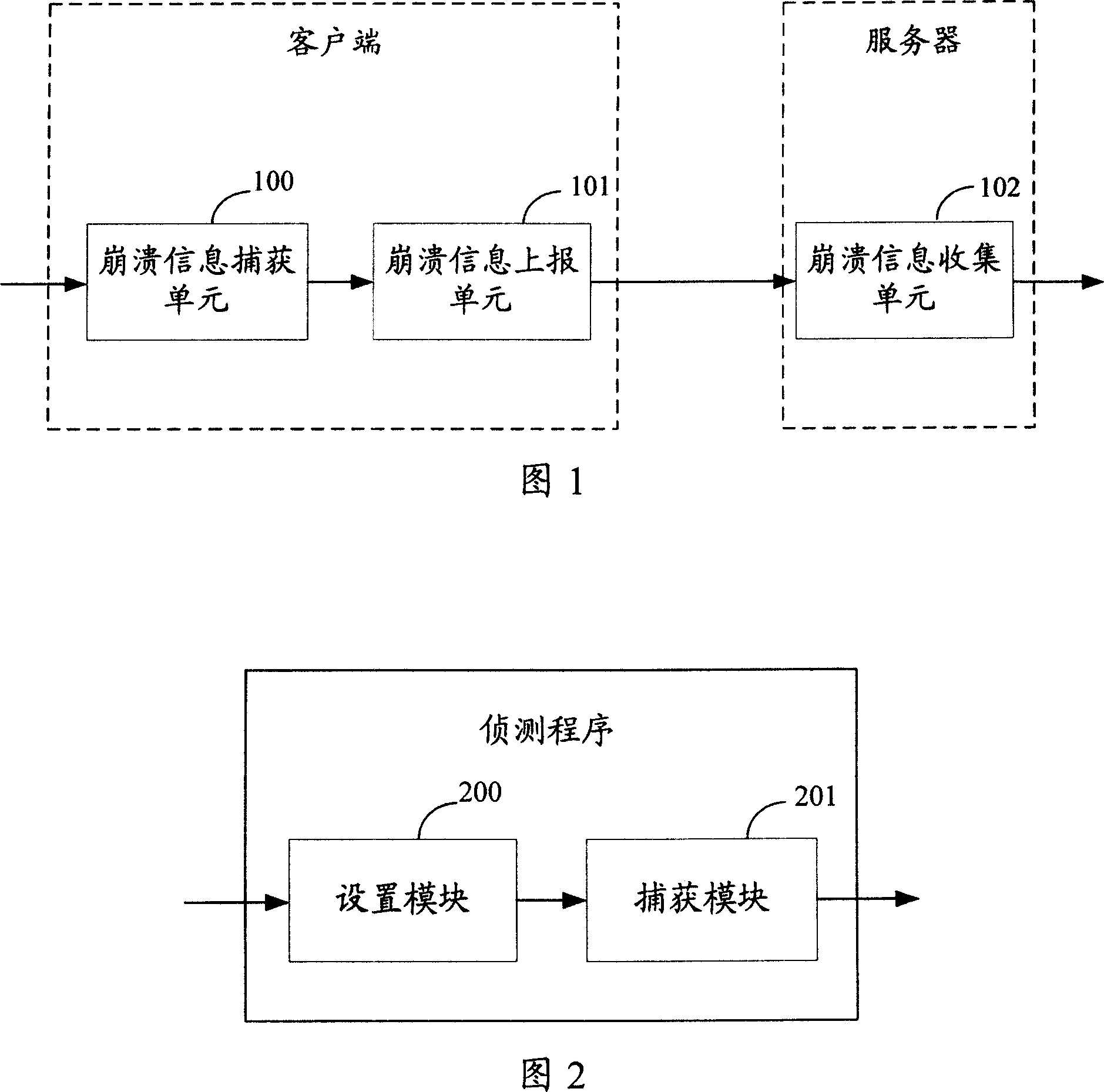

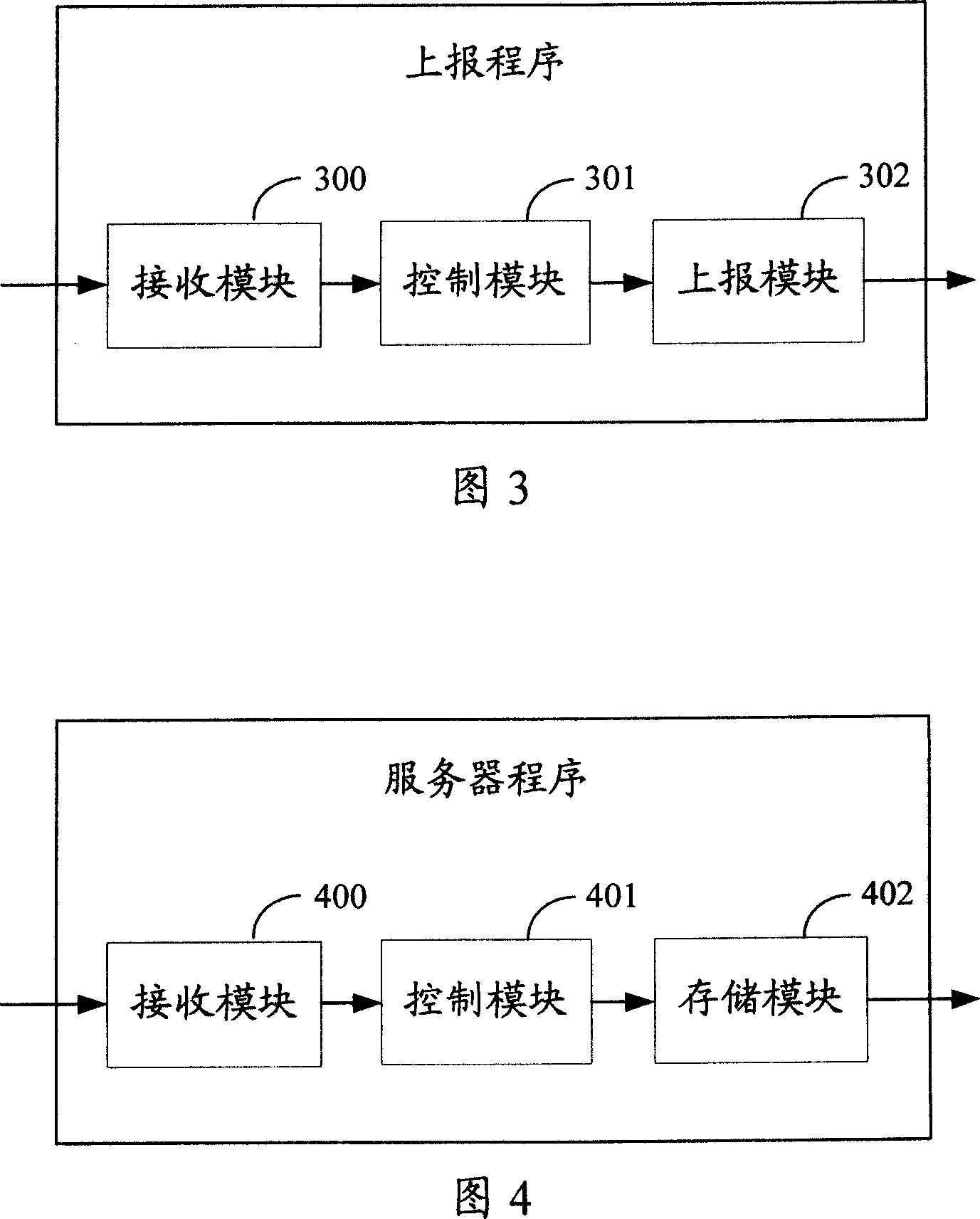

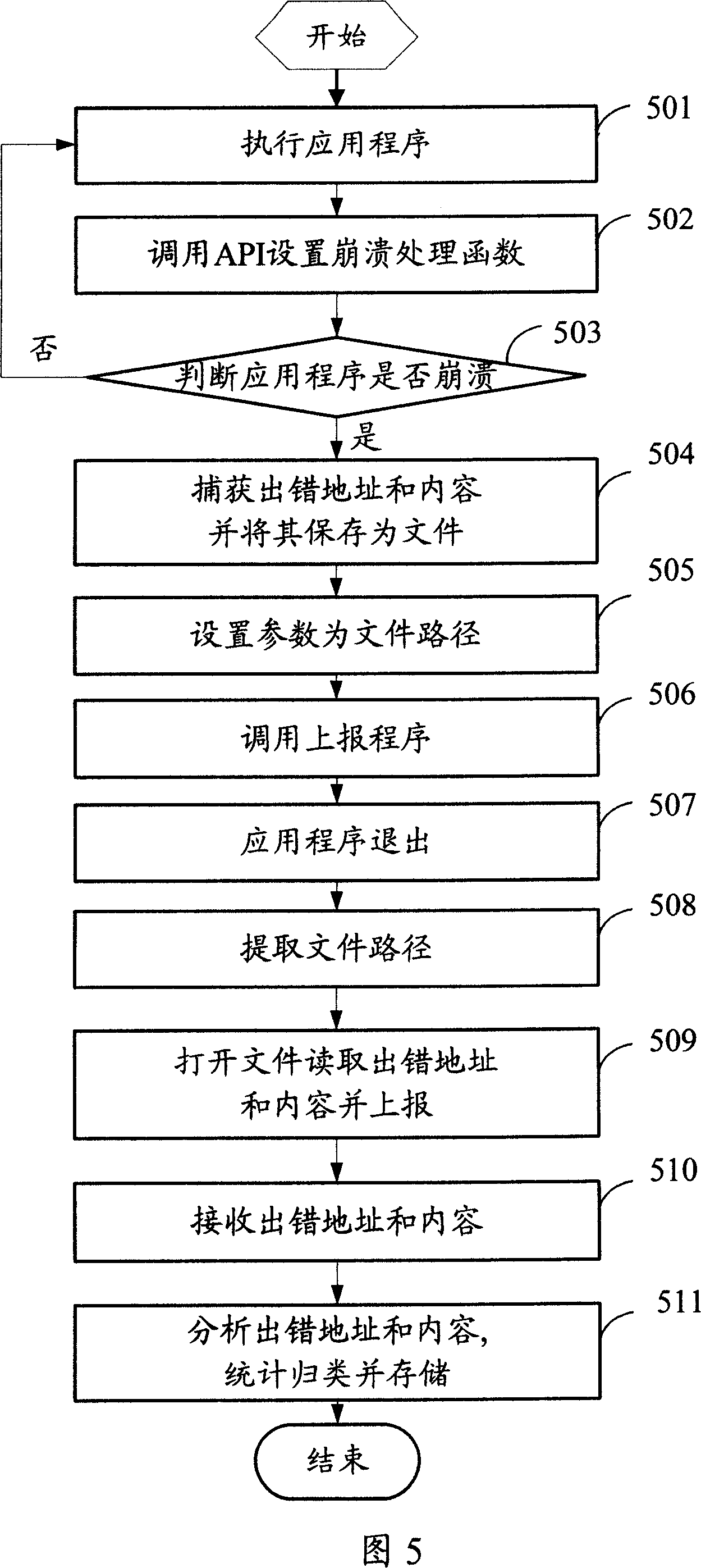

Program crashing information report method and system thereof

The invention discloses a method used for reporting the program crash information, which aims at solving the problems of hard statistics and analysis of the program mistakes and inconvenient optimizing of the program in the prior art caused by the non-reporting of the program crash information. The method captures the error address and error message when the program is crashed, taking advantage of the detecting program and reports to the server via the reporting program, while the server analyzes the error address and error message reported when the application program is crashed, and classifies and stores the error message according to the error address. At the same time, the invention discloses a processing system for program crash information.

Owner:TENCENT TECH (SHENZHEN) CO LTD

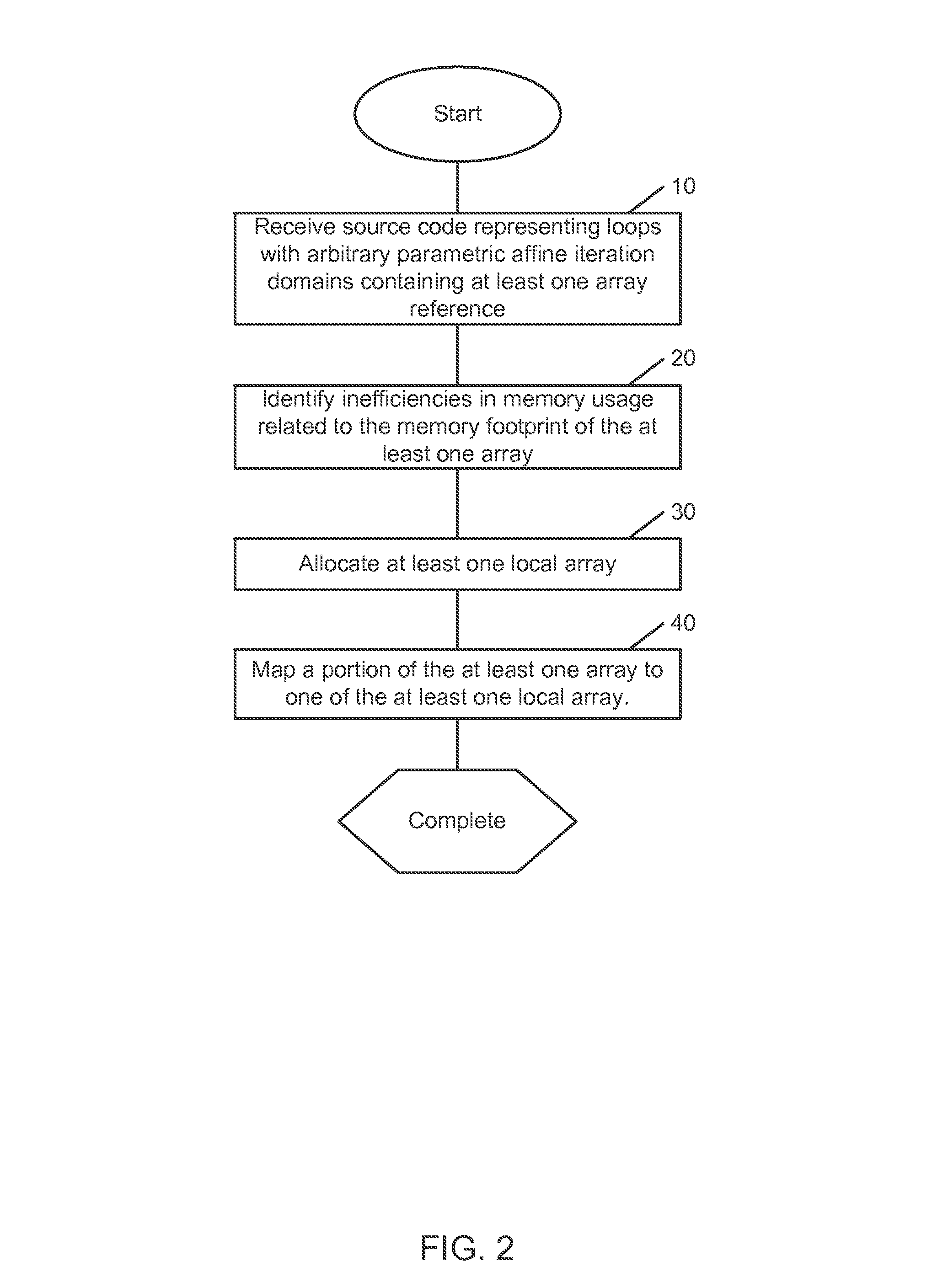

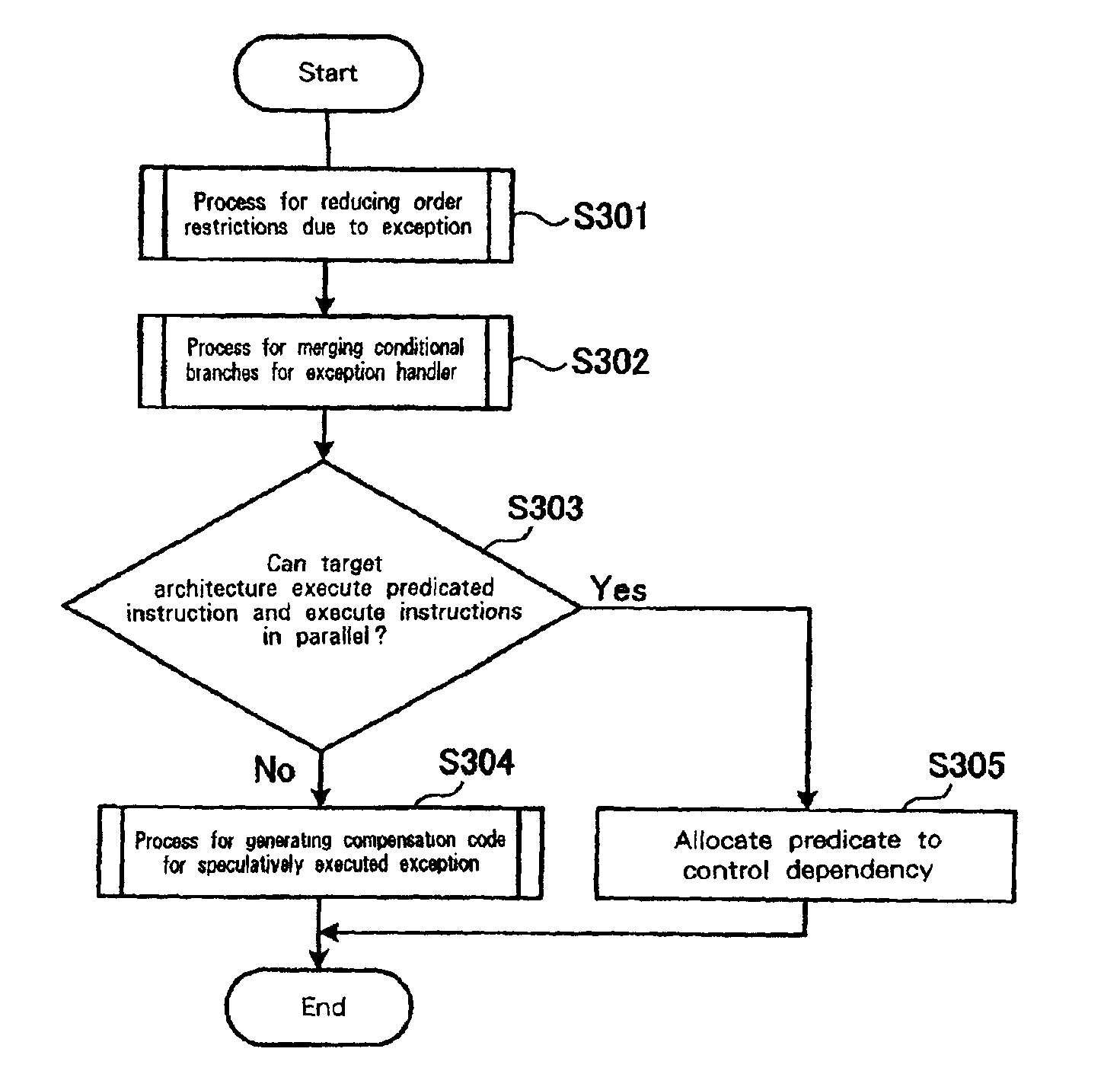

Program optimization

InactiveUS6931635B2Control instructionReduce restrictionsSoftware engineeringDigital computer detailsProgram instructionSource code

A program optimization method for converting program source code written in a programming language into machine language includes steps of: analyzing a target program and detecting an exception generative instruction, which may generate an exception, and exception generation detection instructions, which brunches a process to an exception process when an exception occurrence condition is detected and an exception has occurred. The method also includes steps of dividing the exception generation detection instructions into first instructions, for the detection of exception occurrence conditions, and into second instructions, for branching processes to the exception process when the exception occurrence conditions are detected; and establishing dependencies among program instructions, so that when one of the exception occurrence conditions is detected the process is shifted from a first instruction to a second instruction, and so that when none of the exception occurrence conditions are detected, the process is shifted from a first instruction to an exception generative instruction.

Owner:IBM CORP

Program optimizing apparatus, program optimizing method, and program optimizing article of manufacture

InactiveUS20120159461A1Improve application performanceImprove performanceSoftware engineeringProgram controlParallel computingTransactional memory

An apparatus having a transactional memory enabling exclusive control to execute a transaction. The apparatus includes: a first code generating unit configured to interpret a program, and generate first code in which a begin instruction to begin a transaction and an end instruction to commit the transaction are inserted before and after an instruction sequence including multiple instructions to execute designated processing in the program; a second code generating unit configured to generate second code at a predetermined timing by using the multiple instructions according to the designated processing; and a code write unit configured to overwrite the instruction sequence of the first code with the second code or to write the second code to a part of the first code in the transaction.

Owner:IBM CORP

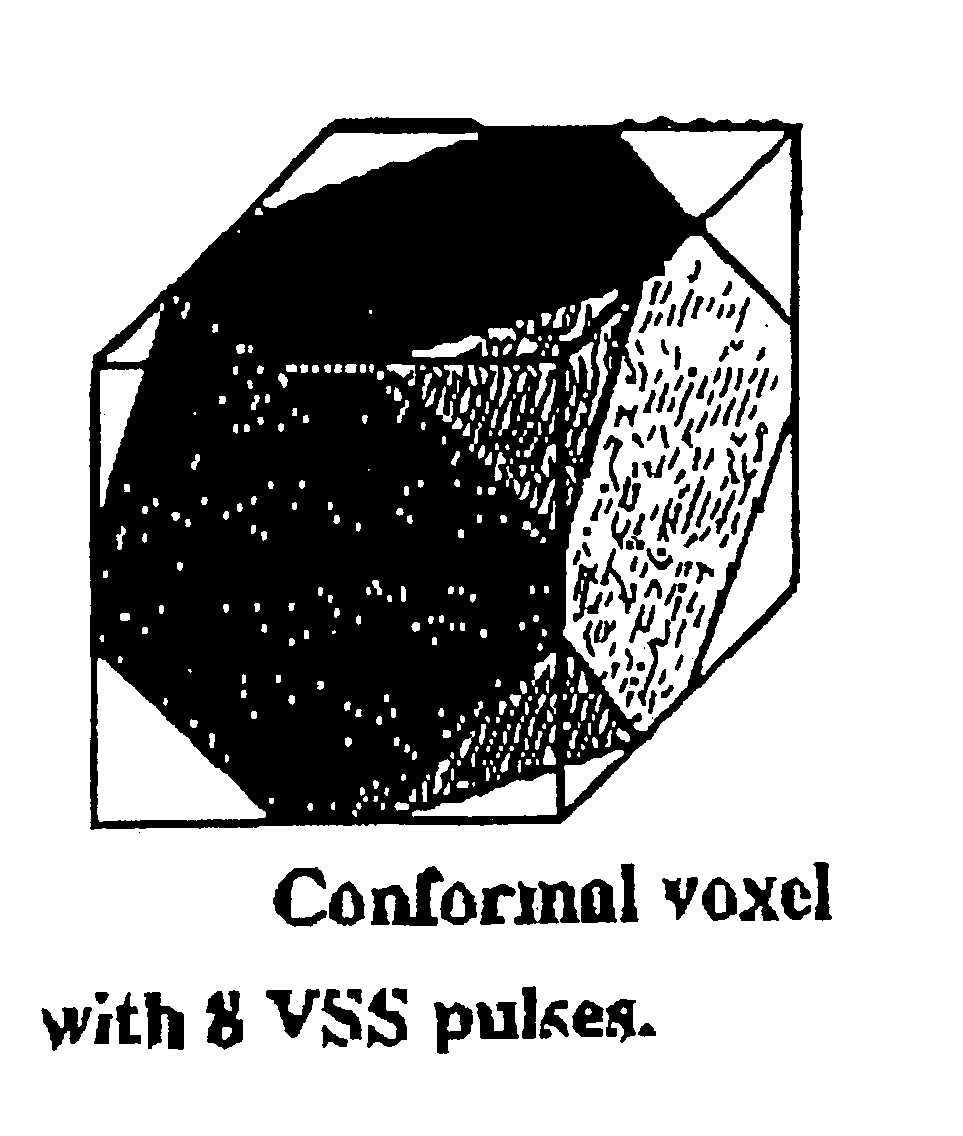

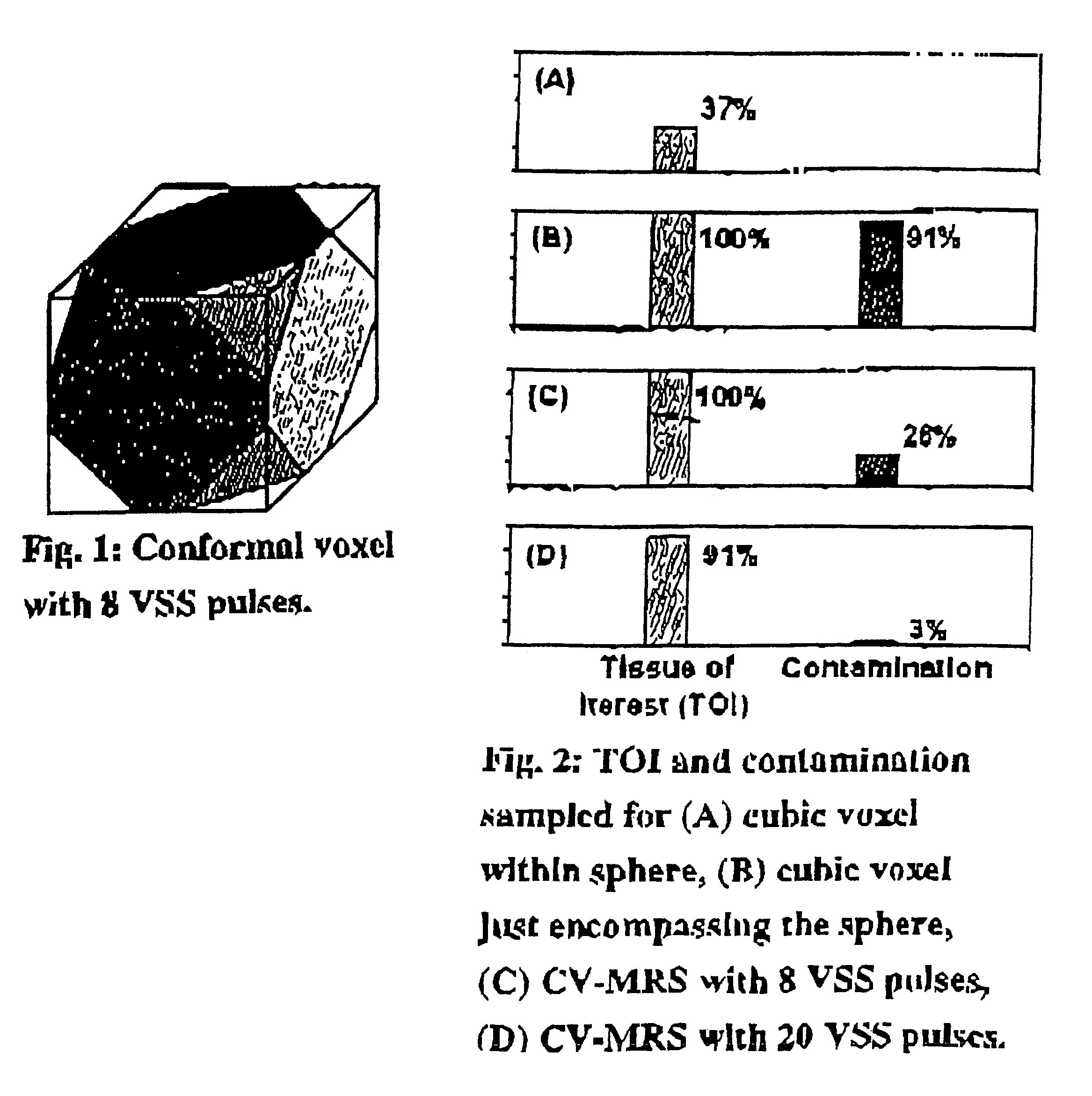

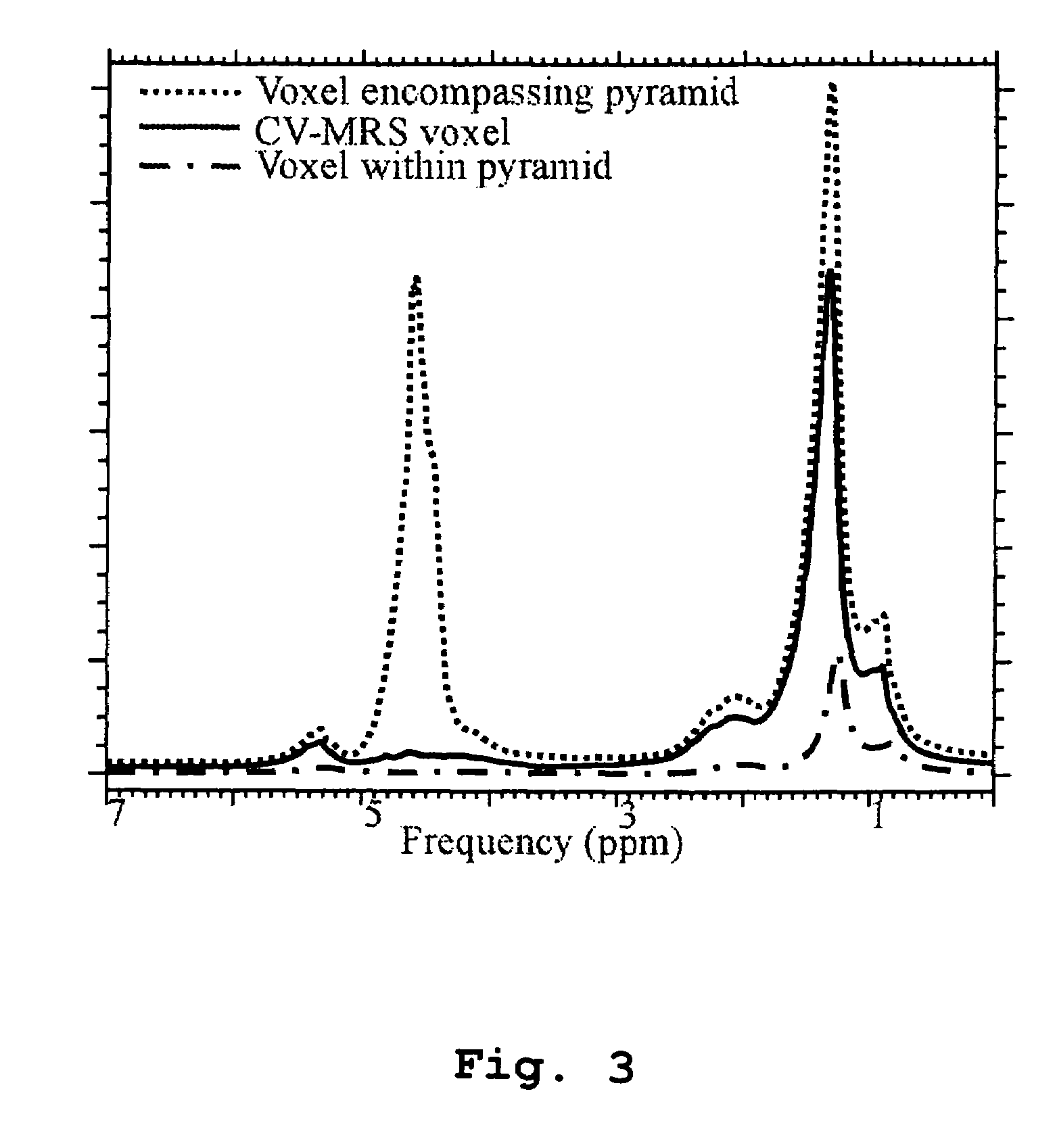

Magnetic resonance spectroscopy using a conformal voxel

InactiveUS7319784B2Improving prescriptionAdd featureMagnetic measurementsCharacter and pattern recognitionVoxelSelective excitation

The goal was to automate and optimize the shaping and positioning of a shape-specific / conformal voxel that conforms to any volume of interest, such as a cranial lesion, to allow conformal voxel magnetic resonance spectroscopy (CV-MRS). We achieved this by using a computer program that optimizes the shape, size, and location of a convex polyhedron within the volume of interest. The sides of the convex polyhedron are used to automatically prescribe the size and location of selective excitation voxels and / or spatial saturation slices. For a spherically-shaped, phantom-simulated lesion, CV-MRS increased the signal from the lesion by a factor of 2.5 compared to a voxel completely inside the lesion. CV-MRS reduces the voxel prescription time, operator subjectivity, and acquisition time.

Owner:NAT RES COUNCIL OF CANADA

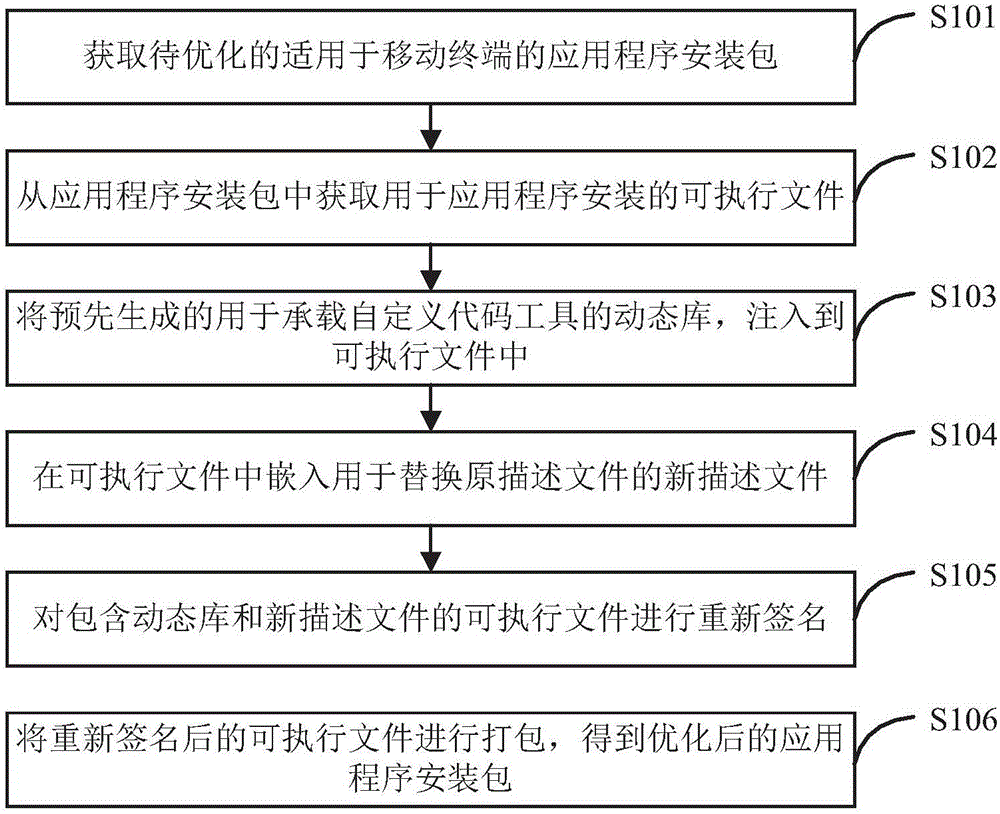

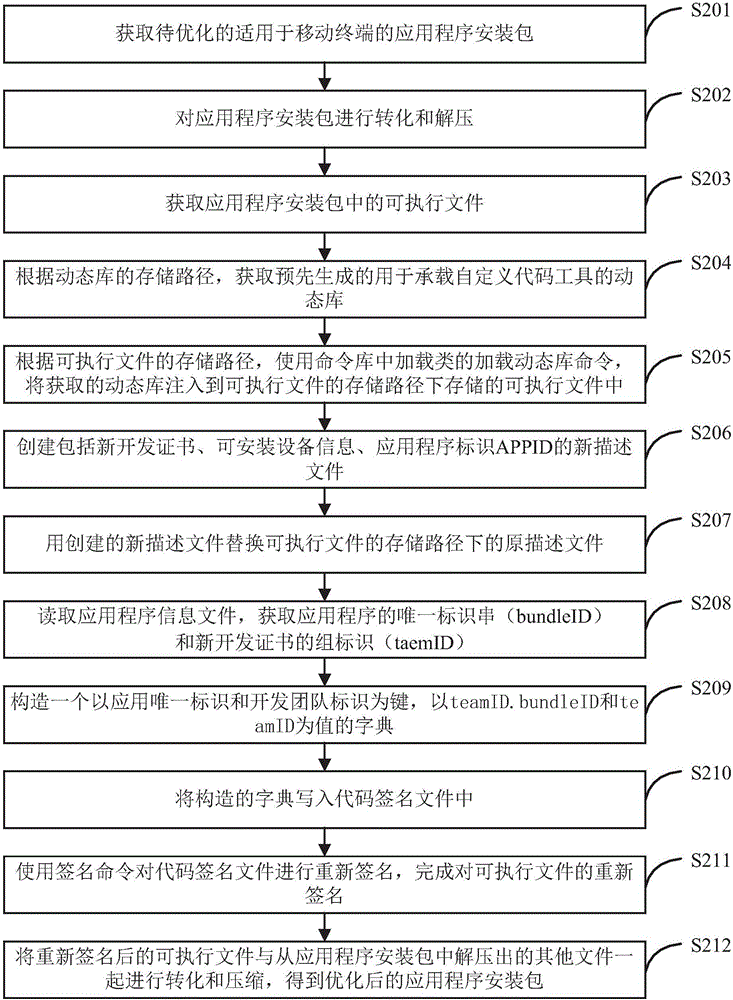

Method, device, and system for optimizing application program

ActiveCN106126290AAchieve optimizationEasy to implementProgram loading/initiatingSoftware deploymentSoftware engineeringComputer terminal

Embodiments of the invention provide a method, a device, and a system for optimizing application programs. The method comprises: obtaining a to-be-optimized application program installation package suitable to be used for a mobile terminal, obtaining an executable file used for installation of the application program from the application program installation package; injecting a dynamic library which is generated in advance to be used for bearing custom code tools into the executable file; embedding a new description file used to replace an original description file into the executable file; resigning the executable file which includes the dynamic library and the new description file; packaging the executable file which is resigned, to obtain an optimized application program installation package; the optimized application program installation package being used to install an optimized application program on a mobile terminal. The method, the device, and the system can simply and conveniently realize optimization of application programs under the condition that preinstallation of APPs is not needed, and complicated analysis processing is not needed, and the method, the device, and the system saves time, efforts, and are efficient.

Owner:MICRO DREAM TECHTRONIC NETWORK TECH CHINACO

Solid-state lamps with partial conversion in phosphors for rendering an enhanced number of colors

ActiveUS20090261710A1Quality improvementDischarge tube luminescnet screensLamp detailsDaylightPeak value

The invention relates to phosphor-conversion (PC) sources of white light, which are composed of at least two groups of emitters, such as blue electroluminescent light-emitting diodes (LEDs) and wide-band (WB) or narrow-band (NB) phosphors that partially absorb and convert the flux generated by the LEDs to other wavelengths, and to improving the quality of the white light emitted by such light sources. In particular, embodiments of the present invention describe new 3-4 component combinations of peak wavelengths and bandwidths for white PC LEDs with partial conversion. These combinations are used to provide spectral power distributions that enable lighting with a considerable portion of a high number of spectrophotometrically calibrated colors rendered almost indistinguishably from a blackbody radiator or daylight illuminant, and which differ from distributions optimized using standard color-rendering assessment procedures based on a small number of test samples.

Owner:SENSOR ELECTRONICS TECH

Washing control optimizing method, device, electronic device and storage medium

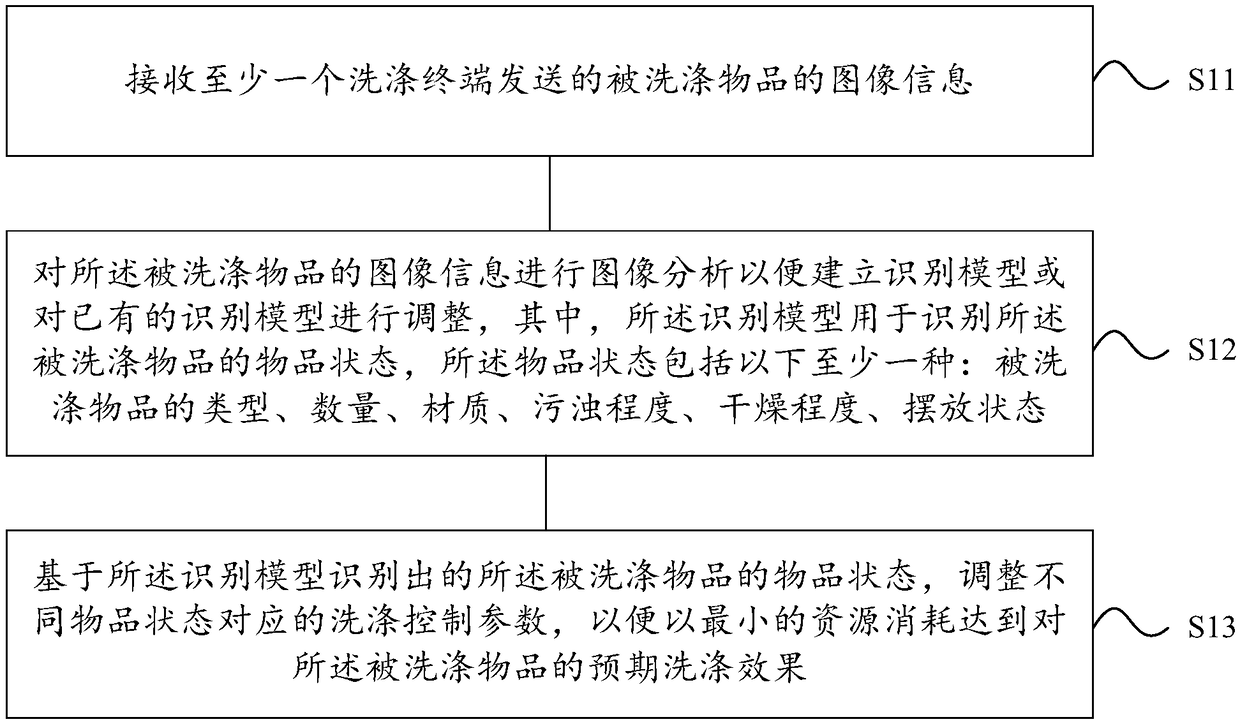

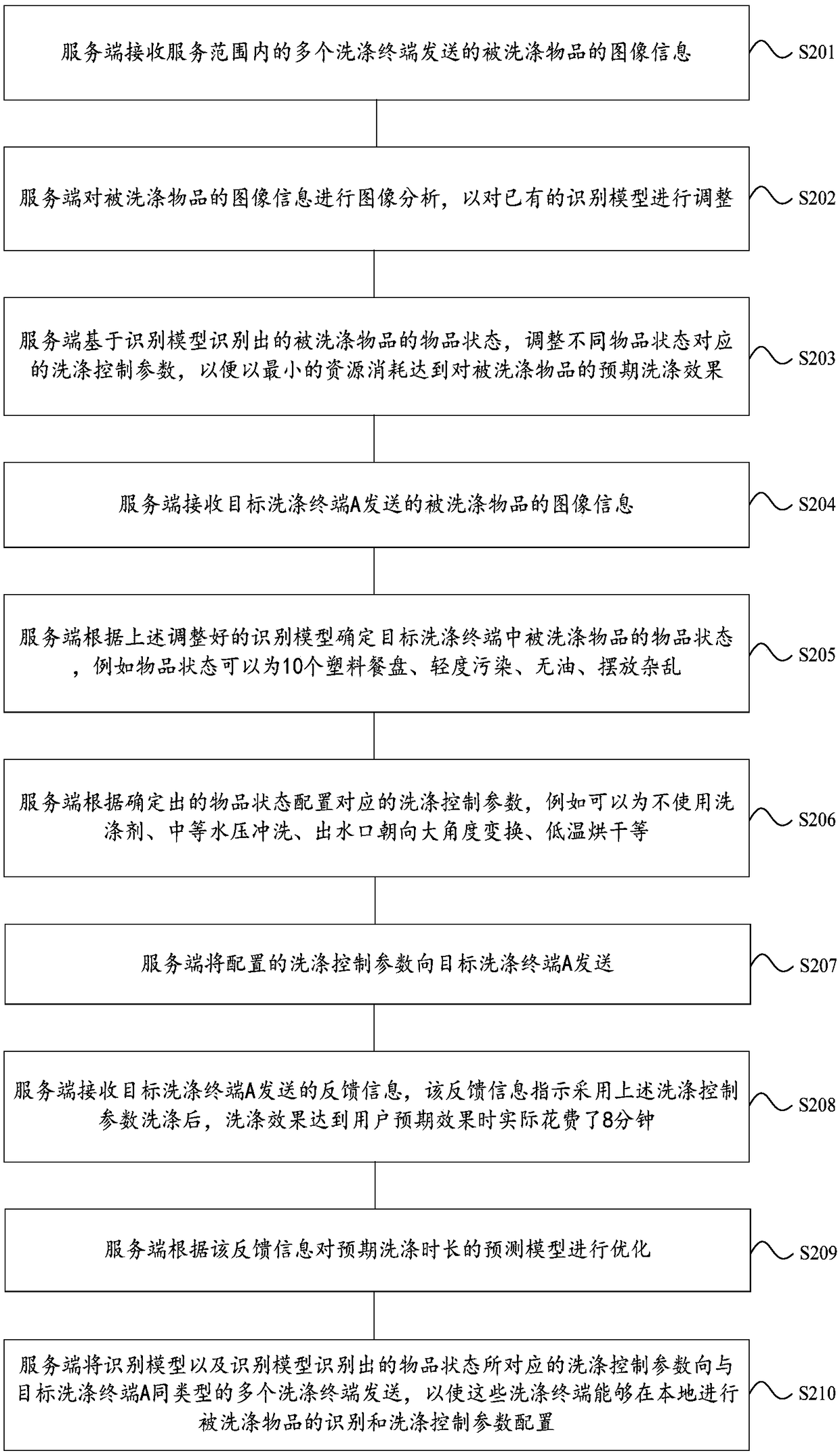

ActiveCN108814501AAvoid repetitionImprove efficiencyTableware washing/rinsing machine detailsWashing processesImaging analysisElectric equipment

The embodiment of the invention discloses a washing control optimizing method, device, electronic device and storage medium and relates to the technical field of smart home. The washing control optimizing method, device, electronic device and storage medium can effectively improve the optimizing efficiency and effects of washing control programs. The washing control optimizing method comprises receiving image information of objects to be washed, which is transmitted by at least one washing terminal, performing image analysis on the image information of the objects to be washed to establish identification models or adjusting existing identification models, wherein the identification models are used for identifying the object states of the objects to be washed, and the object states compriseat least one of following parameters including type, number, texture, dirty degree, drying degree and placing state of the objects to be washed; based on the identification models, identifying the object states of the objects to be washed, and adjusting washing control parameters corresponding different object states so as to achieve expected washing effects on the objects to be washed at the minimal consumption of resources. The washing control optimizing method, device, electronic device and storage medium can be applied to washing program optimization of washing equipment.

Owner:KINGSOFT

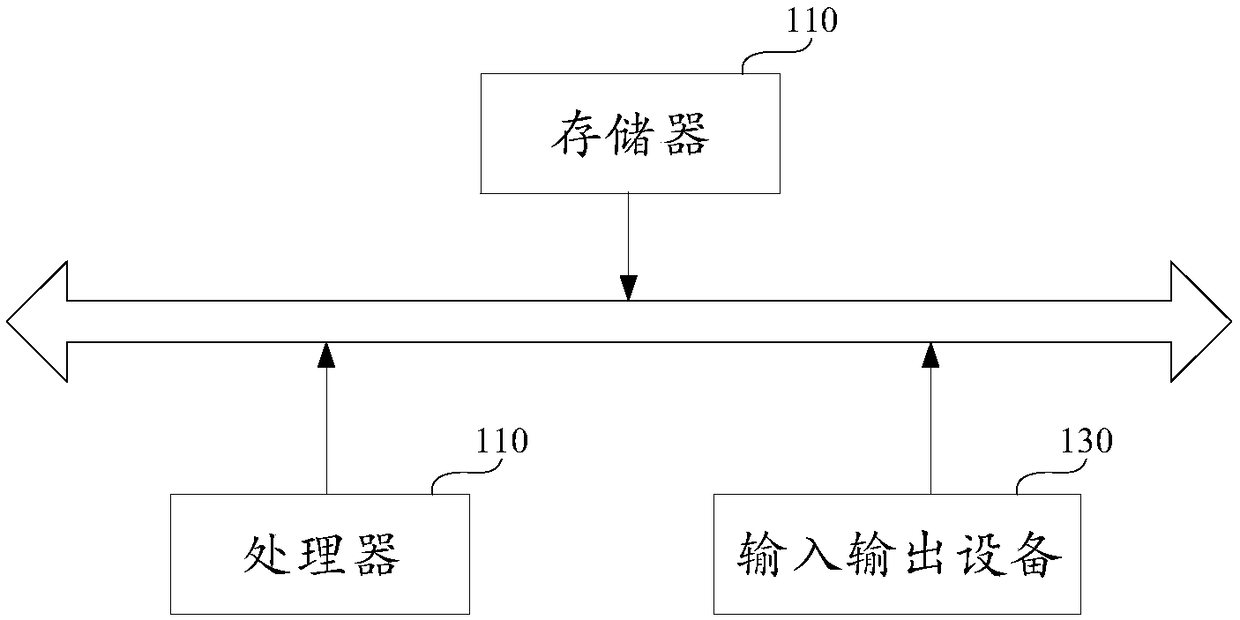

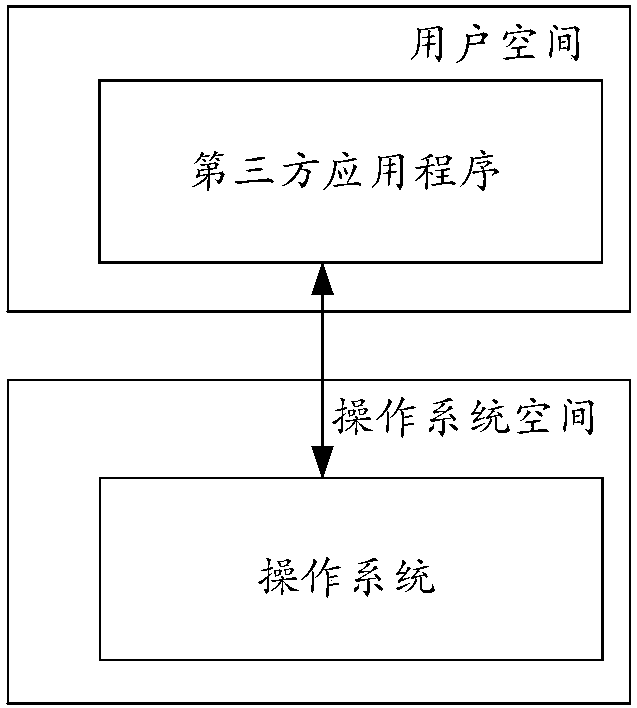

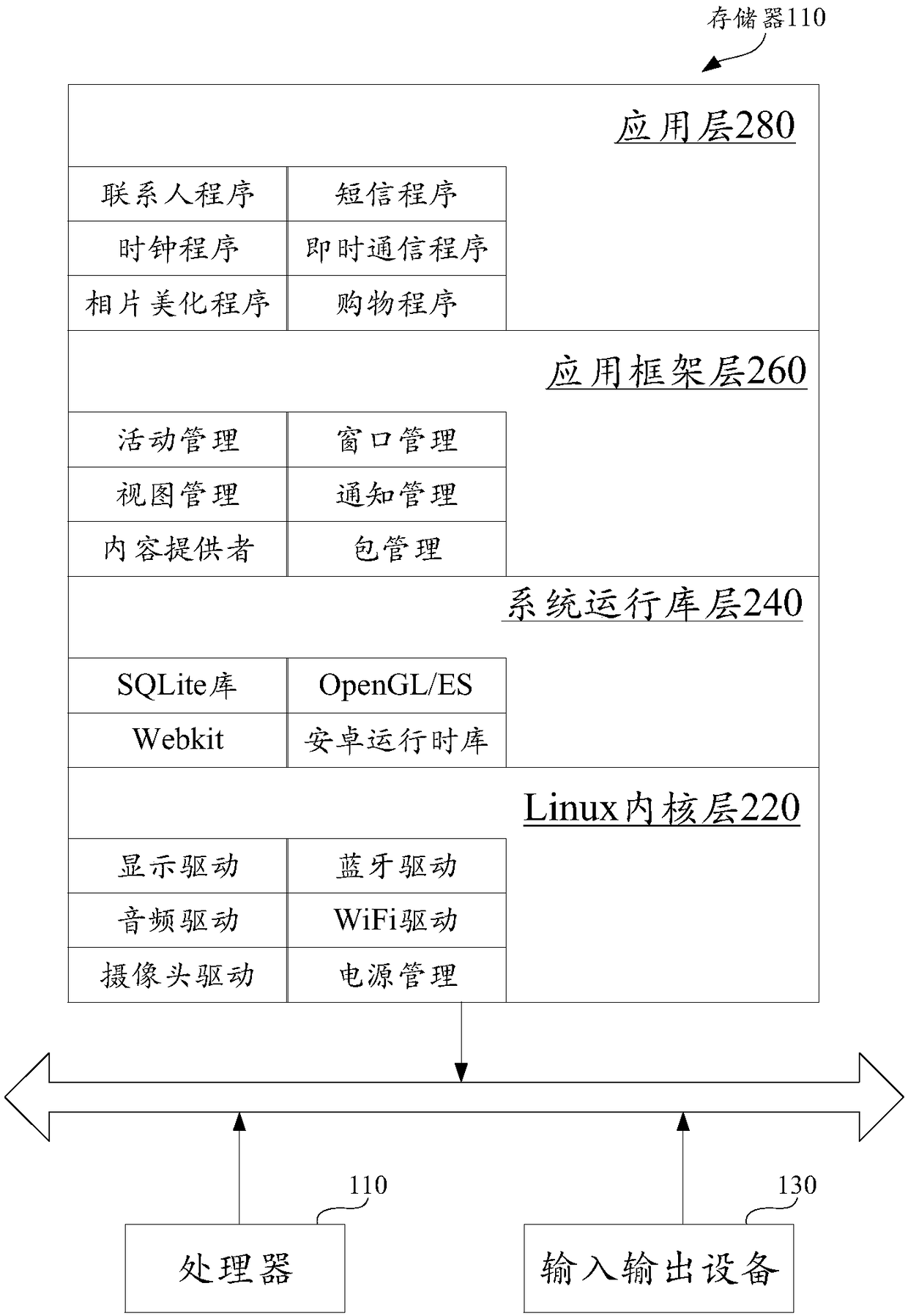

Program optimization method, device, terminal and storage medium

ActiveCN108491275AImprove running qualityImprove running fluencyResource allocationDigital data processing detailsHysteresisOperational system

The embodiment of the invention discloses a program optimization method, device, terminal and storage medium, and belongs to the field of application optimization. The method comprises the steps thatan operation system sends system running information to a target application program through a preset mode, the system running information is used for representing a running state of a system; the target application program receives the system running information; the target application program determines a program optimization strategy according to the system running information, the program optimization strategy is used for adjusting a running strategy of the target application program; and the target application program executes the program optimization strategy. The program optimization method, device, terminal and storage medium have the advantages that based on the system running information transmitted by the operation system, the target application program correspondingly adjusts its own running strategy, thereby improving the running quality of the target application program in the operation system, avoiding hysteresis, frame loss and other phenomena caused by poor hardware performance, and achieving the effect of improving the running fluency of the application program.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

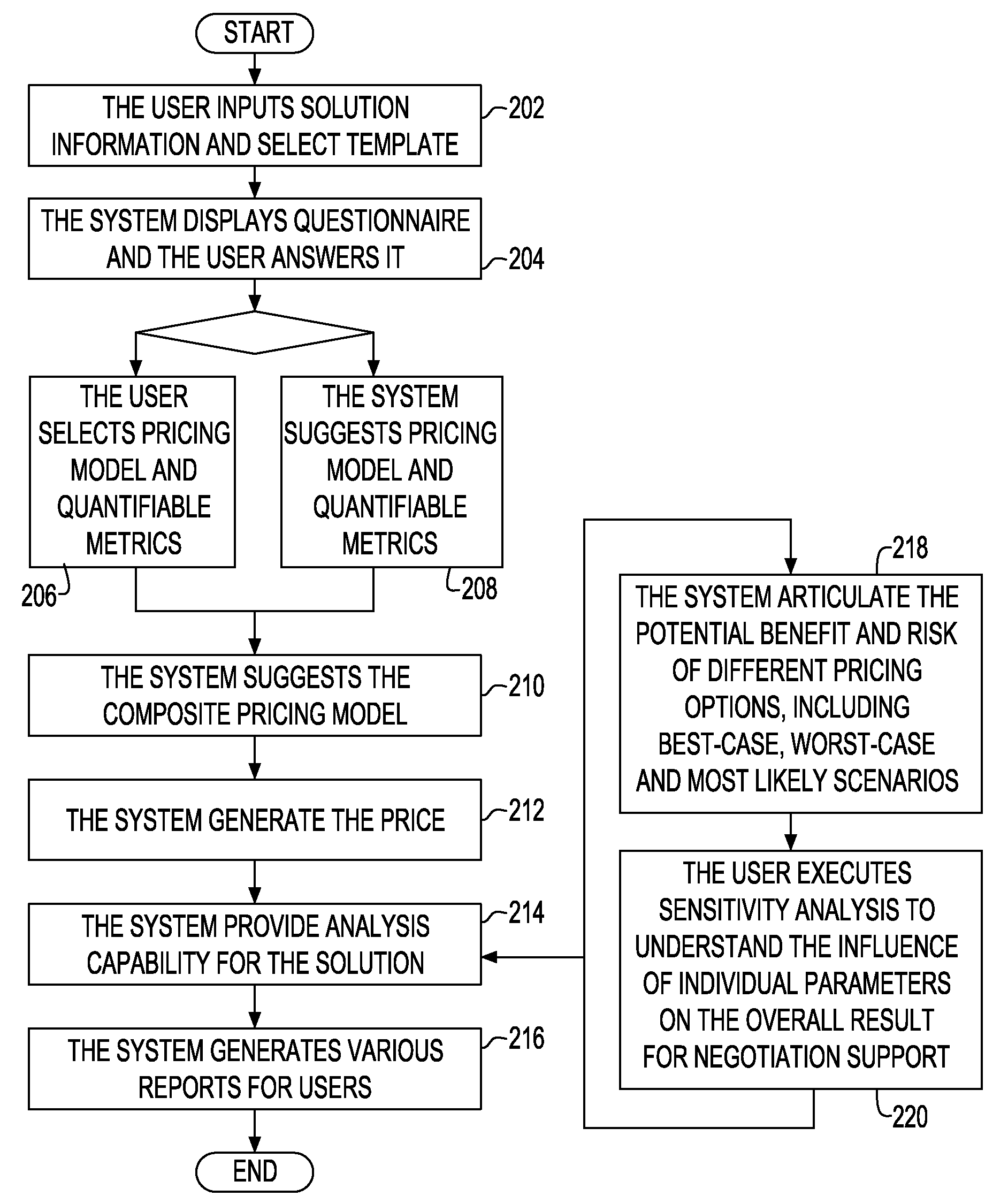

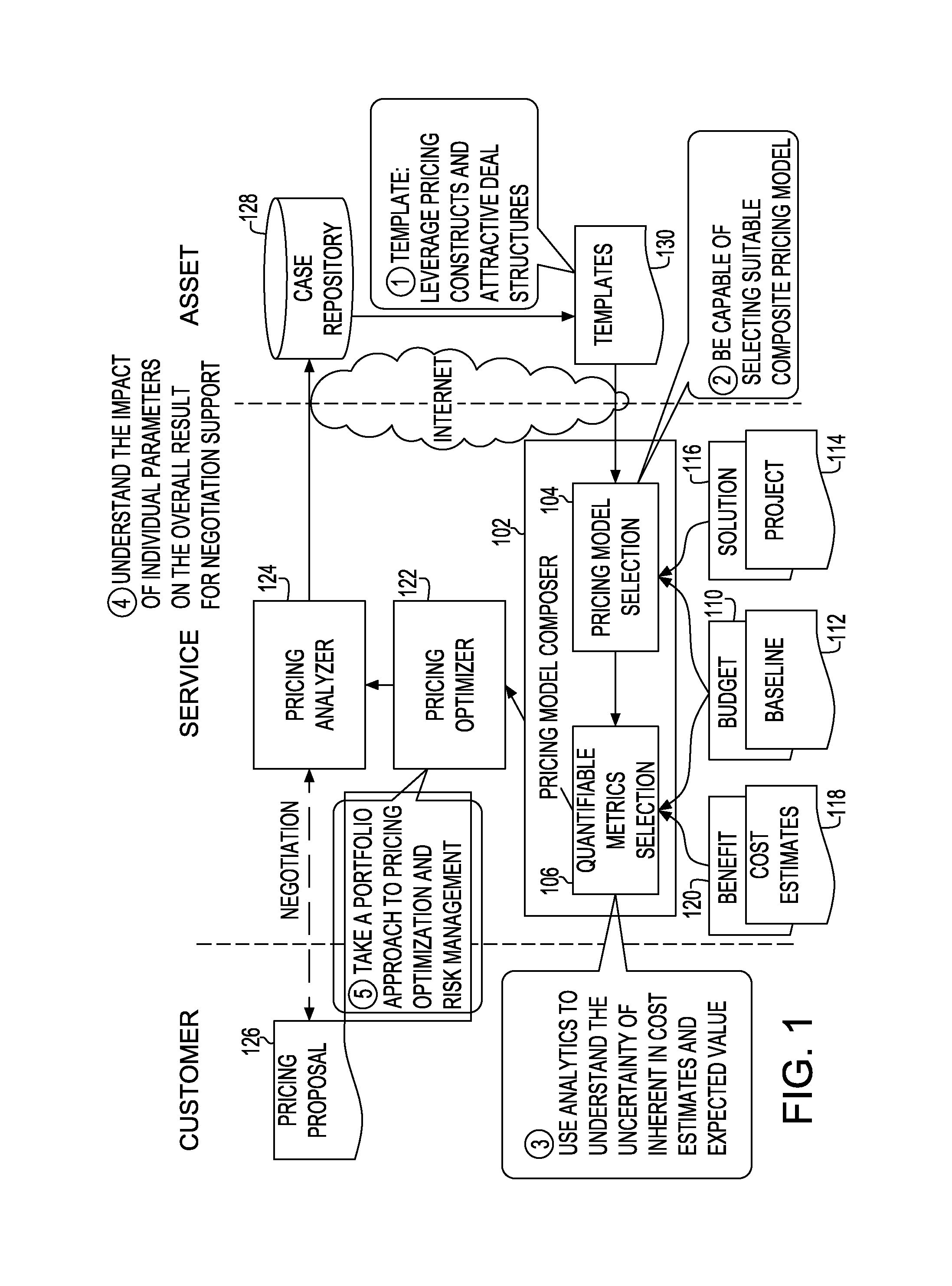

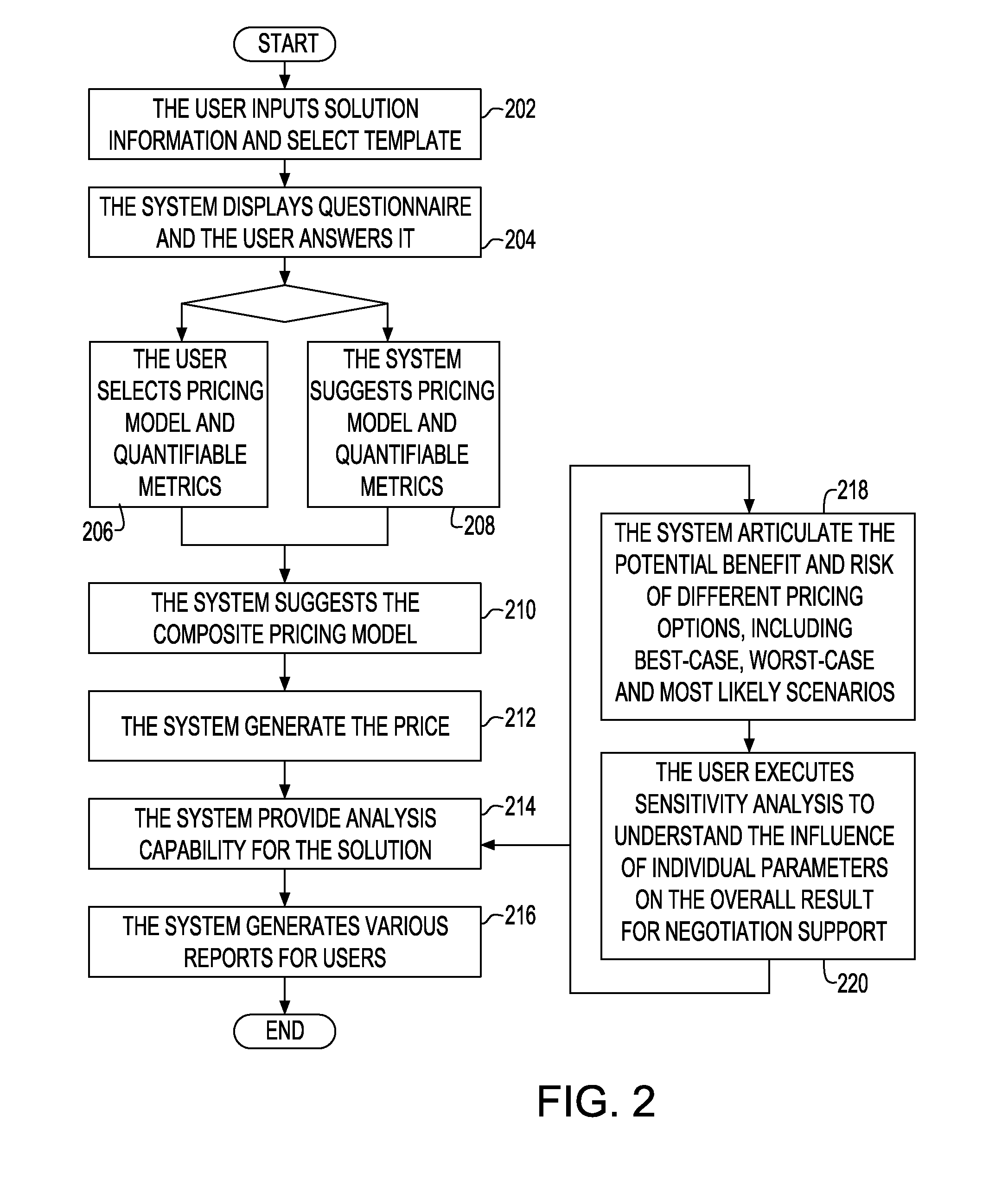

System and method for composite pricing of services to provide optimal bill schedule

System and method for service pricing optimization enables analysis of multi-phased, multi-business unit, multi-process, multi-geo / country deal structure with its parts and phases having different pricing implications, and provides a flexible composite pricing schedule optimized for both service provider and receiver by gain and risk sharing. In one aspect, elementary pricing models and pricing parameters are established and a composite pricing model is constructed based on the elementary pricing models and pricing parameters. An optimizer optimizes the composite pricing model to minimize risk and maximize one or more selected criteria. Price is generated using the optimized composite pricing model.

Owner:IBM CORP

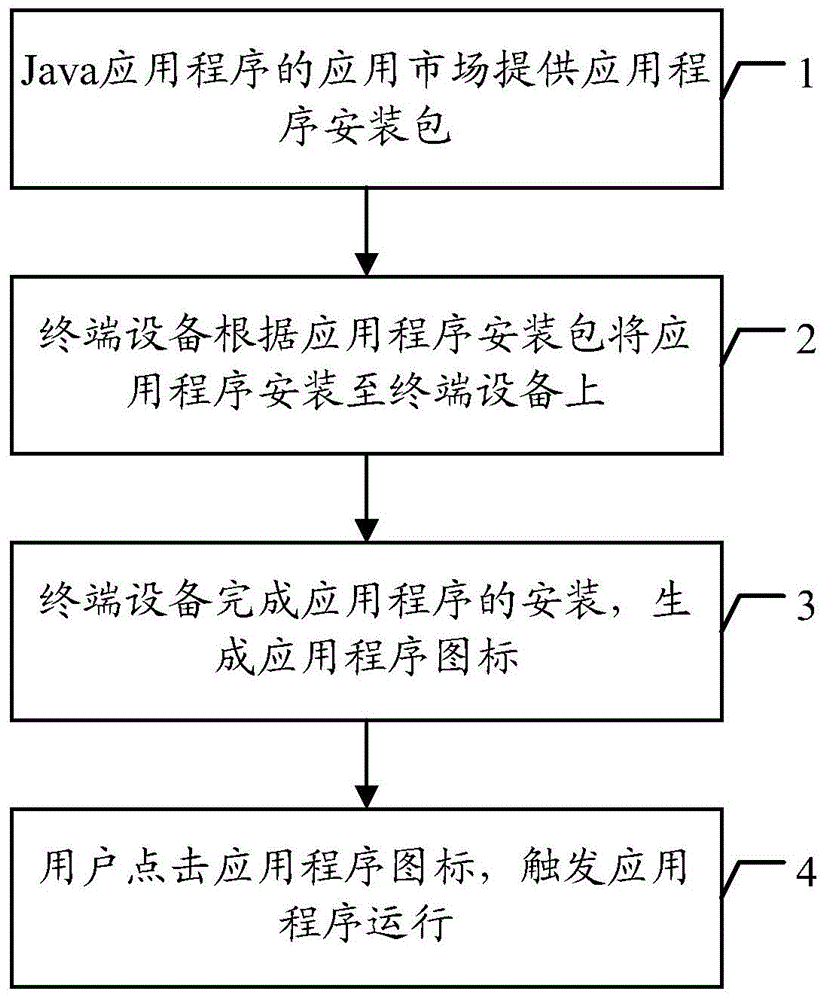

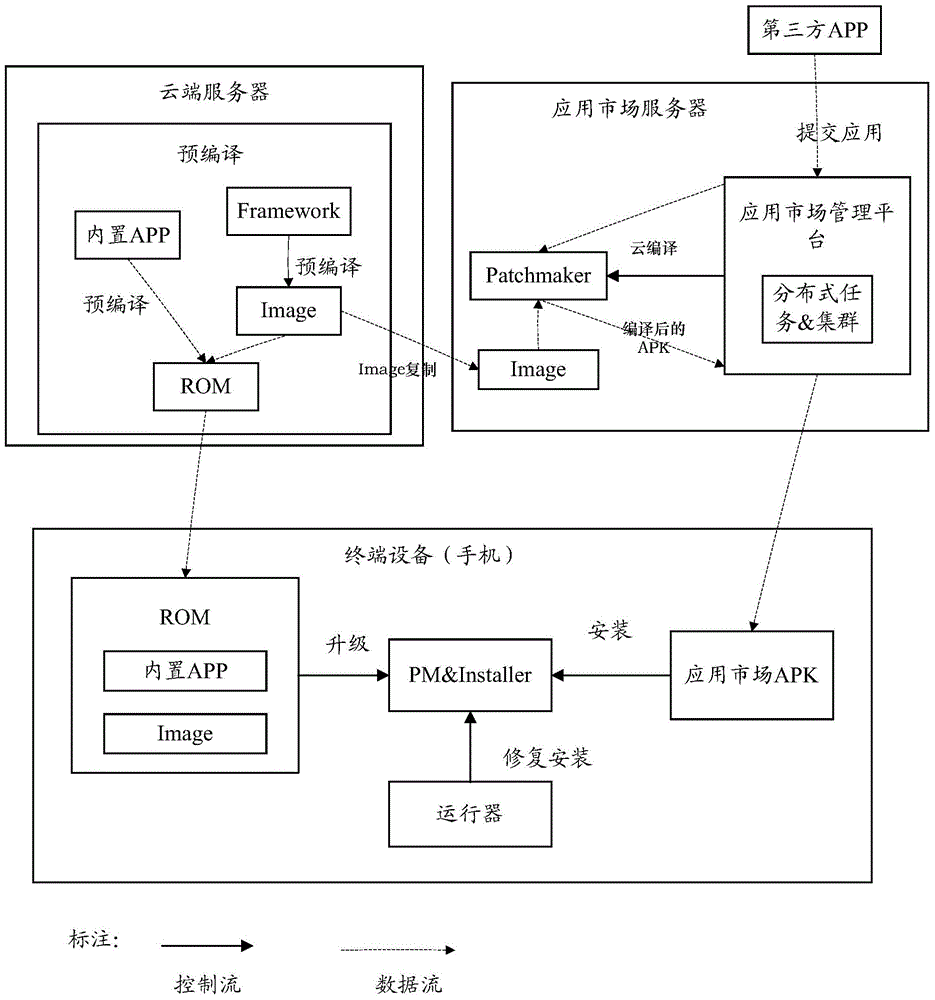

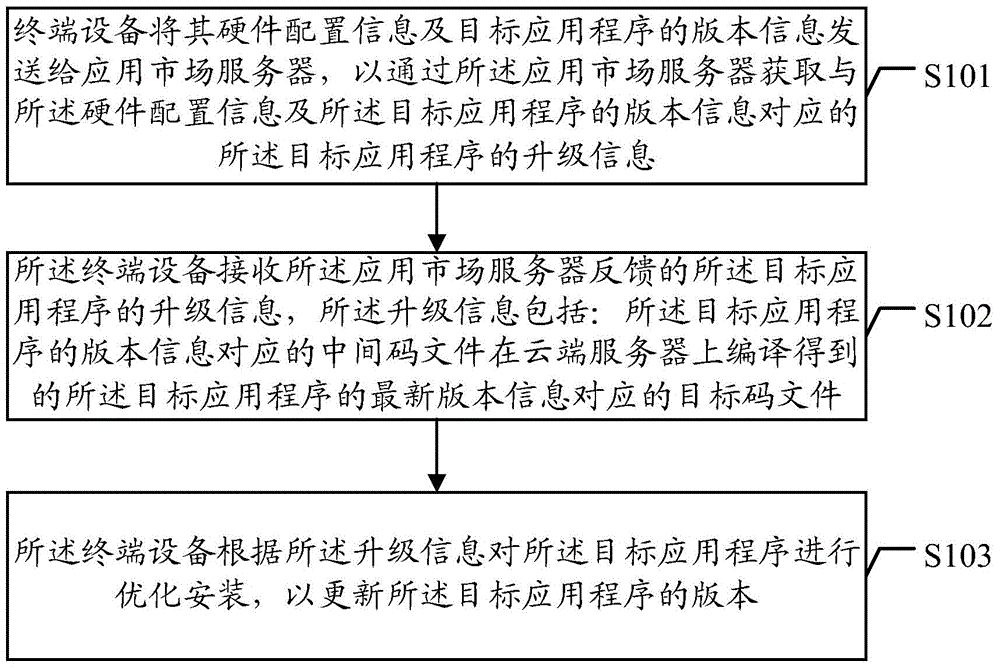

Method, device and system for realizing Java application installation via cloud compilation

ActiveCN105100191AReduce memory spaceReduce storage spaceTransmissionSoftware deploymentTerminal equipmentApplication software

The embodiment of the invention discloses a method for realizing Java application installation via cloud compilation. The method comprises the following steps that: terminal equipment transmits the hardware configuration information of the terminal equipment and the version information of a target application to an application market server in order to obtain the upgrade information of the target application corresponding to the hardware configuration information and the version information of the target application through the application market server; the terminal equipment receives the upgrade information of the target application fed back by the application market server, wherein the upgrade information includes a target code file corresponding to newest version information obtained by compilation of an intermediate code file corresponding to the version information of the target application on a cloud server; and the terminal equipment performs optimal installation of the target application according to the upgrade information in order to update the version of the target application. Through adoption of the embodiment of the invention, the method has the advantages that the equipment memory occupied by the optimal installation of the application can be reduced specifically; the efficiency of optimal installation of the application is increased; and the user experience of application installation is enhanced.

Owner:HUAWEI TECH CO LTD

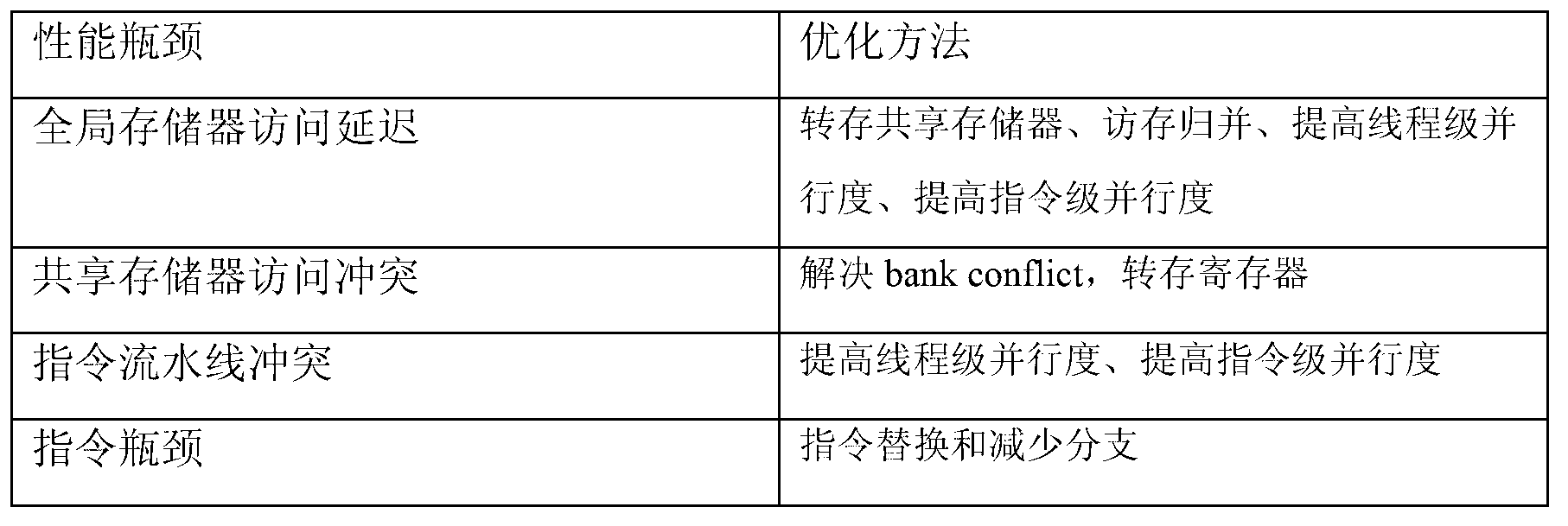

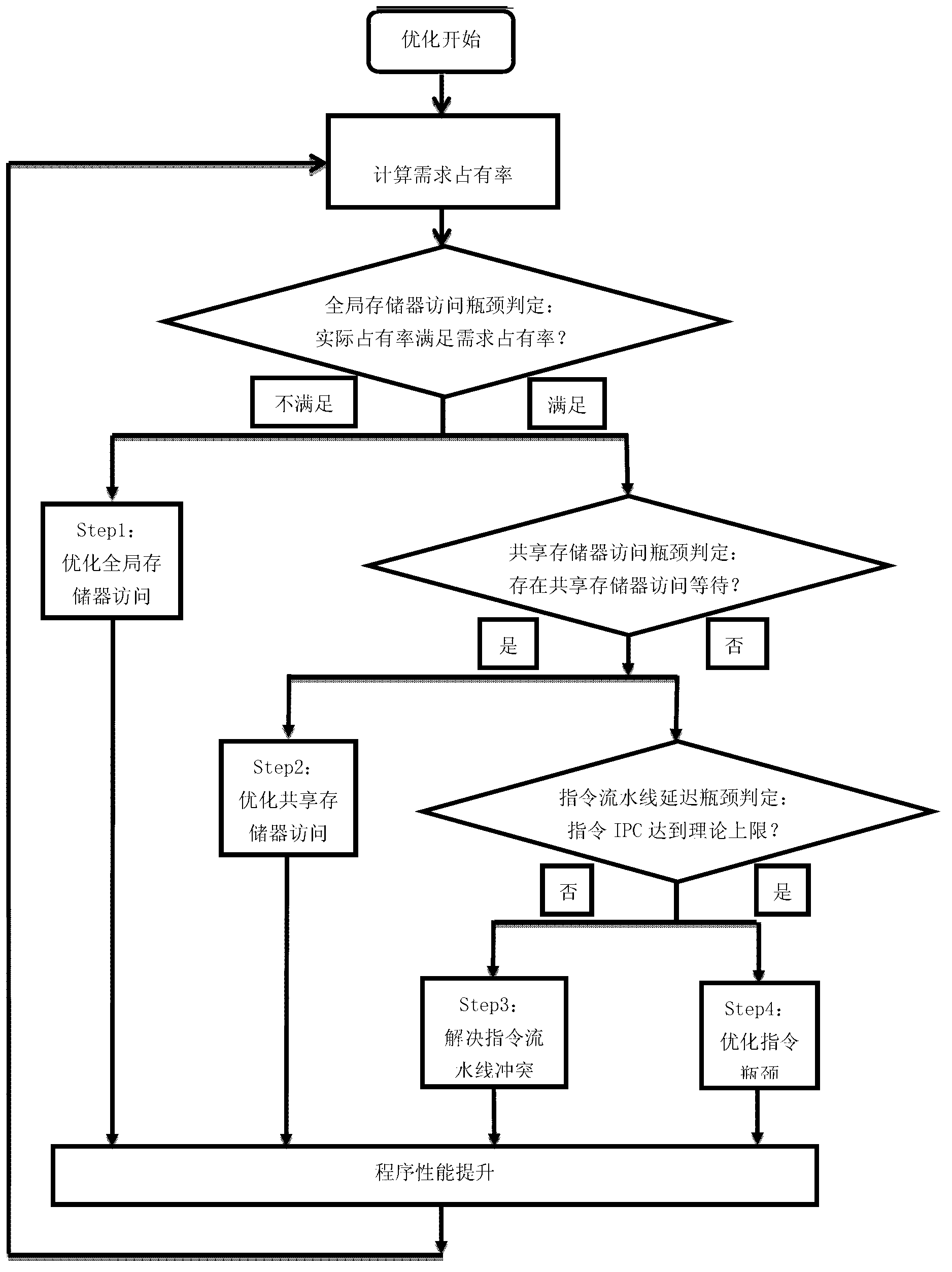

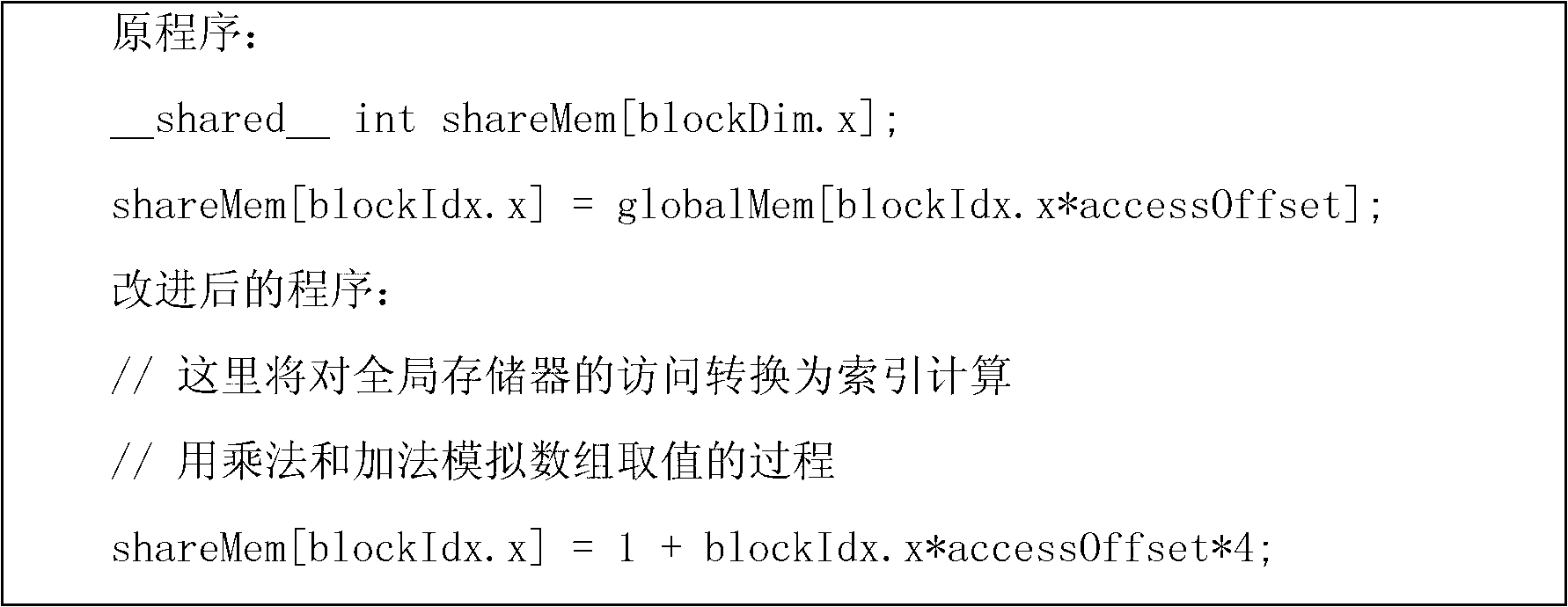

Graphics processing unit (GPU) program optimization method based on compute unified device architecture (CUDA) parallel environment

The invention relates to a graphics processing unit (GPU) program optimization method based on compute unified device architecture (CUDA) parallel environment. The GPU program optimization method defines performance bottleneck of a GPU program core and comprises global storage access delay, shared storage access conflict, instruction pipelining conflict and instruction bottleneck according to grades. An actual operational judgment criterion and a bottleneck optimization solving method of each performance bottleneck are provided. A global storage access delay optimization method includes transferring a shared storage, access merging, improving thread level parallelism and improving instruction level parallelism. A shared storage access conflict and instruction pipelining conflict optimization method includes solving bank conflict, transferring a register, improving thread level parallelism, and improving instruction level parallelism. The instruction bottle neck includes instruction replacing and branch reducing. The GPU program optimization method provides a basis for CUDA programming and optimization, helps a programmer conveniently find the performance bottleneck in a CUDA program, conducts high-efficiency and targeted optimization for the performance bottleneck, and enables the CUDA program to develop computing ability of GPU equipment to the great extent.

Owner:北京微视威信息科技有限公司

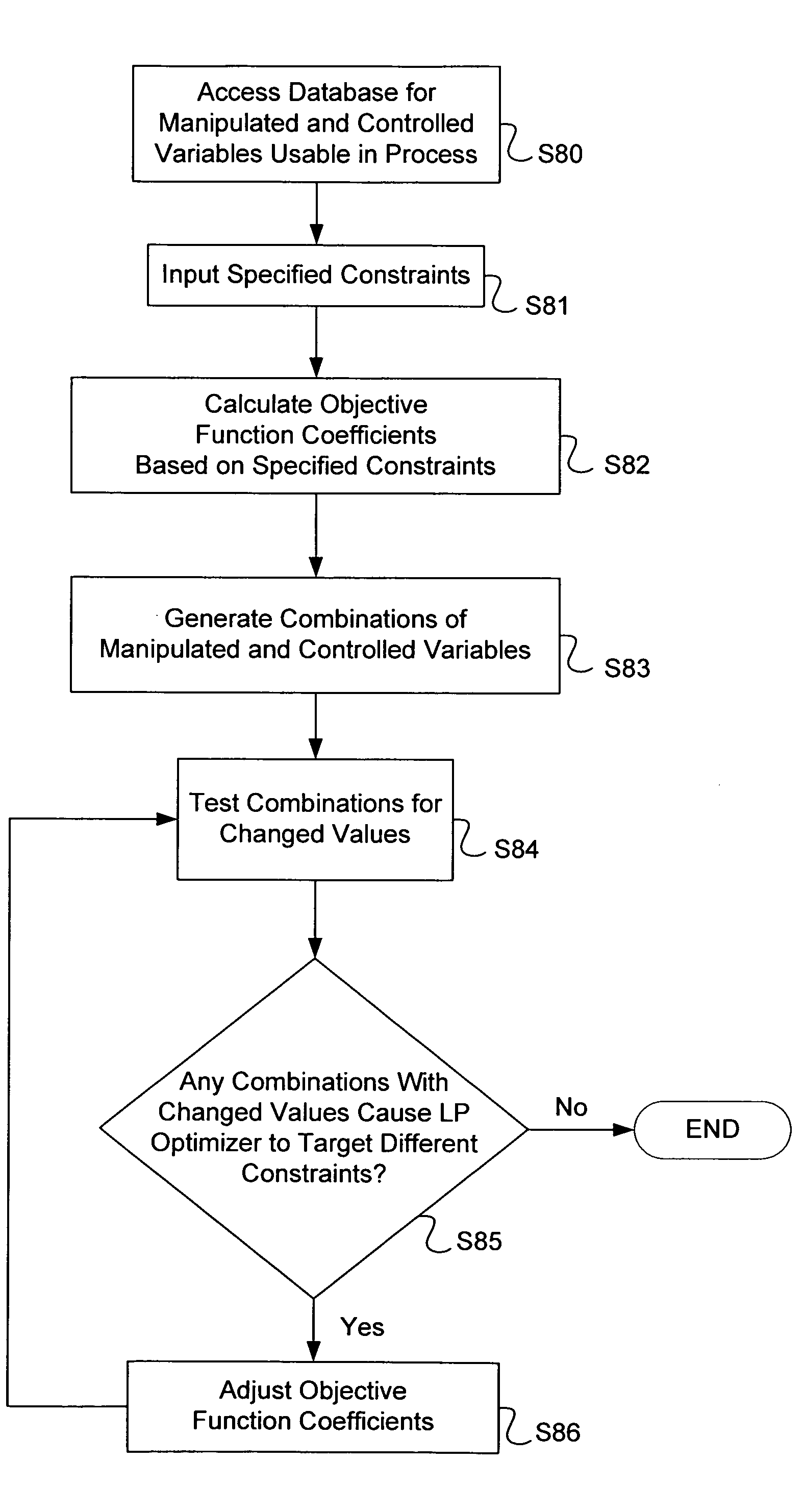

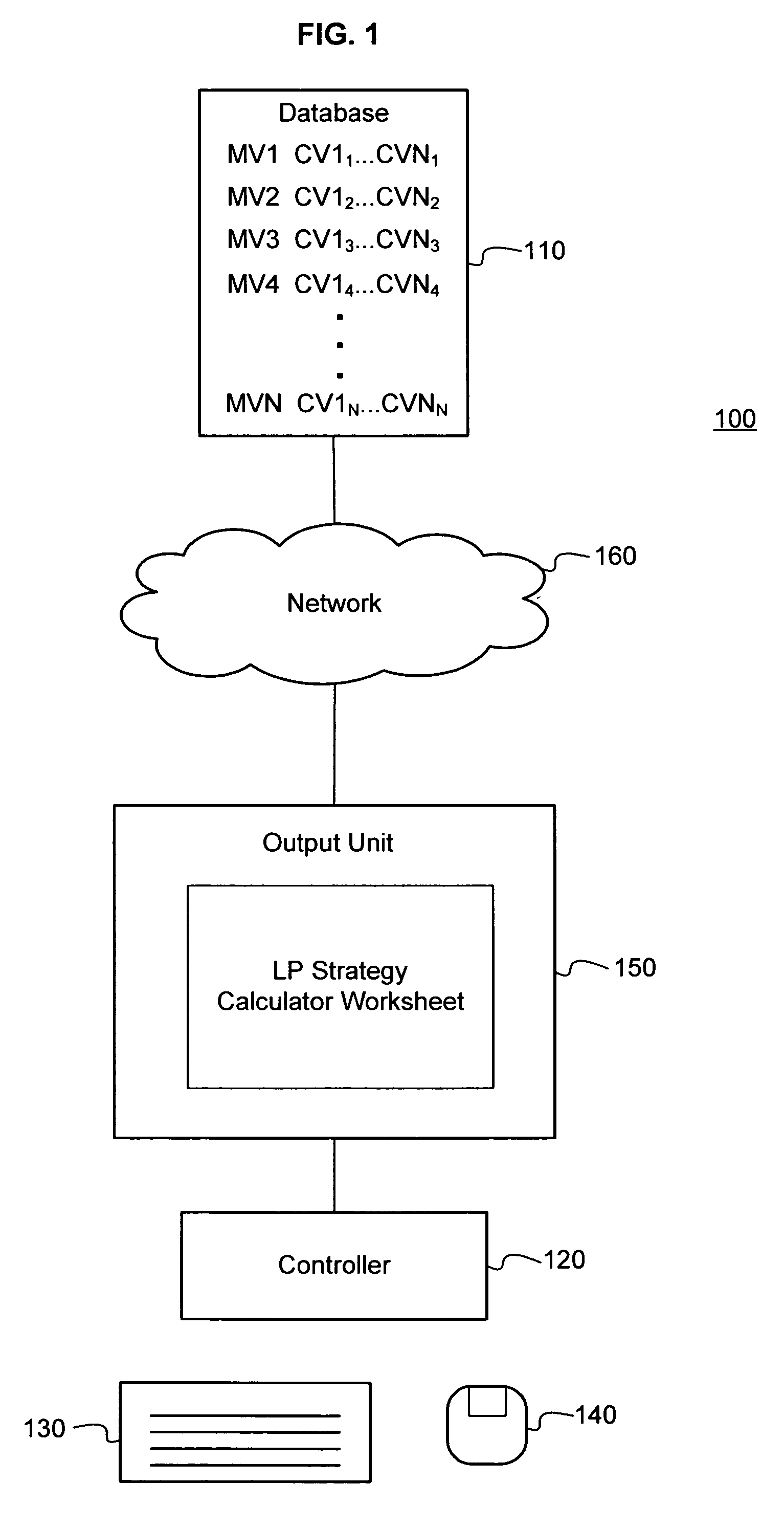

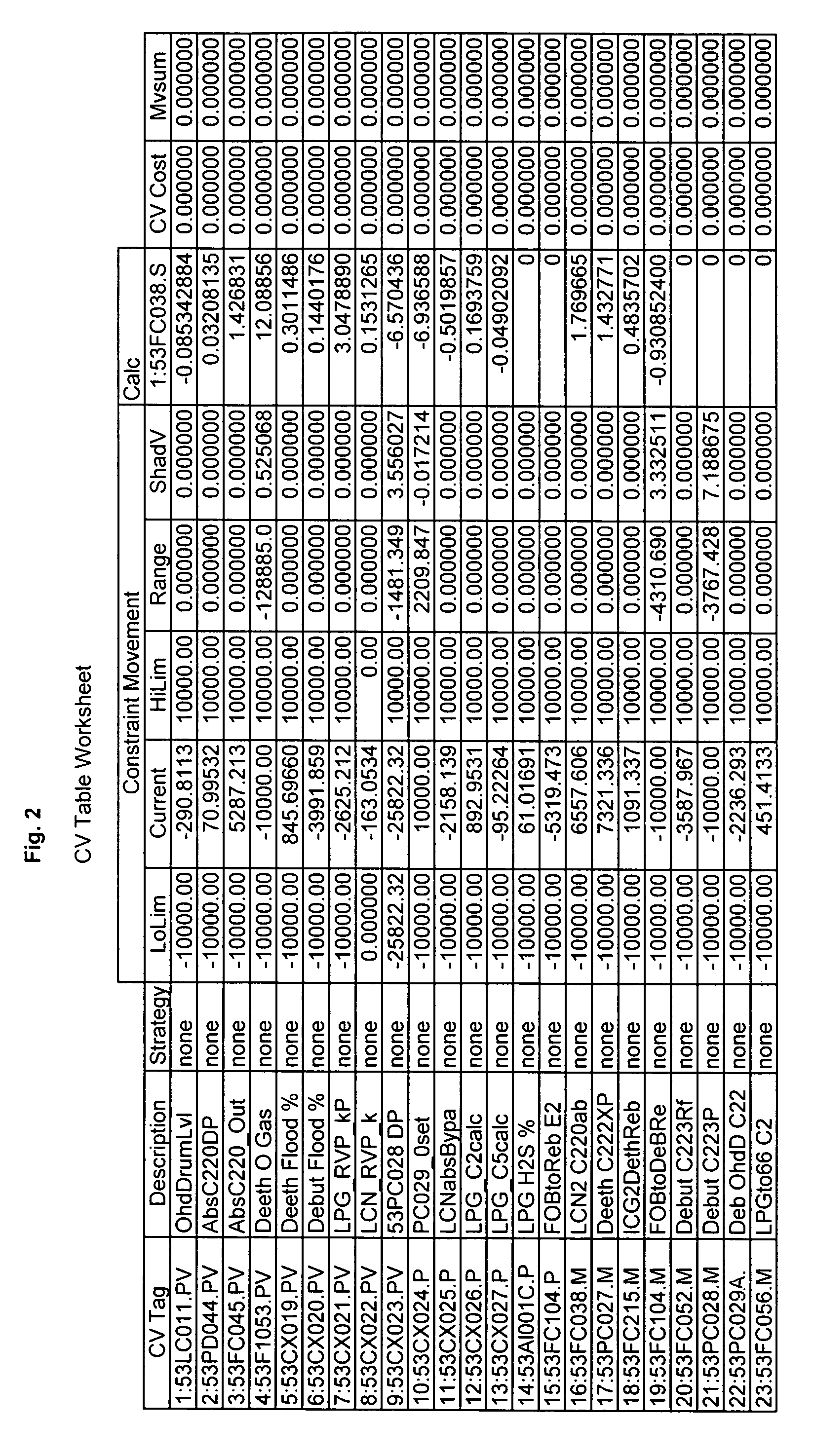

System, method and program for dynamic control and optimization of a process having manipulated and controlled variables

InactiveUS7987005B2Computation using non-denominational number representationAdaptive controlTheoretical computer scienceControl variable

Owner:CHEVROU USA INC

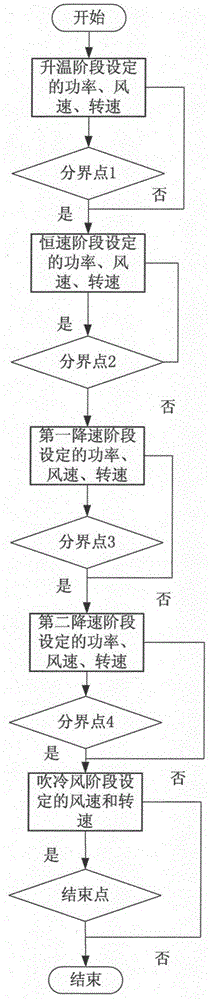

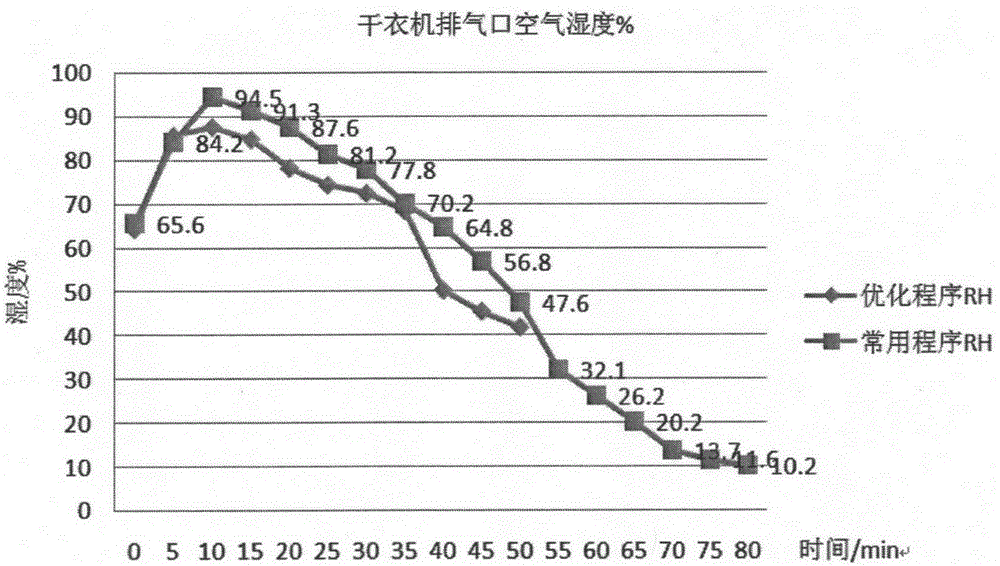

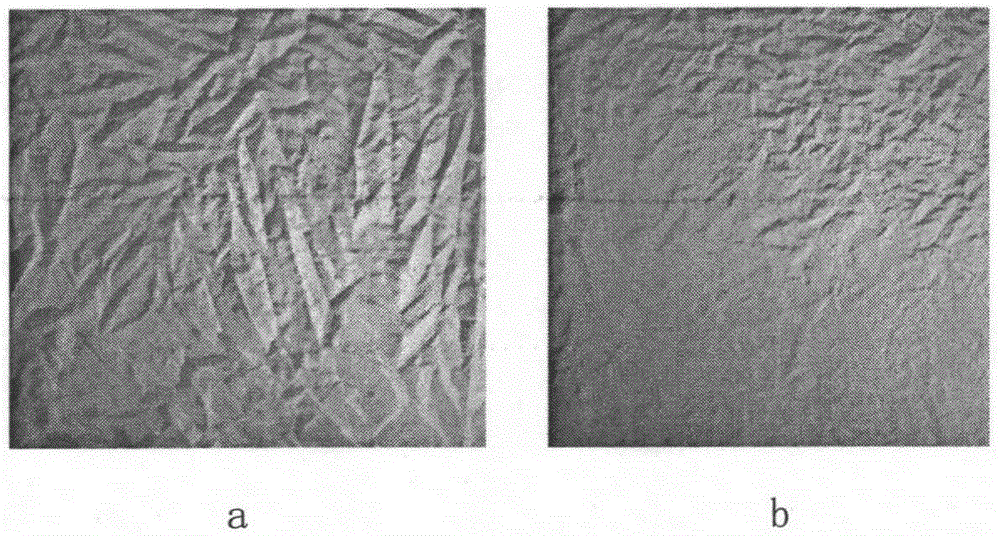

Clothes dryer drying program optimization method realizing energy saving time saving effect

ActiveCN105239339AReduce energy consumptionReduce manufacturing costTextiles and paperLaundry driersProcess engineeringHome appliance

The invention discloses a clothes dryer drying program optimization method realizing energy saving time saving effects, and the method uses air exhaust port humidity as phase division and parameter adjusting basis; the method belongs to the home appliance program optimization technology, and can solve the problems that an existing dryer is large in drying power consumption, and long in drying time. A humidity sensor is arranged in the clothes dryer air exhaust port, and the sensor can provide values for accurately determining a drying phase; in addition, a best drying parameter combination is set for the determined phase, thus maximumly using heat transfer and dehumidifying ability of the drying hot air. The method needs not to change inner structures of the dryer, only the humidity sensor is arranged on the air exhaust port of the dryer, thus realizing energy saving time saving effect without adding dryer inner hardware reconstruction cost.

Owner:DONGHUA UNIV

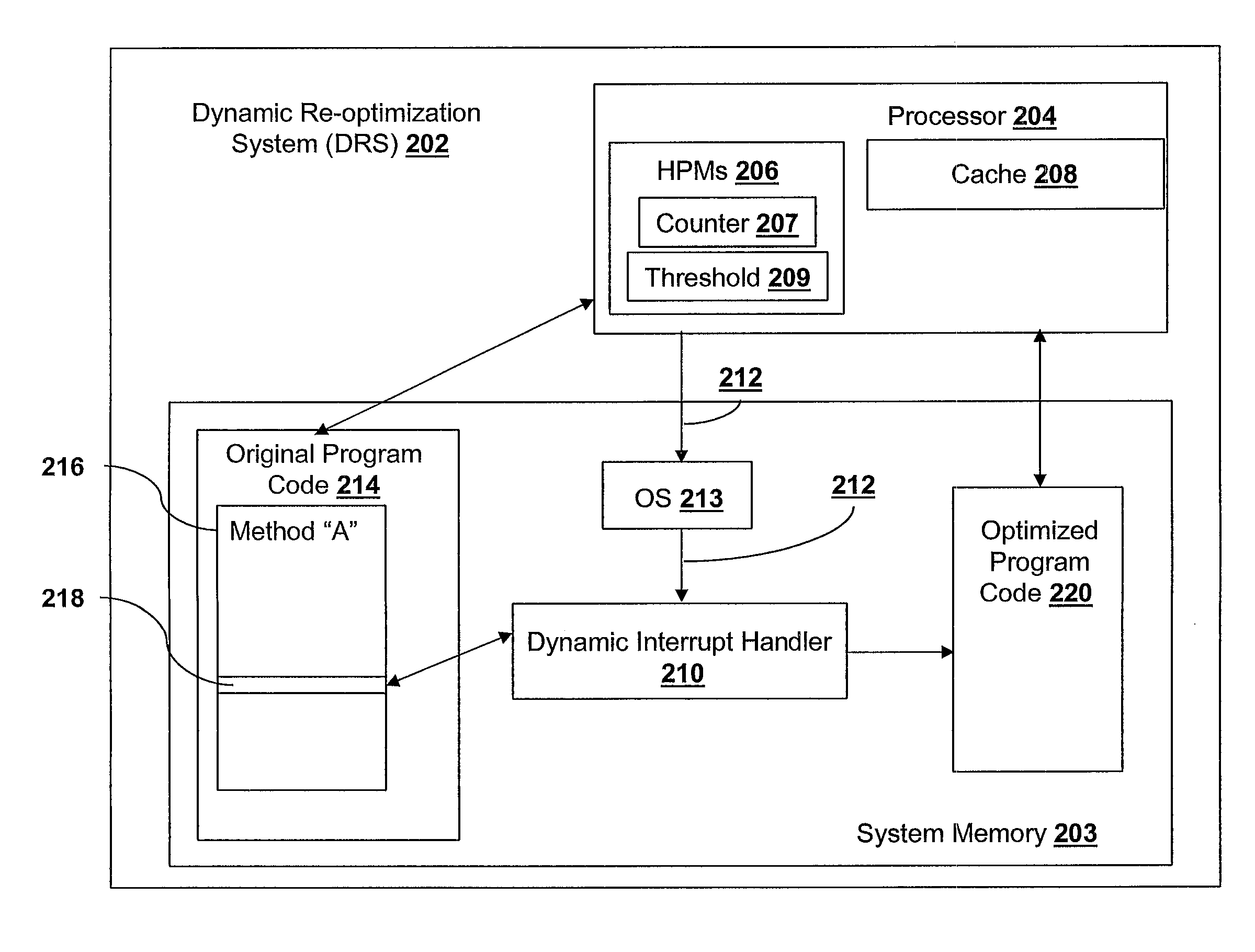

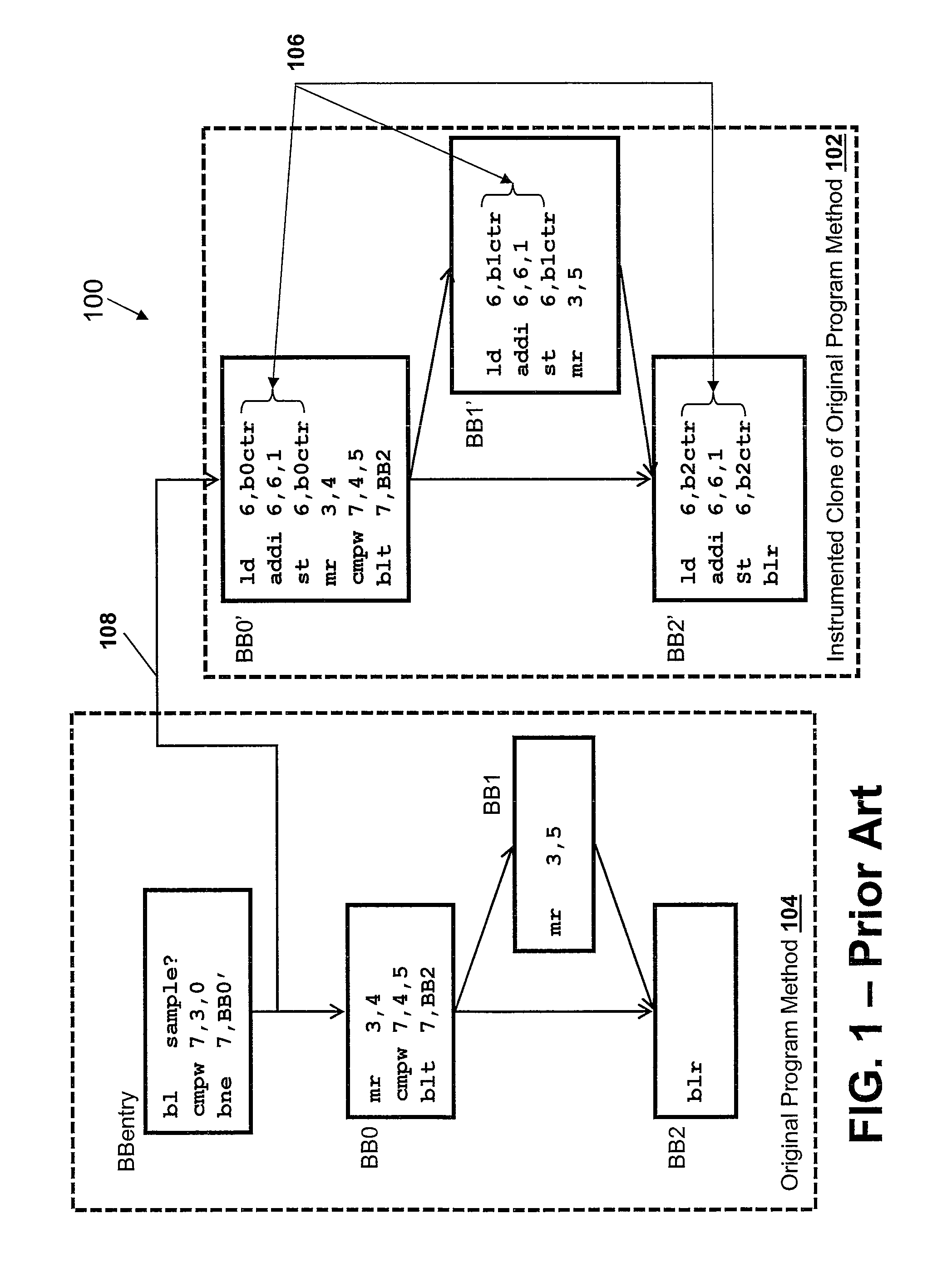

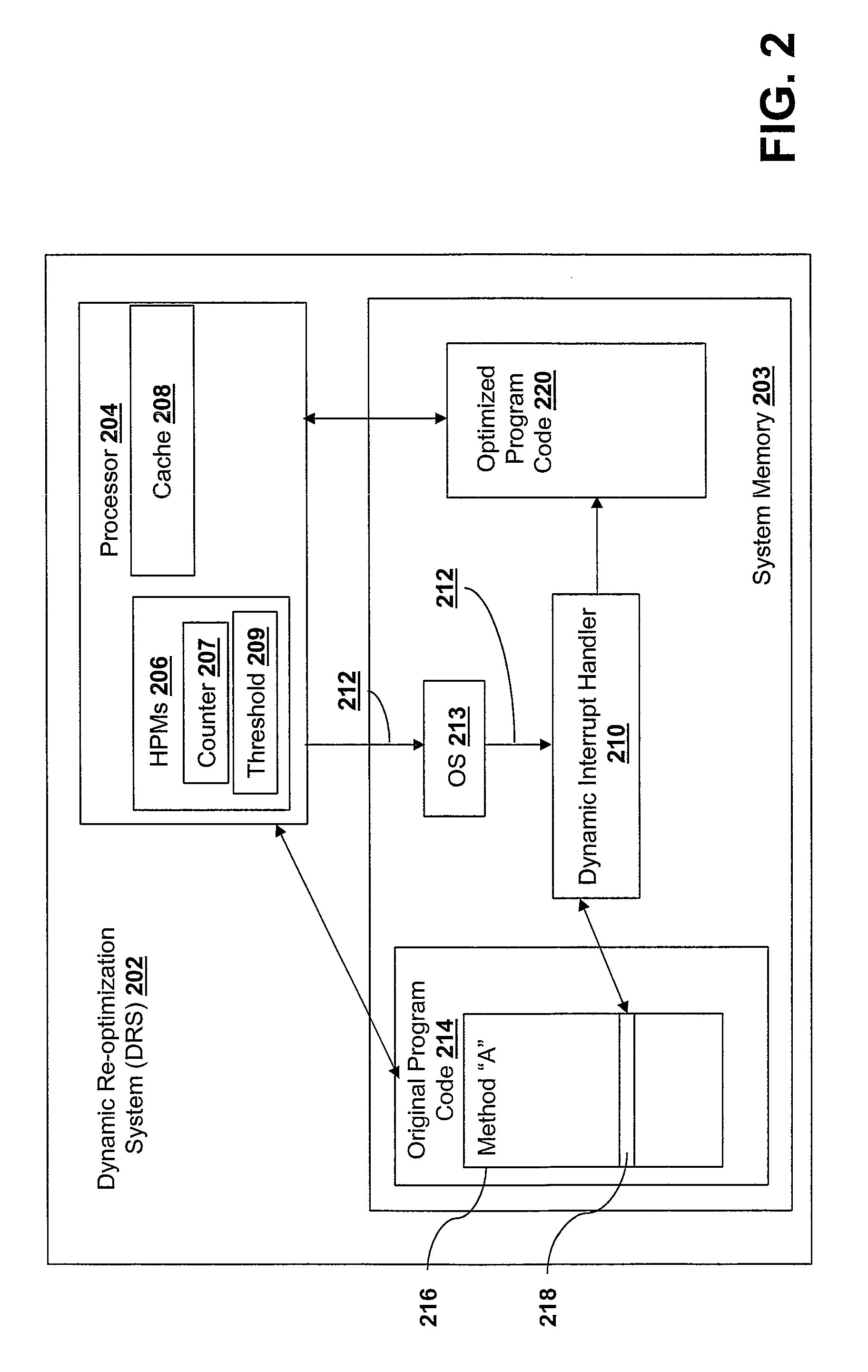

Using hardware interrupts to drive dynamic binary code recompilation

InactiveUS20090271772A1Specific program execution arrangementsMemory systemsComputerized systemDynamic recompilation

A method, computer system, and computer program product for using one or more hardware interrupts to drive dynamic binary code recompilation. The execution of a plurality of instructions is monitored to detect a problematic instruction. In response to detecting the problematic instruction, a hardware interrupt is thrown to a dynamic interrupt handler. A determination is made whether a threshold for dynamic binary code recompilation is satisfied. If the threshold for dynamic code recompilation is satisfied, the dynamic interrupt handler optimizes at least one of the plurality of instructions.

Owner:IBM CORP

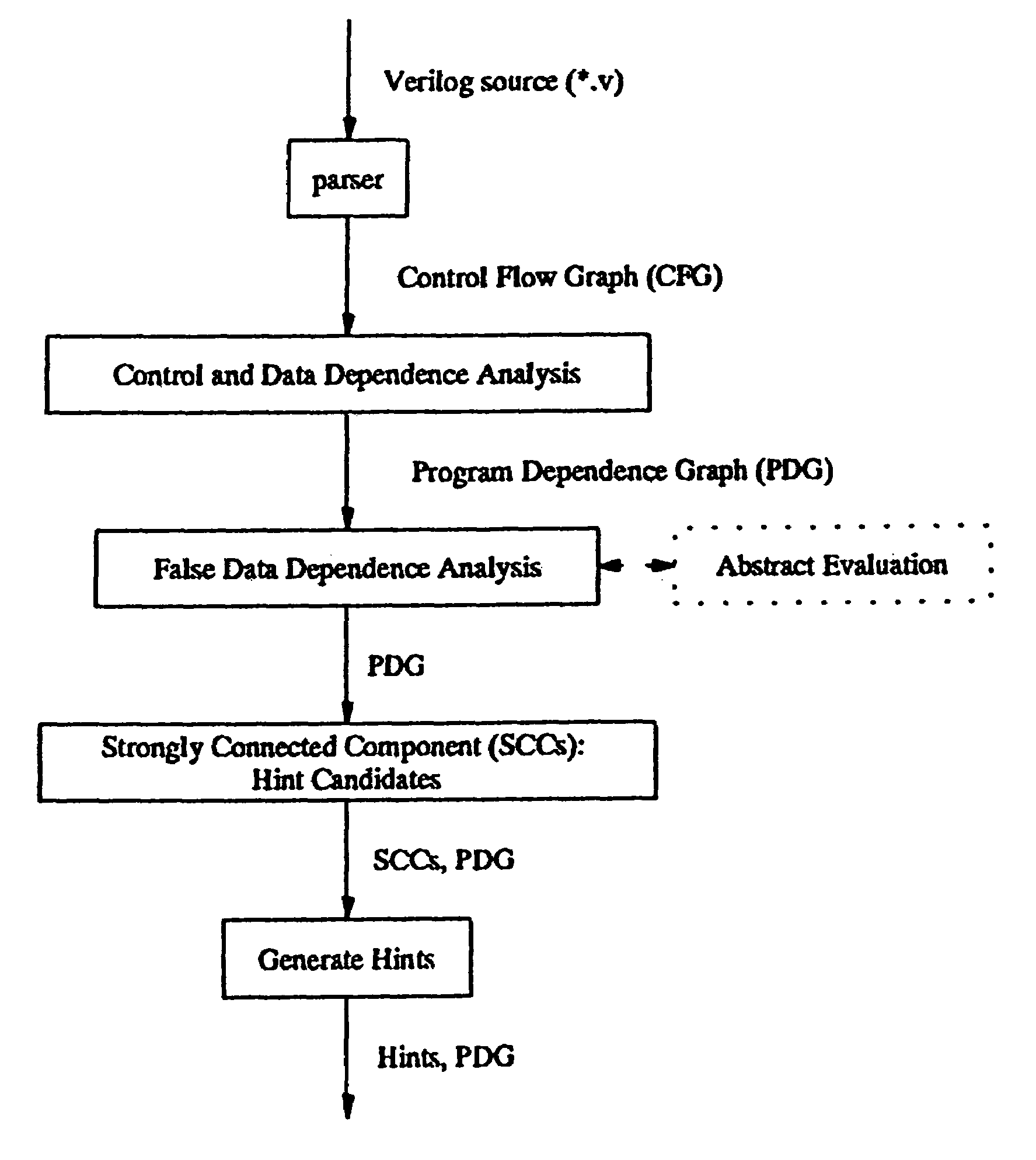

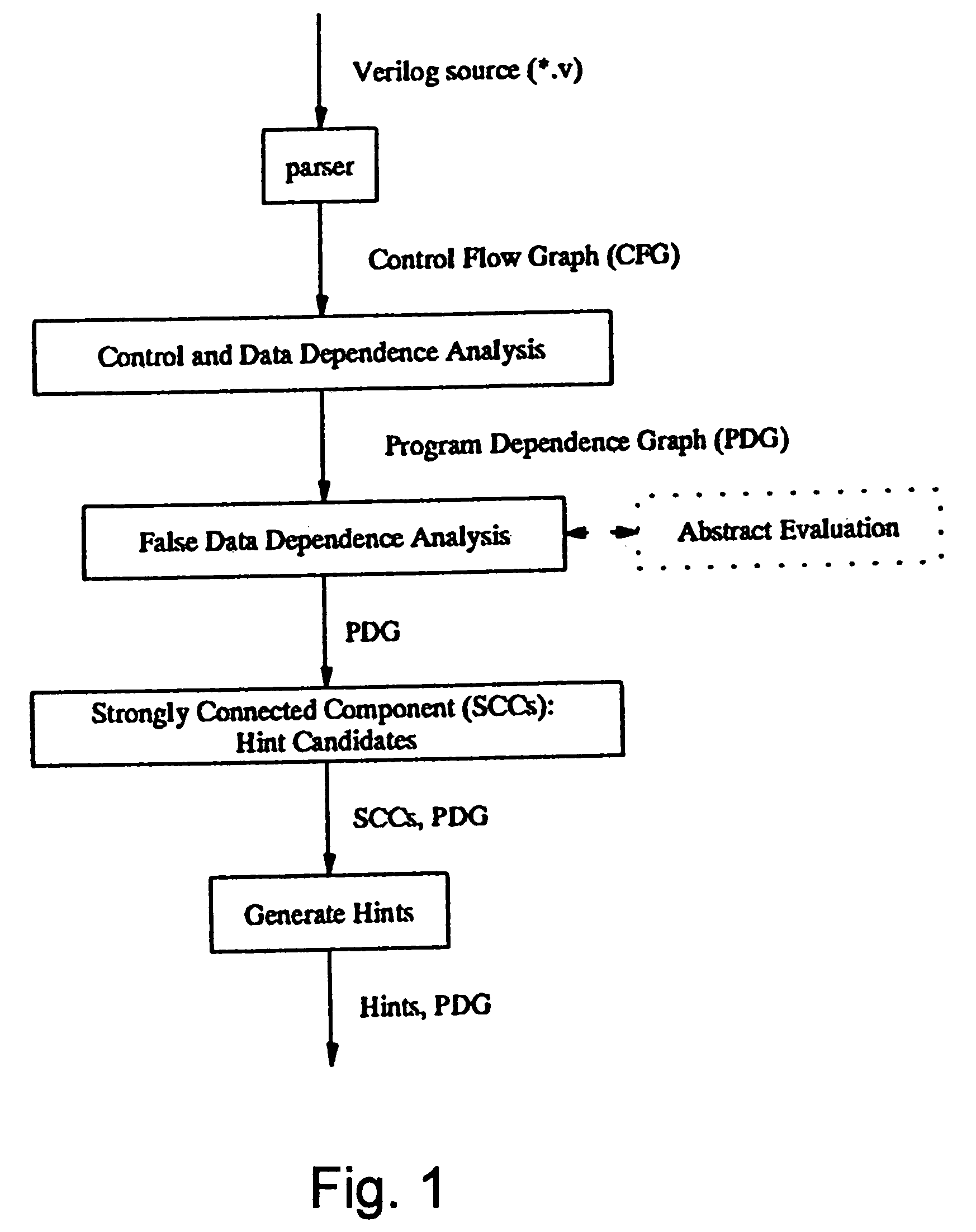

Method for optimizing integrated circuit device design and service

InactiveUS7246331B2CAD circuit designSoftware simulation/interpretation/emulationComputer architectureCircuit design

Improved analysis and refinement of integrated circuit device design and other programs is facilitated by methods in which an original program is partitioned into subprograms representing valid computational paths; each subprogram is refined when cyclic dependencies are found to exist between the variables; computational paths whose over-approximated reachable states are found to be contained in another computational path are merged; and finally, the remaining subprograms conjoined decision conditions become candidates for hints for program refinement.

Owner:GLOBALFOUNDRIES INC

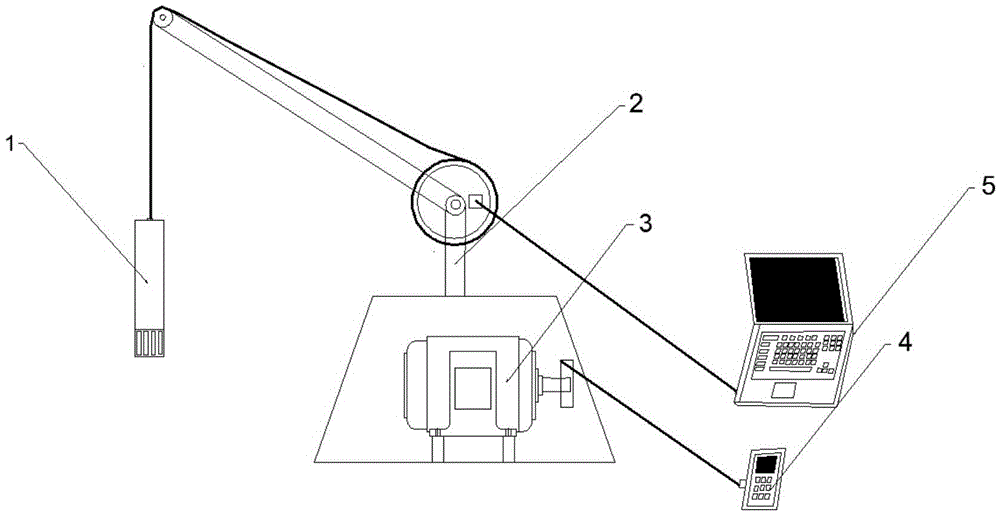

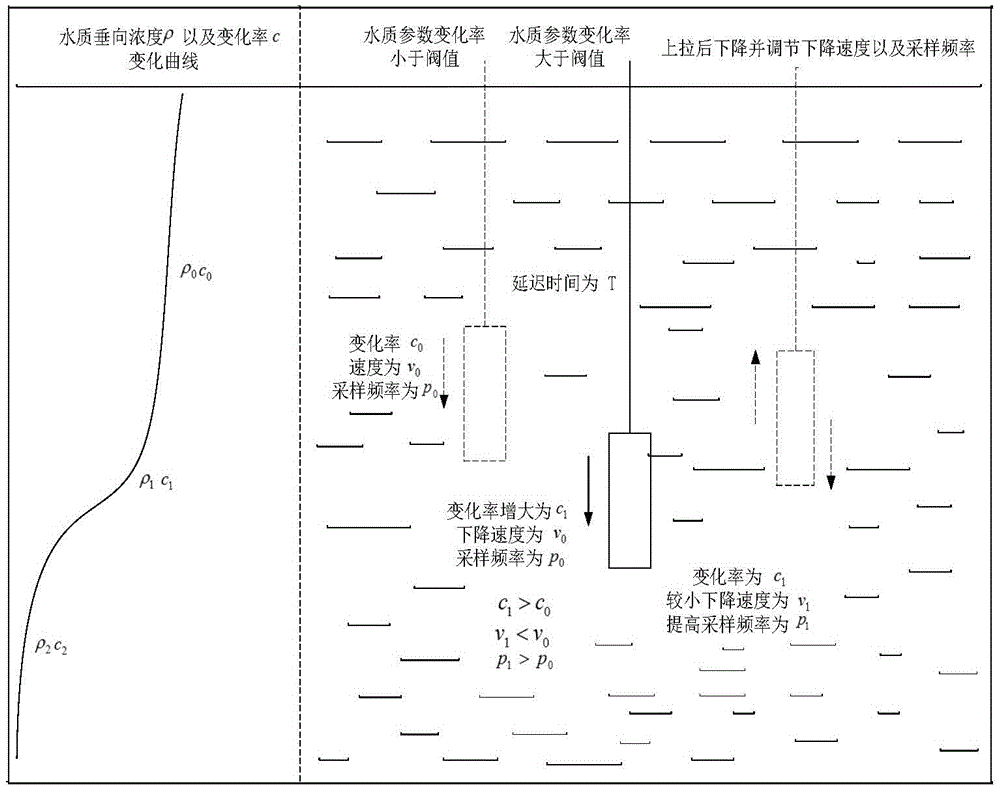

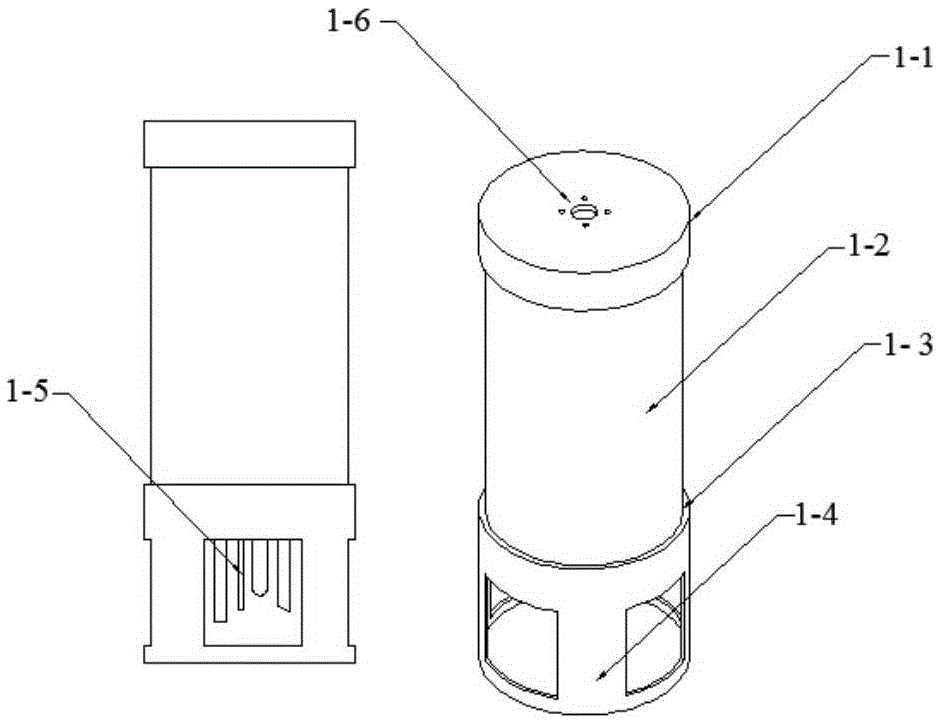

Reservoir multi-parameter water quality vertical dynamic data collecting method

ActiveCN105137021AReduce measurement errorEfficient collectionTesting waterMicrocontrollerWater quality

The invention discloses a reservoir multi-parameter water quality vertical dynamic data collecting method, and belongs to the filed of water quality monitoring. A control program of a computer terminal is utilized for optimizing the delay and monitoring depth relationship so as to reduce the delay influence caused by a water quality monitoring probe. In the process of putting a step motor in a water quality collecting system, the computer terminal receives and analyzes the change rate of multiple parameters on the vertical section at a measuring point in real time; the sampling frequency is regulated according to the change rate value of reservoir water quality parameters; the step motor is controlled by a single chip microcomputer for controlling the upward pulling action and the downward putting speed of a multi-parameter data collecting module, so that the change trend of various parameters of the water quality at the vertical section is caught. The reservoir multi-parameter water quality vertical dynamic data collecting method provided by the invention has the advantages that collected water quality data is reliable and efficient, and the water quality delamination phenomenon of large-area deep water bodies such as reservoirs can be monitored.

Owner:DALIAN UNIV OF TECH

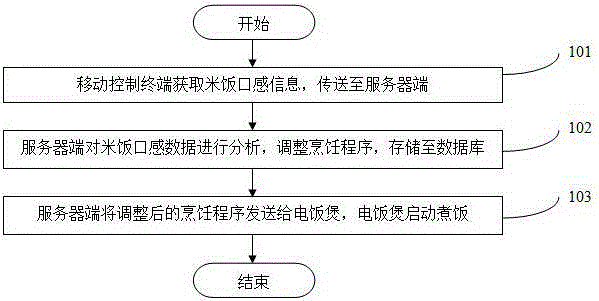

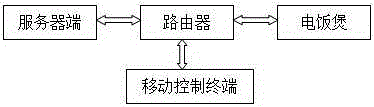

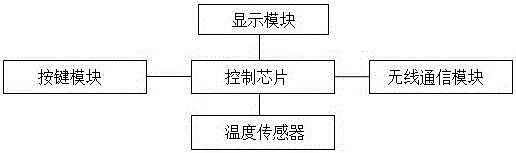

Cooking program and mouthfeel analysis push method and cloud electric rice cooker system

The invention discloses a cooking program and mouthfeel analysis push method and cloud electric rice cooker system; the method and system can use a cooking program and mouthfeel capture function to obtain mass usage information and cooking program and mouthfeel information of an intelligent electric rice cooker user, can carry out big data integration analysis for the captured information, and can carry out data analysis process on a server end. The method and system can store various cooking programs and mouthfeel of the user in a cooking process, thus better providing data for user mouthfeel customization and cooking program optimization treatment. The function can provide data so as to allow the user to continuously improve personal cooking programs and mouthfeel parameters; the server end can integratedly analyze the cooking big data, thus developing more and better cooking methods of different rice under different temperature curve, and providing data support for the user making mouthfeel customization and cooking program operation.

Owner:SHANGHAI CHUNMI ELECTRONICS TECH CO LTD +1

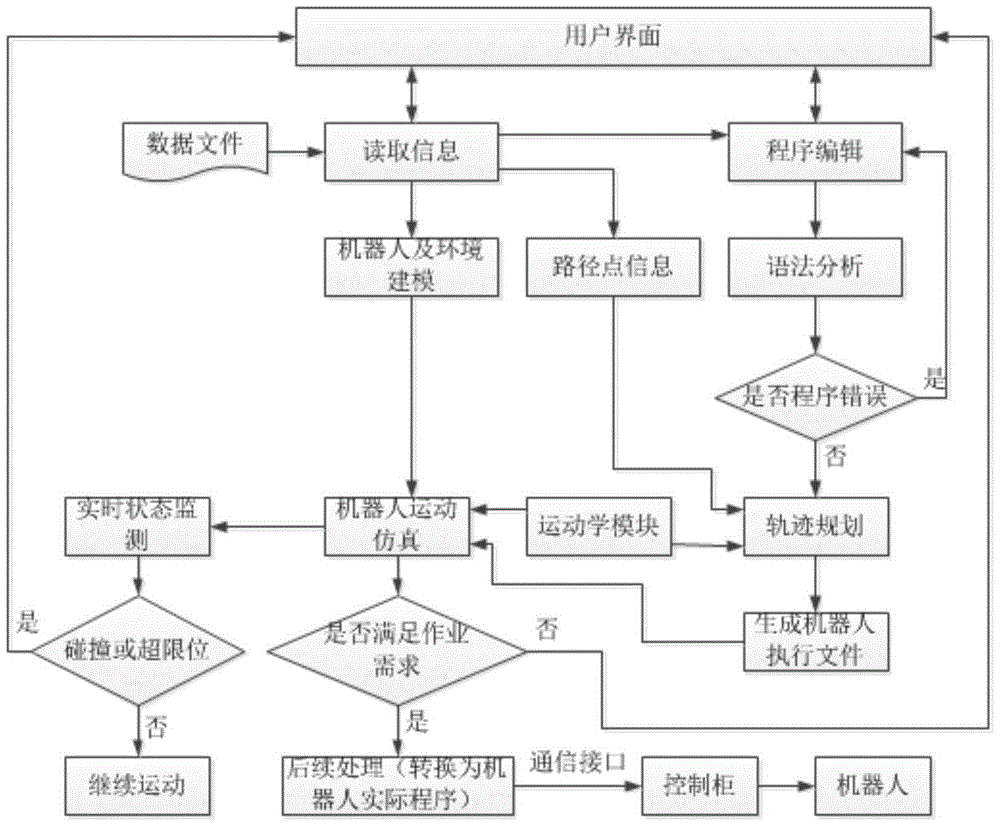

Welding robot offline programming system based on tablet computer, and offline programming method thereof

ActiveCN105786483AEasy to optimize movementEasy to acceptProgramme controlProgramme-controlled manipulatorTablet computerSimulation

The invention discloses a welding robot offline programming system based on a tablet computer, which comprises a system modeling module, a kinematics simulation module, a track planning module, an automatic programming module, a status detection module, and a communication module. The invention further discloses an offline programming method of the offline programming system. The offline programming system provided by the invention has the advantages that compared with the traditional online teaching programming system, the system adopts a human operating interface based on the Linux operating system; highly controllable edit capabilities achieve easy optimization of motions of all axes; an actual working environment is simulated, and arc welding, spot welding and other welding functions of a robot are achieved; special offline and simulation technologies facilitate program optimization, and a completed program can be directly read and adopted by the robot through a wireless network, so that the onsite verification waiting time is shortened, and errors and unnecessary risks are reduced; the cost is low, so that the system is highly acceptable by a vast number of users; the operation is simple and convenient, and the teaching effects are good.

Owner:NANJING PANDA ELECTRONICS +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com