Patents

Literature

588results about "Exclusive-OR circuits" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

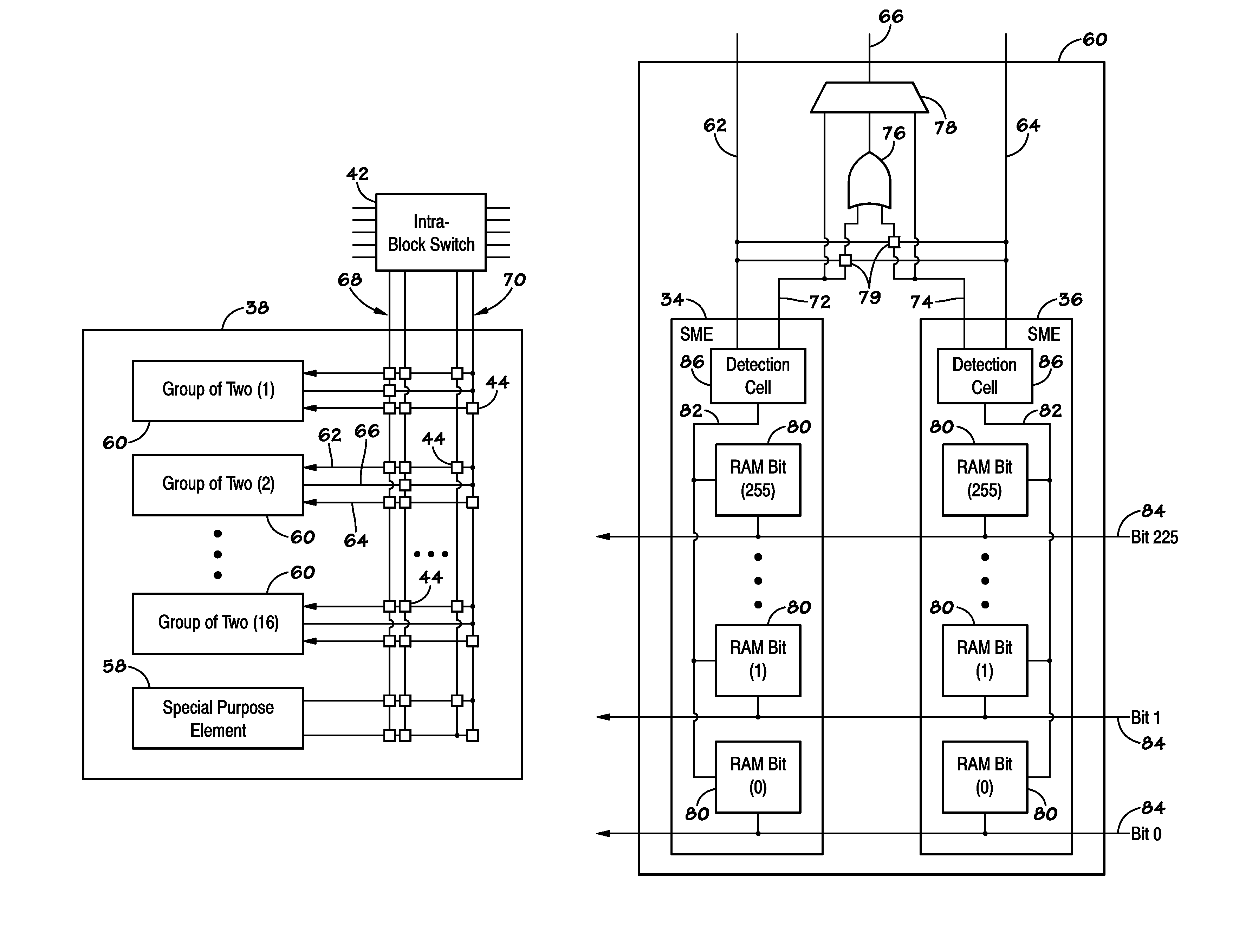

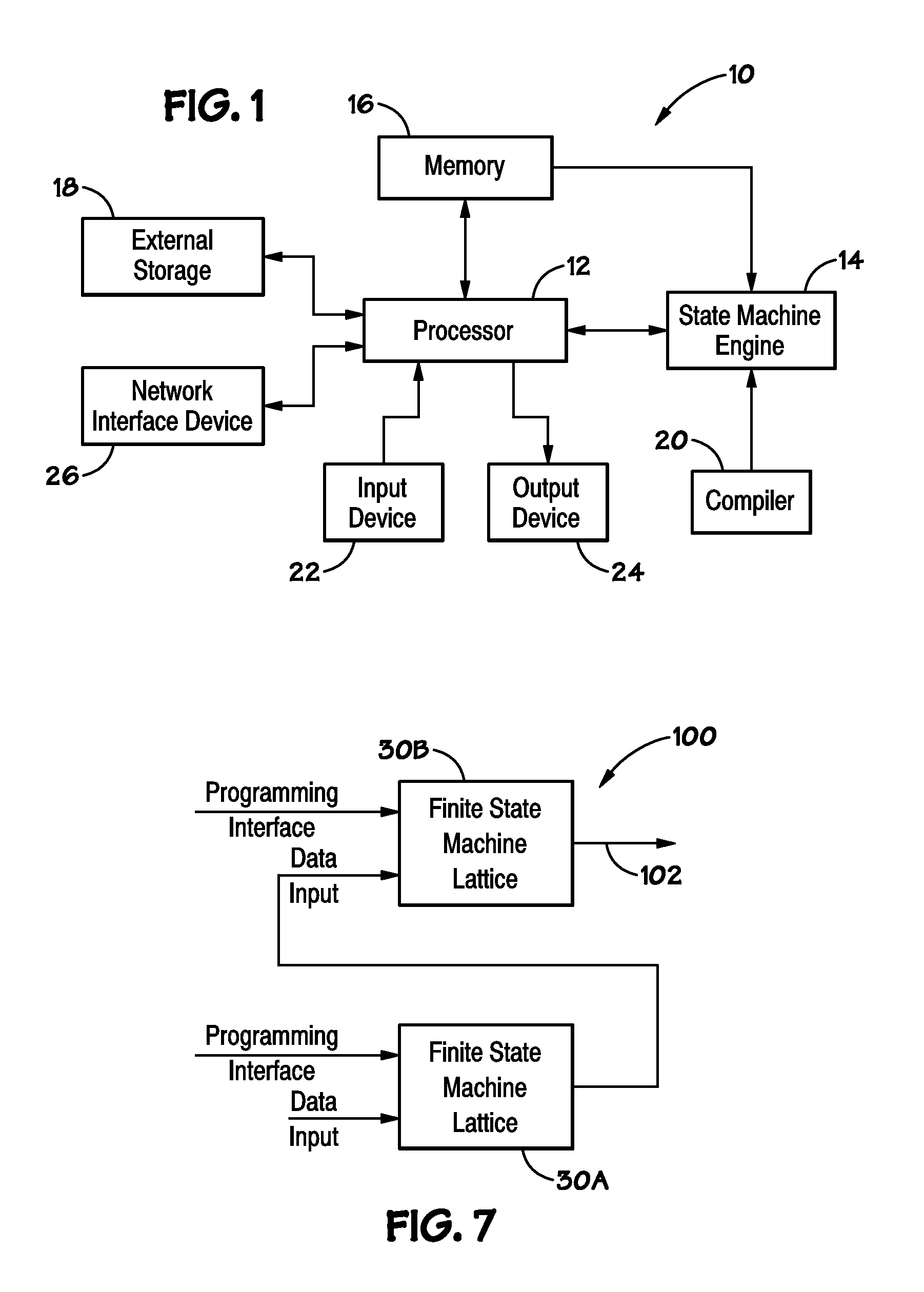

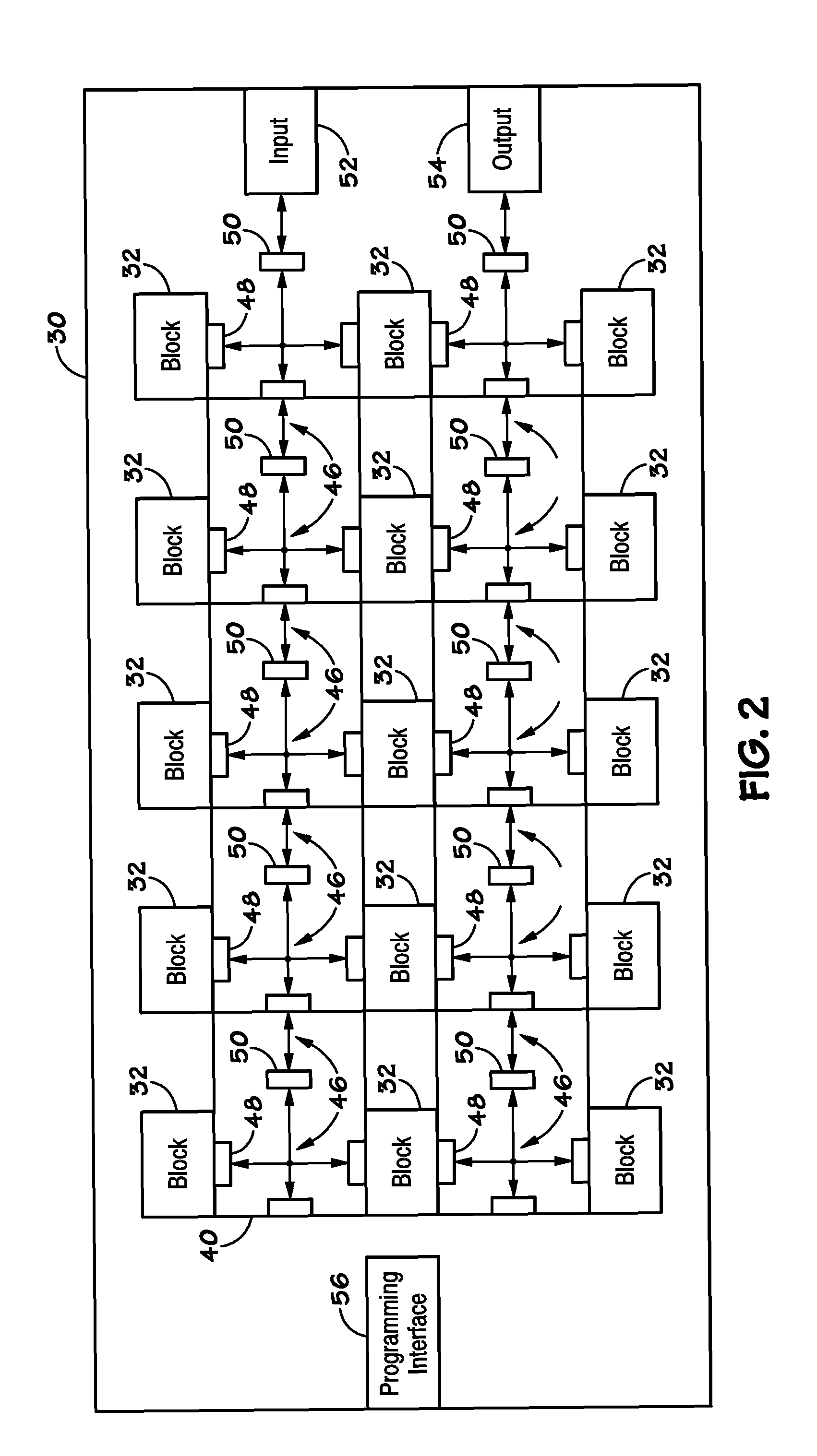

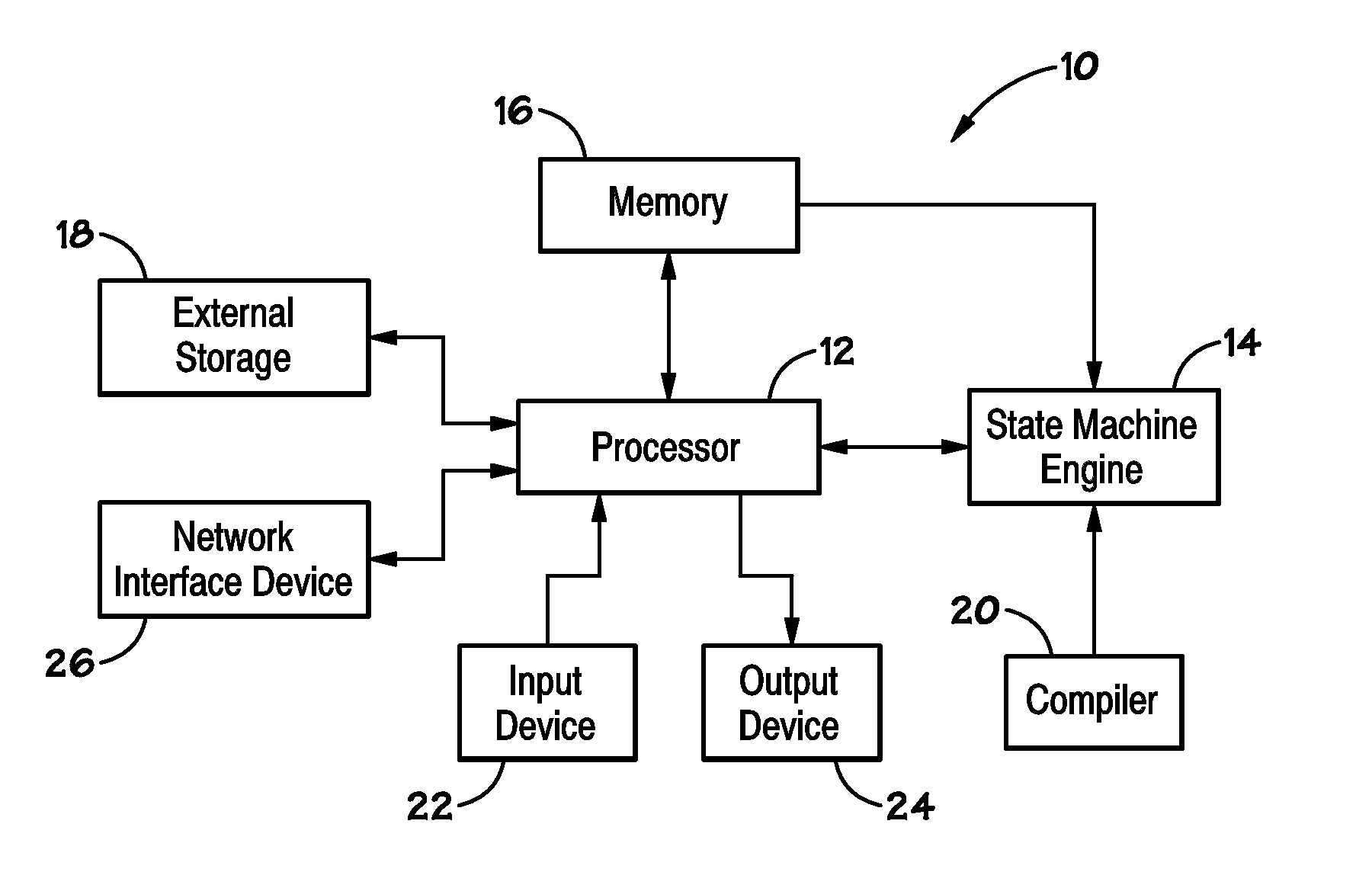

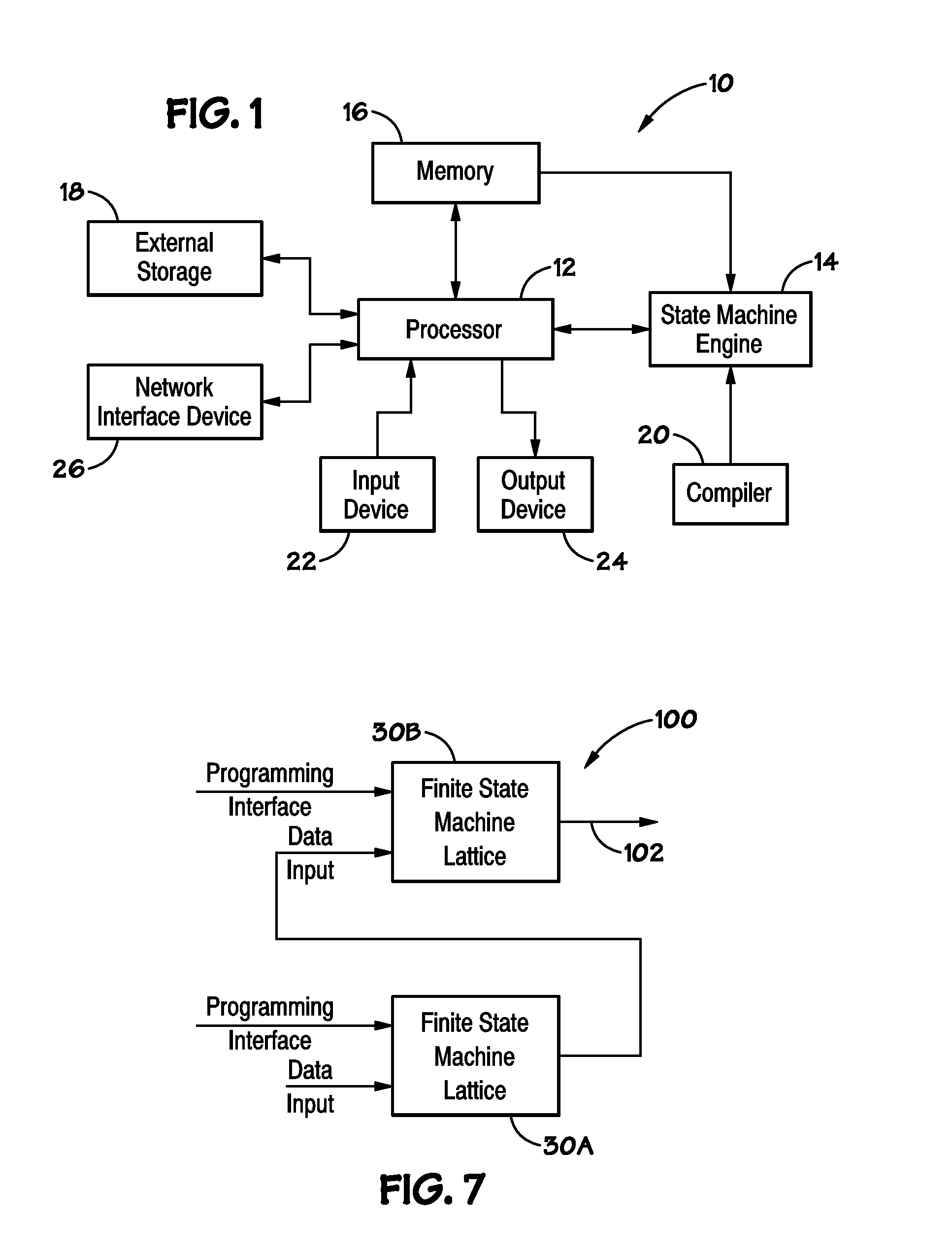

Boolean logic in a state machine lattice

Disclosed are methods and devices, among which is a device that includes a finite state machine lattice. The lattice may includes a programmable Boolean logic cell that may be programmed to perform various logic functions on a data stream. The programmability includes an inversion of a first input to the Boolean logic cell, an inversion of a last output of the Boolean logic cell, and a selection of an AND gate or an OR gate as a final output of the Boolean logic cell. The Boolean logic cell also includes end of data circuitry configured to cause the Boolean logic cell to only output after an end of data signifying the end of a data stream is received at the Boolean logic cell.

Owner:MICRON TECH INC

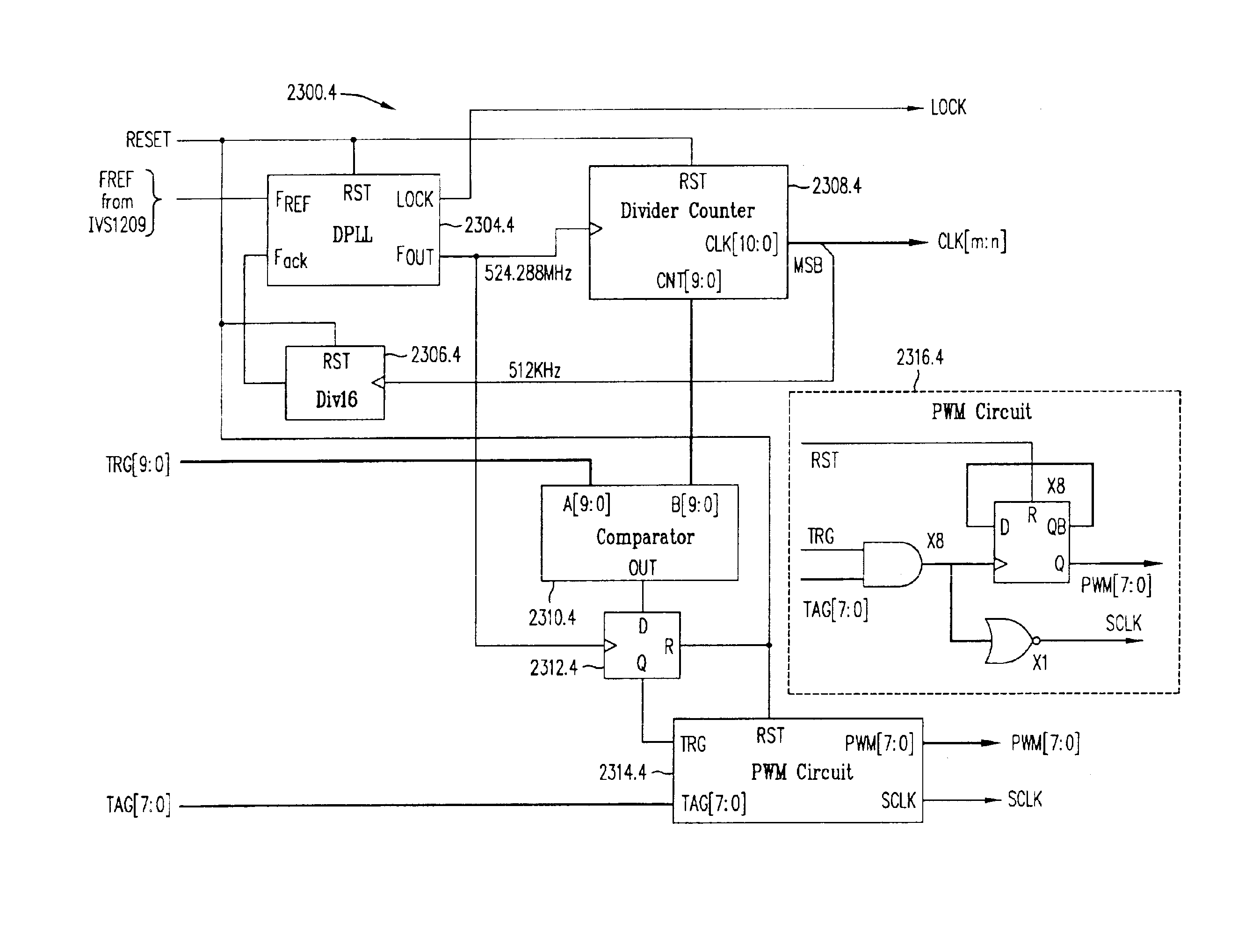

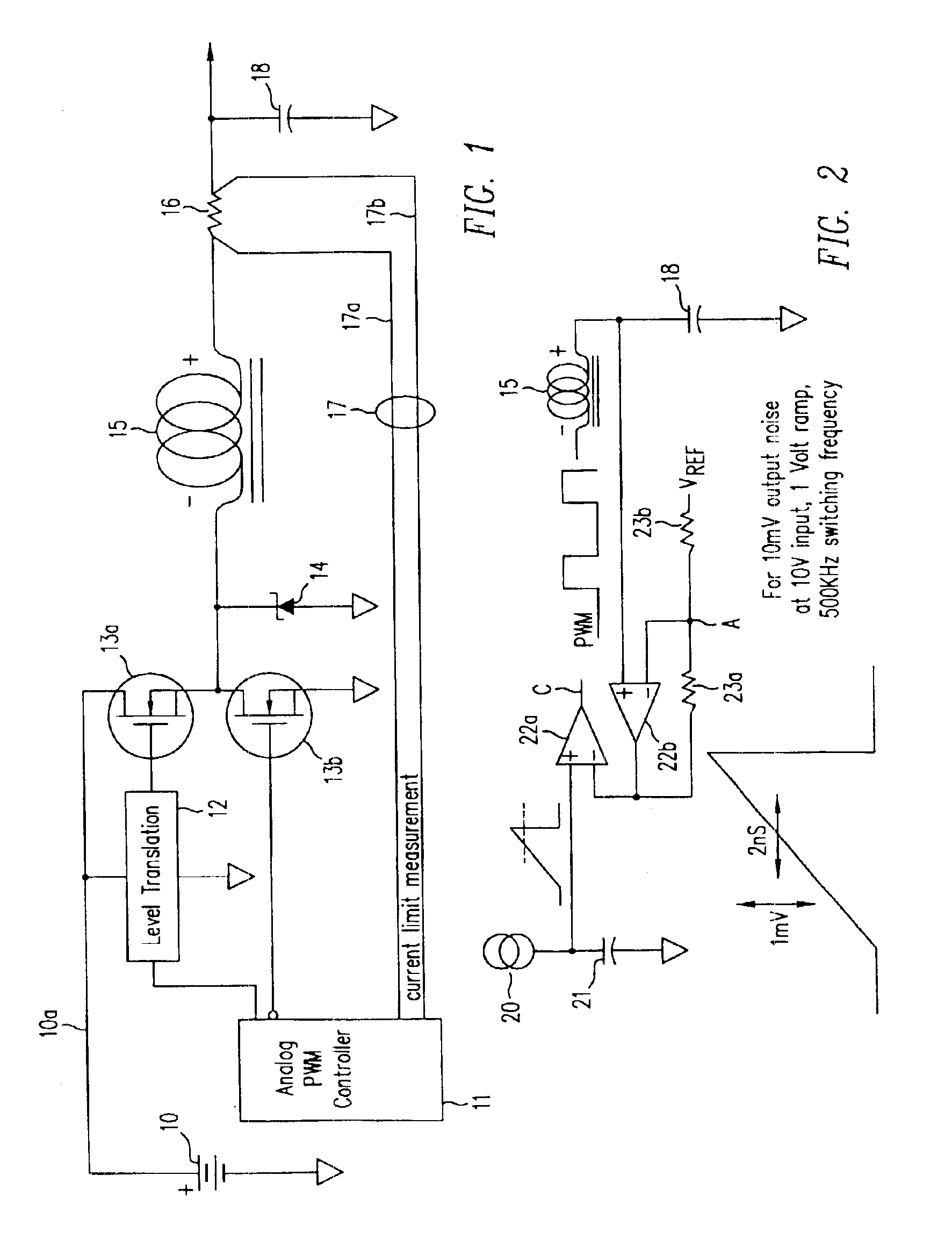

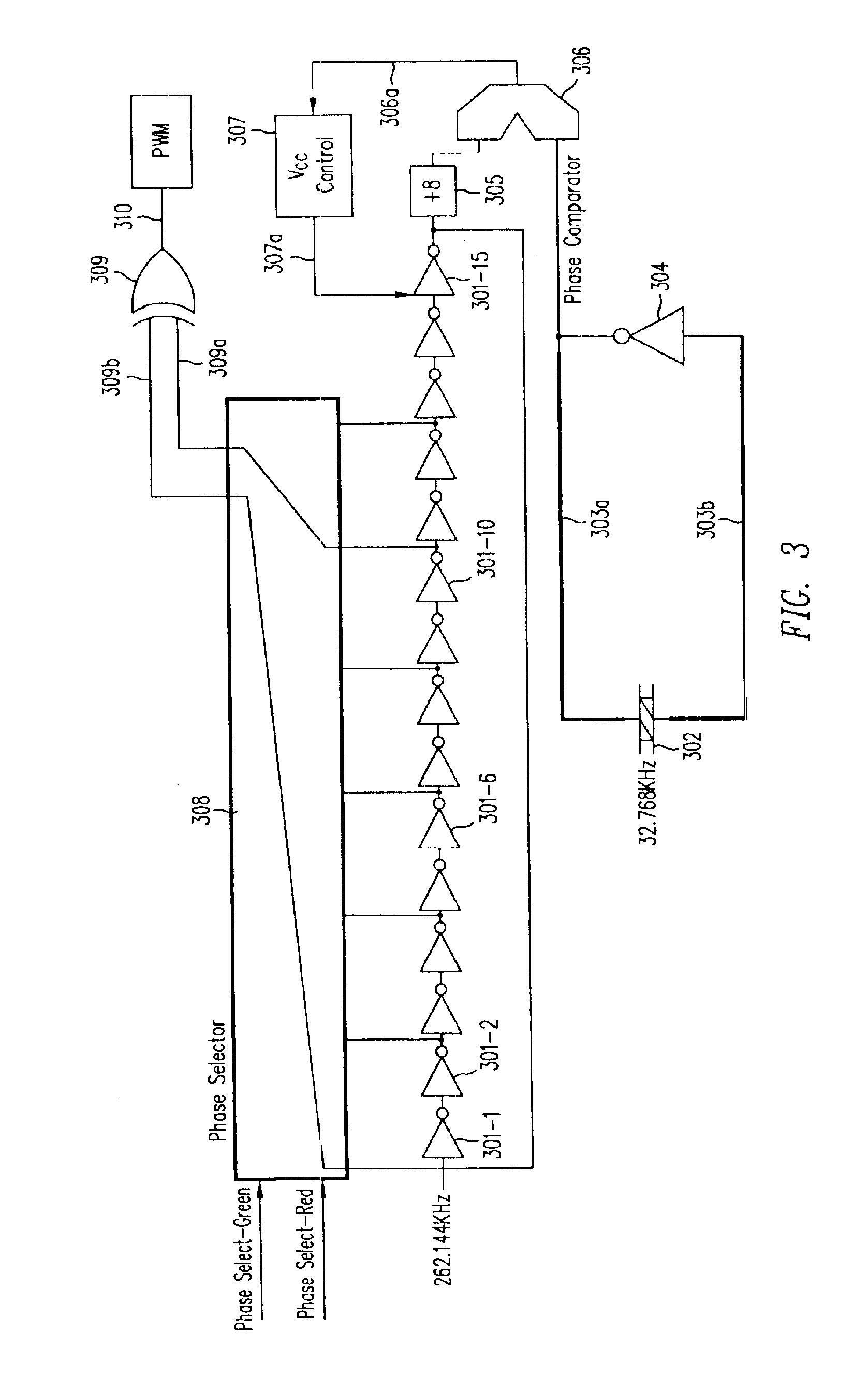

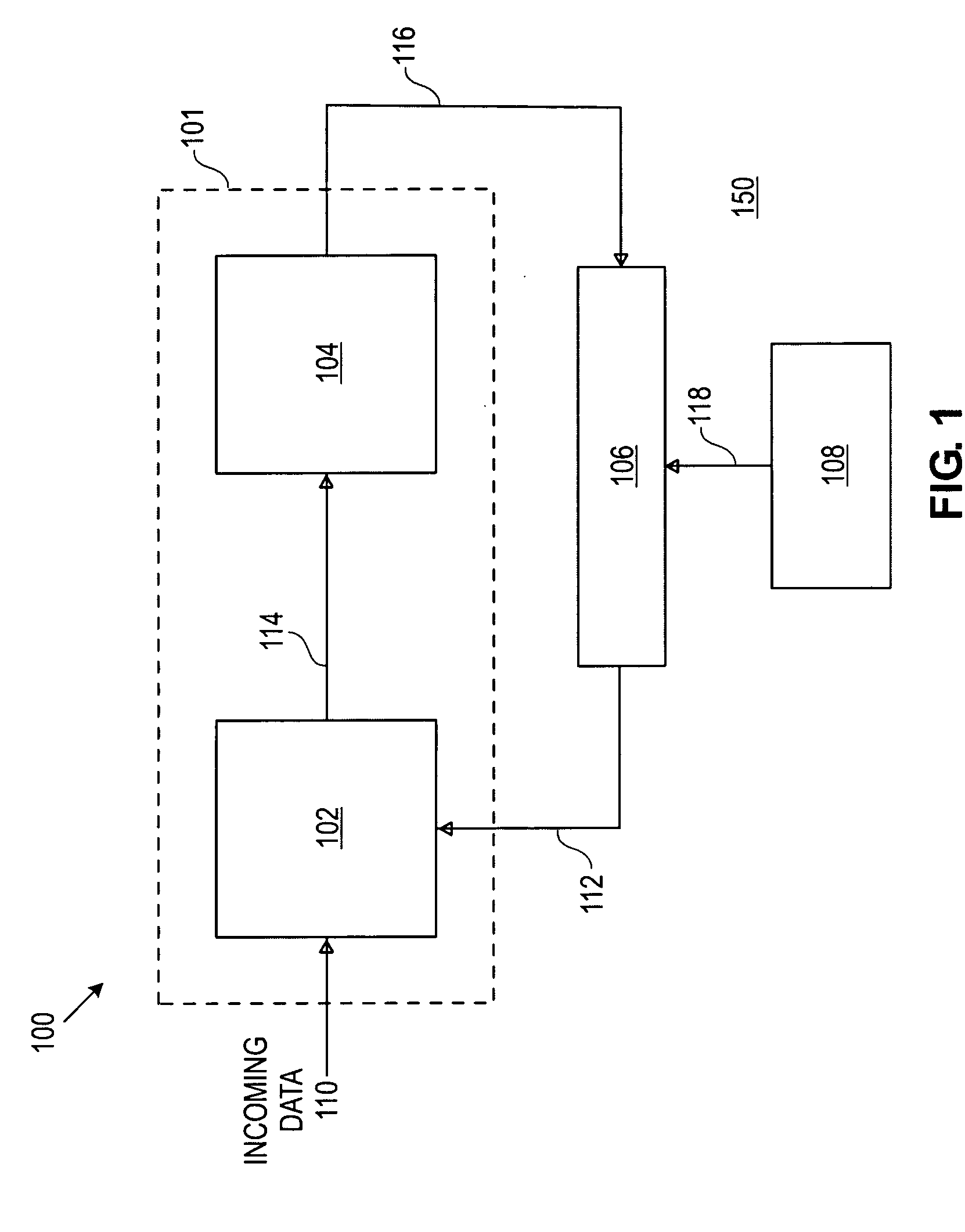

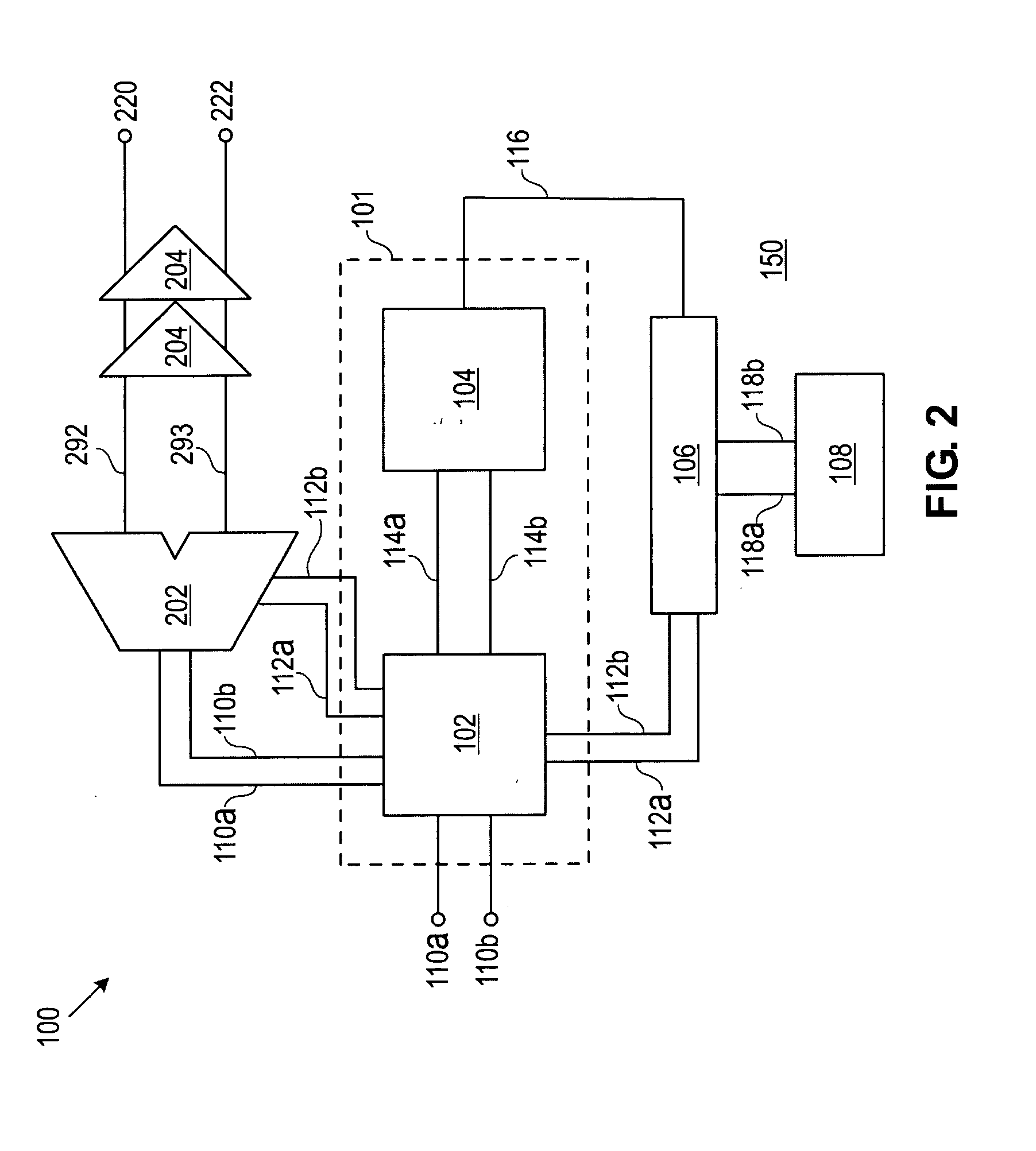

Multi-channel control methods for switched power converters

InactiveUS6912139B2Channel interference is avoided and minimizedExclusive-OR circuitsCounting chain pulse counters with non-natural counting orderVoltage pulseTransverter

Owner:EXAR CORP

Boolean logic in a state machine lattice

Disclosed are methods and devices, among which is a device that includes a finite state machine lattice. The lattice may includes a programmable Boolean logic cell that may be programmed to perform various logic functions on a data stream. The programmability includes an inversion of a first input to the Boolean logic cell, an inversion of a last output of the Boolean logic cell, and a selection of an AND gate or an OR gate as a final output of the Boolean logic cell. The Boolean logic cell also includes end of data circuitry configured to cause the Boolean logic cell to only output after an end of data signifying the end of a data stream is received at the Boolean logic cell.

Owner:MICRON TECH INC

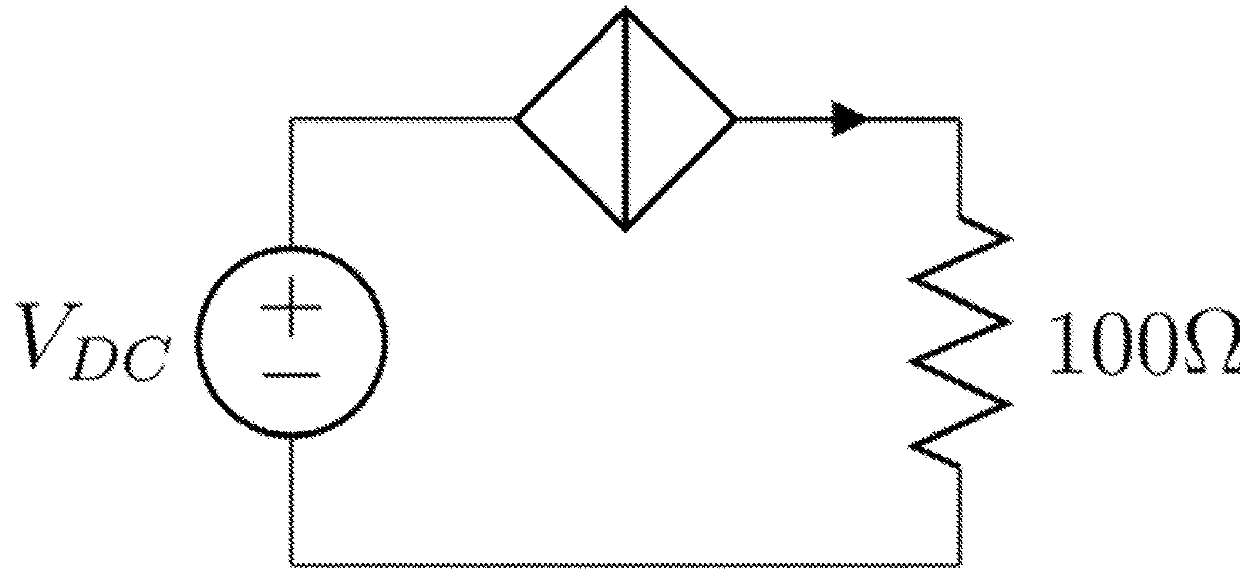

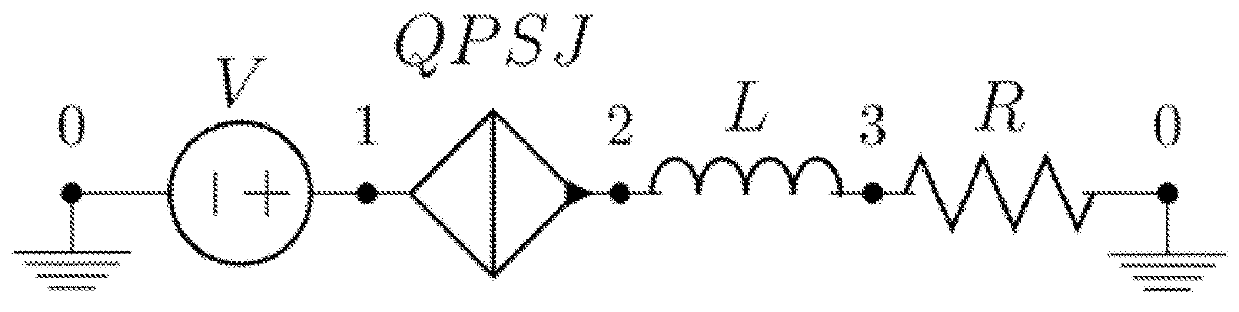

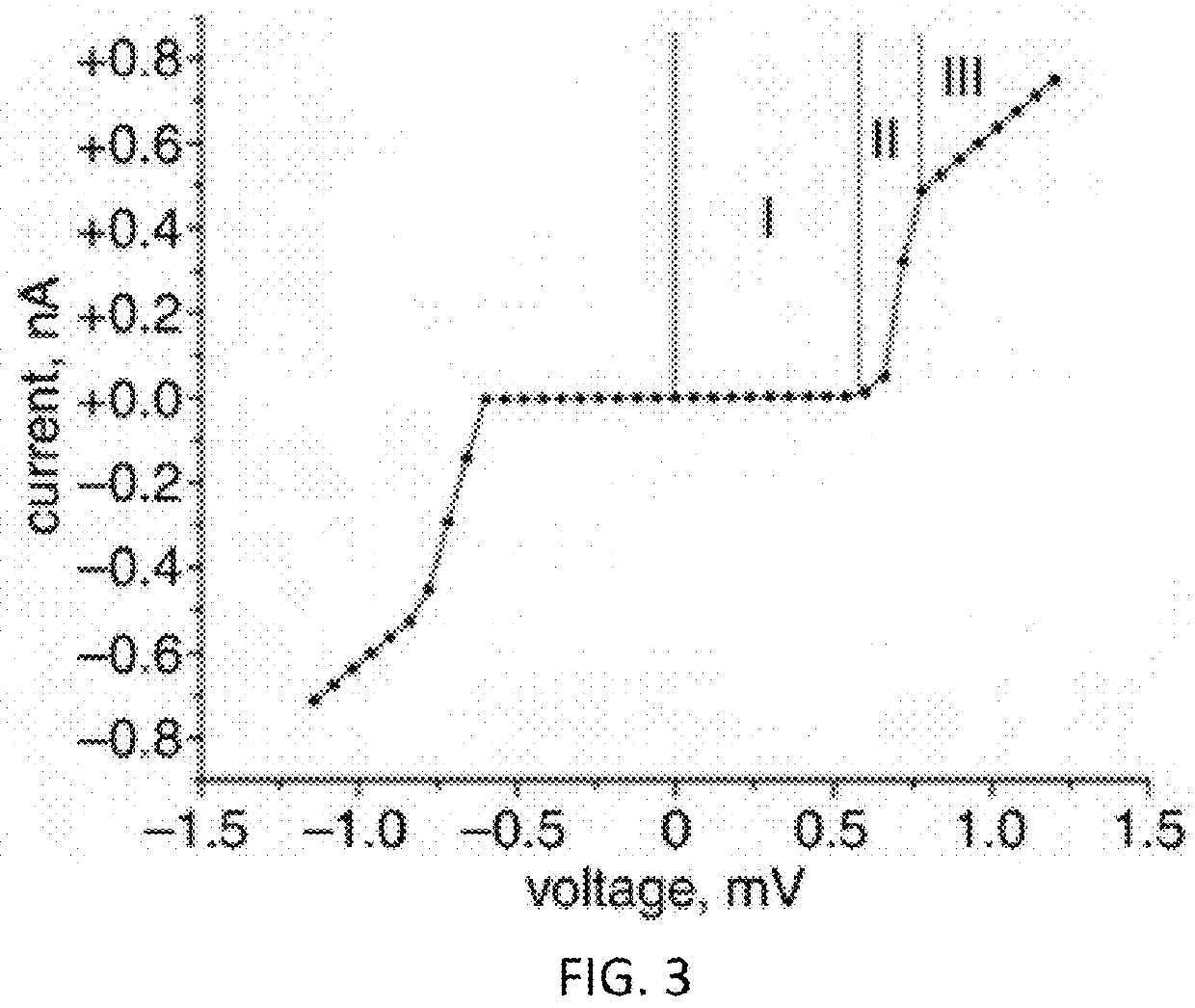

Superconducting quantum logic and applications of same

ActiveUS9998122B2Avoid flowExclusive-OR circuitsPulse generation by super conductive devicesVoltage pulseLogic cell

A superconducting logic cell includes at least one quantum phase-slip junction (QPSJ) for receiving at least one input and responsively providing at least one output, each QPSJ being configured such that when an input voltage of an input voltage pulse exceeds a critical value, a quantized charge of a Cooper electron pair tunnels across said QPSJ as an output, when the input voltage is less than the critical value, no quantized charge of the Cooper electron pair tunnels across said QPSJ as the output, where the presence and absence of the quantized charge in the form of a constant area current pulse in the output form two logic states, and the at least one QPSJ is biased with a bias voltage. The superconducting logic cell further includes at least one Josephson junction (JJ) coupled with the at least one QPSJ to perform one or more logic operations.

Owner:AUBURN UNIV

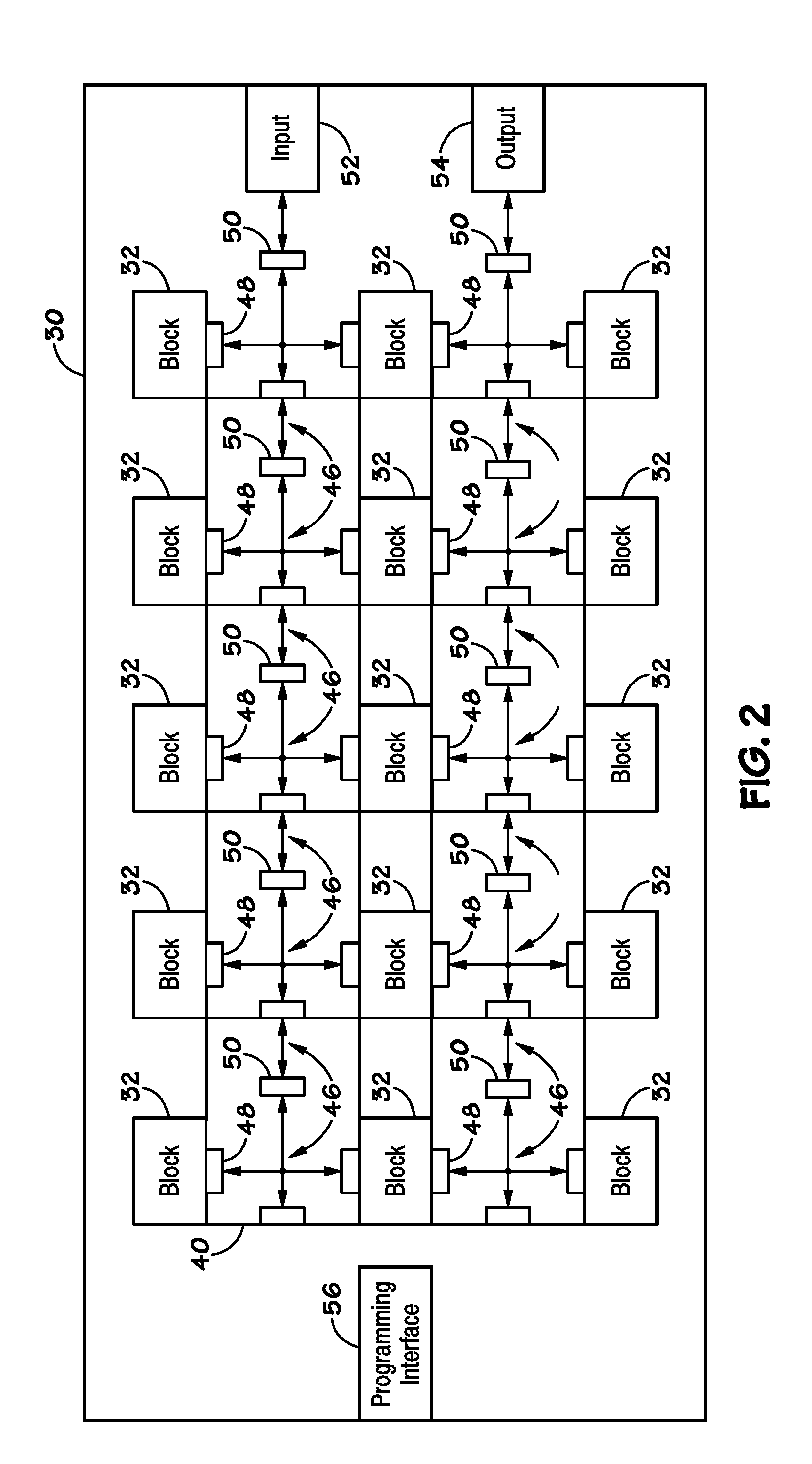

Logic drive using standard commodity programmable logic IC chips comprising non-volatile random access memory cells

ActiveUS20190238135A1Reduce Non-Recurring Engineering (NRE) expenseLower the barrierExclusive-OR circuitsSemiconductor/solid-state device detailsMemory chipNon-volatile random-access memory

A multi-chip package includes a field-programmable-gate-array (FPGA) integrated-circuit (IC) chip configured to perform a logic function based on a truth table, wherein the field-programmable-gate-array (FPGA) integrated-circuit (IC) chip comprises multiple non-volatile memory cells therein configured to store multiple resulting values of the truth table, and a programmable logic block therein configured to select, in accordance with one of the combinations of its inputs, one from the resulting values into its output; and a memory chip coupling to the field-programmable-gate-array (FPGA) integrated-circuit (IC) chip, wherein a data bit width between the field-programmable-gate-array (FPGA) integrated-circuit (IC) chip and the memory chip is greater than or equal to 64.

Owner:ICOMETRUE CO LTD

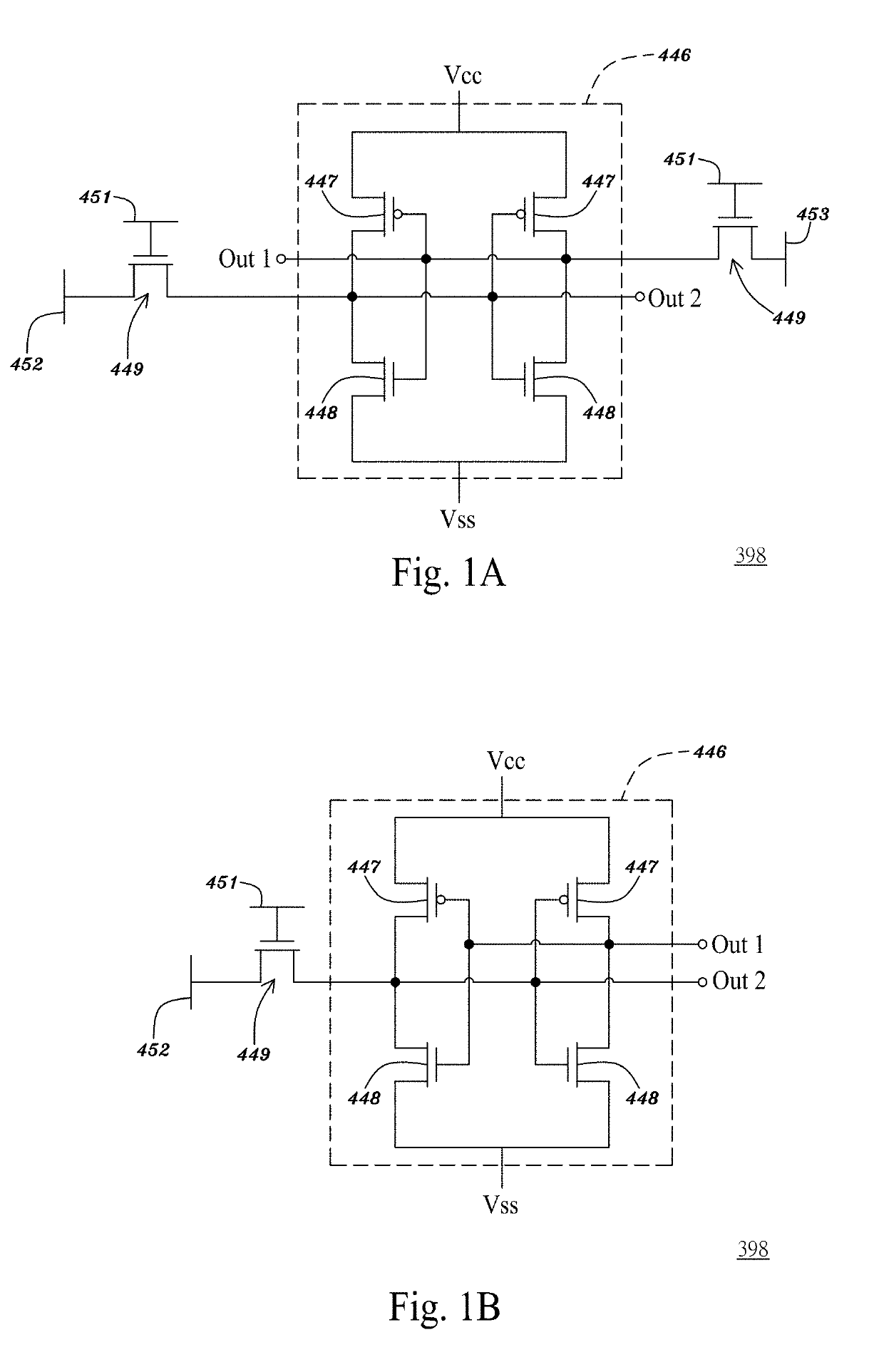

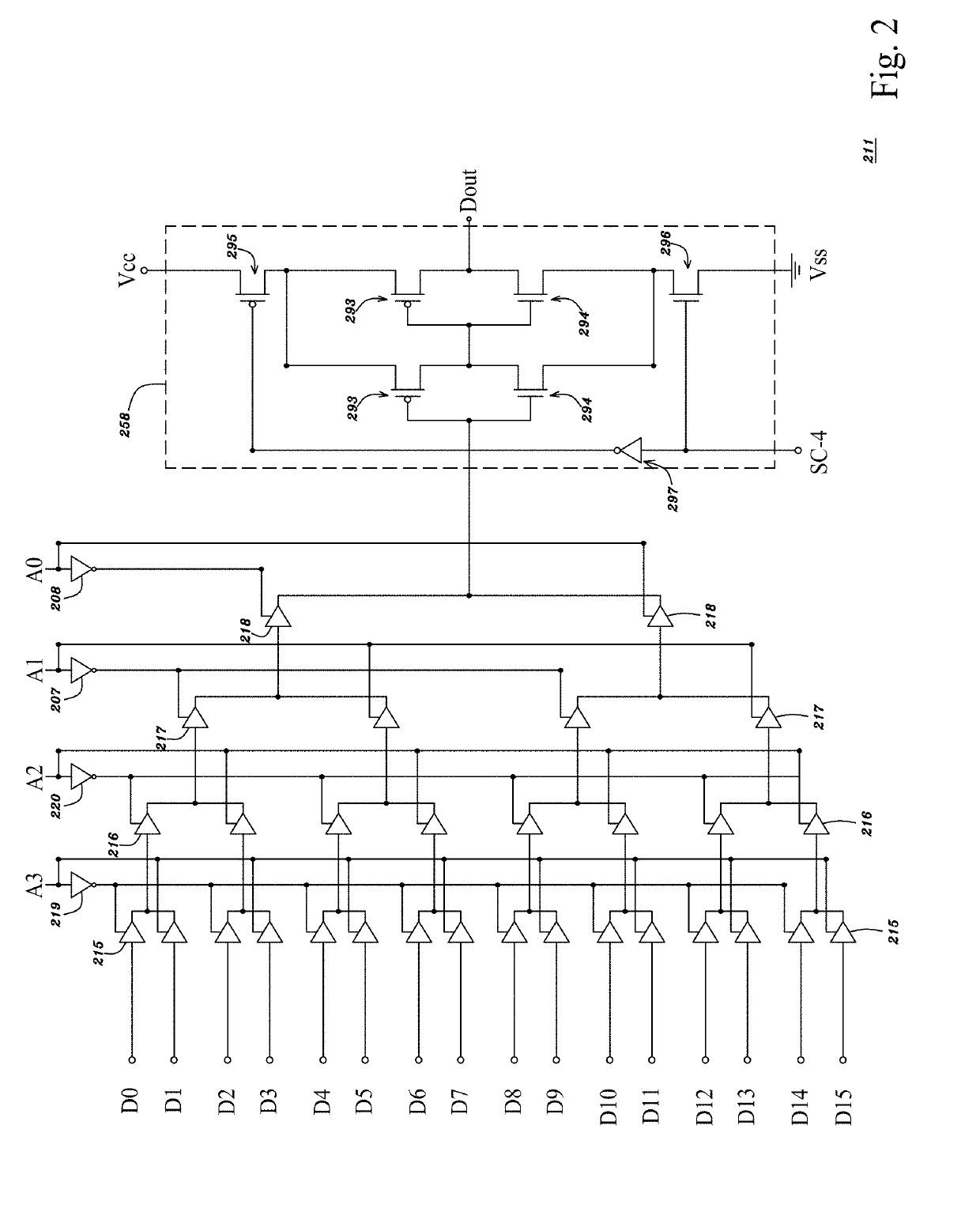

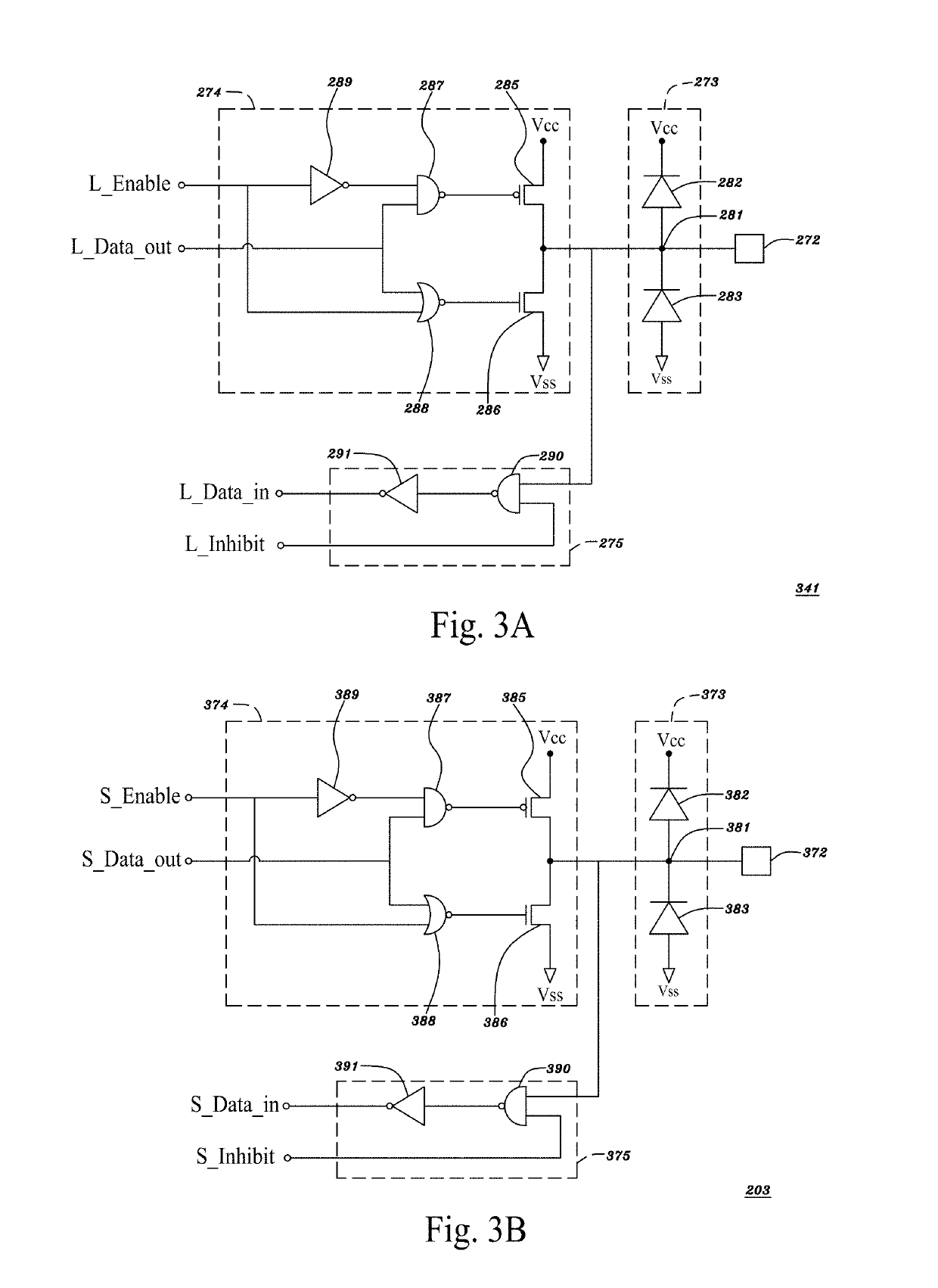

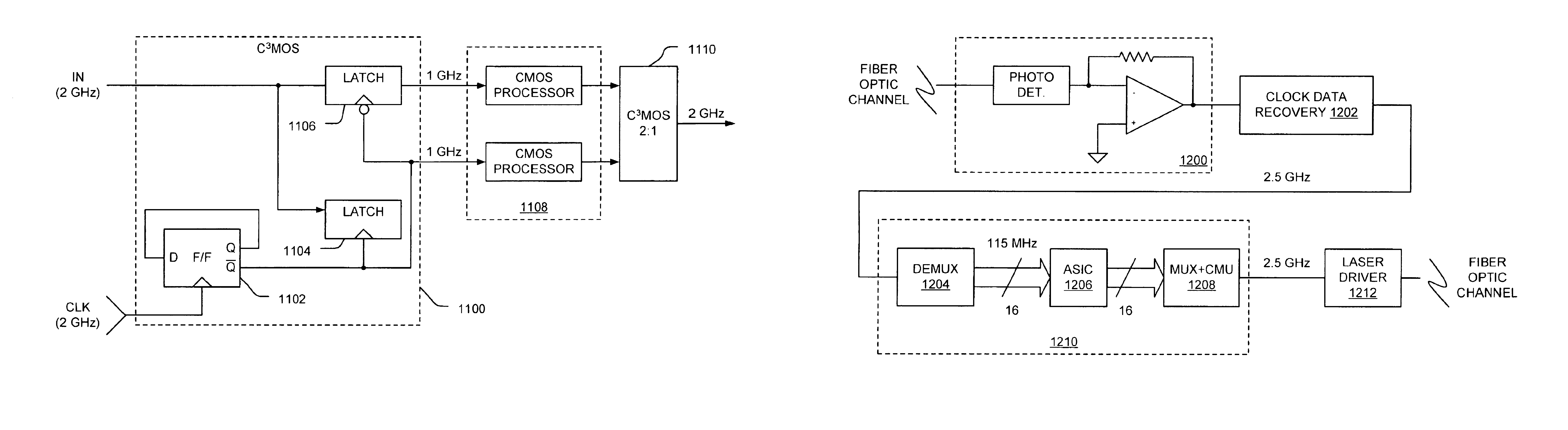

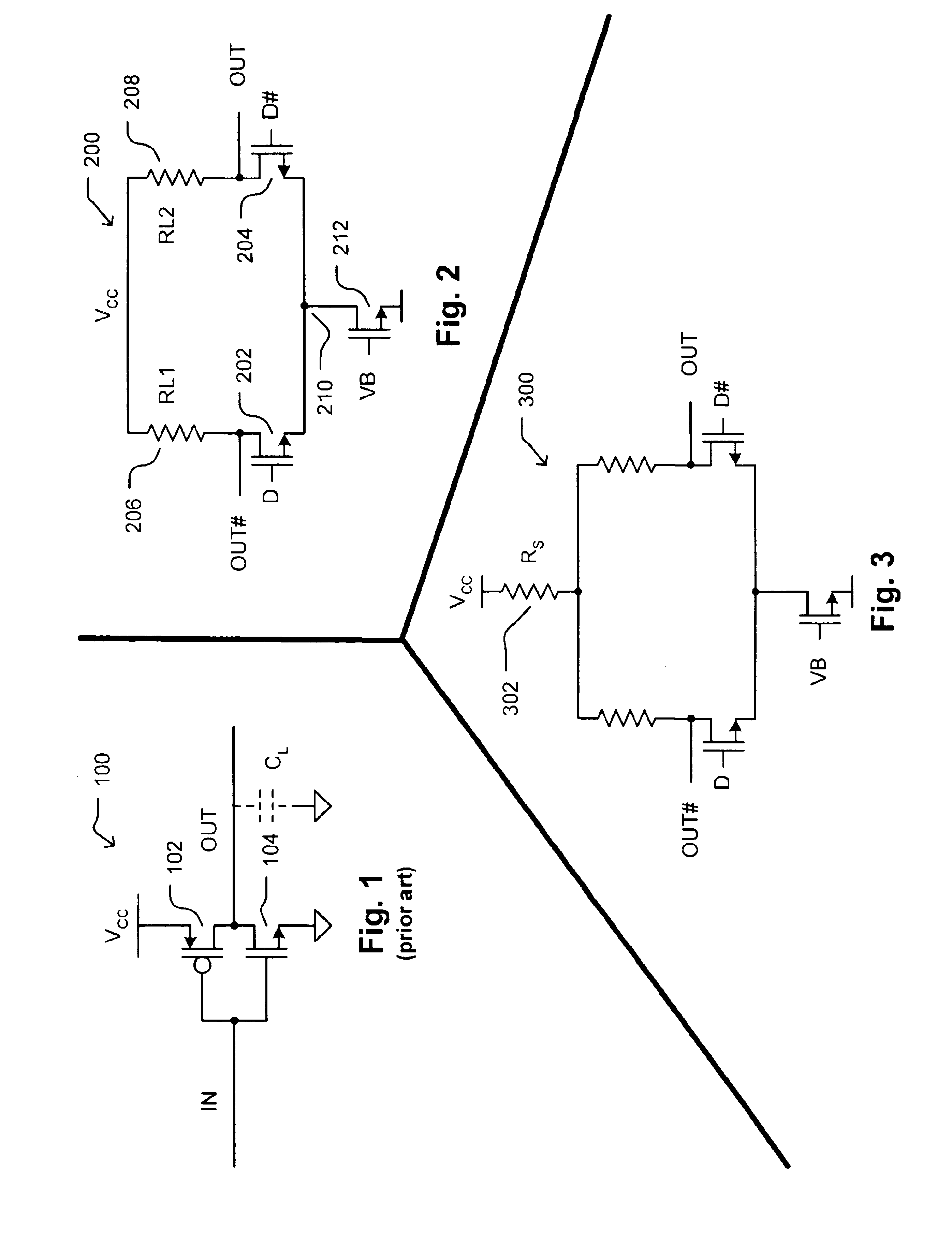

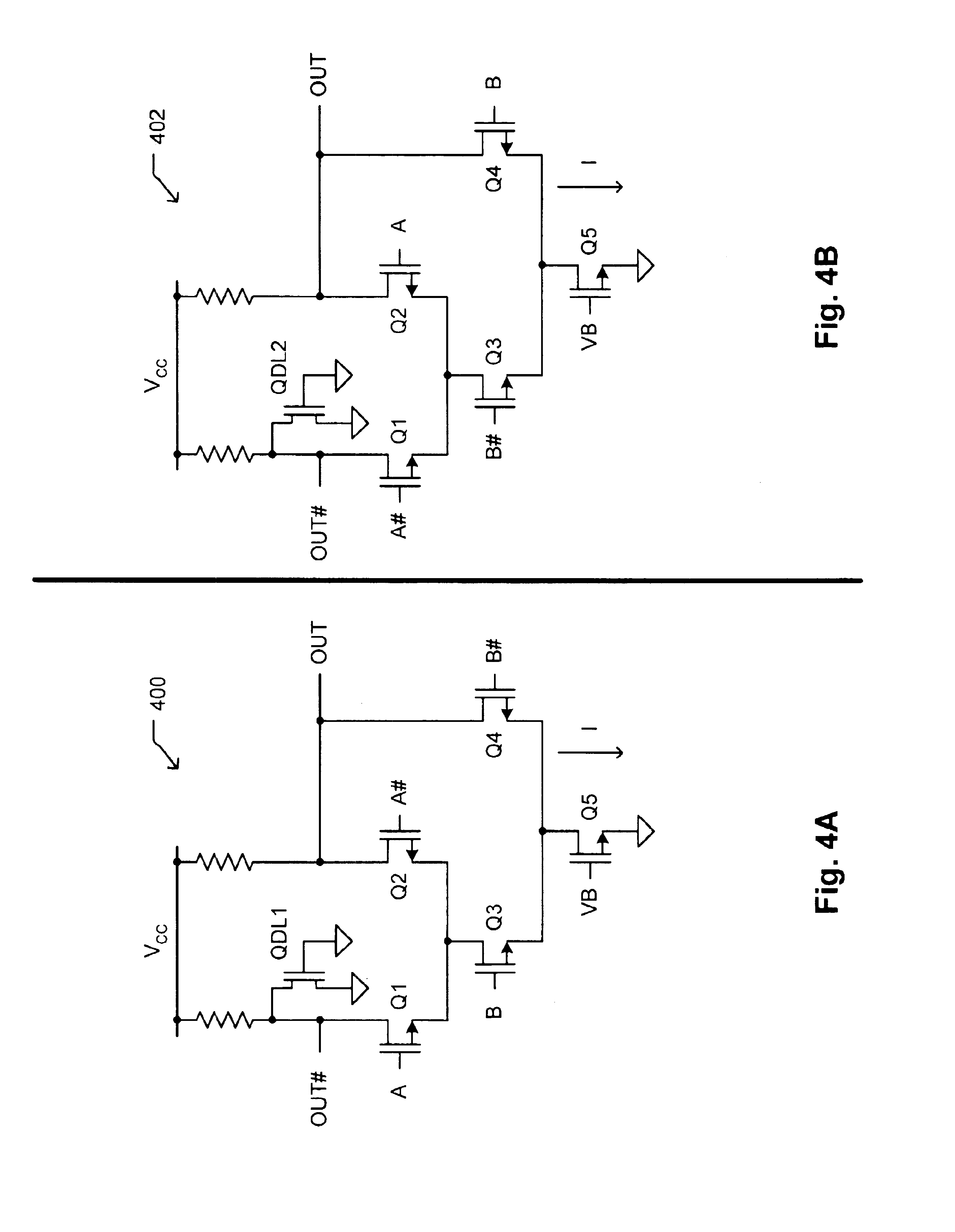

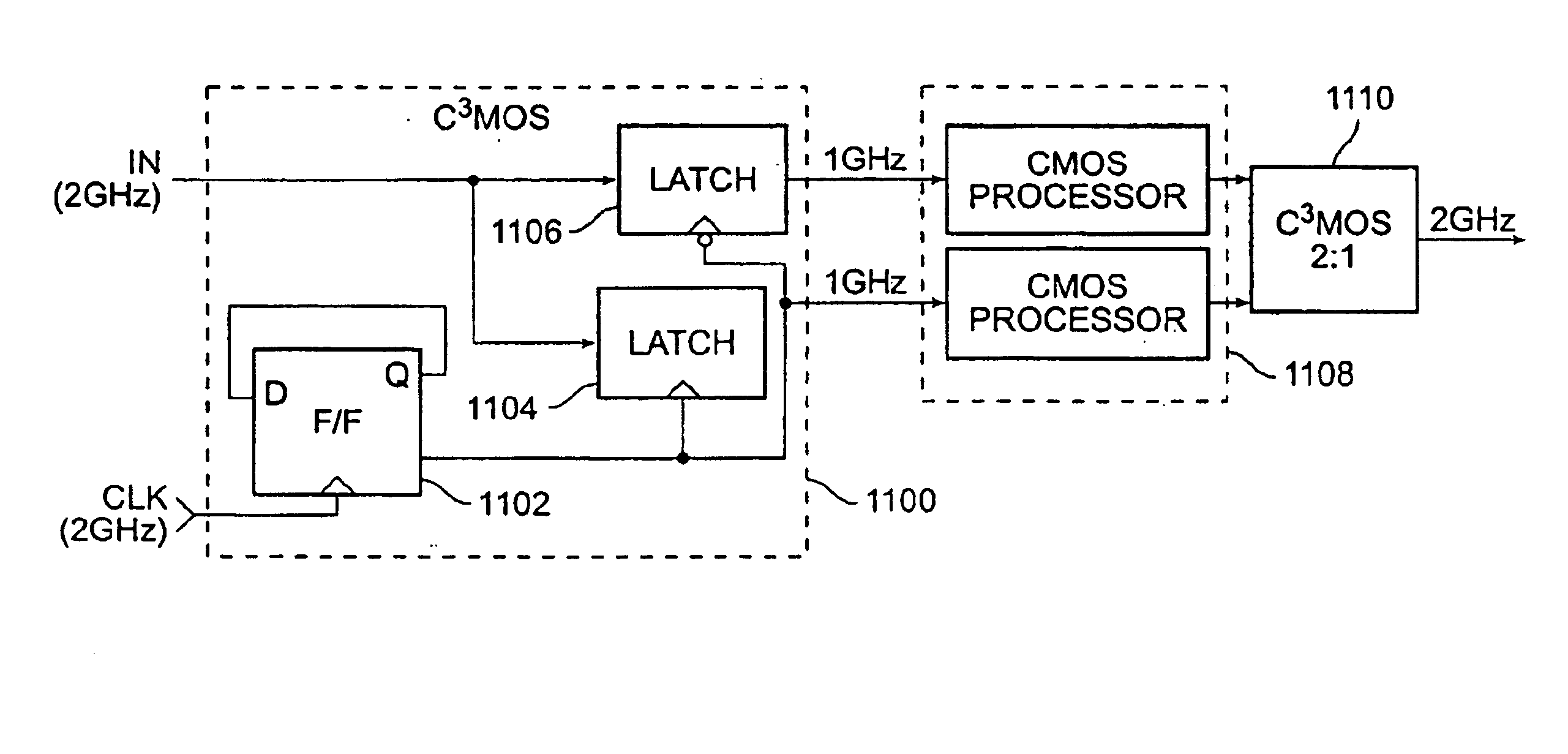

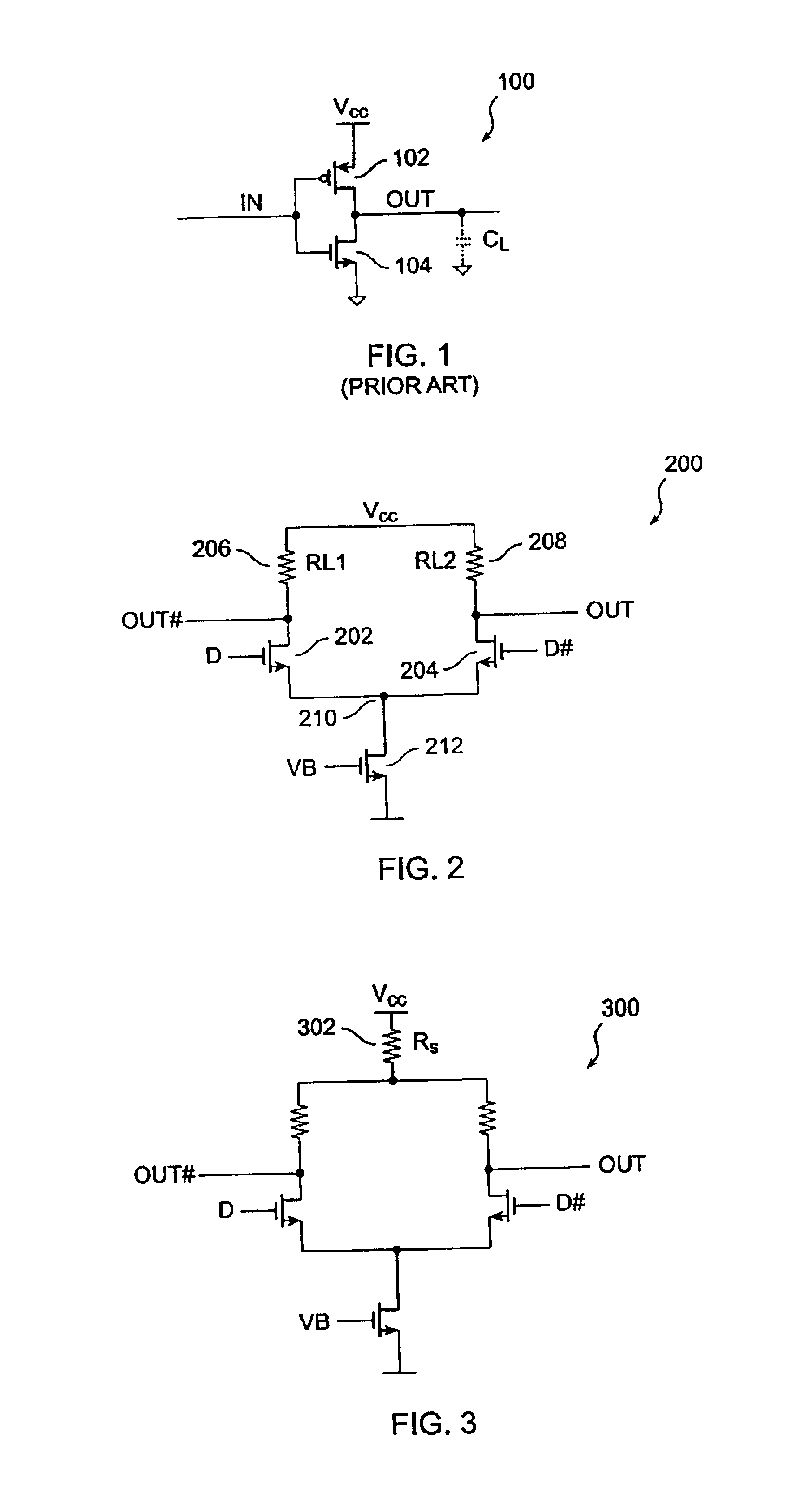

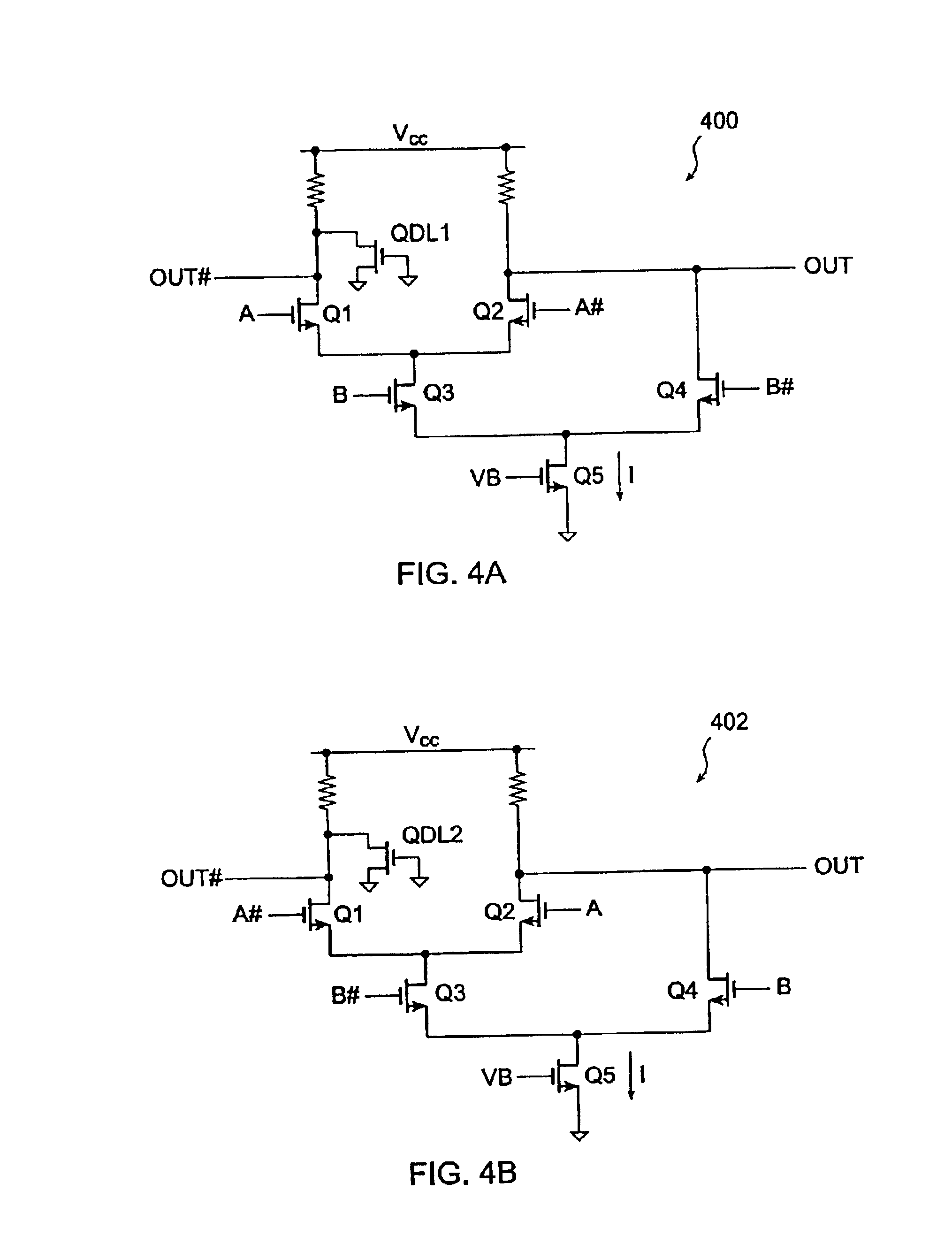

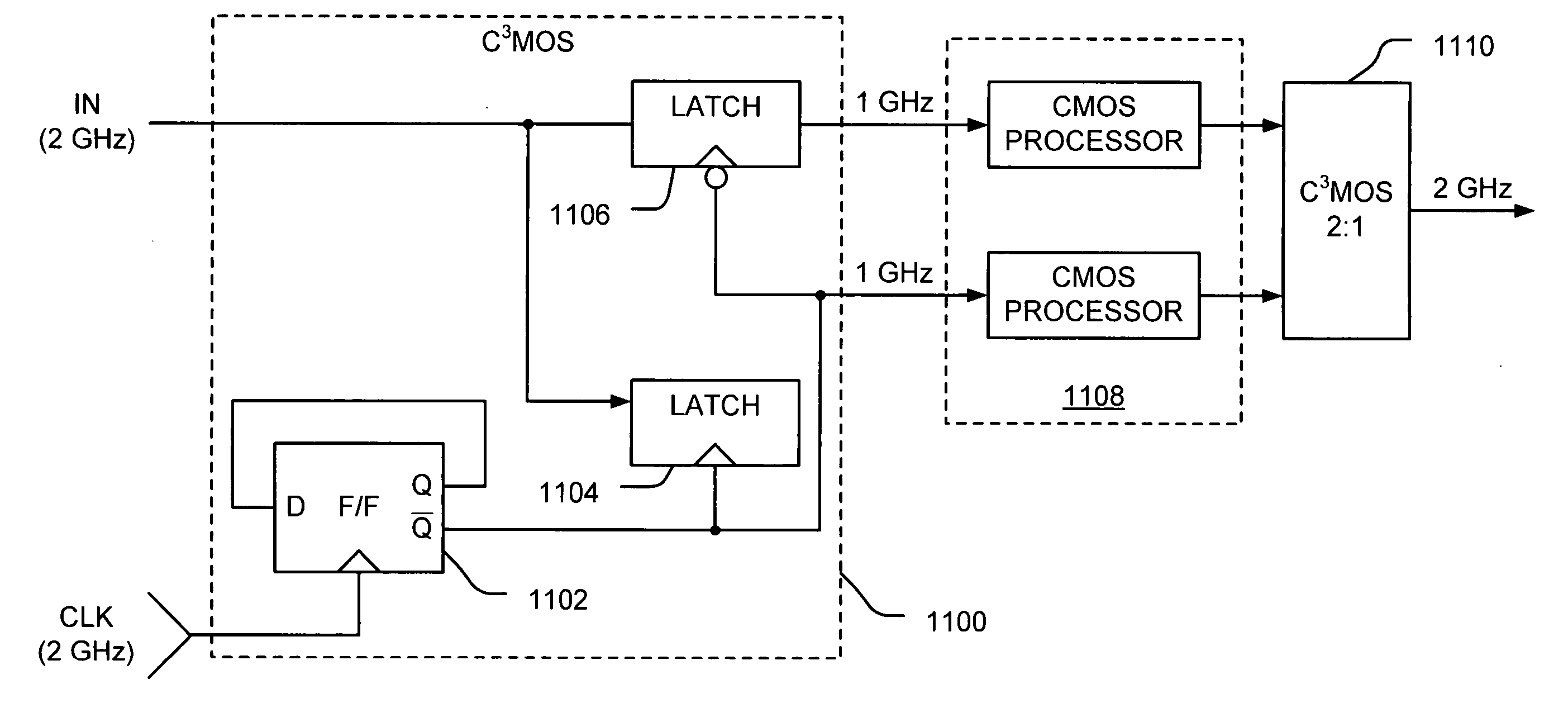

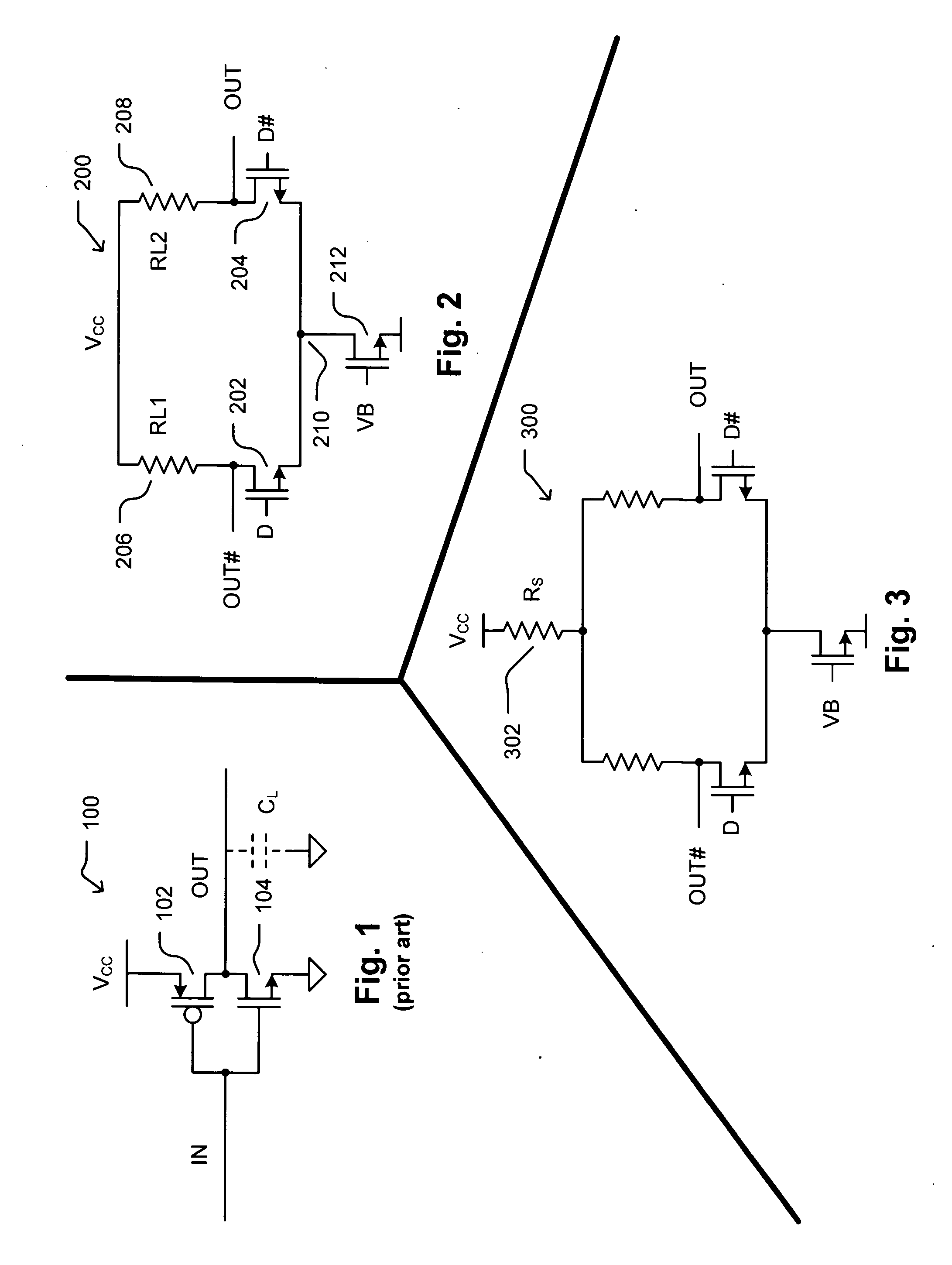

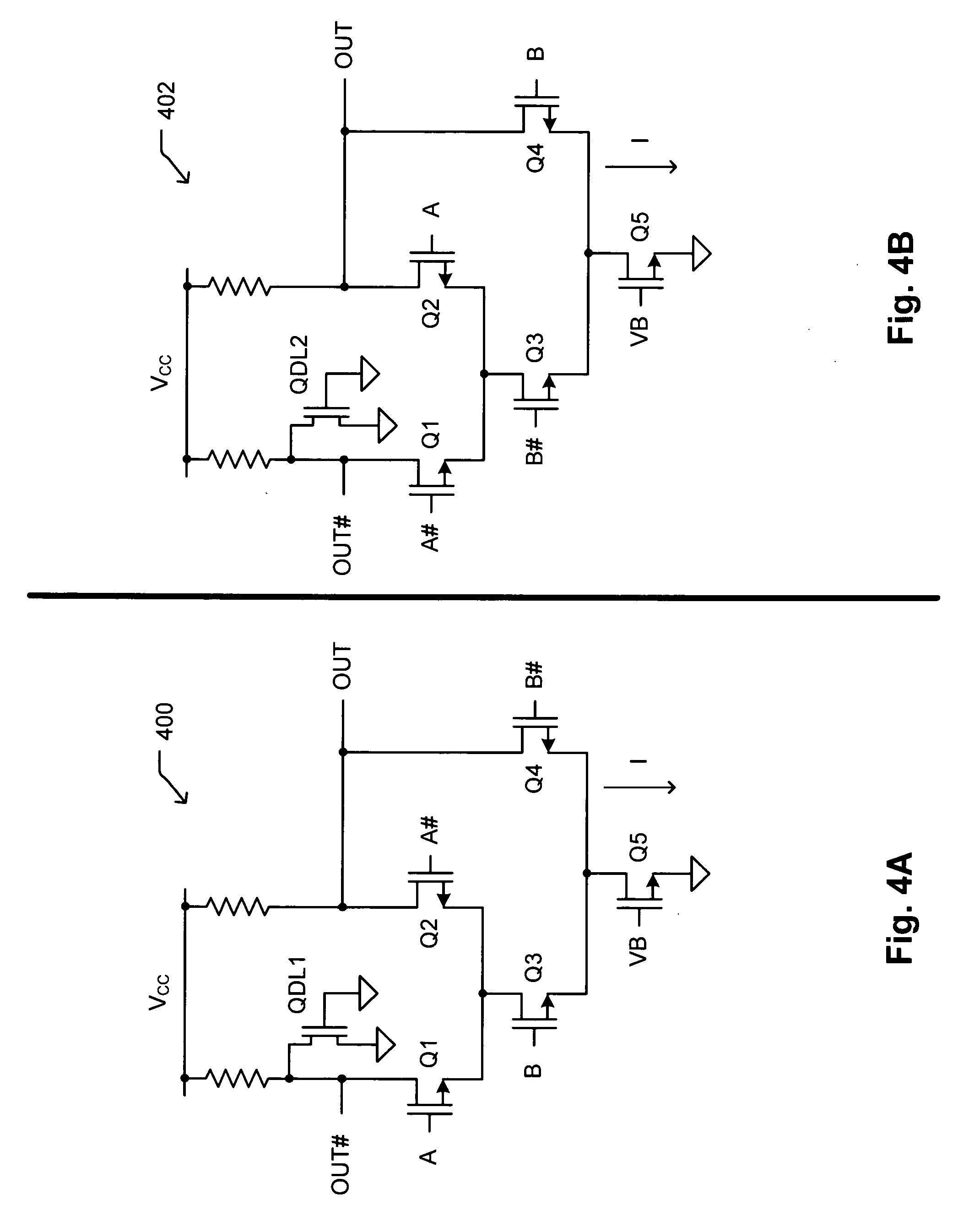

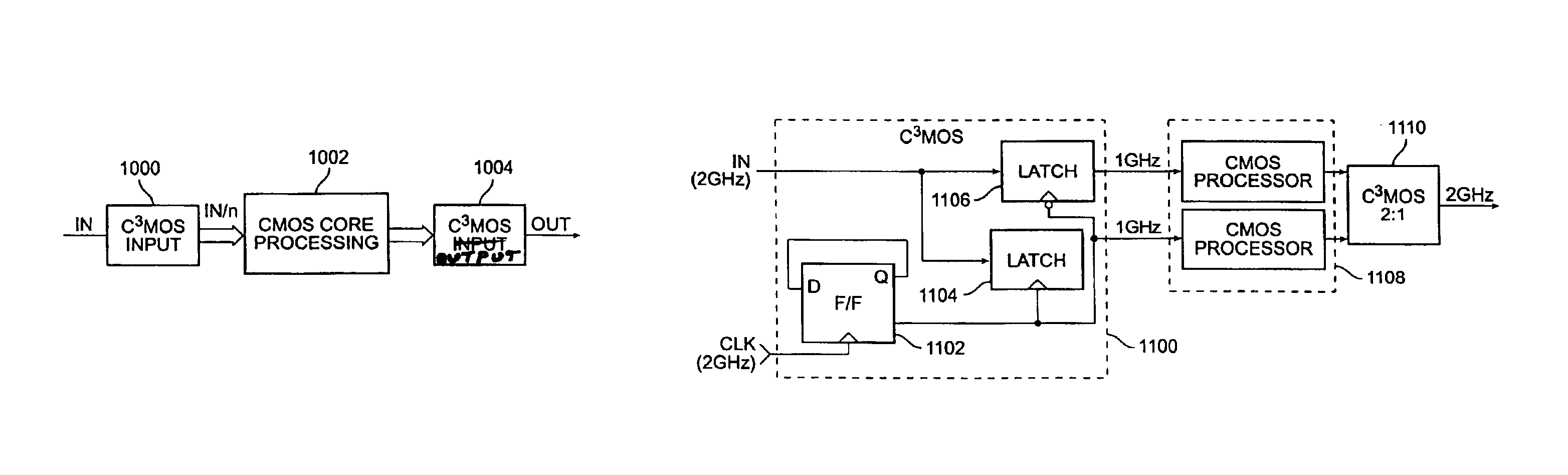

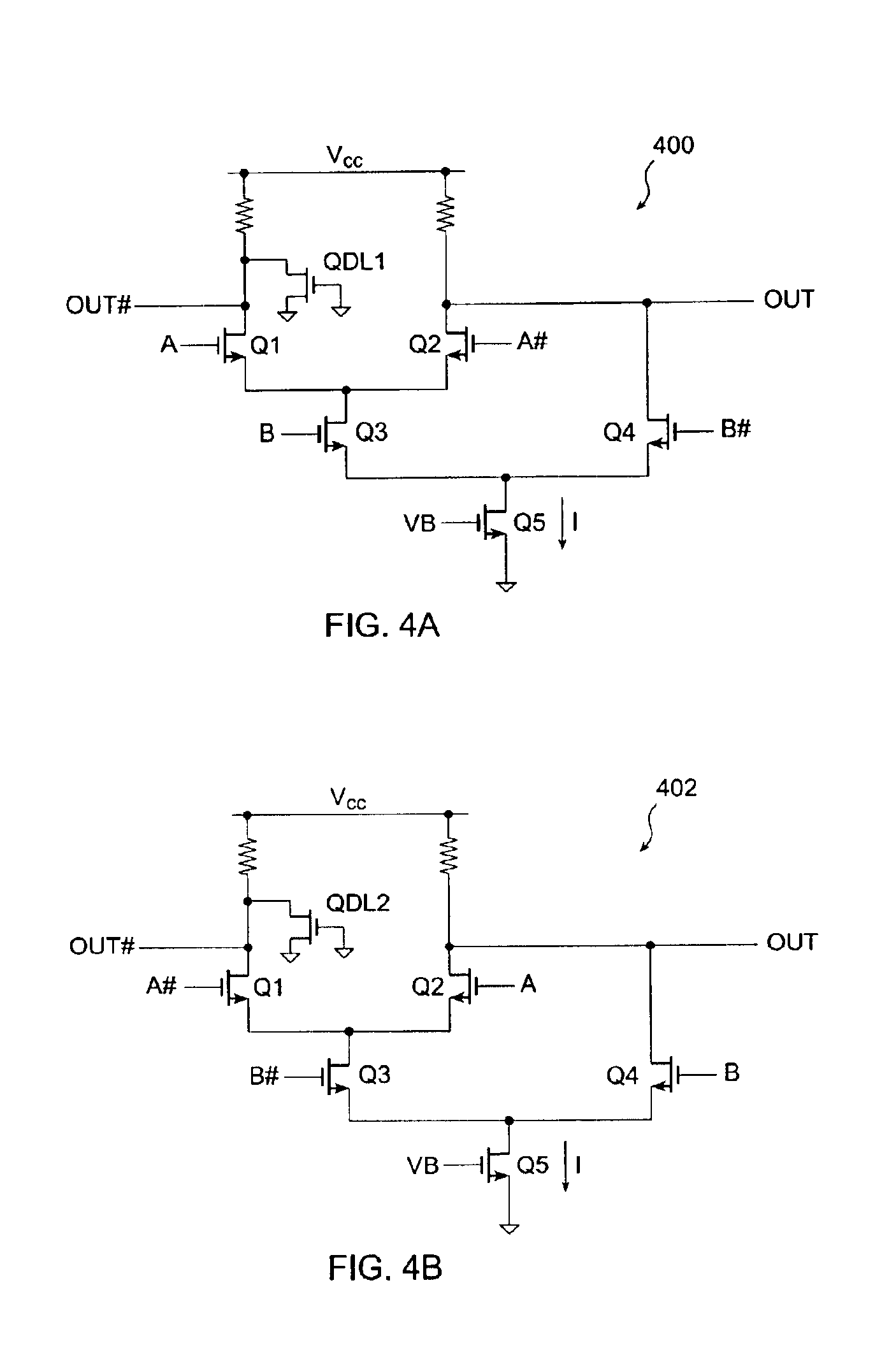

Current-controlled CMOS circuit using higher voltage supply in low voltage CMOS process

InactiveUS6982583B2Speed maximizationIncrease circuit speedExclusive-OR circuitsMultiple input and output pulse circuitsTransceiverHigh voltage

Various circuit techniques for implementing ultra high speed circuits use current-controlled CMOS (C3MOS) logic fabricated in conventional CMOS process technology. An entire family of logic elements including inverter / buffers, level shifters, NAND, NOR, XOR gates, latches, flip-flops and the like are implemented using C3MOS techniques. Optimum balance between power consumption and speed for each circuit application is achieve by combining high speed C3MOS logic with low power conventional CMOS logic. The combined C3MOS / CMOS logic allows greater integration of circuits such as high speed transceivers used in fiber optic communication systems. The C3MOS structure enables the use of a power supply voltage that may be larger than the voltage required by the CMOS fabrication process, further enhancing the performance of the circuit.

Owner:AVAGO TECH INT SALES PTE LTD

Current-controlled CMOS circuit using higher voltage supply in low voltage CMOS process

InactiveUS6897697B2Speed maximizationDissipates static currentExclusive-OR circuitsElectronic switchingTransceiverEngineering

Various circuit techniques for implementing ultra high speed circuits use current-controlled CMOS (C3MOS) logic fabricated in conventional CMOS process technology. An entire family of logic elements including inverter / buffers, level shifters, NAND, NOR, XOR gates, latches, flip-flops and the like are implemented using C3MOS techniques. Optimum balance between power consumption and speed for each circuit application is achieve by combining high speed C3MOS logic with low power conventional CMOS logic. The combined C3MOS / CMOS logic allows greater integration of circuits such as high speed transceivers used in fiber optic communication systems. The C3MOS structure enables the use of a power supply voltage that may be larger than the voltage required by the CMOS fabrication process, further enhancing the performance of the circuit.

Owner:AVAGO TECH INT SALES PTE LTD

Self repairing neural network

ActiveUS20190042377A1Facilitate time borrowingReduce loadExclusive-OR circuitsSemiconductor/solid-state device detailsPattern recognitionAlgorithm

Some embodiments of the invention provide an integrated circuit (IC) with a defect-tolerant neural network. The neural network has one or more redundant neurons in some embodiments. After the IC is manufactured, a defective neuron in the neural network can be detected through a test procedure and then replaced by a redundant neuron (i.e., the redundant neuron can be assigned the operation of the defective neuron). The routing fabric of the neural network can be reconfigured so that it re-routes signals around the discarded, defective neuron. In some embodiments, the reconfigured routing fabric does not provide any signal to or forward any signal from the discarded, defective neuron, and instead provides signals to and forwards signals from the redundant neuron that takes the defective neuron's position in the neural network. In some embodiments that implement a neural network by re-purposing (i.e., reconfiguring) one or more individual neurons to implement neurons of multiple stages of the neural network, the IC discards a defective neuron by removing it from the pool of neurons that it configures to perform the operation(s) of neurons in one or more stages of neurons, and assigning this defective neuron's configuration(s) (i.e., its machine-trained parameter set(s)) to a redundant neuron. In some of these embodiments, the IC would re-route around the defective neuron and route to the redundant neuron, by (1) supplying machine-trained parameters and input signals (e.g., previous stage neuron outputs) to the redundant neuron instead of supplying these parameters and signals to the defective neuron, and (2) storing the output(s) of the redundant neuron instead of storing the output(s) of the defective neuron.

Owner:XCELSIS CORP

Three dimensional chip structure implementing machine trained network

ActiveUS20190043832A1Facilitate time borrowingReduce loadExclusive-OR circuitsError detection/correctionNODALNetwork on

Some embodiments provide a three-dimensional (3D) circuit structure that has two or more vertically stacked bonded layers with a machine-trained network on at least one bonded layer. As described above, each bonded layer can be an IC die or an IC wafer in some embodiments with different embodiments encompassing different combinations of wafers and dies for the different bonded layers. The machine-trained network in some embodiments includes several stages of machine-trained processing nodes with routing fabric that supplies the outputs of earlier stage nodes to drive the inputs of later stage nodes. In some embodiments, the machine-trained network is a neural network and the processing nodes are neurons of the neural network. In some embodiments, one or more parameters associated with each processing node (e.g., each neuron) is defined through machine-trained processes that define the values of these parameters in order to allow the machine-trained network (e.g., neural network) to perform particular operations (e.g., face recognition, voice recognition, etc.). For example, in some embodiments, the machine-trained parameters are weight values that are used to aggregate (e.g., to sum) several output values of several earlier stage processing nodes to produce an input value for a later stage processing node.

Owner:XCELSIS CORP

Distributed delay-locked-based clock and data recovery systems

A clock and data recovery system using a distributed variable delay line is provided. The clock and data recovery system can use a delay-locked loop methodology to align a local clock with an incoming data stream. The variable delay line can include a transmission line coupled with a plurality of variable capacitors responsive to a control voltage. The variable delay line can also have a ladder configuration of multiple LC subcircuits each having a variable impedance responsive to a control voltage.

Owner:RGT UNIV OF CALIFORNIA

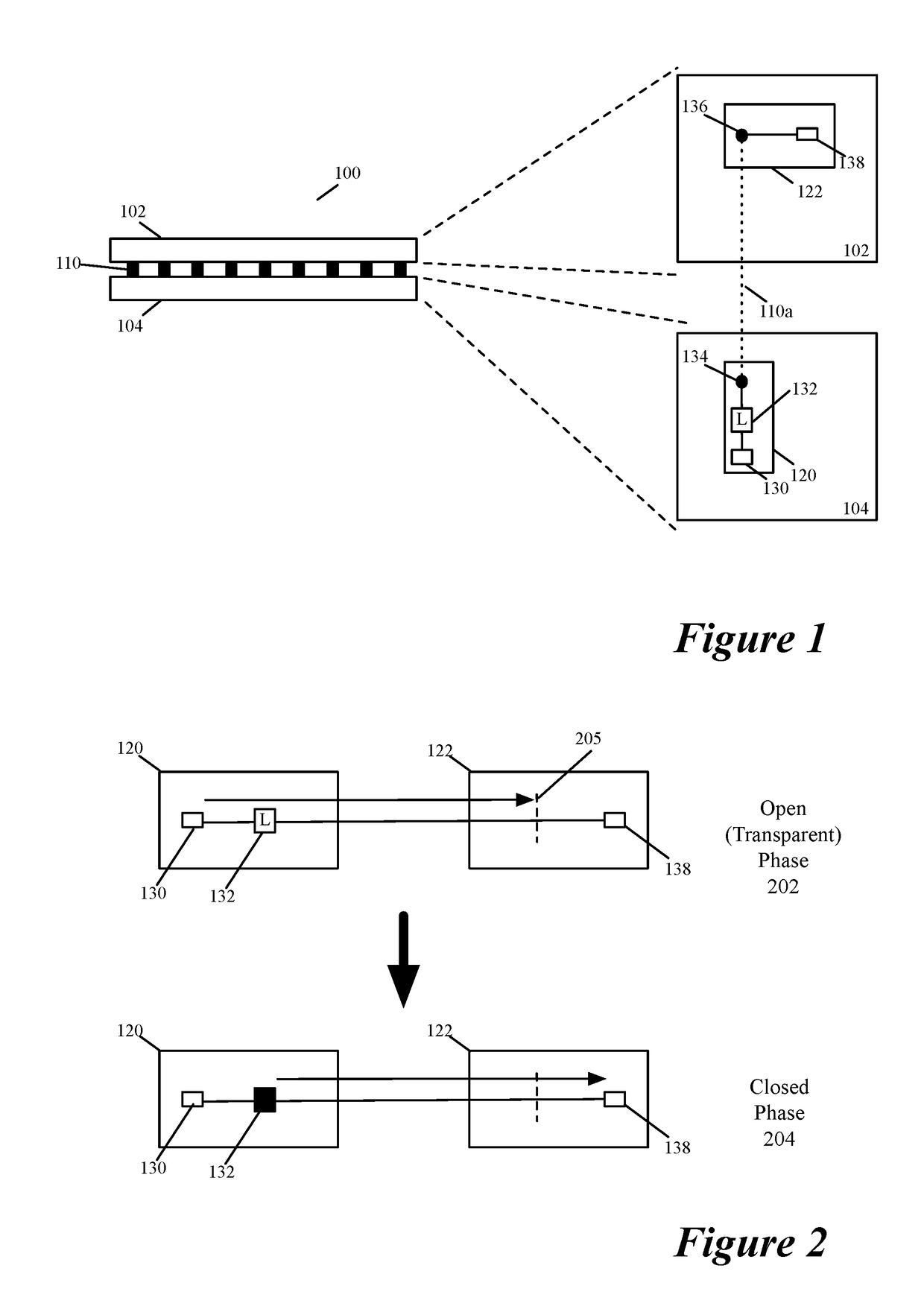

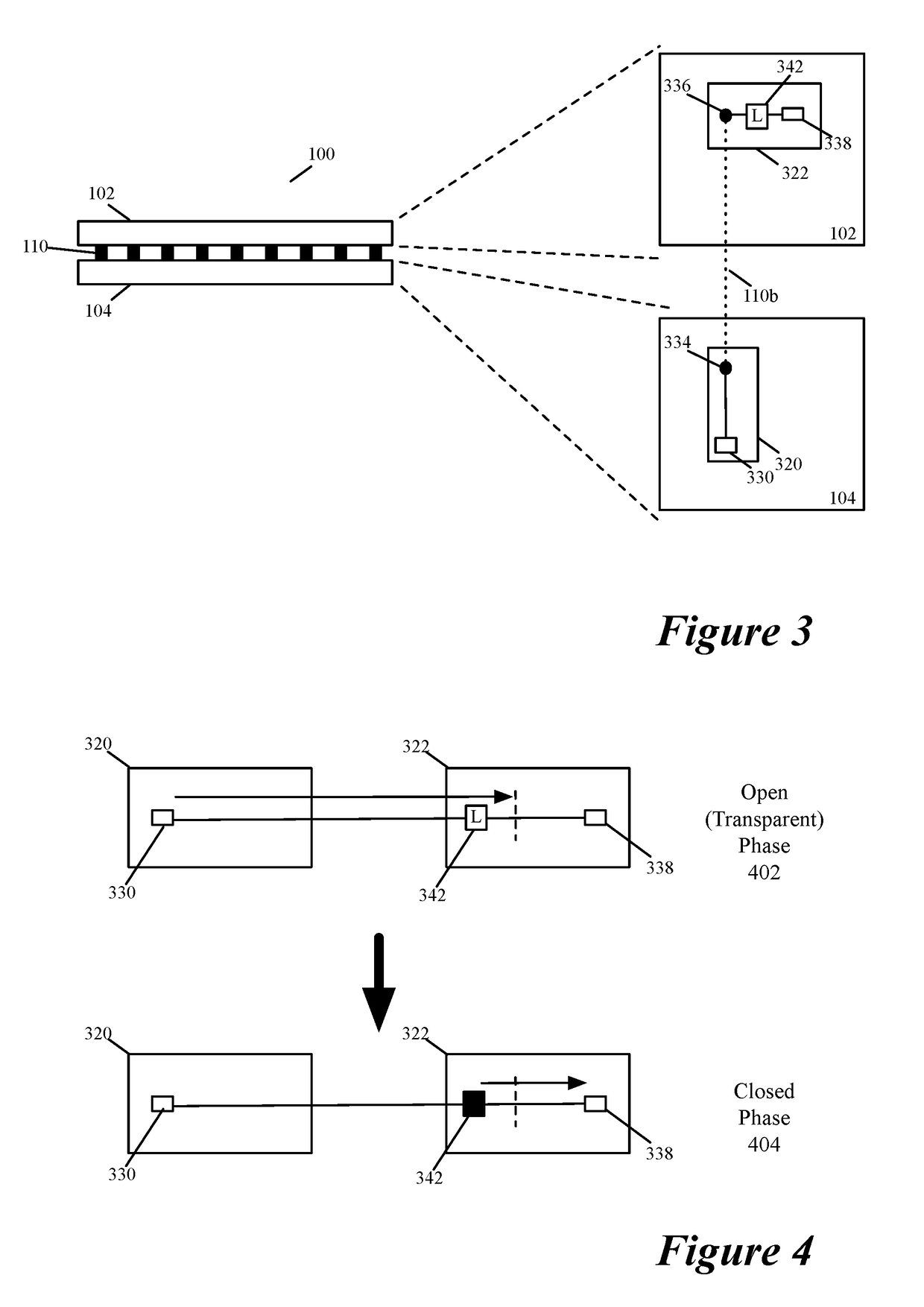

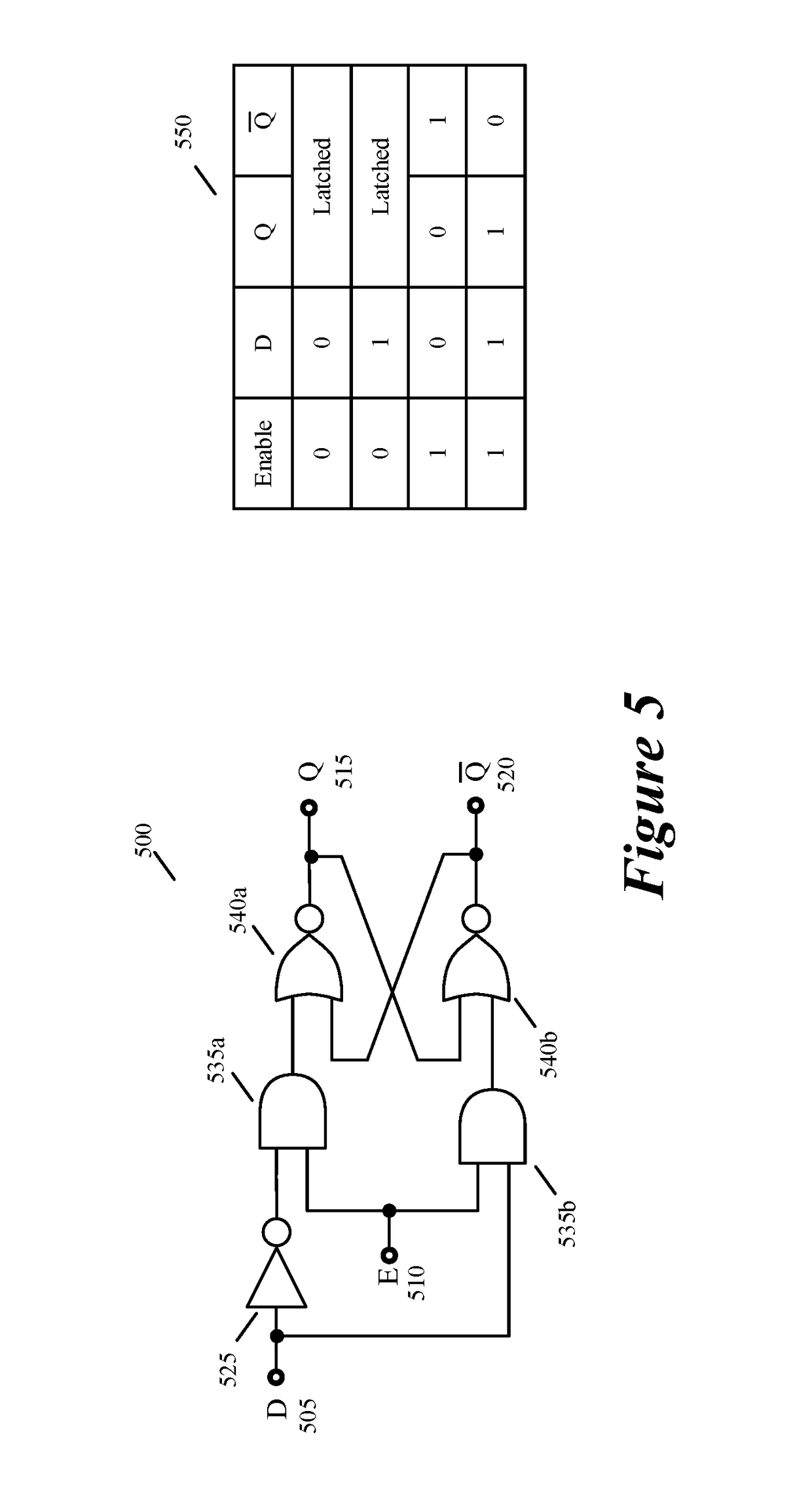

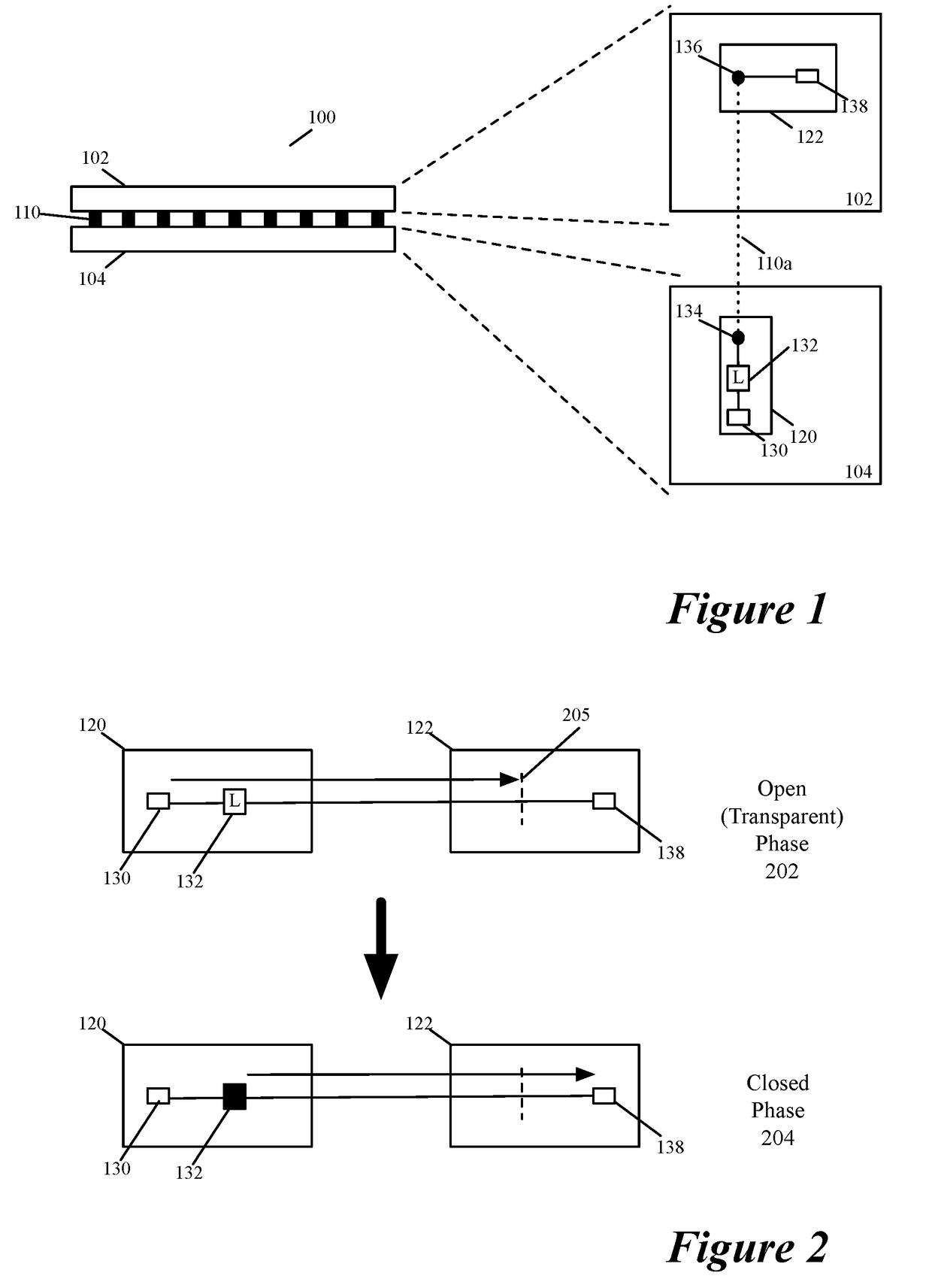

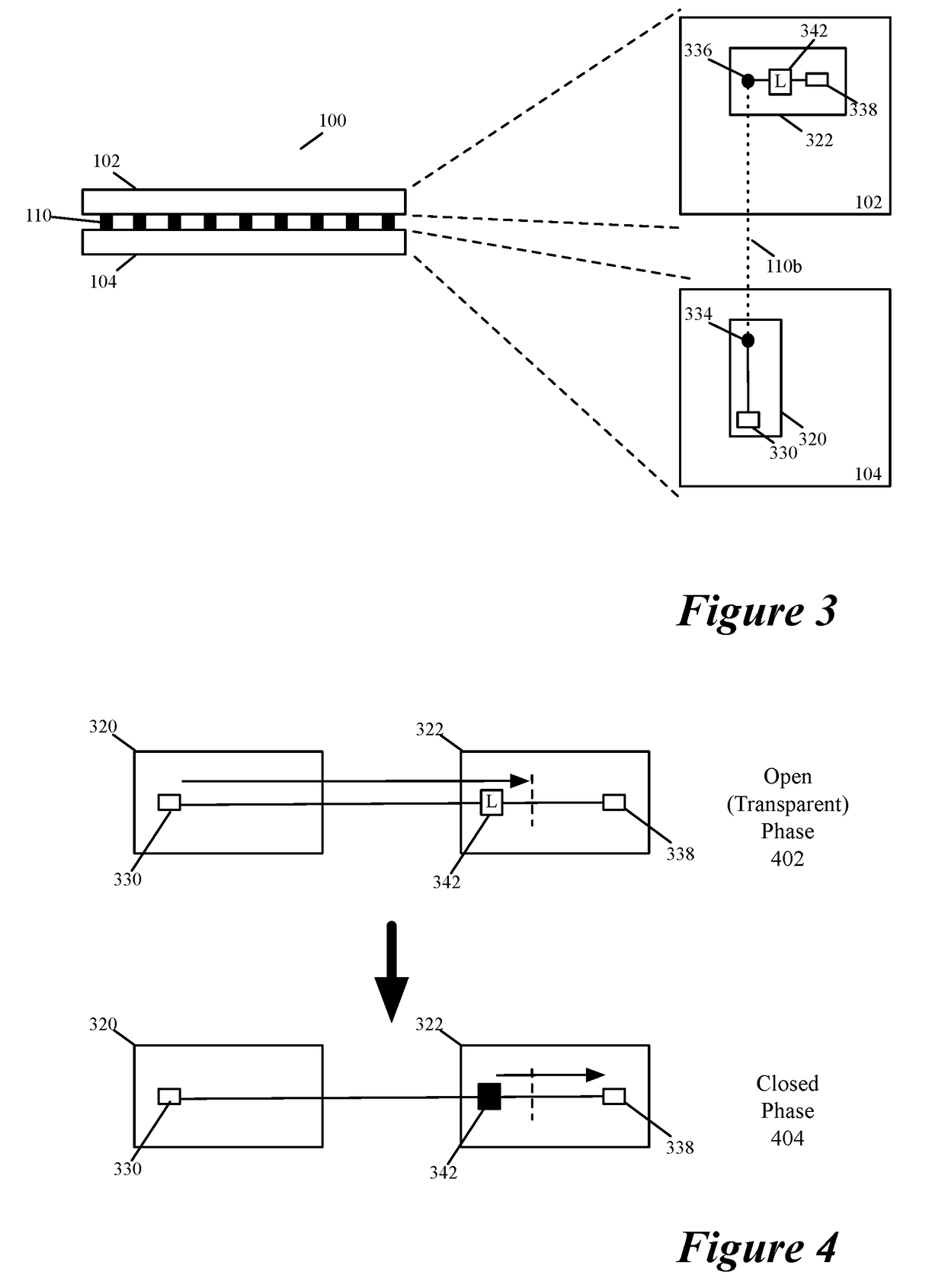

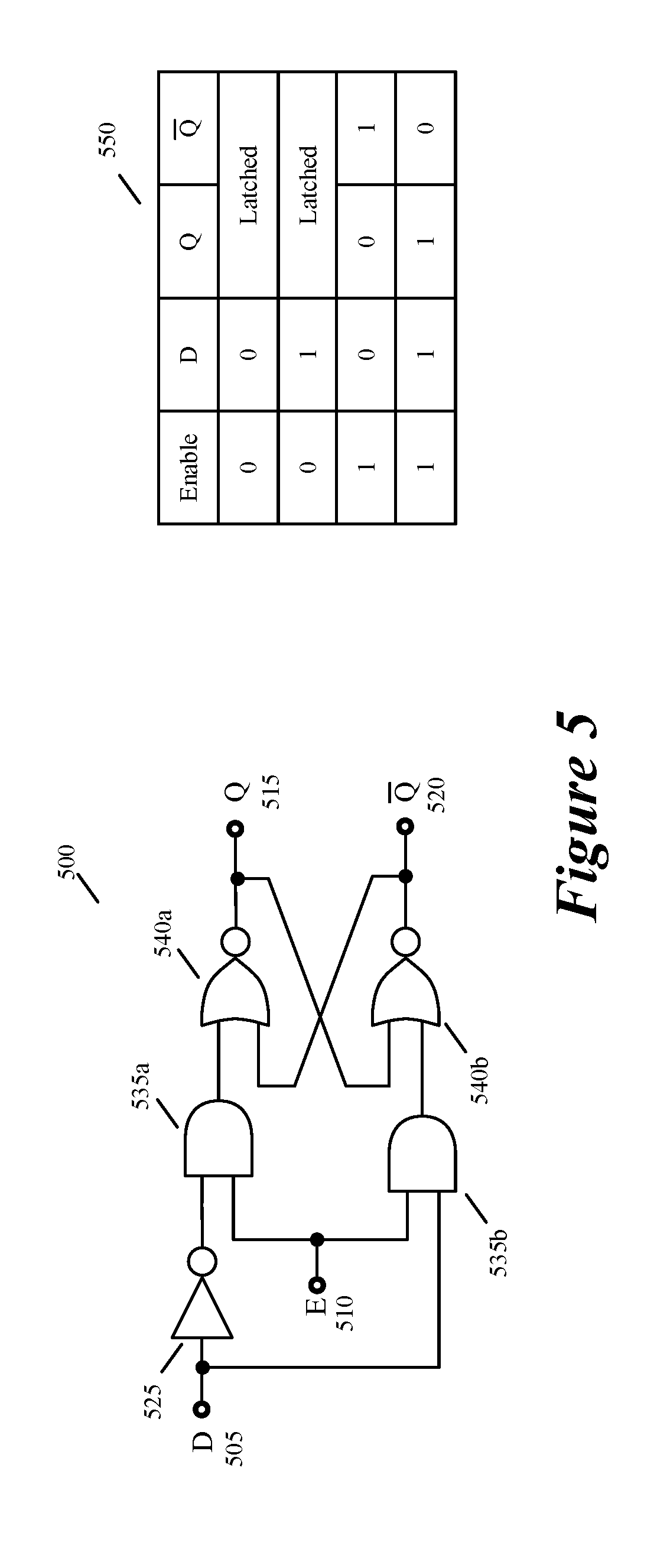

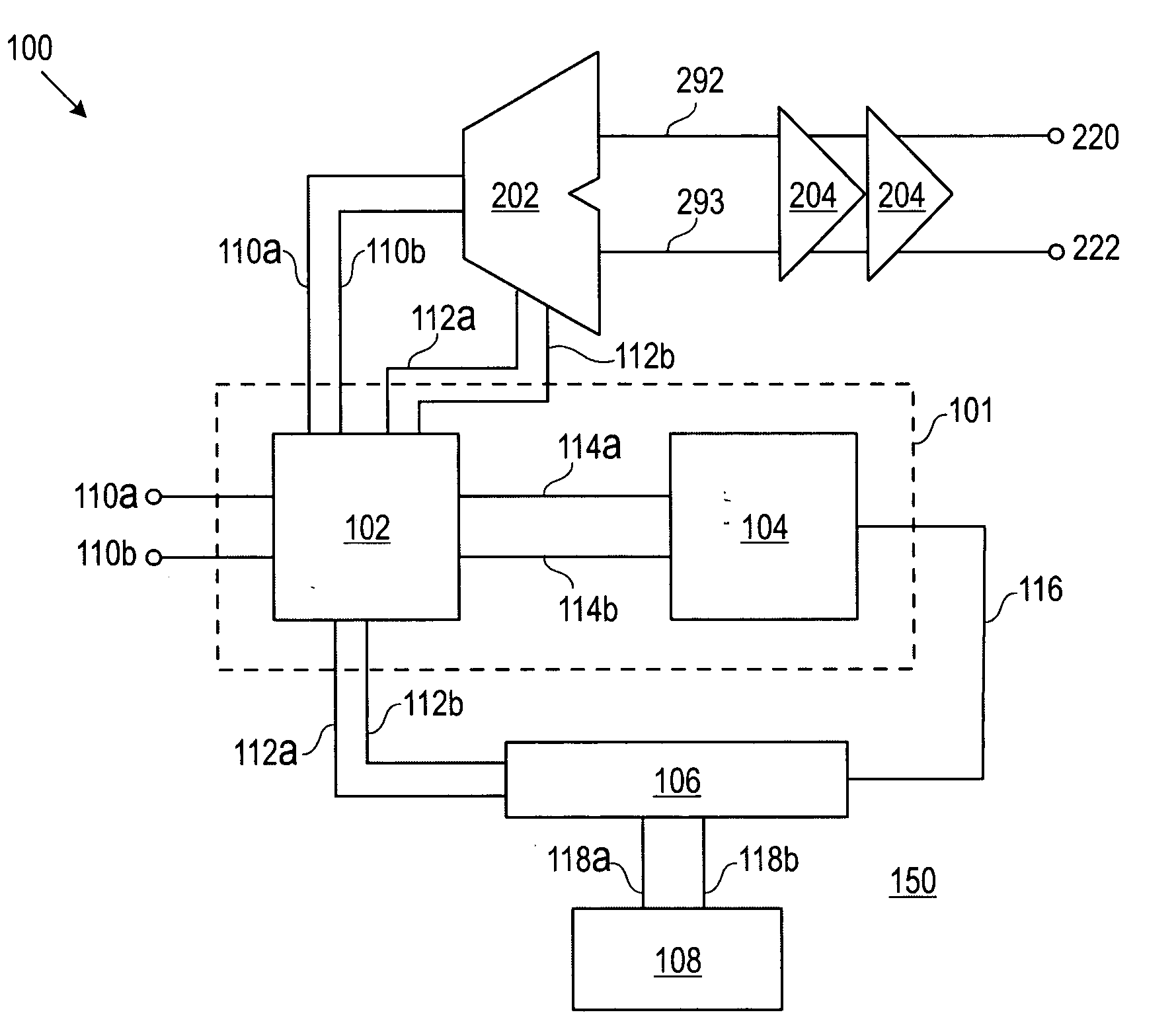

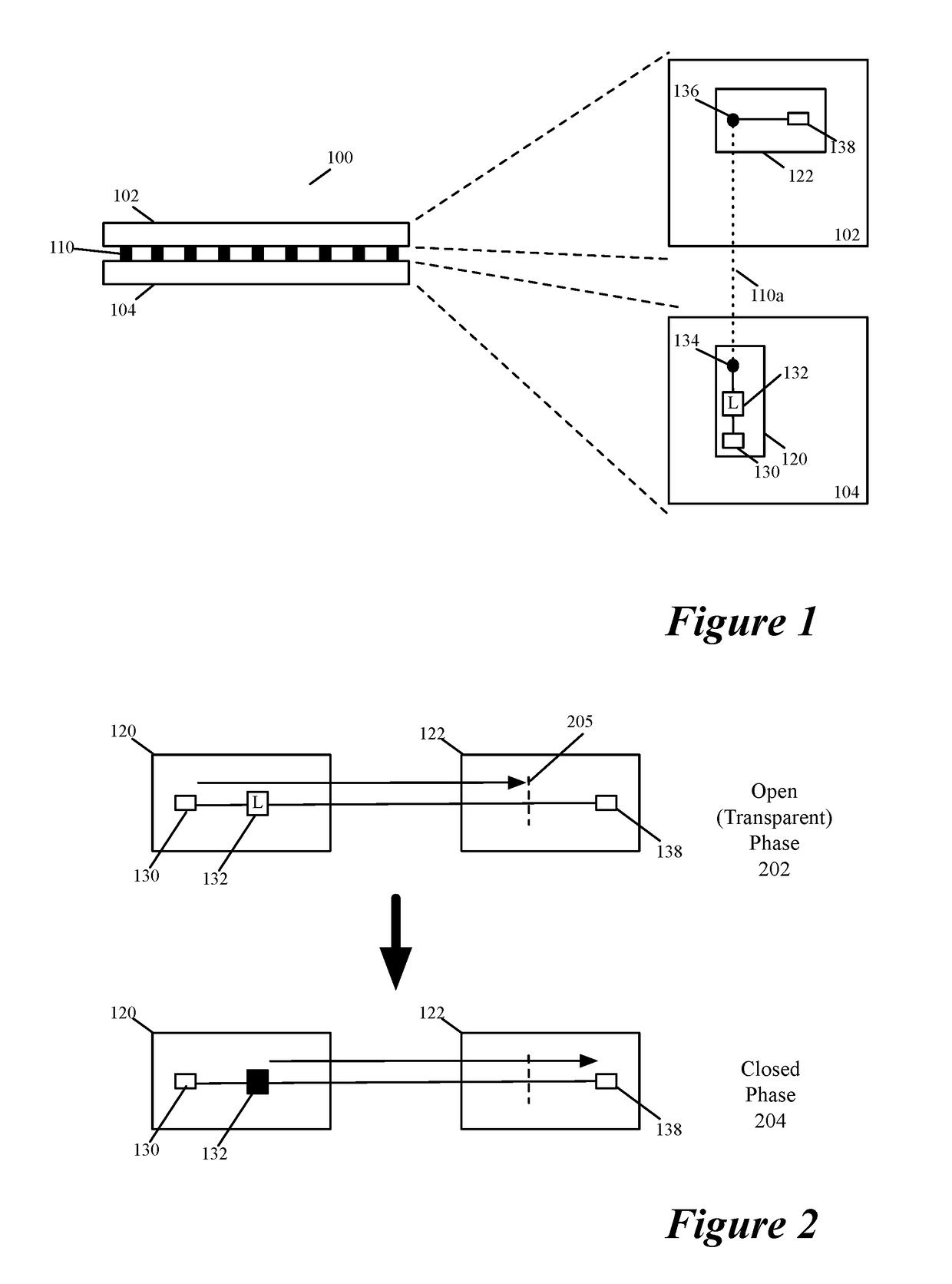

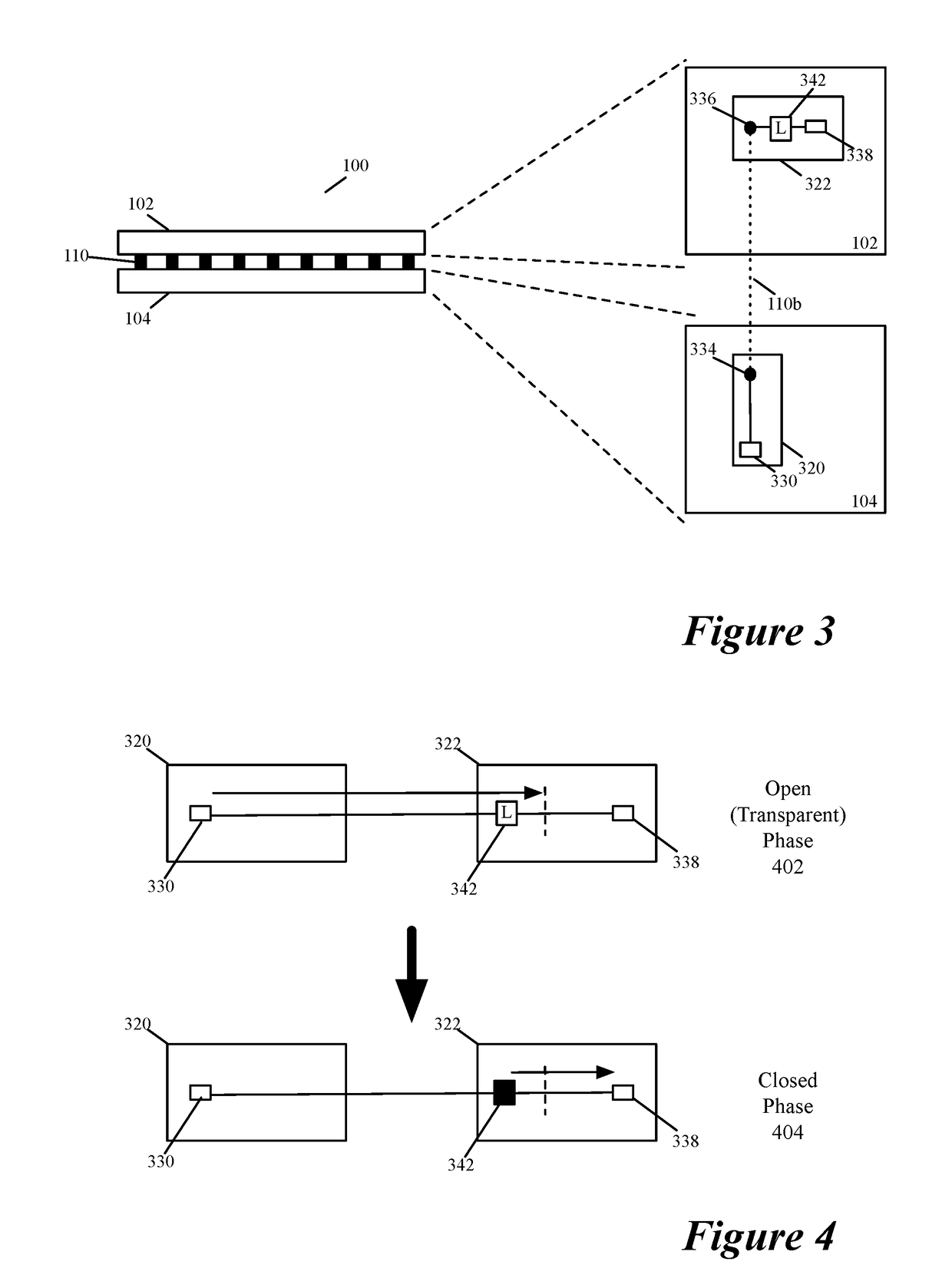

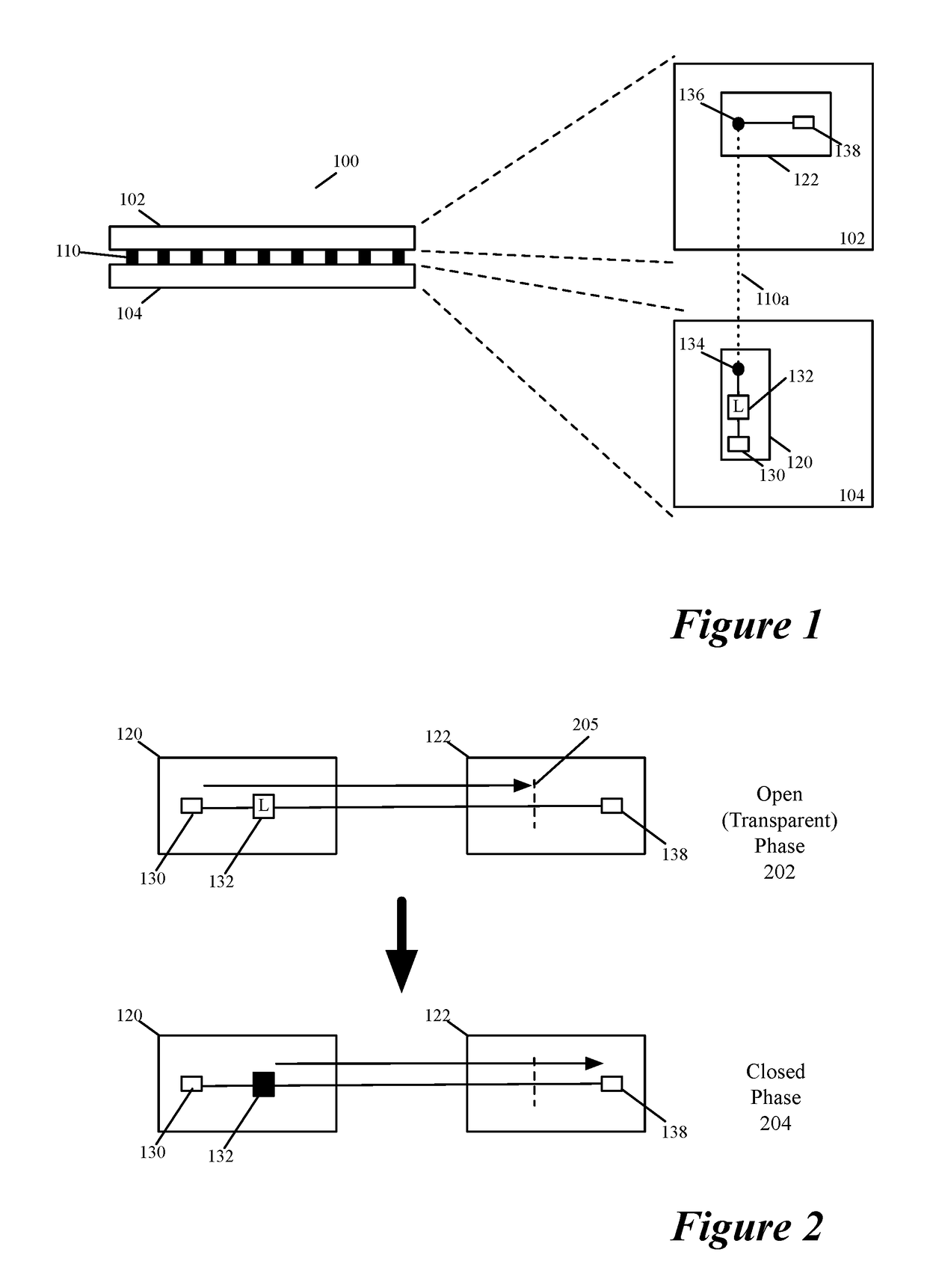

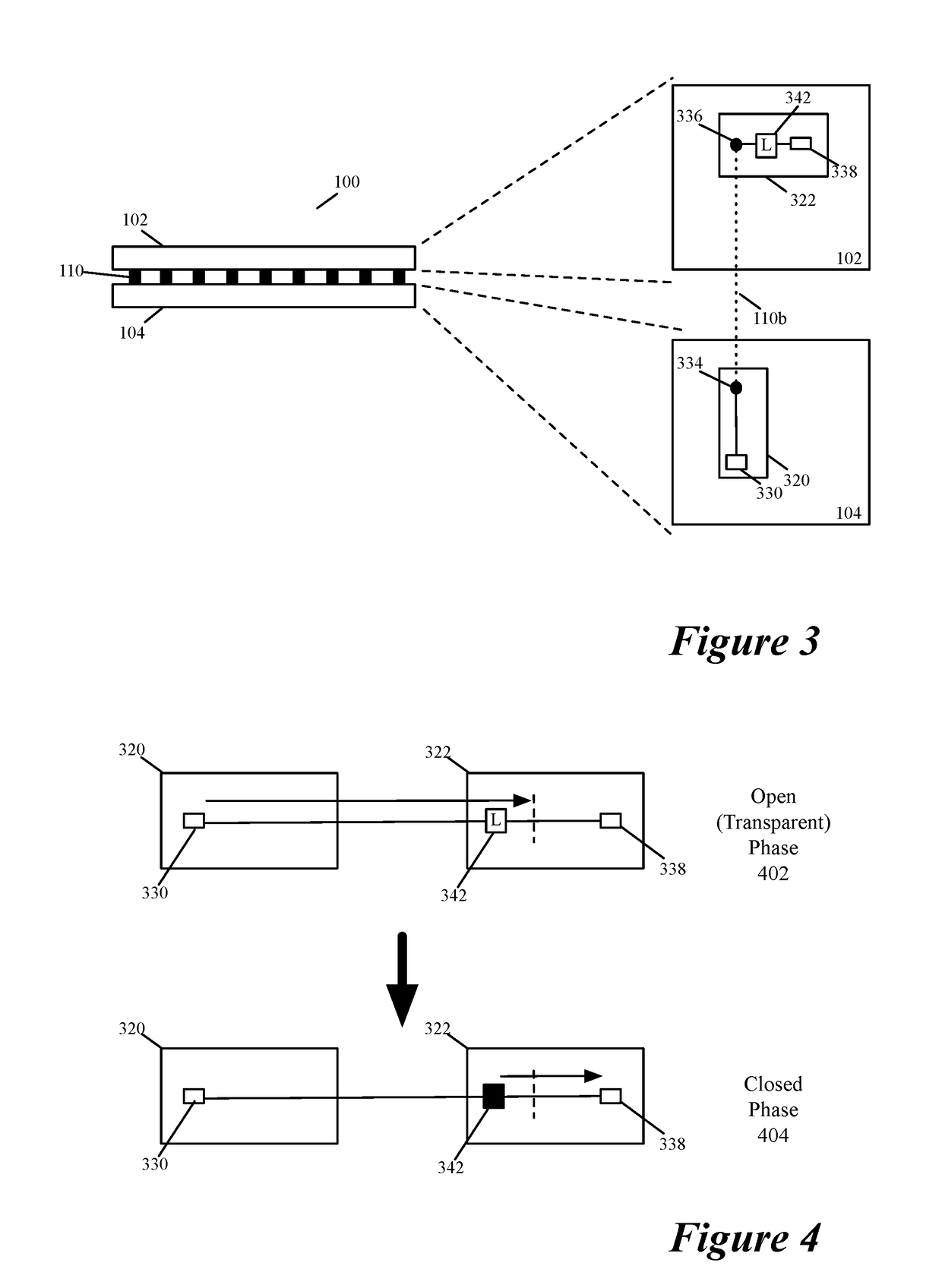

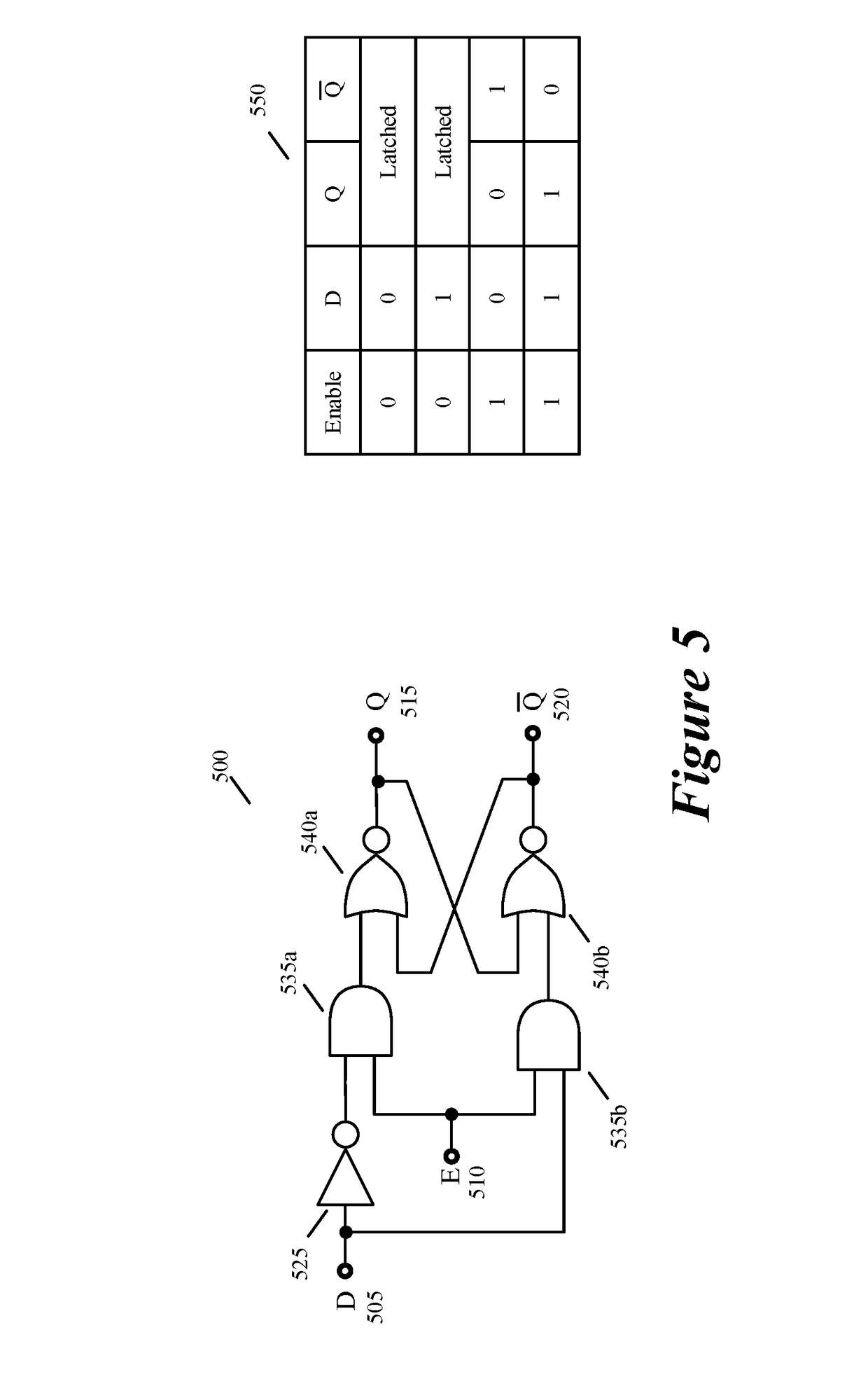

Time borrowing between layers of a three dimensional chip stack

ActiveUS20190042912A1Reduce loadExclusive-OR circuitsError detection/correctionEngineeringThree-dimensional integrated circuit

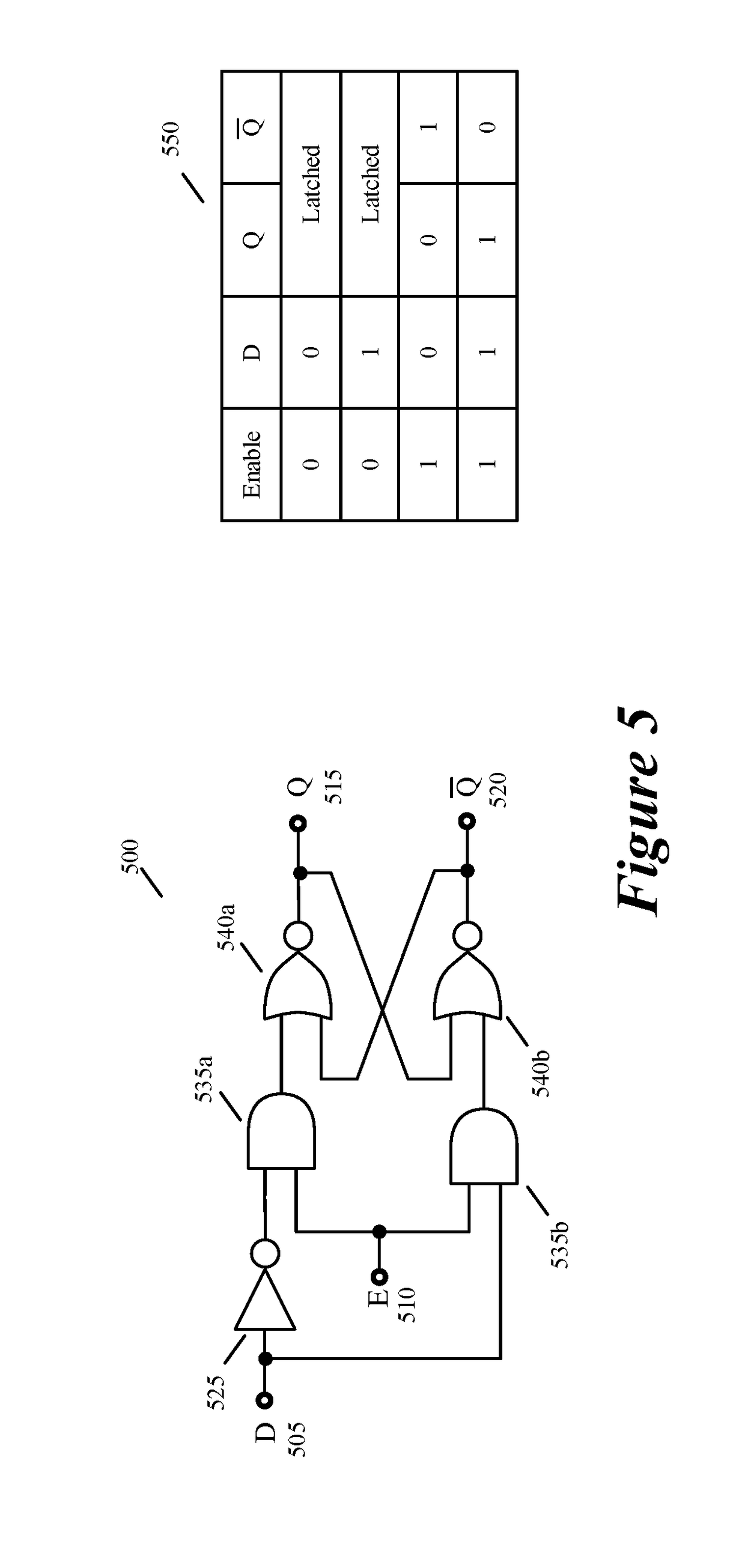

Some embodiments of the invention provide a three-dimensional (3D) circuit structure that uses latches to transfer signals between two bonded circuit layers. In some embodiments, this structure includes a first circuit partition on a first bonded layer and a second circuit partition on a second bonded layer. It also includes at least one latch to transfer signals between the first circuit partition on the first bonded layer and the second circuit partition on the second bonded layer. In some embodiments, the latch operates in (1) an open first mode that allows a signal to pass from the first circuit partition to the second circuit partition and (2) a closed second mode that maintains the signal passed through during the prior open first mode. By allowing the signal to pass through the first circuit partition to the second circuit partition during its open mode, the latch allows the signal to borrow time from a first portion of a clock cycle of the second circuit partition for a second portion of the clock cycle of the second circuit partition.

Owner:XCELSIS CORP

Avoiding forbidden data patterns in coded audio data

InactiveUS6233718B1Effective avoidanceTelevision system detailsError preventionInformation processingData mining

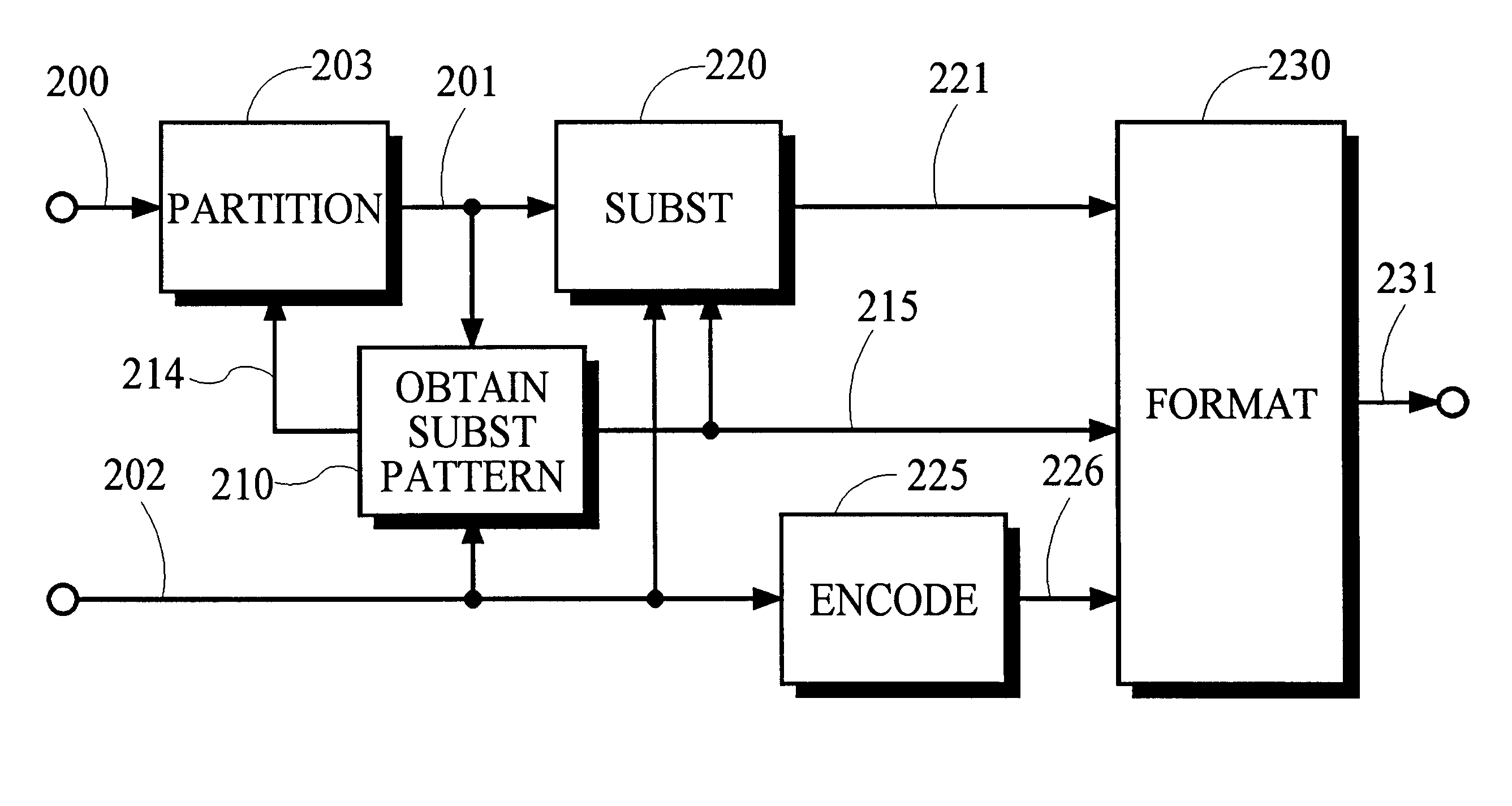

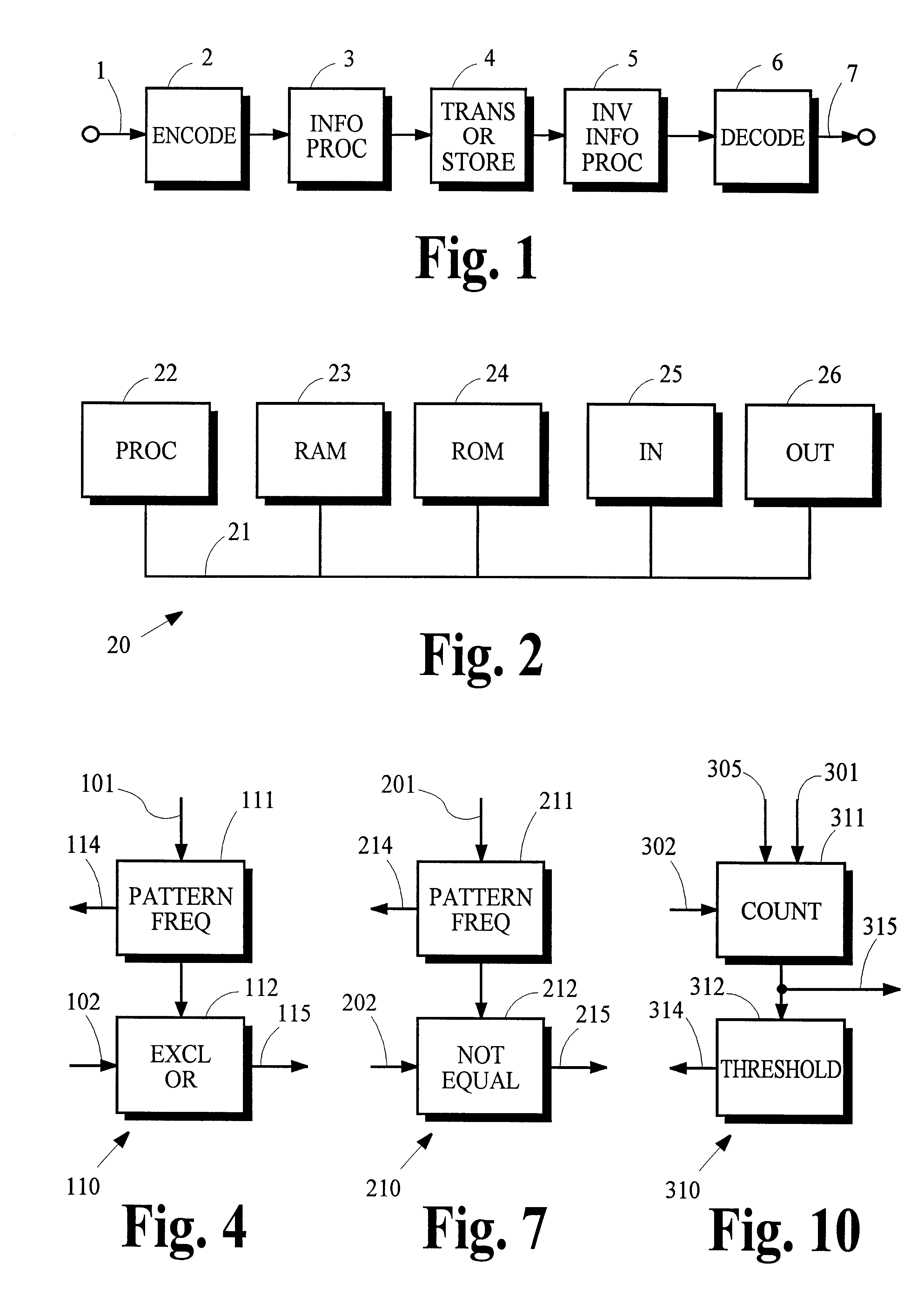

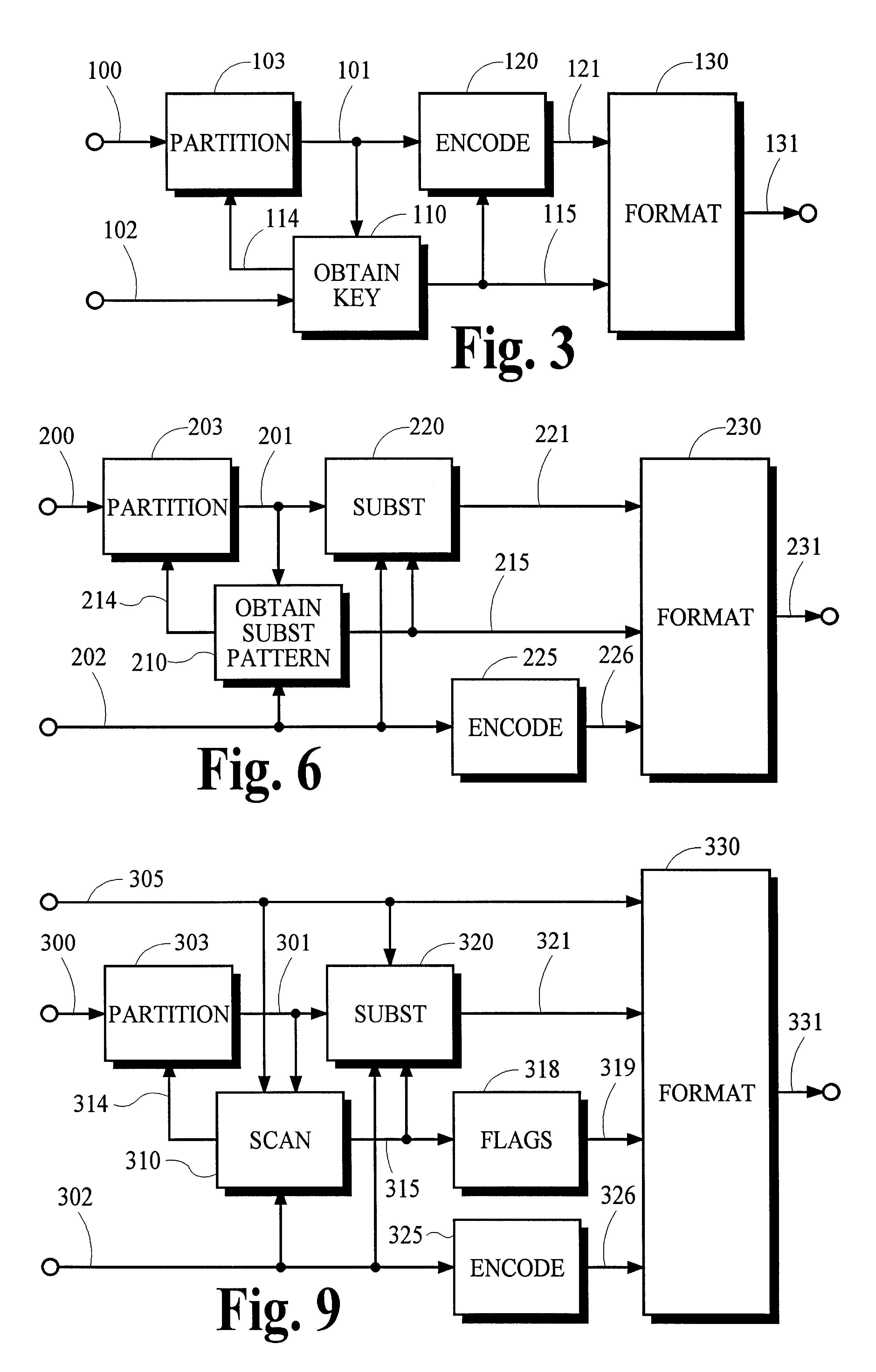

Any of several information processing techniques may be used in various information storage and transmission applications to prevent the occurrence of certain "forbidden" bit patterns. According to an encoding technique, a reversible coding process is used to generate an encoded representation of an information stream that cannot contain any forbidden data patterns. This may be accomplished by partitioning the information stream into segments and encoding each segment according to a respective encoding key that is selected such that the results of the coding process cannot contain a forbidden data pattern. According to one substitution technique, all occurrences of forbidden data patterns are replaced with permissible data patterns that do not otherwise occur in the information stream. This may be accomplished by partitioning the information stream into segments, identifying an unused data pattern in a respective segment, and carrying out the replacement of all occurrences of the forbidden data pattern in that segment. According to another substitution technique, all occurrences of a forbidden data pattern are replaced by any permissible data pattern. This may be accomplished by partitioning the information stream into segments, identifying occurrences of the substitution data pattern and the forbidden data pattern in a respective segment, constructing a flag for each occurrence, and replacing all occurrences of the forbidden data pattern in that segment with the substitution data pattern. Decoding keys, substitution data patterns, substitution flags or any other information needed to recover the original information is assembled with the modified information in a form that does not equal the forbidden data pattern.

Owner:DOLBY LAB LICENSING CORP

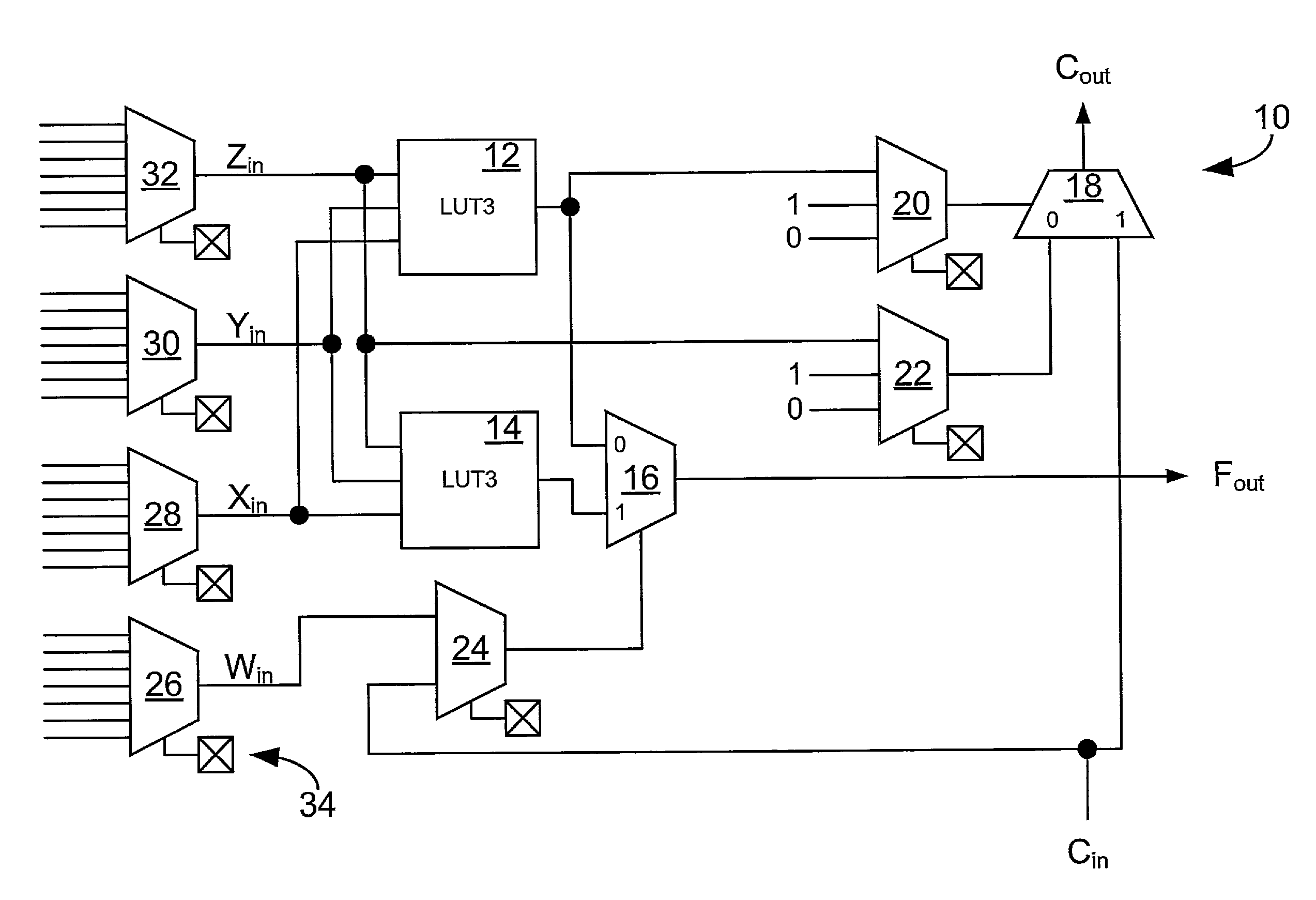

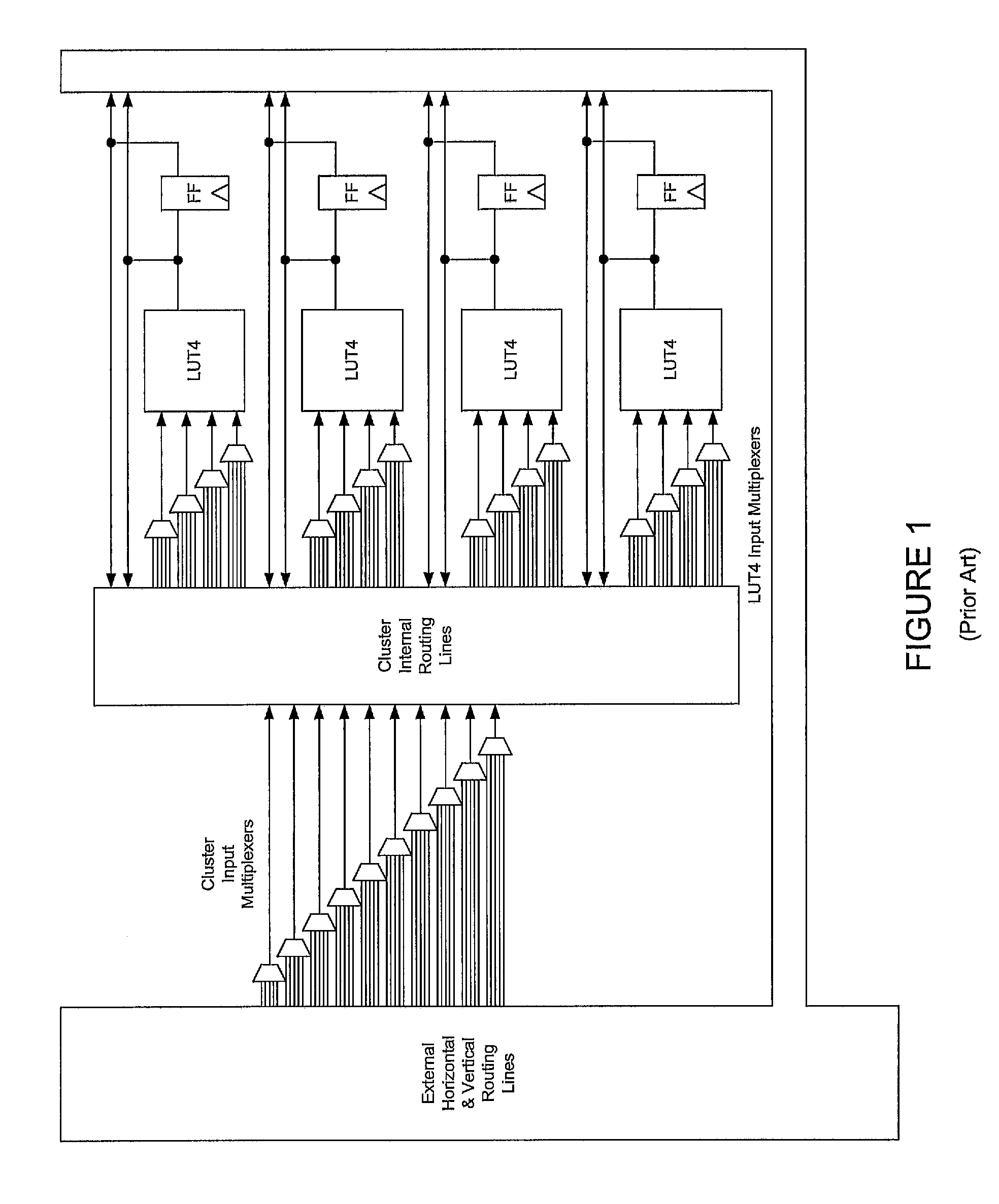

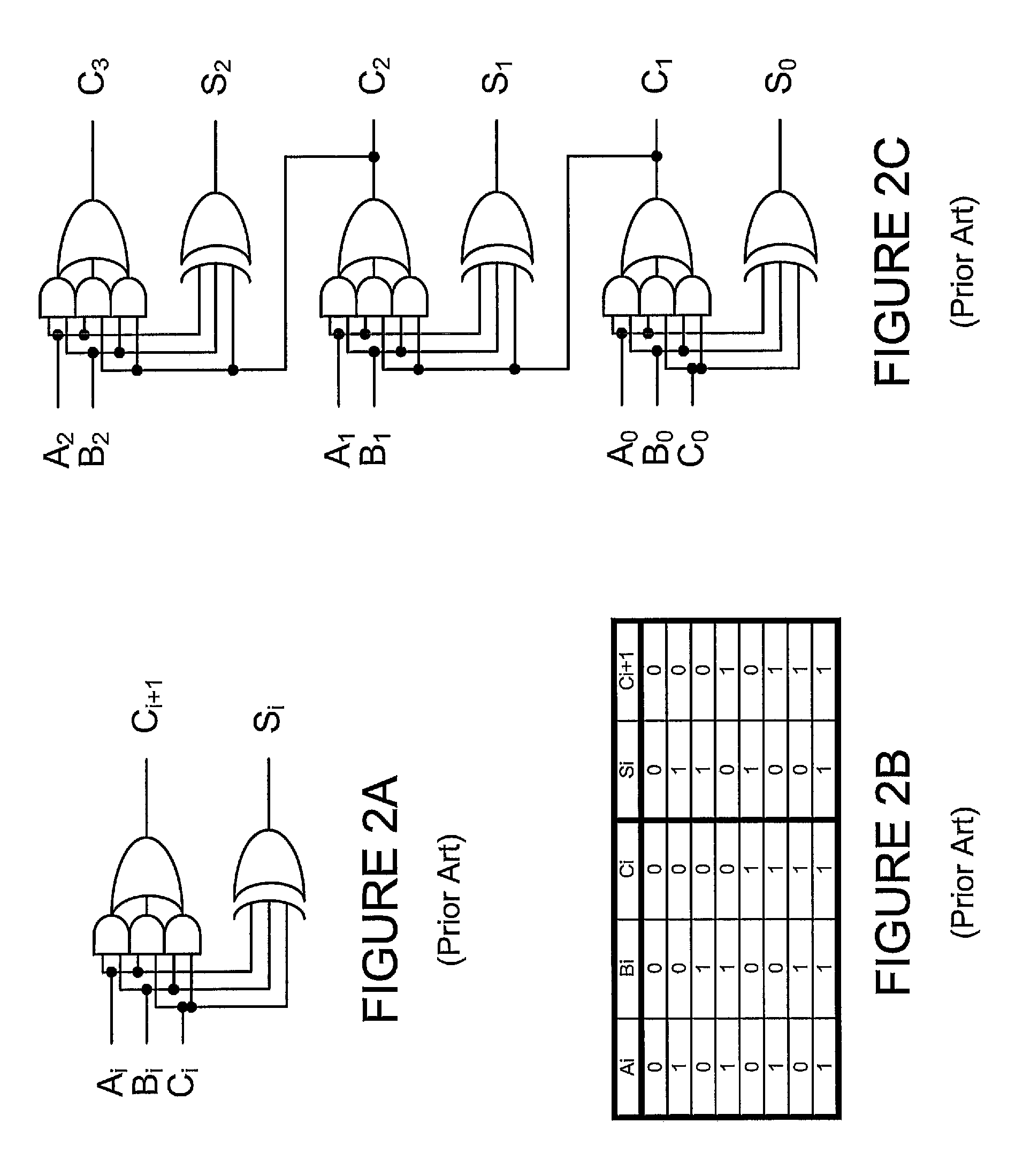

Flexible carry scheme for field programmable gate arrays

InactiveUS7663400B1Exclusive-OR circuitsComputations using contact-making devicesLeast significant bitComputer module

A fast, flexible carry scheme for use in clustered field programmable gate array architectures is described. Each cluster has a cluster carry input node, a cluster carry output node, a cluster carry output circuit having an output coupled to the cluster carry output node, a first input coupled to the cluster carry input node, and a second input and a plurality of logic modules each comprising a logic function generator circuit coupled to a carry circuit. The logic modules are coupled in a series carry arrangement between the cluster carry input node and the second input of the cluster carry output circuit such that the least significant bit of an arithmetic logic circuit can be programmably placed in any of the logic modules.

Owner:MICROSEMI SOC

Three dimensional circuit implementing machine trained network

ActiveUS20190042929A1Facilitate time borrowingReduce loadExclusive-OR circuitsError detection/correctionNerve networkNetwork on

Some embodiments provide a three-dimensional (3D) circuit structure that has two or more vertically stacked bonded layers with a machine-trained network on at least one bonded layer. As described above, each bonded layer can be an IC die or an IC wafer in some embodiments with different embodiments encompassing different combinations of wafers and dies for the different bonded layers. The machine-trained network in some embodiments includes several stages of machine-trained processing nodes with routing fabric that supplies the outputs of earlier stage nodes to drive the inputs of later stage nodes. In some embodiments, the machine-trained network is a neural network and the processing nodes are neurons of the neural network. In some embodiments, one or more parameters associated with each processing node (e.g., each neuron) is defined through machine-trained processes that define the values of these parameters in order to allow the machine-trained network (e.g., neural network) to perform particular operations (e.g., face recognition, voice recognition, etc.). For example, in some embodiments, the machine-trained parameters are weight values that are used to aggregate (e.g., to sum) several output values of several earlier stage processing nodes to produce an input value for a later stage processing node.

Owner:XCELSIS CORP

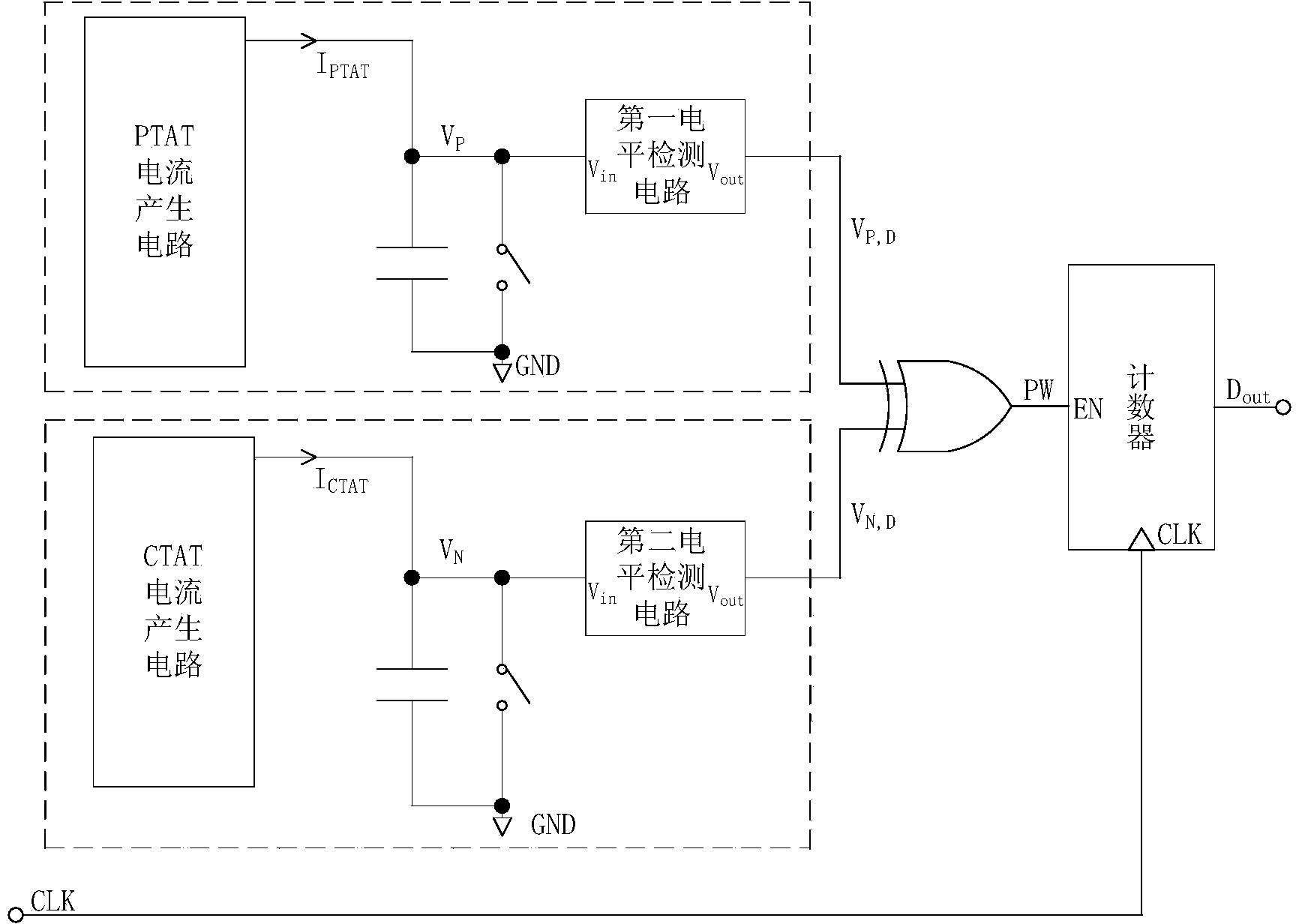

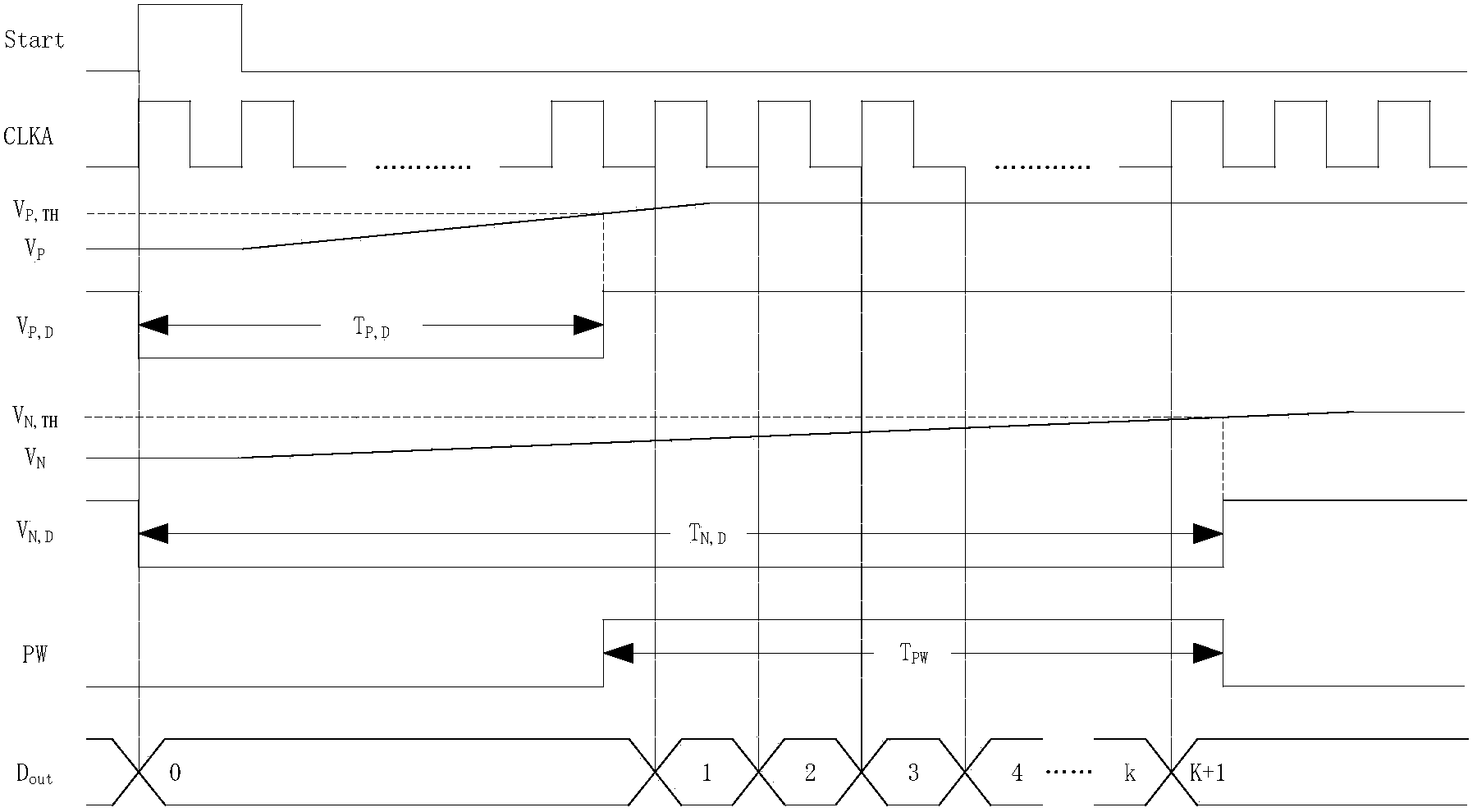

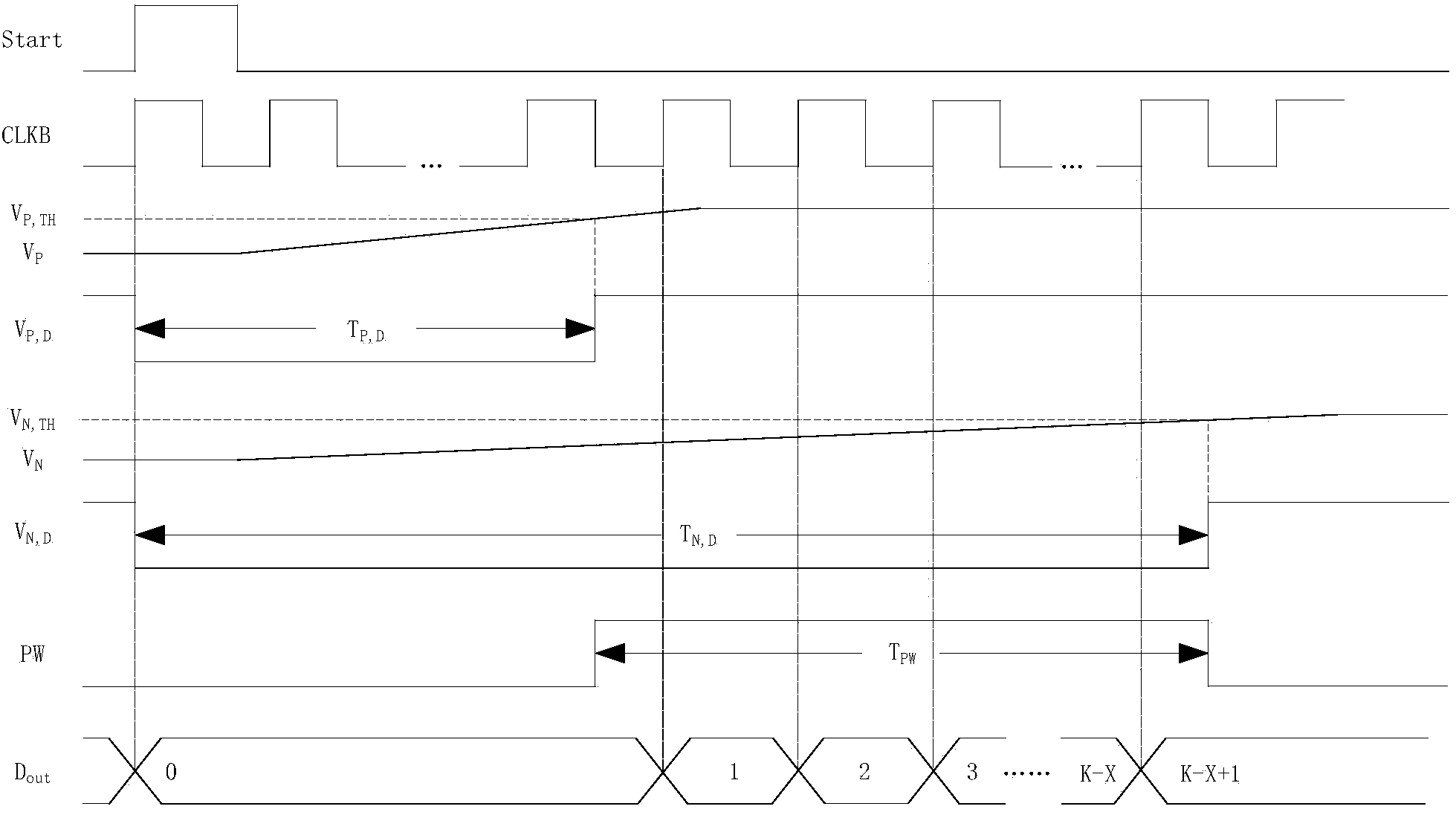

Time domain integrated temperature sensor

The invention provides a time domain integrated temperature sensor. Charging time of a capacitor is controlled through a shaped clock signal, so that the capacitor generates a charging time delay signal related with an input clock cycle. A time delay signal produced due to the fact that a path of PTAT currents charge the capacitor in the preceding control mode and a time delay signal produced due to the fact that a path of CTAT currents charge the capacitor in the same mode generate a pulse signal through an XOR operation, the width of the pulse signal is related with temperature and the input clock cycle, the pulse width of the pulse signal is quantized through the same input clock signal, and the obtained quantized result is completely counteracted with relevance of the input clock cycle, namely, an output value of the temperature sensor has no relation with the cycle of the input clock signal. The problem that due to the fact that an existing time domain integrated temperature sensor changes with the clock signal cycle, readings are inconsistent is solved, and precision of the time domain integrated temperature sensor is improved to a certain extent.

Owner:EXCELIO TECH SHENZHEN

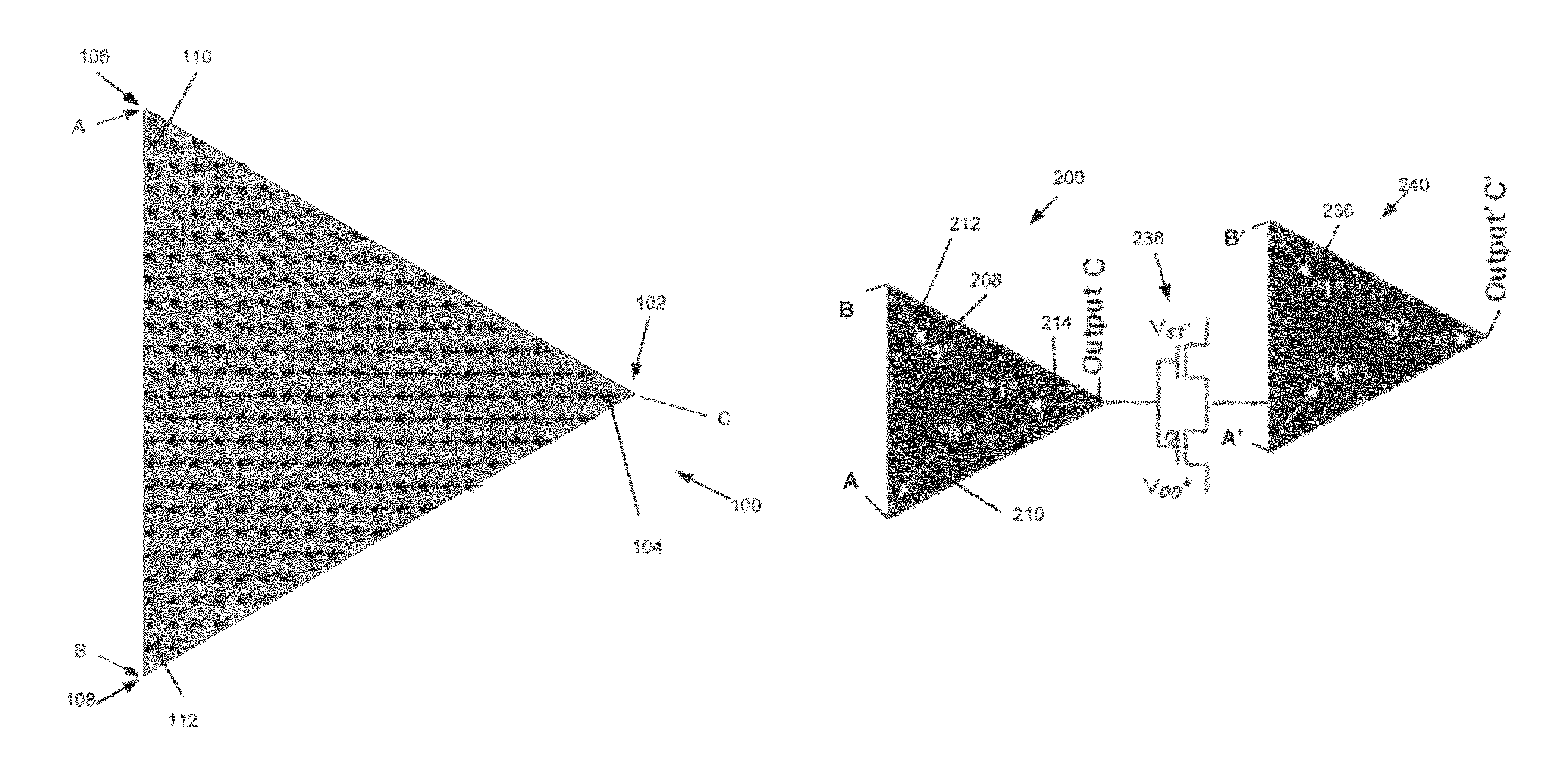

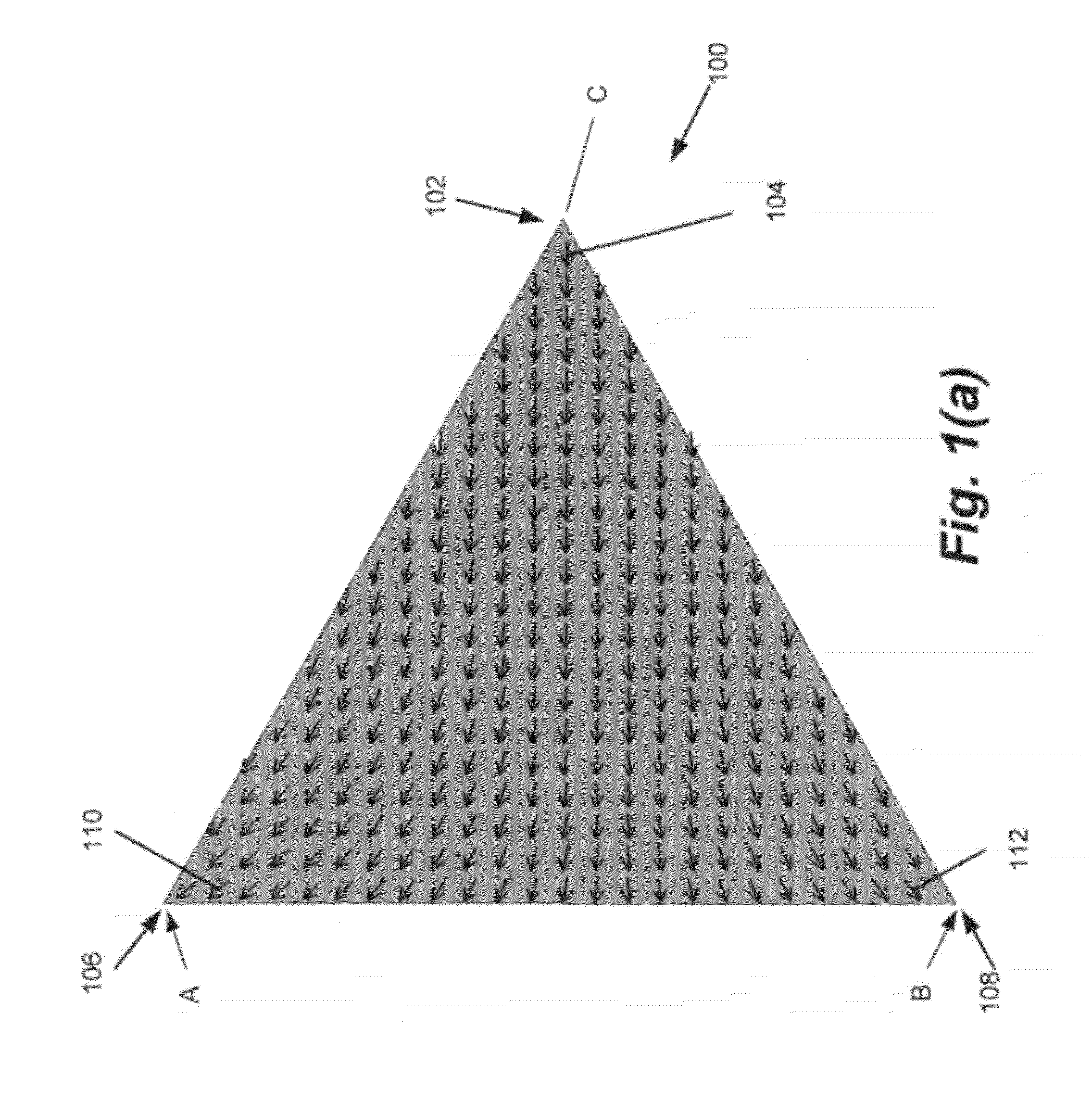

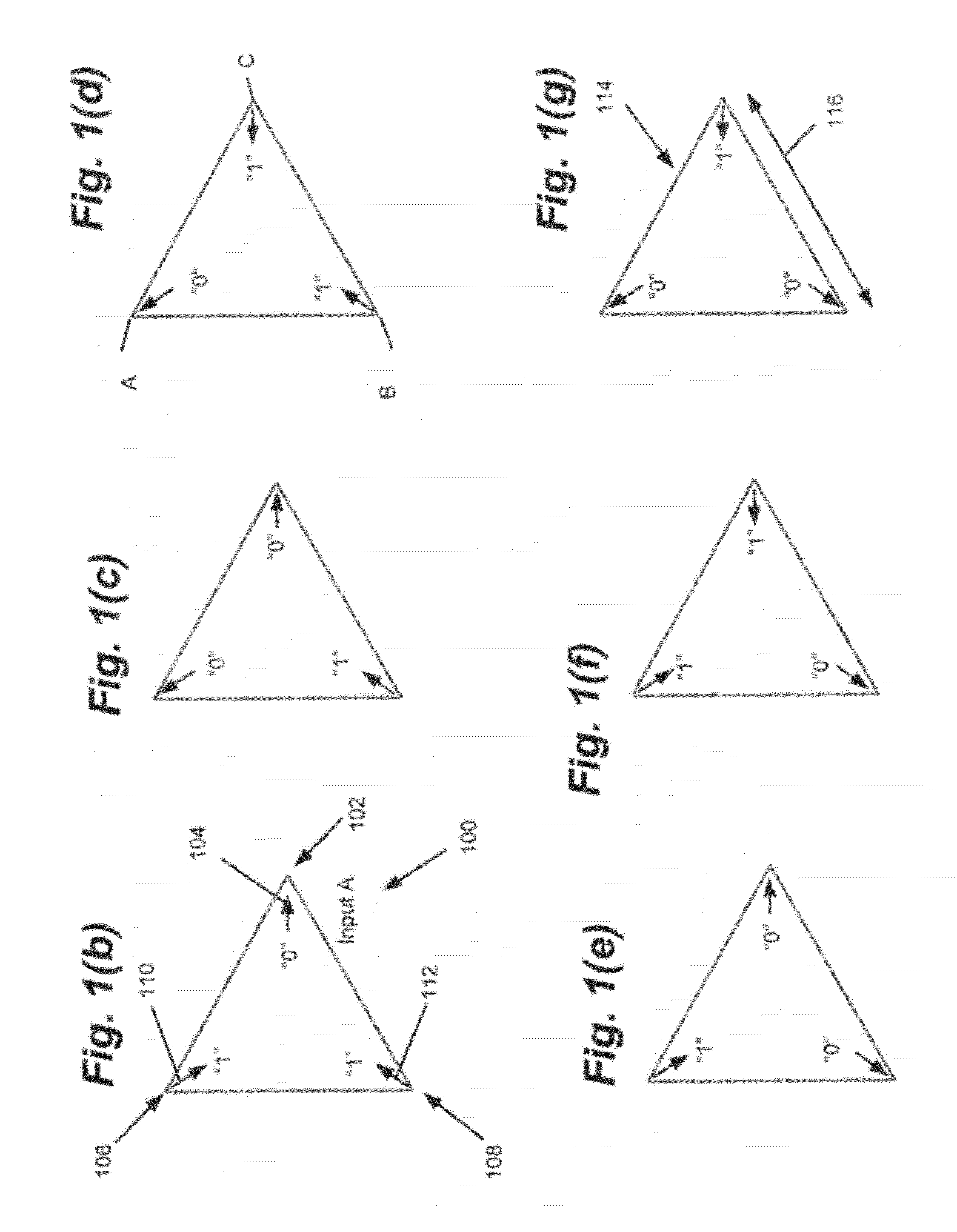

Spin transfer torque triad for non-volatile logic gates

InactiveUS8198919B1Exclusive-OR circuitsComputation using non-contact making devicesMagnetic anisotropySpin-transfer torque

A non-volatile logic gate, including a magnetic material having a shape induced magnetic anisotropy, wherein a shape of the magnetic material has a first vertex, a second vertex, and a third vertex and supports a single magnetic domain; regions of the magnetic material including a first input region adjacent the first vertex, a second input region adjacent the second vertex, and an output region adjacent a third vertex; the first input region for receiving a first logic input to the logic gate, the second input region for receiving a second logic input to the logic gate, and the output region for outputting at least one logic output of the logic gate; and the shape induced magnetic anisotropy determining at least part of a truth table for the logic gate, so that the logic gate produces the at least one logic output from the logic inputs using the shape.

Owner:RGT UNIV OF CALIFORNIA

Current-controlled CMOS logic family

Various circuit techniques for implementing ultra high speed circuits use current-controlled CMOS (C3MOS) logic fabricated in conventional CMOS process technology. An entire family of logic elements including inverter / buffers, level shifters, NAND, NOR, XOR gates, latches, flip-flops and the like are implemented using C3MOS techniques. Optimum balance between power consumption and speed for each circuit application is achieve by combining high speed C3MOS logic with low power conventional CMOS logic. The combined C3MOS / CMOS logic allows greater integration of circuits such as high speed transceivers used in fiber optic communication systems.

Owner:PITNEY BOWES INC +1

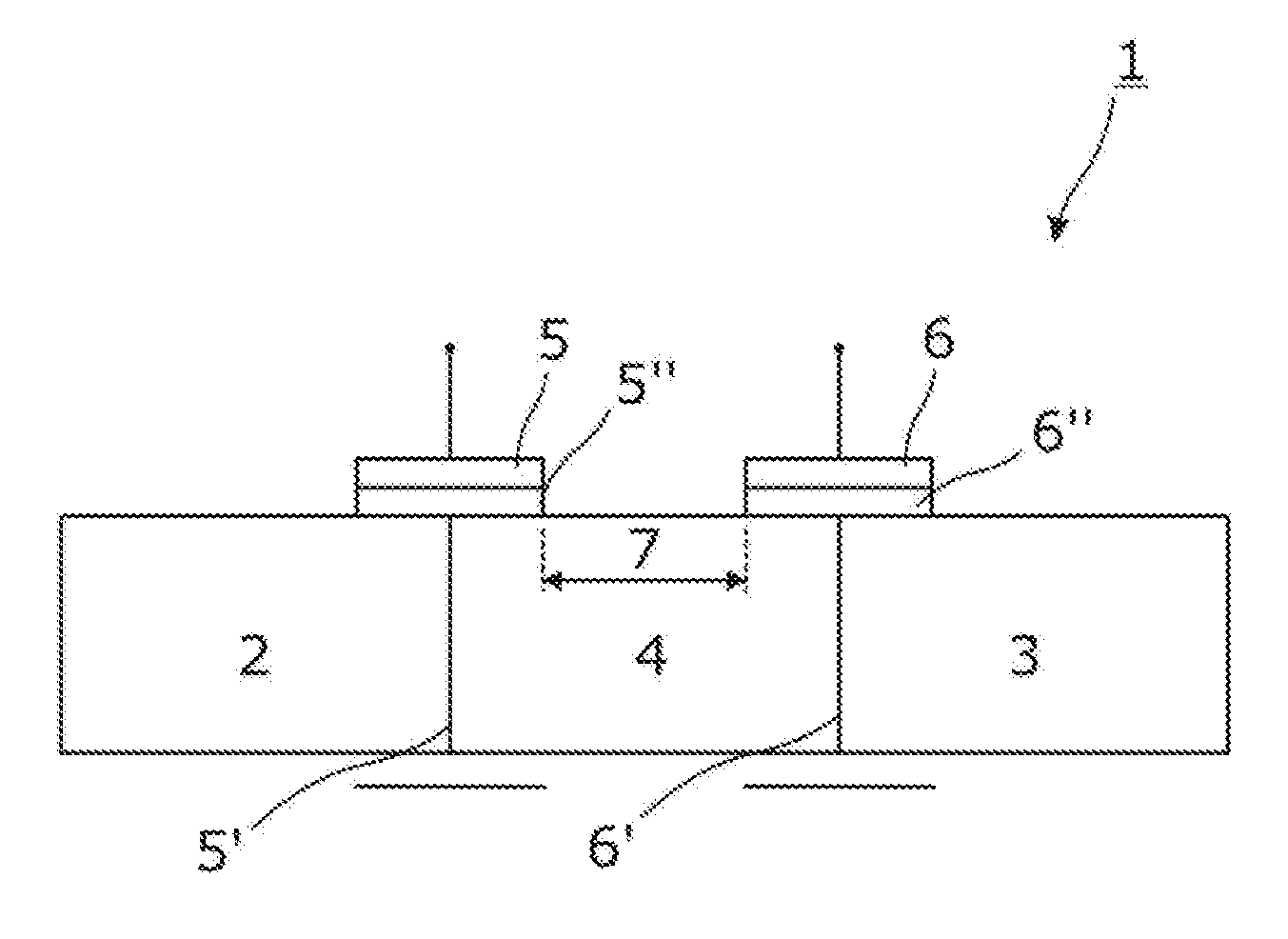

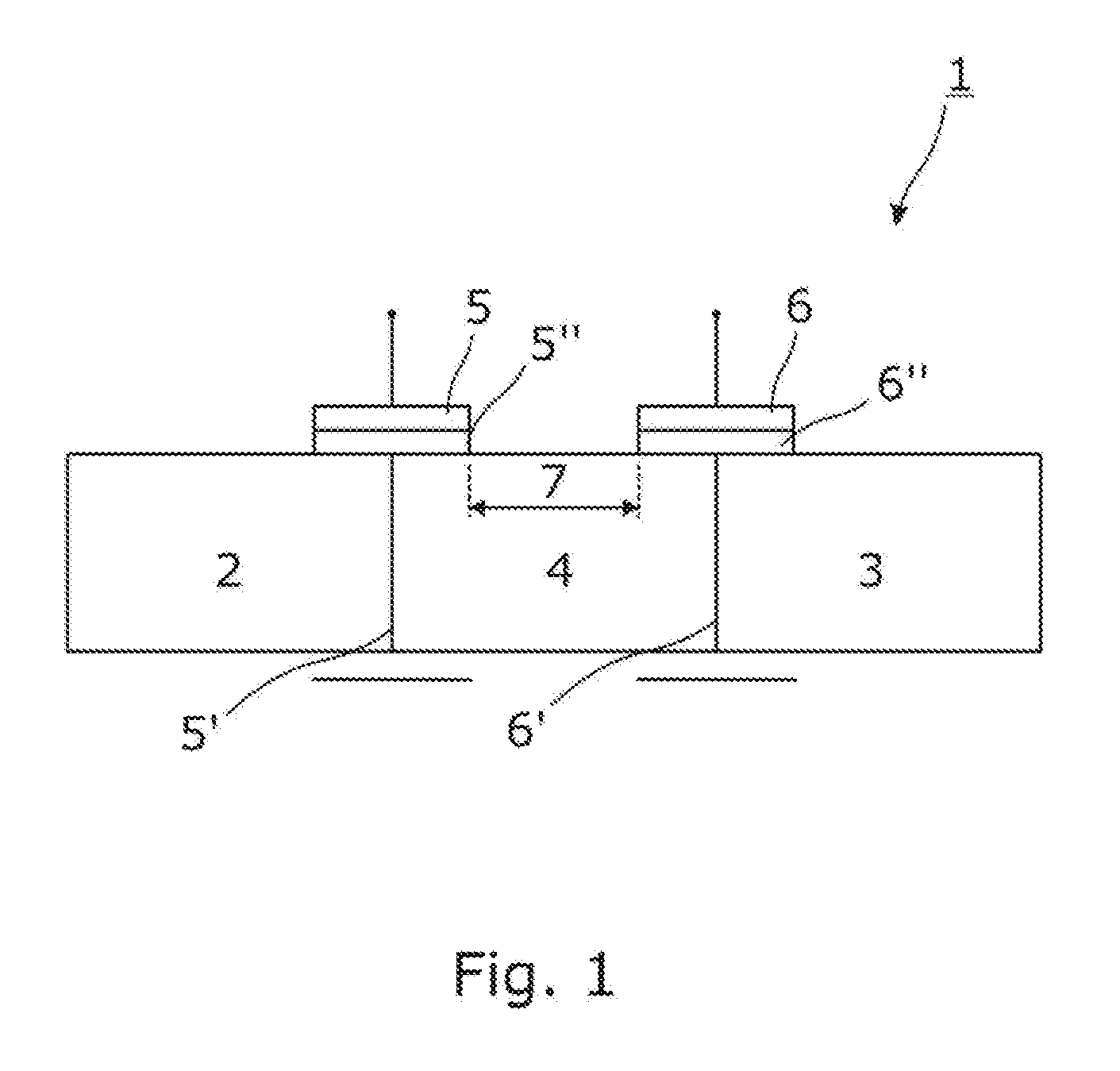

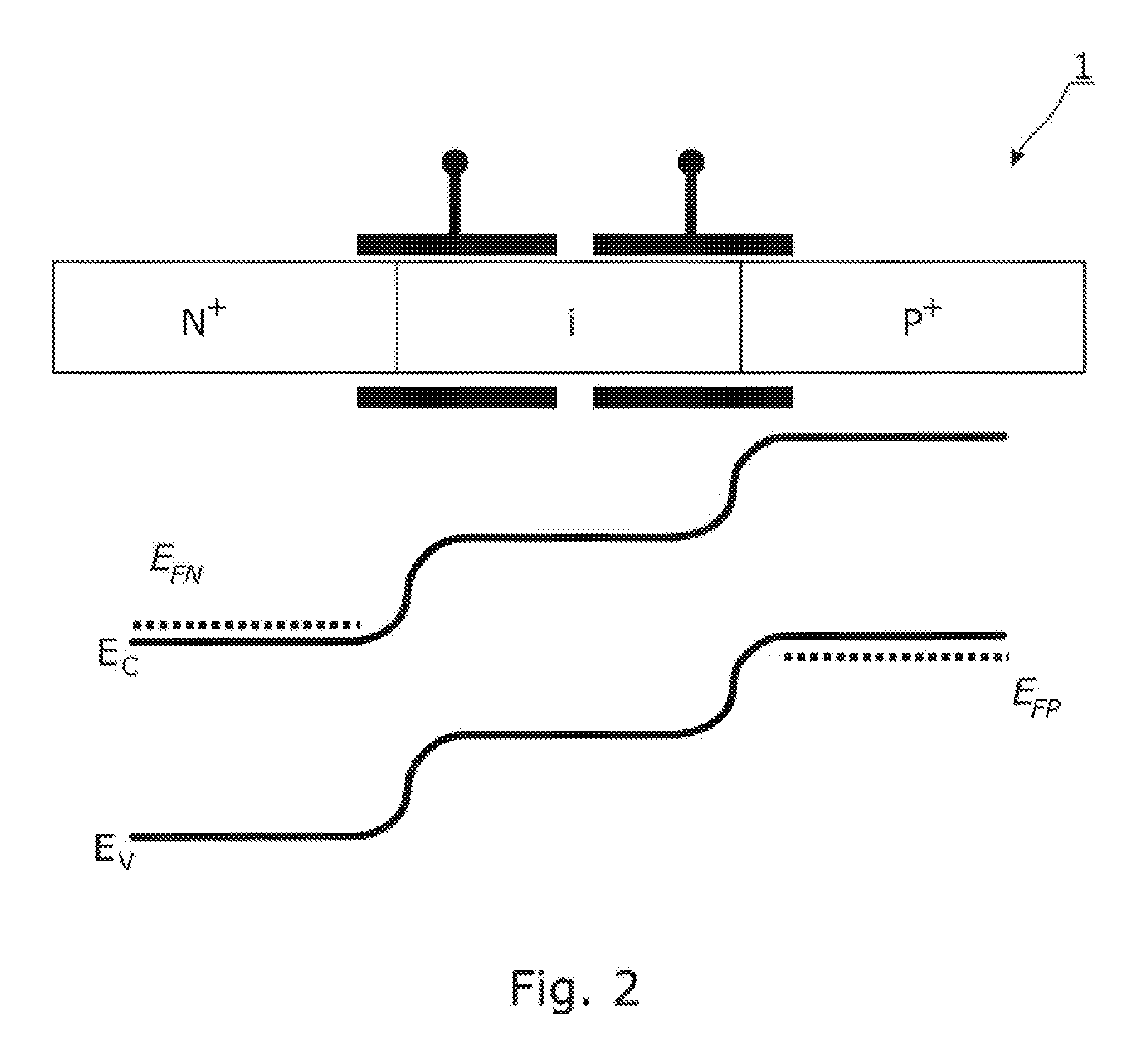

Tunnel field-effect transistor

ActiveUS20130021061A1Improve device performanceInhibition featureExclusive-OR circuitsComputation using non-contact making devicesSemiconductor materialsEngineering

A tunnel field-effect transistor including at least: a source region including a corresponding source semiconductor material; a drain region including a corresponding drain semiconductor material, and a channel region including a corresponding channel semiconductor material, which is arranged between the source region and the drain region. The tunnel field-effect transistor further includes at least: a source-channel gate electrode provided on an interface between the source region and the channel region; an insulator corresponding to the source-channel gate electrode that is provided between the source-channel gate electrode and the interface between the source region and the channel region; a drain-channel gate electrode provided on an interface between the drain region and the channel region; and an insulator corresponding to the drain-channel gate electrode that is provided between the drain-channel gate electrode and the interface between the drain region and the channel region.

Owner:GLOBALFOUNDRIES US INC

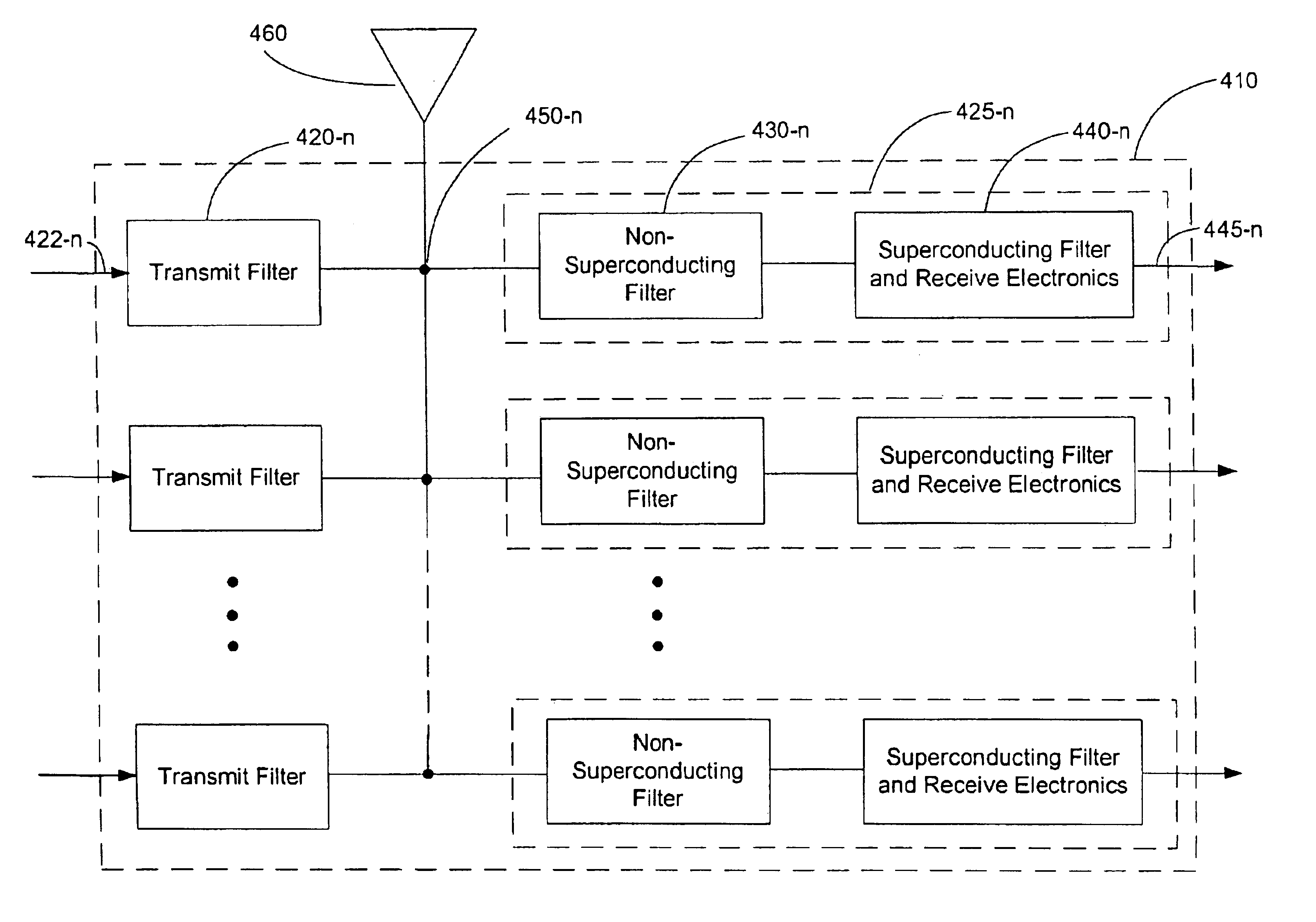

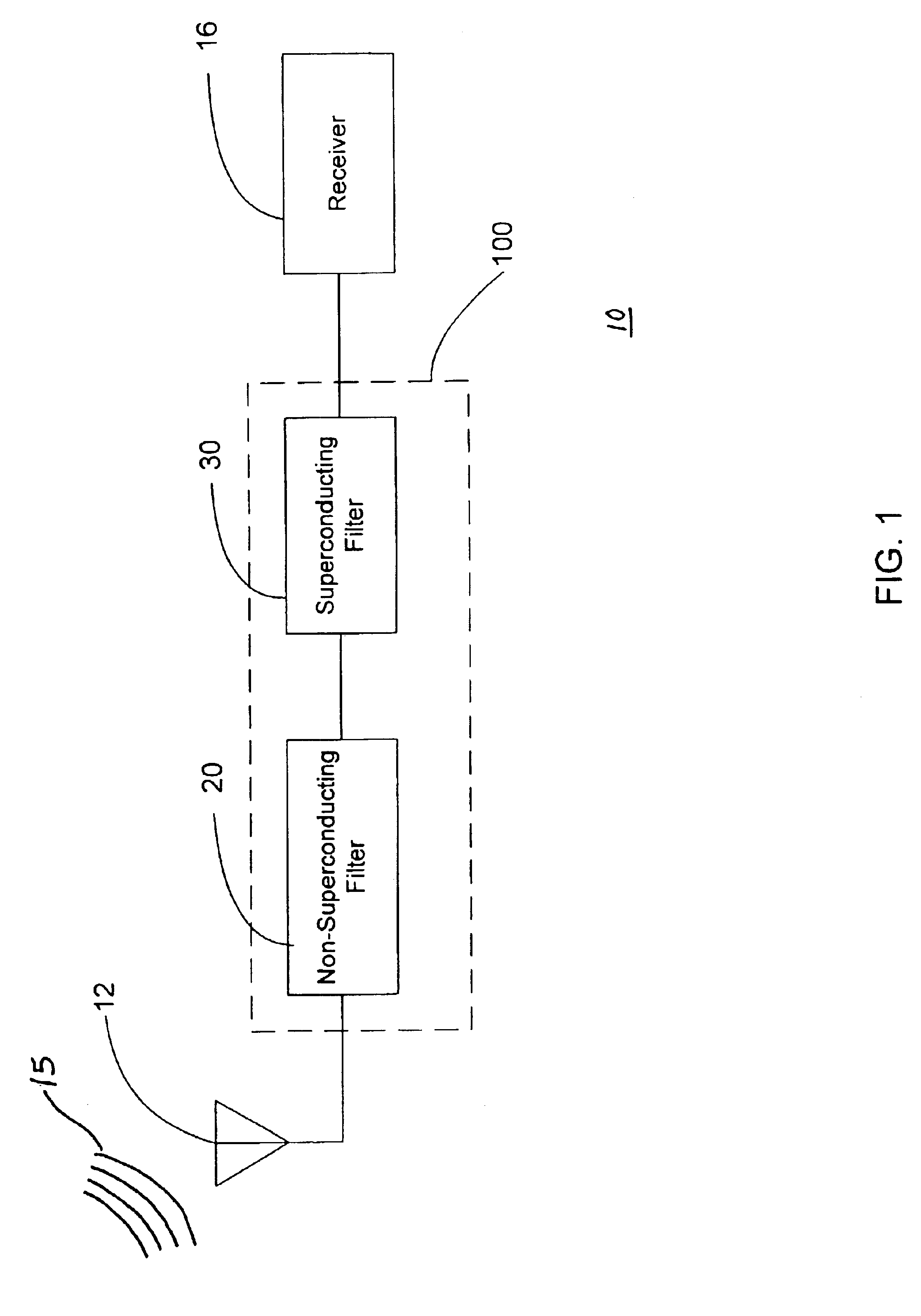

Filter network combining non-superconducting and superconducting filters

InactiveUS6933748B2Improve frequency selectivityHigh frequencyExclusive-OR circuitsComputation using non-contact making devicesElectronicsPhysics

A filter network designed for providing high frequency selectivity with a high degree of reliability and availability. The filter network comprises a superconducting filter and a non-superconducting filter, or a combination thereof to form multiplexers. A receive side of the non-superconducting filter pre-filters received RF signals before inputting them to the superconducting filter. The non-superconducting filter is constructed and arranged to pass RF signals having a frequency within a first pass band to the superconducting filter. The superconducting device is constructed and arranged to exhibit a high-degree of frequency selectivity in further narrowing the received RF signals. Other aspects are directed to the arrangement, construction, and uses of the same structures to accomplish different but similar goals. In a multiplexed configuration, various combinations of transmit filters are used to enable the use of a common antenna with the receive side electronics, which may be located at the top of the antenna tower or in the base station.

Owner:SUPERCONDUCTOR TECHNOLOGIES INC

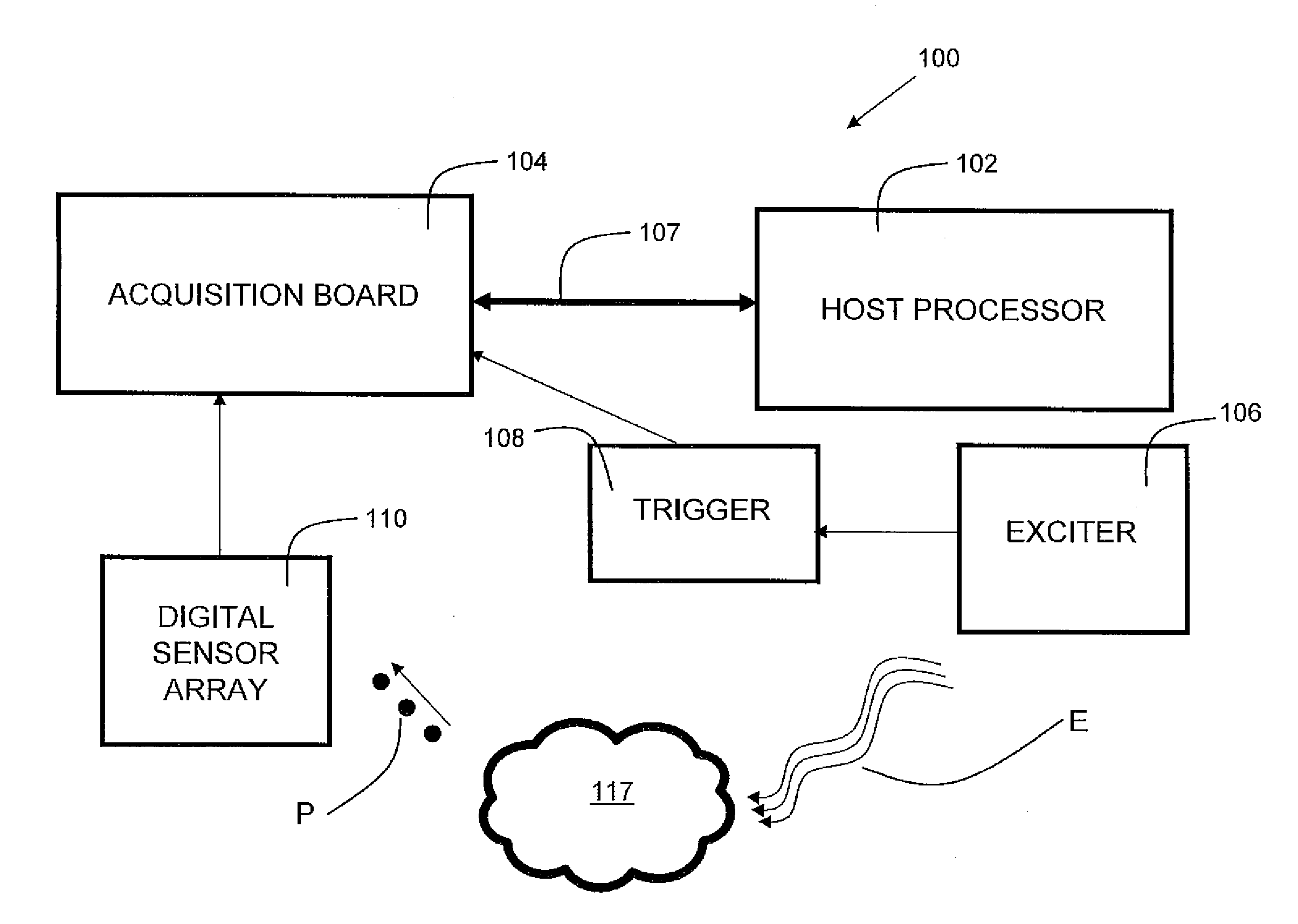

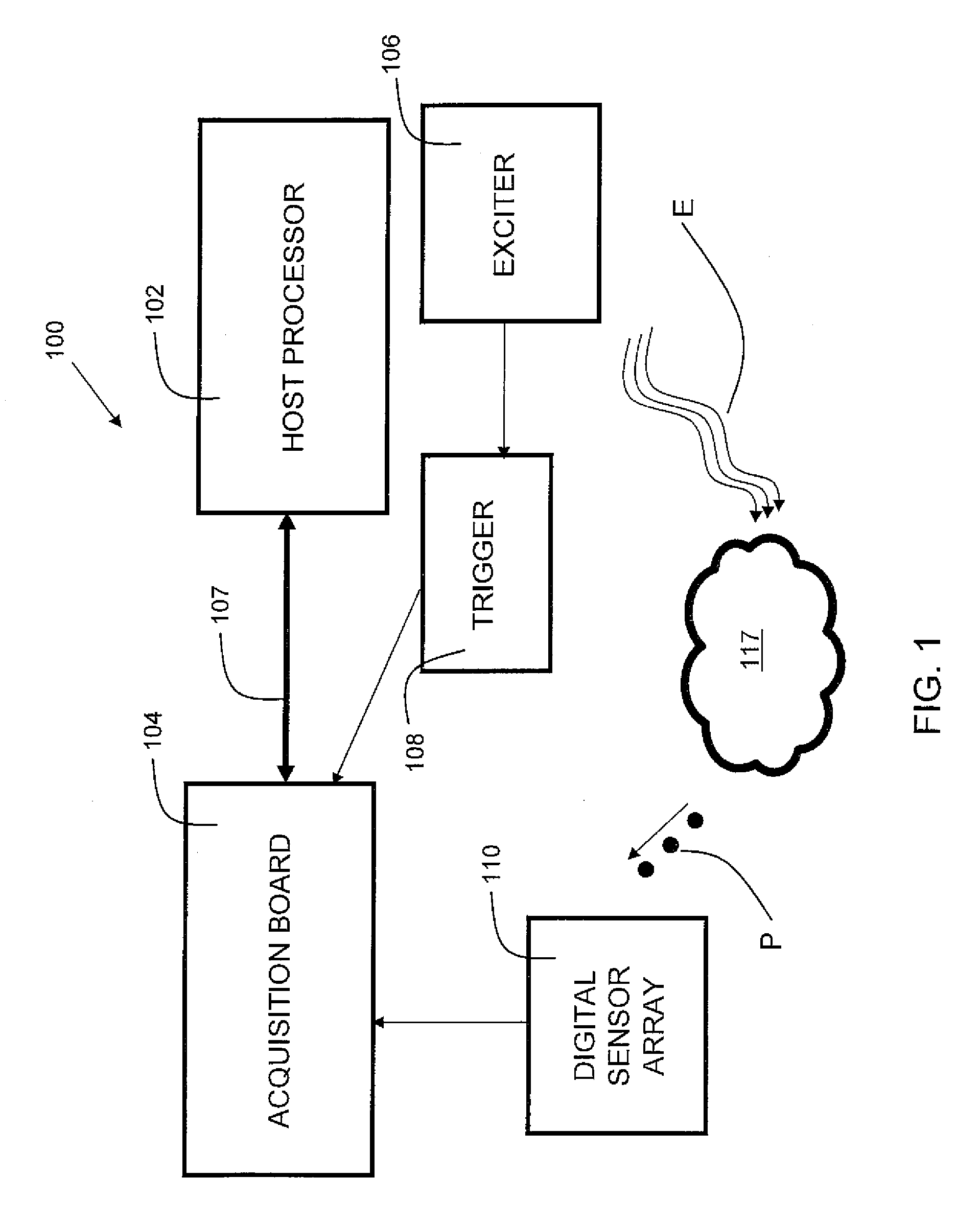

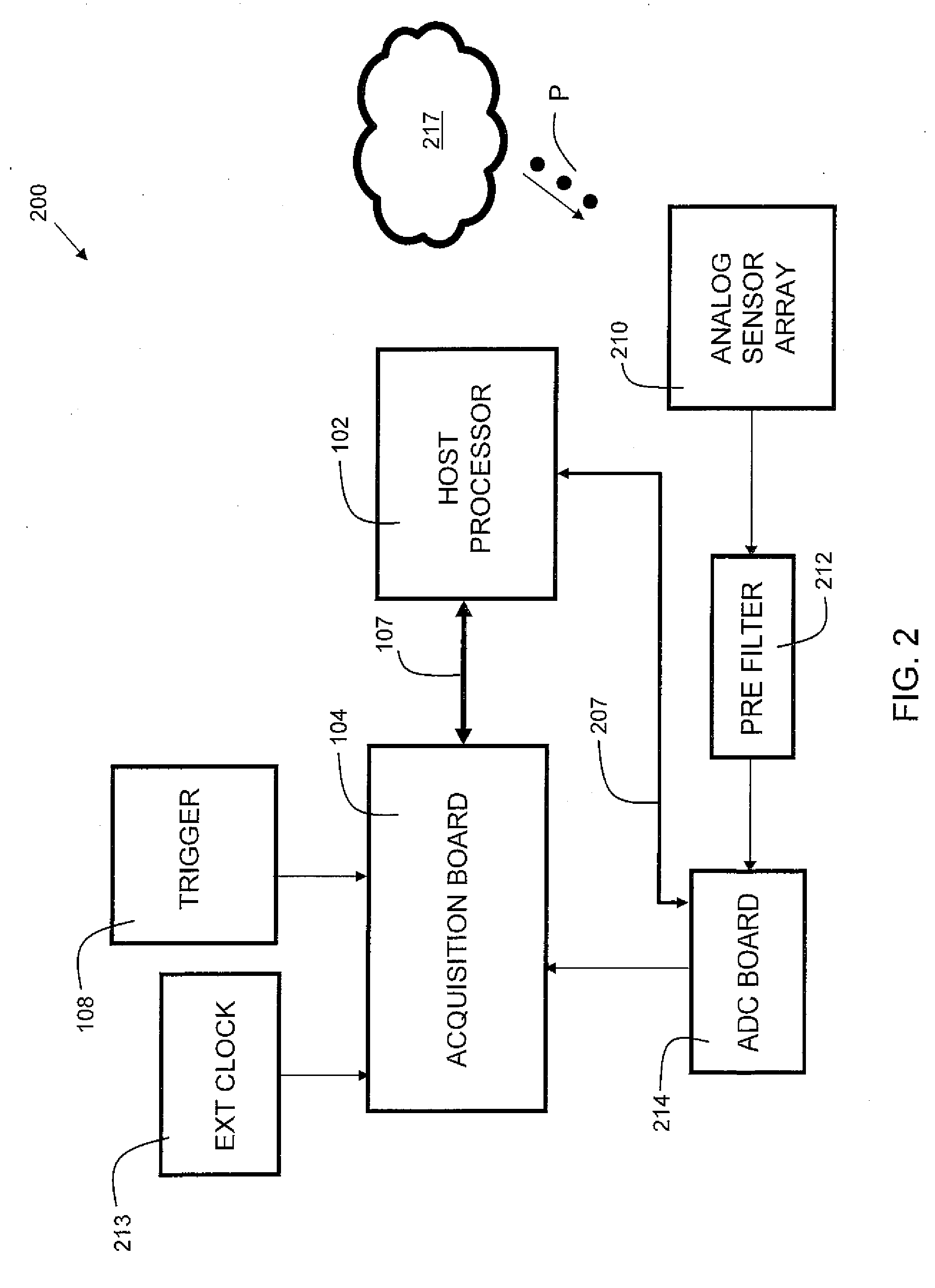

Low Cost Multi-Channel Data Acquisition System

ActiveUS20110178774A1Detection moreOptimizationExclusive-OR circuitsWave based measurement systemsPhysical laboratoryData acquisition

Embodiments of the present invention provide an inexpensive and fast pulse characterization platform capable of real time operation, suitable for acquisition of single-photon data. Embodiments of the present invention include both a digital multi-channel data acquisition instrument and an analog pulse acquisition instrument suitable for a wide range of applications in physics laboratories. An FPGA performs multi-channel acquisition in real time, time stamps single events, and determines if the events fit a predetermined signature, which causes the events to be categorized as a coincidence. The indications of coincidences are then communicated to a host computer for further processing as desired.

Owner:GOVERNMENT OF THE UNITED STATES OF AMERICA AS REPRESENTED BY THE SEC OF COMMERCE THE NAT INST OF STANDARDS & TEHCNOLOGY +1

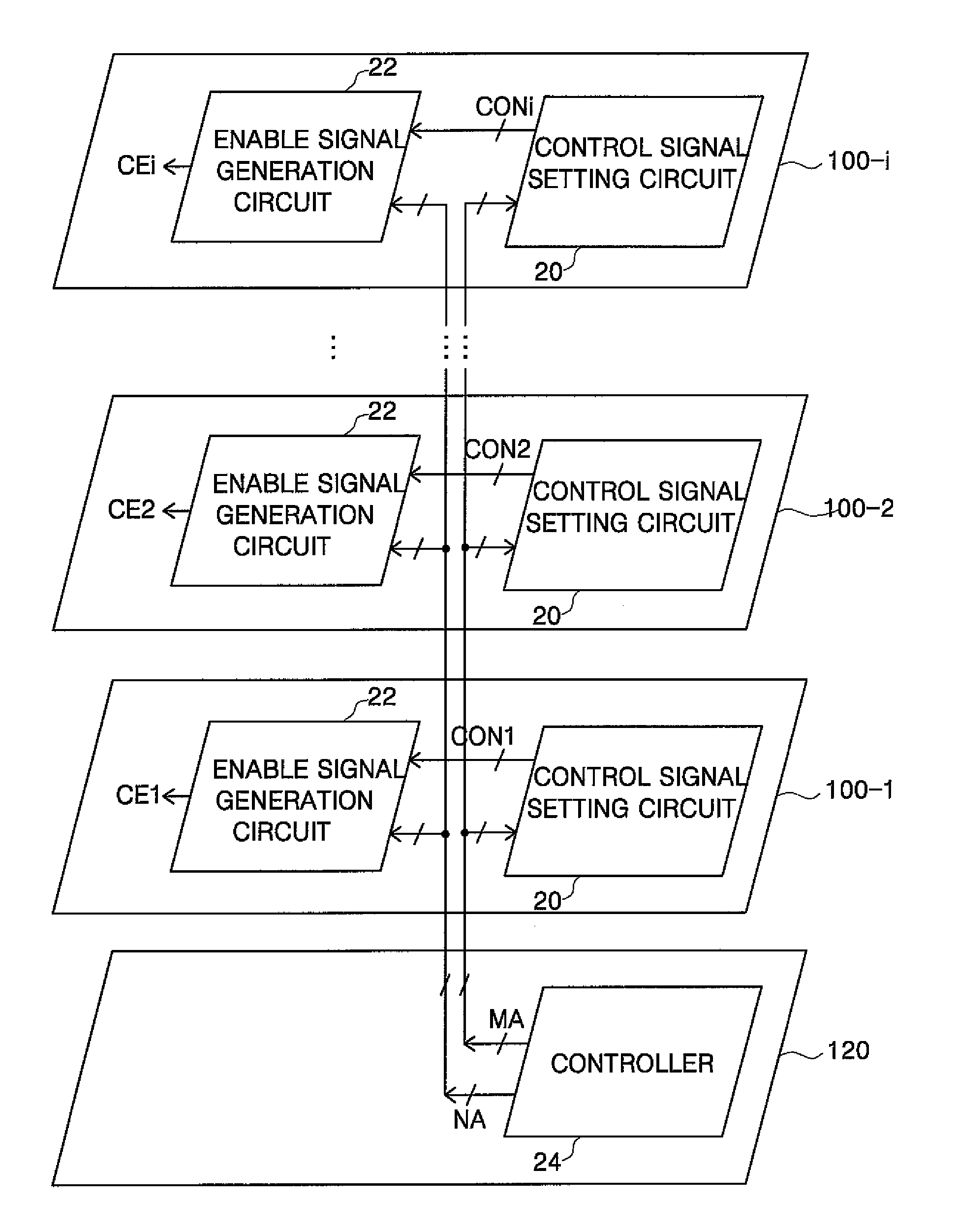

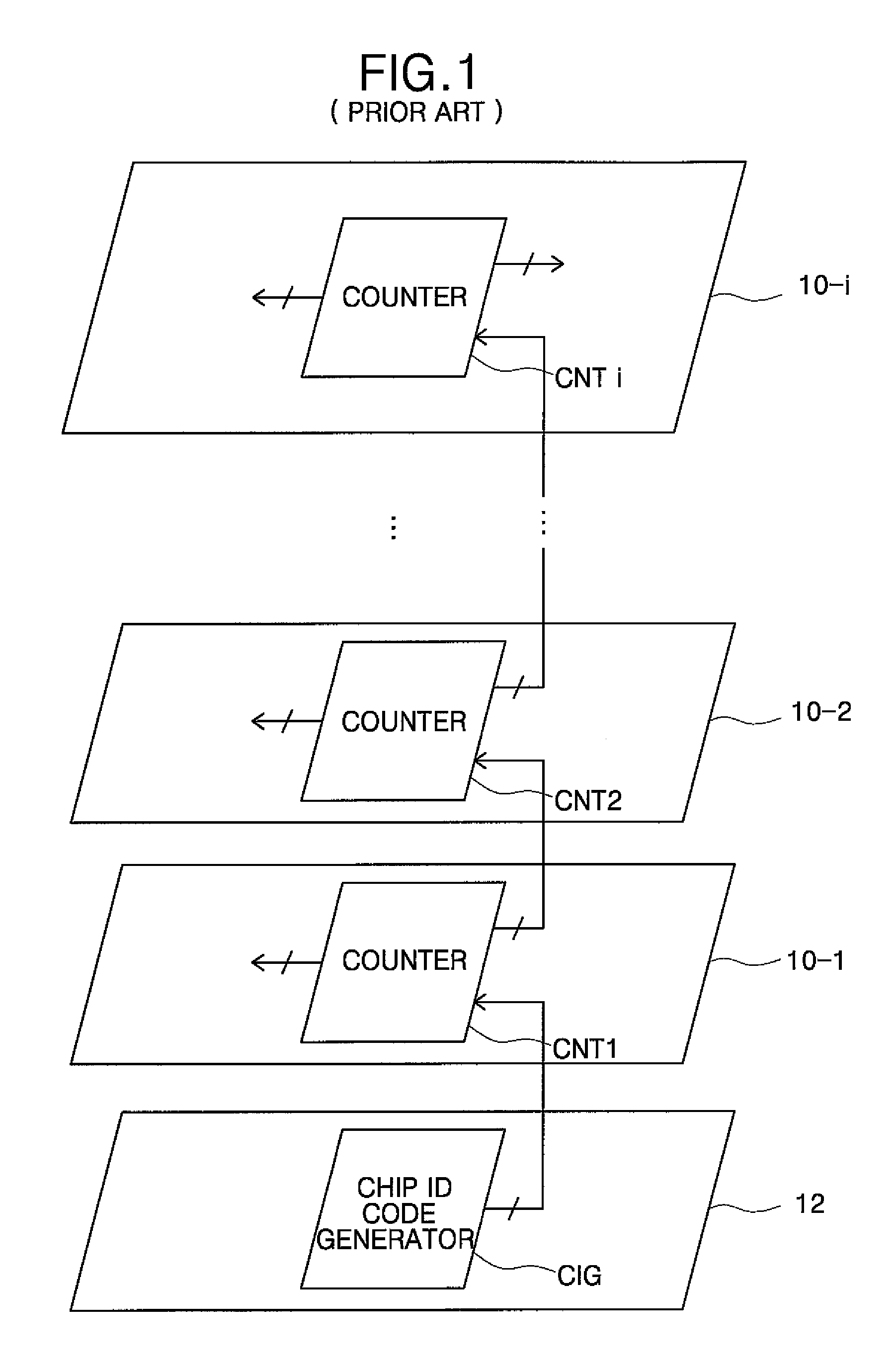

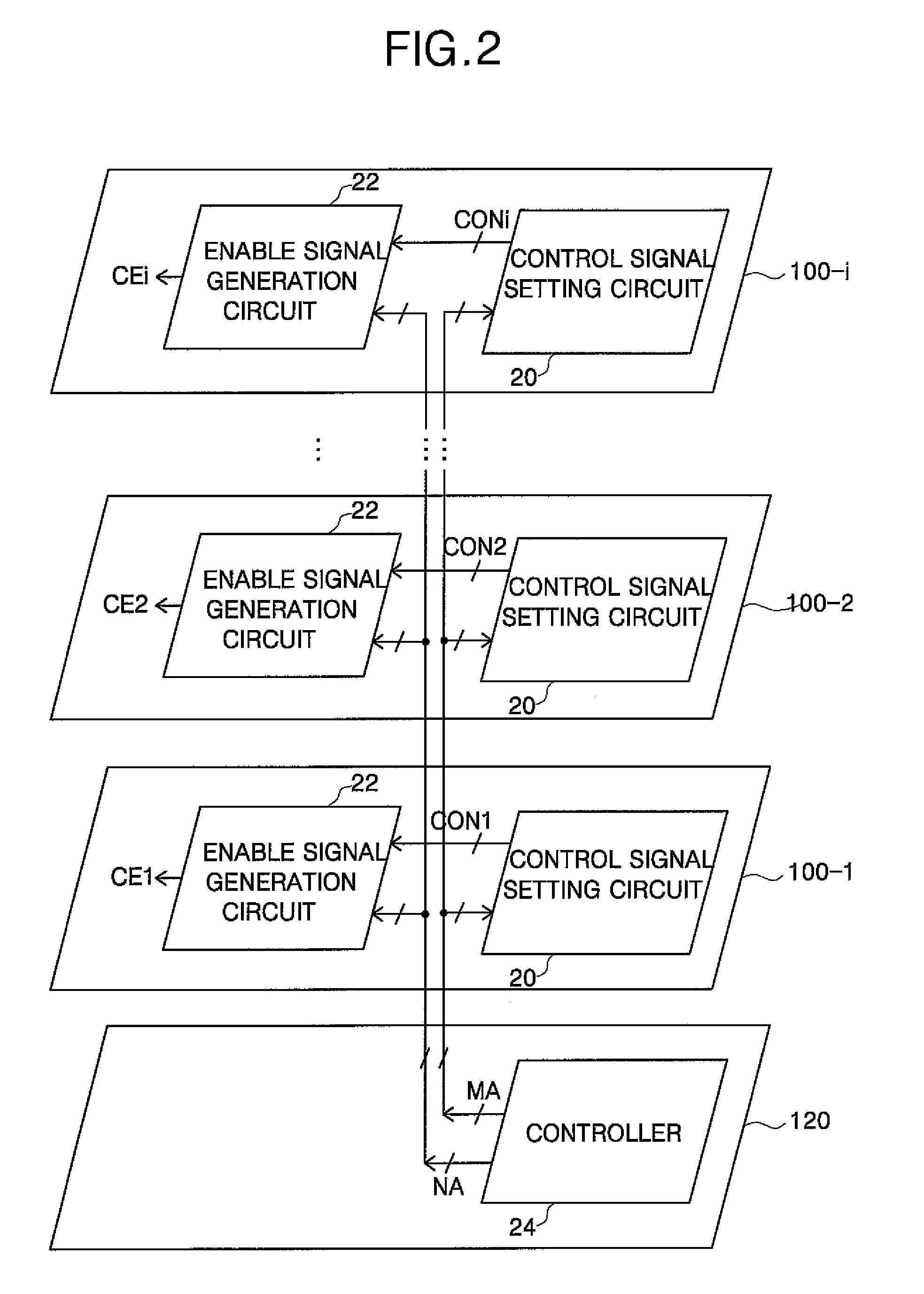

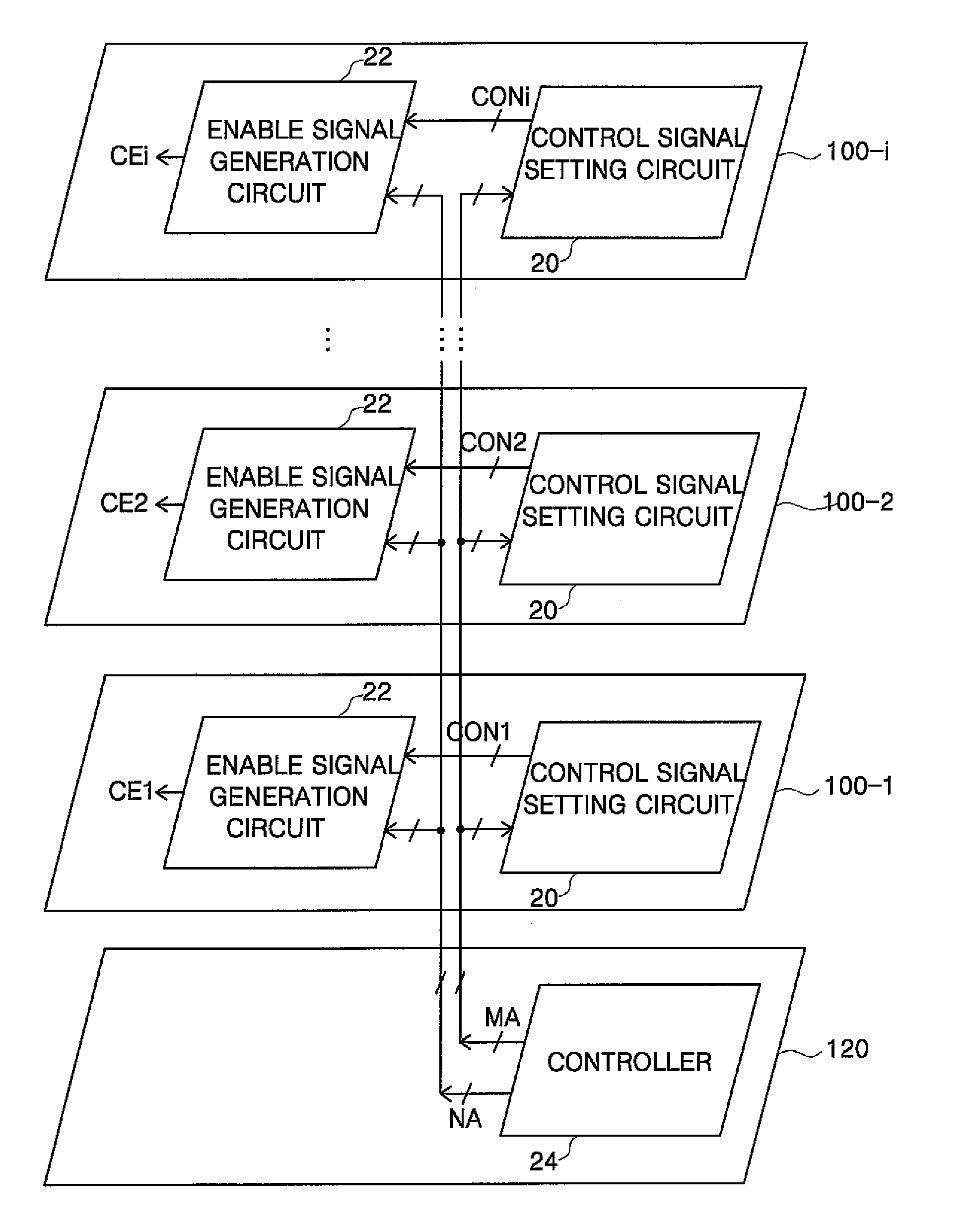

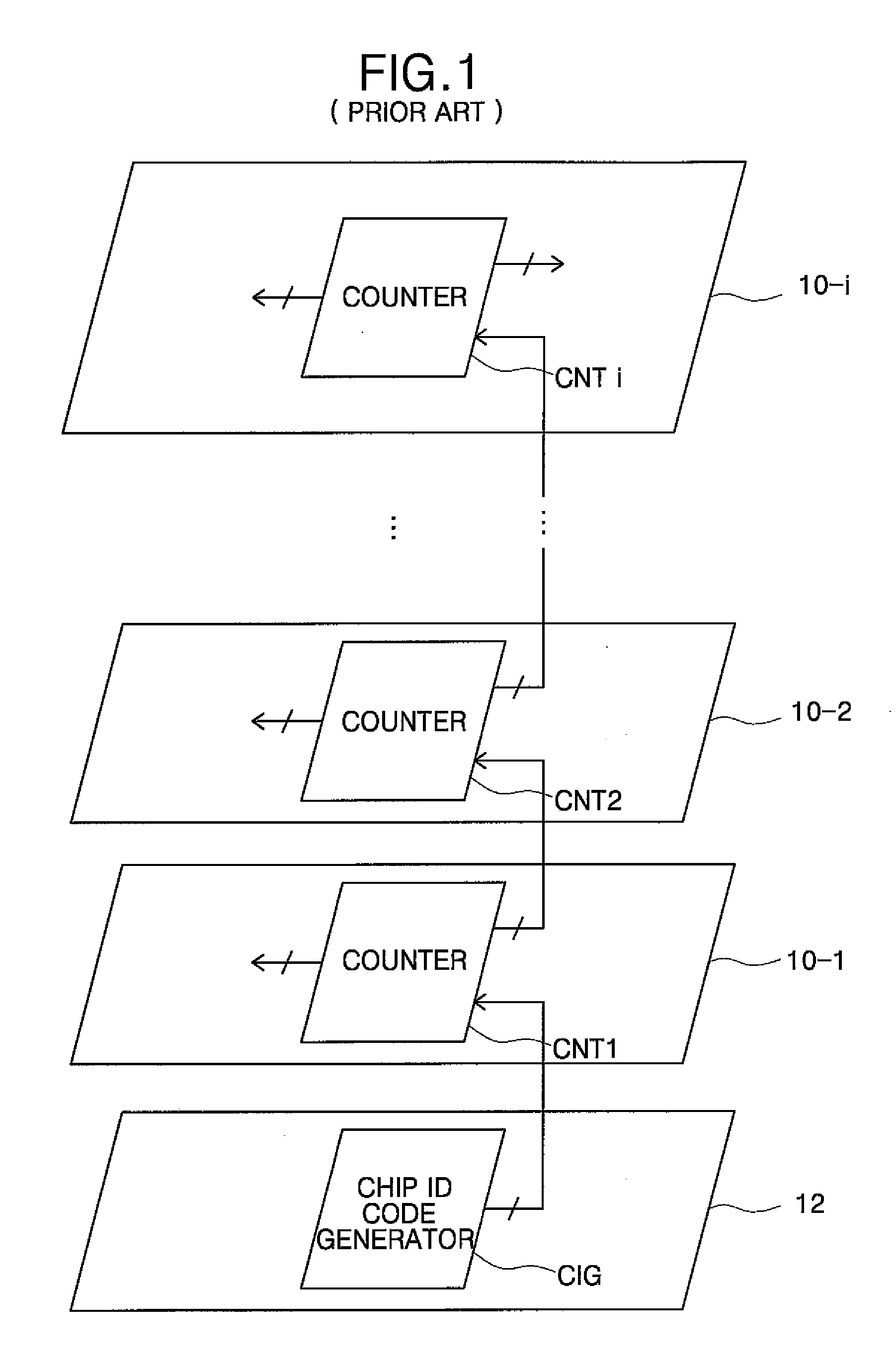

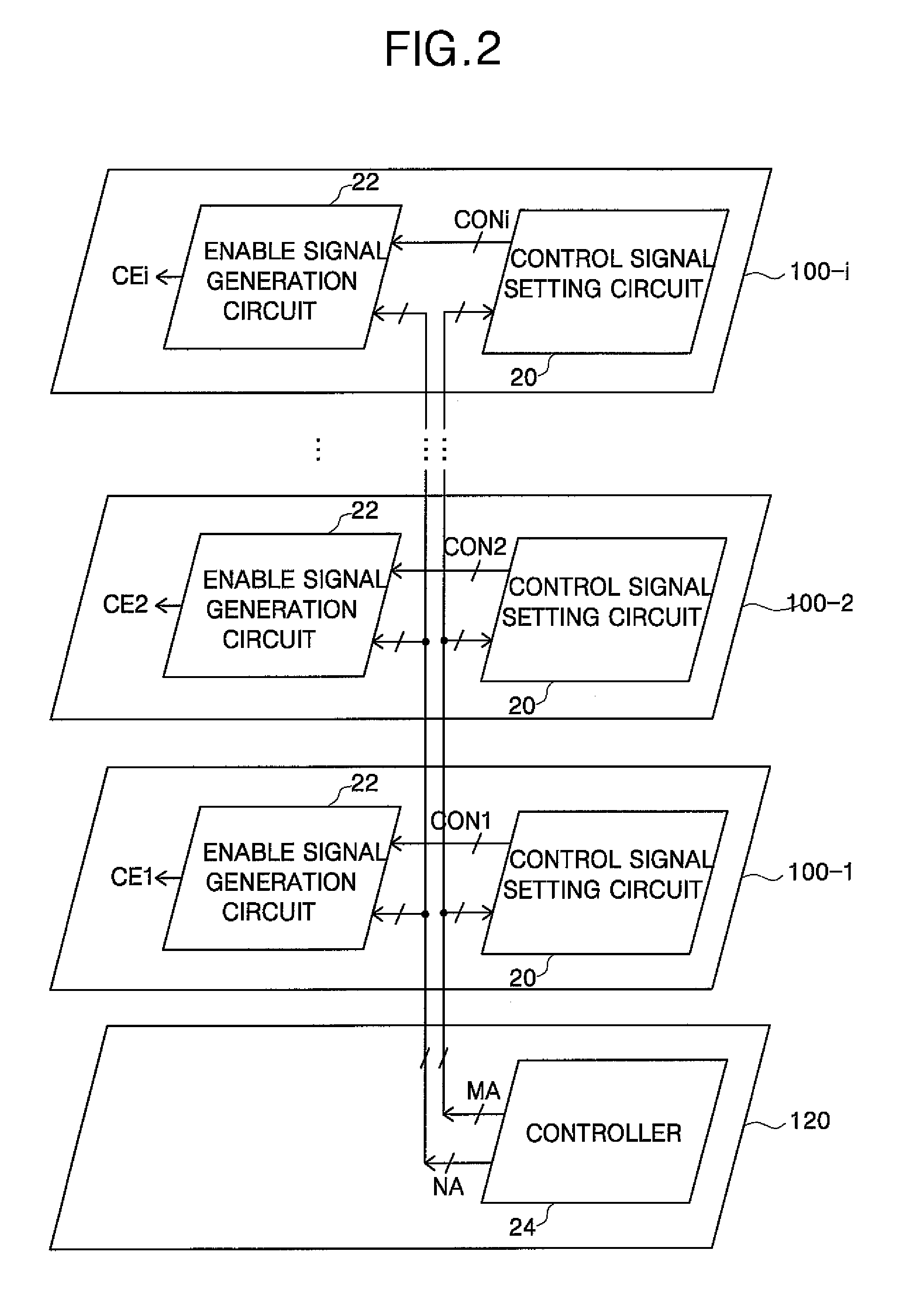

Semiconductor memory device and method of generating chip enable signal thereof

ActiveUS7633785B2Simple circuit configurationExclusive-OR circuitsComputation using non-contact making devicesMemory chipControl signal

Provided are a semiconductor memory device and a method of generating a chip enable signal thereof. The device includes a plurality of memory chips and an interface chip that are stacked. Each of the memory chips includes a control signal setting unit, which sets input signals applied to first and second input nodes as less significant 2-bit control signals of n-bit control signals, performs a logic AND operation on the less significant 2-bit control signals to generate AND operated signals, performs a logic XOR operation on each of the AND operated signals and each bit signal of more significant n−2-bit input signals applied to third to n-th input nodes to set the n−2-bit control signals, outputs the signal applied to the second input node through a first output node, inverts the signal applied to the first input node to output the inverted signal through a second output node, and outputs the more significant n−2-bit input signals through third through n-th output nodes, respectively. The first through n-th output nodes of one of two adjacent memory chips are respectively connected to the first through n-th input nodes of the other of the two adjacent memory chips.

Owner:SAMSUNG ELECTRONICS CO LTD

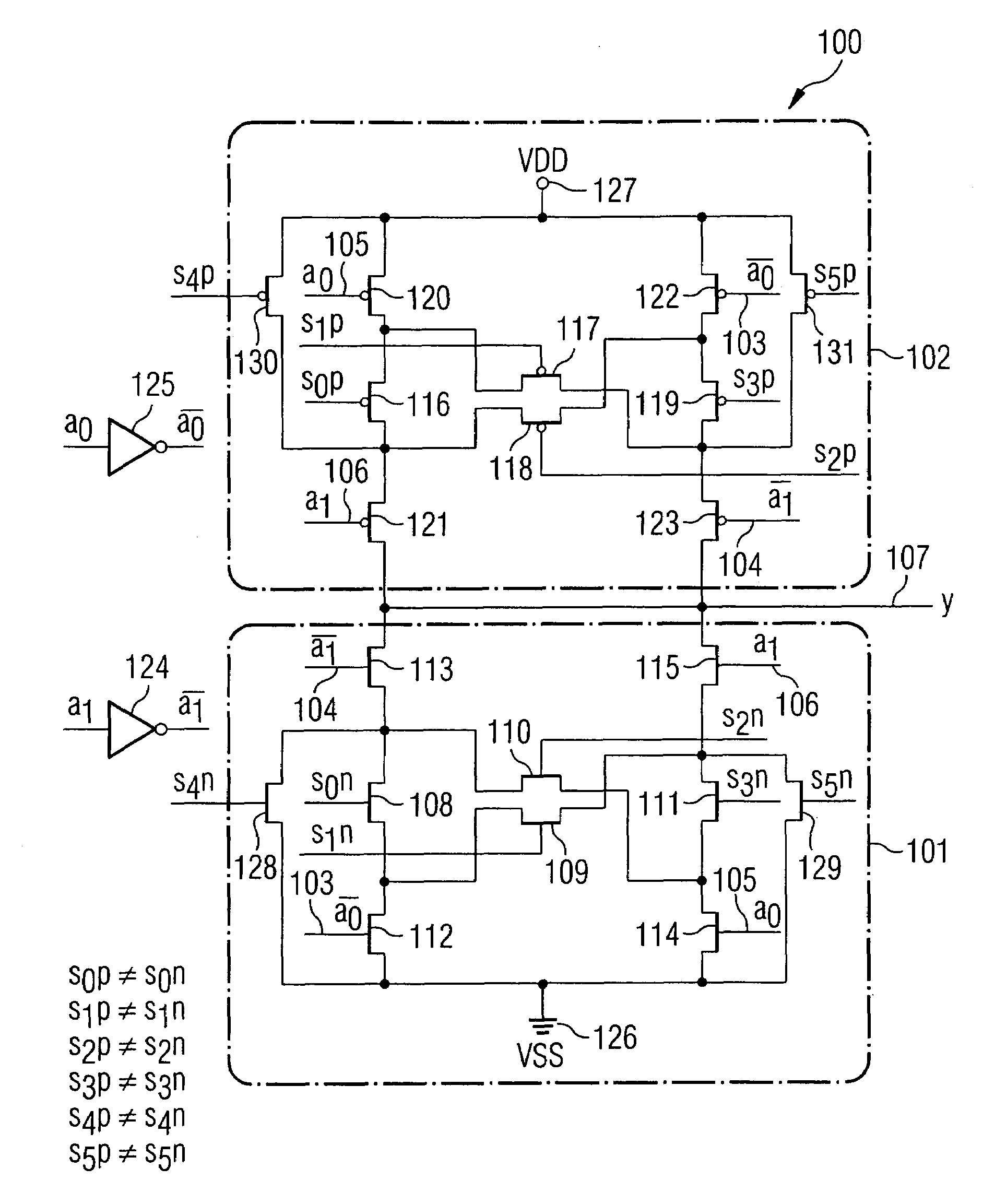

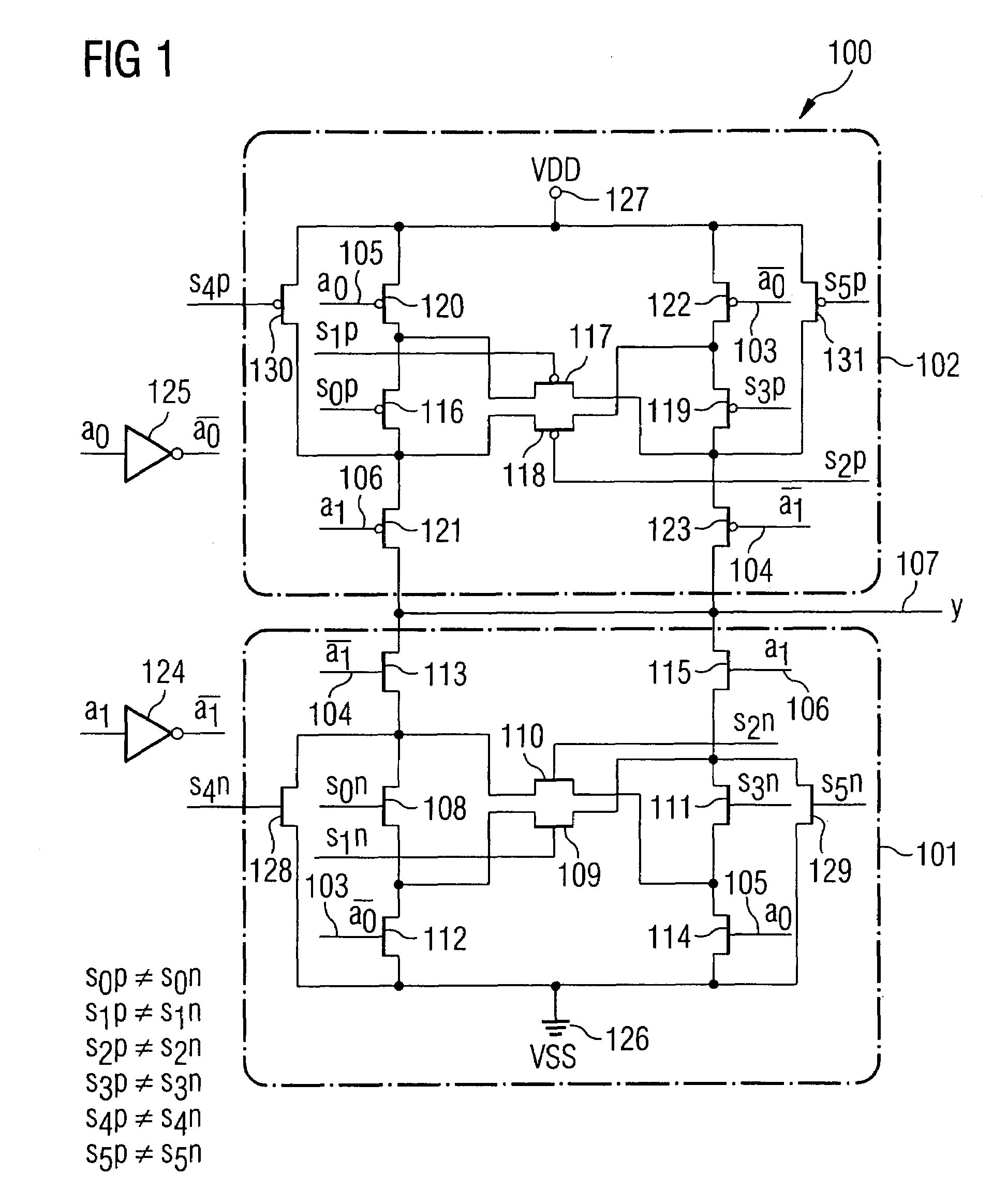

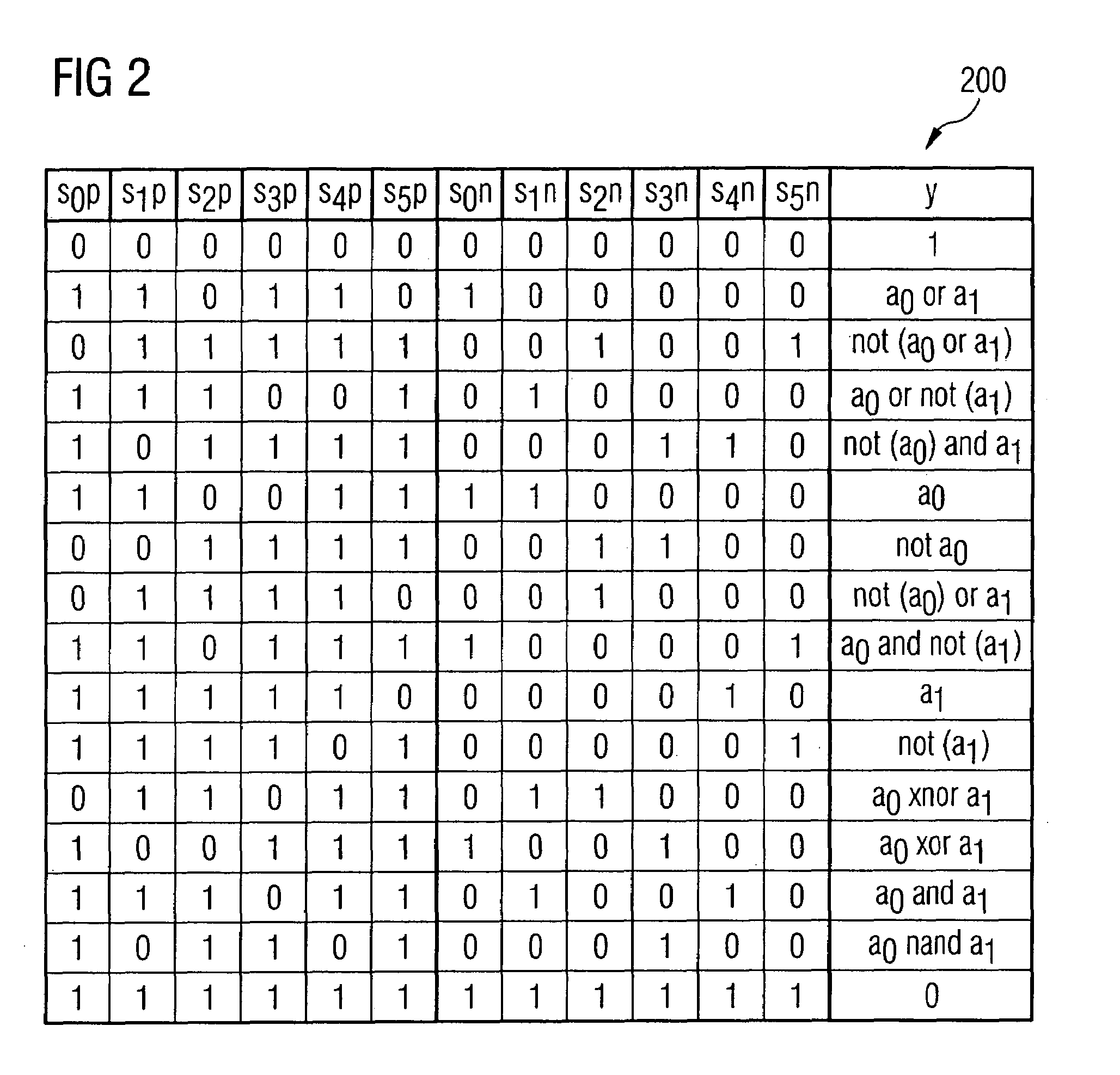

Logic basic cell, logic basic cell arrangement and logic device

InactiveUS7279936B2Exclusive-OR circuitsComputation using non-contact making devicesTheoretical computer scienceData signal

A logic basic cell, a logic basic cell arrangement, and a logic device. A logic basic cell is provided for forming a logic combination of two data signals in accordance with a logic function that can be selected by means of a plurality of logic selection elements, having four data signal inputs, to which two data signals and the logically complementary data signals thereof can be applied, and having six logic selection elements between the data signal inputs. At a data signal output, the logic combination of the two data signals in accordance with the logic function selected by means of the logic selection elements can be provided as output signal.

Owner:INFINEON TECH AG

Semiconductor memory device and method of generating chip enable signal thereof

ActiveUS20090015291A1Simple circuit configurationExclusive-OR circuitsComputation using non-contact making devicesMemory chipControl signal

Provided are a semiconductor memory device and a method of generating a chip enable signal thereof. The device includes a plurality of memory chips and an interface chip that are stacked. Each of the memory chips includes a control signal setting unit, which sets input signals applied to first and second input nodes as less significant 2-bit control signals of n-bit control signals, performs a logic AND operation on the less significant 2-bit control signals to generate AND operated signals, performs a logic XOR operation on each of the AND operated signals and each bit signal of more significant n−2-bit input signals applied to third to n-th input nodes to set the n−2-bit control signals, outputs the signal applied to the second input node through a first output node, inverts the signal applied to the first input node to output the inverted signal through a second output node, and outputs the more significant n−2-bit input signals through third through n-th output nodes, respectively. The first through n-th output nodes of one of two adjacent memory chips are respectively connected to the first through n-th input nodes of the other of the two adjacent memory chips.

Owner:SAMSUNG ELECTRONICS CO LTD

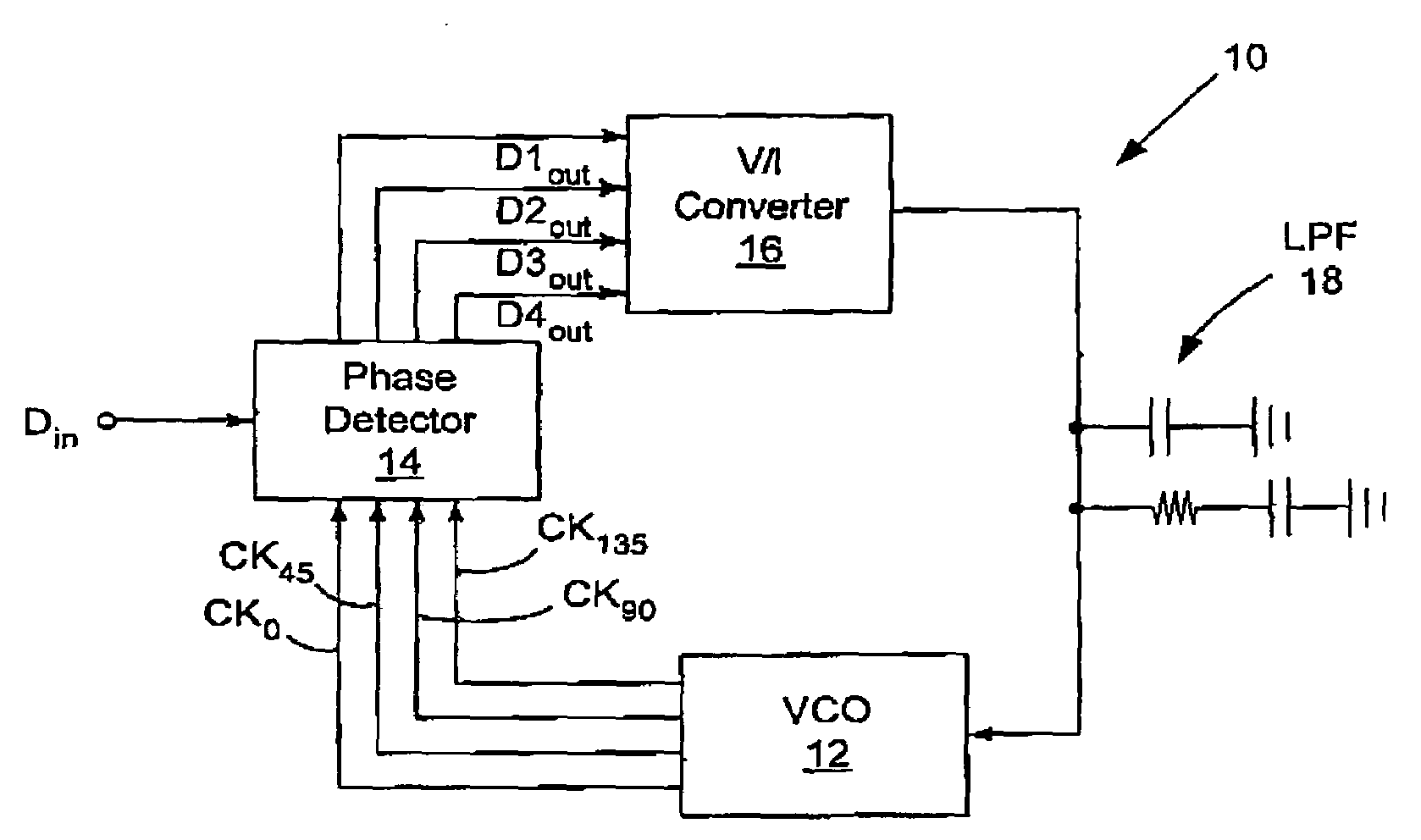

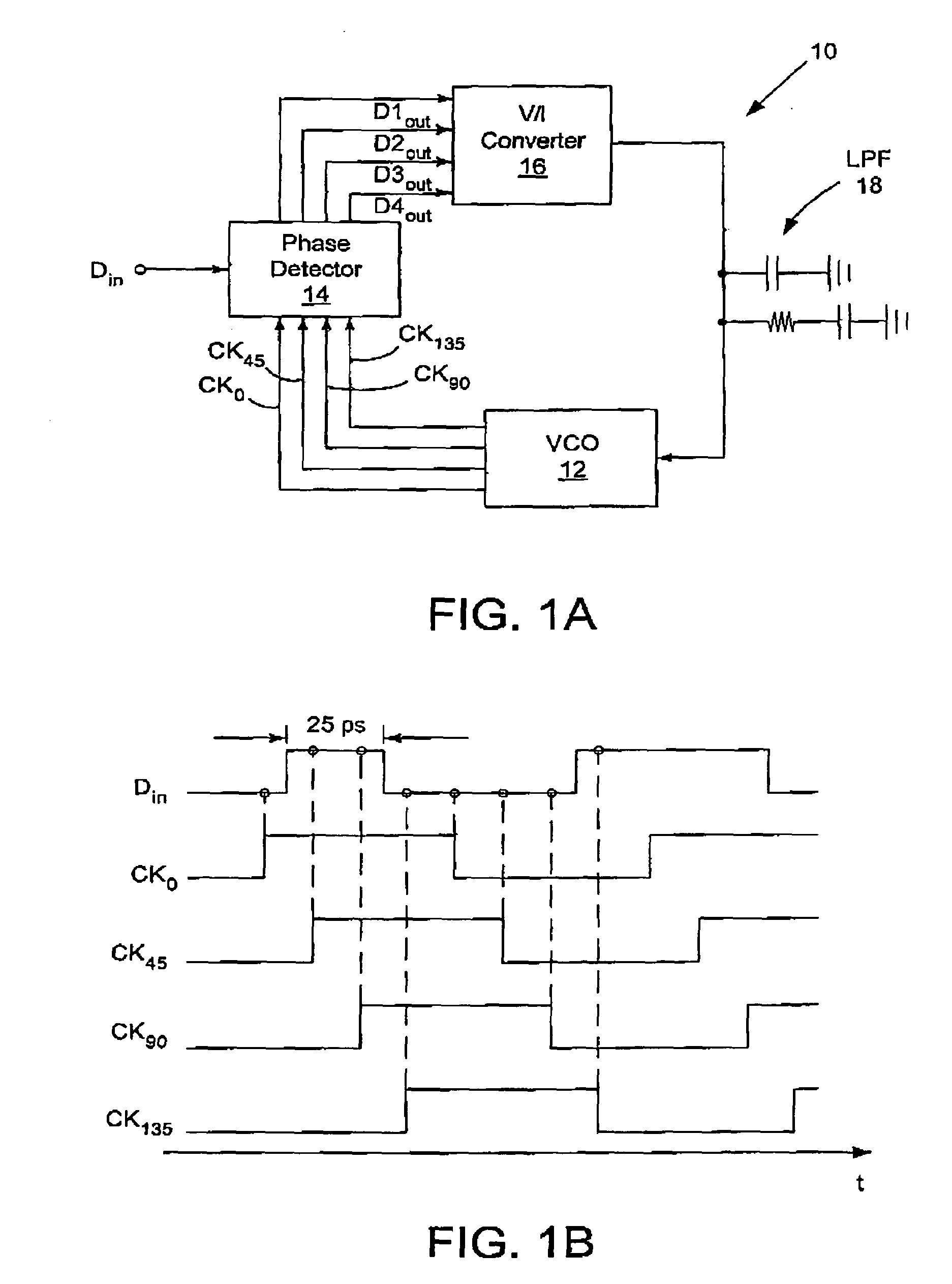

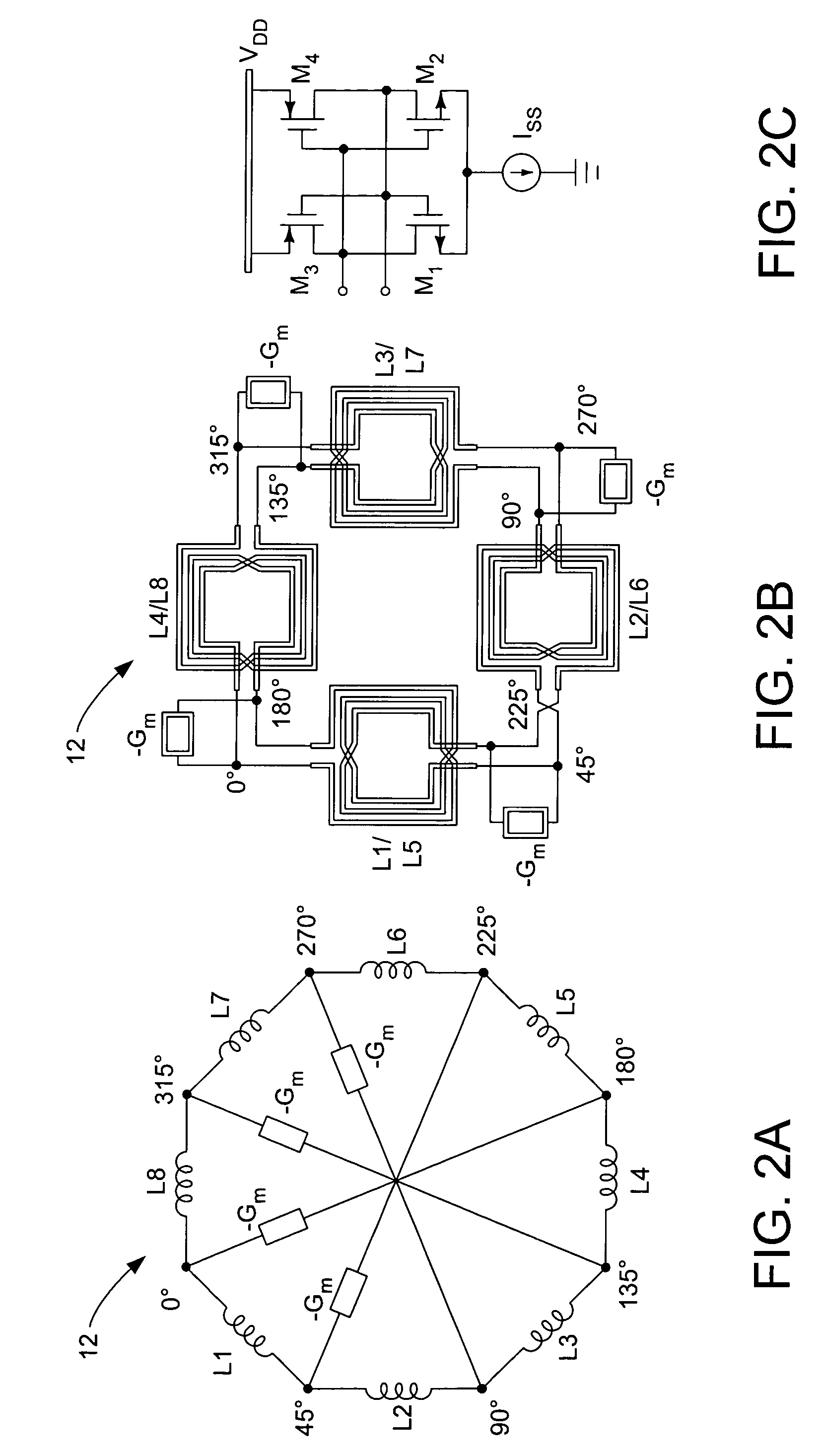

High-speed clock and data recovery circuit

A 40-Gb / s clock and data recovery (CDR) circuit incorporates a quarter-rate phase detector and a multi-phase voltage controlled oscillator to re-time and de-multiplex a 40-Gb / s input data signal into four 10-Gb / s output data signals. The circuit is fabricated in 0.18 μm CMOS technology.

Owner:RGT UNIV OF CALIFORNIA

Current-controlled CMOS logic family

InactiveUS6900670B2Speed maximizationDissipates static currentTransistorExclusive-OR circuitsUltra high speedTransceiver

Various circuit techniques for implementing ultra high speed circuits use current-controlled CMOS (C3MOS) logic fabricated in conventional CMOS process technology. An entire family of logic elements including inverter / buffers, level shifters, NAND, NOR, XOR gates, latches, flip-flops and the like are implemented using C3MOS techniques. Optimum balance between power consumption and speed for each circuit application is achieve by combining high speed C3MOS logic with low power conventional CMOS logic. The combined C3MOS / CMOS logic allows greater integration of circuits such as high speed transceivers used in fiber optic communication systems.

Owner:AVAGO TECH INT SALES PTE LTD

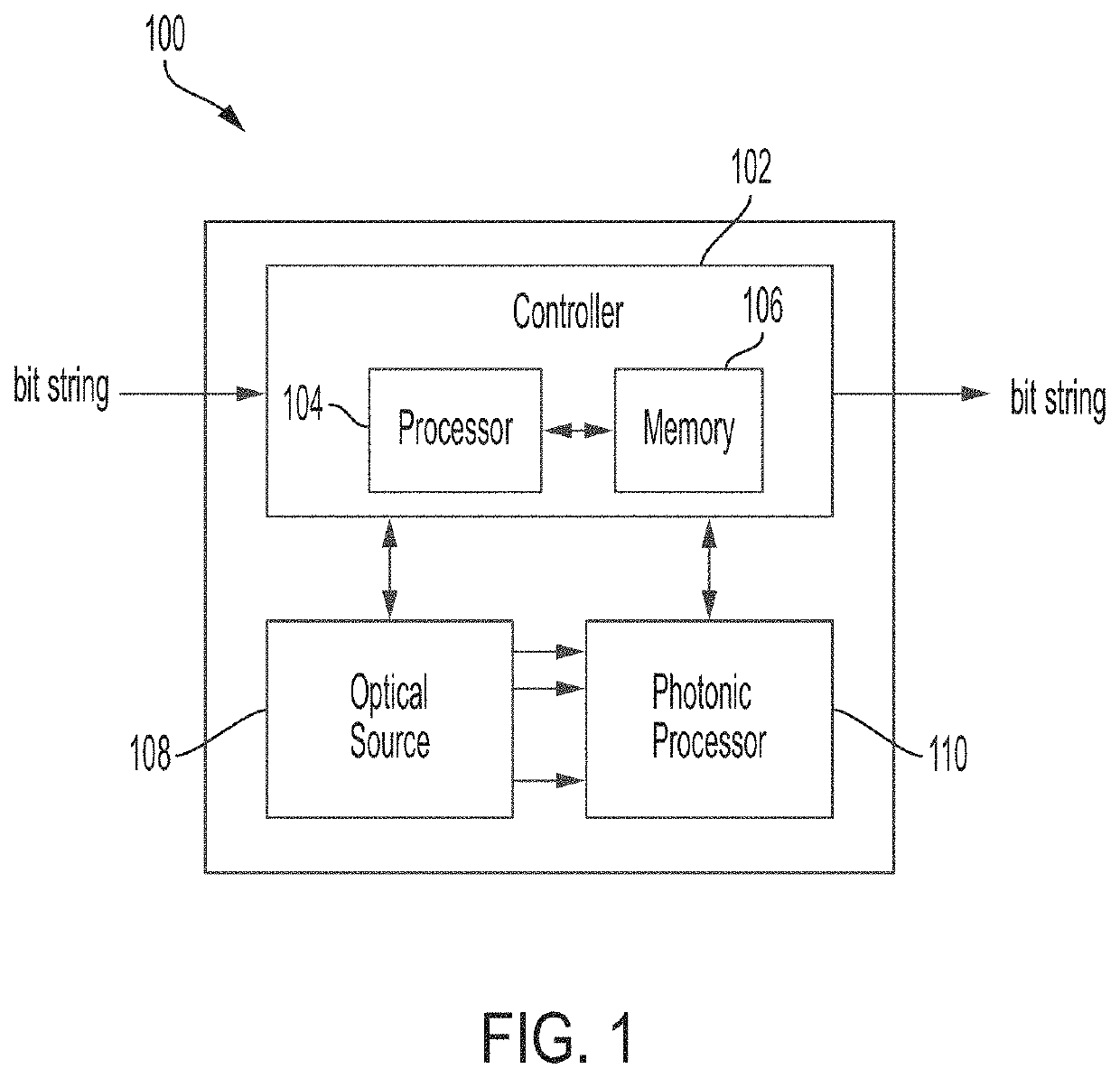

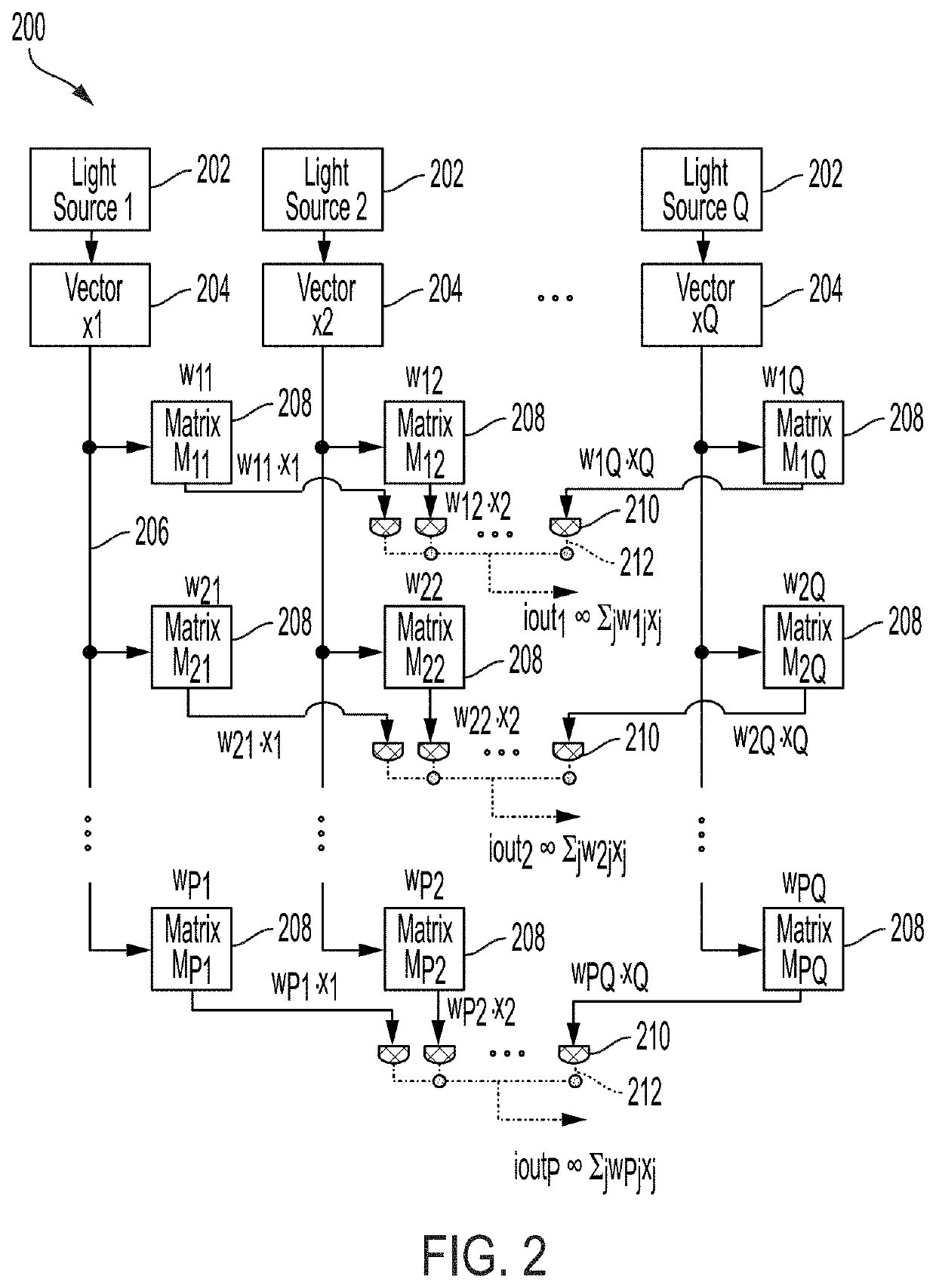

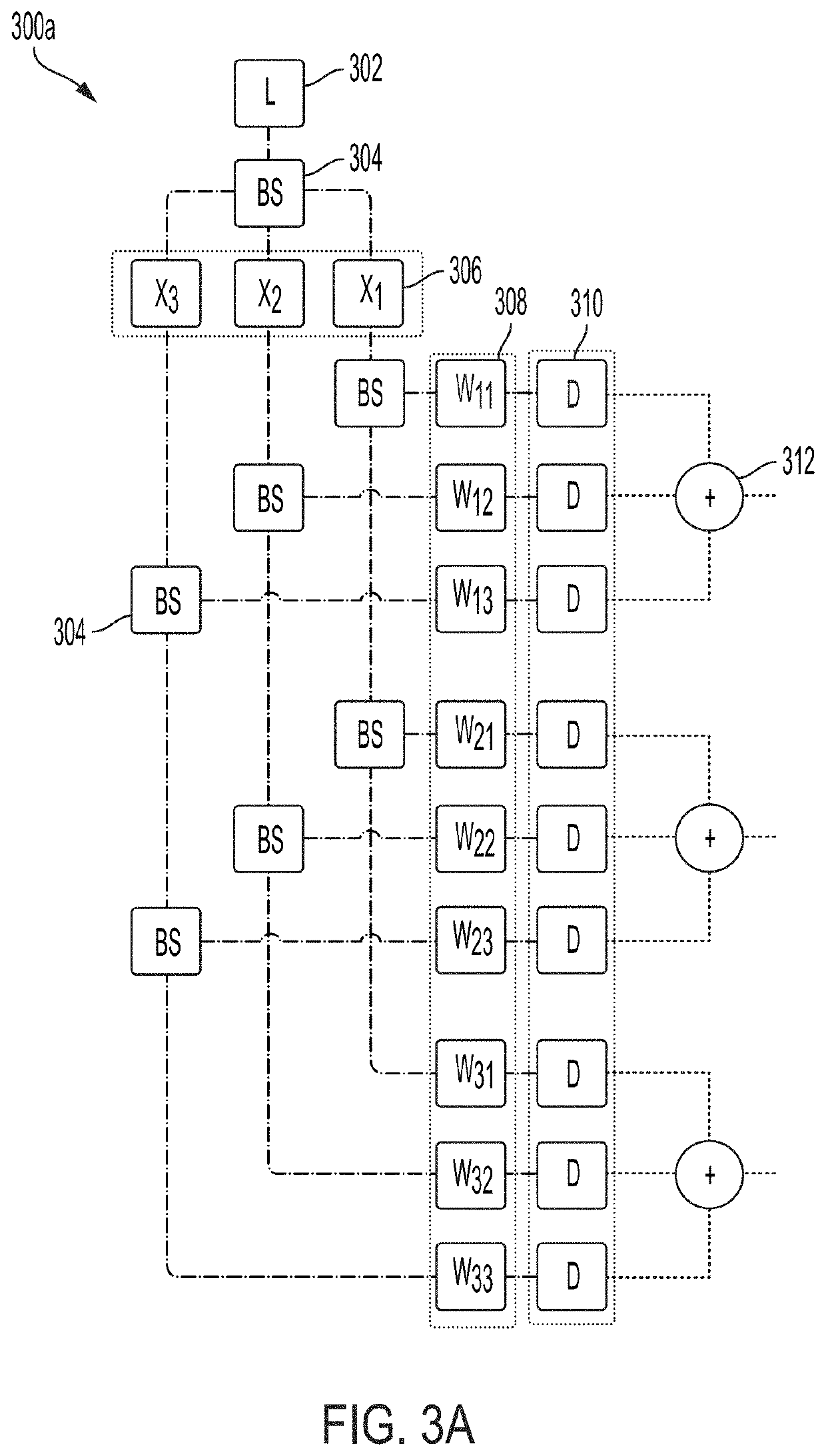

Systems and methods for analog computing using a linear photonic processor

ActiveUS20210036783A1Exclusive-OR circuitsPower supply for data processingAnalogue computationVector element

Systems and methods for performing signed matrix operations using a linear photonic processor are provided. The linear photonic processor is formed as an array of first amplitude modulators and second amplitude modulators, the first amplitude modulators configured to encode elements of a vector into first optical signals and the second amplitude modulators configured to encode a product between the vector elements and matrix elements into second optical signals. An apparatus may be used to implement a signed value of an output of the linear processor. The linear photonic processor may be configured to perform matrix-vector and / or matrix-matrix operations.

Owner:LIGHTMATTER INC

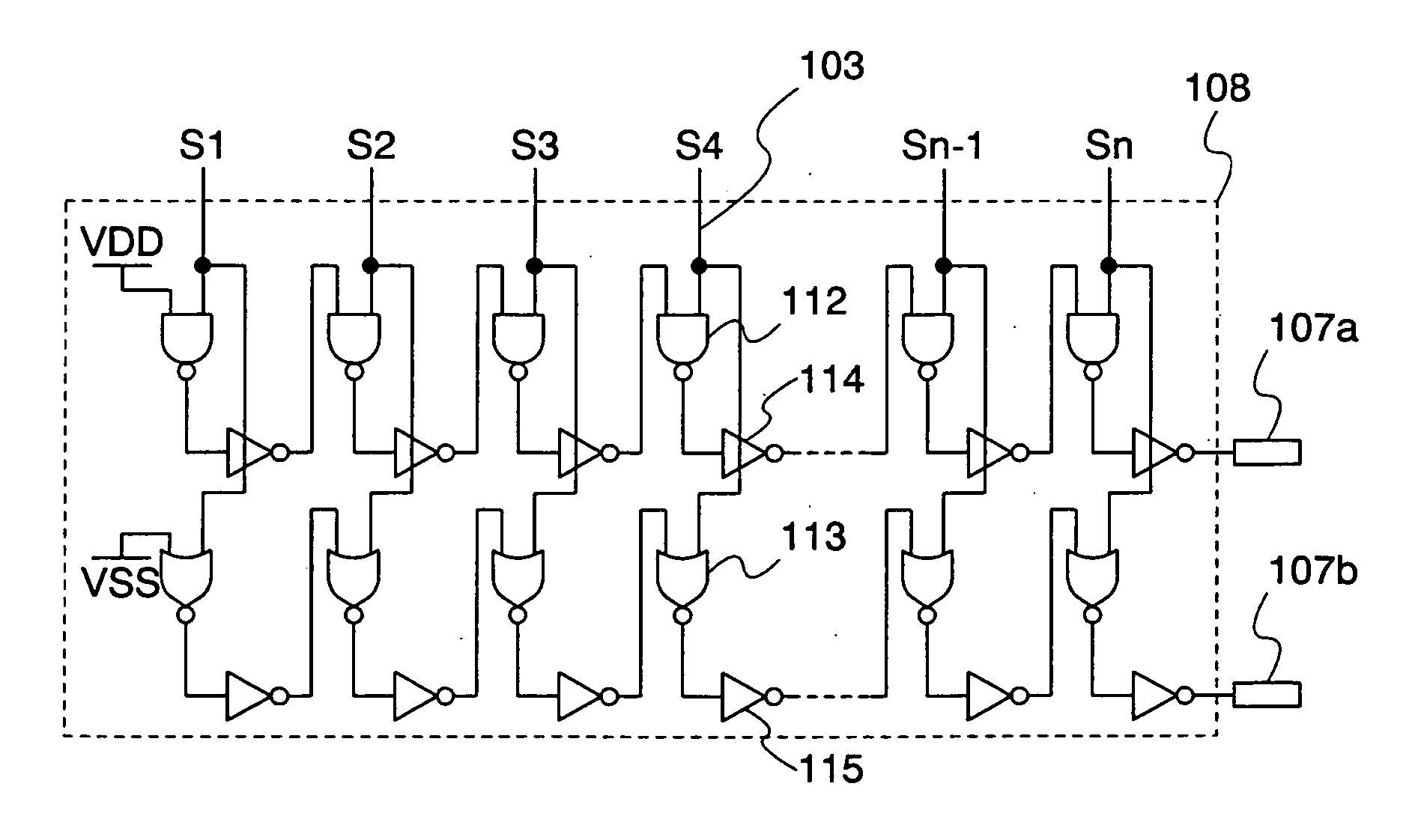

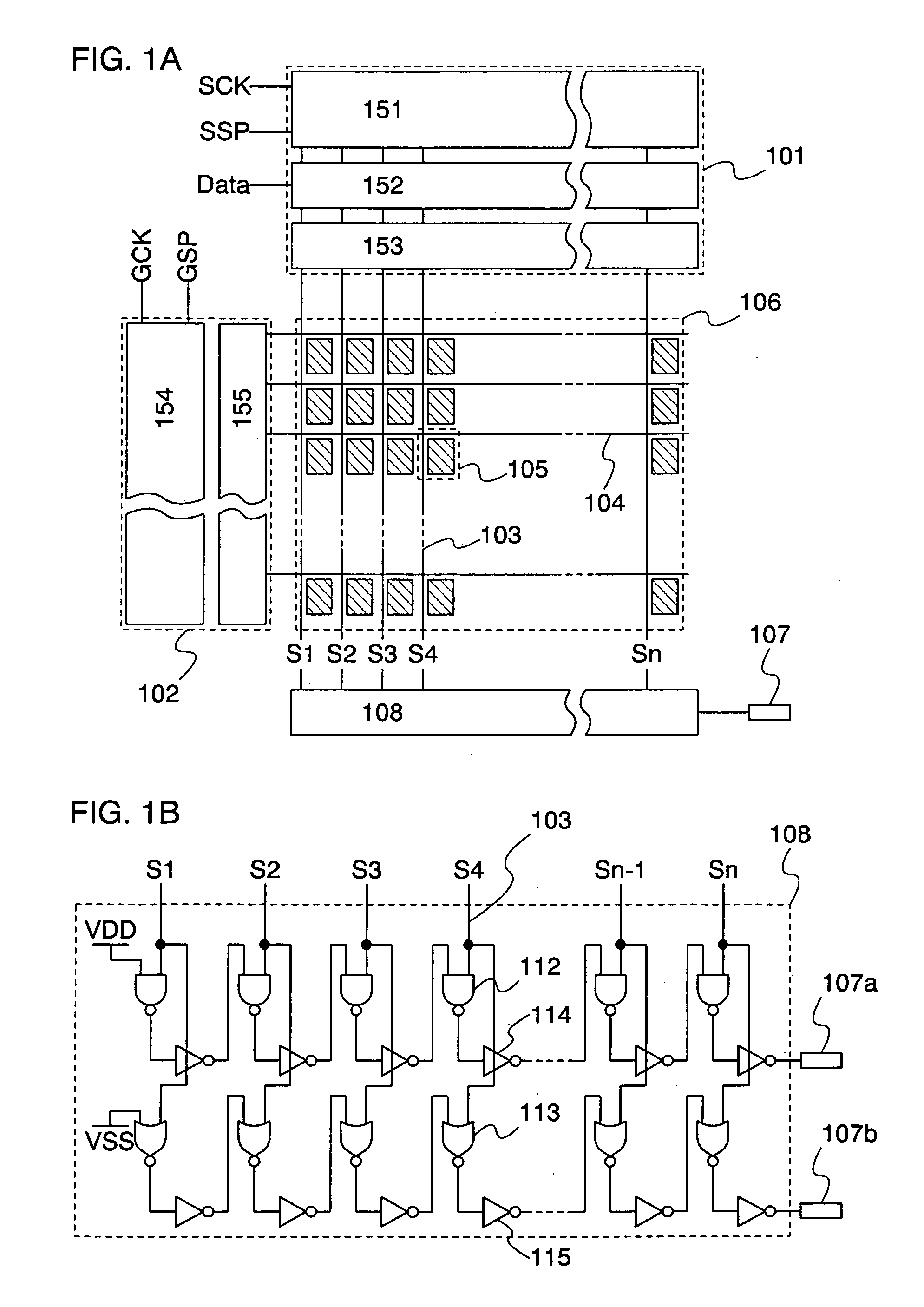

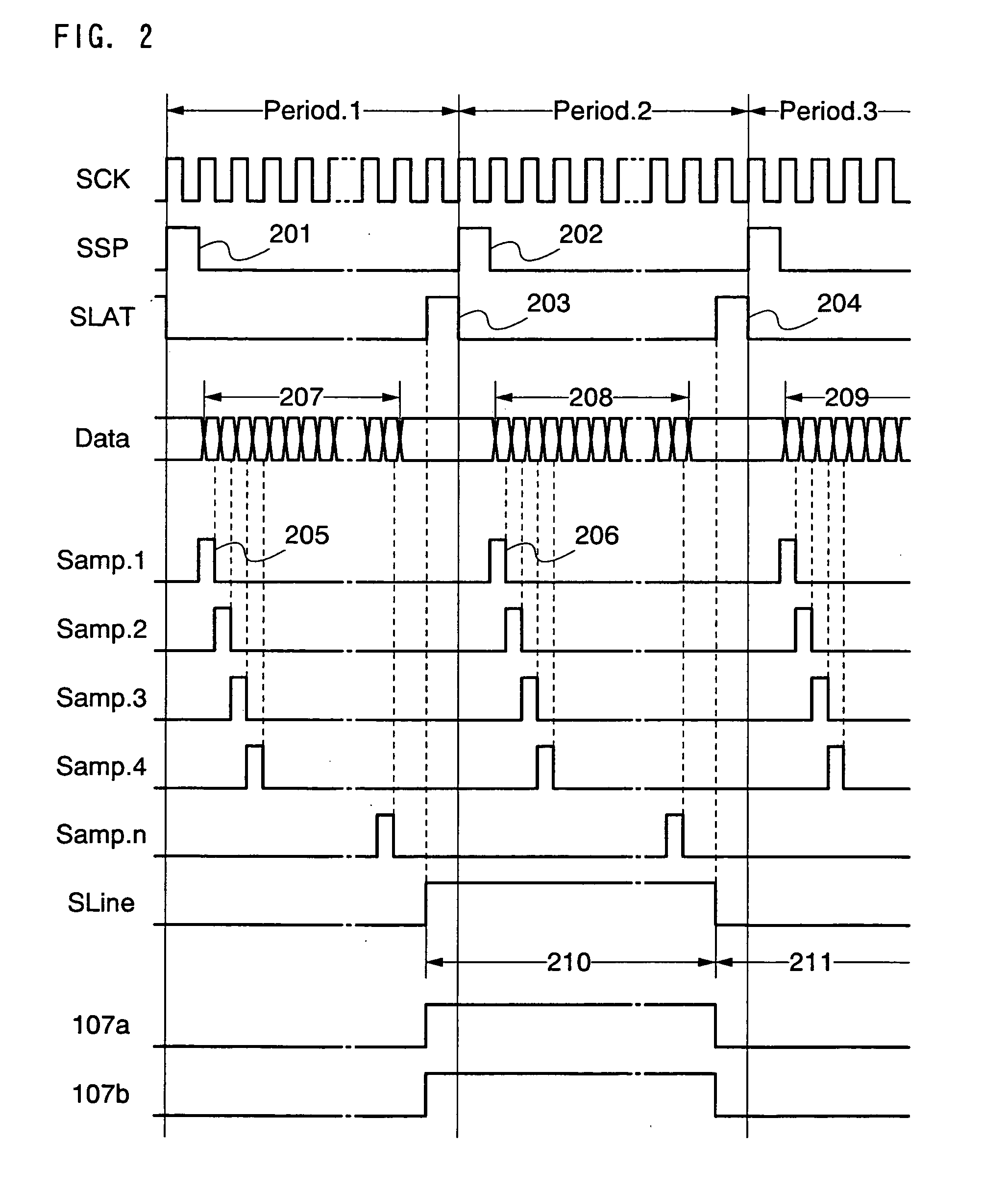

Circuit for inspecting semiconductor device and inspecting method

InactiveUS20050035805A1Effective Quality InspectionEfficient for of display deviceReliability increasing modificationsExclusive-OR circuitsDevice materialEngineering

It is configured by plurality of NAND circuits connected in series through a plurality of inverters, and a plurality of NOR circuits connected in series through the plurality of inverters. Each of a plurality of source signal lines provided in a pixel portion is connected to one input terminal of a NAND circuit and a NOR circuit, and an output of an inspection is obtained from final lines of the NAND circuit and the NOR circuit connected in series. In this manner, an inspecting circuit which is capable of determining a defect simply and accurately by using a small-scale circuit, and a method thereof are provided.

Owner:SEMICON ENERGY LAB CO LTD

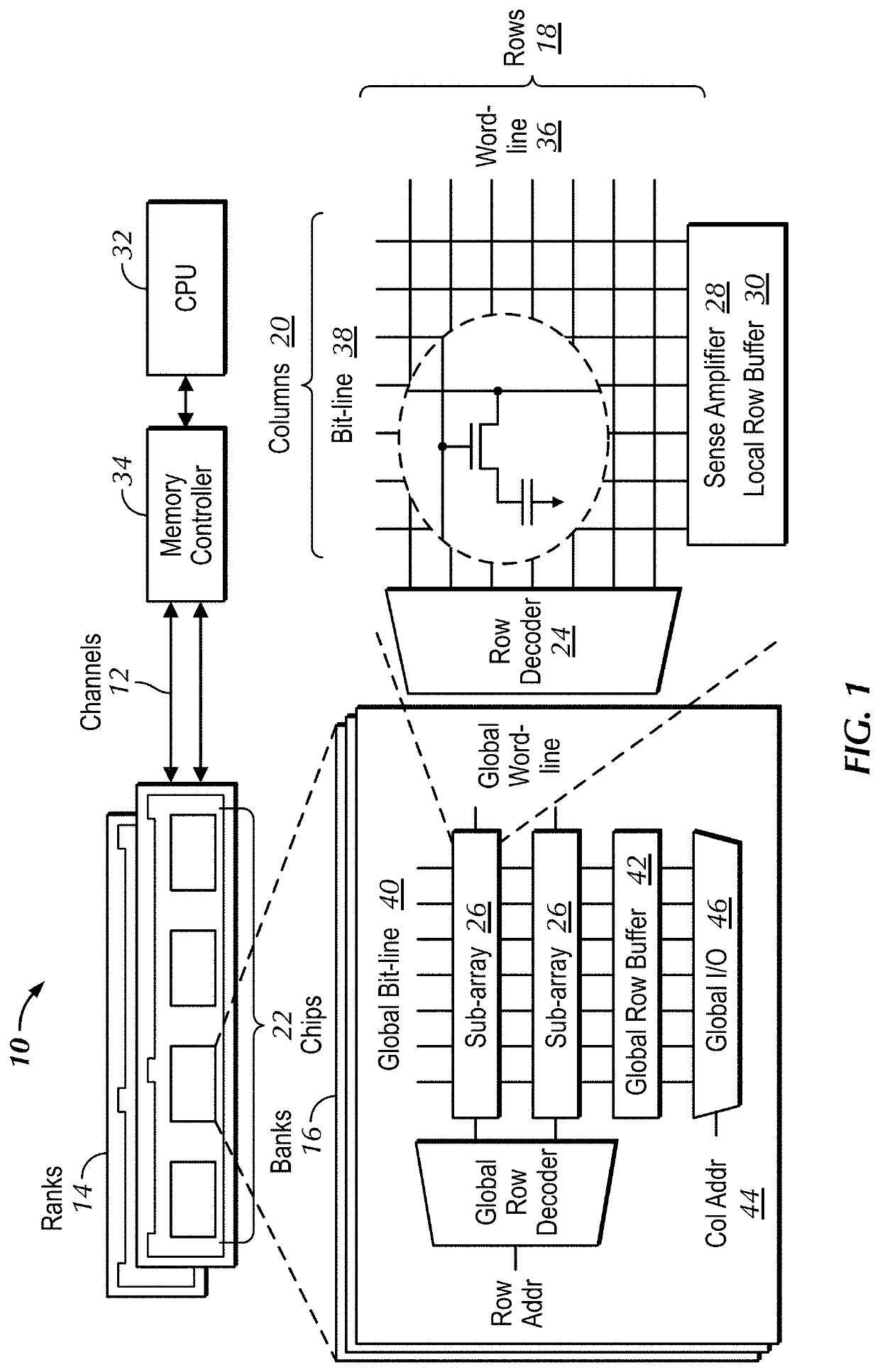

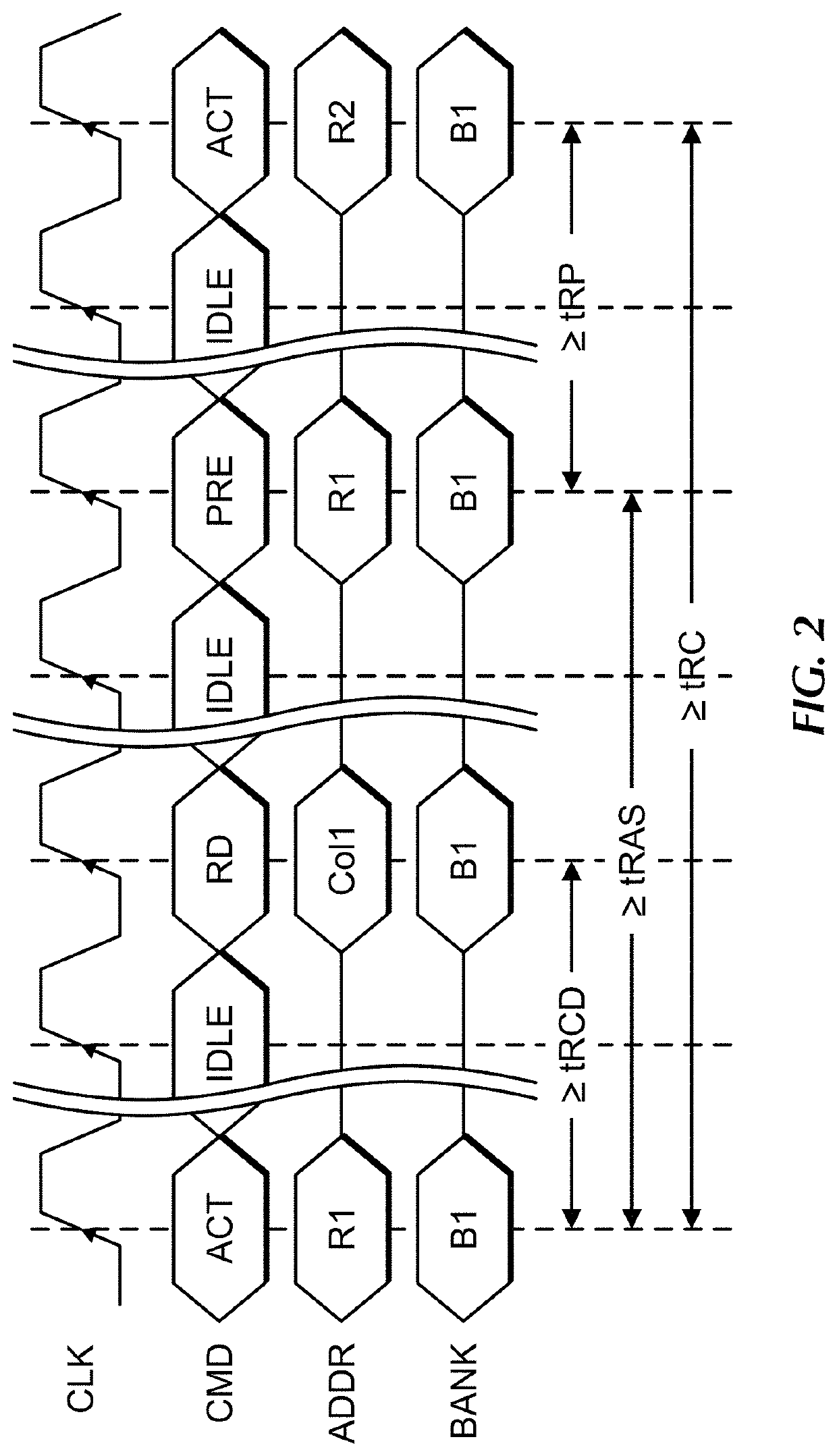

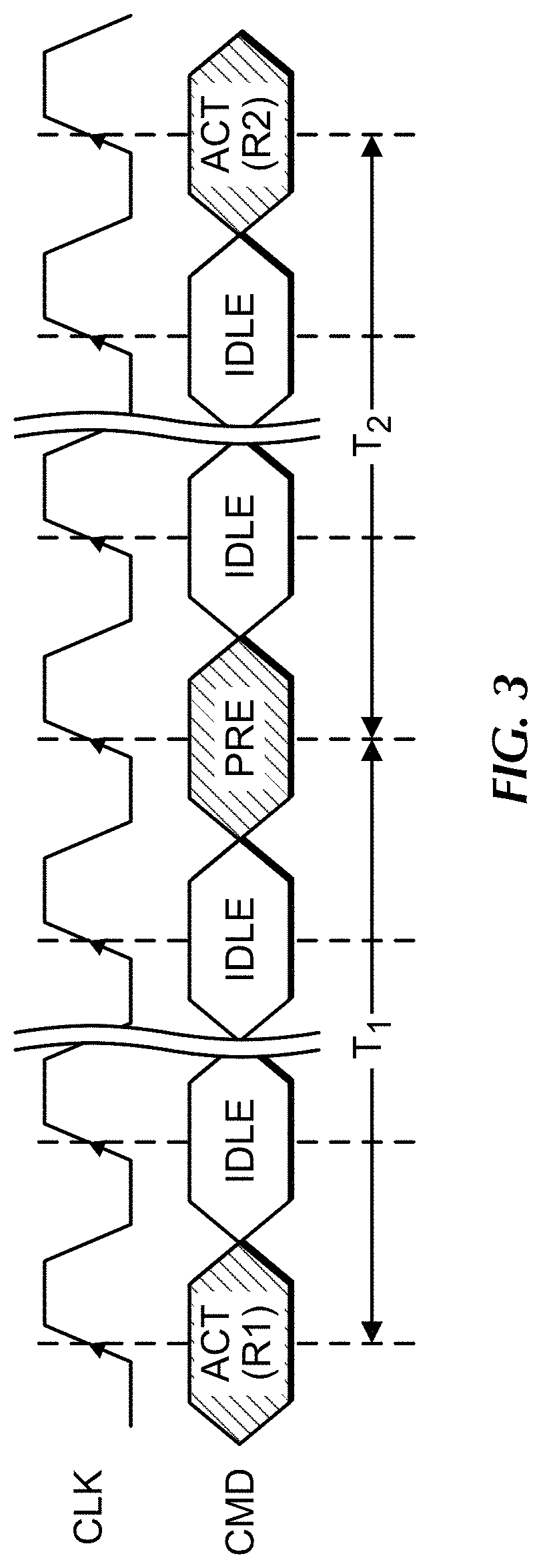

System and method for in-memory compute

According to various embodiments, an in-memory computation system is disclosed. The system includes a dynamic random access memory (DRAM) module. The system further includes a memory controller configured to violate a timing specification for the DRAM module and activate multiple rows of the DRAM module in rapid succession to enable bit-line charge sharing.

Owner:THE TRUSTEES FOR PRINCETON UNIV

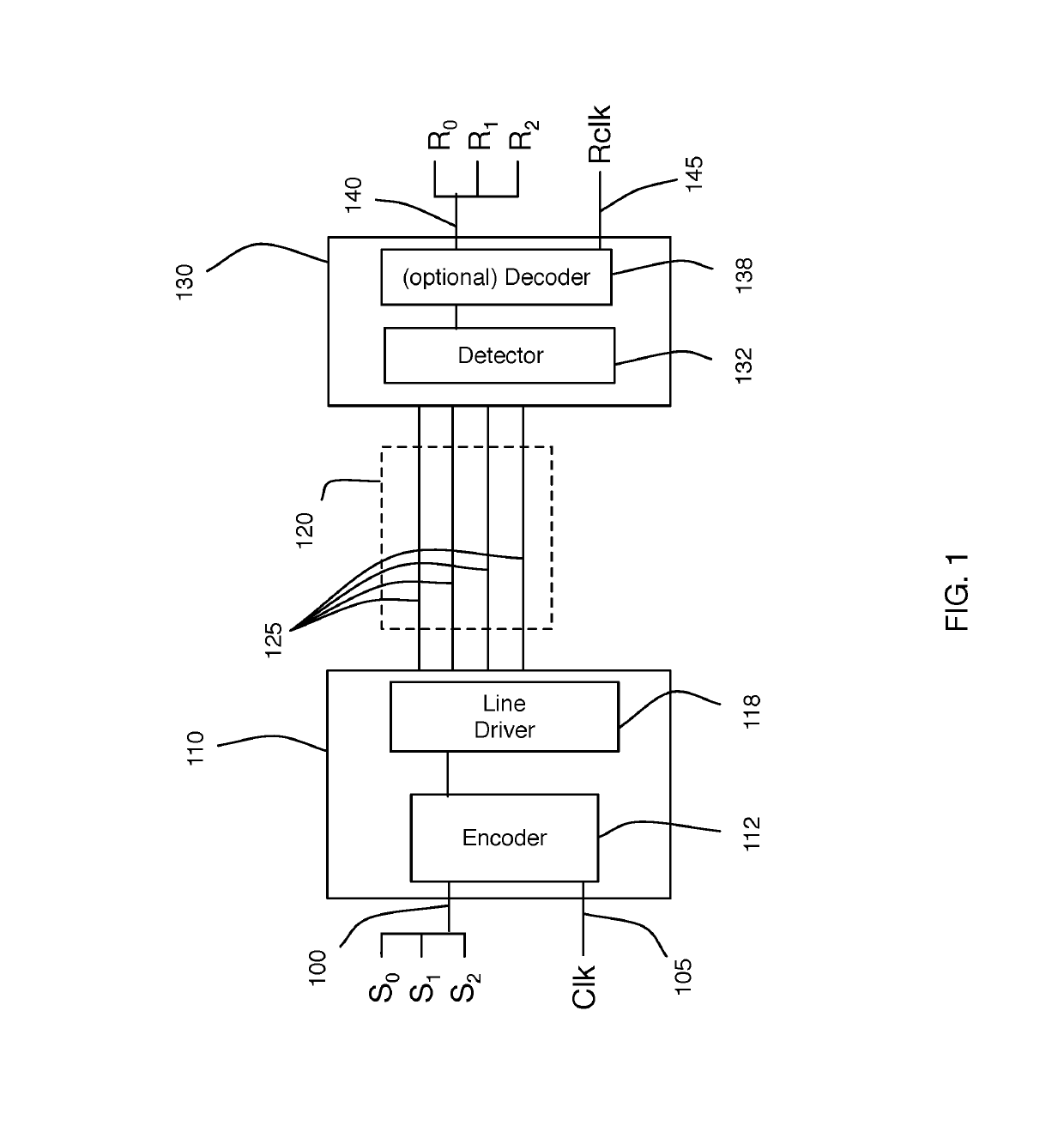

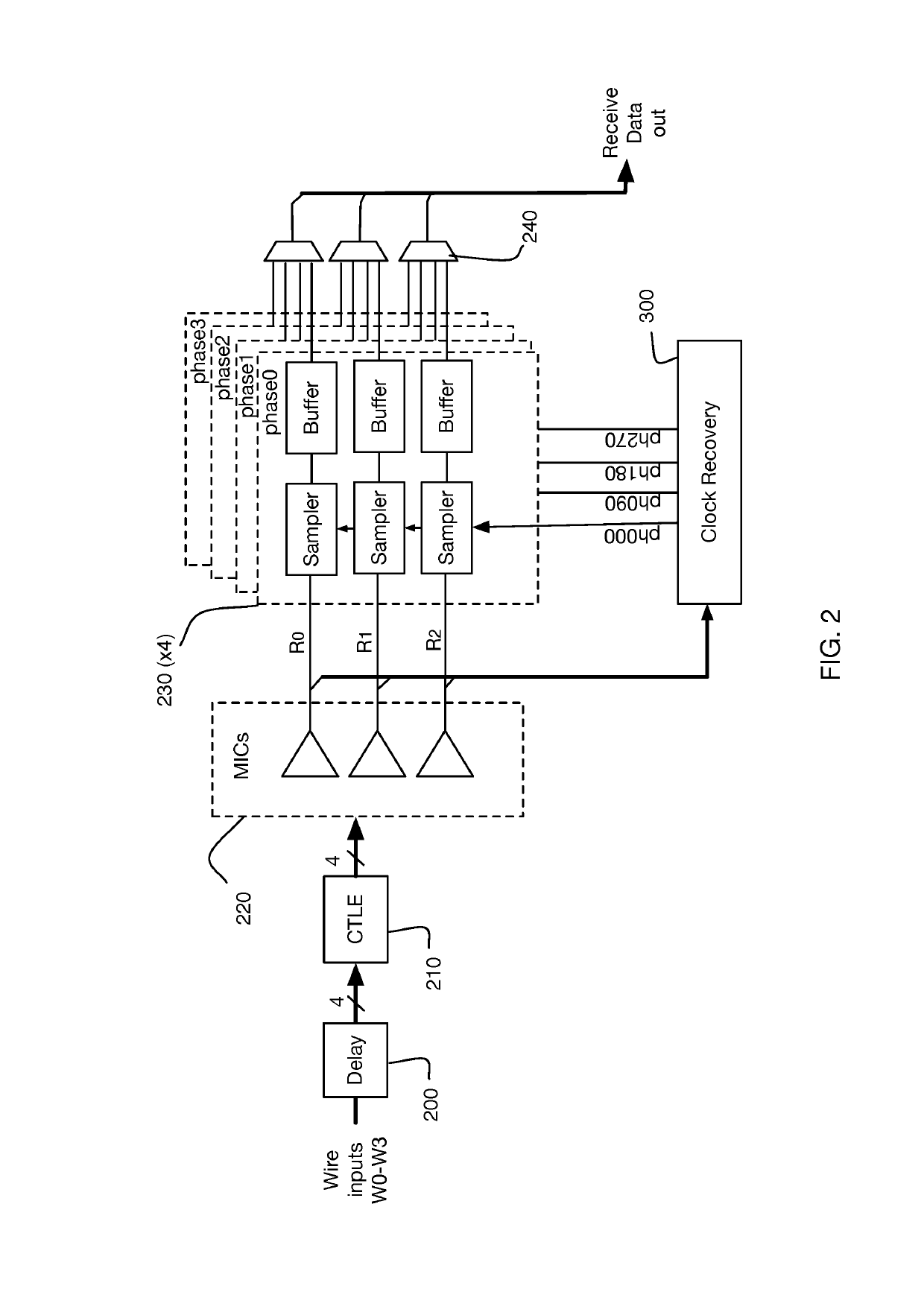

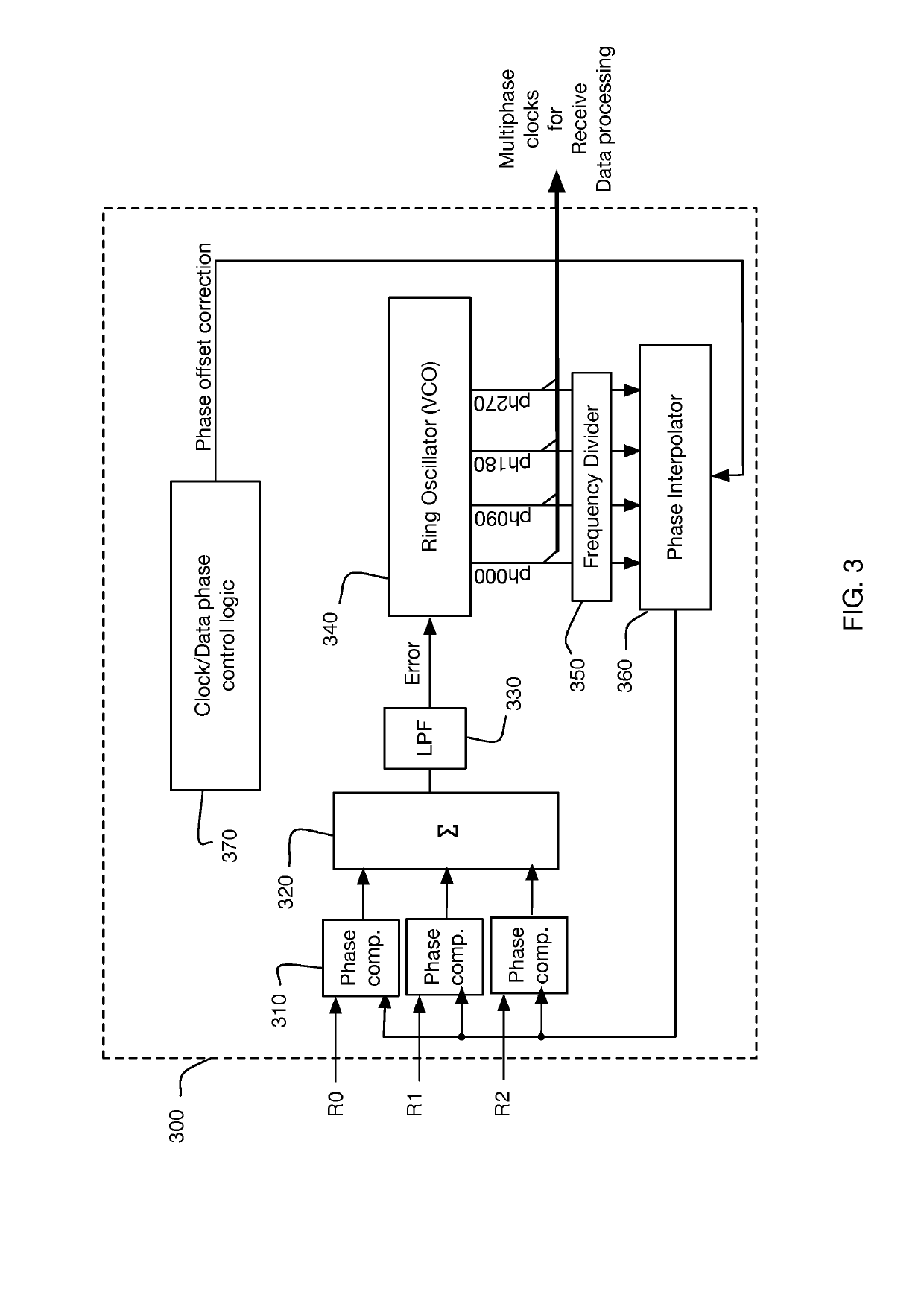

Method and system for calibrating multi-wire skew

ActiveUS10243614B1Reliable detectionAccurate interpretationExclusive-OR circuitsChannel dividing arrangementsMulti inputEngineering

Methods and systems are described for receiving, over a plurality of consecutive signaling intervals, a plurality of codewords, each codeword received as a plurality of symbols via wires of a multi-wire bus, the plurality of symbols received at a plurality of multi-input comparators (MICs), wherein each symbol is received by at least two MICs, generating, for each codeword, a corresponding linear combination of the received symbols, generating a plurality of composite skew measurement signals over the plurality of consecutive signaling intervals, each composite skew measurement signal based on samples of one or more linear combinations, and updating wire-specific skew values of the wires of the multi-wire bus, wherein one or more wire-specific skew values are updated according to composite skew measurement signals associated with linear combinations formed by at least two different MICs.

Owner:KANDOU LABS

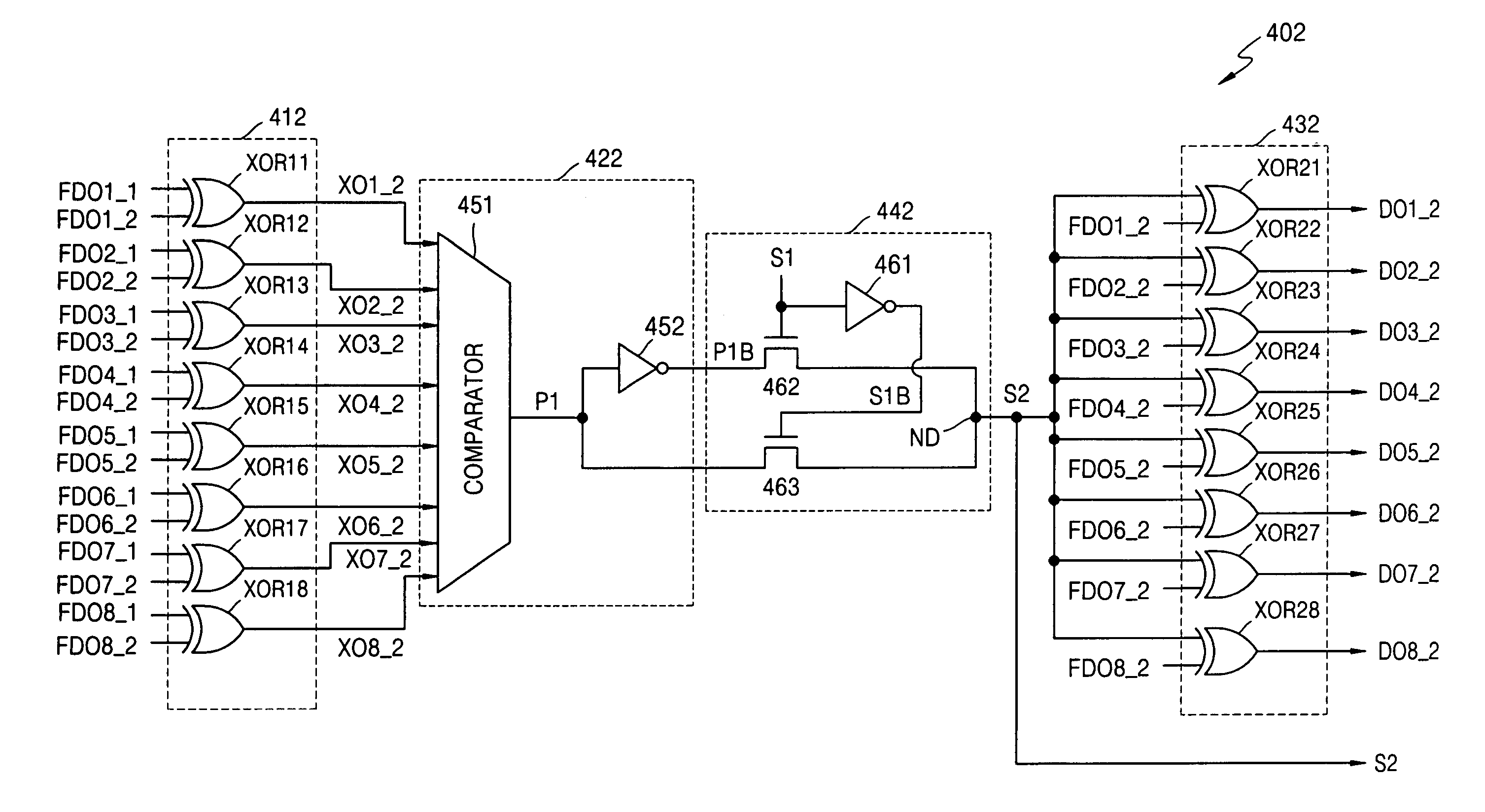

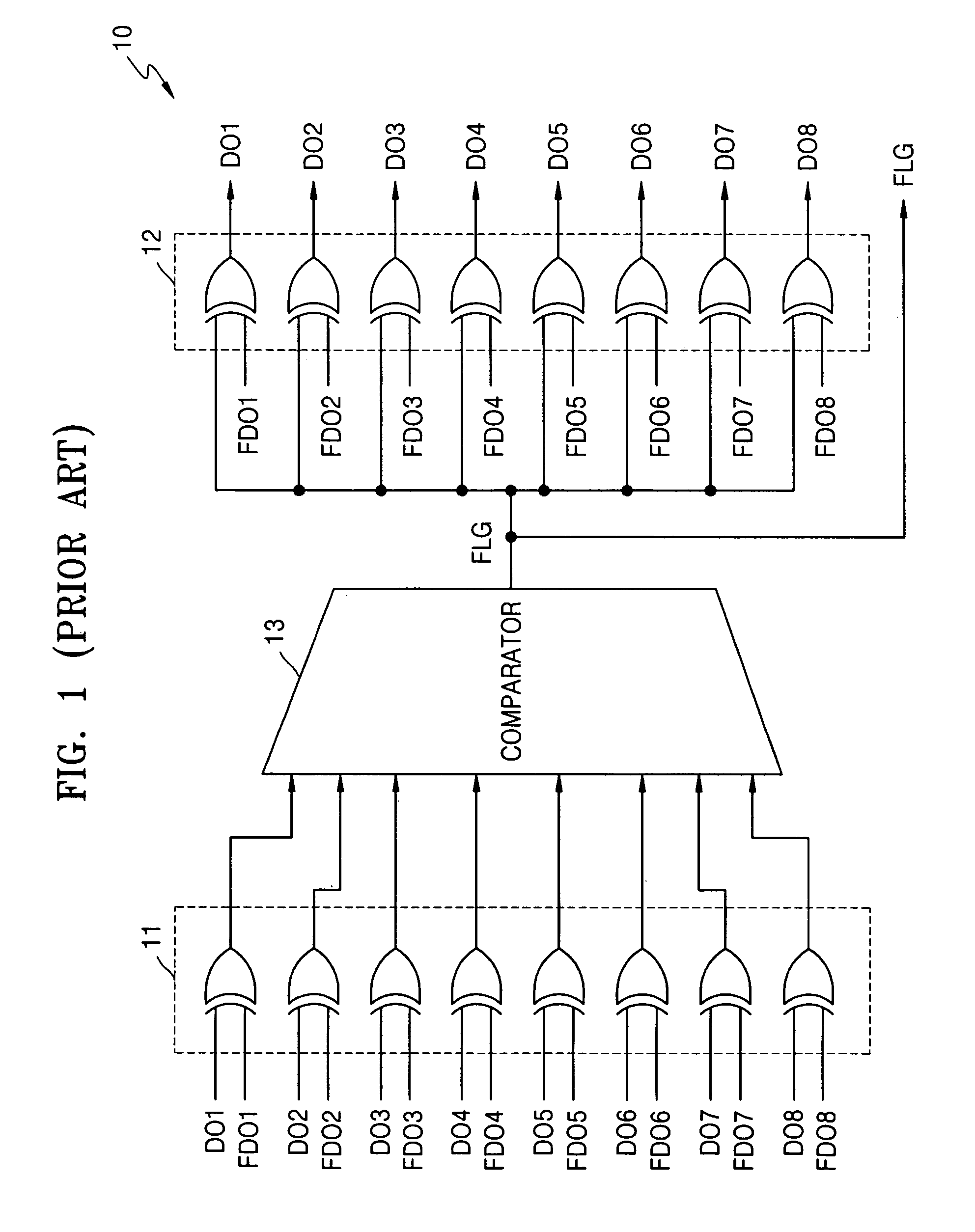

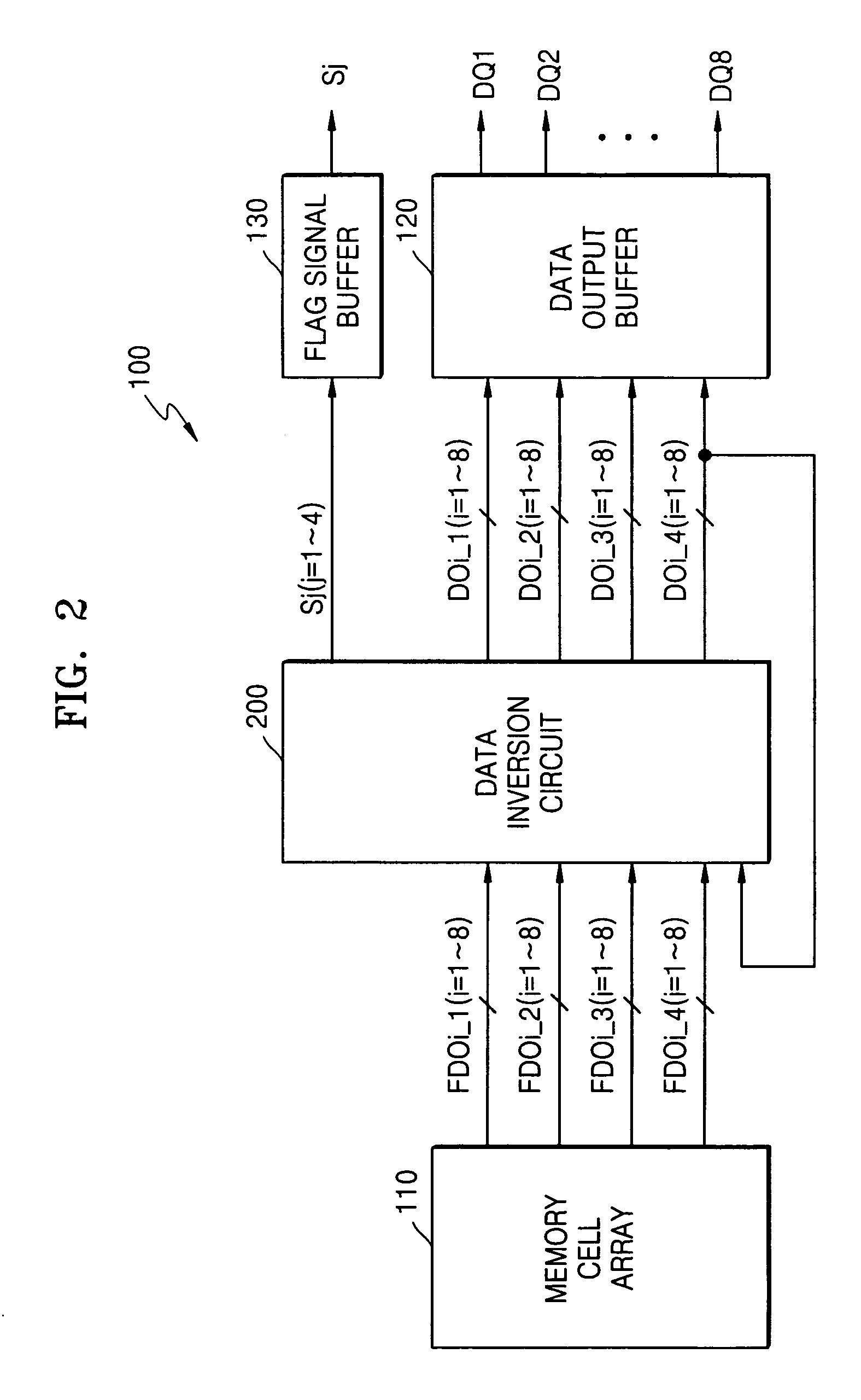

Integrated circuit devices having data inversion circuits therein with multi-bit prefetch structures and methods of operating same

InactiveUS6992506B2Reduce simultaneous switching noiseReduce noiseExclusive-OR circuitsError preventionComputer scienceIntegrated circuit

Integrated circuit devices include data inversion circuits therein that are configured to evaluate at least first and second ordered groups of input data in parallel with an ordered group of output data previously generated by the data inversion circuit. The data inversion circuit is further configured to generate inverted versions of the first and second ordered groups of input data as versions of the first and second ordered groups of data in parallel at outputs thereof whenever a number of bit differences between the first ordered group of input data and the ordered group of output data is greater than one-half a size of the first ordered group of input data and a number of bit differences between the second ordered group of input data and the version of the first ordered group of input data is greater than one-half a size of the second ordered group of input data, respectively.

Owner:SAMSUNG ELECTRONICS CO LTD

Popular searches

CAD circuit design Program control Aerodynamics improvement Special data processing applications Logic circuits using elementary logic circuit components Logic circuit coupling/interface arrangements Knowledge based models Computation using denominational number representation Counting chain synchronous pulse counters Dc-dc conversion

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com