Patents

Literature

928 results about "Machine vision system" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A machine vision system primarily enables a computer to recognize and evaluate images. It is similar to voice recognition technology, but uses images instead. A machine vision system typically consists of digital cameras and back-end image processing hardware and software.

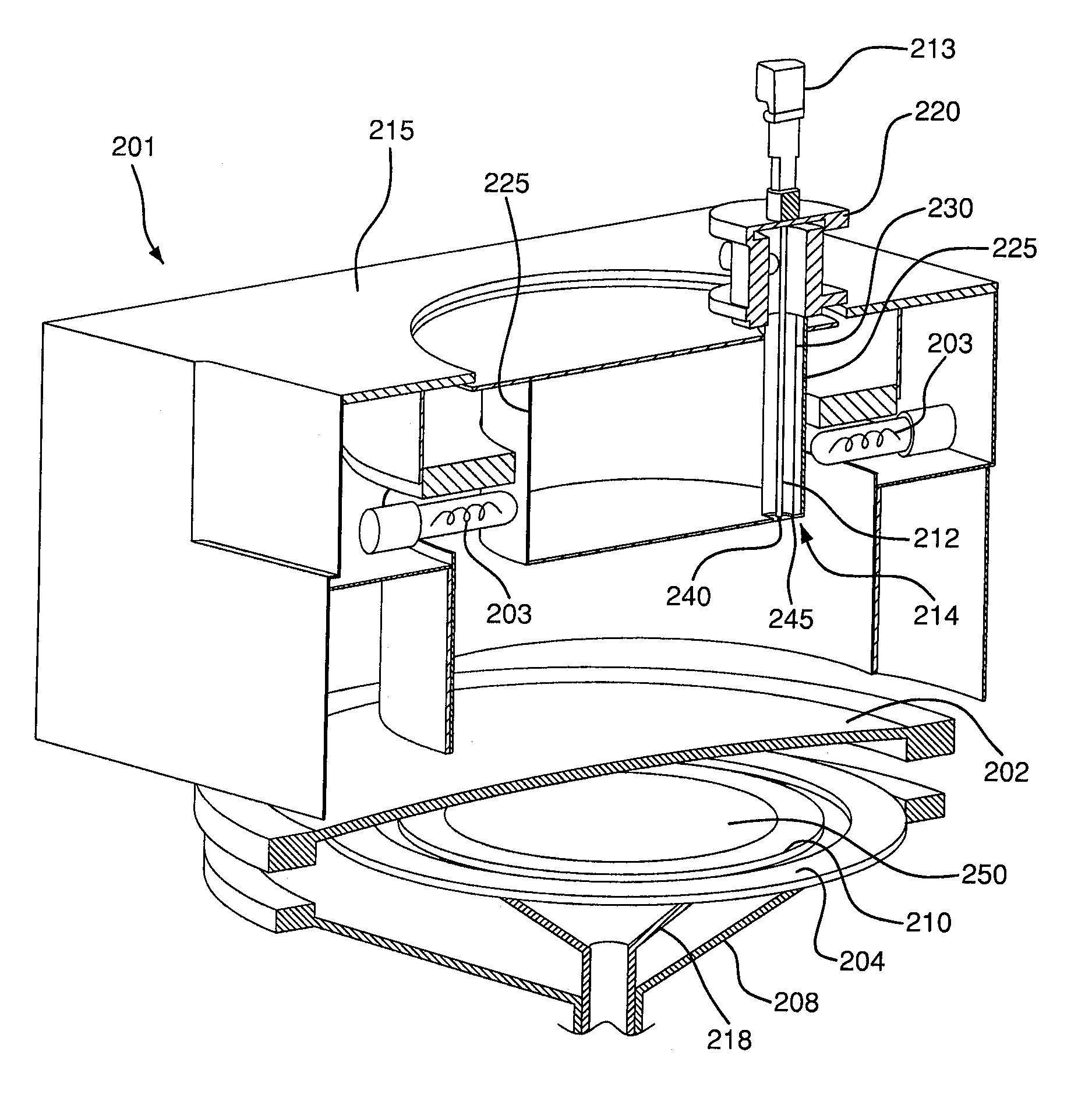

Semiconductor process chamber vision and monitoring system

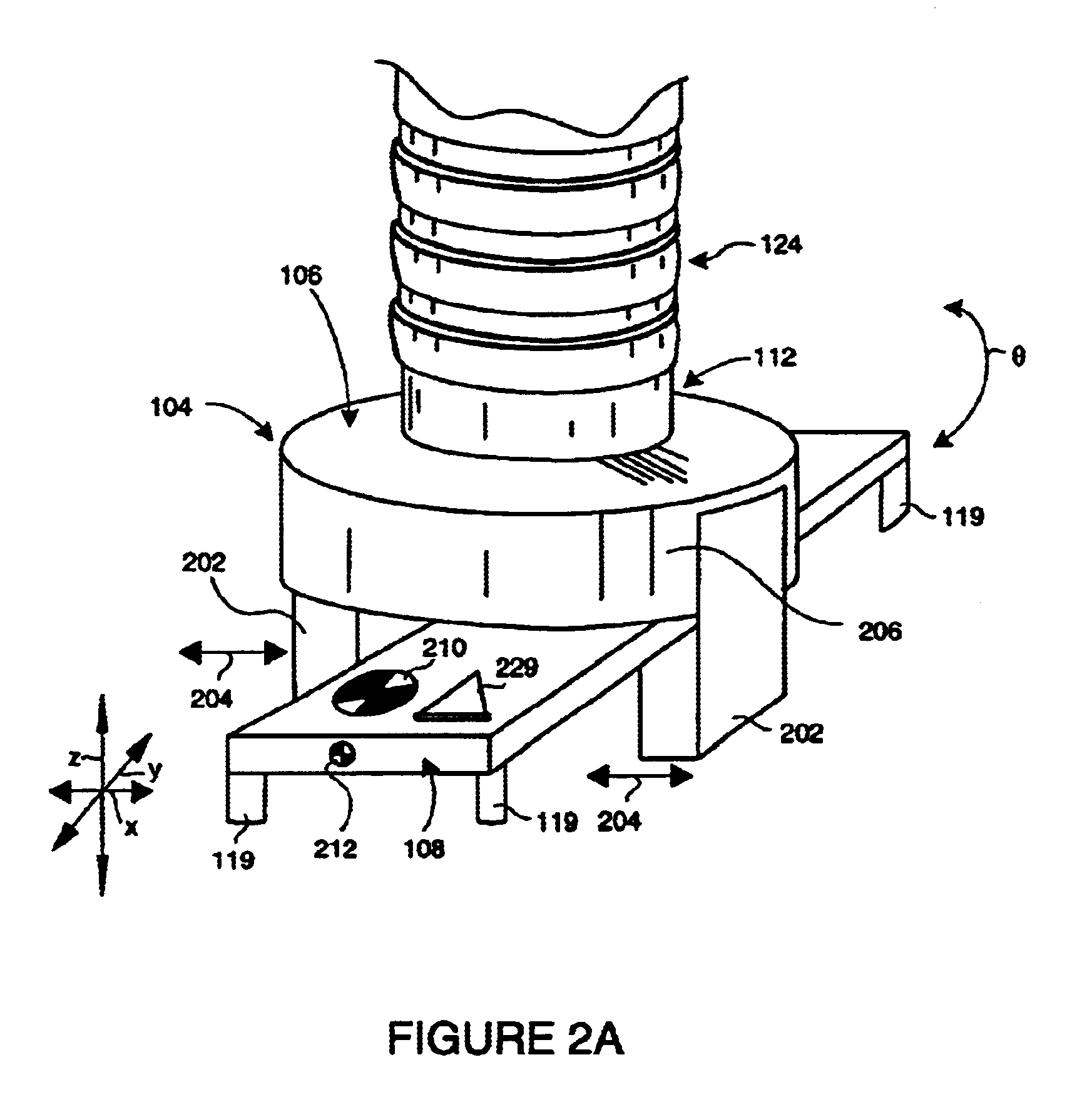

A system for monitoring a process inside a high temperature semiconductor process chamber by capturing images is disclosed. Images are captured through a borescope by a camera. The borescope is protected from high temperatures by a reflective sheath and an Infrared (IR) cut-off filter. Images can be viewed on a monitor and can be recorded by a video recording device. Images can also be processed by a machine vision system. The system can monitor the susceptor and a substrate on the susceptor and surrounding structures. Deviations from preferred geometries of the substrate and deviations from preferred positions of susceptor and the substrate can be detected. Actions based on the detections of deviations can be taken to improve the performance of the process. Illumination of a substrate by a laser for detecting deviations in substrate geometry and position is also disclosed.

Owner:APPLIED MATERIALS INC

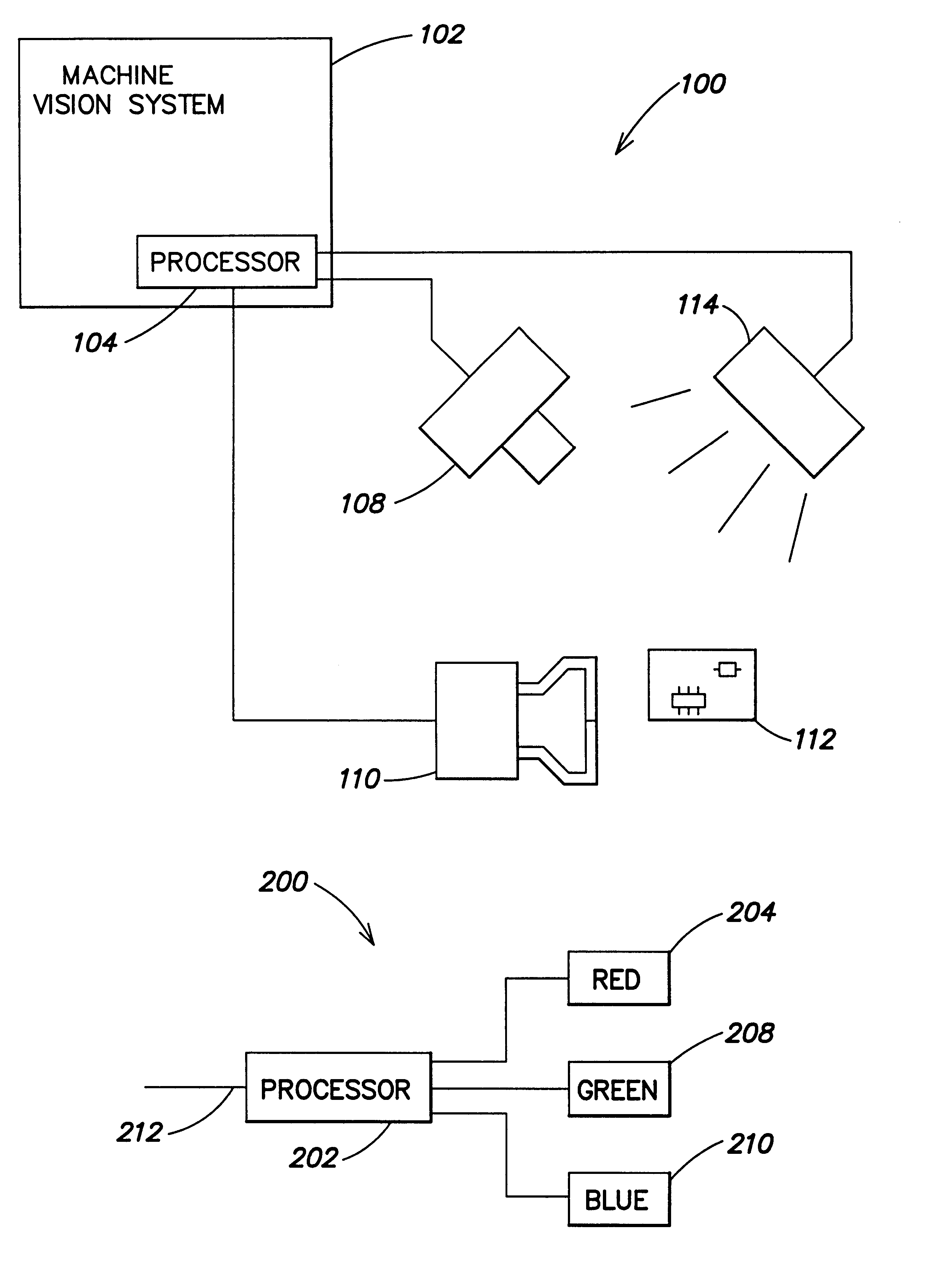

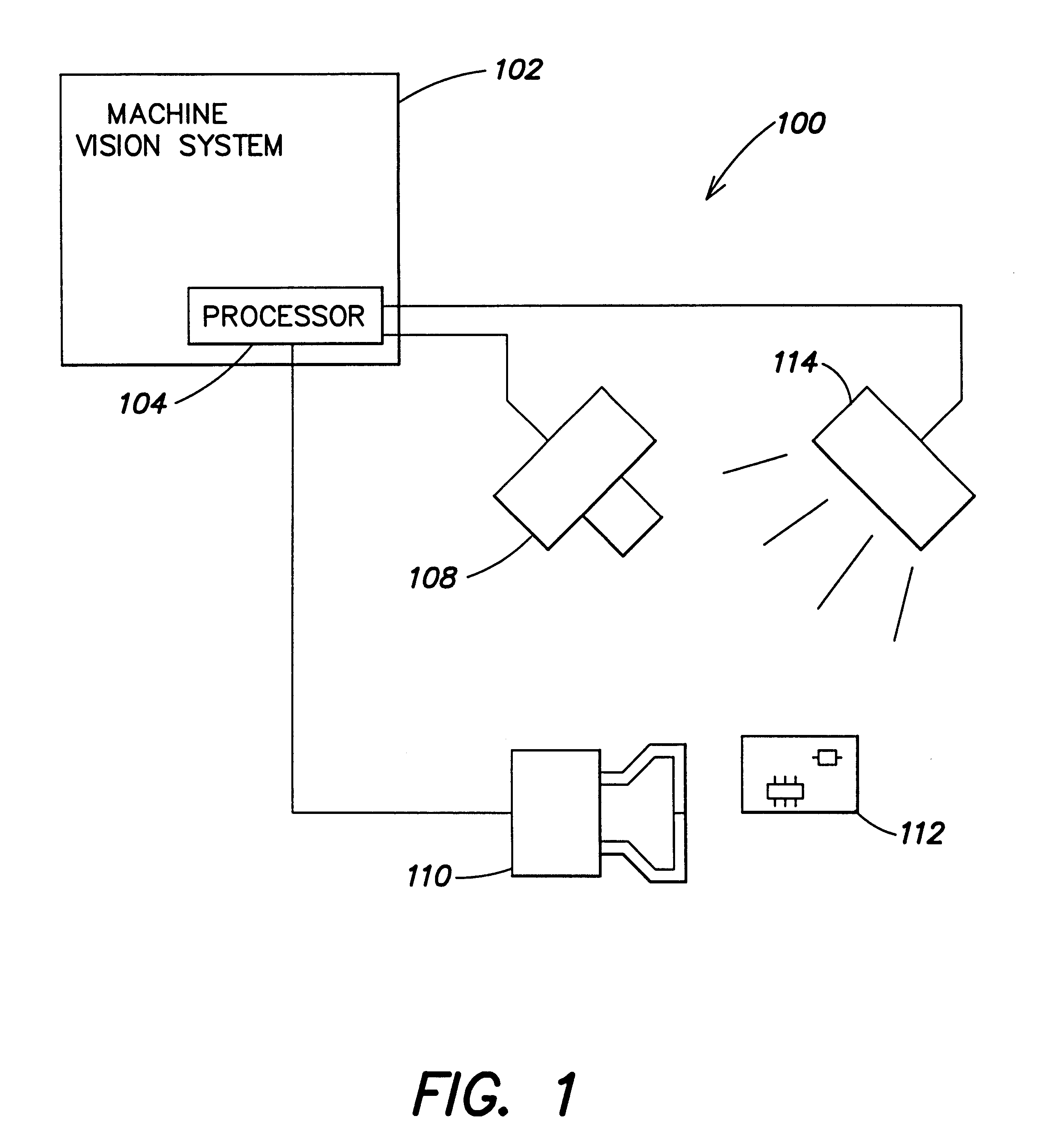

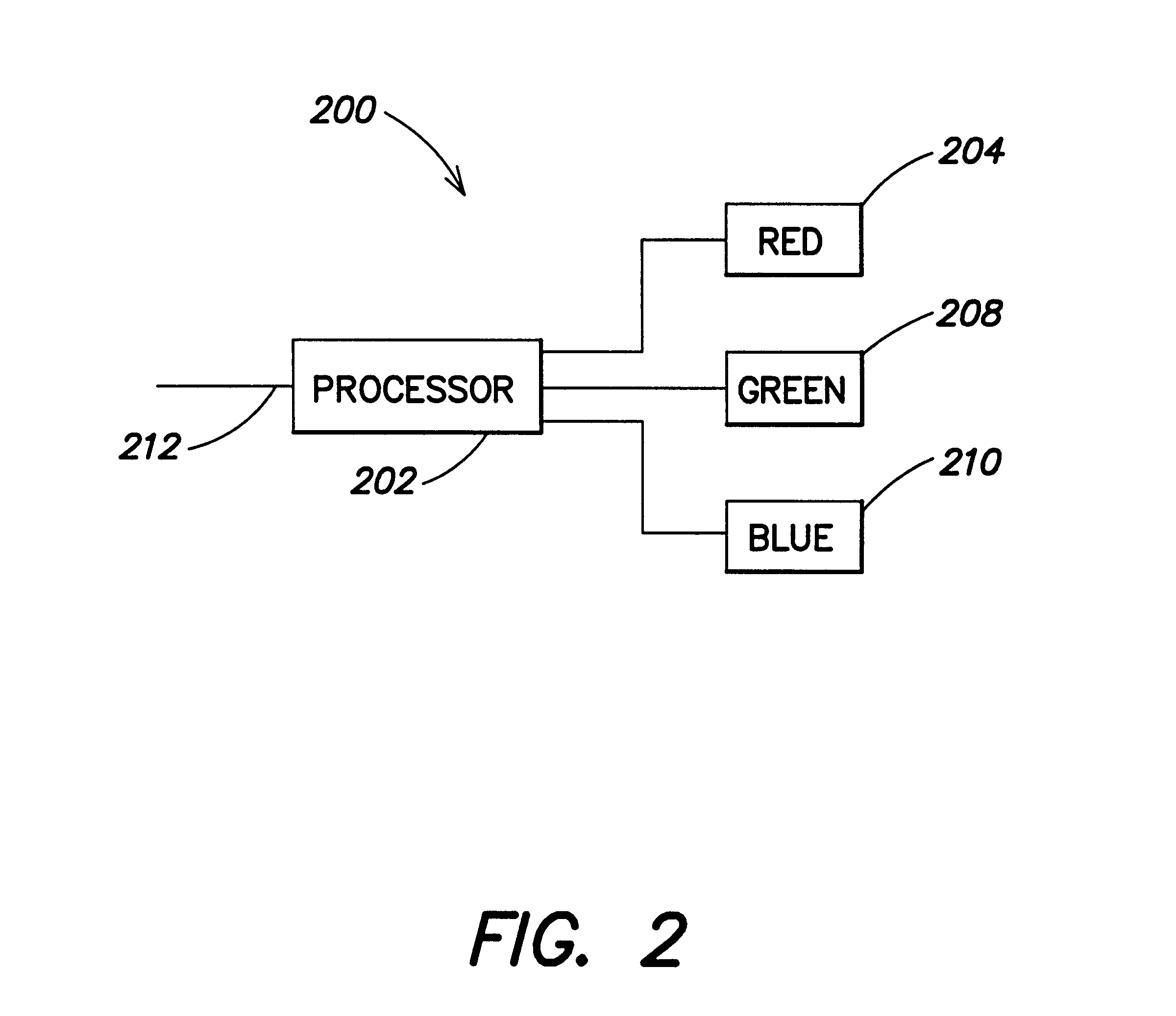

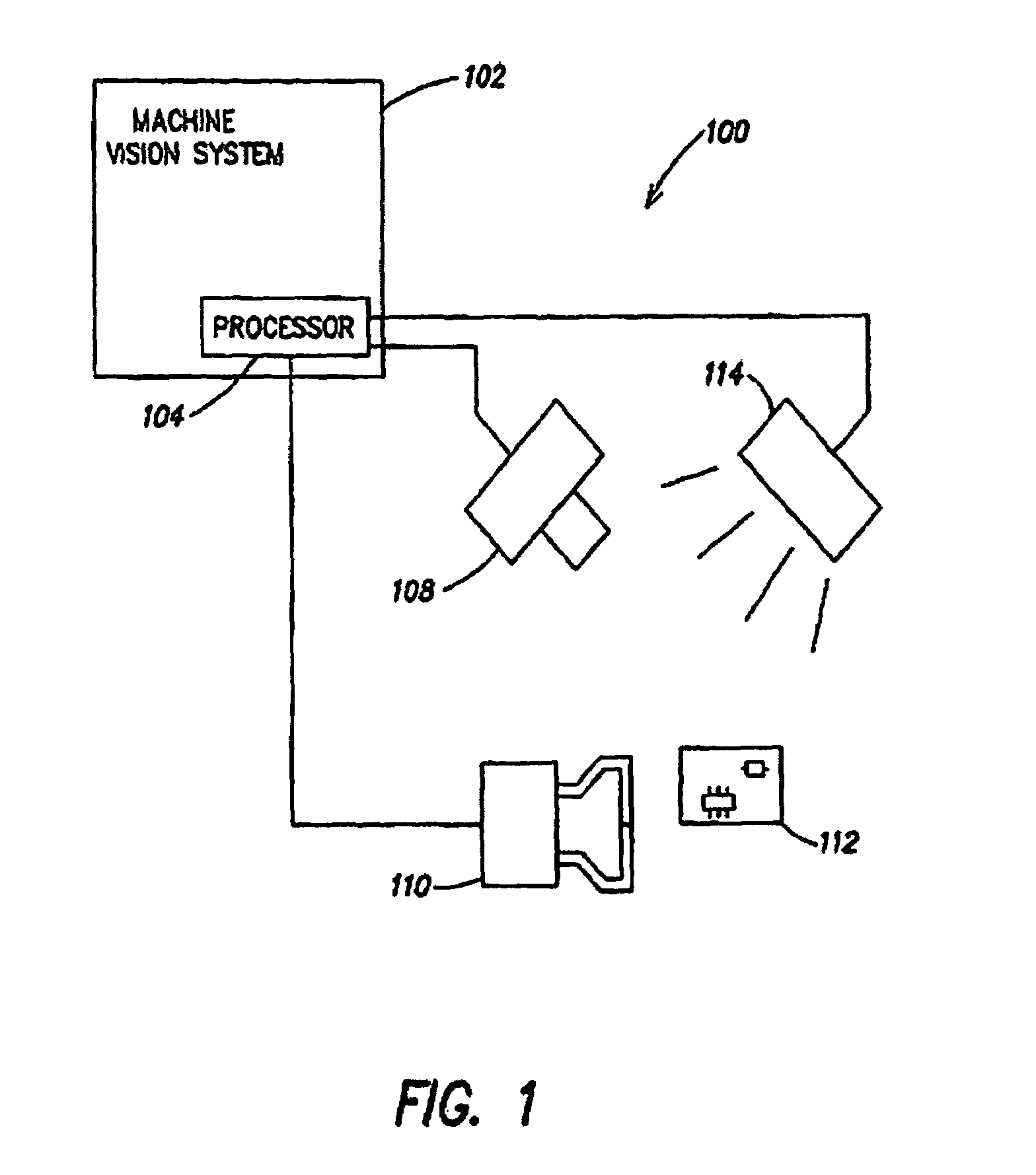

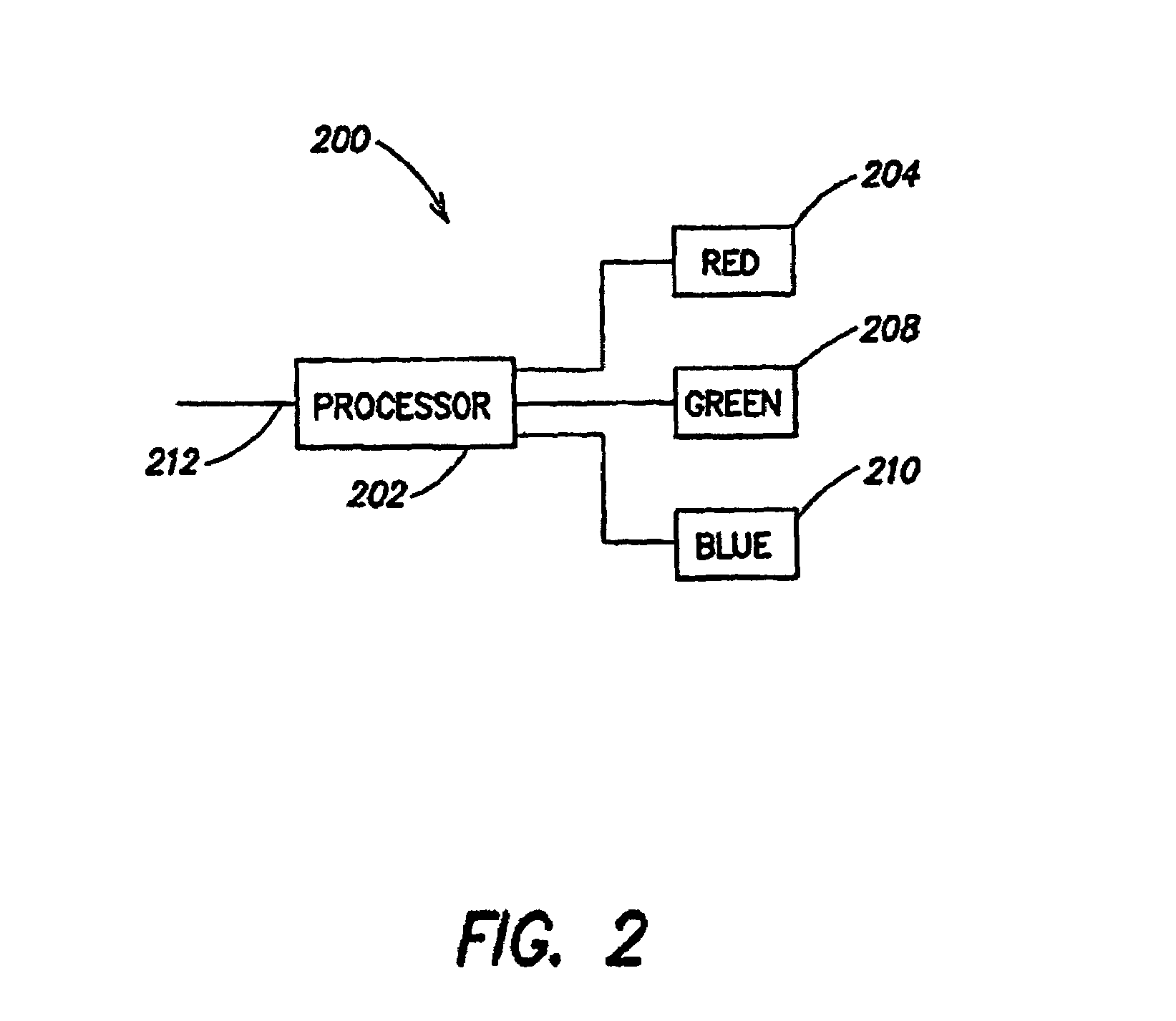

Systems and methods for providing illumination in machine vision systems

InactiveUS6624597B2Electroluminescent light sourcesMaterial analysis by optical meansEffect lightEngineering

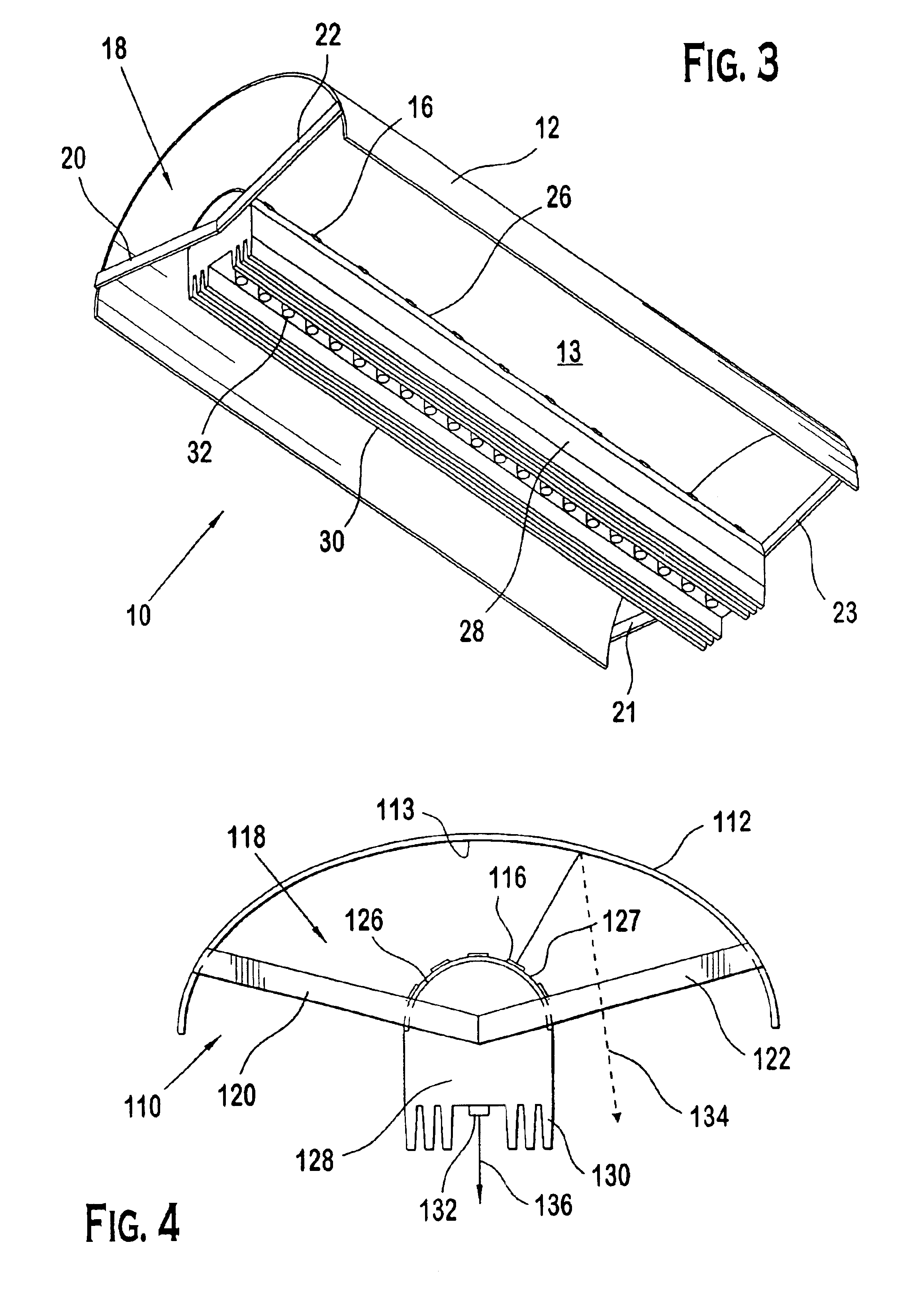

One embodiment is a lighting system associated with a machine vision system. The machine vision system may direct lighting control commands to the lighting system to change the illumination conditions provided to an object. A vision system may also be provided and associated with the machine vision system such that the vision system views and captures an image(s) of the object when lit by the lighting system. The machine vision system may direct the lighting system to change the illumination conditions and then capture the image.

Owner:PHILIPS LIGHTING NORTH AMERICA CORPORATION

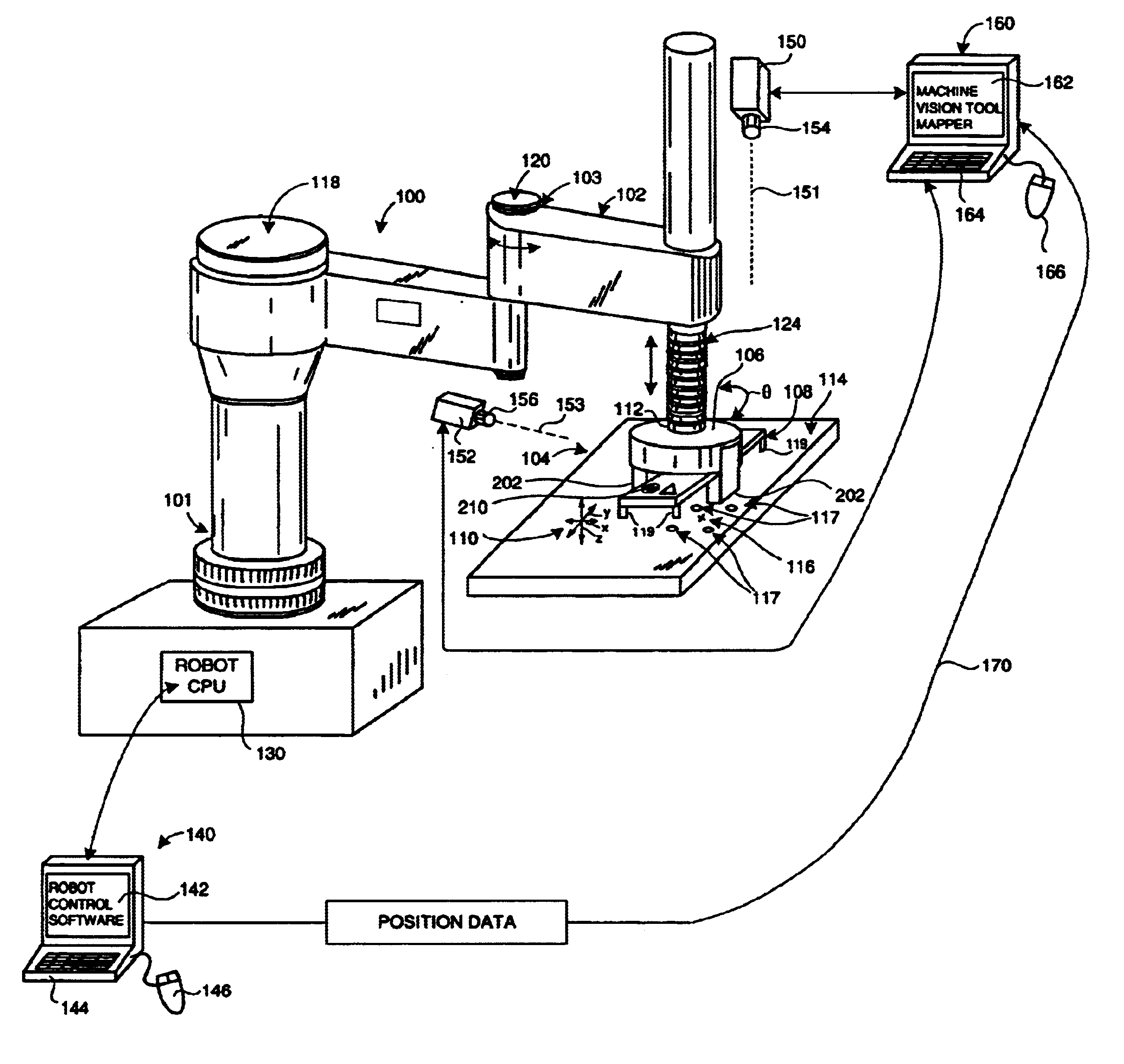

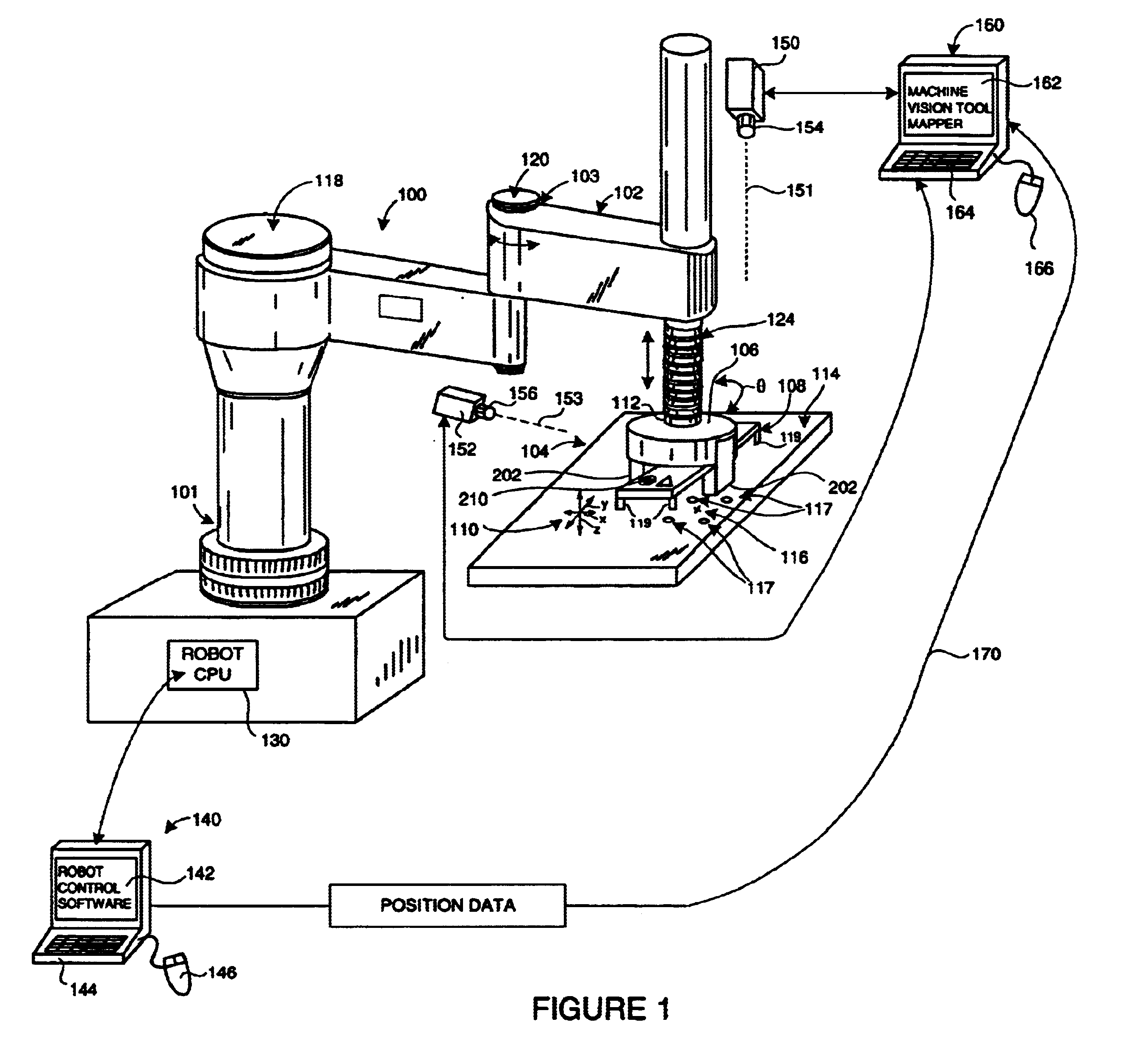

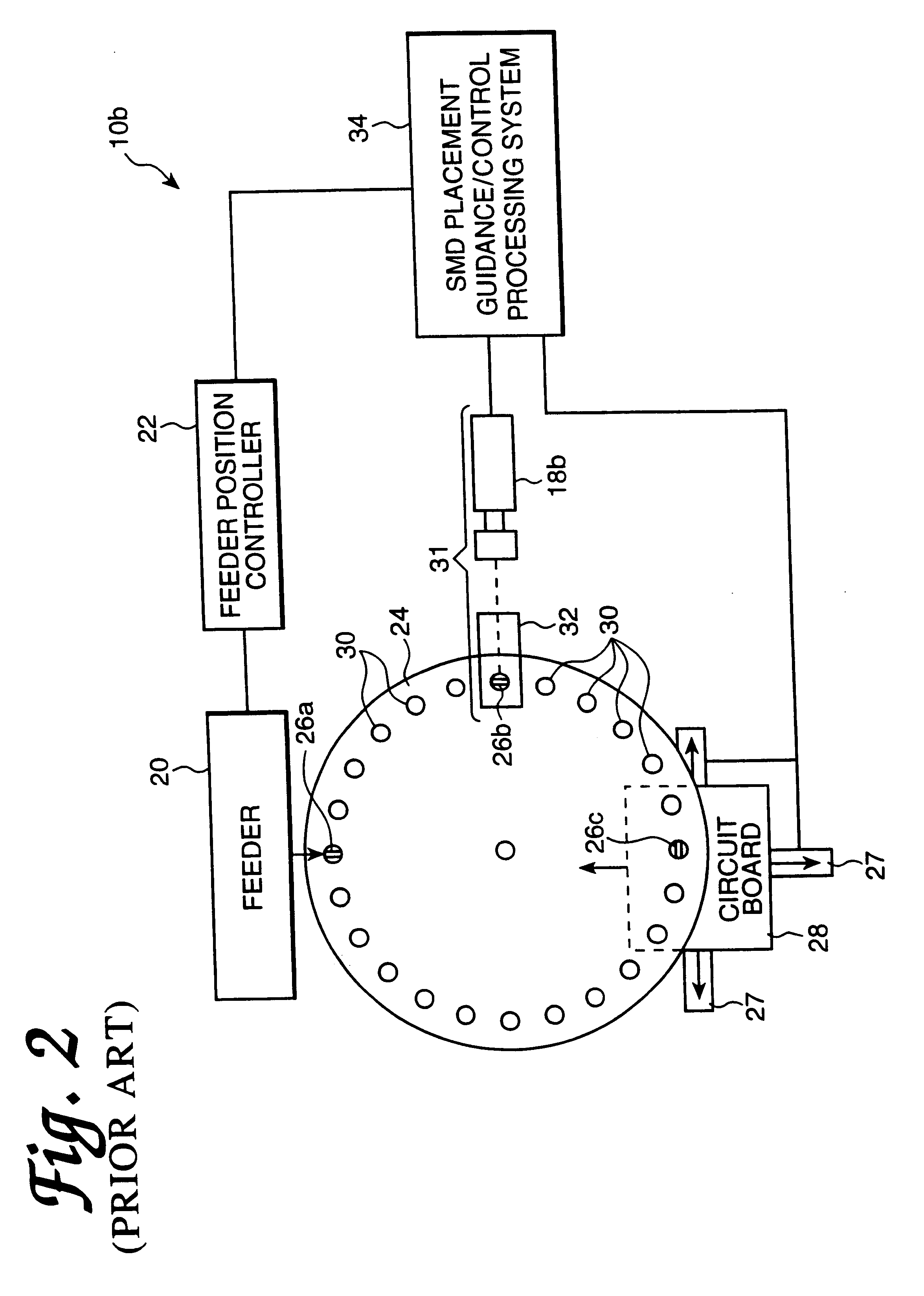

System and method for servoing robots based upon workpieces with fiducial marks using machine vision

InactiveUS6681151B1Sure easyLocated reliablyProgramme-controlled manipulatorPhotometry using reference valueEngineeringDegrees of freedom

A system and method for servoing robot marks using fiducial marks and machine vision provides a machine vision system having a machine vision search tool that is adapted to register a pattern, namely a trained fiducial mark, that is transformed by at least two translational degrees and at least one mon-translational degree of freedom. The fiducial is provided to workpiece carried by an end effector of a robot operating within a work area. When the workpiece enters an area of interest within a field of view of a camera of the machine vision system, the fiducial is recognized by the tool based upon a previously trained and calibrated stored image within the tool. The location of the work-piece is derived by the machine vision system based upon the viewed location of the fiducial. The location of the found fiducial is compared with that of a desired location for the fiducial. The desired location can be based upon a standard or desired position of the workpiece. If a difference between location of the found fiducial and the desired location exists, the difference is calculated with respect to each of the translational axes and the rotation. The difference can then be further transformed into robot-based coordinates to the robot controller, and workpiece movement is adjusted based upon the difference. Fiducial location and adjustment continues until the workpiece is located the desired position with minimum error.

Owner:COGNEX TECH & INVESTMENT

Integrating LED illumination system for machine vision systems

InactiveUS6871993B2Efficiently focusPoint-like light sourcePortable electric lightingLed arrayOptoelectronics

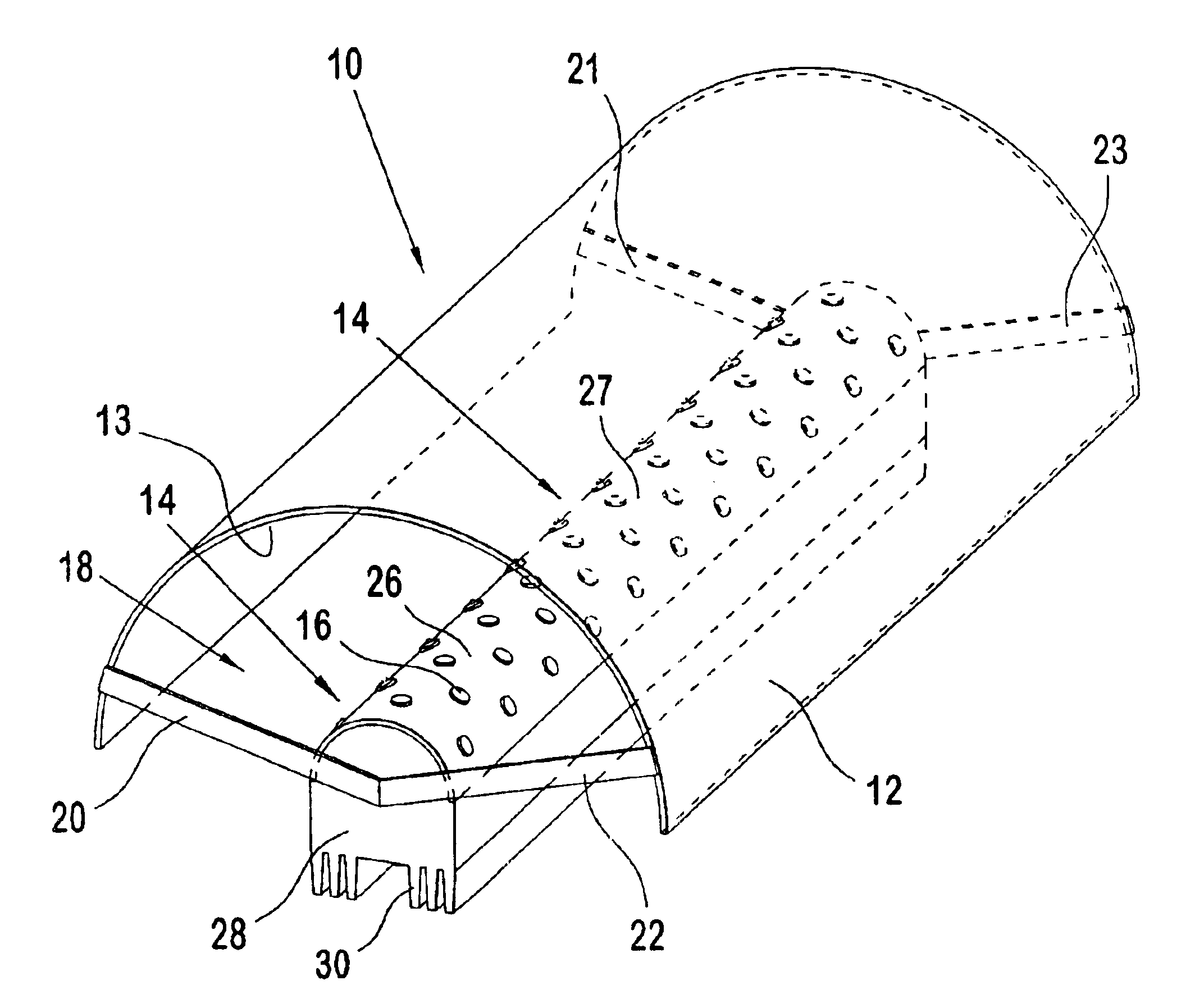

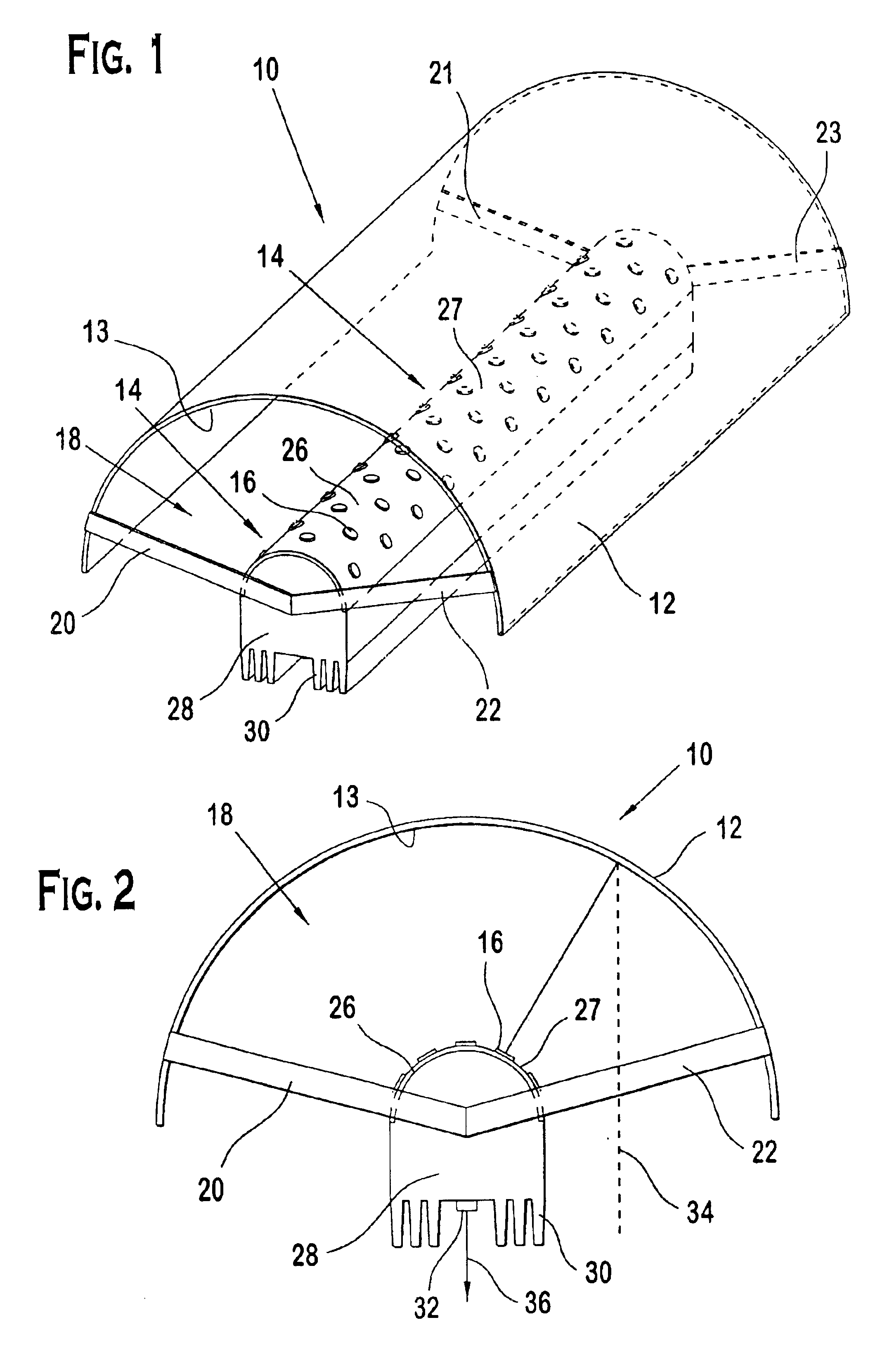

A system for focusing light on an illumination area. The system includes a reflector having a focusing reflective surface and a focal region and an LED array having a plurality of LEDs located within the focal region. Each of the plurality of LEDs in the LED array is positioned to emit light toward the focusing reflective surface. The focusing reflective surface reflects light from each of the plurality of LEDs of the LED array toward the illumination area.

Owner:DATALOGIC AUTOMATION

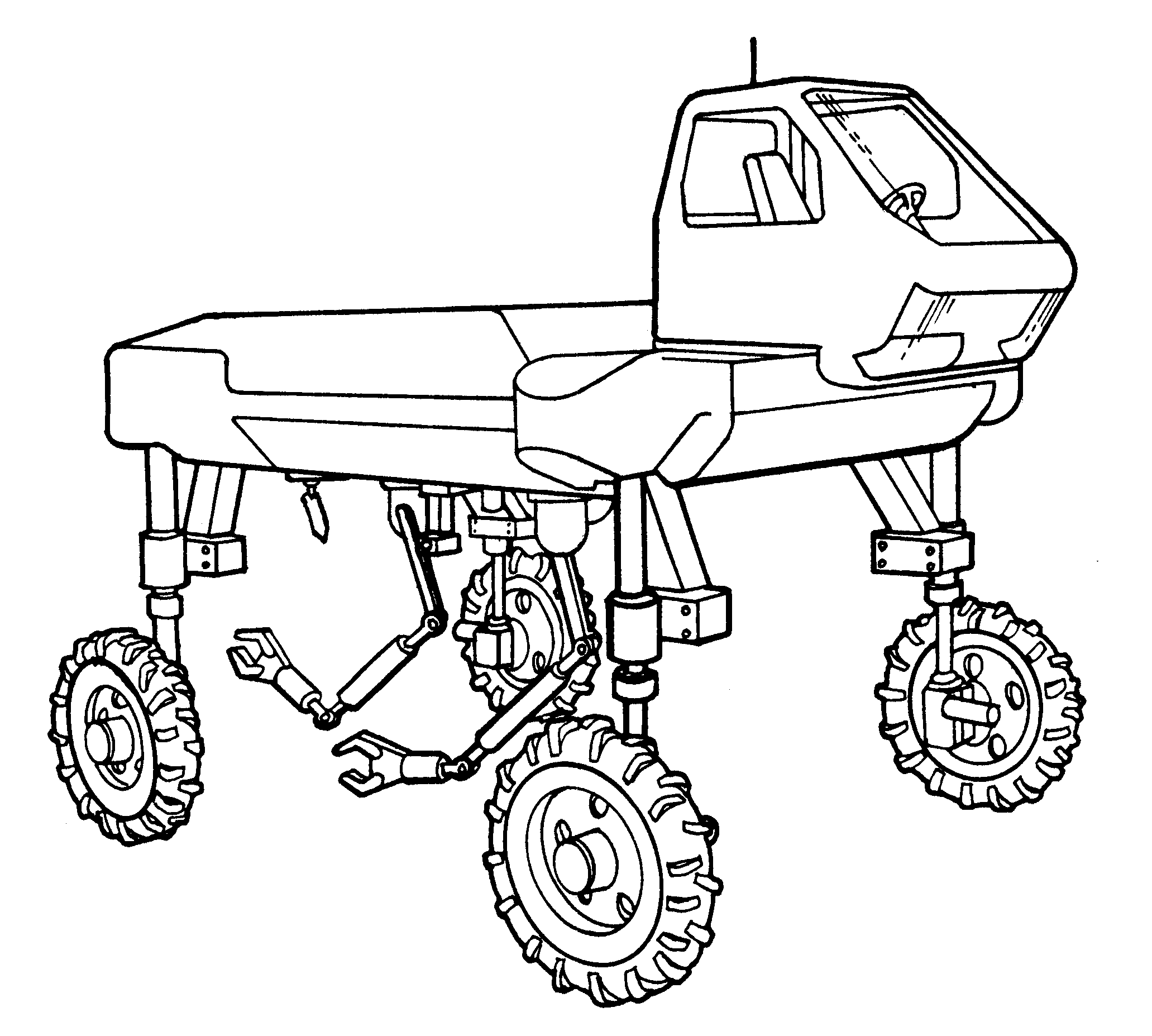

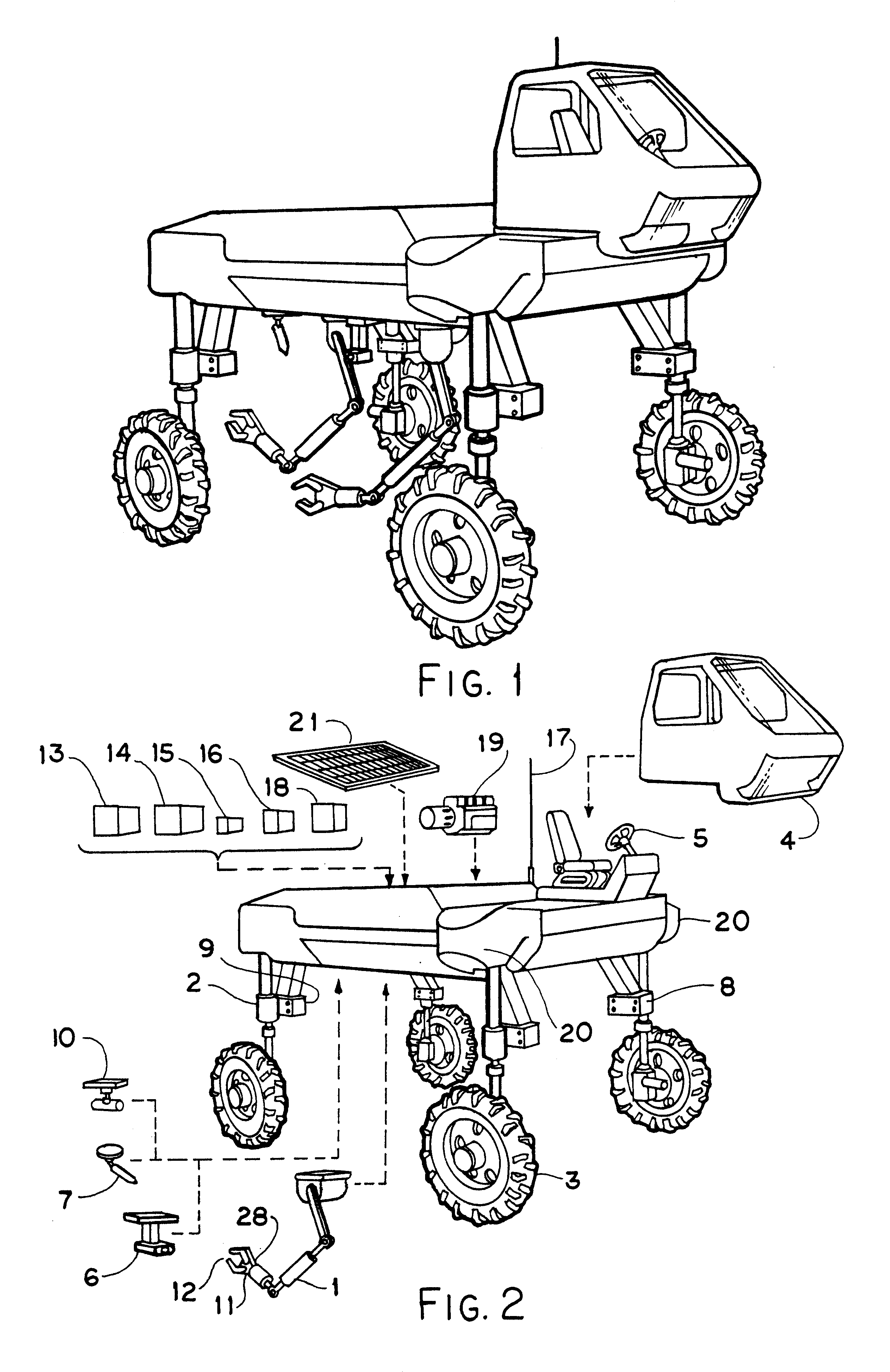

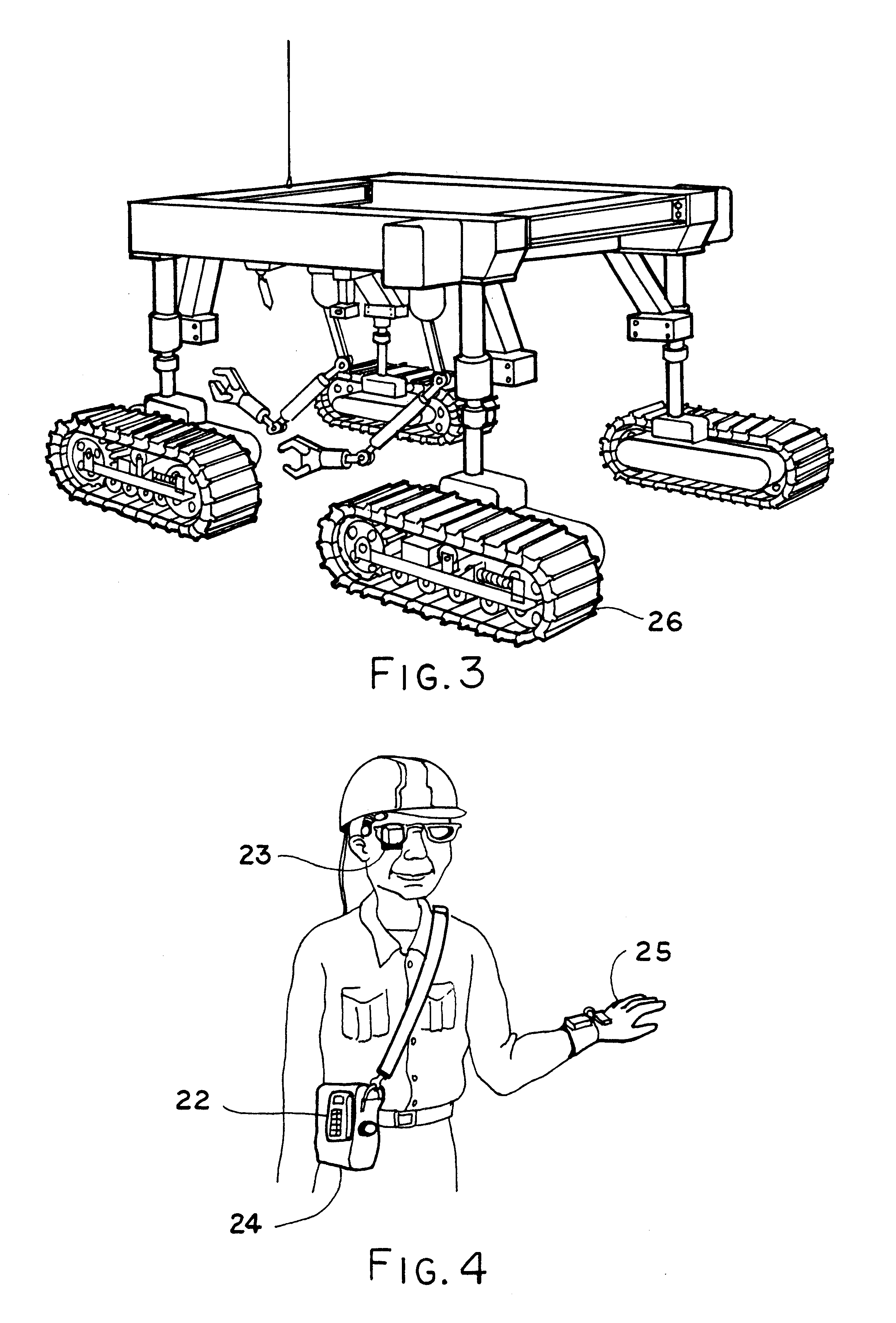

Flexible agricultural automation

InactiveUS6671582B1High precisionQuality improvementOptical radiation measurementPosition fixationAgricultural engineeringAssembly line

Agricultural operations by applying flexible manufacturing software, robotics and sensing techniques to agriculture. In manufacturing operations utilizing flexible machining and flexible assembly robots, work pieces flow through a fixed set of workstations on an assembly line. At different stations are located machine vision systems, laser based raster devices, radar, touch, photocell, and other methods of sensing; flexible robot armatures and the like are used to operate on them. This flexible agricultural automation turns that concept inside out, moving software programmable workstations through farm fields on mobile robots that can sense their environment and respond to it flexibly. The agricultural automation will make it possible for large scale farming to take up labor intensive farming practices which are currently only practical for small scale farming, improving land utilization efficiency, while lowering manpower costs dramatically.

Owner:HANLEY BRIAN P

Systems and methods for providing illumination in machine vision systems

InactiveUS7042172B2Photoelectric discharge tubesElectric light circuit arrangementOphthalmologyLighting system

A lighting system associated with a machine vision system. The machine vision system may direct lighting control commands to the lighting system to change the illumination conditions provided to an object. A vision system may also be provided and associated with the machine vision system such that the vision system views and captures an image(s) of the object when lit by the lighting system. The machine vision system may direct the lighting system to change the illumination conditions and then capture the image.

Owner:SIGNIFY NORTH AMERICA CORP

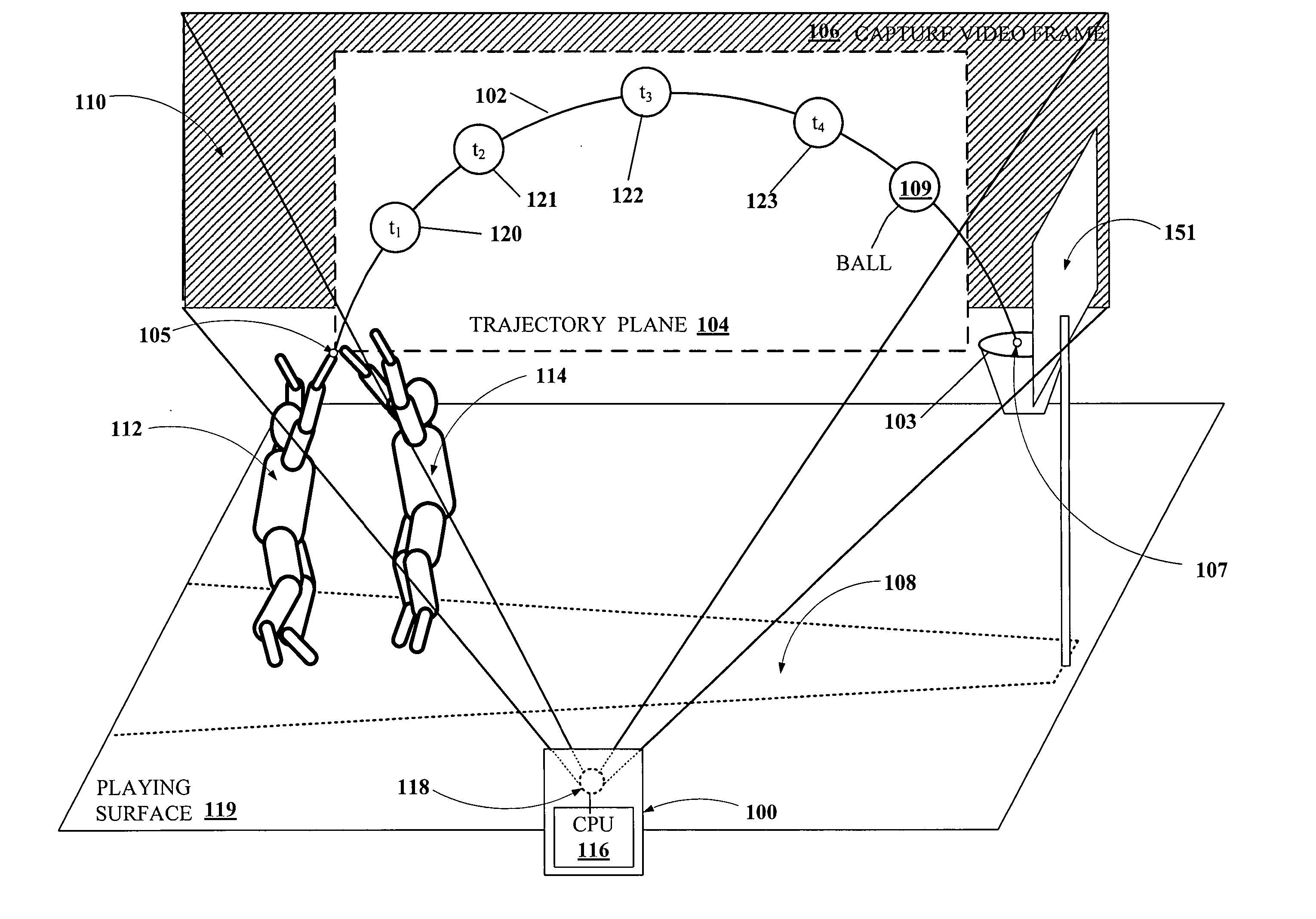

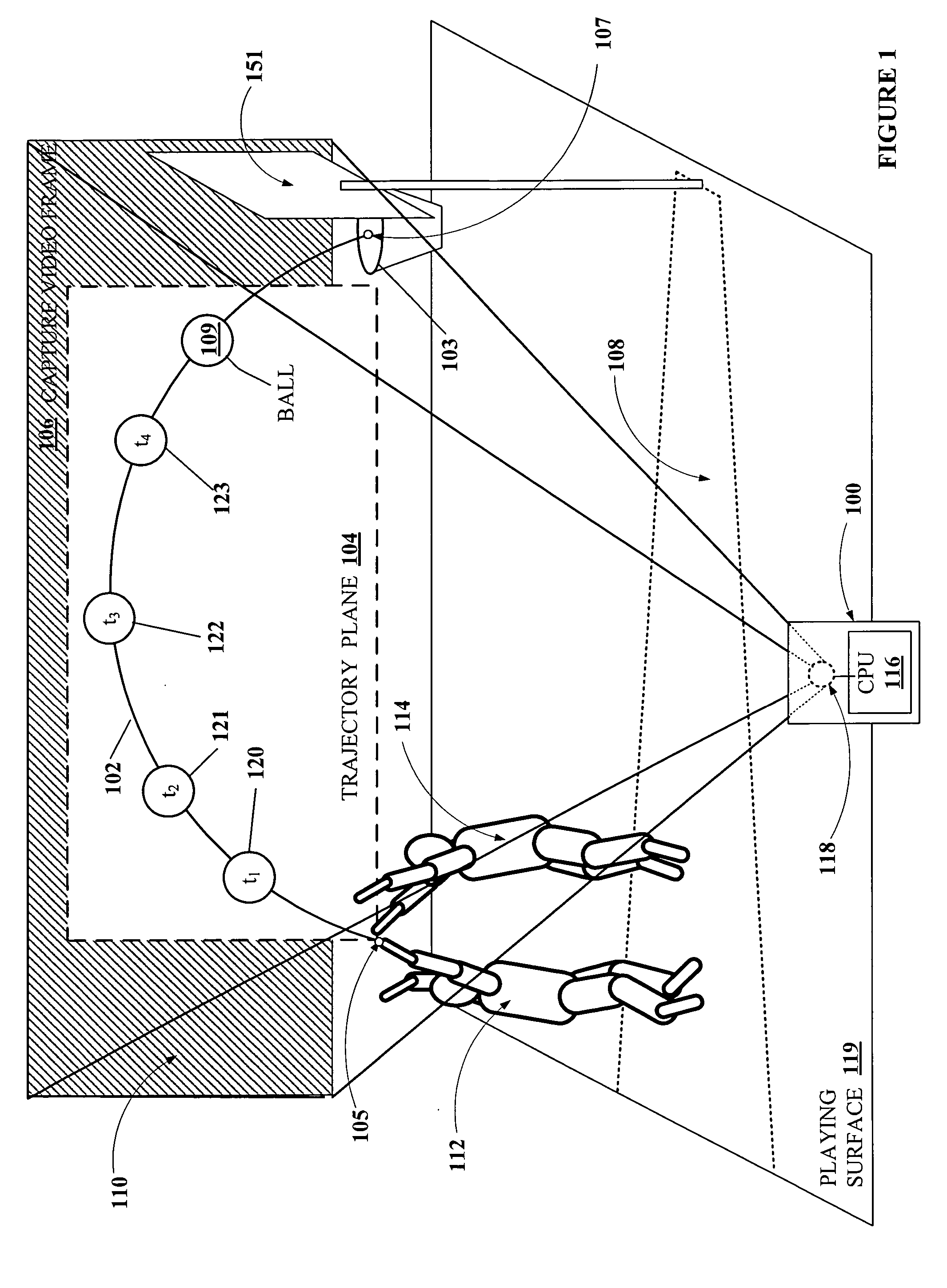

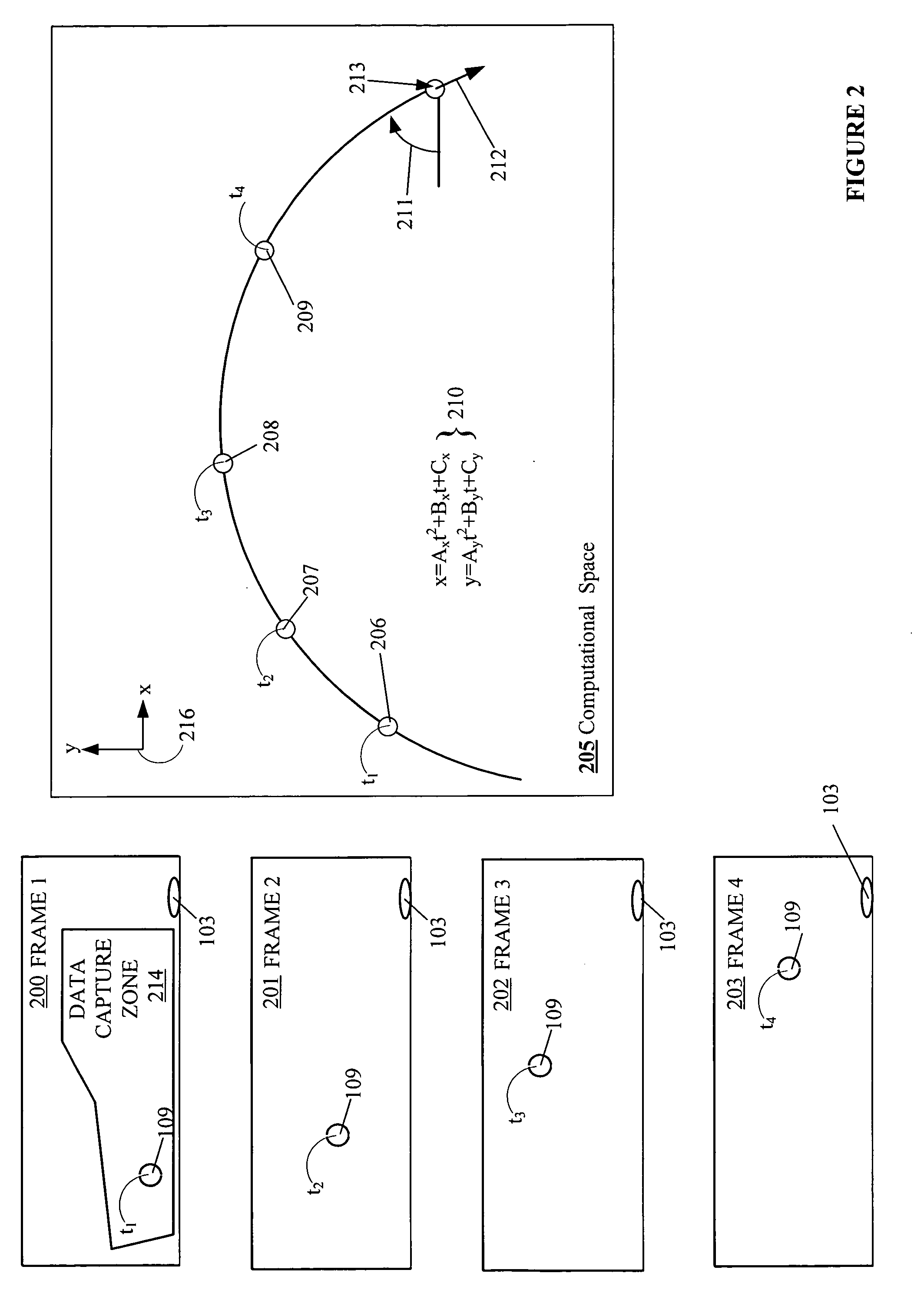

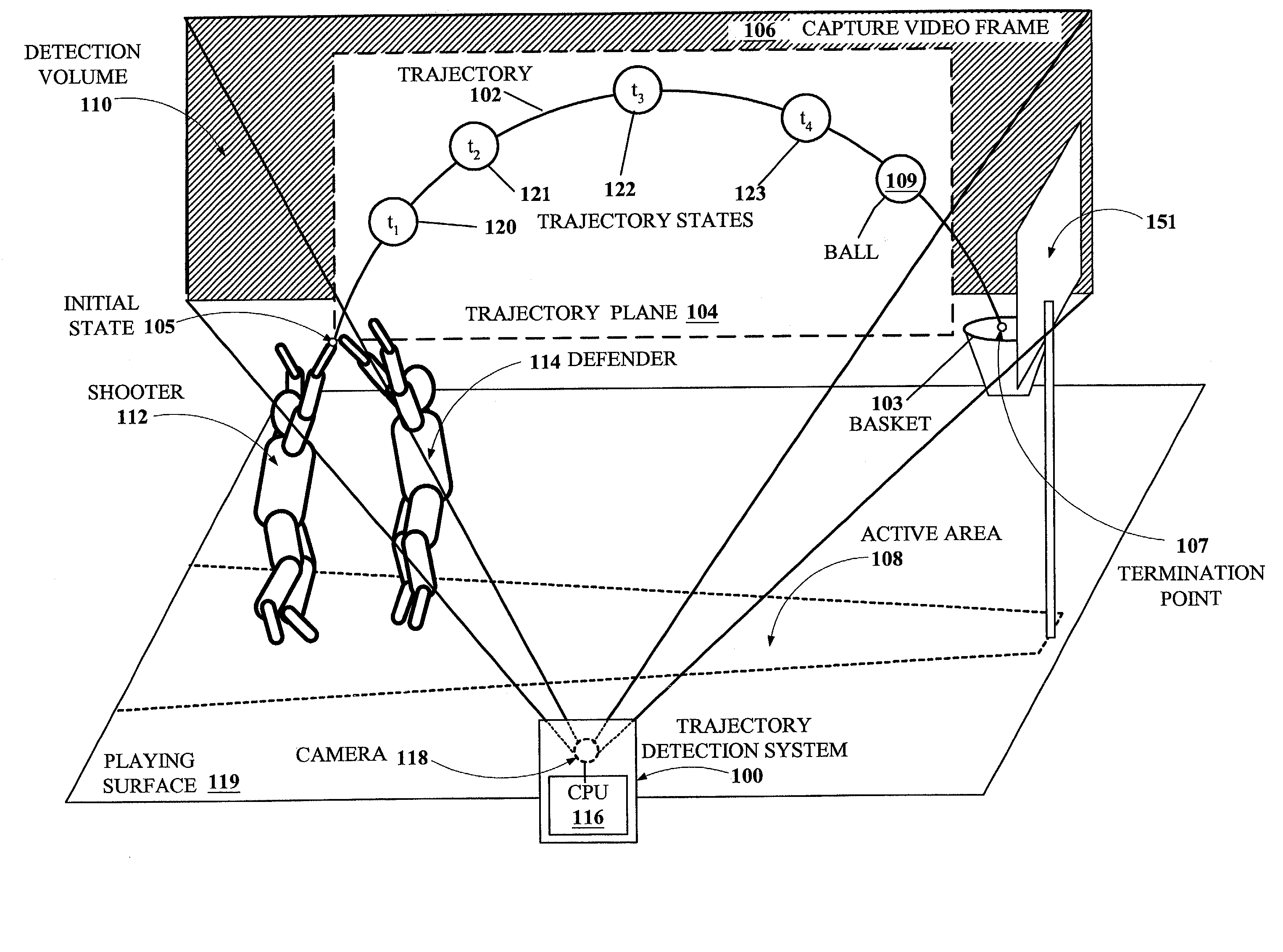

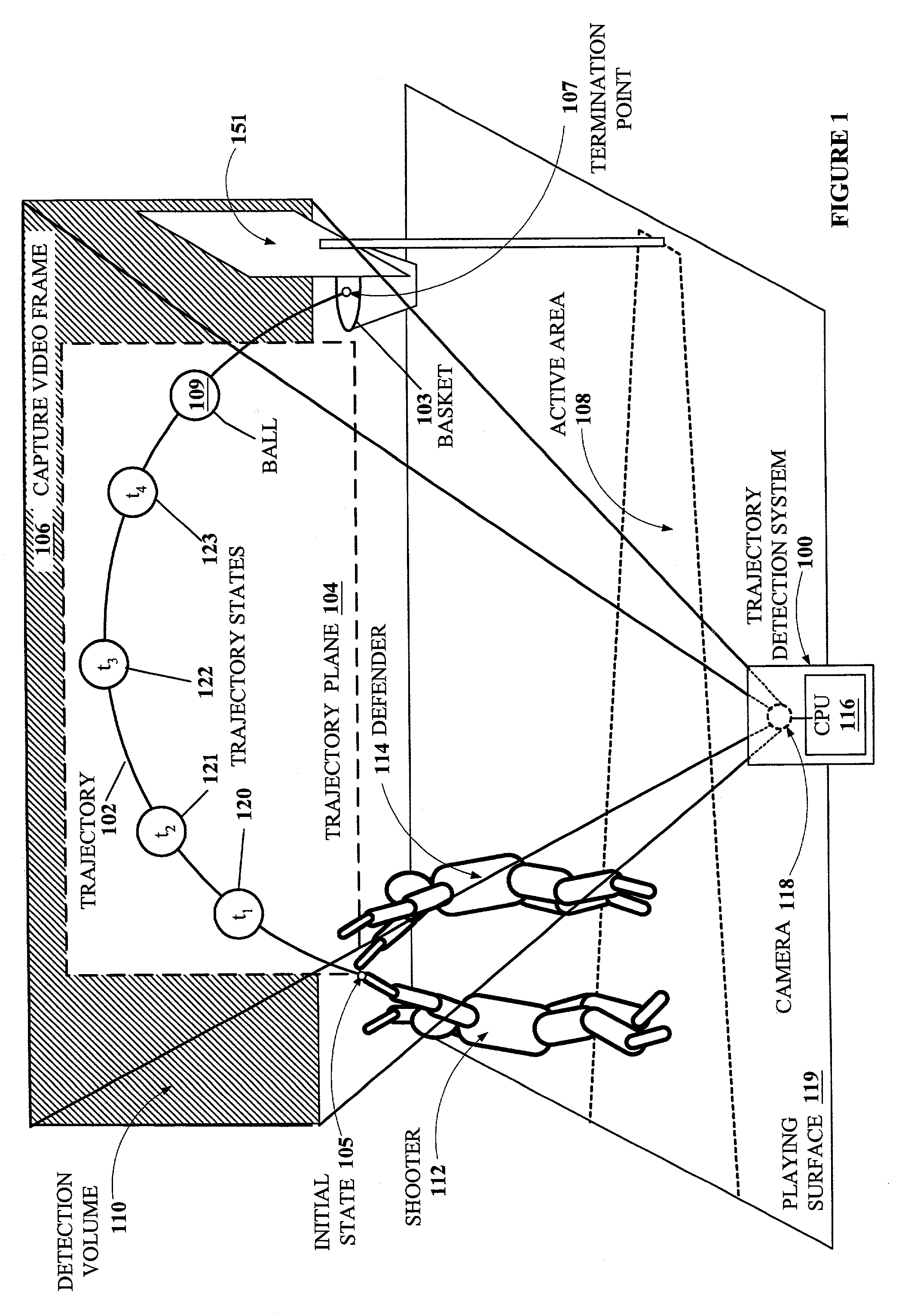

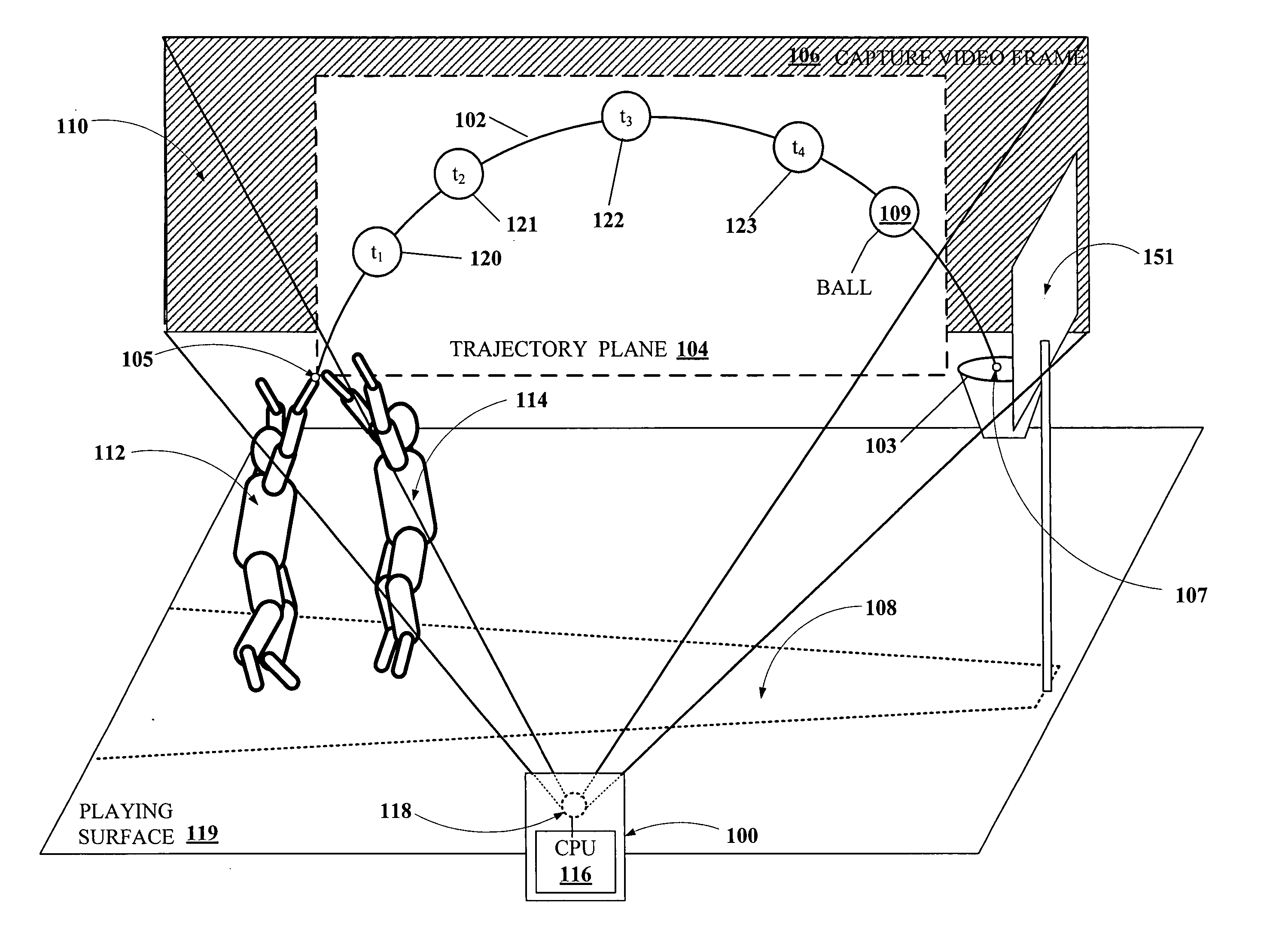

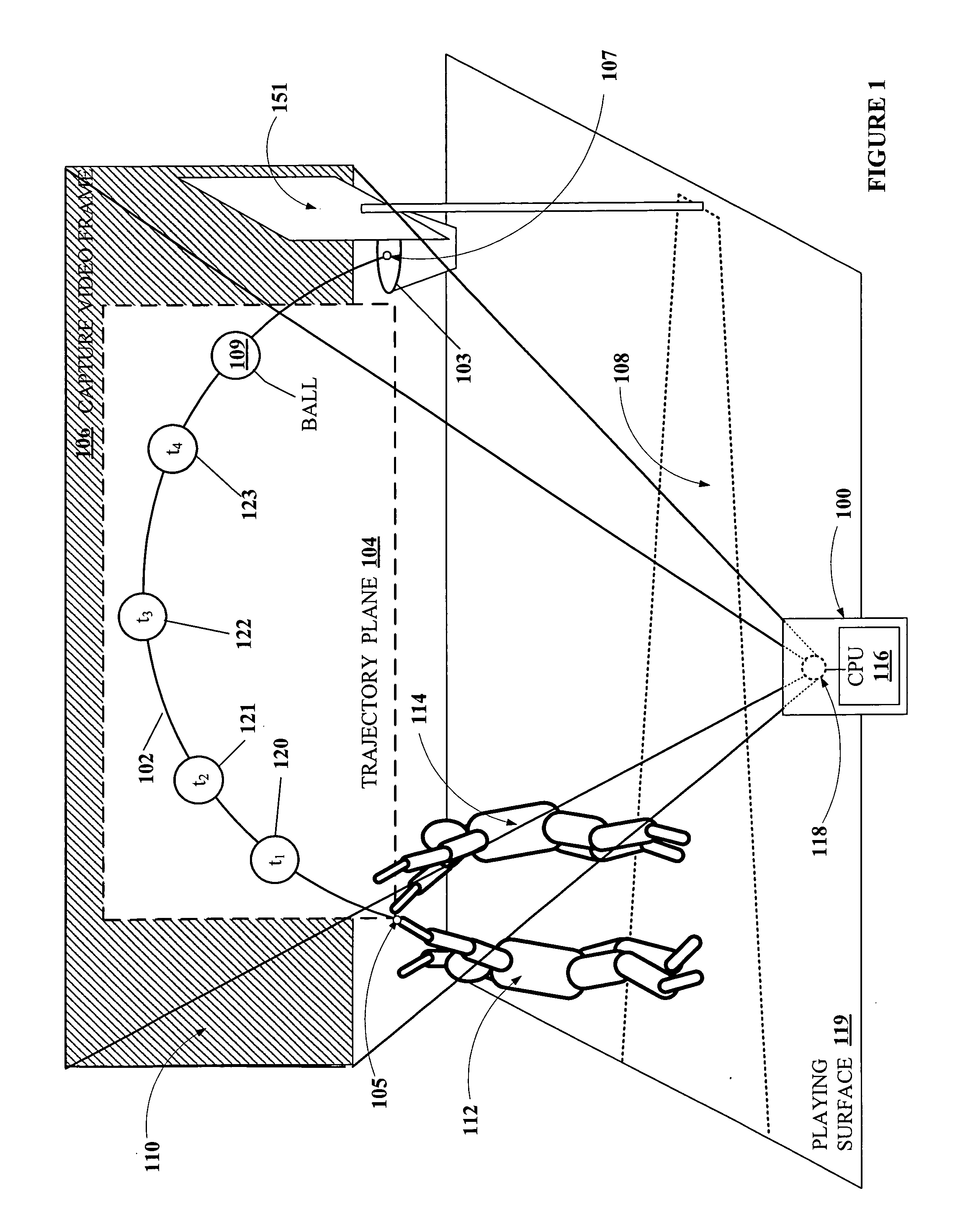

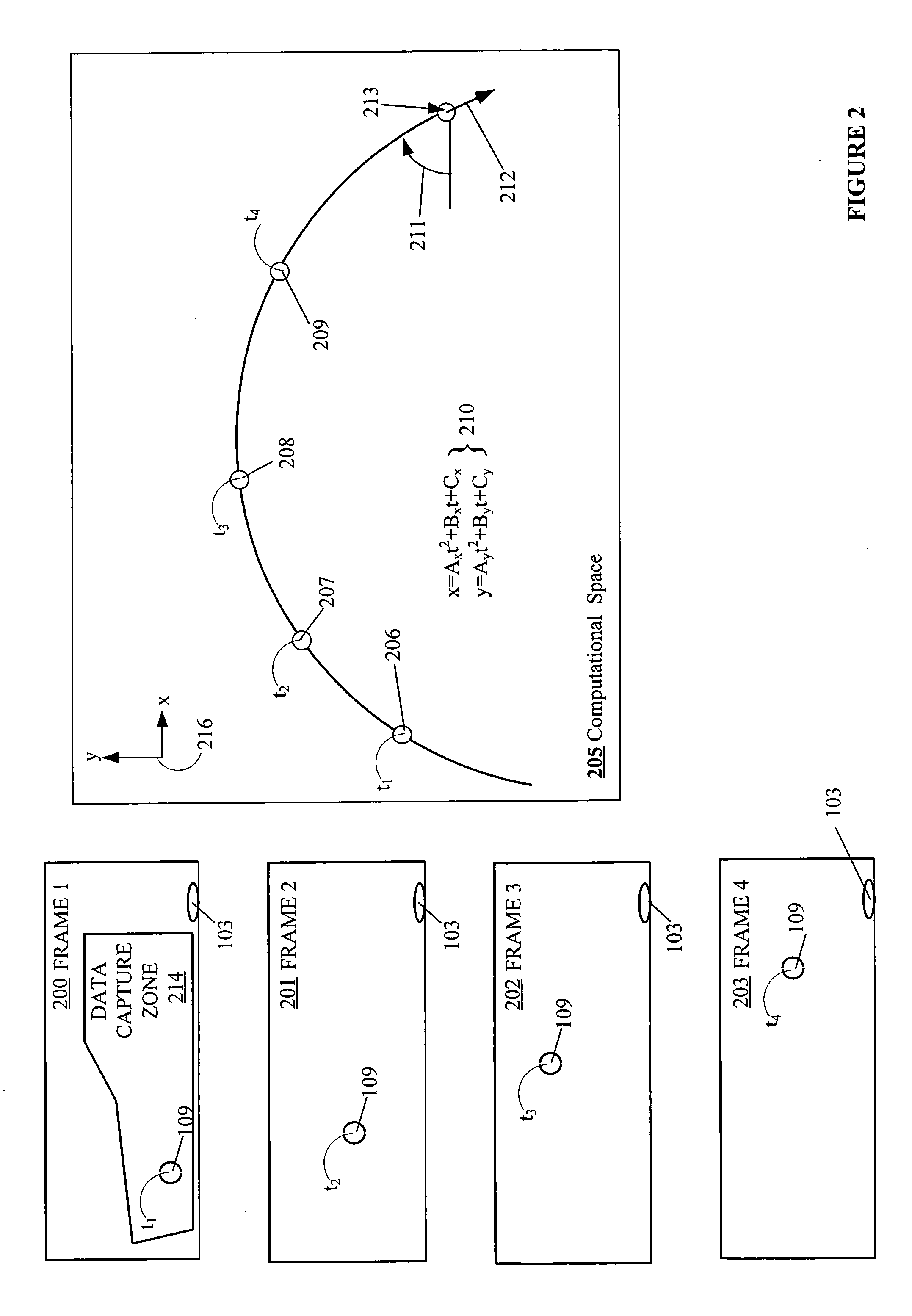

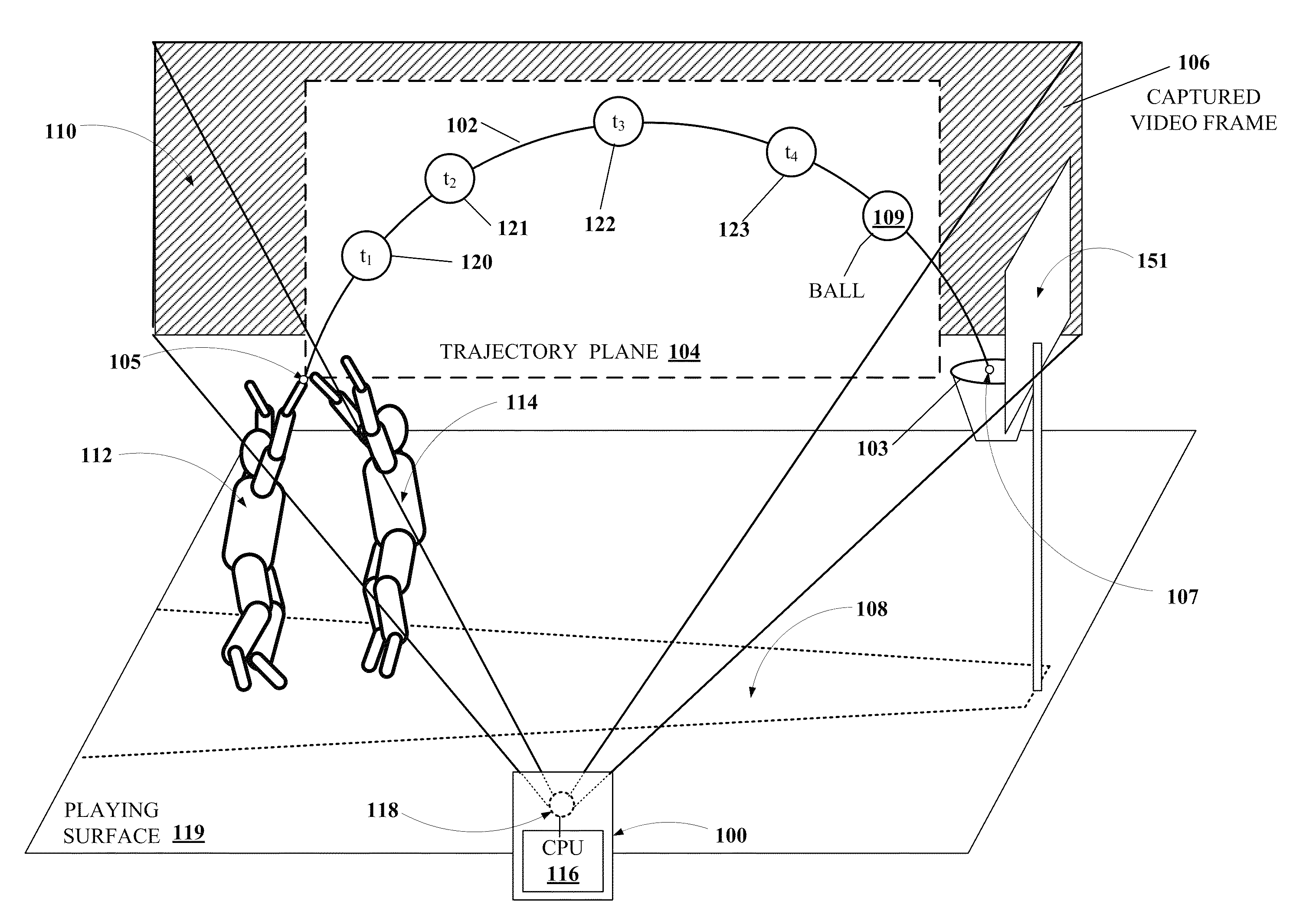

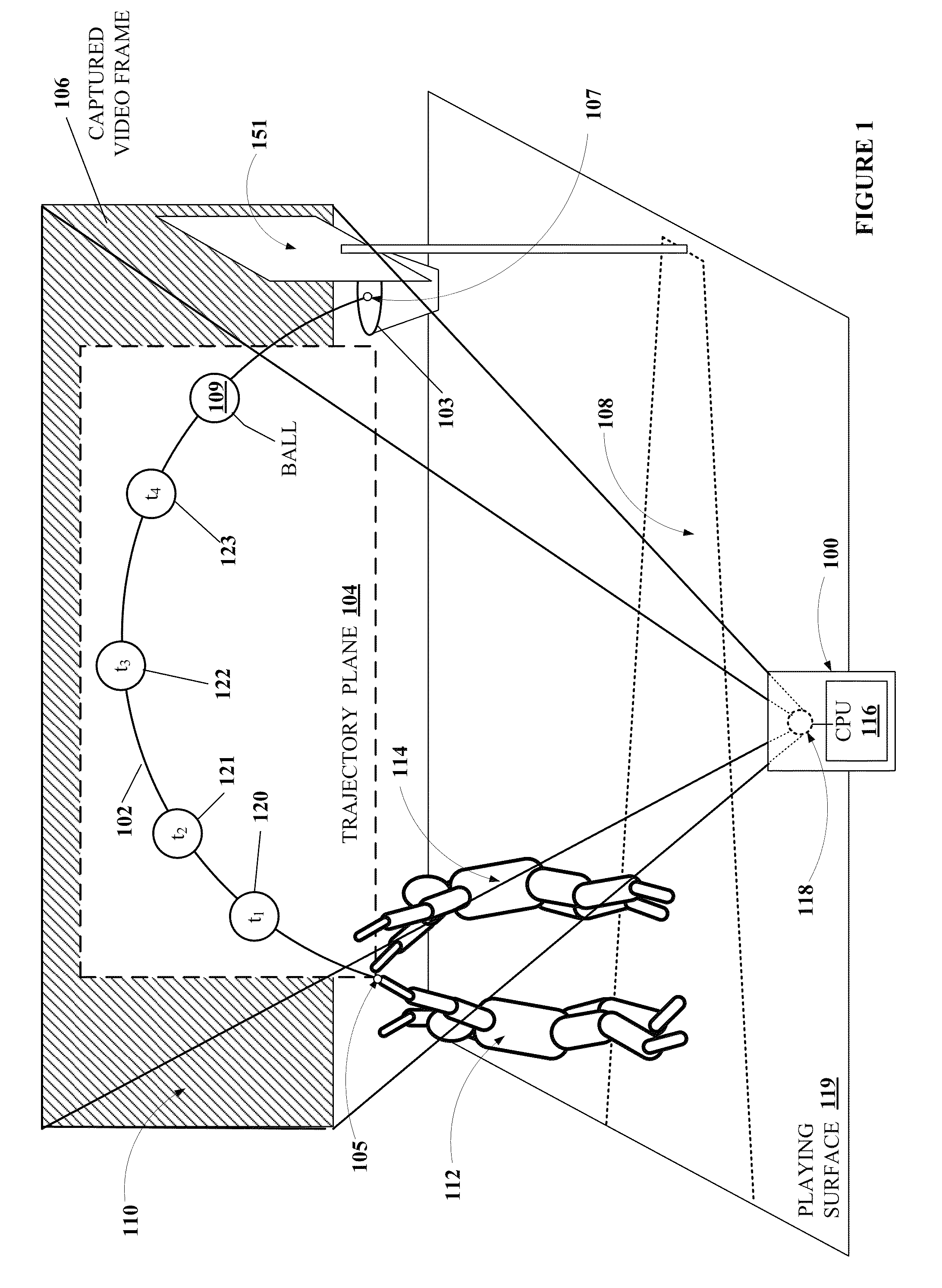

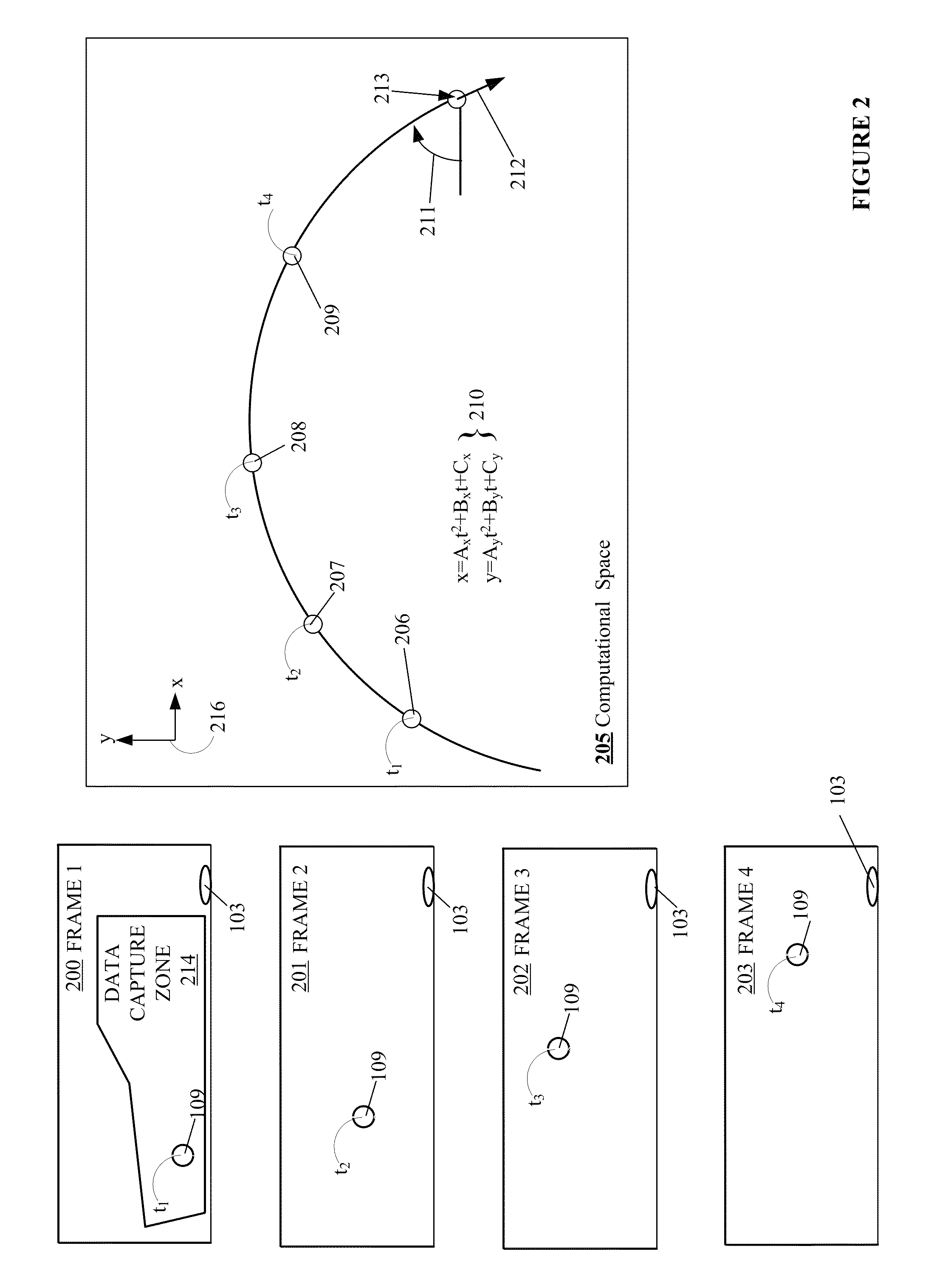

Trajectory detection and feedback system

ActiveUS20070026975A1Improve consistencyImprove performanceSki bindingsGymnastic exercisingFree flightMedicine

A disclosed device provides a trajectory detection and feedback system. The system is capable of detecting one or more moving objects in free flight, analyzing a trajectory of each object and providing immediate feedback information to a human that has launched the object into flight, and / or one or more observers in the area. The feedback information may include one or more trajectory parameters that the human may use to evaluate their skill at sending the object along a desired trajectory. In a particular embodiment, a non-intrusive machine vision system that remotely detects trajectories of moving objects may be used to evaluate trajectory parameters for a basketball shot at a basketball hoop by a player. The feedback information, such as a trajectory entry angle into the basketball hoop and / or an entry velocity into the hoop for the shot, may be output to the player in an auditory format using a sound projection device. The system may be operable to be set-up and to operate in a substantially autonomous manner. After the system has evaluated a plurality of shots by the player, the system may provide 1) a diagnosis of their shot consistency, 2) a prediction for improvement based upon improving their shot consistency and 3) a prescription of actions for improving their consistency.

Owner:PILLAR VISION

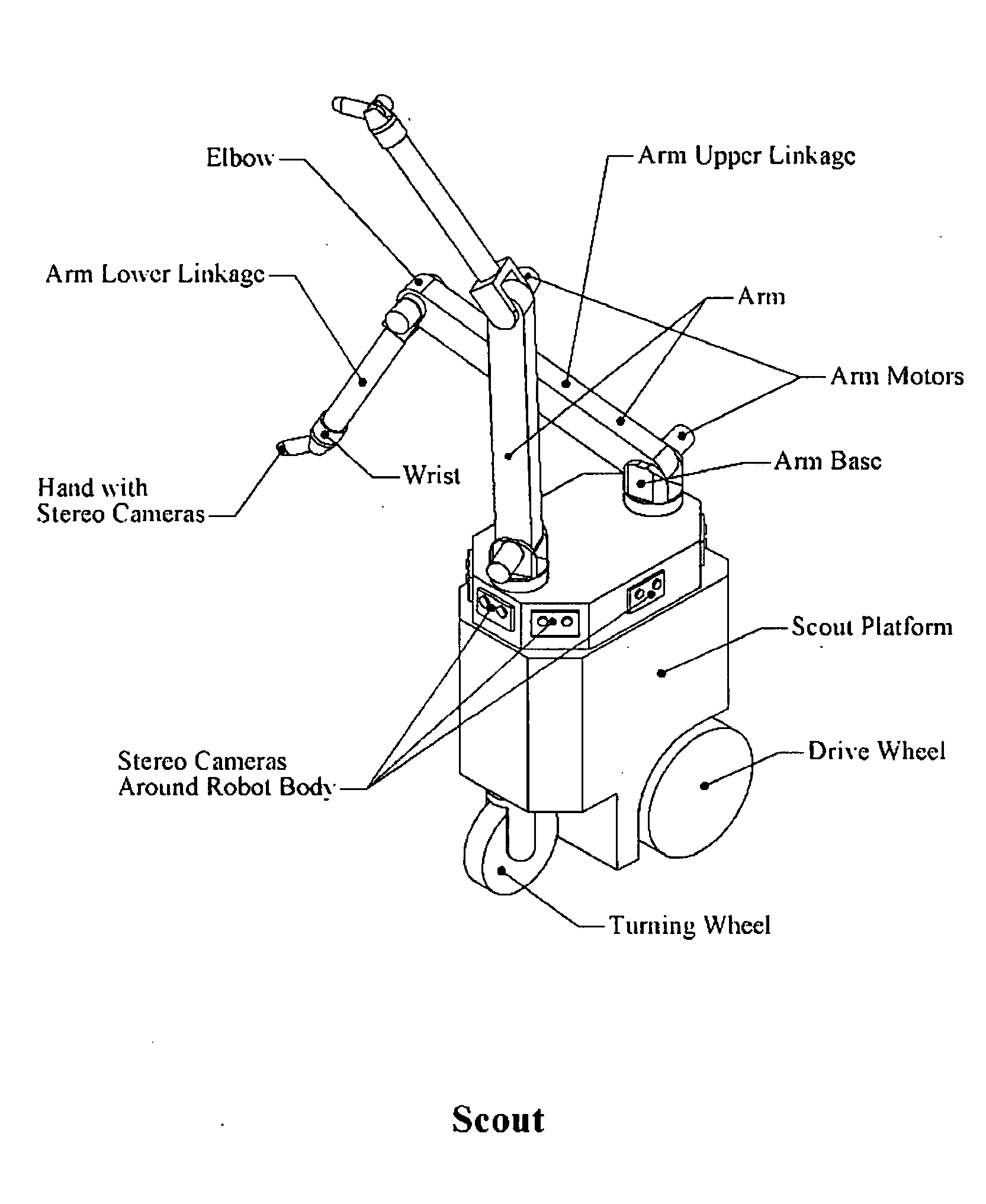

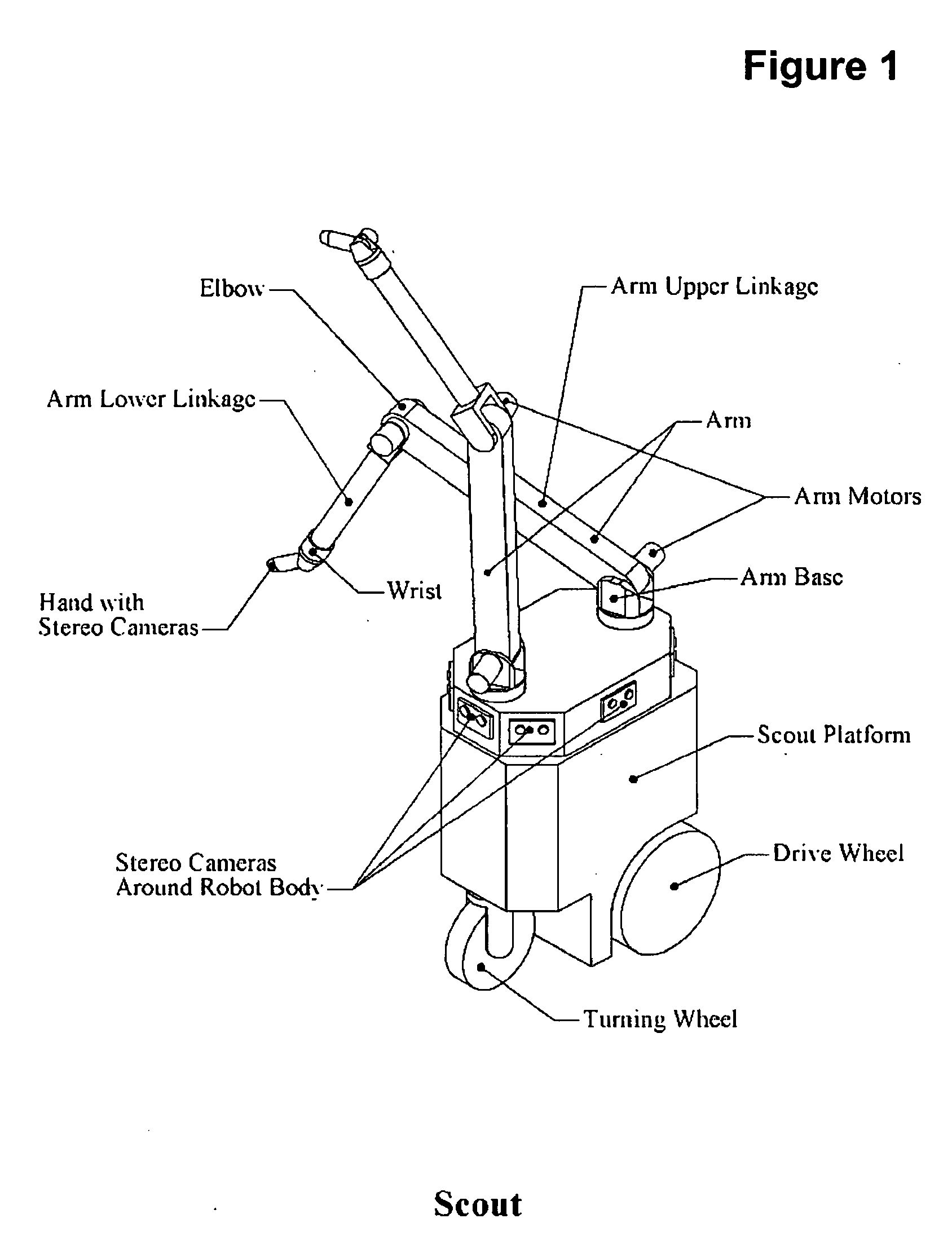

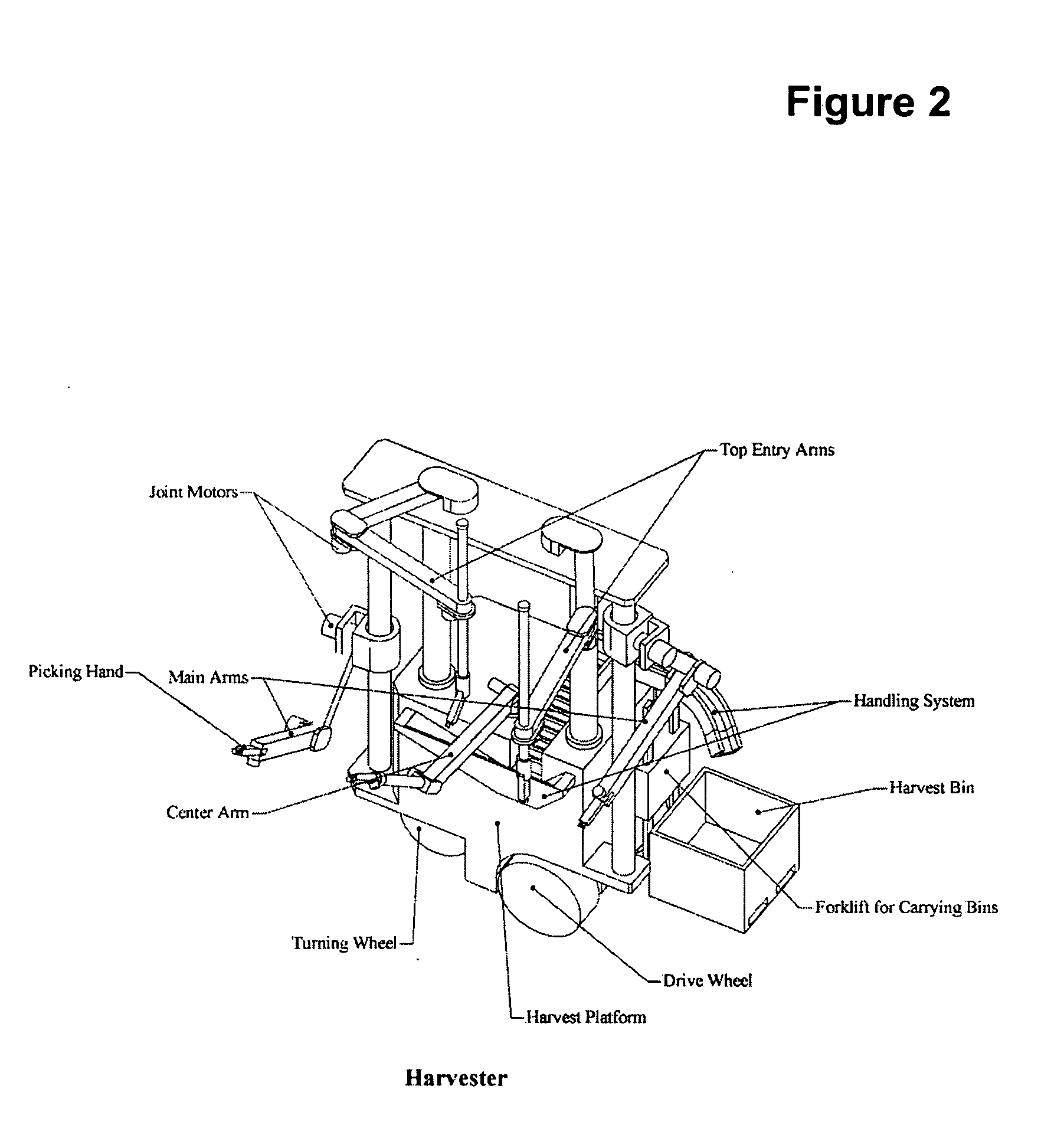

Robot mechanical picker system and method

InactiveUS20050126144A1Improve efficiencyLow costAnalogue computers for trafficMowersRobot planningSimulation

Embodiments of the invention comprise a system and method that enable robotic harvesting of agricultural crops. One approach for automating the harvesting of fresh fruits and vegetables is to use a robot comprising a machine-vision system containing rugged solid-state digital cameras to identify and locate the fruit on each tree, coupled with a picking system to perform the picking. In one embodiment of the invention a robot moves through a field first to “map” the field to determine plant locations, the number and size of fruit on the plants and the approximate positions of the fruit on each plant. A robot employed in this embodiment may comprise a GPS sensor to simplify the mapping process. At least one camera on at least one arm of a robot may be mounted in appropriately shaped protective enclosure so that a camera can be physically moved into the canopy of the plant if necessary to map fruit locations from inside the canopy. Once the map of the fruit is complete for a field, the robot can plan and implement an efficient picking plan for itself or another robot. In one embodiment of the invention, a scout robot or harvest robot determines a picking plan in advance of picking a tree. This may be done if the map is finished hours, days or weeks before a robot is scheduled to harvest, or if the picking plan algorithm selected requires significant computational time and cannot be implemented in “real time” by the harvesting robot as it is picking the field. If the picking algorithm selected is less computationally intense, the harvester may calculate the plan as it is harvesting. The system harvests according to the selected picking plan. The picking plan may be generated in the scout robot, harvest robot or on a server. Each of the elements in the system may be configured to communicate with each other using wireless communications technologies.

Owner:VISION ROBOTICS

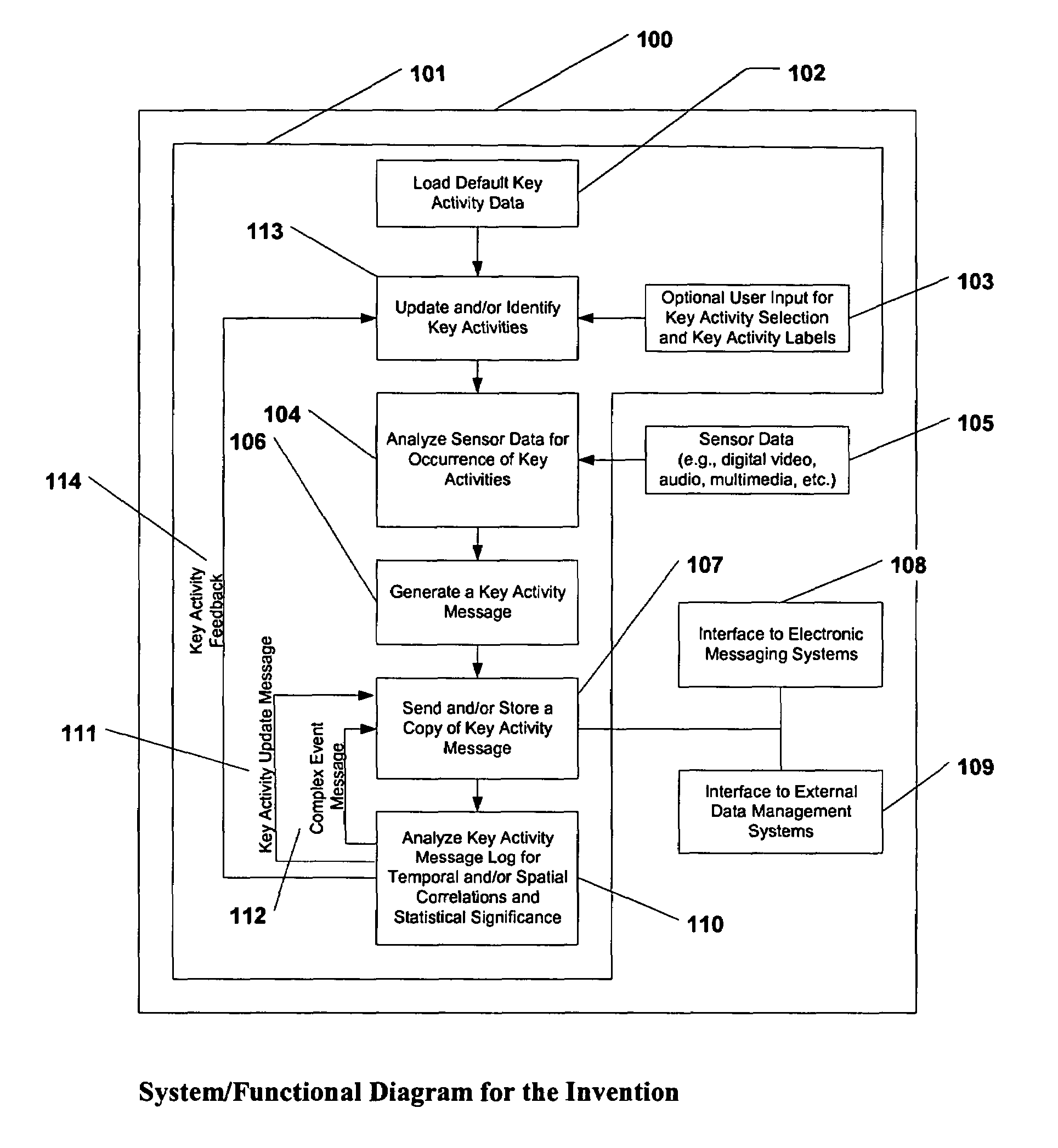

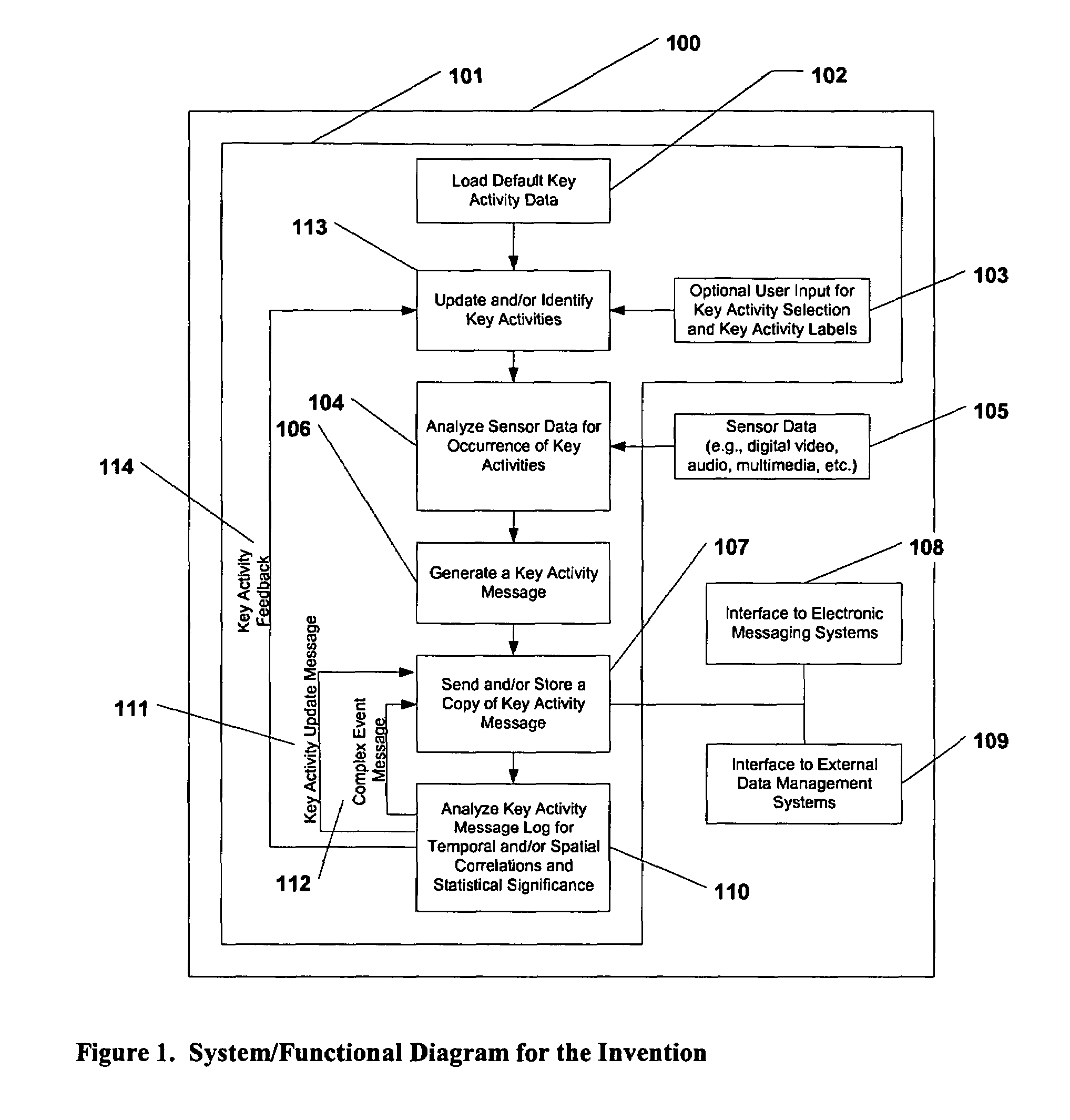

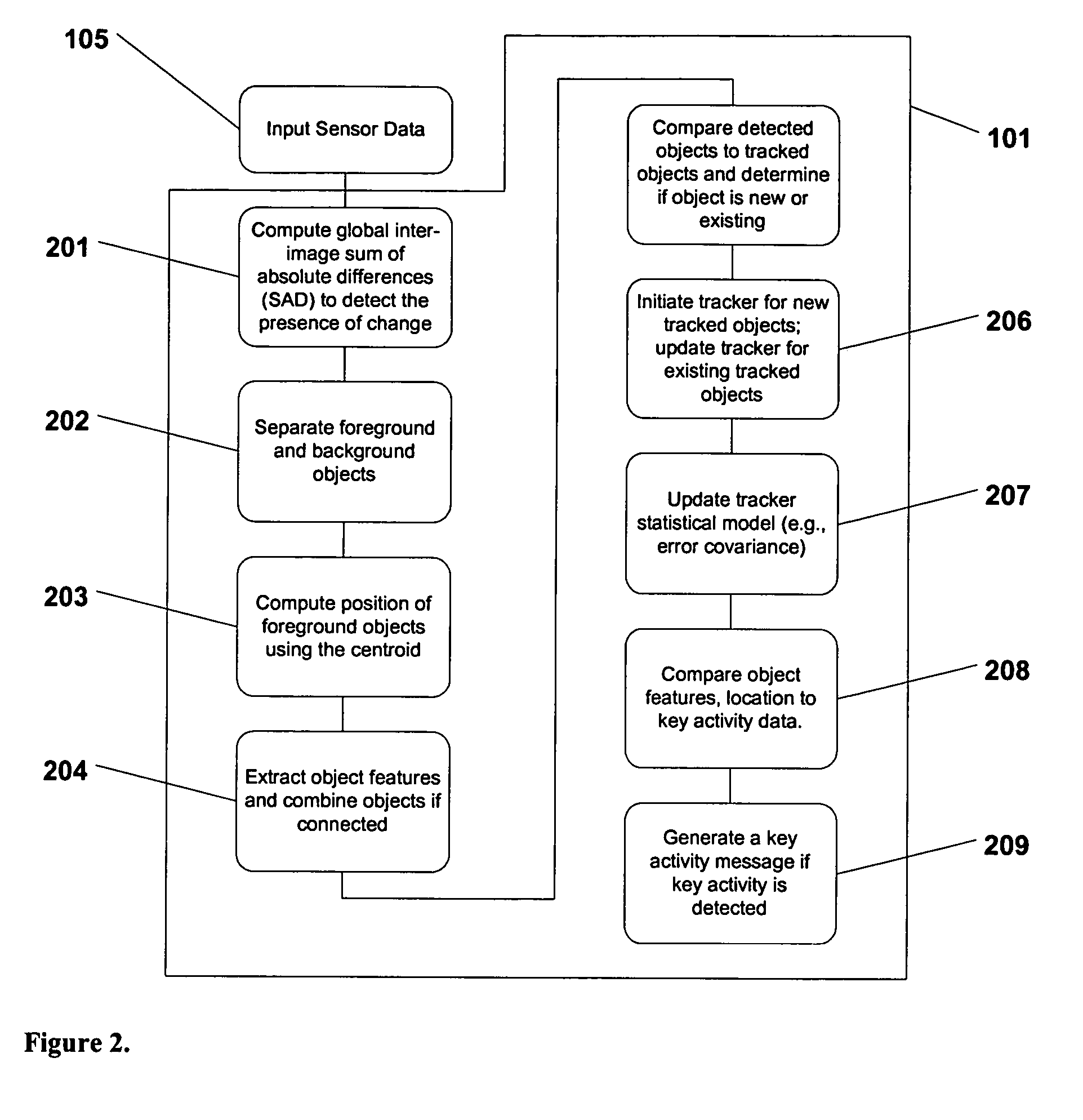

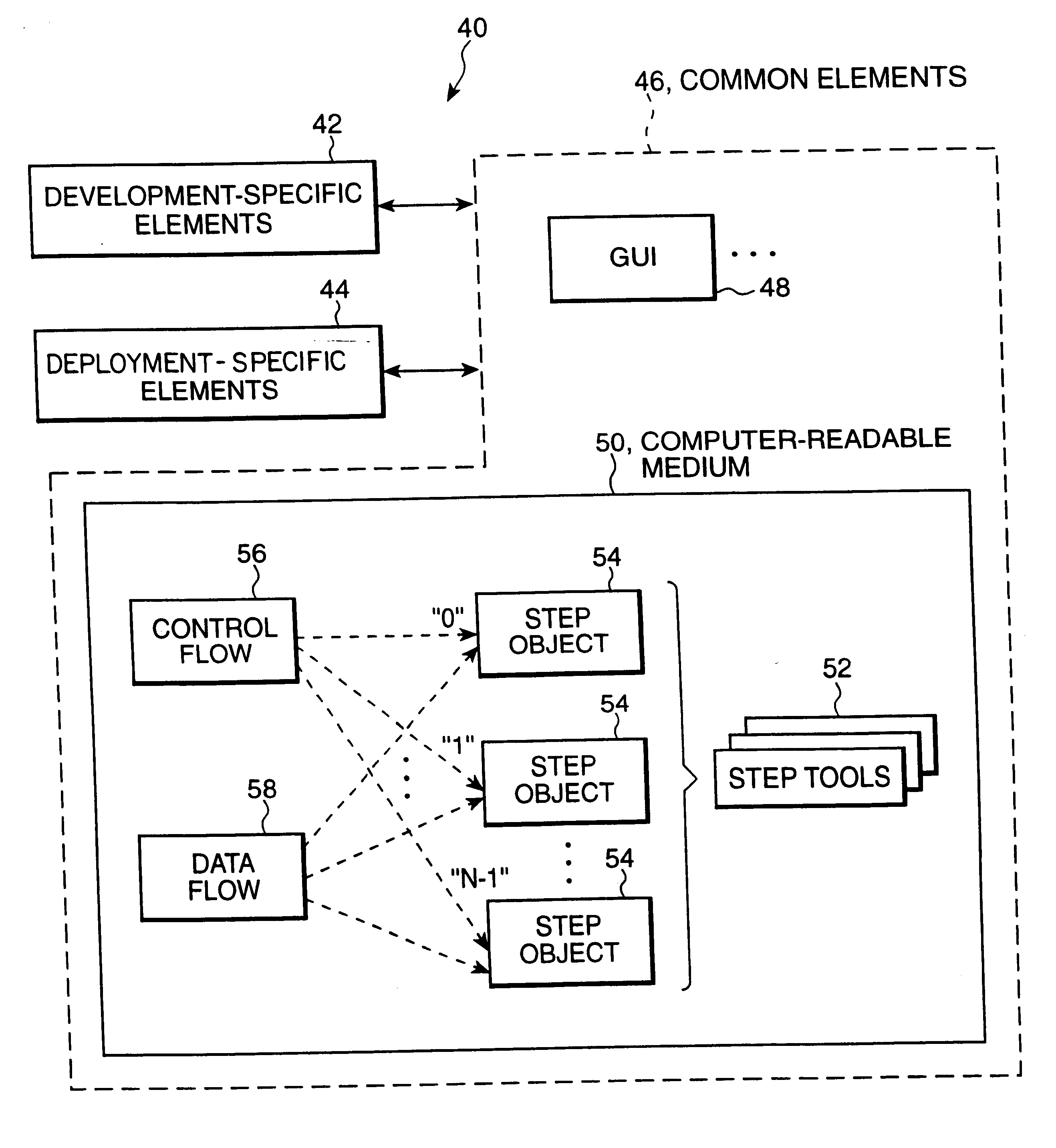

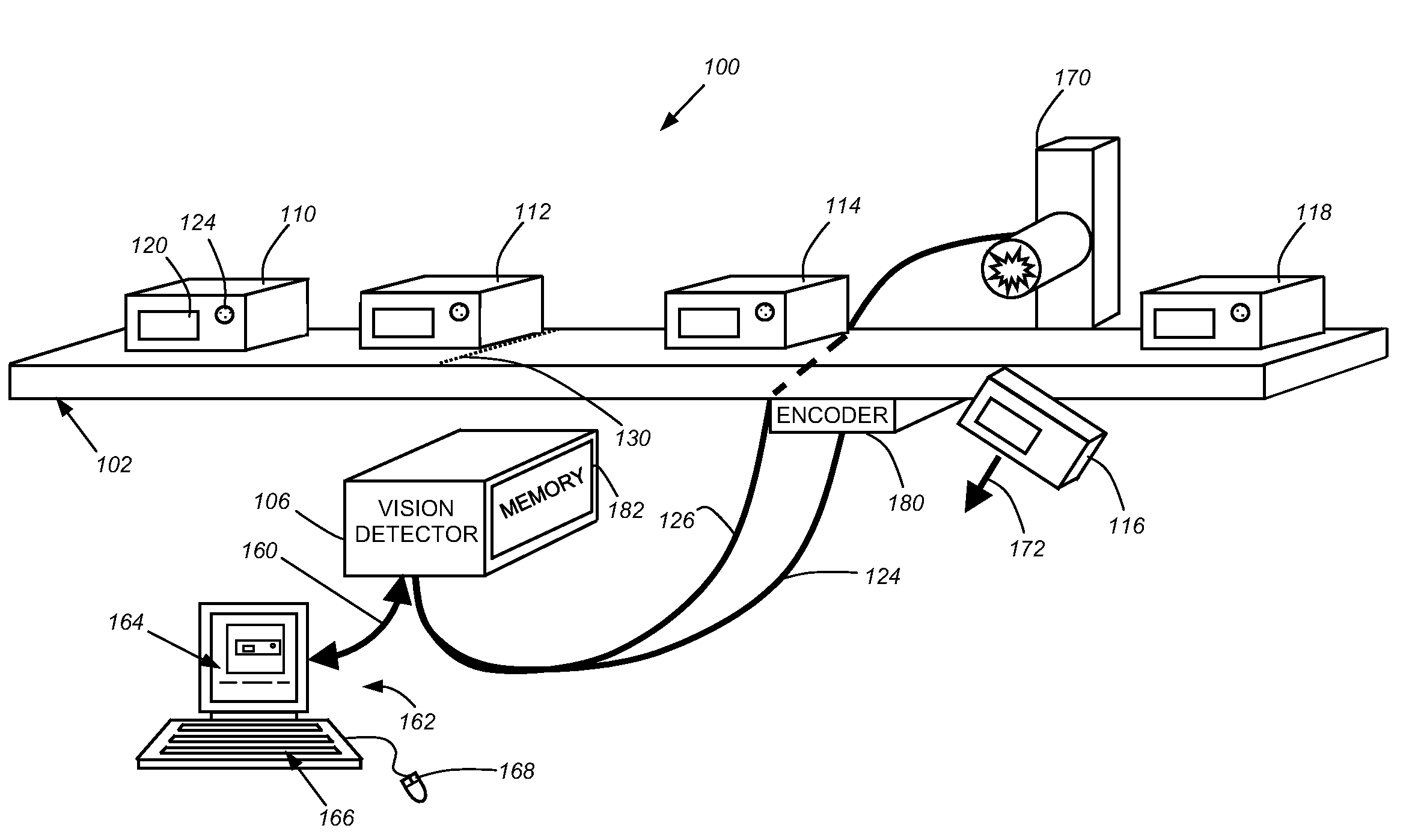

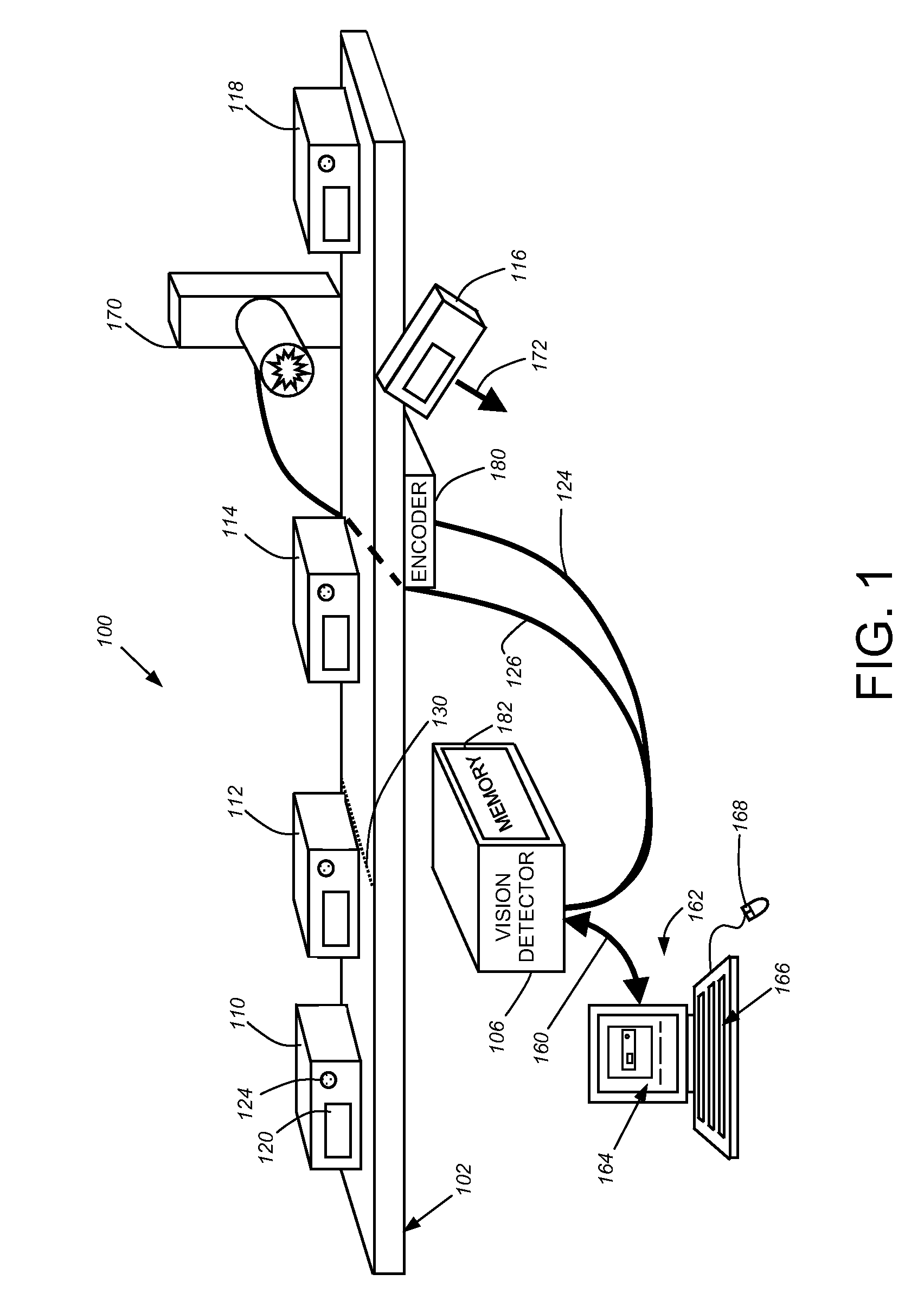

Machine vision system for enterprise management

ActiveUS7617167B2Eliminate needDigital data processing detailsPosition fixationKnowledge extractionEnterprise data management

Owner:DIVERSIFIED INNOVATIONS FUND LLLP

Systems and methods for providing illumination in machine vision systems

InactiveUS20040113568A1Photoelectric discharge tubesElectric light circuit arrangementEffect lightEngineering

A lighting system associated with a machine vision system. The machine vision system may direct lighting control commands to the lighting system to change the illumination conditions provided to an object. A vision system may also be provided and associated with the machine vision system such that the vision system views and captures an image(s) of the object when lit by the lighting system. The machine vision system may direct the lighting system to change the illumination conditions and then capture the image.

Owner:SIGNIFY NORTH AMERICA CORP

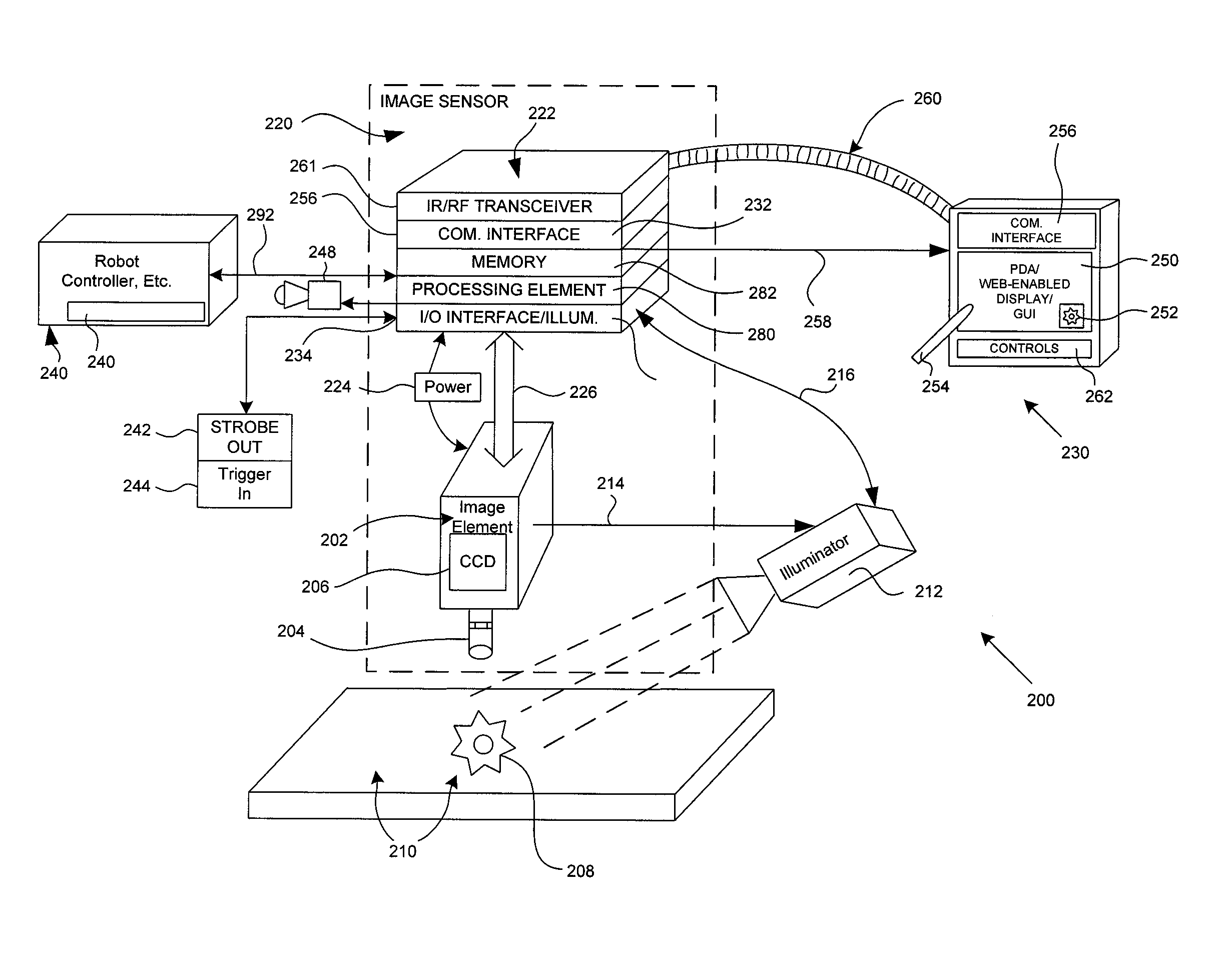

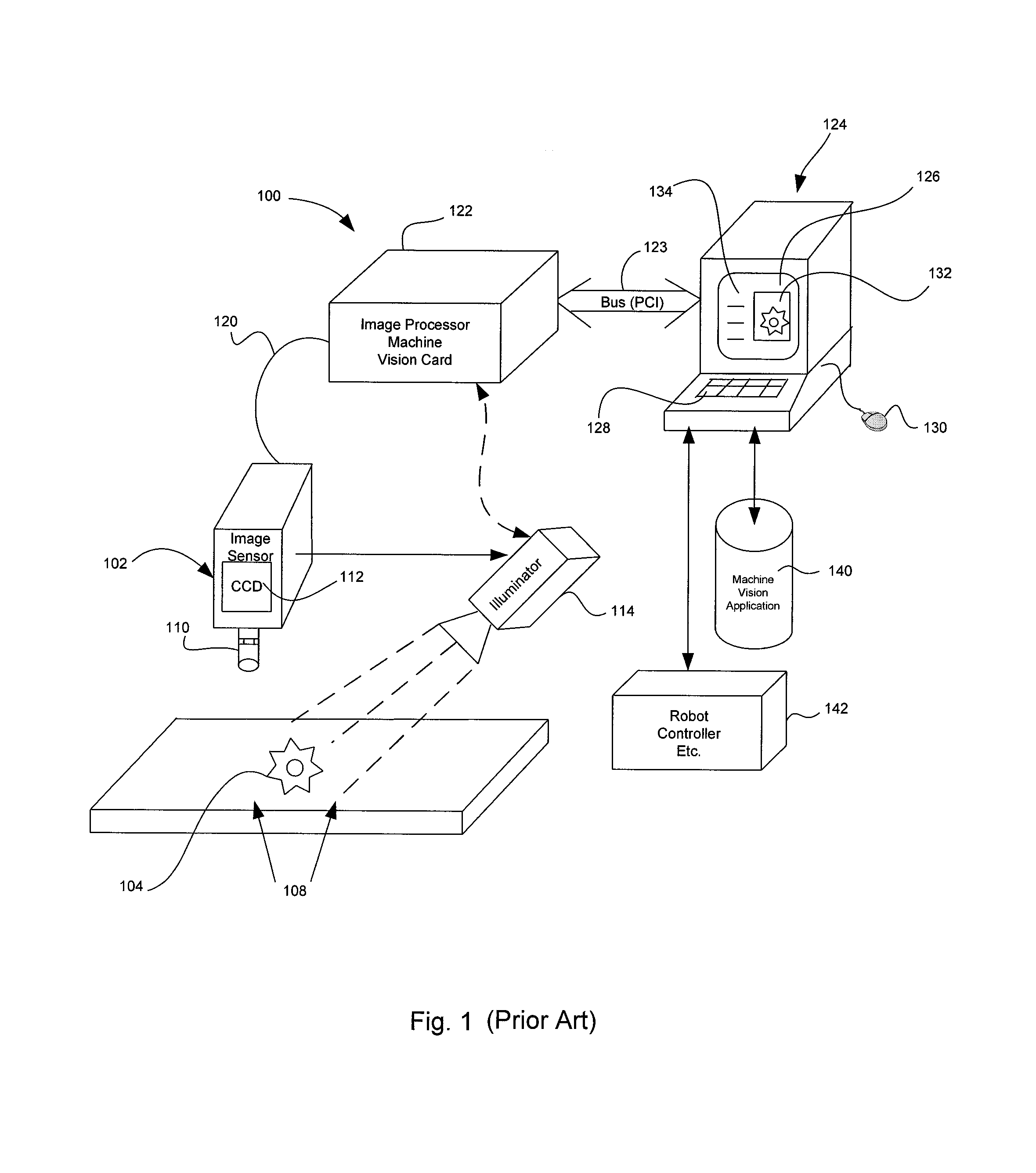

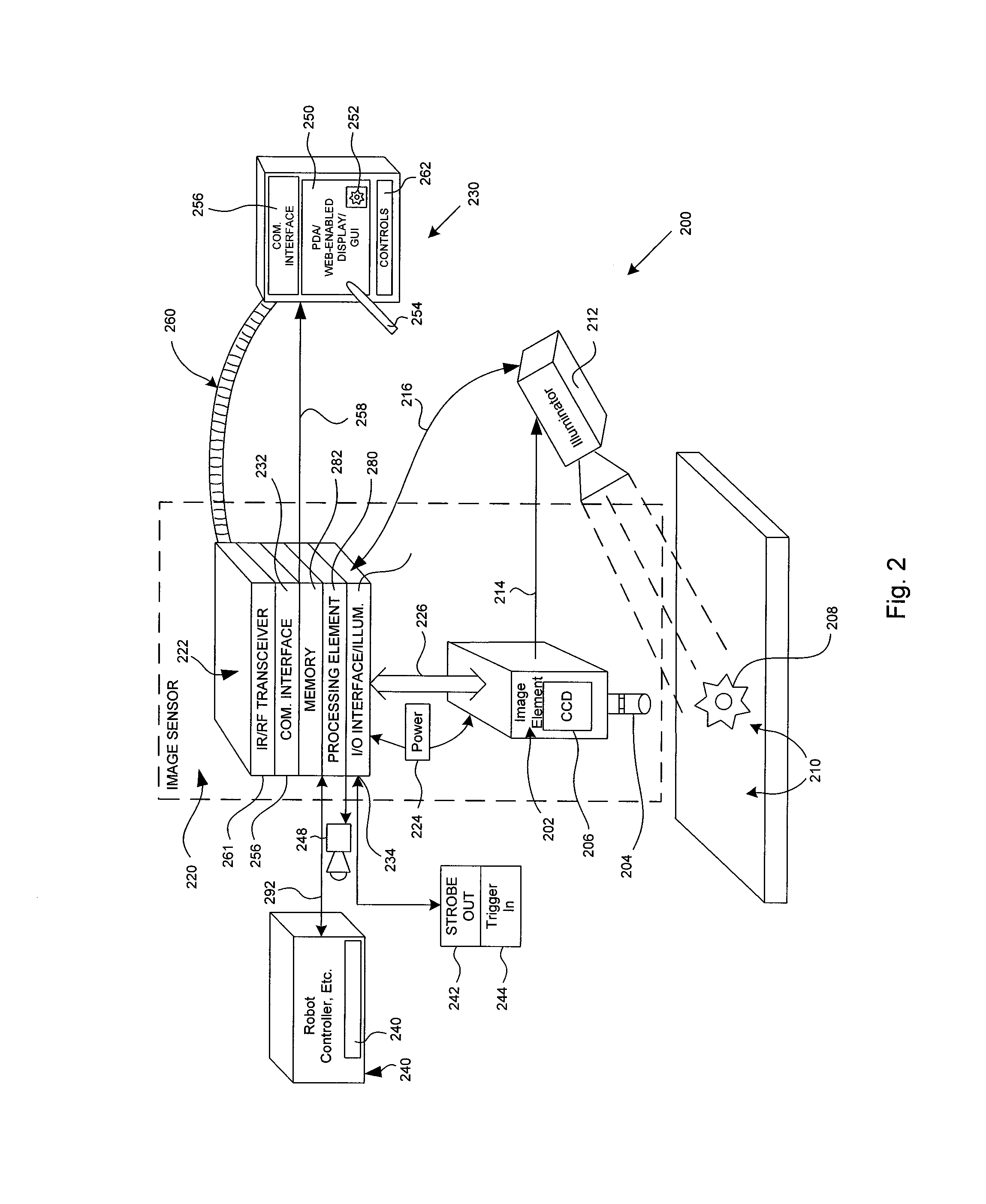

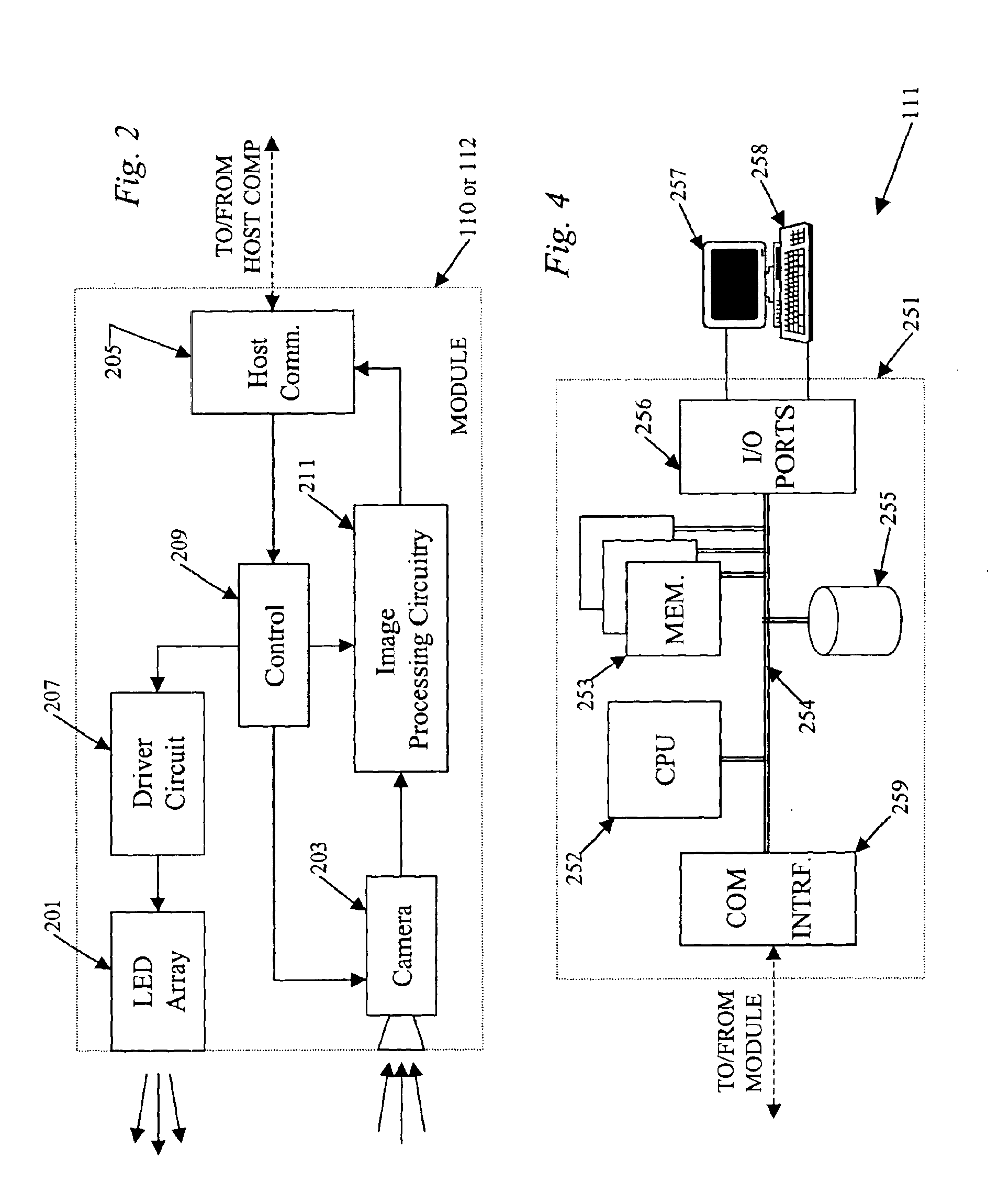

Human/machine interface for a machine vision sensor and method for installing and operating the same

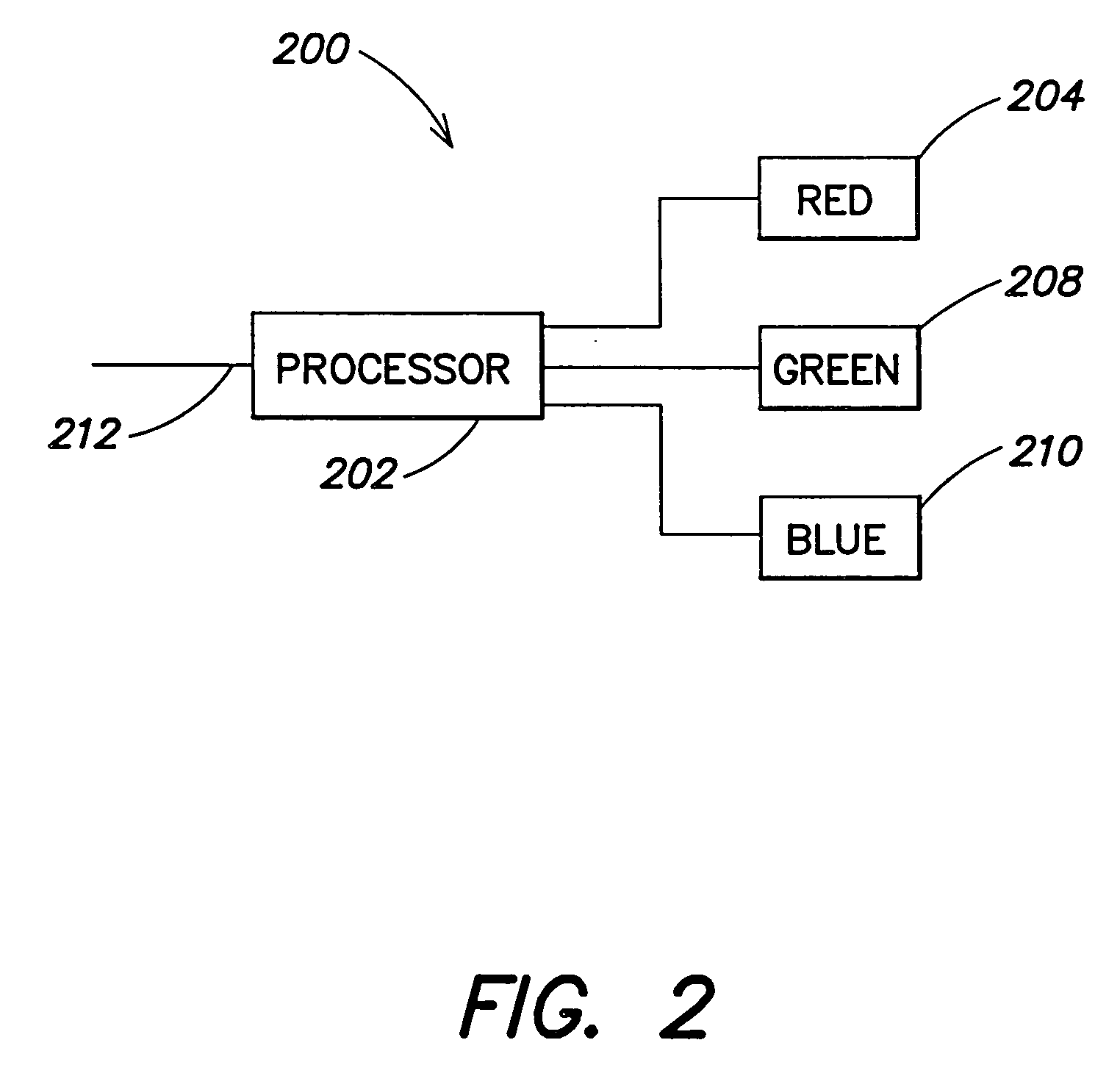

InactiveUS7305114B2Overcome disadvantagesHigh resolutionProgramme controlCharacter and pattern recognitionTelecommunications linkHand held

This invention overcomes the disadvantages of the prior art by providing a human / machine interface (HMI) for use with machine vision systems (MVSs) that provides the machine vision system processing functionality at the sensor end of the system, and uses a communication interface to exchange control, image and analysis information with a standardized, preferably portable device that can be removed from the MVS during runtime. In an illustrative embodiment, this portable device can be a web-browser equipped computer (handheld, laptop or fixed PC) or a Personal Digital Assistant (PDA). The communication interface on the sensor-end of the system is adapted to communicate over a cable or wireless communication link (for example infrared (IR) or radio frequency (RF)), with a corresponding communication interface in the portable device. The data communicated over the interconnect or link is formatted so that it is read at a data speed and level of resolution (pixel count) that is appropriate to the portable device by use of an image processor at the sensor end.

Owner:COGNEX TECH & INVESTMENT

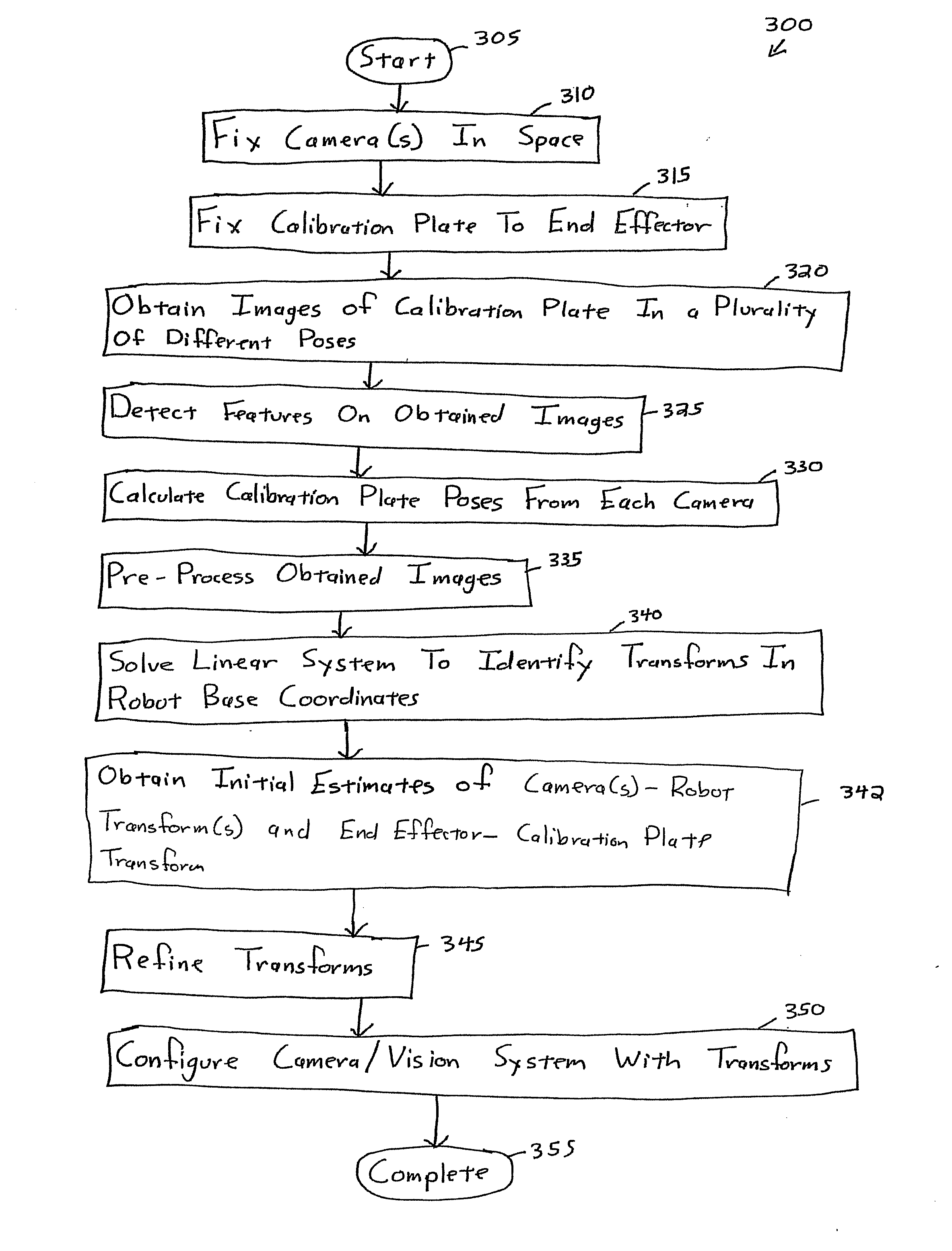

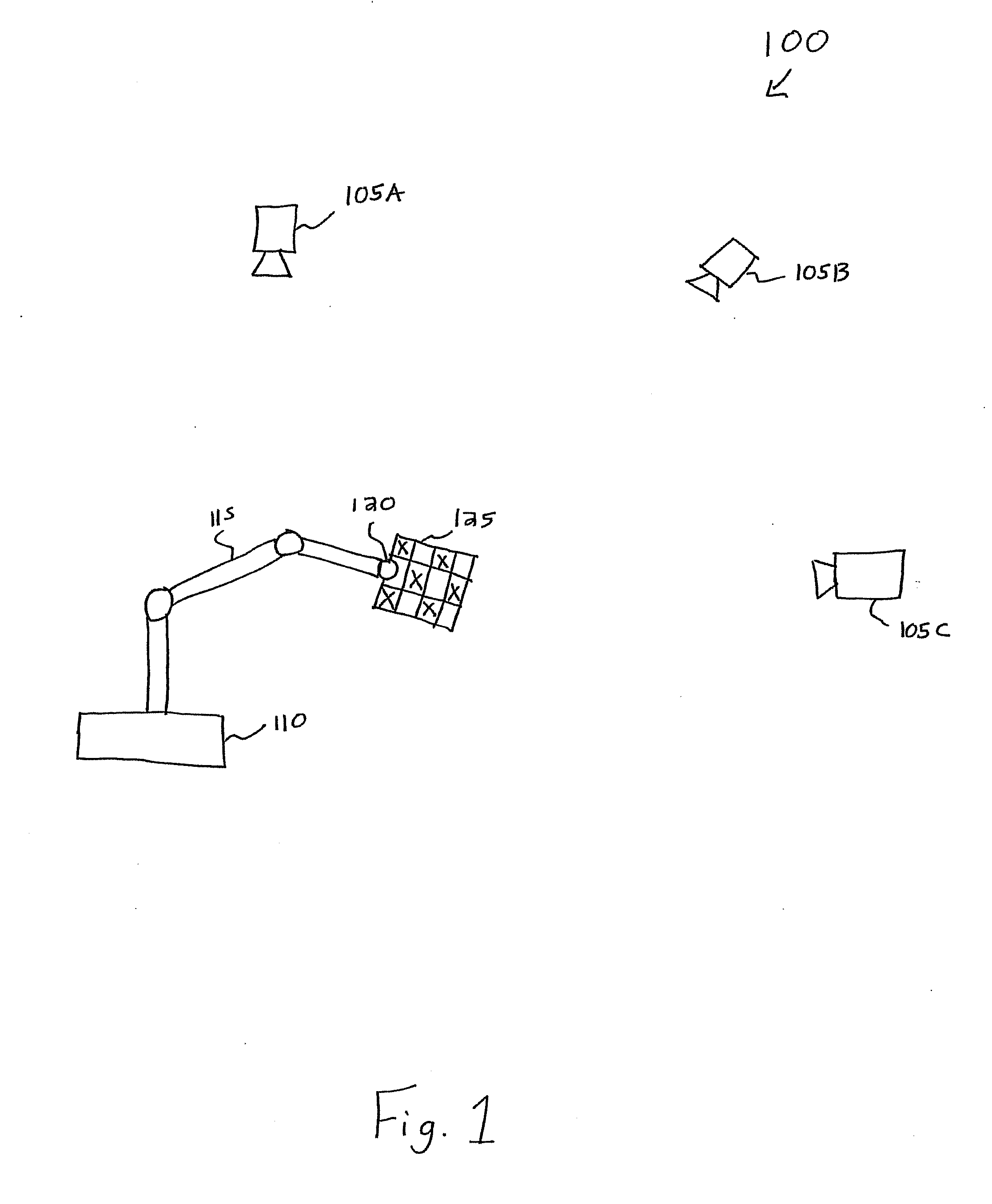

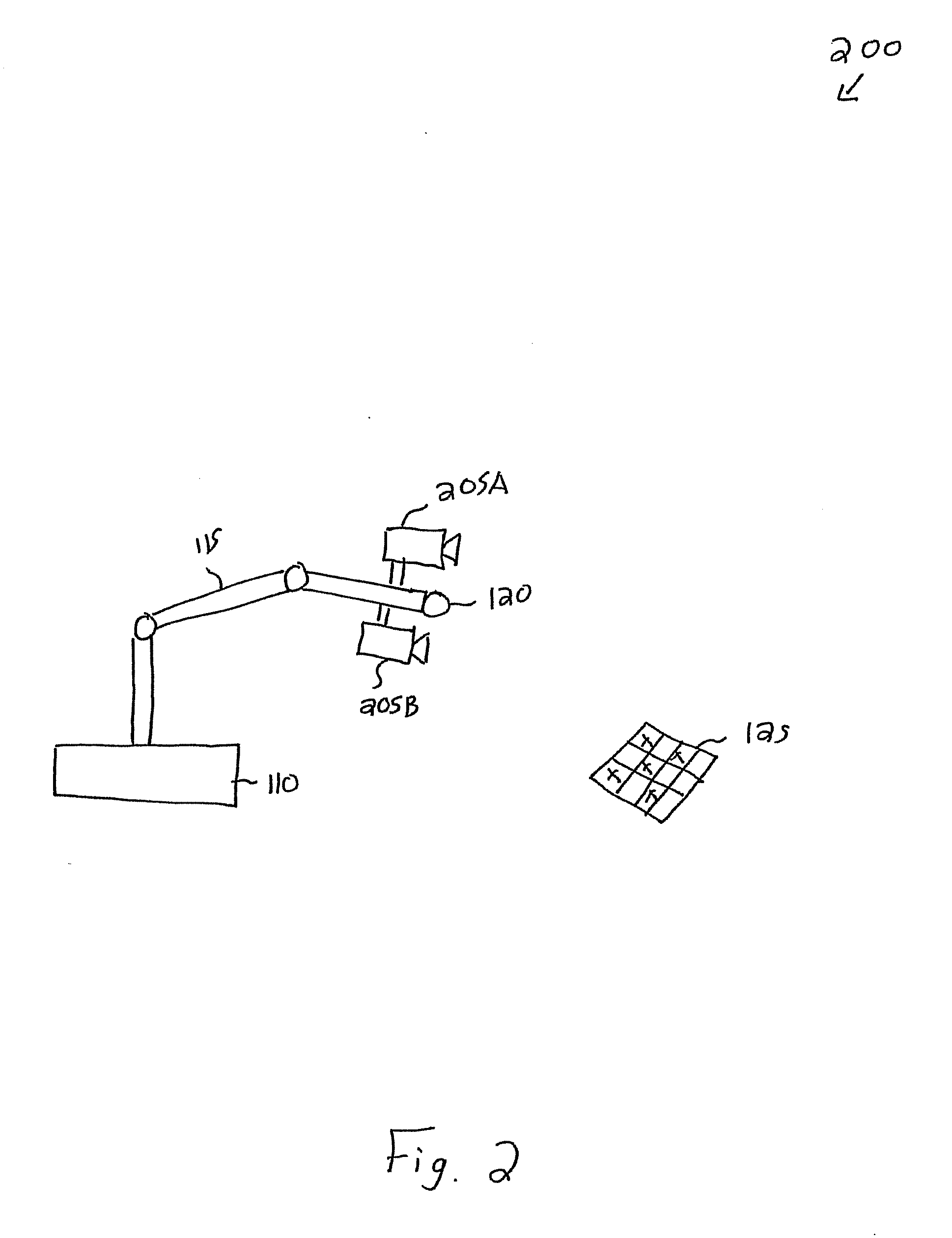

System and method for robust calibration between a machine vision system and a robot

ActiveUS20110280472A1Programme controlProgramme-controlled manipulatorEngineeringRobot control system

A system and method for robustly calibrating a vision system and a robot is provided. The system and method enables a plurality of cameras to be calibrated into a robot base coordinate system to enable a machine vision / robot control system to accurately identify the location of objects of interest within robot base coordinates.

Owner:COGNEX CORP

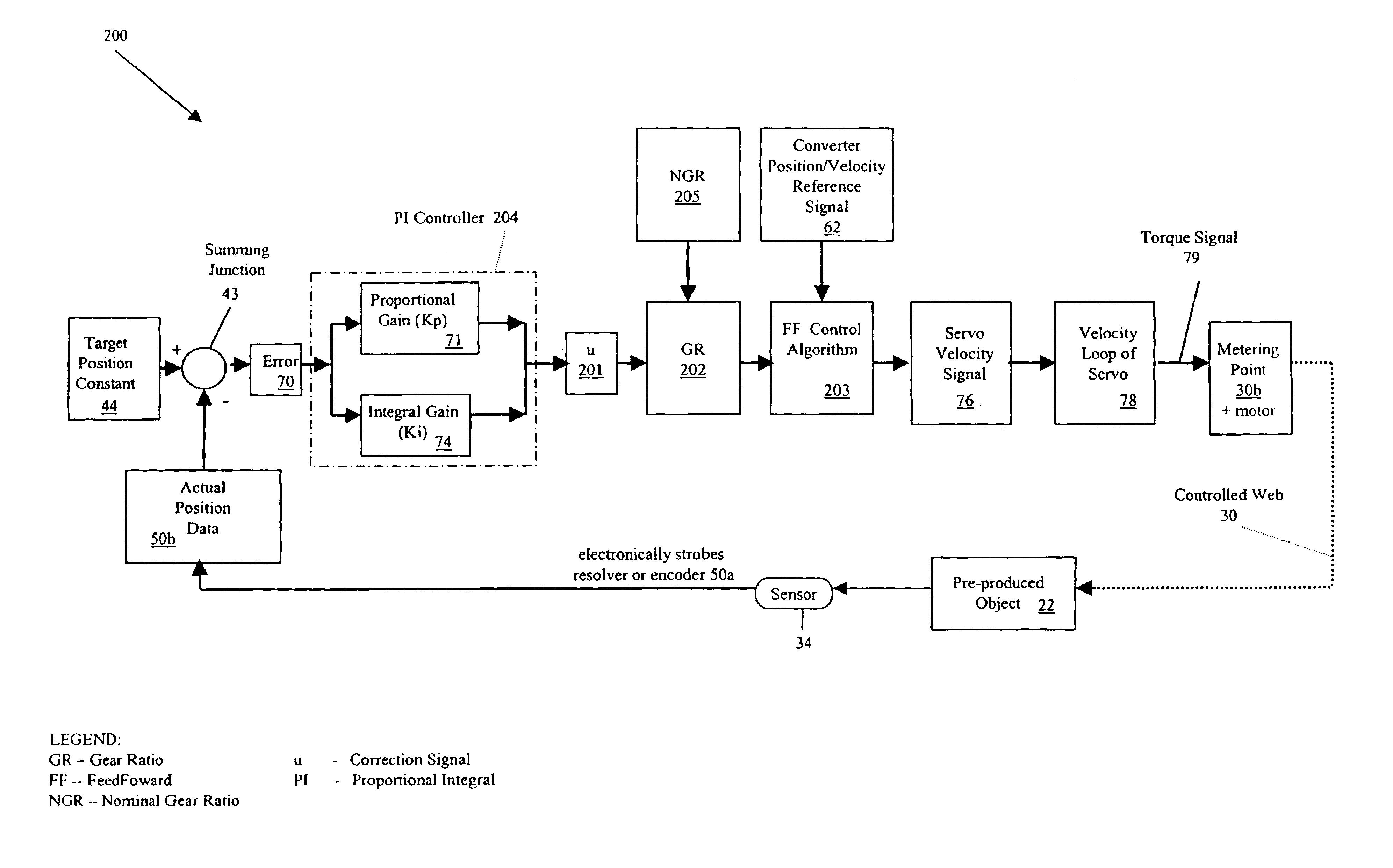

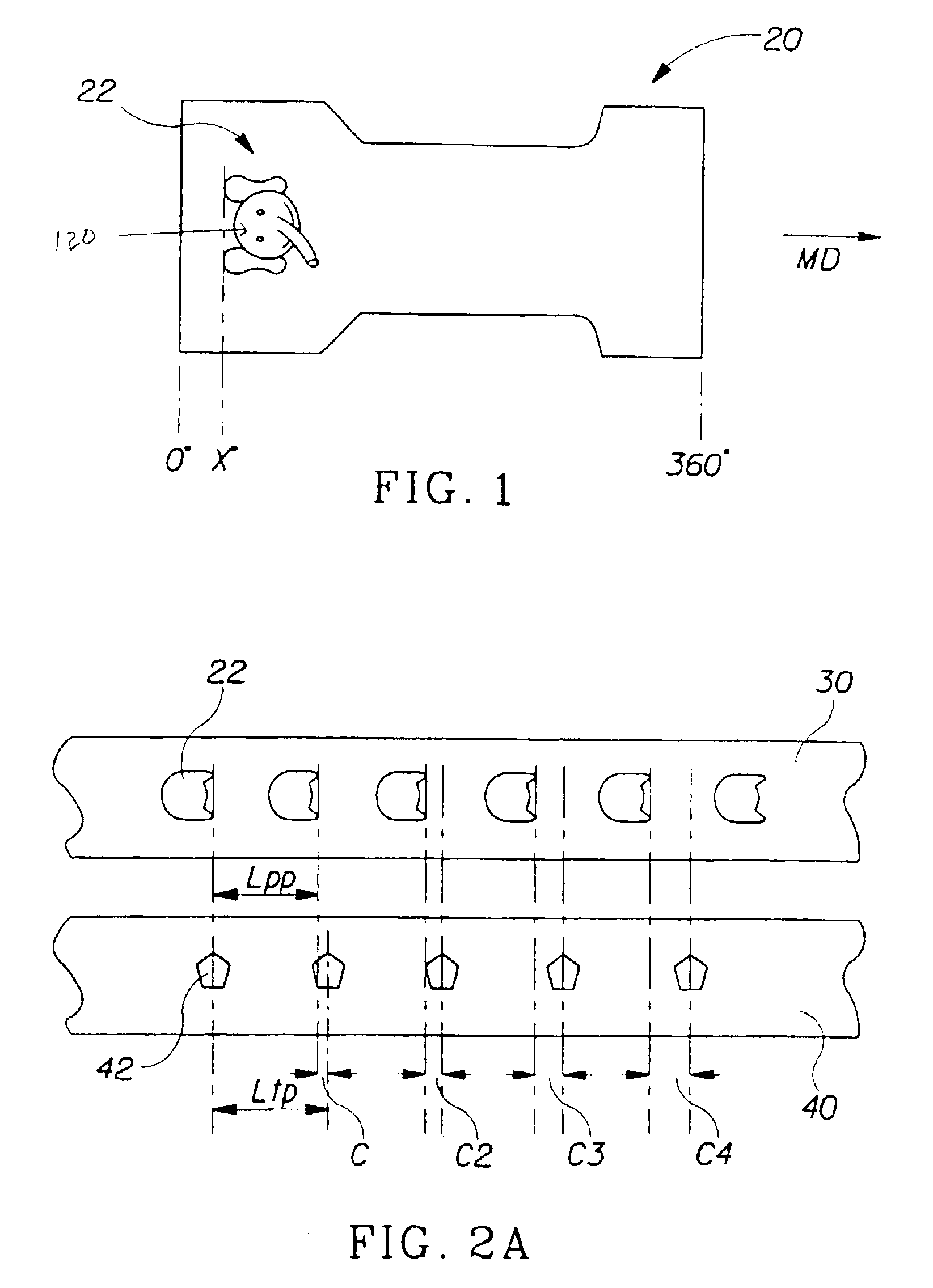

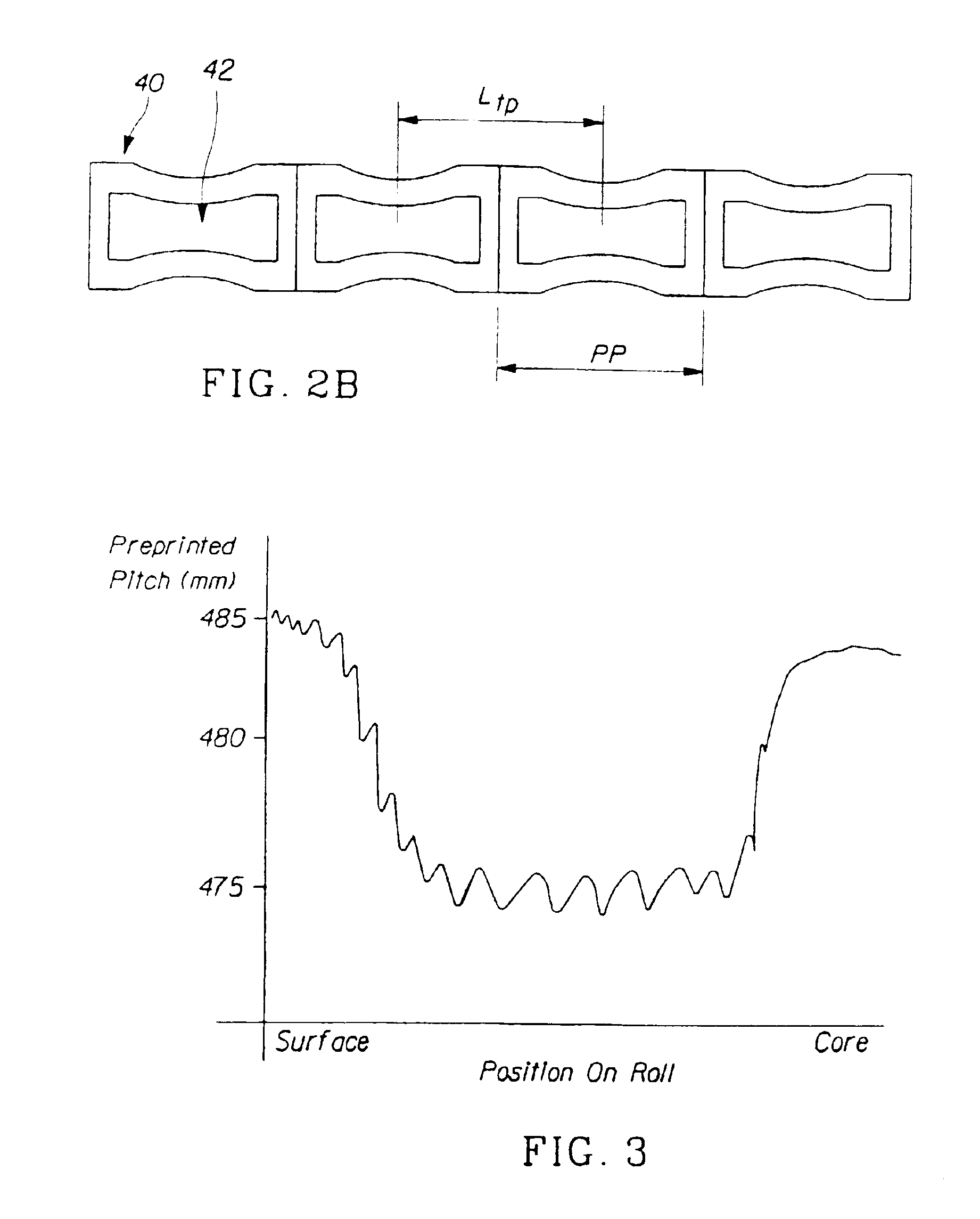

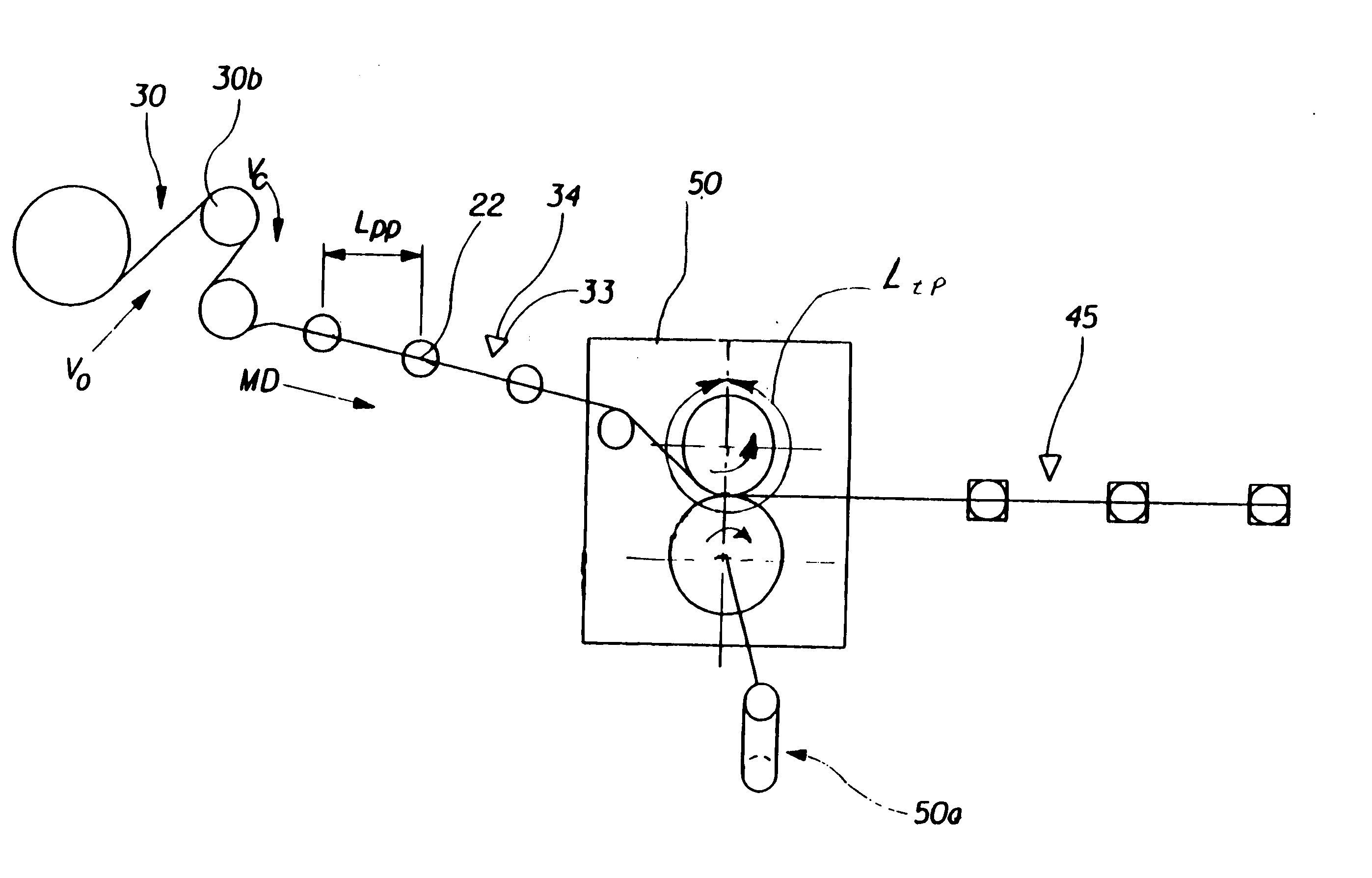

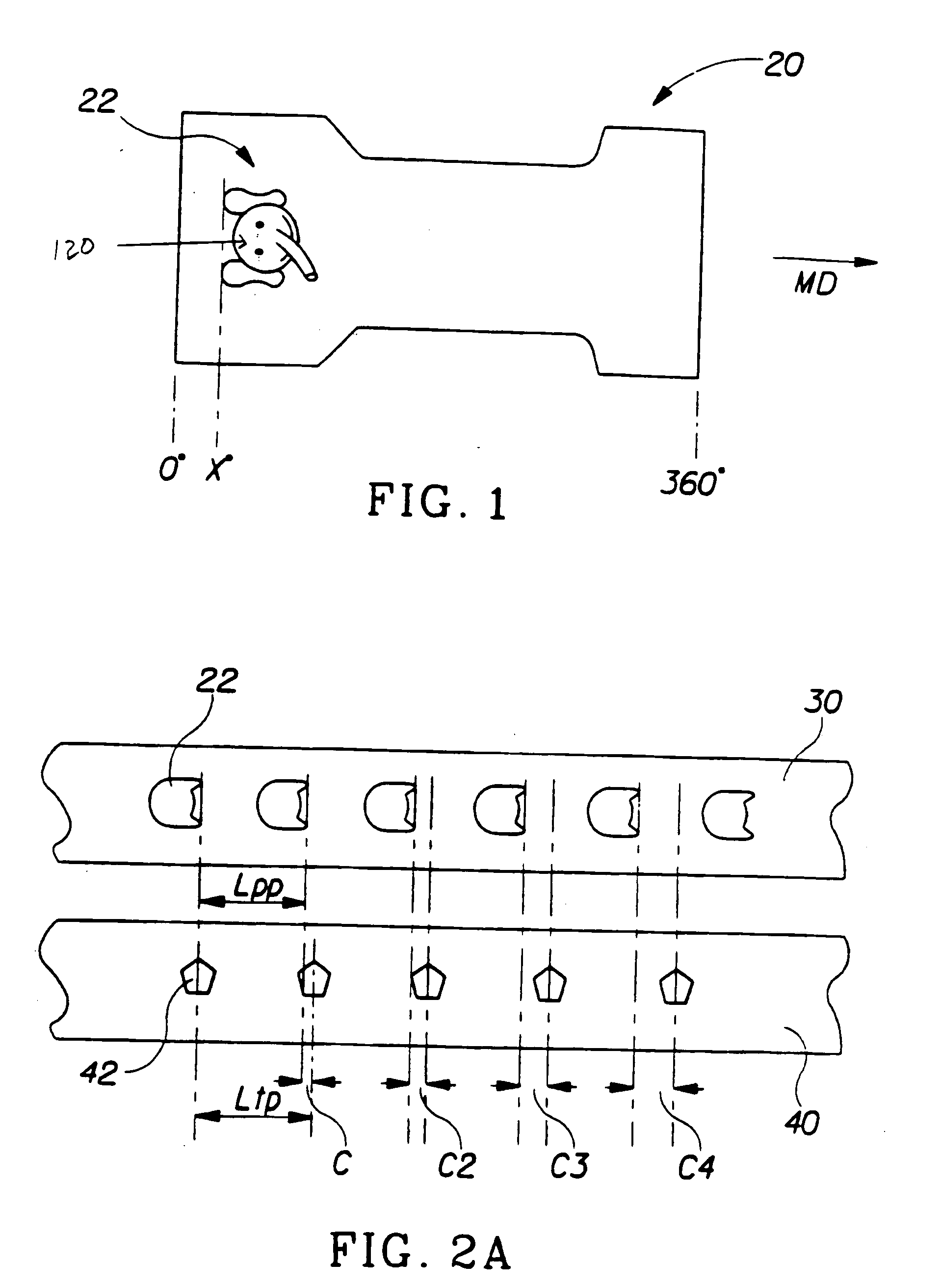

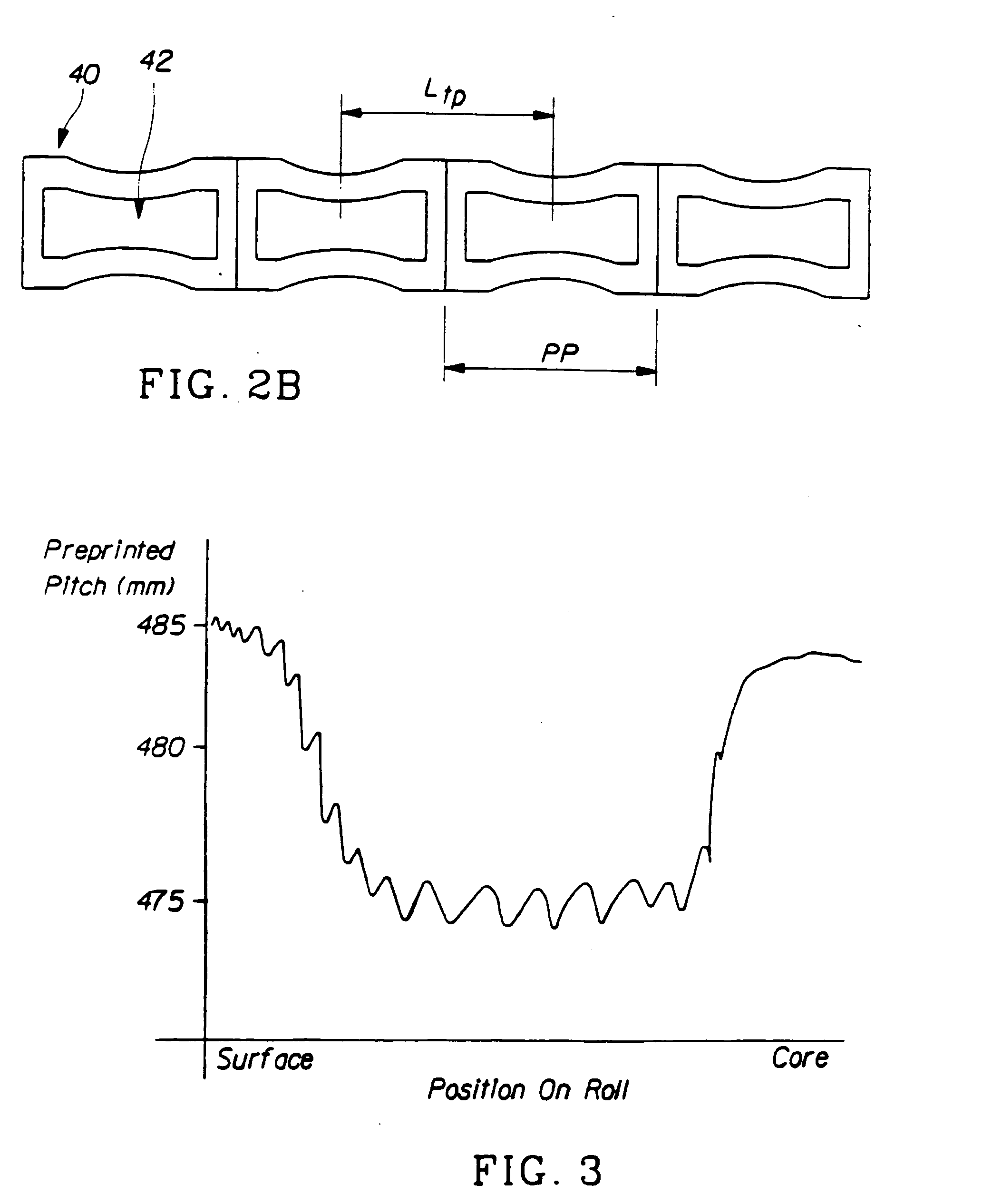

Method and system for registering pre-produced webs with variable pitch length

A new machine control method and system for registering pro-produced webs into a converting line producing disposable absorbent articles such as diapers, pants, feminine hygiene articles or a component thereof. The pre-produced web can include a multiplicity of pre-produced objects spaced on the web at a pitch interval in the web direction. The pre-produced web being manipulated in order for the pre-produced object of the web to be registered in relation to a target position constant. The present invention includes three embodiments, where the first embodiment is expressed as a generic claim. The first embodiment includes a closed-loop feedback registration system; the second and third embodiments, in addition, include an open-loop feedforward phasing system. In addition, the third embodiment uses a machine vision system to recognize any element of a complex pre-produced object (e.g., colorful graphics).

Owner:THE PROCTER & GAMBLE COMPANY

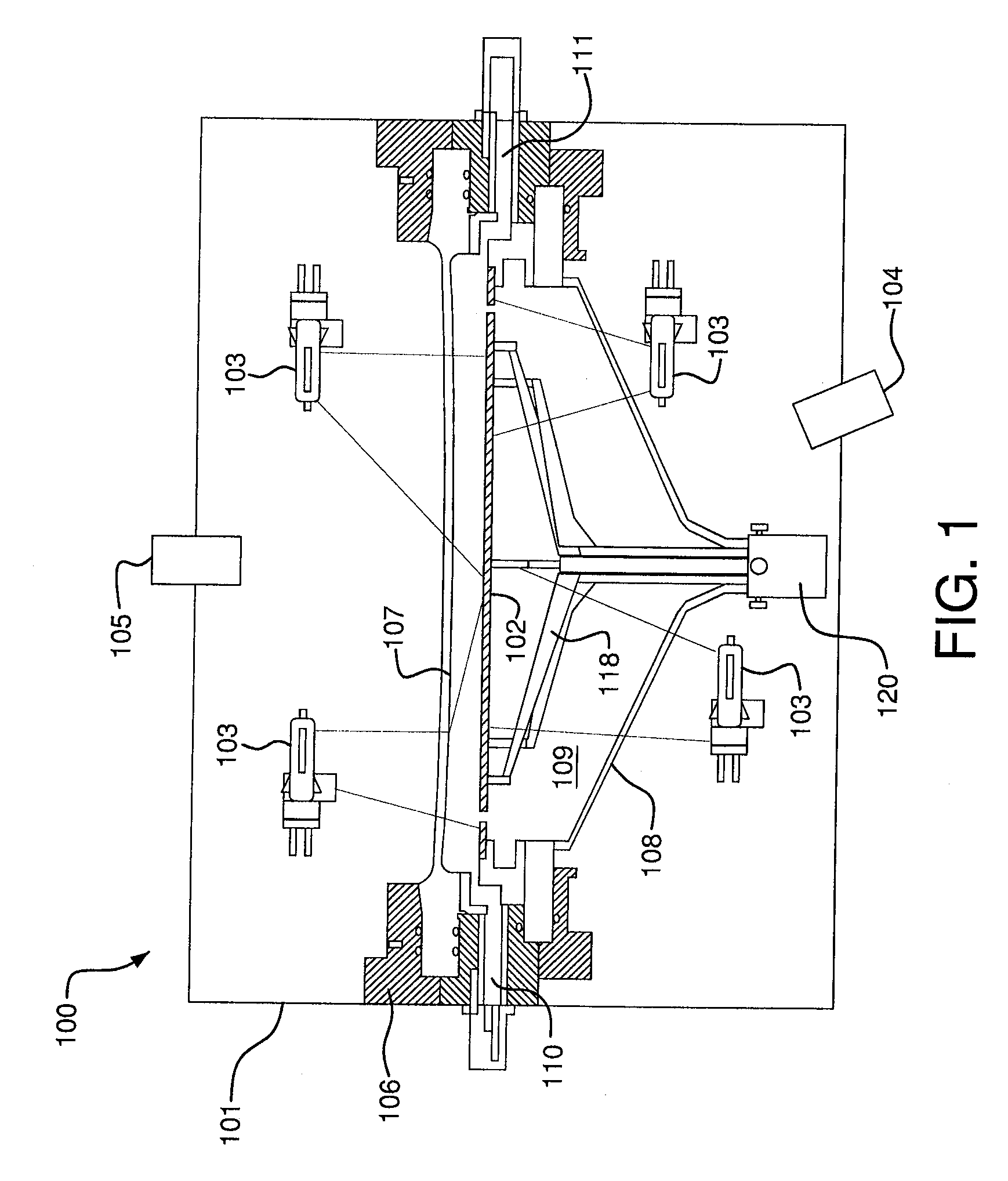

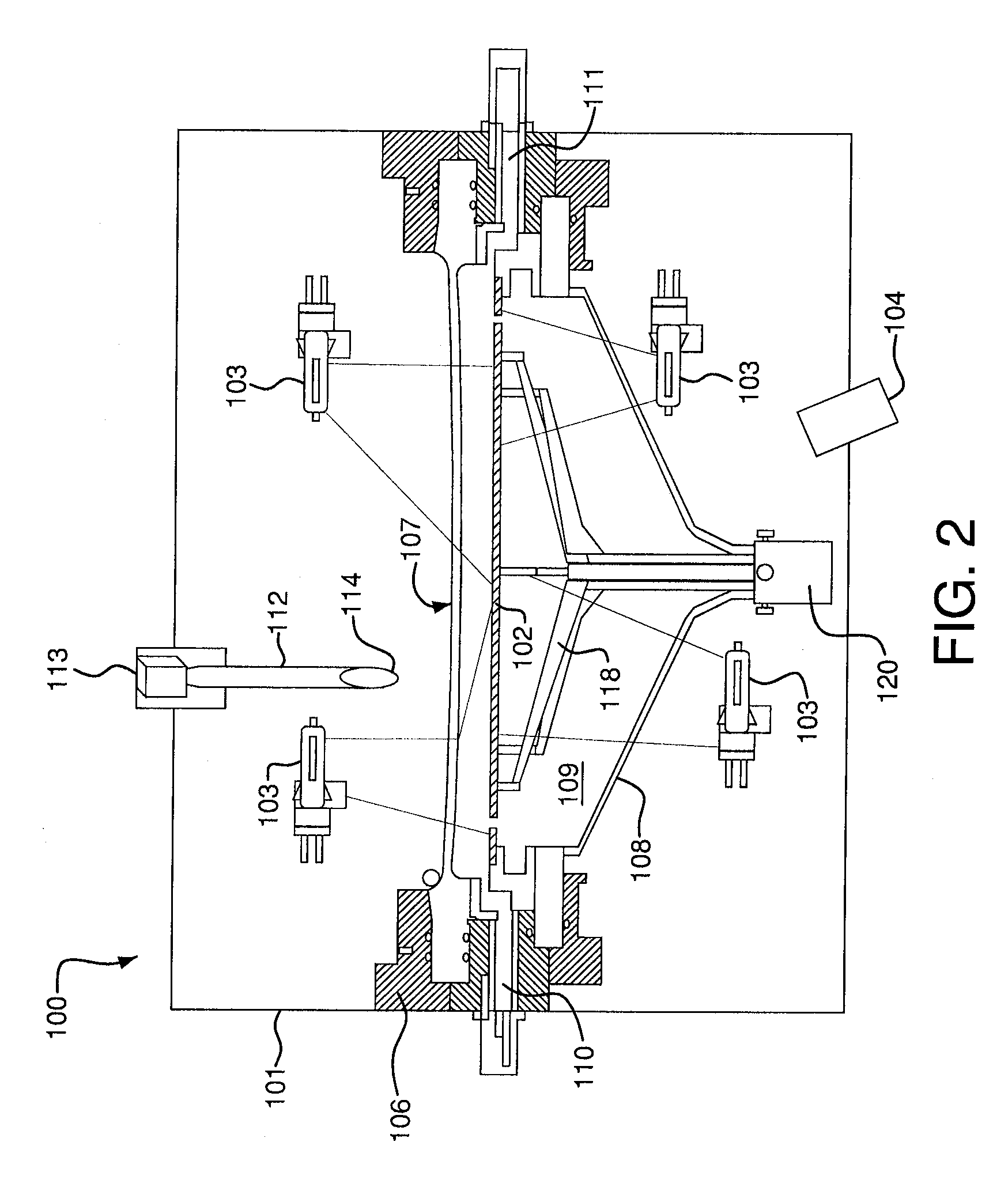

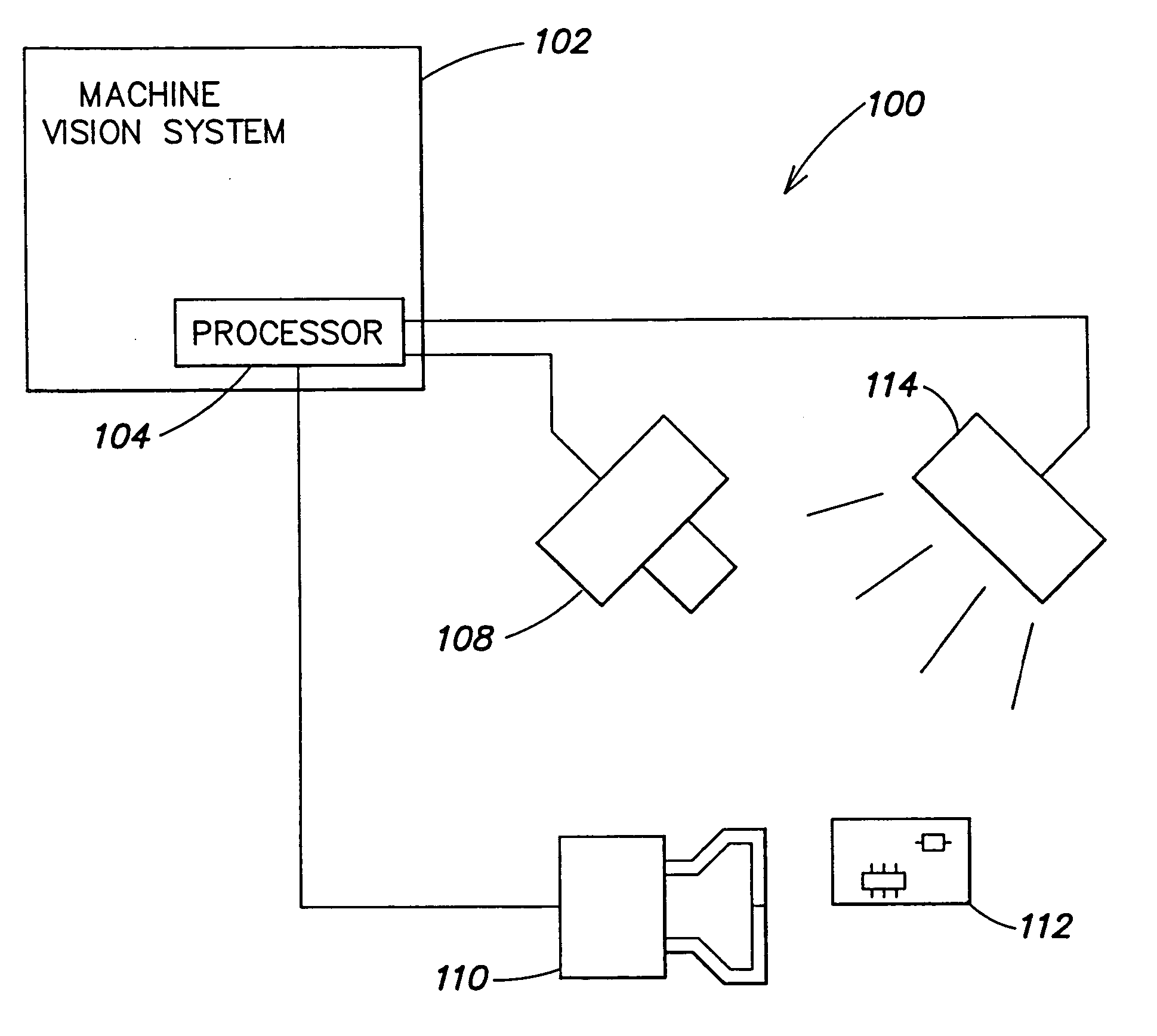

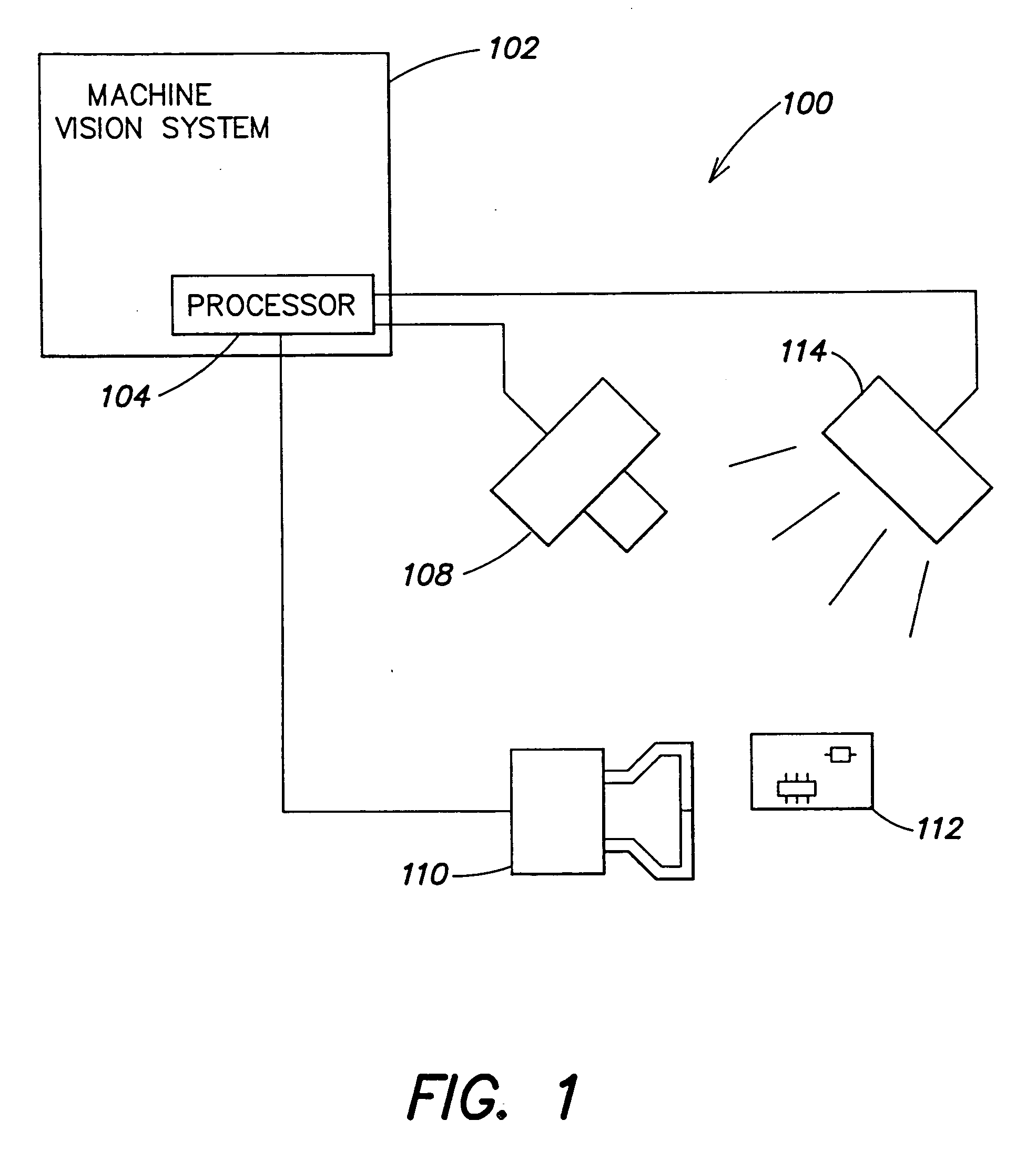

Trajectory detection and feedback system

ActiveUS7094164B2Improve consistencyImprove performanceIndoor gamesGymnastic exercisingFree flightMedicine

A trajectory detection and feedback system is described. The system is capable of detecting one or more moving objects in free flight, analyzing a trajectory of each object and providing immediate feedback information to a human that has launched tile object into flight, and / or one or more observers in the area The feedback information may include one or more trajectory parameters that the human may use to evaluate their skill at sending the object along a desired trajectory. A non-intrusive machine vision system may be used to evaluate trajectory parameters for a basketball shot at a basketball hoop by a player. After the system has evaluated a plurality of shots by the player, the system may provide 1) a diagnosis of their shot consistency, 2) a prediction for improvement based upon improving their shot consistency and 3) a prescription of actions for improving their consistency.

Owner:PILLAR VISION

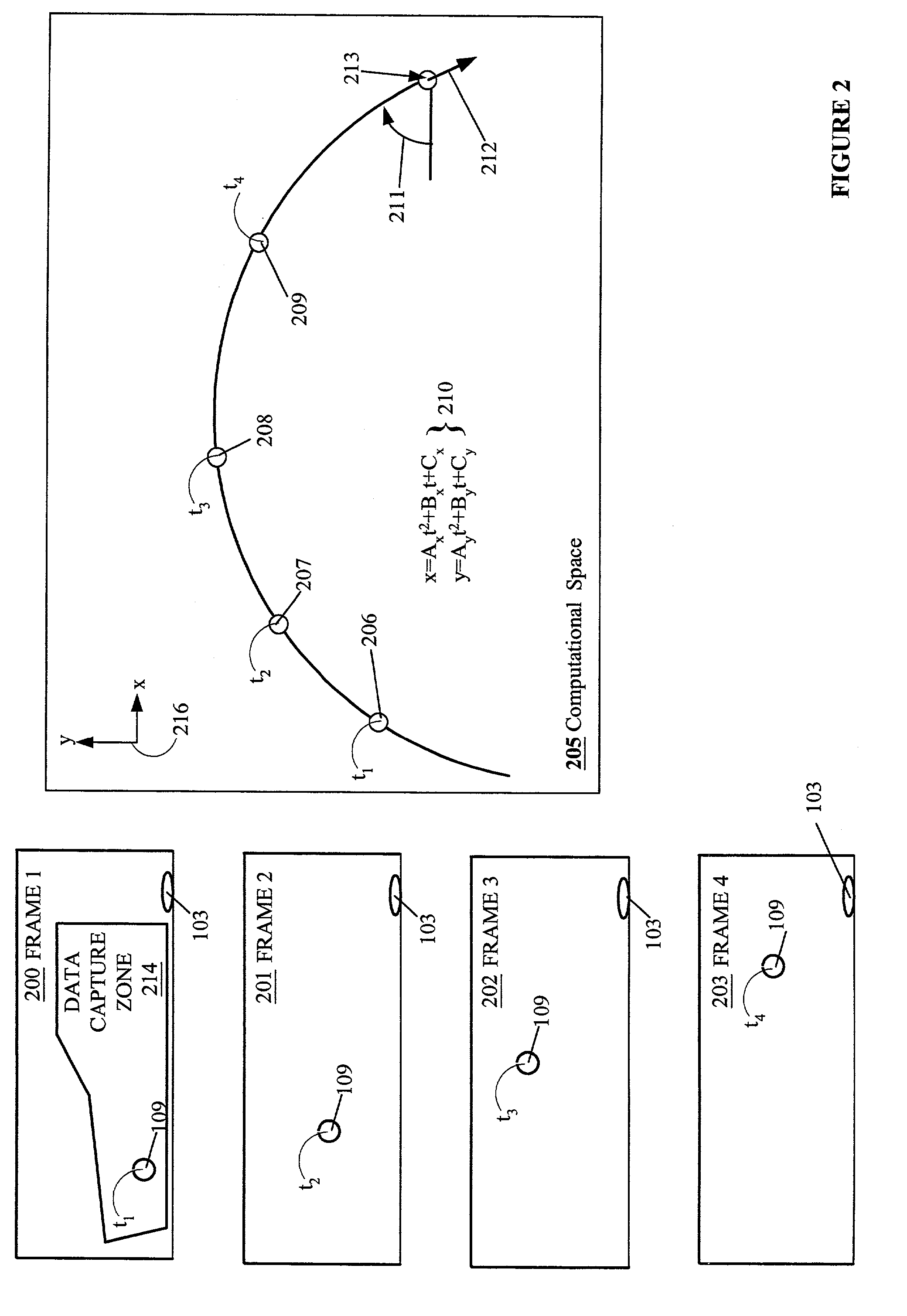

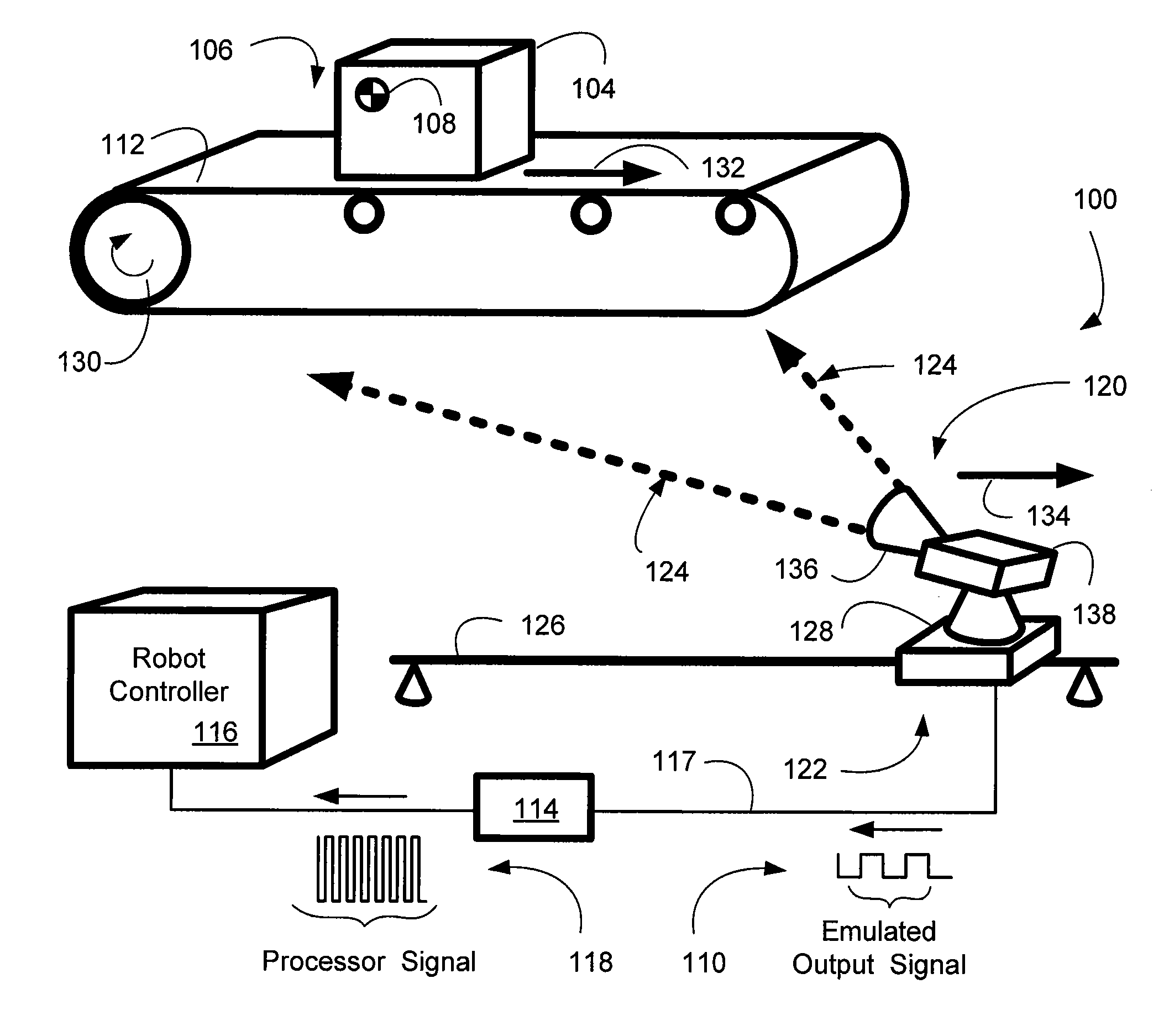

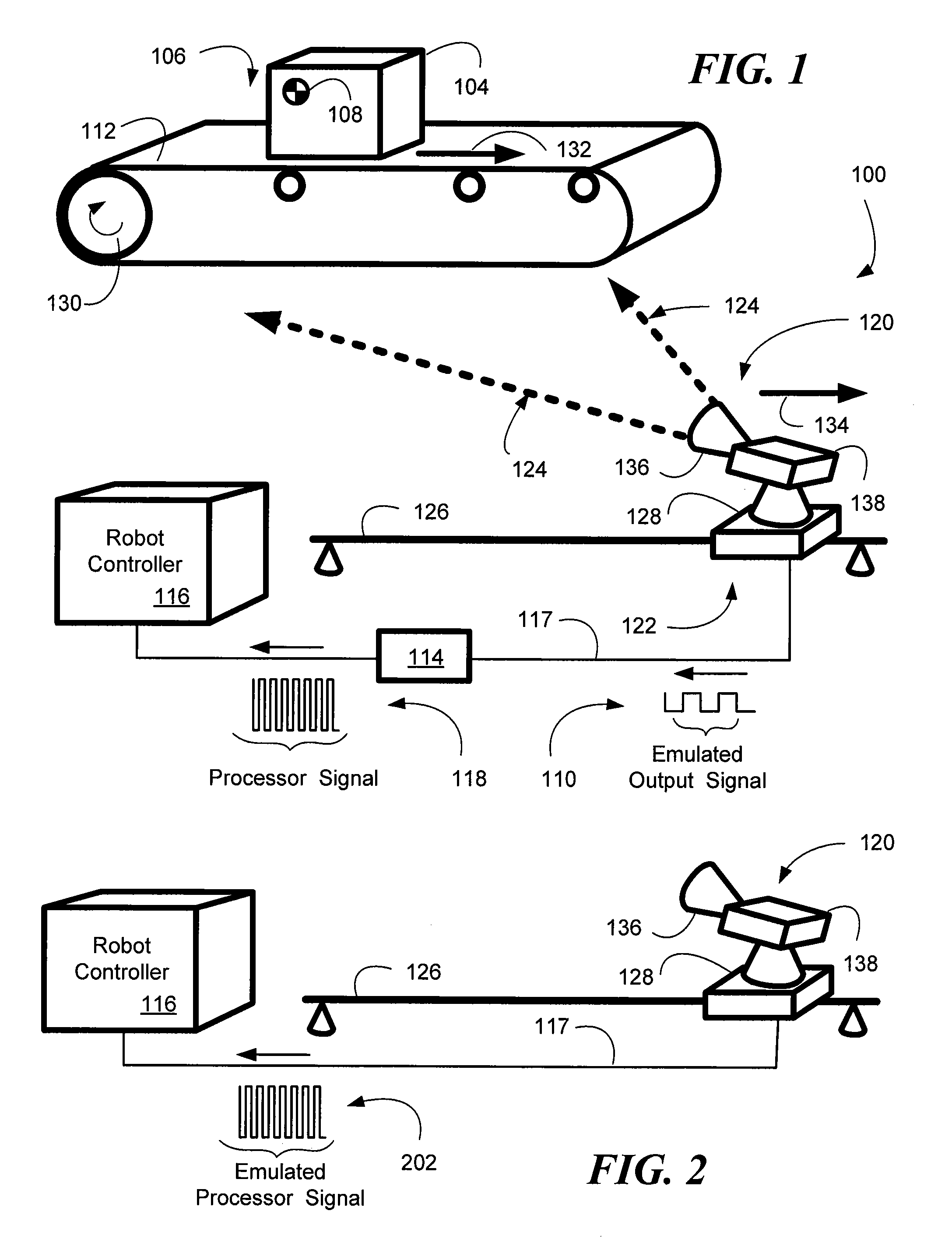

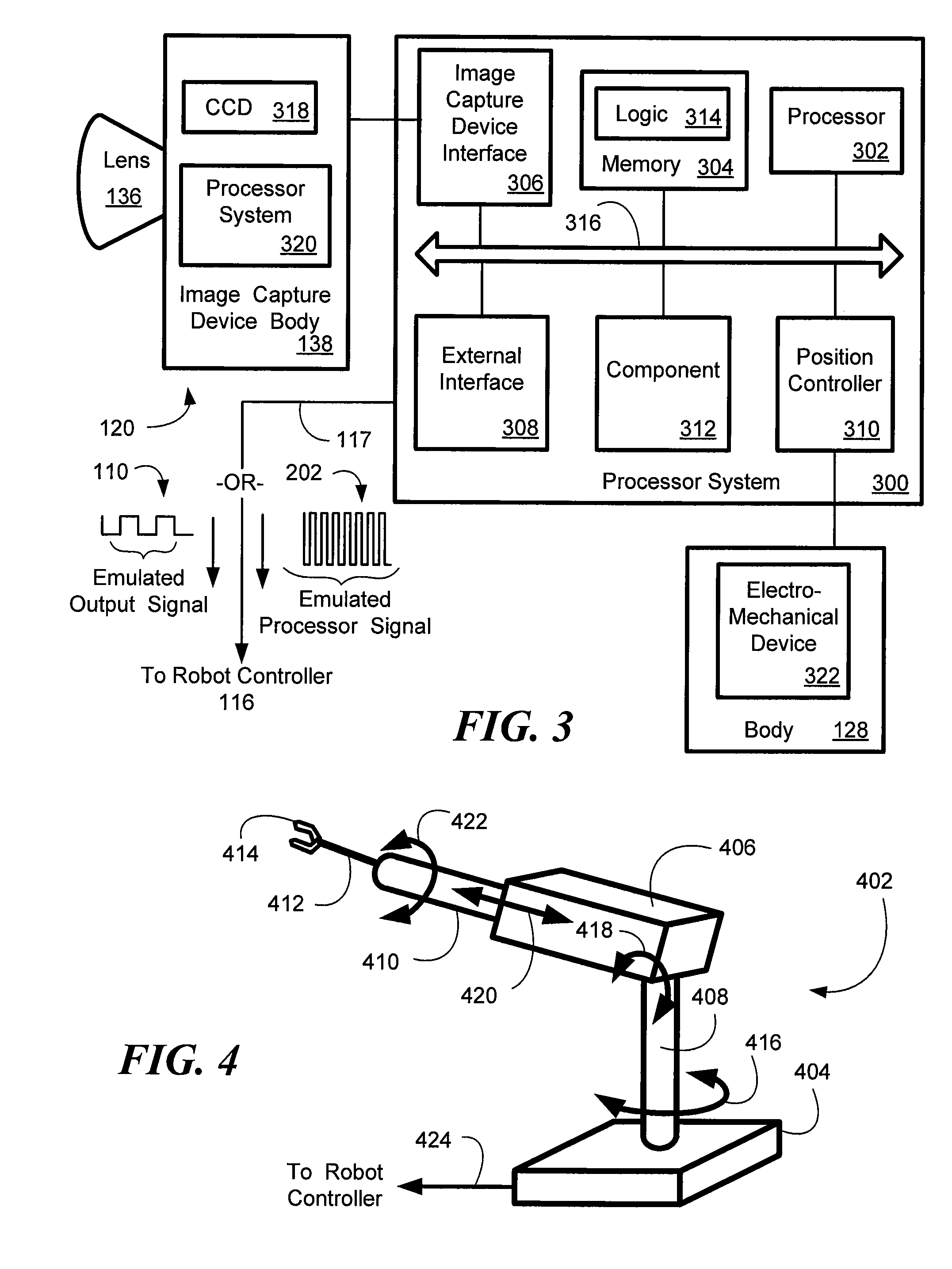

System and method of visual tracking

InactiveUS20070073439A1Eliminate dependenciesProblem can be addressedProgramme controlProgramme-controlled manipulatorDigital dataRobotics

A machine-vision system, method and article is useful in the field of robotics. One embodiment produces signals that emulate the output of an encoder, based on captured images of an object, which may be in motion. One embodiment provides digital data directly to a robot controller without the use of an intermediary transceiver such as an encoder interface card. One embodiment predicts or determines the occurrence of an occlusion and moves at least one of a camera and / or the object accordingly.

Owner:BRAINTECH

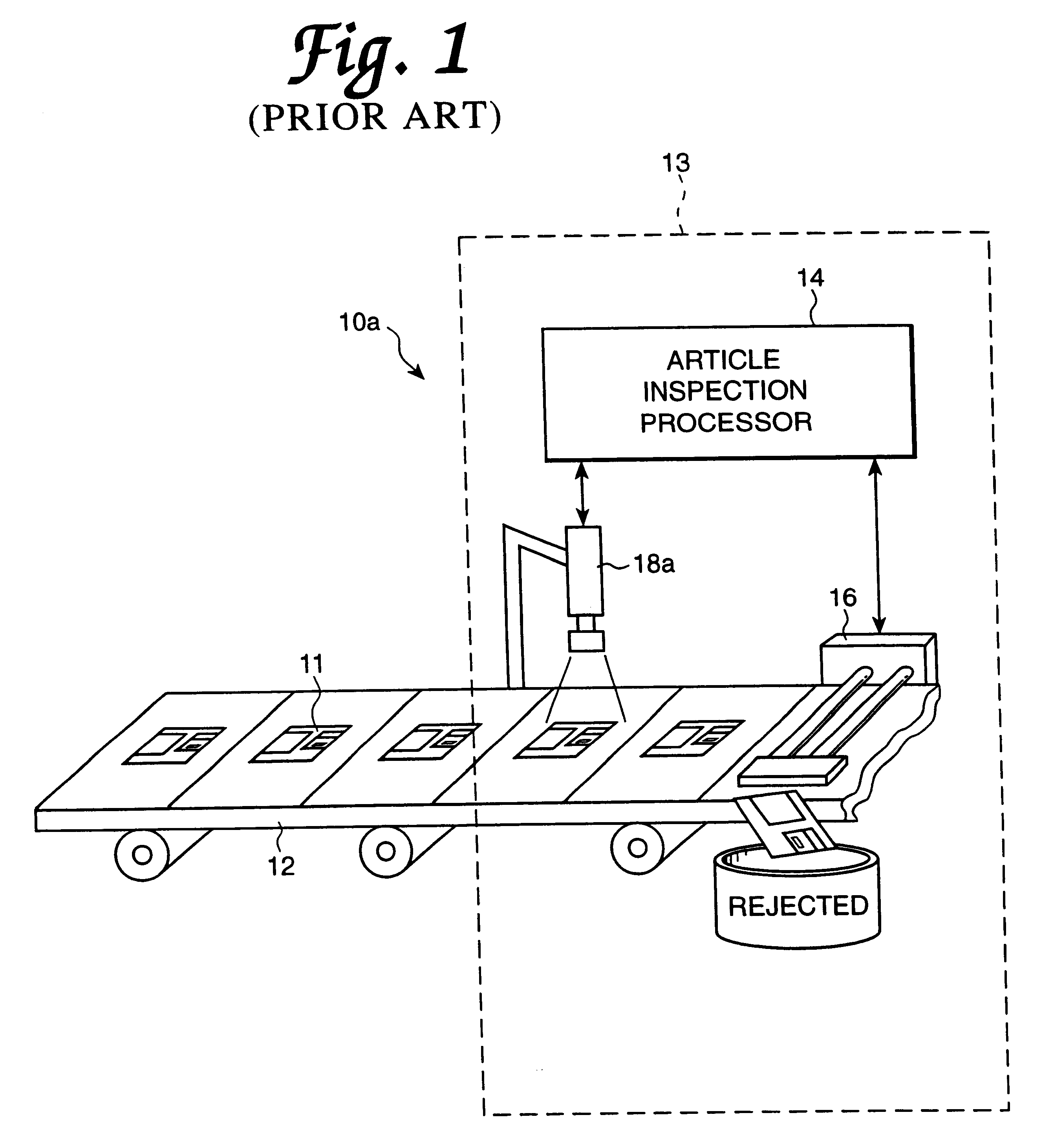

Machine vision system for identifying and assessing features of an article

An improved vision system is provided for identifying and assessing features of an article. Systems are provided for developing feature assessment programs, which, when deployed, may inspect parts and / or provide position information for guiding automated manipulation of such parts. The improved system is easy to use and facilitates the development of versatile and flexible article assessment programs. In one aspect, the system comprises a set of step tools from which a set of step objects is instantiated. The set of step tools may comprise machine vision step objects that comprise routines for processing an image of the article to provide article feature information. A control flow data structure and a data flow data structure may each be provided. The control flow data structure charts a flow of control among the step objects. The data flow data structure includes a data flow connection providing access to portions of the data flow data structure for at least one of individual accessing and individual defining of a data source for a given step object.

Owner:COGNEX CORP

Trajectory detection and feedback system

ActiveUS20070026974A1Improve consistencyImprove performanceSki bindingsGymnastic exercisingFree flightMedicine

A disclosed device provides a trajectory detection and feedback system. The system is capable of detecting one or more moving objects in free flight, analyzing a trajectory of each object and providing immediate feedback information to a human that has launched the object into flight, and / or one or more observers in the area. The feedback information may include one or more trajectory parameters that the human may use to evaluate their skill at sending the object along a desired trajectory. In a particular embodiment, a non-intrusive machine vision system that remotely detects trajectories of moving objects may be used to evaluate trajectory parameters for a basketball shot at a basketball hoop by a player. The feedback information, such as a trajectory entry angle into the basketball hoop and / or an entry velocity into the hoop for the shot, may be output to the player in an auditory format using a sound projection device. The system may be operable to be set-up and to operate in a substantially autonomous manner. After the system has evaluated a plurality of shots by the player, the system may provide 1) a diagnosis of their shot consistency, 2) a prediction for improvement based upon improving their shot consistency and 3) a prescription of actions for improving their consistency.

Owner:PILLAR VISION

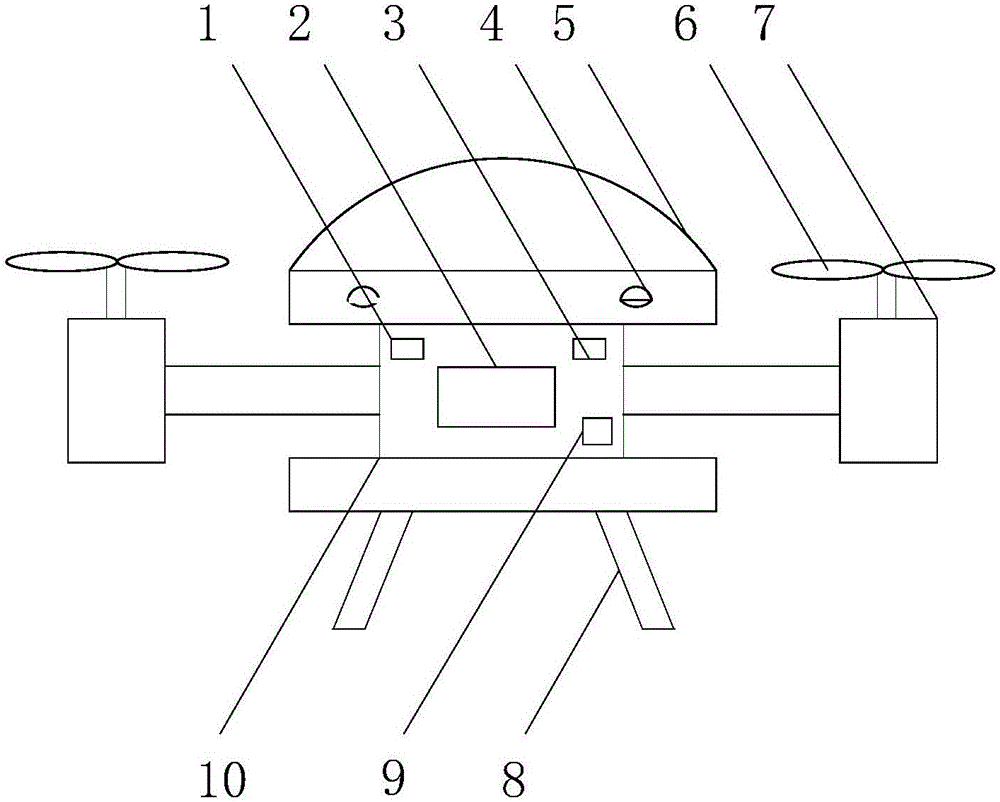

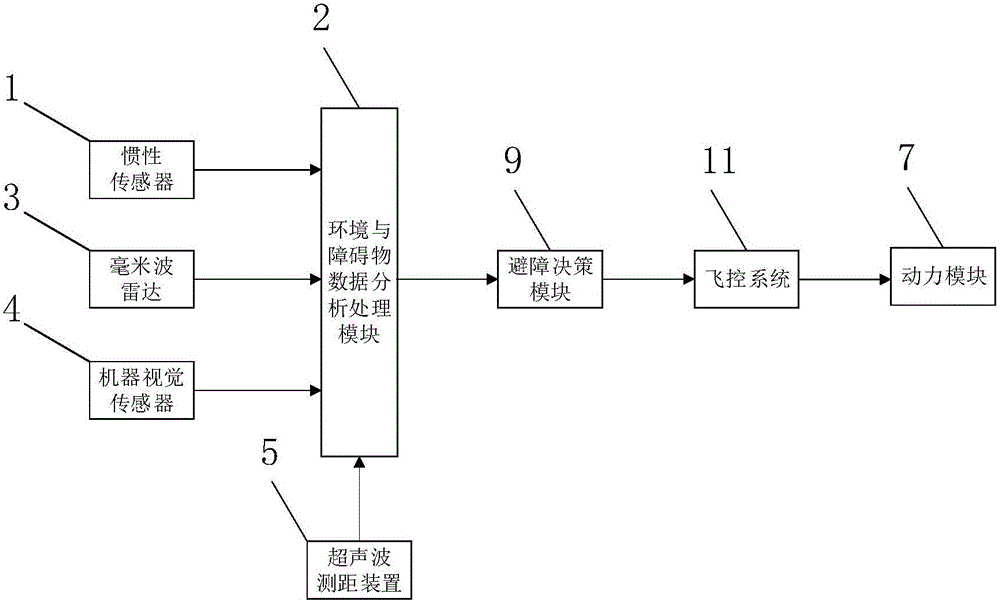

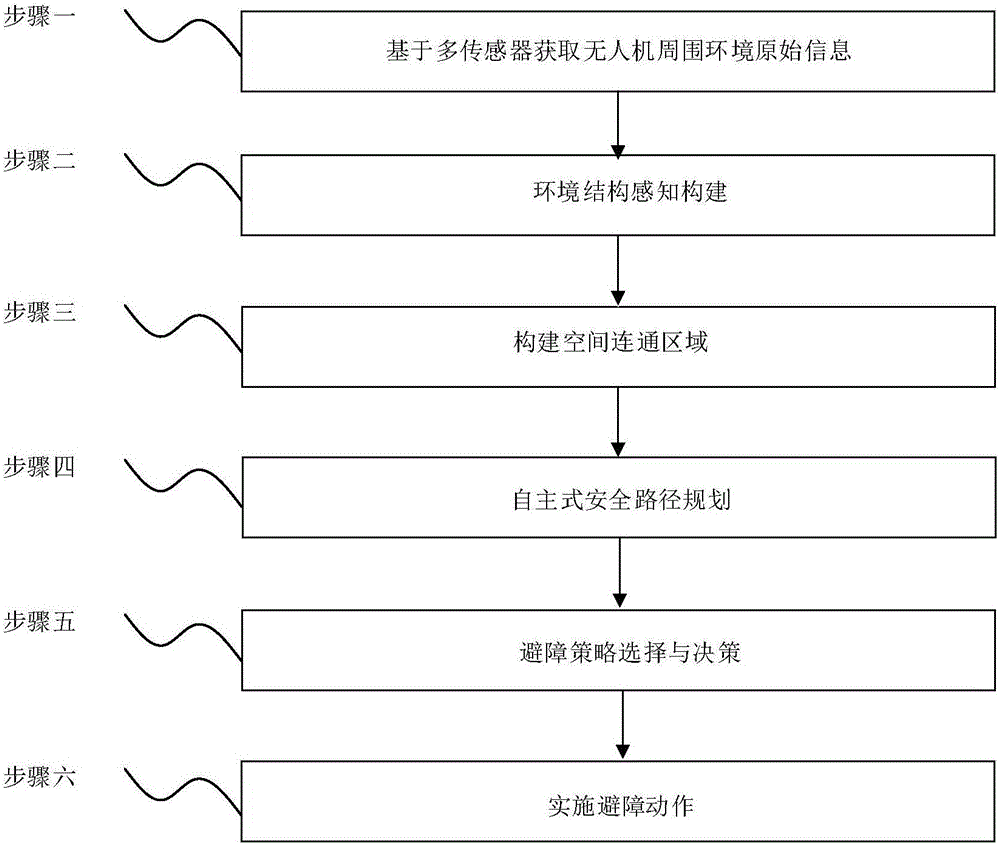

Multi-sensor fusion-based autonomous obstacle avoidance unmanned aerial vehicle system and control method

ActiveCN105892489AAvoid collisionImprove real-time detectionPosition/course control in three dimensionsMulti sensorBinocular distance

The invention discloses a multi-sensor fusion-based autonomous obstacle avoidance unmanned aerial vehicle system and a control method. The system includes an environment information real-time detection module which carries out real-time detection on surrounding environment through adopting a multi-sensor fusion technology and transmits detected information to an obstacle data analysis processing module, the obstacle data analysis processing module which carries out environment structure sensing construction on the received information of the surrounding environment so as to determine an obstacle, and an obstacle avoidance decision-making module which determines an obstacle avoidance decision according to the output result of the obstacle data analysis processing module, so as to achieve obstacle avoidance of an unmanned aerial vehicle through the driving of power modules which is performed by a flight control system. According to the multi-sensor fusion-based autonomous obstacle avoidance unmanned aerial vehicle system and the control method of the invention, binocular machine vision systems are arranged around the body of the unmanned aerial vehicle, so that 3D space reconstruction can be realized; and an ultrasonic device and a millimeter wave radar in an advancing direction are used in cooperation, so that an obstacle avoidance method is more comprehensive. The system has the advantages of high real-time performance of obstacle detection, long visual detection distance and high resolution.

Owner:STATE GRID INTELLIGENCE TECH CO LTD

Trajectory detection and feedback system

InactiveUS8622832B2Improve consistencyImprove performanceGymnastic exercisingCharacter and pattern recognitionFree flightSimulation

A disclosed device provides a trajectory detection and feedback system. The system is capable of detecting one or more moving objects in free flight, analyzing a trajectory of each object and providing immediate feedback information to a human that has launched the object into flight, and / or one or more observers in the area. In a particular embodiment, a non-intrusive machine vision system that remotely detects trajectories of moving objects may be used to evaluate trajectory parameters for a basketball shot at a basketball hoop by a player. The feedback information, such as a trajectory entry angle into the basketball hoop and / or an entry velocity into the hoop for the shot, may be output to the player in an auditory format using a sound projection device. The system may be operable to be set-up and to operate in a substantially autonomous manner.

Owner:PILLAR VISION

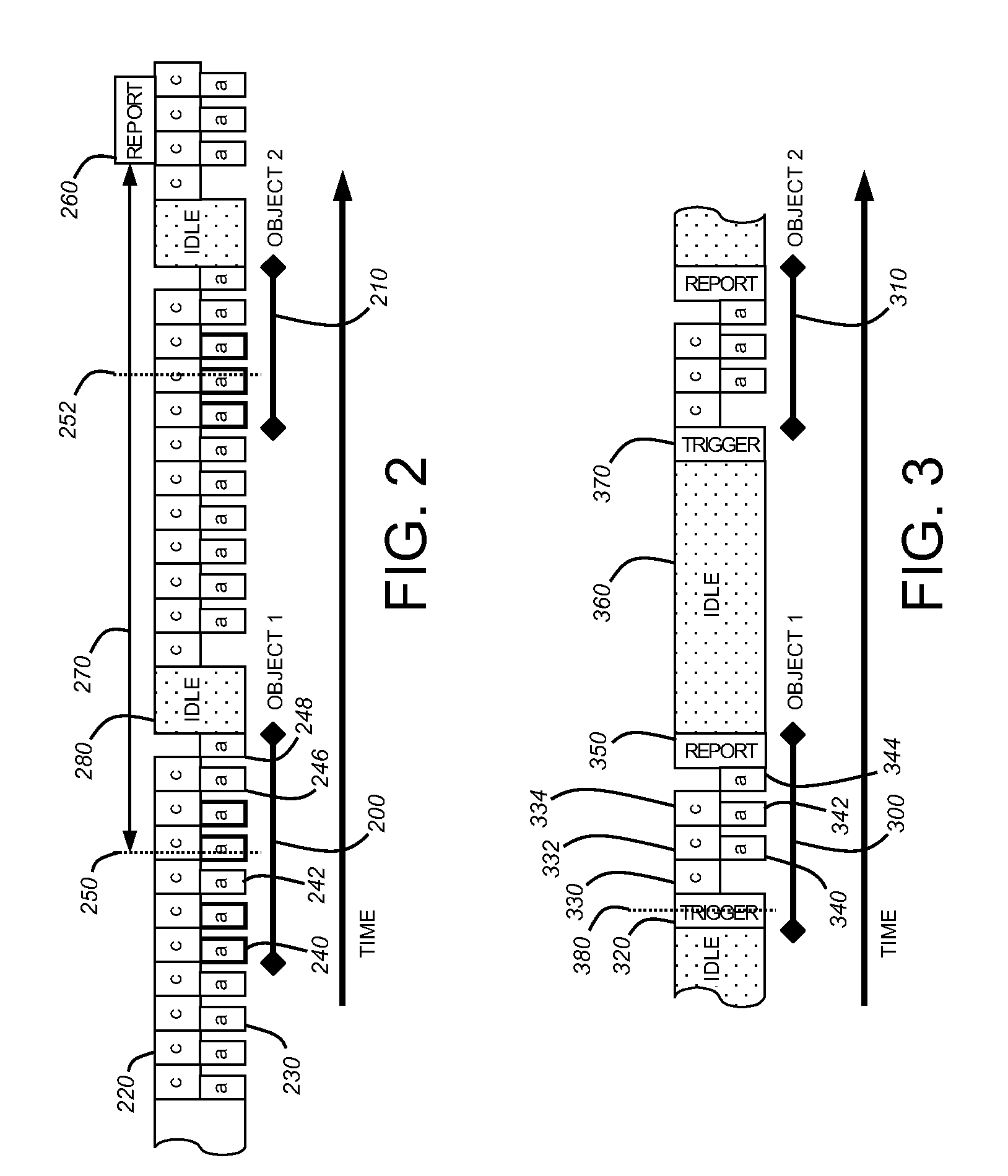

Human-machine-interface and method for manipulating data in a machine vision system

InactiveUS20070146491A1Easy to set upIncrease speedTelevision system detailsImage analysisHuman–machine interfaceThumbnail

This invention provides a Graphical User Interface (GUI) that operates in connection with a machine vision detector or other machine vision system, which provides a highly intuitive and industrial machine-like appearance and layout. The GUI includes a centralized image frame window surrounded by panes having buttons and specific interface components that the user employs in each step of a machine vision system set up and run procedure. One pane allows the user to view and manipulate a recorded filmstrip of image thumbnails taken in a sequence, and provides the filmstrip with specialized highlighting (colors or patterns) that indicate useful information about the underlying images. The system is set up and run are using a sequential series of buttons or switches that are activated by the user in turn to perform each of the steps needed to connect to a vision system, train the system to recognize or detect objects / parts, configure the logic that is used to handle recognition / detection signals, set up system outputs from the system based upon the logical results, and finally, run the programmed system in real time. The programming of logic is performed using a programming window that includes a ladder logic arrangement. A thumbnail window is provided on the programming window in which an image from a filmstrip is displayed, focusing upon the locations of the image (and underlying viewed object / part) in which the selected contact element is provided.

Owner:COGNEX CORP

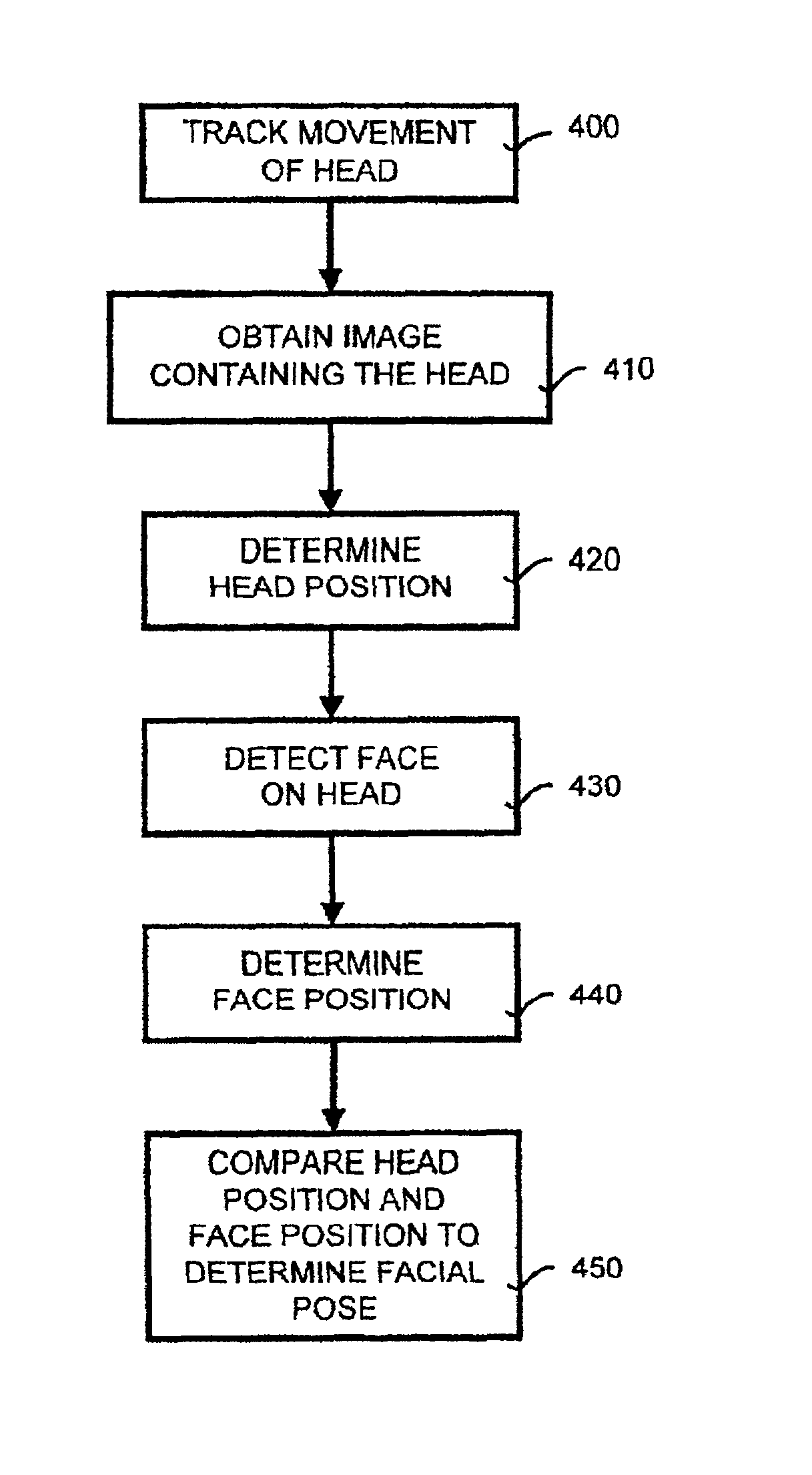

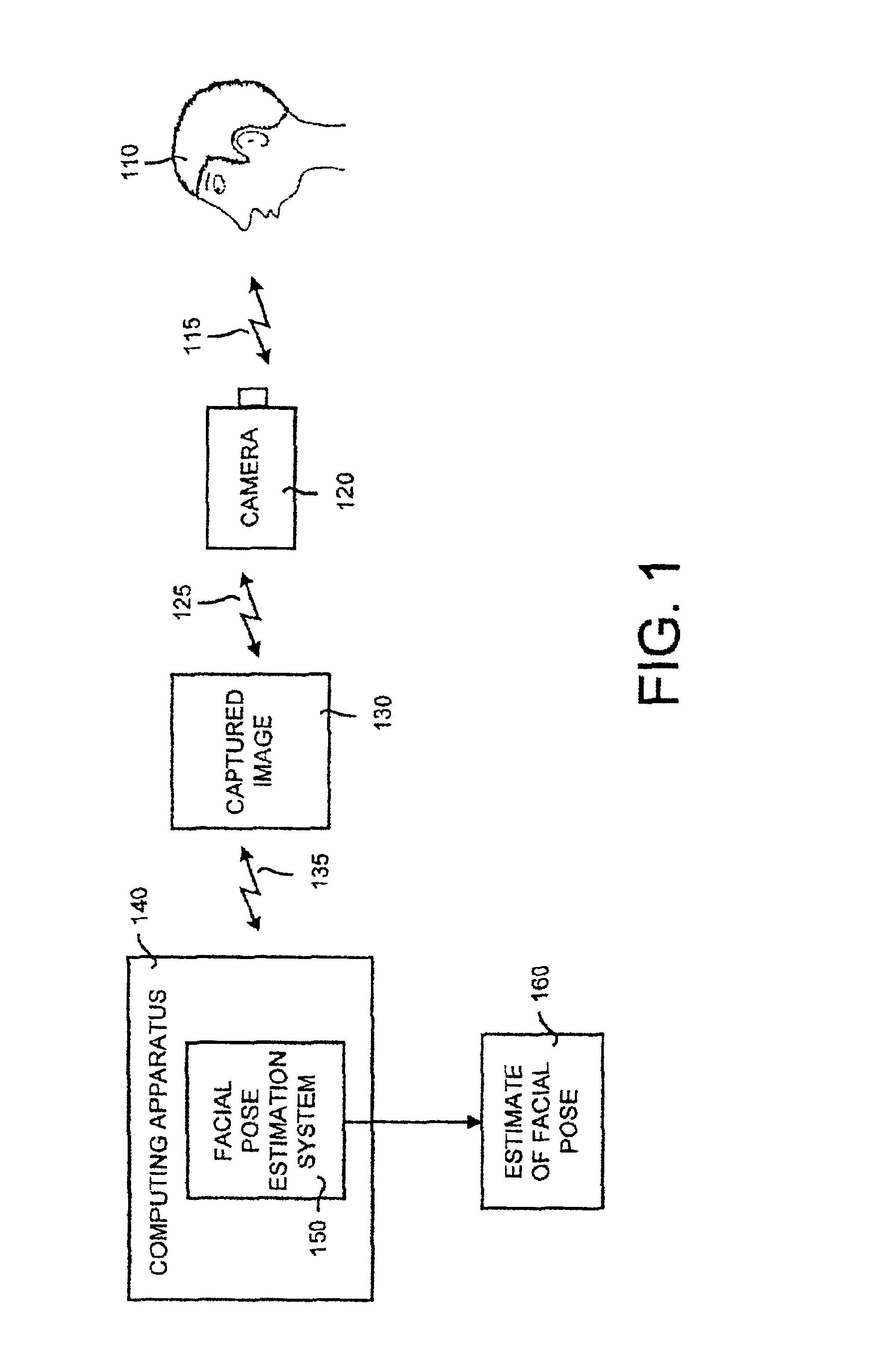

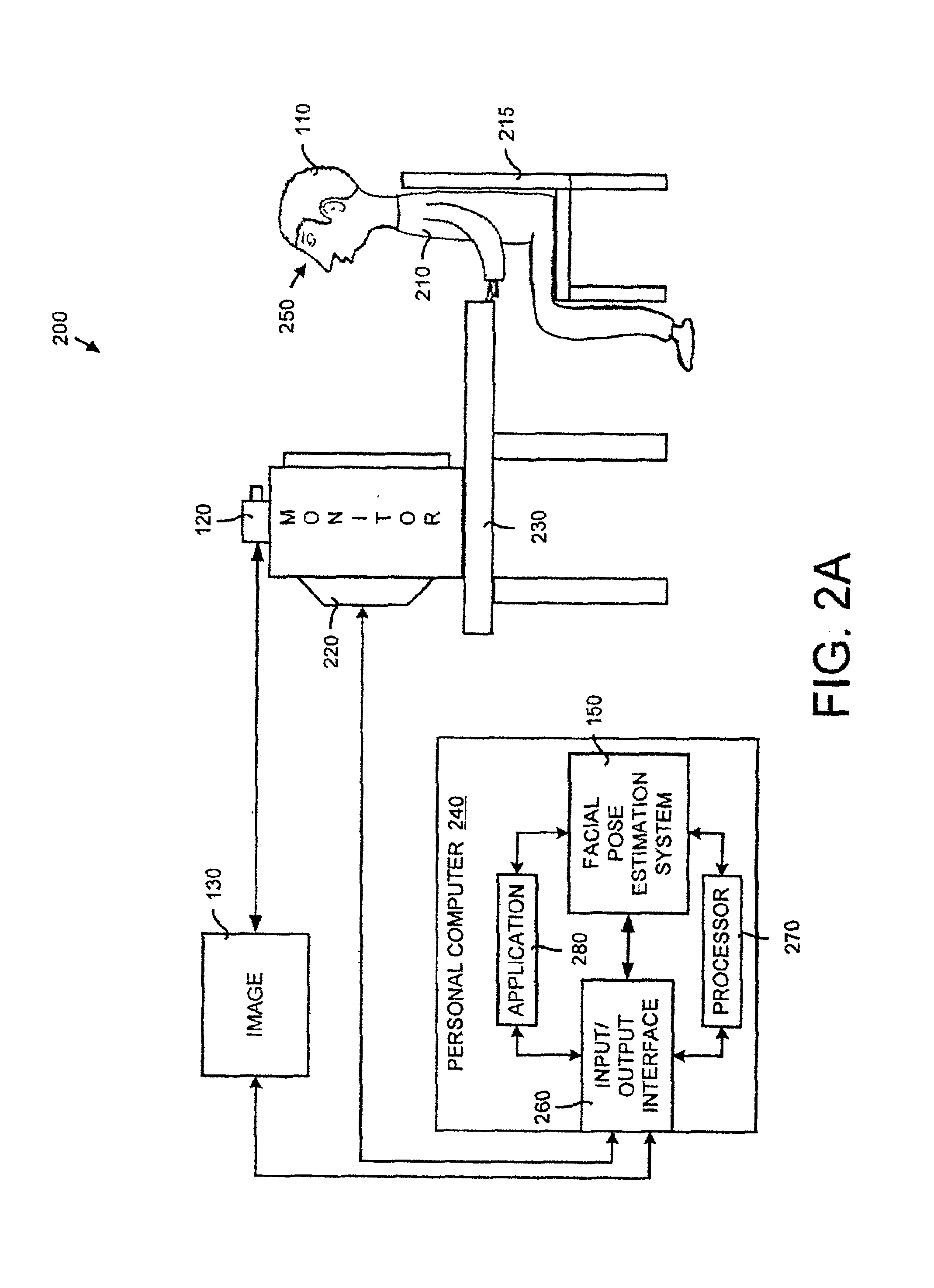

Machine vision system and method for estimating and tracking facial pose

The present invention includes in a system and method for estimating and tracking an orientation of a user's face by combining head tracking and face detection techniques. The orientation of the face, or facial pose, can be expressed in terms of pitch, roll and yaw of the user's head. Facial pose information can be used, for example, to ascertain in which direction the user is looking. In general, the facial pose estimation method obtains a position of the head and a position of the face and compares the two to obtain the facial pose. In particular, a camera is used to obtain an image containing a user's head. Any movement of the user's head is tracked and the head position is determined. A face then is detected on the head and the face position is determined. The head and face positions then are compared.

Owner:MICROSOFT TECH LICENSING LLC

Method and system for registering pre-produced webs with variable pitch length

A new machine control method and system for registering pre-produced webs into a converting line producing disposable absorbent articles such as diapers, pull-ups, feminine hygiene articles or a component thereof. The pre-produced webs can include a multiplicity of pre-produced objects spaced on the web at a pitch interval in the web direction. The pre-produced web being manipulated in order for the pre-produced object of the web to be registered in relation to a target position. The present invention includes five embodiments, where the first embodiment is expressed as a generic claim. The first embodiment includes a closed-loop feedback registration system; the second and third embodiments, in addition, include an open-loop feedforward control system; the fourth and fifth embodiments, in addition, include an automatic phasing system. In addition, the third embodiment uses a machine vision system to recognize any element of a complex pre-produced object (e.g., colorful graphics).

Owner:THE PROCTER & GAMBLE COMPANY

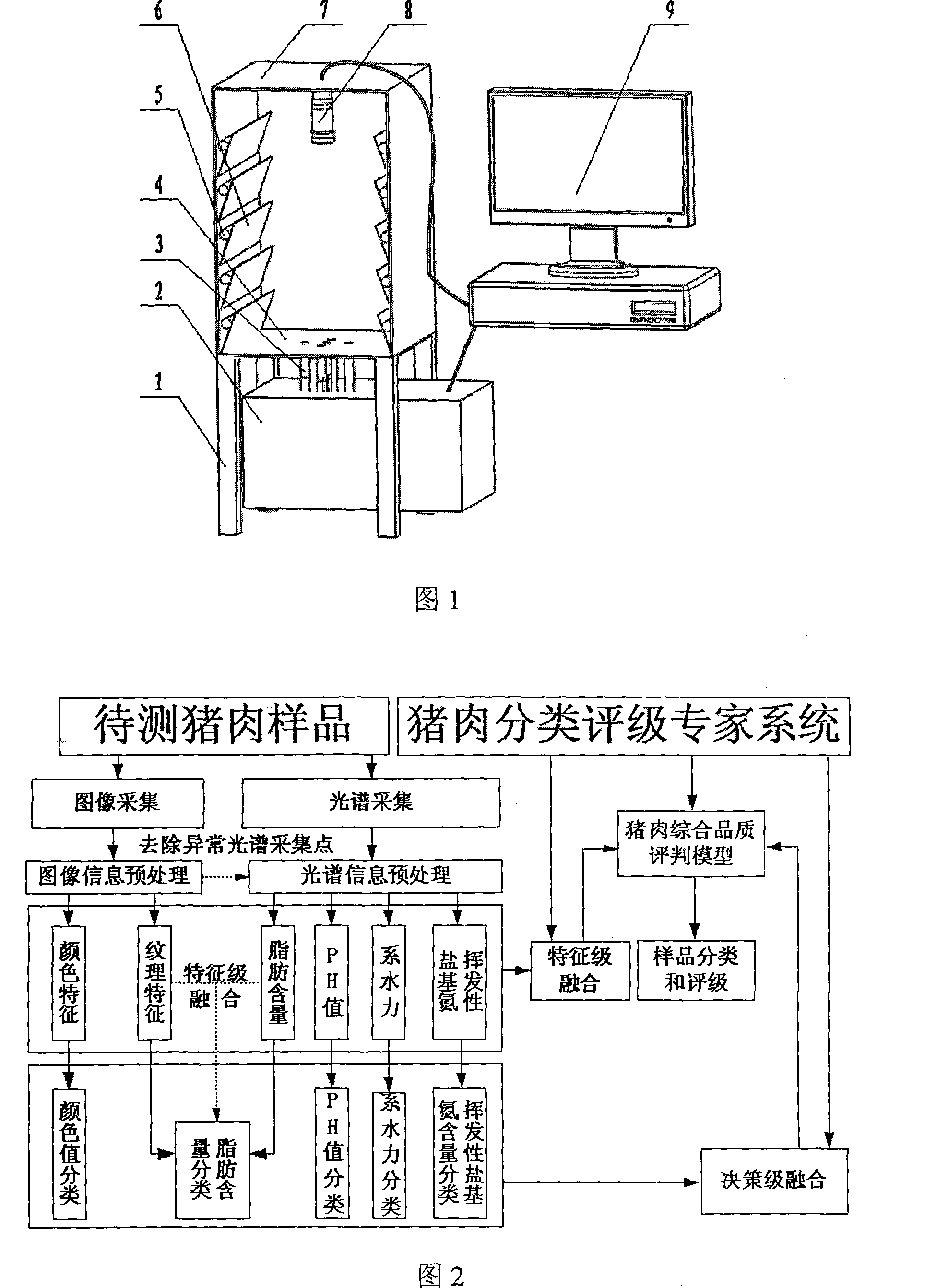

Method and apparatus for nondestructively testing food synthetic quality

ActiveCN101251526AFast NDTThe detection process is fastMaterial analysis by optical meansTesting foodFood materialFood classification

The invention discloses a non-destructive inspection method for the comprehensive quality of food and a device thereof, wherein image information reflecting characteristics of an inspected object, such as color, texture, size and shape, etc. is acquired by a machine vision system, and spectral information reflecting physical and chemical indexes of the sample such as moisture, sugar, protein, lipid and PH value, etc. is obtained by a spectrographic detection system, and the acquired image information and the spectral information undergo the preprocessing on the data layer and the information integration on the characteristic layer or the decision layer; together with a built food classification grading expert system, the quality of the inspection object is comprehensively graded. The invention comprehensively utilizes the light image information and the spectral information to inspect the appearance and inner quality of food, thereby the invention can make a quick, convenient, non-destructive and objective inspection on the comprehensive quality of food; the method and the device are widely used to classify food materials, monitor the food processing and grade the food, etc., which ensures the quality of food and contributes to the good quality and low price of food.

Owner:ZHEJIANG UNIV

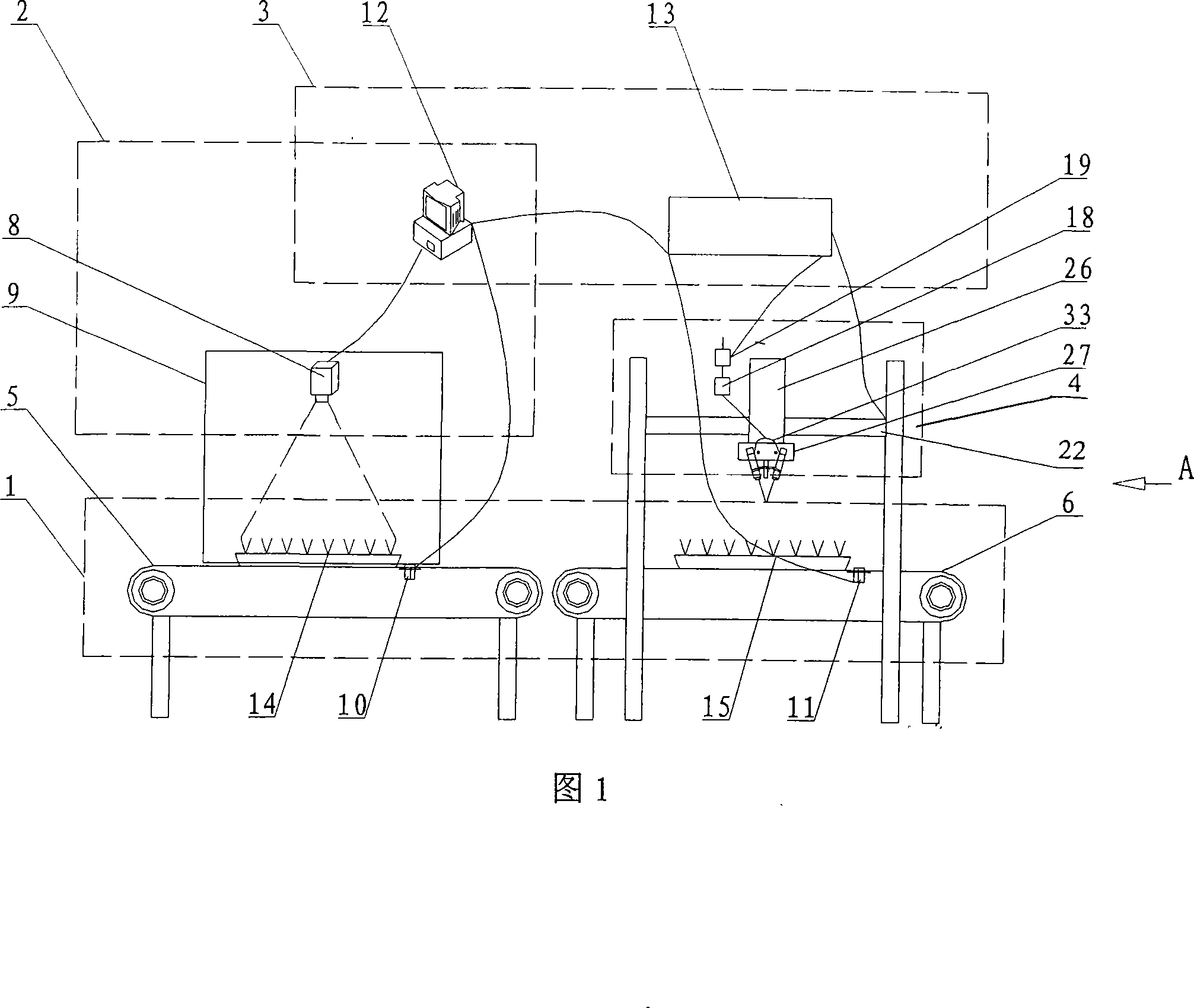

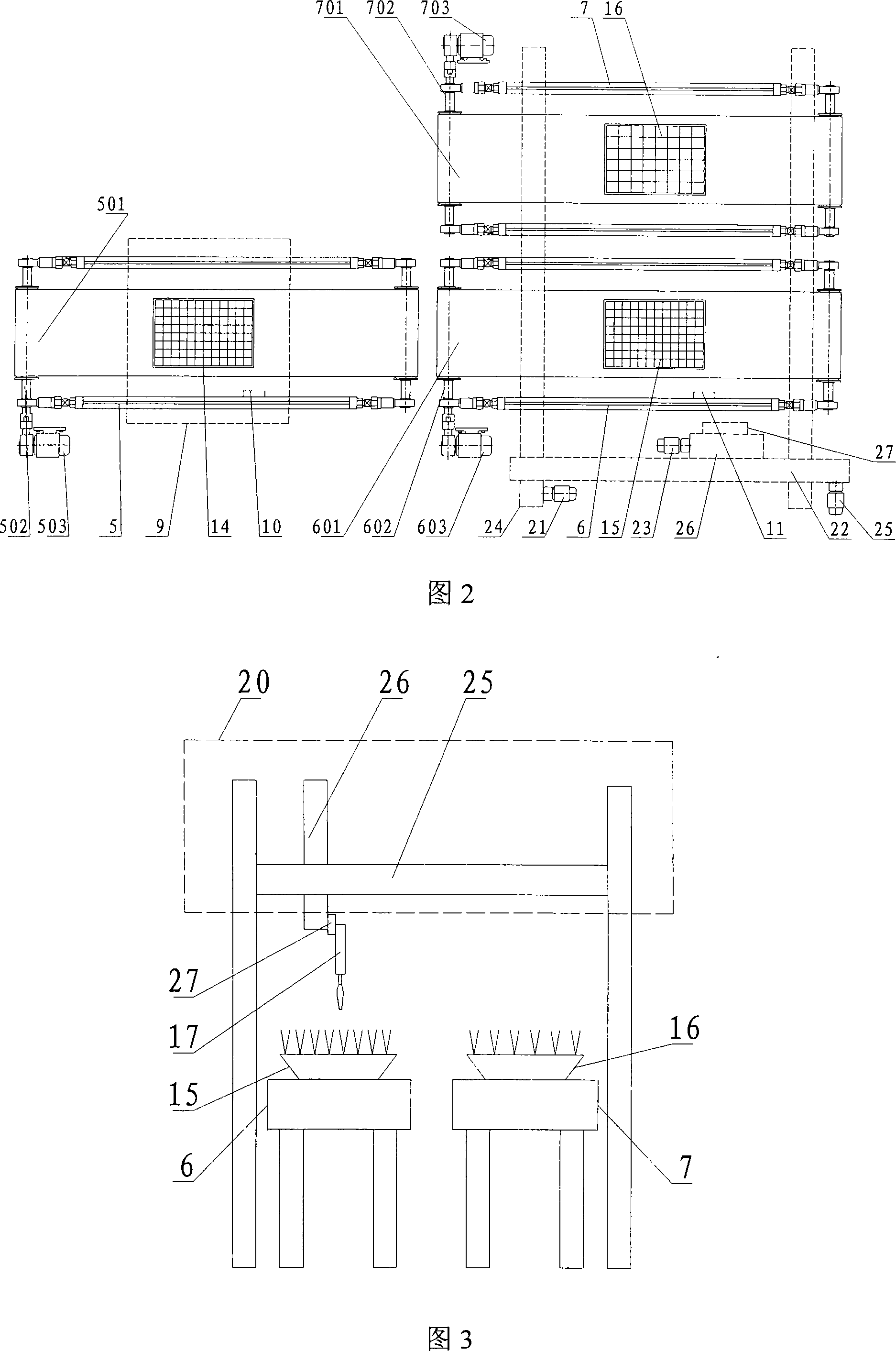

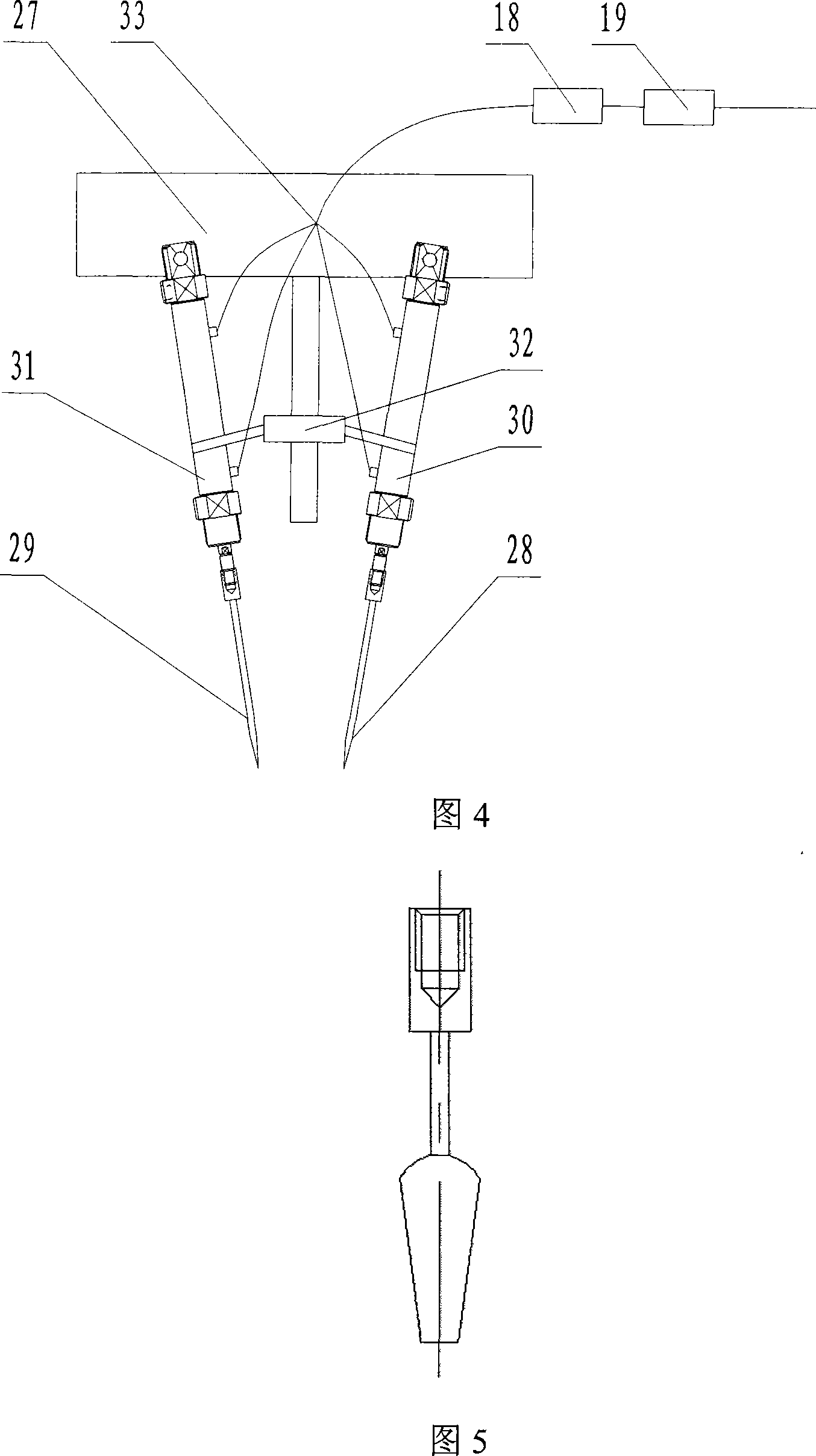

Seedling replanting system based on machine vision

InactiveCN101180928ARealize automatic transplantingIncrease the level of automationProgramme-controlled manipulatorTransplantingColor imageRelevant information

The invention discloses a seedling transplanting system based on machine vision and consists of components of seedling transportation, machine vision identification, control and transplanting. A conveyor belt of the seedling transportation component can convey seedling plates to the transplanting positions and automatically convey after the completion of the transplanting; color images obtained from the machine vision system are used for detecting a plurality of appearance growth indicators such as the size, the number of leaves of the seedlings so as to comprehensively judge whether the seedlings are suitable for transplanting and meanwhile determine the location information of the seedlings to be transferred to the control system by a computer through a serial communication interface RS-232; then the PLC of the control system sends commands to an end effector to realize automatic transplantation of the seedlings through the control system; the end effector adopts shovel-shaped fingers driven by a linear cylinder and meets the seedling transplanting demand for seedling plates with different sizes through regulating the angle of fingers. The invention utilizes the vision system to obtain the growth and location information of the seedlings and realizes the transplantation through the control of the computer and the PLC.

Owner:ZHEJIANG UNIV

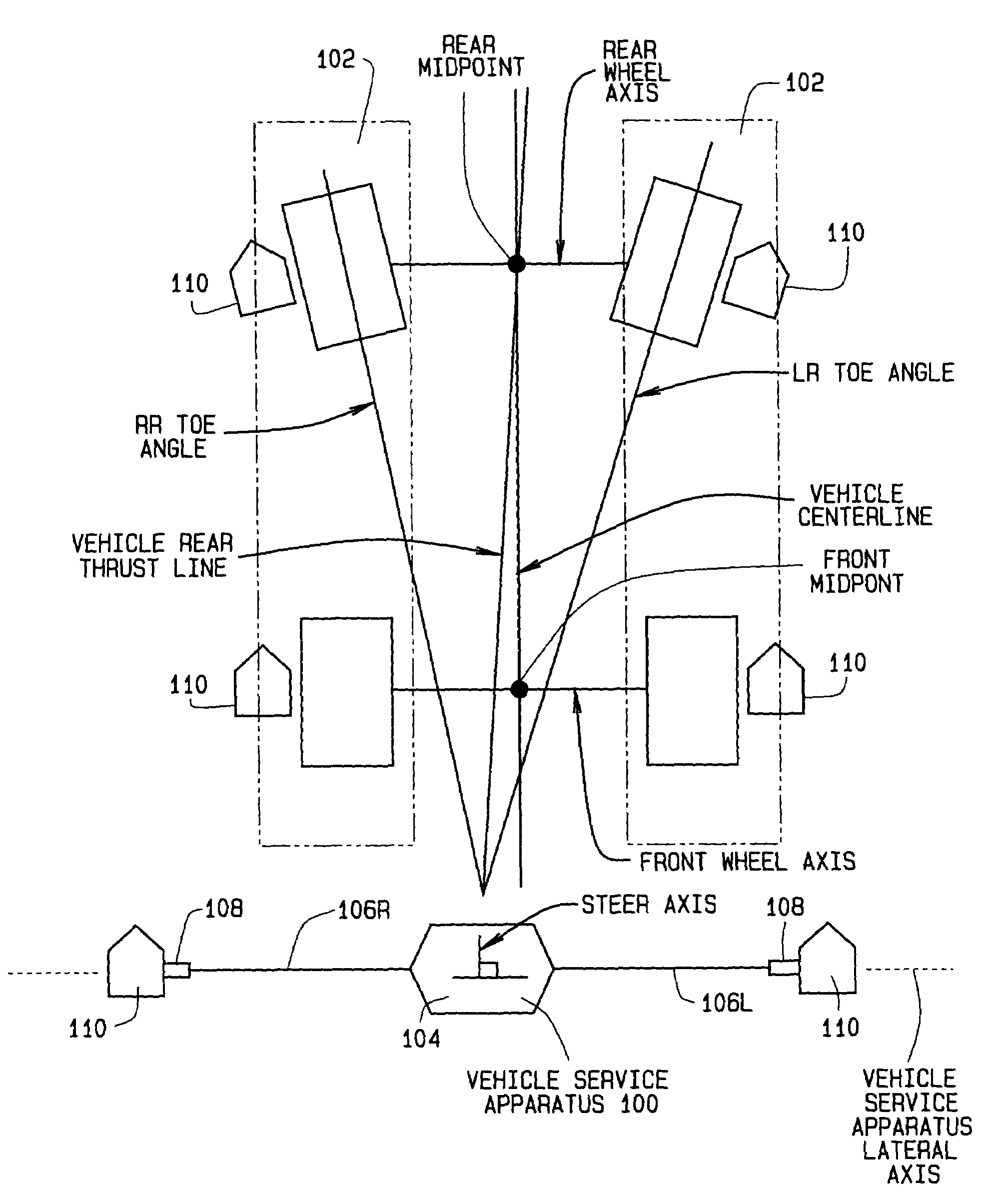

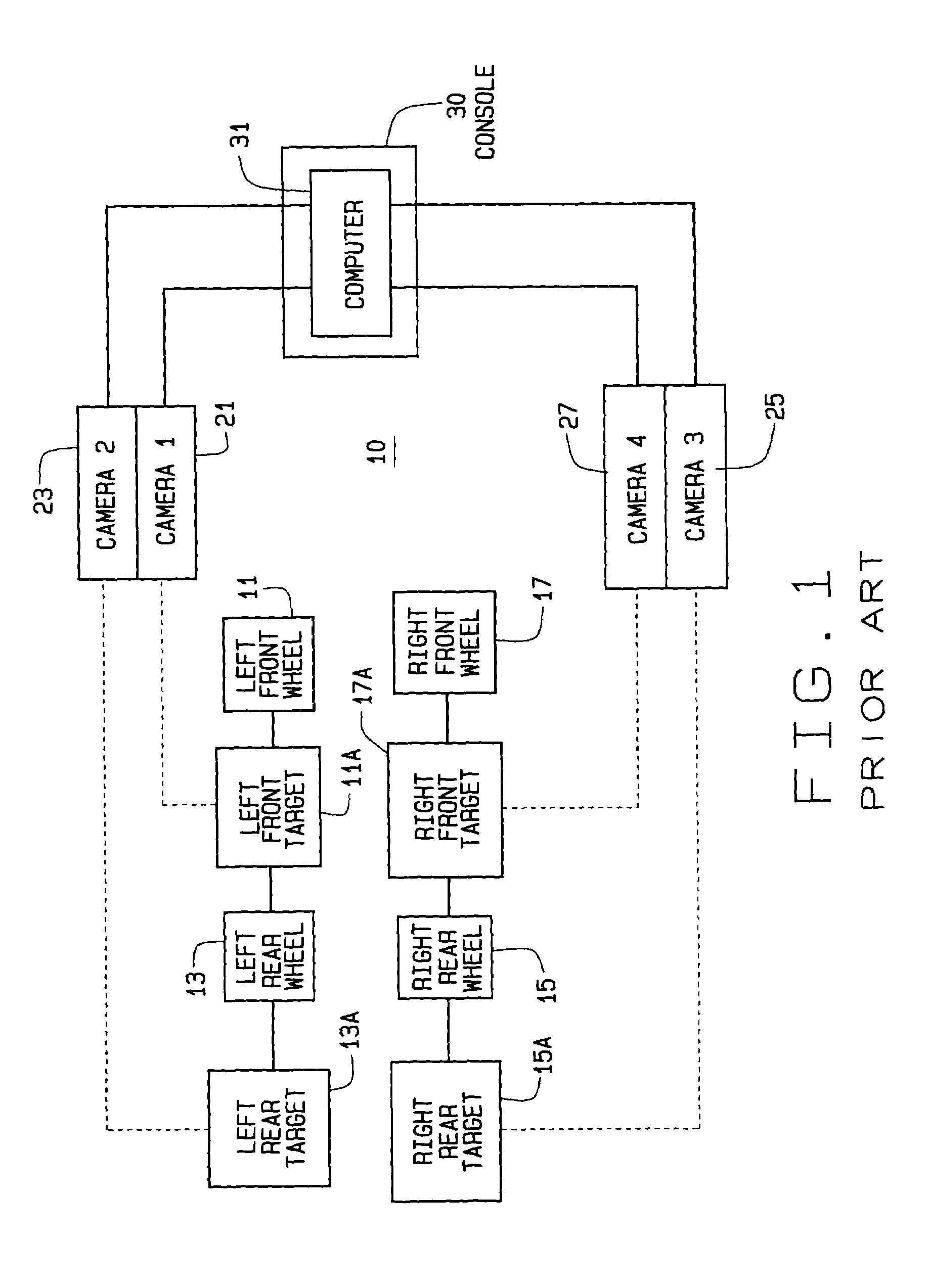

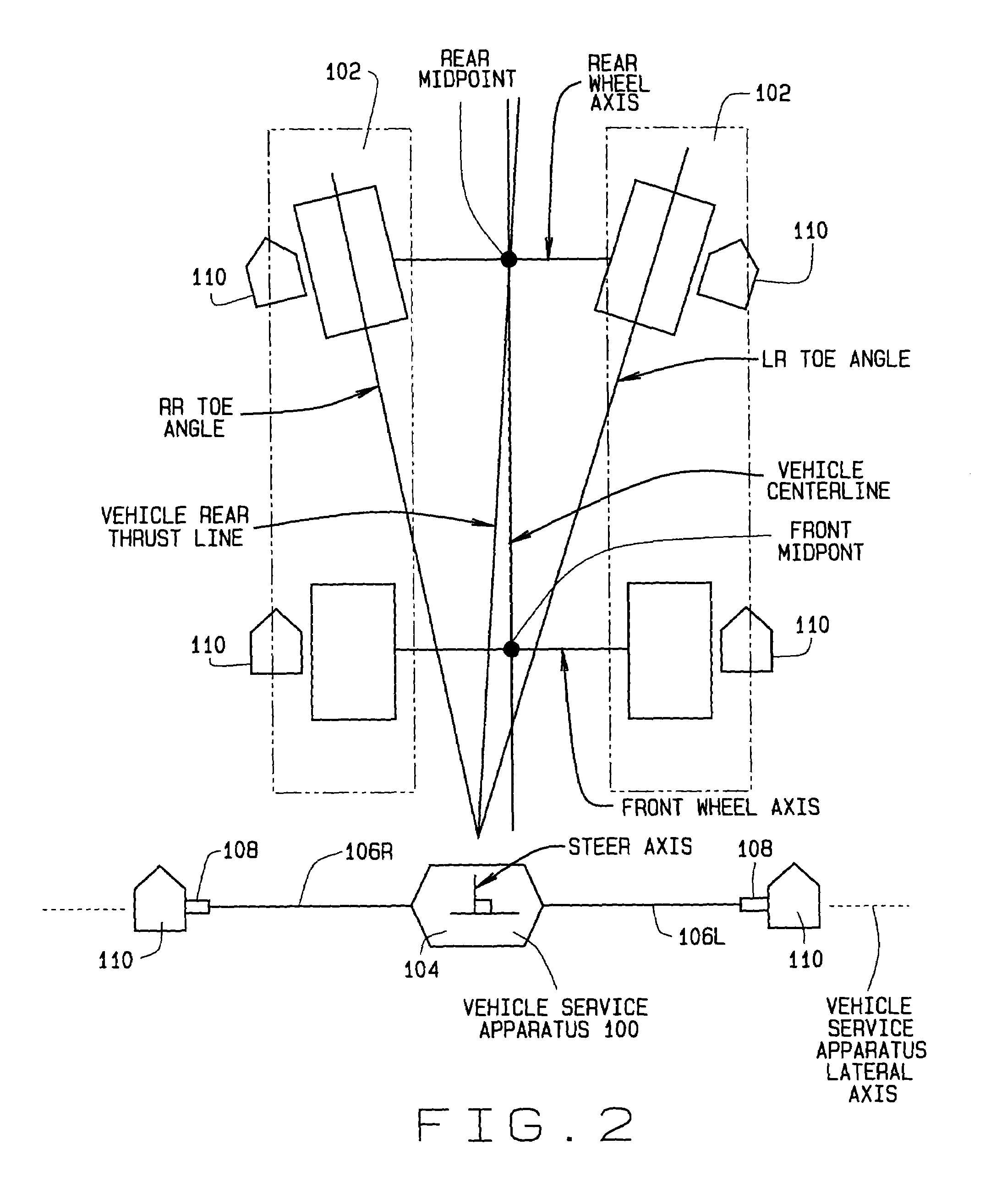

Method and apparatus for guiding placement of vehicle service fixtures

ActiveUS7382913B2Easy to installAngles/taper measurementsImage enhancementServicing equipmentVisual perception

A machine vision system is configured to facilitate placement of a vehicle service apparatus relative to an associated vehicle. The machine vision system is configured to utilize images of optical targets received from one or more cameras to guide the placement of the vehicle service apparatus relative to the associated vehicle.

Owner:HUNTER ENG

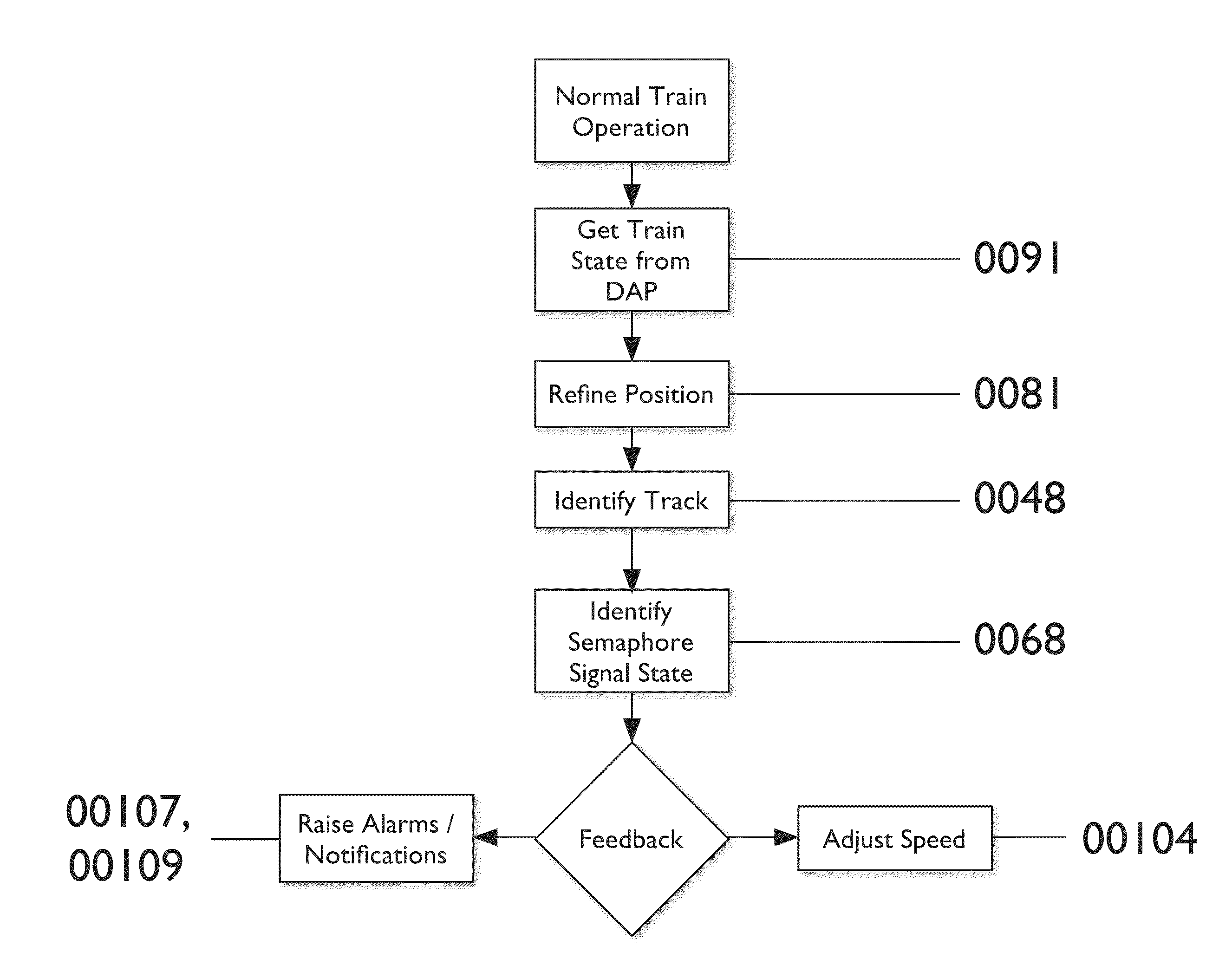

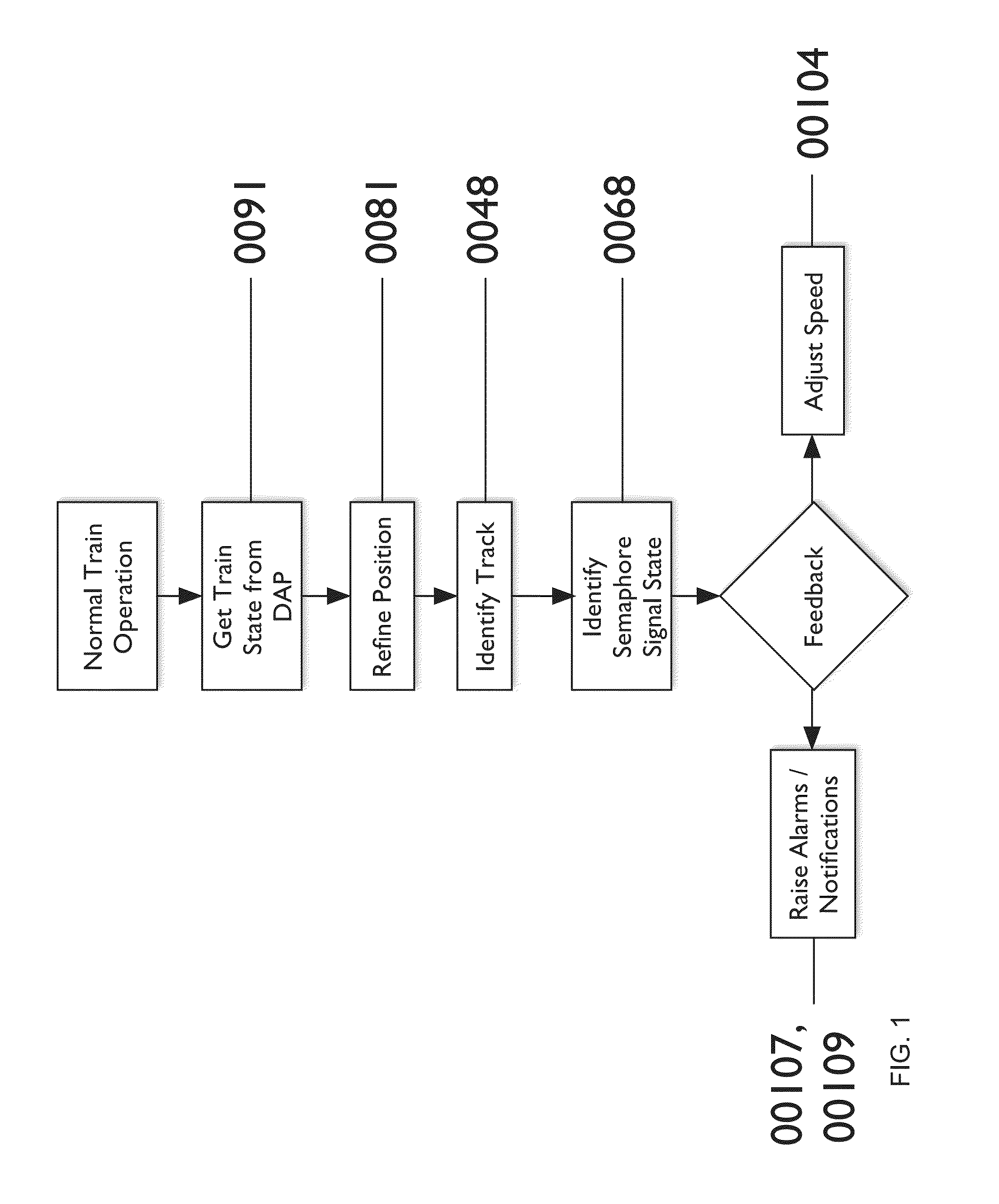

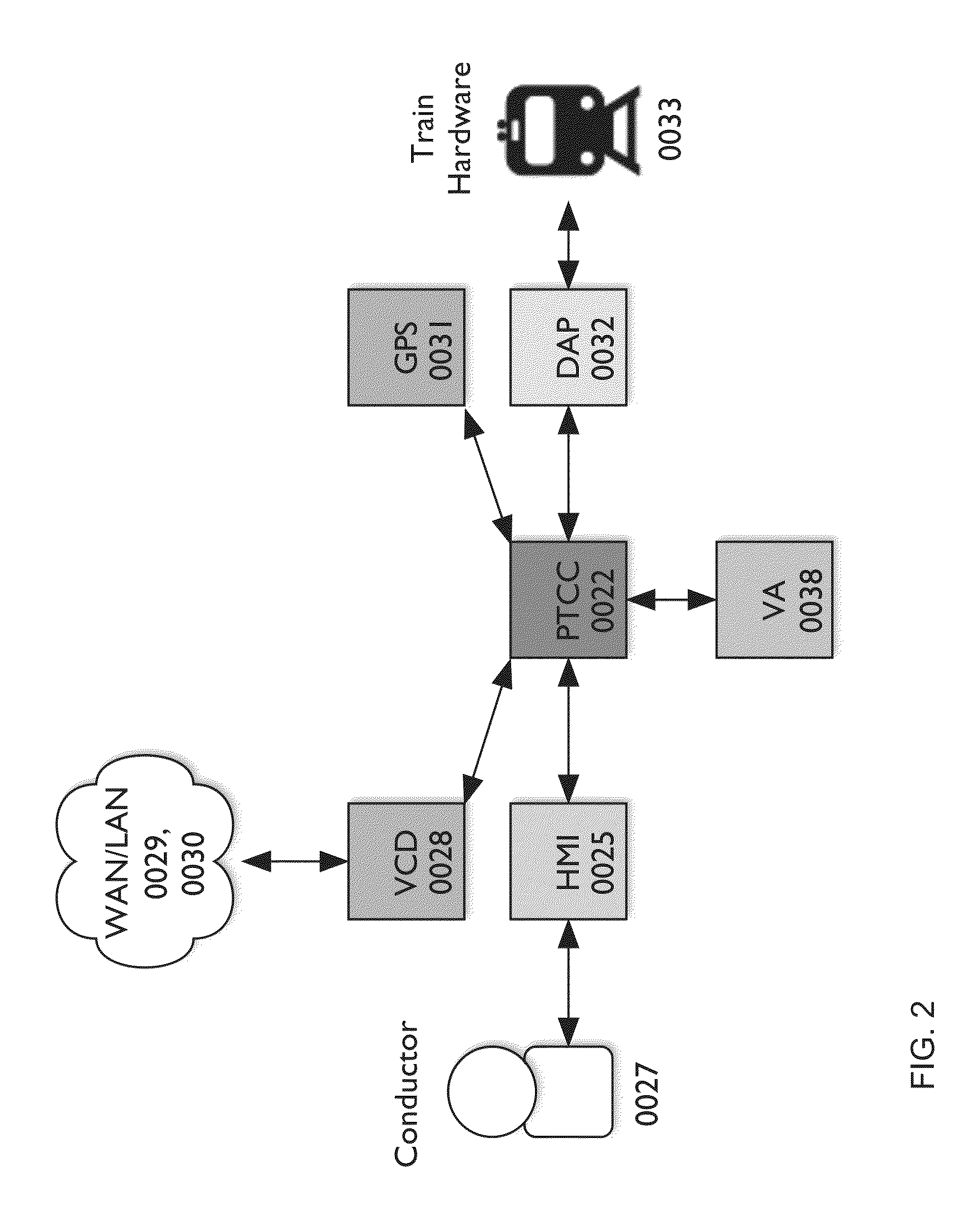

Real time machine vision system for train control and protection

ActiveUS20160121912A1Ensure safetyAutomatic systemsDigital data processing detailsMobile vehicleVisual perception

A system, method, and apparatus are disclosed for a machine vision system that incorporates hardware and / or software, remote databases, and algorithms to map assets, evaluate railroad track conditions, and accurately determine the position of a moving vehicle on a railroad track. One benefit of the invention is the possibility of real-time processing of sensor data for guiding operation of the moving vehicle.

Owner:CONDOR ACQUISITION SUB II INC

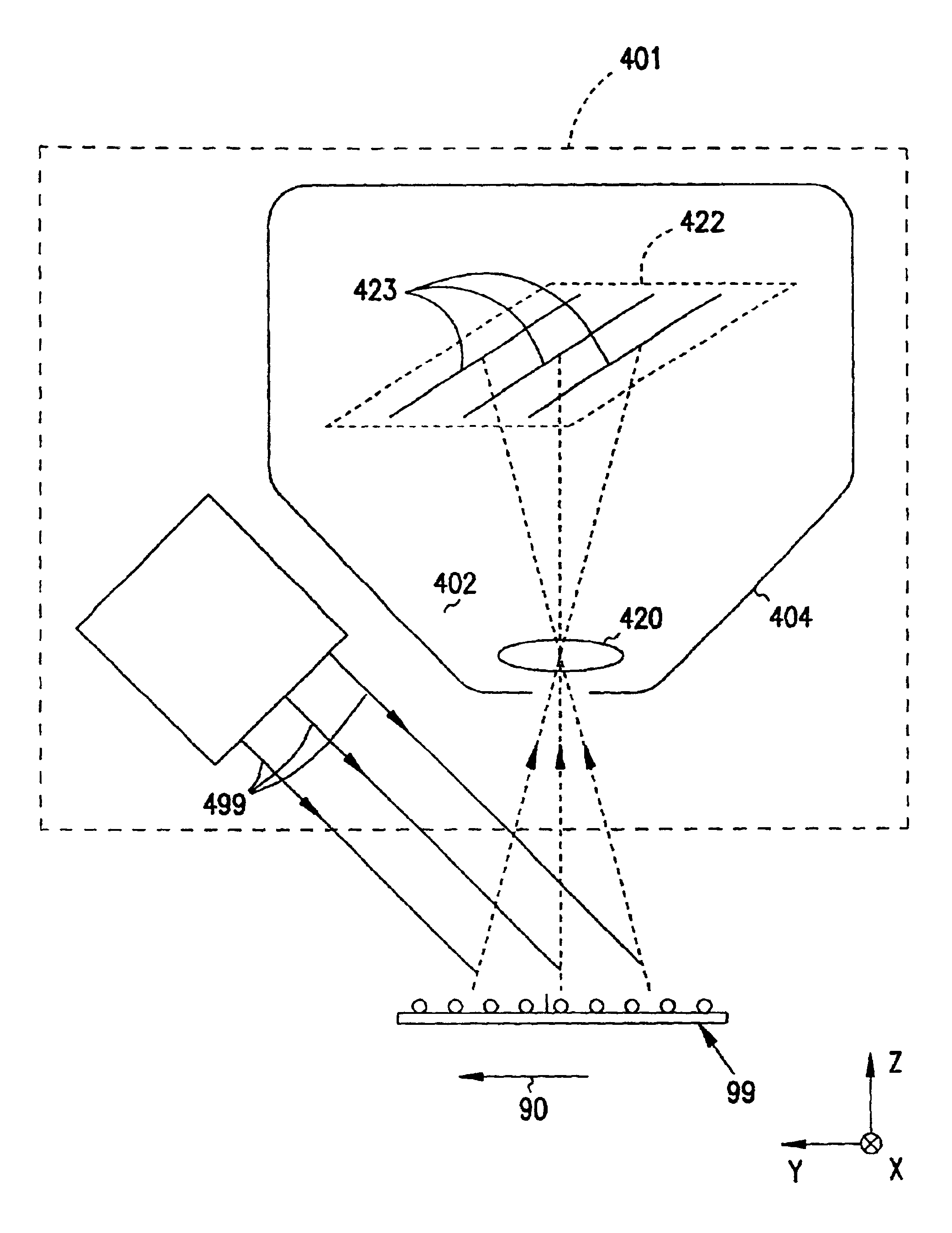

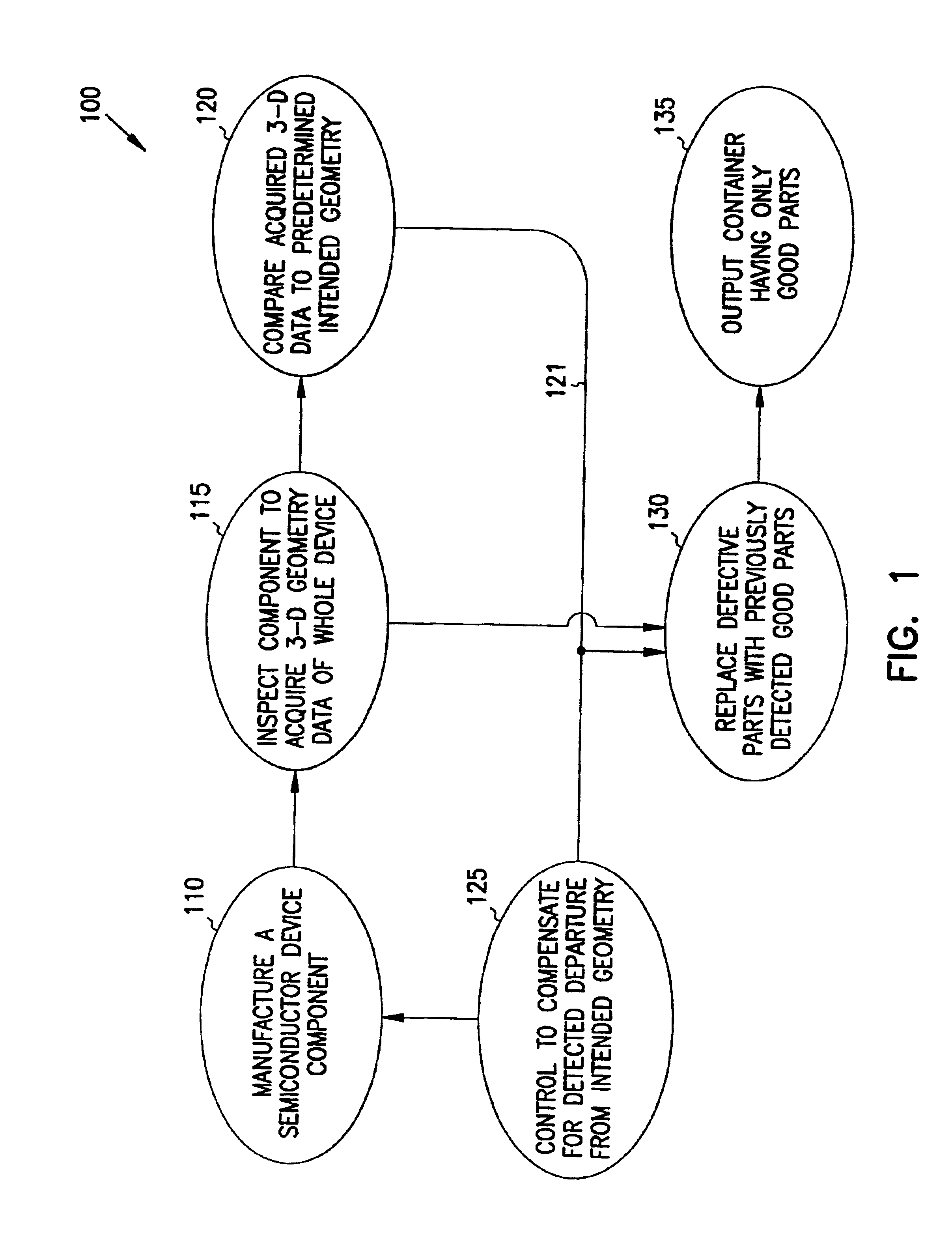

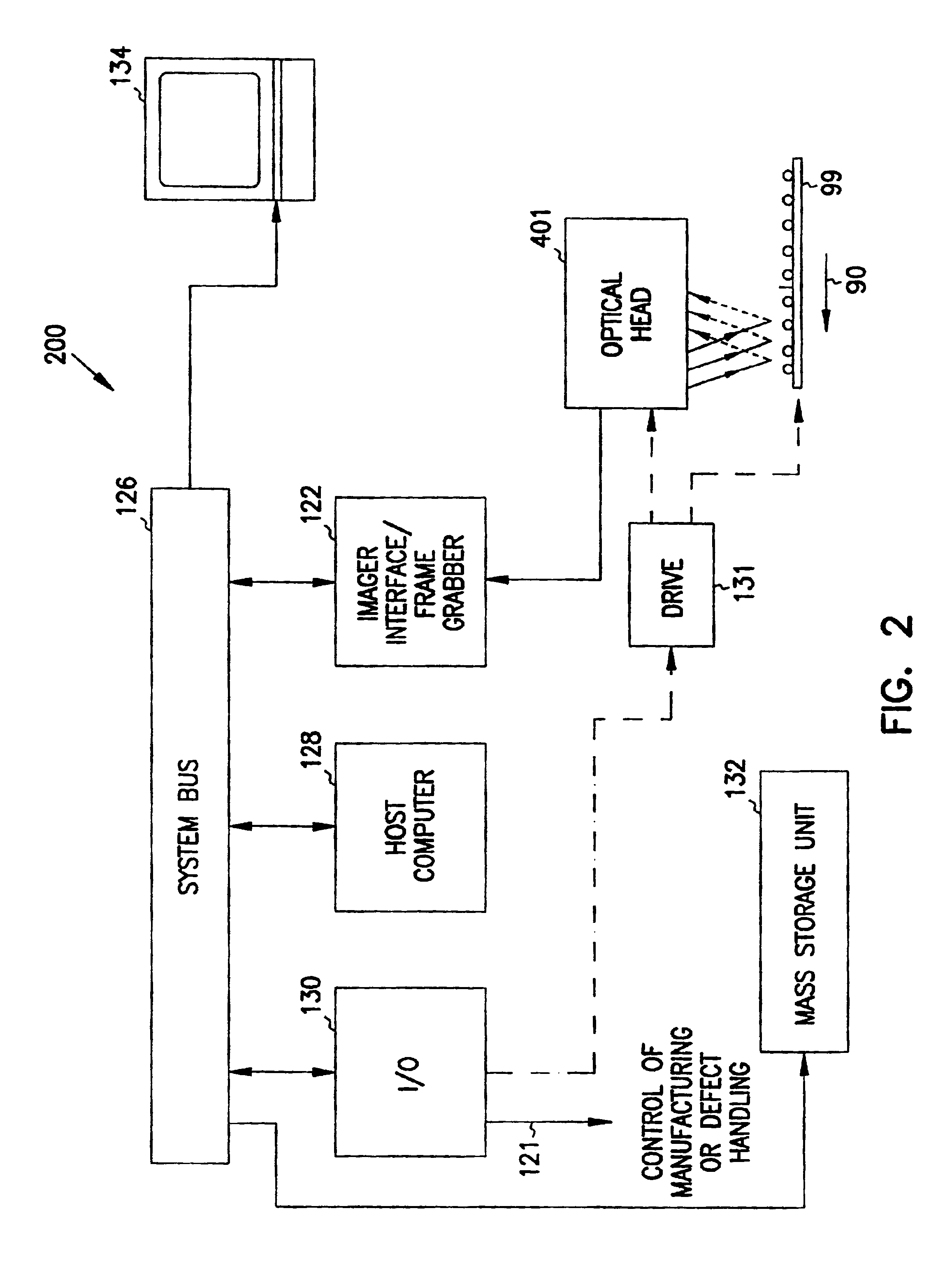

Imaging for a machine-vision system

InactiveUS6956963B2Prevents thermal driftLow variabilityImage analysisSemiconductor/solid-state device manufacturingImage detectionSemiconductor fab

Manufacturing lines include inspection systems for monitoring the quality of parts produced. Manufacturing lines for making semiconductor devices generally inspect each fabricated part. The information obtained is used to fix manufacturing problems in the semiconductor fab plant. A machine-vision system for inspecting devices includes a light source for propagating light to the device and an image detector that receives light from the device. Also included is a light sensor assembly for receiving a portion of the light from the light source. The light sensor assembly produces an output signal responsive to the intensity of the light received at the light sensor assembly. A controller controls the amount of light received by the image detector to a desired intensity range in response to the output from the light sensor. The image detector may include an array of imaging pixels. The imaging system may also include a memory device which stores correction values for at least one of the pixels in the array of imaging pixels. To minimize or control thermal drift of signals output from an array of imaging pixels, the machine-vision system may also include a cooling element attached to the imaging device. The light source for propagating light to the device may be strobed. The image detector that receives light from the device remains in a fixed position with respect to the strobed light source. A translation element moves the strobed light source and image detector with respect to the device. The strobed light may be alternated between a first and second level.

Owner:ISMECA SEMICONDUCTOR HOLDING SA

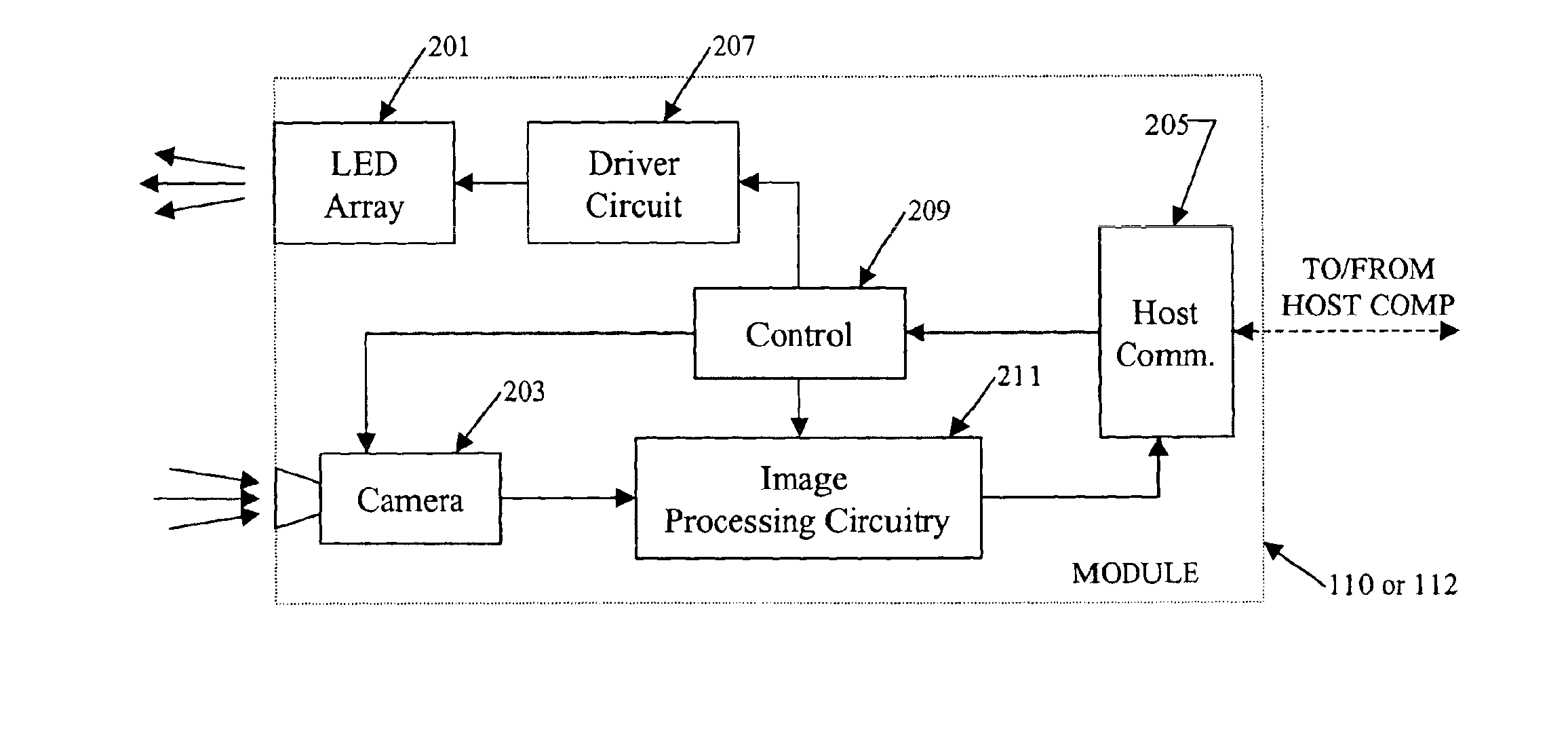

Gradient calculating camera board

InactiveUS6871409B2Reduce bandwidth requirementsNot require as complex (or expensive) hardwareAngles/taper measurementsAngle measurementCamera controlVisual perception

In a machine vision system utilizing computer processing of image data, an imaging module incorporates the image sensor as well as pre-processing circuitry, for example, for performing a background subtraction and / or a gradient calculation. The pre-processing circuitry may also compress the image information. The host computer receives the pre-processed image data and performs all other calculations necessary to complete the machine vision application, for example, to determine one or more wheel alignment parameters of a subject vehicle. In a disclosed example useful for wheel alignment, the module also includes illumination elements, and the module circuitry provides associated camera control. The background subtraction, gradient calculation and associated compression require simpler, less expensive circuitry than for typical image pre-processing boards. Yet, the pre-processing at the imaging module substantially reduces the processing burden on the host computer when compared to machine vision implementations using direct streaming of image data to the host computer.

Owner:SNAP ON INC

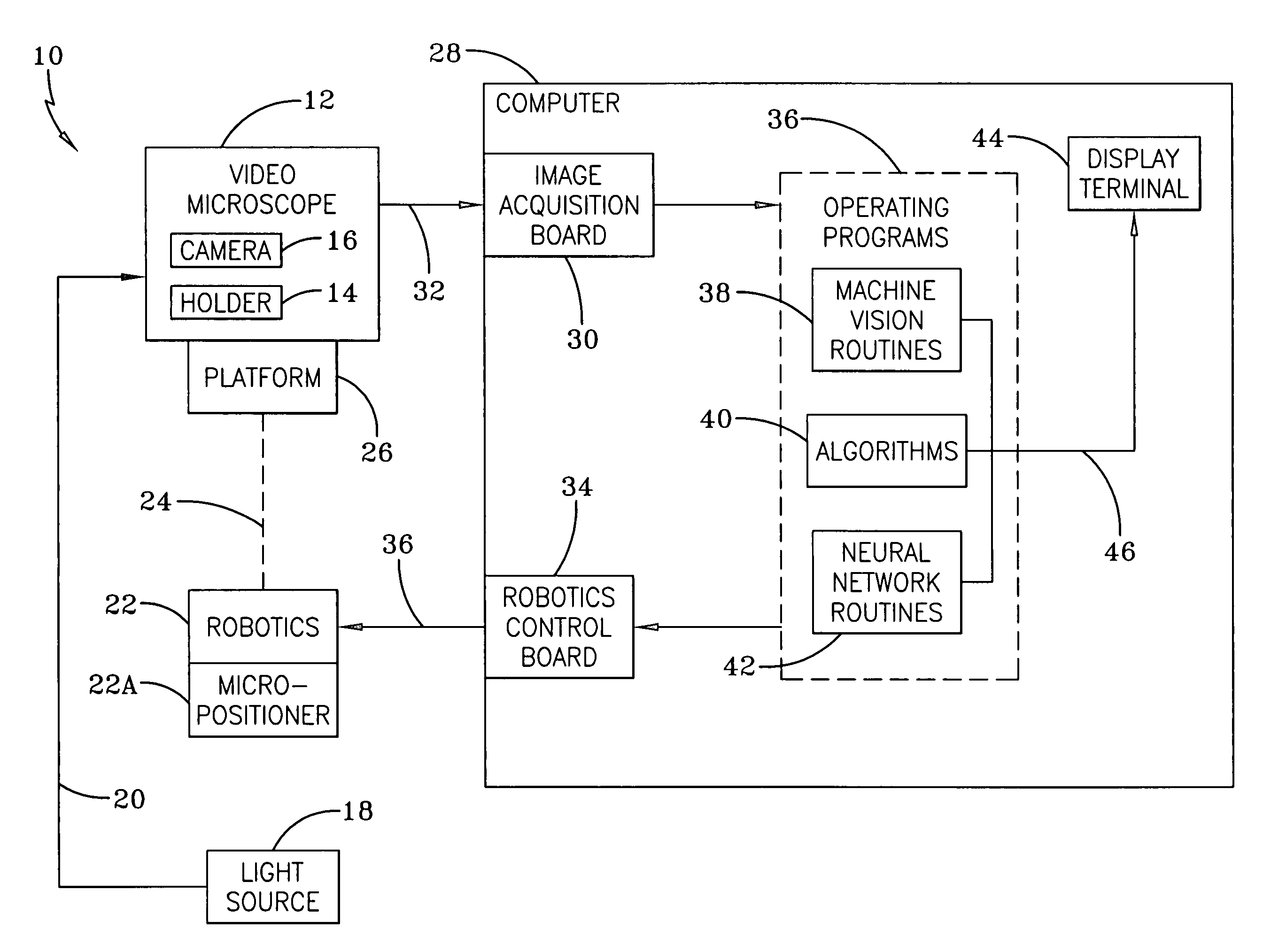

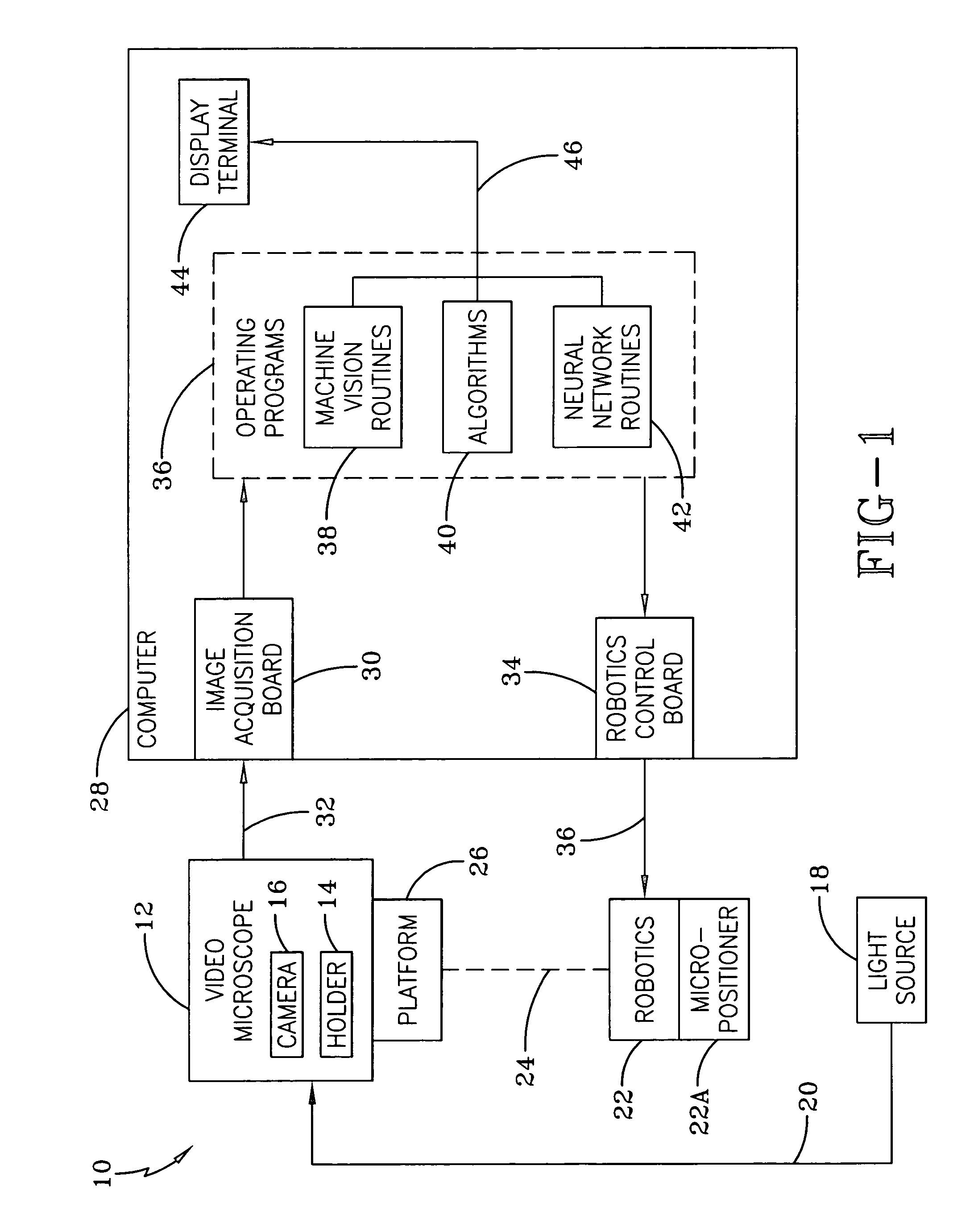

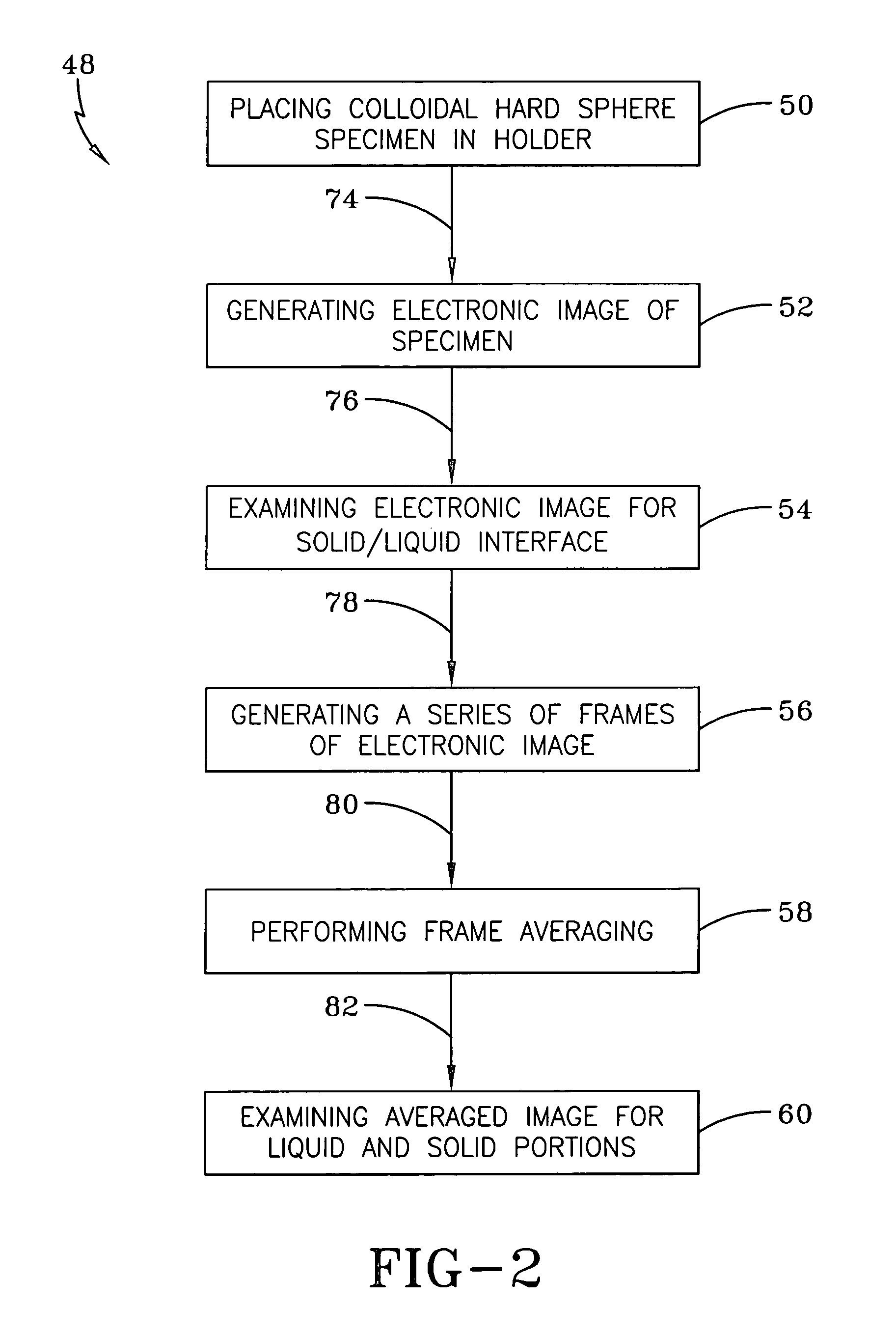

Identification of cells with a compact microscope imaging system with intelligent controls

A Microscope Imaging System (CMIS) with intelligent controls is disclosed that provides techniques for scanning, identifying, detecting and tracking microscopic changes in selected characteristics or features of various surfaces including, but not limited to, cells, spheres, and manufactured products subject to difficult-to-see imperfections. The practice of the present invention provides applications that include colloidal hard spheres experiments, biological cell detection for patch clamping, cell movement and tracking, as well as defect identification in products, such as semiconductor devices, where surface damage can be significant, but difficult to detect. The CMIS system is a machine vision system, which combines intelligent image processing with remote control capabilities and provides the ability to auto-focus on a microscope sample, automatically scan an image, and perform machine vision analysis on multiple samples simultaneously.

Owner:NASA

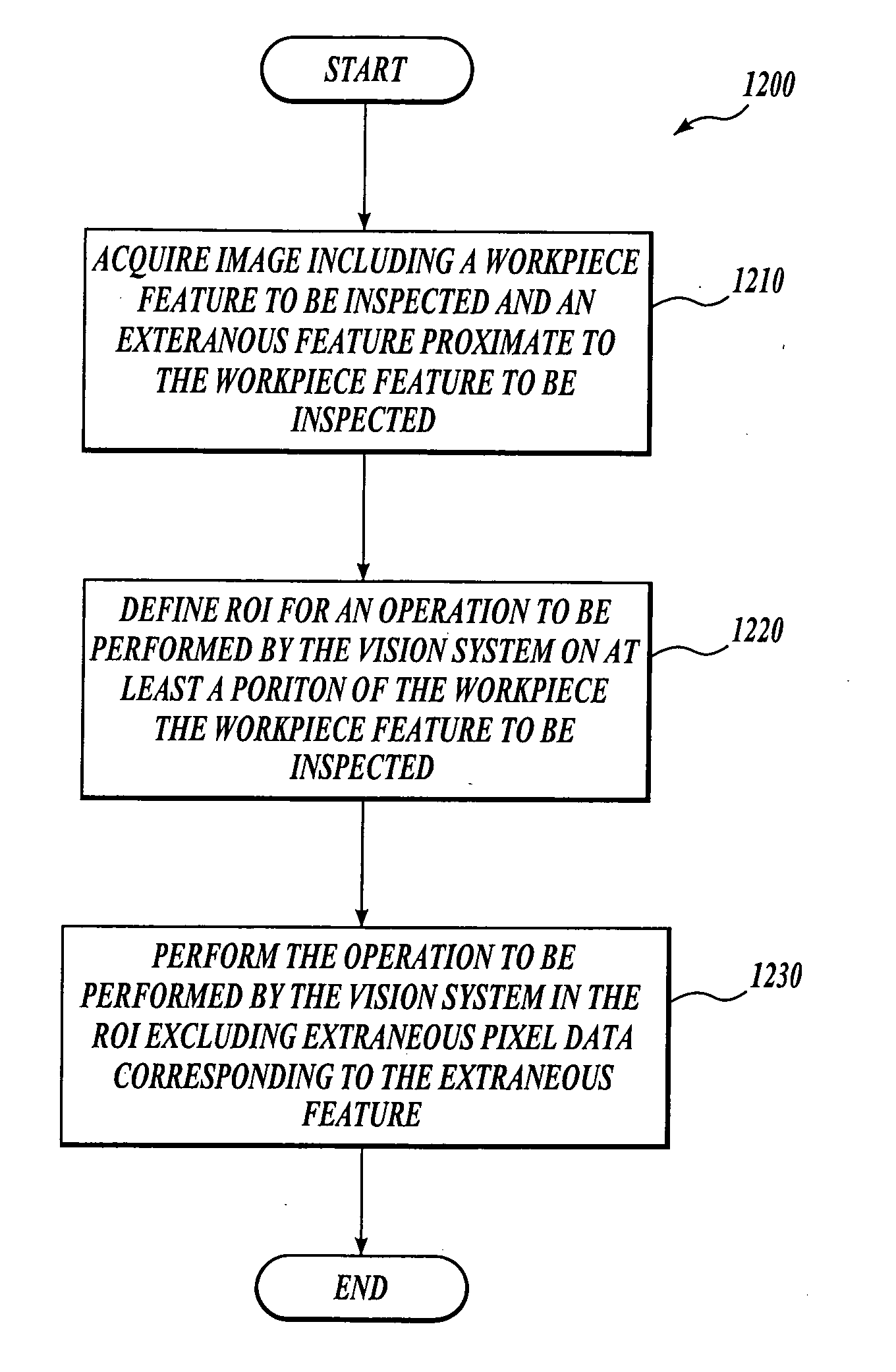

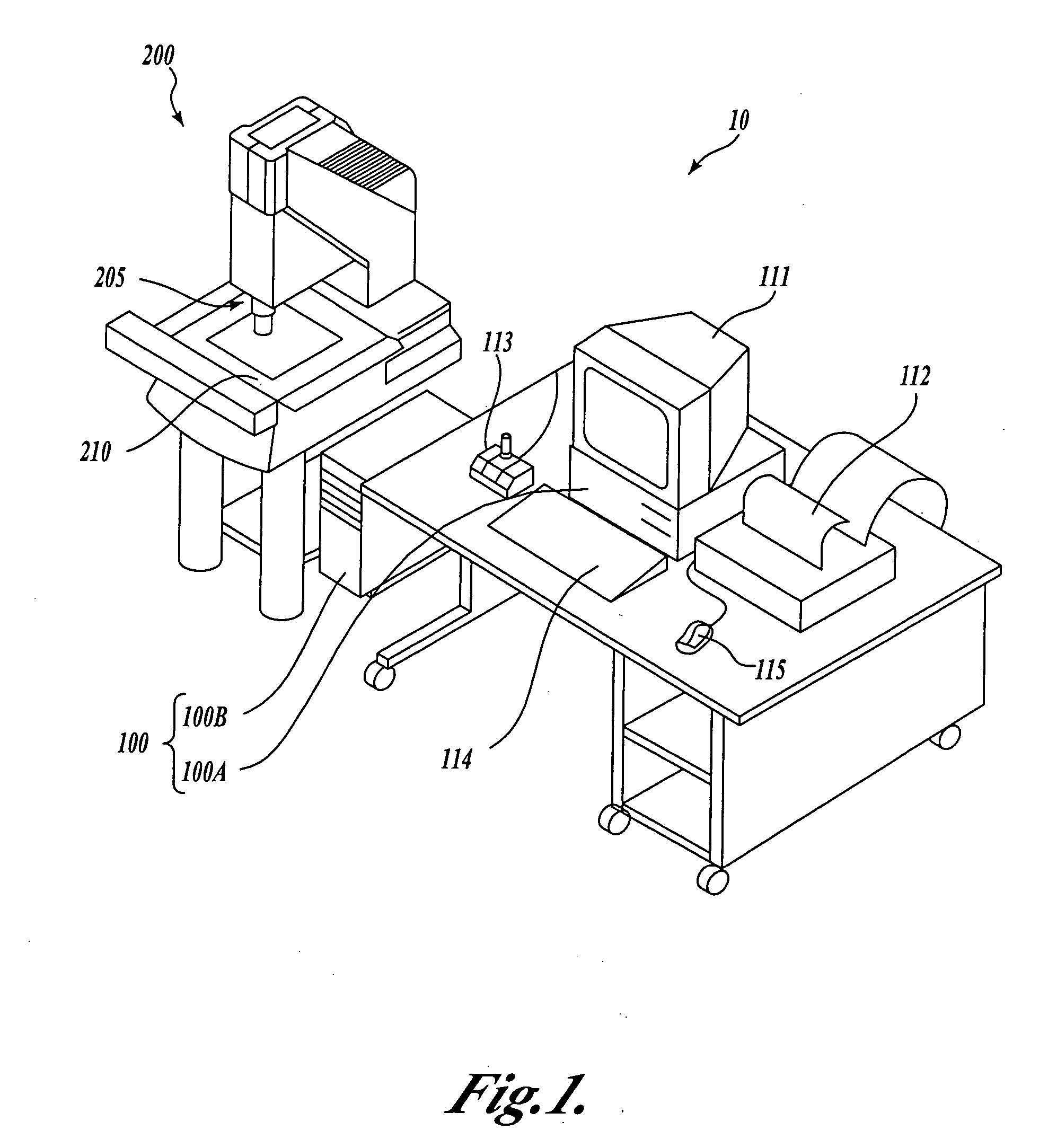

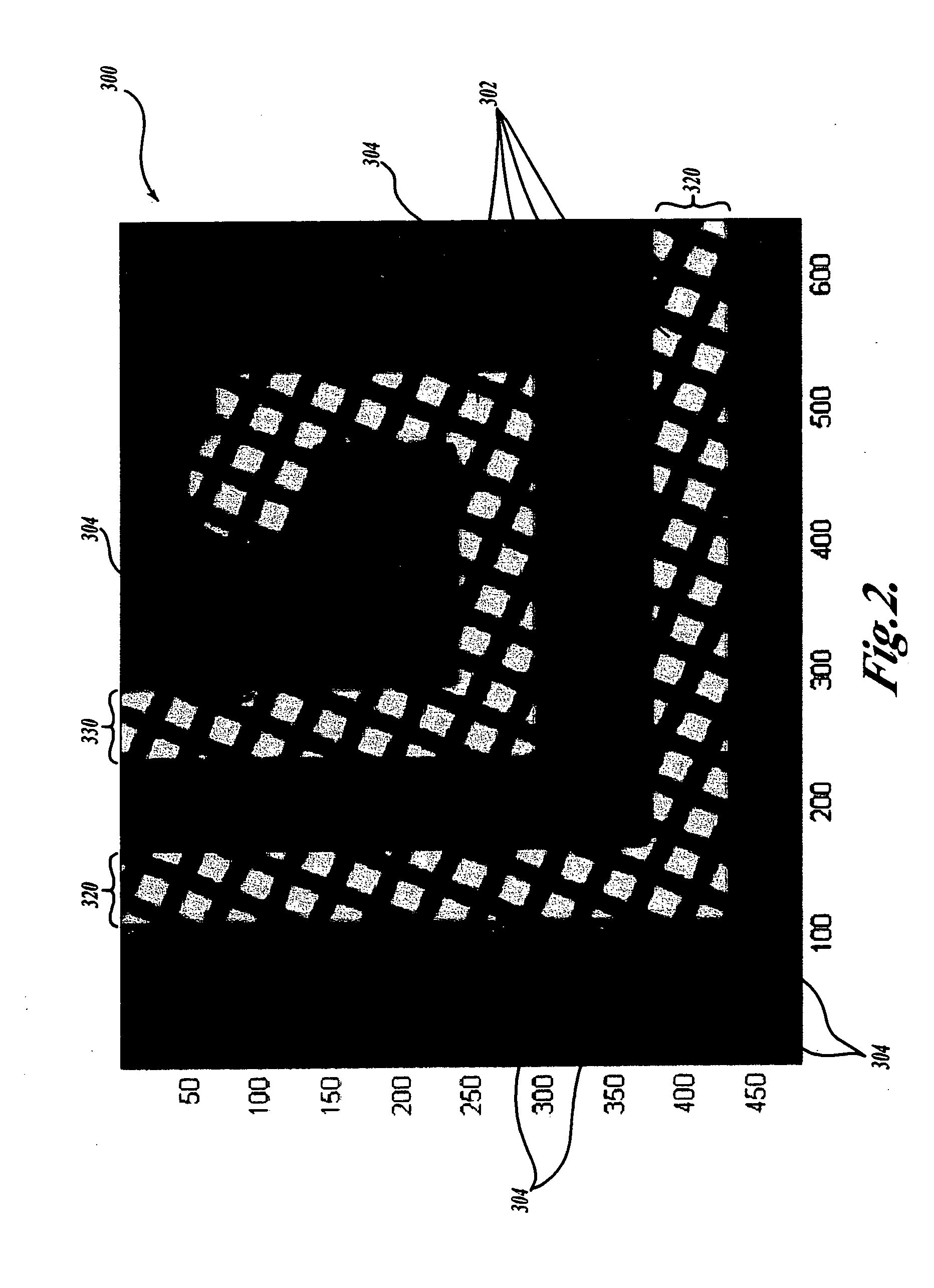

System and method for excluding extraneous features from inspection operations performed by a machine vision inspection system

ActiveUS20050213807A1Prevent proliferationEasy maintenanceImage enhancementImage analysisMetrologyImaging Feature

Systems and methods for a machine vision metrology and inspection system are provided for excluding extraneous image features from various inspection or control operations of the machine vision system. The extraneous image features may be in close proximity to other image features to be inspected. One aspect of various embodiments of the invention is that no filtering or other image modifications are performed on the “non-excluded” original image data in the region of the feature to be inspected. Another aspect of various embodiments of the invention is that a region of interest associated with a video tool provided by the user interface of the machine vision system can encompass a region or regions of the feature to be inspected, as well as regions having excluded data, making the video tool easy to use and robust against reasonably expected variations in the spacing between the features to be inspected and the extraneous image features. In various embodiments of the invention, the extraneous image excluding operations are concentrated in the region of interest defining operations of the machine vision system, such that the feature measuring or characterizing operations of the machine vision system operate similarly whether there is excluded data in the associated region of interest or not. Various user interface features and methods are provided for implementing and using the extraneous image feature excluding operations when the machine vision system is operated in a learning or training mode used to create part programs usable for repeated automatic workpiece inspection. The invention is of particular use when inspecting flat panel display screen masks having occluded features to be inspected.

Owner:MITUTOYO CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com