Patents

Literature

40results about How to "Reduce data bandwidth" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

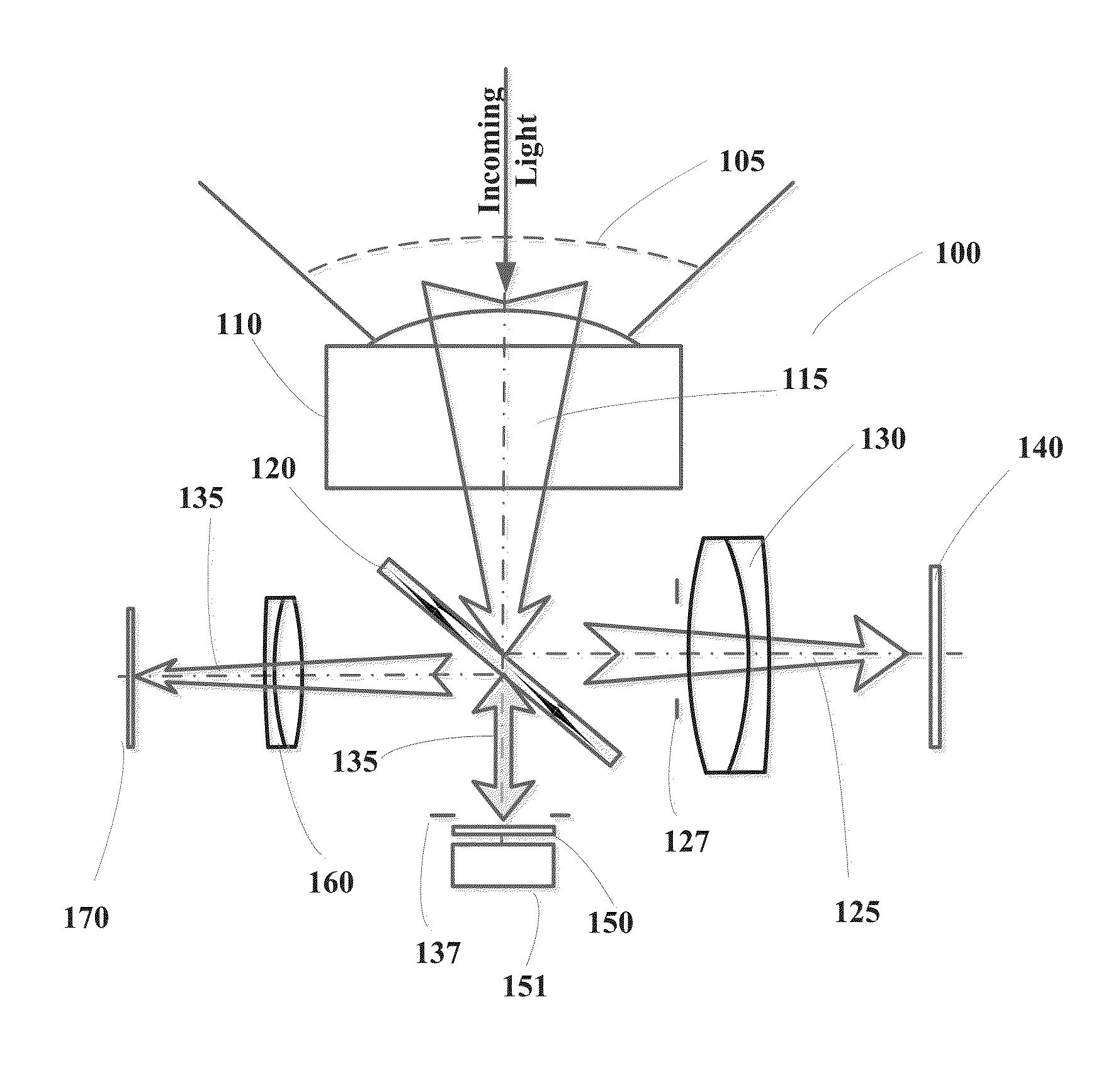

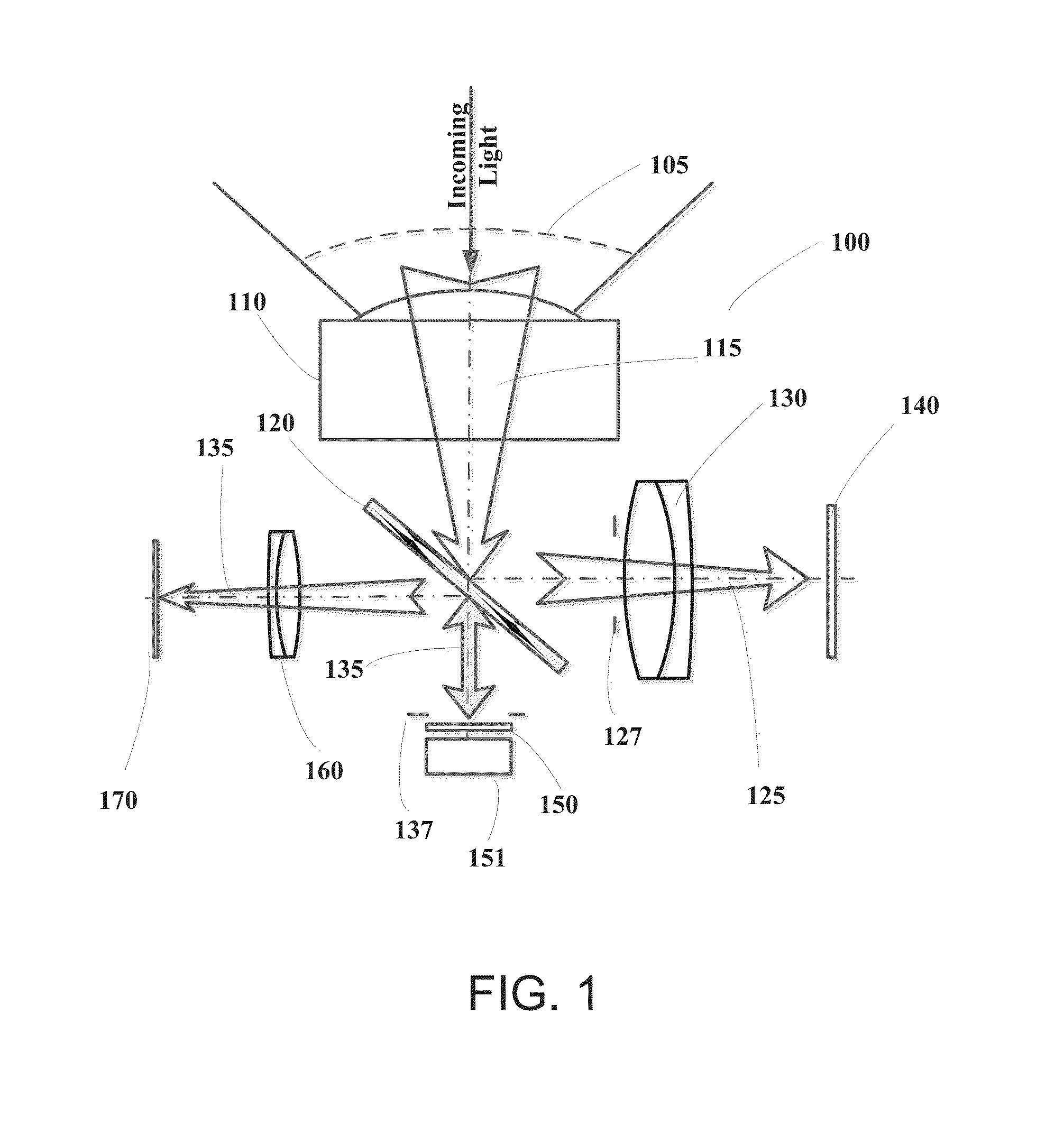

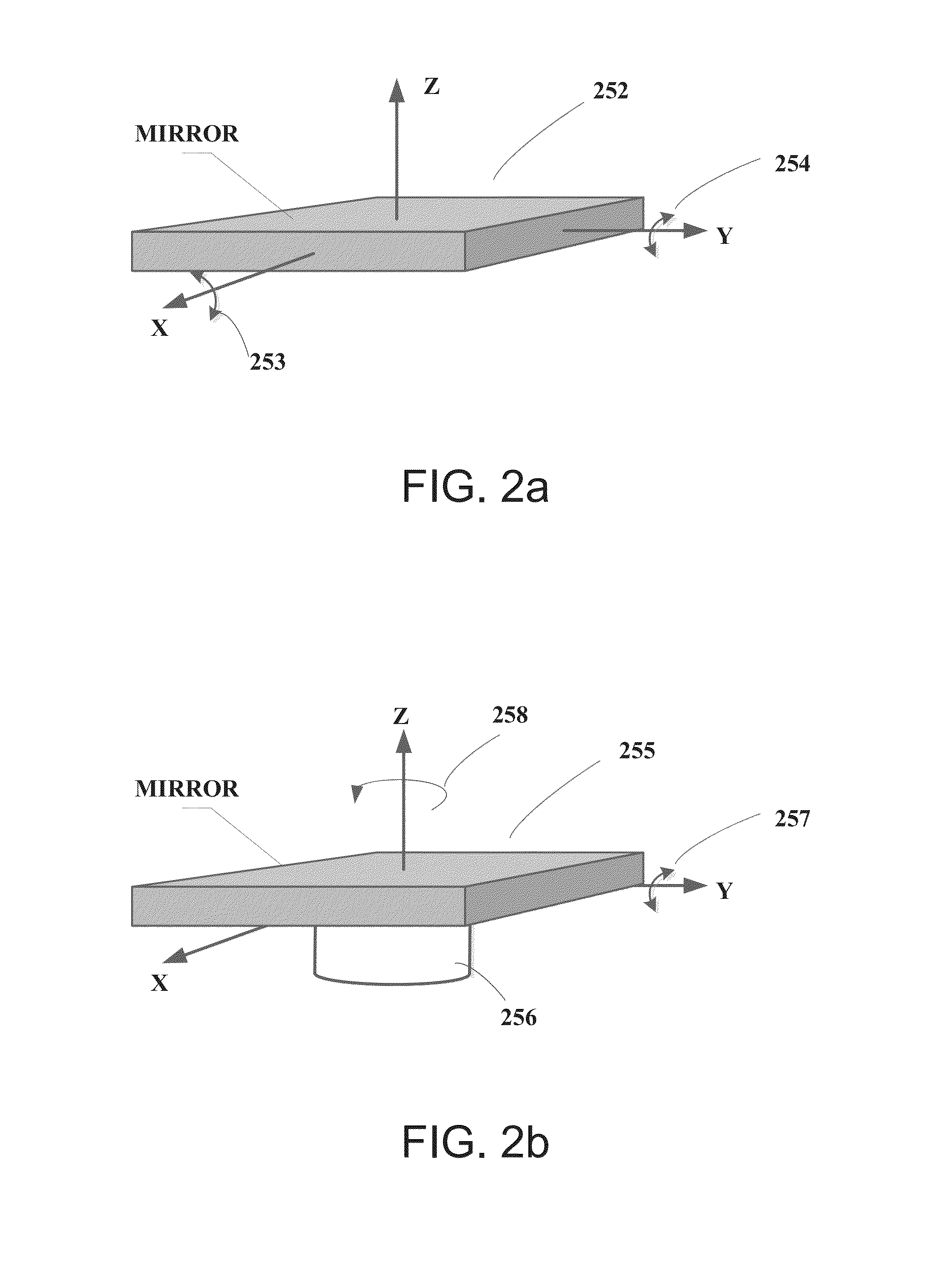

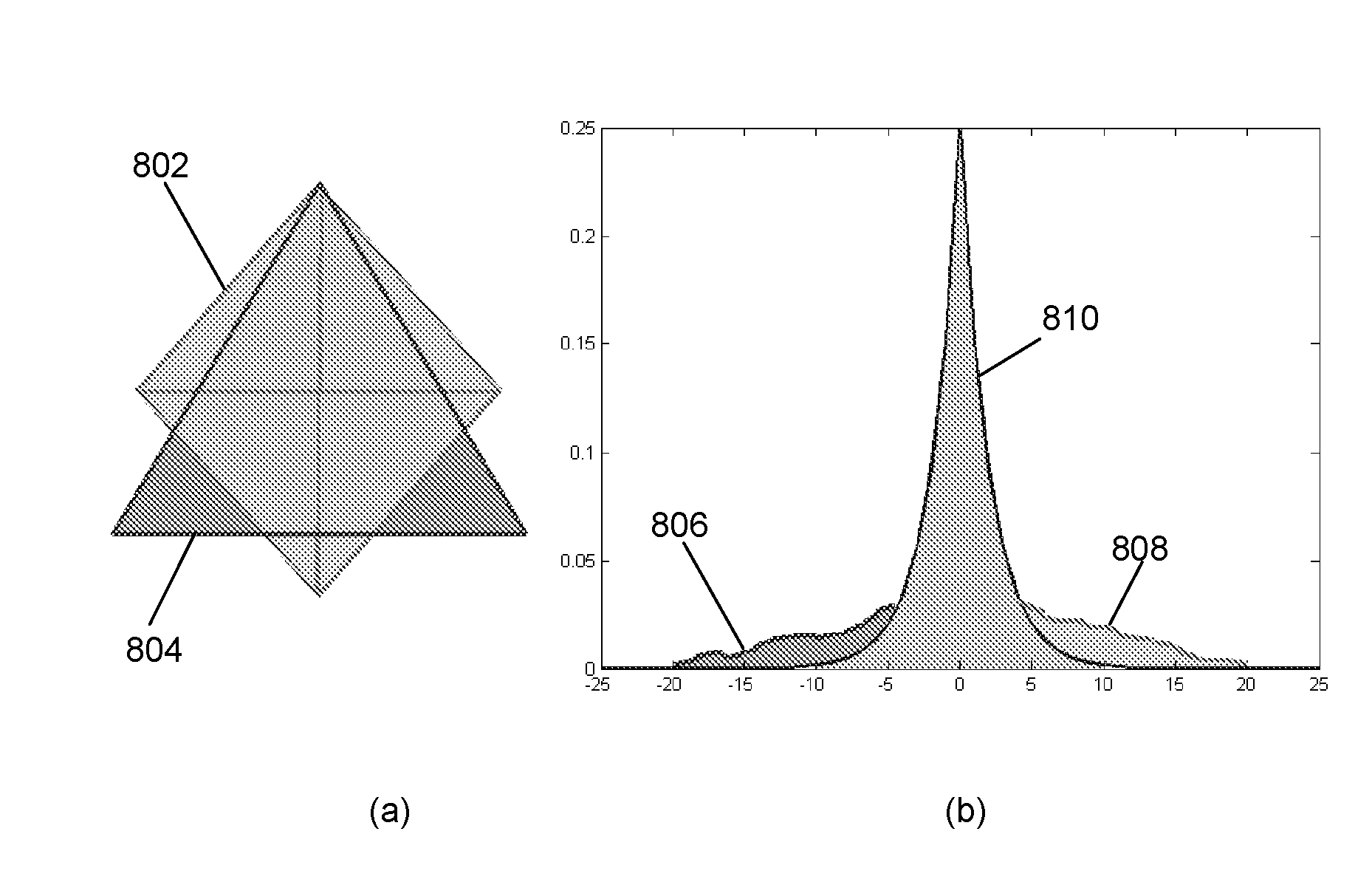

Wide-field of view (FOV) imaging devices with active foveation capability

ActiveUS20140218468A1High resolutionIncrease frame rateTelevision system detailsPrismsWide fieldFoveated imaging

The present invention comprises a foveated imaging system capable of capturing a wide field of view image and a foveated image, where the foveated image is a controllable region of interest of the wide field of view image.

Owner:MAGIC LEAP INC

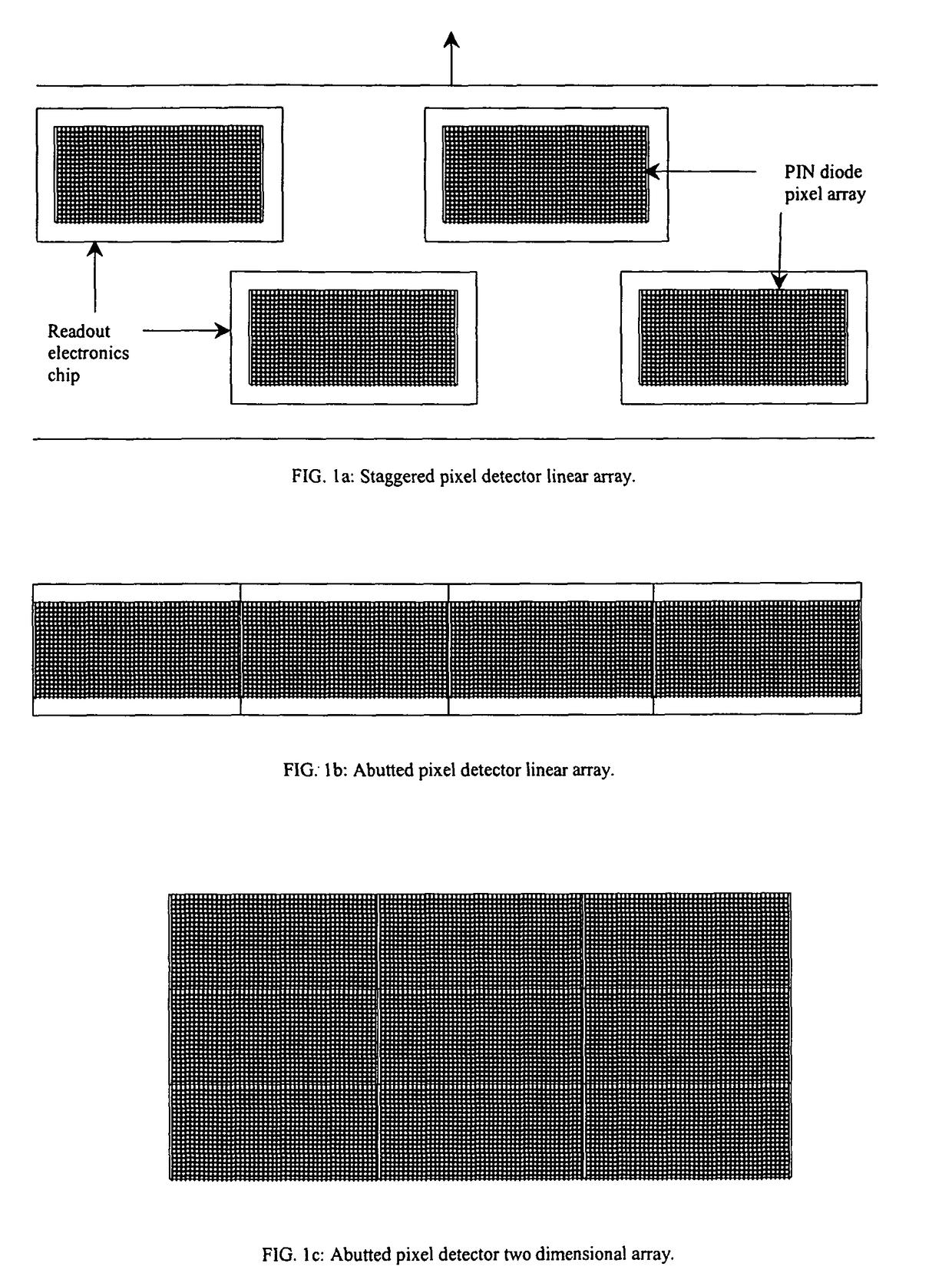

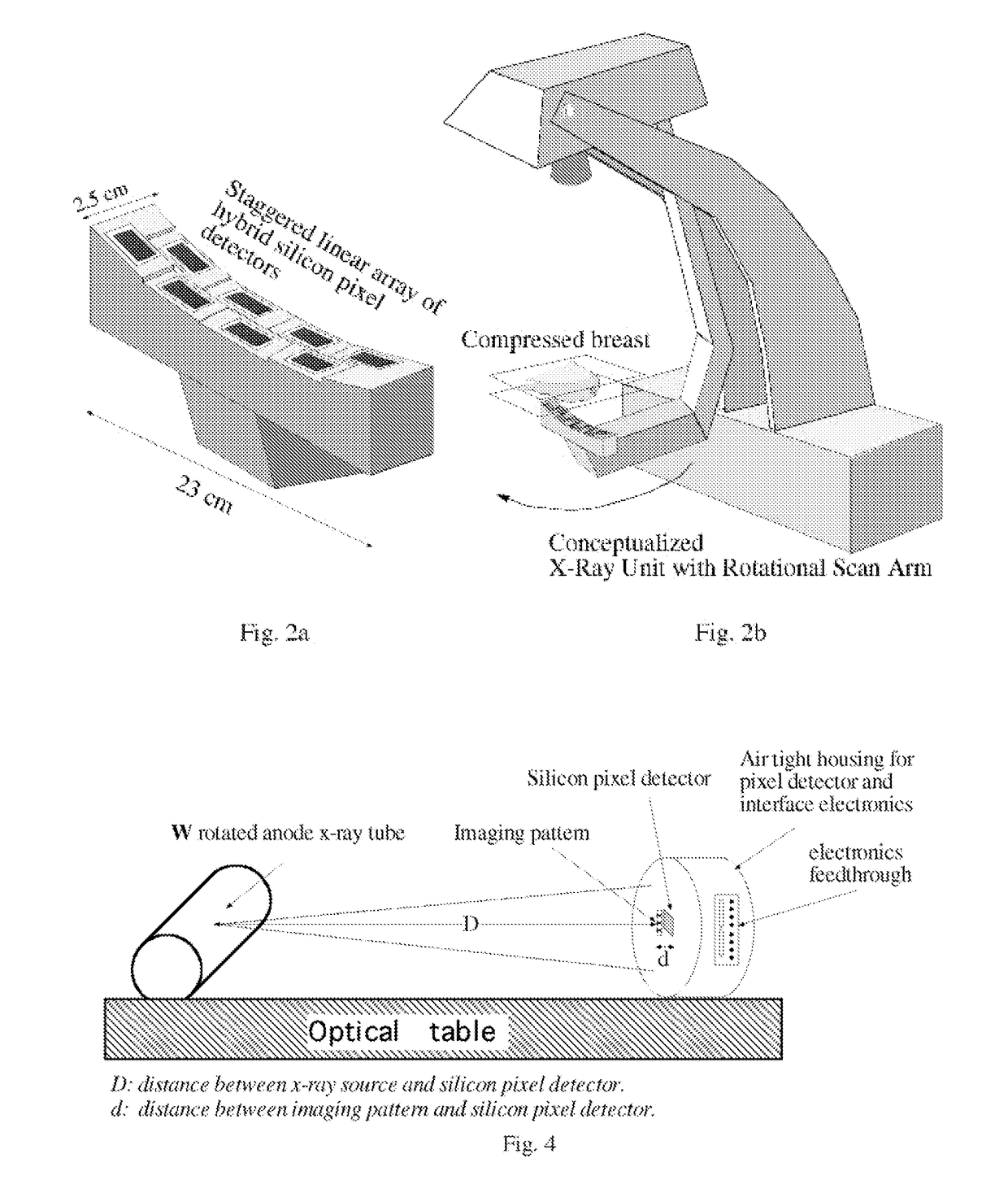

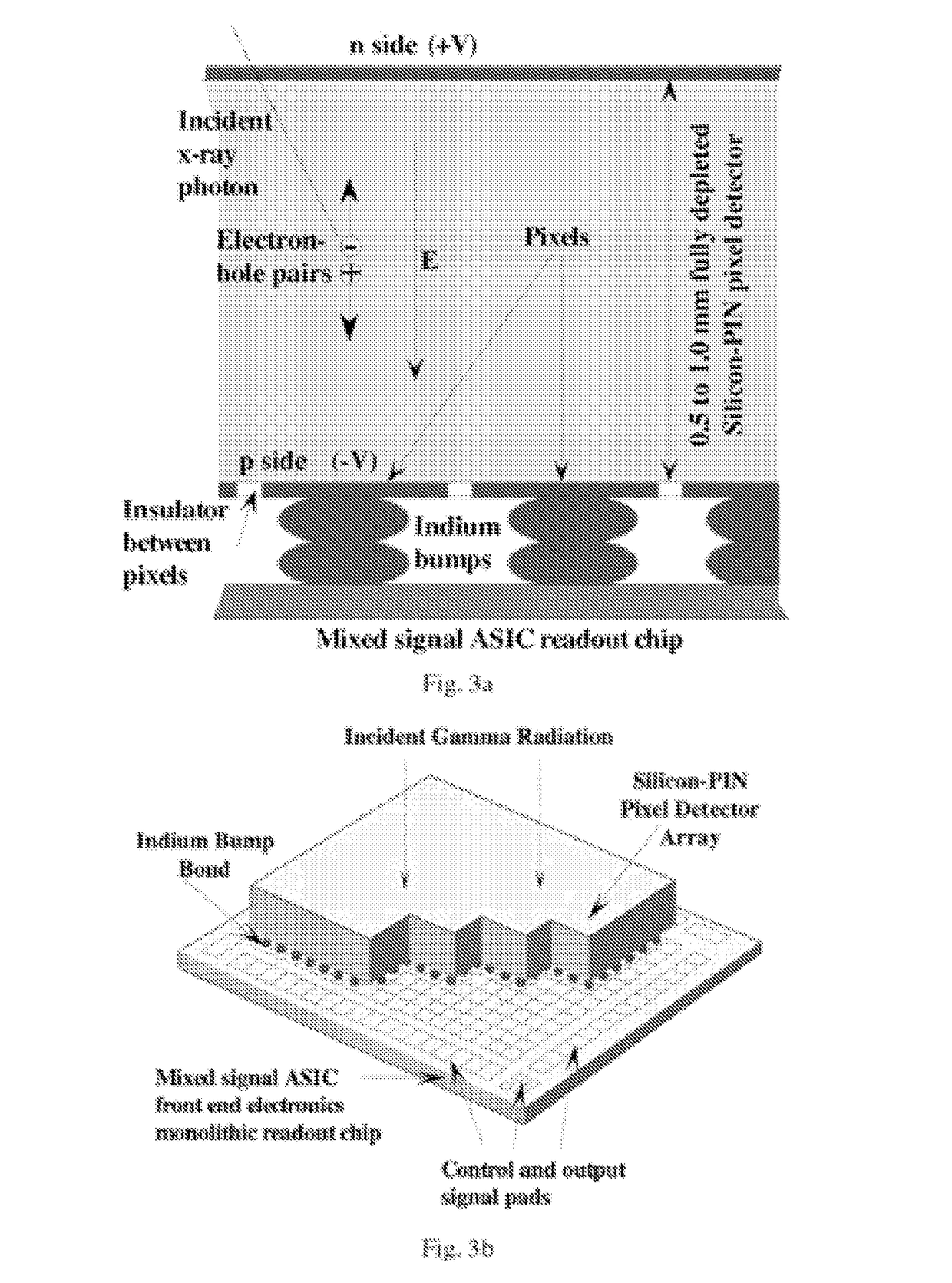

High resoultion digital imaging apparatus

InactiveUS8120683B1Improve quantum efficiencyImprove the immunityTelevision system detailsTelevision system scanning detailsDigital imagingData acquisition

An integrated application specific integrated circuit having a detection layer, a time delayed integration capability, data acquisition electronics, and a readout function is provided for detecting breast cancer in women. The detection layer receives x-ray radiation and converts the received energy to electron pairs, one of which is received by pixels. The time delay integration is on the chip and a part of the readout architecture. The detector may be a hybrid silicon detector (SiPD), a CdZnTe detector, or a GaAs detector.

Owner:NOVA R&D

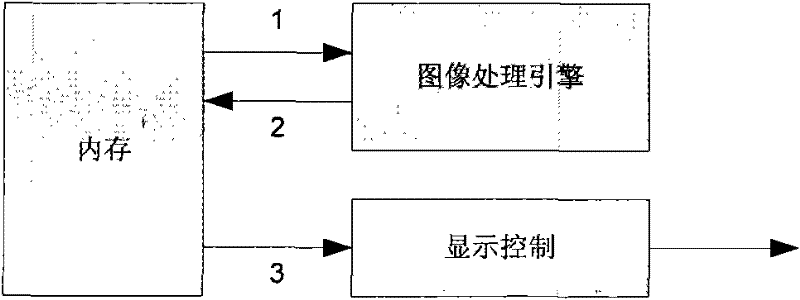

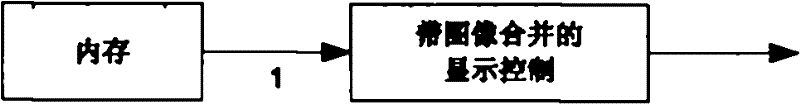

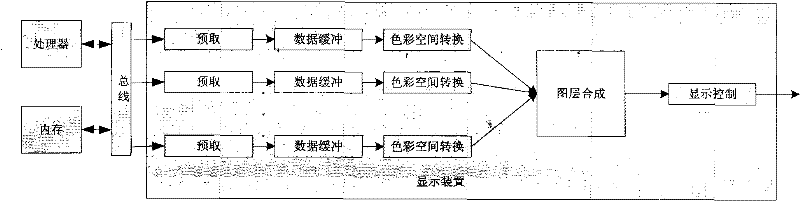

A method and a device for image composition display of multi-layer and multi-format input

InactiveCN102184720AReduce accessImage synthesis in real timeCathode-ray tube indicatorsComputer science

The invention discloses a method and a device for image composition display of multi-layer and multi-format input. The method and the device are characterized by first performing composition anticipation of each layer respectively through a prefetching module, reading data according to anticipation results, realizing image merging of the layers on a pixel-by-pixel basis through an image merging module during display. The method and device significantly reduce a demand of the image composition for data bandwidth through the intelligent anticipation of the prefetching module.

Owner:SHANGHAI INFOTM MICROELECTRONICS

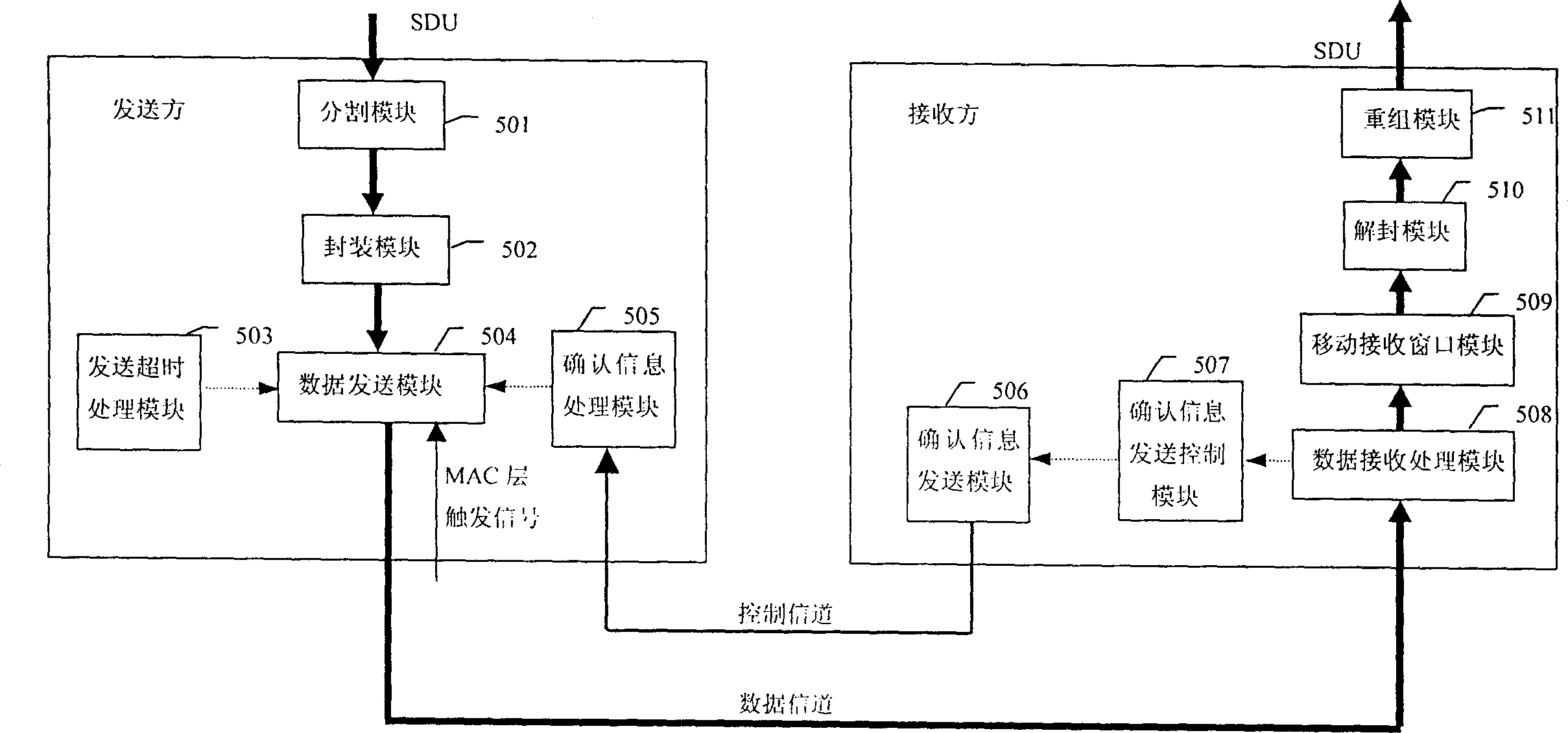

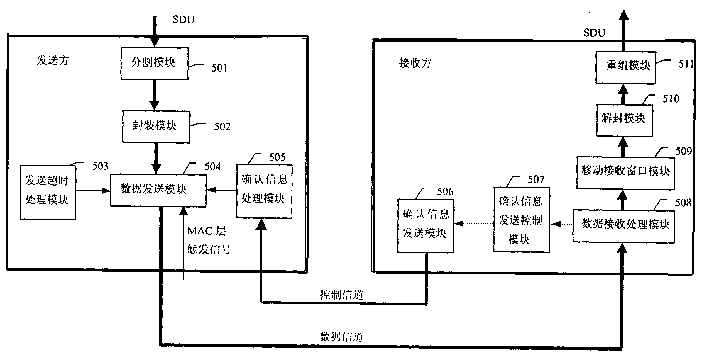

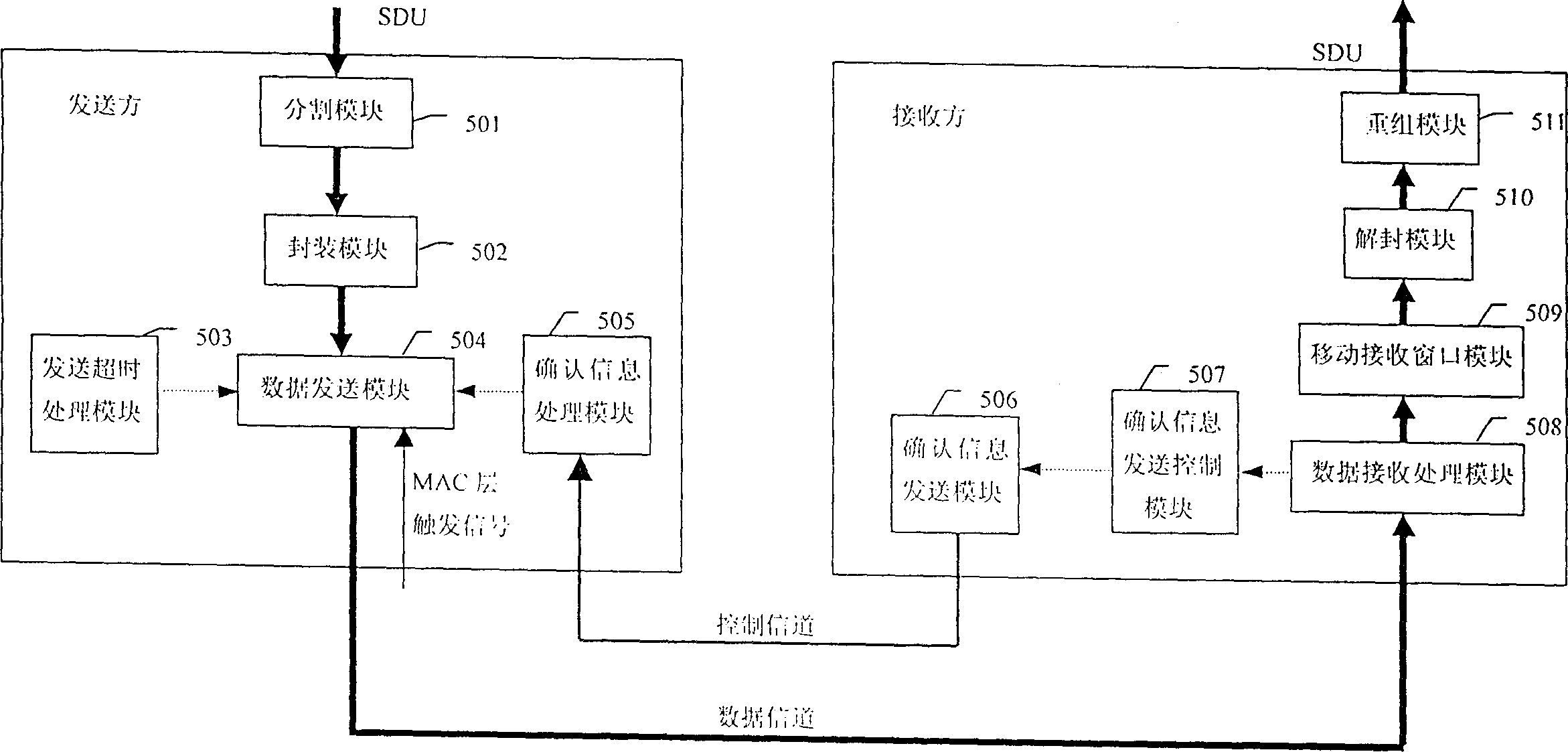

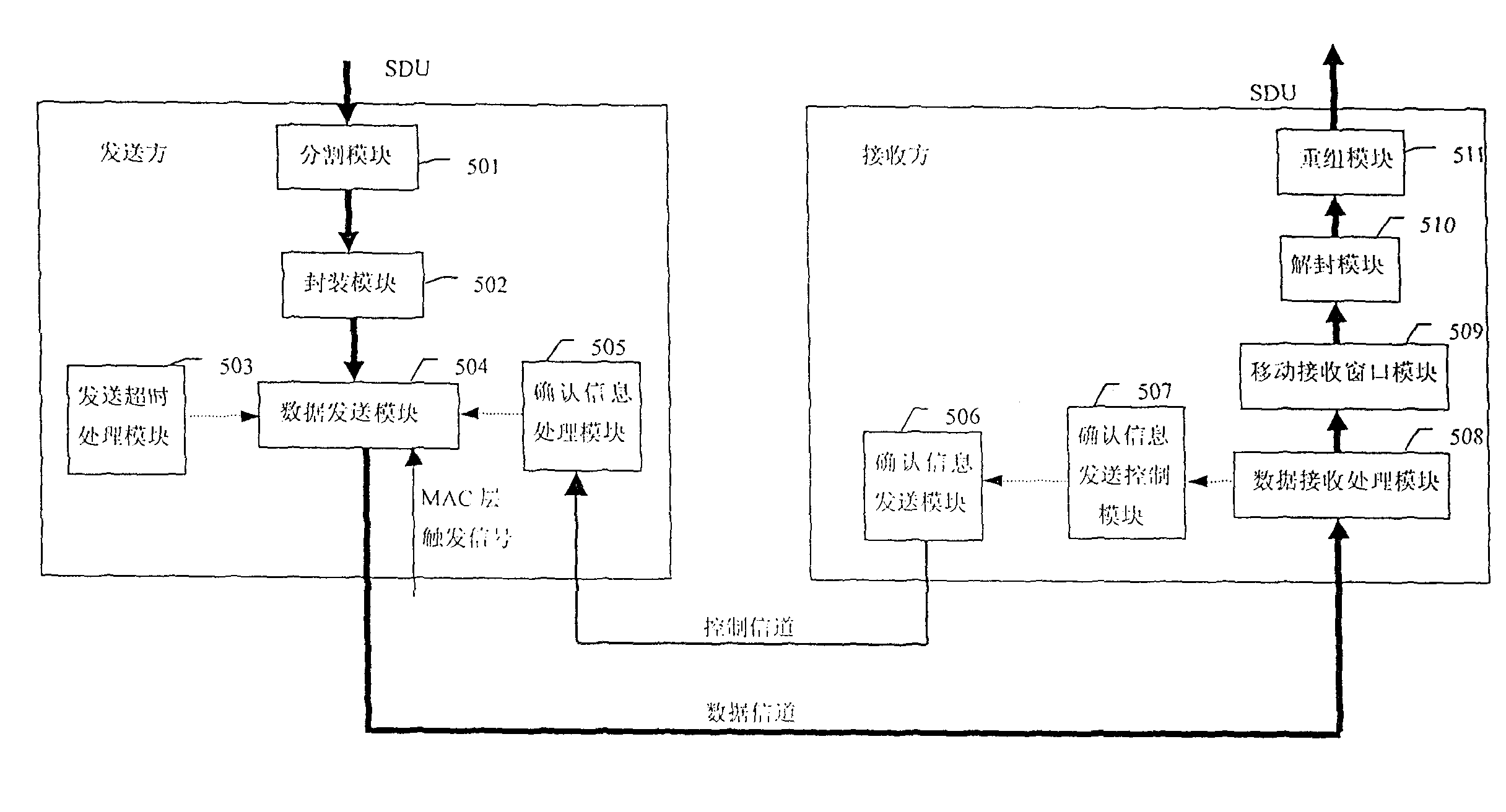

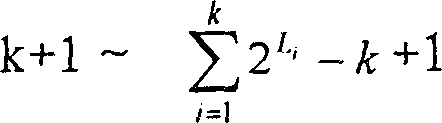

ARQ mechanism able to automatically request retransmissions for multiple rejections

InactiveCN1379557ASupport bi-directional transmissionImprove confirmation efficiencyError prevention/detection by using return channelNetwork traffic/resource managementComputer hardwareCommunications system

This invention relates to ARQ mechanism for data link layer error control in the wireless data communication system, and reforms the unreliable wireless physical link into the reliable data logical link to provide the reliable data transmission sphere for the network layer. Compared with the existing ARQ mechanism, this invention greatly increases the acknowledgement rate, yet does not reduce thethroughput rate, greatly lowers the data band-width of acknowledgement, and therefore more data band-width can send the backward data to support two-way data transmission. By fixing the length bit map and relative bias, in accordance with error data PDU distribution, this invention automatically select the acknowledgement type with the highest acknowledgement efficiency to confirm the part of data PDU that need the most acknowlegement in the reception window.

Owner:武汉汉网高技术有限公司

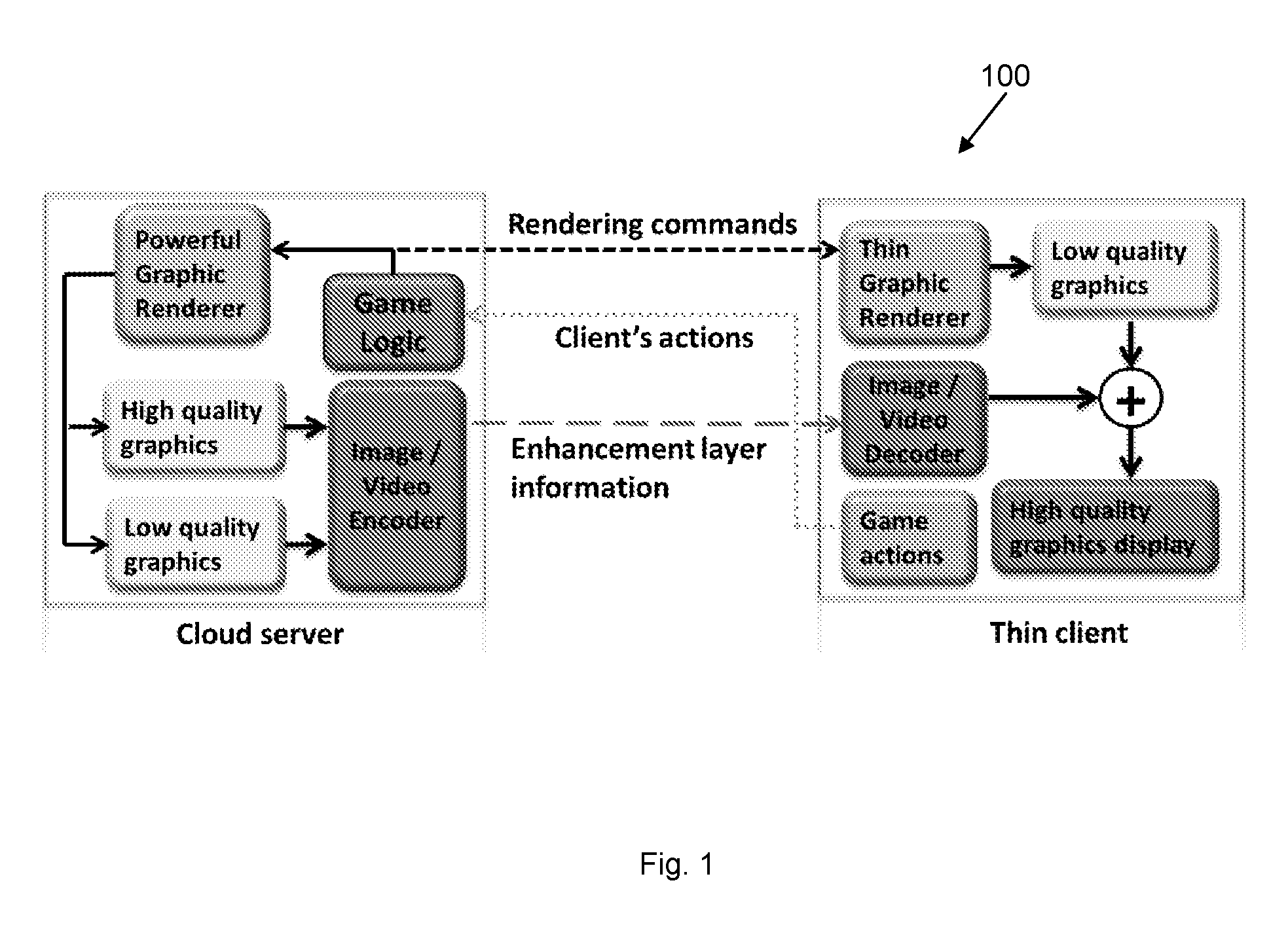

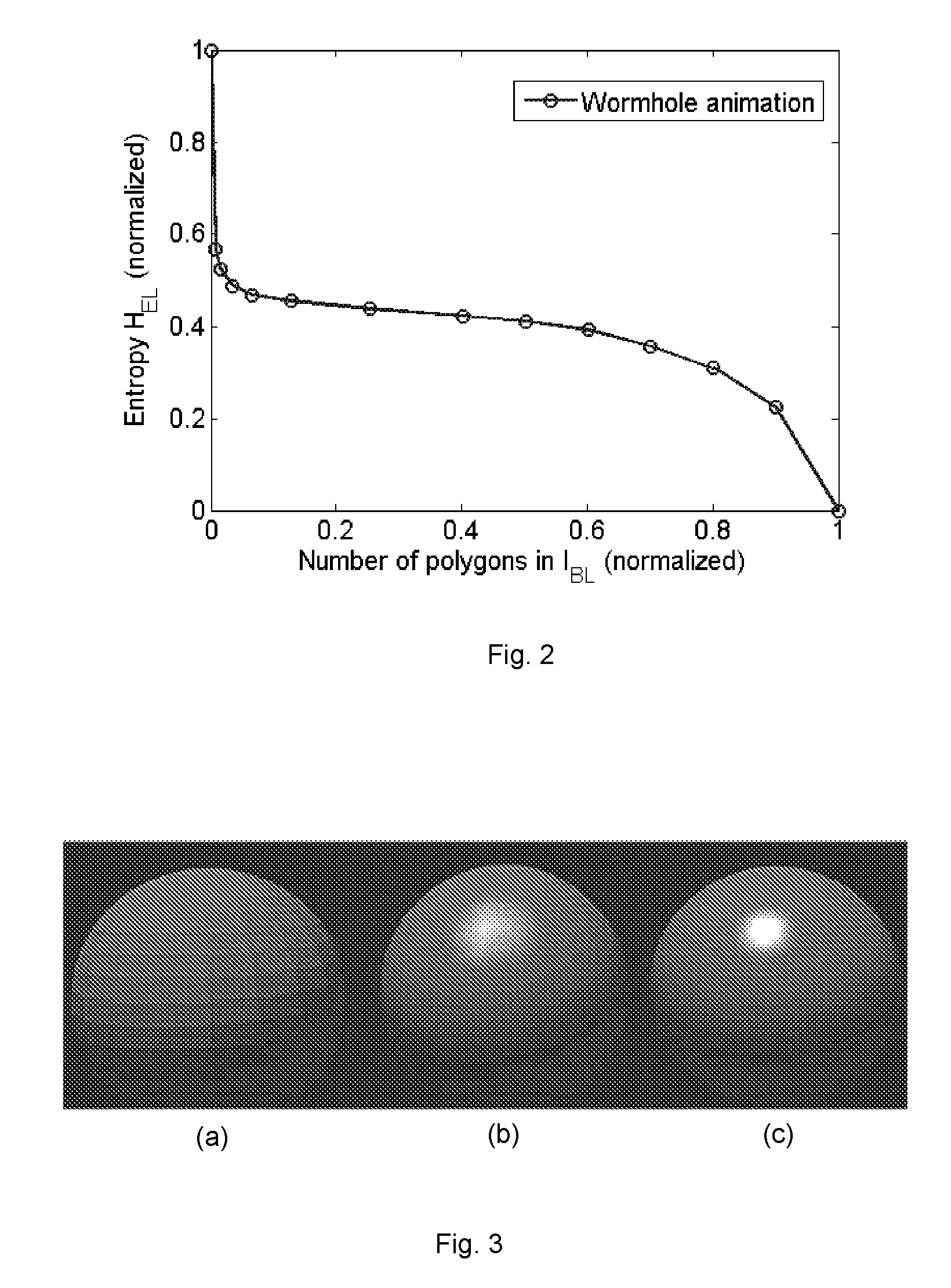

A method and apparatus for reducing data bandwidth between a cloud server and a thin client

InactiveUS20160330493A1Reduce data bandwidthAttenuation bandwidthImage data processing detailsVideo gamesThin clientCloud server

The present invention relates to a method for reducing data bandwidth between a cloud server and a thin client. The method comprises: rendering a base layer image or video stream at the thin client, transmitting an enhancement layer image or video stream from the cloud server to the thin client, displaying a composite layer image or video stream on the thin client, the composite layer being based on the base layer and the enhancement layer.

Owner:SINGAPORE UNIVERSITY OF TECHNOLOGY AND DESIGN

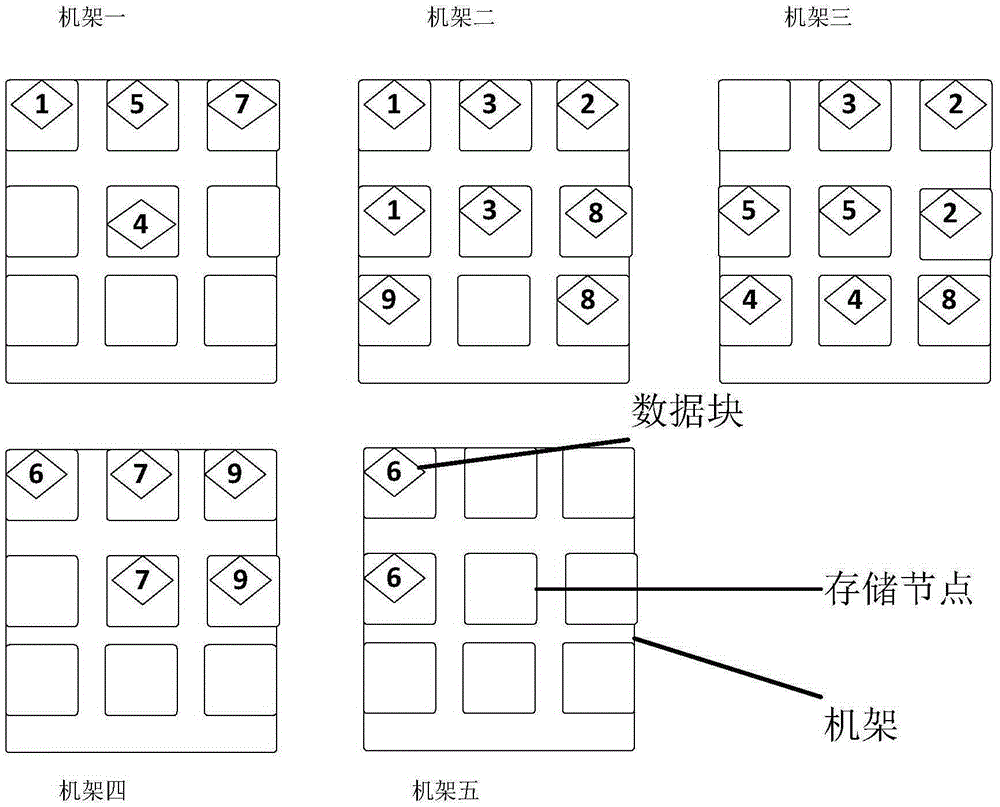

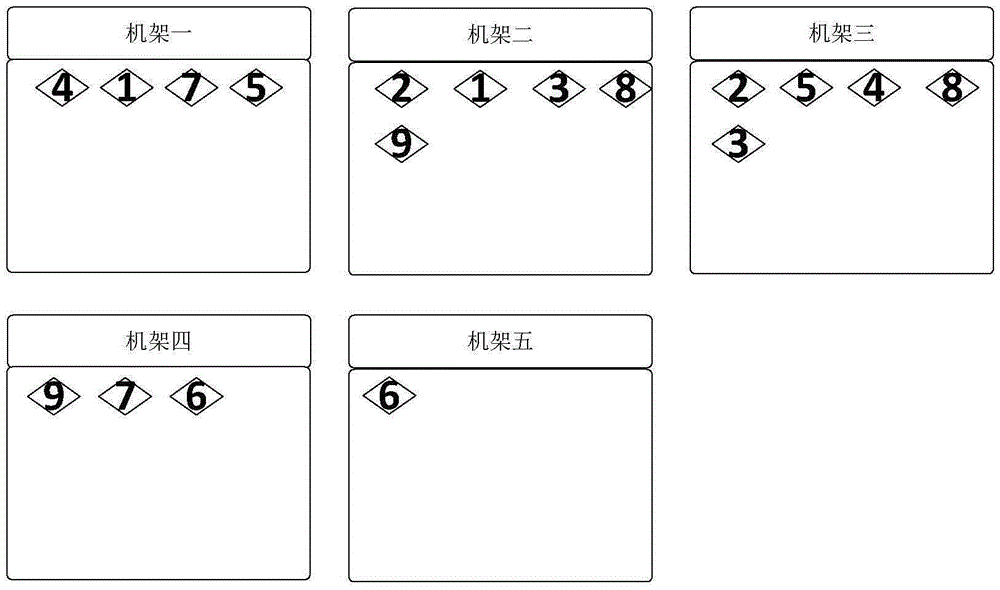

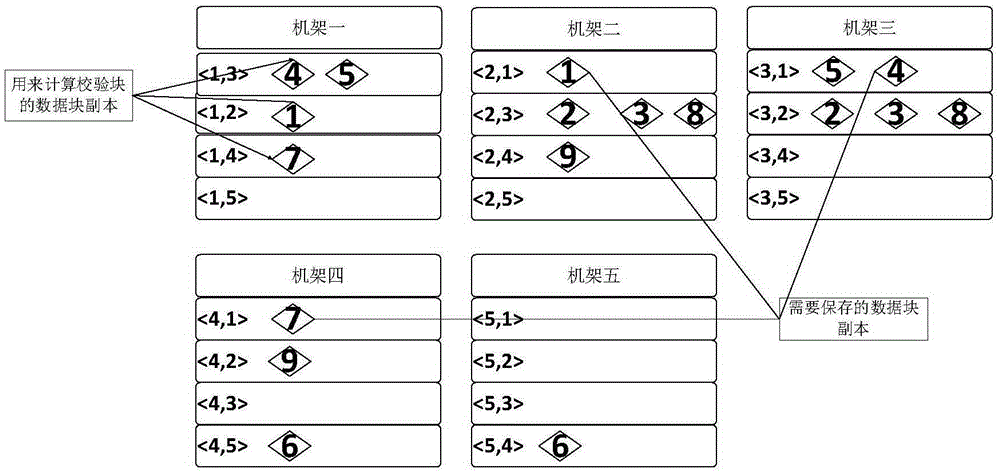

Distributed type encoding method based on dynamic band configuration

ActiveCN105302500AReduced conversion timeReduce data downloadsInput/output to record carriersQuality of serviceFault tolerance

The invention discloses a distributed type encoding method based on dynamic band configuration. The distributed type encoding method is characterized by comprising the following operation steps: acquiring data block information from a general control node, and dynamically constructing a data band according to the information; carrying out persistent storage on construction information of the data band, and distributing verification data block calculation tasks; deleting a redundant data node; and redistributing the data band which is not incompletely distributed. With the adoption of a manner of dynamically constructing the data band, compared with a traditional manner of constructing the data band by adopting a continuous data block, a rack-spanning or node-spanning data downloading amount in a conversion process is reduced under the condition of guaranteeing the fault tolerance of the data block of a system, conversion time of data from three-copy storage to erasure code storage is shortened, data bandwidth in the conversion process is reduced, and service quality and performance of the distributed type system are improved.

Owner:UNIV OF SCI & TECH OF CHINA

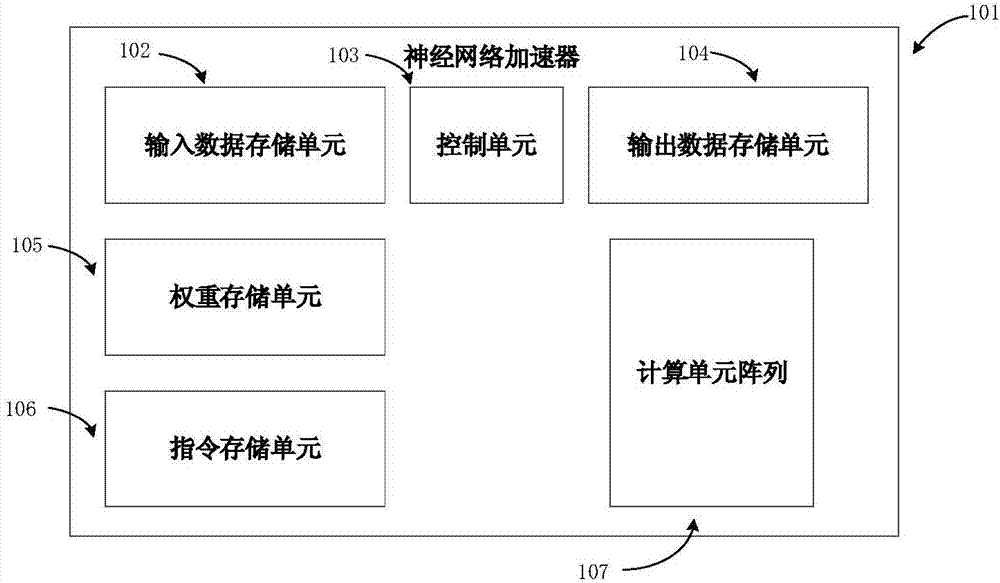

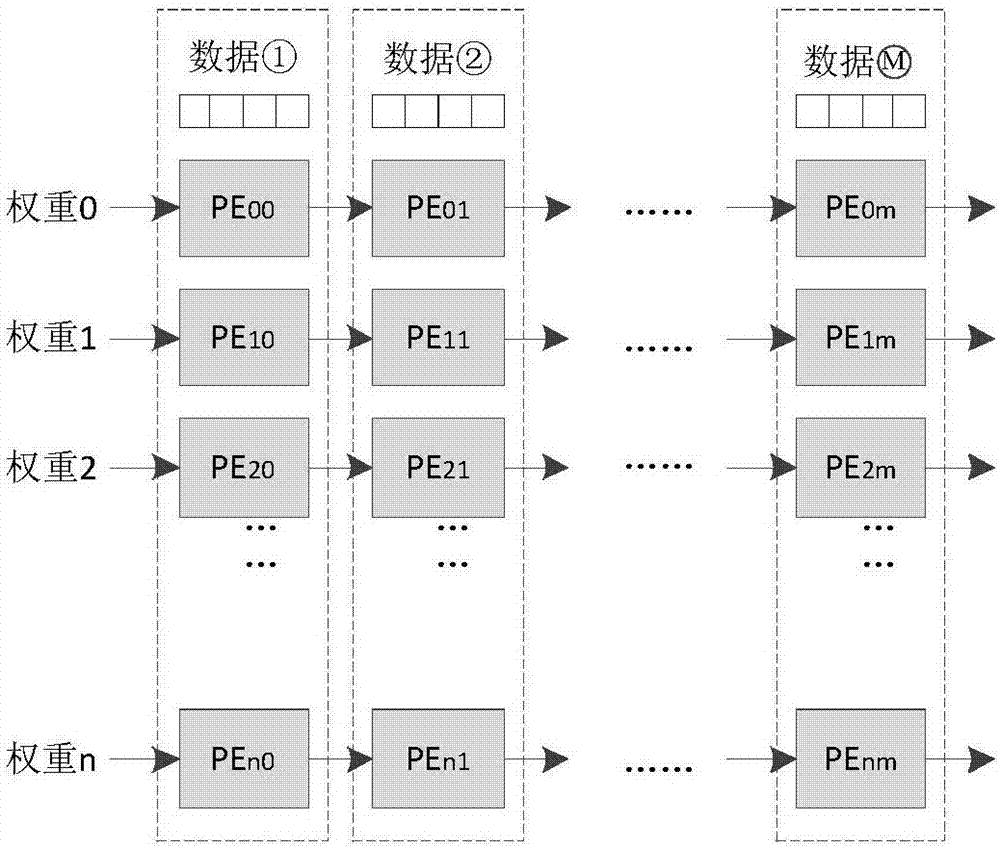

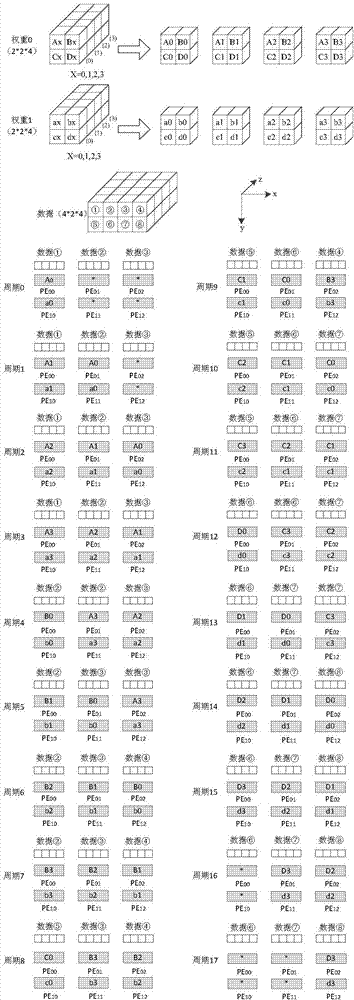

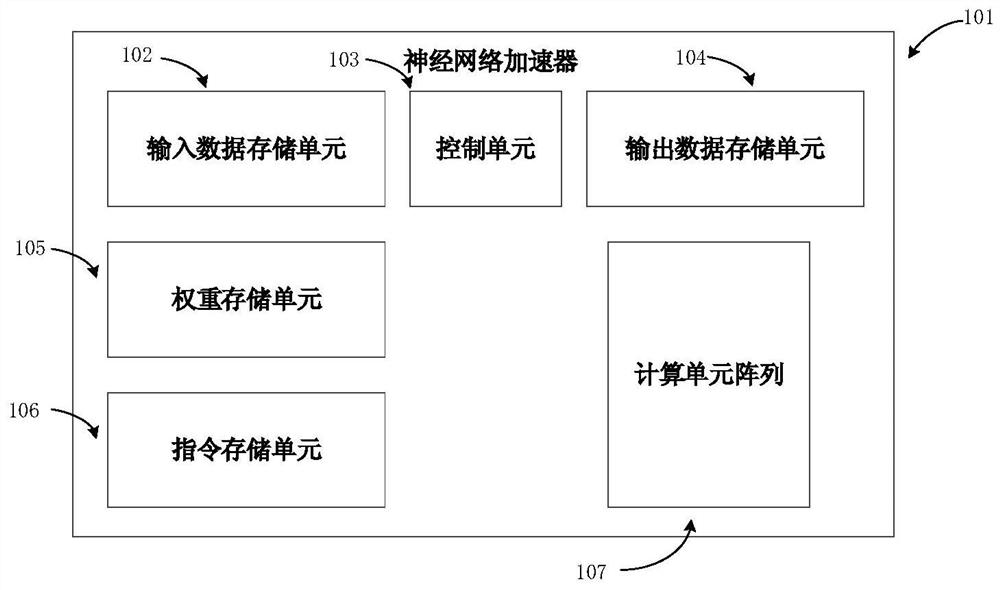

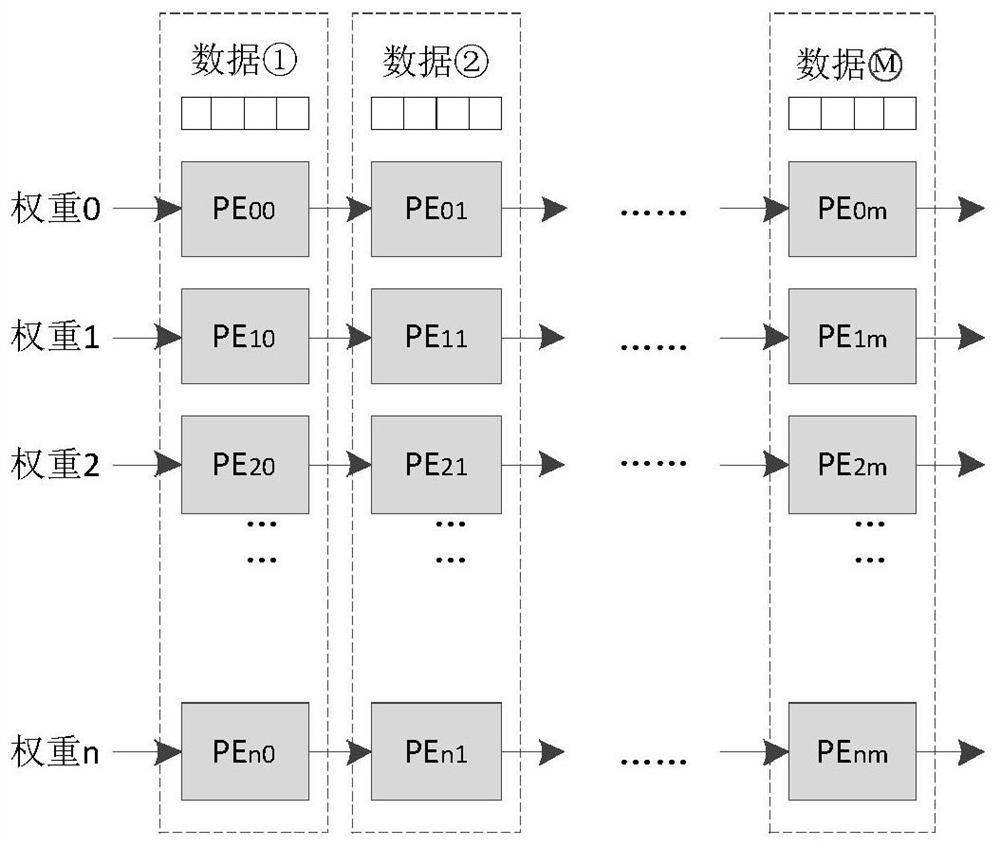

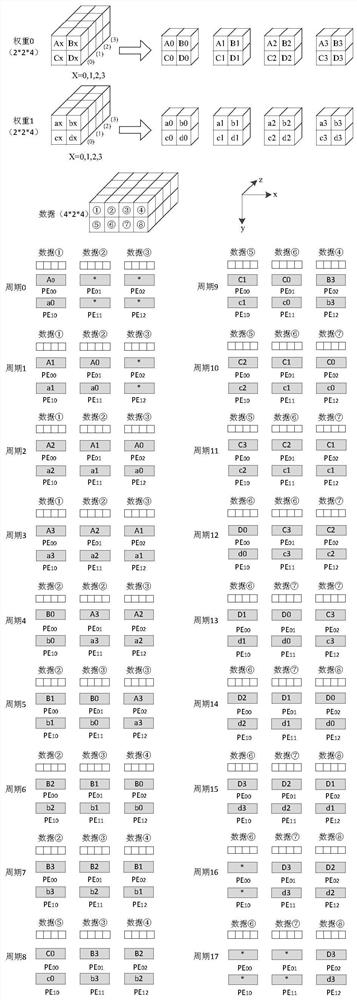

Neural network processor based on efficient multiplex data stream, and design method

ActiveCN107085562AReduced on-chip data bandwidthImprove data sharing rateEnergy efficient computingArchitecture with single central processing unitHardware accelerationData sharing

The invention puts forward a neural network processor based on efficient multiplex data stream, and a design method, and relates to the technical field of the hardware acceleration of neural network model calculation. The processor compares at least one storage unit, at least one calculation unit and a control unit, wherein the at least one storage unit is used for storing an operation instruction and arithmetic data; the at least one calculation unit is used for executing neural network calculation; and the control unit is connected with the at least one storage unit and the at least one calculation unit for obtaining the operation instruction stored by the at least one storage unit via the at least one storage unit, and analyzing the operation instruction to control the at least one calculation unit, wherein the arithmetic data adopts a form of the efficient multiplex data stream. By use of the processor, the efficient multiplex data stream is adopted in a neural network processing process, a weight and data only need to be loaded into one row of calculation unit in a calculation unit array each time, the bandwidth of data on chip is lowered, a data sharing rate is improved, and energy efficiency is improved.

Owner:INST OF COMPUTING TECHNOLOGY - CHINESE ACAD OF SCI

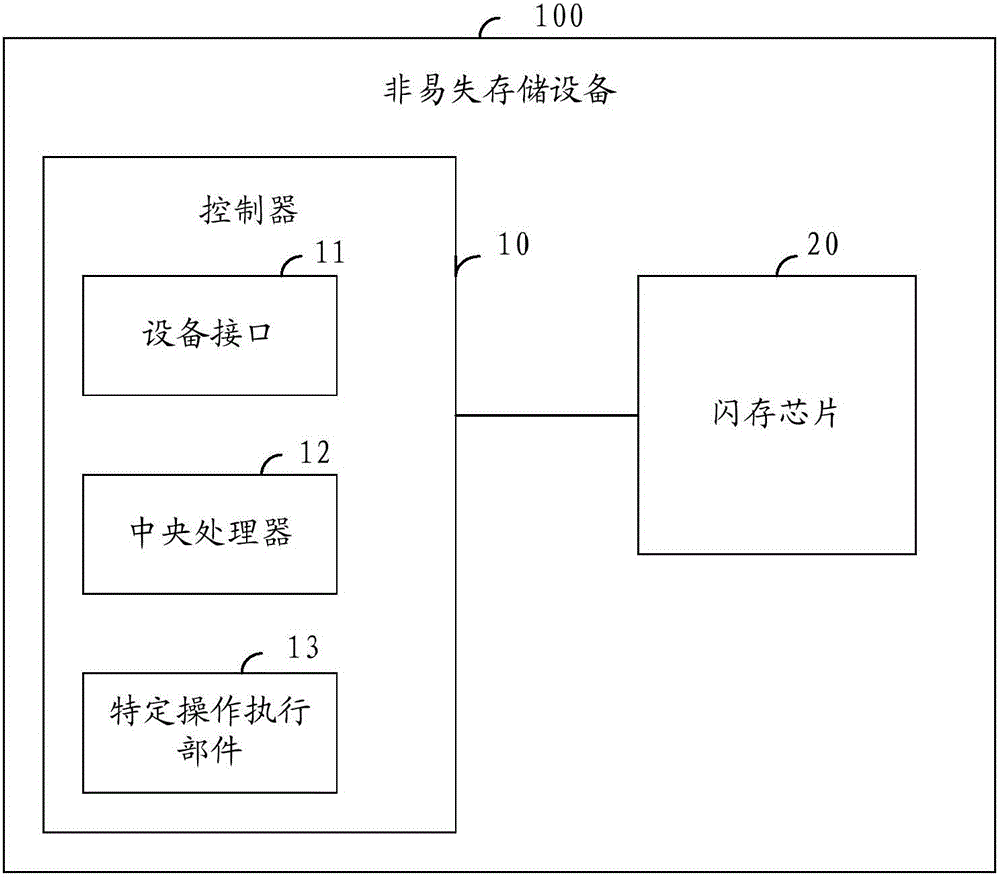

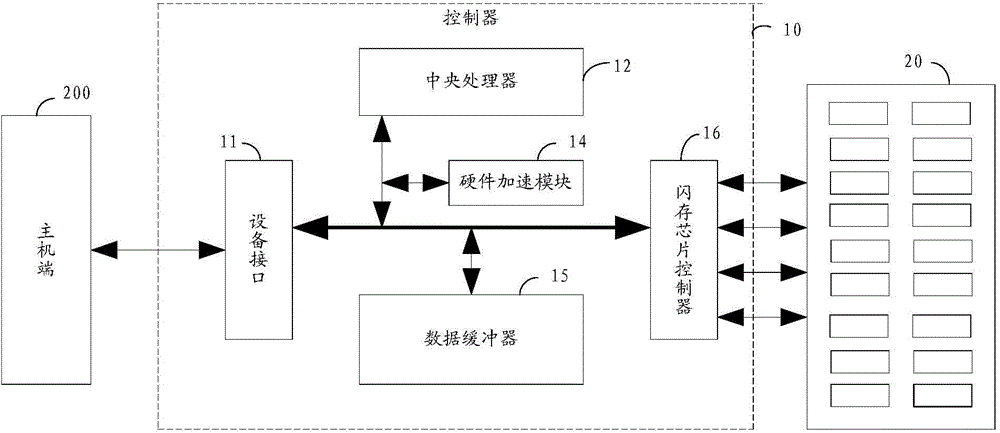

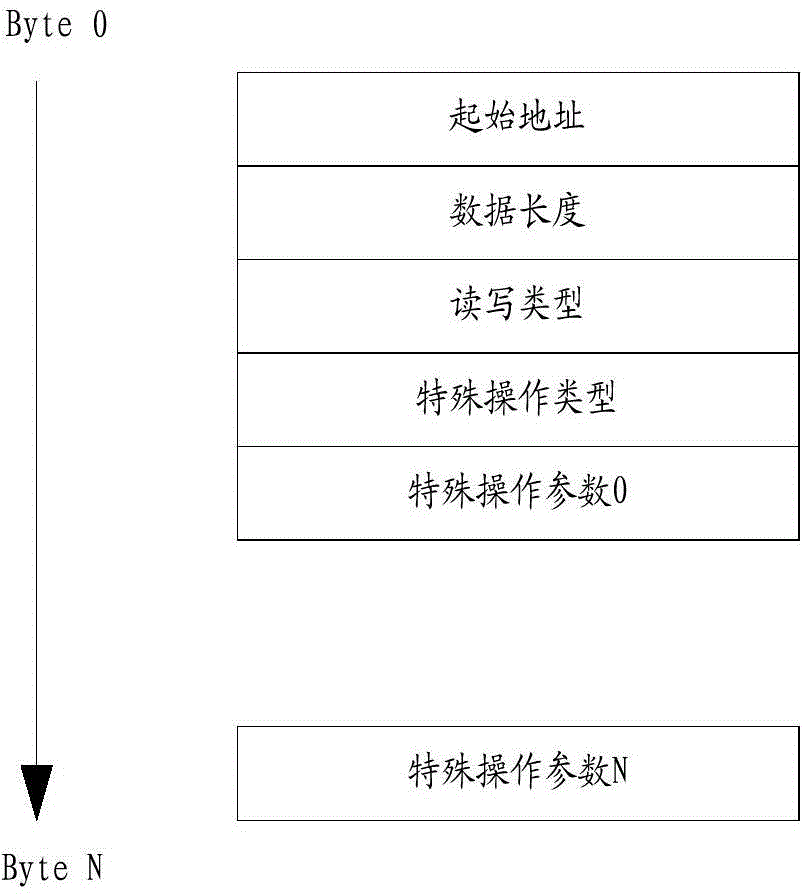

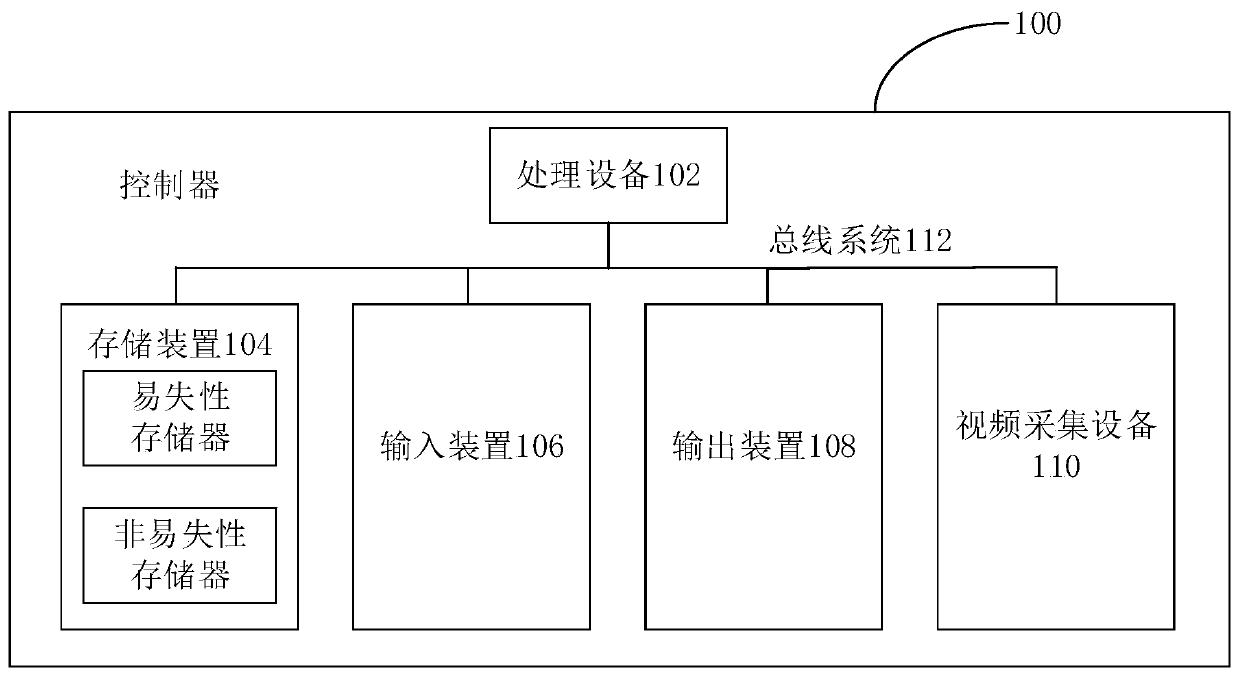

Nonvolatile storage equipment and method of carrying out data manipulation therethrough

InactiveCN103955440AReduce data bandwidthIncrease profitElectric digital data processingData contentCpu load

The invention provides nonvolatile storage equipment and a method of carrying out data manipulation therethrough. The method comprises the following steps of receiving specific operating commands which are transmitted from a host end and are other data manipulation commands except for read-write operating commands; converting the specific operating commands into the read-write operating commands of a nonvolatile storage device, and reading or writing data from or into the nonvolatile storage device according to the read-write operating commands; carrying out specific operations corresponding to the specific operating commands on the read or write data stream, and returning operating result data to the host end. Except for read and write operations, other operations are carried out on the data through the nonvolatile storage equipment, so that a data processing method and a data processing flow are changed; the operating command of a data content is completed by utilizing the processing capacity of a chip controller in the nonvolatile storage equipment, so that the data bandwidth is effectively saved, the utilization rate of the bandwidth of a port is increased, and the load of a CPU (Central Processing Unit) on the host end is reduced. In the specification, a flash is taken as an embodiment of the nonvolatile storage device, but the right of the invention is not considered to be limited to the flash.

Owner:RAMAXEL TECH SHENZHEN

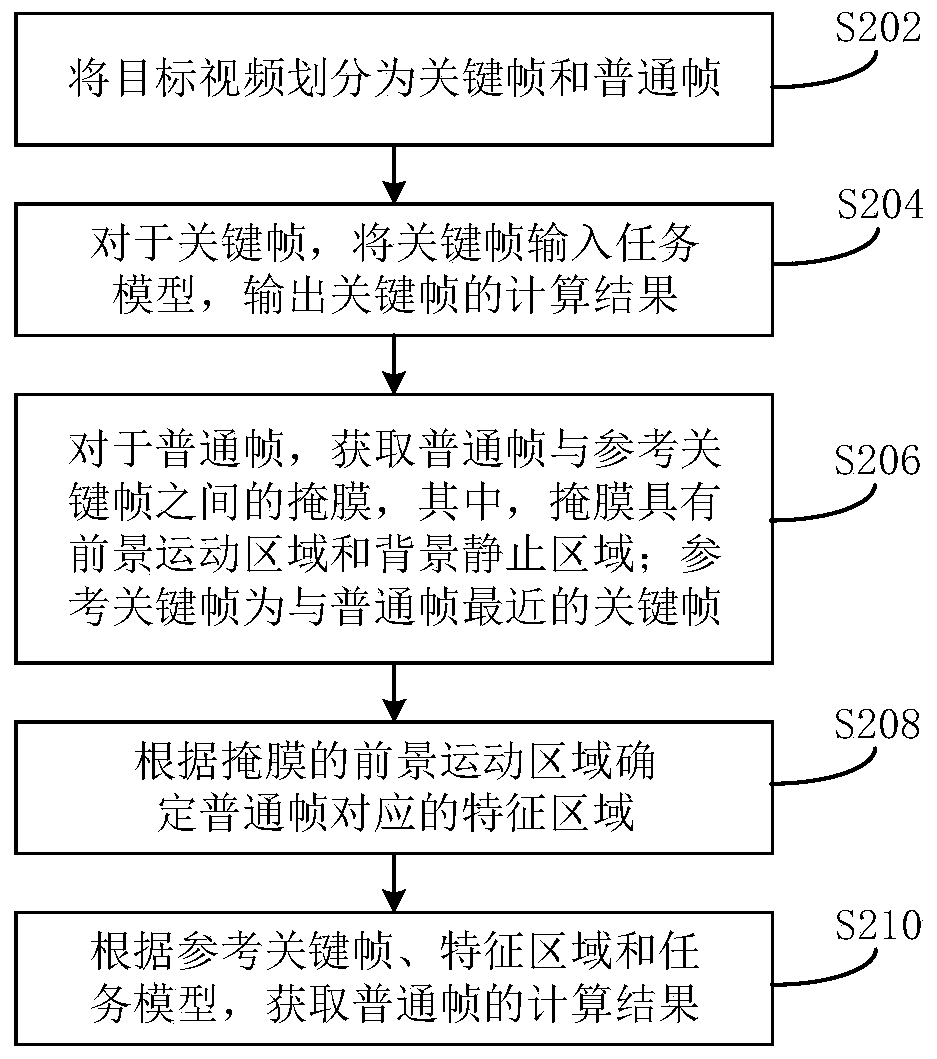

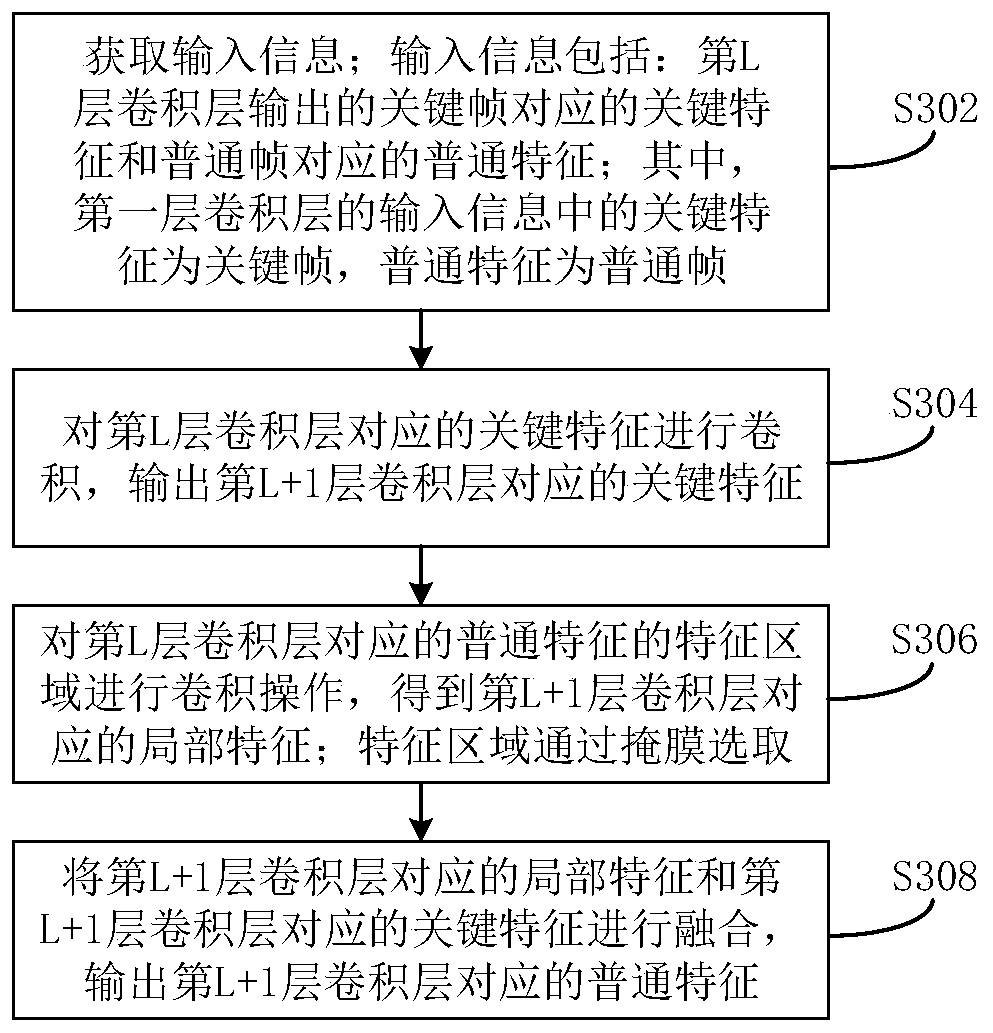

Video data processing method and device and electronic system

PendingCN110956219ASmall amount of calculationReduce data bandwidth and power consumptionCharacter and pattern recognitionEngineeringConvolution

The invention provides a video data processing method and device and an electronic system. The video data processing method comprises the following steps: dividing a target video into a key frame anda common frame; for the key frame, inputting the key frame into the task model, and outputting a calculation result of the key frame; for the common frame, obtaining a mask between the common frame and the reference key frame, wherein the mask has a foreground motion area and a background static area, and the reference key frame is the key frame closest to the common frame; determining a feature region corresponding to the common frame according to the foreground motion region of the mask; and obtaining a calculation result of the common frame according to the reference key frame, the featureregion and the task model. According to the video data processing method, common features are not completely input into the task model, and only the feature region is input into the task model, so that the calculation amount of the neural network can be reduced, and the data bandwidth and the power consumption can be reduced, and real-time calculation can be realized on the basis of keeping the convolution calculation effect not to be reduced.

Owner:MEGVII BEIJINGTECH CO LTD

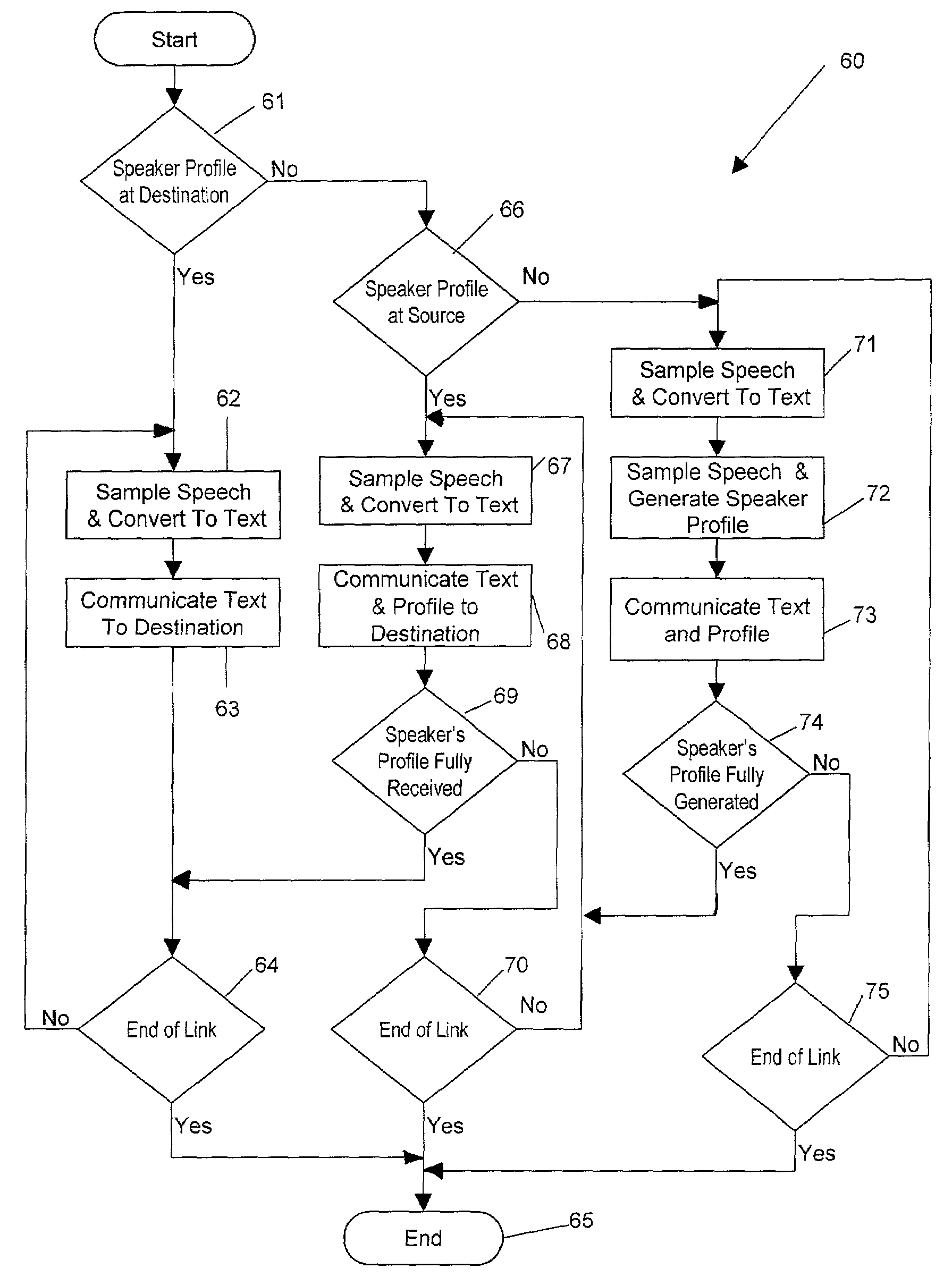

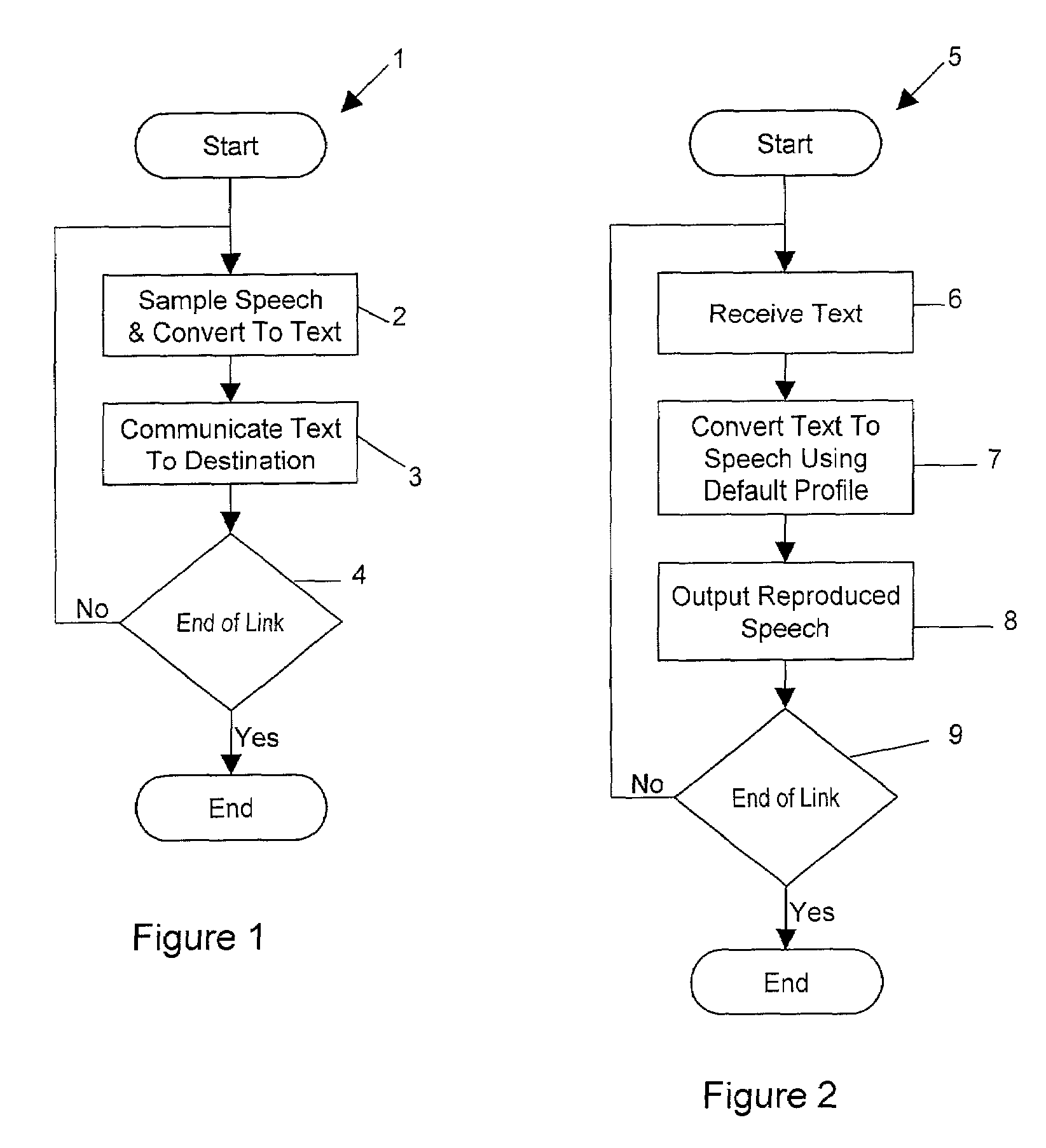

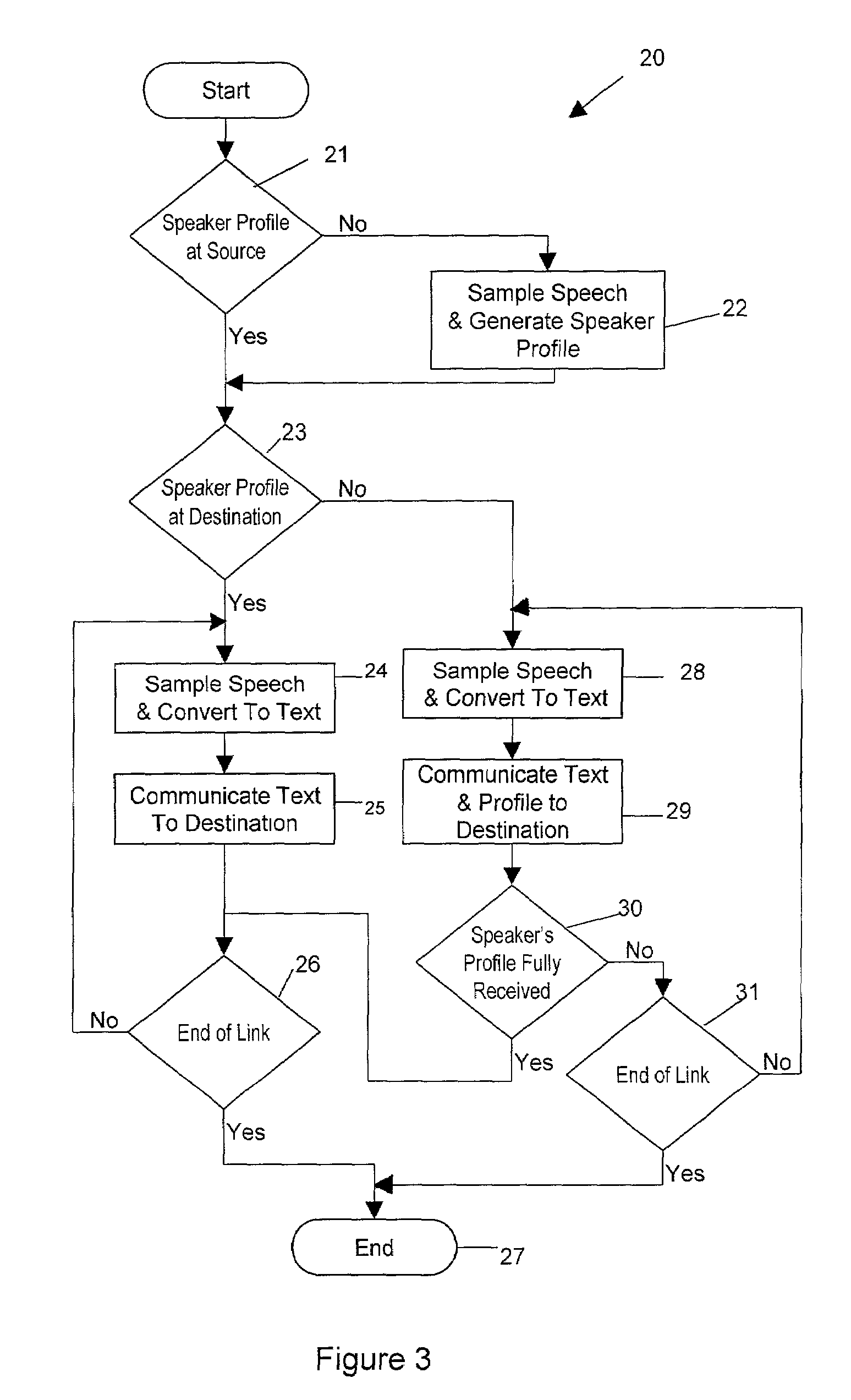

Speech transfer over packet networks using very low digital data bandwidths

ActiveUS7177801B2Improve call qualityAttenuation bandwidthSpeech recognitionTransmissionDigital dataTelecommunications link

A method of communicating speech across a communication link using very low digital data bandwidth is disclosed, having the steps of: translating speech into text at a source terminal; communicating the text across the communication link to a destination terminal; and translating the text into reproduced speech at the destination terminal. In a preferred embodiment, a speech profile corresponding to the speaker is used to reproduce the speech at the destination terminal so that the reproduced speech more closely approximates the original speech of the speaker. A default voice profile is used to recreate speech when a user profile is unavailable. User specific profiles can be created during training prior to communication or can be created during communication from actual speech. The user profiles can be updated to improve accuracy of recognition and to enhance reproduction of speech. The updated user profiles are transmitted to the destination terminals as needed.

Owner:TELOGY NETWORKS

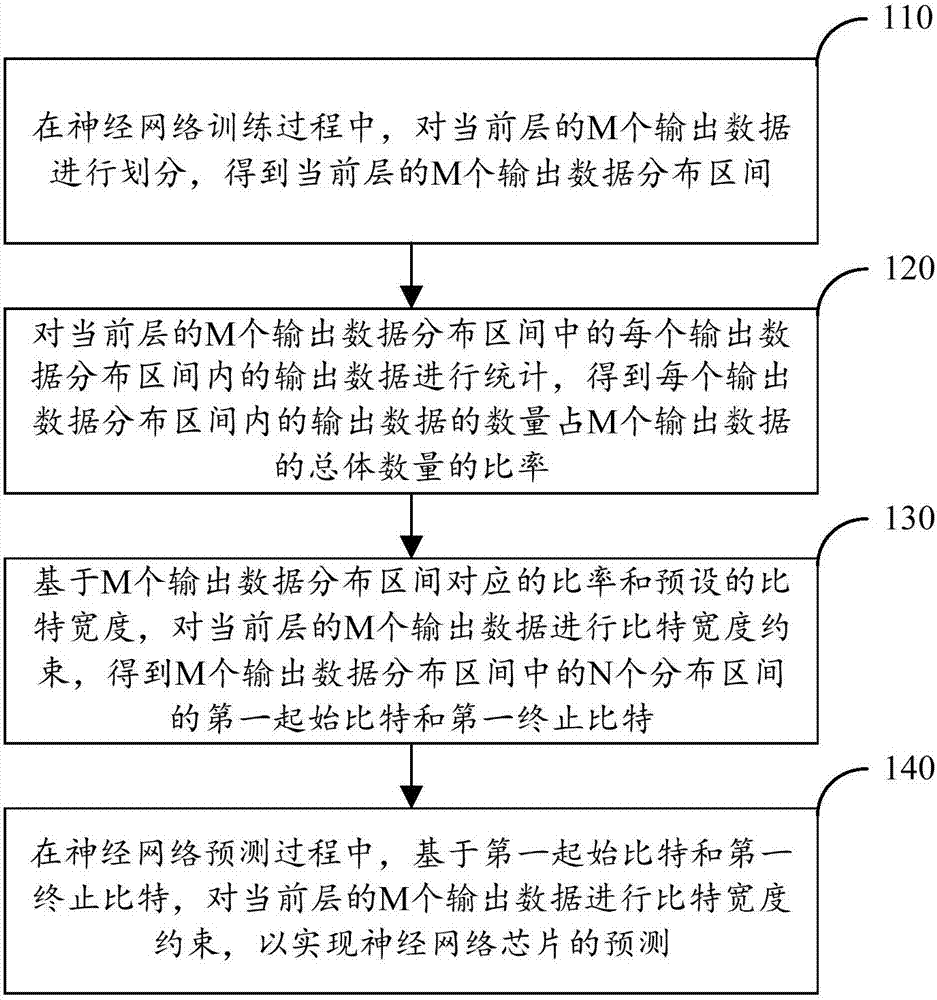

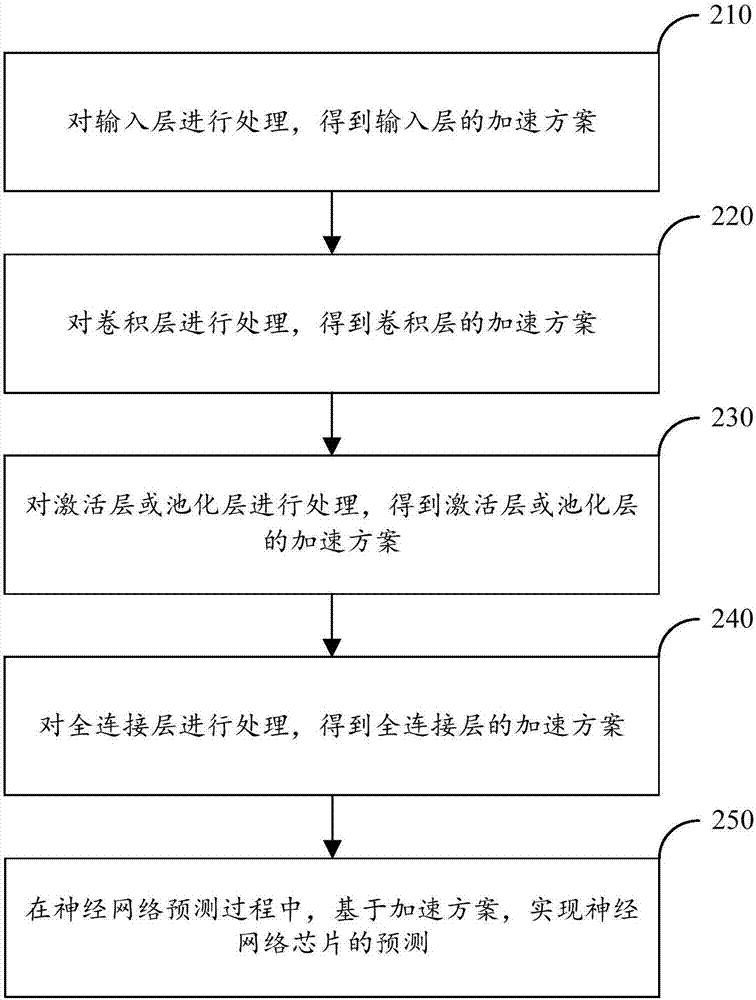

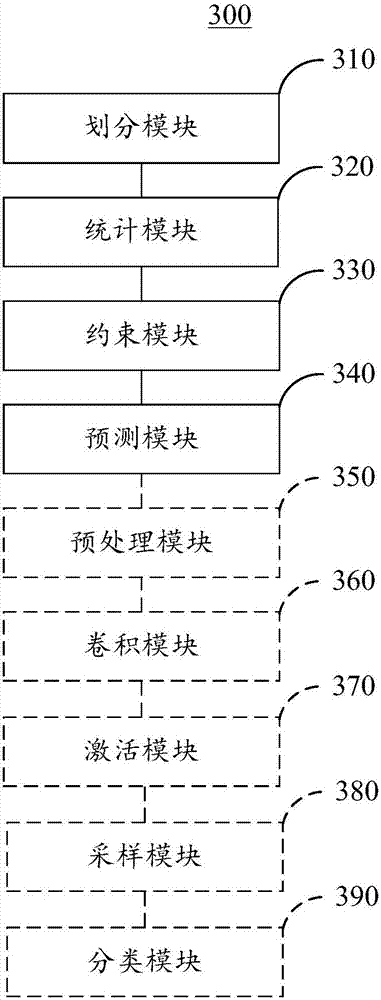

Prediction method applied to neural network chip and prediction apparatus thereof

ActiveCN107292458AAchieve forecastReduce data bandwidthForecastingNeural architecturesComputer sciencePrediction methods

The invention provides a prediction method applied to a neural network chip and an apparatus thereof, a server and a readable storage medium. The method comprises the following steps of dividing M output data of a current layer and acquiring M output data distribution intervals of the current layer; carrying out statistics on the output data in each output data distribution interval of the M output data distribution intervals and acquiring a ratio of a number of the output data in each output data distribution interval and a total number of the M output data; based on the ratio corresponding to the M output data distribution intervals and a preset bit width, carrying out a bit width constraint on the M output data and acquiring a first initial bit and a first termination bit of N distribution intervals of the M output data distribution intervals; and based on the first initial bit and the first termination bit, carrying out bit width constraint on the M output data of the current layer so as to realize prediction of the neural network chip. In the invention, a data bandwidth is reduced and calculating efficiency is further increased.

Owner:上海中星微莘庄人工智能芯片有限公司

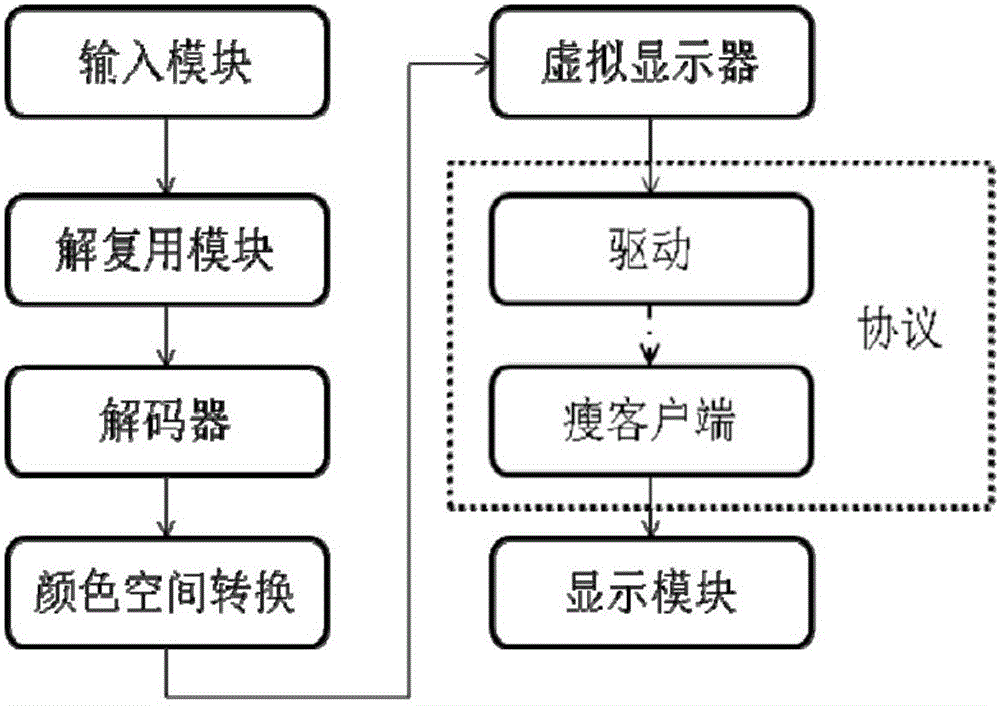

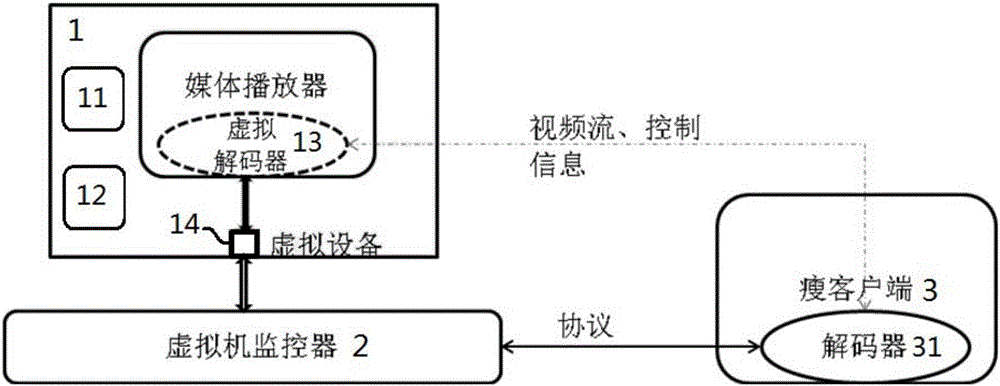

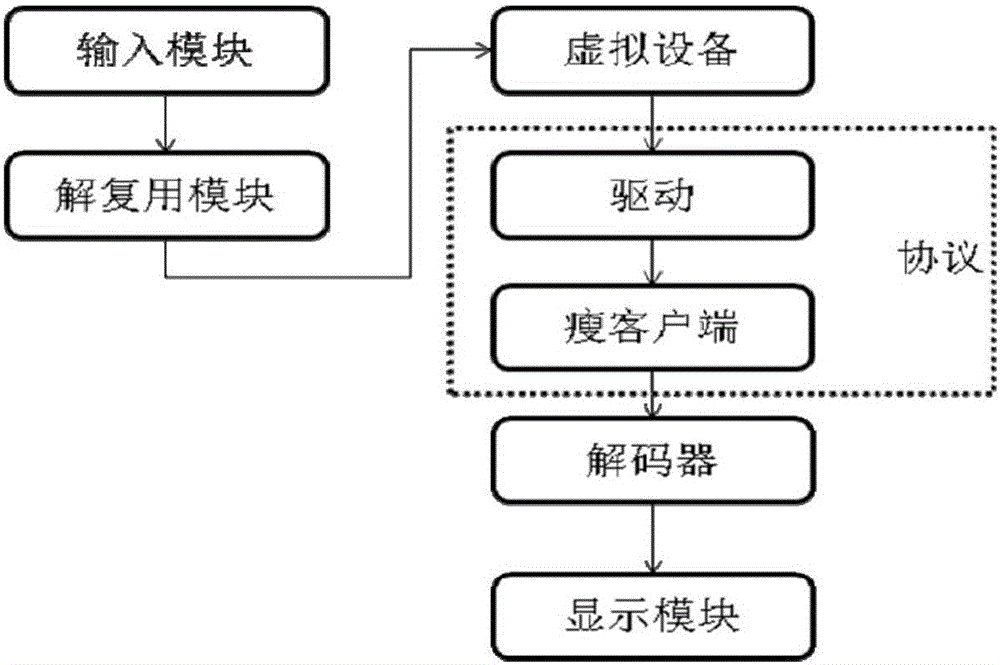

Video redirection method and system based on remote desktop presentation protocol

InactiveCN106210865AReduce CPU consumptionImprove experienceSelective content distributionComputer hardwareRemote desktop

The invention relates to a video redirection method and system based on a remote desktop presentation protocol. The method comprises the following steps: acquiring a video file of a media player, performing code-stream separation on the video file, sending a decoding request of a video stream to a thin client, wherein the decoding request is sent to the thin client through a constructed virtual decoder, and the virtual decoder is the agency of the local decoder in the thin client; judging whether receiving a decodable feedback of the thin client or not, if the decodable feedback of the thin client is received, sending the video stream and the playing parameter to the thin client through the remote desktop presentation protocol so as to be displayed after the decoding; if the decodable feedback of the thin client is not received, decoding through the media player. Compared with the prior art, the method disclosed by the invention has the advantages as follows: the bandwidth and computation capacity of the server is effectively reduced, and the user experience of watching the high-definition video is improved.

Owner:东莞市正讯实业投资有限公司

Split type portable electronic equipment and method for optimizing communication using progressive transmission

InactiveCN101064887APerformance leapImprove the constrained environmentNetwork traffic/resource managementRadio/inductive link selection arrangementsOriginal dataKinesis

The invention discloses detachable portable equipment and designing project to optimize the data communication using the progressive transmission. The scheme includes: detachable host machine and terminal, and the host machine cooperates with the terminal via the wireless communication mode, the communication mode includes but not limits to optimize the original data transmitted by progressive transmission. The invention is applicable to the portable electric device, for example: mobile phone, personal digital assistant, media player, digital camera, video camera and portable computer. Using the wireless communication mode to detach the host machine and the terminal of portable electric device, it changes the restrict environment of biokinetics for the electric device, and make the portable electric device has great handling ability, agile portability, and comfortable handle operation. Using the progressive transmission, it can reduce the transmission of failure data in the data communication, and realizes a data communication which can save data bandwidth, improve the real time of data, and protect the accuracy of data.

Owner:蔡强

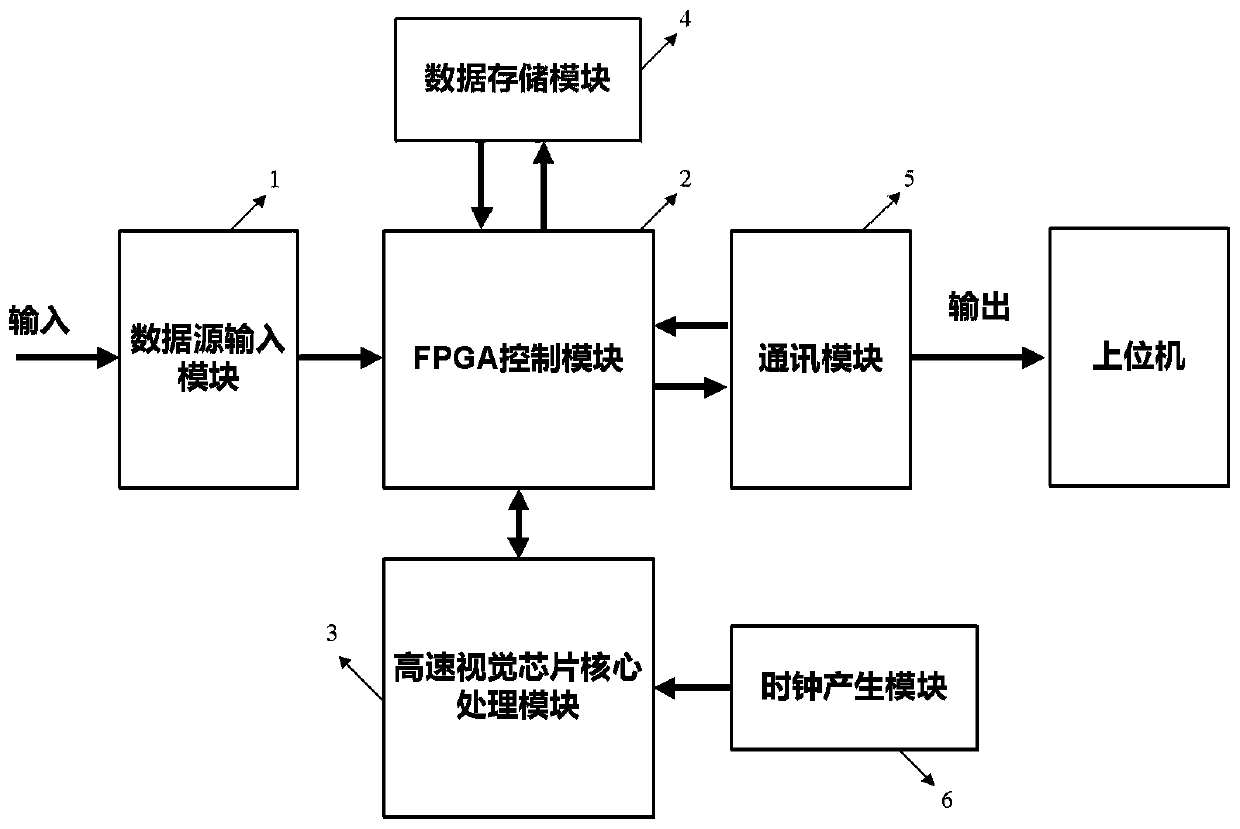

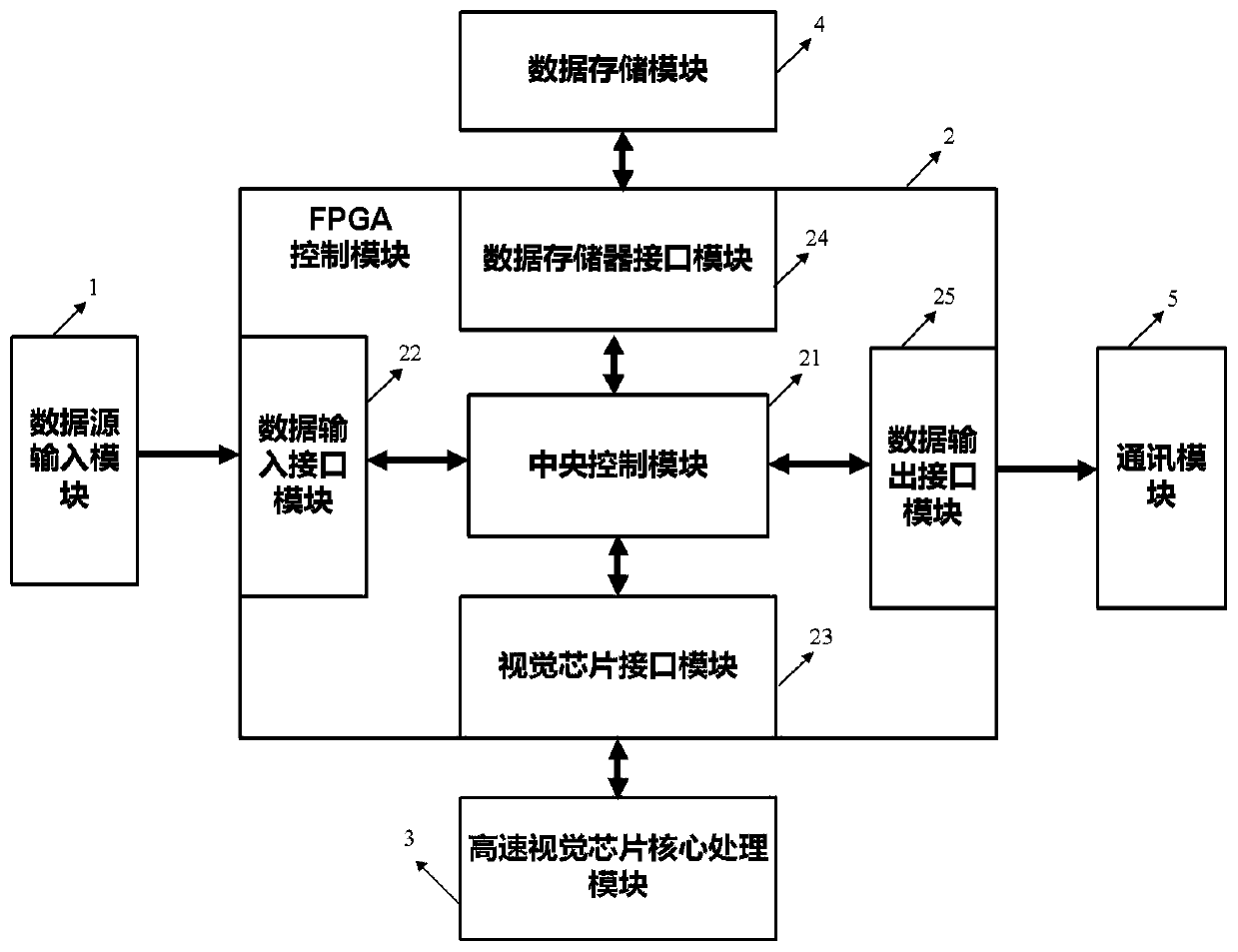

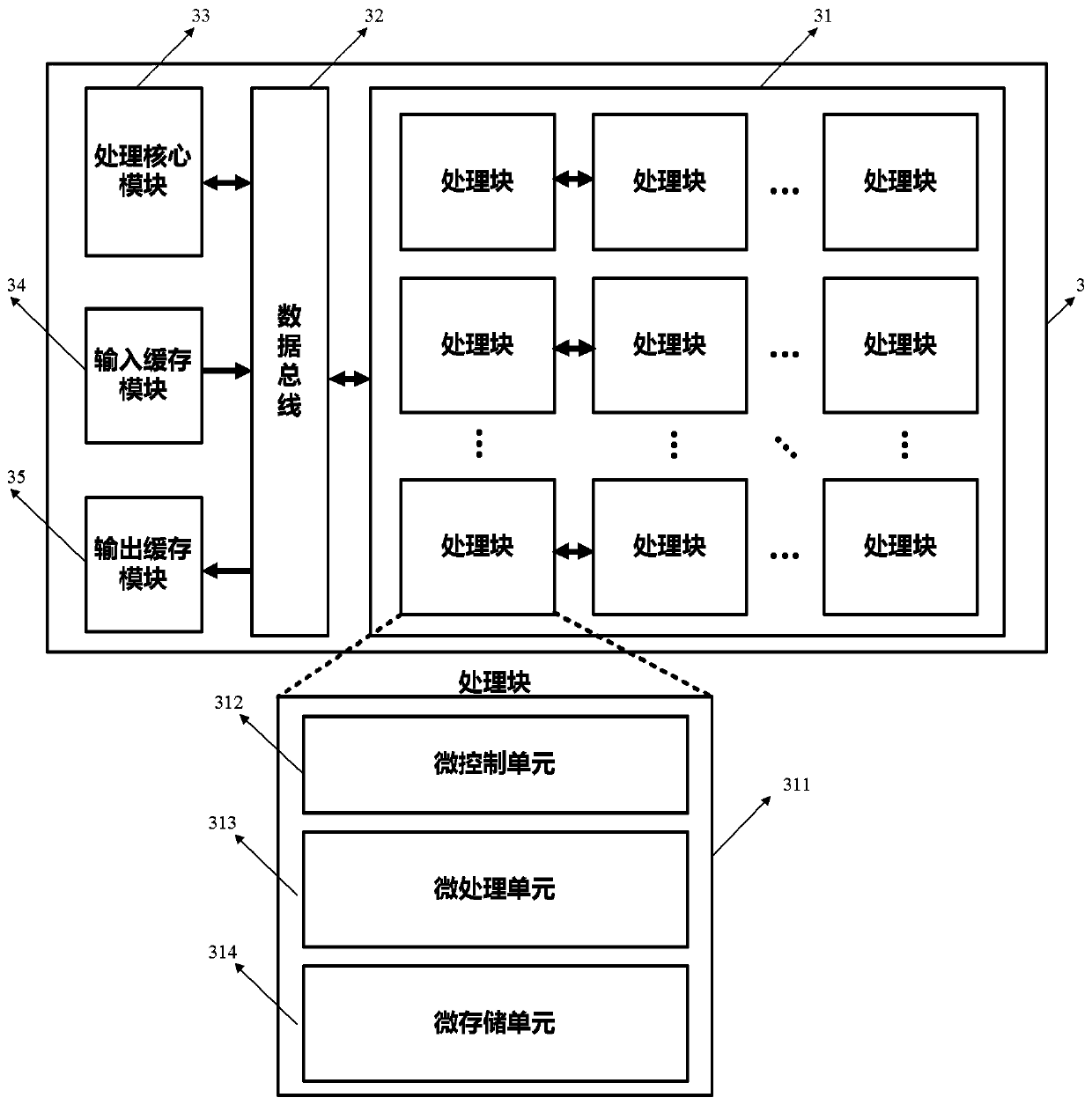

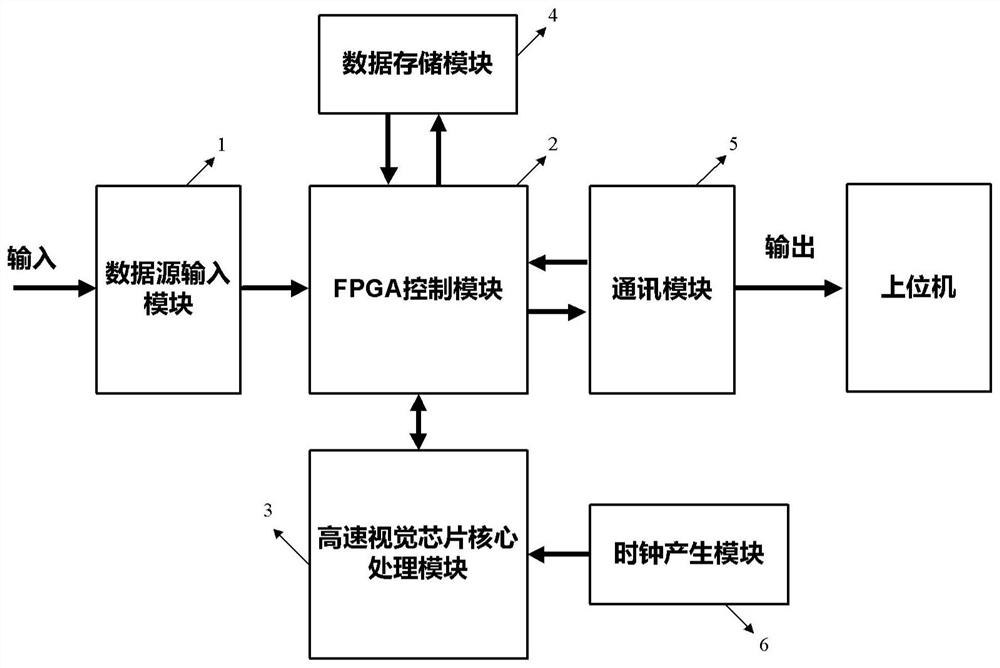

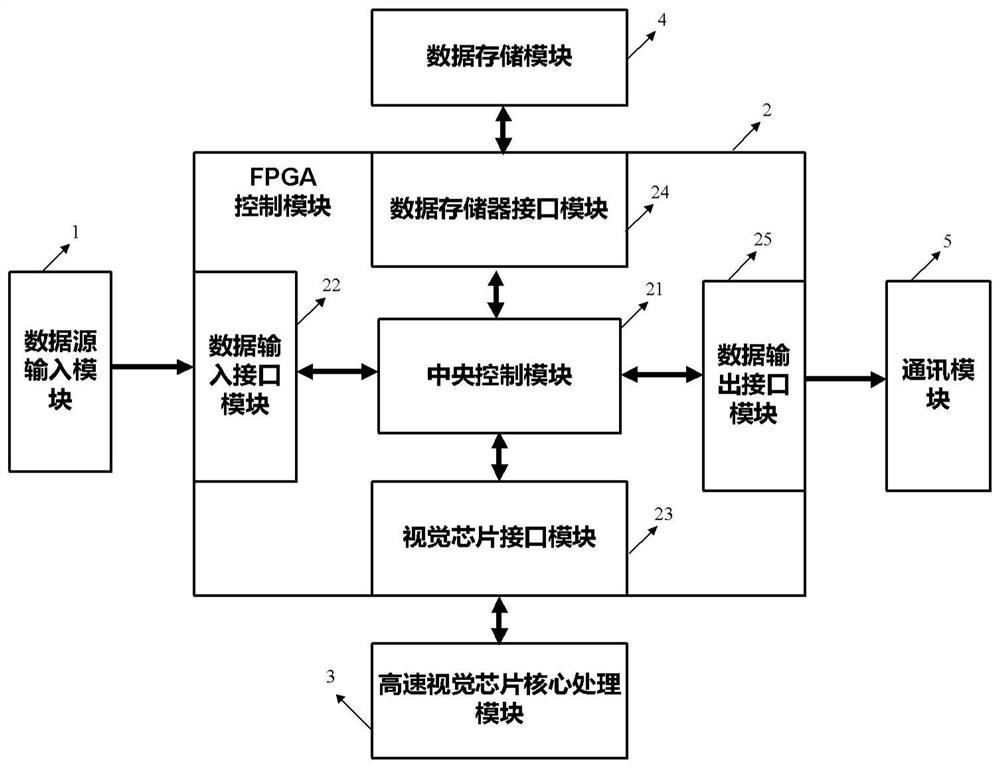

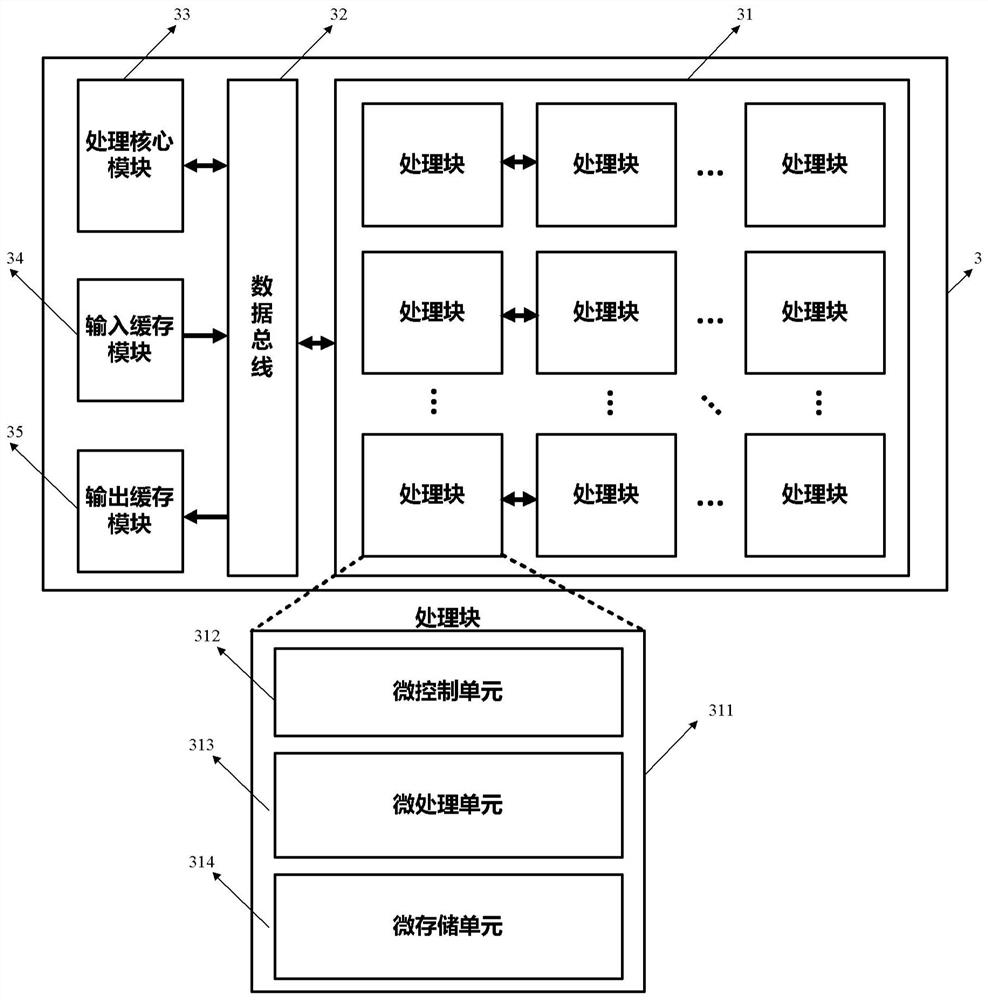

A large-scale image data processing system and method

ActiveCN109741237ASimple structureReduce power consumptionProcessor architectures/configurationImage resolutionHigh energy

The invention discloses a large-scale image data processing system and method. The system comprises the components of: a high-speed visual chip core processing module used for carrying out hierarchical multi-stage parallel deep learning algorithm processing on input image data and comprising a processing core module and a processing block array, the processing block array comprises M * N processing blocks which are connected and configurable, M and N are positive integers, and each processing block comprises a micro-processing unit array; wherein the processing core module is used for connection configuration, operation control and state monitoring of the whole processing block array, and can realize global image data processing; wherein each processing block in the processing block arrayis used for parallel processing of various images with different resolutions; wherein the micro-processing unit array comprises P micro-processing units, and P is a positive integer and is used for realizing parallel processing of pixel-level data. The system has the comprehensive performances of simple structure, low power consumption, high energy efficiency, high data throughput rate and high target identification precision.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

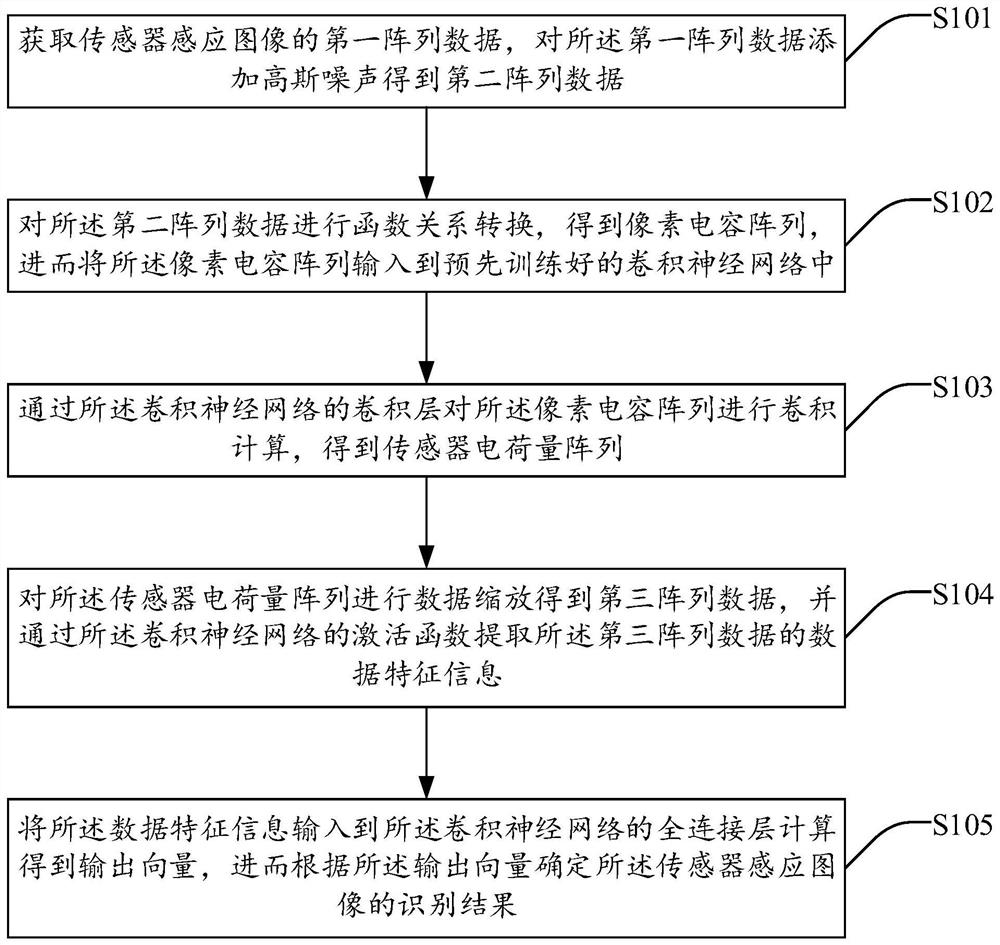

Sensor sensing image recognition method, system and device and storage medium

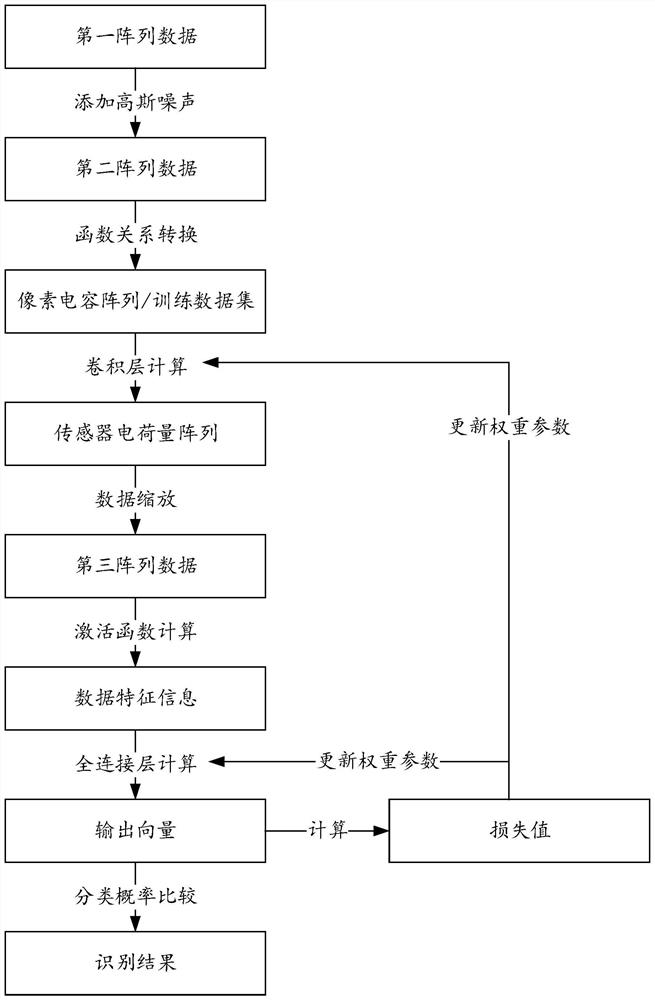

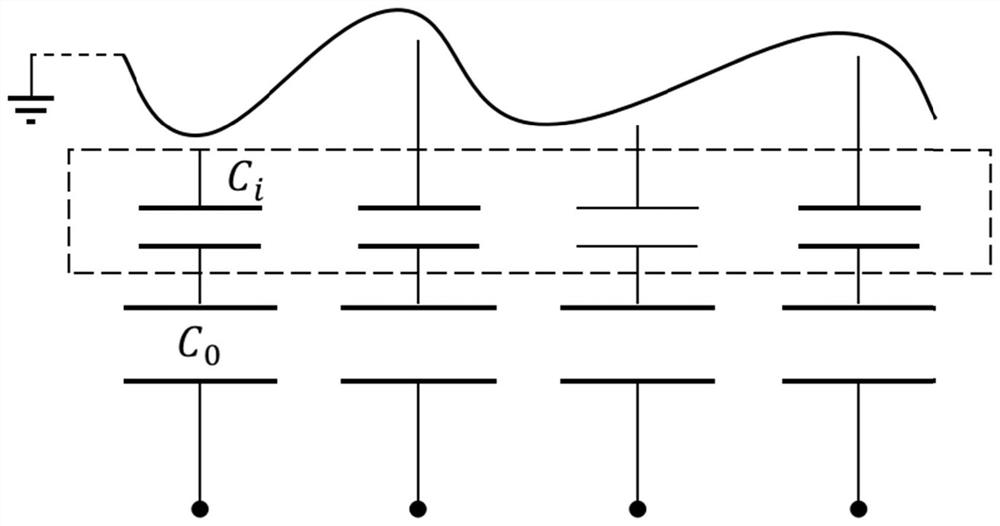

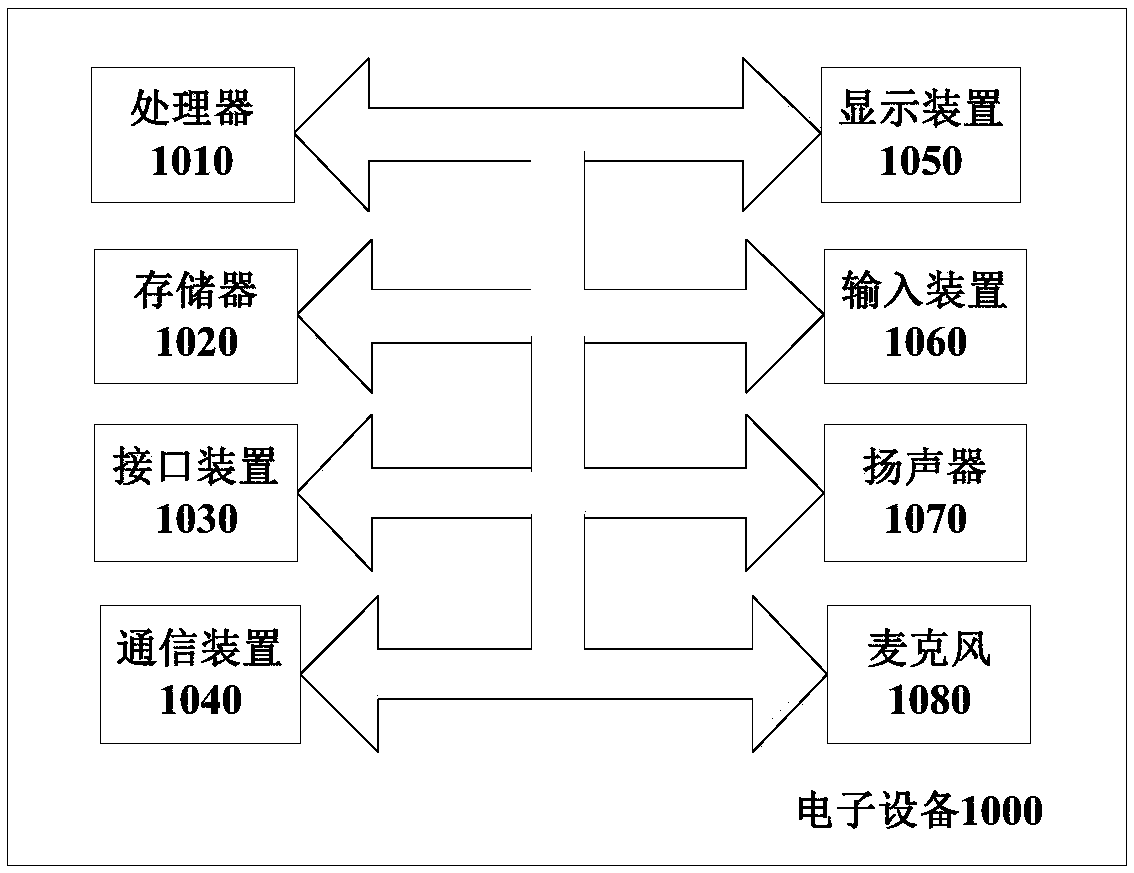

PendingCN114187484AQuick identificationImprove recognition efficiencyNeural architecturesNeural learning methodsActivation functionNeural network nn

The invention discloses a sensor sensing image recognition method, system and device and a storage medium, and the method comprises the steps: obtaining first array data of a sensor sensing image, and adding Gaussian noise to the first array data to obtain second array data; performing function relation conversion on the second array data to obtain a pixel capacitor array, and inputting the pixel capacitor array into a pre-trained convolutional neural network; performing convolution calculation on the pixel capacitor array through a convolution layer of the convolutional neural network to obtain a sensor charge quantity array; performing data scaling on the sensor charge quantity array to obtain third array data, and extracting data feature information of the third array data through an activation function of the convolutional neural network; and inputting the data feature information into a full connection layer of the convolutional neural network, calculating to obtain an output vector, and determining a recognition result of the sensor sensing image according to the output vector. The method improves the recognition efficiency of the sensor sensing image, and can be widely applied to the technical field of artificial intelligence.

Owner:SUN YAT SEN UNIV

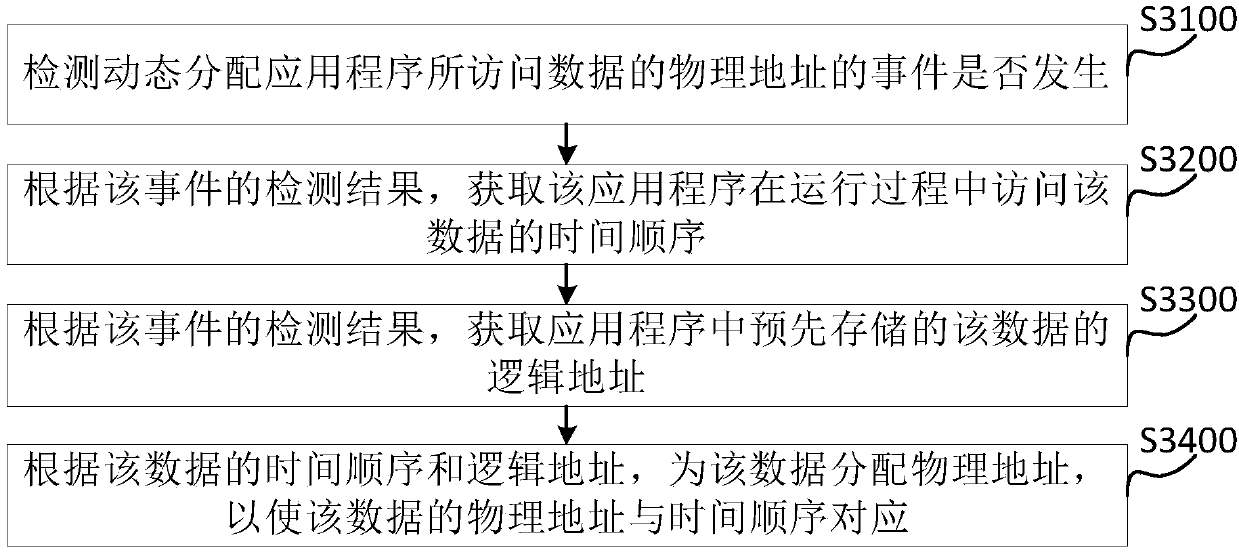

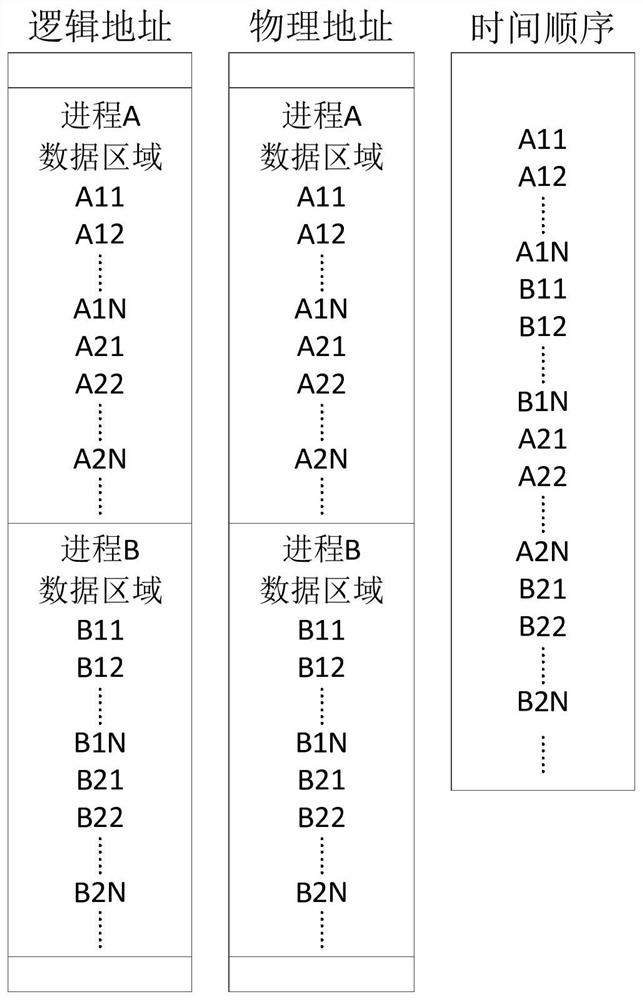

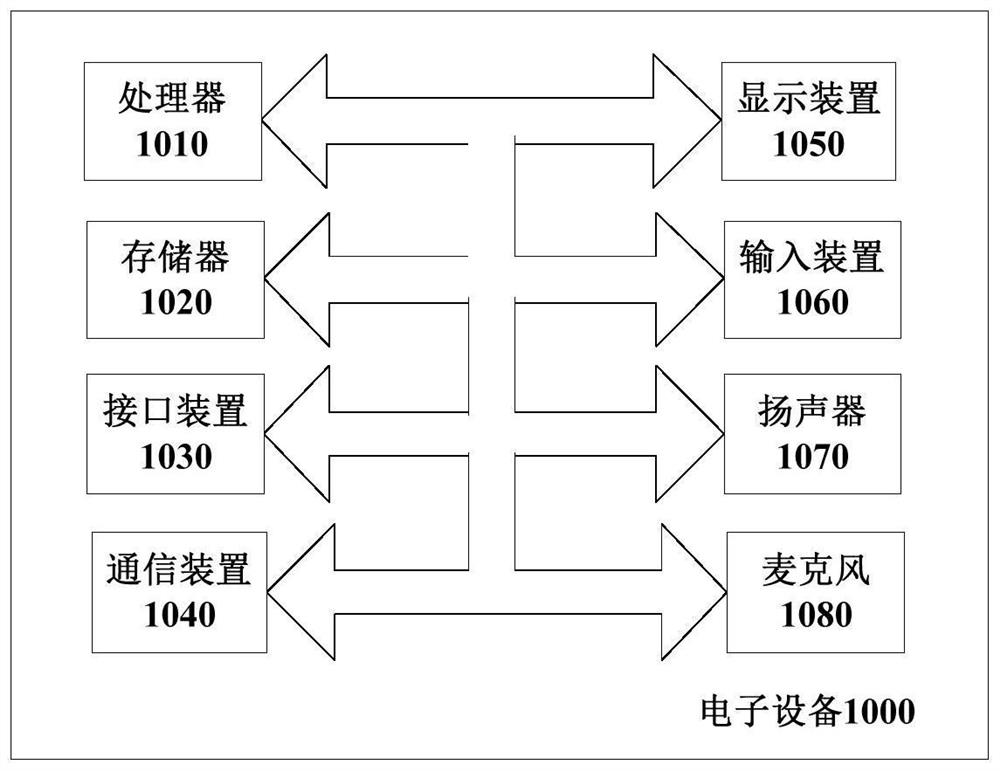

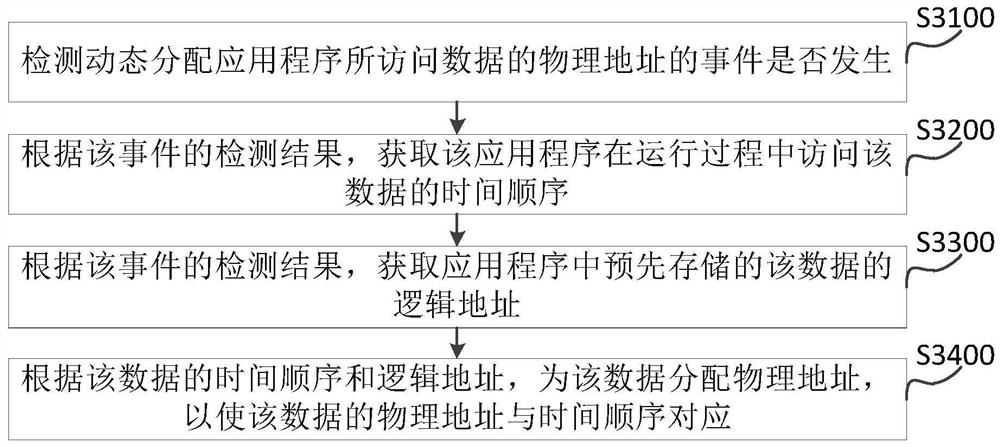

Method and device for dynamically allocating physical address, and electronic device

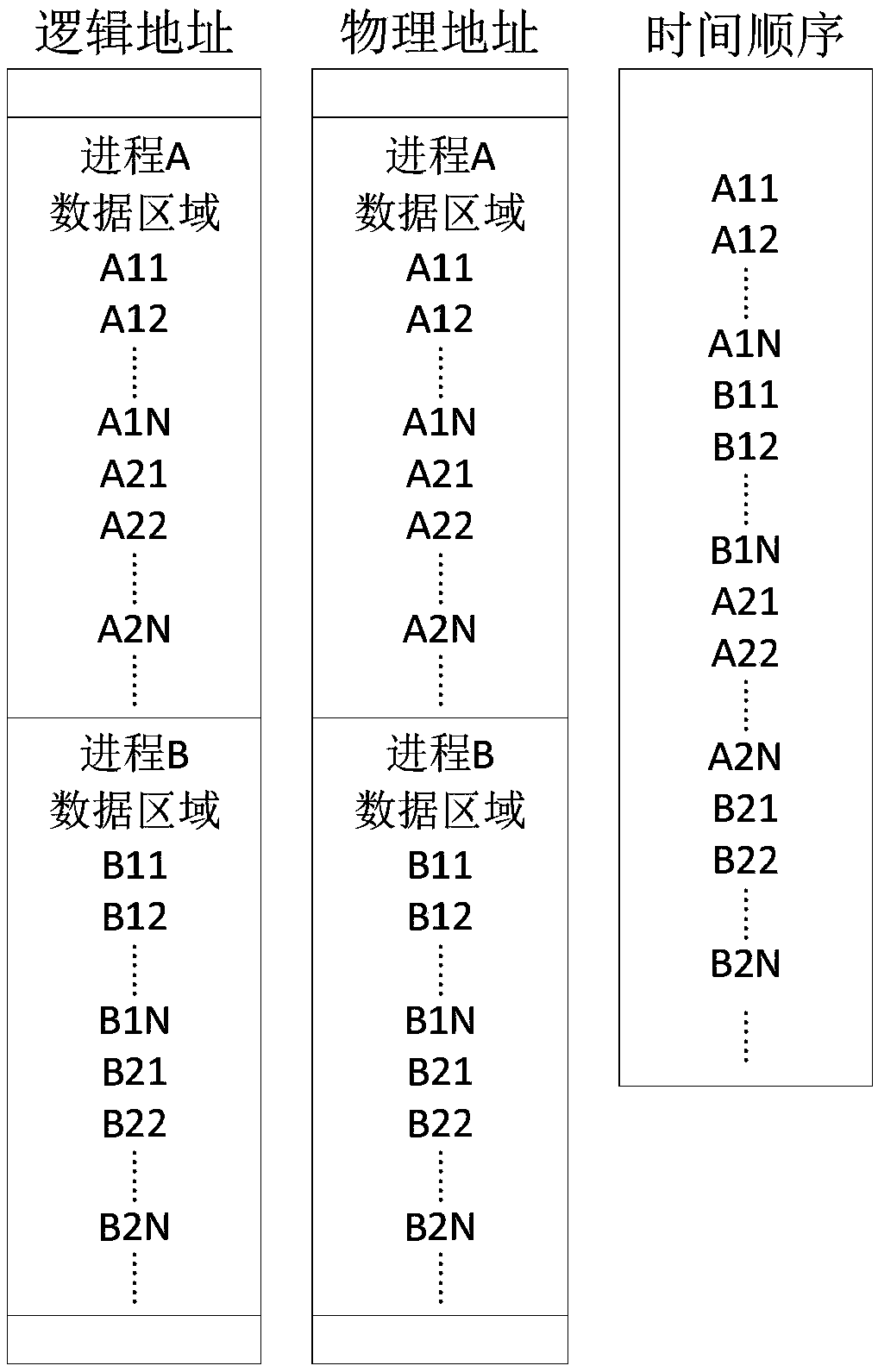

ActiveCN109542798AIncrease workloadIncrease the efficiency of accessing memoryMemory adressing/allocation/relocationTime ratioChronological time

The invention discloses a method and device for dynamically allocating a physical address and an electronic device. The method comprises the following steps: detecting whether an event of dynamicallyallocating a physical address of data accessed by an application program occurs; According to the detection results of events, the time order of accessing data in the process of application program running is obtained; Obtaining a logical address of the data stored in advance in the application program according to the detection result of the event; According to the time order and logical addressof the data, the physical address is assigned to the data so that the physical address of the data corresponds to the time order. As such, that proportion of time the system spend accessing the storage as a contiguous physical address increase during the running of the application, thereby increasing the efficiency of the system storage access to the storage.

Owner:WEIFANG GOERTEK MICROELECTRONICS CO LTD

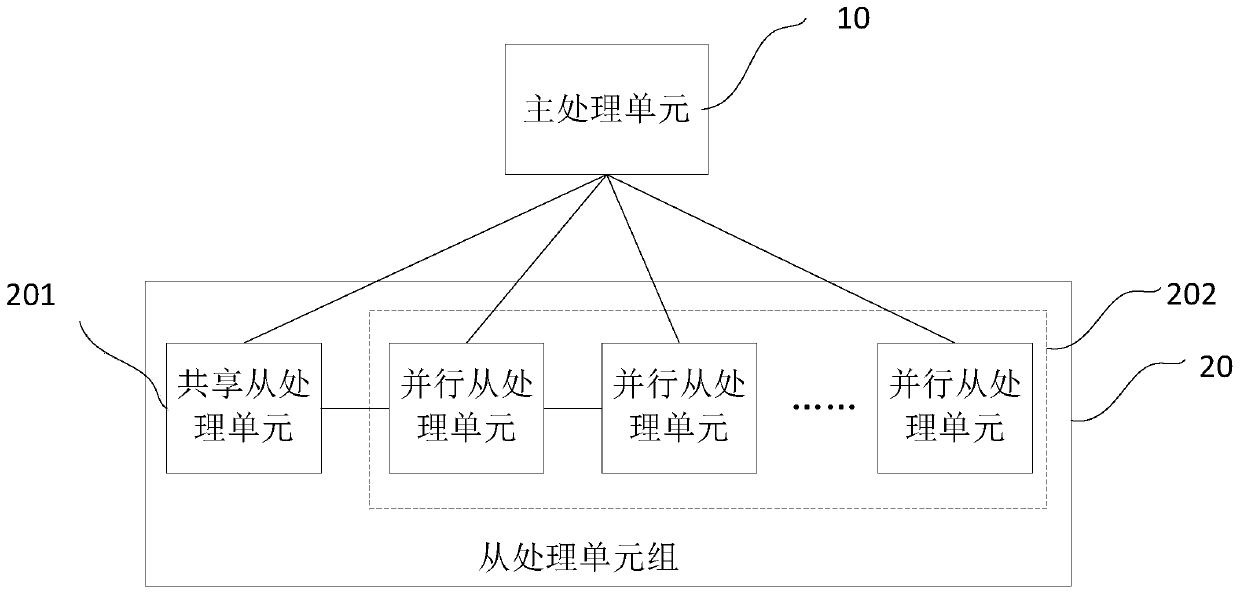

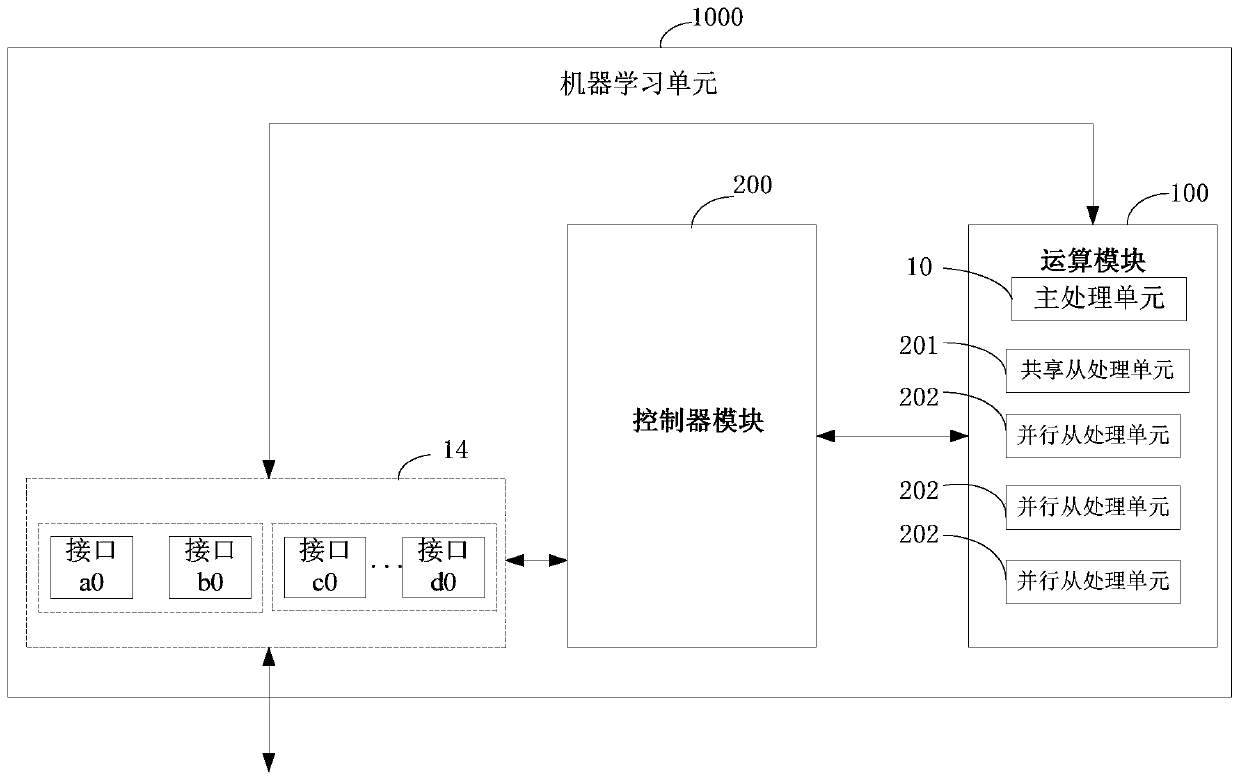

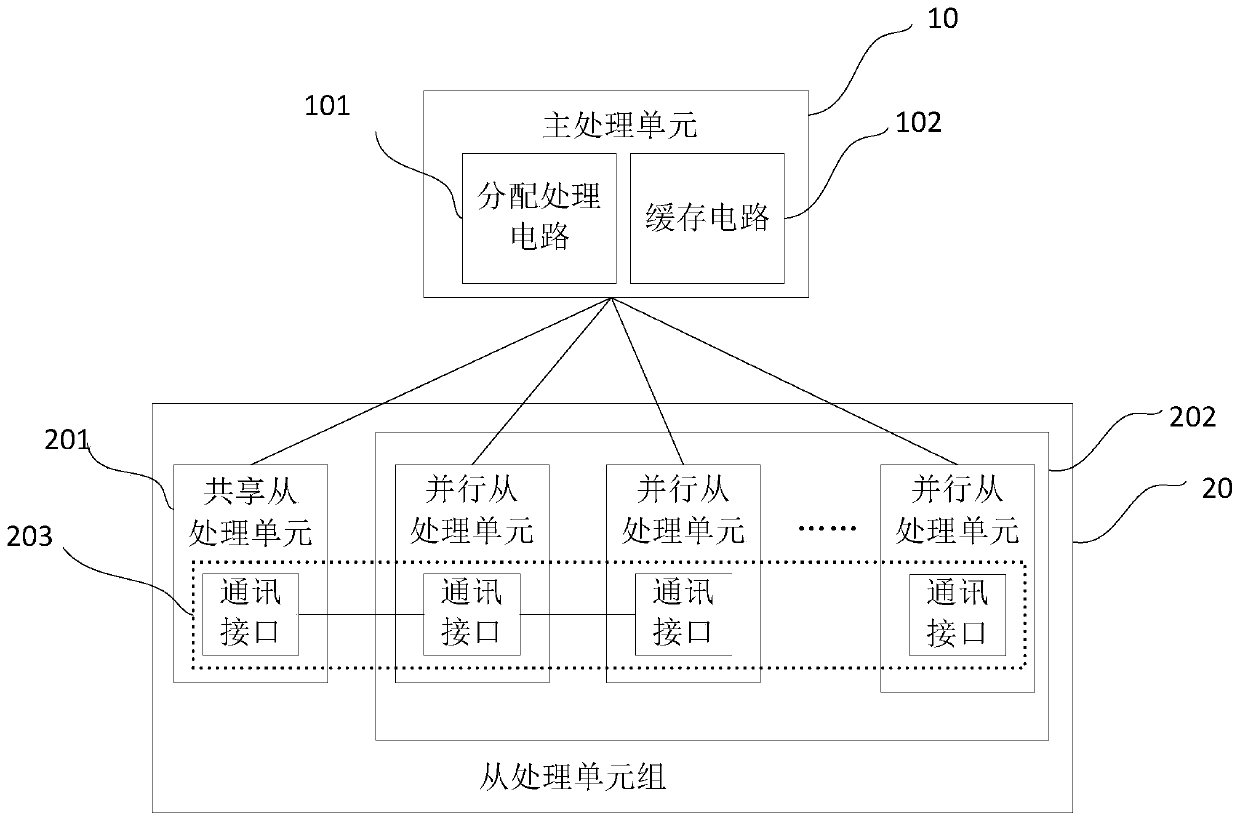

Data processing device and related product

ActiveCN111381882AReduce overheadReduce data bandwidthConcurrent instruction executionPhysical realisationMain processing unitComputer architecture

The invention relates to a data processing device and a related product. The system comprises a master processing unit and at least one slave processing unit group, and each slave processing unit group comprises a shared slave processing unit and at least one parallel slave processing unit; and the master processing unit is used for sending shared data to the shared slave processing units and sending parallel computing data to the parallel slave processing units. The shared slave processing unit transmits the shared data to each parallel slave processing unit, and the parallel slave processingunits respectively receive parallel computing data in two clock periods and transmit the parallel computing data to other parallel slave processing units step by step. According to the data processing device and the related product provided in the invention, the machine learning data is split into the shared data and the parallel computing data, data interaction between the master processing unitand the slave processing unit is realized through two clock periods, the data bandwidth occupied by data interaction between the master processing unit and the slave processing unit is reduced, and the hardware overhead of a machine learning chip for transmission is further reduced.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

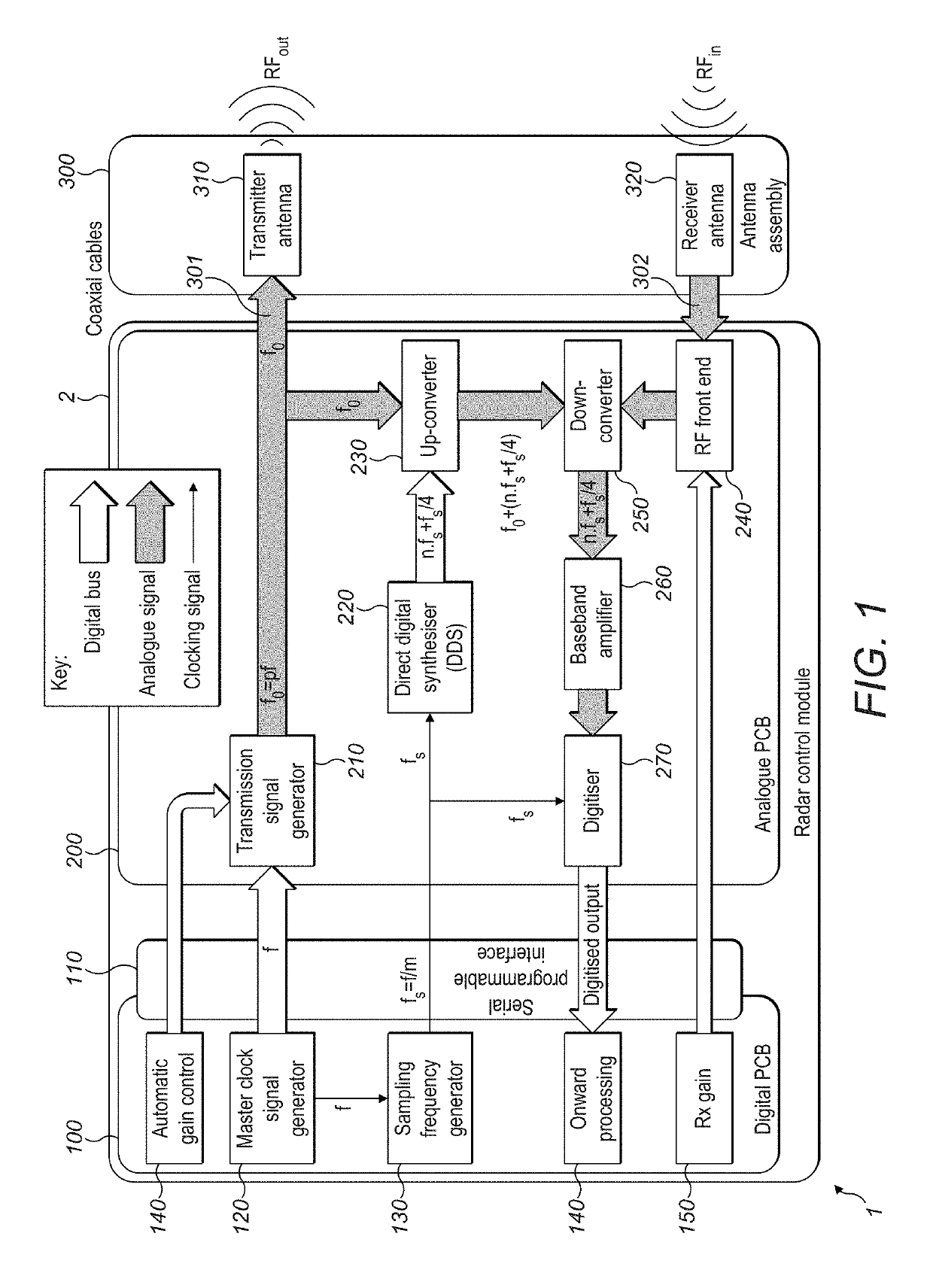

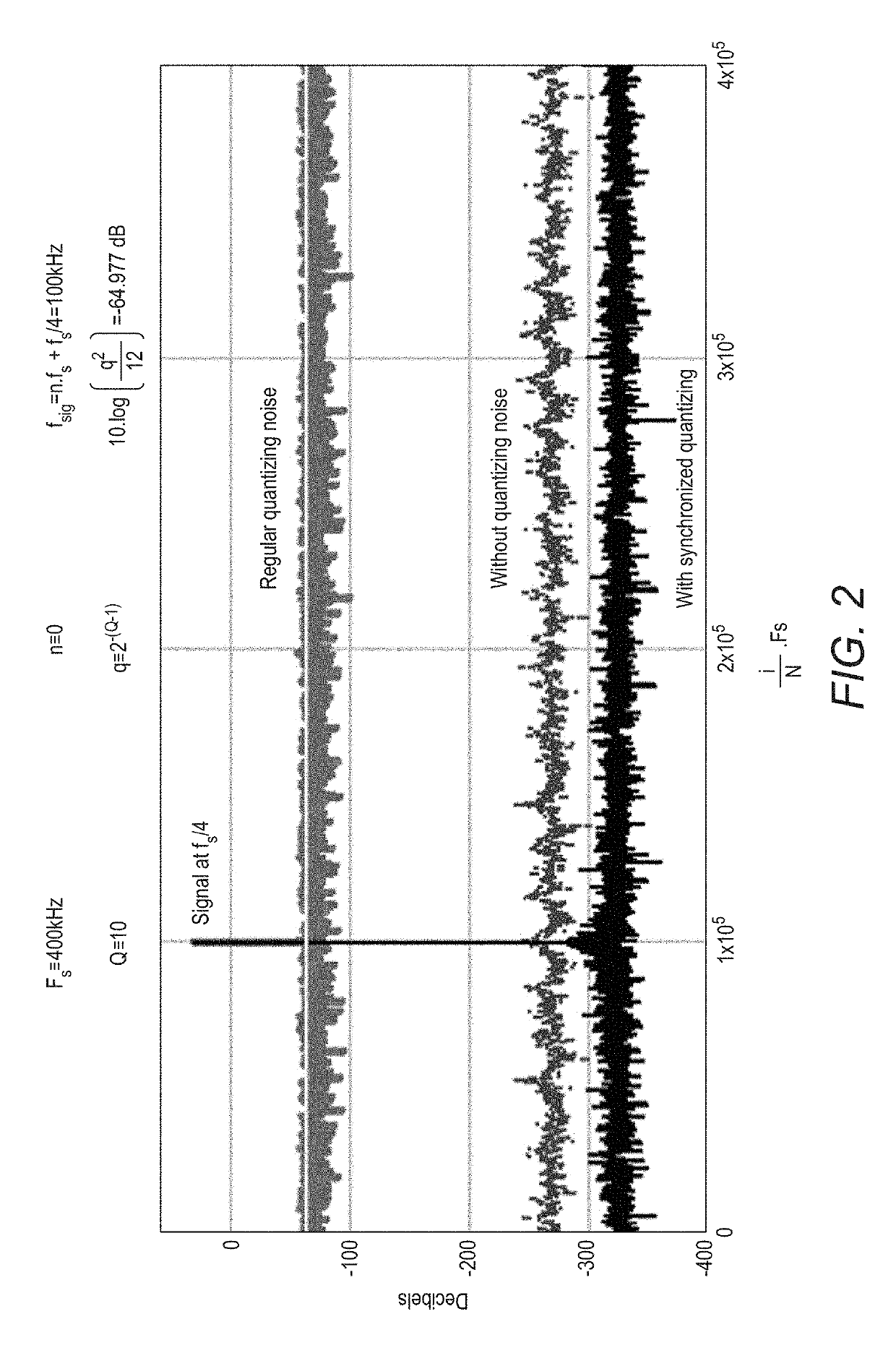

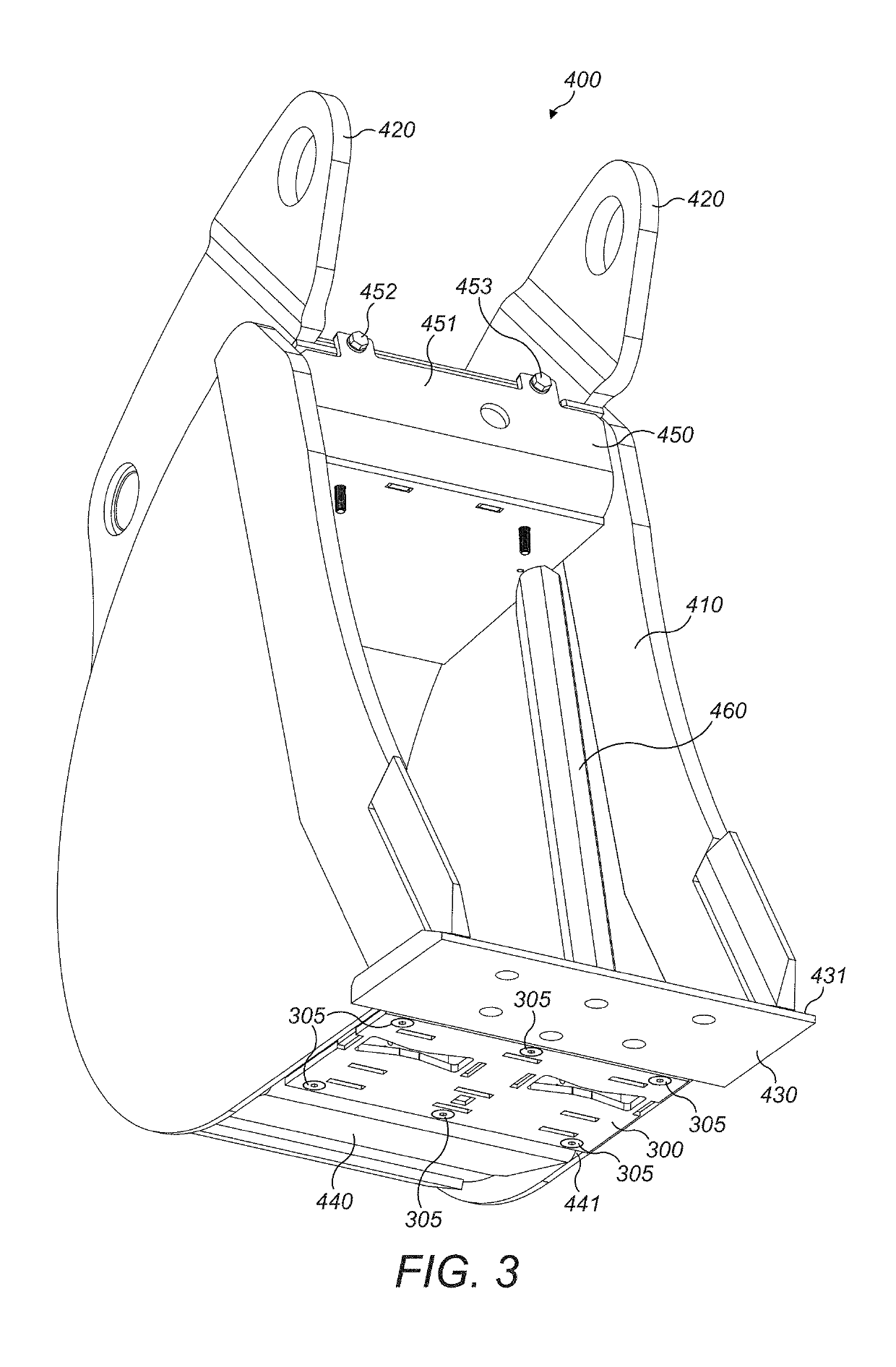

Radar System For Detecting Profiles Of Objects, Particularly In A Vicinity Of A Machine Work Tool

ActiveUS20190129001A1Low bandwidthReduce the sampling frequencyMechanical machines/dredgersGain controlFrequency changerData stream

A radar system is disclosed for detecting profiles of objects, particularly in a vicinity of a machine work tool. The radar system uses a direct digital synthesiser to generate an intermediate frequency off-set frequency. It also uses an up-converter comprising a quadrature mixer, single-side mixer or complex mixer to add the off-set frequency to the transmitted frequency. It further uses a down-converter in the receive path driven by the off-set frequency as a local oscillator. The radar system enables received information to be transferred to the intermediate frequency. This in turn can be sampled synchronously in such a way as to provide a complex data stream carrying amplitude and phase information. The radar system is implementable with a single transmit channel and a single receive channel.

Owner:RODRADAR LTD

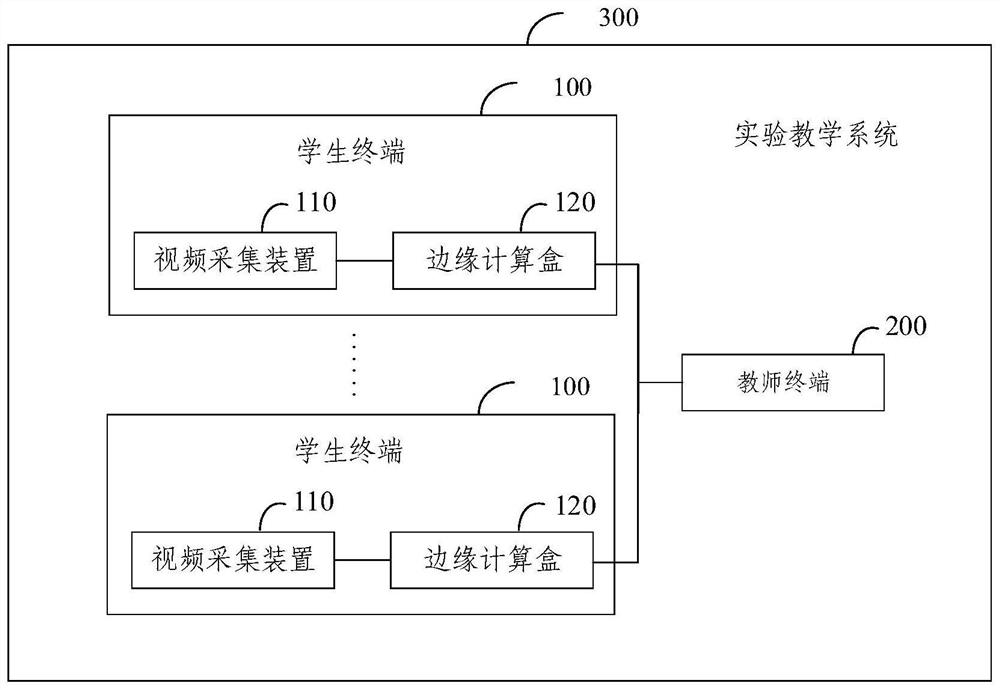

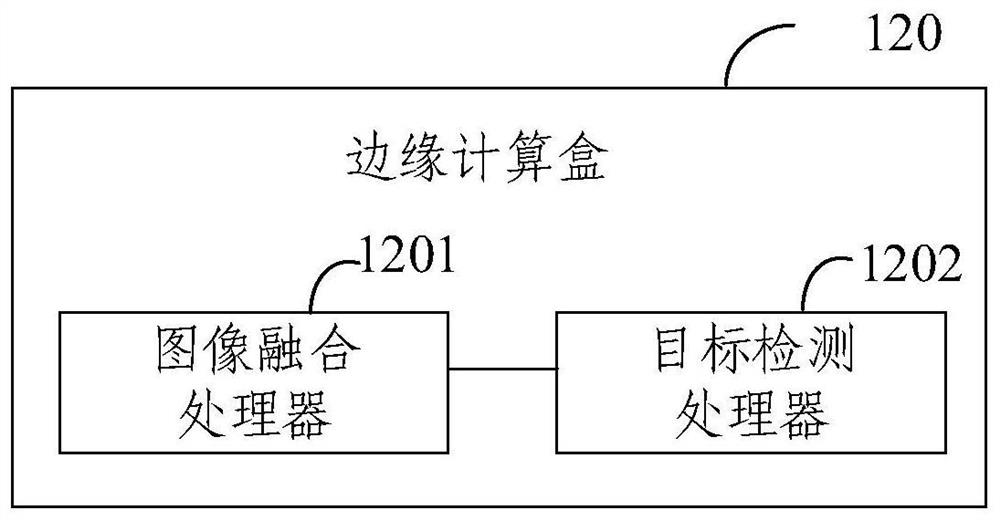

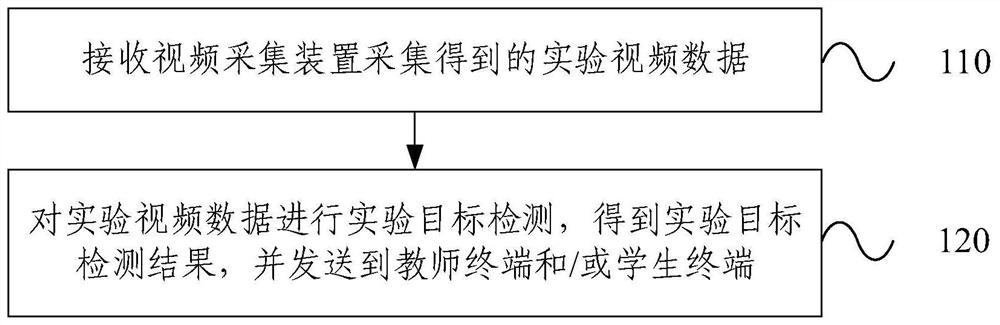

Experiment teaching system and method

InactiveCN112735198ALower latencyReduce data volumeTelevision system detailsResource allocationAlgorithmData transmission time

The invention relates to the technical field of computers, and provides an experiment teaching system and method, and the system comprises a teacher terminal and at least two student terminals. Each student terminal comprises an edge calculation box and a video acquisition device; the video signal output end of each video acquisition device is electrically connected with the calculation data input end of corresponding edge calculation box, and the video acquisition device is used for acquiring experimental video data; the calculation data output end of the edge calculation box is electrically connected with the detection result input end of the teacher terminal, and the edge calculation box is used for carrying out experiment target detection on the experiment video data to obtain an experiment target detection result; and the teacher terminal is used for receiving an experiment target detection result of each student terminal. According to the system and the method provided by the invention, the data transmission time and the data processing time are saved, the time delay of the whole experiment teaching system is reduced, and the teaching efficiency and the teaching quality are improved.

Owner:DEEPBLUE TECH (SHANGHAI) CO LTD

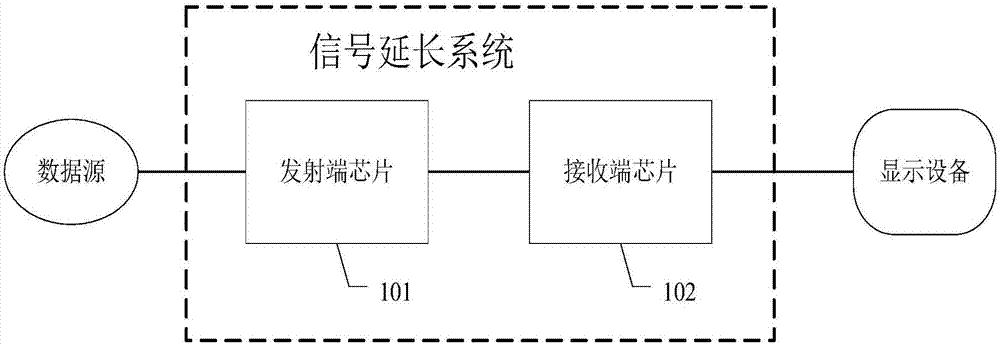

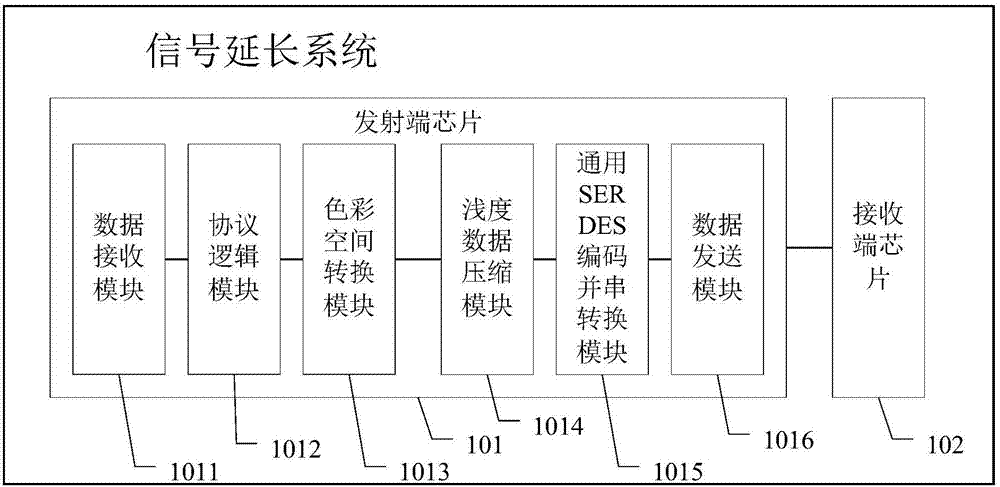

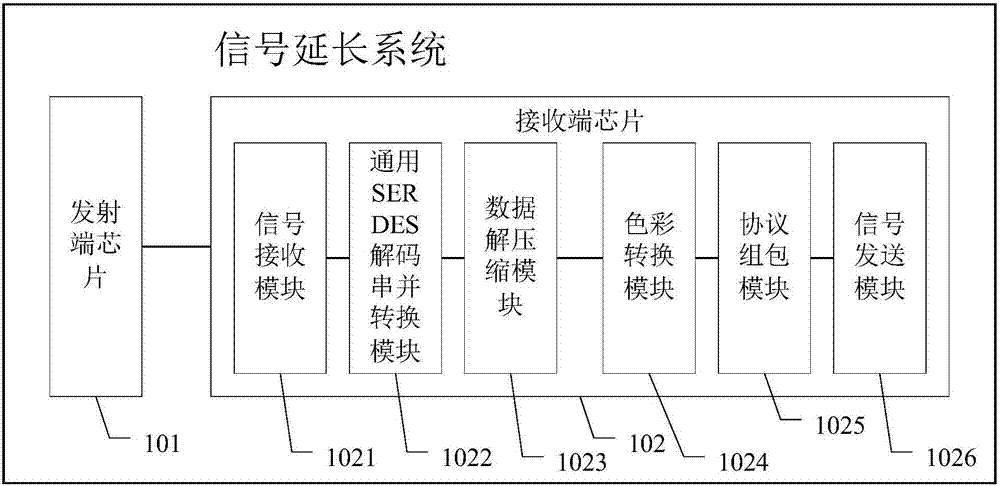

Signal prolonging method and system

ActiveCN107249113ALong transmission distanceDoes not affect image qualityColor signal processing circuitsDigital video signal modificationComputer hardwareDisplay device

The present invention provides a signal prolonging system. The system comprises a transmitting end chip and a receiving end chip connected with the transmitting end chip; the transmitting end chip is used for receiving high-definition video data, sequentially performing color space conversion processing, shallowness compression processing and parallel-to-serial encoding processing on the high-definition video data, and sending the high-definition video data to the receiving end chip; and the receiving end chip is used for receiving the high-definition video data sent by the transmitting end chip, performing parallel-to-serial encoding processing, shallowness compression processing and color space conversion processing on the received high-definition video data and outputting the high-definition video data to a display device for playing the high-definition video data. With the signal prolonging system adopted, the transmission distance of the high-definition video data can be prolonged.

Owner:SHENZHEN LONTIUM SEMICON TECH CO LTD

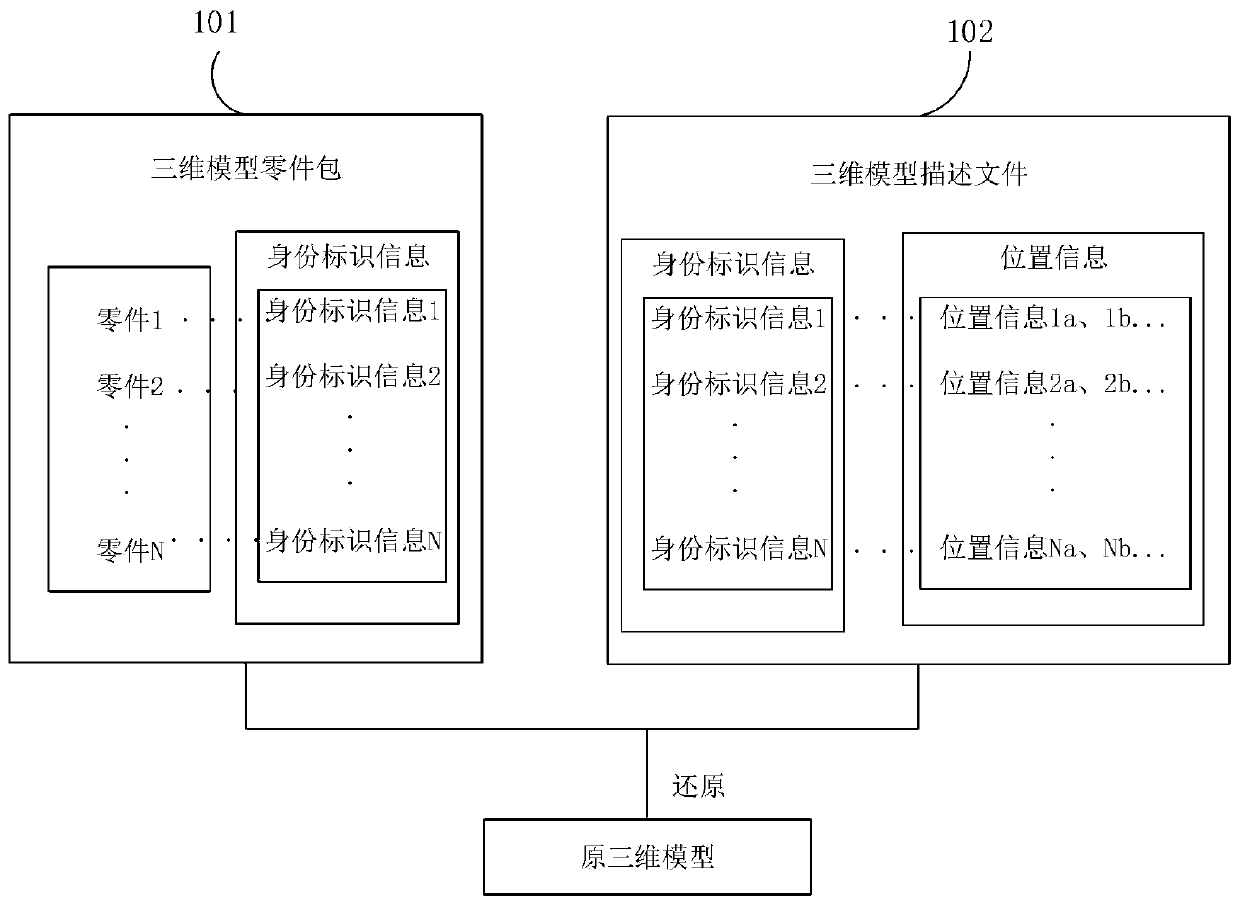

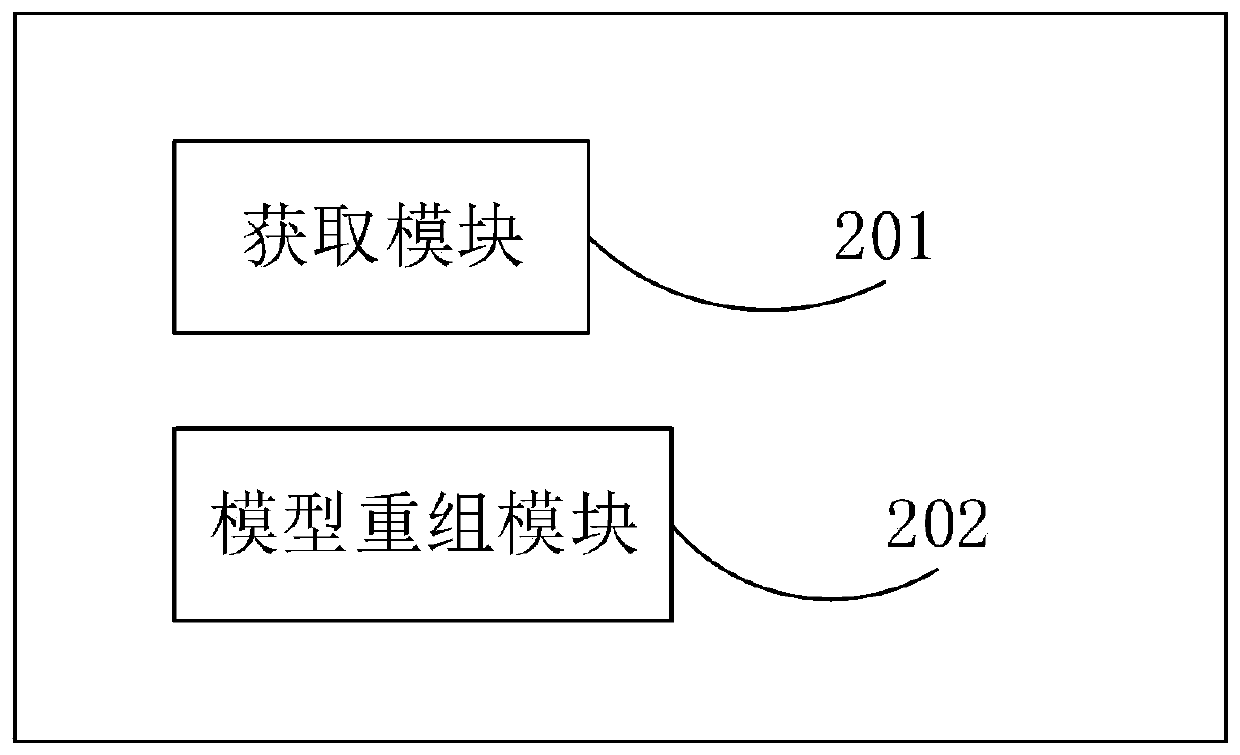

Three-dimensional model loading method and device and electronic equipment

PendingCN110610538AImprove loading efficiencyReduce size3D modellingIdentity recognitionComputer science

The invention discloses a three-dimensional model loading method. The method comprises the following steps: establishing a corresponding relationship between each part in a generated three-dimensionalmodel part package and each part of position information in a three-dimensional model description file by utilizing identity recognition information of the part, restoring each part in the three-dimensional model part package to a corresponding position in the three-dimensional model to obtain a displayable original three-dimensional model, the generated three-dimensional model part package comprising different parts disassembled from the three-dimensional model and identity recognition information set for each part; wherein the three-dimensional model description file comprises identity recognition information of each part and position information of the corresponding part in the three-dimensional model. According to the three-dimensional model loading method disclosed by the invention,the size of the three-dimensional model part package is reduced, and the model loading efficiency is improved. The invention further discloses a three-dimensional model loading device, a three-dimensional model splitting method and device, a three-dimensional model splitting and loading method and system and electronic equipment.

Owner:长江工程监理咨询有限公司(湖北)

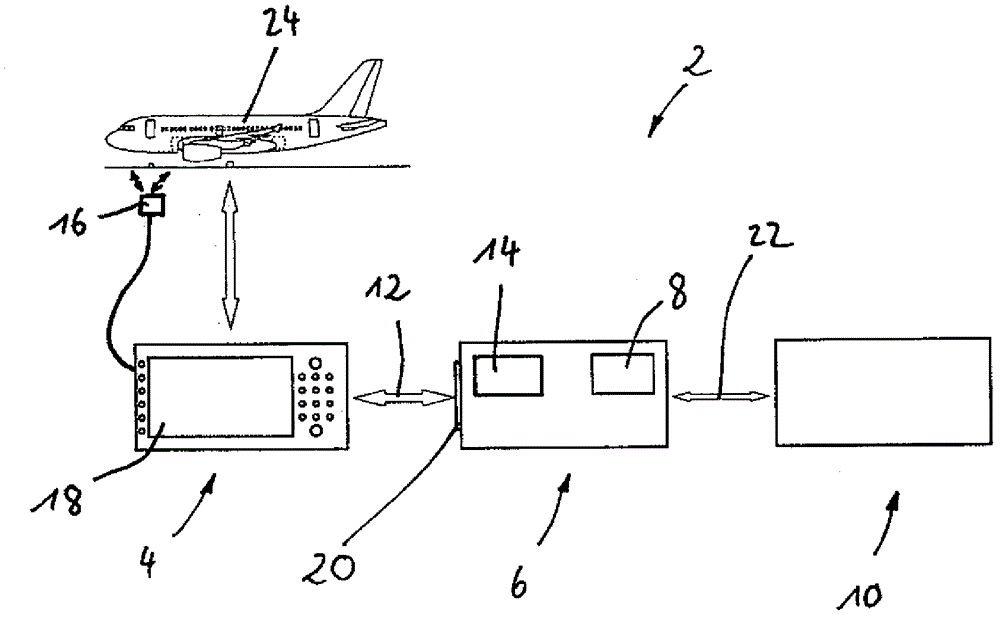

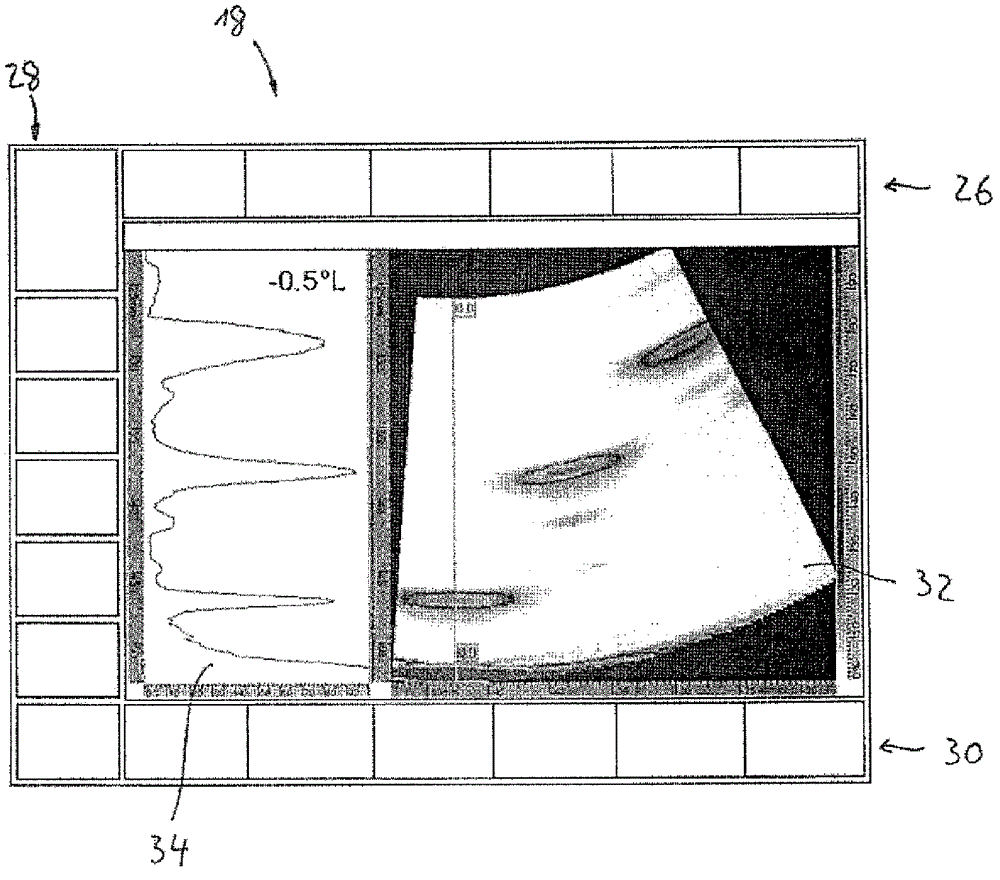

Remote measurement system and method for carrying out a test method on a remote object

InactiveCN102640191BReduce data bandwidthRegistering/indicating working of vehiclesComputer hardwareCommunication unit

A remote measuring system includes a test apparatus connected to a sensor for testing an object, a communication unit, and a remoteoperating unit. Control signals may be sent from this operating unit to the communication unit to operate the test apparatus. A data processing unit performs a compression process of a first flow of test data emanating from the test apparatus and sends a second flow of test data to the operating unit. The data processing unit performs a quantisation process, which may be controlled through control signals at the operating unit. A remotely located expert may thus receive a direct and qualified impression of the procedure and the inspection results and give advice on correct procedures or on changing the manner of the recording. By obtaining support from experts, time is saved and the transfer of know-how and the use of experts is improved.

Owner:AIRBUS OPERATIONS GMBH

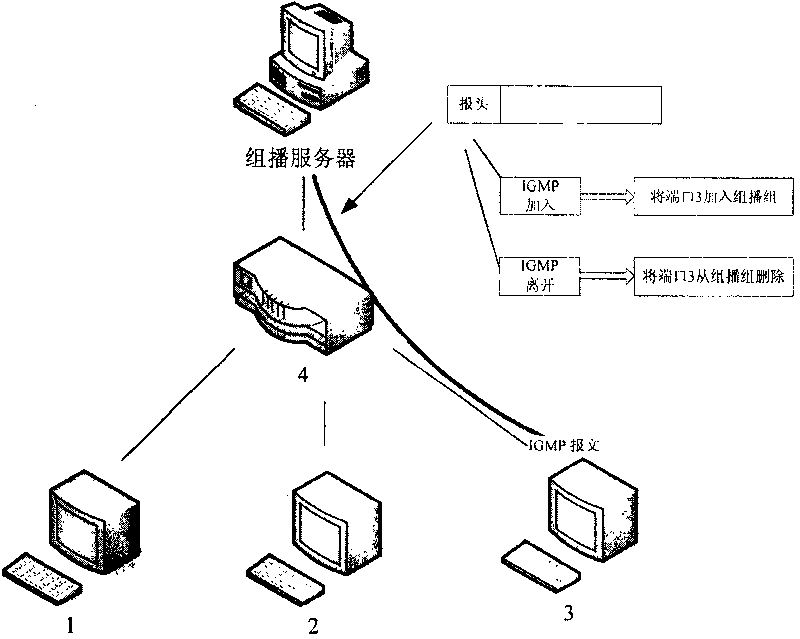

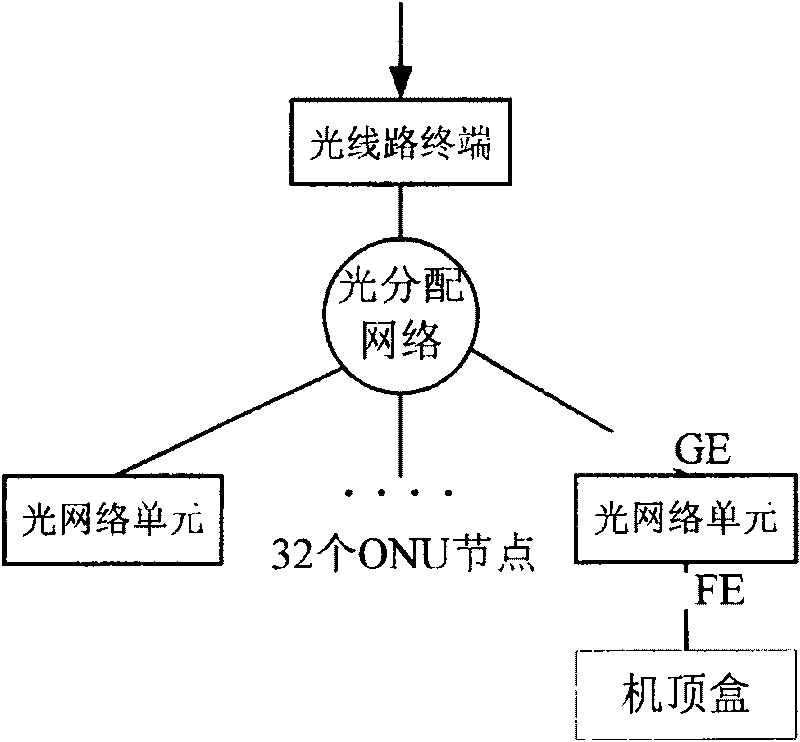

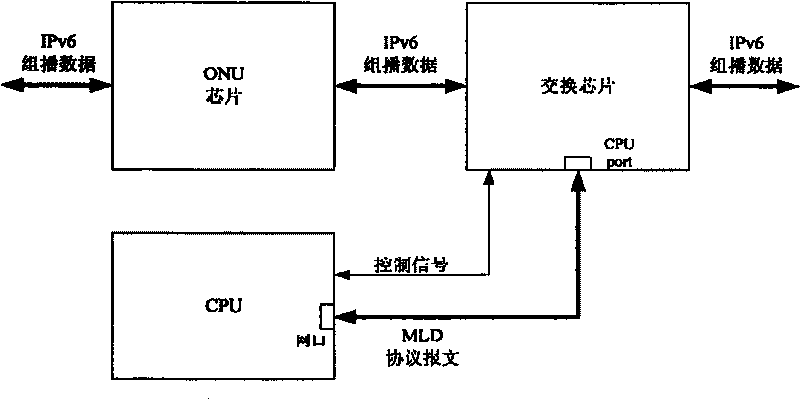

Apparatus and method for implementing IPV6 multicast filtering on EPON using hardware extended mode

InactiveCN101098287BLow costReduce data bandwidthData switching networksSecuring communicationEmbedded systemIPv6

The invention provides a device for realizing IPV6 multicast filter on EPON via hardware expansion and a relative method, wherein the device comprises an ONU chip, an exchange chip, and a MAC controller chip. The ONU chip comprises an embedded CPU and a control interface. The exchange chip comprises a CPU port and a control port, while the CPU port is connected with the MAC controller chip, the exchange chip is used to capture a MLD protocol report to be sent to the MAC controller chip. The MAC controller chip is used to send a received report to the embedded CPU of the ONU chip, while the embedded CPU is used to analyze the MLD protocol report and build multicast filter list, to realize IPV6 multicast filter. The invention via expanding MAC controller chip realizes MLD Snooping function on ONU chip, to reduce cost, which can realize IPV6 multicast filter on exchange chip, and realize IPv6 multicast filter on ONU chip, thereby saving UNI port descending data bandwidth of ONU chip.

Owner:SHANGHAI BROADBAND TECH

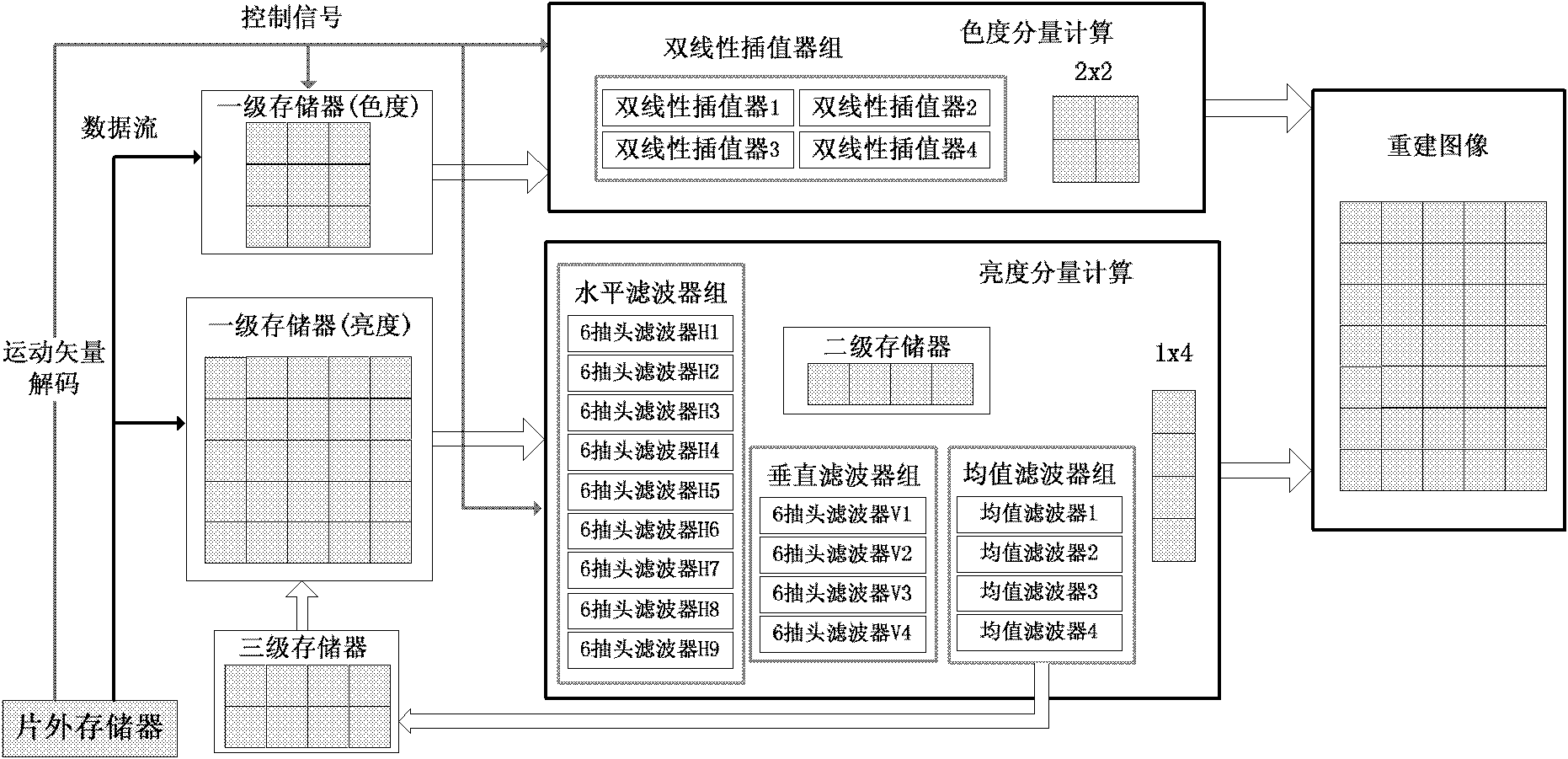

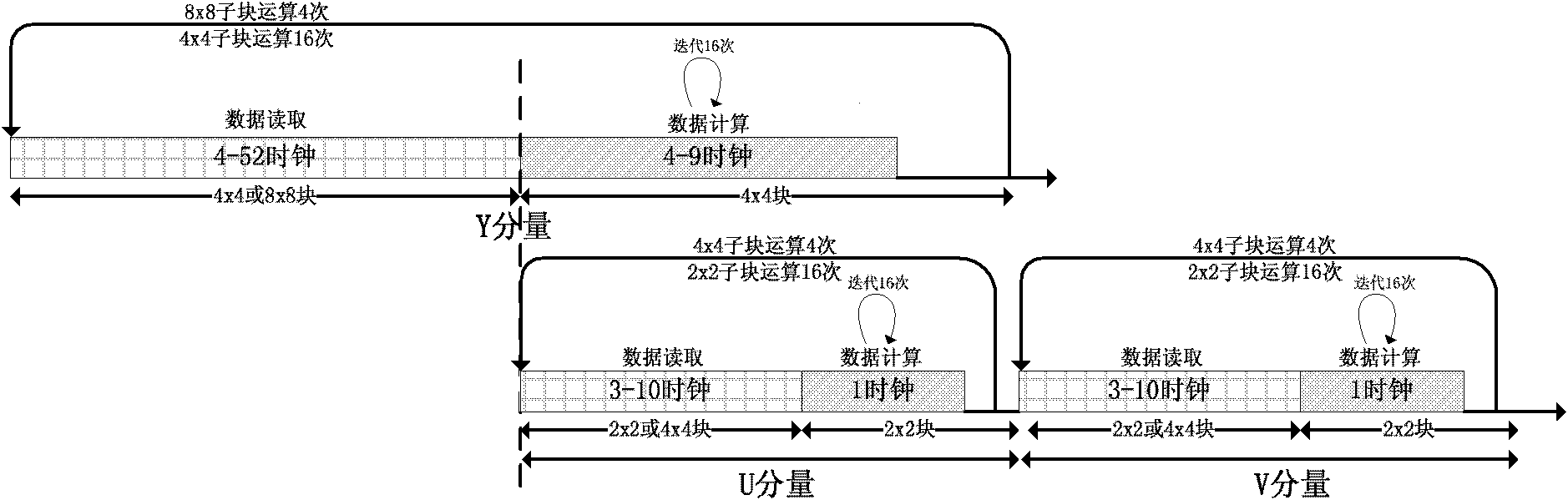

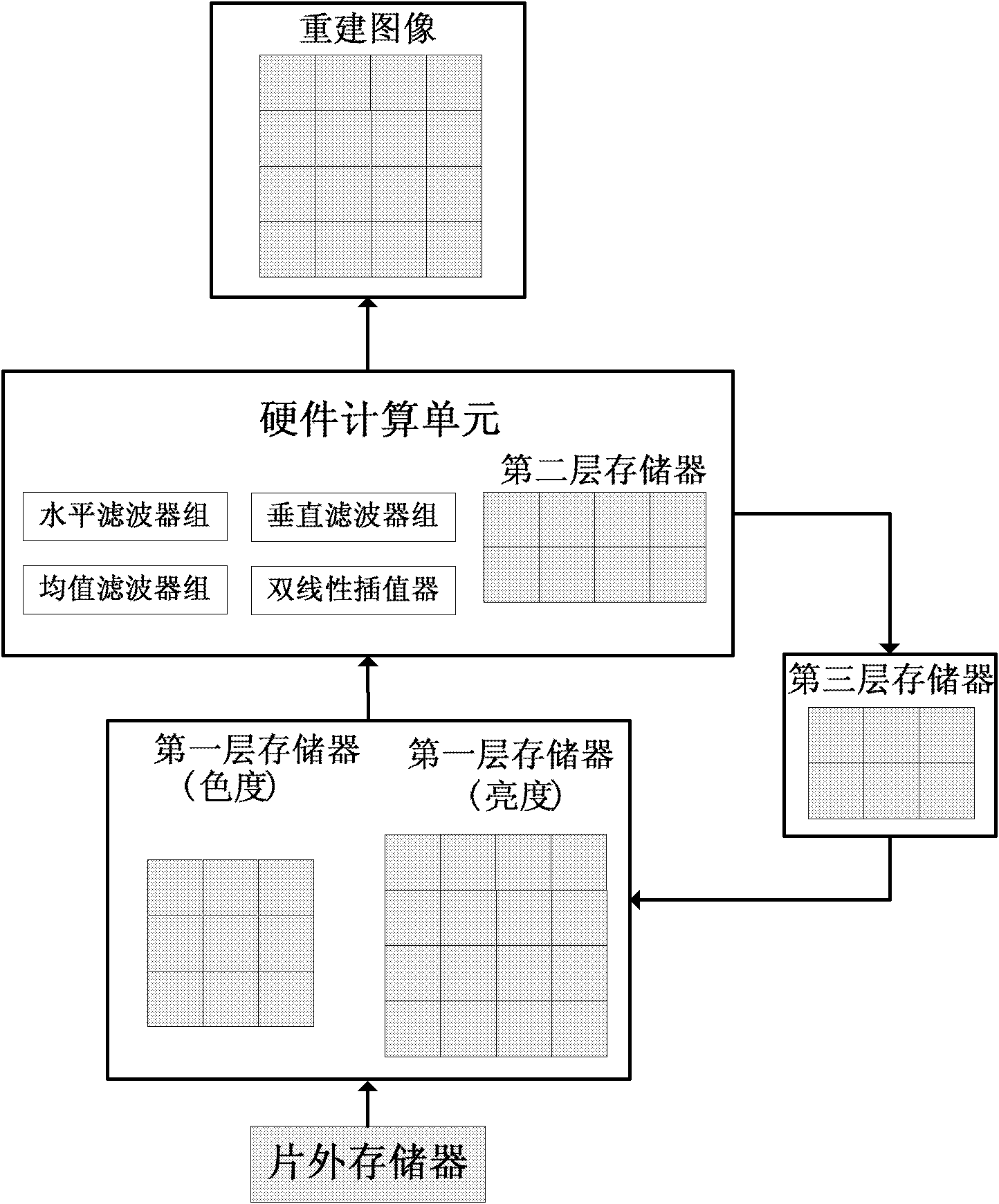

VLSI (Very Large Scale Integration) structure design method for parallel flowing motion compensating filter

InactiveCN101888554BReduce accessSpend less timeBrightness and chrominance signal processing circuitsTelevision systemsParallel computingVery-large-scale integration

The invention discloses a VLSI (Very Large Scale Integration) structure design method for a parallel flowing motion compensating filter. By carrying out parallel calculation on a brightness pixel point and a chroma pixel point of a motion compensation module and adopting an inner pipelining processing way of a self-adaption block, the processing time on the whole decoding is reduced, and the system throughput is improved. By adopting layering framework design on an on-chip memory and further optimizing an access way, the access on an off-chip memory is reduced. By adopting a data multiplexingtechnology of a reference pixel and adopting a macro-subblock updating treatment technology, time on reading data is obviously saved, and data reading bandwidth is reduced, thereby improving the decoding efficiency of a decoder and decoding performance.

Owner:XI AN JIAOTONG UNIV

Large-scale image data processing system and method

ActiveCN109741237BImprove energy efficiencyImprove data throughputProcessor architectures/configurationHigh energyLevel data

The invention discloses a large-scale image data processing system and method. The system comprises the components of: a high-speed visual chip core processing module used for carrying out hierarchical multi-stage parallel deep learning algorithm processing on input image data and comprising a processing core module and a processing block array, the processing block array comprises M * N processing blocks which are connected and configurable, M and N are positive integers, and each processing block comprises a micro-processing unit array; wherein the processing core module is used for connection configuration, operation control and state monitoring of the whole processing block array, and can realize global image data processing; wherein each processing block in the processing block arrayis used for parallel processing of various images with different resolutions; wherein the micro-processing unit array comprises P micro-processing units, and P is a positive integer and is used for realizing parallel processing of pixel-level data. The system has the comprehensive performances of simple structure, low power consumption, high energy efficiency, high data throughput rate and high target identification precision.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

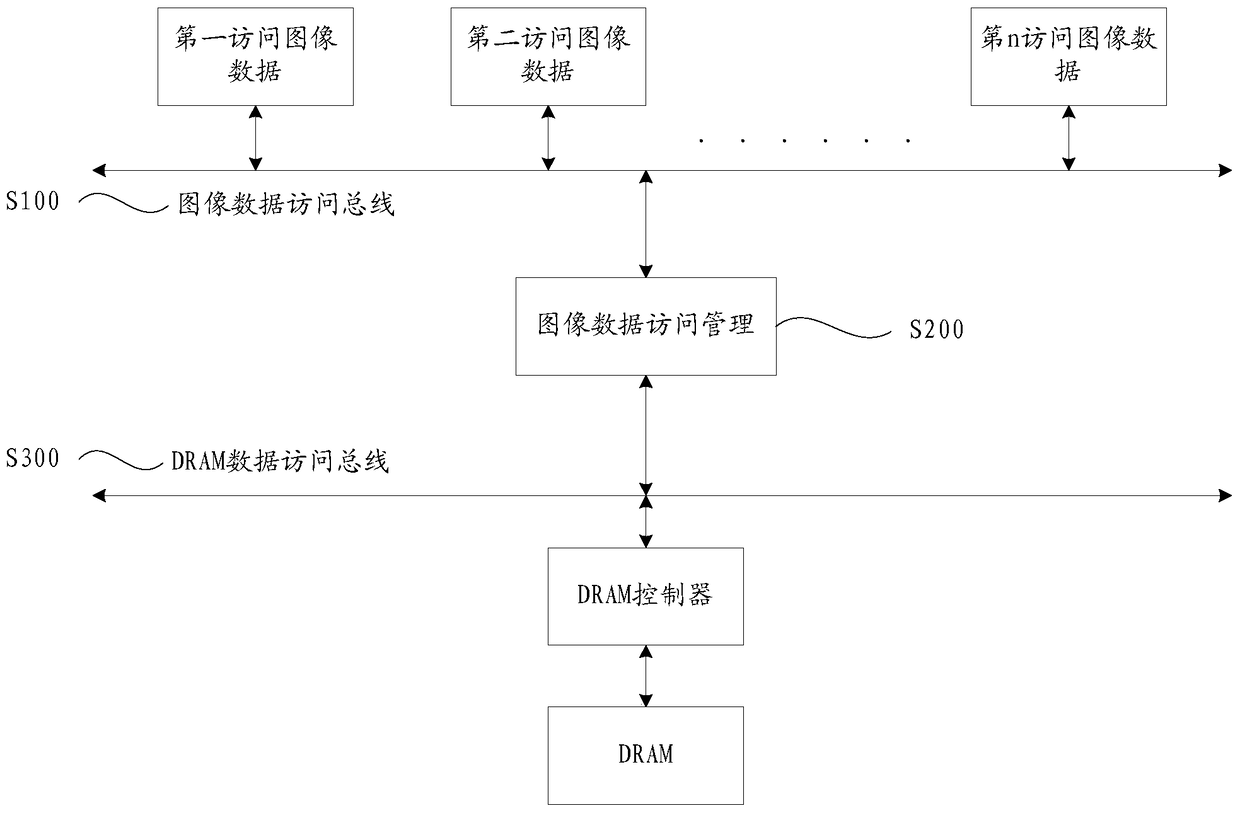

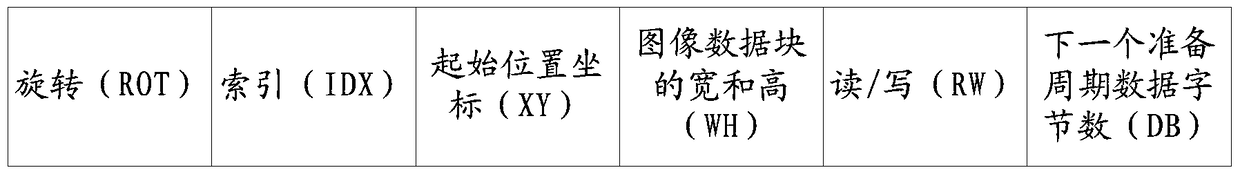

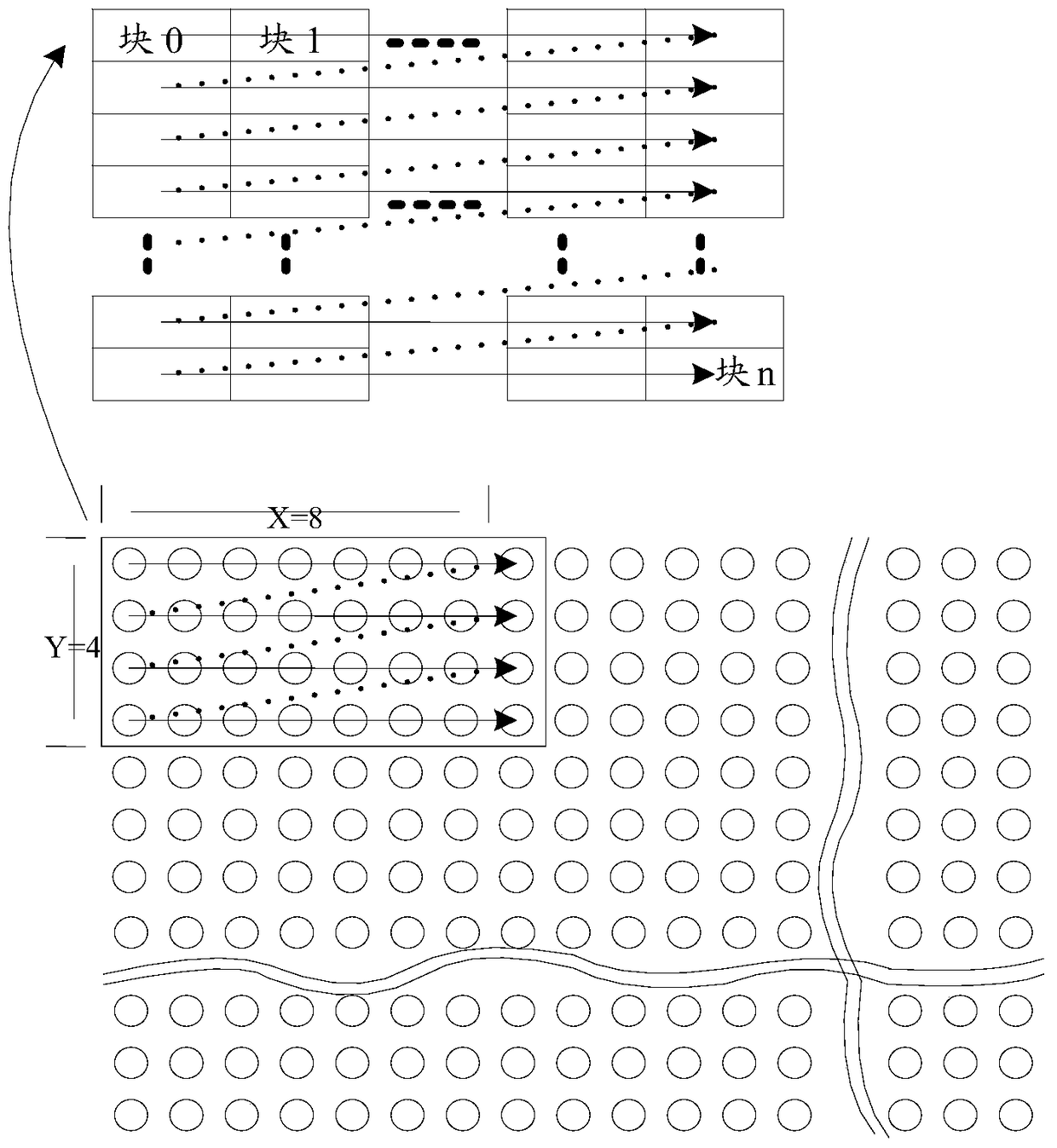

Image data processing method and system in dram

ActiveCN104899824BSave storage spaceReduce data bandwidthImage memory managementImaging dataOperating system

The present invention provides a processing method and system for image data in a DRAM. The method comprises the following steps: sending, by a GBUS, a request command of reading and writing image data; receiving, by a GDAM, the request command from the GBUS, converting the request command to a request signal corresponding to a DRAM controller, and sending the request signal to a DBUS; receiving, by the DBUS, the request command from the GDAM, and processing the image data in the DRAM by using the DRAM controller. According to the method, the reading and writing of the image data in the DRAM are finished, convenience for accessing the image data in the DRAM is improved, and the highest efficiency of the DRAM is achieved; the effective measures are taken to ensure that storage space of the DRAM is used as little as possible, data bandwidth of the DRAM as little as possible is consumed, and the access efficiency of the DRAM as high as possible is achieved.

Owner:ALLWINNER TECH CO LTD

Method, device and electronic equipment for dynamically allocating physical addresses

ActiveCN109542798BIncrease workloadIncrease the efficiency of accessing memoryMemory adressing/allocation/relocationApplication procedureData storing

The invention discloses a method and device for dynamically allocating a physical address and an electronic device. The method comprises the following steps: detecting whether an event of dynamicallyallocating a physical address of data accessed by an application program occurs; According to the detection results of events, the time order of accessing data in the process of application program running is obtained; Obtaining a logical address of the data stored in advance in the application program according to the detection result of the event; According to the time order and logical addressof the data, the physical address is assigned to the data so that the physical address of the data corresponds to the time order. As such, that proportion of time the system spend accessing the storage as a contiguous physical address increase during the running of the application, thereby increasing the efficiency of the system storage access to the storage.

Owner:WEIFANG GOERTEK MICROELECTRONICS CO LTD

A Neural Network Processor and Design Method Based on Efficient Multiplexing Data Stream

ActiveCN107085562BReduce data bandwidthImprove data sharing rateArchitecture with single central processing unitEnergy efficient computingData streamEngineering

The invention puts forward a neural network processor based on efficient multiplex data stream, and a design method, and relates to the technical field of the hardware acceleration of neural network model calculation. The processor compares at least one storage unit, at least one calculation unit and a control unit, wherein the at least one storage unit is used for storing an operation instruction and arithmetic data; the at least one calculation unit is used for executing neural network calculation; and the control unit is connected with the at least one storage unit and the at least one calculation unit for obtaining the operation instruction stored by the at least one storage unit via the at least one storage unit, and analyzing the operation instruction to control the at least one calculation unit, wherein the arithmetic data adopts a form of the efficient multiplex data stream. By use of the processor, the efficient multiplex data stream is adopted in a neural network processing process, a weight and data only need to be loaded into one row of calculation unit in a calculation unit array each time, the bandwidth of data on chip is lowered, a data sharing rate is improved, and energy efficiency is improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

ARQ mechanism able to automatically request retransmissions for multiple rejections

InactiveCN1165129CSupport bi-directional transmissionImprove confirmation efficiencyError prevention/detection by using return channelNetwork traffic/resource managementComputer hardwareCommunications system

This invention relates to ARQ mechanism for data link layer error control in the wireless data communication system, and reforms the unreliable wireless physical link into the reliable data logical link to provide the reliable data transmission sphere for the network layer. Compared with the existing ARQ mechanism, this invention greatly increases the acknowledgement rate, yet does not reduce the throughput rate, greatly lowers the data band-width of acknowledgement, and therefore more data band-width can send the backward data to support two-way data transmission. By fixing the length bit map and relative bias, in accordance with error data PDU distribution, this invention automatically select the acknowledgement type with the highest acknowledgement efficiency to confirm the part of data PDU that need the most acknowlegement in the reception window.

Owner:武汉汉网高技术有限公司

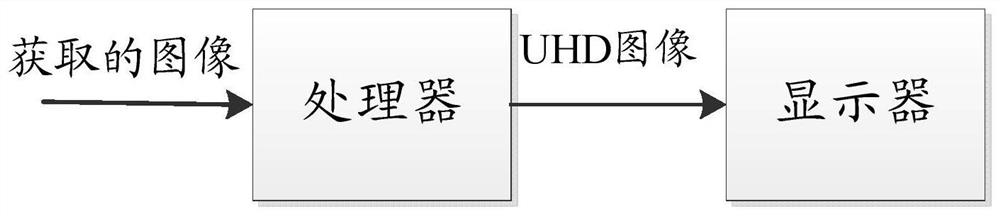

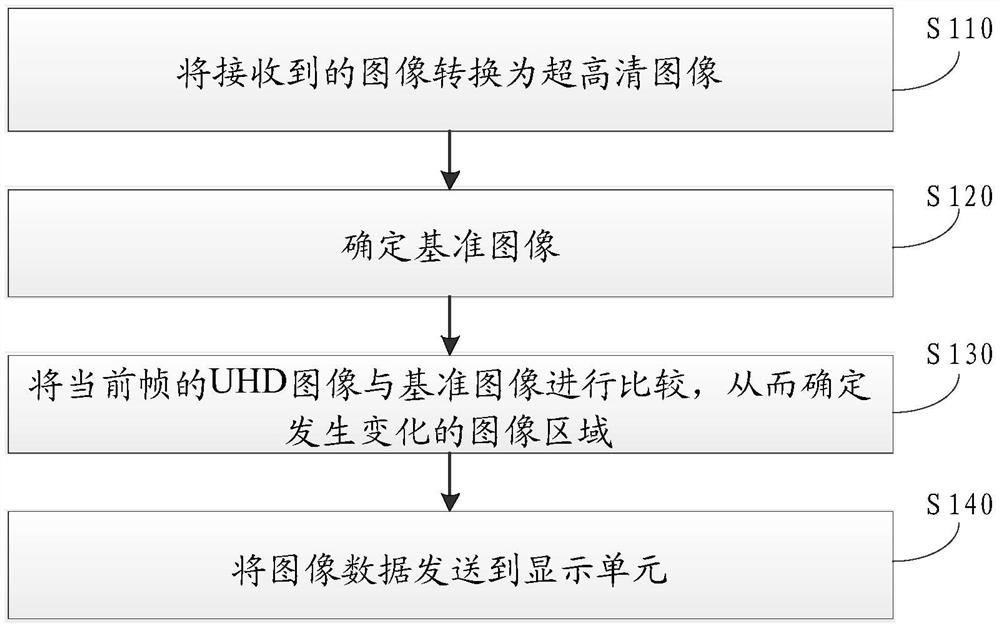

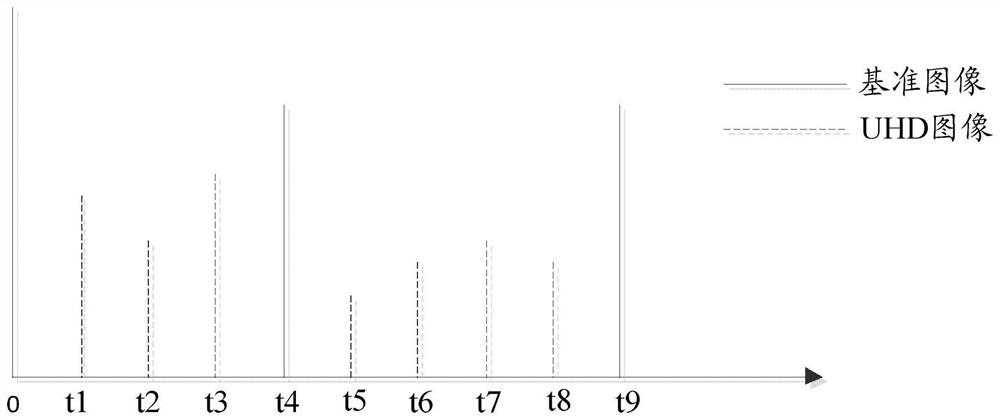

Image processing method, device and system

ActiveCN108156409BReduce bandwidth requirementsReduce the amount of data transferredVideo signal spatial resolution conversionConversion with high definition standardImaging processingRadiology

The invention provides an image processing method, device and system. The image processing method comprises the steps that received images are converted into an ultra high-definition image; a reference image is determined on the basis of the ultra high-definition image obtained after conversion and sent to a display unit to be displayed; the current-frame ultra high-definition image is compared with the reference image to determine an image area, generating changes compared with the reference image, in the current-frame ultra high-definition image; and image data corresponding to the image area is sent to the display unit to allow the display unit to update the currently-displayed reference image according to the image data. According to the method, the transmitted data amount is decreased, the requirement on the data bandwidth is lowered, and the refreshing rate is increased.

Owner:HANGZHOU HIKVISION DIGITAL TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com