Patents

Literature

160 results about "Mobile robot navigation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

For any mobile device, the ability to navigate in its environment is important. Avoiding dangerous situations such as collisions and unsafe conditions (temperature, radiation, exposure to weather, etc.) comes first, but if the robot has a purpose that relates to specific places in the robot environment, it must find those places. This article will present an overview of the skill of navigation and try to identify the basic blocks of a robot navigation system, types of navigation systems, and closer look at its related building components.

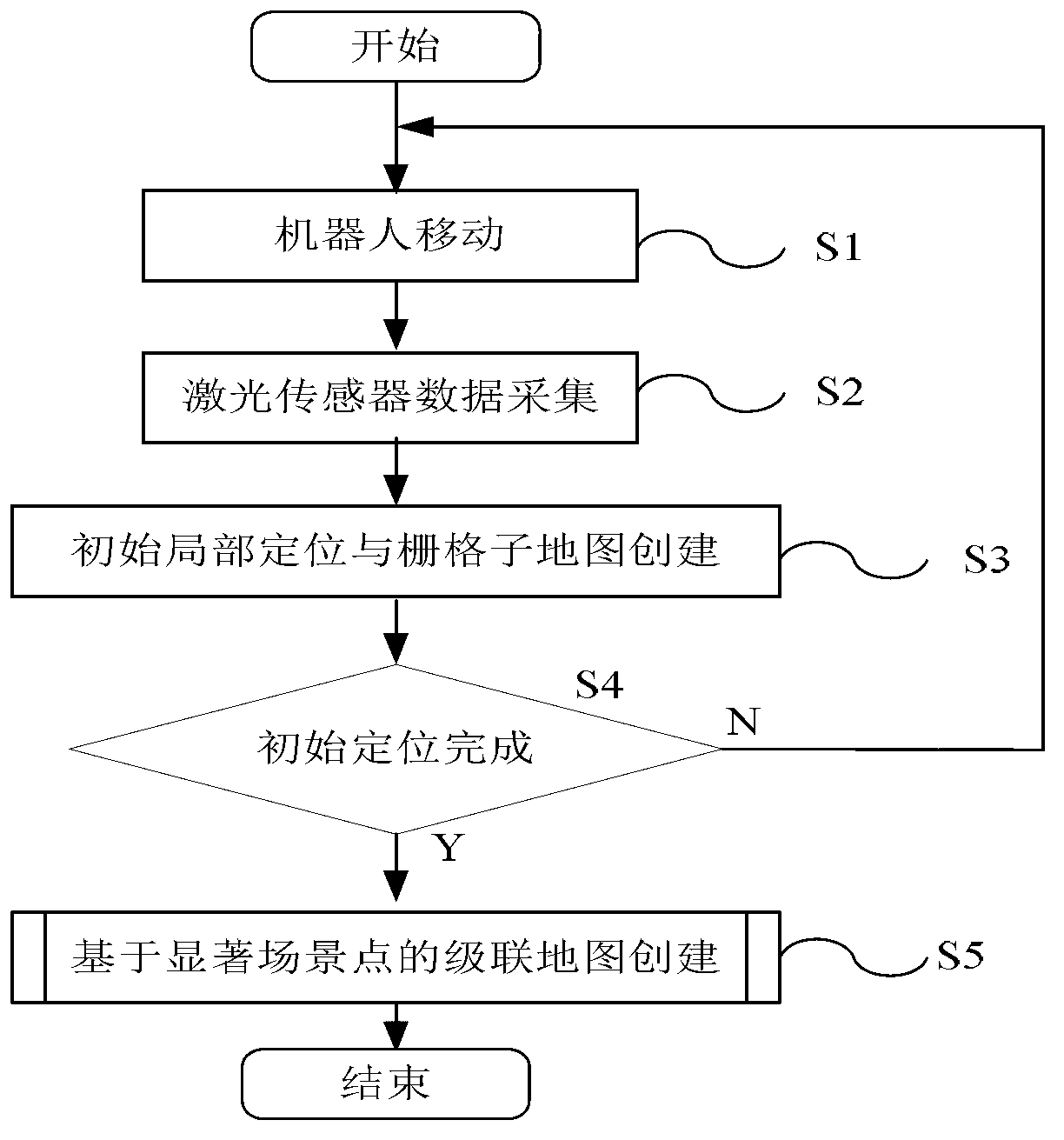

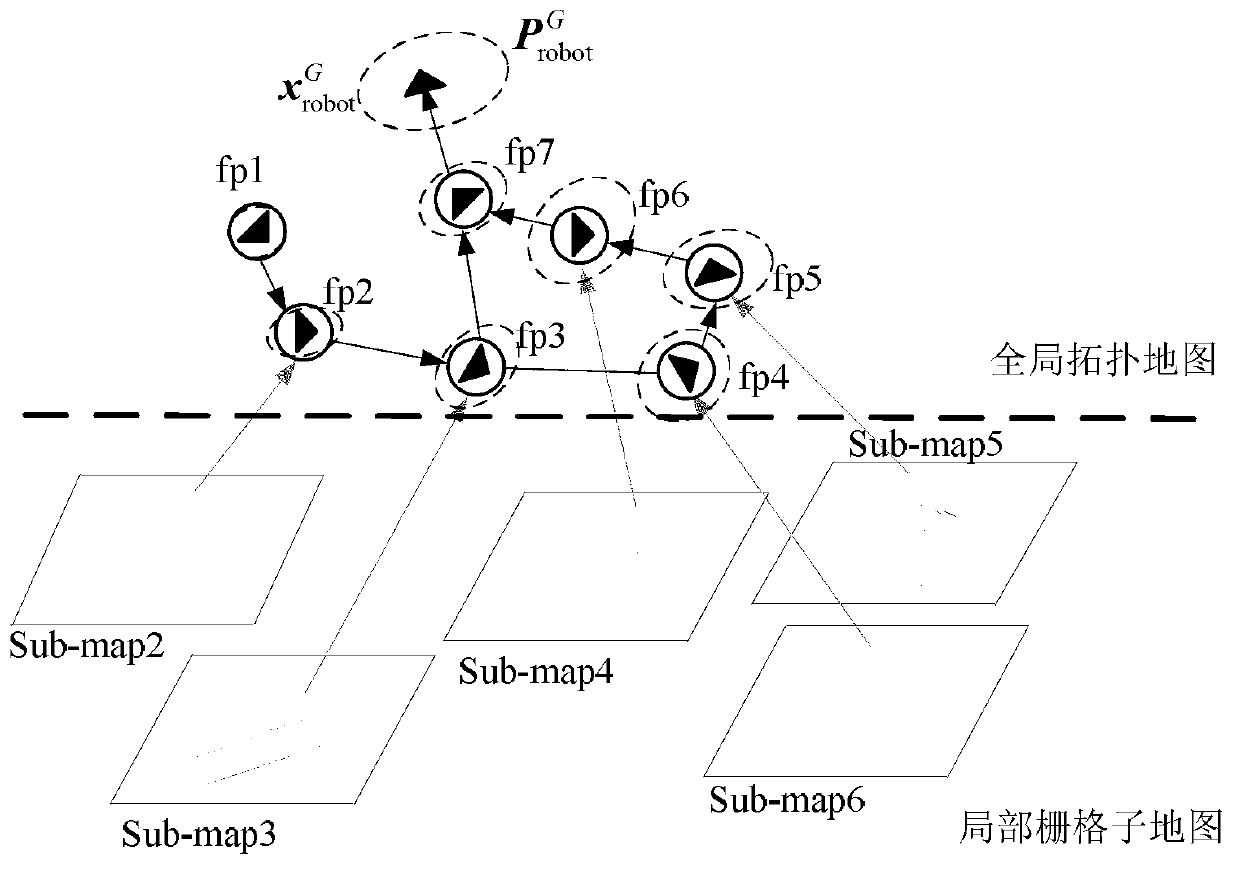

Mobile robot cascading map building method based on remarkable scenic spot detection

InactiveCN103278170ARealize online creationSolve the key problems of automatic segmentationInstruments for road network navigationImage analysisComputer scienceMobile robot navigation

The invention relates to the technical field of mobile robot navigation and discloses a mobile robot cascading map building method based on remarkable scenic spot detection. The mobile robot cascading map building method comprises the steps of: 1) according to image data collected by a mobile robot sensor, carrying out on-line detection on a road sign of a natural scene corresponding to a remarkable scene so as to generate a topological node in a global map; 2) updating the pose of a mobile robot and a local grid map; and 3) building a global topological map structure by taking a remarkable scenic spot as the topological node, and optimizing the topological structure by introducing a weighted scanning and matching method and a relaxation method on the basis of the closed detection of trajectory of the robot to ensure the global consistency of the topological map. The mobile robot cascading map building method is applicable to the autonomous path planning and navigation application for various mobile robots in large indoor environment ranges such as a plurality of rooms and corridors.

Owner:SOUTHEAST UNIV

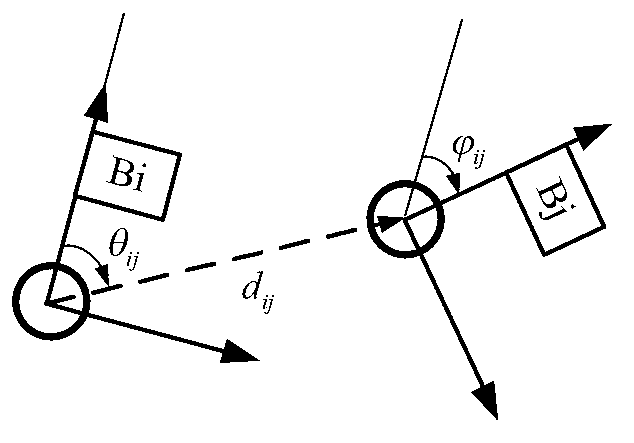

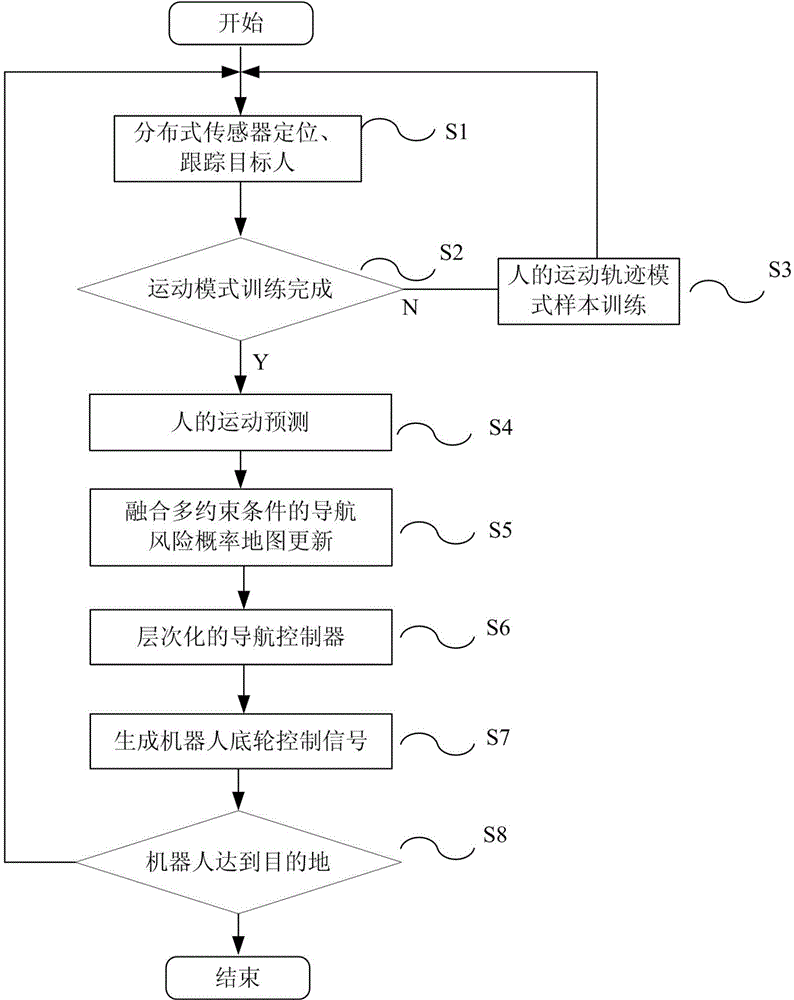

Service mobile robot navigation method in dynamic environment

InactiveCN103558856AEnsure predictabilityImprove securityPosition/course control in two dimensionsLocation trackingLaser sensor

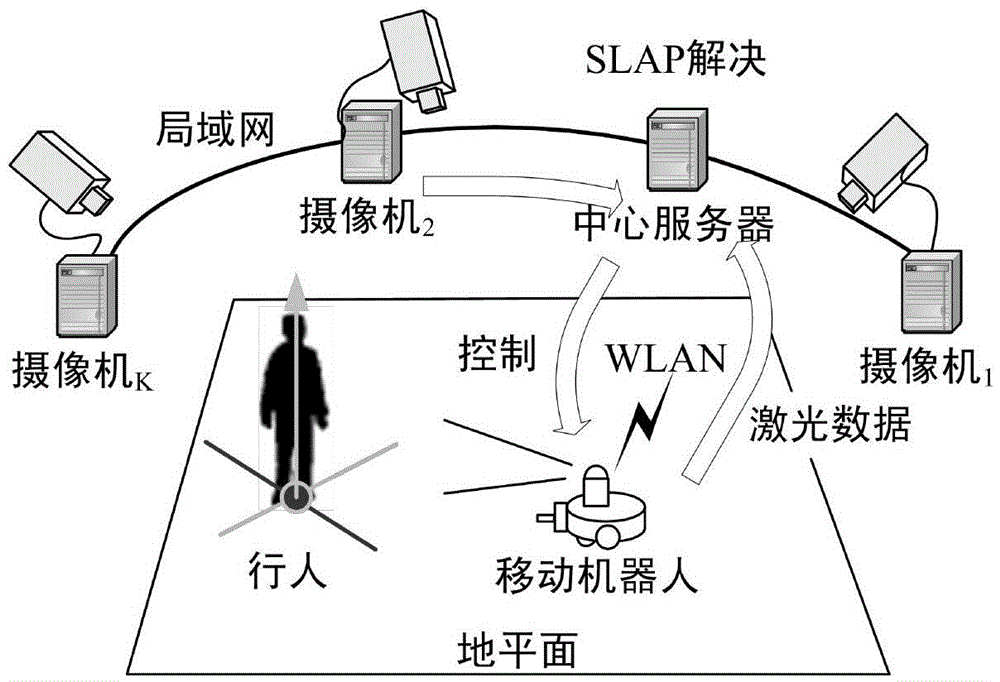

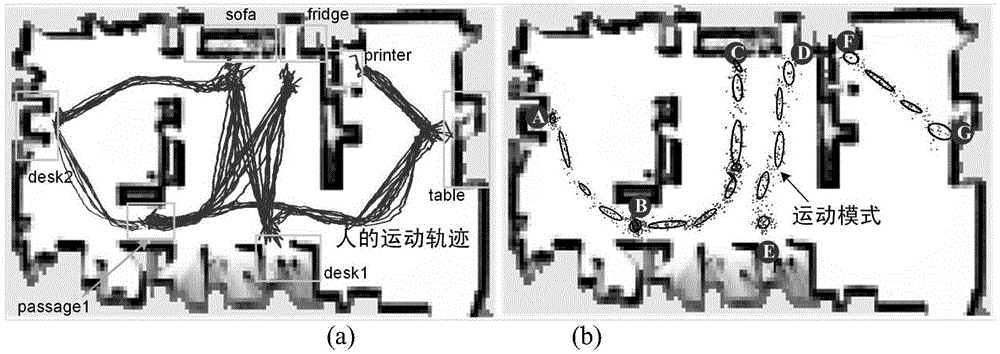

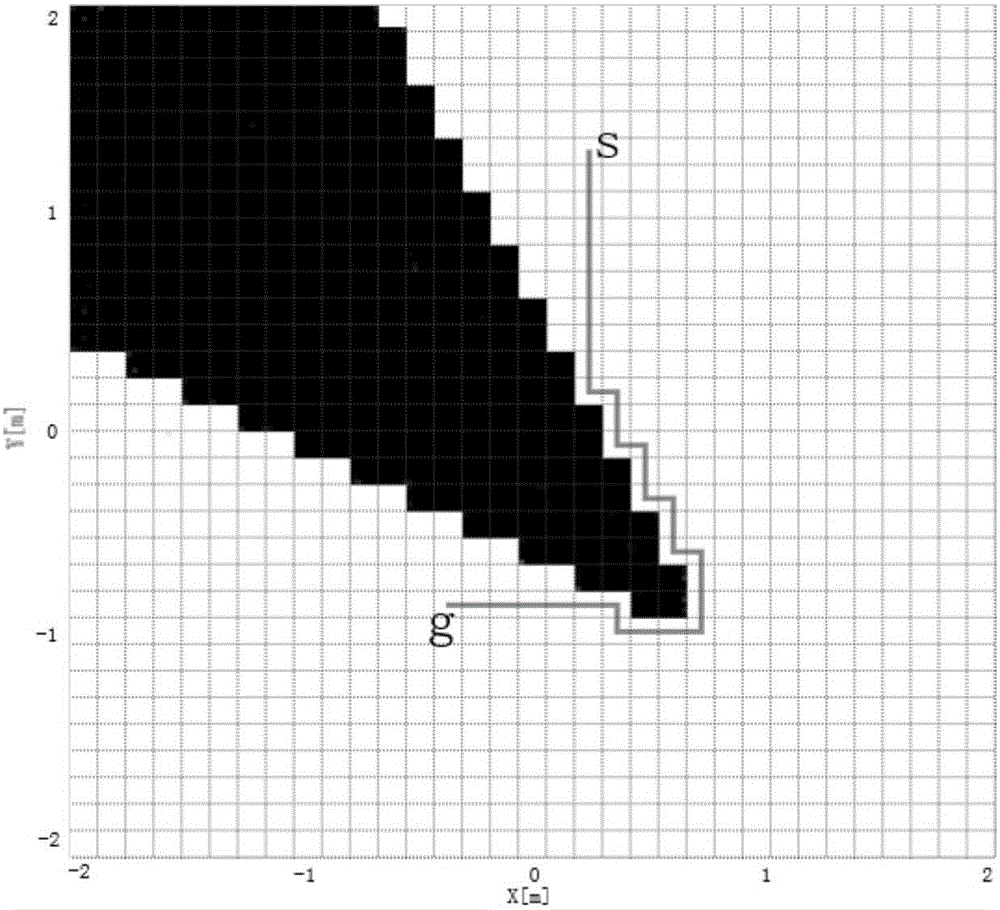

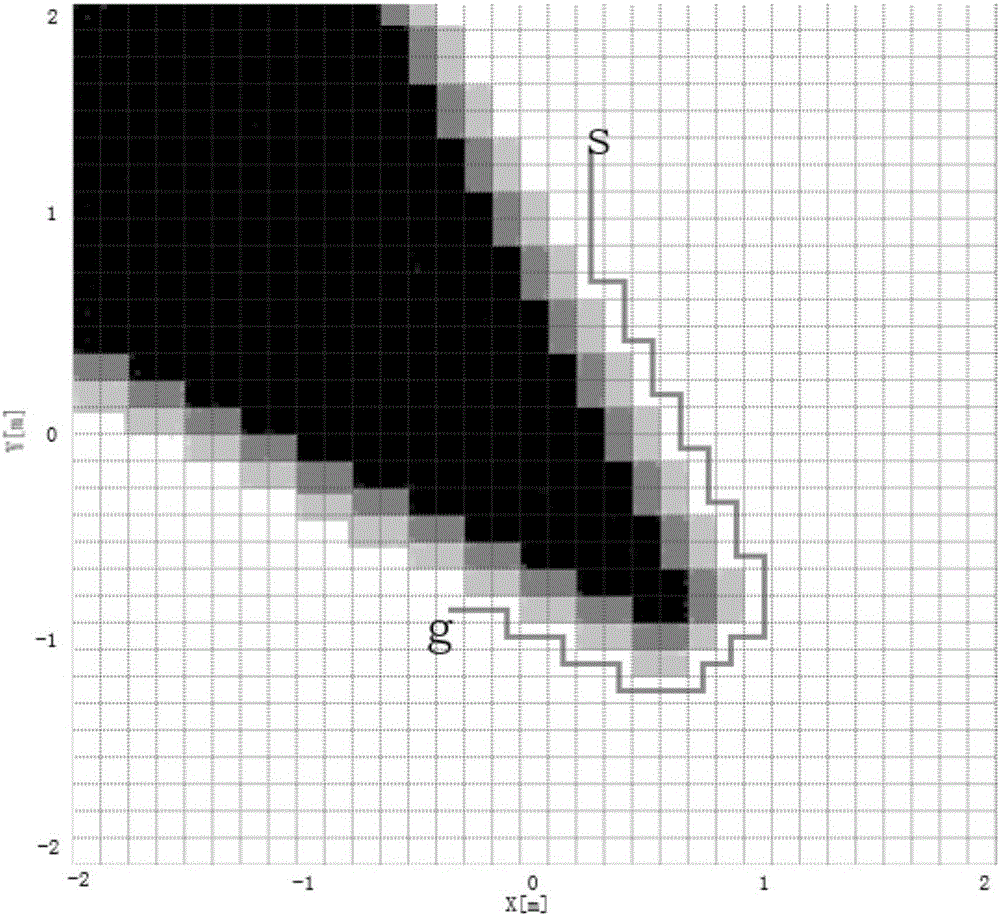

The invention relates to the technical field of mobile robot autonomous navigation and discloses a service mobile robot navigation method in a dynamic environment. The service mobile robot navigation method in the dynamic environment includes the following steps that firstly, position tracking of people can be achieved by utilizing multiple global cameras and robot vehicle-mounted laser sensors in an indoor environment; secondly, the moving mode of people under a specific indoor environment site is trained according to collected samples, and the moving trend of people is predicated; thirdly, a current position and a predicated position of people are merged with an environment static obstacle raster map, and a navigation risk probability map is generated; fourthly, a robot navigation movement controller of a global route planning-local obstacle avoidance control gradational structure is adopted to control robot navigation behavior, and safe and efficient navigation behavior of a robot under a complex dynamic environment where the robot coexists with people is ensured through controlling.

Owner:SOUTHEAST UNIV

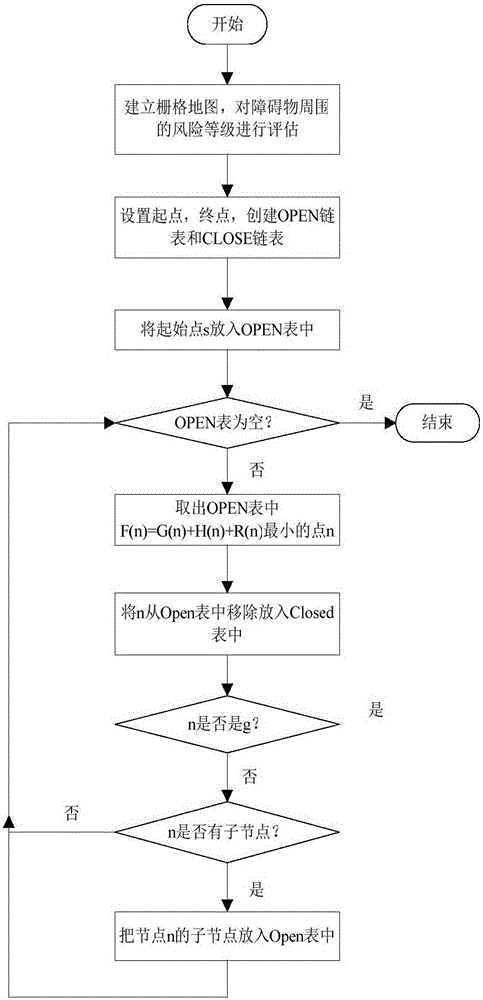

AGV path tracking and obstacle avoiding coordination method based on A* extraction guide point

The invention discloses an AGV path tracking and obstacle avoiding coordination method based on an A* extraction guide point, and relates to the field of mobile robot navigation. The method can achieve the coordination of path tracking and obstacle avoiding. The method comprises the steps: planning a safe global path; building an initial grid map according to the environment information; carrying out the evaluation of the risk level of surrounding nodes of an obstacle avoiding object through a risk evaluation function R(n); obtaining a new safety grid map with a risk region; extracting a key path point from the global path obtained through planning. For the path tracking and obstacle avoiding coordination, the method employs a dynamic window based on a laser sensor for obstacle avoiding, takes the key path point as the guide point, carries out the updating of the guide point, and achieves the coordination of the path tracking and obstacle avoiding.

Owner:XIAMEN UNIV

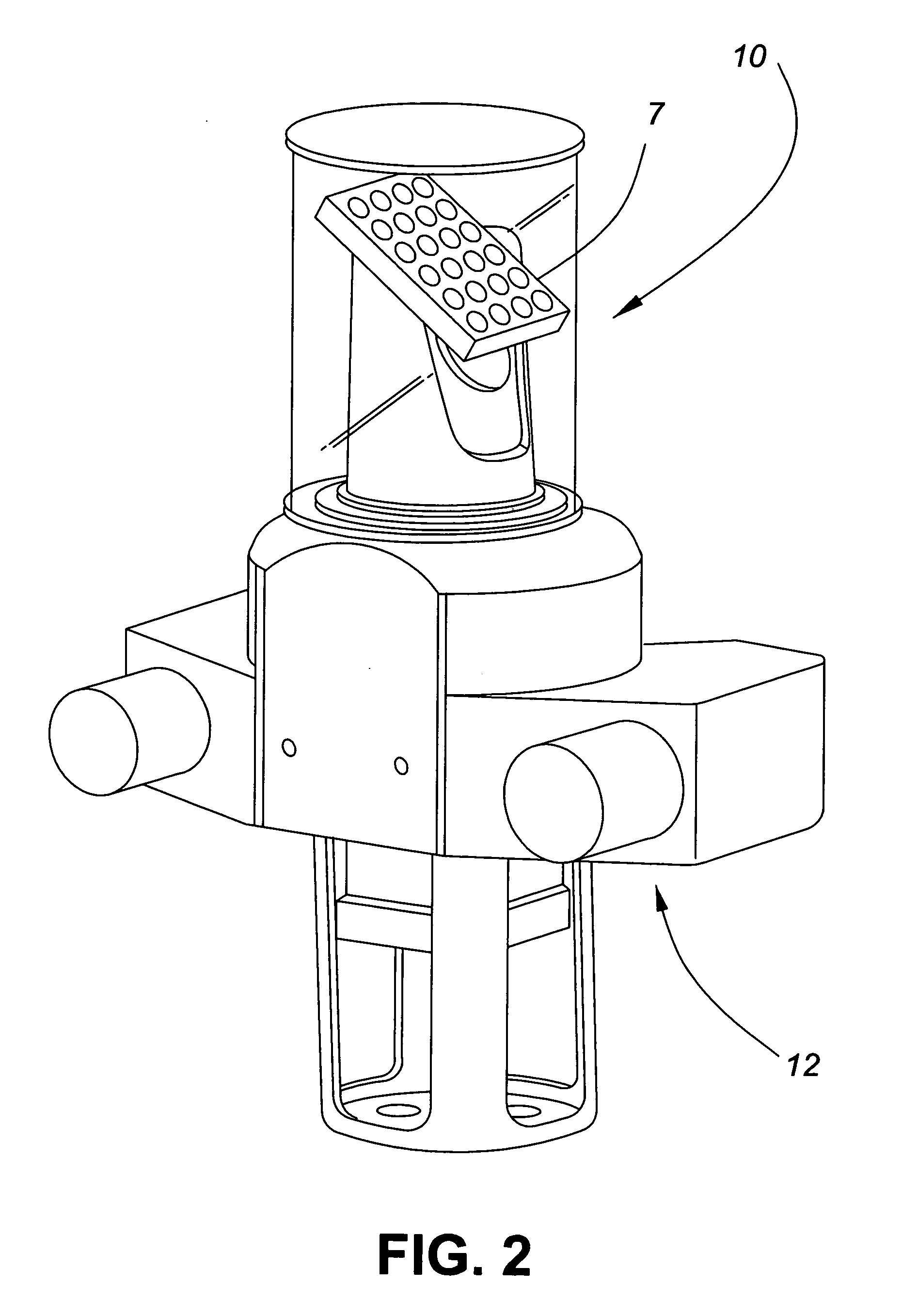

Volumetric sensor for mobile robotics

A volumetric sensor for mobile robot navigation to avoid obstacles in the robot's path includes a laser volumetric sensor on a platform with a laser and detector directed to a tiltable mirror in a cylinder that is rotatable through 360° by a motor, a rotatable cam in the cylinder tilts the mirror to provide a laser scan and distance measurements of obstacles near the robot. A stereo camera is held by the platform, that camera being rotatable by a motor to provide distance measurements to more remote objects.

Owner:HER MAJESTY THE QUEEN AS REPRESENTED BY THE MINIST OF NAT DEFENCE OF HER MAJESTYS CANADIAN GOVERNMENT

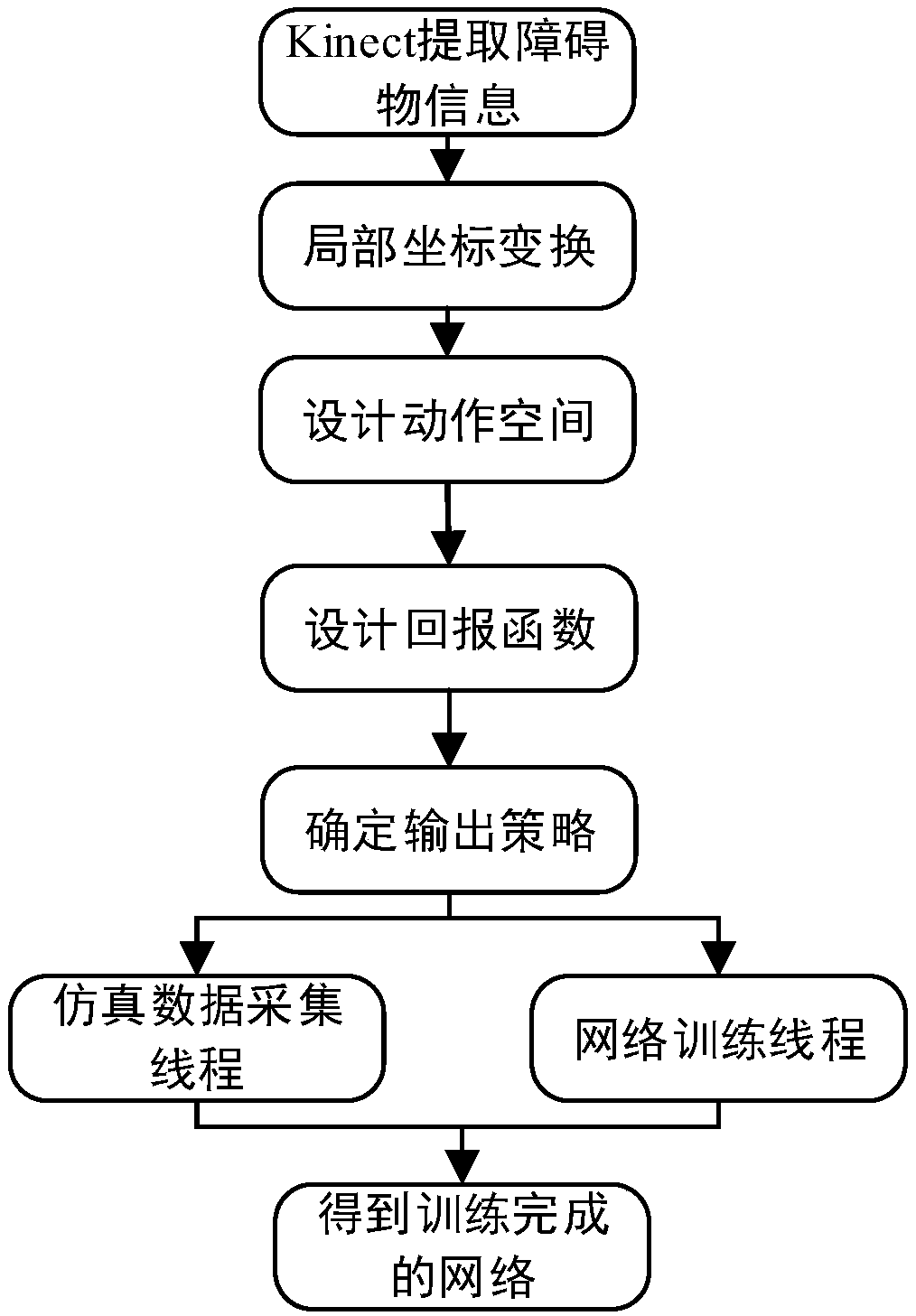

Mobile robot obstacle avoidance method based on DoubleDQN network and deep reinforcement learning

ActiveCN109407676AOvercoming success rateOvercoming the problem of high response latencyNeural architecturesPosition/course control in two dimensionsData acquisitionSimulation

The invention, which belongs to the technical field of mobile robot navigation, provides a mobile robot obstacle avoidance method based on a DoubleDQN network and deep reinforcement learning so that problems of long response delay, long needed training time, and low success rate of obstacle avoidance based on the existing deep reinforcement learning obstacle avoidance method can be solved. Specialdecision action space and a reward function are designed; mobile robot trajectory data collection and Double DQN network training are performed in parallel at two threads, so that the training efficiency is improved effectively and a problem of long training time needed by the existing deep reinforcement learning obstacle avoidance method is solved. According to the invention, unbiased estimationof an action value is carried out by using the Double DQN network, so that a problem of falling into local optimum is solved and problems of low success rate and high response delay of the existing deep reinforcement learning obstacle avoidance method are solved. Compared with the prior art, the mobile robot obstacle avoidance method has the following advantages: the network training time is shortened to be below 20% of the time in the prior art; and the 100% of obstacle avoidance success rate is kept. The mobile robot obstacle avoidance method can be applied to the technical field of mobilerobot navigation.

Owner:HARBIN INST OF TECH +1

Mobile robot navigation method based on binocular camera and two-dimensional laser radar

ActiveCN108663681AAchieve integrationNavigational calculation instrumentsElectromagnetic wave reradiationRoute planningHeuristic (computer science)

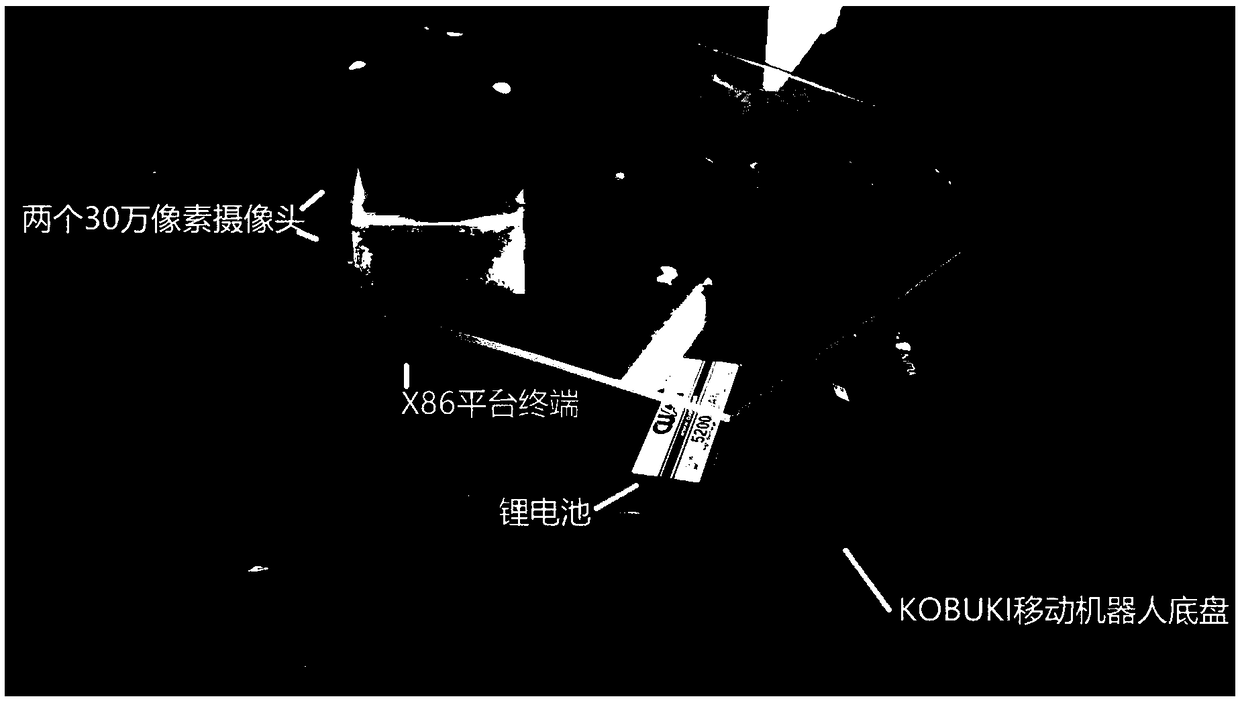

The invention discloses a mobile robot navigation method based on a binocular camera and a two-dimensional laser radar. The mobile robot navigation method includes the steps that a two-dimensional grid map model is built; a mobile-robot pose model is built; a laser radar data model is built; a joint calibration model of the binocular camera and the laser radar is established; route planning and obstacle avoidance navigation are carried out with the Dijkstra algorithm and the A-Star algorithm. According to the mobile robot navigation method, the relative position and the relative orientation ofthe binocular camera and the two-dimensional laser radar can be determined with the triangle joint calibration method; in the known-environment two-dimensional grid map environment, the binocular camera can be used for detecting barriers higher than and lower than the two-dimensional laser radar plane, the barriers can be mapped into an environment map, through the shortest path algorithm and heuristic search optimization, a navigation path line capable of avoiding the barriers is obtained, and a mobile robot can reach the destination along a path capable of avoiding the barriers in the movement process.

Owner:SOUTH CHINA UNIV OF TECH

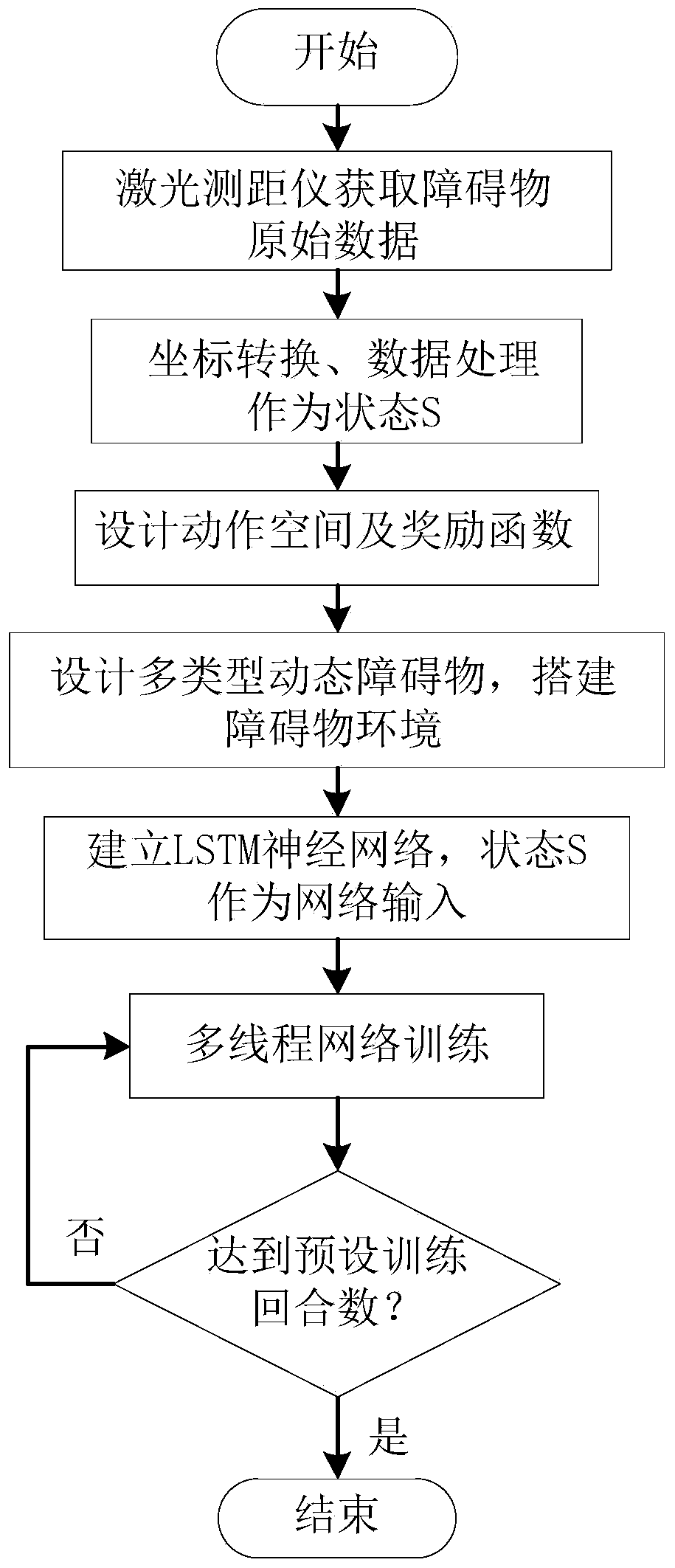

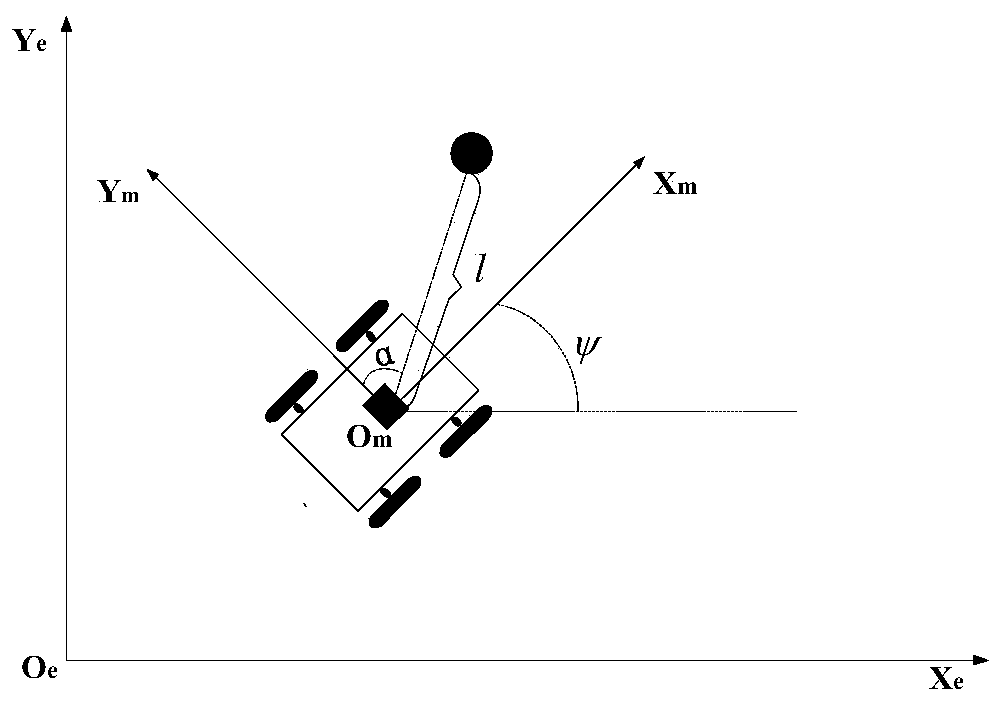

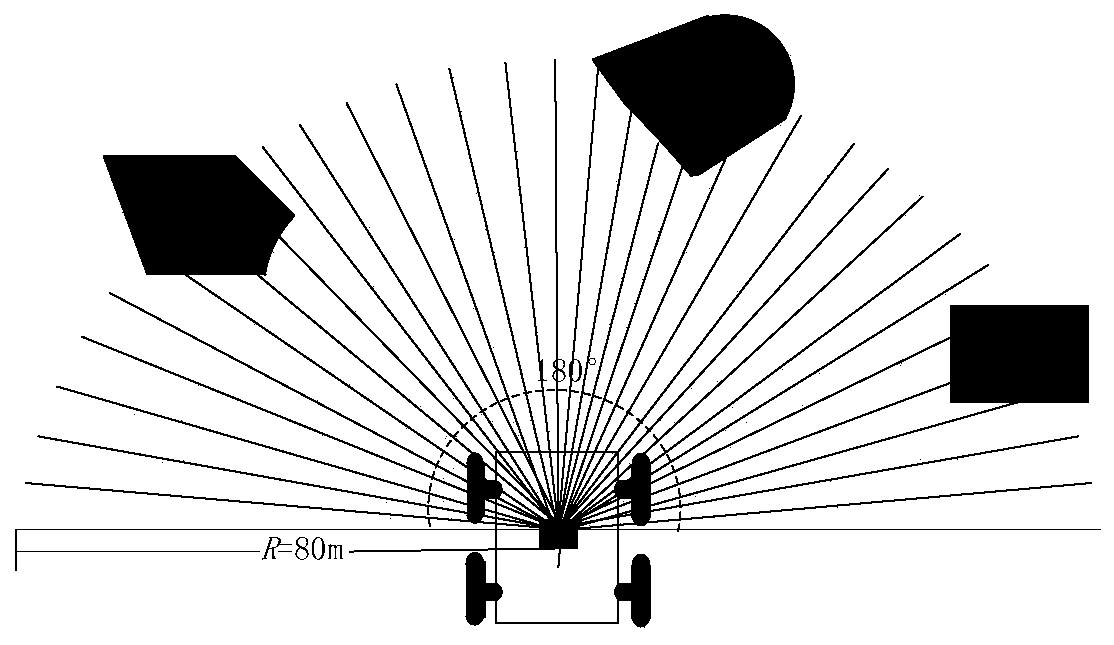

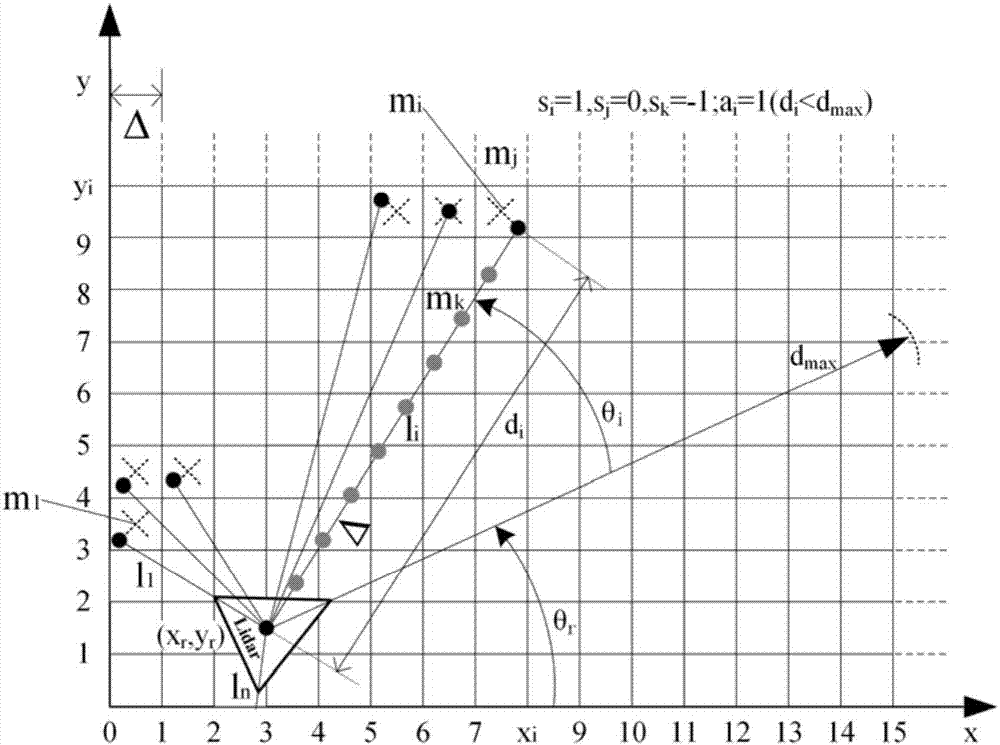

Collision avoidance planning method for mobile robots based on deep reinforcement learning in dynamic environment

ActiveCN110632931AAdaptableImprove smoothnessPosition/course control in two dimensionsAlgorithm convergenceStudy methods

The invention discloses a collision avoidance planning method for mobile robots based on deep reinforcement learning in a dynamic environment, and belongs to the technical field of mobile robot navigation. The method of the invention includes the following steps of: collecting raw data through a laser rangefinder, processing the raw data as input of a neural network, and building an LSTM neural network; through an A3C algorithm, outputting corresponding parameters by the neural network, and processing the corresponding parameters to obtain the action of each step of the robot. The scheme of the invention does not need to model the environment, is more suitable for an unknown obstacle environment, adopts an actor-critic framework and a temporal difference algorithm, is more suitable for a continuous motion space while realizing low variance, and realizes the effect of learning while training. The scheme of the invention designs the continuous motion space with a heading angle limitationand uses 4 threads for parallel learning and training, so that compared with general deep reinforcement learning methods, the learning and training time is greatly improved, the sample correlation isreduced, the high utilization of exploration spaces and the diversity of exploration strategies are guaranteed, and thus the algorithm convergence, stability and the success rate of obstacle avoidance can be improved.

Owner:HARBIN ENG UNIV

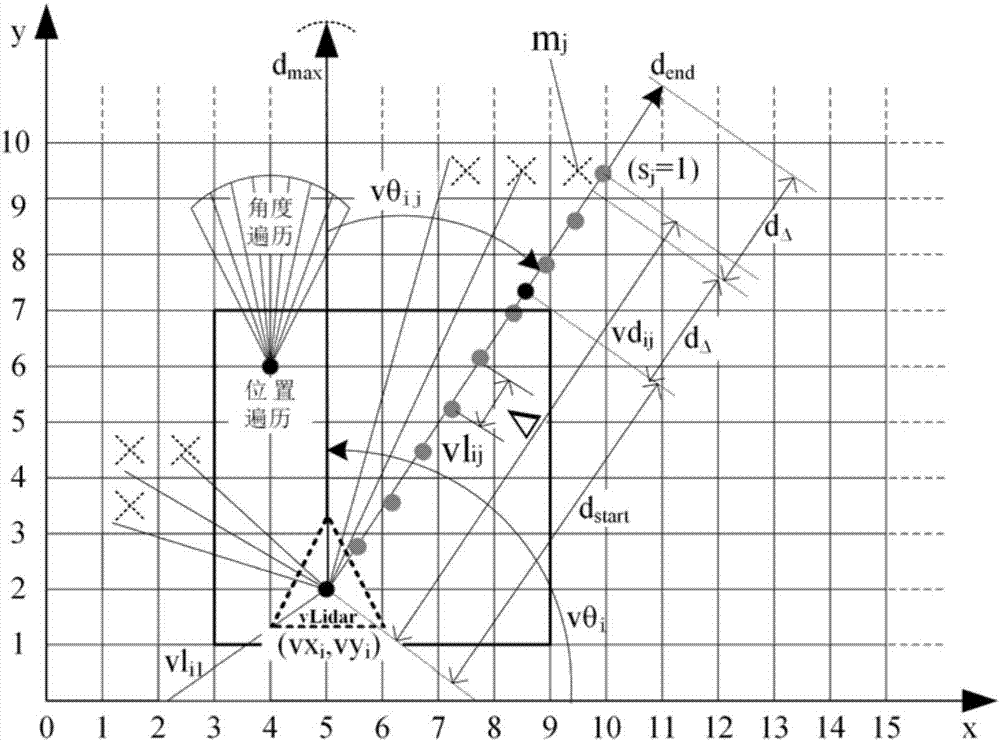

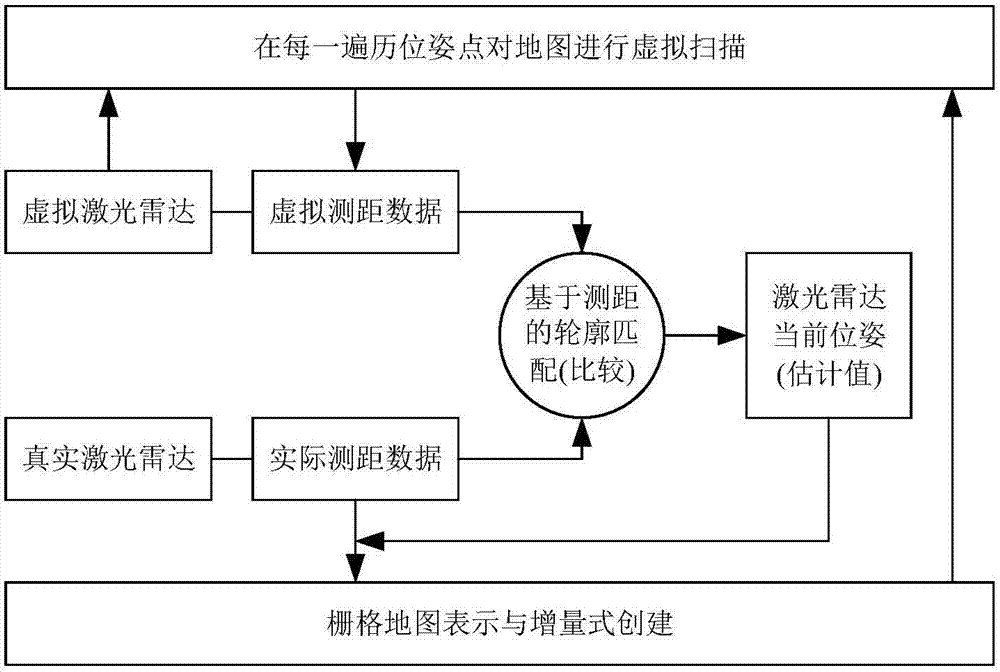

Virtual scanning and ranging matching-based AGV laser SLAM method

InactiveCN107239076AImprove real-time performanceIncrease flexibilityPosition/course control in two dimensionsTriangulationArtificial intelligence

The invention discloses a virtual scanning and ranging matching-based AGV laser SLAM method, and relates to mobile robot navigation and positioning. The method comprises a grid map representation and establishment method, a virtual scanning and matching positioning method and an algorithm instantaneity improving method. The method using a contour traversal matching principle comprises the specific steps of scanning a map by adopting a virtual laser radar at each traversal position, directly comparing virtually scanned data with data of a current laser radar, finding out optimal position information of an AGV robot and then incrementally building the map. For the problems that most of existing laser SLAM algorithms are aimed at a low-precision sensor, and the filtering, estimating and optimizing stability and the positioning accuracy cannot be absolutely ensured and the application requirements of the industrial AGV robot cannot be easily met, the problem of preliminary construction and calibration in navigation by adopting a reflector board and a triangulation principle can be solved by adopting multi-GPU parallel processing and changing an initial propulsion position of virtual ranging, and the flexibility, the reliability and the precision are improved.

Owner:仲训昱

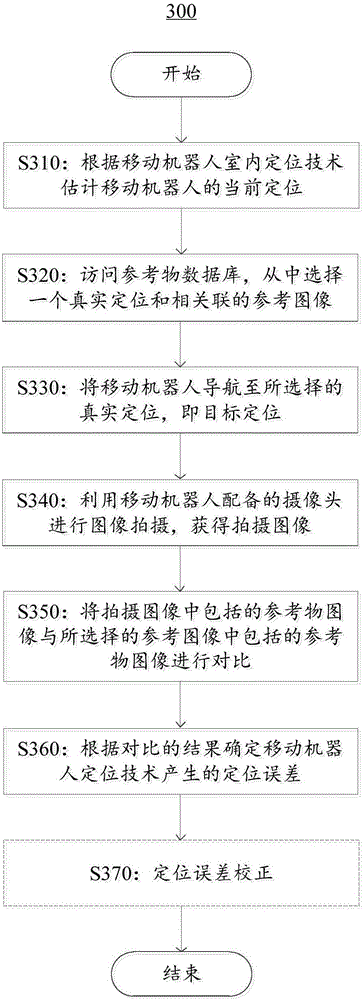

Method and device applied to indoor location of mobile robot

ActiveCN105865451ATimely detection of positioning errorsImage analysisNavigational calculation instrumentsReference imagePositioning technology

The embodiment of the invention relates to a method and a device applied to indoor location of a mobile robot. The method comprises the following steps: estimating the current location of the mobile robot according to the indoor location technique of the mobile robot; accessing a reference object database and selecting a real location and relevant reference images from the database; navigating the mobile robot to the selected real location; shooting images by using a camera matched with the mobile robot to obtain shot images; comparing reference object images included in the shot images with reference object images included in the selected reference images; and determining location errors generated by the indoor location technique of the mobile robot according to comparison results. Through the method and the device applied to indoor location of the mobile robot according to the embodiment of the invention, the indoor location of the mobile robot can be improved.

Owner:SMART DYNAMICS CO LTD

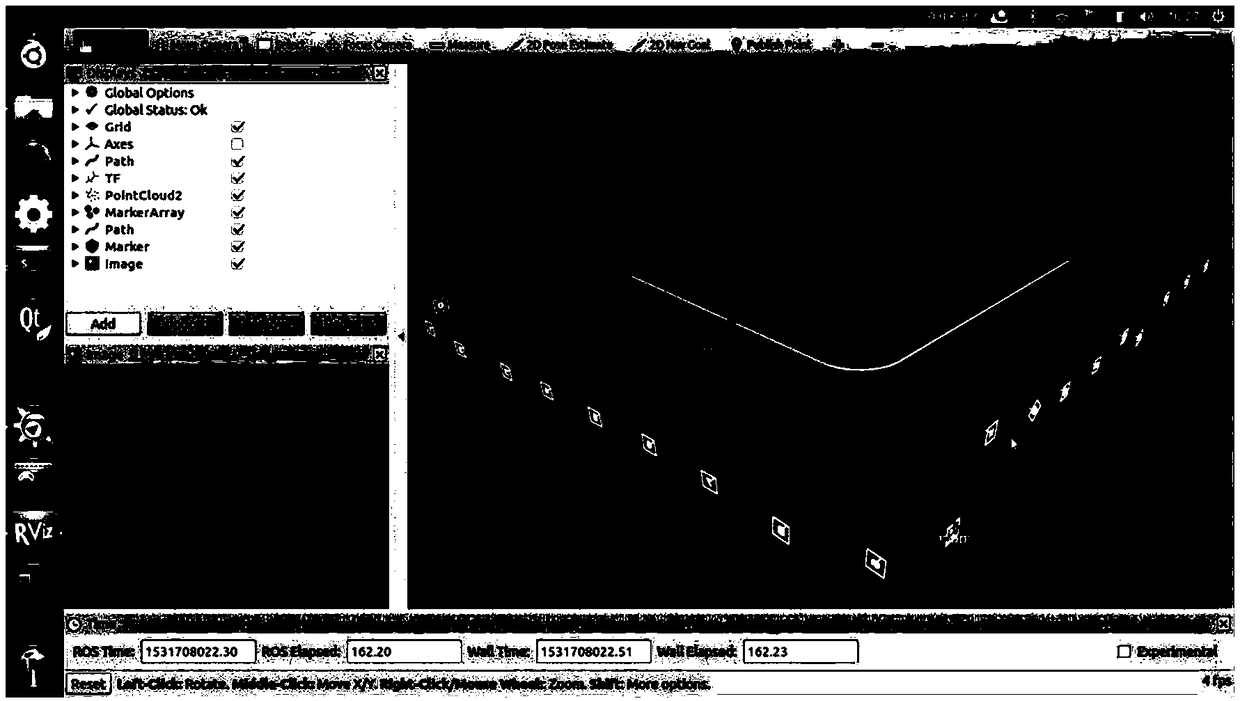

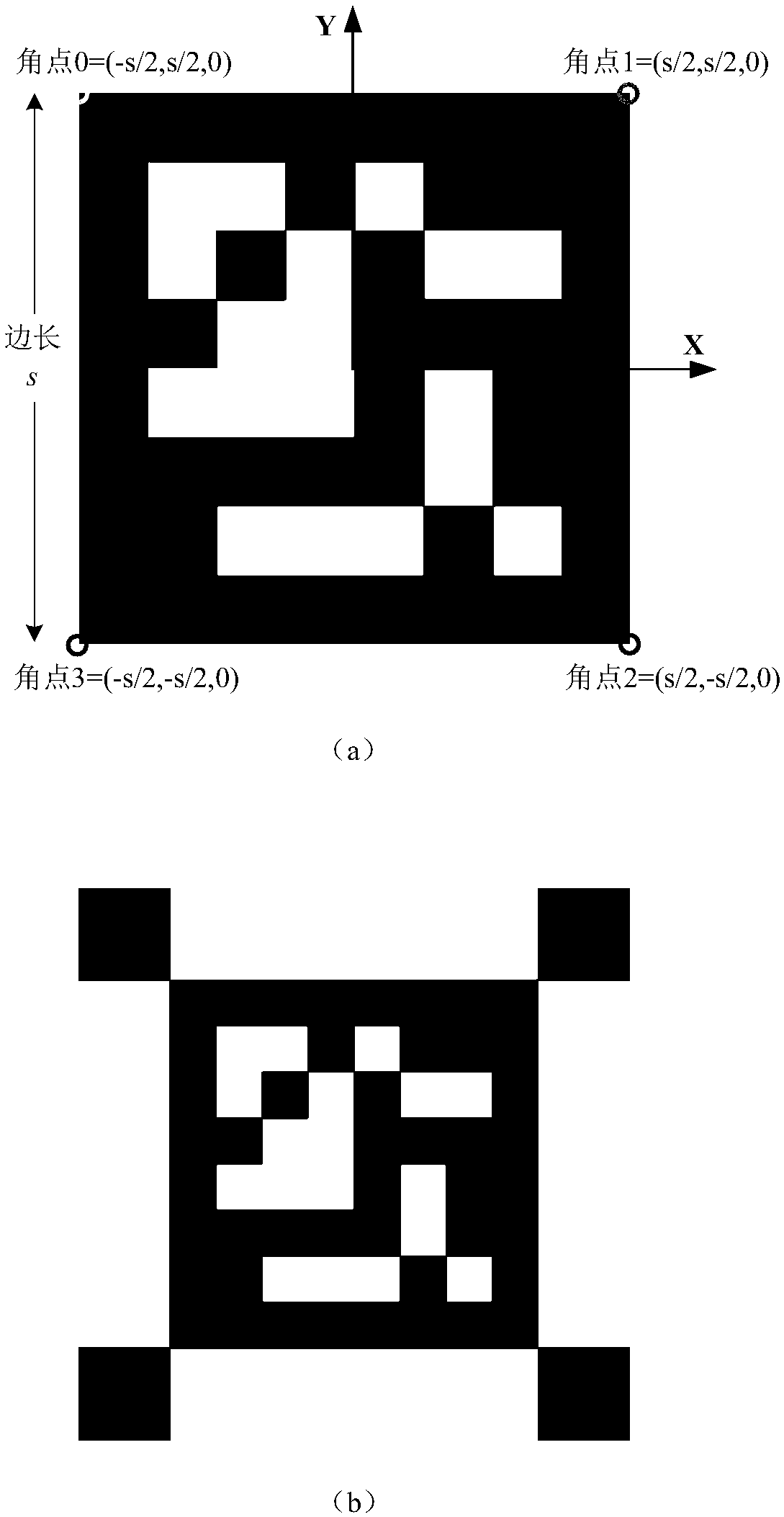

Mobile robot visual positioning and navigating method based on ArUco code

InactiveCN109374003AReasonable designGood effectNavigational calculation instrumentsThree-dimensional spaceMobile device

The invention discloses a mobile robot visual positioning and navigating method based on an ArUco code and belongs to the fields of computer visual positioning and mobile robot navigation. The mobilerobot visual positioning and navigating method comprises the following steps: map creation; route creation; and positioning and navigating. By using the method disclosed by the invention, not only arethe defects such as strong dependency on auxiliary physical condition, high environmental requirement and inflexibility of a traditional visual navigation way overcome, but also a traditional visualnavigation method only suitable for a ground mobile robot is widened to a three-dimensional space, so that the mobile robot visual positioning and navigating method is very suitable for positioning and navigating an aerial unmanned aerial vehicle; and the mobile robot visual positioning and navigating method has various advantages such as high precision, high flexibility and wide application rangeand can be used for autonomously positioning and navigating intelligent mobile equipment such as mobile robots and unmanned aerial vehicles in an indoor environment, so that the application scenes are widened.

Owner:SHANDONG UNIV OF SCI & TECH

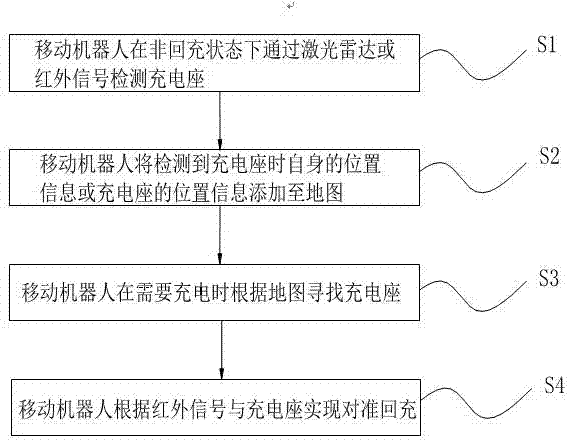

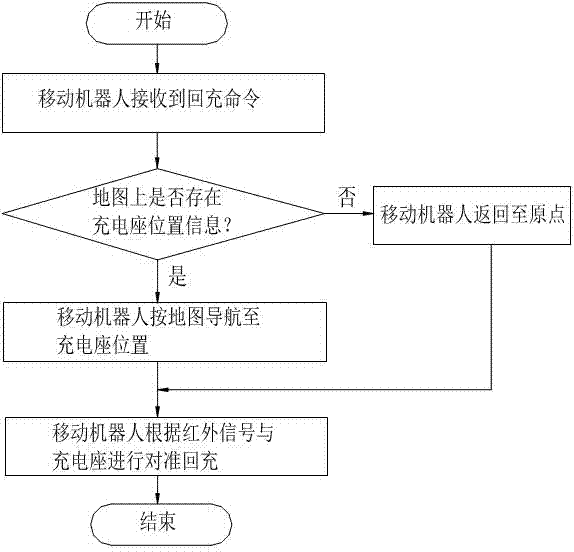

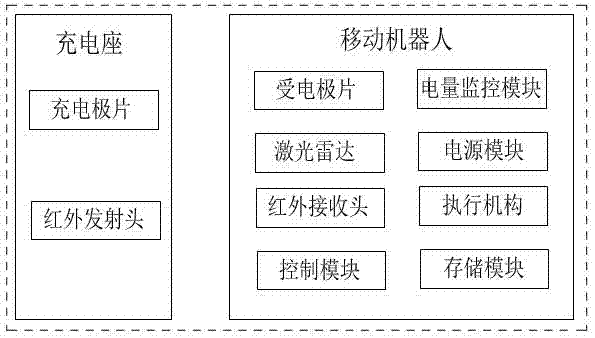

Charging method and charging system of mobile robot

PendingCN107124014AQuick navigationRecharge quicklyBatteries circuit arrangementsElectric powerRadarEngineering

The invention discloses a charging method of a mobile robot. The charging method comprises the following steps of S1, detecting a charging seat by a laser radar or an infrared signal when the mobile robot is in a non charging-back state; S2, adding position information of the mobile robot when the charging seat is detected or position information of the charging seat into a map by the mobile robot; S3, seeking a position of the charging seat by the mobile robot according to the map; and S4, achieving aligned charging-back with the charging seat by the mobile robot according to an infrared charging-back signal. The position information of the mobile robot or the position information of the charging seat when the charging signal is detected is added into the map, the charging seat is sought according to the map information when charging-back is required by the mobile robot, the mobile robot can be rapidly navigated to a part near to the charging seat, alignment is performed by an infrared positioning mode, and the charging method has the advantages of rapid charging-back and high alignment accuracy. Moreover, the invention also discloses a charging system of the mobile robot.

Owner:SHEN ZHEN 3IROBOTICS CO LTD

Multi-grid value navigation method based on robot pose and application thereof

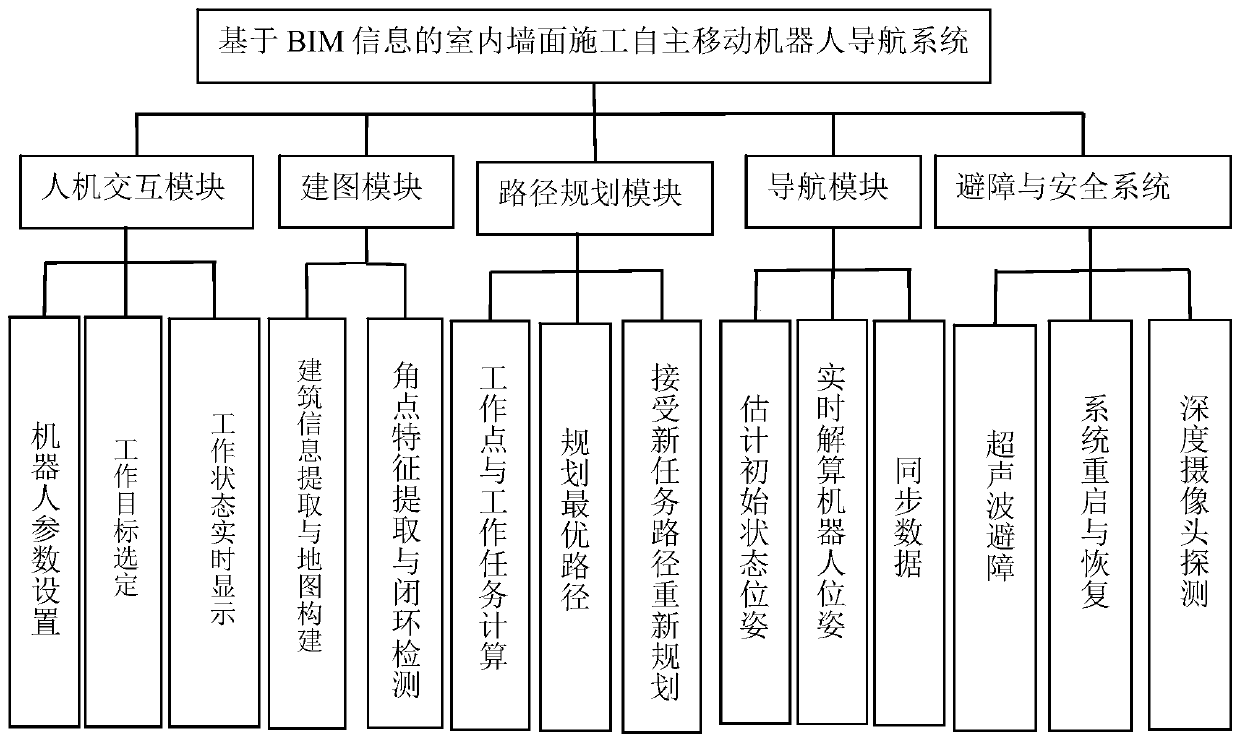

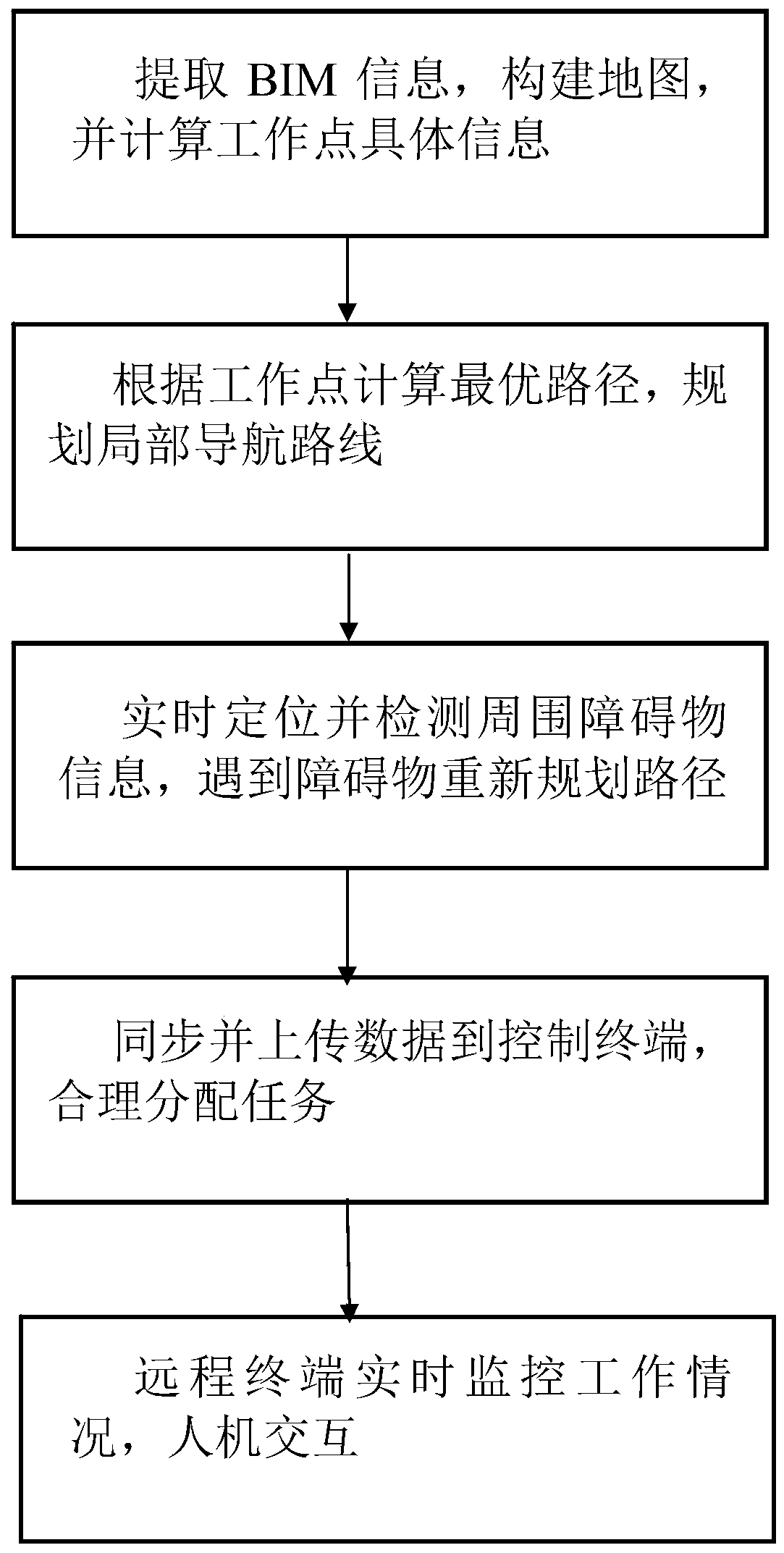

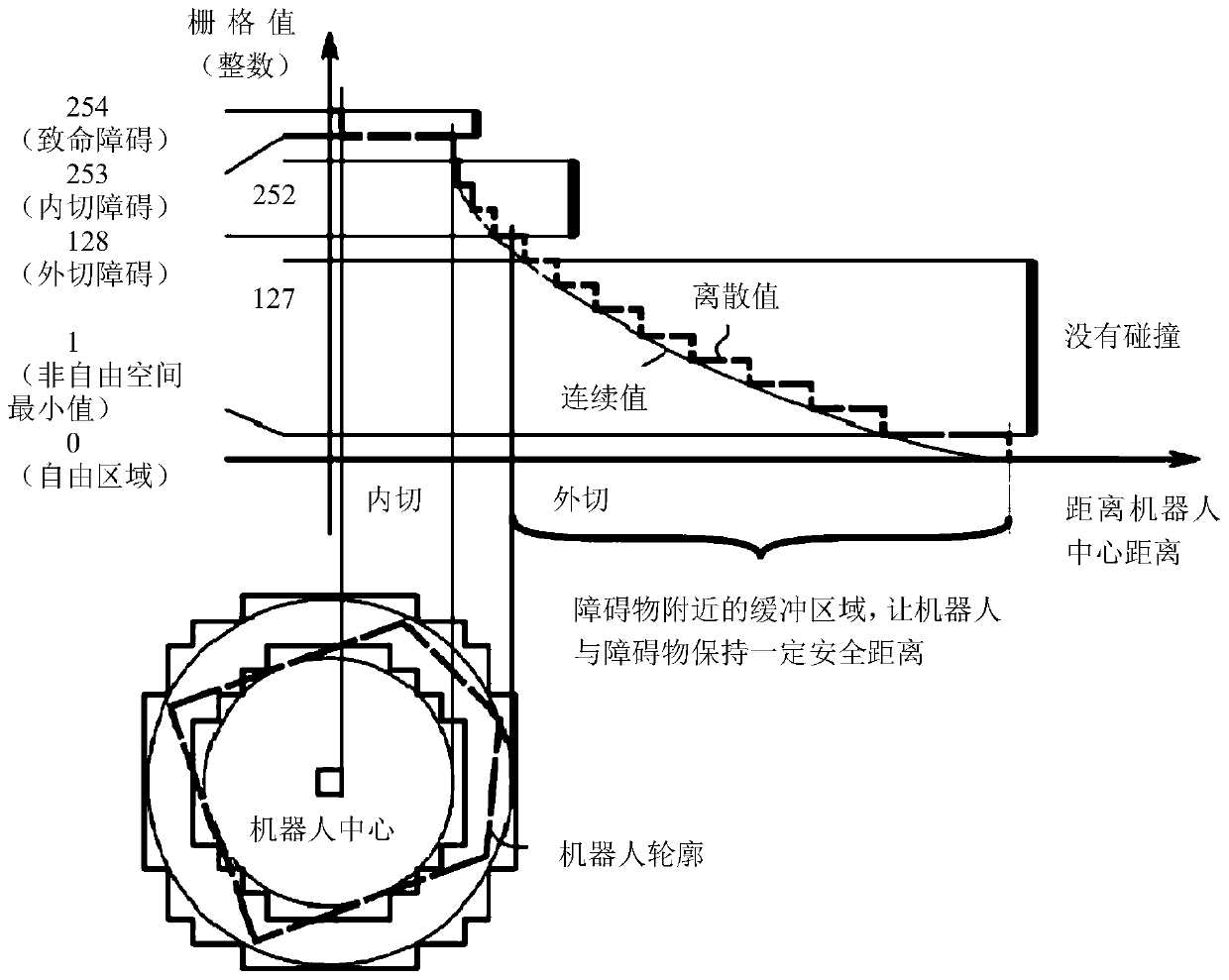

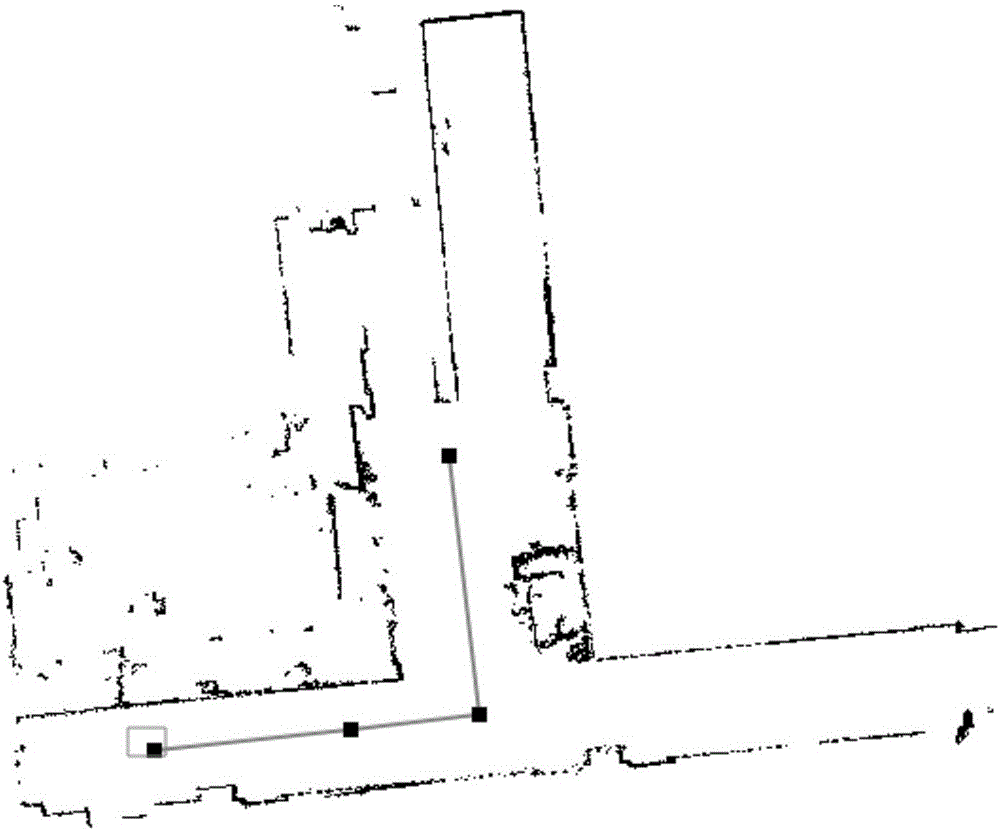

ActiveCN109916393AExpand the passable areaImprove connectivityNavigation by speed/acceleration measurementsElectromagnetic wave reradiationWork taskComputer science

The invention discloses a multi-grid value navigation method based on the robot pose and an application thereof and belongs to the technical field of robot navigation. The method is characterized in that the accessible area of the robot in the grid map is expanded, and connectivity of topology maps is enhanced, moreover, through the indoor wall construction autonomous mobile robot navigation method based on the BIM information, working points are calculated based on the extracted BIM information, according to the position information of the working points and the order of work tasks, the multi-grid value navigation method based on the robot pose is utilized to set local navigation routes between the adjacent working points; real-time positioning is performed, and the obstacle information around an indoor wall construction autonomous mobile robot is detected, the grid map is updated, and the local navigation routes between the adjacent working points are reset based on the updated gridmap. The method is advantaged in that navigation work efficiency and accuracy are improved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

Mobile robot navigation control method based on laser data

InactiveCN106681320ACreate fastAccurate Navigation ControlPosition/course control in two dimensionsShort path algorithmSimulation

The invention discloses a mobile robot navigation control method based on laser data, comprising the steps of 1, adding and setting a path in a global map; 2, acquiring real-time positioning information of a mobile robot through iterative matching algorithm; 3, planning a shortest path required by navigation of the mobile robot through shortest path algorithm; 4, navigating the mobile robot to run along the planned path through left and right wheel differential speed control; 5, enabling the mobile robot to park accurately at a target parking point on the path through deceleration control. The mobile robot navigation control method based on laser data has the advantages that data acquisition is simple and needs no extra laying of a landmark, the cost is low, positioning precision is high, and the mobile robot is allowed to be autonomously positioned and navigate-controlled in indoor and outdoor environments.

Owner:ZHEJIANG UNIV

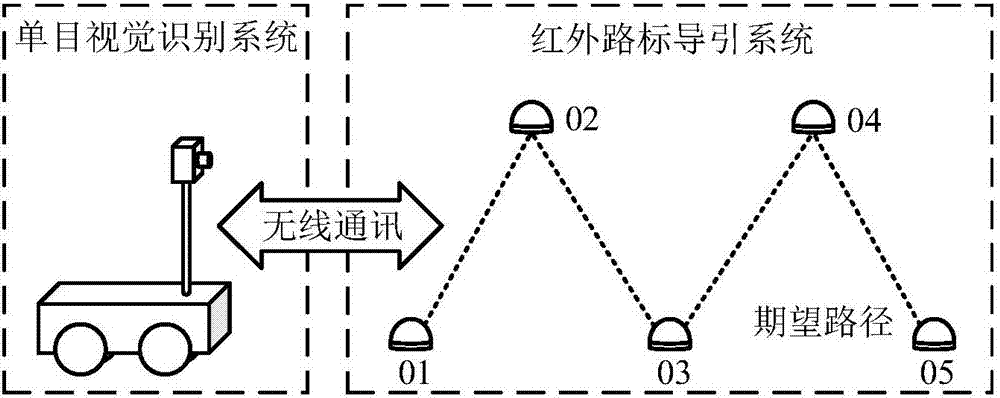

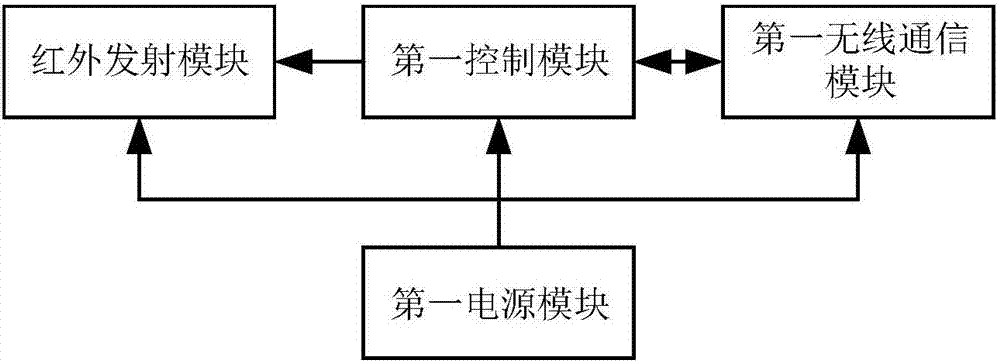

Indoor mobile robot navigation system and method based on infrared landmarks

ActiveCN107450540AWide application conditionsReduce computing pressurePosition/course control in two dimensionsVehiclesGuidance systemImaging processing

The invention discloses an indoor mobile robot navigation system and method based on infrared landmarks. A monocular vision identification system collects an image of a luminous infrared landmark in an infrared landmark guidance system, and calculates the position of an indoor mobile robot relative to the current luminous infrared landmark through image processing; the indoor mobile robot is driven to move towards the luminous infrared landmark according to the relative position of the indoor mobile robot; after the indoor mobile robot enters the set area coverage of the current luminous infrared landmark, the monocular vision identification system issues a light emitting stop instruction to the current luminous infrared landmark, and issues a light emitting start instruction to next luminous infrared landmark; the monocular vision identification system makes the indoor mobile robot move to the next luminous infrared landmark according to the position of the next luminous infrared landmark, and so on; and the indoor mobile robot ends movement when moving to the set area coverage of the last luminous infrared landmark.

Owner:SHANDONG UNIV

Mobile Robot Navigation

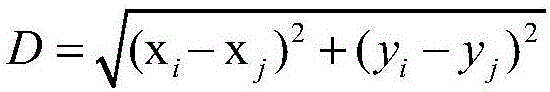

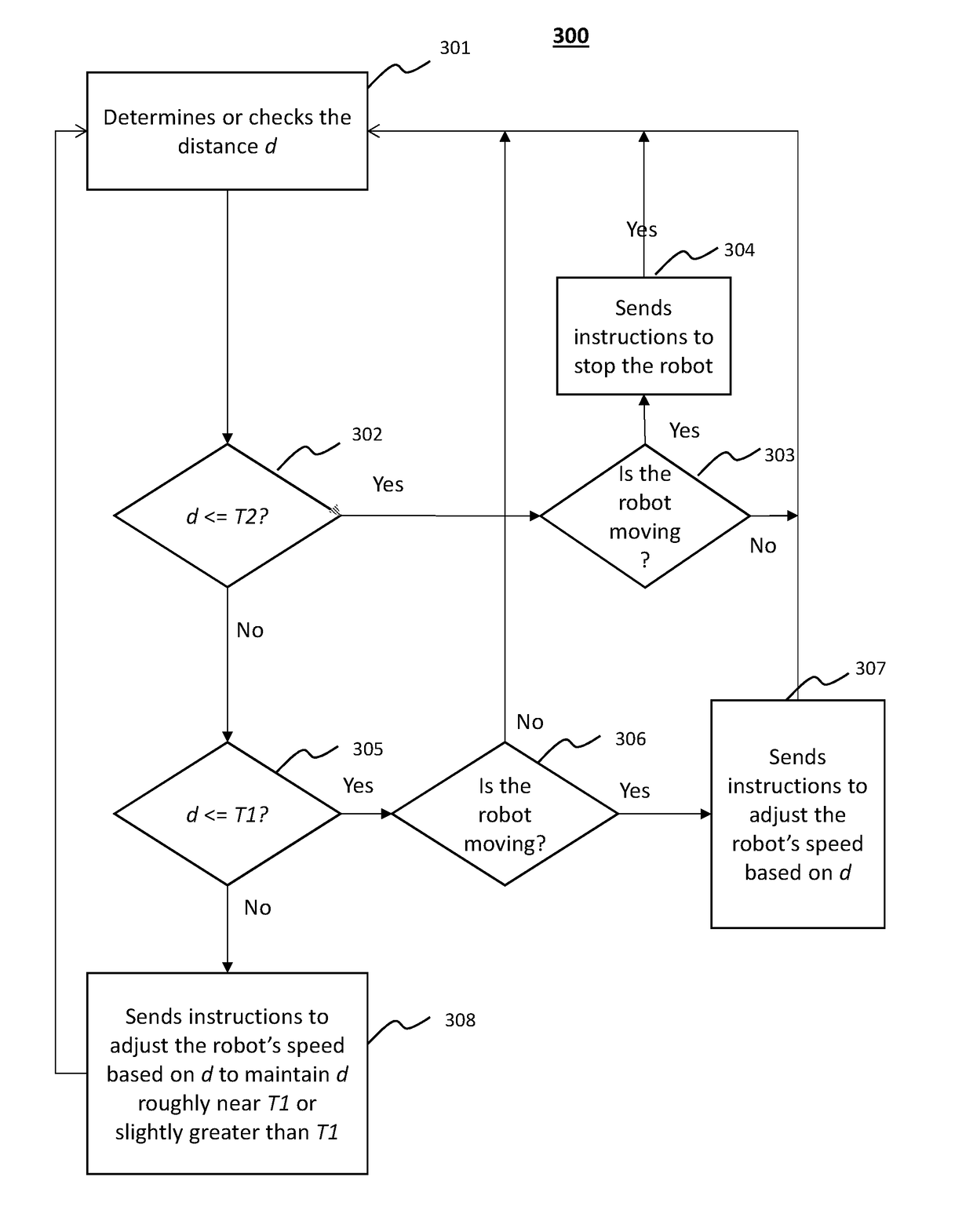

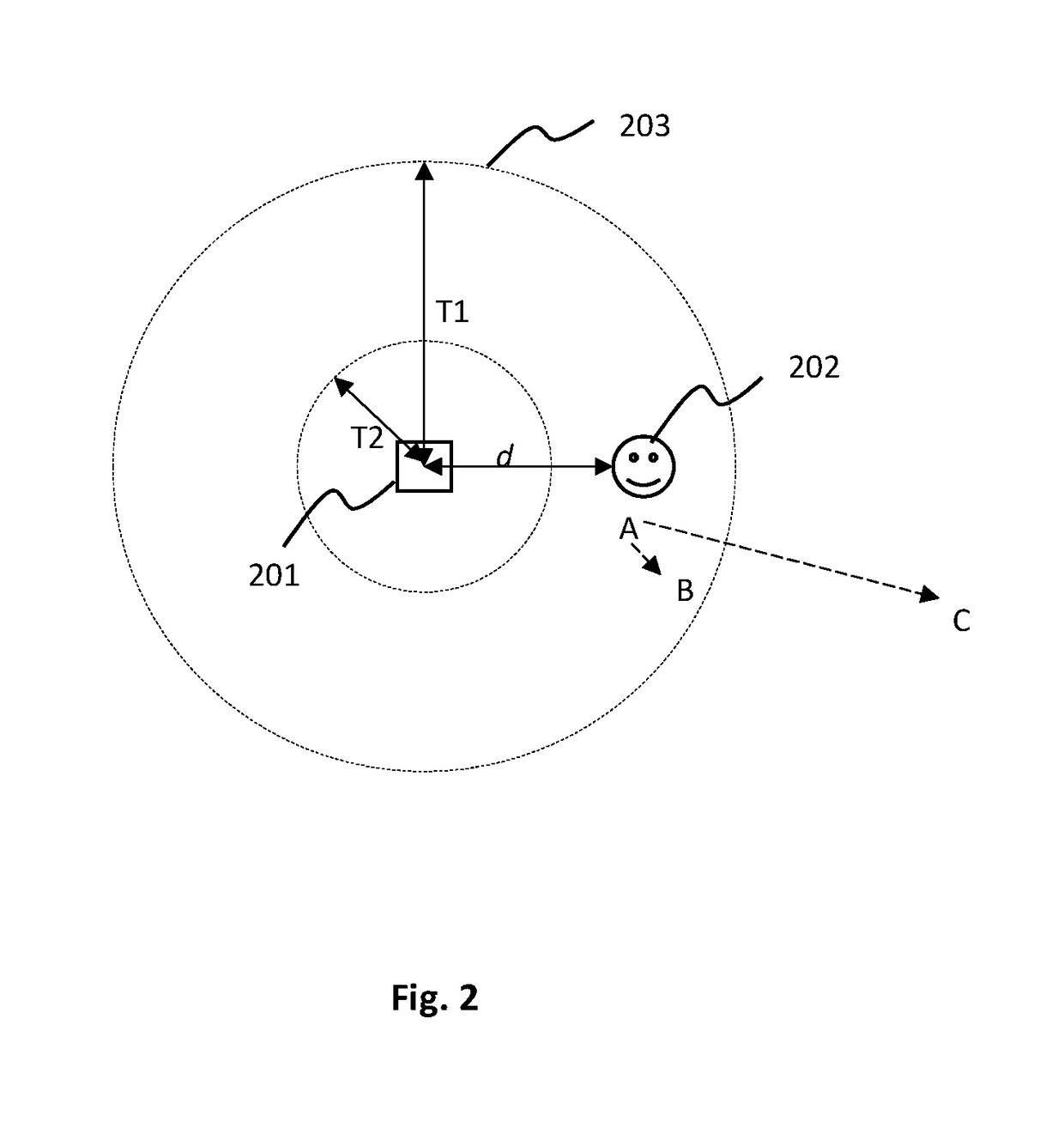

InactiveUS20170368691A1Avoids following and reactingProgramme-controlled manipulatorRobotEngineeringDouble threshold

A double-threshold mechanism is used for implementing the follow-me function of a mobile robot. Initially, a user comes to a mobile robot and turns on its follow-me function. Then, the mobile robot is in the follow-me mode or can simply be described as following the user. When the user moves away from the robot, the robot determines whether the distance between itself and the user exceeds a first distance threshold. If so, the robot starts moving to follow the user. Otherwise, the robot stays put. While following the user's movement, the robot continues to monitor the distance between itself and the user. When the robot determines that the distance between them is less than a second distance threshold—because the user has slowed down or stopped, for example—the robot stops moving. The second distance threshold is lower than the first distance threshold.

Owner:DILILI LABS INC

Construction method for mixed map for navigation of mobile robots

ActiveCN105225604AIncrease probabilityReduce probabilityMaps/plans/chartsComputer scienceVisual perception

Owner:SHANTOU UNIV

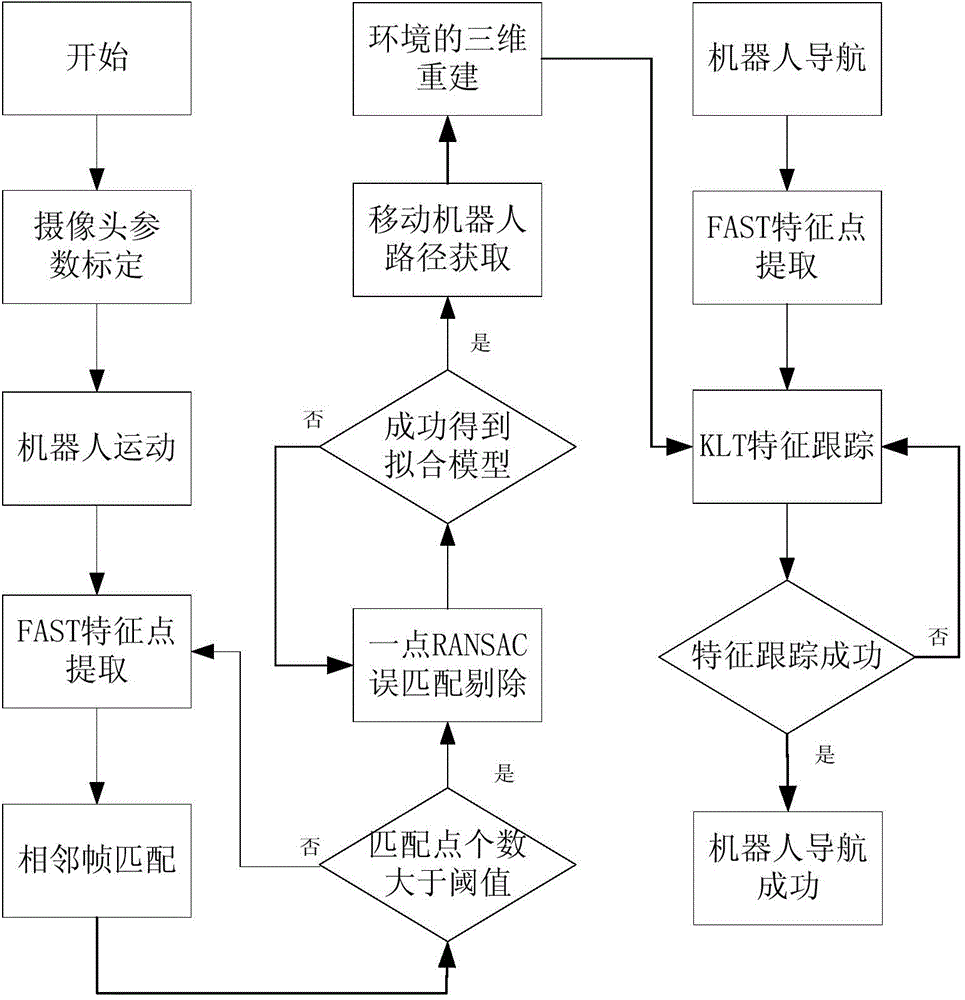

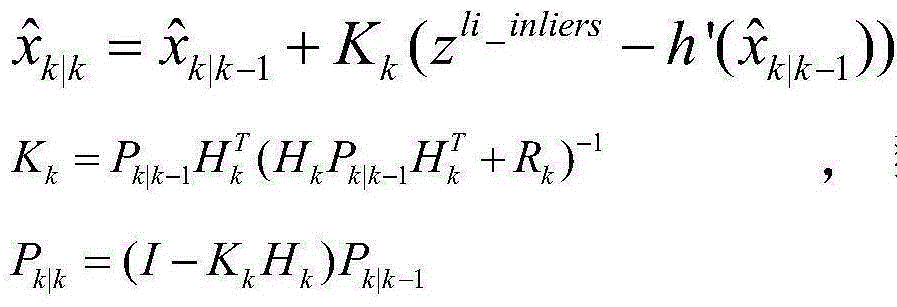

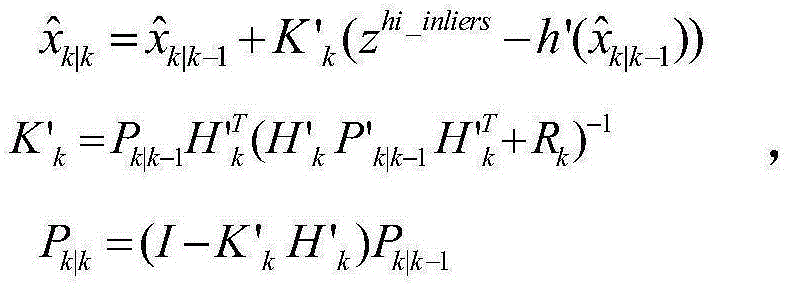

Mobile robot navigation method based on one point RANSAC and FAST algorithm

ActiveCN104881029AImprove real-time performanceReduce the number of iterationsPosition/course control in two dimensionsPrior informationFast algorithm

The invention provides a mobile robot navigation method based on one point RANSAC and FAST algorithms. The method mainly includes that a robot adopts the FAST corner extraction algorithm for collecting surround environment information through a self-equipped camera; the one point RANSAC algorithm combined with extension Kalman filtering is adopted during a matching process for error matching remove; and obtained matching points are used for three-dimensional environment reconstruction and map creation. Aiming at a problem that the number of iterations is too large in the prior art employing the RANSAC algorithm, prior information obtained in a filtering phase is utilized fully. In this way, the number of iterations of the algorithm is reduced effectively and the algorithm robustness is guaranteed at the same time, so that feature matching is implemented. Problems that a traditional mobile robot is poor in monocular vision navigation real time performance and robustness and the number of iterations of the RANSAC algorithm is too large are solved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

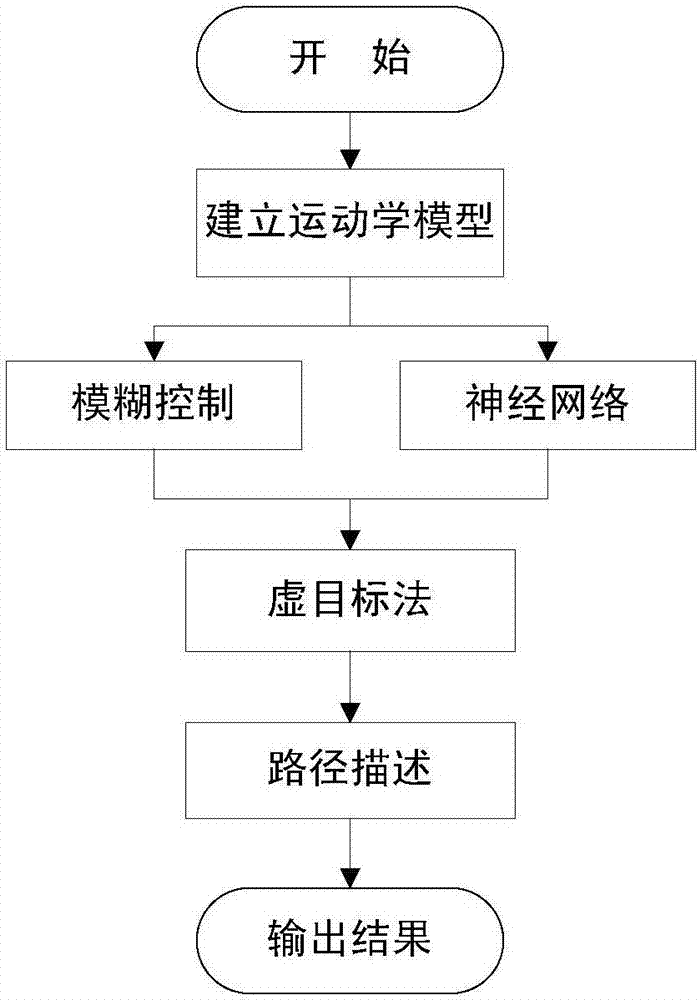

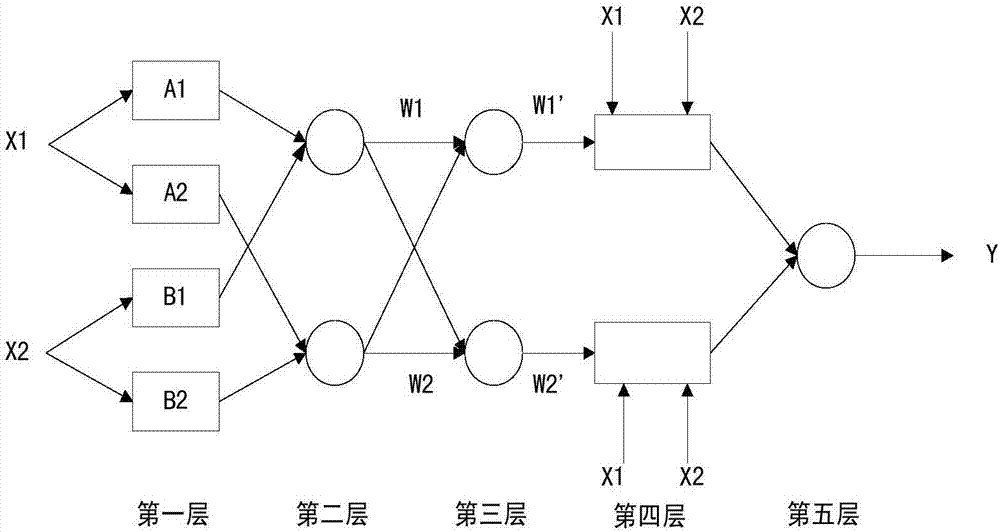

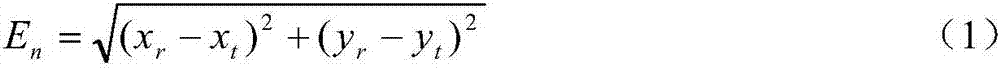

Robot path planning method based on ANFIS fuzzy neural network

InactiveCN107168324AReduce logical reasoning workloadGet out of the trap statePosition/course control in two dimensionsVehiclesTakagi sugenoSimulation

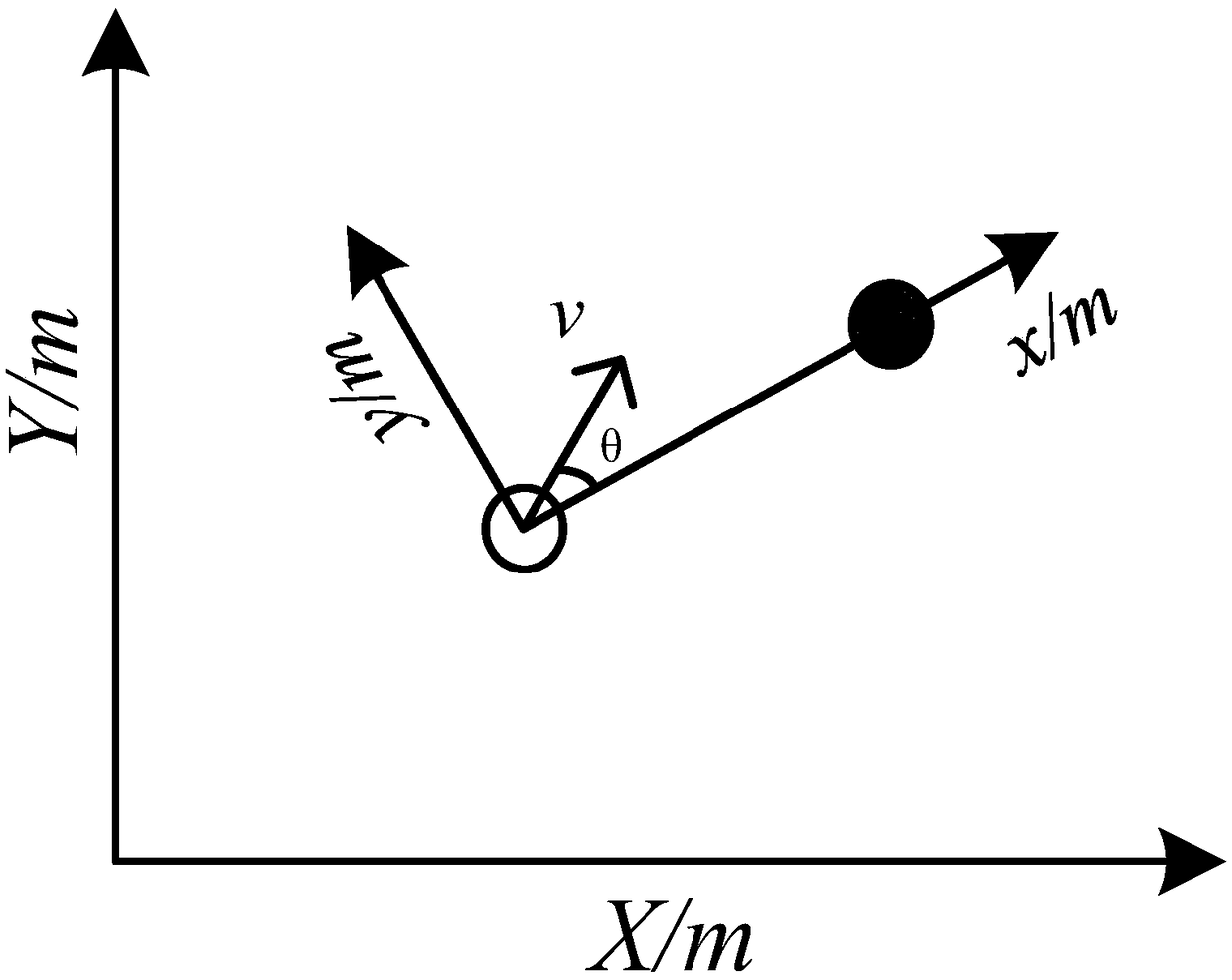

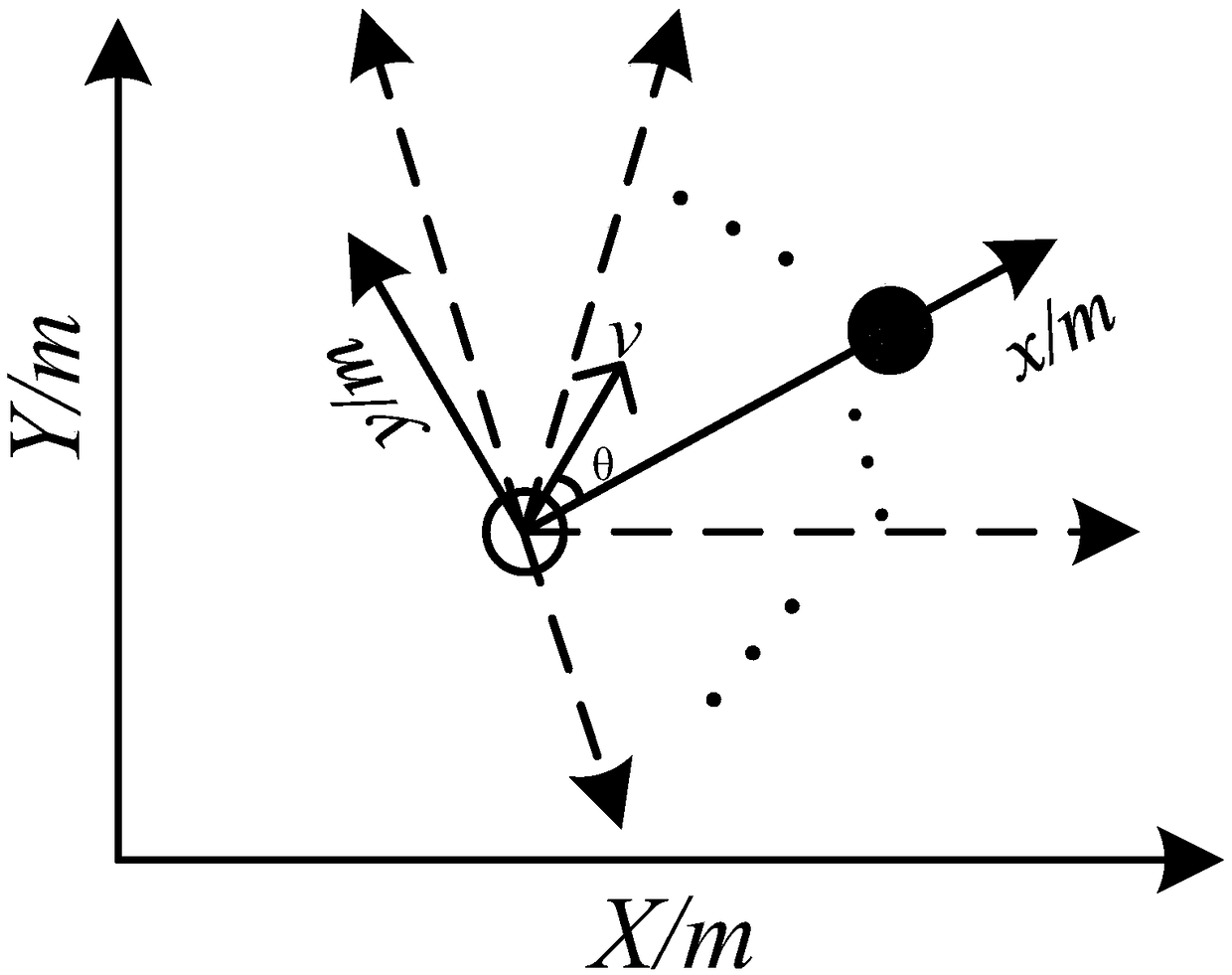

The invention discloses a robot path planning method based on an ANFIS fuzzy neural network, and mainly solves the problems of complex trap path reciprocating and path redundancy in conventional reactive navigation. The method comprises the following steps: to begin with, establishing a kinematic model for a mobile robot; providing a mobile robot navigation controller based on the fuzzy neural network by means of autonomous learning function of the neural network and fuzzy reasoning ability of the fuzzy theory; constructing a Takagi-Sugeno fuzzy inference system based on an adaptive fuzzy neural network structure and serving the Takagi-Sugeno fuzzy inference system as a reference model for local reaction control of the robot; the fuzzy neural network controller outputting offset angle and operation speed in real time, and adjusting the migration direction of the mobile robot online to enable the mobile robot to be able to adjust speed automatically and approach the goal collisionless; and through an improved virtual target method, selecting an optimal path capable of allowing the robot to escape a trapping state.

Owner:CHINA UNIV OF MINING & TECH

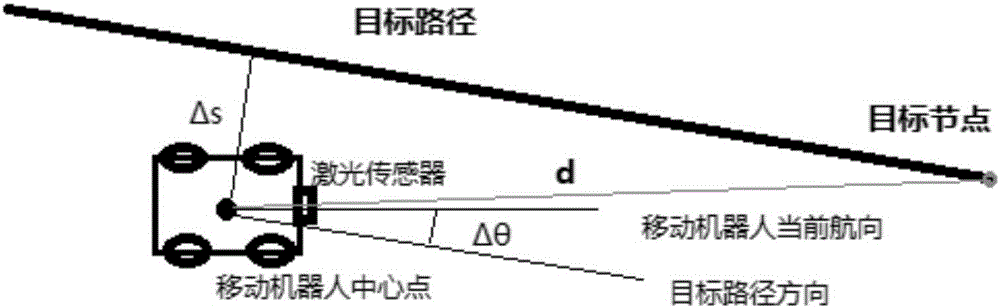

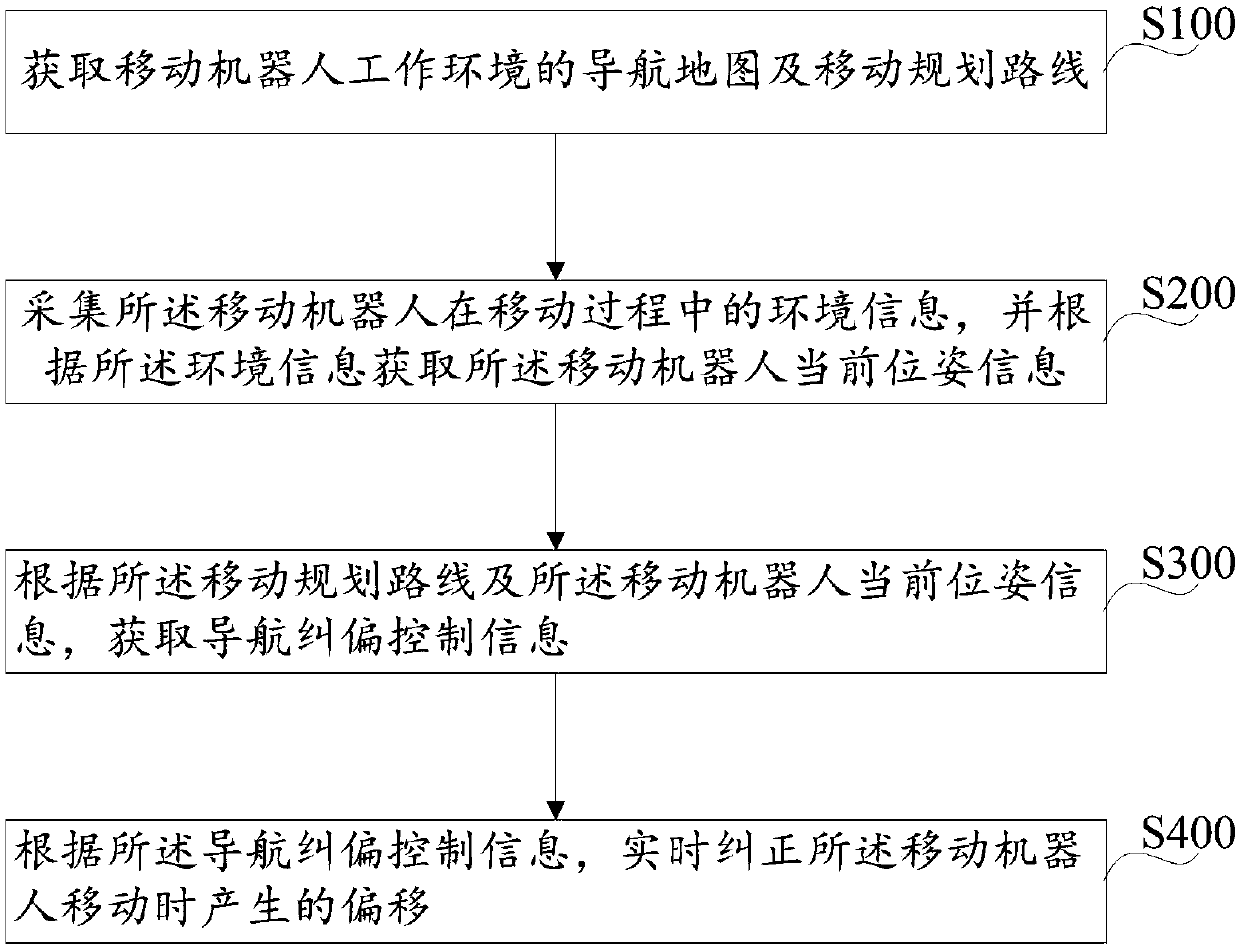

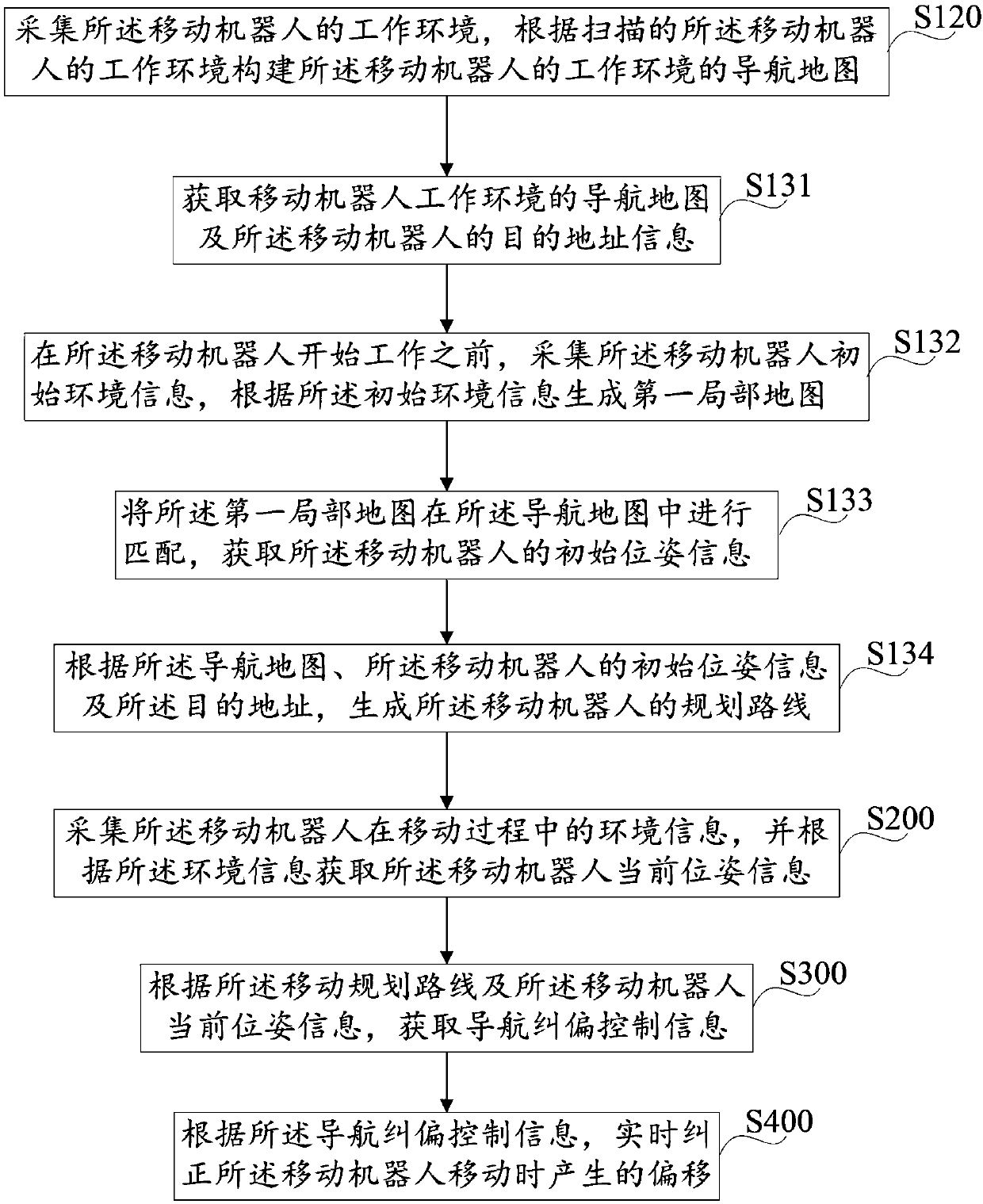

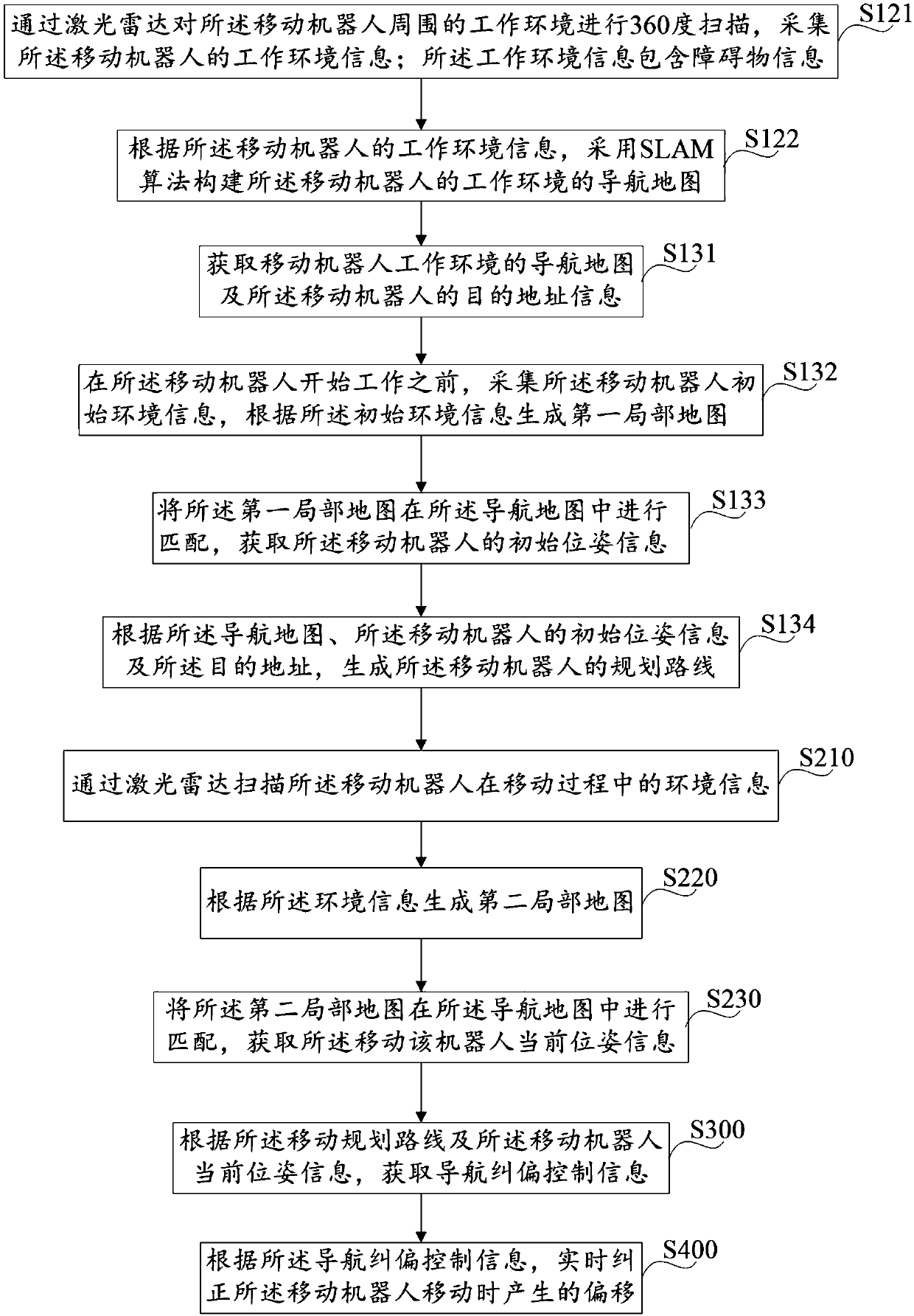

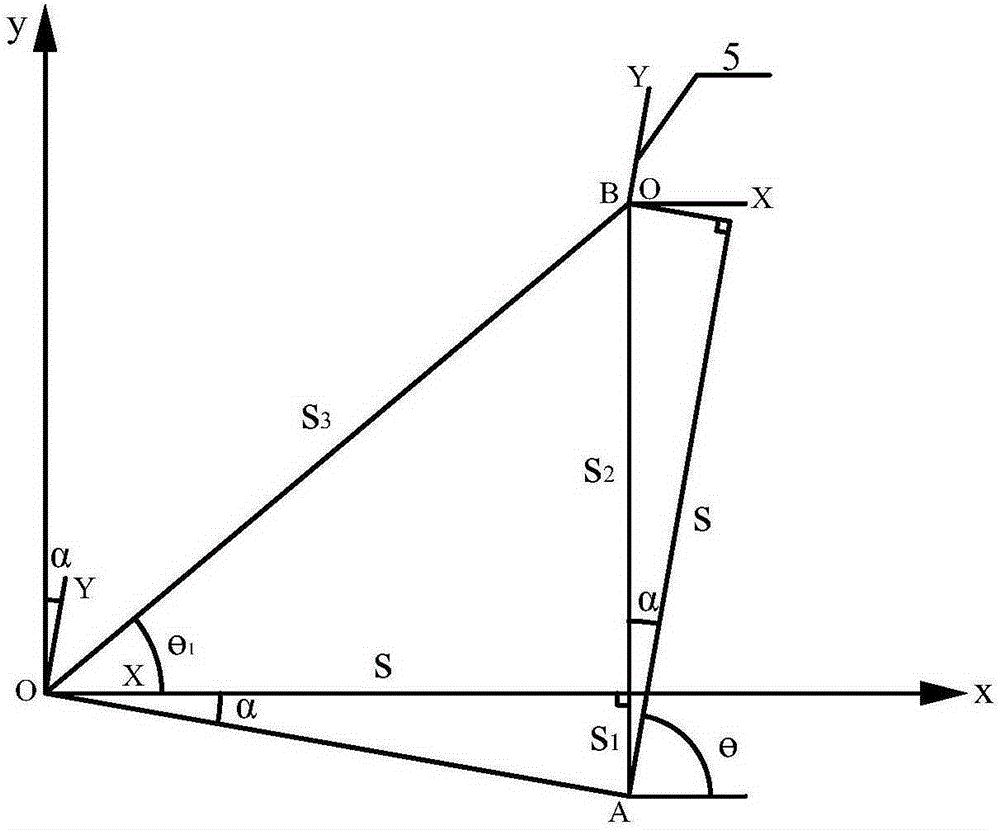

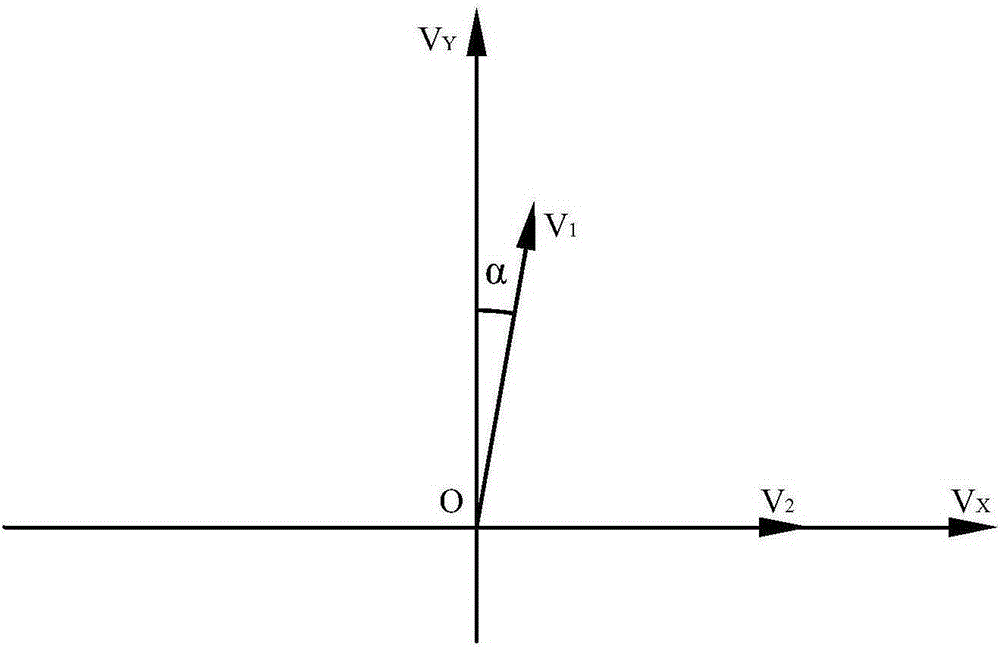

Mobile robot navigation offset correction method and device

InactiveCN107918391AStable controlImprove navigation accuracyPosition/course control in two dimensionsVehiclesDevice typeMobile navigation

The invention discloses a mobile robot navigation offset correction method comprising the following steps: obtaining a navigation map and mobile programmed routes of the mobile robot work environment;gathering environment information of the mobile robot in a moving process, and obtaining the current pose information of the mobile robot according to the environment information; obtaining navigation offset correction control information according to the mobile programmed route and the current pose information of the mobile robot; correcting the offset of the moving robot in real time accordingto the navigation offset correction control information. In addition, the invention also discloses a mobile robot navigation offset correction device. The method and device can correct the offsets ofthe mobile robot in the moving process, thus enabling the mobile robot to precisely arrive at the destination. The navigation offset correction method and device are simple and practical, low in realization cost, and high in precision.

Owner:台州市吉吉知识产权运营有限公司

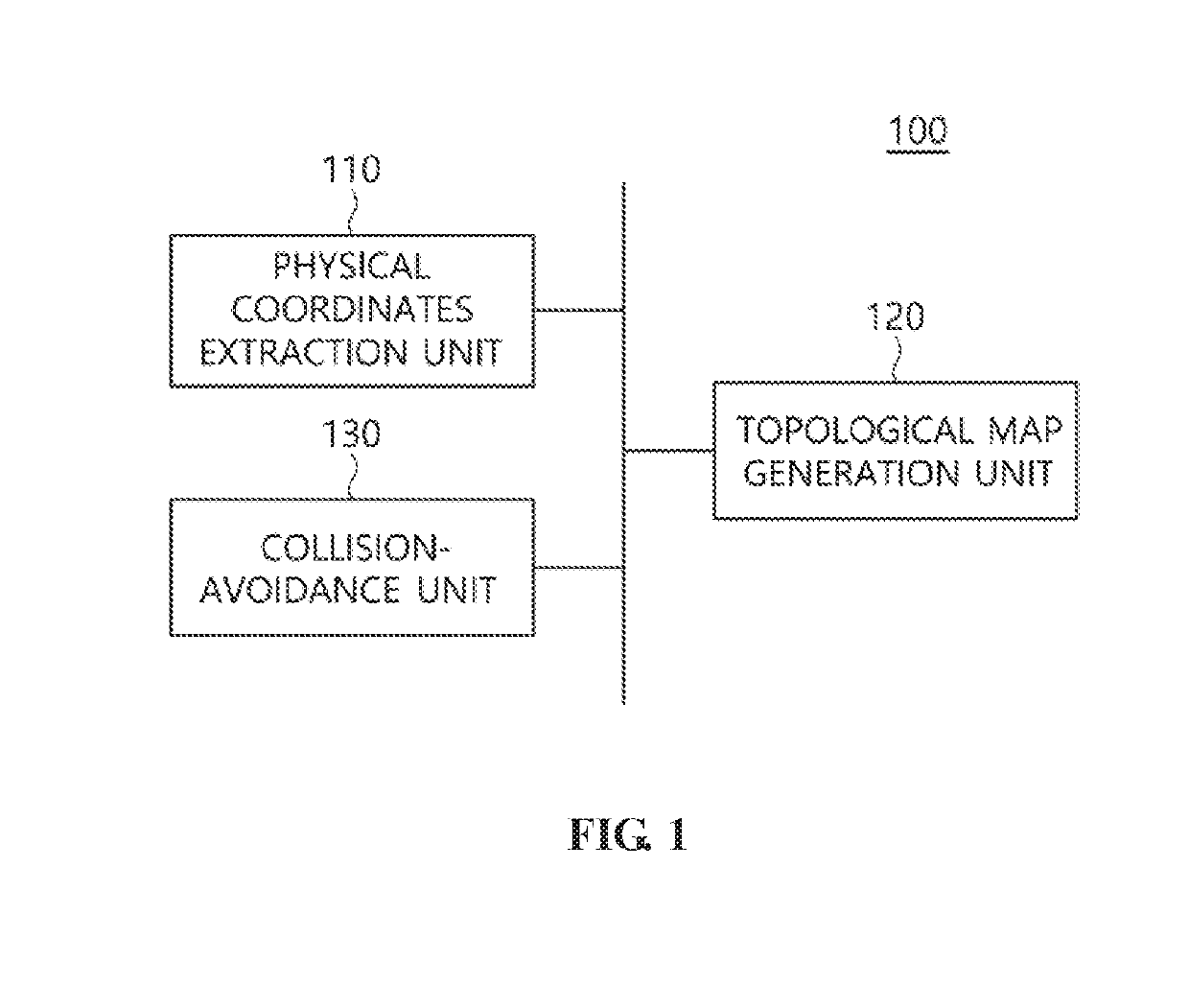

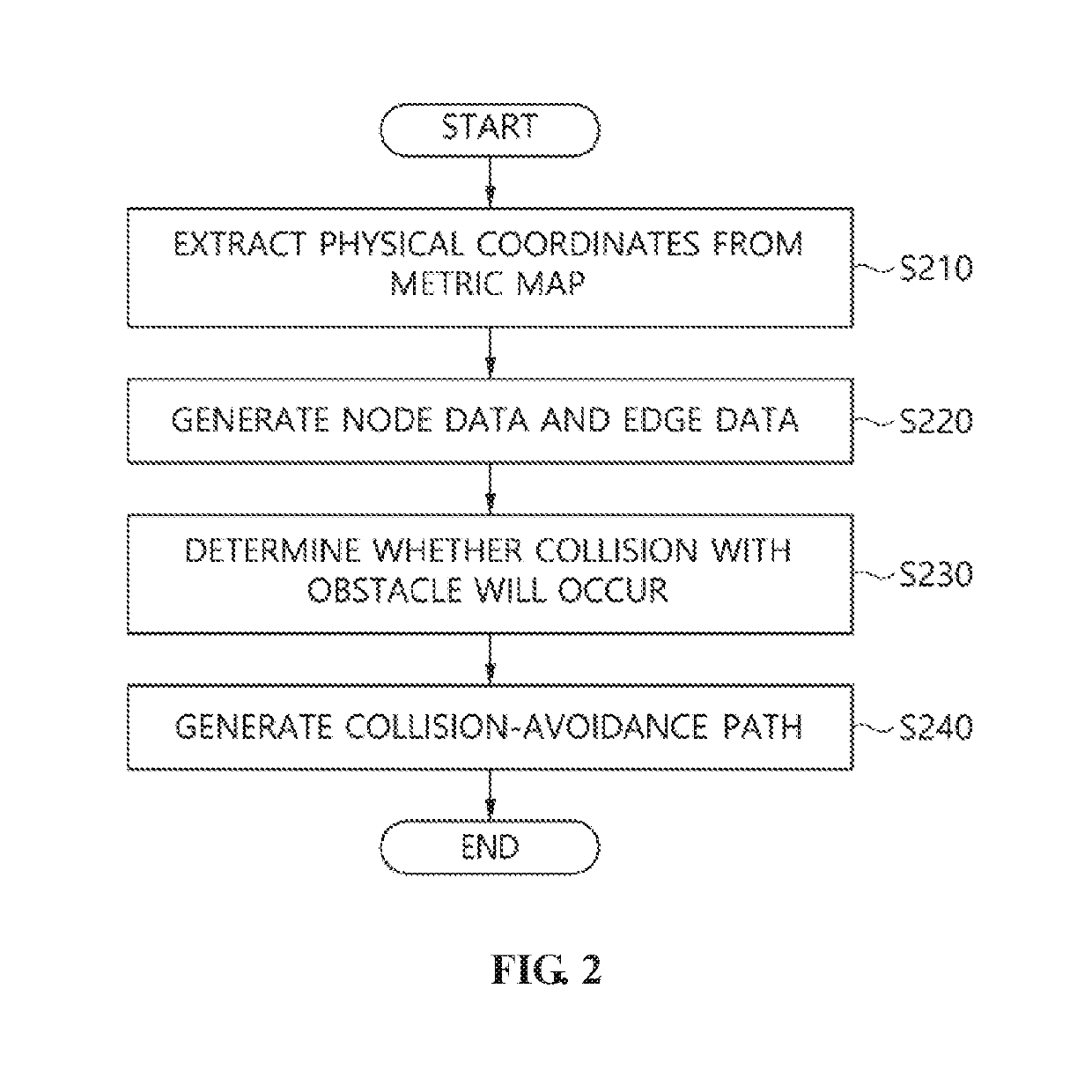

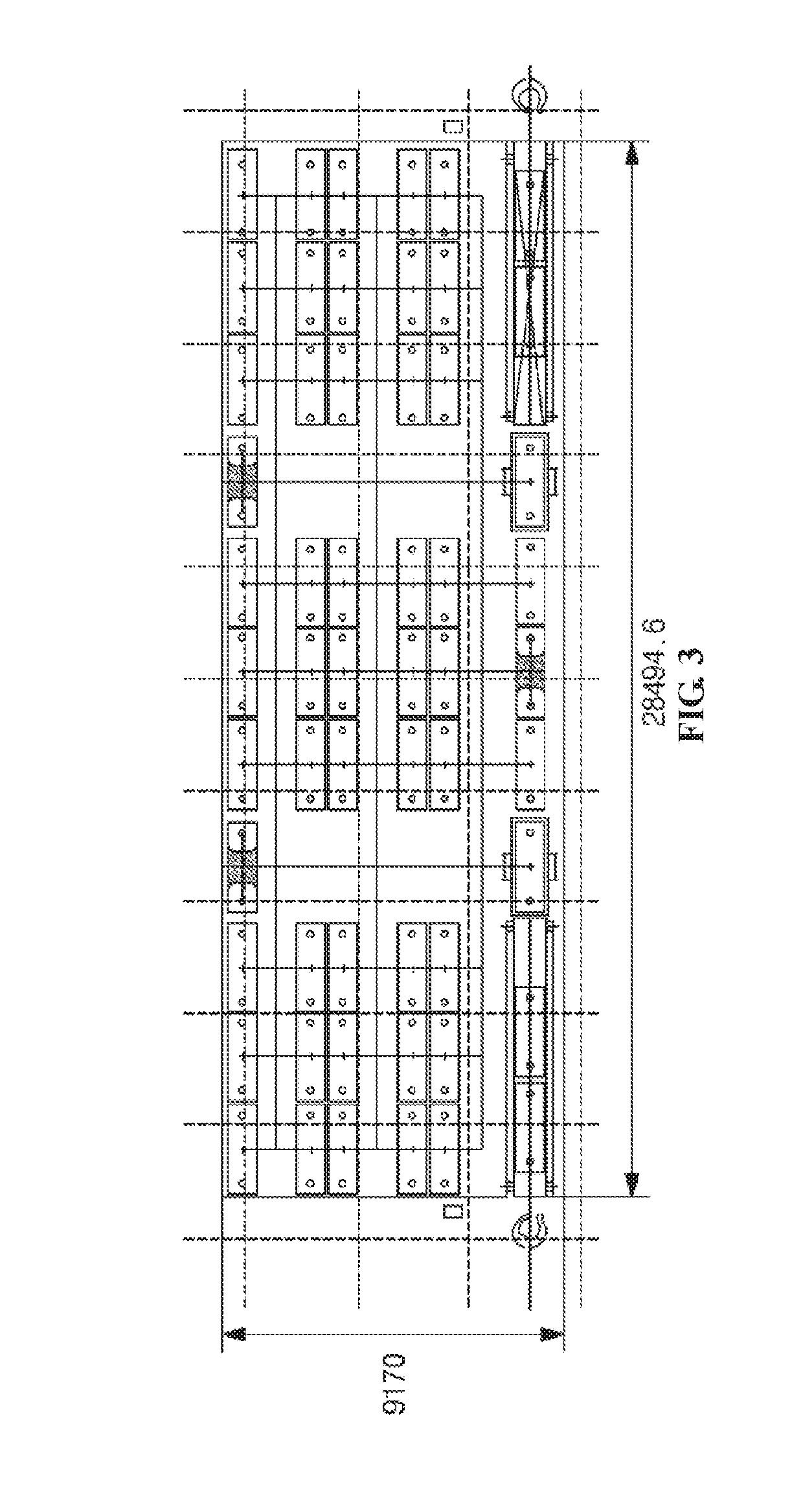

Topological map generation apparatus for navigation of robot and method thereof

ActiveUS20190310653A1Navigation safetyAccurate detectionProgramme-controlled manipulatorImage generationComputer scienceMobile robot navigation

Disclosed herein are an apparatus and method for generating a topological map for navigation of a robot. The method for generating a topological map for navigation of a robot, performed by the apparatus for building the topological map for the navigation of the robot, includes calculating the physical size of a single pixel on a metric map of a space in which a mobile robot is to navigate, extracting the physical coordinates of the pixel on the metric map, building node data and edge data for the navigation of the mobile robot using the physical coordinates, and generating a topological map for the navigation of the mobile robot based on the built node data and the built edge data.

Owner:ELECTRONICS & TELECOMM RES INST

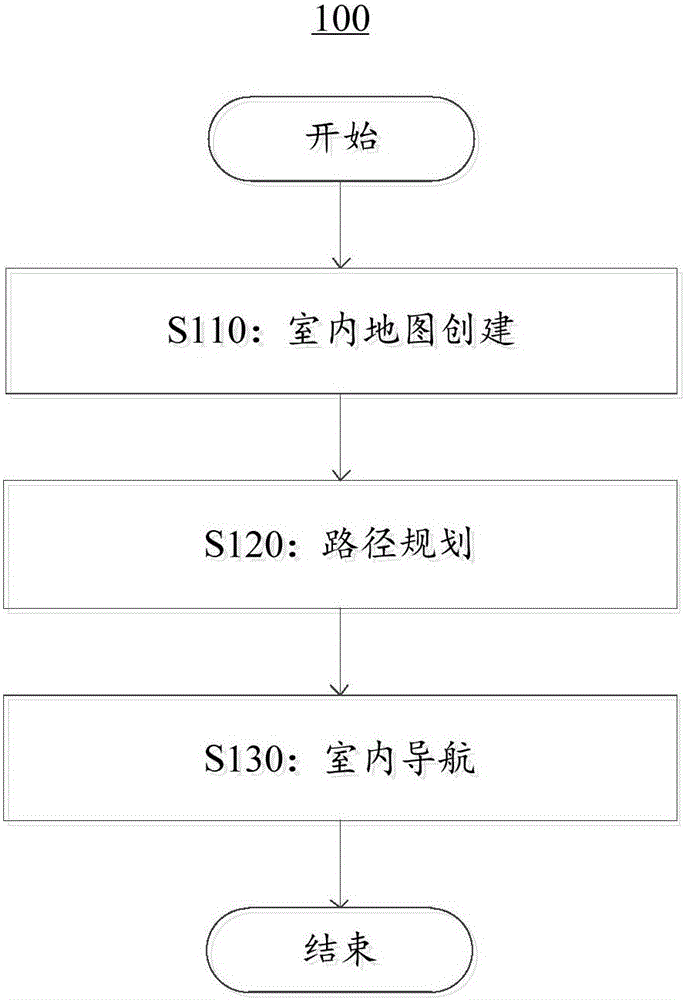

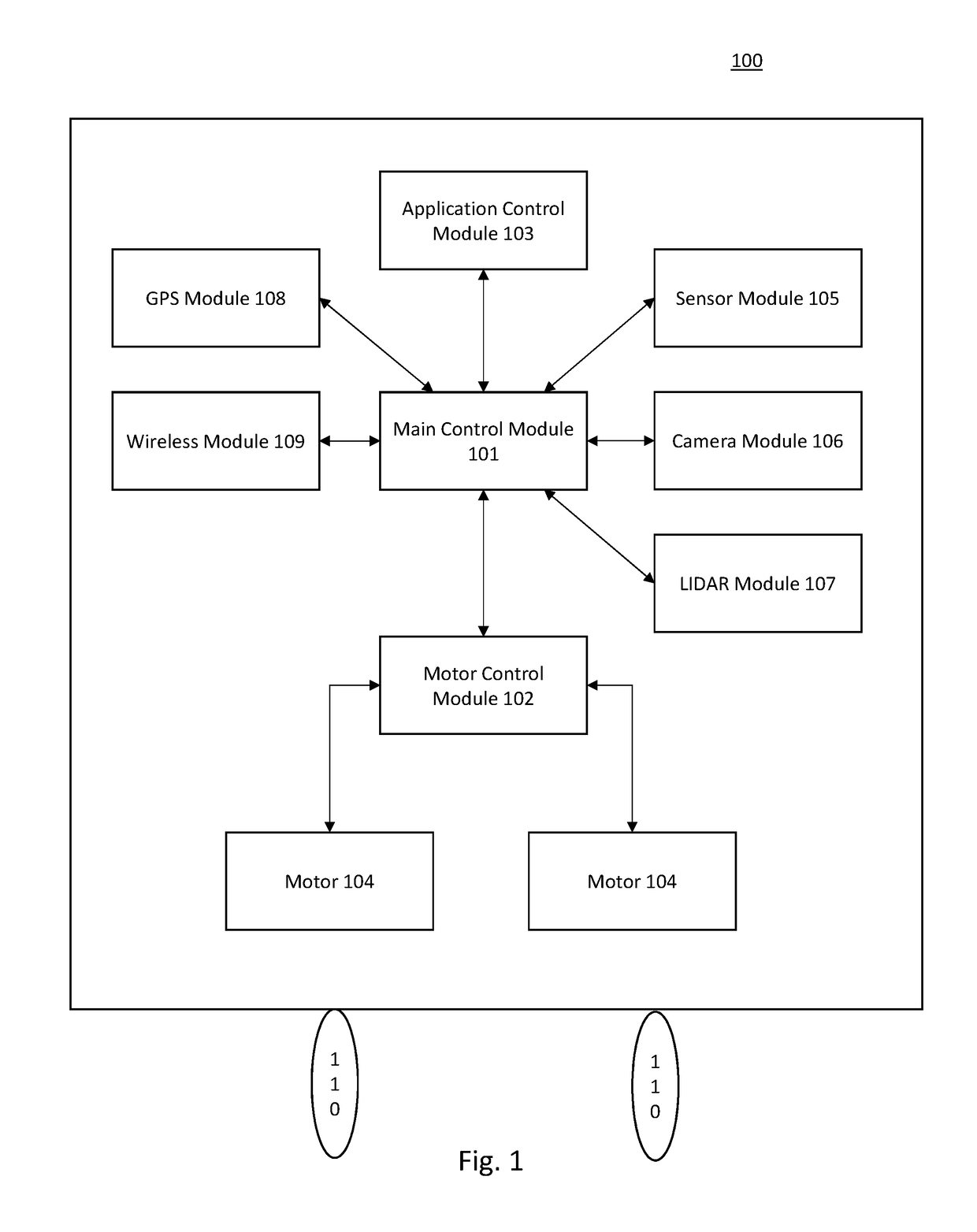

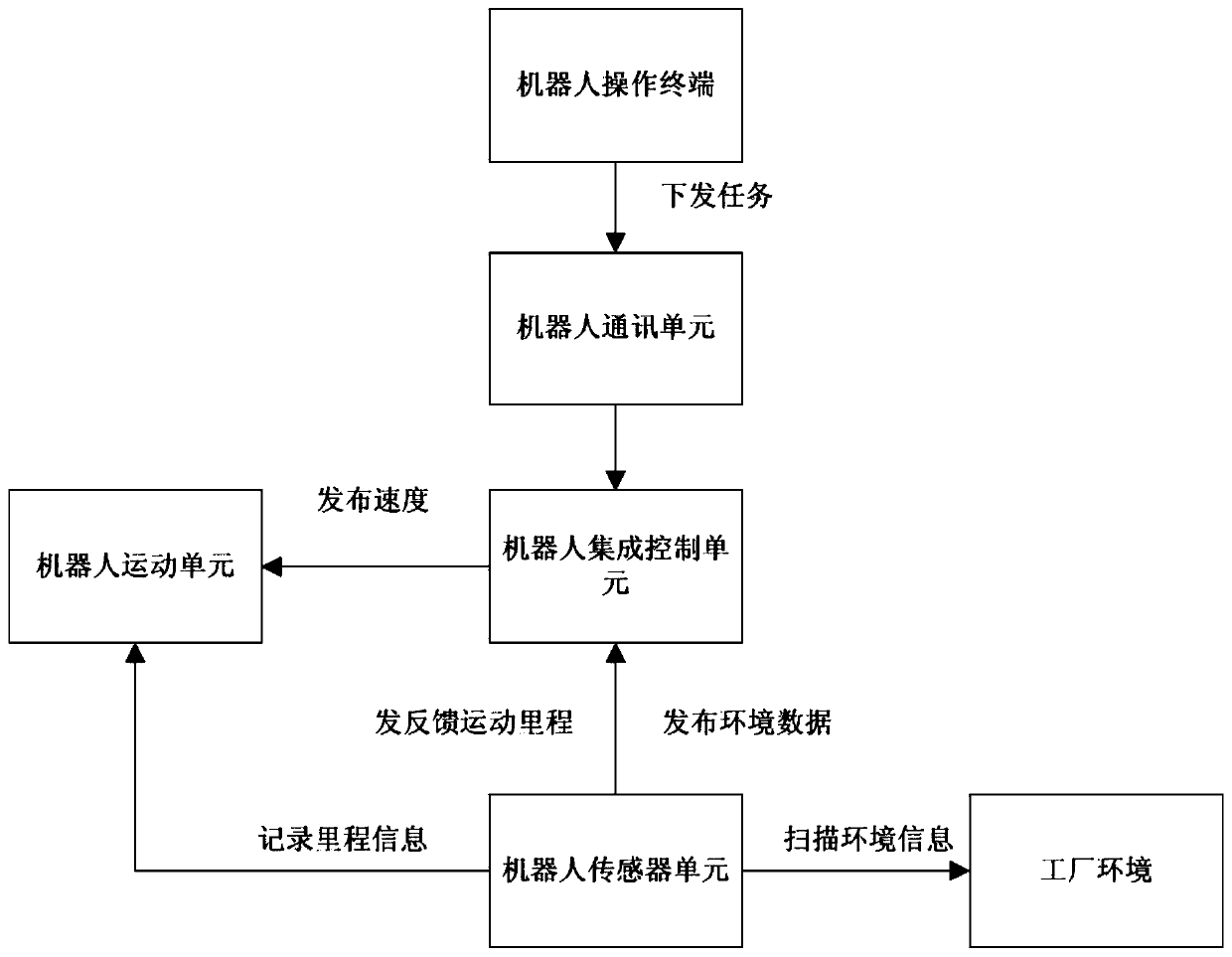

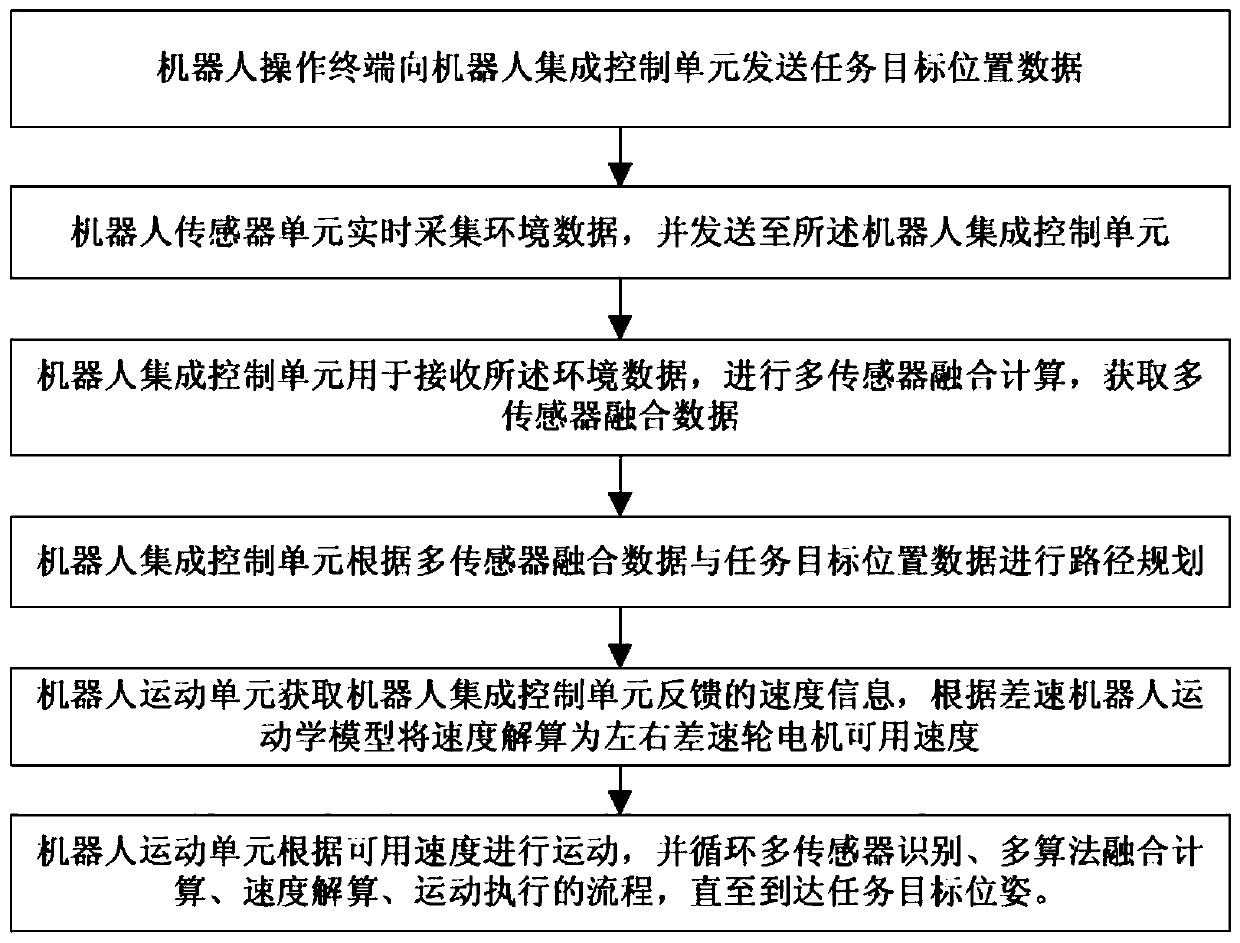

Mobile robot navigation system and method

InactiveCN111123925AAchieving autonomous mobilityMake up for the error defectPosition/course control in two dimensionsVehiclesMultiple sensorControl cell

The invention discloses a navigation system for a mobile robot. The system comprises a robot operation terminal for transmitting task target pose data to a robot integrated control unit, a robot sensor unit for acquiring environmental data in real time, and the robot integrated control unit for receiving the environment data, carrying out multi-sensor fusion calculation, obtaining multi-sensor fusion data, and carrying out path planning according to the multi-sensor fusion data and task target pose data. The invention further provides a navigation method of the mobile robot by corresponding tothe system. According to the invention, technologies such as an artificial intelligence algorithm, intelligent perception, operation execution and the like are combined to realize autonomous movement, accurate positioning and safety cooperation of the robot; trackless operation is achieved; the basic environment does not need to be modified, the error defect of a single sensor and a single algorithm is overcome; the positioning precision and safety of the motion robot can be greatly improved; and the production and transportation efficiency and safety of complex factories are ensured.

Owner:TIANJIN LIANHUI OIL GAS TECH CO LTD

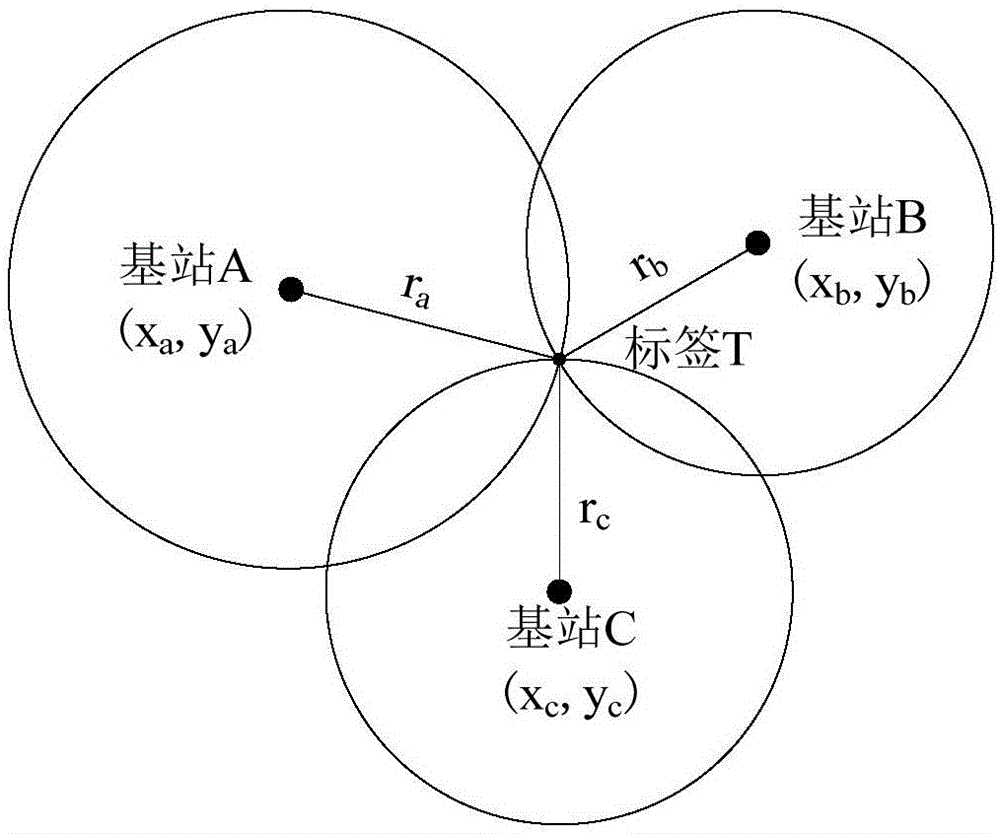

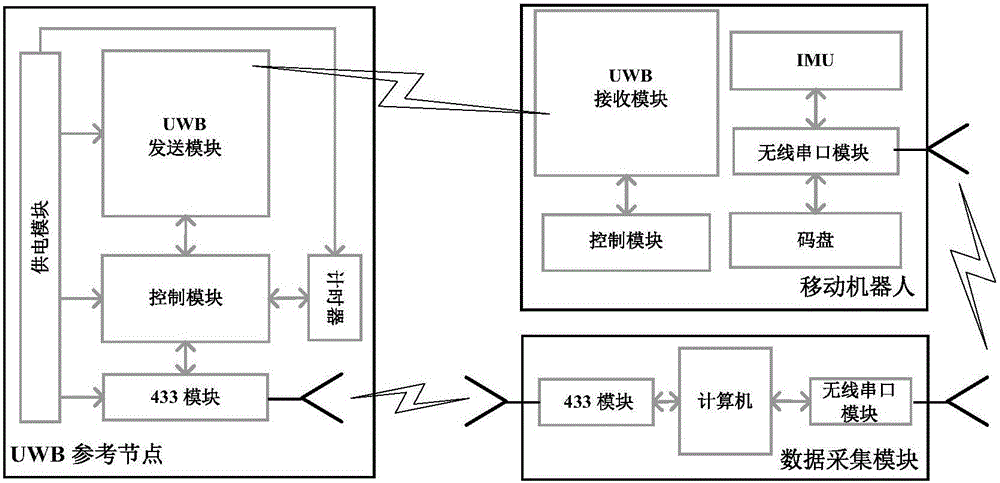

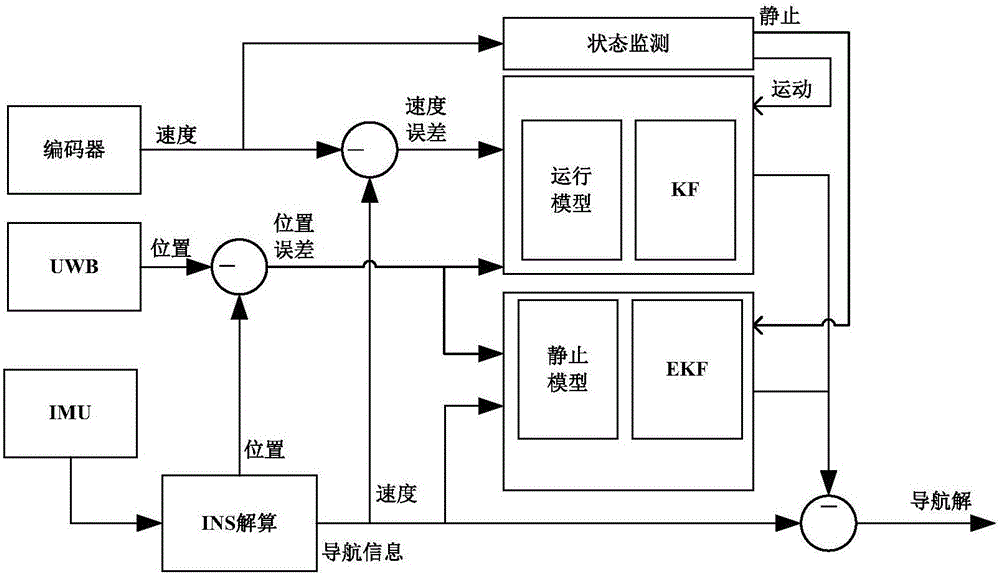

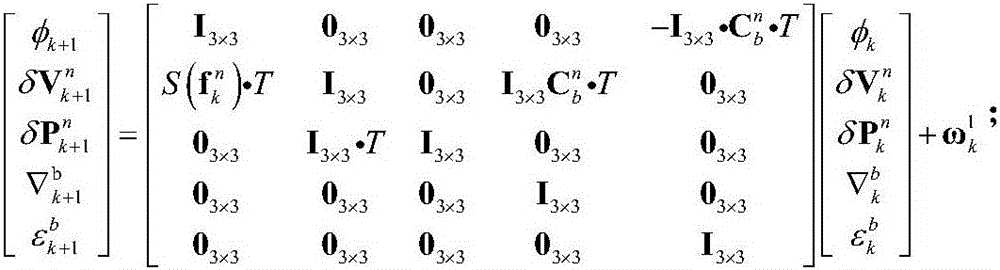

Mobile robot IMU/UWB/code disc loose combination navigation system and method adopting multi-mode description

ActiveCN106052684ANavigational calculation instrumentsNavigation by speed/acceleration measurementsData acquisitionCalculation error

The invention discloses a mobile robot IMU / UWB / code disc loose combination navigation system and method adopting multi-mode description. The system comprises a UWB reference node, a mobile robot and a data acquisition module, wherein the UWB reference node communicates with the mobile robot and the data acquisition module; the mobile robot communicates with the data acquisition module; the UWB reference node is used for measuring a distance between the reference node and the mobile robot; a moving state of the mobile robot can be judged according to a speed acquired by a code disc fixed on the mobile robot, and a calculation error can be respectively estimated according to different moving states. The system and the method have the beneficial effects that the system and the method can meet the requirements on high-precision positioning and orientation during indoor mobile robot navigation. The system can be used for positioning the mobile robot at high precision under an indoor environment.

Owner:UNIV OF JINAN

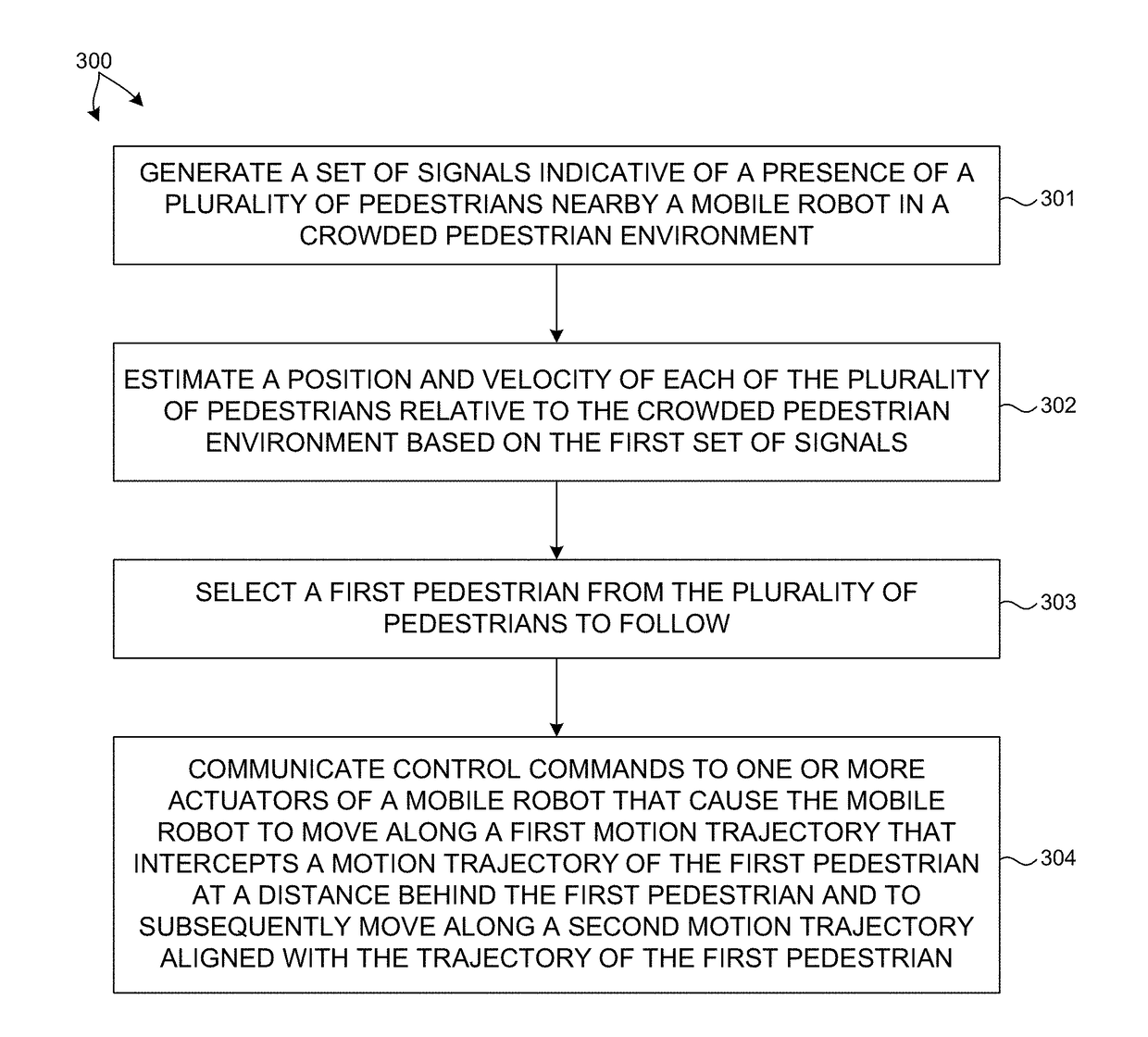

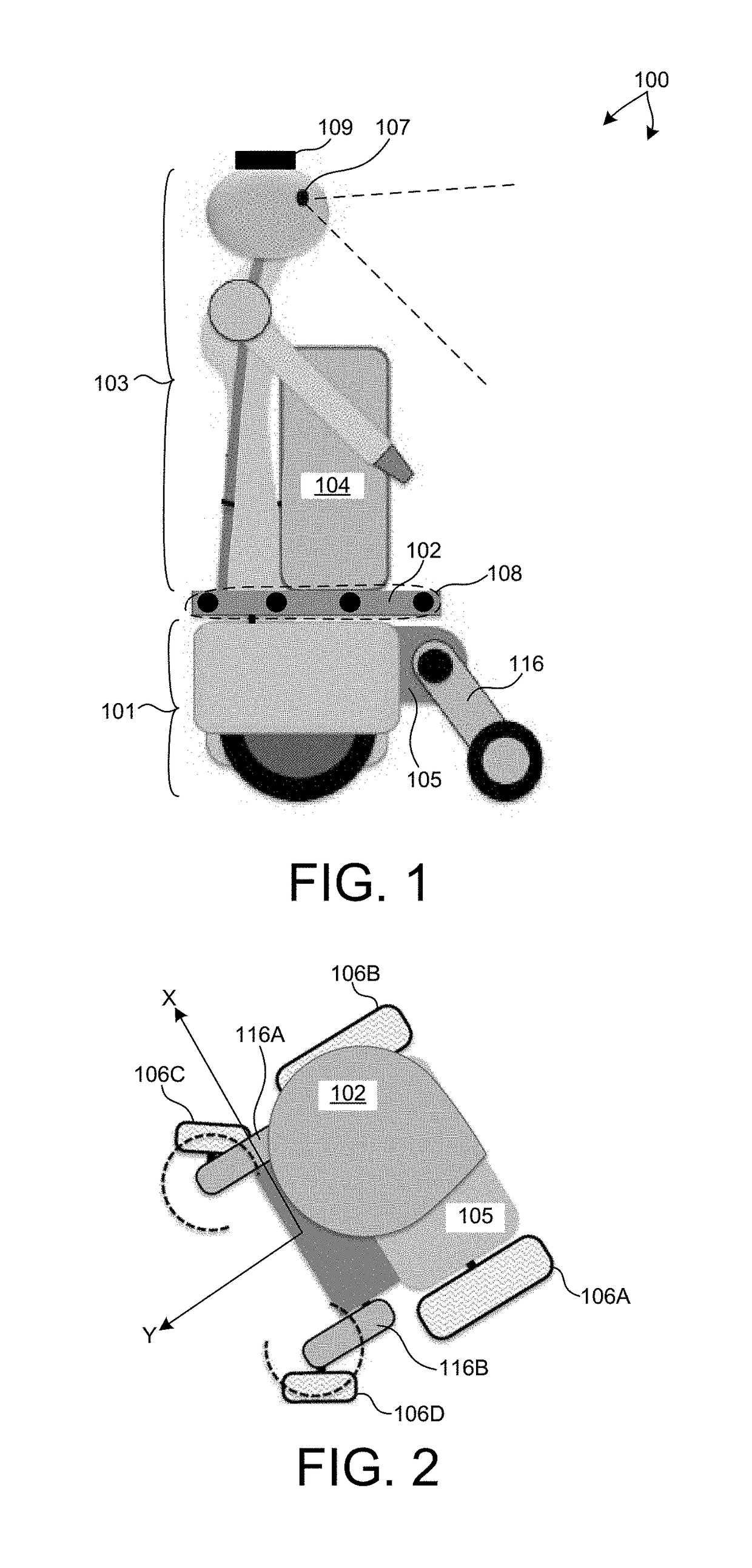

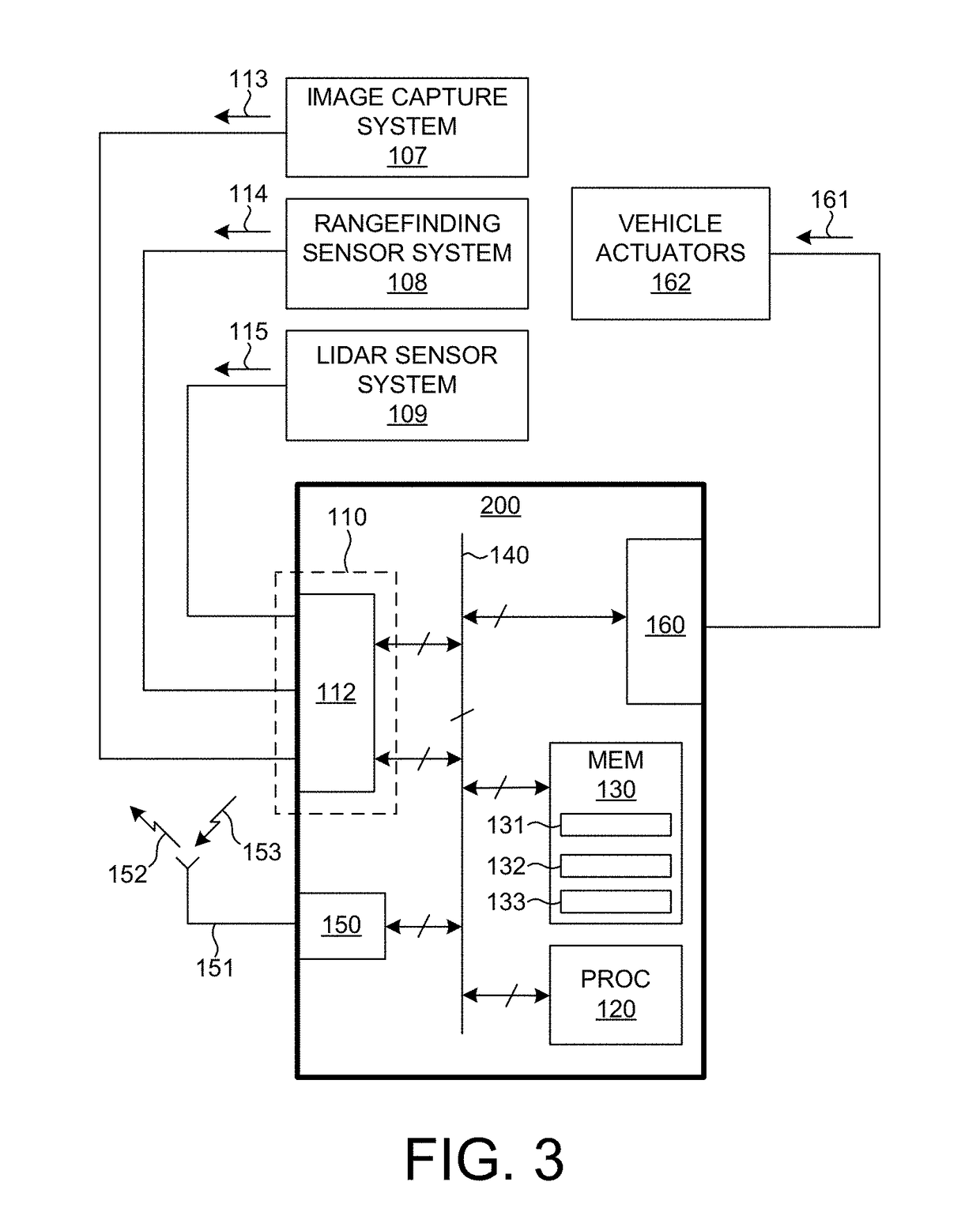

Navigation Of Mobile Robots Based On Passenger Following

ActiveUS20180129217A1Avoid collisionPosition/course control in two dimensionsVehiclesSimulationMobile robot navigation

Methods and systems for navigating a mobile robot through a crowded pedestrian environment by selecting and following a particular pedestrian are described herein. In one aspect, a navigation model directs a mobile robot to follow a pedestrian based on the position and velocity of nearby pedestrians and the current and desired positions of the mobile robot in the service environment. The mobile robot advances toward its desired destination by following the selected pedestrian. By repeatedly sampling the positions and velocities of nearby pedestrians and the current location, the navigation model directs the mobile robot toward the endpoint location. In some examples, the mobile robot selects and follows a sequence of different pedestrians to navigate to the desired endpoint location. In a further aspect, the navigation model determines whether following a particular pedestrian will lead to a collision with another pedestrian. If so, the navigation model selects another pedestrian to follow.

Owner:BOSTON INCUBATOR CENT LLC +1

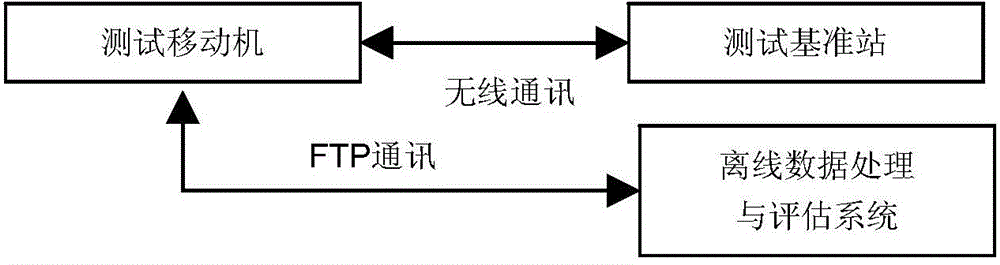

Navigation and positioning performance testing device and method for autonomous mobile robot

InactiveCN105387872AImprove test accuracyImprove dynamic performanceMeasurement devicesTransmission systemsData acquisitionEngineering

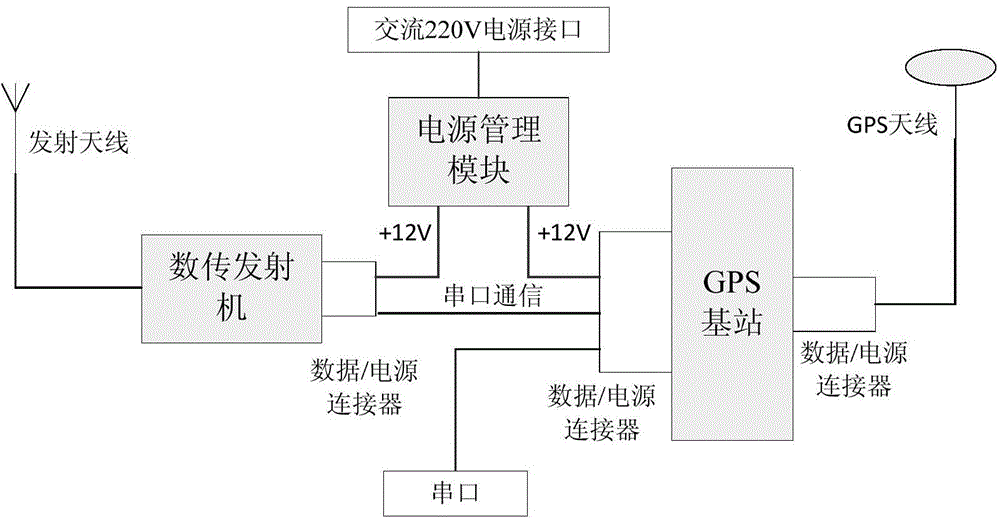

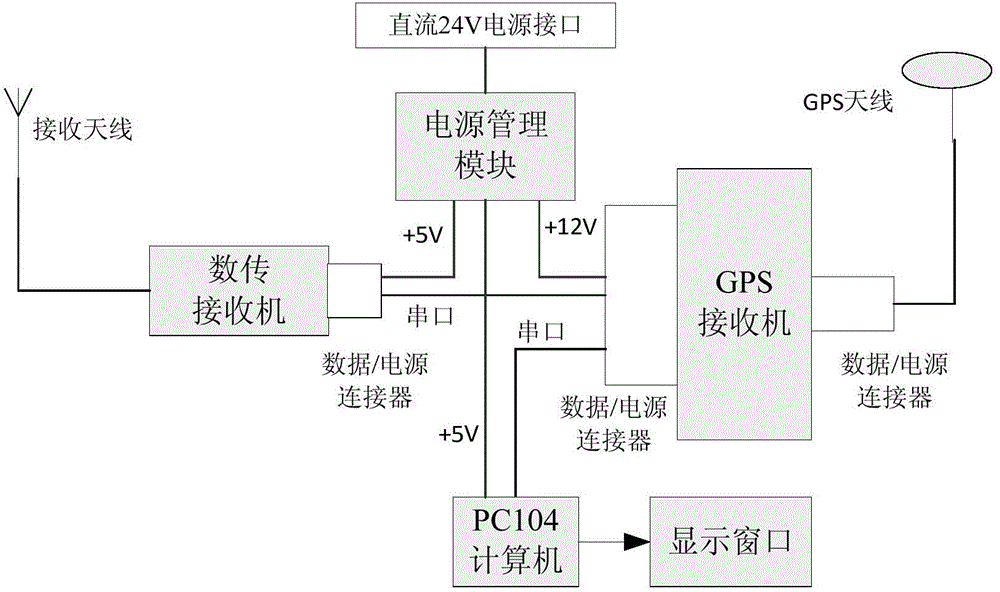

The invention discloses a navigation and positioning performance testing device and method for an autonomous mobile robot. The device comprises a testing base station, a mobile station and an off-line data analysis and processing system. The testing base station is constituted by a differential GPS base station, a data transmission transmitter and a power management module. The mobile station is composed of a GPS receiver module, a data transmission receiver, an on-line data acquisition and processing system and a power management module. The testing base station and the mobile station constitute a high-precision movement track testing hardware device, and motion path information data of the robot are recorded in real time. According to the method, the tested robot performs strict linear motion, self-spinning motion around the center and Z-shaped motion and follows a testing track constructed according to the international standard ISO-3888-1. Statistics and analysis are conducted on position information of the robot through the off-line data analysis and processing system, and robot navigation and positioning performance assessment is achieved. The navigation and positioning performance testing device and method have the advantages of being high in testing precision, good in dynamic performance and the like.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

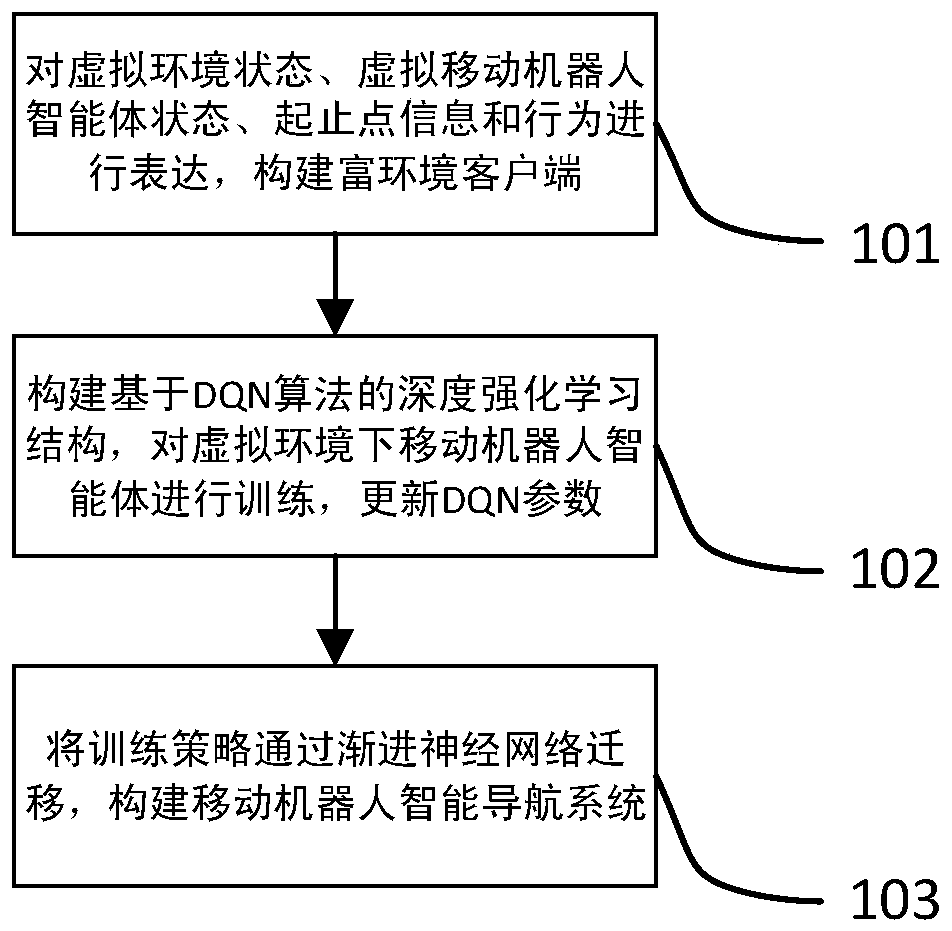

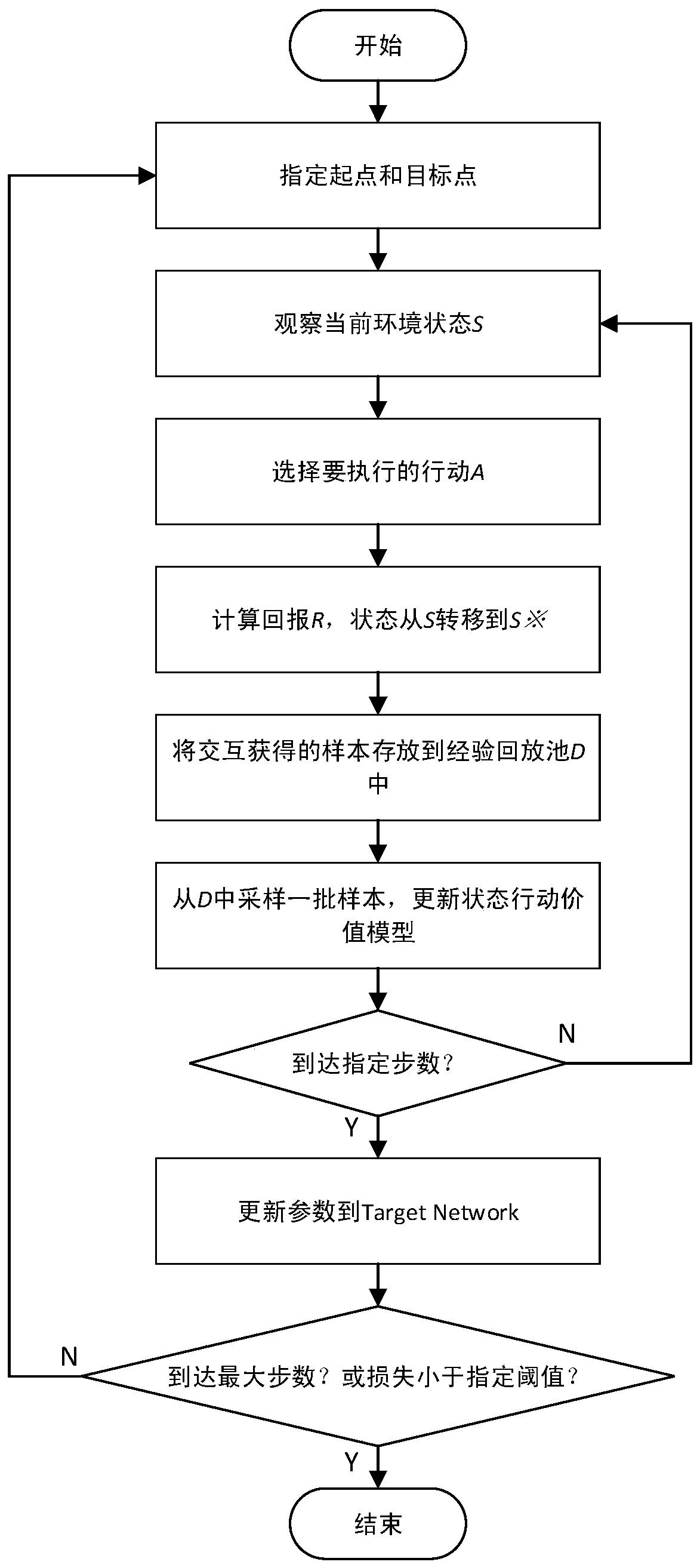

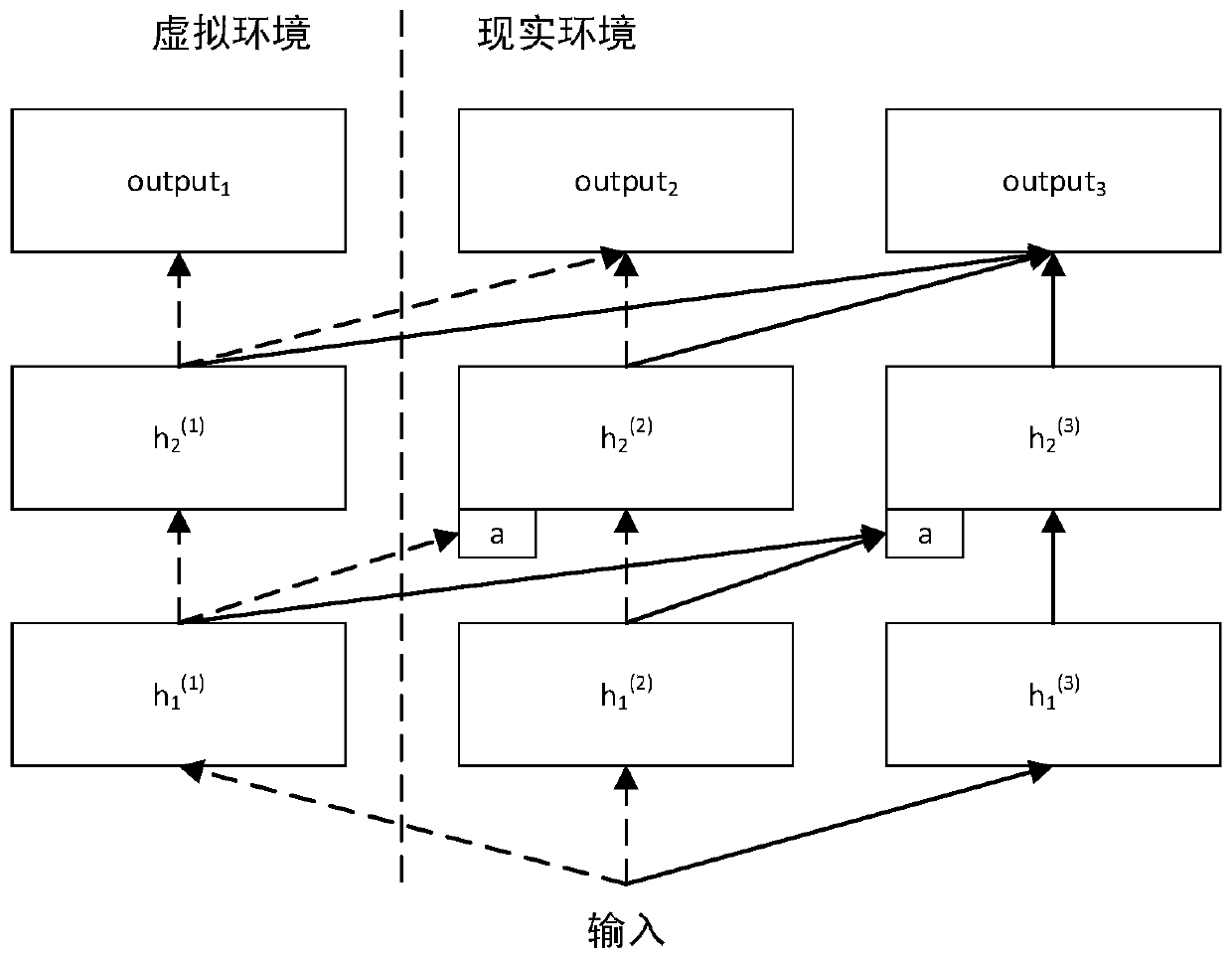

Method for establishing autonomous mobile robot navigation system through virtual environment

The invention discloses a method of establishing navigation for navigation robot based on deep reinforcement learning in an unknown environment. The method is realized through the following mode: first, expressing a virtual environment, the virtual state of a mobile robot agent, starting and ending point information and behaviors, then constructing a deep reinforcement learning structure based onthe DQN algorithm, training the mobile robot agent in the virtual environment, updating DQN network parameters, and finally migrating a training strategy through a progressive neural network, and constructing the mobile robot intelligent navigation system. The method for establishing the autonomous mobile robot navigation system through the virtual environment not only has relatively good flexibility and universality, but also establishes a complete one-stop solution for establishing the navigation system for the navigation robot.

Owner:DONGHUA UNIV

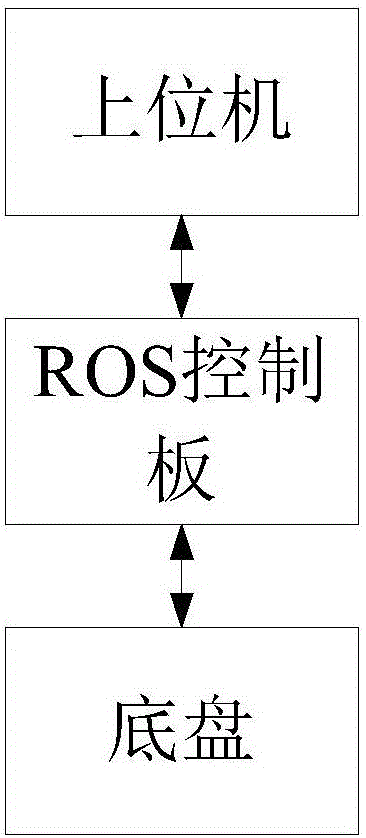

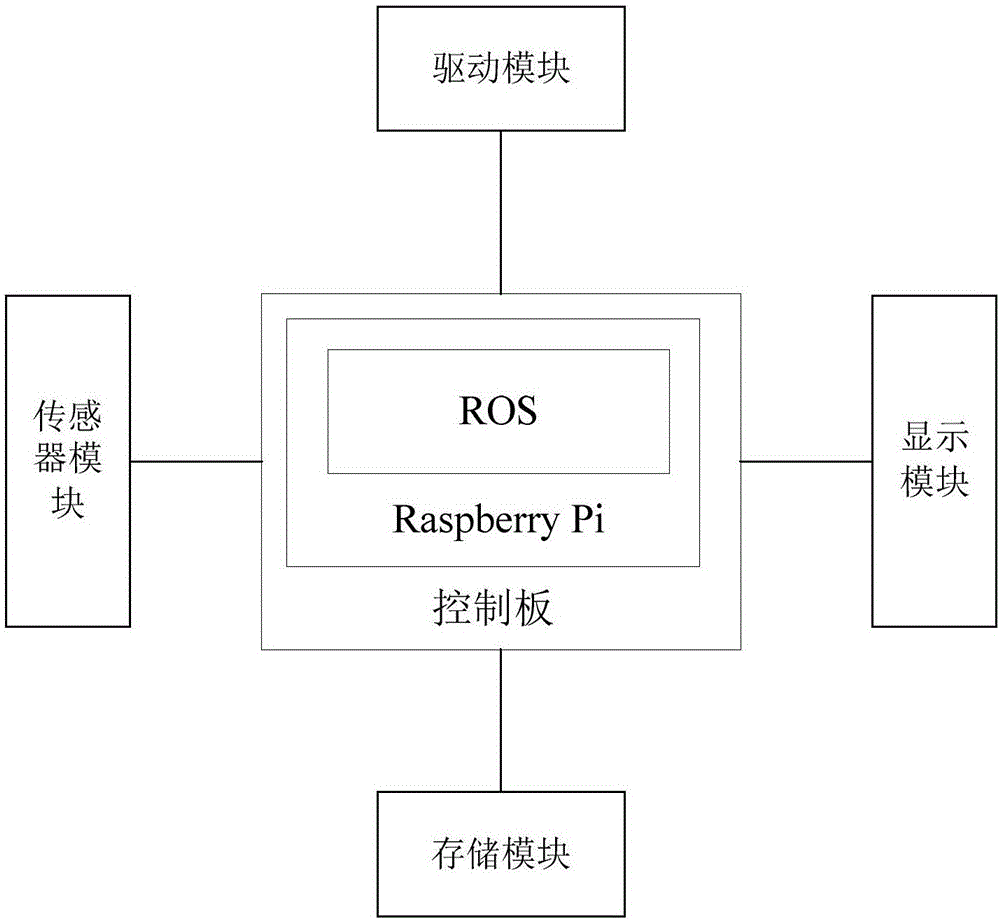

Mobile robot navigation method and system

InactiveCN106842230AAchieve positioningRealize the navigation functionOptical rangefindersNavigation by speed/acceleration measurementsSource codeMobile robot navigation

The invention discloses a mobile robot navigation method. The method comprises the following steps that 100, a raspberry pi control panel is installed on a chassis of a mobile robot; 200, a bottom layer driver file of an ROS system is transplanted; 300, a special mobile robot driver file is transplanted; 400, source codes of a navigation algorithm are complied. A robot navigation algorithm can be conveniently modified / updated.

Owner:SHENZHEN QIANHAI YYD ROBOT CO LTD

Wheel type mobile robot navigation method based on IHDR self-learning frame

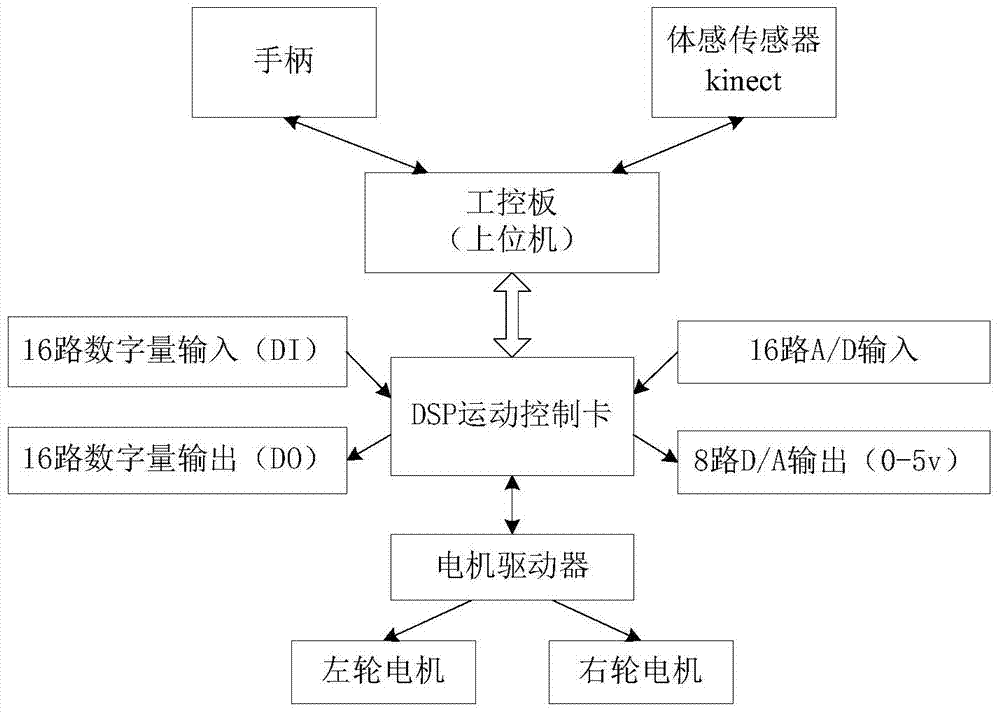

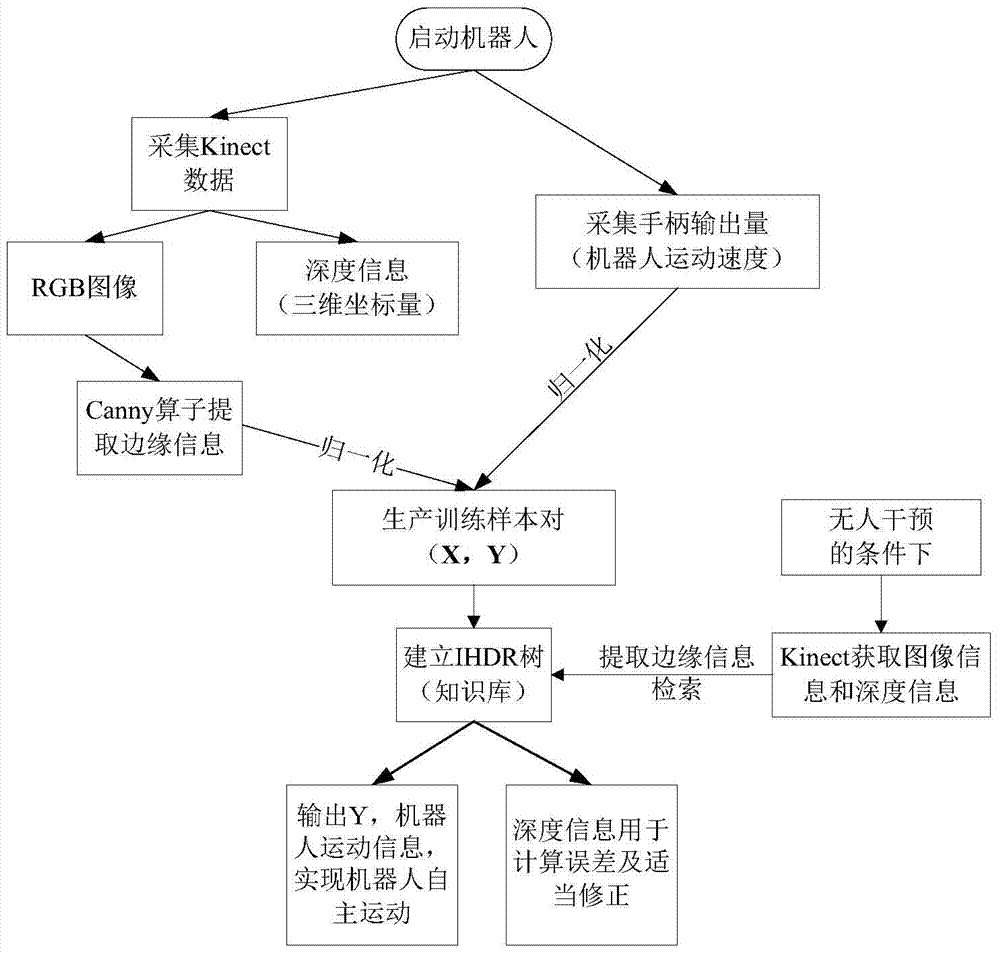

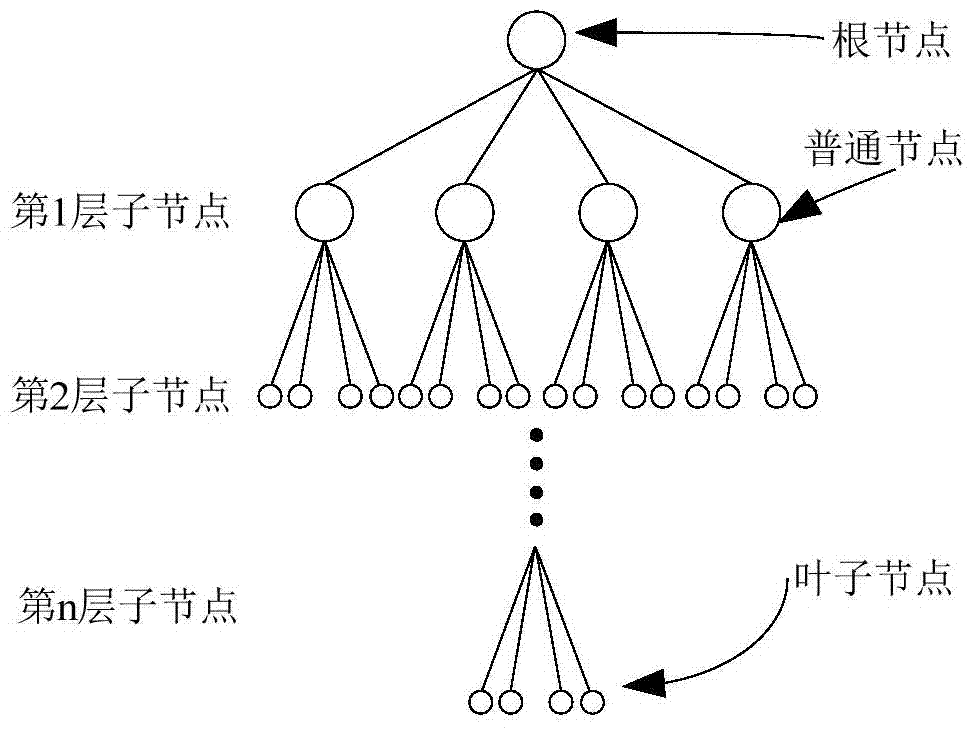

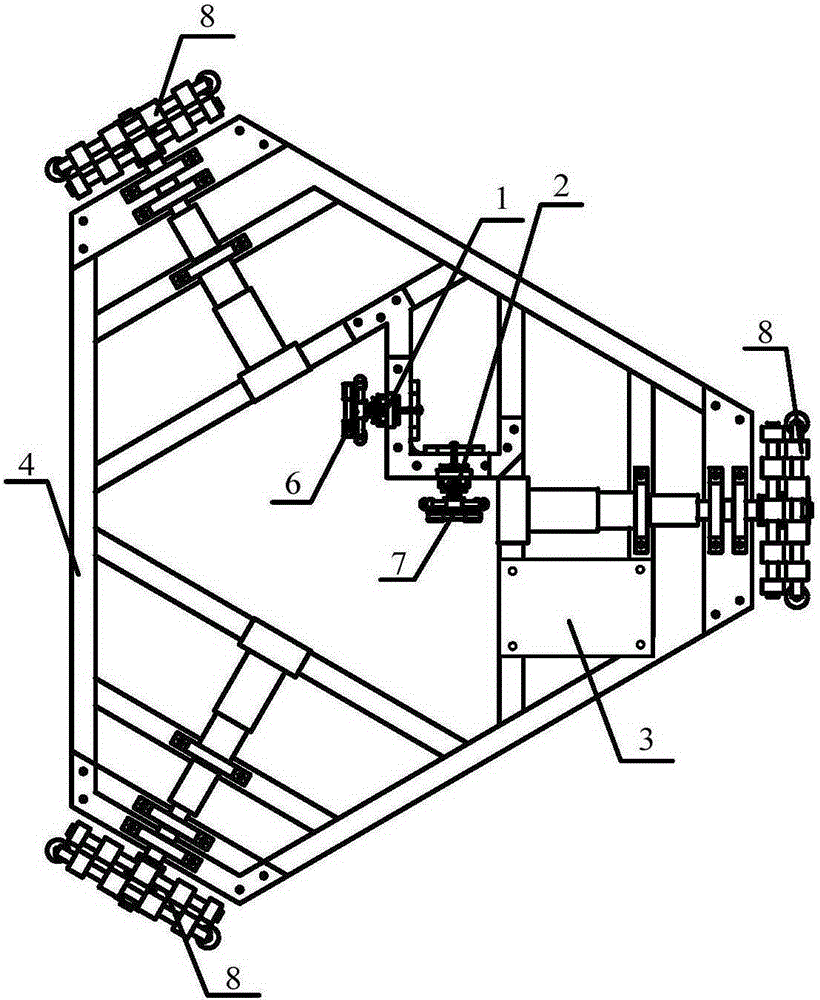

ActiveCN104850120AHigh degree of intelligencePrevent buildupPosition/course control in two dimensionsSelf navigationMathematical model

The invention relates to a wheel type mobile robot navigation method based on an IHDR self-learning frame. The method comprises following steps: firstly, a robot is guided to move, and in the process, the robot is controlled to acquire current image information and speed values in real time via a kinect sensor and a handle; then the IHDR algorithm is employed to establish a mapping relation between image input and speed value output and store a lot of acquired data in the robot in the manner of a "knowledge" tree-shaped structure; finally, the robot can search in a knowledge base according to the current image information without human intervention so that self navigation is realized. According to the method, the robot has the same thinking mode with people, a cognitive ability is reflected, the navigation is not realized via a mathematical model in a conventional manner, and the intelligent degree is higher.

Owner:WUHAN UNIV OF SCI & TECH

Navigation and positioning method for indoor mobile robot

ActiveCN105300378AImprove stabilityEasy to implementNavigation by speed/acceleration measurementsControl systemGps navigation

The invention aims to provide a navigation and positioning method for an indoor mobile robot. Two positioning encoding disks of which encoder axes are not parallel and which are distributed at any angle and an uniaxial optical fiber gyroscope are used for positioning, the positioning encoding disks are installed on the indoor mobile robot, positioning data are directly transmitted to a control system in time, and the data which are fed back by encoders are processed by the control system through a positioning algorithm, so that coordinates of the robot can be positioned. According to the navigation and positioning method, on the basis of the positioning encoding disks and the uniaxial optical fiber gyroscope, i.e. two optical encoders provided with omnidirectional wheels are vertically distributed, the indoor mobile robot belongs to a mechanical device, compared with a wireless sensing response network system, a GPS (Global Position System) navigation and positioning system and the like based on electronic components, a measuring device provided by the invention is simple, the implementation is convenient, and the mechanical device has high stability; the positioning accuracy of the robot can be controlled within 0.5% through experimental debugging for about five times, and high positioning accuracy is obtained.

Owner:HARBIN ENG UNIV

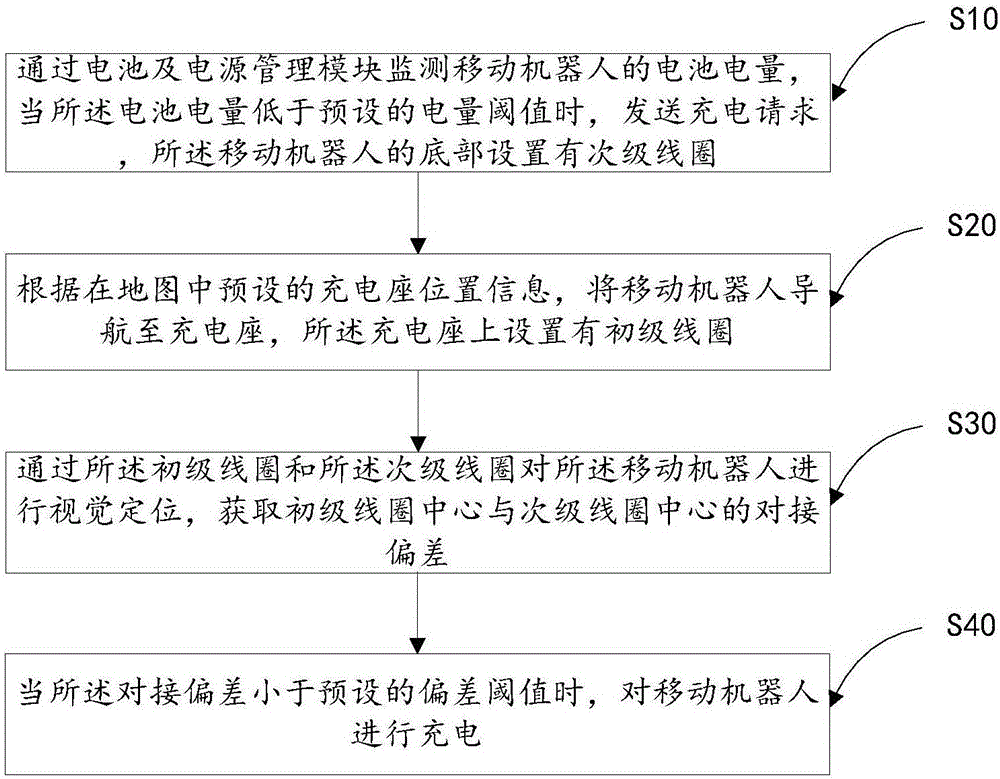

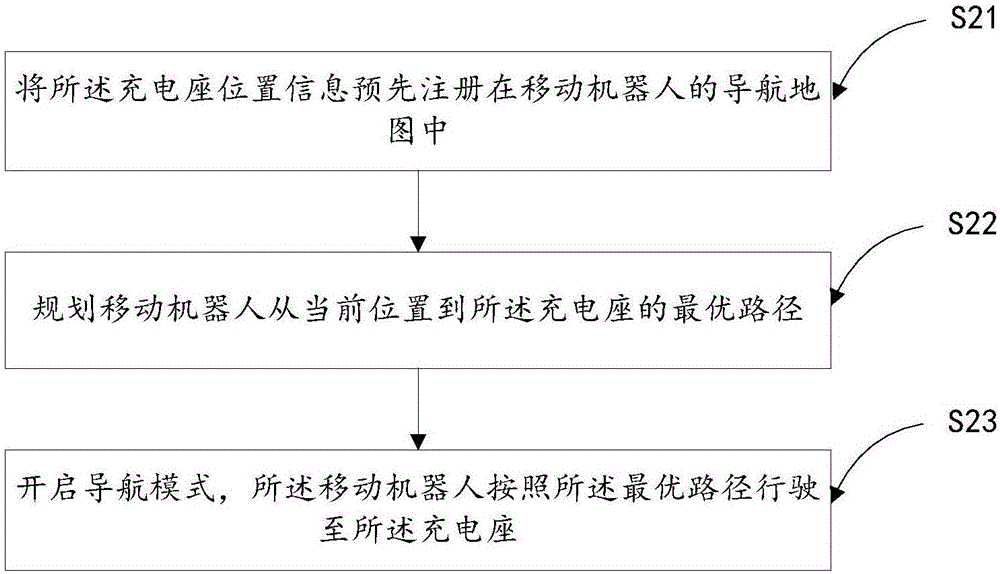

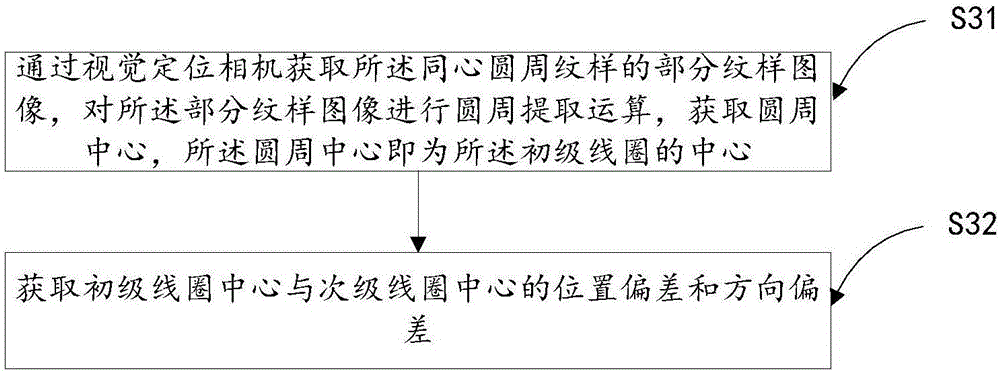

Wireless charging method and device for mobile robot

InactiveCN106787266ANo manual operationImprove charging efficiencyElectric powerArrangements for several simultaneous batteriesSupply managementEngineering

The invention relates to the technical field of intelligent control and discloses a wireless charging method and device for a mobile robot. The method comprises steps as follows: battery capacity of the mobile robot is monitored through a battery and power supply management module; when the battery capacity is lower than a preset threshold value of power capacity, a charging request is sent, and a secondary coil is arranged at the bottom of the mobile robot; the mobile robot is navigated to a charging base according to charging base position information preset in a map, and a primary coil is arranged on the charging base; the mobile robot is subjected to vision localization through the primary coil and the secondary coil, and docking deviation between the primary coil and the secondary coil is acquired; when the docking deviation is smaller than a preset deviation threshold value, the mobile robot is charged. Preliminary positioning can be realized through navigation, accurate positioning is performed through a vision camera, the robot is automatically and wirelessly charged without manual operation, and the charging efficiency is improved.

Owner:SHENZHEN SMART SECURITY & SURVEILLANCE SERVICE ROBOT CO LTD

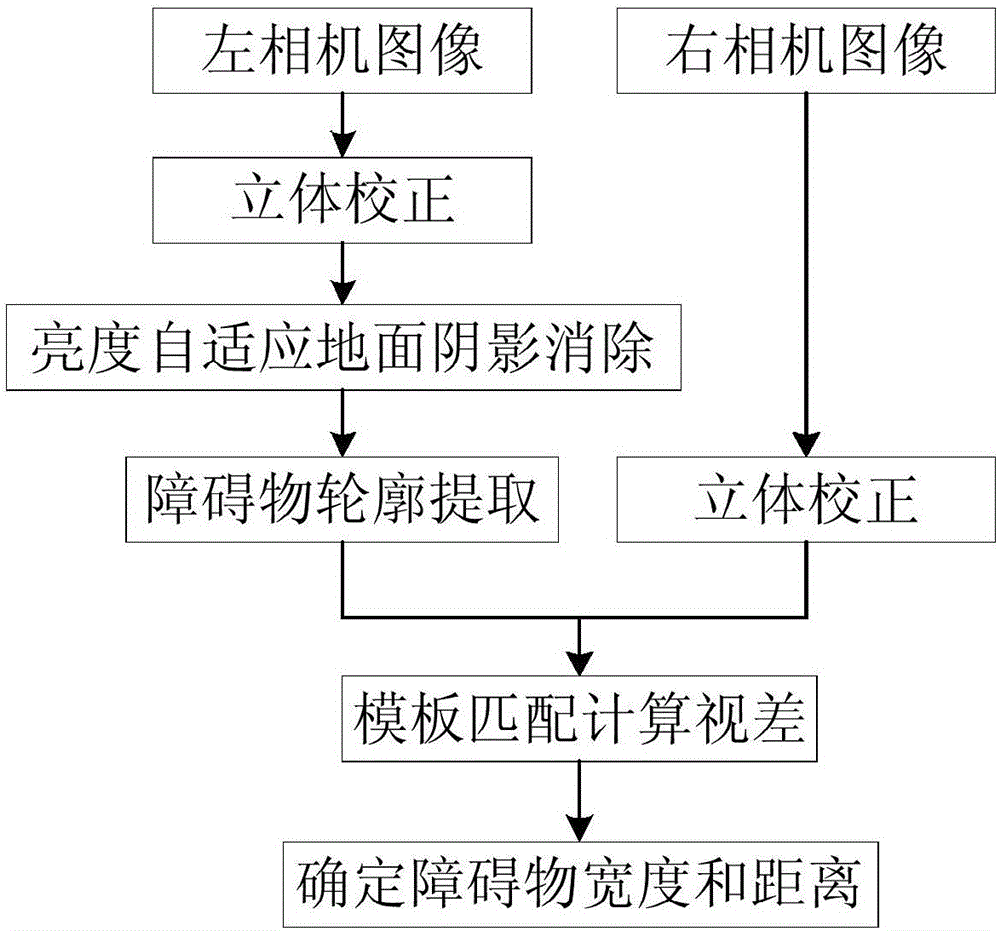

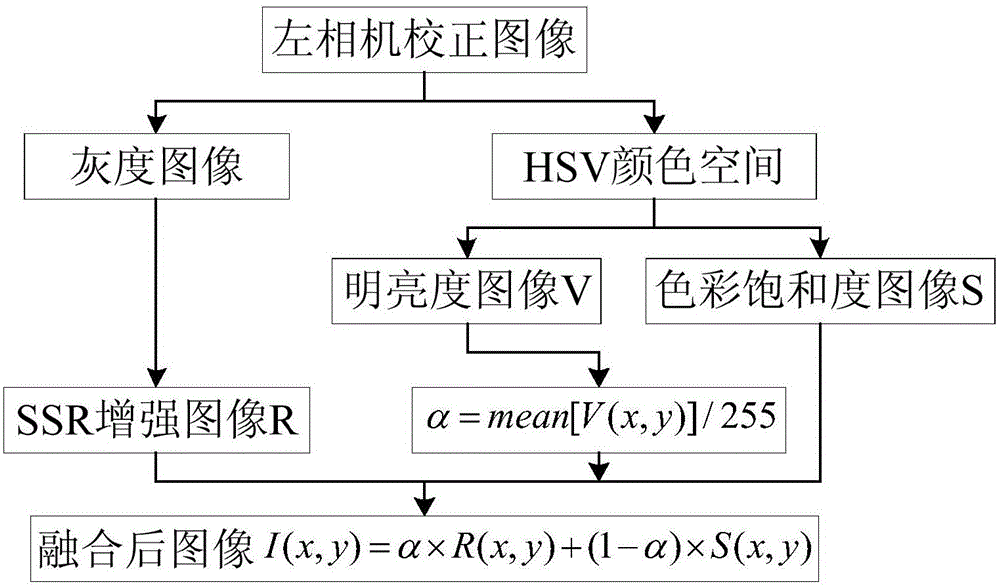

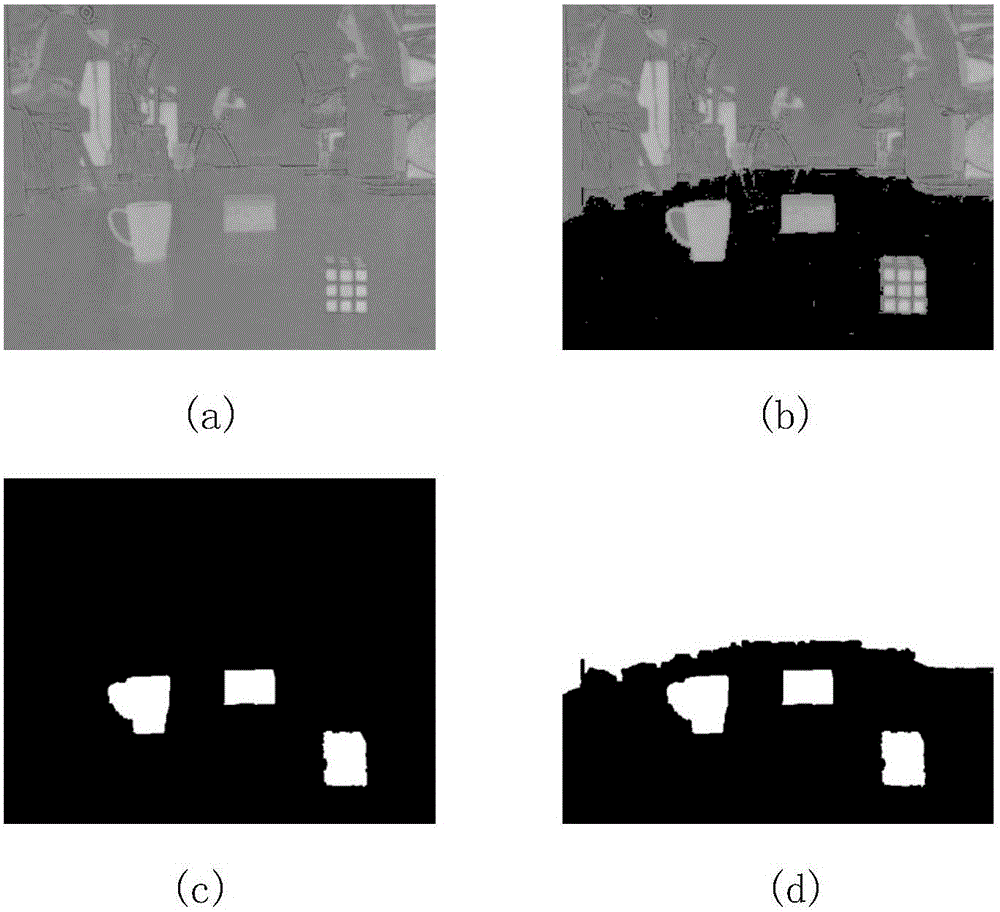

Binocular vision-based method and apparatus for detecting barrier in indoor shadow environment

ActiveCN106650701AEasy extractionGet it quickly and accuratelyOptical rangefindersCharacter and pattern recognitionParallaxCamera image

The invention discloses a binocular vision-based method for detecting a barrier in an indoor shadow environment. A binocular vision system is adopted. The method comprises the steps of (1) removing a shadow, extracting color saturation of an original image, performing fusion with image information subjected to shadow removal, and adaptively adjusting and increasing grayscale difference of the ground and the barrier through environmental brightness information; (2) filling a ground region of a fused image by utilizing a seed filling algorithm, and obtaining a barrier region through threshold segmentation, corrosion and expansion operations; and (3) performing matching in a right camera image by taking the obtained barrier region as a template through applying the binocular vision system to calculate parallax of a central point, and calculating three-dimensional coordinates of the central point and the width and distance of the barrier. The invention furthermore discloses a binocular vision-based apparatus for detecting the barrier in the indoor shadow environment. According to the method and the apparatus, the barrier can be completely extracted in the indoor shadow environment; and the method and the apparatus are simple and efficient, have relatively good real-time property and relatively high precision, and are suitable for mobile robot navigation and barrier avoidance.

Owner:SOUTH CHINA UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com