Patents

Literature

53results about How to "Improve rebuild quality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

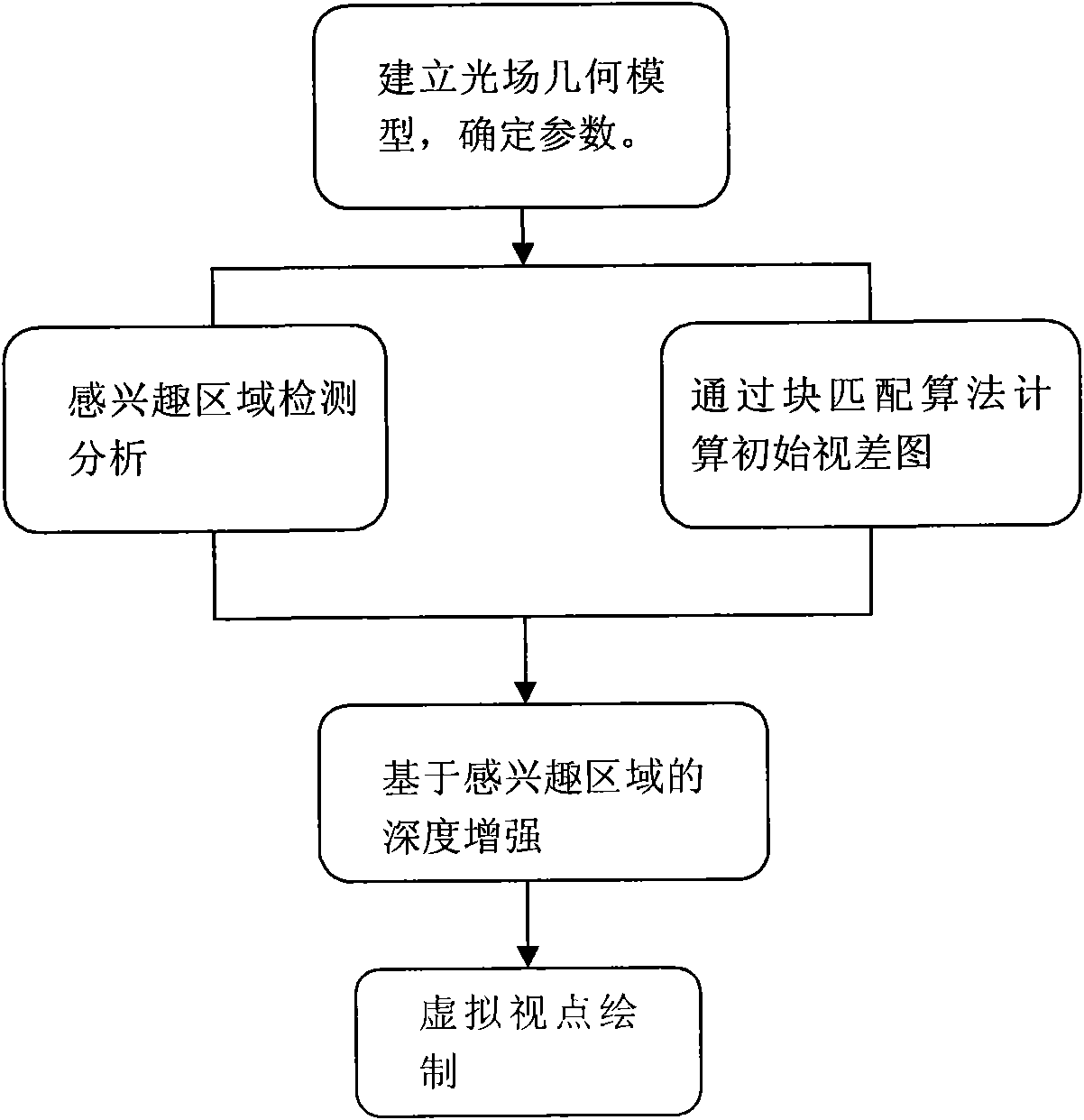

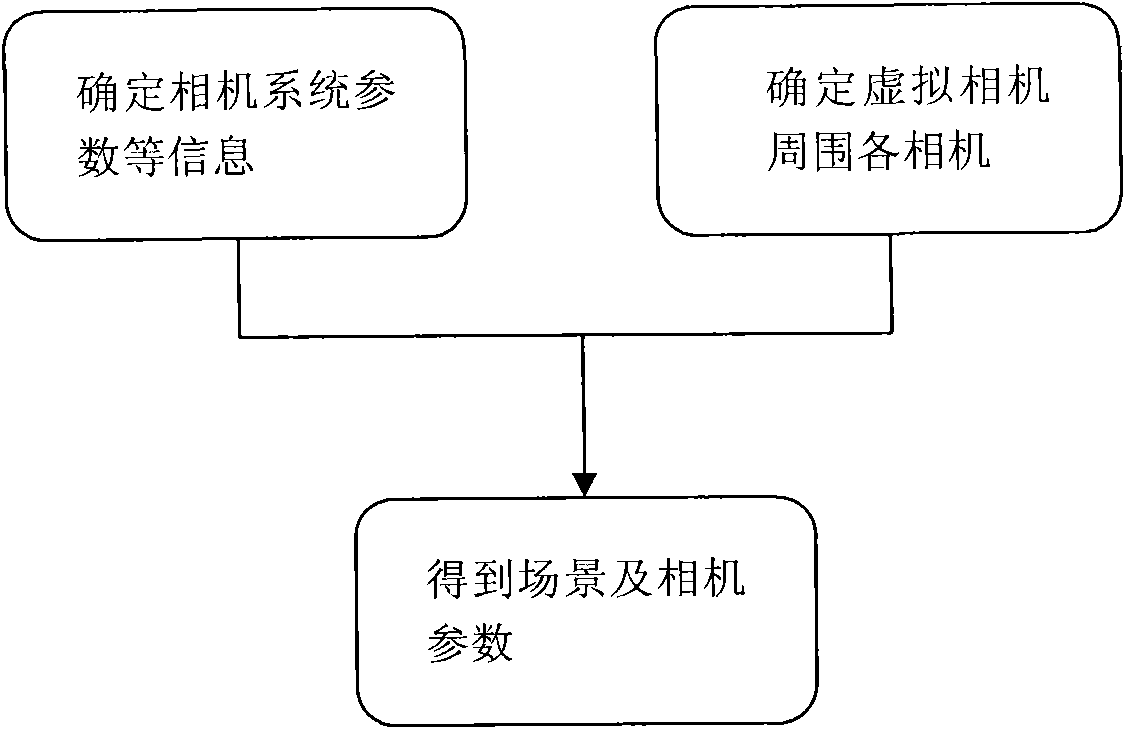

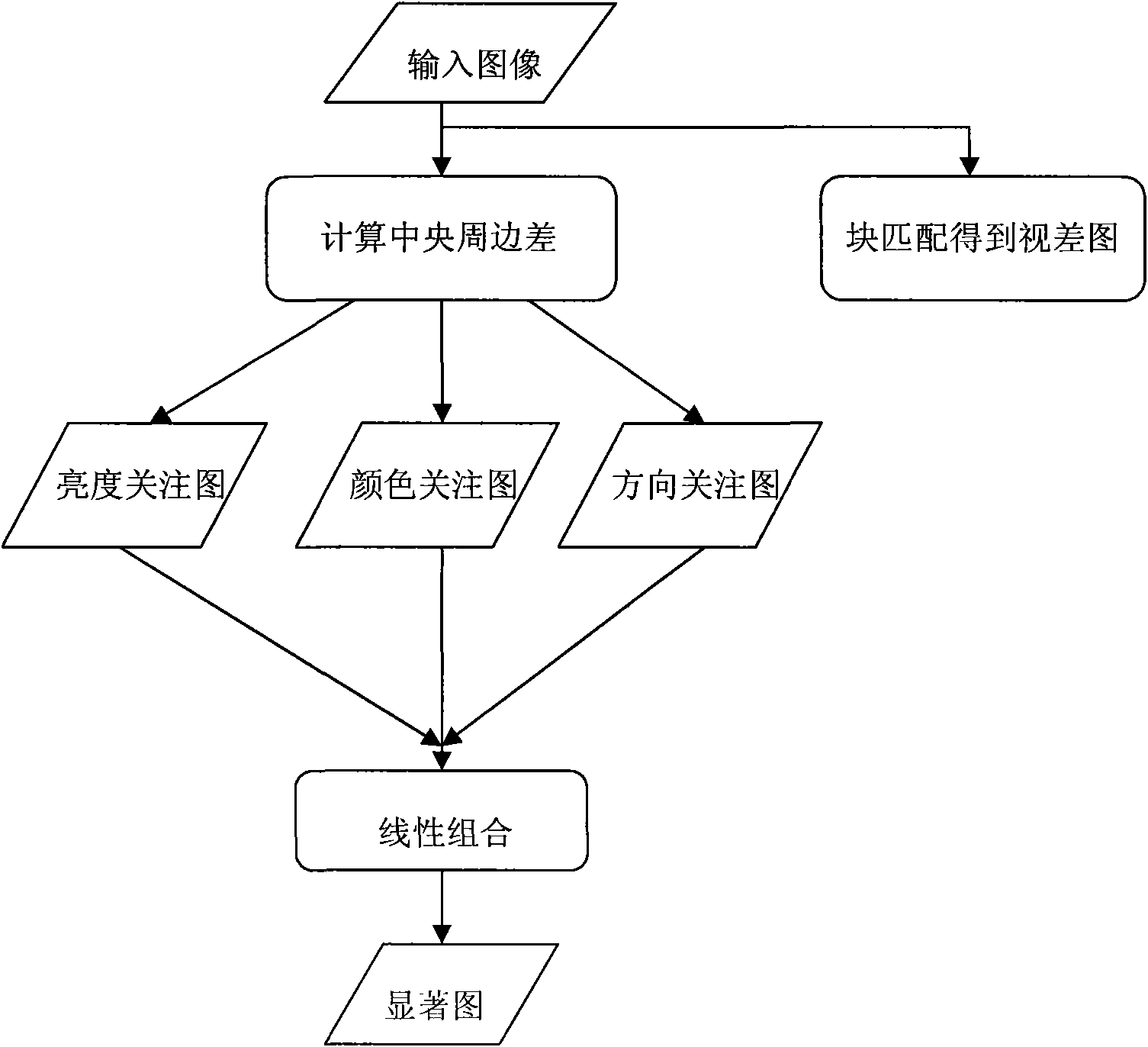

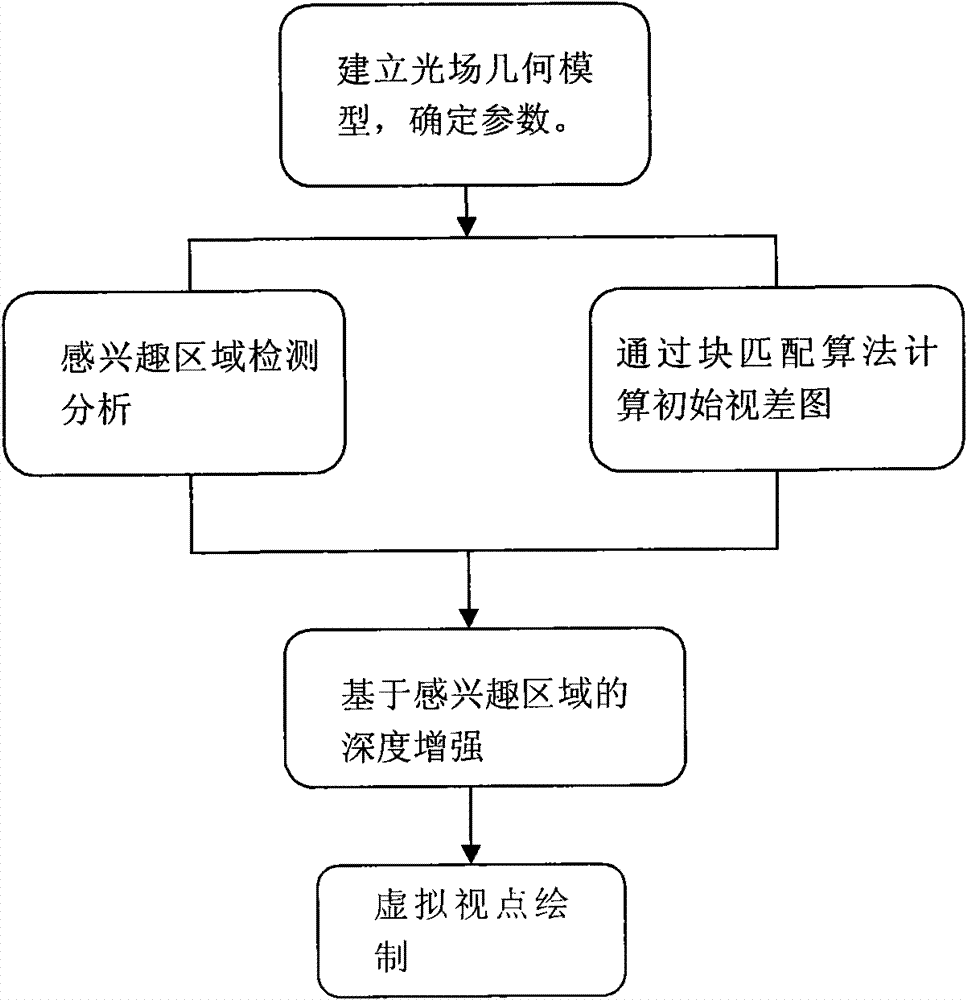

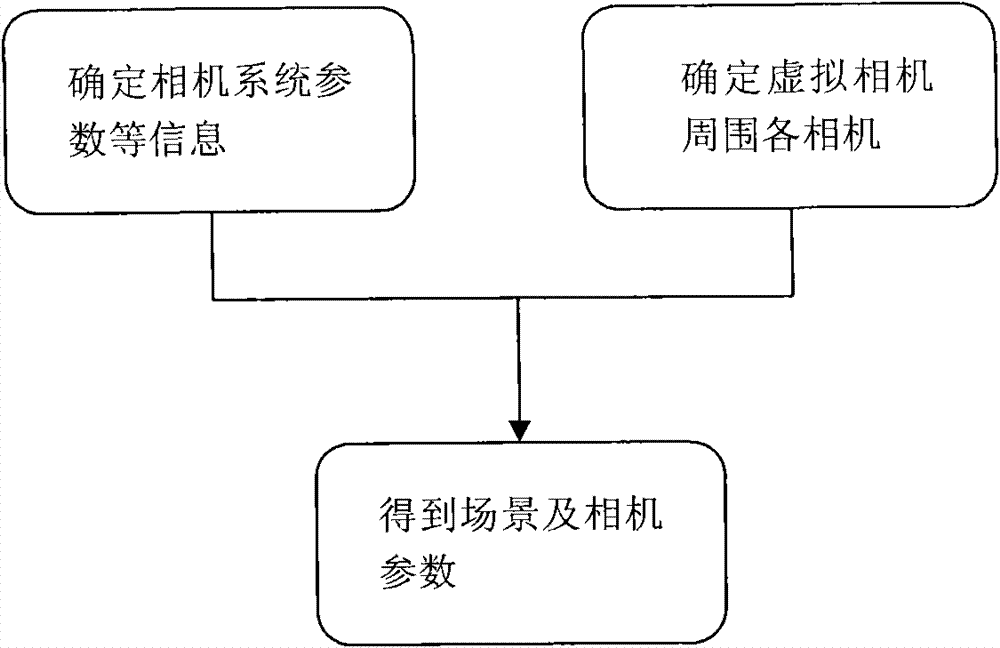

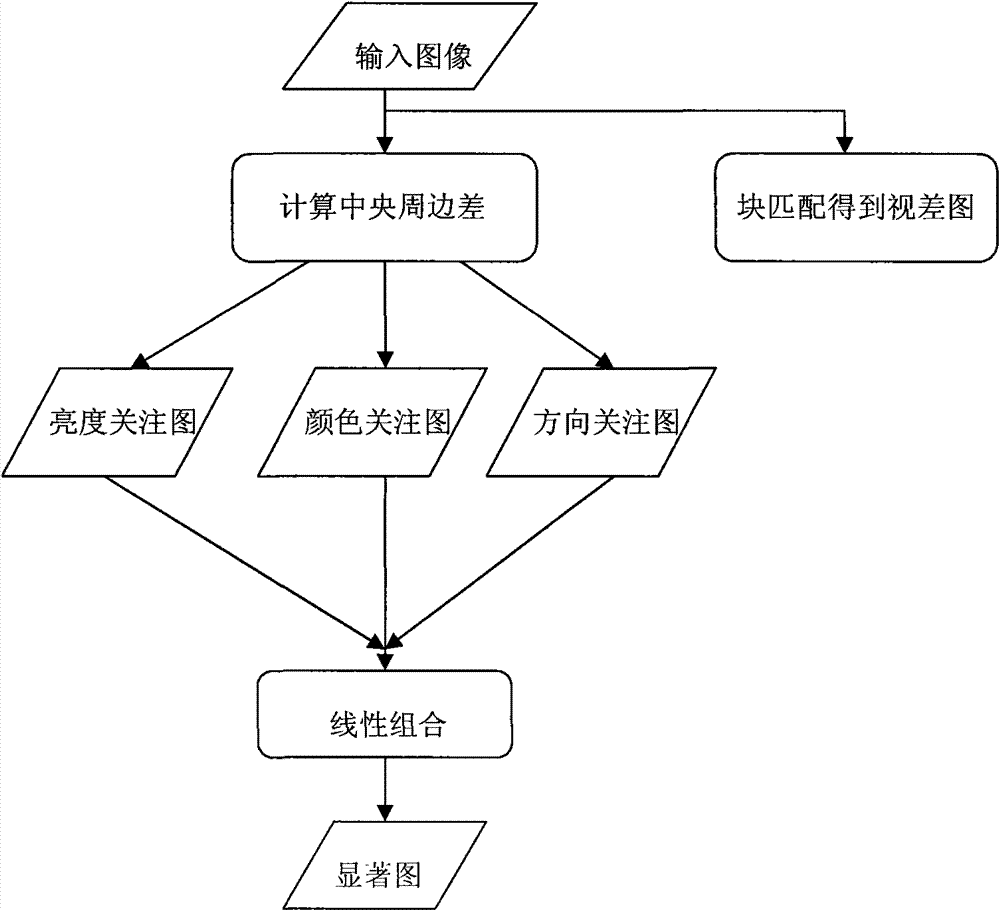

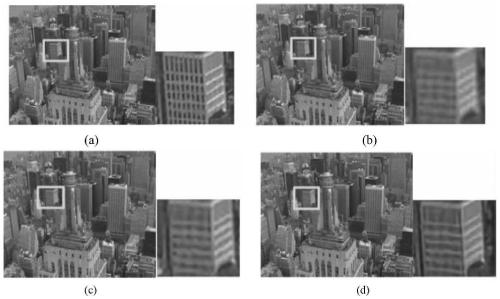

Method for drawing viewpoints by reinforcing interested region

ActiveCN101883291AReduce computational complexityEasy to implementImage analysisSteroscopic systemsViewpointsCollection system

The invention aims to provide a method for drawing viewpoints by reinforcing an interested region, comprising the following steps of: aiming at the collection mode of a light field camera, firstly establishing the camera geometrical model of the collection mode of the light field camera according to the parameters of a collection system and the geometrical information of a scene, then calculatingthe interested region and reinforcing the original thin depth map through the identified interested region; and then carrying out light field drawing by utilizing the reinforced depth map according to camera parameters and a geometrical scene to obtain a new viewpoint image. The test on the method indicates that the invention can obtain favorable viewpoint reestablishing quality.

Owner:SHANGHAI UNIV

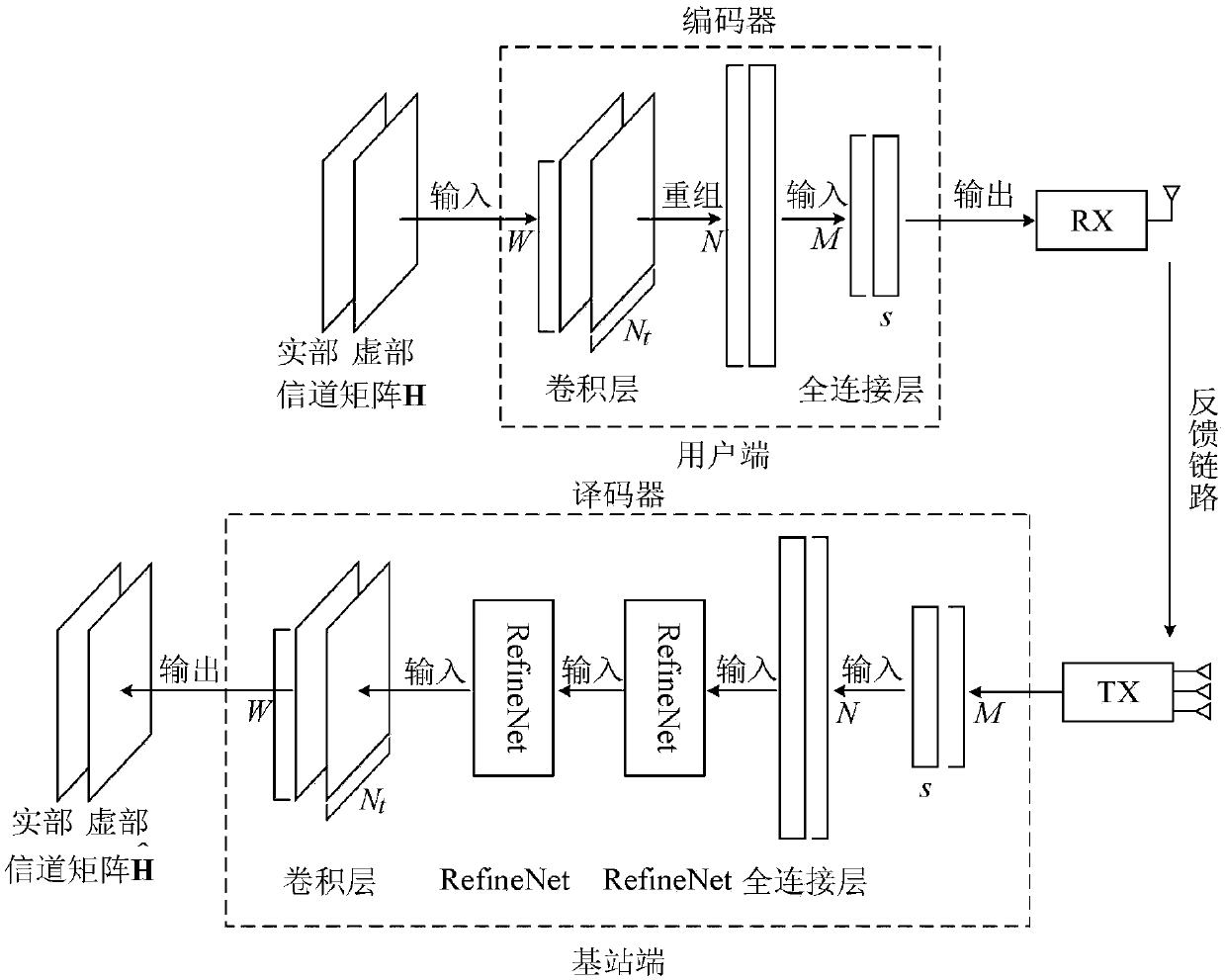

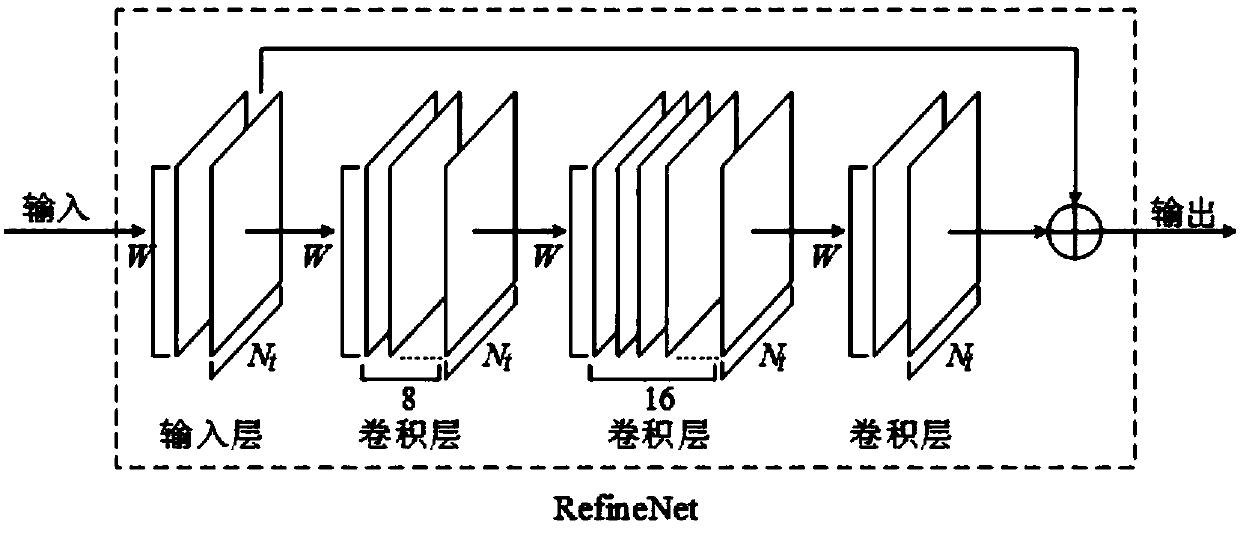

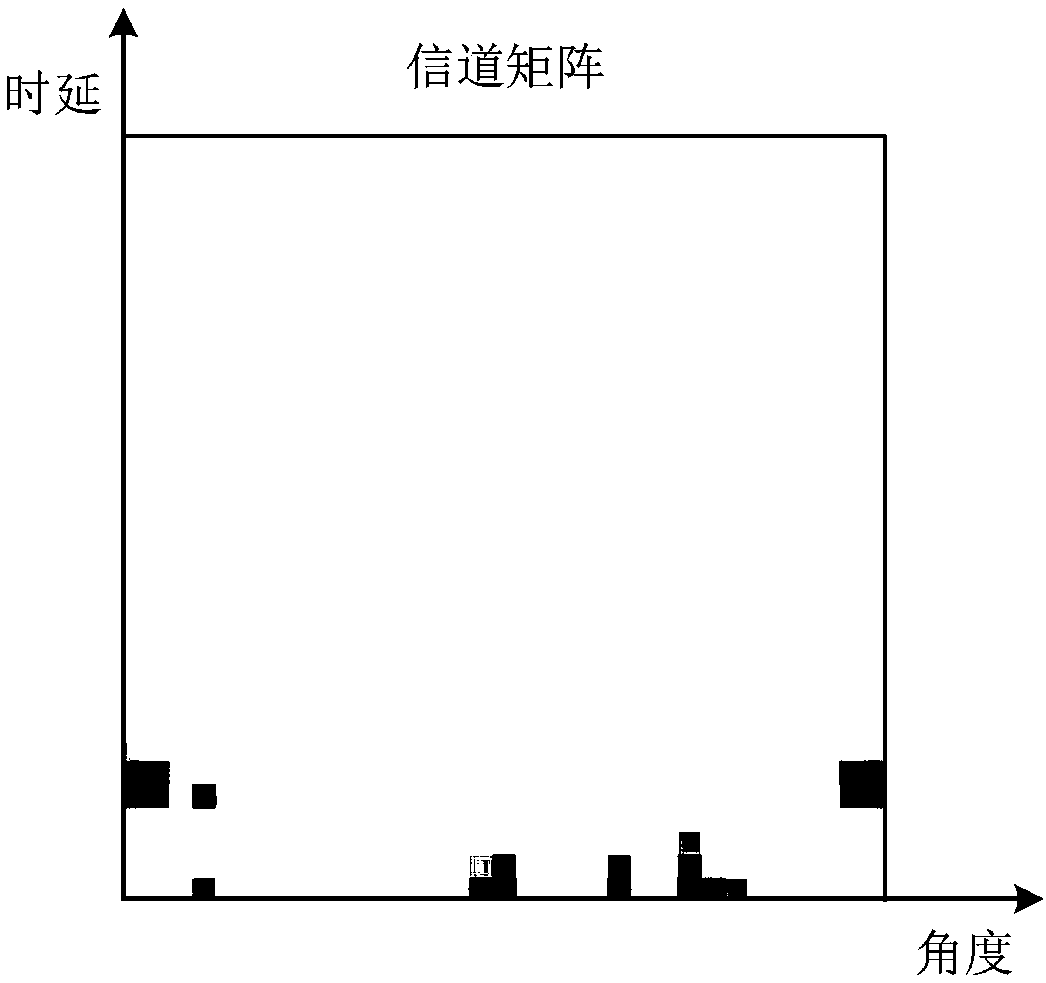

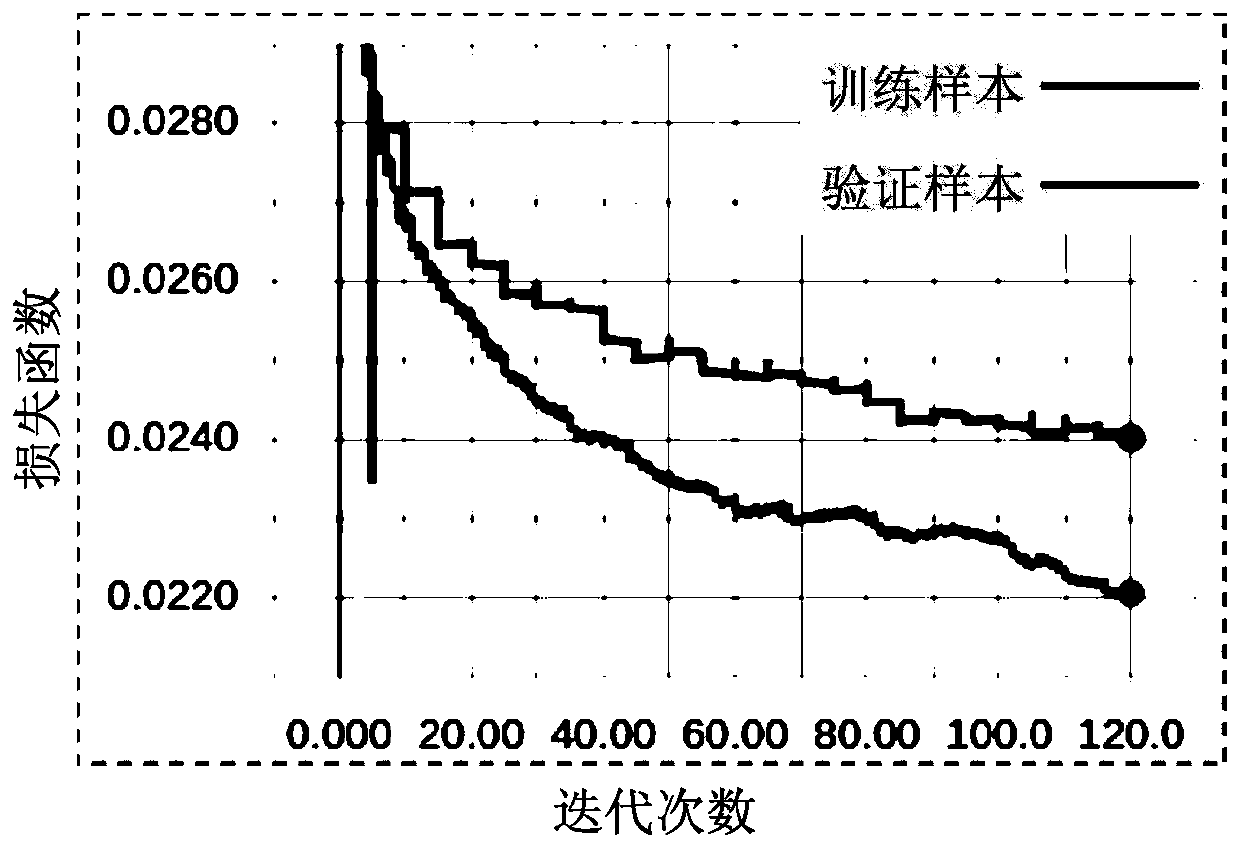

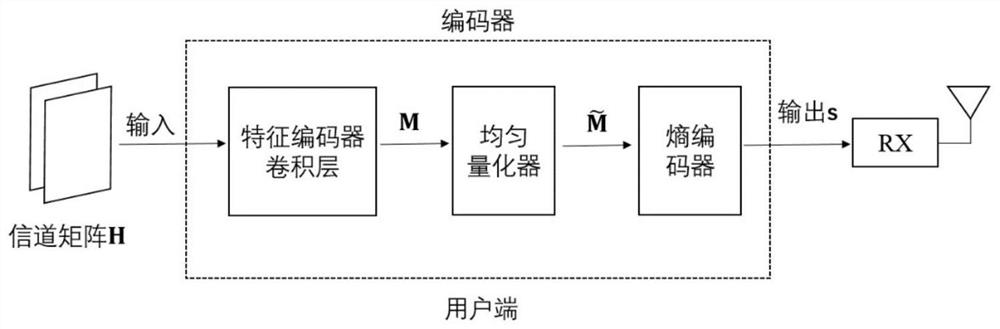

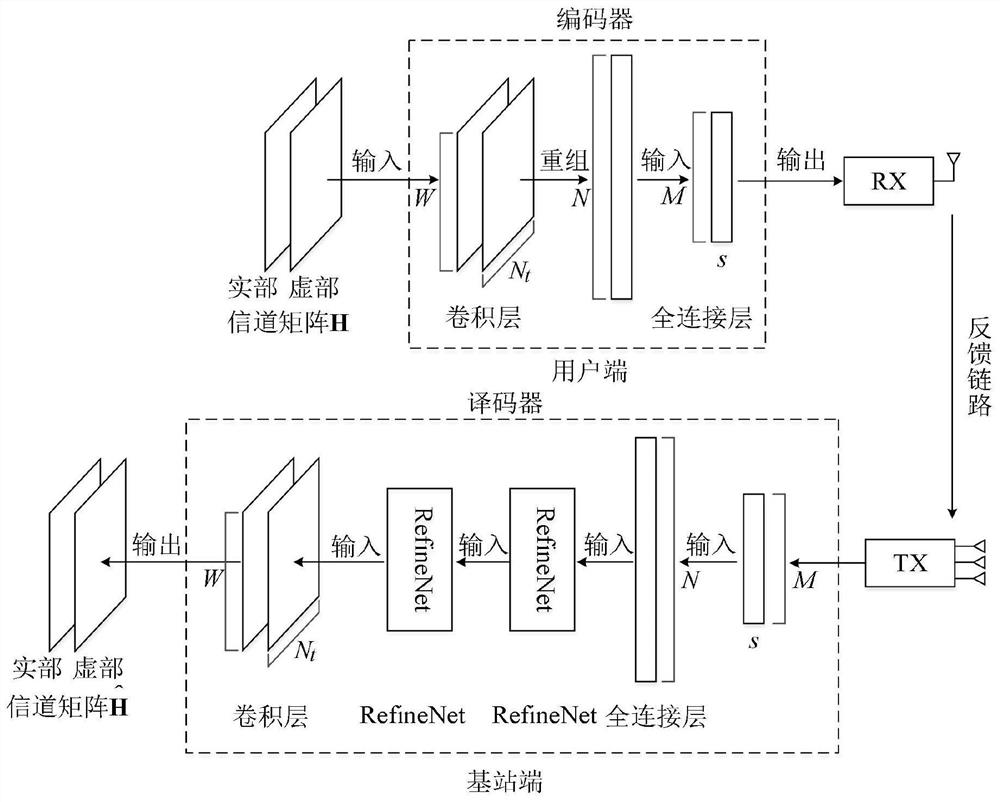

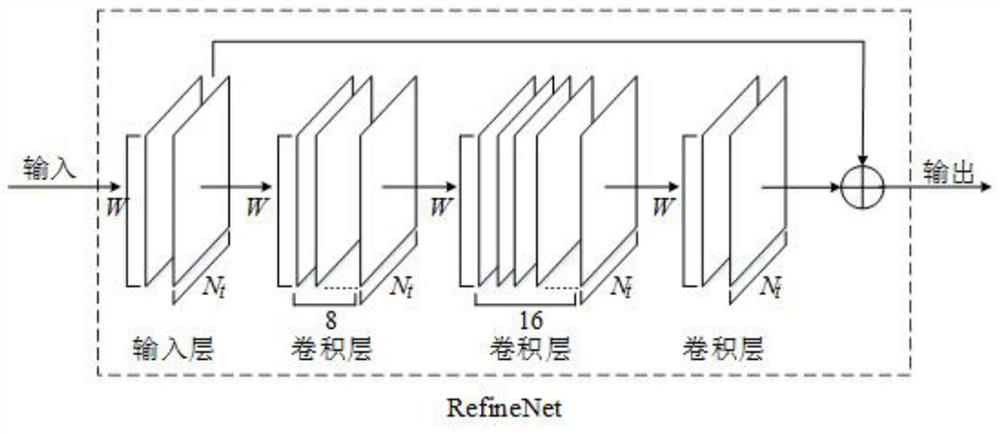

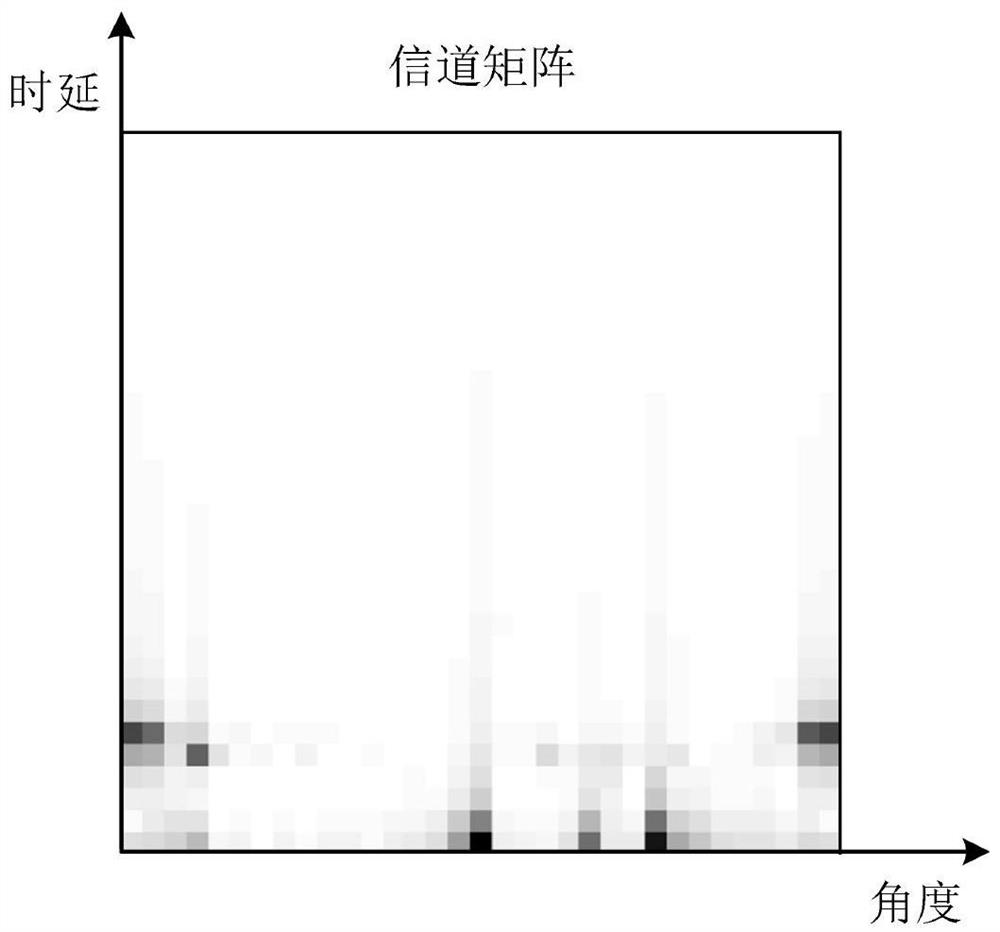

Large-scale MIMO channel state information feedback method based on deep learning

ActiveCN108390706AHigh speedImprove rebuild qualitySpatial transmit diversityFourier transform on finite groupsModel parameters

The invention discloses a large-scale MIMO channel state information feedback method based on deep learning. The method comprises the following steps: firstly, carrying out two-dimensional discrete Fourier transform (DFT) on a channel matrix H-wave of MIMO channel state information in a spatial frequency domain on a user side, so that a channel matrix H which is sparse in an angle delay domain isobtained; secondly, constructing a model CsiNet comprising a coder and a decoder, wherein the coder belongs to the user side and is used for coding the channel matrix H into codons with a lower dimension, and the decoder belongs to a base station side and is used for reconstructing an original channel matrix estimation value H-arrow from the codons; thirdly, training the model CsiNet to obtain model parameters; fourthly, carrying out two-dimensional inverse DFT on a reconstructed channel matrix H-arrow which is output by the CsiNet, so that a reconstructed value of the original channel matrixH-wave in the spatial frequency domain is recovered; and finally, using the trained model CsiNet for compressed sensing and reconstruction of channel information. The method provided by the inventionhas the advantages that large-scale MIMO channel state information feedback expenditures can be reduced, and an extremely high channel reconstruction quality and an extremely high channel reconstruction speed can be achieved.

Owner:SOUTHEAST UNIV

Reconstruction method for image super-resolution

ActiveCN102360498AGood edge propertiesGood removal effectImage enhancementImaging processingImage resolution

The invention discloses a reconstruction method for image super-resolution. The reconstruction method comprises the following steps of: (1) inputting a low-resolution image sequence of m frames represented by vectors in the same scene; (2) processing all low-resolution images by adopting a bilinear interpolation method, and acquiring initial high-resolution image estimated values, namely initial estimated values; (3) substituting the initial estimated values into an iterative formula, and performing iterative computation; (4) judging whether iterative convergence conditions are met or not, stopping iteration if the iterative convergence conditions are met, and outputting a high-resolution image. The reconstruction method for the image super-resolution provided by the invention has wide application prospects in the fields such as video and image processing, medical imaging, remote sensing images and the like.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD

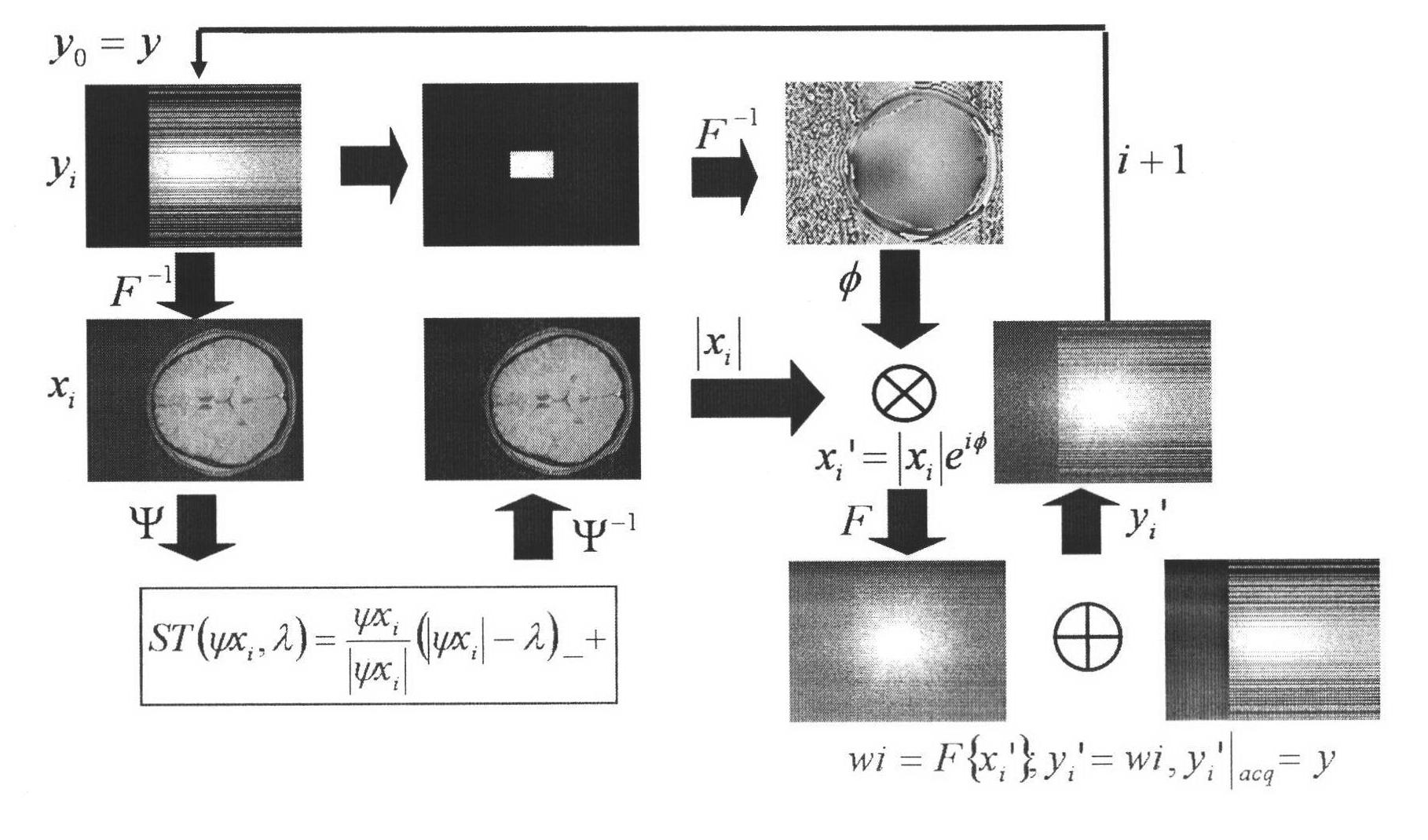

Partial echo compressed sensing-based quick magnetic resonance imaging method

InactiveCN101975935AReduce acquisition timeReduce echo timeMagnetic measurementsLow speedData acquisition

The invention discloses a partial echo compressed sensing-based quick magnetic resonance imaging (MRI) method. The conventional imaging method has low speed and high hardware cost. The method comprises the following steps of: acquiring echo data of a random variable density part, namely intensively acquiring data in a central area of a k-space and acquiring the data around the k-space randomly and sparsely to generate a two-dimensional random mask, adding the two-dimensional random mask into every data point which needs to be acquired on a frequency coding shaft to form a three-dimensional random mask, and acquiring the data of the k-space according to the generated three-dimensional random mask; re-establishing by projection onto convex sets based on a wavelet domain which is de-noised by soft thresholding; and nonlinearly re-establishing a minimum L1 normal number based on finite difference transformation, namely sparsely transforming an image space signal x, determining an optimization objective and solving the optimization objective. By the method of the invention, partial echo technology and compressed sensing technology are combined and applied to data acquisition of MRI, sothat echo time is shortened, and data acquisition time is shortened at the same time.

Owner:HANGZHOU DIANZI UNIV

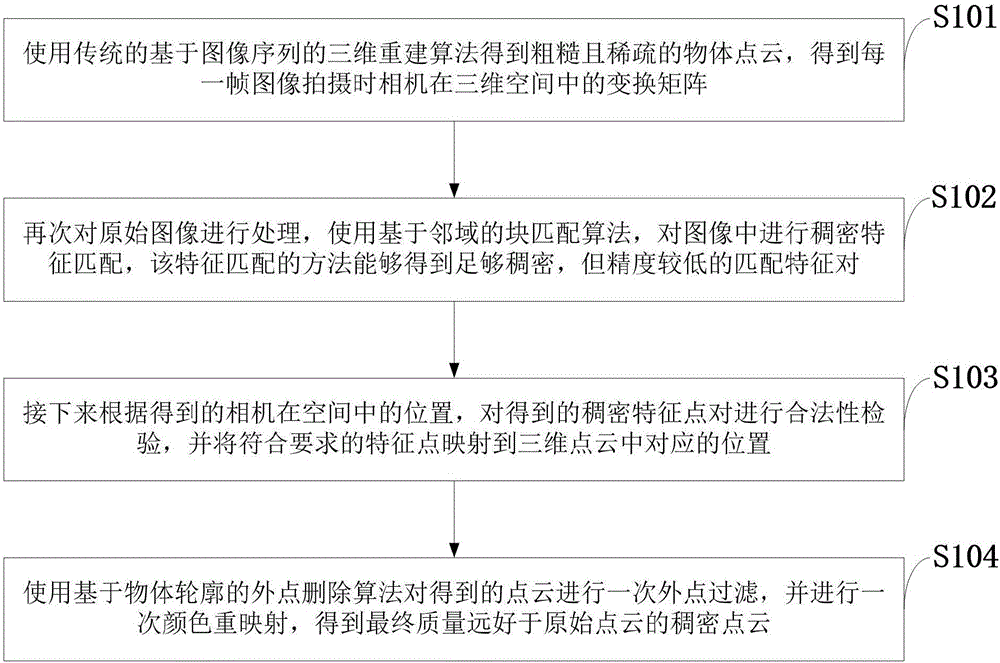

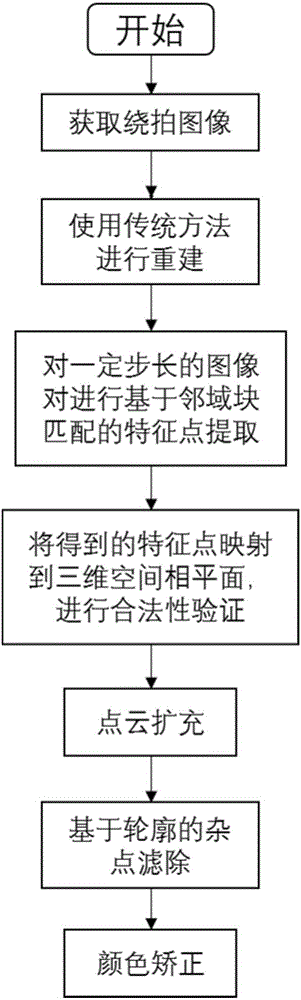

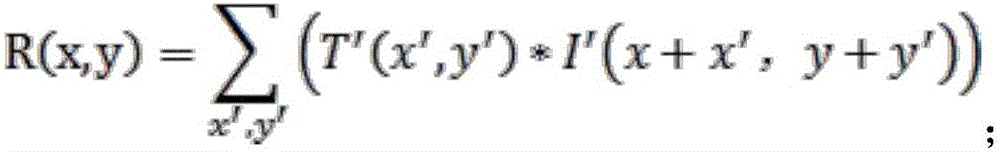

Method of improving density of three-dimensional reconstructed point cloud based on neighborhood block matching

ActiveCN106683173AQuality improvementHigh densityImage analysis3D modellingPoint cloudThree-dimensional space

Owner:陕西仙电同圆信息科技有限公司

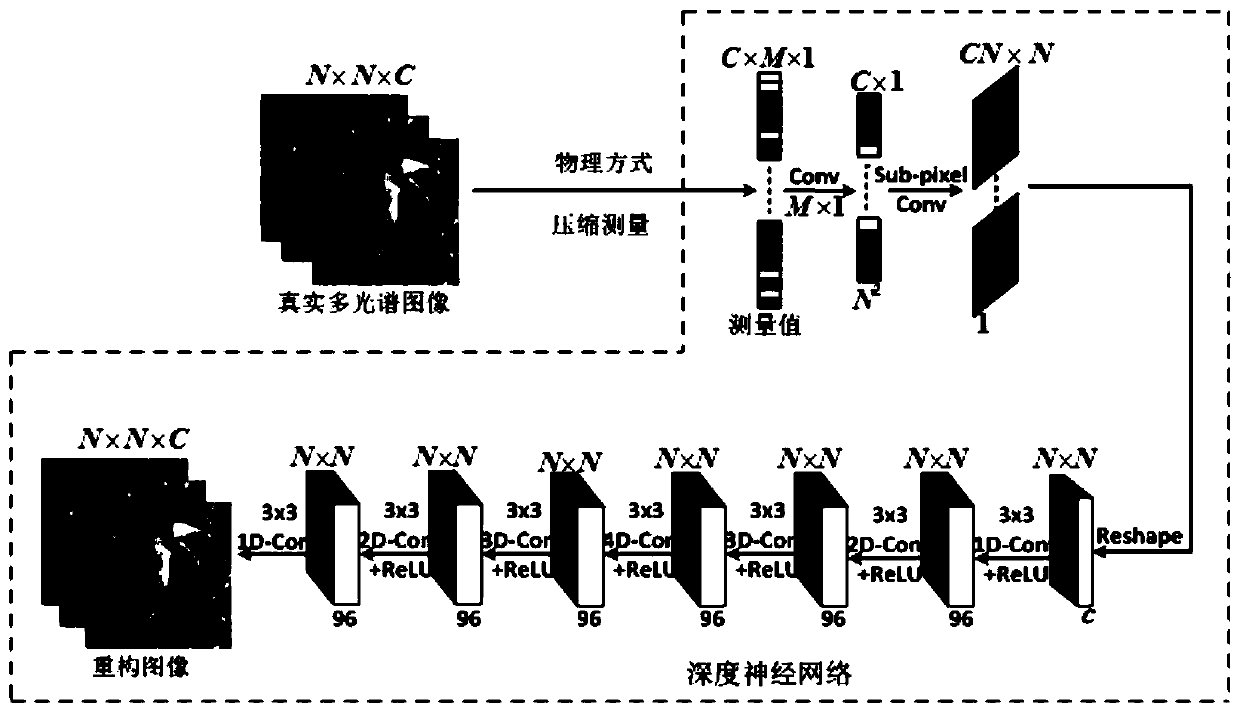

Multispectral single-pixel imaging deep learning image reconstruction method

ActiveCN110175971ADownsamplingShort rebuild timeImage enhancementImage analysisReconstruction methodMultispectral image

The invention provides a multispectral single-pixel imaging deep learning image reconstruction method, which comprises a measurement process and a reconstruction process, and is characterized in thatin the measurement process is characterized by using a coding pattern for coding a target scene, and then using a multispectral detector for recording the light intensities corresponding to differentwavelengths; after the multispectral single-pixel detection is realized in a physical mode, based on an image reconstruction method of a deep neural network, realizing a process of reconstructing anoriginal signal X from all detection signals Yc, wherein the deep neural network is composed of a linear mapping network and a convolutional neural network; splicing C measurement vectors together according to columns to form a new matrix Y ', wherein the linear mapping network uses Y' as the input data to preliminarily perform linear processing on the data, then subjecting the linear processing result to the information fusion processing between channels through the convolutional neural network, and finally obtaining a to-be-observed image X through reconstruction. According to the technicalscheme, the problems that in the prior art, the algorithm complexity is high, the reconstruction time is longer, and the requirement for the sampling rate is higher are solved.

Owner:DALIAN MARITIME UNIVERSITY

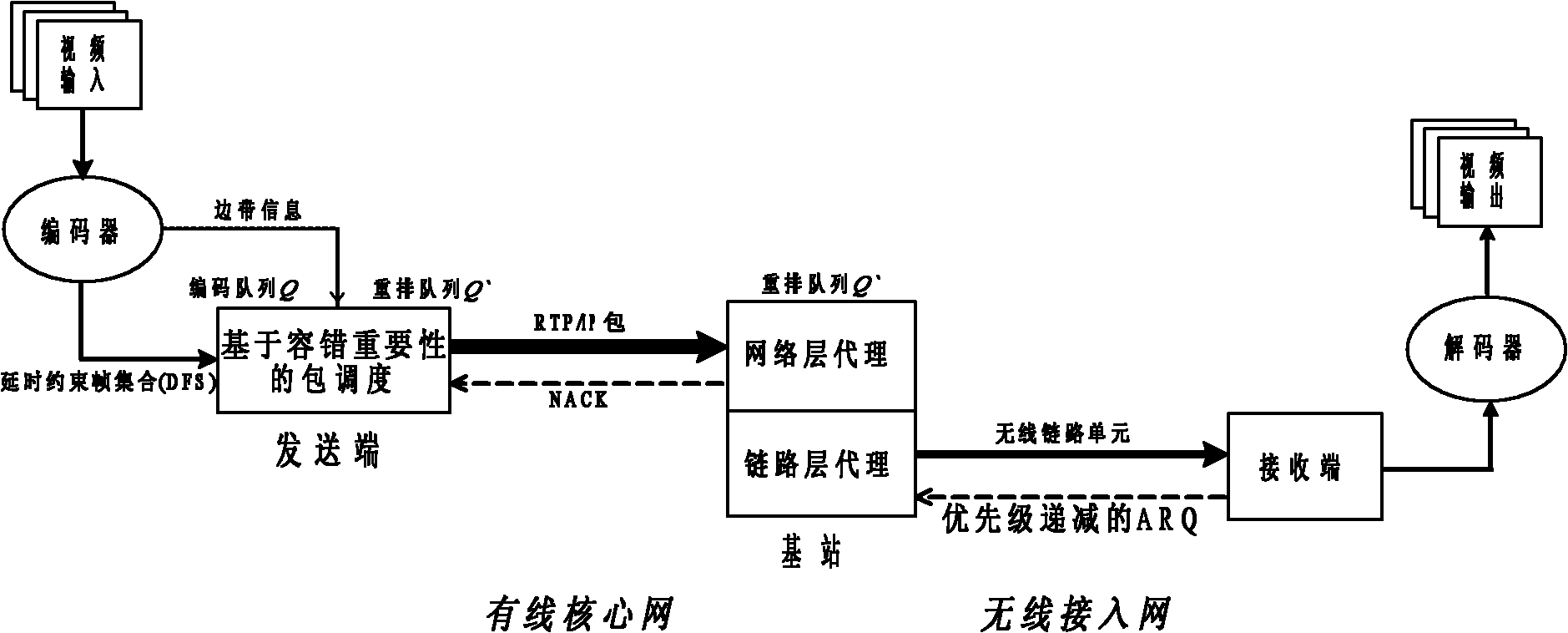

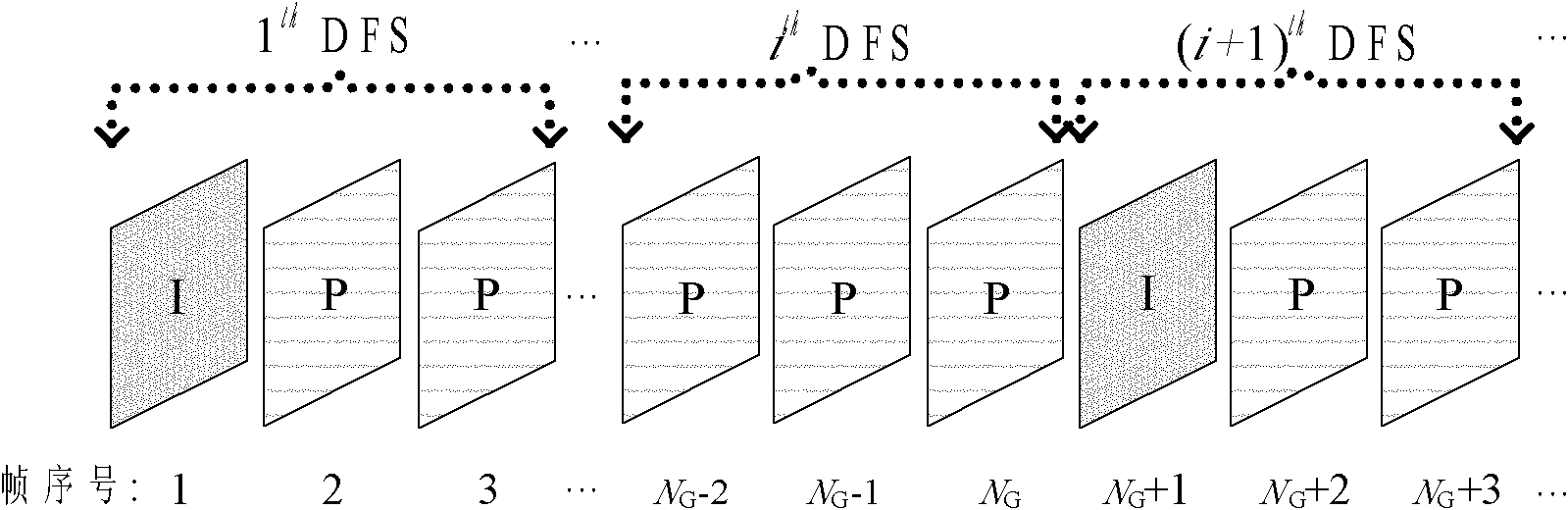

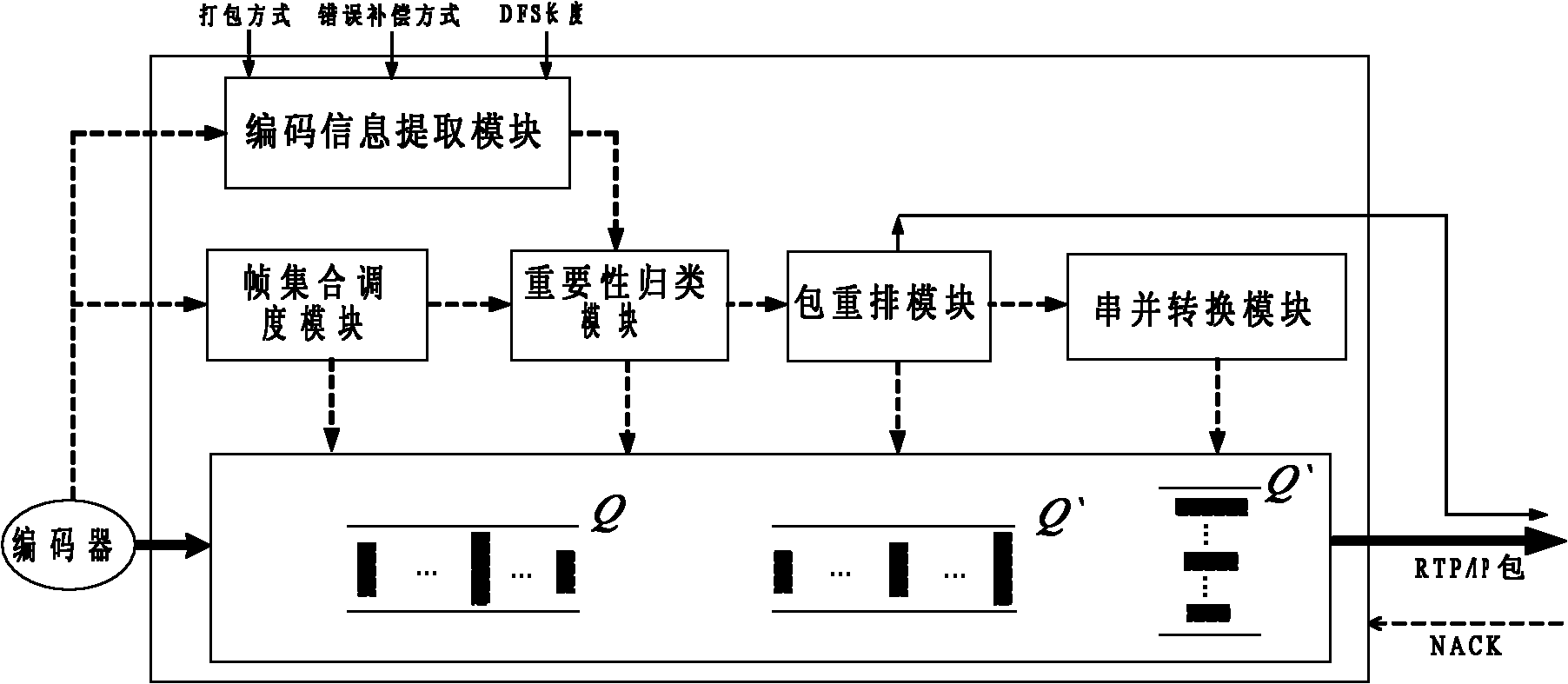

Cross-node controlled online video stream selective retransmission method

InactiveCN101938341AImprove rebuild qualityImplement priority transmissionError prevention/detection by using return channelPulse modulation television signal transmissionPacket schedulingComputer science

The invention relates to a cross-node controlled online video stream selective retransmission method comprising the following steps of: when an online-coded single video stream enters a transmitting end, dividing the online-coded single video stream into DFS (Delay Constraint Frame Sets) one by one according to starting and transmitting delay limitation as selective retransmission basic objects; using the transmitting end as a first communication node, determining importance levels of IP (Internet Protocol) packets in the DFS according to fault-tolerant importance measure, and supplying the selective retransmission of the IP packets by packet scheduling based on fault-tolerant importance; and on the basis of the importance levels of the IP packets, using a base station as a second communication node, and supplying the selective retransmission of a wireless link unit by a priority descending ARQ (Automatic Request for Repetition) mode. Under delay and bandwidth condition limitation, the invention organically combines priority transmission mechanisms among main communication nodes, realizes the importance division and the selective retransmission of wireless link unit levels, can strengthen the error control performance of online video streams and effectively increase the rebuilding quality of received videos.

Owner:DONGHUA UNIV

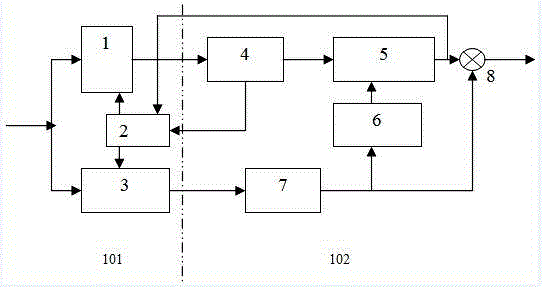

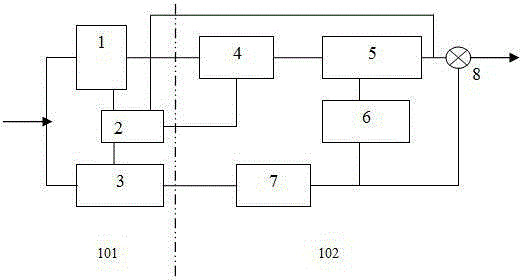

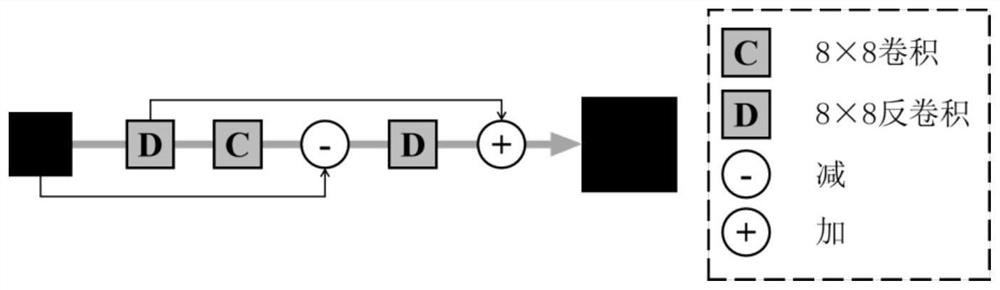

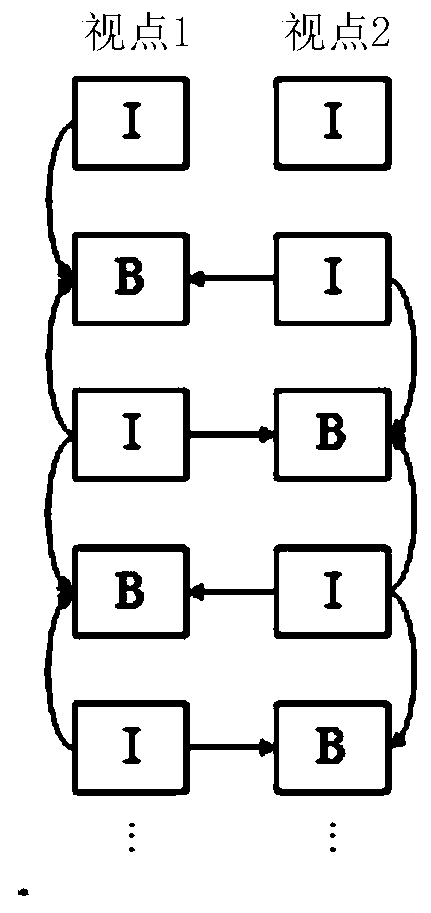

Distributed video compressed sensing coding technical method

ActiveCN105338357AEncoding operation complexity is lowImprove rebuild qualityDigital video signal modificationCompressed sensingVideo encoding

Distributed compressed sensing coding of video signals is the base and the key for realizing high-efficiency transmission of the video signals. Side information is acquired by motion search and homographic matrix compensation time interpolation by aiming at the problems of side information accurate acquisition and reconstruction algorithm in distributed compressed sensing coding, and decoding can be performed through fusion of the side information after the coset index is received by a decoding end. Smooth constraining is performed on decoding pixel points by introducing a Huber-Markov random field prior probability model in joint decoding reconstruction so as to obtain better reconstruction quality. According to a distributed video coding and decoding technical method based on compressed sensing, the method of "independent coding and joint decoding" is adopted, and complexity is transferred to the decoding end from a coding end so that complexity of coding operation is enabled to be low, and the method is suitable for resource constrained video coding equipment in a wireless network.

Owner:SHENGLONG ELECTRIC

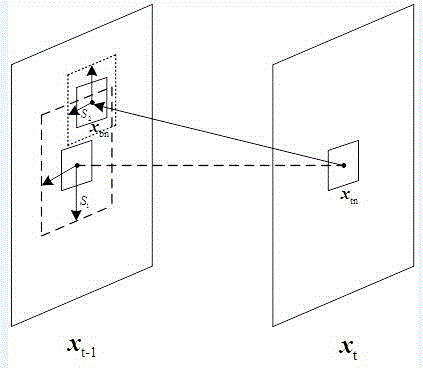

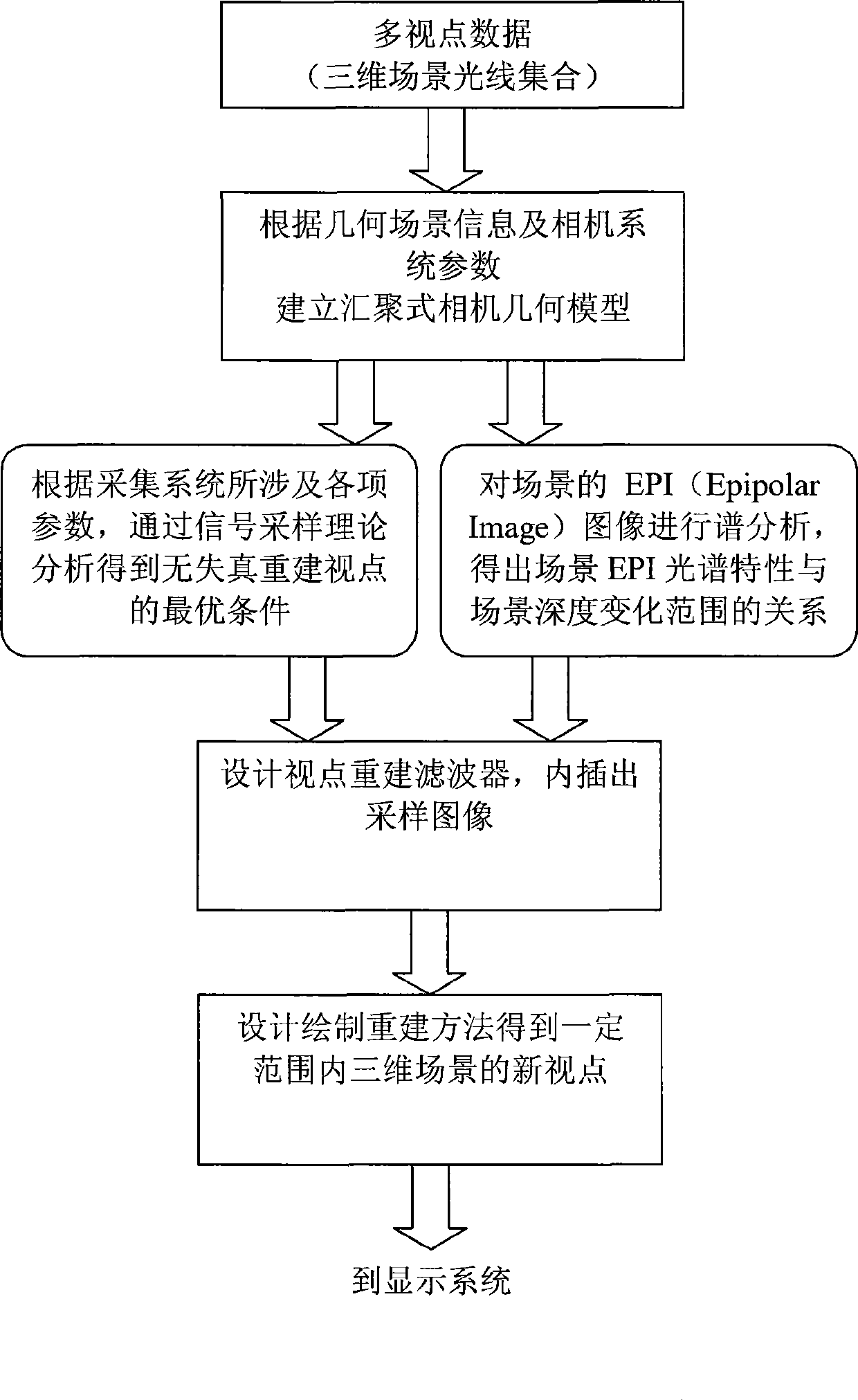

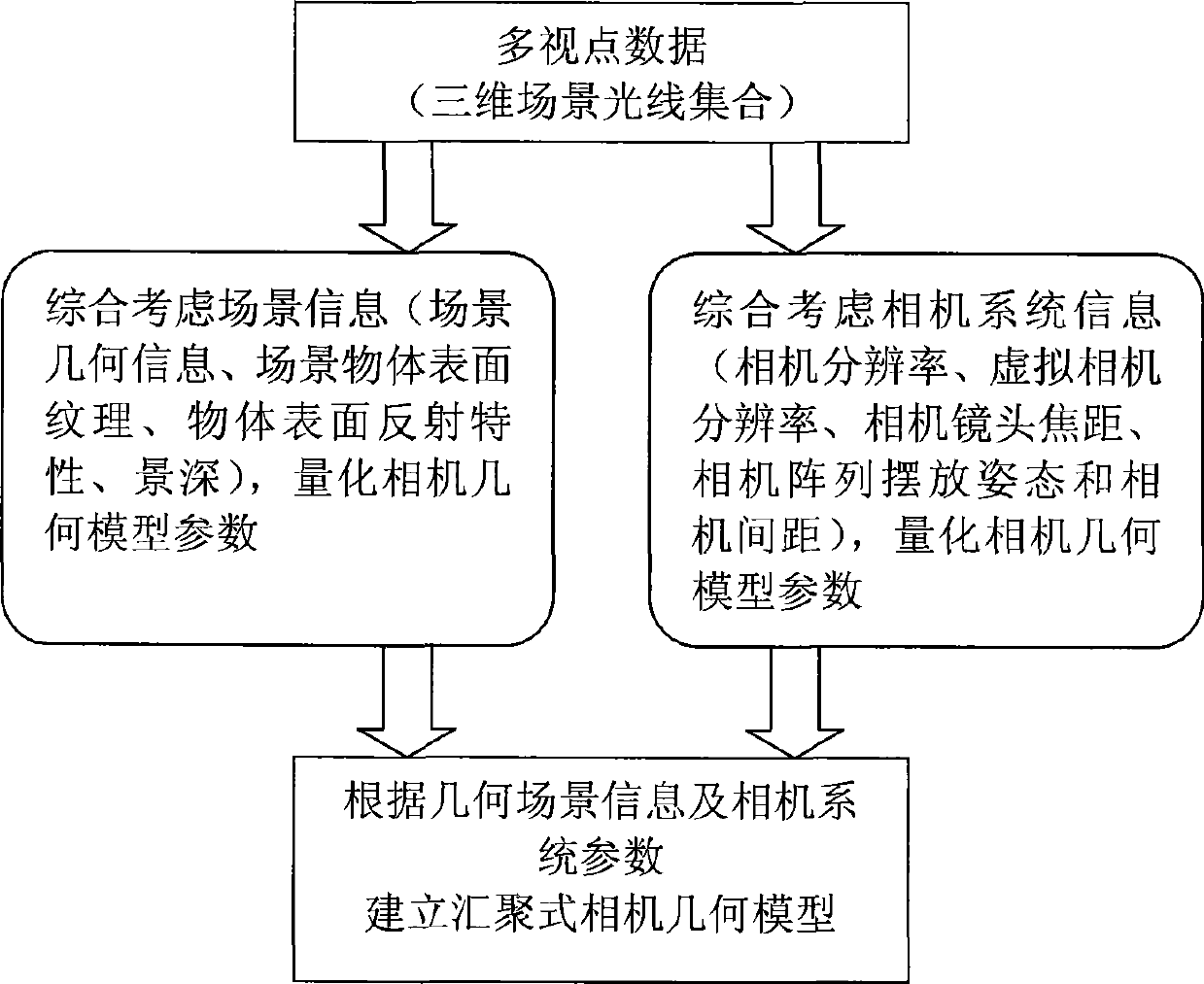

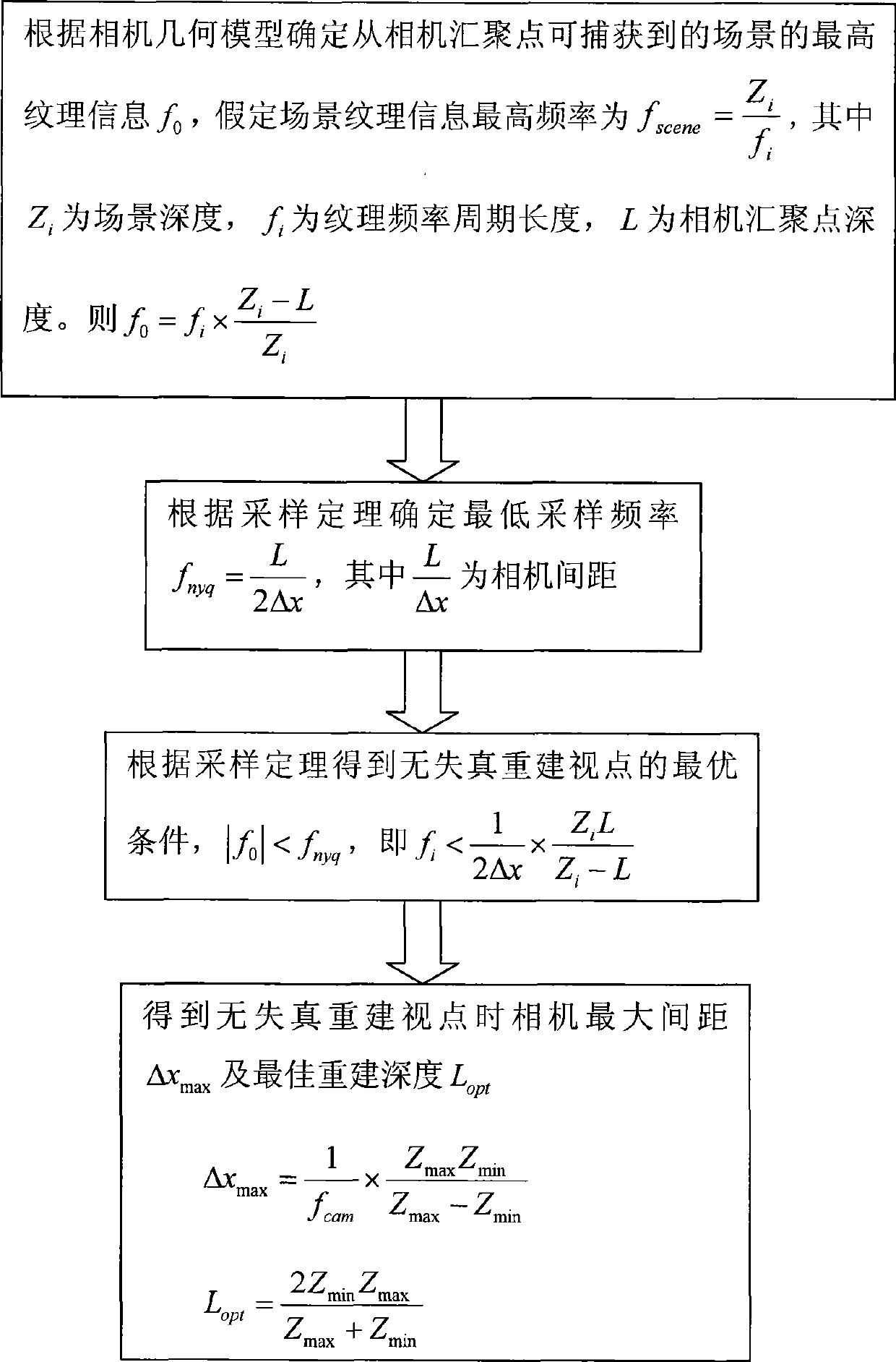

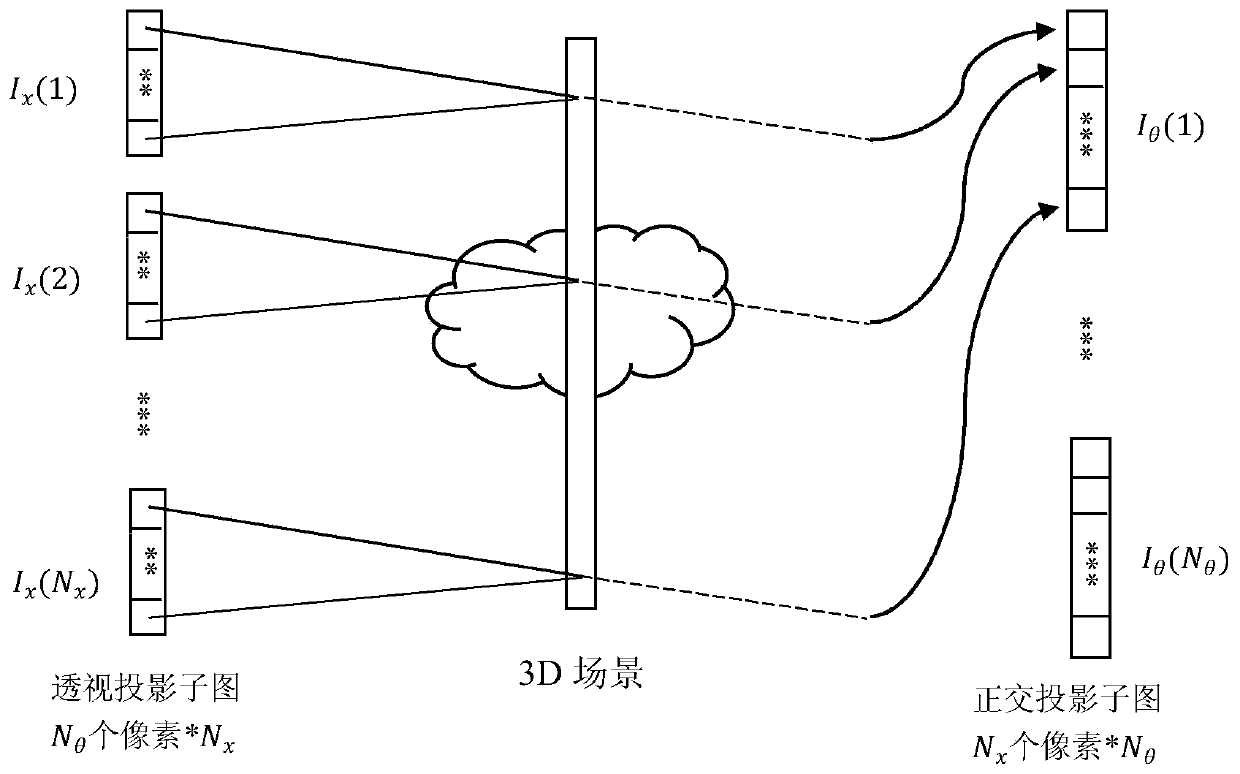

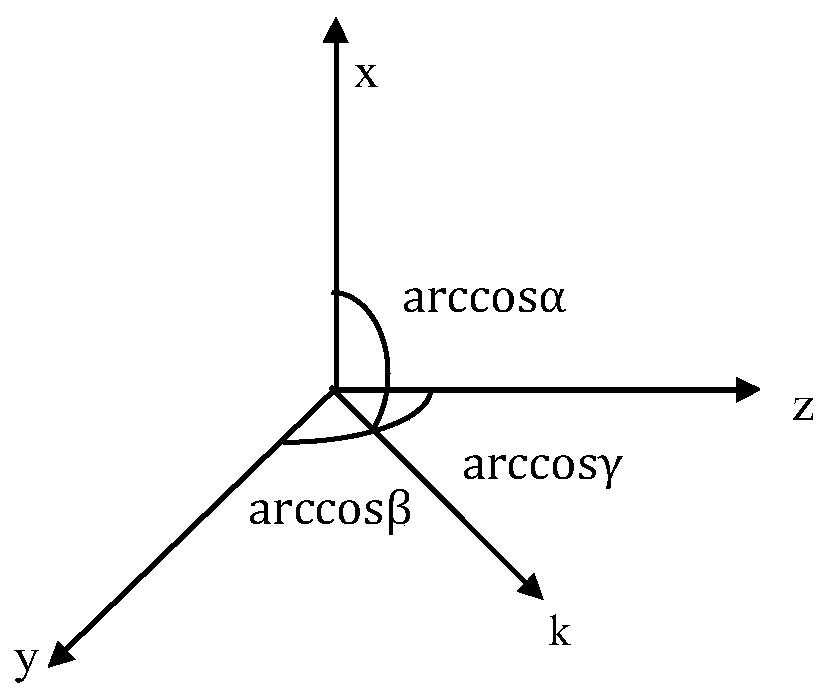

Novel sight point reconstruction method for multi-sight point collection/display system of convergence type camera

InactiveCN101369348AReduced number of samplesEasy to implement3D-image rendering3D modellingReconstruction filterCollection system

The invention relates to a novel viewpoint rebuilding method in a multi-viewpoint collection / display system of a converge type camera. According to a collection mode of the converge type camera, first, a geometric model of the converge type camera is built, each parameter related to the collection system is comprehensively considered, an optimized condition of distortionless rebuilding viewpoints is obtained through signal sample theory analysis, then spectrum analysis is performed on EPI image on the scene, relation between scene EPI spectral characteristic and scene depth span is obtained. Based on the above analysis, the number of the sample image is determined, and a viewpoint rebuild filter is designed, a sample image is generated for the rebuilt viewpoint through interpolation technology, finally a novel viewpoint of a three-dimension scene in certain range is obtained through the converge model designing and plotting rebuild method. In experiment, verification is respectively performed on an analogue system and a practical system with similar parameters, obtaining excellent rebuilding quality. The invention is of reference value for other camera array types and viewpoint rebuilding system.

Owner:SHANGHAI UNIV

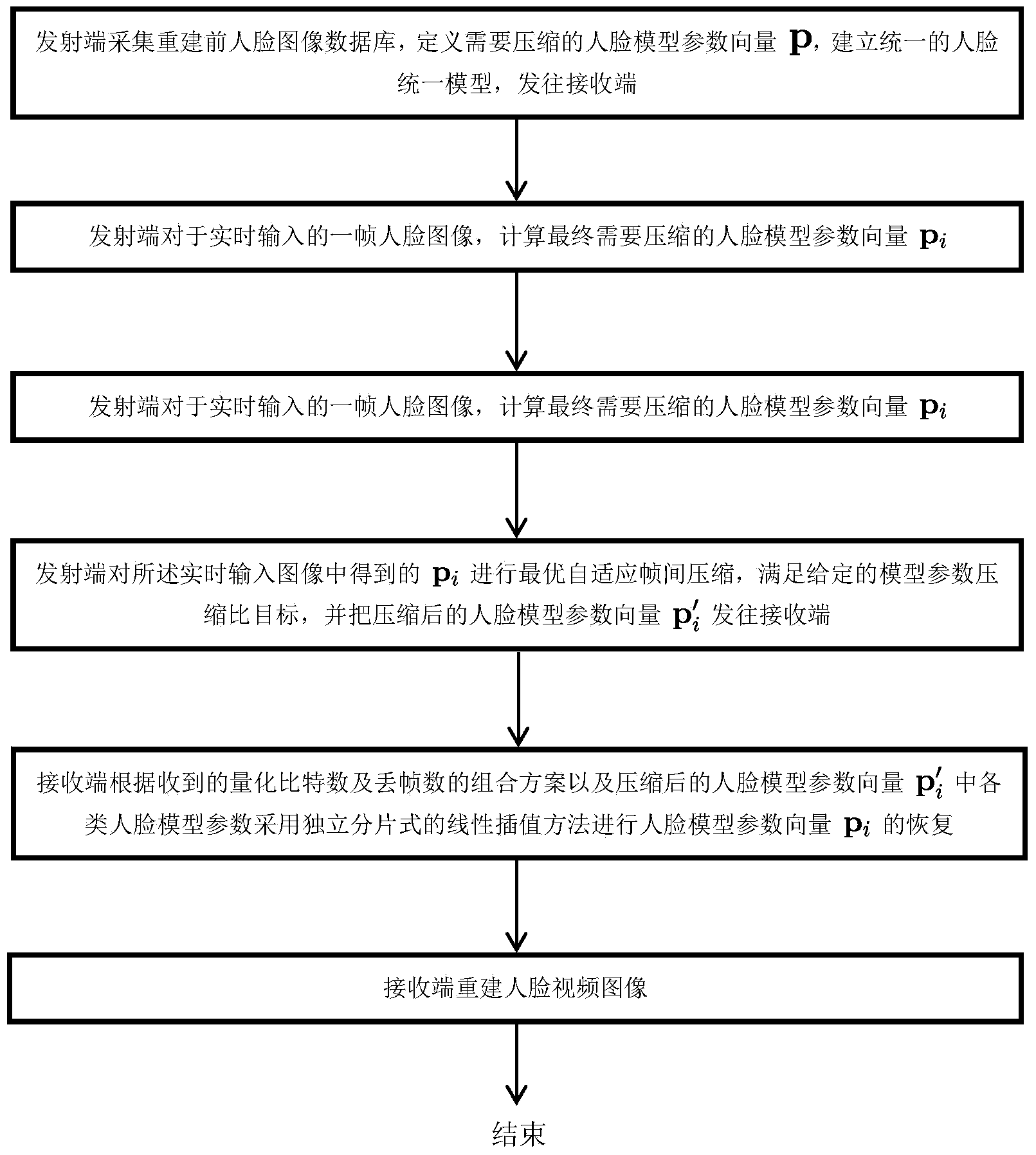

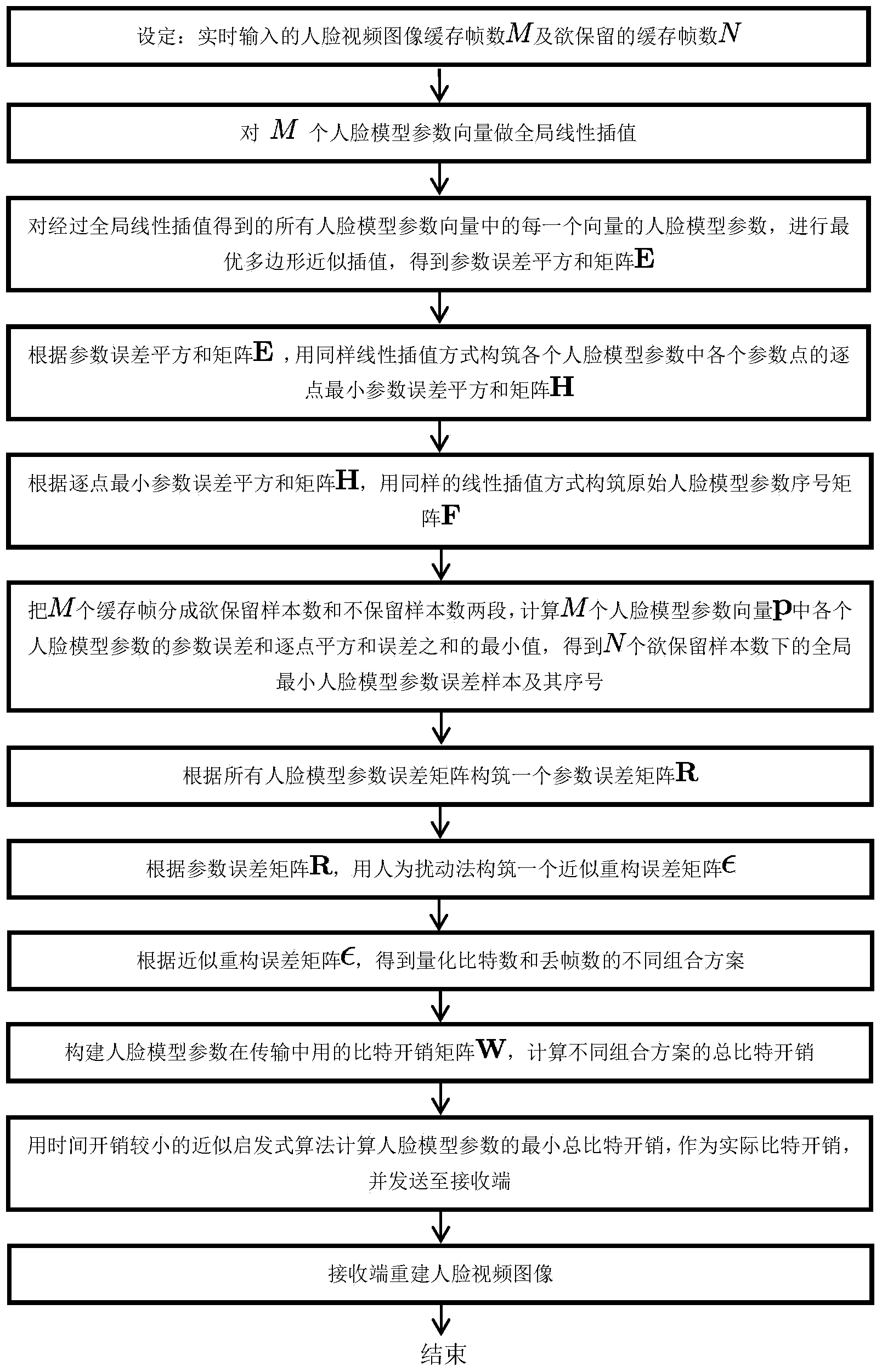

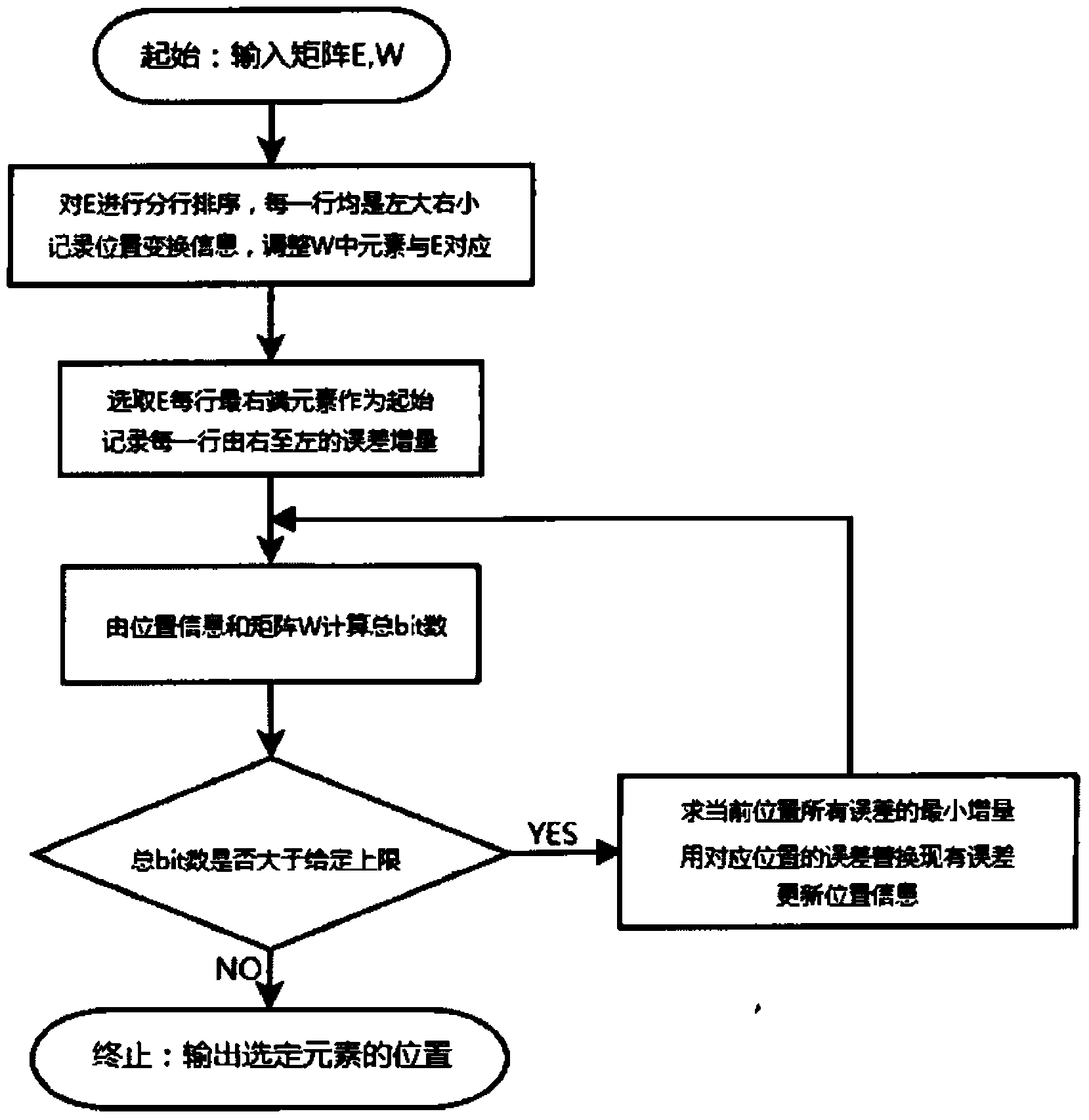

Face video compression method

ActiveCN104023216AIncrease the compression ratioImprove utilization efficiencyCharacter and pattern recognitionTelevision systemsPattern recognitionData compression

The invention discloses a face video compression method and belongs to the technical field of face video data compression in the case of multimedia communication. The method is characterized by comprising steps: a face model is used for carrying out positioning and parametric characterization on the face in the video at the sending end, a face model parameter vector represented by an illumination parameter vector, a gesture parameter vector and a shape and appearance combined parameter vector is obtained, constraint that global minimal parameter error and model parameter total bit are less than the given upper limit on the basis of the given to-be-retained parameter frame sample number is carried out, the face model parameter vector after optimal adaptive interframe compression is sent to a receiving end, an independent slicing linear interpolation method is used for restoring the face model parameter vector, and the original image is obtained through face shape calculation and restored face appearance. The face video compression ratio is greatly improved while the optimal reconstruction quality is ensured, redundancy in the face video is removed to the maximal degree in the time domain, and the communication resource utilization rate is thus improved.

Owner:TSINGHUA UNIV

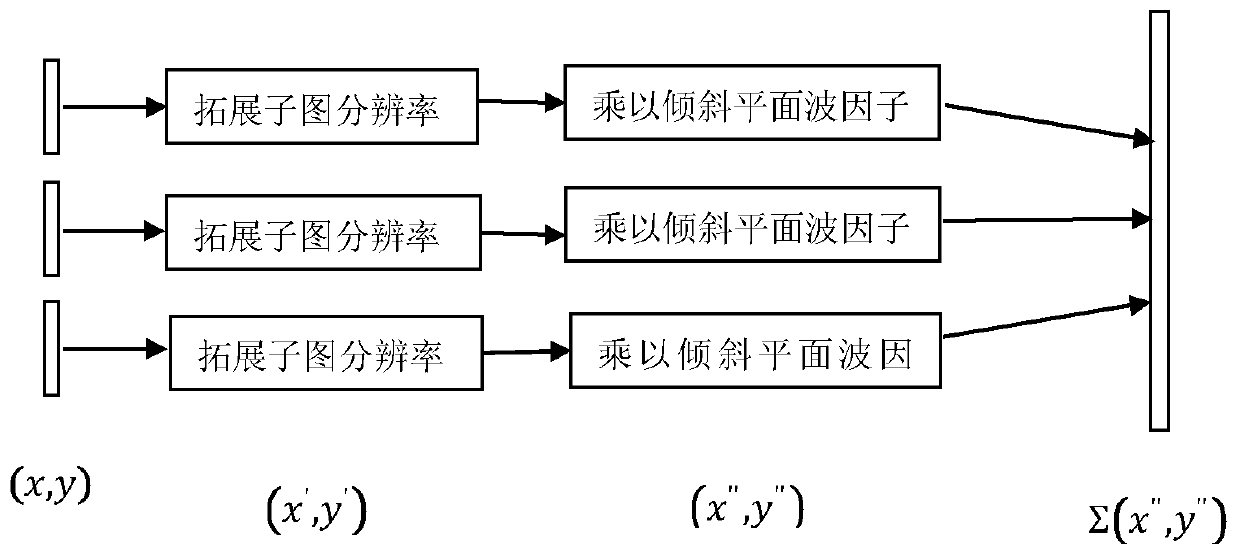

Complex amplitude hologram algorithm based on light field

PendingCN110738727AGood reconstructionImprove the sense of plaidImage enhancementImage analysisFrequency domainVirtual camera

The invention discloses a complex amplitude hologram algorithm based on a light field, and the algorithm comprises the steps: firstly carrying out the multi-angle photographing of a three-dimensionalobject through a virtual camera, and obtaining a series of subgraphs; then adding an inclination phase factor of a corresponding angle to each sub-image in an algorithm, and adding the obtained imagesto obtain a complex amplitude hologram; or, displacing the frequency spectrum of each sub-image in the frequency domain space, enabling the displacement to correspond to the angle information duringshooting, and adding the sub-images of which the frequency spectrum positions are moved to obtain a complex amplitude hologram; and finally, modulating the obtained complex amplitude hologram by usinga complex amplitude modulation device so as to reconstruct the three-dimensional object. According to the algorithm, a three-dimensional light field closer to a natural scene can be reconstructed, the calculation speed of a hologram is increased, and meanwhile, a reconstruction light path is simplified.

Owner:SOUTHEAST UNIV

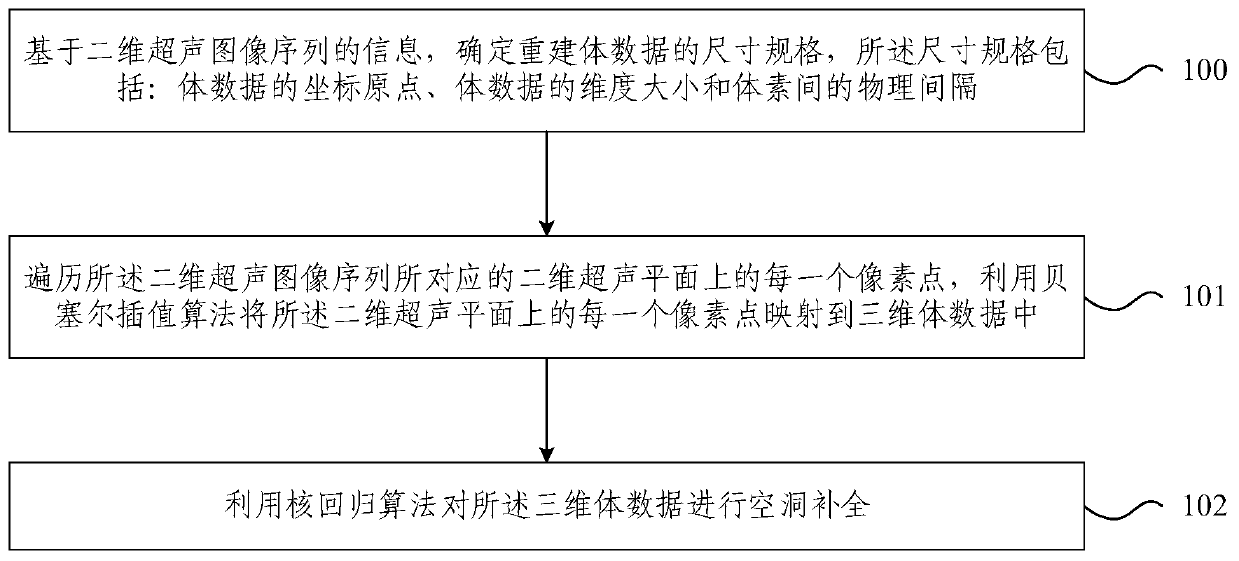

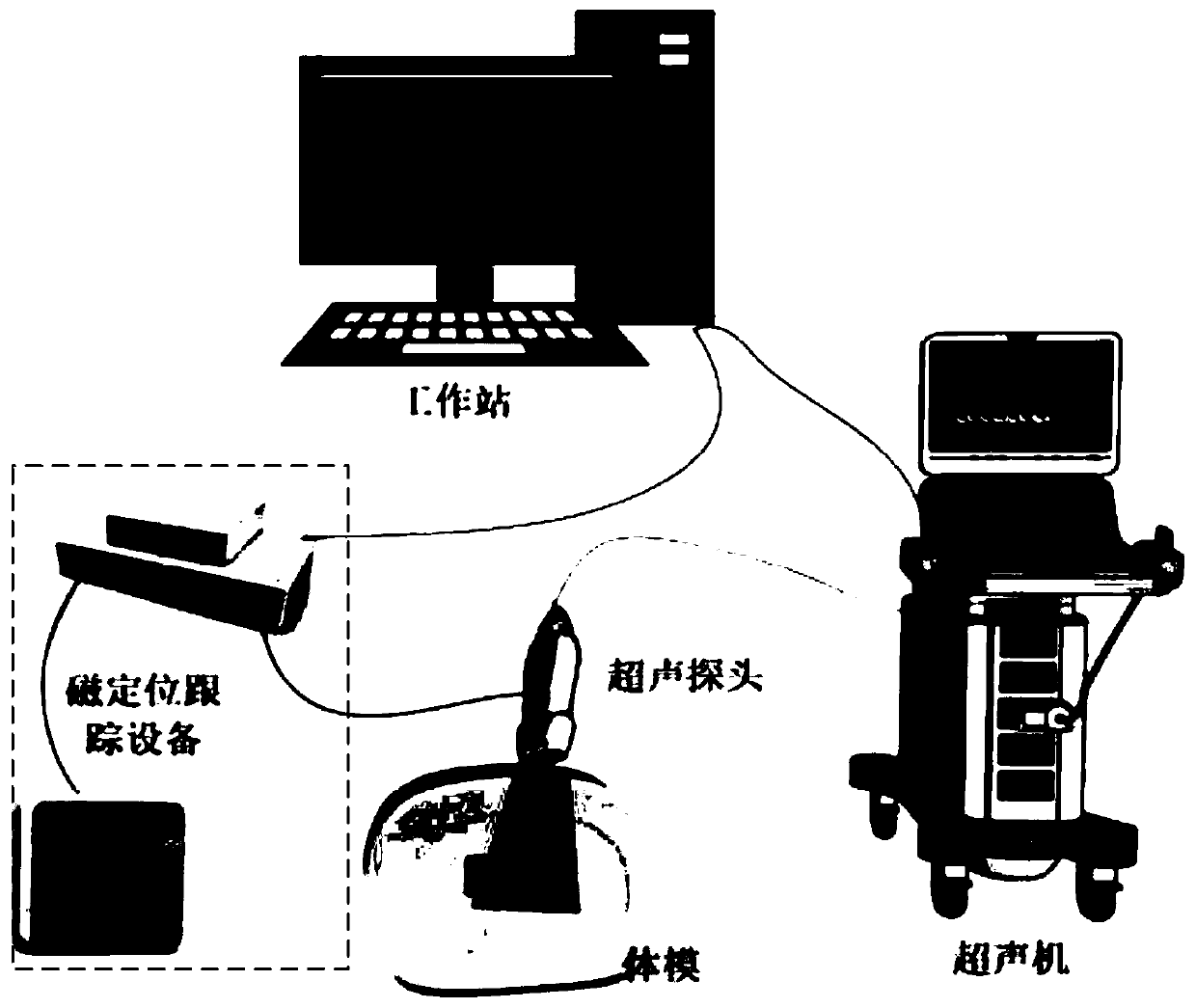

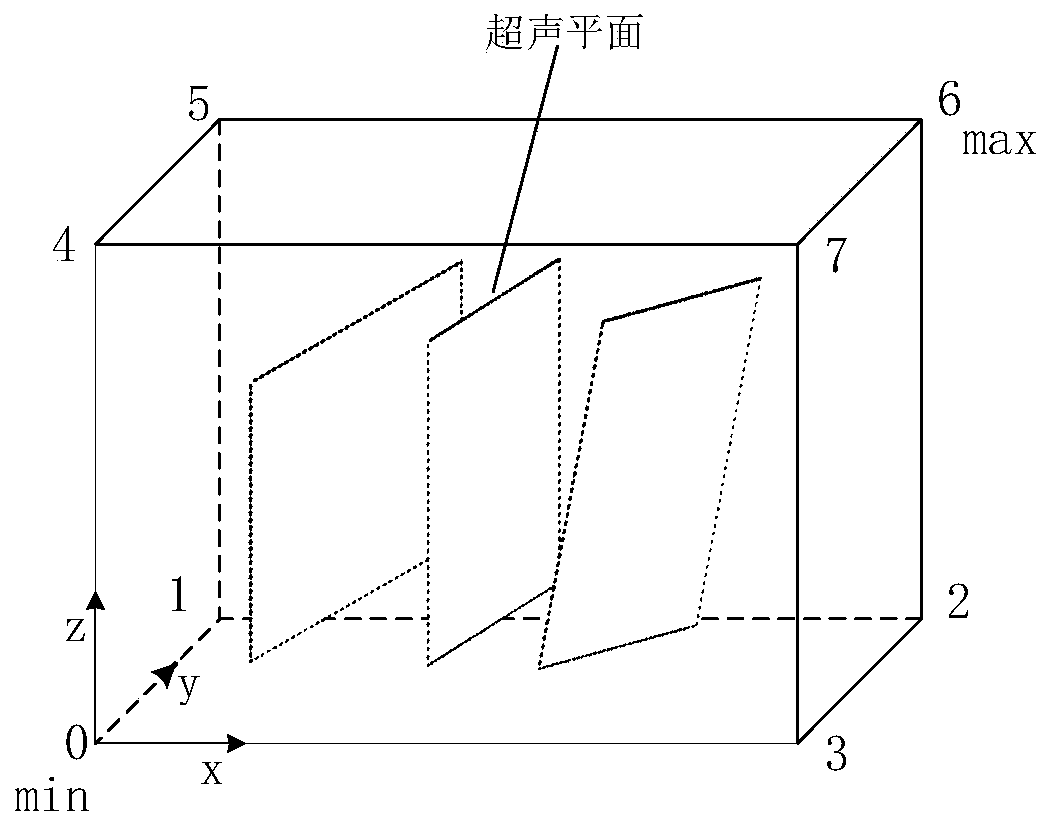

Ultrasonic three-dimensional reconstruction method and device

InactiveCN110251231AImprove rebuild qualitySurgical navigation systemsComputer-aided planning/modellingReconstruction methodVoxel

An embodiment of the invention provides an ultrasonic three-dimensional reconstruction method and device. The method comprises: determining dimensions of reconstruction volume data, wherein the dimensions include a coordinate origin of the volume data, dimensional size of the volume data and inter-voxel physical spacing; traversing each pixel in a two-dimensional ultrasonic plane corresponding to a two-dimensional ultrasonic image sequence, mapping each pixel of the two-dimensional ultrasonic plane into three-dimensional volume data through Bessel interpolation algorithm, and carrying out interpolation; performing hole completion on the three-dimensional volume data through a kernel regression algorithm. The ultrasonic three-dimensional reconstruction method and device provided by the embodiment of the invention enable reconstruction quality of three-dimensional reconstruction to be improved effectively by the combination of the Bessel interpolation algorithm and kernel regression algorithm.

Owner:ARIEMEDI SCI SHIJIAZHUANG CO LTD

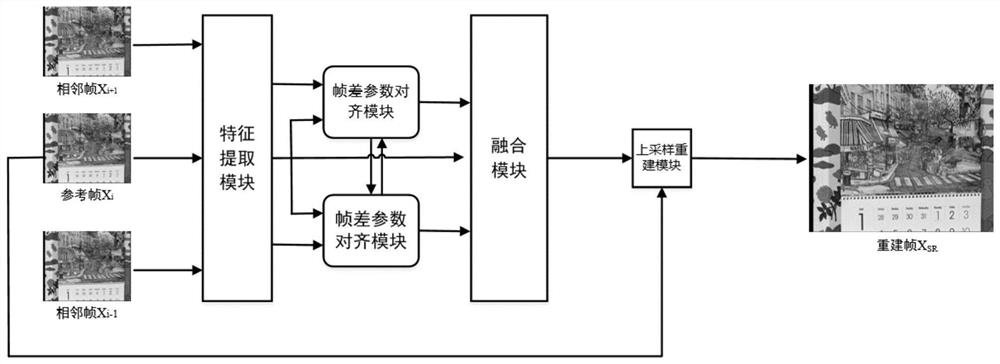

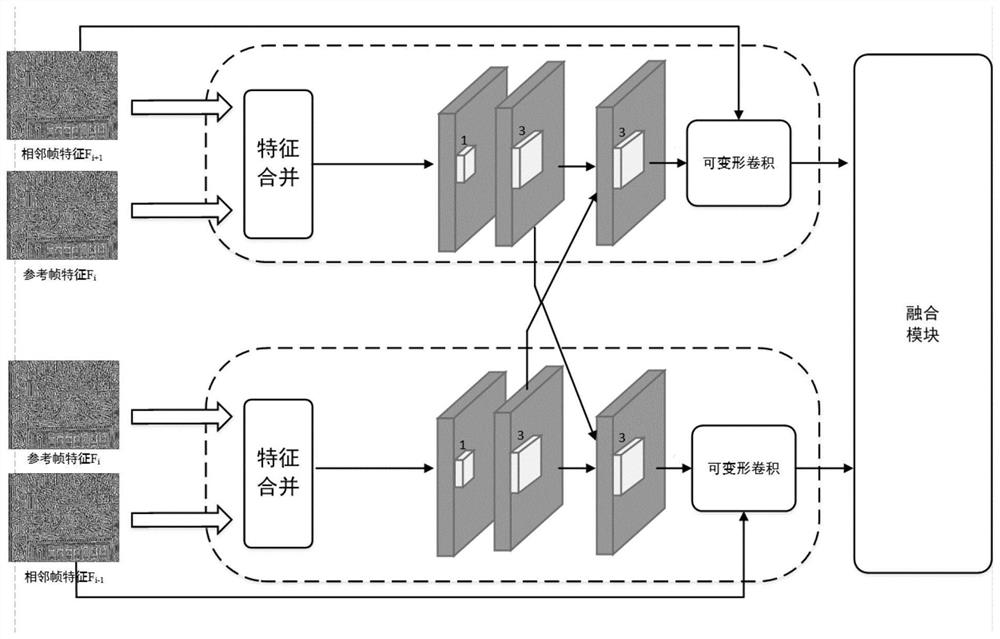

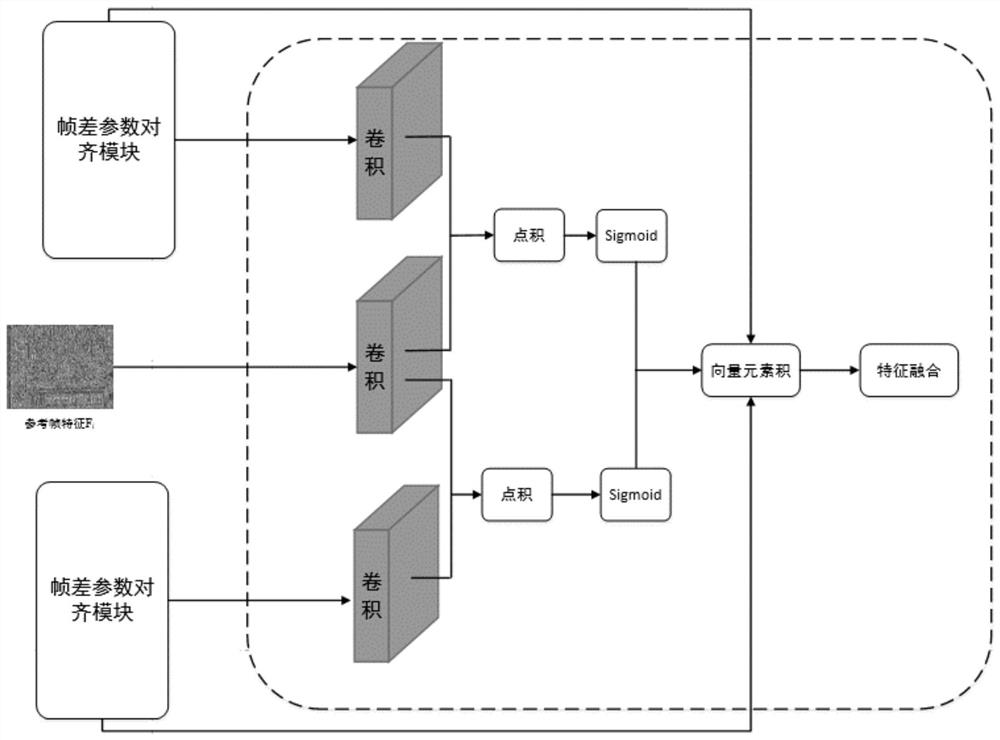

Super-resolution reconstruction method for real-time video session service

ActiveCN113205456ARebuild fastImprove rebuild qualityImage enhancementGeometric image transformationReconstruction methodEngineering

The invention provides a super-resolution reconstruction method for a real-time video session service, and relates to the technical field of digital image processing. According to the invention, the method comprises the steps: redesigning each super-resolution module, enabling a feature extraction module to adopt coarse-to-fine feature extraction and adopt a residual idea to accelerate the feature extraction speed, introducing deformable convolution into the video super-resolution reconstruction method, and through the idea of a recurrent neural network, dynamically optimizing a frame difference learning module to obtain an optimal alignment parameter; employing the optimal parameter for guiding deformable convolution to carry out alignment operation; designing a feature fusion network for enhancing correlation to carry out feature fusion of adjacent frames, and finally designing a reconstruction module by adopting an information distillation thought, designing an up-sampling reconstruction module, extracting more edge and texture features by using an information distillation block, and adding the extracted edge and texture features with an up-sampled reference frame to generate a final high-resolution video frame. The method is high in reconstruction speed and good in reconstruction quality.

Owner:NORTHEASTERN UNIV

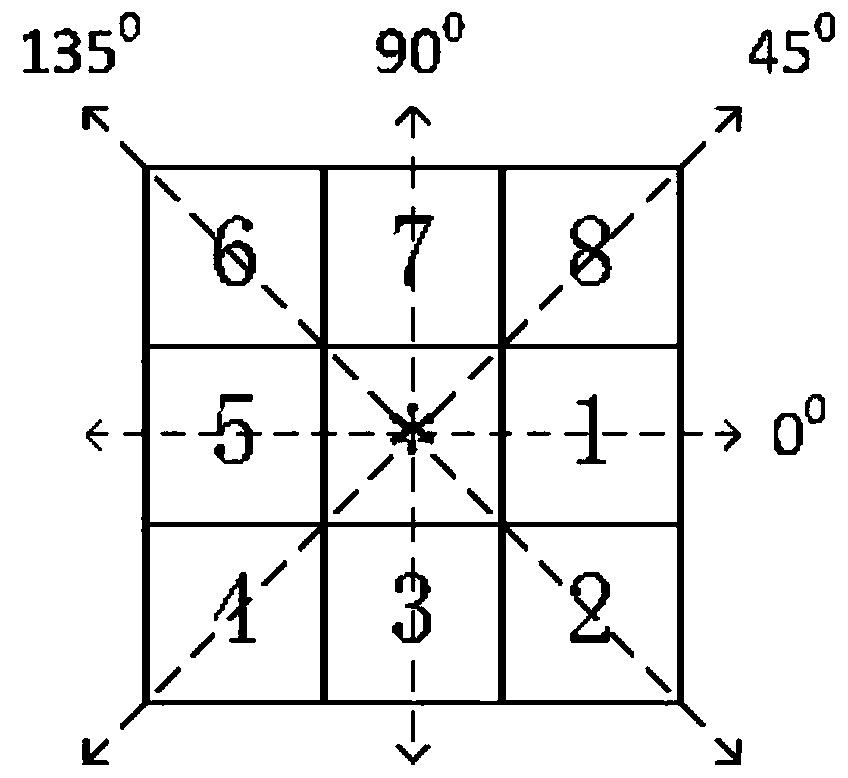

Adaptive block compressive sensing reconstruction method

InactiveCN103559695AEliminate blockingReduce rebuild timeImage enhancementImaging processingFeature extraction

The invention belongs to the field of image processing, and particularly relates to an adaptive block compressive sensing reconstruction method used for feature extraction and identification of a target image. The adaptive block compressive sensing reconstruction method comprises the steps that initial parameters are defined; the image is divided into sub-image blocks with the sizes being A; energy E of each sub-image block is calculated, and according to a preset energy threshold value T, each sub-image block is divided into a background sub-image block and a target sub-image block; a background region and a target region of the image are blocked again; measured-value obtaining and image reconstruction are conducted on the background region and the target region of the image with the same sampling rate; a reconstructed target region image and a reconstructed background region image are combined into a reconstructed original image. As for the adaptive block compressive sensing reconstruction method, the image is divided into the background region and the target region according to an energy value, different blocking schemes are used for the background region and the target region, a blocking effect on the target region can be omitted, and better reconstruction quality can be obtained with less reconstruction time.

Owner:HARBIN ENG UNIV

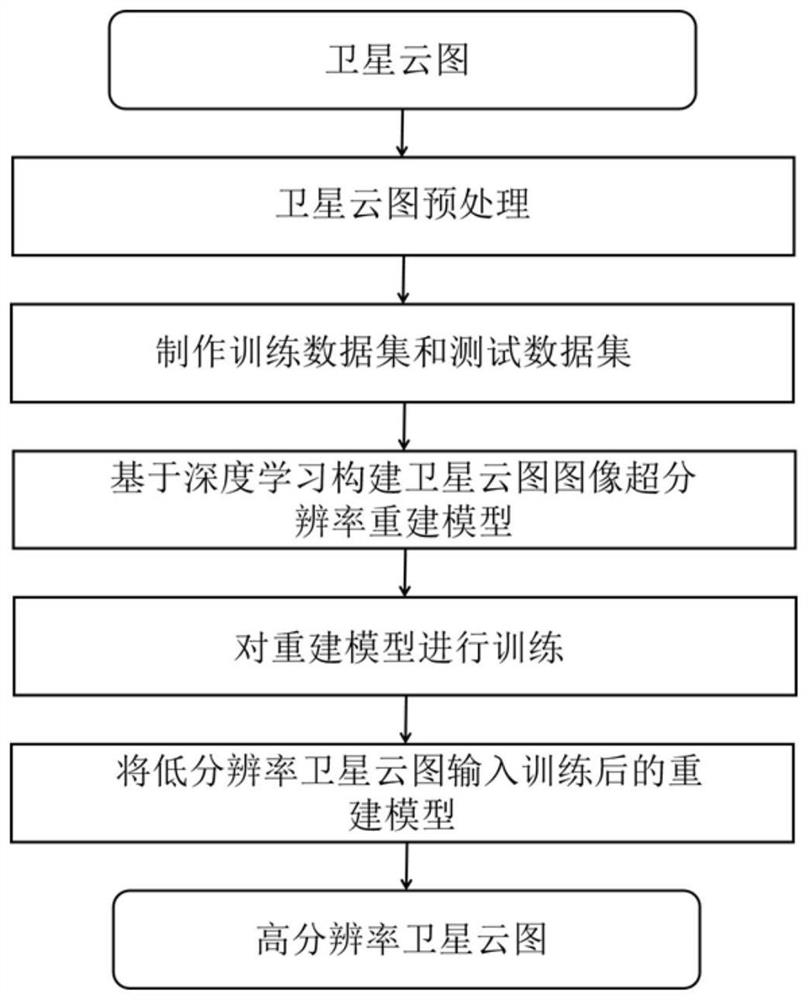

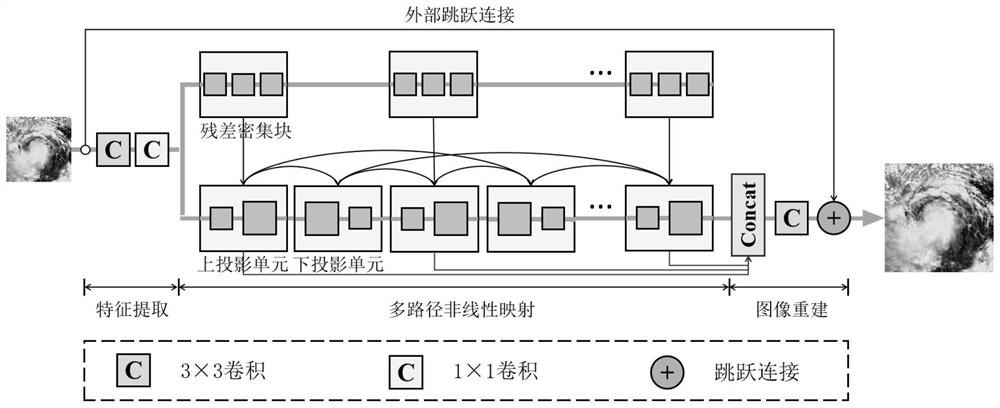

Satellite cloud picture super-resolution reconstruction method based on deep learning

ActiveCN111861884AImprove signal-to-noise ratioSimple structureImage enhancementImage analysisComputational scienceData set

The invention discloses a satellite cloud picture super-resolution reconstruction method based on deep learning, and the method comprises: making and preprocessing a satellite cloud picture, and obtaining a high-resolution satellite cloud picture data set; dividing the high-resolution satellite cloud picture data set into a training data set and a test data set for modeling; constructing and training a satellite cloud picture super-resolution reconstruction model based on deep learning; and inputting the low-resolution satellite cloud picture into the trained satellite cloud picture super-resolution reconstruction model to obtain a high-resolution satellite cloud picture. The high-precision detailed satellite cloud picture high-resolution reconstruction image is obtained through the deep learning method, the reconstruction effect which is more accurate and universal than that of a traditional method is obtained, the high-resolution satellite cloud picture can be obtained through the low-resolution satellite cloud picture, and the practicability of the method is improved.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

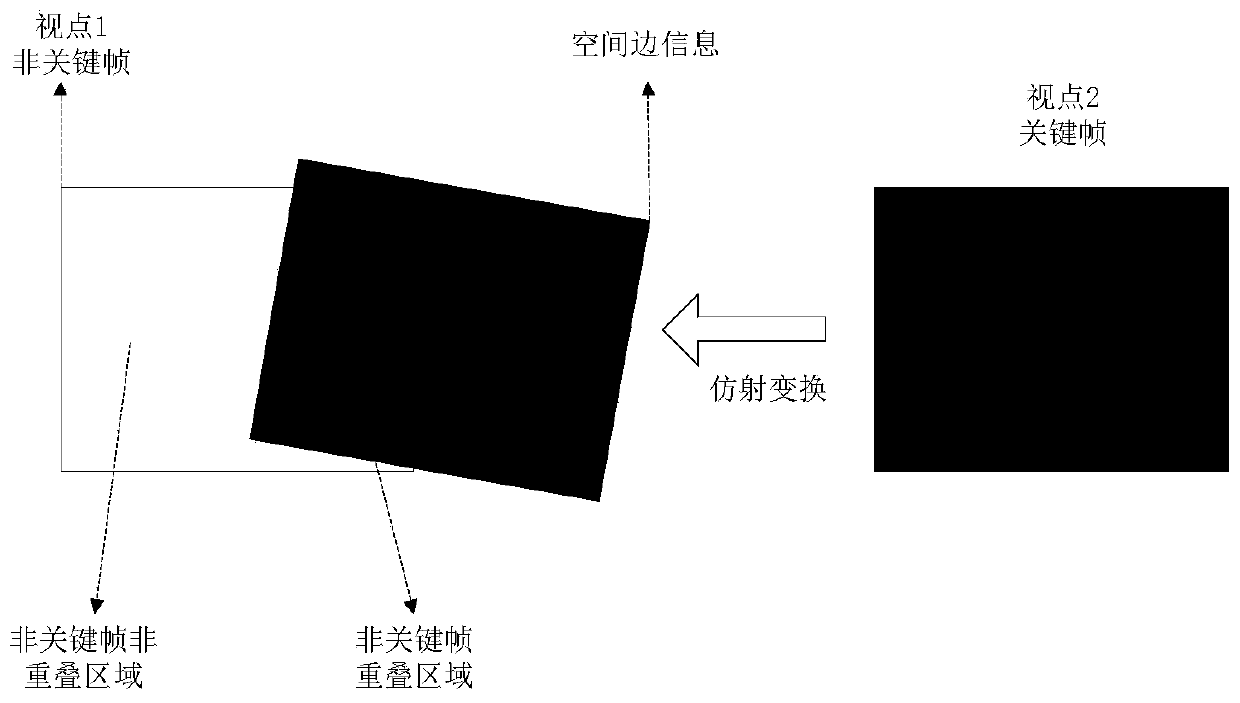

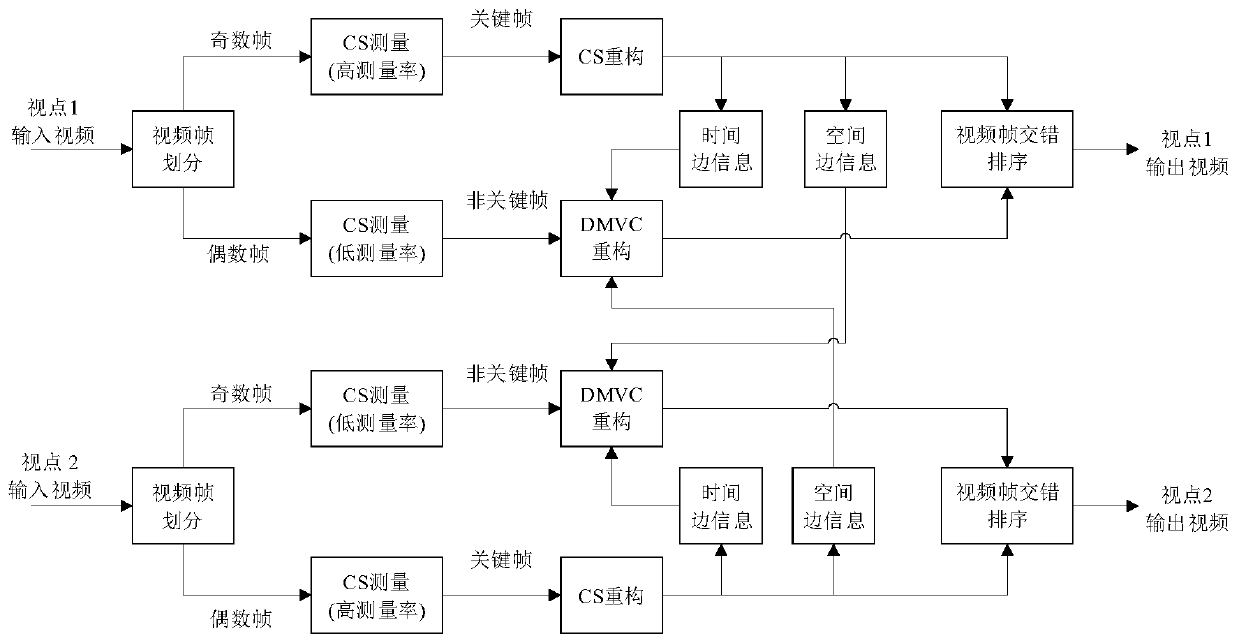

Distributed multi-view video compression sampling reconstruction method combining space-time side information

ActiveCN110392258AImprove rebuild qualityDigital video signal modificationSpatial correlationViewpoints

The invention relates to a distributed multi-view video compression sampling reconstruction method combining space-time side information, and aims to meet the application requirements of low-complexity video acquisition coding under the condition that the computing and storage capacities of all nodes in a distributed multi-view video acquisition network are limited. Considering that continuous video frames in the same viewpoint have time correlation, the video frames of the adjacent viewpoints at the same moment have spatial correlation, and the time and space side information obtained by thetime correlation and the spatial correlation can be further characterized by sparse constraints of differences between the side information and the current frame, so that a non-key frame reconstruction optimization model in the multi-viewpoint video is generated. And finally, optimization solution is carried out through an FISTA strategy, so that non-key frame information with relatively good reconstruction quality is generated.

Owner:WUHAN UNIV

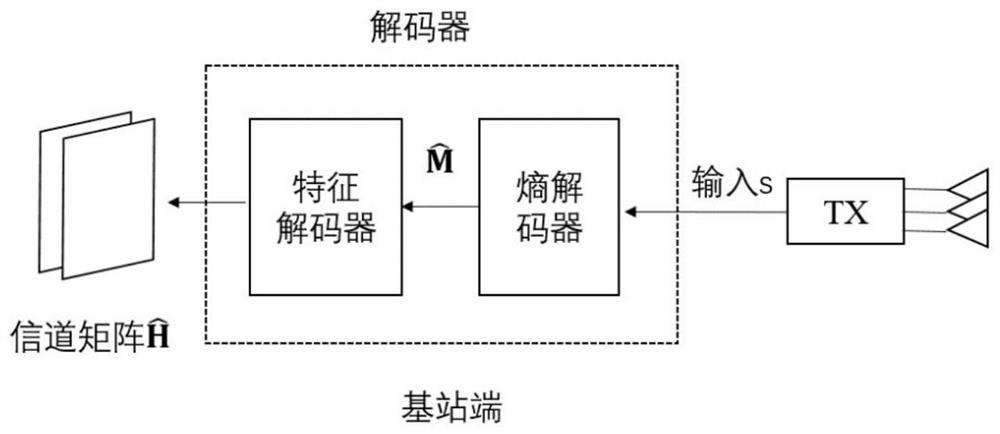

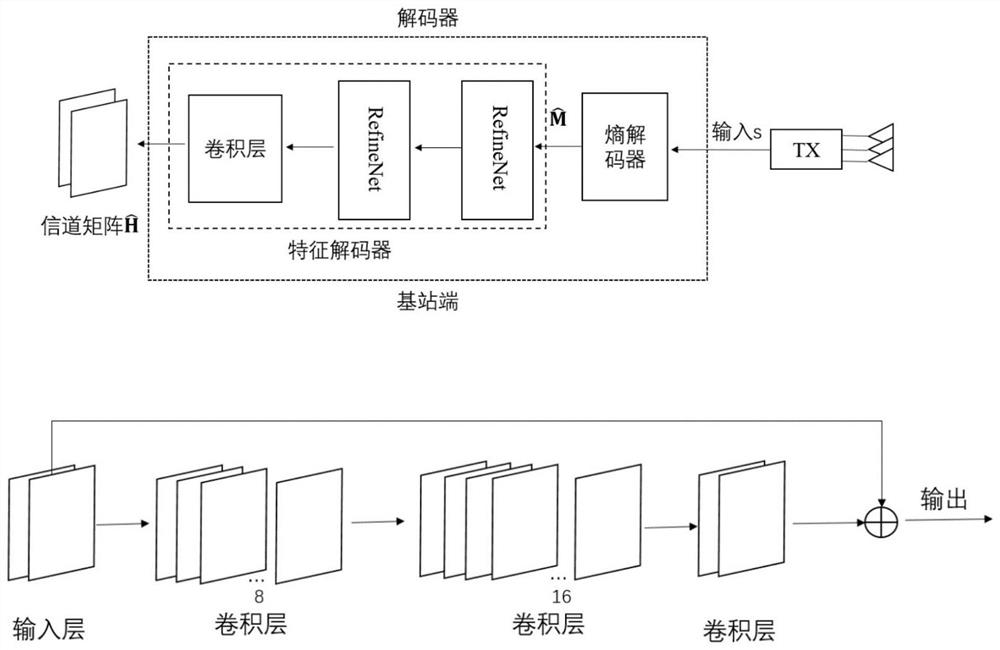

Channel state information feedback method based on deep learning and entropy coding

ActiveCN113098804AImprove rebuild qualityImplement feedbackBaseband system detailsRadio transmissionStreamMatrix estimation

The invention discloses a channel state information feedback method based on deep learning and entropy coding, and the method comprises the steps: carrying out the preprocessing of a channel matrix of MIMO channel state information at a user side, selecting key matrix elements to reduce the calculation amount, and obtaining a channel matrix H which is actually used for feedback; constructing a model combining a deep learning feature encoder and entropy encoding at a user side, and encoding a channel matrix H into a binary bit stream; at a base station end, constructing a model combining a deep learning feature decoder and entropy decoding, and reconstructing an original channel matrix estimated value from a binary bit stream; training the model to obtain model parameters and an output reconstruction value of a reconstructed channel matrix, and finally applying the trained model based on deep learning and entropy coding to compressed sensing and reconstruction of channel information. According to the invention, the large-scale MIMO channel state information feedback overhead can be reduced.

Owner:SOUTHEAST UNIV

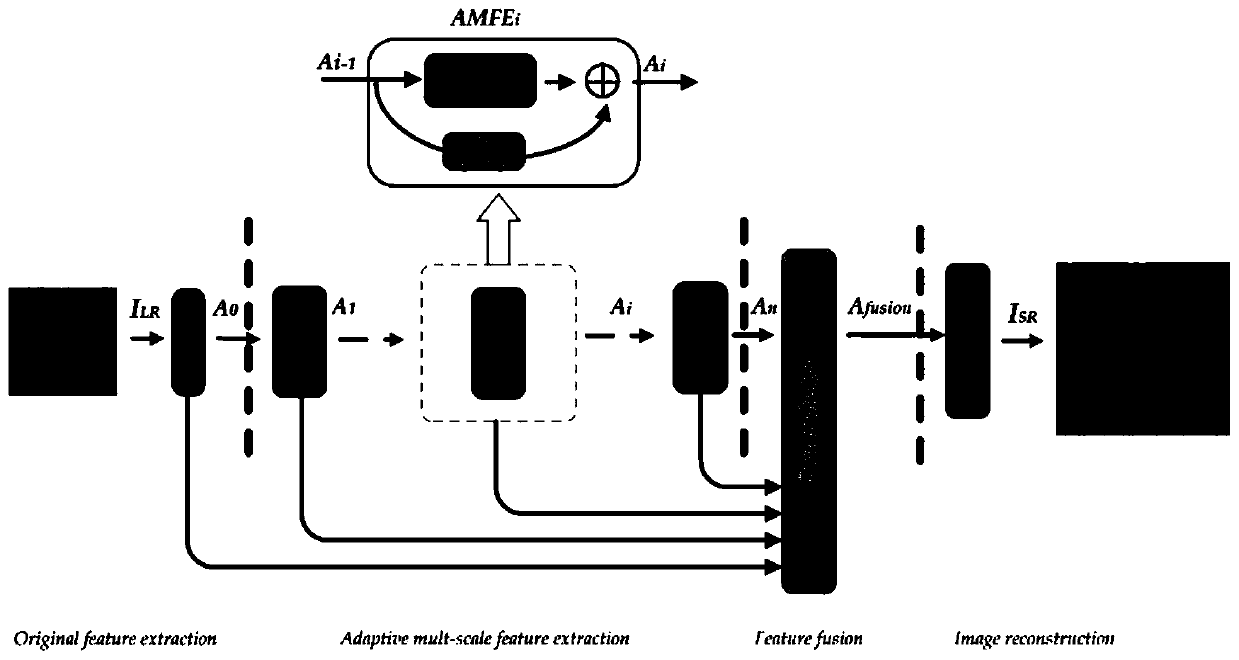

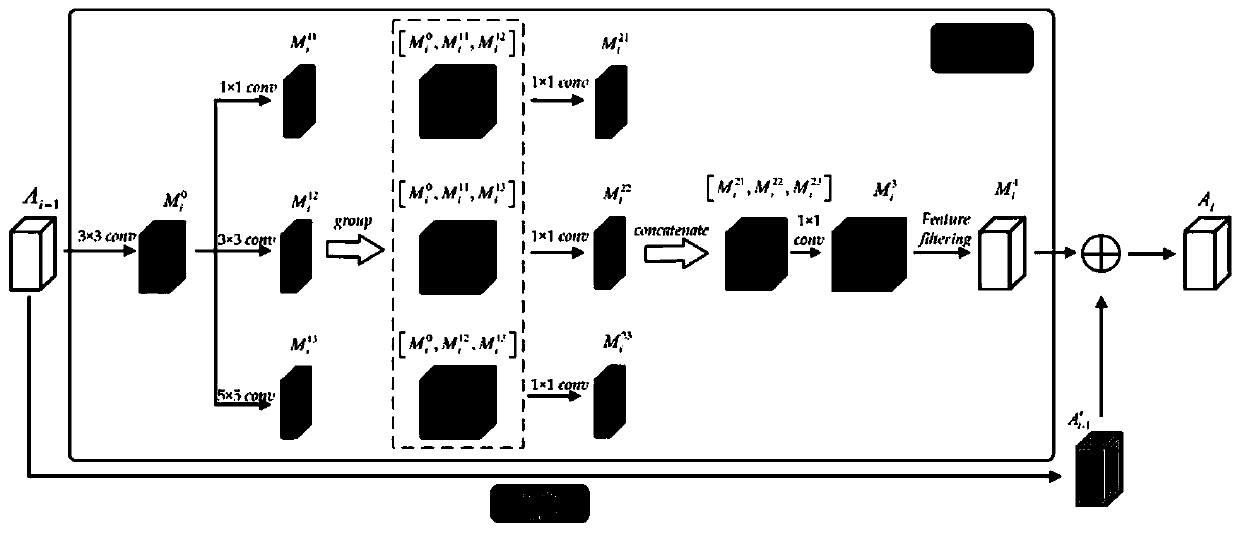

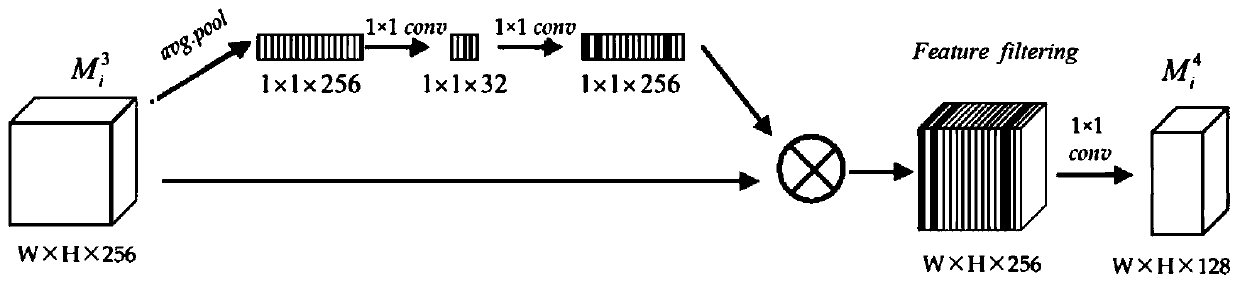

Remote sensing image super-resolution method based on multi-scale feature adaptive fusion network

PendingCN111414988AImprove rebuild qualityReduce redundant informationGeometric image transformationCharacter and pattern recognitionSelf adaptiveImage resolution

The invention relates to a remote sensing image super-resolution method based on a multi-scale feature adaptive fusion network, and the method comprises: 1) carrying out the convolution operation of an originally inputted low-resolution remote sensing image through a filter, and extracting an original feature map; 2) extracting self-adaptive multi-scale features of the original feature map throughn cascaded multi-scale feature extraction modules AMFE to obtain a self-adaptive multi-scale feature map; 3) superposing the original feature map and the adaptive multi-scale feature map, and performing convolution operation on the superposed map by using a filter to realize feature dimension reduction and fusion; and 4) by adopting a sub-pixel convolution method, obtaining a final remote sensingimage after super-resolution reconstruction. The invention provides the remote sensing image super-resolution method based on the multi-scale feature self-adaptive fusion network, which can realize self-adaptive fusion of multi-scale feature information of the remote sensing image, can realize efficient reconstruction of high-resolution detail information of the remote sensing image and can improve the image super-resolution reconstruction effect.

Owner:HUBEI UNIV OF TECH

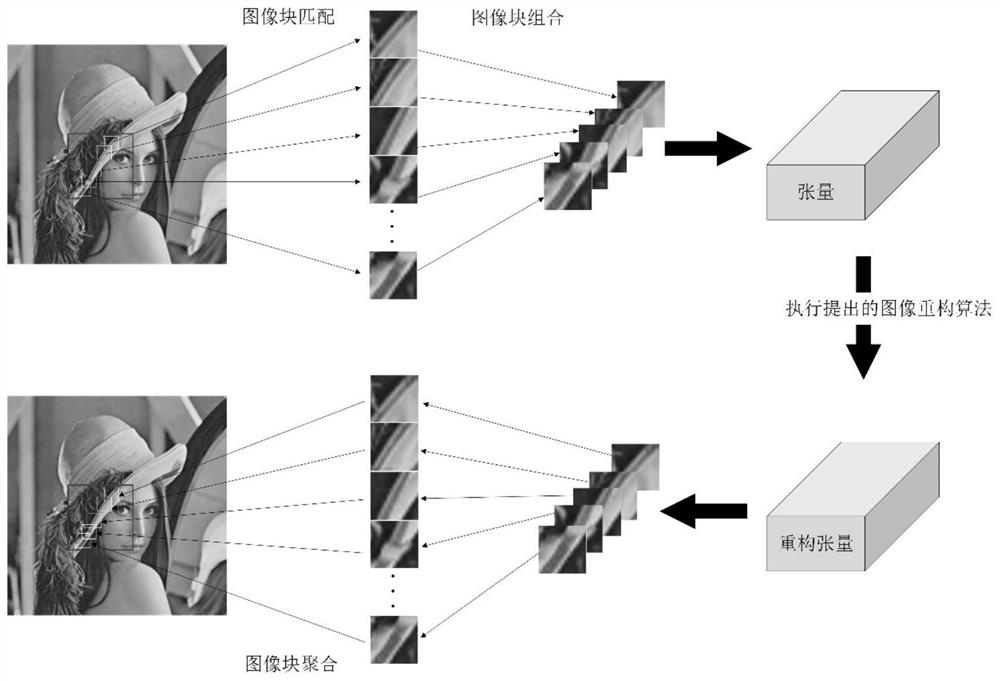

Compressed sensing image and video recovery method based on tensor approximation and space-time correlation

PendingCN112541965AHigh quality recoveryQuality improvementImage enhancementImage analysisImage recoveryImage map

The invention discloses a compressed sensing image and video recovery method based on tensor approximation and space-time correlation, and relates to the technical field of video image processing. Themethod comprises the following steps: S1, material reading: reading a material, and carrying out framing processing to form an independent image; S2, image blocking: performing image blocking processing on the single image in the step S1 to obtain small image blocks; S3, recovery processing: carrying out initial image recovery processing on the small image blocks by adopting a tensor approximation thought; and S4: image reconstruction: carrying out image reconstruction processing on the image after initial image recovery processing to obtain an image block with good quality. According to themethod, a novel recovery model is provided for natural images and videos, and high-quality recovery is realized; and according to the image restoration model, local self-similarity can be fully utilized by extracting similar blocks, and the model can be established by considering low-rank attributes, so that the quality of a reconstructed image is further improved.

Owner:STATE GRID CHONGQING ELECTRIC POWER CO ELECTRIC POWER RES INST +1

Method for drawing viewpoints by reinforcing interested region

ActiveCN101883291BReduce computational complexityEasy to implementImage analysisSteroscopic systemsViewpointsCollection system

The invention aims to provide a method for drawing viewpoints by reinforcing an interested region, comprising the following steps of: aiming at the collection mode of a light field camera, firstly establishing the camera geometrical model of the collection mode of the light field camera according to the parameters of a collection system and the geometrical information of a scene, then calculatingthe interested region and reinforcing the original thin depth map through the identified interested region; and then carrying out light field drawing by utilizing the reinforced depth map according to camera parameters and a geometrical scene to obtain a new viewpoint image. The test on the method indicates that the invention can obtain favorable viewpoint reestablishing quality.

Owner:SHANGHAI UNIV

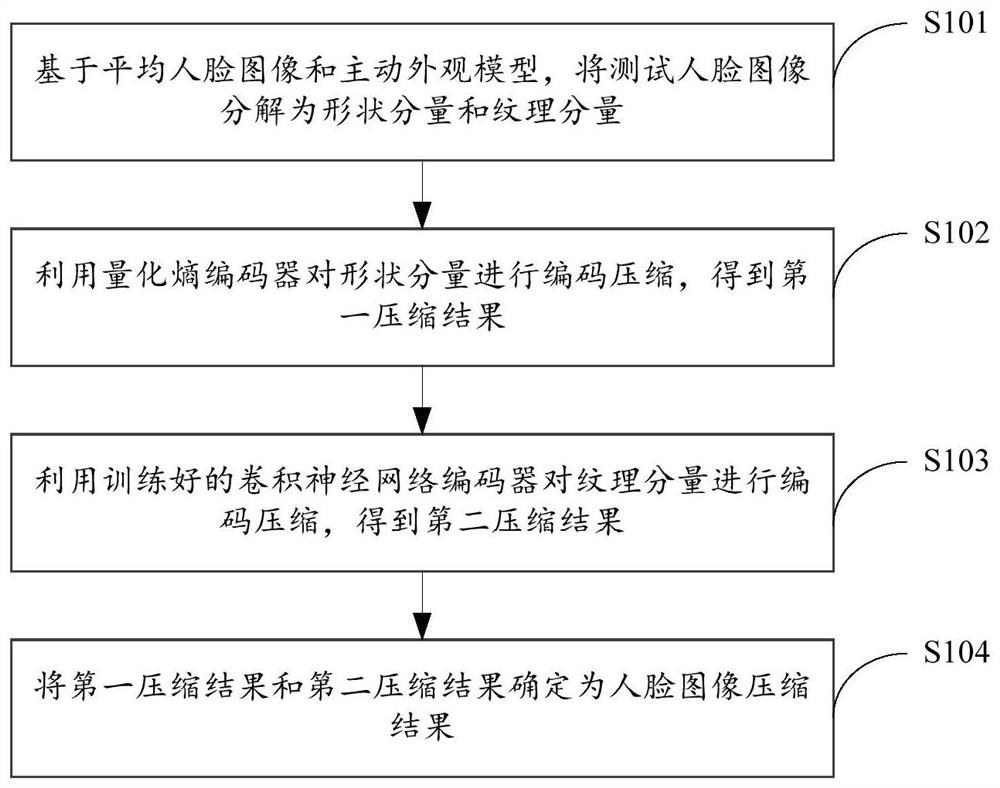

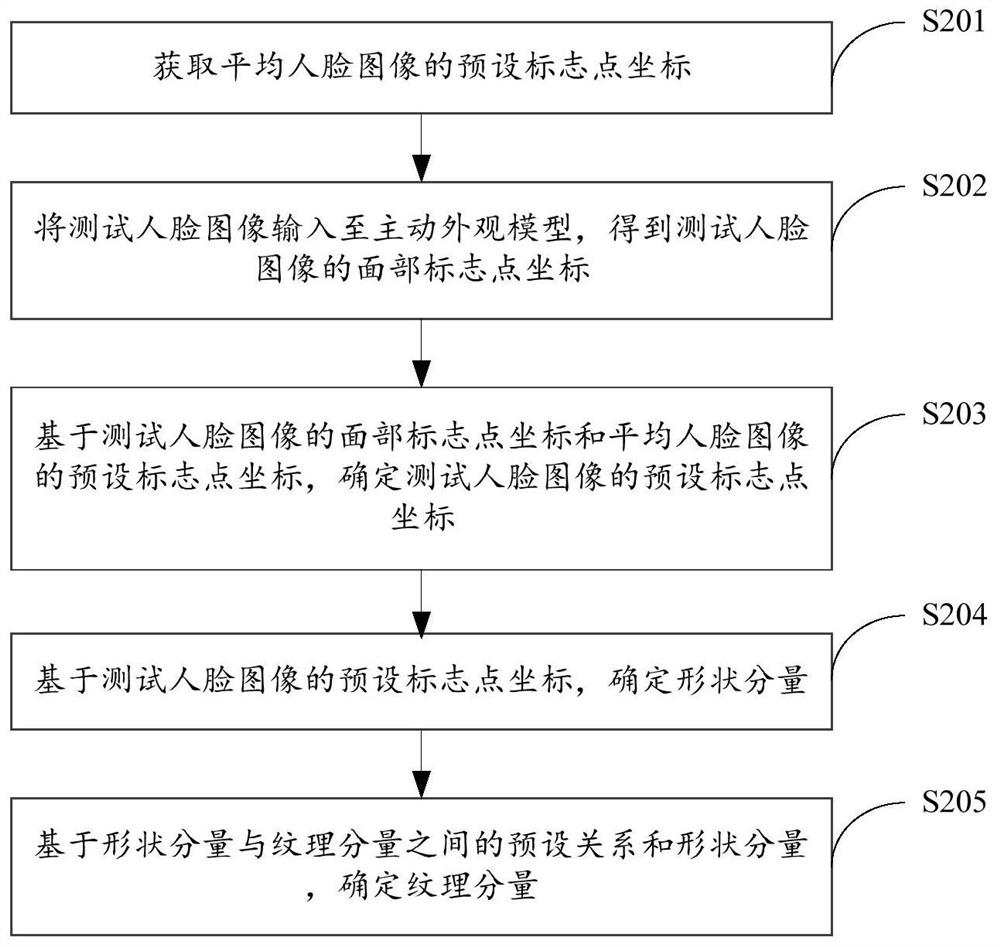

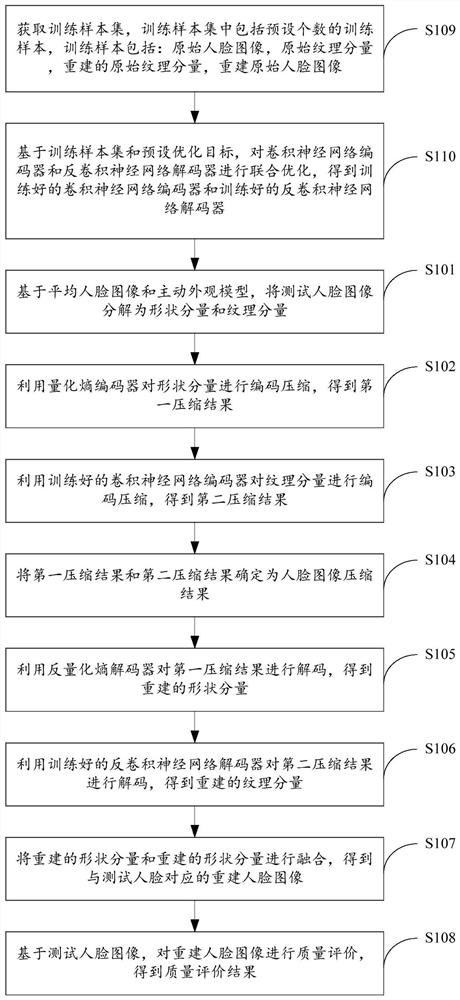

Face image compression method and device based on deep learning

ActiveCN112053408AGuaranteed reconstruction qualityImprove compression efficiencyImage enhancementImage analysisQuantization (image processing)Imaging processing

The invention provides a face image compression method and device based on deep learning, and relates to the technical field of image processing, and the method comprises the steps: decomposing a testface image into a shape component and a texture component based on an average face image and an active appearance model; then, using a quantization entropy encoder for encoding and compressing the shape component to obtain a first compression result; using a trained convolutional neural network encoder for encoding and compressing the texture component to obtain a second compression result; and finally, determining the first compression result and the second compression result as a face image compression result. According to the method, the average face image and the active appearance model are used as priori knowledge, and the trained convolutional neural network encoder is used to encode and compress the texture component, so that the second compression result represented by a low-dimensional feature can be obtained, therefore, the redundancy of the texture component is reduced, and the compression efficiency of the face image is improved.

Owner:TSINGHUA UNIV

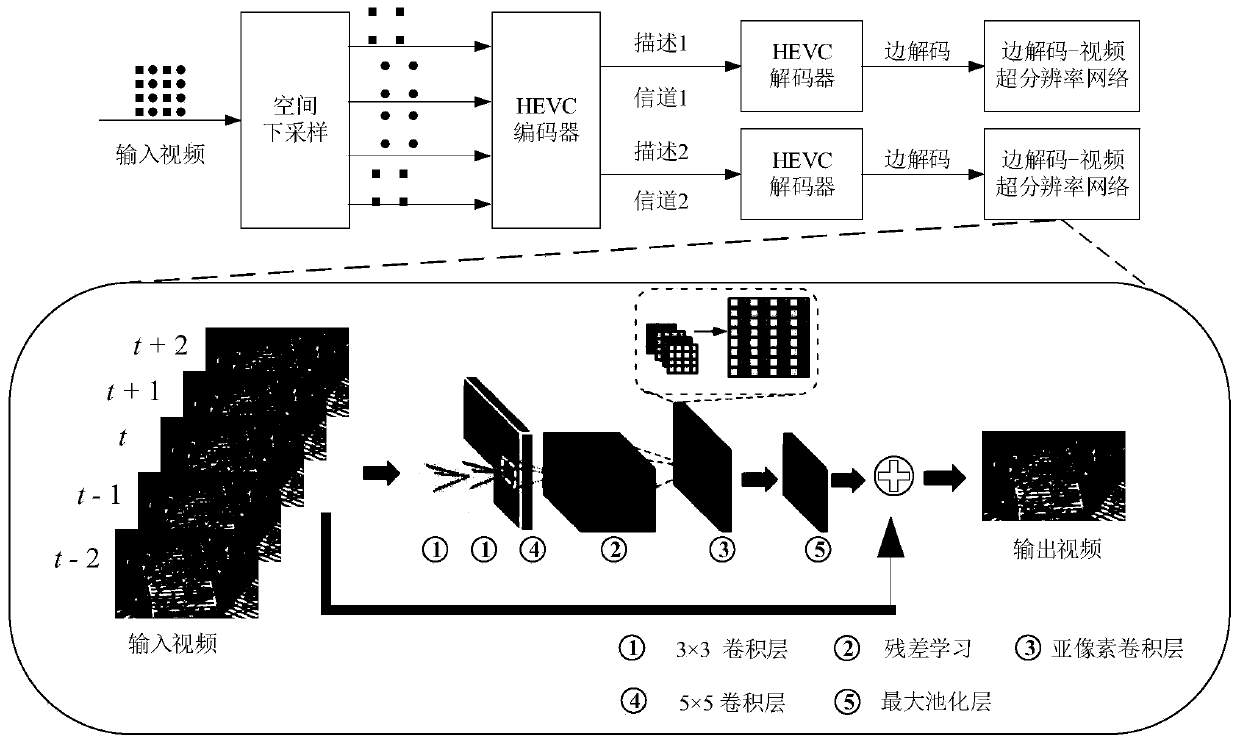

Multiple description coding high-quality edge reconstruction method based on spatial downsampling

ActiveCN111510721AImprove video qualityImprove reconstruction qualityDigital video signal modificationData setFeature extraction

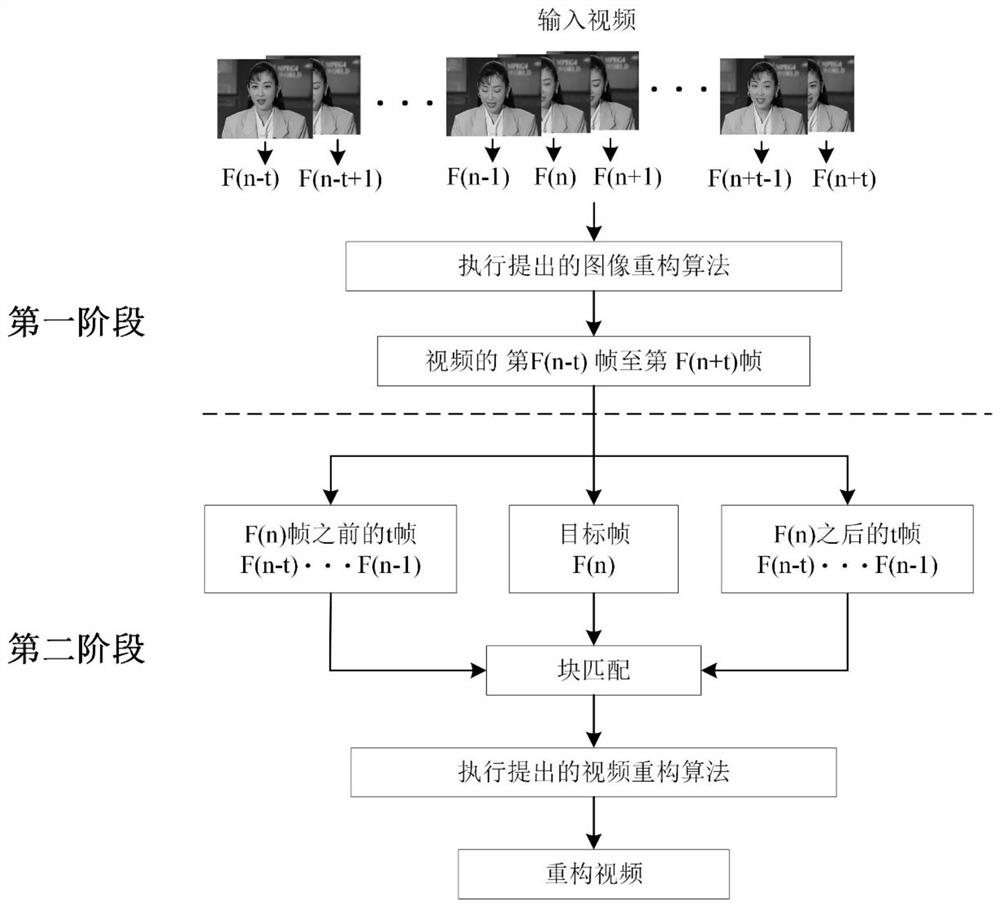

The invention provides a multiple description coding high-quality edge reconstruction method based on spatial downsampling, which comprises the following steps: making a data set: selecting a video, dividing the video into two descriptions through spatial downsampling, coding and decoding the video under the setting of a quantization parameter QP value, and taking the decoded video and a corresponding original video as a training set; and training a SD-VSRnet network: taking every five frames of the video as an input of the network, sequentially performing feature extraction, high-frequency detail recovery and pixel rearrangement, then performing jump connection with an input intermediate frame to obtain reconstructed video frames, and performing frame-by-frame reconstruction to obtain a final reconstructed video, thereby realizing training of the SD-VSRnet network. According to the method provided by the invention, a multiple description coding high-quality edge reconstruction data set suitable for space downsampling is made, in addition, a video super-resolution neural network is adopted to separately test four QP values, and the edge decoding video reconstruction quality of different compression degrees can be effectively improved.

Owner:HUAQIAO UNIVERSITY

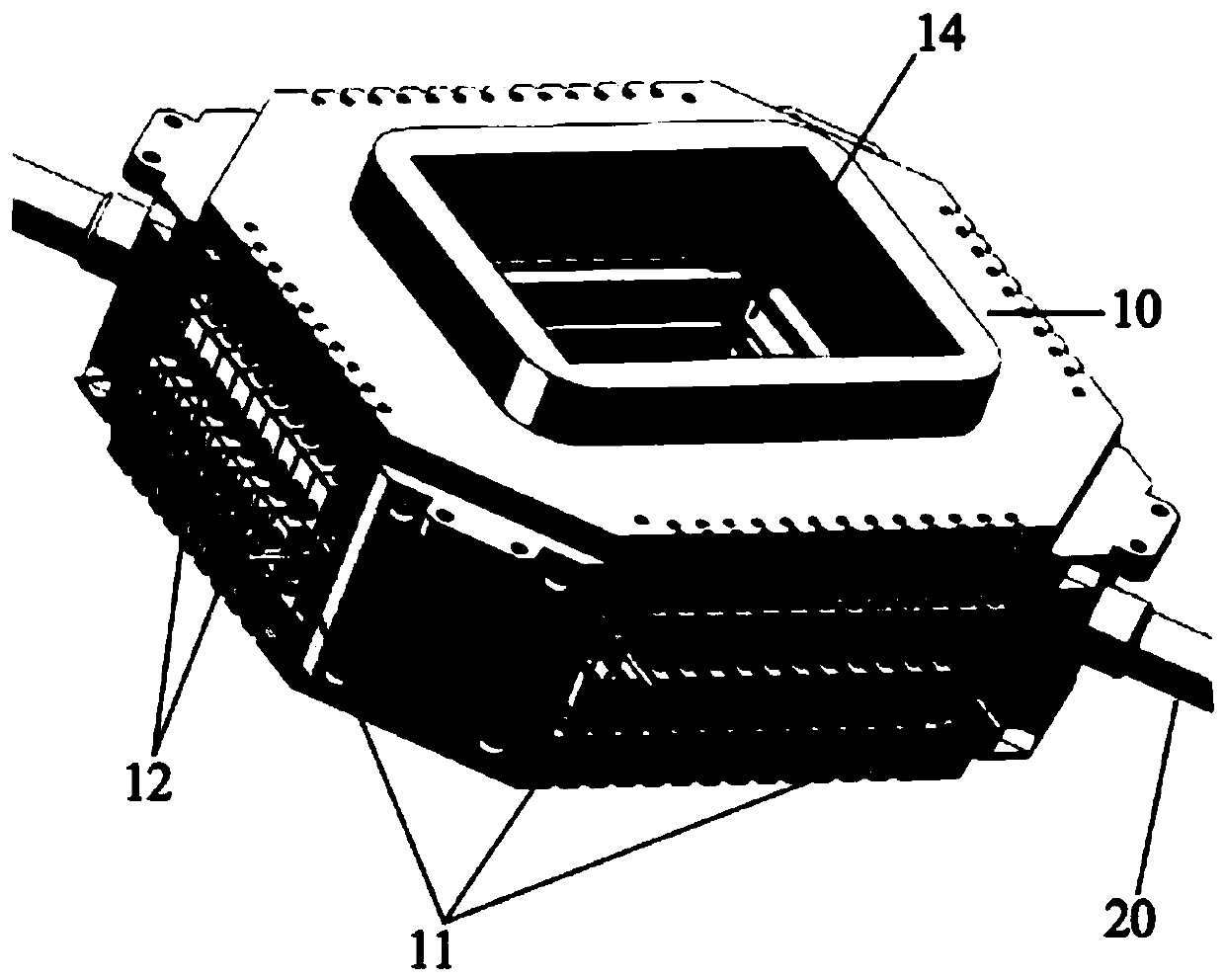

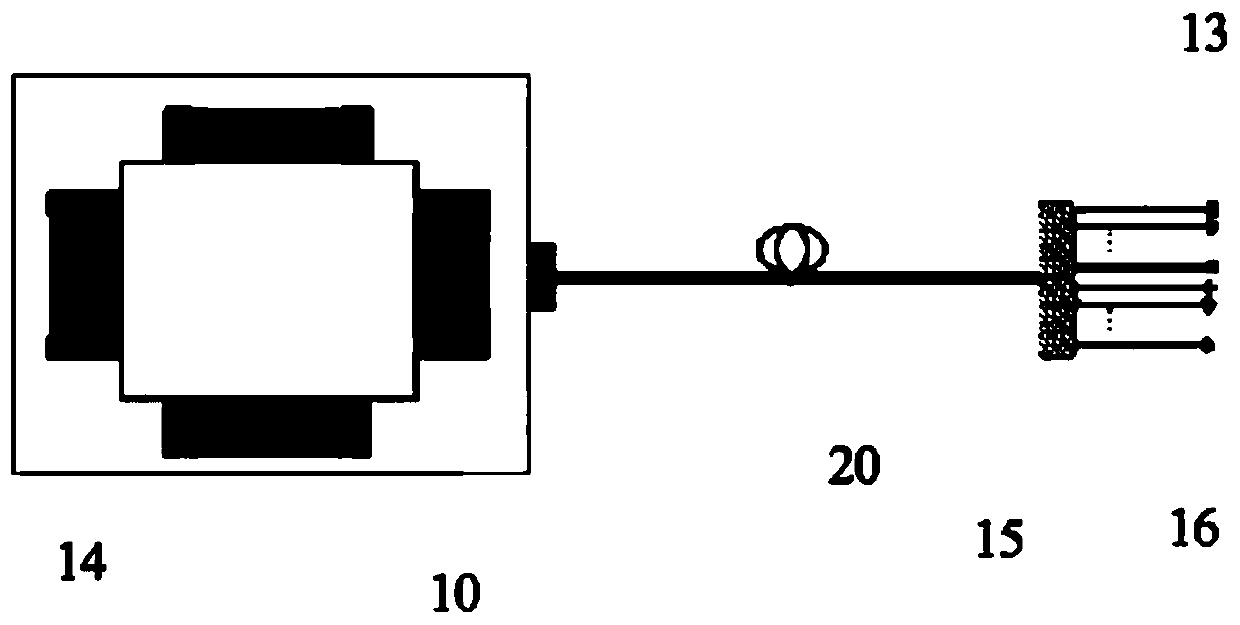

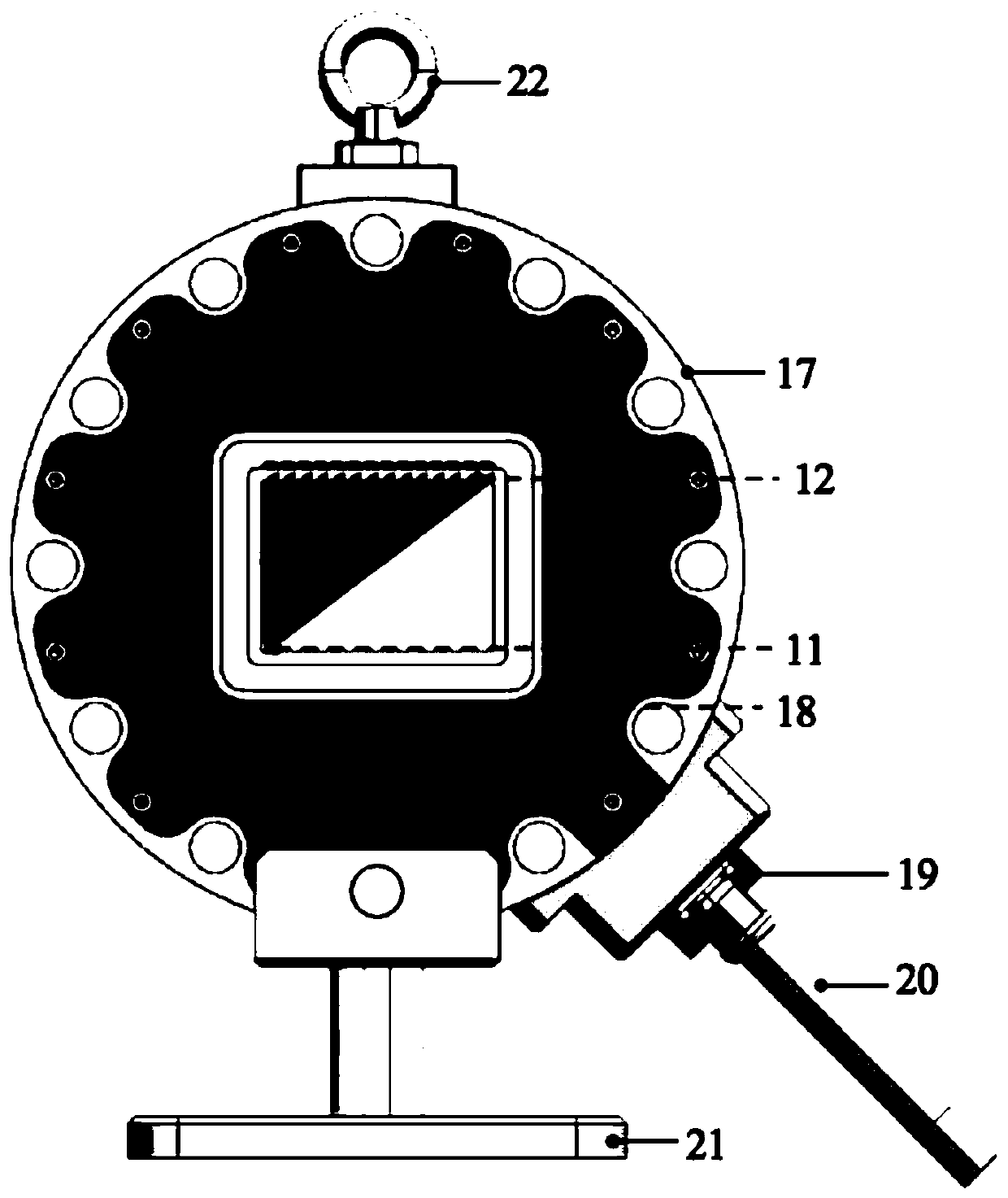

Measuring device for measuring gas parameters of combustion field

PendingCN111141524AIncreased deployment count and coverageHigh reconstruction resolutionInternal-combustion engine testingSingle-mode optical fiberOptical measurements

The invention discloses a measuring device for measuring gas parameters of a combustion field, belongs to the technical field of flow field optical measurement, and can solve the problem of poor measurement result caused by insufficient light distribution in the flow field in the prior art. The measuring device comprises a measuring ring; each laser emitting unit corresponds to each laser receiving unit group, and the corresponding laser emitting unit and laser receiving unit group are respectively arranged on two opposite side walls of the measuring ring; each laser receiving unit group comprises a plurality of laser receiving units arranged in rows; each single-mode optical fiber corresponds to each laser emission unit; each first multimode optical fiber corresponds to each laser receiving unit; the single-mode optical fiber outputs a laser beam to the laser emission unit; the laser emitting unit converts the laser beam into a fan-shaped light beam, and the laser receiving unit converges the fan-shaped light beam irradiated on the laser receiving unit and couples the fan-shaped light beam into the corresponding first multimode optical fiber. The device is used for flow field gasparameter measurement.

Owner:PLA PEOPLES LIBERATION ARMY OF CHINA STRATEGIC SUPPORT FORCE AEROSPACE ENG UNIV +1

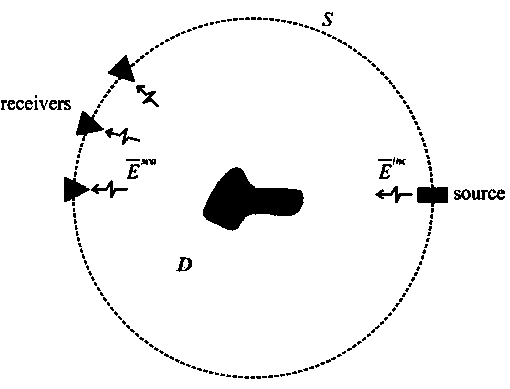

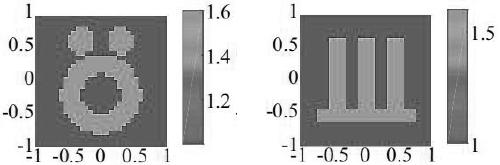

Hybrid input method for solving electromagnetic inverse scattering problem based on deep learning

ActiveCN111488549AAchieve reconstructionImprove rebuild qualityNeural architecturesNeural learning methodsComputational physicsStatistical physics

The invention discloses a hybrid input method for solving an electromagnetic inverse scattering problem based on deep learning, and the method comprises the following steps: 1, obtaining the quantitative information of an unknown scatterer through employing a quantitative inversion method, and enabling the quantitative information to comprise a contrast ratio; 2, obtaining qualitative informationof an unknown scatterer by utilizing a qualitative inversion method, wherein the qualitative information comprises normalized numerical values, an index function is defined on the interested domain and used for judging whether the sampling points are located inside or outside the unknown scatterer, a set of normalized numerical values are obtained according to the index function, and the normalized numerical values indicate whether each sampling point is located at a point inside the unknown scatterer or not; 3, performing point multiplication on the normalized numerical value and the contrastvalue, and converting a point multiplication result into a combined dielectric constant value; 4, taking the combined dielectric constant value as the input of the neural network, taking the real dielectric constant value of the scatterer as the output of the neural network, and training the neural network.

Owner:HANGZHOU DIANZI UNIV

Reconstruction method for image super-resolution

ActiveCN102360498BGood edge propertiesGood removal effectImage enhancementImaging processingImage resolution

The invention discloses a reconstruction method for image super-resolution. The reconstruction method comprises the following steps of: (1) inputting a low-resolution image sequence of m frames represented by vectors in the same scene; (2) processing all low-resolution images by adopting a bilinear interpolation method, and acquiring initial high-resolution image estimated values, namely initial estimated values; (3) substituting the initial estimated values into an iterative formula, and performing iterative computation; (4) judging whether iterative convergence conditions are met or not, stopping iteration if the iterative convergence conditions are met, and outputting a high-resolution image. The reconstruction method for the image super-resolution provided by the invention has wide application prospects in the fields such as video and image processing, medical imaging, remote sensing images and the like.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD

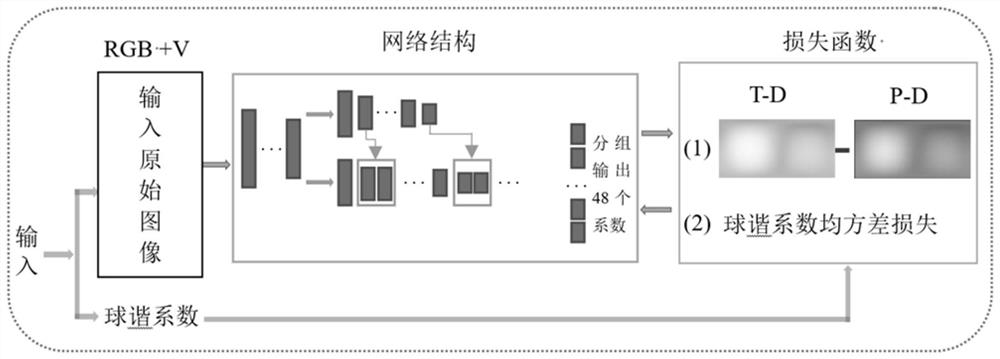

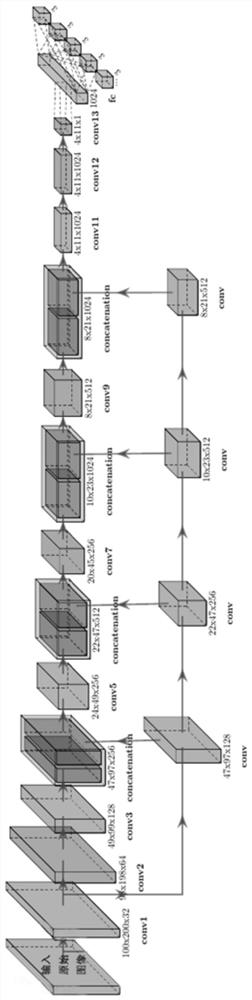

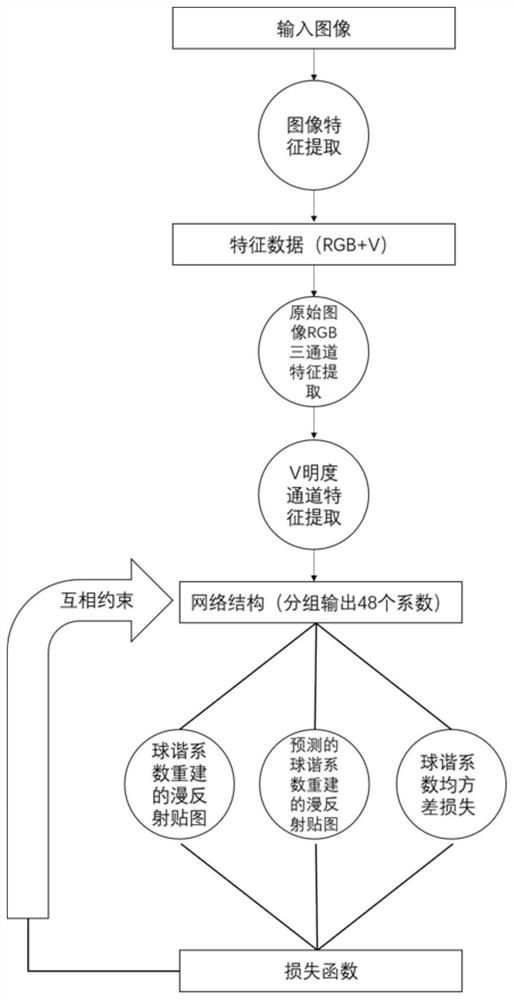

High-precision image information extraction method based on low dynamic range

PendingCN111915533AImprove forecast accuracyImprove reconstruction qualityImage enhancementImage analysisFeature extractionComputational physics

The invention relates to a high-precision image information extraction method based on a low dynamic range, and the method comprises the steps: 1, carrying out feature extraction of an image to acquire three RGB channels of an original image and a V brightness channel in an HSV color space; 2, outputting 48 coefficients in groups by using a full convolutional neural network structure, and adding ashortcut structure on the basis of the 48 coefficients to realize fusion of high-level features and low-level features; finally, outputting the 48 spherical harmonic coefficients in total, dividing the 48 spherical harmonic coefficients into 16 groups, wherein each group comprises three pieces of data which represent components on an R channel, a G channel and a B channel respectively; 3, establishing a spherical harmonic coefficient loss function and a diffuse reflection map loss function, and calculating a mean square error loss function and a diffuse reflection map loss function of 48 spherical harmonic coefficients; and 4, performing feedback constraint on the full convolutional neural network structure by utilizing the mean square error loss function and the diffuse reflection mapping loss function of the 48 spherical harmonic coefficients in the step 3.

Owner:SHANGHAI GOLDEN BRIDGE INFOTECH CO LTD

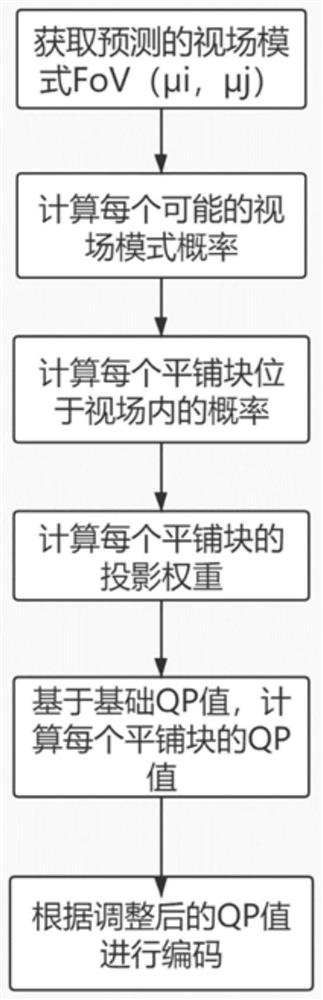

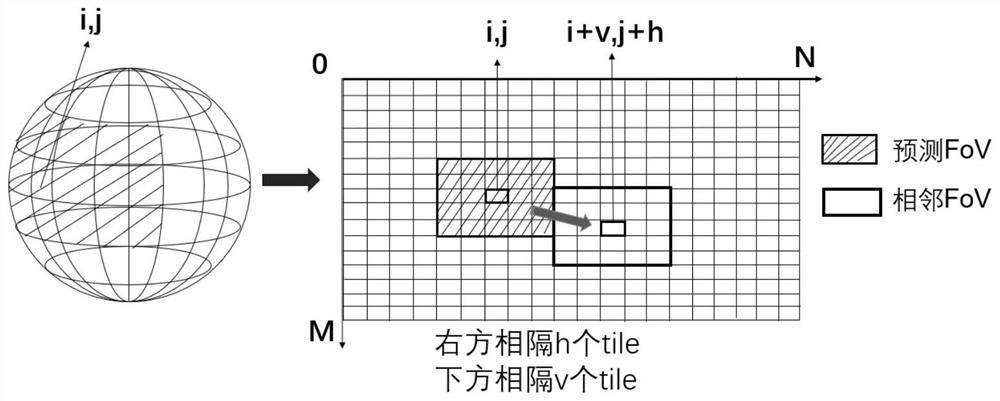

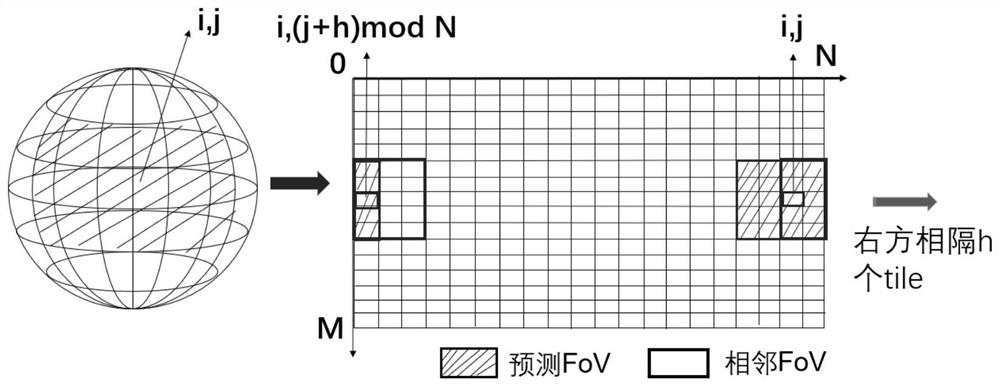

Panoramic video coding optimization algorithm based on user field of view

ActiveCN113395505AImprove rebuild qualityDigital video signal modificationSteroscopic systemsAlgorithmField of view

The invention discloses a panoramic video coding optimization algorithm based on a user field of view, and the algorithm comprises the following steps: (1), obtaining a predicted FoV mode FoV([mu]i, [mu]j); (2) generating a two-dimensional Gaussian distribution probability corresponding to the FoV (i, j) in the current frame according to the FoV([mu]i, [mu]j); (3) obtaining the cumulative probability that the tile (i, j) is possibly in n*m FoV modes, namely the probability that the tile is observed; (4) calculating the projection weight of tile (i, j) by using a projection transformation formula in an ERP format; (5) calculating and correcting lambda and QP values according to the finally obtained observation probability and projection weight; and (6) encoding by using the finally obtained QP value, and encoding according to the obtained tile-level quantization parameter selection scheme after encoding. According to the method, the coding quality of a user watching area is fully considered, so that the final coding effect achieves better reconstruction quality under the condition of consuming smaller bits.

Owner:HOHAI UNIV

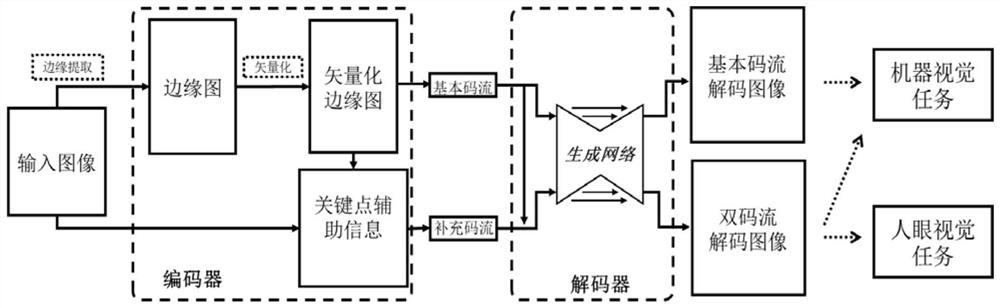

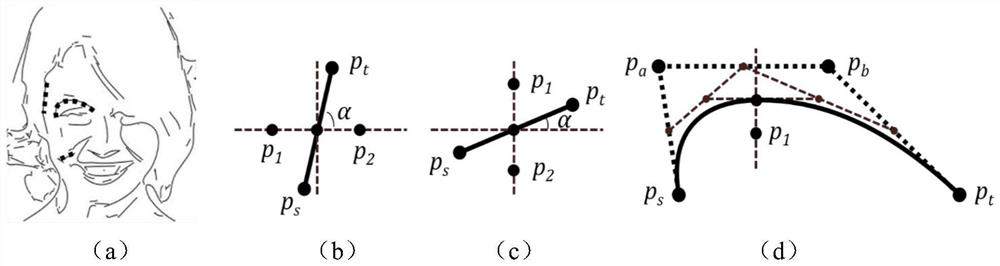

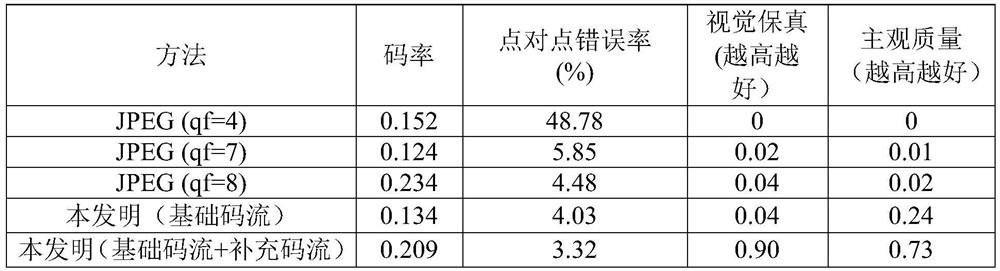

Extensible man-machine cooperation image coding method and coding system

ActiveCN113132755AGuaranteed Visual QualityGuaranteed performanceDigital video signal modificationSelective content distributionSample imageEntropy encoding

The invention discloses an extensible man-machine cooperation image coding method and coding system. The method comprises the following steps: extracting an edge graph of each sample picture and vectorizing the edge graph as a compact representation for driving a machine vision task; performing key point extraction in the vectorized edge image to serve as auxiliary information; respectively carrying out entropy coding lossless compression on the compact representation and the auxiliary information to obtain two paths of code streams; carrying out preliminary decoding on the two paths of code streams to obtain an edge graph and auxiliary information; inputting the edge graph obtained by decoding and auxiliary information into a generative neural network, and performing forward calculation of the network; carrying out loss function calculation according to the obtained calculation result and the corresponding original picture, and carrying out back propagation on the calculated loss to a neural network to carry out network weight updating until the neural network converges, so as to obtain a double-path code stream decoder; obtaining an edge image and auxiliary information of a to-be-processed image, and encoding and compressing the edge image and the auxiliary information to obtain two paths of code streams; and enabling the two-path code stream decoder to decode the received code stream and reconstructs an image.

Owner:PEKING UNIV

A Large-Scale MIMO Channel State Information Feedback Method Based on Deep Learning

ActiveCN108390706BHigh speedImprove rebuild qualitySpatial transmit diversityTheoretical computer scienceChannel state information feedback

The invention discloses a large-scale MIMO channel state information feedback method based on deep learning. The method comprises the following steps: firstly, carrying out two-dimensional discrete Fourier transform (DFT) on a channel matrix H-wave of MIMO channel state information in a spatial frequency domain on a user side, so that a channel matrix H which is sparse in an angle delay domain isobtained; secondly, constructing a model CsiNet comprising a coder and a decoder, wherein the coder belongs to the user side and is used for coding the channel matrix H into codons with a lower dimension, and the decoder belongs to a base station side and is used for reconstructing an original channel matrix estimation value H-arrow from the codons; thirdly, training the model CsiNet to obtain model parameters; fourthly, carrying out two-dimensional inverse DFT on a reconstructed channel matrix H-arrow which is output by the CsiNet, so that a reconstructed value of the original channel matrixH-wave in the spatial frequency domain is recovered; and finally, using the trained model CsiNet for compressed sensing and reconstruction of channel information. The method provided by the inventionhas the advantages that large-scale MIMO channel state information feedback expenditures can be reduced, and an extremely high channel reconstruction quality and an extremely high channel reconstruction speed can be achieved.

Owner:SOUTHEAST UNIV

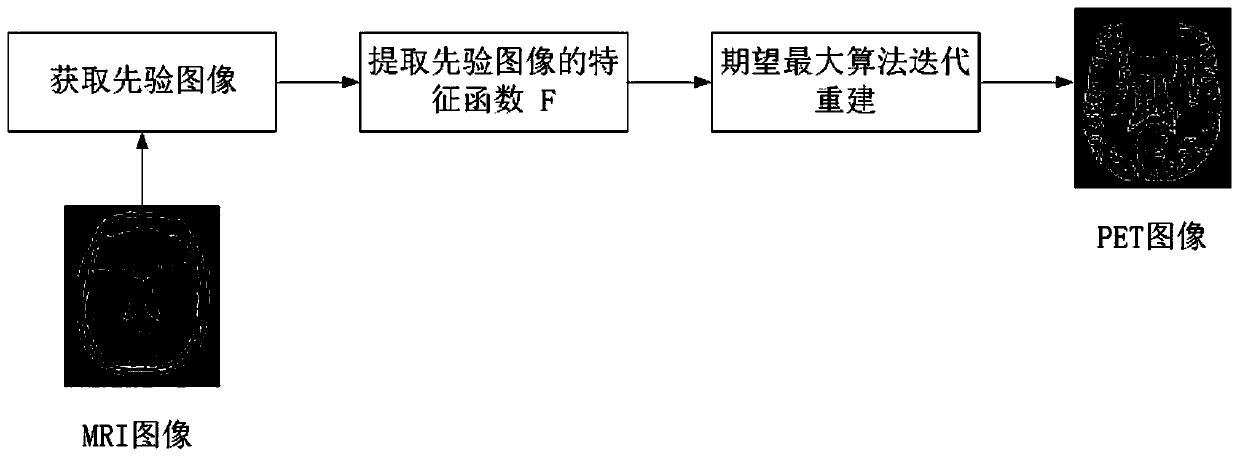

PET reconstruction method based on anatomical prior information

PendingCN111402357AImprove signal-to-noise ratioImprove rebuild qualityReconstruction from projectionExpectation–maximization algorithmKernel method

A PET reconstruction method based on anatomical prior information relates to the medical PET imaging technology, and comprises the following steps: 1, obtaining a prior image; 2, extracting a functionF representing anatomical features in a high-dimensional space; 3, selecting a radial Gaussian kernel function; 4, adding a kernel method into the expectation maximization algorithm iterative reconstruction framework to represent the PET image in the reconstruction process; 5, finally obtaining a reconstruction result, adding the extracted priori information of the anatomical image with high spatial resolution into a kernel method reconstruction process based on machine learning, obtaining better PET image reconstruction quality, and improving an image signal-to-noise ratio and a quantitativeanalysis effect.

Owner:广州互云医院管理有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com