Patents

Literature

139 results about "Brain control" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

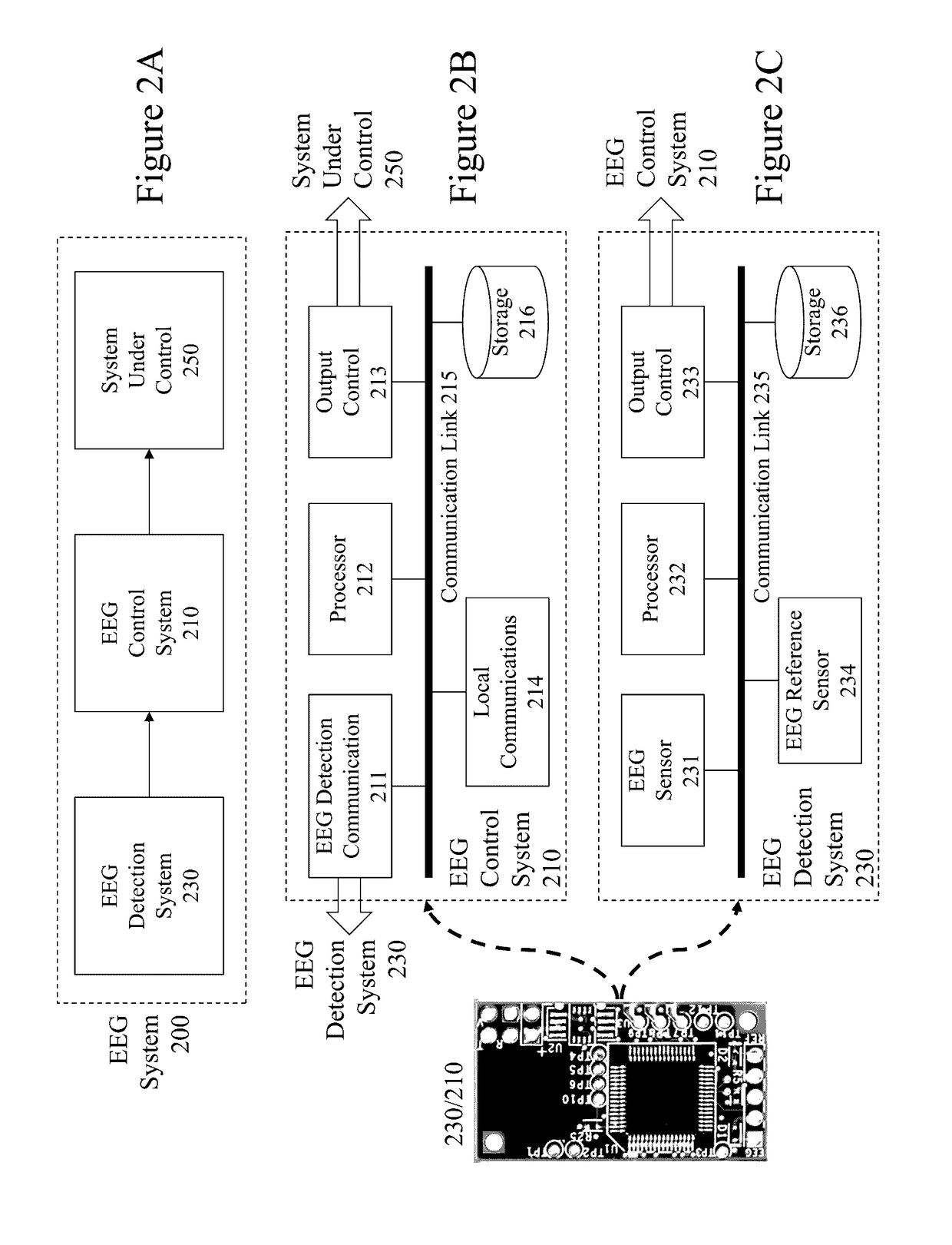

Methods and devices for brain activity monitoring supporting mental state development and training

InactiveUS20140316230A1Extensive analysisMitigate such drawbackElectroencephalographyMedical automated diagnosisEEG deviceEeg data

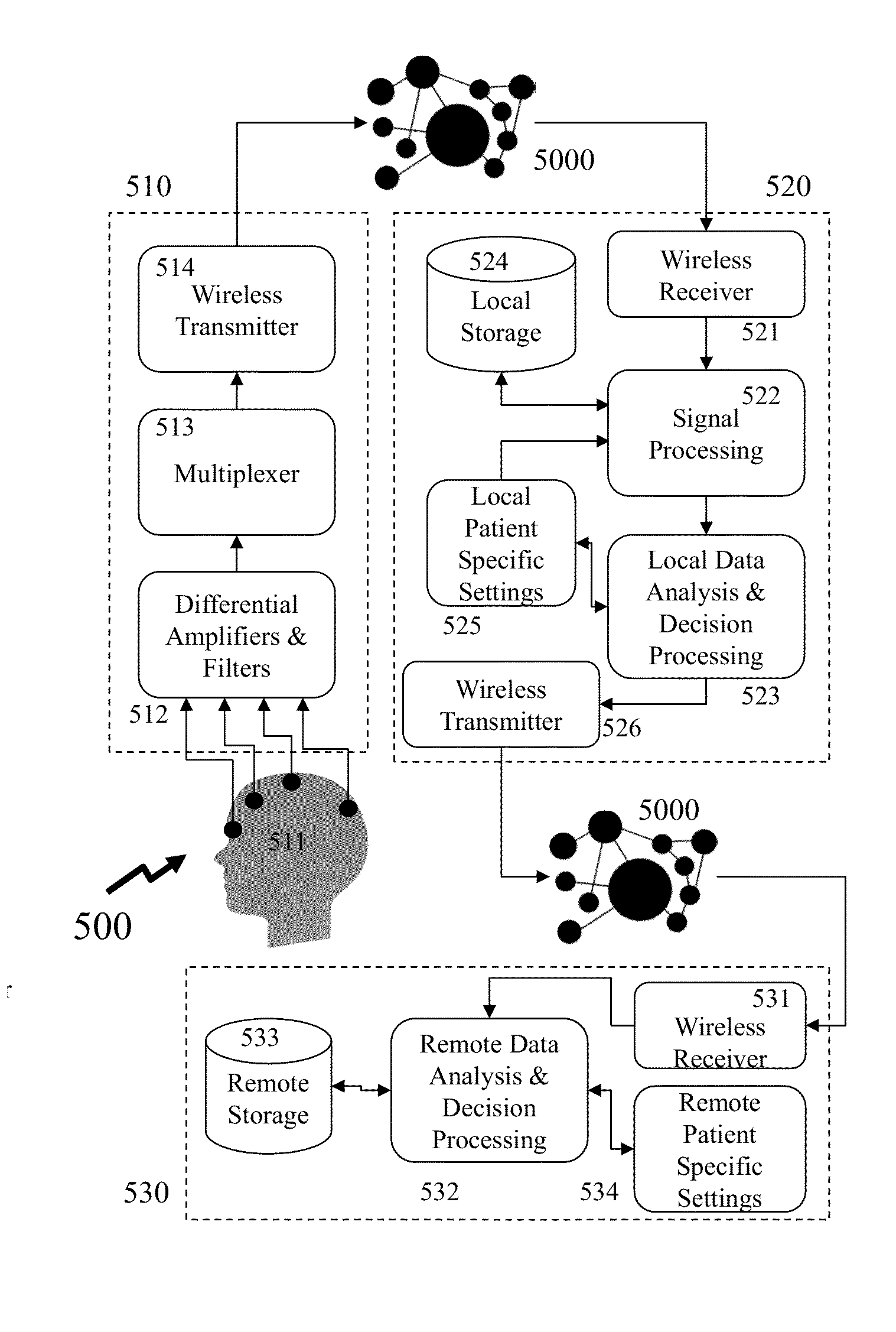

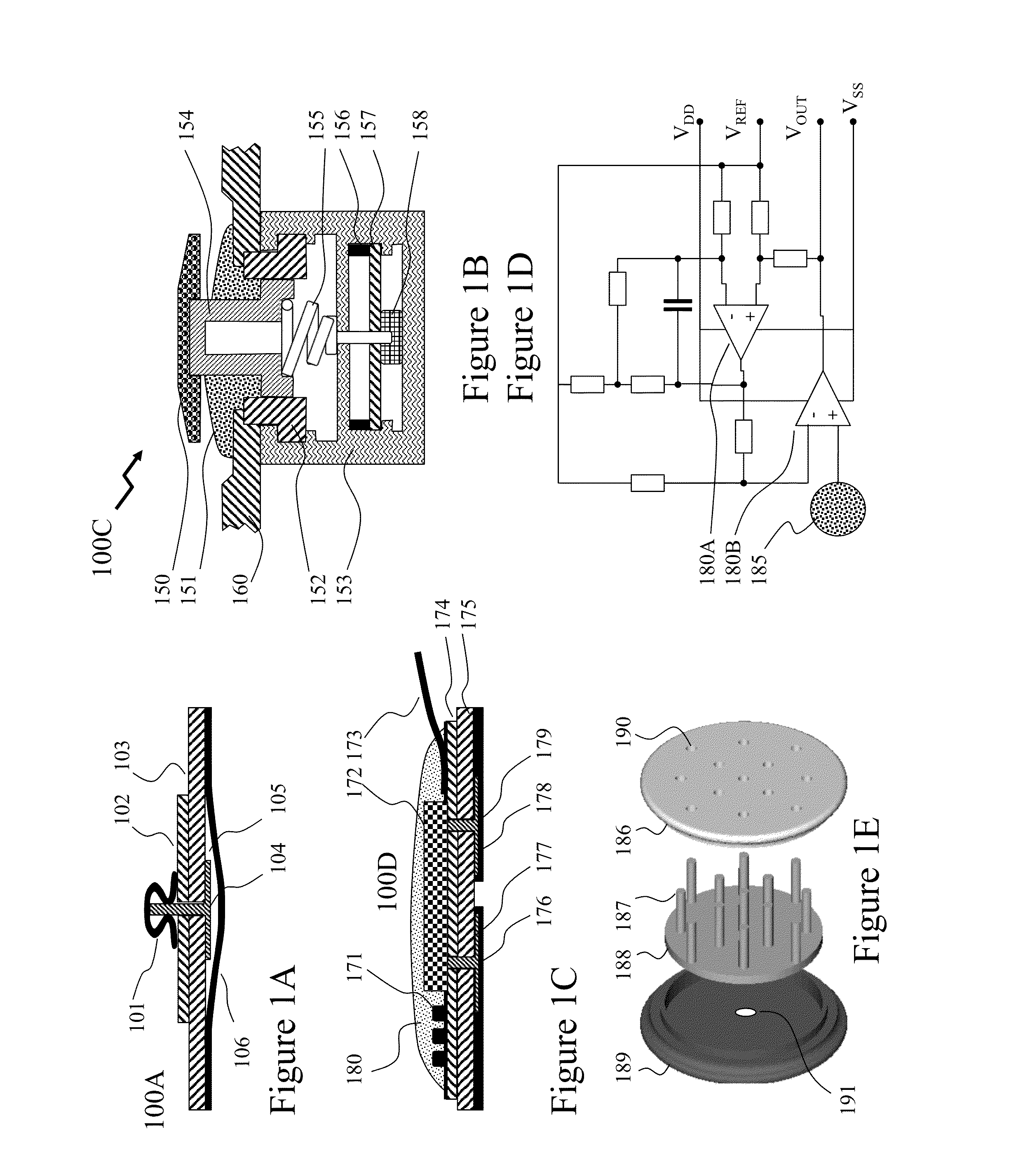

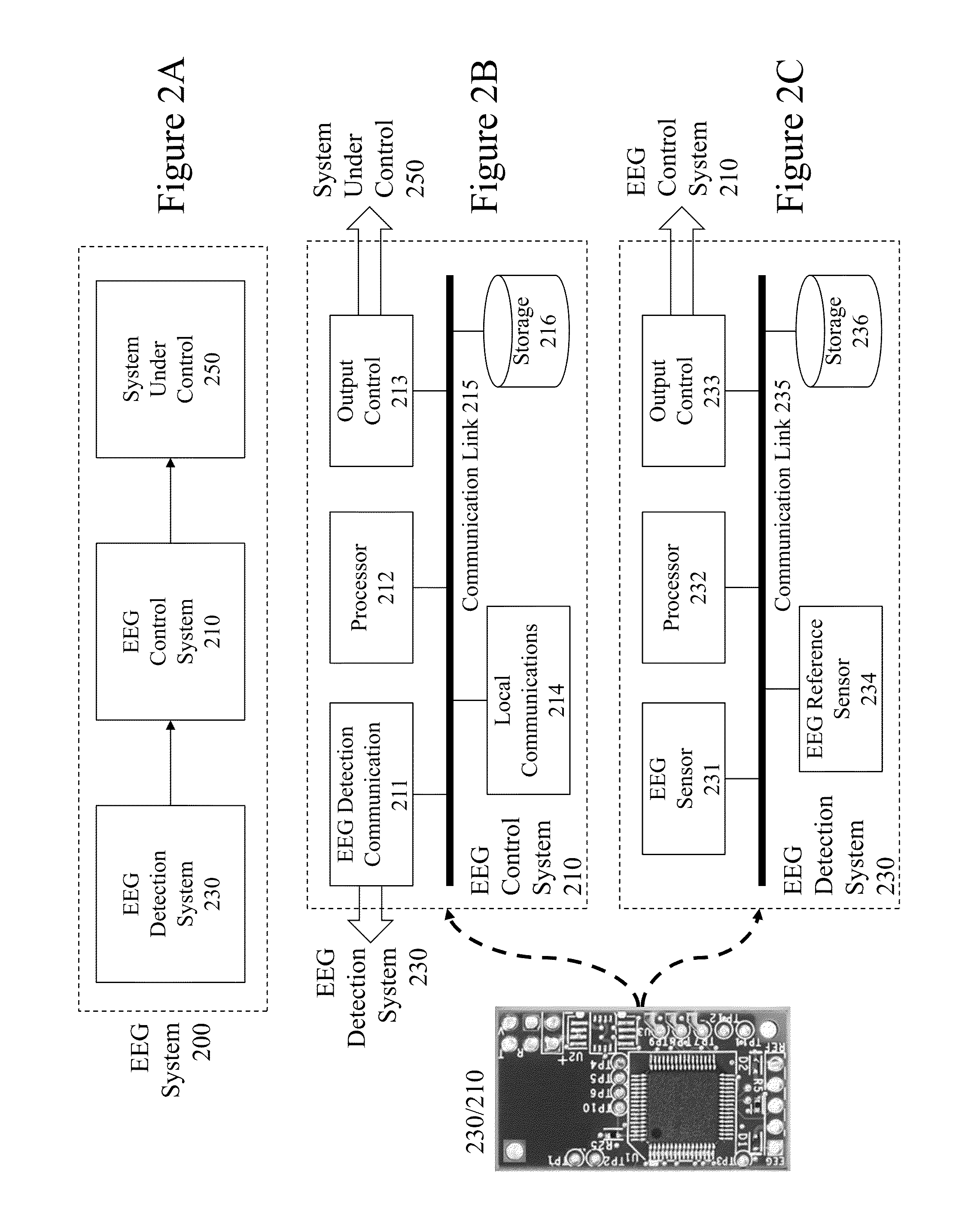

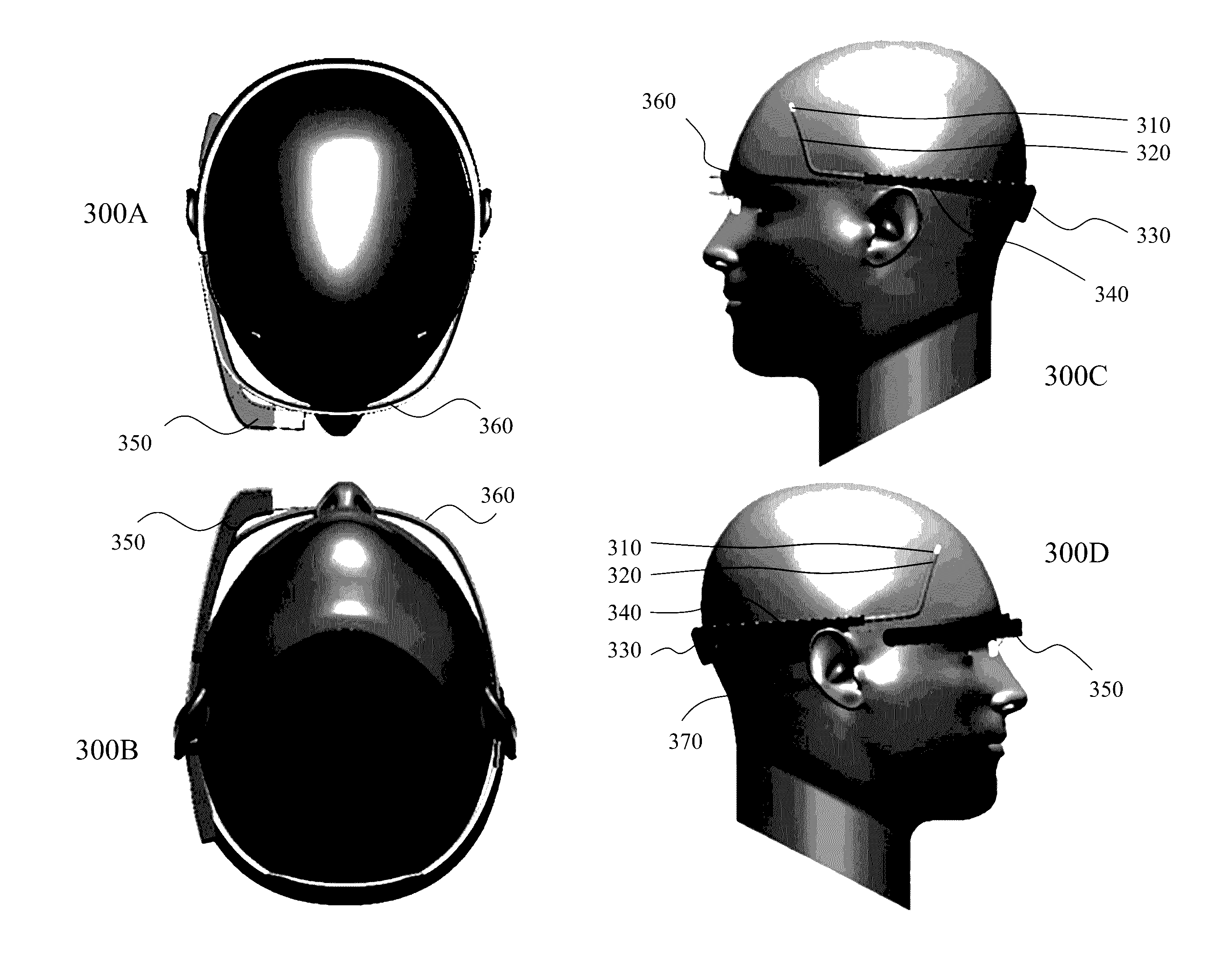

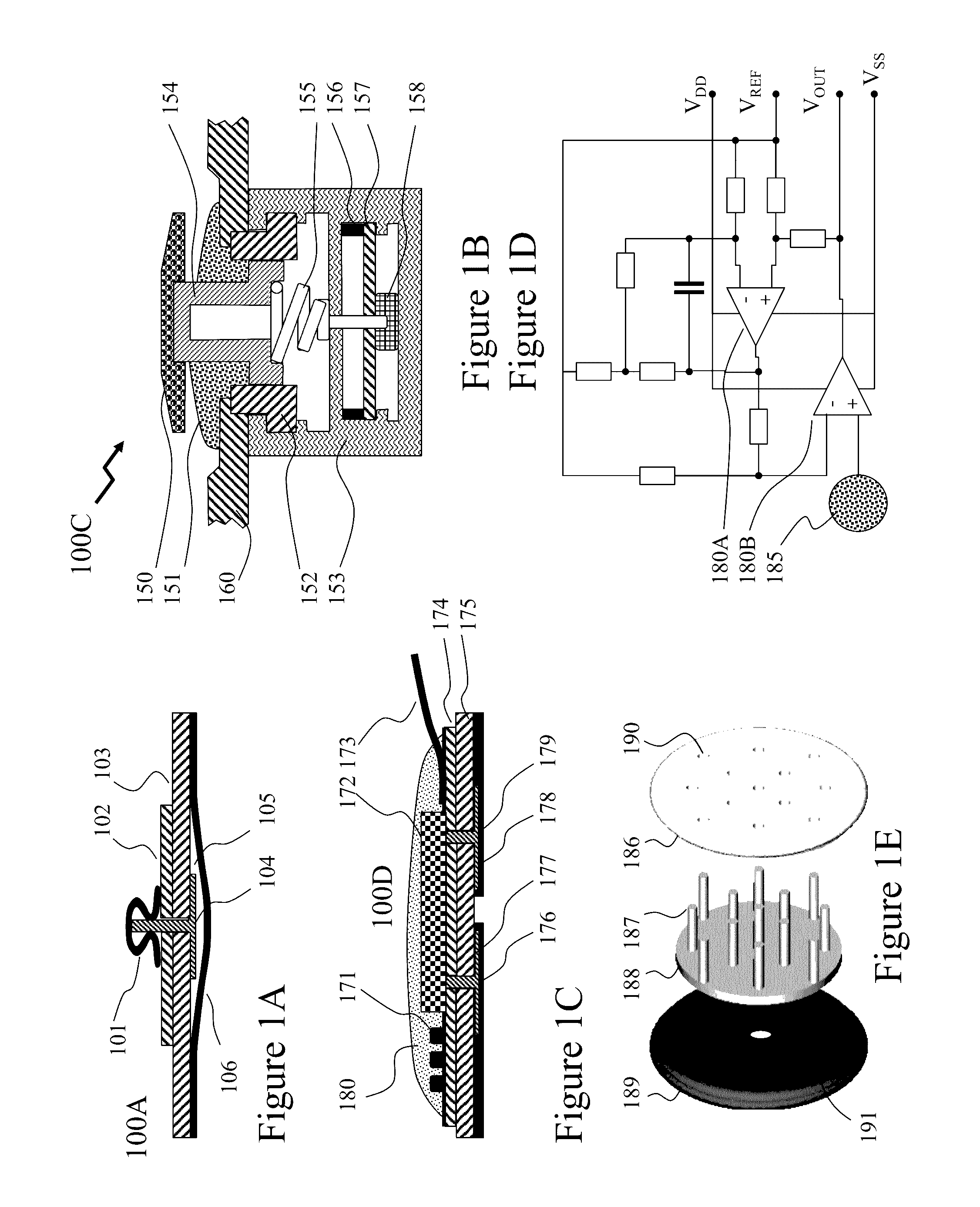

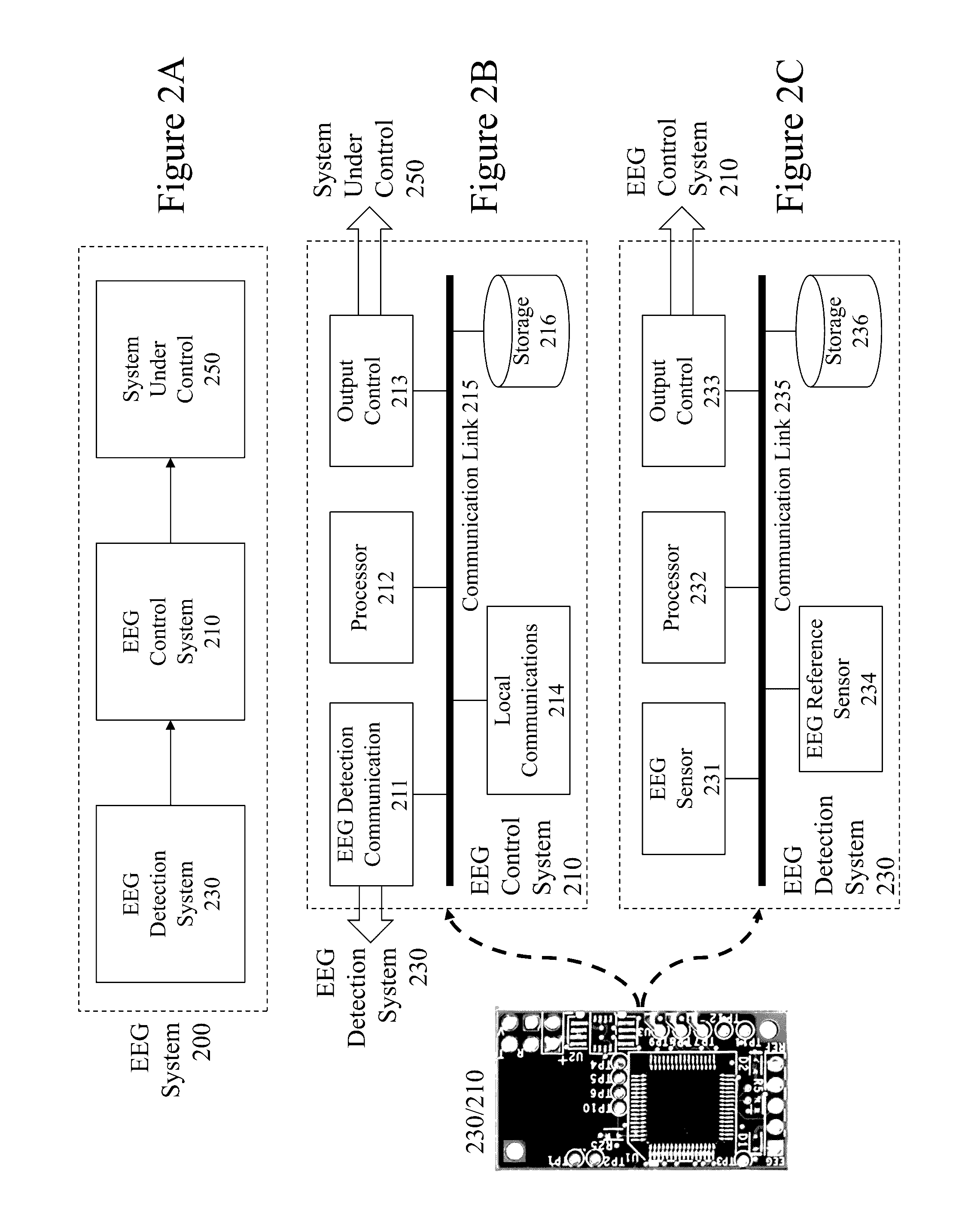

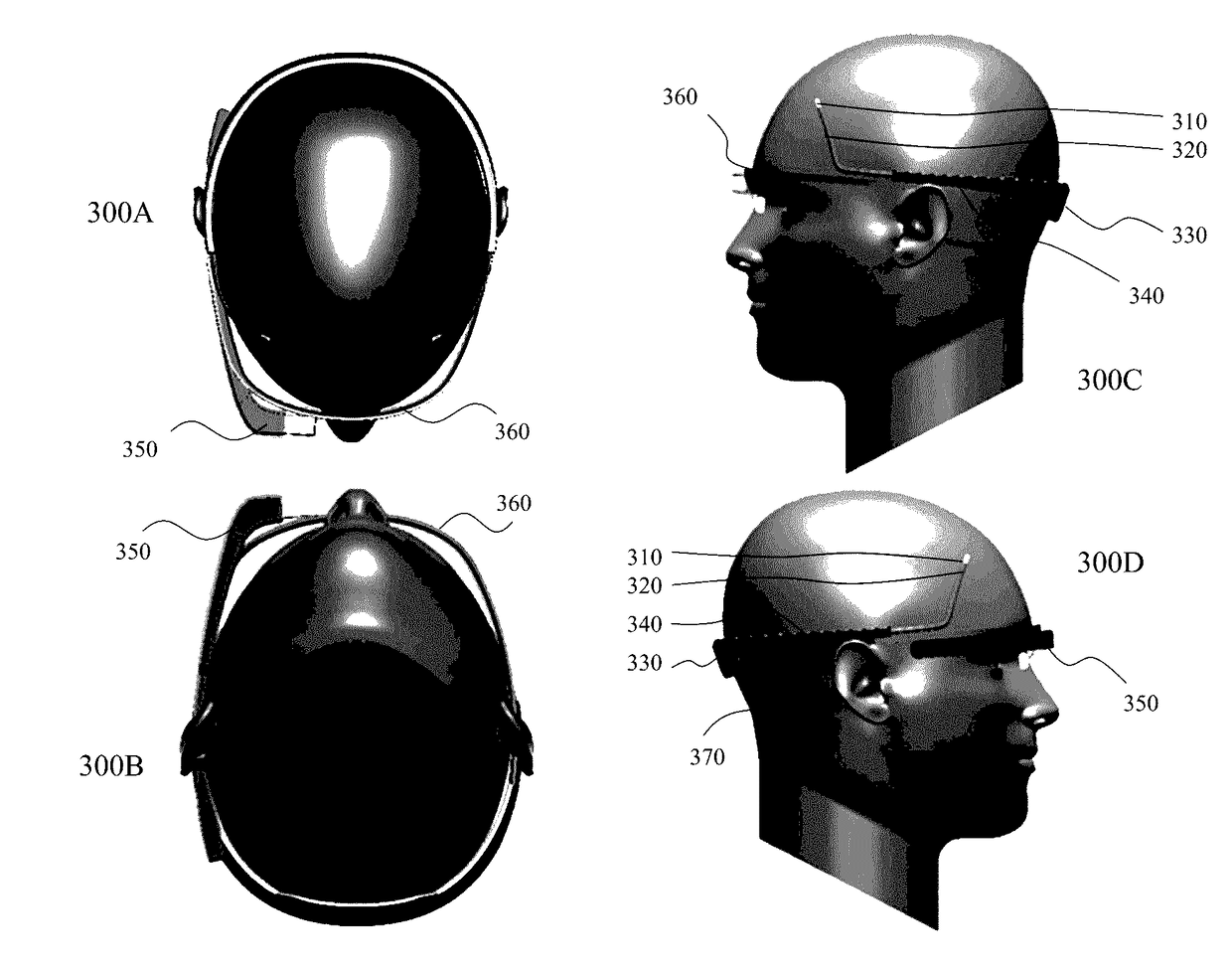

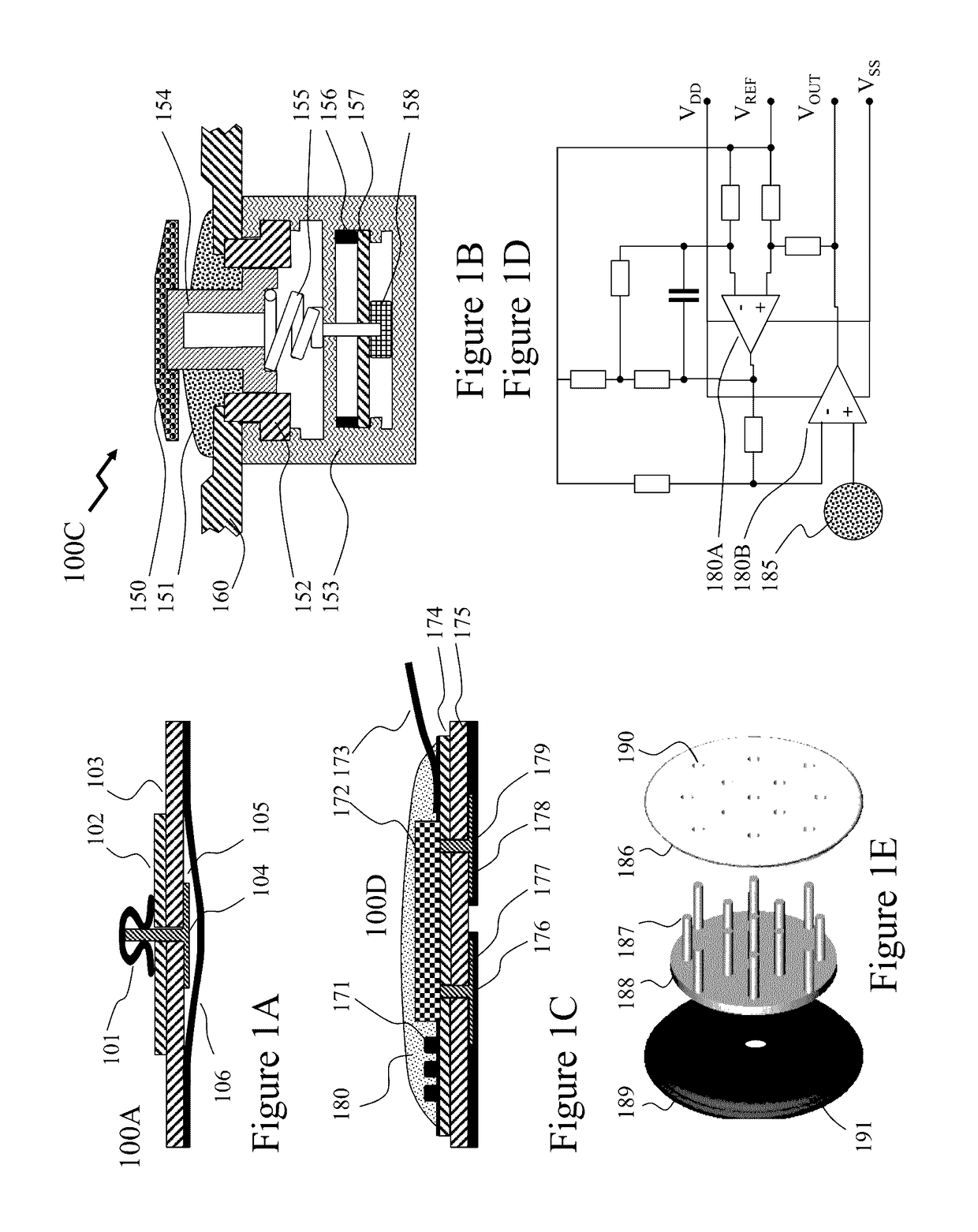

With explosive penetration of portable electronic devices (PEDs) recent focus into consumer EEG devices has been to bring advantages including localized wireless interfacing, portability, and a low-cost high-performance electronics platform to host the processing algorithms to bear. However, most development continues to focus on brain-controlled video games which are nearly identical to those created for earlier, more stationary consumer EEG devices and personal EEG is treated as of a novelty or toy. According to embodiments of the invention the inventors have established new technologies and solutions that address these limitations within the prior art and provide benefits including, but not limited to, global acquisition and storage of acquired EEG data and processed EEG data, development interfaces for expansion and re-analysis of acquired EEG data, integration to other non-EEG derived user data, and long-term user wearability.

Owner:PERSONAL NEURO DEVICES

Methods and devices for brain activity monitoring supporting mental state development and training

InactiveUS9532748B2Mitigate such drawbackElectroencephalographyMedical automated diagnosisEEG deviceEeg data

With explosive penetration of portable electronic devices (PEDs) recent focus into consumer EEG devices has been to bring advantages including localized wireless interfacing, portability, and a low-cost high-performance electronics platform to host the processing algorithms to bear. However, most development continues to focus on brain-controlled video games which are nearly identical to those created for earlier, more stationary consumer EEG devices and personal EEG is treated as of a novelty or toy. According to embodiments of the invention the inventors have established new technologies and solutions that address these limitations within the prior art and provide benefits including, but not limited to, global acquisition and storage of acquired EEG data and processed EEG data, development interfaces for expansion and re-analysis of acquired EEG data, integration to other non-EEG derived user data, and long-term user wearability.

Owner:PERSONAL NEURO DEVICES

Methods and devices for brain activity monitoring supporting mental state development and training

InactiveUS20170071495A1Mitigate such drawbackElectroencephalographyMedical automated diagnosisEEG deviceEeg data

With explosive penetration of portable electronic devices (PEDs) recent focus into consumer EEG devices has been to bring advantages including localized wireless interfacing, portability, and a low-cost high-performance electronics platform to host the processing algorithms to bear. However, most development continues to focus on brain-controlled video games which are nearly identical to those created for earlier, more stationary consumer EEG devices and personal EEG is treated as of a novelty or toy. According to embodiments of the invention the inventors have established new technologies and solutions that address these limitations within the prior art and provide benefits including, but not limited to, global acquisition and storage of acquired EEG data and processed EEG data, development interfaces for expansion and re-analysis of acquired EEG data, integration to other non-EEG derived user data, and long-term user wearability.

Owner:PERSONAL NEURO DEVICES

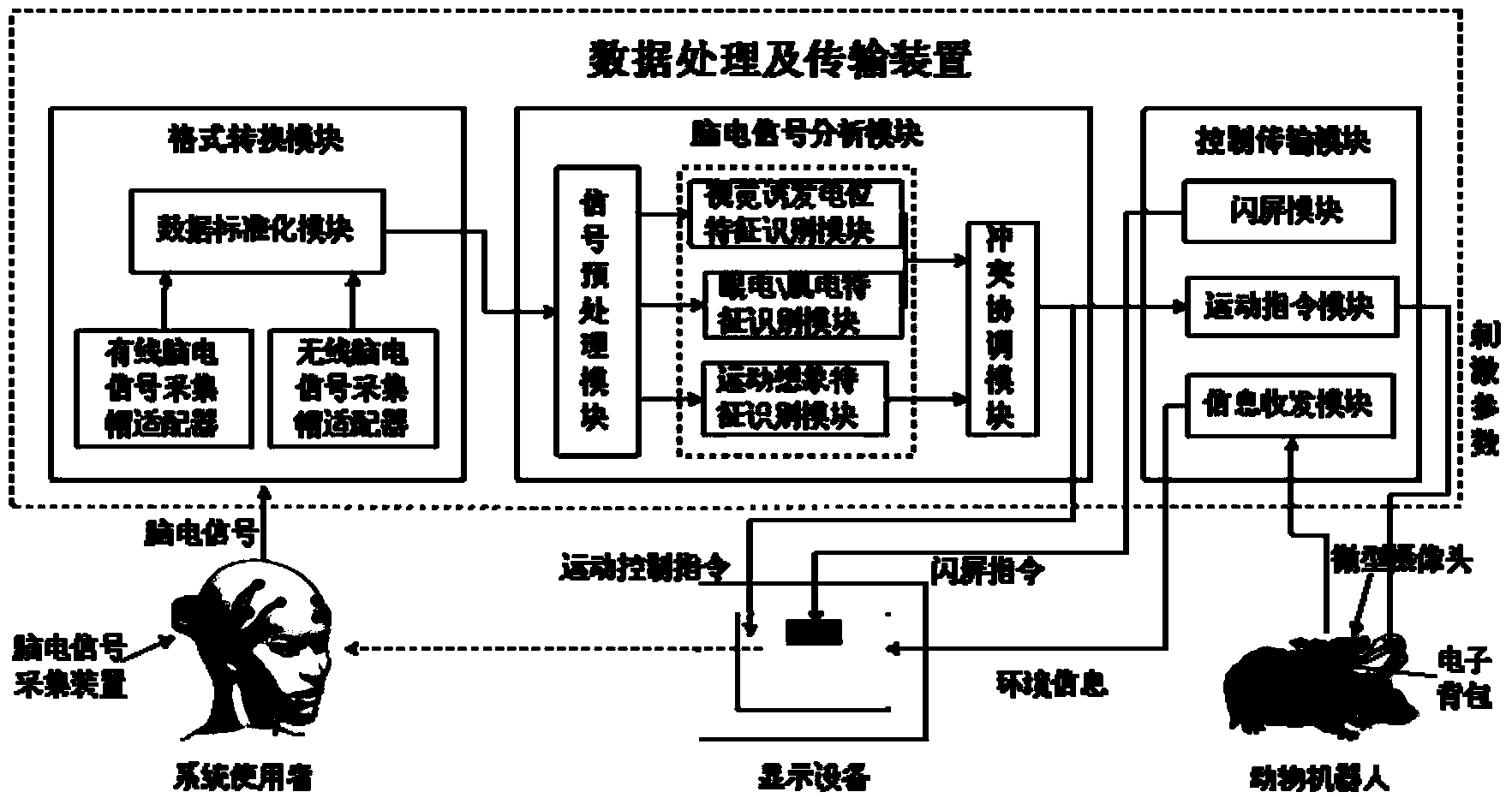

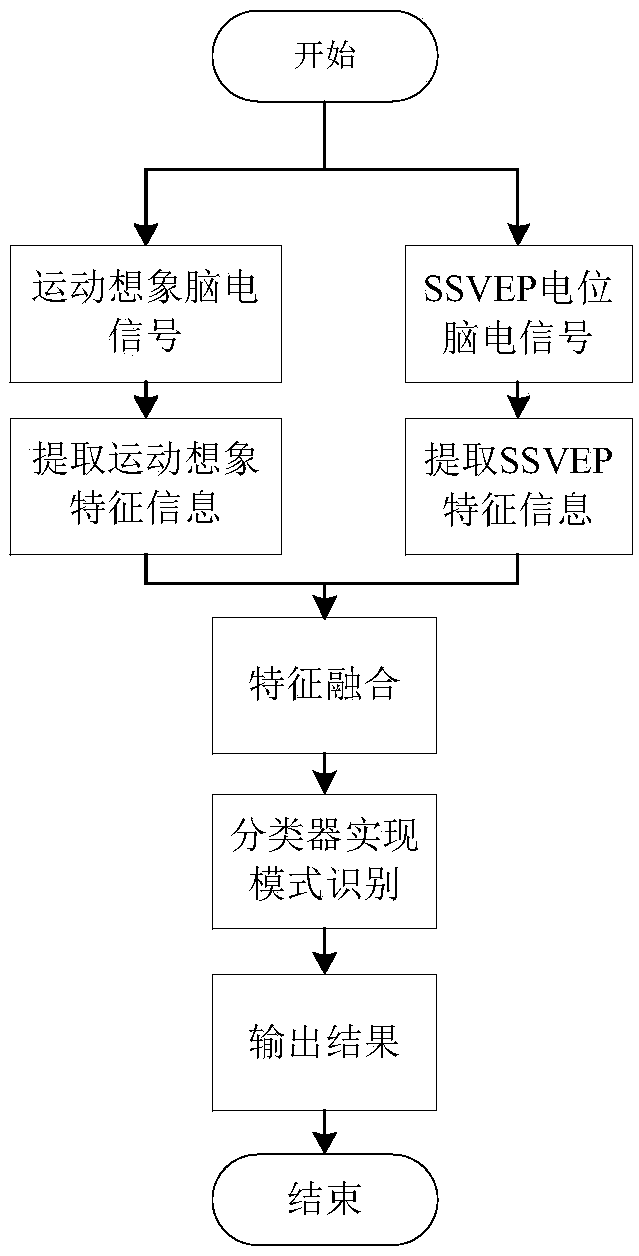

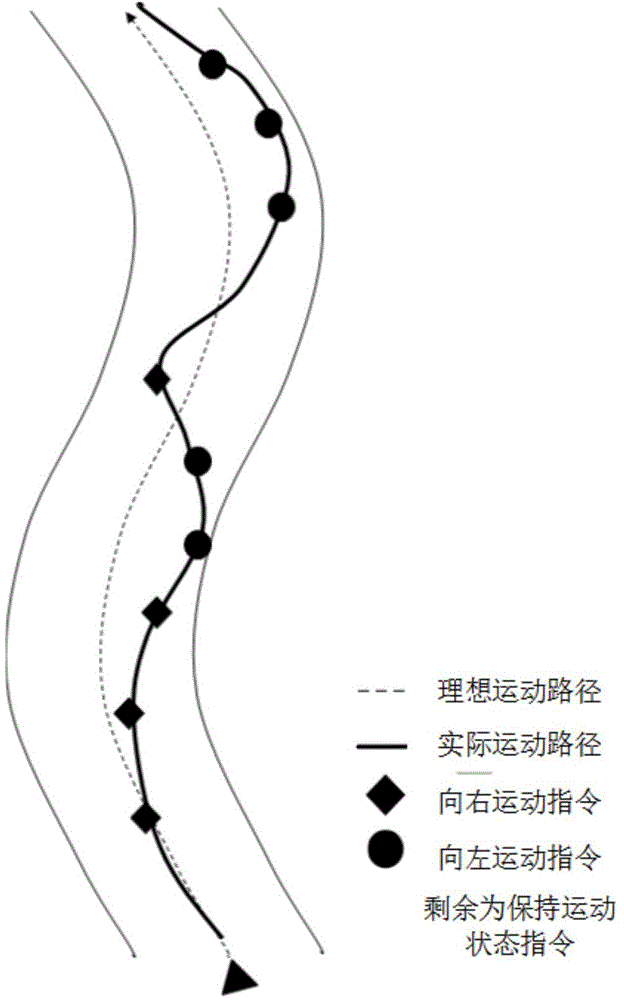

Brain-controlling animal robot system and brain-controlling method of animal robot

ActiveCN103885445AImprove real-time performanceImprove reliabilityPosition/course control in two dimensionsRobotic systemsElectricity

The invention discloses a brain-controlling animal robot system and a brain-controlling method of an animal robot. A corresponding control instruction is generated by collecting brain electrical signals of the brain and processing the brain electrical signals, the corresponding control instruction is used for controlling the animal robot to move, and a brain-to-brain normal form of two mixed modes can effectively control the brain-to-brain animal robot. The brain-controlling animal robot system and the brain-controlling method of the animal robot adopt two control modes, namely the mixed control mode based on ocular electricity / myoelectricity characteristics and motion imagery characteristics of the brain electrical signals and the mixed control mode based on visual evoked potential characteristics and the motion imagery characteristics, select a proper control mode according to the state of a user, and largely improve the real-time performance and reliability of control. The brain-controlling animal robot system can be applied to the fields of unknown environment exploration, brain function mechanism research, brain-to-brain network communication, life assistance and entertainment for the disabled and the like.

Owner:浙江浙大西投脑机智能科技有限公司

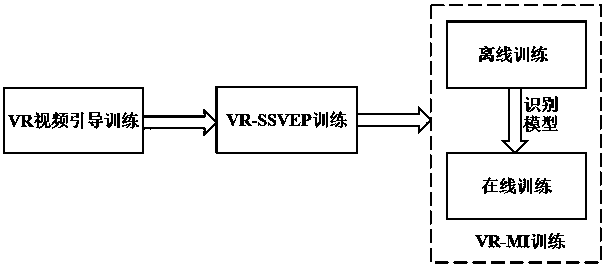

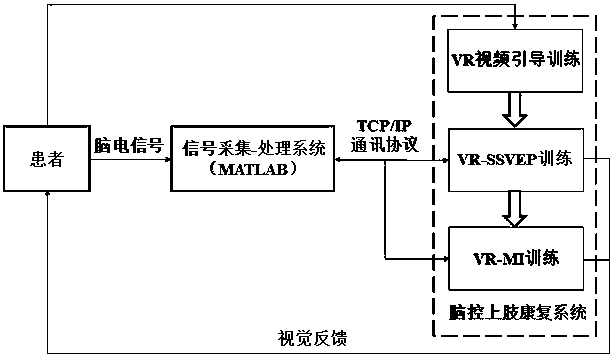

Three-stage brain-controlled upper limb rehabilitation method combining steady-state visual evoked potential and mental imagery

InactiveCN108597584AImprove securityImprove immersion effectMedical simulationMental therapiesPatient needUpper limb rehabilitation

The invention relates to a three-stage brain-controlled upper limb rehabilitation method combining steady-state visual evoked potential and mental imagery (MI). The method comprises the following steps: (1) the first stage of VR video guidance training: a patient is made to be familiar with upper limb rehabilitation movements through VR video guidance; (2) the second stage of VR-SSVEP training: the patient needs to concentrate to observe pictures that represent different upper limb movements and flicker with a specific frequency, EEG signals of the patient are collected in real-time to analyzeintentions of the patient, and visual feedback is provided to the patient through VR animation to make the patient learn to concentrate; and (3) the third stage of VR-MI training: EEG signals of theleft and right upper limbs of the patient during MI are collected during off-line training, and a mental imagery intention recognition model is established. The EEG signals of mental imagery of the patient are analyzed according to the model during online training, movement intentions of the patient are recognized, and movements of a 3D character in an interface are controlled in real time, so that brain central nerve remodeling is facilitated through MI. The method exhibits a good immersion property, enables active rehabilitation to be realized, enables rehabilitation to proceed step by step,and is a new method for upper limb rehabilitation of a cerebral stoke patient.

Owner:SHANGHAI UNIV

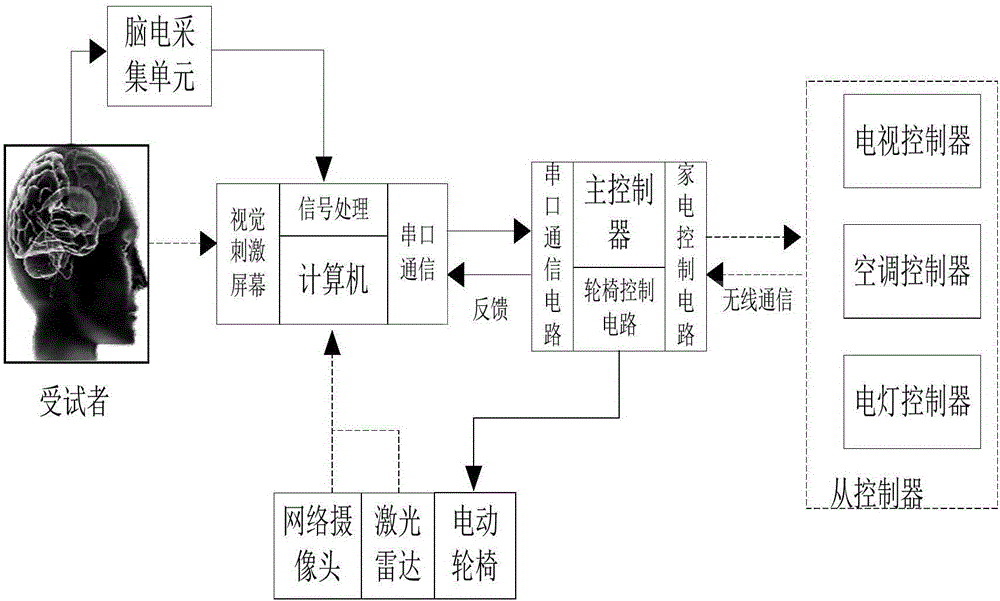

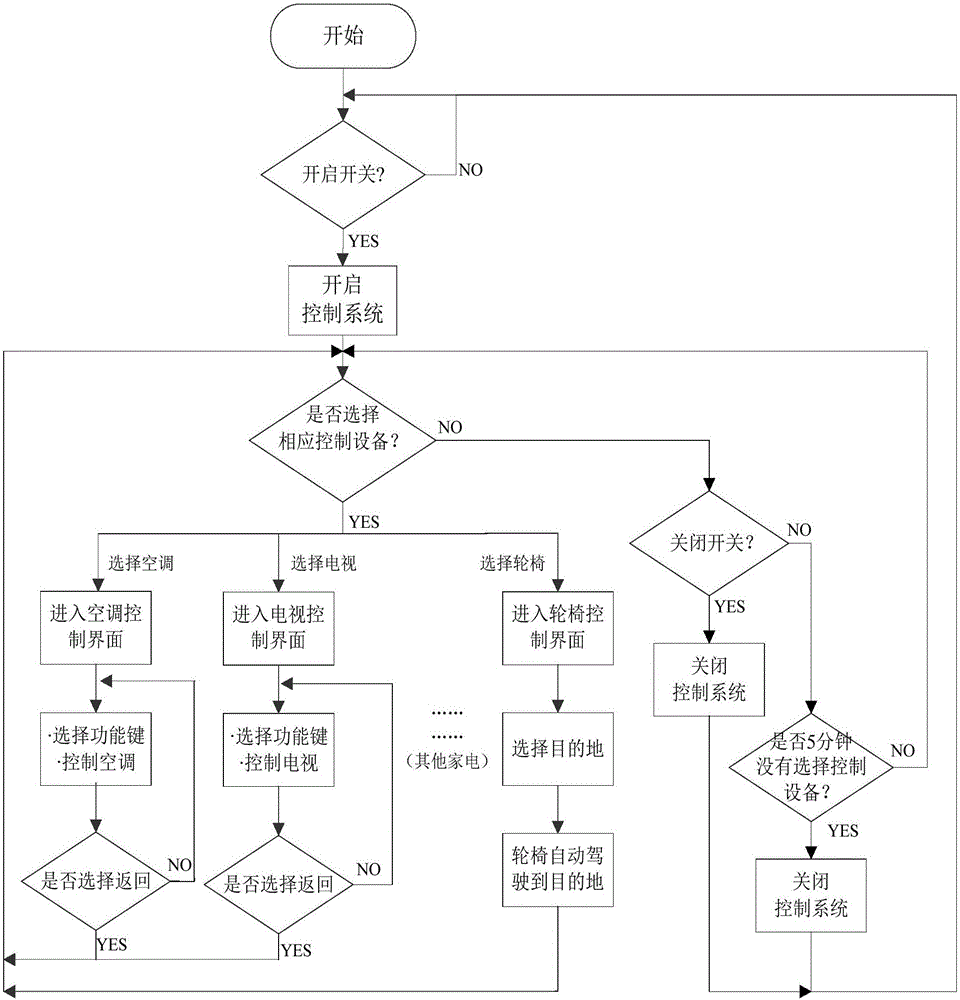

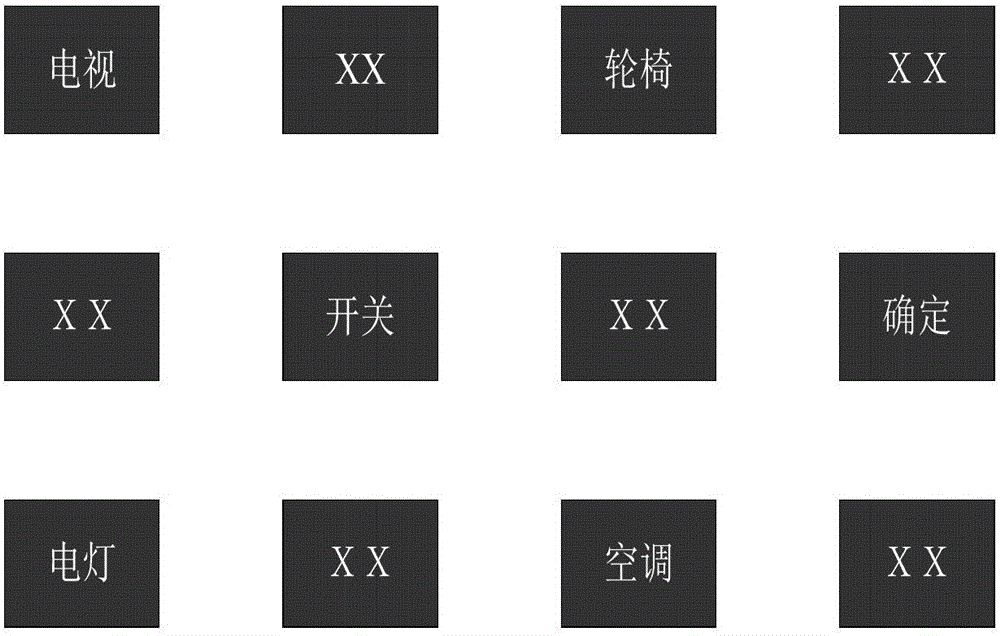

Brain computer interface-based self-adaptive home environment control device and control method thereof

ActiveCN106020470AEasy to useImprove the quality of lifeProgramme controlInput/output for user-computer interactionLife qualityBrain computer interfacing

The invention discloses a brain computer interface-based self-adaptive home environment control device and a control method thereof. The device comprises a visual stimulation screen, a brain electricity acquisition unit, a computer, a master controller and a slave controller. The device comprises two parts: brain control home appliances and a brain control wheelchair; the patients with severe paralysis (such as spinocerebellar ataxia, amyotrophic lateral sclerosis and the like) can realize indoor movement by using the wheelchair and realize the control of home appliances (such as a television, an air conditioner and an electric lamp) while sitting on the wheelchair. The device is stable in performance, and can be used for effectively improving the self-care ability and life quality of the severe paralyzed patients.

Owner:华南脑控(广东)智能科技有限公司

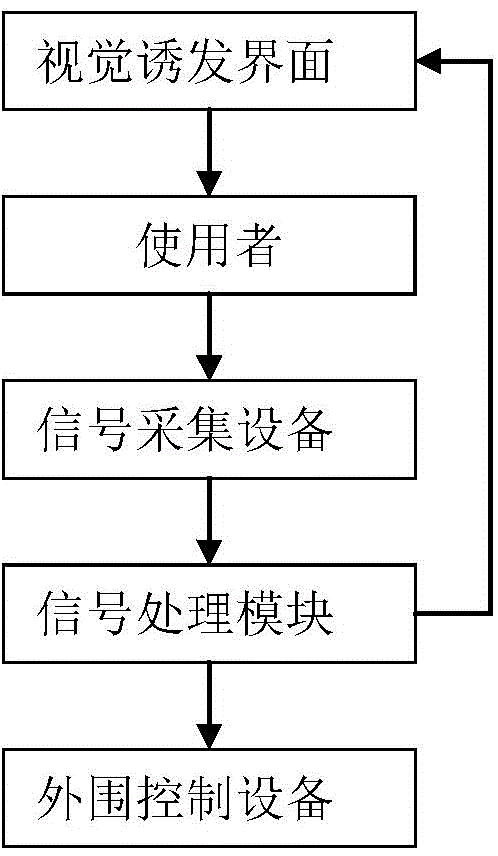

Assistance system for disabled people based on brain control mobile eye and control method for assistance system

ActiveCN104799984AImprove securityReduction of consecutive stimulusInput/output for user-computer interactionInvalid friendly devicesReal time analysisInteraction interface

The invention discloses an assistance system for disabled people based on a brain control mobile eye and a control method for the assistance system. The system comprises vision inducing equipment, signal acquisition equipment, a signal processing module and peripheral control equipment, wherein the vision inducing equipment is used for providing a user interaction interface of the whole system, and a user carries out control by using the interface; the signal acquisition equipment is used for acquiring the brain electrical activity of the scalp, converting an acquired analog signal into a digital signal and transmitting the signal to the signal processing module; the signal processing module is used for analyzing acquired data in real time and controlling an experimental process; the peripheral control equipment comprises the mobile eye and a wheelchair; the mobile eye comprises an intelligent trolley and a camera holder arranged on the intelligent trolley; the peripheral control equipment can do corresponding motion according to a control instruction sent by the signal processing module. According to the assistance system for the disabled people based on the brain control mobile eye and the control method for the assistance system, the safety of wheelchair control can be improved.

Owner:EAST CHINA UNIV OF SCI & TECH

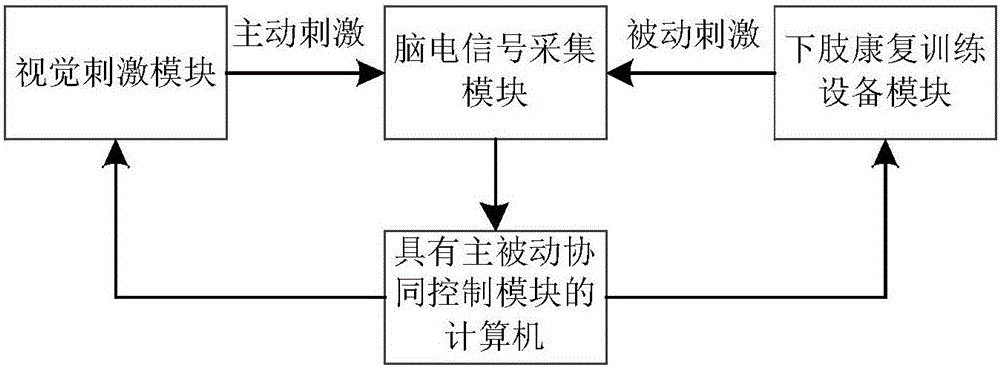

Visual motion evoked brain-controlled lower limb active and passive cooperative rehabilitation training system

ActiveCN105853140ANot easy to cause visual fatigueStrong evoked EEG signalGymnastic exercisingChiropractic devicesPhysical medicine and rehabilitationClosed loop

The invention discloses a visual motion evoked brain-controlled lower limb active and passive cooperative rehabilitation training system, which comprises a visual stimulation module, wherein the output of the visual stimulation module is connected to the first input of an electroencephalogram signal acquisition module; the second input of the electroencephalogram signal acquisition module is connected to the output of a lower limb rehabilitation training module; the output of the electroencephalogram signal acquisition module is connected to the input of a computer which is provided with an active and passive cooperative control module; the first output of the computer which is provided with the active and passive cooperative control module is connected to the input of the visual stimulation module; and the second output of the computer which is provided with the active and passive cooperative control module is connected to the input of the lower limb rehabilitation training module; active stimulation on motion control nerves and passive stimulation on motion perception nerves are achieved, and a closed-loop nerve bypass is established so as to promote neural reorganization and reconstruction; and meanwhile, the rehabilitation training is completed on the basis of the full development of patient's subjective desire, and the interesting of the rehabilitation training is enhanced, so that patient's initiative is mobilized.

Owner:西安臻泰智能科技有限公司

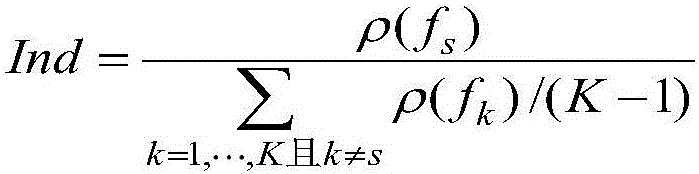

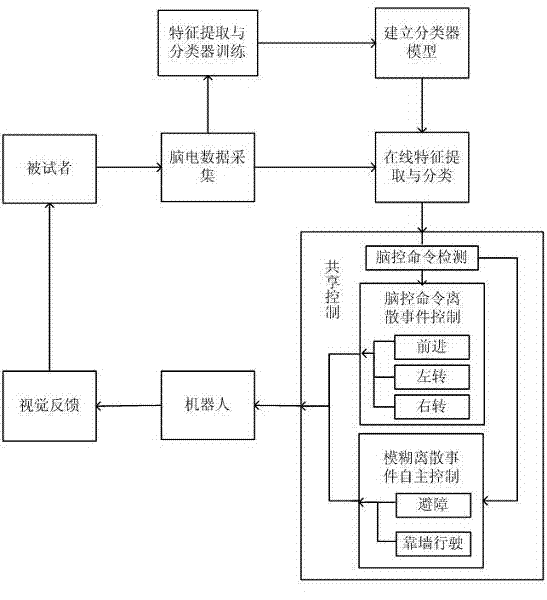

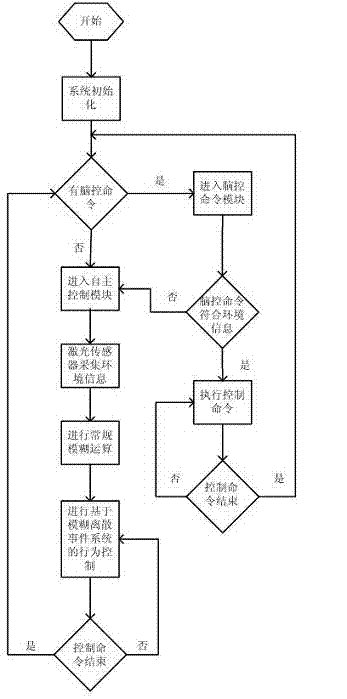

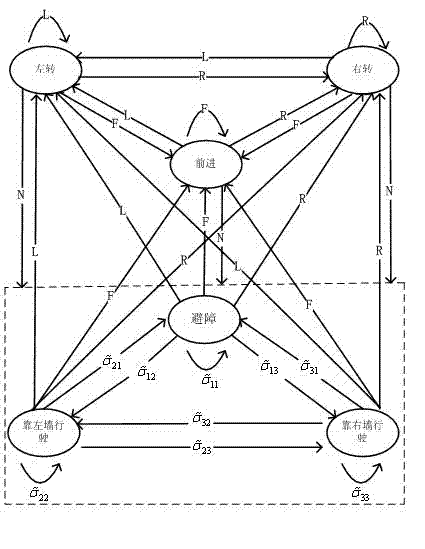

Vague discrete event shared control method of brain-controlled robotic system

InactiveCN103116279AImprove adaptabilityMake up for low transmission rate, high recognition error rate, control delay and other problemsAdaptive controlRobotic systemsInformation transmission

The invention belongs to the field of a brain machine connector and provides a vague discrete event shared control method of a brain-controlled robotic system. The vague discrete event shared control method of the brain-controlled robotic system utilizes a method of combing a human brain control command and robot autonomous control based on the vague discrete event system to distinguish a motor imagery brain electroencephalogram online and take the motor imagery brain electroencephalogram as a control command with the highest priority degree to control a robot to advance, turn left and turn right. When no brain control command exists, the autonomous control module which is based on the vague discrete event system is operated to blur autonomous control states of obstacle avoidance and traveling close to a wall of the robot and forms the vague discrete event system aiming at the size of a barrier in a route, the length of distance and the like. The vague discrete event shared control method of the brain controlled robotic system makes up the problems that information transmission speed of the brain machine connector is low, distinguish error rate is high, control is delayed and the like through the shared control method and strengthens the adaptive capacity of the robot in a complex environment.

Owner:DALIAN UNIV OF TECH

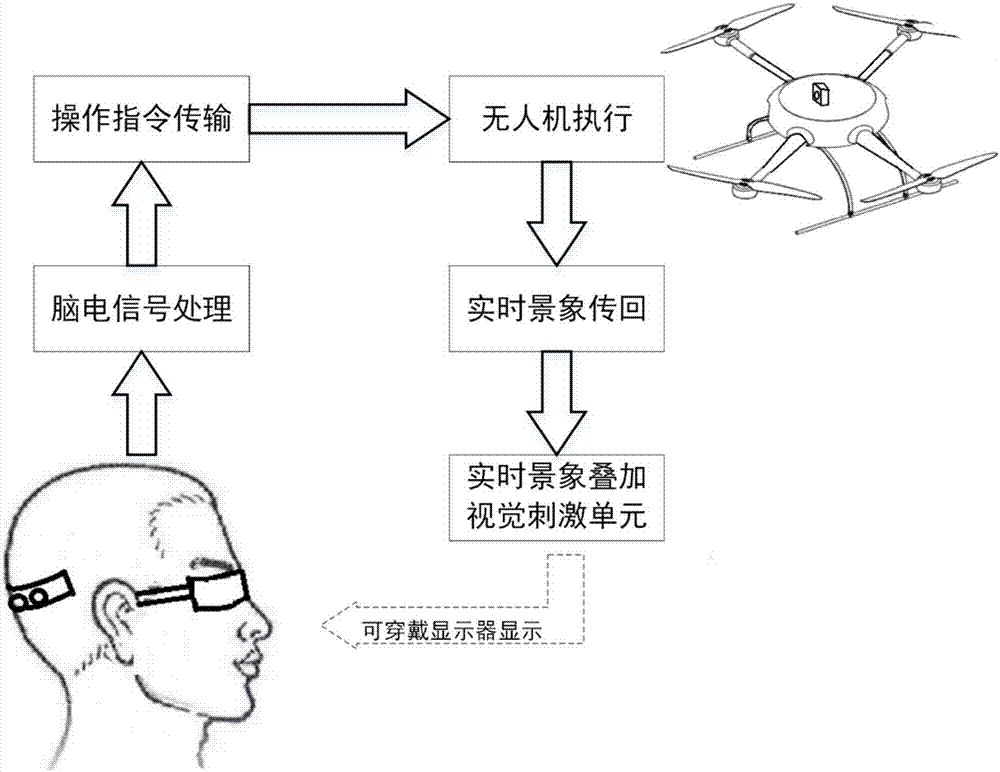

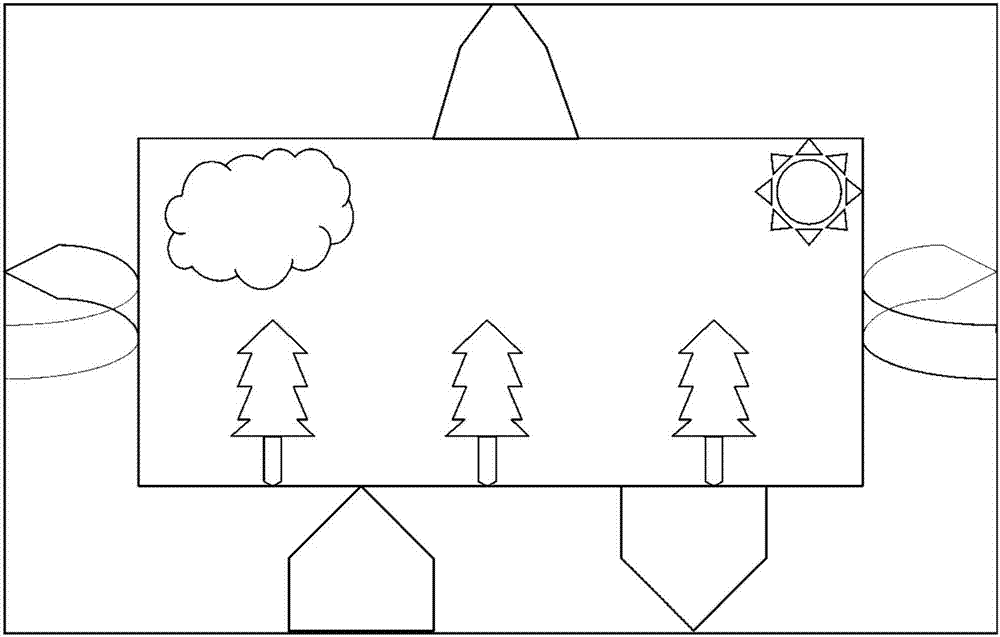

Wearable display-based asynchronous type brain-controlled unmanned aerial vehicle system

InactiveCN107168346AStrong flickeringStrong fatigueInput/output for user-computer interactionAttitude controlBrain computer interfacingSuperimposition

The present invention discloses a wearable display-based asynchronous type brain-controlled unmanned aerial vehicle system. A wearable display is utilized to display a group of visual stimulation units which flick with different frequencies; the visual stimulation units are corresponding to one group of different operation instructions of an unmanned aerial vehicle; a user gazes at different visual stimulation units, so that brain electrical signals can be generated, and the brain electrical signals are processed, so that the visual stimulation units which are gazed by the user and operation instructions corresponding to the visual stimulation units are identified; identification results are sent to the unmanned aerial vehicle end so as to be executed; the real-time scene pictures of the unmanned aerial vehicle are transmitted to the ground and are superimposed with the visual stimulation units, and superimposition results are displayed on the wearable display; and therefore, first-person perspective feedback can be provided for the user, and the portability of a steady-state vision-induced electric potential brain-computer interface system can be improved. According to the wearable display-based asynchronous type brain-controlled unmanned aerial vehicle system of the invention, an idle state can be fully utilized to perform decoding, and therefore, operational burden and fatigue feeling caused by using a brain-computer interface to control flight can be greatly decreased, and more free flight experience can be brought to the user.

Owner:SHANGHAI JIAO TONG UNIV

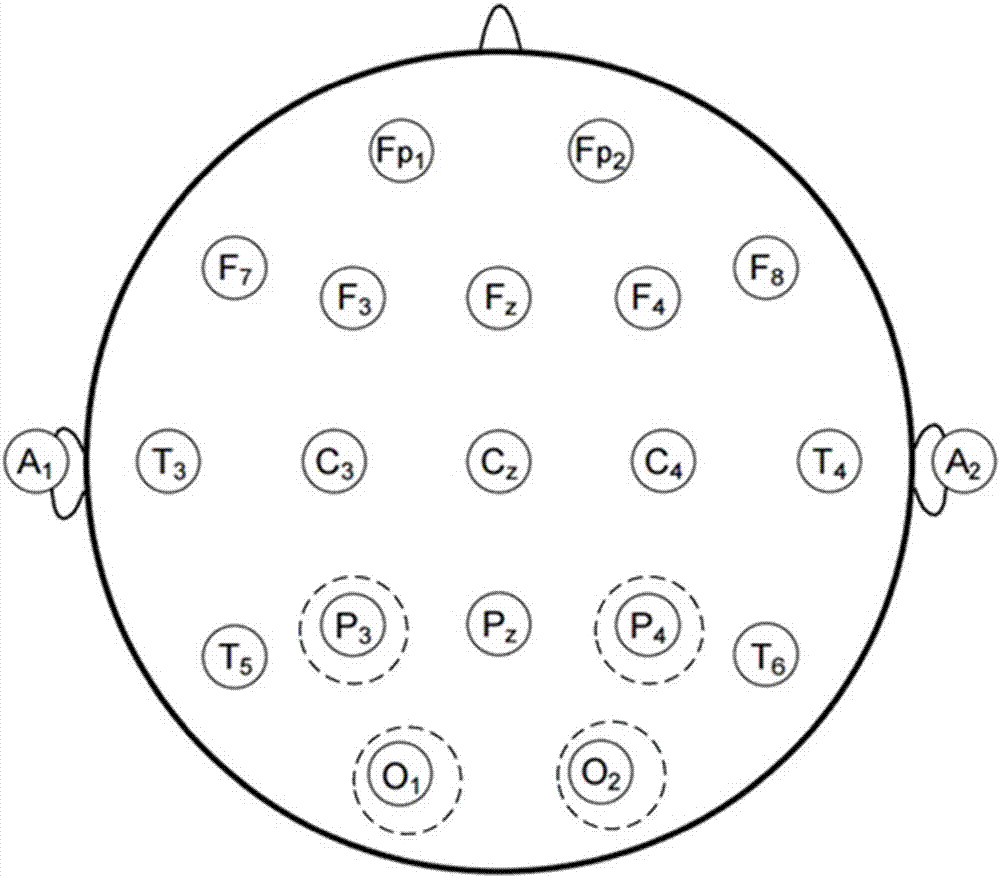

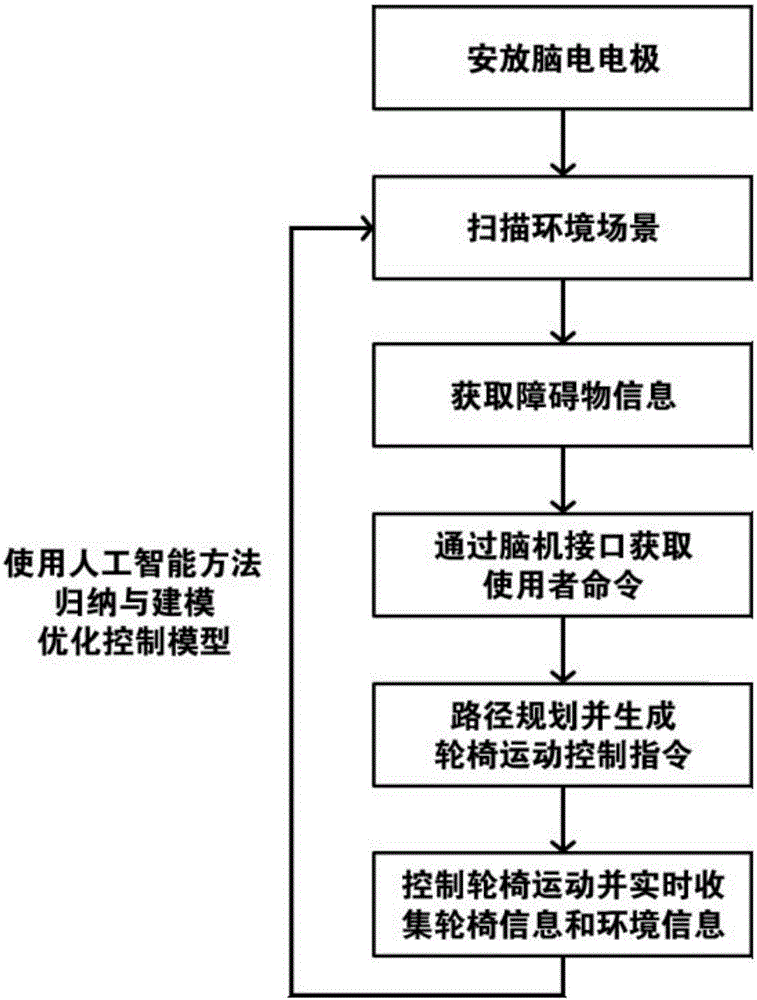

Intelligent wheelchair control method based on brain-computer interface and artificial intelligence

ActiveCN106726209ASafe and effective controlControl's smart wheelchair controls are safe and effectiveWheelchairs/patient conveyanceBrain computer interfacingHabit

The invention relates to an intelligent wheelchair control method based on a brain-computer interface and artificial intelligence. The control method is characterized in that a measuring electrode is placed on a user's scalp and the user's brain electrical signals are accessed by means of wireless or wired. The user's motion control information is acquired by means of brain-computer interface. The environment map is generated according to LIDAR and obstacle information is acquired by means of ultrasonic distance measurement or camera. Obstacles are avoided and motion path planning is generated. The movement of wheelchair is controlled and machine learning method is used to achieve feedback of self-adapting and self-learning during movement control. The intelligent wheelchair control method has the advantages of achieving safe and effective control function of the wheelchair for the user and achieving control output of self-learning and self-adapting based on the user's control habit without installation of camera or other sensors in the operation environment. By cameras and sensors on the wheelchair, the coordination control of artificial intelligence is completed, and thus the application scope of brain-controlled intelligent wheelchair is expanded.

Owner:INST OF BIOMEDICAL ENG CHINESE ACAD OF MEDICAL SCI

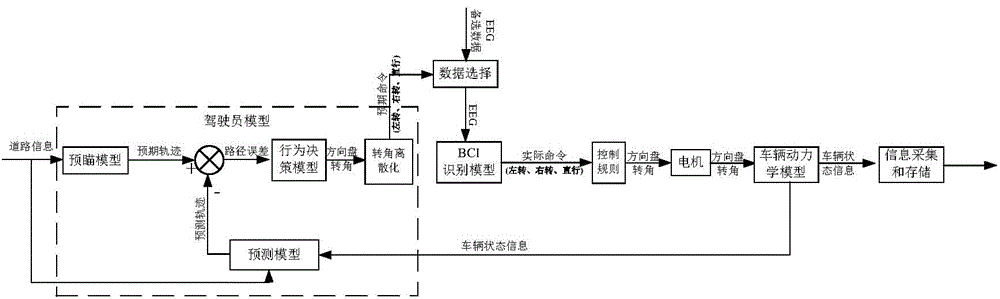

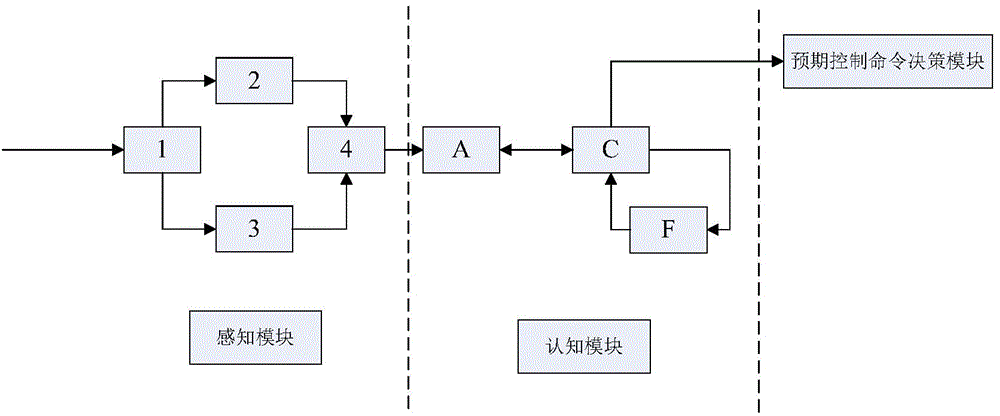

Method for designing brain-computer interface parameters and kinetic parameters of brain controlled vehicle based on human-vehicle-road model

ActiveCN104462716AReduce wasteShorten the development cycleSpecial data processing applicationsBrain computer interfacingSimulation

The invention provides a method for designing brain-computer interface parameters and kinetic parameters of a brain controlled vehicle based on a human-vehicle-road model. The method is mainly applied to brain controlled vehicle kinetic parameter design, brain controlled recognition model parameter design and brain controlled driving performance testing. The method is based on a virtual simulation platform to test set brain controlled vehicle kinetic parameters and brain-computer interface parameters with the driving characteristics of a driver considered. A corresponding system comprises the human-vehicle-road model and an information storage module, wherein the human-vehicle-road model comprises a brain control driver model, a BCI recognition model, a control rule, an executer model, a vehicle model and a virtual road environment module. The system simulates the process of driving a vehicle through brain control, a vehicle state response is obtained and analyzed by changing the kinetic parameters of the vehicle and the parameters of the recognition model, vehicle kinetic parameters and recognition parameters are optimized, and a foundation is provided for personalized brain-controlled vehicle design.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

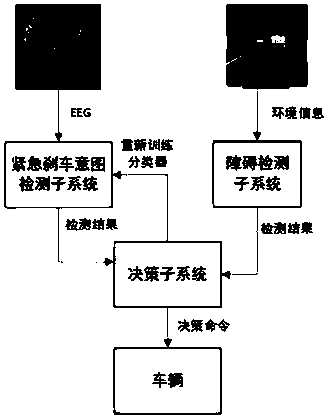

Emergency state detection system fused with brain electrical signal and environmental information

ActiveCN108693973AImprove securityImprove driving experienceInput/output for user-computer interactionAutomatic initiationsAutomatic controlHuman–robot interaction

The invention relates to an emergency state detection system fused with a brain electrical signal and environmental information and a related computation method thereof, which aim at improving the safety and driving experience of a brain-to-vehicle in an automatic drive process. According to the emergency state detection system fused with the brain electrical signal and the environmental information and the related computation method thereof, identification of an emergency state is implemented through brain electrical signal-based emergency brake intention detection in combination with environmental information-based obstacle detection to further enable the vehicle to brake emergently. The emergency state detection system fused with the brain electrical signal and the environmental information and the related computation method thereof are of great importance for improvement of the safety of brain-controlled drive and automatic drive and belong to an comprehensive application in the field of vehicle design as well as the fields of human-computer interaction science, cognitive neuroscience and automatic control.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

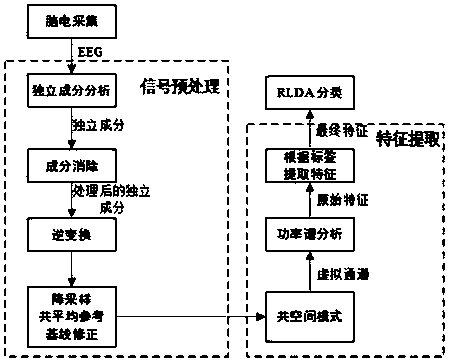

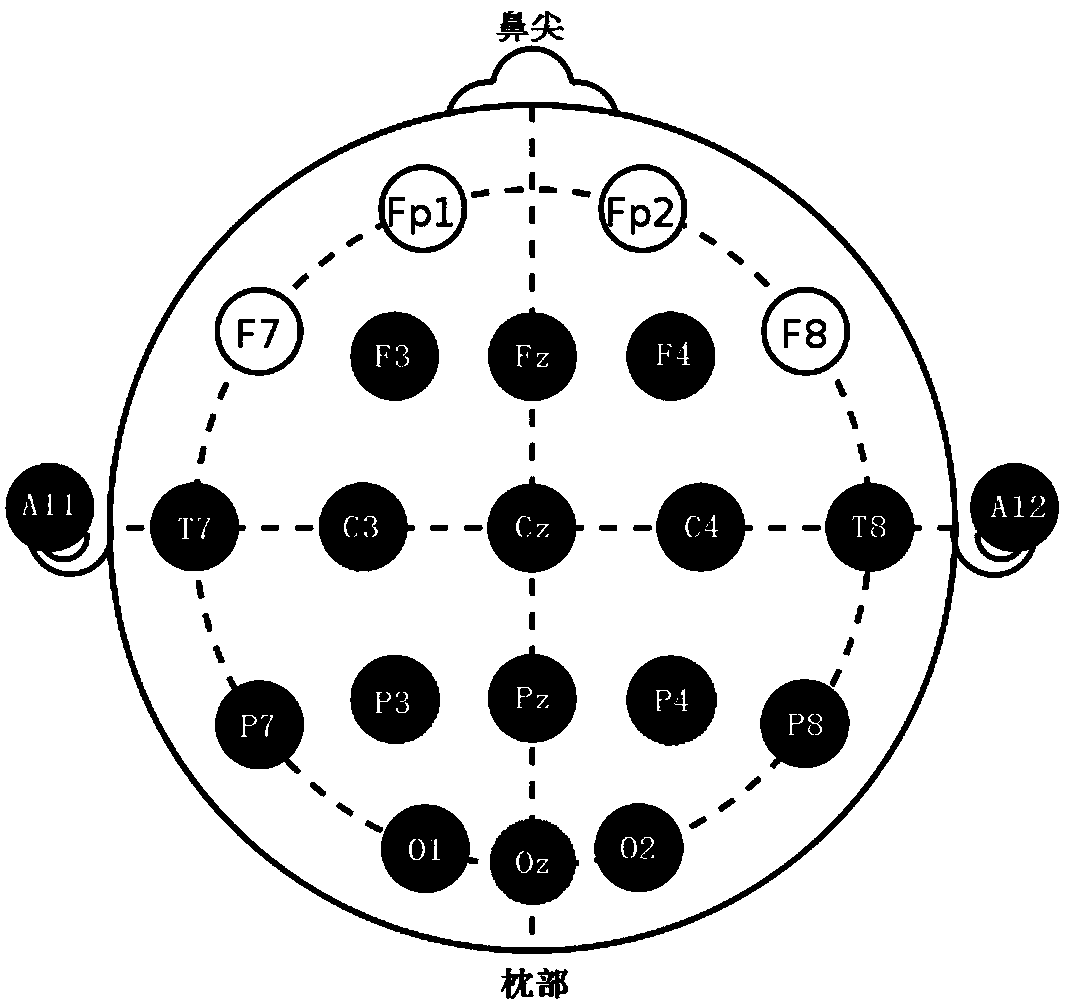

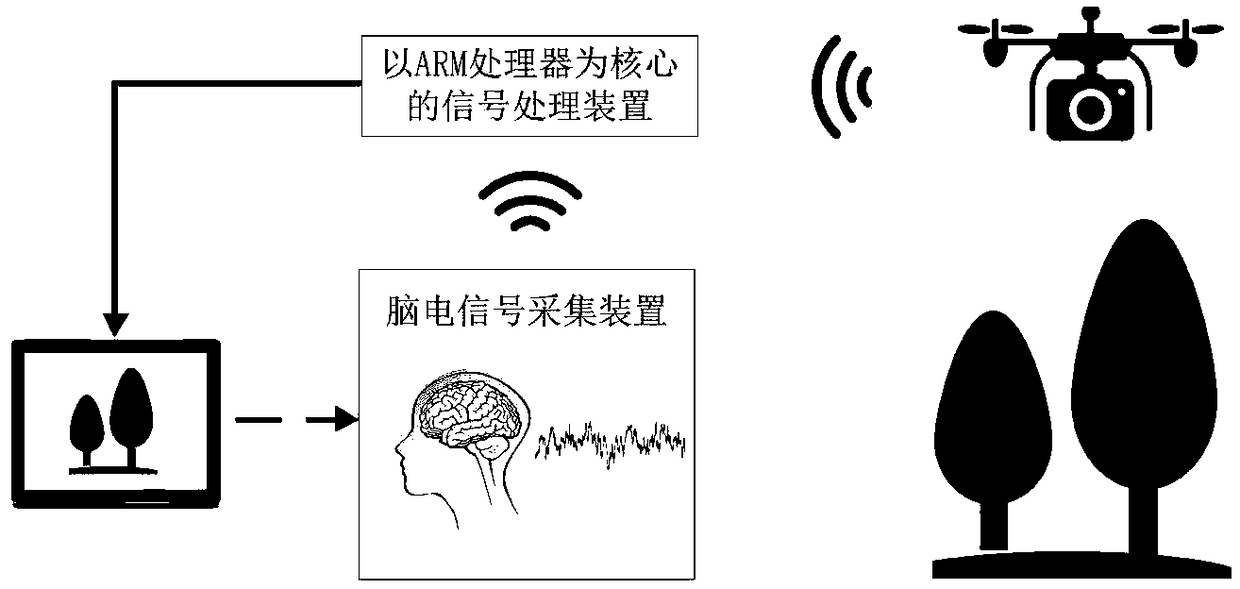

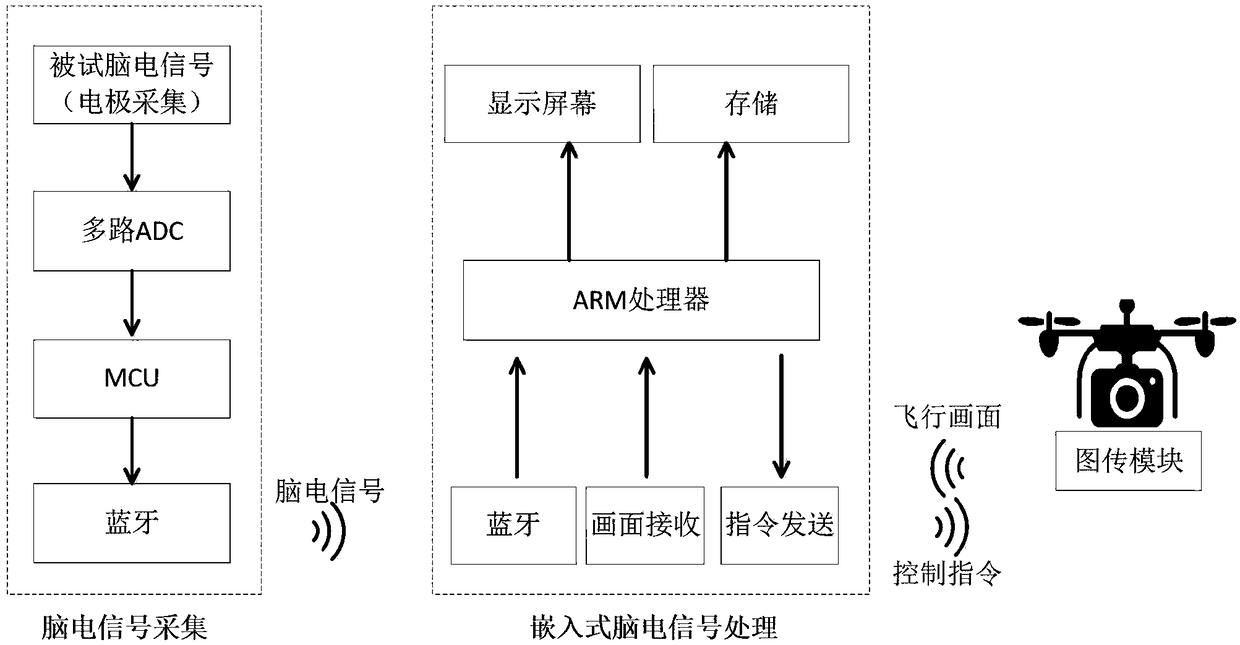

Motion-imagination-based portable brain-controlled unmanned aerial vehicle system and control method thereof

PendingCN108762303AReduce volumeReduce power consumptionInput/output for user-computer interactionGraph readingSystems designBrain computer interfacing

The invention, which belongs to the field of brain-computer interfaces, relates to a motion-imagination-based portable brain-controlled unmanned aerial vehicle system and a control method thereof. Thesystem is composed of an EEG signal acquisition device, an embedded EEG signal processing device, and a flight portion. The EEG signal acquisition device uses a high-precision and high-integration analog front end and a high-precision and high-integration AD conversion chip, so that power consumption of the EEG signal acquisition module is reduced, the system design is simplified, and the signal-to-noise ratio is improved; and signal processing is carried out well and thus the size of the EEG signal acquisition device is reduced substantially. According to the system, the EEG signal acquisition device is installed at an occipital lobe part of an electrode cap in an integration manner and the bluetooth is used as a data transmission mode, so that the collection part of the EEG signal is combined with the electrode cap and an independent part is formed. Therefore, the comfort and flexibility of the user are improved substantially.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

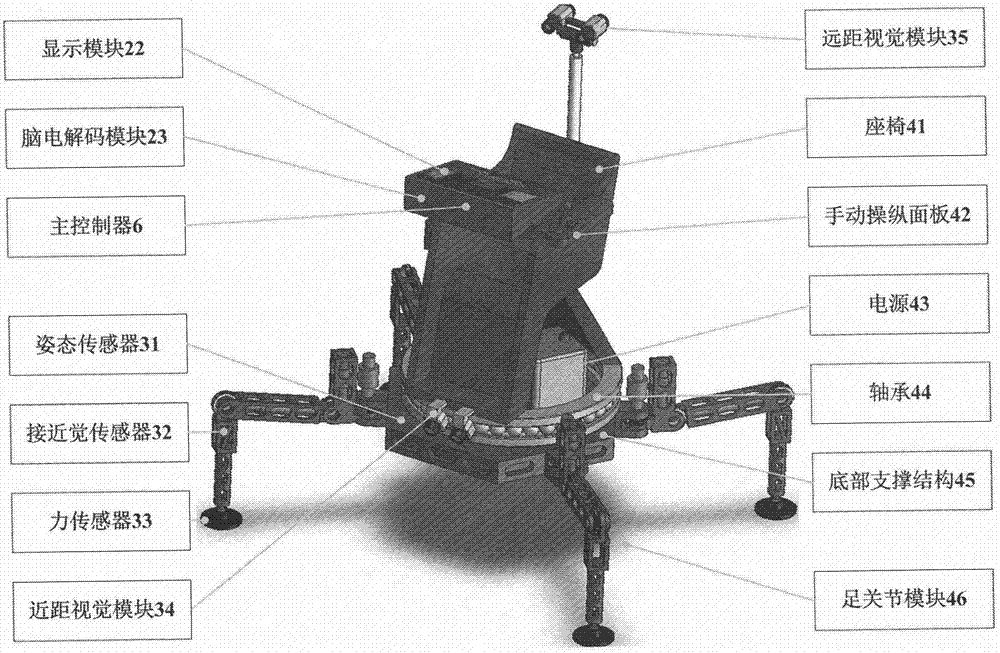

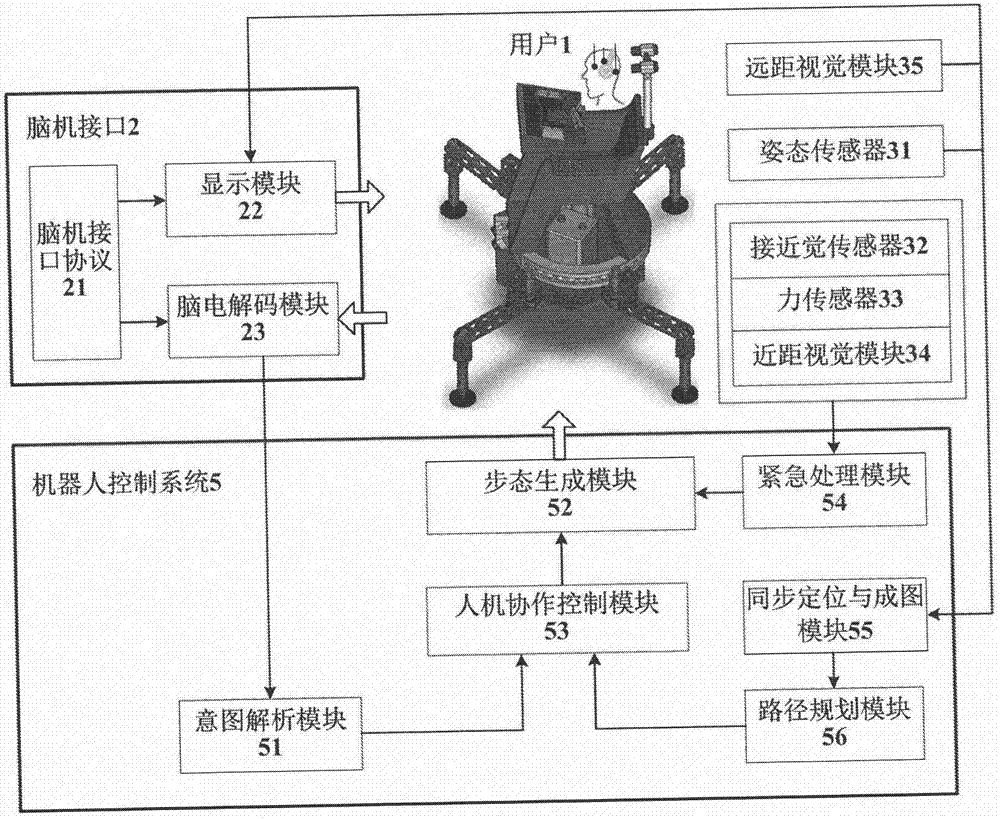

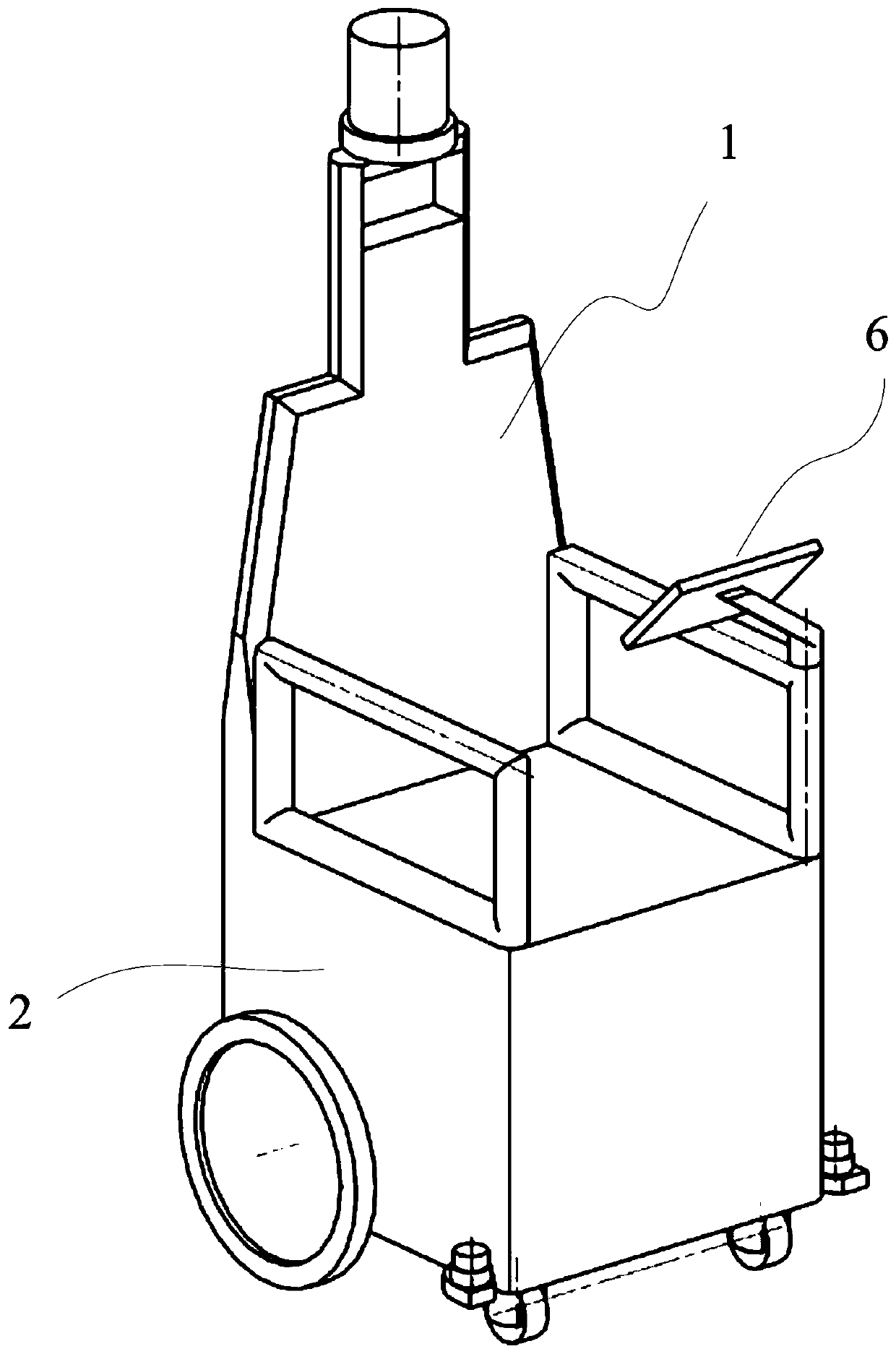

Walking chair device controlled by electroencephalogram signals and control system thereof

The invention relates to a novel foot type auxiliary movement device using a brain-machine interface and a control system thereof. The system is characterized in that limited kinds of electroencephalogram signals can be used for operation and control; the adaptation to the unflattened terrain environment can be realized; a user can be helped to realize the safe and reliable navigation and movement; the system comprises a walking chair robot and a brain-machine interface. The user is in charge of task statement; the walking chair robot is in charge of task concrete planning and execution; the brain-machine interface solves the decision intention of the user according to the electroencephalogram signals, and is in charge of the communication between the user and the wheelchair robot; the structure of the walking chair robot is divided into an upper part and a lower part in the space; the lower side is a four-foot moving system; the upper side is a rotatable chair platform. The user can perform the practical brain control operation by sitting on the chair platform and can perform remote control operation on the brain control walking chair at the remote end by using the brain-machine interface. In addition, the brain control walking chair also provides a conventional operation rocker rod for a patient with the hand moving ability to use. The control system performs the man-machine cooperated control by using the safety as the first target; the high-grade intention of people is understood through an intension solving module; the intention of people is possibly met on the premise of ensuring the safety.

Owner:SHANGHAI UNIV

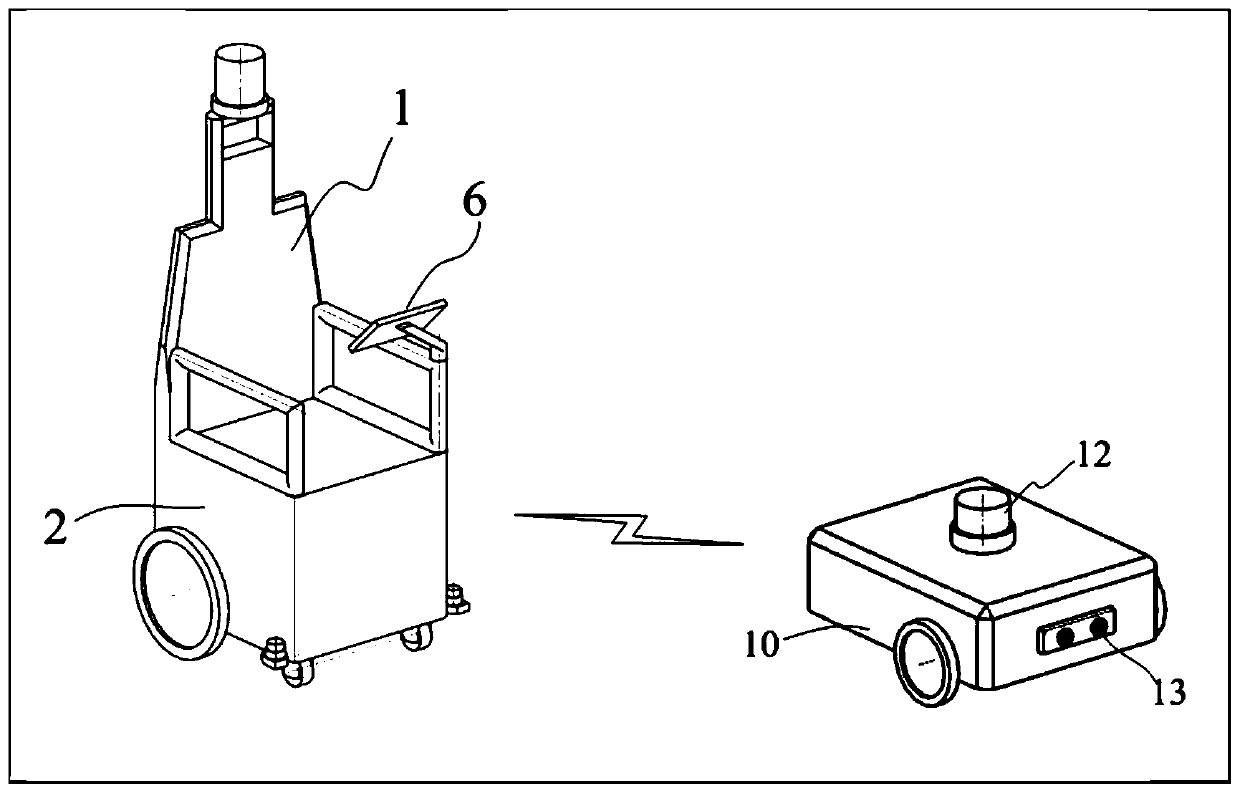

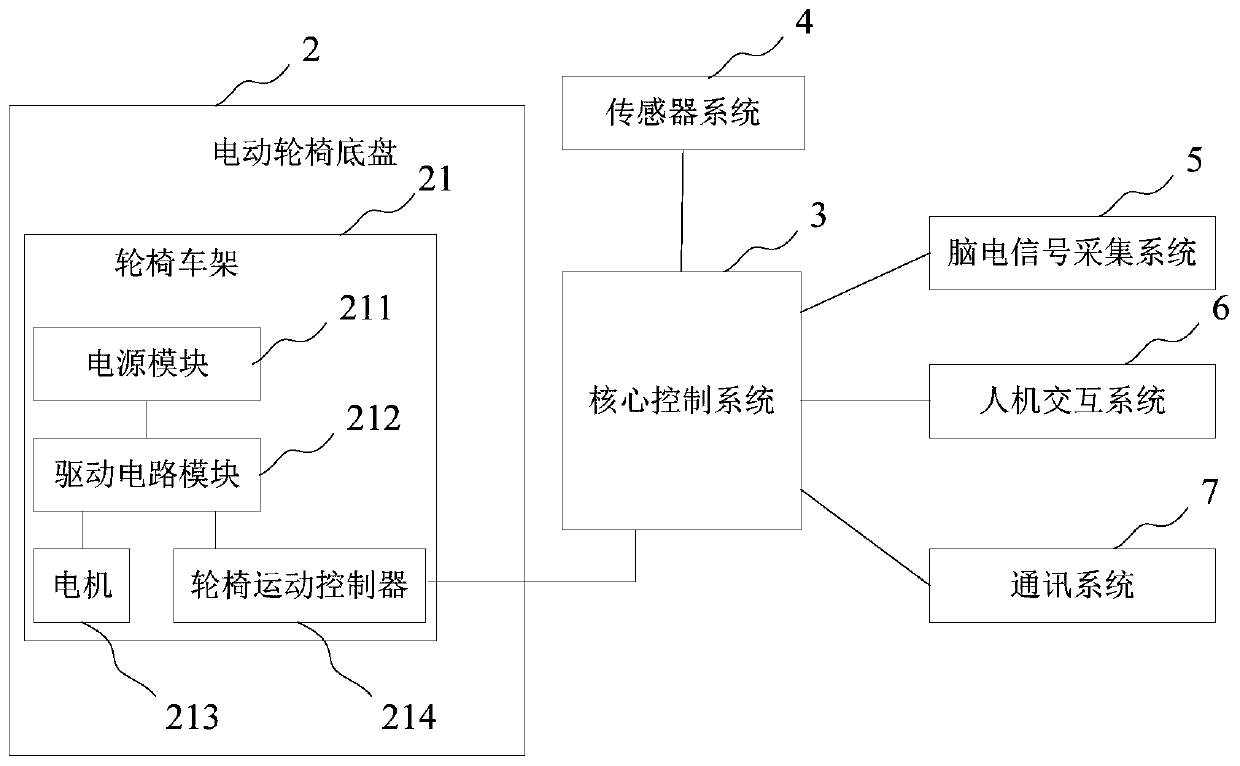

Combined brain control and autopilot wheelchair with detection device and control method thereof

ActiveCN109966064AAvoid stayingImproved autonomous driving safetyWheelchairs/patient conveyanceDiagnostic recording/measuringWheelchairElectroencephalography

The invention discloses a combined brain control and autopilot wheelchair with a detection device and a control method thereof. a combined brain control and autopilot electric wheelchair and an autonomous detection robot device are involved and are in communication connection with each other, wherein an autonomous detection robot is driven in the front of a path which the wheelchair herein is about to travel to, so that information of a road ahead of the wheelchair can be acquired in advance, information of key nodes of a map, such as crossings, can be recognized and fed back in advance, the information of the road can be disclosed to a wheelchair driver in advance, and wheelchair trafficability can be improved. Therefore, the wheelchair driver can select paths in advance through electroencephalography; a driving task can be continuously performed; the stay of the wheelchair at the key nodes, such as crossings, where traffic is complex, is avoided, and autopilot safety of the wheelchair can be improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

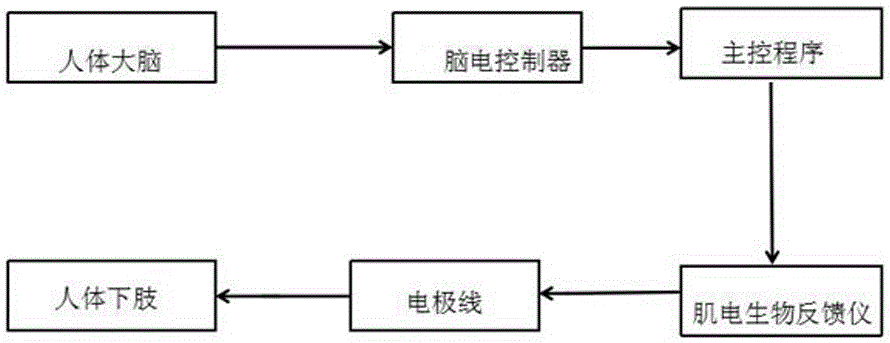

Brain-controlled active lower limb medical rehabilitation training system

InactiveCN106237510AMotor performance improvement and recoveryElectrotherapyArtificial respirationHuman bodyElectromyographic biofeedback

The invention discloses a brain-controlled active lower limb medical rehabilitation training system, and the system comprises an electroencephalograph controller, brain-controlled software downloaded in a computer, and a myoelectricity biological feedback device. The electroencephalograph controller, the brain-controlled software downloaded and the myoelectricity biological feedback device are connected with each other based on 3G or 4G wireless signals. The electroencephalograph controller comprises a wearable electroencephalograph sensor and a wireless communicator. The myoelectricity biological feedback device comprises a main unit and an electrode wire which is electrically connected with the main unit and can be connected with the lower limb of a human body. Based on the above, the invention also discloses an application method of the system. Through collecting a brain signal of a patient, the system carries out the feature analysis, recognizes the motion intention of a lower limber of a motion afunction patient, controls the myoelectricity biological feedback device to stimulate the muscle of the lower limber to complete the corresponding motion, and effectively improves and restores the motion function of the patient.

Owner:山东海天智能工程有限公司

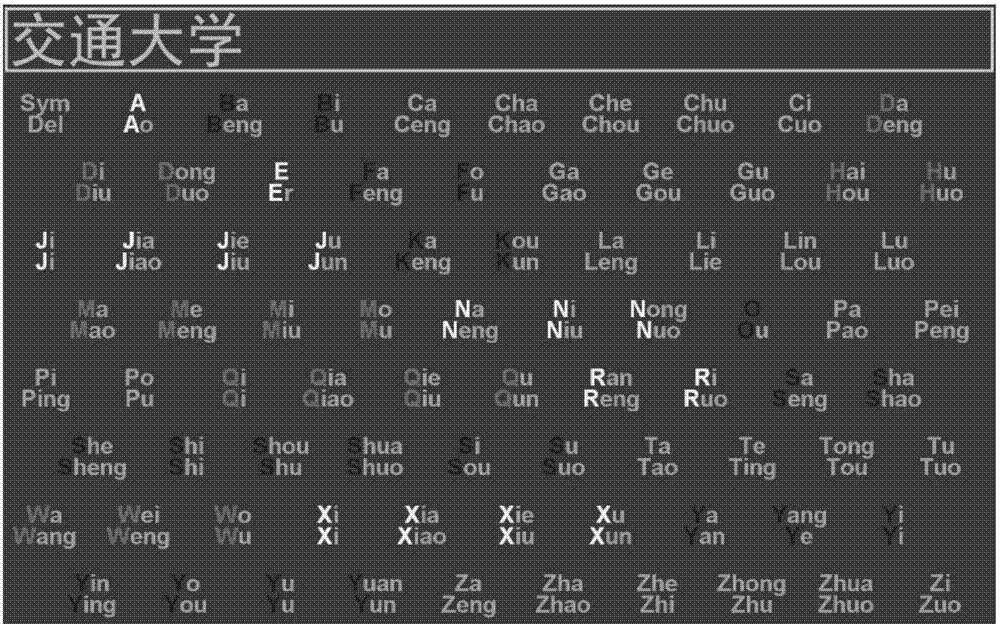

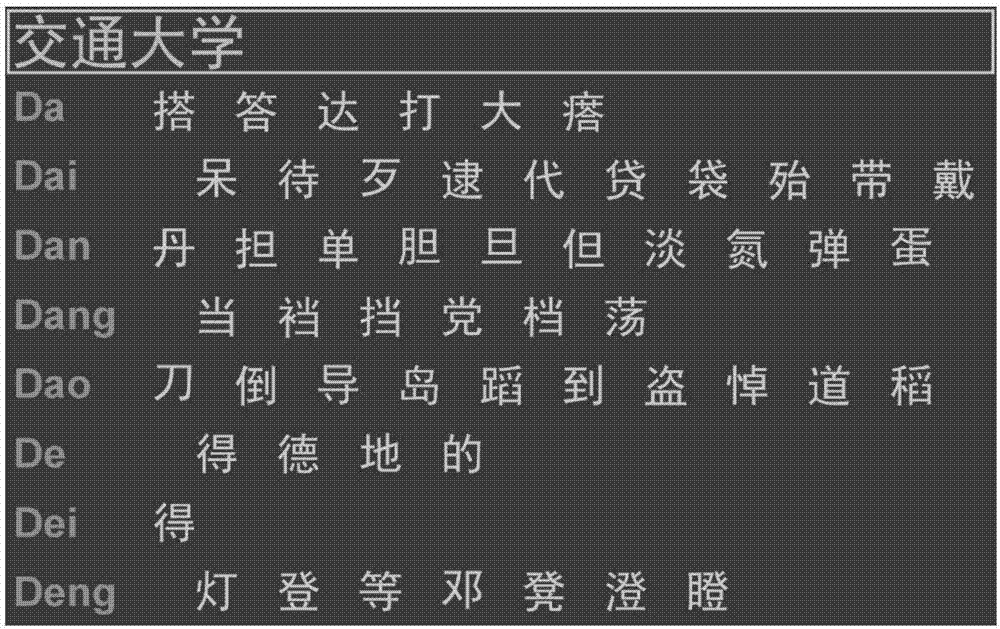

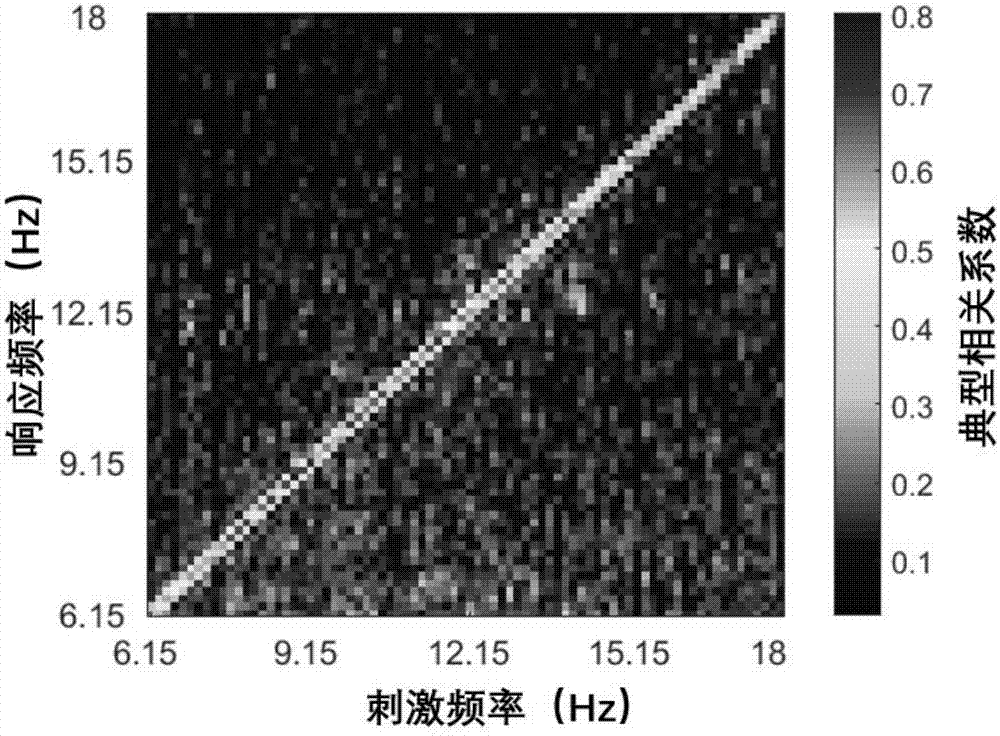

Efficient brain-controlled Chinese input method based on movement vision evoked potential

ActiveCN107390869AImprove comfortEasy to operateInput/output for user-computer interactionGraph readingData treatmentHuman–computer interaction

The invention provides an efficient brain-controlled Chinese input method based on a movement vision evoked potential. The method in which a pronunciation and pattern two-step spelling method is adopted, Chinese characters are input in two steps, and meanwhile, the steady state movement vision evoked potential serves as a brain-machine interface is designed, when the method is used, an electrode is placed on the head of a user first, after the user accepts the movement vision simulation of an interactive interface, an electroencephalogram response is induced, the electroencephalogram response is processed through the electrode and an electroencephalogram collection module, and an electroencephalogram signal is obtained; after the electroencephalogram signal is received by a data processing and control module, an identification result is obtained through analysis, and a user interface is controlled to display the corresponding characters. By means of the method, the operation steps and time can be obviously reduced, the operation is simple and convenient, the user is comfortable to use, the efficient brain-controlled input of the Chinese characters under a large character library is achieved, a dyskinesia patient can be assisted in life communication, and the method has good practical prospects.

Owner:XI AN JIAOTONG UNIV

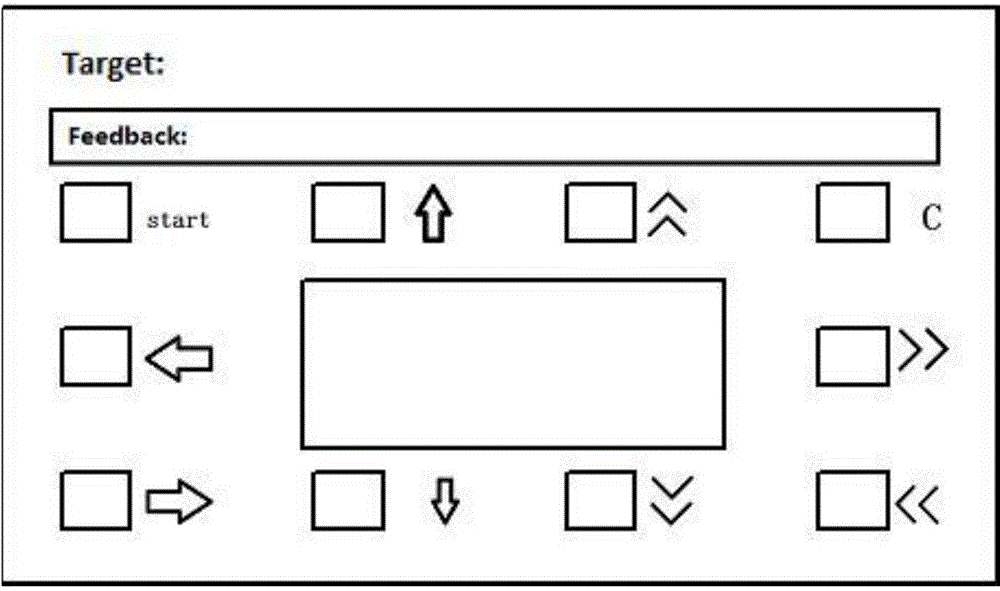

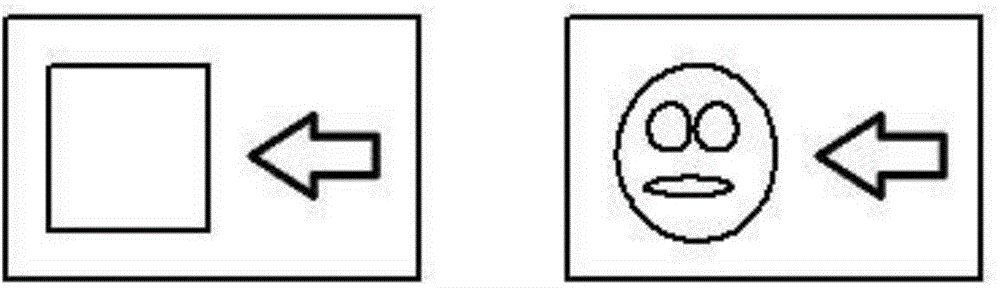

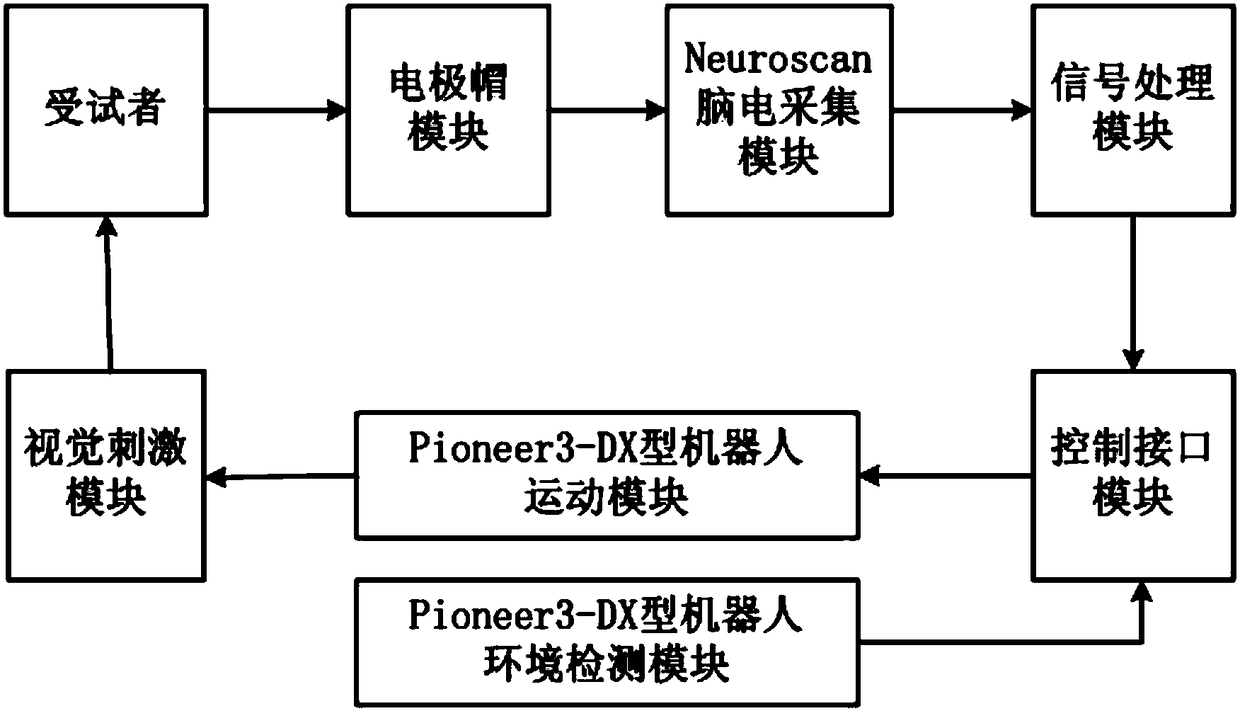

P300-based brain-controlled robot system and realization method thereof

ActiveCN108415554AThe meaning is clearImprove applicabilityInput/output for user-computer interactionGraph readingBrain computer interfacingAutomatic control

The invention belongs to the technical field of brain-computer interface and robot control, and provides a P300-based brain-controlled robot system and a realization method thereof. The system comprises a visual stimulation module, and a testee, an electrode cap module, a Neuroscan electroencephalogram collection module, a signal processing module, a control interface module, a Pioneer3-DX robot motion module connected with the visual stimulation module in sequence; the Pioneer3-DX robot motion module is further connected with the visual stimulation module; and the control interface is furtherconnected with a Pioneer3-DX robot environment detection module. Graphic symbols are adopted as new stimulation types, and brain-computer interface attributes are combined and improved, so that a higher-amplitude P300 component is induced, and the system transmission rate is increased. Moreover, in combination with a brain-computer interface technology and an automatic control technology, interactive sharing of brain control and robot autonomous control is realized in an asynchronous control mode, and motion behaviors of forward moving, backward moving, left turn, right turn and stillness ofa robot are realized, so that the system is more stable and quicker.

Owner:DALIAN UNIV OF TECH

Brain-controlled input method based on steady state visual evoked potential and motor imagination

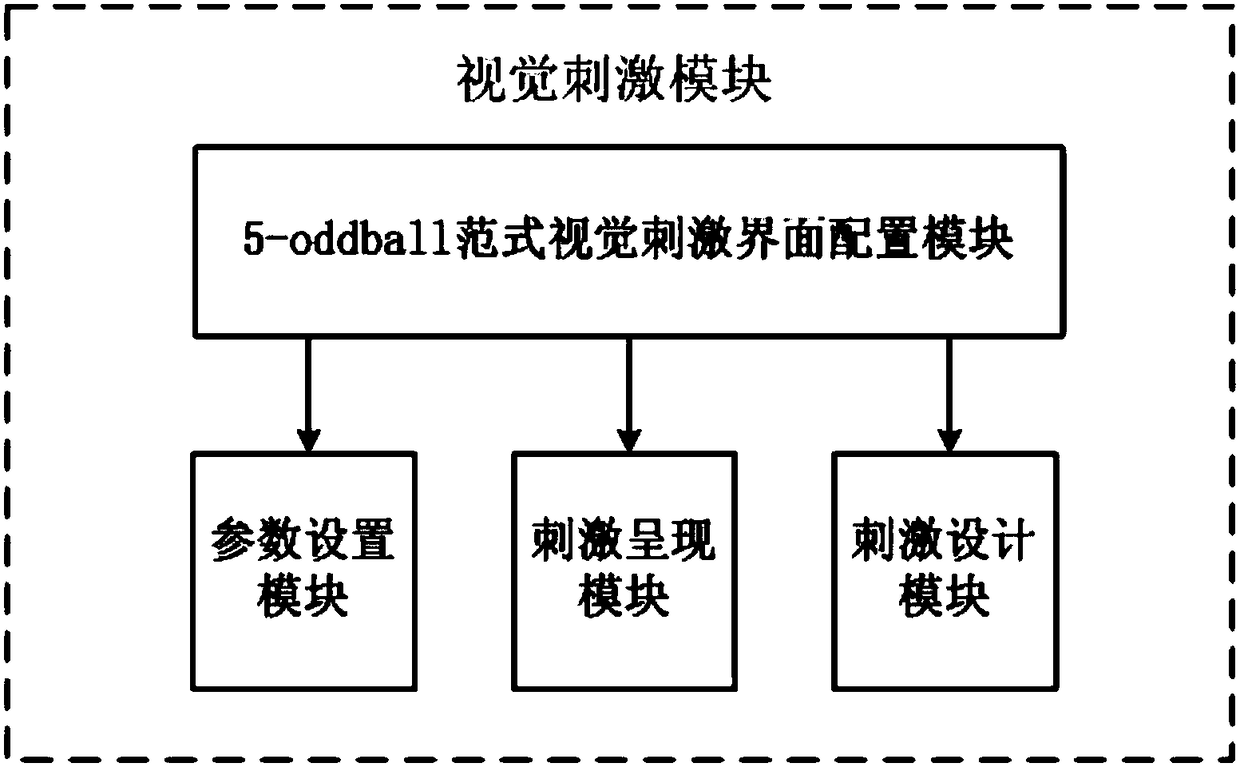

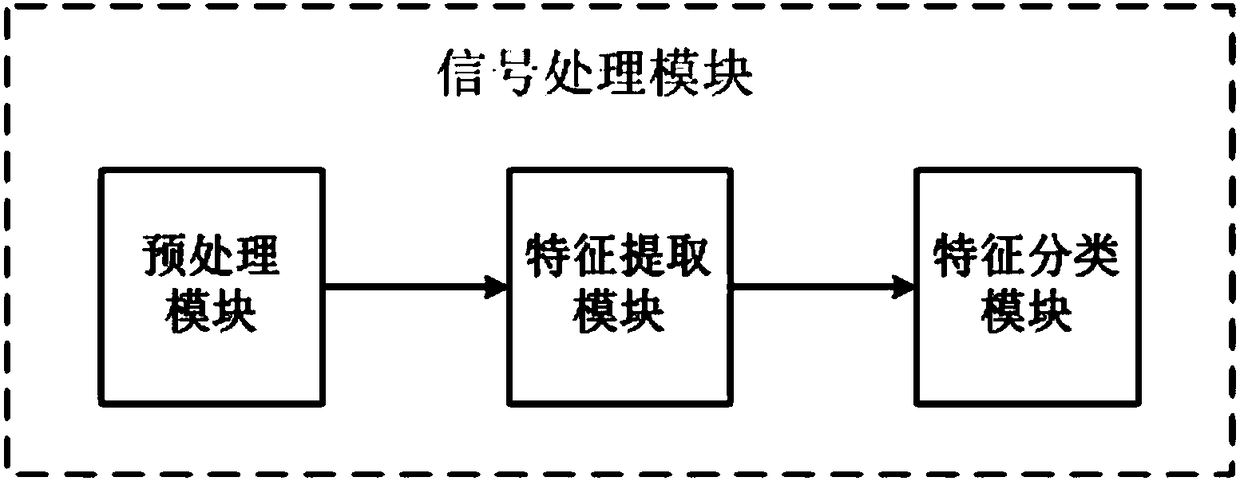

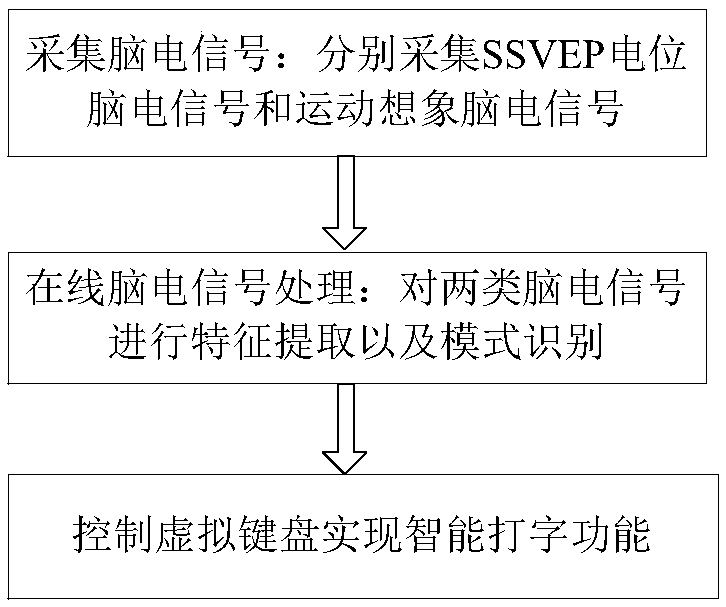

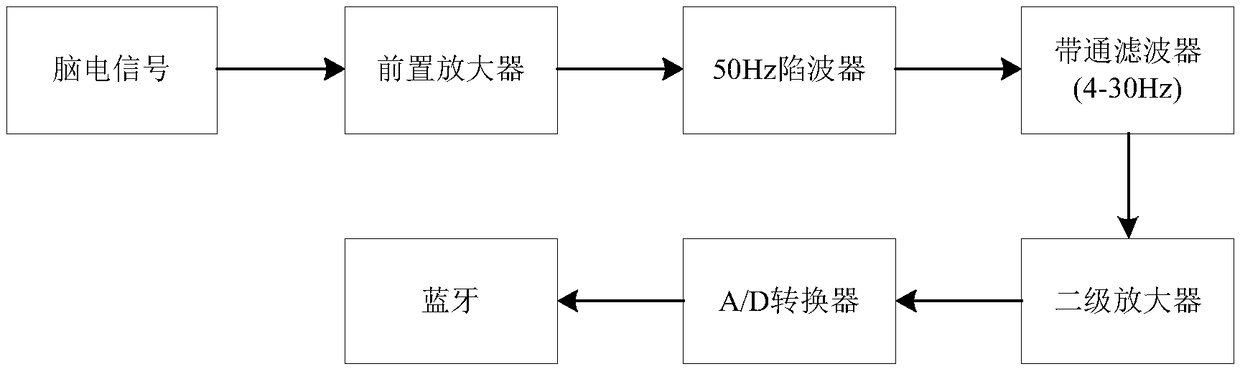

InactiveCN109471530ARealize smart typing functionRealize the function of shift left and rightInput/output for user-computer interactionCharacter and pattern recognitionHand movementsVisual perception

The invention discloses a brain-controlled input method based on steady-state visual evoked potential and motor imagination. In order to overcome the problem of singleness and limitation of the function realization of the existing control method, the method comprises the following steps: 1) collecting EEG signals; (1) generating EEG signals; (2) EEG signal acquisition; 2) on-line EEG signal processing; 3) control that virtual keyboard to realize the intelligent typing function: the virtual keyboard interface is divide into: (1) a display interface: a. A character display area, wherein the character display area is used for displaying the input character; (b) vocabulary association area: that vocabulary association area can be use for users to quickly select character-related vocabulary andcan be selected whether to open or not; (2) Visual evoked input interface: All the 13 flashing keys in the visual evoked input interface can stimulate human brain to produce SSVEP potential. The movement of virtual keys is controlled by left and right hand movement imagination, and the selection of virtual keys is controlled by stimulating cerebral cortex to produce SSVEP signal by fixing virtualkeys.

Owner:JILIN UNIV

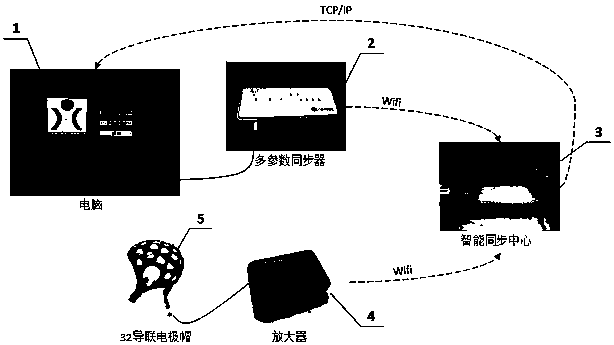

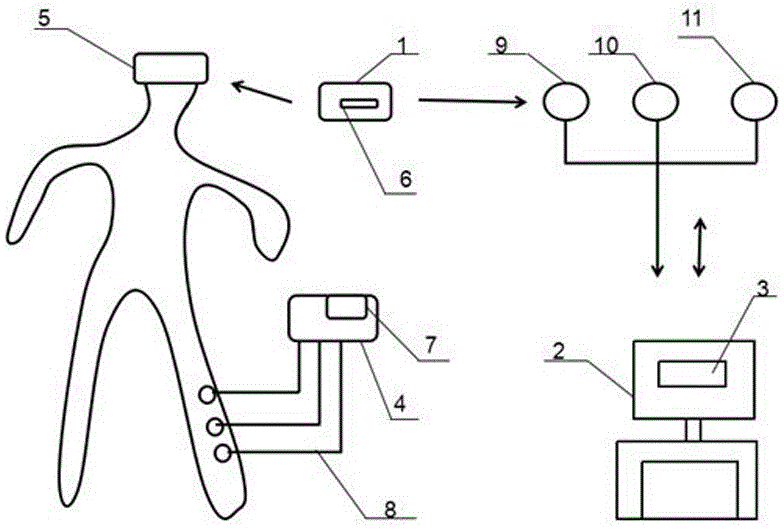

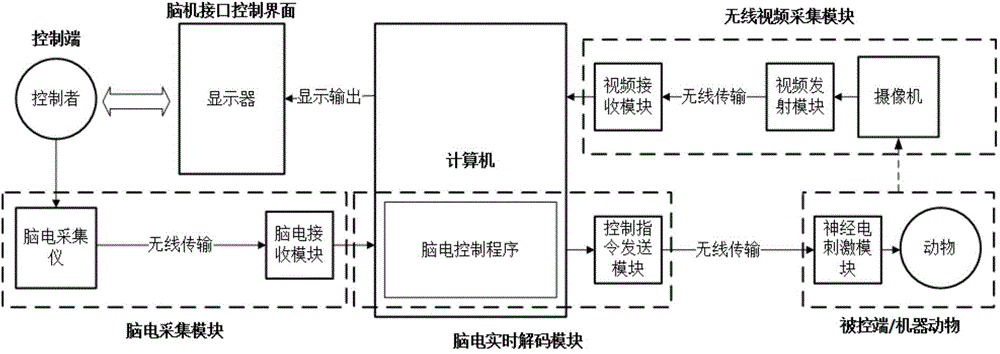

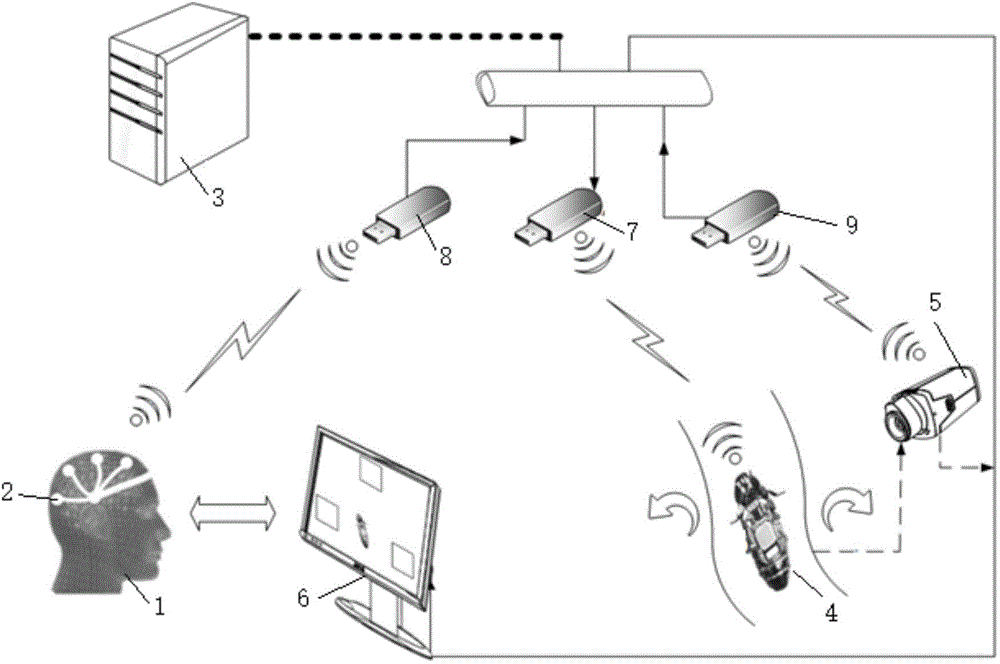

System and method for realizing allogeneic biological control by brain-brain interface

InactiveCN104524689AReconstruction of body functionInvalid friendly devicesArtificial respirationElectricityBrain computer interfacing

The invention relates to a system for realizing allogeneic biological control by a brain-brain interface. The system comprises a wireless video acquisition module, a real-time brain-computer interface control interface, a wireless electroencephalogram acquisition module, an electroencephalogram signal real-time decoding module, a robotic animal and a controller. The wireless video acquisition module monitors current moving information of the robotic animal and transmits the information to the real-time brain-computer interface control interface wirelessly; the controller senses the moving state of the robotic animal visually at the control interface and expresses a control will by a visual simulation pattern; the wireless electroencephalogram acquisition module collects an electroencephalogram signal and sends the signal to the electroencephalogram signal real-time decoding module at a computer end wirelessly; and the electroencephalogram signal real-time decoding module decodes the received electroencephalogram signal in real time and then generates a control instruction of the robotic animal, and sends the control instruction of an electrical nerve stimulation module of the robotic animal; and the electrical nerve stimulation module sends a weak electrical simulation pulse sequence to the animal nerve according to the control instruction, thereby controlling the moving direction of the animal. With the system, allogeneic biological brain control is realized.

Owner:SHANGHAI JIAO TONG UNIV

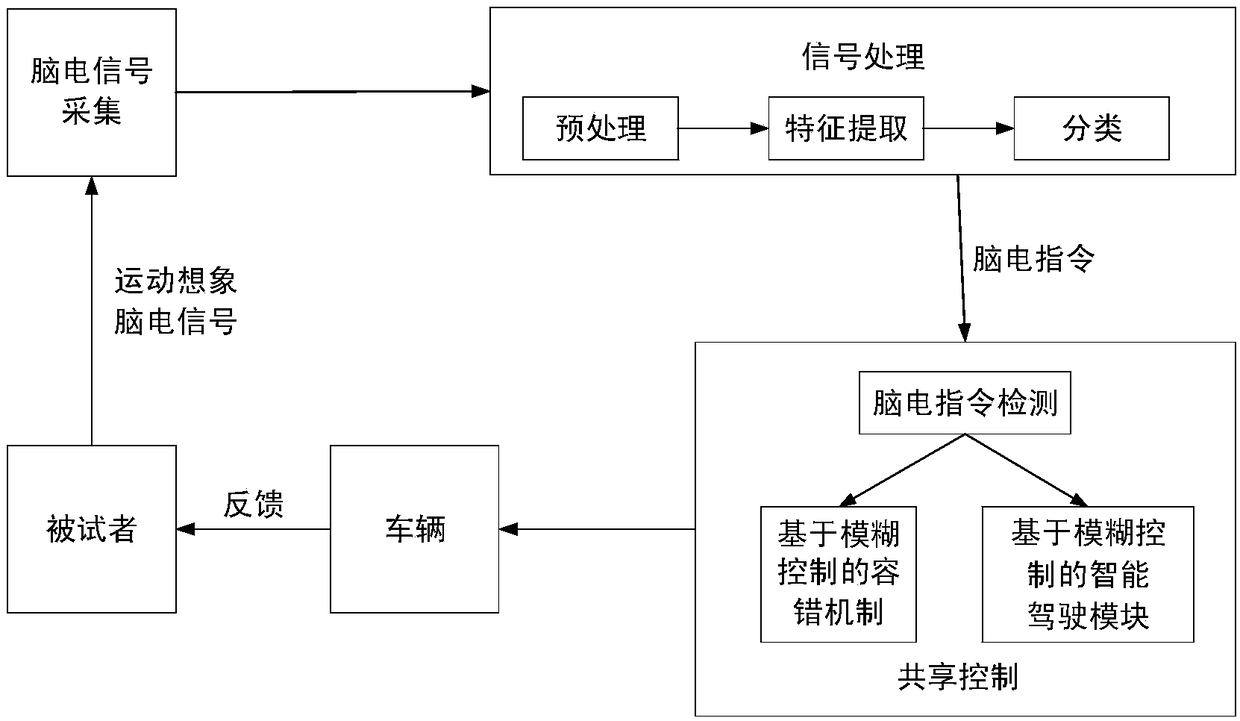

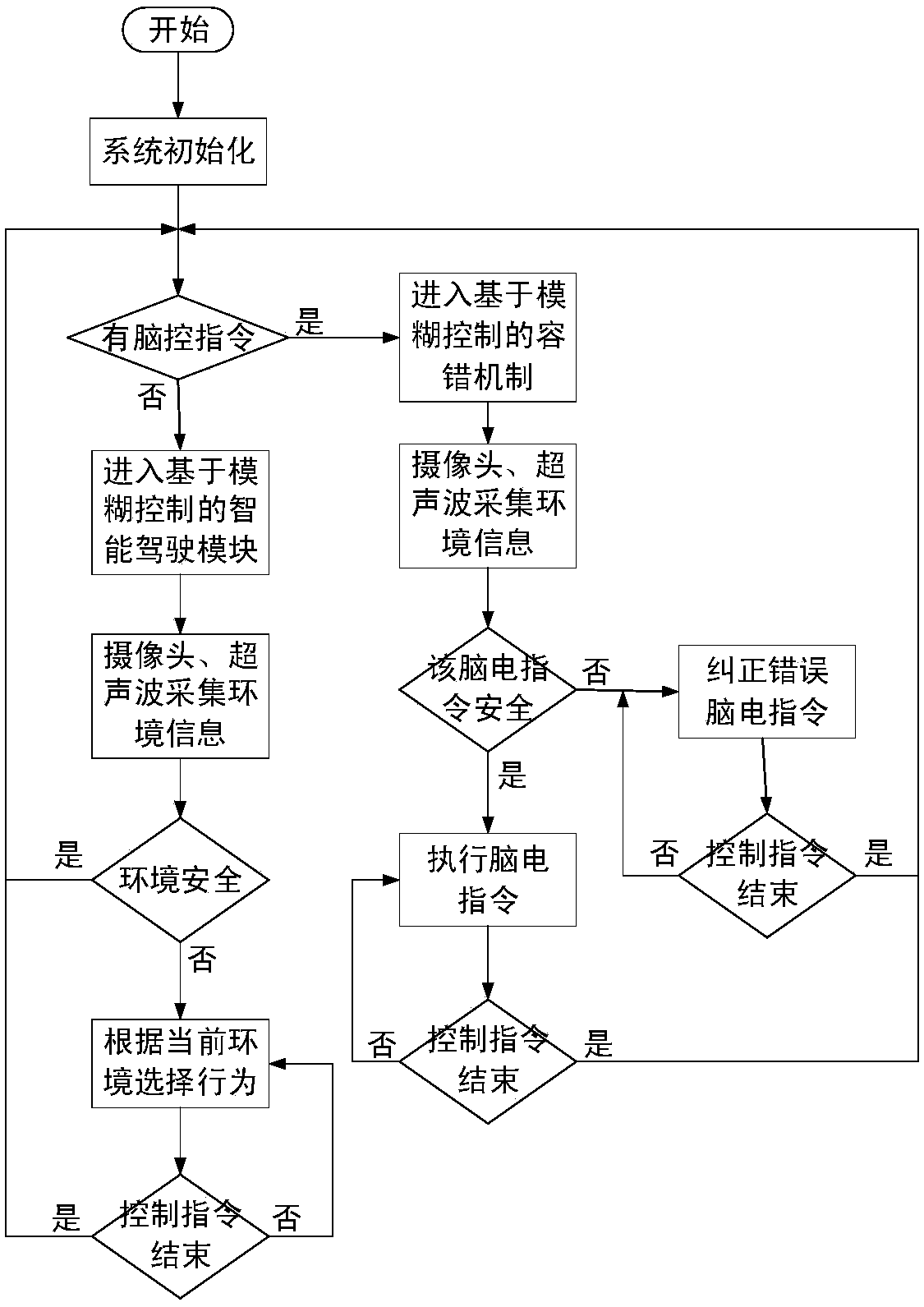

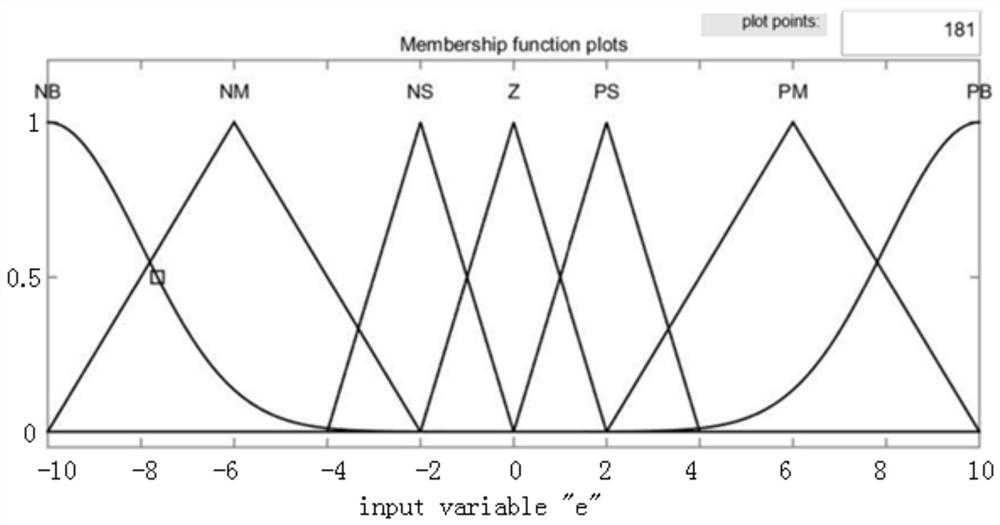

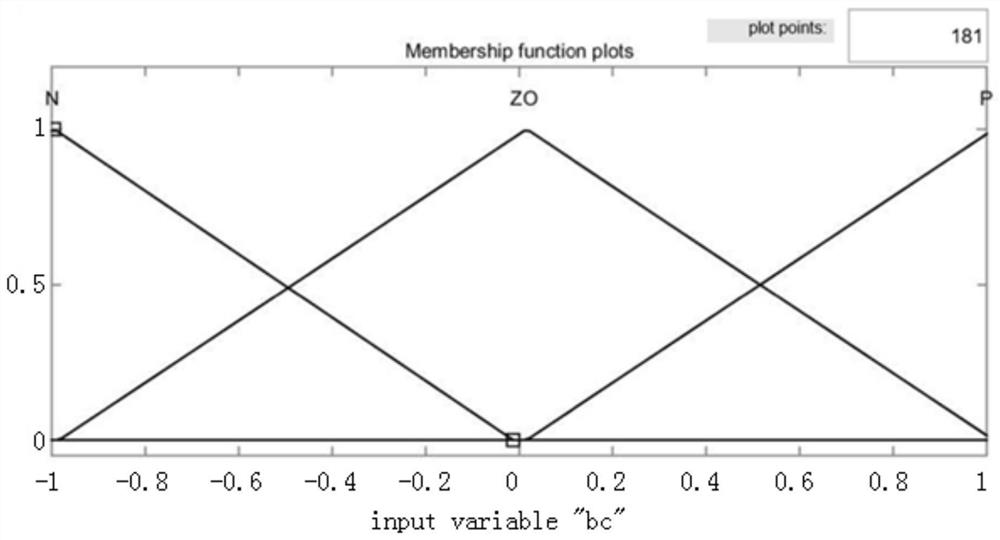

Brain-controlled vehicle sharing control method based on fuzzy control

ActiveCN108491071AImprove securityMake up identificationInput/output for user-computer interactionCharacter and pattern recognitionBrain computer interfacingControl engineering

The present invention relates to a brain-controlled vehicle sharing control method based on fuzzy control. The method is characterized in that: in the vehicle driving process, when an EEG command withmotion imagination is identified online, the vehicle enters a fault-tolerant mechanism based on fuzzy control; and when the EEG command with motion imagination is not identified, the vehicle enters an intelligent driving mechanism based on fuzzy control. According to the method provided by the present invention, the wrong EEG signal can be corrected, the vehicle can be supervised autonomously inthe case that there is no EEG command, the problems such as a high error rate in identification, poor real-time performance, the limited number of commands, and the like of the brain-computer interface are made up for, and the safety of brain-controlled vehicles in an unknown environment is greatly improved.

Owner:SOUTHEAST UNIV

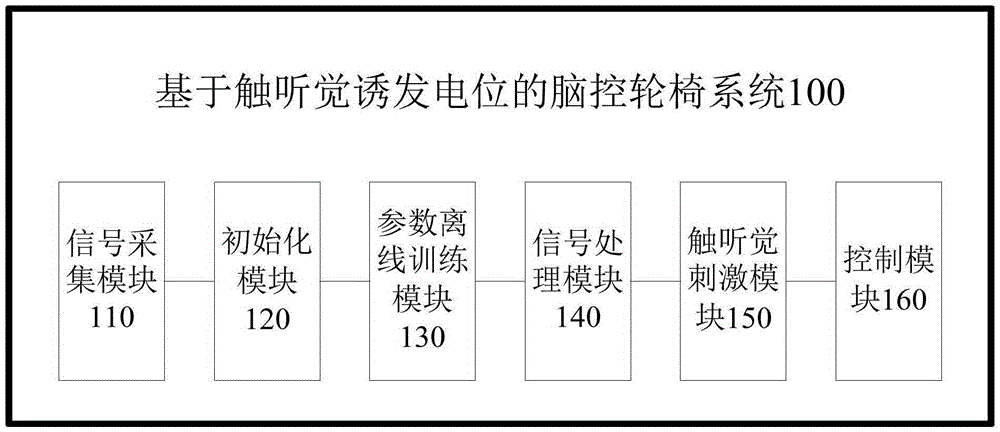

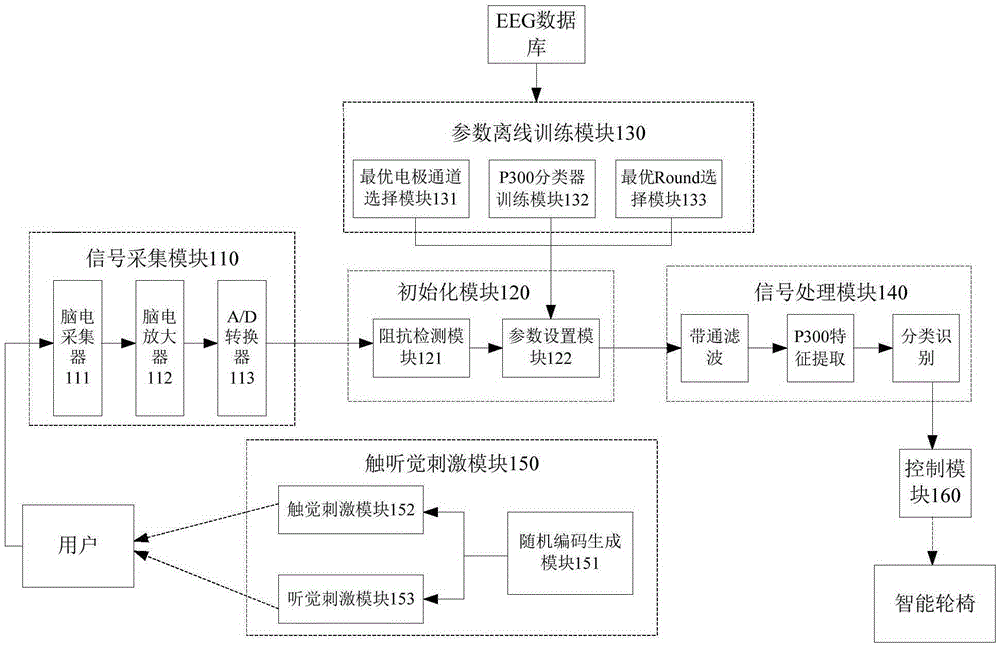

Brain control wheelchair system based on touch and auditory evoked potential

ActiveCN105411580AQuick start to useAchieve mind controlDiagnostic signal processingCharacter and pattern recognitionBrain controlSignal acquisition

The invention provides a brain control wheelchair system based on touch and auditory evoked potential. The brain control wheelchair system comprises a signal acquisition module, an initialization module, a parameter offline training module, a signal processing module, a touch and auditory stimulation module and a control module. The signal acquisition module collects and preprocesses electroencephalogram signals of a user. The initialization module conducts detection on resistance value of electrodes and sets parameters of electrode positions, the optimal Round number and a P300 classifier. The parameter offline training module obtains the optical electrode passageway and the optical Round number, and trains the P300 classifier. The signal processing module is used for extracting and recognizing P300 characteristics. The touch and auditory stimulation module exerts touch and auditory bimodal random stimulation on the user so that the P300 characteristic potential in electroencephalogram signals can be induced. The control module is used for generating a corresponding control instruction according to the P300 characteristic potential, converting the control instruction into a voltage signal and controlling a wheelchair to execute corresponding operation according to the voltage signal. The brain control wheelchair system is simple in principle, easy and convenient to obtain and high in control accuracy, and the operation efficiency of the system can be improved.

Owner:SCI RES TRAINING CENT FOR CHINESE ASTRONAUTS

Intention priority fuzzy fusion control method for brain-controlled vehicle

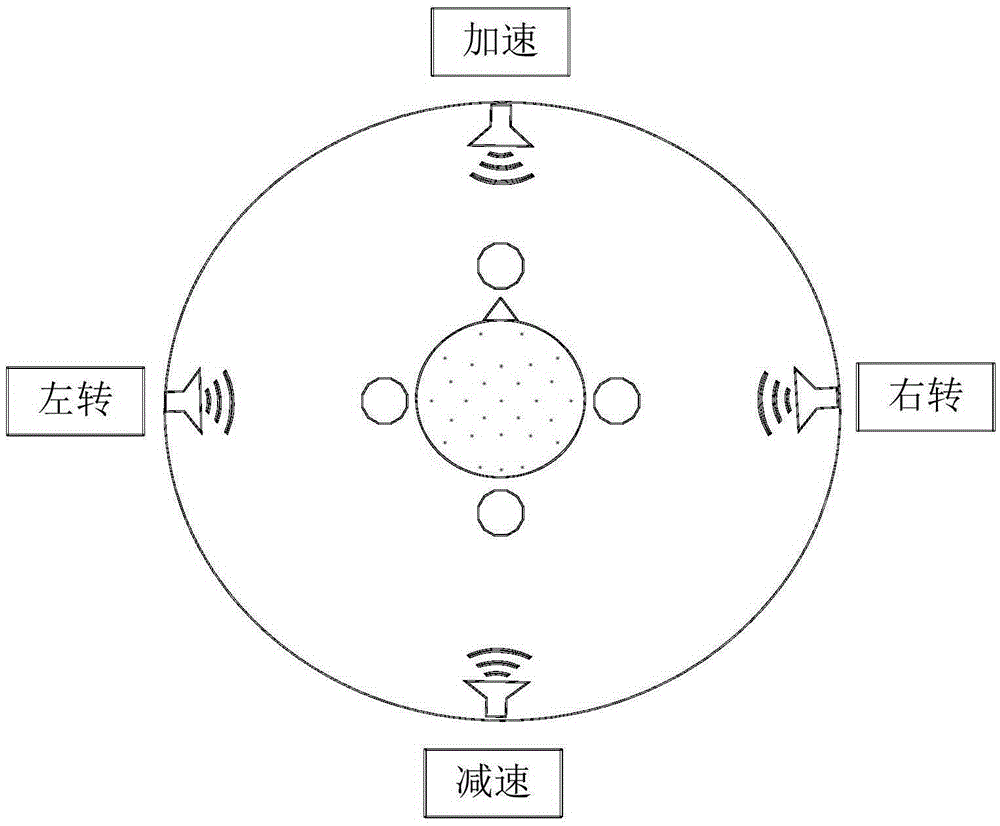

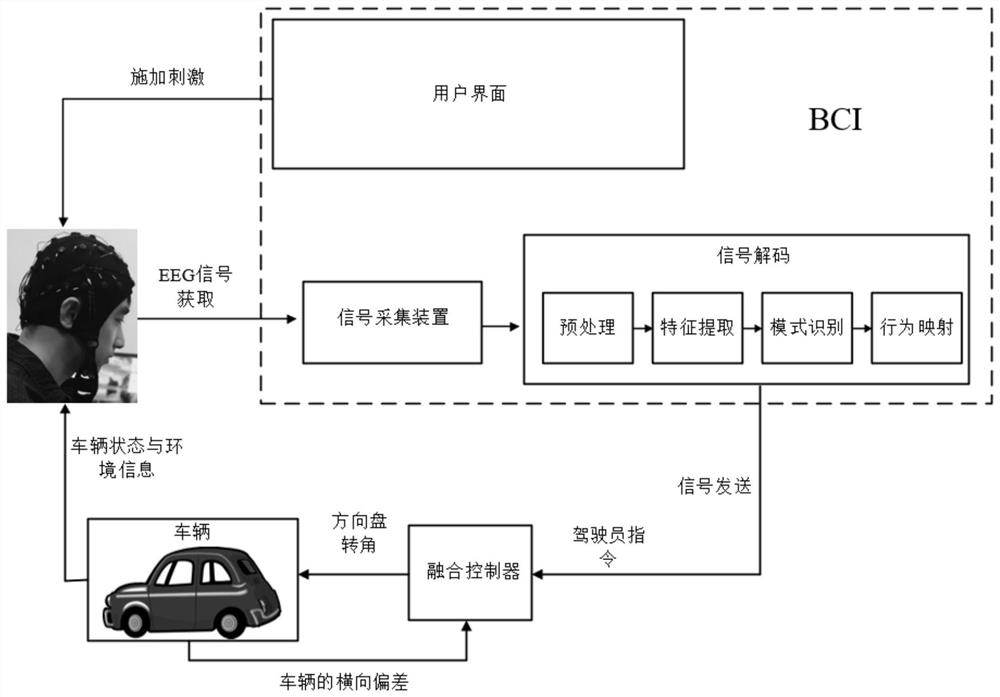

PendingCN112000087AAvoid modeling problemsAvoiding the Problem of Modeling the Decision ProcessPosition/course control in two dimensionsVehiclesDriver/operatorAutomatic control

The invention discloses an intention priority fuzzy fusion control method for a brain-controlled vehicle. The intention priority fuzzy fusion control method comprises the steps of: deciding a brain-controlled command by a brain-controlled driver based on a vehicle state and environment information, wherein the brain-controlled command comprises turning a steering wheel to the left, turning the steering wheel to the right and keeping the steering wheel; secondly, according to a type of a used brain-computer interface, applying corresponding stimulation to the brain-controlled driver through a user interface, wherein the stimulation comprises a rotation paradigm picture; subjecting acquired electroencephalogram signals to signal decoding and taking the decoded signals as one path of input signals to be sent to a fuzzy brain control fusion controller; synthesizing the brain-controlled command and the current vehicle state by means of the fuzzy brain control fusion controller in real time;deciding whether to execute the brain-controlled command or not and how to execute the brain-controlled command according to a fuzzy rule based on a principle of respecting subjective ideas of peopleas much as possible; and finally, outputting a steering wheel turning angle and sent it to a vehicle. The intention priority fuzzy fusion control method realizes cooperative control over automatic control and brain control, and therefore the performance of a brain-controlled system is improved.

Owner:TIANJIN UNIV

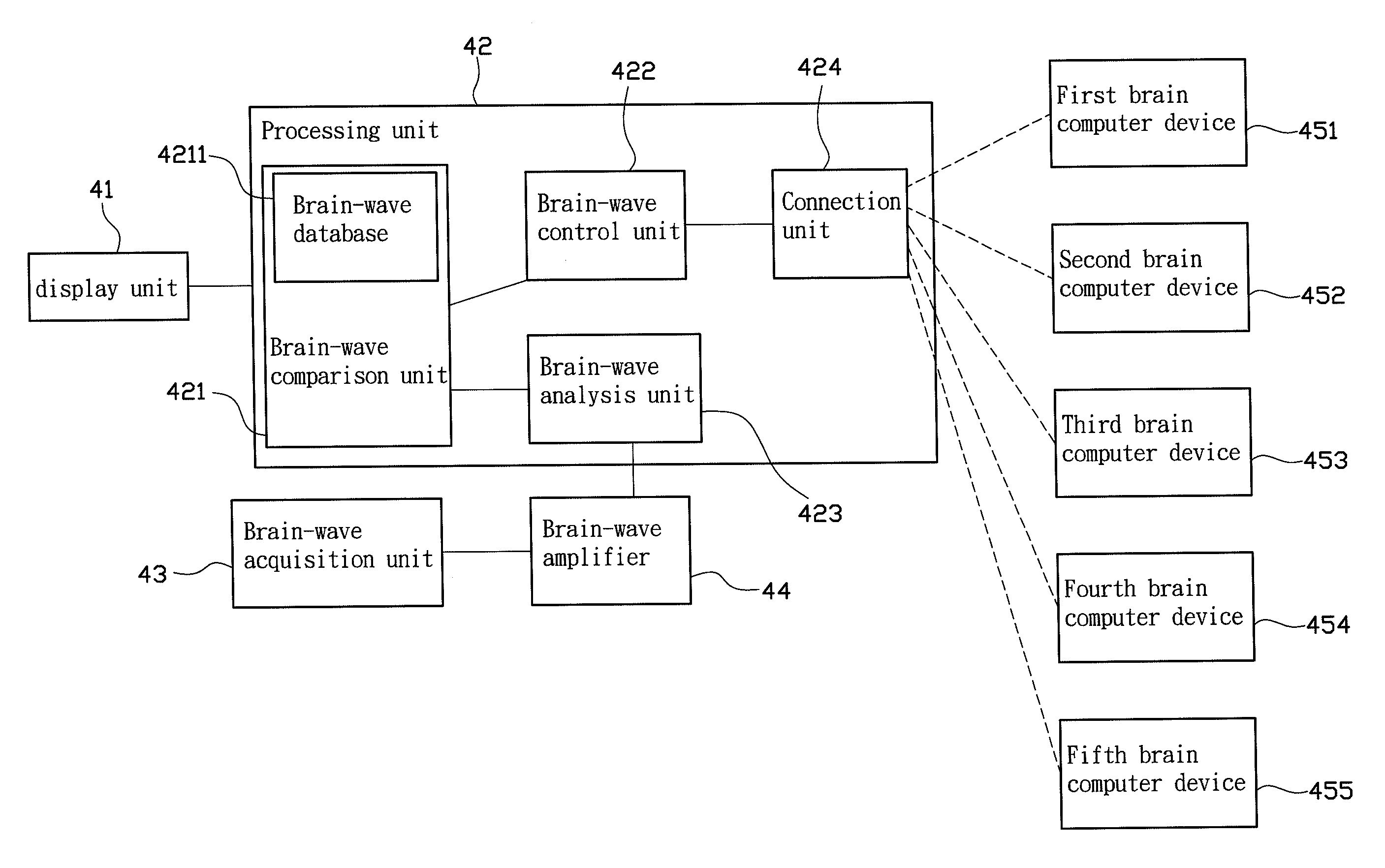

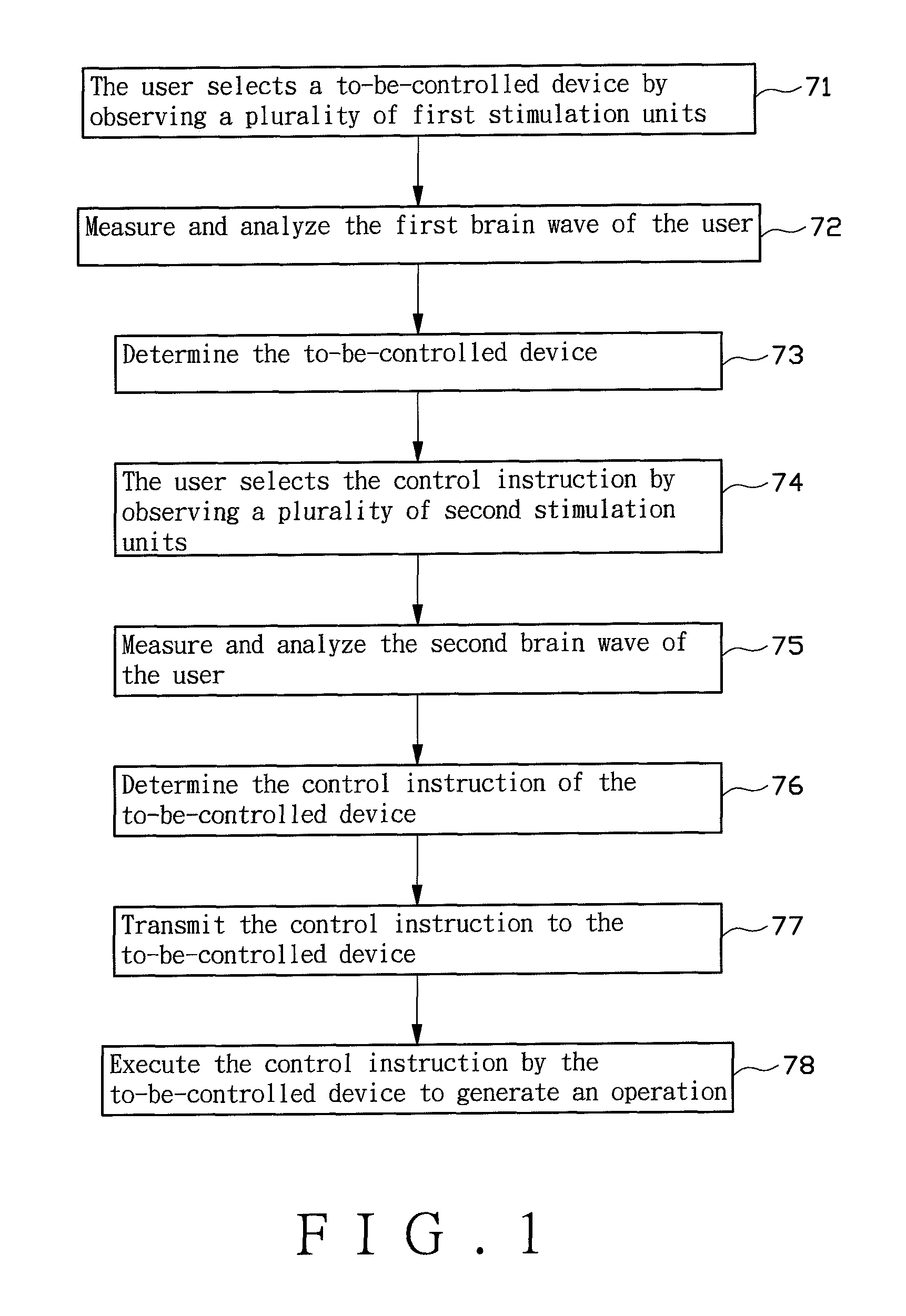

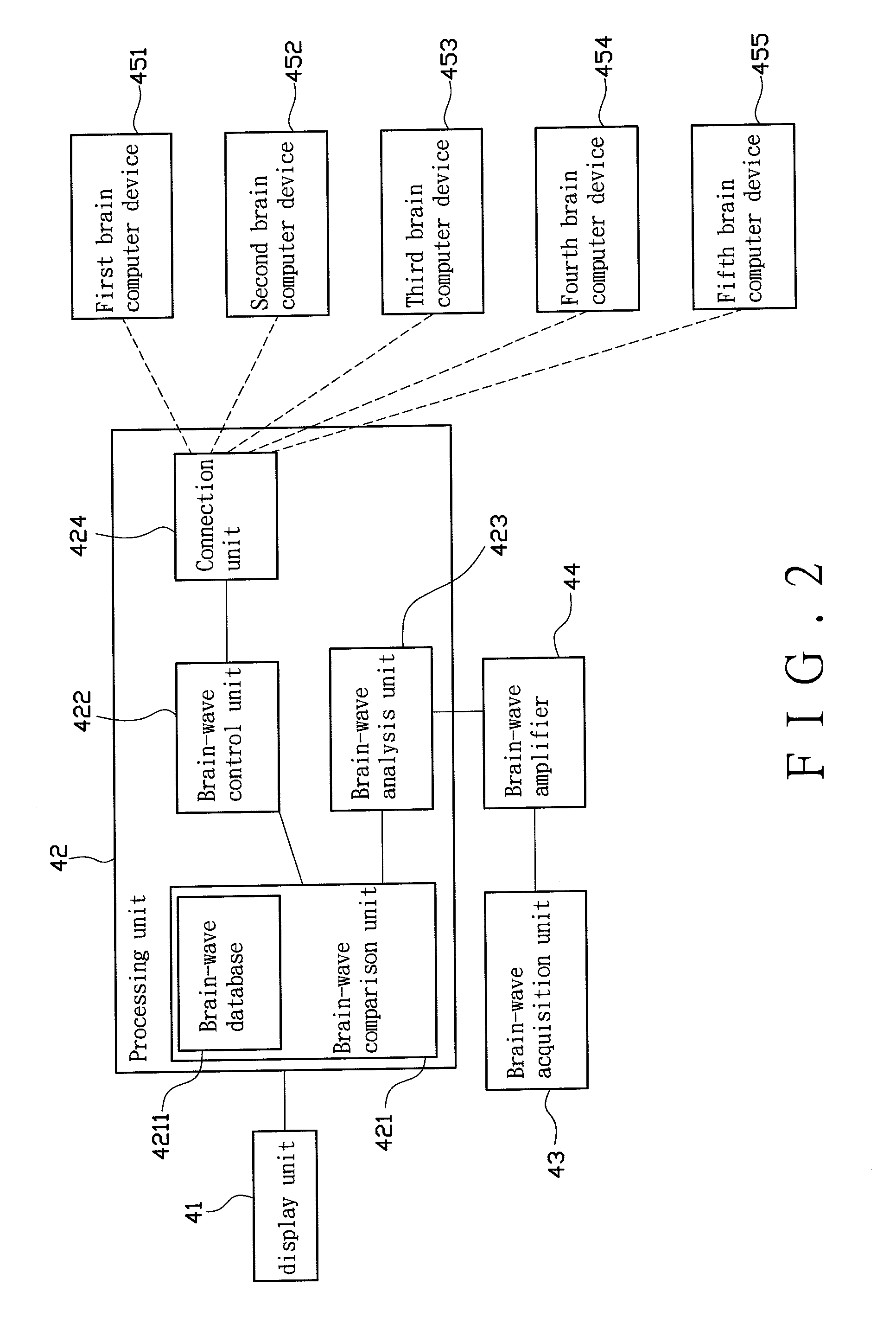

Platform and method for BCI control

InactiveUS9092055B2Improve accuracyInput/output for user-computer interactionComputer controlEngineeringVisual perception

A method for BCI control is utilized to control a plurality of brain control devices. The brain control devices are capable of executing an operation themselves. A brain-wave control platform is provided for supplying a first signal and a second signal, wherein the first and second signals are utilized to visually evoke a user's first and second brain waves, respectively. The brain-wave control platform selects one of the brain control devices as a to-be-controlled device by the first brain wave, and the to-be-controlled device is controlled to finish an operation by the second brain wave.

Owner:SOUTHERN TAIWAN UNIVERSITY OF TECHNOLOGY

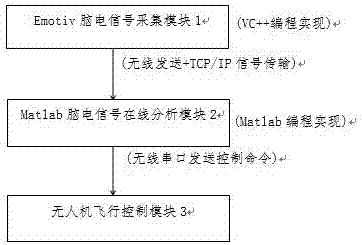

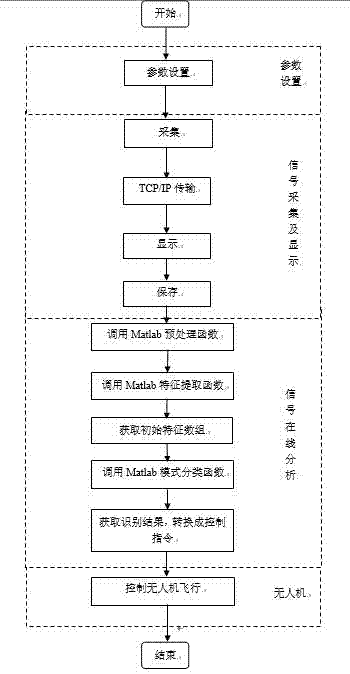

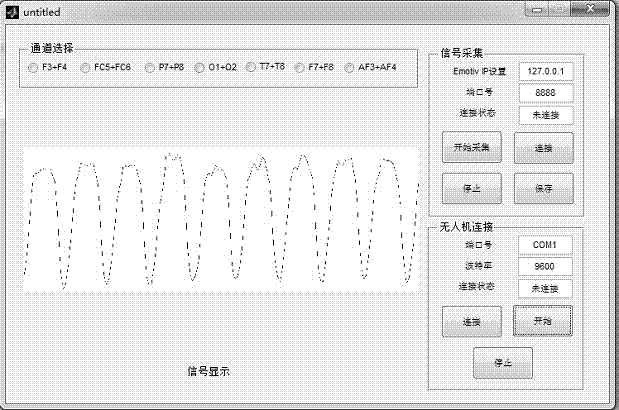

Emotiv brain-controlled unmanned aerial vehicle system and method based on VC++ and Matlab mixed programming

ActiveCN106940593AReal-time recognitionFriendly interfaceInput/output for user-computer interactionGraph readingBrain computer interfacingMixed programming

The invention relates to an Emotiv brain-controlled unmanned aerial vehicle system and method based on VC++ and Matlab mixed programming. The system comprises an Emotiv electroencephalogram signal acquisition module (1), a Matlab electroencephalogram signal online analysis module (2) and an unmanned aerial vehicle flight control module (3), wherein the Emotiv electroencephalogram signal acquisition module (1) is VC++ acquisition software for acquiring an electroencephalogram signal for controlling an unmanned aerial vehicle by a tested person, and transmitting the electroencephalogram signal to a computer; the acquisition software is used for transmitting the electroencephalogram signal to the Matlab electroencephalogram signal online analysis module (2) through adopting a TCP / IP (Transmission Control Protocol / Internet Protocol); the acquired electroencephalogram signal is subjected to pre-processing, characteristic extraction and classification, and effective components in the signal are analyzed and are converted into a control command; the control command is sent to the unmanned aerial vehicle flight control module (3) through a wireless serial port manner; after the control command is received, launching, landing, leftward flight and rightward flight operations are carried out. The Emotiv brain-controlled unmanned aerial vehicle system and method are realized by adopting the VC++ and Matlab mixed programming; a pattern recognition method and actual hardware equipment and software application, which can be conveniently embedded into a brain-computer interface, lay a foundation for online analysis and actual application of the brain-computer interface.

Owner:上海韶脑传感技术有限公司

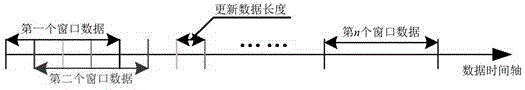

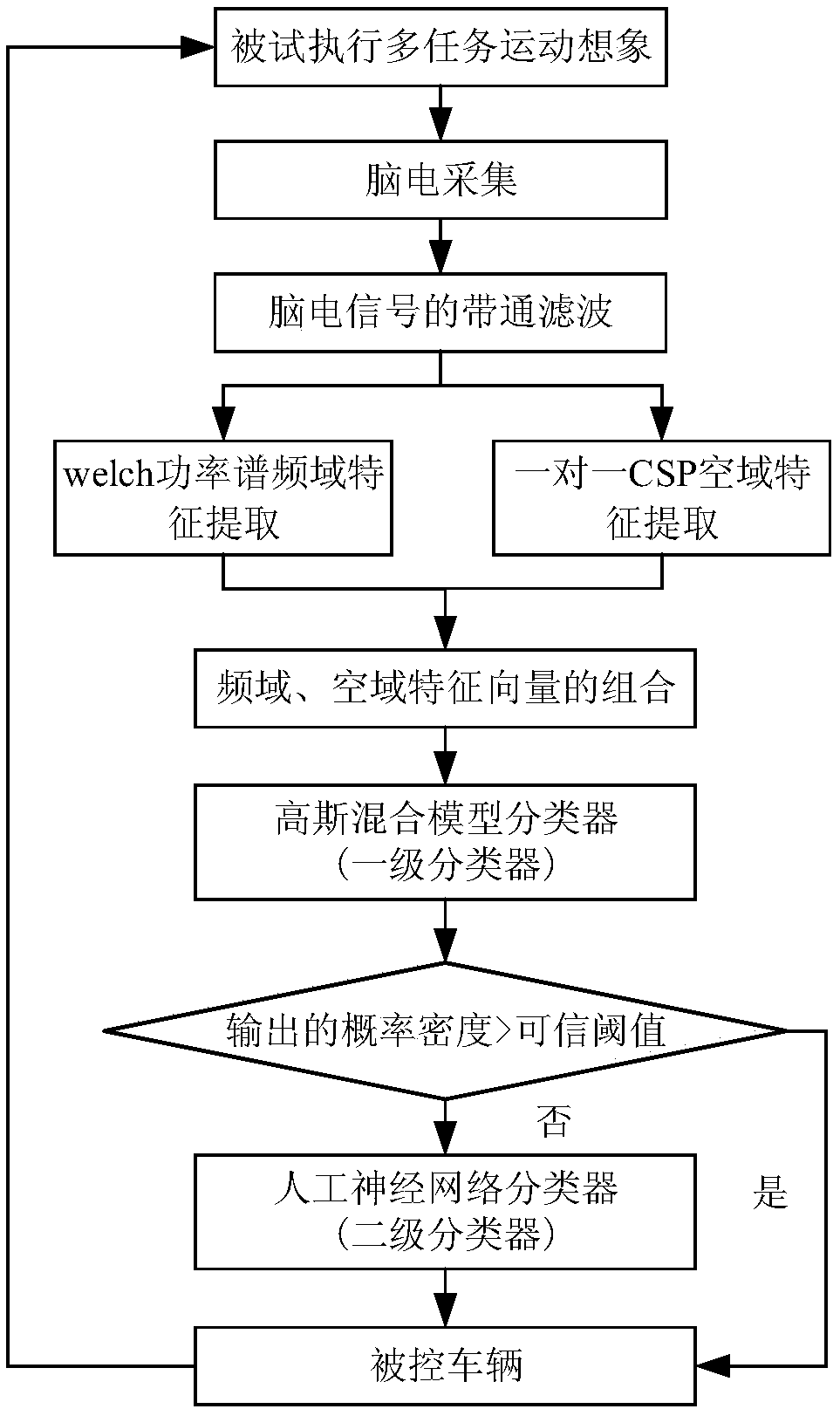

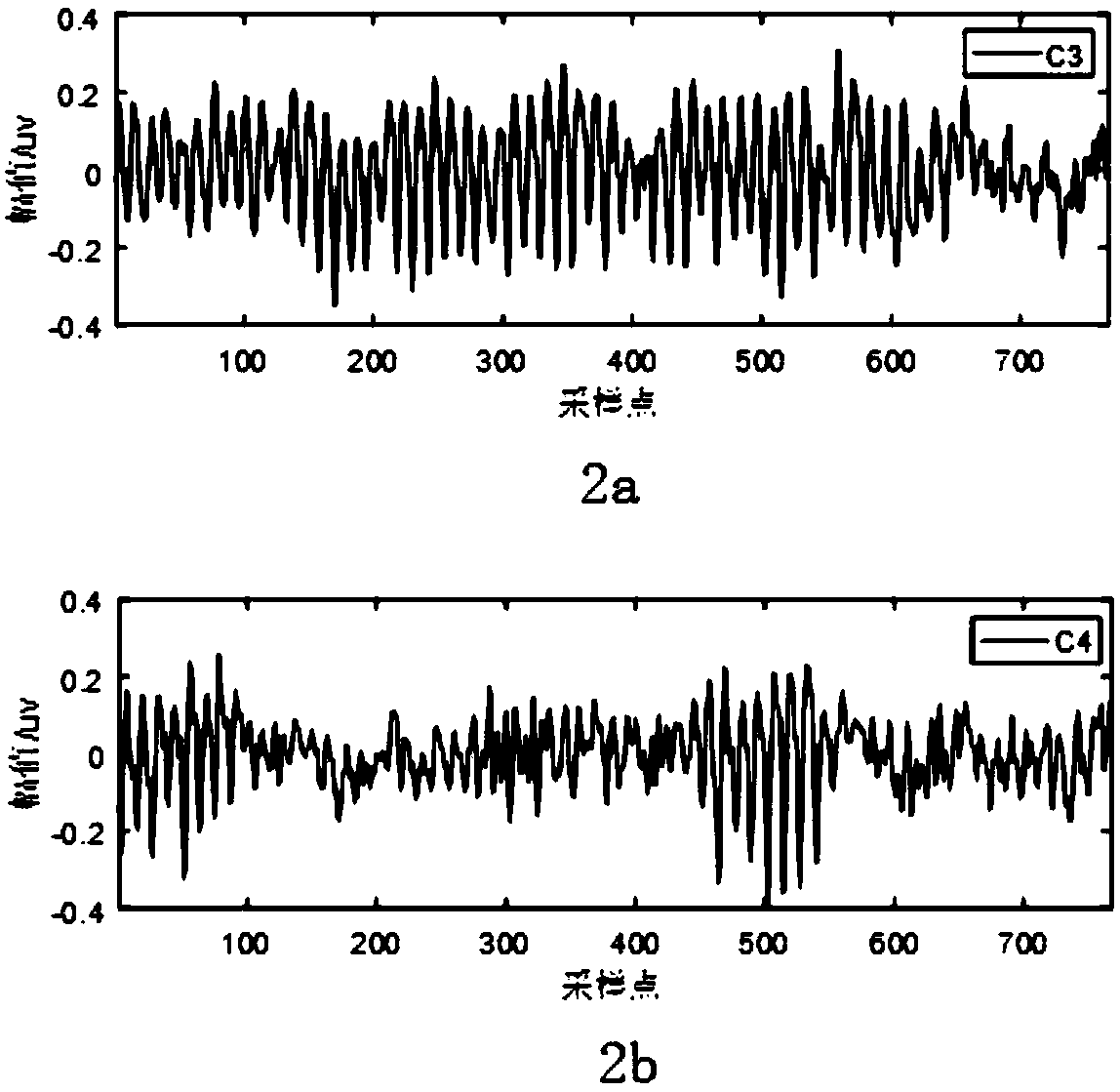

A multi-task motor imagination EEG feature extraction and pattern recognition method for vehicle control

ActiveCN109299647AKeep statistical informationImprove real-time performanceCharacter and pattern recognitionControl devicesVehicle drivingEEG feature

The invention relates to a multi-task motor imagination EEG feature extraction and pattern recognition method for vehicle control. The multi-task imagination EEG signals were collected by EEG amplifier and transmitted to PC. The frequency domain and spatial feature combinations of imaginary EEG were extracted by Welch power spectrum and one-to-one common spatial pattern algorithm. A plurality of GMM classifiers are constructed according to the categories to which the training set data belongs. The original EEG signal is passed through the GMM classifier, and the probability density is comparedwith the set credible threshold. The samples below the credible threshold are classified twice by artificial neural network, and the final classification result is transmitted to the vehicle throughwireless serial port to realize the real-time movement of the vehicle. The invention utilizes welch power spectrum and CSP to extract frequency domain and spatial domain features related to motion imagination, utilizes GMM and artificial neural network two-level classifier, effectively improves real-time performance of vehicle control and safety of vehicle driving, and lays a foundation for practical application of brain-controlled vehicle.

Owner:SOUTHEAST UNIV

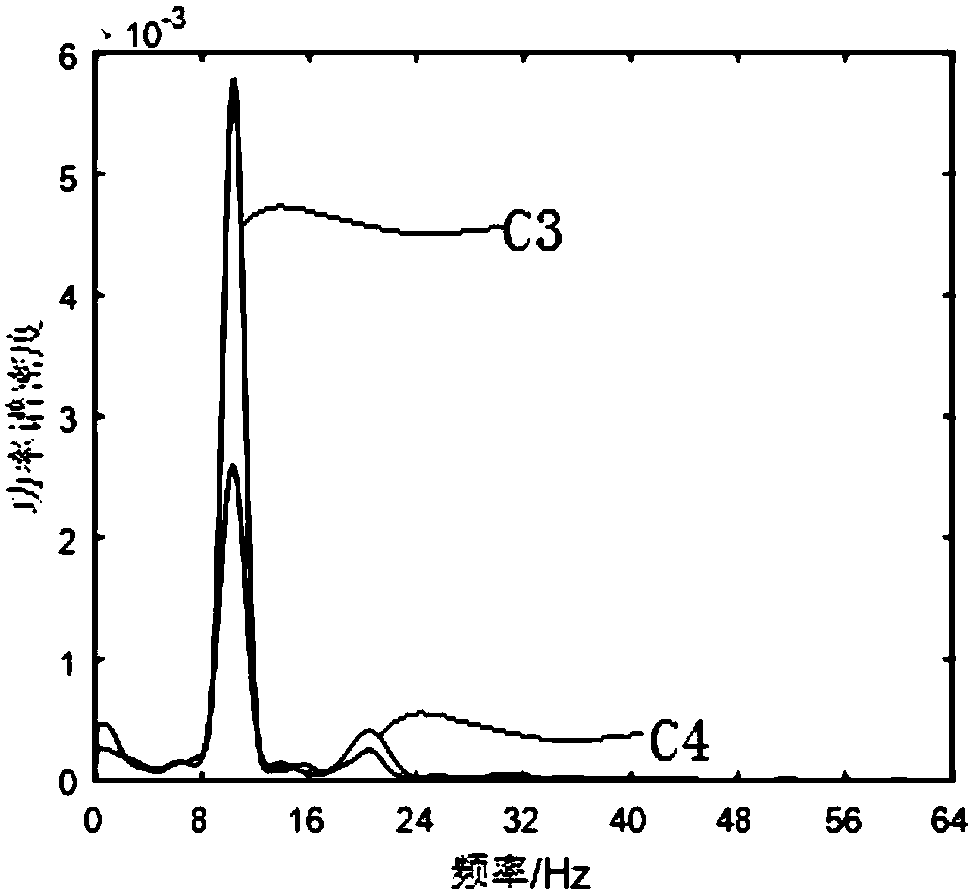

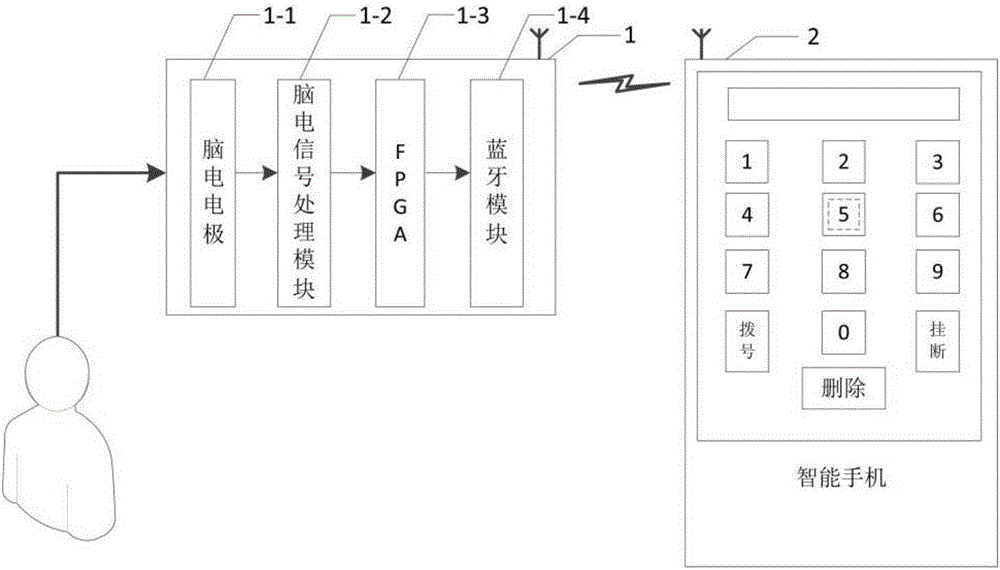

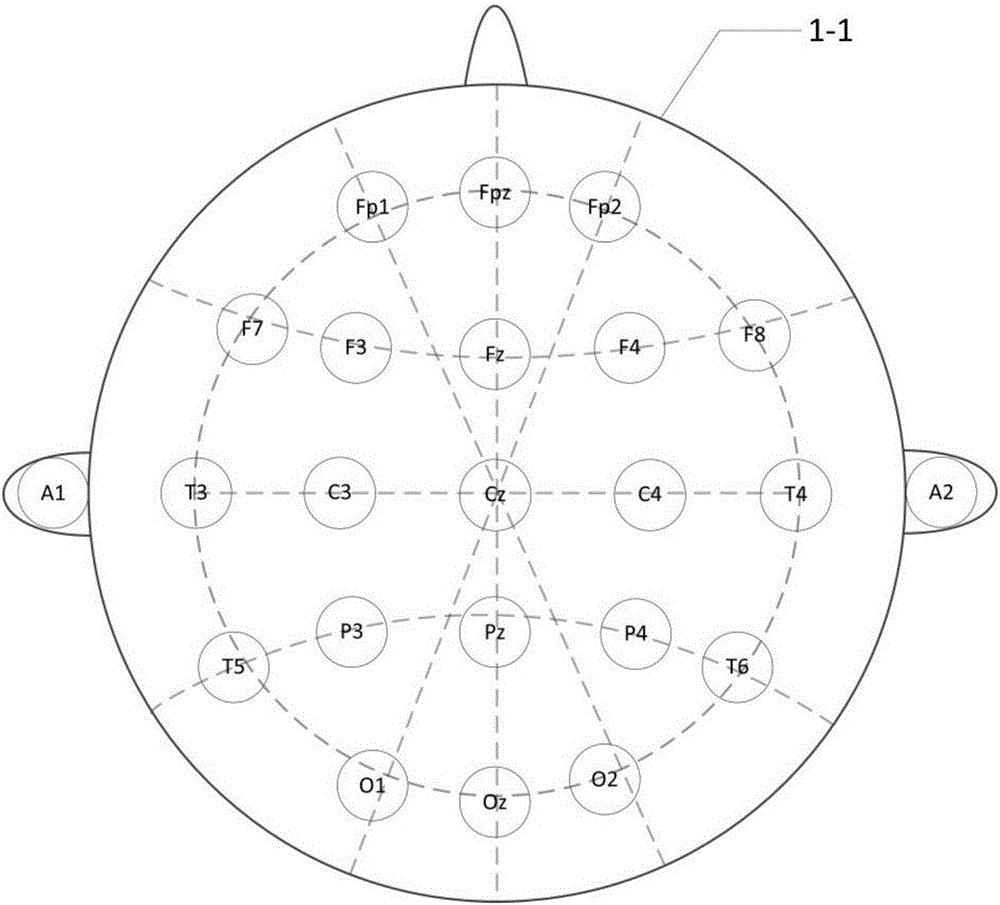

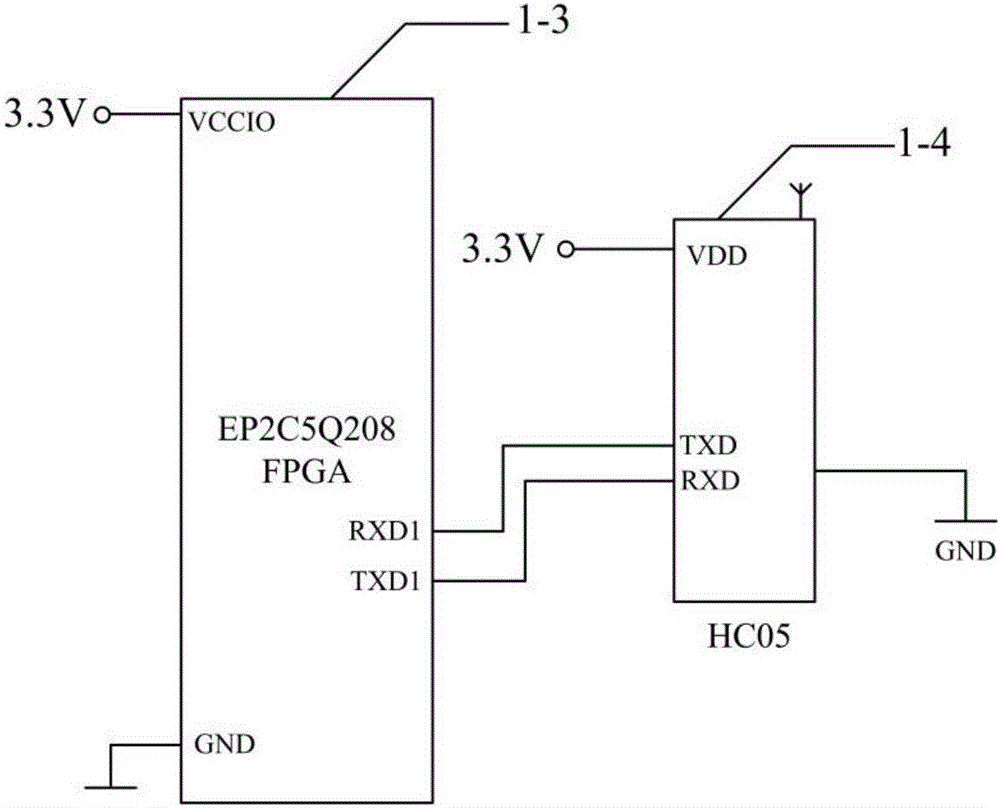

Brain-controlled mobile phone dialing control device and control method

PendingCN106066697ATo achieve the purpose of remote control mobile phone equipmentInput/output for user-computer interactionSubstation equipmentMicrocontrollerComputer module

The invention belongs to the brain-machine interface technical field, and relates to a brain-controlled mobile phone dialing control device and a control method, thus solving the existing problems that special population cannot use the mobile phone with convenience; the control device comprises an EEG helmet and a controlled end; the EEG helmet comprises an EEG electrode, an EEG signal processing module, a FPGA microcontroller, and a Bluetooth module; the controlled end comprises a smart phone device; the control method comprises the following steps: 1, control device initialization; 2, carrying out Bluetooth pairing between the EEG helmet and the smart phone device; 3, using the EEG electrode to receive an EEG signal; 4, using the EEG signal processing module to amplify the EEG signal received by the EEG electrode, carrying out A / D conversion, and sending the signal to the FPGA microcontroller; 5, using the FPGA microcontroller to send the EEG signal to the smart phone device; 6, using the smart phone device to receive the EEG signal and open software A so as to compile and decode the EEG signal, an executing EEG signal corresponding functions on the smart phone device.

Owner:JILIN UNIV

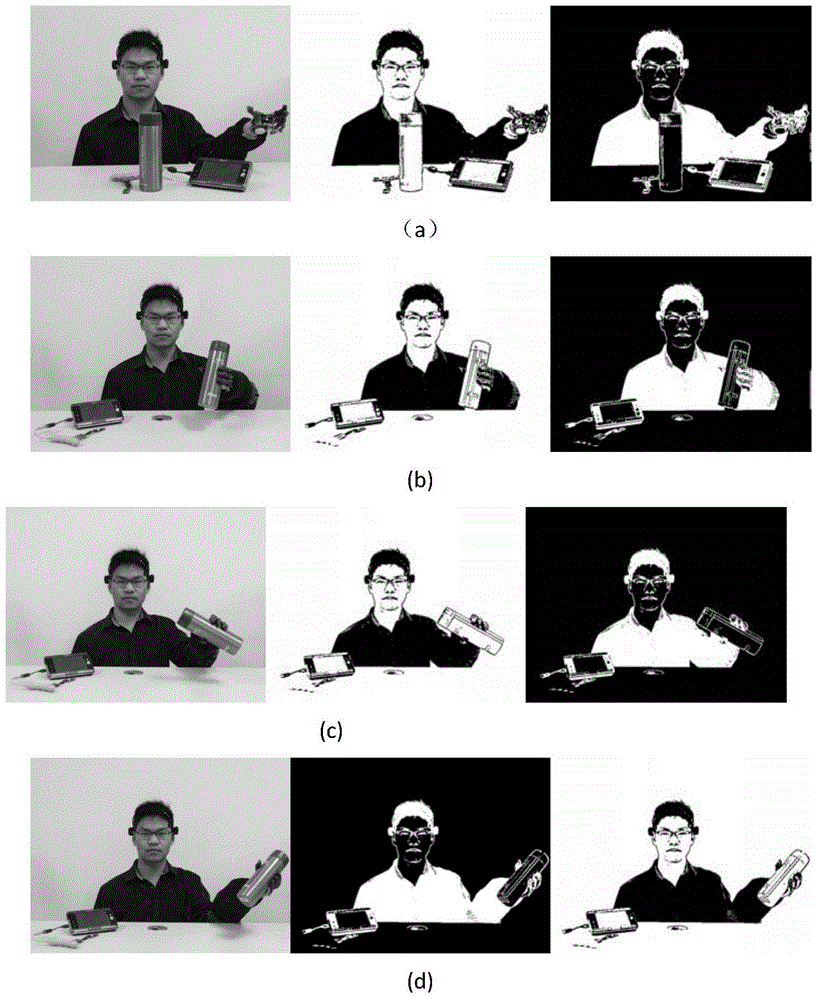

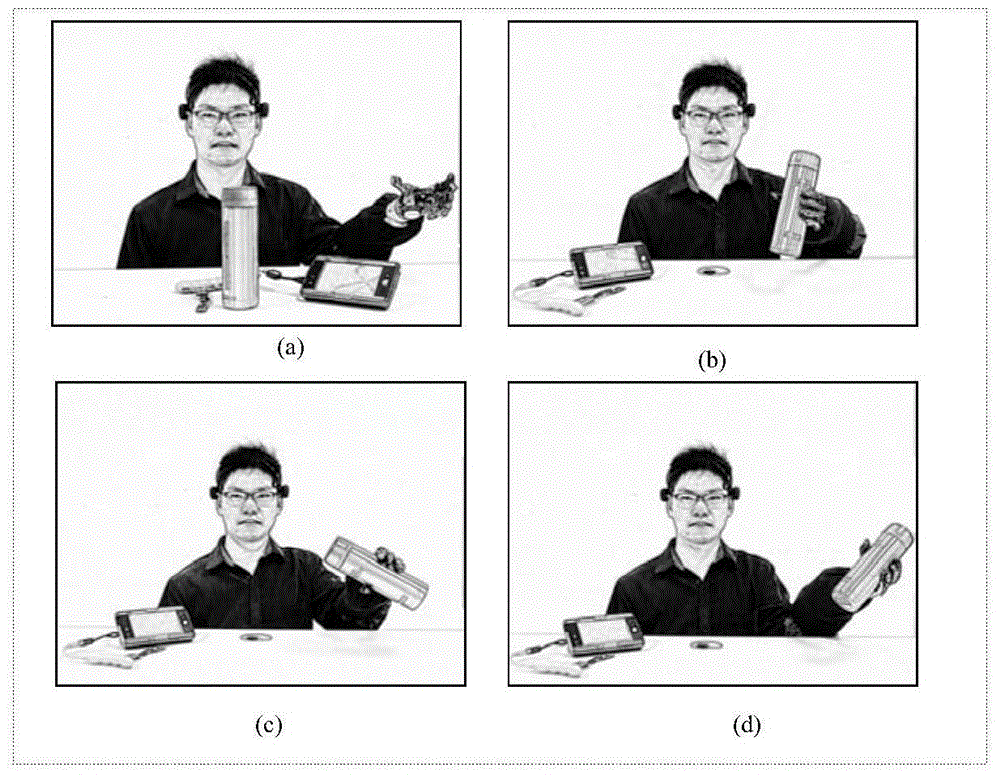

Serial multi-mode brain control method for smooth grabbing operation of artificial hand

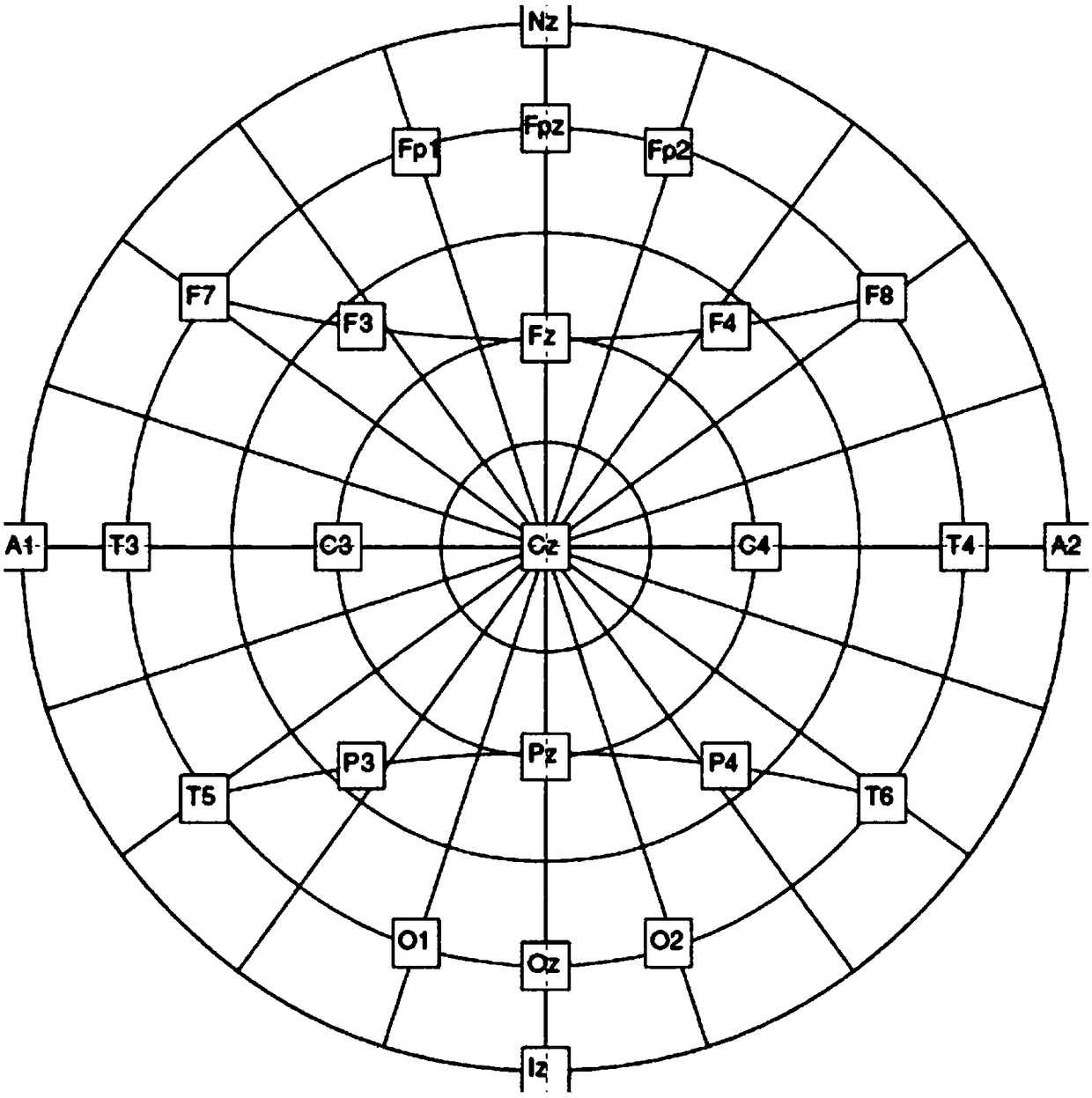

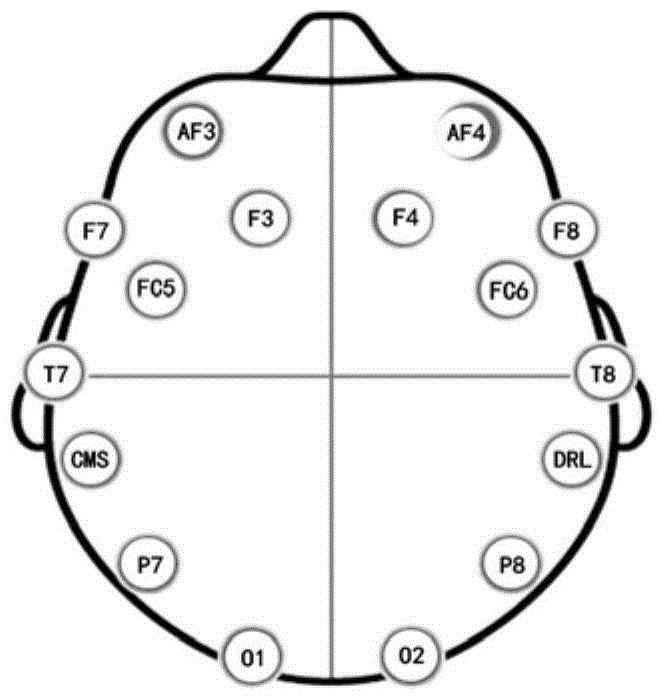

ActiveCN105708586AImprove information transfer rateImprove accuracyProsthesisBand-pass filterFrontal cortex

The invention discloses a serial multi-mode brain control method for a smooth grabbing operation of an artificial hand. The method comprises the following steps: when a tested subject undergoes initiative expression drive and scene automation visual evoking, collecting electroencephalogram signals of F7 and F8 channels in a lateral frontal cortex area and O1 and O2 channels in an occipital area by virtue of electroencephalogram collection equipment, and conducting amplification and band-pass filtering preprocessing on the electroencephalogram signals; extracting time-frequency domain characteristic values of the preprocessed electroencephalogram signals by virtue of signal processing equipment, and in accordance with a serial control method, judging artificial hand basis action types corresponding to the electroencephalogram signals under four initiative expression drives as well as time-frequency characteristic values of artificial hand basic action processes corresponding to the electroencephalogram signals generated from four different scene automation visual evoked pictures; and finally, depending on a judging result, controlling the artificial hand to complete four basic actions.

Owner:XI AN JIAOTONG UNIV

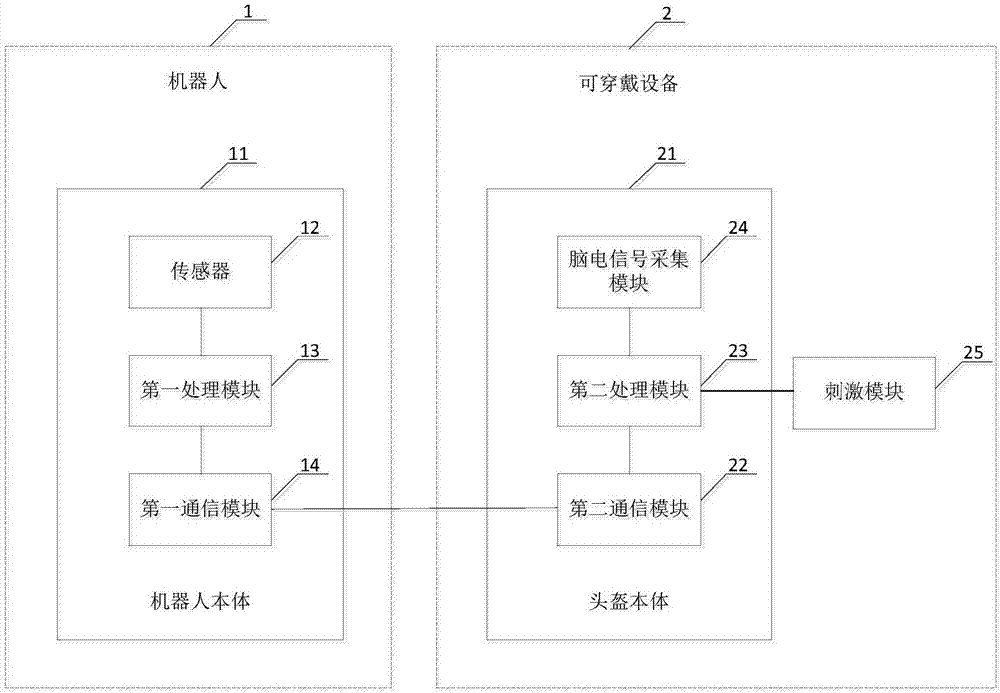

Holographic brain-controlled robot system

InactiveCN106873780ARealization of brain controlInput/output for user-computer interactionAlarmsBrain computer interfacingSimulation

The invention discloses a holographic brain-controlled robot system which comprises a robot and a wearable device. The robot comprises a robot body and a sensor, a first processing module and a first communication module which are arranged on the robot body; the wearable device comprises a stimulation module, a helmet body, a second processing module, a second communication module and an electroencephalogram signal collection module used for collecting electroencephalogram signals, wherein the second processing module and the second communication module are arranged on the helmet body. The holographic brain-controlled robot system is a mature holographic brain-controlled robot system, and combination of a brain computer interface technology and an intelligent robot control technology is achieved; in addition, the robot can feed information of the environment where the robot is located back to the wearable device through the sensor, a user can understand and feel the change of the environment where the robot is located through the wearable device and the stimulation module in the wearable device, and brain control over the robot is achieved.

Owner:GUANGDONG UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com