Patents

Literature

115 results about "Computational RAM" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

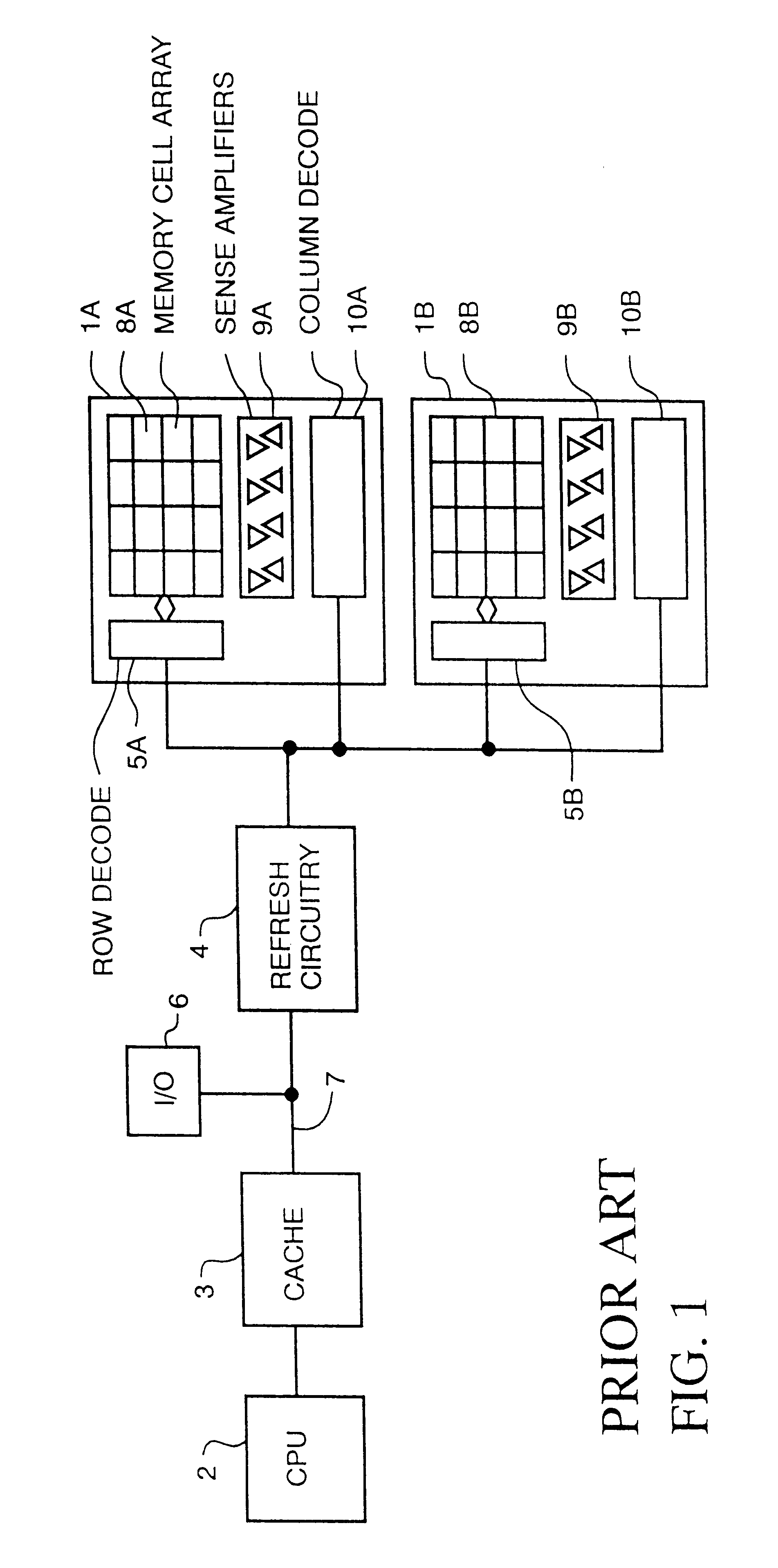

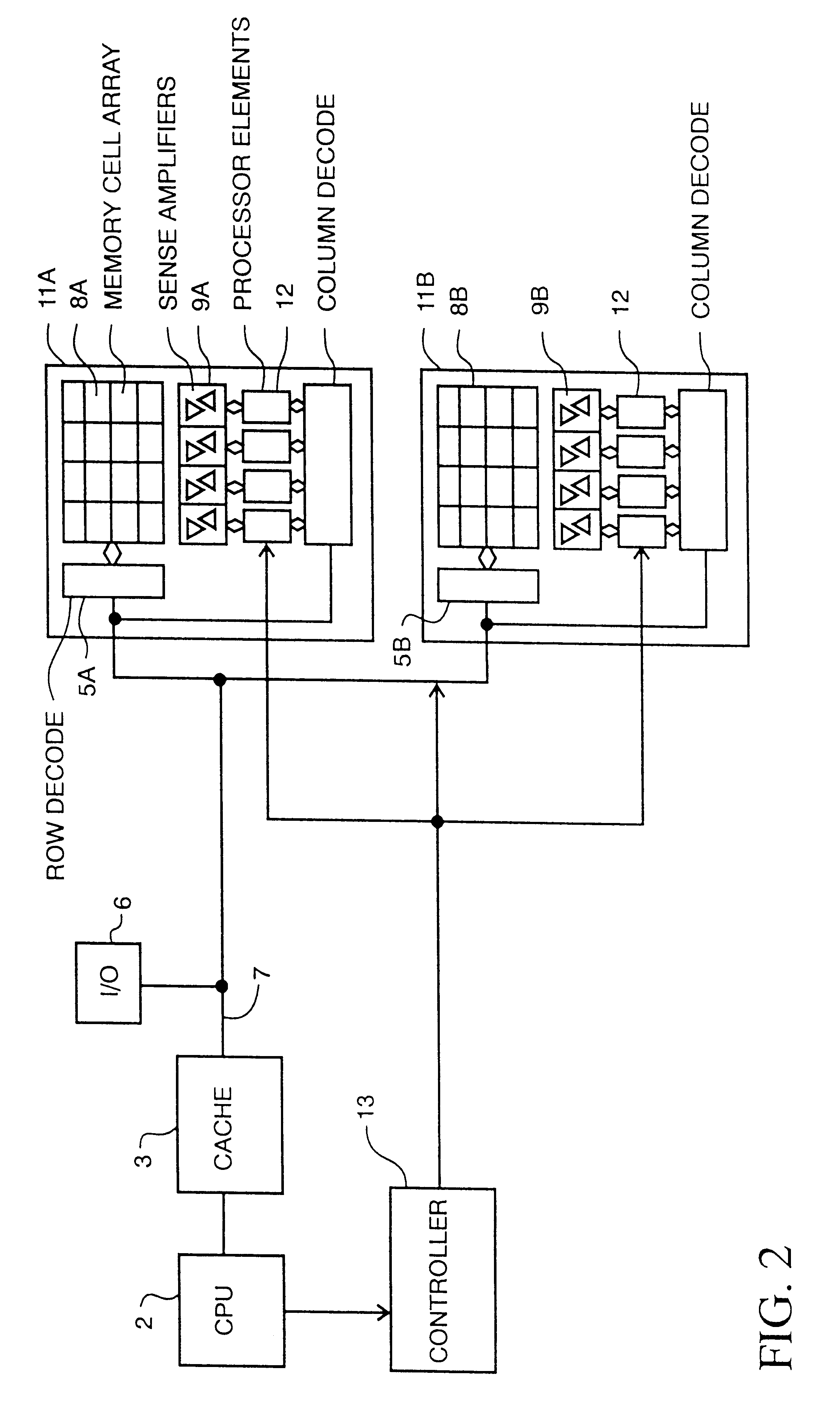

Computational RAM or C-RAM is random-access memory with processing elements integrated on the same chip. This enables C-RAM to be used as a SIMD computer. It also can be used to more efficiently use memory bandwidth within a memory chip.

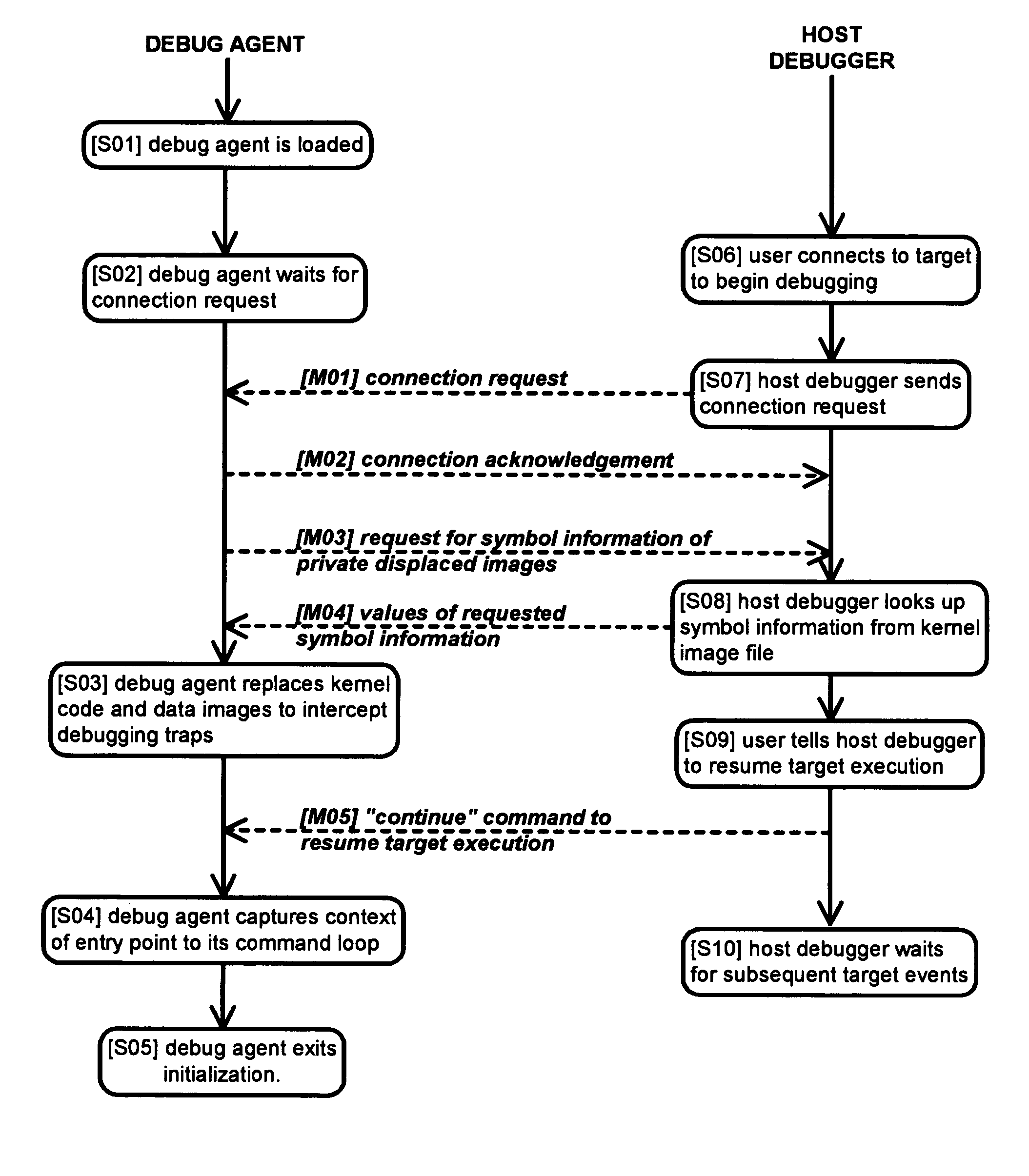

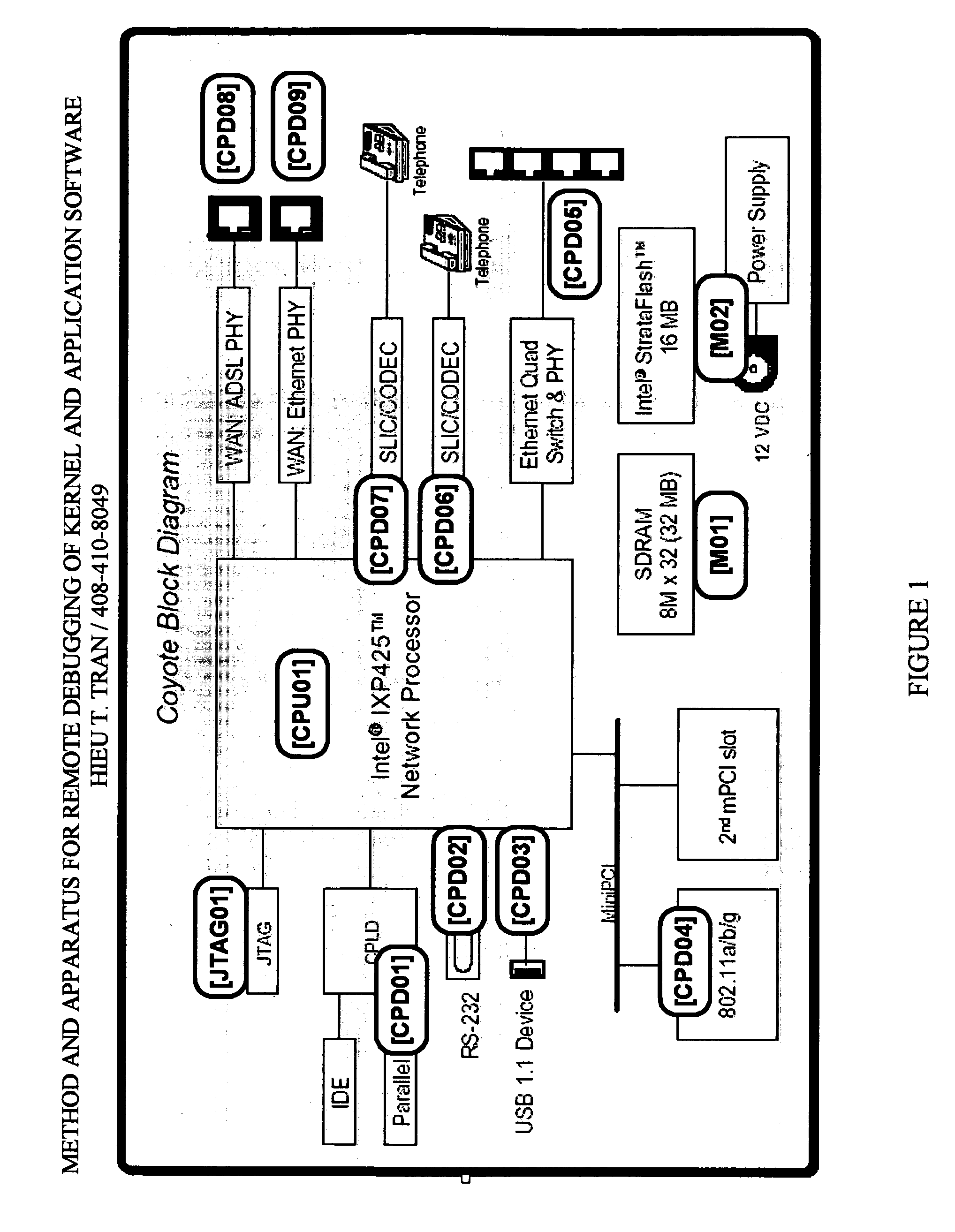

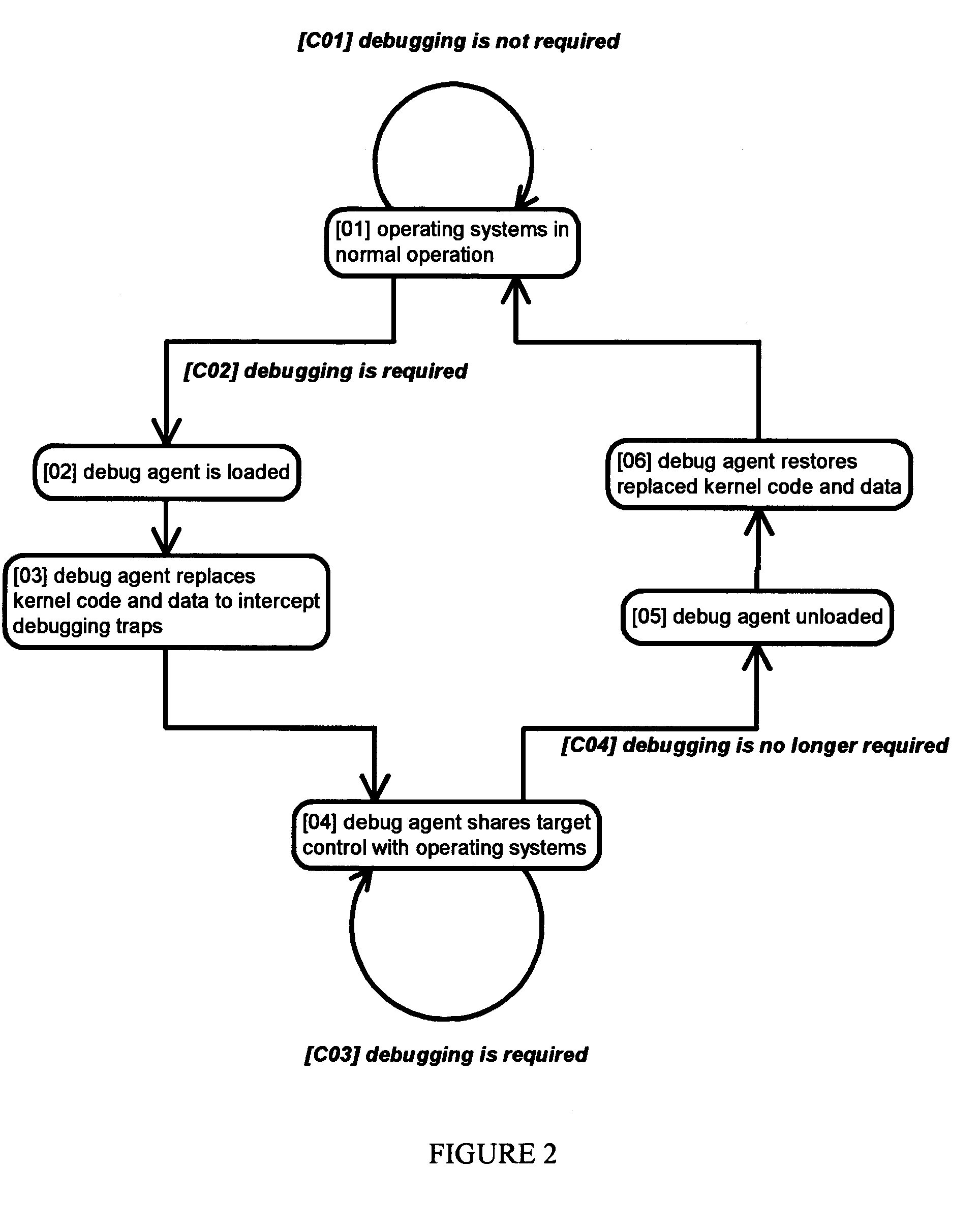

Method and apparatus for remote debugging of kernel and application software

InactiveUS20050216895A1Modified dynamicallyError detection/correctionSpecific program execution arrangementsOperational systemSystem call

A method and apparatus for debugging of OS kernel and applications software that does not require use of a hardware probe; can debug both user-mode programs and a significant body of the OS kernel code; allows the OS to continue servicing exceptions while debugging; leverages OS built-in device drivers for communicating devices to communicate with the host debugger; and can debug a production version of the OS kernel. When debugging is required, the running OS kernel dynamically loads a software-based debug agent on demand whereby such debug agent dynamically modifies the running production OS kernel code and data to intercept debugging traps and provide run-control. To provide debugging of loadable module, the debug agent implement techniques to intercept the OS module loading system call; set breakpoints in the loaded module initialization function; calculate the start address of the debugged module in memory; and asynchronously put the system under debug. By structuring command loop to execute in non-exception mode, and devising a process to transfer execution from the debug agent exception handler to the debug agent command loop and back, the debug agent can communicate with the host debugger using interrupt-driven input / output devices as well as allowing the system to service interrupts while under debug.

Owner:TRAN HIEU TRUNG

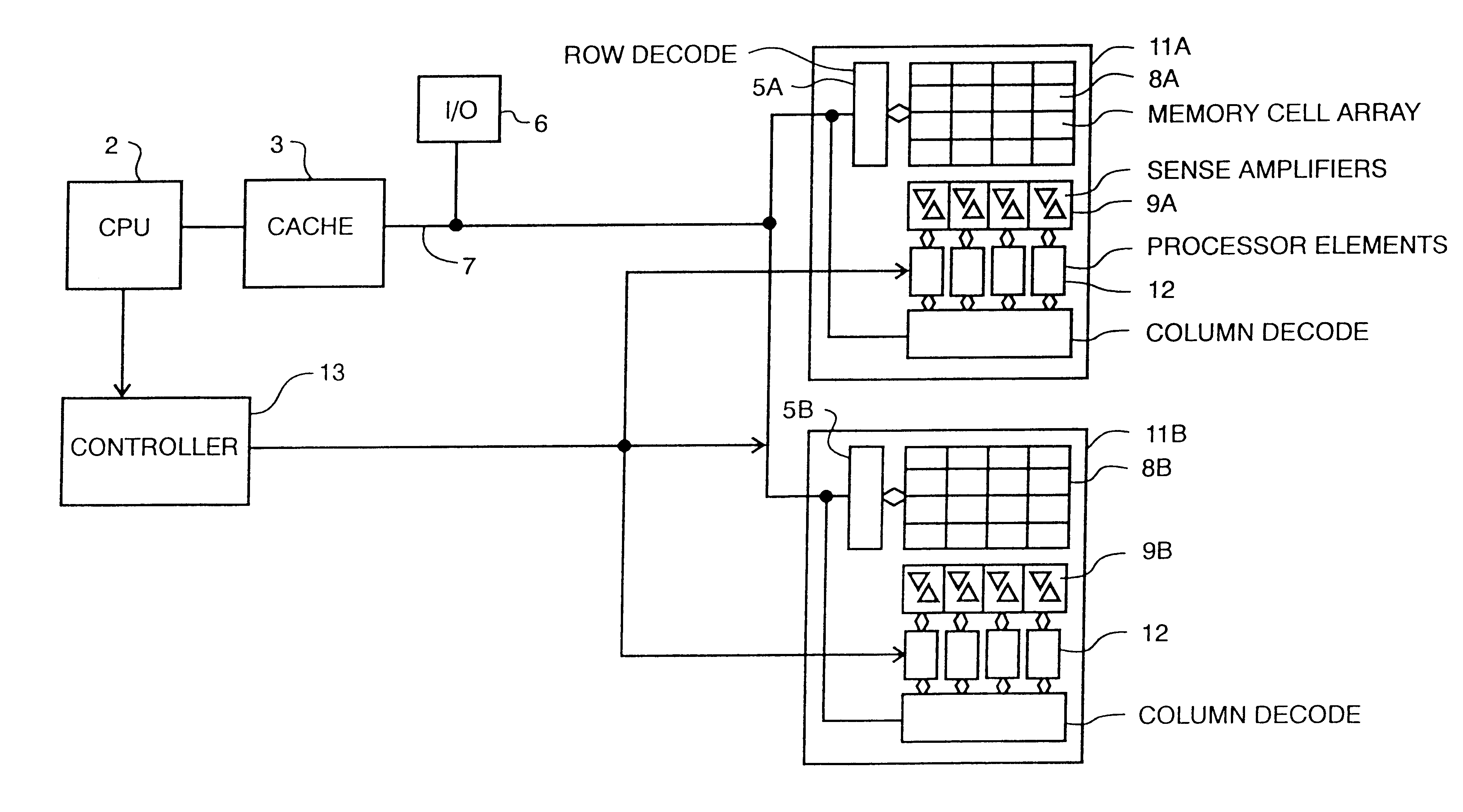

Memory device with multiple processors having parallel access to the same memory area

InactiveUS6279088B1Energy efficient ICTOperational speed enhancementArithmetic logic unitRead-modify-write

A digital computer performs read-modify-write (RMW) processing on each bit of a row of memory in parallel, in one operation cycle, comprising: (a) addressing a memory, (b) reading each bit of a row of data from the memory in parallel, (c) performing the same computational operation on each bit of the data in parallel, using an arithmetic logic unit (ALU) in a dedicated processing element, and (d) writing the result of the operation back into the original memory location for each bit in the row.

Owner:SATECH GRP A B LLC

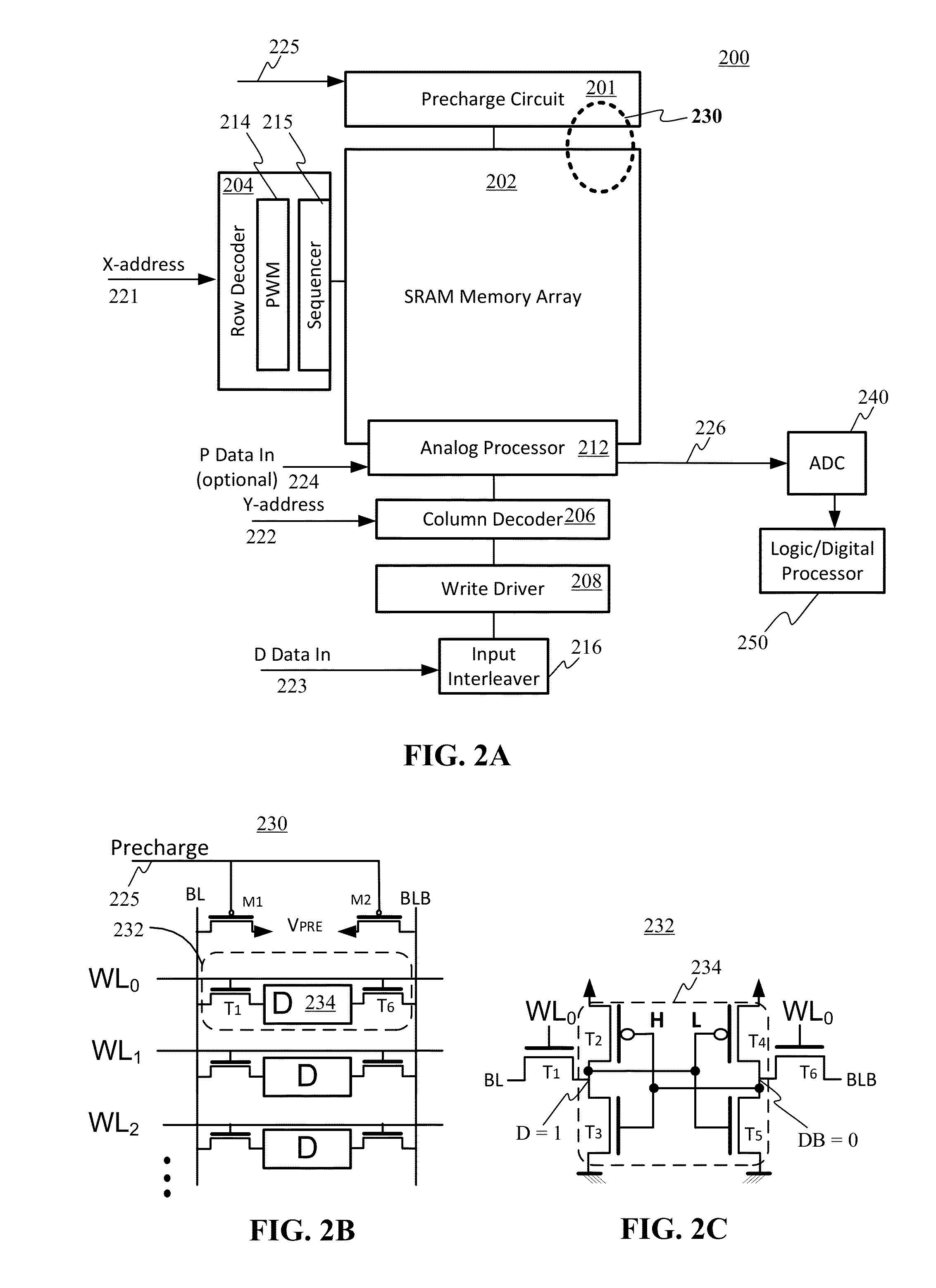

Compute memory

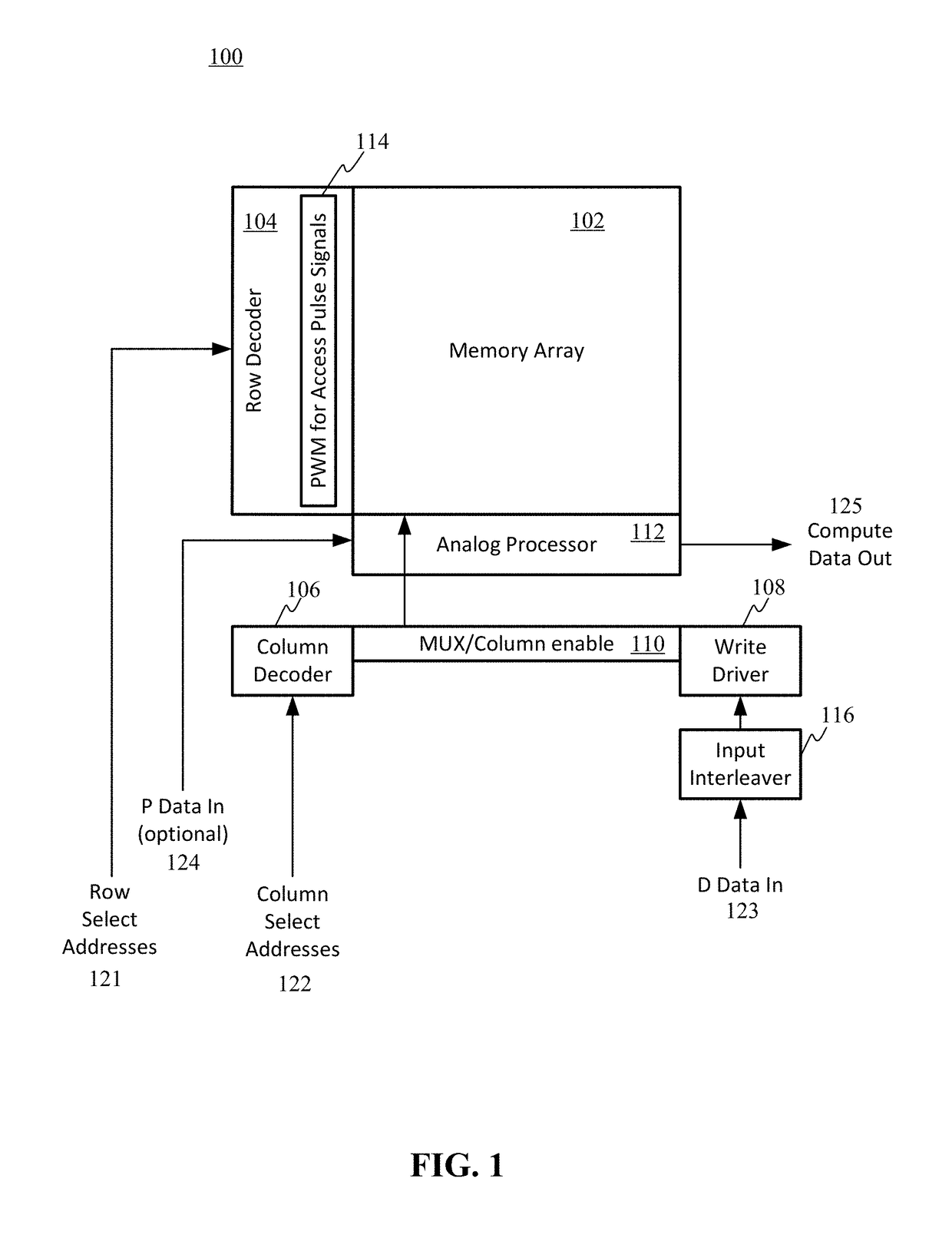

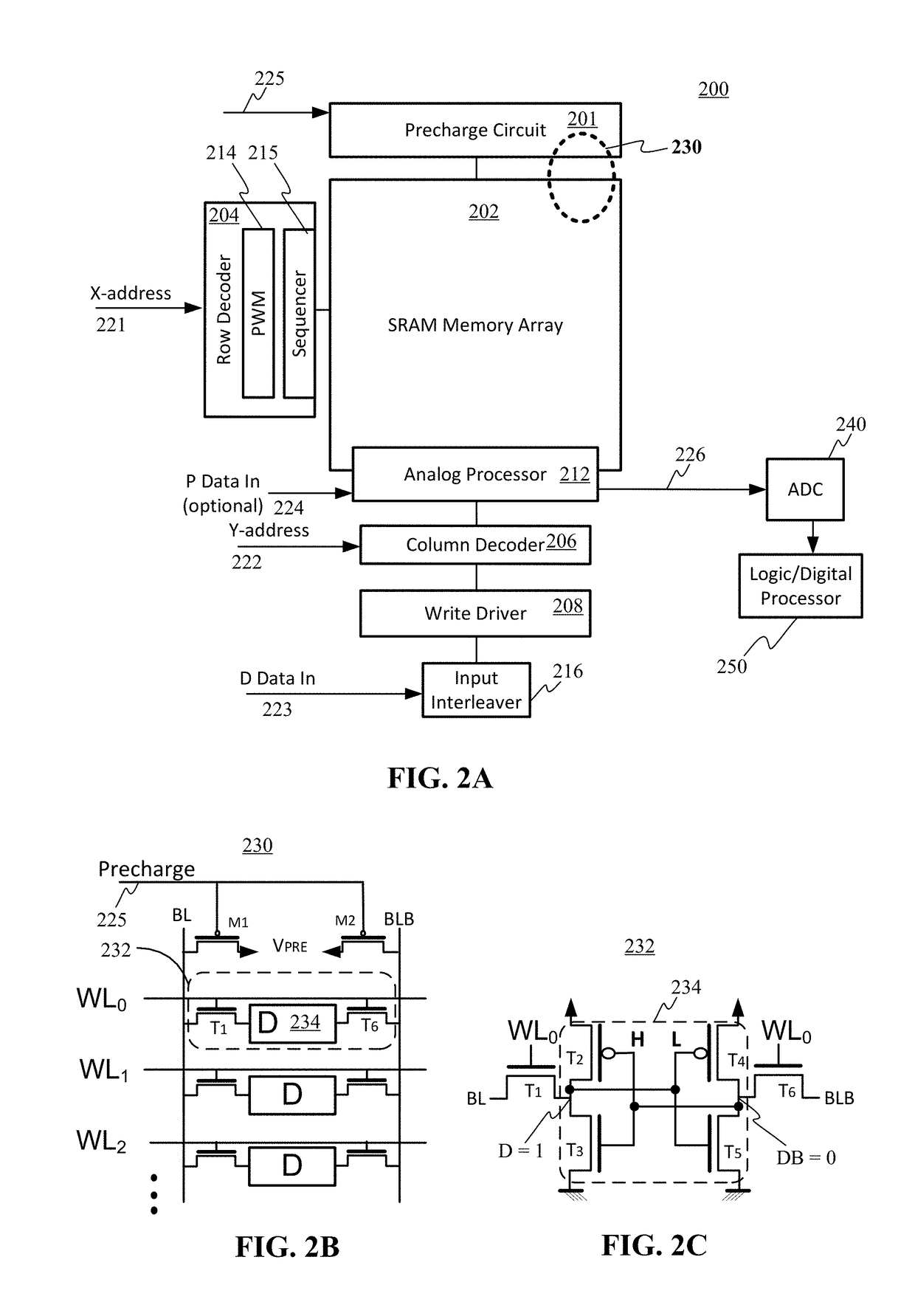

ActiveUS20160232951A1Achieve energy efficiencyReduce delaysDigital storageAnalog signal processingBit plane

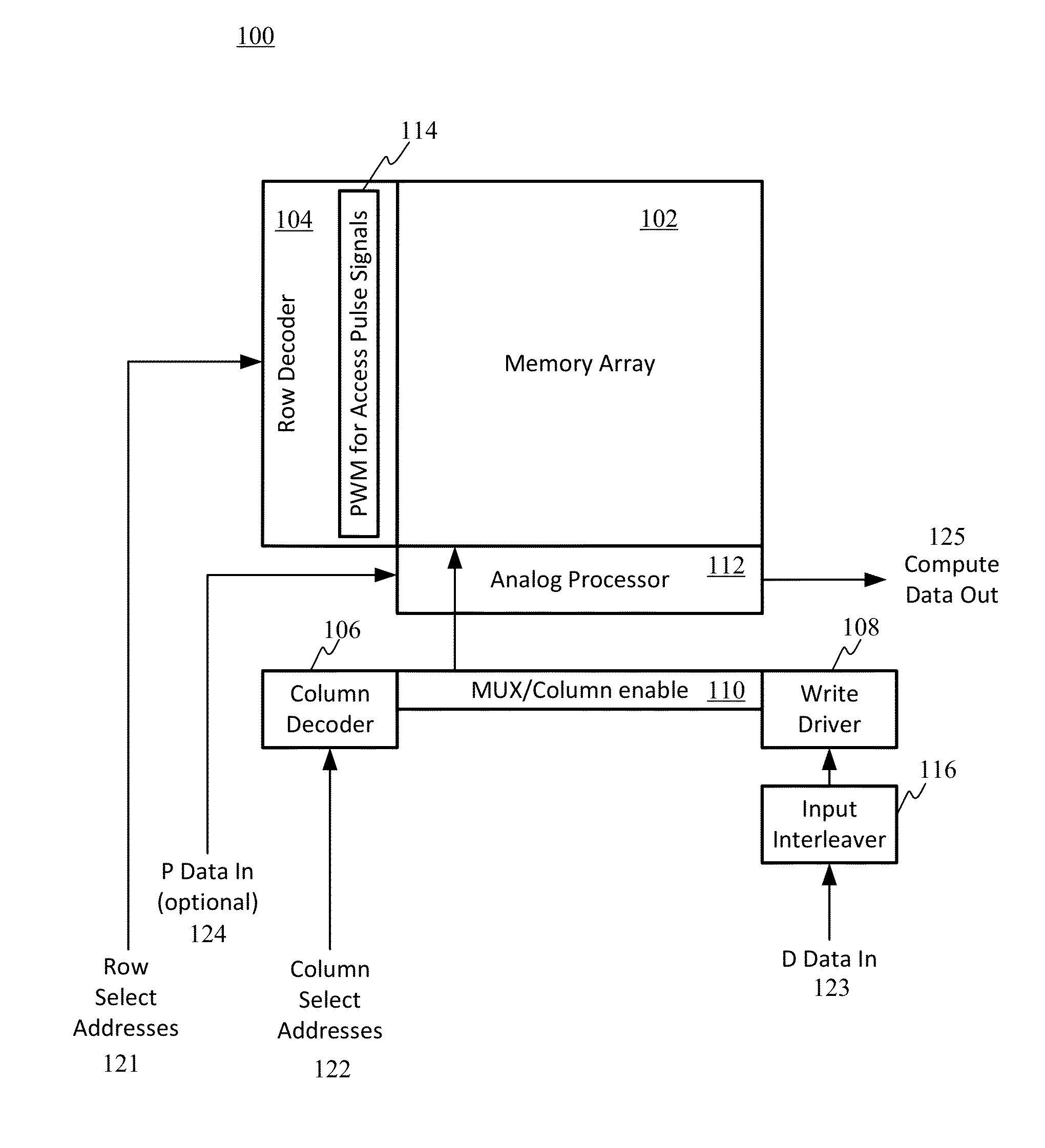

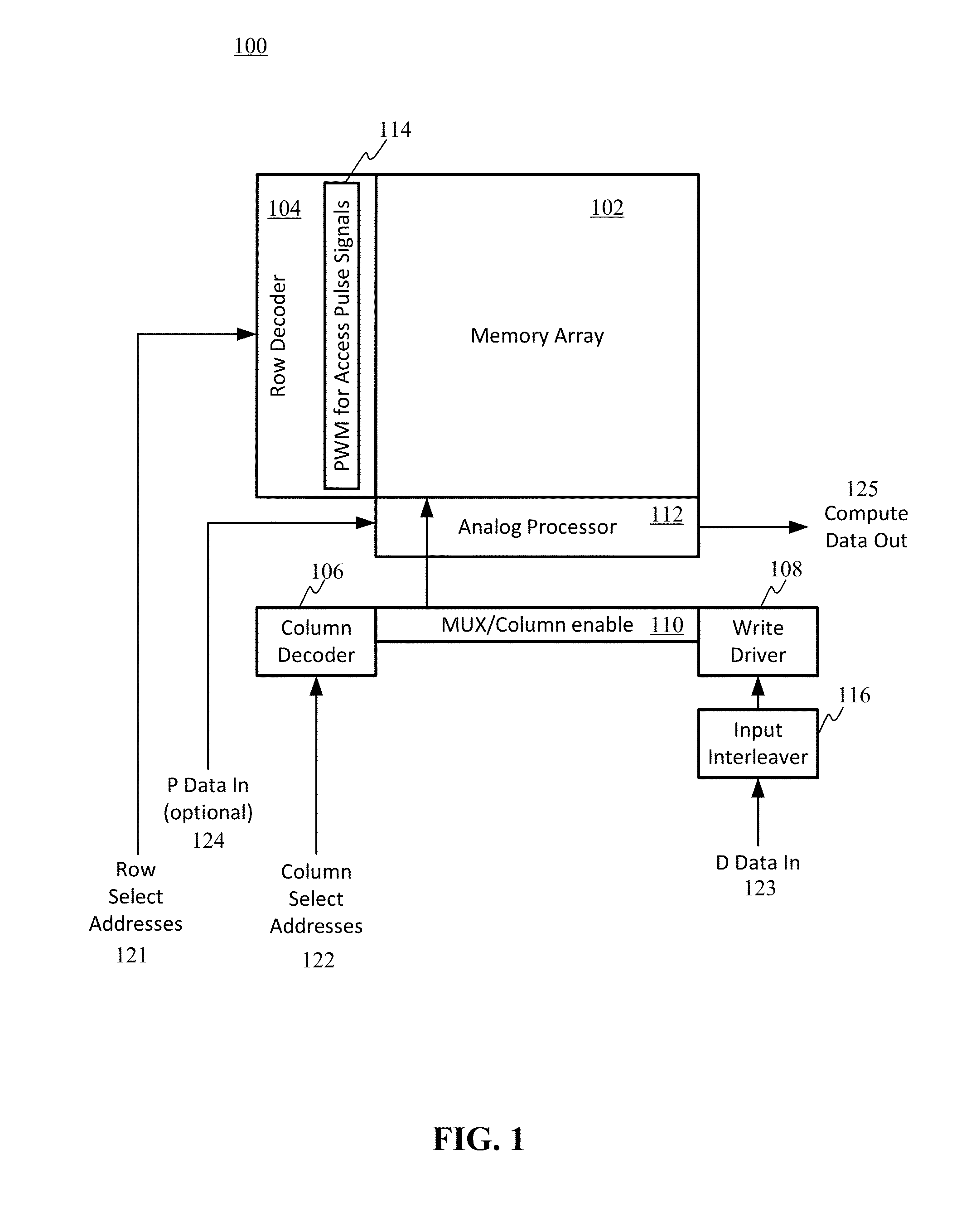

A compute memory system can include a memory array and a controller that generates N-ary weighted (e.g., binary weighted) access pulses for a set of word lines during a single read operation. This multi-row read generates a charge on a bit line representing a word stored in a column of the memory array. The compute memory system further includes an embedded analog signal processor stage through which voltages from bit lines can be processed in the analog domain. Data is written into the memory array in a manner that stores words in columns instead of the traditional row configuration.

Owner:THE BOARD OF TRUSTEES OF THE UNIV OF ILLINOIS

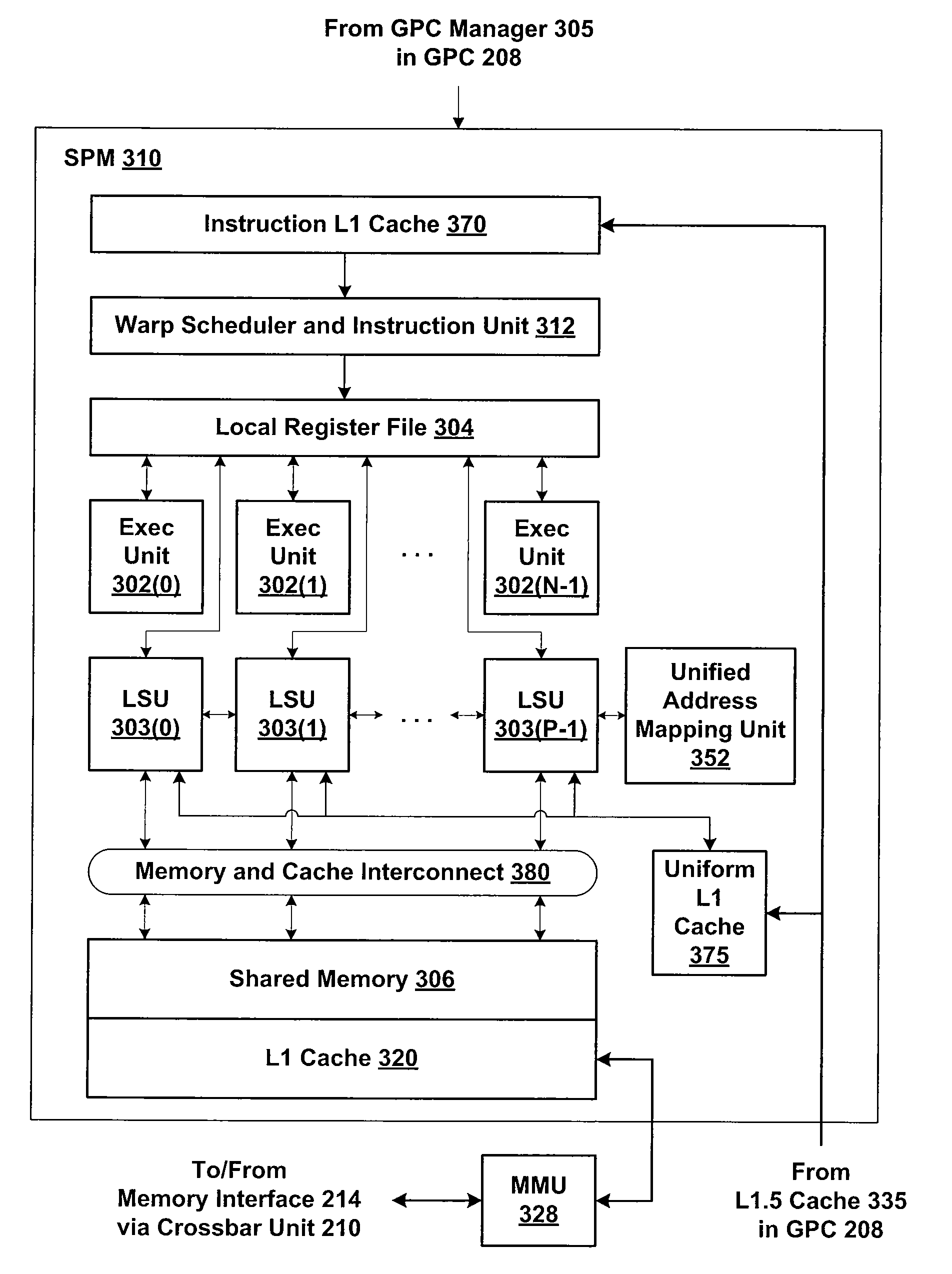

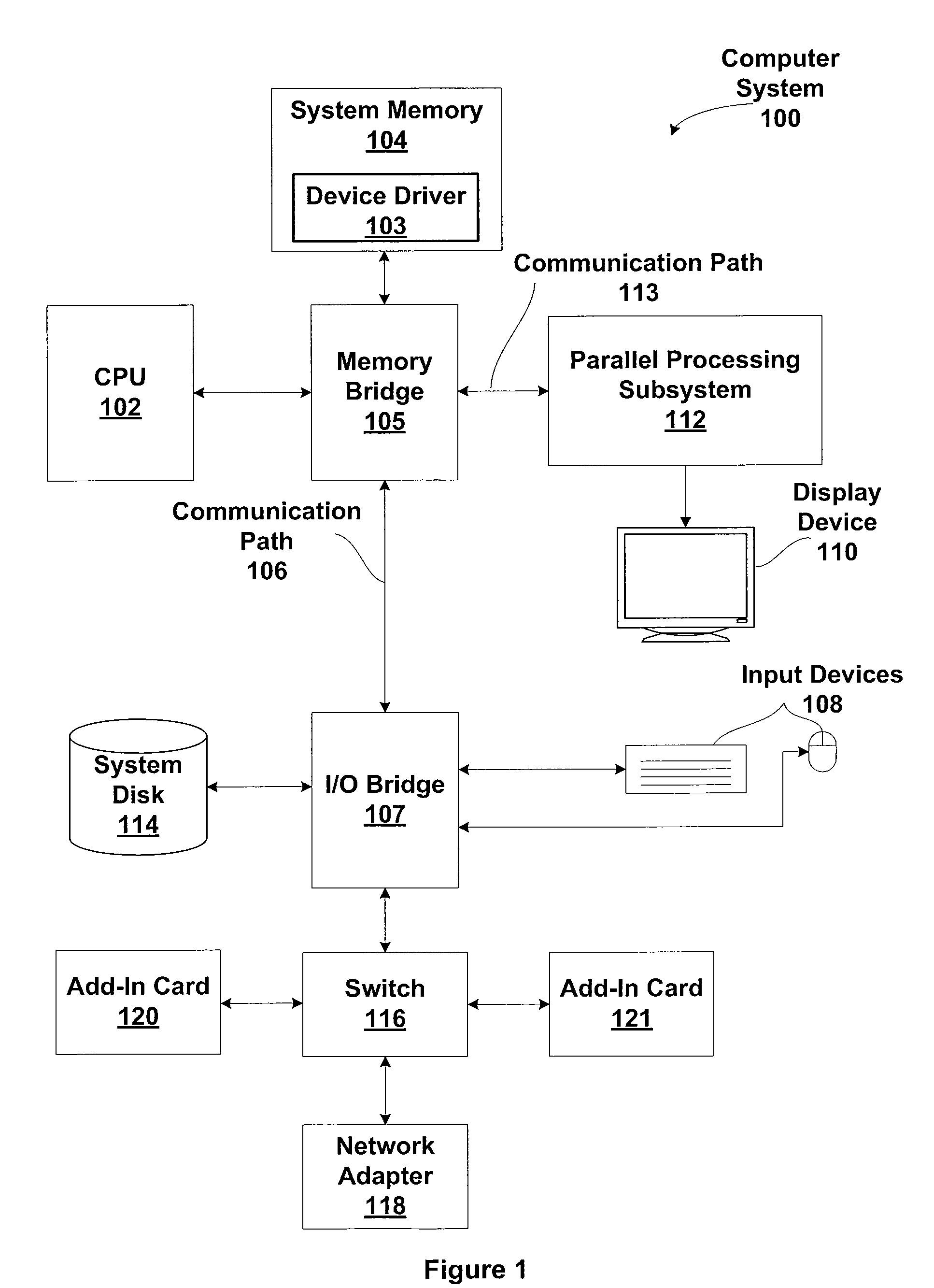

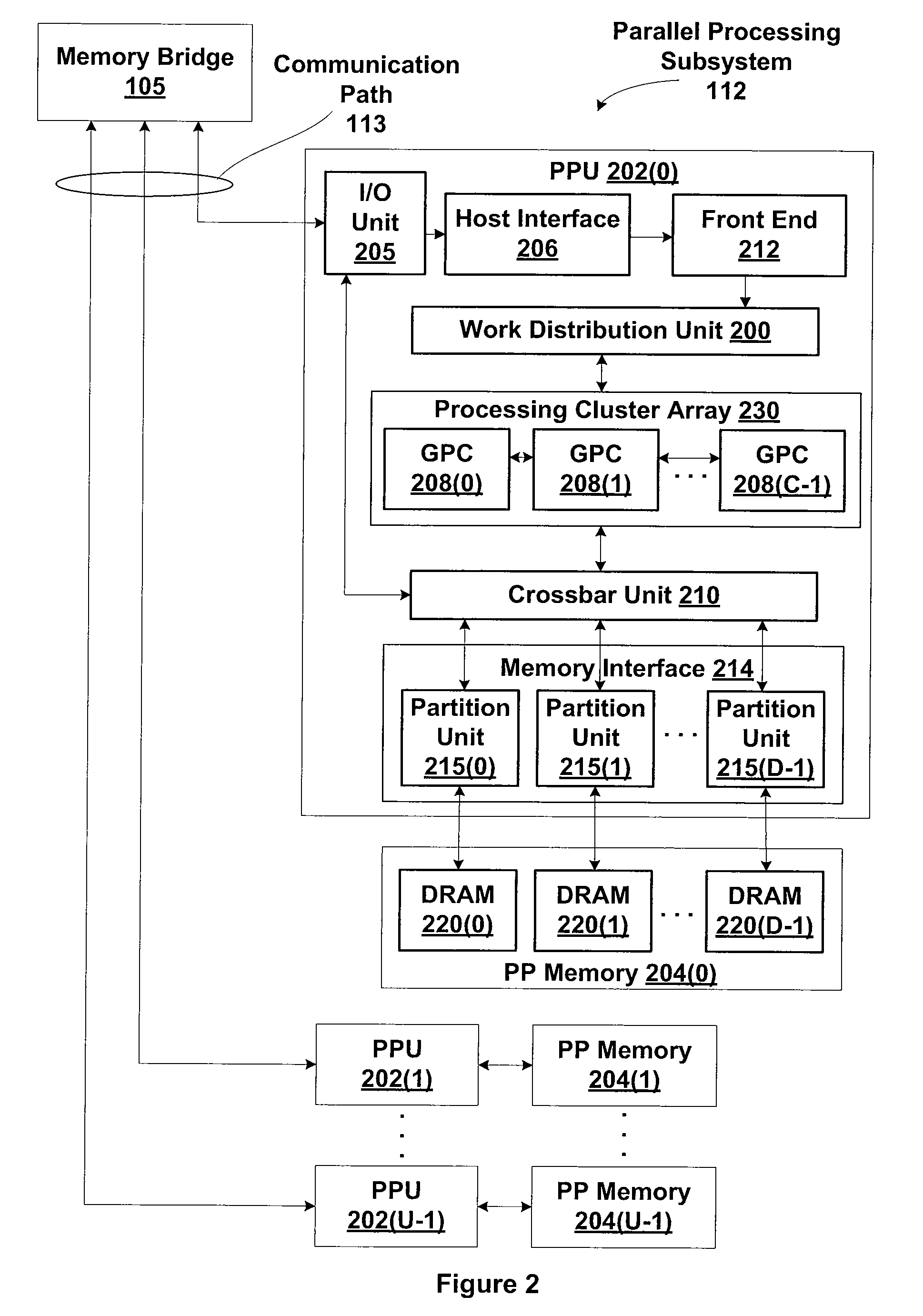

Efficient implementation of arrays of structures on simt and simd architectures

ActiveUS20120089792A1Improve processing efficiencyProcessor architectures/configurationProgram controlAccess methodJoint Implementation

One embodiment of the present invention sets forth a technique providing an optimized way to allocate and access memory across a plurality of thread / data lanes. Specifically, the device driver receives an instruction targeted to a memory set up as an array of structures of arrays. The device driver computes an address within the memory using information about the number of thread / data lanes and parameters from the instruction itself. The result is a memory allocation and access approach where the device driver properly computes the target address in the memory. Advantageously, processing efficiency is improved where memory in a parallel processing subsystem is internally stored and accessed as an array of structures of arrays, proportional to the SIMT / SIMD group width (the number of threads or lanes per execution group).

Owner:NVIDIA CORP

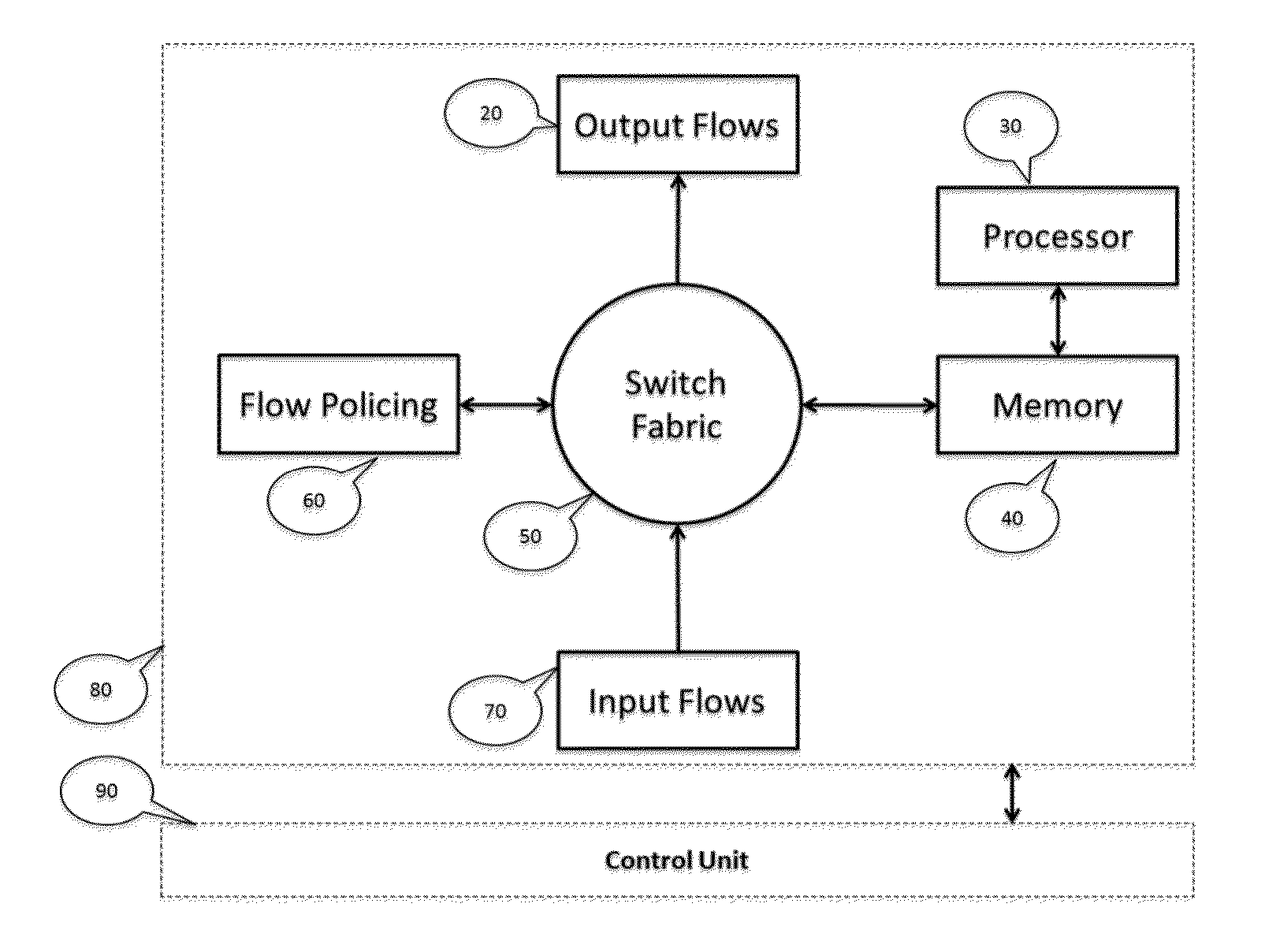

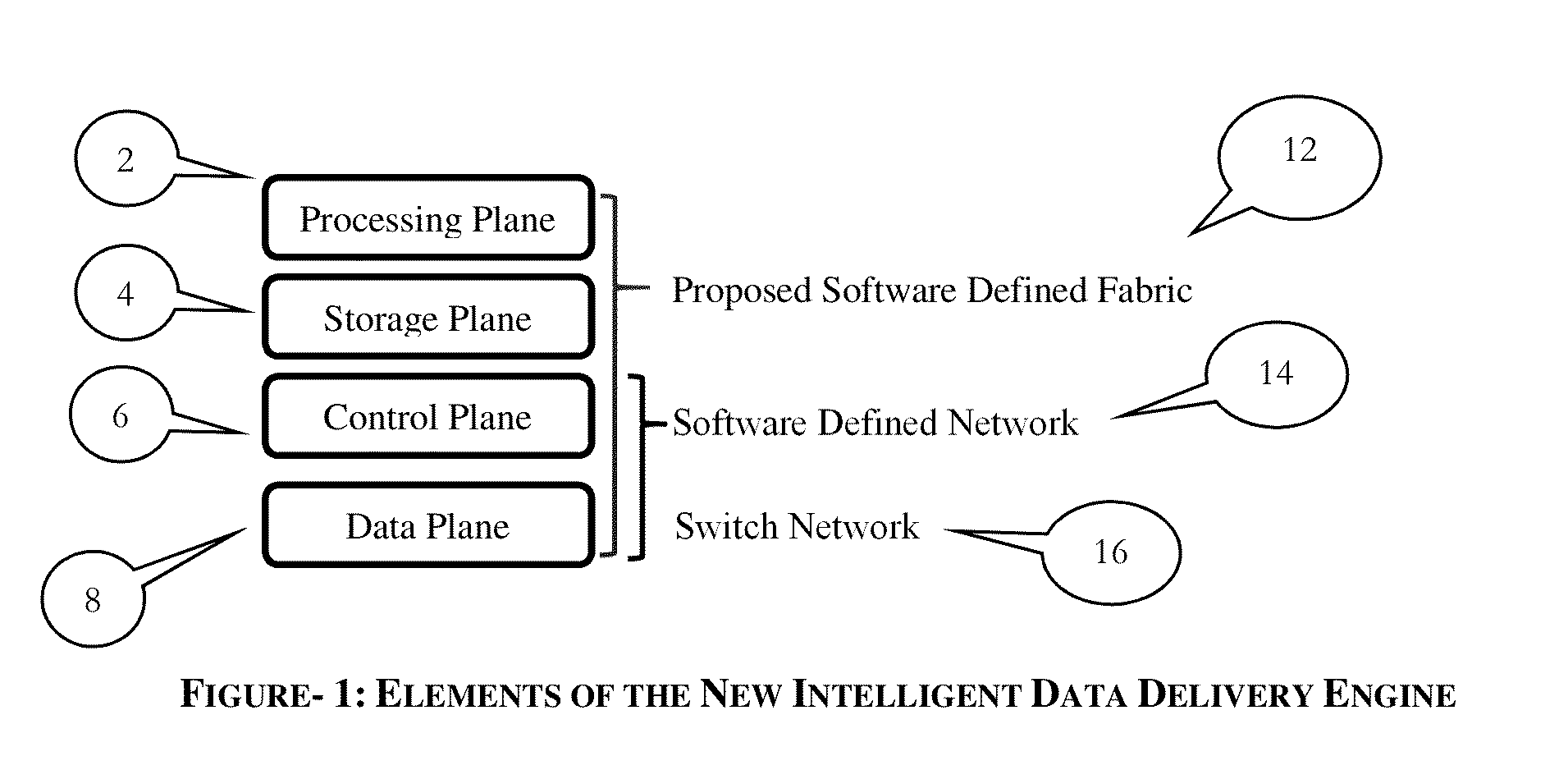

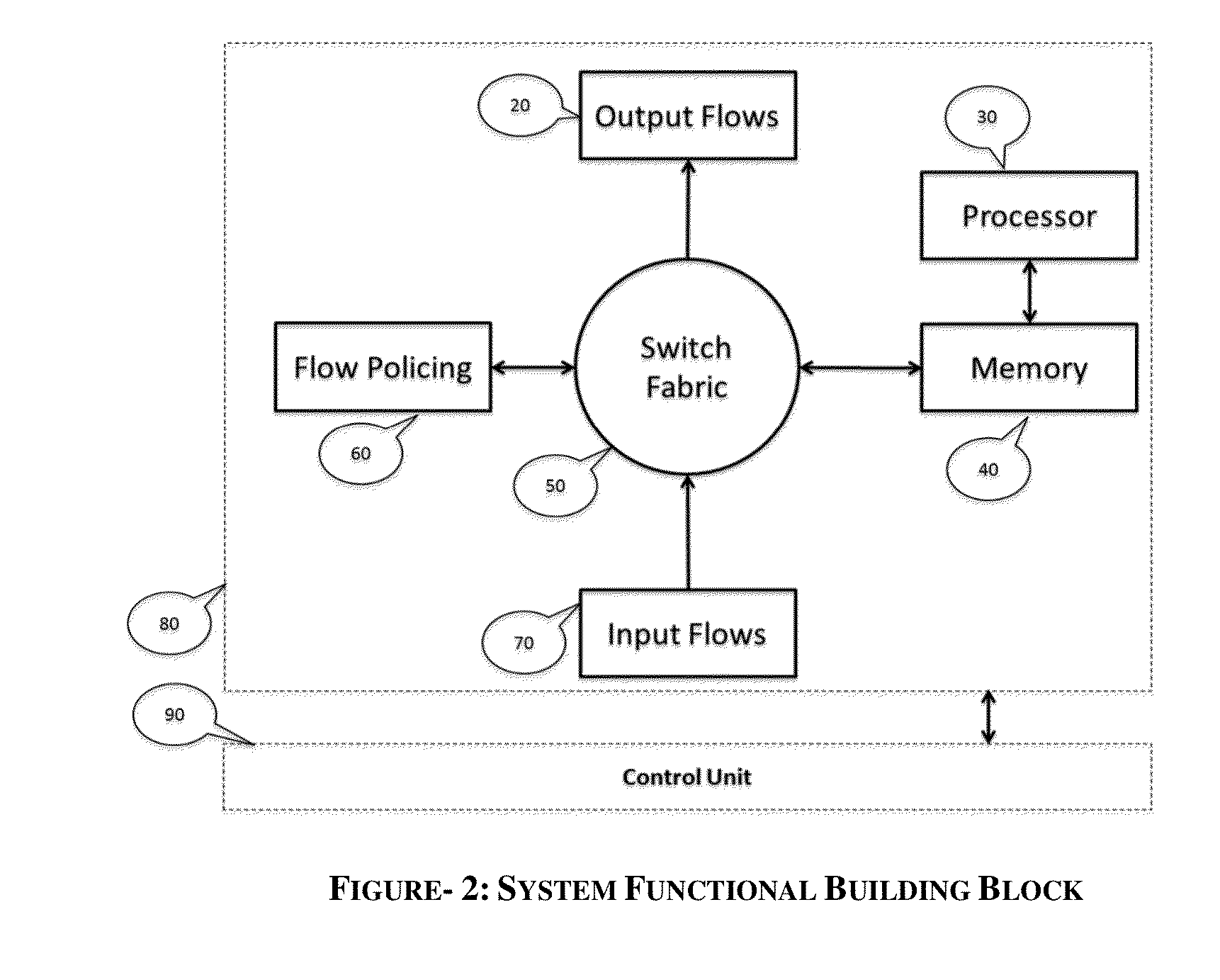

Programmable switching engine with storage, analytic and processing capabilities

InactiveUS20150063349A1Good varietyEasy to manageMultiplex system selection arrangementsCircuit switching systemsStructure of Management InformationMulti dimensional

An improvement to the prior-art extends an intelligent solution beyond simple IP packet switching. It intersects with computing, analytics, storage and performs delivery diversity in an efficient intelligent manner. A flexible programmable network is enabled that can store, time shift, deliver, process, analyze, map, optimize and switch flows at hardware speed. Multi-layer functions are enabled in the same node by scaling for diversified data delivery, scheduling, storing, and processing at much lower cost to enable multi-dimensional optimization options and time shift delivery, protocol optimization, traffic profiling, load balancing, and traffic classification and traffic engineering. An integrated high performance flexible switching fabric has integrated computing, memory storage, programmable control, integrated self-organizing flow control and switching.

Owner:ARDALAN SHAHAB +1

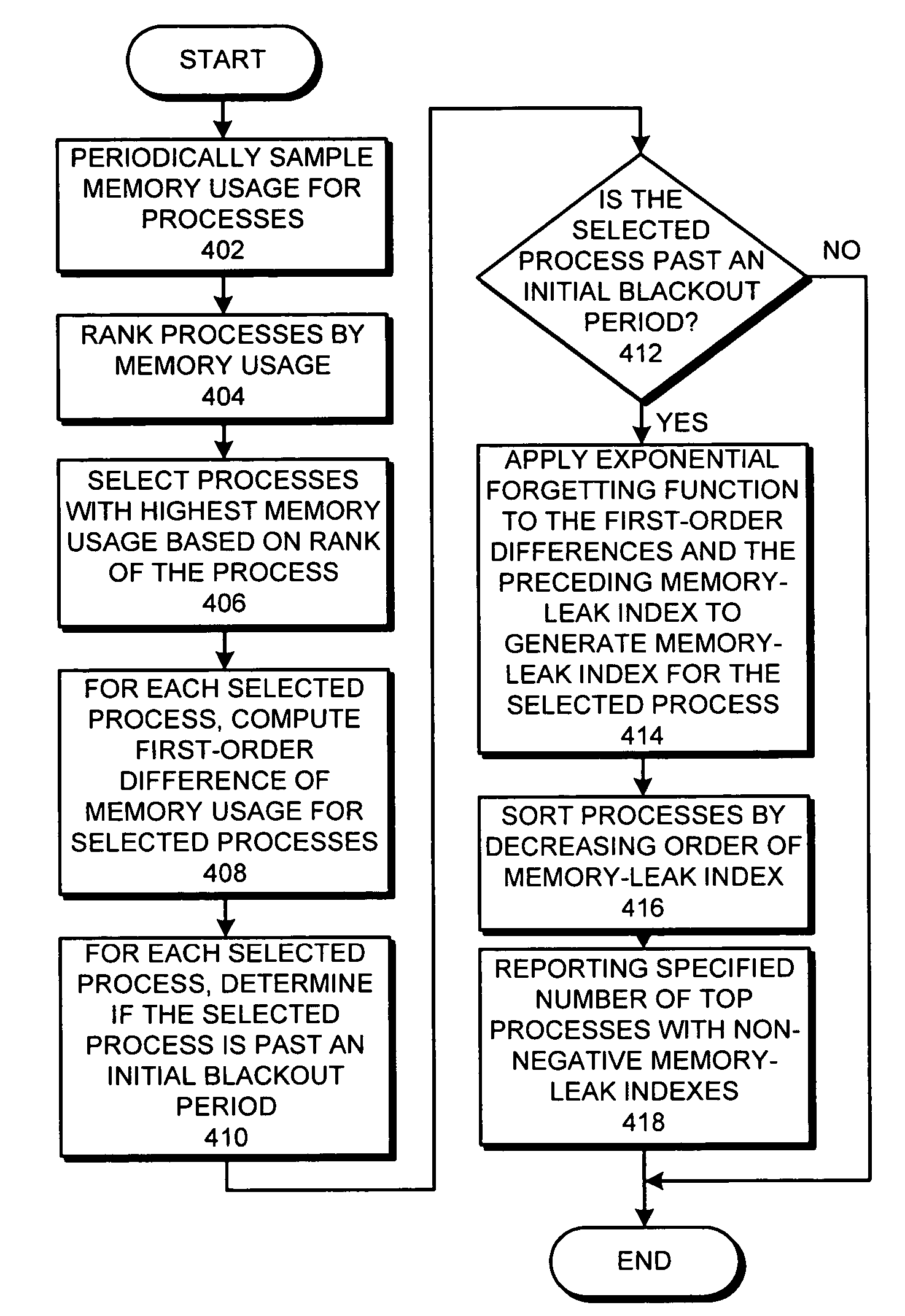

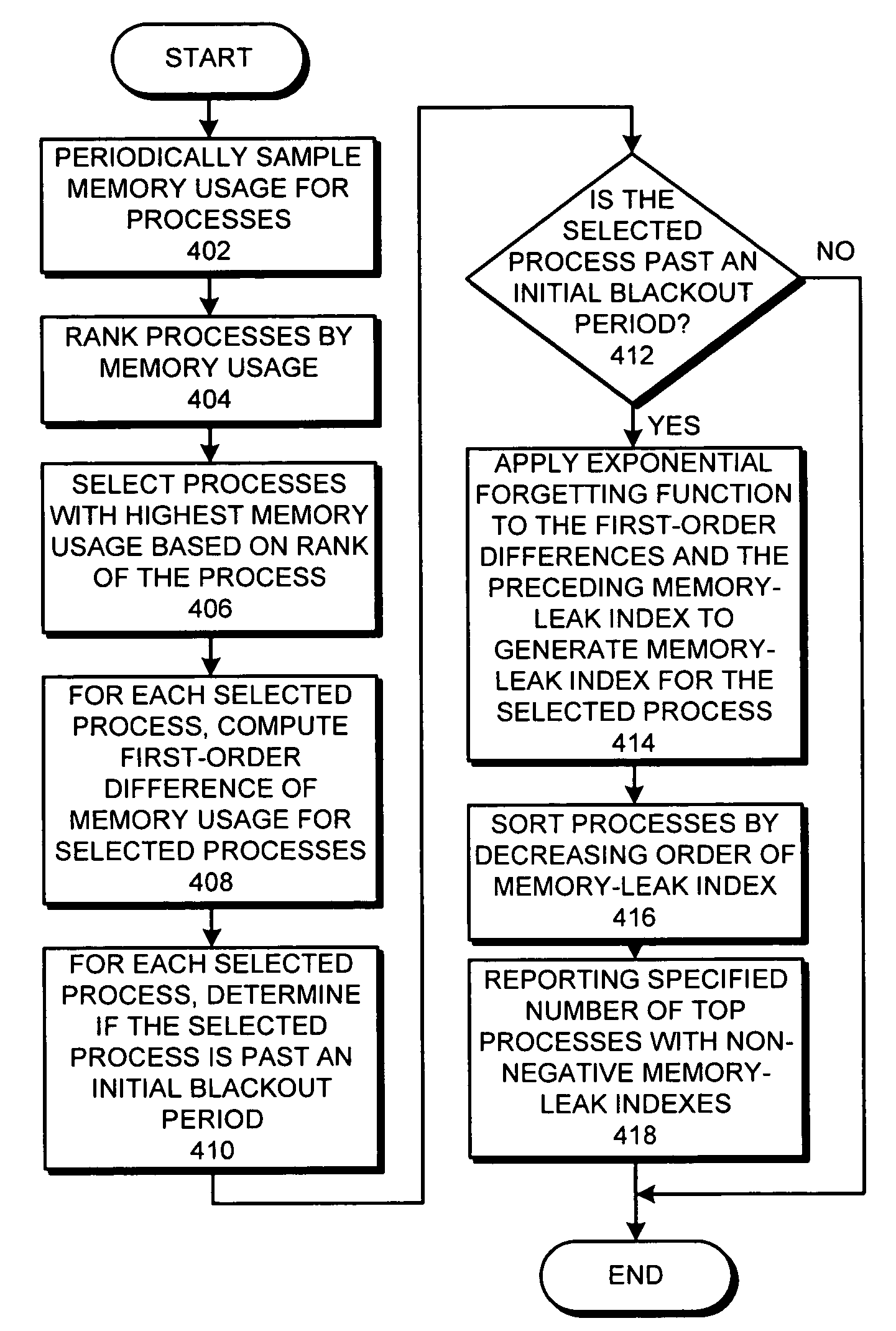

Method and apparatus for detecting memory leaks in computer systems

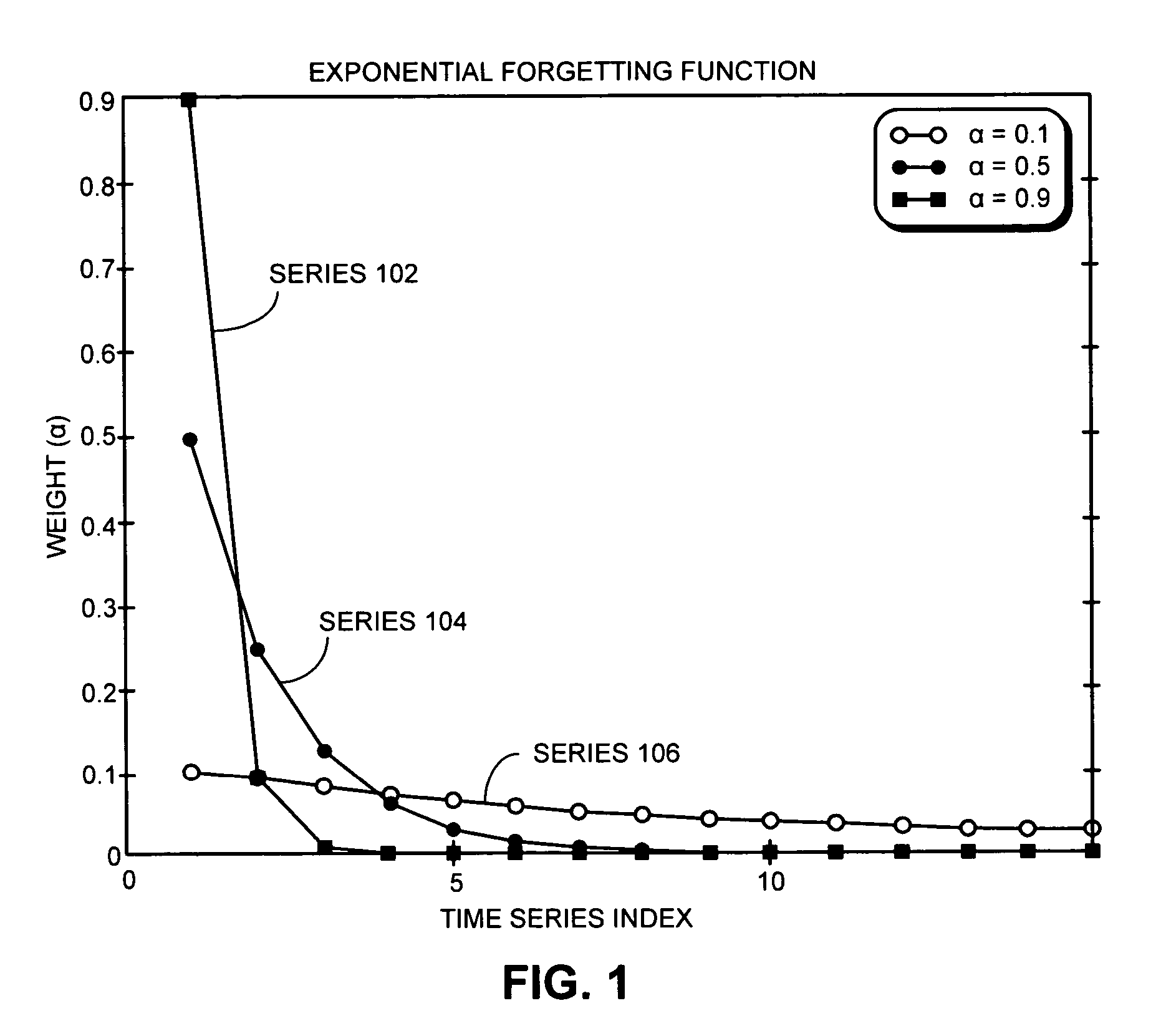

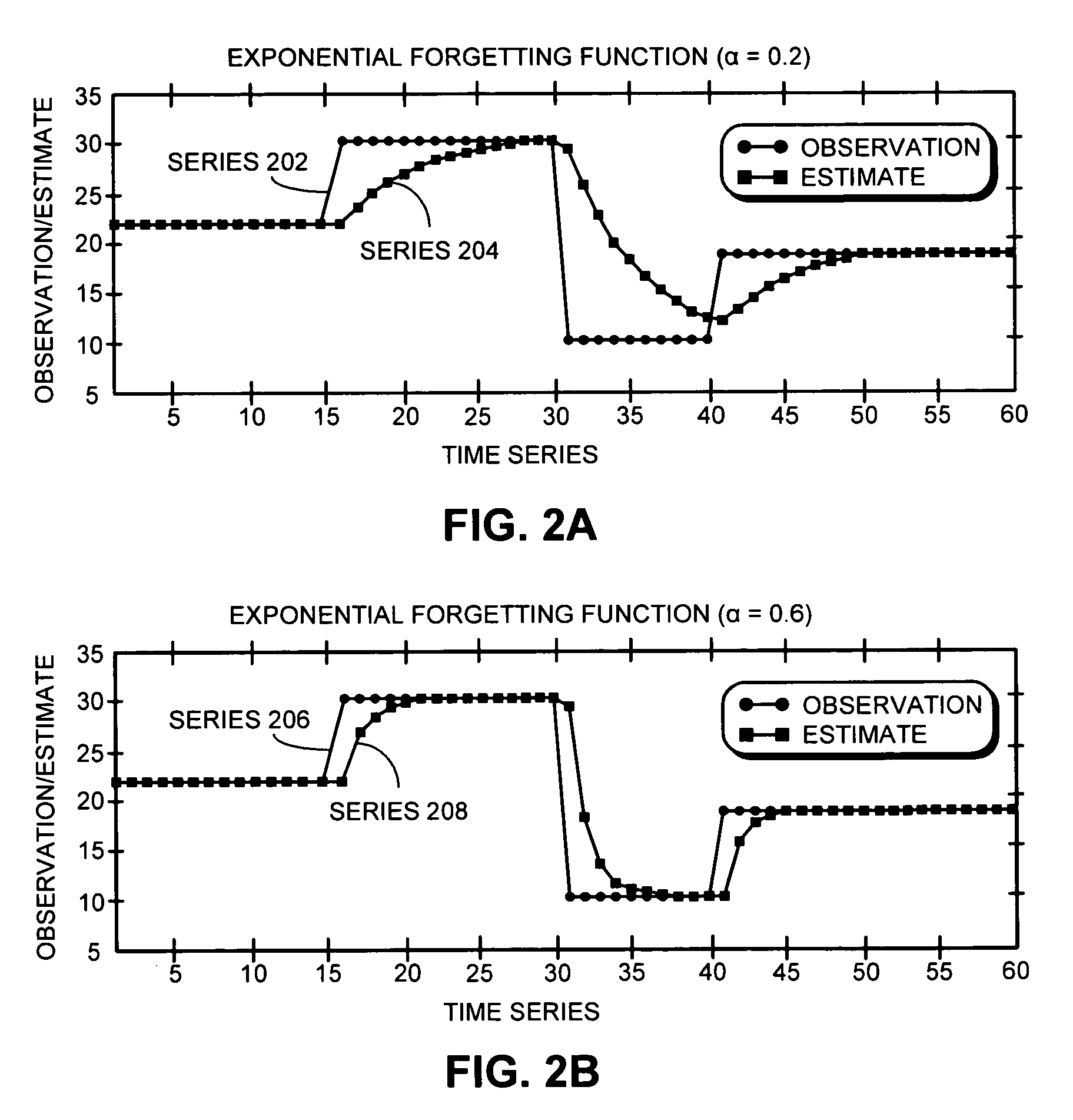

ActiveUS7716648B2Discard past memory usage values more quicklyDiscard past memory usage valuesError detection/correctionSpecific program execution arrangementsRankingComputerized system

A system that identifies processes with a memory leak in a computer system. During operation, the system periodically samples memory usage for processes running on the computer system. The system then ranks the processes by memory usage and selects a specified number of processes with highest memory usage based on the ranking. For each selected process, the system computes a first-order difference of memory usage by taking a difference between the memory usage at a current sampling time and the memory usage at an immediately preceding sampling time. The system then generates a memory-leak index based on the first-order difference and a preceding memory-leak index computed at the immediately preceding sampling time.

Owner:ORACLE INT CORP

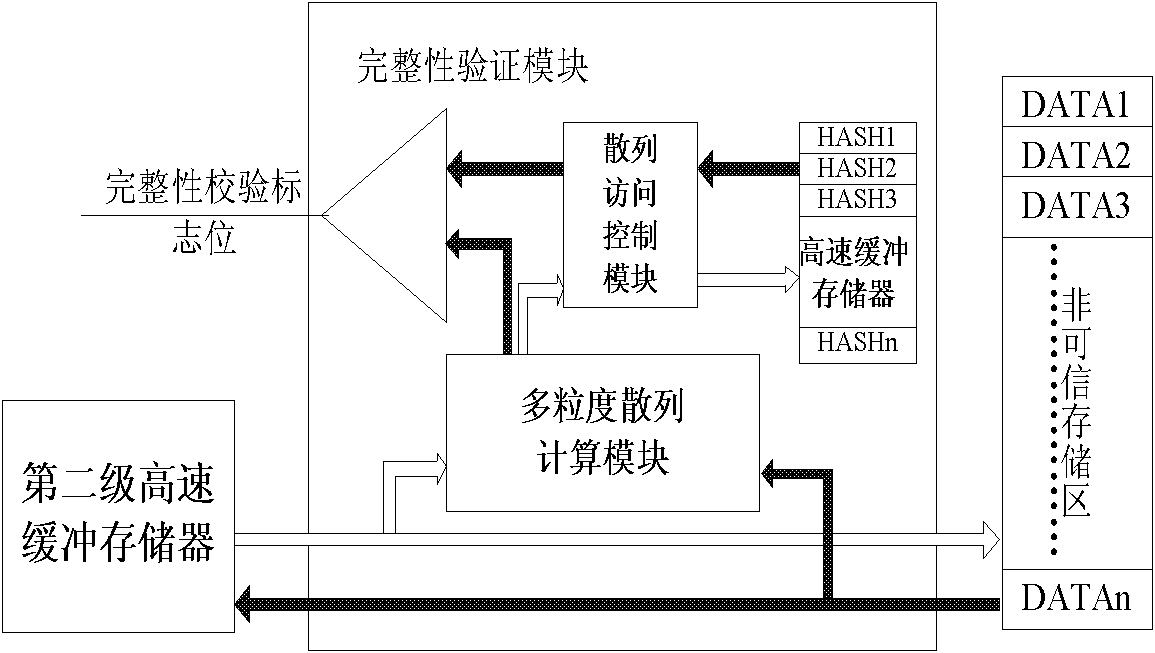

Data integrity verification method suitable for embedded processor

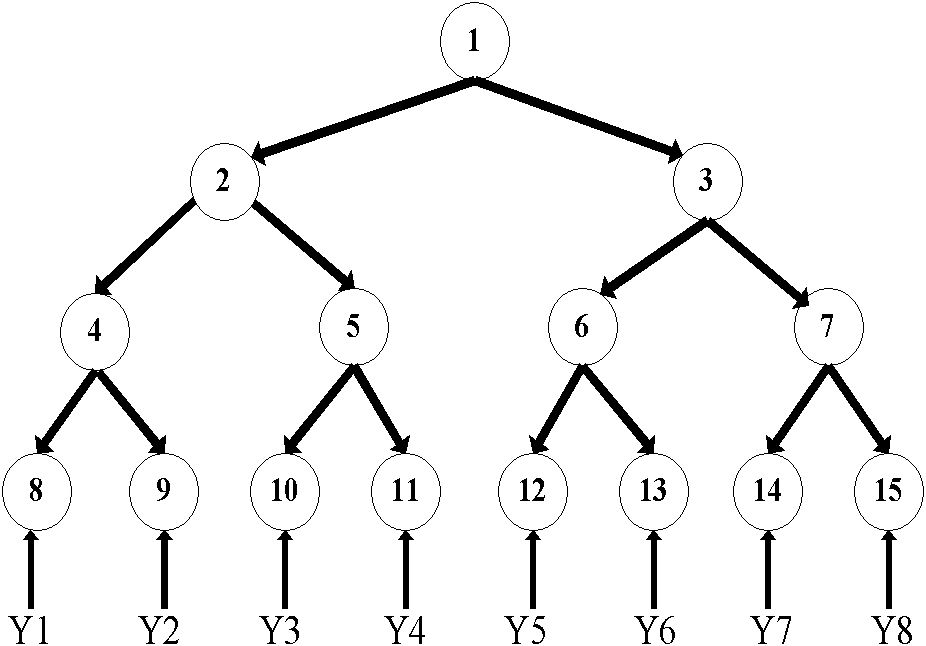

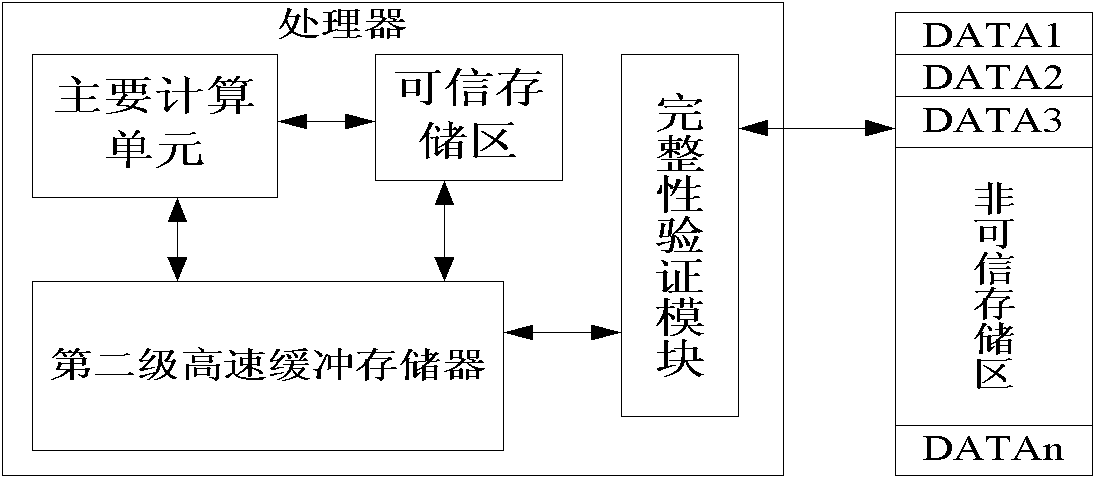

InactiveCN101853190AImprove hit rateImprove verification effectError detection/correctionCountermeasureData integrity

The invention discloses a data integrity verification method suitable for an embedded processor. The method comprises a multi-granularity hash computation method, an address conversion method and a hash node access control method, wherein the multi-granularity hash computation method is used for generating a multi-granularity Merkle tree which is cached in a hash cache and is in charge of computing a hash value of a data block in a memory when nodes of the tree are absent; the address conversion method is used for providing a unique corresponding address for each node; and the hash node access control method is mainly in charge of accessing the nodes of each hash tree and adopting different strategies particularly during reading absence and writing operation. Due to the multi-granularity hash computation method, the hash tree generated by the data integrity verification method has less nodes and layers so as to reduce a memory space and hardware area overhead, shorten initialization time and improve the performance.

Owner:HUAZHONG UNIV OF SCI & TECH

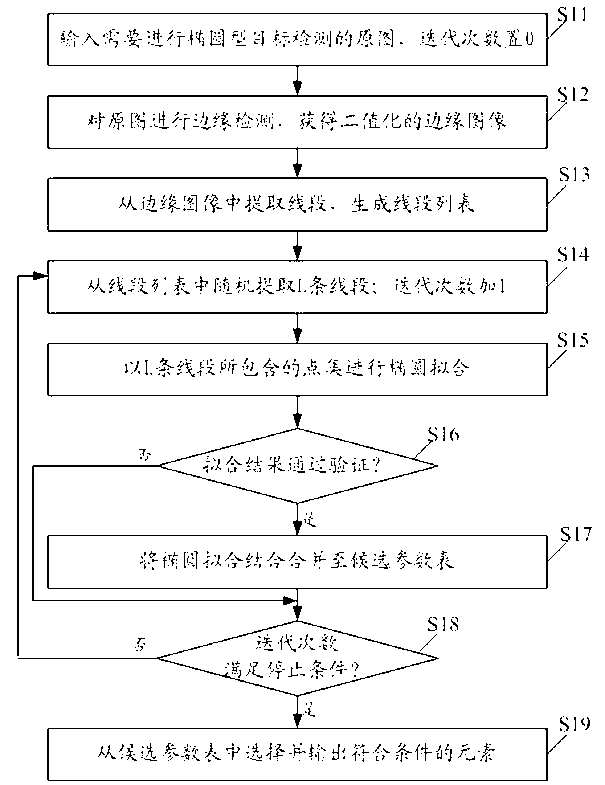

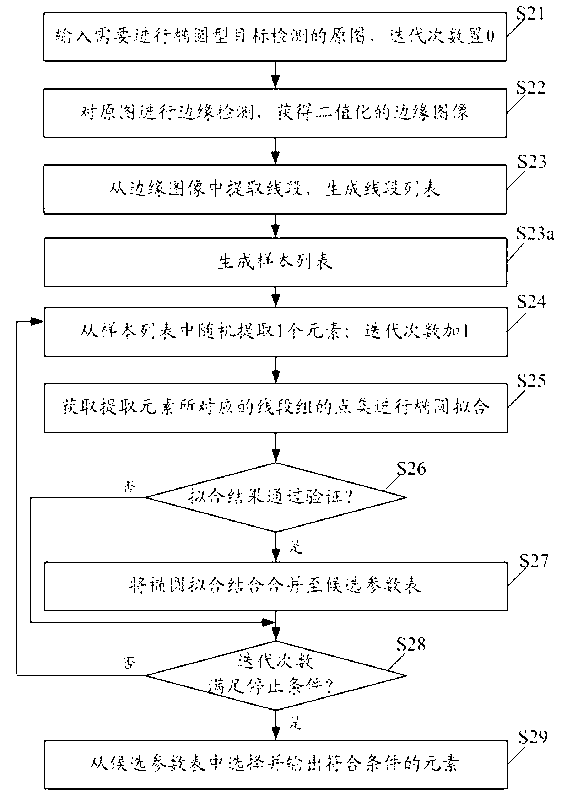

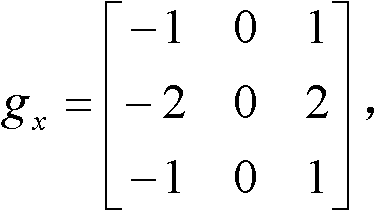

Method and system for detecting elliptical target in image

InactiveCN103020626AFast operationComputational memory requirements are smallCharacter and pattern recognitionPattern recognitionEllipse

The invention discloses a method and a system for detecting an elliptical target in an image. The method comprises the following steps of: A. giving an image, and obtaining an edge image by using an edge detection or ridge detection algorithm; B. extracting line segments from the edge image, and generating a line segment list; C. randomly extracting L line segments from the line segment list, and carrying out ellipse fitting by using the L line segments, and combining an ellipse fitting result into a candidate parameter list when the ellipse fitting result passes validation; and D. repeating the step C till a stop condition is met, and selecting and outputting ellipse candidate elements being in line with conditions from the candidate parameter list. The method and the system have the advantages of fast operating rate, low requirement on computation memory, and high detection accuracy.

Owner:SHENZHEN LANDWIND IND

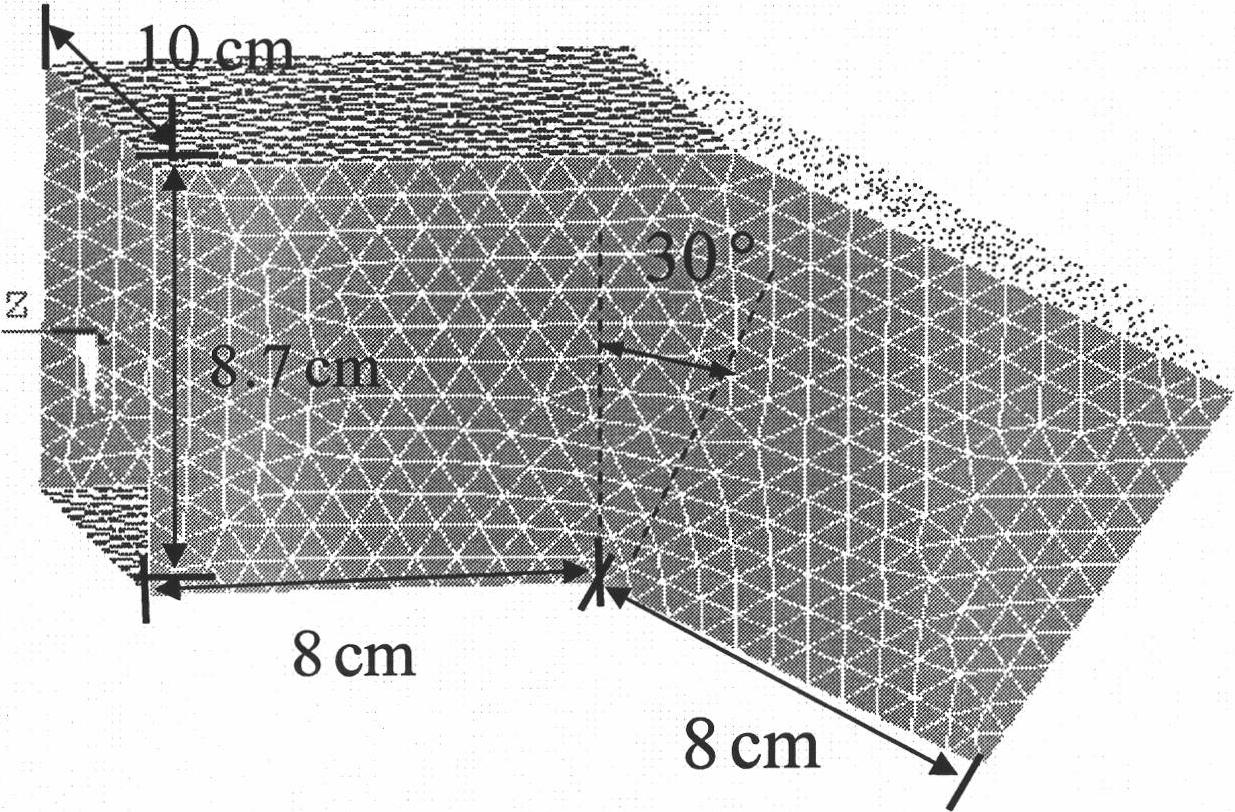

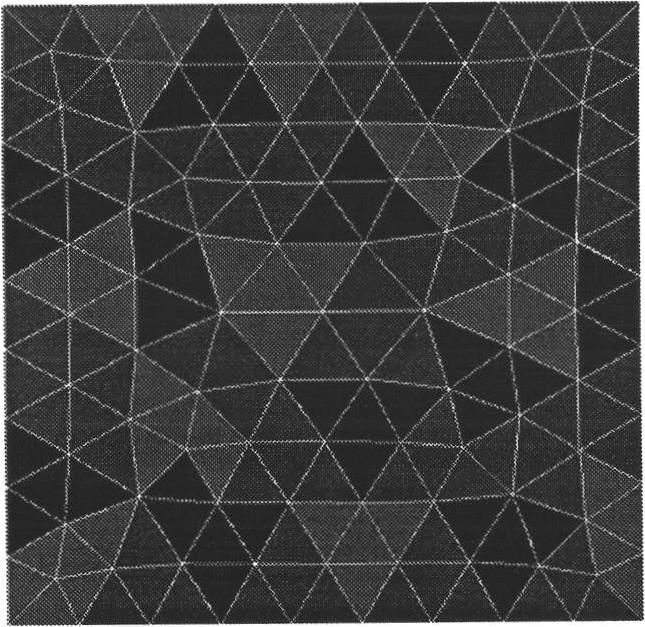

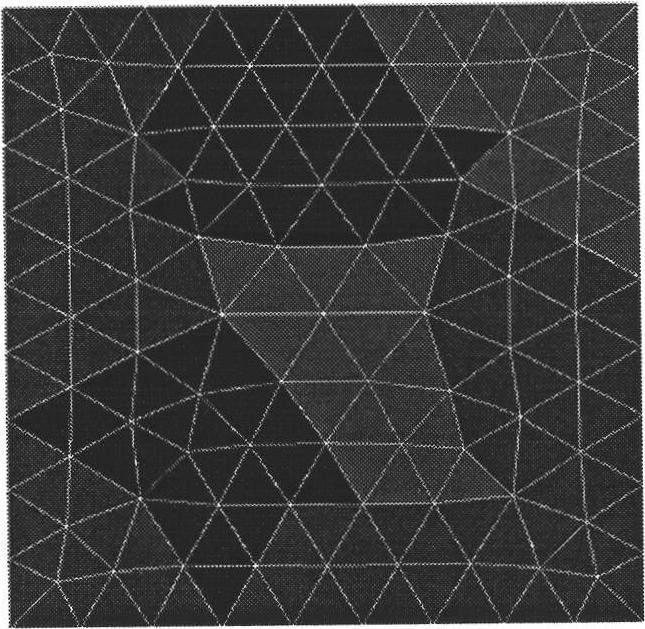

Multi-resolution precondition method for analyzing aerial radiation and electromagnetic scattering

ActiveCN102156764AGood mannersIterative convergence is fastSpecial data processing applicationsLow demandElectromagnetic shielding

The invention discloses a multi-resolution precondition method for analyzing aerial radiation and electromagnetic scattering problems in electromagnetic simulation. The method is a method for generating a multi-resolution basis function by using a geometrical mode on a laminar grid constructed in a grid aggregation mode and further generating multi-resolution preconditions, wherein the multi-resolution basis function is formed by linear combination of a classical vector triangle basis function (RWG), and can be conveniently applied to the conventional moment method electromagnetic simulation program to effectively improve the behavior of a matrix formed in the moment method electromagnetic simulation process so as to realize acceleration of the iterative solution process of a matrix equation and fulfill the purpose of accelerating the moment method electromagnetic simulation process. Meanwhile, the multi-resolution pre-processing technology can also be conveniently combined with a quick algorithm such as a quick multi-pole algorithm. The method has the advantages of short calculation time and capability of ensuring high precision of the program and low demand of a computing memory, and can effectively improve the computing efficiency of the conventional electromagnetic simulation.

Owner:NANJING UNIV OF SCI & TECH

Memory management method, printing control device and printing apparatus

InactiveUS6052200AVisual representatino by photographic printingOther printing apparatusData transformationComputer graphics (images)

A memory management method for use in a printing process in which a time required for rendering is calculated, for managing printing data in units of band, calculating a size of the printing data in a memory, managing plural band rasters, varying the number of band rasters to be managed in the memory, converting an image to data for management, determining in accordance with a memory state whether or not data conversion is performed, and calculating a data size in the memory after the data conversion.

Owner:CANON KK

Method and apparatus for detecting memory leaks in computer systems

ActiveUS20070033365A1Significant valueQuickly discardedError detection/correctionSpecific program execution arrangementsParallel computingRanking

A system that identifies processes with a memory leak in a computer system. During operation, the system periodically samples memory usage for processes running on the computer system. The system then ranks the processes by memory usage and selects a specified number of processes with highest memory usage based on the ranking. For each selected process, the system computes a first-order difference of memory usage by taking a difference between the memory usage at a current sampling time and the memory usage at an immediately preceding sampling time. The system then generates a memory-leak index based on the first-order difference and a preceding memory-leak index computed at the immediately preceding sampling time.

Owner:ORACLE INT CORP

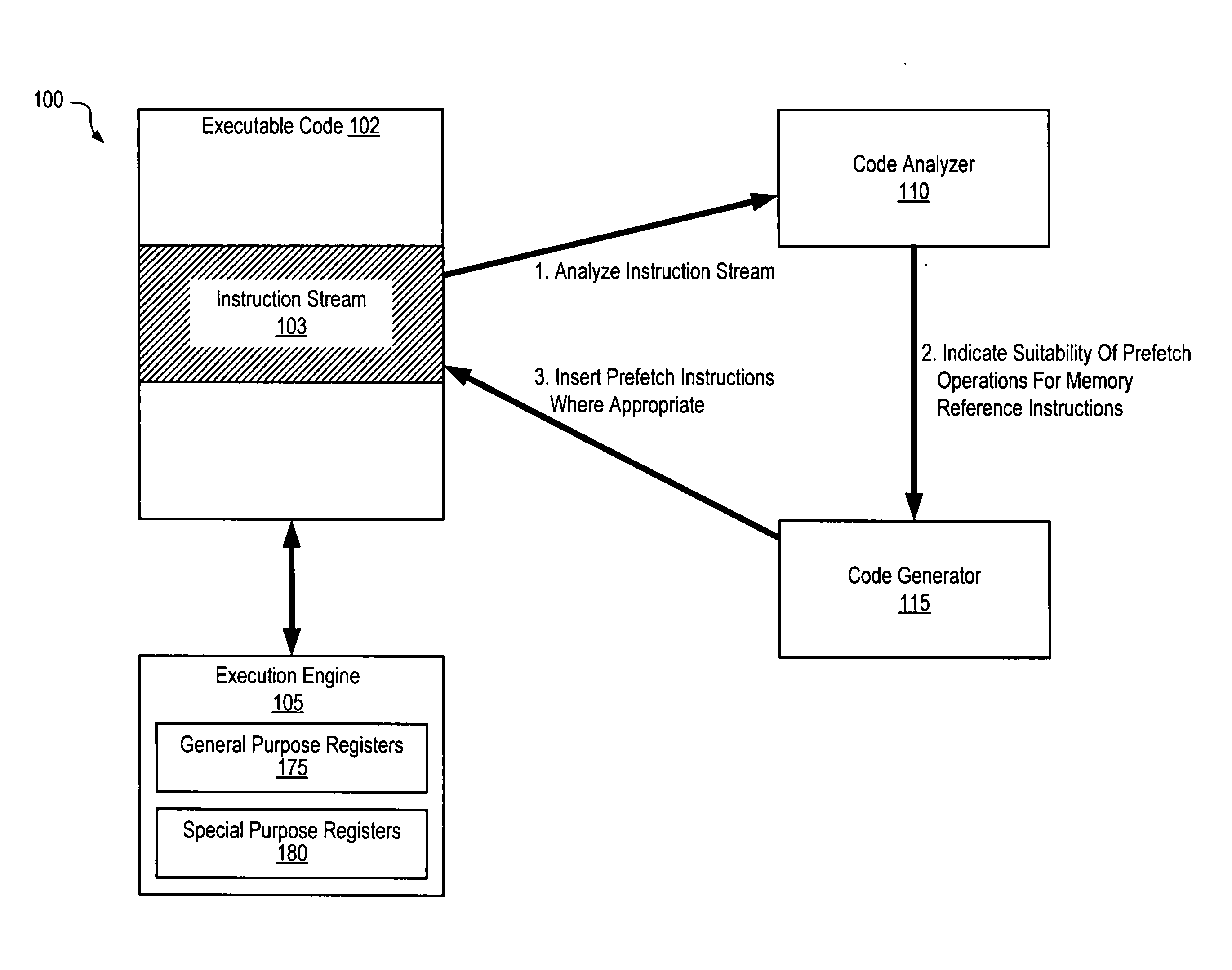

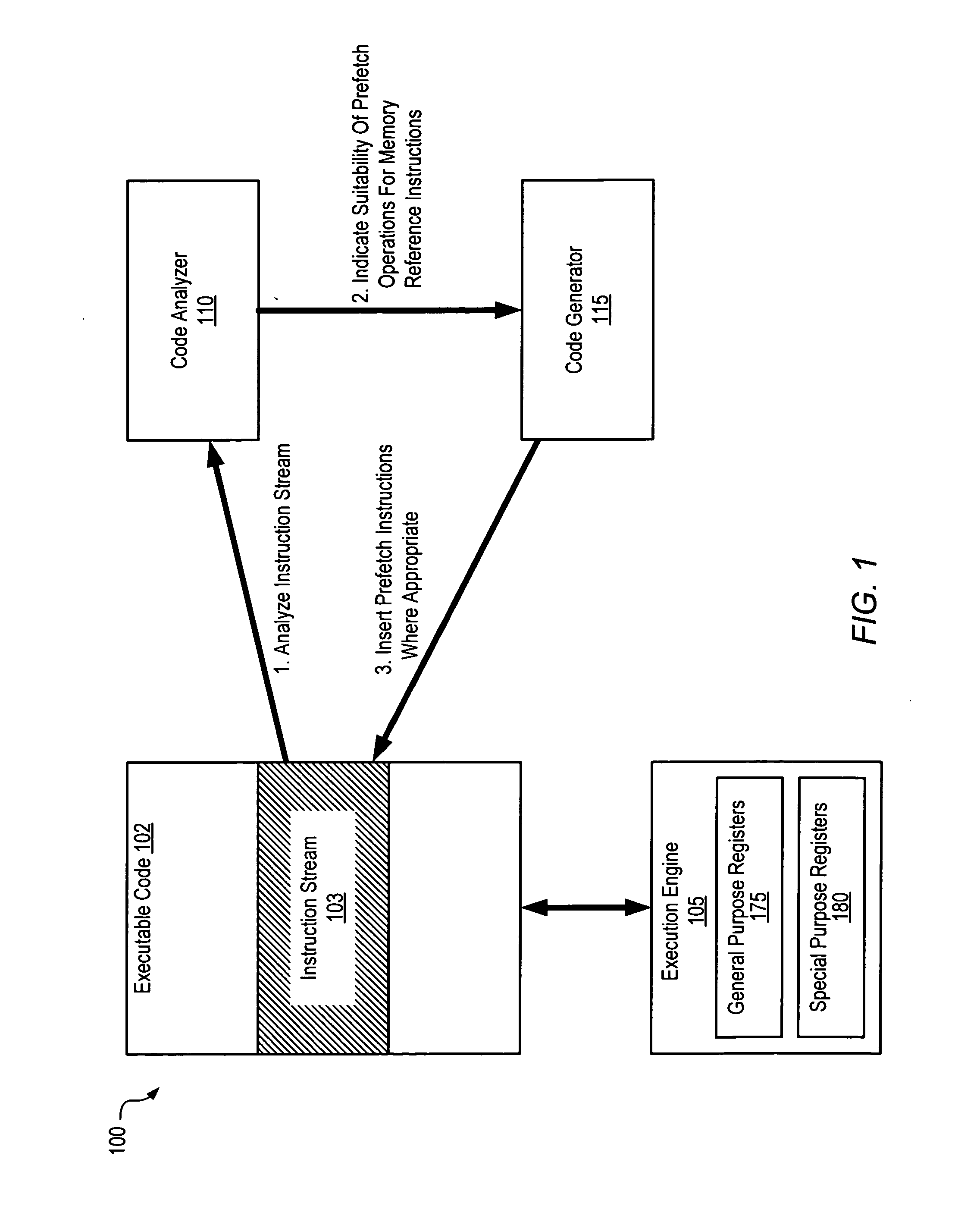

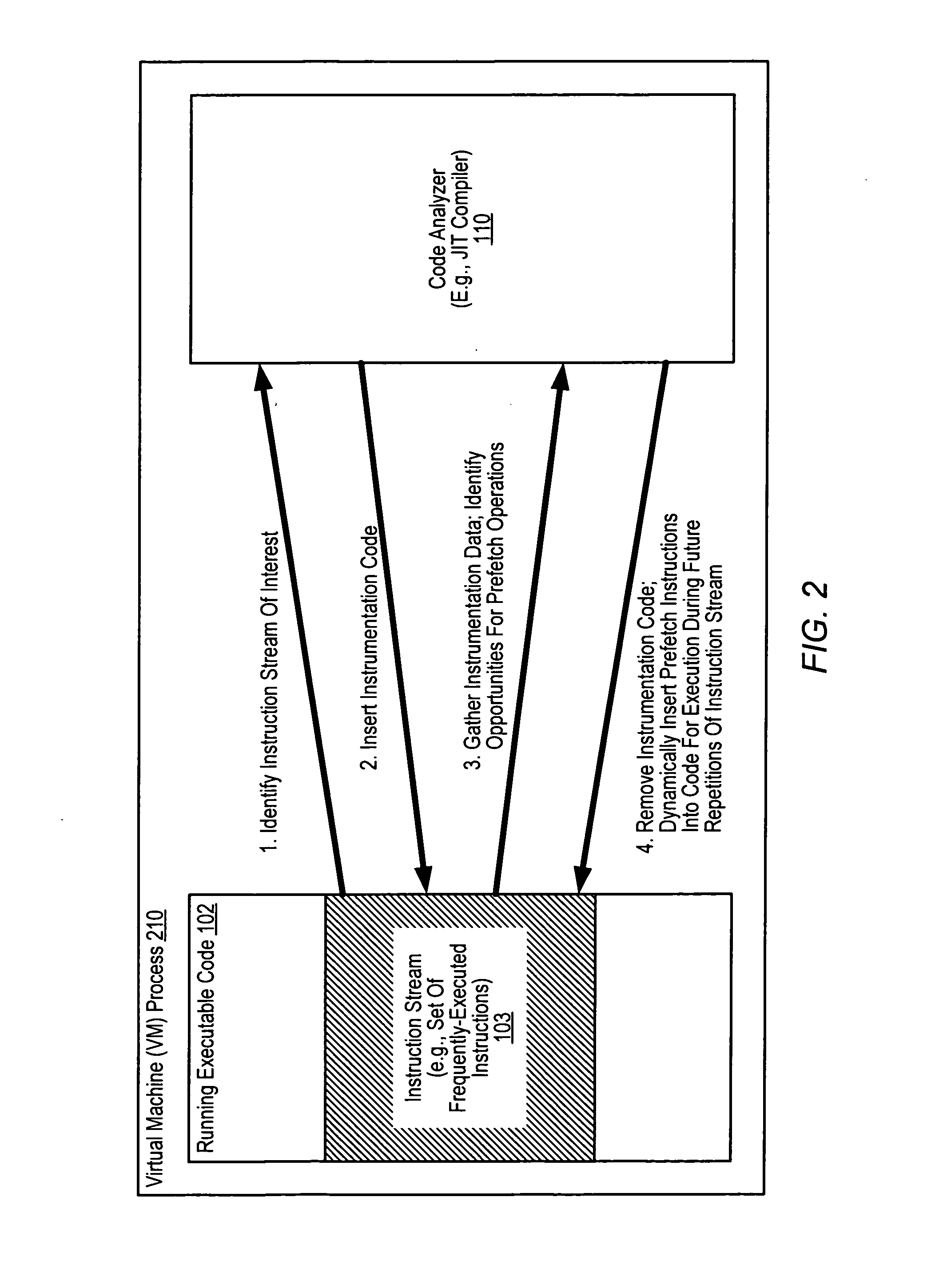

Binary code instrumentation to reduce effective memory latency

ActiveUS20070226703A1Reduce effective memory latencyPreventing processor stallMemory architecture accessing/allocationSpecific program execution arrangementsMemory addressProgram instruction

A system for binary code instrumentation to reduce effective memory latency comprises a processor and memory coupled to the processor. The memory comprises program instructions executable by the processor to implement . a code analyzer configured to analyze an instruction stream of compiled code executable at an execution engine to identify, for a given memory reference instruction in the stream that references data at a memory address calculated during an execution of the instruction stream, an earliest point in time during the execution at which sufficient data is available at the execution engine to calculate the memory address. The code analyzer generates an indication of whether the given memory reference instruction is suitable for a prefetch operation based on a difference in time between the earliest point in time and a time at which the given memory reference instruction is executed during the execution.

Owner:ORACLE INT CORP

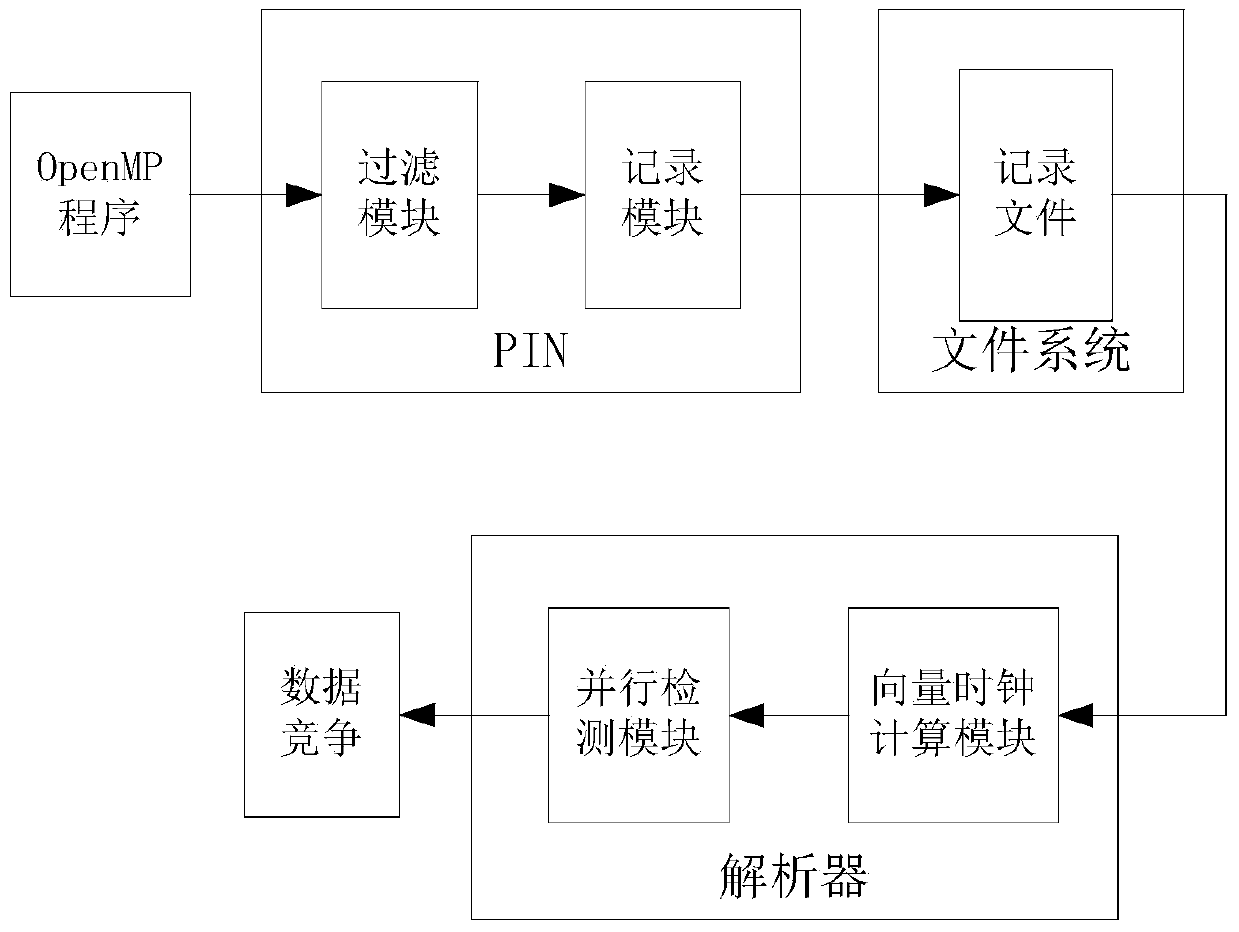

Synchrony relation based parallel dynamic data race detection system

InactiveCN103729291ASave spaceReduce data volumeSoftware testing/debuggingSpecific program execution arrangementsParallel dynamicsFiltration

The invention discloses a synchrony relation based parallel dynamic data race detection system which comprises a filter module, a recording module, a vector clock computation module and a parallel detection module. The filter module is responsible for monitoring memory access operation of each thread of a to-be-detected program in the running process and filtering redundant memory accesses and accesses with data races impossibly occurring, and rest accesses after filtration are written in record files corresponding to the threads by the recording module. The vector clock computation module reads out the memory access records of the program from the record files, and computes vector clocks of the memory accesses. The parallel detection module partitions a detection task by the vector clocks into small tasks to be distributed to multiple worker threads, and is responsible for summarizing detection results. The system only records the memory accesses with the races possibly occurring and merges and compresses access intervals, so that the records are greatly decreased; meanwhile, a data race detection algorithm is high in degree of parallelism, and multi-core hardware can be fully utilized to realize detection acceleration.

Owner:HUAZHONG UNIV OF SCI & TECH

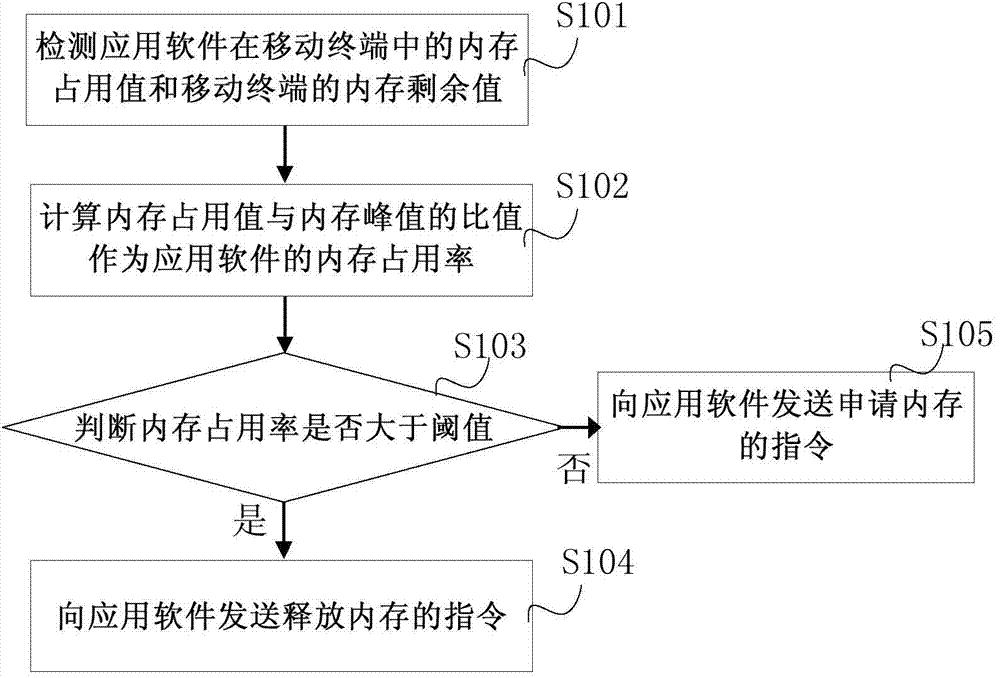

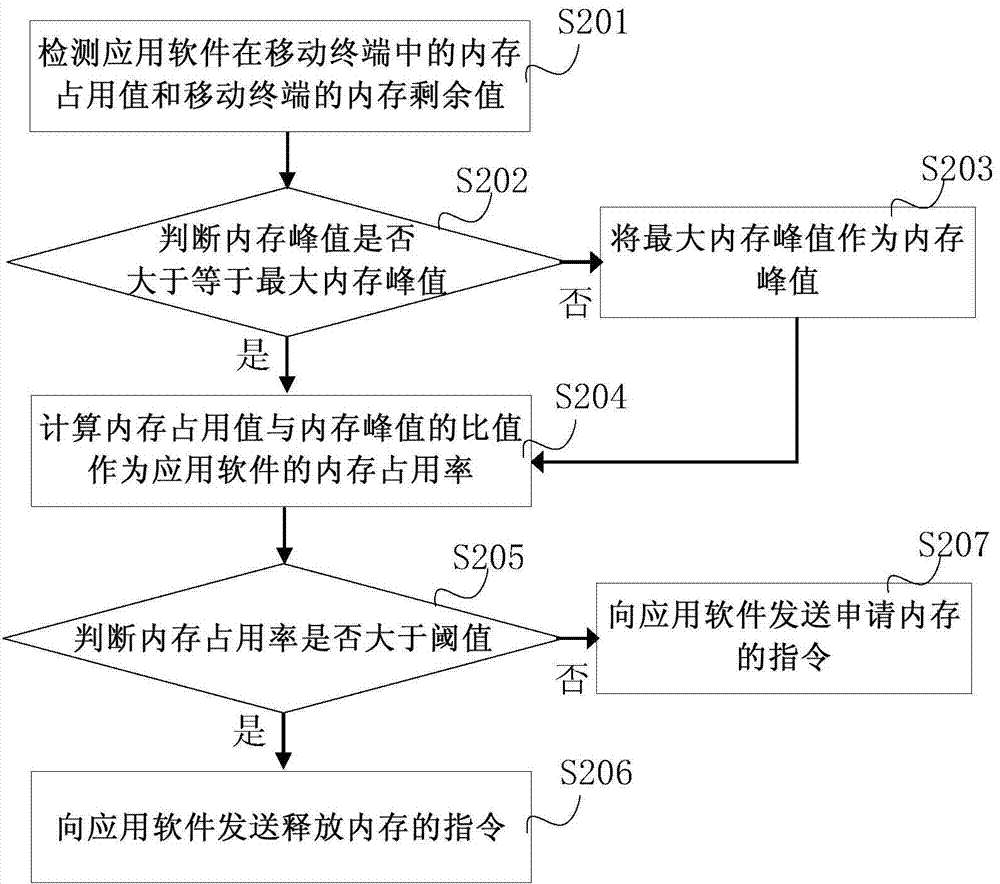

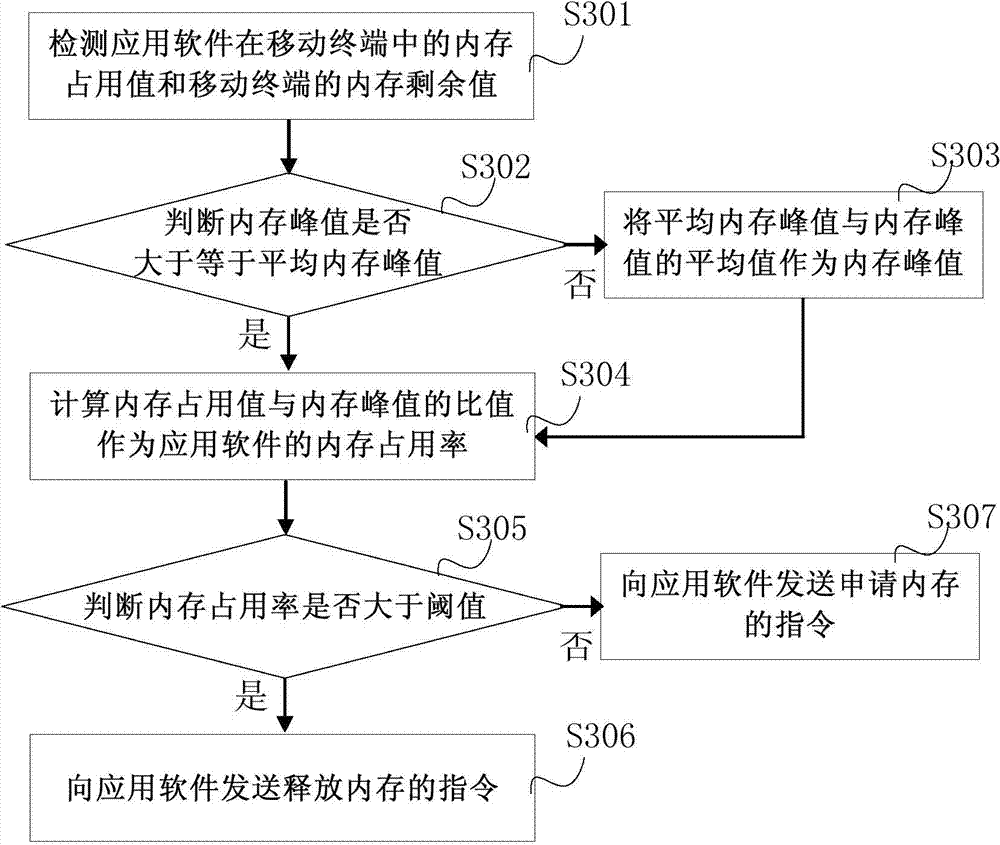

Memory usage feedback method and device

ActiveCN103927230AGuaranteed uptimeIncrease profitResource allocationMemory footprintApplication software

Embodiments of the invention disclose a memory usage feedback method and device. The method includes: detecting memory usage value of application software in a mobile terminal and memory residual value in the mobile terminal; calculating a ratio of the memory usage value to a memory peak as memory usage of the application software; judging whether the memory usage is larger than a threshold or not; if yes, transmitting a memory release command to the application software; if not, transmitting a memory applying command to the application software. The memory peak is a sum of the memory usage value and the memory residual value. The memory usage feedback method and device according to the scheme provided by the embodiments has the advantages that stable operation of the application software in the mobile terminal can be guaranteed, utilization rate of memory by the application software is increased, and user experience is improved.

Owner:ALIBABA (CHINA) CO LTD

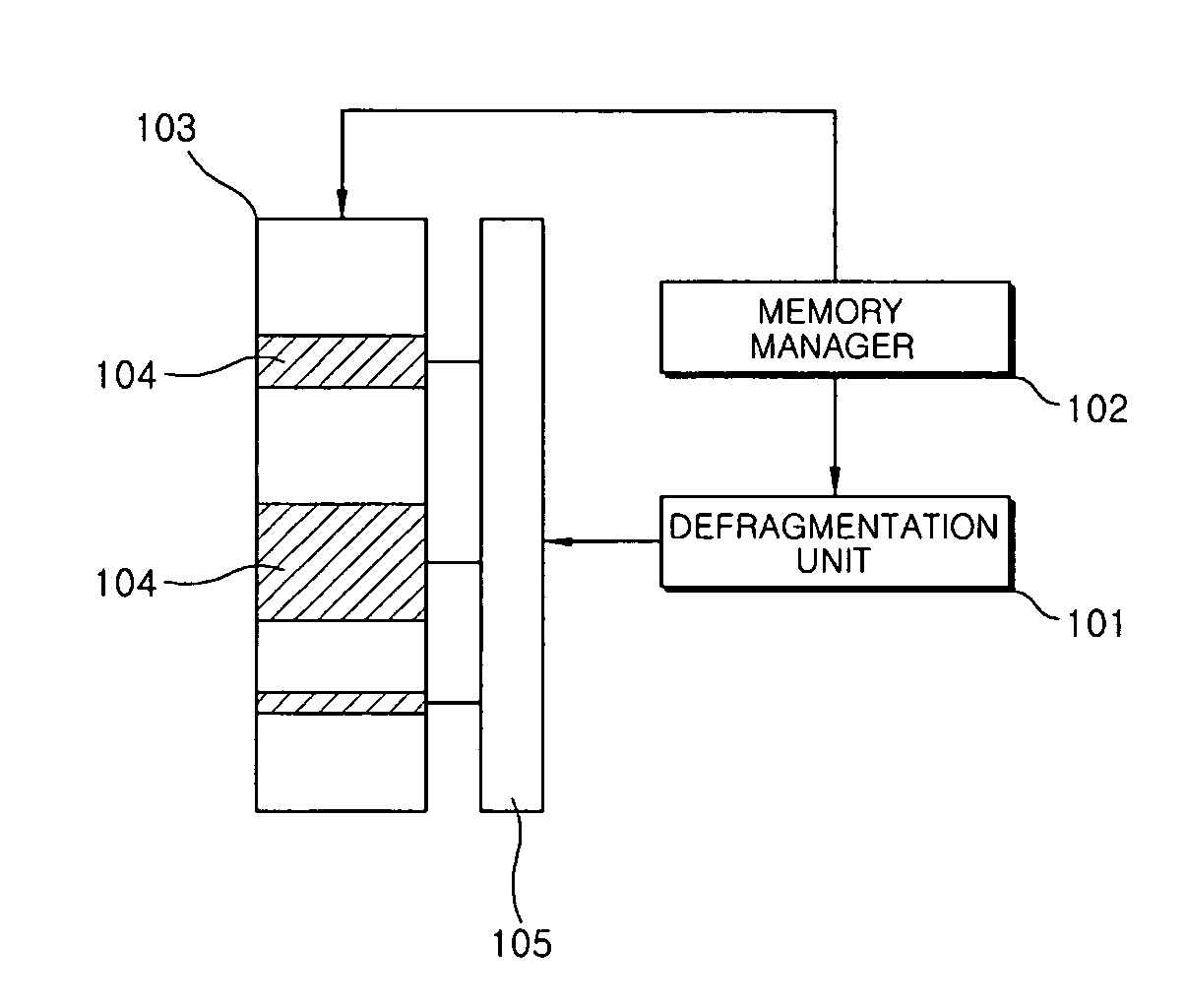

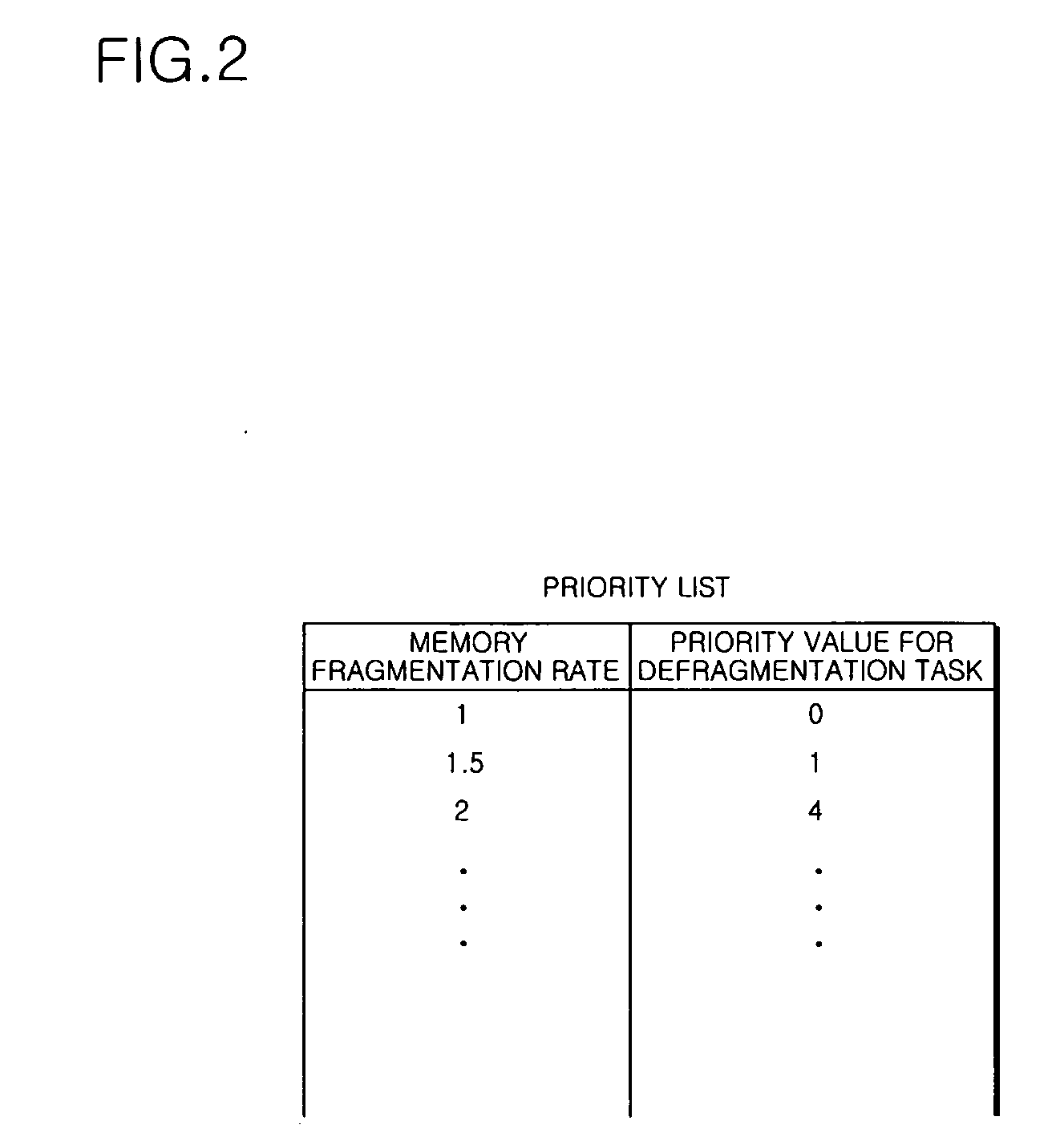

Apparatus for managing memory in real-time embedded system and method of allocating, deallocating and managing memory in real-time embedded system

An apparatus for managing memory in a real-time embedded system and a method of allocating, deallocating and managing memory in a real-time embedded system. The apparatus includes a defragmentation unit performing a defragmentation task according to a predetermined priority to collect together memory fragments, and a memory manager allocating or deallocating a predetermined area of memory upon request of a task, and calculating a memory fragmentation rate of the memory to determine a priority of the defragmentation task. The method of managing memory in a real-time embedded system includes determining whether the conditions under which the memory is used vary, and if the condition vary, calculating a memory fragmentation rate of the memory to determine a priority of the defragmentation task according to the memory fragmentation rate.

Owner:SAMSUNG ELECTRONICS CO LTD

Method for operating semiconductor device and semiconductor system

ActiveUS20160306567A1Flexible useMemory architecture accessing/allocationInput/output to record carriersComputer hardwareDevice material

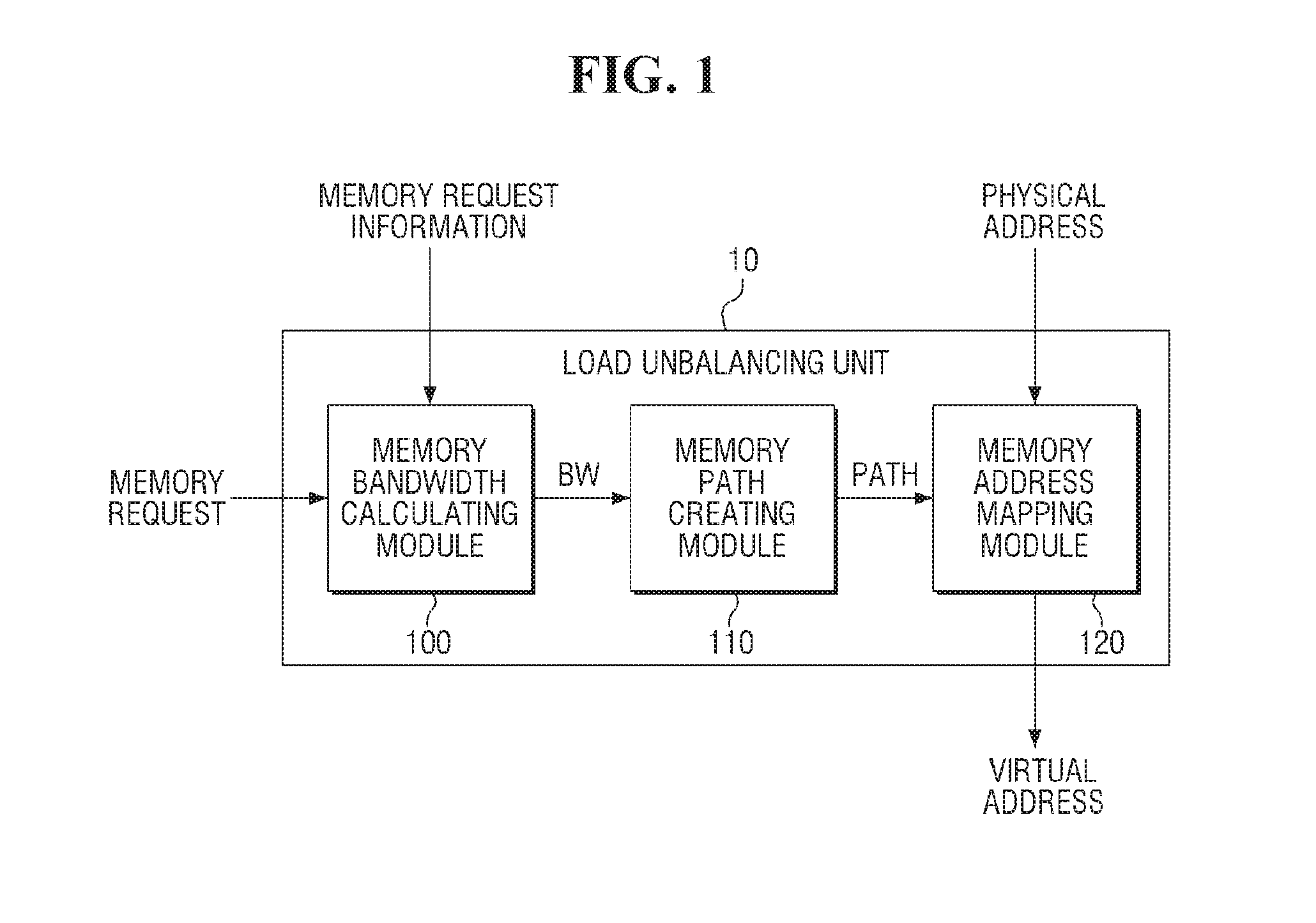

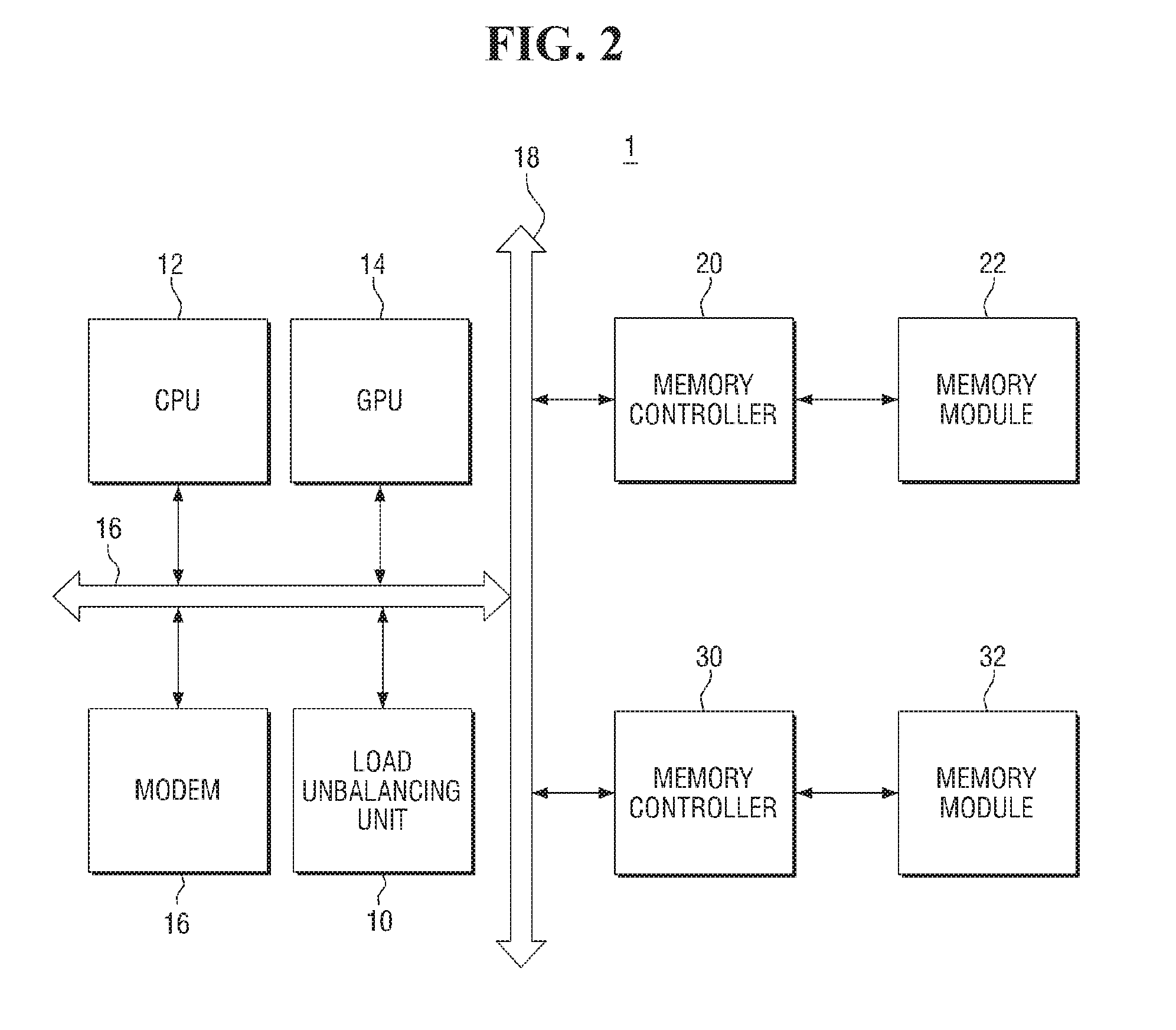

A method for operating a semiconductor device includes receiving a memory request for a memory; calculating a memory bandwidth such that the memory bandwidth is at least large enough to support allocation of the memory in accordance with the memory request; creating a memory path for accessing the memory using a memory hierarchical structure wherein a memory region that corresponds to the memory path is a memory region that is allocated to support the memory bandwidth; and performing memory interleaving with respect to the memory region that corresponds to the memory path.

Owner:SAMSUNG ELECTRONICS CO LTD

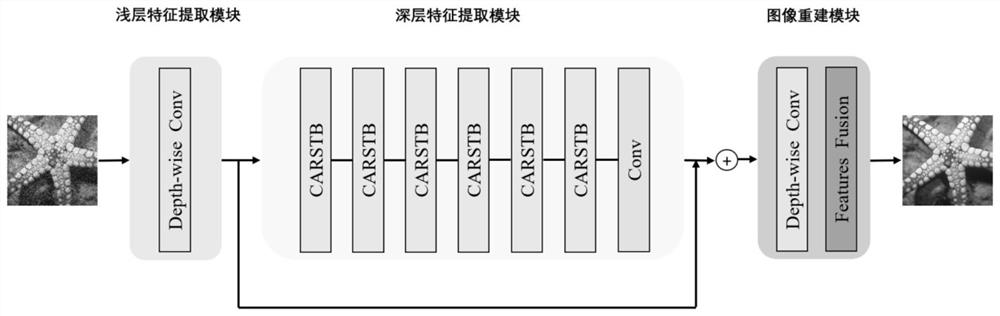

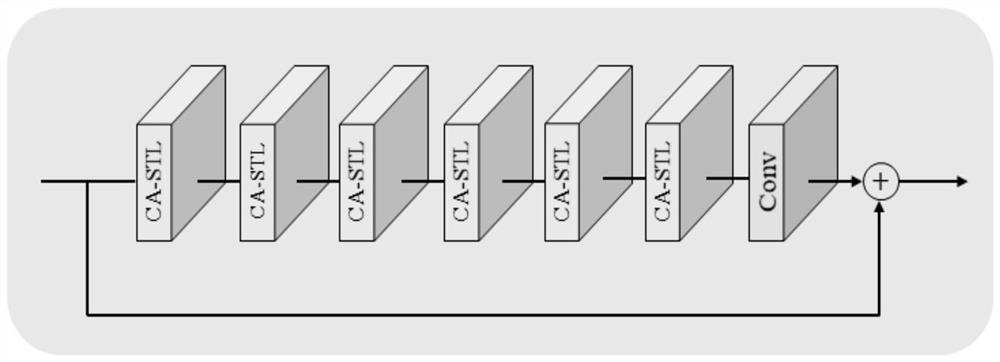

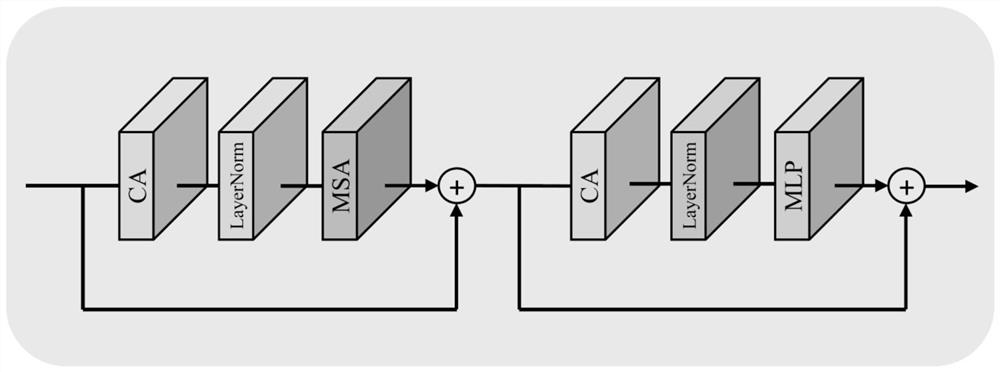

Swin-Transform image denoising method and system based on channel attention

ActiveCN114140353AOvercome high computational memoryOvercoming time consumingImage enhancementImage analysisImage denoisingNetwork model

The invention relates to a Swindow-Transform image denoising method and a Swindow-Transform image denoising system based on channel attention. According to the method, a noise image is input into a trained and optimized denoising network model, and a shallow feature extraction network in the denoising network model firstly extracts shallow feature information such as noise and channels of the noise image; inputting the extracted shallow feature information into a deep feature extraction network in a denoising network model to obtain deep feature information, and inputting the shallow feature information and the deep feature information into a reconstruction network of the denoising network model for feature fusion to obtain a pure image. The problems that in the prior art, an image denoising method based on a deep convolutional neural network is prone to losing input noise image details, and high computation memory and time consumption are caused are solved.

Owner:SUZHOU UNIV

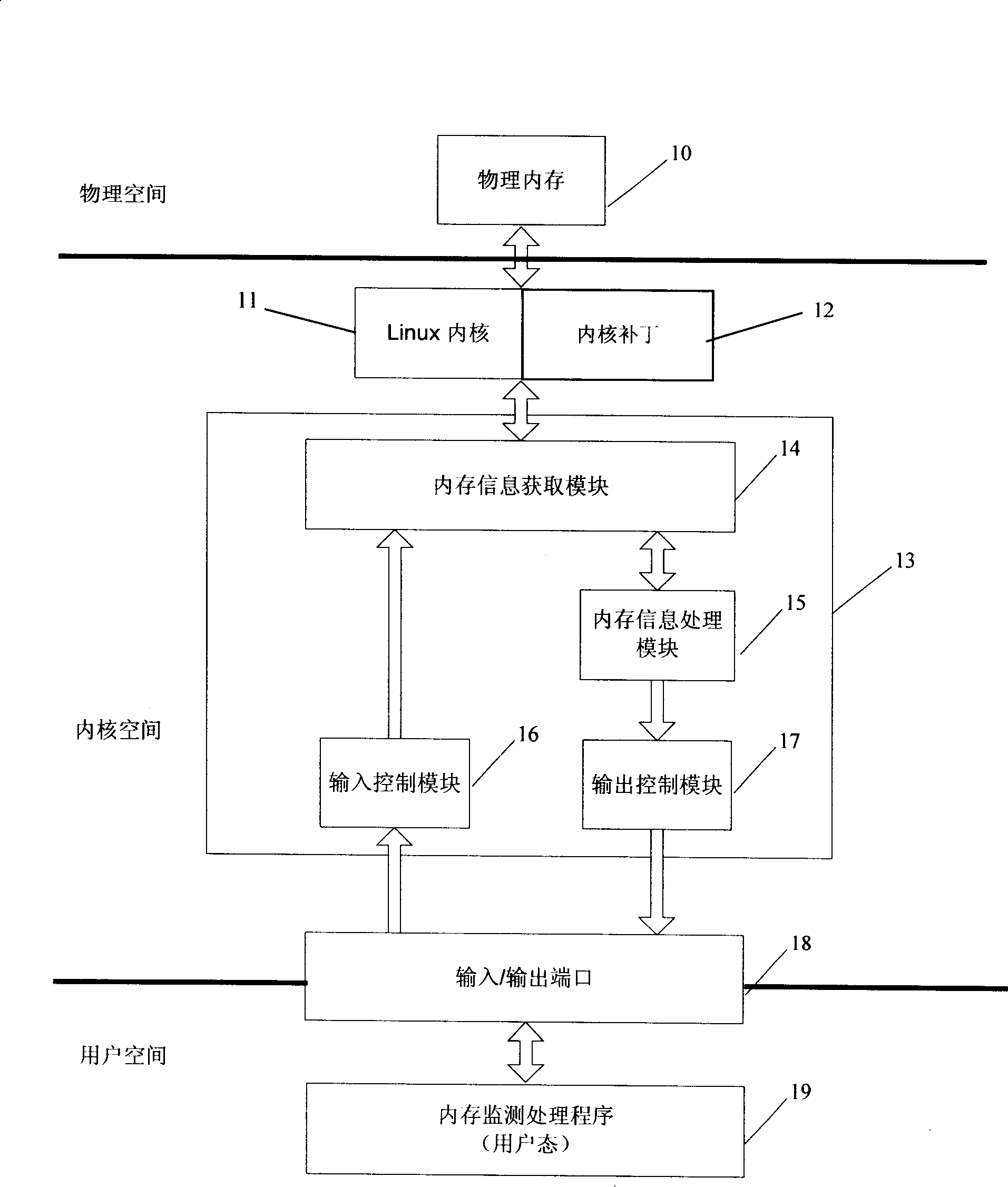

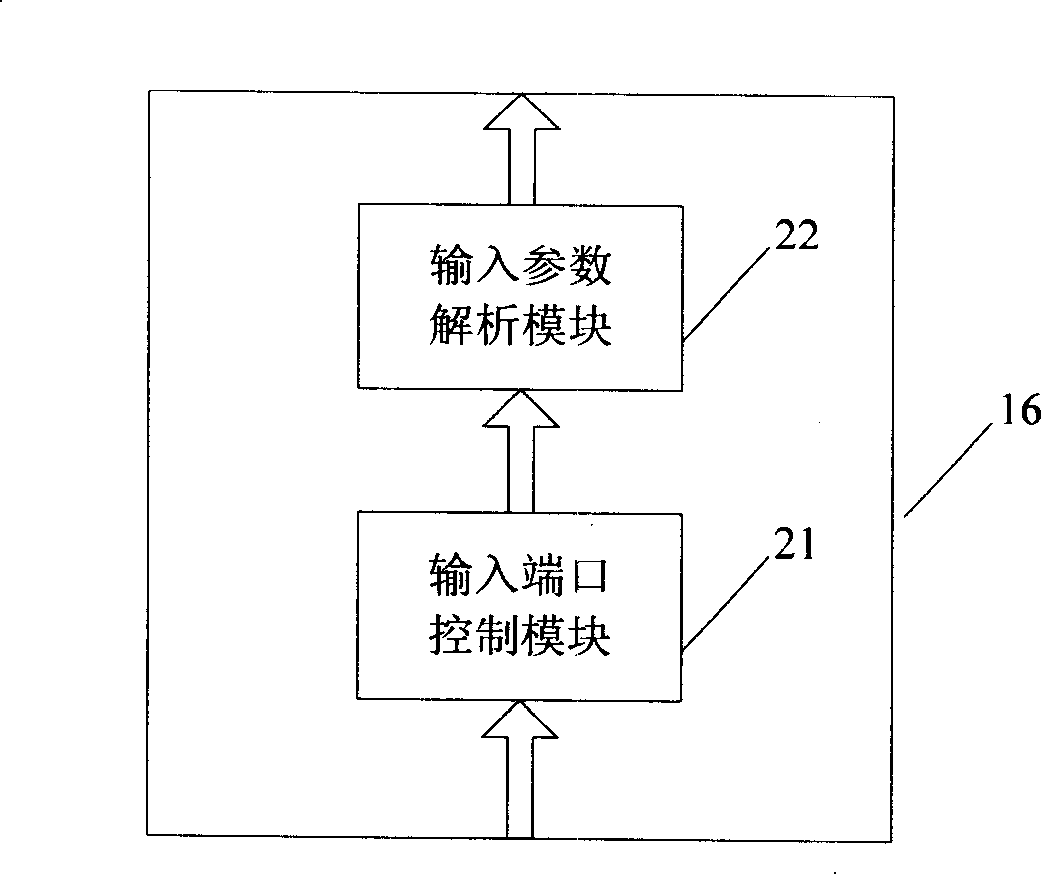

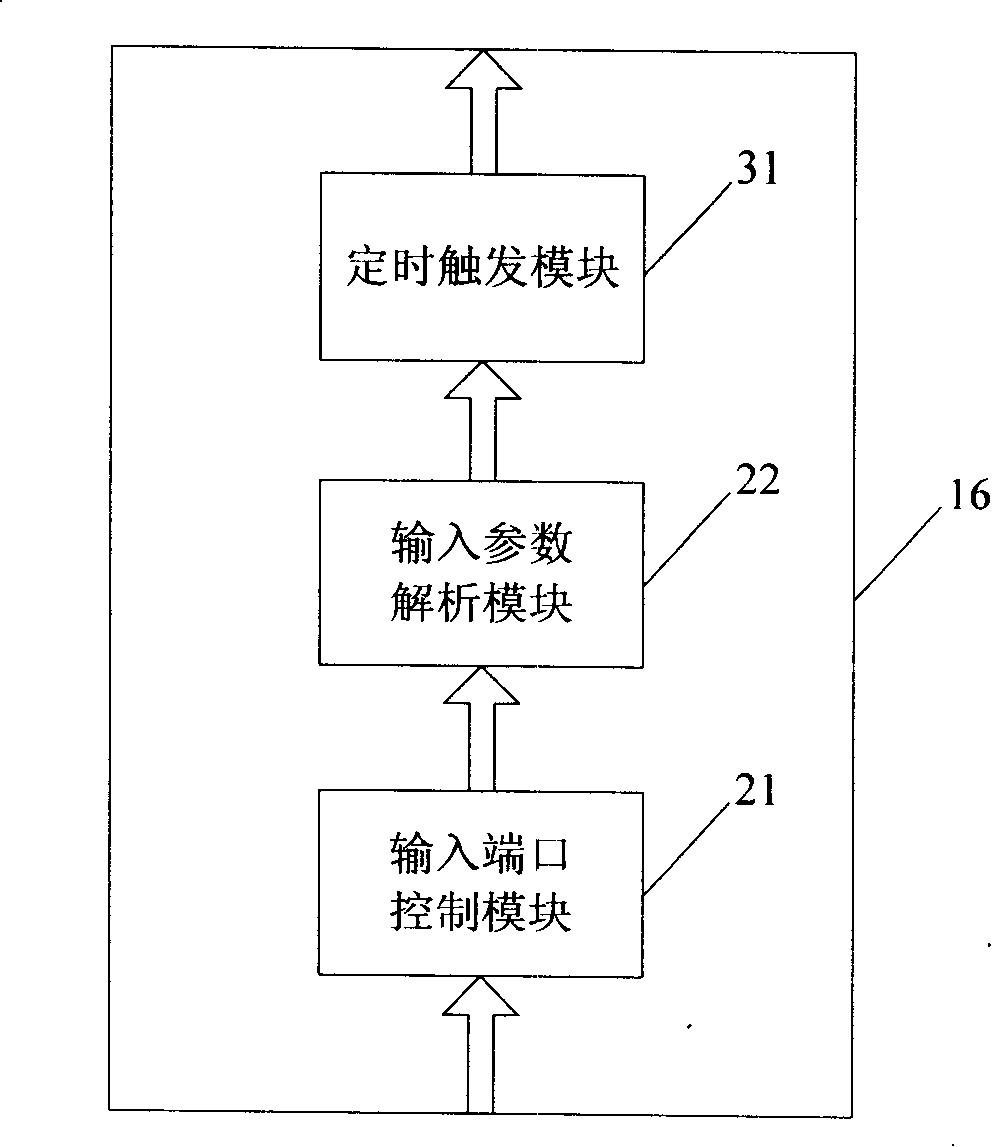

Physical memory information monitoring system of Linux platform

InactiveCN101221527ARealize monitoringImprove compatibilityHardware monitoringFragmentation rateGNU/Linux

The invention discloses a Linux platform physical memory information monitoring system, which can monitor use conditions of physical memories under a Linux platform and optimize use of Linux platform physical memory resources. By adoption of the means of kernel patching and a memory monitoring processing module, static monitoring and dynamic monitoring of overall conditions and detailed use conditions of the physical memories in the Linux platform can be performed and monitoring of the following physical memory information in the Linux platform is realized, for example, acquisition of the number of distributive designated stage memory blocks, acquisition of memory details to compute external fragmentation rate of the memories and so on, thereby the invention has good compatibility and suitability. The system of the invention is particularly suitable for an embedded type Linux platform, wherein, various memory resources are limited but complex memory operations are needed to be provided.

Owner:上海宇梦通信科技有限公司

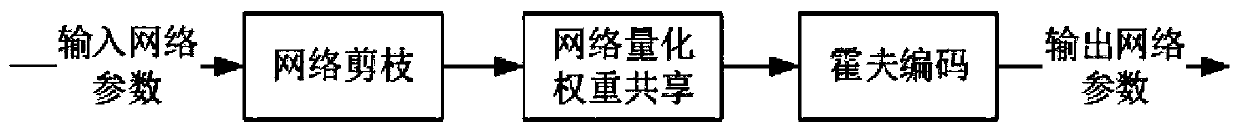

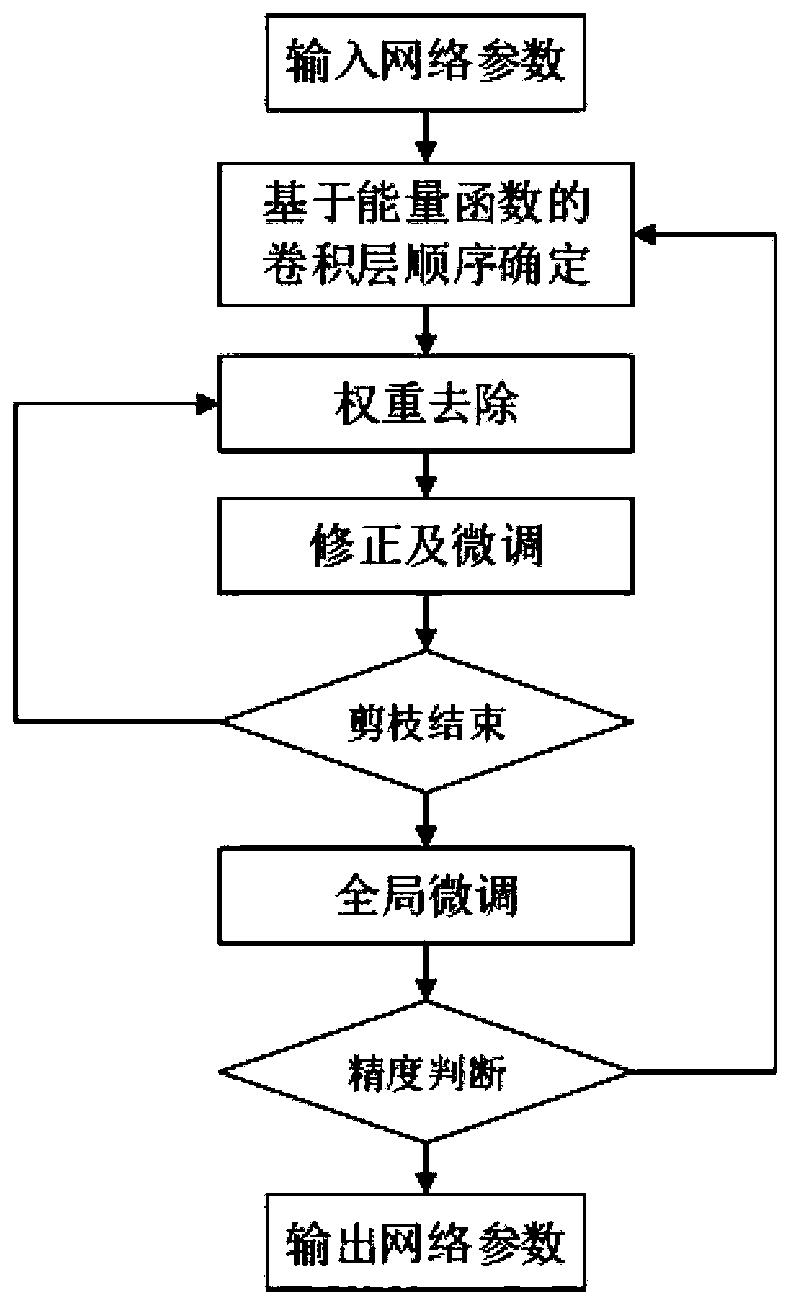

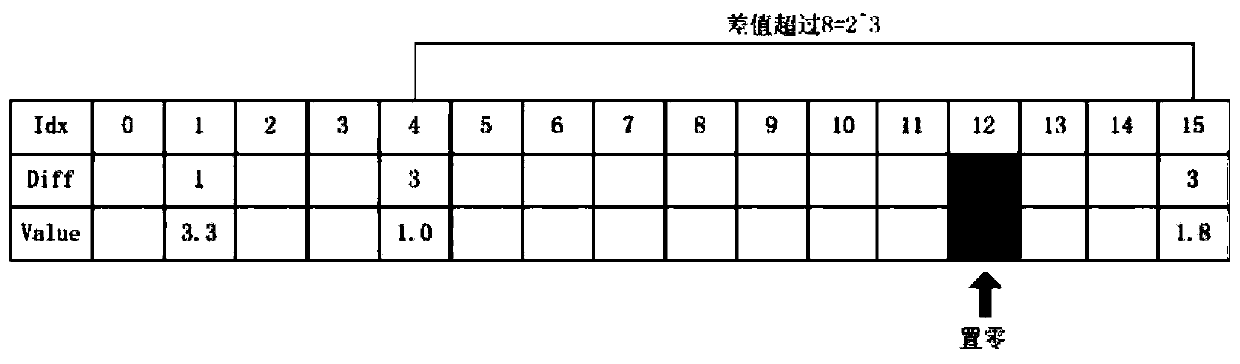

A compression method of a deep neural network

InactiveCN109726799AReduce storageIncrease the compression ratioNeural architecturesNeural learning methodsPruningDeep neural networks

The invention discloses a compression method of a deep neural network, which comprises the following steps of: network parameter trimming: trimming the network through pruning, deleting redundant connections, and reserving the connection with the maximum information amount; Training quantification and weight sharing: quantifying the weight, enabling the plurality of connections to share the same weight, and storing the effective weight and the index; And obtaining a compression network by using Huffman coding and bias distribution of effective weights. According to the method, the precision ofthe compressed network is improved through modes of modifying pruning, weight sharing and the like, the calculation memory space is greatly reduced, and the running speed is greatly increased; Therefore, the calculation amount and the memory of the large-scale network are effectively reduced, so that the large-scale network can effectively run on limited hardware equipment.

Owner:SICHUAN UNIV

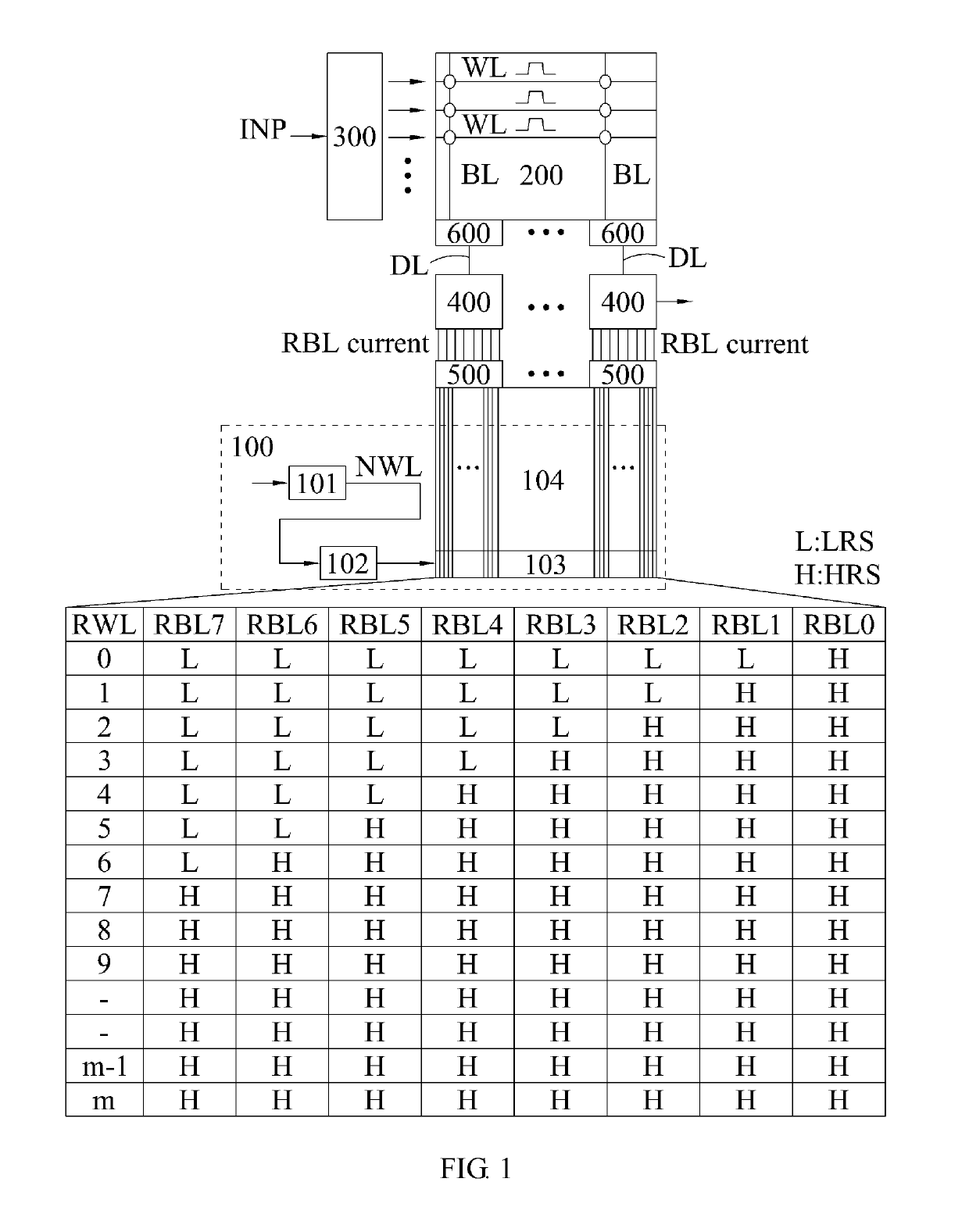

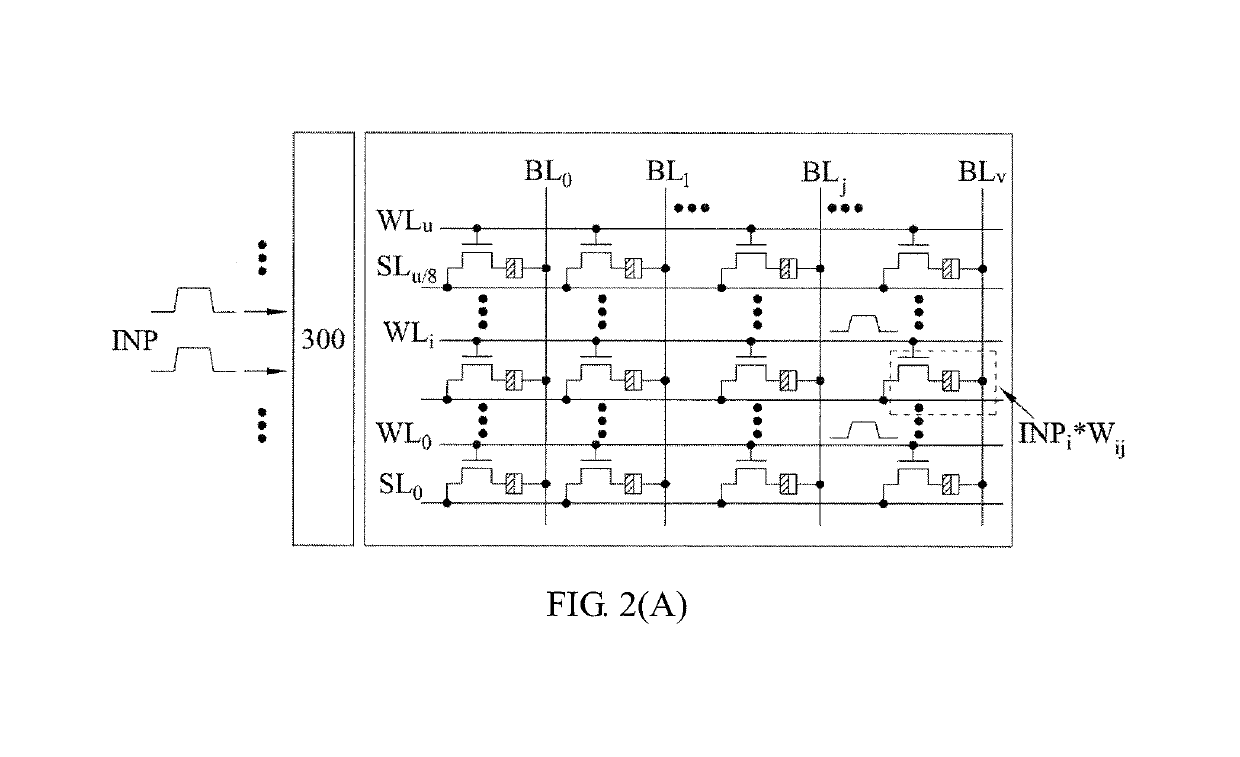

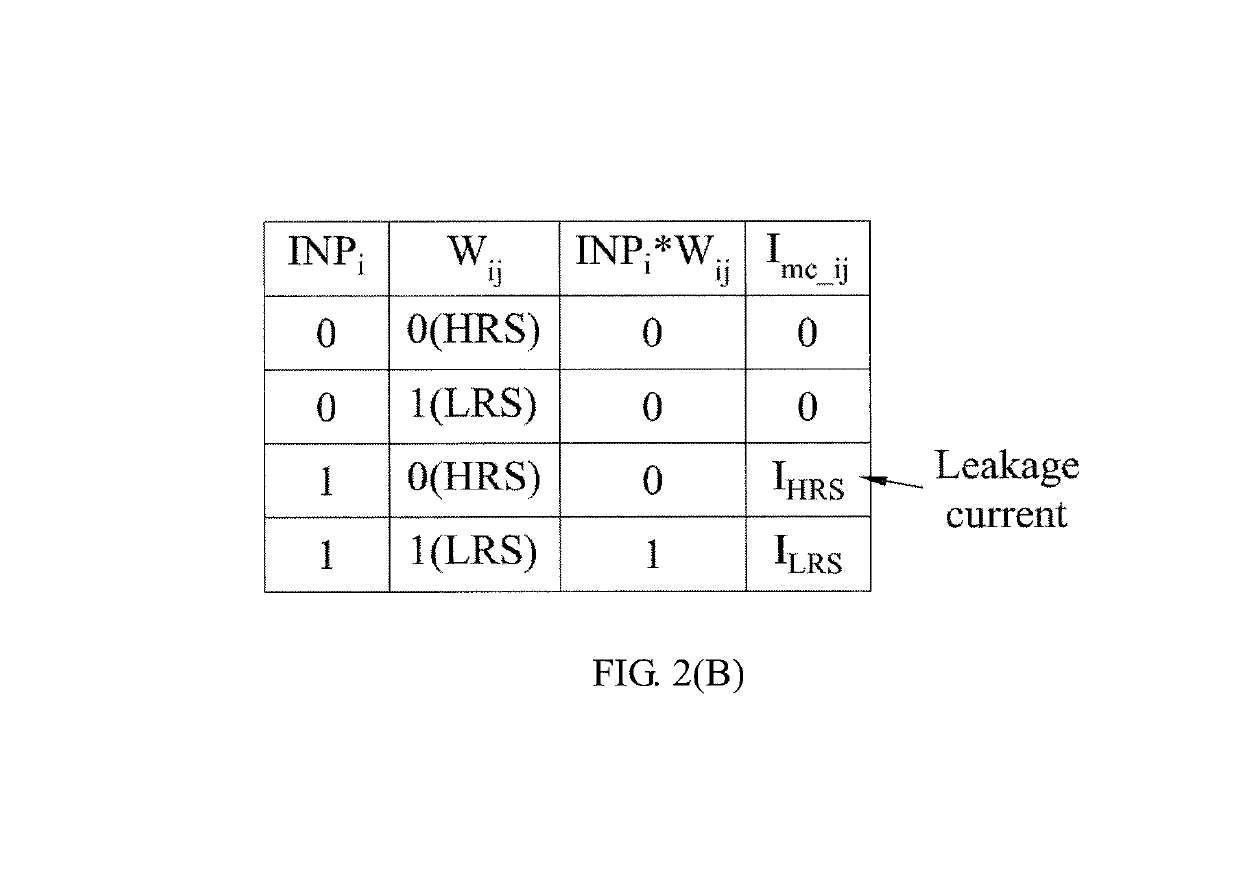

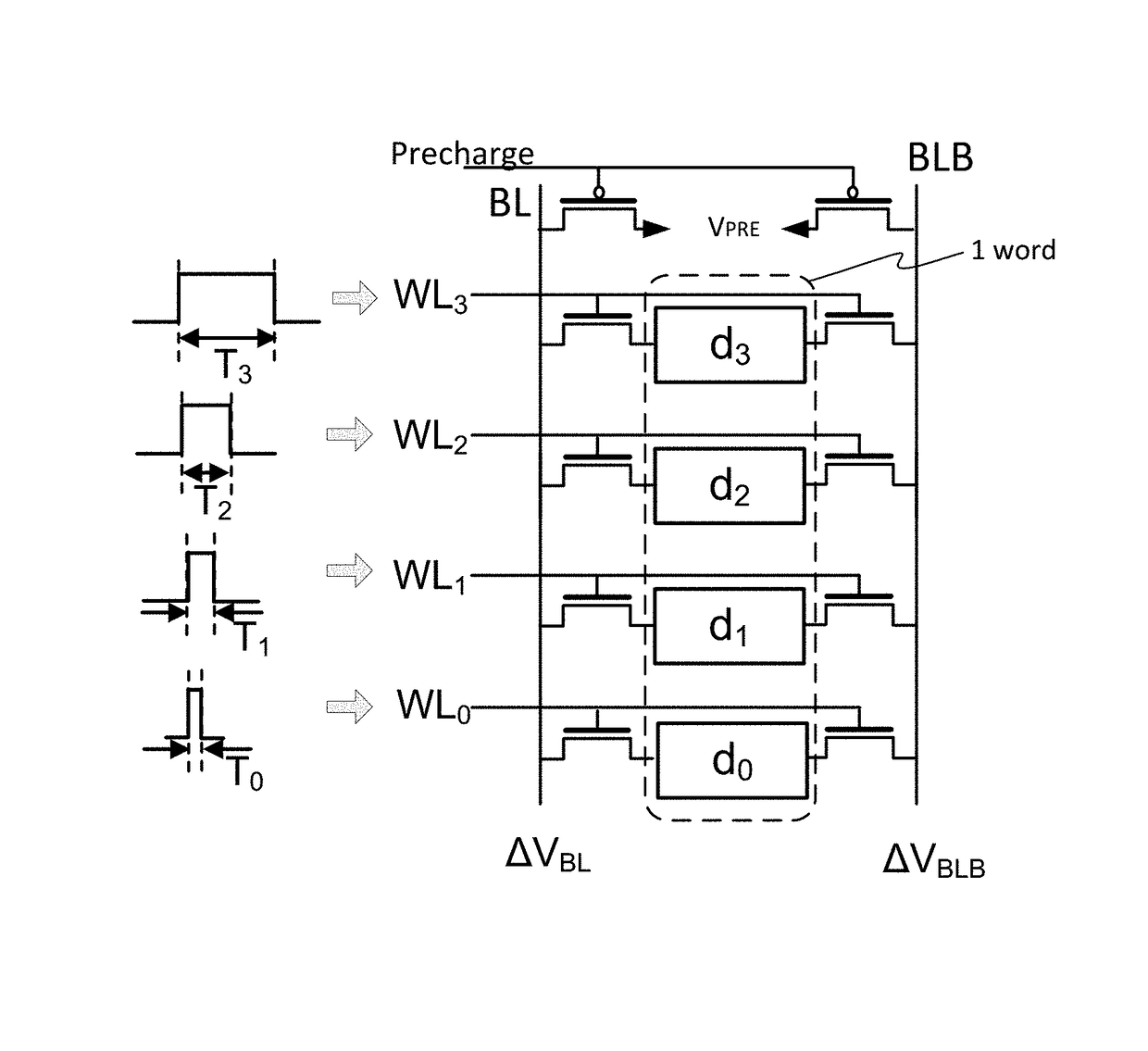

Input-pattern aware reference generation system and computing-in-memory system including the same

ActiveUS10340003B1Increase sensing marginImprove sensing yieldDigital data processing detailsDigital storageElectricityControl circuit

An input-pattern aware reference generation system for a memory cell array having a plurality of word lines crossing a plurality of bit lines includes an input counting circuit, a reference array, and a reference word line control circuit. The input counting circuit receives the input signal of the memory cell array, discovers input activated word lines according to the input signal and generates a number signal representing a number of the input activated word lines. The reference array includes a plurality of reference memory cells storing a predetermined set of weights. The reference word line control circuit is electrically connected between the input counting circuit and the reference array. Moreover, the reference word line control circuit controls the reference array to generate a plurality of reference signals being able to distinguish candidates of the computational result of the bit lines in the memory cell array.

Owner:NATIONAL TSING HUA UNIVERSITY

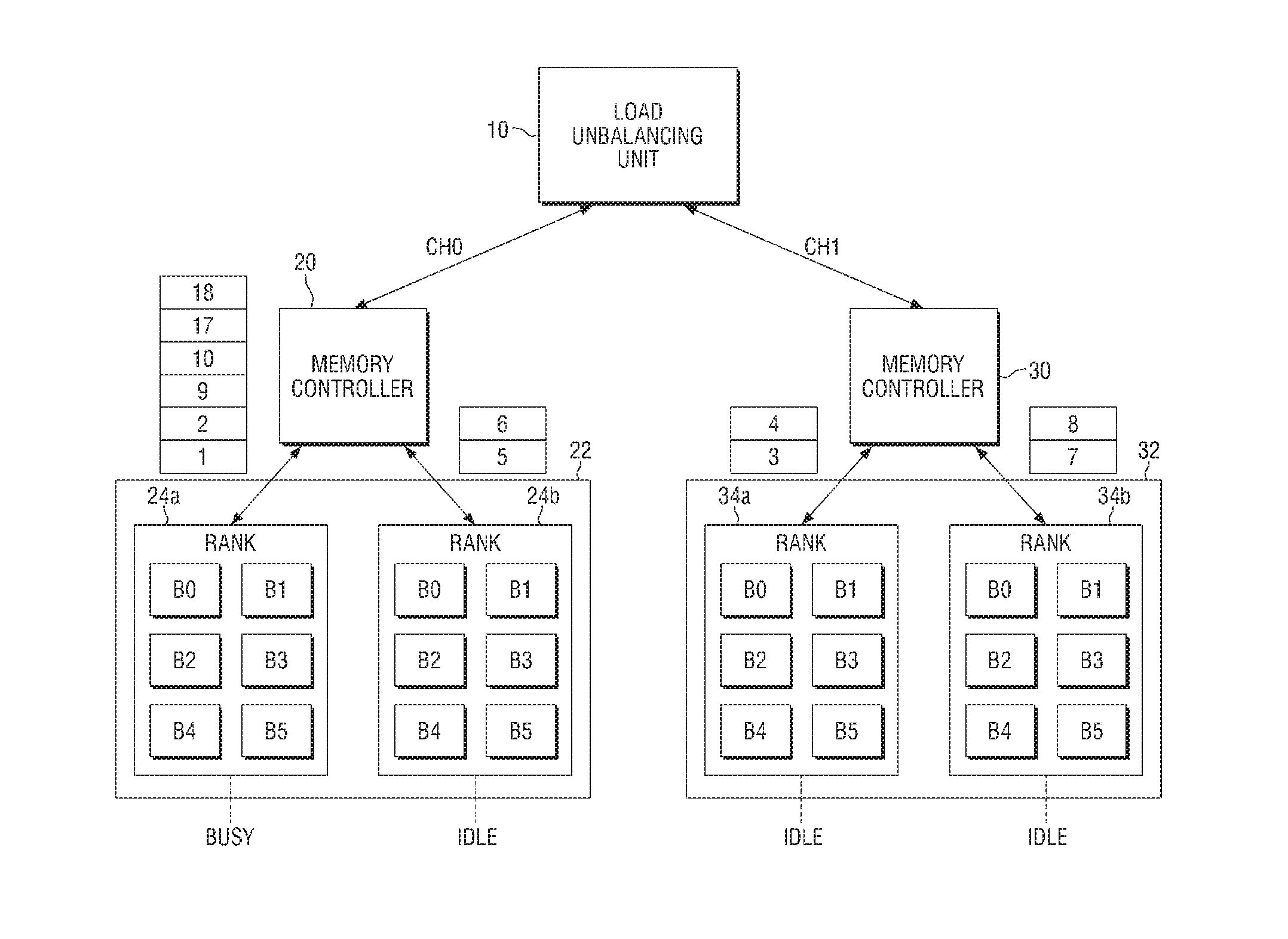

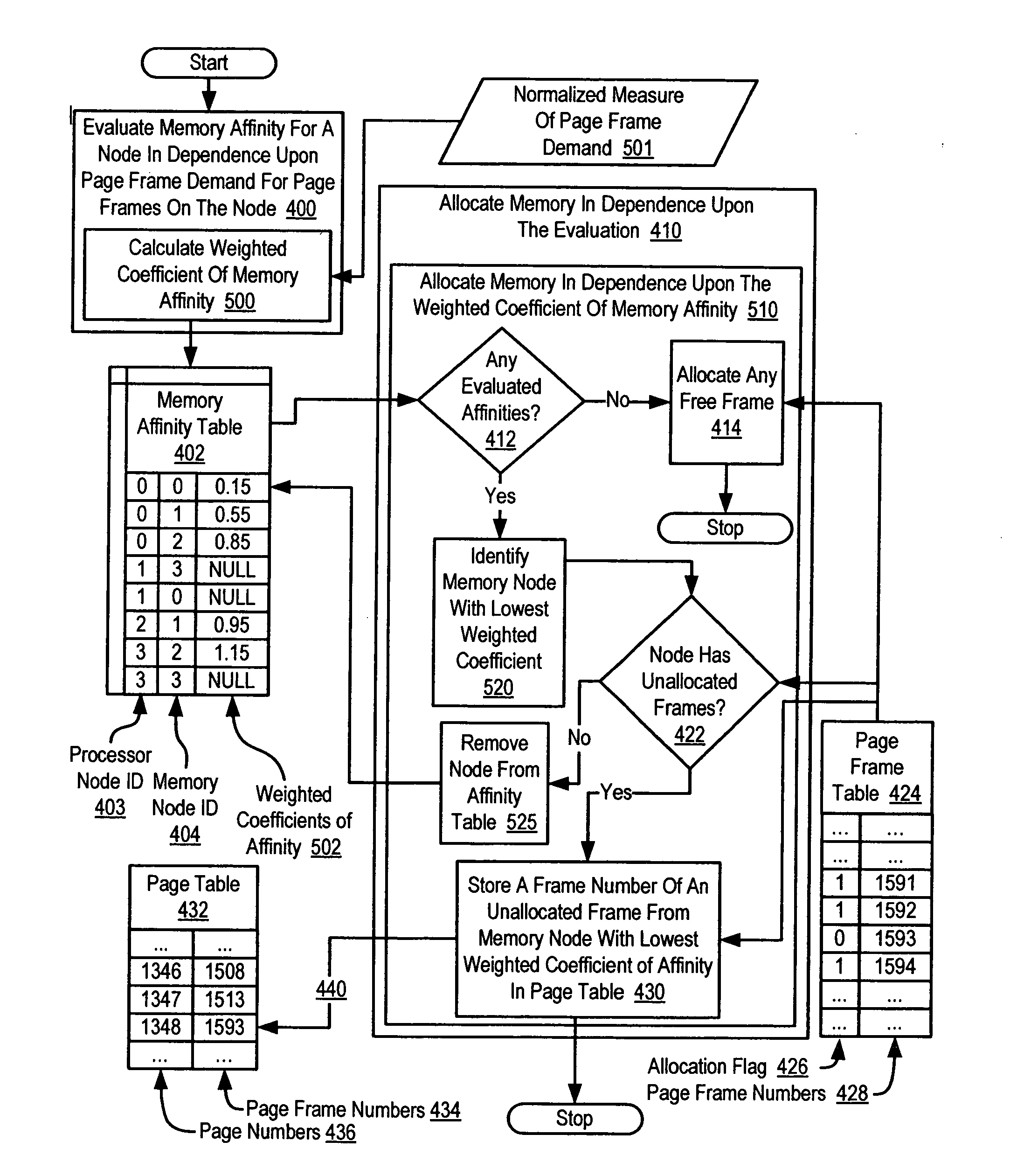

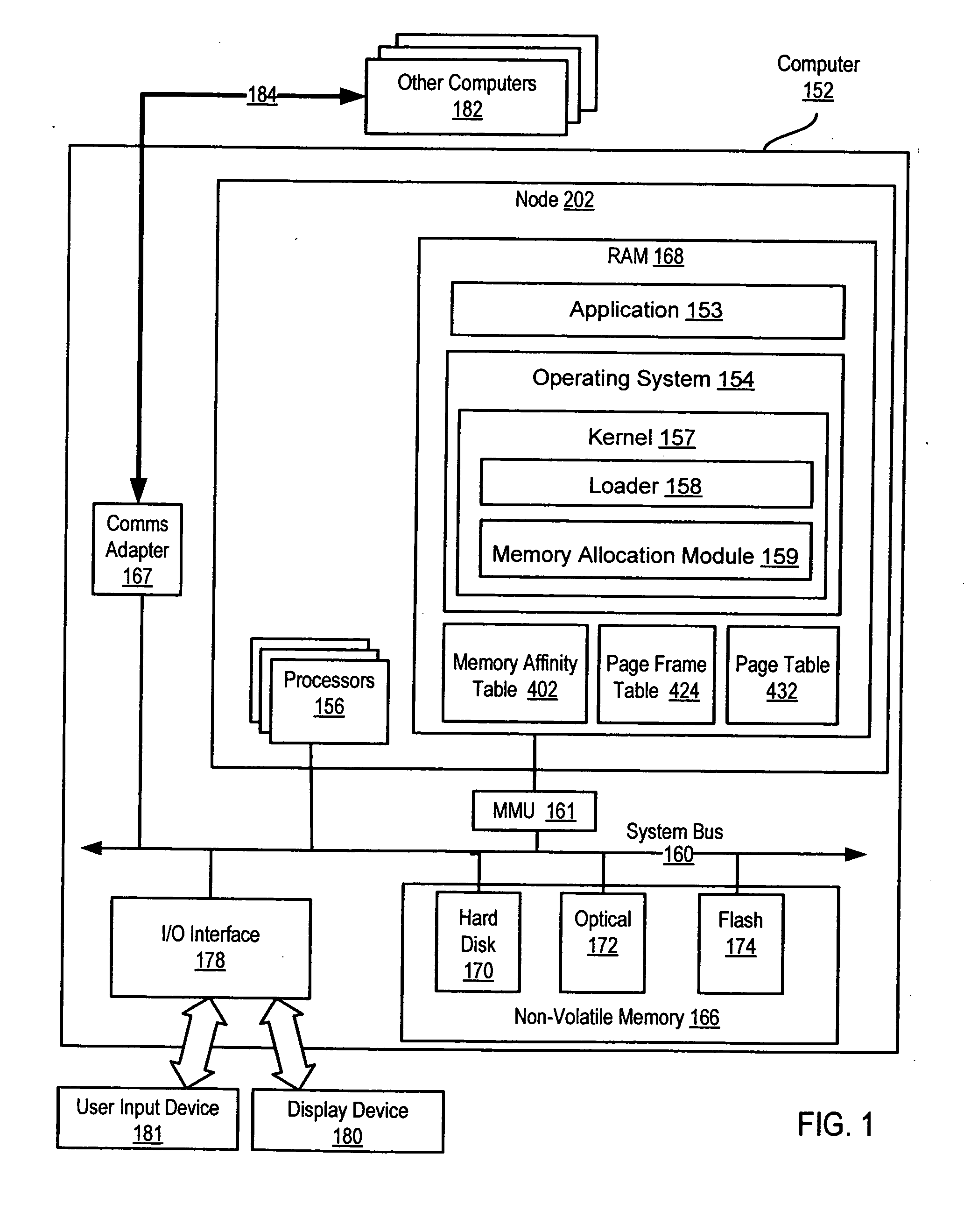

Memory allocation in a multi-node computer

ActiveUS20070073992A1Reduce riskMemory adressing/allocation/relocationProgram controlWeight coefficientParallel computing

Evaluating memory allocation in a multi-node computer including calculating, in dependence upon a normalized measure of page frame demand, a weighted coefficient of memory affinity, the weighted coefficient representing desirability of allocating memory from the node, and allocating memory may include allocating memory in dependence upon the weighted coefficient of memory affinity.

Owner:IBM CORP

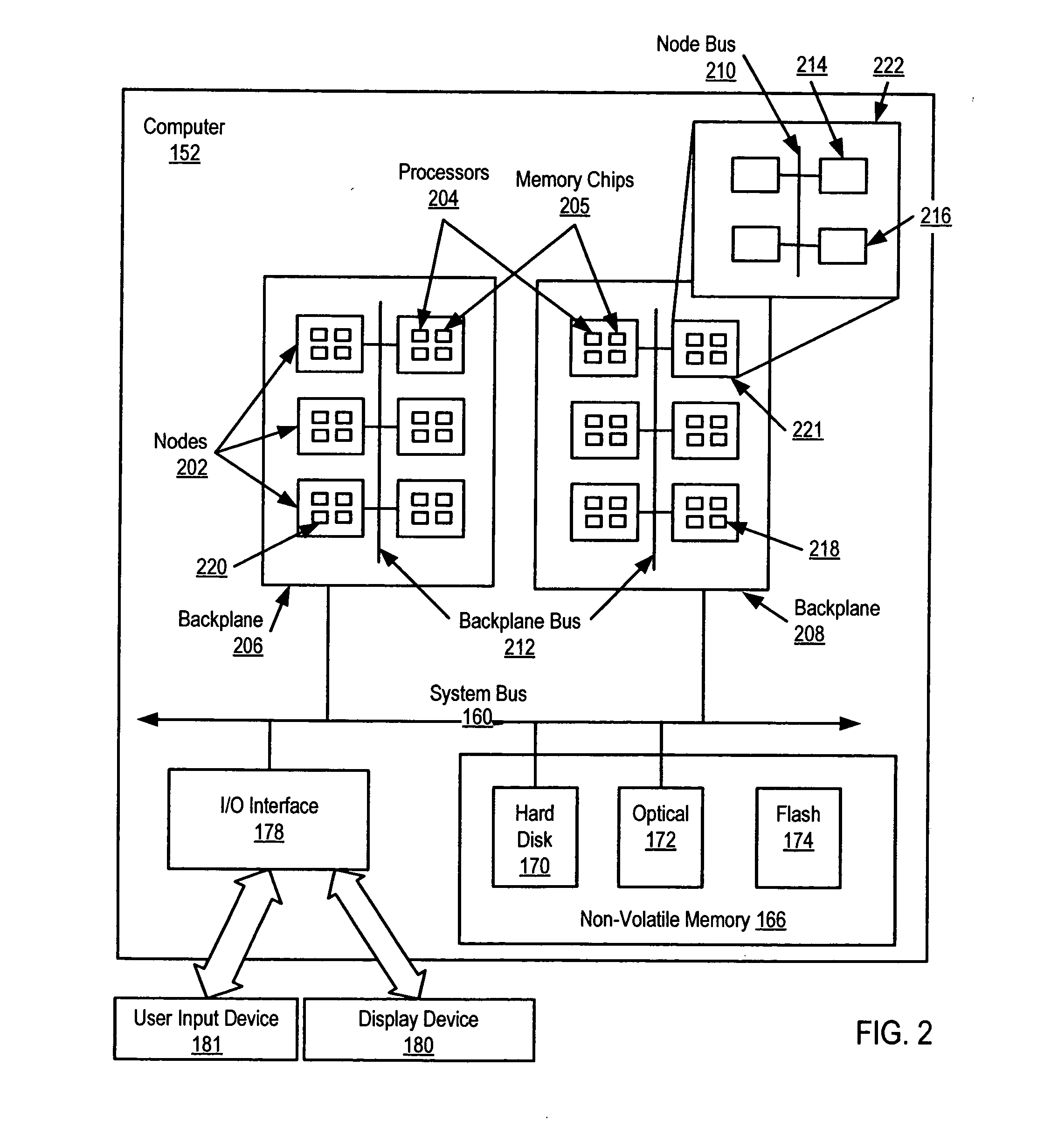

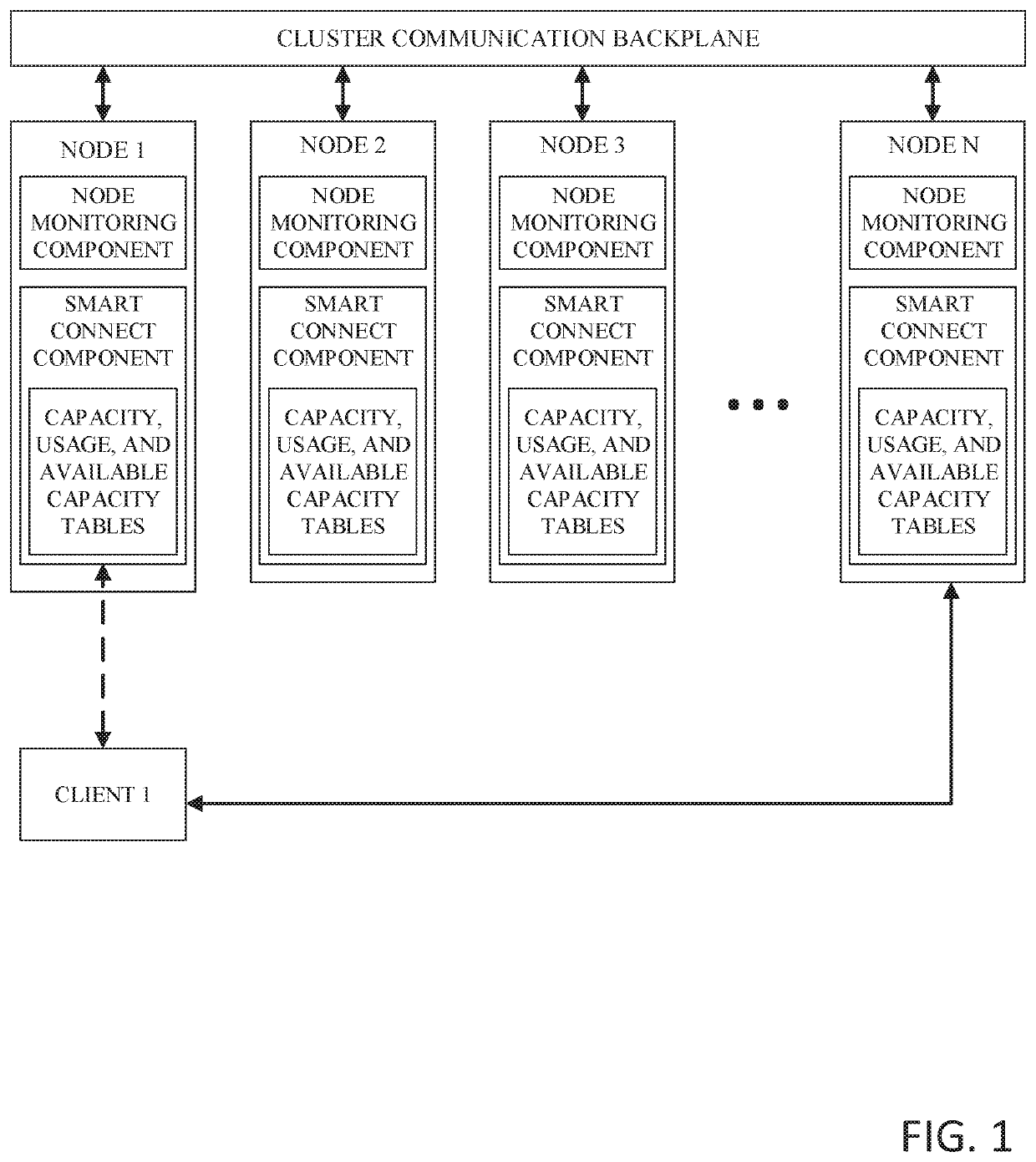

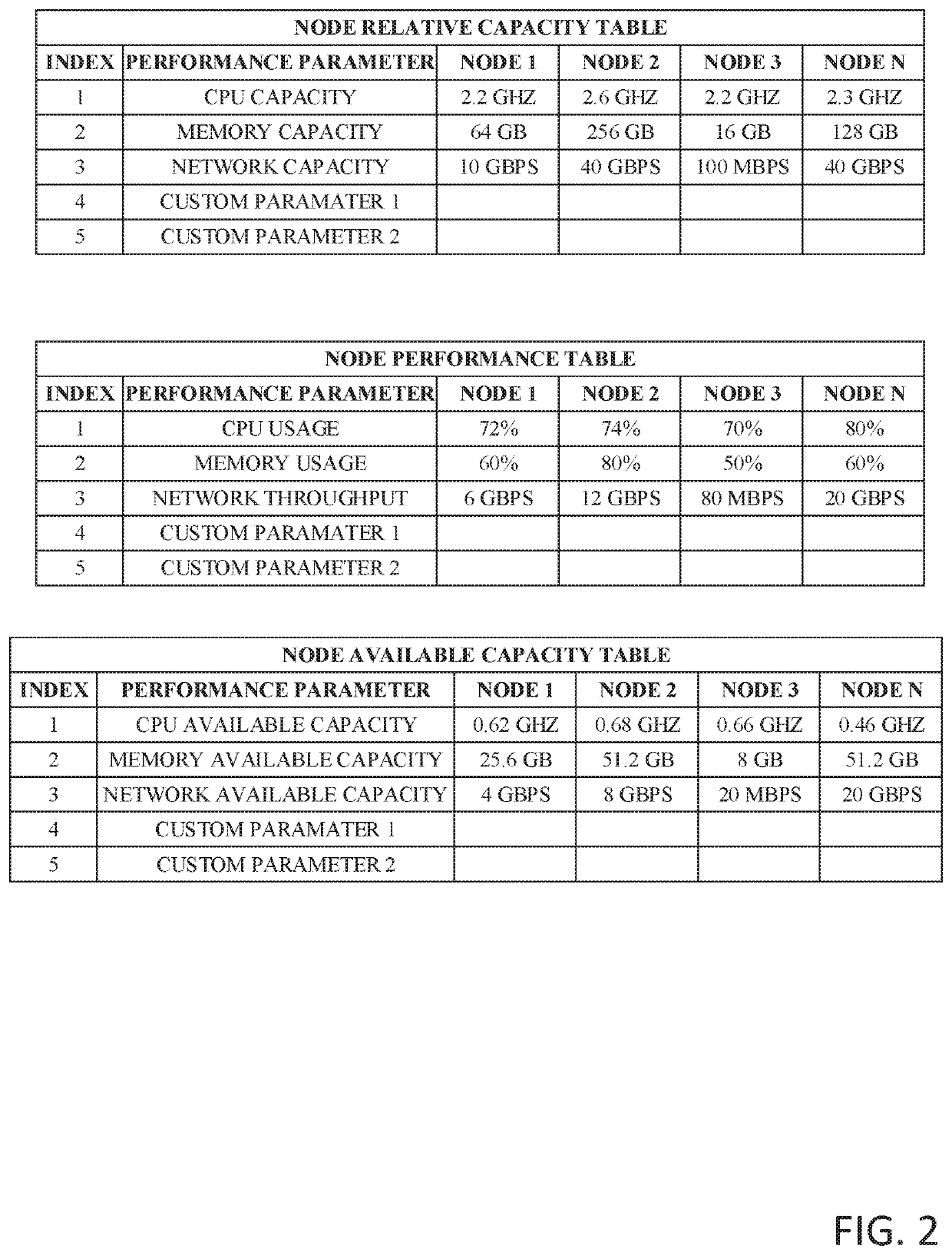

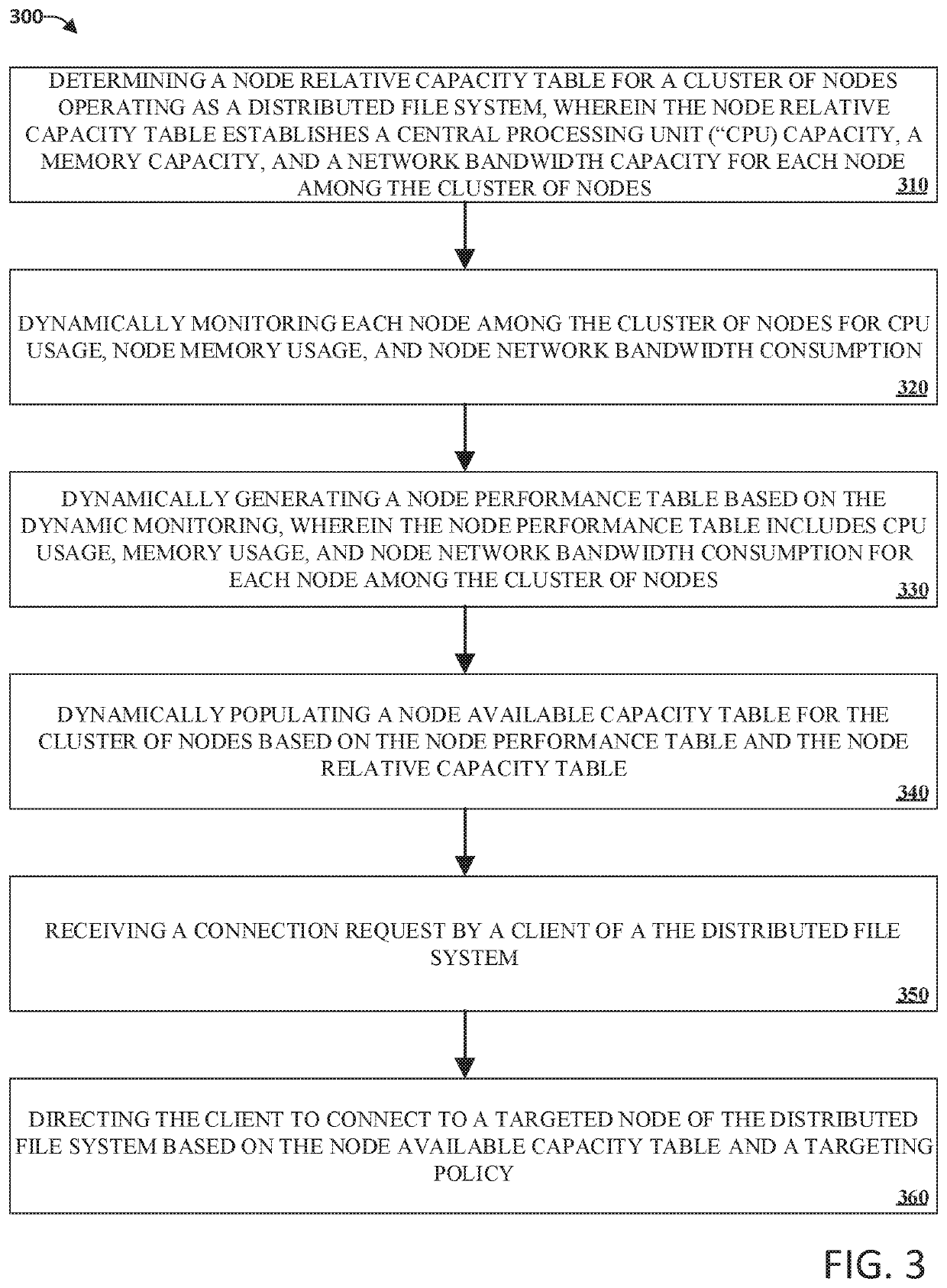

Distributed file system load balancing based on available node capacity

InactiveUS20200042608A1Well formedSpecial data processing applicationsComputer networkDistributed File System

Implementations are provided herein for optimizing the usage of cluster resources in a cluster of nodes operating as a distributed file system. A node relative capacity table can be generated that inventories the total capacity of each node within the cluster of nodes. Each node can then be dynamically monitored for usage of node resources. A node available capacity table can be dynamically populated with the amount of available capacity each node has for compute, memory usage, and network bandwidth. When clients connect to the distributed file system, they can be directed to have their requests serviced by nodes with greater available capacity based on policy.

Owner:EMC IP HLDG CO LLC

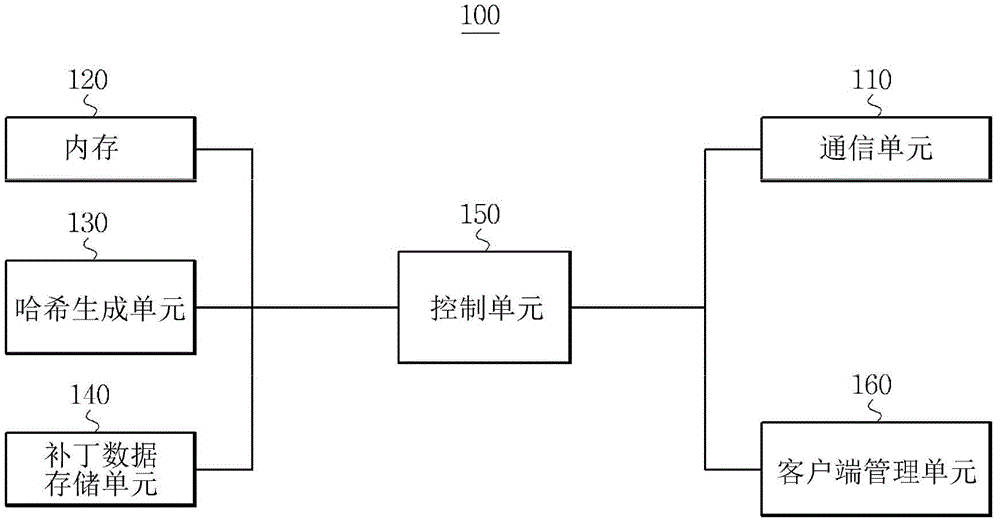

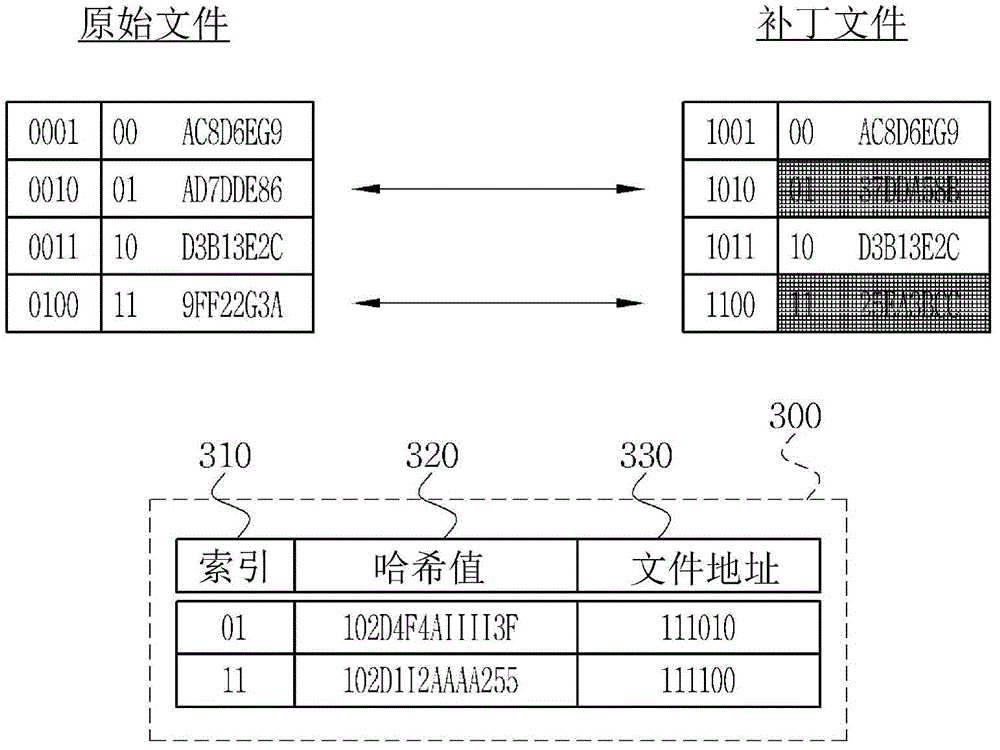

Patch method using RAM(random-access memory)and temporary memory, patch server, and client

InactiveCN102945170AProgram control using stored programsMultiple digital computer combinationsRandom access memoryClient-side

The invention provides a patch method using RAM and temporary memory, a patch server, and a client. A patch method is disclosed. The patch method can be executed in the patch client. The patch client can be connected to the patch serve, and comprises a memory device and the RAM. The patch method comprises the following steps: a) accessing the patch server and receiving the patch data from the patch server; b) calculating the available space of the RAM; c) when the patch data is smaller than or equal to the available space of the RAM, the available space of the RAM executes the patching operation; d) when the patch data is larger than or equal to the available space of the RAM, the temporary memory having the capacity corresponding to the capacity of the patch data can be distributed to the memory device, and the patching operation can be executed by using the distributed temporary memory.

Owner:NEOWIZ GAMES CO LTD

Compute memory

ActiveUS9697877B2Reduce widthAchieve energy efficiencyDigital storageAnalog signal processingBit plane

A compute memory system can include a memory array and a controller that generates N-ary weighted (e.g., binary weighted) access pulses for a set of word lines during a single read operation. This multi-row read generates a charge on a bit line representing a word stored in a column of the memory array. The compute memory system further includes an embedded analog signal processor stage through which voltages from bit lines can be processed in the analog domain. Data is written into the memory array in a manner that stores words in columns instead of the traditional row configuration.

Owner:THE BOARD OF TRUSTEES OF THE UNIV OF ILLINOIS

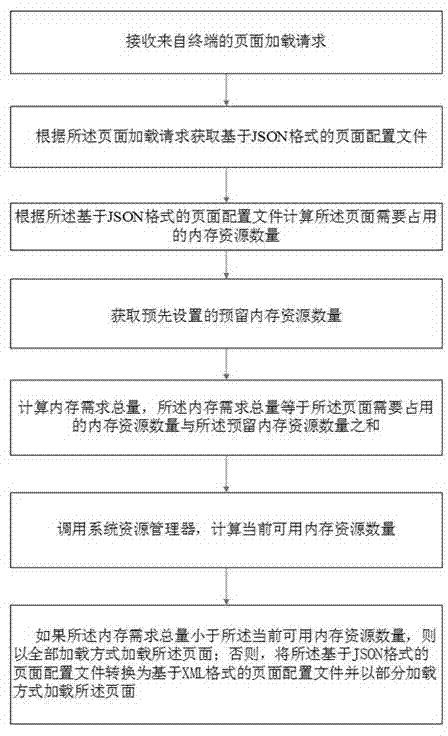

Page generating and display method based on JSON (java script object notation) format

ActiveCN104765760AReduce the chance of crashingSmall granularitySpecial data processing applicationsGranularityXML

The invention discloses a page generating and display method based on a JSON (java script object notation) format. The page generating and display method comprises the following steps: receiving a page loading request; obtaining a page configuration file based on the JSON format; calculating quantity of memory resources to be occupied by the page; obtaining the quantity of preset reserved memory resources; calculating total amount of memory needs; calculating the quantity of current available memory resources; if the total amount of memory needs is smaller than the quantity of current available memory resources, loading the page in a full loading mode, otherwise, converting the page configuration file based on the JSON format into the page configuration file based on an XML (extensive markup language) format and loading the page in a partial loading mode. The page generating and display method is suitable for needs of different memory conditions, so that the system breakdown probability is reduced, the memory resource judging granularity is relatively small, the judging accuracy for the practical memory available resources is relatively high, the practical page loading speed is increased, and the defect that the JSON format cannot be partially loaded is overcome.

Owner:深圳微迅信息科技有限公司

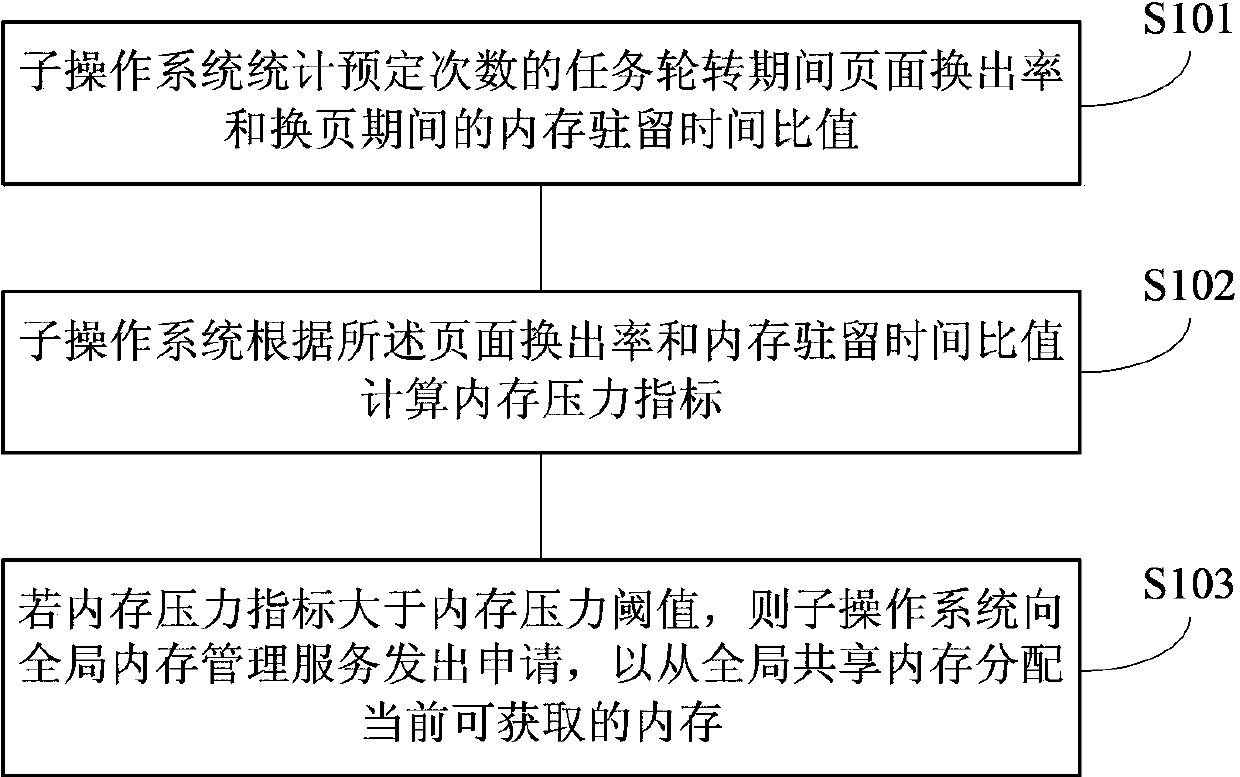

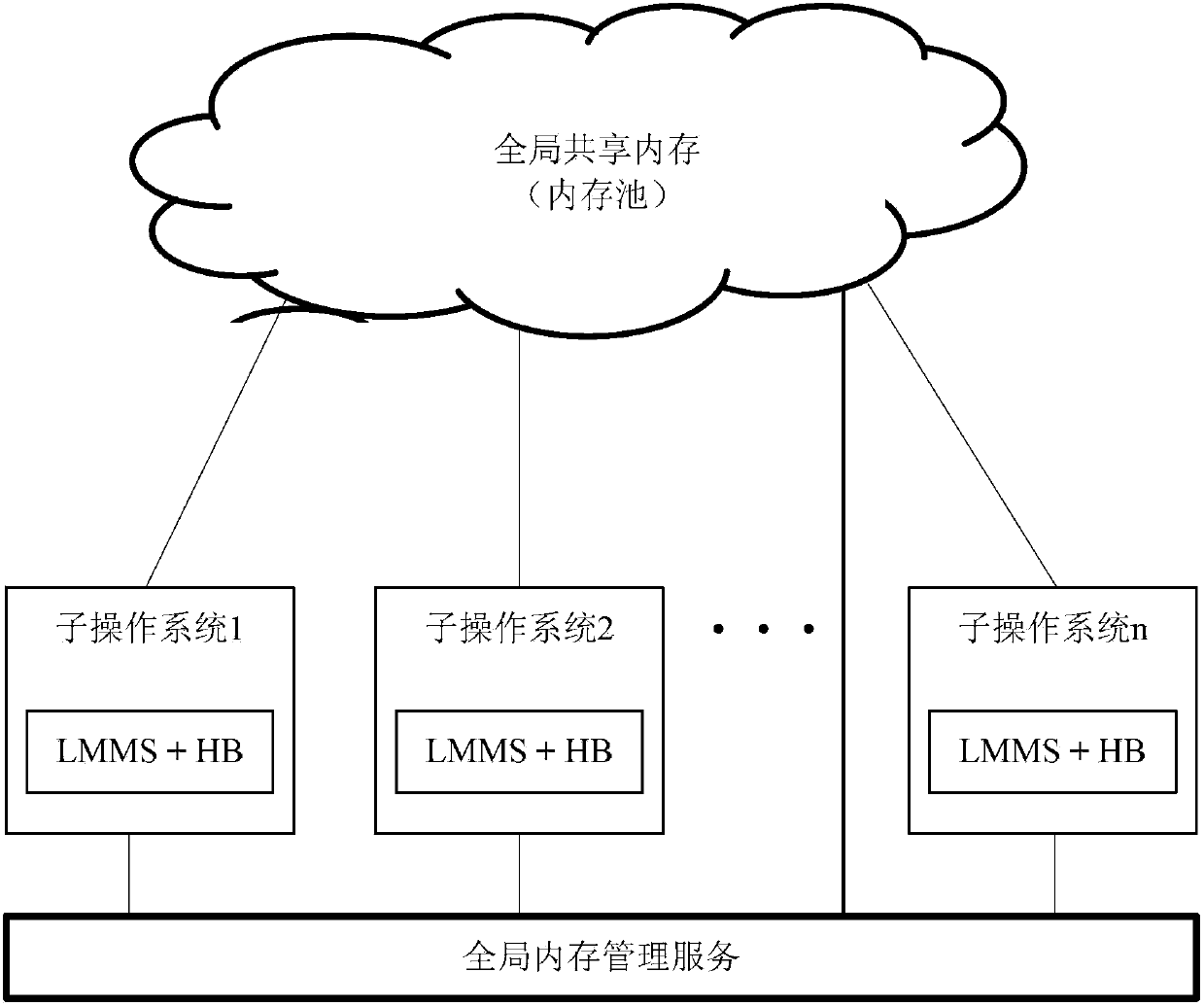

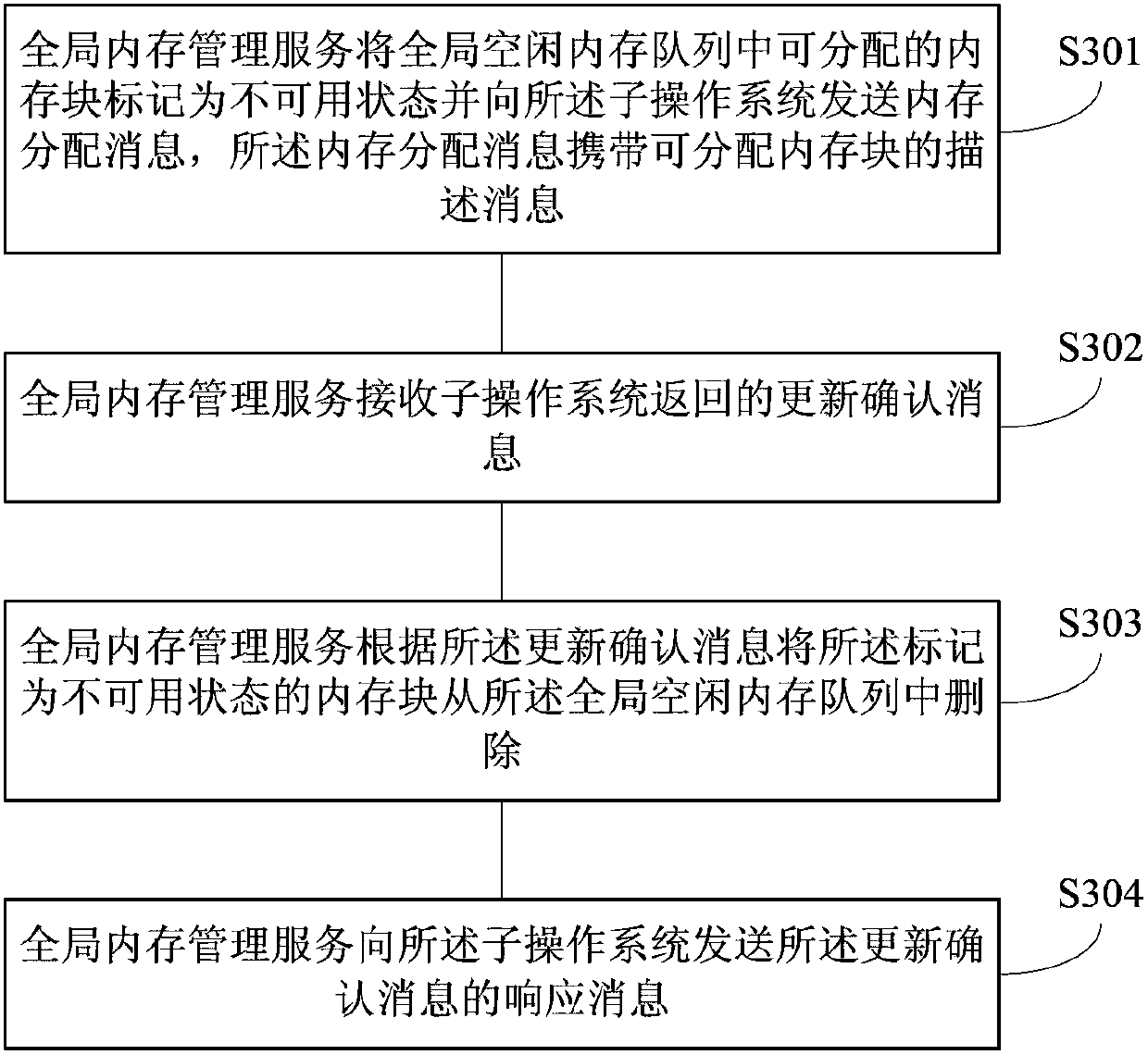

Global memory sharing method, global memory sharing device and communication system

ActiveCN103870333AReduce complexityImprove performanceResource allocationMemory adressing/allocation/relocationExtensibilityOperational system

An embodiment of the invention provides a global memory sharing method, a global memory sharing device and a communication system to improve independence of sub-operation systems and reduce the pressure of a global memory management service. The method includes the steps: counting the page swap-out rate in the rotation period of tasks in predetermined times and the memory residence time ratio in the page swapping period by the sub-operation systems; calculating memory pressure indexes according to the page swap-out rate and the memory residence time ratio by the sub-operation systems; issuing application to the global memory management service by the sub-operation systems if the memory pressure indexes are larger than a memory pressure threshold. Under the architecture of a multi-operation system, the sub-operation systems can finish more adaptive work, global memory management service complexity is reduced, and system performances are improved. Besides, as the sub-operation systems can independently apply for and release memories, the utilization rate of a global memory can be increased, and the architecture of the multi-operation system has better expandability.

Owner:HUAWEI TECH CO LTD +1

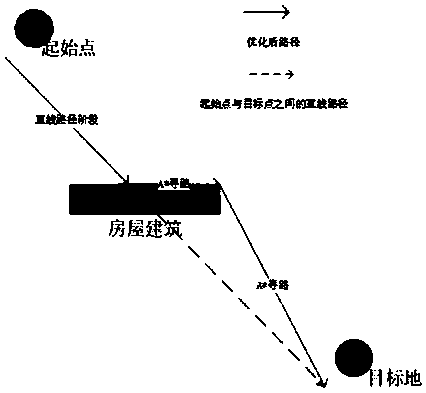

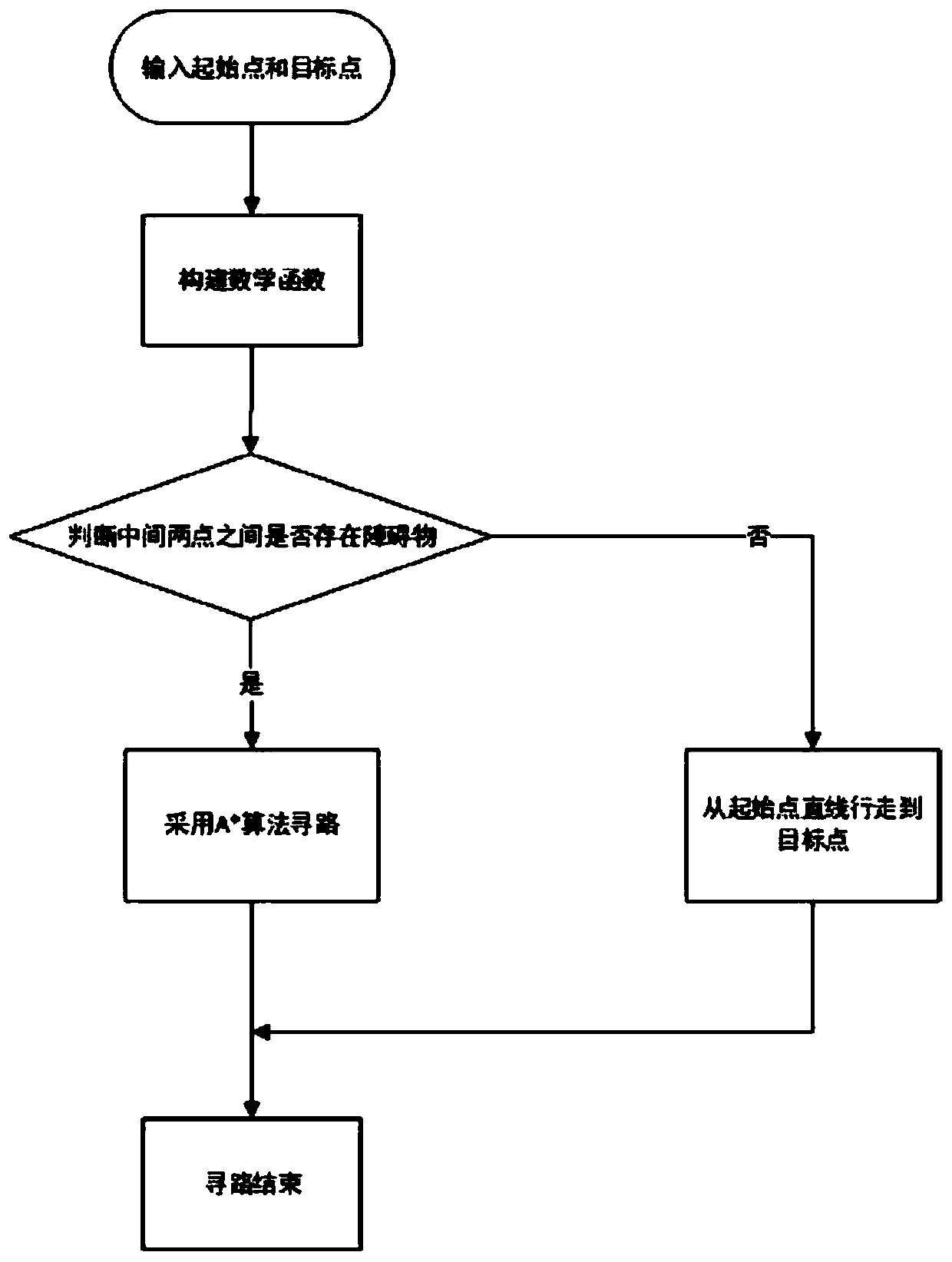

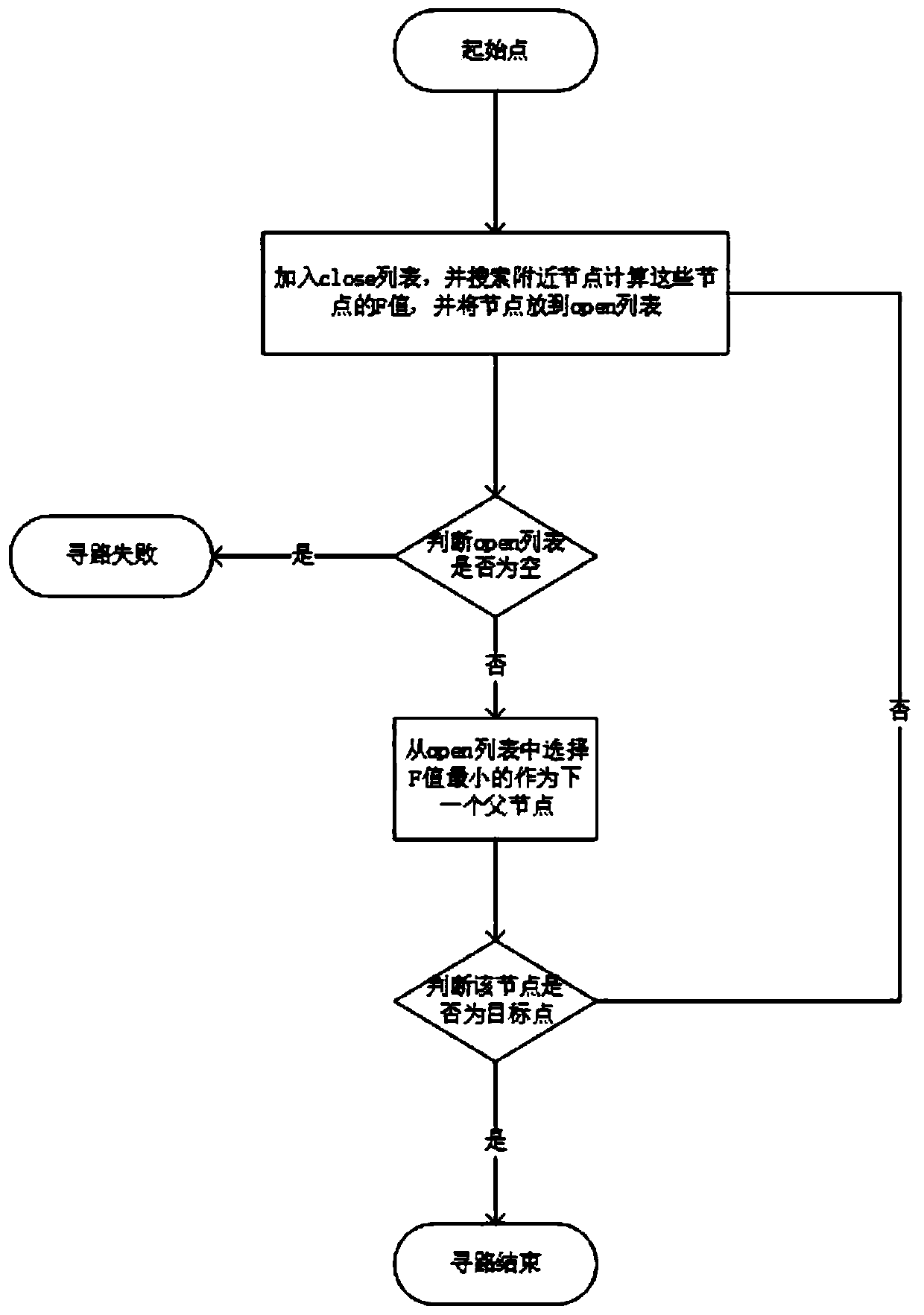

Path finding method based on A star optimization algorithm

ActiveCN111289005AResolution timeSolve the problem of computing memoryInstruments for road network navigationPosition/course control in two dimensionsAlgorithmTheoretical computer science

The invention provides a path finding method based on an A star optimization algorithm. The method comprises the steps of: giving a grid chart of a map, determining the position coordinates of a corresponding obstacle point, and determining the coordinates of a starting point and a target point, constructing a linear function between the starting point and the target point, solving a key point where a straight line intersects with the grid, then solving related adjacent nodes, and judging whether nodes coinciding with the obstacle point exist in the nodes or not, if the node does not coincidewith the obstacle node, enabling the searched optimal path to be a straight line between the starting point and the target point of the automobile, if an obstacle point is encountered, internally calling an A star algorithm, and putting non-obstacle nodes around the point into an open list, judging whether the open list is empty or not, taking a point with the smallest f value out from the open list to serve as the next step of path finding, judging whether the point is a target point or not, and if so, determining that path finding succeeds and ending the algorithm, or otherwise, continuing path finding, setting the point as the current point, and continuing the path finding process. According to the method, the algorithm is optimized by preprocessing the obstacle, so that the node searchtime is shortened, and the calculation memory is reduced.

Owner:JIANGSU UNIV OF TECH

Method for testing power consumption of memory

InactiveCN104317683AGood choiceEasy to operateDetecting faulty computer hardwareElectrical resistance and conductanceTest power

The invention discloses a method for testing the power consumption of a memory. The method comprises the following steps of connecting a resistor to the memory in series by using a memory increasing card; measuring current passing through a memory bank by the resistor; then multiplying the current by VDD (Voltage Drain Drain) of the memory to accurately calculate the power consumption of the memory; meanwhile, calculating values of the power consumption in different states by combining a memory pressure procedure under the system. Compared with the traditional method for testing the power consumption of the memory, the method for testing the power consumption of the memory, has the advantages that the accuracy is higher; the power consumption values of the memory in different states can be tested; the power consumption conditions of the memories from all manufacturers can be transversely compared, so the convenience is brought to the selection of customers; the simplicity and feasibility for operation are realized; higher practicality and better popularization and use prospect are obtained.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

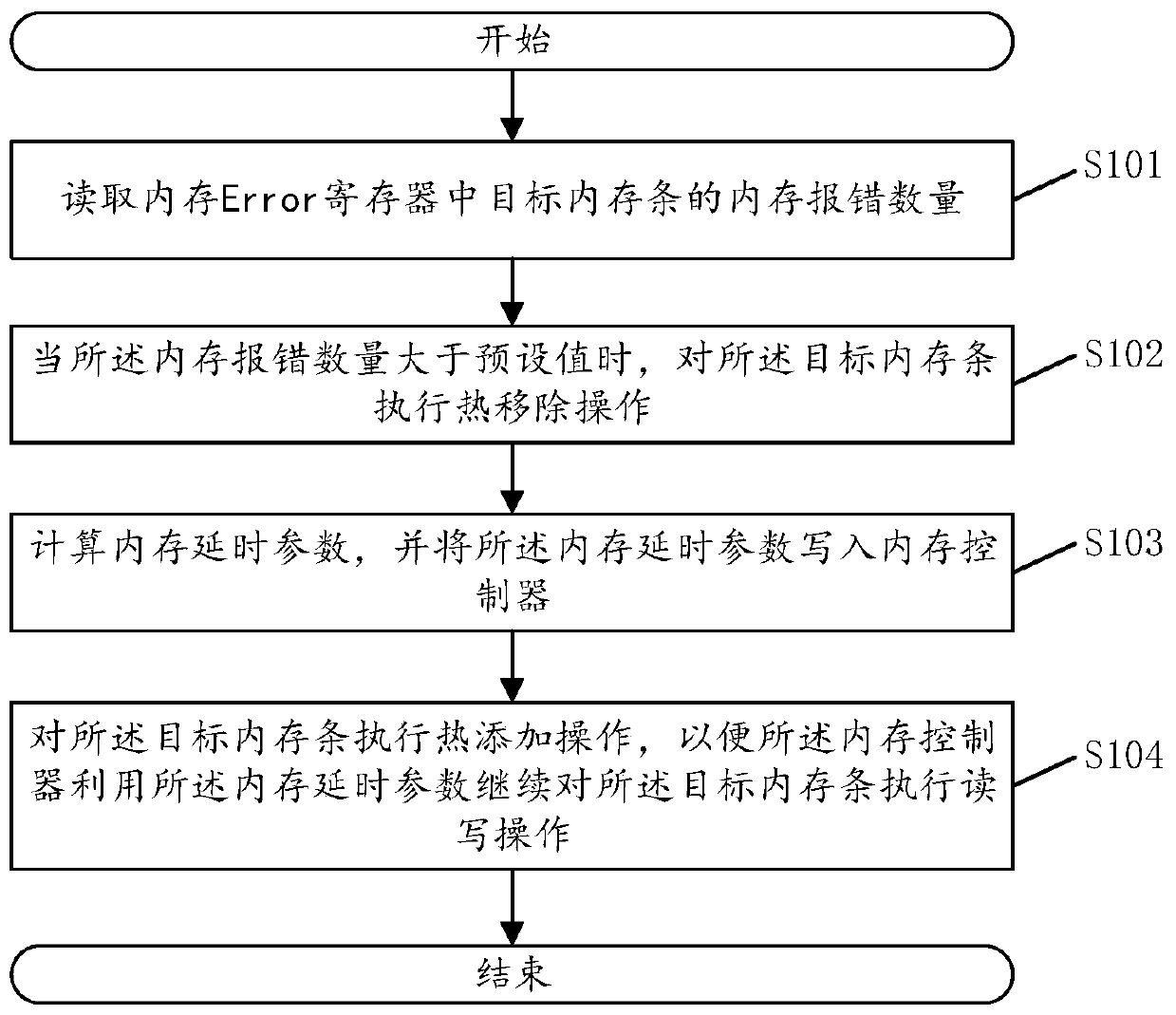

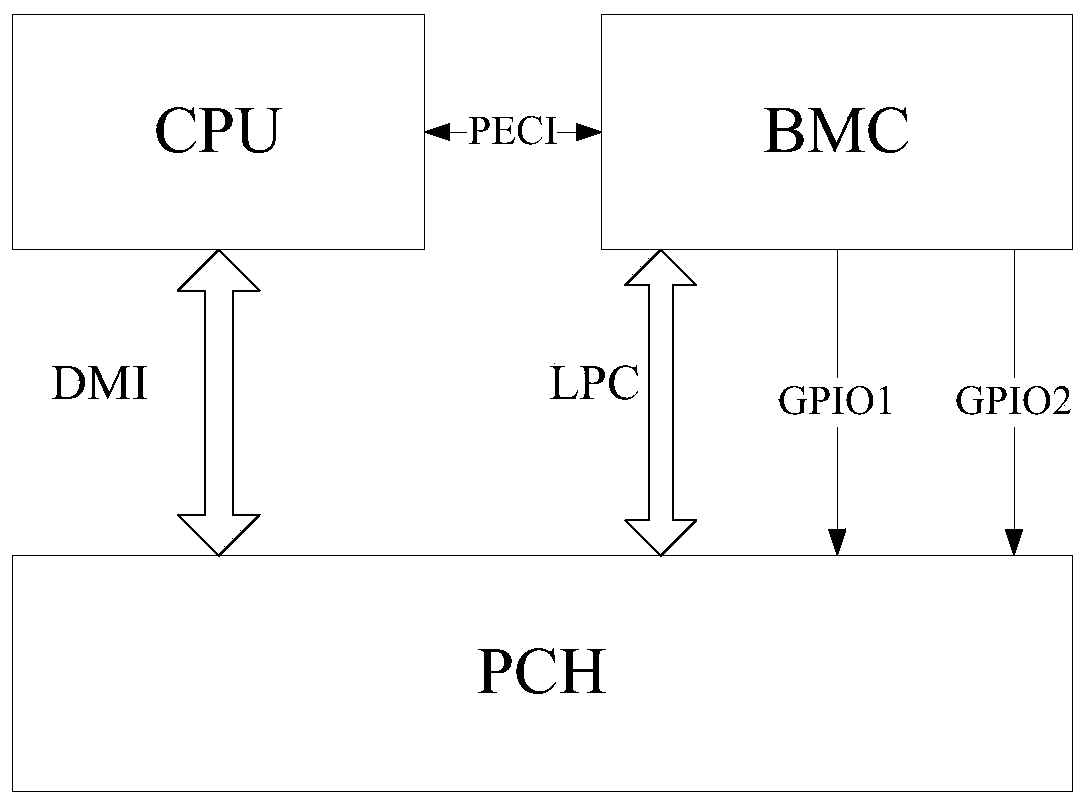

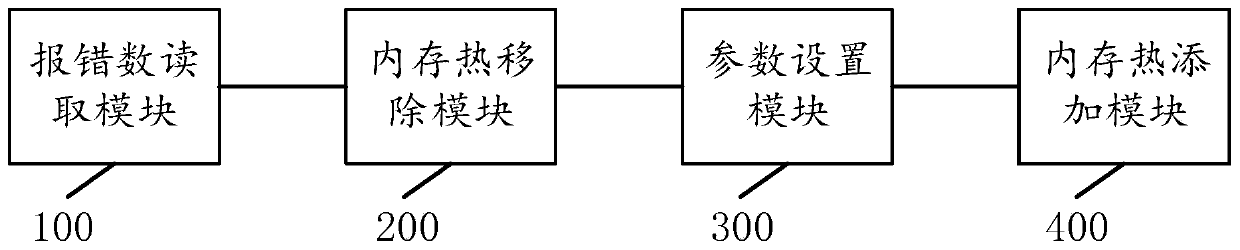

Memory exception processing method and system, electronic equipment and storage medium

InactiveCN111143104AReduced number of memory errorsReduce memory read and write errorsNon-redundant fault processingStatic storageComputer hardwareMemory bank

The invention discloses a memory exception processing method. The processing method comprises the steps of reading a memory error number of a target memory bank in a memory Error register; when the memory error number is greater than a preset value, executing a heat removal operation on the target memory bank; calculating a memory delay parameter, and writing the memory delay parameter into a memory controller; wherein the memory delay parameter is waiting time after the memory controller controls the target memory bank to receive a read-write command; and executing a hot addition operation onthe target memory bank, so that the memory controller continues to execute a read-write operation on the target memory bank by using the memory delay parameter. According to the invention, the errorrate of memory reading and writing can be reduced. The invention furthermore discloses a memory exception processing system, electronic equipment and a storage medium, which have the above beneficialeffects.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

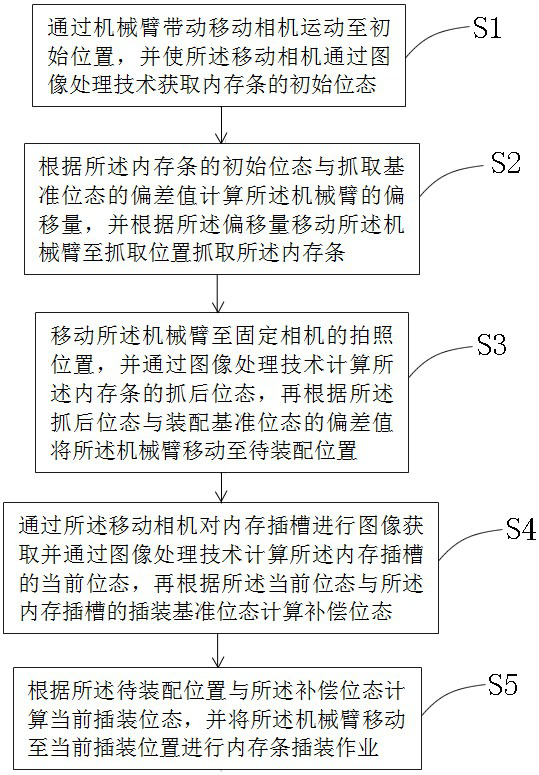

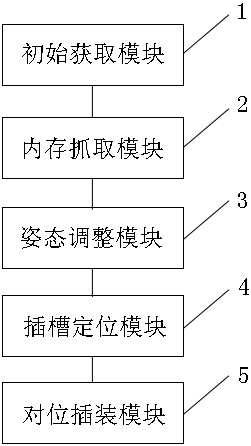

Memory alignment plugging method and system based on machine vision, equipment and storage medium

InactiveCN111815634AImprove work efficiencyAccurateImage enhancementImage analysisComputer hardwareMobile camera

The invention discloses a memory alignment plugging method and system based on machine vision, equipment and a storage medium. The method comprises the steps of: driving a mobile camera to move to aninitial position through a mechanical arm, and obtaining the initial state of a memory bank; moving the mechanical arm to a grabbing position to grab the memory bank according to the deviation value of the initial state of the memory bank and a grabbing reference state; moving the mechanical arm to a photographing position of a fixed camera, calculating the post-grabbing state of the memory bank,and moving the mechanical arm to a position to be assembled according to the deviation value of the post-grabbing state of the memory bank and an assembling reference state; performing image acquisition on a memory slot, calculating the current state of the memory slot, and calculating a compensation state according to the current state of the memory slot and an insertion reference state of the memory slot; and calculating a current plugging mounting state according to the position to be assembled and the compensation state, and moving the mechanical arm to the current plugging mounting position to perform memory bank plugging mounting operation. According to the method, system, equipment and storage medium of the invention, the memory plugging mounting operation efficiency can be improved, accurate alignment of the memory bank and the memory slot is ensured, and collision accidents in the plugging mounting operation process are prevented.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com