Patents

Literature

80 results about "Bayesian optimization algorithm" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

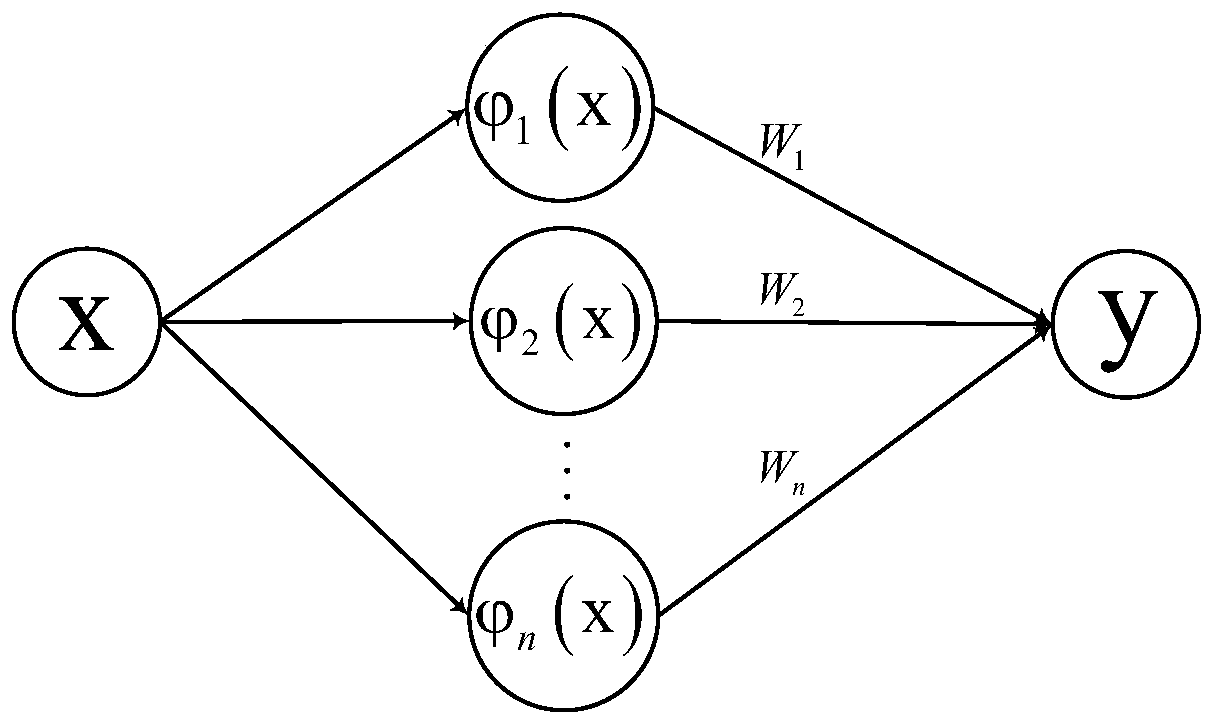

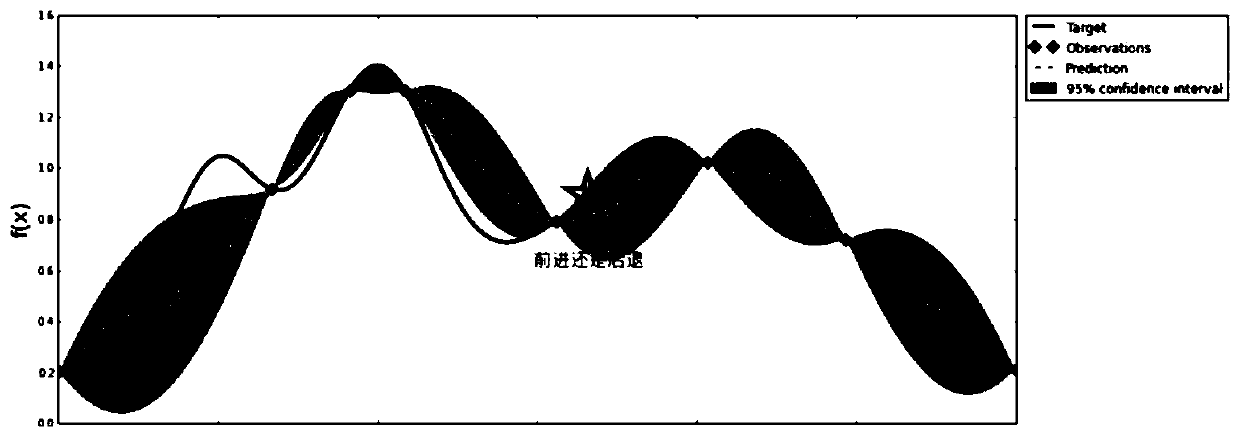

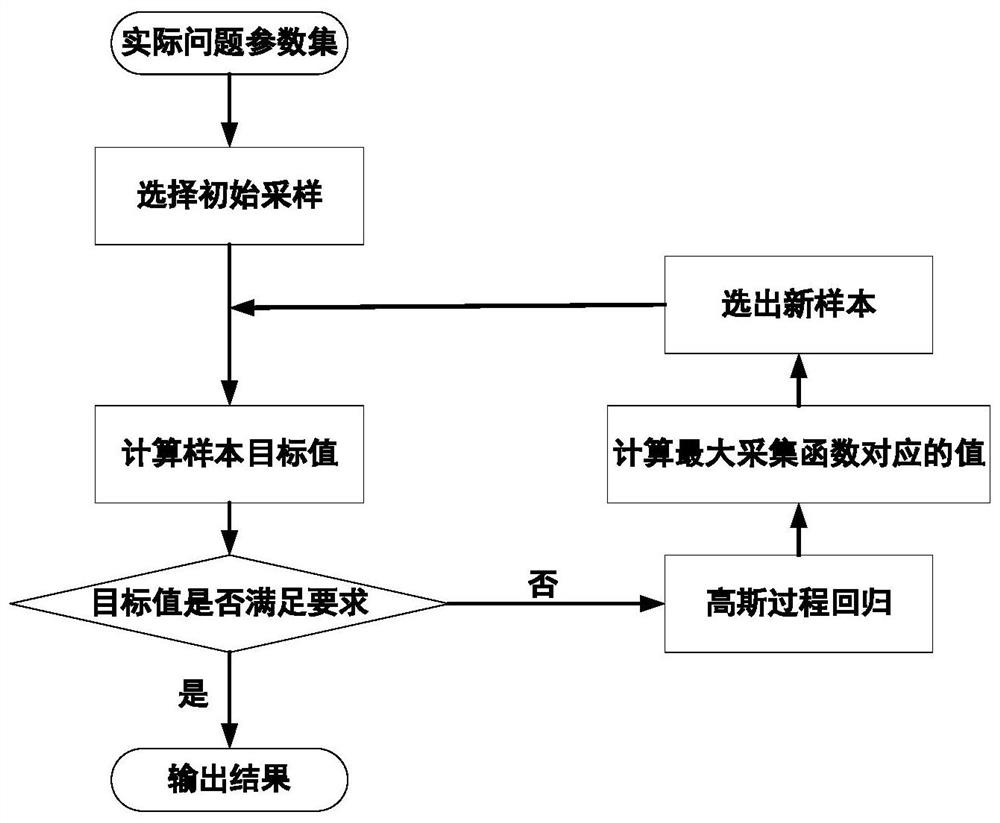

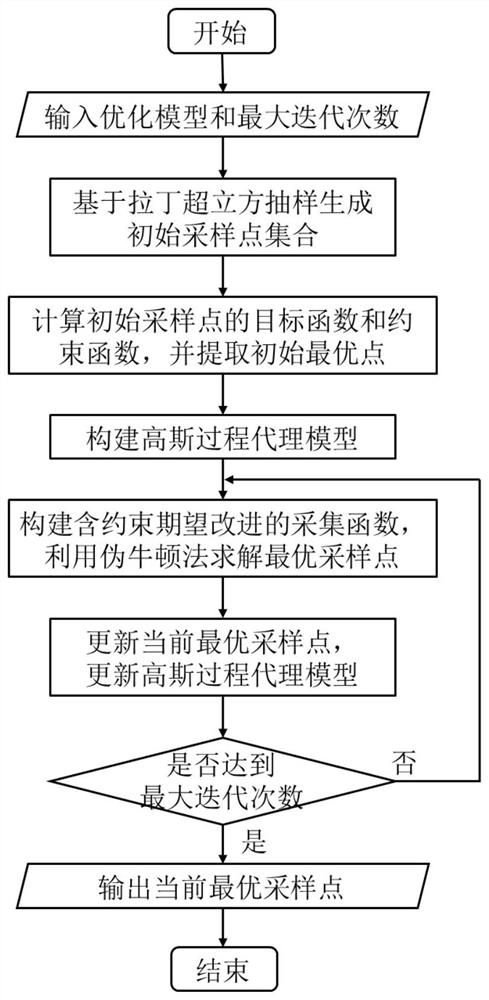

Bayesian optimization falls in a class of optimization algorithms called sequential model-based optimization (SMBO) algorithms. These algorithms use previous observations of the loss , to determine the next (optimal) point to sample for. The algorithm can roughly be outlined as follows.

Deep neural network multi-task hyper-parameter optimization method and device

InactiveCN110443364AAchieve optimizationThe overall calculation is smallNeural architecturesNeural learning methodsBayesian optimization algorithmRadial basis function neural

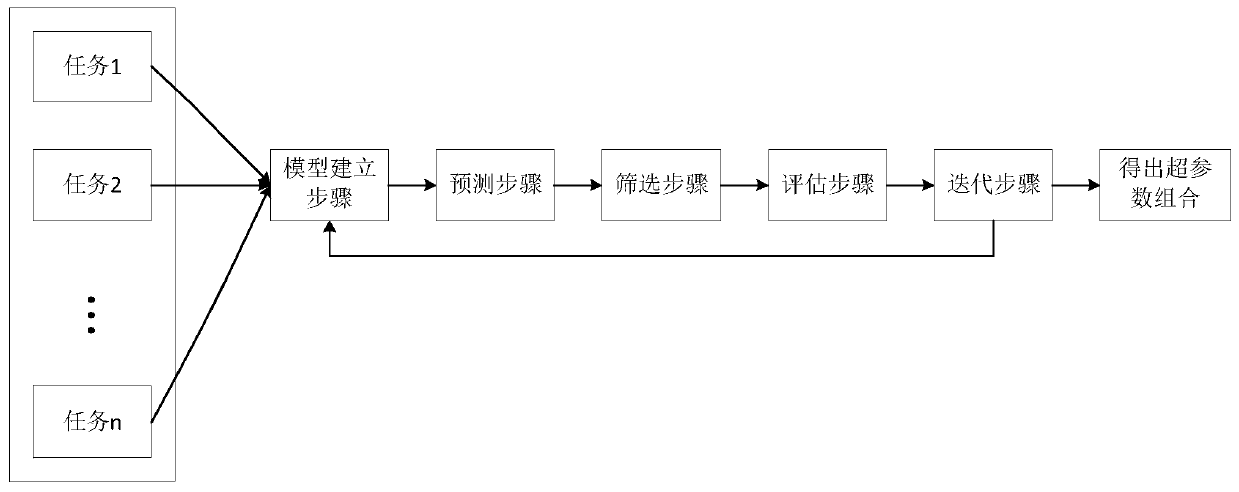

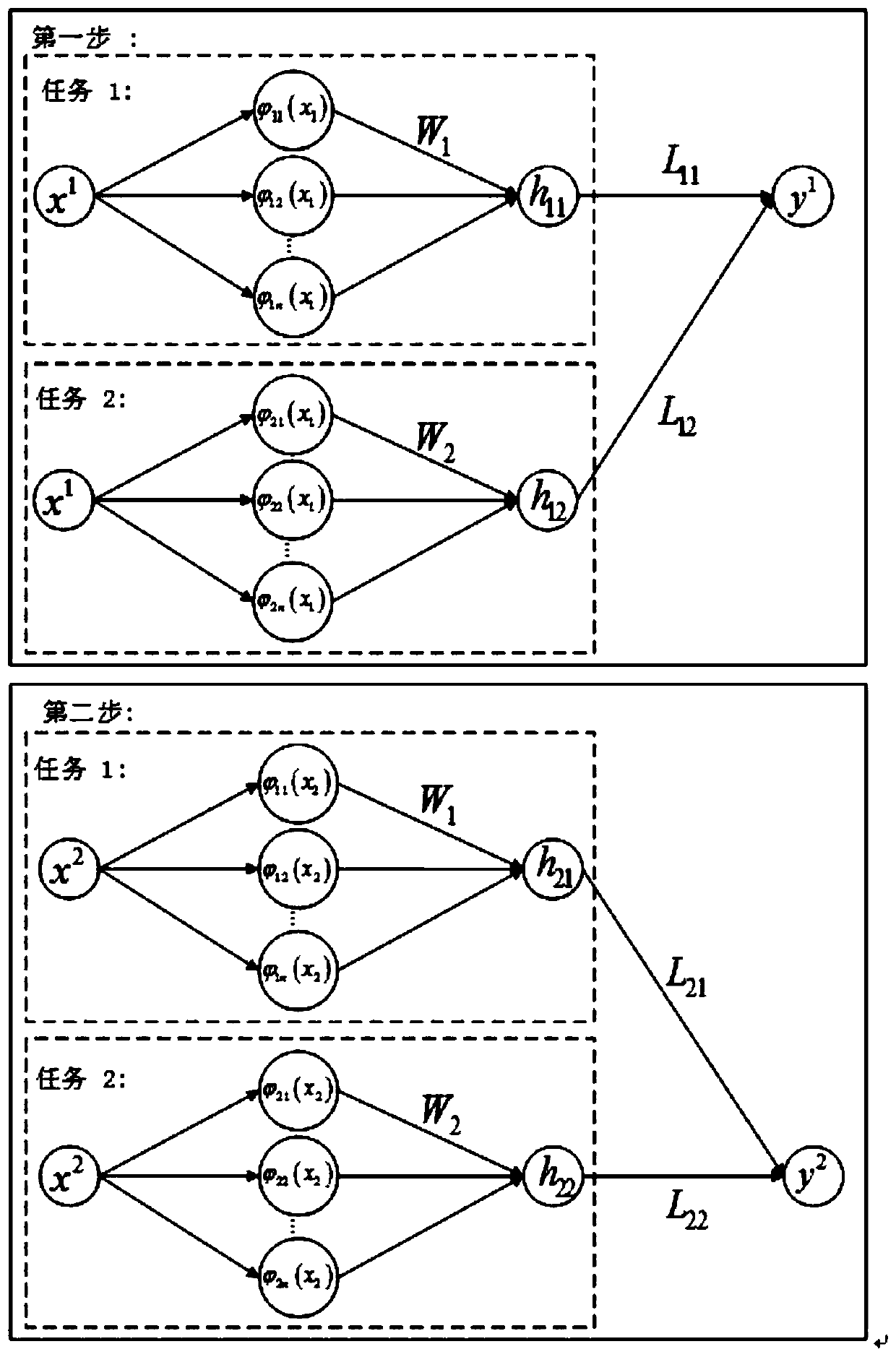

The invention discloses a deep neural network multitask hyper-parameter optimization method. The method comprises: firstly, a data training set of each task being subjected to model training to obtaina multi-task learning network model; secondly, predicting all points in an unknown region, screening candidate points from a prediction result, finally evaluating the screened candidate points, adding the candidate points and target function values of the candidate points into the data training set, and establishing a model, predicting, screening and evaluating again; and so on, until the maximumnumber of iterations is reached, finally selecting a candidate point corresponding to the maximum target function value from the data training set, that is, the hyper-parameter combination of each task in the multi-task learning network model. According to the method, the Gaussian model is replaced by the radial basis function neural network model, and the radial basis function neural network model is combined with multi-task learning and is applied to the Bayesian optimization algorithm to realize hyper-parameter optimization, so that the calculation amount of hyper-parameter optimization isgreatly reduced. The invention further discloses an electronic device and a storage medium.

Owner:SHENZHEN UNIV

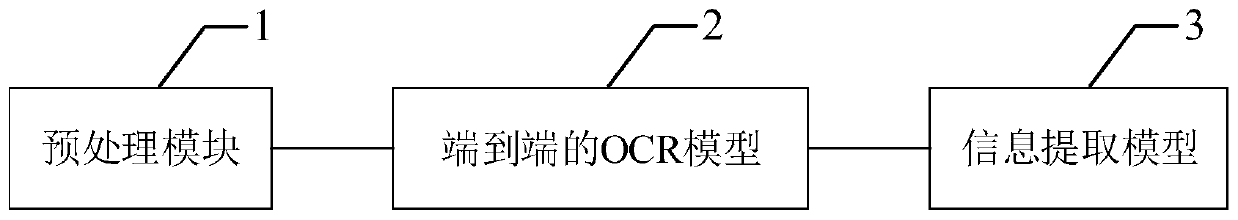

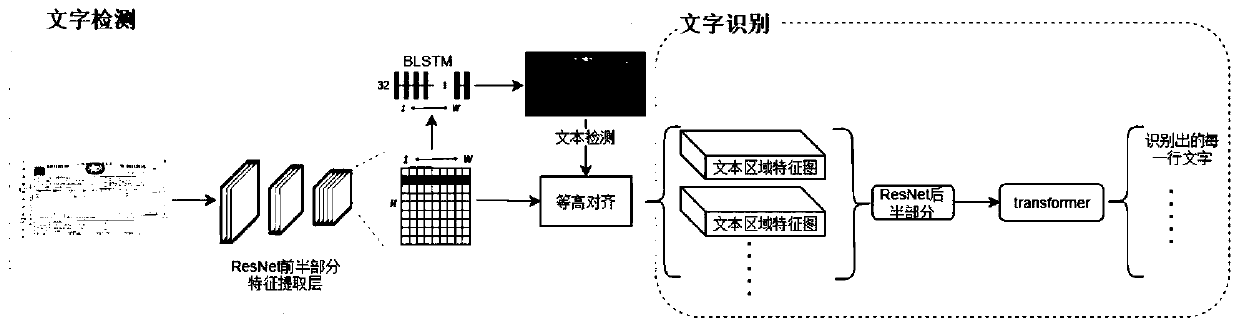

Discrete picture file information extraction system and method based on deep learning

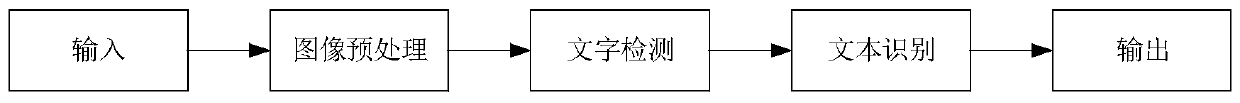

The invention discloses a discrete picture file information extraction system and method based on deep learning, an end-to-end OCR model combines text detection with text recognition, the sharing of an image processing layer of a convolutional neural network is achieved, and the calculation speed and recognition effect are improved. Two-way LSTM is used in the aspect of character detection, a textarea is accurately segmented according to file layout when characters are recognized, and the problems that noise can be recognized as the characters through an existing OCR technology, messy codes can be generated for a chart, and wrong lines can be generated can be solved. In the aspect of text recognition, a ResNet is combined with a Transformer codec, so that written bodies and handwritten bodies can be accurately recognized; by combining an end-to-end OCR model with an information extraction model, information loss can be reduced, and the accuracy of information extraction can be improved; and the Bayesian optimization algorithm is used to realize training and parameter adjustment automation of the information extraction model, so that a user can customize the desired information extraction model even if the user does not understand machine learning.

Owner:朱跃飞 +1

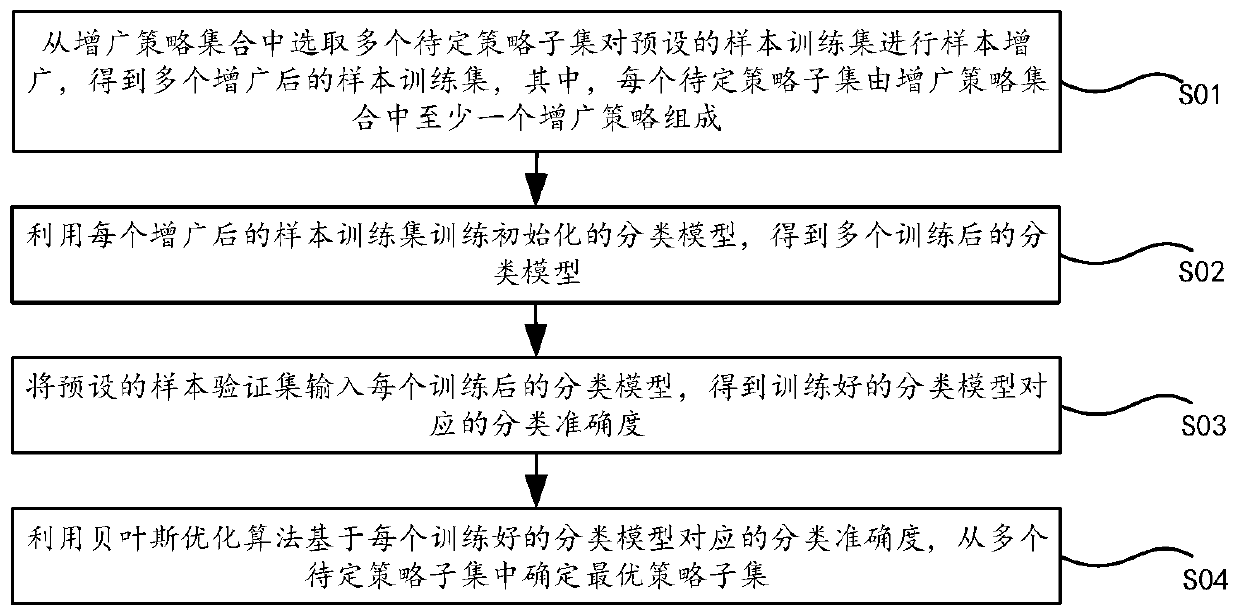

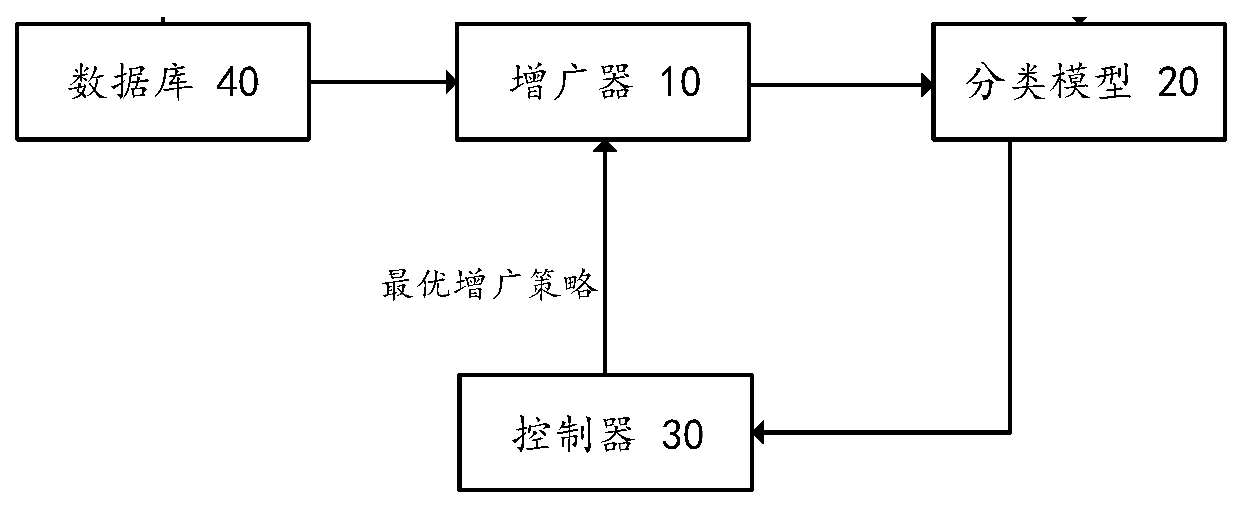

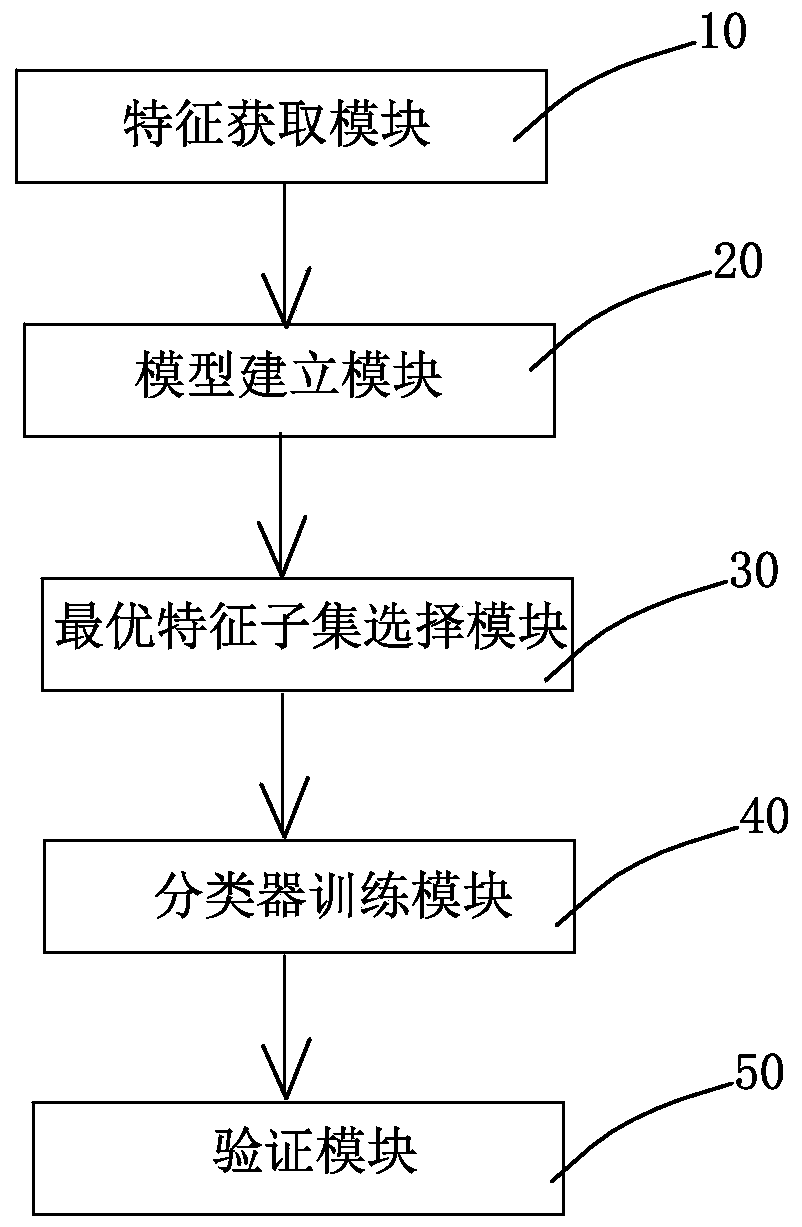

Image data augmentation strategy selection method and system

PendingCN111275129AImprove selection efficiencyCharacter and pattern recognitionNeural architecturesBayesian optimization algorithmMachine learning

The embodiment of the invention provides an image data augmentation strategy selection method and system, and relates to the technical field of artificial intelligence, and the method comprises the steps: selecting a plurality of to-be-determined strategy subsets from an augmentation strategy set, carrying out the sample augmentation of a preset sample training set, and obtaining a plurality of augmented sample training sets; training the initialized classification model by using each augmented sample training set to obtain a plurality of trained classification models; inputting a preset sample verification set into each trained classification model to obtain classification accuracy corresponding to the trained classification model; and determining an optimal strategy subset from the plurality of undetermined strategy subsets by using a Bayesian optimization algorithm based on the classification accuracy corresponding to each trained classification model. According to the technical scheme provided by the embodiment of the invention, the problem that which augmentation strategy is most effective to the current type of image sample is difficult to determine can be solved.

Owner:PING AN TECH (SHENZHEN) CO LTD

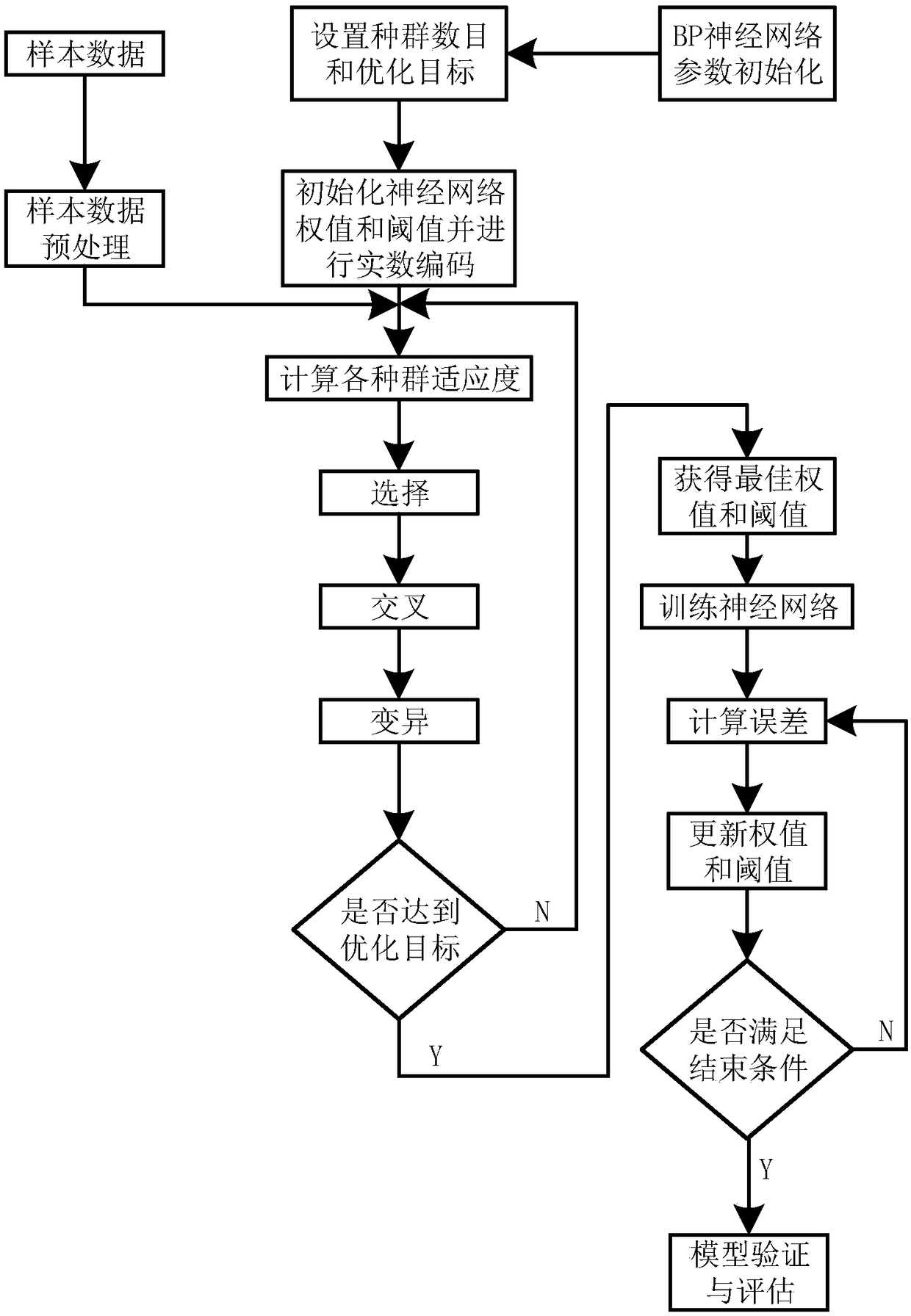

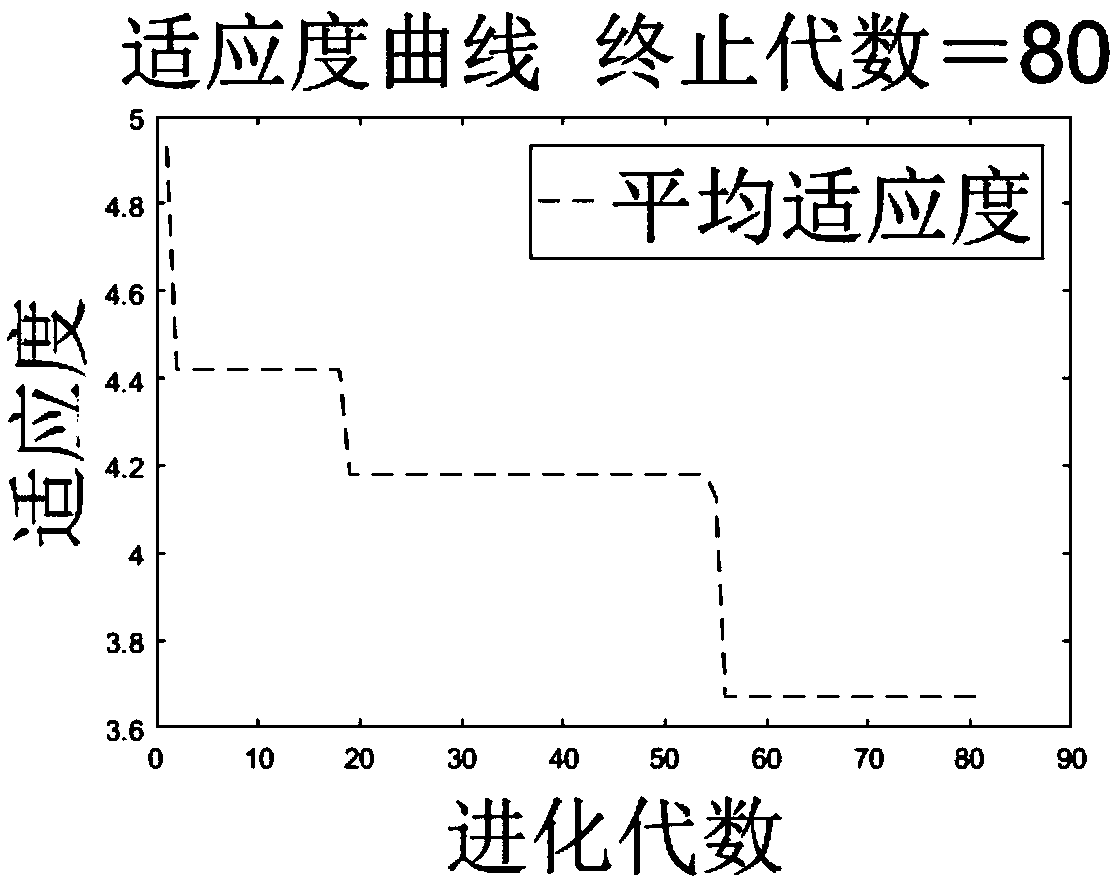

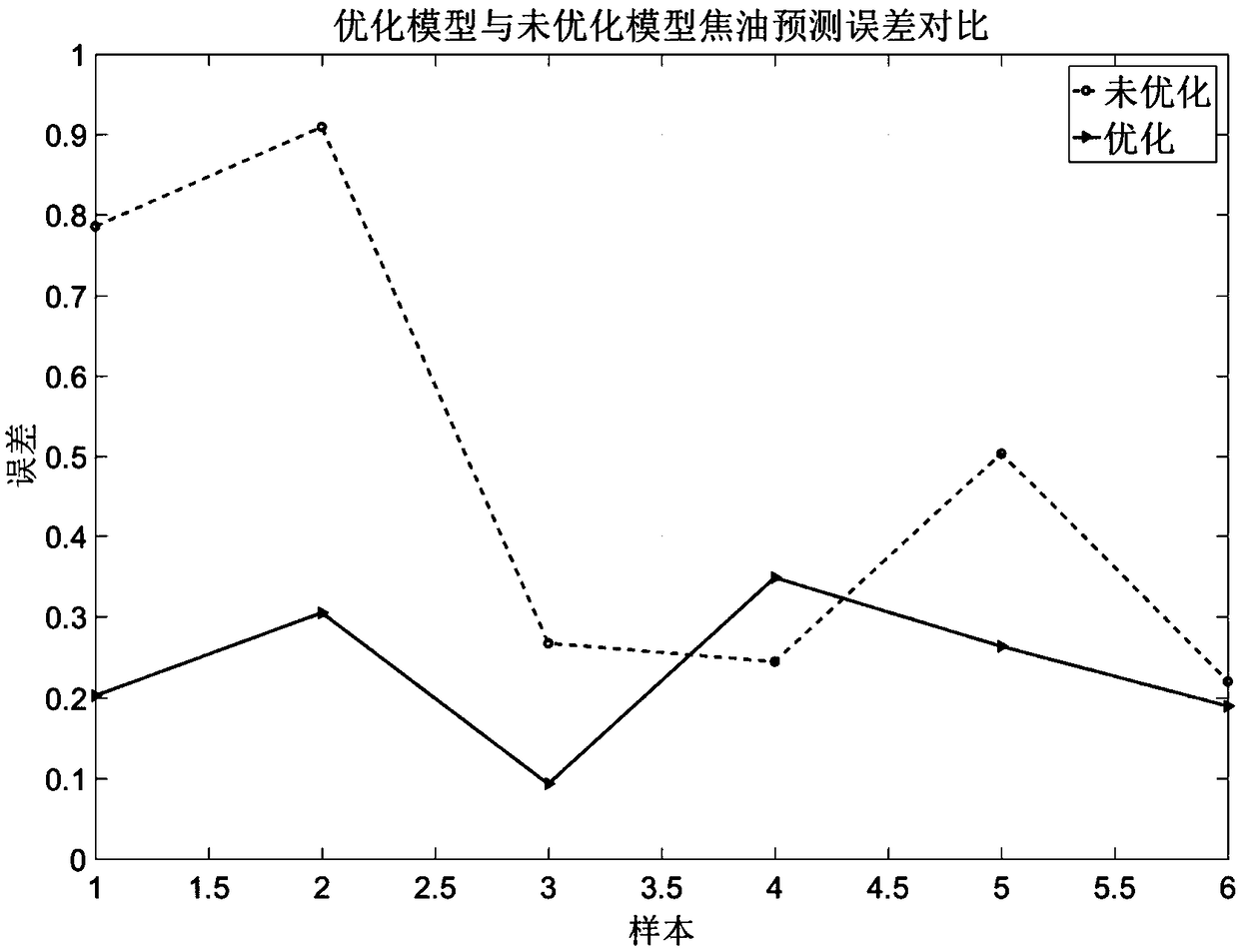

Prediction model of cigarette material and mainstream smoke composition based on genetic algorithm optimized neural network

A prediction model of cigarette material and mainstream smoke composition based on genetic algorithm optimized neural network comprises the following steps: sample pretreatment; obtaining the optimalweights and threshold parameters of neural network based on genetic algorithm; constructing and training the neural network based on the optimal weights and thresholds obtained by genetic algorithm. The trained neural network model is verified and the evaluation model is applied to the actual effect. Compared with the neural network without optimization algorithm, the neural network model based ongenetic algorithm firstly uses genetic algorithm to select the weights and thresholds to minimize the model error as the initial parameters of the training neural network, which can avoid the model falling into the local optimal solution, but can not get the global optimal solution.

Owner:HUBEI CHINA TOBACCO IND

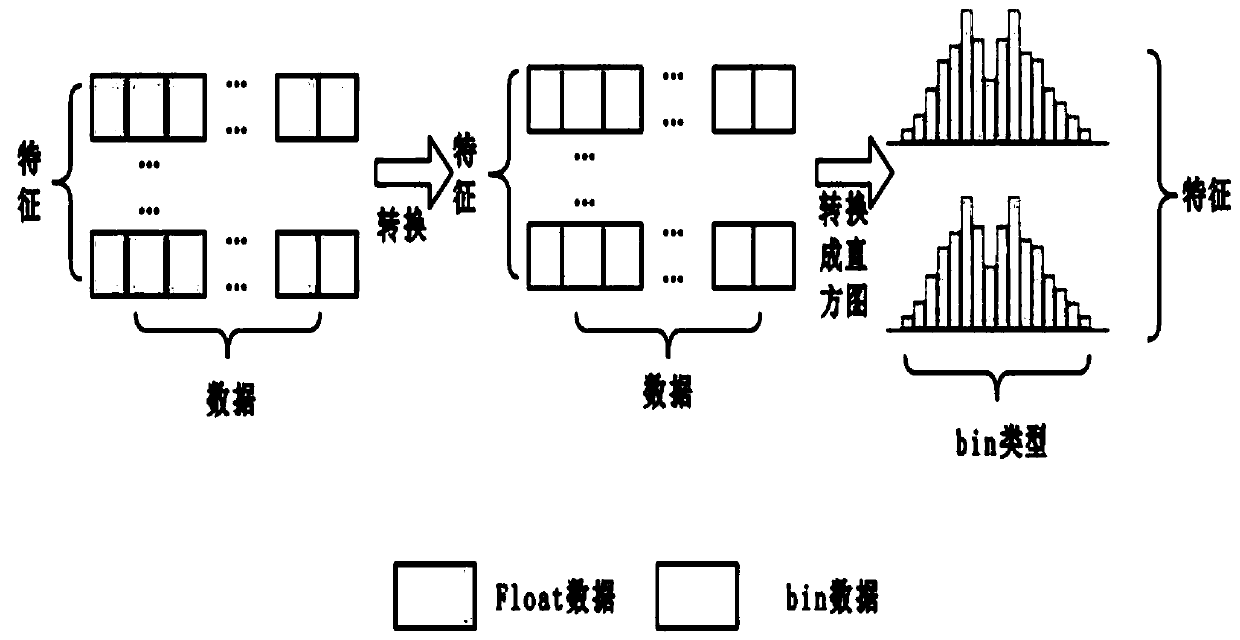

LightGBM fault diagnosis method based on improved Bayesian optimization

ActiveCN110413494AReduce computational complexityImprove performanceHardware monitoringCharacter and pattern recognitionBayesian optimization algorithmAlgorithm

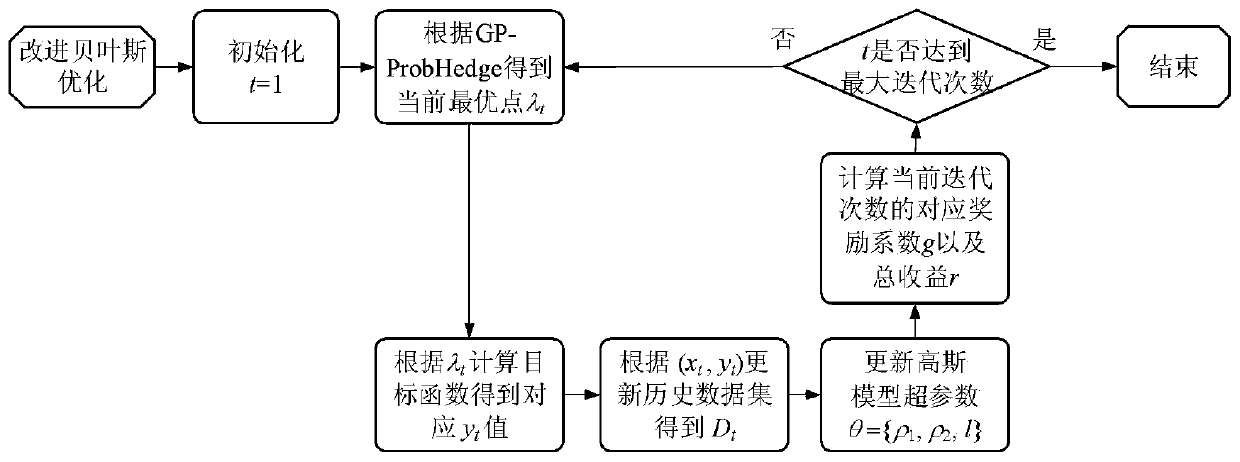

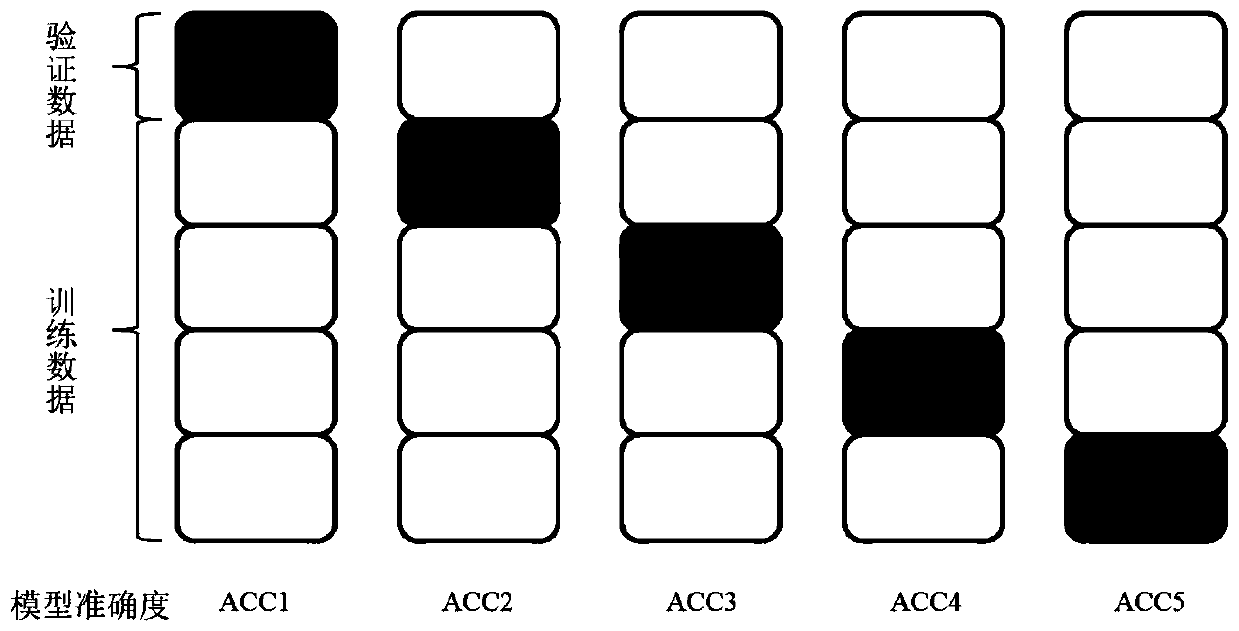

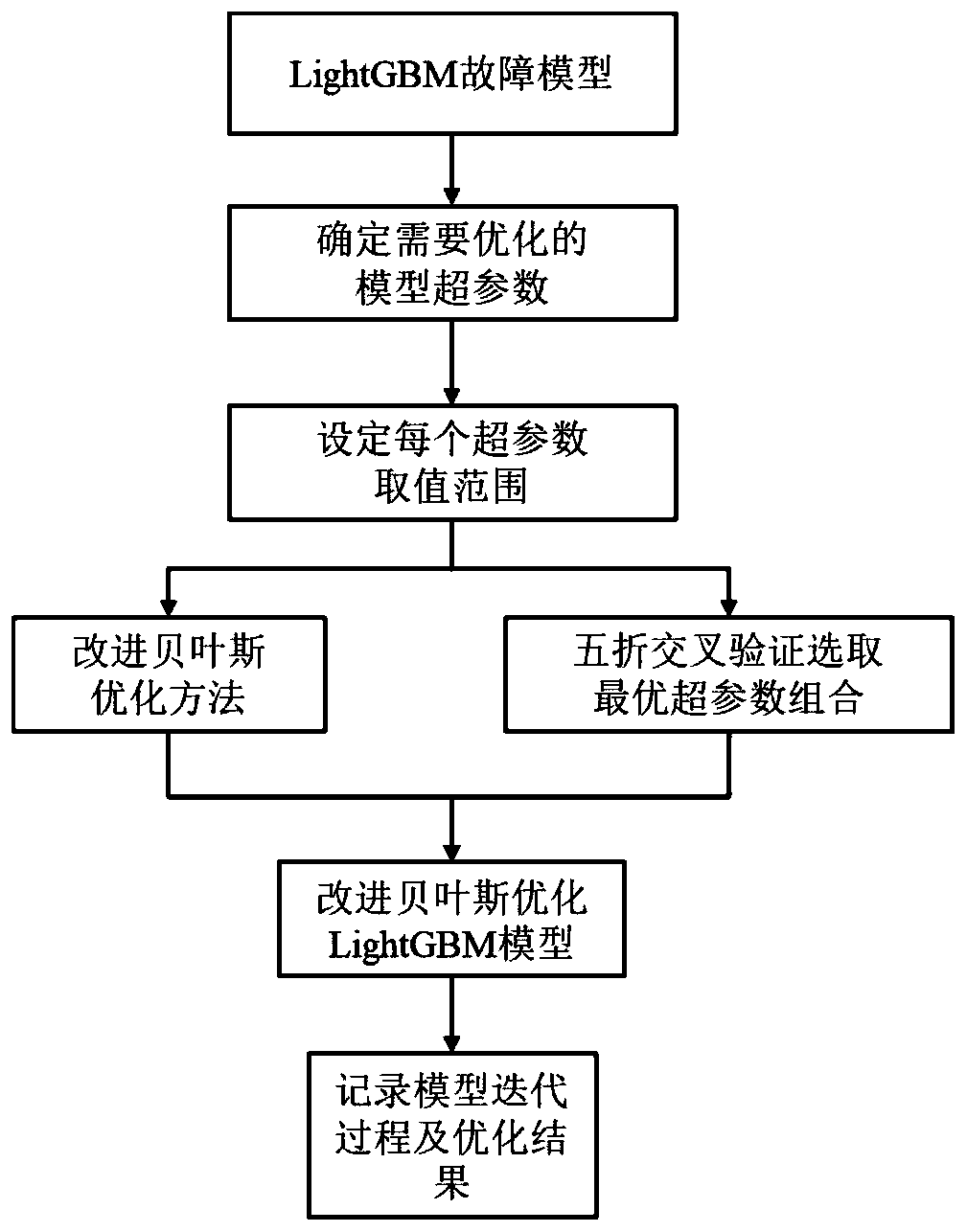

The invention discloses a LightGBM fault diagnosis method based on improved Bayesian optimization. The LightGBM fault diagnosis method comprises the following steps: 1) determining hyper-parameters needing to be optimized by a LightGBM model and a hyper-parameter value range; 2) improving the Bayesian optimization algorithm to obtain an improved Bayesian optimization algorithm GP-ProbHedge; 3) selecting an optimal hyper-parameter combination of the fault diagnosis model by using the method in the step 2) in combination with a five-fold cross validation mode; and 4) constructing an improved Bayesian optimization LightGBM fault diagnosis model, and giving a model iteration process and an optimization result. By adopting the technology, compared with the prior art, according to the invention,an improved Bayesian optimization algorithm is provided to carry out optimization selection on parameters of a fault model; by improving an acquisition function of a traditional Bayesian optimizationalgorithm and a covariance function of a Gaussian process of the traditional Bayesian optimization algorithm, an improved Bayesian optimization LightGBM fault diagnosis method is provided, and equipment faults are diagnosed and predicted.

Owner:ZHEJIANG UNIV OF TECH

Aerial power component image classification method based on knowledge transfer learning

ActiveCN110472545AIncrease widthImprove classification effectScene recognitionNeural architecturesNerve networkAlgorithm

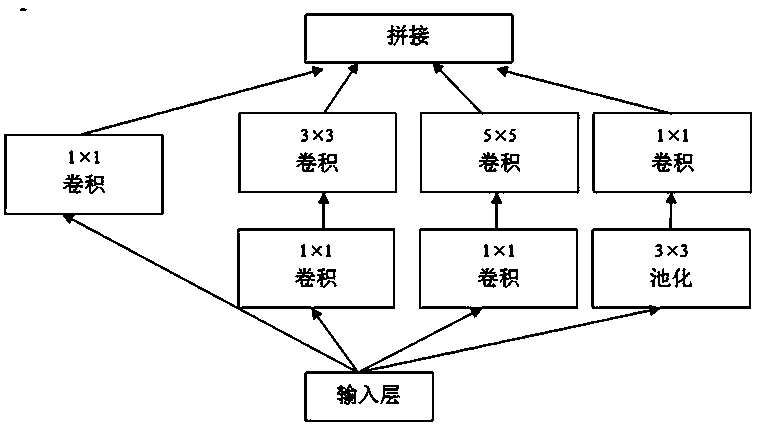

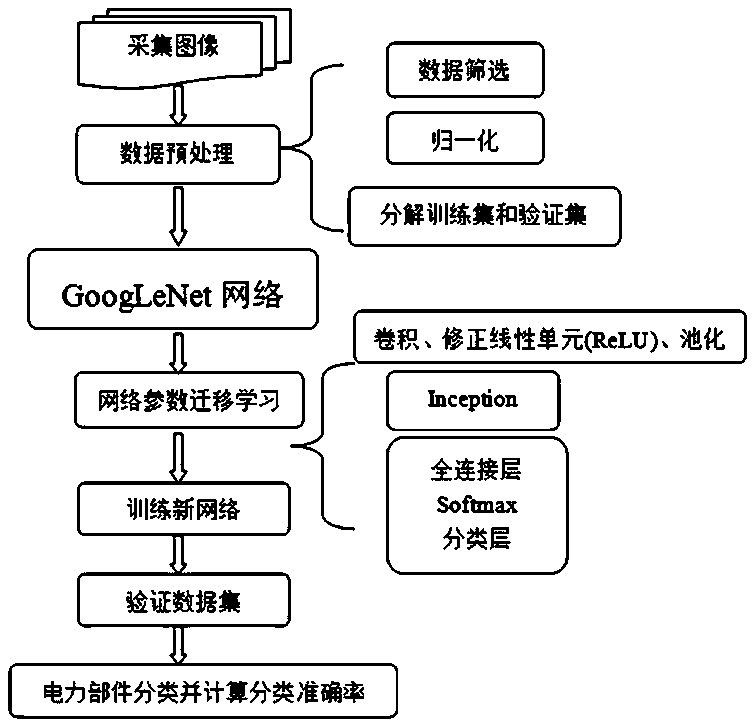

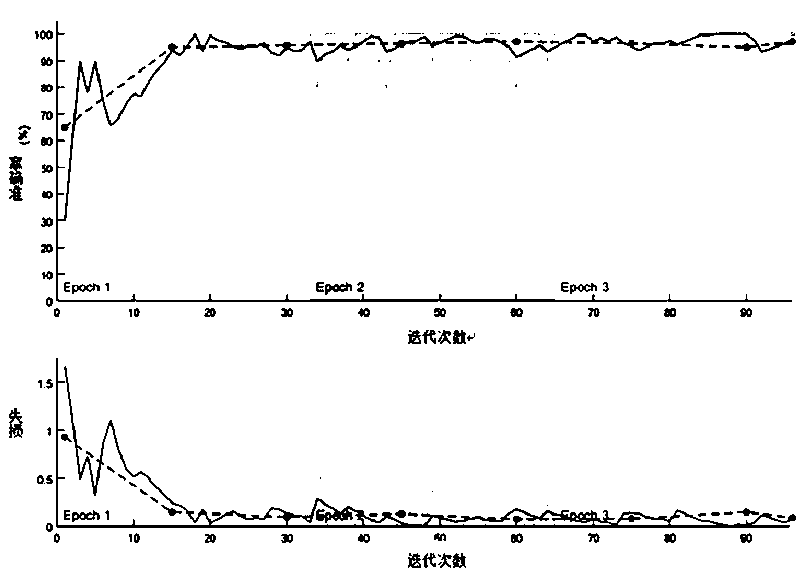

The invention relates to the field of combination of deep learning and machine vision in artificial intelligence, in particular to a knowledge transfer learning-based aerial power part image classification method, which comprises the following steps of establishing a convolutional neural network GoogLeNet; adjusting and optimizing the convolutional neural network GoogLeNet, on the basis of the convolutional neural network GoogLeNet, replacing the last three layers of the convolutional neural network GoogLeNet with a full connection layer, a softmax layer and a classification output layer, andcarrying out optimization setting; when the network is trained, obtaining the network parameters by combining multiple simulation experiments and a Bayesian optimization algorithm; performing normalization preprocessing on the acquired electric power part images, inputting the images into the set new deep convolutional neural network obtained in the step 2 for learning, and performing classification according to the types of insulators, hardware fittings, towers and the like; and verifying by performing simulation experiments.

Owner:ZHONGBEI UNIV

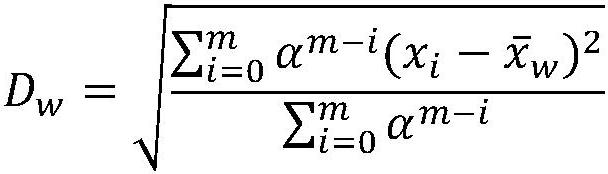

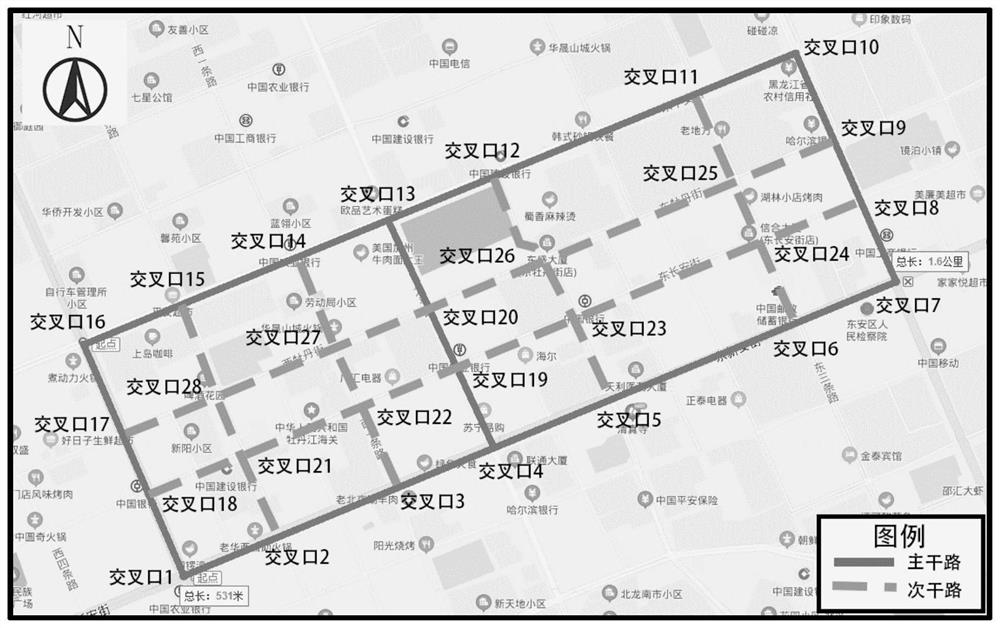

Short-term traffic flow prediction method and system based on Bayesian optimization

InactiveCN111192453AImprove generalization abilityImprove forecast accuracyMathematical modelsDetection of traffic movementStreaming dataAlgorithm

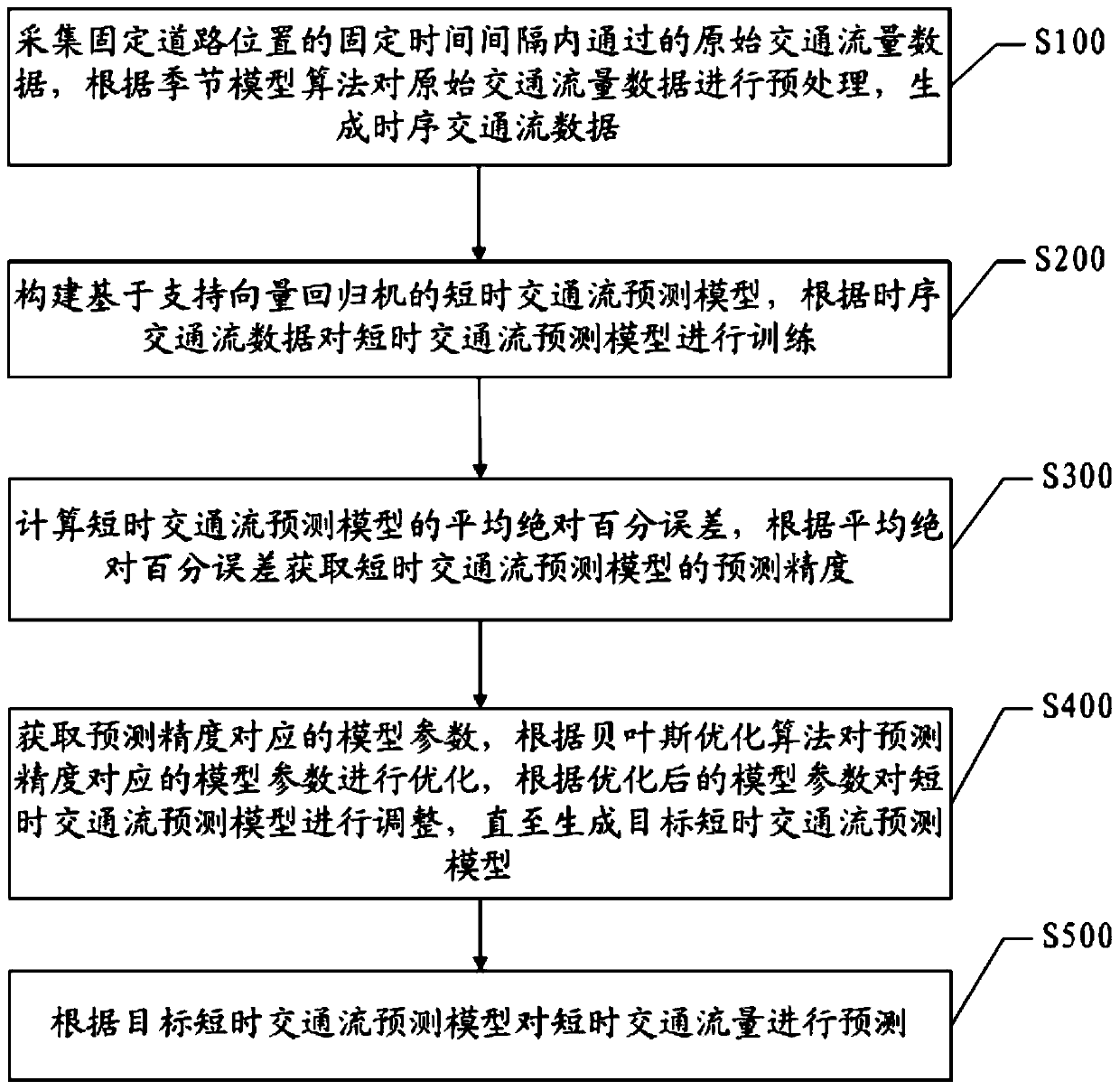

The embodiment of the invention discloses a short-term traffic flow prediction method and system based on Bayesian optimization. The method comprises the steps of: collecting original traffic flow data passing through a fixed road position in a fixed time interval, carrying out preprocessing on the original traffic flow data according to a seasonal model algorithm to generate time sequence trafficflow data; constructing a short-term traffic flow prediction model based on a support vector regression machine, and training the short-term traffic flow prediction model; calculating a mean absolutepercentage error of the short-term traffic flow prediction model, and acquiring the prediction precision of the short-term traffic flow prediction model according to the mean absolute percentage error; optimizing the model parameters corresponding to the prediction precision according to a Bayesian optimization algorithm until a target short-term traffic flow prediction model is generated; and predicting the short-term traffic flow according to the target short-time traffic flow prediction model. According to the embodiment of the invention, the generalization ability of the short-term traffic flow prediction model is improved, the prediction precision is improved, and convenience is provided for intelligent traffic.

Owner:SHENZHEN MAPGOO TECH

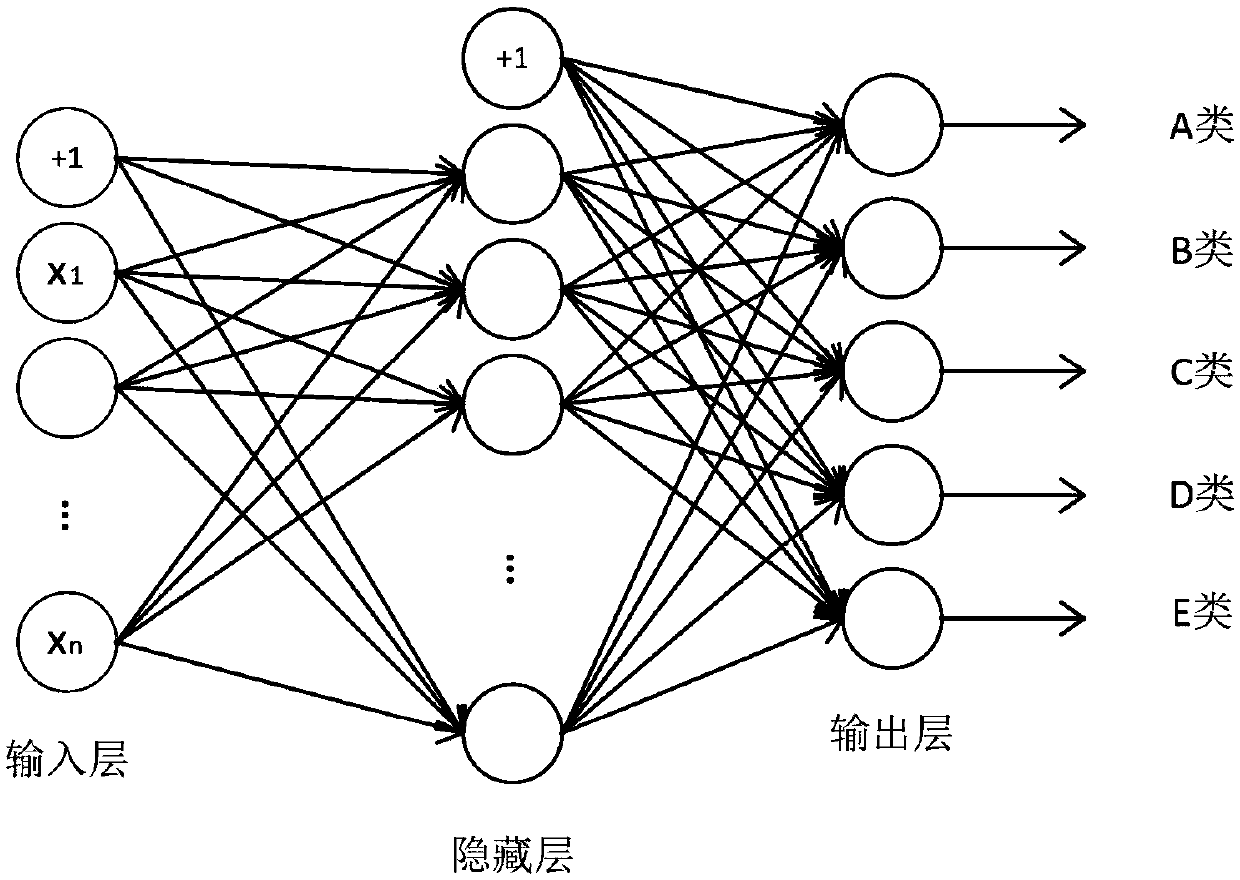

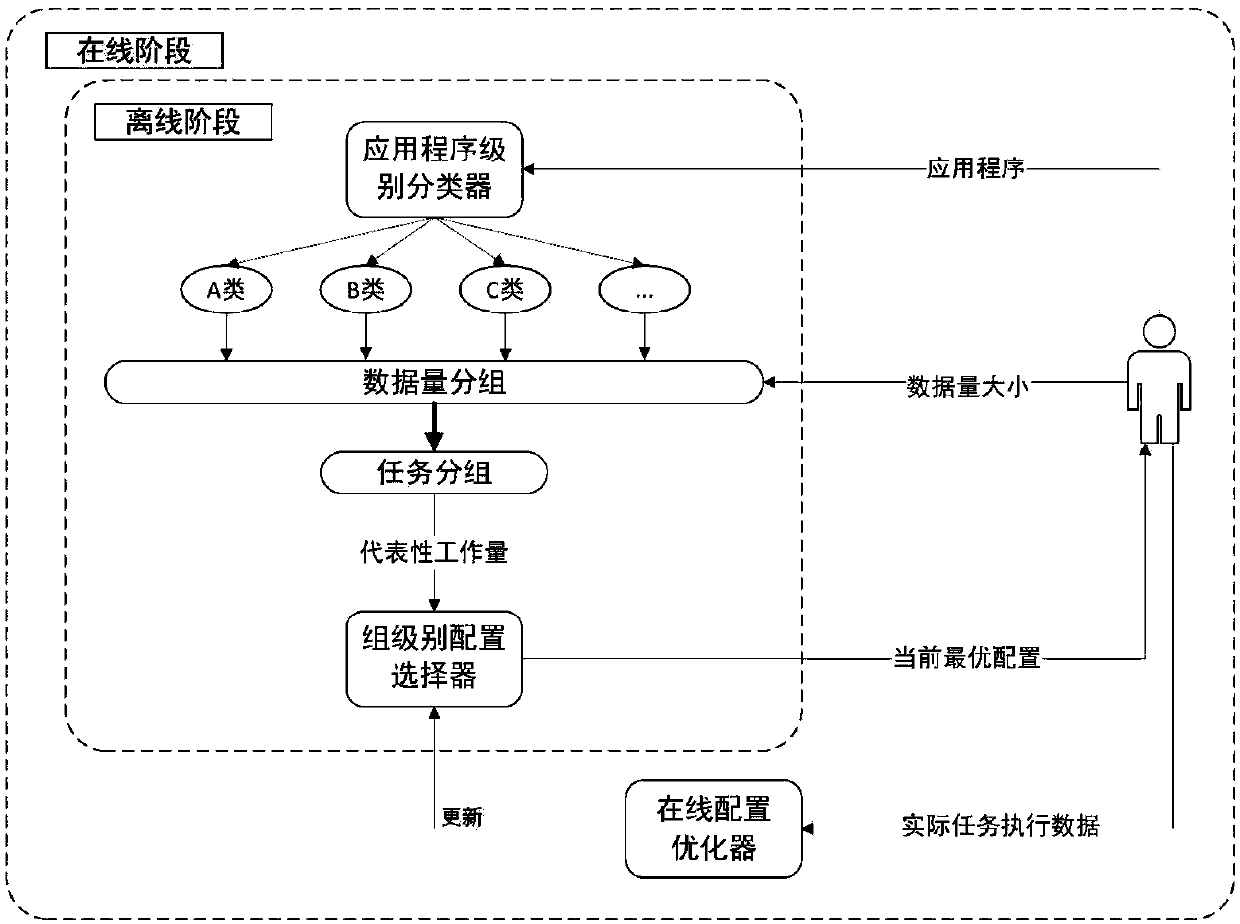

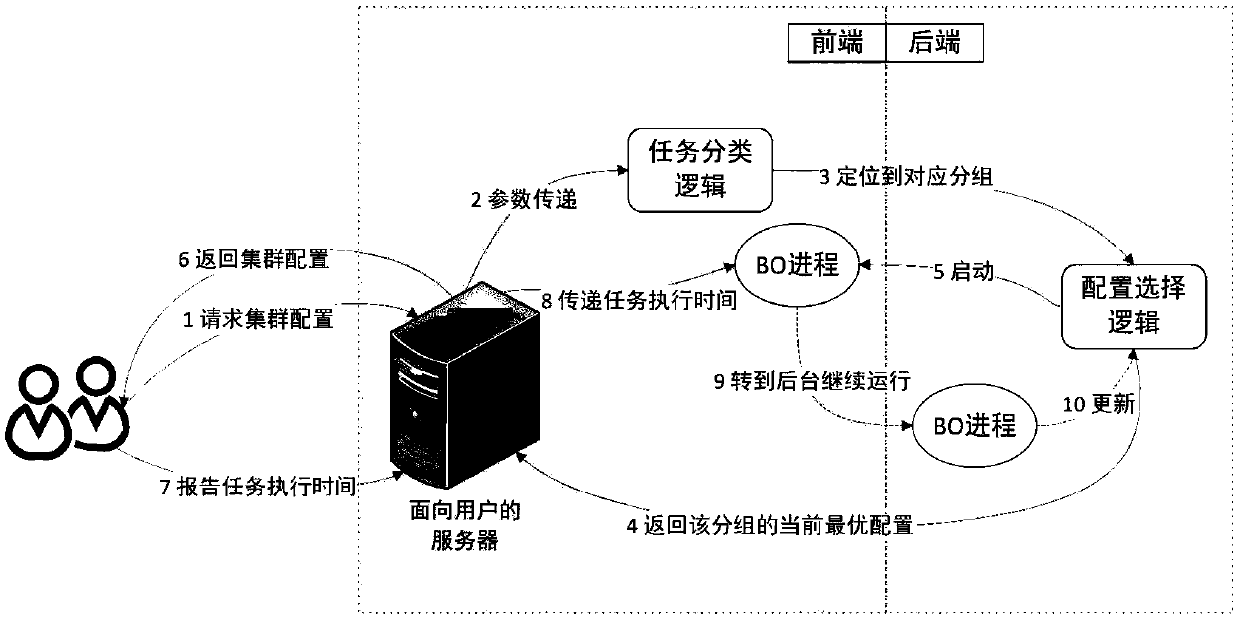

Big data cluster self-adaptive resource scheduling method based on cloud platform

ActiveCN110390345AEnsure resource utilizationSolve the problem of reasonable selection of cluster configurationCharacter and pattern recognitionTransmissionResource utilizationConfiguration selection

The invention belongs to the technical field of computing, and relates to a big data cluster adaptive resource scheduling method based on a cloud platform. The method comprises the steps that in the big data analysis task classification analysis stage, CPU and I / O characteristics of big data analysis tasks are preliminarily analyzed through a neural network classifier; in the initial stage of configuration of a small number of sample clusters, the optimal configuration is rapidly obtained by means of a Bayesian optimization algorithm; in the cluster configuration online optimization stage, iterative optimization of a selection strategy is configured; and in a configuration selection stage in which sufficient samples have time limitation, execution time of the big data analysis task is predicted under different configurations based on a non-negative least square method, and optimal configuration is selected under the condition of time limitation. The method can solve the problem of reasonable selection of cluster configuration for running big data analysis tasks on the cloud platform, and guarantees the resource utilization rate of the cloud platform while guaranteeing the task execution efficiency.

Owner:FUDAN UNIV

Runoff probability prediction method and system based on deep learning

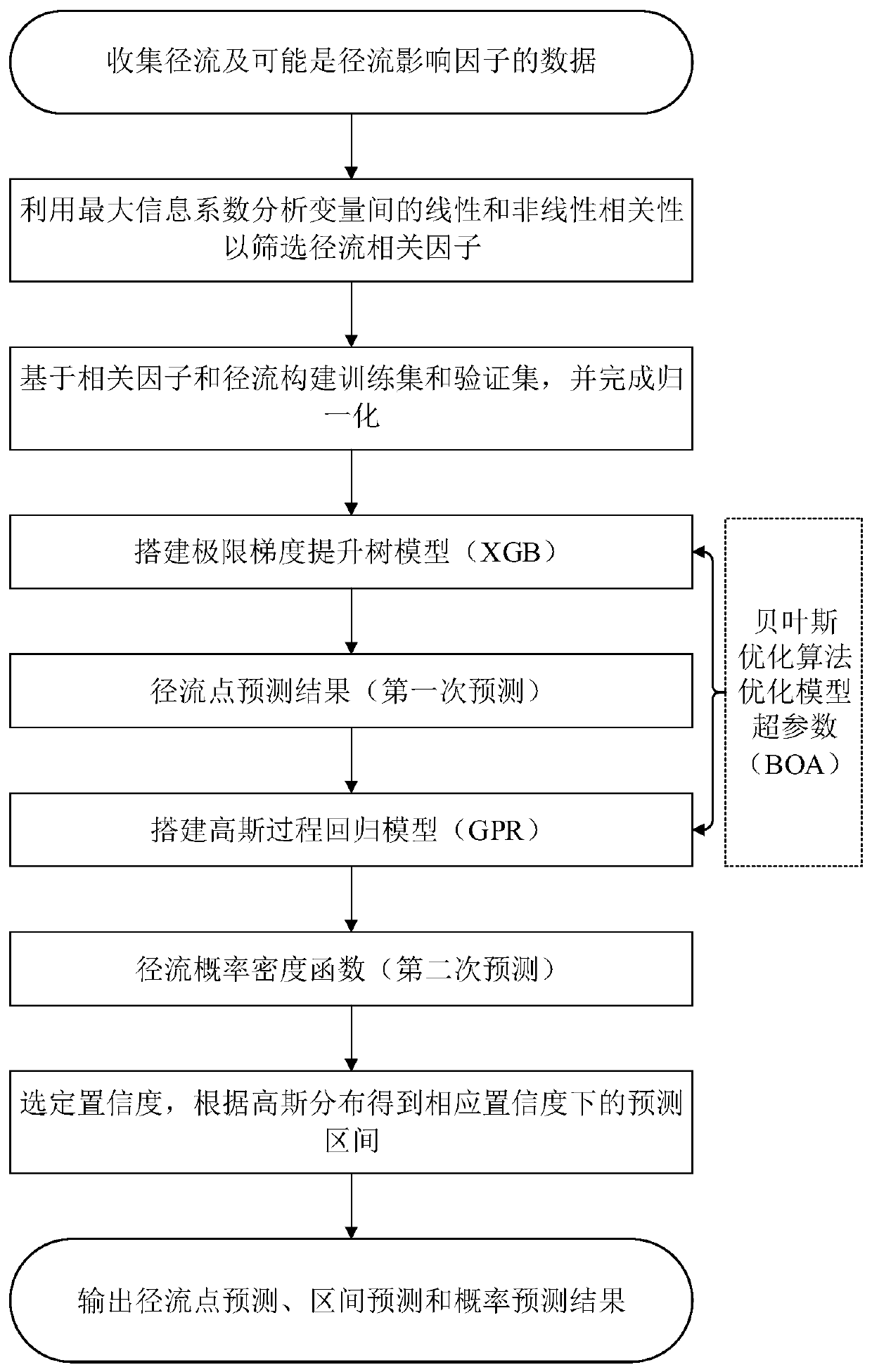

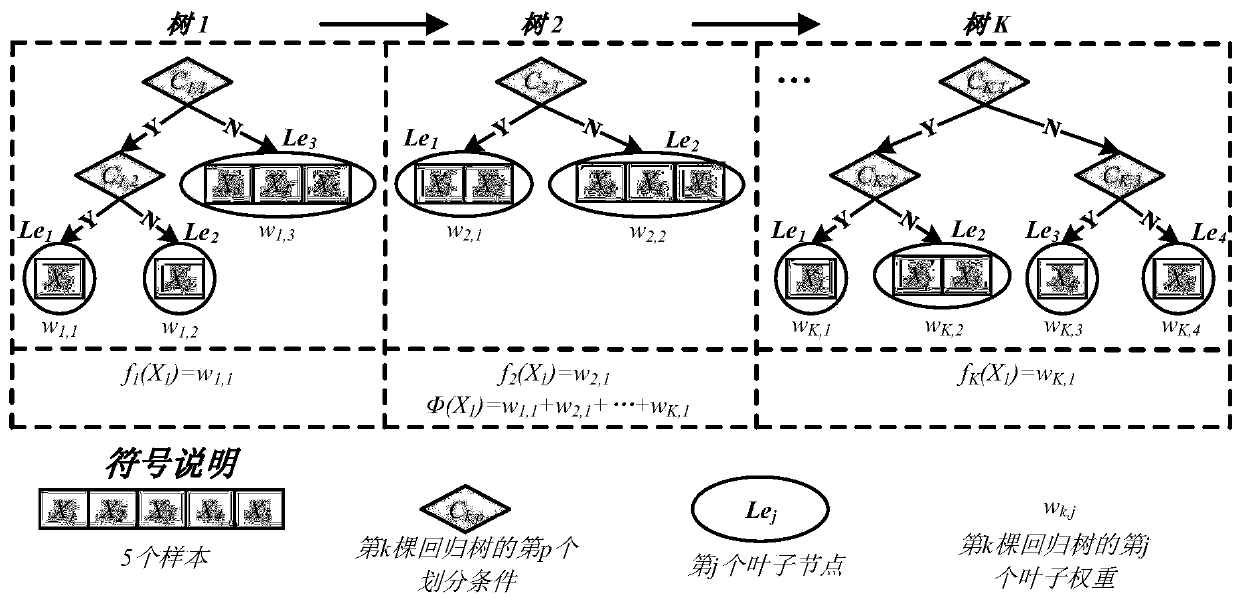

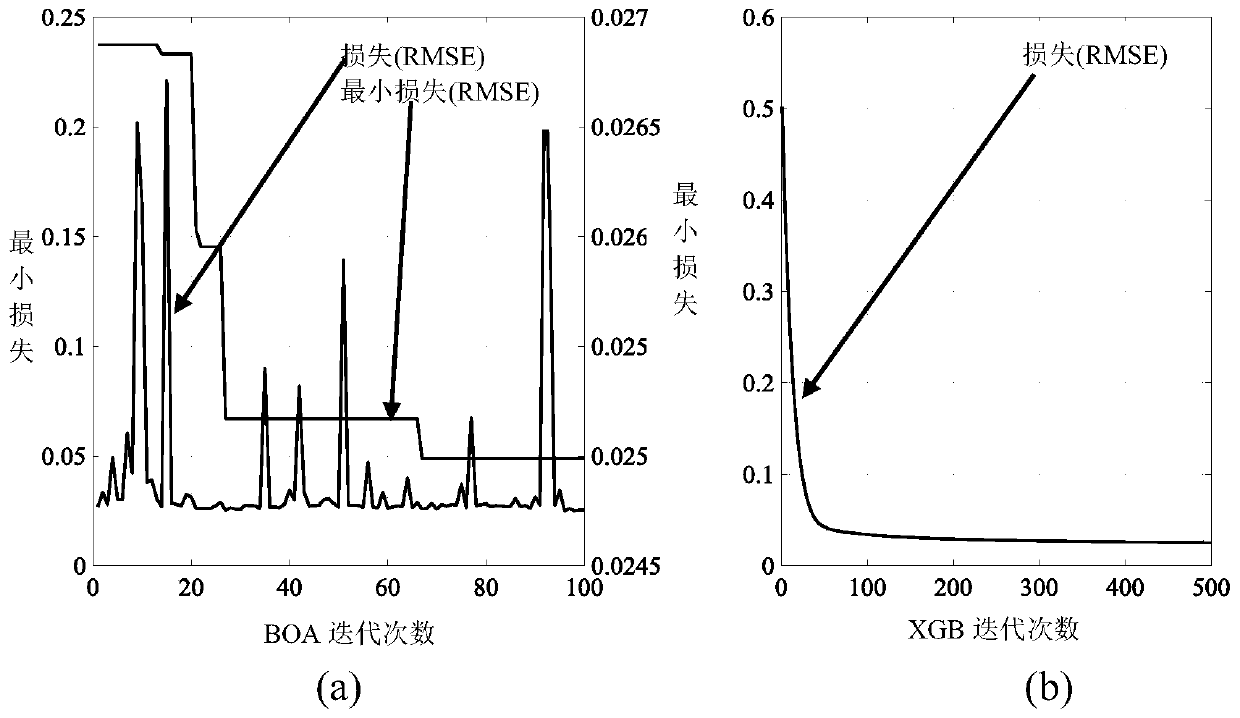

PendingCN110969290AEffective identification and extractionImprove forecast accuracyForecastingComplex mathematical operationsConfidence metricEngineering

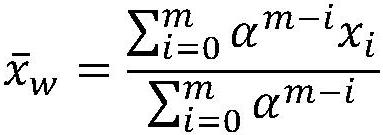

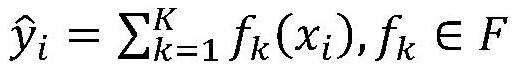

The invention belongs to the technical field of runoff prediction, and discloses a runoff probability prediction method and system based on deep learning, and the method comprises the steps: employinga maximum information coefficient to analyze the linear and nonlinear correlation between variables, so as to screen a runoff correlation factor; building an extreme gradient boosting tree model on the basis of correlation analysis, and inputting runoff correlation factors into a trained XGB model to complete runoff point prediction; inputting a point prediction result obtained by the XGB model into a GPR model, and performing secondary prediction to obtain a runoff probability prediction result; selecting confidence and acquiring a runoff interval prediction result under the corresponding confidence through Gaussian distribution; and optimizing hyper-parameters in the XGB model and the GPR model by adopting a Bayesian optimization algorithm. A high-precision runoff point prediction result, an appropriate runoff prediction interval and reliable runoff probability prediction distribution can be obtained, and the prediction method plays a crucial role in utilization of water resourcesand reservoir scheduling.

Owner:国家能源集团湖南巫水水电开发有限公司 +1

Water chilling unit fault diagnosis method and system based on Bayesian optimization LightGBM, and medium

ActiveCN113792762ADeterioration in operationImprove reliabilitySpace heating and ventilation safety systemsLighting and heating apparatusBayesian optimization algorithmEngineering

The invention discloses a water chilling unit fault diagnosis method and system based on Bayesian optimization LightGBM, and a medium. The method comprises the following steps: collecting and storing on-site historical data of a water chilling unit through a sensor; preprocessing the historical data; performing feature selection by using a two-step method combining an embedding method and a recursive feature elimination method; using the historical data subjected to data preprocessing and feature selection for training a LightGBM model, combining a Bayesian optimization algorithm with a ten-fold cross validation mode to determine an optimal hyper-parameter combination of the LightGBM model, and then obtaining a trained LightGBM diagnosis model; and preprocessing the real-time operation data and inputting the data into the diagnosis model to obtain a water chilling unit fault diagnosis result. Parameters of the diagnosis model can be rapidly determined, the operation state of the water chilling unit can be rapidly and accurately evaluated, key fault features can be extracted, and the method is used for guiding engineering practice and strengthening on-site operation maintenance of the water chilling unit.

Owner:SOUTH CHINA UNIV OF TECH

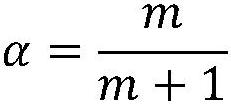

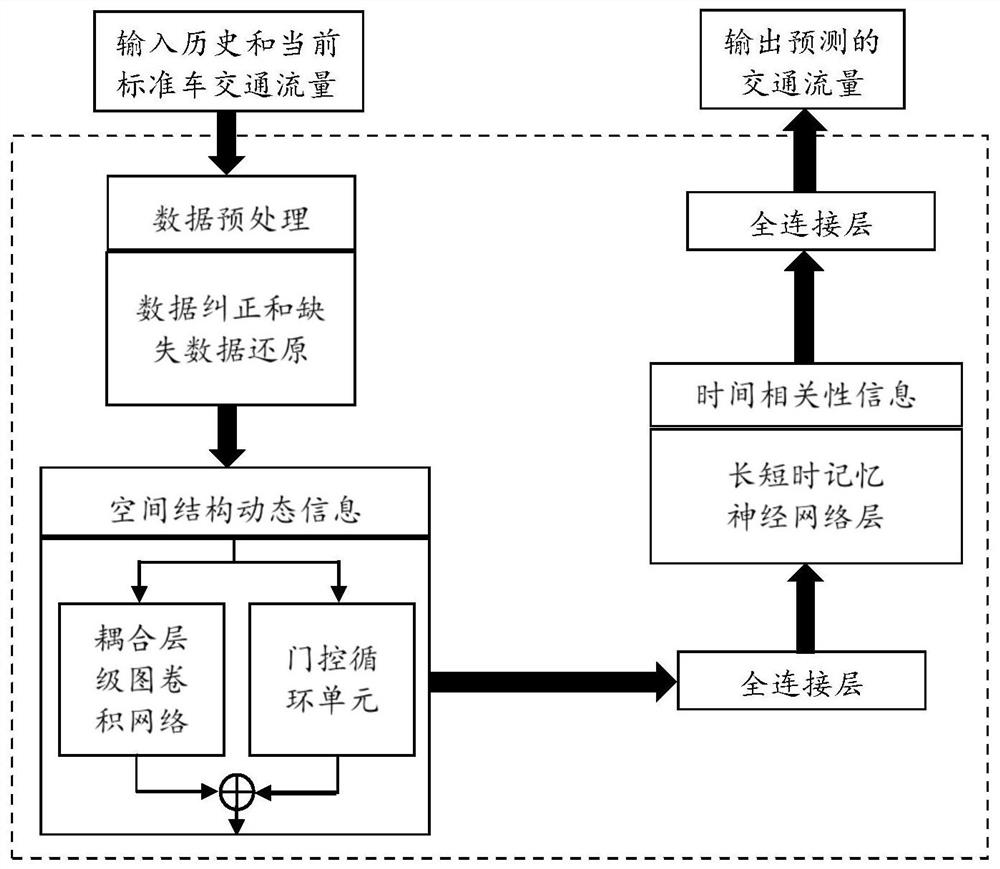

Adaptive full-chain urban area network signal control optimization method

ActiveCN113538910AGood effectAchieve interactionControlling traffic signalsInternal combustion piston enginesPrediction algorithmsArea network

The invention belongs to the technical field of ITS intelligent traffic systems, and particularly relates to an adaptive full-chain urban area network signal control optimization method. Traffic flow parameters are collected by utilizing a machine vision technology, traffic flow prediction is carried out based on a pre-trained traffic flow prediction algorithm by using obtained data, a microscopic traffic simulation model is constructed according to predicted traffic flow data, an original signal timing scheme and traffic network basic data, and a network-level signal optimization model is constructed. Active optimization is carried out on the network-level signal optimization model by adopting a Bayesian optimization algorithm so as to obtain an optimal signal timing scheme of the target area network. The method has good integration, an inner and outer circulation feedback closed loop is formed, an inner and outer circulation feedback mechanism can achieve interaction of a network signal optimization model and a microscopic traffic simulation model, it can be guaranteed that an optimization result scheme adapts to dynamic changes of the external environment, and then instantaneous dynamic optimization and long-term steady-state optimization are achieved.

Owner:李丹丹

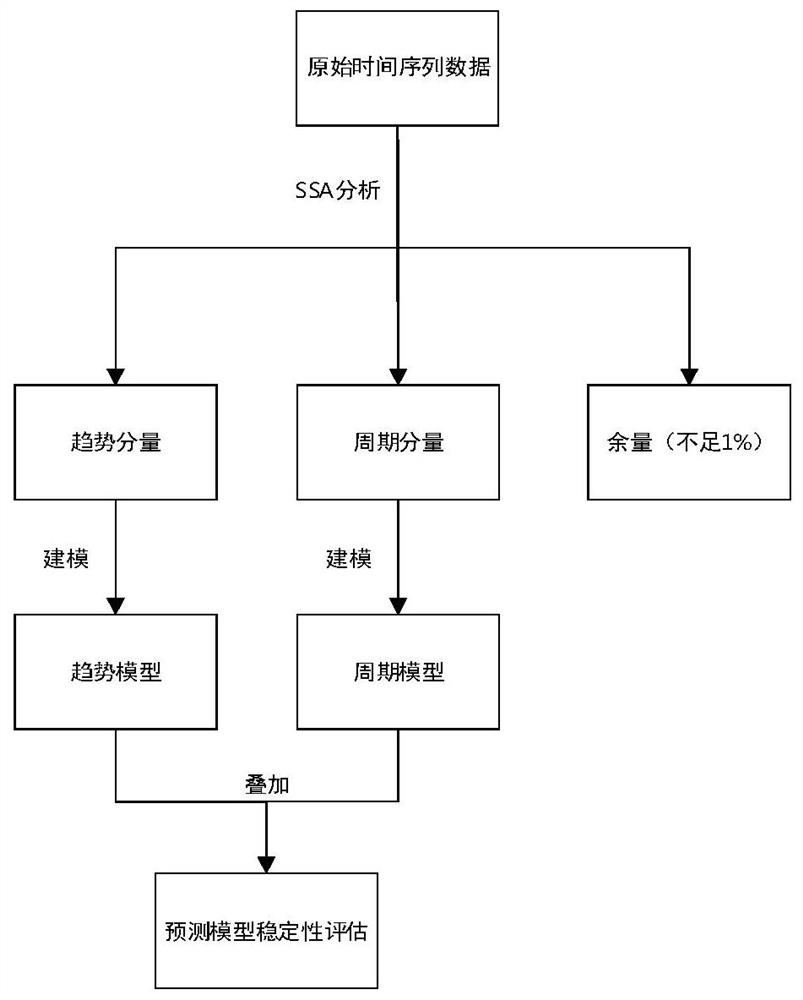

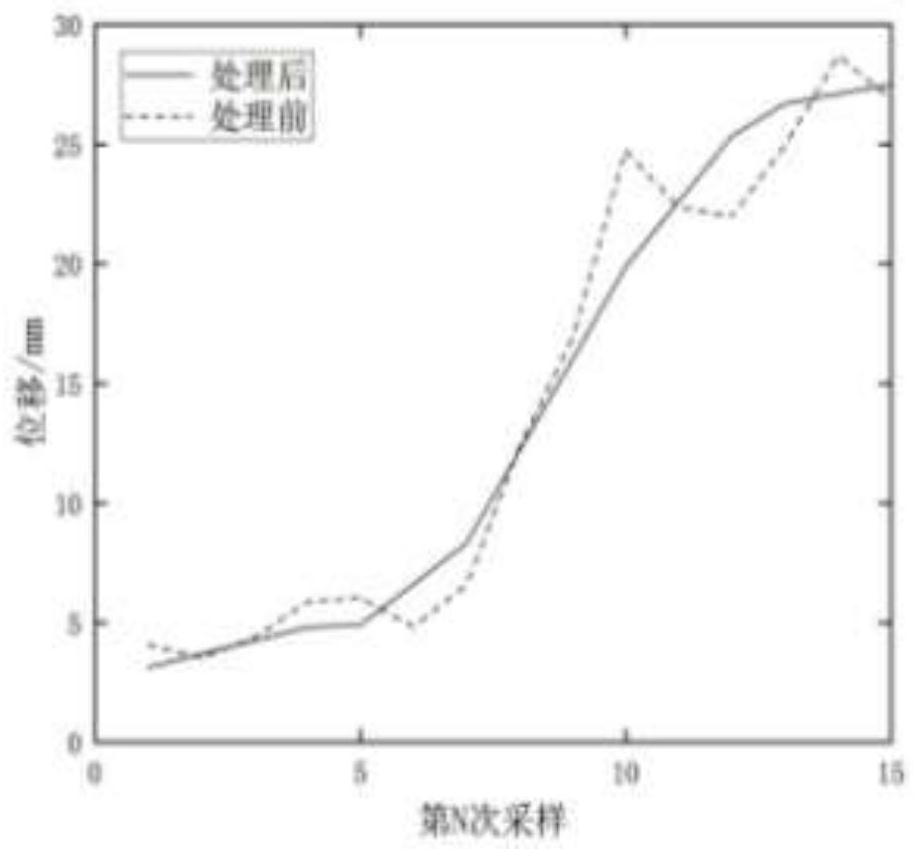

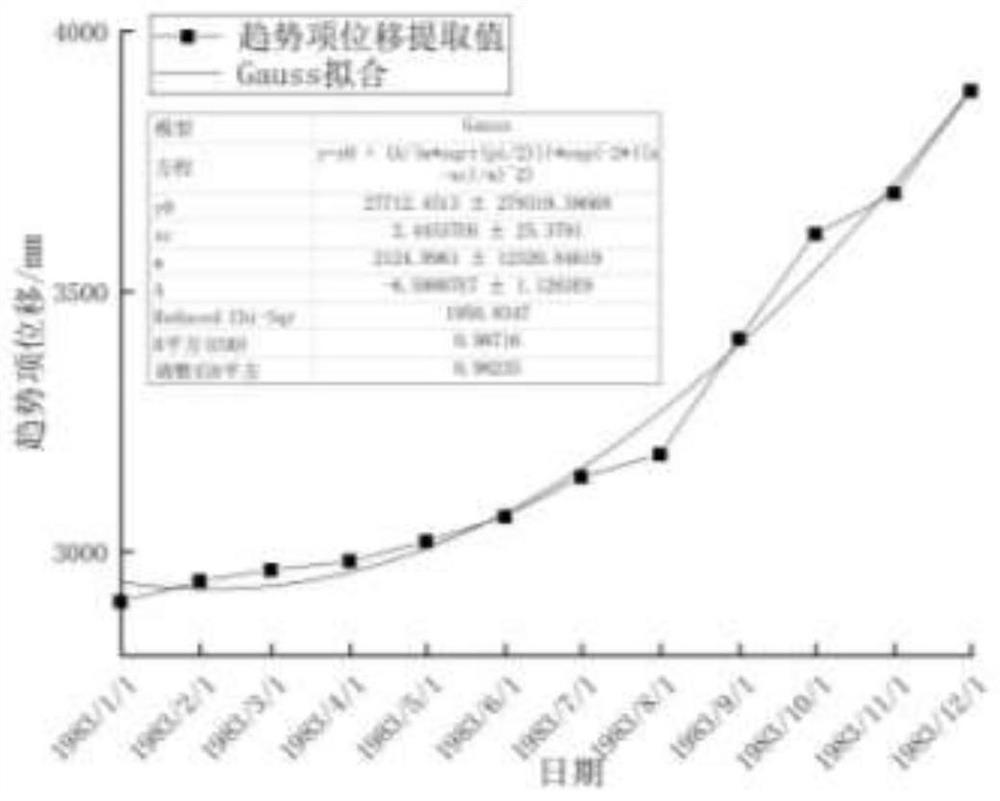

Singular spectrum analysis-based landslide mass displacement prediction method

PendingCN112270229AImprove forecast accuracyImprove generalization abilityCharacter and pattern recognitionNeural architecturesAlgorithmEngineering

The invention discloses a singular spectrum analysis-based landslide mass displacement prediction method. The method is specifically implemented according to the following steps of performing data preprocessing on a time sequence by utilizing a spectral decomposition theory and an embedded reconstruction theory of singular spectrum analysis to obtain the accumulated landslide displacement data; removing the trend term displacement from the accumulated displacement to obtain the periodic term displacement; adopting Gaussian fitting to perform fitting prediction on the trend term displacement; selecting influence factors from the predicted trend term displacement by adopting a rapid multi-principal-component parallel extraction algorithm, and selecting the LSTM model related parameters by utilizing a Bayesian optimization algorithm; constructing a training set, a verification set and a prediction set, and establishing an LSTM network model to predict the periodic item displacement; and according to a time sequence decomposition principle, superposing the predicted values of the displacement sub-sequences to obtain a final predicted value of the displacement, thereby finishing the landslide body displacement prediction method. According to the present invention, the problem that multi-source heterogeneous influence factors are difficult to fuse for collaborative and dynamic forecasting in the prior art, is solved.

Owner:XI'AN POLYTECHNIC UNIVERSITY

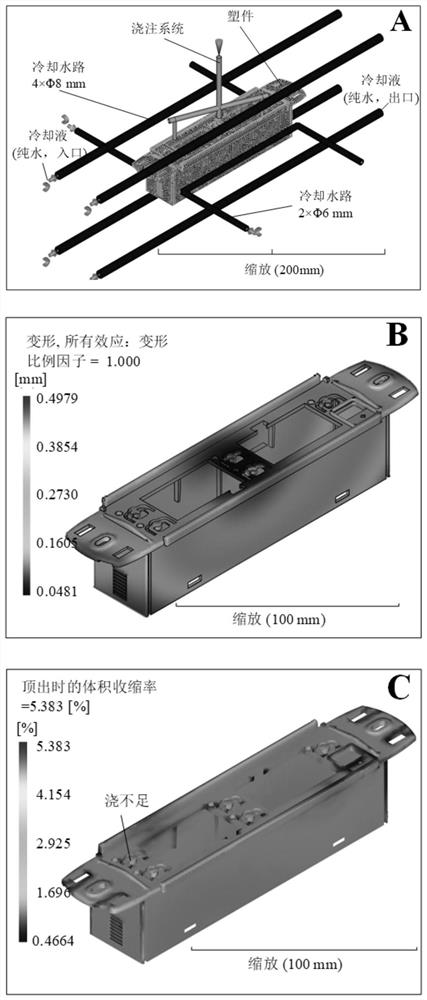

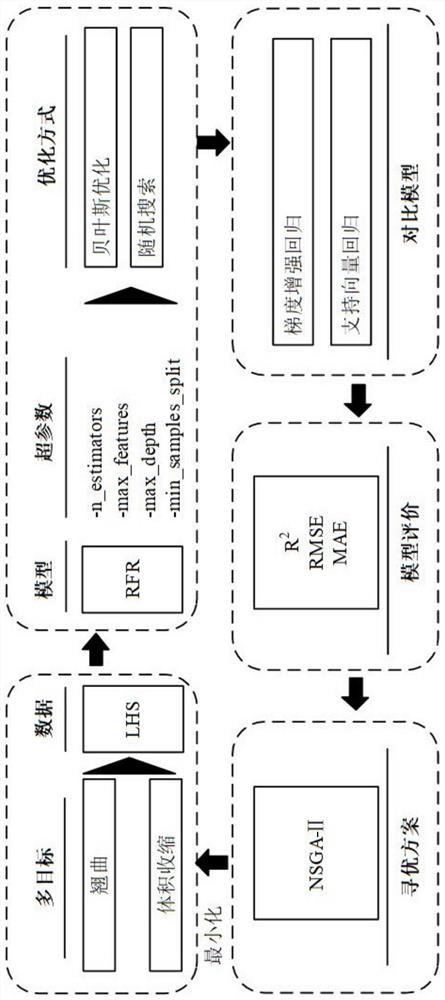

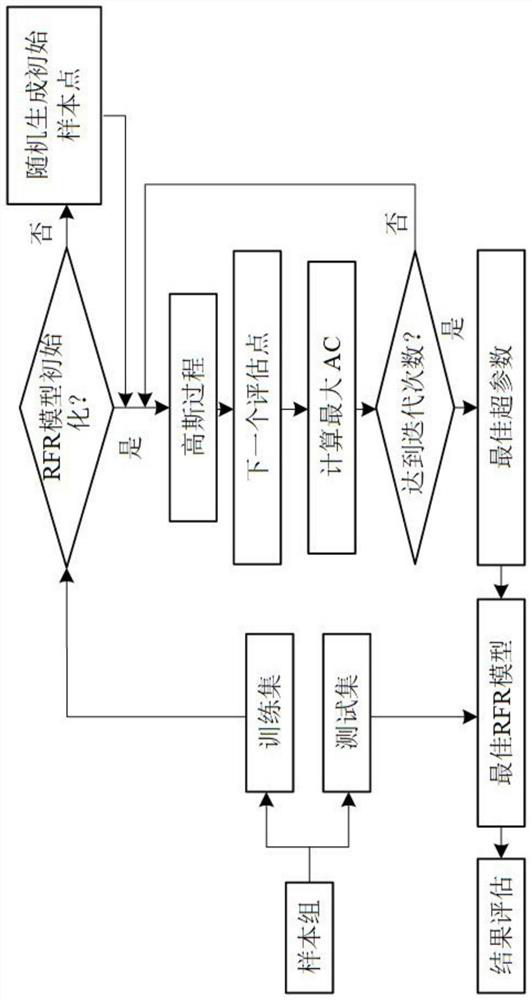

Thin-wall plastic part injection molding process parameter multi-objective optimization method

PendingCN112101630AReduce warpageReduce volumeForecastingDesign optimisation/simulationVolumetric shrinkageGaussian process

The invention discloses a thin-wall plastic part injection molding process parameter multi-objective optimization method, which takes minimum warping and volume shrinkage as two optimization objectives, combines Moldflow simulation software with Latin hypercube sampling LHS, and comprises the following steps of: firstly, constructing a mathematical relationship between injection molding process parameters and the two optimization objectives by adopting random forest regression RFR on the basis of the LHS; secondly, establishing a Bayesian optimization algorithm BO by taking a Gaussian processGP as a probability agent model and taking a lifting strategy PI as an acquisition function, and optimizing hyper-parameters of the RFR according to the Bayesian optimization algorithm BO so as to construct a BORFR model; finally, carrying out multi-objective optimization on the BORFR by adopting NSGAII to obtain optimal injection molding process parameters. Finite element simulation verificationand physical test verification show that the optimization method can greatly reduce the warping and volume shrinkage rate of the thin-wall plastic part.

Owner:XUZHOU NORMAL UNIVERSITY

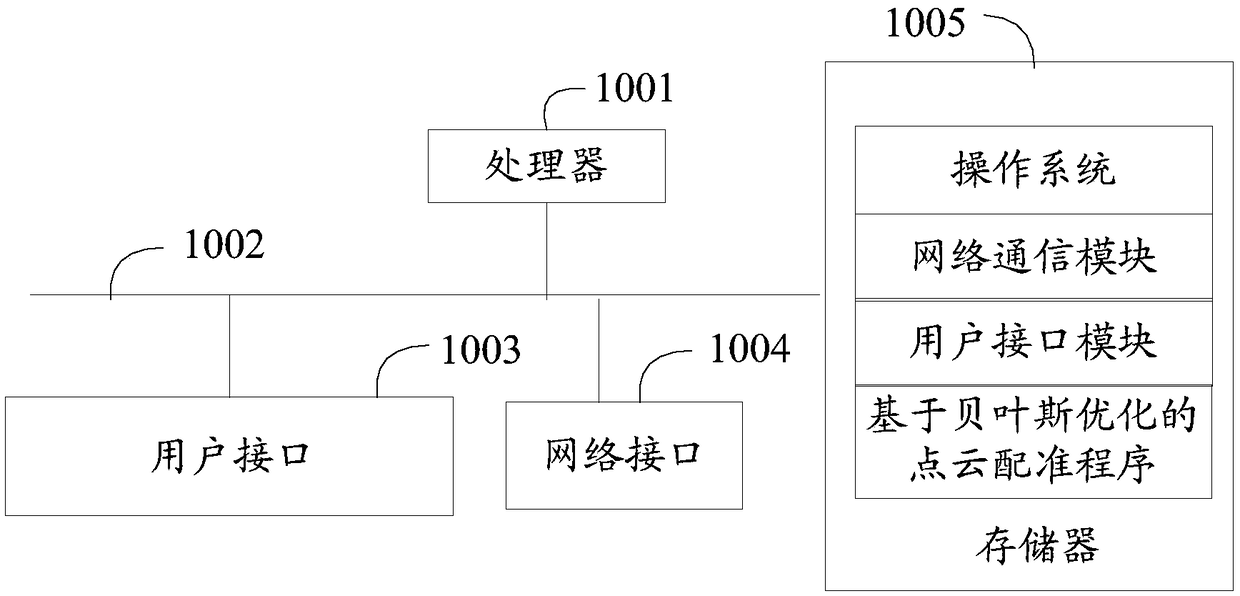

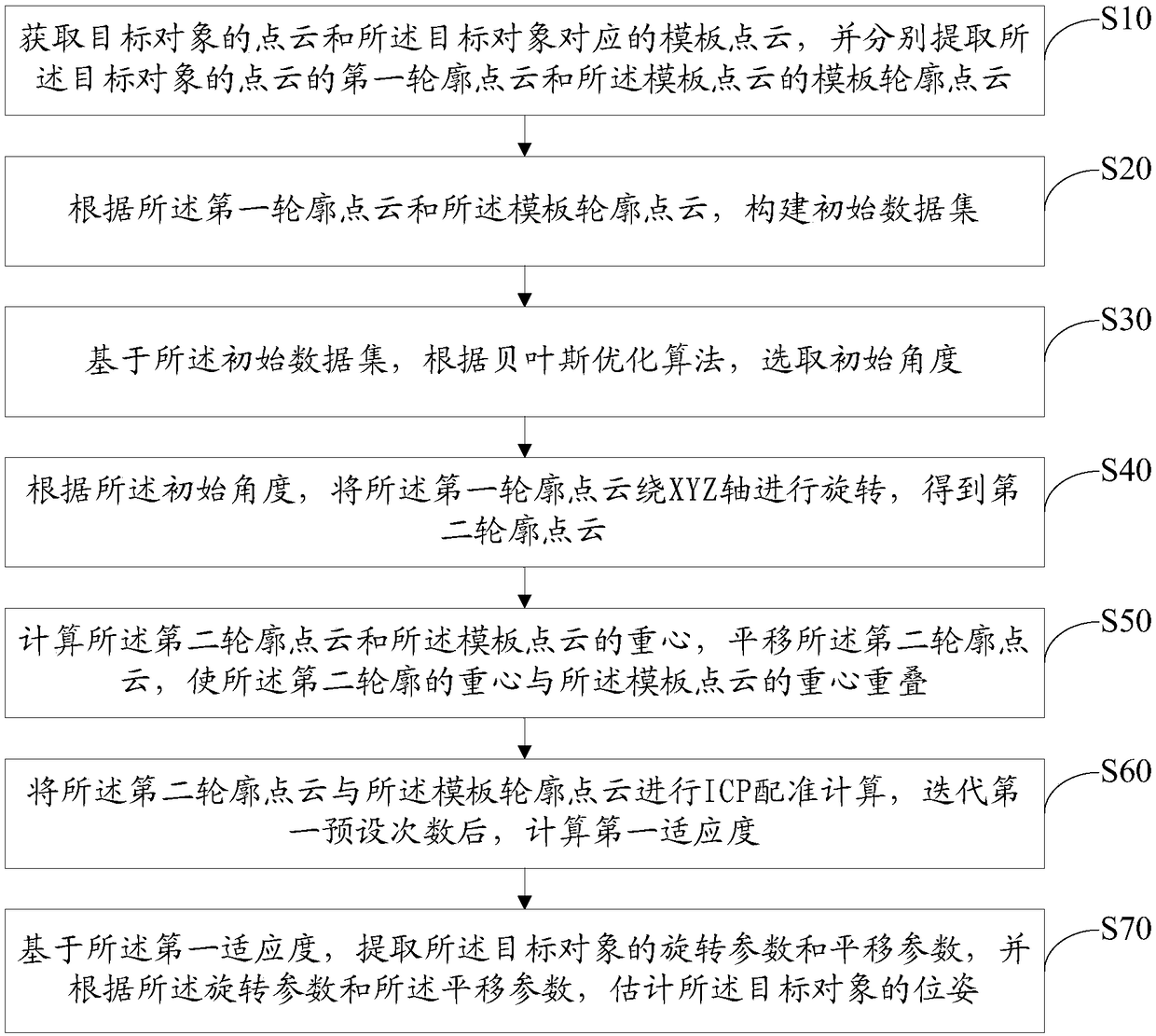

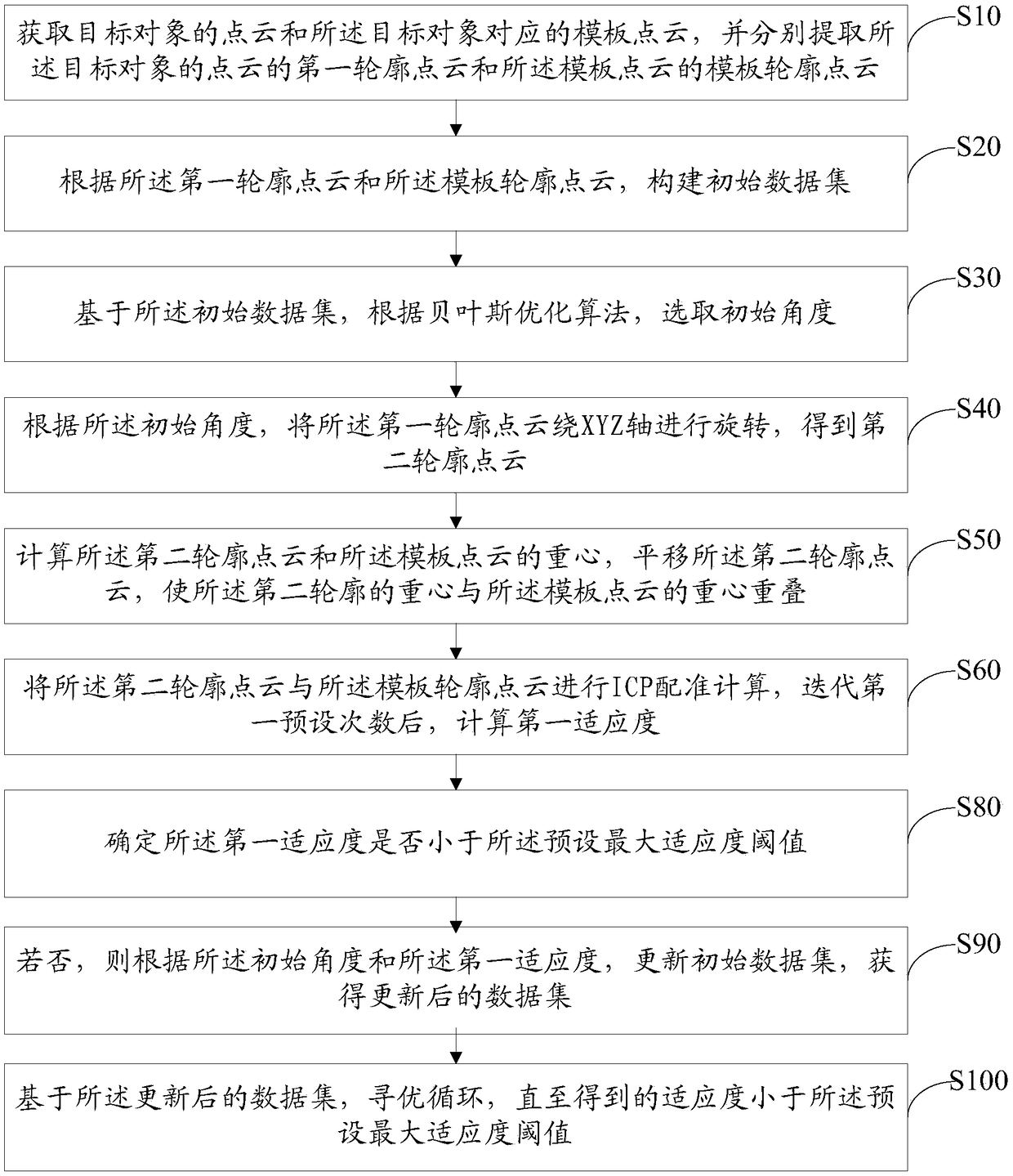

Point cloud registration method, system and readable storage medium based on bayesian optimization

The invention discloses a point cloud registration method based on Bayesian optimization, a system and a readable storage medium. The method comprises the following steps: obtaining a contour point cloud of a target object and a template contour point cloud; Build the initial data set; According to the Bayesian optimization algorithm, the initial angle is selected. According to the initial angle,the contour point cloud is rotated and translated to obtain a new contour point cloud. The new contour point cloud and the template contour point cloud are registered by ICP, and the fitness is obtained. And extracting a rotation parameter and a translation parameter of the target object based on the first fitness to estimate a pose of the target object. The invention utilizes the contour point cloud of the target object to carry out registration and optimization based on the Bayesian optimization algorithm, and finds out the relatively optimized initial position and posture of the target object, so that the robot adjusts the picking action according to the initial position and posture, and quickly completes the picking of the target object.

Owner:深圳一步智造科技有限公司

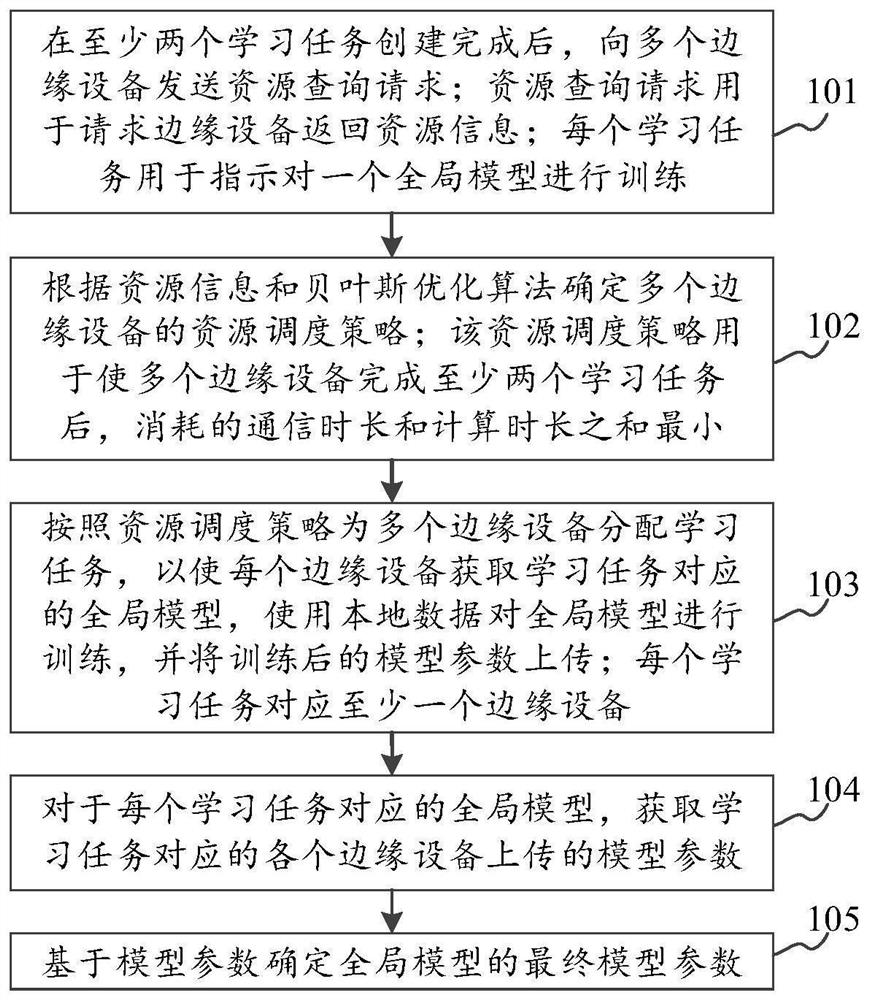

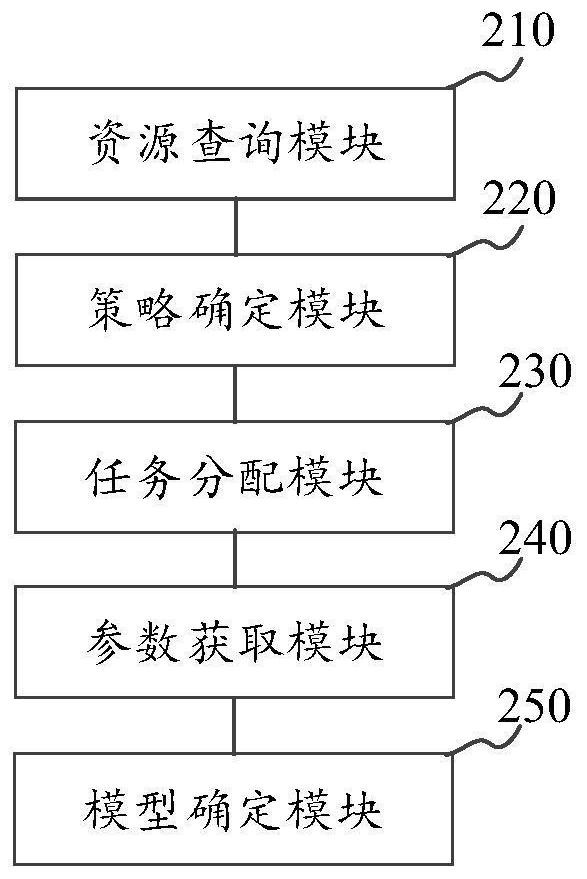

Multi-task federal learning method and device for edge device

PendingCN113094181AImprove learning efficiencySolve the problem of low learning efficiencyMathematical modelsResource allocationBayesian optimization algorithmResource information

The invention relates to a multi-task federal learning method and device for edge devices, and belongs to the technical field of computers. The method comprises the following steps: after at least two learning tasks are created, sending a resource query request to multiple edge devices; determining a resource scheduling strategy of the plurality of edge devices according to resource information queried by the resource query request and a Bayesian optimization algorithm; distributing learning tasks to the plurality of edge devices according to a resource scheduling strategy; for the global model corresponding to each learning task, obtaining model parameters uploaded by each edge device corresponding to the learning task; determining final model parameters of the global model based on the model parameters. The problem that when multiple learning tasks exist, equipment resources cannot be reasonably scheduled, and consequently federal learning efficiency is not high can be solved. By minimizing the sum of the completion durations of the at least two submitted learning tasks, even if a plurality of learning tasks can be converged as quickly as possible, the multi-task learning efficiency can be improved.

Owner:苏州联电能源发展有限公司

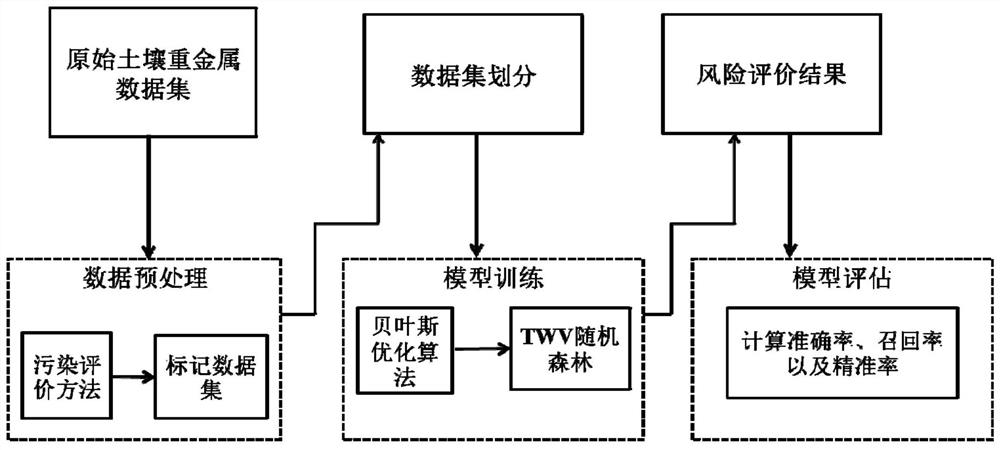

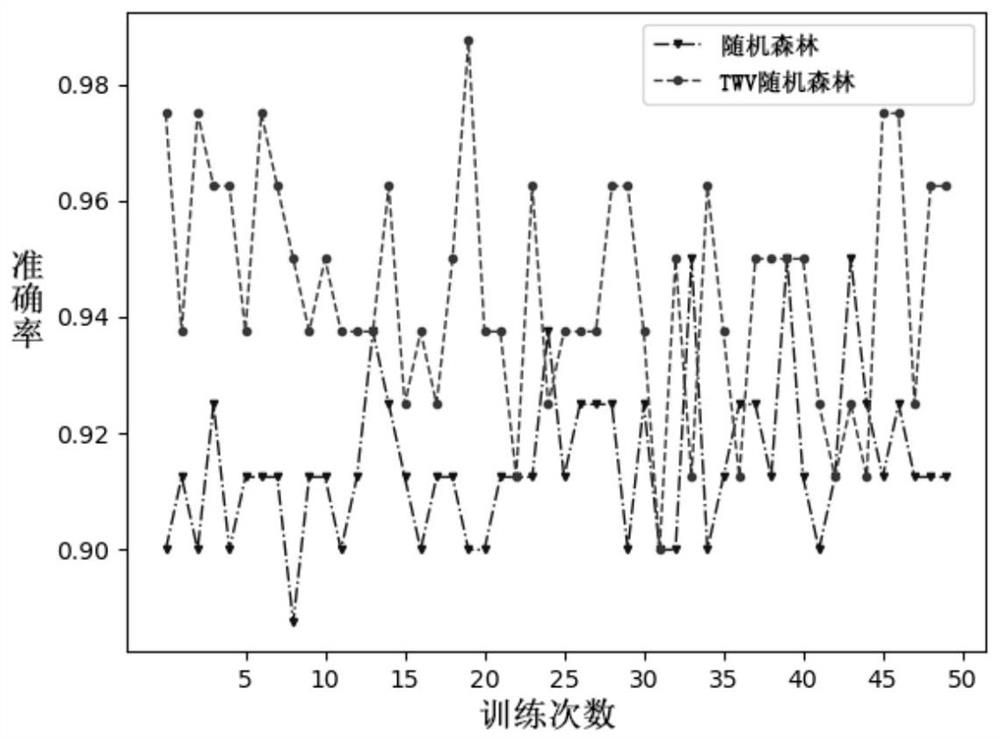

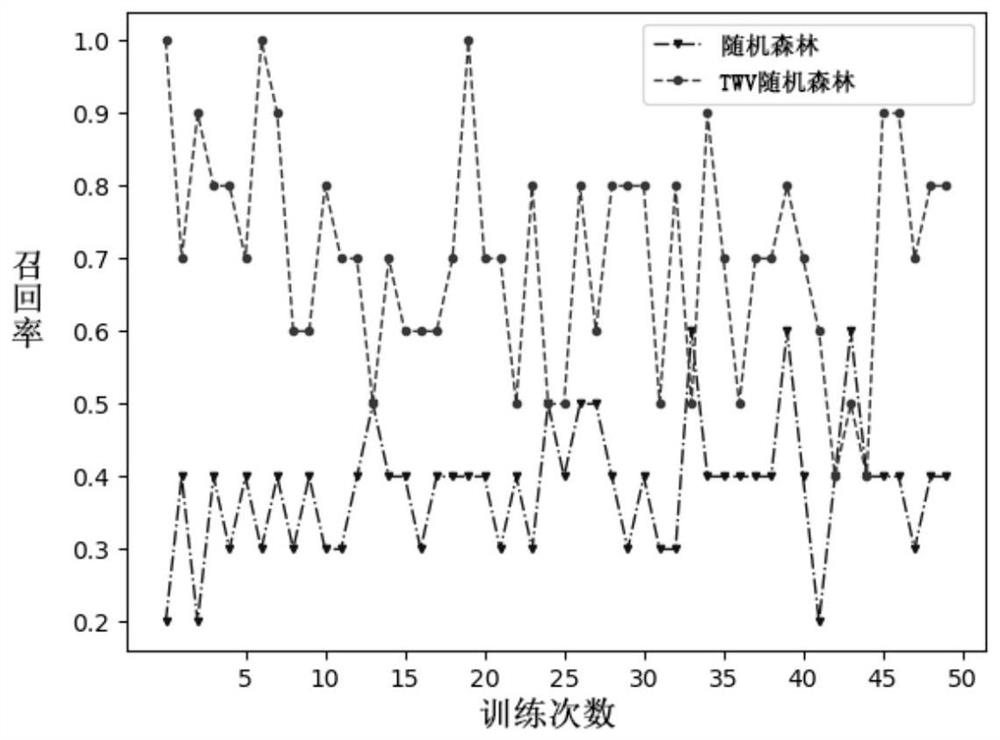

Credibility-based random forest soil heavy metal risk evaluation method and system

PendingCN112633733AImprove classification accuracyHigh precisionArtificial lifeResourcesData setOriginal data

The invention provides a credibility-based random forest soil heavy metal risk evaluation method and system, and the method comprises the steps: carrying out the data preprocessing: carrying out the preprocessing of an original data set, and obtaining an unmarked data set; marking data, namely marking whether the samples in the data set have pollution risks or not by adopting a soil pollution evaluation method; dividing a data set, namely performing stratified sampling on the data set according to proportions of different categories, and dividing a training set and a test set; model training: learning the training set by using a random forest algorithm based on true positive rate weighted voting to obtain a risk evaluation model, and inputting the test set into the model to obtain a risk evaluation result, using a Bayesian optimization algorithm to find a parameter combination with the highest accuracy rate by taking the accuracy rate as an optimization target, and and carrying out soil heavy metal risk evaluation and evaluation by utilizing the trained model. According to the method, the recall rate of few types of samples is improved on the unbalanced data set, and the pollution-free risk of the samples can be accurately distinguished.

Owner:WUHAN POLYTECHNIC UNIVERSITY

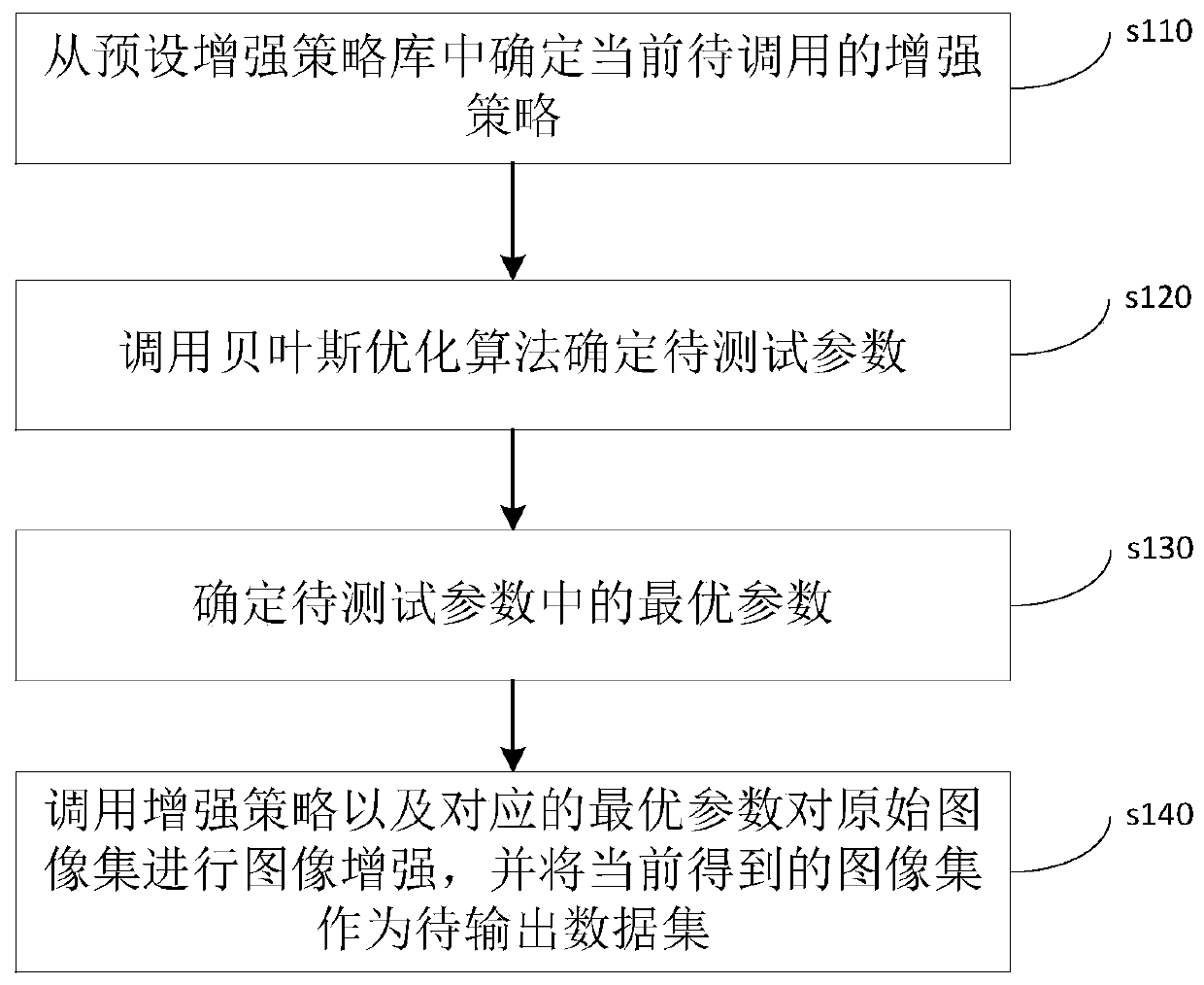

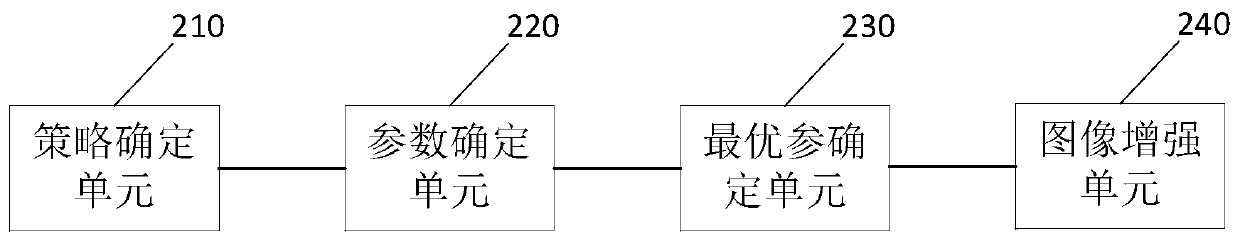

Image set expansion method, device and apparatus and readable storage medium

ActiveCN110378419ASolve the shortageImprove usabilityCharacter and pattern recognitionPerformance enhancementData set

The invention discloses an image set expansion method. The method comprises the steps of determining a current to-be-called enhancement strategy from a preset enhancement strategy library; calling a Bayesian optimization algorithm to determine to-be-tested parameters, calling the Bayesian optimization algorithm to quickly determine a plurality of parameters with excellent performance enhancement performance from calling parameters of countless enhancement strategies, and determining optimal parameters in the to-be-tested parameters, with the optimal parameters being the parameters with the best enhancement effect in the to-be-tested parameters. due to the fact that the called enhancement strategy parameters are excellent in enhancement performance, an enhancement strategy and an optimal parameter are called to carry out image enhancement on the original image set. High availability of the generated image can be ensured, so that a currently obtained image set can be directly used as theto-be-output data set, excessive resources do not need to be occupied for image validity verification, the problem that the number of the image sets is insufficient can be quickly solved, and the implementation cost can be reduced. The invention further discloses an image set expansion device and apparatus and a readable storage medium, which have the above beneficial effects.

Owner:GUANGDONG INSPUR BIG DATA RES CO LTD

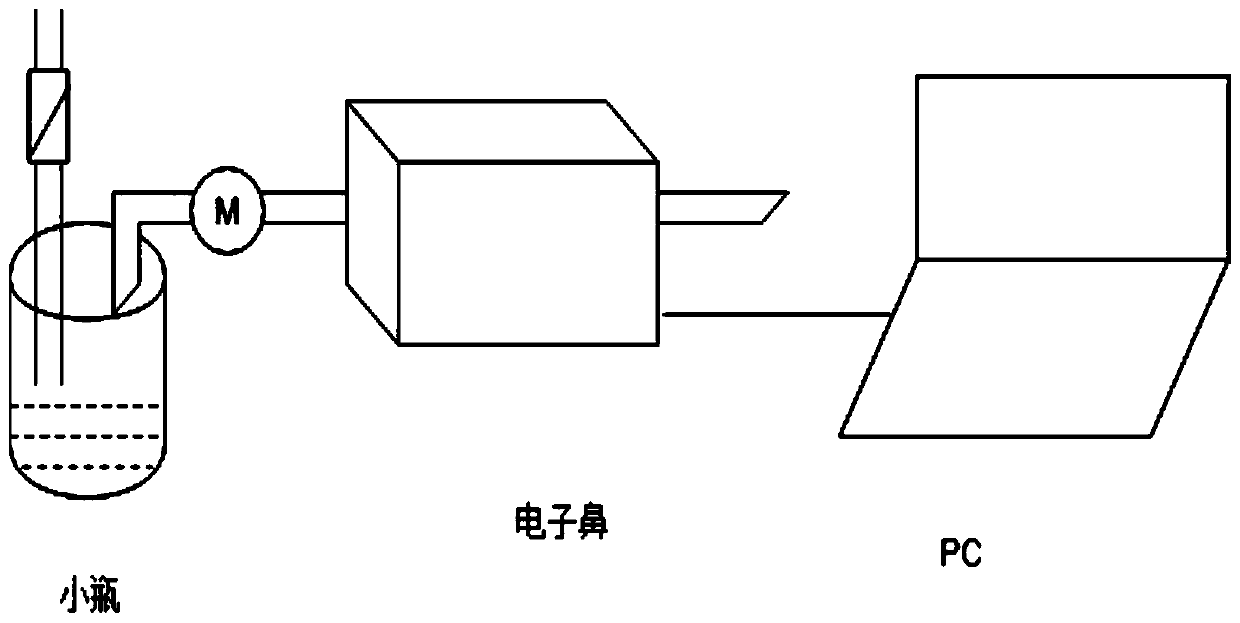

Grape wine classification method based on Bayesian optimization and electronic nose

InactiveCN110705641ASimple processQuick search resultsCharacter and pattern recognitionBayesian optimization algorithmGlobal optimization problem

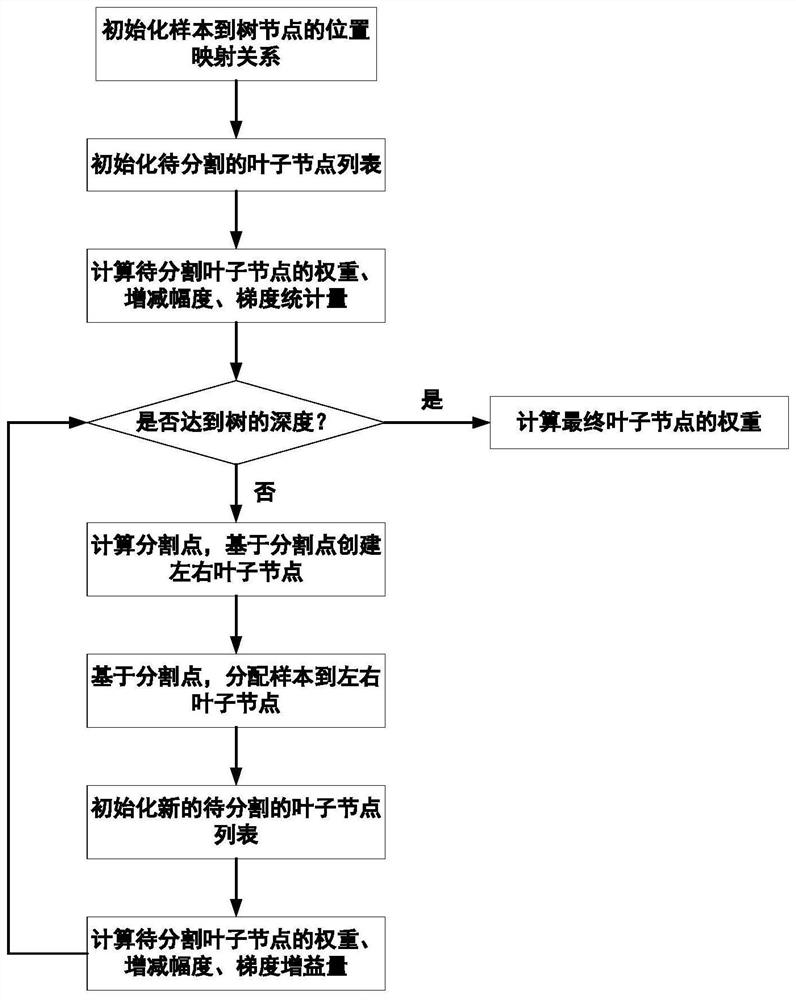

The invention relates to a grape wine classification method based on Bayesian optimization and an electronic nose, and the method comprises the following steps: S1, employing a LightGBM algorithm, employing a Leaf-wise tree building method, finding a leaf with the maximum splitting gain from all current leaves each time during tree building, then splitting, and repeating the above steps; the LightGBM uses the maximum tree depth to prune the tree, and excessive fitting is avoided; S2, building a Bayesian optimization algorithm; S3, building a BO-LightGBM, and performing self-optimization adjustment on hyper-parameters of the LightGBM by using a Bayesian hyper-parameter optimization algorithm; enabling bayesian optimization to use a probability model to replace a complex optimization function, introducing the prior of a to-be-optimized target into the probability model, thus the model can effectively reduce unnecessary sampling. The Bayesian optimization method has the advantages that the Bayesian optimization method determines the optimization method of the next evaluation point by constructing the probability model of the function to be optimized and utilizing the probability model, the most advanced result is achieved on some global optimization problems, and the Bayesian optimization method is a better solution for hyper-parameter optimization.

Owner:HEBEI UNIV OF TECH

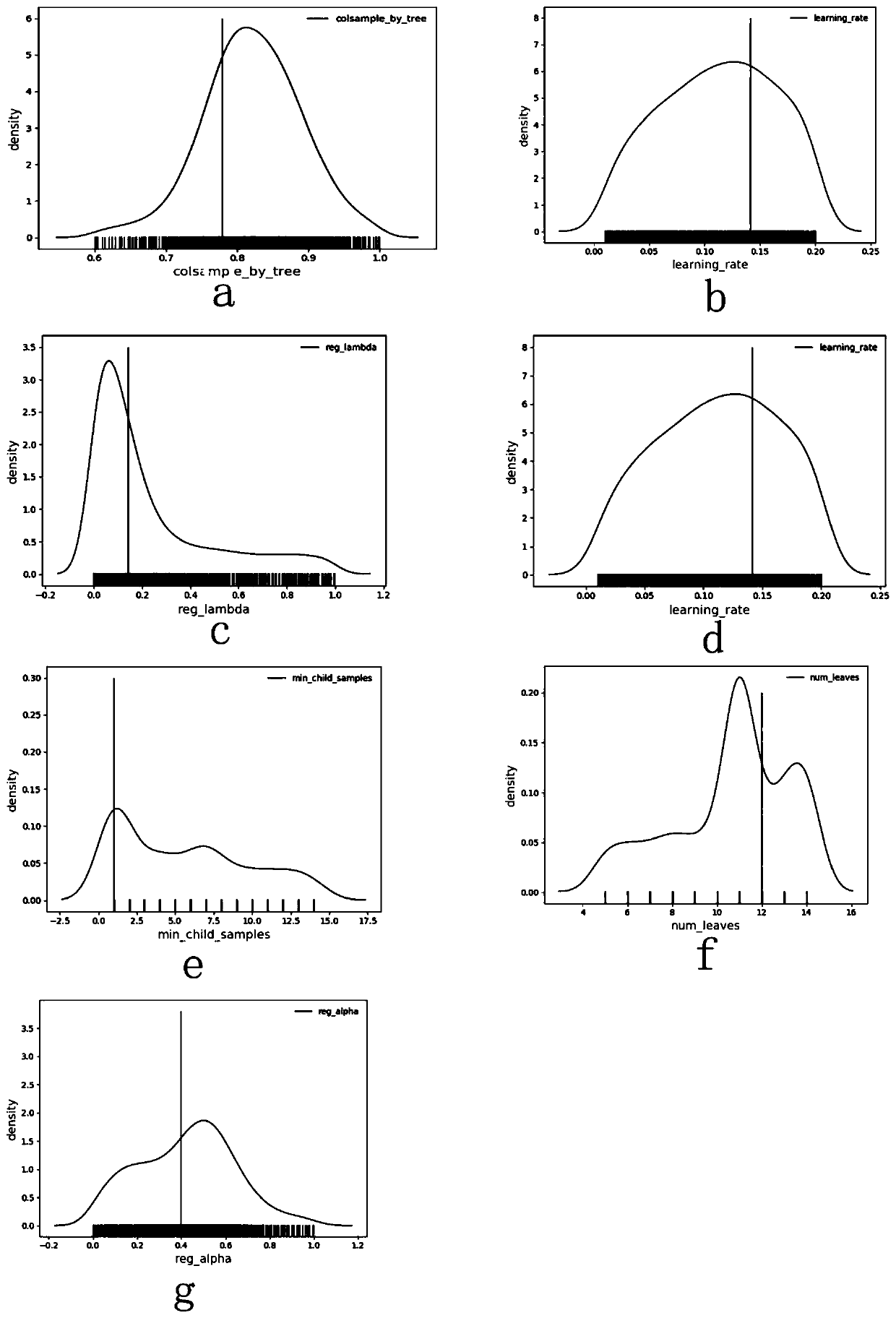

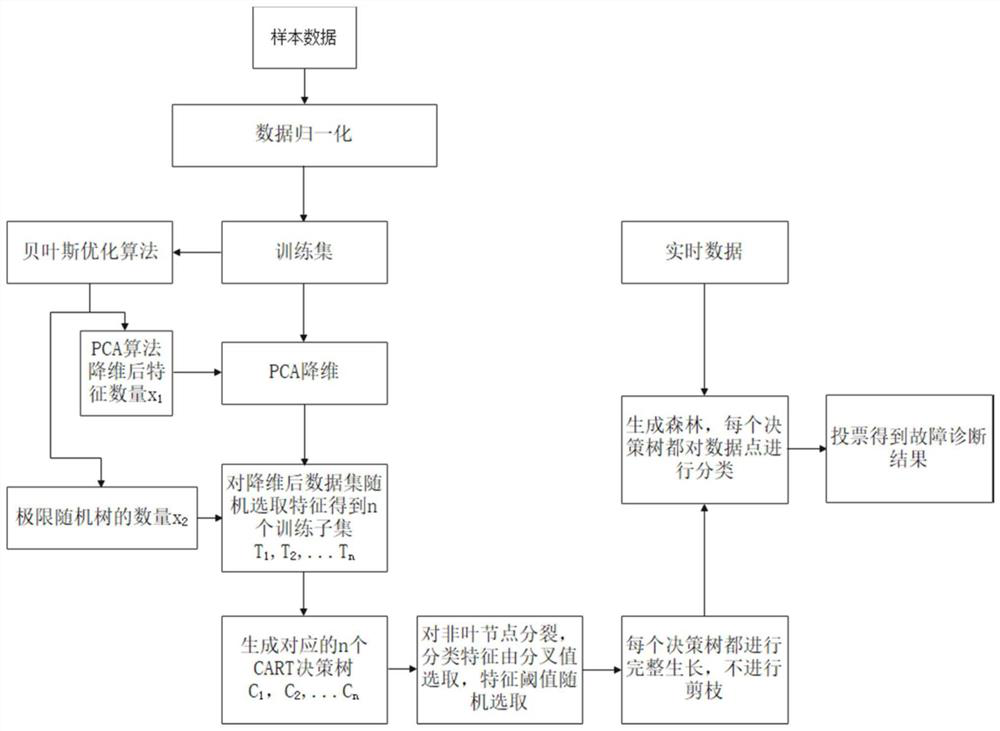

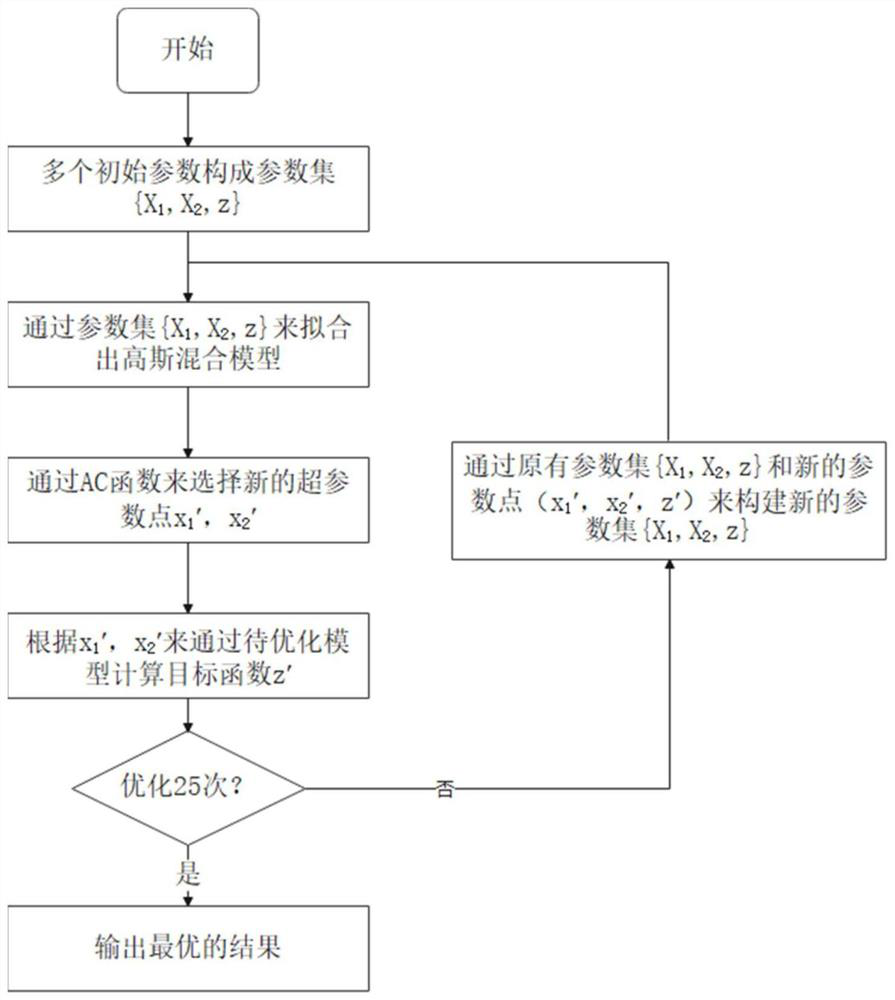

Conditioner fault diagnosis method based on Bayesian optimization PCA-limit random tree

ActiveCN113177594AGuaranteed training speedHigh speedSpace heating and ventilation safety systemsLighting and heating apparatusReal-time dataAlgorithm

The invention discloses an air conditioner fault diagnosis method based on a Bayesian optimization PCA-limit random tree. The air conditioner fault diagnosis method comprises the following steps: 1) acquiring operation data of an air conditioner under normal and different faults and normalizing the operation data; 2) carrying out dimensionality reduction on the normalized data through a PCA algorithm, and taking the normalized data as the input of an ExtraTree model; 3) establishing a limit random tree classification model, training and testing a classifier, and obtaining a PCA-limit random tree fault diagnosis model for an air conditioner; 4) utilizing a Bayesian optimization algorithm to optimize the feature number and the CART decision tree number of a PCA-extreme random tree fault diagnosis model after the PCA dimension reduction to obtain the optimal feature number and the optimal CART decision tree number after the dimension reduction; and 5) then, taking the calculated optimal PCA dimension-reduced feature quantity value and CART decision tree quantity value as parameters of a PCA-limit random tree model, training a sample to obtain a PCA-limit random tree fault diagnosis model, and then using the diagnosis model to diagnose real-time data.

Owner:ZHEJIANG UNIV

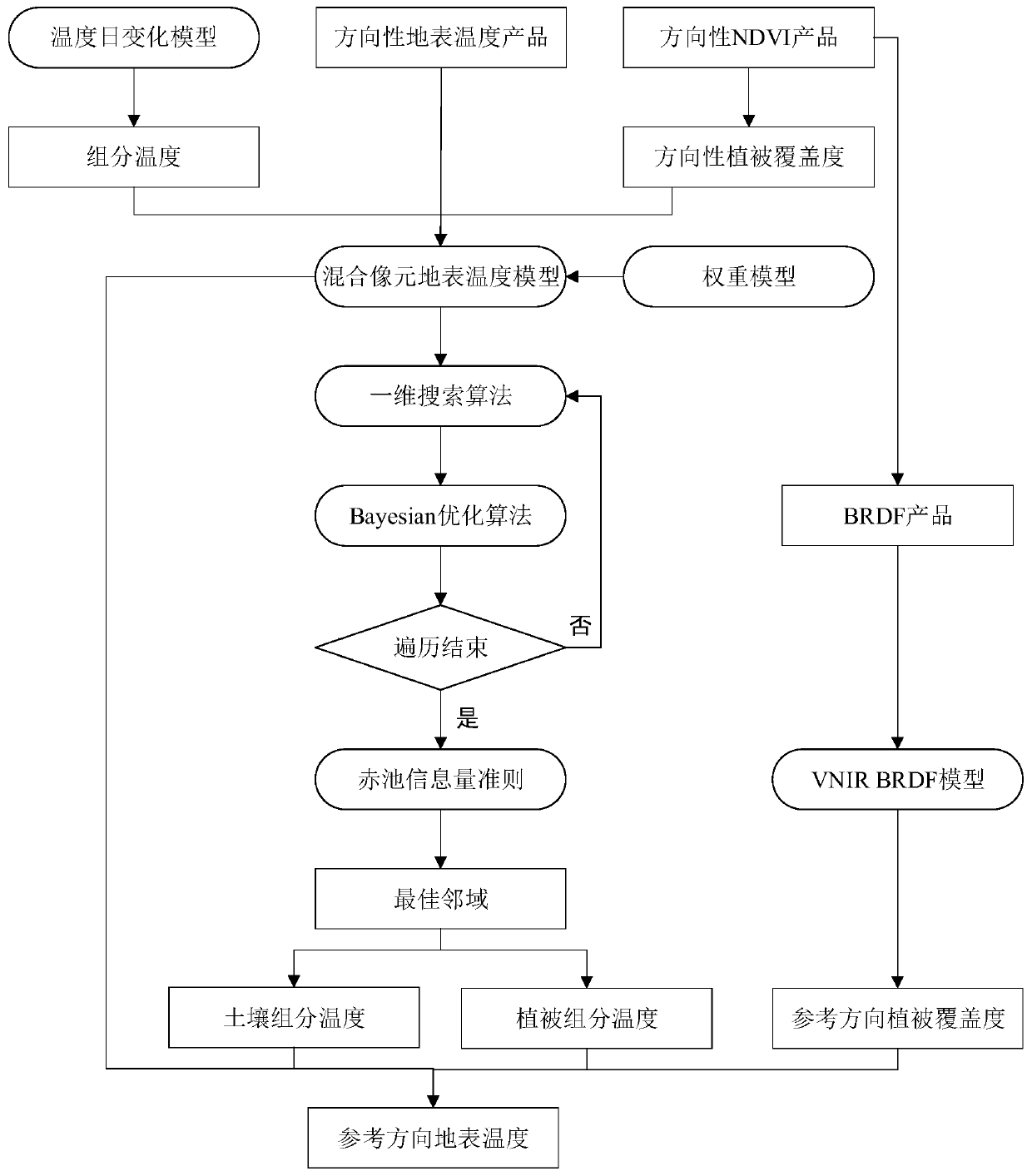

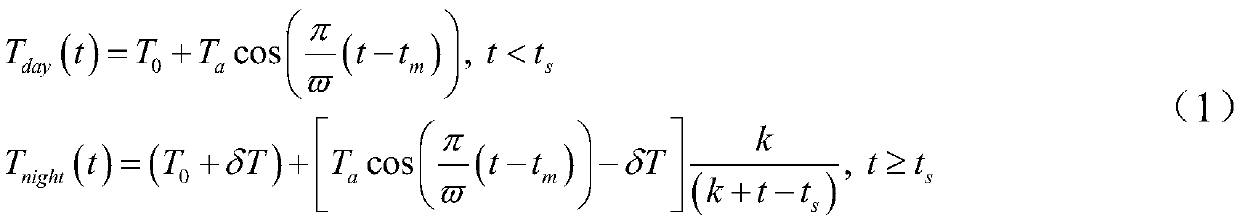

Earth surface temperature angle normalization method based on component temperature

InactiveCN110659450APracticalComplex mathematical operationsBayesian optimization algorithmBidirectional reflectance distribution function

The invention discloses a component temperature-based earth surface temperature angle normalization method. The method comprises the following steps of describing component temperatures of vegetationand soil by utilizing a temperature daily change model; constructing a mixed pixel surface temperature model by combining a directional surface temperature product and a directional preparation indexproduct; solving the mixed pixel surface temperature model by utilizing a Bayesian optimization algorithm, obtaining an optimal neighborhood size based on an akaike information criterion, and obtaining component temperatures of vegetation and soil; and obtaining vegetation coverage in the reference direction by using a bidirectional reflection distribution function, and substituting the componenttemperature and the vegetation coverage into the mixed pixel surface temperature model to obtain surface temperature in the reference direction, thereby completing angle normalization. The temperatureis started from the physical mechanism of the surface temperature, the previous research based on illumination and shadow component difference is converted into the research based on vegetation and soil component difference, and high-precision angle normalization is carried out on the surface temperature based on the component temperature.

Owner:KUNMING UNIV OF SCI & TECH

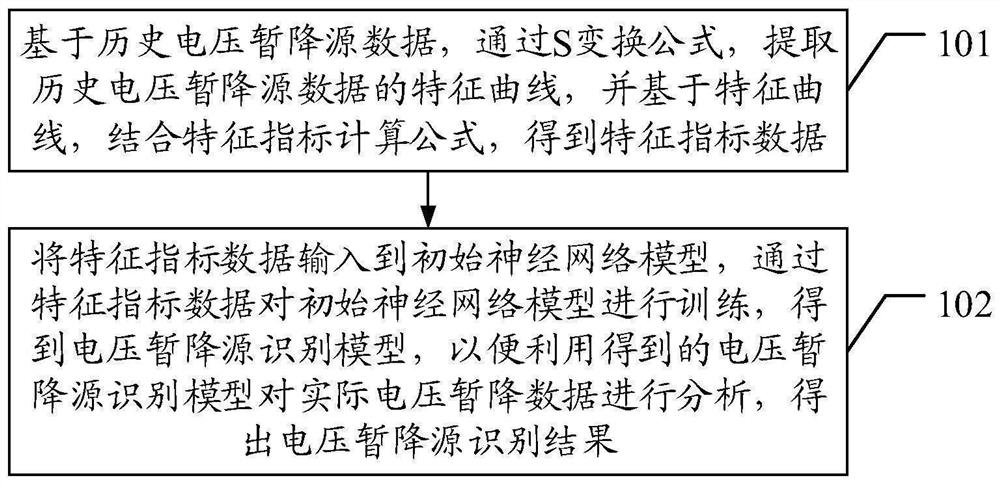

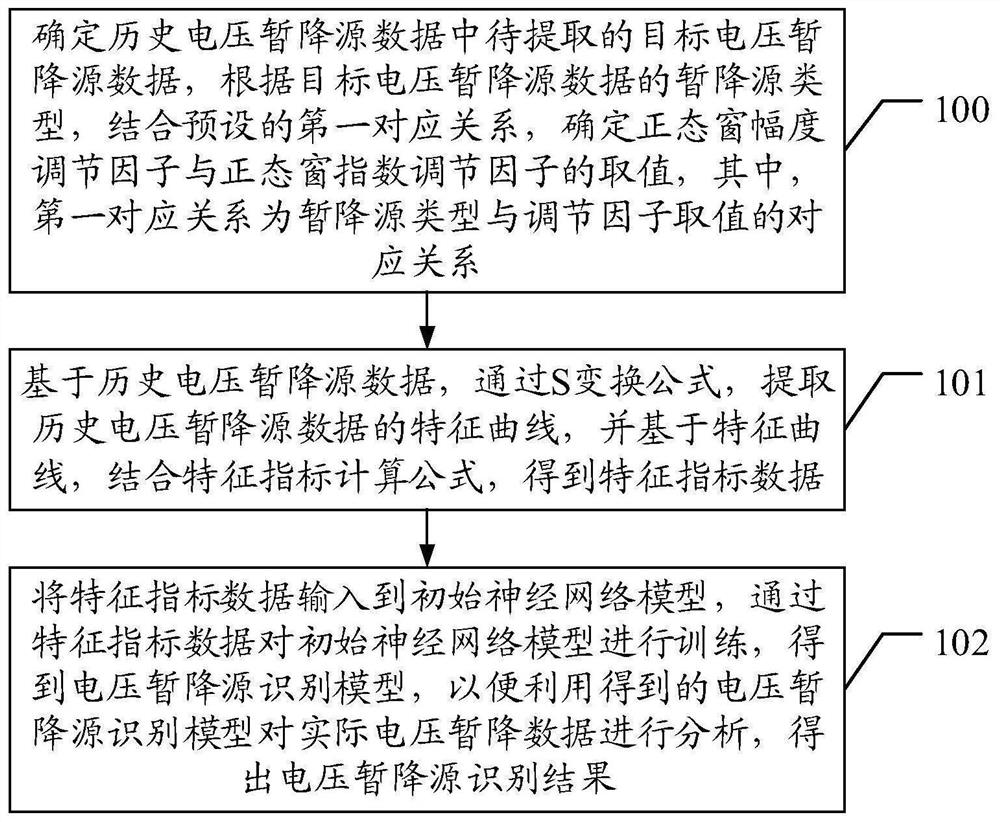

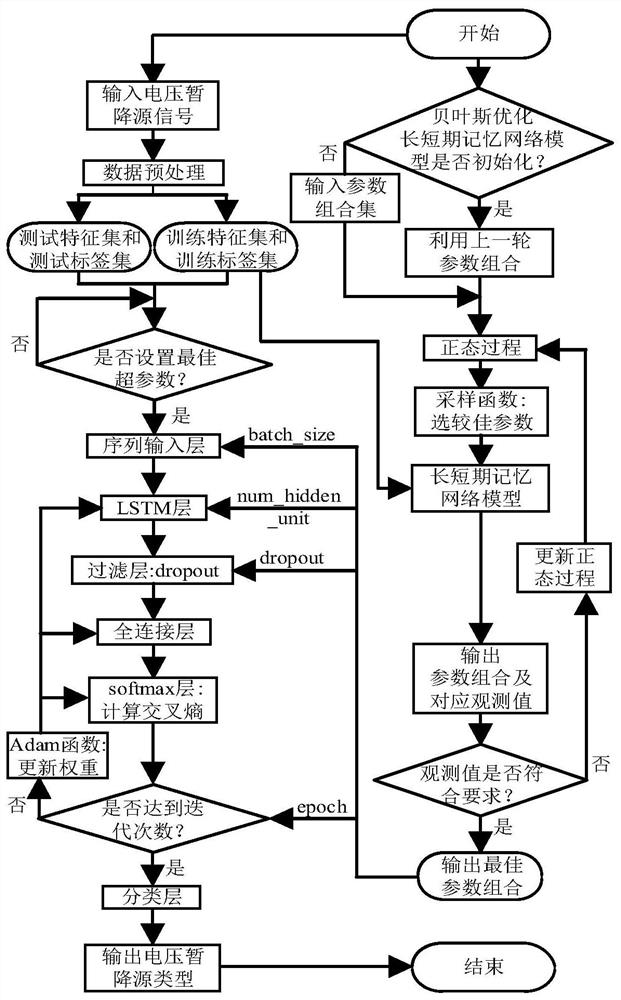

Voltage sag source recognition model construction method and device, terminal and medium

PendingCN113743504AAvoid inefficiencyImprove accuracyData processing applicationsCharacter and pattern recognitionAlgorithmEngineering

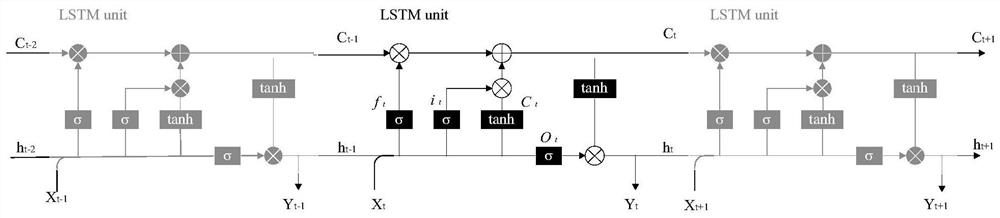

The invention discloses a voltage sag source recognition model construction method and device, a terminal and a medium. The method comprises the steps of extracting a feature curve of historical voltage sag source data through an S transformation formula based on the historical voltage sag source data, and obtaining feature index data based on the feature curve and in combination with a feature index calculation formula; inputting the feature index data into an initial neural network model, the initial neural network model being a hyper-parameter obtained through optimization based on a Bayesian optimization algorithm, enabling the constructed LSTM network model to train the initial neural network model through the feature index data, and analyzing actual voltage sag data by using the trained voltage sag source recognition model. According to the method, the Bayesian algorithm is used for optimizing the parameters of the long-short-term memory network model, the optimal parameters are obtained and set in the long-short-term memory network, the low efficiency of manual parameter adjustment is avoided, and the accuracy of identifying different voltage sag sources by the network is effectively improved.

Owner:GUANGZHOU POWER SUPPLY BUREAU GUANGDONG POWER GRID CO LTD

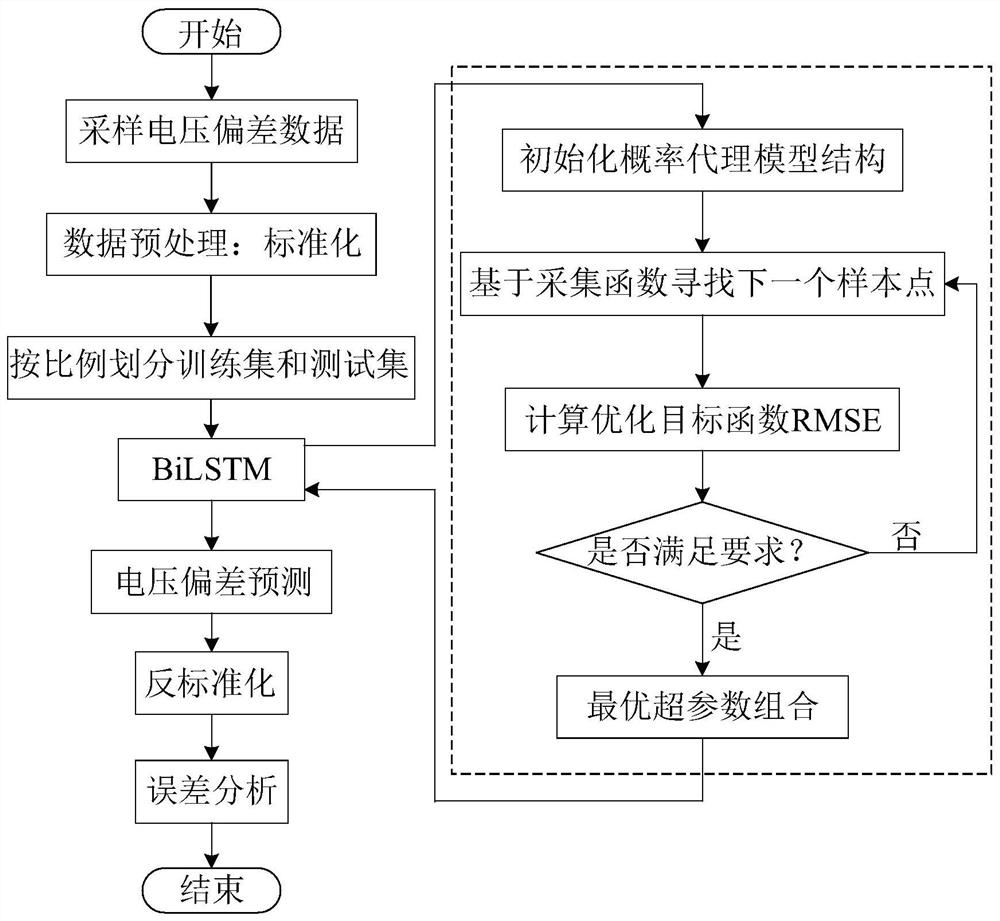

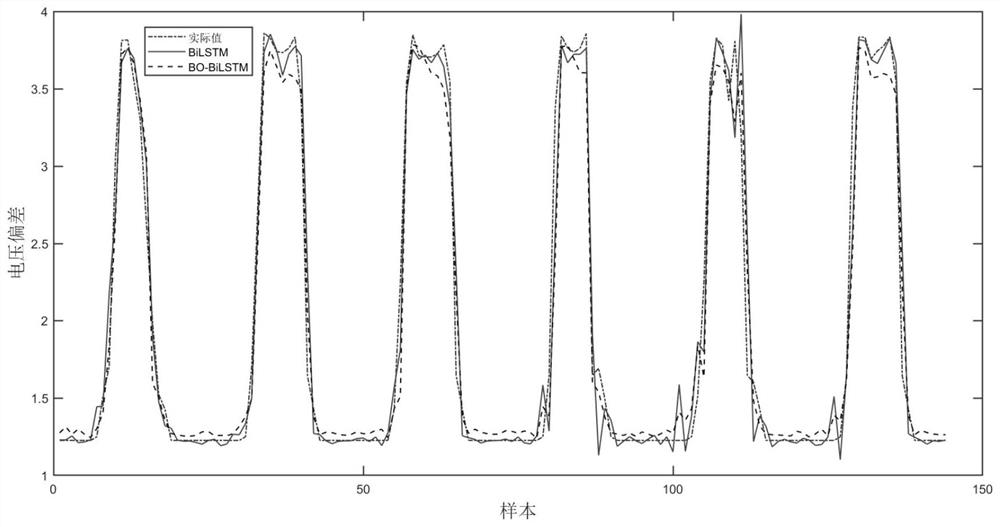

BiLSTM voltage deviation prediction method based on Bayesian optimization

PendingCN113554148AImprove generalization abilityHigh precisionMathematical modelsForecastingData setEngineering

The invention discloses a BiLSTM voltage deviation prediction method based on Bayesian optimization, and the method comprises the steps of carrying out the standard deviation standardization processing of a voltage deviation time series data set, carrying out the data segmentation according to a proportion, and obtaining a training set and a verification set; training a BiLSTM voltage deviation prediction model by using the preprocessed voltage deviation data training set; inputting the verification set into a trained BiLSTM voltage deviation prediction model, obtaining a voltage deviation prediction value, then carrying out inverse standard deviation processing, taking a root-mean-square error as a target function for the hyper-parameter optimization of the BiLSTM voltage deviation prediction model, optimizing the hyper-parameters of the BiLSTM voltage deviation prediction model by using a Bayesian optimization algorithm, and obtaining an optimal hyper-parameter combination; and taking the optimal hyper-parameter combination as hyper-parameters of a BiLSTM prediction model, constructing a BiLSTM voltage deviation prediction model based on a Bayesian optimization algorithm, and predicting the voltage deviation time sequence data to obtain final prediction data. The invention is high in precision and reliable in prediction effect.

Owner:NANJING UNIV OF SCI & TECH

Fatigue prediction method based on Bayesian optimization XGBoost algorithm

InactiveCN111814880AHigh degree of complianceImprove fatigue prediction accuracyHealth-index calculationCharacter and pattern recognitionAlgorithmBayesian optimization algorithm

The invention provides a fatigue prediction method based on a Bayesian optimization XGBoost algorithm. The method comprises the following steps: 1, obtaining physiological signal data of a tester in amovement fatigue state through a signal collection instrument, and storing the physiological signal data; 2, eliminating abnormal data based on an RANSAC algorithm; 3, performing minority sample resampling by using an SMOTE oversampling algorithm to solve the class imbalance problem; 4, performing fatigue prediction by using the XGBoost model, and inputting the processed sample data into the XGBoost model for classification; and 5, optimizing the XGBoost model by using a Bayesian optimization algorithm. Through the above steps, a fatigue prediction process is realized, and the intelligence and accuracy of exercise fatigue recognition are improved.

Owner:BEIHANG UNIV

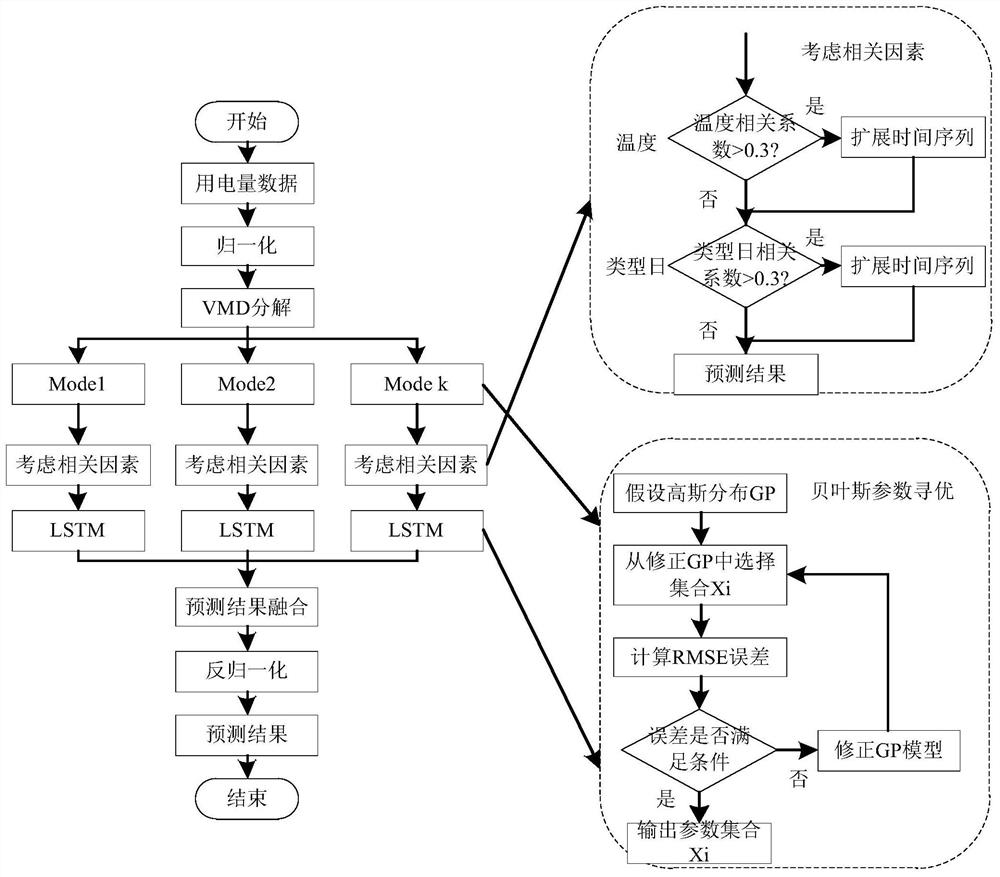

Daily power consumption prediction method based on VMD decomposition and LSTM network

PendingCN112651543AImprove forecast accuracyDescribe wellRelational databasesForecastingOriginal dataEngineering

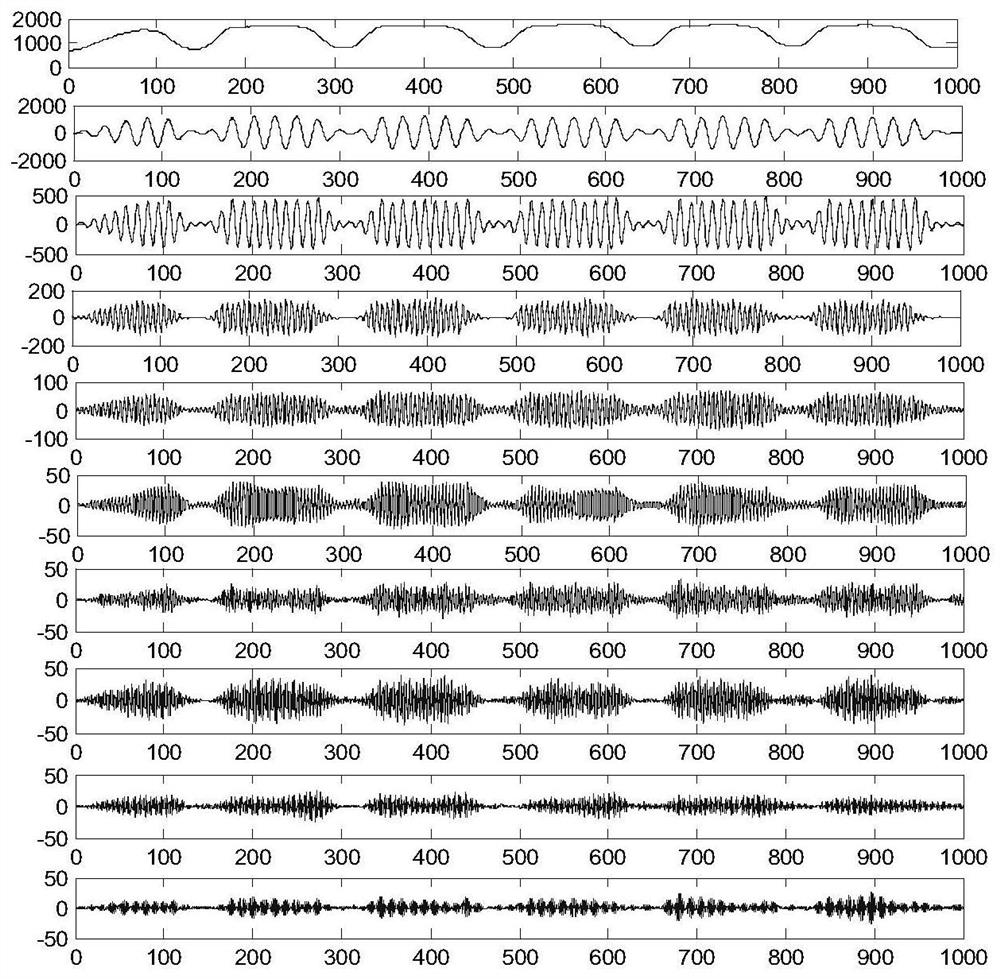

The invention relates to a daily power consumption prediction method based on VMD decomposition and an LSTM network. The method comprises the steps: carrying out variational modal decomposition on preprocessed data, wherein a modal number K is optimized through a Bayesian optimization algorithm; expanding related influence factors of the electricity consumption sequence data, wherein mapping parameters between the original data and the mapping data are obtained through optimization of a Bayesian optimization algorithm; dividing the expanded data of the related influence factors into a training set, a verification set and a test set; training each sub-mode through an LSTM model, and calculating a root-mean-square error through comparison of the test set and the verification set; reconstructing and reversely normalizing the prediction result, and determining whether a termination condition is met or not; and inputting the optimized mapping parameters into an LSTM model, using the training set and the verification set as new training data, performing reconstruction and reverse normalization on test data, and outputting a prediction result. The relation between the key factors and the power consumption sequence can be accurately described, and the daily power consumption prediction precision is improved.

Owner:SHENYANG INST OF ENG

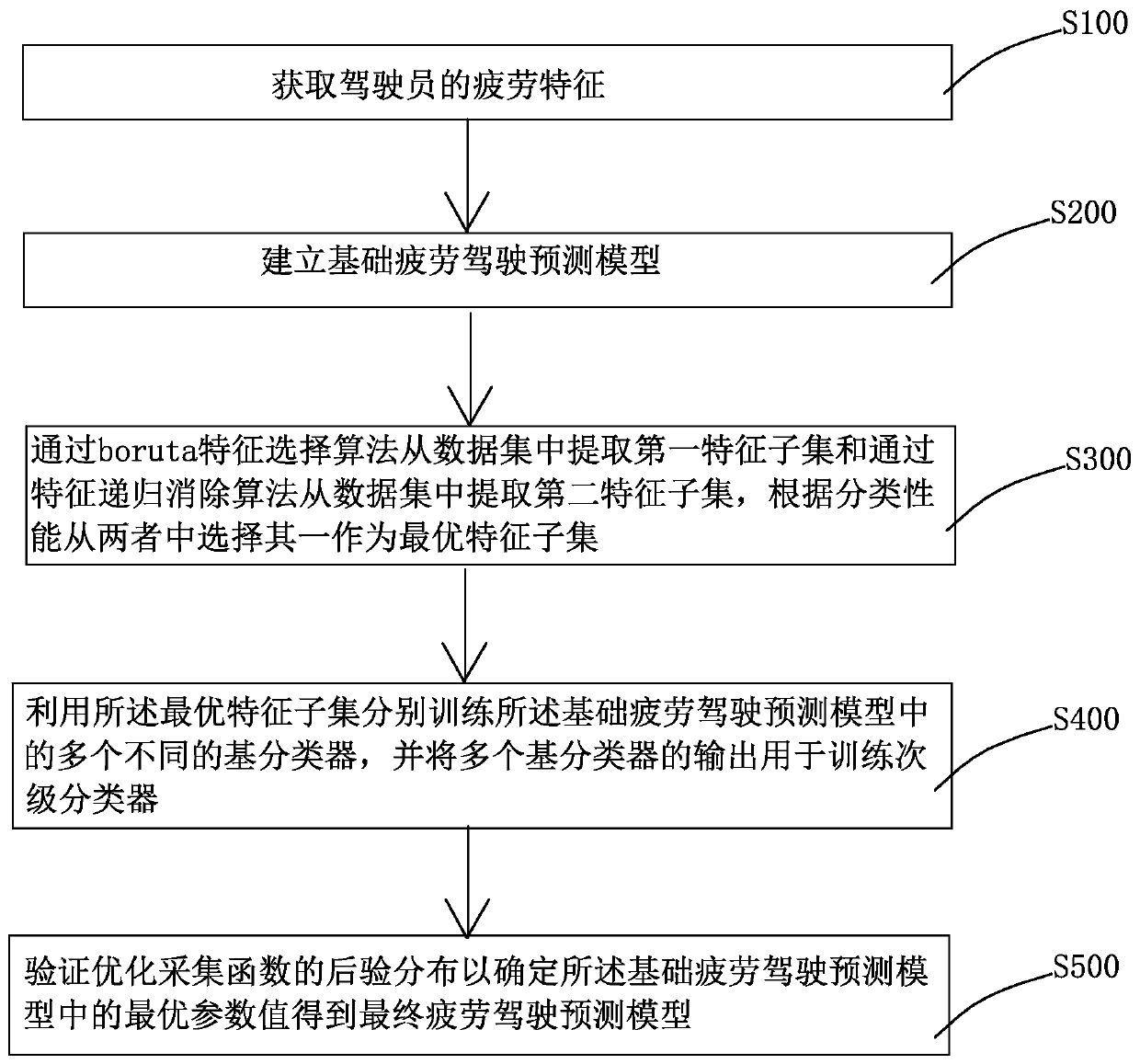

Fatigue driving prediction model construction method and device and storage medium

ActiveCN111444657AAvoid duplicationAvoid data breachesCharacter and pattern recognitionDesign optimisation/simulationDriver/operatorBayesian optimization algorithm

The invention discloses a fatigue driving prediction model construction method and device, and a storage medium. The construction method comprises the steps of obtaining fatigue features of a driver;establishing a basic fatigue driving prediction model; selecting an optimal feature subset through a boruta feature selection algorithm and a feature recursion elimination algorithm; training a plurality of base classifiers by using the optimal feature subset, and training a secondary classifier by using outputs of the plurality of base classifiers to obtain a classification result; and optimizingthe model through parameter optimization. The training process is optimized, and the problems of repeated calculation and data leakage of a training set and a test set are avoided; after the classifiers are trained, a plurality of different classifier prediction results are aggregated, so that the final model has better generalization and robustness; the Bayesian optimization algorithm is used for model hyper-parameter tuning, hyper-parameters are adjusted through fewer iterations, and the model performance is further improved.

Owner:WUYI UNIV

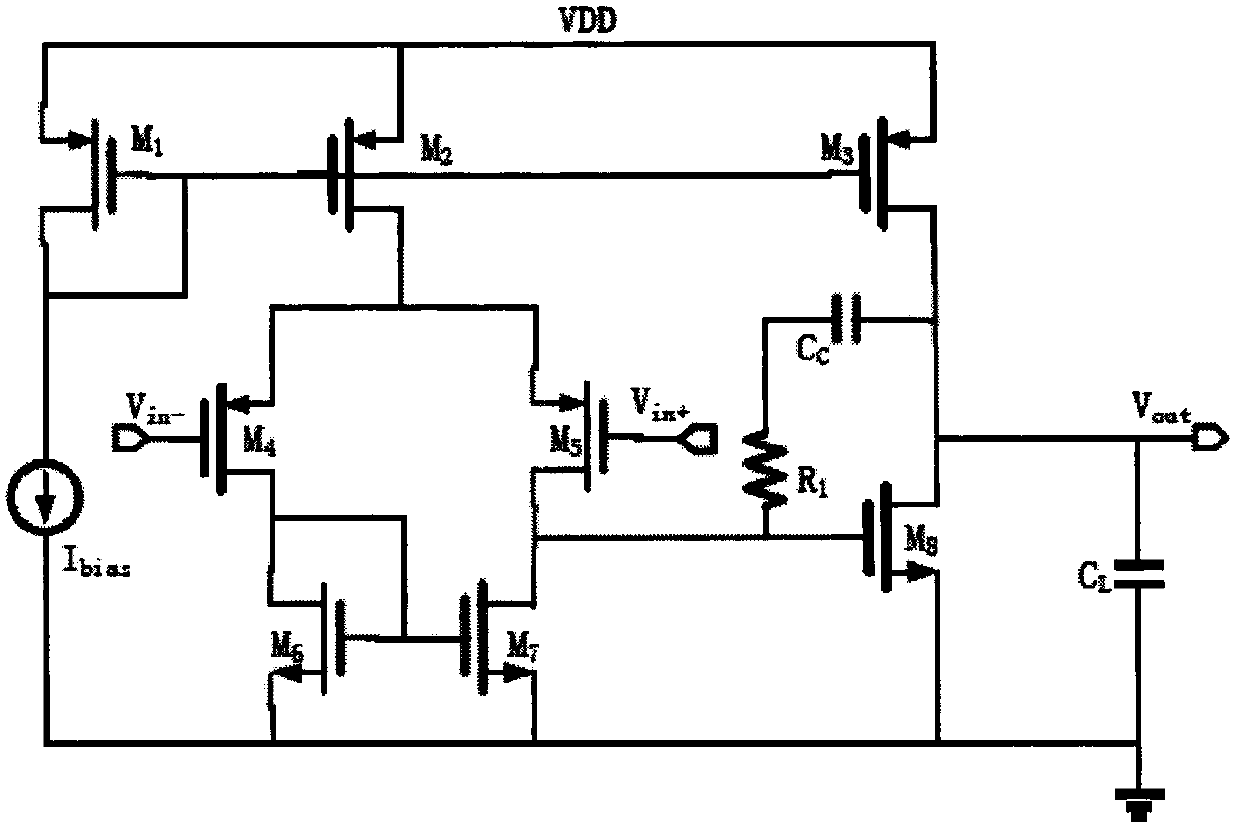

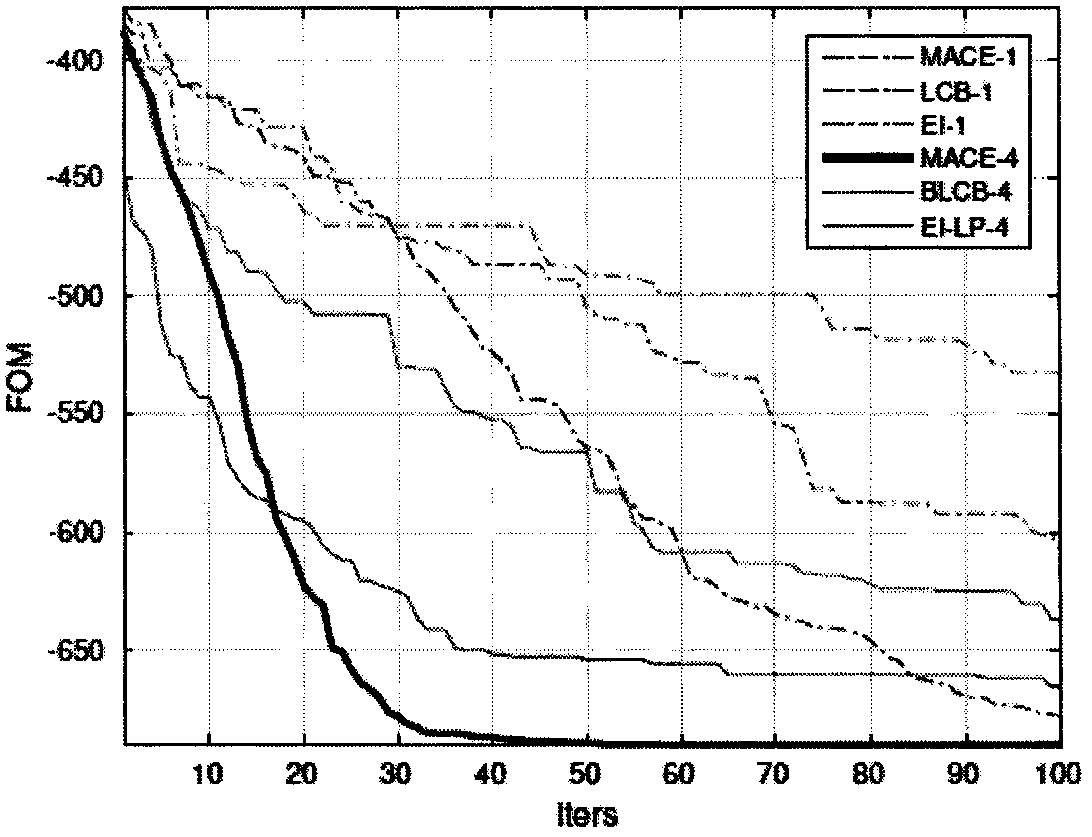

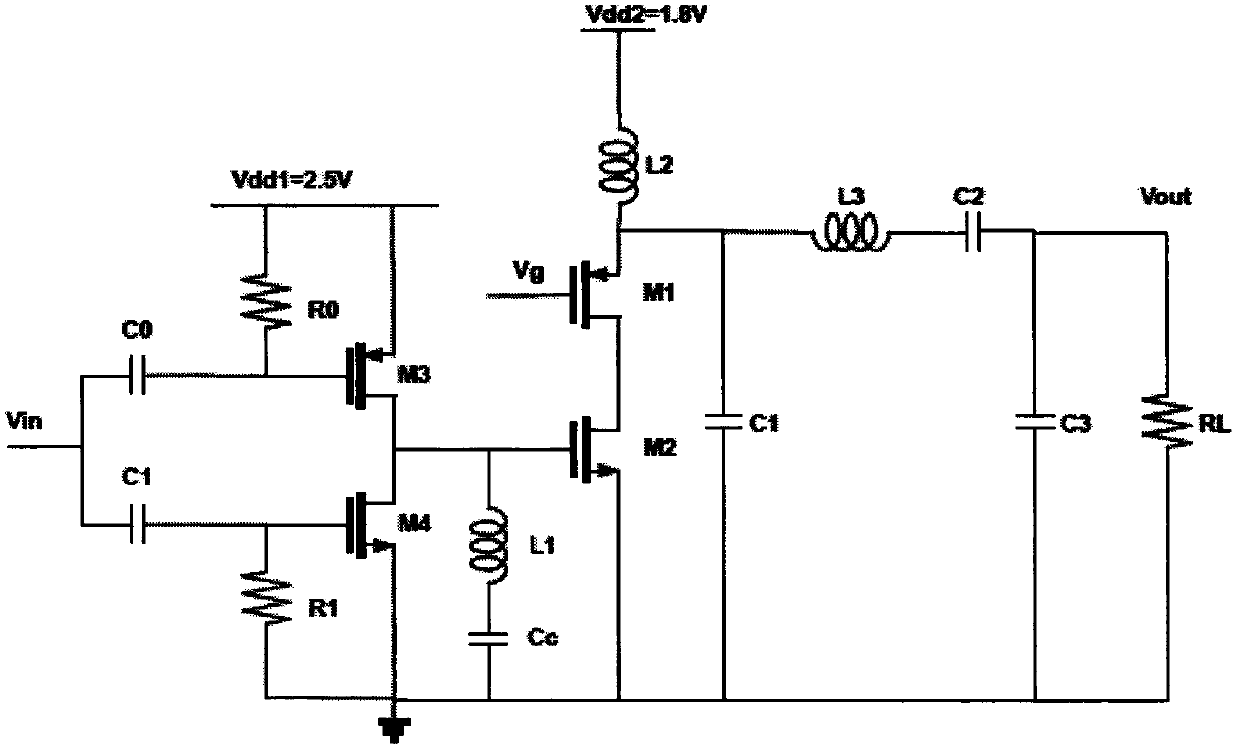

Analog circuit optimization algorithm based on multi-objective acquisition function integrated parallel Bayesian optimization

PendingCN110750948AImprove optimization effectEasy to optimizeDesign optimisation/simulationCAD circuit designAnalog circuit designBayesian optimization algorithm

The invention belongs to the field of automatic optimization of analog circuit design parameters in integrated circuit design, in particular to a Gaussian process model-based a circuit optimization method using a Batch Bayesian optimization algorithm. According to the method, in each iteration, a Gaussian process model is firstly constructed, then a plurality of acquisition functions are constructed by the Gaussian process model, multi-objective optimization is carried out on the acquisition functions to obtain Pareto front edges of the acquisition functions, and a plurality of points for circuit simulation are selected from the Pareto front edges. According to the method, the simulation frequency of the circuit in the optimization process can be greatly reduced, analog circuit design parameters meeting the performance requirements are obtained, and meanwhile circuit optimization can be accelerated through the parallel optimization technology.

Owner:FUDAN UNIV

Hyper-parameter determination method, apparatus and device

ActiveCN108921207AImprove determination efficiencyReduce workloadCharacter and pattern recognitionArray data structureData set

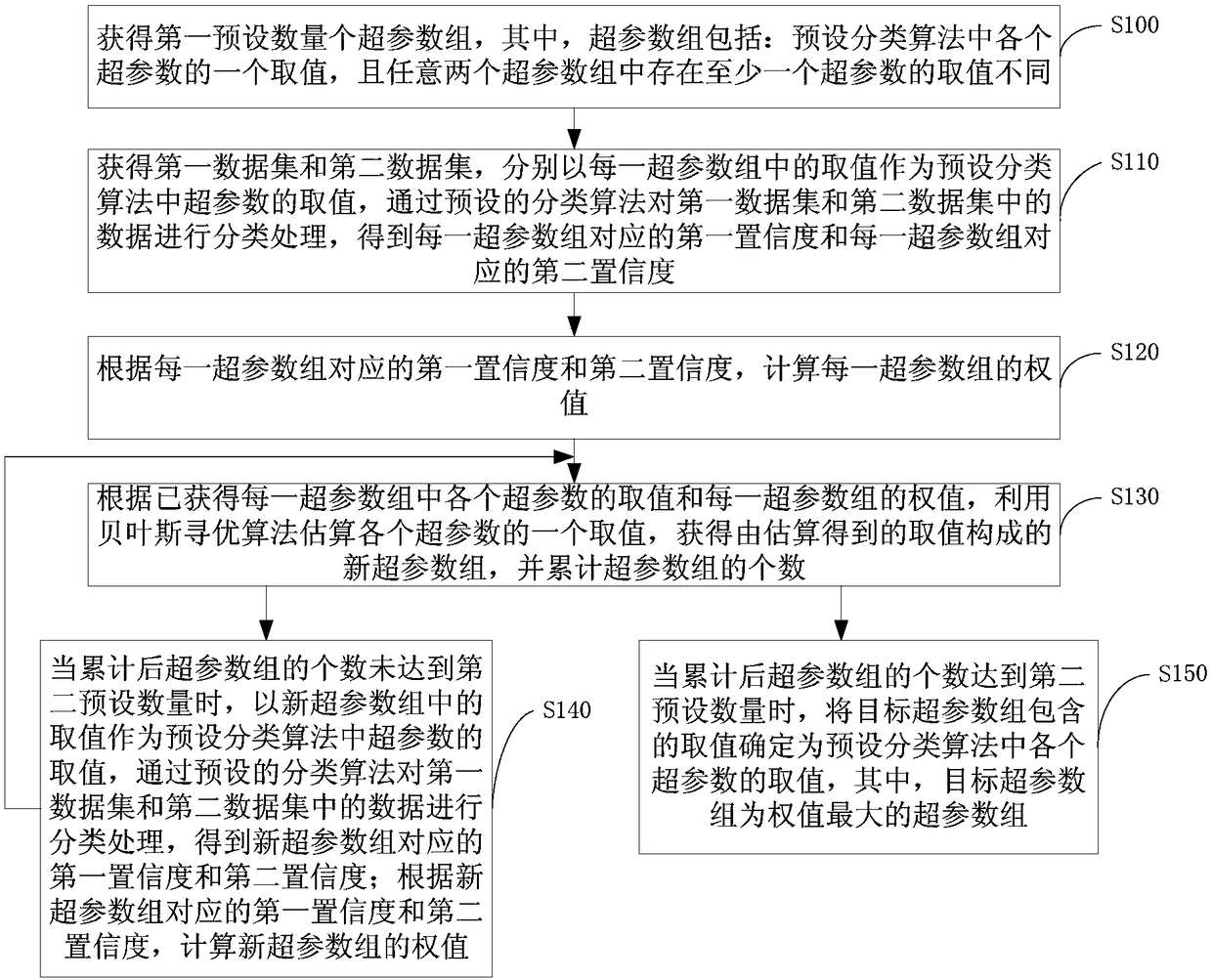

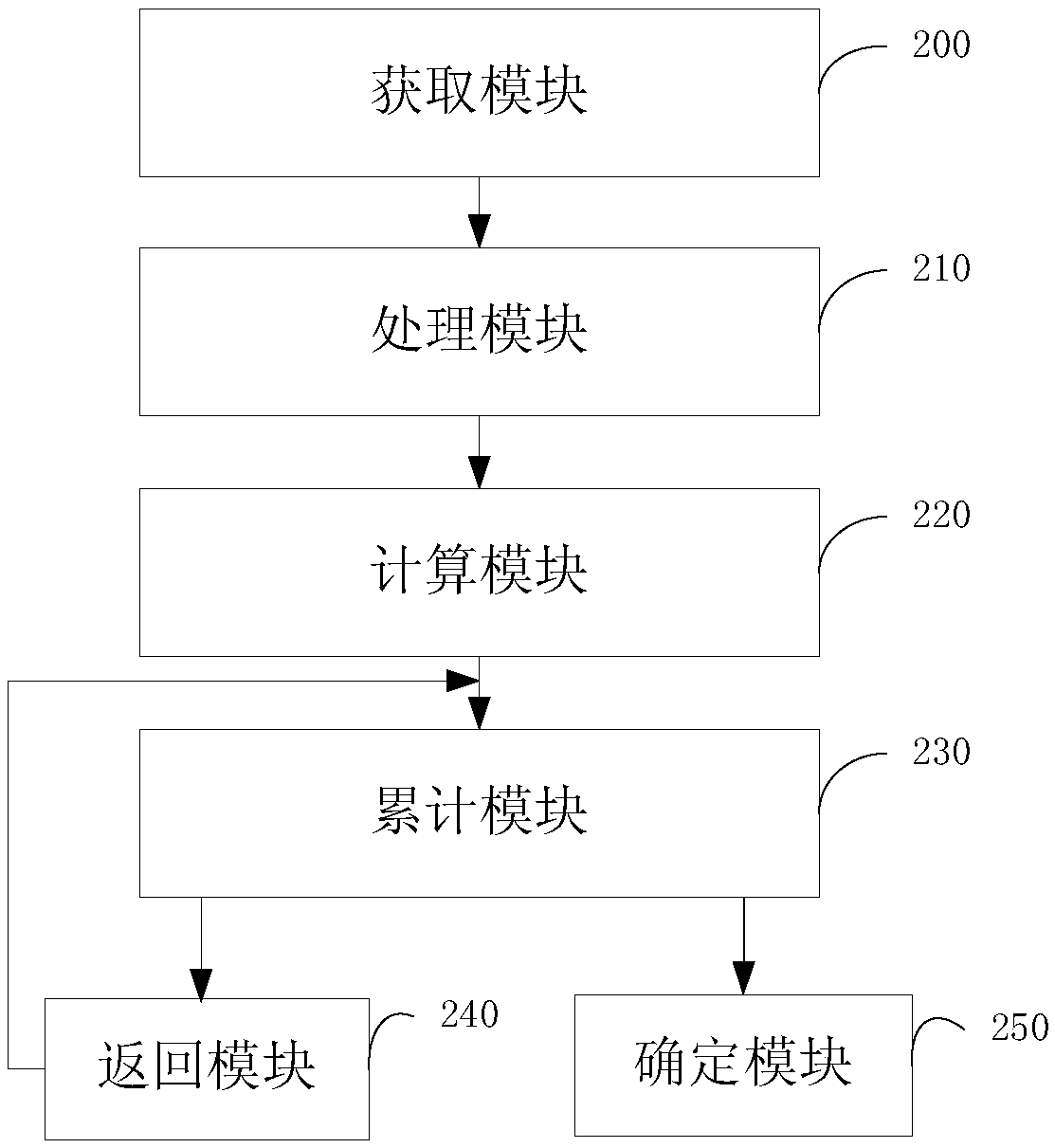

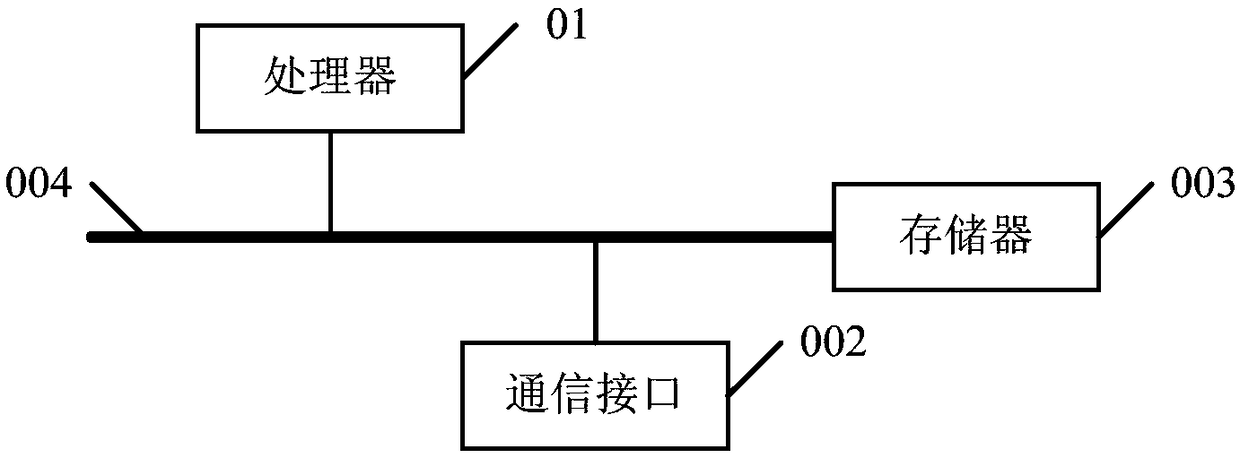

Embodiments of the invention provide a hyper-parameter determination method, apparatus and device. The method comprises the steps of obtaining a first preset number of hyper-parameter sets; obtaininga first data set and a second data set, and by taking values in each hyper-parameter set as values of hyper-parameters in a preset classification algorithm, performing classification processing on data in the first data set and the second data set, thereby obtaining a first confidence degree and a second confidence degree corresponding to each hyper-parameter set; calculating a weight value of each hyper-parameter set according to the confidence degree corresponding to each hyper-parameter set; according to the obtained values in each hyper-parameter set and weight value of each hyper-parameter set, estimating a value of each hyper-parameter by utilizing a Bayesian optimization algorithm, obtaining new hyper-parameter sets and accumulating the number of the hyper-parameter sets; and takingthe values in the hyper-parameter set with the maximum weight value as the values of the hyper-parameters in the preset classification algorithm when the accumulated number of the hyper-parameter sets reaches a second preset number. By applying the method provided by the embodiment of the invention, the hyper-parameter determination efficiency can be improved.

Owner:中诚信征信有限公司

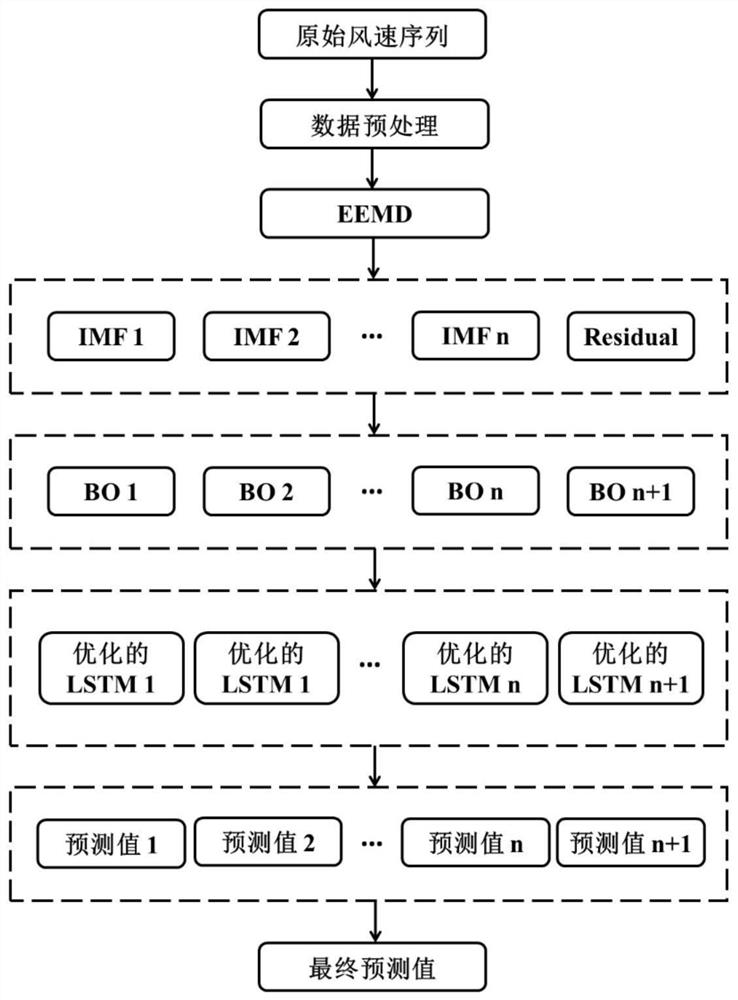

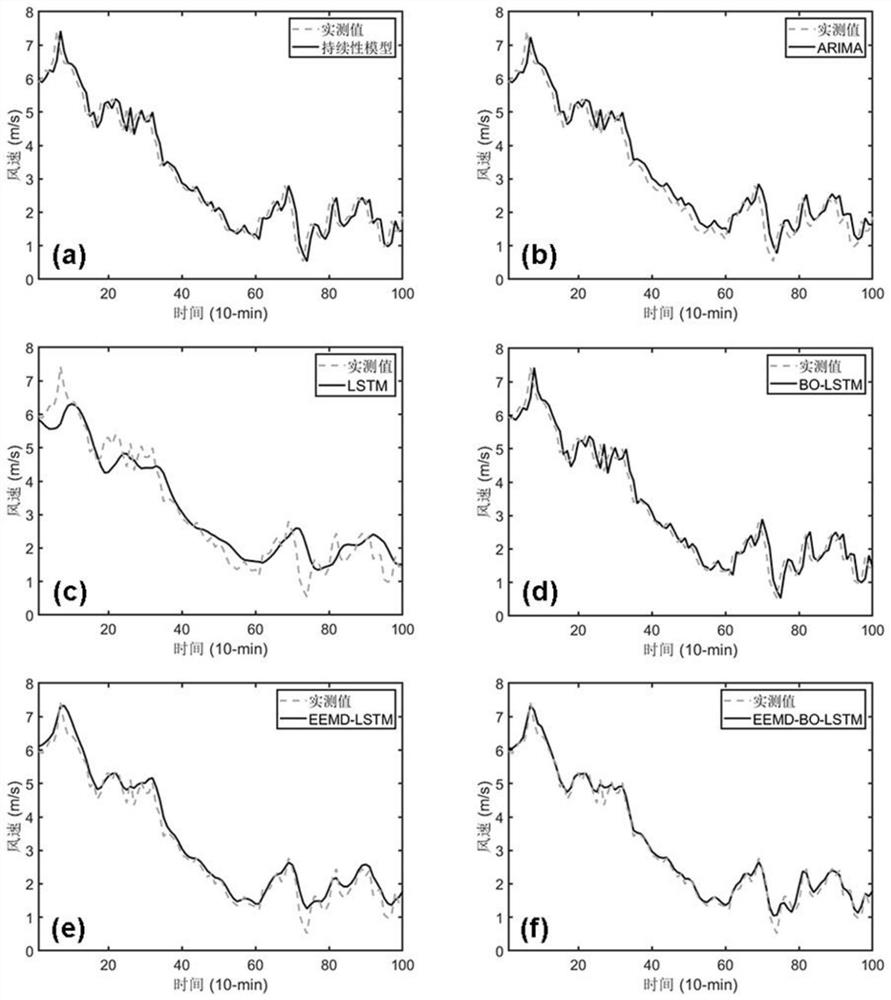

Wind speed prediction method based on hybrid neural network model

ActiveCN111695724AFitting long-term dependent featuresReduce the difficulty of forecastingClimate change adaptationForecastingBayesian optimization algorithmEngineering

The invention discloses a wind speed prediction method based on a hybrid neural network model. The method comprises the following steps: carrying out integrated empirical mode decomposition on wind speed original time series data; and establishing a long-short-term memory neural network to predict a component signal obtained by integrated empirical mode decomposition, adjusting and optimizing hyper-parameters of the long-short-term memory neural network through a Bayesian optimization algorithm, and synthesizing a prediction result of the component signal into a final prediction result. According to the invention, a random unsteady original short-term wind speed time sequence is decomposed into stably changing time sequence data; and the hyper-parameters of the long-term and short-term memory neural network are automatically adjusted and optimized to obtain a prediction result, so that the prediction error is greatly reduced, the prediction precision is improved, the method can be applied to prediction of short-term wind speed, and a powerful tool is provided for intelligent operation and maintenance of a wind power generation network.

Owner:ZHEJIANG UNIV

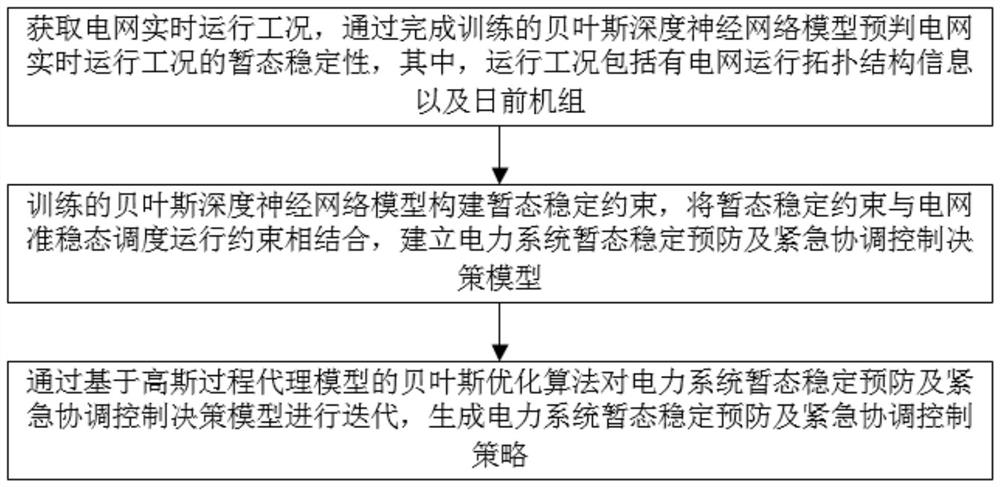

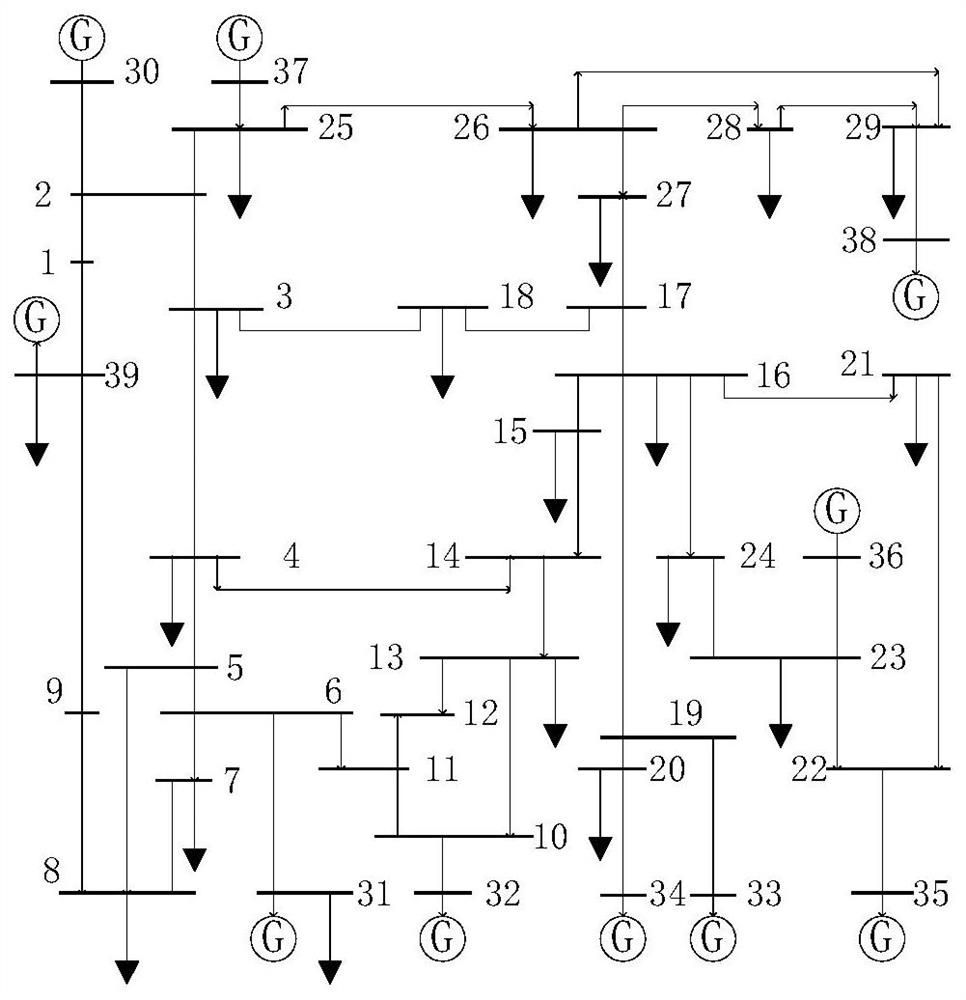

Power system transient stability prevention and emergency coordination control auxiliary decision-making method

PendingCN113591379AImprove operational safetyReal-time monitoring of dynamic security statusContigency dealing ac circuit arrangementsDesign optimisation/simulationDecision modelPower-system automation

The invention discloses a power system transient stability prevention and emergency coordination control auxiliary decision-making method, and relates to the technical field of power system automation, and the method comprises the steps: obtaining the real-time operation condition of a power grid, and pre-judging the transient stability of the real-time operation condition of the power grid through a trained Bayesian deep neural network model, wherein the operation condition comprises power grid operation topological structure information and a day-ahead unit; establishing transient stability constraints by the trained Bayesian deep neural network model, combining the transient stability constraints with power grid quasi-steady-state scheduling operation constraints, and establishing a power system transient stability prevention and emergency coordination control decision model; and iterating the power system transient stability prevention and emergency coordination control decision model through a Bayesian optimization algorithm based on a Gaussian process agent model to generate a power system transient stability prevention and emergency coordination control strategy. The method can monitor the dynamic safety state of the power grid in real time, and effectively improves the operation safety level of a power system.

Owner:SICHUAN UNIV

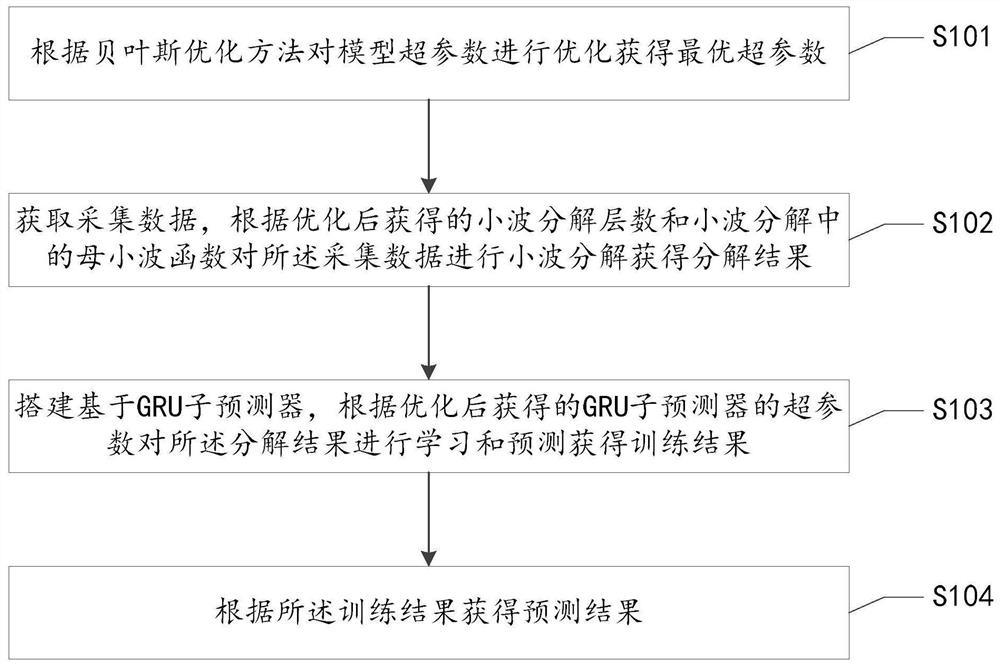

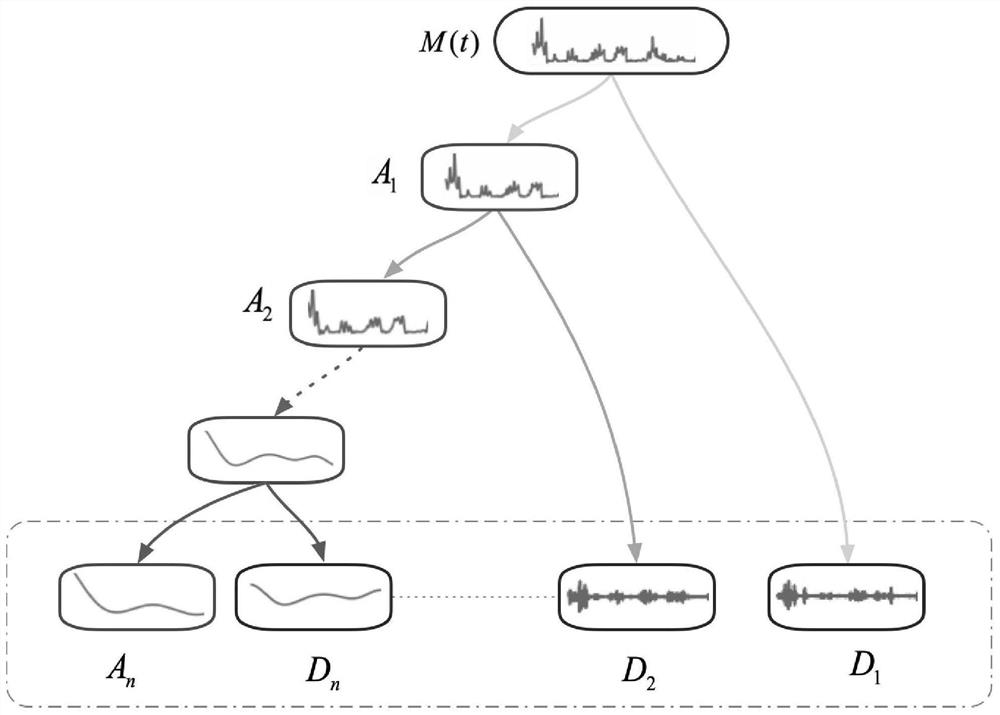

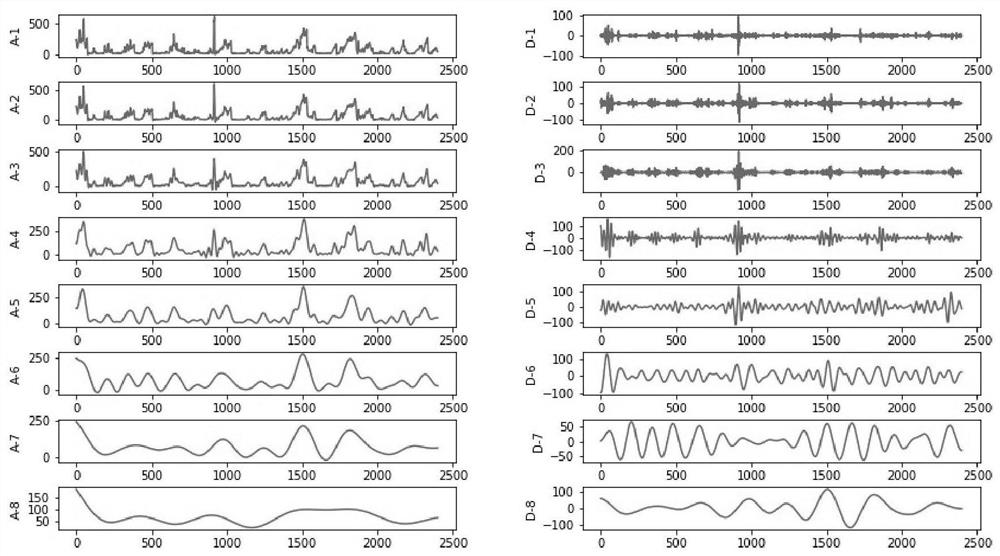

Time sequence prediction method and device based on Bayesian optimization and wavelet decomposition

PendingCN111859264AReduce complexityImprove accuracyMathematical modelsNeural architecturesBayesian optimization algorithmWavelet decomposition

The invention provides a time sequence prediction method based on Bayesian optimization and wavelet decomposition, and the method comprises: optimizing a model hyper-parameter according to a Bayesianoptimization method, and obtaining an optimal hyper-parameter which comprises the number of wavelet decomposition layers, a mother wavelet function in wavelet decomposition, and a hyper-parameter of aGRU sub-predictor; acquiring acquired data, and performing wavelet decomposition on the acquired data according to the wavelet decomposition layer number obtained after optimization and a mother wavelet function in wavelet decomposition to obtain a decomposition result; building a GRU-based sub-predictor, and learning and predicting the decomposition result according to the hyper-parameters of the GRU-based sub-predictor obtained after optimization to obtain a training result; and obtaining a prediction result according to the training result. The Bayesian optimization algorithm is used for optimizing the hyper-parameters, and the method has very high accuracy in a long-term time sequence prediction task.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com