Patents

Literature

42results about How to "Speed up exploration" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

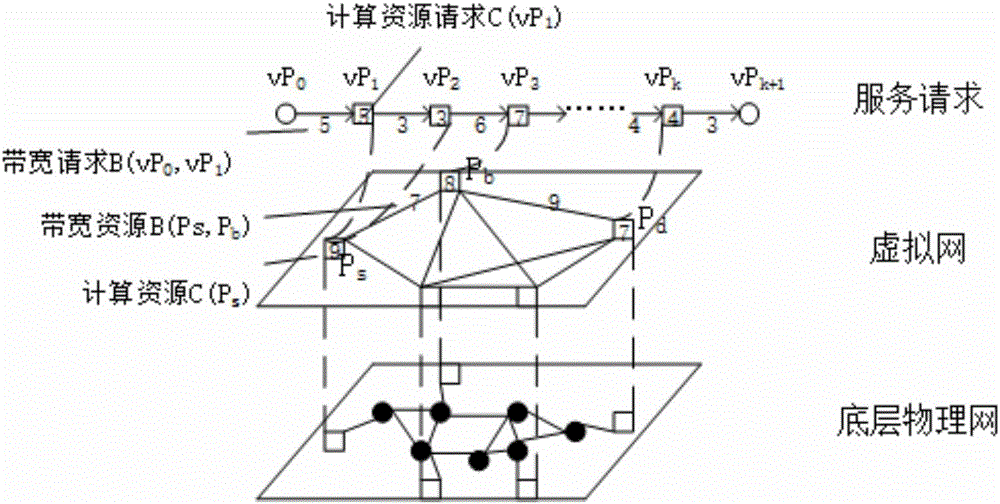

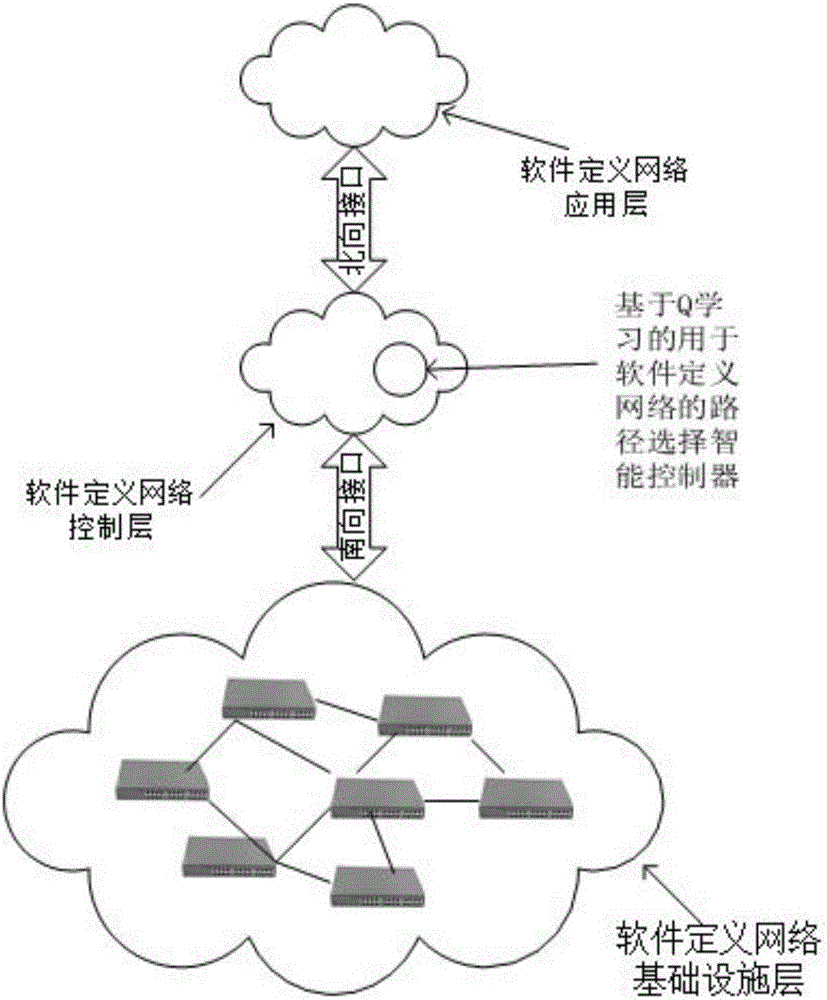

Path selection method for software defined network based on Q learning

ActiveCN106411749AShort forwarding pathShorten the timeData switching networksComputer terminalQ-learning

The invention discloses a path selection method for a software defined network based on Q learning. A software defined network infrastructure layer receives a service request, constructs a virtual network, and allocates a proper network path to complete the service request, and the path selection method is characterized in that the proper network path is acquired in a Q learning mode: (1) setting a plurality of service nodes P on the constructed virtual network, and correspondingly allocating corresponding bandwidth resources to each service node; (2) decomposing the received service request into available actions a, and attempting to select a path capable of arriving at a terminal according to eta-greedy; (3) recording data summarization as a Q value table, and updating the Q value table; and (4) finding the proper path according to recorded data in the Q value table. According to the path selection method disclosed by the invention, a network path with short forwarding path, little time consumption, little bandwidth resource occupation and suitable for dynamic and complex networks can be found by the Q learning manner, and meanwhile other service requests can be satisfied as many as possible.

Owner:JIANGSU ELECTRIC POWER CO

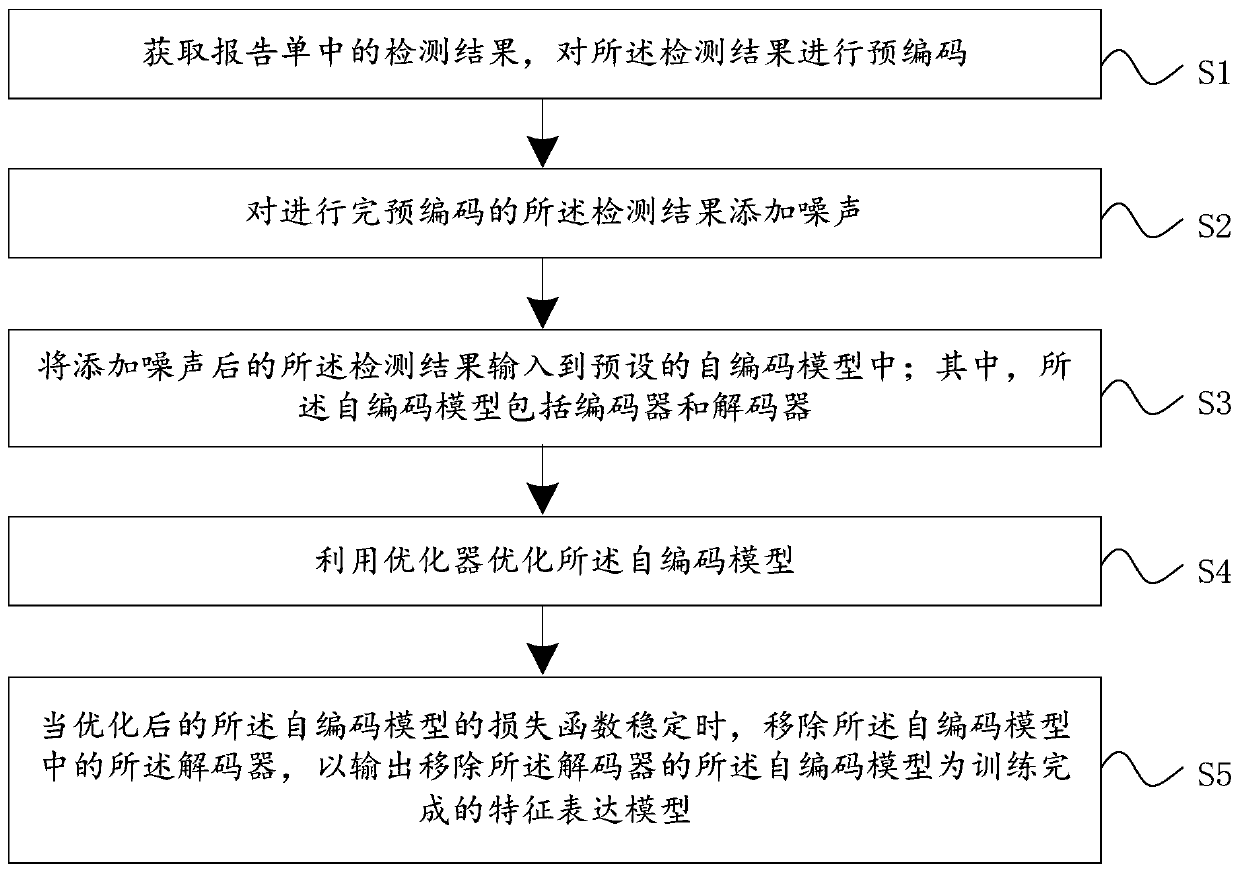

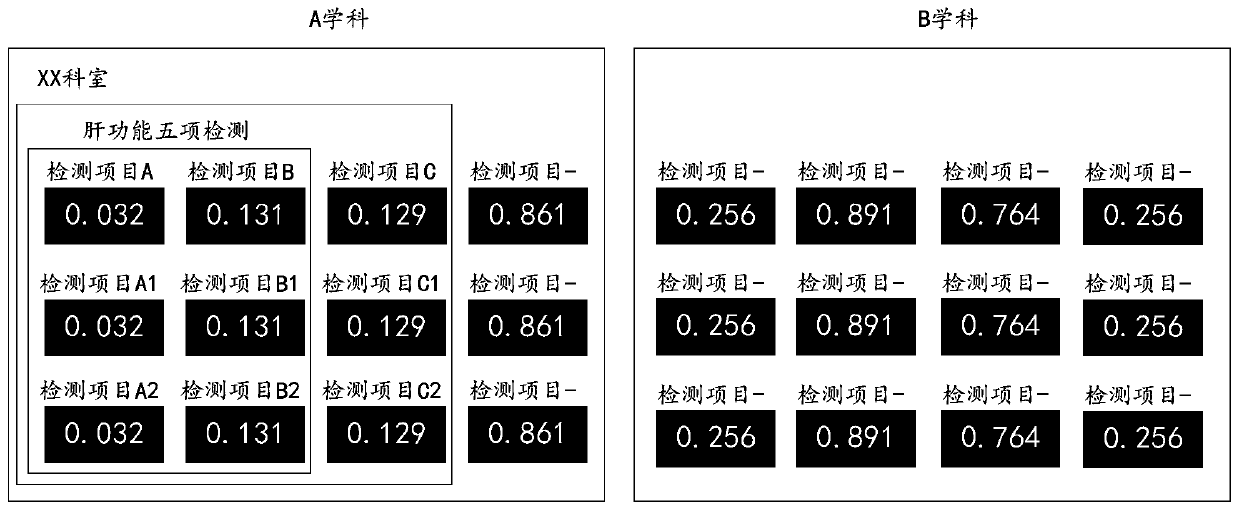

Self-encoding model training method, device and equipment and storage medium

PendingCN110910982ASpeed up explorationSolve the characteristicsDigital data information retrievalSpecial data processing applicationsAlgorithmEngineering

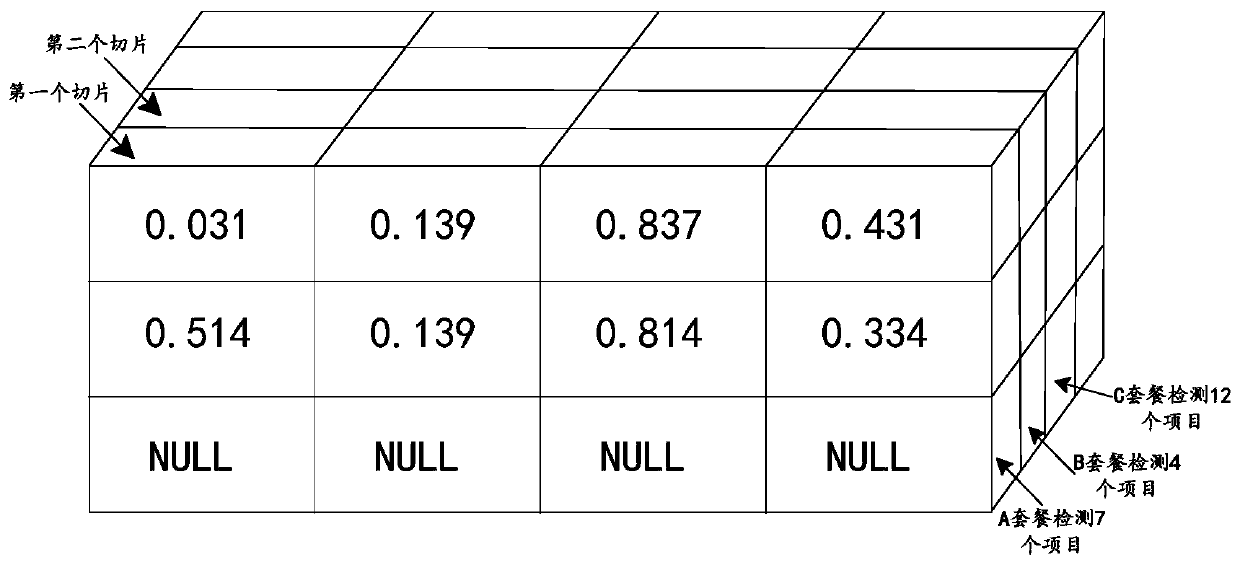

The invention discloses a self-encoding model training method, and the method comprises the following steps: obtaining a detection result in a report, and performing pre-encoding of the detection result; adding noise to the detection result subjected to precoding; inputting the detection result added with the noise into a preset self-encoding model, wherein the self-encoding model comprises an encoder and a decoder; optimizing the self-encoding model by using an optimizer; when the loss function of the optimized self-encoding model is stable, removing the decoder in the self-encoding model tooutput the self-encoding model with the decoder removed as a trained feature expression model. The invention further discloses a self-encoding model training device and equipment and a computer storage medium. By adopting the embodiment of the invention, the trained feature expression model can improve the exploration of the report detection result space, and solves the problems of incomplete coverage, low efficiency and the like of manually constructed medical examination report detection result feature variables.

Owner:GUANGZHOU KINGMED DIAGNOSTICS CENT

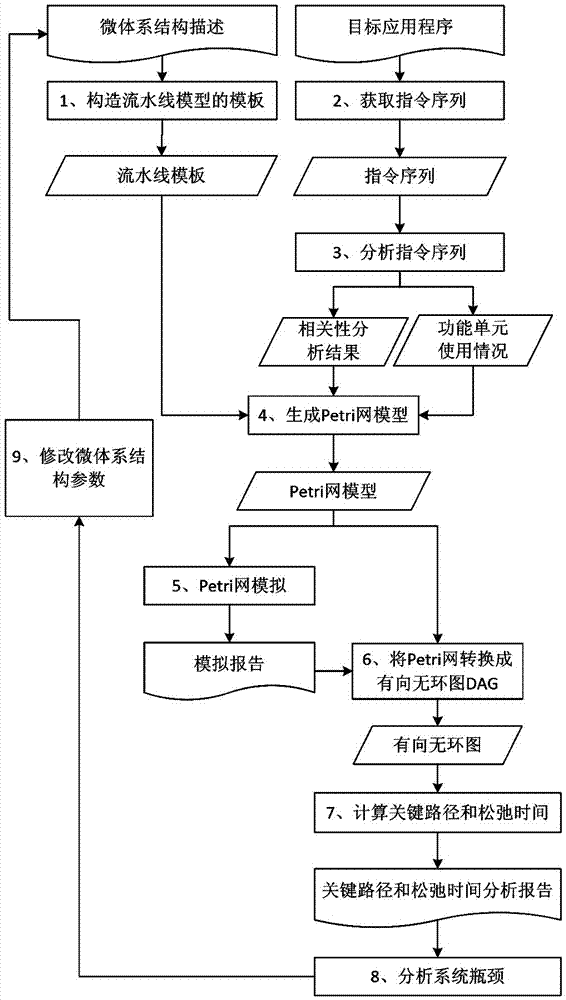

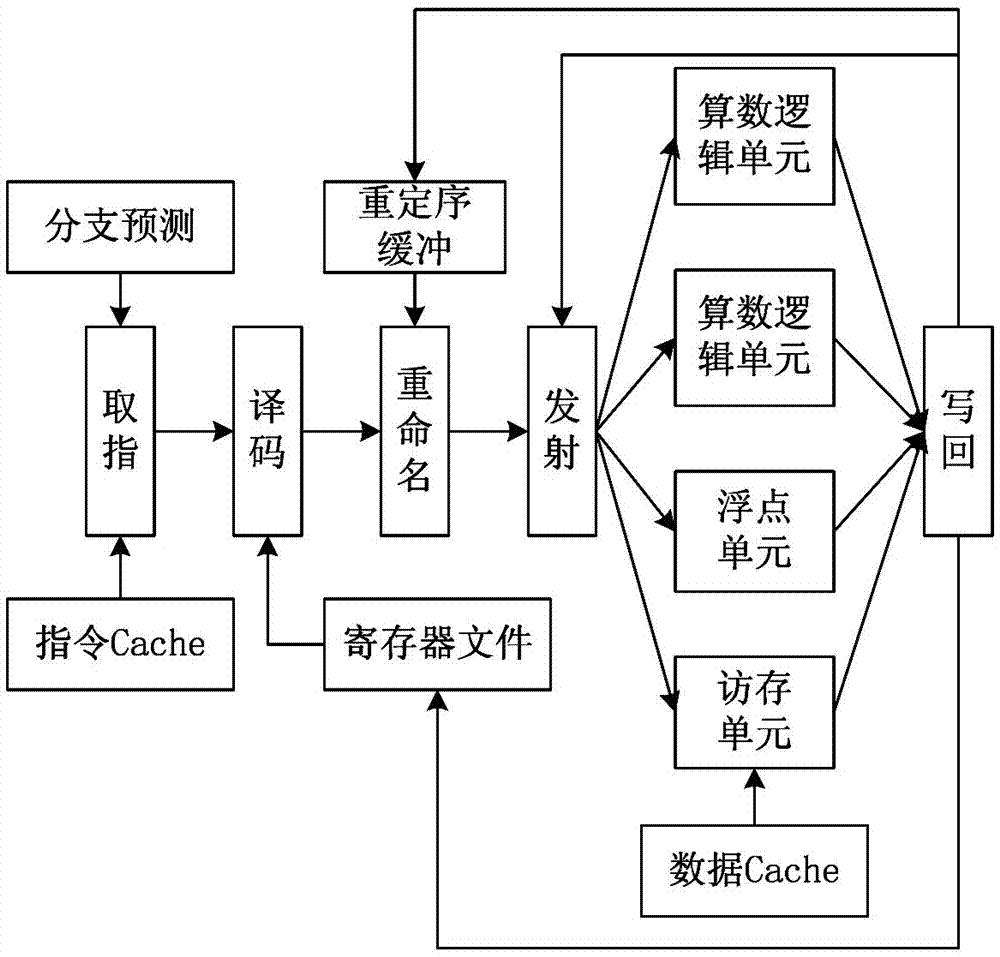

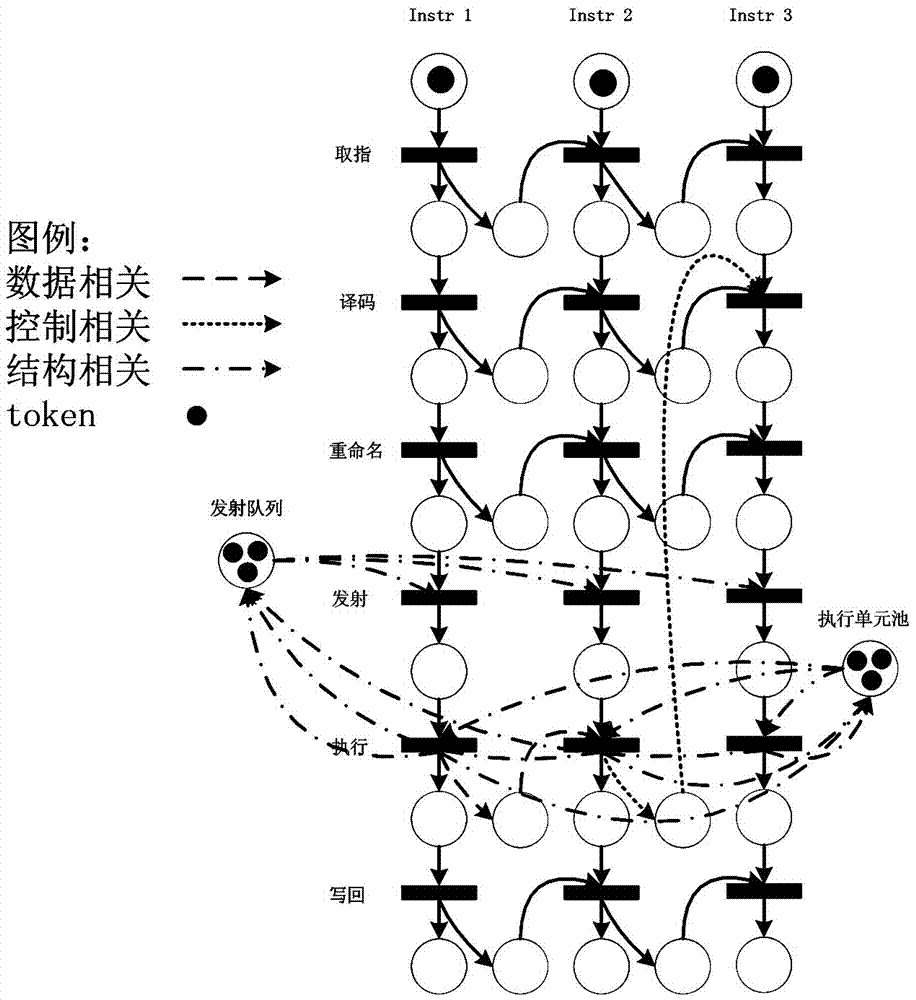

Microprocessor micro system structure parameter optimization method based on Petri network

ActiveCN104361182ARapid modelingHigh precisionSpecial data processing applicationsSpecific program execution arrangementsNODALParallel computing

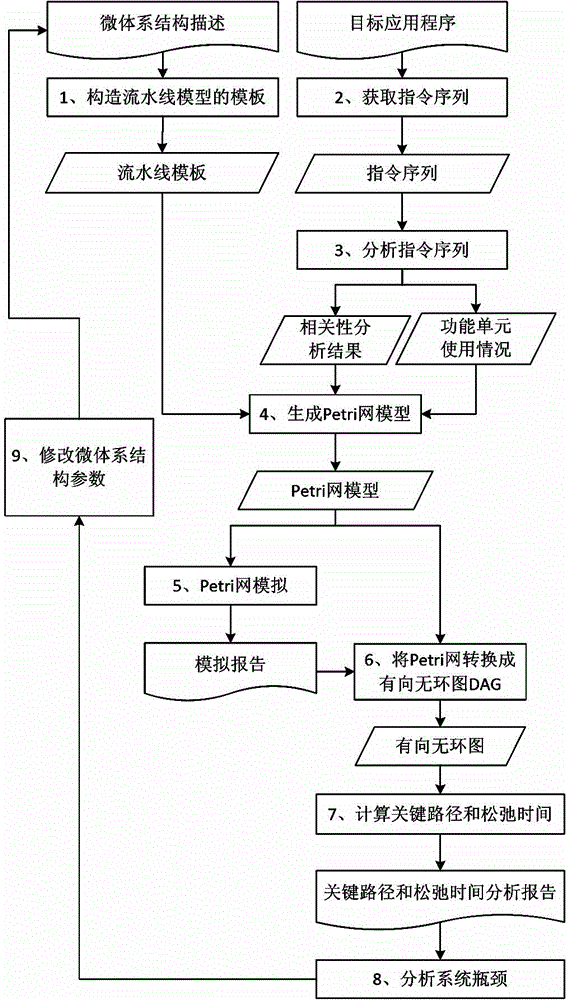

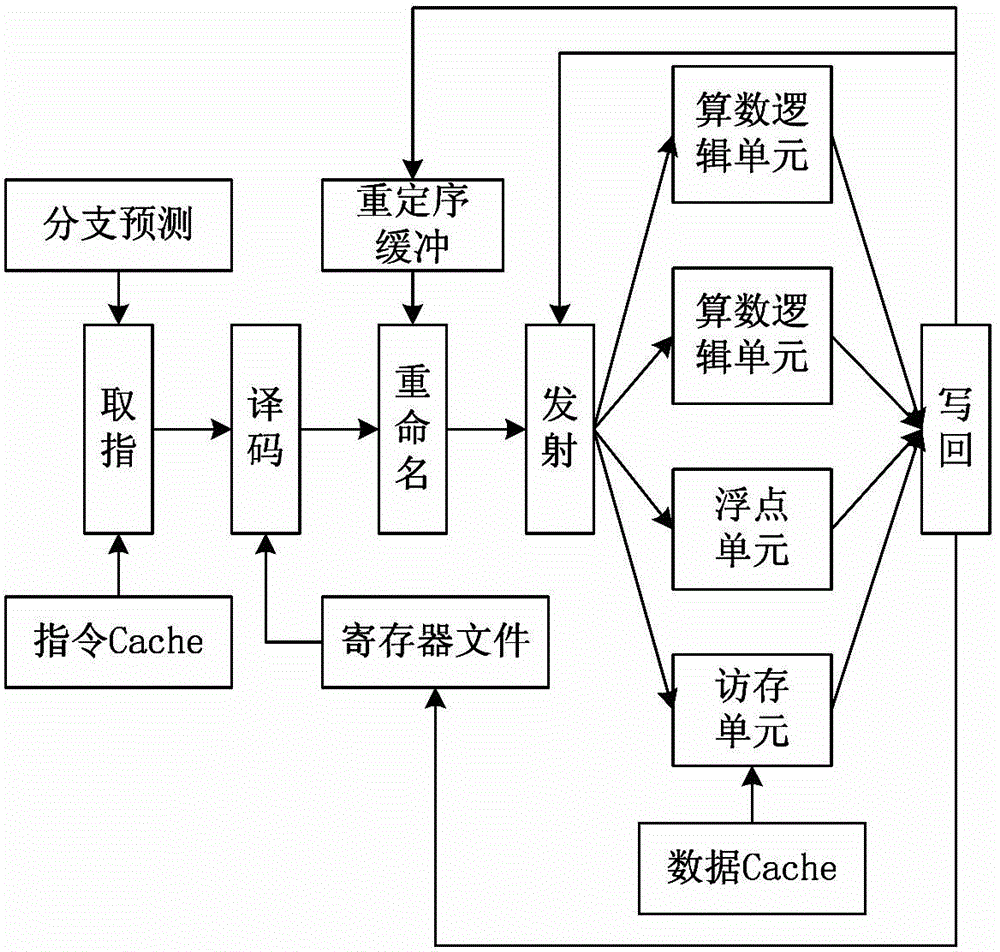

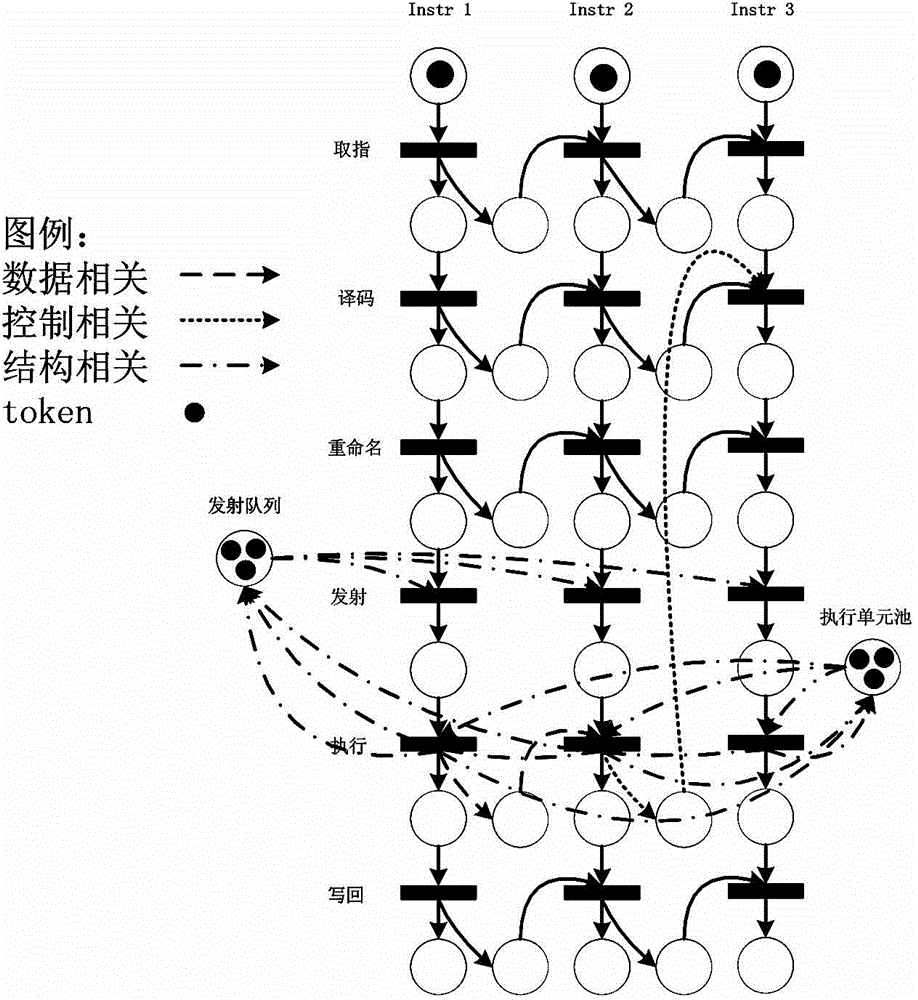

The invention discloses a microprocessor micro system structure parameter optimization method based on a Petri network. The method comprises the following steps that a template of a flow line model is built on the basis of the colored Petri network, an instruction sequence of a target application program is obtained, relevant information between the instructions and the function unit types is obtained, a colored Petri network model, running under the current parameter configuration, of the target program is generated, a Petri network simulation tool is used for simulation, a simulation report is generated, the colored Petri network model is used for generating a corresponding directed acyclic graph according to the simulation report, a key path of the directed acyclic graph and nodes passed by the key path are calculated, the release time of each entering edge of each node is calculated, the performance bottle neck or power consumption bottle neck for running the target application program by the microprocessor in the current micro system structure parameter configuration is analyzed, and if the optimization is required, the micro system structure parameters are regulated. The microprocessor micro system structure parameter optimization method has the advantages that the prediction reliability and the precision are high, the searching design space relating range is wide, the optimization algorithm complexity is lower, and the optimization is fast and efficient.

Owner:NAT UNIV OF DEFENSE TECH

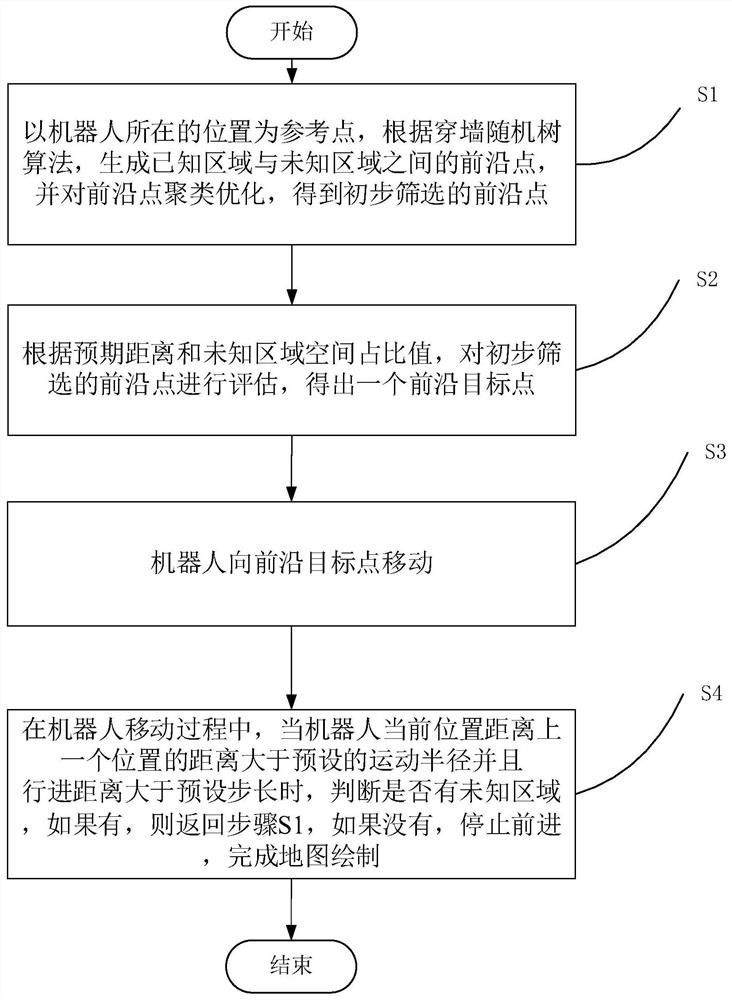

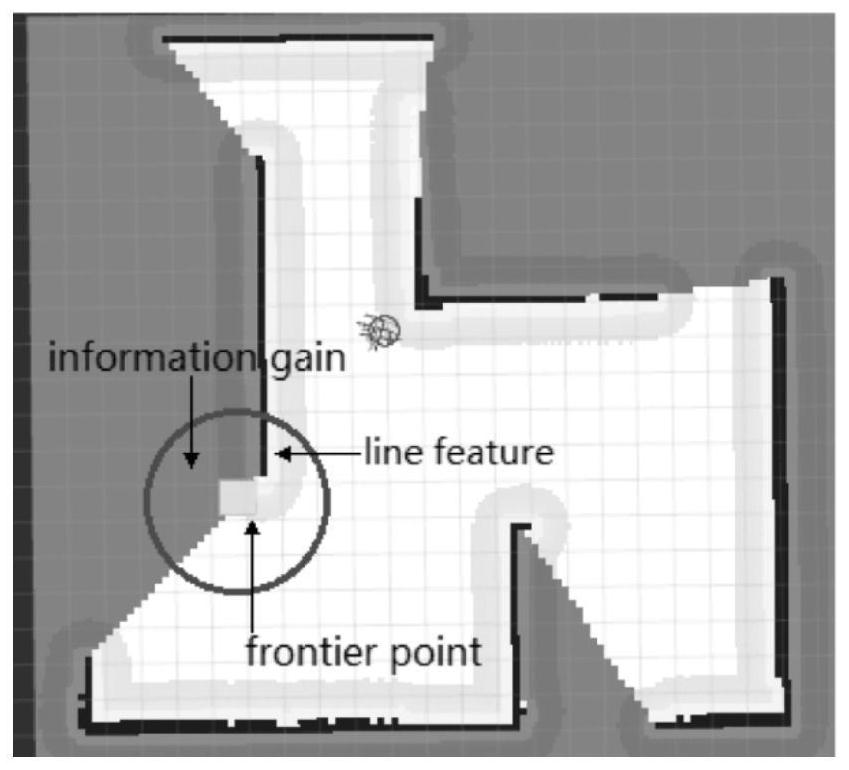

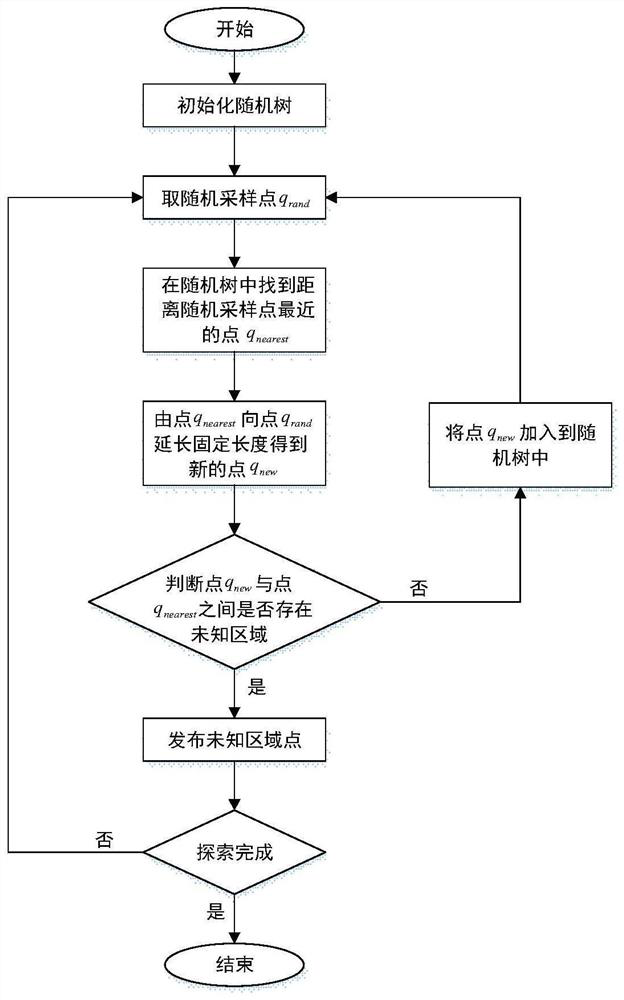

Robot autonomous mapping method in strange environment

ActiveCN111638526AImprove growing conditionsFast growthNavigation instrumentsElectromagnetic wave reradiationSimulationComputer vision

The invention discloses a robot autonomous mapping method in a strange environment in the field of robot autonomous exploration. The method comprises the following steps: S1, generating leading edge points between a known region and an unknown region according to a through-wall random tree algorithm, and carrying out the clustering optimization of the leading edge points to obtain preliminarily screened leading edge points; S2, evaluating the preliminarily screened leading edge points to obtain a leading edge target point; S3, moving the robot towards the leading edge target point; and S4, when a distance between the current position of the robot and the previous position is greater than a preset movement radius or the advancing distance is greater than a preset step length, judging whether an unknown region exists or not, if so, returning to the S1, and if not, stopping advancing to finish map drawing. Compared with an existing random tree exploration strategy, the method has the advantages that the growth conditions of the random tree are expanded, the refreshing frequency is set, and leading edge points near the robot can be explored more quickly.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

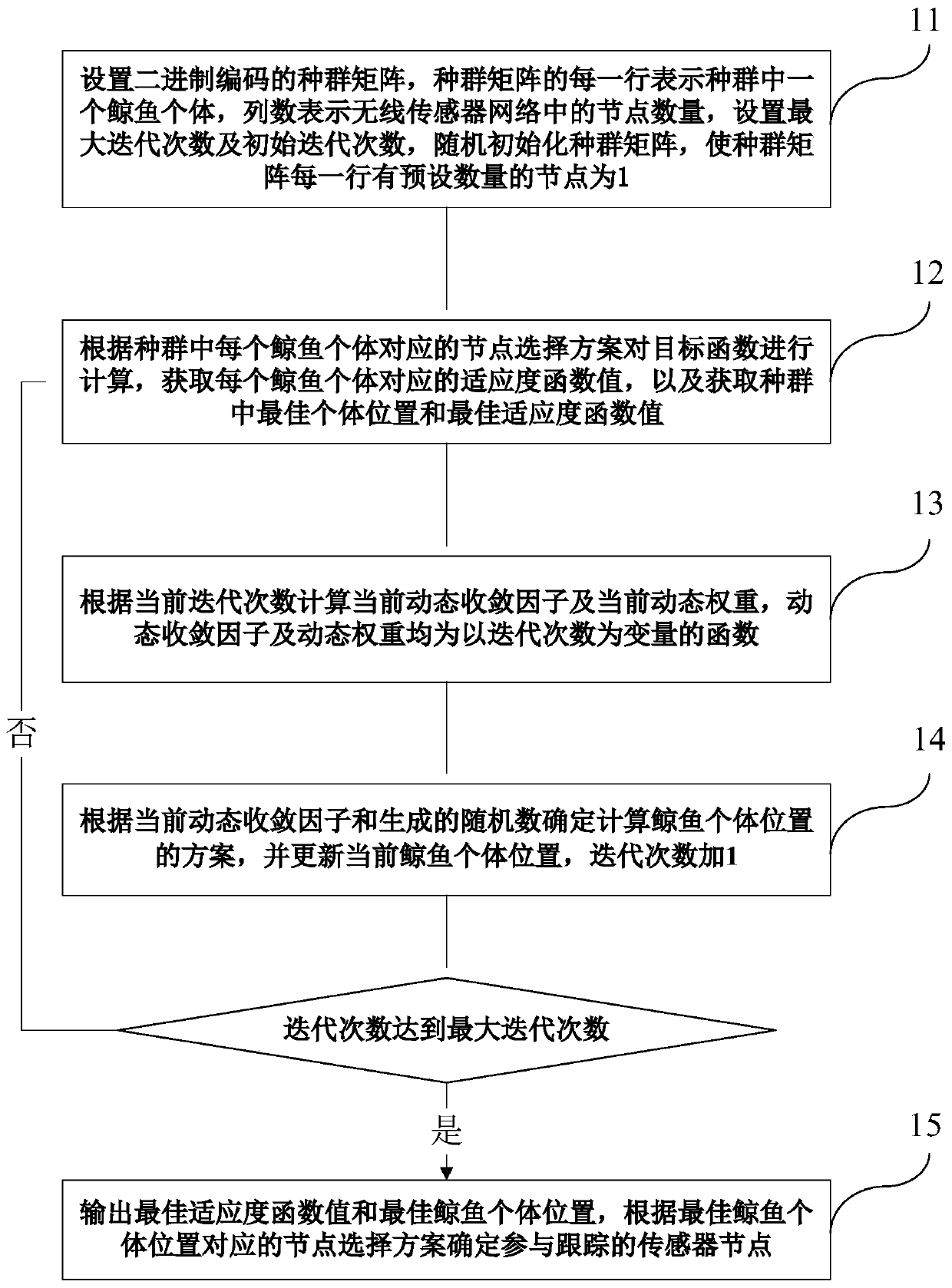

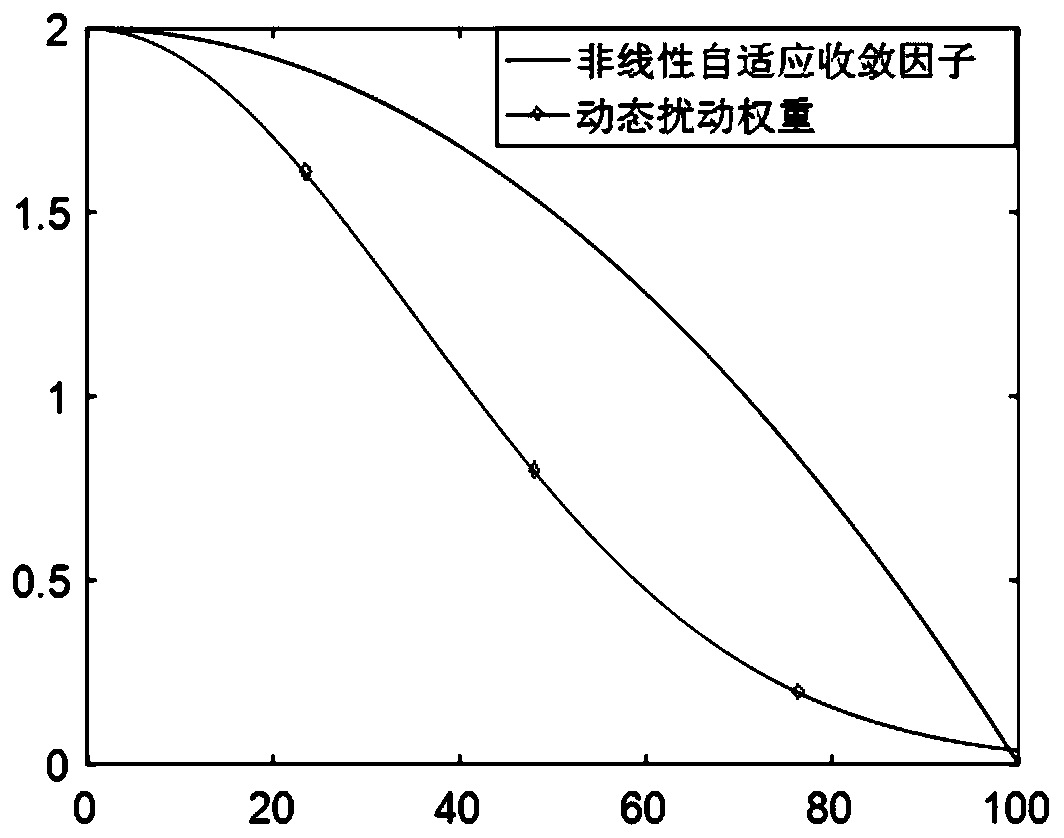

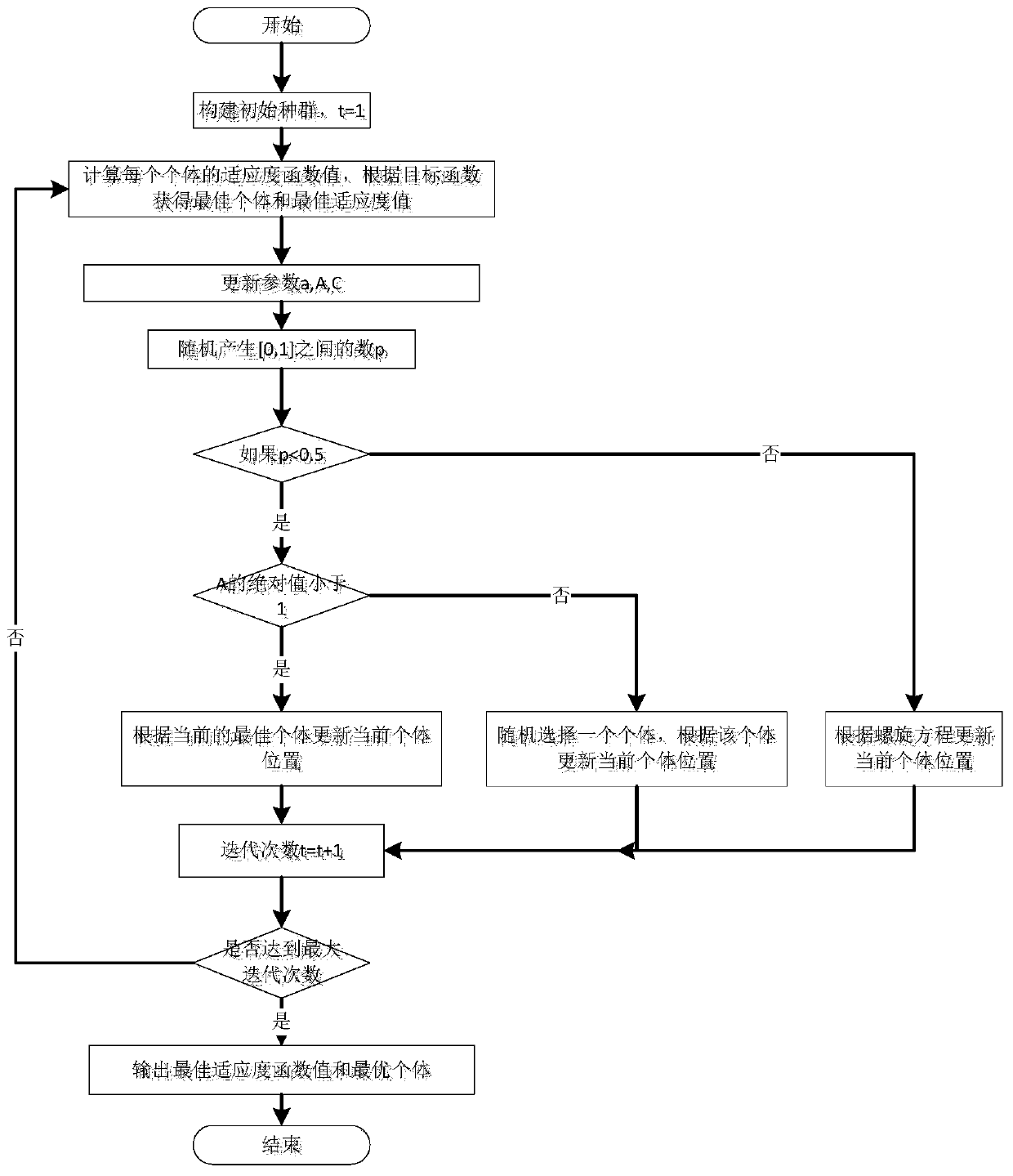

Network node selection method and system based on whale optimization algorithm and storage medium

ActiveCN110996287AImprove global search performanceGuaranteed search speedParticular environment based servicesAlgorithmWireless sensor networking

The invention discloses a network node selection method and system based on a whale optimization algorithm, and a storage medium. The method comprises the following steps: setting a binary coded population matrix, setting the maximum number of iterations and the initial number of iterations, and randomly initializing the population matrix; calculating the target function according to the node selection scheme corresponding to each whale individual in the population to obtain the optimal individual position and the optimal fitness function value in the population; calculating a current dynamicconvergence factor and a current dynamic weight according to the current number of iterations; determining a scheme for calculating the whale individual position according to the current dynamic convergence factor and the generated random number, and updating the current whale individual position and the number of iterations; if the number of iterations reaches the maximum number of iterations, returning to the optimal whale individual position, and determining sensor nodes participating in tracking according to the optimal whale individual position; otherwise return computation. According tothe invention, the tracking precision and the real-time performance in the target tracking process in the wireless sensor network can be improved.

Owner:SHANGHAI UNIV OF ENG SCI

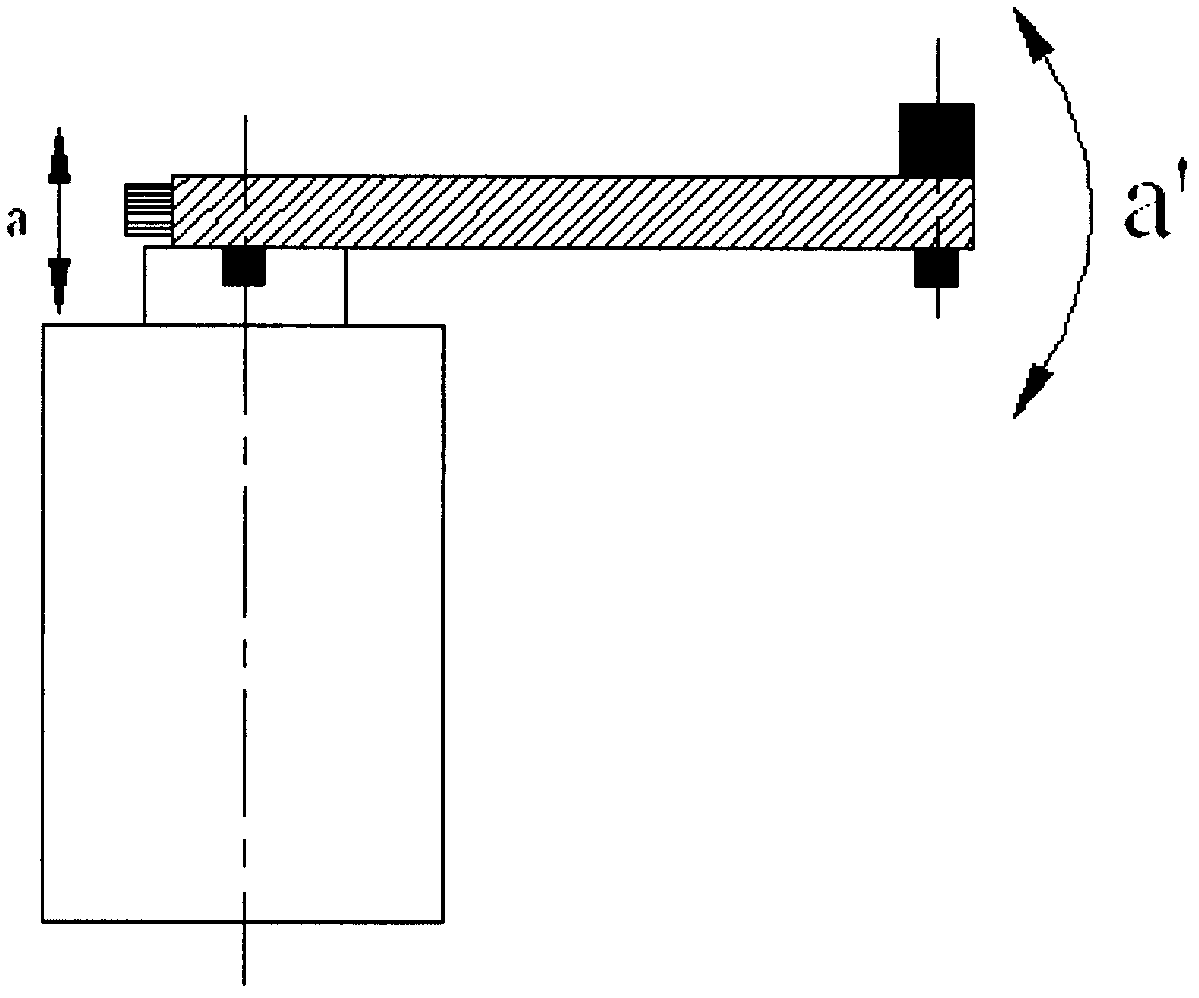

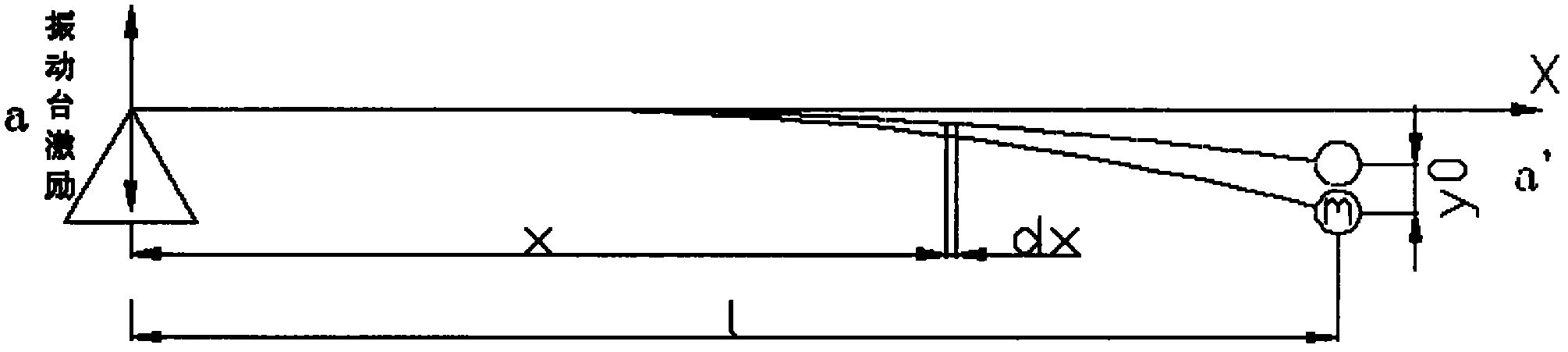

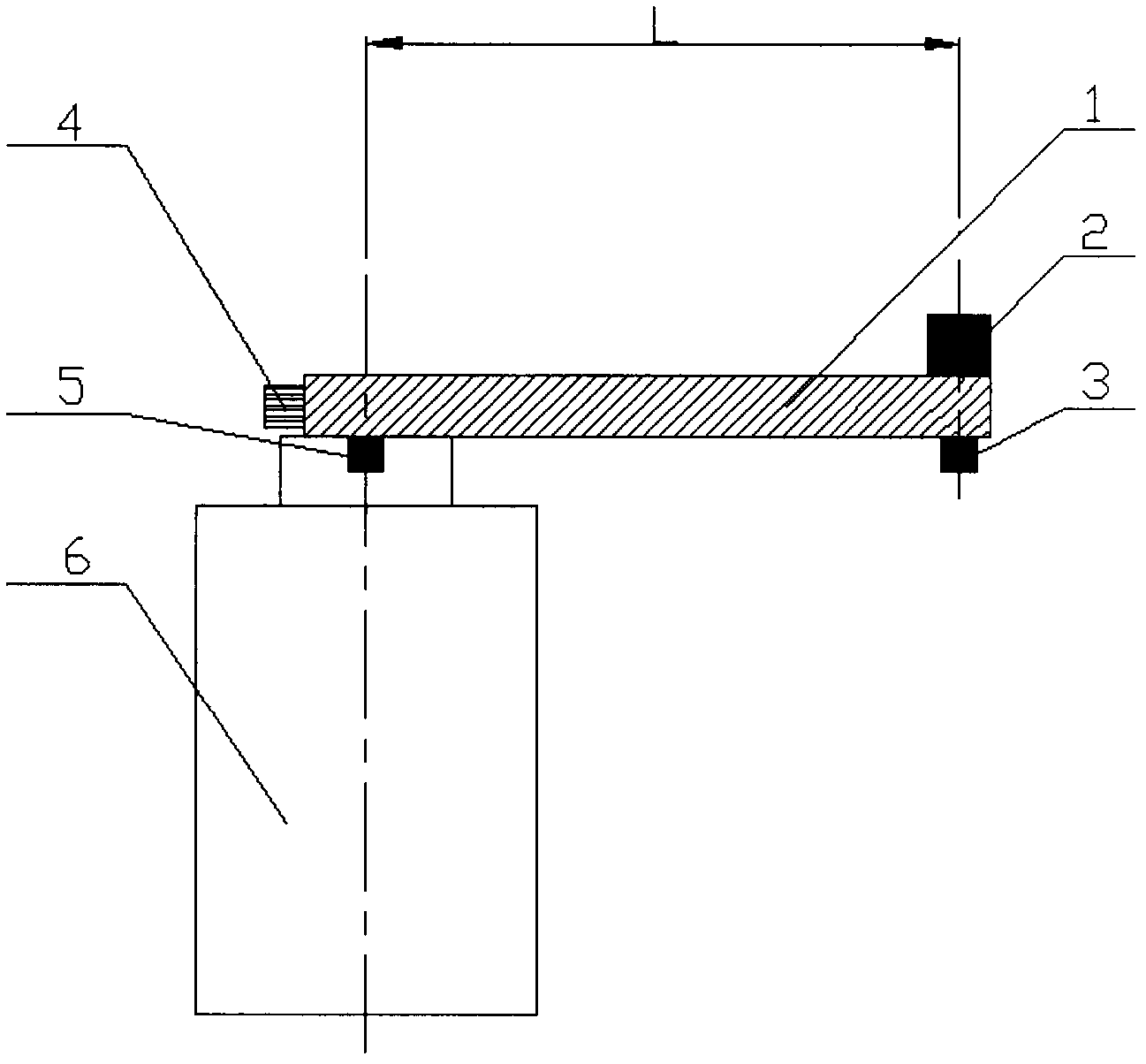

Method for testing vibration acceleration of sensor at 100g or above

InactiveCN103134583AExploration of Vibration Limits BroadenedSpeed up explorationSubsonic/sonic/ultrasonic wave measurementCantilevered beamVibration acceleration

The invention provides a method used for testing vibration acceleration of a sensor at 100g or above. The method can broaden the exploration of the limit of vibration in engineering to about 500g and adjust the resonant frequency through changes of material and length of a cantilever to achieve adjustment of test frequency. The method is achieved through the technical scheme that the cantilever is formed by machining of metal material, a test sensor is used as a mass block forming unit, the cantilever and the mass block forming unit are connected on a vibration platform rigidly, then the vibration platform is used for inflicting a vibration acceleration smaller than 100g at one end of the vibration platform to enable the test sensor which serves as a test point of reference acceleration to reach an acceleration over 100g at the resonant frequency, and when the frequency inflicted on the vibration platform is close to or equal to inherent frequencies of the cantilever and the mass block forming unit, the mass block forming unit generates resonance, and the acceleration of the vibration platform can be amplified by a number of times at the point.

Owner:CHENGDU KAITIAN ELECTRONICS

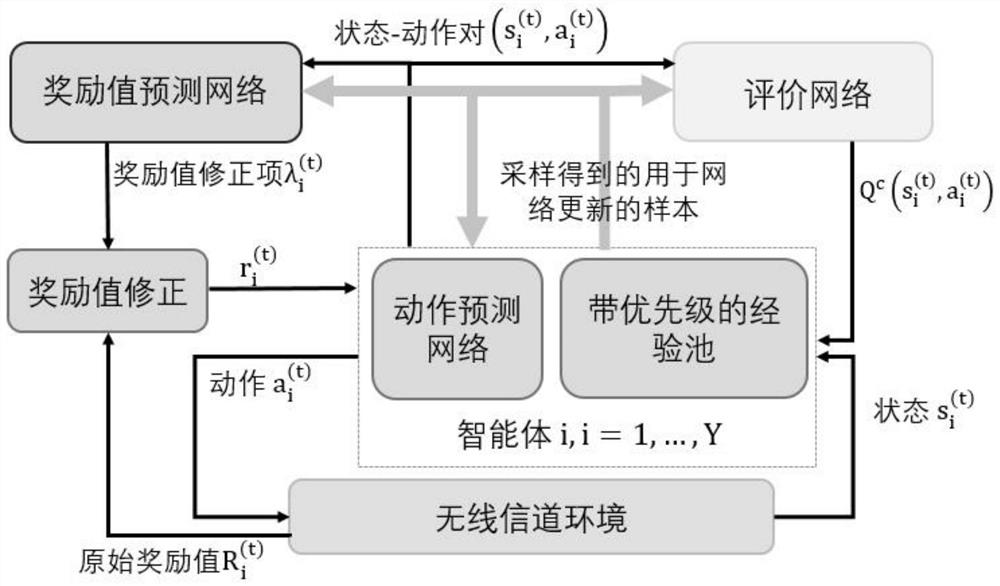

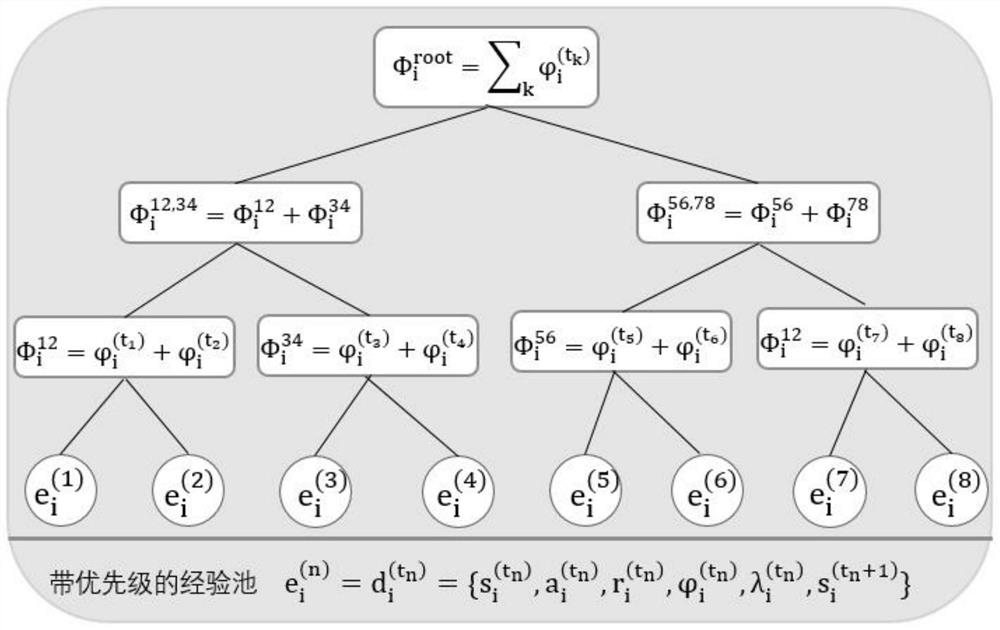

MU-MISO hybrid precoding design method based on multi-agent deep reinforcement learning

ActiveCN112260733ASpeed up explorationRaise priorityRadio transmissionNeural architecturesChannel state informationComputational simulation

The invention discloses an MU-MISO hybrid precoding design method based on multi-agent deep reinforcement learning, and the method is suitable for a downlink system in communication. According to themethod, a base station constructs a plurality of deep reinforcement learning agents used for calculating an analog precoding matrix, each agent comprises an action prediction network and an experiencepool with priority, and the agents share a centralized reward value prediction network and a centralized evaluation network to cooperatively explore an analog precoding strategy. The method comprisesthe following steps: enabling a base station to acquire channel state information of a plurality of users, inputting the user channel information into a constructed intelligent agent, and outputtinga corresponding analog precoding matrix; and calculating a digital precoding matrix containing the digital precoding vector of each user through zero-forcing precoding and a water injection algorithm.According to the method, the problems of high hybrid precoding design complexity and poor reachable rate performance in a large-scale MIMO system can be effectively solved, and the method has relatively high robustness to a channel environment.

Owner:SOUTHEAST UNIV

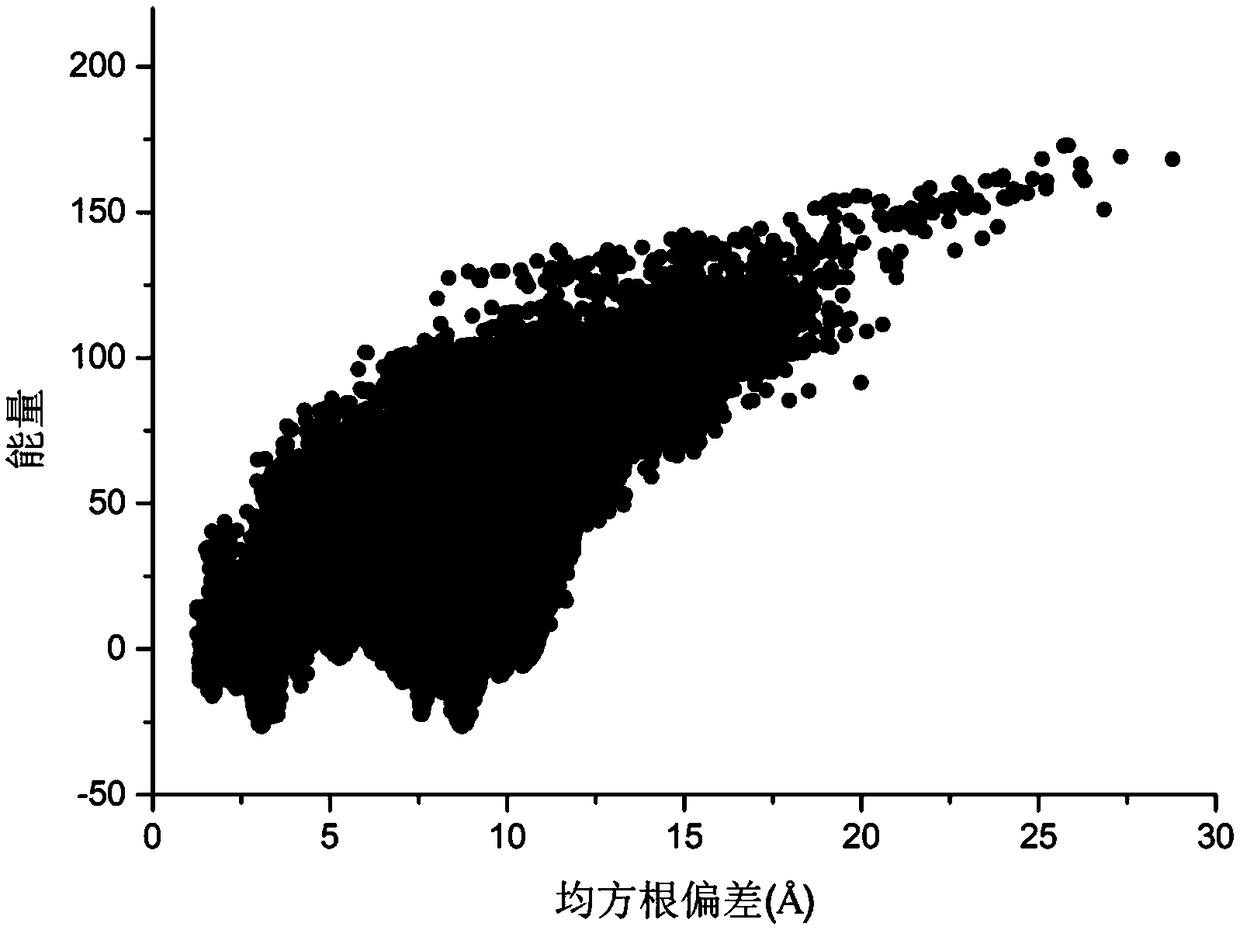

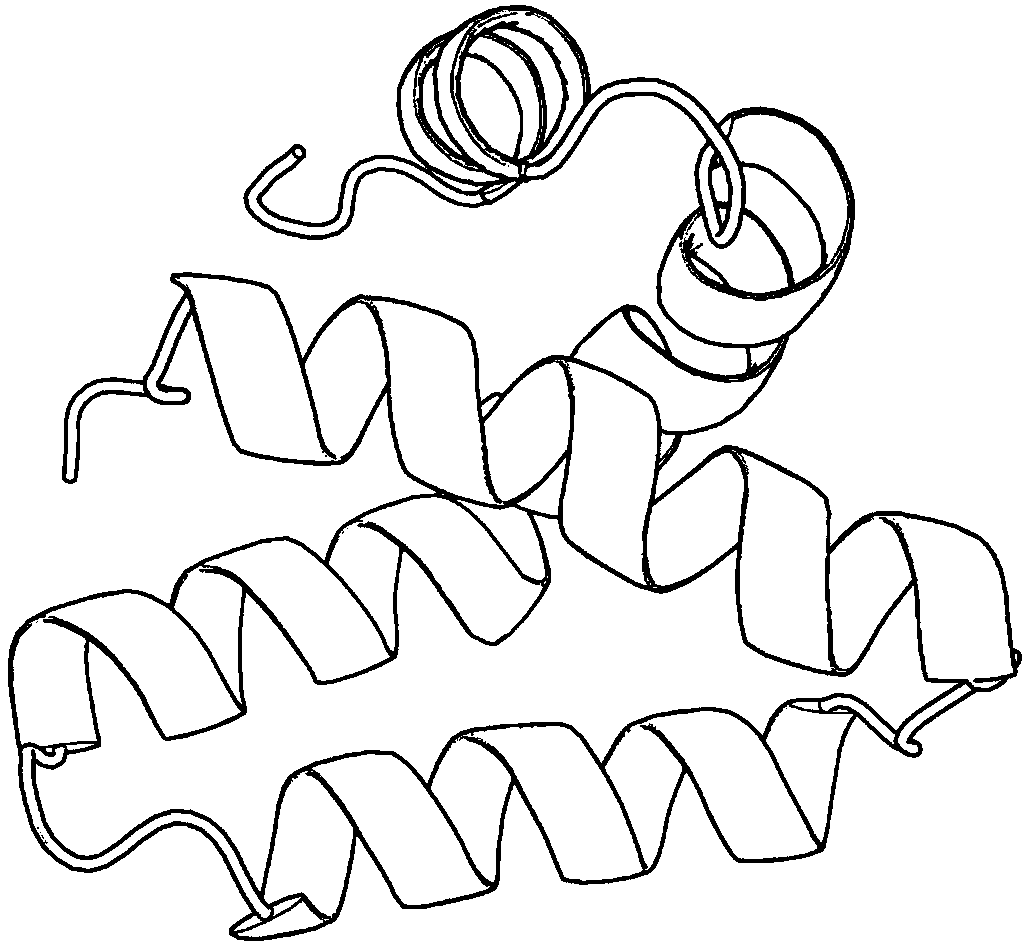

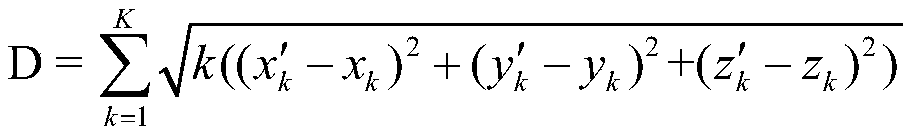

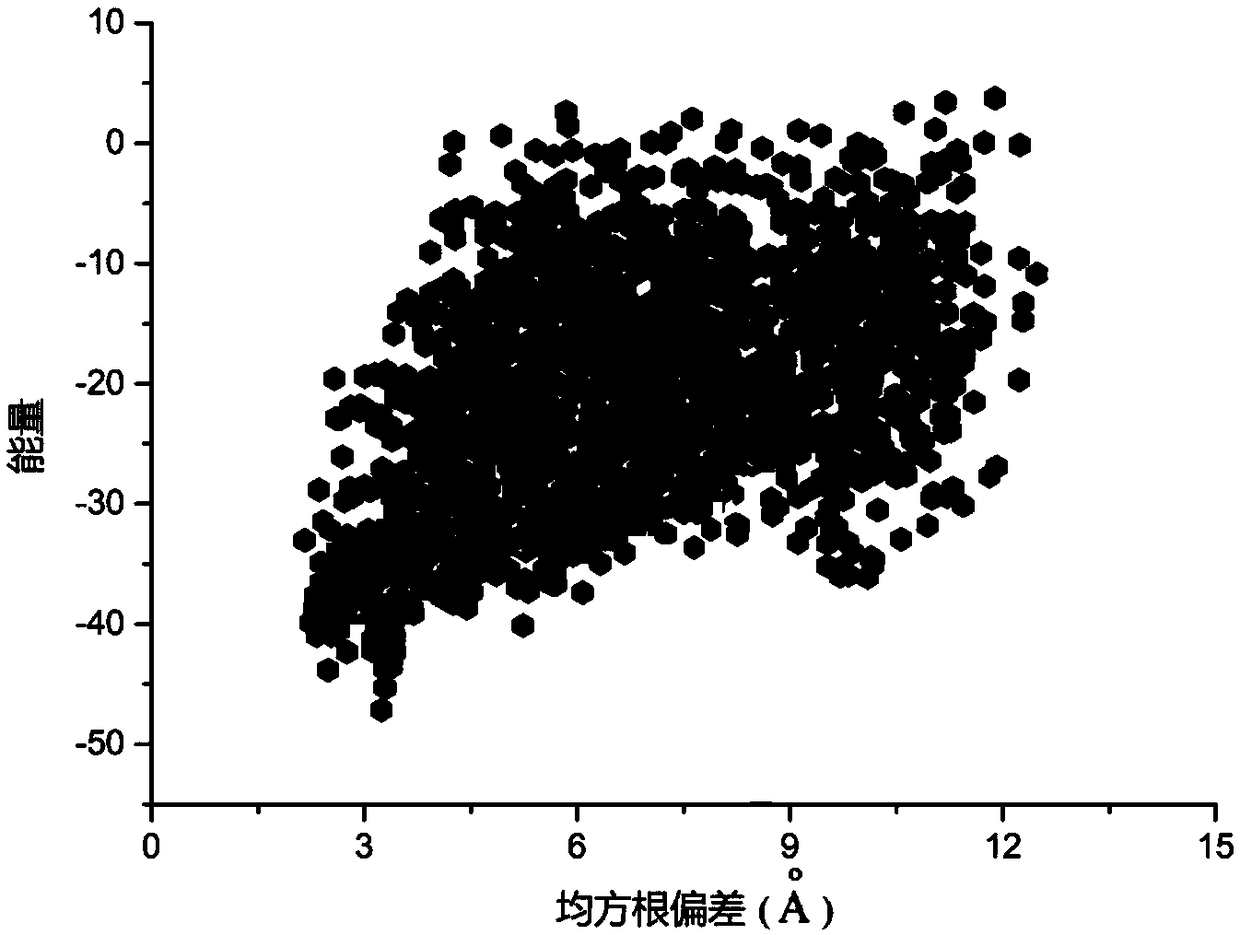

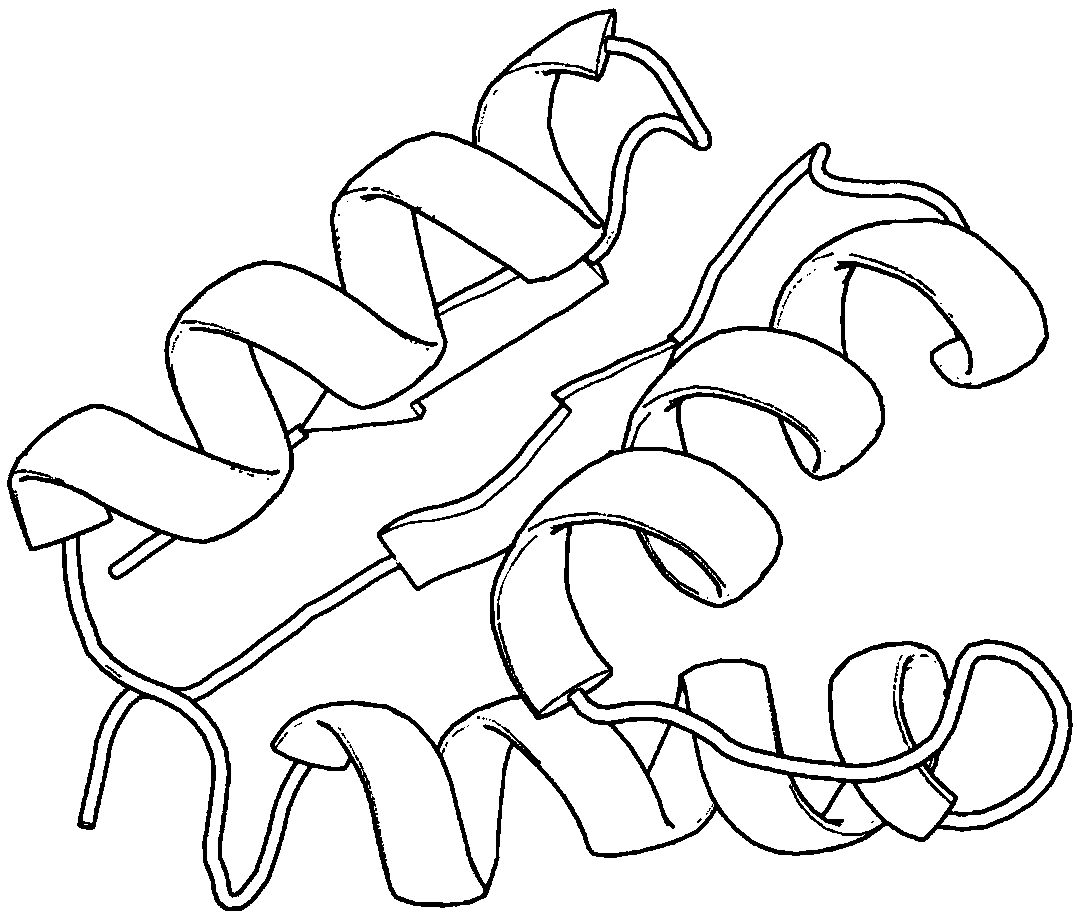

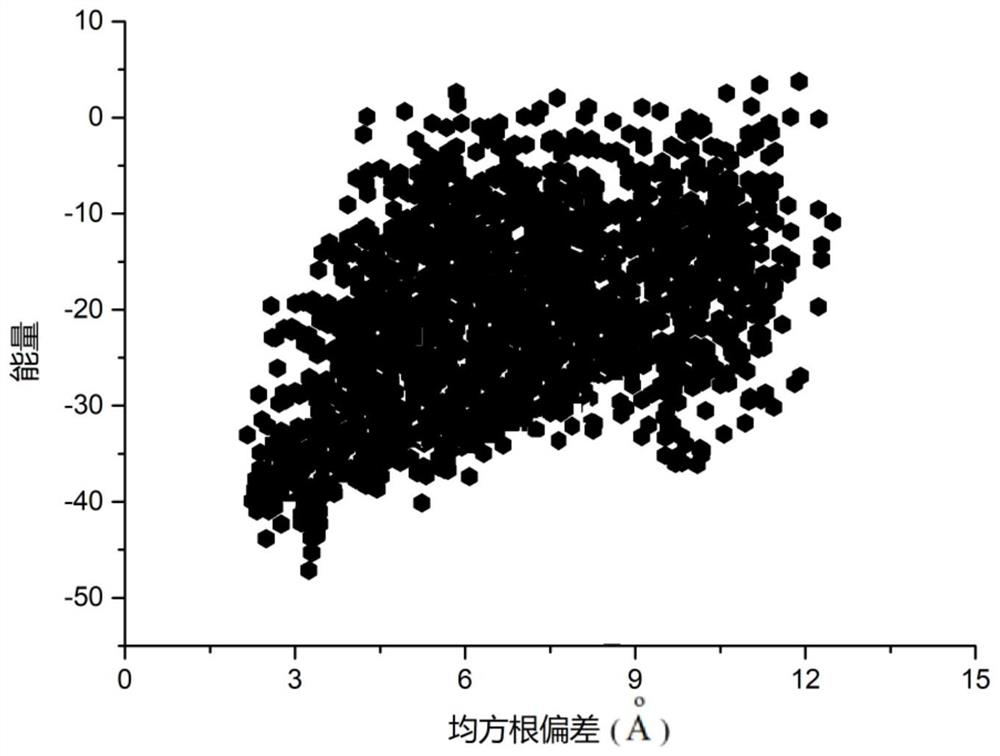

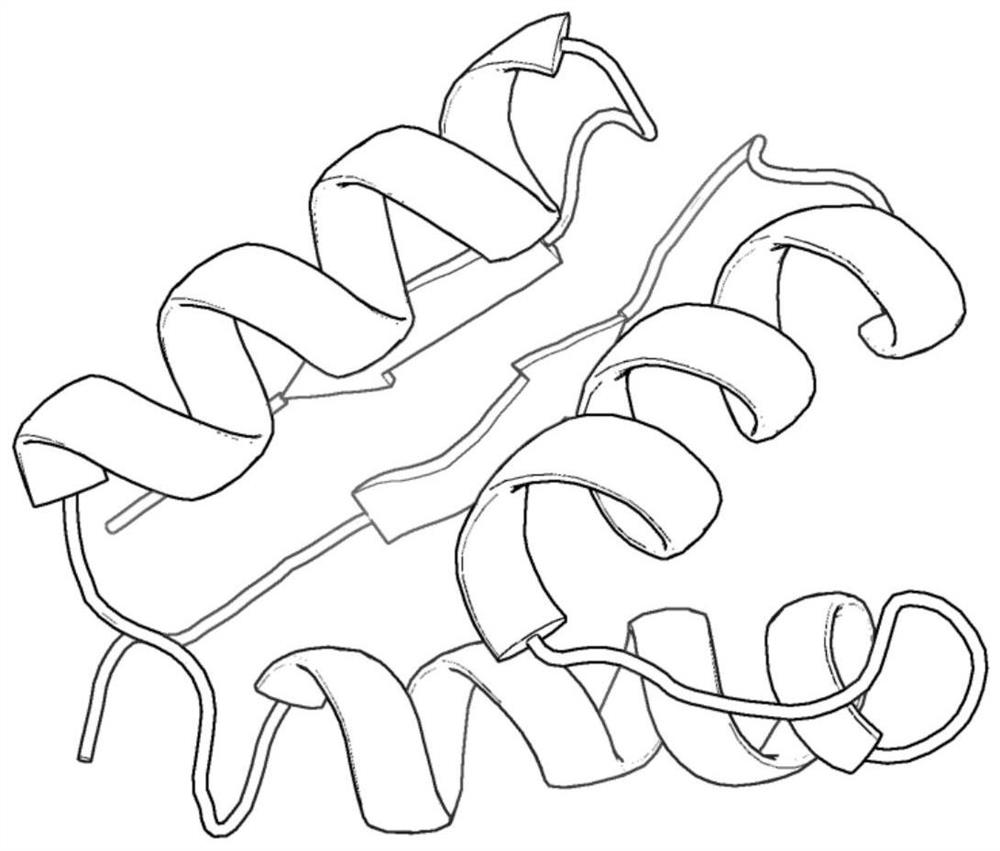

Protein conformation space optimization method based on differential evolution local disturbance

ActiveCN109360596ASpeed up explorationImprove exploration abilityArtificial lifeMolecular structuresDifferential evolutionFine-tuning

A protein conformation space optimization method based on differential evolution local disturbance is disclosed. Under the framework of a differential evolution algorithm, information exchange betweenindividuals in a population is used to enhance the exploration ability of the algorithm; and simultaneously, the differential evolution algorithm is used to achieve the fine tuning of a loop area andincrease the diversity of a loop area structure so that the exploration of the loop area is further enhanced based on an existing structure and then the exploration efficiency and the prediction precision of an integral body are increased. By using the protein conformation space optimization method based on the differential evolution local disturbance, prediction precision is high.

Owner:ZHEJIANG UNIV OF TECH

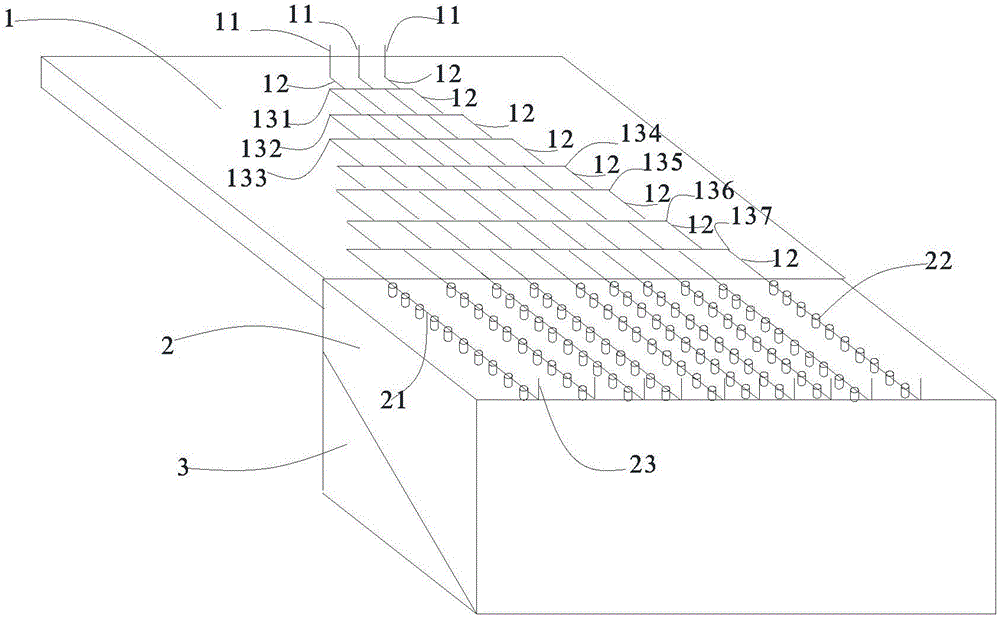

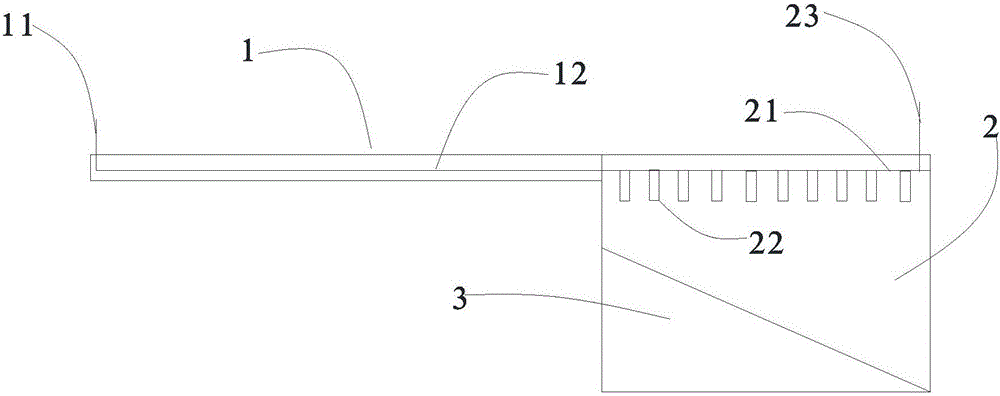

Device for synthesising metal matrix powder material and high-flux synthesis method thereof

Owner:TIANJIN UNIV

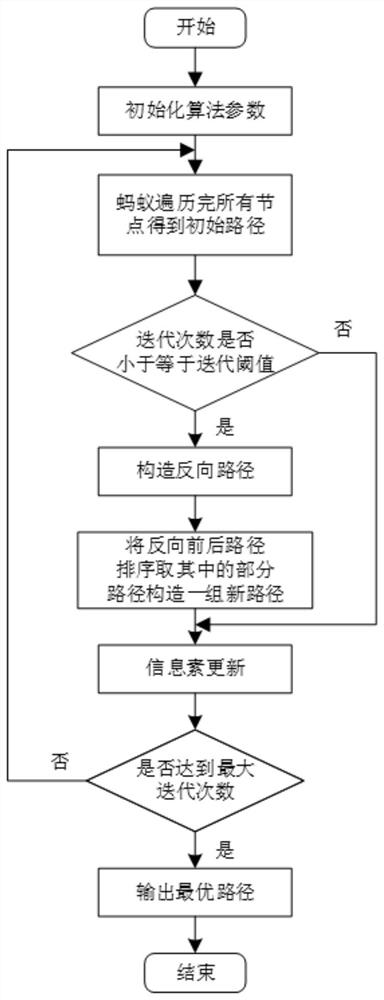

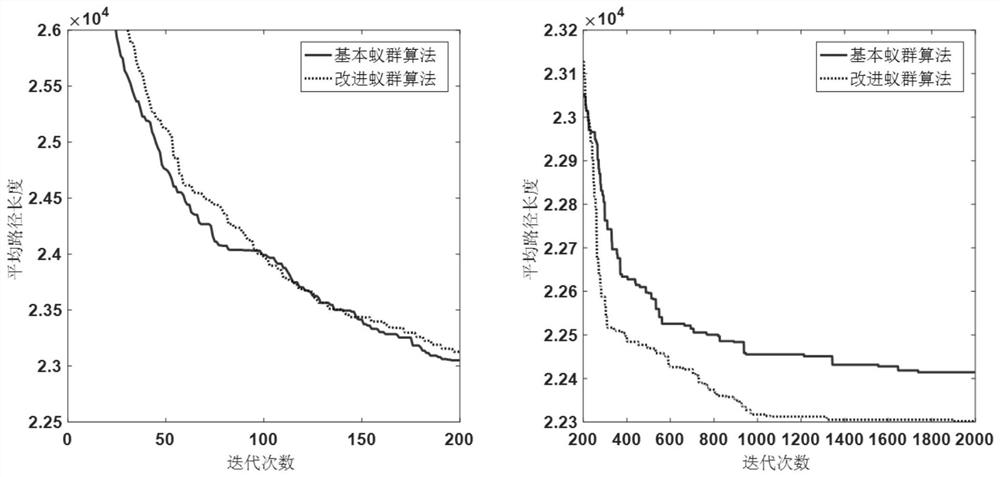

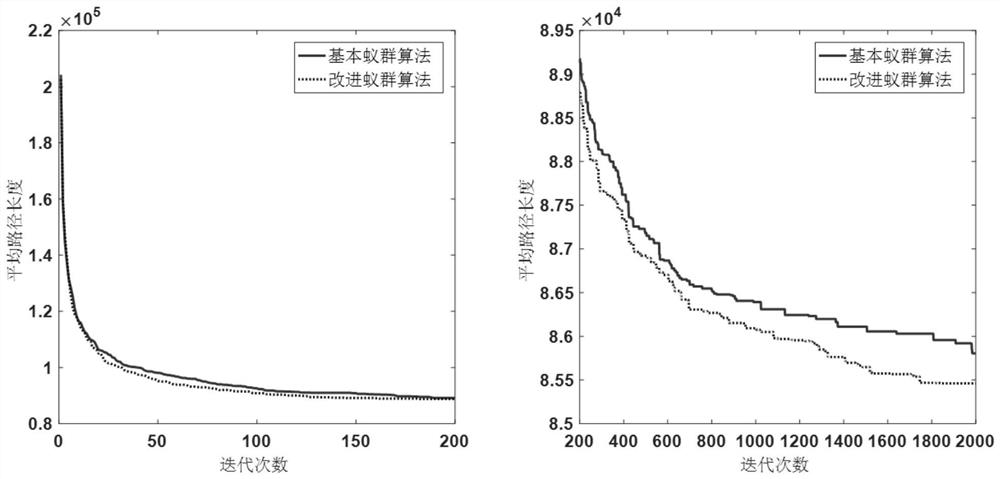

Ant colony algorithm optimization method based on reverse learning

PendingCN111695668AIncrease explorationReduce running timeArtificial lifeAnt colonyTravelling salesman problem

The invention relates to an ant colony algorithm optimization method based on reverse learning, which is used for solving a traveling salesman problem. The improvement of the algorithm mainly comprises the following points: 1, after an initial path is solved, reversing the serial number of each city in the initial path, and constructing a reverse path; 2, sorting the initial paths and the reversepaths from small to large according to the lengths, and taking part of the paths to form a group of new paths; and 3, setting an iteration threshold value, and if the current iteration frequency doesnot reach the iteration threshold value, carrying out pheromone updating on a new group of paths; otherwise, performing pheromone updating on the initial path. The pheromone updating aspect of the basic ant colony algorithm is improved, in the early stage of iteration, reverse learning is introduced to construct a reverse path, and participates in pheromone updating, so that the search range of ants is expanded, the ants are prevented from falling into local extremum, and exploration and development of the understanding space are balanced.

Owner:XUZHOU NORMAL UNIVERSITY

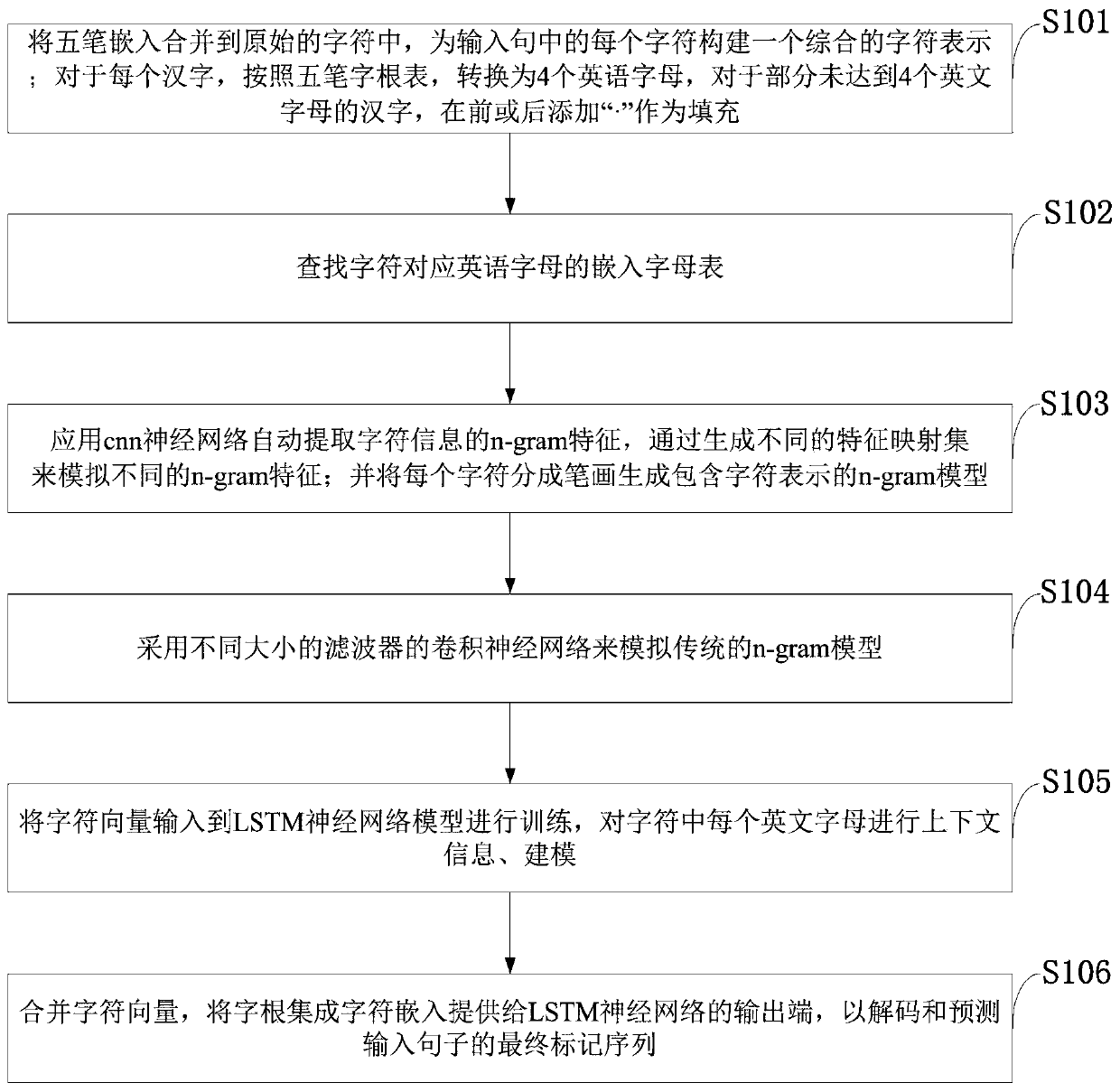

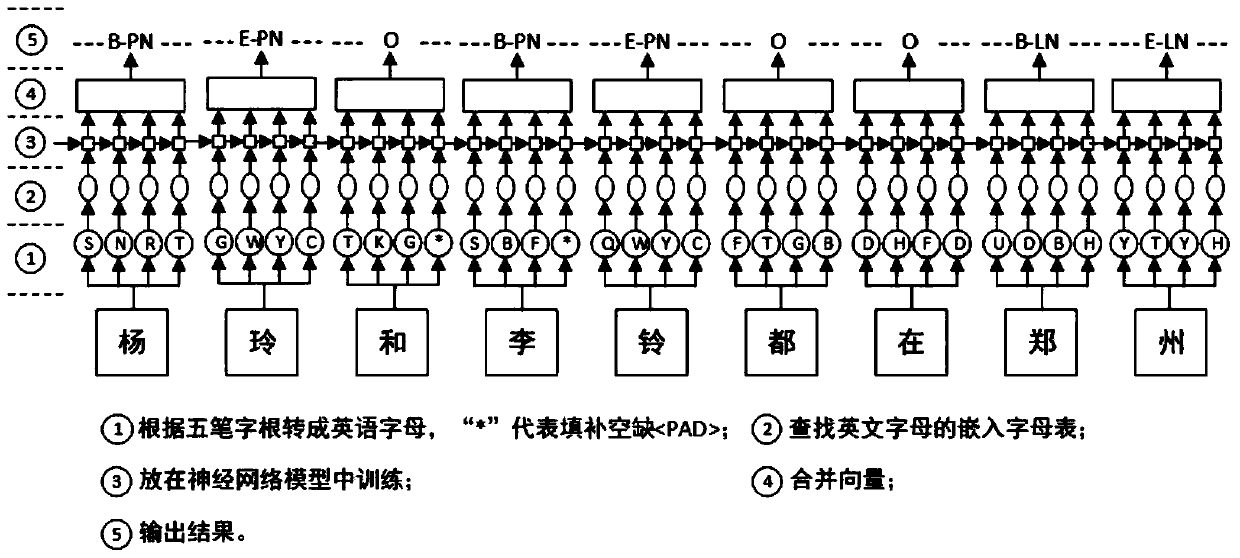

Unregistered word identification method and system using five-stroke character root deep learning

PendingCN110287483AImprove the performance of identifying unregistered wordsSolve the sparse problemCharacter and pattern recognitionNatural language data processingPart of speechWord identification

The invention belongs to the technical field of natural language data processing, and discloses an unregistered word identification method and system using five-stroke character root deep learning. The method comprises the steps of converting a Chinese character into four English letters according to a five-stroke character root table; then inputting an embedded vector serving as an embedded vector of the model into an embedded vector corresponding to the words in a corpus to train a neural network model; and finally, enabling the model to output a most similar vocabulary vector in a previous corpus, and using the vocabulary vector as an important basis for identifying the unlogged vocabularies to better identify the unlogged vocabularies. According to the present invention, the Chinese character words with close radicals mostly have the same part-of-speech, and the five-stroke codes of the Chinese character words are similar, so that the neural network entity identification method based on the five-stroke roots is provided and can improve the performance of identifying the unlogged words through the neural network model. According to the present invention, the word vectors are used for representing the words based on deep learning, so that the sparse problem of the high-latitude vector space is solved, and the method is simpler and more effective.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

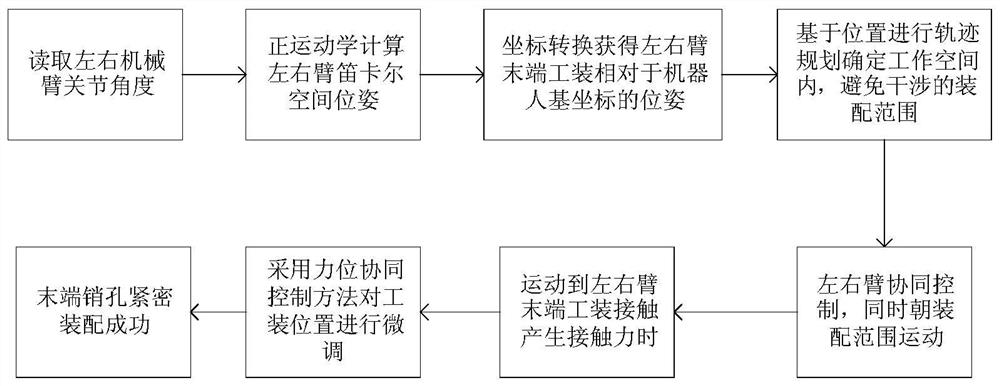

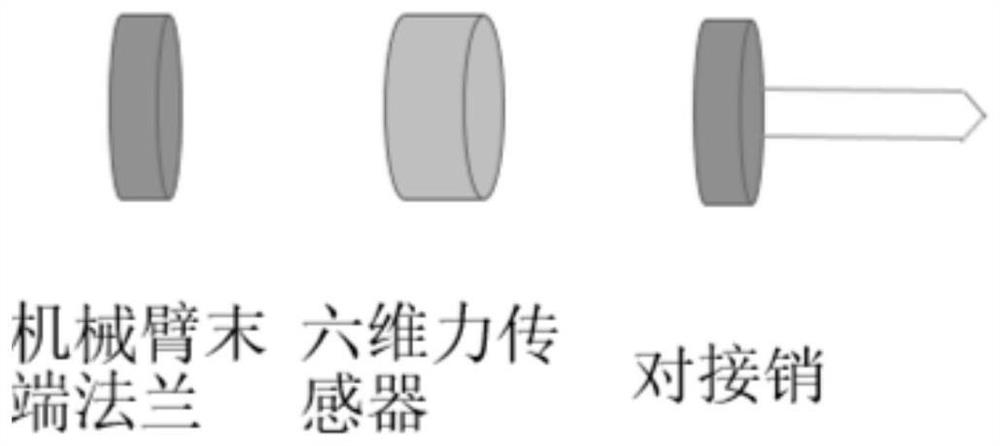

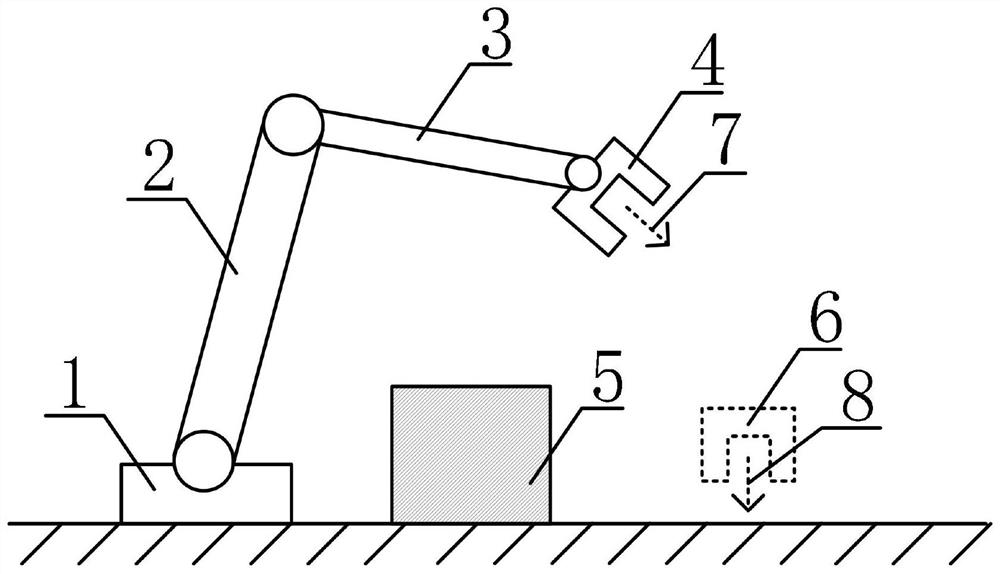

Two-arm robot precise assembly method based on six-dimensional force sensor

ActiveCN113547506AEasy fine-tuningAssembly precisionProgramme-controlled manipulatorTotal factory controlControl systemControl engineering

The invention discloses a two-arm robot precise assembly method based on a six-dimensional force sensor. The method comprises the following steps that a robot controller reads all joint angles of a left mechanical arm and all joint angles of a right mechanical arm; Cartesian space poses of the left mechanical arm and the right mechanical arm are obtained; according to the Cartesian space poses of the left and right mechanical arms, the Cartesian space poses of pin hole workpieces at the tail ends of the left and right arms relative to the base coordinates of the robot are obtained through coordinate conversion; a left arm tail end pin hole planning path and a right arm tail end pin hole planning path are obtained through a fast potential energy exploration-based random tree method; wherein the planned path is short in movement distance, short in assembling operation time, low in mechanical arm energy consumption and capable of avoiding interference; and according to the left arm tail end pin hole and right arm tail end pin hole planning path, a control method based on a learning variable impedance control system is used for controlling the left mechanical arm and the right mechanical arm to be flexibly assembled. According to the method, the high-quality path can be planned, and the compliant assembly capacity is achieved.

Owner:BEIJING RES INST OF PRECISE MECHATRONICS CONTROLS

Semantic-enhanced large-scale multi-element graph simplified visualization method

ActiveCN109766478AEfficient extractionEfficient expressionData processing applicationsOther databases indexingModularityMulti dimensional

The invention discloses a semantic-enhanced large-scale multi-element graph simplification visualization method, which comprises the following steps of: establishing a large-scale multi-element graph,and extracting a hierarchical structure of the large-scale multi-element graph; constructing a multi-scale community set according to the hierarchical structure of the large-scale multi-element graphby utilizing the attributes of the large-scale multi-element graph, the attributes of the large-scale multi-element graph including modularity and multi-dimensional attribute information entropy; constructing a multi-level force guiding layout for the multi-scale community set according to the hierarchical structure of the large-scale multivariate graph, and displaying the semantic expression ofthe communities through mapping; And using the community after mapping display to obtain a hierarchical view and an attribute mulberry-based view, and performing visual analysis on the large-scale multi-element view by using a multi-level force guide layout, the hierarchical view and the attribute mulberry-based view. According to the method, the visual expression of the large-scale multi-elementgraph can be effectively simplified, the association structure and semantic composition of the large-scale multi-element graph in different application fields can be rapidly analyzed, and the practicability is high.

Owner:ZHEJIANG UNIV OF FINANCE & ECONOMICS

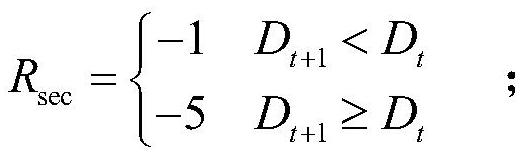

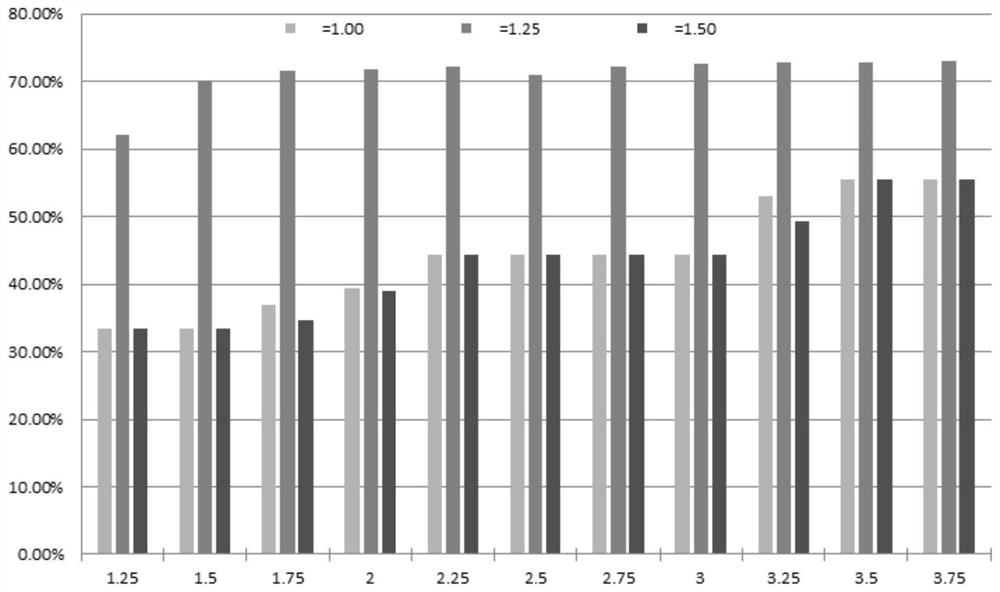

Industrial robot-oriented reinforcement learning reward value calculation method

ActiveCN114851184ASpeed up explorationAvoid the problem of not being able to meet actual production needsProgramme-controlled manipulatorTotal factory controlControl engineeringIndustrial robotics

The invention discloses an industrial robot-oriented reinforcement learning reward value calculation method. The method comprises the following steps of S1, carrying out initialization calculation on state parameters of an industrial robot; s2, calculating a pose reward value of an end execution mechanism of the industrial robot; s3, calculating a collision reward value of the industrial robot; s4, calculating an exploration reward value of the industrial robot; s5, calculating a target reward value; according to the method, the target nearby area and the non-target nearby area are divided, so that the tail end of the industrial robot can be quickly close to the target position in the early stage and can be adjusted to be in a proper posture while being close to the target position in the later stage, and the exploration process of the industrial robot is accelerated; various state information (positions, postures, collision and the like) of the industrial robot are comprehensively considered, and the problem that postures of a motion track finally planned by the industrial robot cannot meet actual production requirements is solved.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

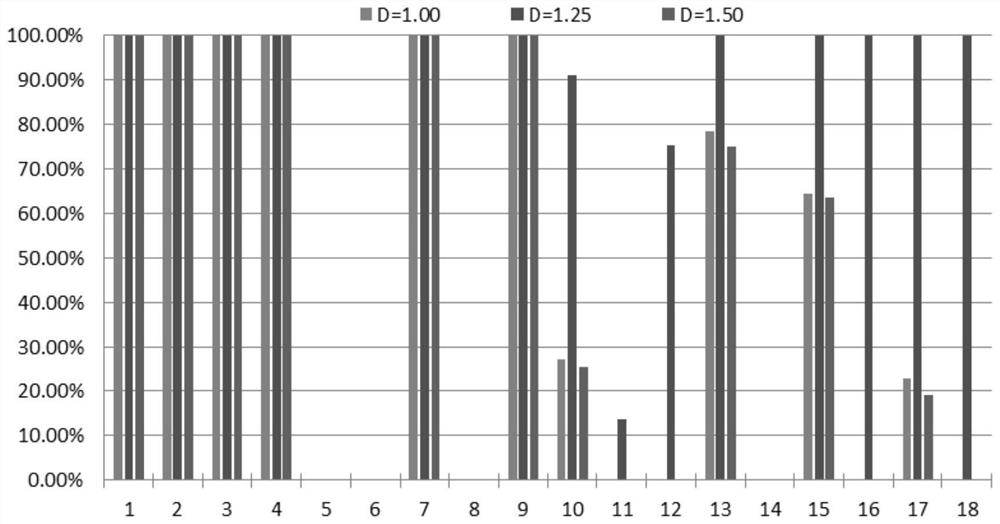

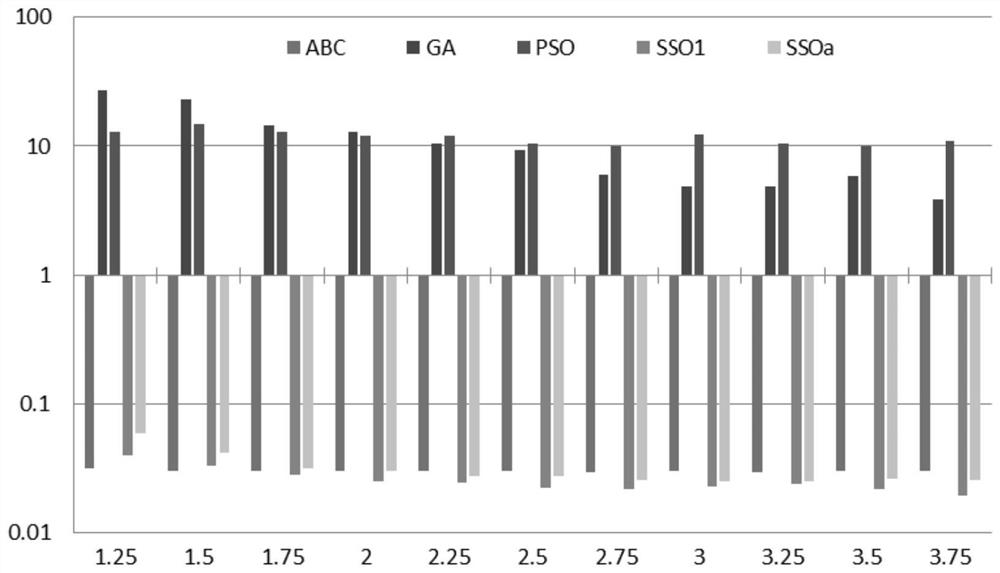

Harmonic group optimization method and application thereof

The invention provides a harmonic group optimization method and an application thereof, and provides a new continuous SSO integrated univariate updating mechanism UM1 and a new harmonic step length strategy HSS to improve the continuous simplified group optimization SSO, and the UM1 and the HSS can balance the exploration and utilization capability of the continuous SSO in exploring a high-dimensional multivariable and multi-modal numerical continuous reference function; an updating mechanism UM1 only needs to update one variable, and the updating mechanism UM1 is completely different from thevariables in the SSO and does not need to update all the variables; in the UM1, the HSS enhances the utilization capacity by reducing the step length based on a harmonic sequence; numerical experiments are carried out on the 18 high-dimensional functions to confirm efficiency of the method provided by the invention. According to the method, the traditional ABC and SSO exploration and utilizationperformance is improved, a relatively excellent balance point is obtained between exploration and utilization, the application range is wide, and classification and prediction precision of the newly obtained big data supported by an artificial neural network, a vector machine and the like can be greatly improved.

Owner:FOSHAN UNIVERSITY

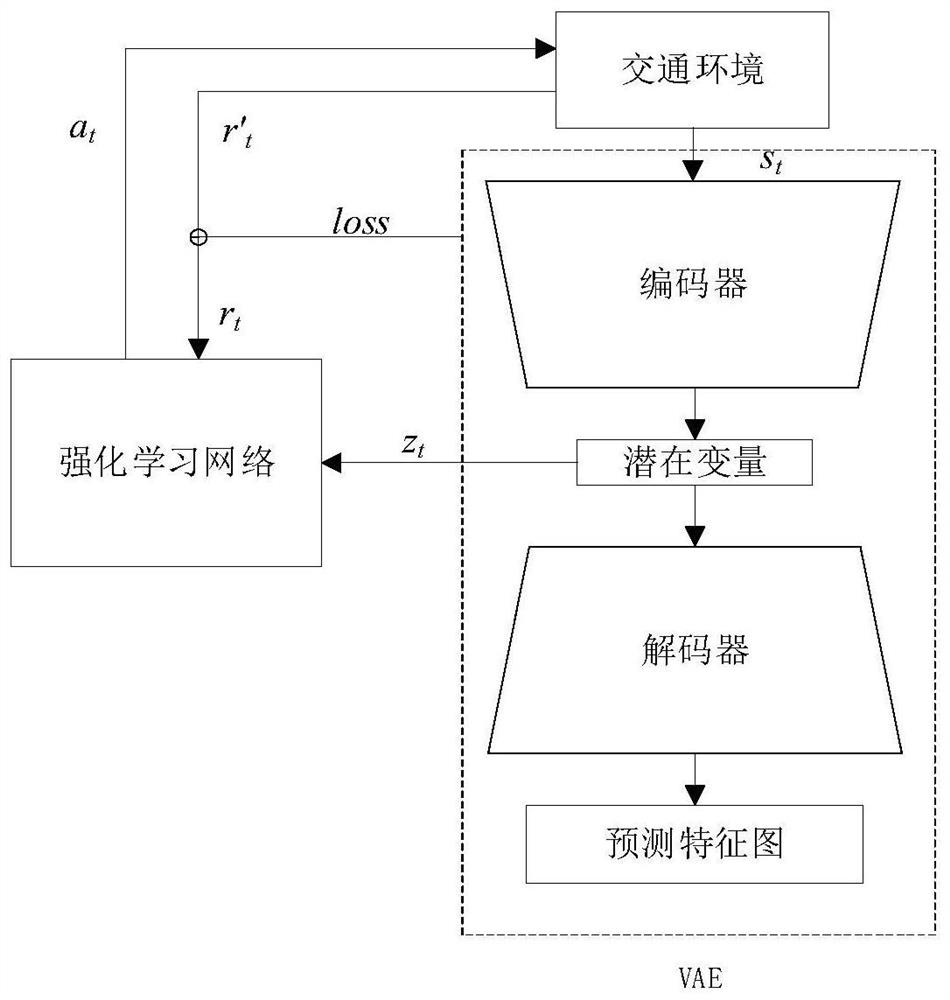

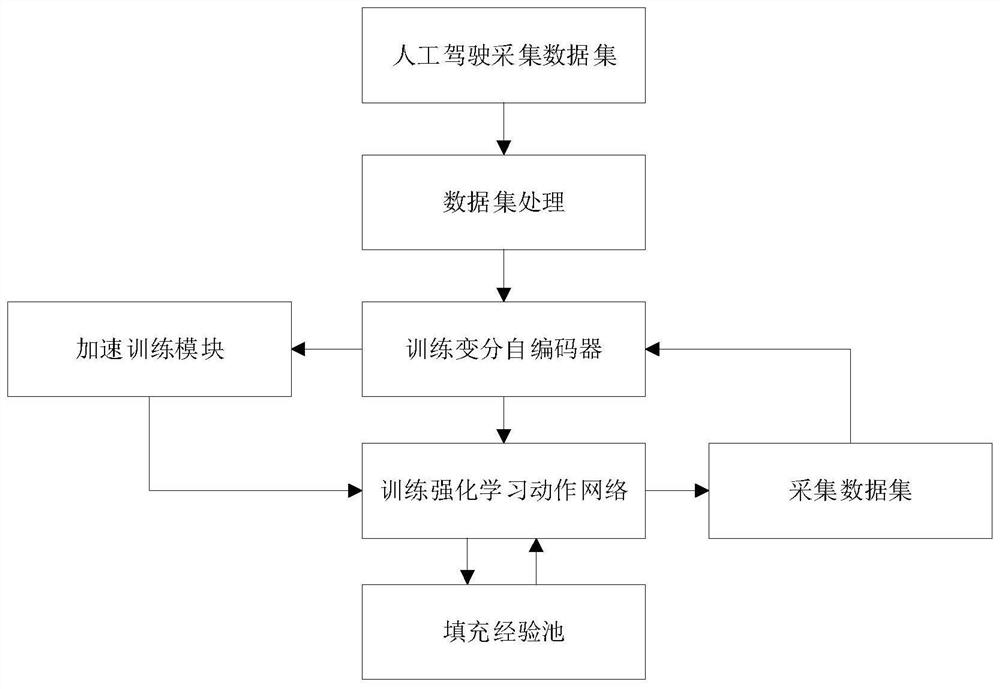

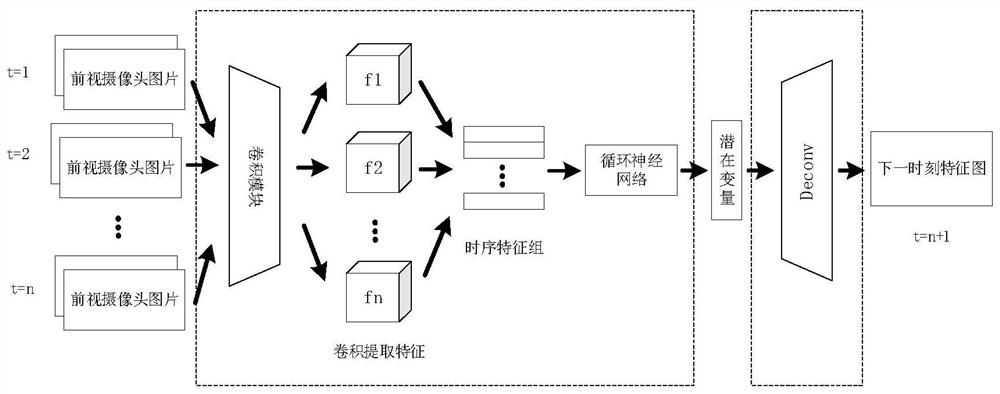

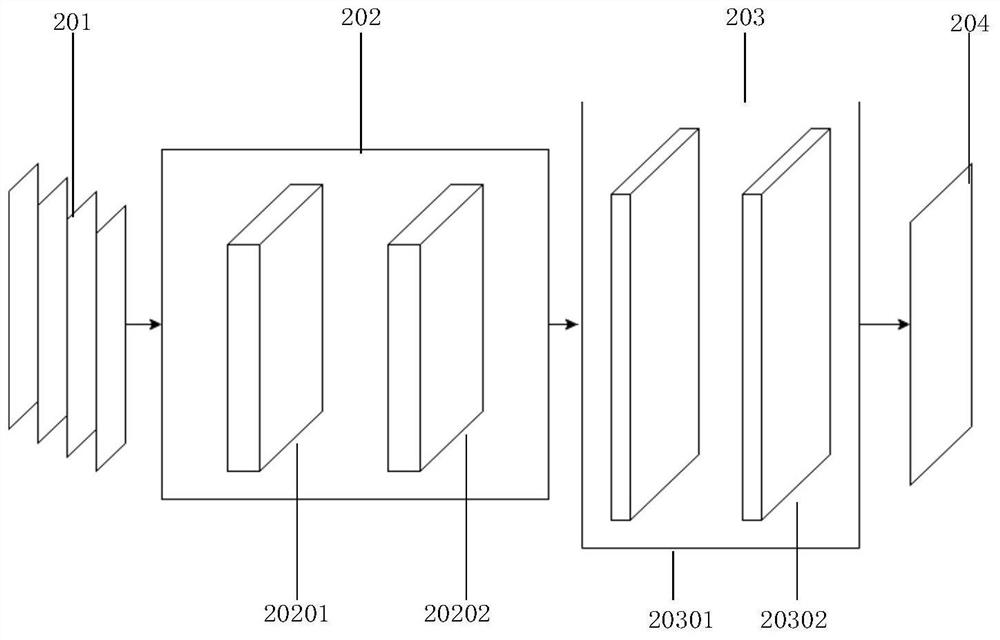

Automatic driving controller and training method based on variational auto-encoder and reinforcement learning

PendingCN112801273AFast convergenceSolve the problem of too large state spaceEnsemble learningNeural architecturesMultiple sensorEngineering

The invention discloses an automatic driving controller and a training method based on a variational auto-encoder and reinforcement learning. The variational auto-encoder is used for extracting surrounding traffic environment information, the encoder adopts a method of a convolutional neural network and a recurrent neural network, information of multiple sensors and historical environment information are effectively extracted, and the information loss is avoided. The reinforcement learning network uses a potential variable extracted by dimension reduction of a variational auto-encoder as a state quantity for training, and the problem that the state space of a reinforcement learning part is too large is solved. The additional reward constructed by using the loss function of the variational auto-encoder accelerates the exploration of the intelligent agent to the unfamiliar state space, and improves the exploration rate and learning rate of reinforcement learning.

Owner:JIANGSU UNIV

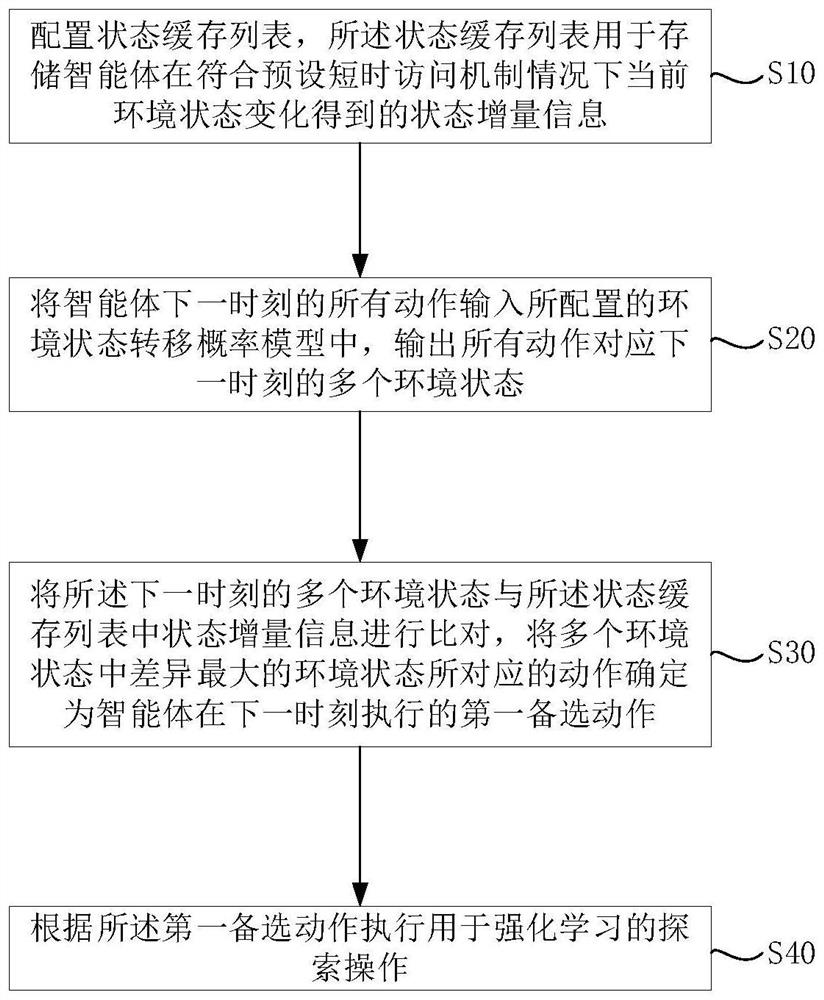

Reinforced learning method and device based on short-time access mechanism and storage medium

PendingCN111898727ASpeed up explorationExplore guideNeural architecturesNeural learning methodsEngineeringData mining

The invention relates to a reinforcement learning method and device based on a short-time access mechanism, and a storage medium. The method comprises the steps: configuring a state cache list which is used for storing state increment information obtained through the change of a current environment state of an intelligent agent under the condition that the intelligent agent meets a preset short-time access mechanism; inputting all actions of the intelligent agent at the next moment into the environment state transition probability model, and outputting a plurality of environment states of allactions corresponding to the next moment; comparing the plurality of environment states at the next moment with the state increment information in the state cache list, and determining an action corresponding to the environment state with the maximum difference in the plurality of environment states as a first alternative action executed by the intelligent agent at the next moment; and executing exploration operation for reinforcement learning according to the first alternative action. According to the invention, through the state cache list, repeated exploration of the explored environment state is avoided; through the environment state transition probability model, the exploration of the intelligent agent to the unknown state is strengthened and guided, and the learning efficiency is effectively improved.

Owner:TSINGHUA UNIV

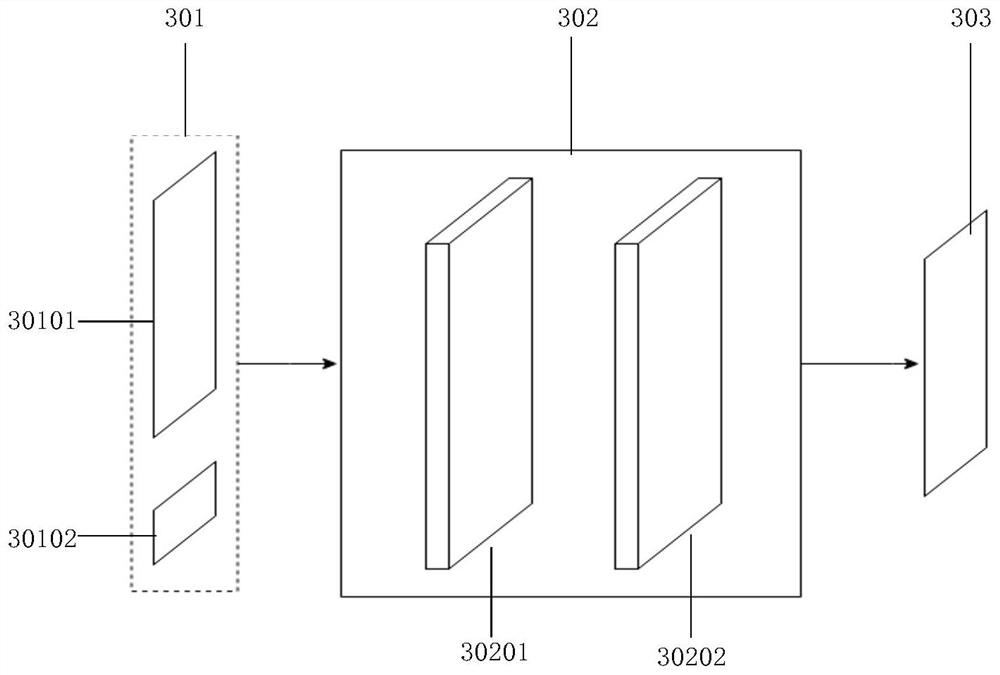

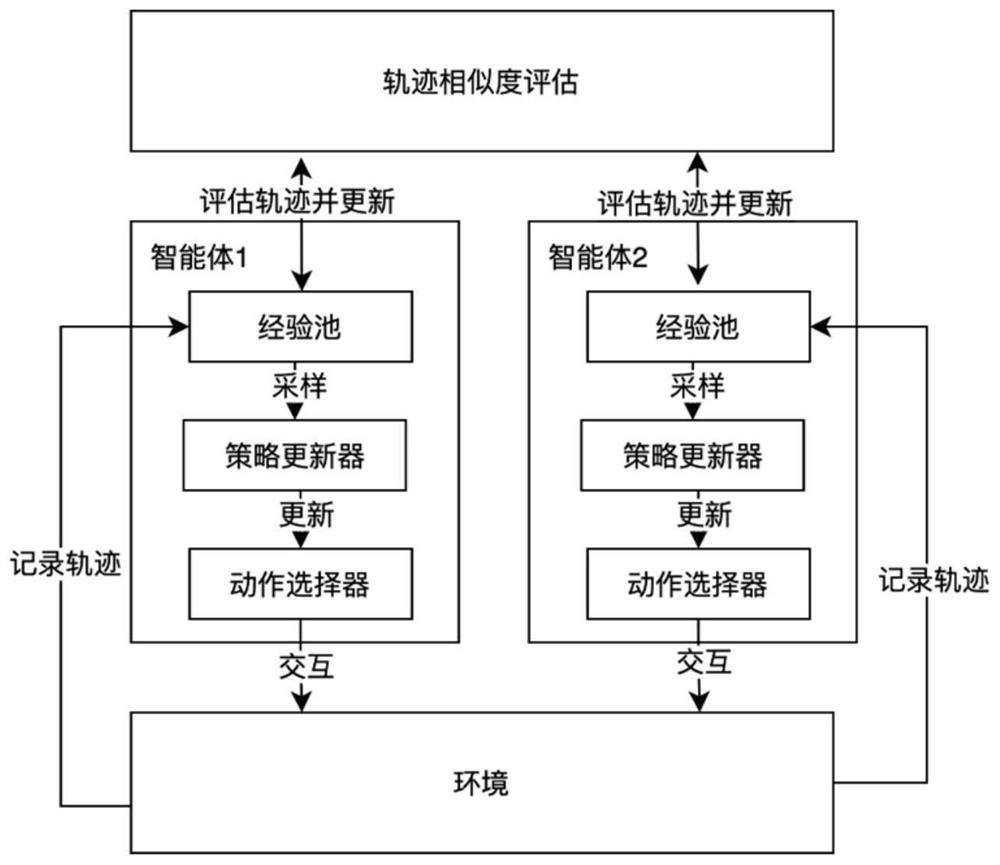

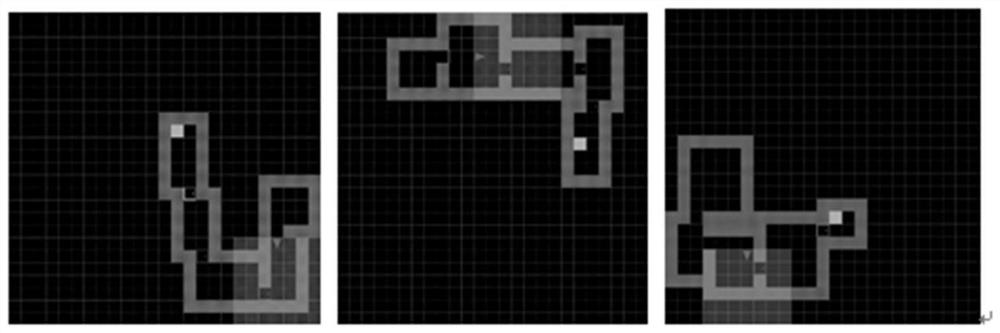

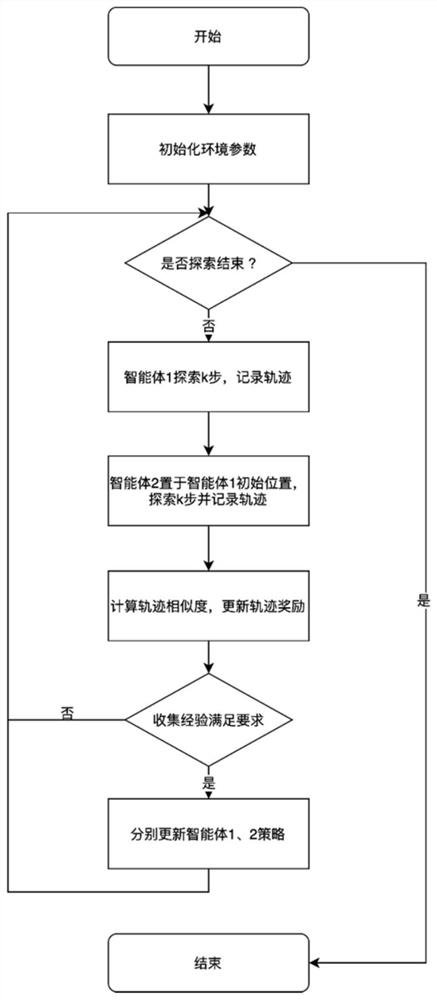

Robot path exploration method based on double-agent competitive reinforcement learning

PendingCN114372520ARemove random noiseImprove robustnessBiological neural network modelsCharacter and pattern recognitionDecision modelEngineering

The invention relates to a robot path exploration method based on double-agent competitive reinforcement learning, and the method comprises the following steps: S1, constructing a Markov decision model, and initializing agents and an experience pool; s2, recording a current state st of an agent Agent1, exploring k steps, and recording a current track sequence to an experience pool Buffer 1; s3, the intelligent agent Agent2 is placed at the state st, the intelligent agent Agent2 explores k steps, and a current track sequence is recorded to an experience pool Buffer 2; s4, taking the similarity between the exploration trajectories as an additional reward of the agent Agent1, and taking an opposite number as an additional reward of the agent Agent2; s5, updating strategies of the agents Agent1 and Agent2 when the number of data in the experience pool meets the requirement; s6, repeatedly executing the steps S2-S5 until the intelligent agent Agent1 reaches the target state or exceeds the set time tlimit; and S7, repeatedly executing the steps S1-S6 until the set training episode number is completed. Compared with the prior art, the method has the advantages that the intelligent agent can explore more effectively, the training speed is increased, the utilization efficiency of samples is improved, random noise can be effectively eliminated, and the robustness is higher.

Owner:TONGJI UNIV

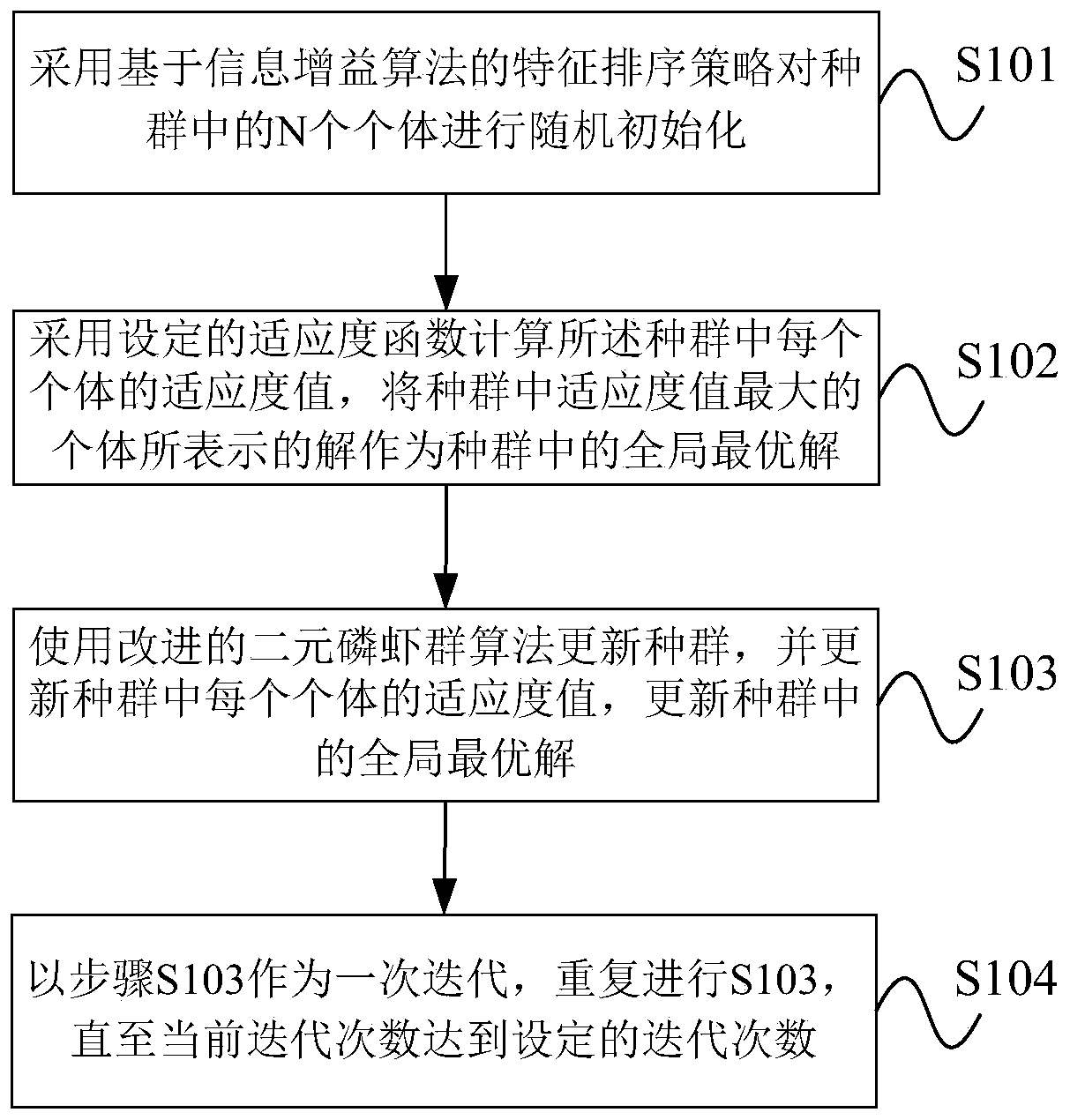

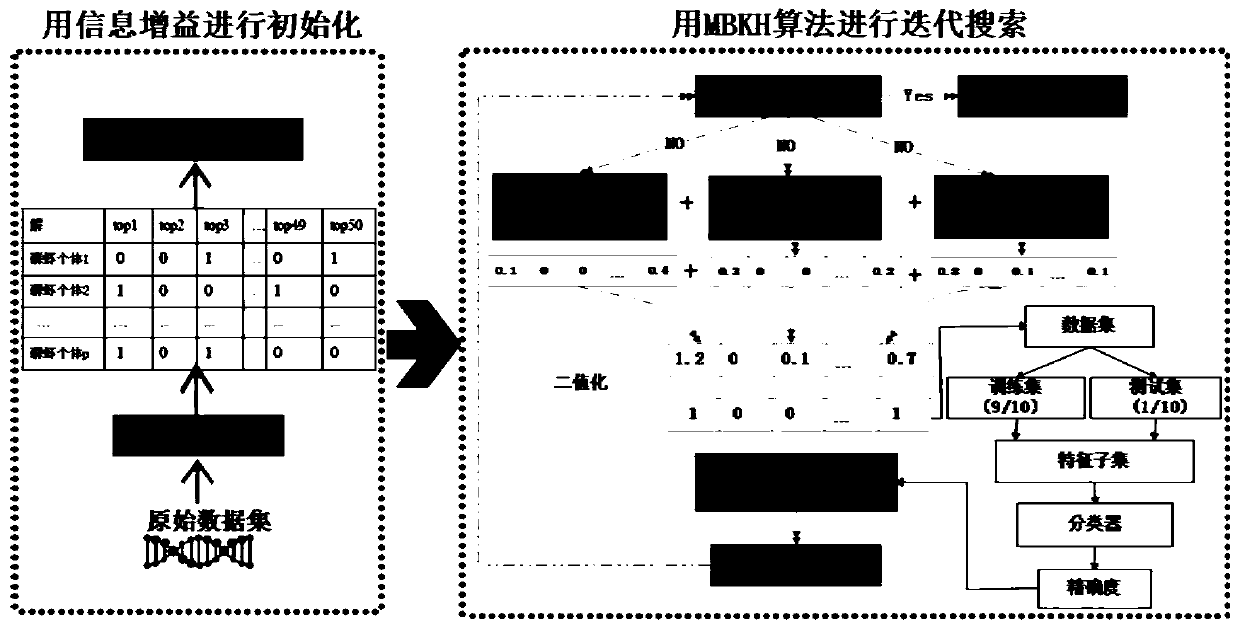

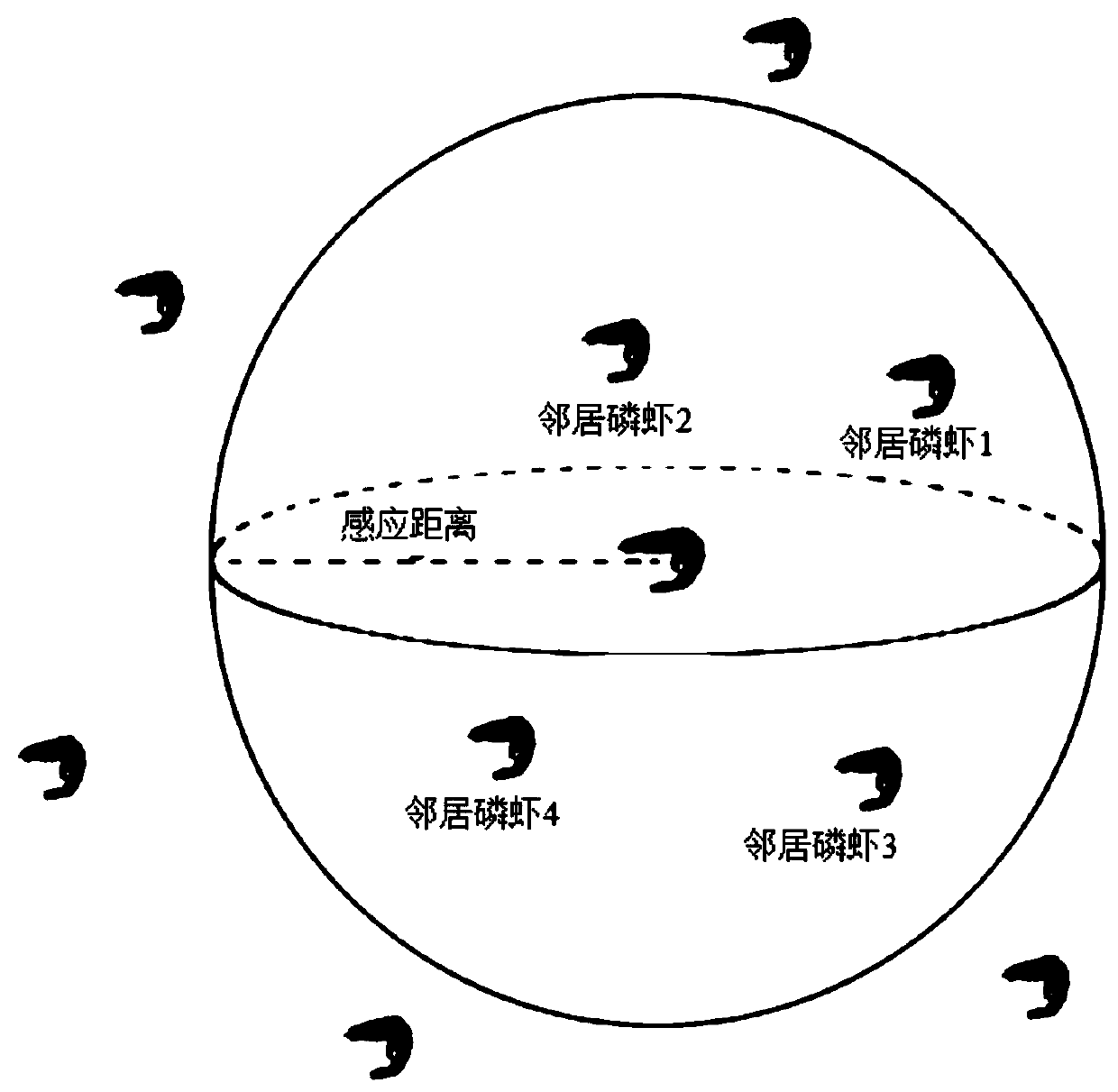

Effective hybrid feature selection method based on improved binary krill swarm algorithm and information gain algorithm

ActiveCN110837884ASpeed up explorationImprove performanceArtificial lifeHybridisationInformation gainExpression gene

The invention provides an effective hybrid feature selection method based on an improved binary krill swarm algorithm and an information gain algorithm. The algorithm comprises the following steps: step 1, randomly initializing N individuals in a population by adopting a feature sorting strategy based on an information gain algorithm; 2, calculating a fitness value of each individual in the population by adopting a set fitness function, and taking a solution expressed by the individual with the maximum fitness value in the population as a global optimal solution in the population; 3, updatingthe population by using an improved binary krill swarm algorithm, updating the fitness value of each individual in the population, and updating a globally optimal solution in the population; and 4, taking the step 3 as one iteration, and repeating the step 3 until the current number of iterations reaches the set number of iterations. Through test verification of 10-fold crossing on nine public biomedical data sets, the number of gene expression levels can be effectively reduced, and compared with other feature selection methods, high classification accuracy is obtained.

Owner:HENAN UNIVERSITY

Protein two-phase conformational space optimization method based on dihedral angle entropy

ActiveCN108804868AReduce adverse effectsSpeed up explorationSpecial data processing applicationsDihedral angleMetapopulation

The invention discloses a protein two-phase conformational space optimization method based on dihedral angle entropy. According to the method, first, a first phase and a second phase of a Rosetta protocol are utilized to initialize a population; in an exploration phase, a third phase of the Rosetta protocol is iterated to perform large-range conformational search, and the change of the dihedral angle entropy in each generation of the population is used as a standard for phase switching; and in an enhancement phase, random local disturbance is performed on a dihedral angle of a residue in a loop region on the basis of a fourth phase of the Rosetta protocol, conformational update is guided by introducing dihedral angle energy in combination with a Rosetta score3 energy function, and the adverse influence brought by non-precision of the energy function is reduced while loop region exploration is enhanced. The protein two-phase conformational space optimization method based on the dihedralangle entropy is high in prediction precision.

Owner:ZHEJIANG UNIV OF TECH

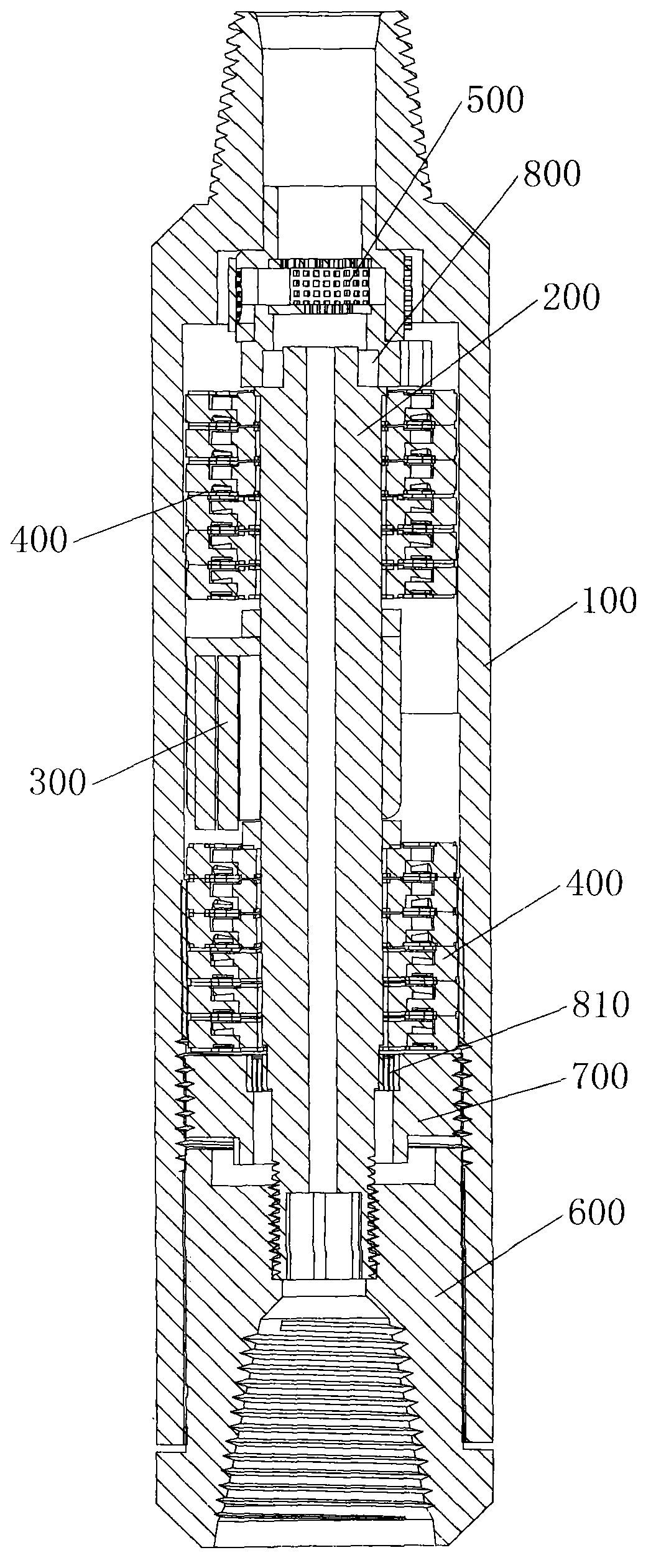

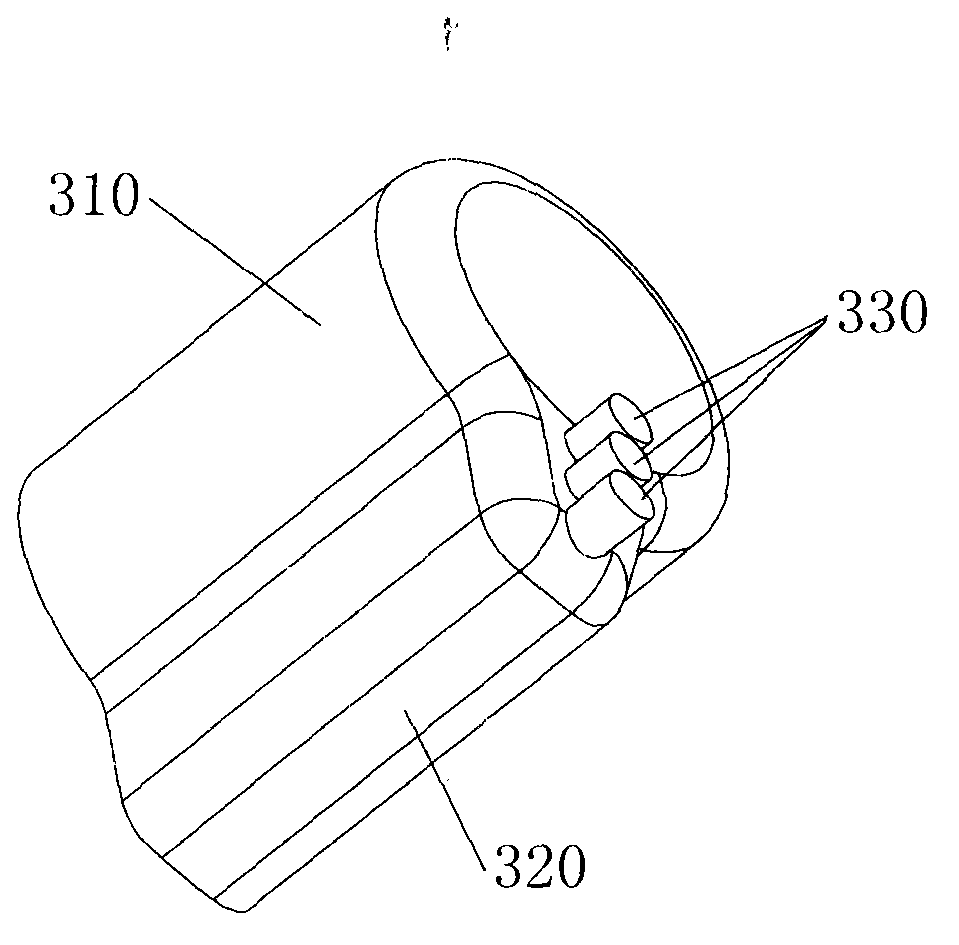

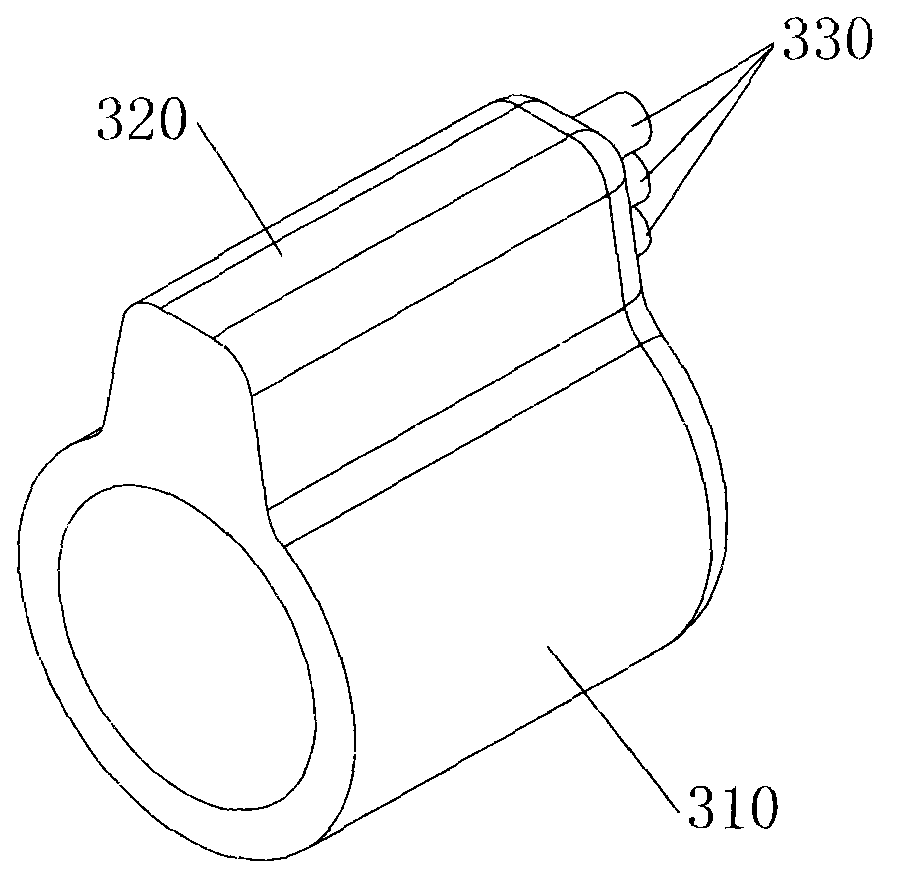

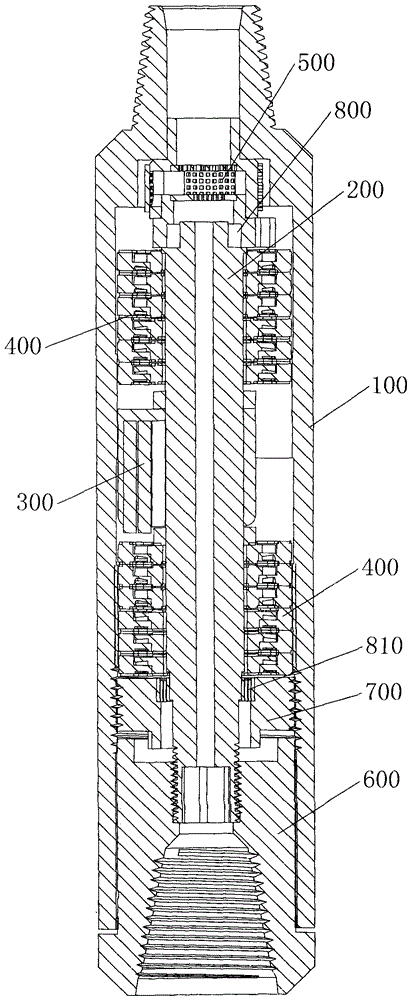

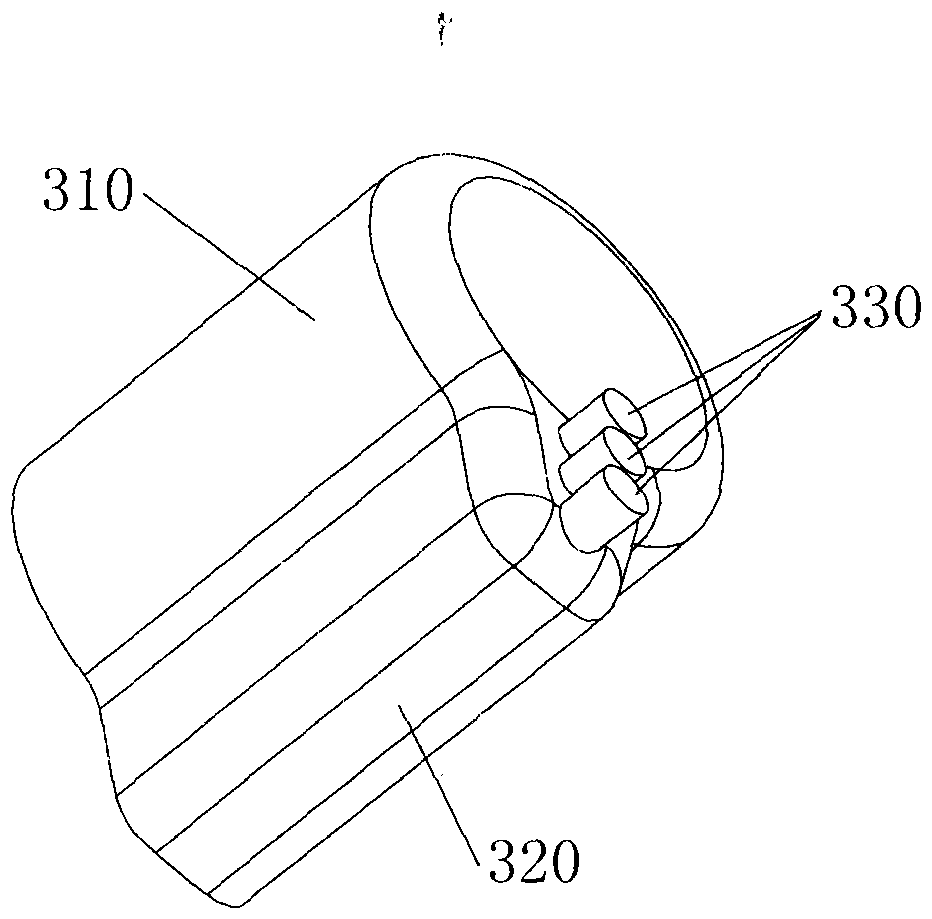

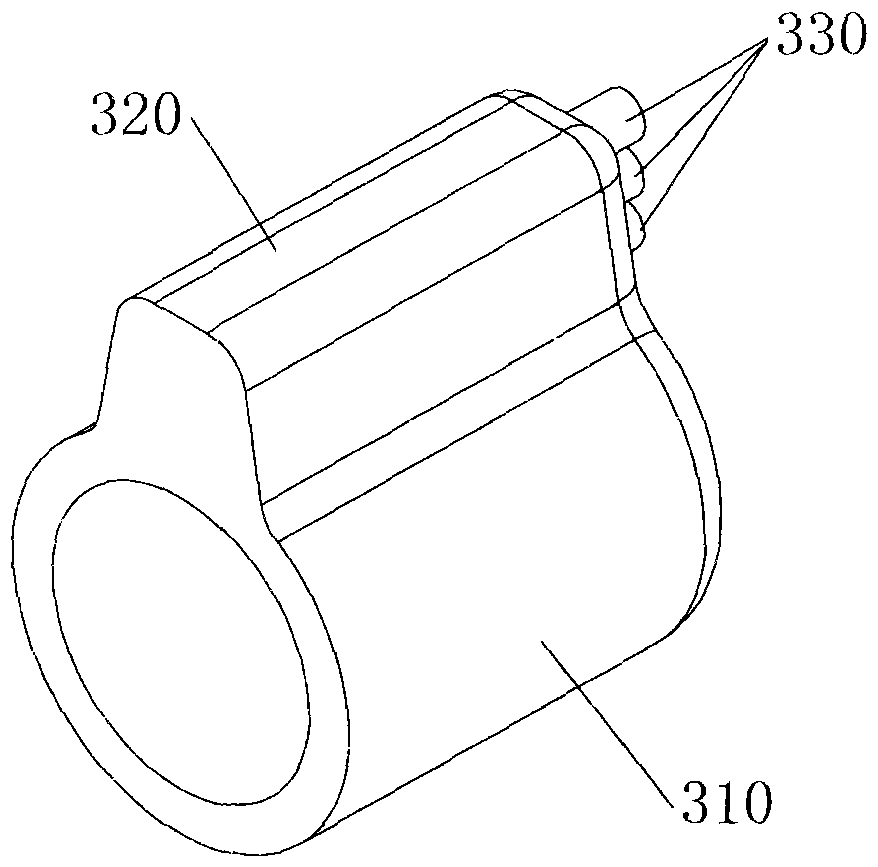

Downhole motor

ActiveCN103061957AStrong two-way powerSpeed up explorationHydro energy generationBorehole drivesMechanical energyTurbine

The invention provides a downhole motor comprising a casing, a mandrel, a motive eccentric weight and at least one turbine assemblies. The mandrel is pivotally mounted in the casing and penetrates the turbine assemblies, the motive eccentric weight is mounted on the mandrel, and the turbine assemblies are mounted in the casing. Liquid energy of drilling liquid is converted into mechanical energy via the turbine assemblies and a liquid carrying mandrel assembly, and the downhole motor is capable of supplying power for a drill for the first time from both the liquid carrying mandrel assembly and the turbine assemblies. Since bi-directional power is supplied to the downhole drill, drilling speed is increased, drilling footage is deepened, drilling accidents are reduced, shaft building period is shortened, and exploration of oil and gas is accelerated.

Owner:深圳市阿特拉能源技术有限公司

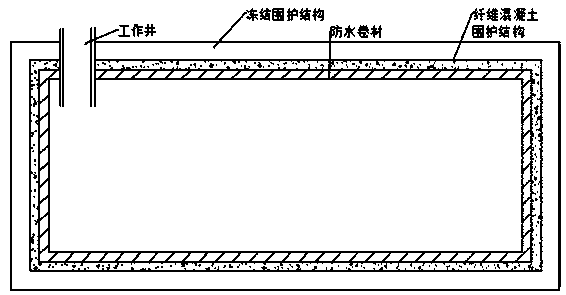

Oil storage device capable of achieving freezing through freezing pipes and construction method thereof

InactiveCN109322325AGood value for moneyControl firmnessArtificial islandsProtective foundationRebarHigh pressure

The invention discloses a construction method of an oil storage device capable of achieving freezing through freezing pipes. The construction method comprises the specific steps that an appropriate geographic position is selected, a main body structure is constructed through reinforcing steel bars, and a fiber concrete space enclosing structure is formed from high-pressure jet grouting fiber cement; a working well is dug; freezing pipes are inserted to the periphery of the fiber concrete space enclosing structure; a refrigerant is introduced into the freezing pipes for freezing soil, and frozen oil with the high strength and good sealing performance is formed; deep-stratum soil is dug away, and an oil storage space is formed; the inner side of the fiber concrete space enclosing structure is paved with waterproof roll, and the upper soil body layer of the oil storage space reinforced through high pressure spouting; and hydraulic oil is injected through high pressure, and sealing is carried out. The invention further discloses the oil storage device capable of achieving freezing through the freezing pipes. The problems of excessive spending and explosion caused by storage in deep-layer soil in the prior art are solve, implementation is convenient, adaptability is good, safety and reliability are high, and no pollution is produced.

Owner:HAINAN UNIVERSITY

Optimization method of microprocessor microarchitecture parameters based on petri net

ActiveCN104361182BHigh precisionSpeed up explorationSpecial data processing applicationsSpecific program execution arrangementsParallel computingRelease time

The invention discloses a microprocessor micro system structure parameter optimization method based on a Petri network. The method comprises the following steps that a template of a flow line model is built on the basis of the colored Petri network, an instruction sequence of a target application program is obtained, relevant information between the instructions and the function unit types is obtained, a colored Petri network model, running under the current parameter configuration, of the target program is generated, a Petri network simulation tool is used for simulation, a simulation report is generated, the colored Petri network model is used for generating a corresponding directed acyclic graph according to the simulation report, a key path of the directed acyclic graph and nodes passed by the key path are calculated, the release time of each entering edge of each node is calculated, the performance bottle neck or power consumption bottle neck for running the target application program by the microprocessor in the current micro system structure parameter configuration is analyzed, and if the optimization is required, the micro system structure parameters are regulated. The microprocessor micro system structure parameter optimization method has the advantages that the prediction reliability and the precision are high, the searching design space relating range is wide, the optimization algorithm complexity is lower, and the optimization is fast and efficient.

Owner:NAT UNIV OF DEFENSE TECH

downhole engine

ActiveCN103061957BStrong two-way powerSpeed up explorationHydro energy generationBorehole drivesMechanical energyEngineering

The invention provides a downhole motor comprising a casing, a mandrel, a motive eccentric weight and at least one turbine assemblies. The mandrel is pivotally mounted in the casing and penetrates the turbine assemblies, the motive eccentric weight is mounted on the mandrel, and the turbine assemblies are mounted in the casing. Liquid energy of drilling liquid is converted into mechanical energy via the turbine assemblies and a liquid carrying mandrel assembly, and the downhole motor is capable of supplying power for a drill for the first time from both the liquid carrying mandrel assembly and the turbine assemblies. Since bi-directional power is supplied to the downhole drill, drilling speed is increased, drilling footage is deepened, drilling accidents are reduced, shaft building period is shortened, and exploration of oil and gas is accelerated.

Owner:深圳市阿特拉能源技术有限公司

A two-stage protein conformational space optimization method based on dihedral angle entropy

ActiveCN108804868BReduce adverse effectsSpeed up explorationInstrumentsMolecular structuresThree stageComputational physics

A two-stage protein conformational space optimization method based on dihedral angle entropy. First, use the first and second stages of the Rosetta protocol to initialize the population; in the exploration stage, iterate the third stage of the Rosetta protocol to search for a wide range of conformations , and the change of dihedral angle entropy value in each generation of population is used as the standard of stage switching; the enhancement stage performs random local perturbation on the dihedral angle of the residues in the loop area on the basis of the fourth stage of the Rosetta protocol, and introduces the dihedral The angular energy combined with the Rosetta score3 energy function guides the conformational update, enhances the exploration of the loop region and reduces the adverse effects of inaccurate energy functions. The invention provides a protein two-stage conformational space optimization method based on dihedral angle entropy with high prediction accuracy.

Owner:ZHEJIANG UNIV OF TECH

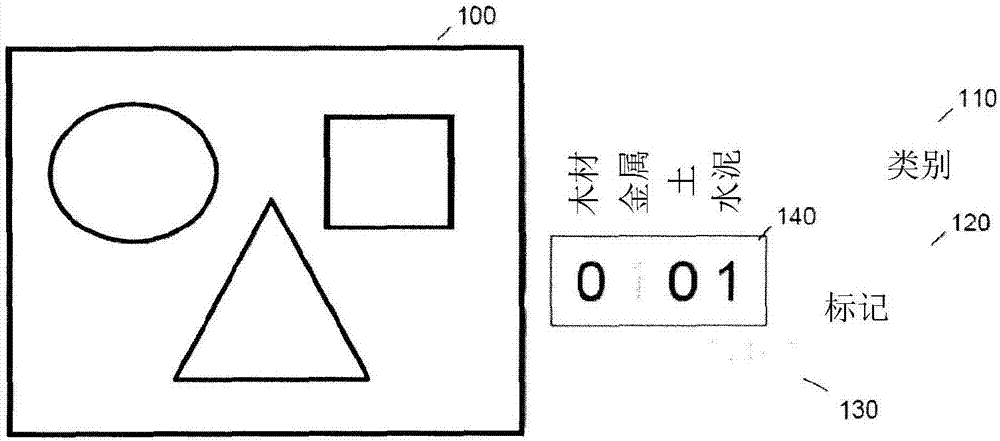

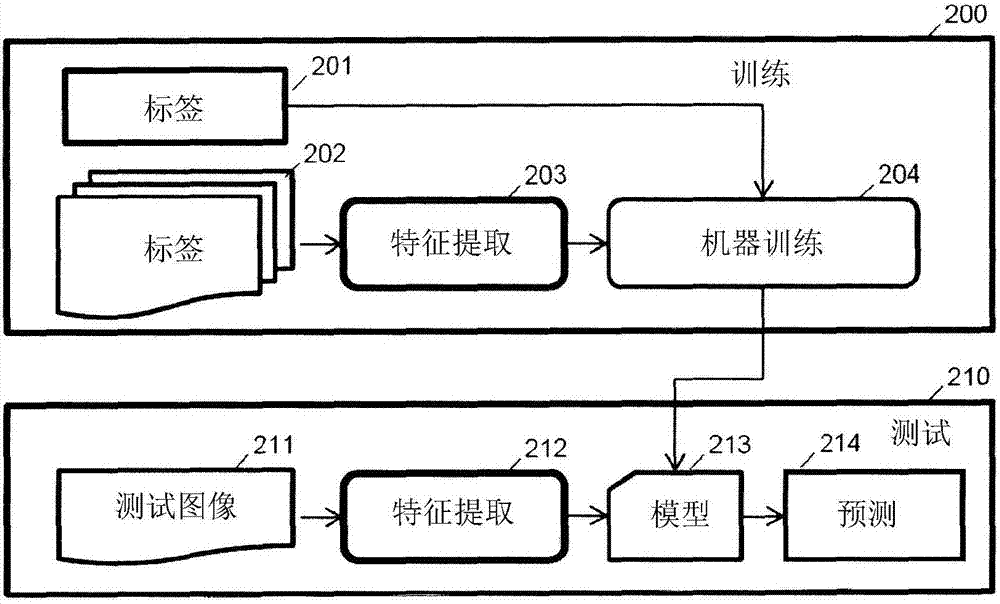

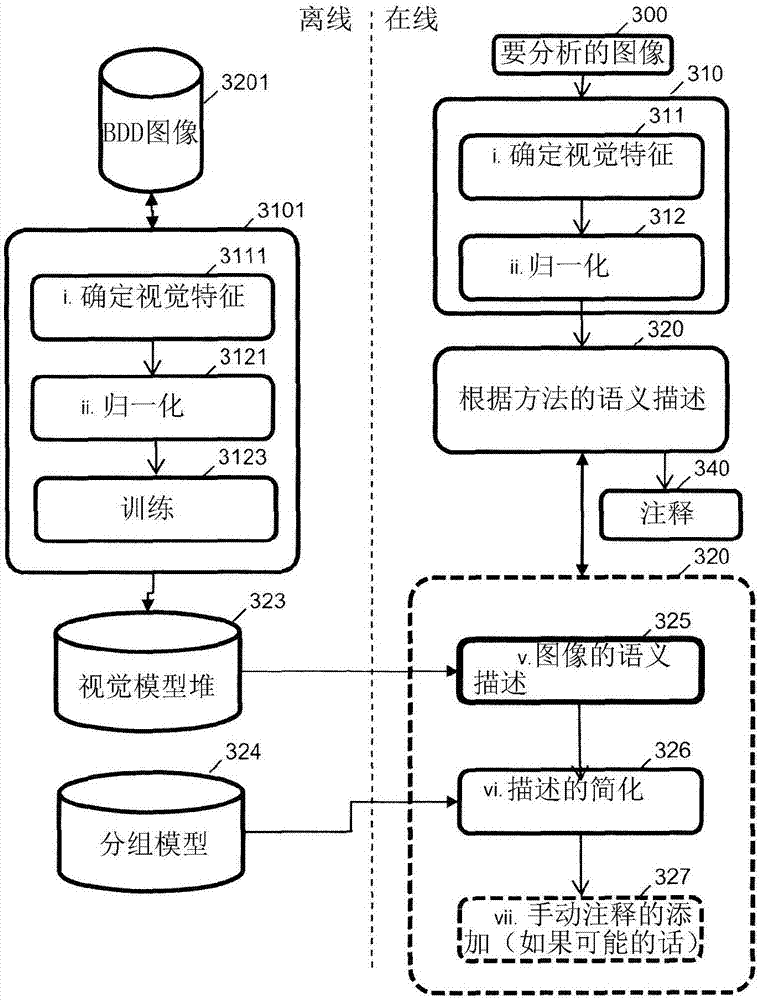

Semantic representation of the content of an image

InactiveCN107430604AAllow for diverse representationsNo performance lossCharacter and pattern recognitionVectoral format still image dataManual annotationSemantic representation

The invention discloses a method implemented by computer for semantically describing the content of an image comprising the steps consisting of receiving a signature associated with said image; receiving a plurality of groups of initial visual concepts; the method being characterised by the steps consisting of expressing the signature of the image in the form of a vector comprising components referring to the groups of initial visual concepts; and modifying said signature by applying a filtering rule that is applied to the components of said vector. Developments describe, in particular, filtering rules that involve filtering by thresholds and / or by intra-group or inter-group order statistics, partitioning techniques including the visual similarity of the images and / or the semantic similarity of the concepts, and the optional addition of manual annotations to the semantic description of the image. The advantages of the method in terms of sparse and diversified semantic representation are presented.

Owner:COMMISSARIAT A LENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES

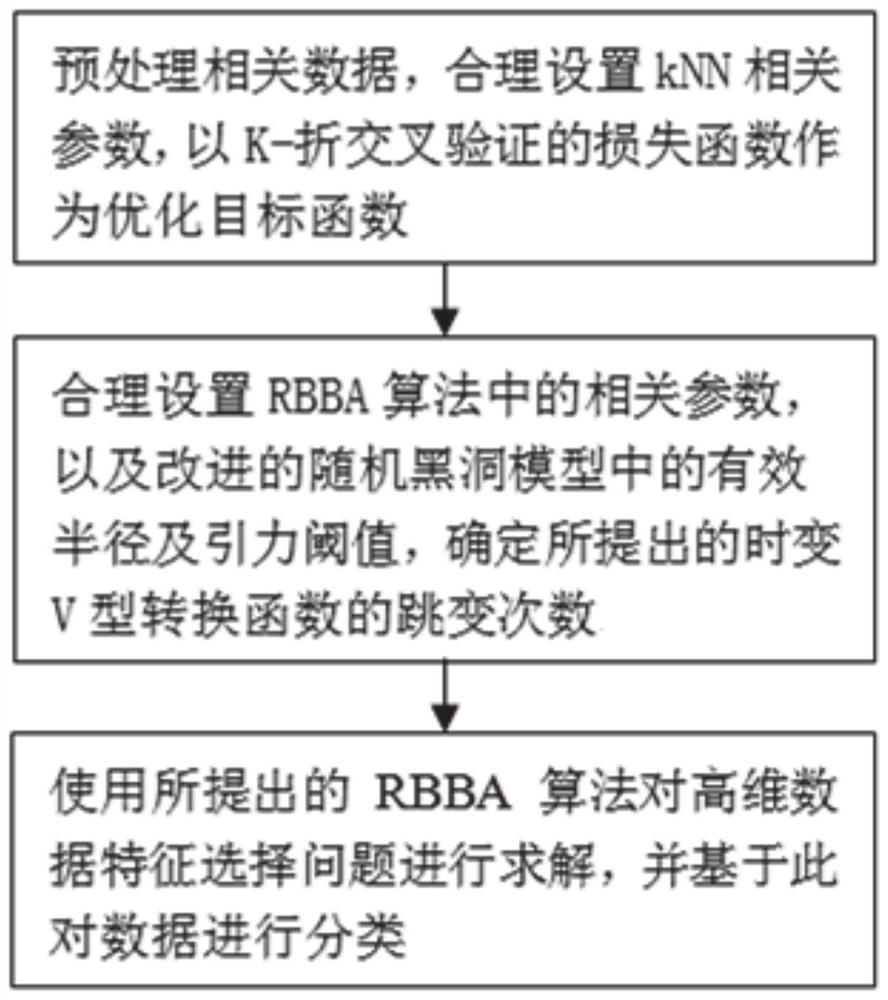

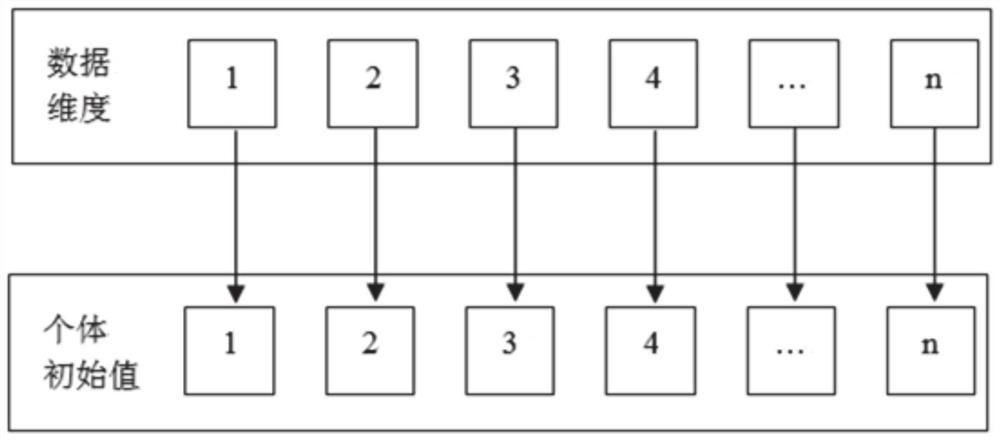

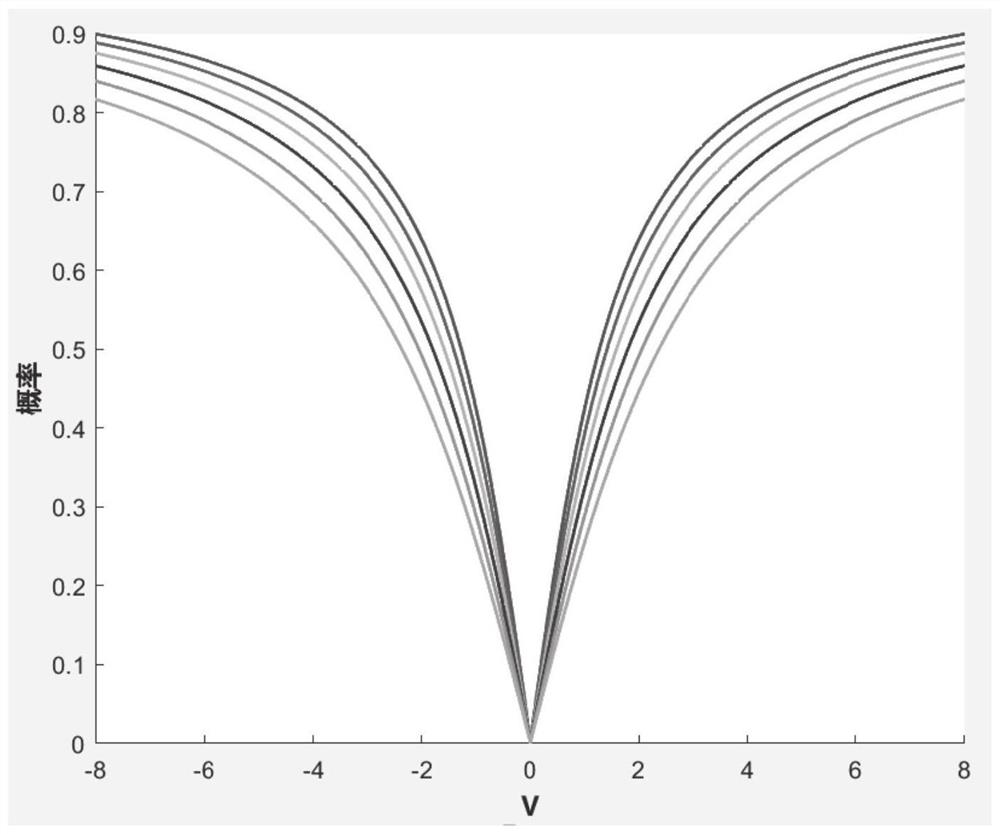

Ionized layer high-dimensional data feature selection method based on improved BBA algorithm

ActiveCN113076695AChoose accuratelyRich in featuresCharacter and pattern recognitionArtificial lifeBinary bat algorithmAlgorithm

The invention discloses an ionized layer high-dimensional data feature selection method based on an improved BBA algorithm. The method comprises the following steps: acquiring ionized layer data; taking a dimension classification loss function as a target function; adopting an improved BBA algorithm to solve the target function, wherein the improved BBA algorithm comprises the following steps: after the individual speed is updated in a single dimension, mapping from a continuous space to a discrete space is conducted on the updated individual speed according to a time-varying V-shaped conversion function; and determining a target dimension after solving, and performing dimension reduction processing on the ionized layer data according to the target dimension to obtain ionized layer features corresponding to the target dimension. A random black hole model is introduced, a time-varying V-shaped conversion function is provided to improve a BBA algorithm, after ionized layer high-dimensional data is subjected to dimension reduction based on an improved discrete binary bat algorithm, a minimized feature subset is generated, the data error rate is reduced, the dimension classification precision is improved, and accurate ionized layer data features are selected.

Owner:武汉鑫卓雅科技发展有限公司

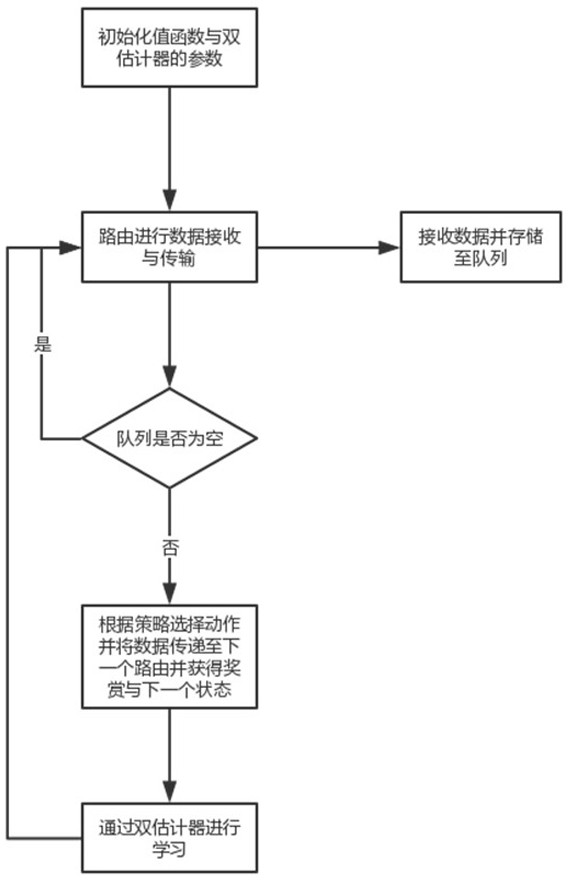

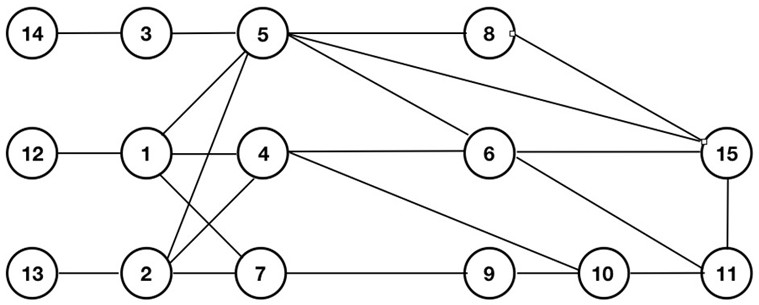

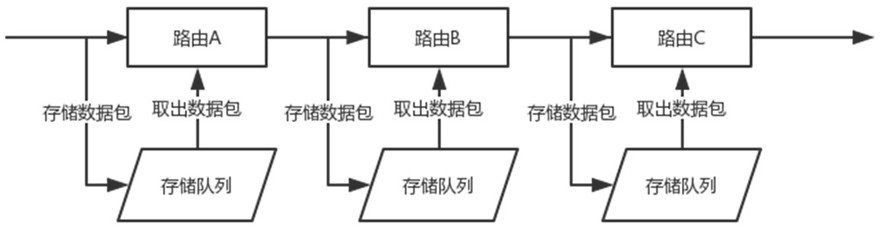

A Dynamic Routing Algorithm Based on Dual Estimators

ActiveCN108737266BReduce congestionThe value function is accurateData switching networksSelection systemAdaptive routing

The invention discloses a dynamic routing selection method based on dual estimators, which has a data transmission system, a queue storage system, and a routing selection system at routing nodes, including the following steps: (1) acquiring environmental information; (2) setting Initial value; provide two estimators, the parameters of each estimator include current state, action, initialization value function and reward information; (3) In each time step, each routing node in the network simultaneously performs data transmission and The work of data reception; (4) the routing selection method during transmission is to obtain a random number, when the random number is greater than, select the optimal action according to the value function of the estimator, otherwise randomly select the action; (5) through the double estimator Update the value function; (6) Repeat steps (3) to (6) for each time step. The invention reduces the congestion of the network, can learn a better strategy at a lower cost, improves the performance of the network, and can effectively deal with the problem of route selection in a highly random network.

Owner:JIANGSU ELECTRIC POWER CO

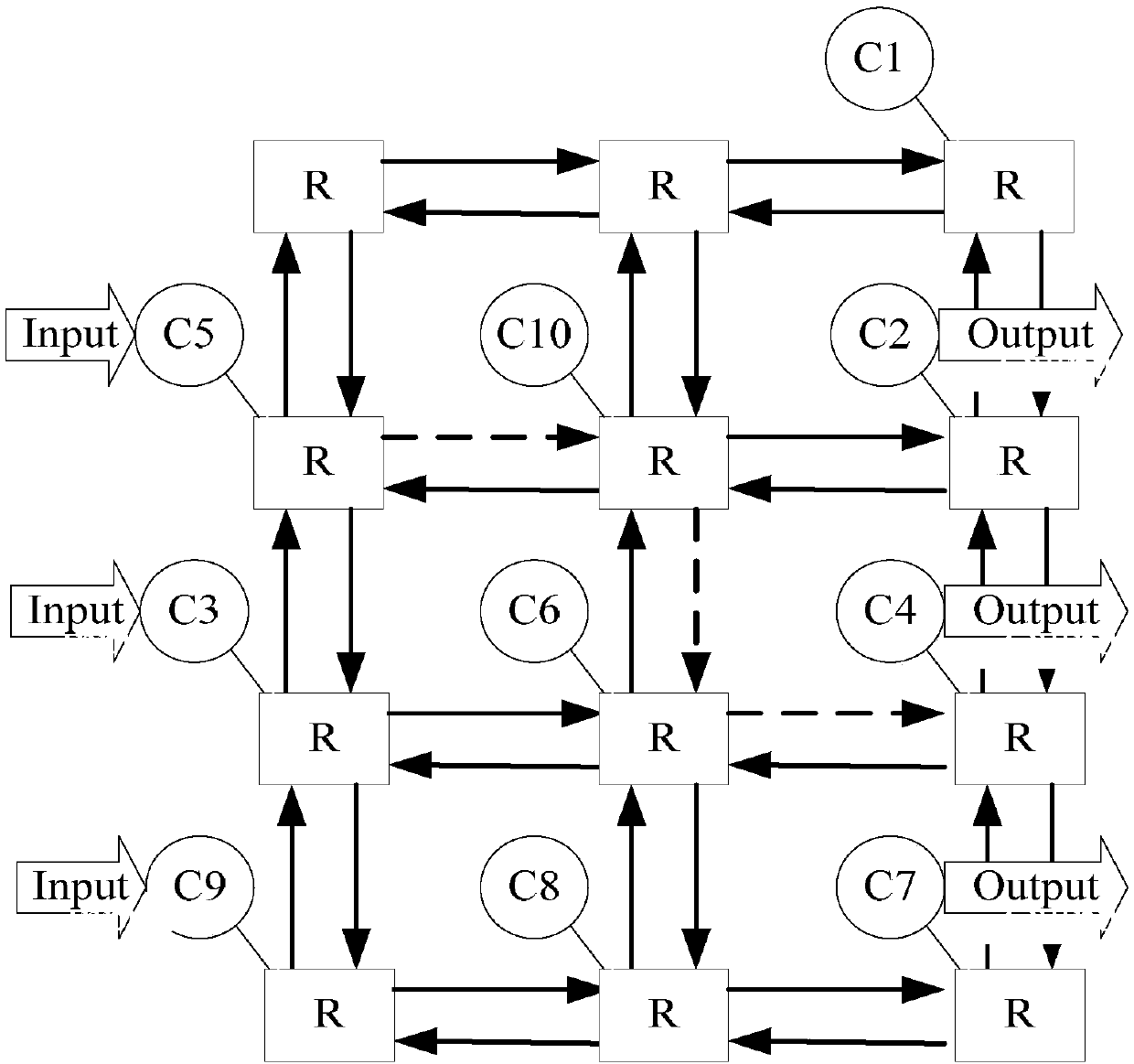

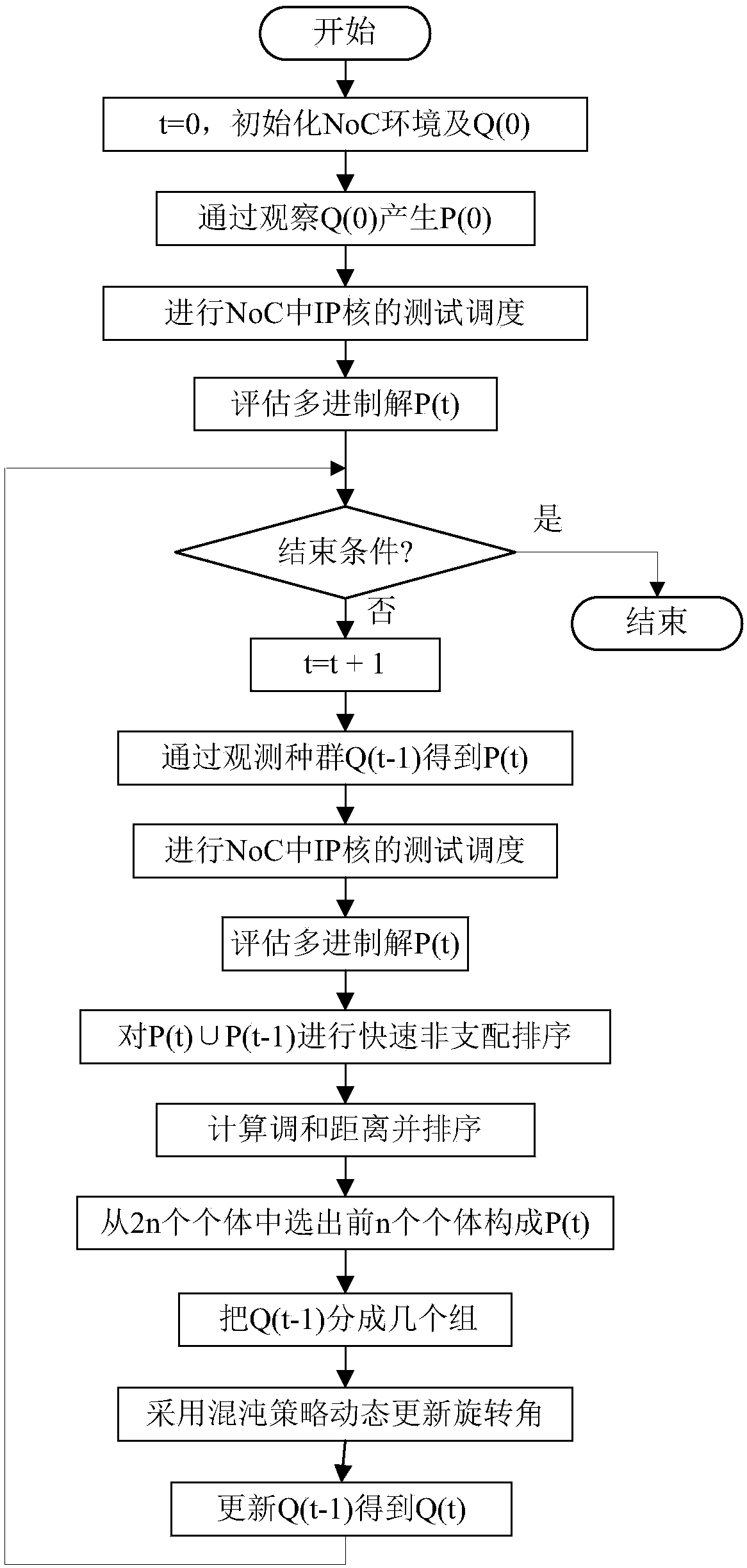

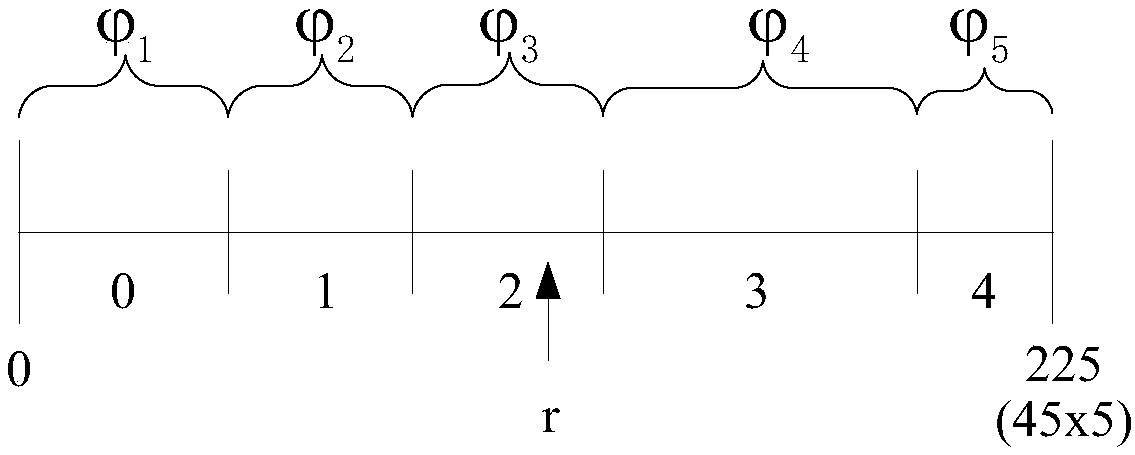

A multi-objective noc test planning optimization method

ActiveCN106526450BFast convergenceSpeed up explorationElectronic circuit testingTest efficiencyComputer science

The invention discloses a multi-target NoC testing planning optimization method, and the method carries out the testing of an IP kernel in an NoC through employing parallel testing method reusing the NoC as a testing access mechanism, saves the testing resources and improving the testing efficiency. On the basis of a quantum multi-target evolution algorithm, the method employs multi-system probability angle coding to replace binary system probability amplitude coding, and is more adaptive to an NoC testing planning problem. The method employs harmonic distance to replace congestion distance, and can balance the congestion degree in a better way. The method employs a chaotic strategy to dynamically update a rotating angle, and gives good consideration to the exploration and mining capability of the algorithm.

Owner:GUILIN UNIV OF ELECTRONIC TECH

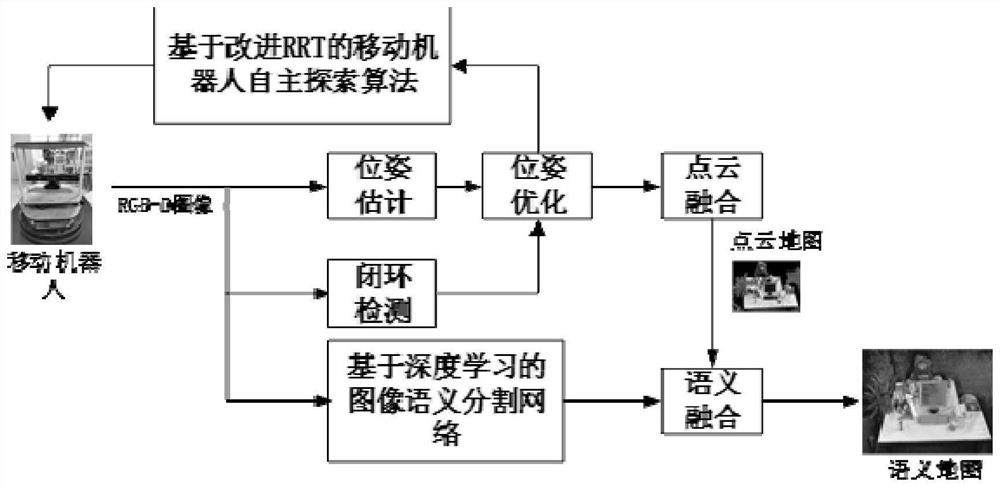

Autonomous exploration type semantic map construction method and system

PendingCN114839975ASpeed up explorationIncrease autonomyPosition/course control in two dimensionsPattern recognitionRgb image

The invention discloses an autonomous exploration type semantic map construction method and system, and the method comprises the steps: carrying out the autonomous exploration of an unknown environment based on a robot autonomous exploration algorithm of an improved rapid extension random tree, and carrying out the global front point detection and local front point detection through employing a global random tree and a local random tree in the exploration process; clustering the front edge points after the front edge points are obtained, selecting the front edge point with the maximum income as an optimal target point, and controlling the robot to arrive at the optimal target point along the optimal path; an image sequence of a current scene is collected in the moving process of the robot, the position of the robot is continuously updated, and when the optimal target point changes, the path is re-planned, and the robot is controlled to reach a new optimal target point; according to the method, the RGB image collected in the autonomous exploration process of the robot is subjected to semantic segmentation, and the RGB image and the corresponding depth map are combined to serve as input of the semantic map construction system, so that the autonomous semantic map construction task is completed, and the autonomy and intelligence of the robot are improved.

Owner:XIAN UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com