Patents

Literature

2296results about How to "Efficient measurement" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Methods of determining concentration of glucose

InactiveUS7058437B2Lower the volumePrecise processMicrobiological testing/measurementMaterial analysis by electric/magnetic meansAnalyteMedicine

A region of skin, other than the fingertips, is stimulated. After stimulation, an opening is created in the skin (e.g., by lancing the skin) to cause a flow of body fluid from the region. At least a portion of this body fluid is transported to a testing device where the concentration of analyte (e.g., glucose) in the body fluid is then determined. It is found that the stimulation of the skin provides results that are generally closer to the results of measurements from the fingertips, the traditional site for obtaining body fluid for analyte testing.

Owner:ABBOTT DIABETES CARE INC

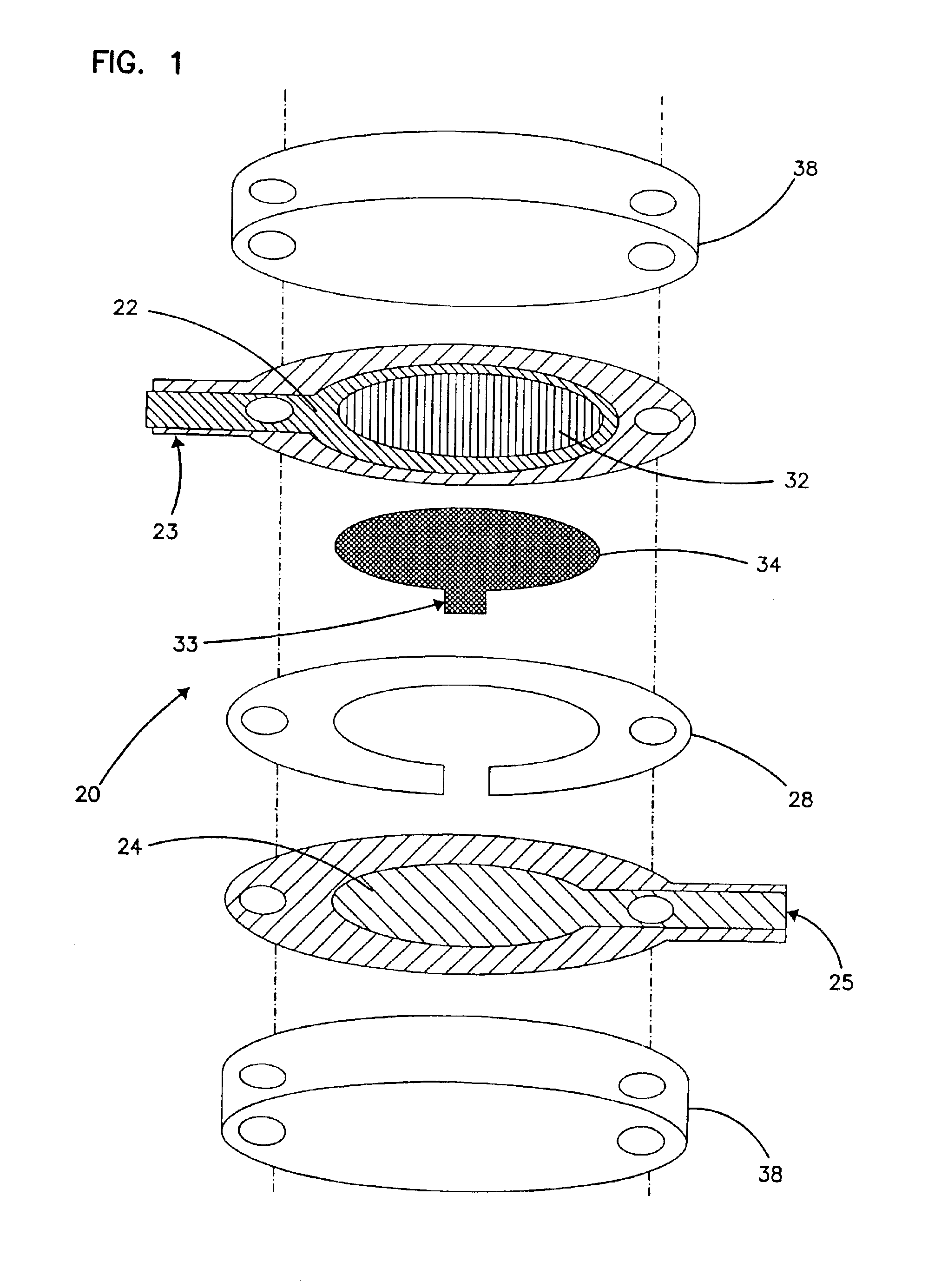

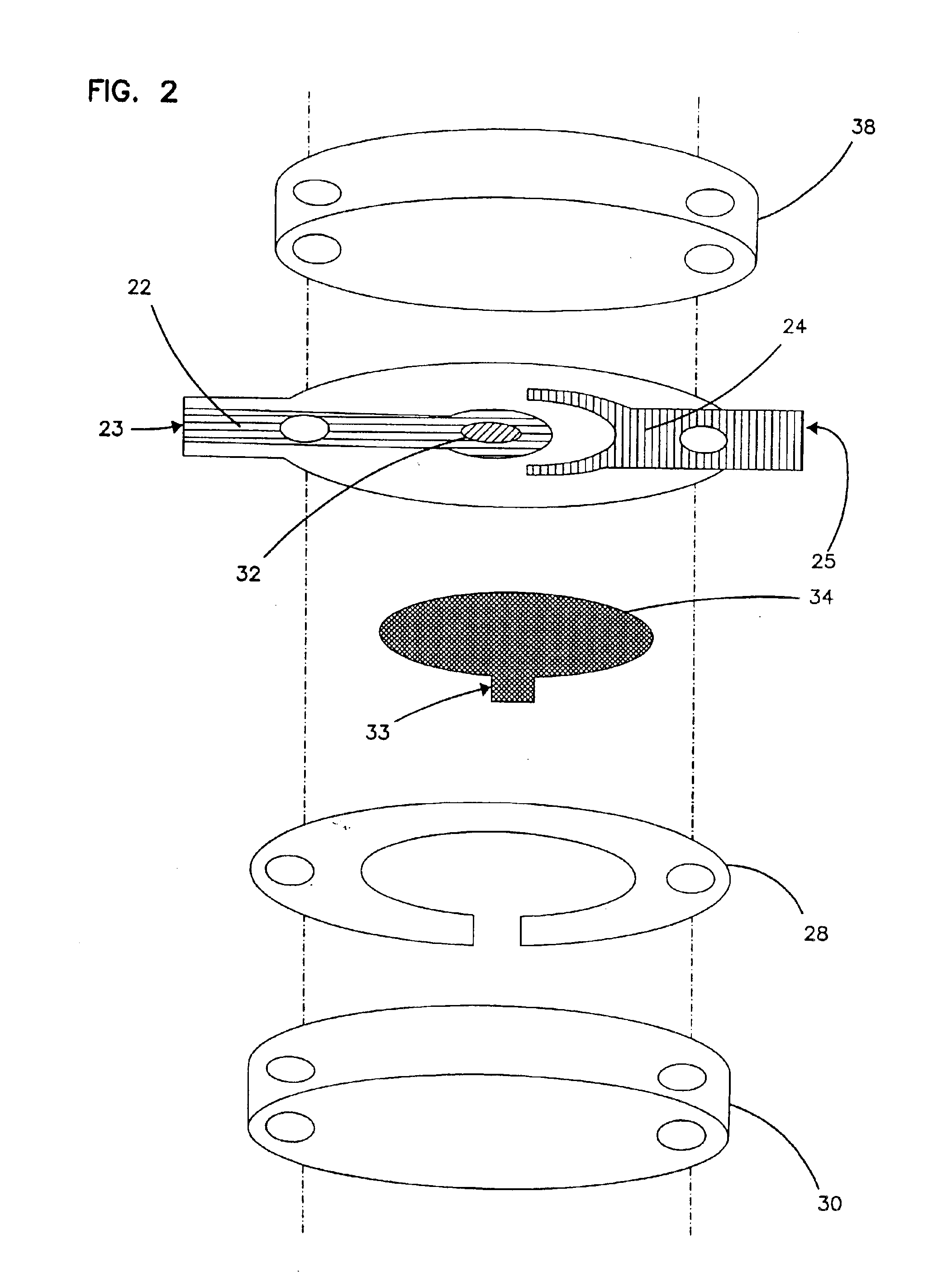

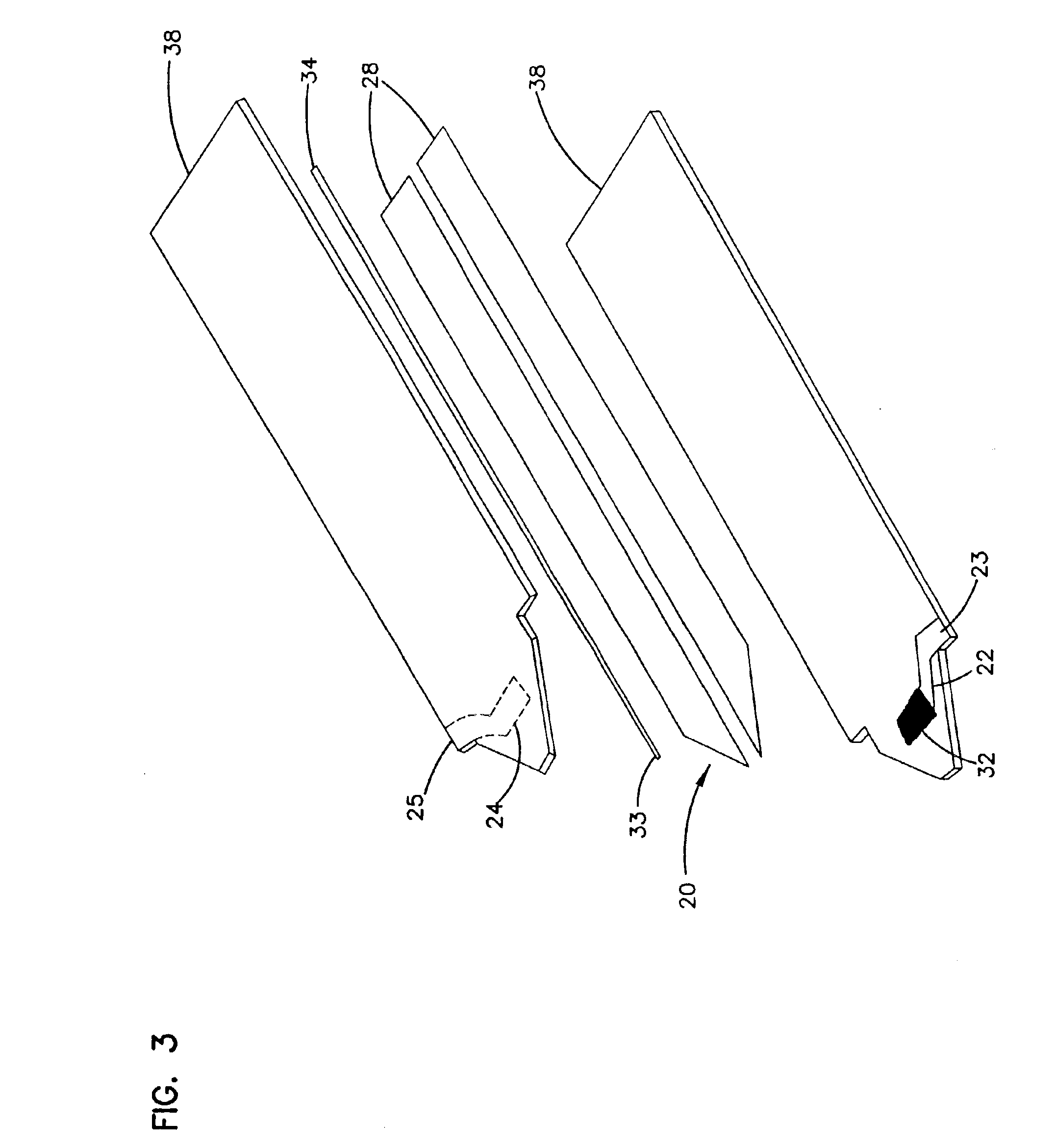

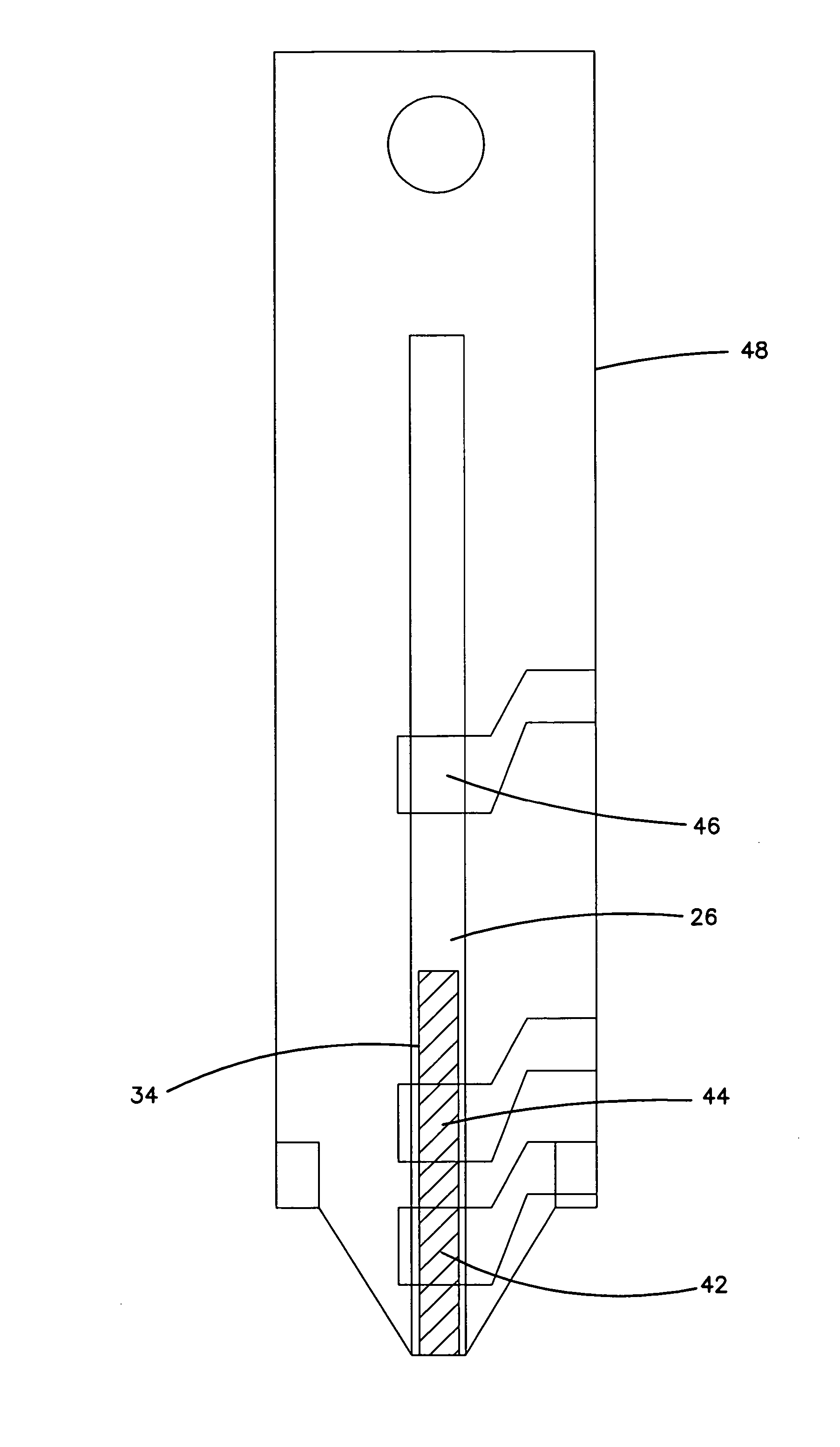

Small volume in vitro analyte sensor with diffusible or non-leachable redox mediator

InactiveUS20060025662A1Lower the volumeAccurate and efficient measurementMicrobiological testing/measurementMaterial analysis by electric/magnetic meansAnalyteRedox mediator

A region of skin, other than the fingertips, is stimulated. After stimulation, an opening is created in the skin (e.g., by lancing the skin) to cause a flow of body fluid from the region. At least a portion of this body fluid is transported to a testing device where the concentration of analyte (e.g., glucose) in the body fluid is then determined. It is found that the stimulation of the skin provides results that are generally closer to the results of measurements from the fingertips, the traditional site for obtaining body fluid for analyte testing.

Owner:ABBOTT DIABETES CARE INC

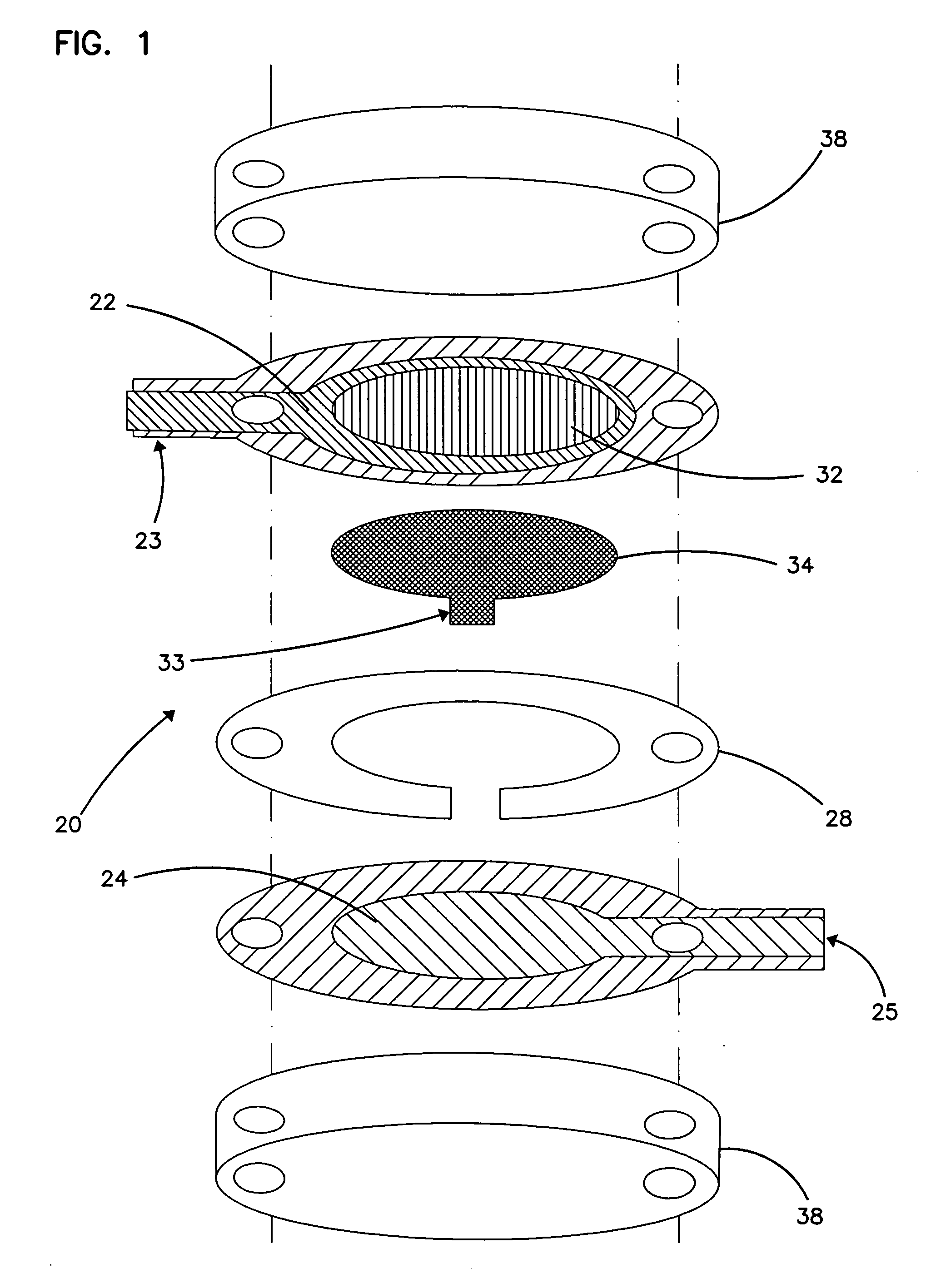

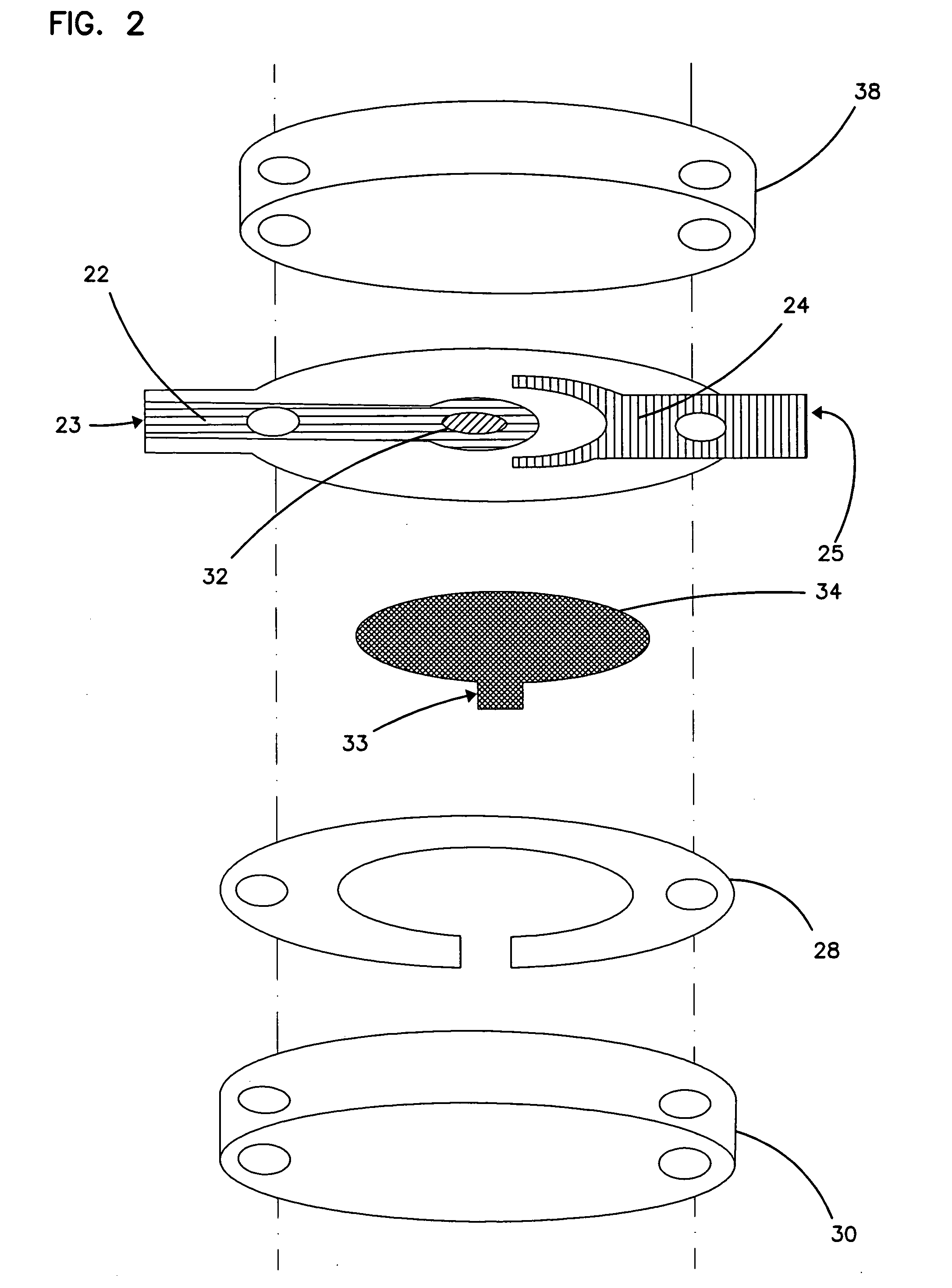

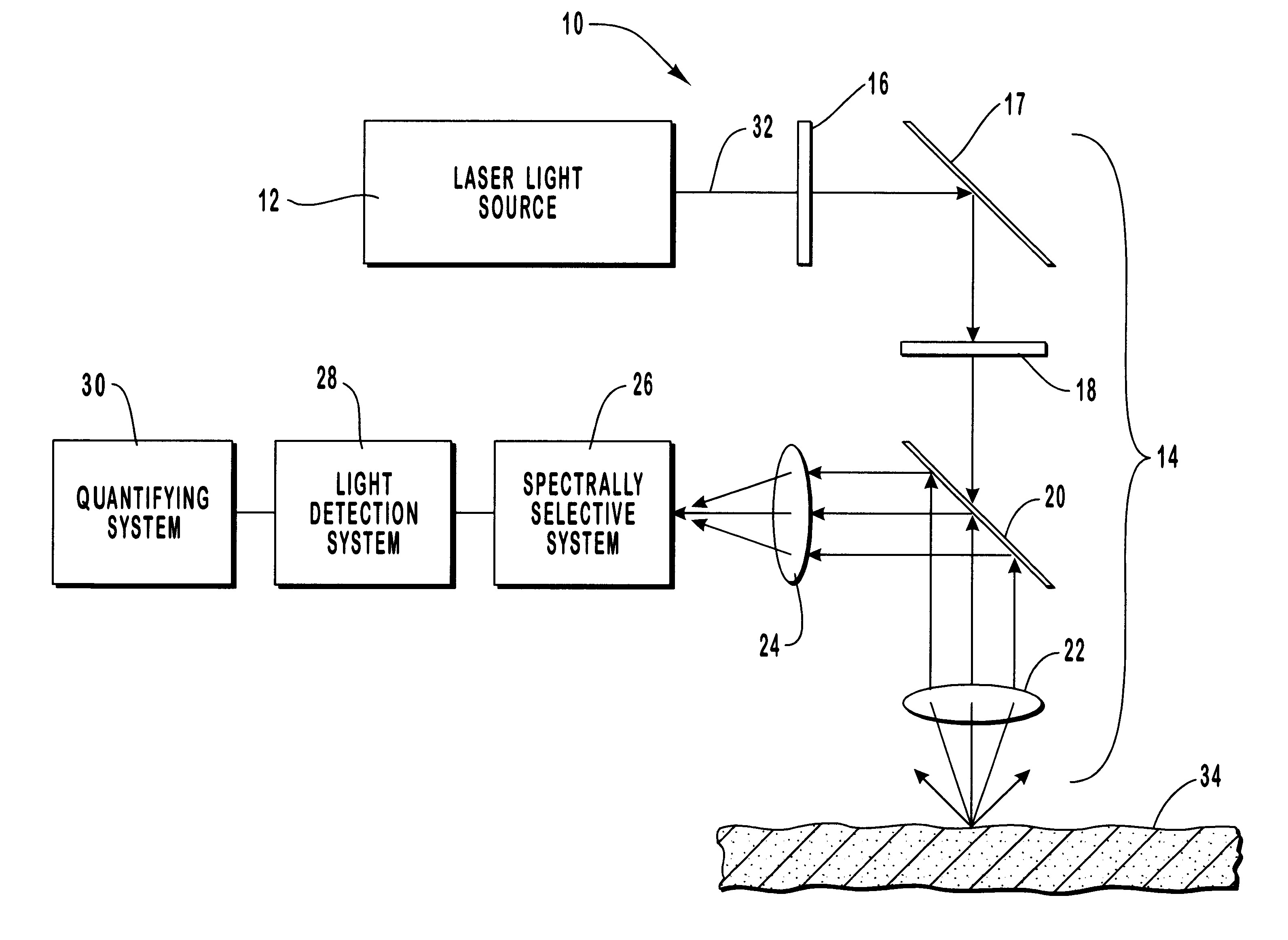

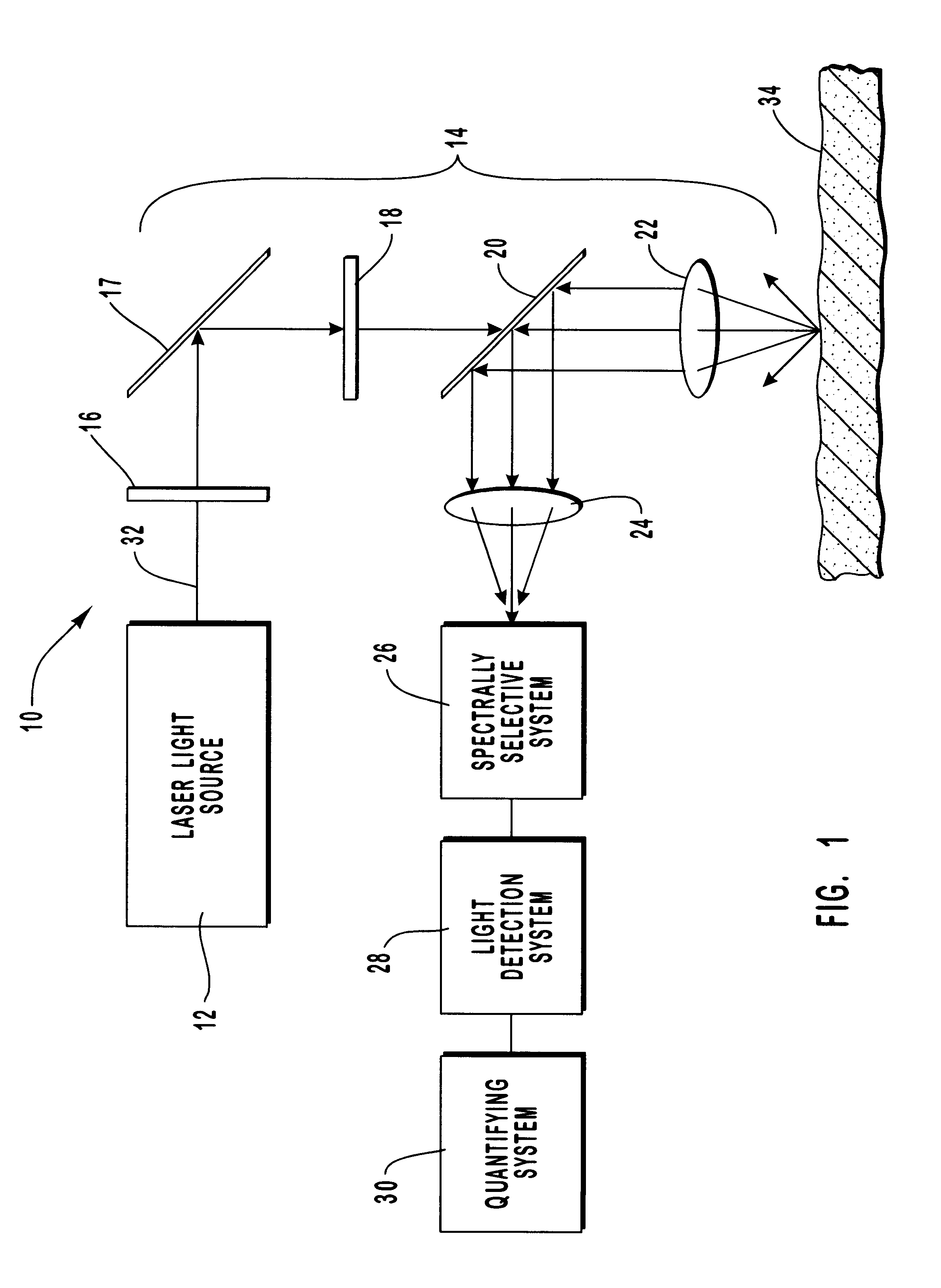

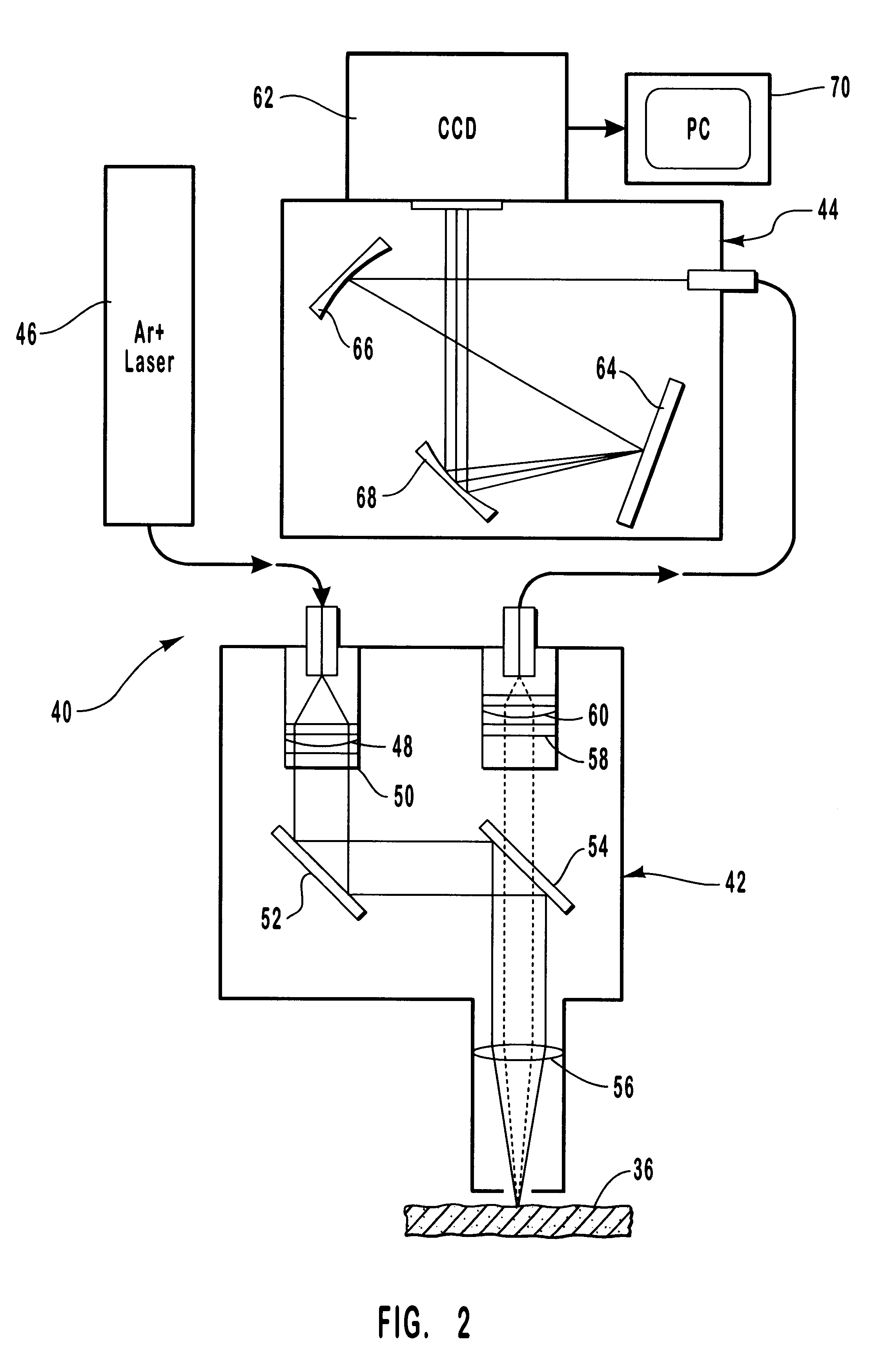

Method and apparatus for noninvasive measurement of carotenoids and related chemical substances in biological tissue

InactiveUS6205354B1Rapid and noninvasive and quantitative measurementRiskRadiation pyrometrySurgeryResonance Raman spectroscopyAntioxidant

A method and apparatus are provided for the determination of levels of carotenoids and similar chemical compounds in biological tissue such as living skin. The method and apparatus provide a noninvasive, rapid, accurate, and safe determination of carotenoid levels which in turn can provide diagnostic information regarding cancer risk, or can be a marker for conditions where carotenoids or other antioxidant compounds may provide diagnostic information. Such early diagnostic information allows for the possibility of preventative intervention. The method and apparatus utilize the technique of resonance Raman spectroscopy to measure the levels of carotenoids and similar substances in tissue. In this technique, laser light is directed upon the area of tissue which is of interest. A small fraction of the scattered light is scattered inelastically, producing the carotenoid Raman signal which is at a different frequency than the incident laser light, and the Raman signal is collected, filtered, and measured. The resulting Raman signal can be analyzed such that the background fluorescence signal is subtracted and the results displayed and compared with known calibration standards.

Owner:UNIV OF UTAH RES FOUND

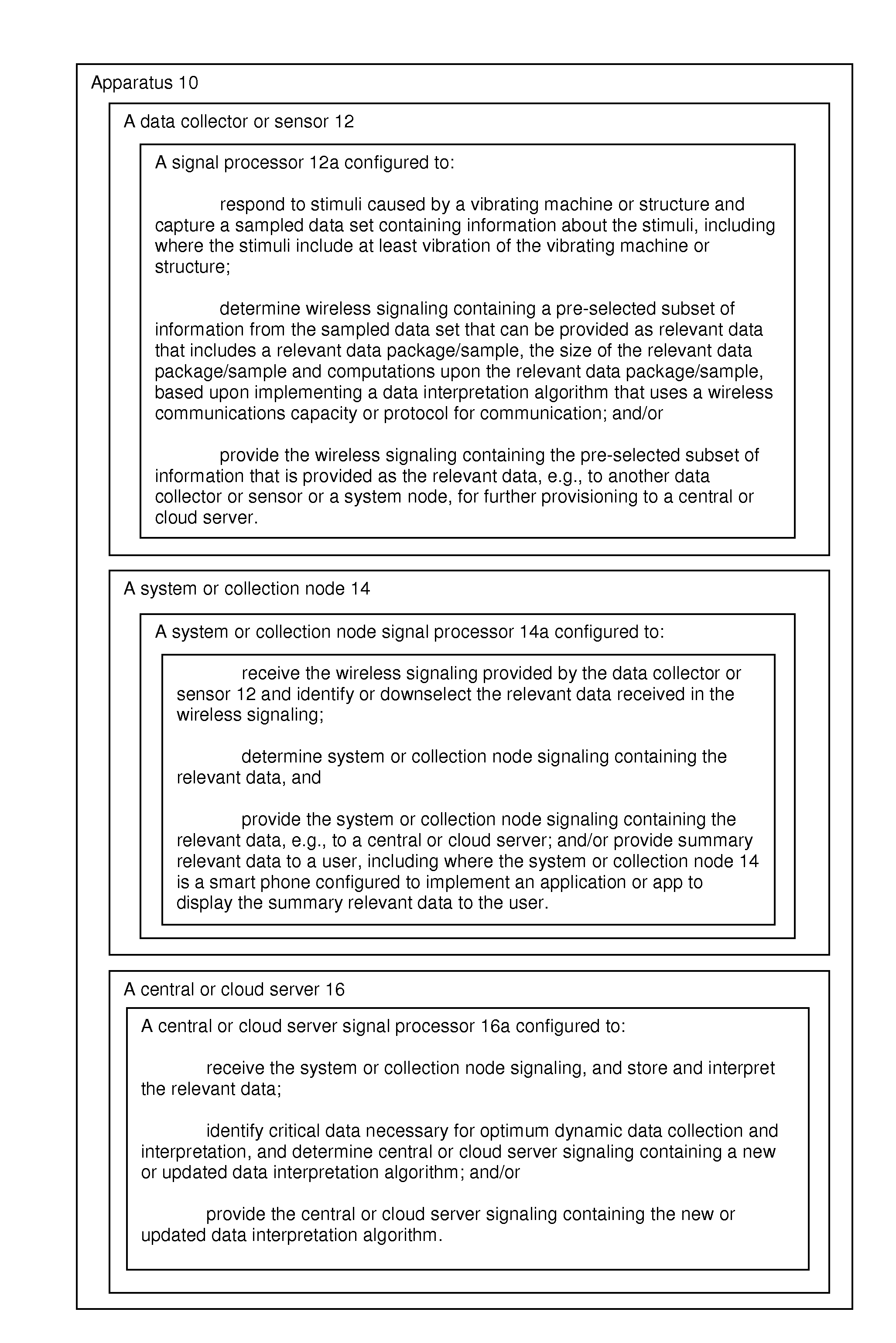

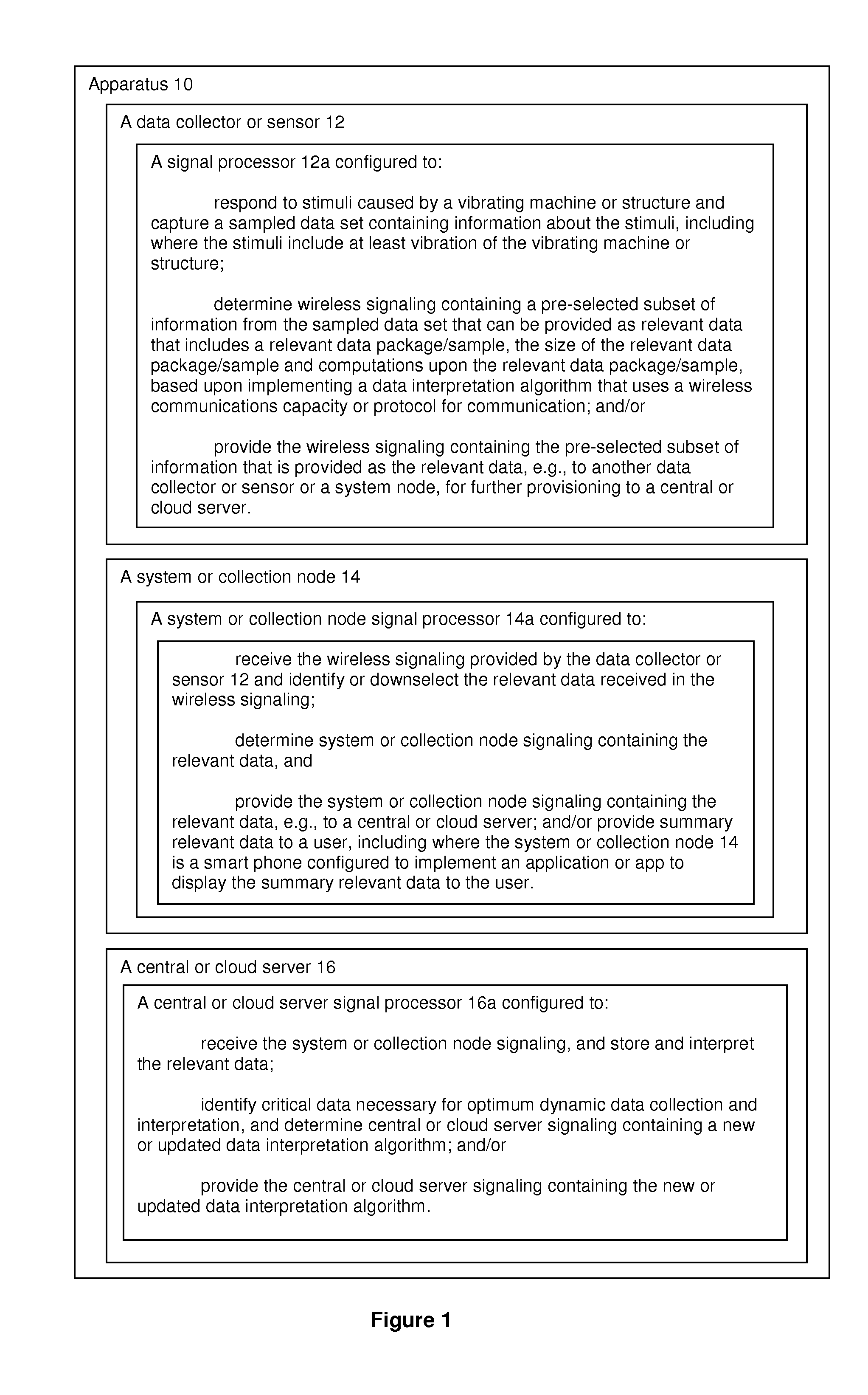

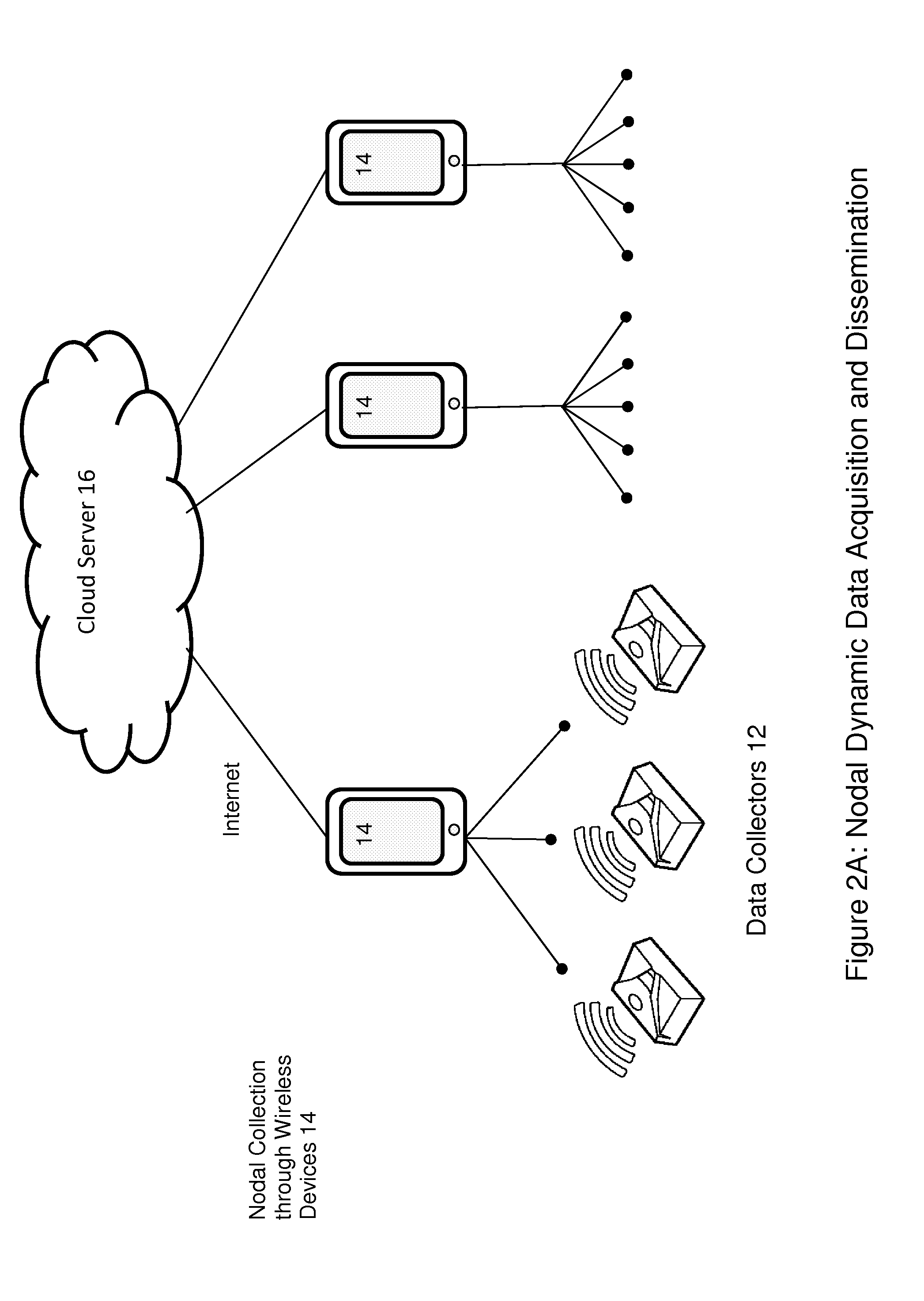

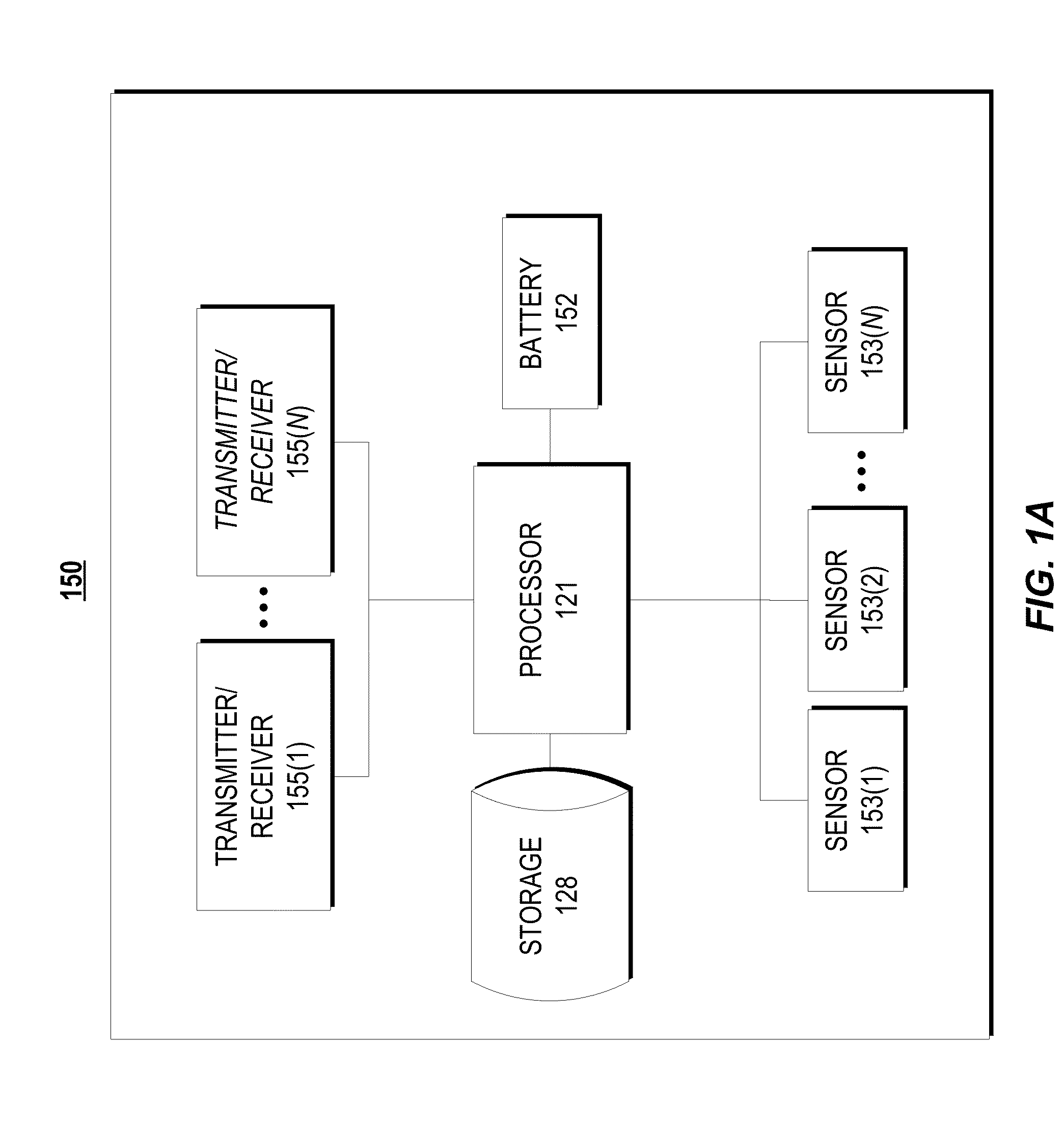

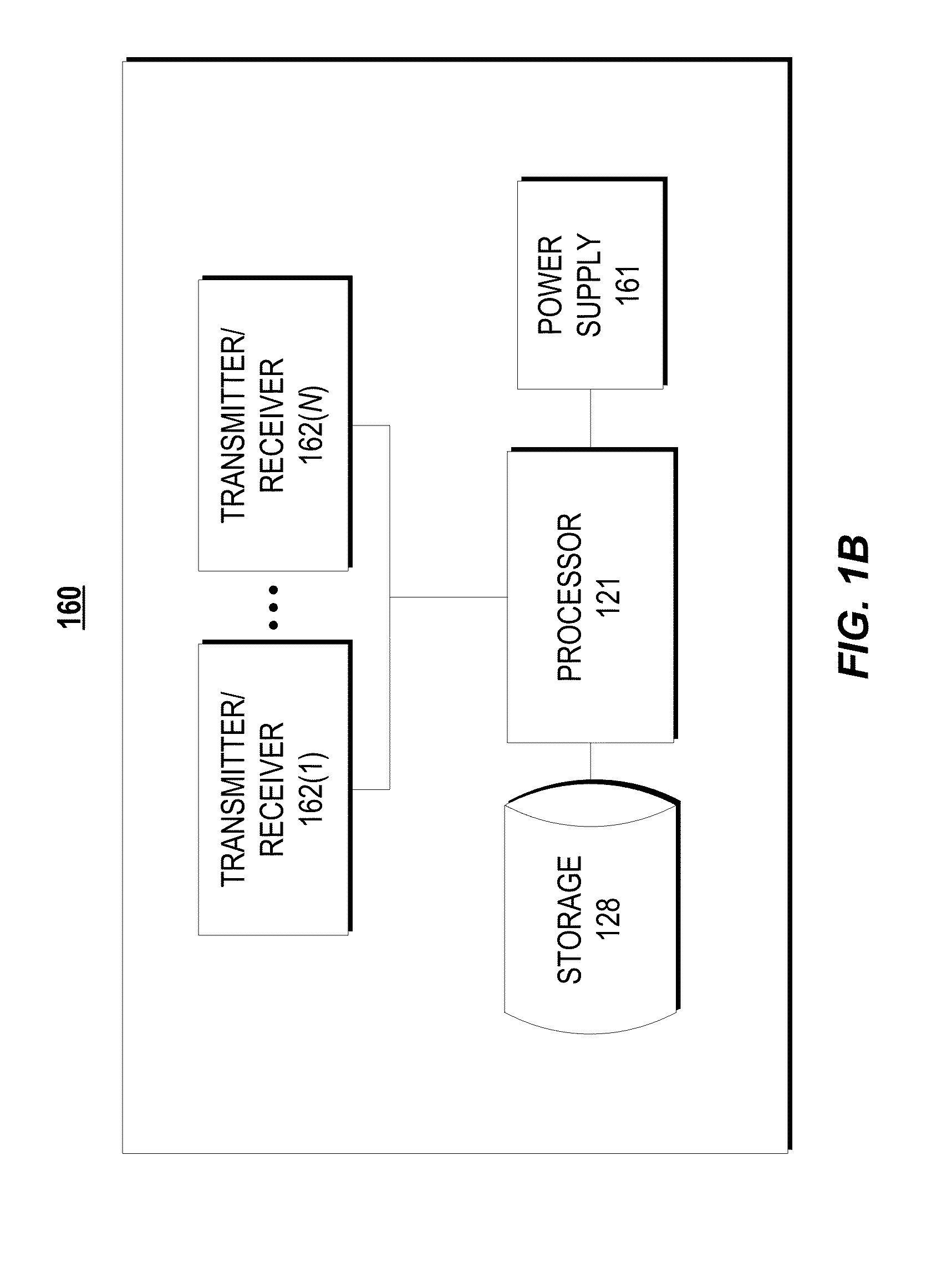

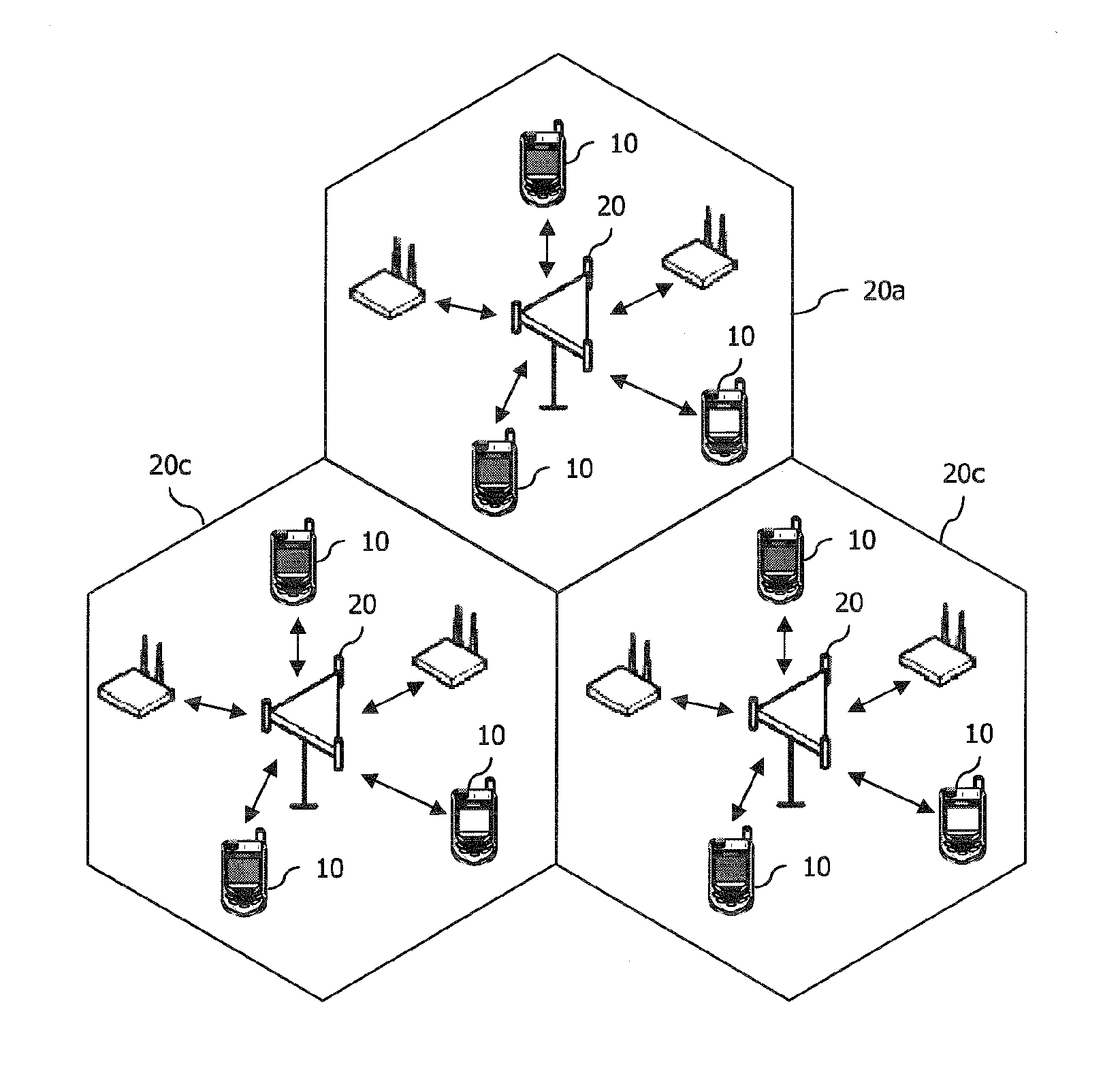

Nodal dynamic data acquisition and dissemination

ActiveUS20160301991A1Efficient measurementConsistent measurementVibration measurement in solidsTransmission systemsNODALData set

Apparatus is provided having a signal processor configured to: respond to stimuli caused by a vibrating machine or structure and capture a sampled data set containing information about the stimuli, including where the stimuli include at least vibration of the vibrating machine or structure; and determine wireless signaling containing a pre-selected subset of information from the sampled data set that can be provided as relevant data that includes a relevant data package / sample, the size of the relevant data package / sample, and computations upon the relevant data package / sample, based upon implementing a data interpretation algorithm that uses a wireless communication capacity or protocol for communication. The apparatus may include a data collector or sensor having the signal processor arranged therein and configured for coupling to the vibrating machine or structure to be monitored.

Owner:ITT MFG ENTERPRISES LLC

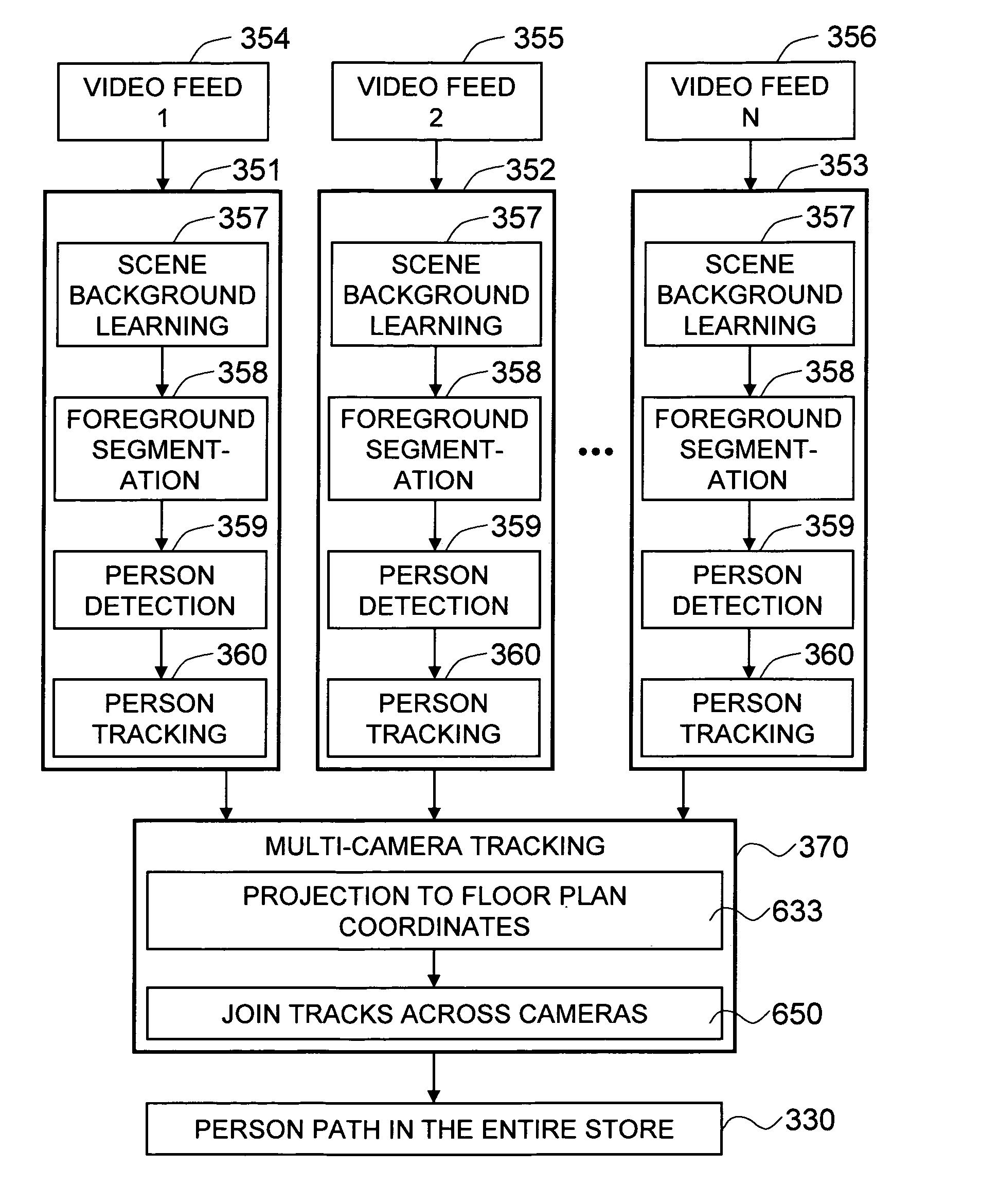

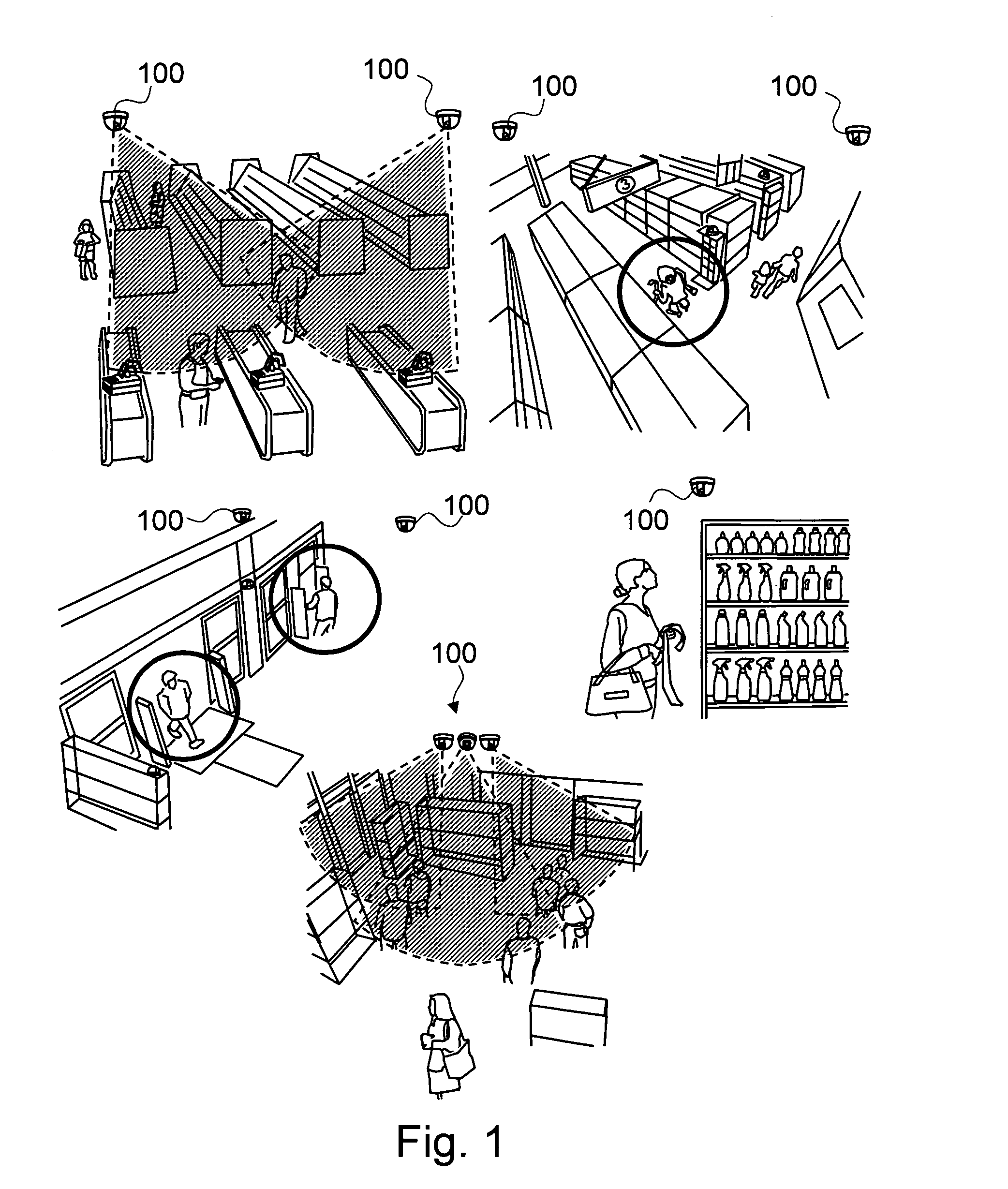

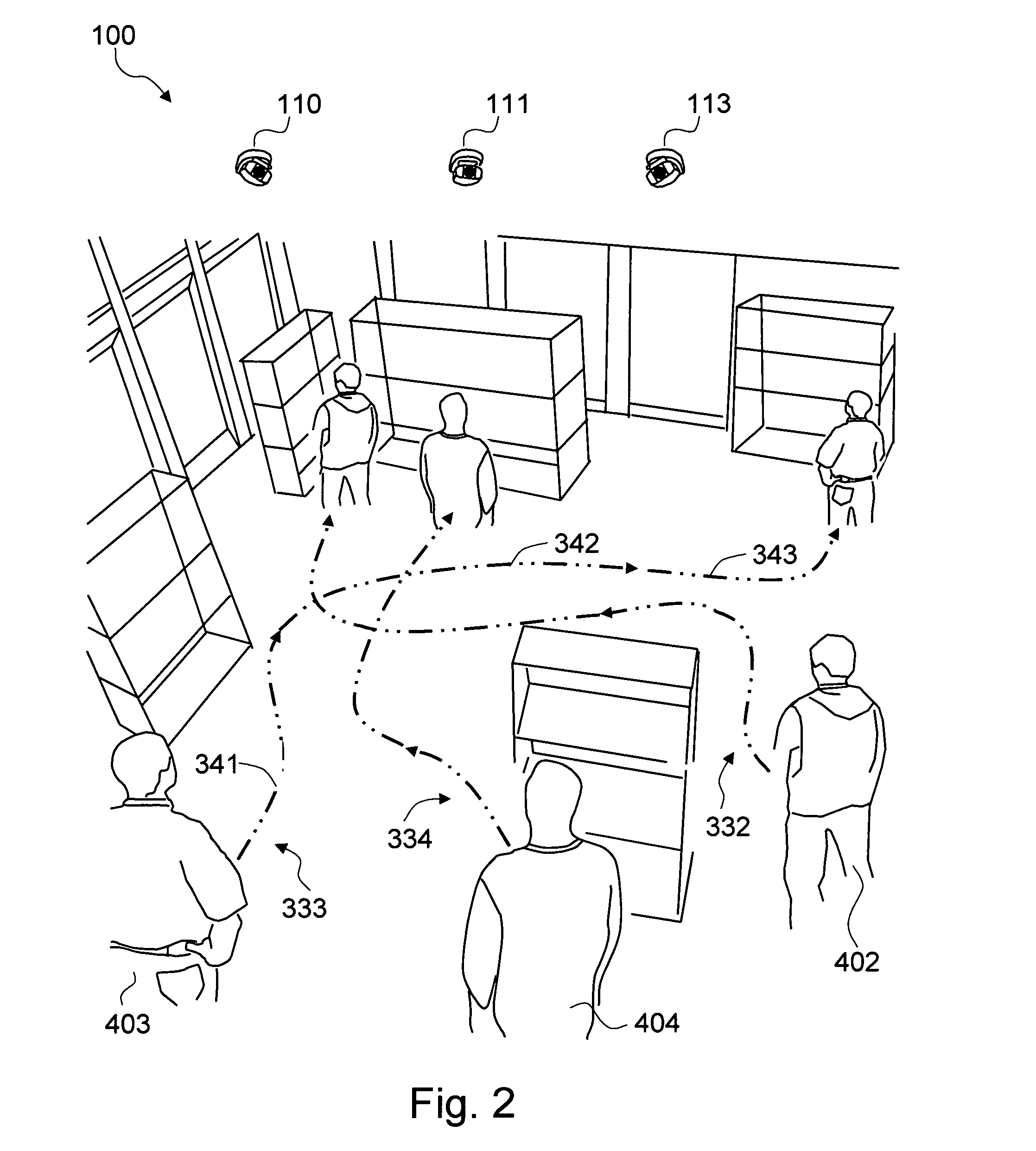

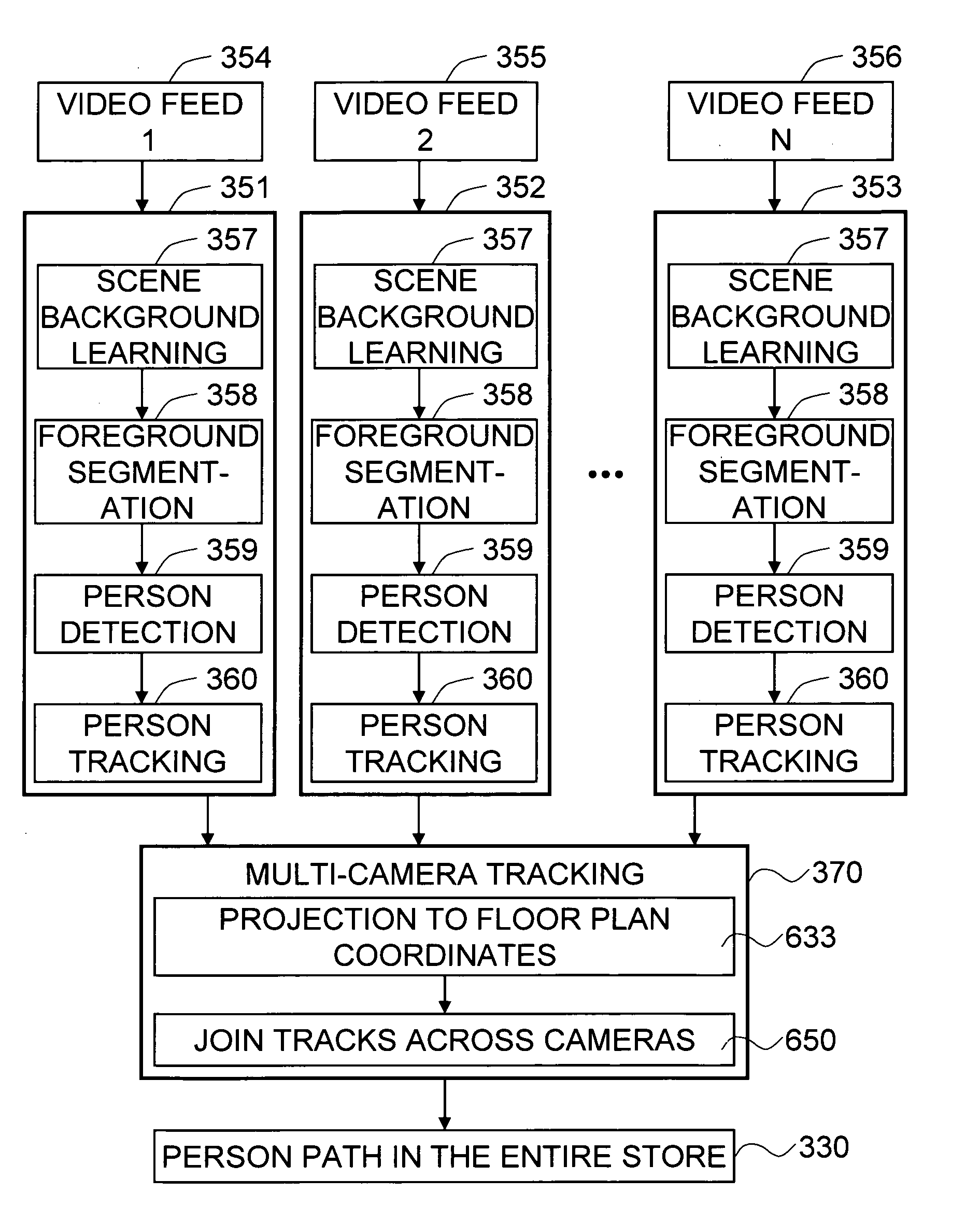

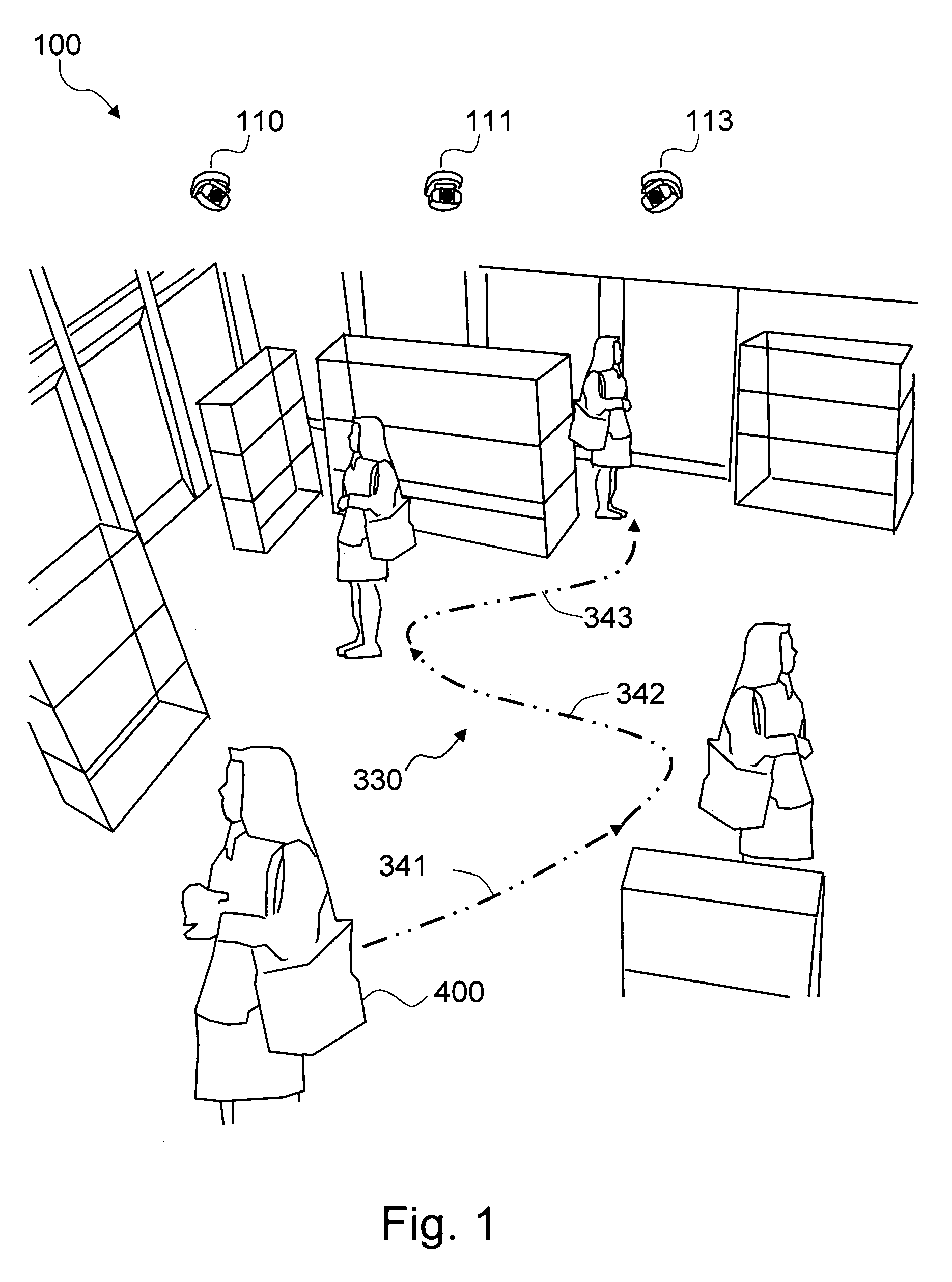

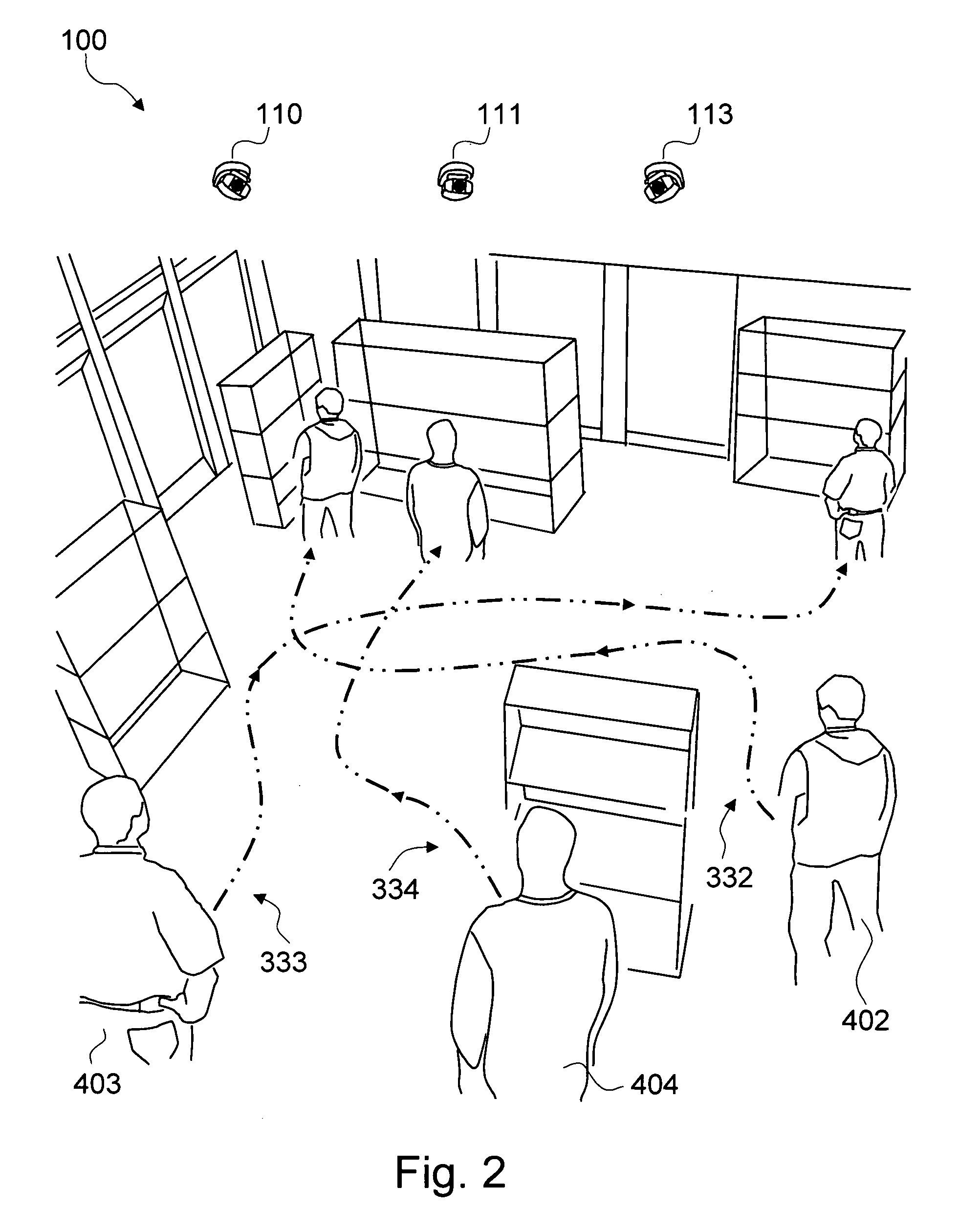

Method and system for analyzing shopping behavior using multiple sensor tracking

ActiveUS8009863B1Efficient measurementDeep insight behaviorImage enhancementImage analysisOutcome measurementsInformation analysis

The present invention is a method and system for automatically analyzing the behavior of a person and a plurality of persons in a physical space based on measurement of the trip of the person and the plurality of persons on input images. The present invention captures a plurality of input images of the person by a plurality of means for capturing images, such as cameras. The plurality of input images is processed in order to track the person in each field of view of the plurality of means for capturing images. The present invention measures the information for the trip of the person in the physical space based on the processed results from the plurality of tracks and analyzes the behavior of the person based on the trip information. The trip information can comprise coordinates of the person's position and temporal attributes, such as trip time and trip length, for the plurality of tracks. The physical space may be a retail space, and the person may be a customer in the retail space. The trip information can provide key measurements as a foundation for the behavior analysis of the customer along the entire shopping trip, from entrance to checkout, that deliver deeper insights about the customer behavior. The focus of the present invention is given to the automatic behavior analytics applications based upon the trip from the extracted video, where the exemplary behavior analysis comprises map generation as visualization of the behavior, quantitative category measurement, dominant path measurement, category correlation measurement, and category sequence measurement.

Owner:VIDEOMINING CORP

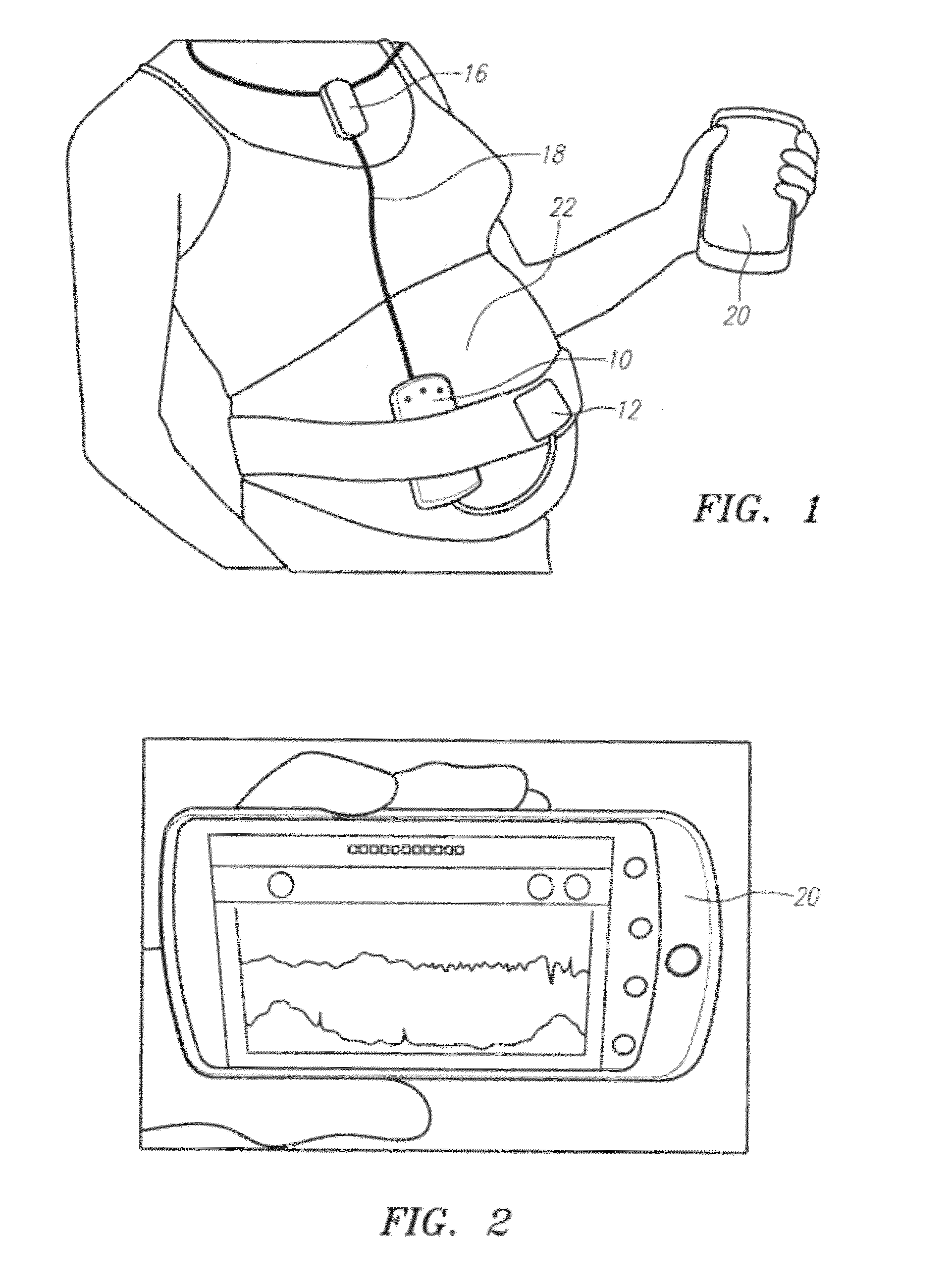

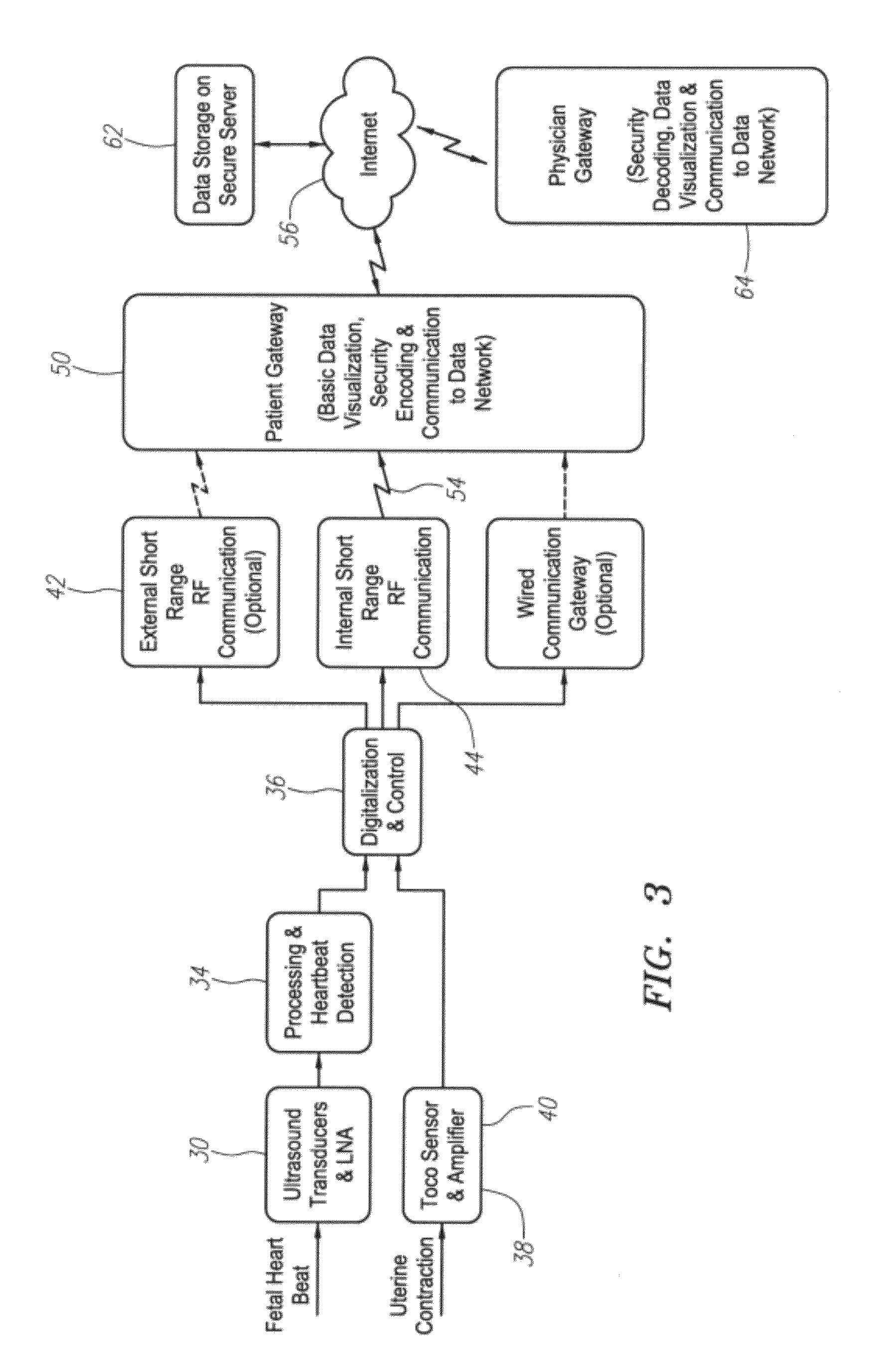

Wireless fetal monitoring system

InactiveUS20120232398A1Low costPoor outcomeElectromyographyHeart/pulse rate measurement devicesEngineeringPatient monitor

A wireless fetal and maternal monitoring system includes a fetal sensor unit adapted to receive signals indicative of a fetal heartbeat, the sensor optionally utilizing a Doppler ultrasound sensor. A short-range transmission unit sends the signals indicative of fetal heartbeat to a gateway unit, either directly or via an auxiliary communications unit, in which case the electrical coupling between the short-range transmission unit and the auxiliary communications unit is via a wired connection. The system includes a contraction actuator actuatable upon a maternal uterine contraction, which optionally is a EMG sensor. A gateway device provides for data visualization and data securitization. The gateway device provides for remote transmission of information through a data communication network. A server adapted to receive the information from the gateway device serves to store and process the data, and an interface system to permits remote patient monitoring.

Owner:GARY & MARY WEST HEALTH INST

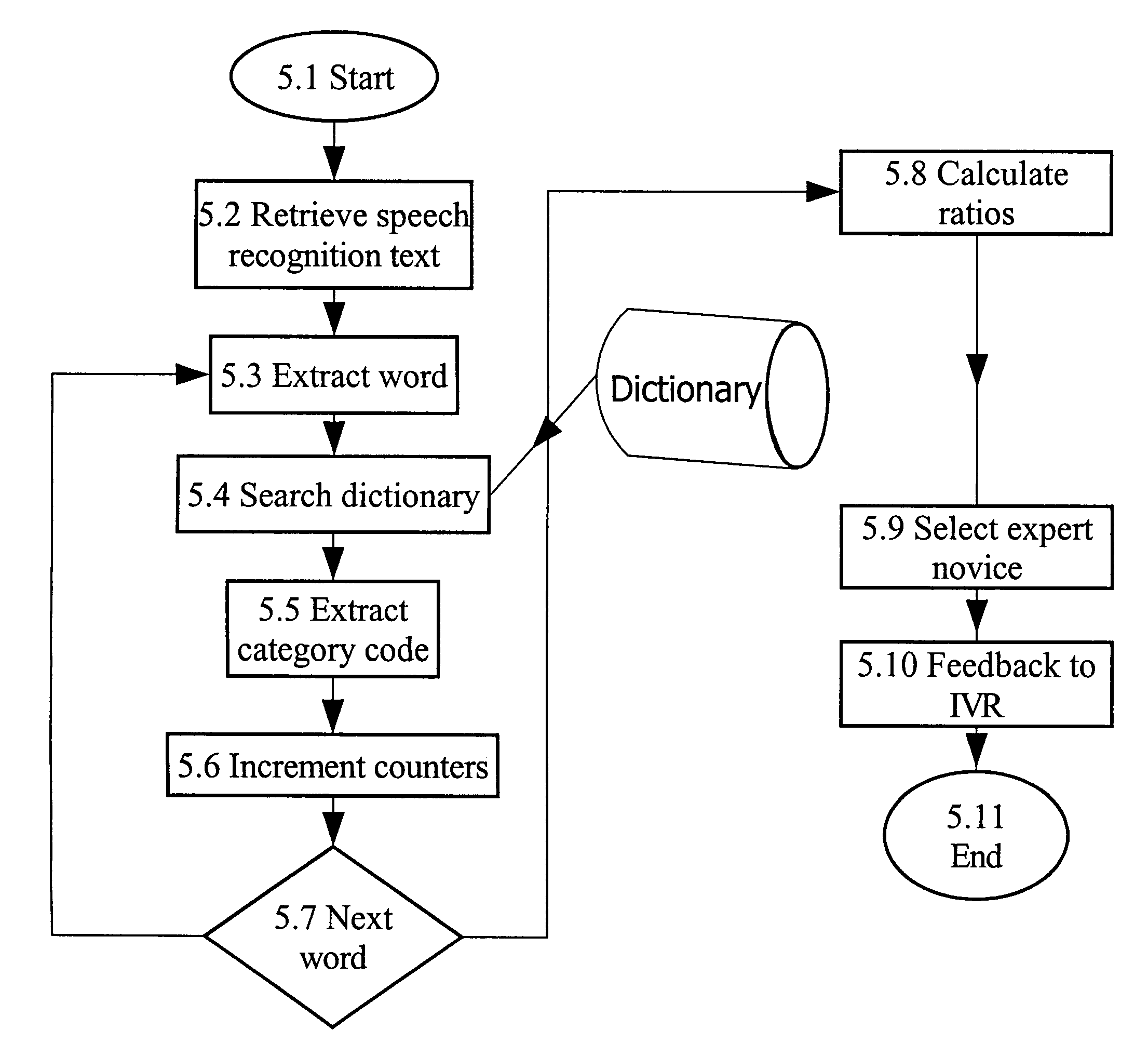

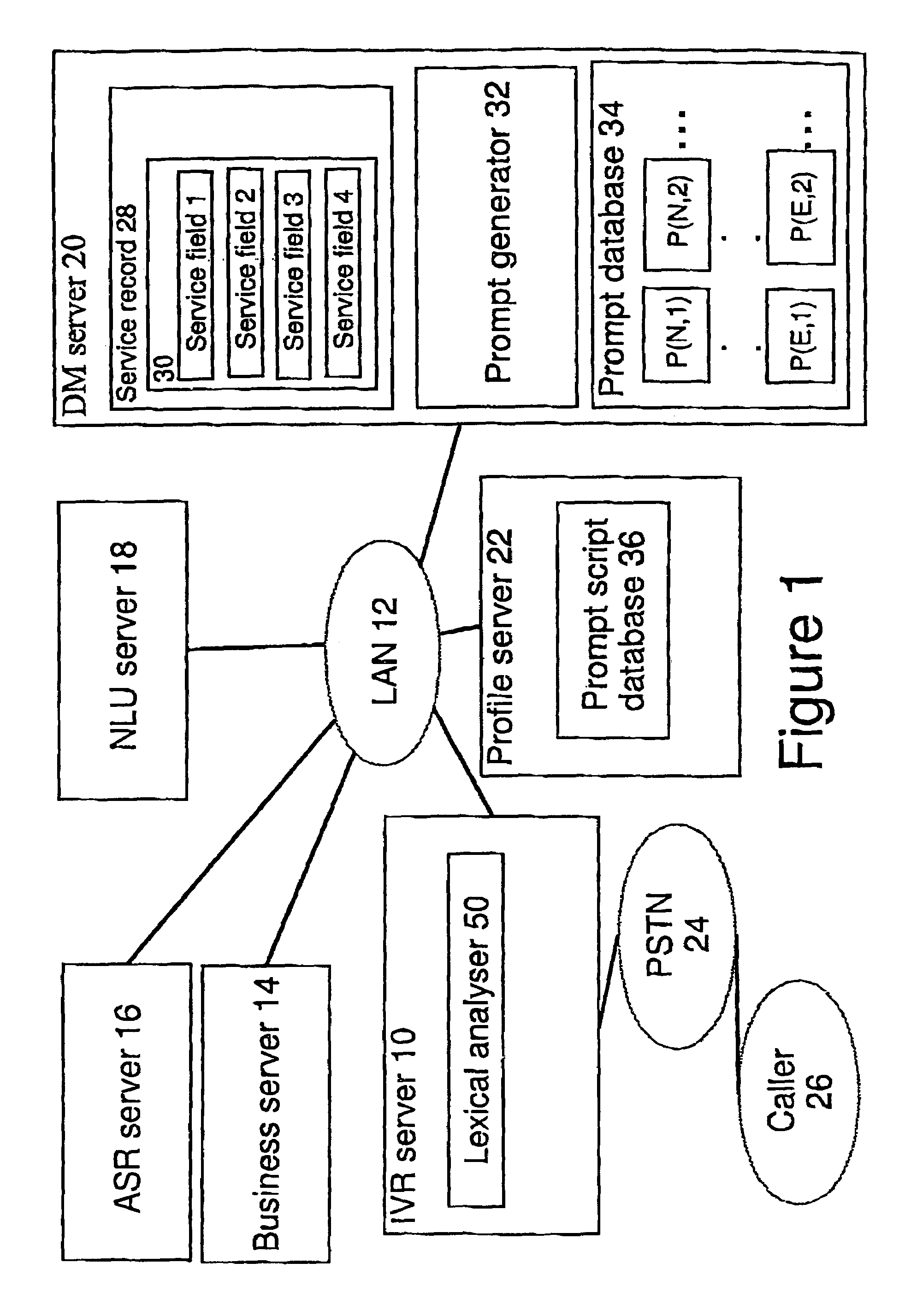

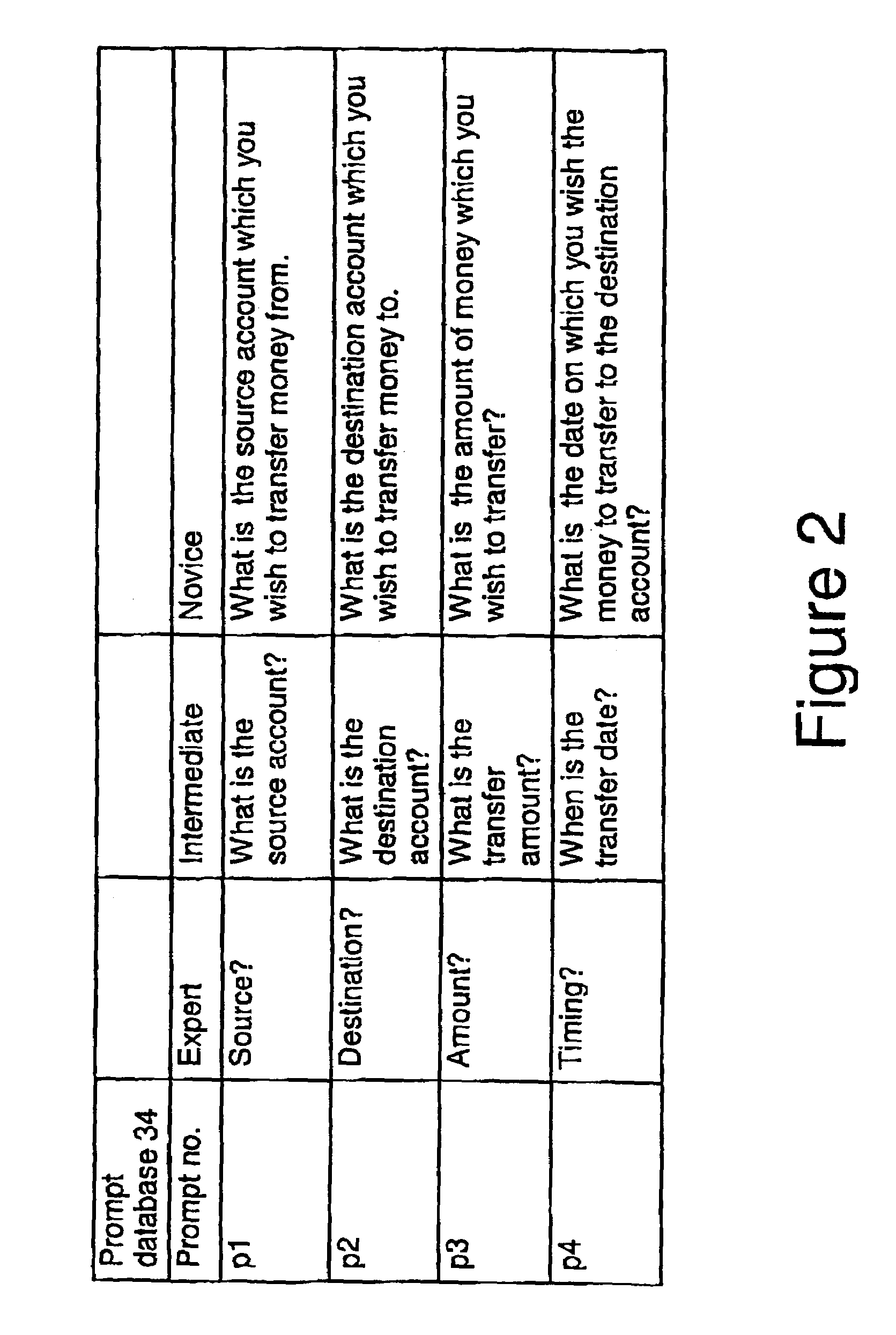

Interactive voice response system

InactiveUS6944592B1Efficient implementationEfficient measurementAutomatic exchangesSpeech recognitionStatistical analysisInteractive voice response system

An interactive voice response system which statistically analyses word usage in speech recognition results to select prompts for use in the interaction. A received voice signal is converted to text and calculating factors such as context and task word ratios and word rate. These factors are used to make dialogue decisions as to the whether to use expert, intermediate, or novice prompts depending on whether the factor falls below, inside or above a threshold range.

Owner:NUANCE COMM INC

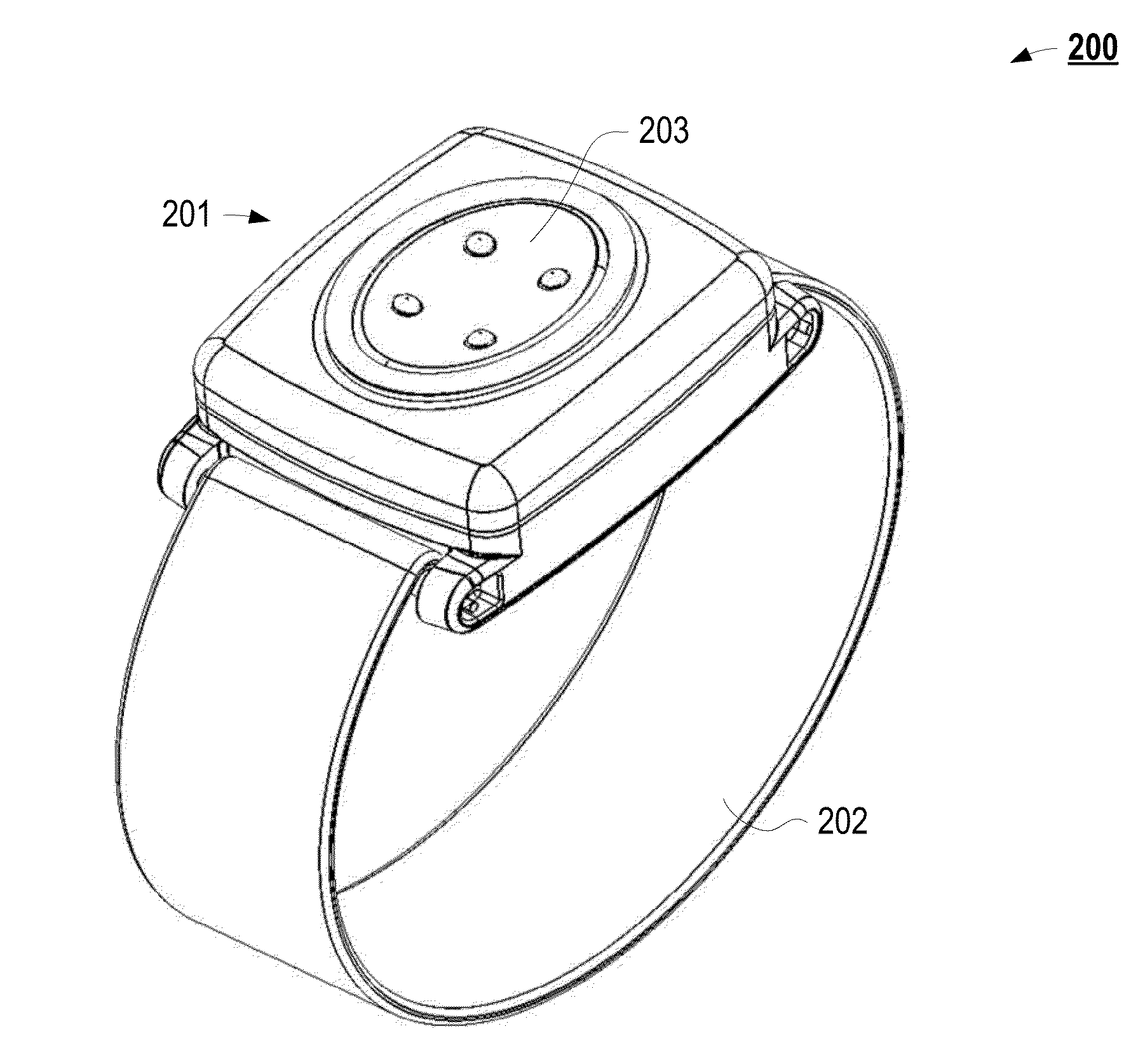

Power efficient system and method for measuring physical activity in resource constrained devices

ActiveUS20150057967A1Without compromising battery lifeEfficient measurementAmplifier modifications to reduce noise influenceInertial sensorsPower efficientEngineering

The systems and methods described herein relate to the power efficient measurement of physical activity with a wearable sensor. More particularly, the systems and methods described herein enable the continuous activity tracking without compromising battery life. In some implementations, the wearable sensor detects movement and enters predefined power states responsive to the type of movement detected.

Owner:EVERYFIT

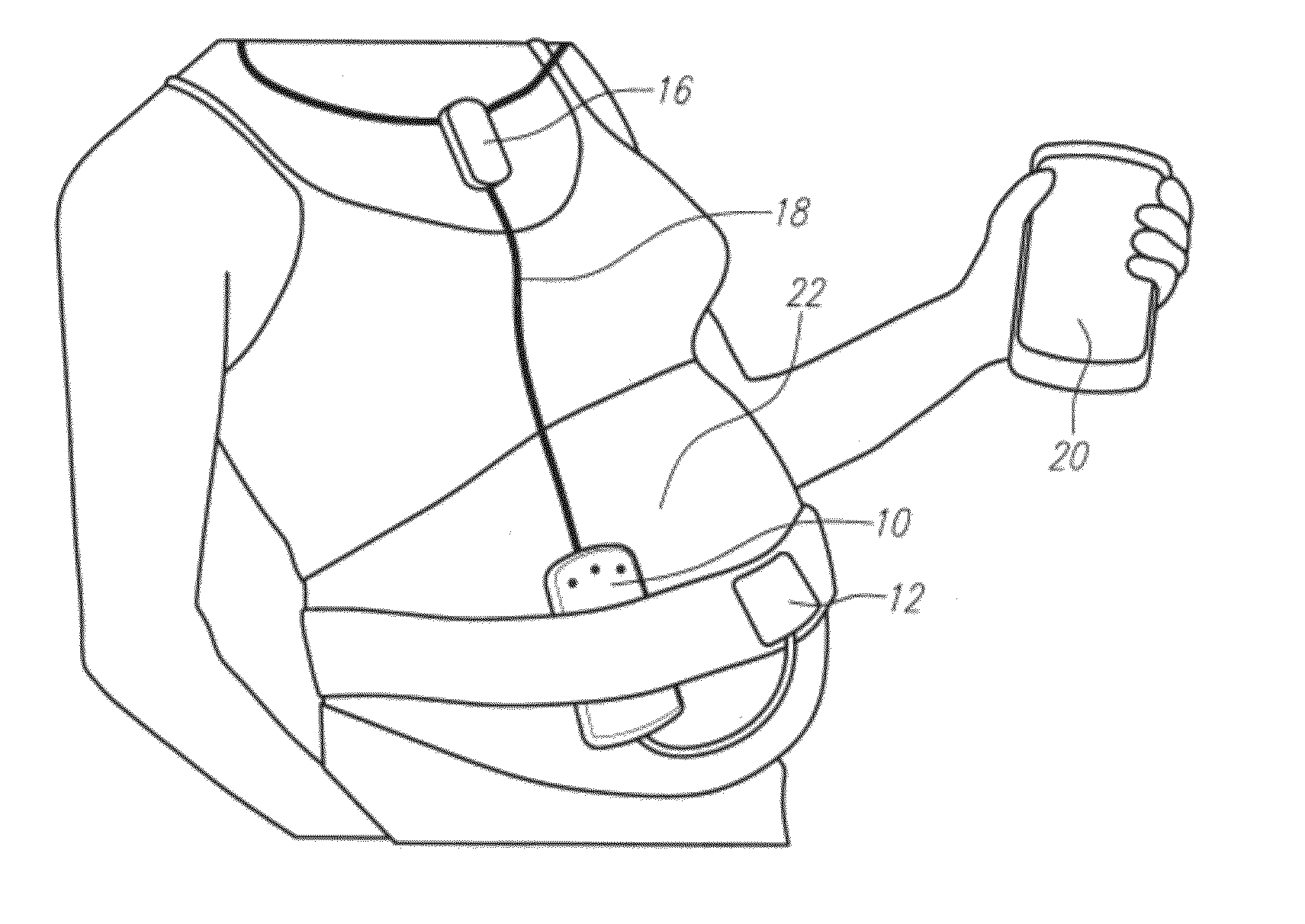

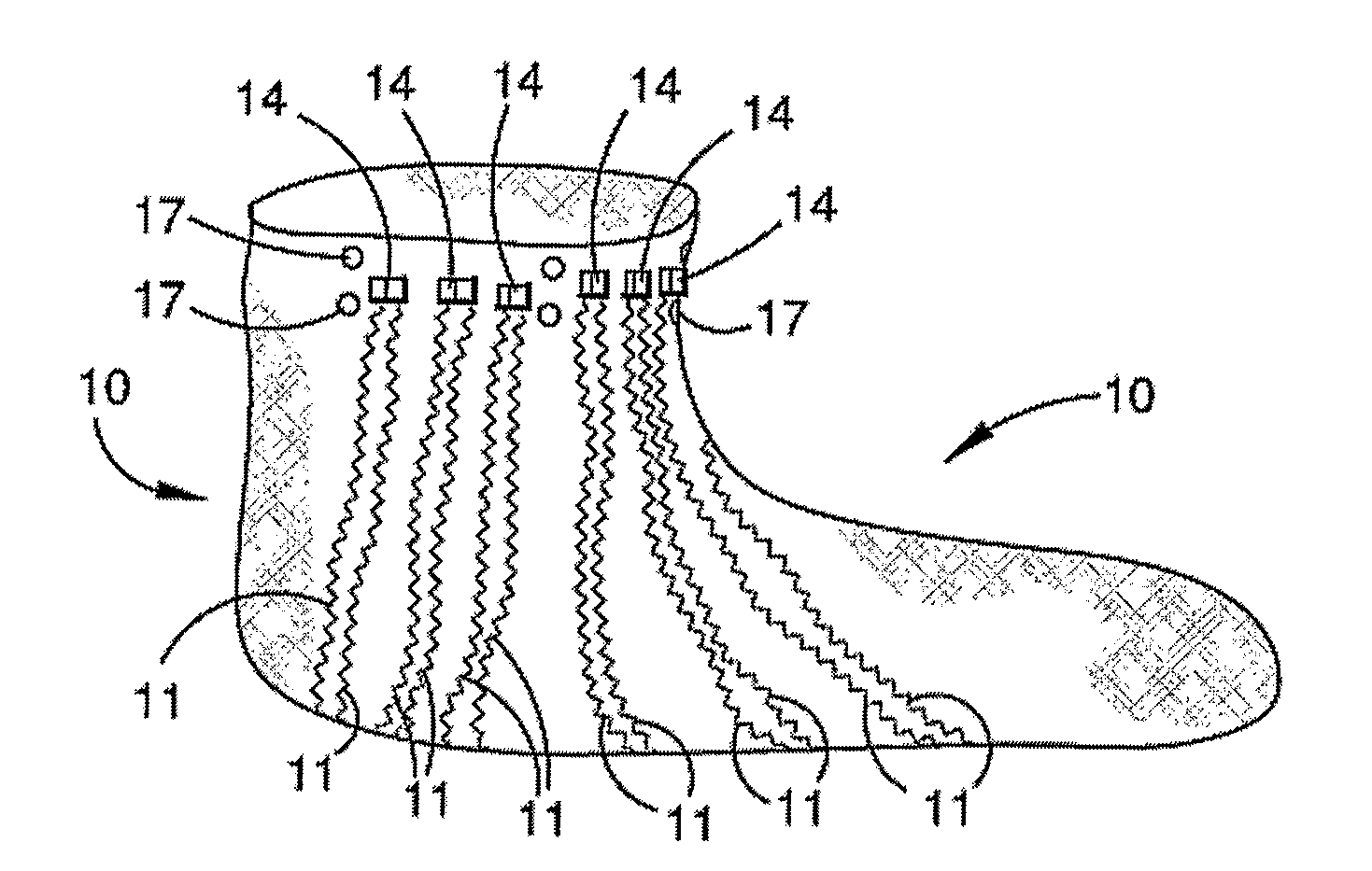

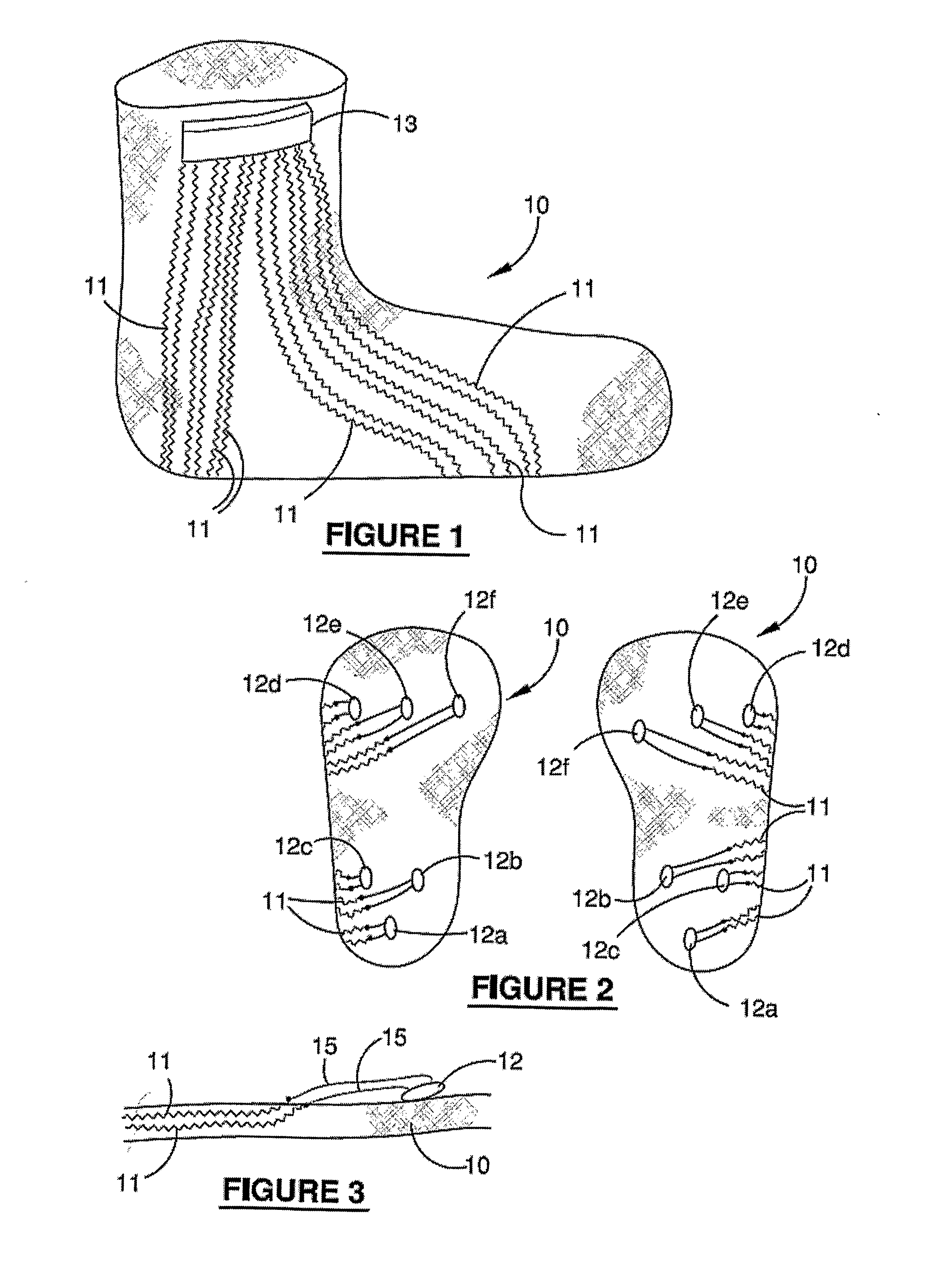

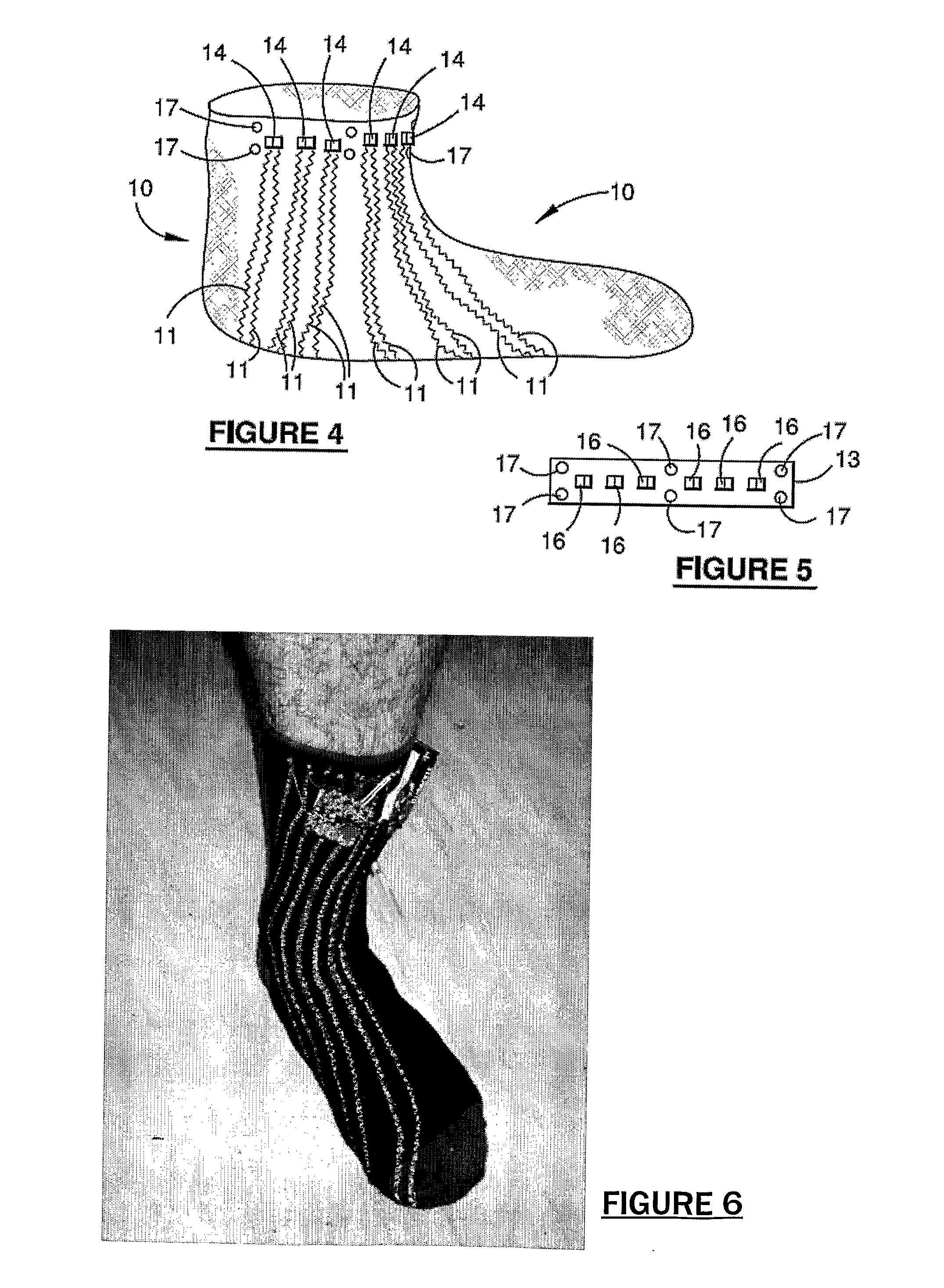

System, garment and method

ActiveUS20110015498A1Level of customizationSame compressionPerson identificationSensorsButtocksExternal pressure

The present invention relates to a system and garment that incorporates sensors that can be used for measuring or monitoring pressure or forces in feet, the stumps of limbs of an amputee that are fitted with prosthetic devices, or any other parts of the body that are subject to forces such as the buttock while seated or when external pressure inducing devices are employed, such as for example, pressure bandages. The invention also relates to a method to monitor or diagnose any foot or limb related activity for recreational, sporting, military or medical reasons and is particularly aimed at the treatment of neuropathic or other degenerating conditions.

Owner:SENSORIA

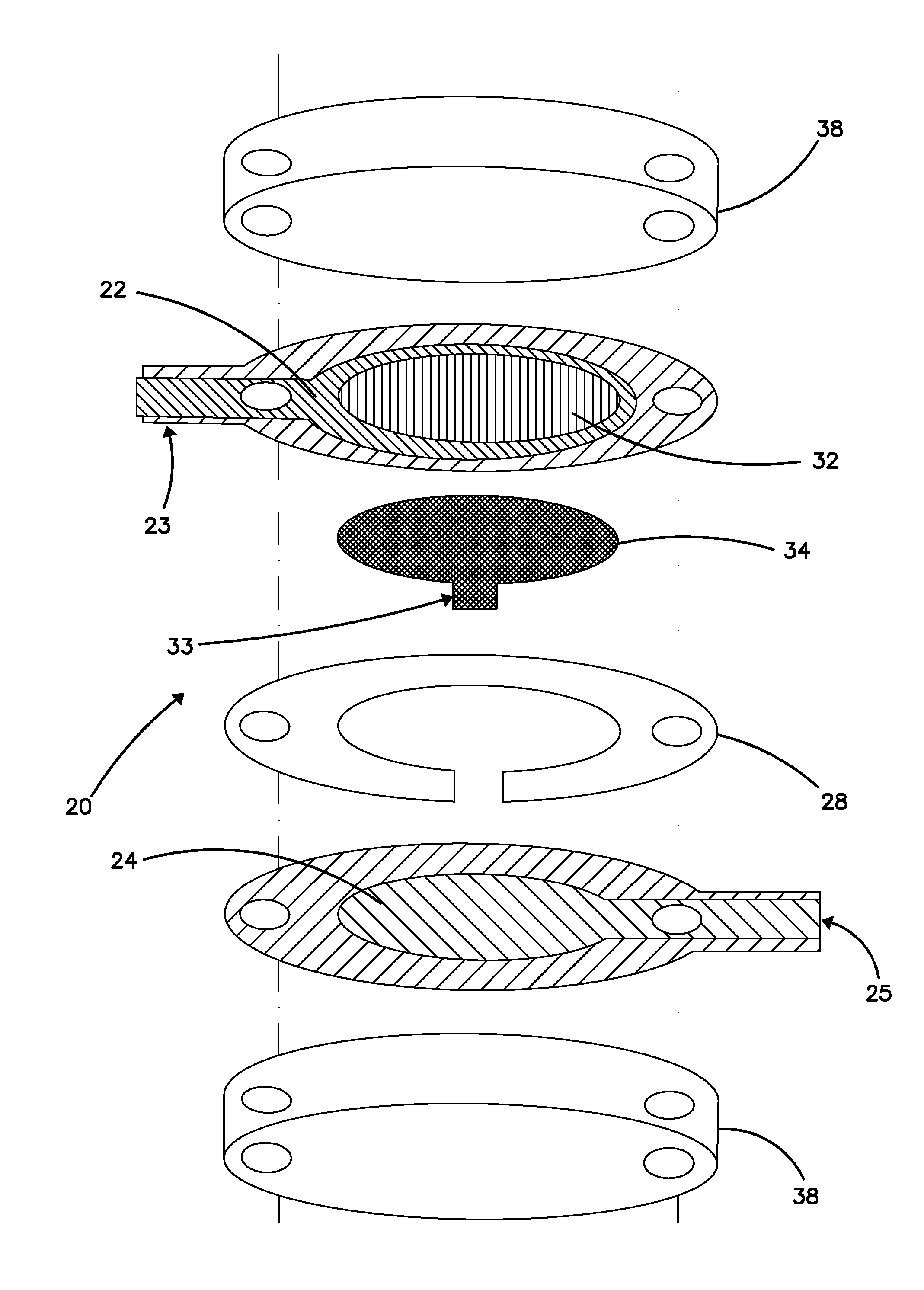

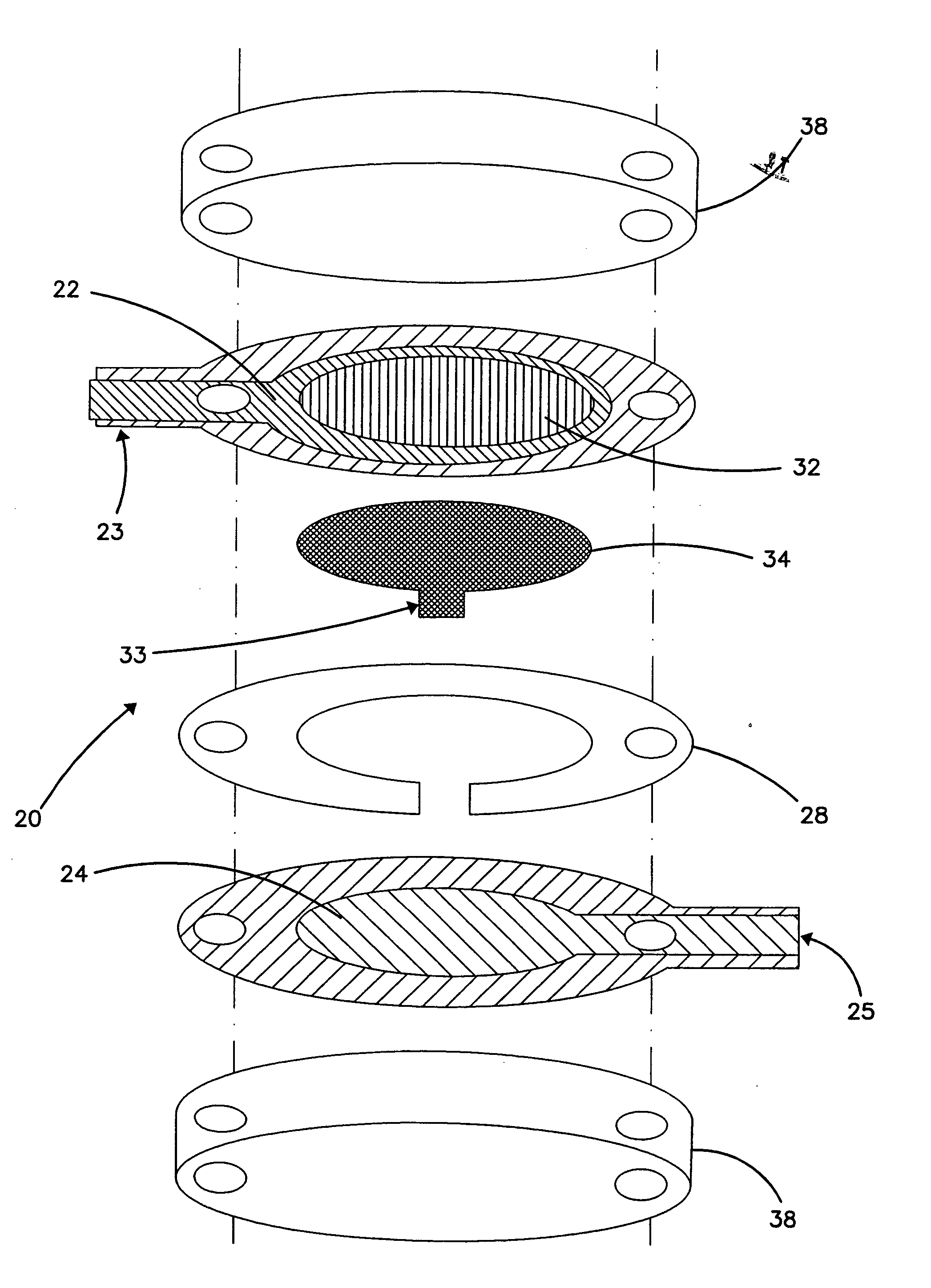

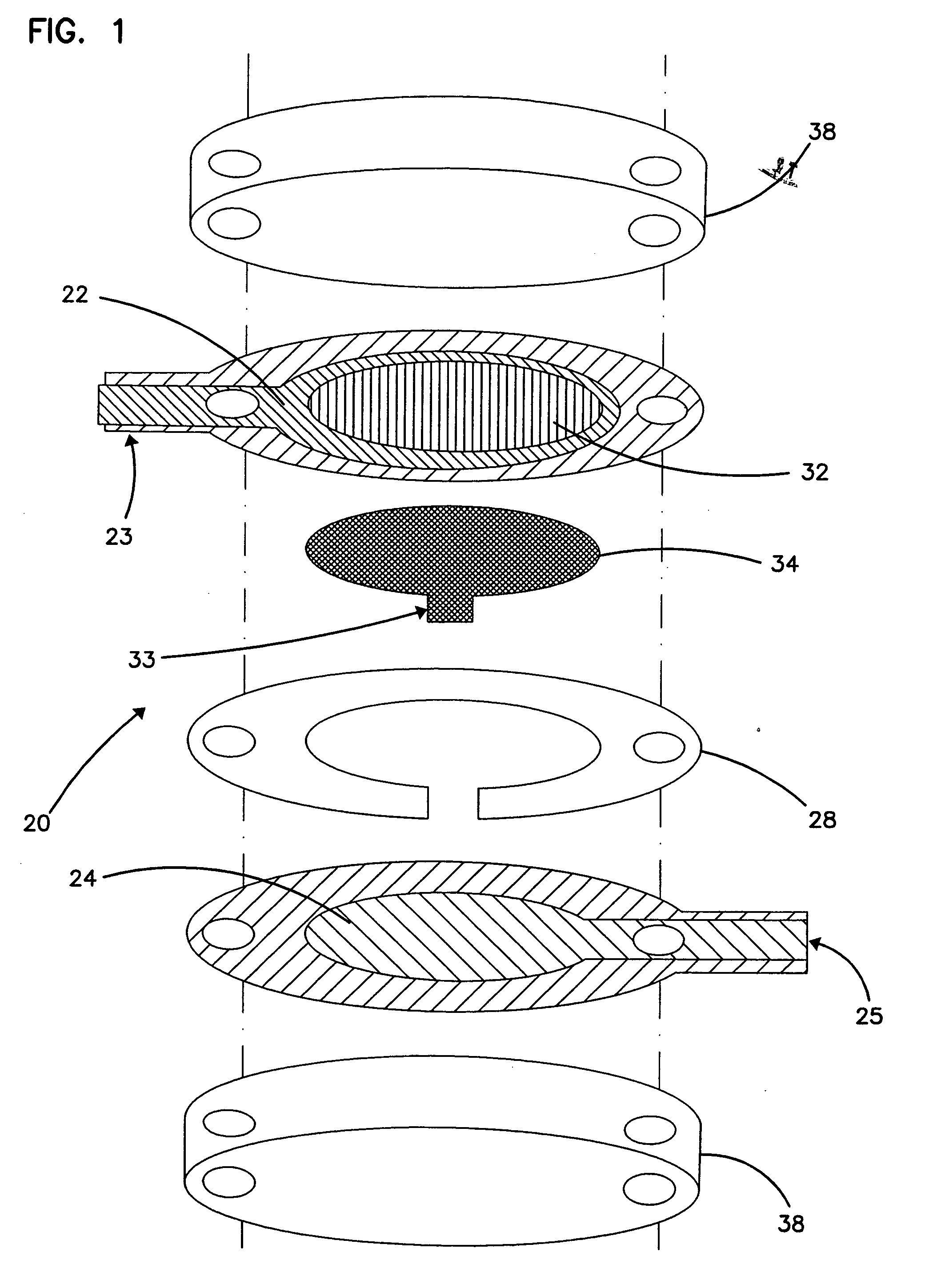

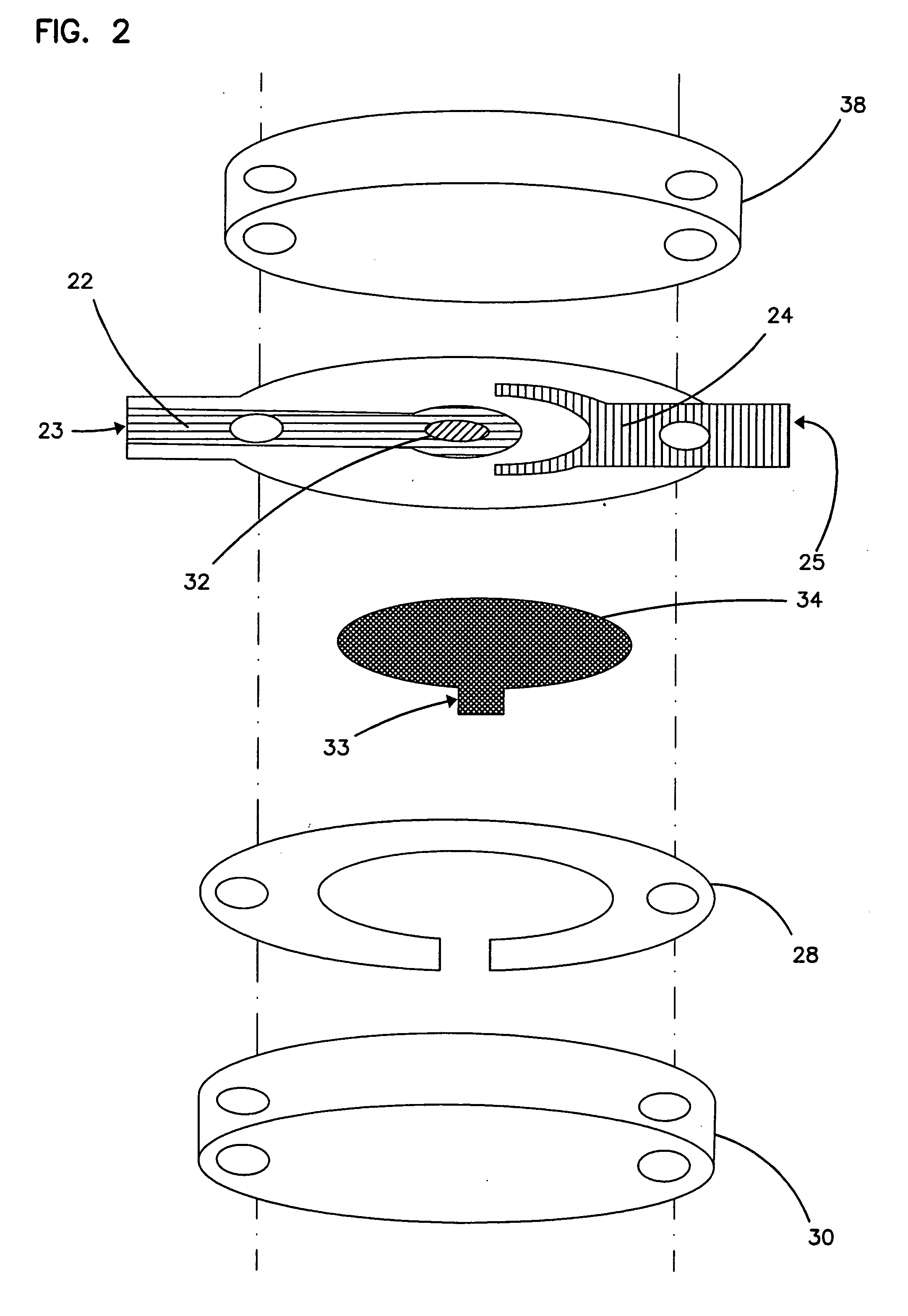

Small volume in vitro analyte sensor

InactiveUS20060169599A1Lower the volumePrecise processImmobilised enzymesBioreactor/fermenter combinationsElectrolysisElectron transfer

A sensor utilizing a non-leachable or diffusible redox mediator is described. The sensor includes a sample chamber to hold a sample in electrolytic contact with a working electrode, and in at least some instances, the sensor also contains a non-leachable or a diffusible second electron transfer agent. The sensor and / or the methods used produce a sensor signal in response to the analyte that can be distinguished from a background signal caused by the mediator. The invention can be used to determine the concentration of a biomolecule, such as glucose or lactate, in a biological fluid, such as blood or serum, using techniques such as coulometry, amperometry, and potentiometry. An enzyme capable of catalyzing the electrooxidation or electroreduction of the biomolecule is typically provided as a second electron transfer agent.

Owner:ABBOTT DIABETES CARE INC

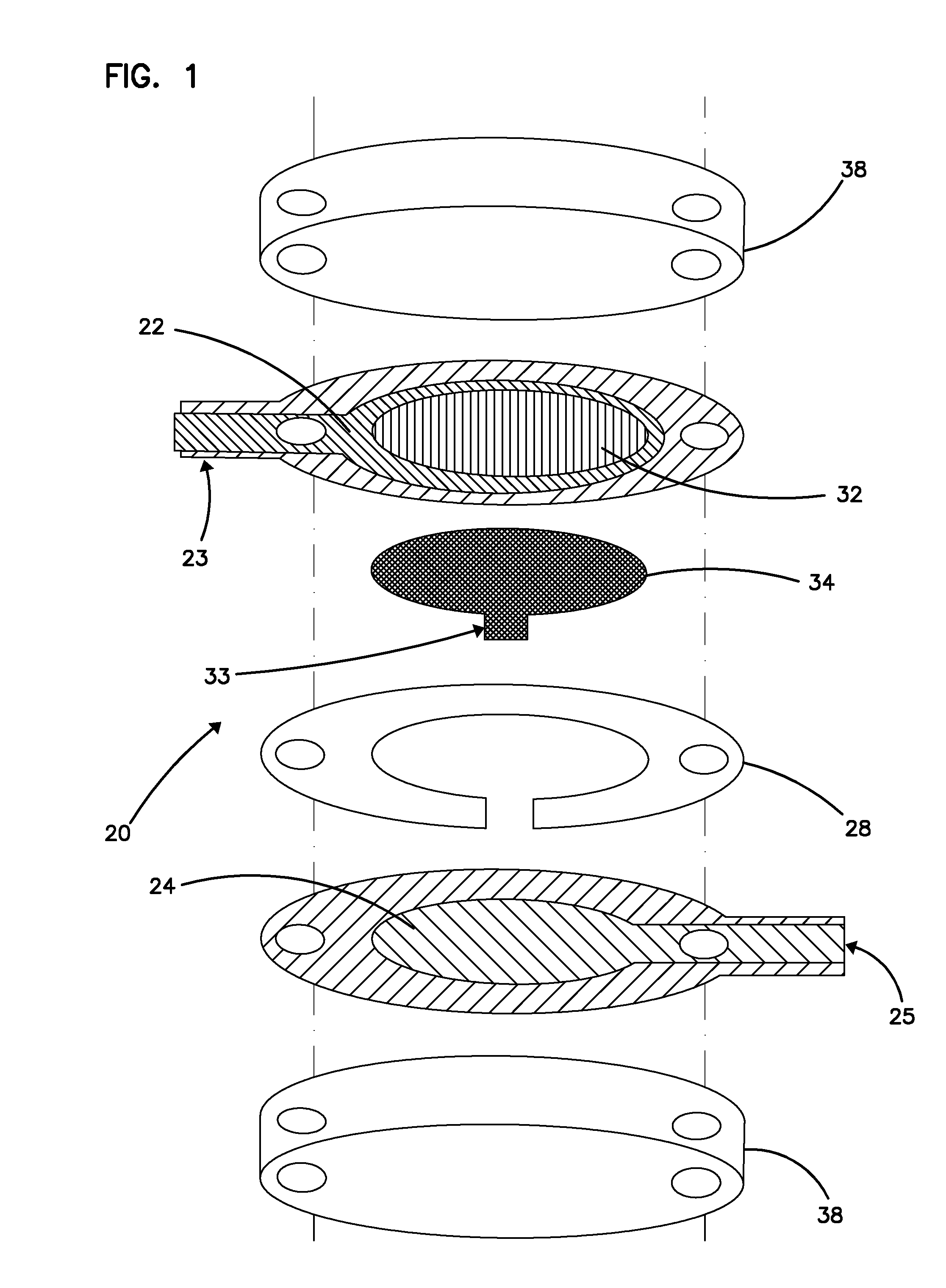

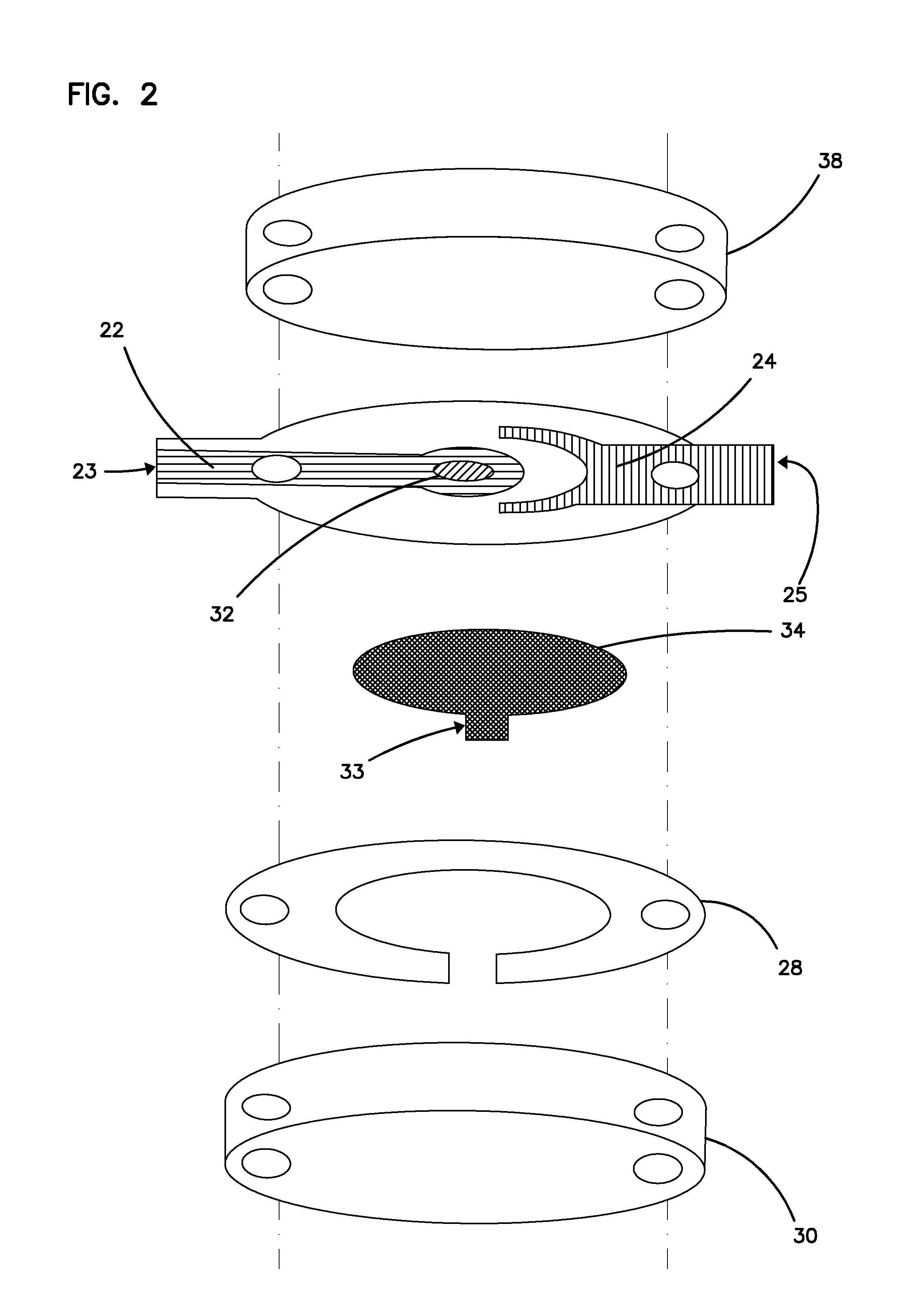

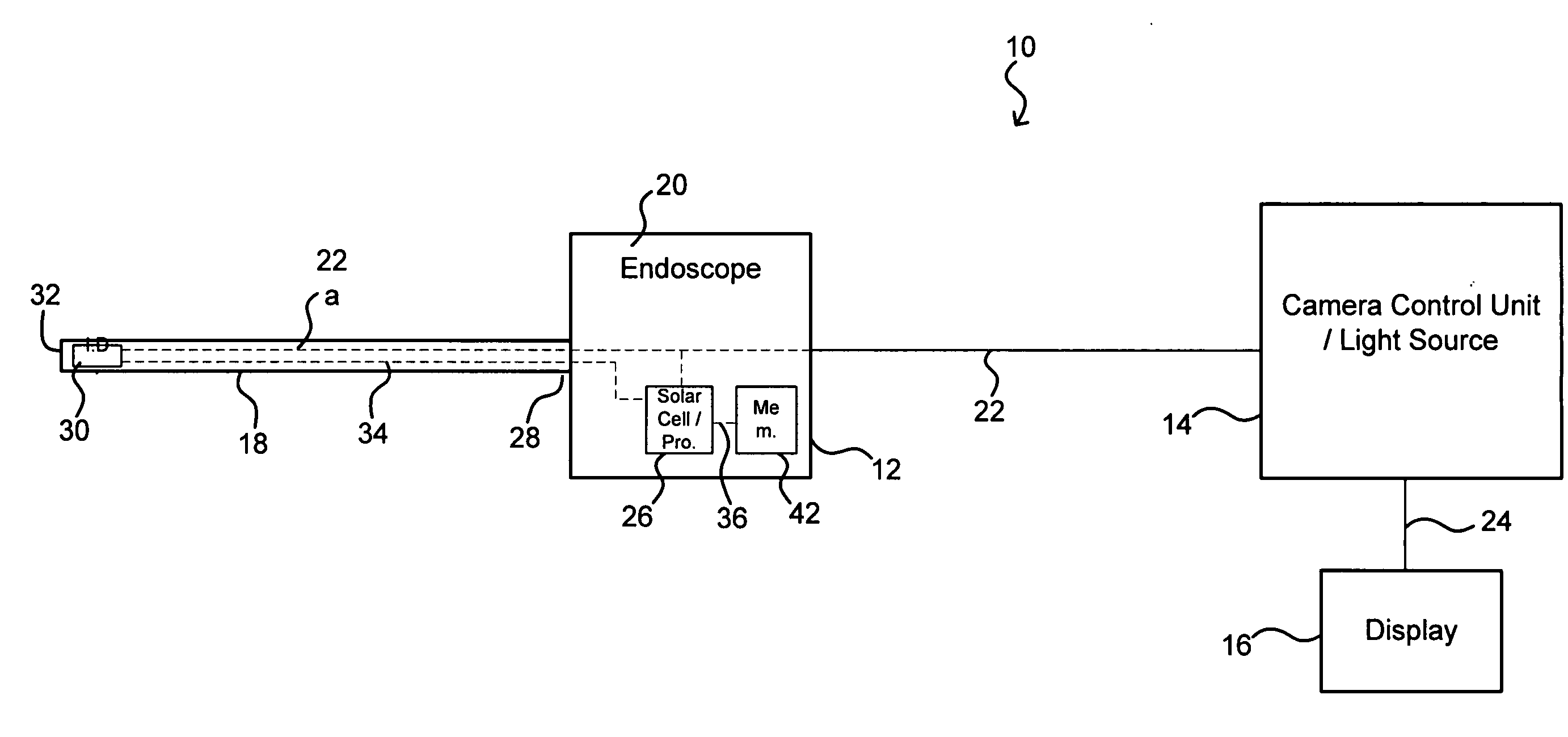

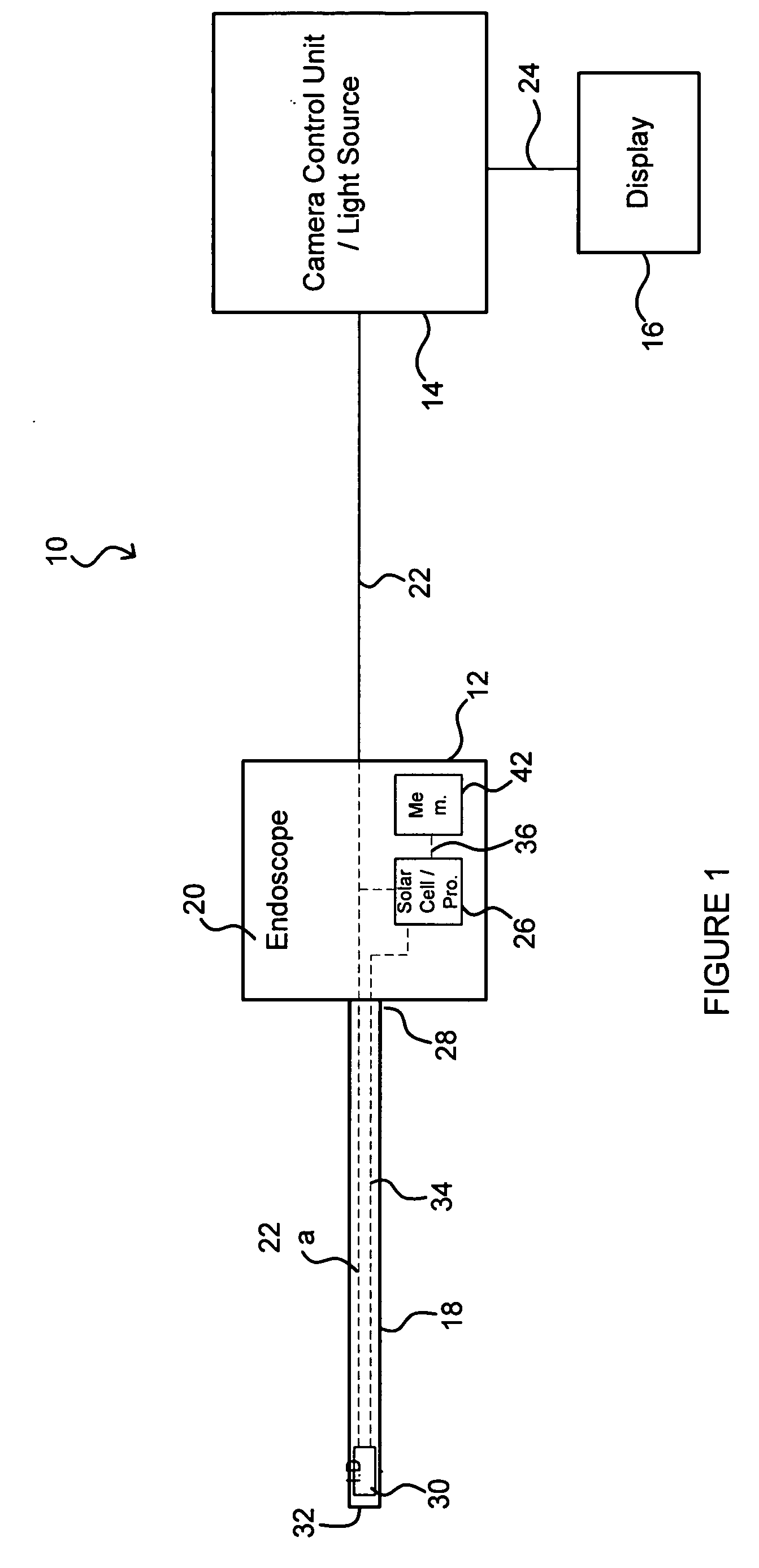

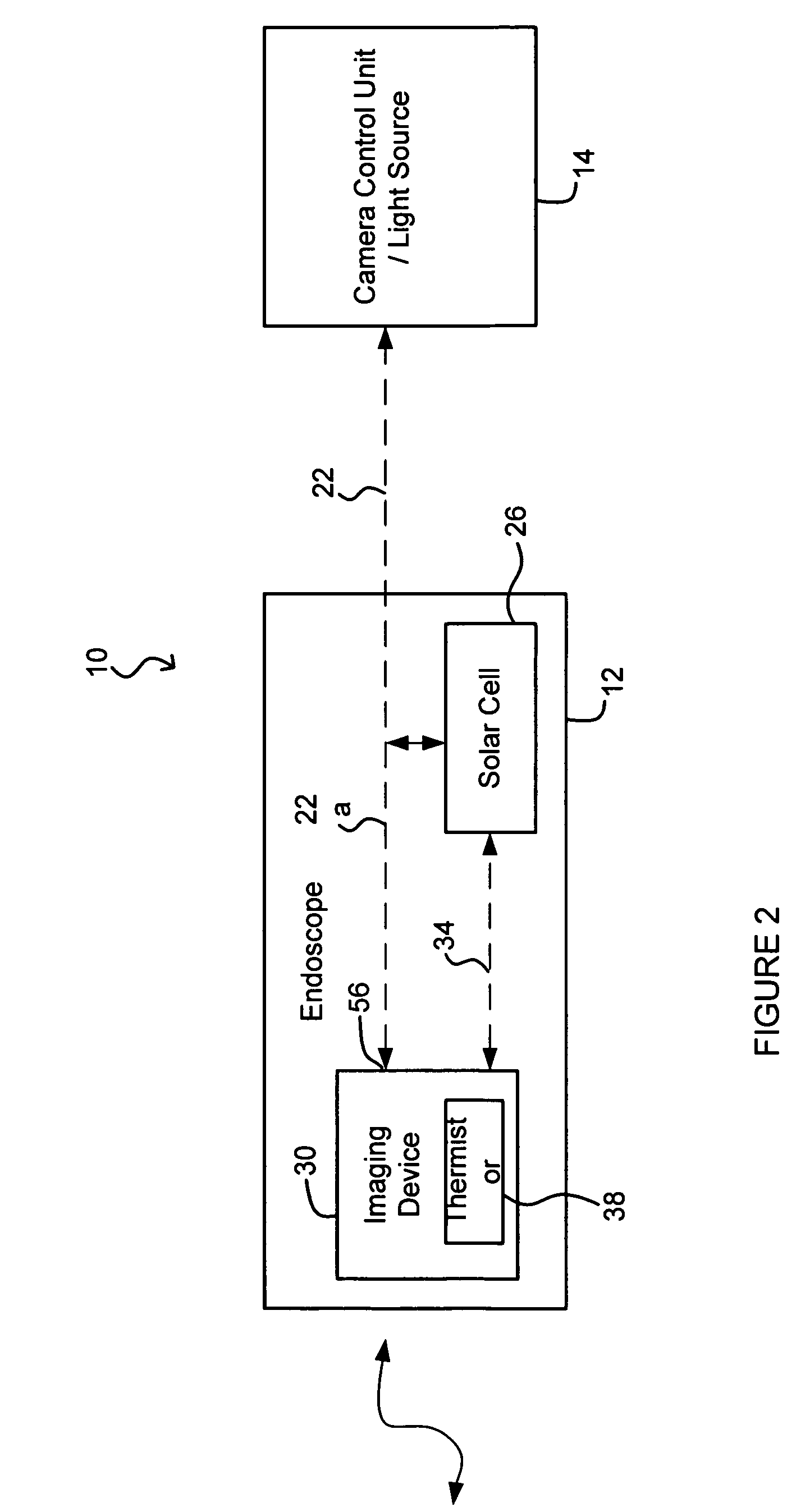

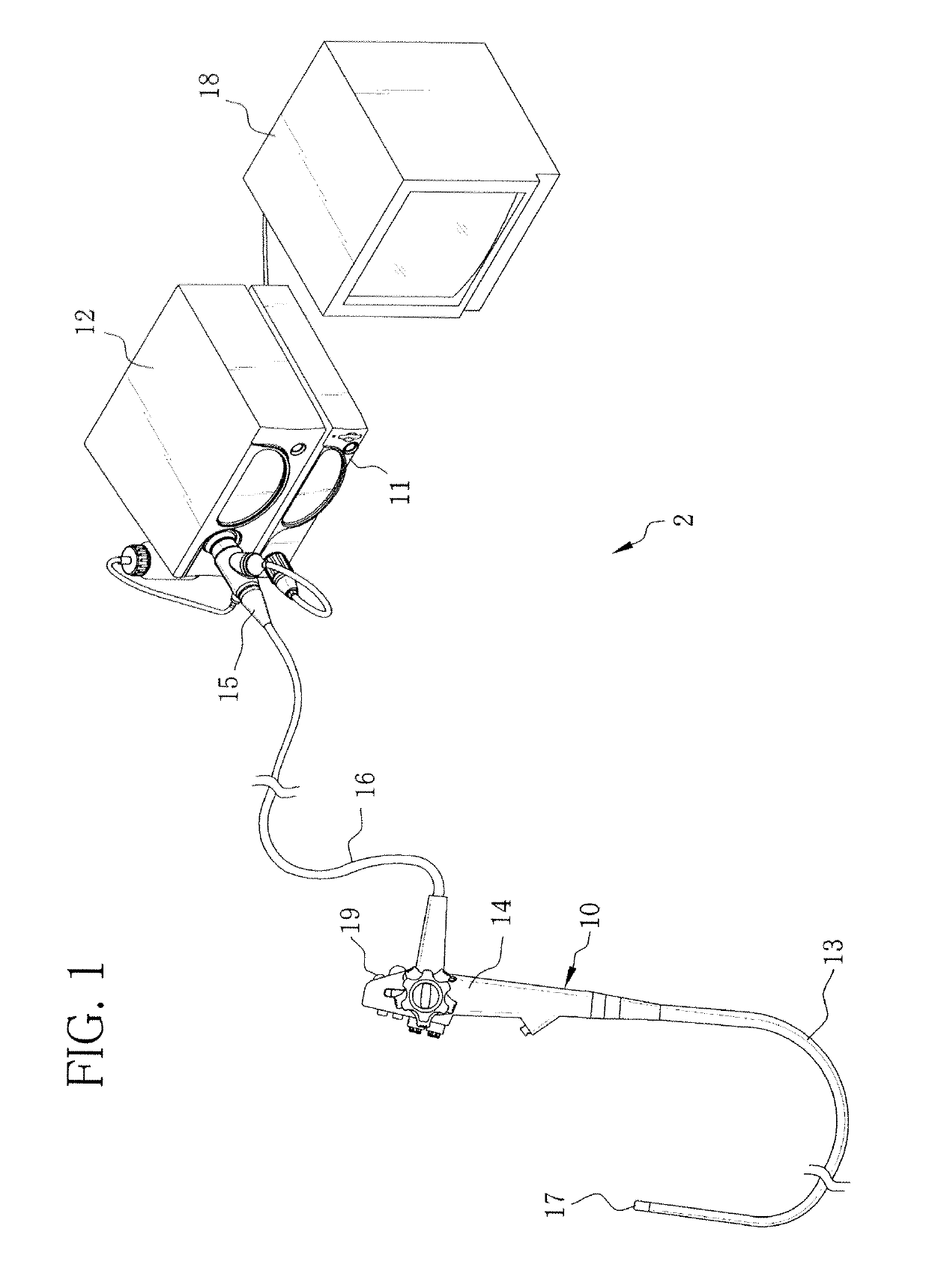

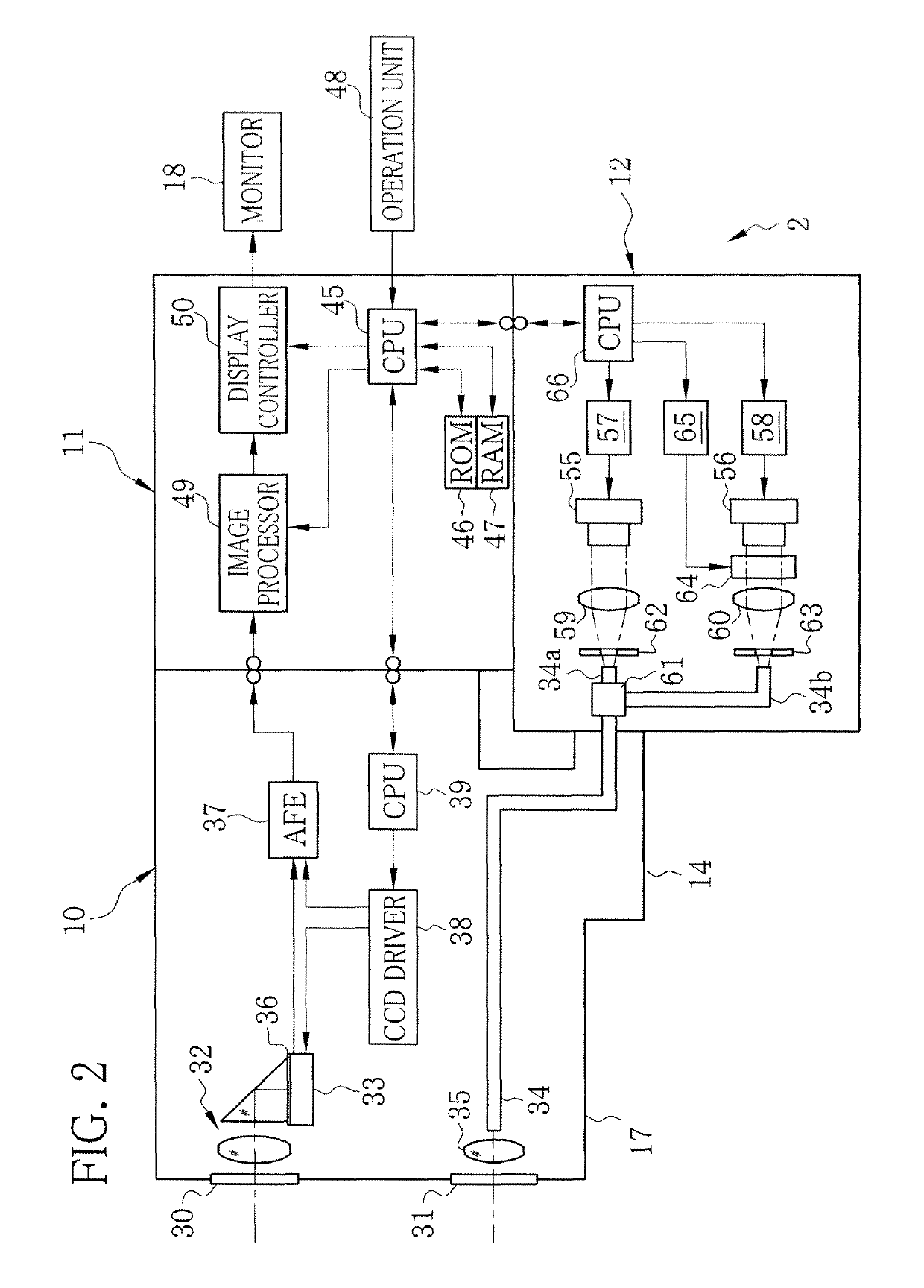

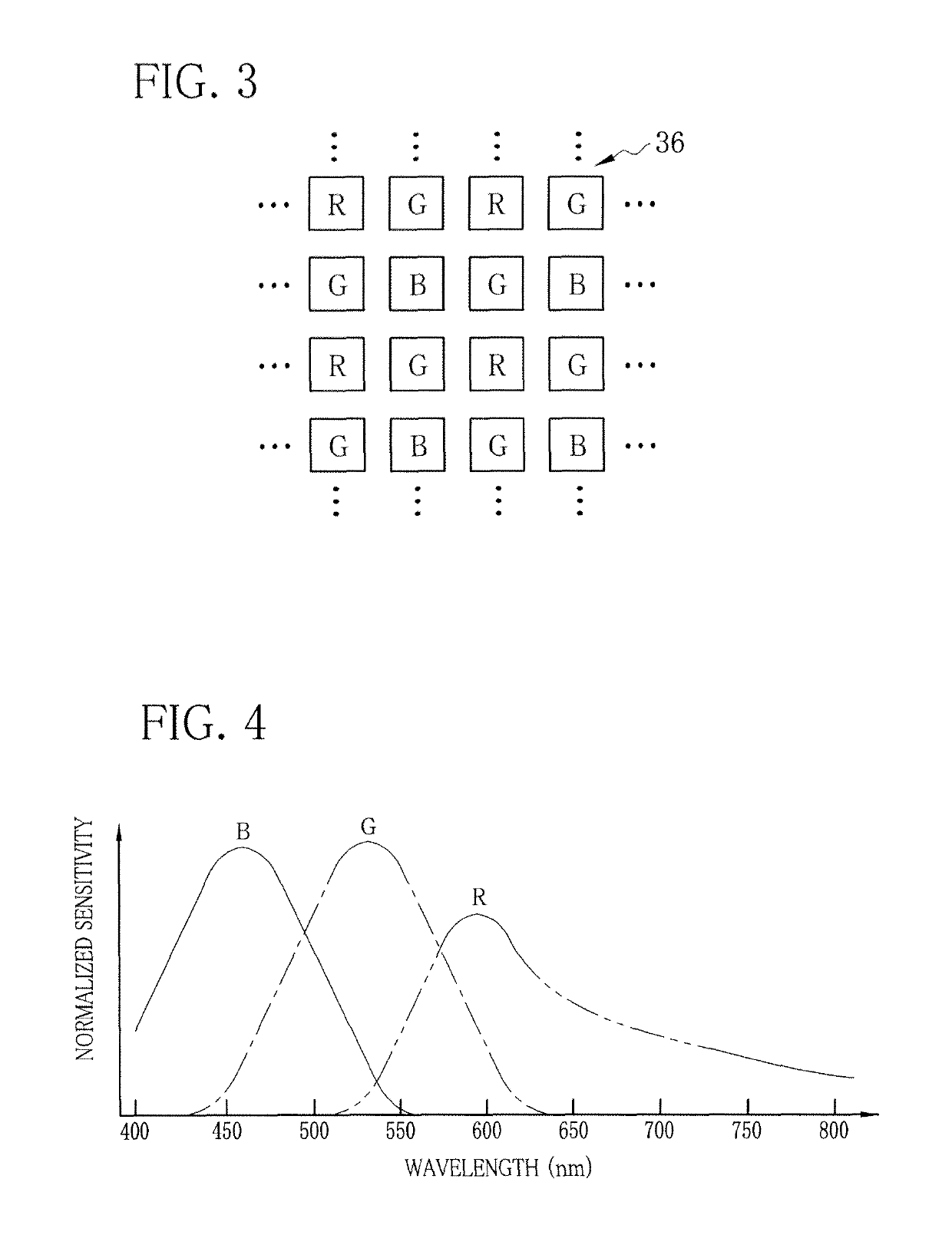

Optically coupled endoscope with microchip

InactiveUS20070282165A1Improve the level ofEfficient measurementSurgeryEndoscopesCamera controlCamera control unit

An endoscope device providing electrical isolation between the endoscope and a camera control unit coupled to the endoscope. The endoscope uses an optical channel to transmit illuminating light to the endoscope and to transmit image data generated by an imaging device to the camera control unit. In this manner, only an optical connection between the endoscope and the camera control unit exits. Alternatively, a wireless channel for transmission of the image data and any commands signals may be utilized.

Owner:KARL STORZ ENDOVISION INC

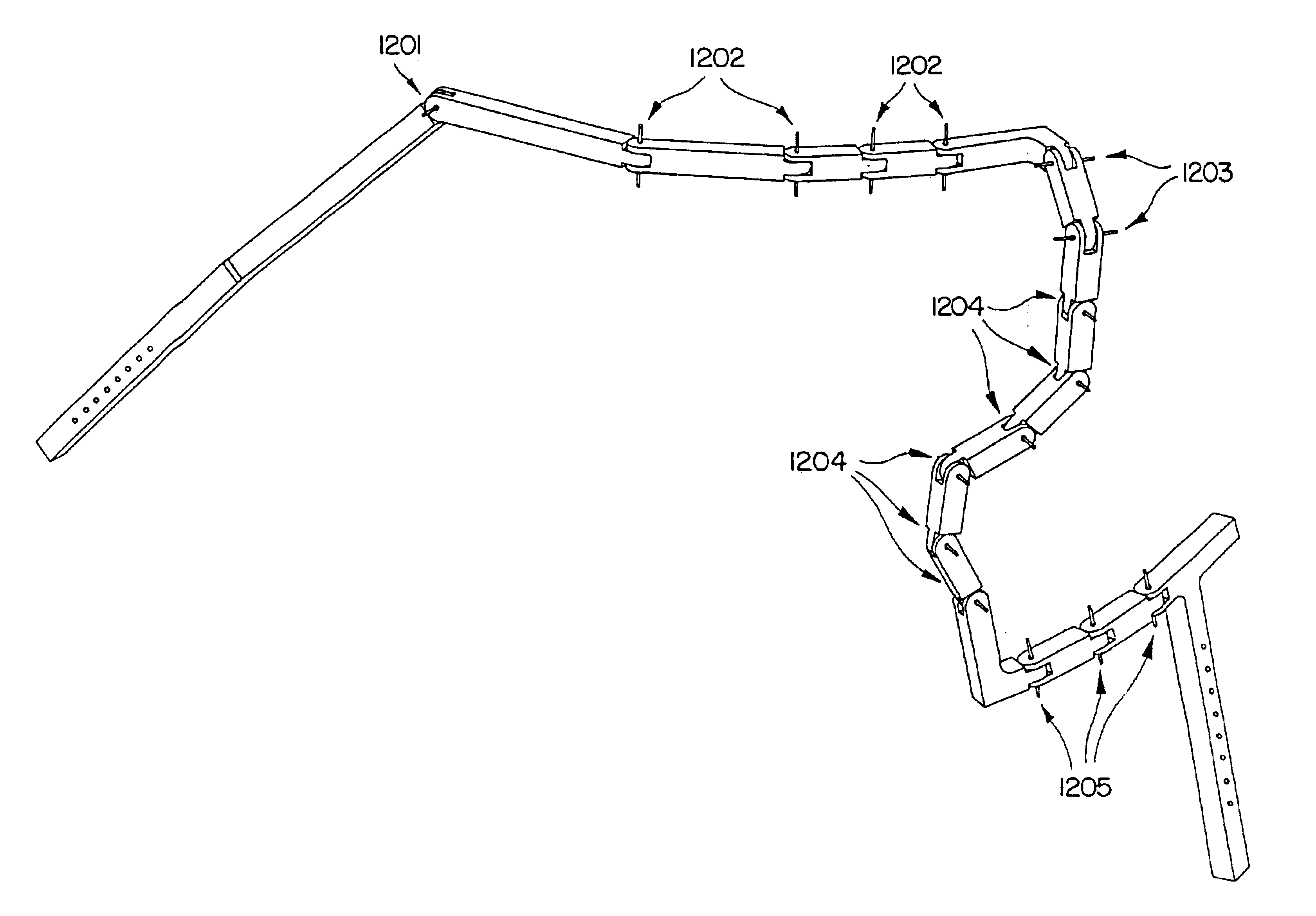

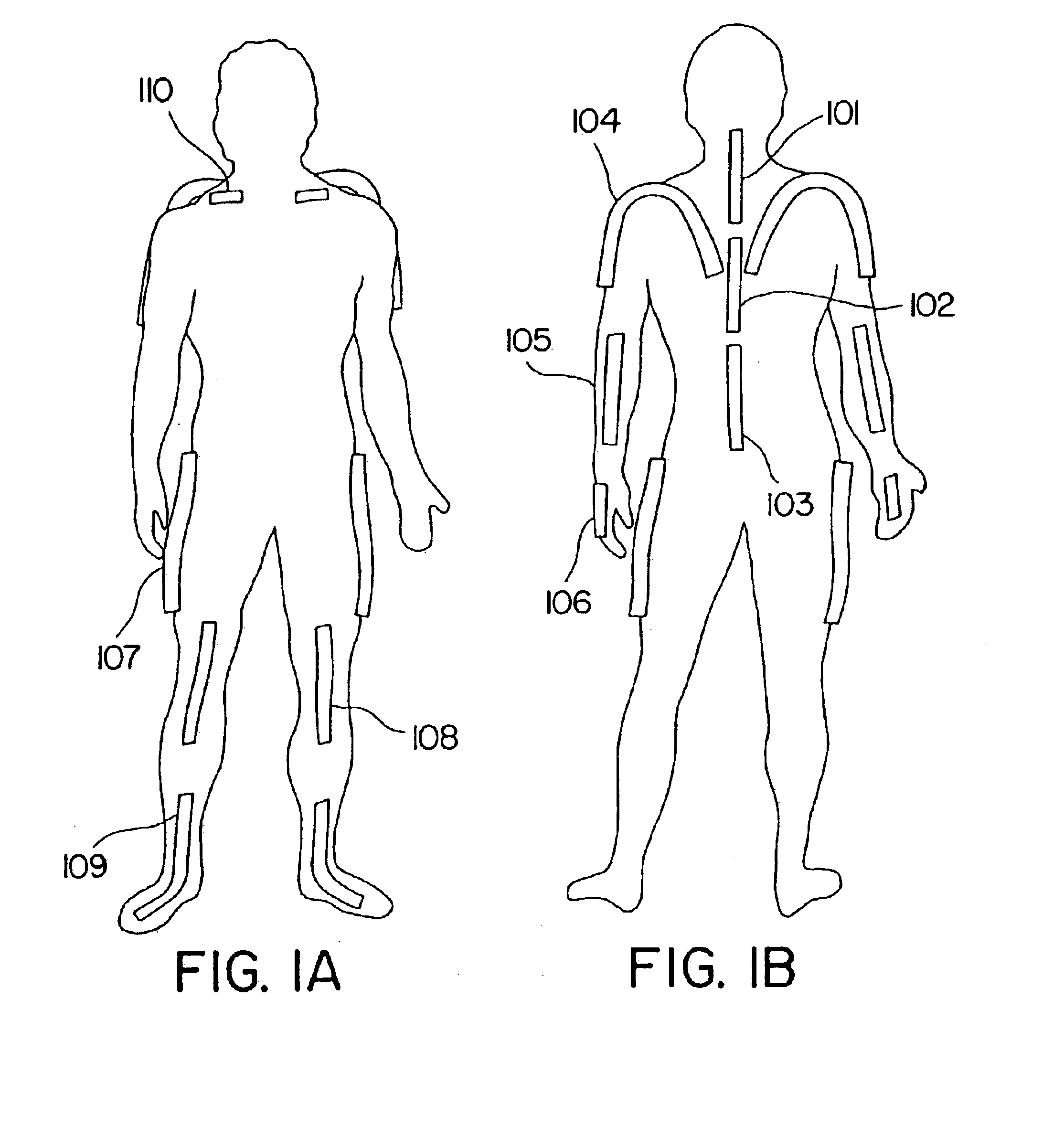

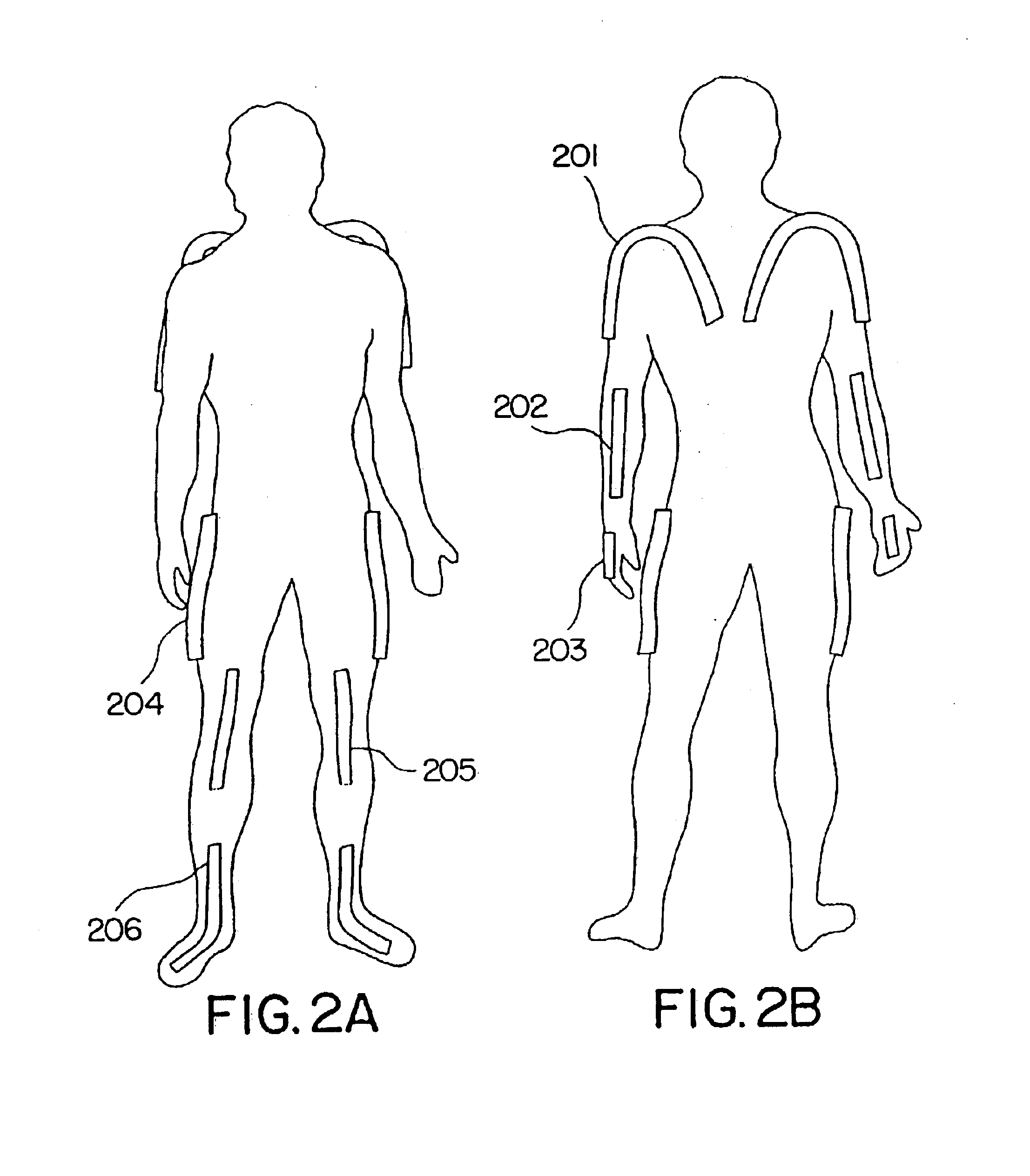

Goniometer-based body-tracking device

InactiveUS7070571B2Binding can be eliminatedReduce resistanceInput/output for user-computer interactionStrain gaugeHuman bodyBiomechanics

A sensing system is provided for measuring various joints of a human body for applications for performance animation, biomechanical studies and general motion capture. One sensing device of the system is a linkage-based sensing structure comprising rigid links interconnected by revolute joints, where each joint angle is measured by a resistive bend sensor or other convenient goniometer. Such a linkage-based sensing structure is typically used for measuring joints of the body, such as the shoulders, hips, neck, back and forearm, which have more than a single rotary degree of freedom of movement. In one embodiment of the linkage-based sensing structure, a single long resistive bend sensor measures the angle of more than one revolute joint. A second sensing device of the sensing system comprises a flat, flexible resistive bend sensor guided by a channel on an elastic garment.

Owner:IMMERSION CORPORATION

Method and system for automatic analysis of the trip of people in a retail space using multiple cameras

ActiveUS8098888B1Easy to handleDeep insightCharacter and pattern recognitionPhysical spaceTrip length

The present invention is a method and system for automatically determining the trip of people in a physical space, such as retail space, by capturing a plurality of input images of the people by a plurality of means for capturing images, processing the plurality of input images in order to track the people in each field of view of the plurality of means for capturing images, mapping the trip on to the coordinates of the physical space, joining the plurality of tracks across the multiple fields of view of the plurality of means for capturing images, and finding information for the trip of the people based on the processed results from the plurality of tracks. The trip information can comprise coordinates of the people's position and temporal attributes, such as trip time and trip length, for the plurality of tracks. The physical space may be a retail space, and the people may be customers in the retail space. The trip information can provide key measurements along the entire shopping trip, from entrance to checkout, that deliver deeper insights about the trip as a whole.

Owner:VIDEOMINING CORP

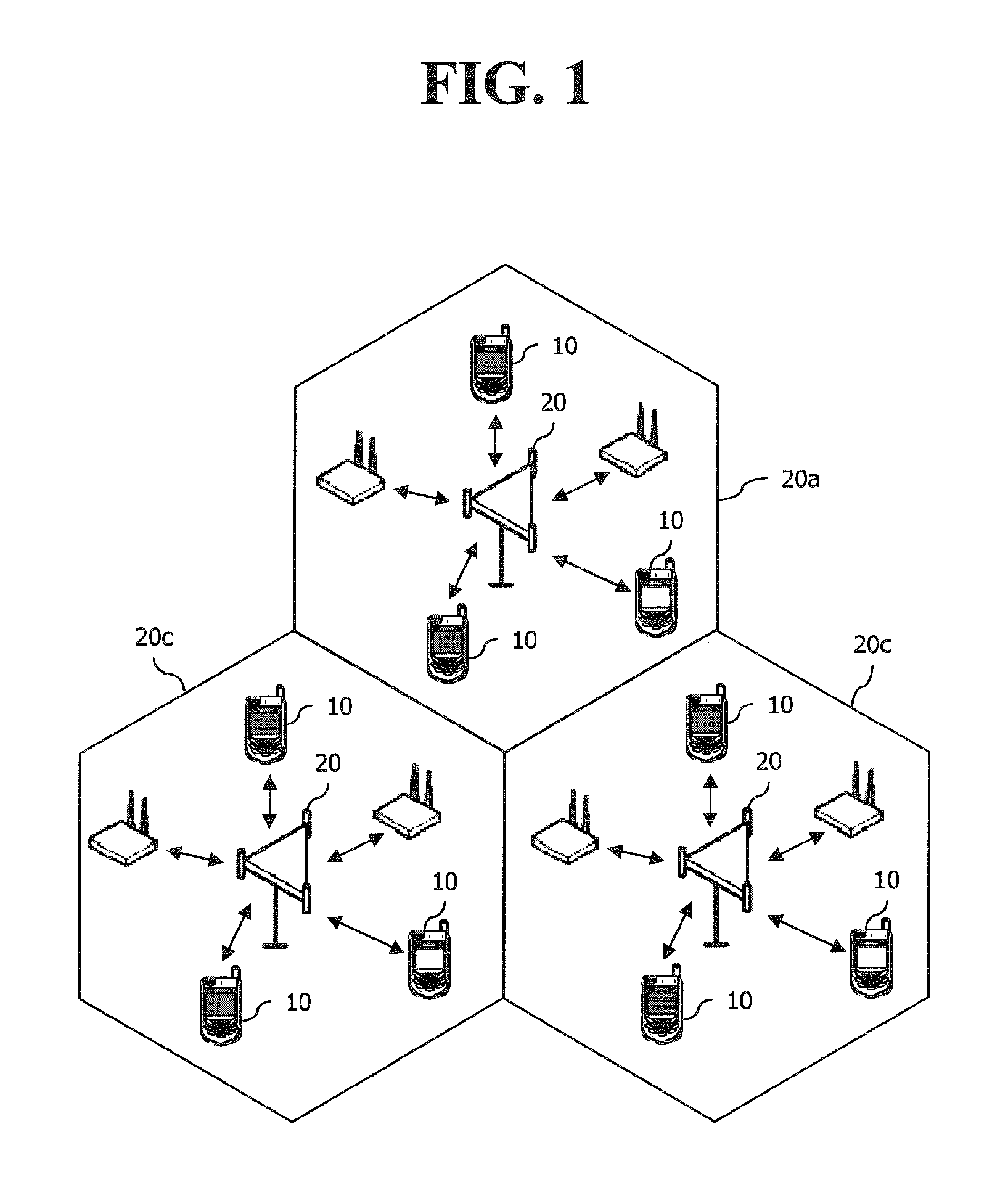

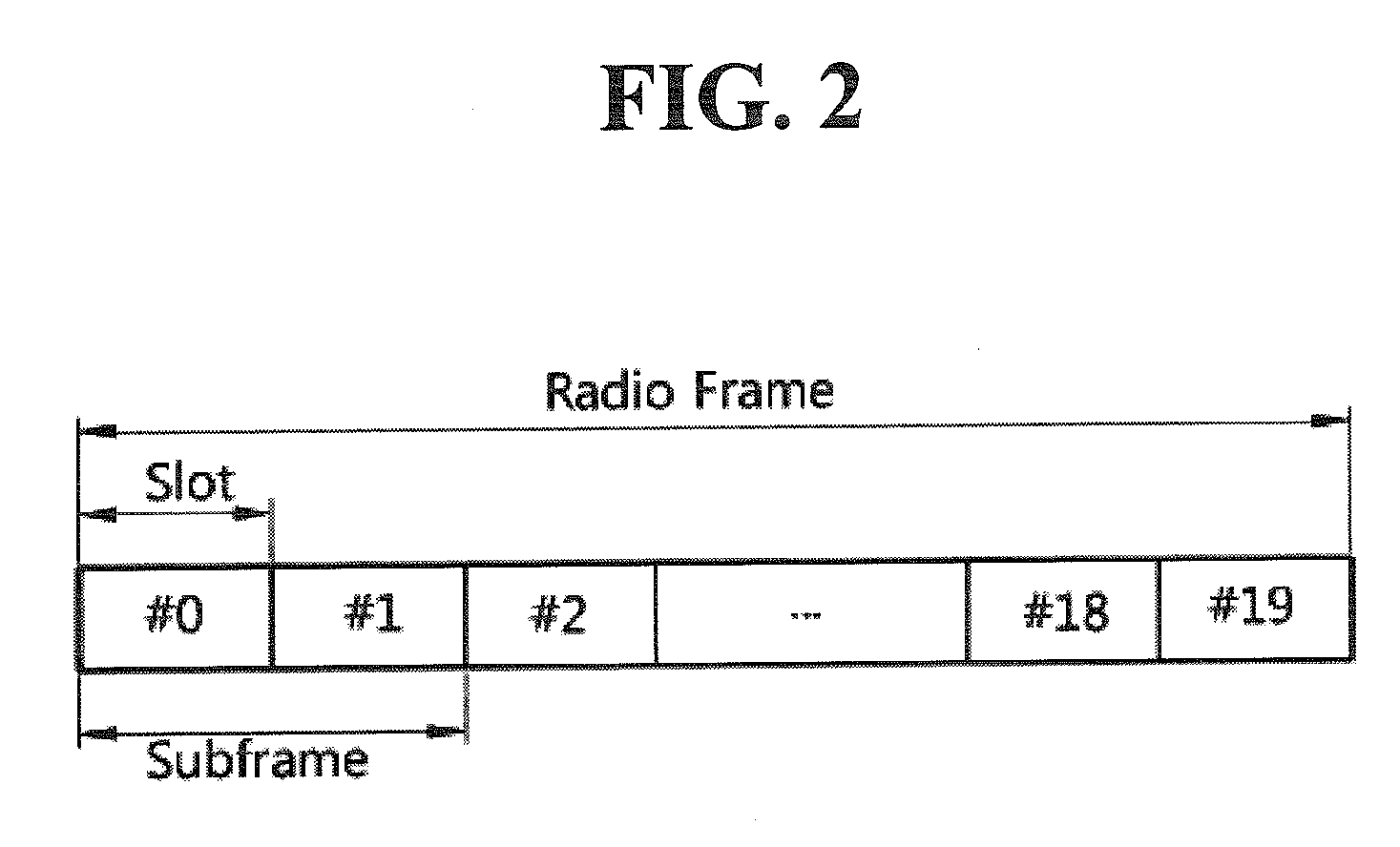

Method of performing cell measurement and method of providing information for cell measurement

ActiveUS20130058234A1Efficient powerEfficient qualityError detection/prevention using signal quality detectorFrequency-division multiplex detailsComputer scienceUser equipment

A method of providing information for cell measurement is provided. In the method, a first cell obtains a first pattern information indicating a subframe for performing measurement with respect a second cell. The first cell configures a subframe for performing measurement, which is different from that for performing measurement with respect the second cell. The first cell transmits a second pattern information on the subframe configured by the first cell to a user equipment (UE).

Owner:LG ELECTRONICS INC

Small volume in vitro analyte sensor

InactiveUS20050278945A1Lower the volumeAccurate and efficient measurementImmobilised enzymesBioreactor/fermenter combinationsElectrolysisElectron transfer

A sensor utilizing a non-leachable or diffusible redox mediator is described. The sensor includes a sample chamber to hold a sample in electrolytic contact with a working electrode, and in at least some instances, the sensor also contains a non-leachable or a diffusible second electron transfer agent. The sensor and / or the methods used produce a sensor signal in response to the analyte that can be distinguished from a background signal caused by the mediator. The invention can be used to determine the concentration of a biomolecule, such as glucose or lactate, in a biological fluid, such as blood or serum, using techniques such as coulometry, amperometry, and potentiometry. An enzyme capable of catalyzing the electrooxidation or electroreduction of the biomolecule is typically provided as a second electron transfer agent.

Owner:ABBOTT DIABETES CARE INC

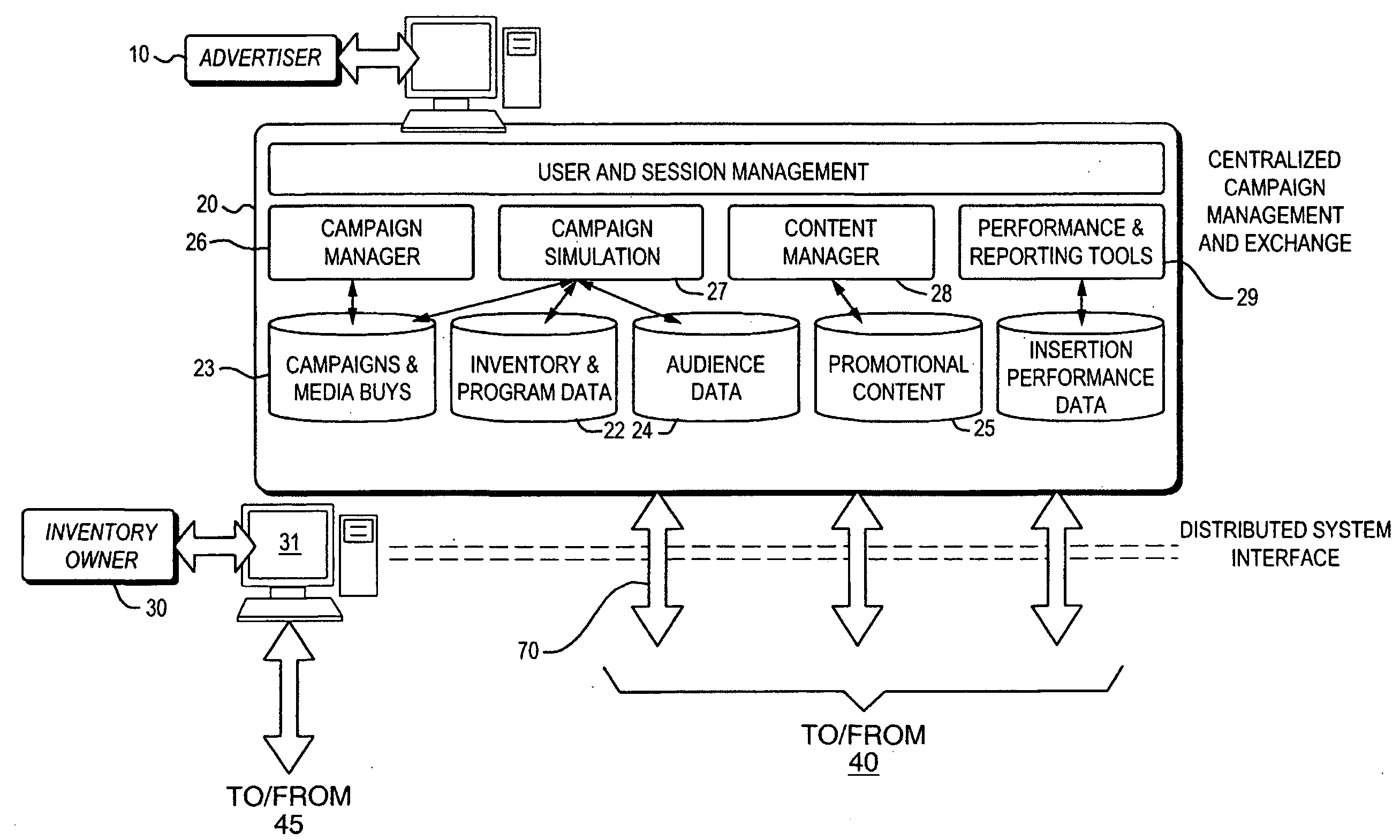

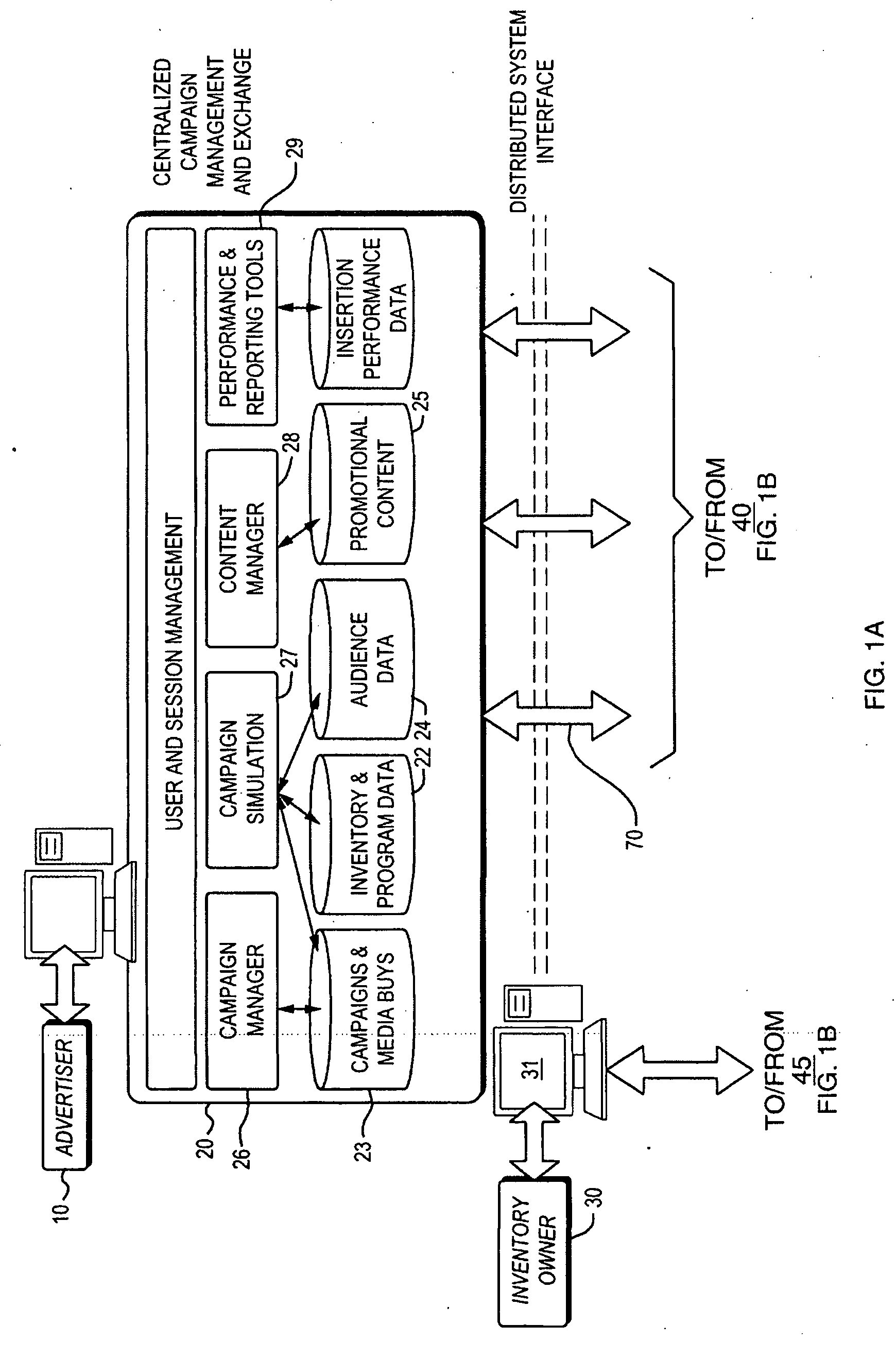

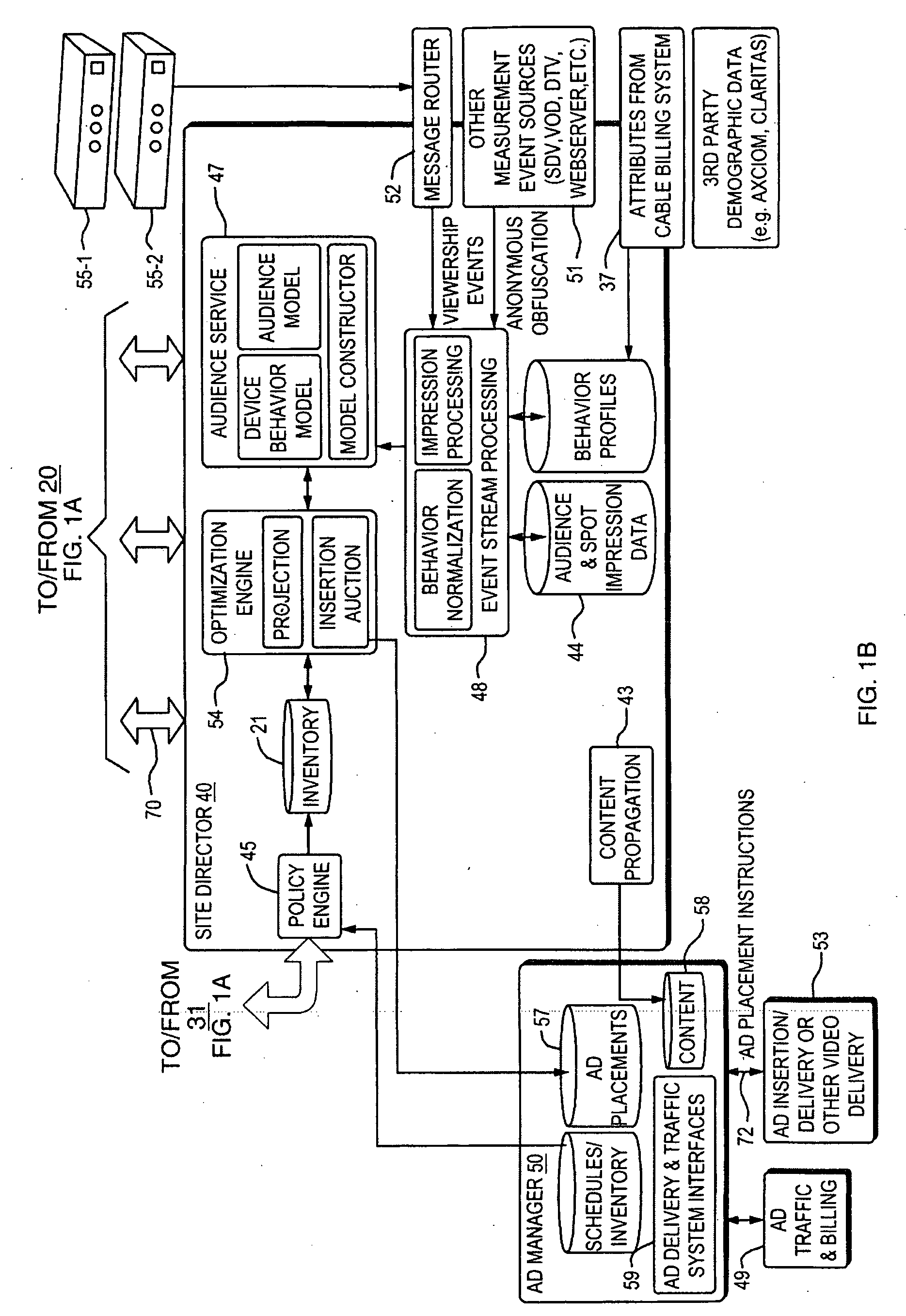

Negotiated access to promotional insertion opportunity

InactiveUS20080271070A1Without overheadEffective placementElectrical cable transmission adaptationSelective content distributionTechnology assessmentWorld Wide Web

A technique (and corresponding system) for controlling access to insertion opportunities in a multi-channel streaming media system is provided. The technique receives parameters for access to the insertion opportunities from multiple advertisers, such as desired audience viewership profile characteristics. The technique evaluates the received parameters to select which advertisers gain access to the insertion opportunities to place promotional content. The technique analyzes an audience of the placed promotional content and identifies which of the possible promotional content optimizes the value of the insertion opportunities or other maxima. Unlike traditional advertising in which advertisers pay per expected viewership, the technique may be arranged to charge advertisers only for a targeted audience that viewed the placed promotional content. The technique thus enables advertisers to access disparate insertion opportunities and to target an audience at lower cost without having to establish relationships with owners of insertion opportunities.

Owner:MICROSOFT TECH LICENSING LLC +1

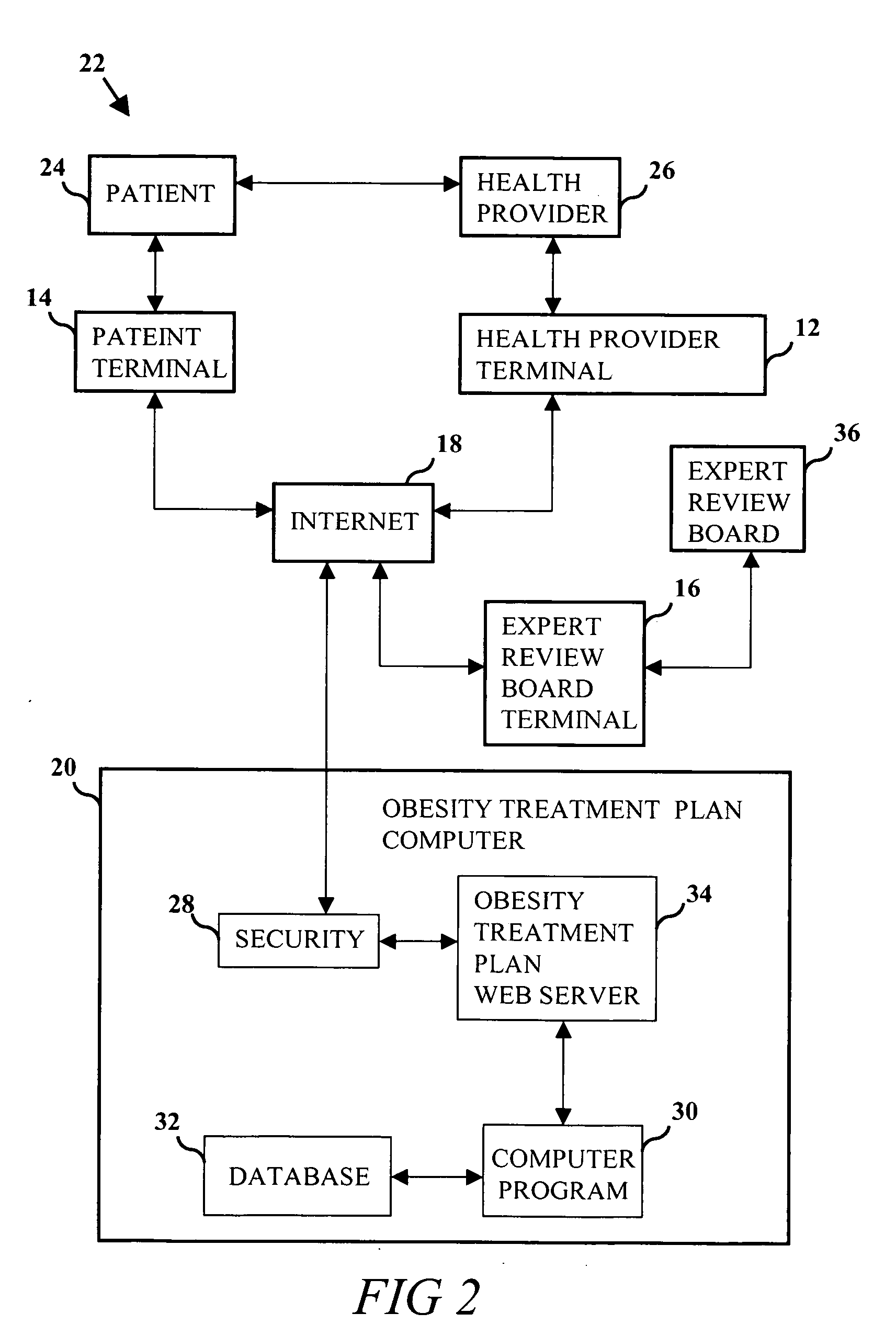

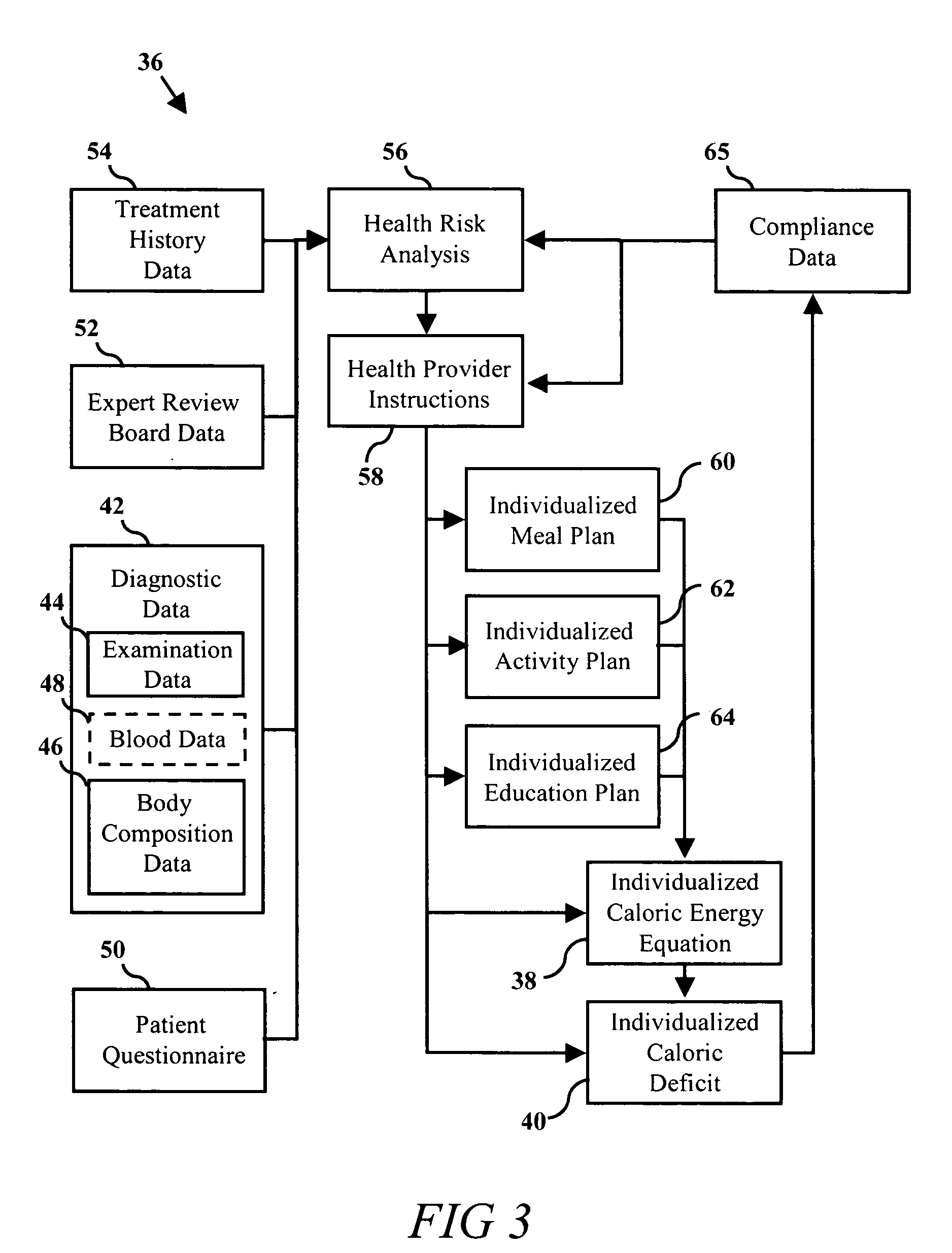

System and method of implementing multi-level marketing of weight management products

InactiveUS20050240434A1Maximize chanceReducing attritionMedical communicationHealth-index calculationWeight managementPersonalization

A system and method of driving weight management product sales in a multi-level marketing environment using a body impedance data acquisition device, a weight management software program, nutritional supplements and a standardized sales pathway software program, resulting in direct sales, lead generation and new distributor sign up. A prospect's personal information and lean body mass data are input to the weight management computer software program for determining an individualized weight management plan, where the lean body mass data are obtained using the body impedance data acquisition device. The prospect is presented weight management product packages for purchase, individualized according to the derived weight management plan and becomes a client upon purchasing a product package. The new customer is presented a business opportunity in becoming a new distributor of the weight management products and, if enlisted, is provided product discounts and sales software tools for facilitating weight management product sales.

Owner:HEALTHPORT

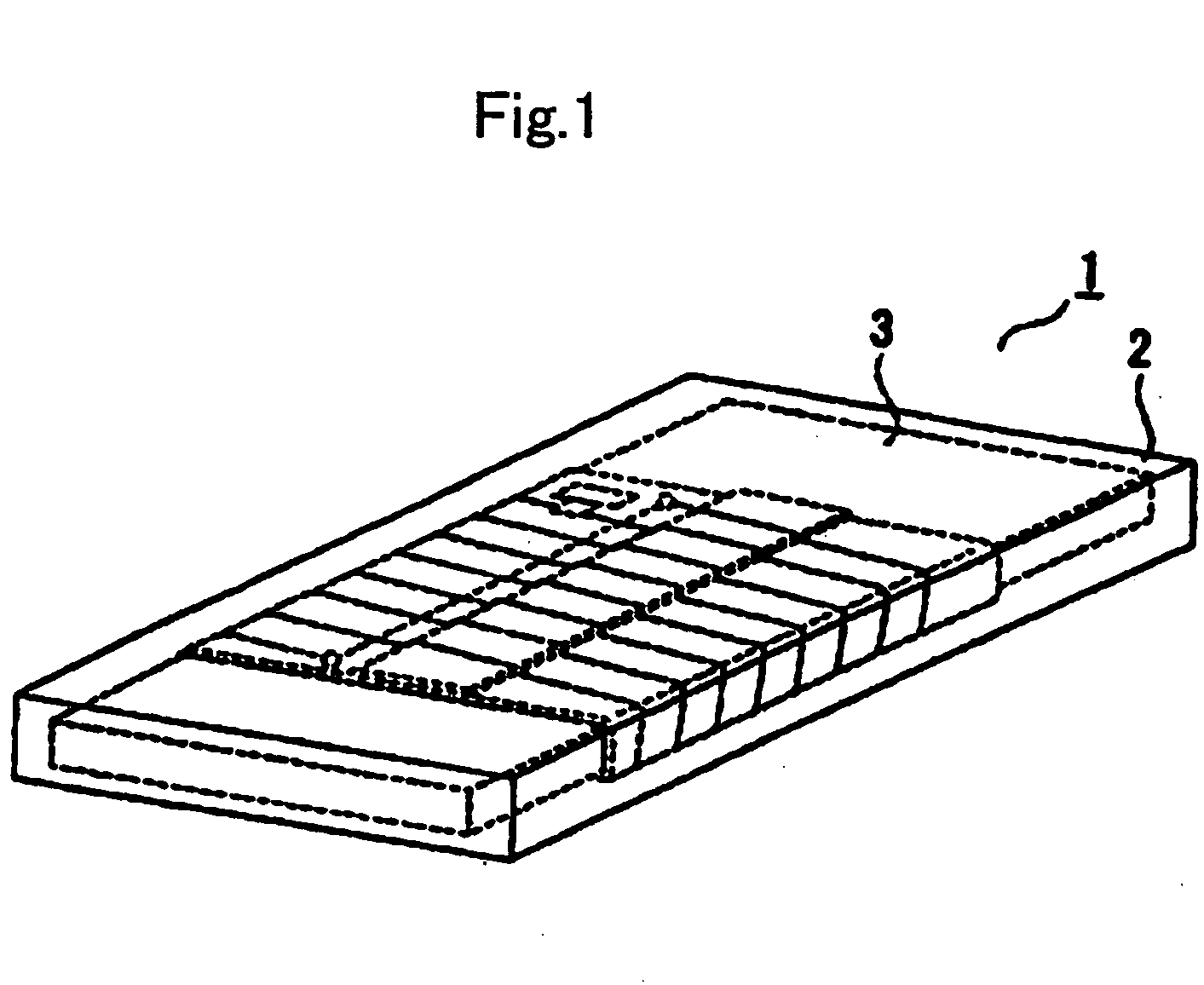

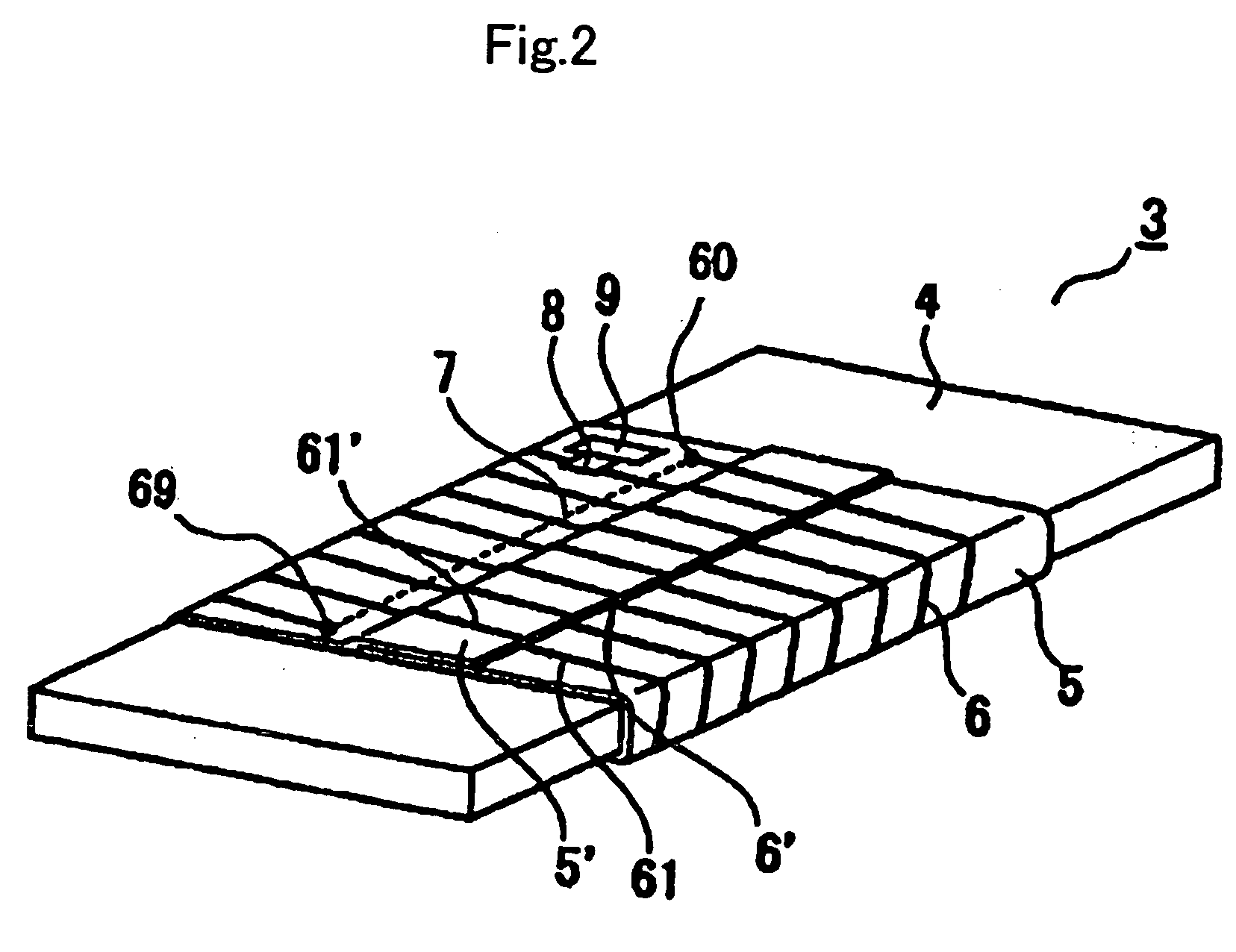

RFID tag and its manufacturing method

InactiveUS20050179552A1Efficient measurementIncrease in costLoop antennas with ferromagnetic coreLine/current collector detailsElectrical conductorEngineering

An RFID tag which is provided with a coil-shaped antenna such that a conductor is placed on the periphery of a magnetic core, the RFID comprising: the magnetic core, an FPC wound on the periphery of the magnetic core, two or more linear conductor patterns formed in parallel with one another on the FPC, an IC that is connected to the linear conductor patterns and disposed on the FPC, a crossover pattern that electrically connects one end and the other end of outermost linear conductor patterns among the linear conductor patterns formed in parallel with one another, where in the two or more linear conductor patterns, adjacent linear conductor patterns in a joint portion in the wound FPC are electrically connected in respective start edges and end edges.

Owner:FURUKAWA ELECTRIC CO LTD

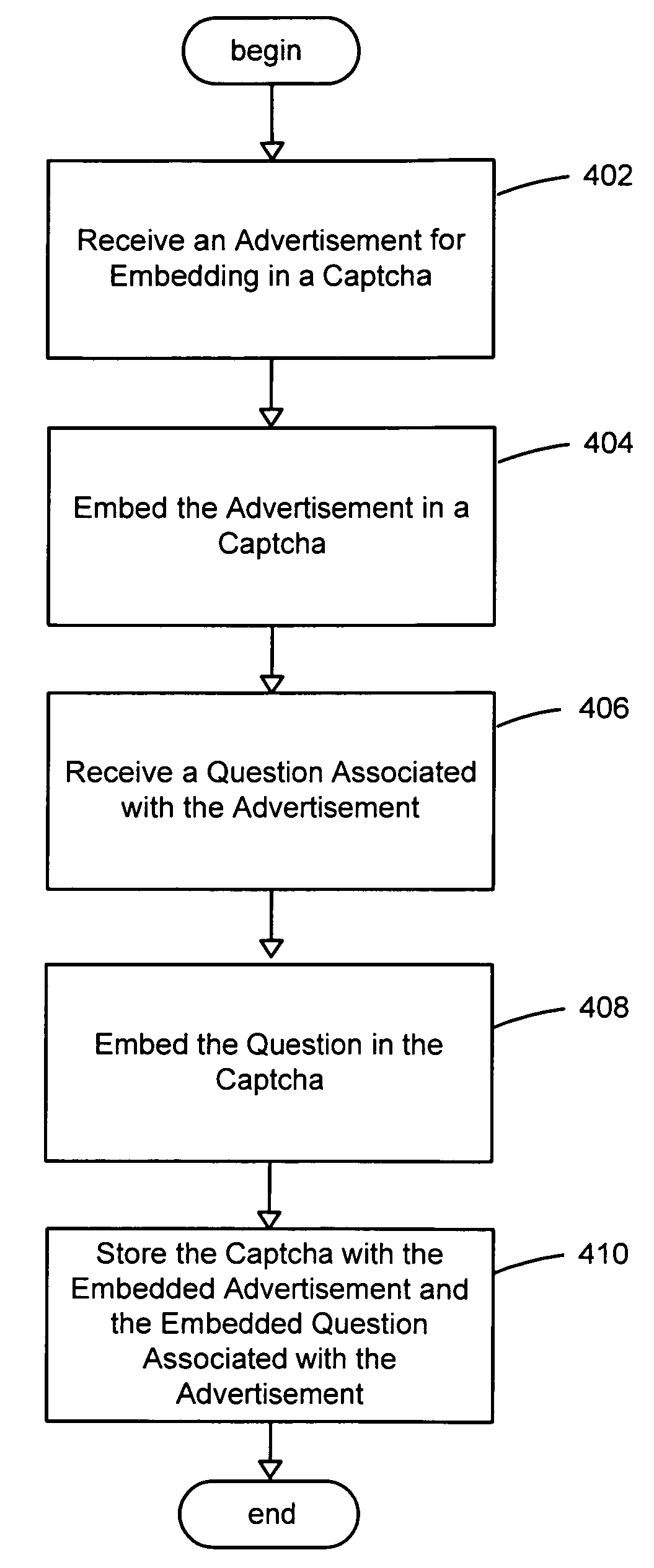

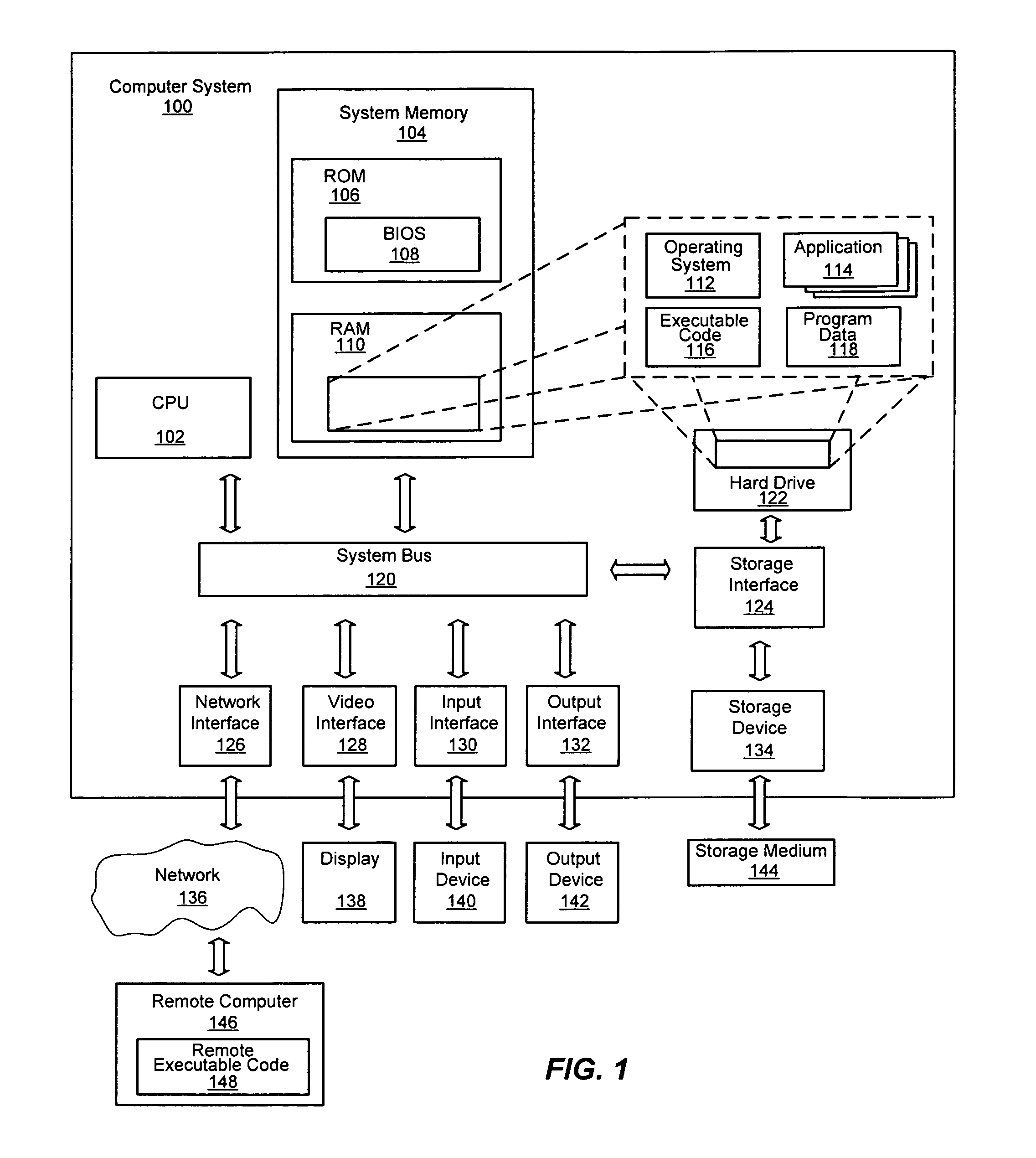

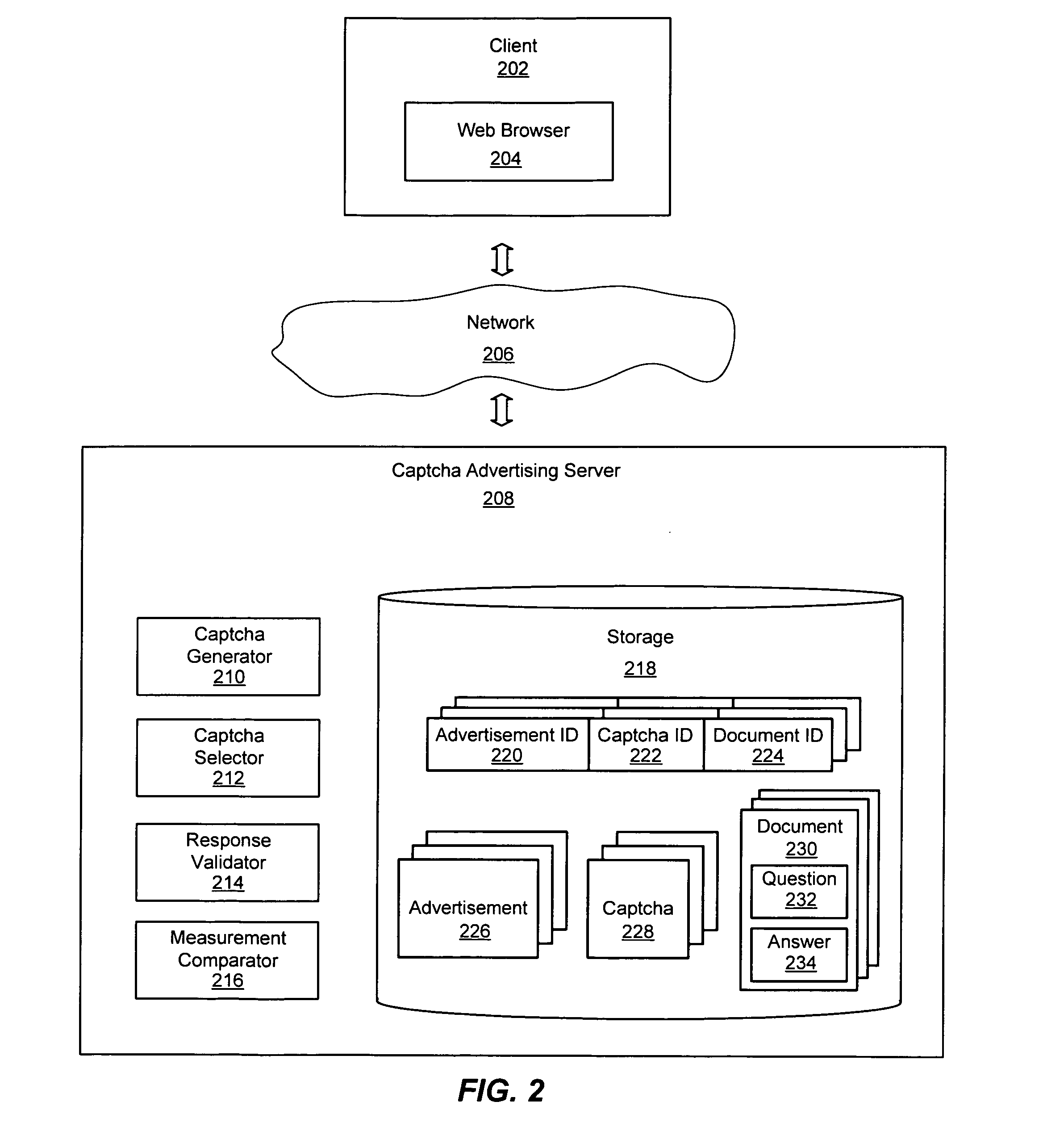

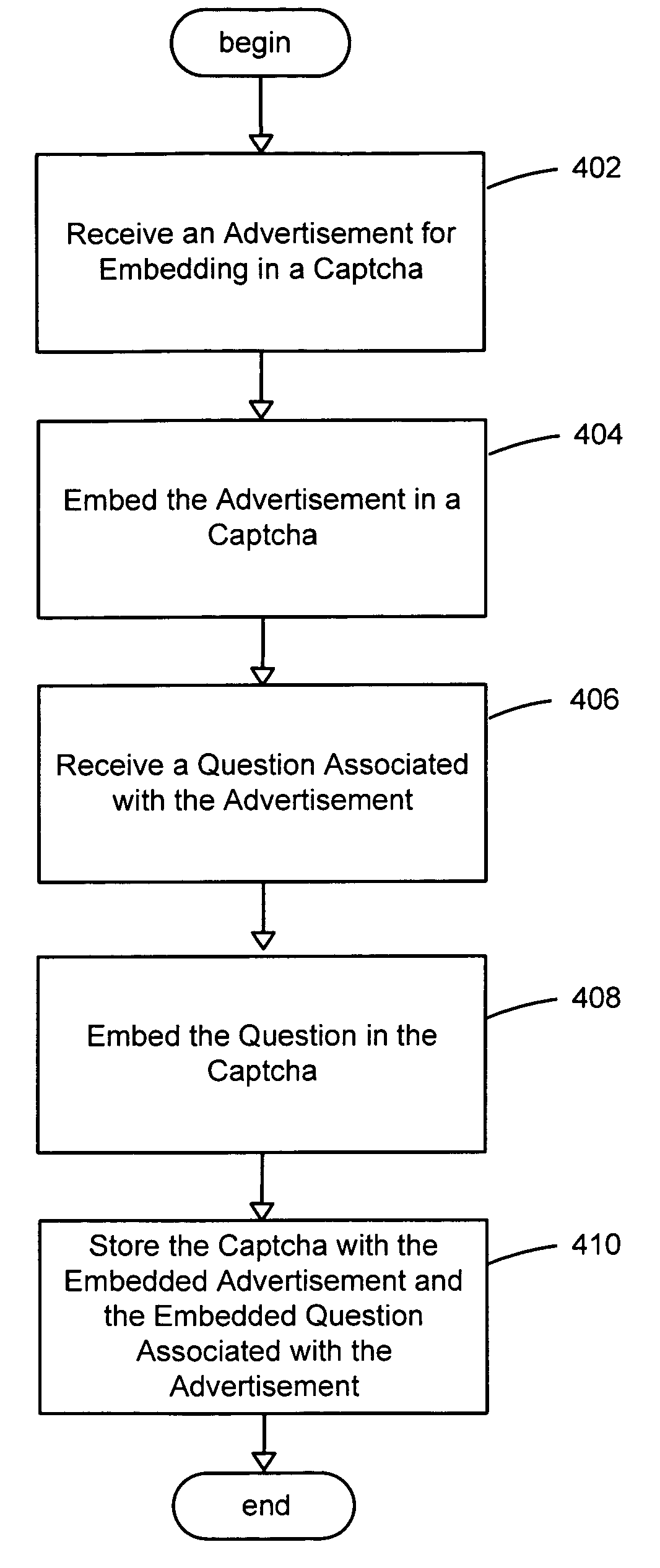

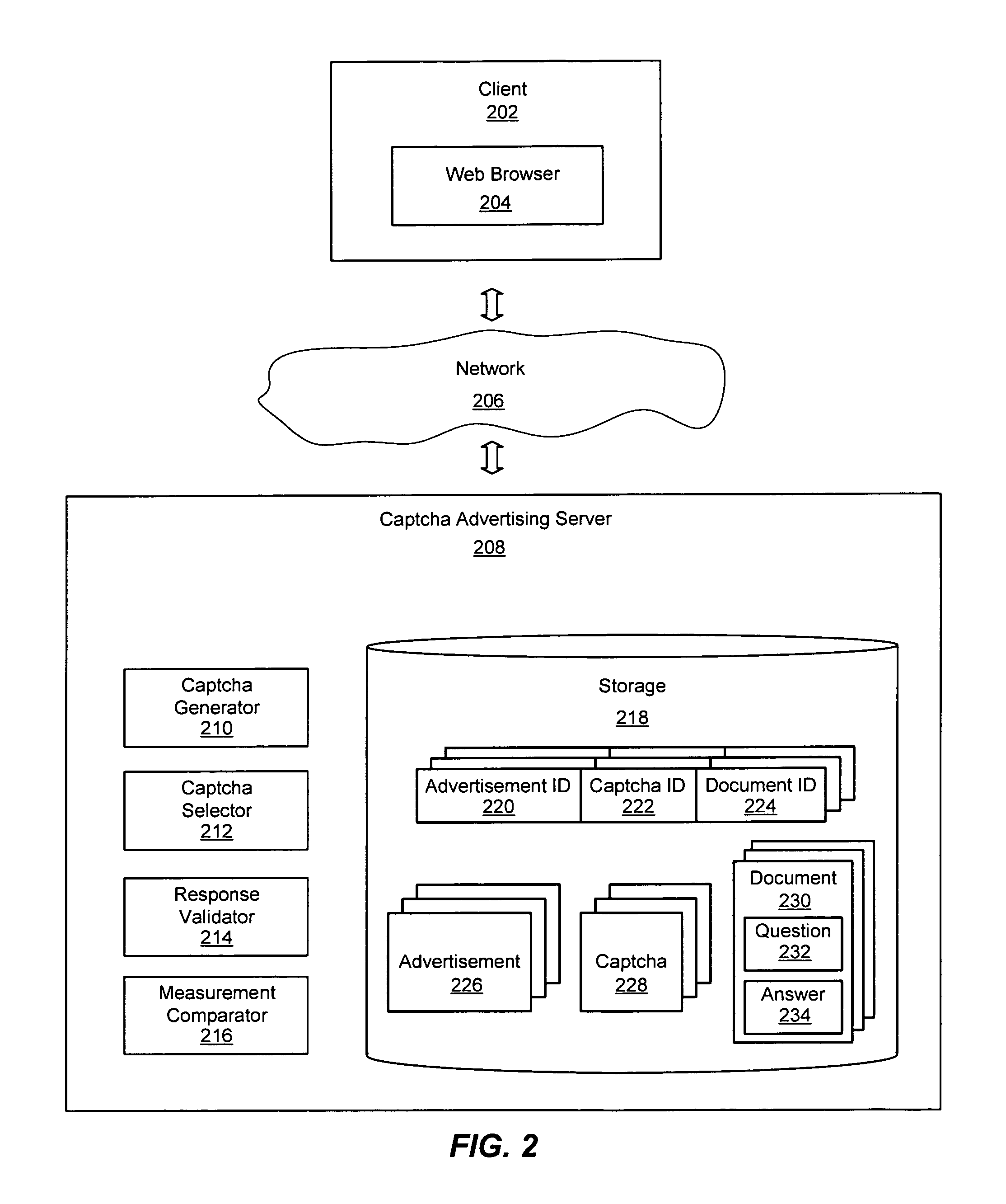

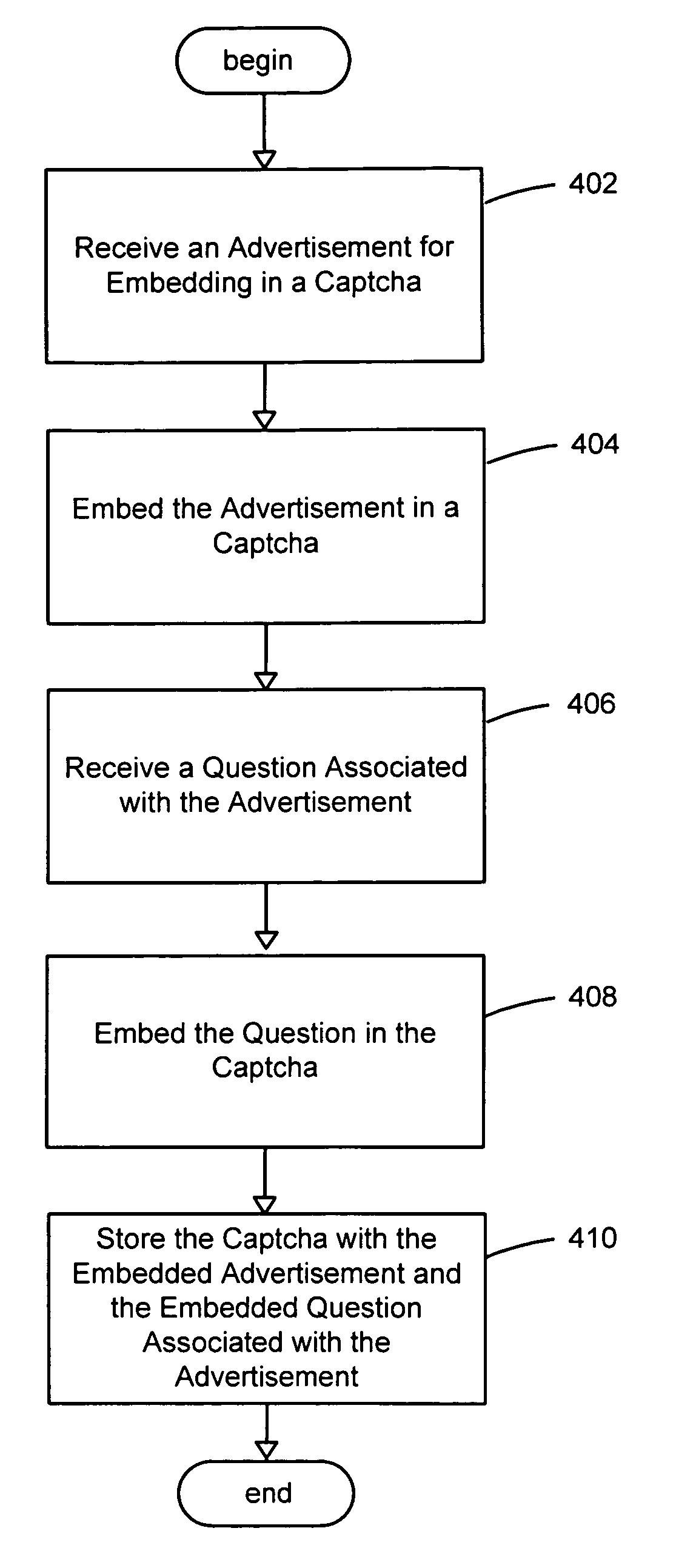

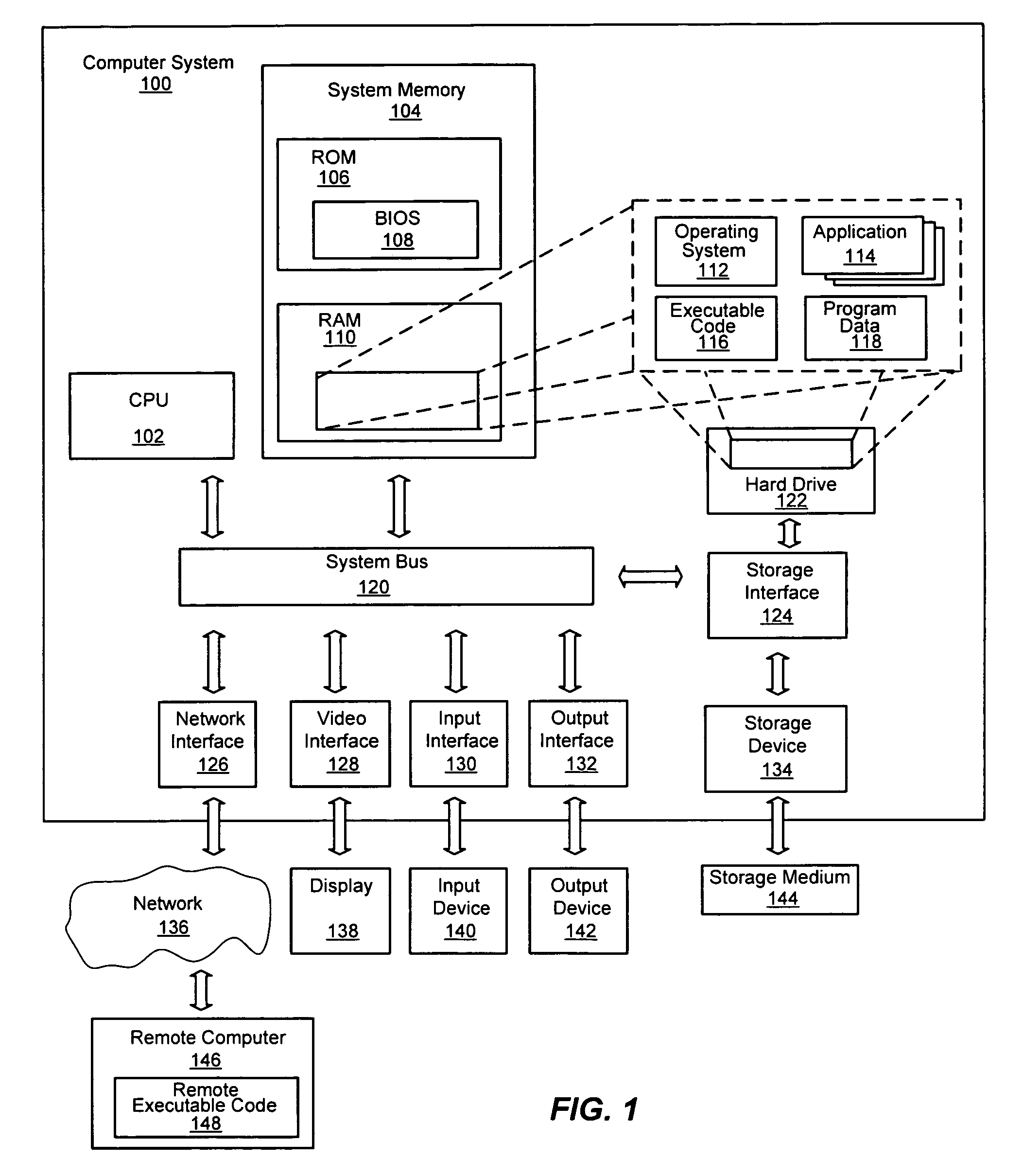

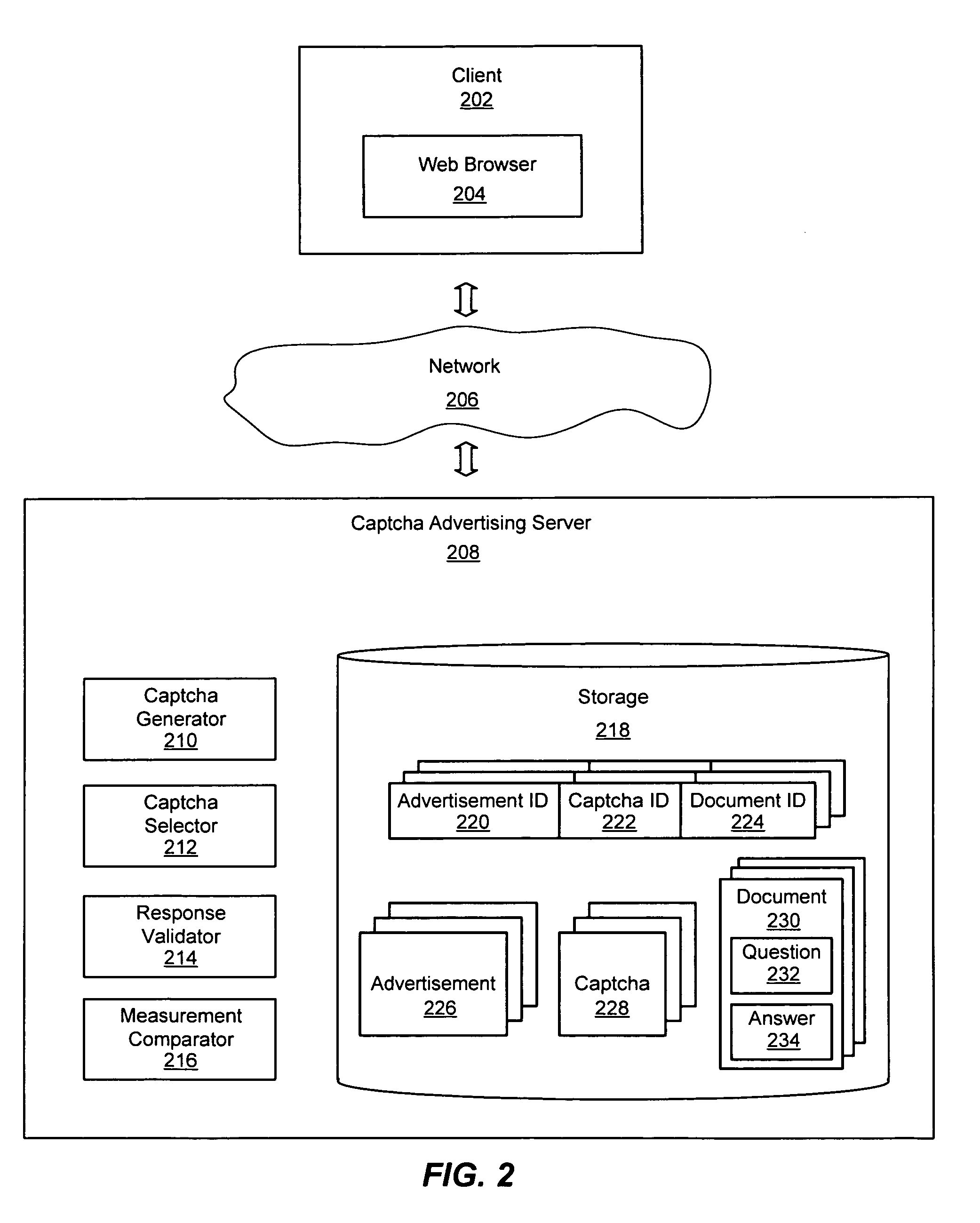

System and method for measuring awareness of online advertising using captchas

An improved system and method for providing and using captchas for online advertising is provided. An advertisement may be received, embedded in a captcha and stored for use in online advertising. A question about the advertisement may also be stored along with a valid answer for use in verifying a user has received an impression of the advertisement. In response to a request received for sending a captcha with an embedded advertisement to a web browser operating on a client, a captcha with an embedded advertisement may be selected and sent to the web browser for display as part of a web page. Upon verifying the response to the question from the user is a valid answer, receipt of an impression of the advertisement may be recorded. Additionally, the awareness of an online advertisement may be measured for a target audience and reported to advertisers.

Owner:OATH INC

System and method for providing semantic captchas for online advertising

InactiveUS20080133347A1Efficient measurementAdvertisementsWeb data indexingWeb browserNative advertising

An improved system and method for providing and using captchas for online advertising is provided. An advertisement may be received, embedded in a captcha and stored for use in online advertising. A question about the advertisement may also be stored along with a valid answer for use in verifying a user has received an impression of the advertisement. In response to a request received for sending a captcha with an embedded advertisement to a web browser operating on a client, a captcha with an embedded advertisement may be selected and sent to the web browser for display as part of a web page. Upon verifying the response to the question from the user is a valid answer, receipt of an impression of the advertisement may be recorded. Additionally, the awareness of an online advertisement may be measured for a target audience and reported to advertisers.

Owner:OATH INC

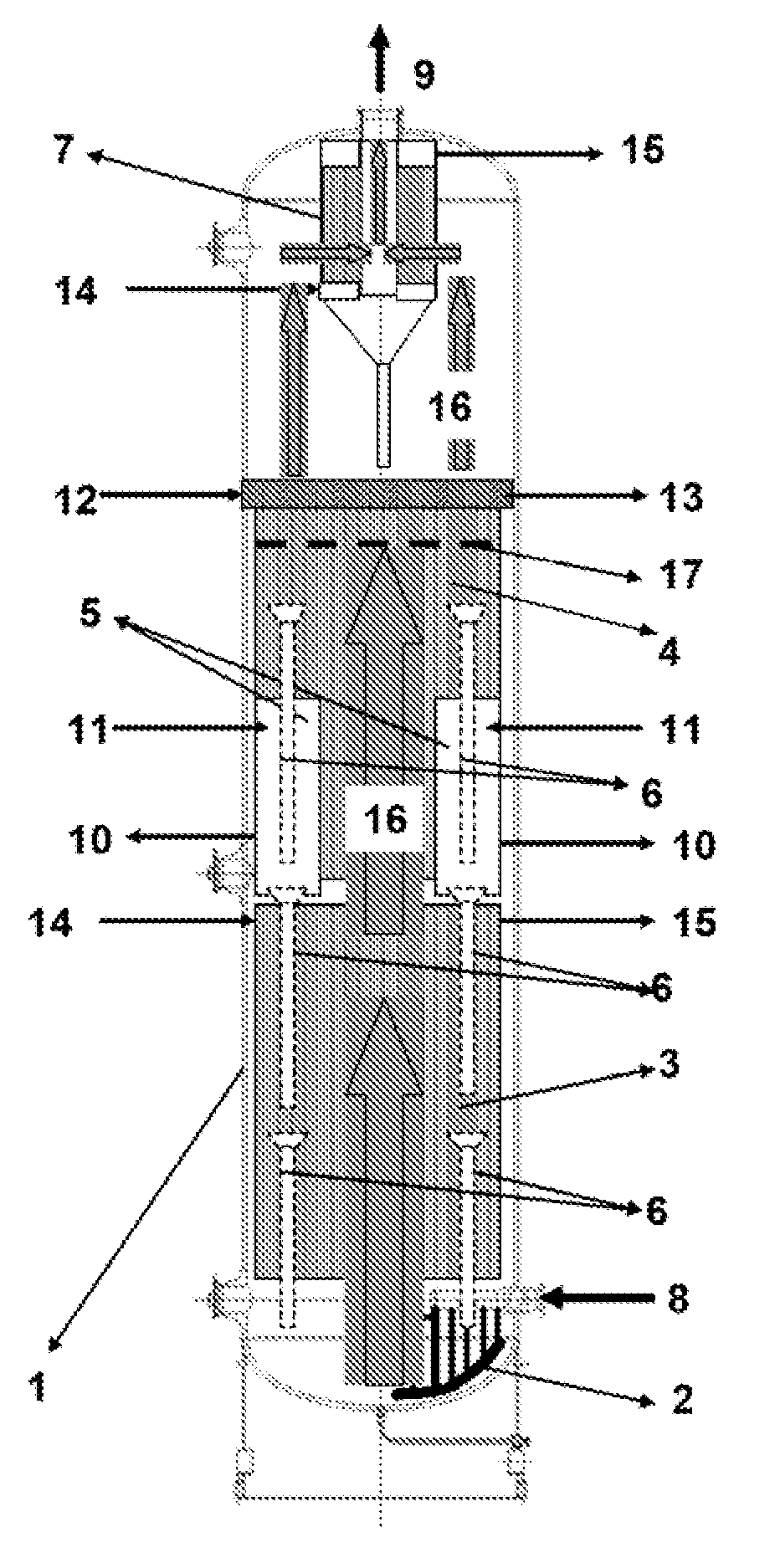

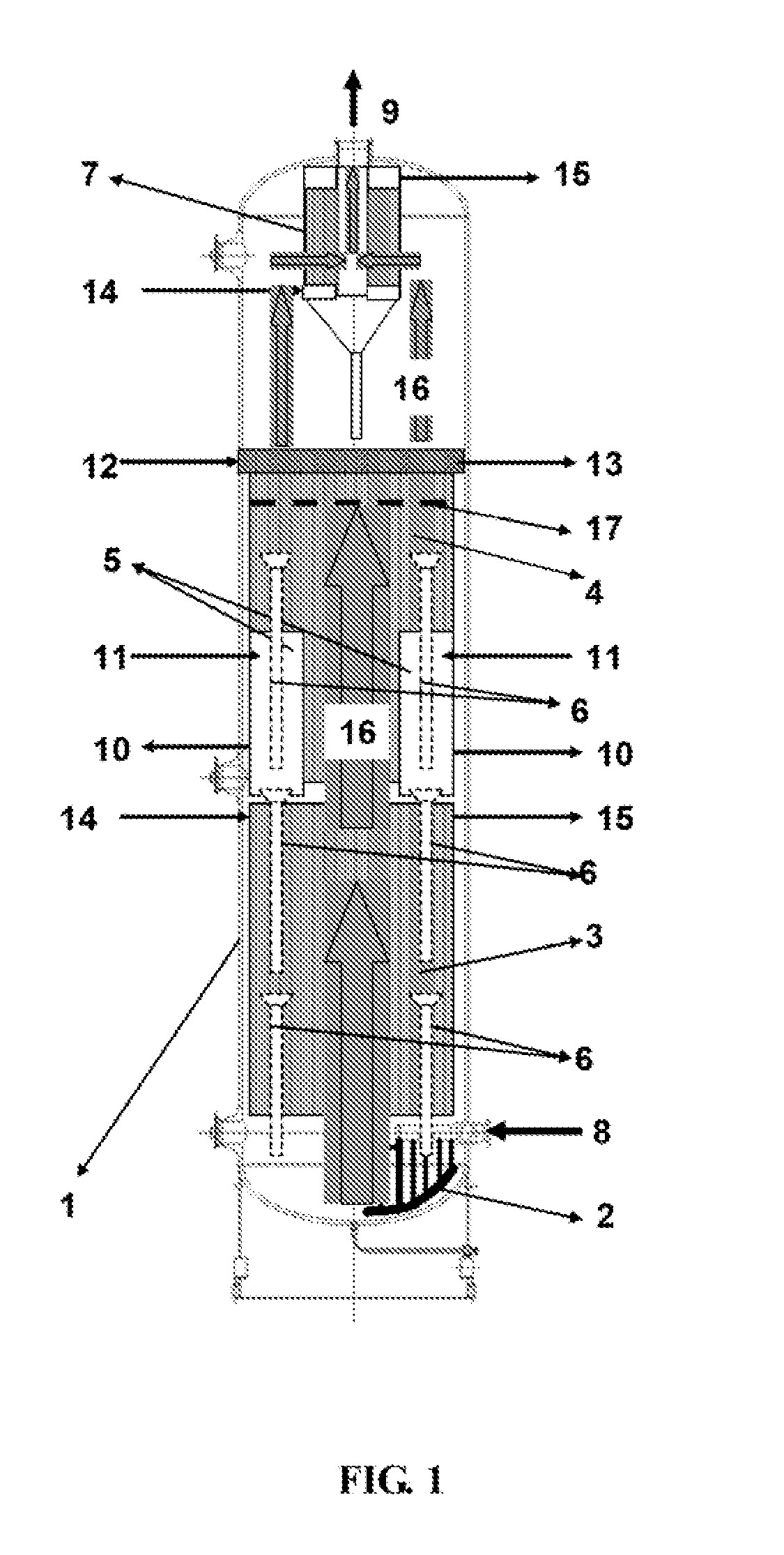

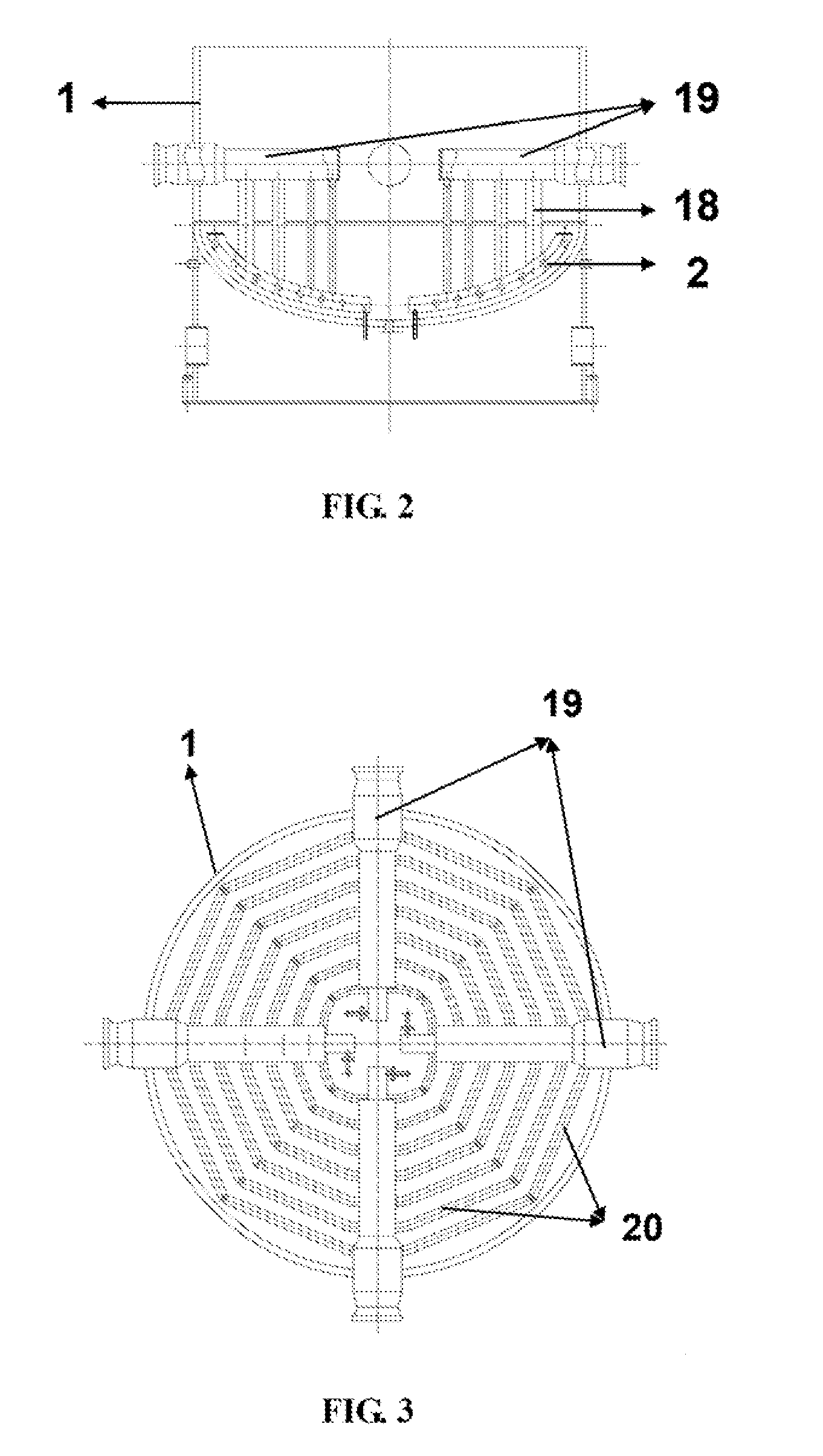

Gas-liquid-solid three-phase suspension bed reactor for fischer-tropsch synthesis and its applications

ActiveUS20100216896A1High activitySimple structureHydrocarbon from carbon oxidesPhysical/chemical process catalystsGas phaseFiltration

A Fischer-Tropsch synthesis three-phase suspension bed reactor (“suspension bed” also called “slurry bed”) and its supplemental systems, may include: 1) structure and dimension design of F-T synthesis reactor, 2) a gas distributor located at the bottom of the reactor, 3) structure and arrangement of a heat exchanger members inside the reactor, 4) a liquid-solid filtration separation device inside reactor, 5) a flow guidance device inside reactor, 6) a condensate flux and separation member located in the gas phase space at the top of reactor, 7) a pressure stabilizer, a cleaning system for the separation device; an online cleaning system for the gas distributor; an ancillary system for slurry deposition and a pre-condensate and mist separation system located at the outlet of upper reactor. This reactor is suitable for industrial scale application of Fischer-Tropsch synthesis.

Owner:SYNFUELS CHINA TECH CO LTD

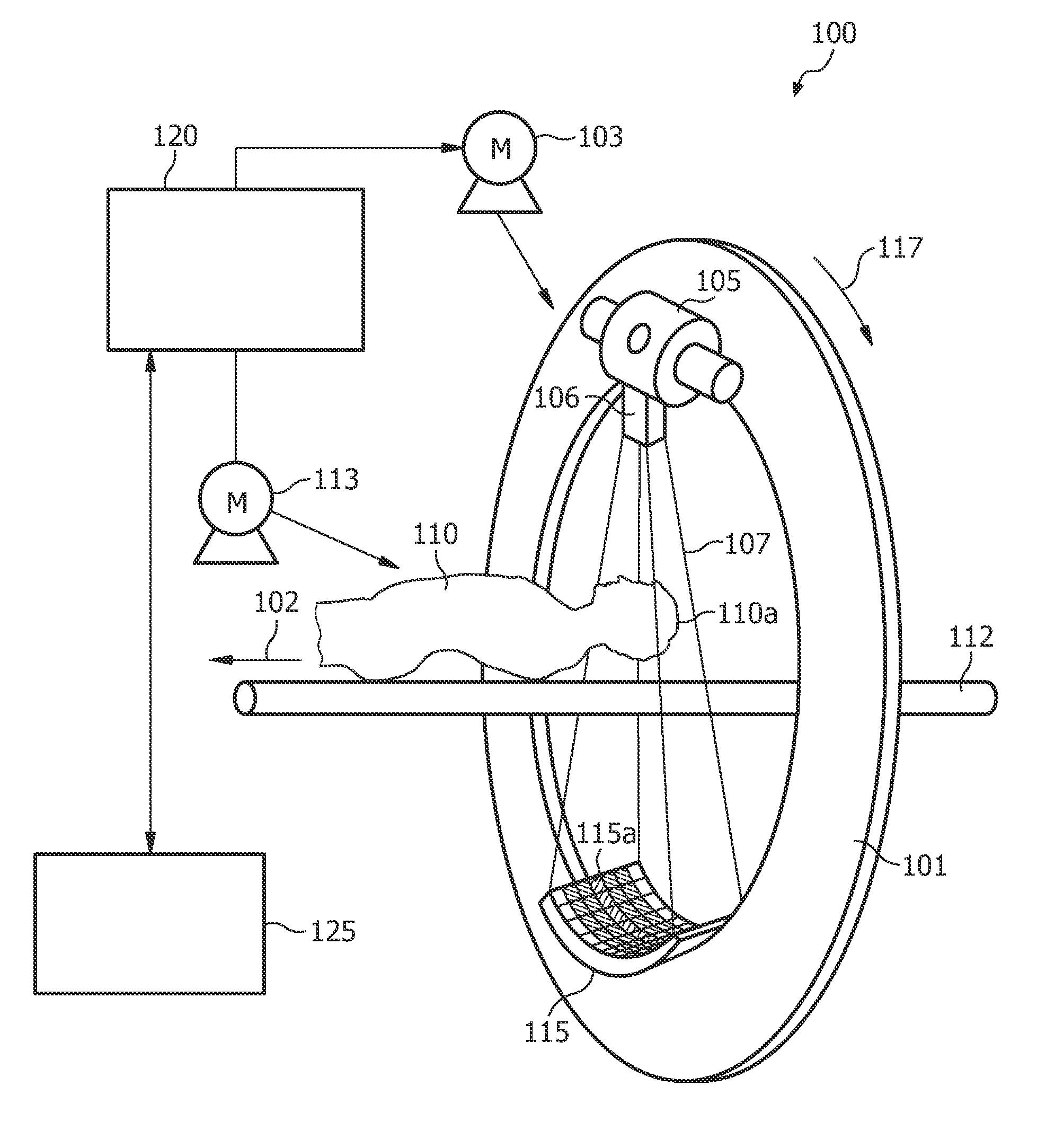

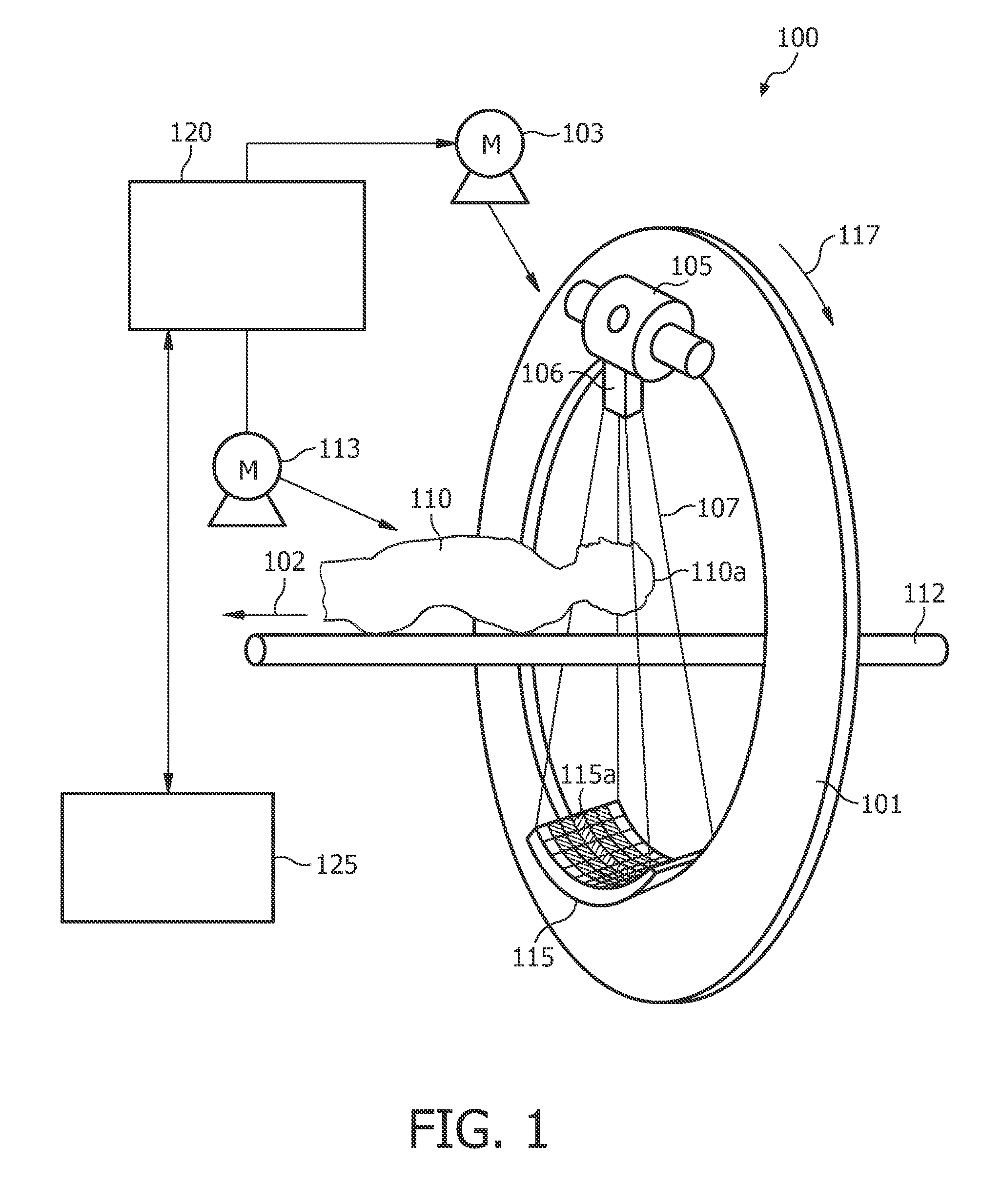

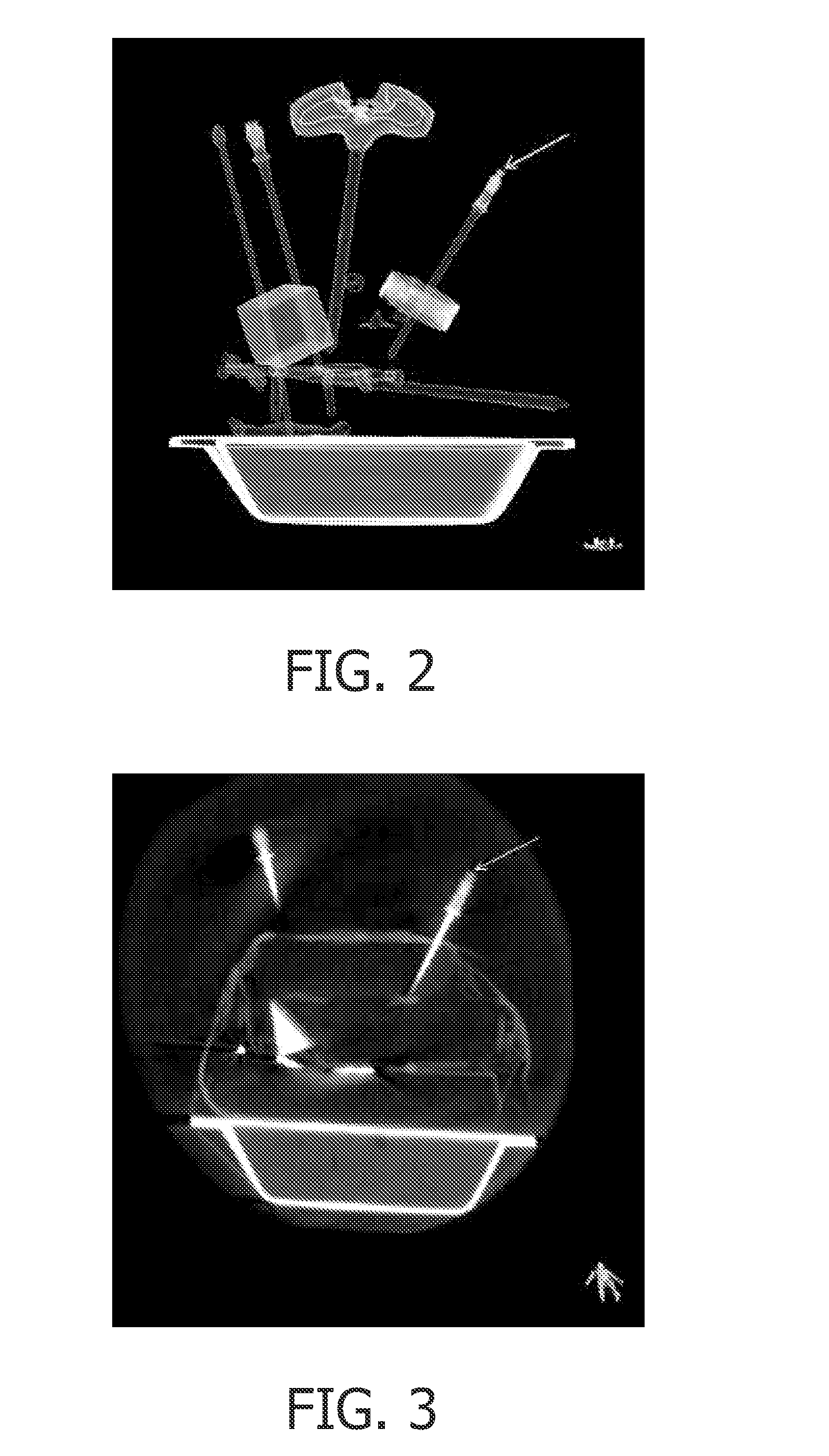

Targeting method, targeting device, computer readable medium and program element

ActiveUS8798339B2Simple and fast and effective targetingEfficient reconstructionSurgical needlesCharacter and pattern recognitionX-rayProgram unit

This invention will introduce a fast and effective target approach planning method preferably for needle guided percutaneous interventions using a rotational X-ray device. According to an exemplary embodiment A targeting method for targeting a first object in an object under examination is provided, wherein the method comprises selecting a first two-dimensional image of an three-dimensional data volume representing the object under examination, determining a target point in the first two-dimensional image, displaying an image of the three-dimensional data volume with the selected target point. Furthermore, the method comprises positioning the said image of the three-dimensional data volume by scrolling and / or rotating such that a suitable path of approach crossing the target point has a first direction parallel to an actual viewing direction of the said image of the three-dimensional data volume and generating a second two-dimensional image out of the three-dimensional data volume, wherein a normal of the plane of the second two-dimensional image is oriented parallel to the first direction and crosses the target point.

Owner:KONINK PHILIPS ELECTRONICS NV

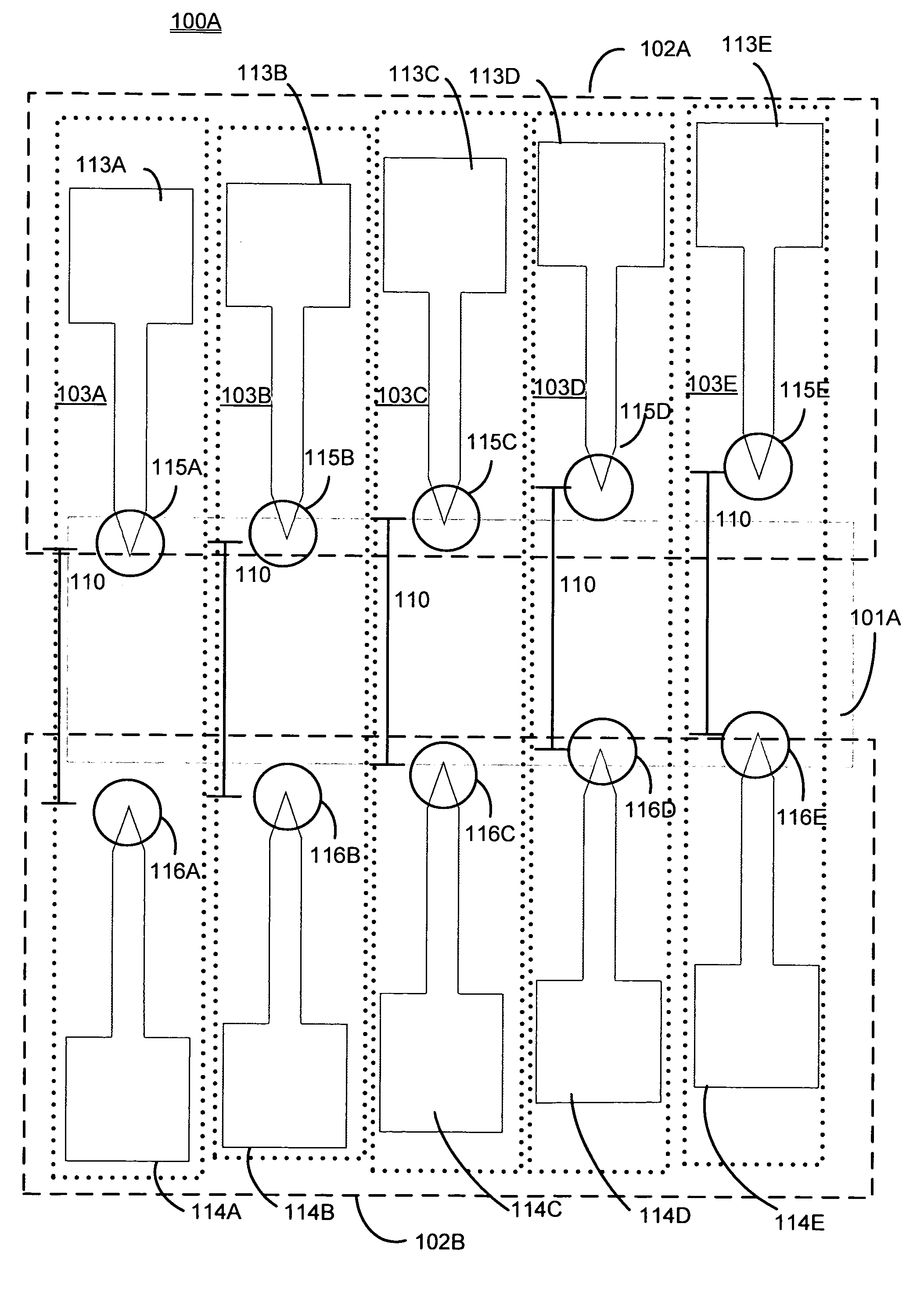

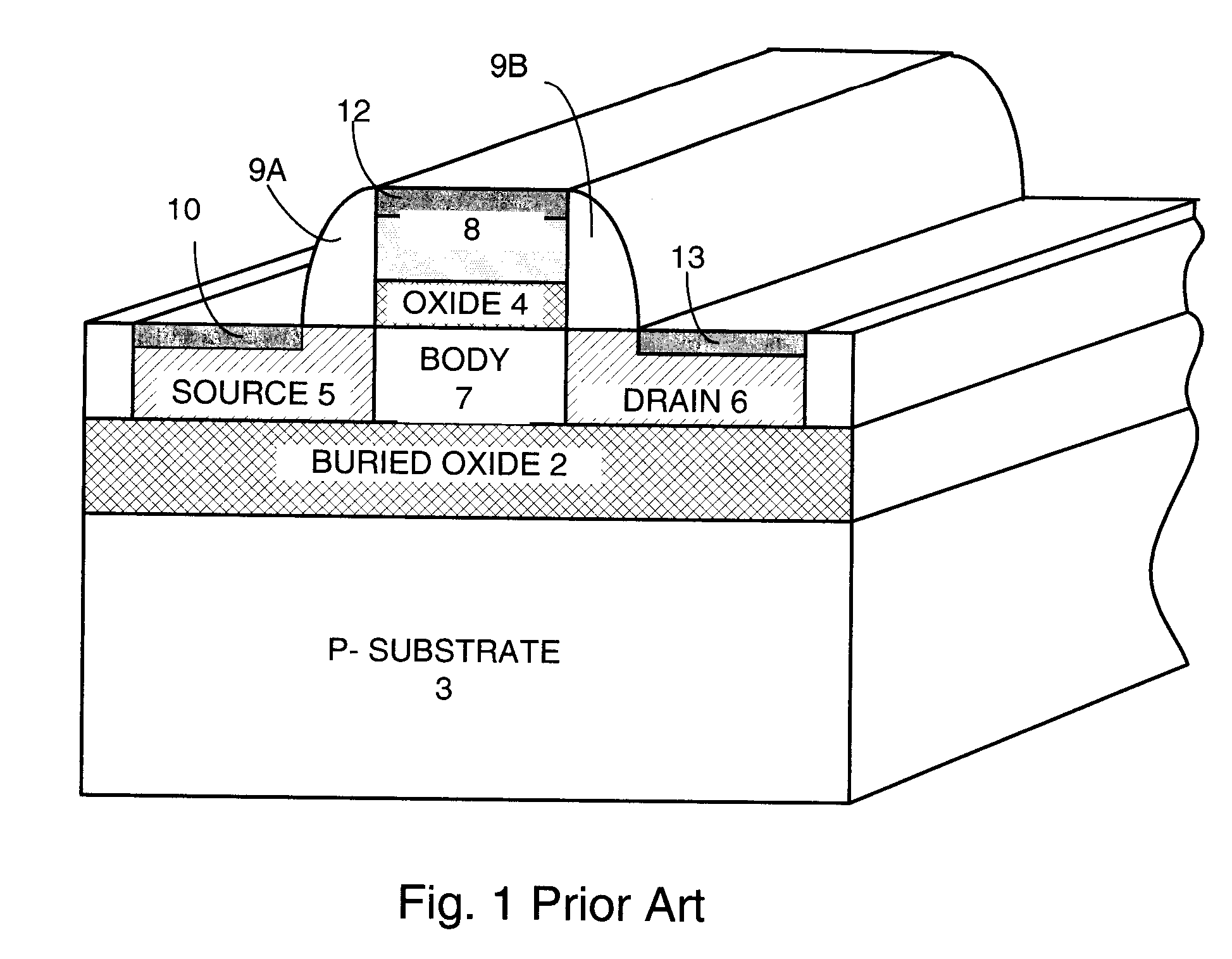

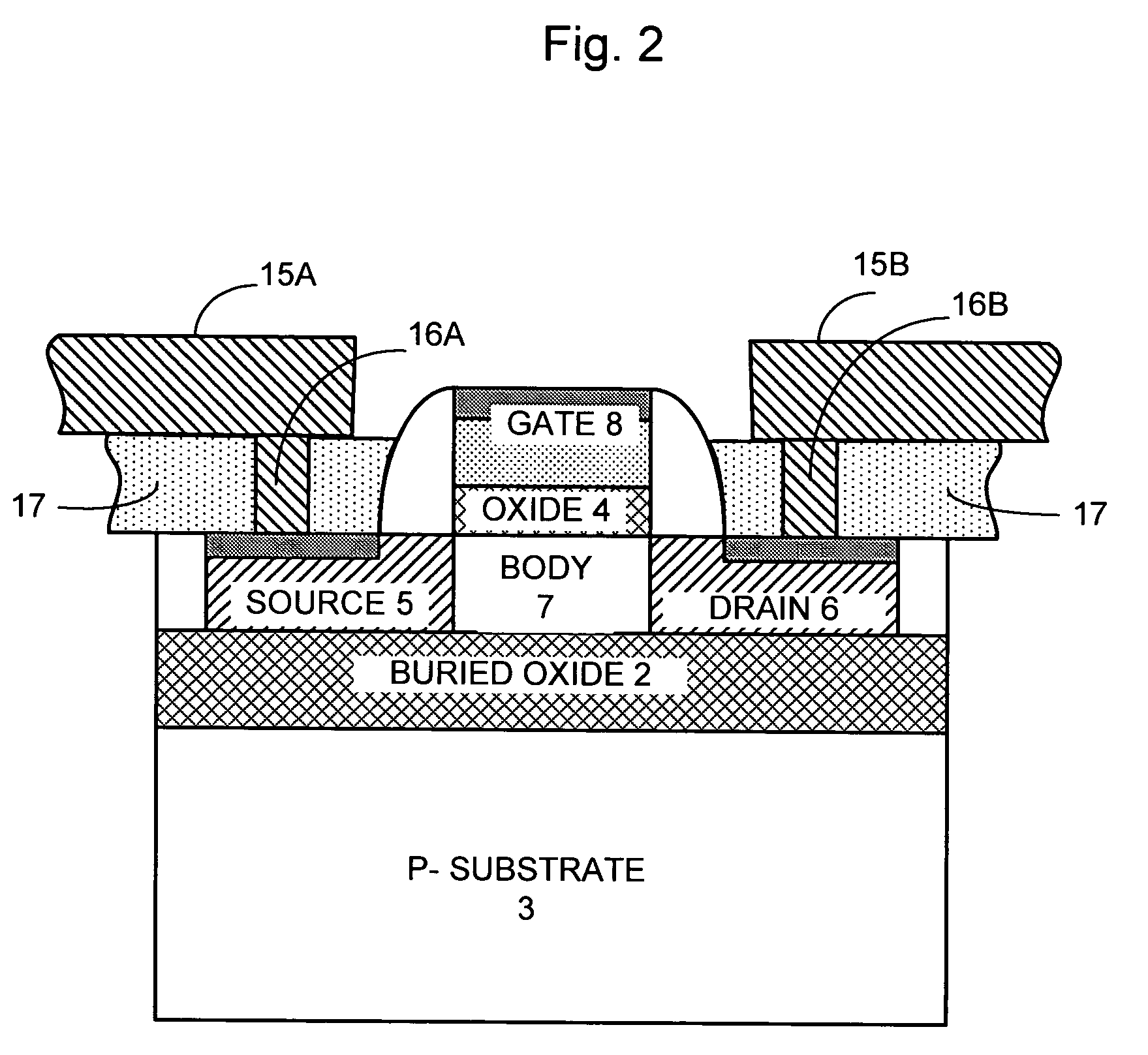

Electrical open/short contact alignment structure for active region vs. gate region

InactiveUS7183780B2Efficient measurementLess design spaceSemiconductor/solid-state device testing/measurementResistance/reactance/impedencePolycrystalline siliconPhysics

An apparatus for measuring alignment of polysilicon shapes to a silicon area. Each polysilicon shape in a first plurality of polysilicon shapes has a bridging vertex positioned near the silicon area. Each polysilicon shape in a second plurality of polysilicon shapes has a bridging vertex positioned near the silicon area. The second plurality of silicon shapes is positioned on the opposite side of the silicon area from the first plurality of silicon shapes. An electrical measurement of how many of the polysilicon shapes in the first plurality of polysilicon shapes and in the second plurality of polysilicon shapes provides a measurement of alignment of the polysilicon shapes and the silicon area.

Owner:IBM CORP

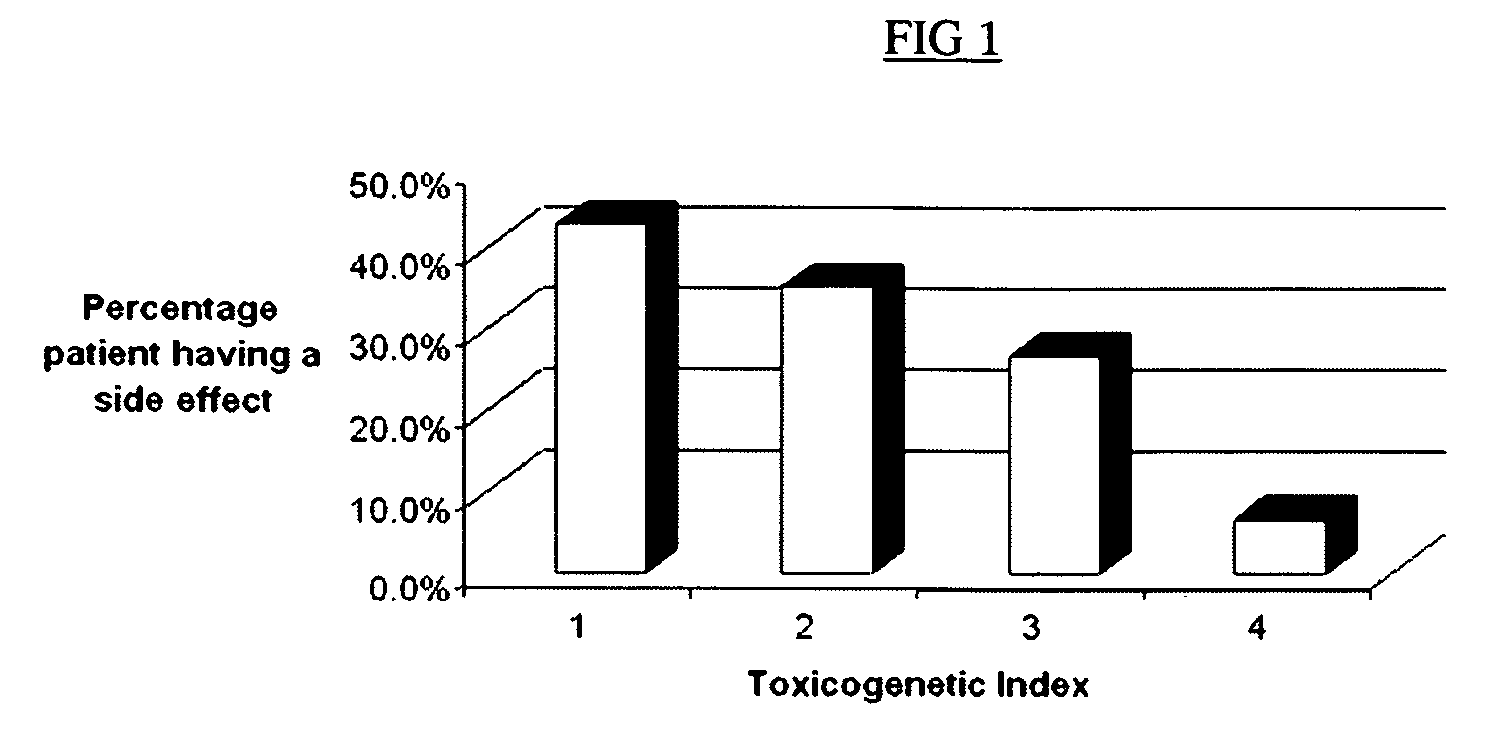

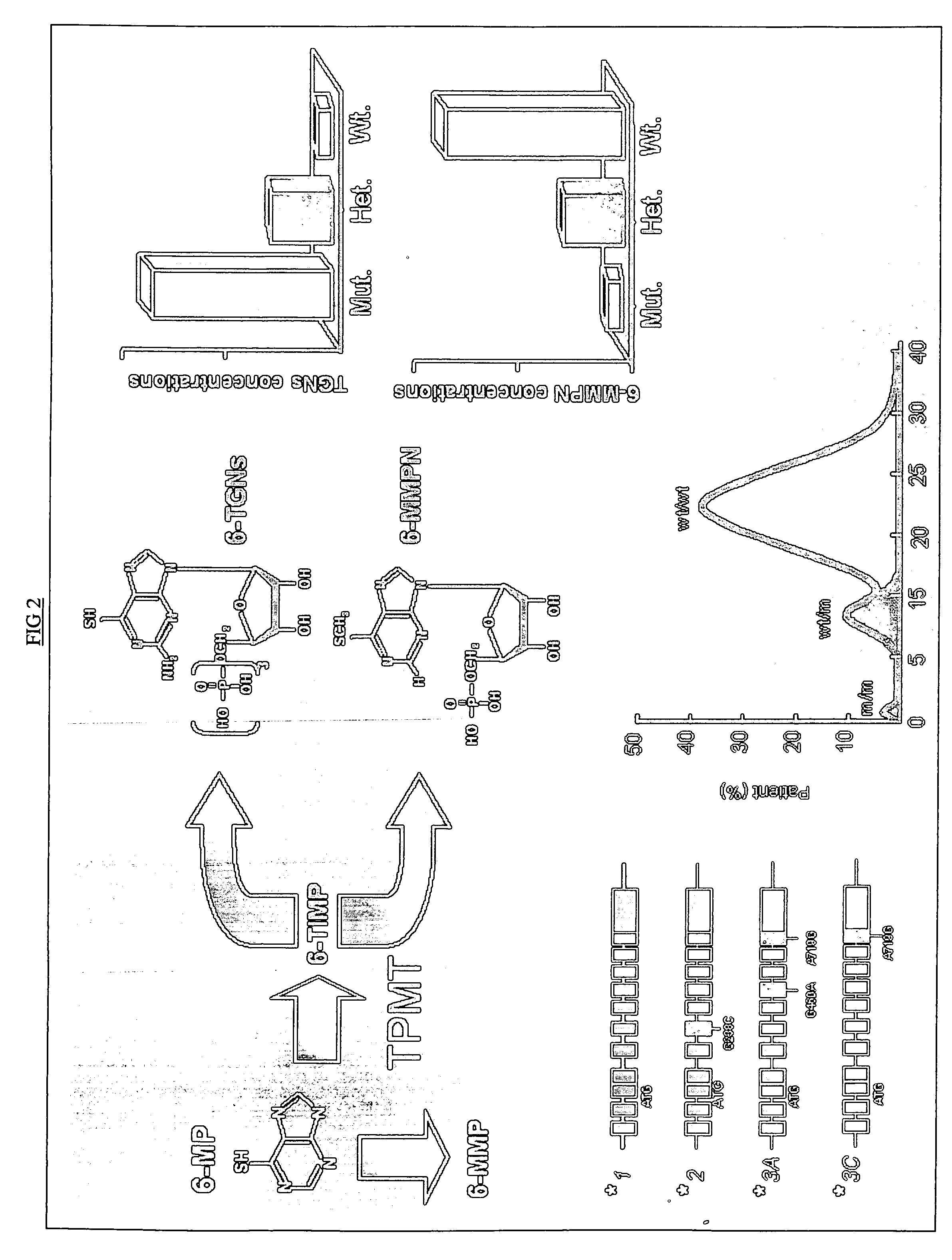

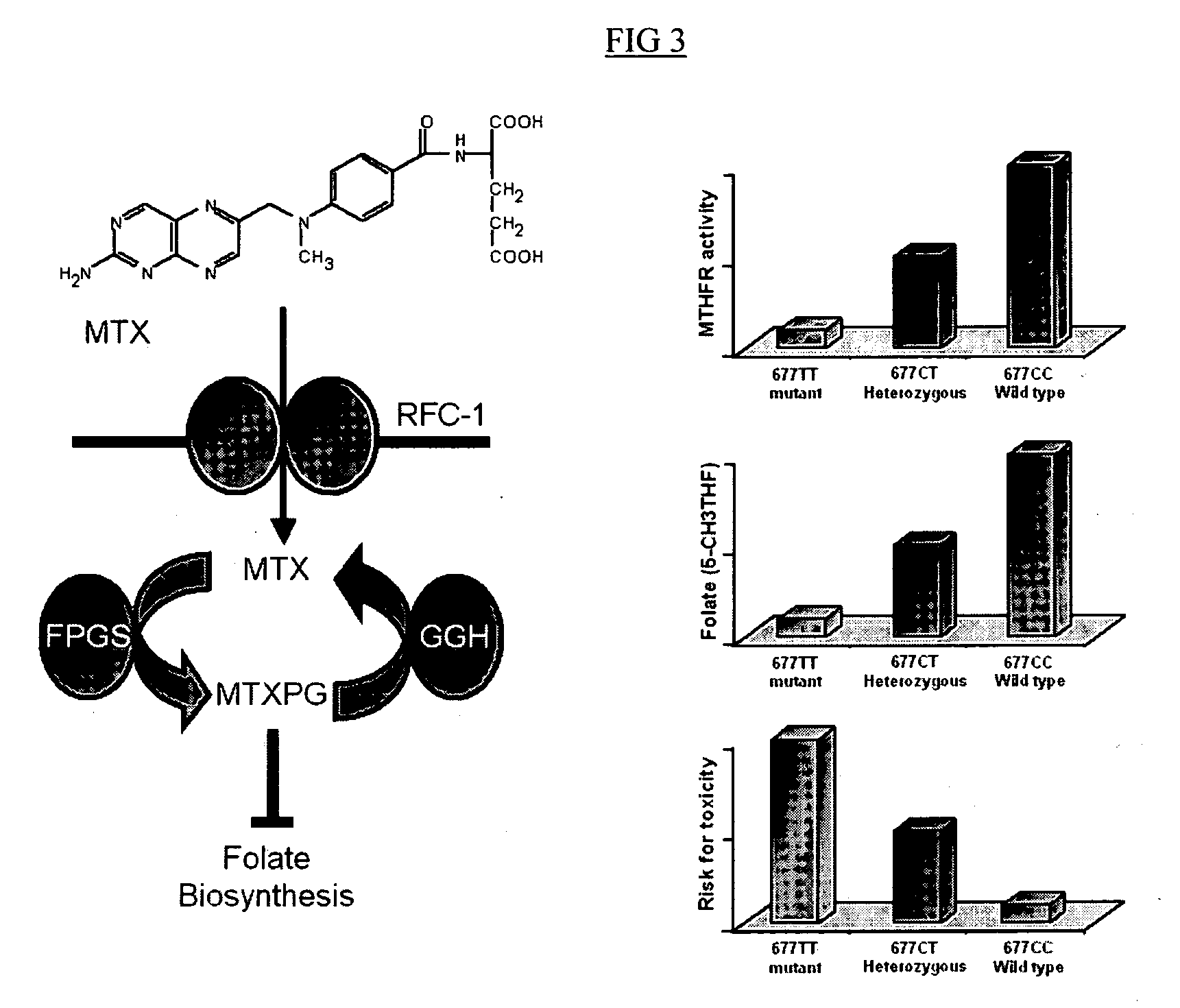

Method to optimize drug selection, dosing and evaluation and to help predict therapeutic response and toxicity from immunosuppressant therapy

InactiveUS20060253263A1Good curative effectMinimizing toxic side effectMicrobiological testing/measurementProteomicsMaintenance therapyAutoimmune condition

The present invention provides a method of effectively measuring risk for therapeutic toxicity of a subject having an autoimmune disorder or cancer and predicting and evaluating therapeutic efficacy of immunosuppressive therapies for autoimmune diseases and cancers before or after starting therapy. The present invention also provides for determining a drug metabolite level of a subject during therapy and measuring periodically the drug metabolite level of a subject on maintenance therapy to ensure treatment compliance and continued therapeutic response by measuring minimal clinical important differences (MCID) in the drug metabolite levels. The present invention also provides for a method to effectively optimize the selection and dose of immunosuppressive therapies of a subject having an autoimmune disease or cancer to improve therapeutic efficacy and reduce therapeutic toxicity prior to starting concomitant biologic therapy and before or after the subject has failed to respond to the at least one immunosuppressive agent.

Owner:MESHKIN BRIAN JAVAADE

System and method for delivering online advertisements using captchas

An improved system and method for providing and using captchas for online advertising is provided. An advertisement may be received, embedded in a captcha and stored for use in online advertising. A question about the advertisement may also be stored along with a valid answer for use in verifying a user has received an impression of the advertisement. In response to a request received for sending a captcha with an embedded advertisement to a web browser operating on a client, a captcha with an embedded advertisement may be selected and sent to the web browser for display as part of a web page. Upon verifying the response to the question from the user is a valid answer, receipt of an impression of the advertisement may be recorded. Additionally, the awareness of an online advertisement may be measured for a target audience and reported to advertisers.

Owner:OATH INC

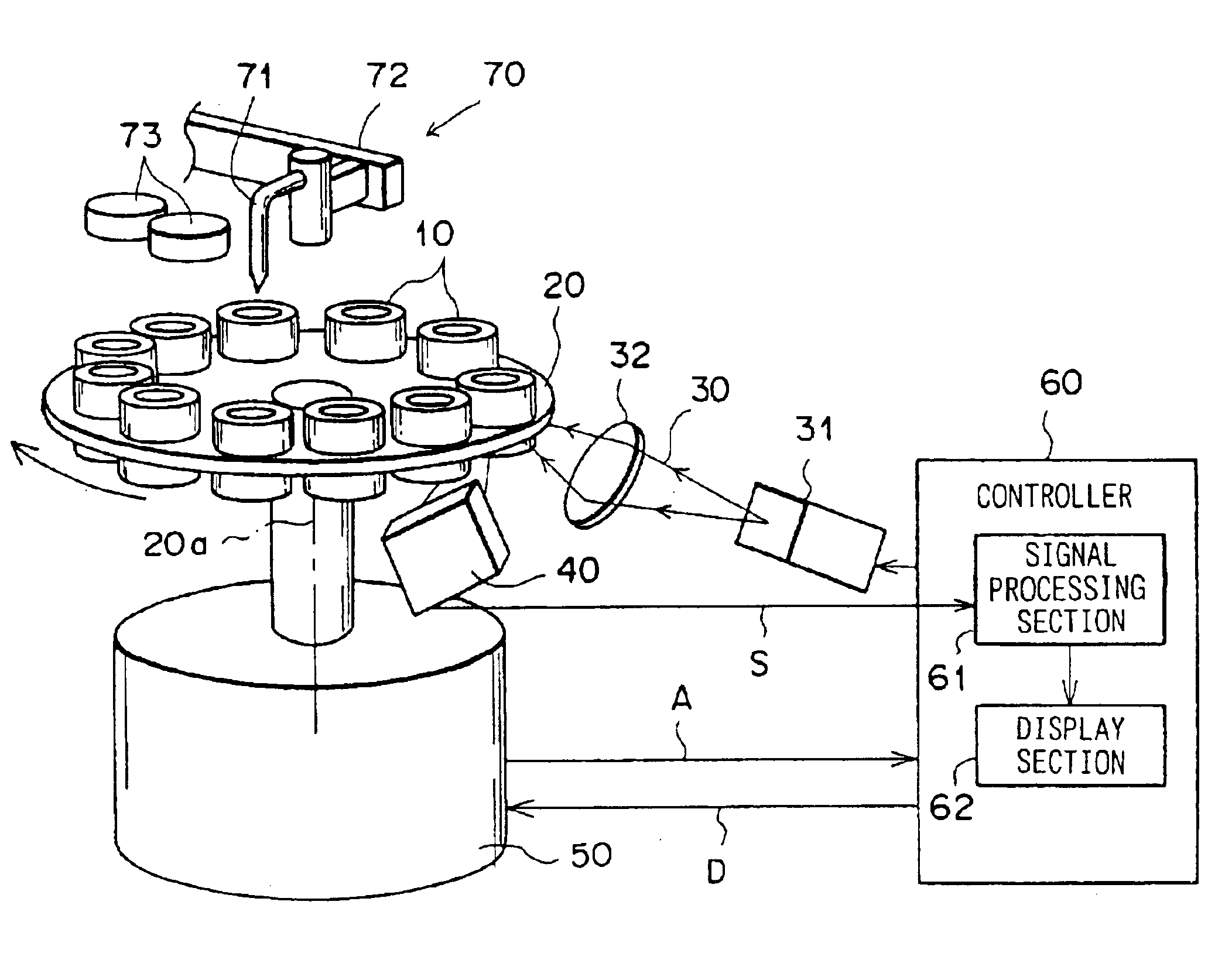

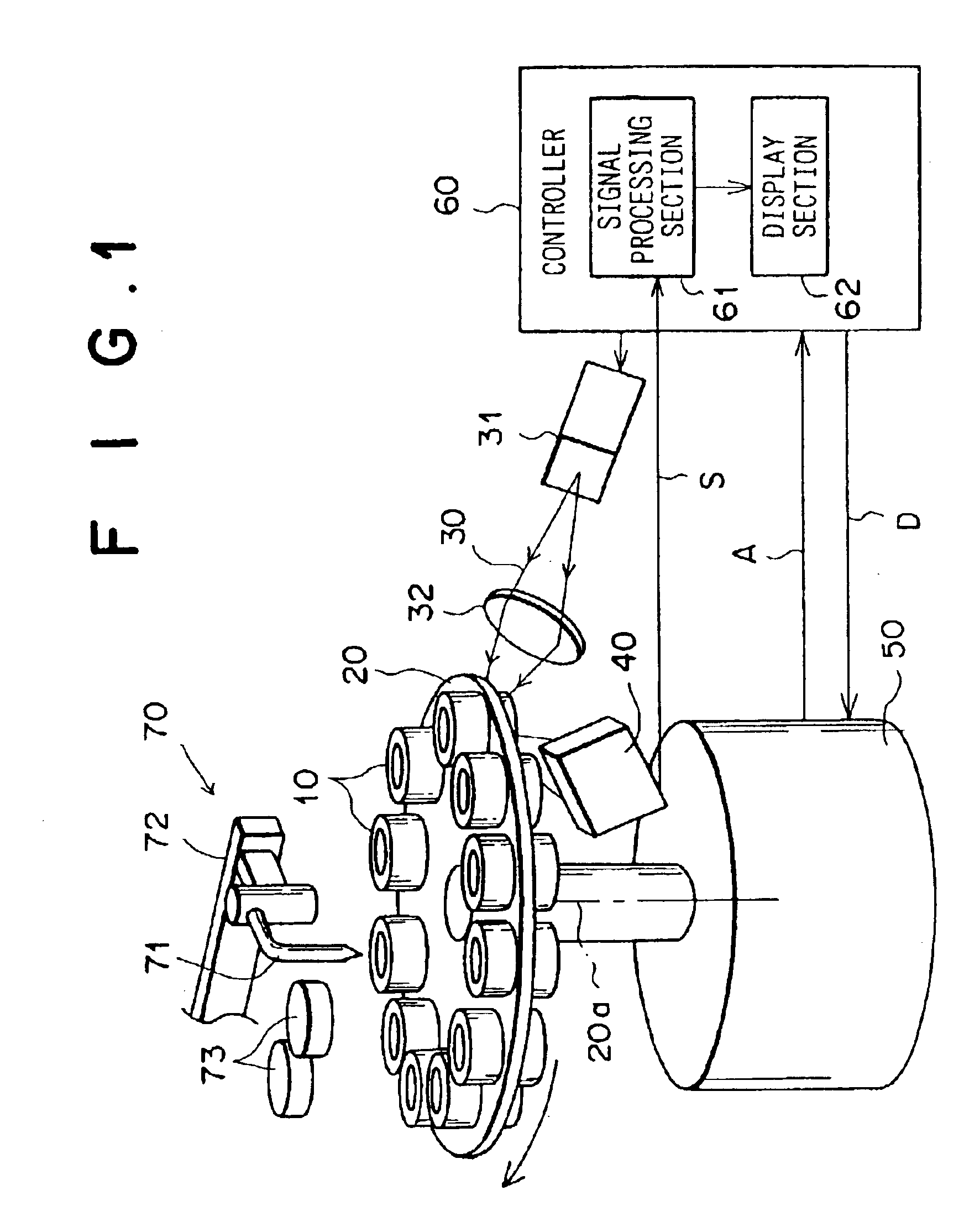

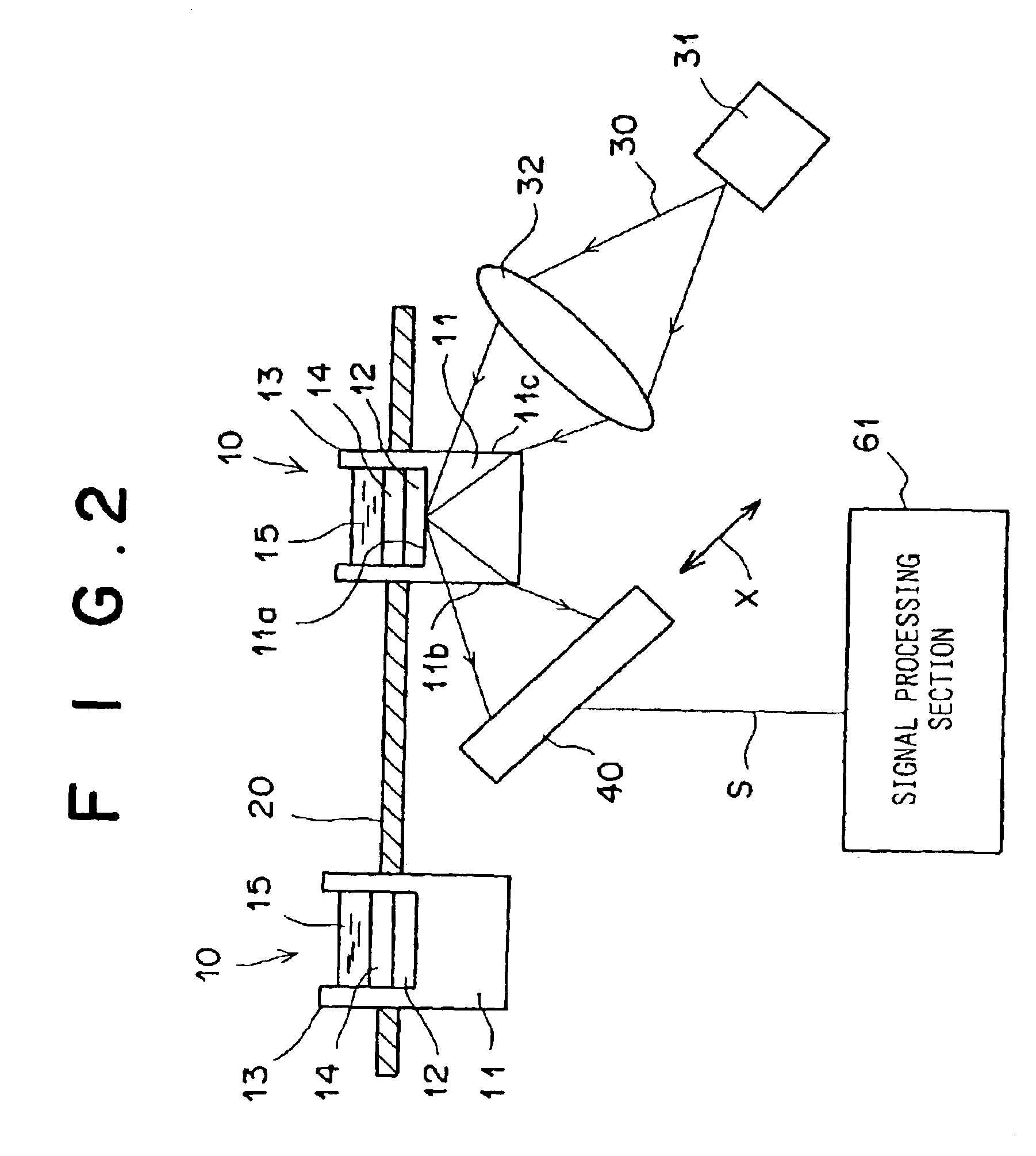

Measuring method and apparatus using attenuation in total reflection

InactiveUS6864984B2Increase supportAccuracy deterioratesScattering properties measurementsColor/spectral properties measurementsPhotodetectorOpto electronic

A plurality of measuring units each comprising a dielectric block, a metal film layer which is formed on a surface of the dielectric block and a sample holder are supported on a support. The support is moved by a support drive means to bring in sequence the measuring units to a measuring portion comprising an optical system which projects a light beam emitted from a light source, and a photodetector which detects attenuation in total internal reflection by detecting the intensity of the light beam which is reflected in total internal reflection at the interface between the dielectric block and the metal film layer. In this measuring apparatus, lots of samples can be measured in a short time.

Owner:FUJIFILM HLDG CORP +1

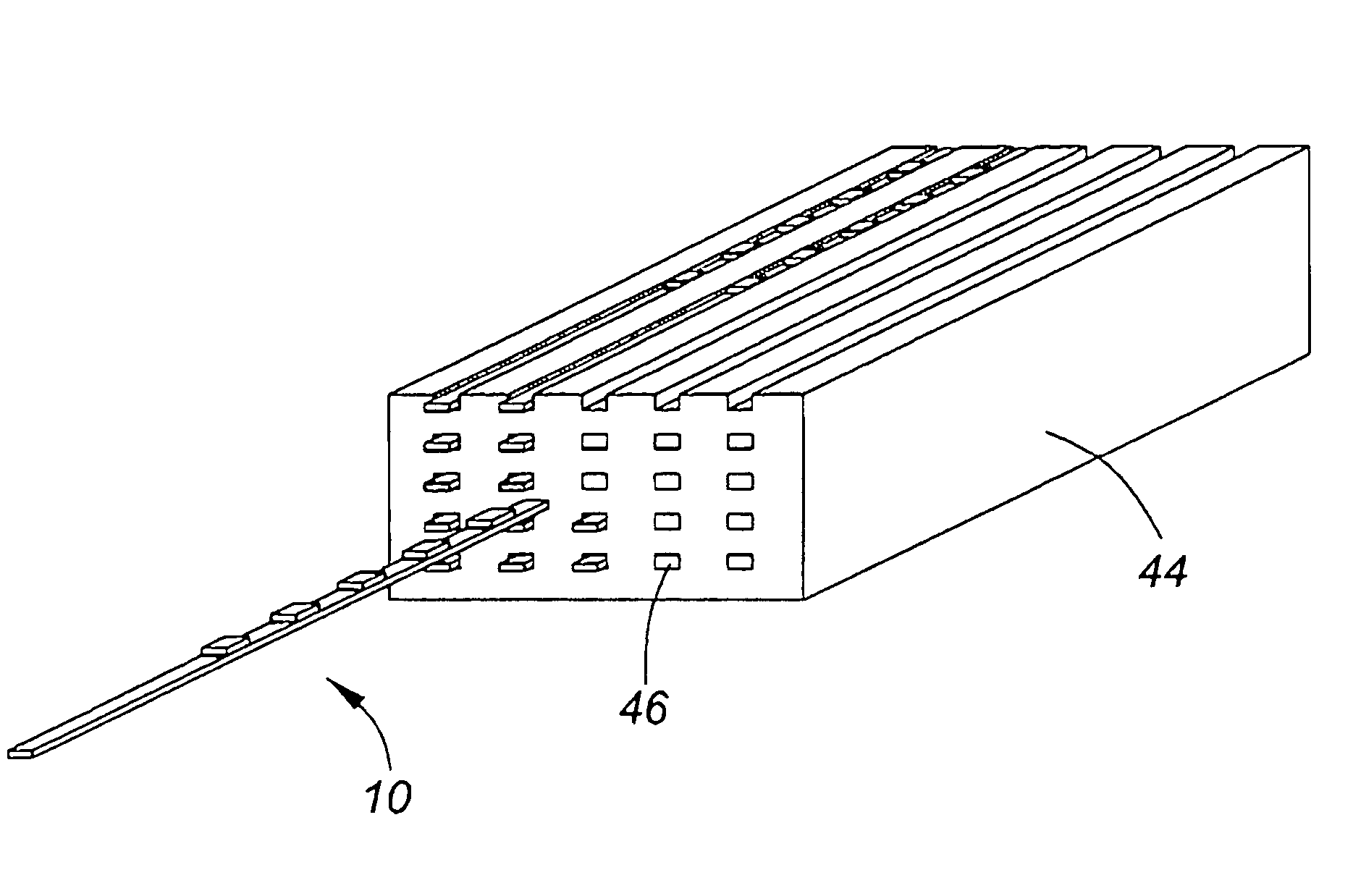

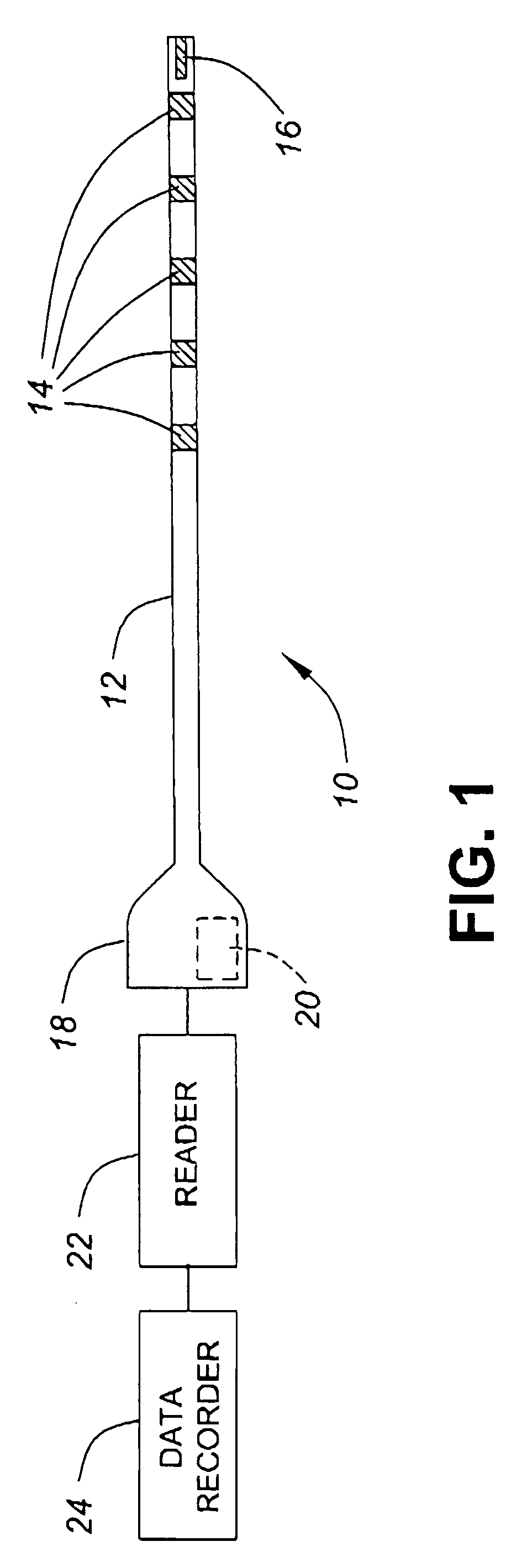

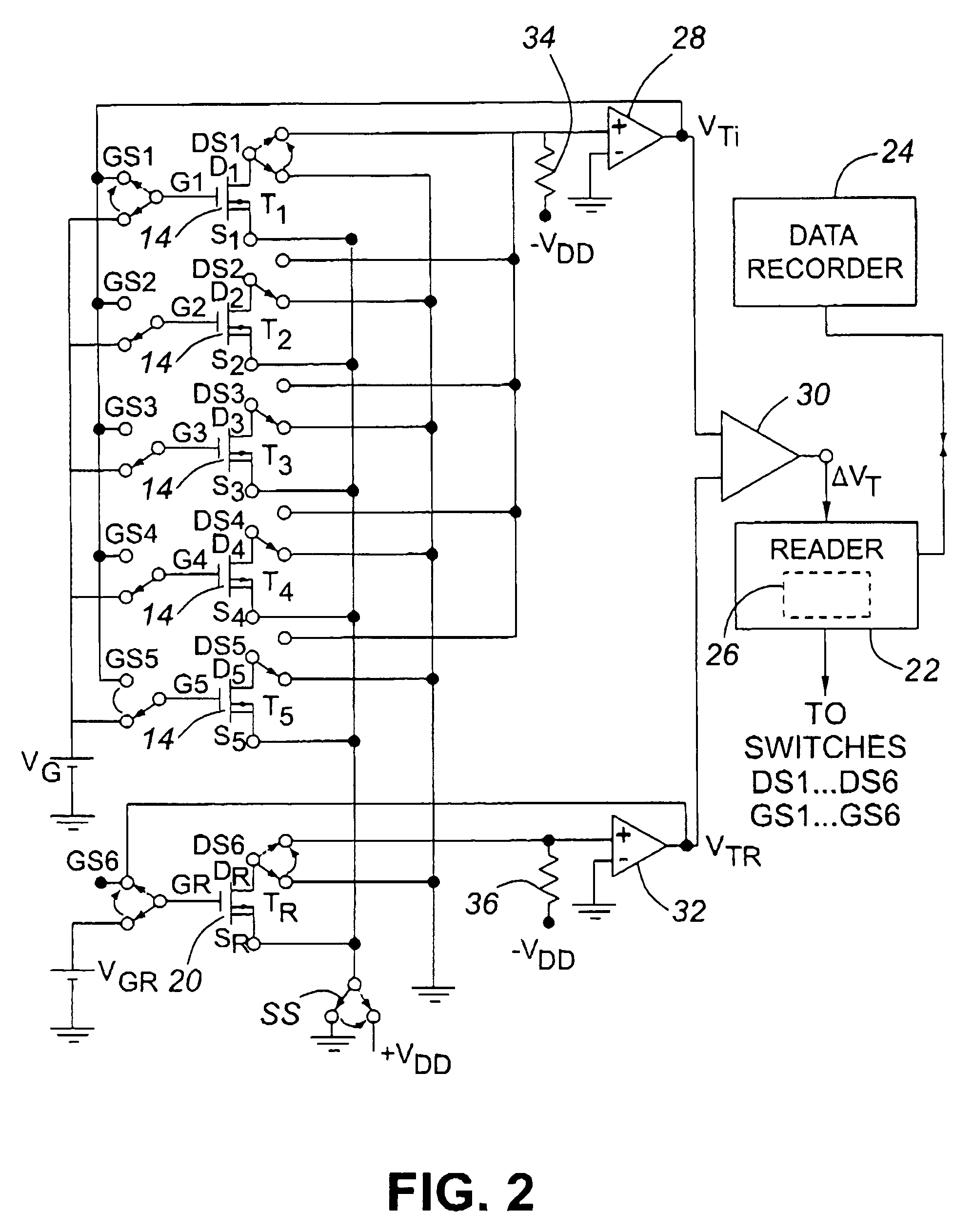

Dosimeter having an array of sensors for measuring ionizing radiation, and dosimetry system and method using such a dosimeter

InactiveUS20060027756A1Limit reduction of width and thicknessEfficient measurementDosimetersMaterial analysis by optical meansSensor arrayElectrical conductor

In a dosimeter for measuring levels of ionizing radiation, for example during radiotherapy, a plurality of radiation sensors, such as insulated gate field effect transistors (IGFETs), are spaced apart at predetermined intervals on a support, for example a flexible printed circuit strip, and connected to a connector which can be coupled to a reader for reading the sensors. The sensors may each be connected to a reference device, which may also be an insulated gate field effect transistor, and the absorbed radiation dose may be determined by measuring, before and after the irradiation, the difference between the threshold voltages of the individual sensors and the threshold voltage reference device. Corresponding terminals of the sensors may be connected to the connector by a single conductor, thereby reducing the number of conductors required.

Owner:BEST THERATRONICS +1

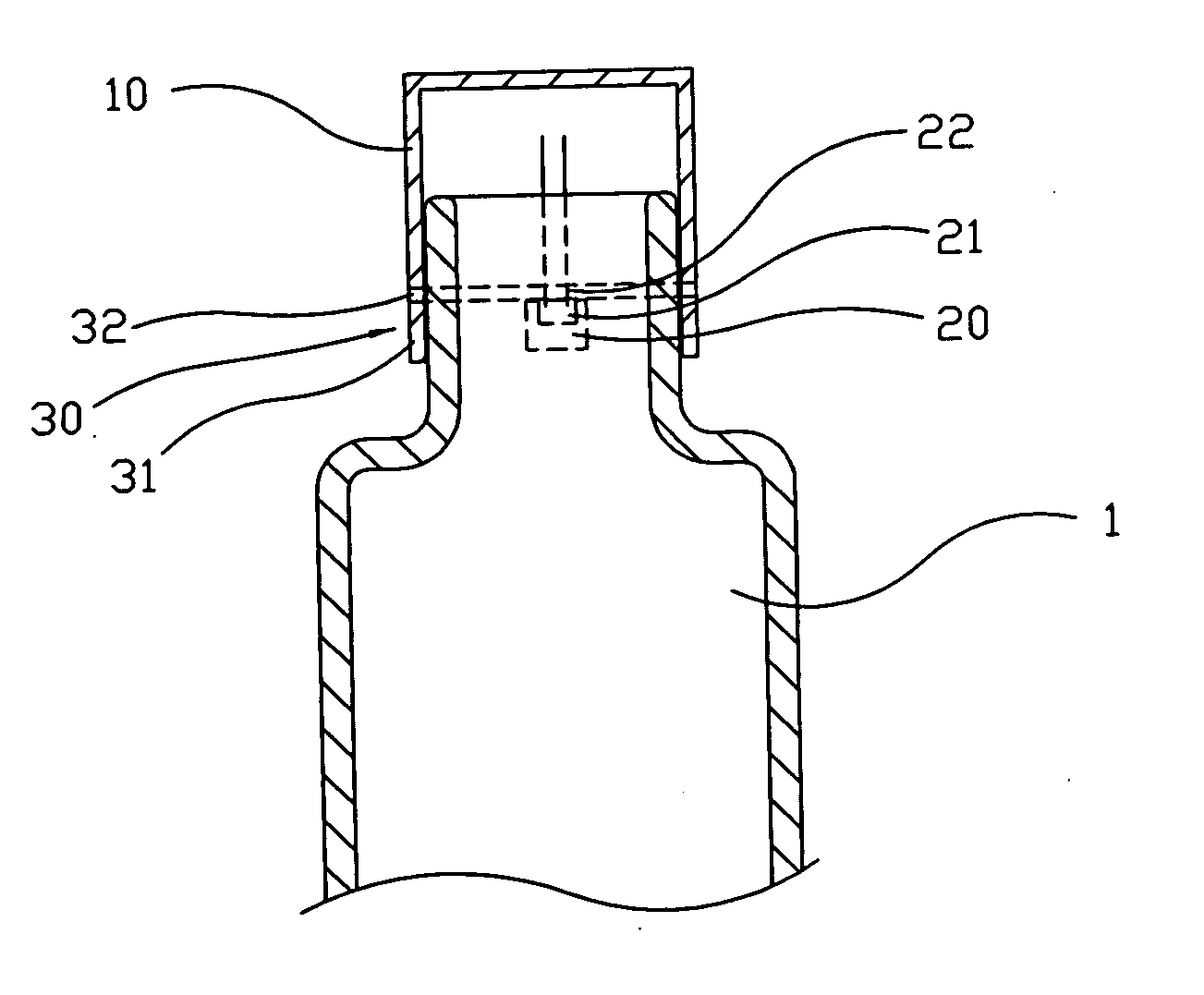

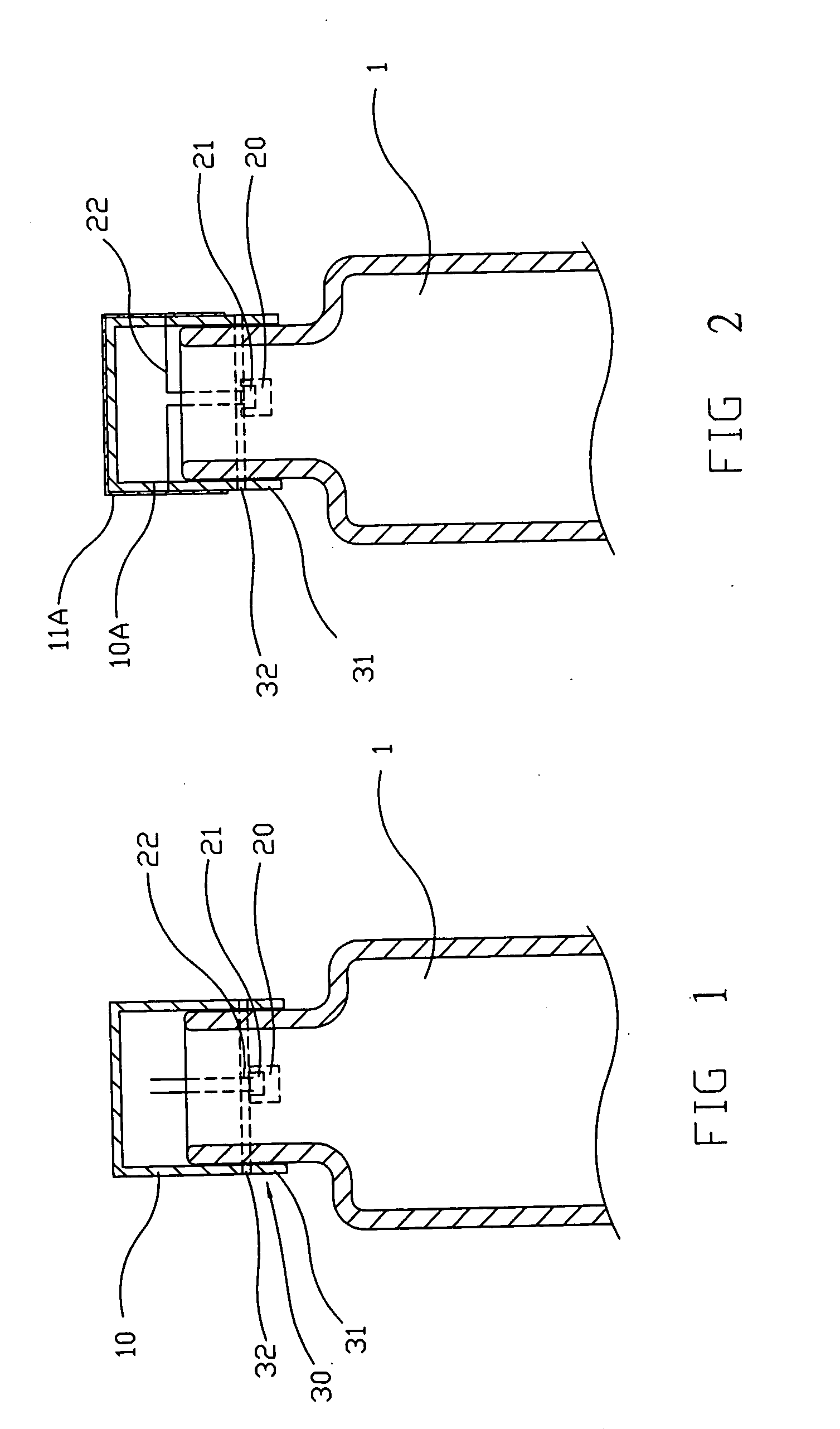

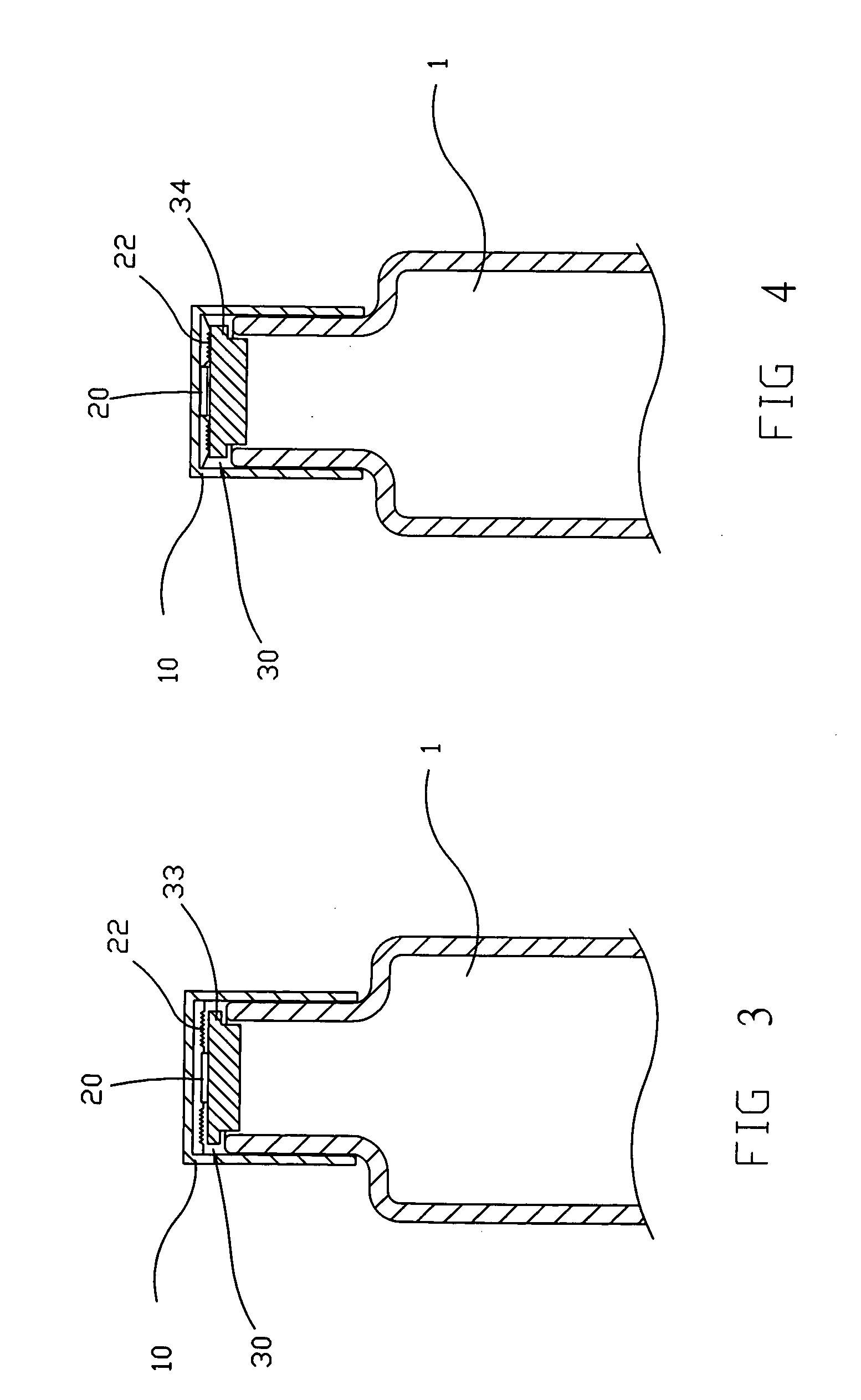

Anti-counterfeit sealing cap with identification capability

ActiveUS20060049948A1High detection sensitivityEfficient measurementContainer decorationsLevel indicationsElectricityEngineering

A sealing cap with an anti-counterfeit and identification capability comprises a cap body, an identification chip with a signal emitting device generating an identification signal, and a destructive device, characterized in that the cap body is electrically connected with the signal generating device and serves as an antenna of a relatively large area and in that the destructive device after dismounting of the cap destroys the capability to emit radiation and thus prevents said identifying chip from being dismounted and reused.

Owner:MEDIATEK INC

Blood information measuring apparatus and method

Owner:FUJIFILM CORP

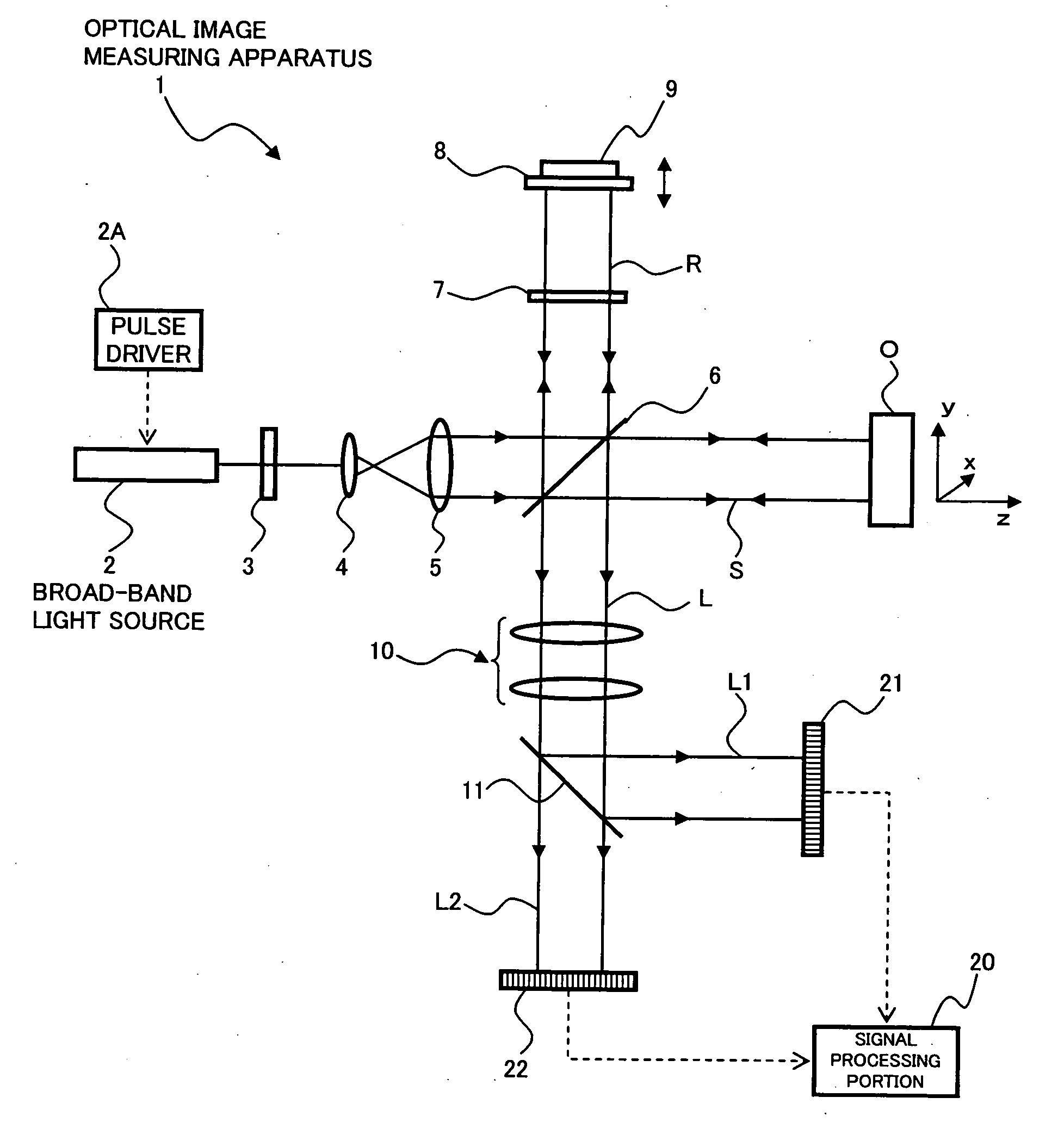

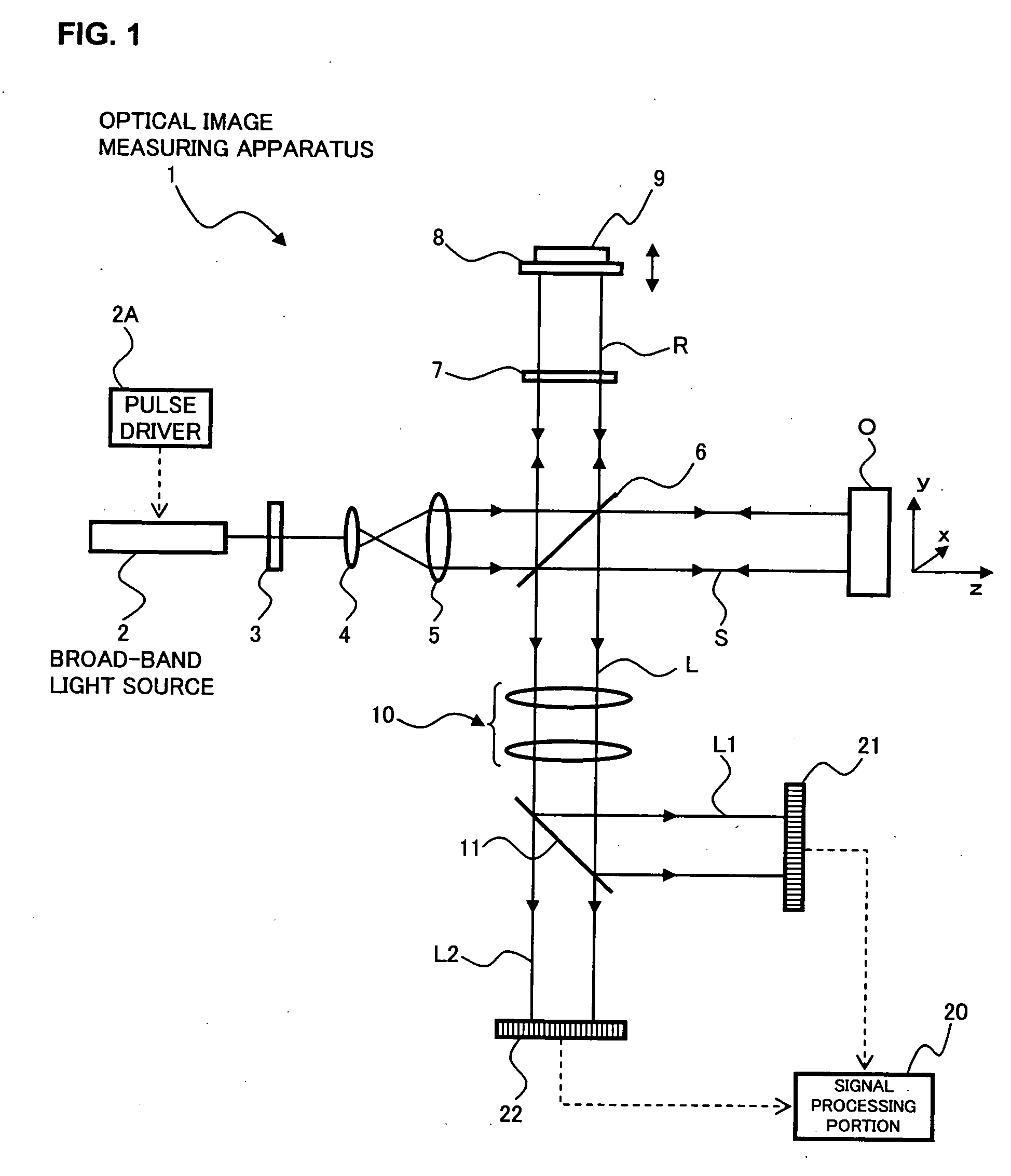

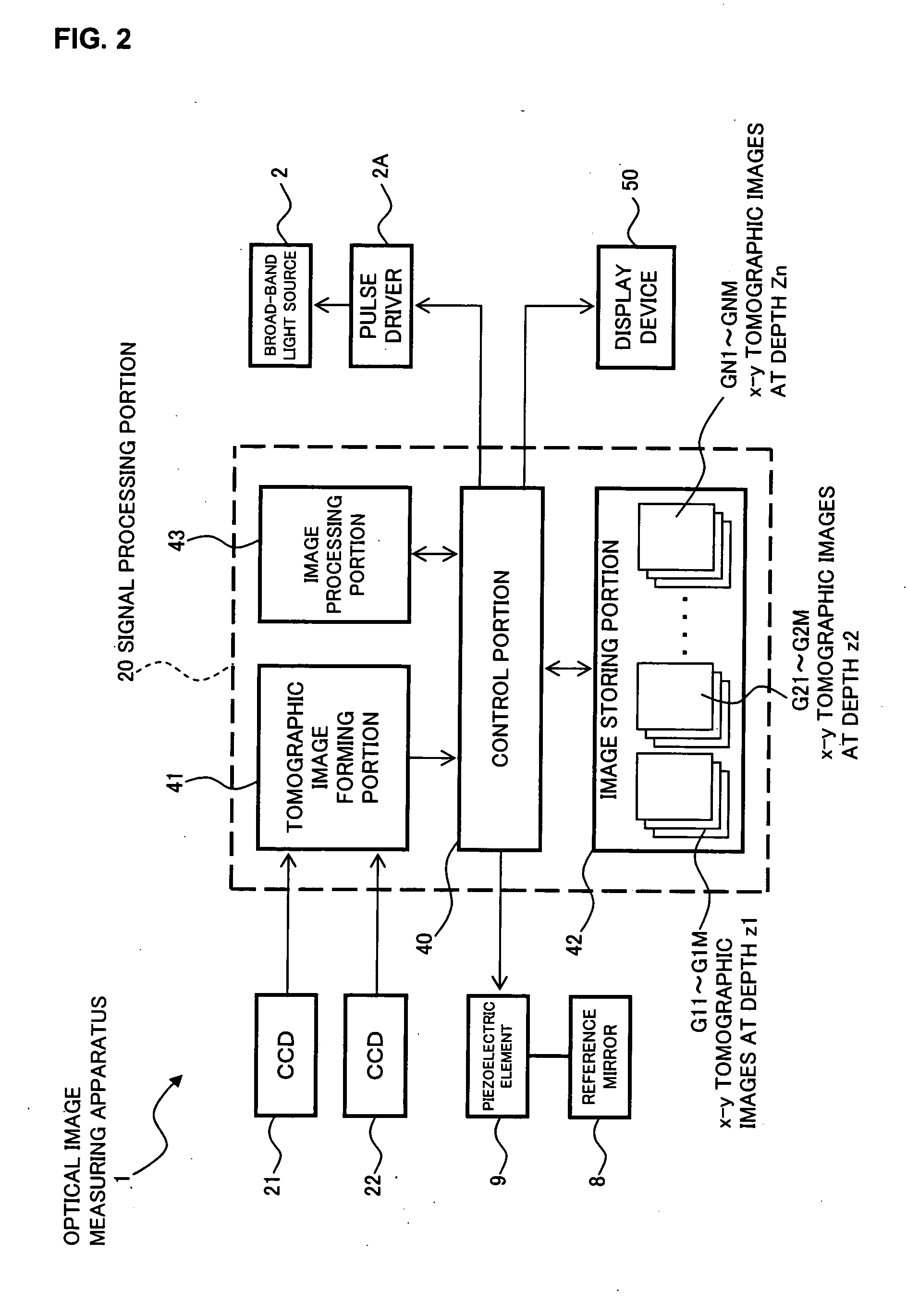

Optical image measuring apparatus and optical image measuring method

InactiveUS20060100528A1Efficient measurementDiagnostics using lightInterferometersSignal lightIntensity modulation

Provided is an optical image measuring apparatus capable of speedily measuring a velocity distribution image of a moving matter. Including a broad-band light source, means for increasing a beam diameter, a polarizing plate converting the light beam to linearly polarized light, and a half mirror, a wavelength plate converting the reference light to circularly polarized light, the half mirror superimposing the signal light whose frequency is partially shifted by the moving matter in the object and the reference light is circularly polarized light to produce superimposed light including interference light, CCDs for receiving different polarized light components of the interference light, and outputting detection signals including interference frequency components corresponding to beat frequencies of the interference light, and a signal processing portion for forming the velocity distribution image based on interference frequency component corresponding to a beat frequency equal to an intensity modulation frequency of the light beam.

Owner:KK TOPCON

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com